Patents

Literature

294 results about "Exact location" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

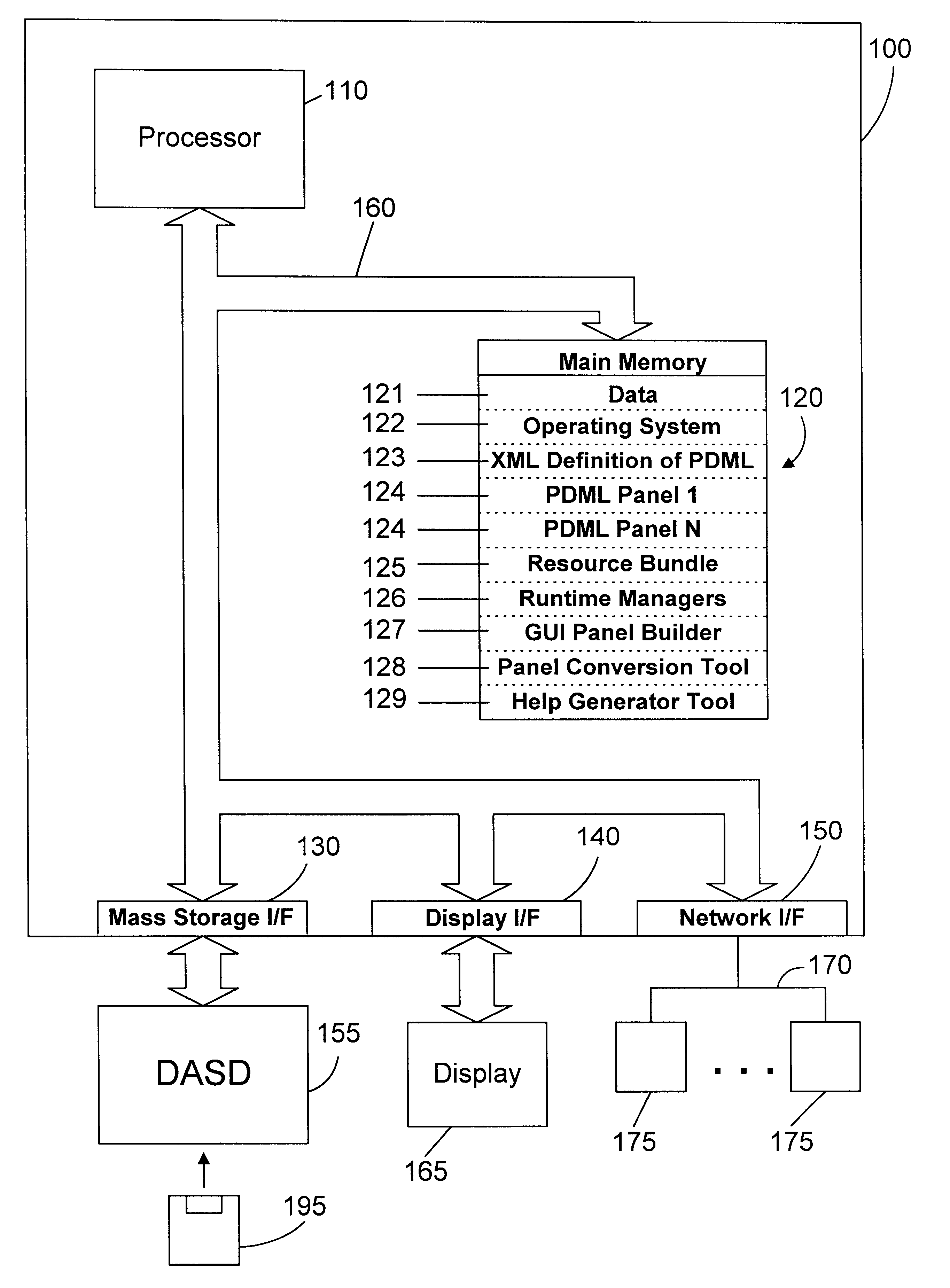

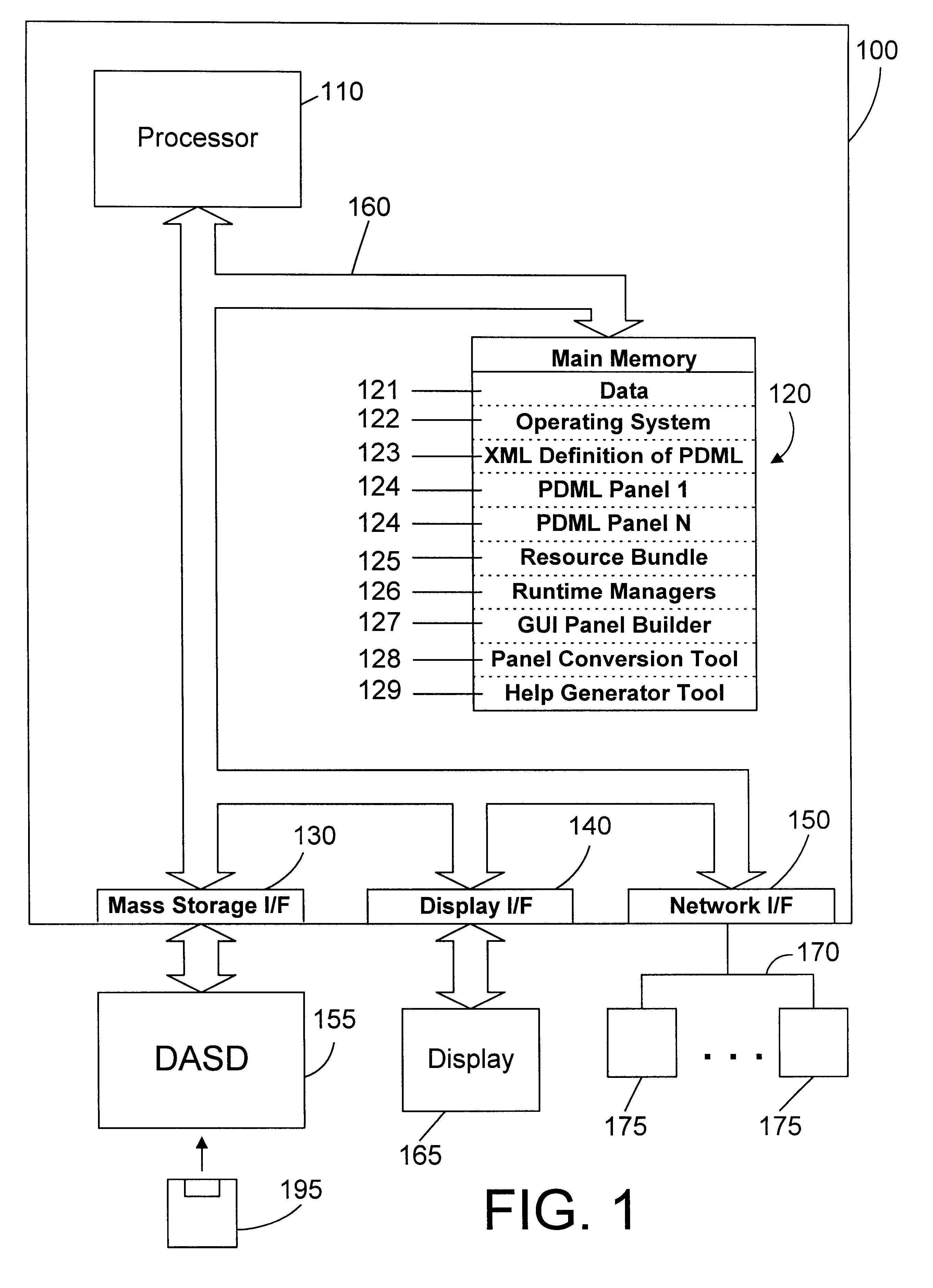

Specification language for defining user interface panels that are platform-independent

InactiveUS6342907B1Promote generationRequirement analysisSpecific program execution arrangementsGraphicsGraph editor

A specification language allows a user to define platform-independent user interface panels without detailed knowledge of complex computer programming languages. The specification language is referred to herein as a Panel Definition Markup Language (PDML), which defines tags that are used in similar fashion to those defined in Hypertext Markup Language (HTML), that allow a user to specify the exact location of components displayed in the panel. A graphical editor allows the creation and modification of platform-independent user interface panels without programming directly in the specification language. A conversion tool may be used to convert platform-specific user interface panels to corresponding platform-independent user interface panels. A help generator tool also facilitates the generation of context-sensitive help for a user interface panel.

Owner:IBM CORP

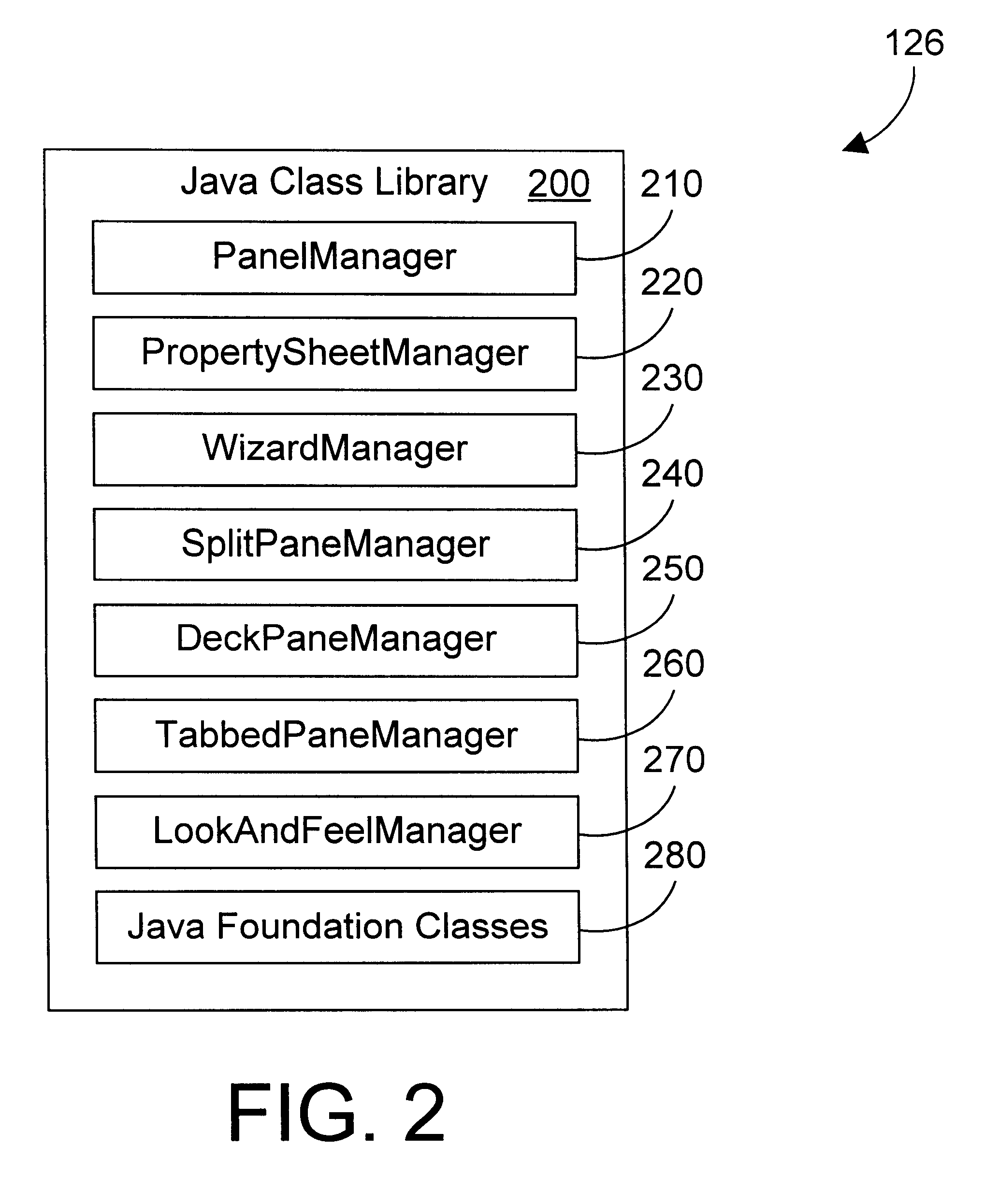

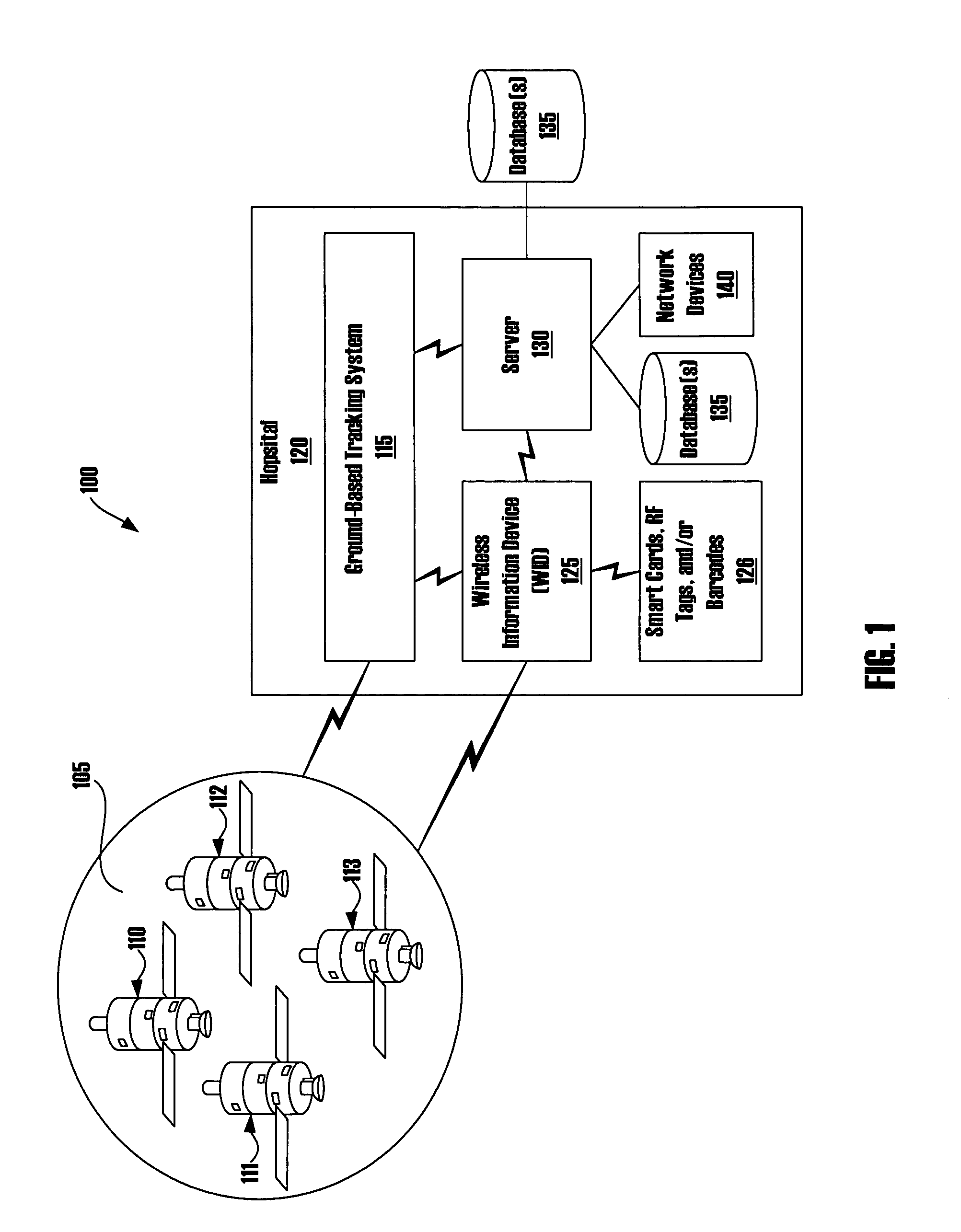

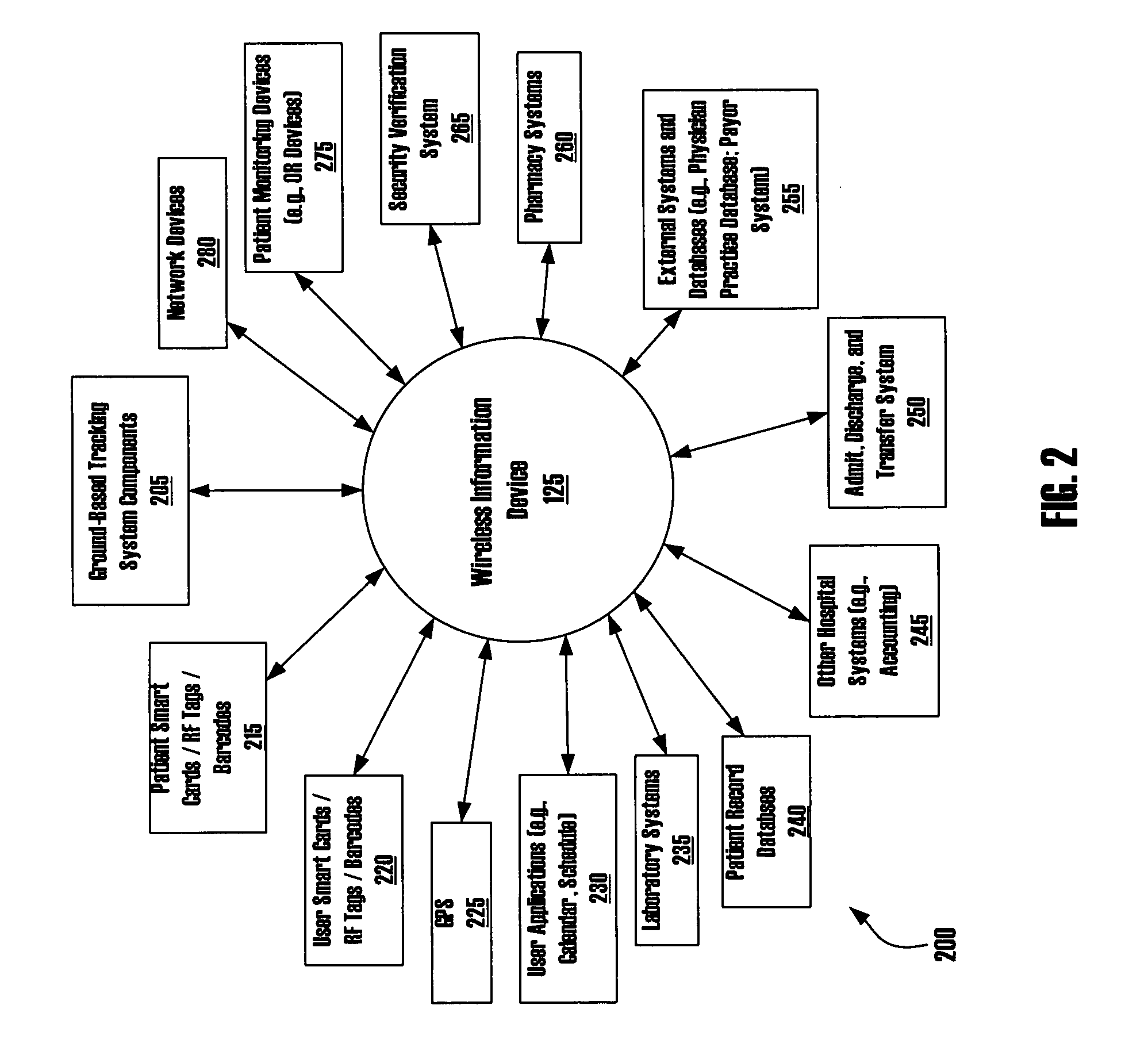

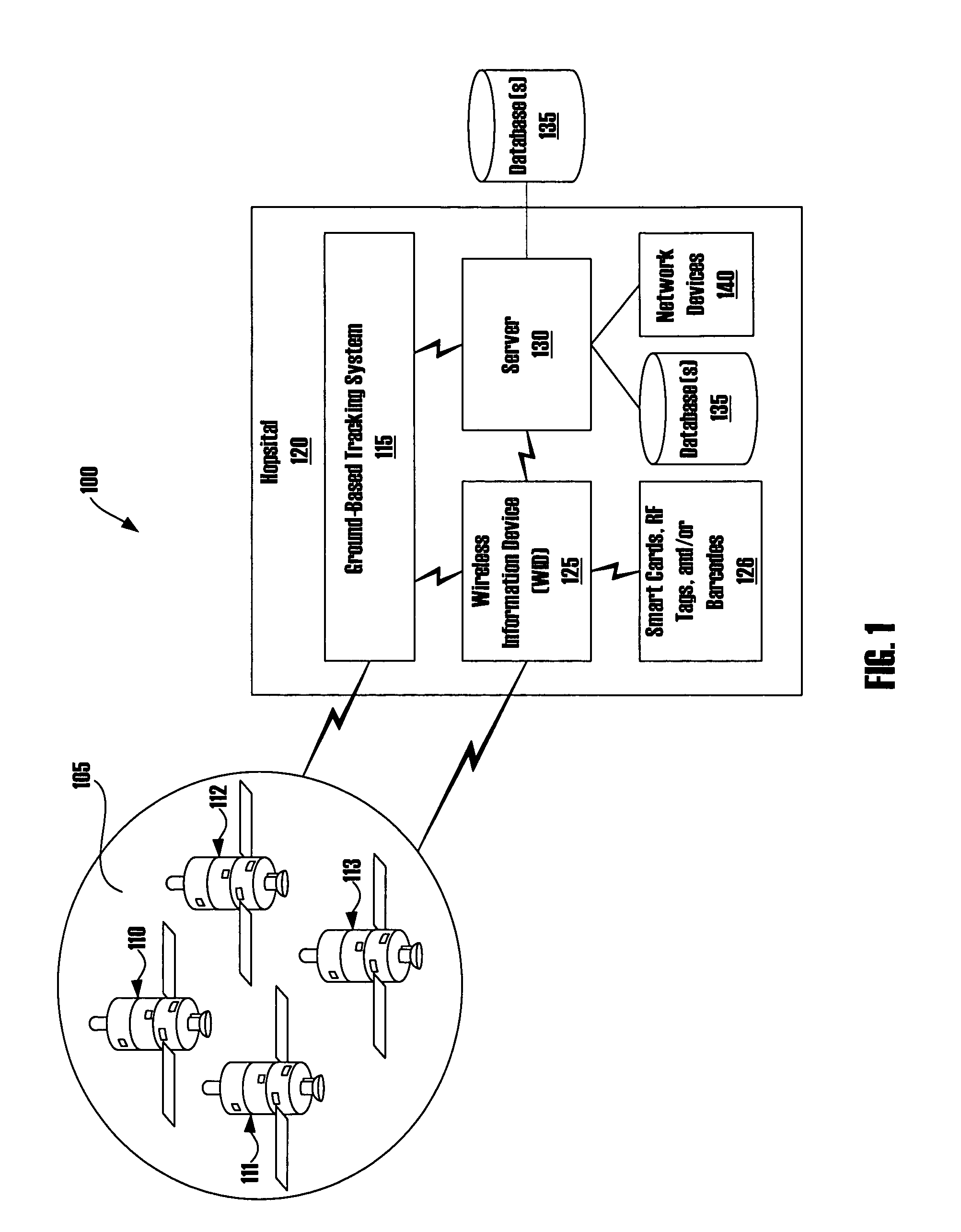

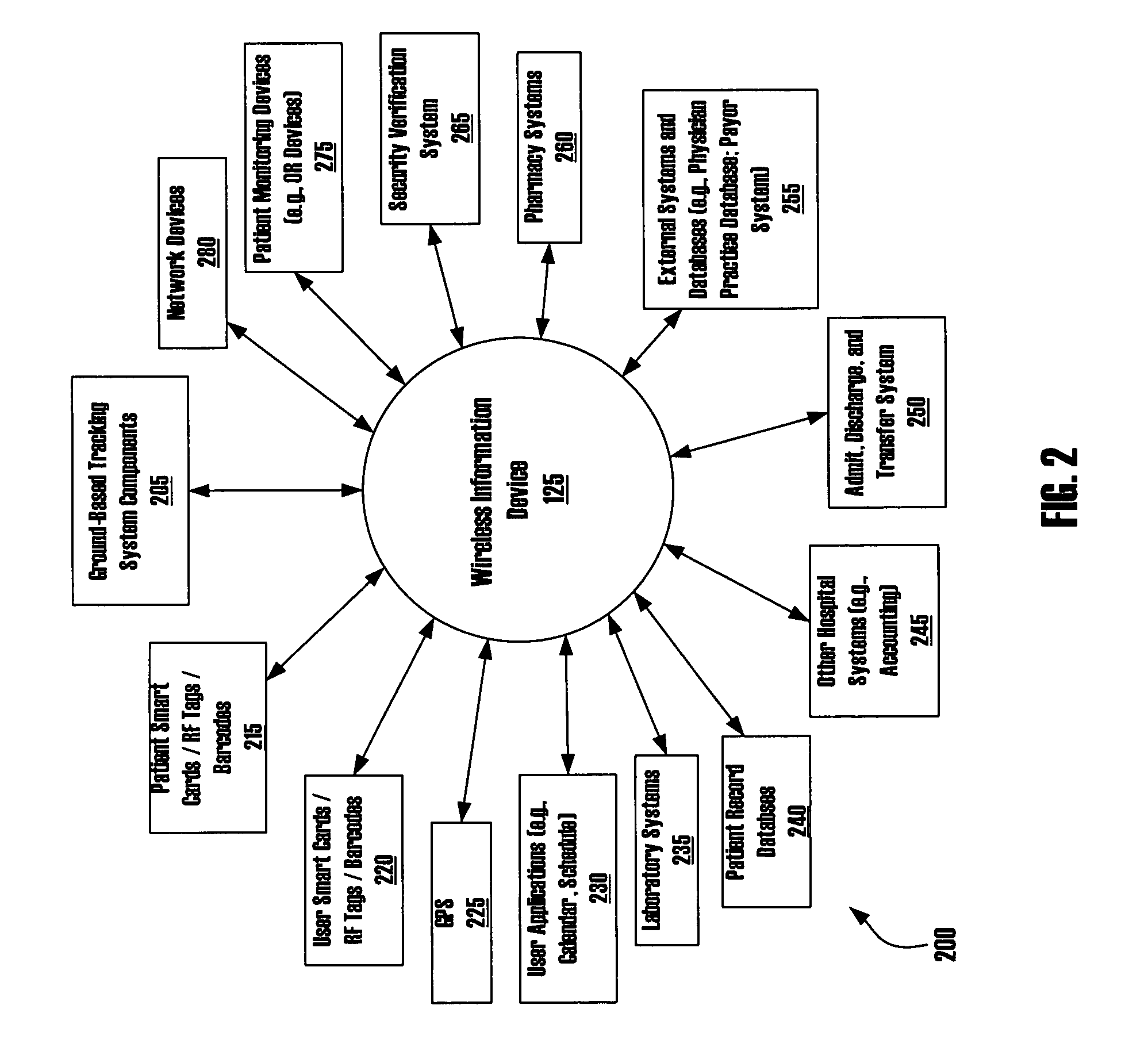

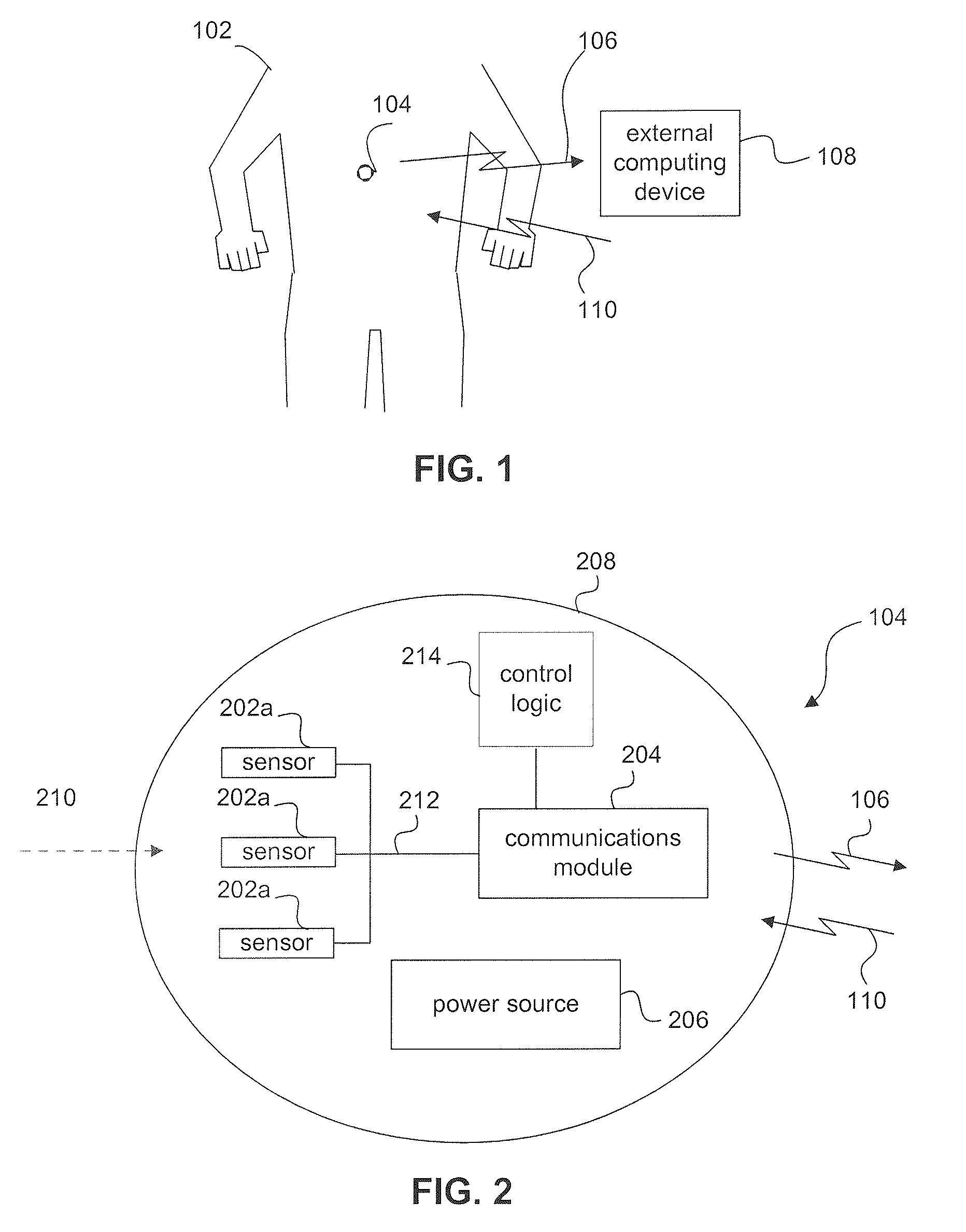

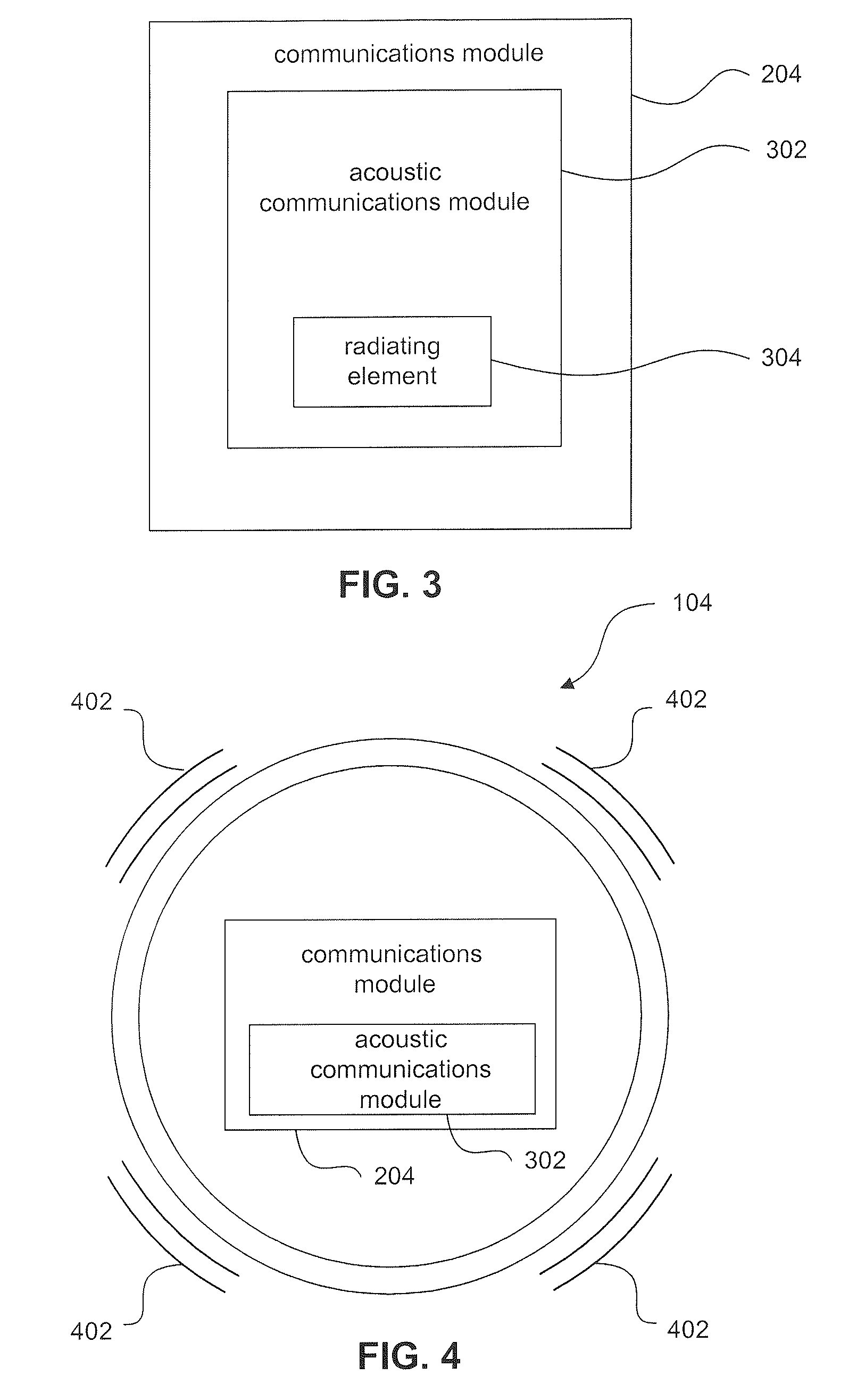

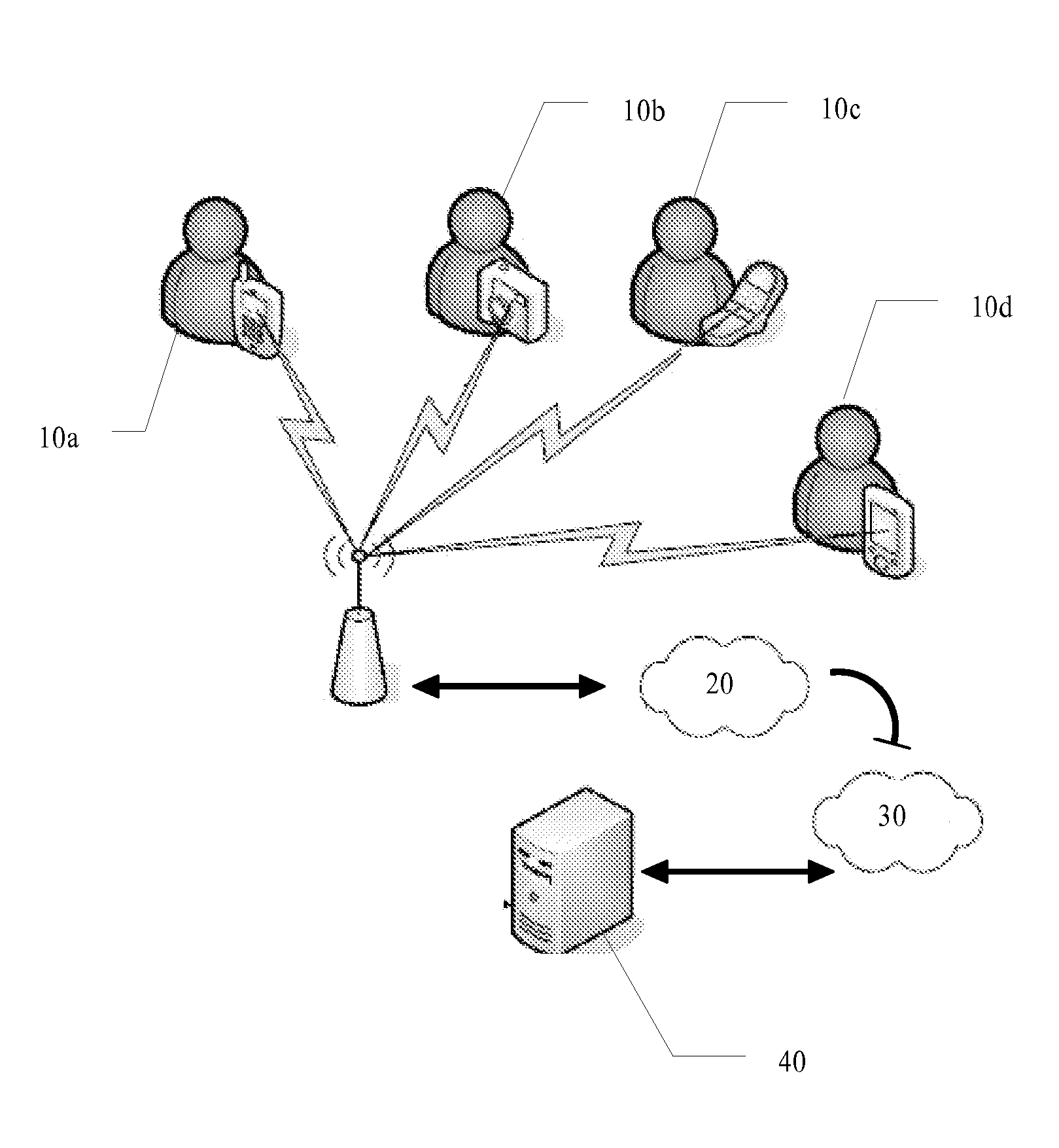

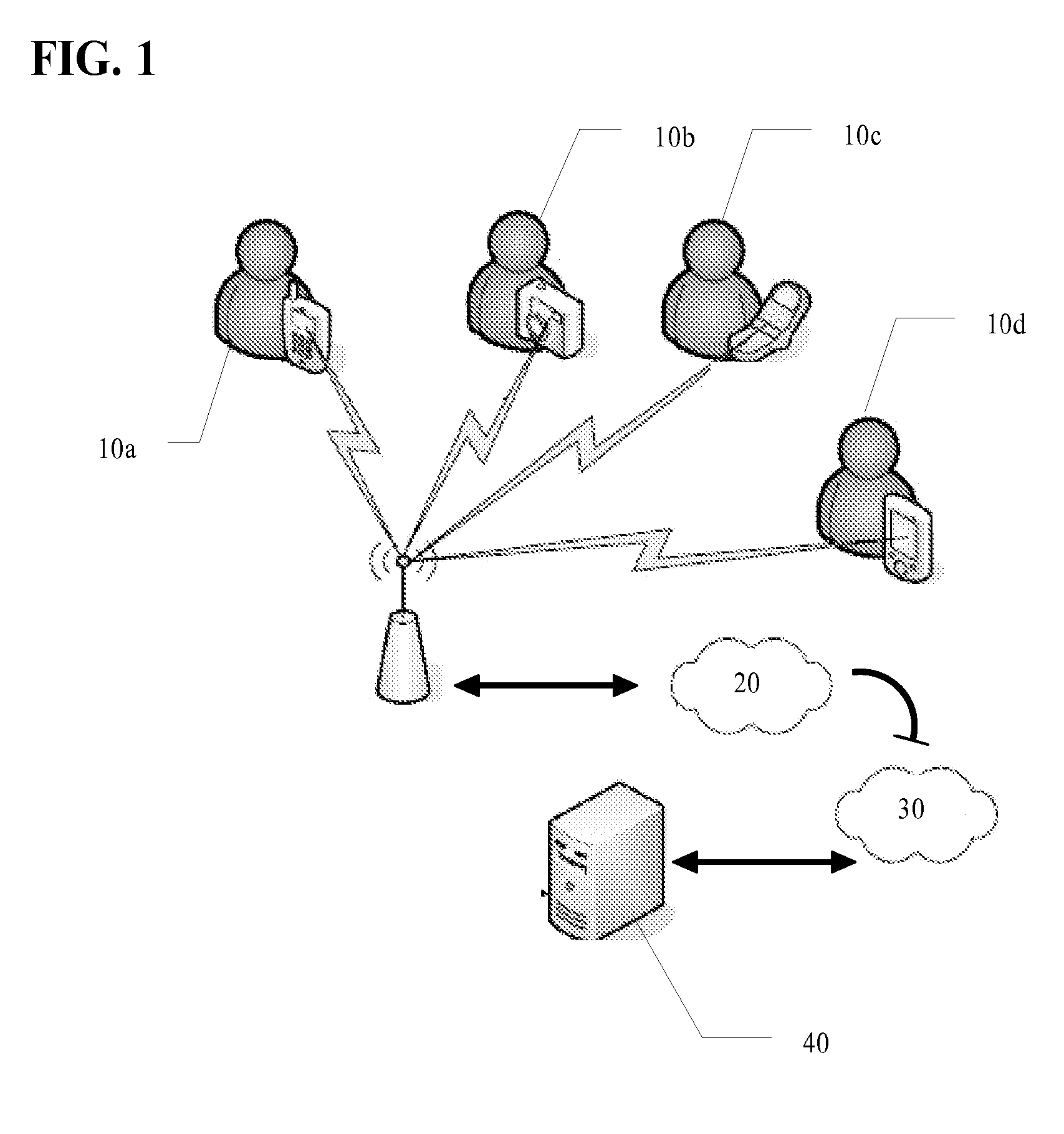

Systems and methods for context relevant information management and display

InactiveUS20050021369A1Easy to displayOut of viewLocal control/monitoringHospital data managementRelevant informationExact location

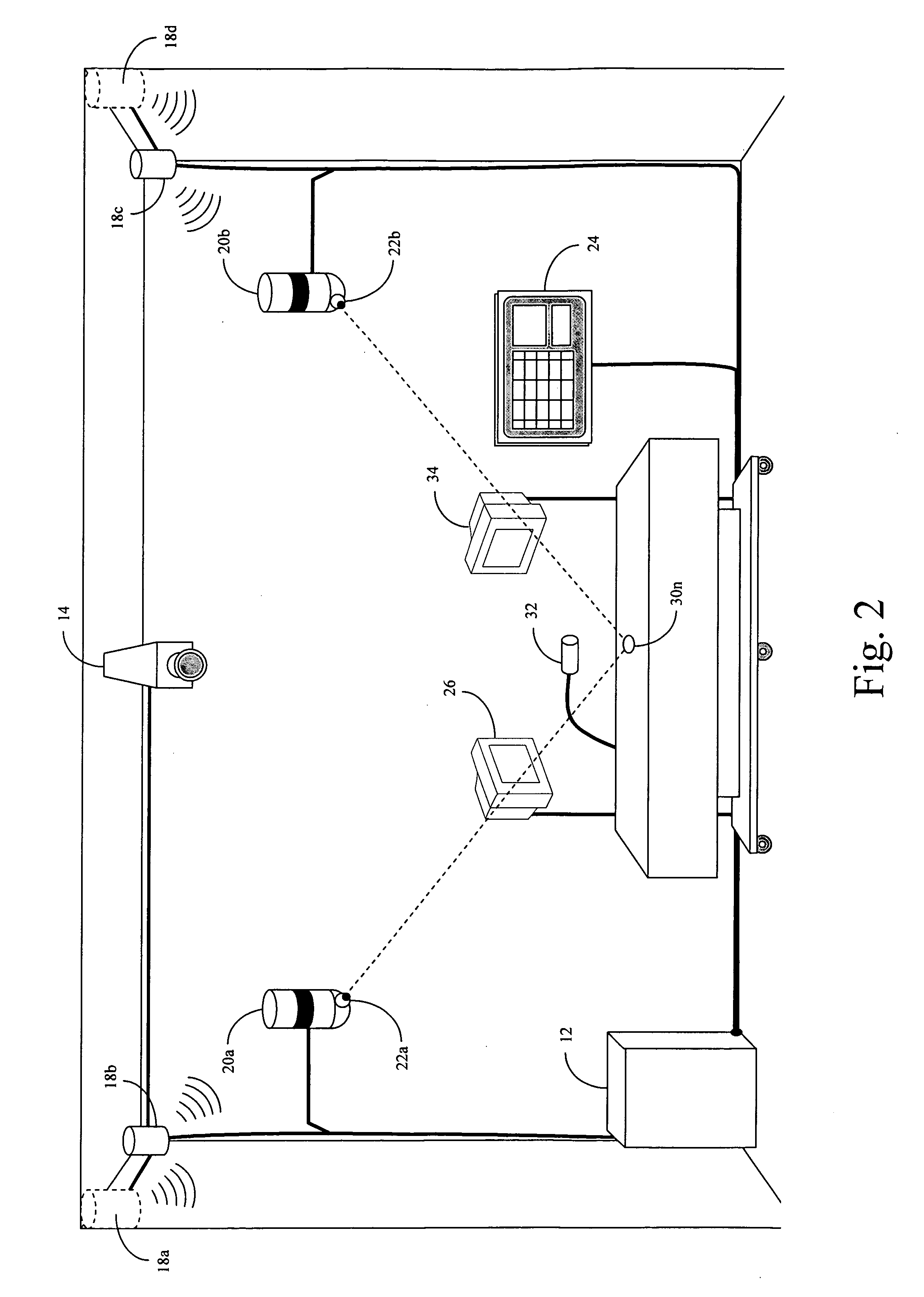

A wireless information device provides a user with context relevant information. The precise location of the wireless device is monitored by a tracking system. Using the location of the wireless device and the identity of the user, context relevant information is transmitted to the device, where the context relevant information is pre-defined, at least in part, by the user. Context relevant information served to the device depends on the identity of the user, the location of the device, and the proximity of the device to persons or objects. The wireless information device may be used by healthcare workers, such as physicians, in hospitals, although other environments are contemplated, such as hotels, airports, zoos, theme parks, and the like.

Owner:STORYMINERS

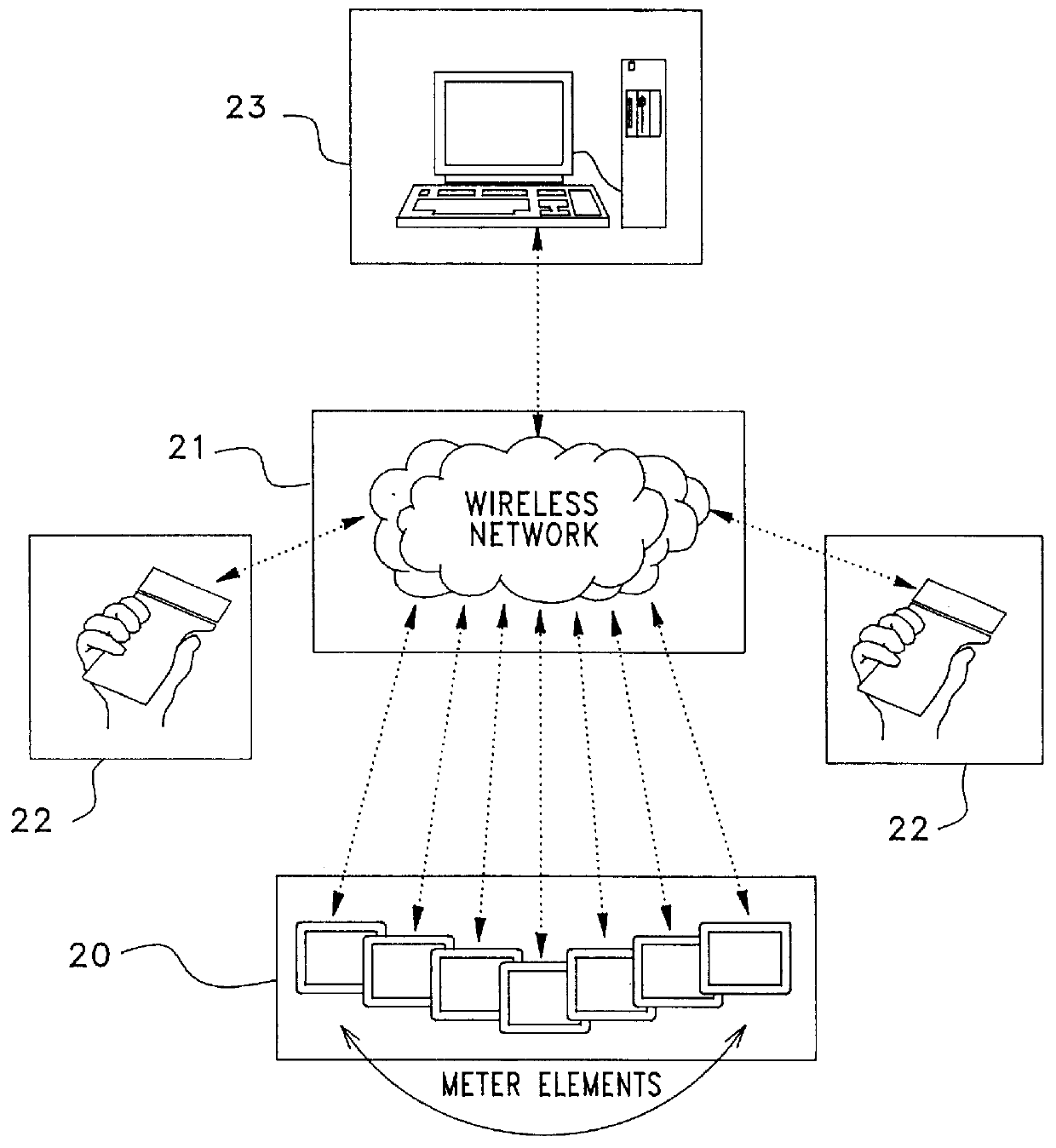

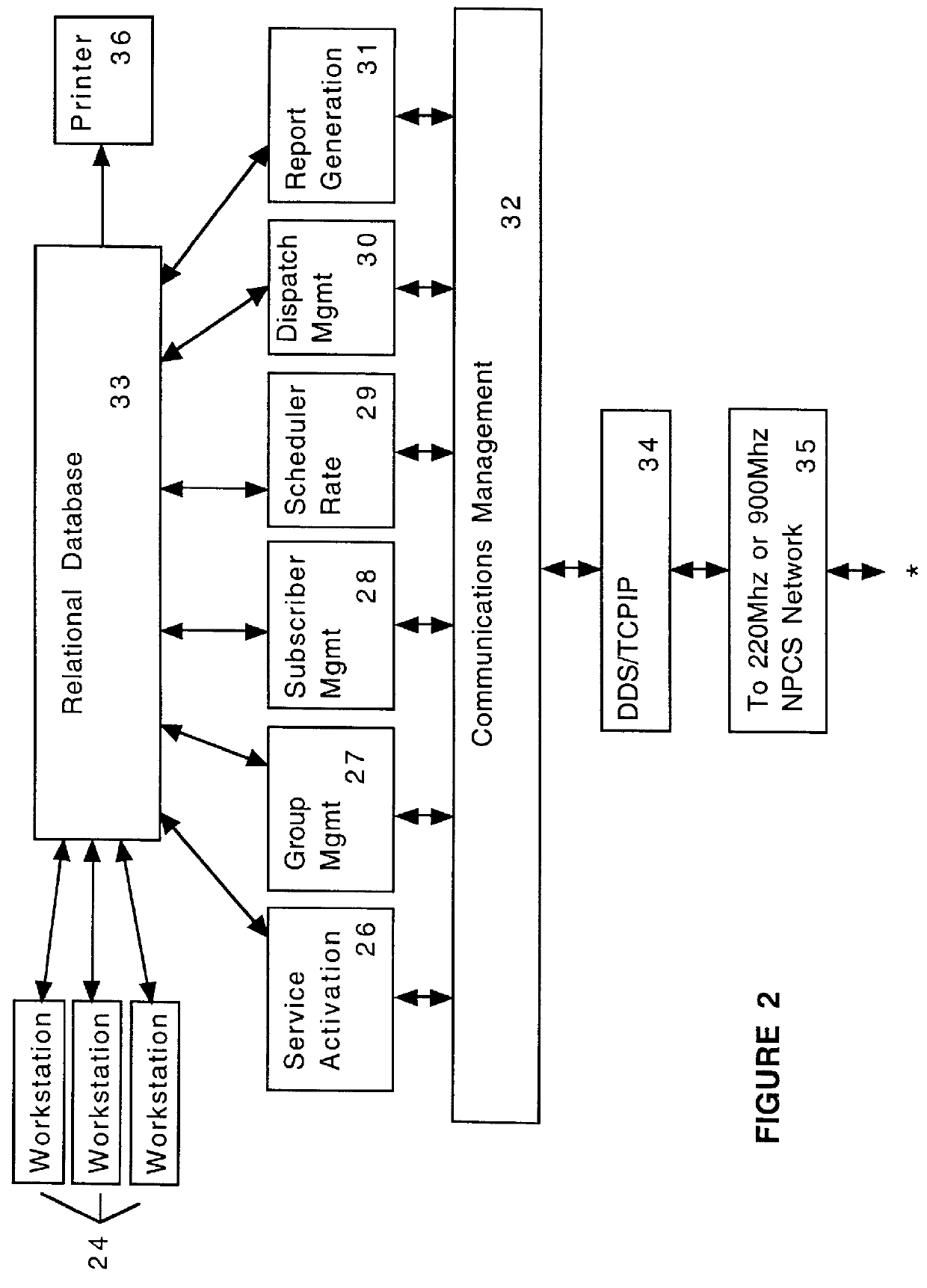

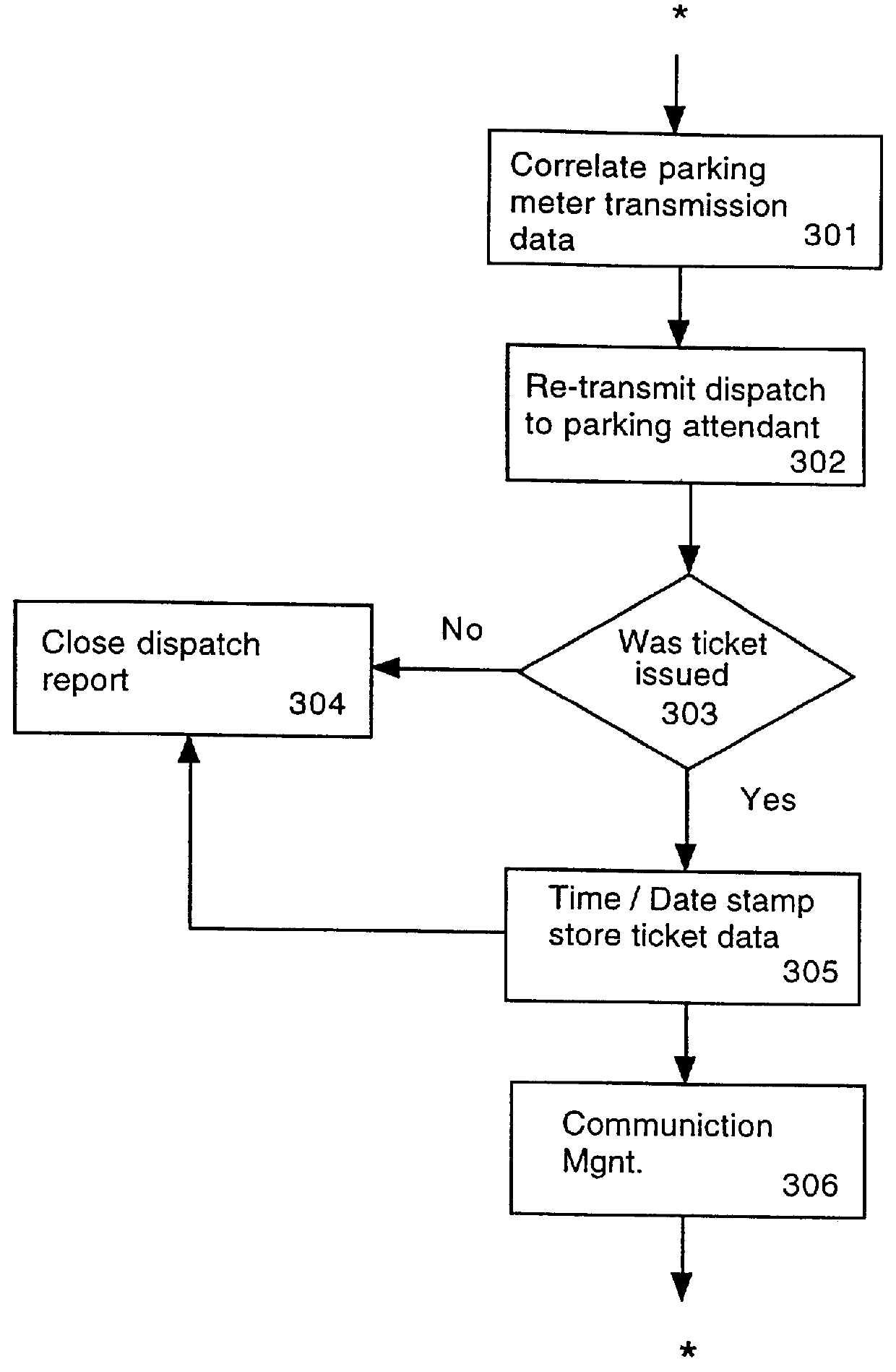

Integrated parking meter system

InactiveUS6037880ASave livesIncrease incomeElectric signal transmission systemsTicket-issuing apparatusParking spaceExact location

An integrated parking meter system automates the issuance of parking citations. Each parking meter will be equipped with a sonar range finder, mercury type switch and a two way radio that communicates via N-PCS to a host computer back at the control center. When a meter runs out of money it checks to see if a vehicle is present in the parking space. If it is, the meter notifies the host computer that there is a car illegally parked in the space. The host computer correlates the information to identify the exact location of the violator. The host computer then sends this information via wireless network to the parking meter attendants' personal communicator. The parking attendant proceeds to the violator and issues the citation. Included within this technology is the capability to remotely change the rate structure of any or all electronic parking meters. A meter diagnostic feature alerts repair personnel to specific malfunctioning meters. The meter may also set the time remaining on the meter to zero whenever a car leaves the adjacent space.

Owner:MANION JEFFREY CHARLES

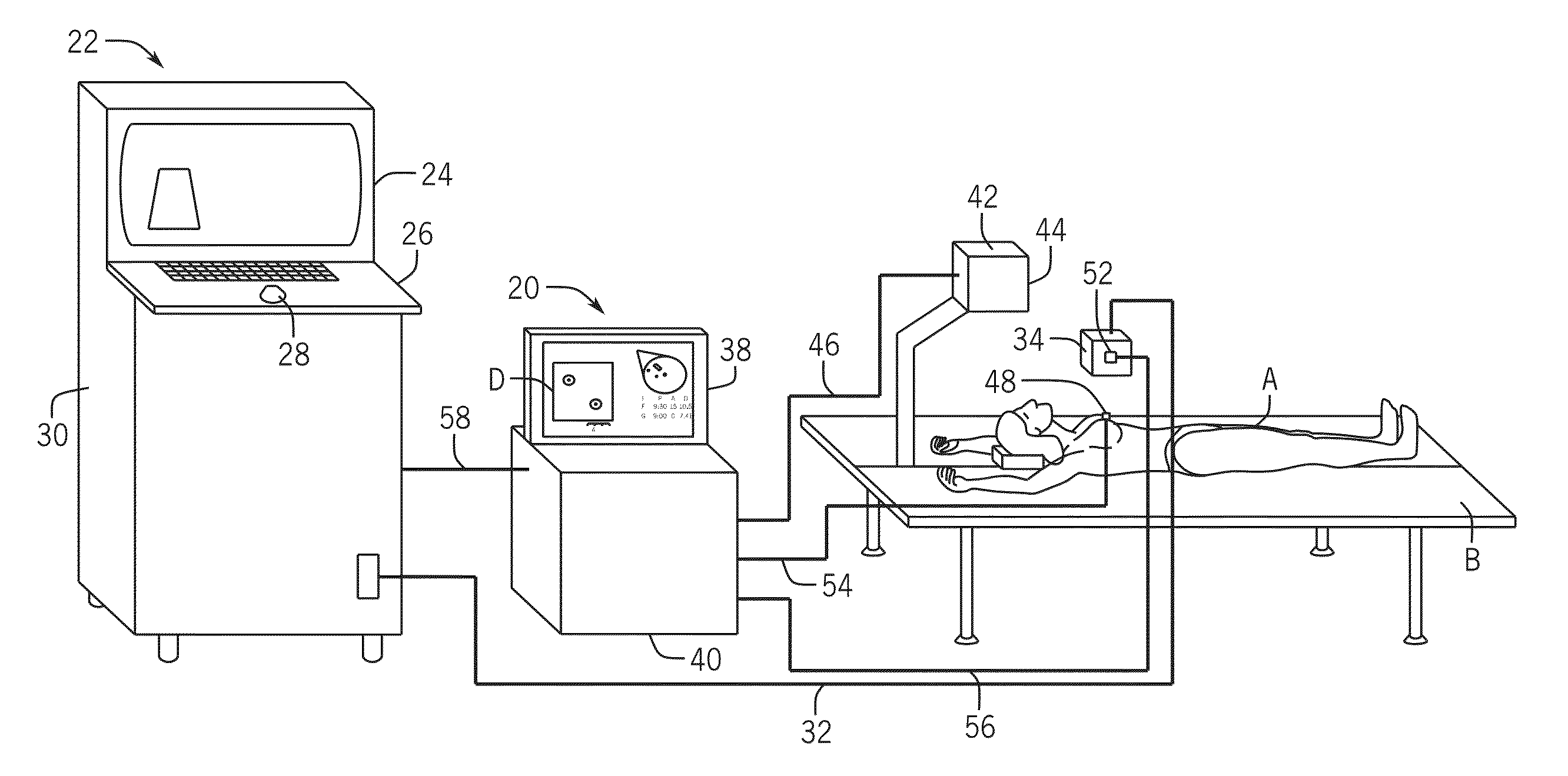

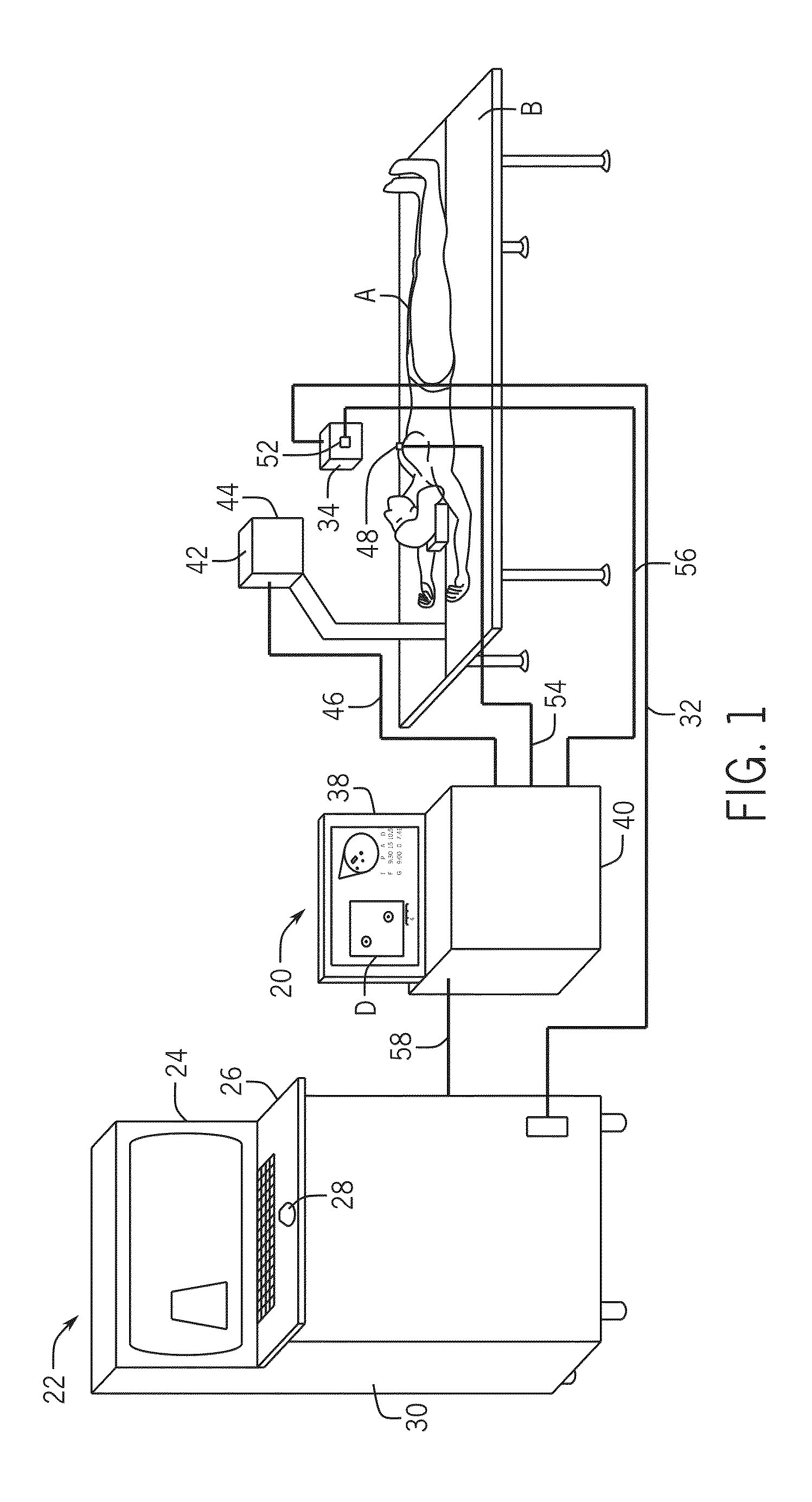

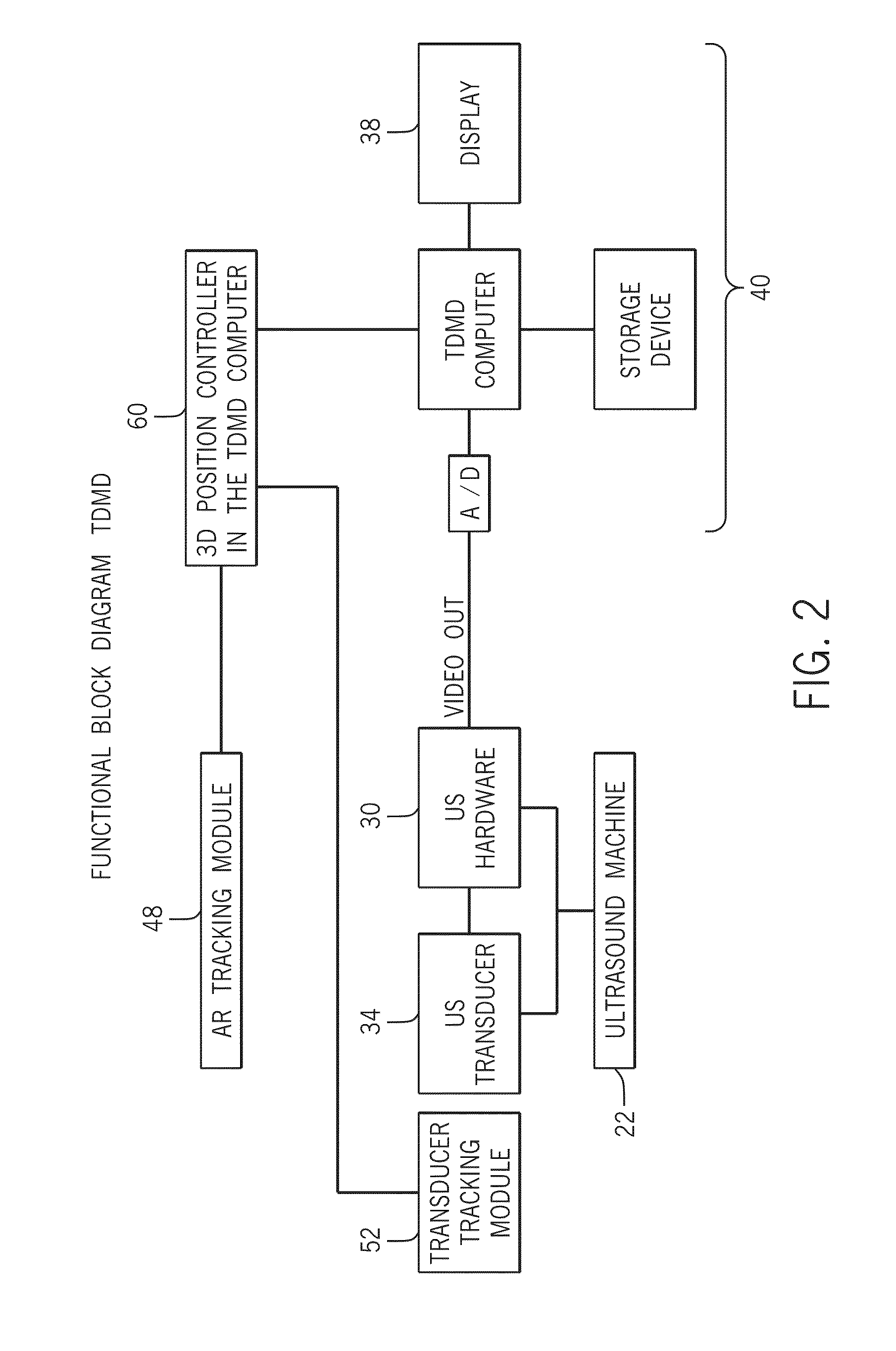

Three Dimensional Mapping Display System for Diagnostic Ultrasound Machines

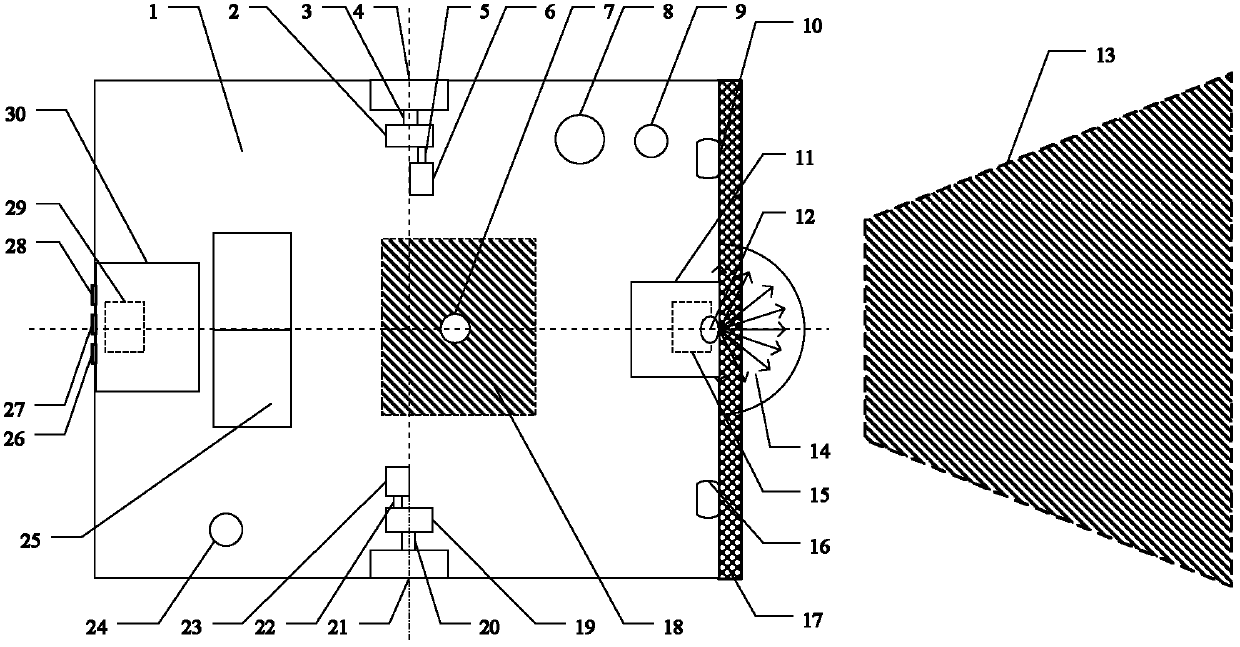

ActiveUS20150051489A1Shorten the timeTime-consuming to eliminateOrgan movement/changes detectionInfrasonic diagnosticsSonificationImaging interpretation

An automated three dimensional mapping and display system for a diagnostic ultrasound system is presented. According to the invention, ultrasound probe position registration is automated, the position of each pixel in the ultrasound image in reference to selected anatomical references is calculated, and specified information is stored on command. The system, during real time ultrasound scanning, enables the ultrasound probe position and orientation to be continuously displayed over a body or body part diagram, thereby facilitating scanning and images interpretation of stored information. The system can then record single or multiple ultrasound free hand two-dimensional (also “2D”) frames in a video sequence (clip) or cine loop wherein multiple 2D frames of one or more video sequences corresponding to a scanned volume can be reconstructed in three-dimensional (also “3D”) volume images corresponding to the scanned region, using known 3D reconstruction algorithms. In later examinations, the exact location and position of the transducer can be recreated along three dimensional or two dimensional axis points enabling known targets to be viewed from an exact, known position.

Owner:METRITRACK

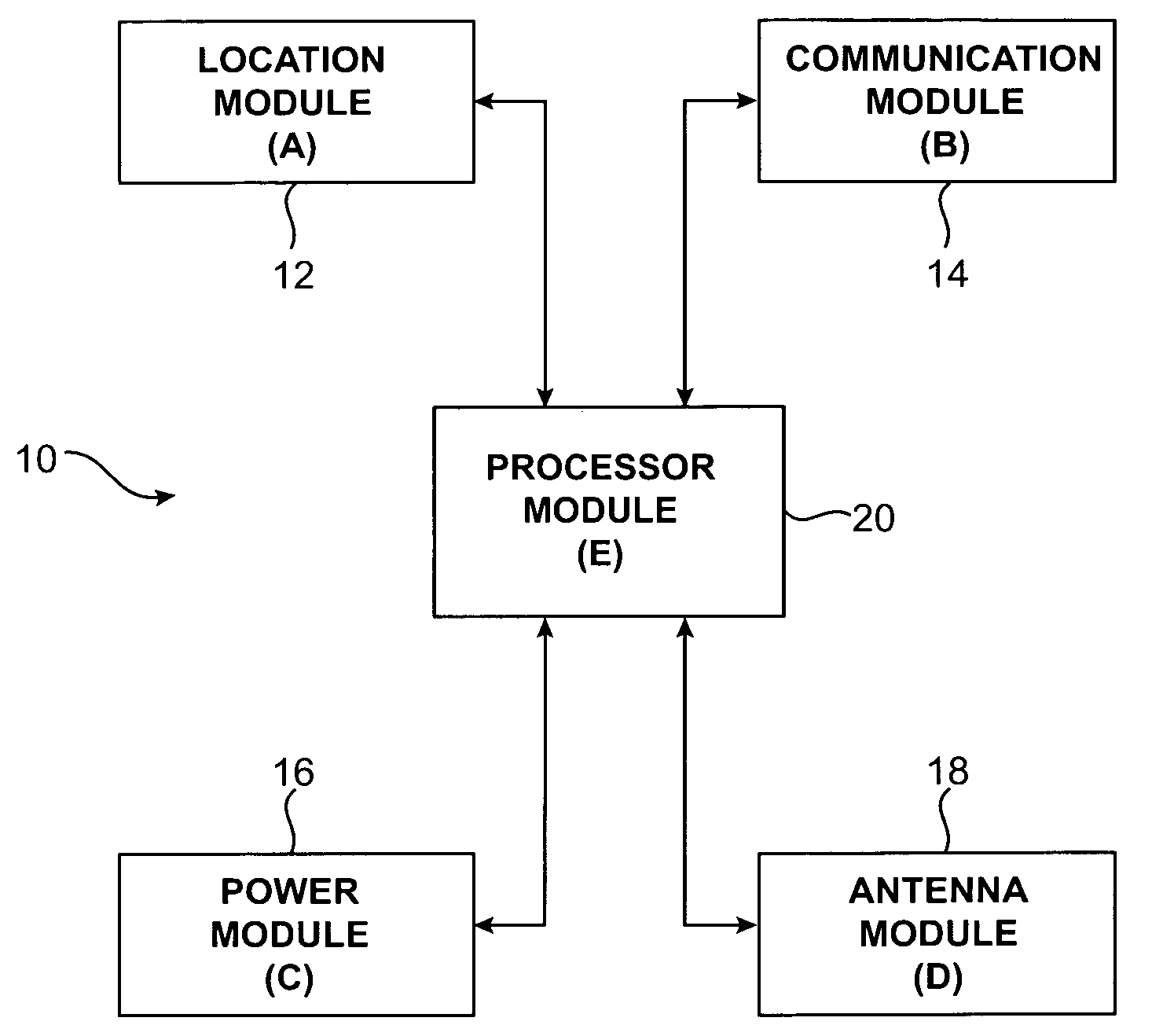

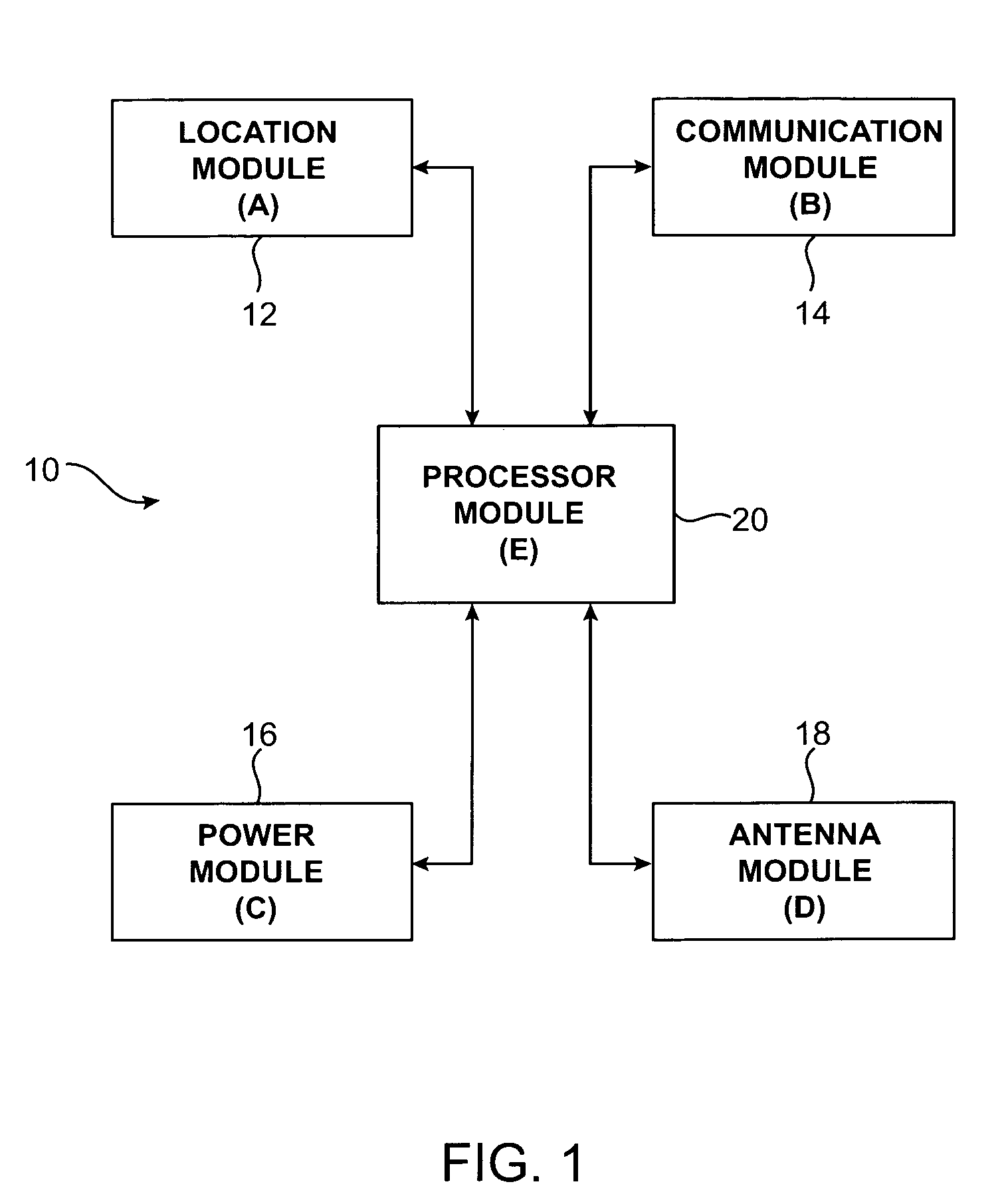

Durable global asset-tracking device and a method of using the same

InactiveUS7072668B2Maintain durabilityIncrease in sizePosition fixationSubstation equipmentConstant powerCommunications system

A communication system for tracking an asset globally accesses multiple communication networks and switches among them, choosing the most economic, available communication mode without need for a constant power supply. An integrated motion sensor uses a combination of GPS updating and dynamic movement calculation to obtain the most reliable position estimation. Current location is identified within a small radius at all times. While taking advantage of the GPS system to obtain accurate location information, direct exposure to GPS satellites is not required at all times. The system obtains position information from GPS satellites whenever valid GPS signals are available, and provides its own location tracking capability when GPS signals are not accessible. The position accuracy of the device is preferably within a 20-meter radius from the exact location.

Owner:GEOSPATIAL TECH

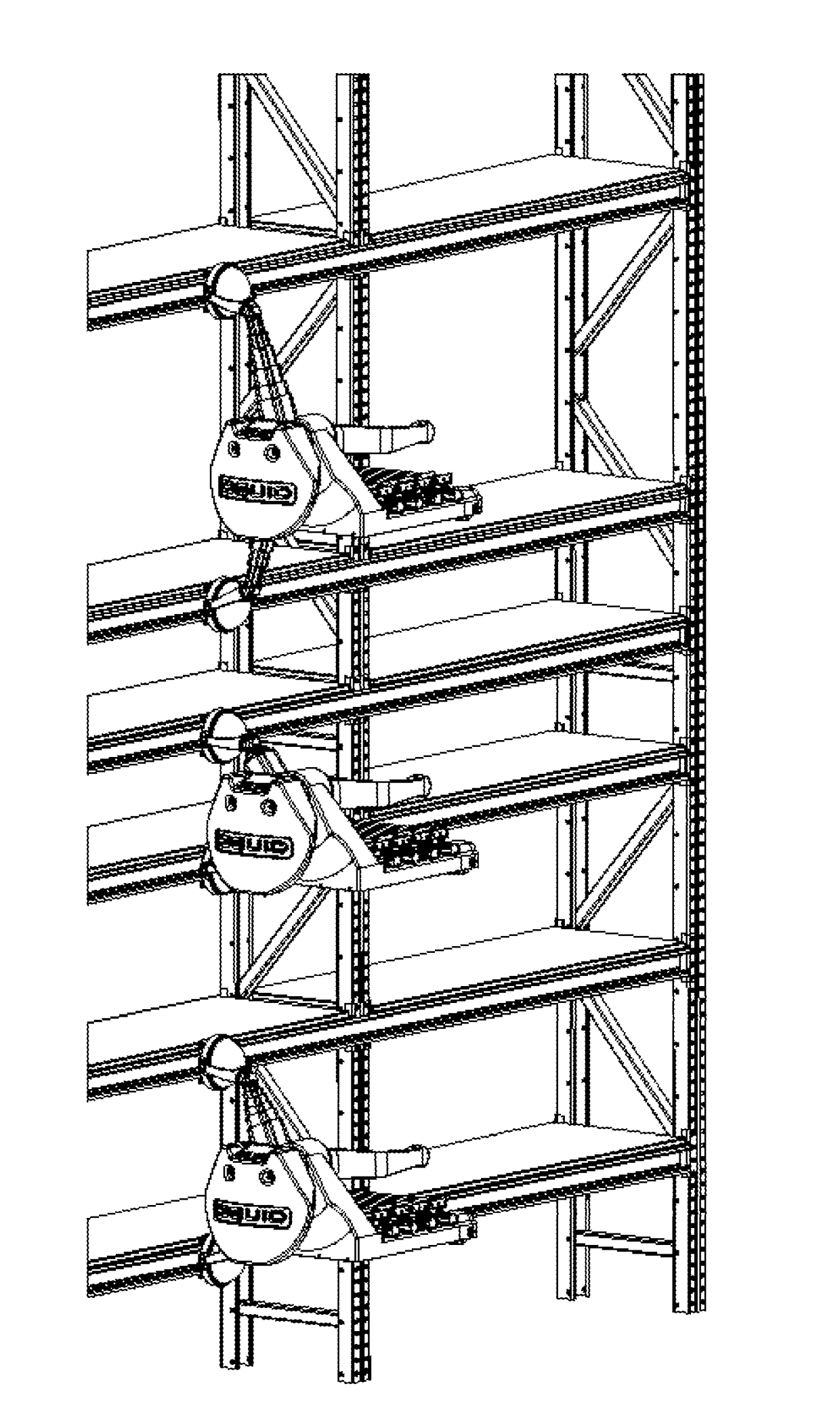

Automatic warehouse system

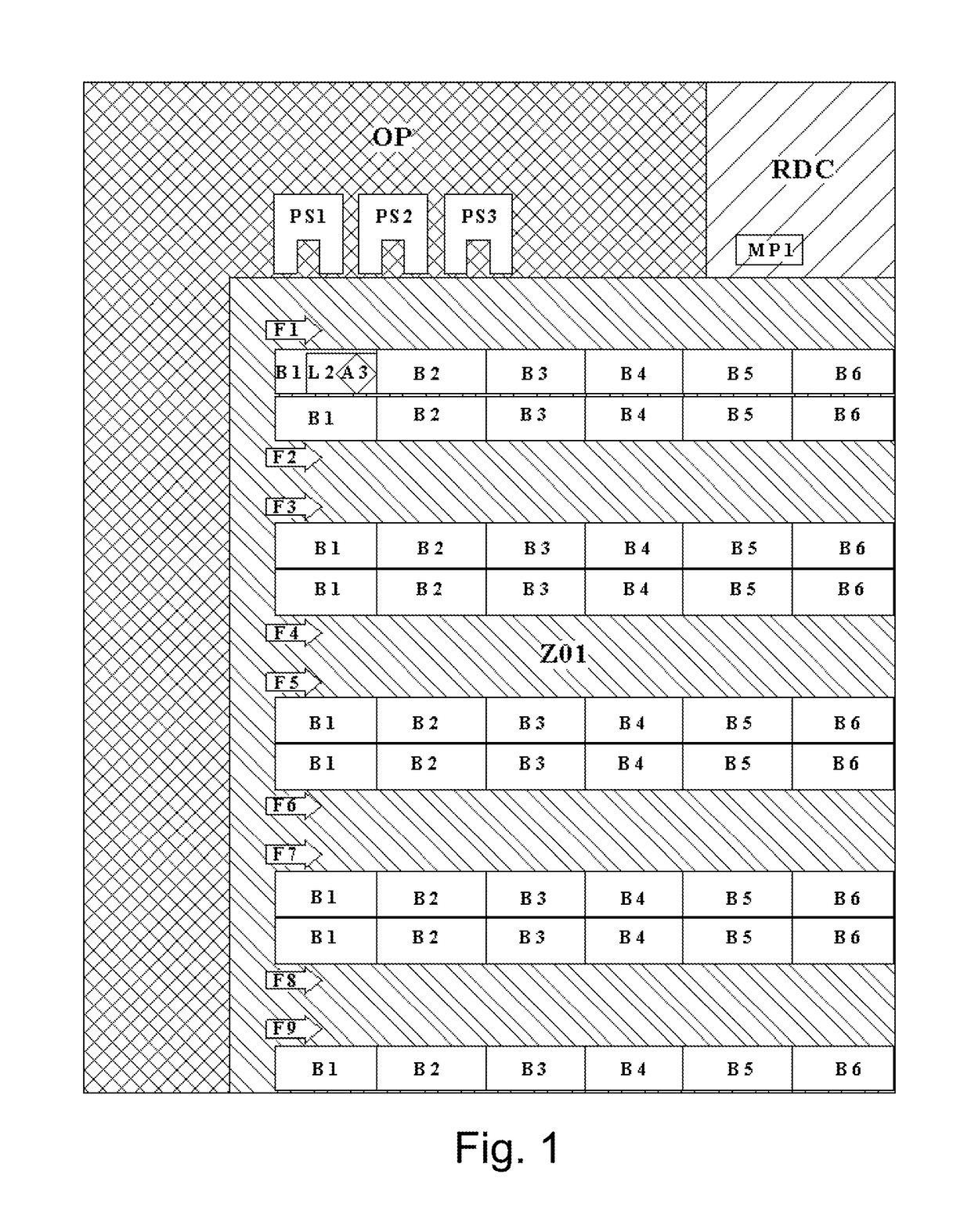

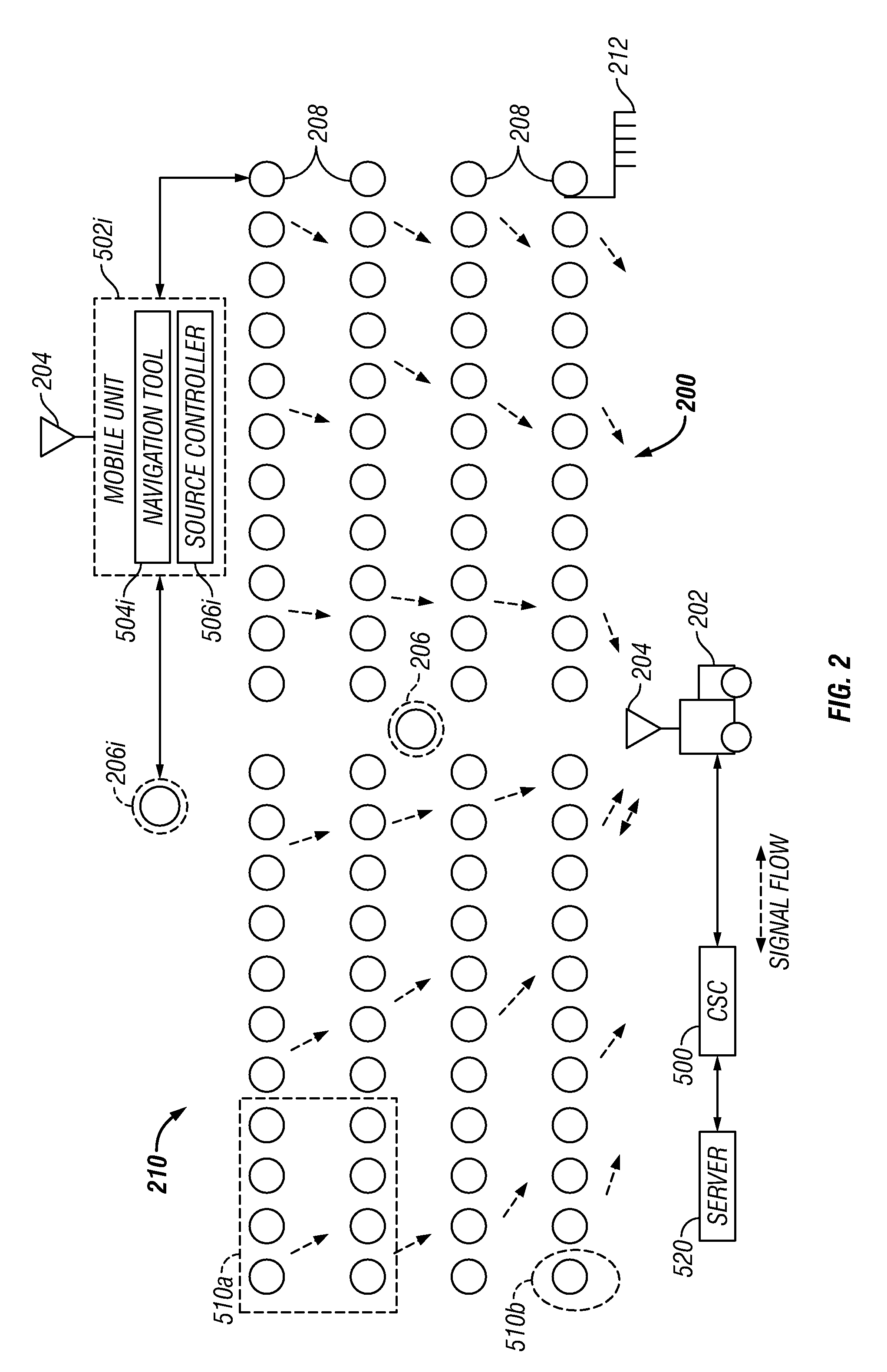

ActiveUS20170158430A1Improve the level ofOngoing scalabilityLogisticsStorage devicesOn boardExact location

The invention is an automatic system for picking and placing boxes on shelves in a warehouse. The system comprises a set of autonomous mobile robots; a network of vertical and horizontal rails that are parallel to the vertical support posts and horizontal shelves of the shelving system in the warehouse; and a Real Time Traffic Management (RTTM) server, which is a central processing server configured to communicate with the robots and other processors and servers in the warehouse. The system is characterized in that the robots comprise a set of on-board sensors, a processor, software, and other electronics configured to provide them with three-dimensional navigation and travel capabilities that enable them to navigate and travel autonomously both along the floor and up the vertical rails and along the horizontal rails of the network of rails to reach an exact location on the floor or shelving system of the warehouse.

Owner:BIONICHIVE

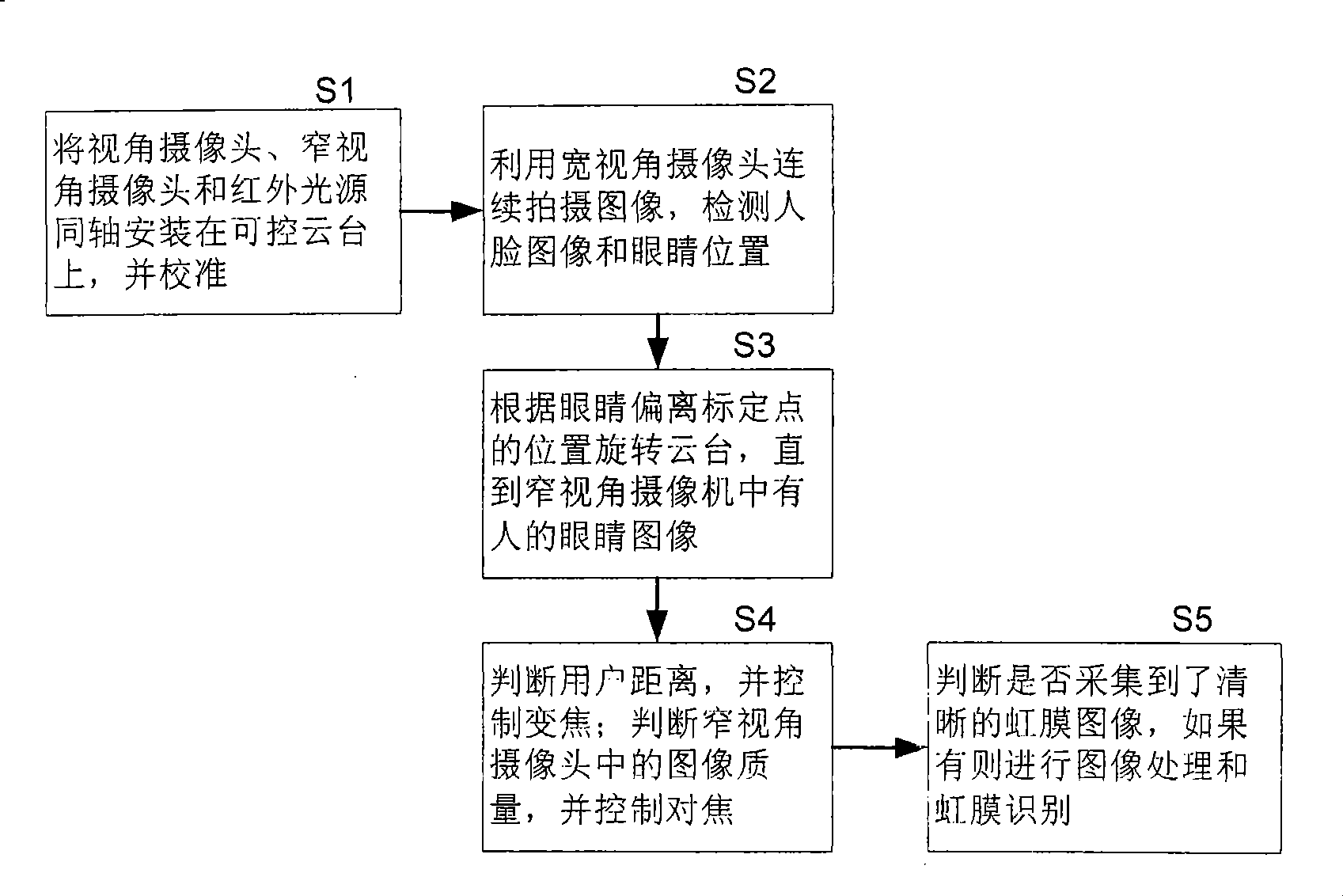

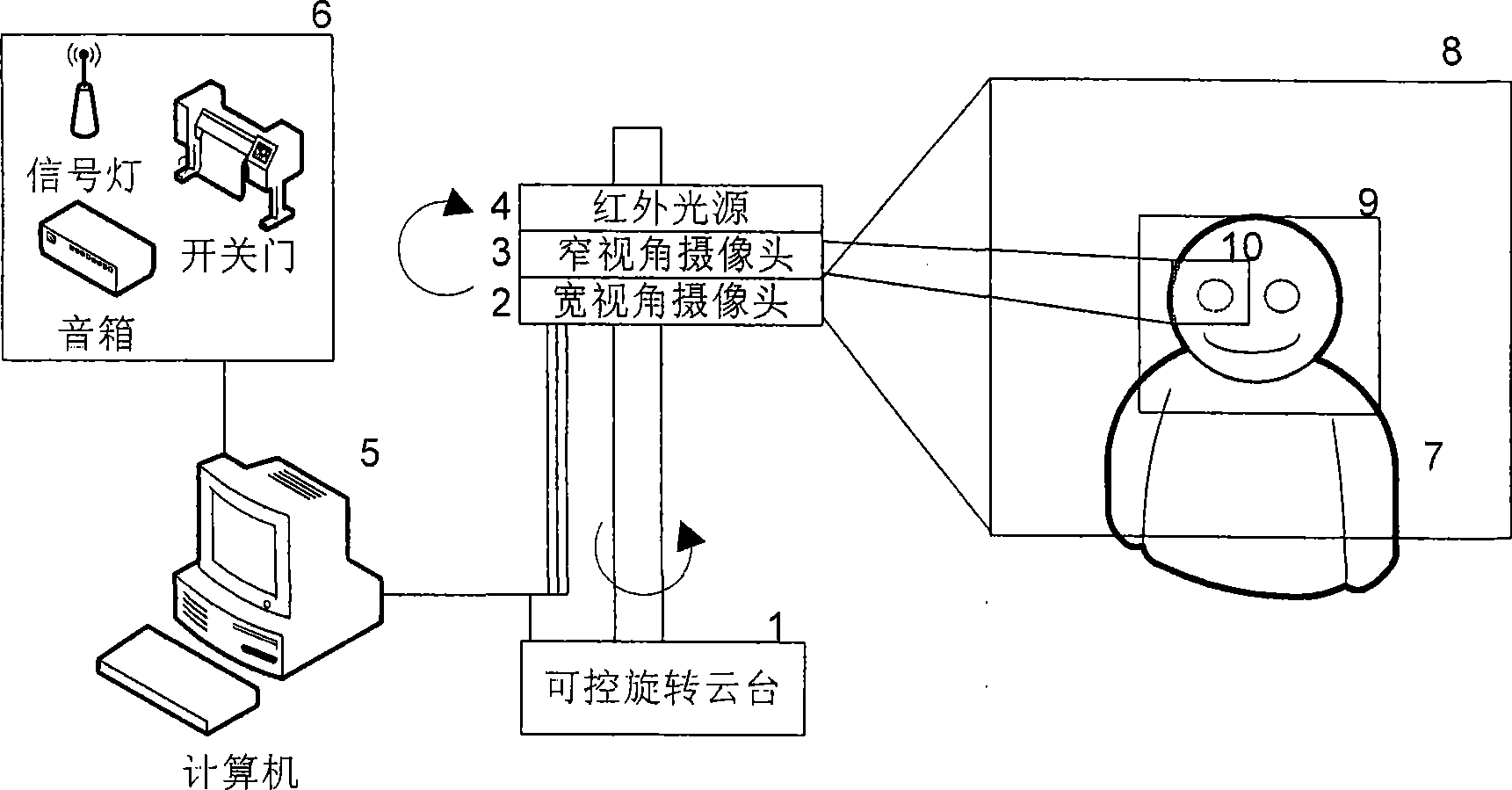

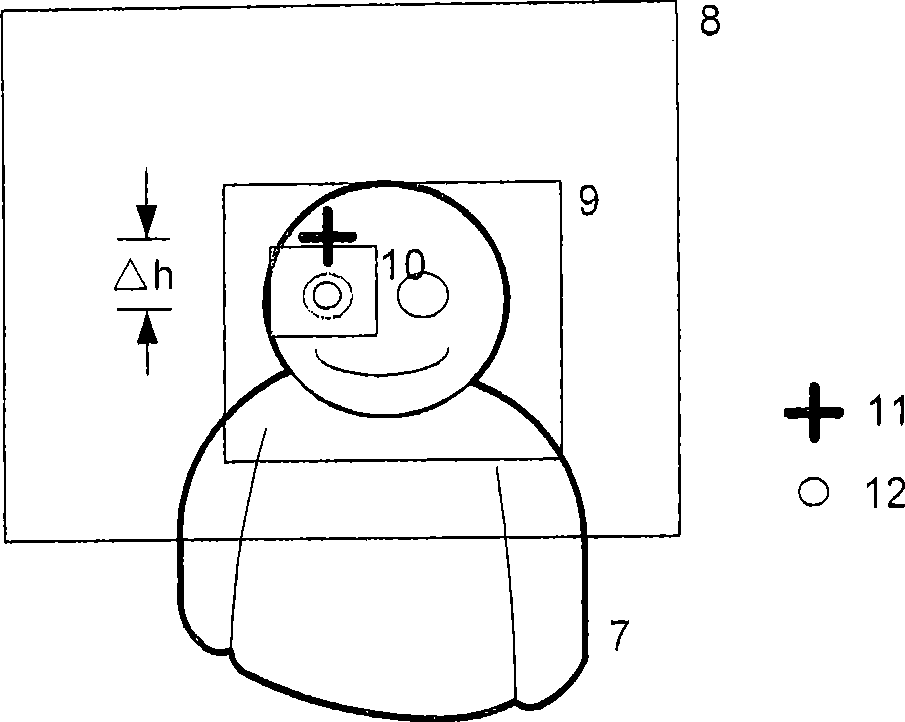

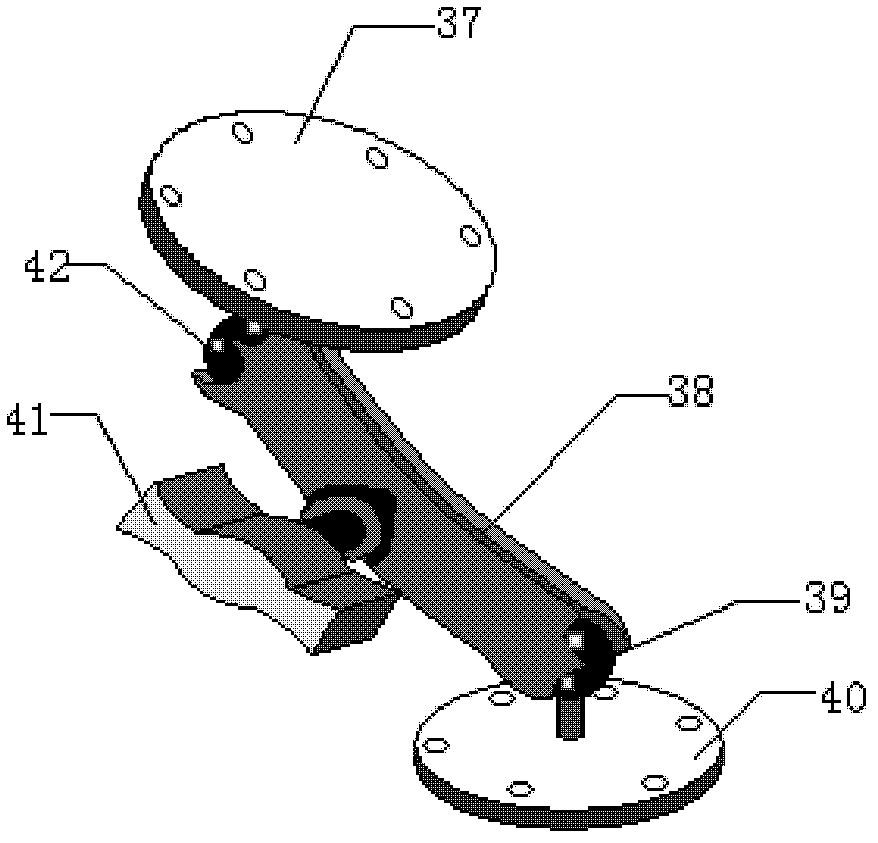

Automatic-tracking and automatic-zooming method for acquiring iris images

InactiveCN101520838AImprove efficiencyTelevision system detailsAcquiring/recognising eyesImaging processingExact location

The invention relates to an automatic-tracking and automatic-zooming method for acquiring iris images. The method includes the following steps: a camera with wide angle of view, a camera with narrow angle of view (long focus) and an infrared light source are closely installed on a controllable tripod head which drives the two cameras and the infrared light source to rotate simultaneously; a system adopts the camera with wide angle of view to continuously detect facial images of human; when the facial image is available, eye images of human are detected to obtain the exact locations of human eyes; and then the tripod head is controlled to rotate up and down or left and right, which allows that the camera with narrow angle of view and the infrared light source aim at the human eyes; user distance and picture quality are determined simultaneously to control the camera to automatically zoom; when clear iris images are acquired, image processing and iris recognition are carried out and the user identity is determined. A system device comprises a computer, the controllable tripod head, the camera with wide angle of view, the camera with narrow angle of view, the infrared light source, a power supply, an image grabbing card and other auxiliary equipments.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

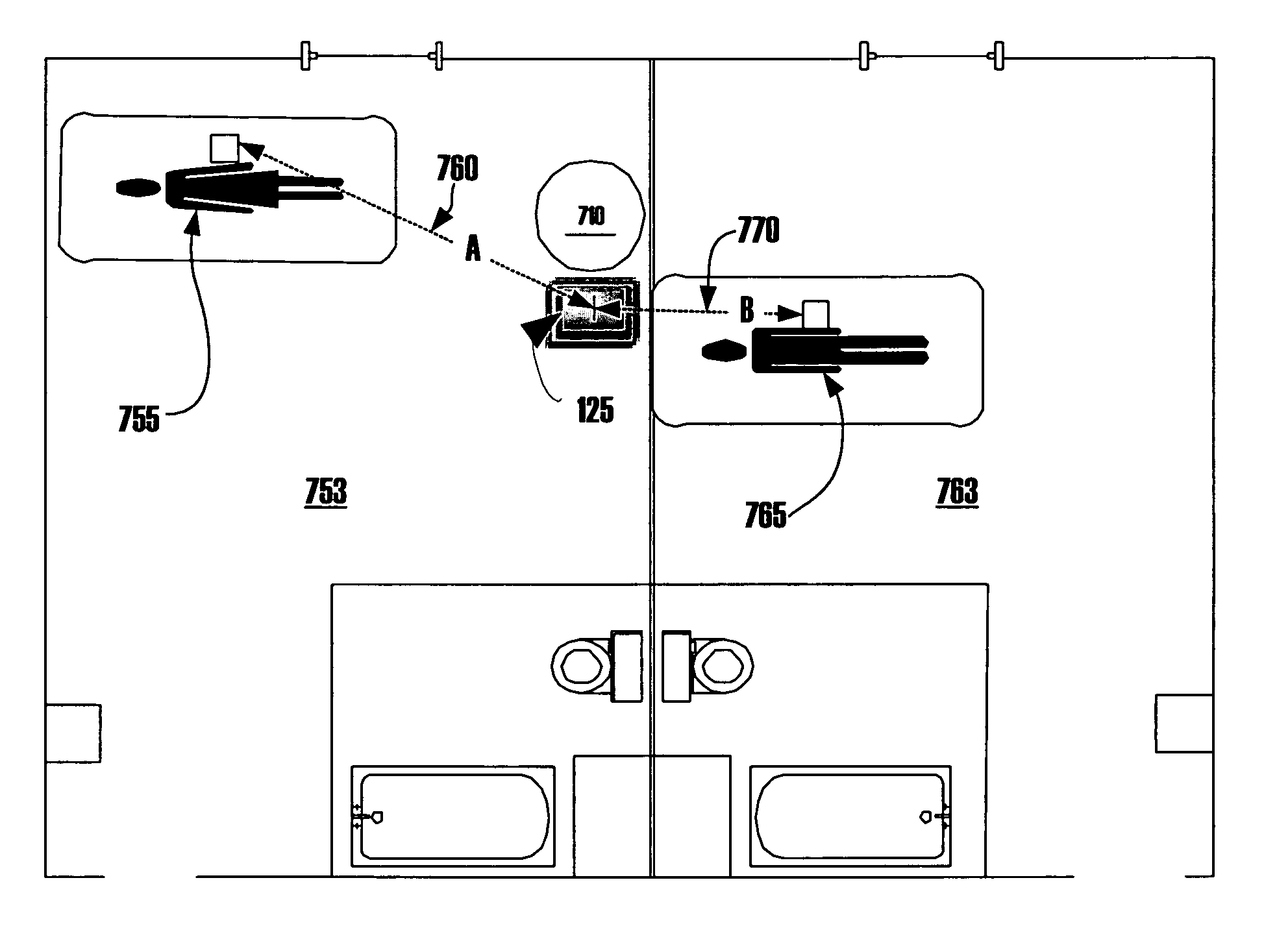

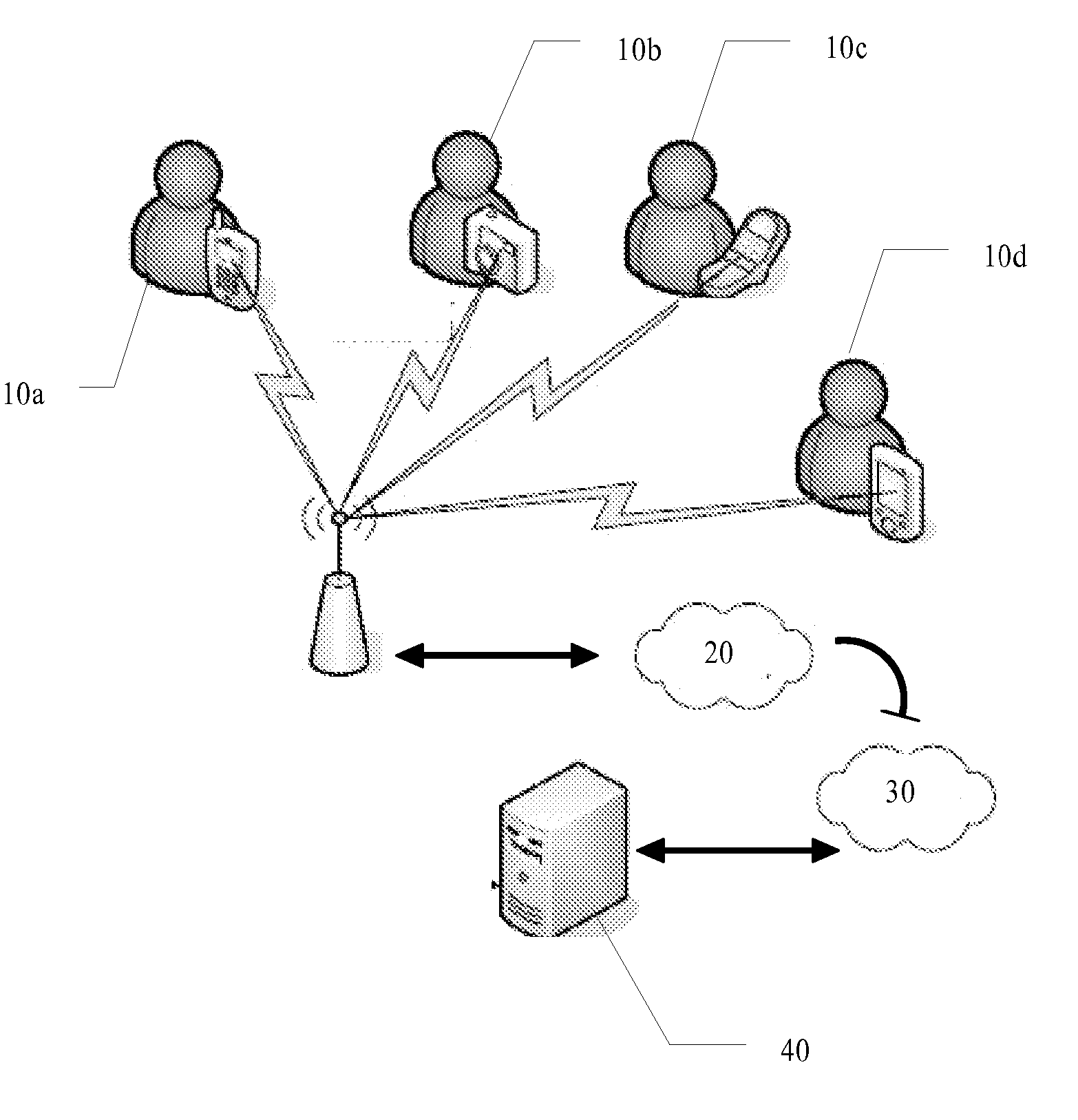

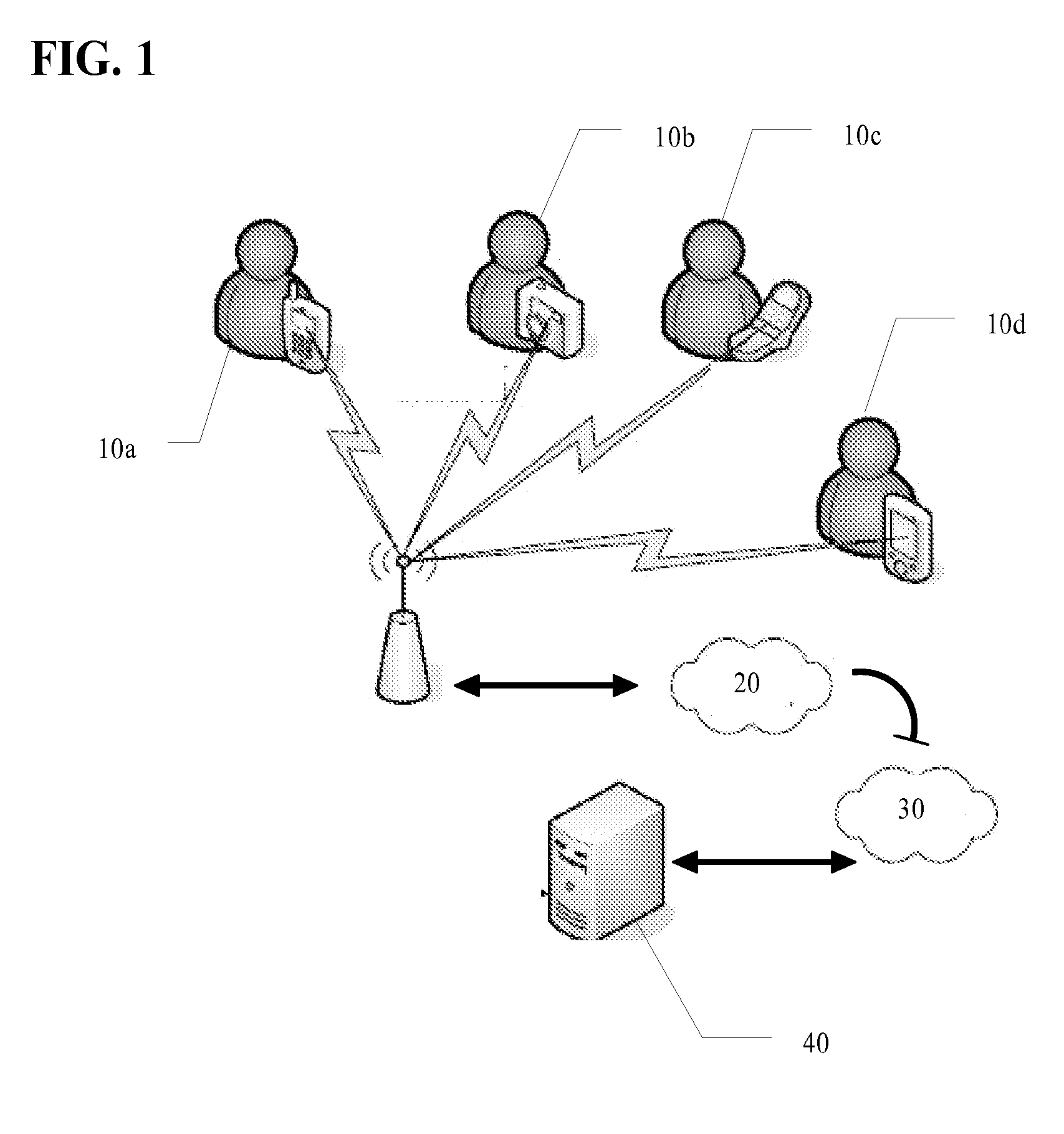

Systems and methods for context relevant information management and display

InactiveUS7627334B2Easy to displayOut of viewLocal control/monitoringDiagnostic recording/measuringRelevant informationExact location

A wireless information device provides a user with context relevant information. The precise location of the wireless device is monitored by a tracking system. Using the location of the wireless device and the identity of the user, context relevant information is transmitted to the device, where the context relevant information is pre-defined, at least in part, by the user. Context relevant information served to the device depends on the identity of the user, the location of the device, and the proximity of the device to persons or objects. The wireless information device may be used by healthcare workers, such as physicians, in hospitals, although other environments are contemplated, such as hotels, airports, zoos, theme parks, and the like.

Owner:STORYMINERS

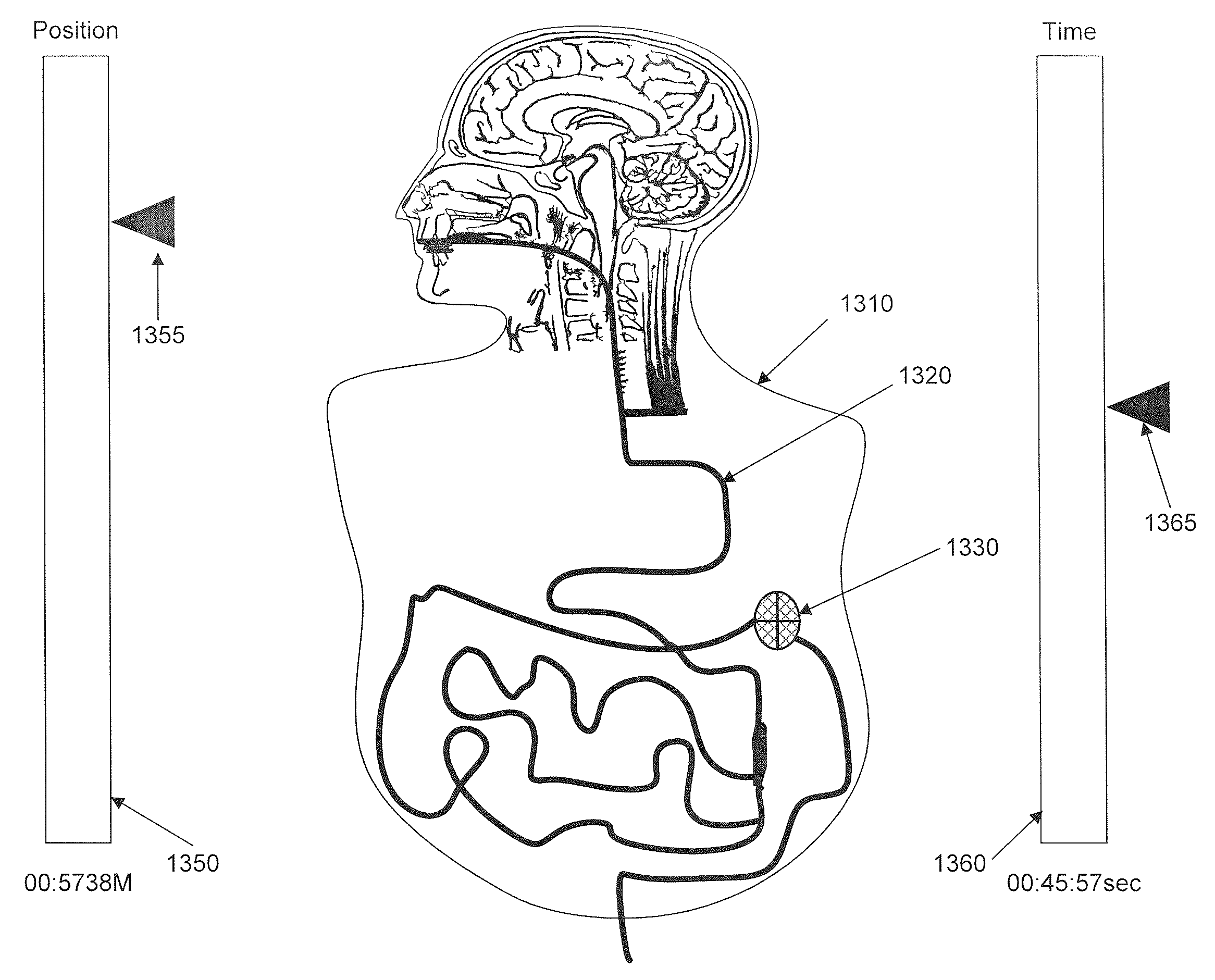

Displaying Image Data From A Scanner Capsule

An ingestible image scanning pill captures high resolution images of the GI tract as it passes through. Images communicated externally have exact location determination. Image processing software discards duplicate information and stitches images together, line scan by line scan, to replicate a complete GI tract as if it were stretched out in a straight line. A fully linear image is displayed to a medical professional as if the GI tract had been stretched in a straight line, cut open, laid flat out on a bench for viewing—all without making any incisions in a live patient.

Owner:INNURVATION

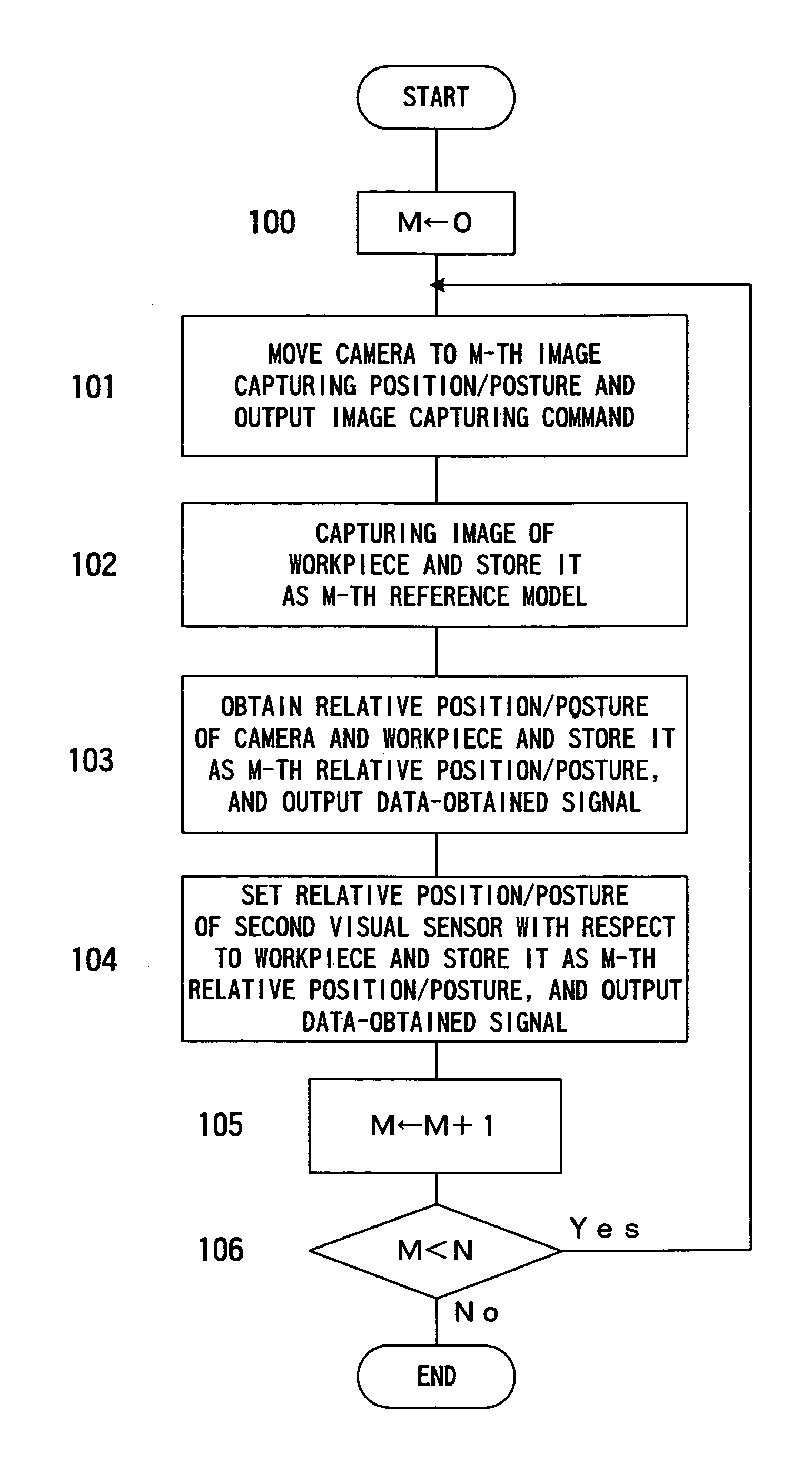

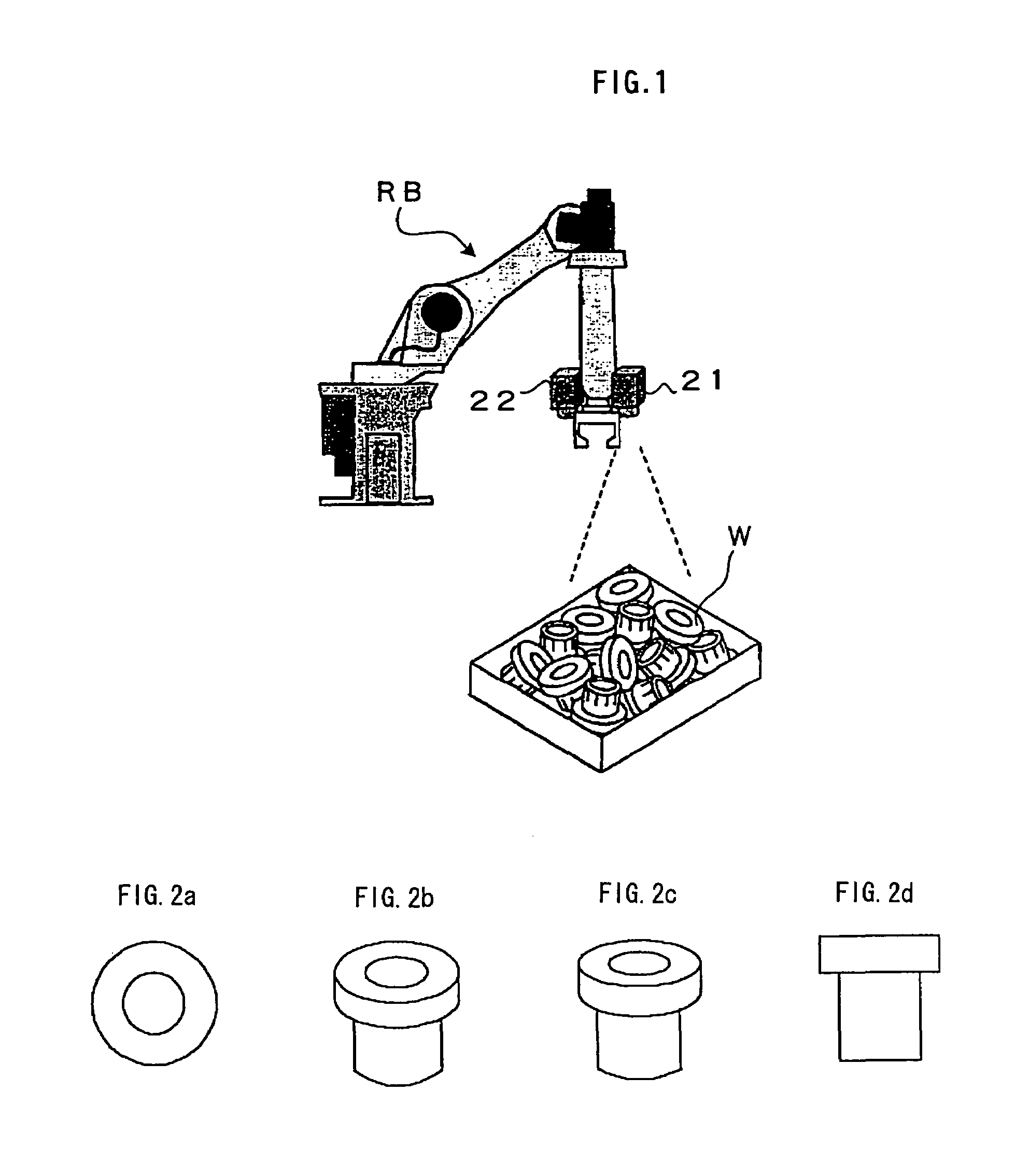

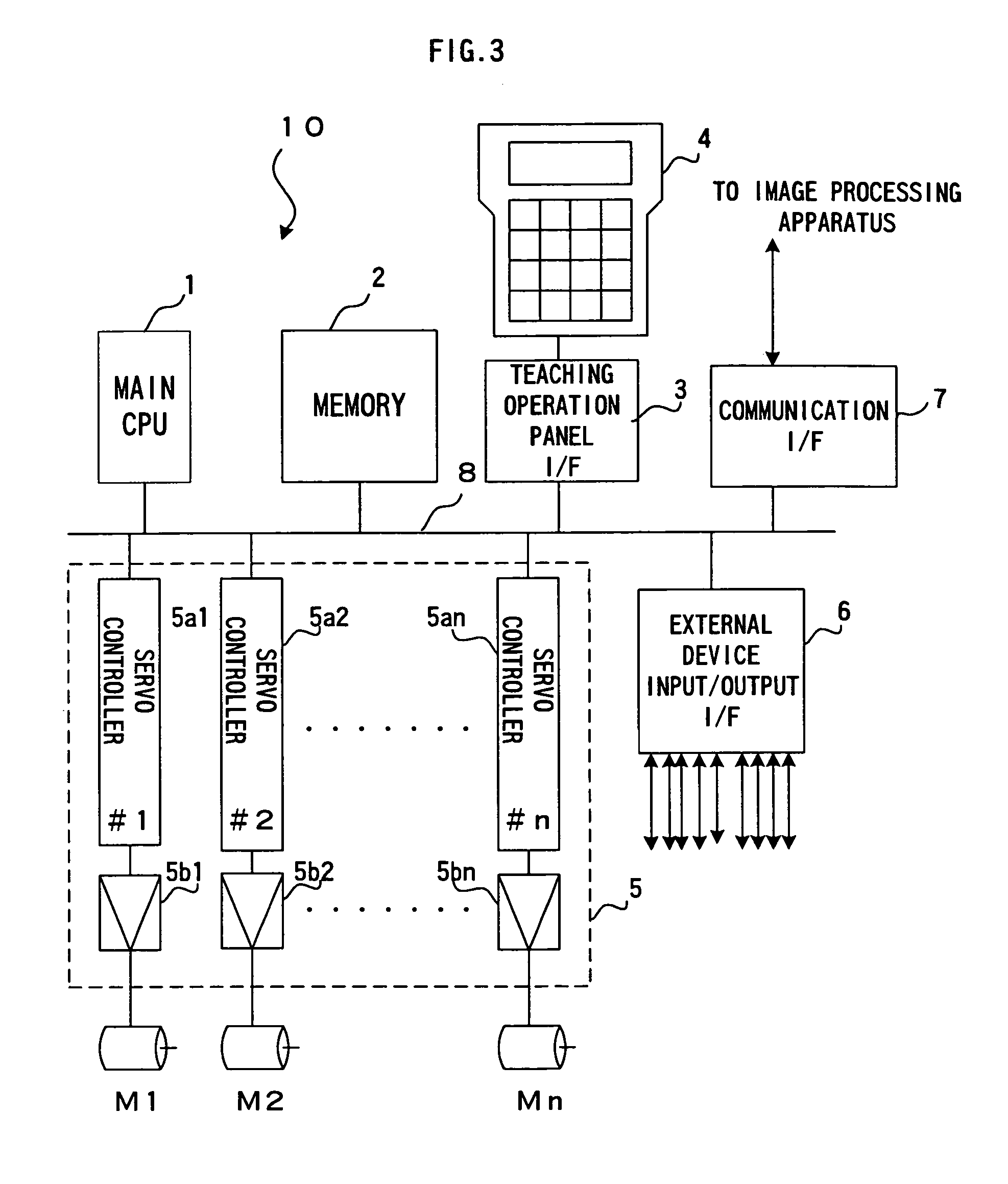

Robot system having image processing function

A robot system having an image processing function capable of detecting position and / or posture of individual workpieces randomly arranged in a stack to determine posture, or posture and position of a robot operation suitable for the detected position and / or posture of the workpiece. Reference models are created from two-dimensional images of a reference workpiece captured in a plurality of directions by a first visual sensor and stored. Also, the relative positions / postures of the first visual sensor with respect to the workpiece at the respective image capturing, and relative position / posture of a second visual sensor to be situated with respect to the workpiece are stored. Matching processing between an image of a stack of workpieces captured by the camera and the reference models are performed and an image of a workpiece matched with one reference model is selected. A three-dimensional position / posture of the workpiece is determined from the image of the selected workpiece, the selected reference model and position / posture information associated with the reference model. The position / posture of the second visual sensor to be situated for measurement is determined based on the determined position / posture of the workpiece and the stored relative position / posture of the second visual sensor, and precise position / posture of the workpiece is measured by the second visual sensor at the determined position / posture of the second visual sensor. A picking operation for picking out a respective workpiece from a randomly arranged stack can be performed by a robot based on the measuring results of the second visual sensor.

Owner:FANUC LTD

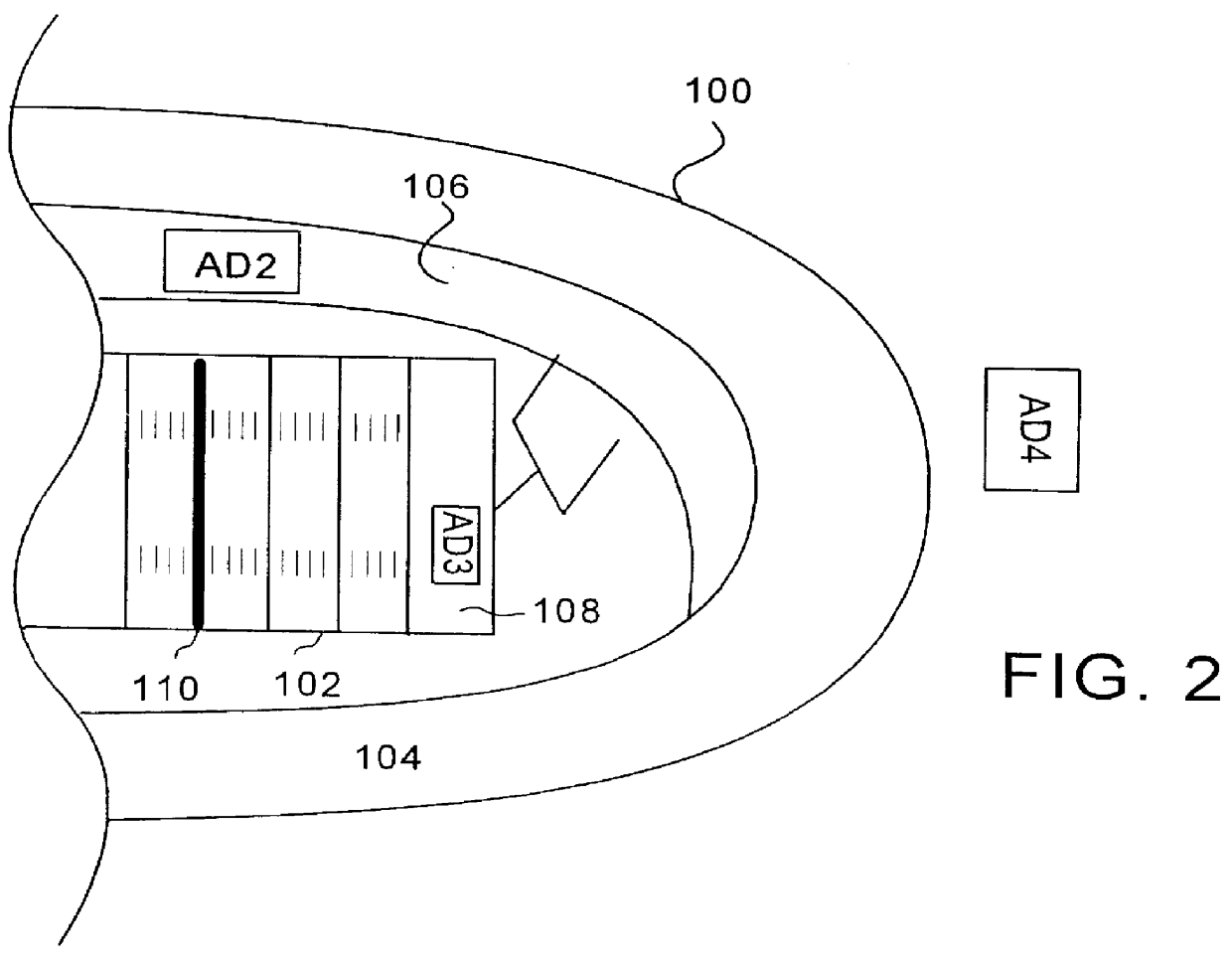

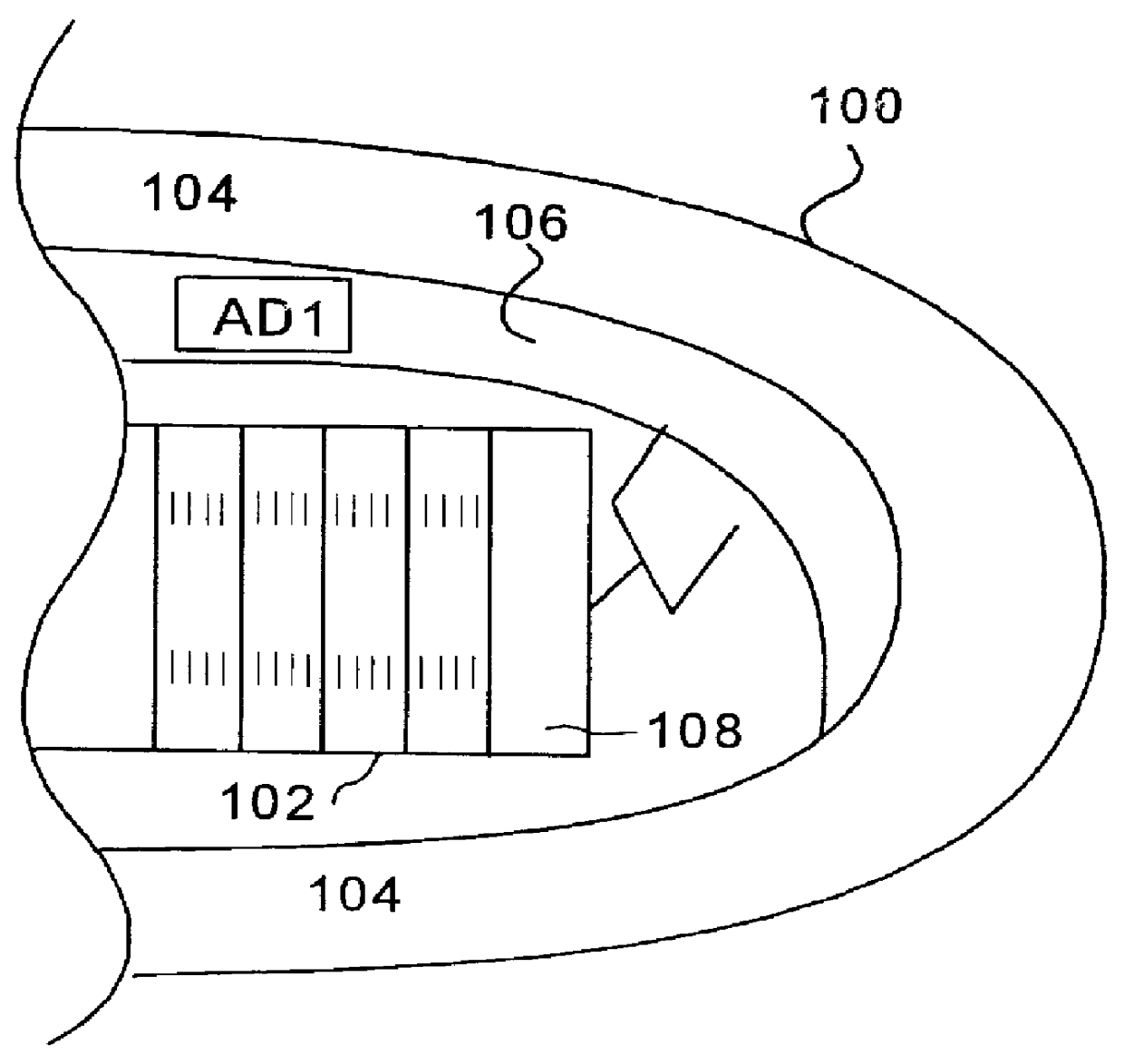

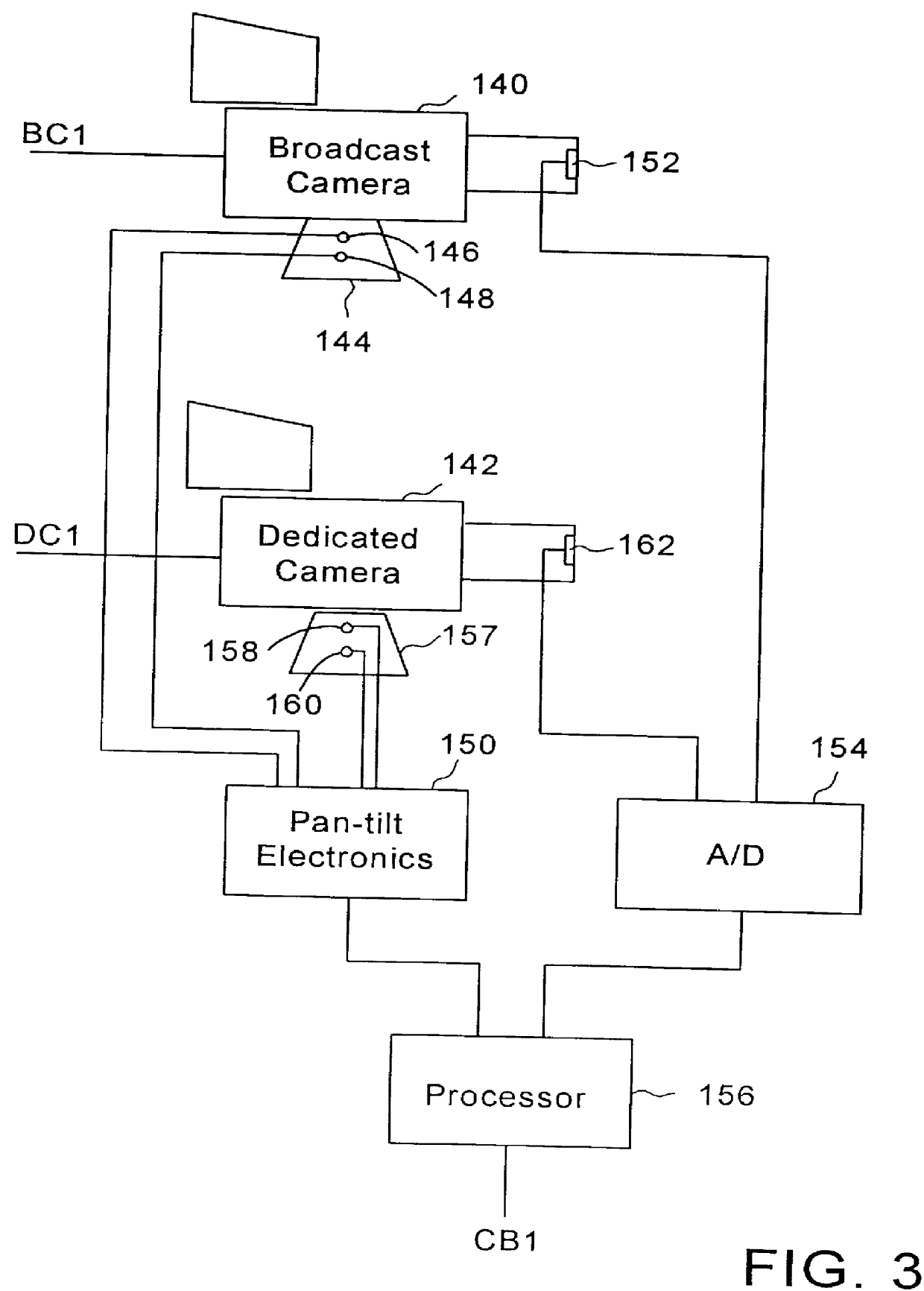

Method and apparatus for adding a graphic indication of a first down to a live video of a football game

InactiveUS6141060AIncrease speedLose accuracyTelevision system detailsColor signal processing circuitsGraphicsExact location

Pan, tilt and zoom sensors are coupled to a broadcast camera in order to determine the field of view of the broadcast camera and to make a rough estimate of a target's location in the broadcast camera's field of view. Pattern recognition techniques can be used to determine the exact location of the target in the broadcast camera's field of view. If a preselected target is at least partially within the field of view of the broadcast camera, all or part of the target's image is enhanced. The enhancements include replacing the target image with a second image, overlaying the target image or highlighting the target image. Examples of a target include a billboard, a portion of a playing field or another location at a live event. The enhancements made to the target's image can be seen by the television viewer but are not visible to persons at the live event.

Owner:SPORTSMEDIA TECH CORP

Emergency Reporting System

ActiveUS20070072583A1Special service for subscribersTransmissionExact locationGlobal Positioning System

Provided is an emergency locator system adapted for GPS-enabled wireless devices. Global Positioning System (GPS) technology is and Location Based Services (LBS) are used to determine the exact location of a user and communicate information relating to the emergency status of that location. The user initiates the locator application via a wireless device and their physical location information is automatically transferred to a server. The server then compares the user's location with Geographic Information System (GIS) maps to identify the emergency status associated with their location. Once the server has calculated the current emergency status, the information is automatically returned to the user, along with emergency instructions.

Owner:UNIV OF SOUTH FLORIDA

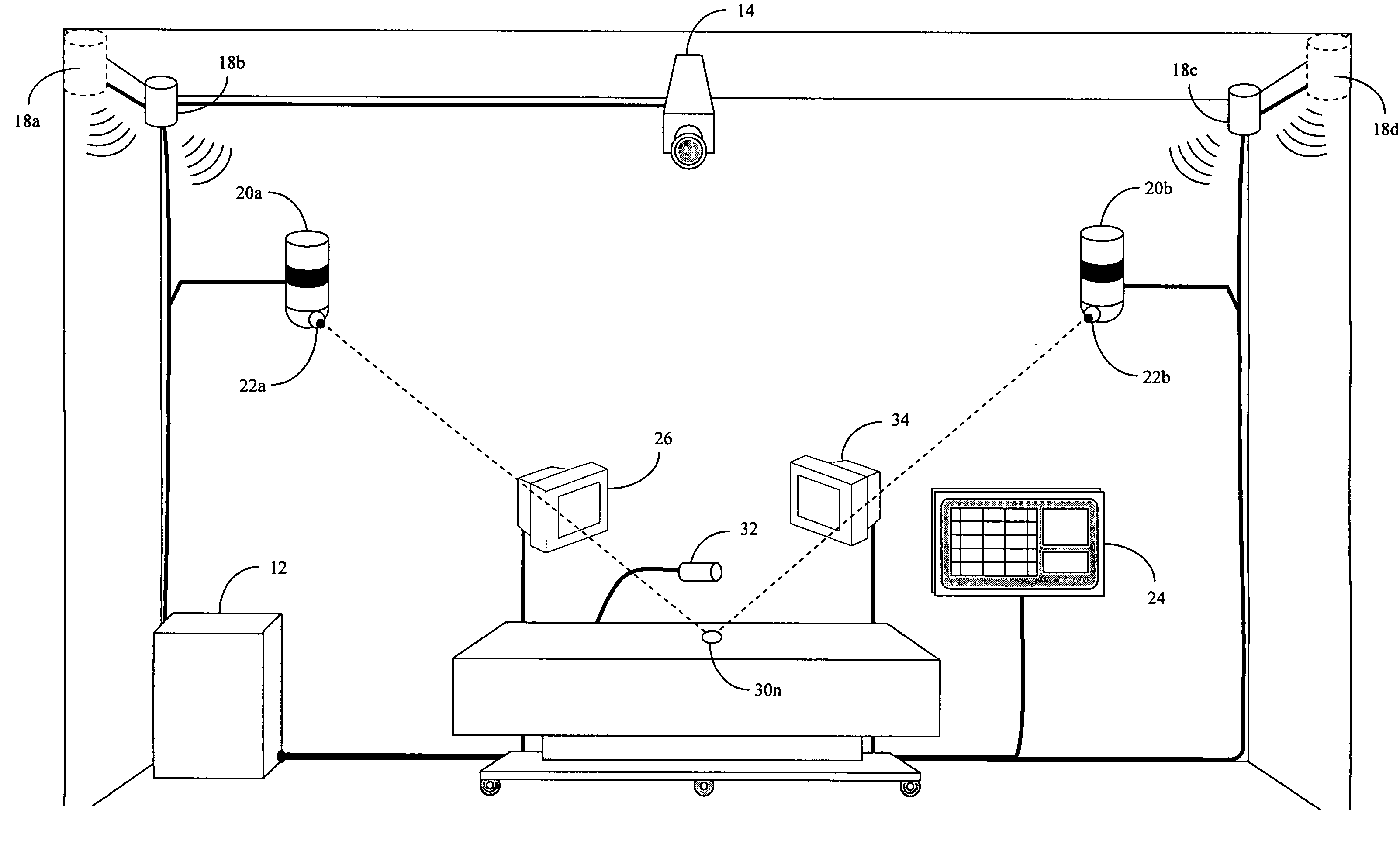

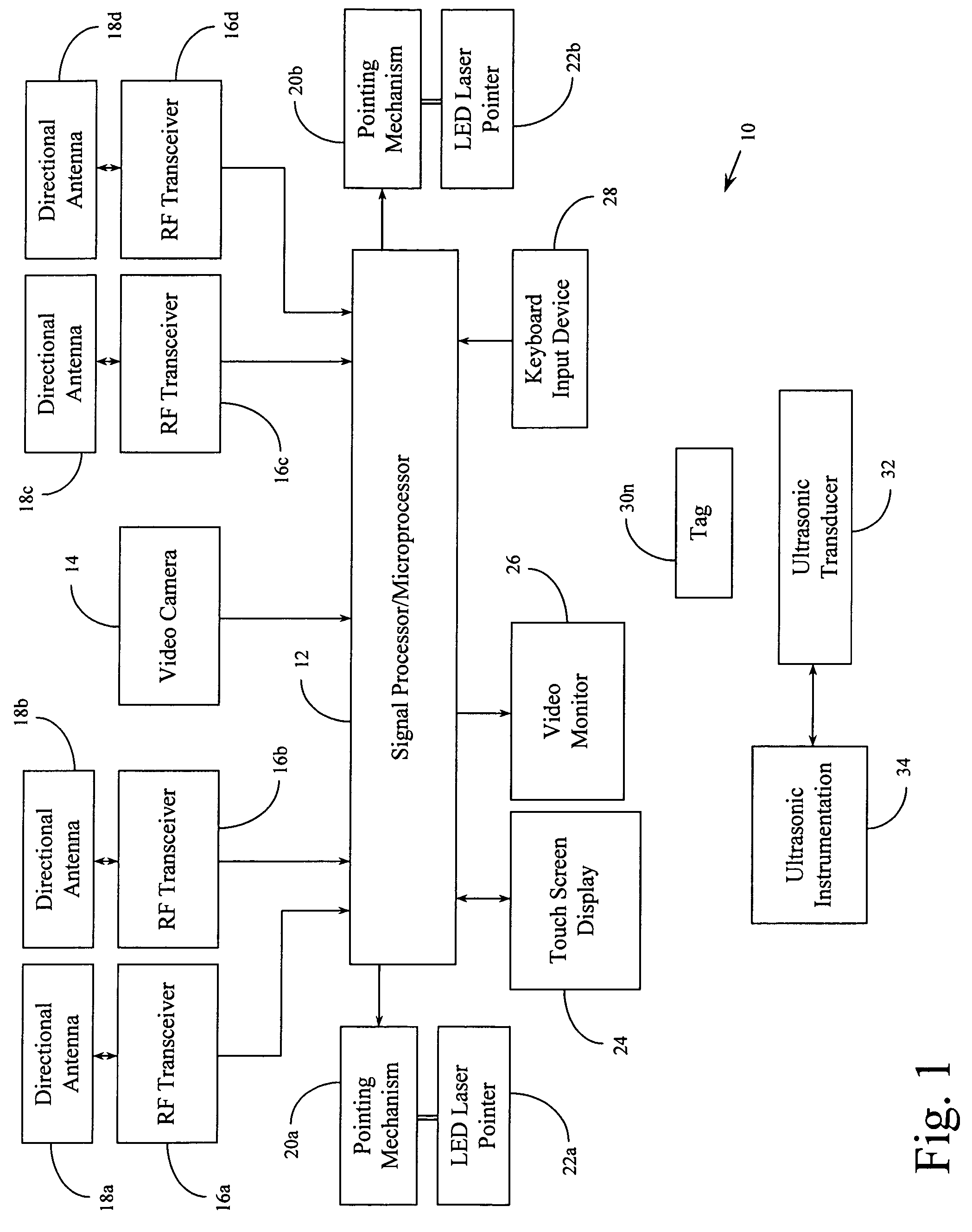

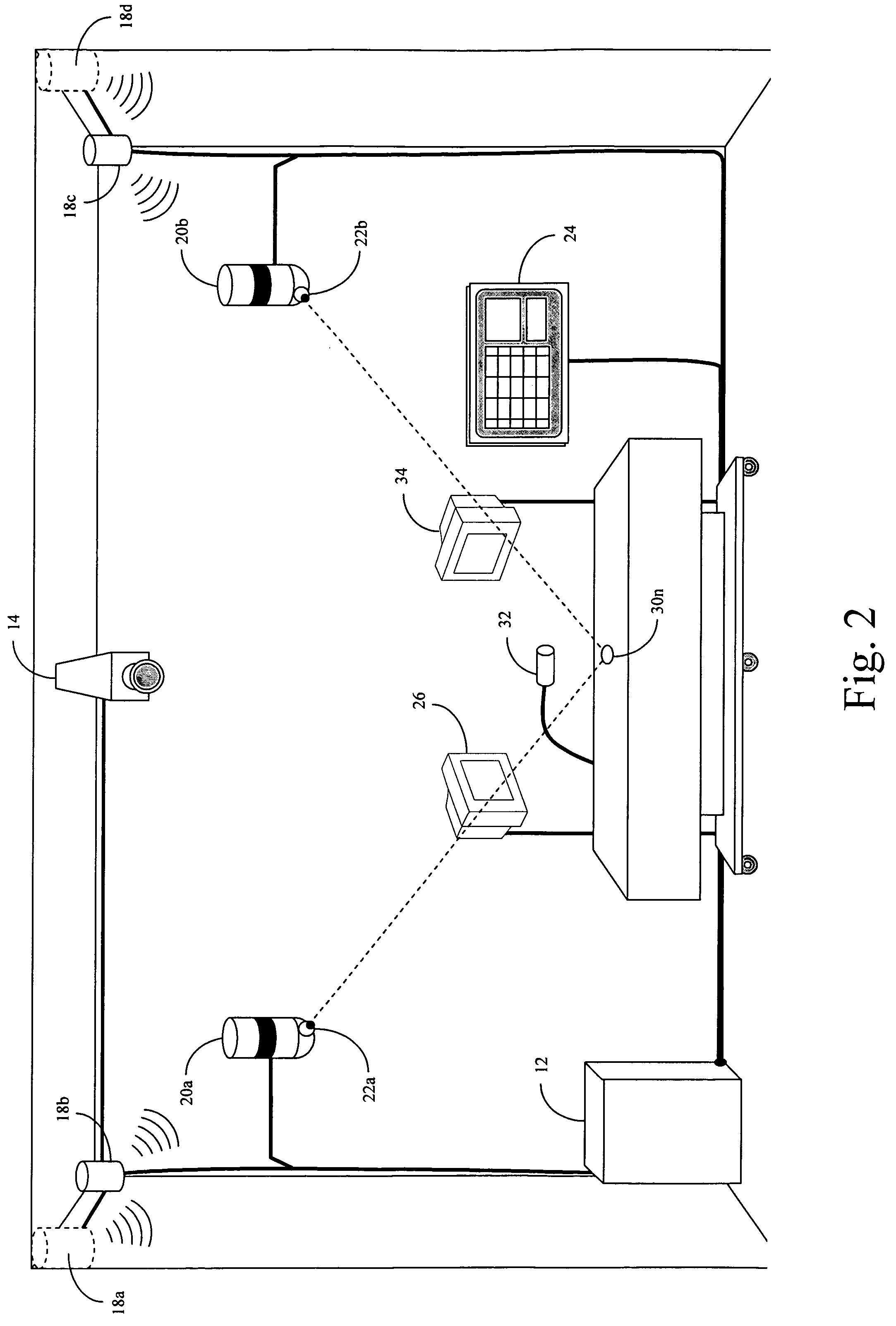

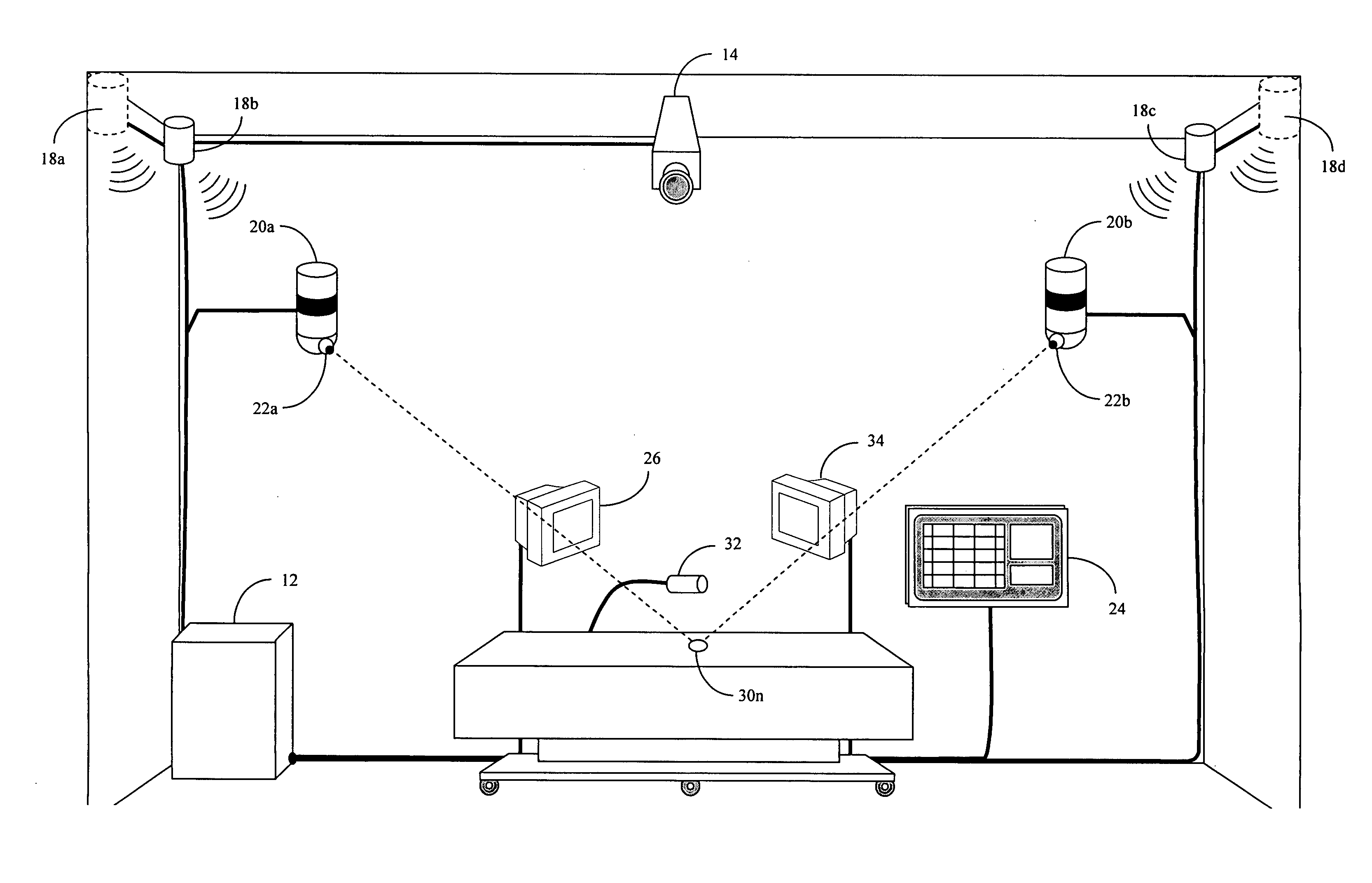

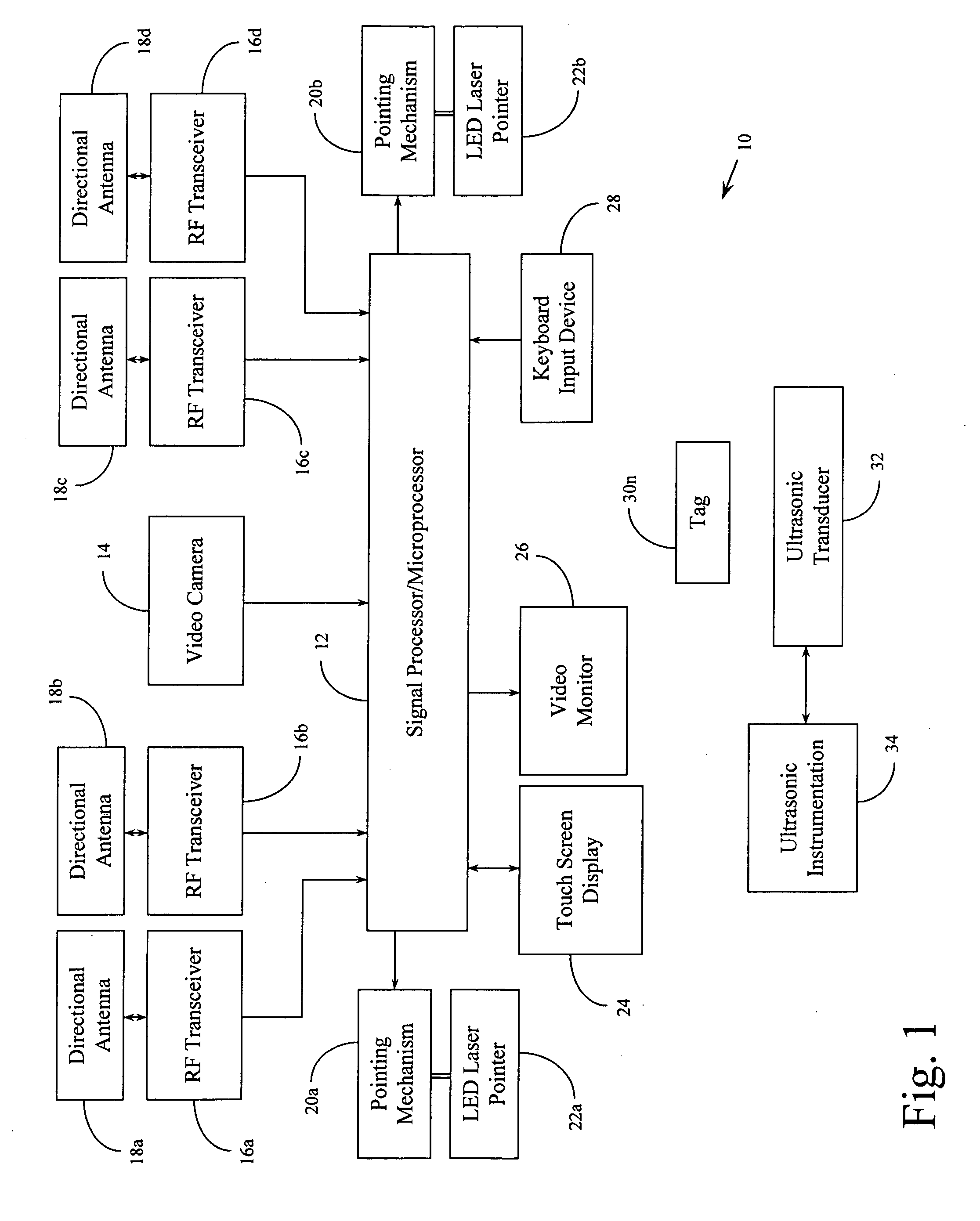

System for tracking surgical items in an operating room environment

InactiveUS7557710B2Firmly connectedDigital data processing detailsBurglar alarm mechanical actuationTransceiverSonification

A system for tracking and locating surgical items and objects in an operating room environment that incorporates two-stage functionality. A first stage provides mechanisms for tracking objects using radio frequency (RF) tags that are positioned on or in conjunction with every surgical item and object so as to be tracked by a number of RF transceivers located about the operating room. In addition to integrating RF ID components, the tags integrate hard spherical components that are easily identifiable by ultrasonic detection. If an object is “lost” from the tracking system functionality (RF tracking), the system operator may review a last known location and movement path presented on a display and thereafter utilize an ultrasonic sub-system in a localized area to detect the exact location of the missing object or item. Narrowing the location of a “lost” object is facilitated by the use of one or more LED laser pointers that are directed through the last known path of the object and to its last known location.

Owner:MEDWAVE INC

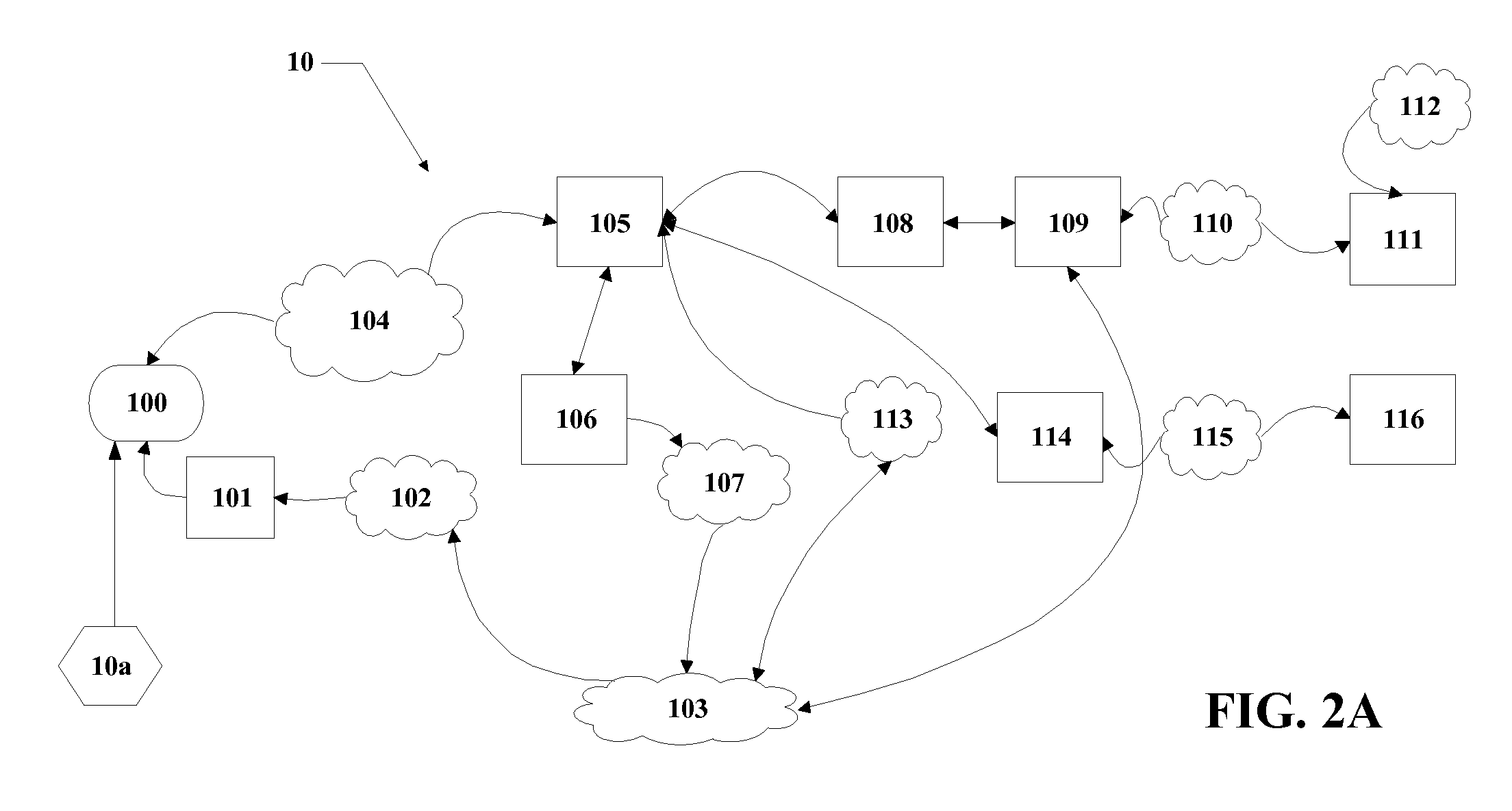

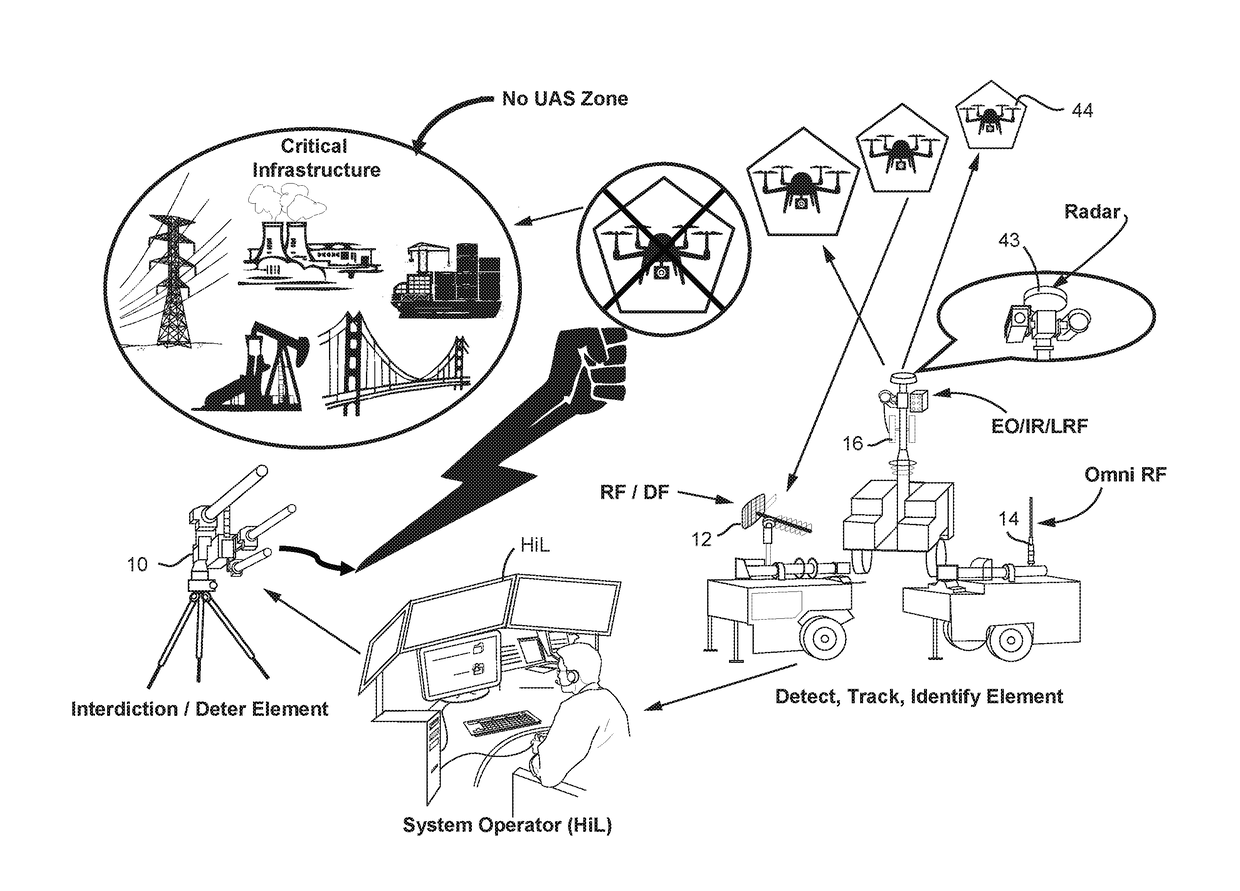

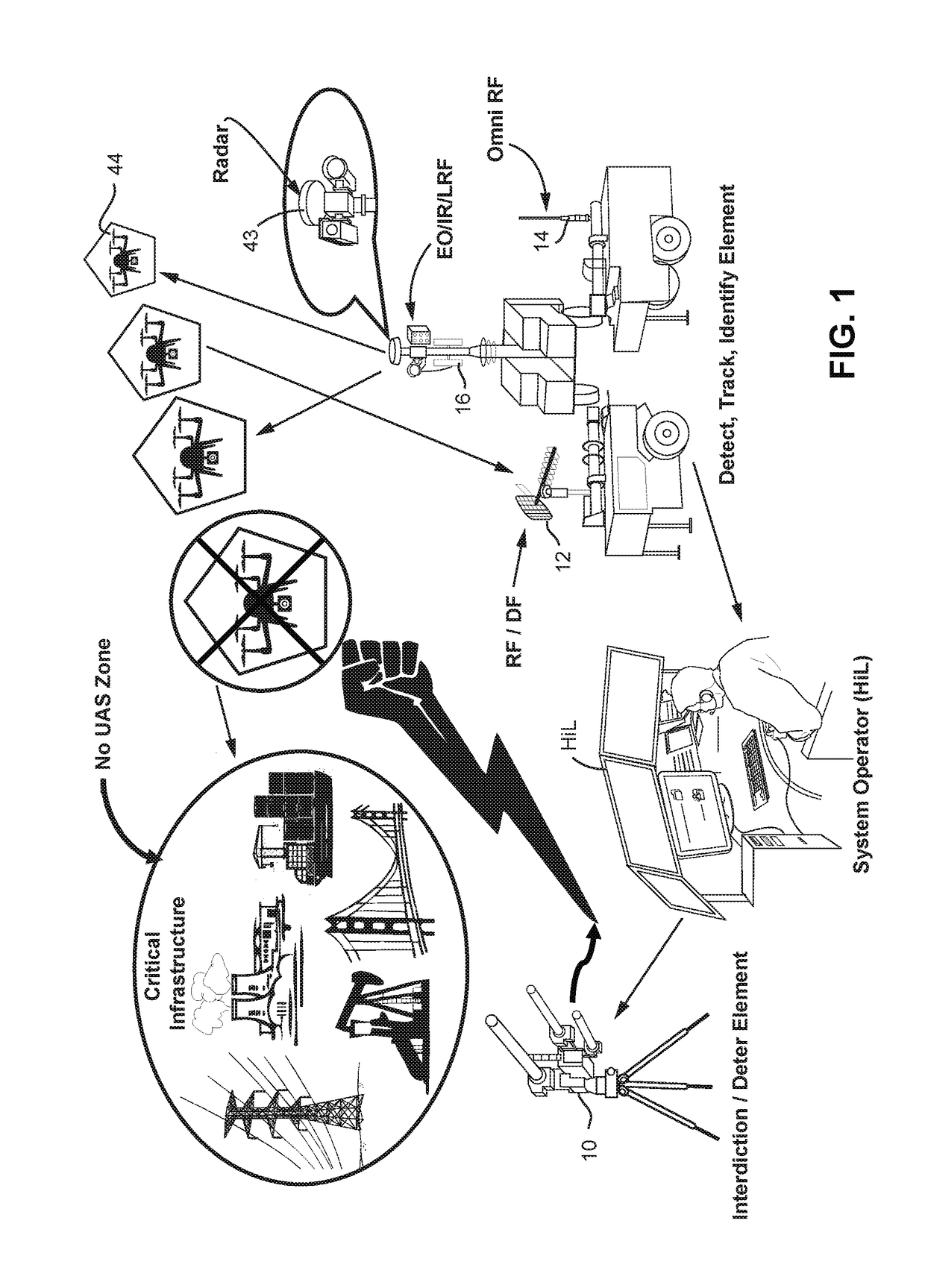

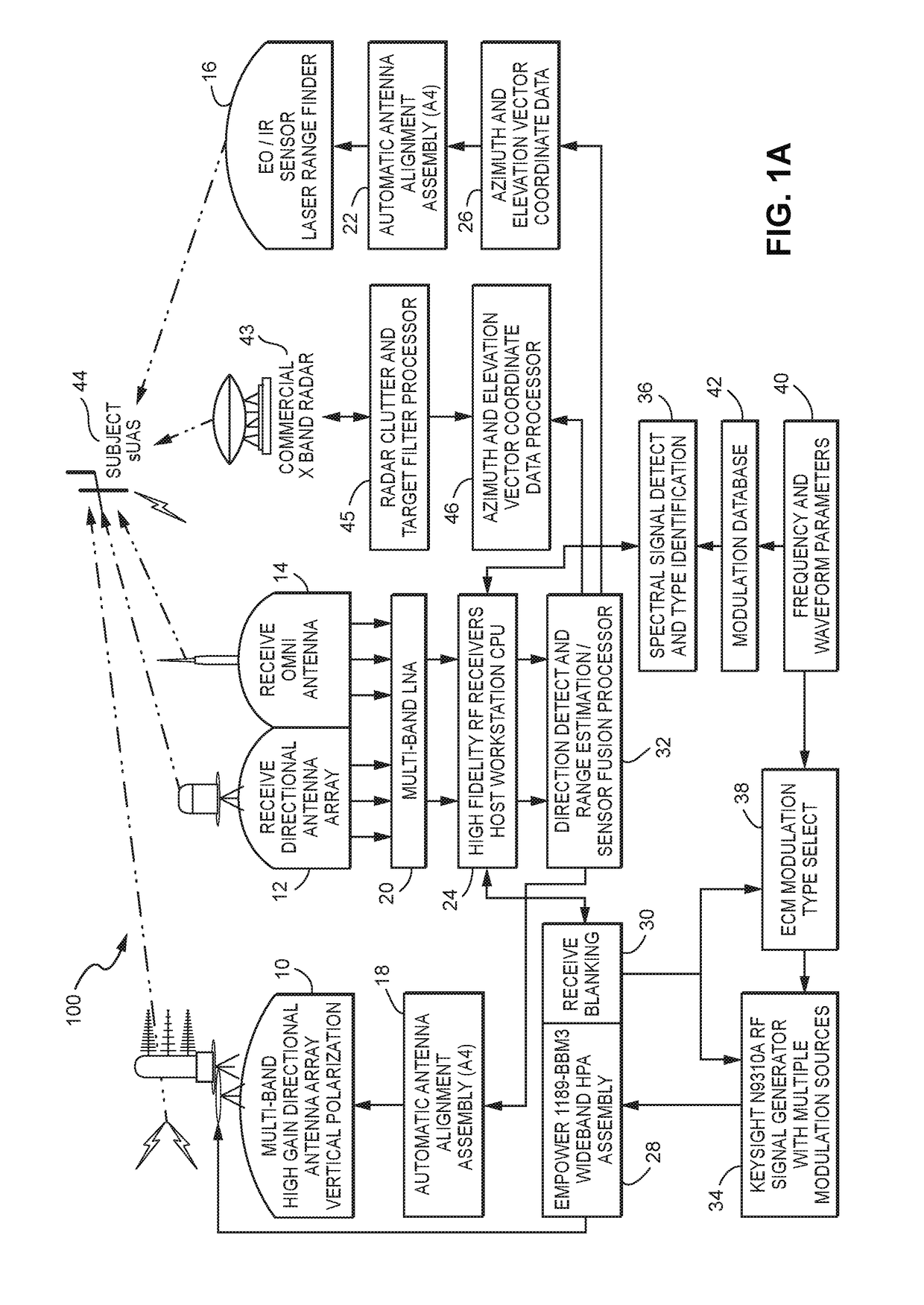

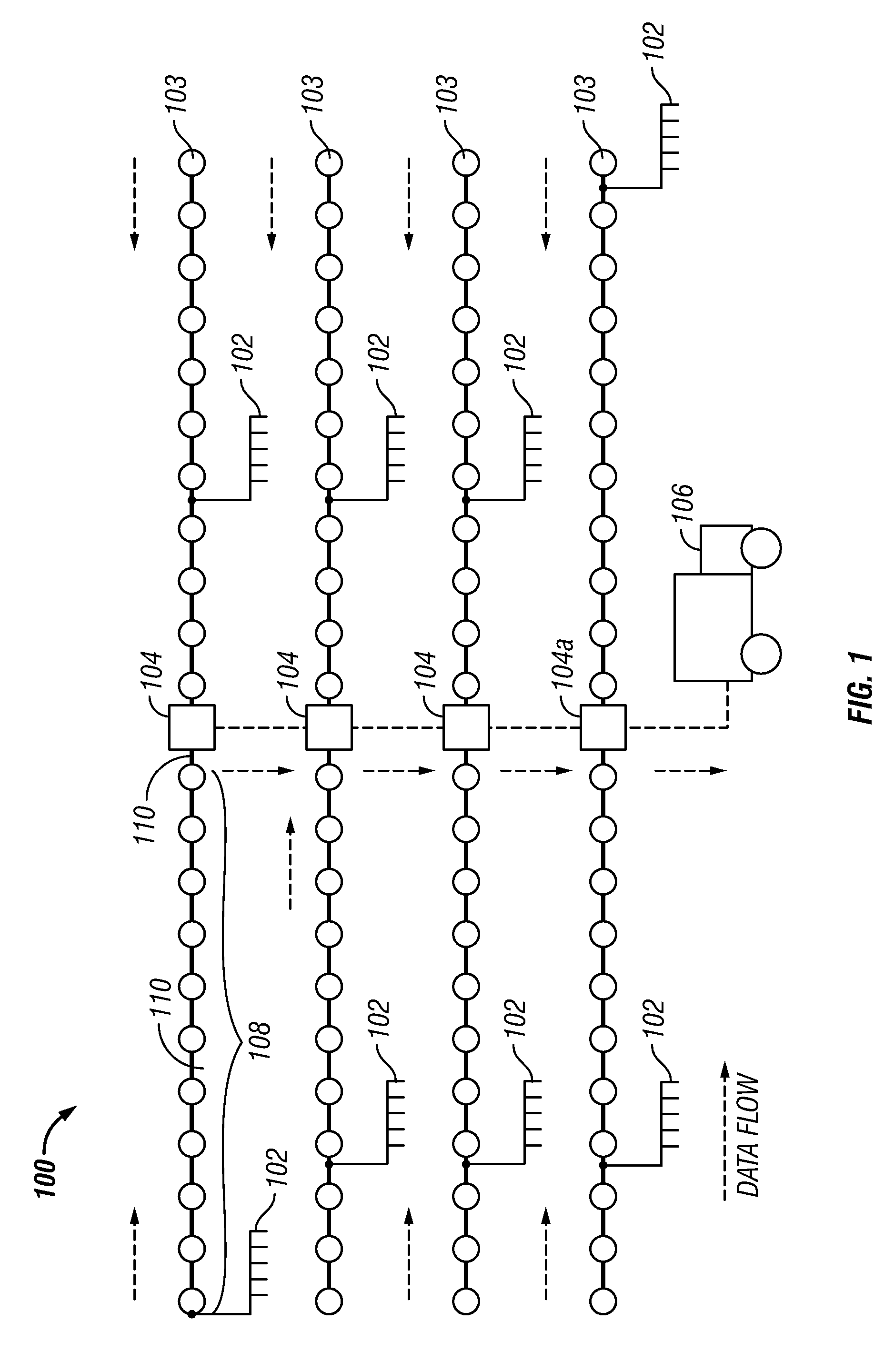

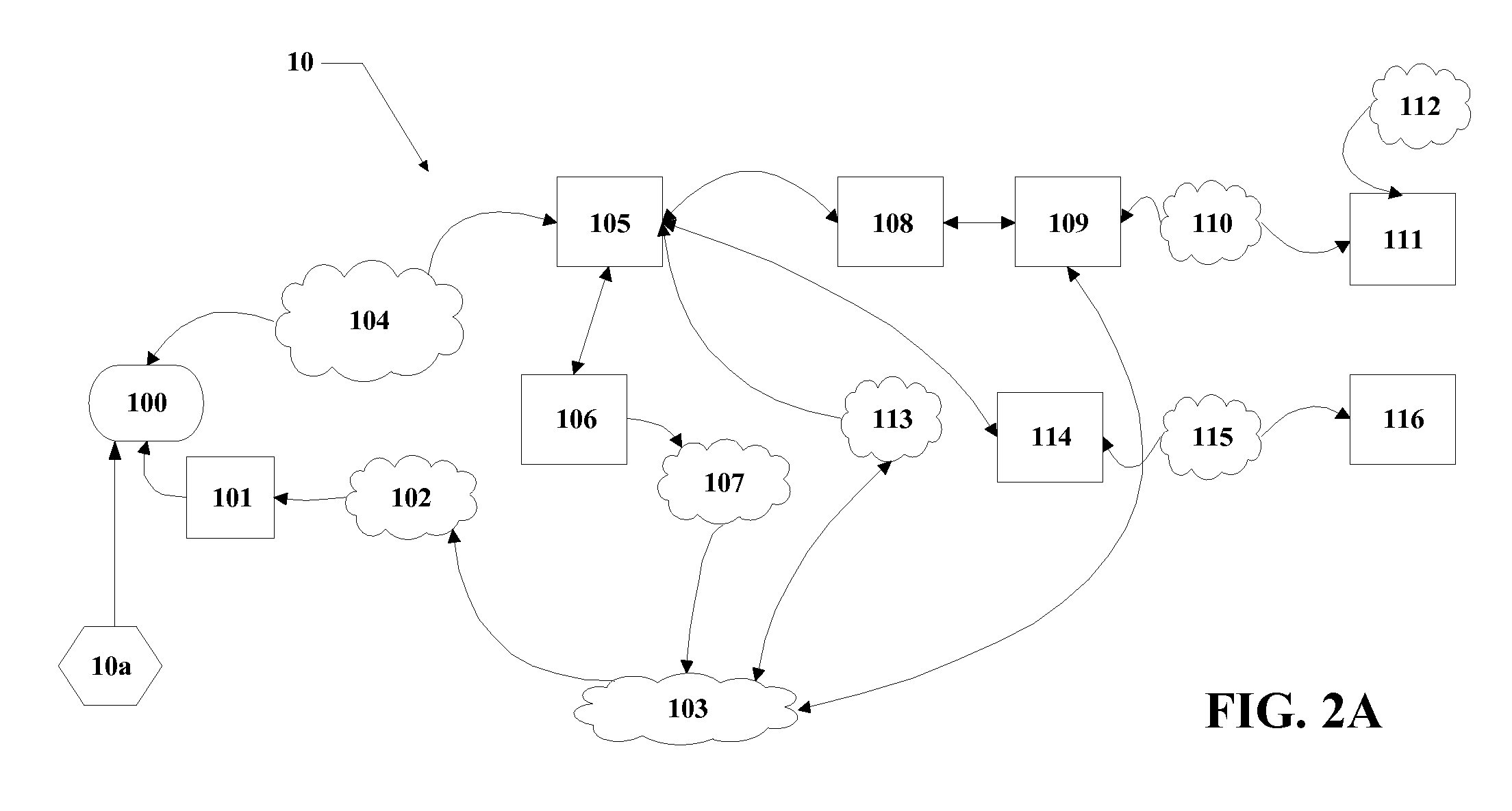

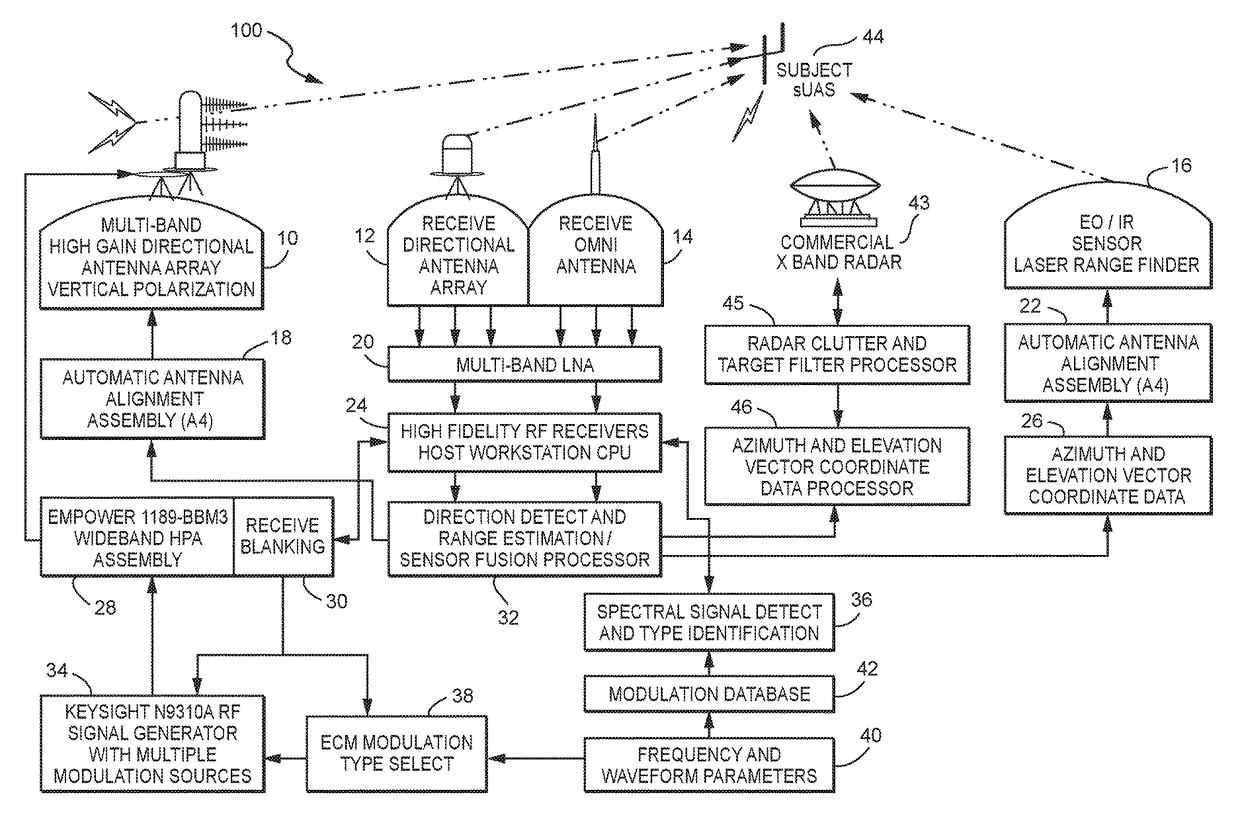

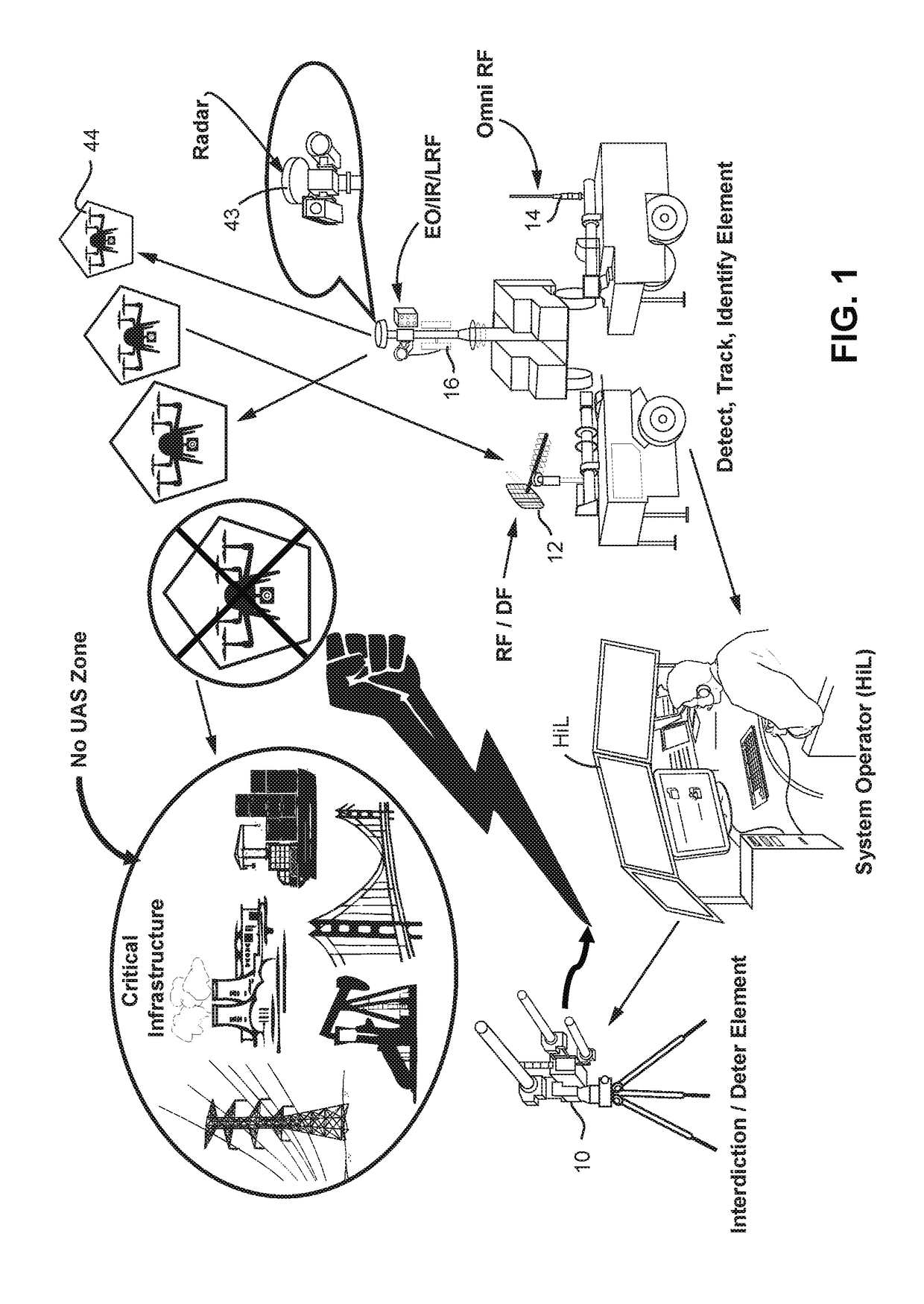

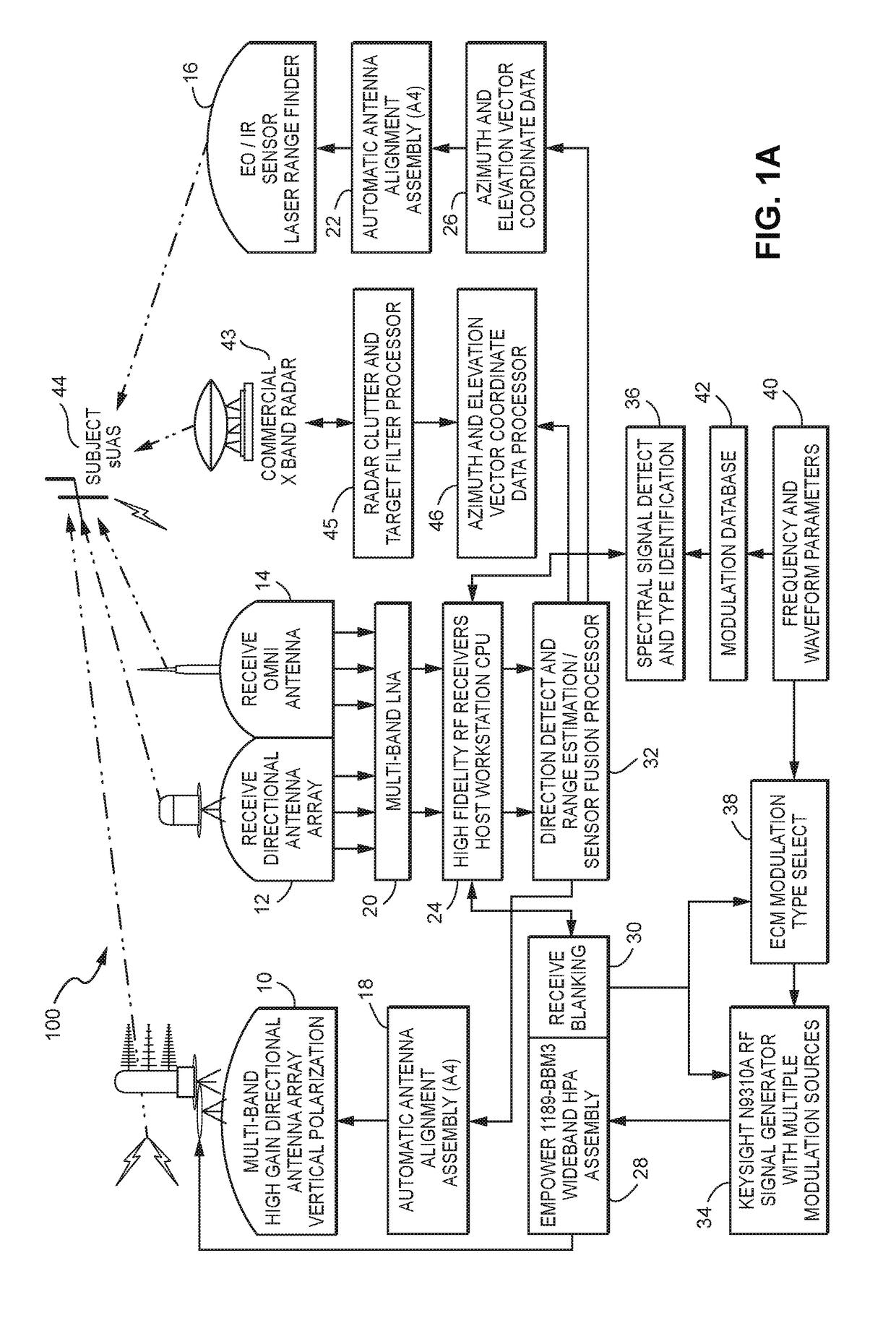

Deterent for unmanned aerial systems

ActiveUS20170192089A1Defence devicesDirection/deviation determining electromagnetic systemsNon destructiveAutomated algorithm

A system (100) for providing an integrated multi-sensor detection and countermeasure against commercial unmanned aerial systems / vehicles (44) and includes a detecting element (103, 104, 105), a tracking element (103,104, 105) an identification element (103, 104, 105) and an interdiction element (102). The detecting element detects an unmanned aerial vehicle in flight in the region of, or approaching, a property, place, event or very important person. The tracking element determines the exact location of the unmanned aerial vehicle. The identification / classification element utilizing data from the other elements generates the identification and threat assessment of the UAS. The interdiction element, based on automated algorithms can either direct the unmanned aerial vehicle away from the property, place, event or very important person in a non-destructive manner, or can disable the unmanned aerial vehicle in a destructive manner. The interdiction process may be over ridden by intervention by a System Operator / HiL.

Owner:XIDRONE SYST INC

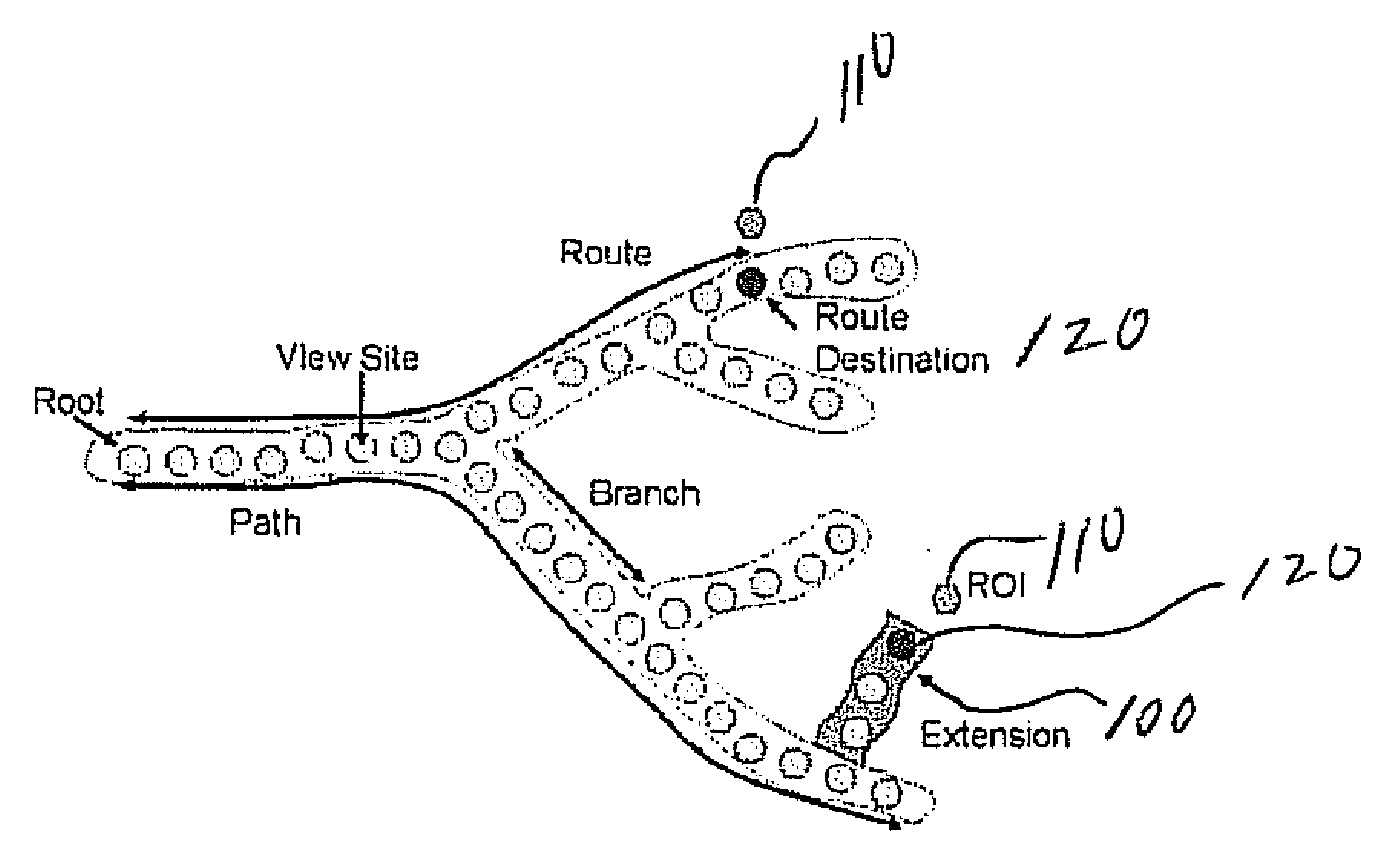

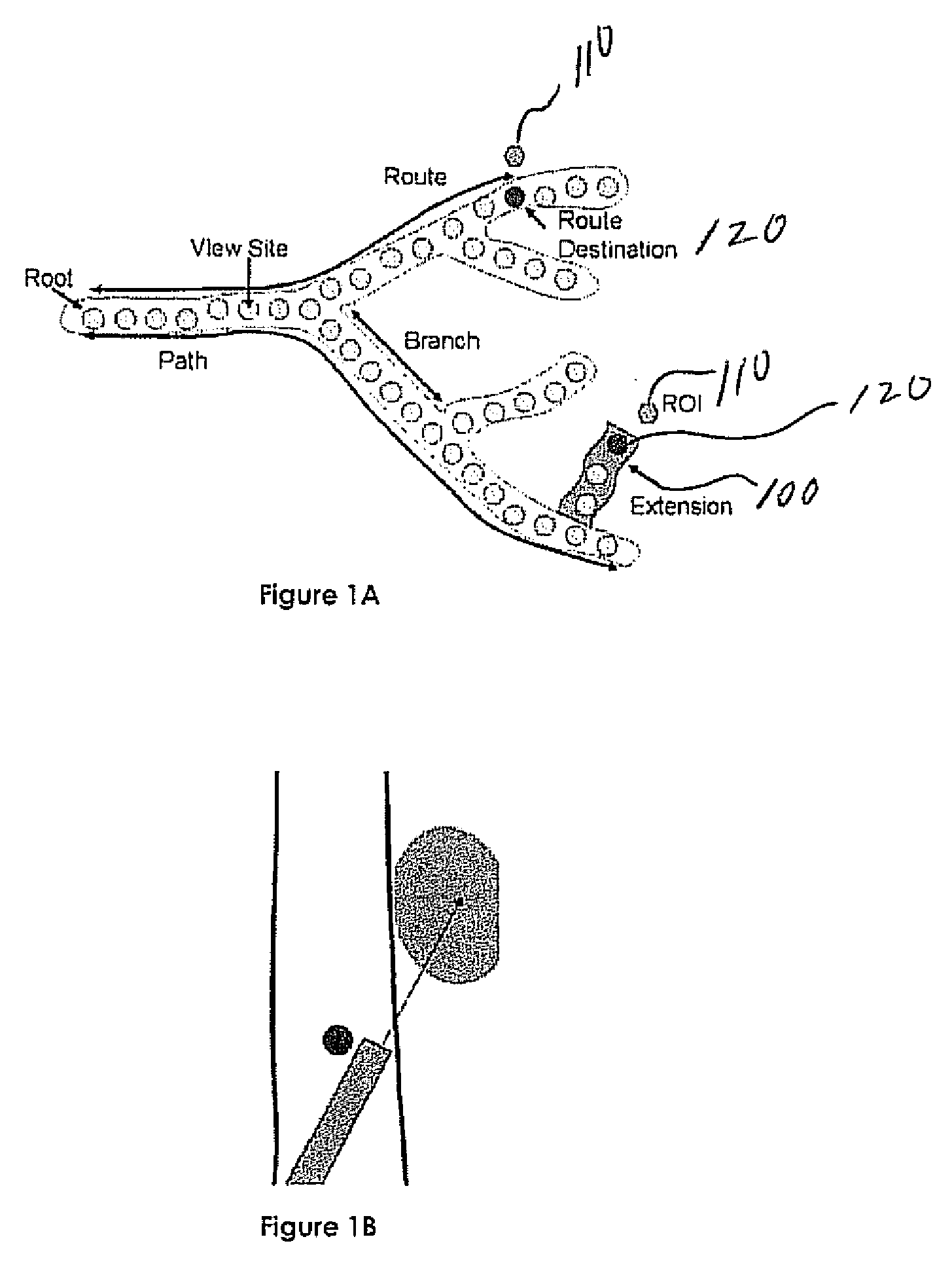

Precise endoscopic planning and visualization

InactiveUS20090156895A1Ensure safetyLarge depthImage enhancementImage analysisThree vesselsPostural orientation

Endoscopic poses are used to indicate the exact location and direction in which a physician must orient the endoscope to sample a region of interest (ROI) in an airway tree or other luminal structure. Using a patient-specific model of the anatomy derived from a 3D MDCT image, poses are chosen to be realizable given the physical characteristics of the endoscope and the relative geometry of the patient's airways and the ROI. To help ensure the safety of the patient, the calculations also account for obstacles such as the aorta and pulmonary arteries, precluding the puncture of these sensitive blood vessels. A real-time visualization system conveys the calculated pose orientation and the quality of any arbitrary bronchoscopic pose orientation. A suggested pose orientation is represented as an icon within a virtual rendering of the patient's airway tree or other structure. The location and orientation of the icon indicates the suggested pose orientation to which the physician should align during the procedure.

Owner:PENN STATE RES FOUND

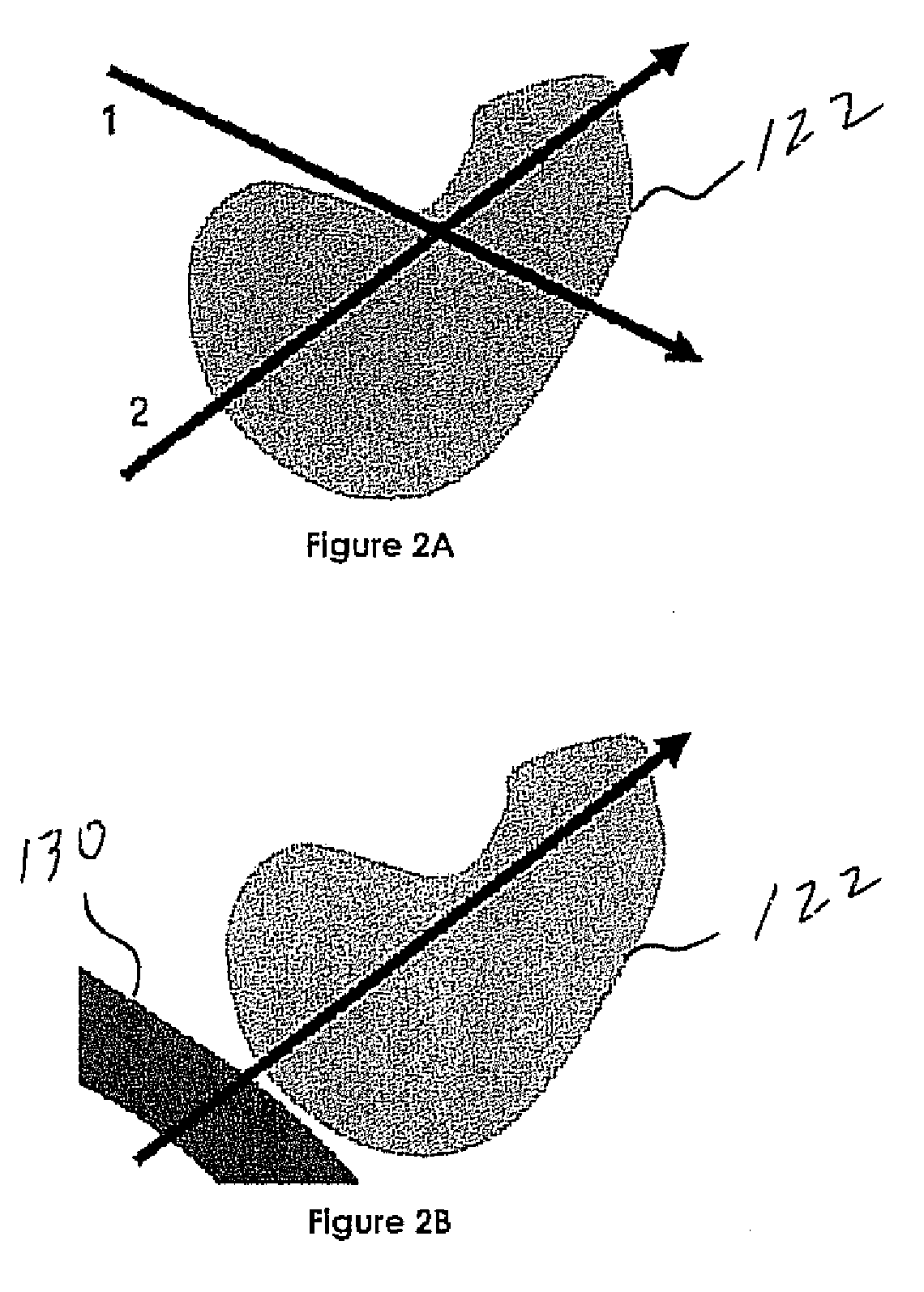

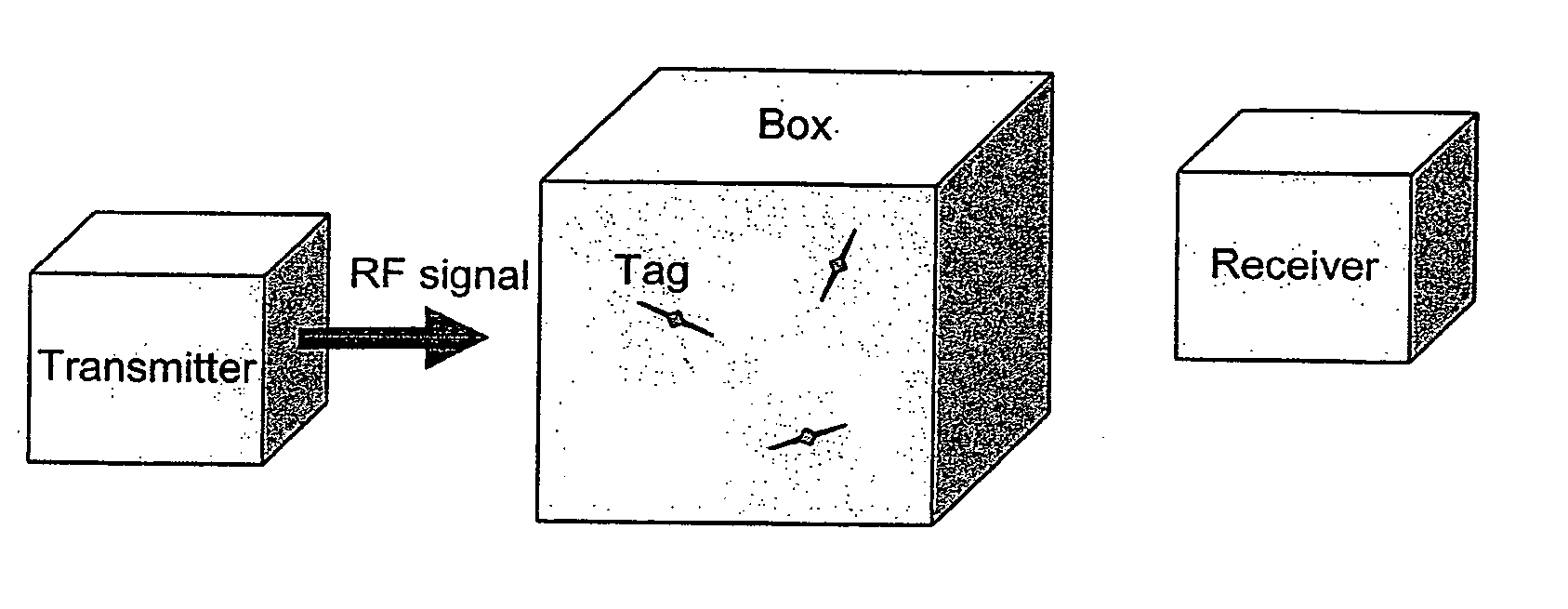

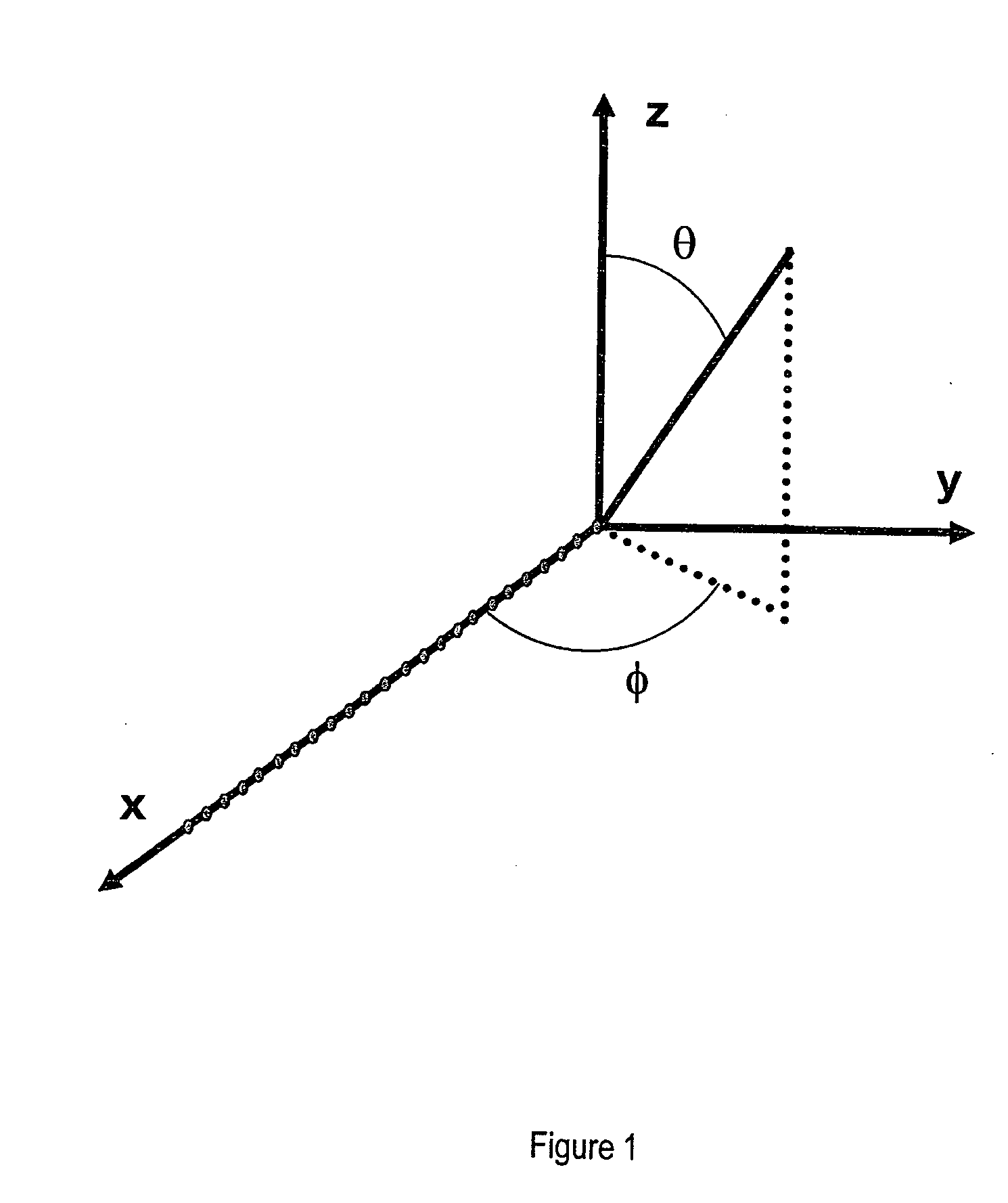

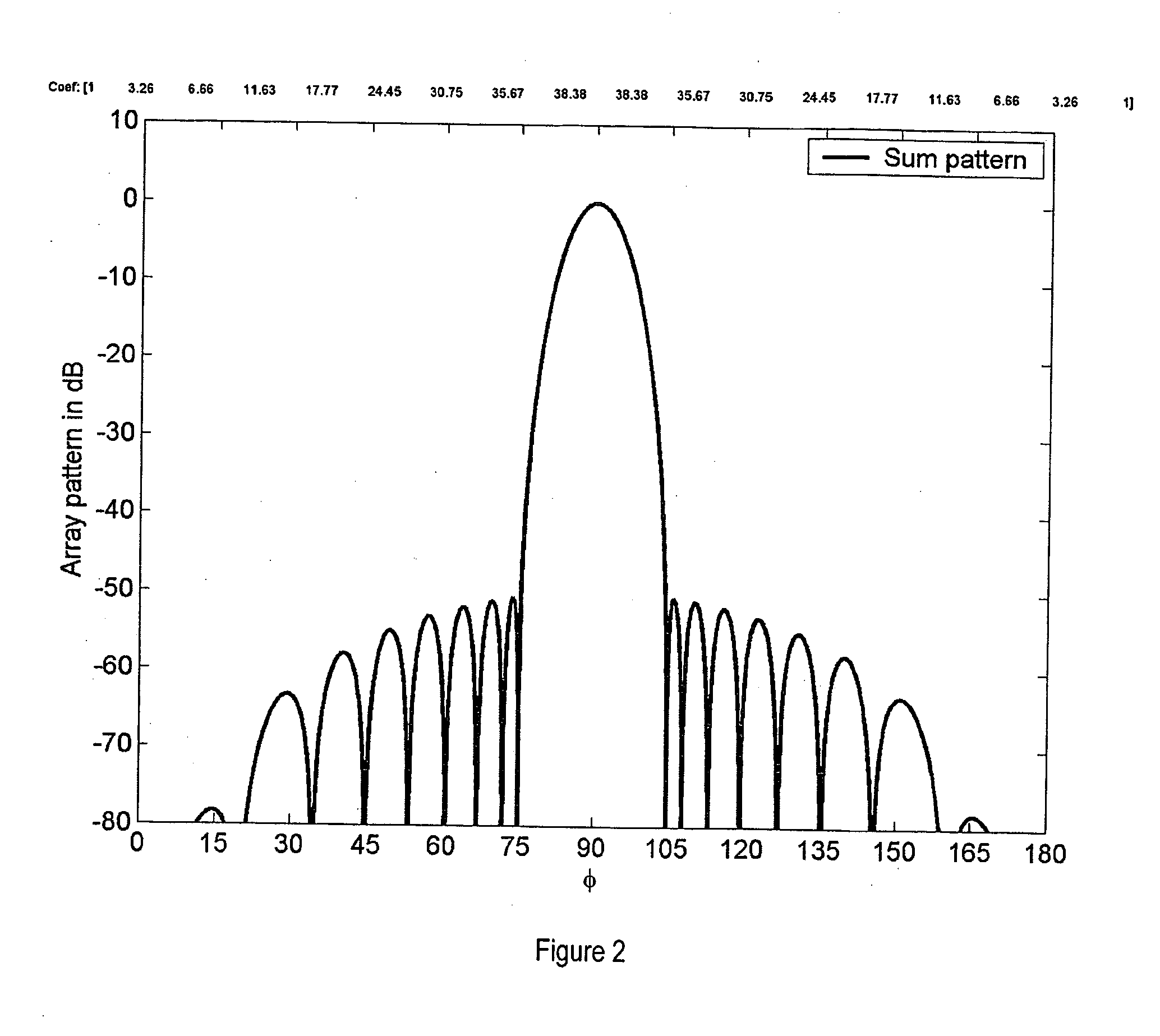

Method and apparatus for improving the efficiency and accuracy of RFID systems

ActiveUS20050212660A1Improve efficiencyPrevent leakageMemory record carrier reading problemsDigital computer detailsExact locationEngineering

The present invention relates to a method and apparatus for transmitting a narrow signal beam that allows the precise location of RFID tags to be determined and reduces tag collisions. The present invention further relates to a method and apparatus for combing an RFID reader with an optical source to visualize the interrogation zone of the reader. The present invention also relates to a method and apparatus for improving the efficiency of RFID systems.

Owner:SEKNION

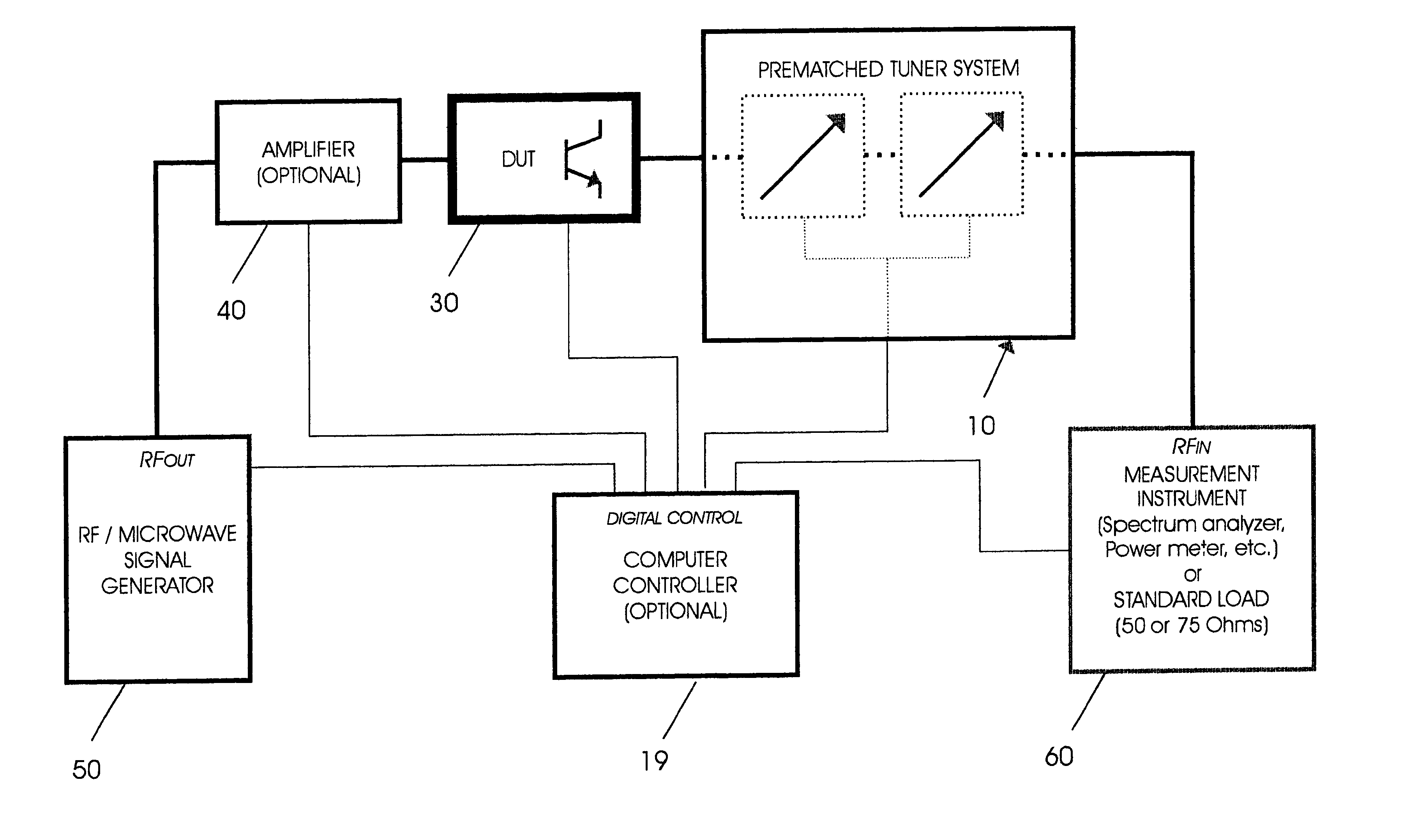

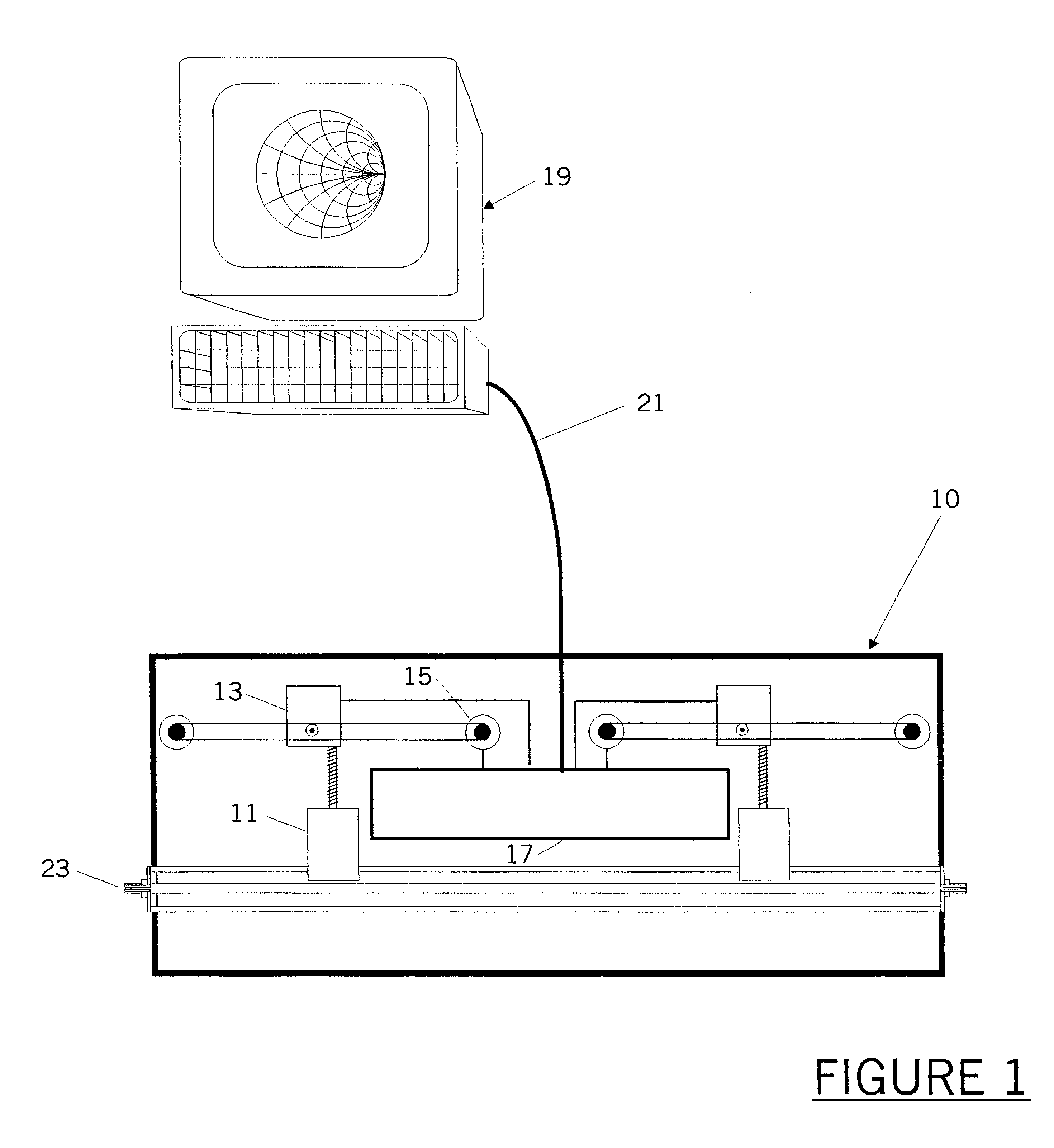

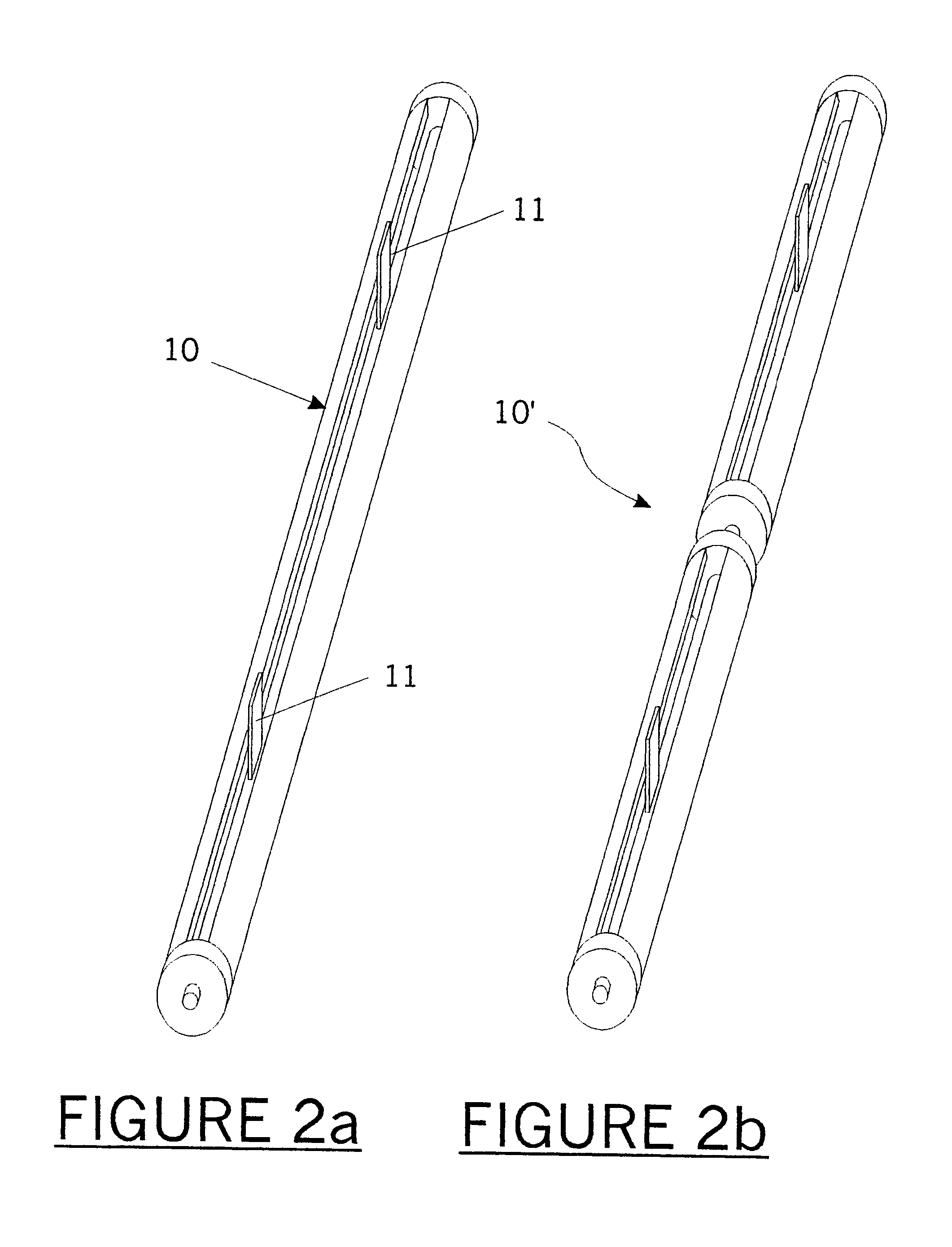

Adaptable pre-matched tuner system and method

The present invention is an adaptable pre-matched tuner system and calibration method for measuring reflection factors above Gamma=0.85 for a DUT. The system includes a first and second large-band microwave tuners connected in series, the first and second large-band tuners being mechanically and electronically integrated; and a controller for controlling the two large-band tuners. The first tuner is adapted to act as a pre-matching tuner and the second tuner is adapted to investigate an area of a Smith Chart that is difficult to characterise with a single tuner, so that the combination of the first and second large-band tuners permits the measurement of reflection factors above Gamma=0.85. The pre-matched tuner system allows the generation of a very high reflection factor at any point of the reflection factor plane (Smith Chart). The pre-matched tuner must be properly calibrated, such as to be able to concentrate the search for optimum performance of the DUT in the exact location of the reflection factor plane where the DUT performs best, using a pre-search algorithm.

Owner:FOCUS MICROWAVES +1

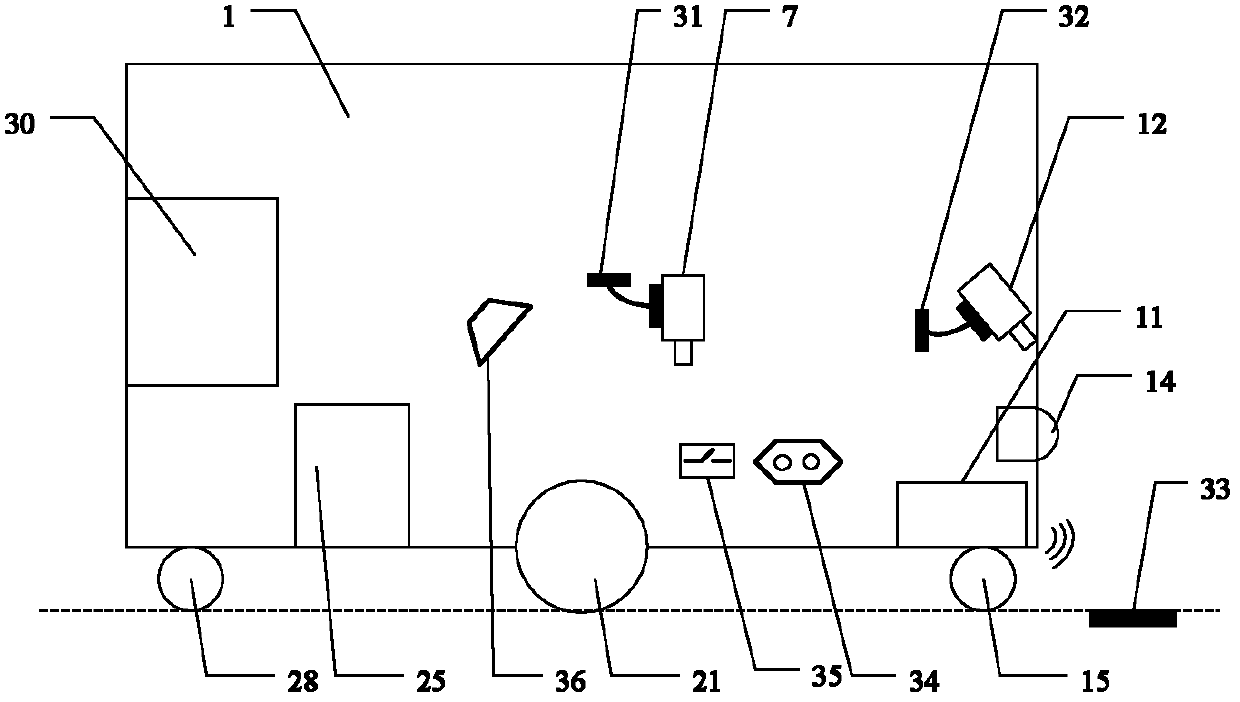

Vision guiding AGV (Automatic Guided Vehicle) system and method of embedded system

InactiveCN102608998ALow costReduce power consumptionPosition/course control in two dimensionsLaser scanningExact location

The invention discloses a vision guiding AGV (Automatic Guided Vehicle) system and a method of an embedded system. Two cameras fixed on a trolley are used for acquiring guiding path information in real time, wherein the cameras and the ground form a certain angle to be inclined forwards and is used for acquiring prospect images; and the cameras are arranged at the middle part and the front part of the interior of the trolley, are vertical to the ground and are used for secondary exact location. The vision guiding AGV method comprises the following step of: embedding an anti-metal radio frequency mark on the ground surface of a key position, wherein a vehicle-mounted radio frequency card reader obtains information in the mark when the trolley passes through the upper part of the mark. When a laser scanner scans a front obstacle in real time, obstacle avoidance detection and obstacle avoidance are realized. A control box of the embedded system disclosed by the invention is as an inner kernel for image collection, image treatment and policy control, the acquired image is subjected to Gauss high-pass filtering, edge detection and two-step Hough transformation so that position deviation and angle deviation of the trolley relative to a current path can be calculated, and two-dimensional deviation value of the AGV currently corresponding to a location reference point is fed back at a station point.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

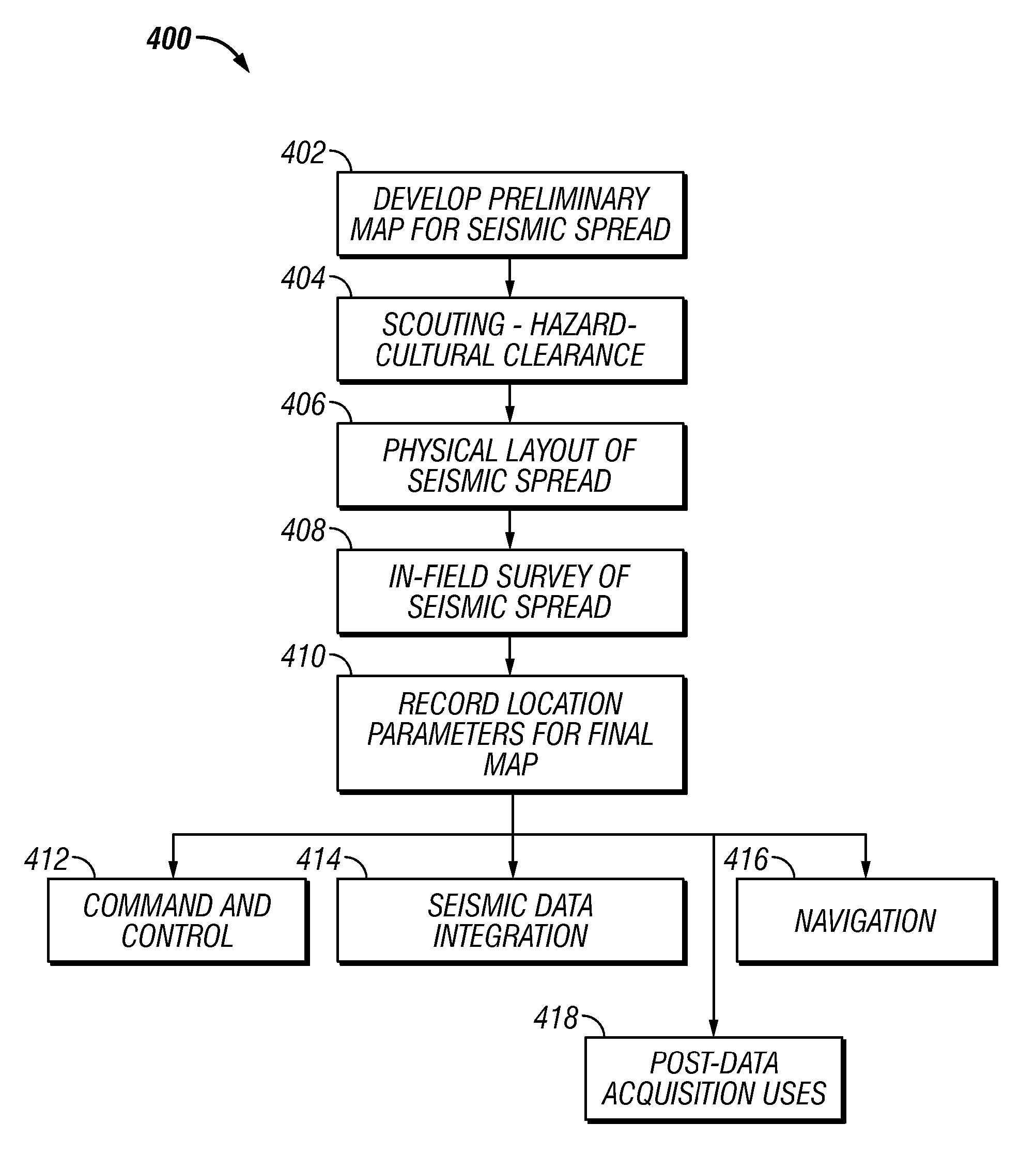

One Touch Data Acquisition

A seismic spread has a plurality of seismic stations positioned over a terrain of interest and a controller programmed to automate the data acquisition activity. In one aspect, the present disclosure provides a method for forming a seismic spread by developing a preliminary map of suggested locations for seismic devices and later forming a final map having in-field determined location data for the seismic devices. Each suggested location is represented by a virtual flag used to navigate to each suggested location. A seismic device is placed at each suggested location and the precise location of the each placed seismic devices is determined by a navigation device. The determined locations are used to form a second map based on the determined location of the one or more of the placed seismic devices. Using the virtual flag eliminates having to survey the terrain and place physical markers and later remove those physical markers. It is emphasized that this abstract is provided to comply with the rules requiring an abstract which will allow a searcher or other reader to quickly ascertain the subject matter of the technical disclosure. It is submitted with the understanding that it will not be used to interpret or limit the scope or meaning of the claims. (37 CFR 1.72(b)

Owner:INOVA

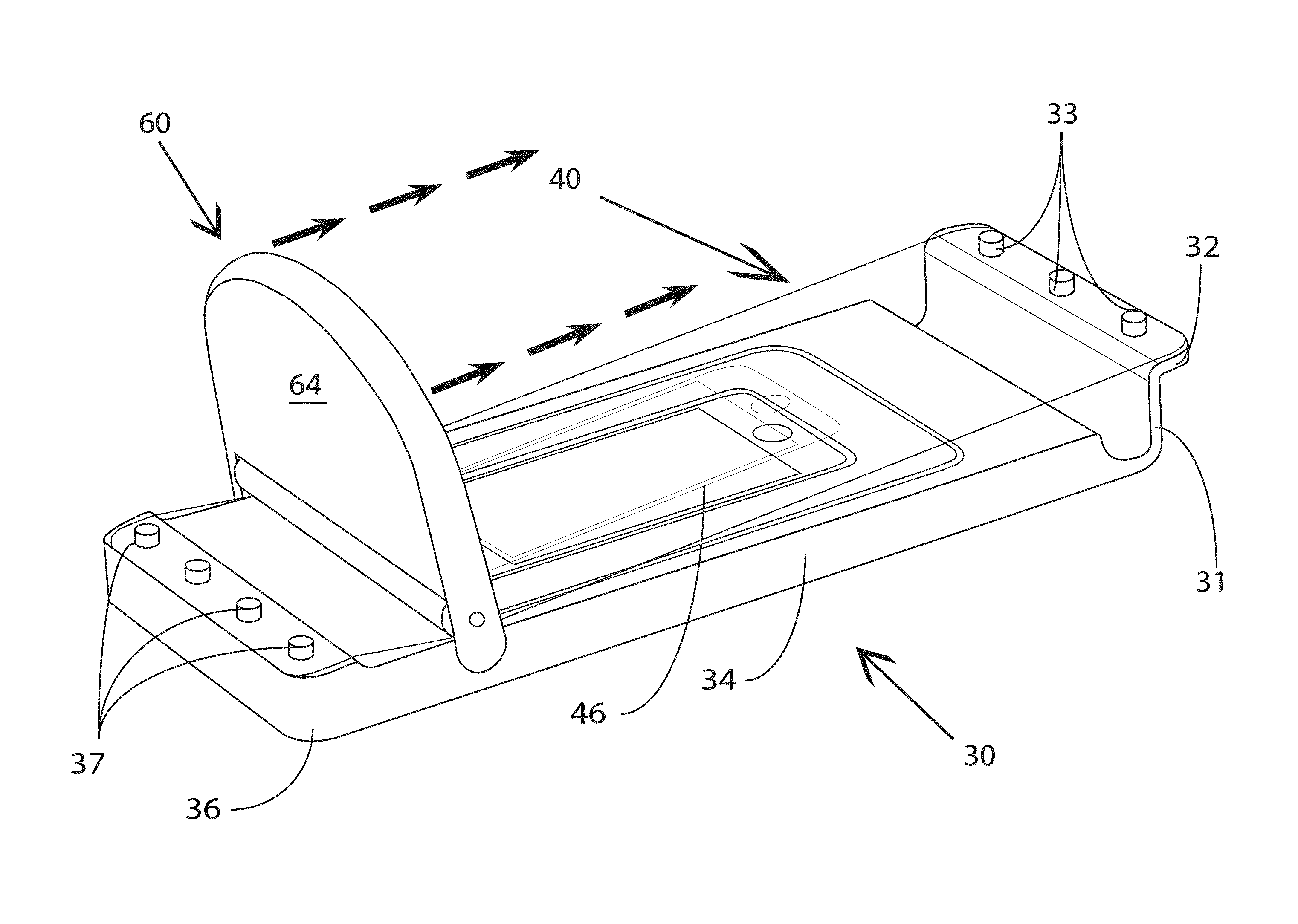

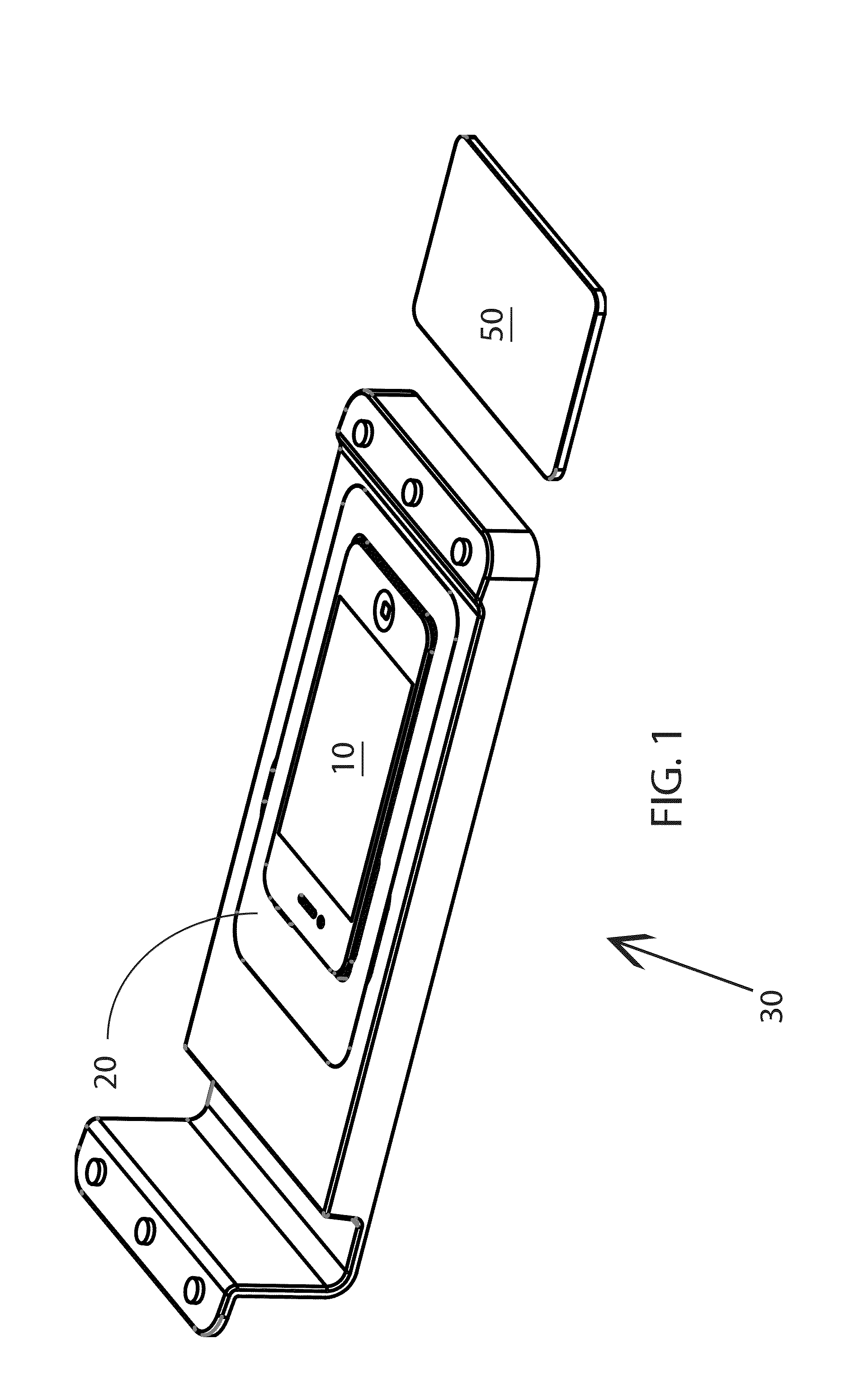

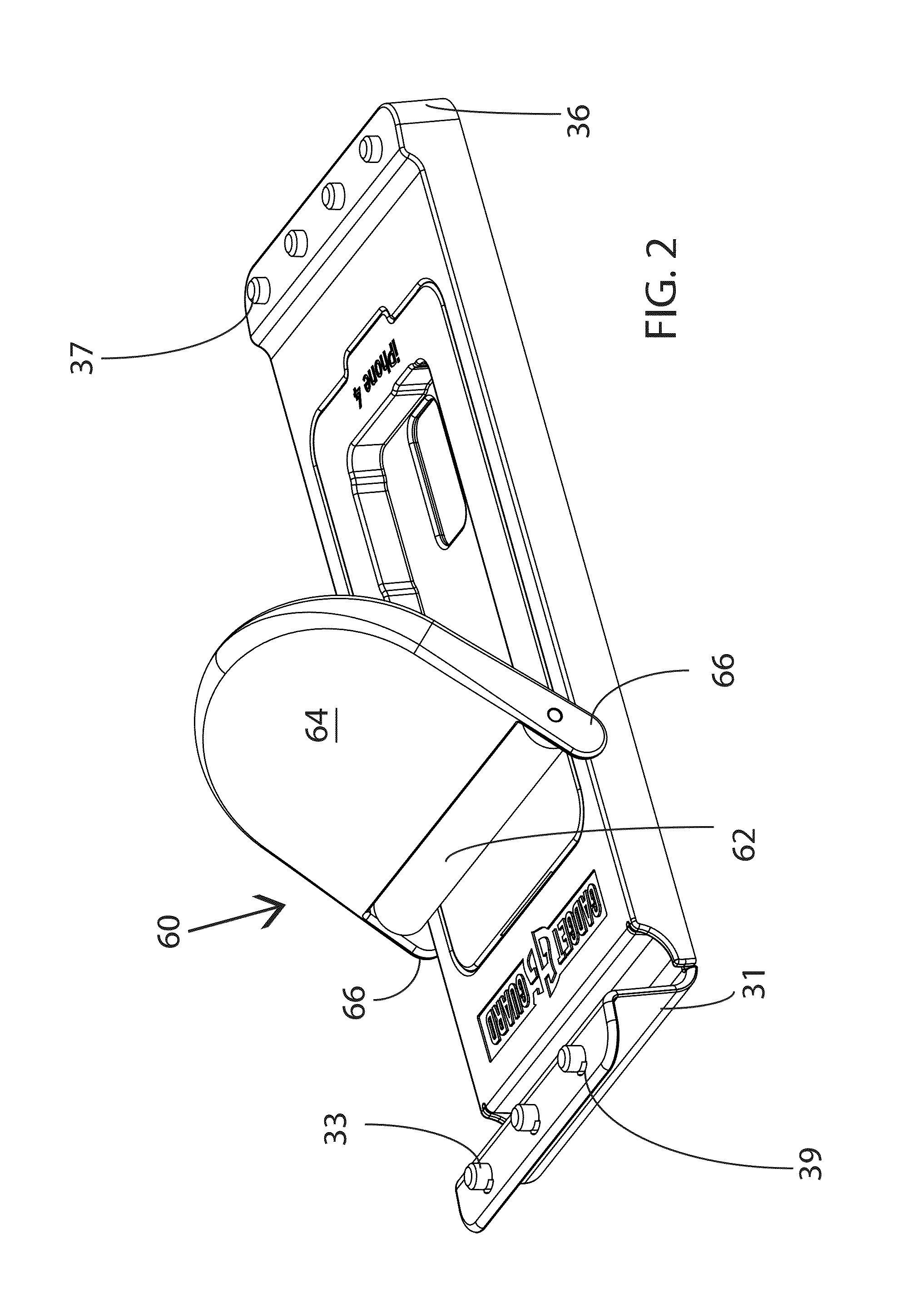

Protective Film Installation Apparatus and Method

InactiveUS20140338829A1Cutting time necessaryImprove application accuracyLamination ancillary operationsWelding/cutting auxillary devicesTectorial membraneExact location

Disclosed is an apparatus for the application of protective films to electronic devices and a method for applying the same. The apparatus utilizes a base shoe and modular framing system that is adaptable for many devices and, when fitted with a device and installed in the shoe, precisely holds the device in a known location relative the shoe. Films are supplied on an application sheet and precisely positioned thereon in a manner such that the application sheet, when positioned on the shoe, holds the film in the exact location needed for quick and precise application of the film. The application sheet is pressed downwards against the device, successively from one edge to another, thereby the film to the device. The shoe features a raised head on a flexible arm, thus raising the film above the level of the device before application and allowing the sheet to be pressed into position.

Owner:ANTENNA79

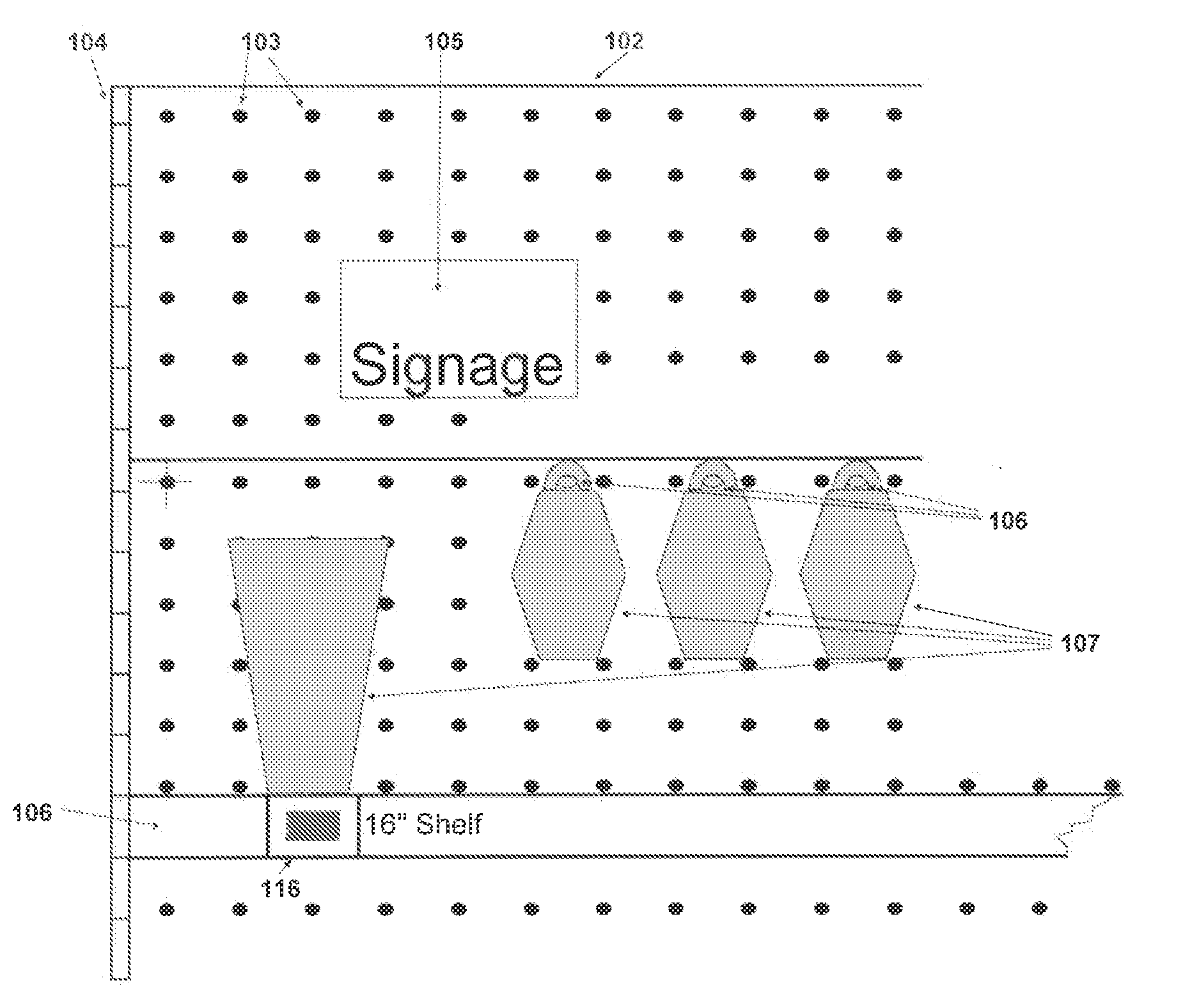

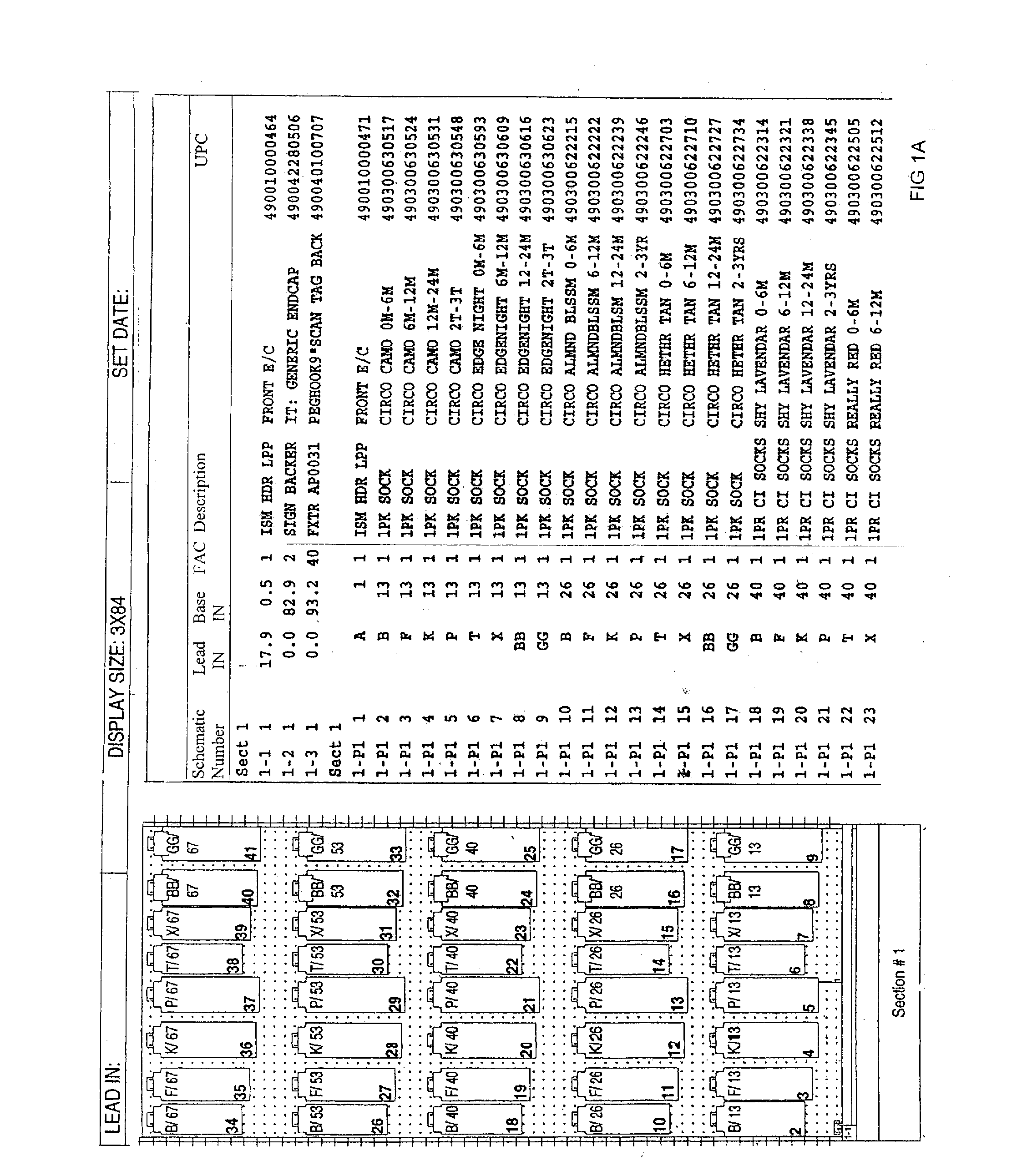

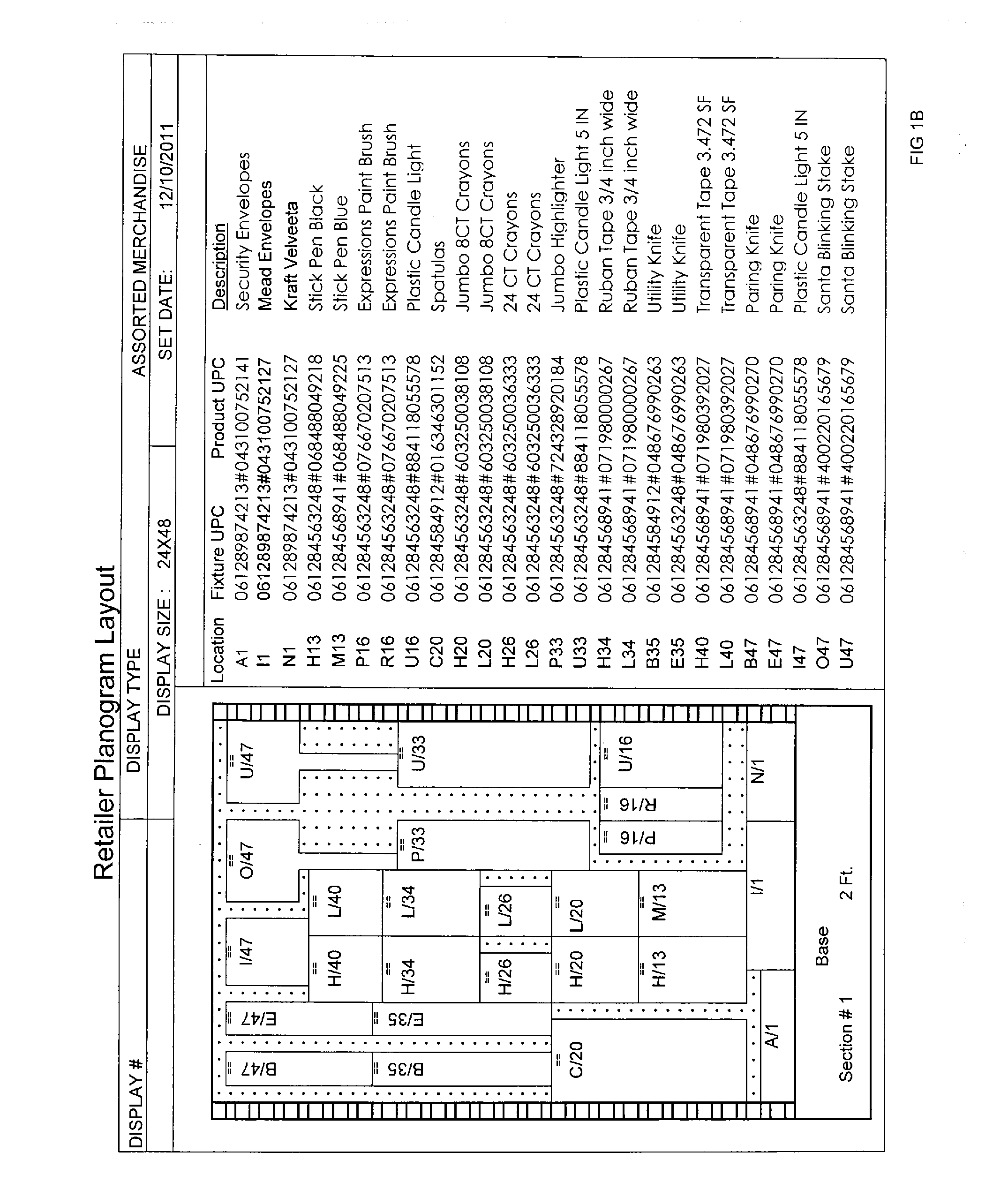

Projected image planogram system

ActiveUS20130119138A1Easy to useReduce riskCharacter and pattern recognitionLogisticsPlanogramData information

An automated projected image display planning and implementation system used in planogramming. Embodiments include a camera, projector, bar code reader, and software to store planograms as designed, communicate these planograms to retail locations, and provide an easy-to-use system insuring that the planograms are recreated as originally conceived. Embodiments create a unique QR code for storing and communicating fixture and merchandise data information as well as the relative placement location within the planogram. Embodiments are configured to project the image of the finished planogram on the display, and further, to illuminating the exact location within the planogram of merchandise and fixtures displayed on the planogram.

Owner:STEVNACY LLC

On-demand emergency notification system using GPS-equipped devices

Provided is an emergency locator system adapted for GPS-enabled wireless devices. Global Positioning System (GPS) technology is and Location Based Services (LBS) are used to determine the exact location of a user and communicate information relating to the emergency status of that location. The user initiates the locator application via a wireless device and their physical location information is automatically transferred to a server. The server then compares the user's location with Geographic Information System (GIS) maps to identify the emergency status associated with their location. Once the server has calculated the current emergency status, the information is automatically returned to the user, along with emergency instructions.

Owner:UNIV OF SOUTH FLORIDA

System for tracking surgical items in an operating room environment

InactiveUS20070268133A1Narrow focusFirmly connectedDigital data processing detailsBurglar alarm mechanical actuationTransceiverExact location

A system for tracking and locating surgical items and objects in an operating room environment that incorporates two-stage functionality. A first stage provides mechanisms for tracking objects using radio frequency (RF) tags that are positioned on or in conjunction with every surgical item and object so as to be tracked by a number of RF transceivers located about the operating room. In addition to integrating RF ID components, the tags integrate hard spherical components that are easily identifiable by ultrasonic detection. If an object is “lost” from the tracking system functionality (RF tracking), the system operator may review a last known location and movement path presented on a display and thereafter utilize an ultrasonic sub-system in a localized area to detect the exact location of the missing object or item. Narrowing the location of a “lost” object is facilitated by the use of one or more LED laser pointers that are directed through the last known path of the object and to its last known location.

Owner:MEDWAVE INC

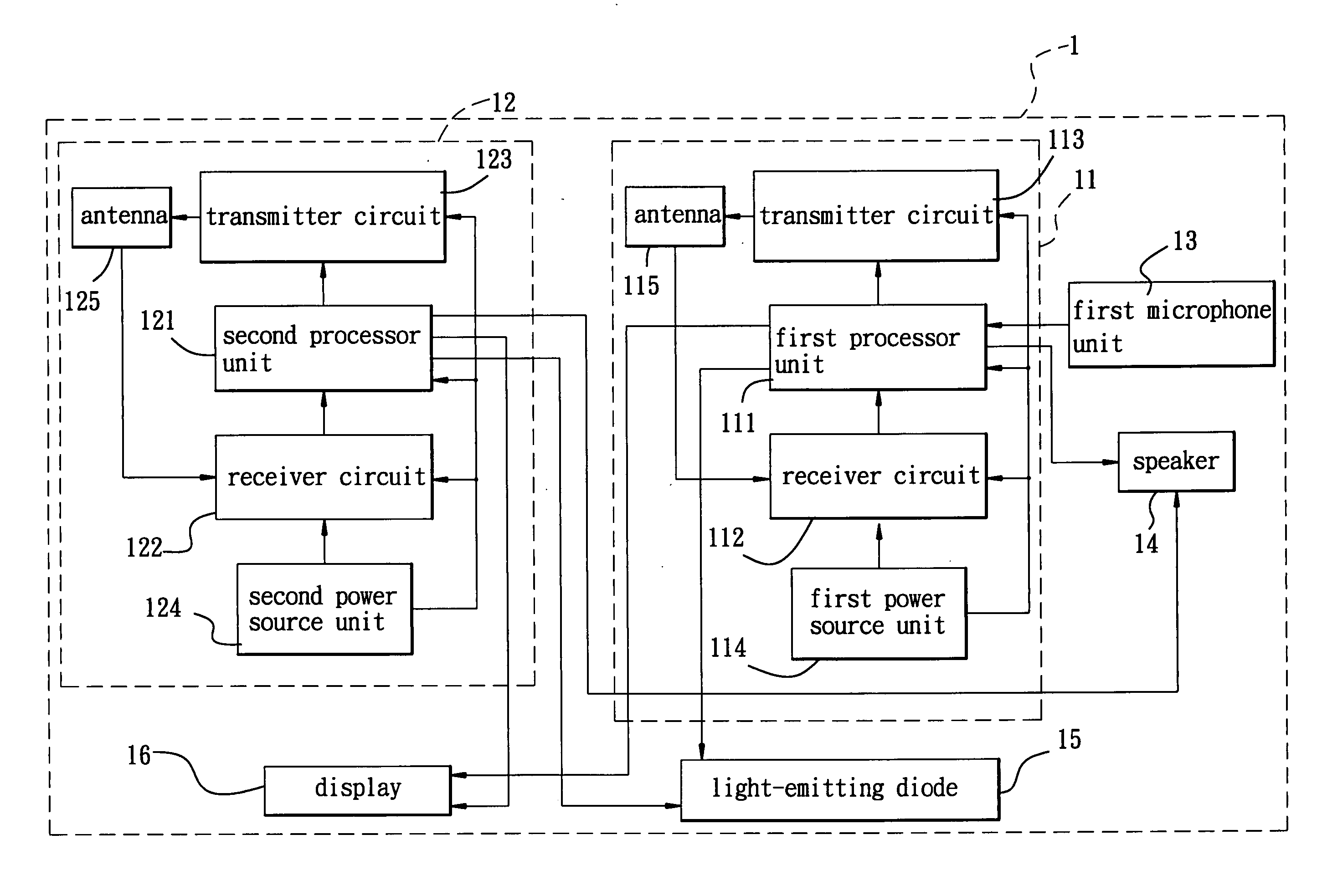

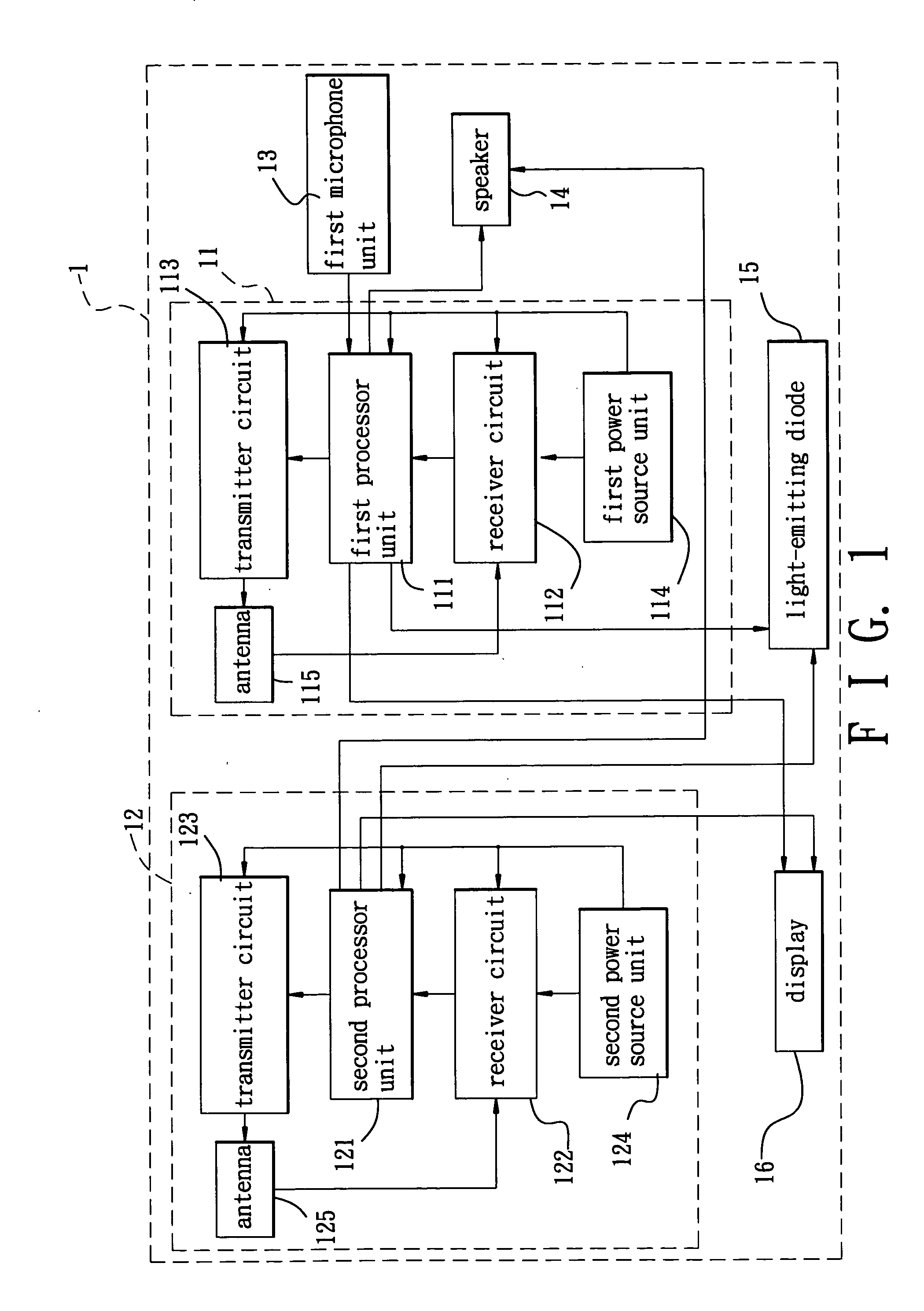

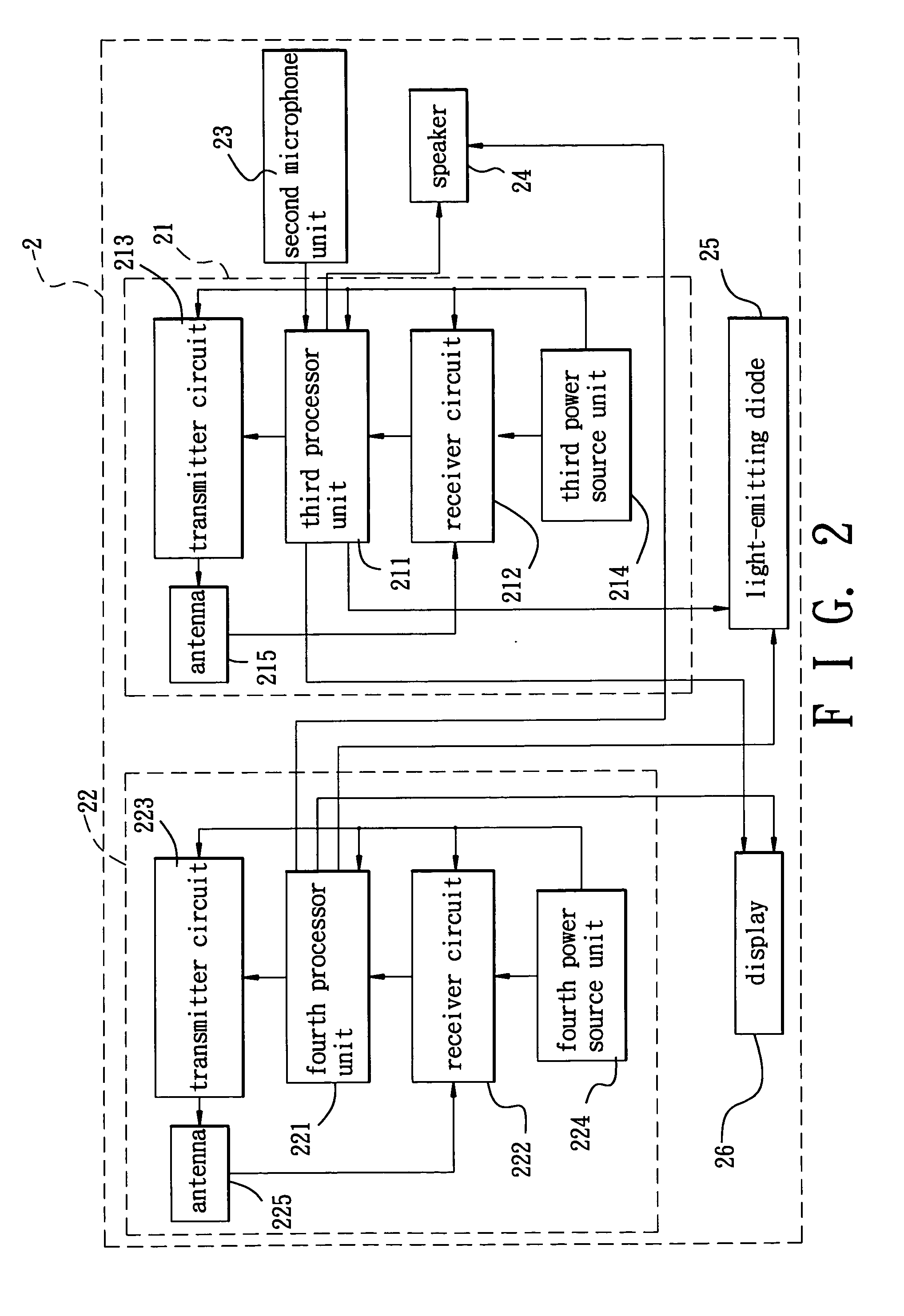

Systems and methods for locating cellular phones and security measures for the same

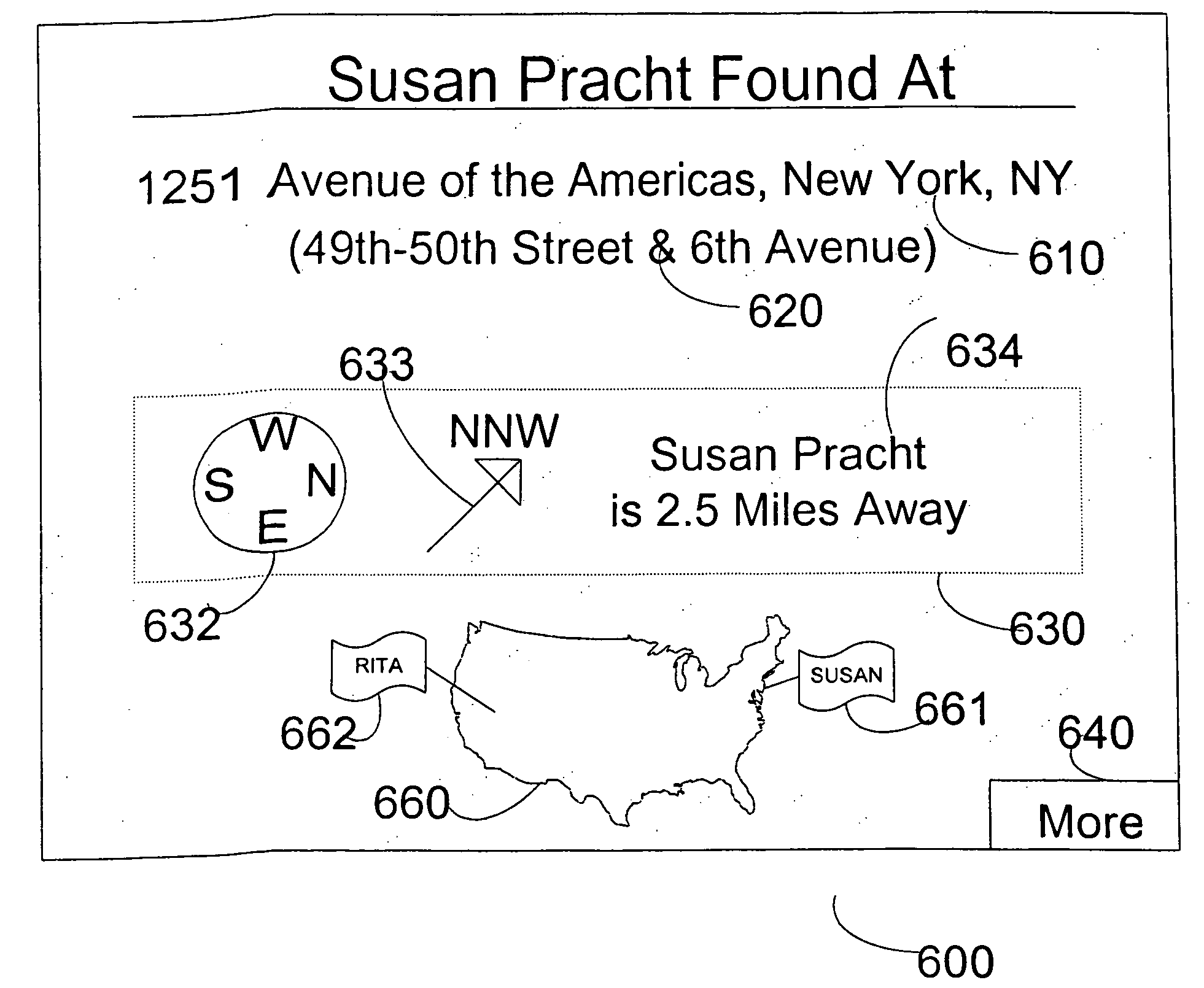

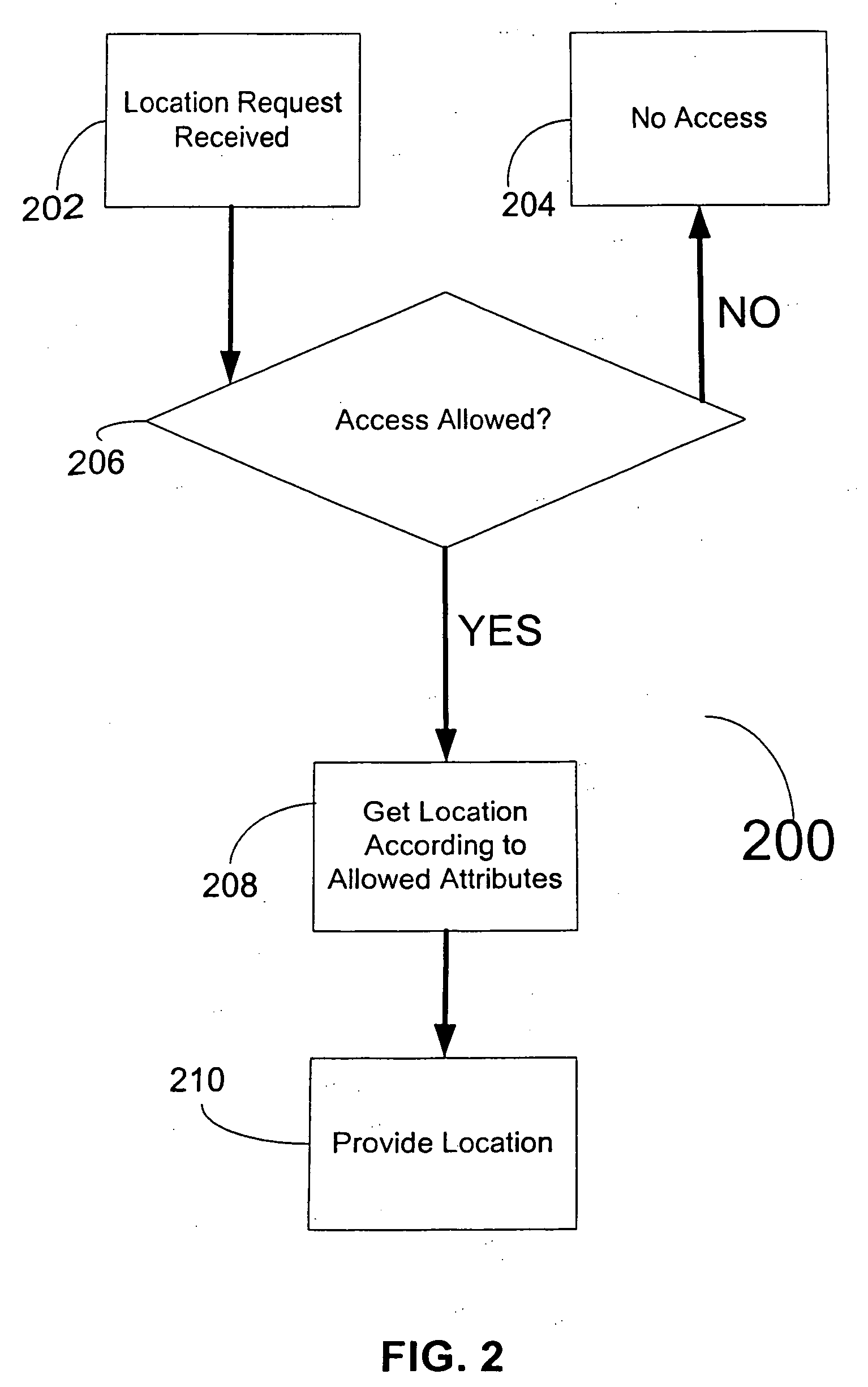

InactiveUS20060183486A1Improve securityPresent inventionUnauthorised/fraudulent call preventionEavesdropping prevention circuitsExact locationSecurity Measure

Systems and methods for locating a cellular phone are provided. More particularly, systems and methods for providing the location of a requested user's cellular phone from a requesting user's device (e.g., a second cellular phone) based on access rights defined by the requested user. Location descriptions may be provided at a multitude of levels. For example, if a cellular phone, or an identity associated to (e.g., logged into) a cellular phone, has been given access rights to a cell phone's exact location for an indefinite amount of time, that cell phone can receive, on command, the exact location of the approved cell phone. Other levels of location information that can be granted include, for example, proximities, states, and countries.

Owner:MULLEN IND LLC

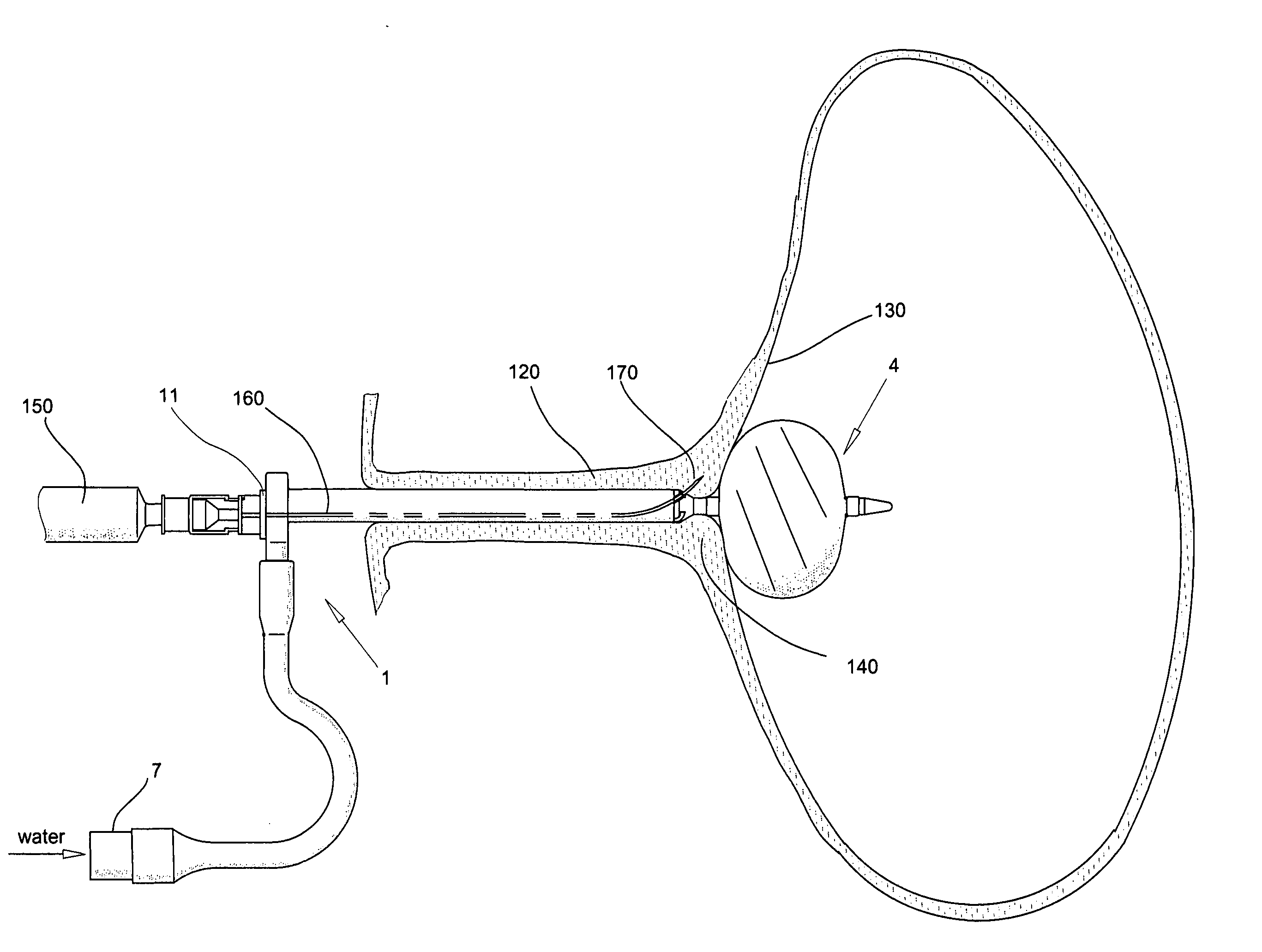

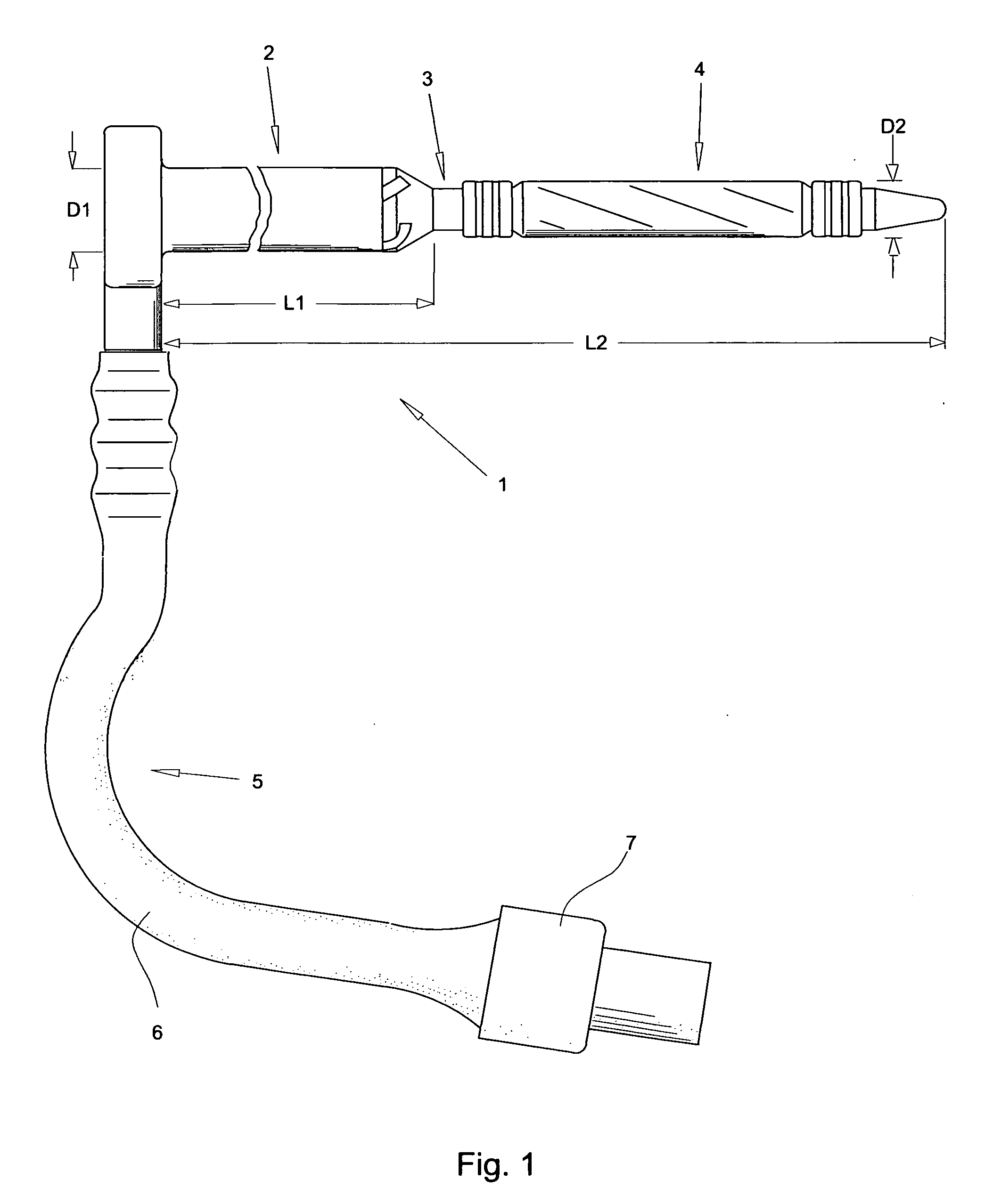

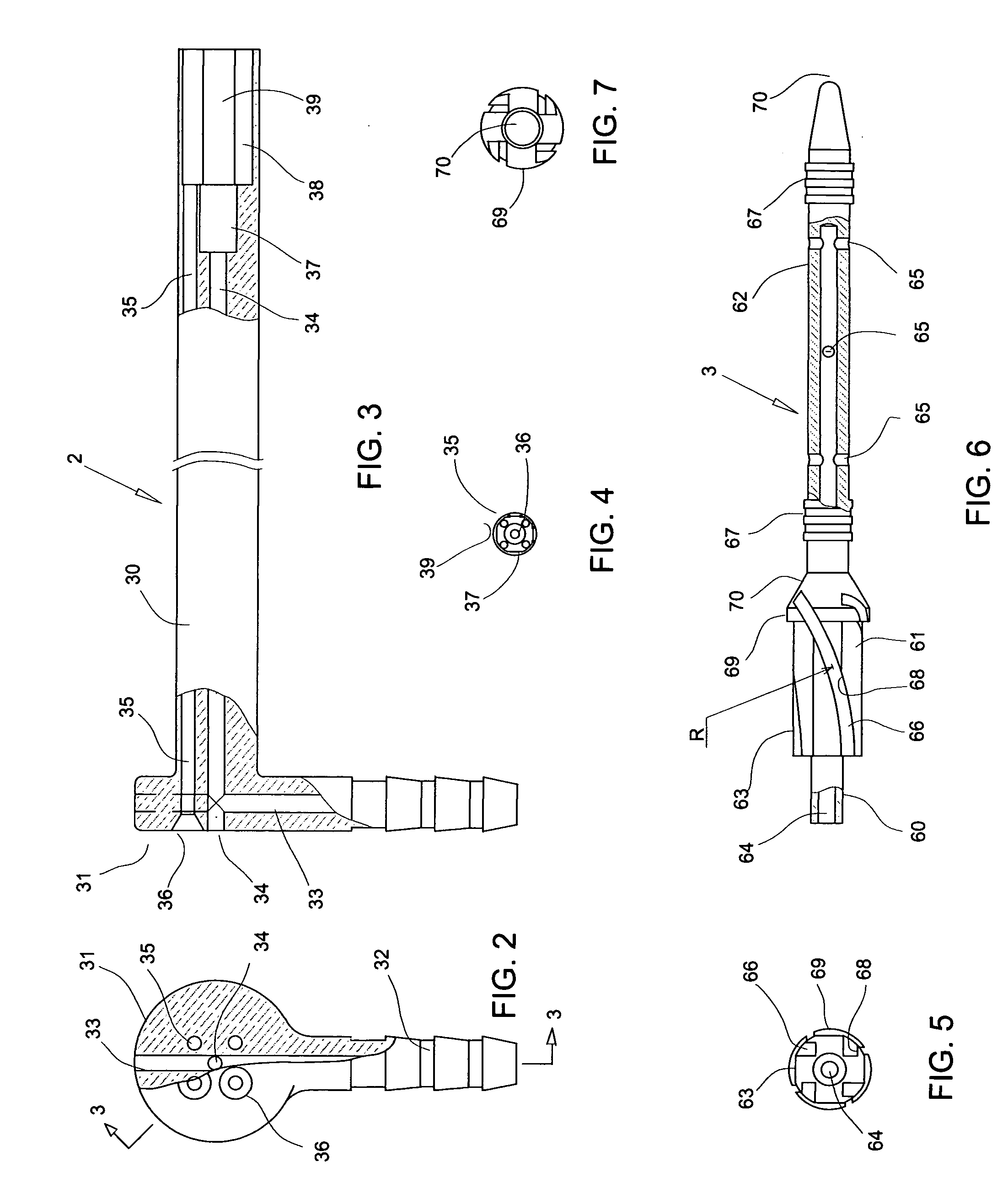

Instrument used in treatment of the urinary incontinence in women

A disposable, inexpensive instrument is provided with the aim to facilitate an injection of bulk enhancing agent into the urethral sphincter. An idea of a balloon of Foley's catheter has been exploited where the balloon acts as a retainer immobilizing the instrument in a desired position inside the bladder during the injection. The instrument has a shape of an elongated shaft with a multitude of curved channels guiding and deflecting the needle to an appropriate angle, depth of penetration and exact location on the sphincter during the injection.

Owner:HIBNER MICHAEL CEZARY

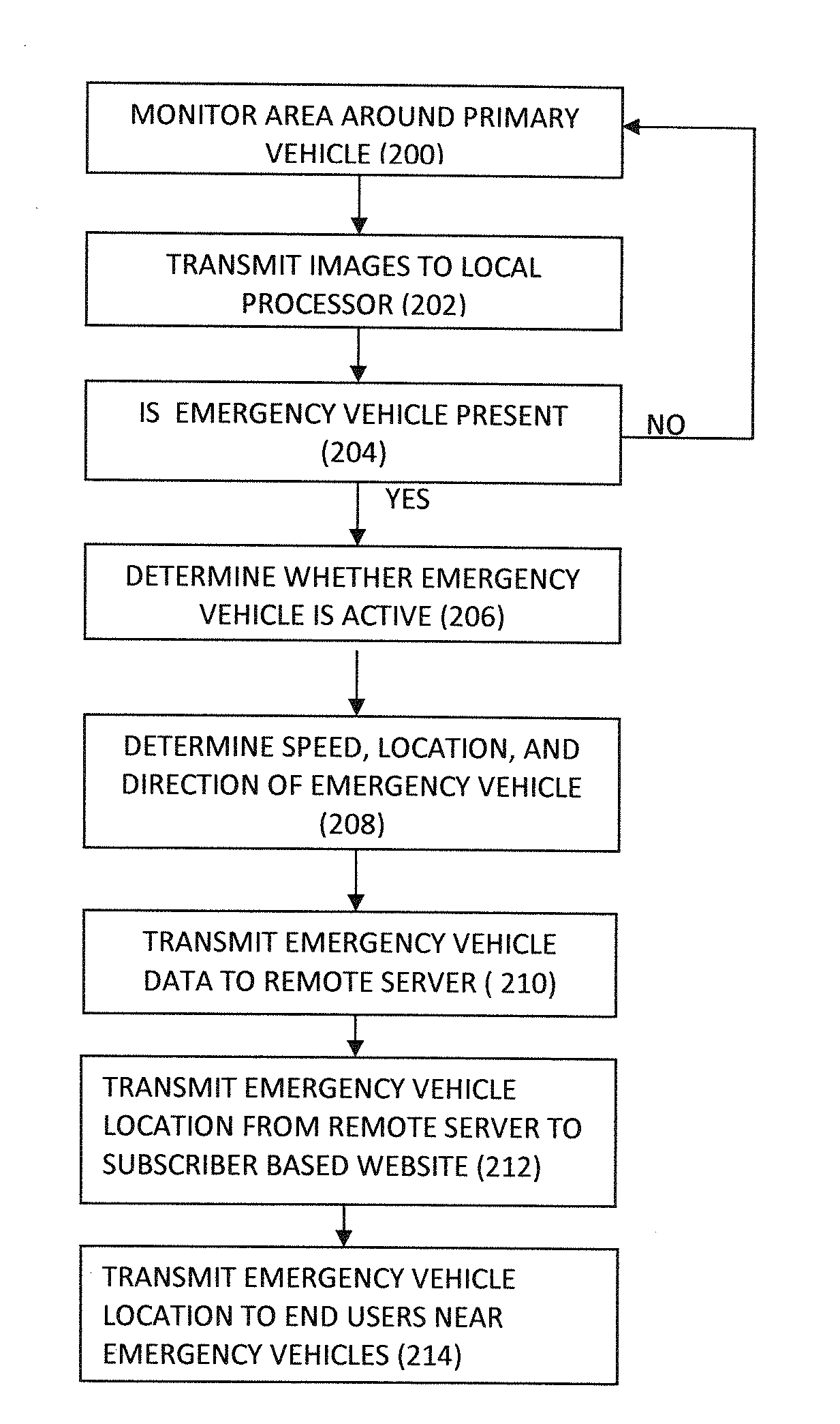

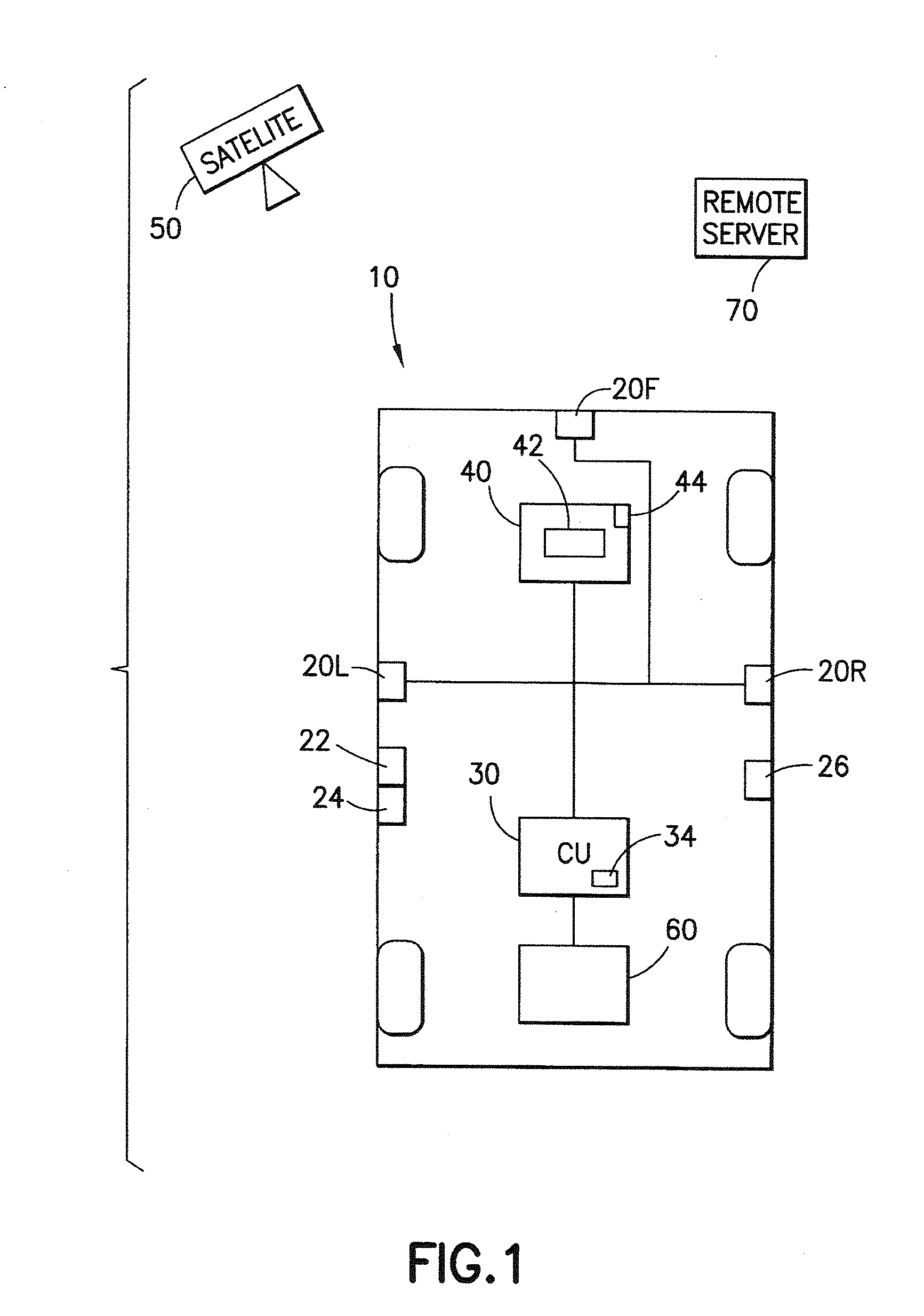

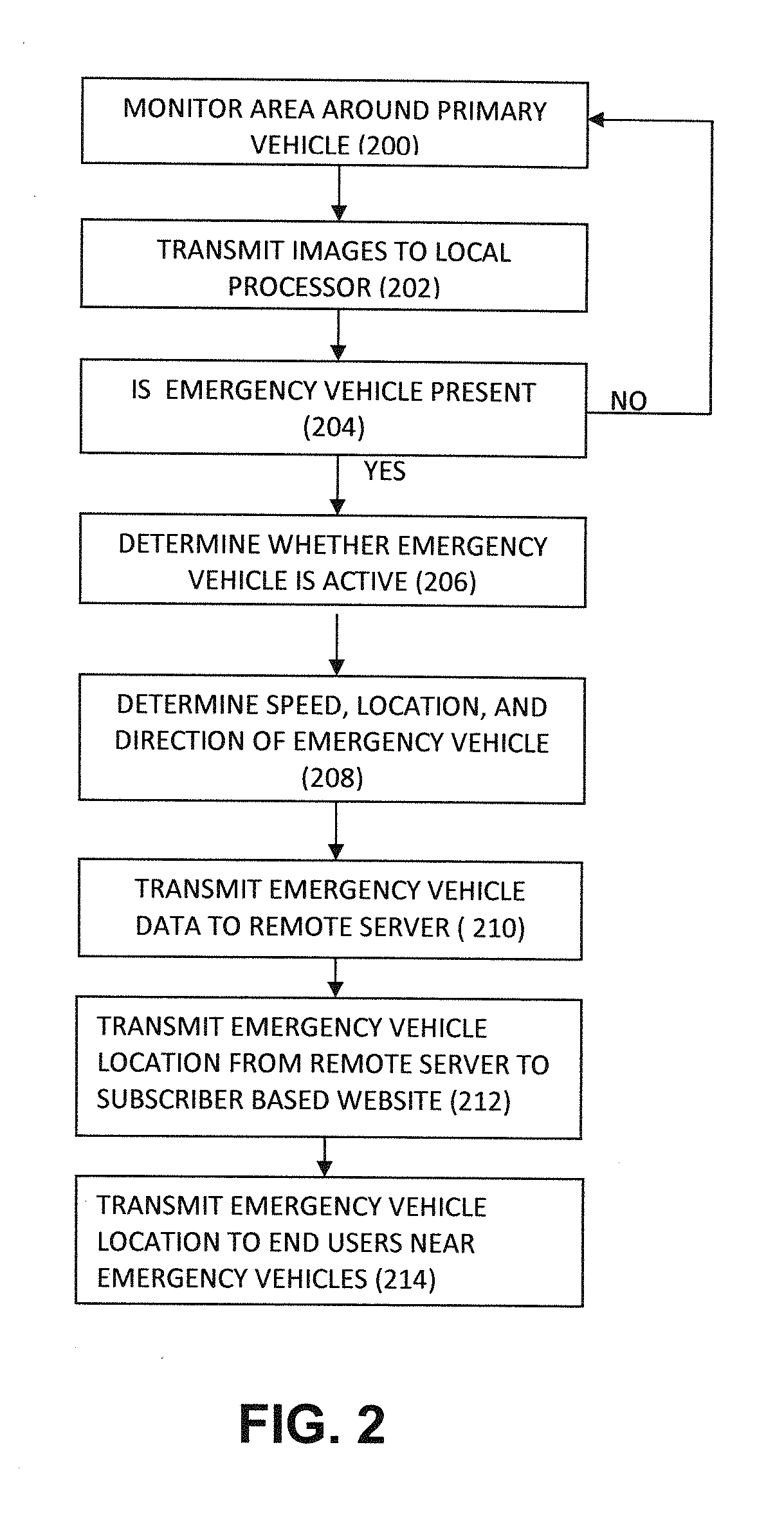

Device and system for identifying emergency vehicles and broadcasting the information

InactiveUS20120136559A1Analogue computers for vehiclesAnalogue computers for trafficExact locationEngineering

A system and method for identifying emergency vehicles and broadcasting their location is provided. The system and method gather and process information and data to identify emergency vehicles such as ambulances, fire trucks, and police cars. Further, the system and method is integrated with a locational information system to determine the exact location of the emergency vehicle. Further, the system and method include auxiliary devices to determine the speed and direction of the vehicle and broadcast the location to other drivers in the area.

Owner:REAGAN INVENTIONS

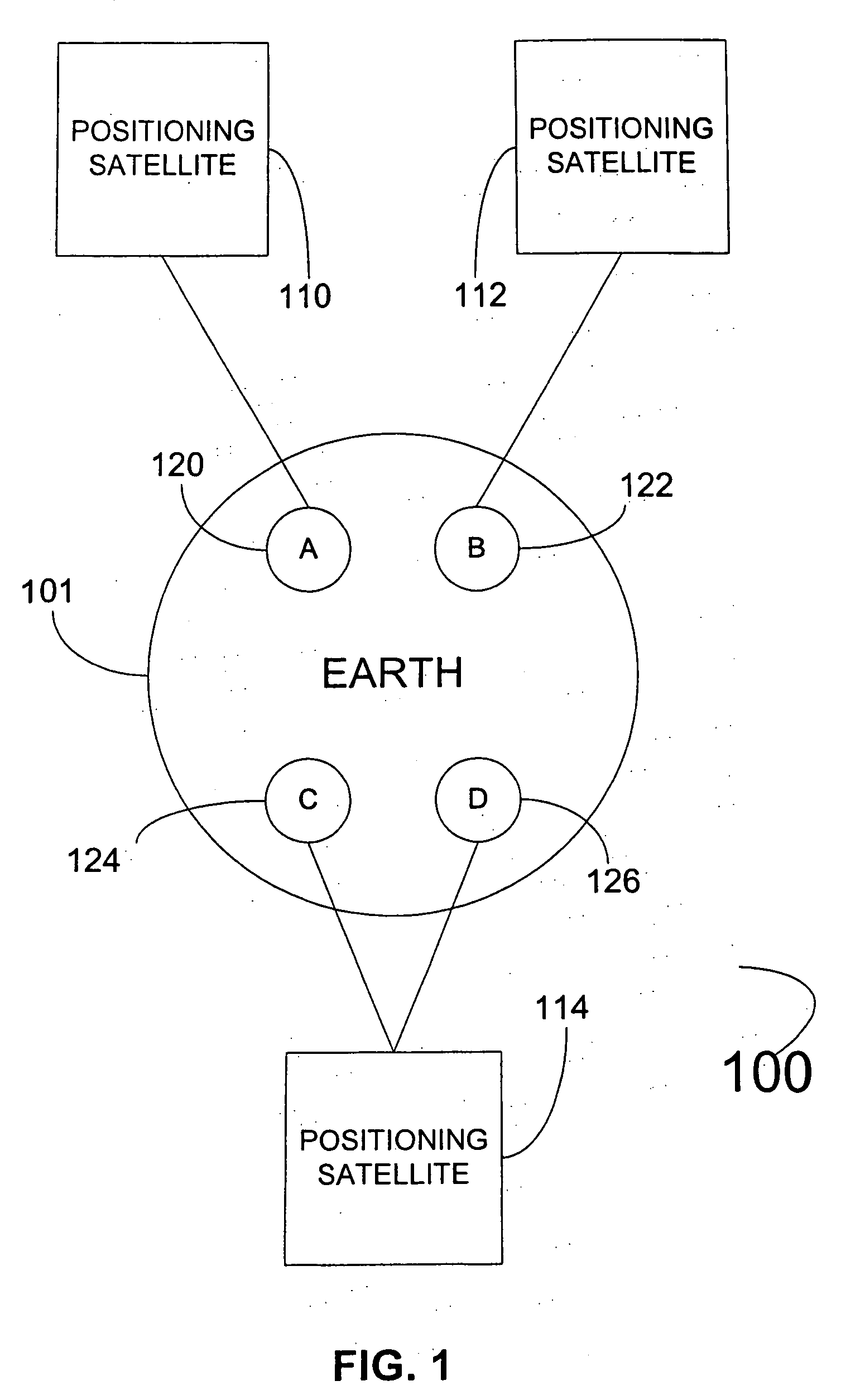

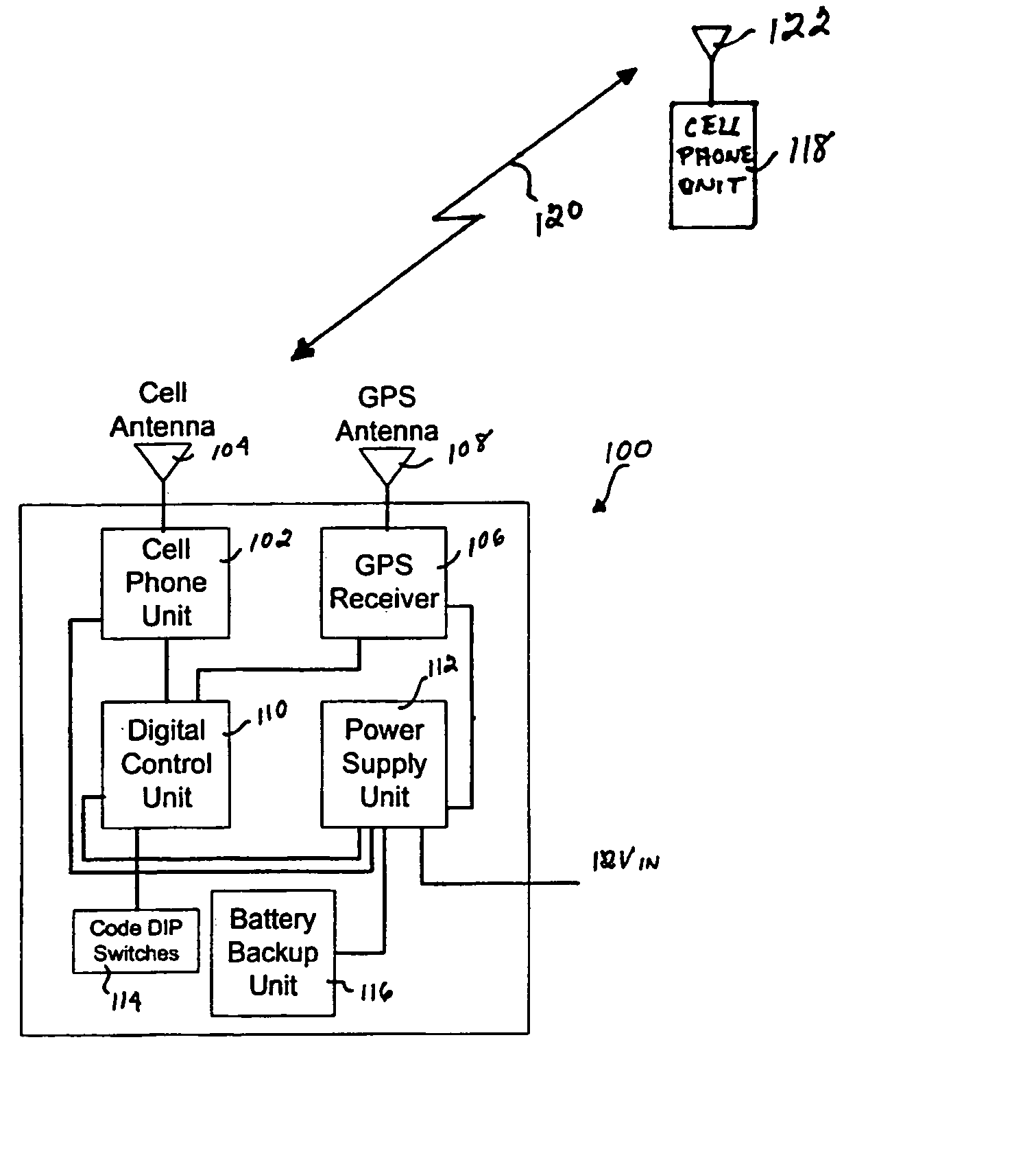

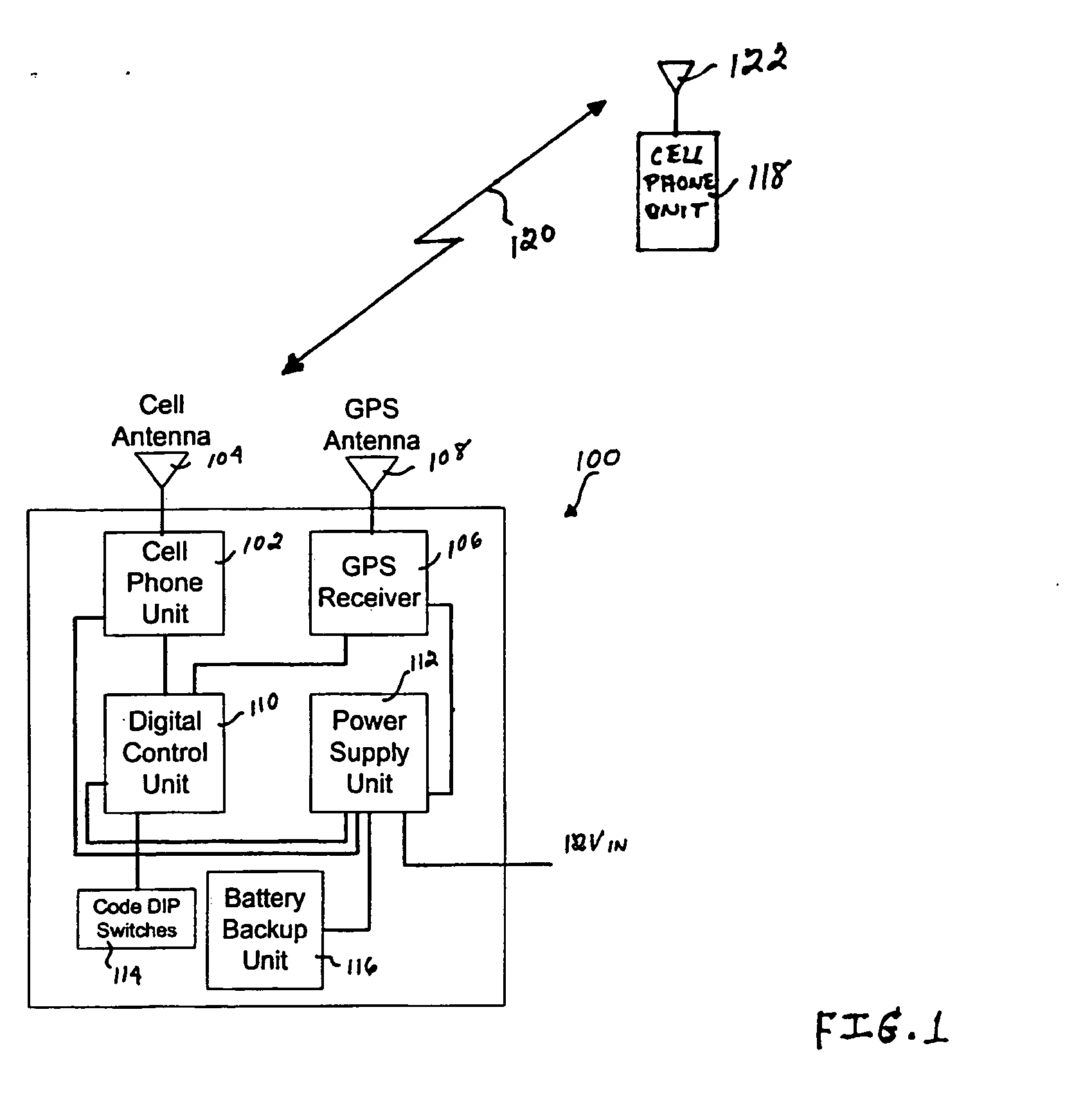

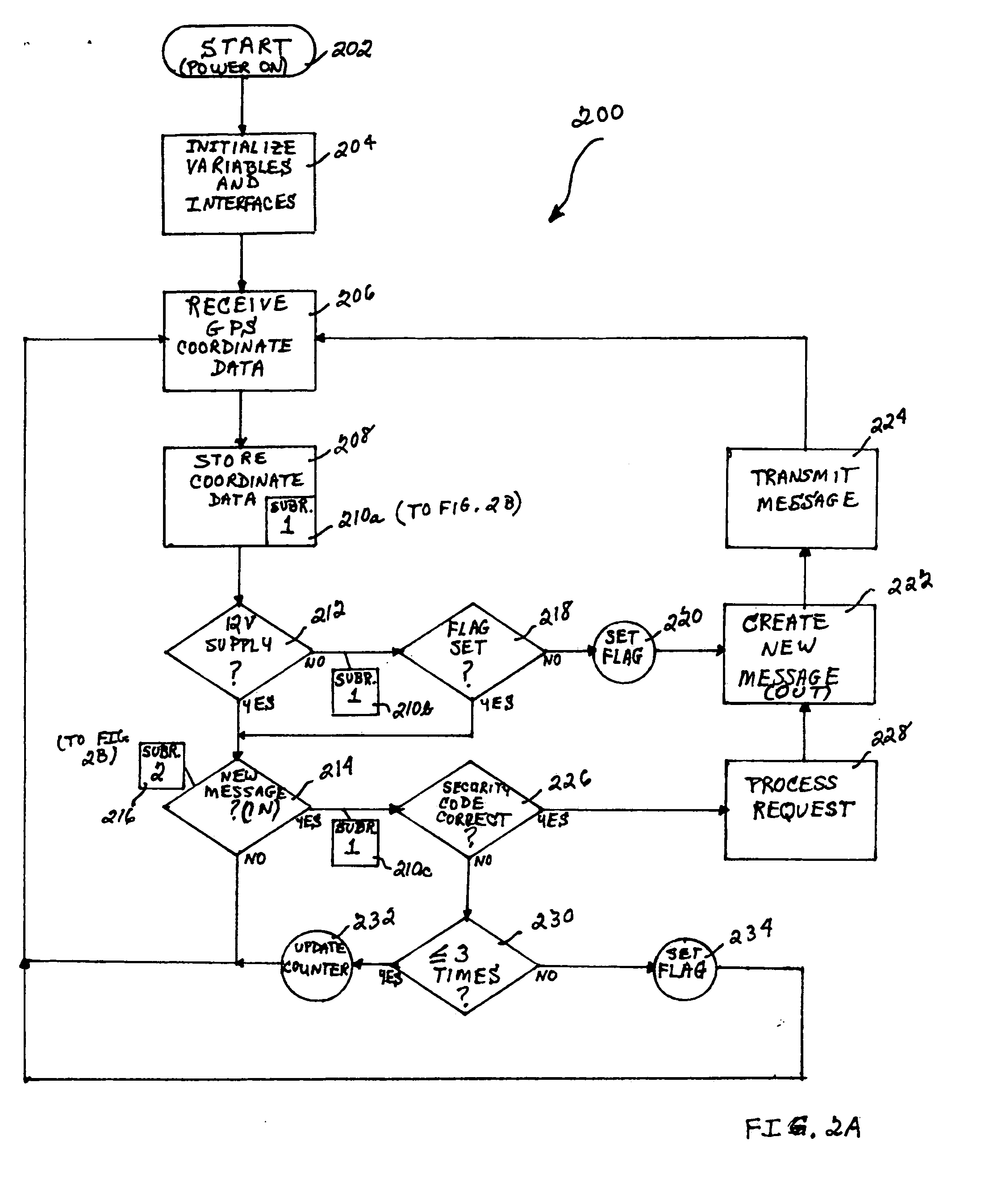

Tracking system and method

A system and method for determining and communicating the precise location of an individual and / or a motor vehicle in real-time is disclosed. As an example, a tracking system is disclosed that includes a Global Positioning System (GPS) receiver, a cellular phone, and a processing unit. The GPS receiver, cellular phone and processing unit are arranged as a single, compact tracking unit. The processing unit receives precise location information (e.g., latitudinal and longitudinal coordinates) for the tracking unit from the GPS receiver. A cellular phone capable of receiving text messages (e.g., and / or voice messages) can be used to call the cellular phone of the tracking unit, which responds (e.g., to an authenticated call) by transmitting a text message (e.g., or synthesized voice message) including the precise coordinates of the tracking unit. Thus, either with or without the knowledge of the individual carrying the tracking unit or driving the motor vehicle containing the tracking unit, the system is capable of providing the exact location of the individual and / or motor vehicle to another at any point in time.

Owner:HONEYWELL INT INC

Tracking method and system to be implemented using a wireless telecommunications network

InactiveUS20050261002A1Radio/inductive link selection arrangementsLocation information based serviceTelecommunications networkExact location

A tracking method for a tracking system that includes target and tracking devices includes the steps of: in response to a location request issued by the tracking device and transmitted through a wireless telecommunications network, enabling operation of the target device to access a location-based service that is provided by the wireless telecommunications network and to generate a location information signal from the location-based service that is subsequently transmitted to the target device through the wireless telecommunications network such that an immediate vicinity of the target device can be located; and, in response to a tracking request from the tracking device, enabling the target device to transmit a beaconing signal wirelessly such that an exact location of the target device can be determined based on the beaconing signal.

Owner:WINTECRONICS

Deterent for unmanned aerial systems

ActiveUS9715009B1Defence devicesDirection/deviation determining electromagnetic systemsNon destructiveCountermeasure

Owner:XIDRONE SYST INC

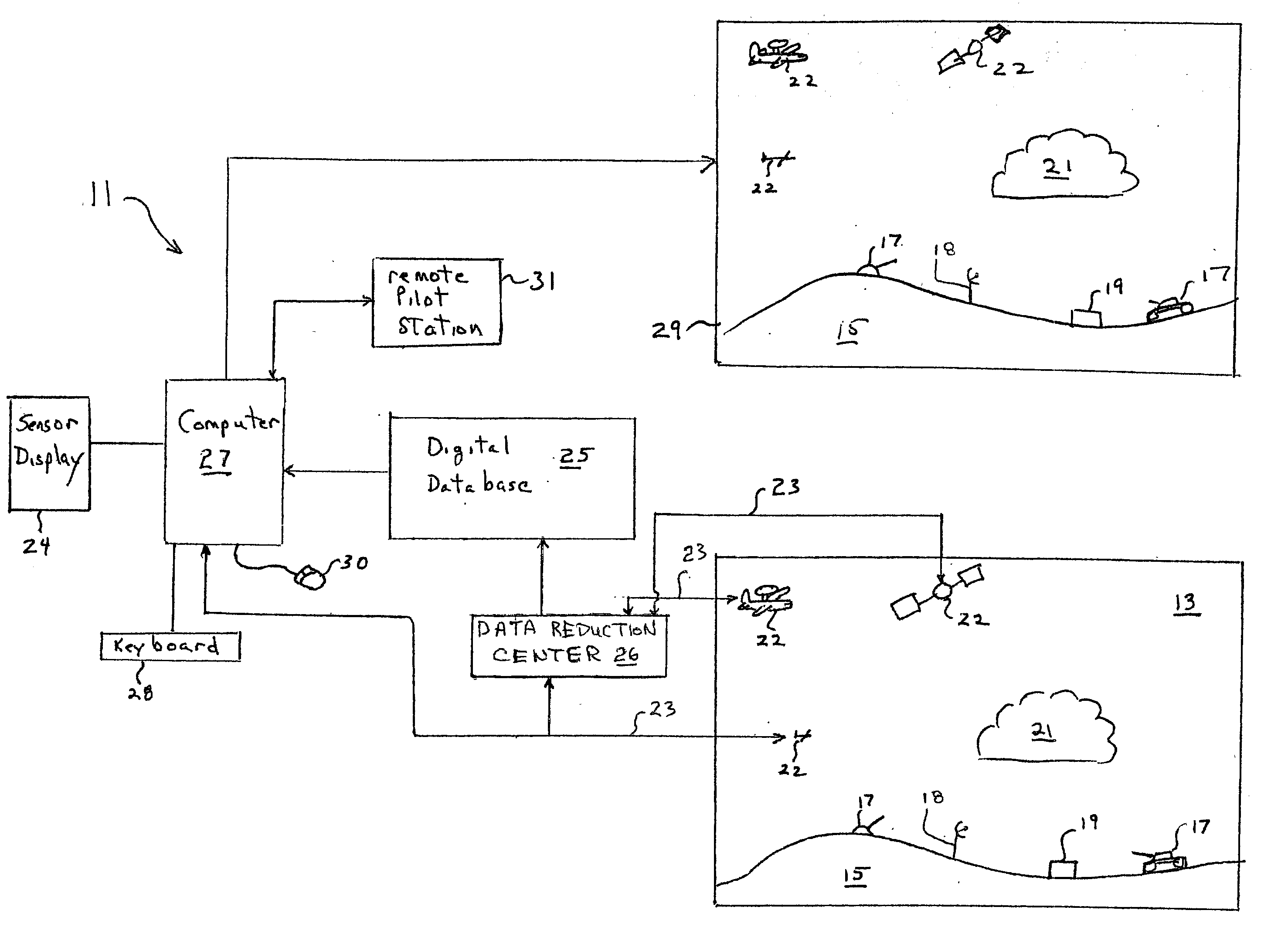

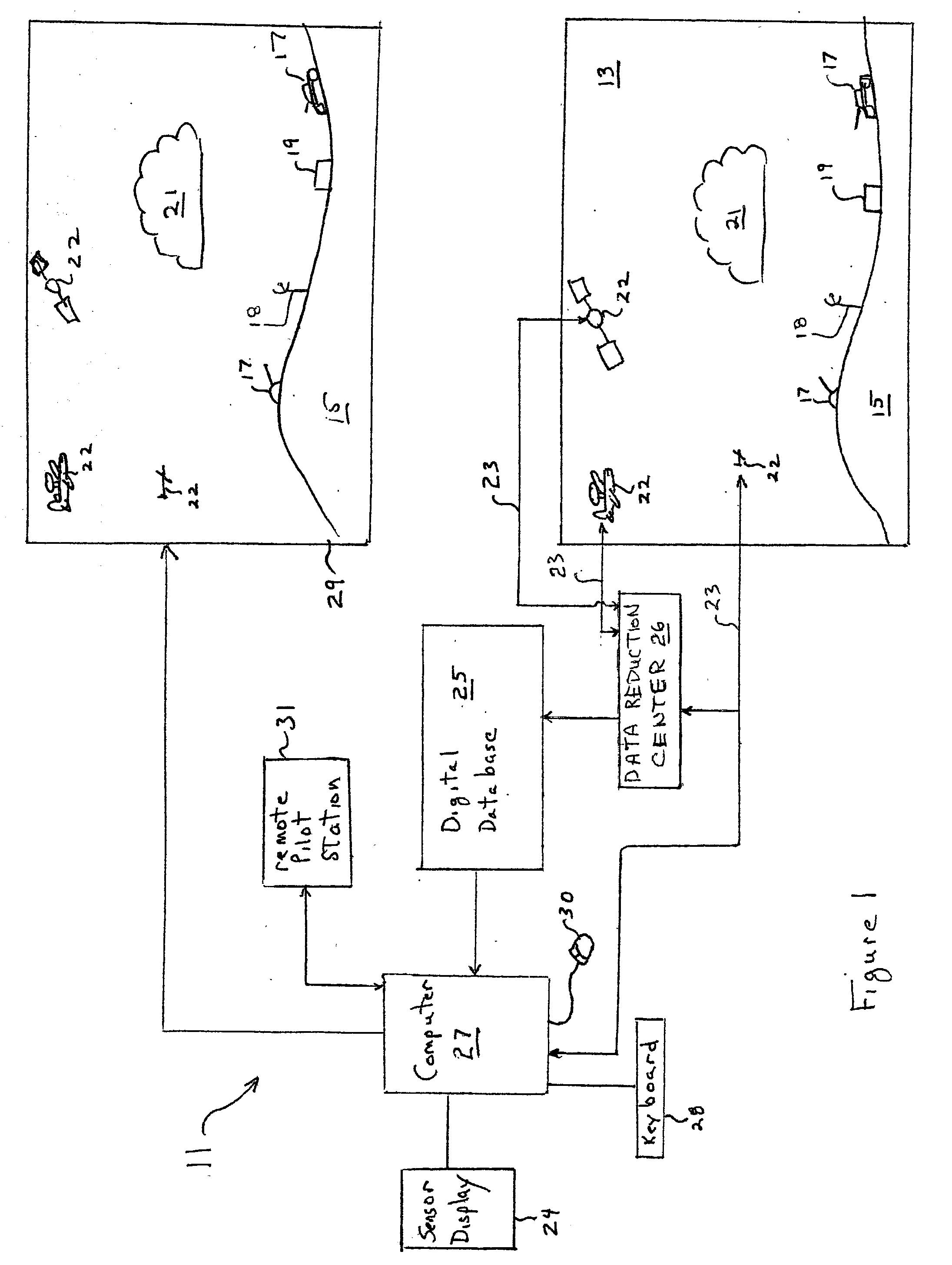

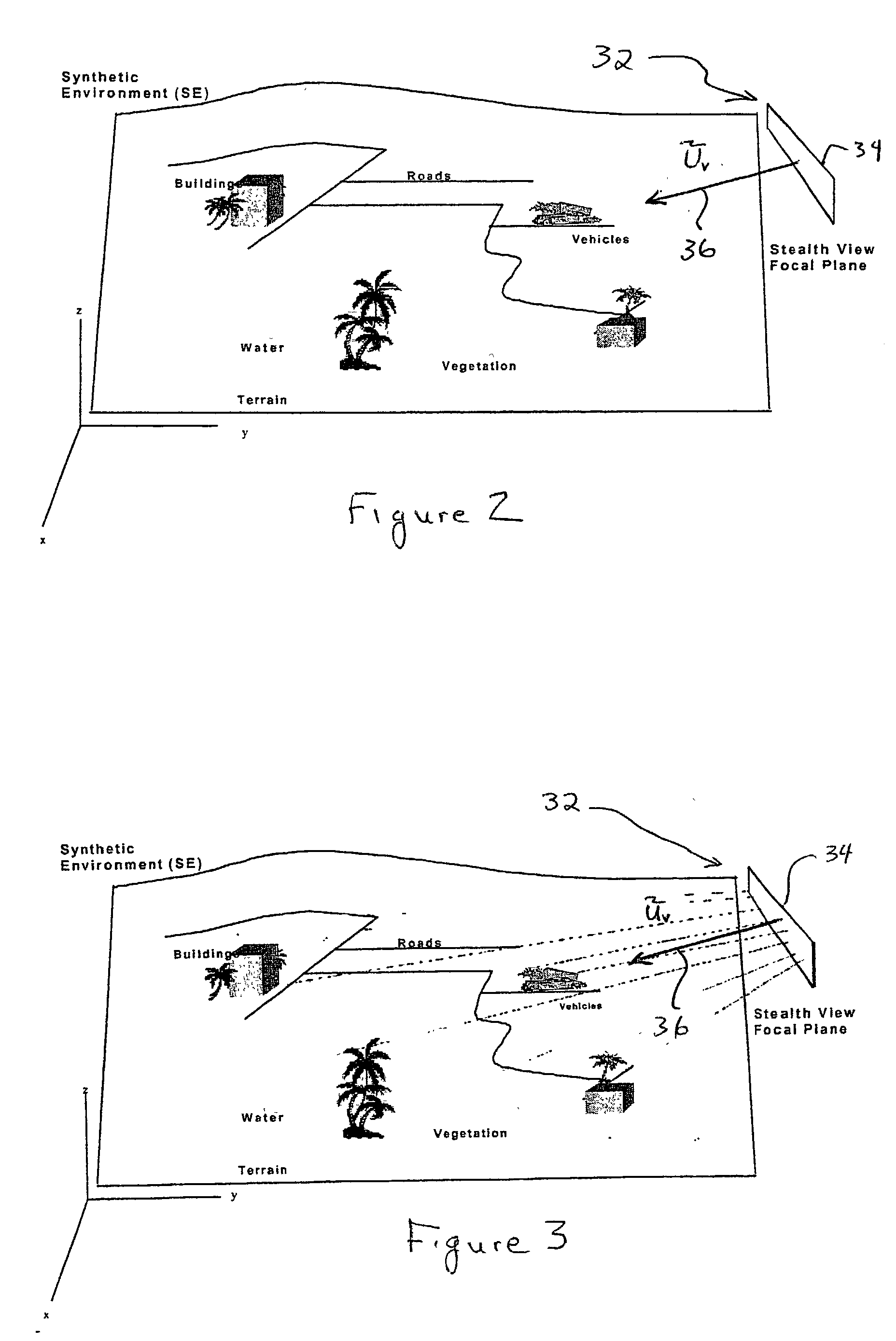

Method and apparatus for determining the geographic location of a target

This invention generally relates to a method and apparatus for locating a target depicted in a real-world image that has a slant angle and vantage point location that are only approximately known using a virtual or synthetic environment representative of the real-world terrain where the target is generally located; and more particularly, to such a method and apparatus wherein a set of views of the virtual environment is compared with the real-world image of the target location for matching the simulated view that most closely corresponds to the real-world view in order to correlate the real-world image of the target with the selected simulated view in order to correctly locate the target in the virtual environment and thereby determine the exact location of the target in the real-world

Owner:LOCKHEED MARTIN CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com