Patents

Literature

1483 results about "Coprocessor" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A coprocessor is a computer processor used to supplement the functions of the primary processor (the CPU). Operations performed by the coprocessor may be floating point arithmetic, graphics, signal processing, string processing, cryptography or I/O interfacing with peripheral devices. By offloading processor-intensive tasks from the main processor, coprocessors can accelerate system performance. Coprocessors allow a line of computers to be customized, so that customers who do not need the extra performance do not need to pay for it.

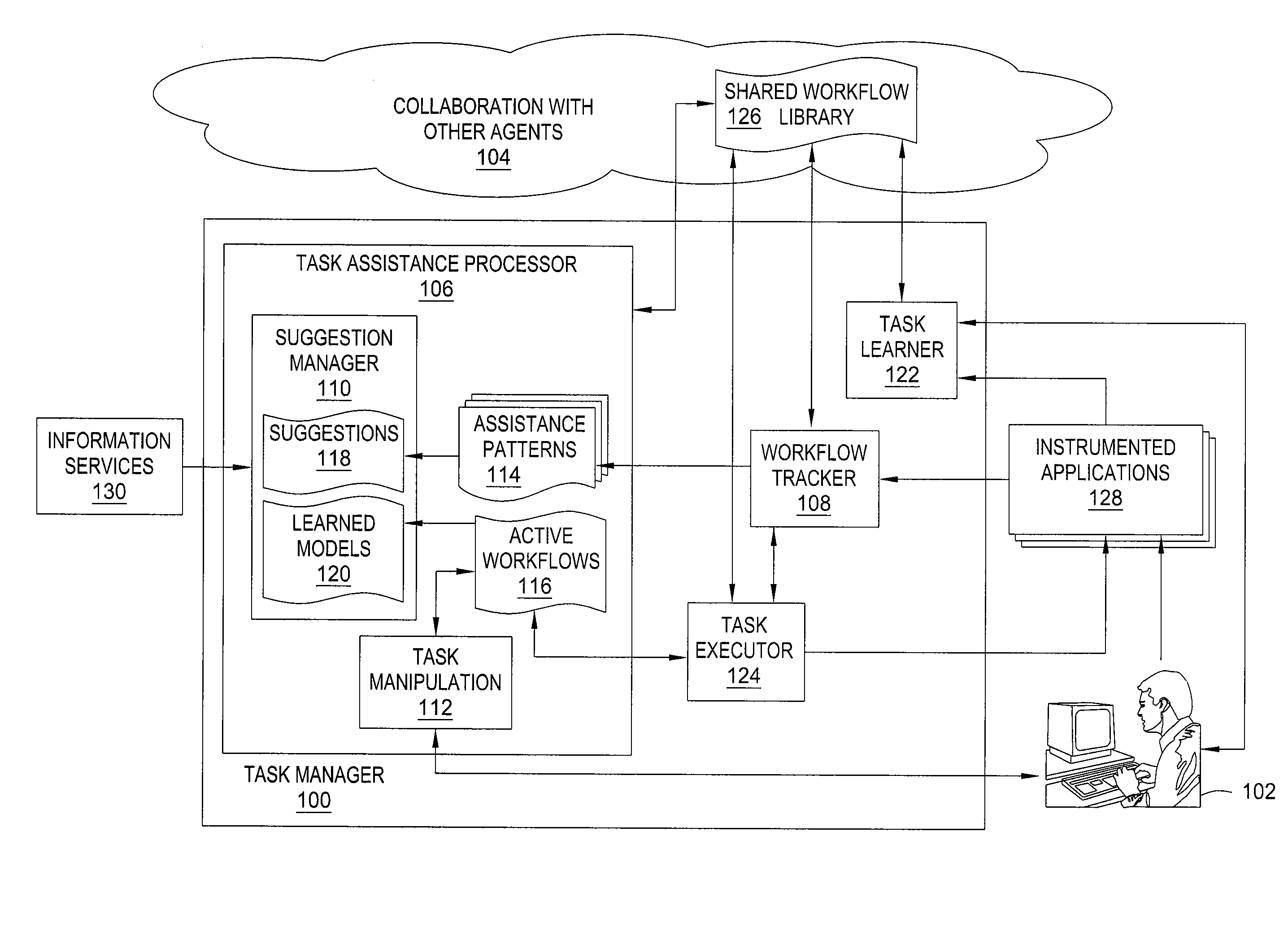

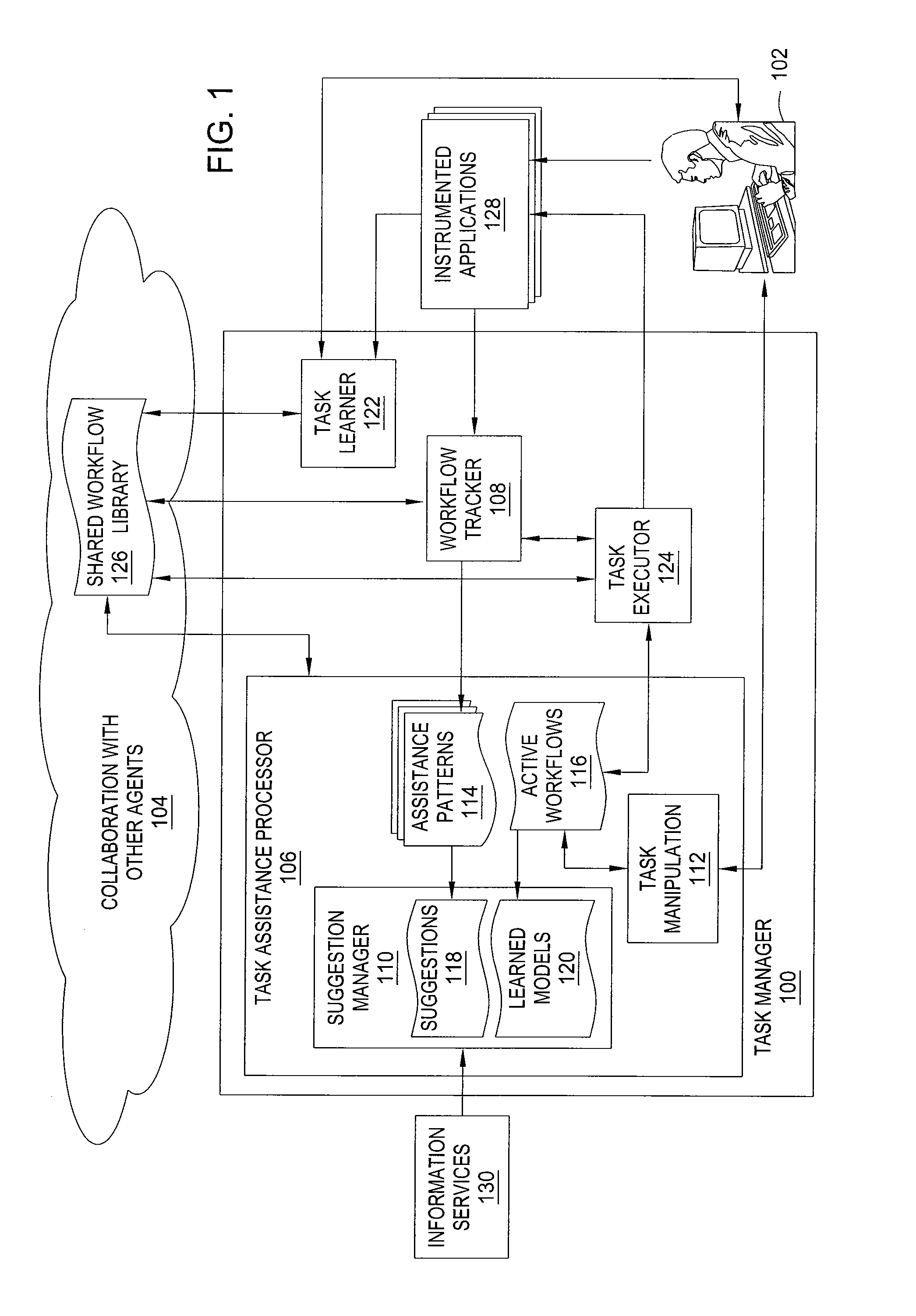

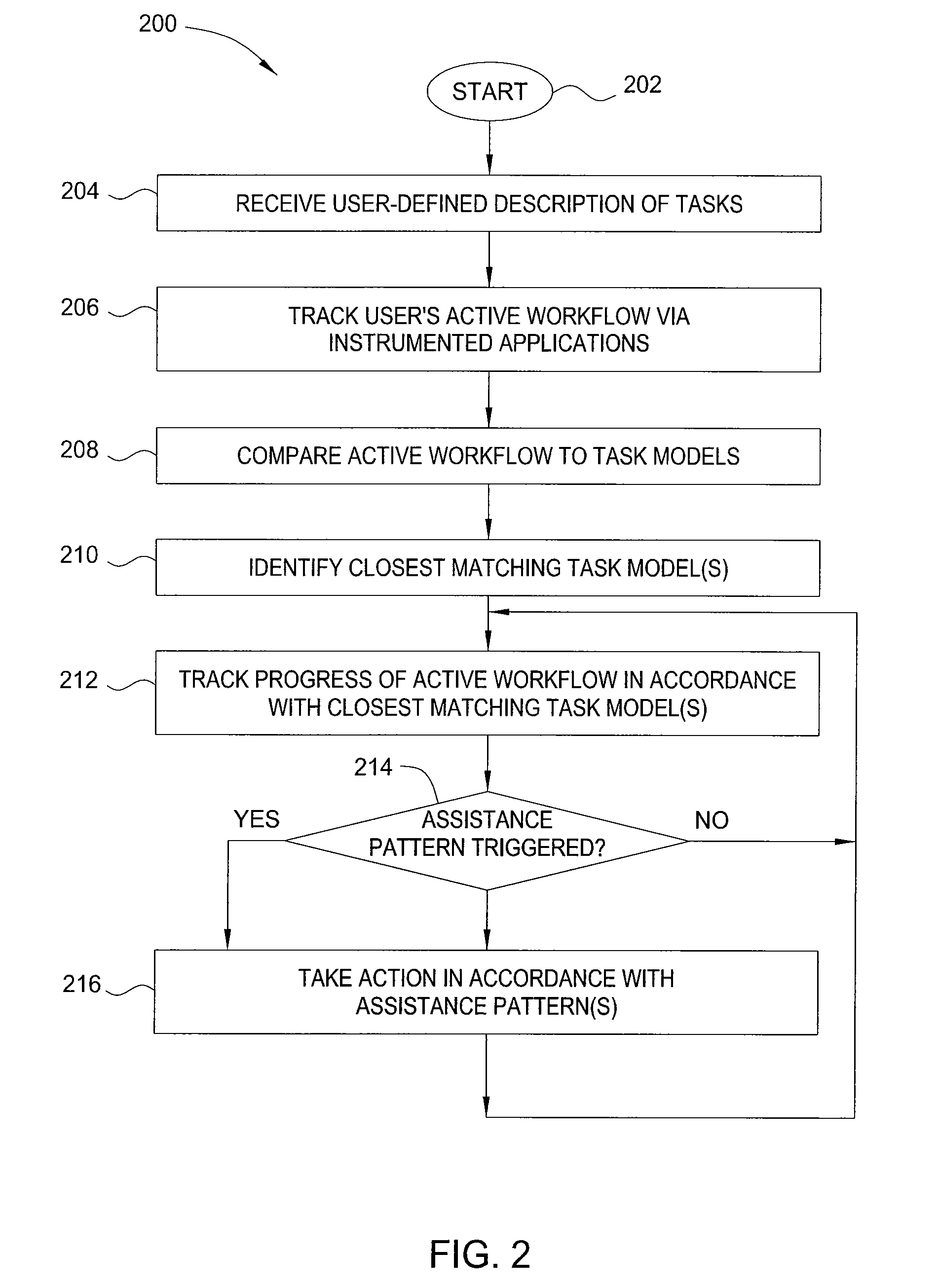

Method and apparatus for automated assistance with task management

ActiveUS20090307162A1Digital computer detailsMultiprogramming arrangementsCoprocessorApplication software

The present invention relates to a method and apparatus for assisting with automated task management. In one embodiment, an apparatus for assisting a user in the execution of a task, where the task includes one or more workflows required to accomplish a goal defined by the user, includes a task learner for creating new workflows from user demonstrations, a workflow tracker for identifying and tracking the progress of a current workflow executing on a machine used by the user, a task assistance processor coupled to the workflow tracker, for generating a suggestion based on the progress of the current workflow, and a task executor coupled to the task assistance processor, for manipulating an application on the machine used by the user to carry out the suggestion.

Owner:SRI INTERNATIONAL

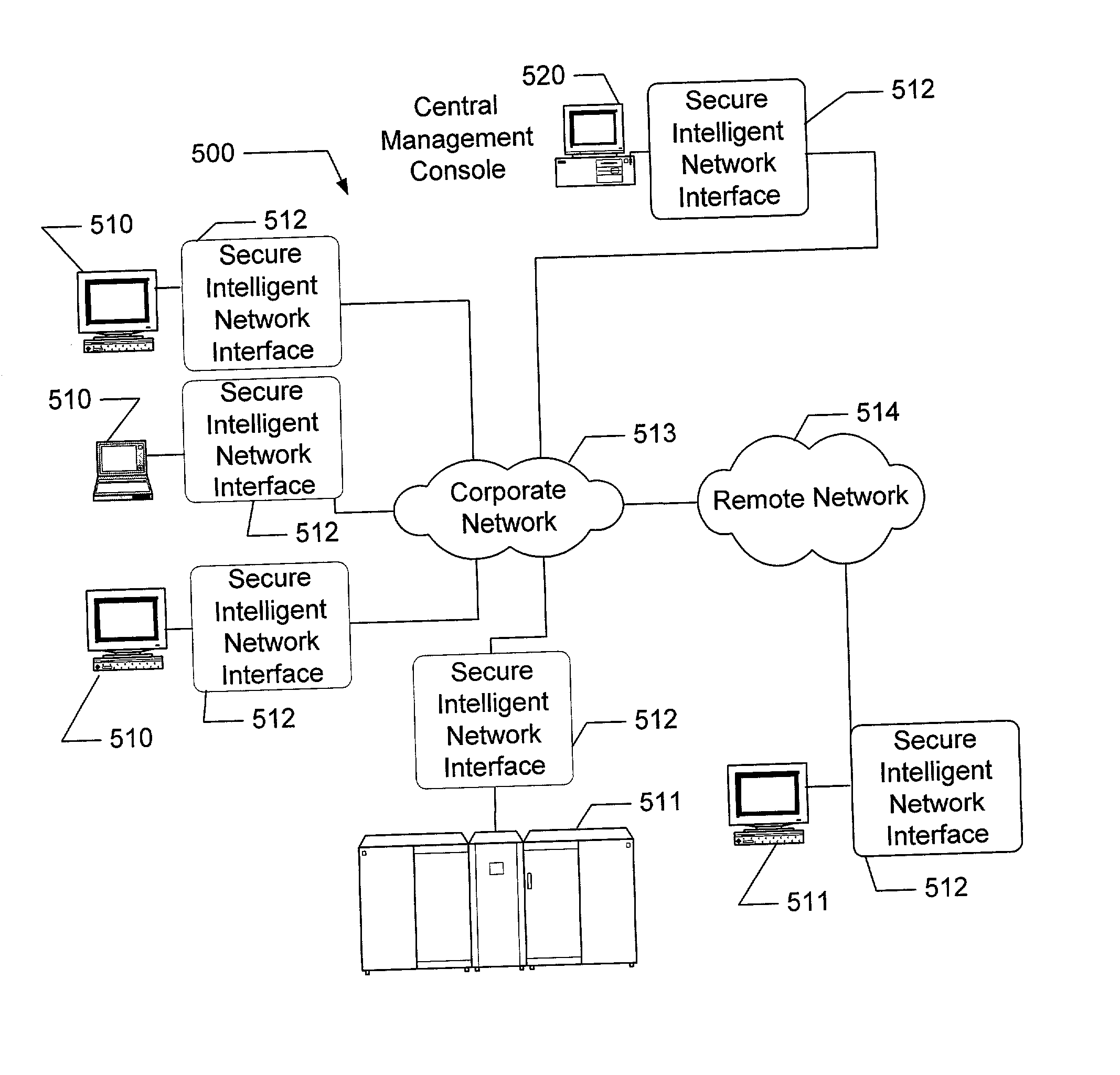

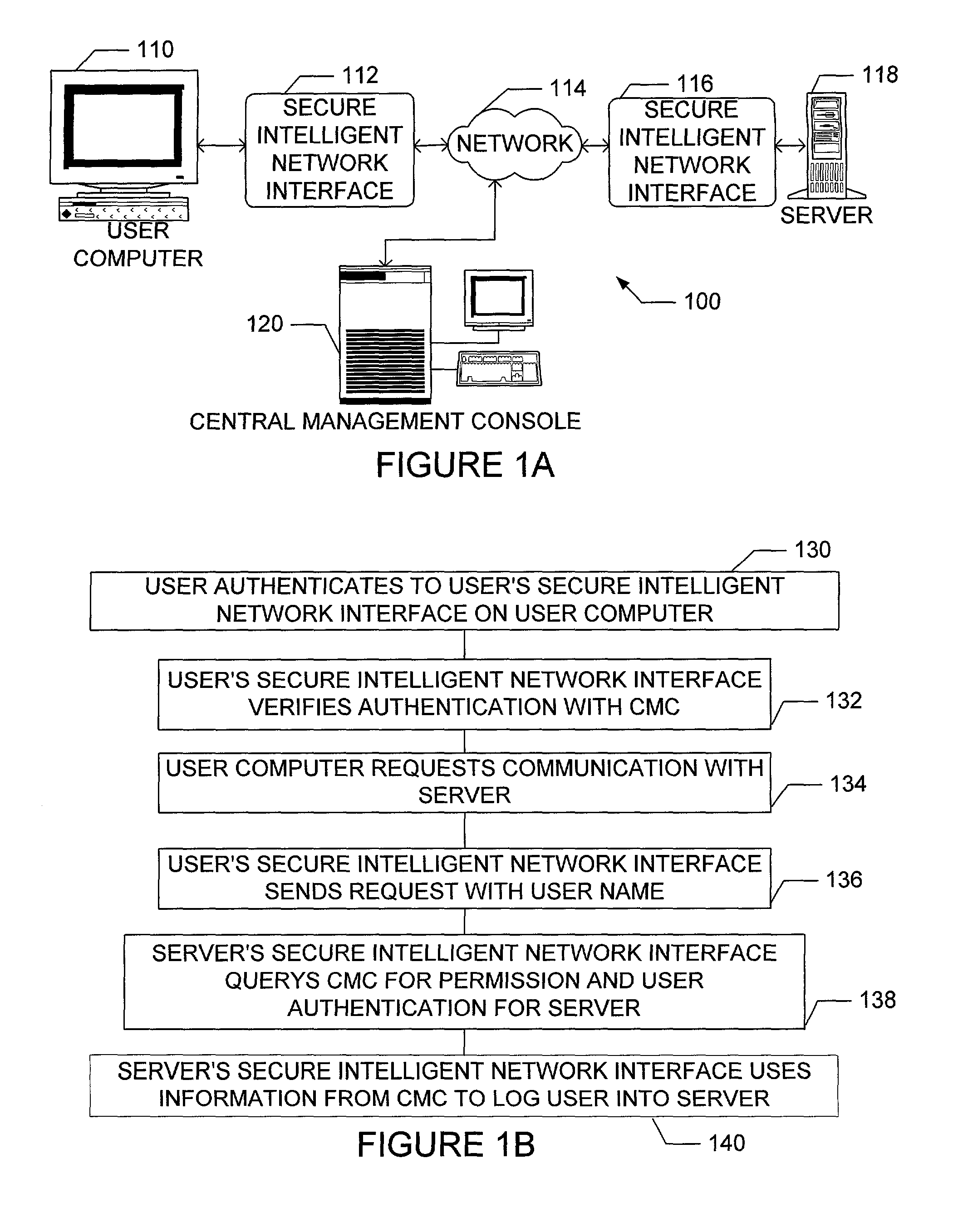

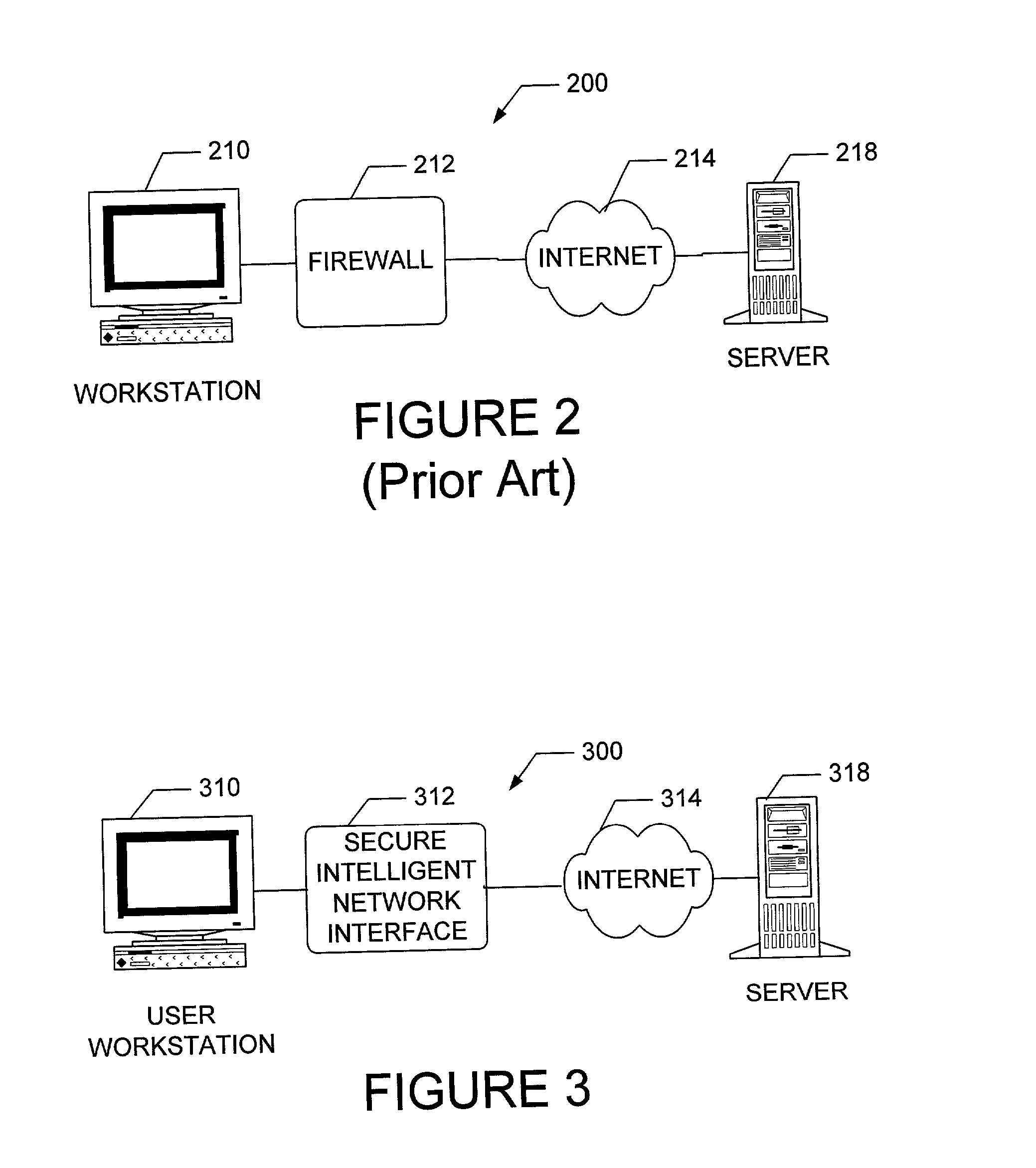

Apparatus and method for providing secure network communication

InactiveUS20020162026A1Eliminate attackEliminate needData taking preventionDigital data processing detailsFault toleranceIntelligent Network

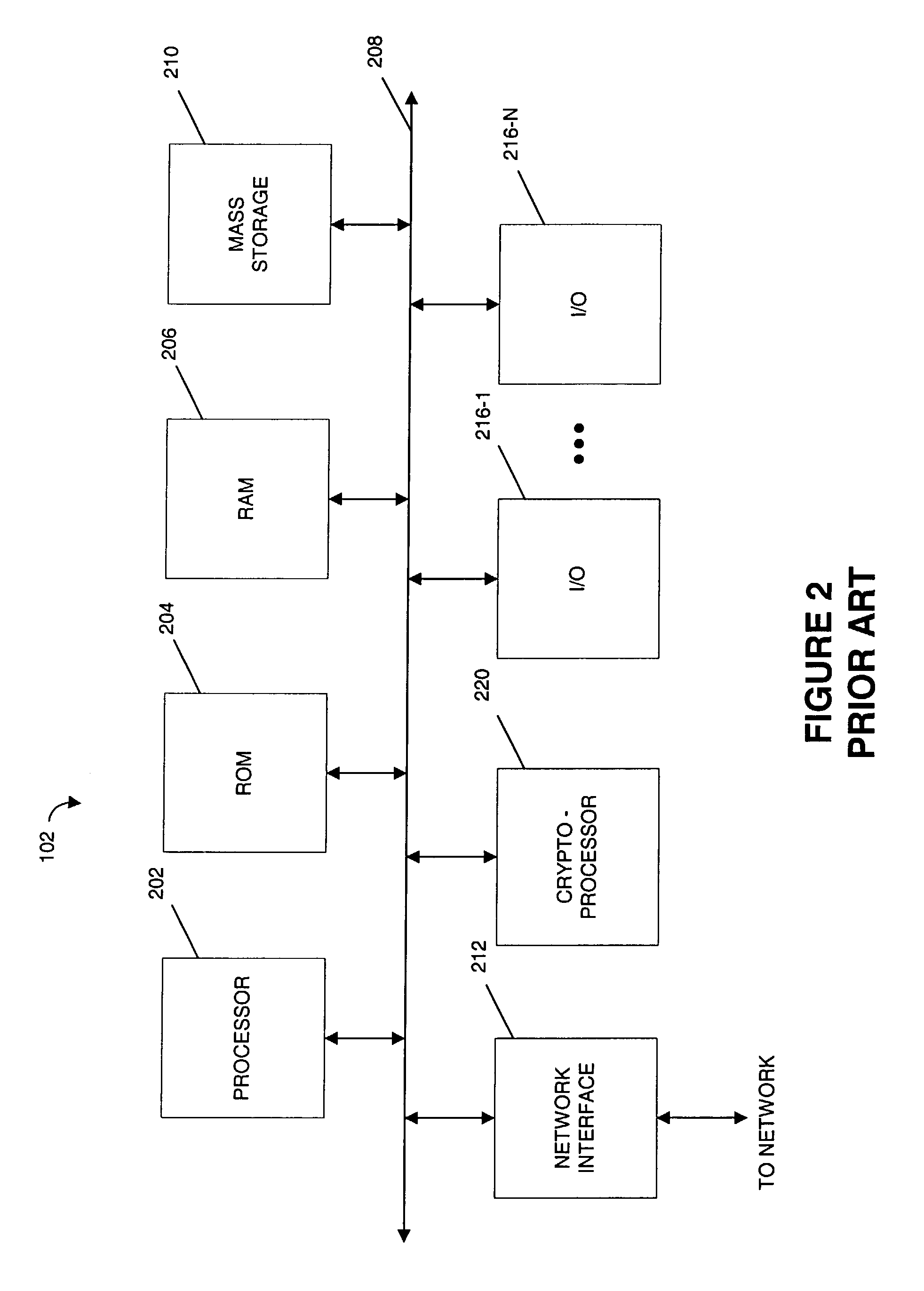

The present invention is drawn to an apparatus and method for providing secure network communication. Each node or computer on the network has a secure, intelligent network interface with a coprocessor that handles all network communication. The intelligent network interface can be built into a network interface card (NIC) or be a separate box between each machine and the network. The intelligent network interface encrypts outgoing packets and decrypts incoming packets from the network based on a key and algorithm managed by a centralized management console (CMC) on the network. The intelligent network interface can also be configured by the CMC with dynamically distributed code to perform authentication functions, protocol translations, single sign-on functions, multi-level firewall functions, distinguished-name based firewall functions, centralized user management functions, machine diagnostics, proxy functions, fault tolerance functions, centralized patching functions, Web-filtering functions, virus-scanning functions, auditing functions, and gateway intrusion detection functions.

Owner:NEUMAN MICHAEL +1

Audio and video decoder circuit and system

InactiveUS6369855B1Accelerates memory block moveAvoid confictTelevision system detailsPulse modulation television signal transmissionCoprocessorNetwork packet

An improved audio-visual circuit is provided that includes a transport packet parsing circuit for receiving a transport data packet stream, a CPU circuit for initializing said integrated circuit and for processing portions of said data packet stream, a ROM circuit for storing data, a RAM circuit for storing data, an audio decoder circuit for decoding audio portions of said data packet stream, a video decoder circuit for decoding video portions of said data packet stream, an NTSC / PAL encoding circuit for encoding video portions of said data packet stream, an OSD coprocessor circuit for processing OSD portions of said data packets, a traffic controller circuit moving portions of said data packet stream between portions of said integrated circuit, an extension bus interface circuit, a P1394 interface circuit, a communication coprocessors circuit, an address bus connected to said circuits, and a data bus connected to said circuits.

Owner:TEXAS INSTR INC

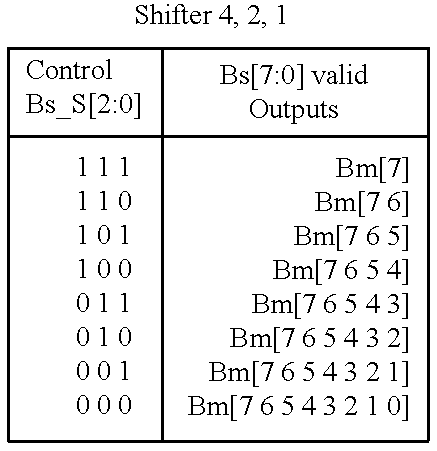

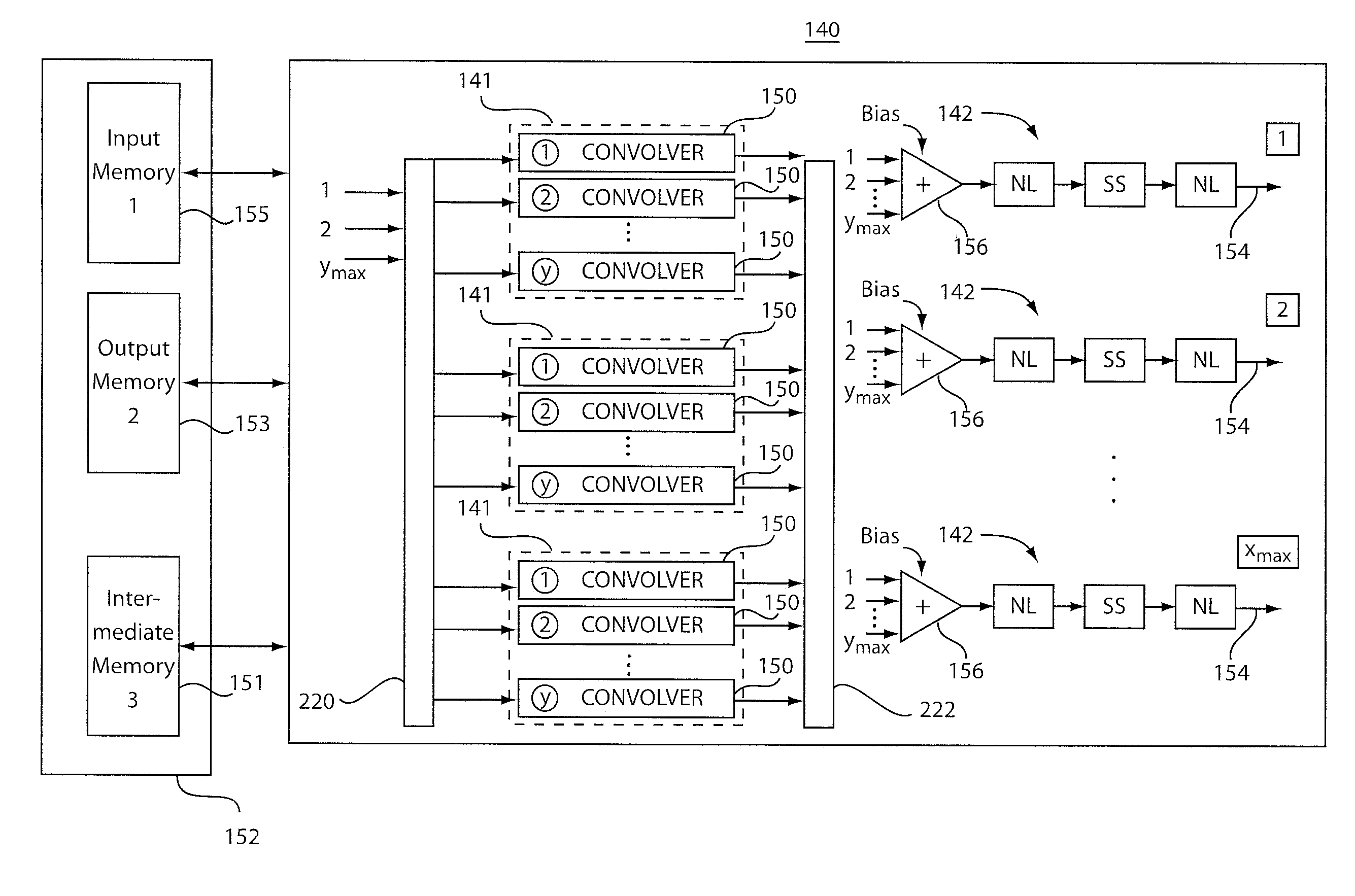

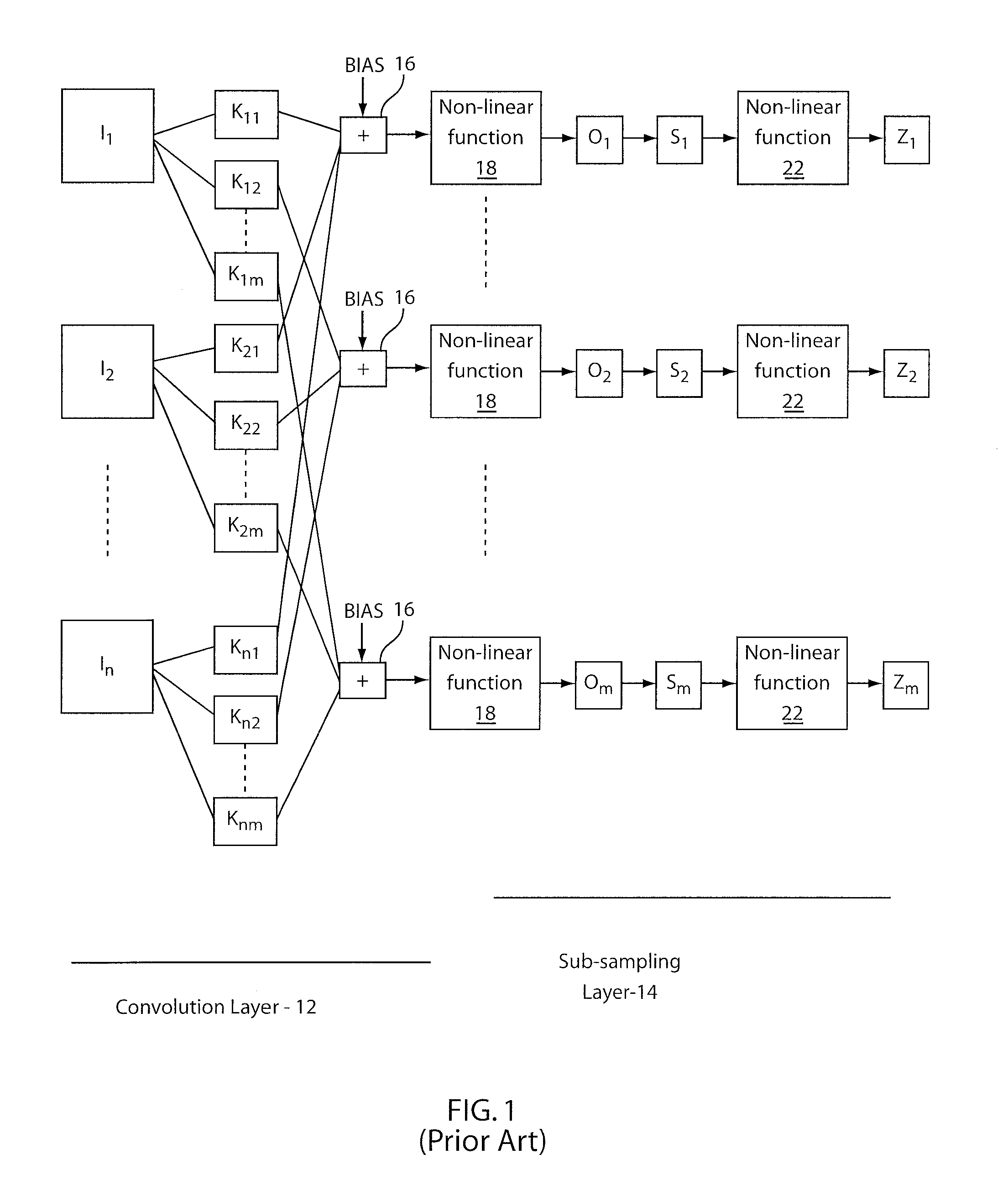

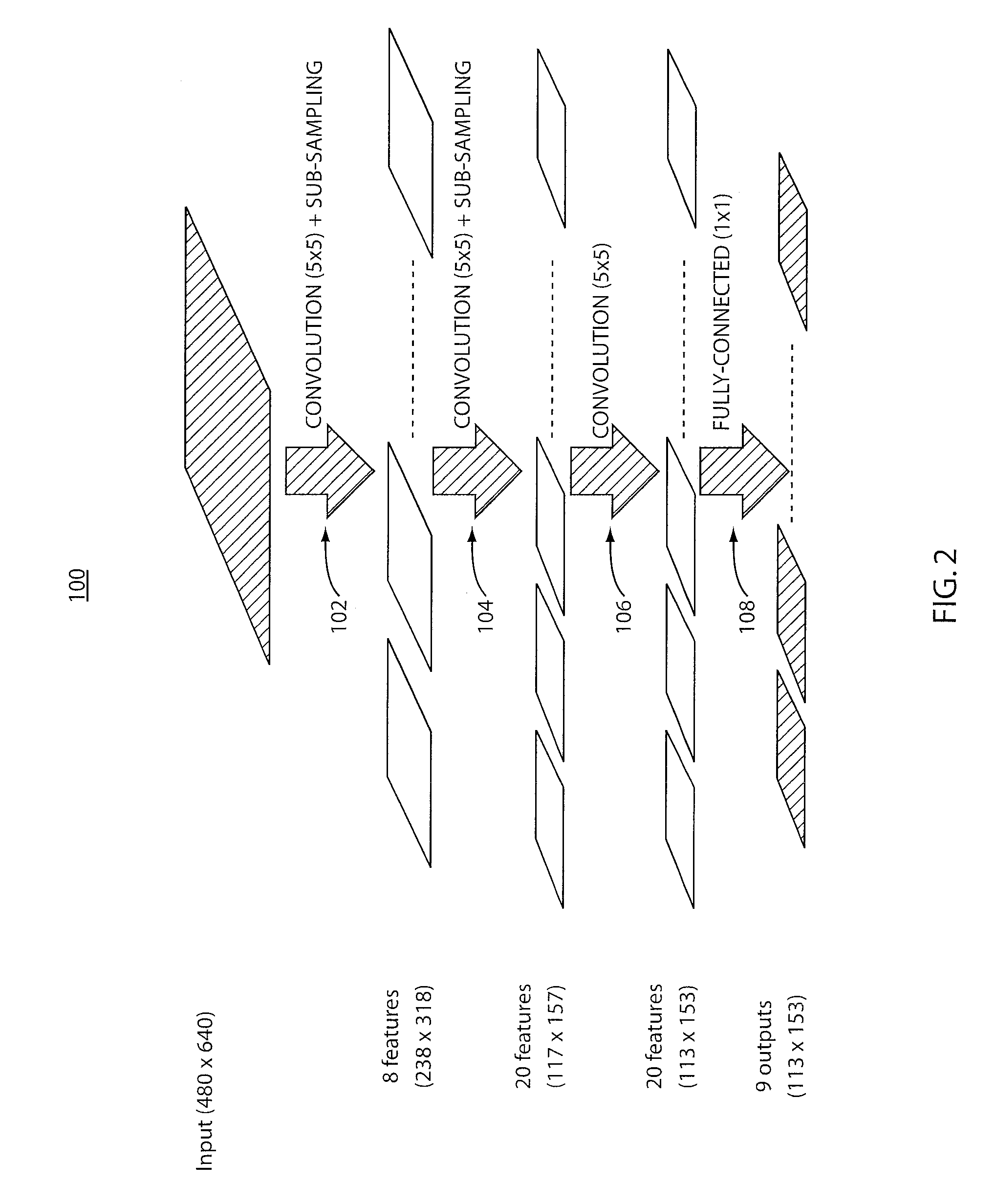

Dynamically configurable, multi-ported co-processor for convolutional neural networks

ActiveUS20110029471A1Improve feed-forward processing speedGeneral purpose stored program computerDigital dataCoprocessorControl signal

A coprocessor and method for processing convolutional neural networks includes a configurable input switch coupled to an input. A plurality of convolver elements are enabled in accordance with the input switch. An output switch is configured to receive outputs from the set of convolver elements to provide data to output branches. A controller is configured to provide control signals to the input switch and the output switch such that the set of convolver elements are rendered active and a number of output branches are selected for a given cycle in accordance with the control signals.

Owner:NEC CORP

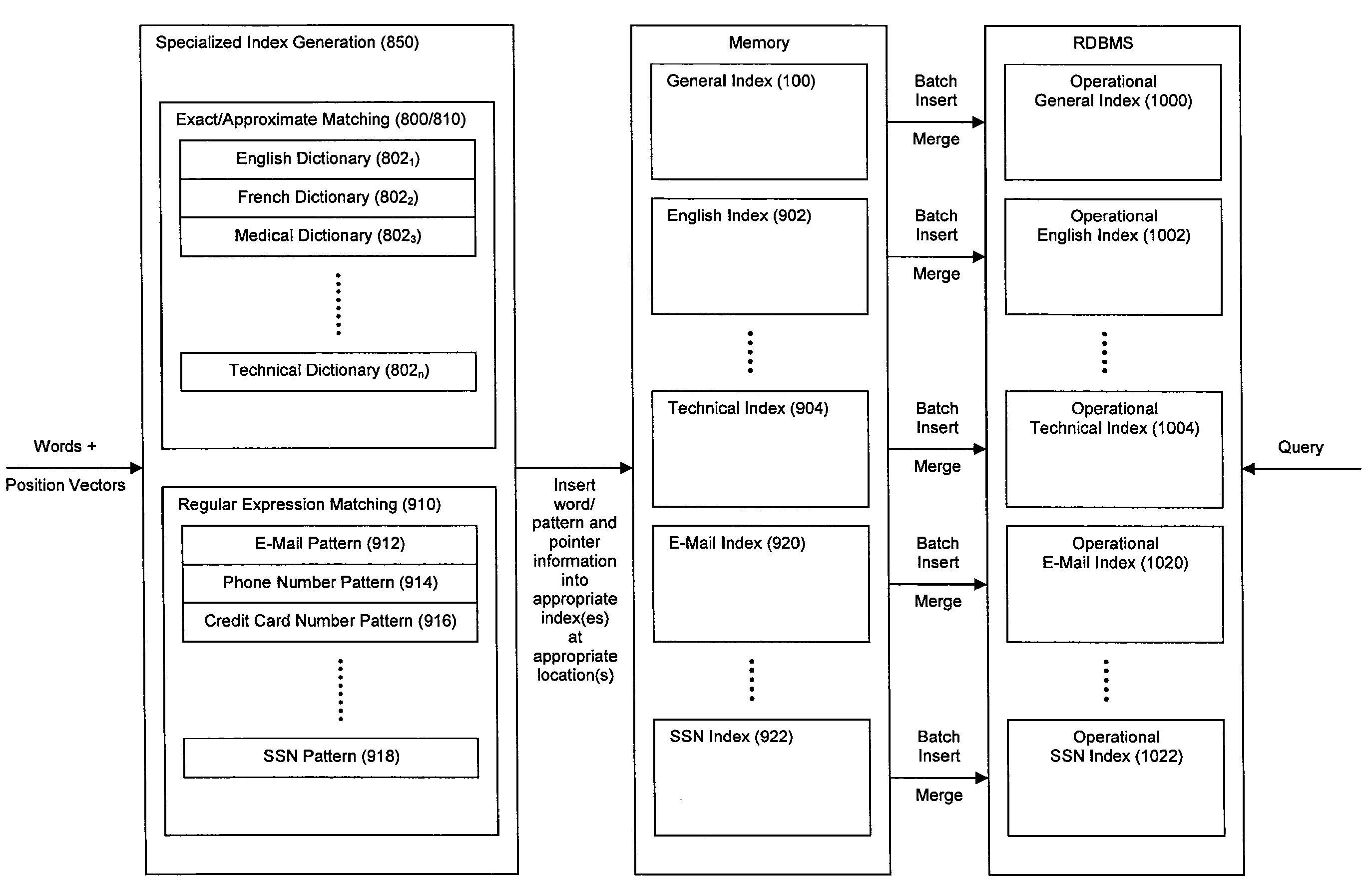

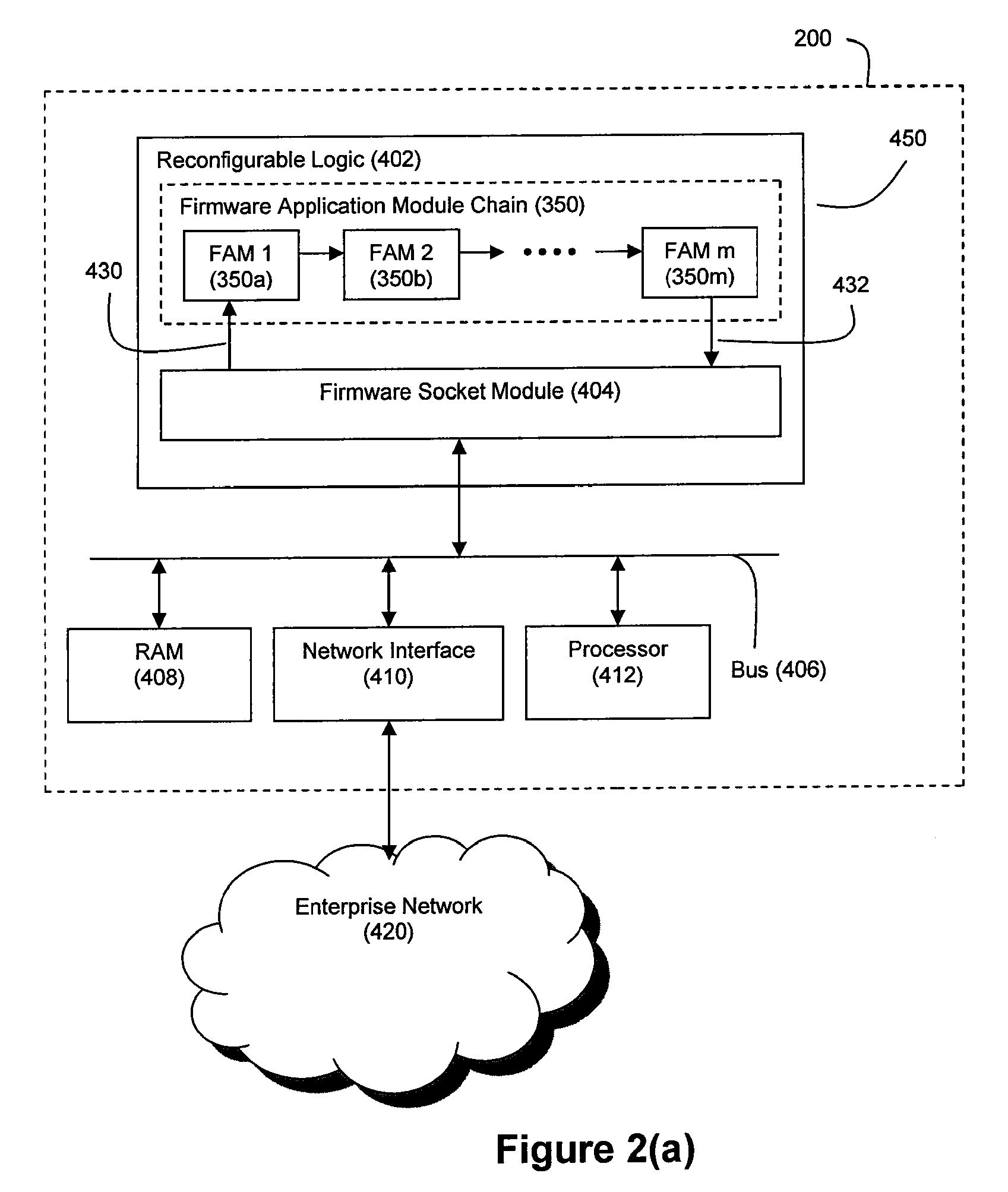

Method and System for High Performance Data Metatagging and Data Indexing Using Coprocessors

ActiveUS20080114725A1Robust and high performance data searchingHigh indexWeb data indexingFile access structuresData streamCoprocessor

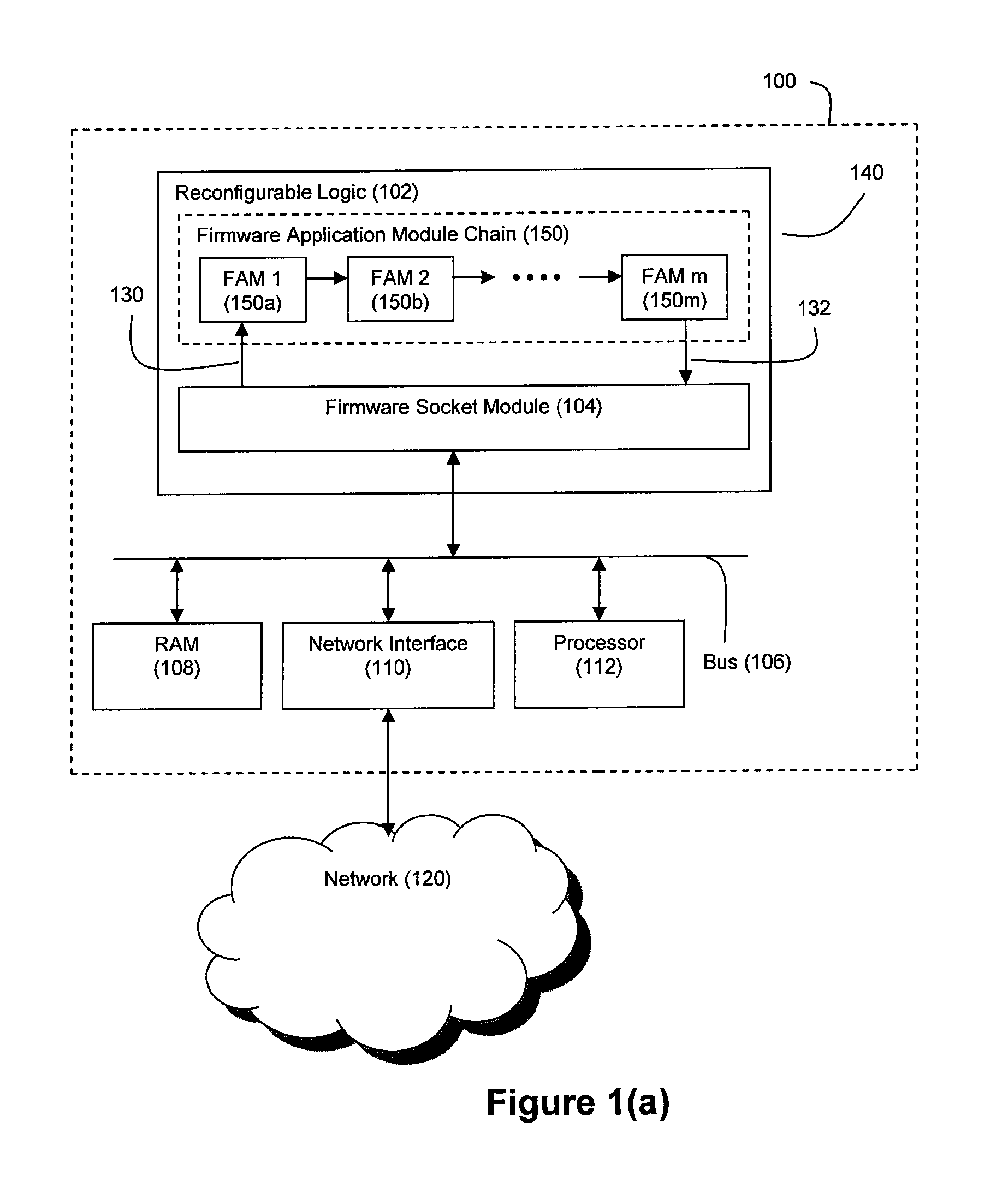

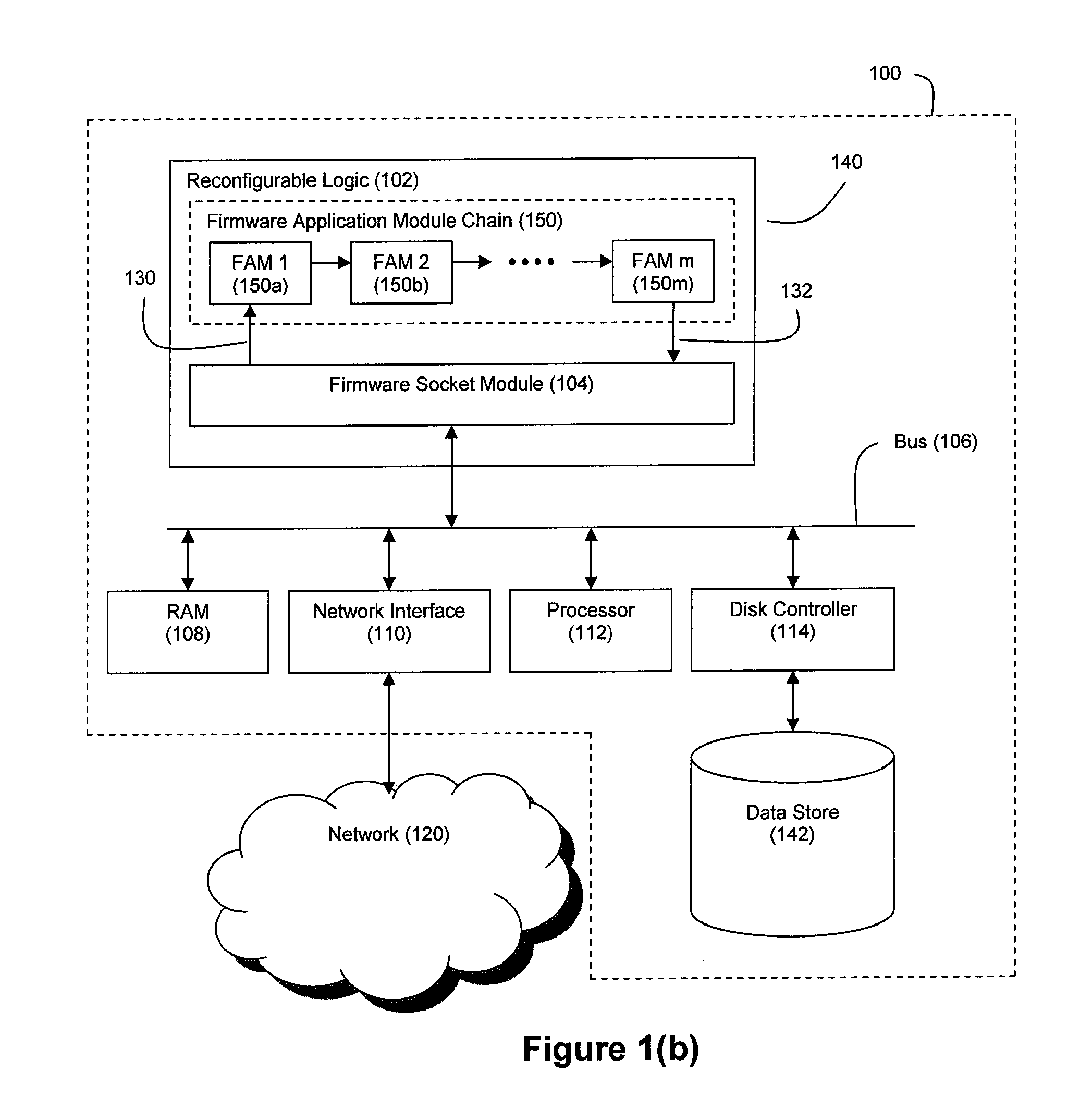

Disclosed herein is a method and system for hardware-accelerating the generation of metadata for a data stream using a coprocessor. Using these techniques, data can be richly indexed, classified, and clustered at high speeds. Reconfigurable logic such a field programmable gate arrays (FPGAs) can be used by the coprocessor for this hardware acceleration. Techniques such as exact matching, approximate matching, and regular expression pattern matching can be employed by the coprocessor to generate desired metadata for the data stream.

Owner:IP RESERVOIR

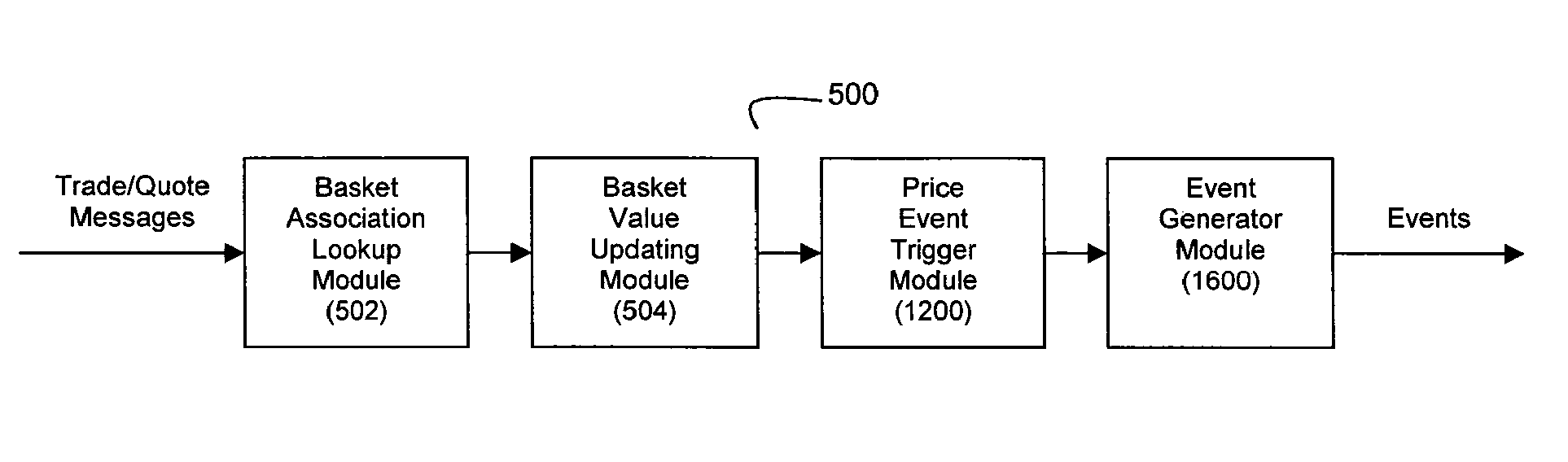

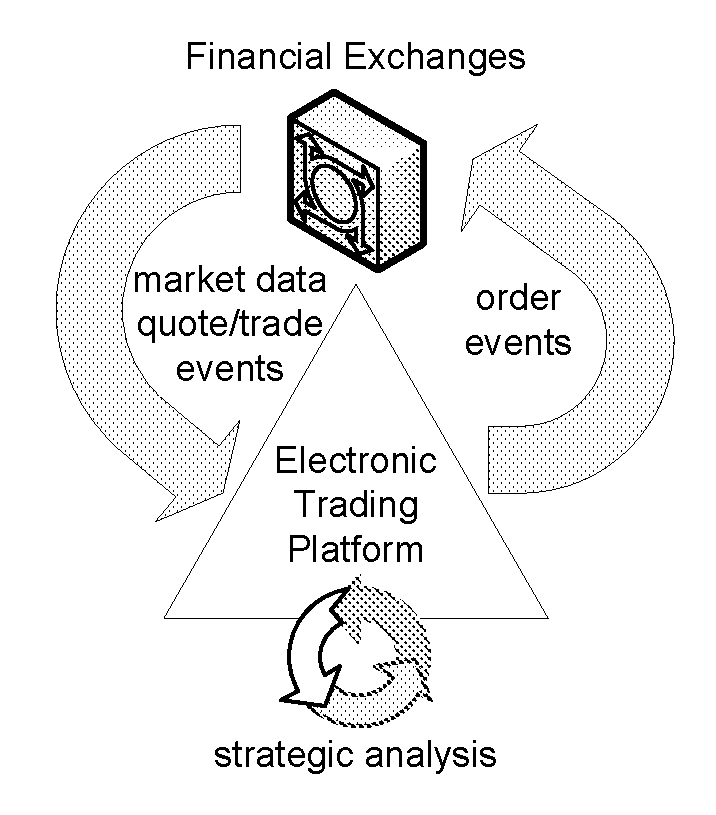

Method and System for Low Latency Basket Calculation

A basket calculation engine is deployed to receive a stream of data and accelerate the computation of basket values based on that data. In a preferred embodiment, the basket calculation engine is used to process financial market data to compute the net asset values (NAVs) of financial instrument baskets. The basket calculation engine can be deployed on a coprocessor and can also be realized via a pipeline, the pipeline preferably comprising a basket association lookup module and a basket value updating module. The coprocessor is preferably a reconfigurable logic device such as a field programmable gate array (FPGA).

Owner:EXEGY INC

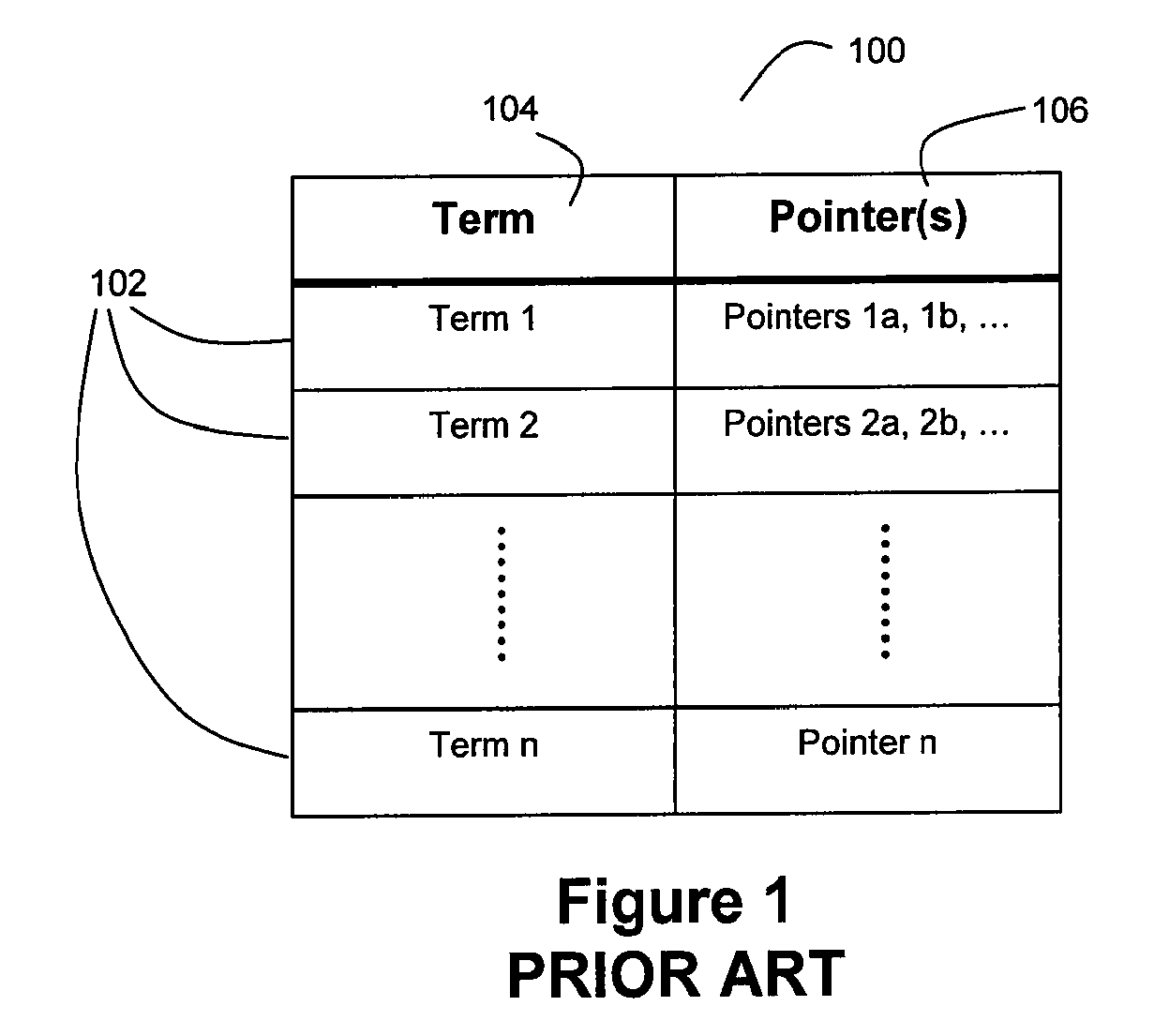

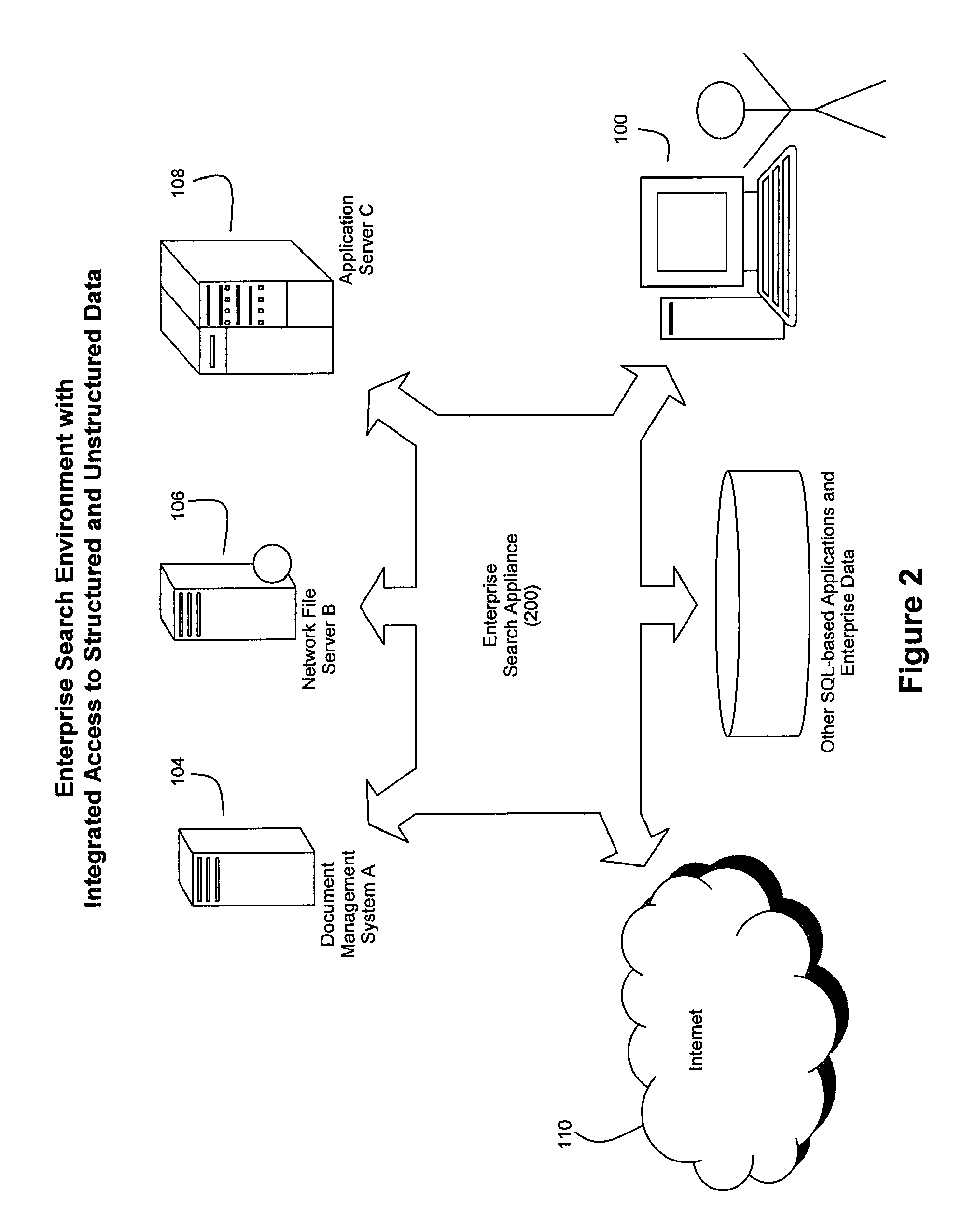

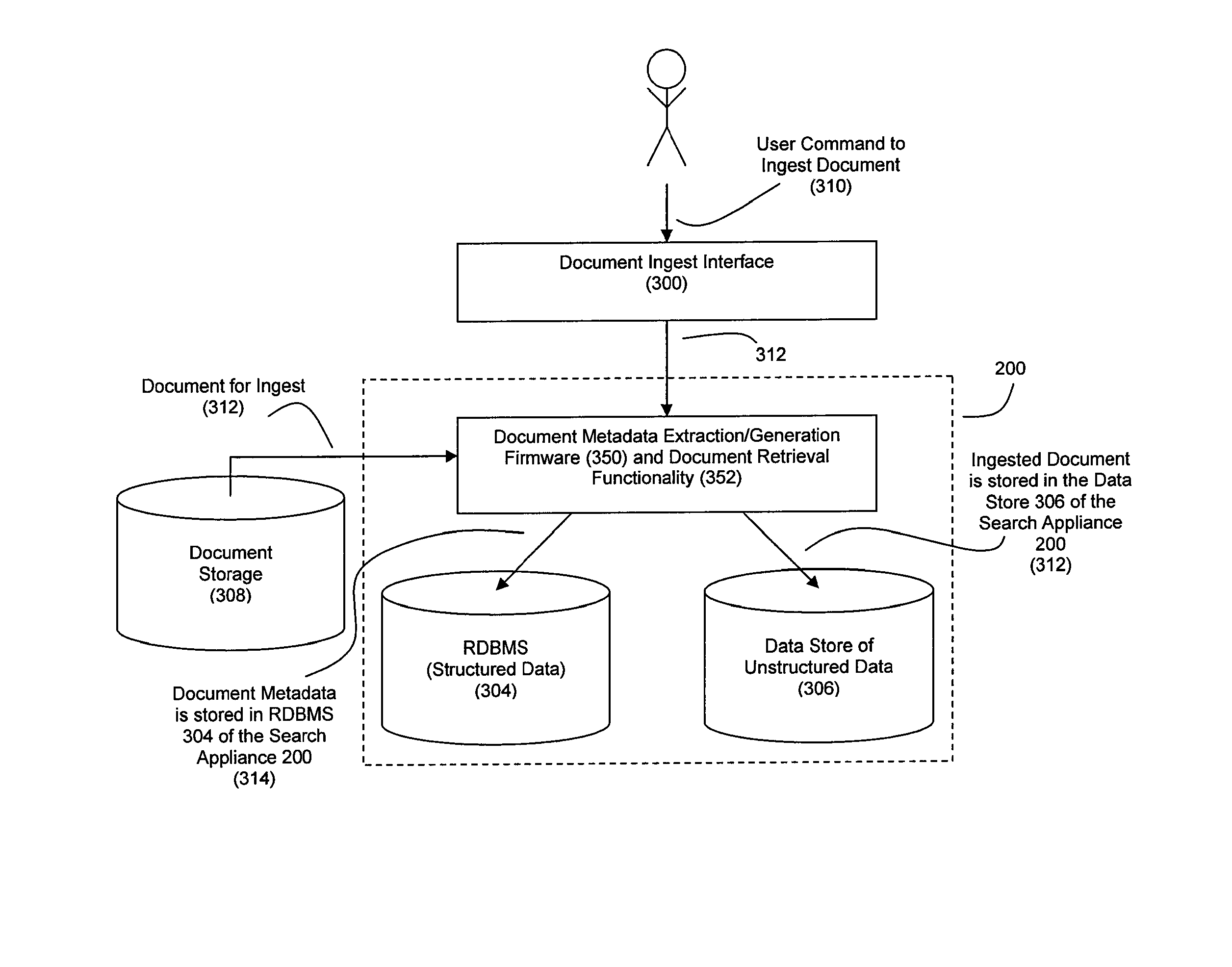

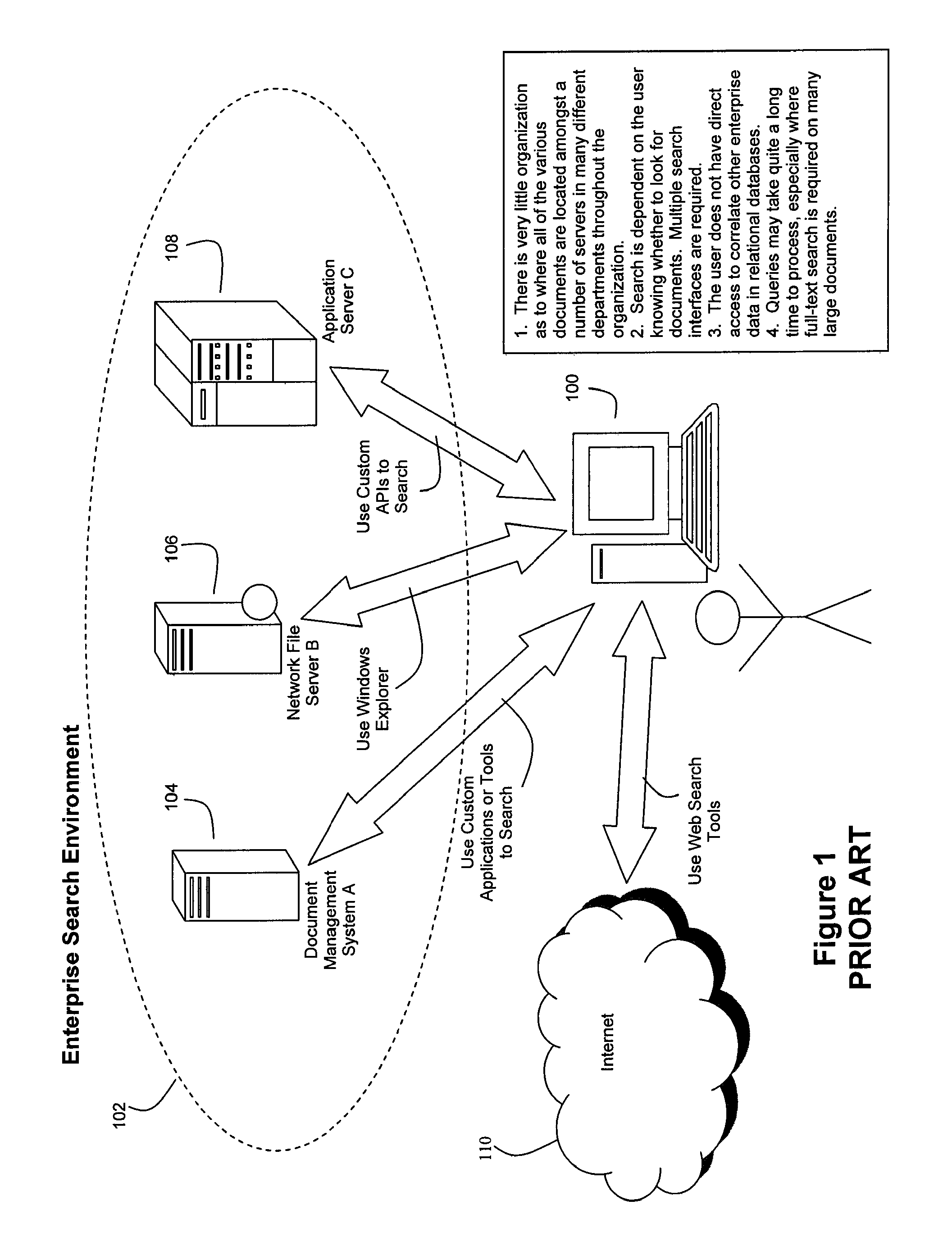

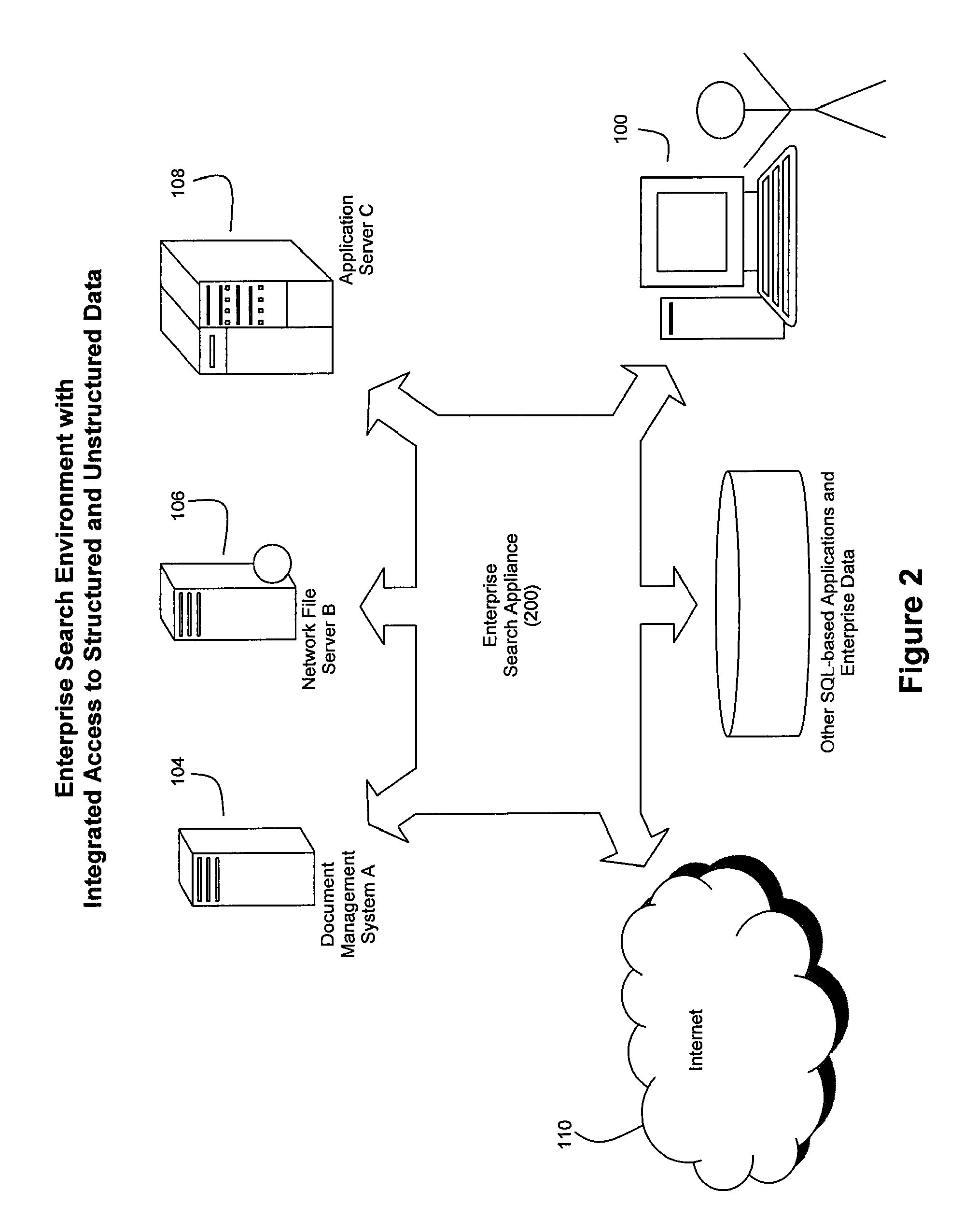

Method and System for High Performance Integration, Processing and Searching of Structured and Unstructured Data Using Coprocessors

ActiveUS20080114724A1Faster and more unified accessEffectively bifurcate query processingData processing applicationsDigital data processing detailsFull text searchRelational database

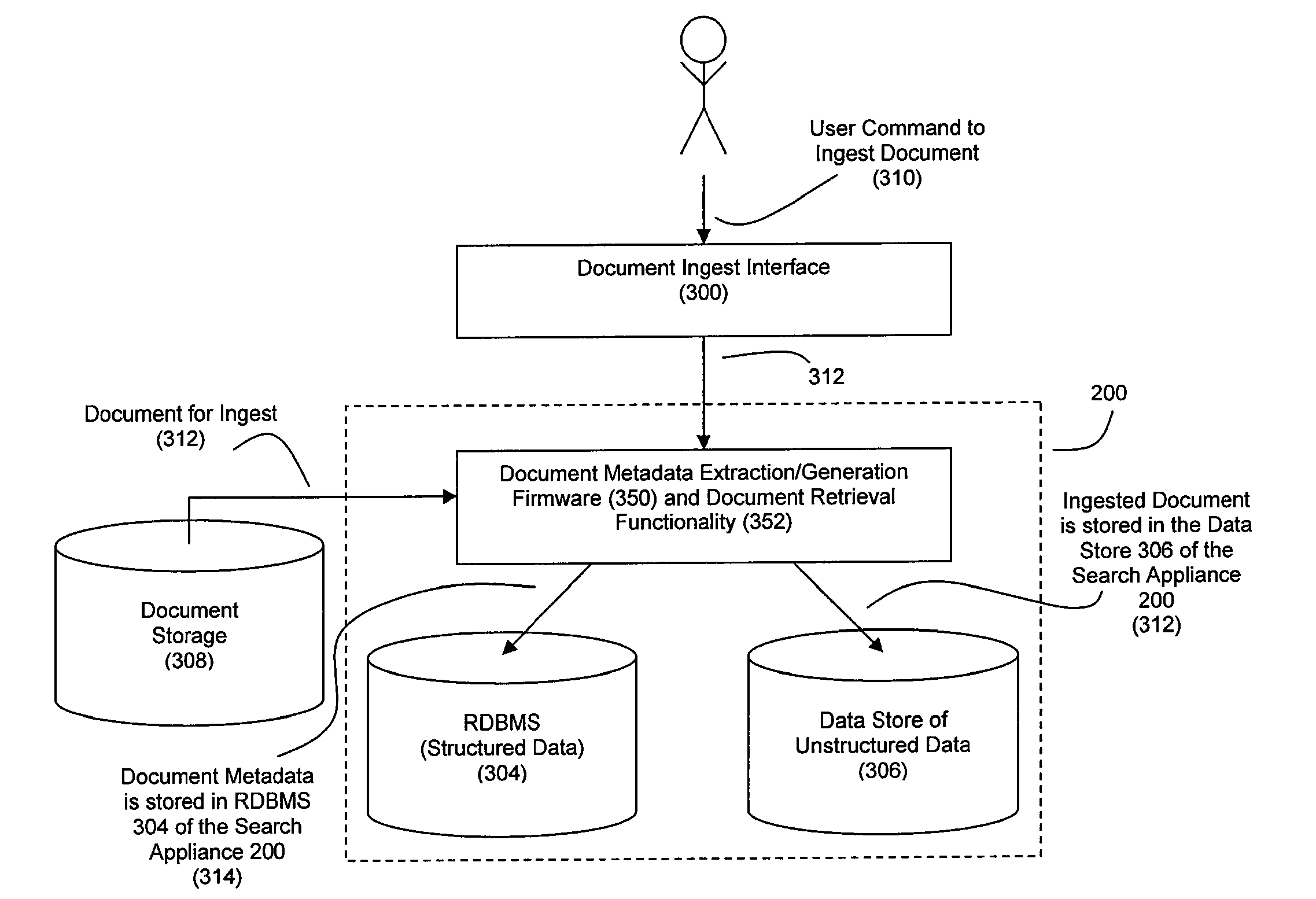

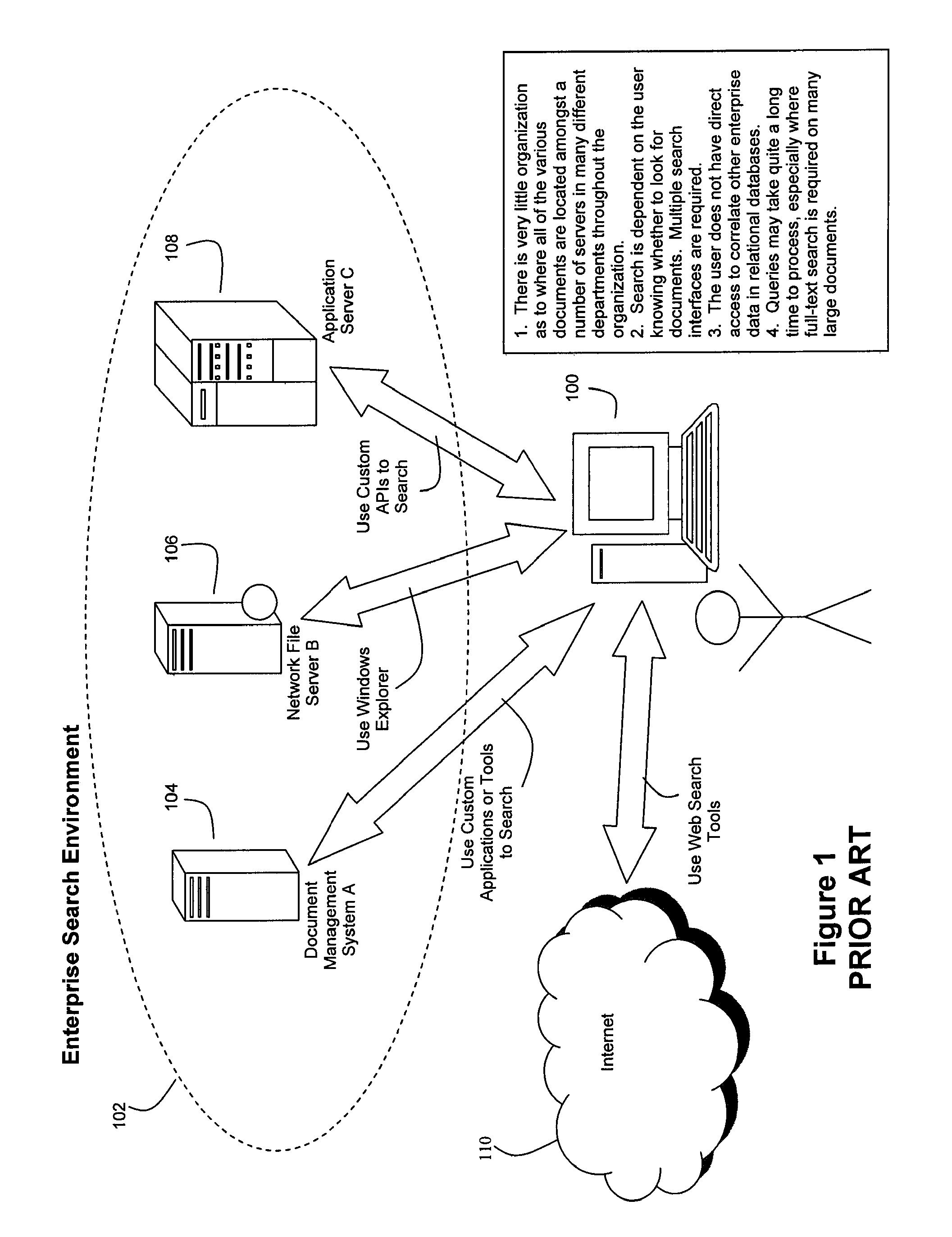

Disclosed herein is a method and system for integrating an enterprise's structured and unstructured data to provide users and enterprise applications with efficient and intelligent access to that data. Queries can be directed toward both an enterprise's structured and unstructured data using standardized database query formats such as SQL commands. A coprocessor can be used to hardware-accelerate data processing tasks (such as full-text searching) on unstructured data as necessary to handle a query. Furthermore, traditional relational database techniques can be used to access structured data stored by a relational database to determine which portions of the enterprise's unstructured data should be delivered to the coprocessor for hardware-accelerated data processing.

Owner:IP RESERVOIR

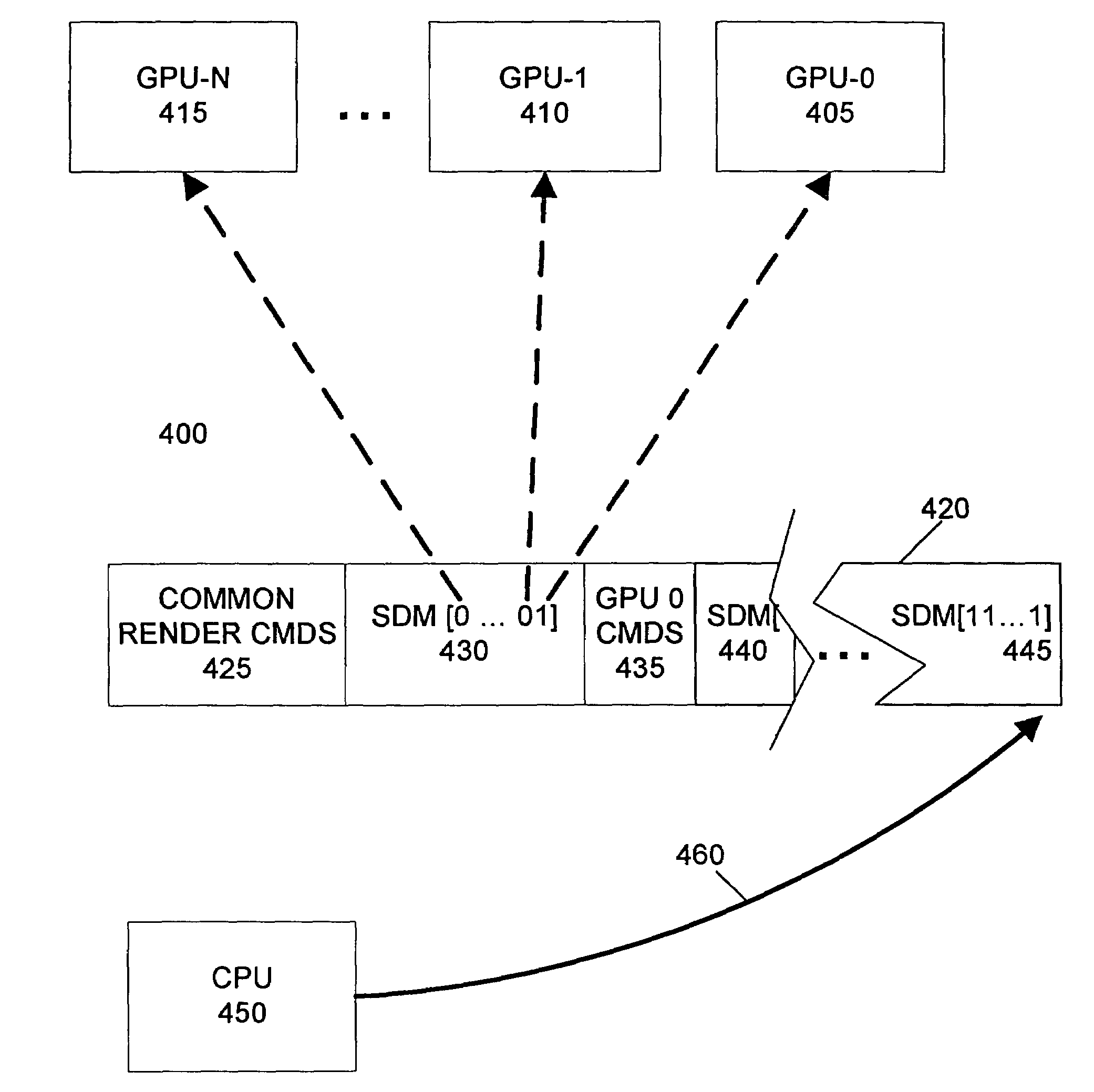

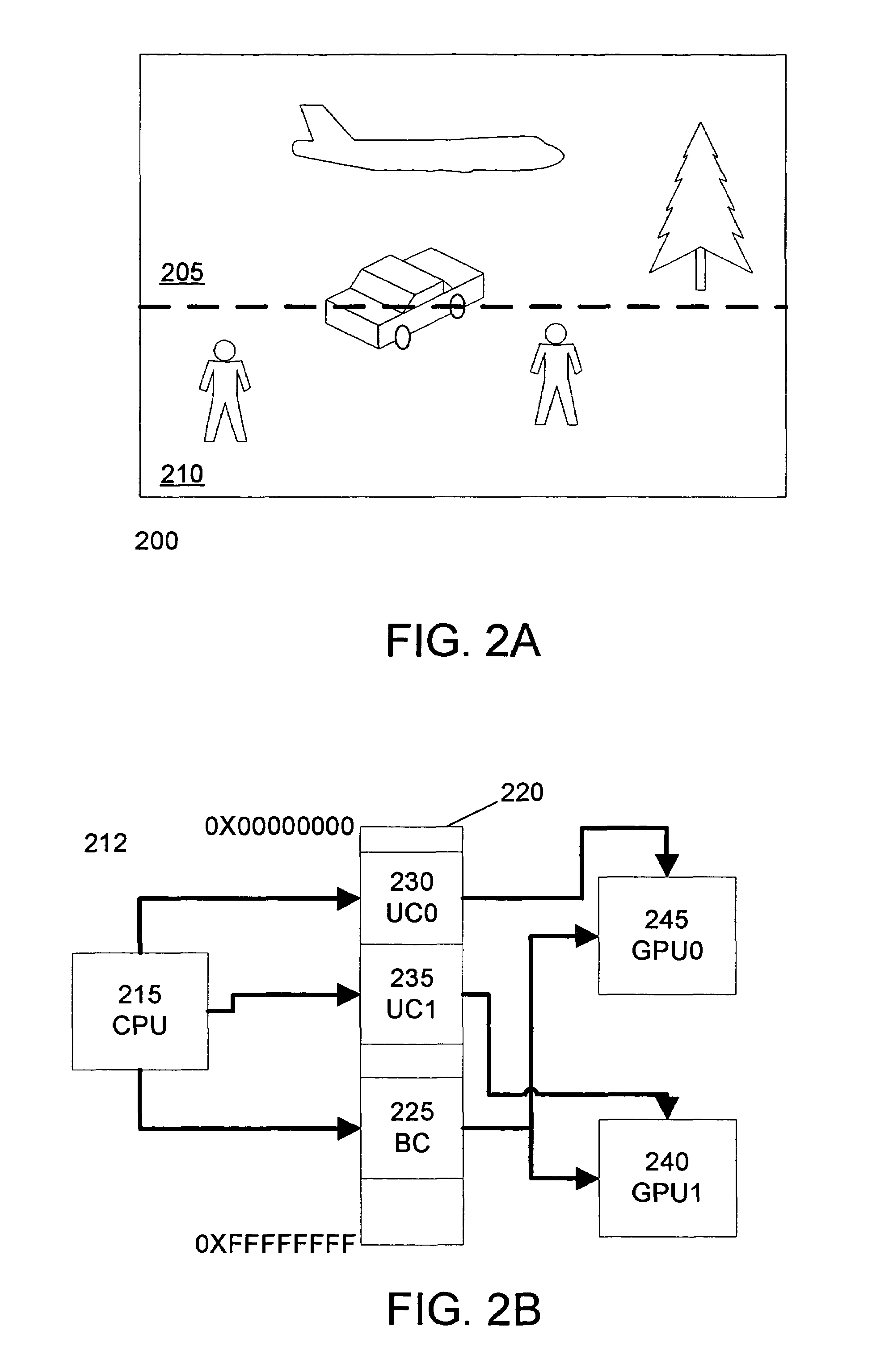

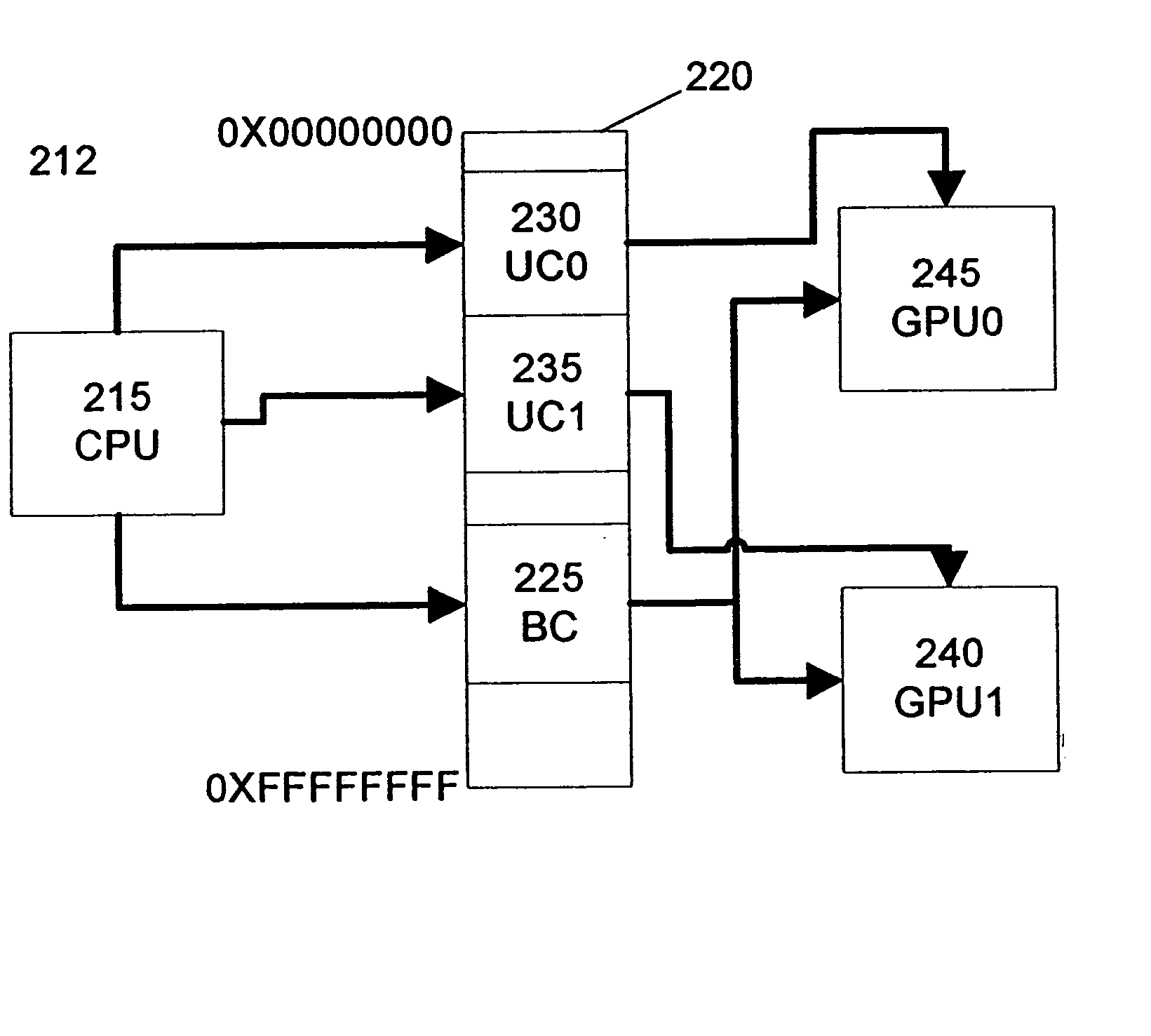

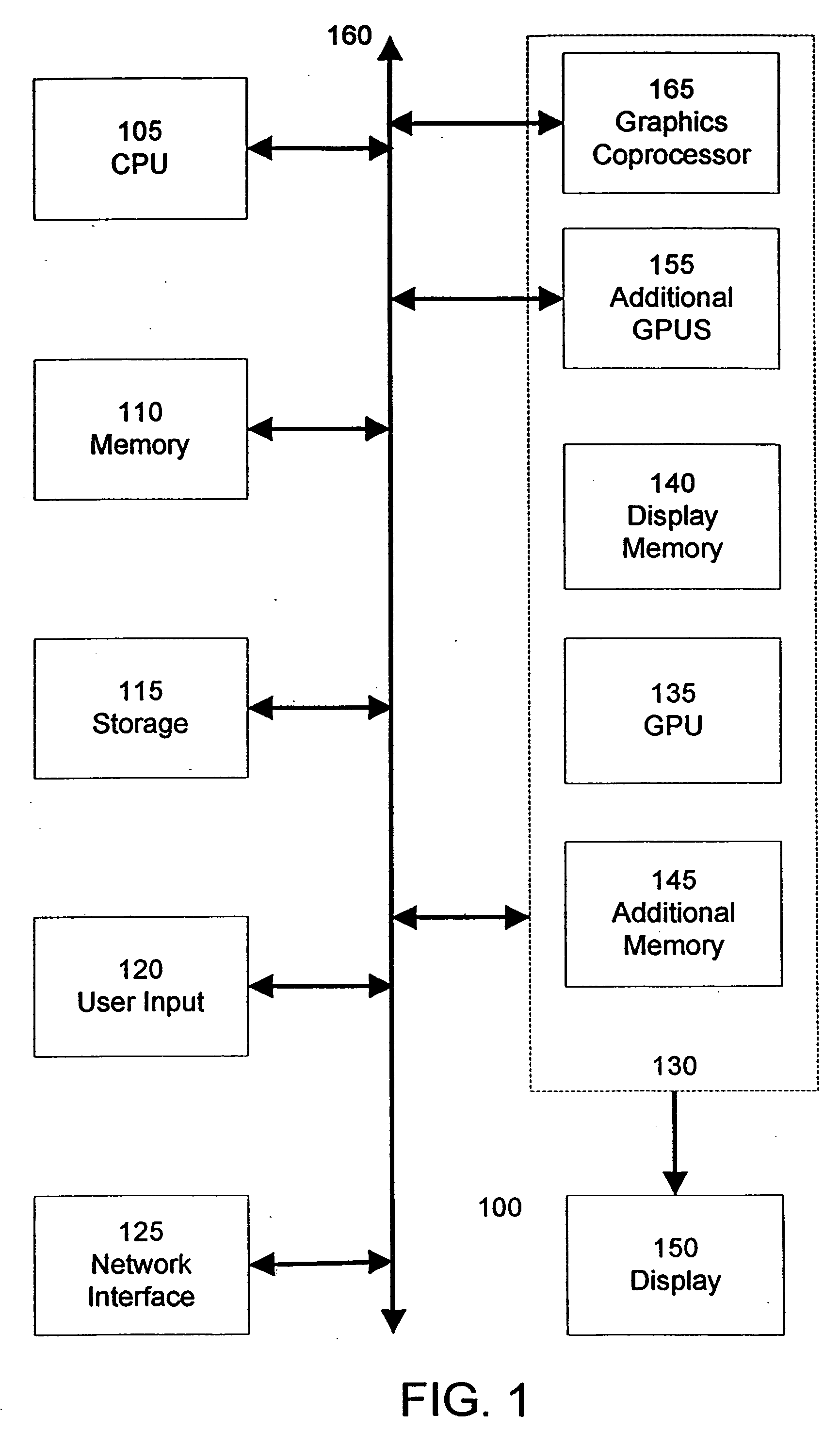

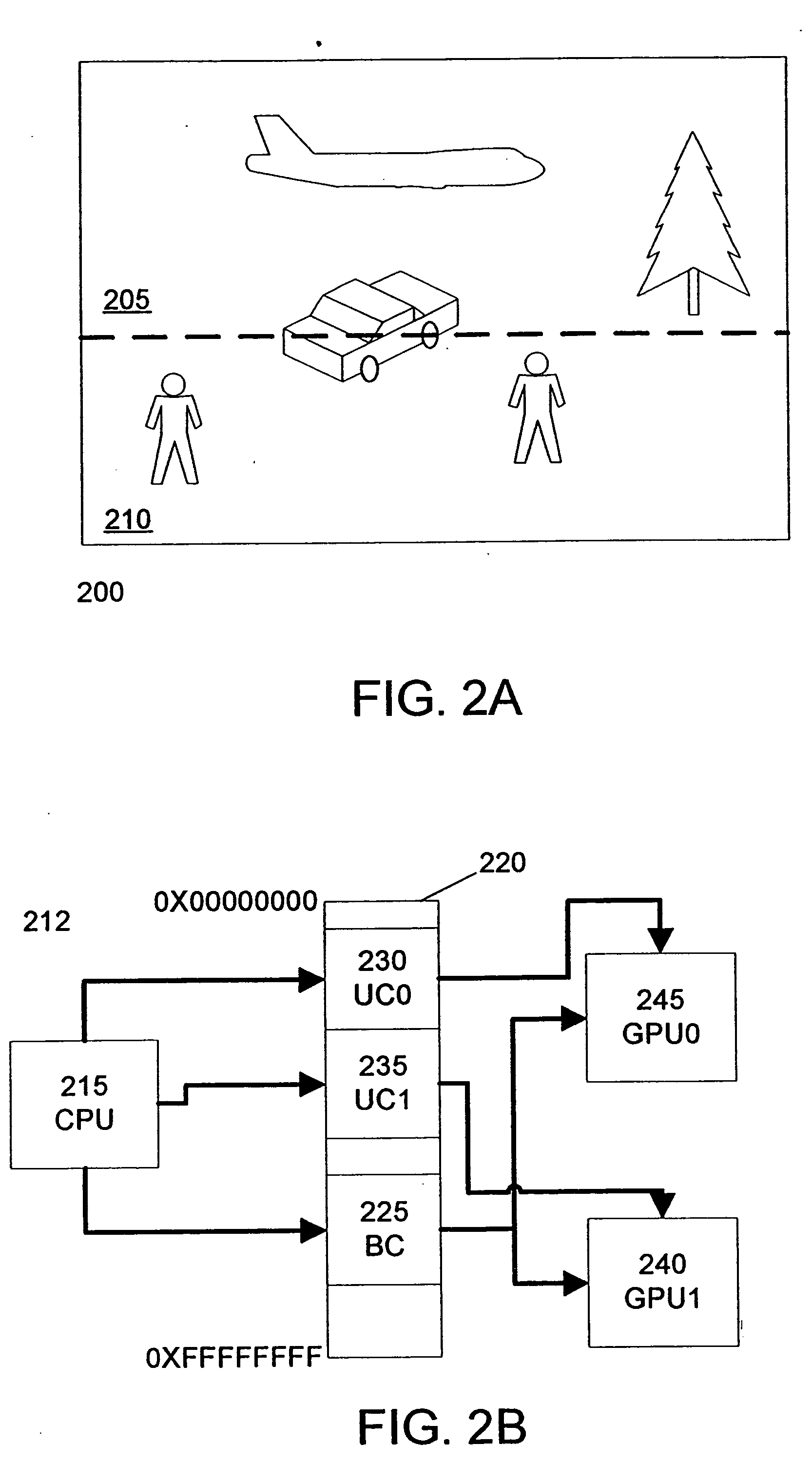

Programming multiple chips from a command buffer

ActiveUS7015915B1Keep in syncSingle instruction multiple data multiprocessorsProcessor architectures/configurationGraphicsCoprocessor

A CPU selectively programs one or more graphics devices by writing a control command to the command buffer that designates a subset of graphics devices to execute subsequent commands. Graphics devices not designated by the control command will ignore the subsequent commands until re-enabled by the CPU. The non-designated graphics devices will continue to read from the command buffer to maintain synchronization. Subsequent control commands can designate different subsets of graphics devices to execute further subsequent commands. Graphics devices include graphics processing units and graphics coprocessors. A unique identifier is associated with each of the graphics devices. The control command designates a subset of graphics devices according to their respective unique identifiers. The control command includes a number of bits. Each bit is associated with one of the unique identifiers and designates the inclusion of one of the graphics devices in the first subset of graphics devices.

Owner:NVIDIA CORP

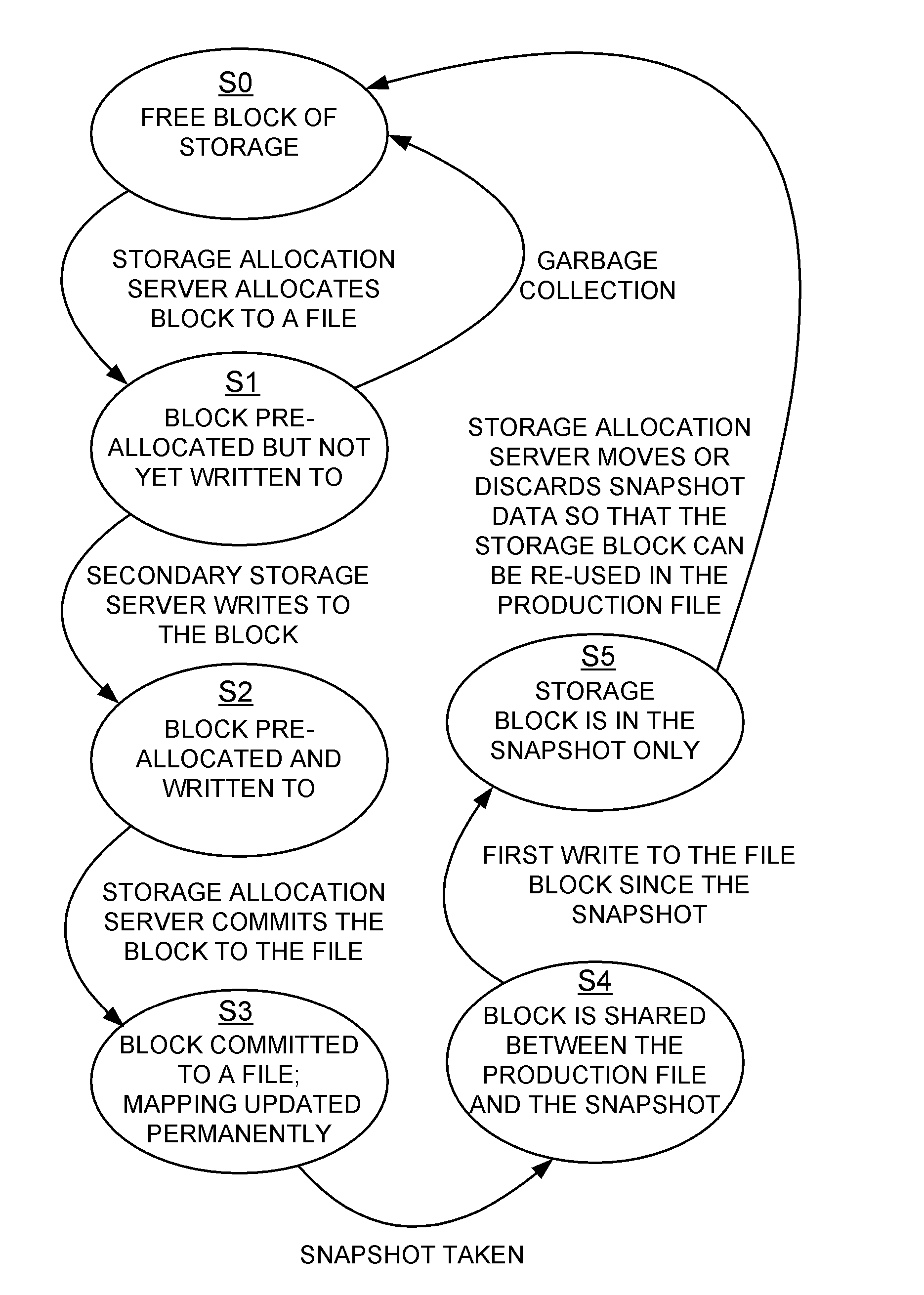

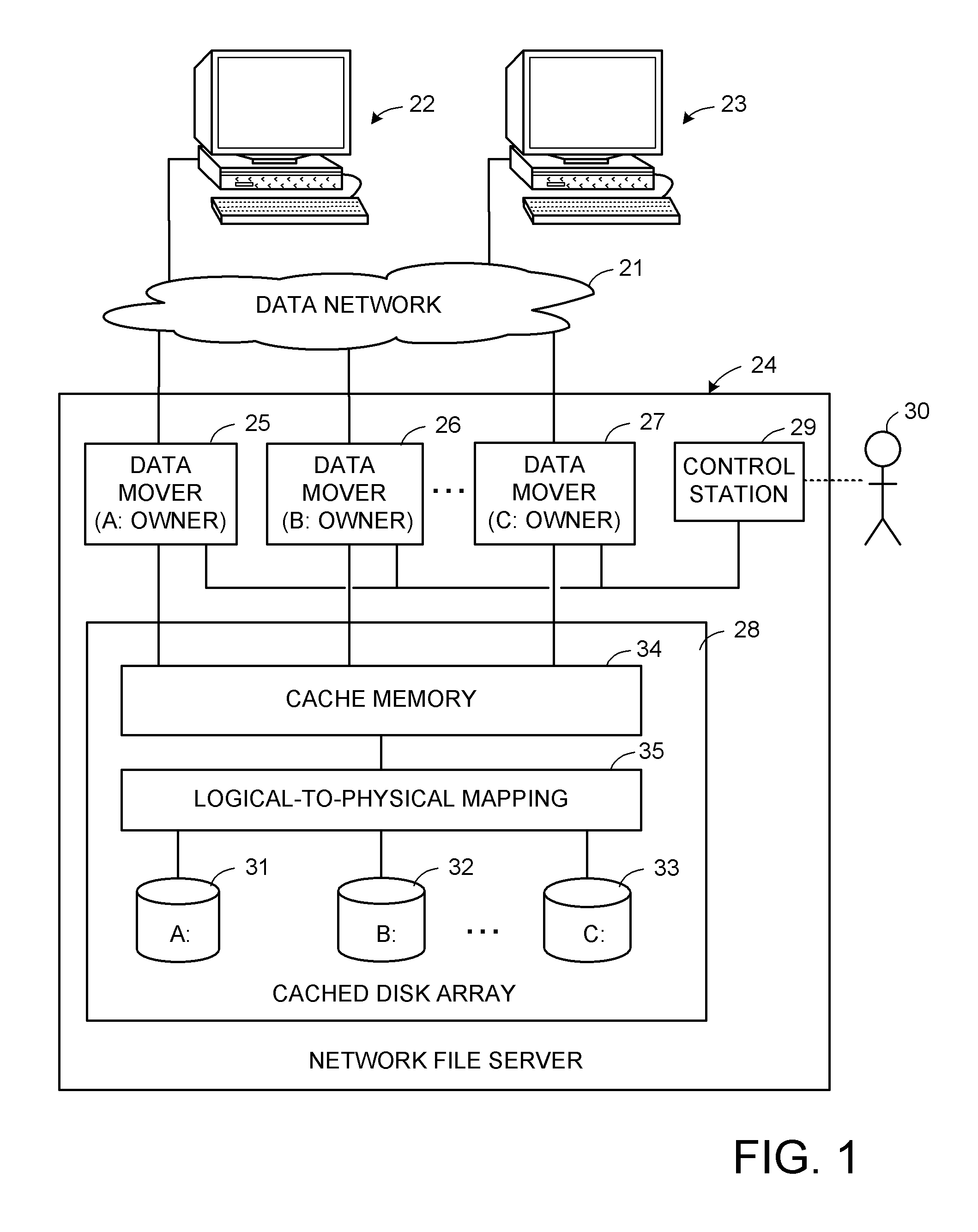

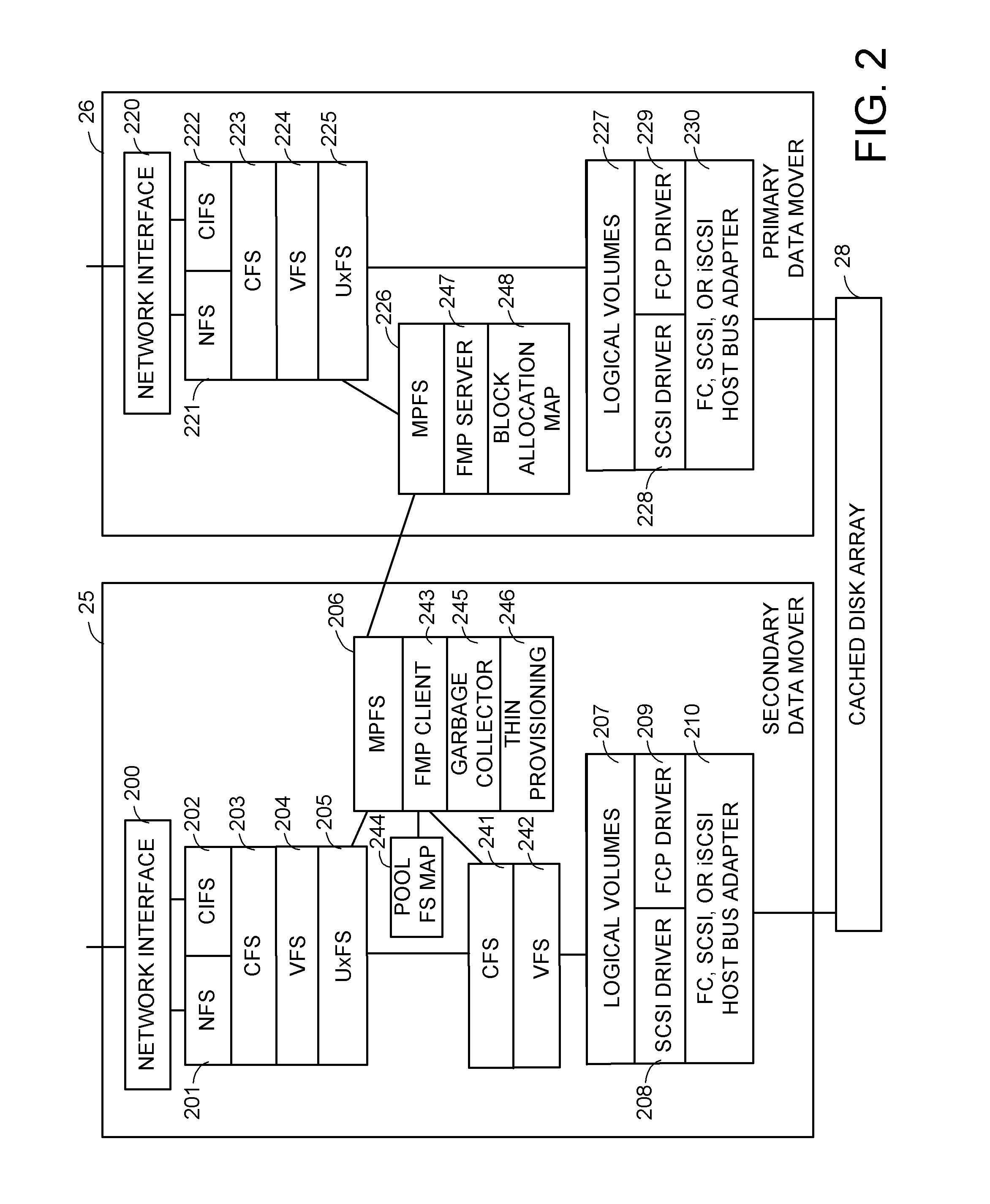

Distributed maintenance of snapshot copies by a primary processor managing metadata and a secondary processor providing read-write access to a production dataset

ActiveUS20070260830A1Degrades I/O performanceImprove I/O performanceMemory loss protectionDigital data processing detailsData setCoprocessor

A primary processor manages metadata of a production dataset and a snapshot copy, while a secondary processor provides concurrent read-write access to the primary dataset. The secondary processor determines when a first write is being made to a data block of the production dataset, and in this case sends a metadata change request to the primary data processor. The primary data processor commits the metadata change to the production dataset and maintains the snapshot copy while the secondary data processor continues to service other read-write requests. The secondary processor logs metadata changes so that the secondary processor may return a “write completed” message before the primary processor commits the metadata change. The primary data processor pre-allocates data storage blocks in such a way that the “write anywhere” method does not result in a gradual degradation in I / O performance.

Owner:EMC IP HLDG CO LLC

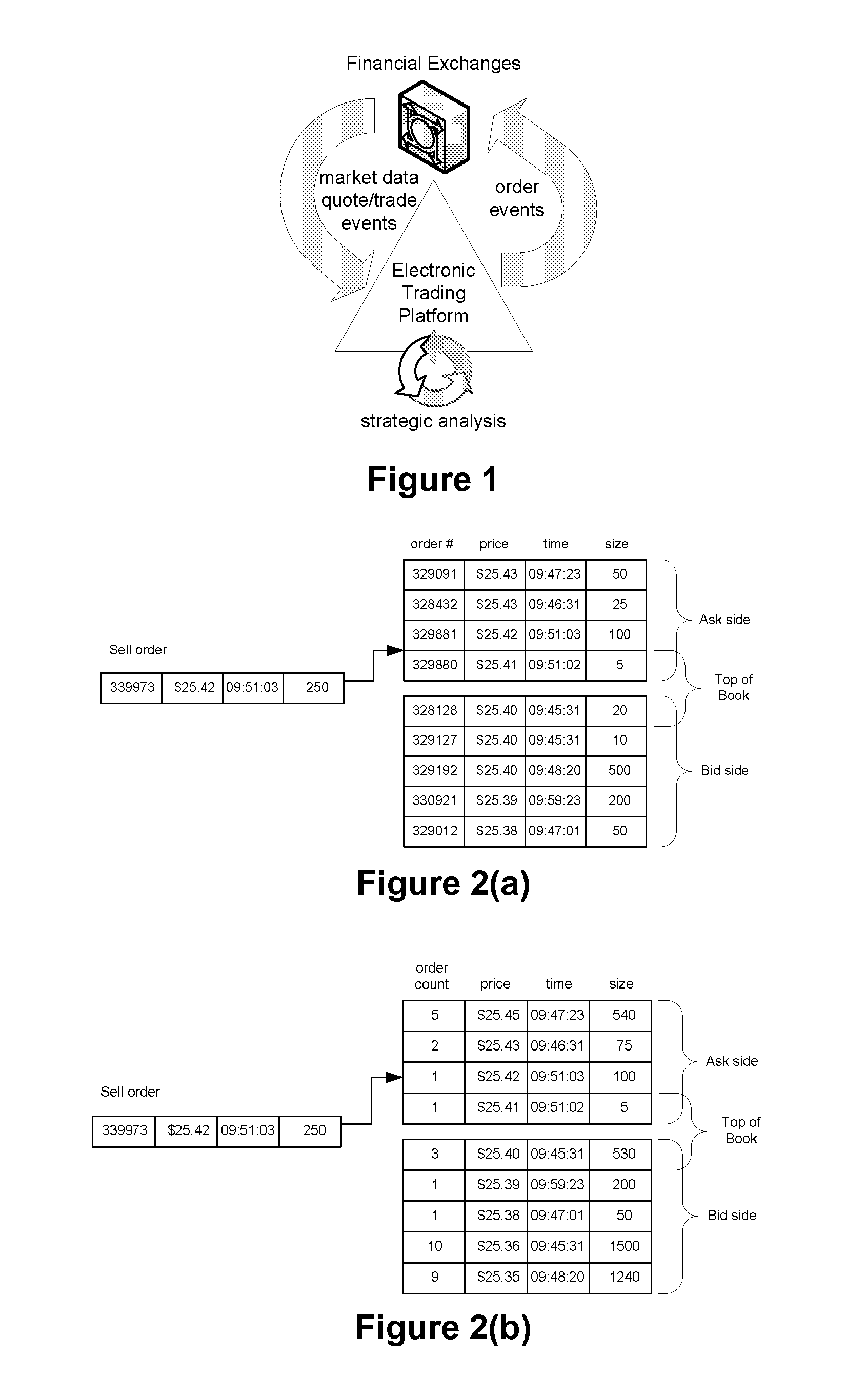

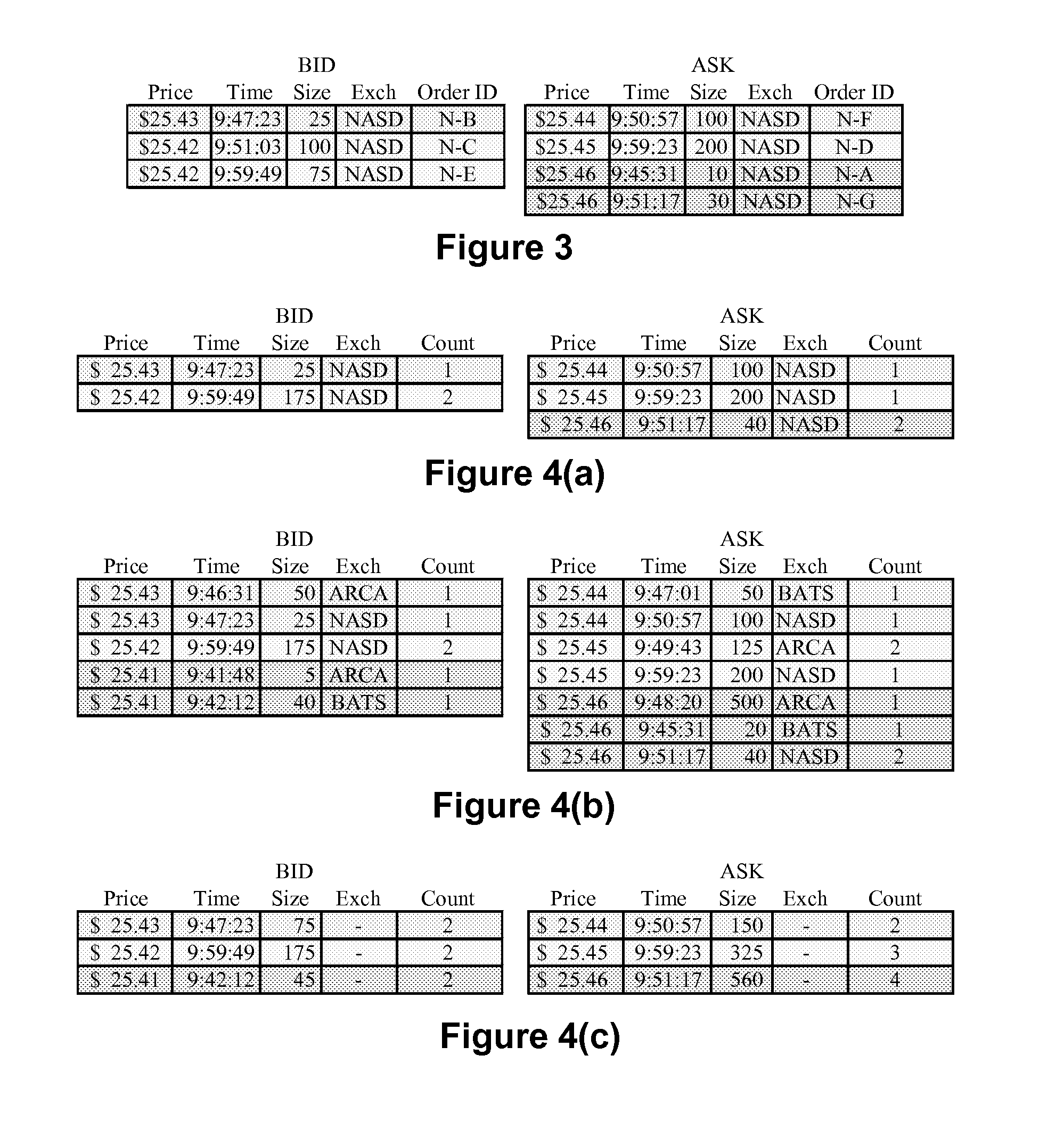

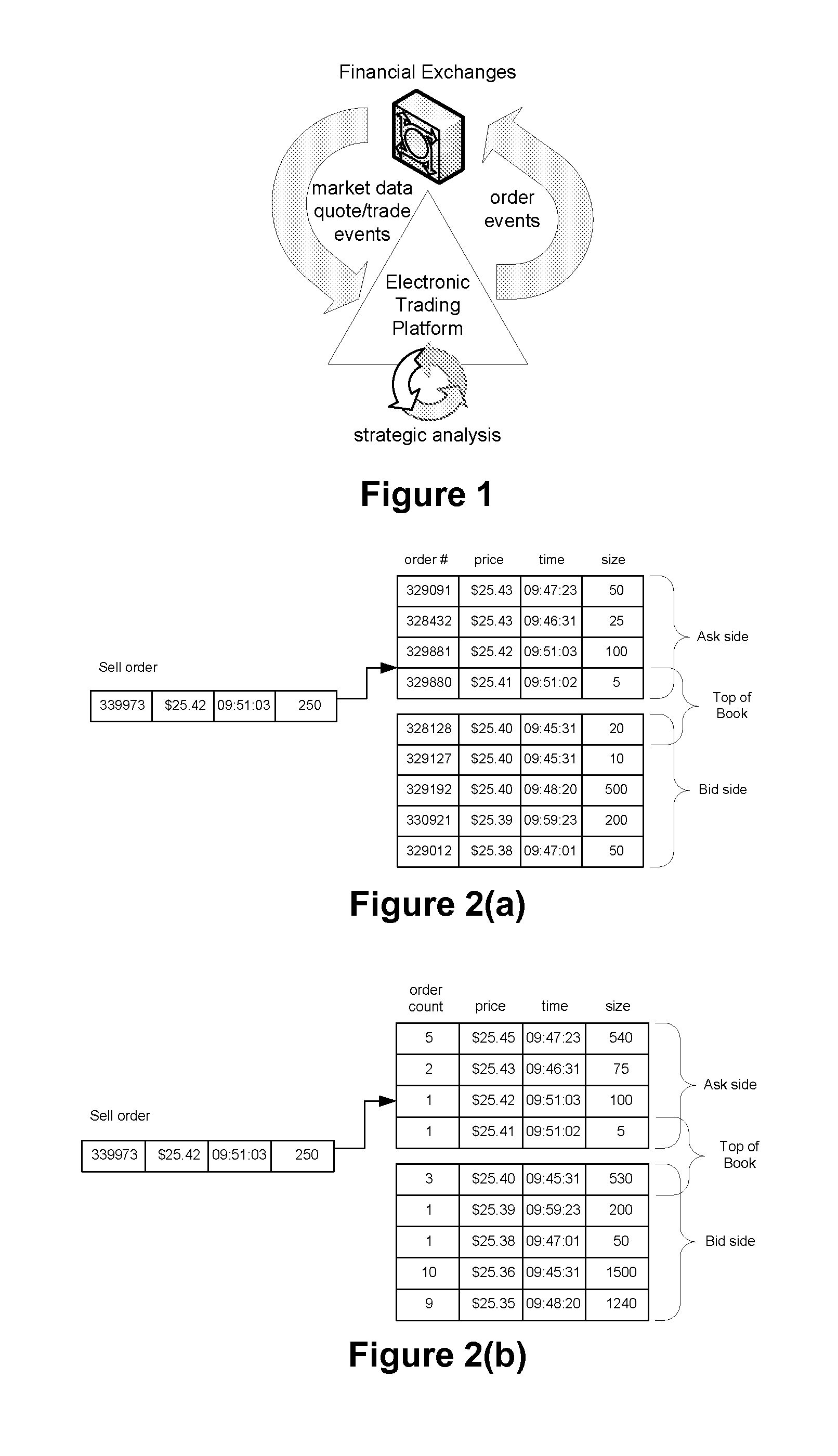

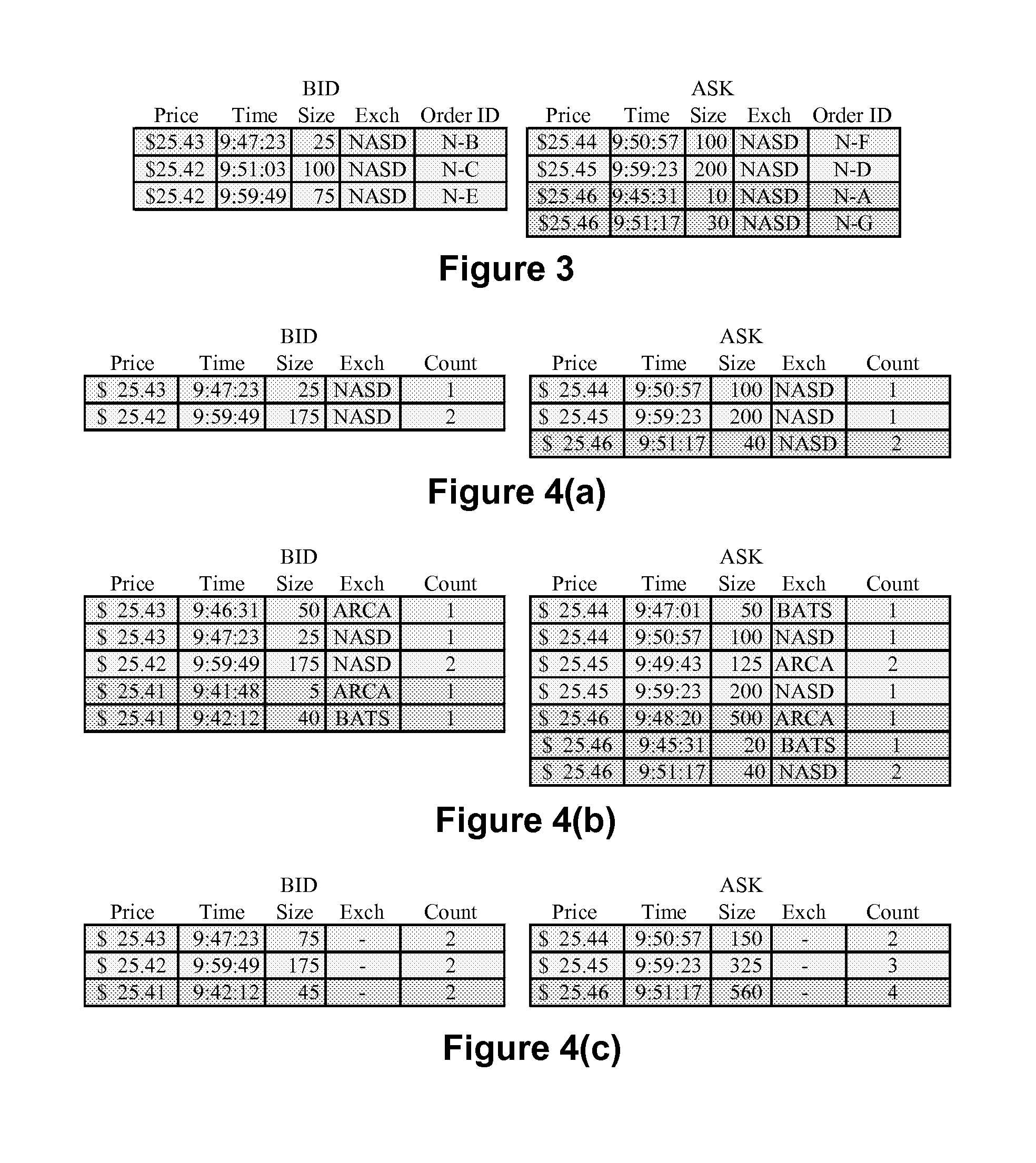

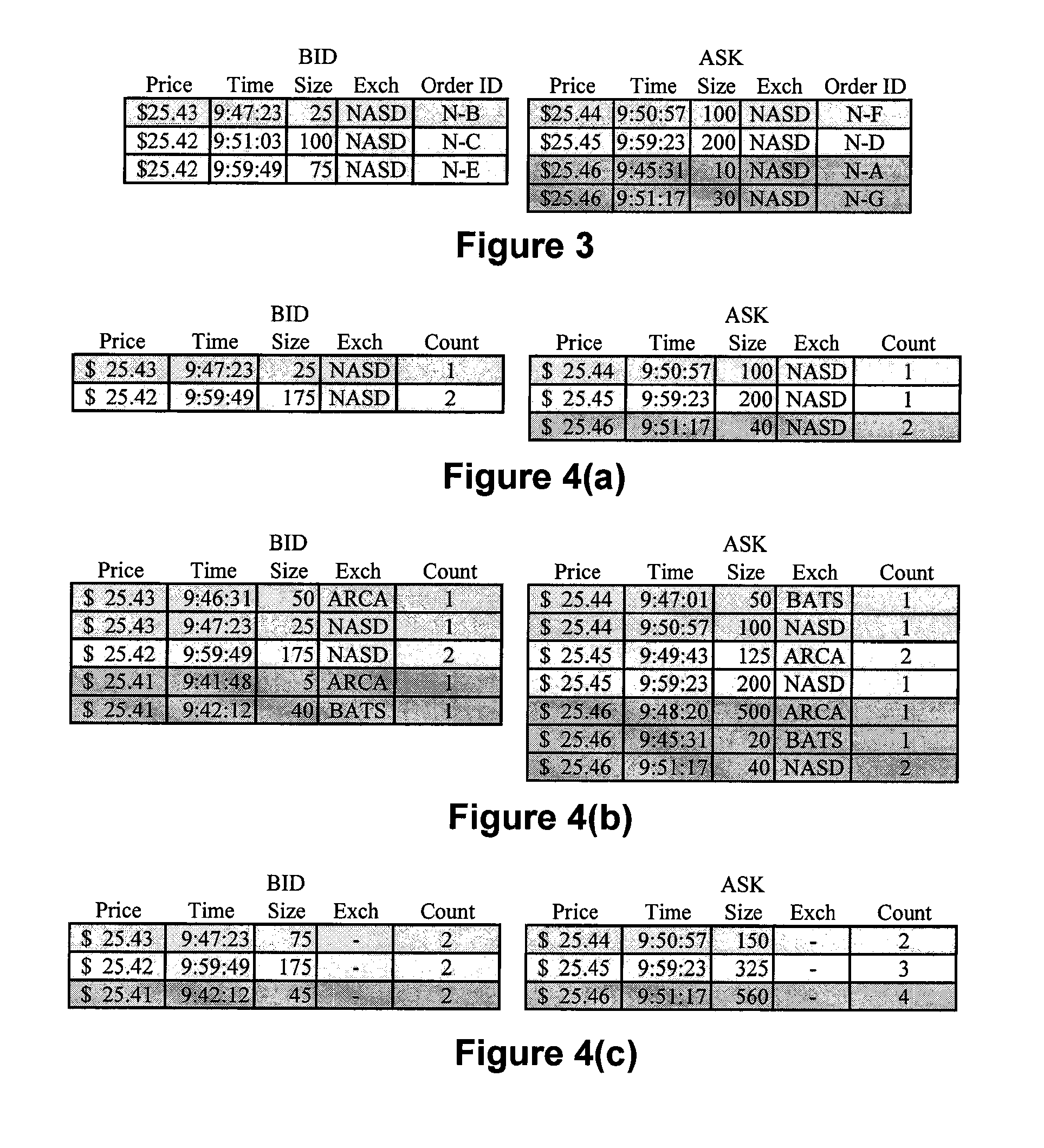

Method and Apparatus for High-Speed Processing of Financial Market Depth Data

ActiveUS20120089496A1Minimize total system latencyProcessing latencyFinanceCoprocessorLatency (engineering)

A variety of embodiments for hardware-accelerating the processing of financial market depth data are disclosed. A coprocessor, which may be resident in a ticker plant, can be configured to update order books based on financial market depth data at extremely low latency. Such a coprocessor can also be configured to enrich a stream of limit order events pertaining to financial instruments with data from a plurality of updated order books.

Owner:EXEGY INC

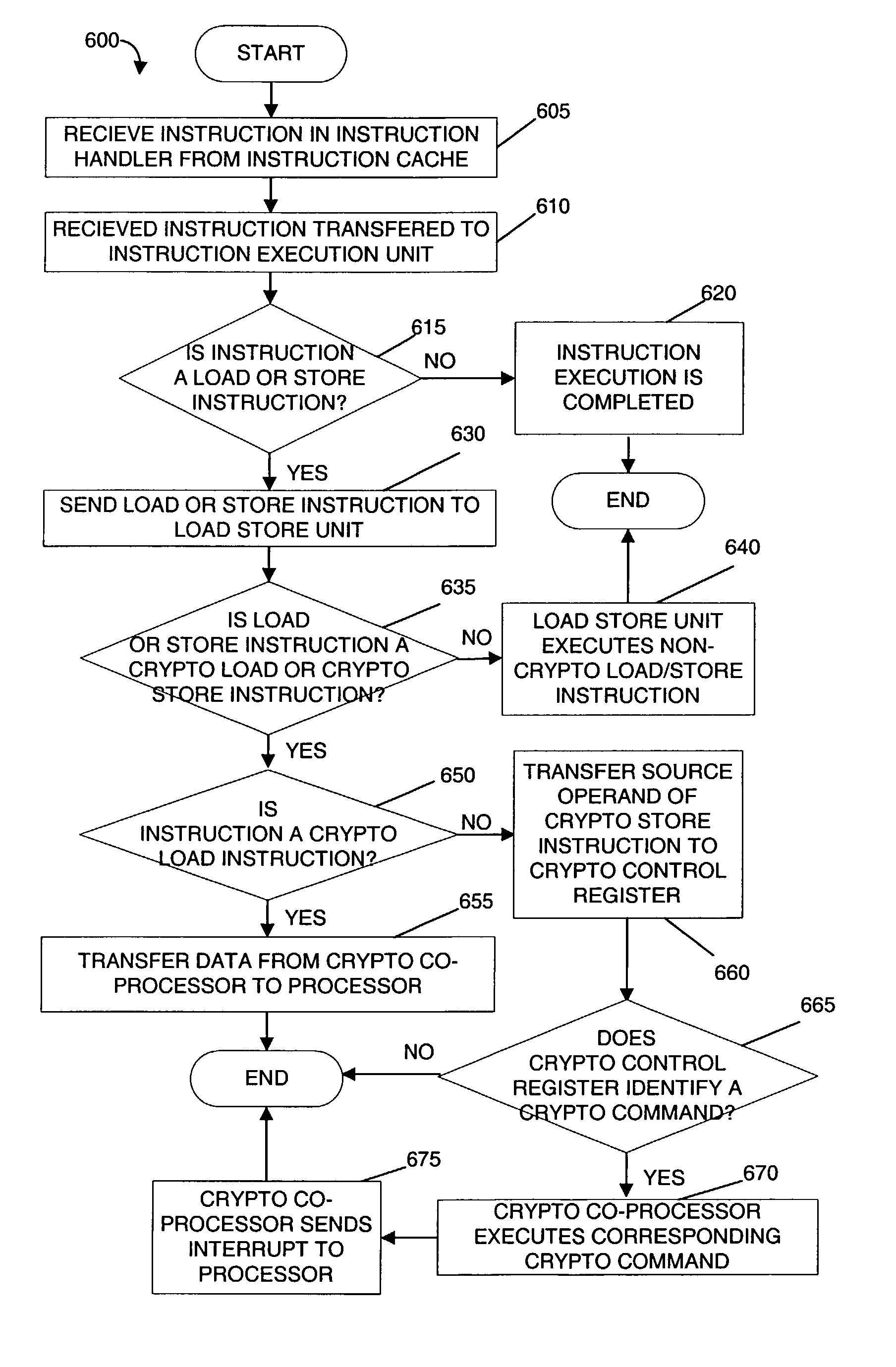

Stream processor with cryptographic co-processor

InactiveUS20030084309A1Energy efficient ICTStatic indicating devicesProcessing coreDirect memory access

A microprocessor includes a first processing core, a first cryptographic coprocessor and an integer multiplier unit that is coupled to the first processing core and the first cryptographic co-processor. The first processing core includes an instruction decode unit, an instruction execution unit, a load / store unit. The first cryptographic coprocessor is located on a first die with the first processing core. The first cryptographic co-processor includes a cryptographic control register, a direct memory access engine that is coupled to the load / store unit in the first processing core and a cryptographic memory.

Owner:SUN MICROSYSTEMS INC

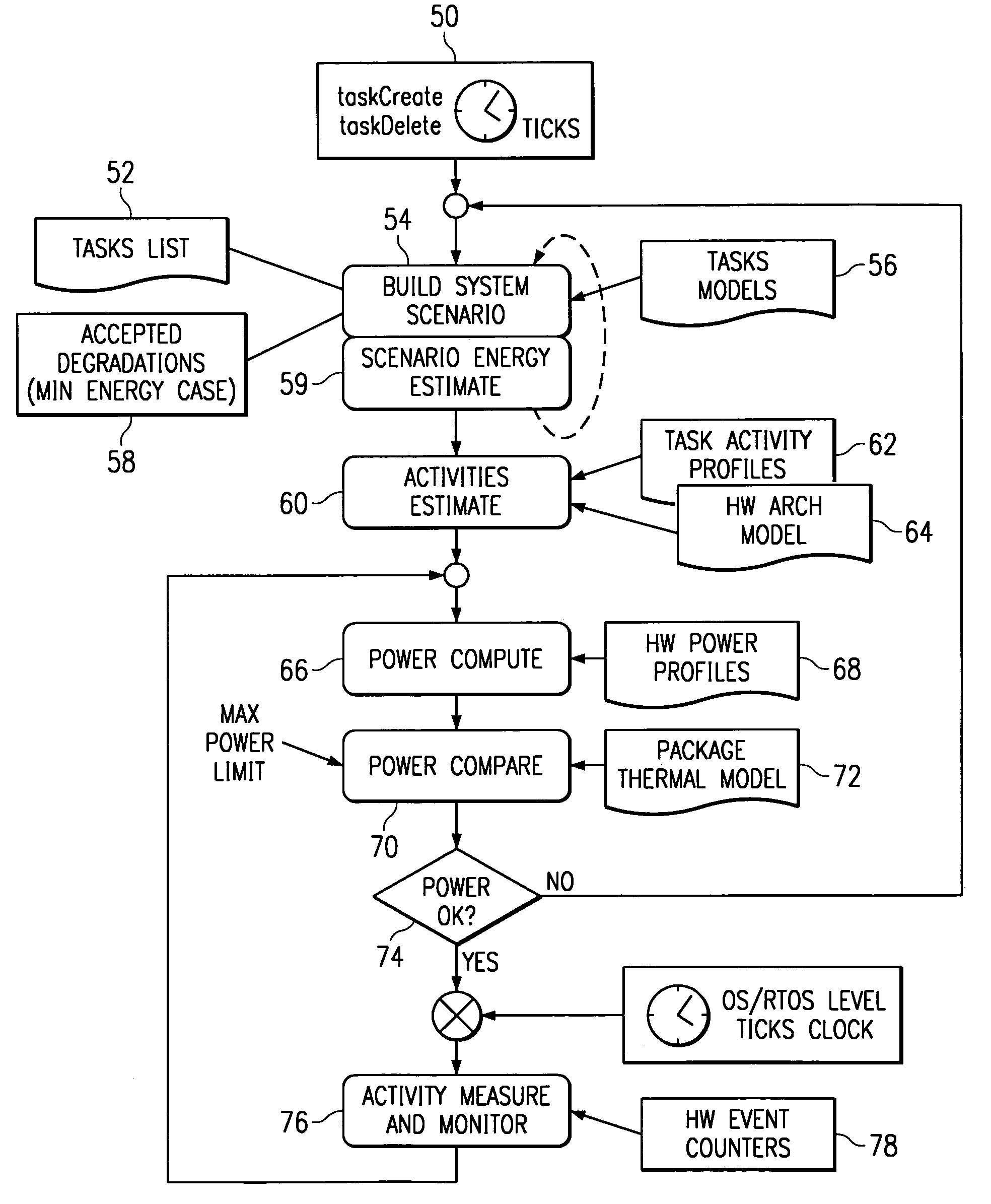

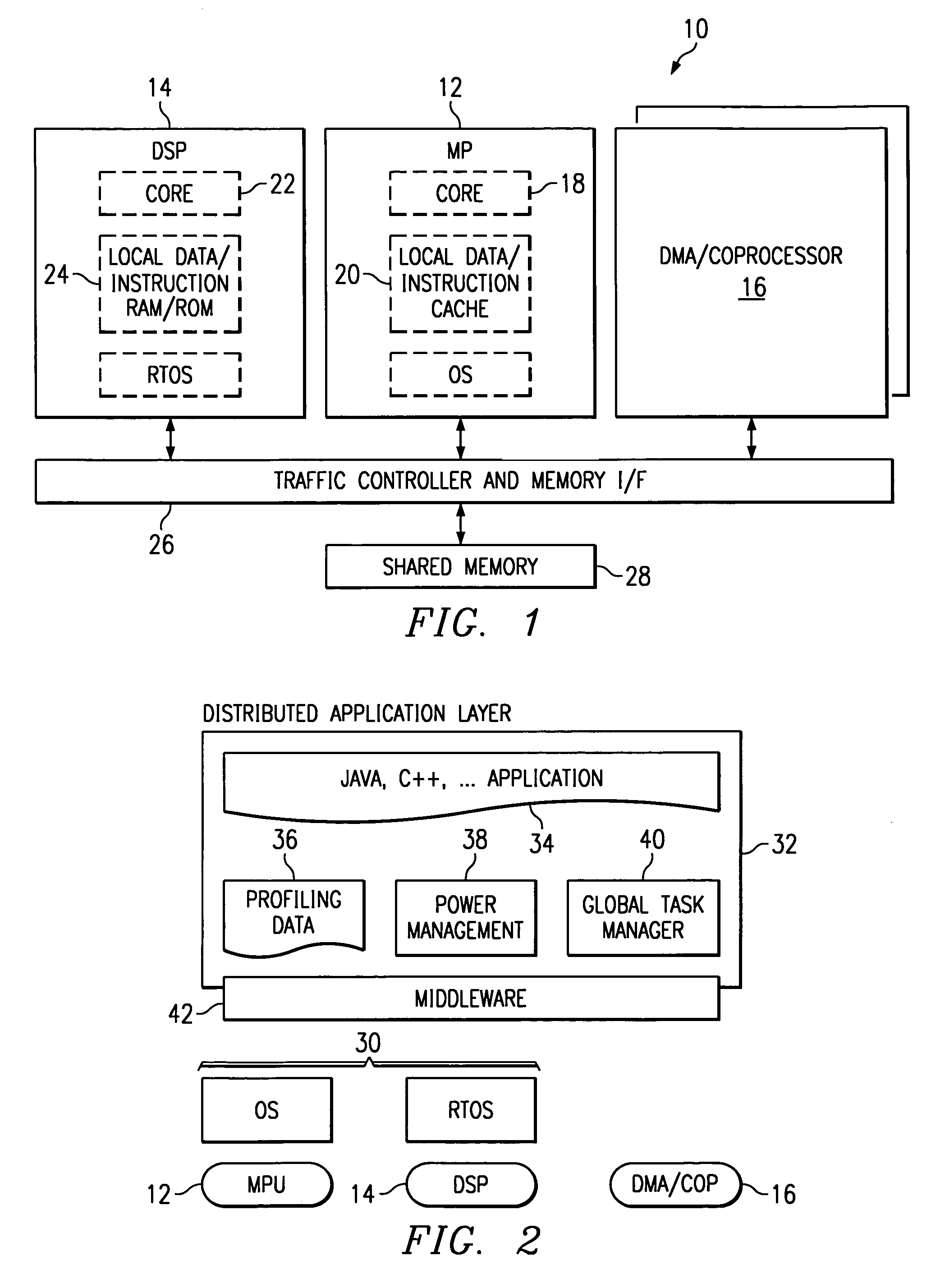

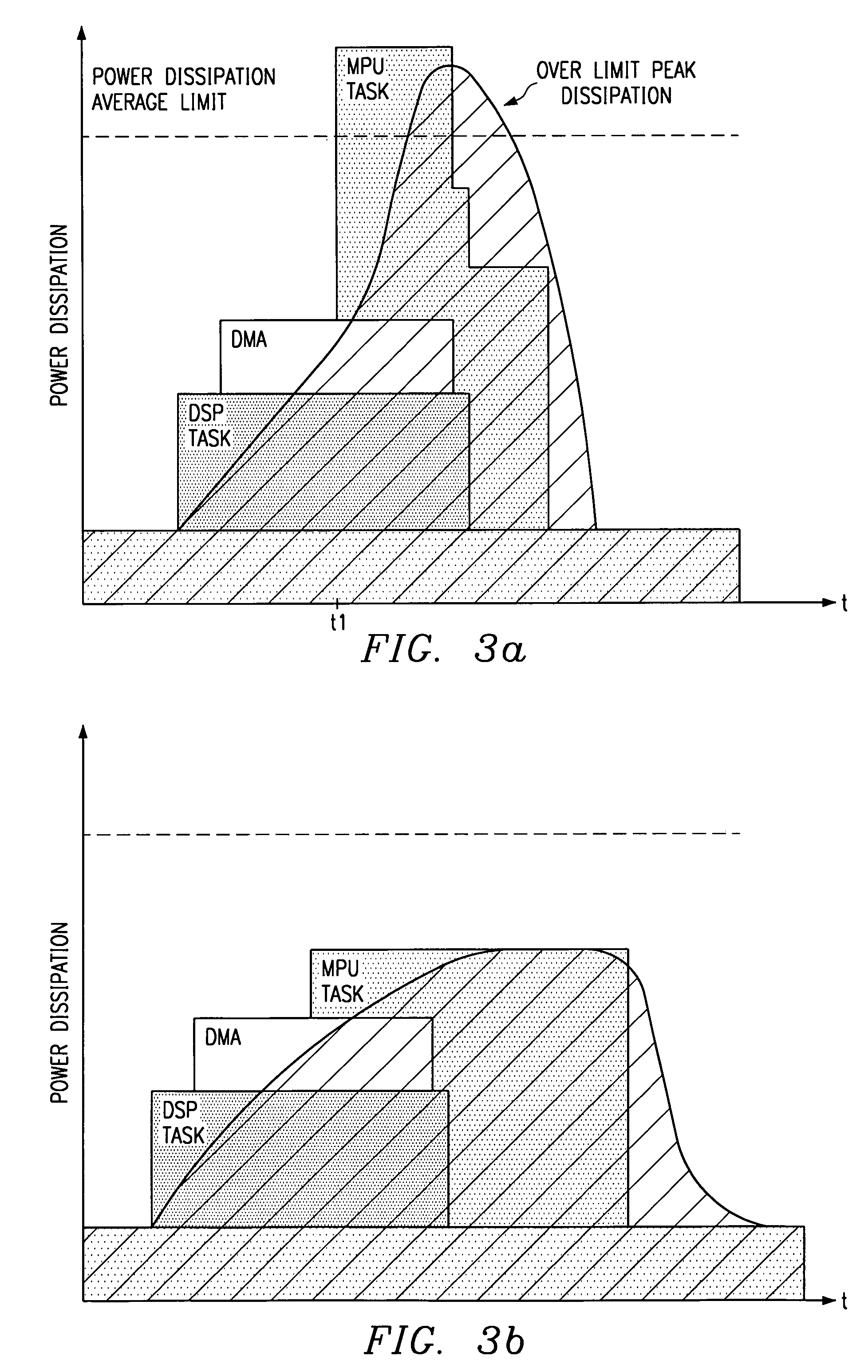

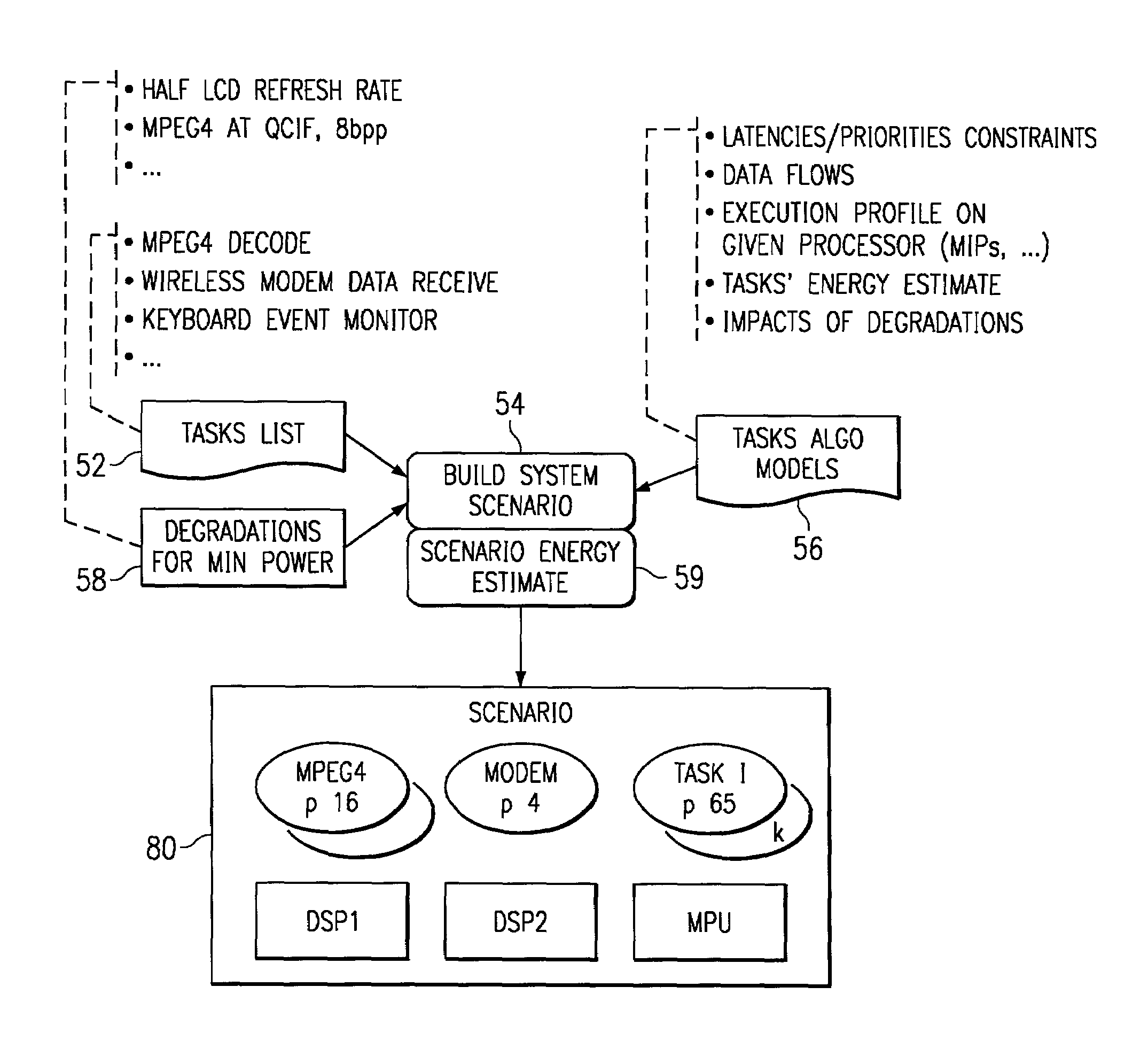

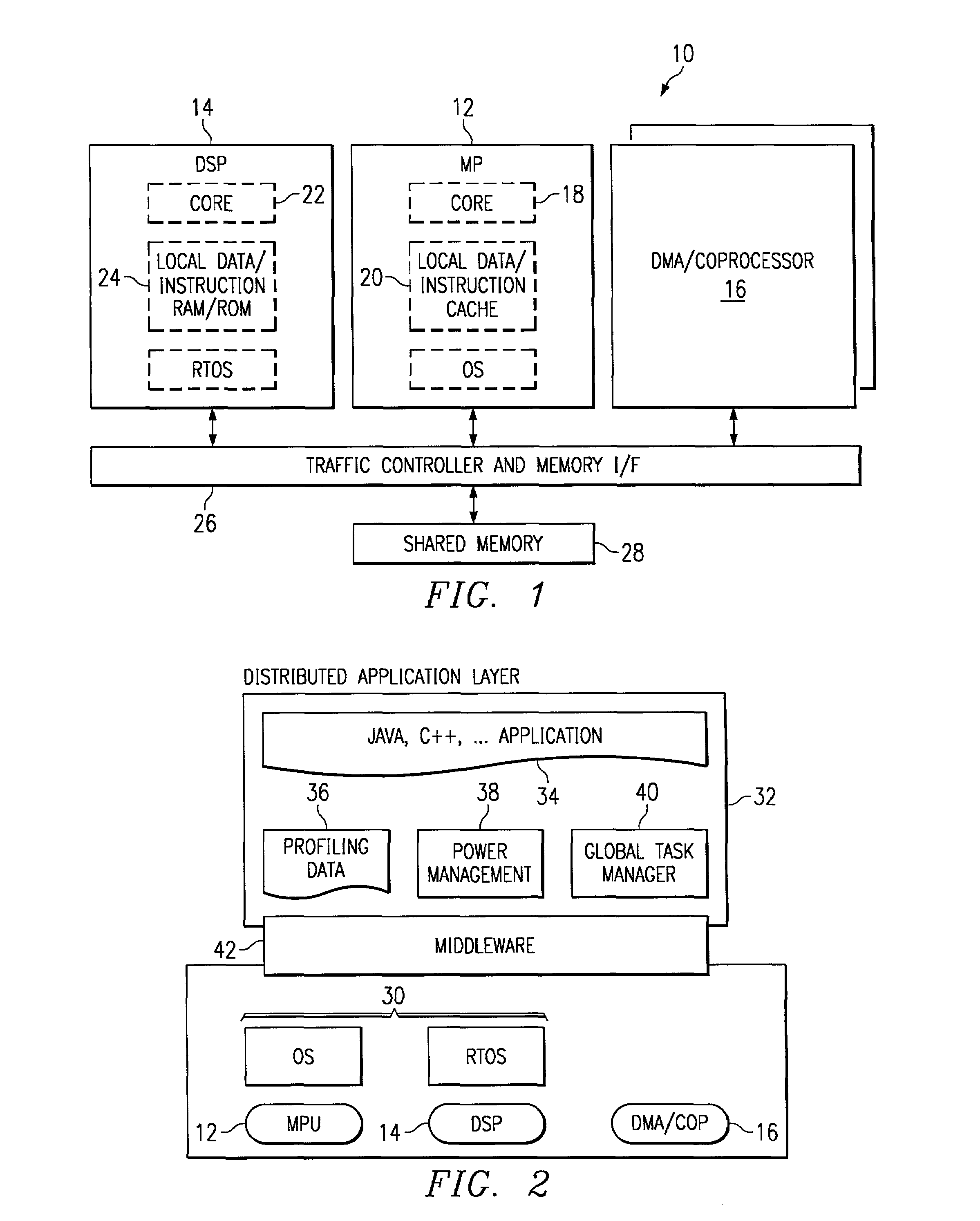

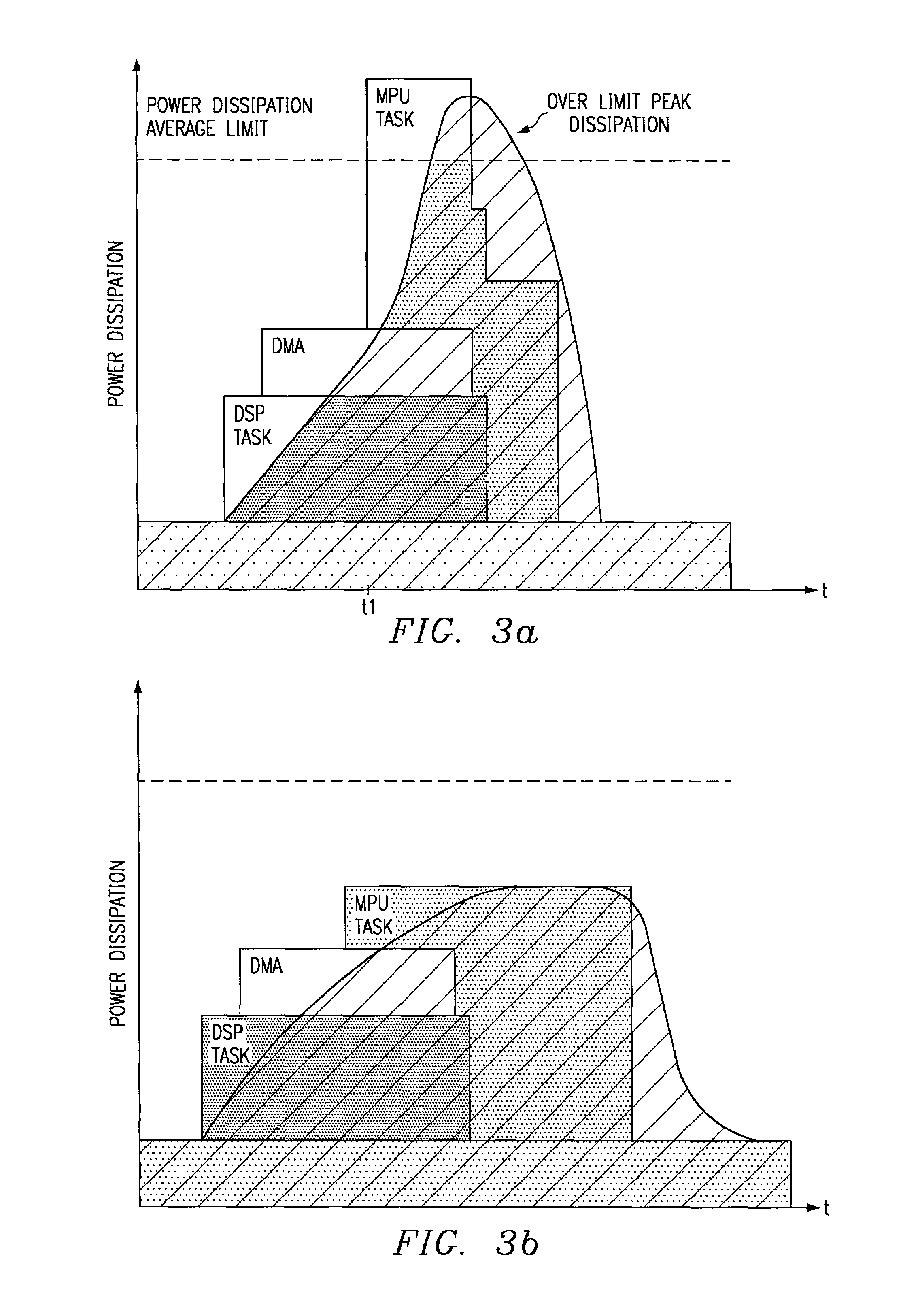

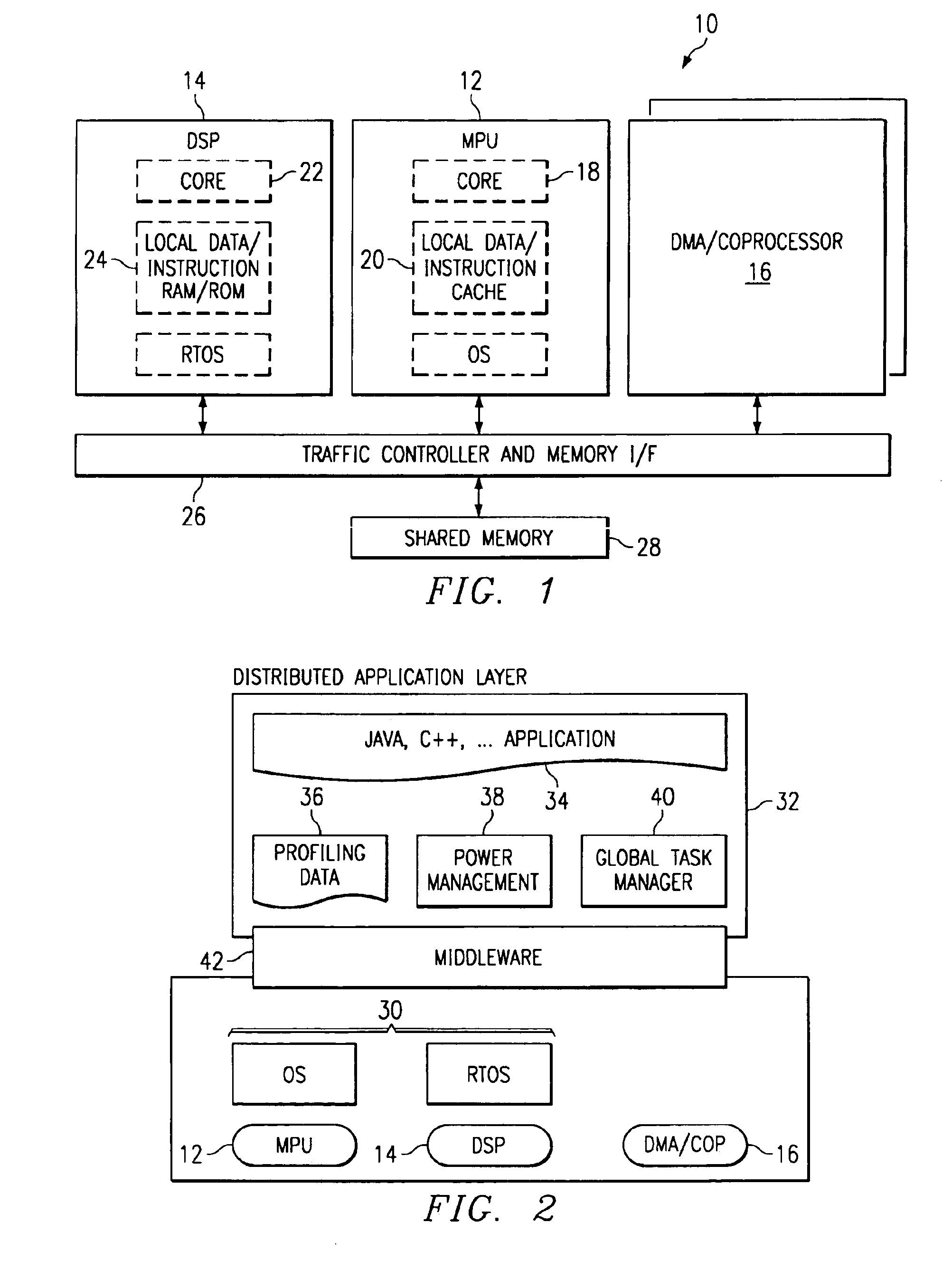

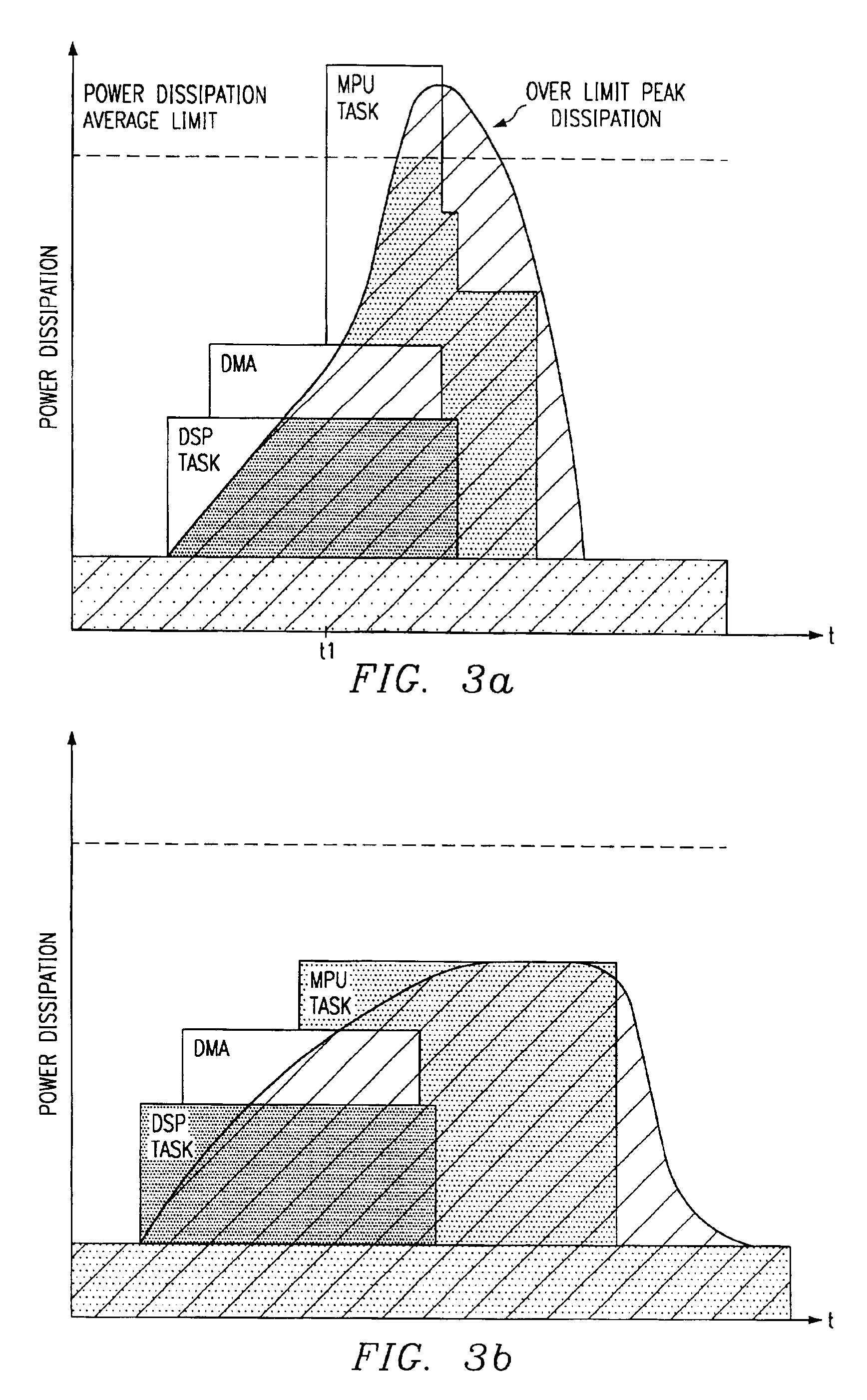

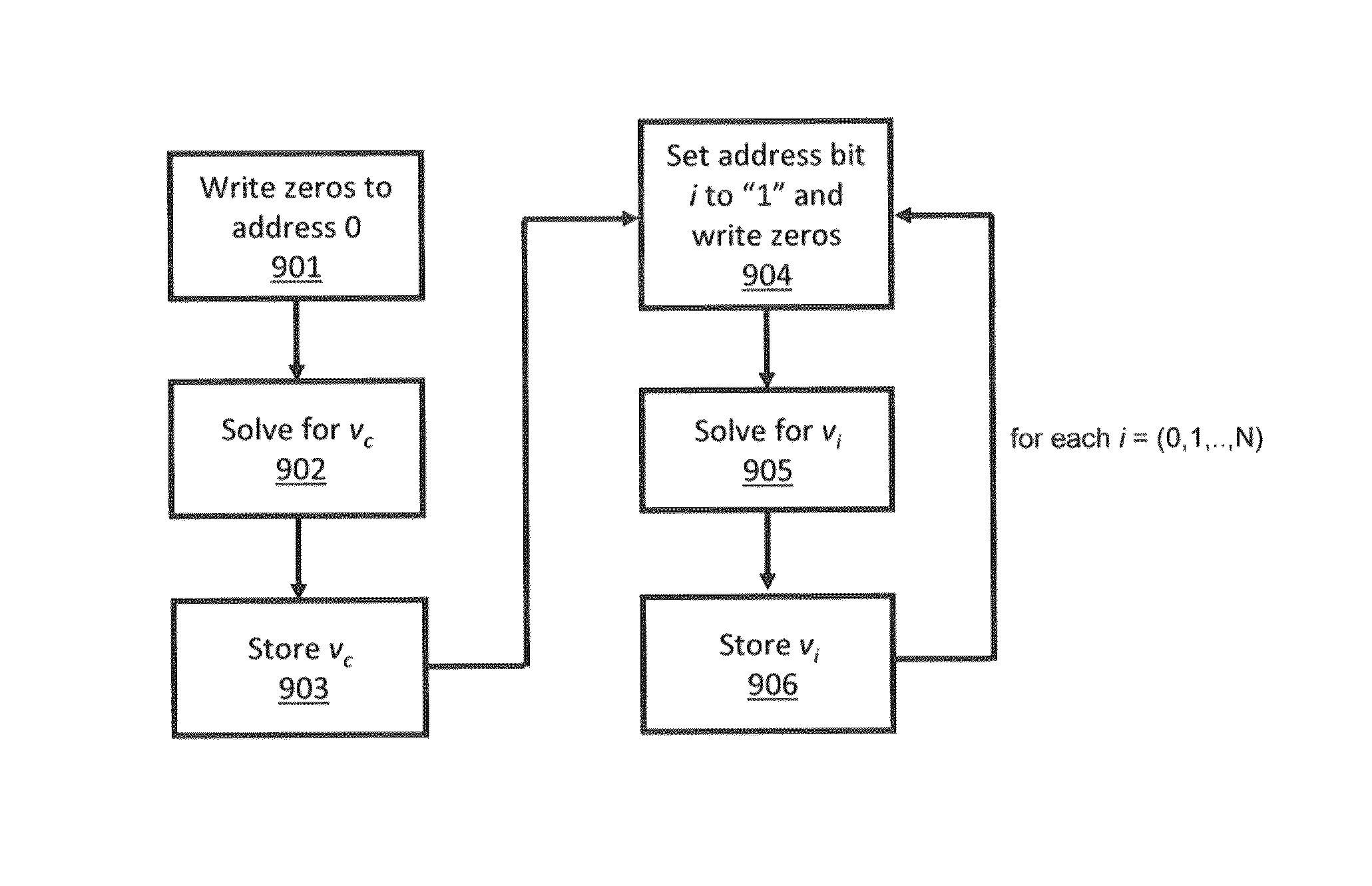

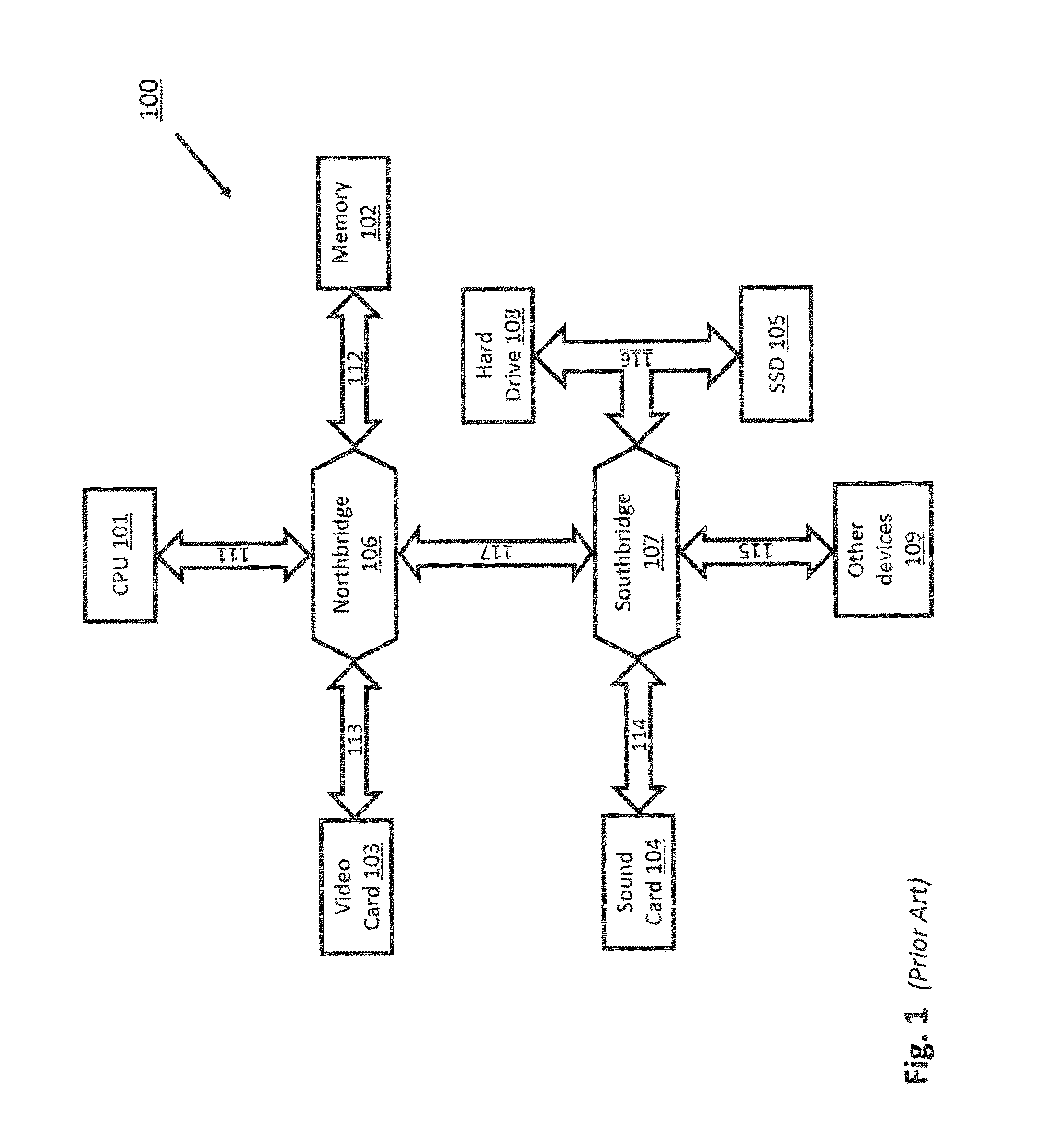

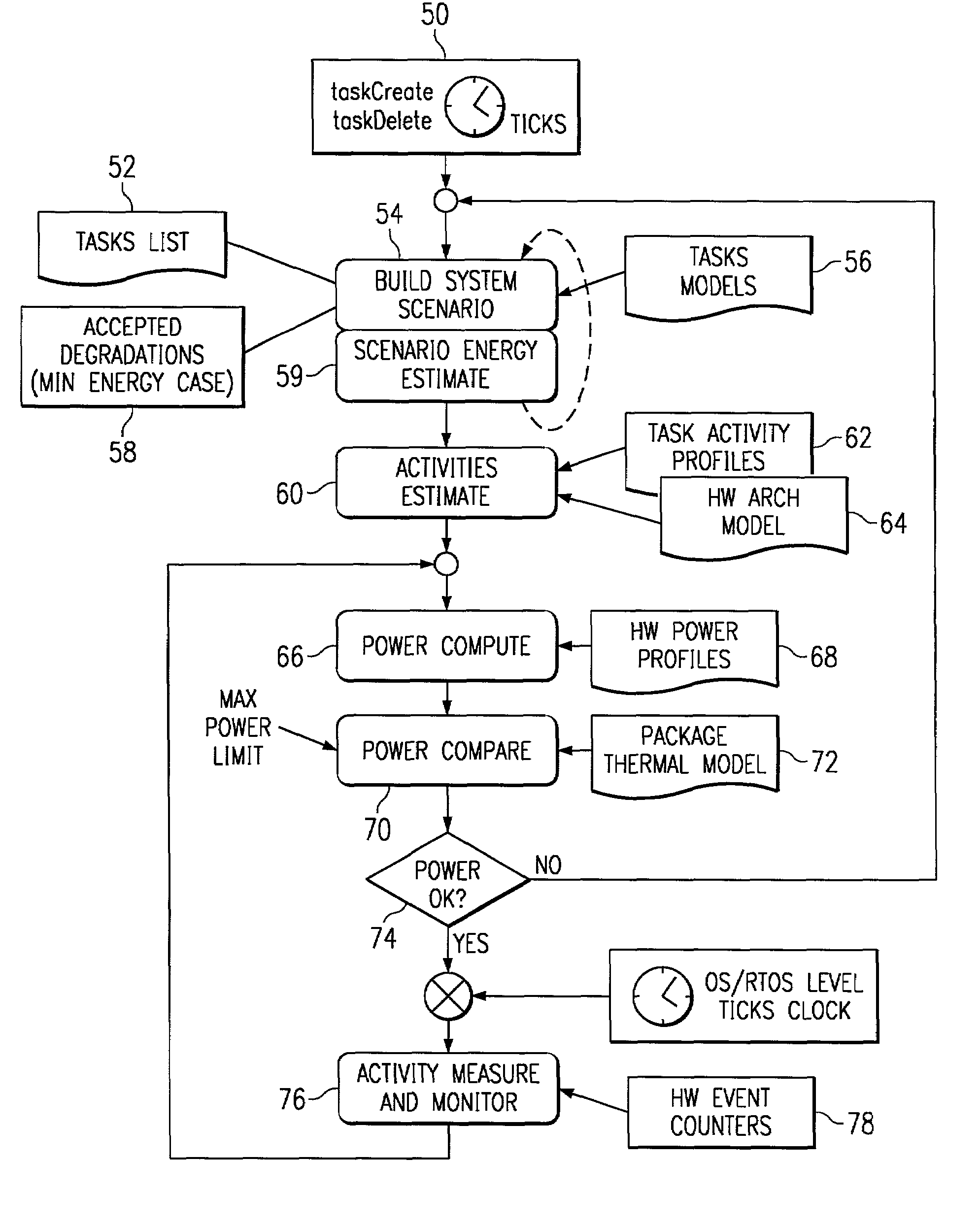

System and method for executing tasks according to a selected scenario in response to probabilistic power consumption information of each scenario

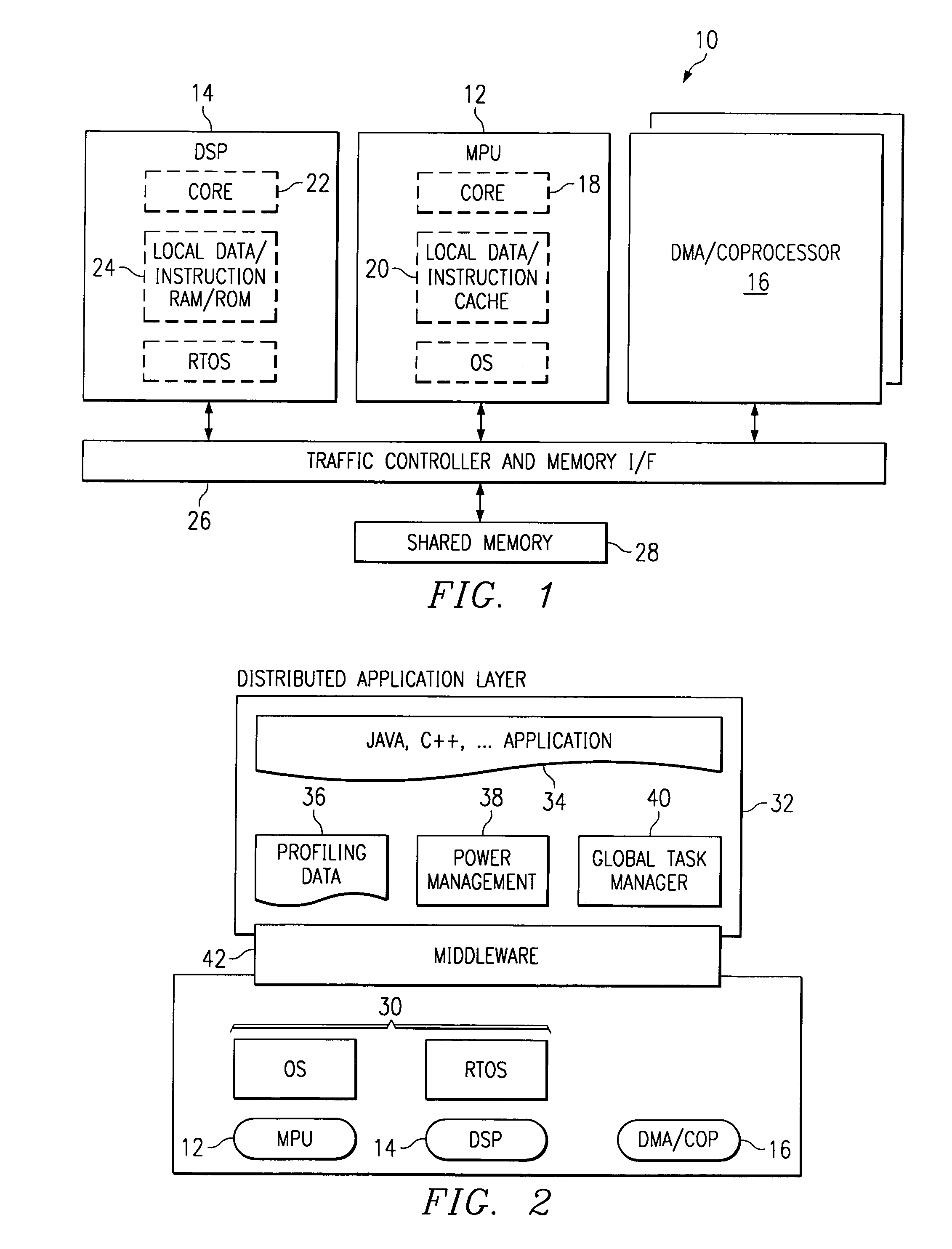

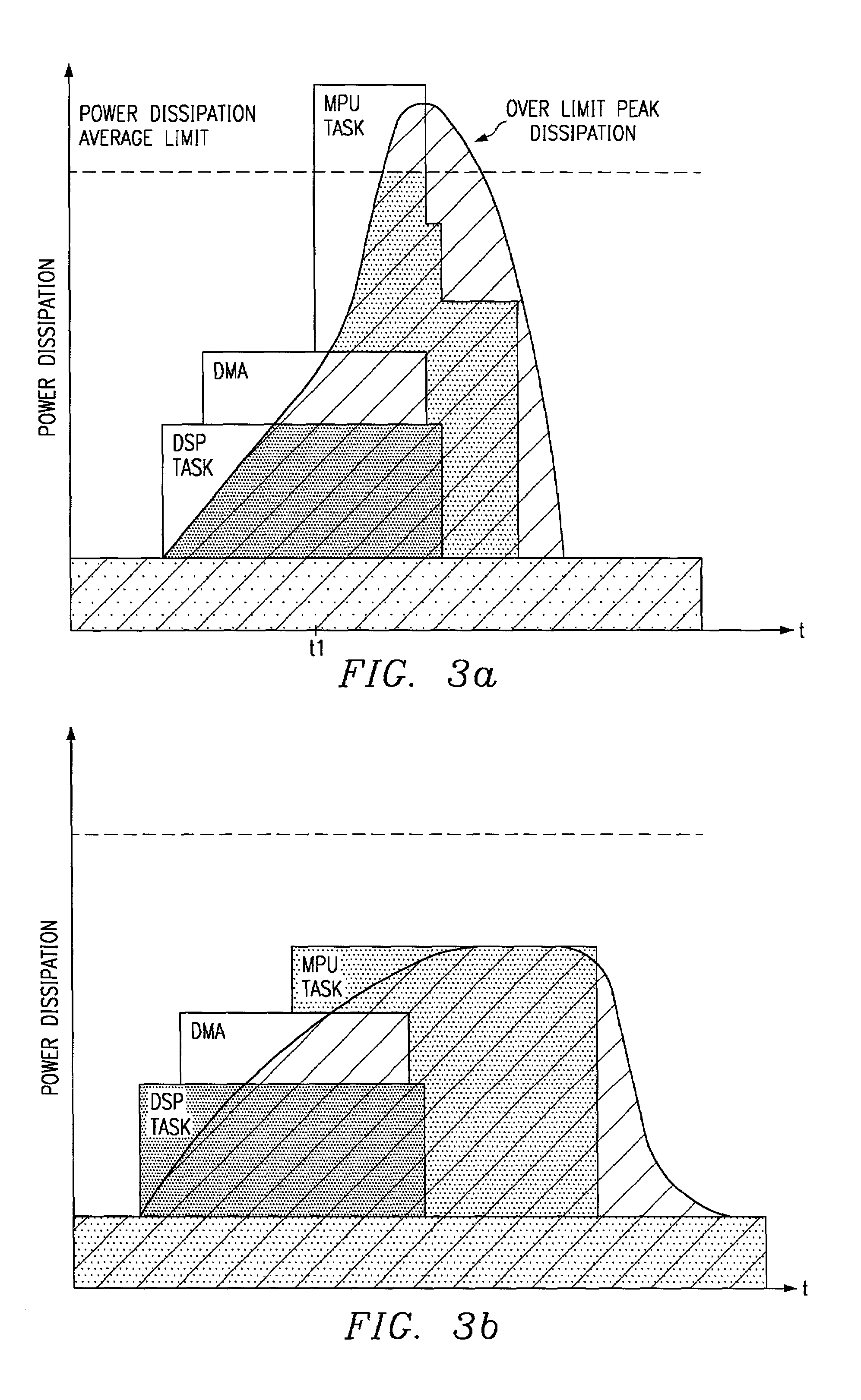

A distributed processing system (10) includes a plurality of processing modules, such as MPUs (12), DSPs (14), and coprocessors / DMA channels (16). Power management software (38) in conjunction with profiles (36) for the various processing modules and the tasks to executed are used to build scenarios which meet predetermined power objectives, such as providing maximum operation within package thermal constraints or using minimum energy. Actual activities associated with the tasks are monitored during operation to ensure compatibility with the objectives. The allocation of tasks may be changed dynamically to accommodate changes in environmental conditions and changes in the task list.

Owner:TEXAS INSTR INC

Coprocessor opcode division by data type

InactiveUS6247113B1Easy to scaleReduced hardware coprocessorRegister arrangementsGeneral purpose stored program computerData processing systemCoprocessor

A data processing system having a main processor and a coprocessor. The main processor responsds to coprocessor instructions within its instruction stream by issuing the coprocessor instructions upon a coprocessor bus and detecting if the coprocessor accepts them by returning an accept signal. The coprocessor instructions include a coprocessor number and the coprocessor checks this number to see if it matches its own number(s) to determine whether or not it should accept the coprocessor instruction. A data type field within the coprocessor number in the coprocessor instruction also serves to specify one of multiple data types to be used in the coprocessor operation; particular coprocessors can interpret this part of the coprocessor number to determine data type. If the coprocessor supports multiple data types, then it has multiple coprocessor numbers for which it will issue accept signals and then uses the data type field to control the data type used. If a coprocessor does not support a particular data type then it will not issue an accept signal for coprocessor instructions that specify that data type. The main processor can then use emulation code to provide support for that coprocessor instruction.

Owner:ARM LTD

Method and Apparatus for High-Speed Processing of Financial Market Depth Data

ActiveUS20120089497A1Minimize total system latencyProcessing latencyFinanceCoprocessorLatency (engineering)

A variety of embodiments for hardware-accelerating the processing of financial market depth data are disclosed. A coprocessor, which may be resident in a ticker plant, can be configured to update order books based on financial market depth data at extremely low latency. Such a coprocessor can also be configured to generate a quote event in response to a limit order event being determined to modify the top of an order book.

Owner:EXEGY INC

Method and apparatus for high-speed processing of financial market depth data

A variety of embodiments for hardware-accelerating the processing of financial market depth data are disclosed. A coprocessor, which may be resident in a ticker plant, can be configured to update order books based on financial market depth data at extremely low latency. Such a coprocessor can also be configured to enrich a stream of limit order events pertaining to financial instruments with data from a plurality of updated order books.

Owner:EXEGY INC

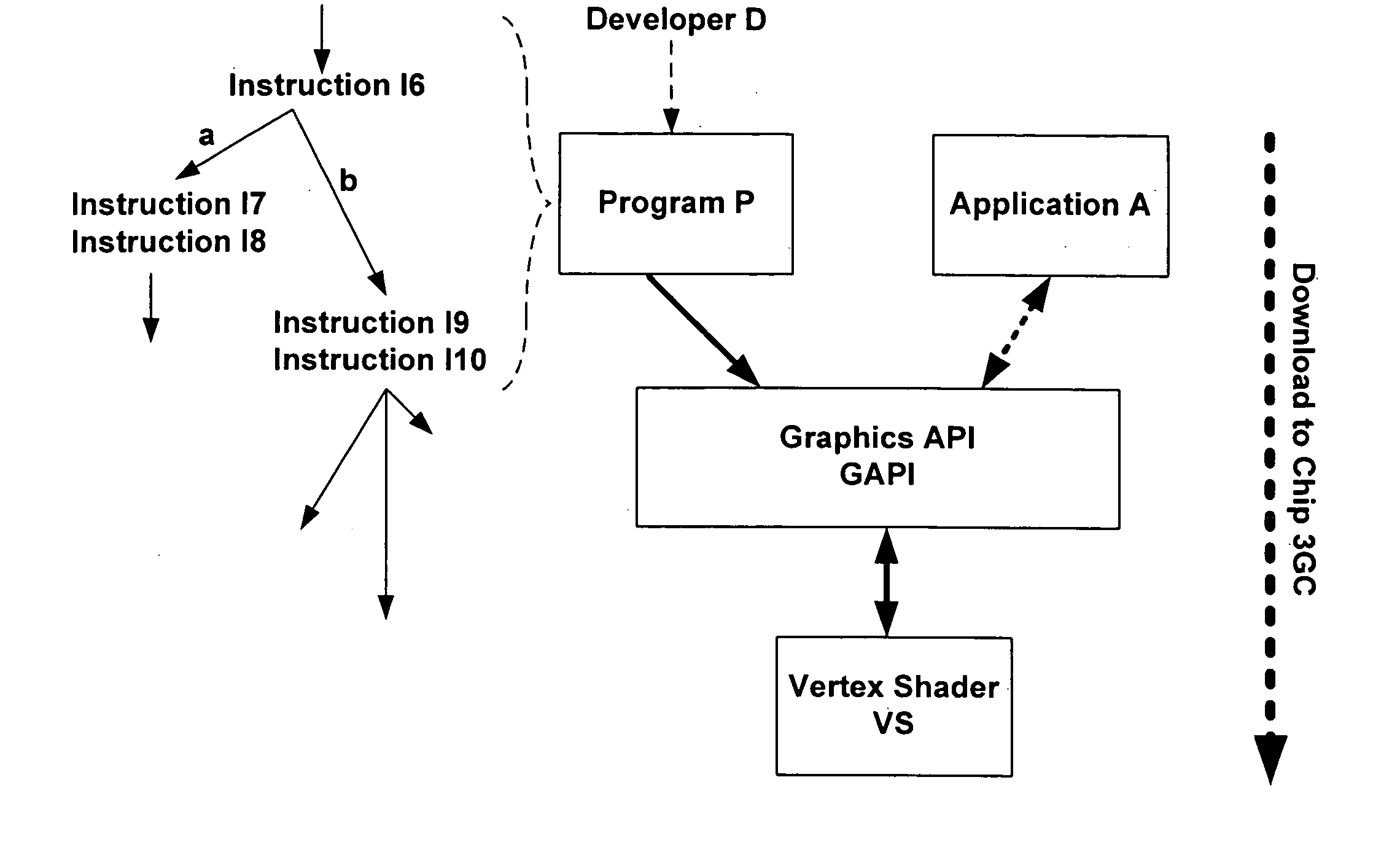

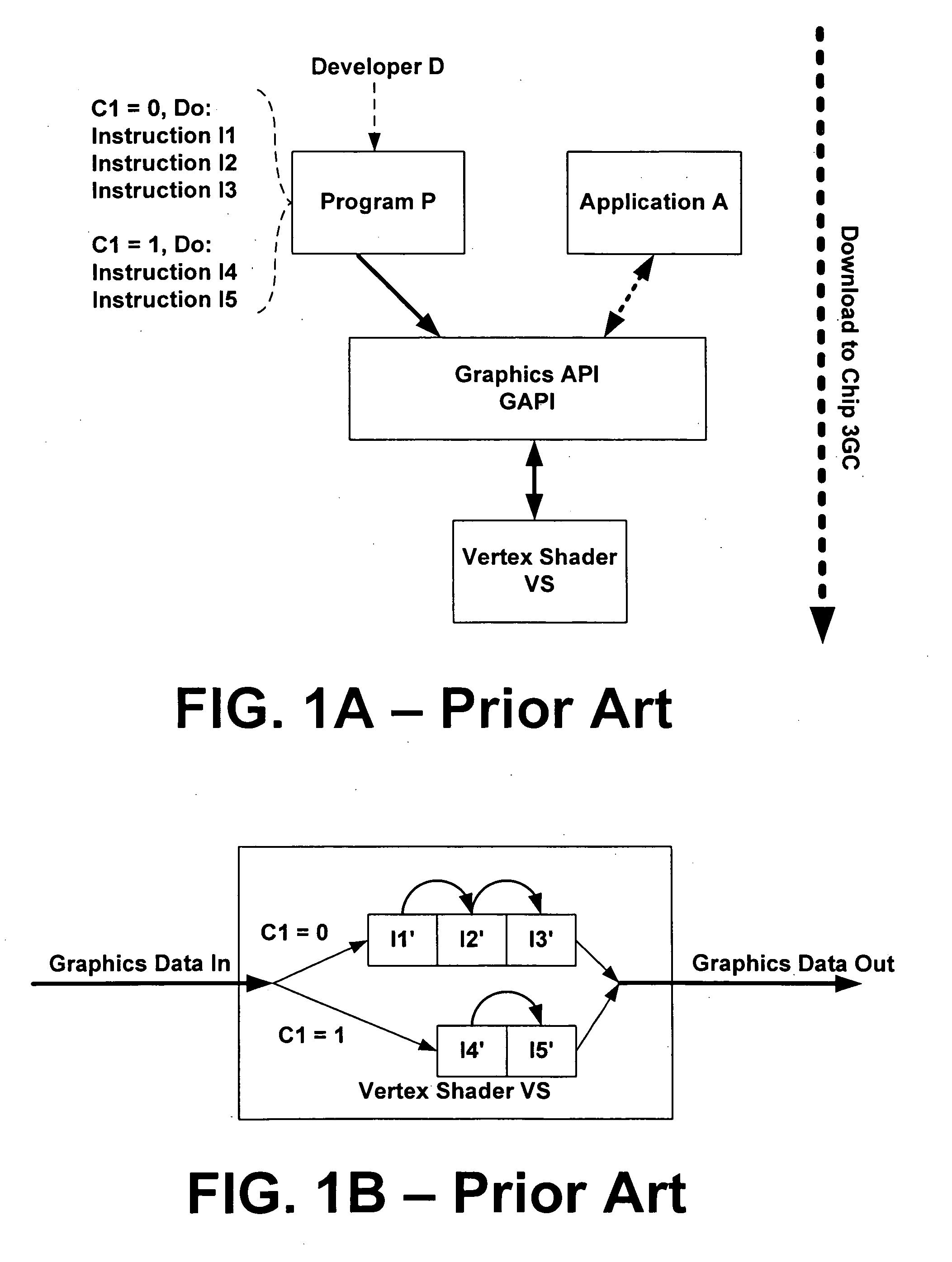

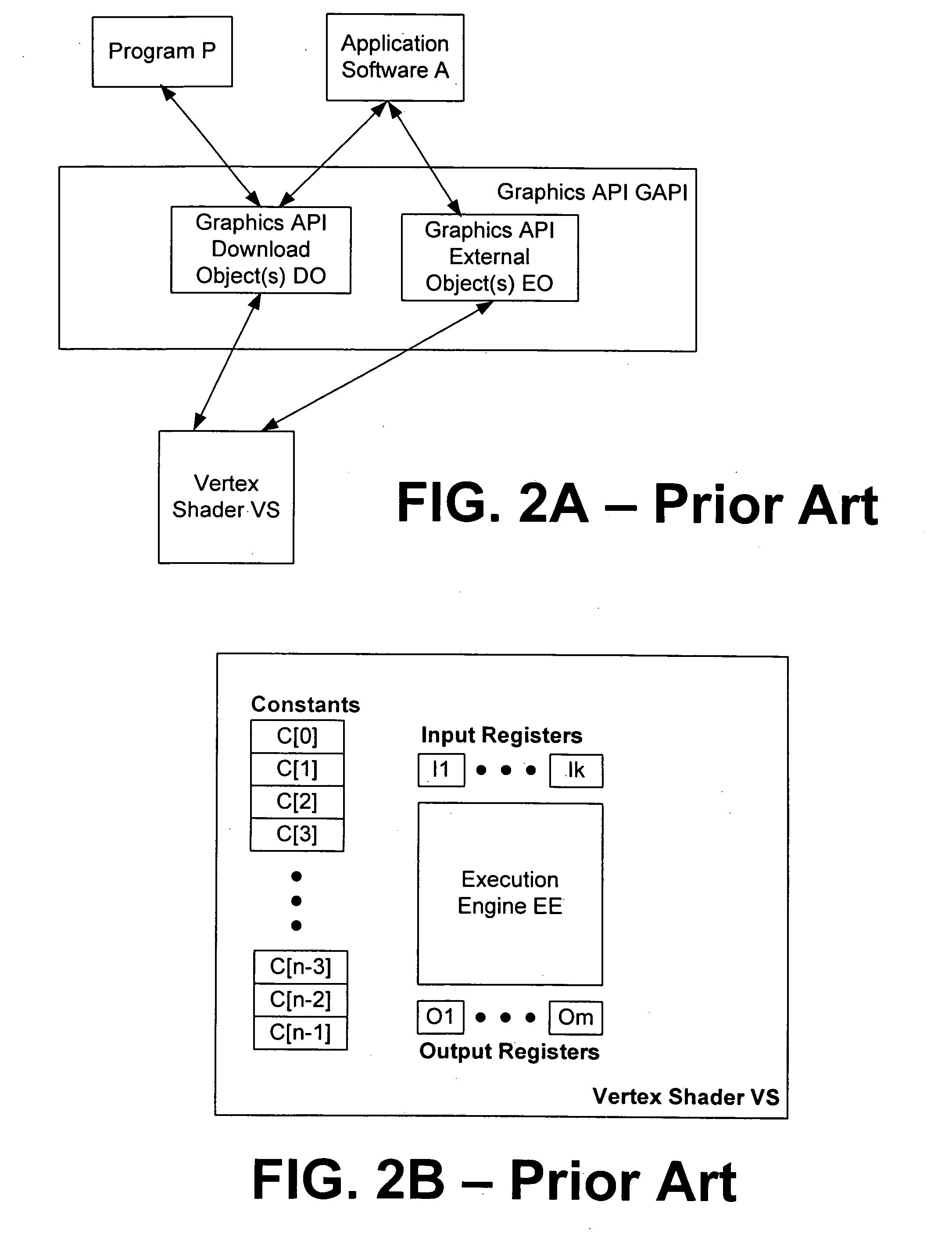

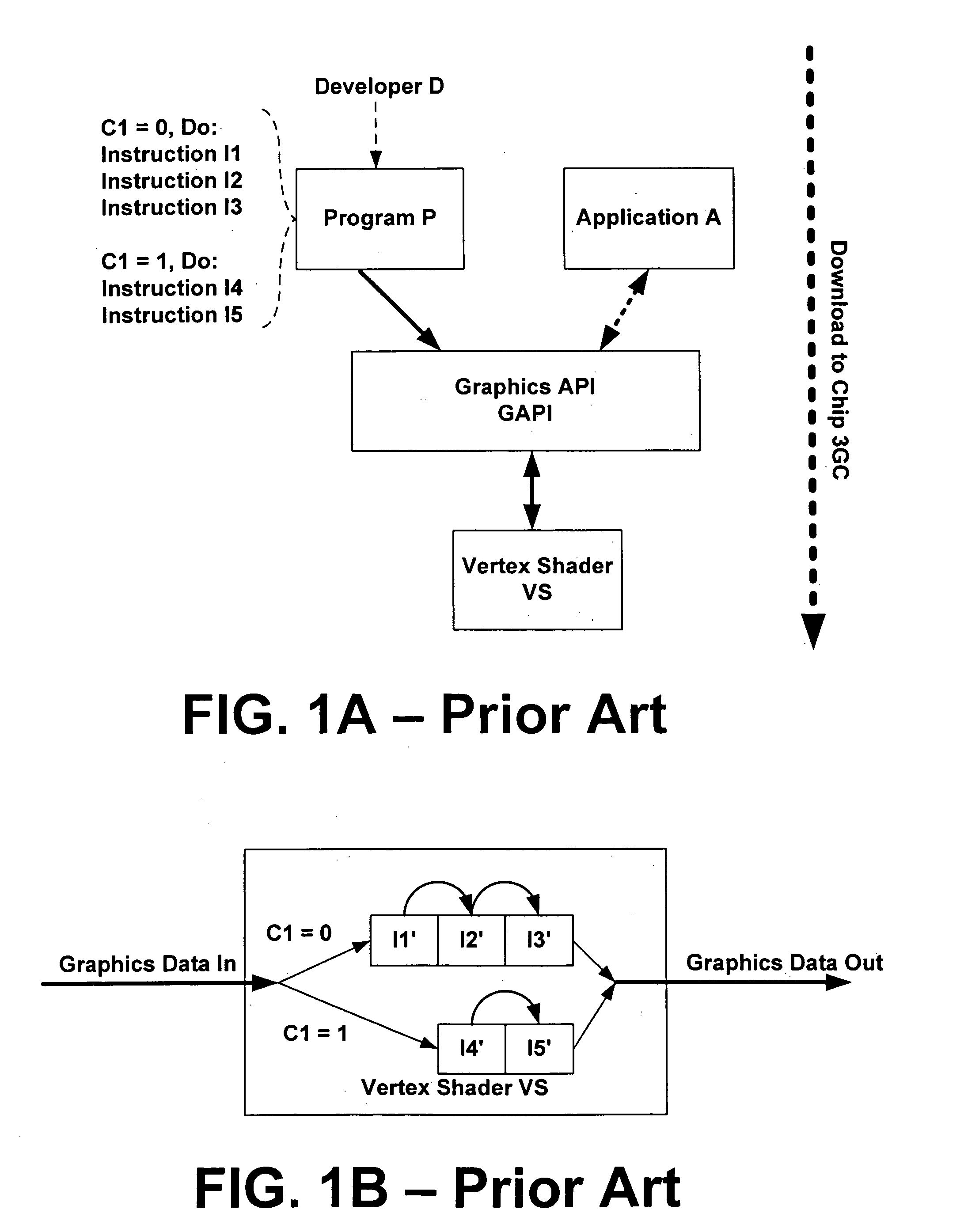

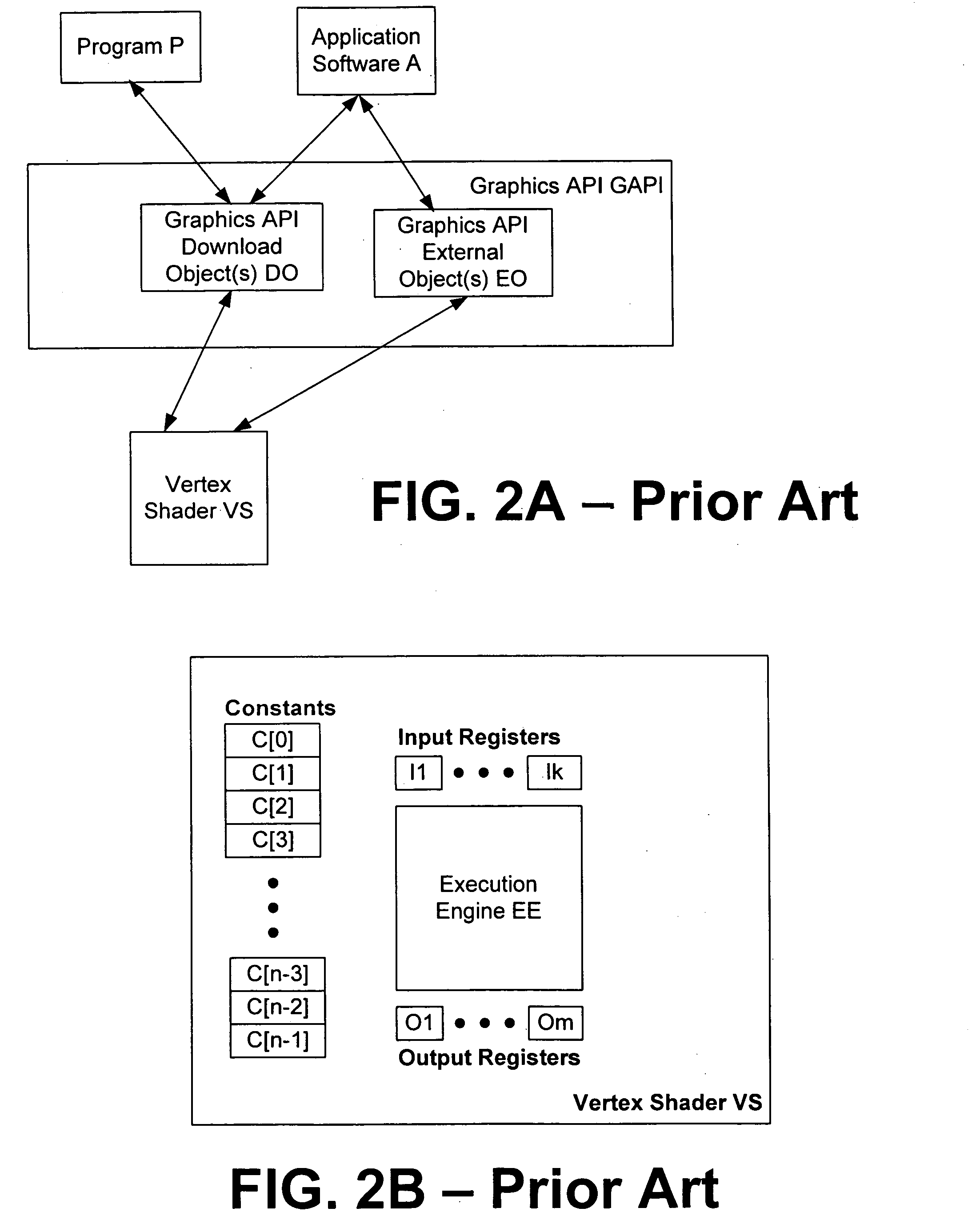

Systems and methods for downloading algorithmic elements to a coprocessor and corresponding techniques

ActiveUS20050122330A1Improve abilitiesSophisticated effectDigital computer detailsNext instruction address formationComputational scienceCoprocessor

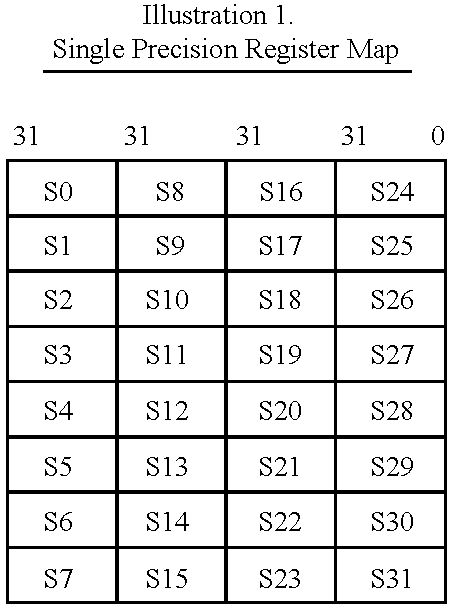

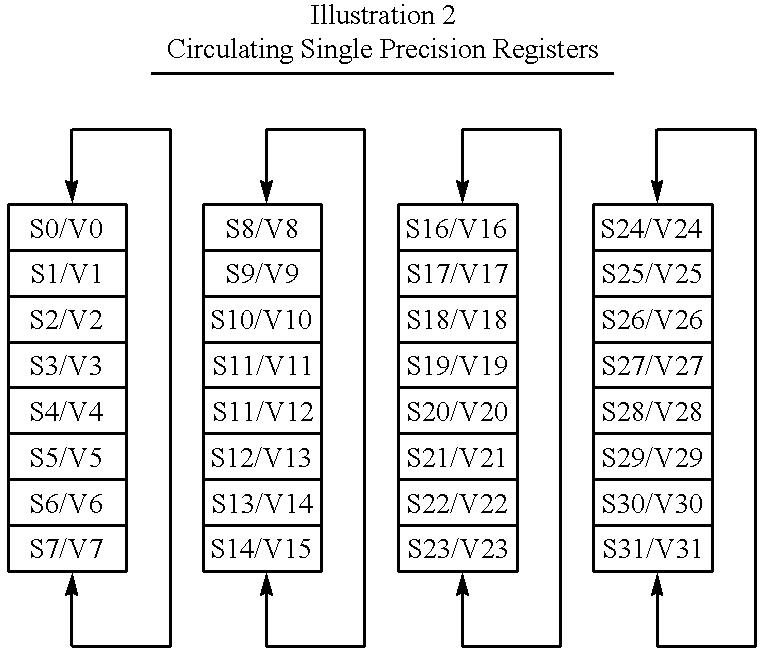

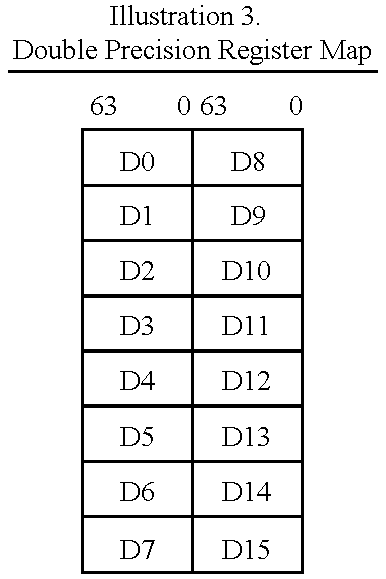

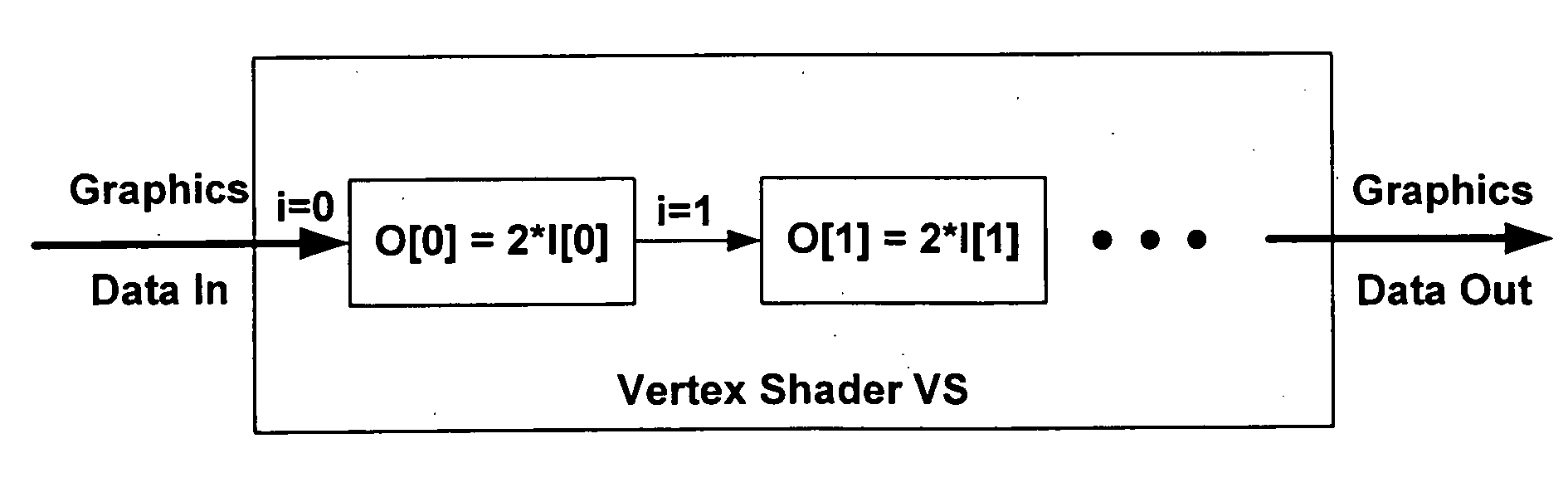

Systems and methods for downloading algorithmic elements to a coprocessor and corresponding processing and communication techniques are provided. For an improved graphics pipeline, the invention provides a class of co-processing device, such as a graphics processor unit (GPU), providing improved capabilities for an abstract or virtual machine for performing graphics calculations and rendering. The invention allows for runtime-predicated flow control of programs downloaded to coprocessors, enables coprocessors to include indexable arrays of on-chip storage elements that are readable and writable during execution of programs, provides native support for textures and texture maps and corresponding operations in a vertex shader, provides frequency division of vertex streams input to a vertex shader with optional support for a stream modulo value, provides a register storage element on a pixel shader and associated interfaces for storage associated with representing the “face” of a pixel, provides vertex shaders and pixel shaders with more on-chip register storage and the ability to receive larger programs than any existing vertex or pixel shaders and provides 32 bit float number support in both vertex and pixel shaders.

Owner:MICROSOFT TECH LICENSING LLC

High performance hybrid micro-computer

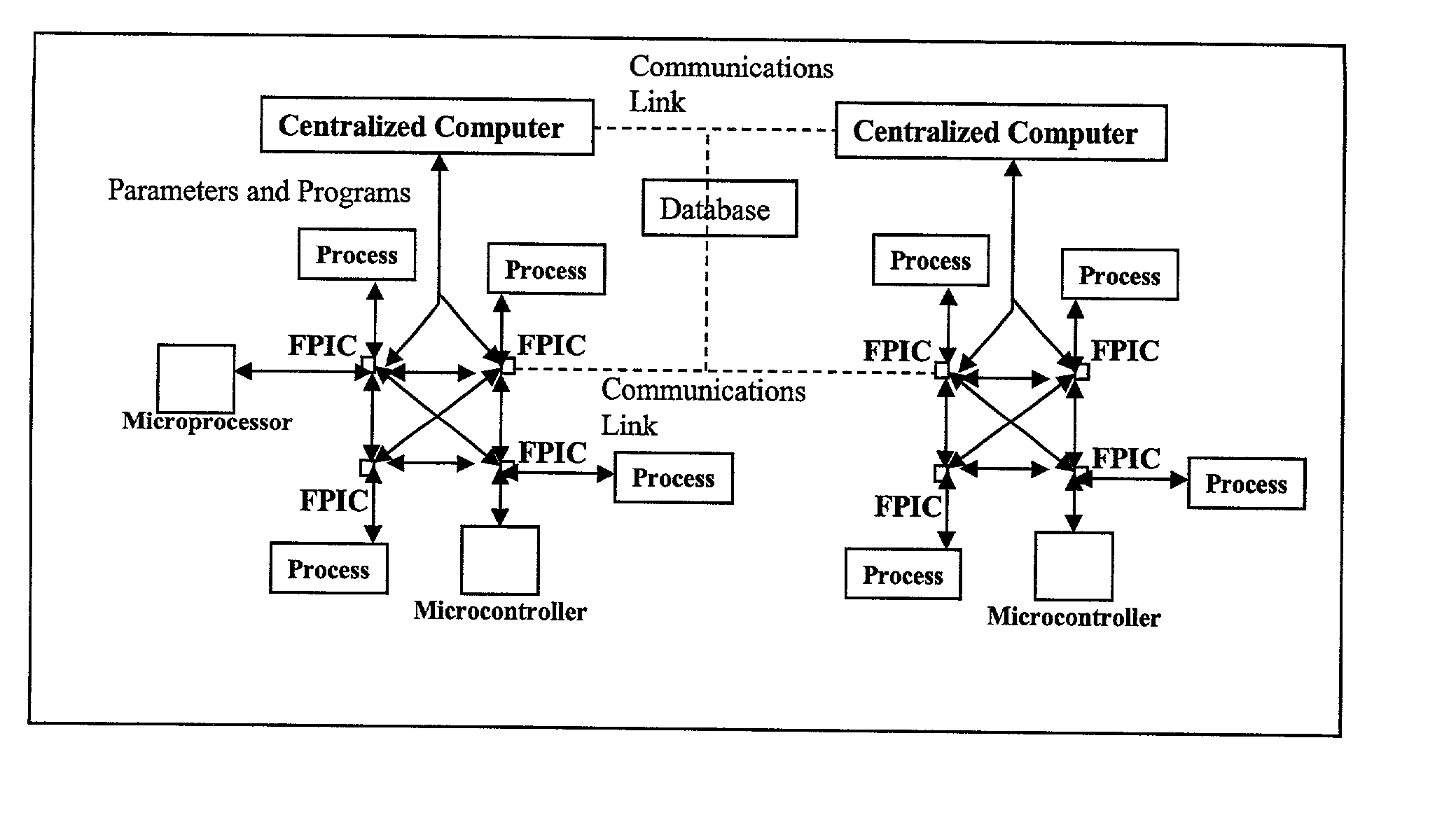

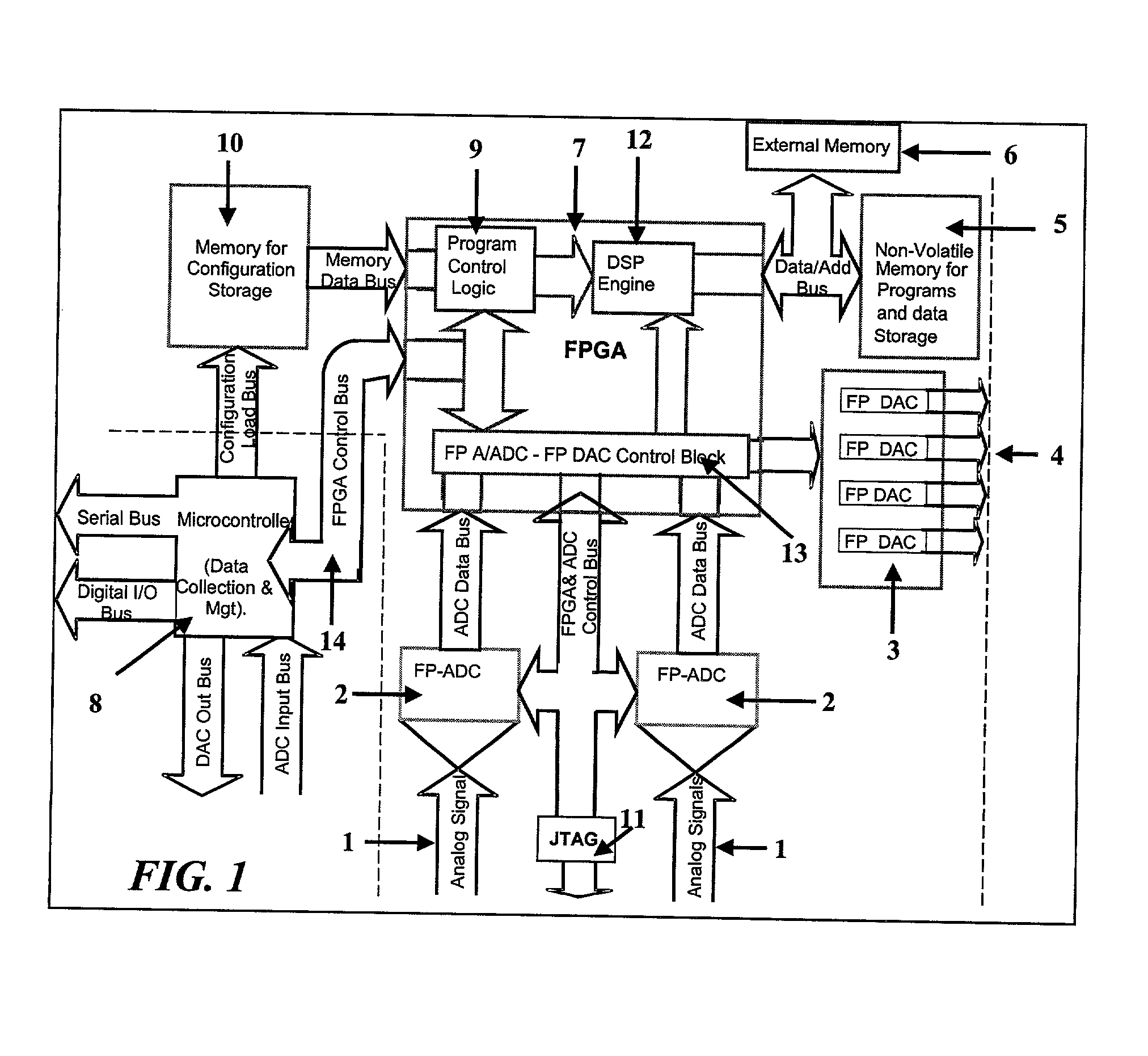

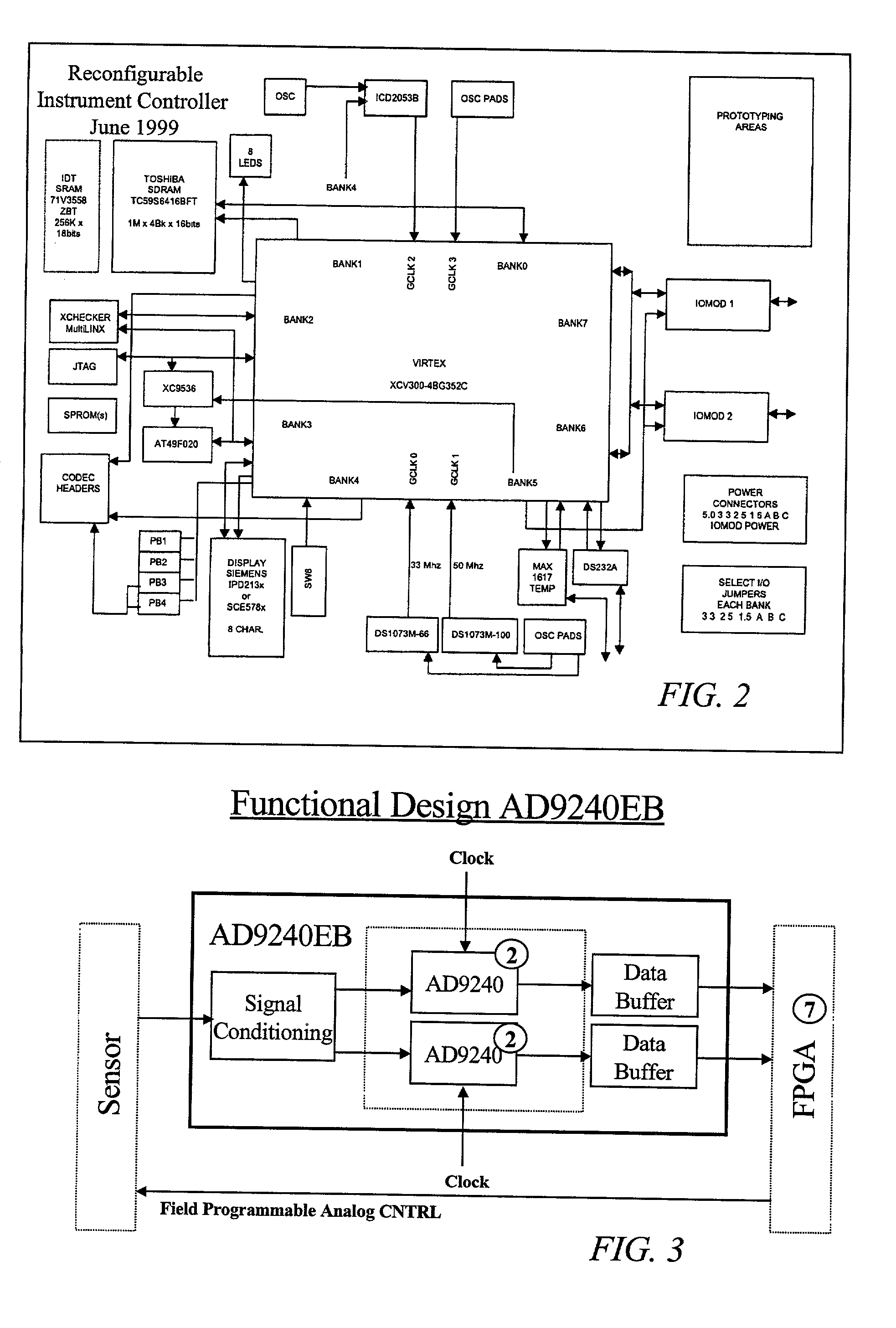

The Field Programmable Instrument Controller (FPIC) is a stand-alone low to high performance, clocked or unclocked multi-processor that operates as a microcontroller with versatile interface and operating options. The FPIC can also be used as a concurrent processor for a microcontroller or other processor. A tightly coupled Multiple Chip Module design incorporates non-volatile memories, a large field programmable gate array (FPGA), field programmable high precision analog to digital converters, field programmable digital to analog signal generators, and multiple ports of external mass data storage and control processors. The FPIC has an inherently open architecture with in-situ reprogrammability and state preservation capability for discontinuous operations. It is designed to operate in multiple roles, including but not limited to, a high speed parallel digital signal processing; co-processor for precision control feedback during analog or hybrid computing; high speed monitoring for condition based maintenance; and distributed real time process control. The FPIC is characterized by low power with small size and weight.

Owner:BLEMEL KENNETH G

Programming multiple chips from a command buffer

ActiveUS20060114260A1Single instruction multiple data multiprocessorsProcessor architectures/configurationComputational scienceGraphics

Owner:NVIDIA CORP

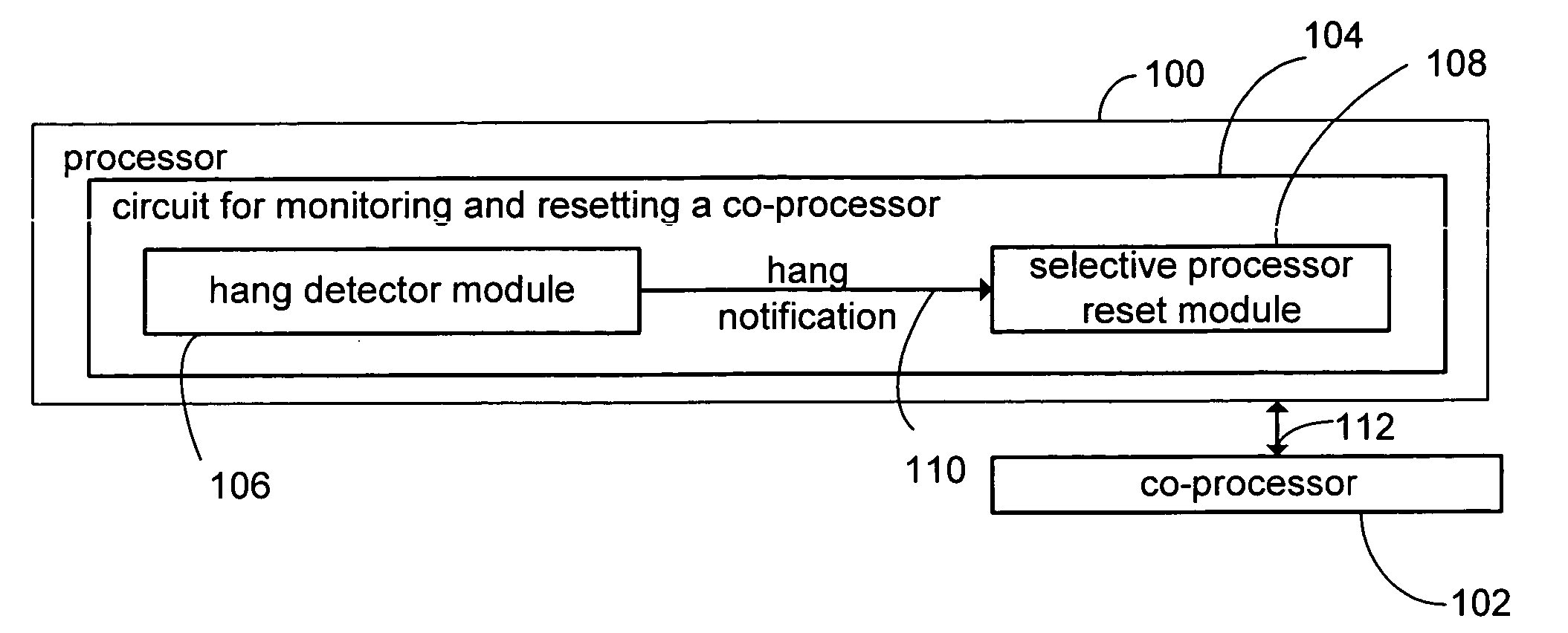

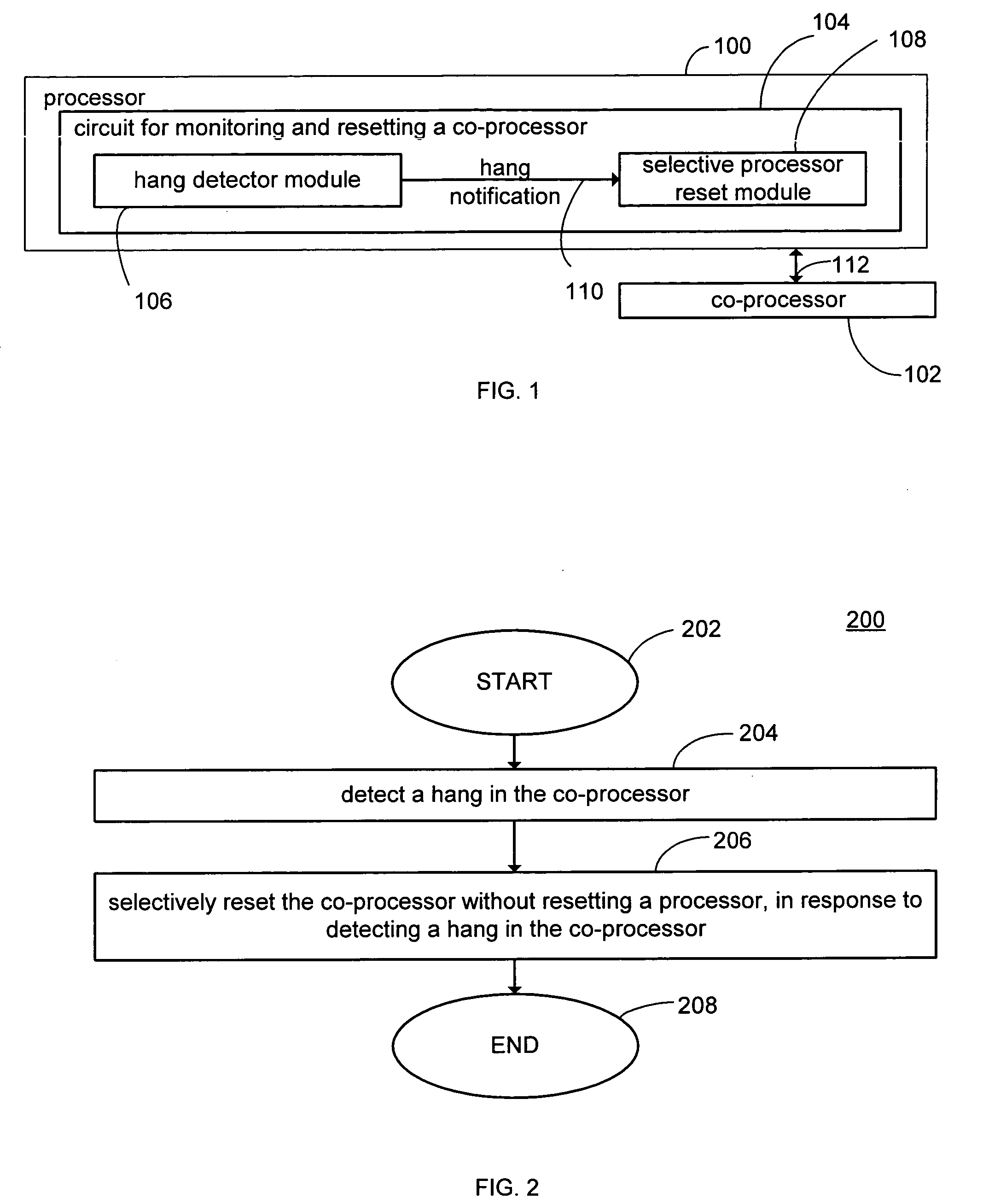

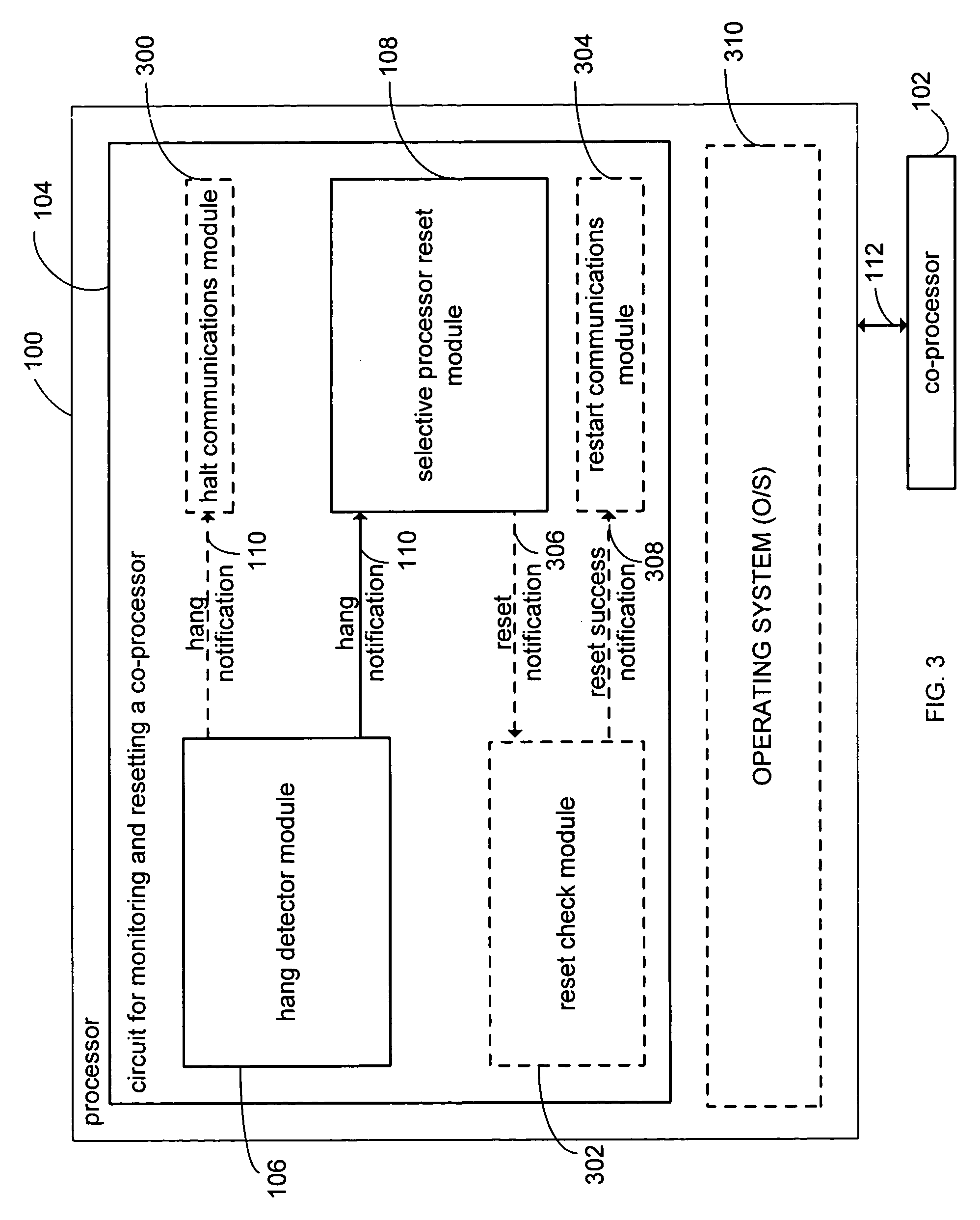

Method and apparatus for monitoring and resetting a co-processor

ActiveUS20050081115A1Multiple digital computer combinationsNon-redundant fault processingCoprocessorComputer science

A circuit monitors and resets a co-processor. The circuit includes a hang detector module for detecting a hang in co-processor. The circuit also includes a selective processor reset module for resetting the co-processor without resetting a processor in response to detecting a hang in the co-processor.

Owner:ATI TECH INC

Temperature field controlled scheduling for processing systems

InactiveUS7174194B2Avoid problemsEnergy efficient ICTError detection/correctionCoprocessorComputer module

A multiprocessor system (10) includes a plurality of processing modules, such as MPUs (12), DSPs (14), and coprocessors / DMA channels (16). Power management software (38) in conjunction with profiles (36) for the various processing modules and the tasks to executed are used to build scenarios which meet predetermined power objectives, such as providing maximum operation within package thermal constraints or using minimum energy. Actual activities associated with the tasks are monitored during operation to ensure compatibility with the objectives. The allocation of tasks may be changed dynamically to accommodate changes in environmental conditions and changes in the task list. Temperatures may be computed at various points in the multiprocessor system by monitoring activity information associated with various subsystems. The activity measurements may be used to compute a current power dissipation distribution over the die. If necessary, the tasks in a scenario may be adjusted to reduce power dissipation. Further, activity counters may be selectively enabled for specific tasks in order to obtain more accurate profile information.

Owner:TEXAS INSTR INC

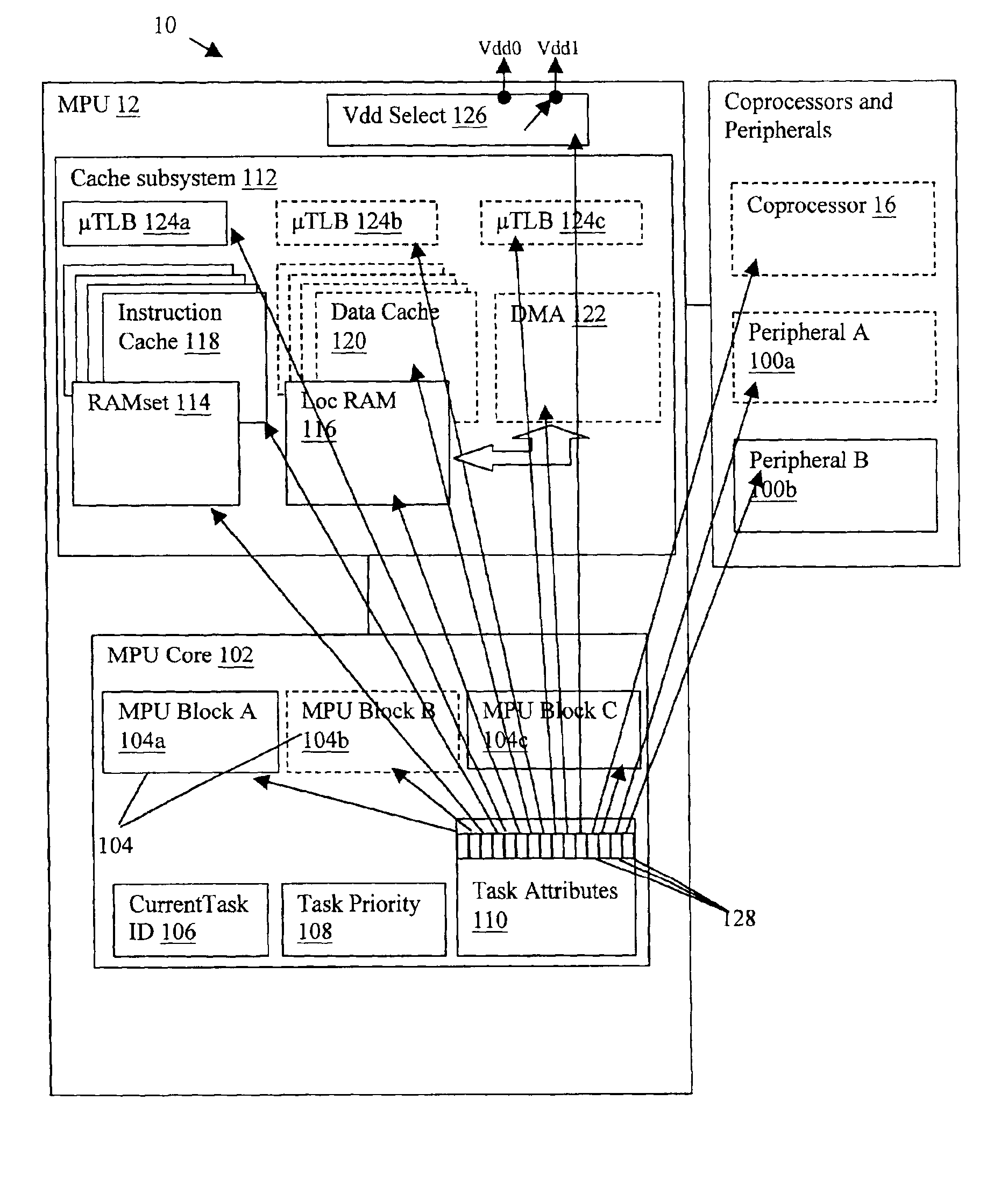

Dynamic hardware control for energy management systems using task attributes

InactiveUS6901521B2Save energyMemory architecture accessing/allocationEnergy efficient ICTCoprocessorMulti processor

A multiprocessor system (10) includes a plurality of processing modules, such as MPUs (12), DSPs (14), and coprocessors / DMA channels (16). Power management software (38) in conjunction with profiles (36) for the various processing modules and the tasks to executed are used to build scenarios which meet predetermined power objectives, such as providing maximum operation within package thermal constraints or using minimum energy. Actual activities associated with the tasks are monitored during operation to ensure compatibility with the objectives. The allocation of tasks may be changed dynamically to accommodate changes in environmental conditions and changes in the task list. As each task in a scenario is executed, a control word associated with the task can be used to enable / disable circuitry, or to set circuits to an optimum configuration.

Owner:TEXAS INSTR INC

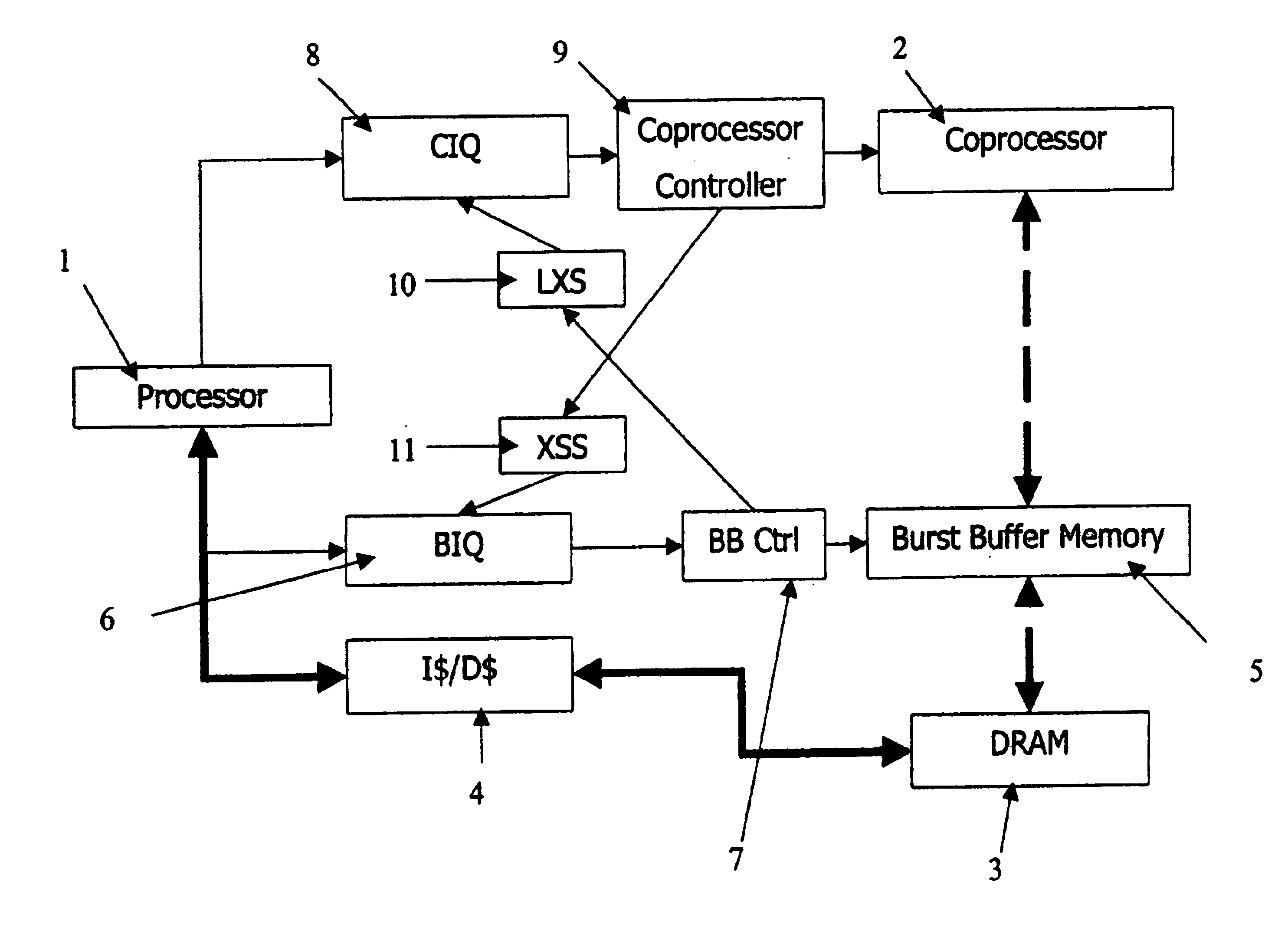

Memory and instructions in computer architecture containing processor and coprocessor

InactiveUS6782445B1Effective movementMinimal involvementGeneral purpose stored program computerMultiprogramming arrangementsCoprocessorComputerized system

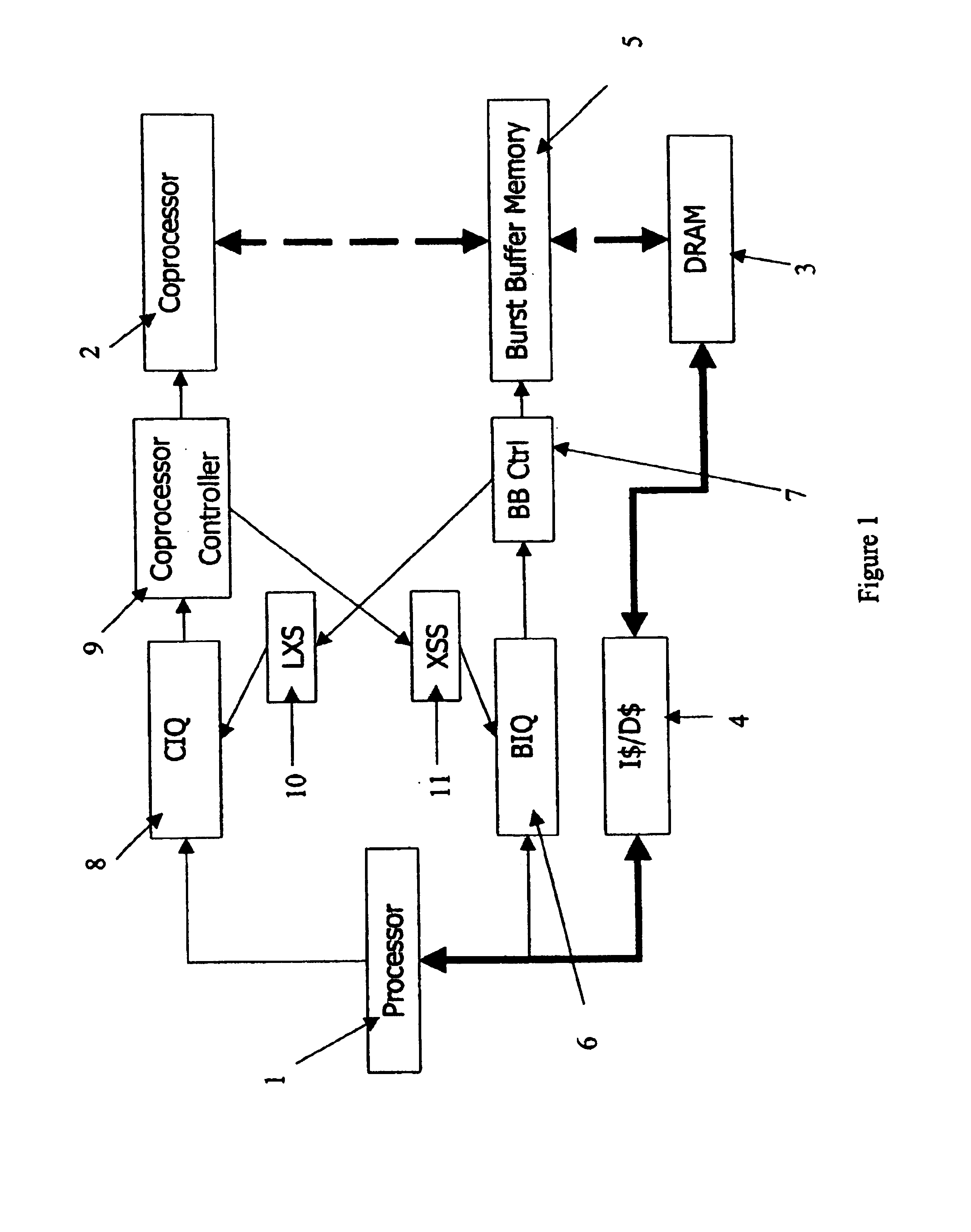

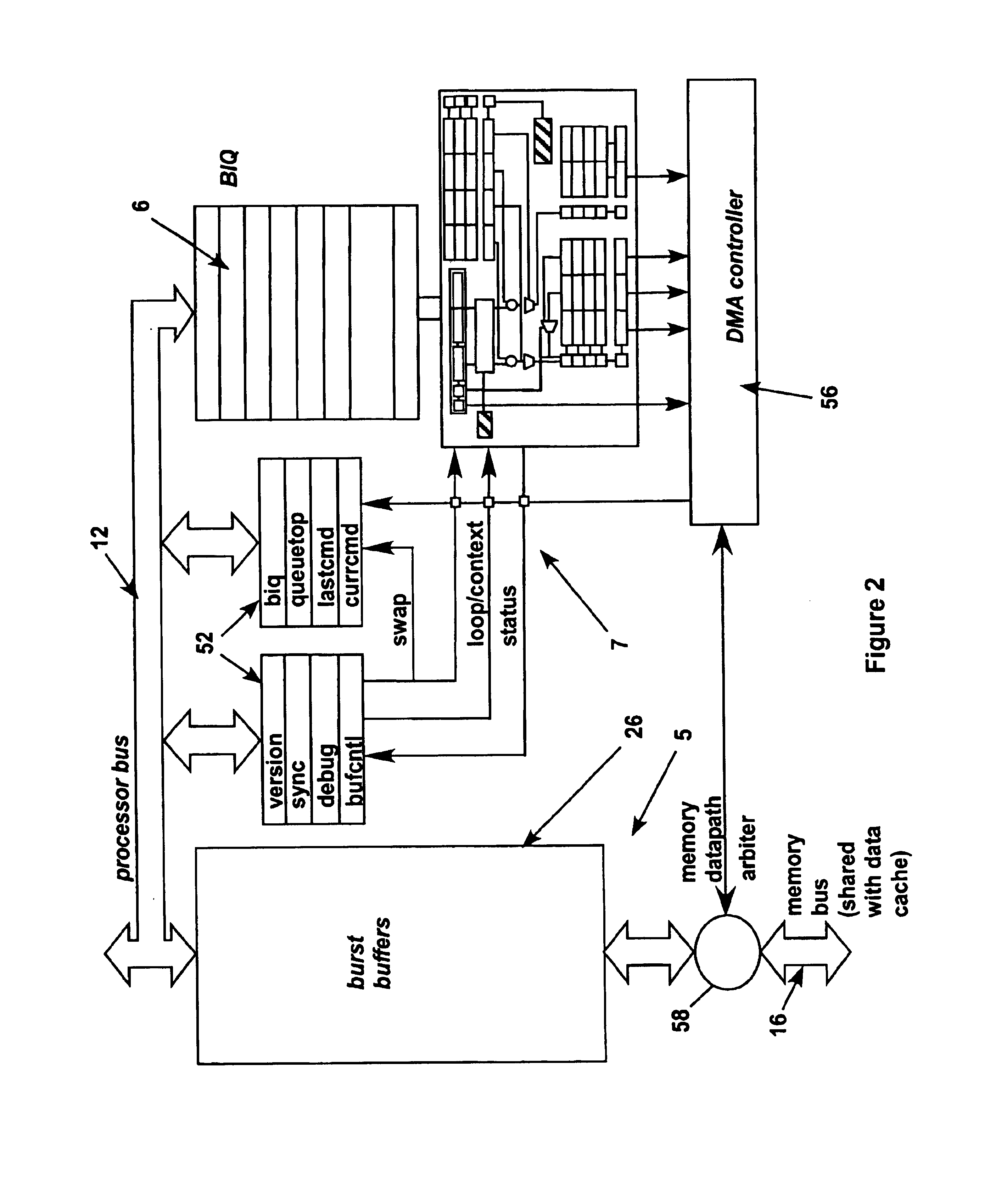

In a computer system, a first processor, a second processor for use as a coprocessor to the first processor, a memory, a data buffer for buffering data to be written to or read from the memory in data bursts in accordance with burst instructions, a burst controller for executing the burst instructions, a burst instructions element for providing burst instructions in a sequence for execution by the burst controller, and a synchronization mechanism for synchronizing execution of coprocessor instructions and burst instructions with availability of data on which said coprocessor instructions and burst instructions are to execute. Burst instructions are provided by the first processor to the burst instructions element and data is read from the memory as input data to the second processor and written to the memory as output data from the second processor through the data buffer in accordance with burst instructions executed by the burst controller.

Owner:HEWLETT PACKARD DEV CO LP

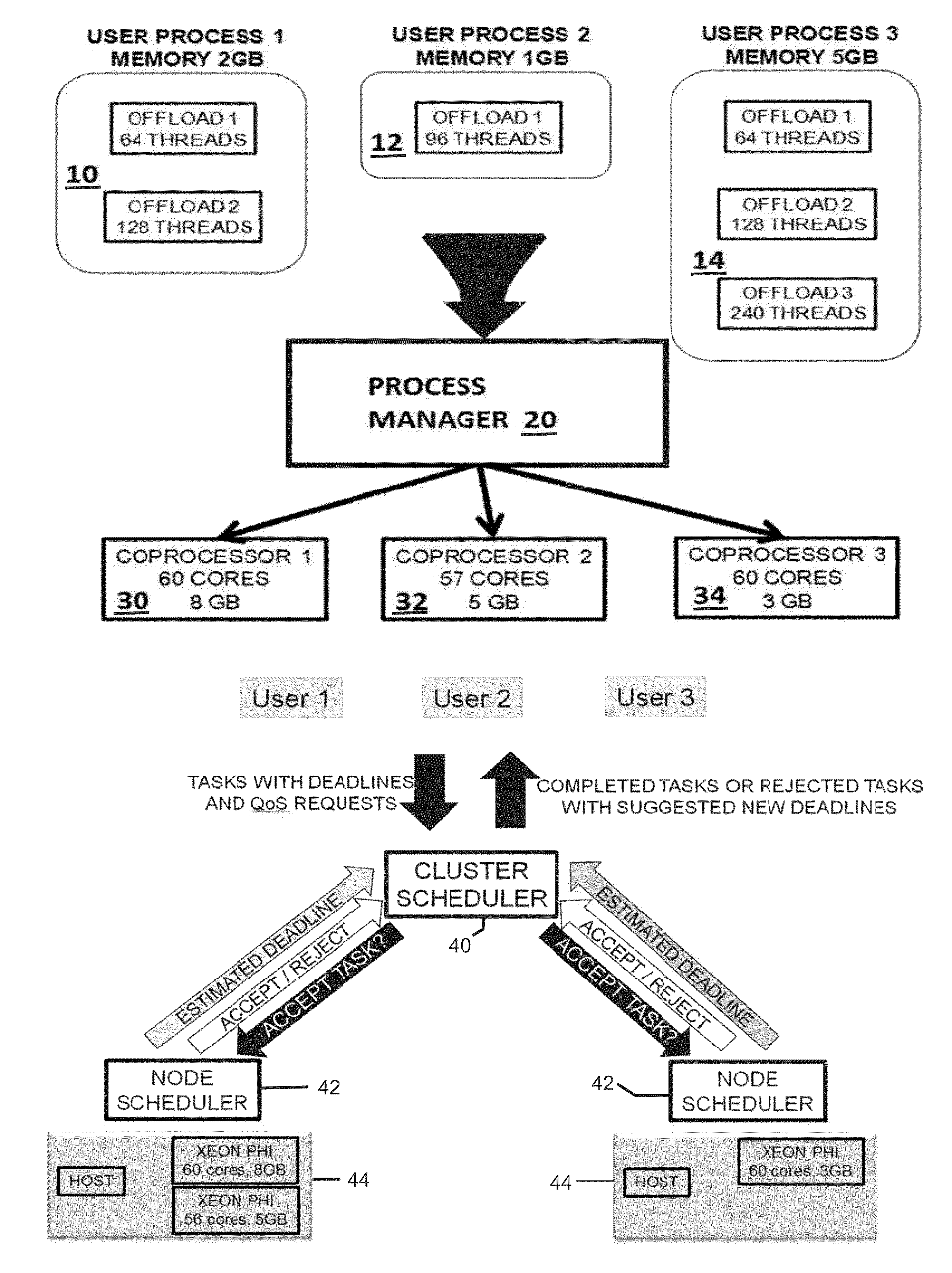

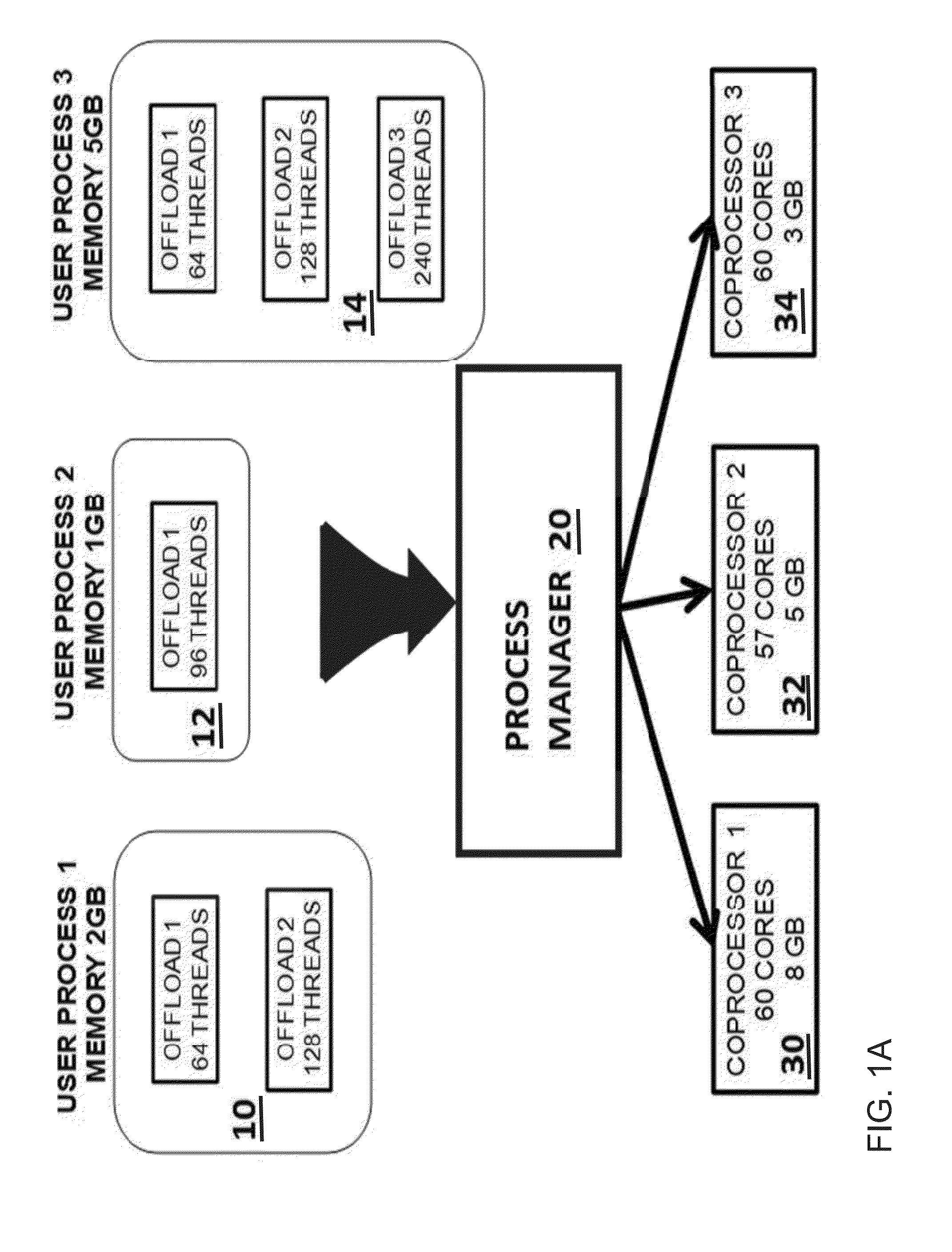

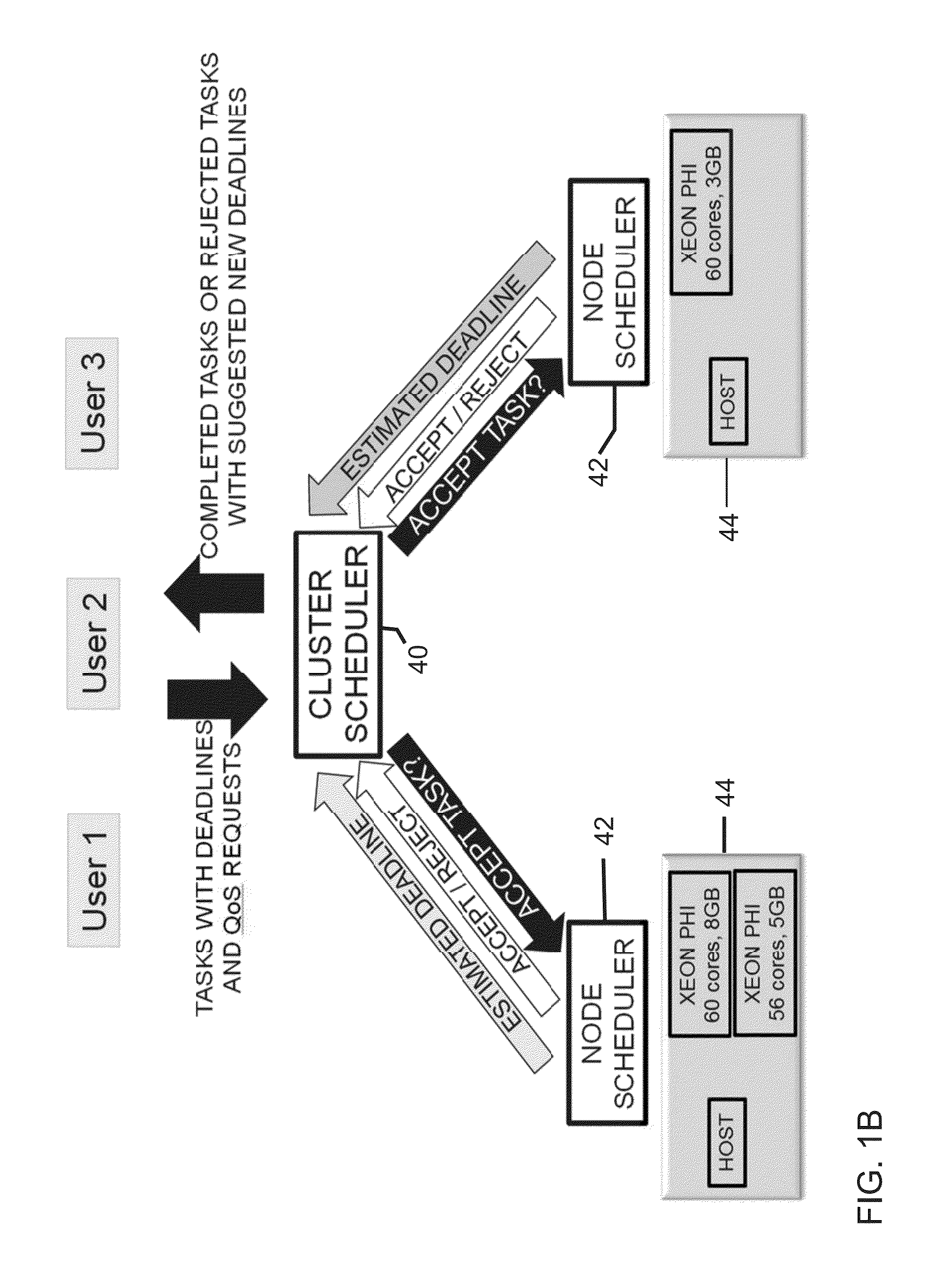

Simultaneous scheduling of processes and offloading computation on many-core coprocessors

Methods and systems for scheduling jobs to manycore nodes in a cluster include selecting a job to run according to the job's wait time and the job's expected execution time; sending job requirements to all nodes in a cluster, where each node includes a manycore processor; determining at each node whether said node has sufficient resources to ever satisfy the job requirements and, if no node has sufficient resources, deleting the job; creating a list of nodes that have sufficient free resources at a present time to satisfy the job requirements; and assigning the job to a node, based on a difference between an expected execution time and associated confidence value for each node and a hypothetical fastest execution time and associated hypothetical maximum confidence value.

Owner:NEC CORP

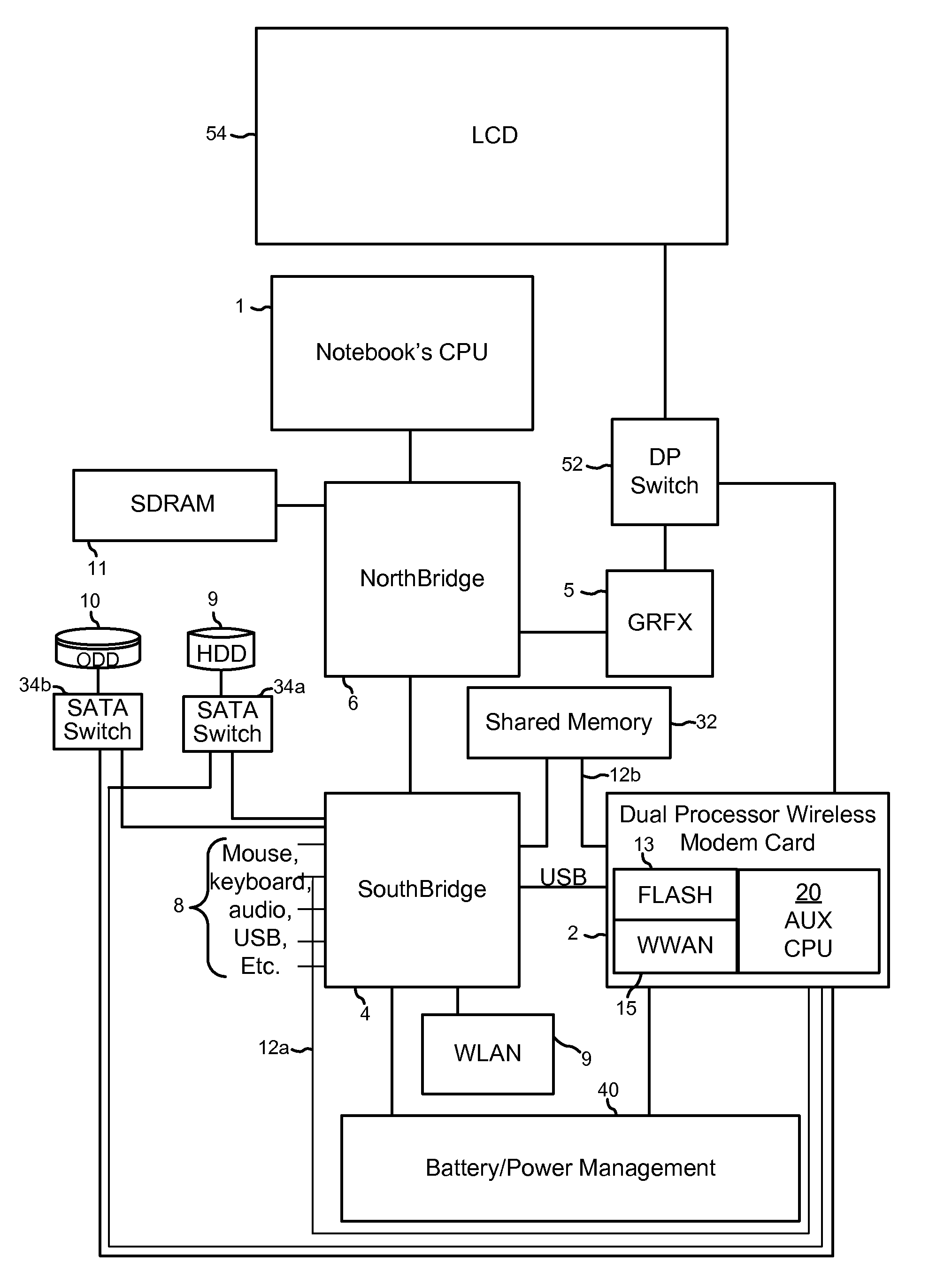

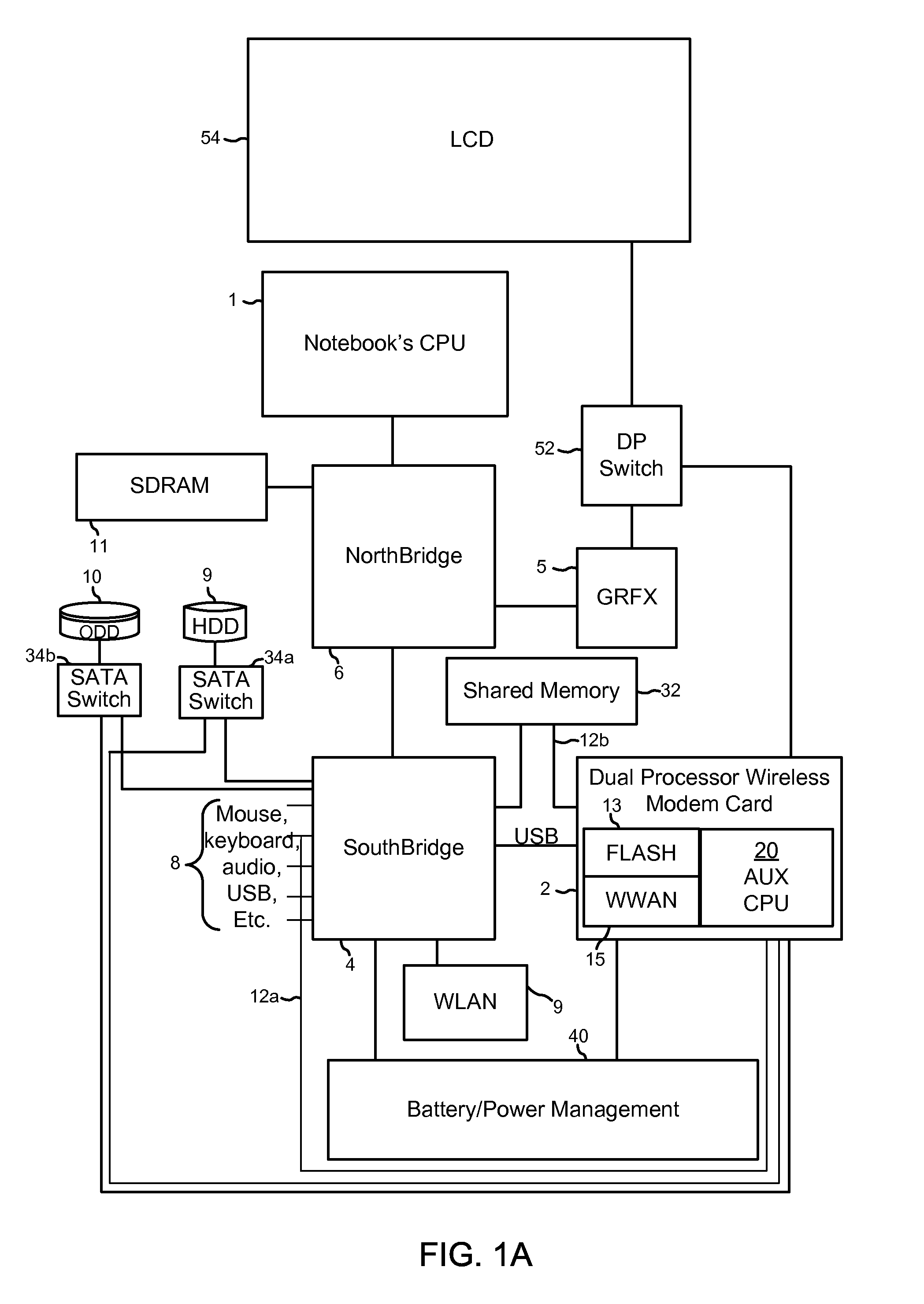

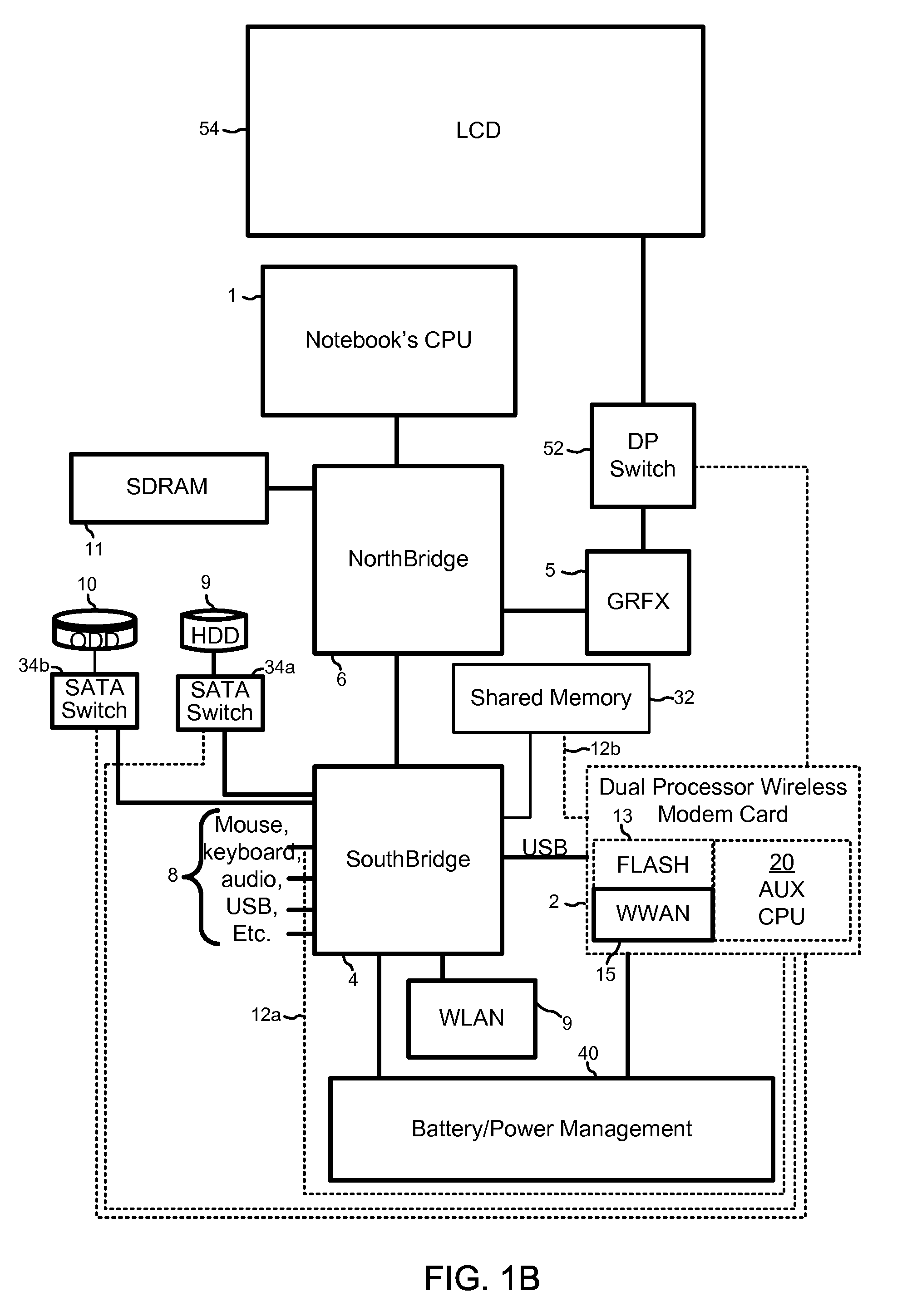

Methods and systems for operating a computer via a low power adjunct processor

ActiveUS20110055434A1Task is limitedEnergy efficient ICTVolume/mass flow measurementElectronic documentCoprocessor

A computing device includes a low power auxiliary processor, such as a processor on a wireless card or sub-system, which is able to takeover processing in place of the computing device's central processing unit (CPU). Operating the computing device on the auxiliary processor draws less power from the computing device battery, enabling extended operation in an auxiliary processor mode. When in this mode, the auxiliary processor controls peripherals and provides the system functionality while the CPU is deactivated, such as in “off,”“standby” or “sleep” modes. In the auxiliary processor mode, the computing device can accomplish useful tasks, such as sending / receiving electronic mail, displaying electronic documents and accessing a network while drawing minimal power from the battery. Transitions between the normal operating mode and auxiliary processor mode may be transparent to users. Such a computer may display instant on, always on and always connected operating features.

Owner:QUALCOMM INC

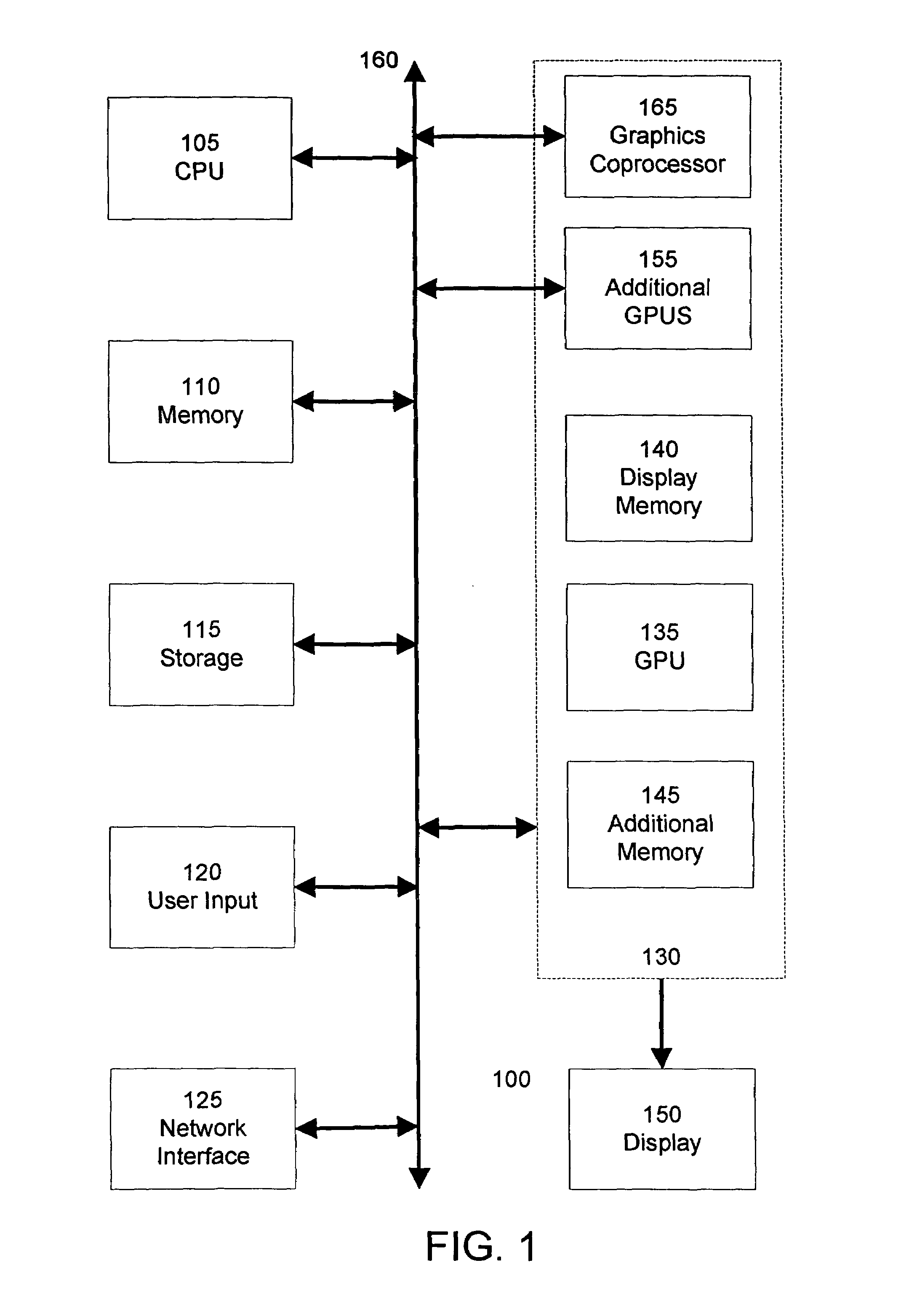

Methods and system for managing computational resources of a coprocessor in a computing system

ActiveUS7234144B2Conducive to efficient executionEfficient sharingProgram initiation/switchingResource allocationGraphicsOperational system

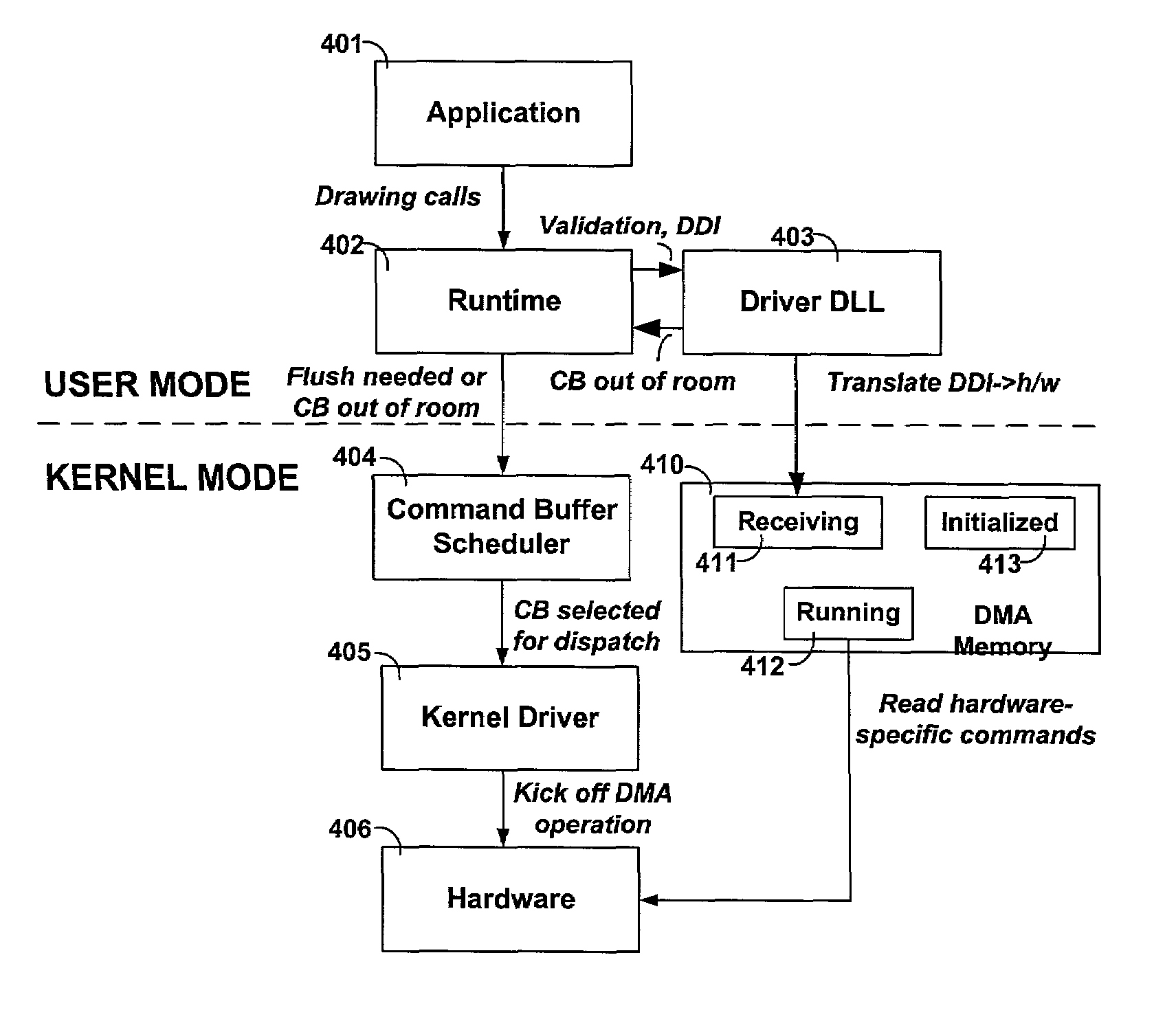

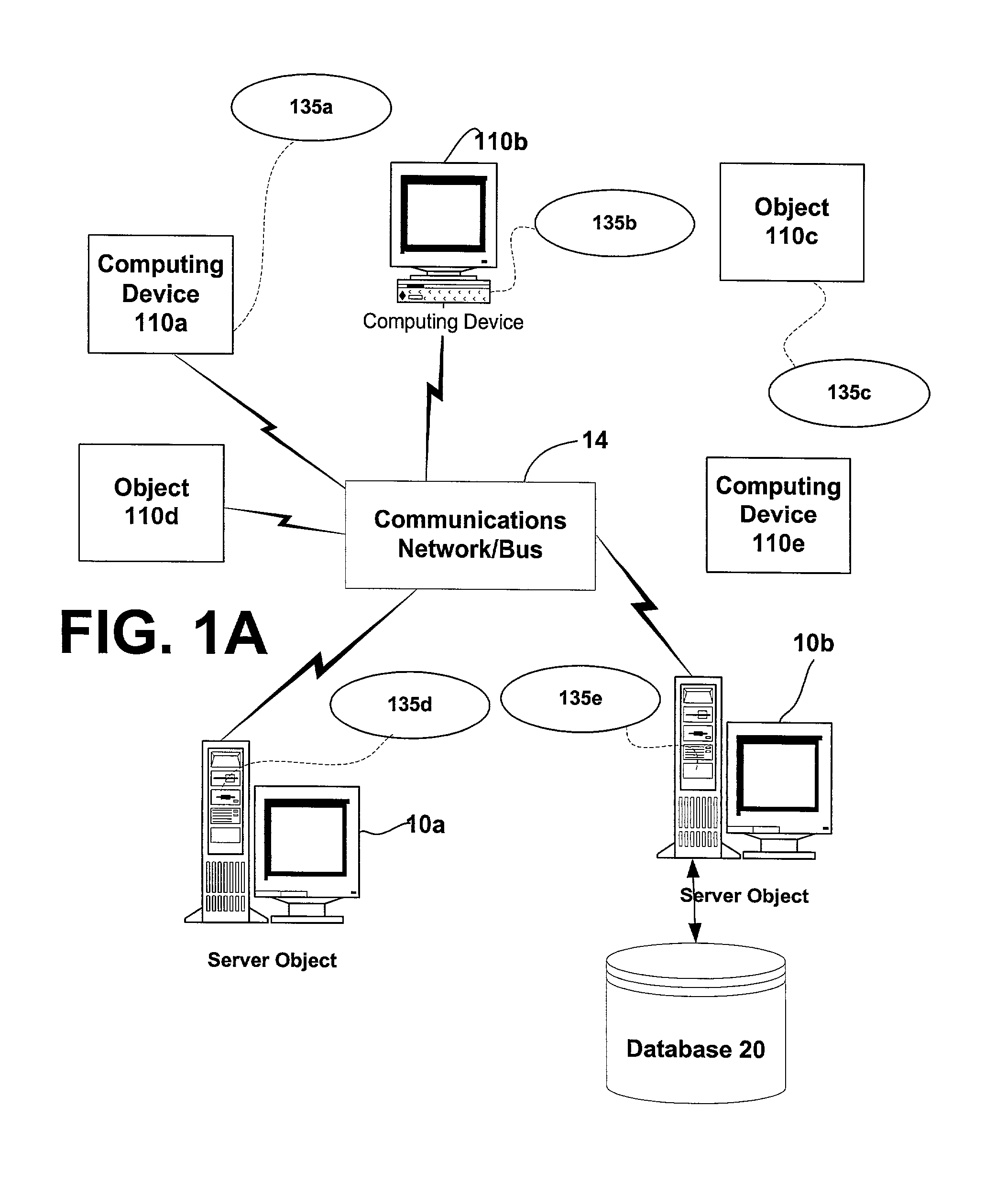

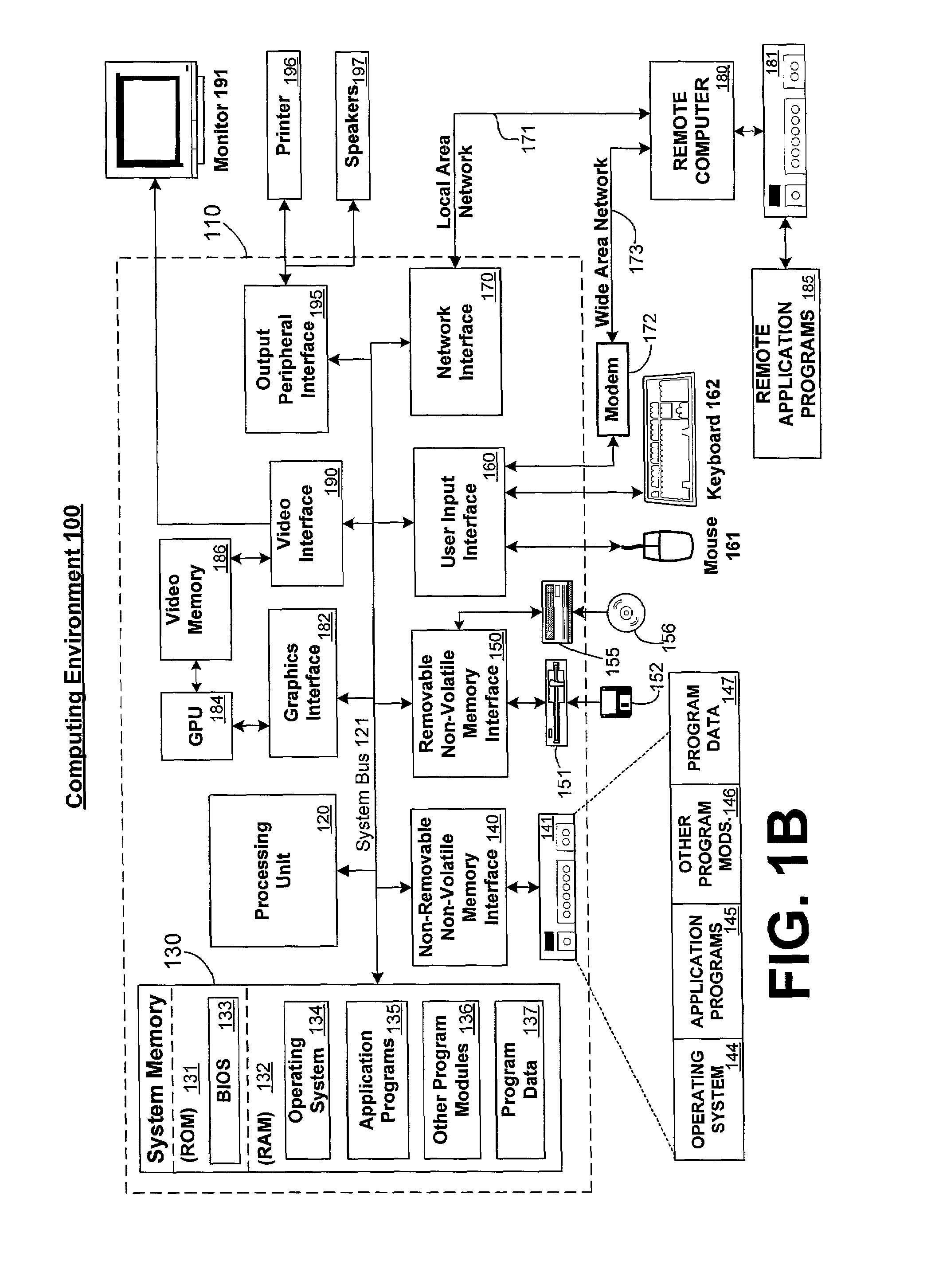

Systems and methods are provided for managing the computational resources of coprocessor(s), such as graphics processor(s), in a computing system. The systems and methods illustrate management of computational resources of coprocessors to facilitate efficient execution of multiple applications in a multitasking environment. By enabling multiple threads of execution to compose command buffers in parallel, submitting those command buffers for scheduling and dispatch by the operating system, and fielding interrupts that notify of completion of command buffers, the system enables multiple applications to efficiently share the computational resources available in the system.

Owner:MICROSOFT TECH LICENSING LLC +1

Systems and methods for downloading algorithmic elements to a coprocessor and corresponding techniques

ActiveUS20050122334A1Improve abilitiesSophisticated effectDigital computer detailsNext instruction address formationComputational scienceCoprocessor

Systems and methods for downloading algorithmic elements to a coprocessor and corresponding processing and communication techniques are provided. For an improved graphics pipeline, the invention provides a class of co-processing device, such as a graphics processor unit (GPU), providing improved capabilities for an abstract or virtual machine for performing graphics calculations and rendering. The invention allows for runtime-predicated flow control of programs downloaded to coprocessors, enables coprocessors to include indexable arrays of on-chip storage elements that are readable and writable during execution of programs, provides native support for textures and texture maps and corresponding operations in a vertex shader, provides frequency division of vertex streams input to a vertex shader with optional support for a stream modulo value, provides a register storage element on a pixel shader and associated interfaces for storage associated with representing the “face” of a pixel, provides vertex shaders and pixel shaders with more on-chip register storage and the ability to receive larger programs than any existing vertex or pixel shaders and provides 32 bit float number support in both vertex and pixel shaders.

Owner:MICROSOFT TECH LICENSING LLC

Method and system for high performance integration, processing and searching of structured and unstructured data using coprocessors

ActiveUS7660793B2Effectively bifurcate query processingTimely responseData processing applicationsDigital data processing detailsFull text searchRelational database

Disclosed herein is a method and system for integrating an enterprise's structured and unstructured data to provide users and enterprise applications with efficient and intelligent access to that data. Queries can be directed toward both an enterprise's structured and unstructured data using standardized database query formats such as SQL commands. A coprocessor can be used to hardware-accelerate data processing tasks (such as full-text searching) on unstructured data as necessary to handle a query. Furthermore, traditional relational database techniques can be used to access structured data stored by a relational database to determine which portions of the enterprise's unstructured data should be delivered to the coprocessor for hardware-accelerated data processing.

Owner:IP RESERVOIR

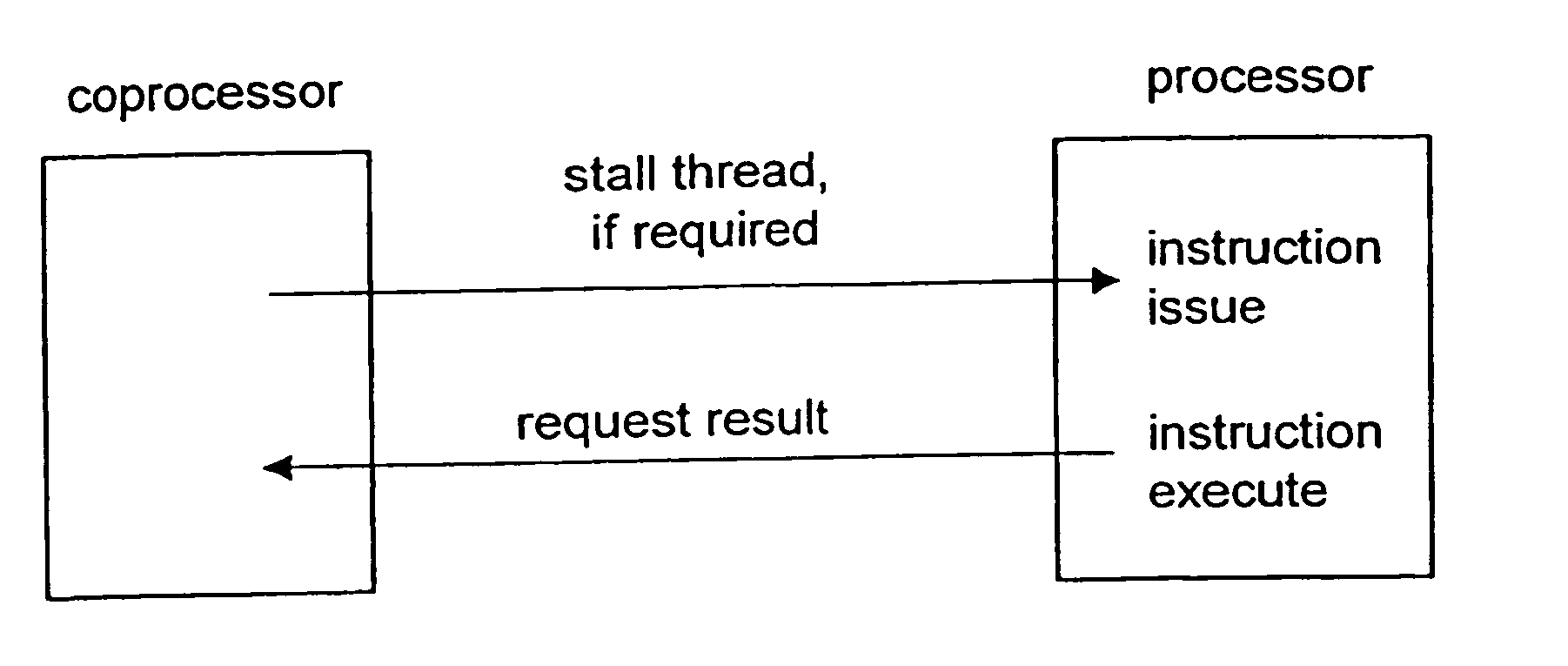

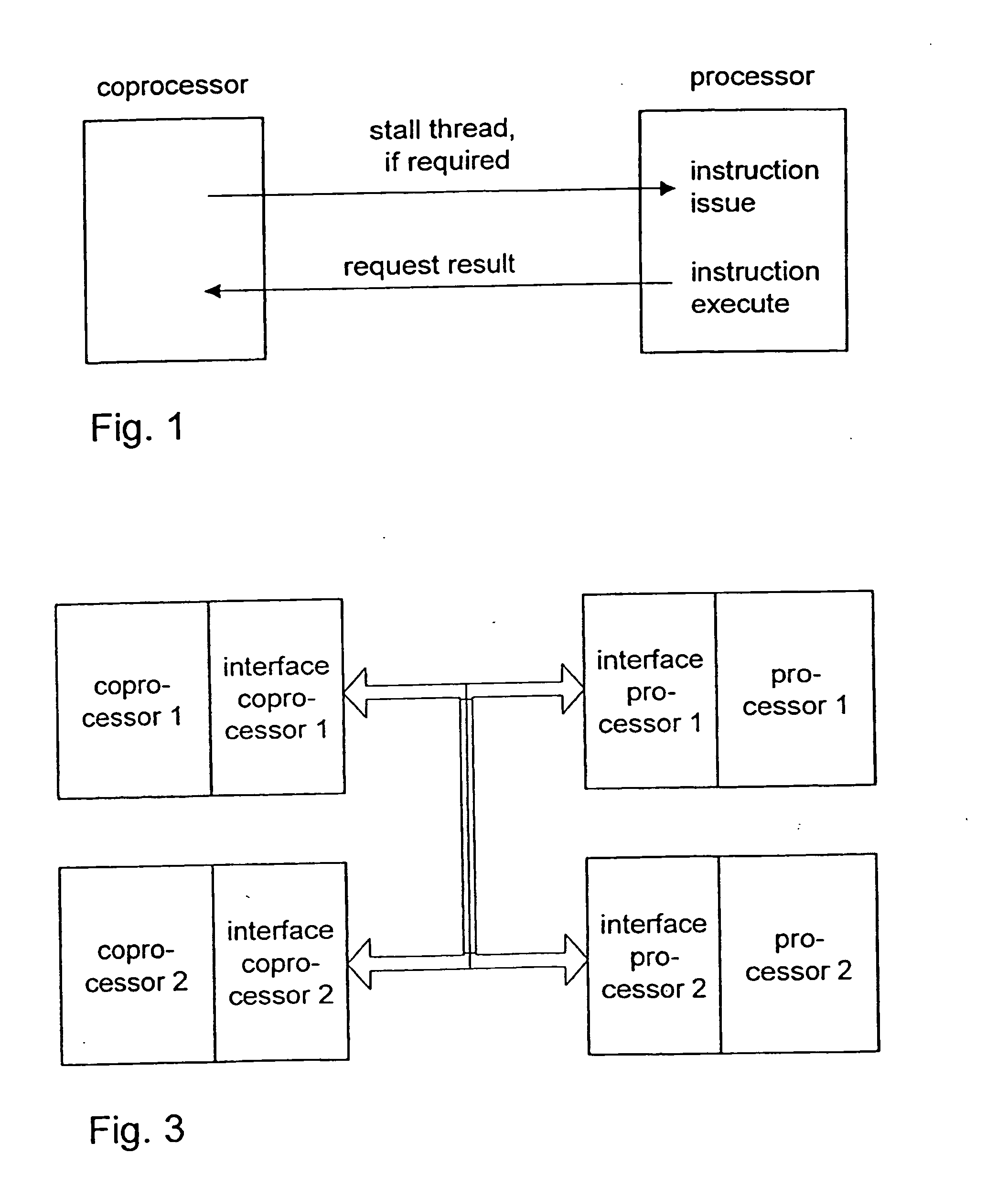

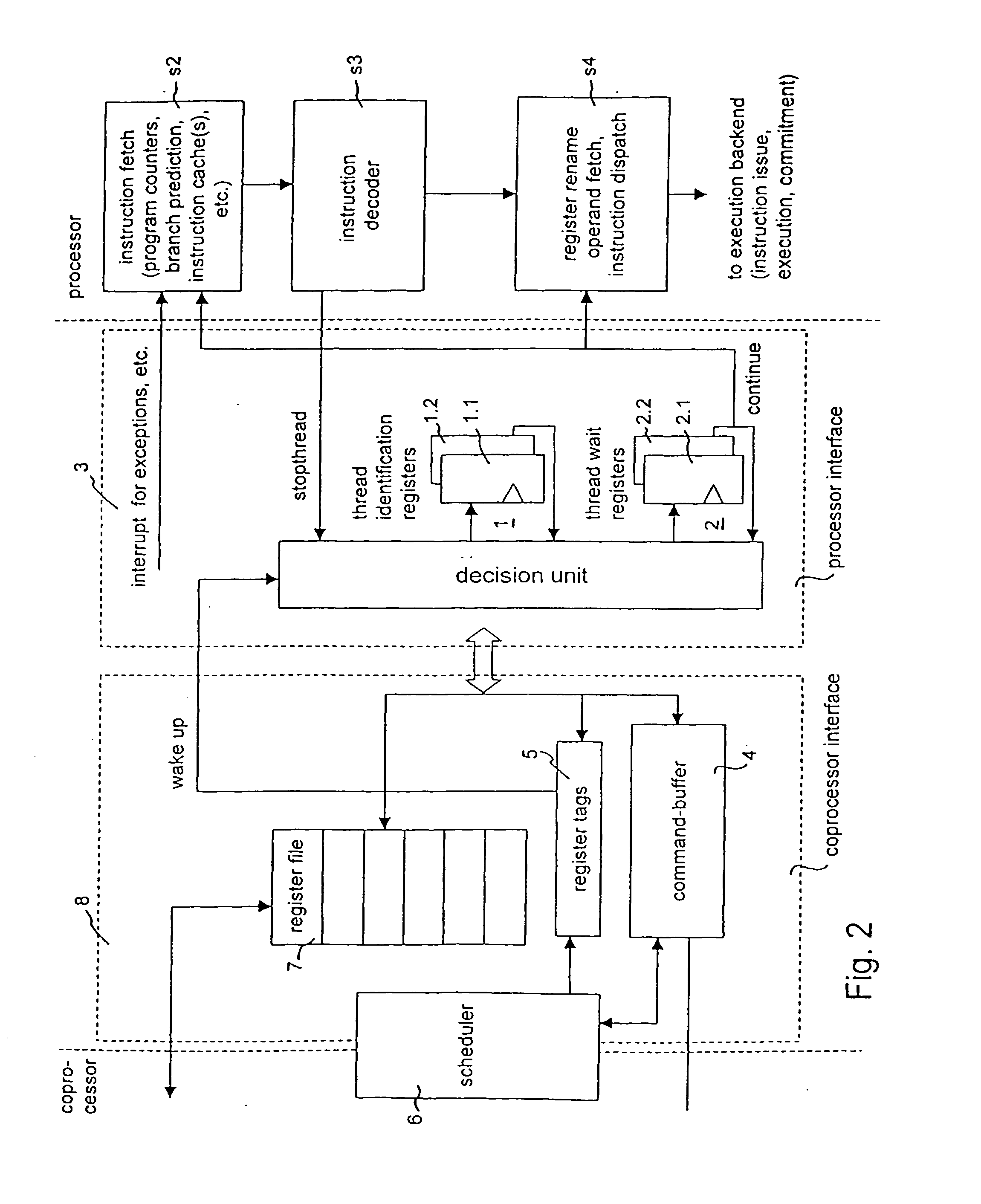

Method and device for synchronizing a processor and a coprocessor

InactiveUS20050055594A1Improve reliabilityImprove efficiencyGeneral purpose stored program computerMultiple digital computer combinationsReal-time computingCoprocessor

A system and method for synchronizing a processor and a coprocessor includes a processor and coprocessor working off a thread, wherein the thread includes a thread control instruction (stopthread) for controlling the timing of this thread. When the processor executes the thread control instruction this thread is stopped with the help of the thread control instruction until a wake up signal from the coprocessor allows the continuation of working off of this thread.

Owner:IBM CORP

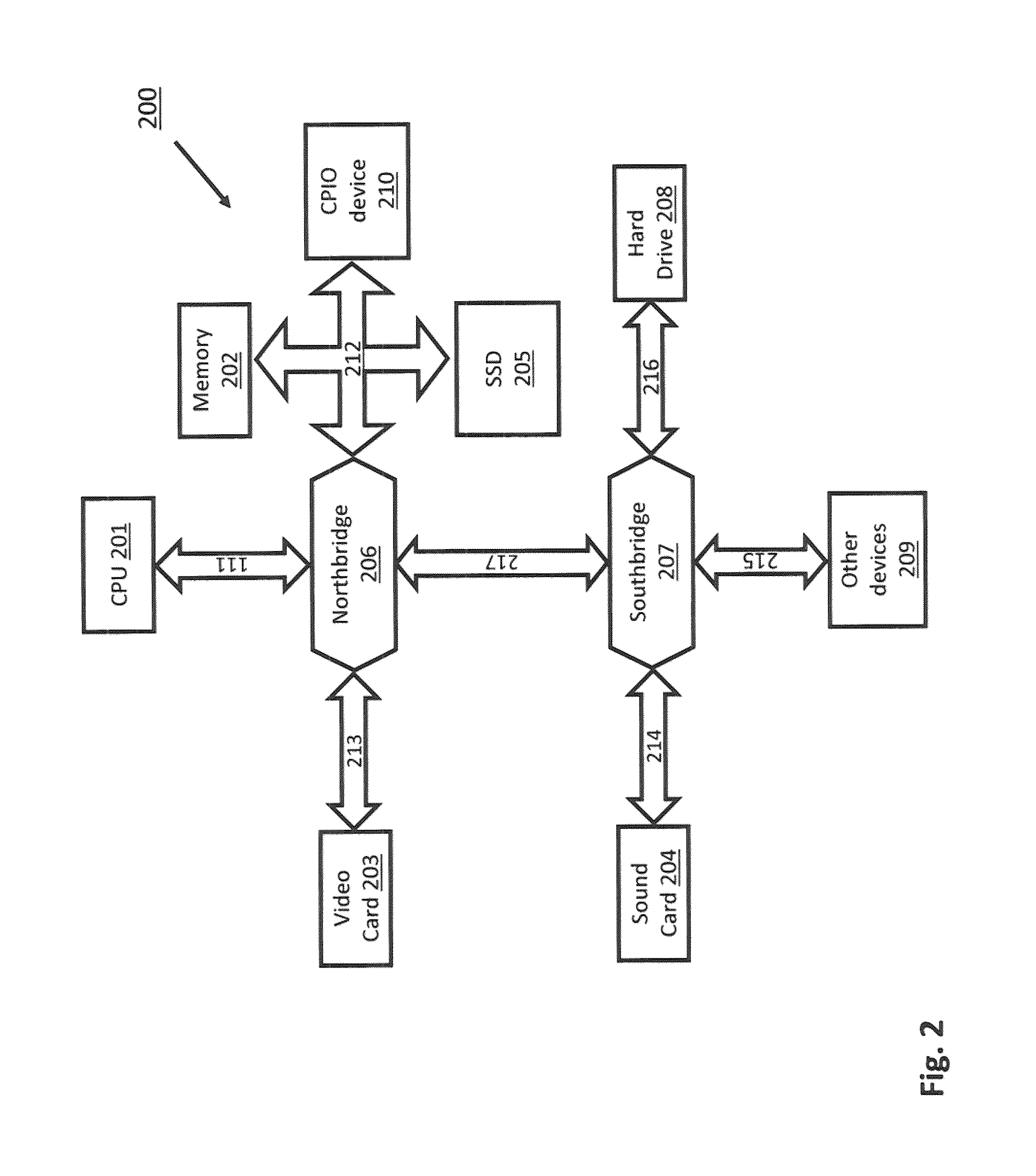

System and method of interfacing co-processors and input/output devices via a main memory system

ActiveUS8713379B2Memory architecture accessing/allocationError detection/correctionCoprocessorMemory bus

A system for interfacing with a co-processor or input / output device is disclosed. According to one embodiment, the system includes a computer processing unit, a memory module, a memory bus that connects the computer processing unit and the memory module, and a co-processing unit or input / output device, wherein the memory bus also connects the co-processing unit or input / output device to the computer processing unit.

Owner:RAMBUS INC

Task based adaptative profiling and debugging

ActiveUS7062304B2Accurate profile dataIncrease success rateMemory architecture accessing/allocationEnergy efficient ICTCoprocessorMulti processor

A multiprocessor system (10) includes a plurality of processing modules, such as MPUs (12), DSPs (14), and coprocessors / DMA channels (16). Power management software (38) in conjunction with profiles (36) for the various processing modules and the tasks to executed are used to build scenarios which meet predetermined power objectives, such as providing maximum operation within package thermal constraints or using minimum energy. Actual activities associated with the tasks are monitored during operation to ensure compatibility with the objectives. The allocation of tasks may be changed dynamically to accommodate changes in environmental conditions and changes in the task list. Temperatures may be computed at various points in the multiprocessor system by monitoring activity information associated with various subsystems. The activity measurements may be used to compute a current power dissipation distribution over the die. If necessary, the tasks in a scenario may be adjusted to reduce power dissipation. Further, activity counters may be selectively enabled for specific tasks in order to obtain more accurate profile information.

Owner:TEXAS INSTR INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com