Patents

Literature

88 results about "Free list" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A free list is a data structure used in a scheme for dynamic memory allocation. It operates by connecting unallocated regions of memory together in a linked list, using the first word of each unallocated region as a pointer to the next. It is most suitable for allocating from a memory pool, where all objects have the same size.

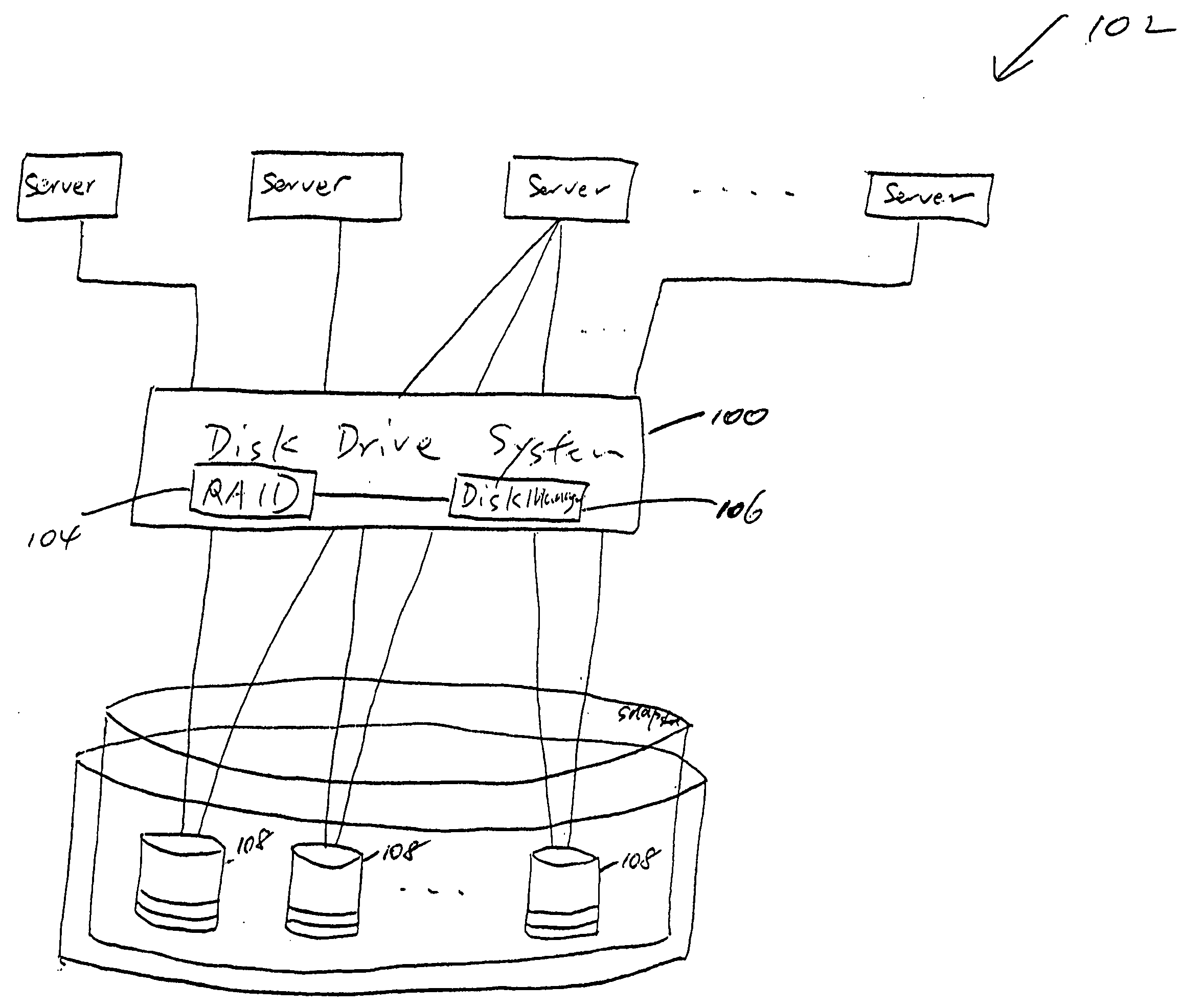

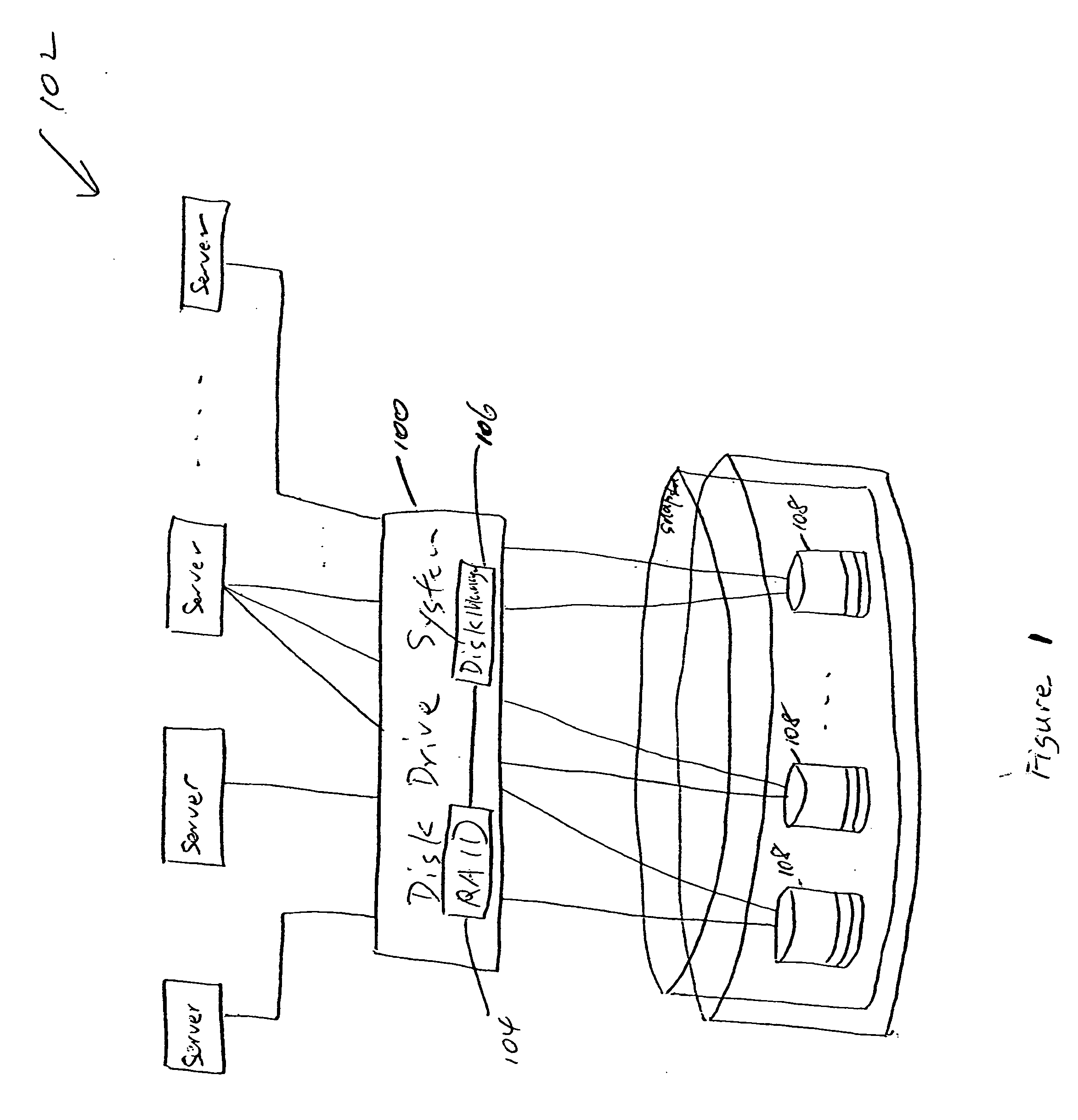

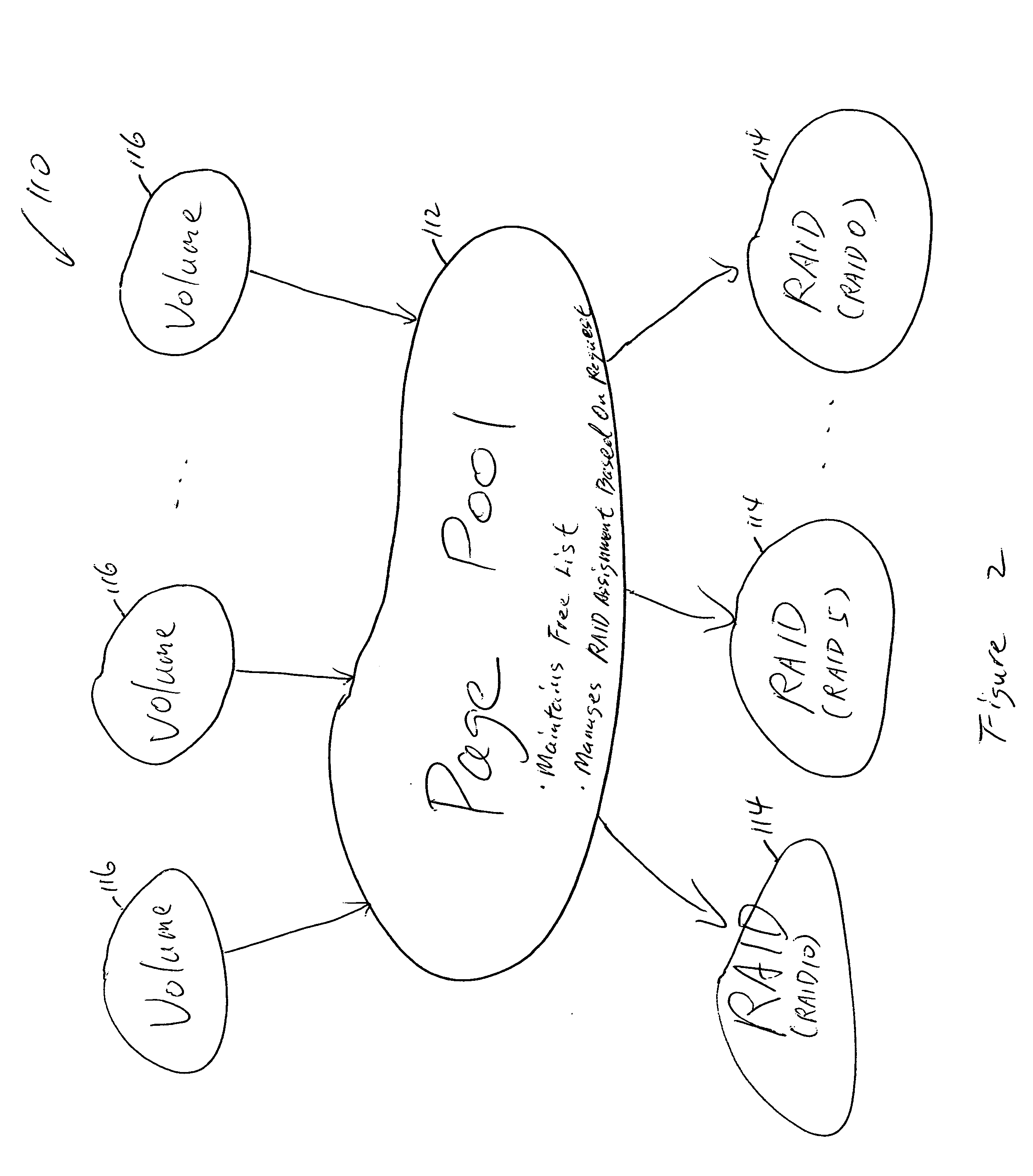

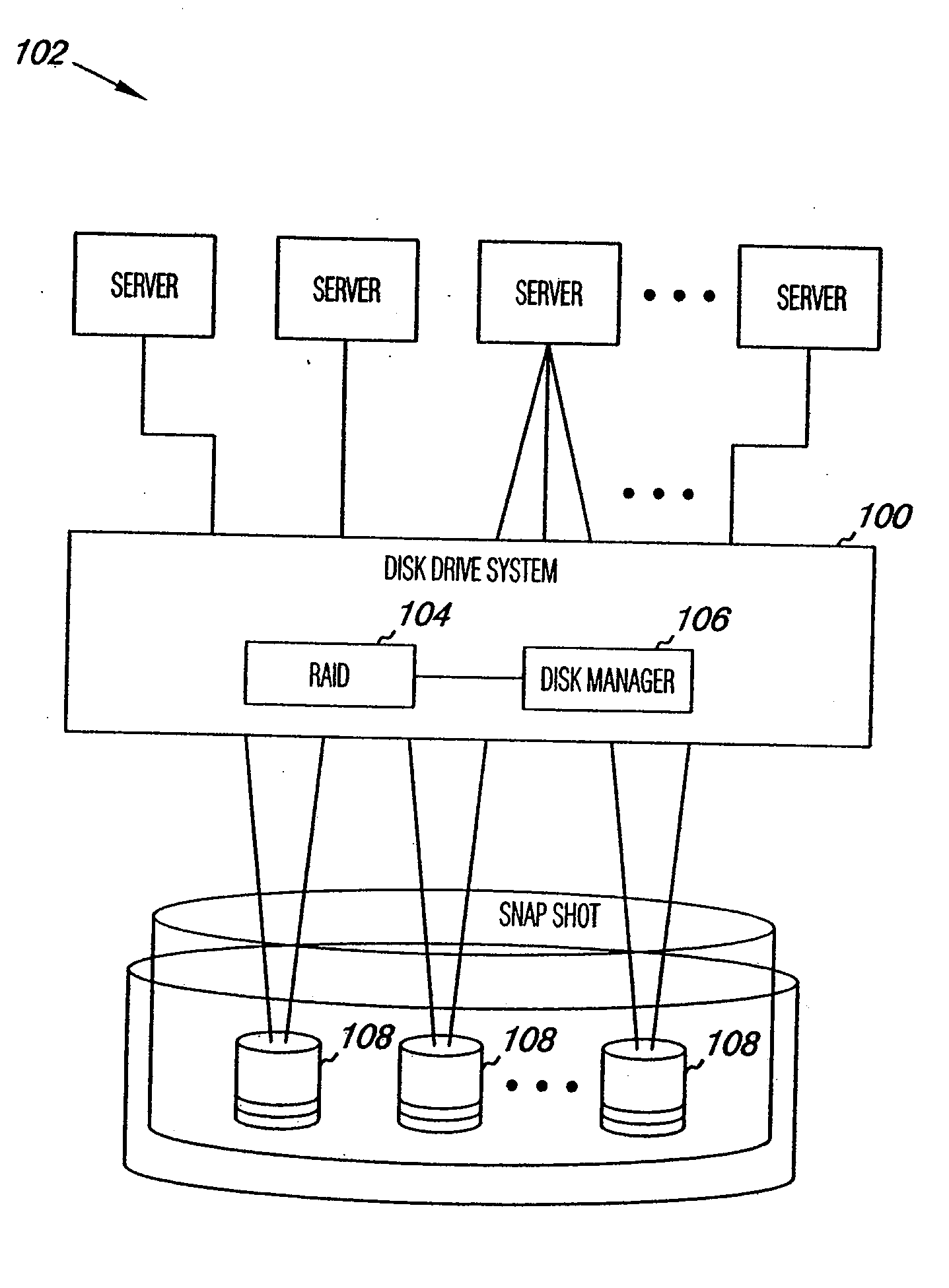

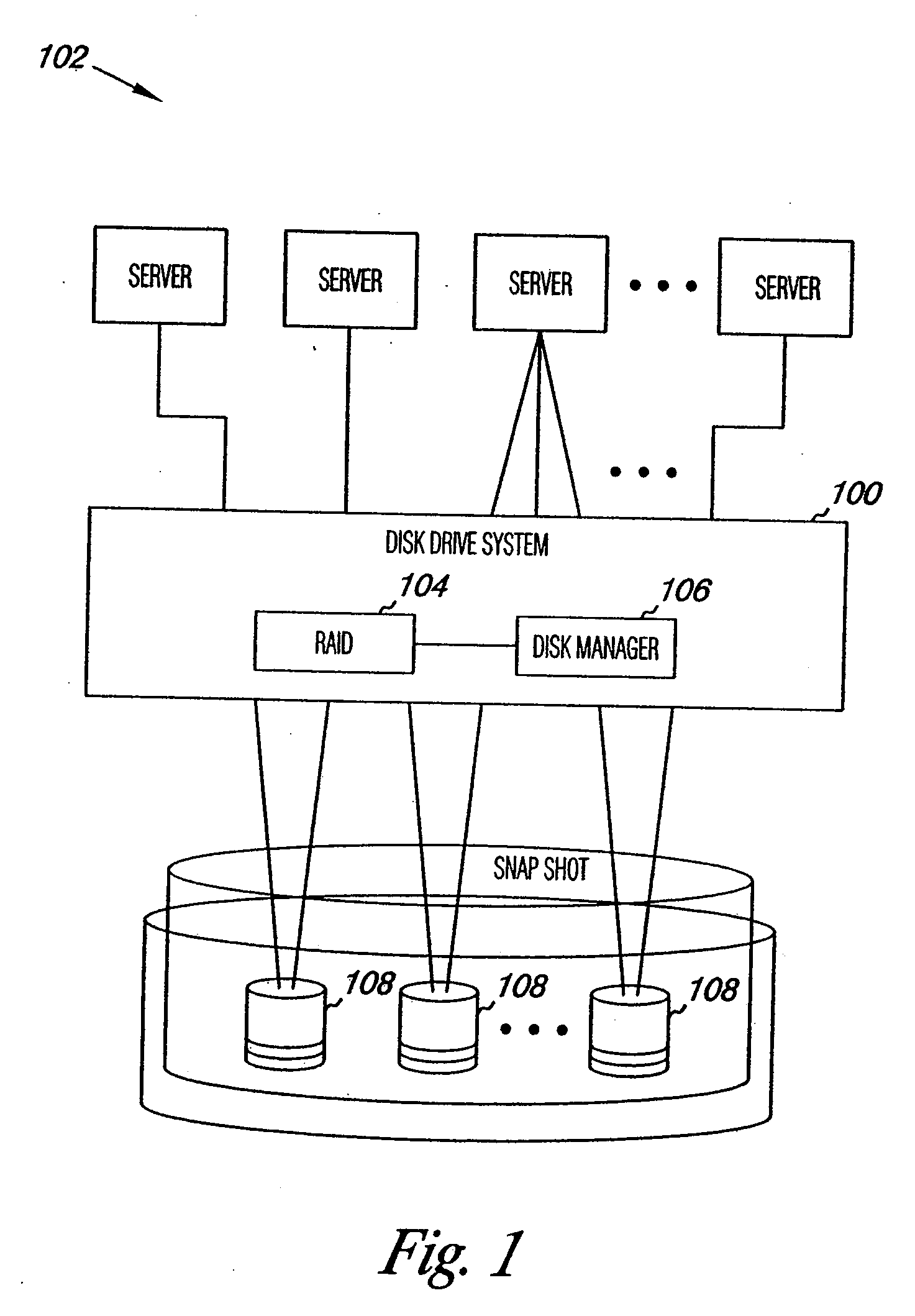

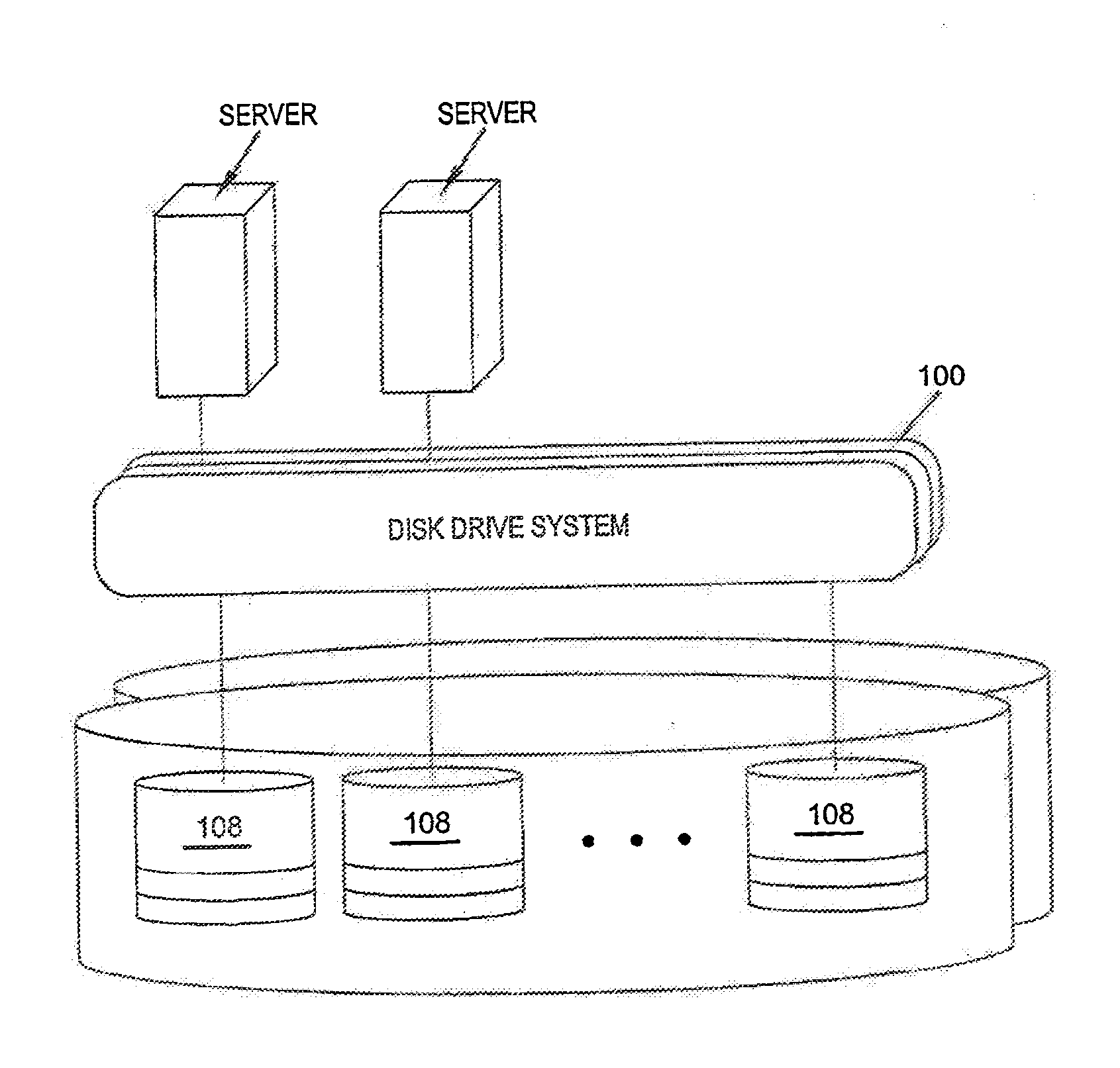

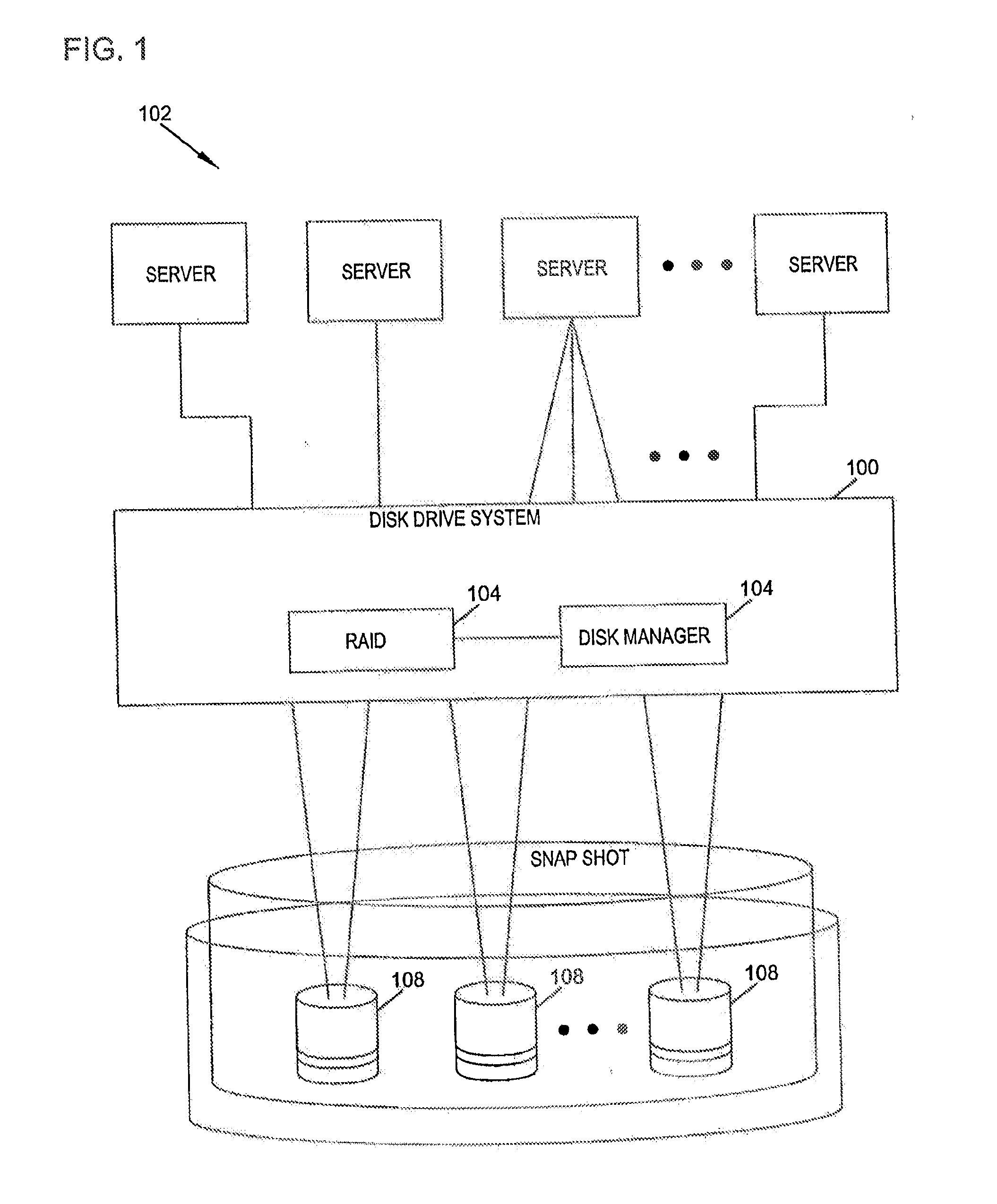

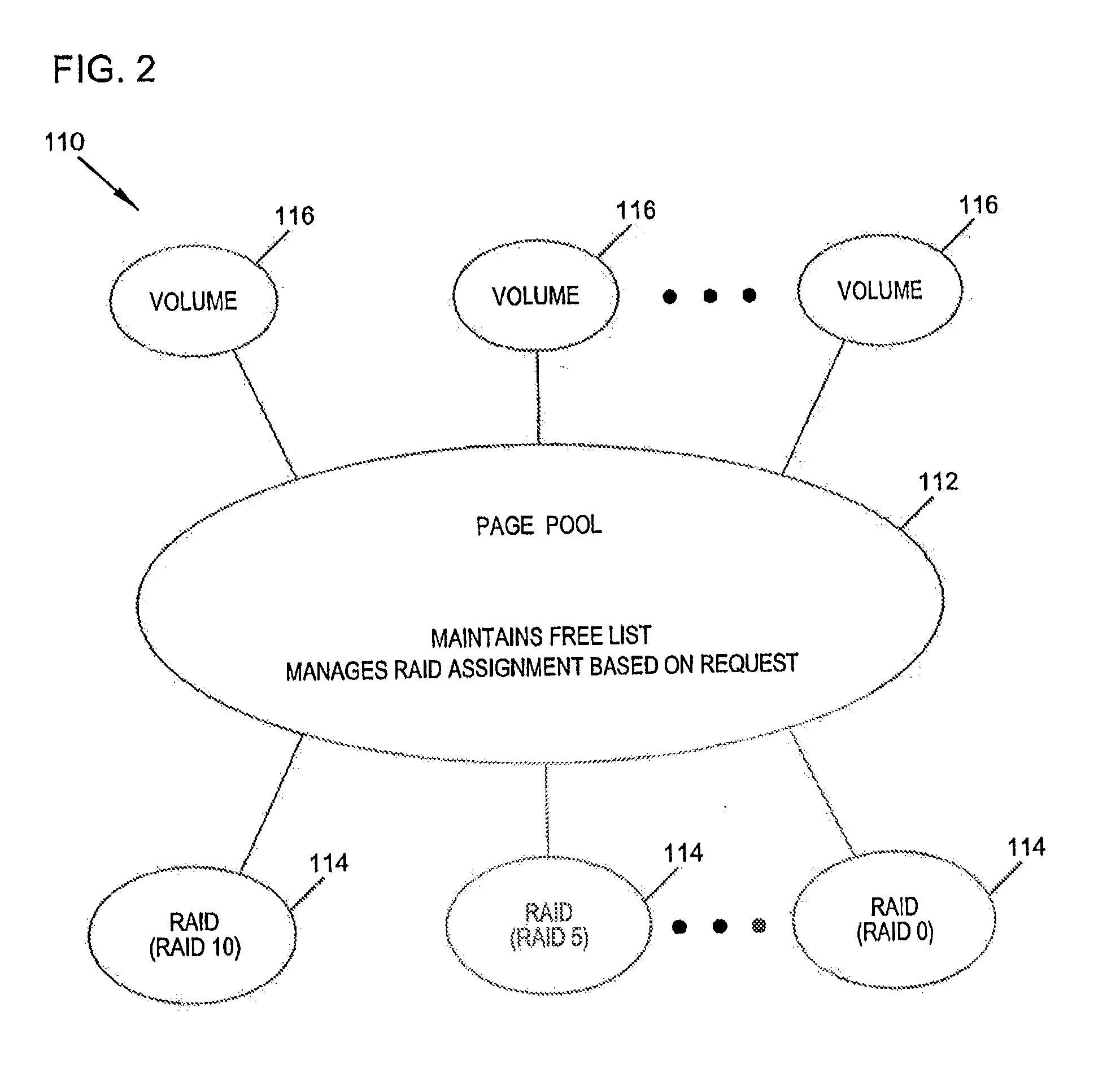

Virtual disk drive system and method

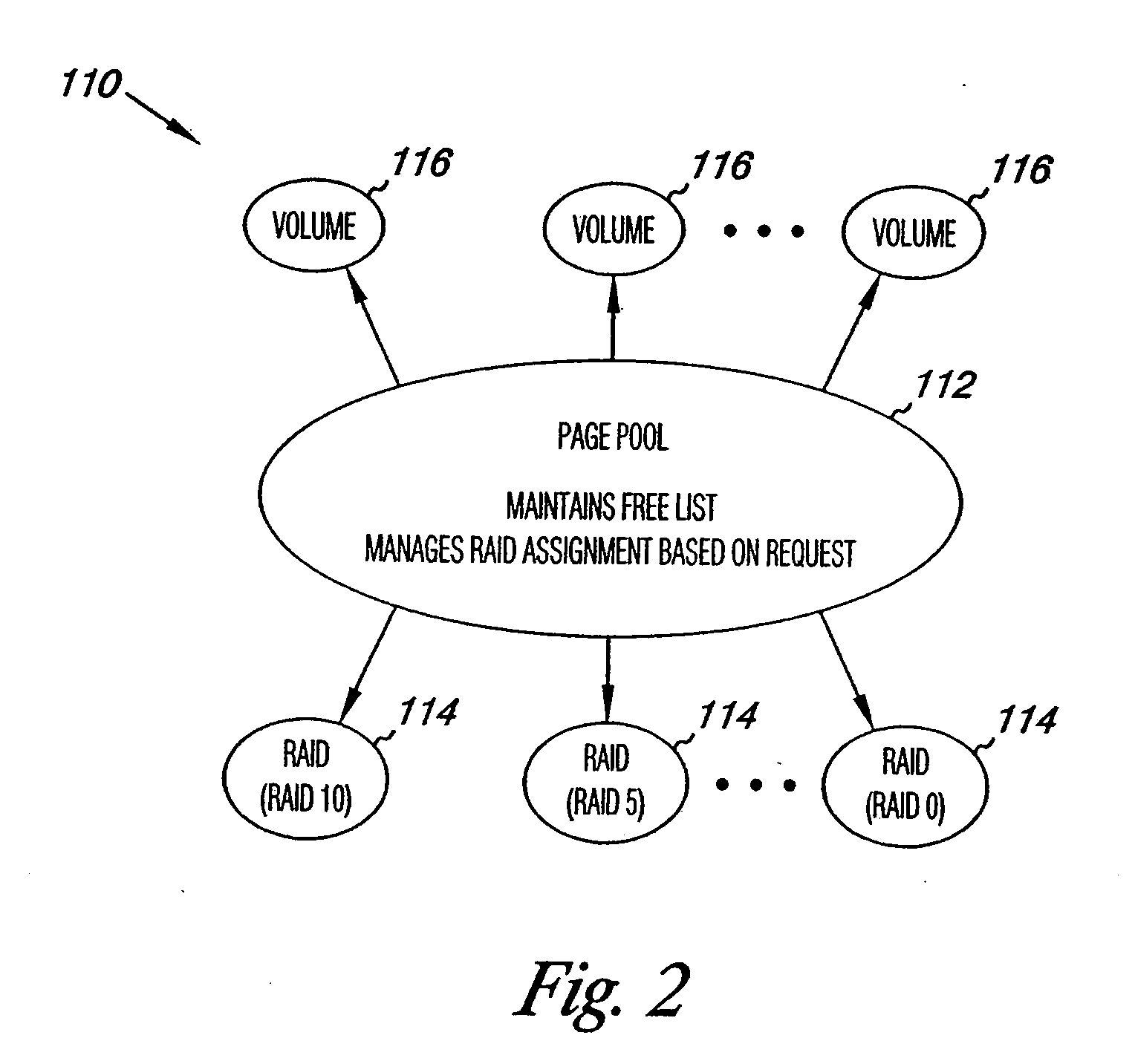

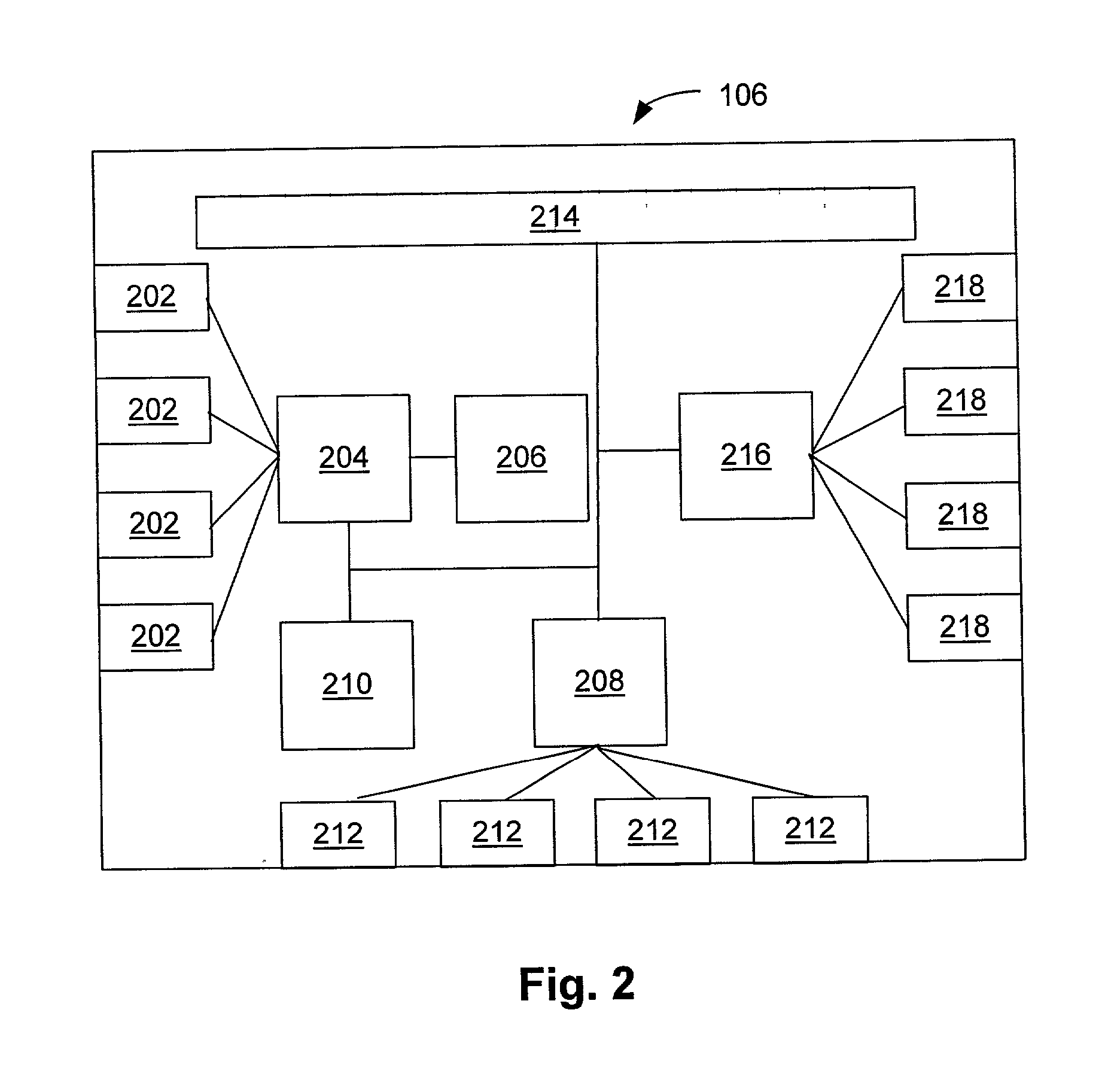

ActiveUS20050055603A1Useful operationInput/output to record carriersMemory adressing/allocation/relocationRAIDDynamic data

A disk drive system and method capable of dynamically allocating data is provided. The disk drive system may include a RAID subsystem having a pool of storage, for example a page pool of storage that maintains a free list of RAIDs, or a matrix of disk storage blocks that maintain a null list of RAIDs, and a disk manager having at least one disk storage system controller. The RAID subsystem and disk manager dynamically allocate data across the pool of storage and a plurality of disk drives based on RAID-to-disk mapping. The RAID subsystem and disk manager determine whether additional disk drives are required, and a notification is sent if the additional disk drives are required. Dynamic data allocation and data progression allow a user to acquire a disk drive later in time when it is needed. Dynamic data allocation also allows efficient data storage of snapshots / point-in-time copies of virtual volume pool of storage, instant data replay and data instant fusion for data backup, recovery etc., remote data storage, and data progression, etc.

Owner:DELL INT L L C

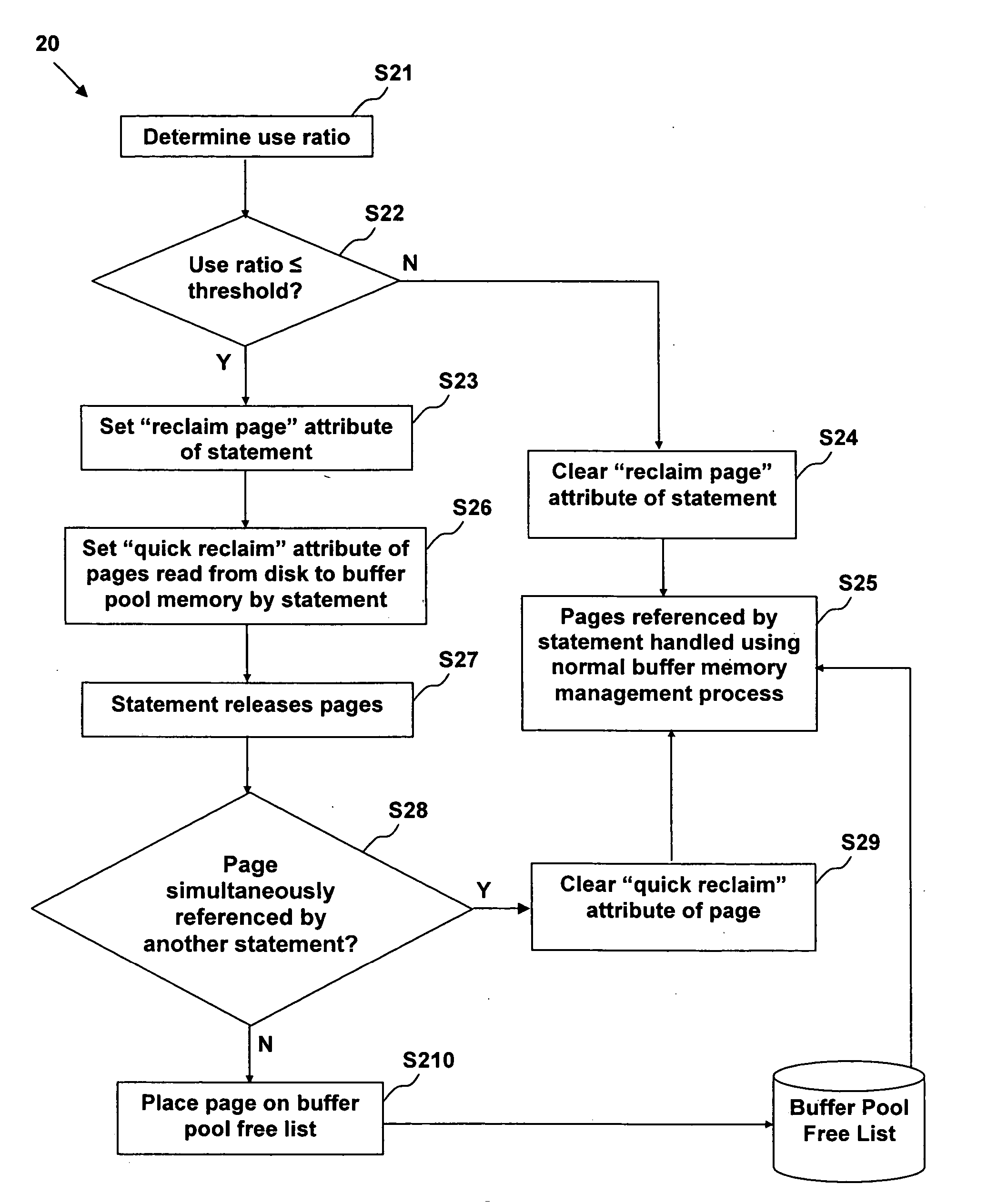

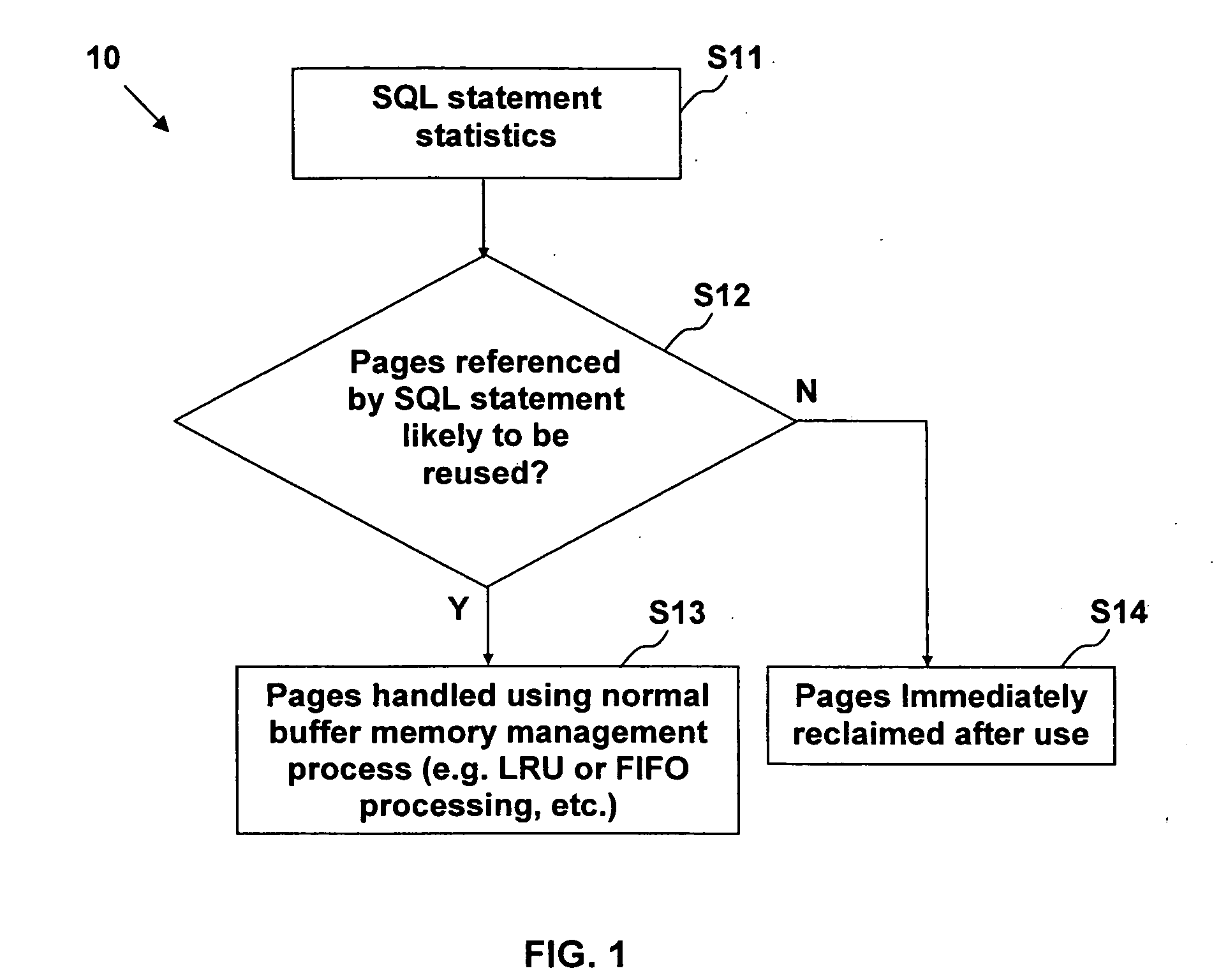

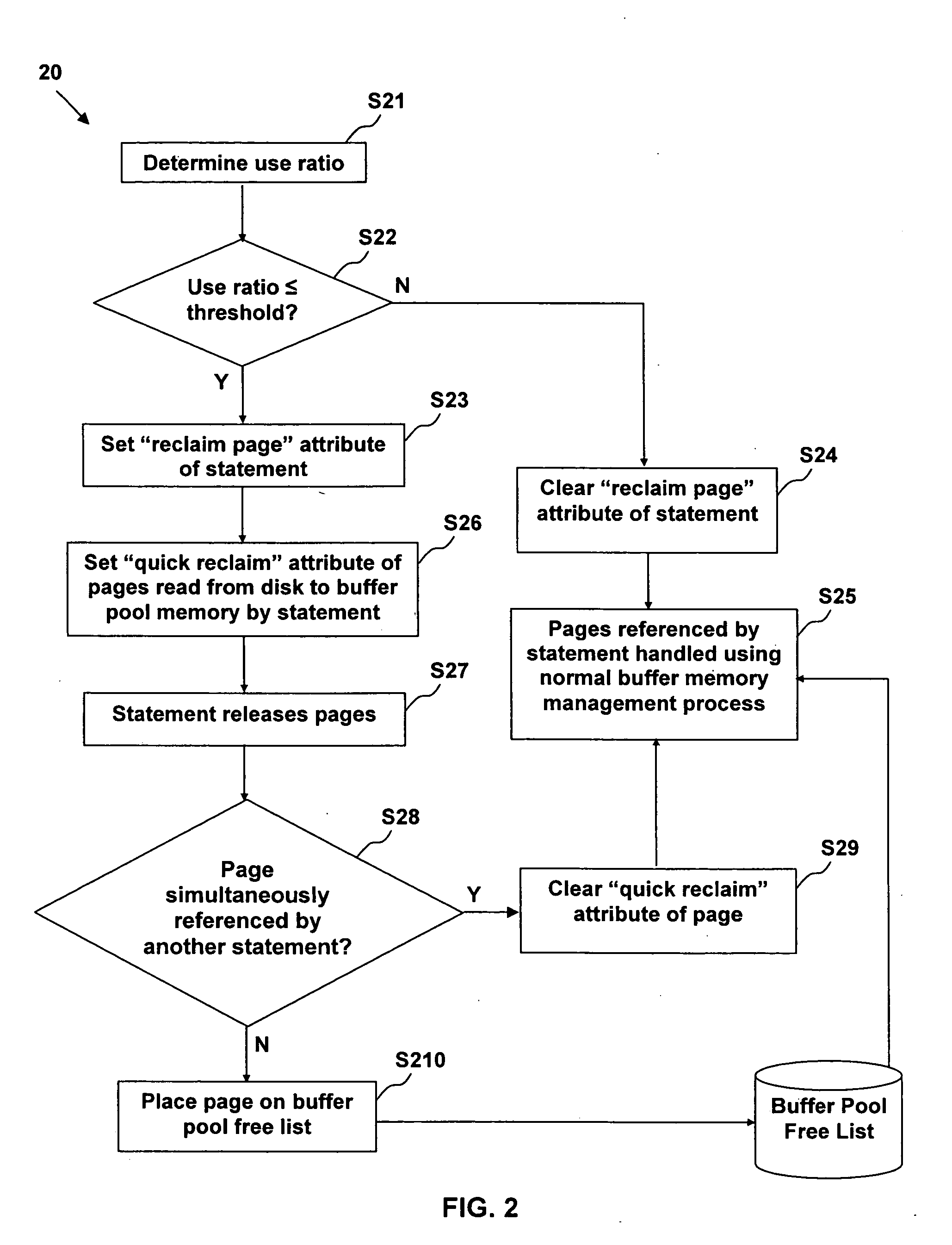

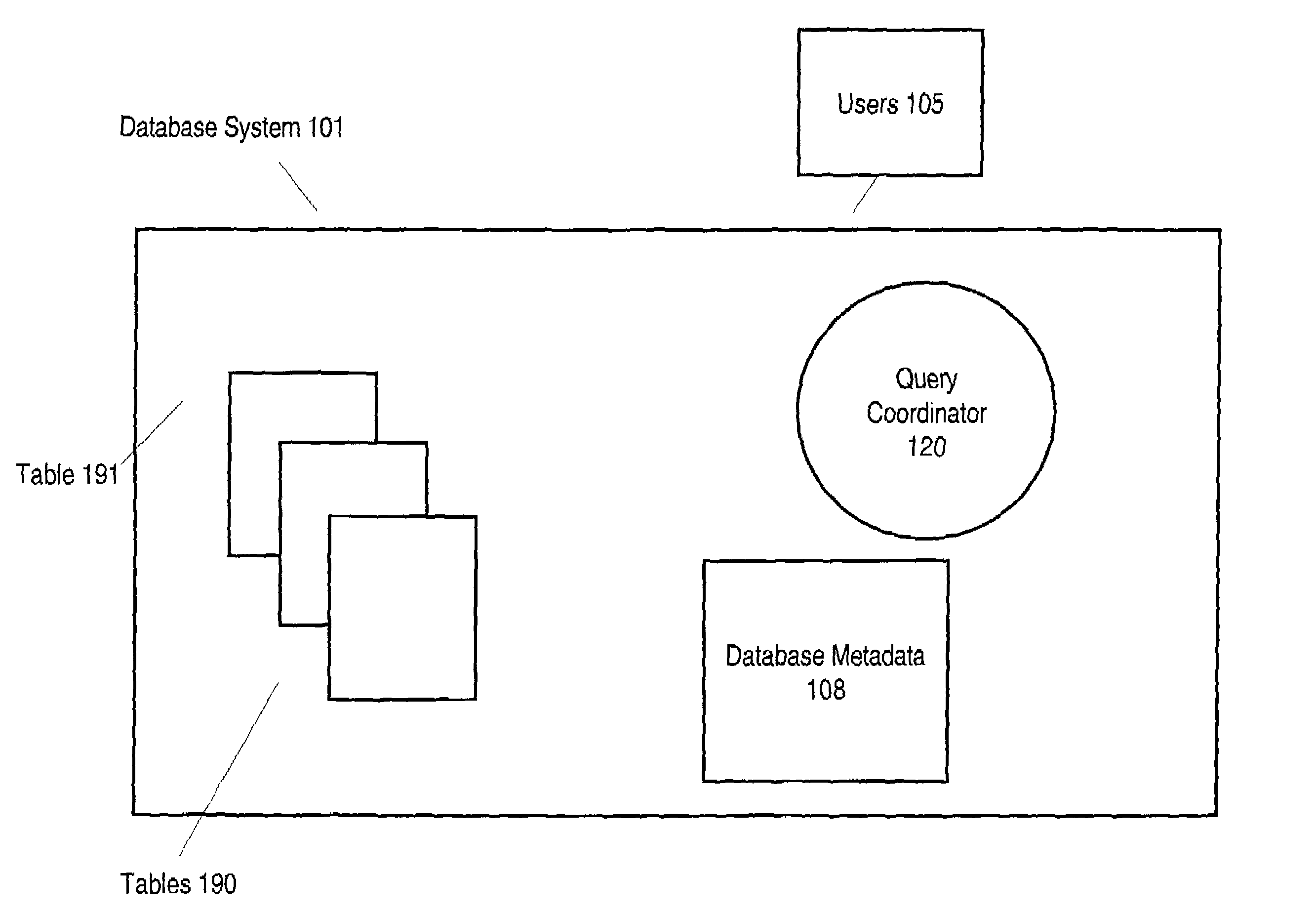

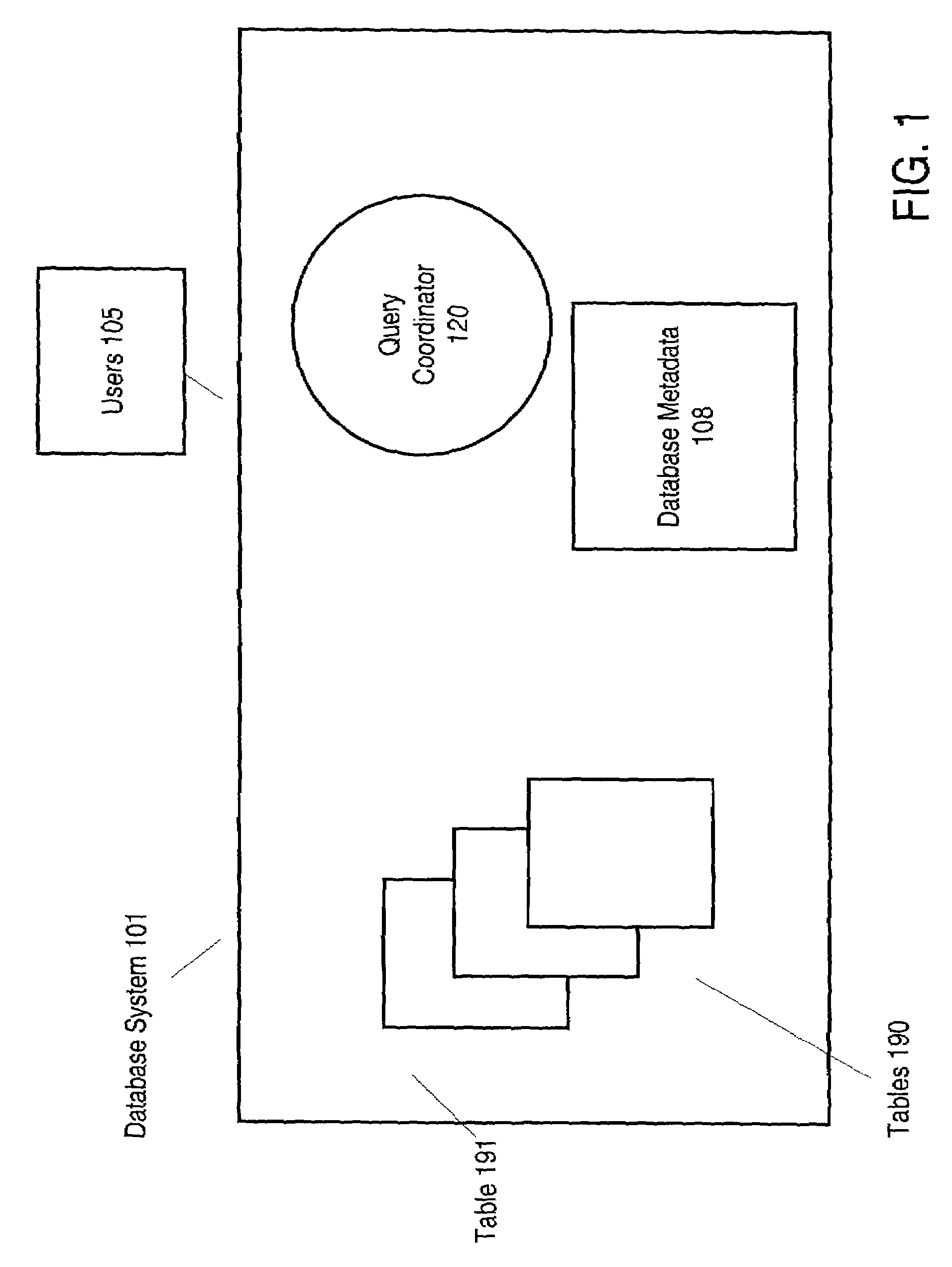

Adaptive database buffer memory management using dynamic SQL statement cache statistics

InactiveUS20060074872A1Speed up the processDigital data information retrievalSpecial data processing applicationsTerm memoryQuery language

The present invention provides a method, system, and computer program product for adaptive database buffer memory management using dynamic Structured Query Language (SQL) statement cache statistics. The method comprises: using SQL statement cache statistics to infer page re-use. The method further comprises: determining a use ratio of an SQL statement; comparing the use ratio of the statement to a threshold value; if the use ratio is less than the threshold value, setting a reclaim page attribute of the statement indicating a low likelihood of page re-use of pages referenced by the statement; and, if the reclaim page attribute of the statement is set: setting a quick reclaim attribute of each page read from disk by the statement; and after each page is released by the statement, placing the page in a buffer pool free list, wherein a memory location of the page in a buffer pool memory is immediately available for re-use.

Owner:IBM CORP

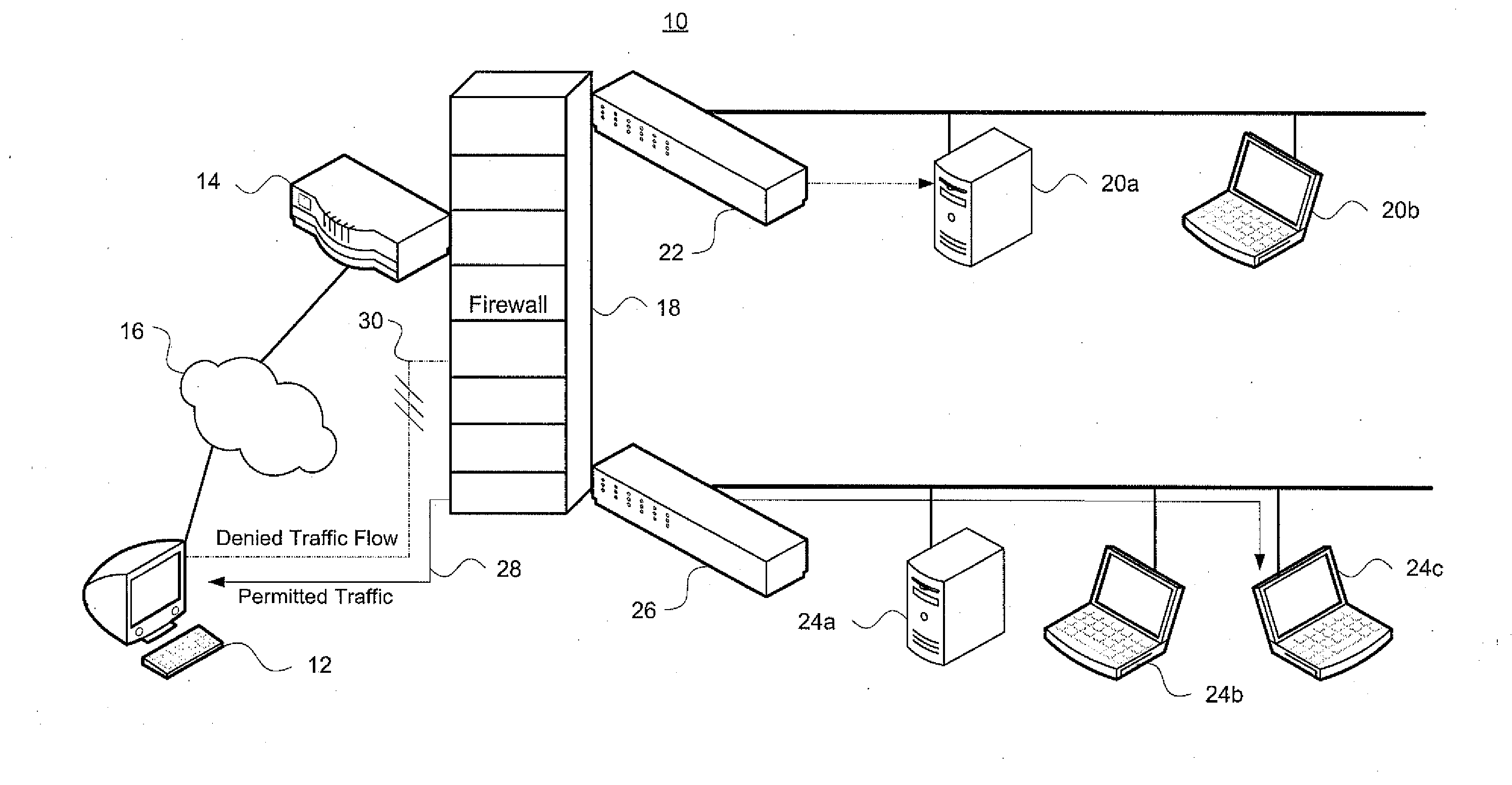

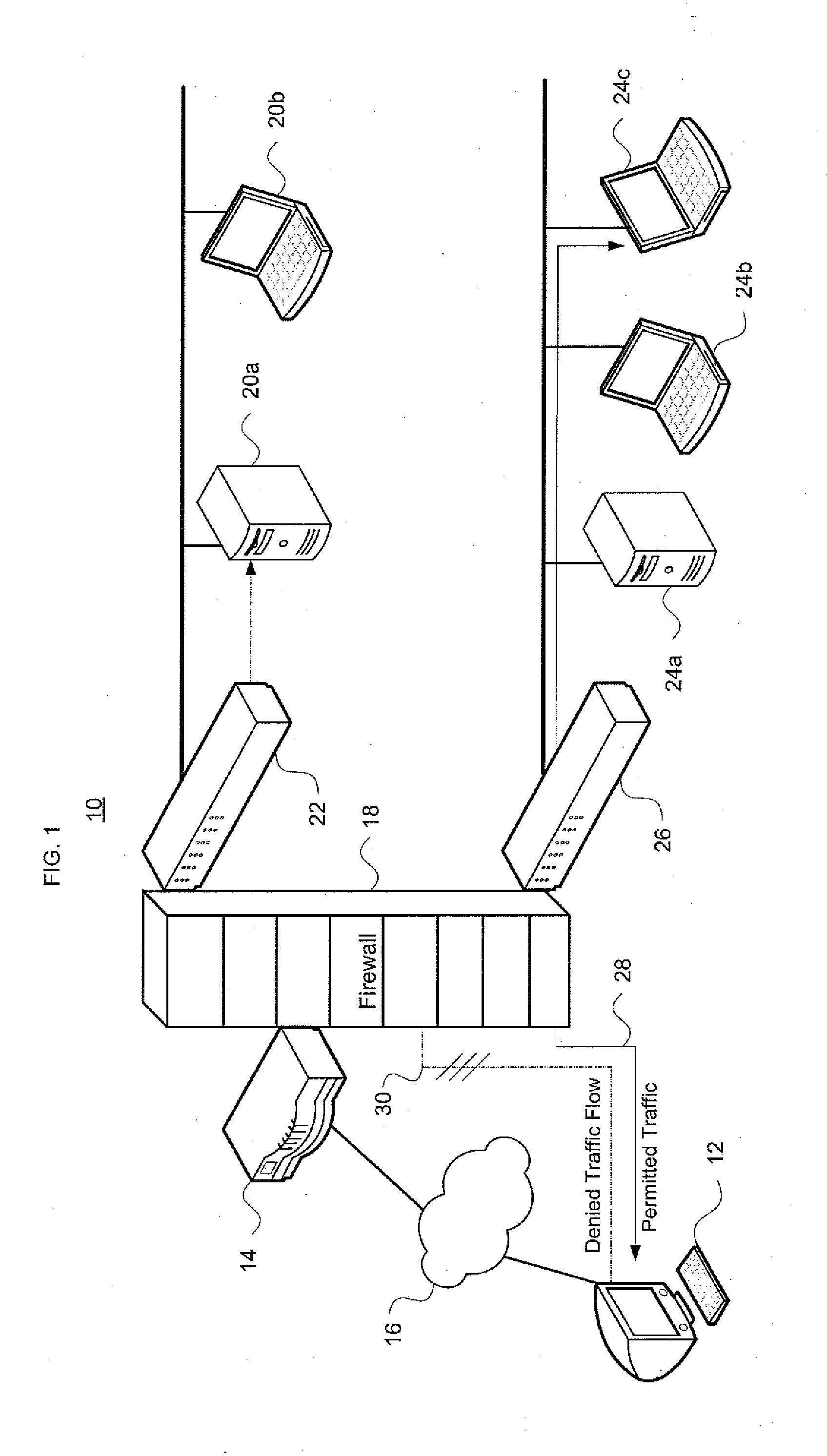

System and method for determining symantic equivalence between access control lists

InactiveUS20100199346A1Effective judgmentEfficiently determinedNext instruction address formationMultiple digital computer combinationsSemantic equivalenceDatabase

Aspects of the invention pertain to analyzing and modifying access control lists that are used in computer networks. Access control lists may have many individual rules that indicate whether information can be passed between certain devices in a computer network. The access control lists may include redundant or conflicting rules. An aspect of the invention determines whether two or more access control lists are equivalent or not. Order-dependent access control lists are converted into order-independent access control lists, which enable checking of semantic equivalence of different access control lists. Upon conversion to an order-independent access control list, lower-precedence rules in the order-free list are checked for overlap with a current higher precedence entry. If overlap exists, existing order-free rules are modified so that spinoff rules have no overlap with the current entry. This is done while maintaining semantic equivalence.

Owner:TT GOVERNMENT SOLUTIONS

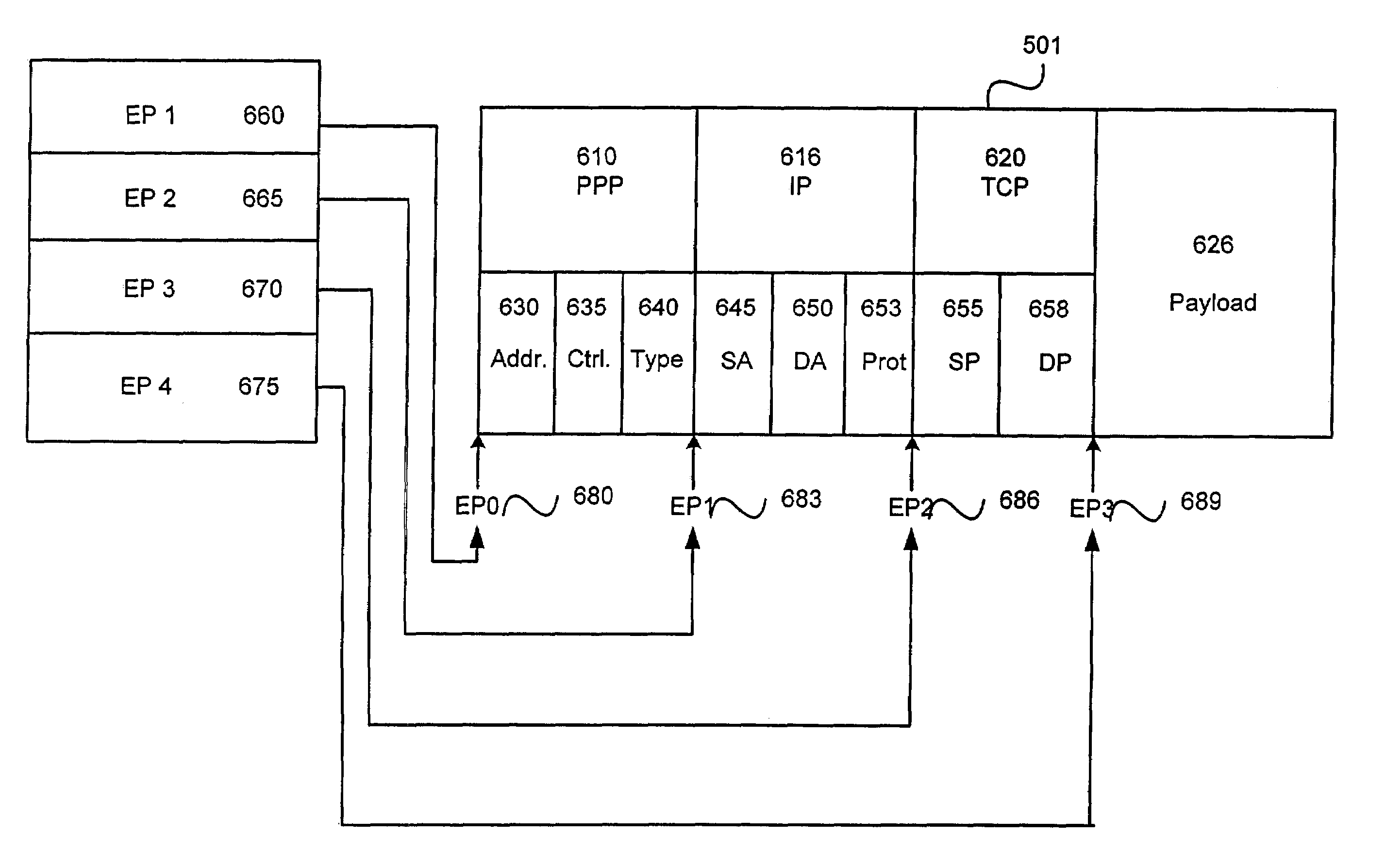

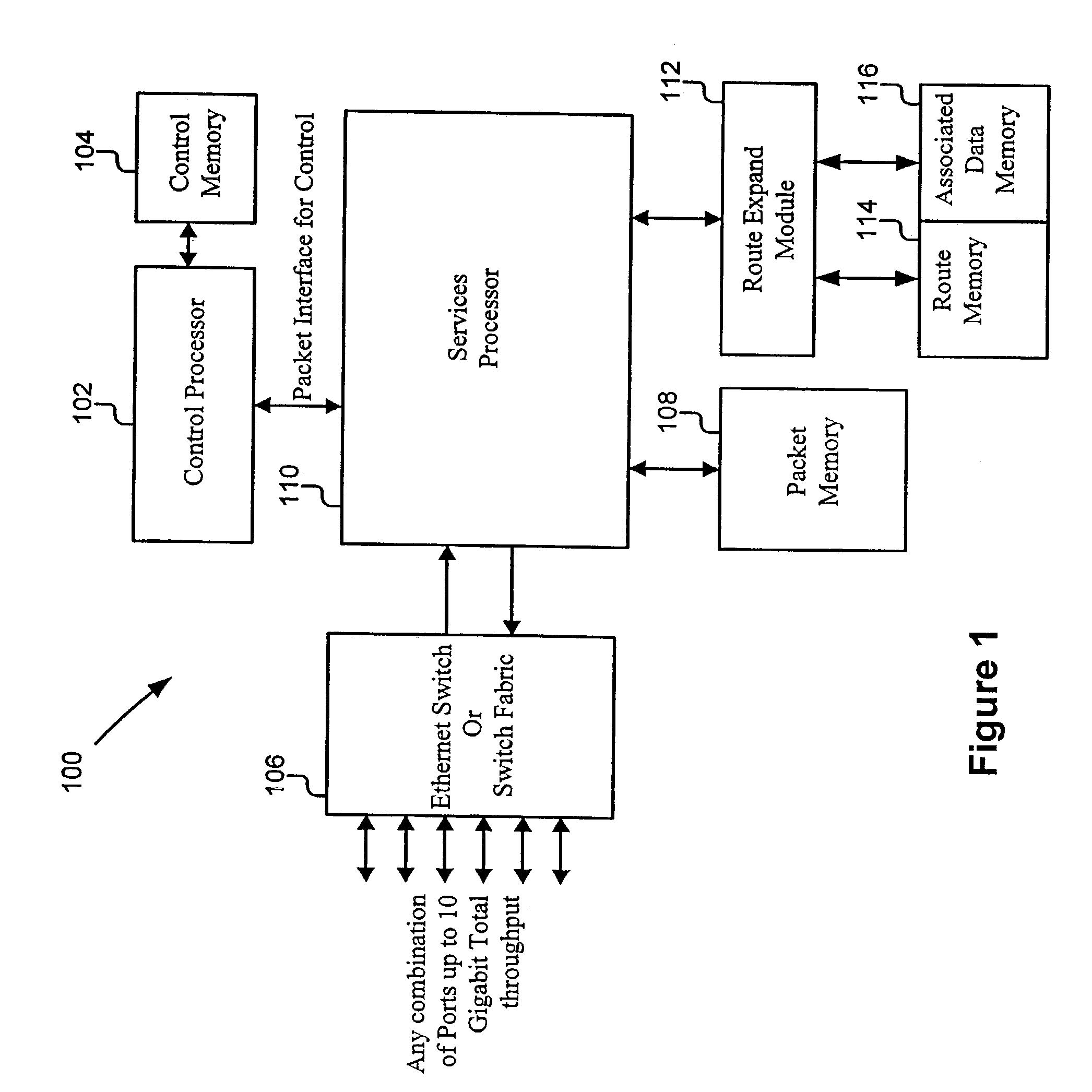

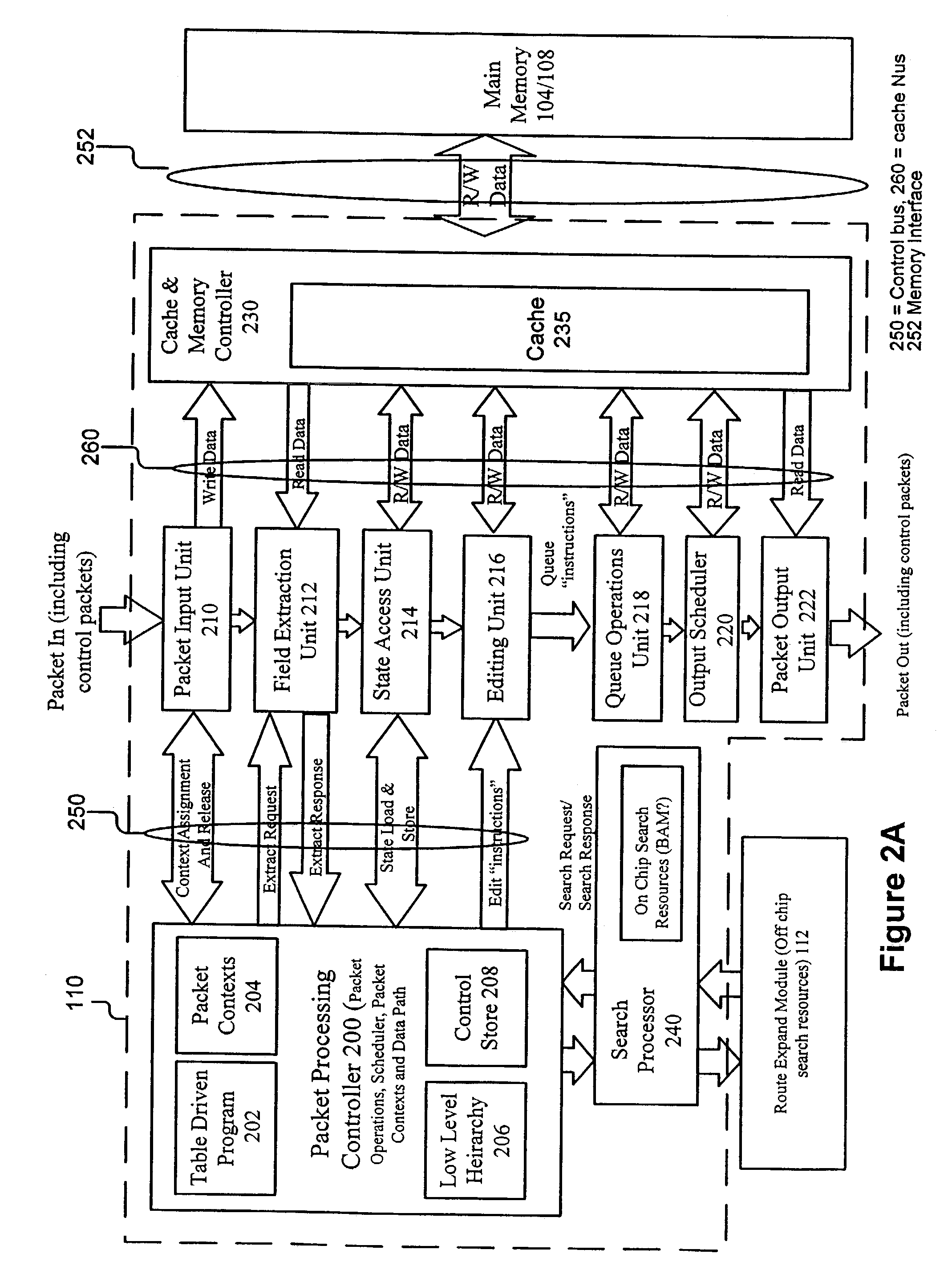

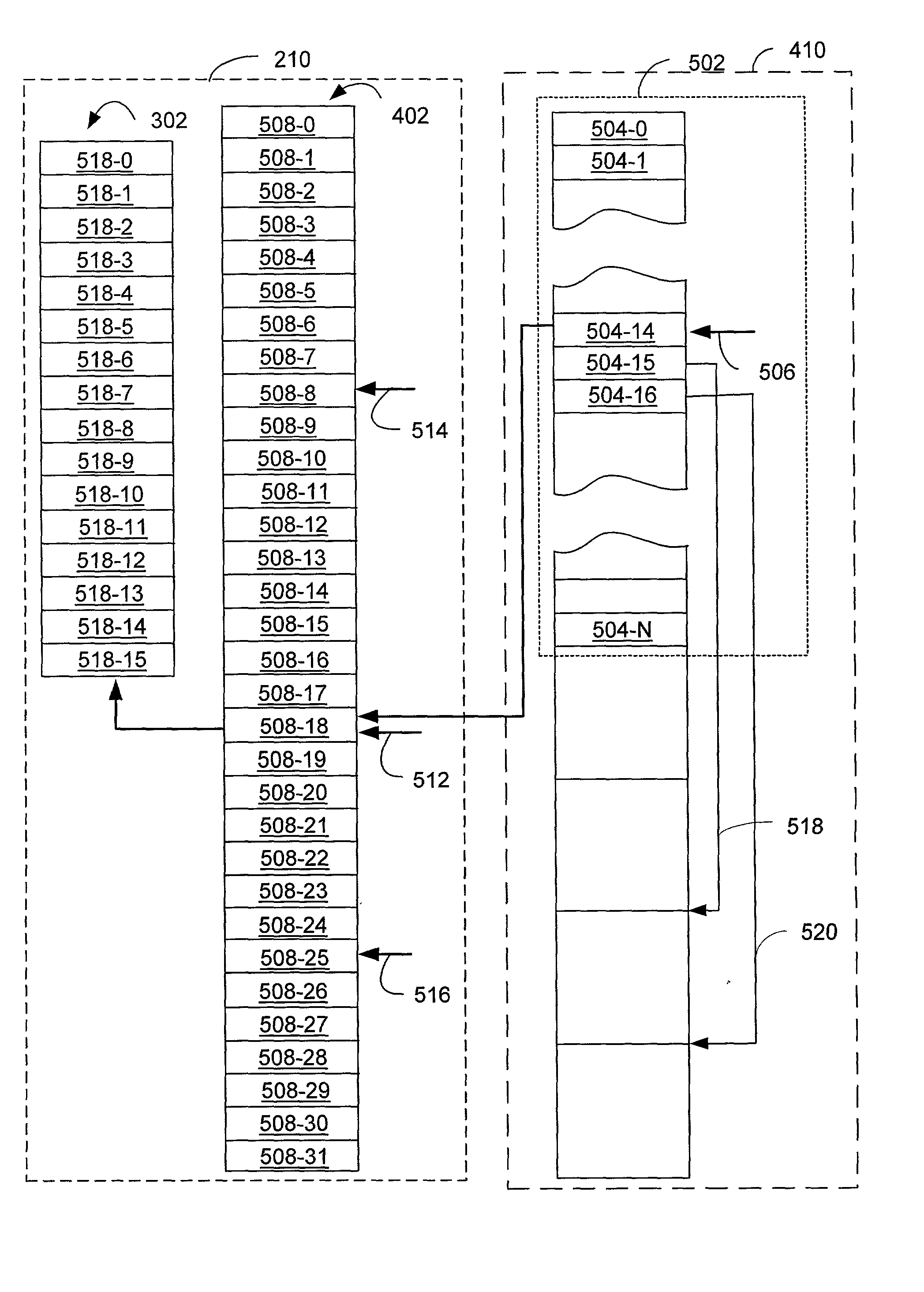

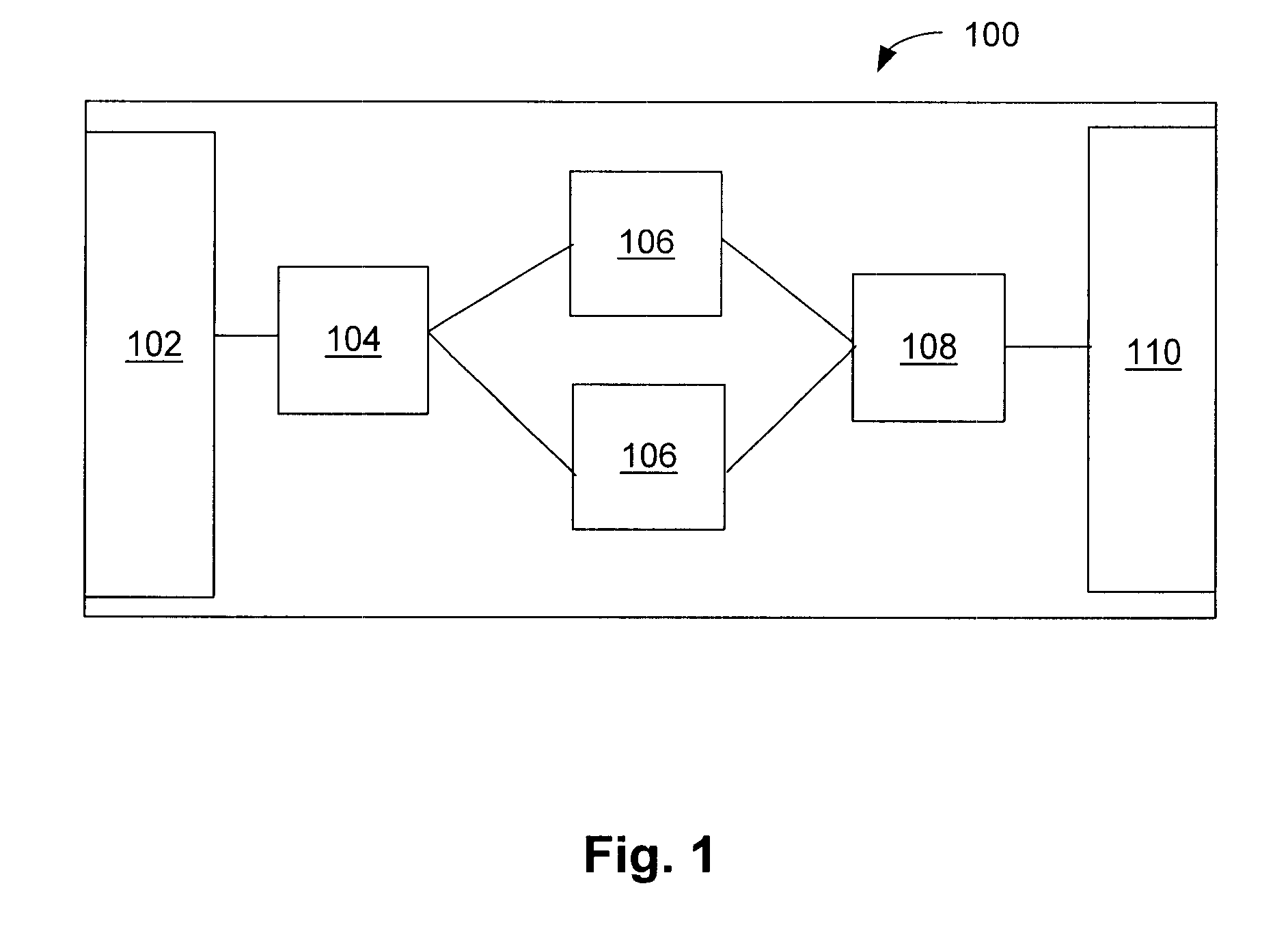

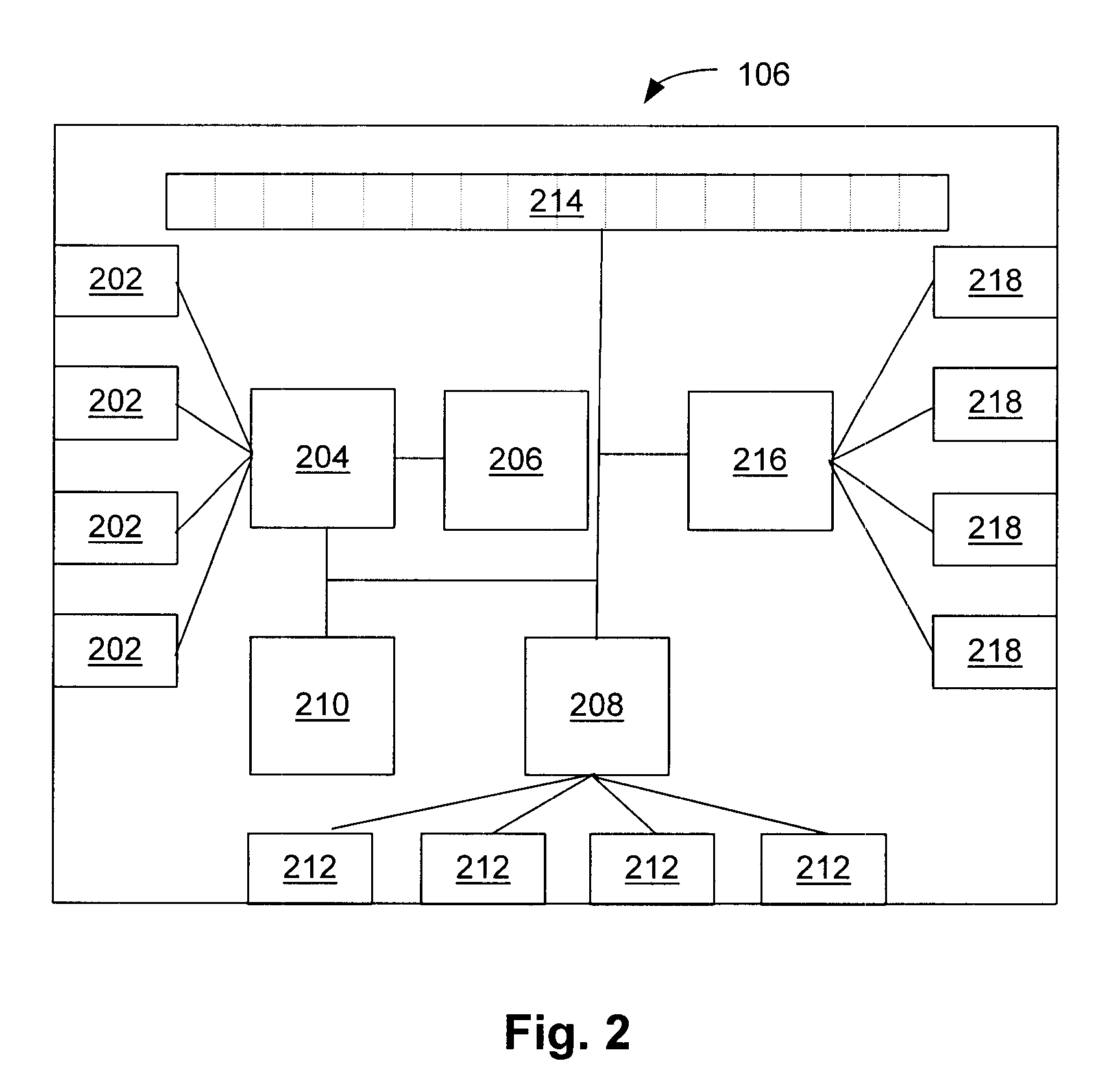

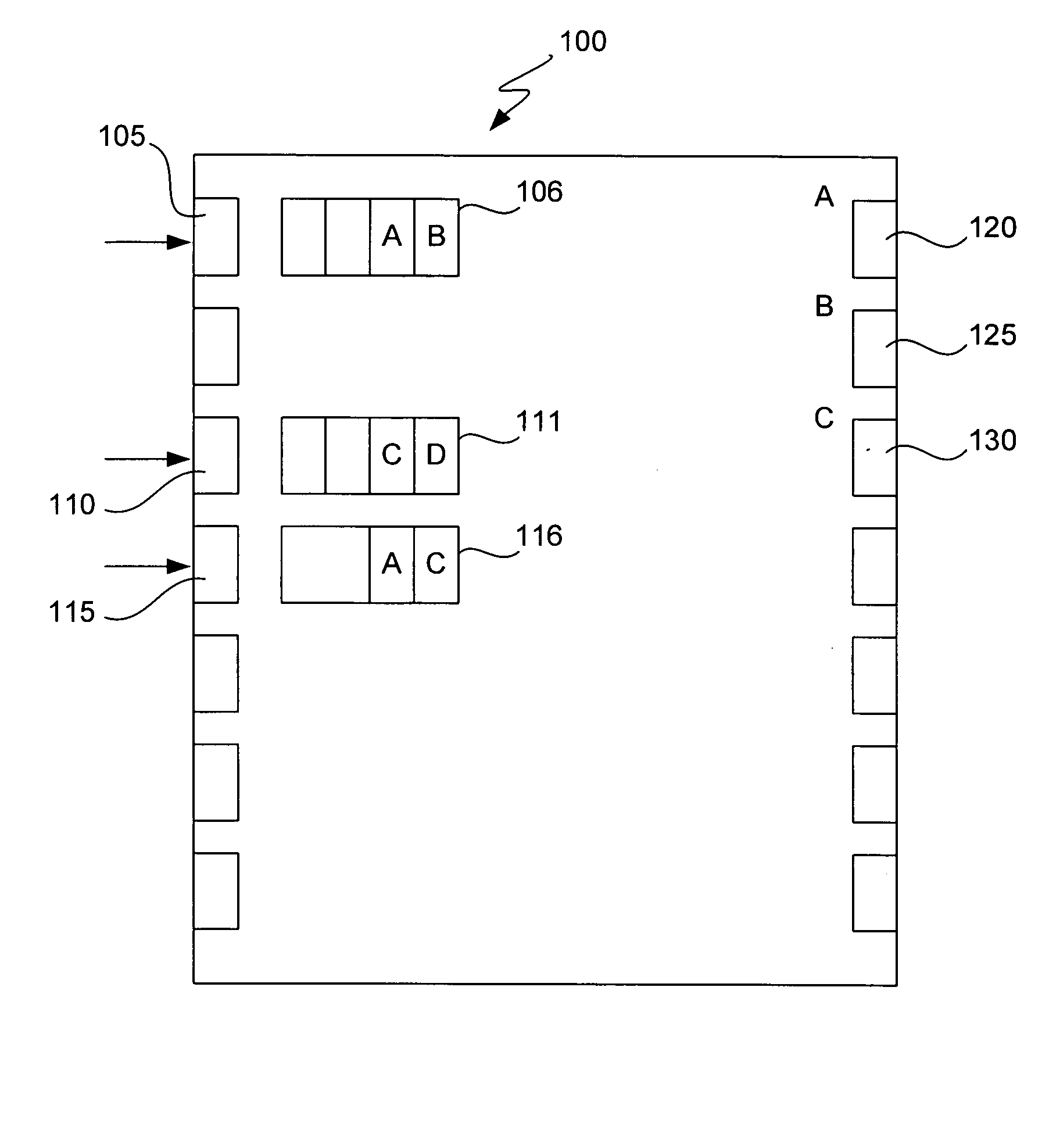

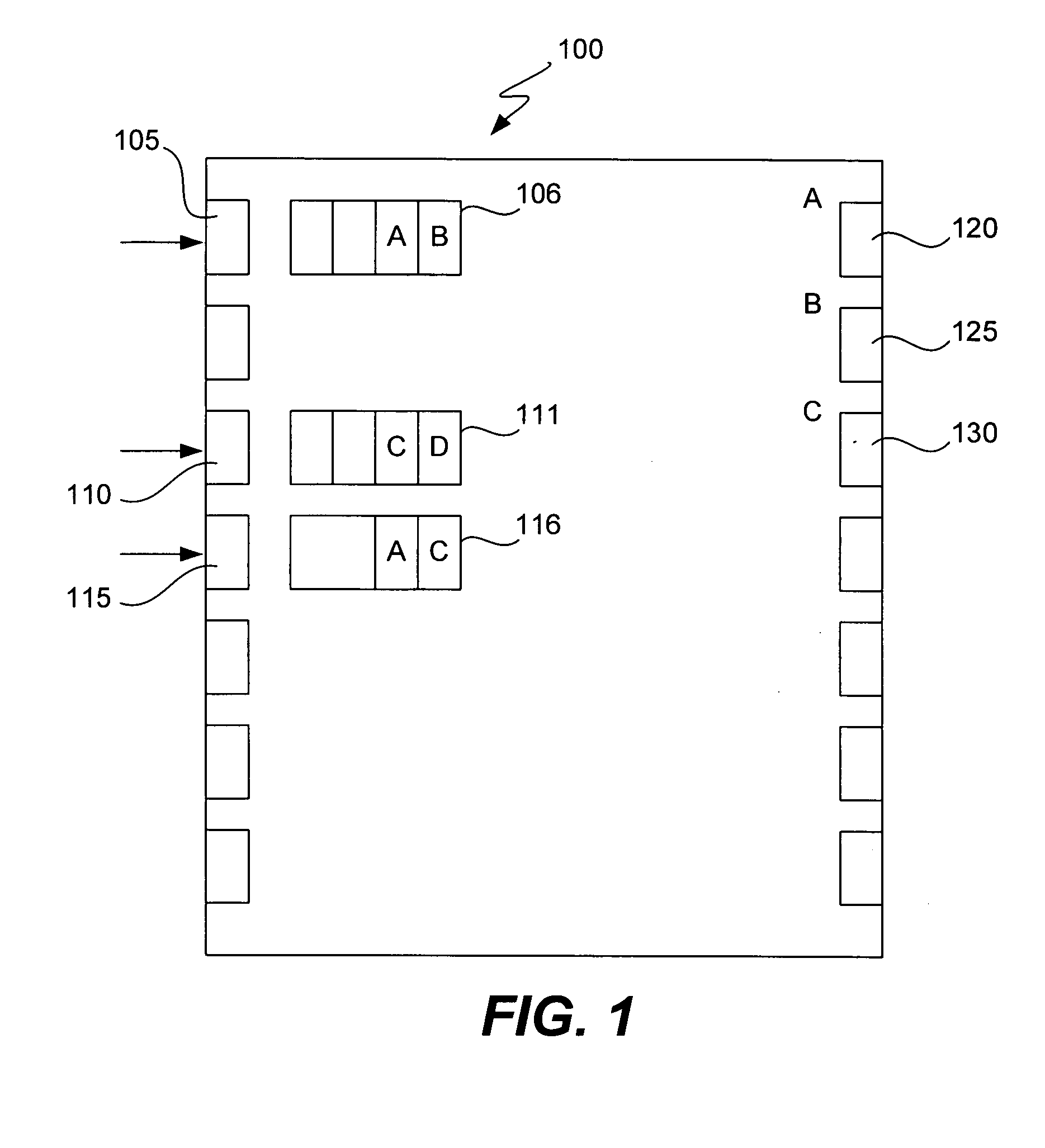

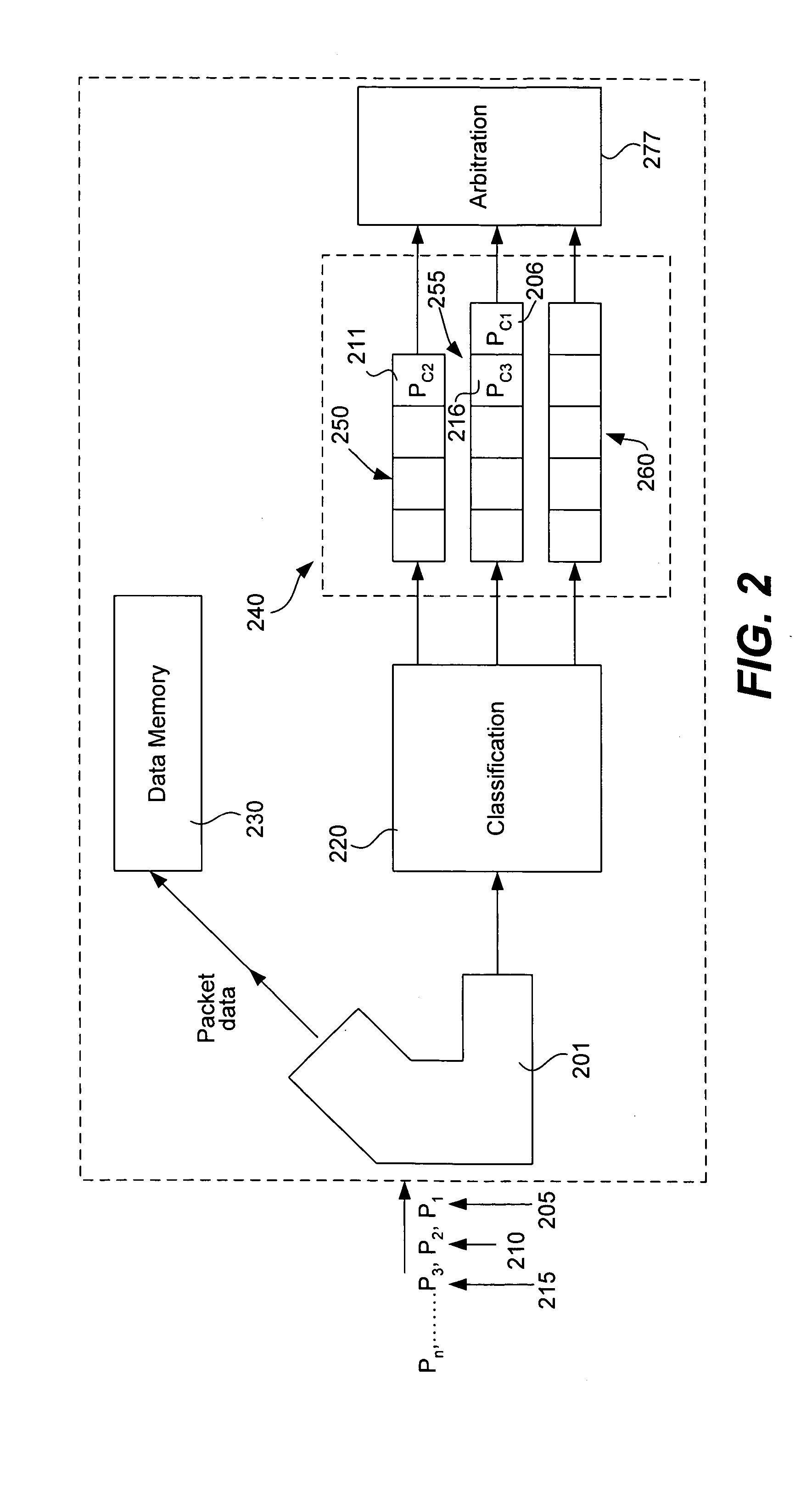

System and method for packet storage and retrieval

InactiveUS7058789B2Maximize throughputMultiplex system selection arrangementsMemory adressing/allocation/relocationManagement unitProcessor register

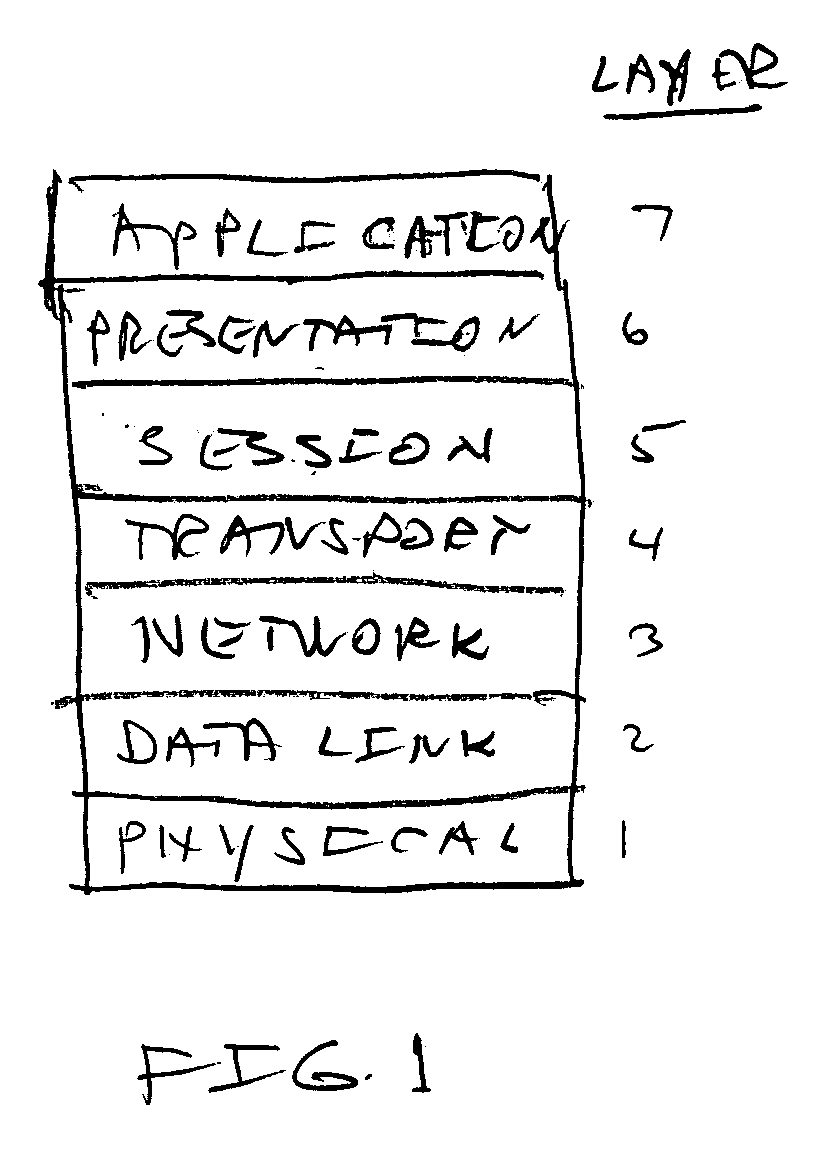

A network services processor receives, stores, and modifies incoming packets and transmits them to their intended destination. The network services processor stores packets as buffers in main and cache memory for manipulation and retrieval. A memory subsystem stores packets as linked lists of buffers. Each bank of memory includes a separate memory management controller for controlling accesses to the memory bank. The memory management controllers, a cache management unit, and free list manager shift the scheduling of read and write operations to maximize overall system throughput. For each packet, packet context registers are assigned, including a packet handle that points to the location in memory of the packet buffer. The contents of individual packets can be accessed through the use of encapsulation pointer registers that are directed towards particular offsets within a packet, such as the beginning of different protocol layers within the packet.

Owner:INTEL CORP

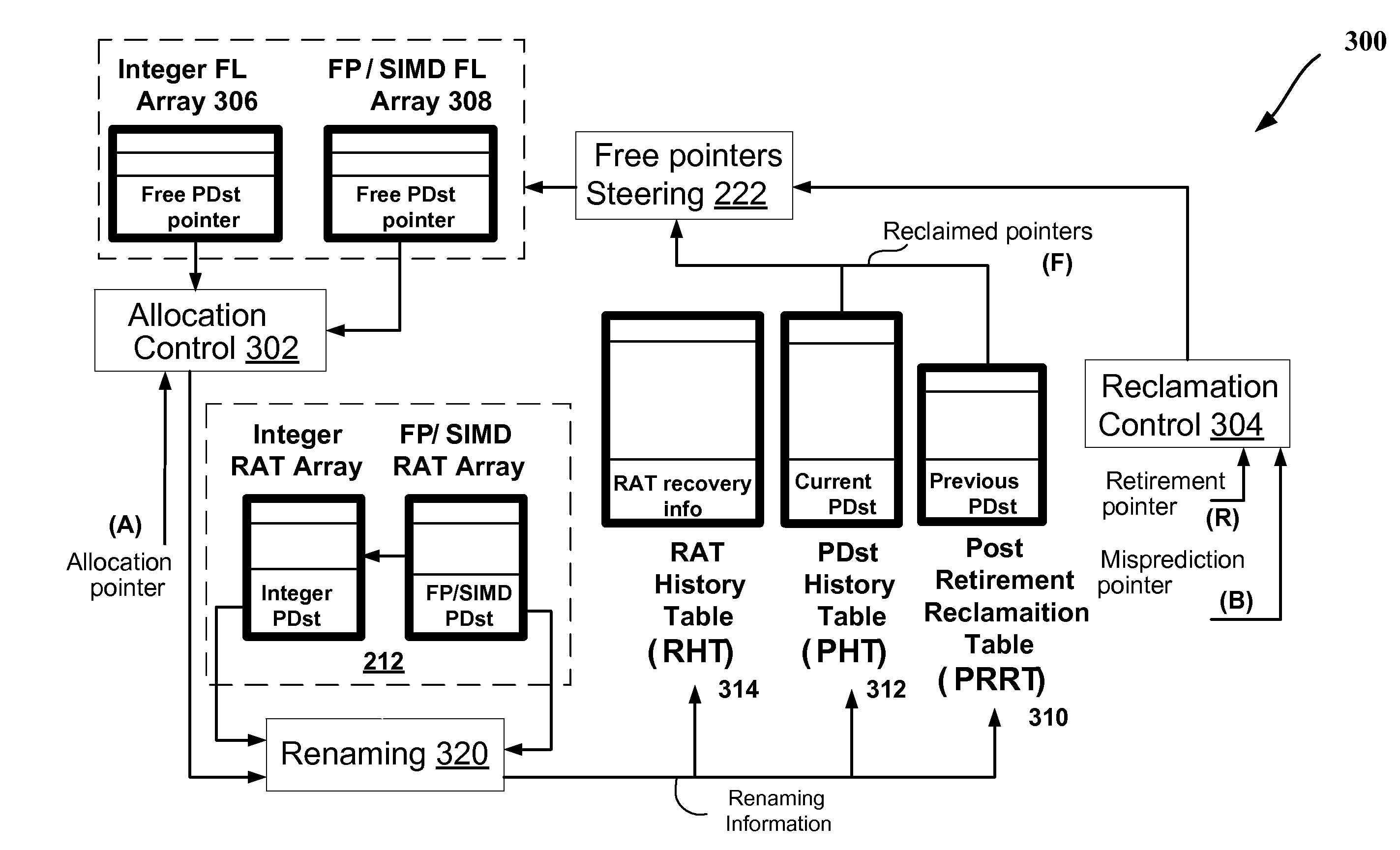

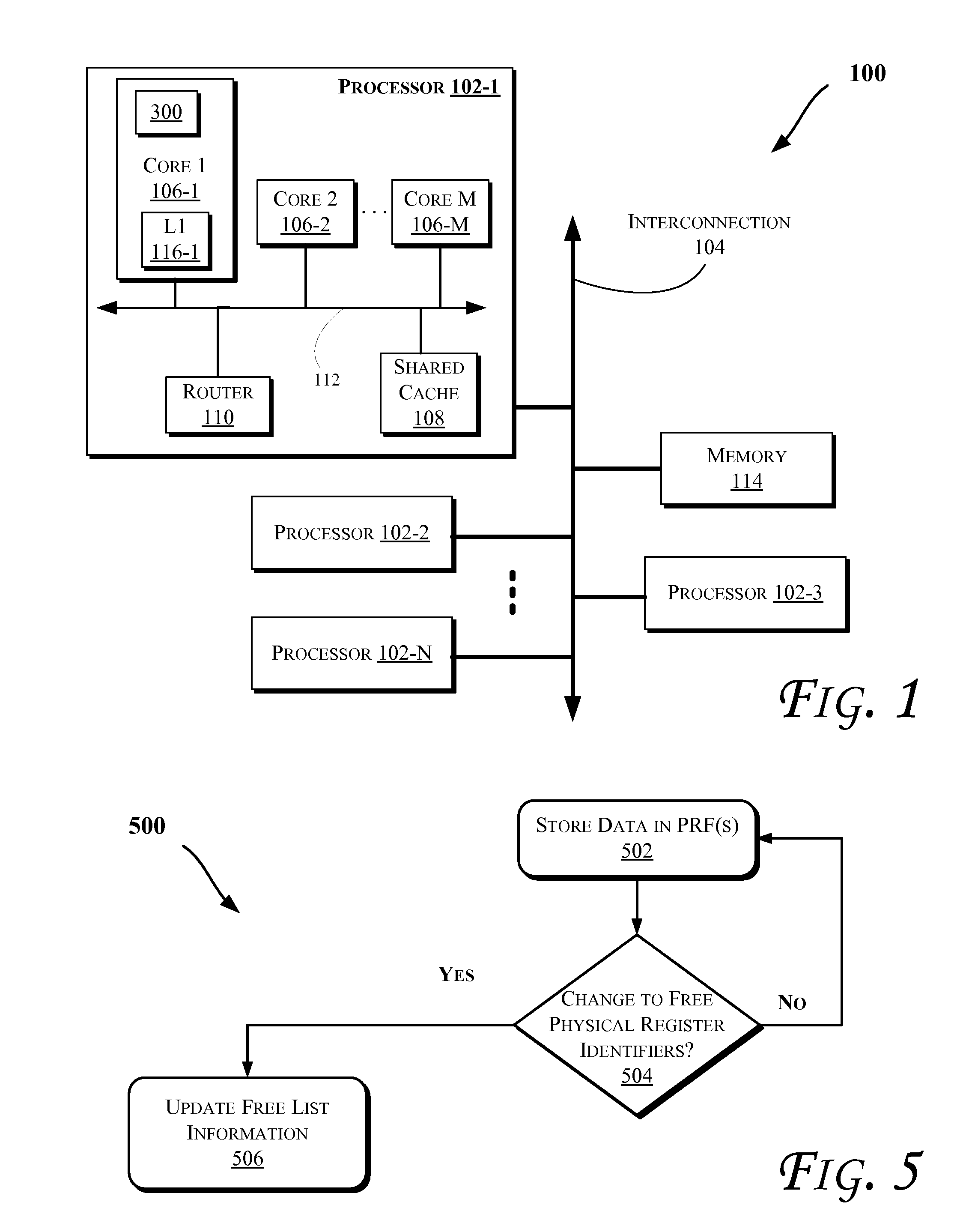

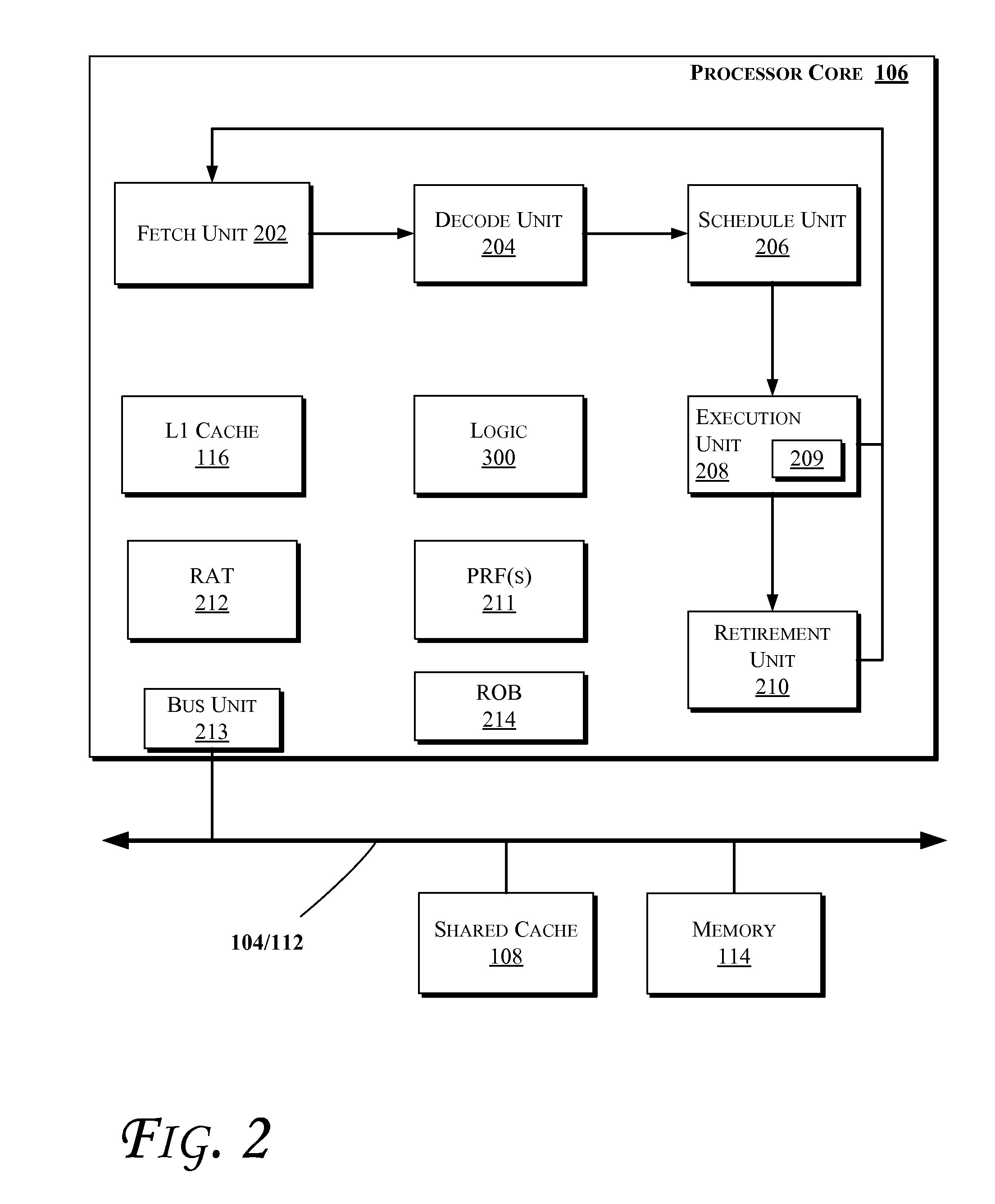

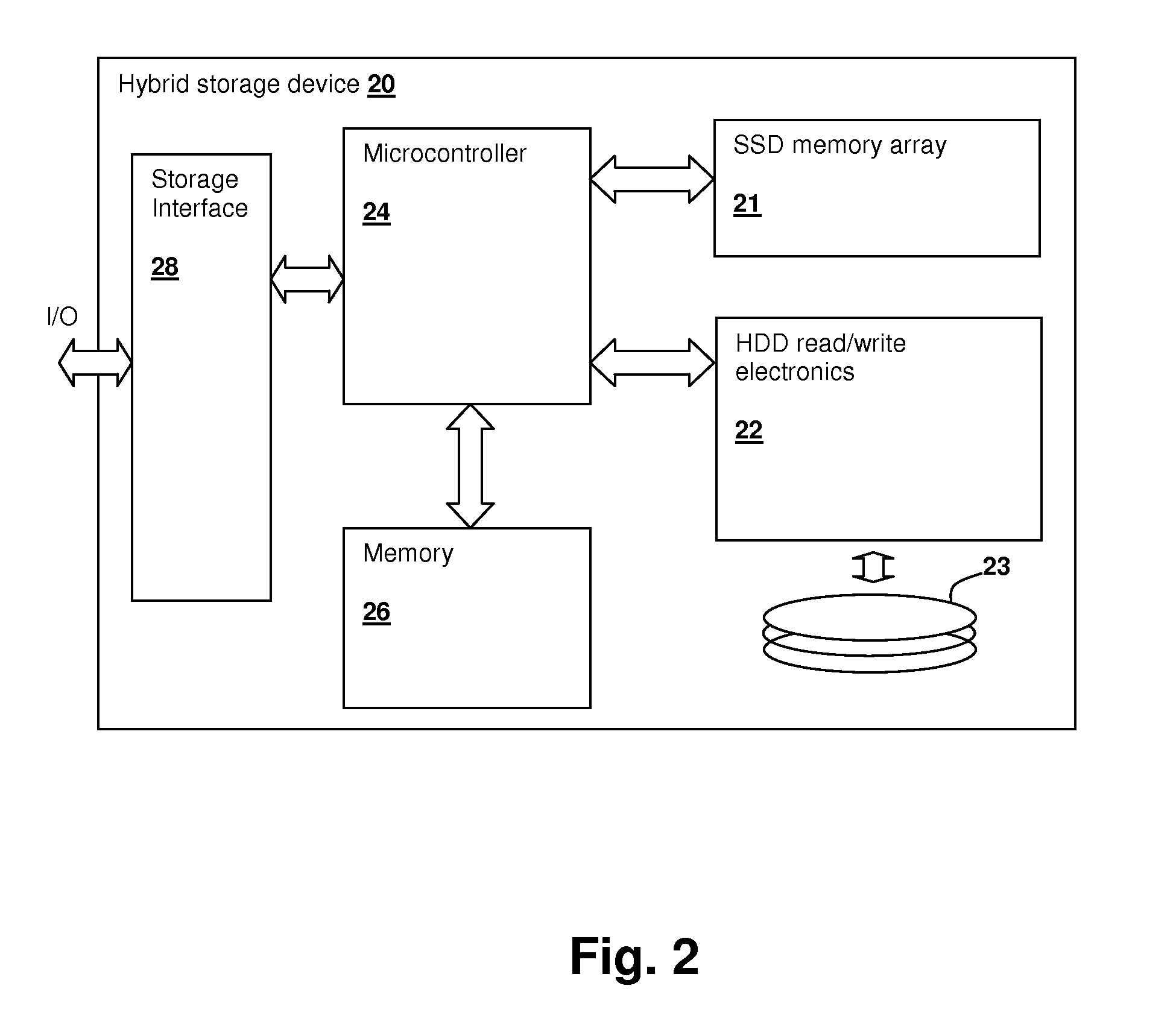

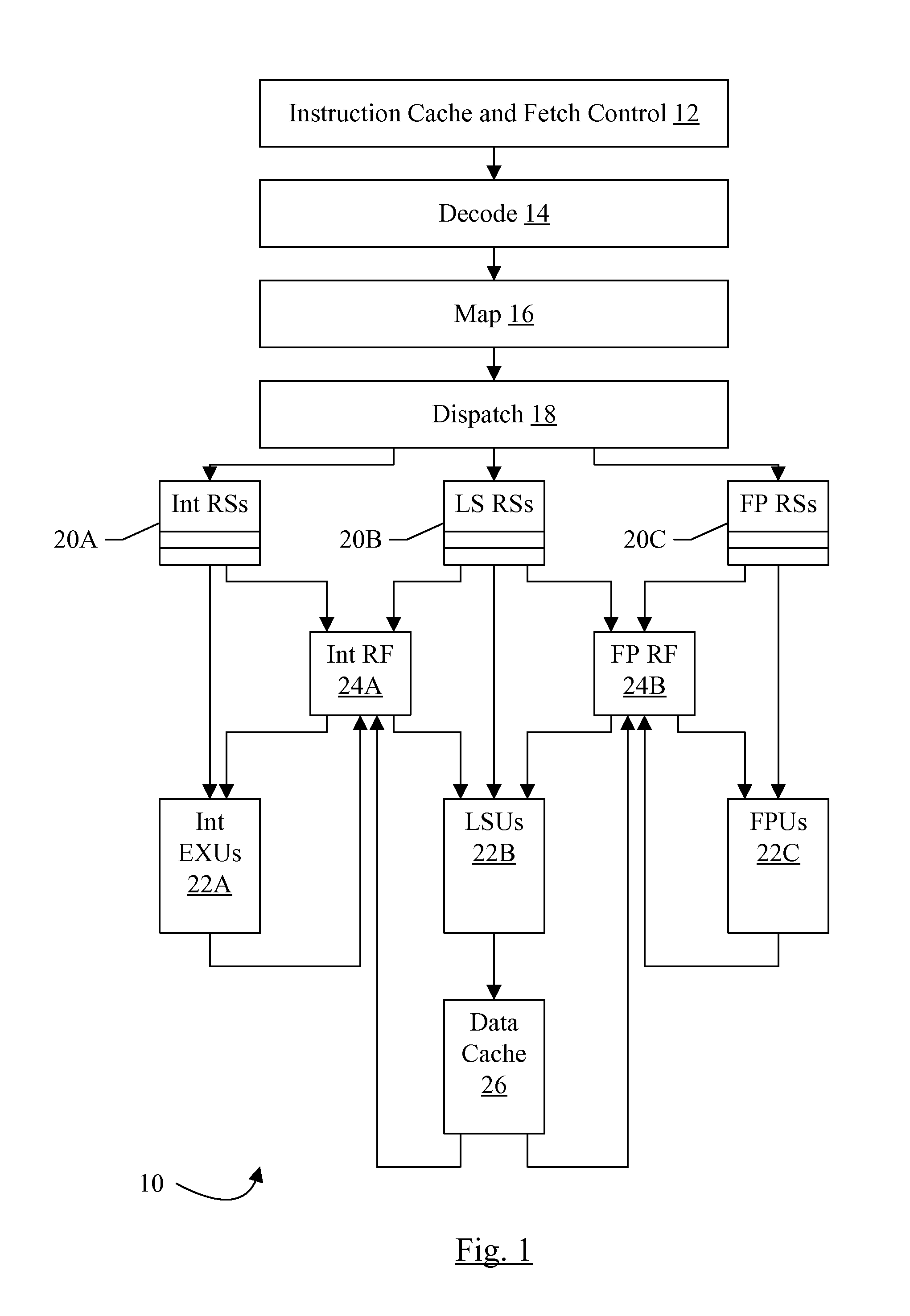

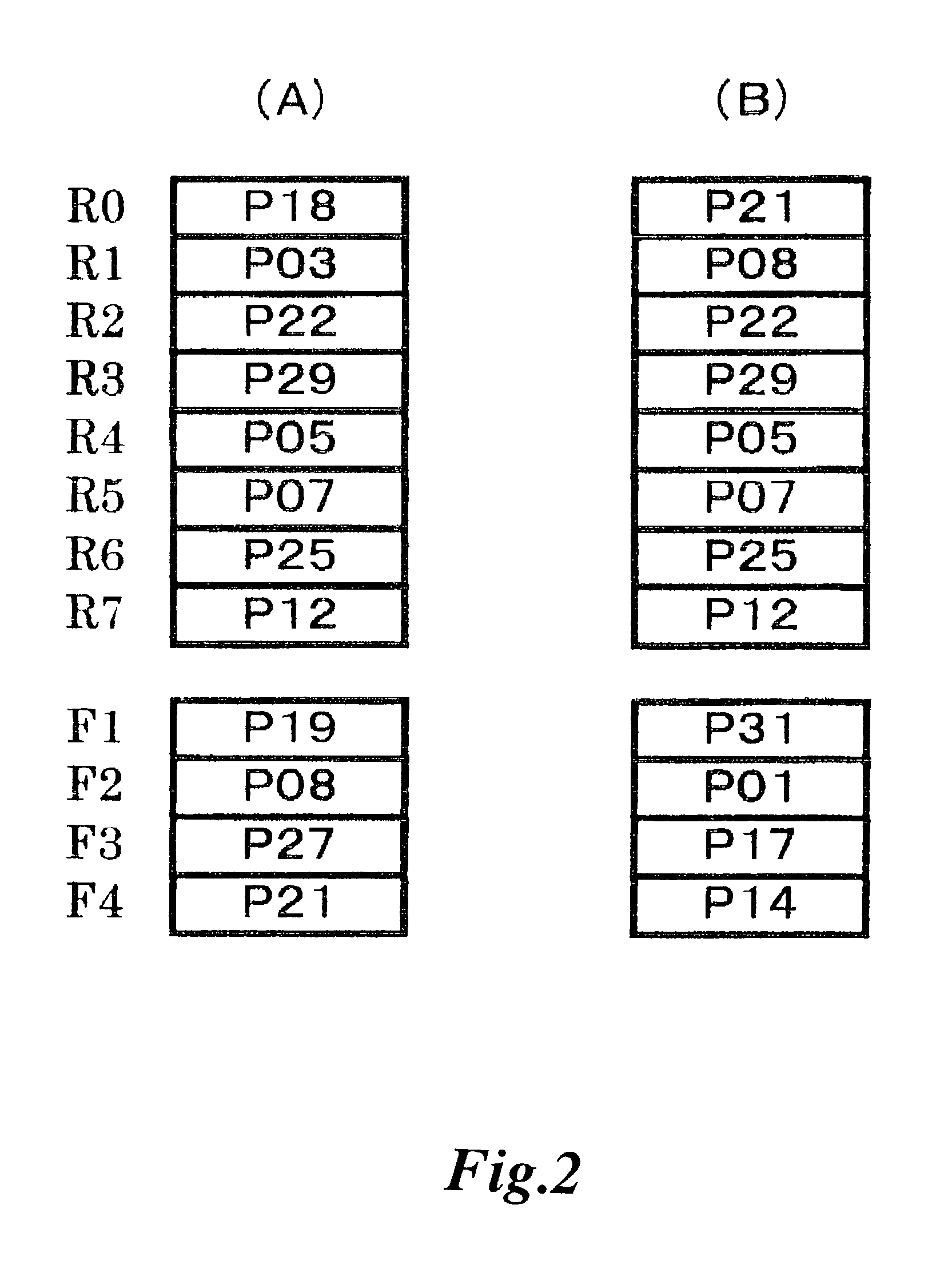

Mechanisms to handle free physical register identifiers for smt out-of-order processors

InactiveUS20090327661A1Register arrangementsDigital computer detailsProcessor registerSimultaneous multithreading

Methods and apparatus relating to mechanisms to handle free physical register identifiers for SMT (Simultaneous Multi-Threading) out-of-order processors are described. In some embodiments, a physical register file stores both speculative data and architectural data corresponding to a plurality of registers. A free list logic may maintain free physical register identifiers corresponding to the plurality of registers. An instruction may read the architectural data from the physical register file at dispatch. Other embodiments are also described and claimed.

Owner:INTEL CORP

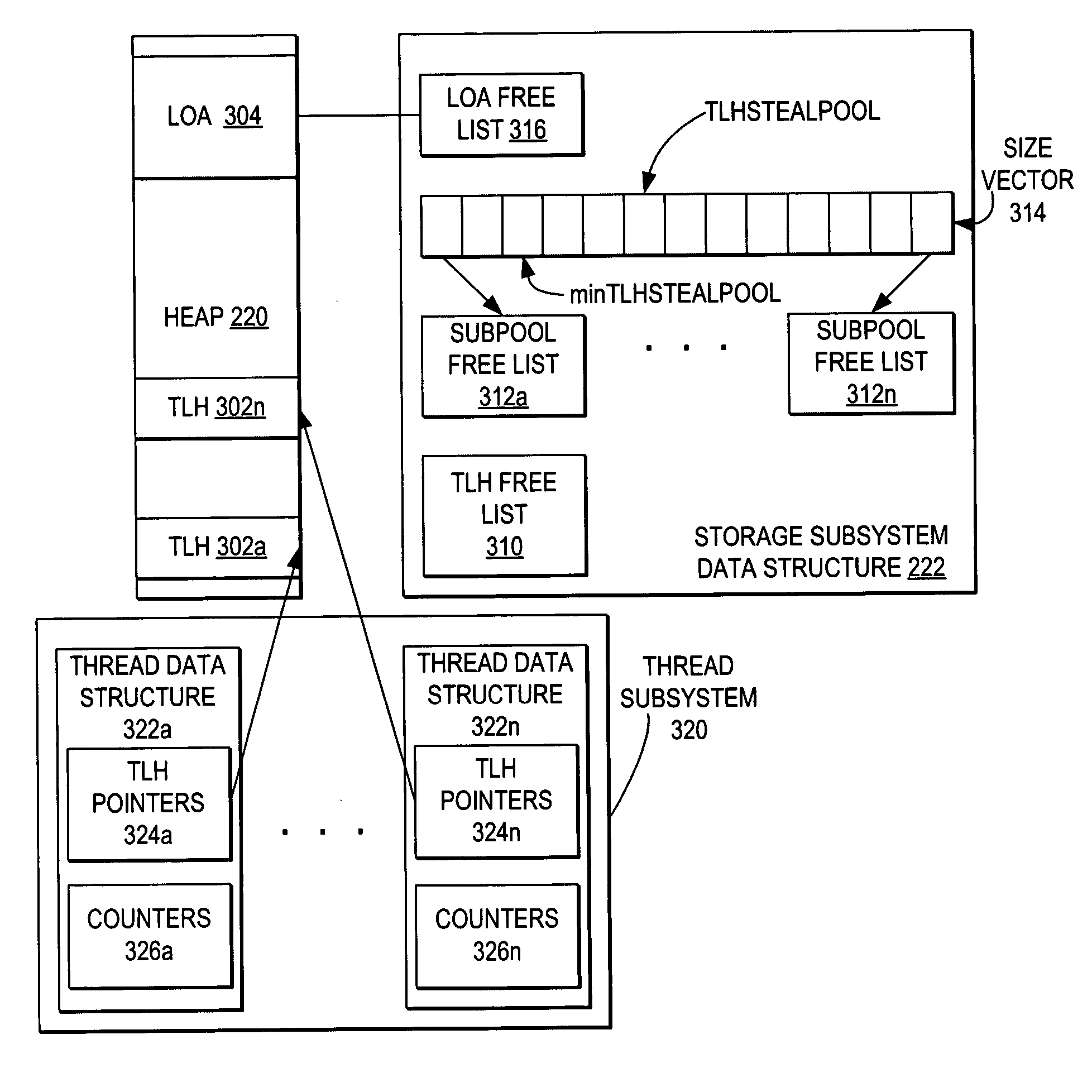

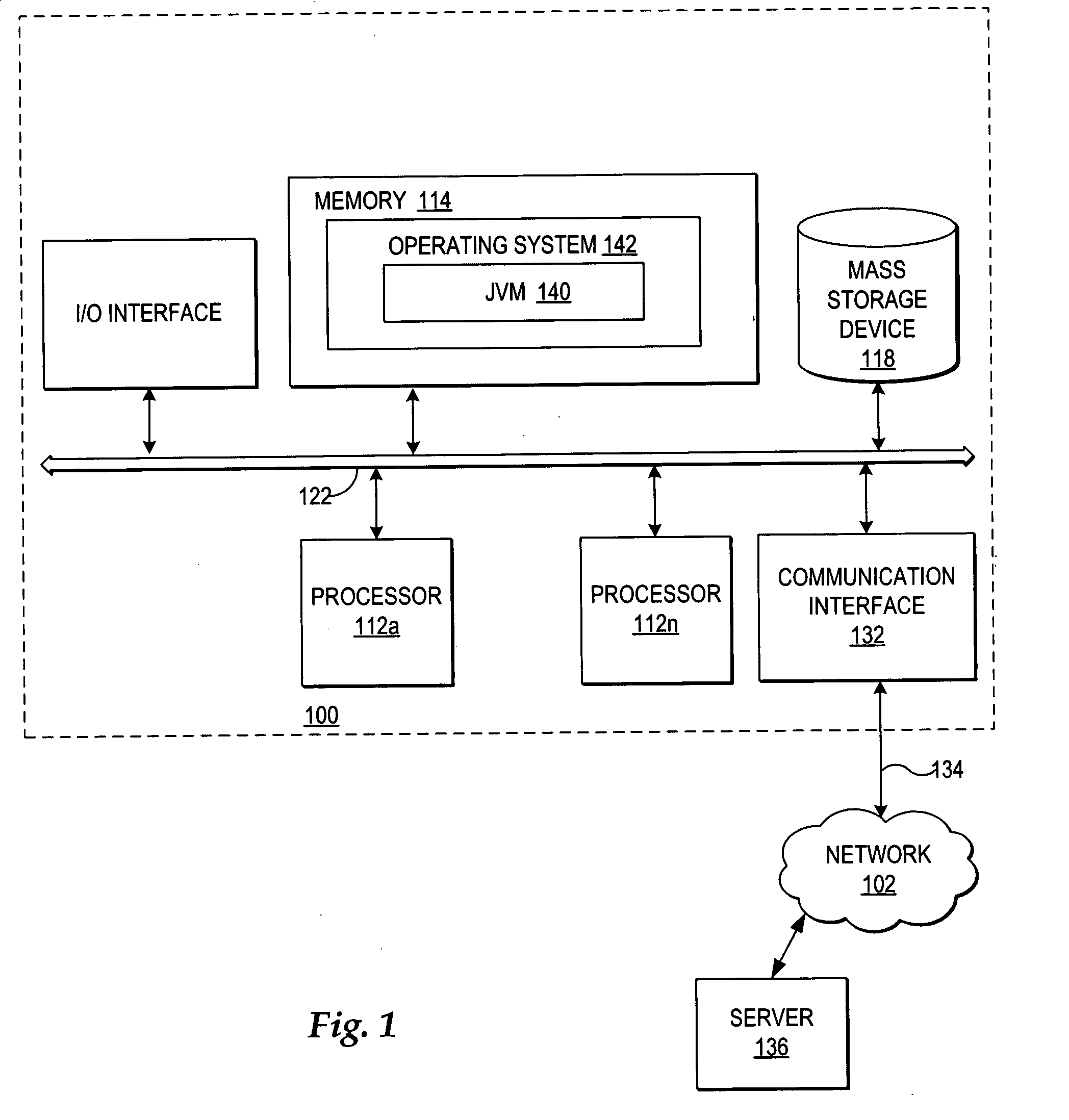

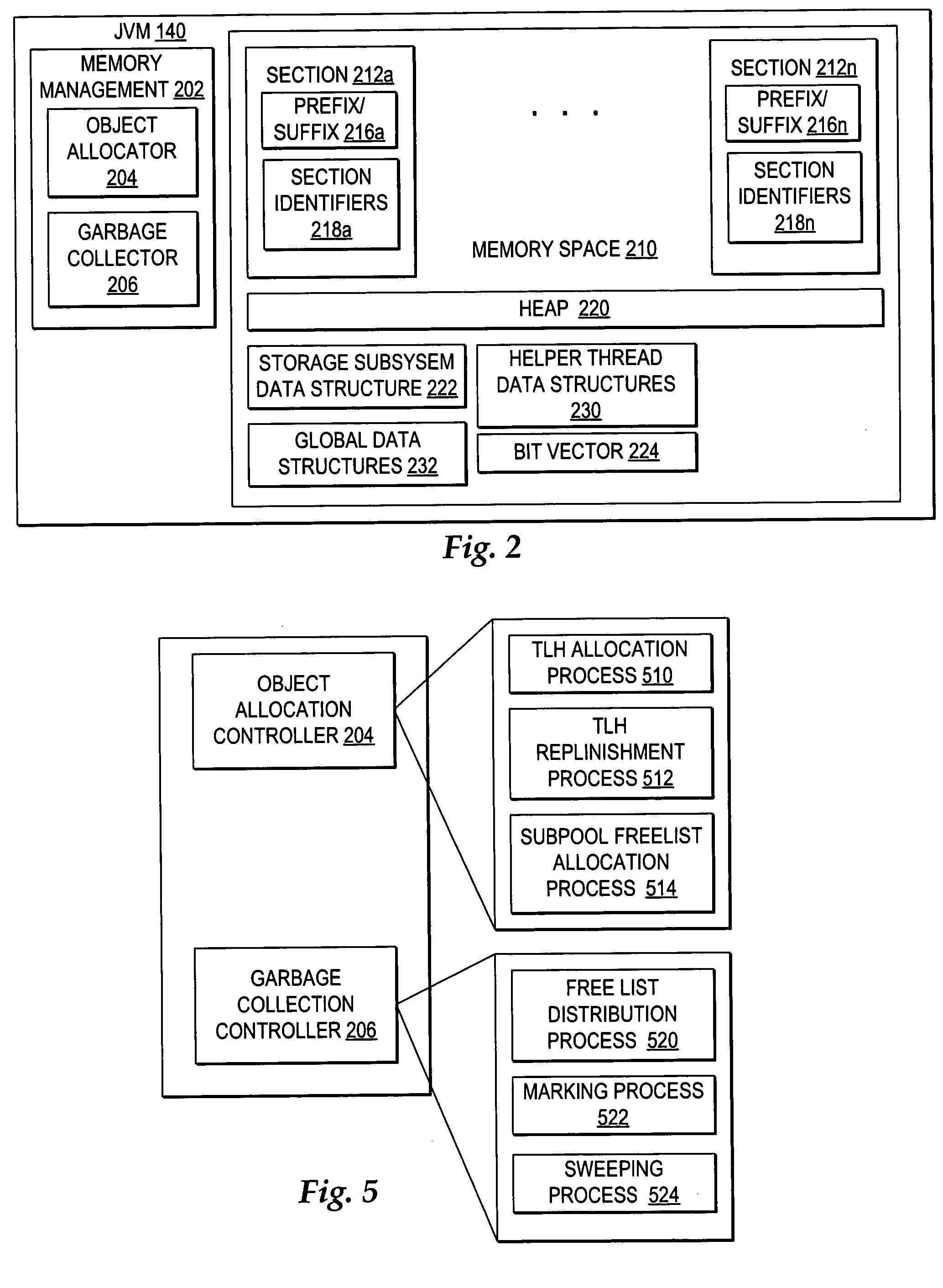

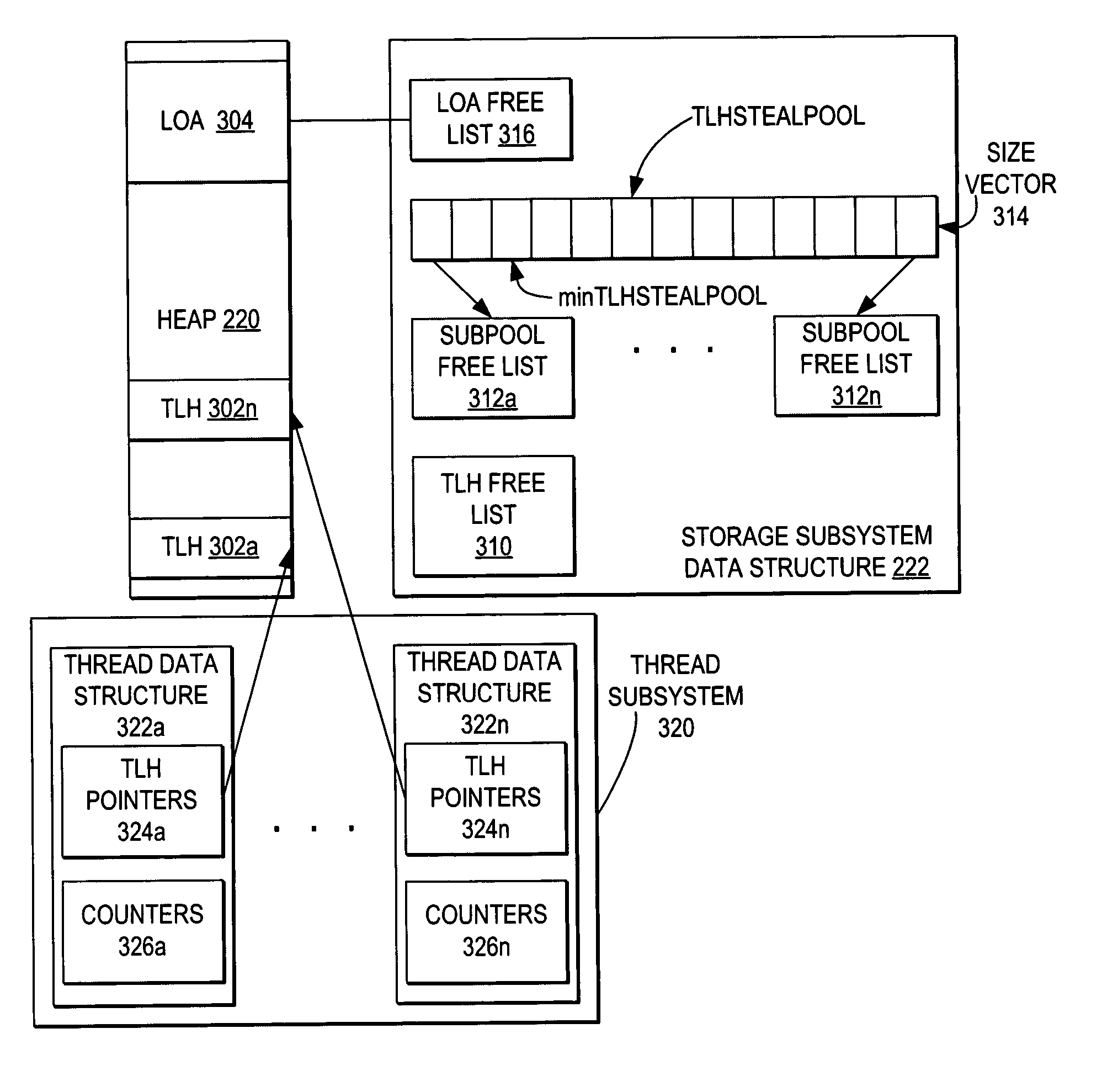

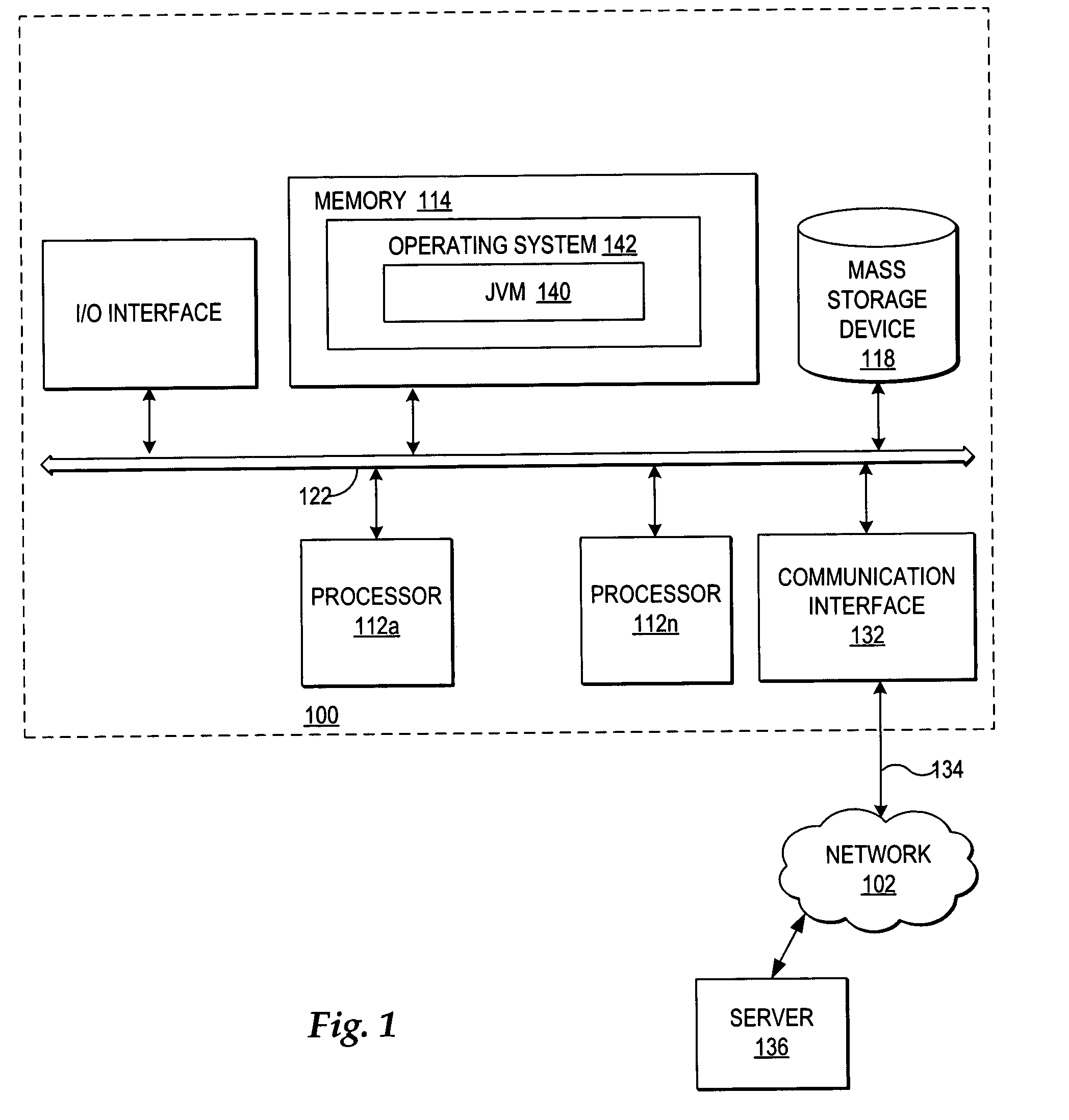

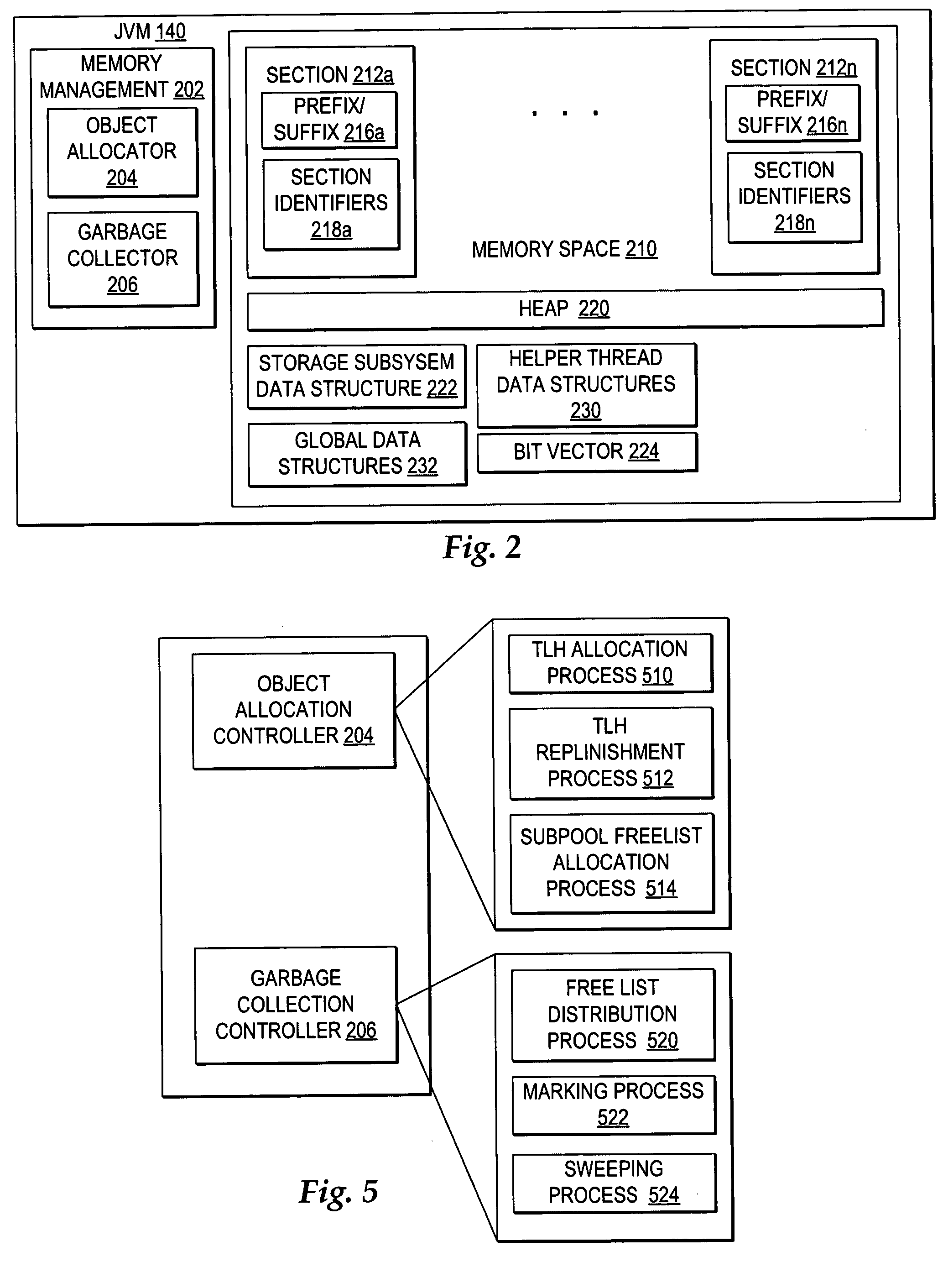

Free item distribution among multiple free lists during garbage collection for more efficient object allocation

InactiveUS20050273568A1Easy to manageEfficient managementData processing applicationsMemory adressing/allocation/relocationParallel computingComputer science

A method, system, and program for improving free item distribution among multiple free lists during garbage collection for more efficient object allocation are provided. A garbage collector predicts future allocation requirements and then distributes free items to multiple subpool free lists and a TLH free list during the sweep phase according to the future allocation requirements. The sizes of subpools and number of free items in subpools are predicted as the most likely to match future allocation requests. In particular, once a subpool free list is filled with the number of free items needed according to the future allocation requirements, any additional free items designated for the subpool free list can be divided into multiple TLH sized free items and placed on the TLH free list. Allocation threads are enabled to acquire free items from the TLH free list and to replenish a current TLH without acquiring heap lock.

Owner:IBM CORP

Virtual Disk Drive System and Method

ActiveUS20070180306A1Useful operationInput/output to record carriersMemory adressing/allocation/relocationRAIDDynamic data

A disk drive system and method capable of dynamically allocating data is provided. The disk drive system may include a RAID subsystem having a pool of storage, for example a page pool of storage that maintains a free list of RAIDs, or a matrix of disk storage blocks that maintain a null list of RAIDs, and a disk manager having at least one disk storage system controller. The RAID subsystem and disk manager dynamically allocate data across the pool of storage and a plurality of disk drives based on RAID-to-disk mapping. The RAID subsystem and disk manager determine whether additional disk drives are required, and a notification is sent if the additional disk drives are required. Dynamic data allocation and data progression allow a user to acquire a disk drive later in time when it is needed. Dynamic data allocation also allows efficient data storage of snapshots / point-in-time copies of virtual volume pool of storage, instant data replay and data instant fusion for data backup, recovery etc., remote data storage, and data progression, etc.

Owner:DELL INT L L C

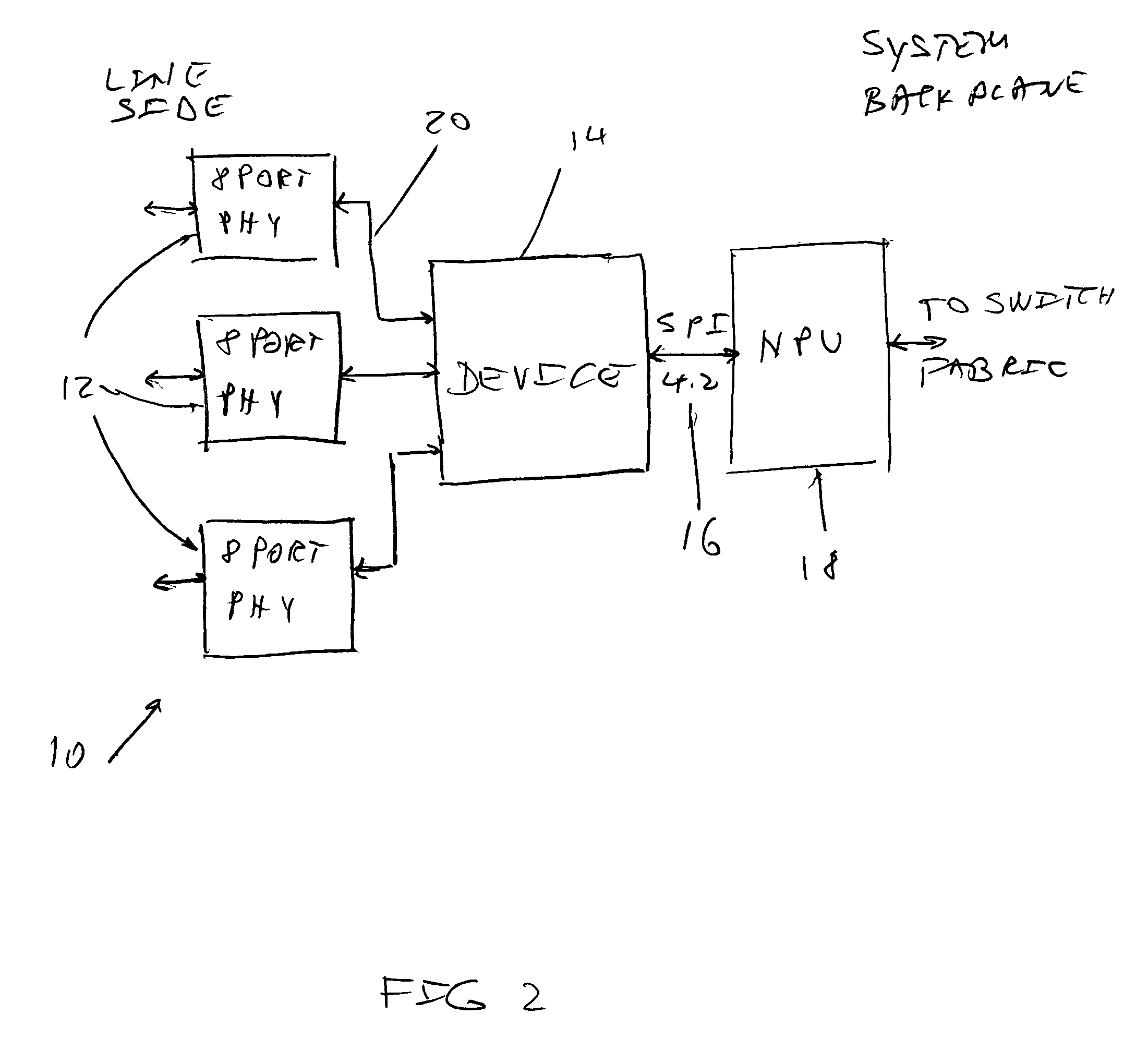

Device and method for managing oversubscription in a network

Owner:MADISON KEN +4

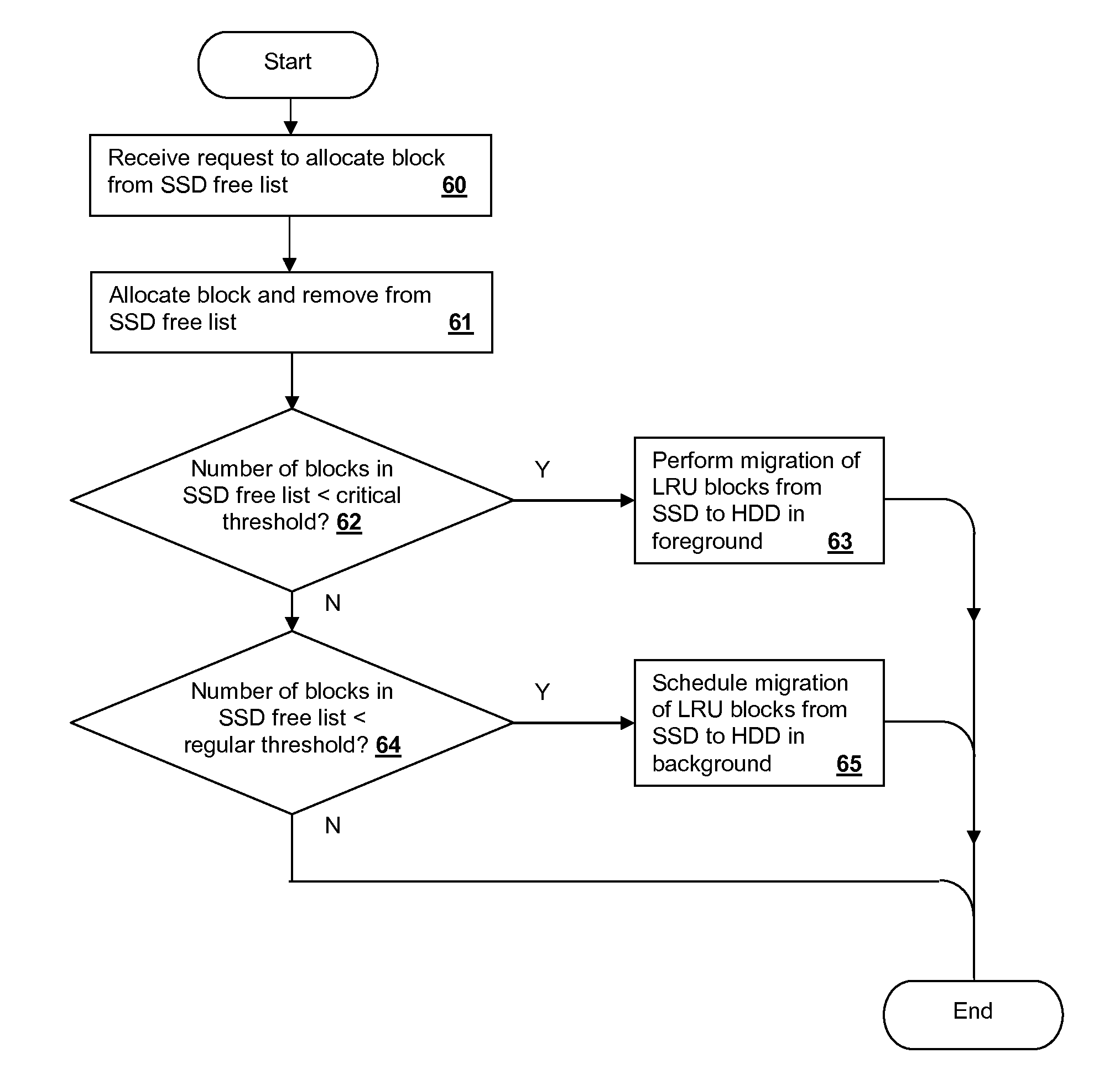

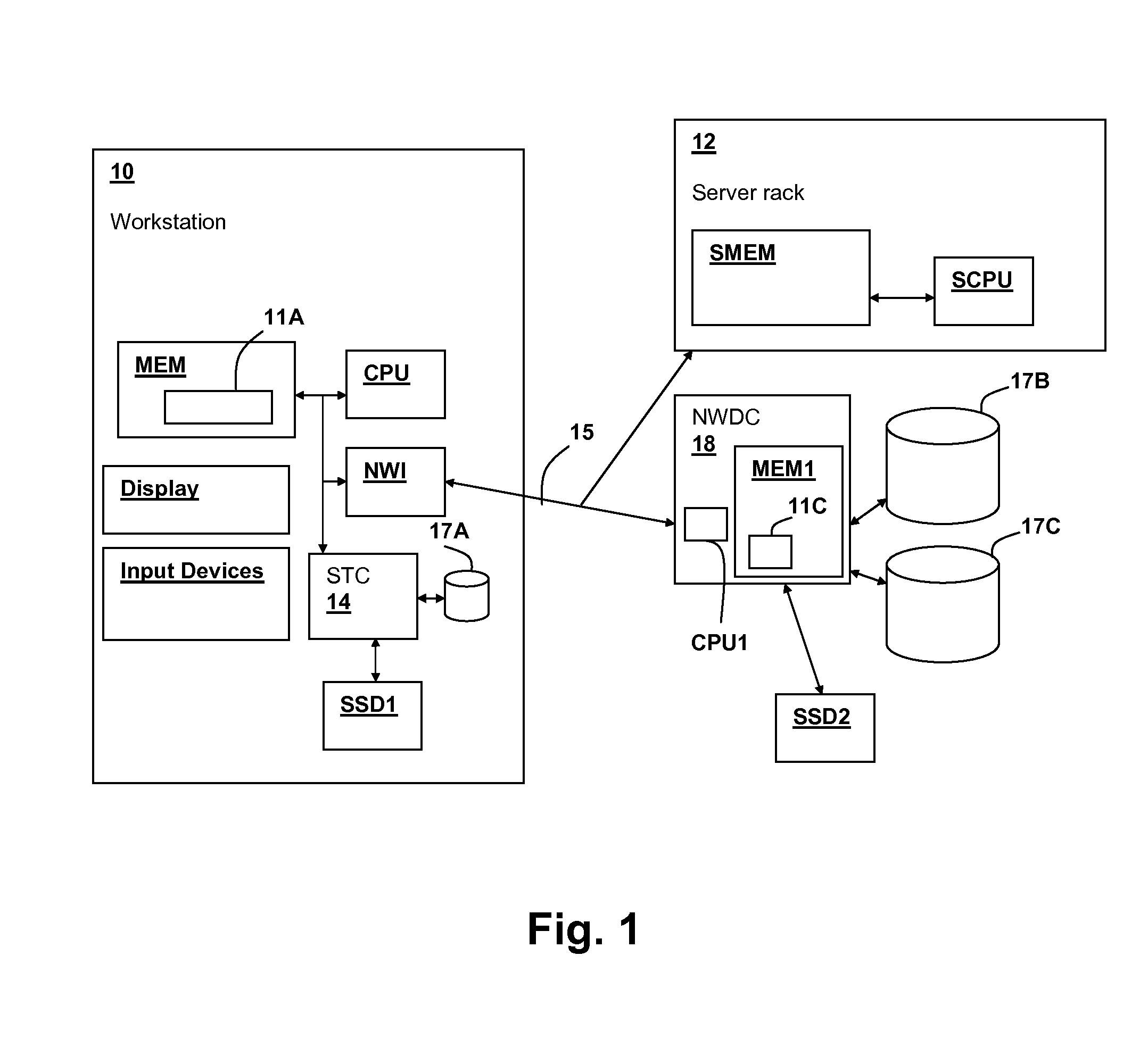

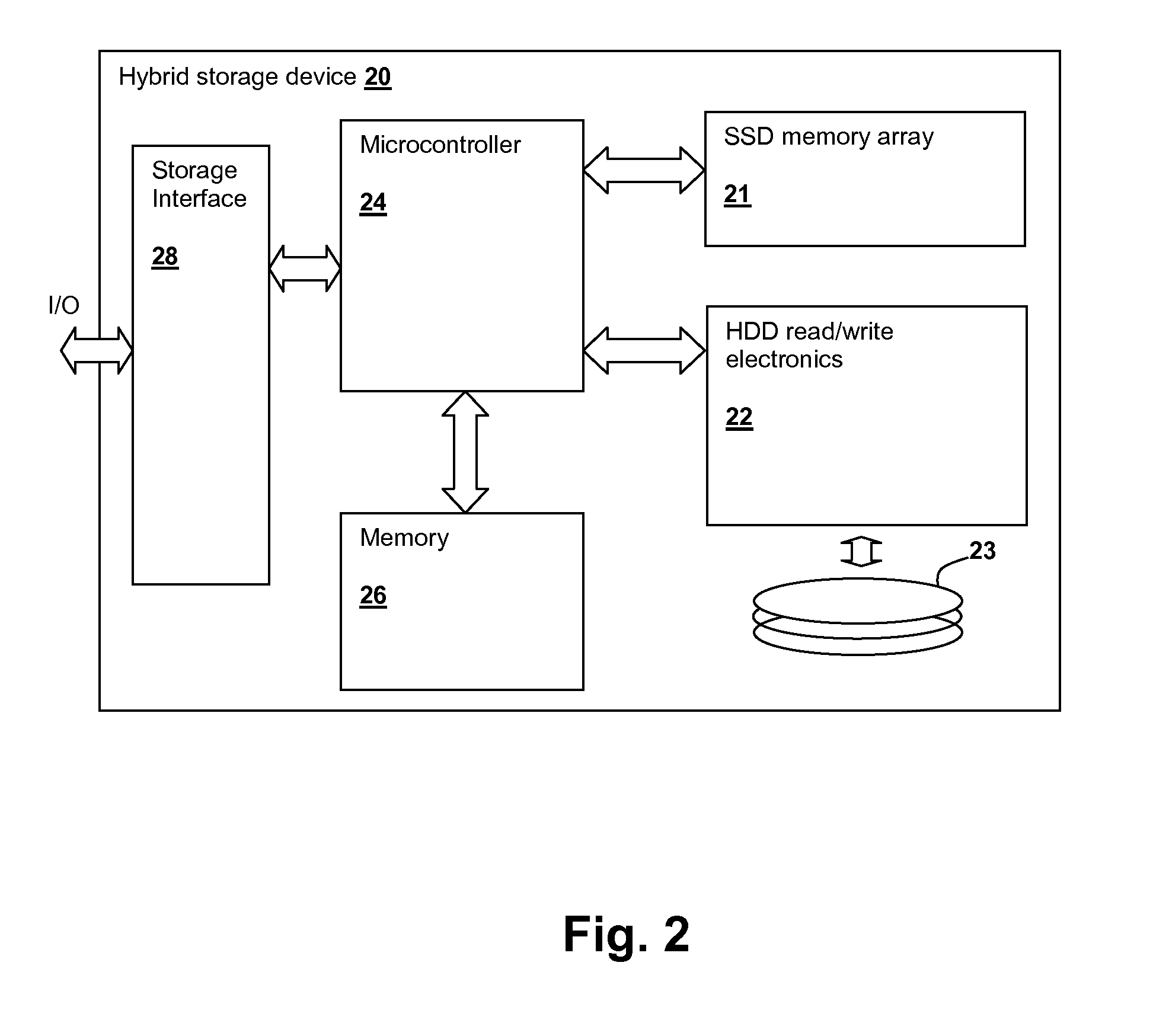

Hybrid storage subsystem with mixed placement of file contents

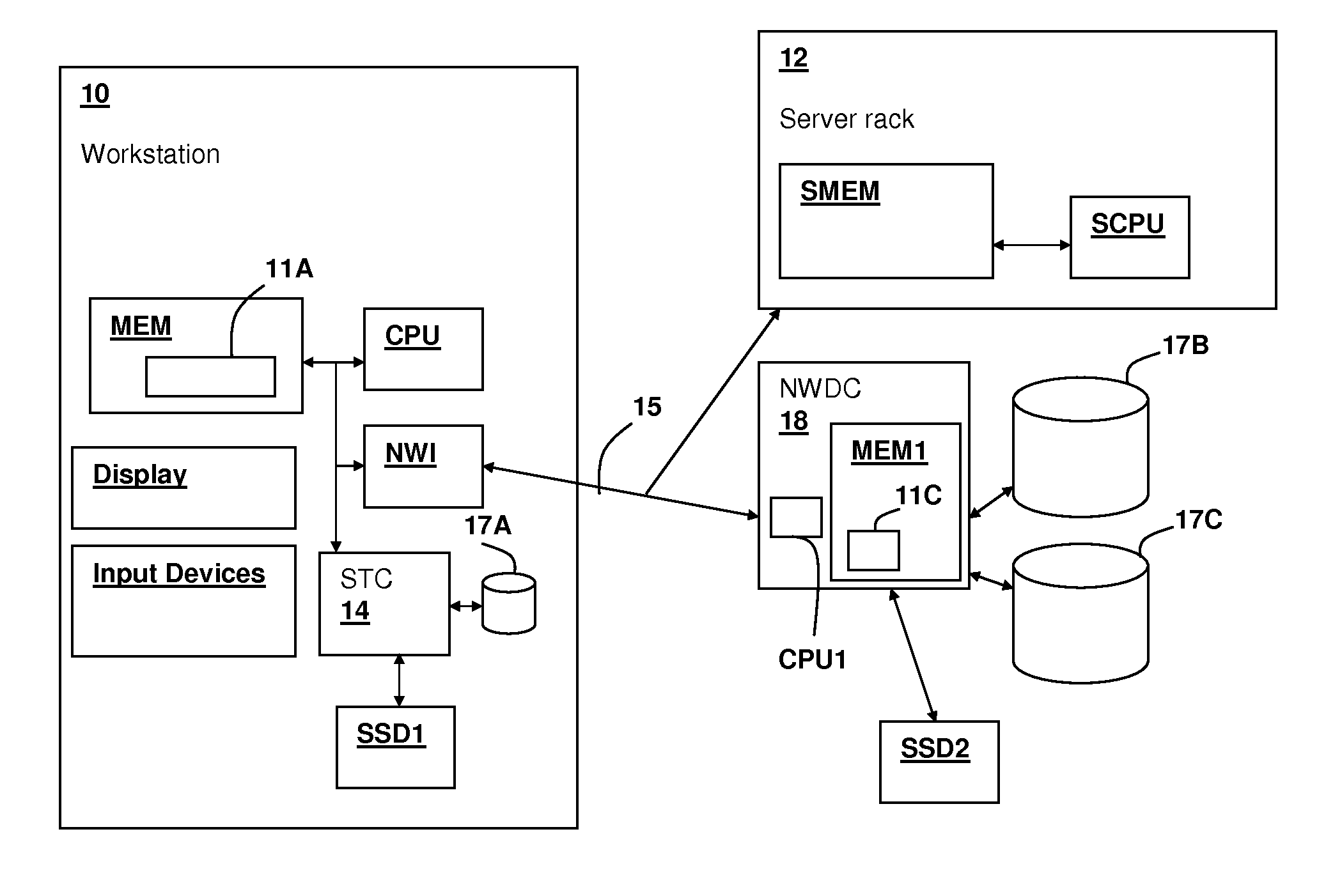

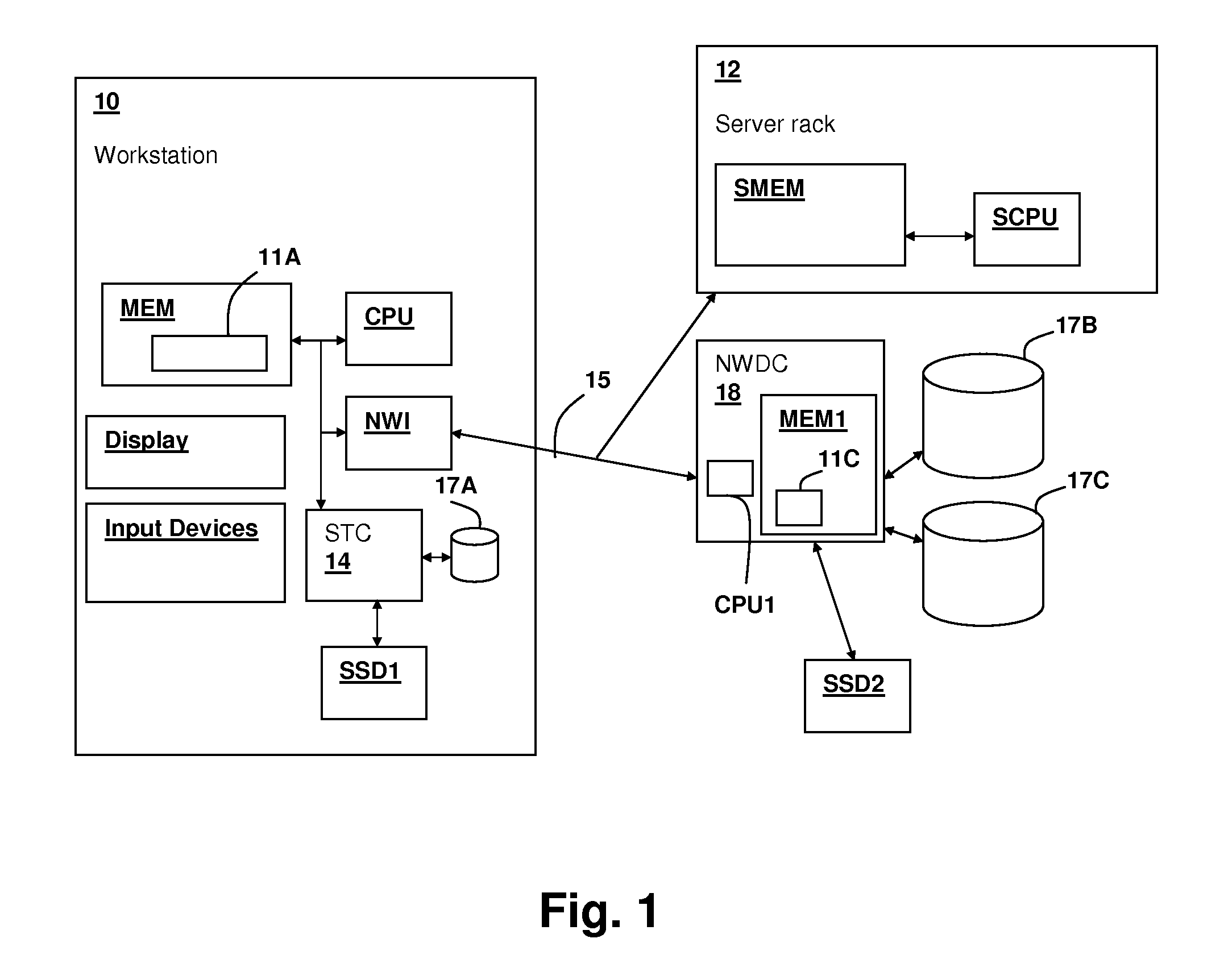

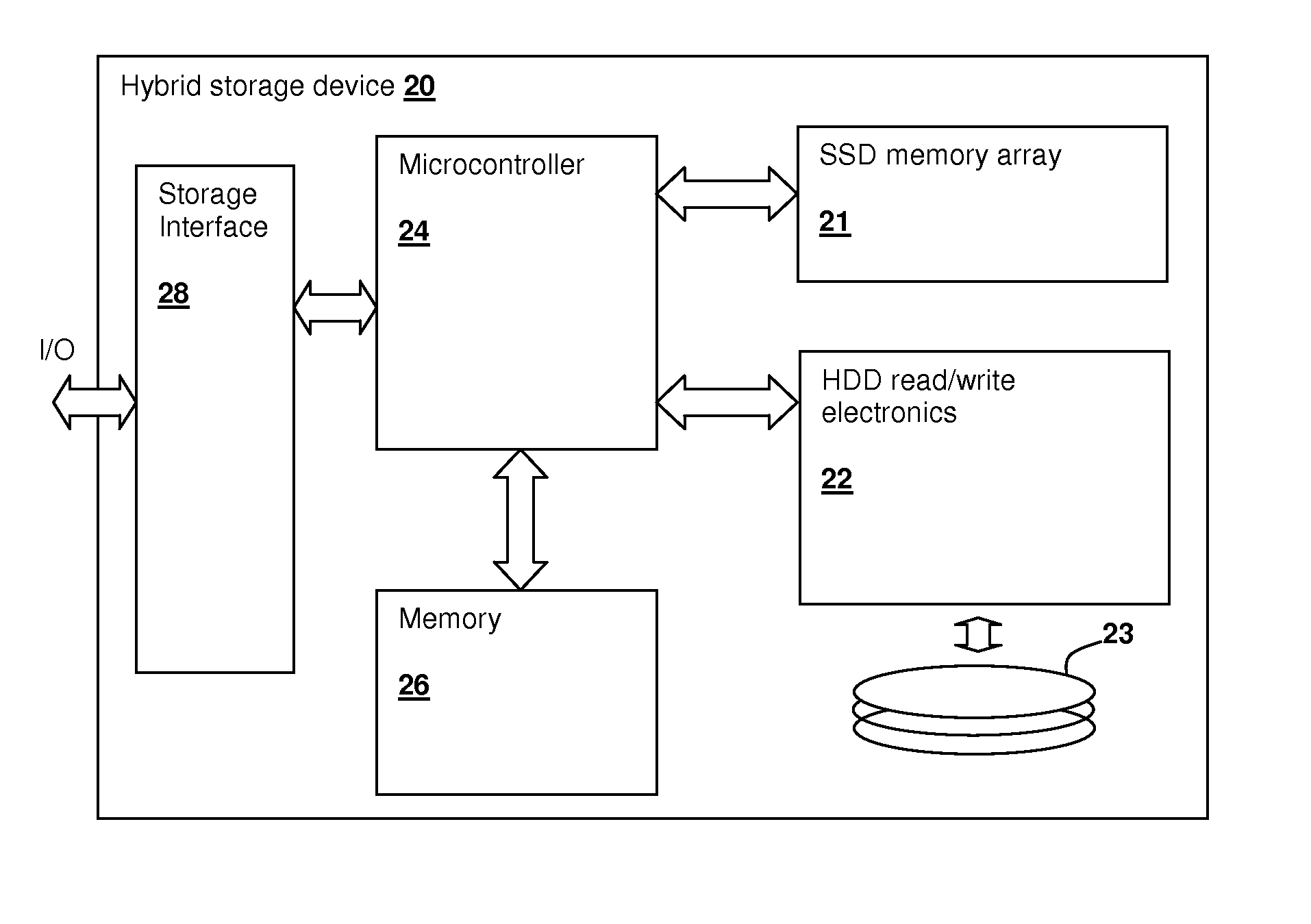

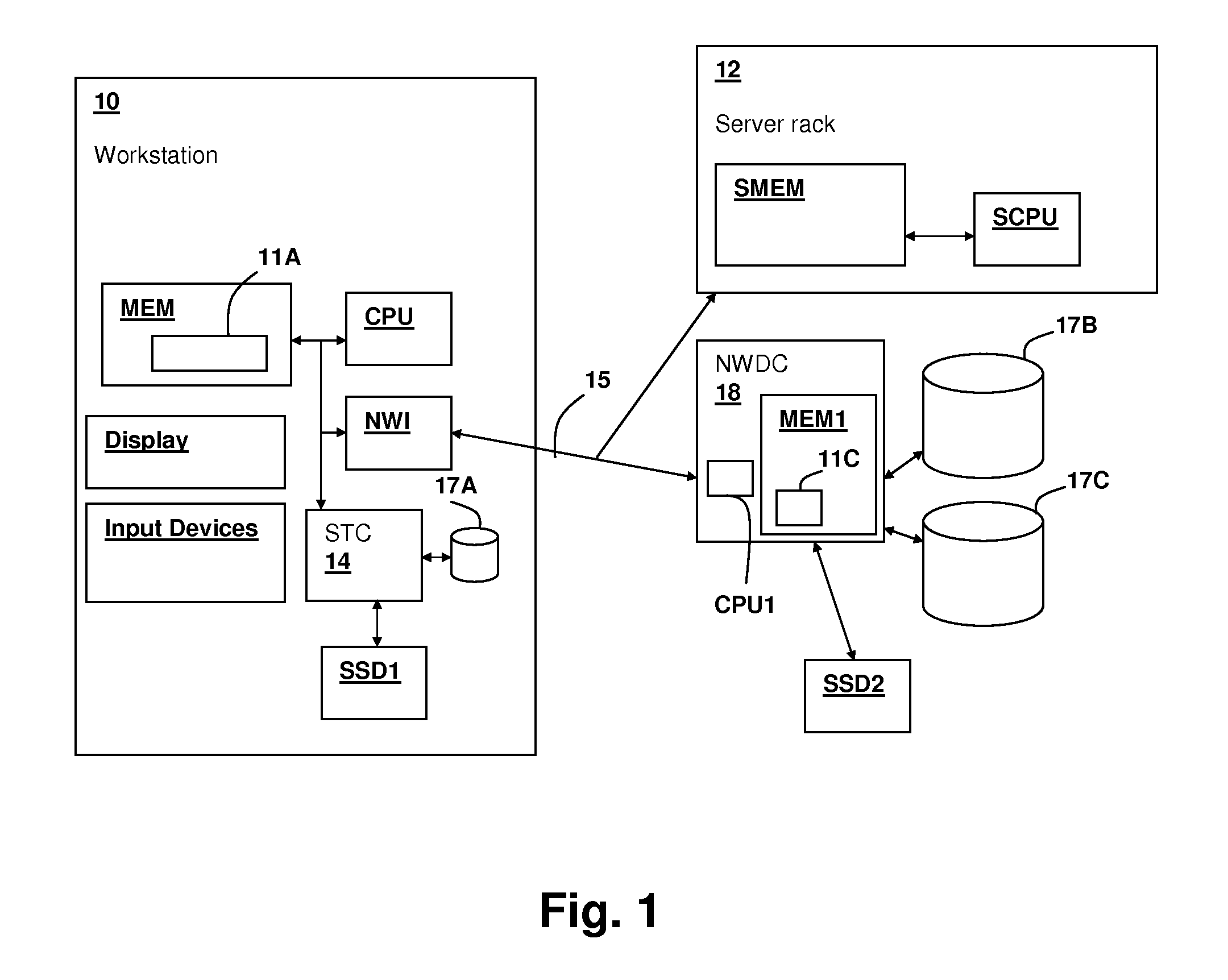

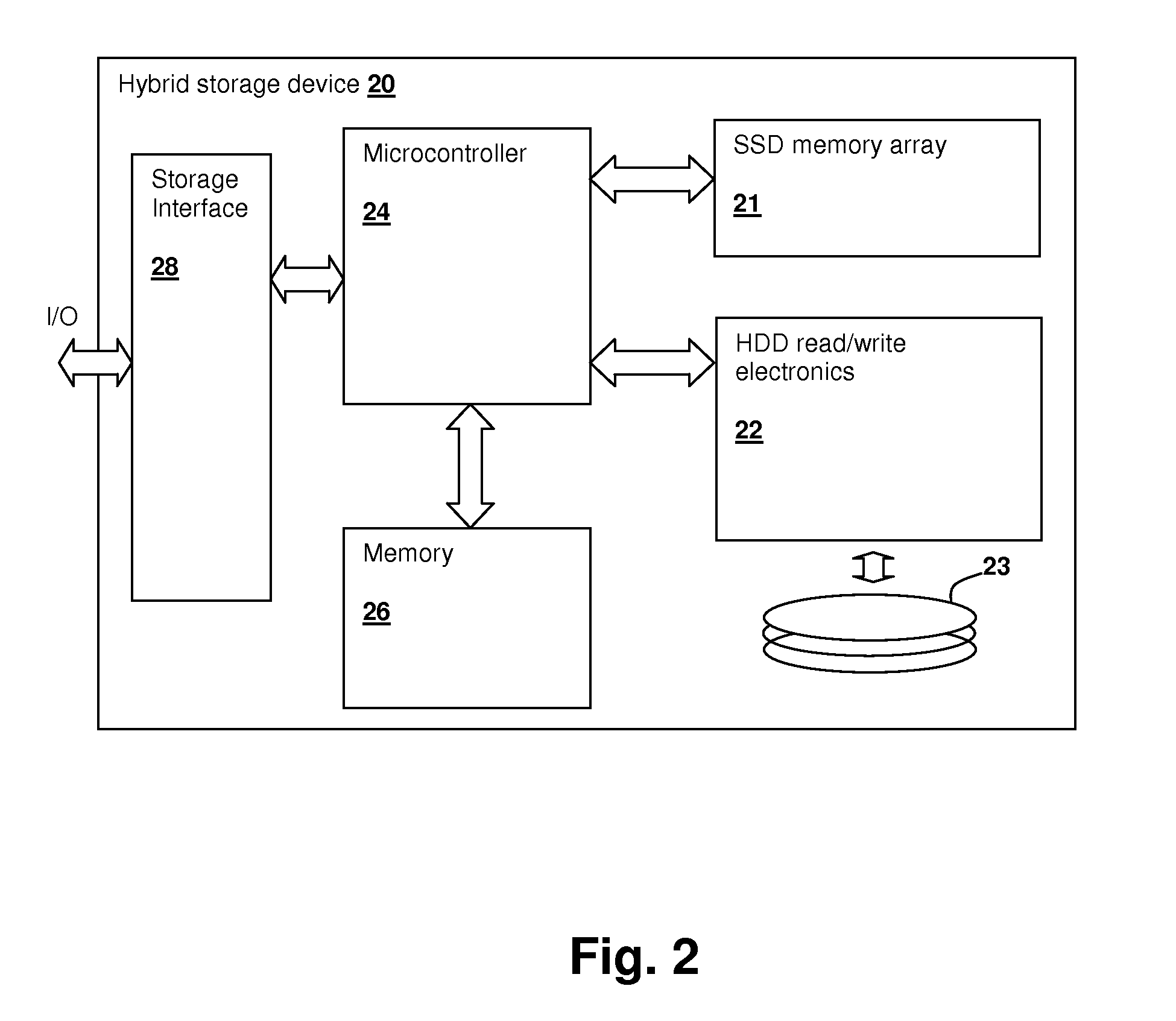

InactiveUS20110153931A1Digital data information retrievalMemory adressing/allocation/relocationHard disc driveFile system

A storage subsystem combining solid state drive (SSD) and hard disk drive (HDD) technologies provides low access latency and low complexity. Separate free lists are maintained for the SSD and the HDD and blocks of file system data are stored uniquely on either the SSD or the HDD. When a read access is made to the subsystem, if the data is present on the SSD, the data is returned, but if the block is present on the HDD, it is migrated to the SSD and the block on the HDD is returned to the HDD free list. On a write access, if the block is present in the either the SSD or HDD, the block is overwritten, but if the block is not present in the subsystem, the block is written to the HDD.

Owner:IBM CORP

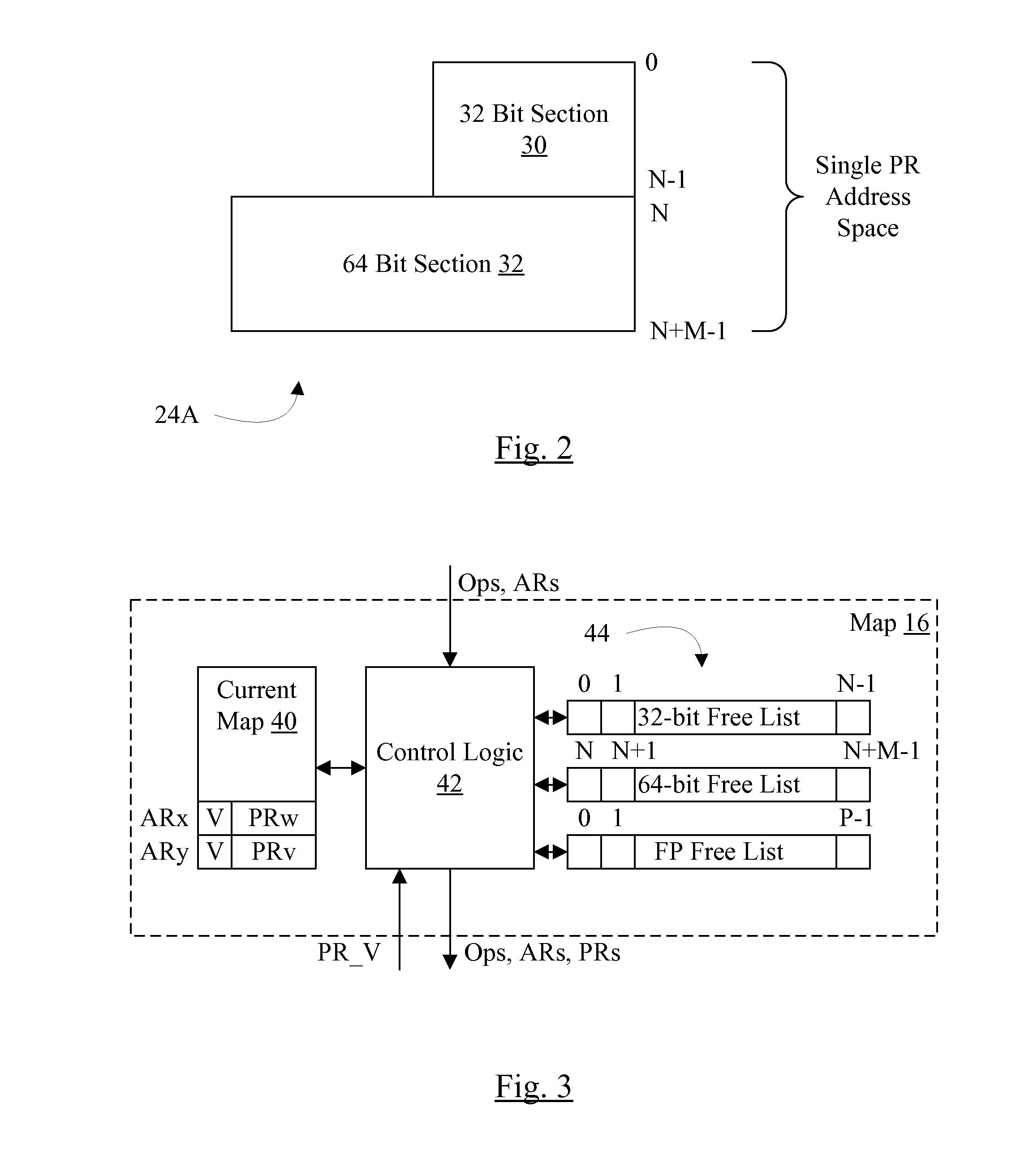

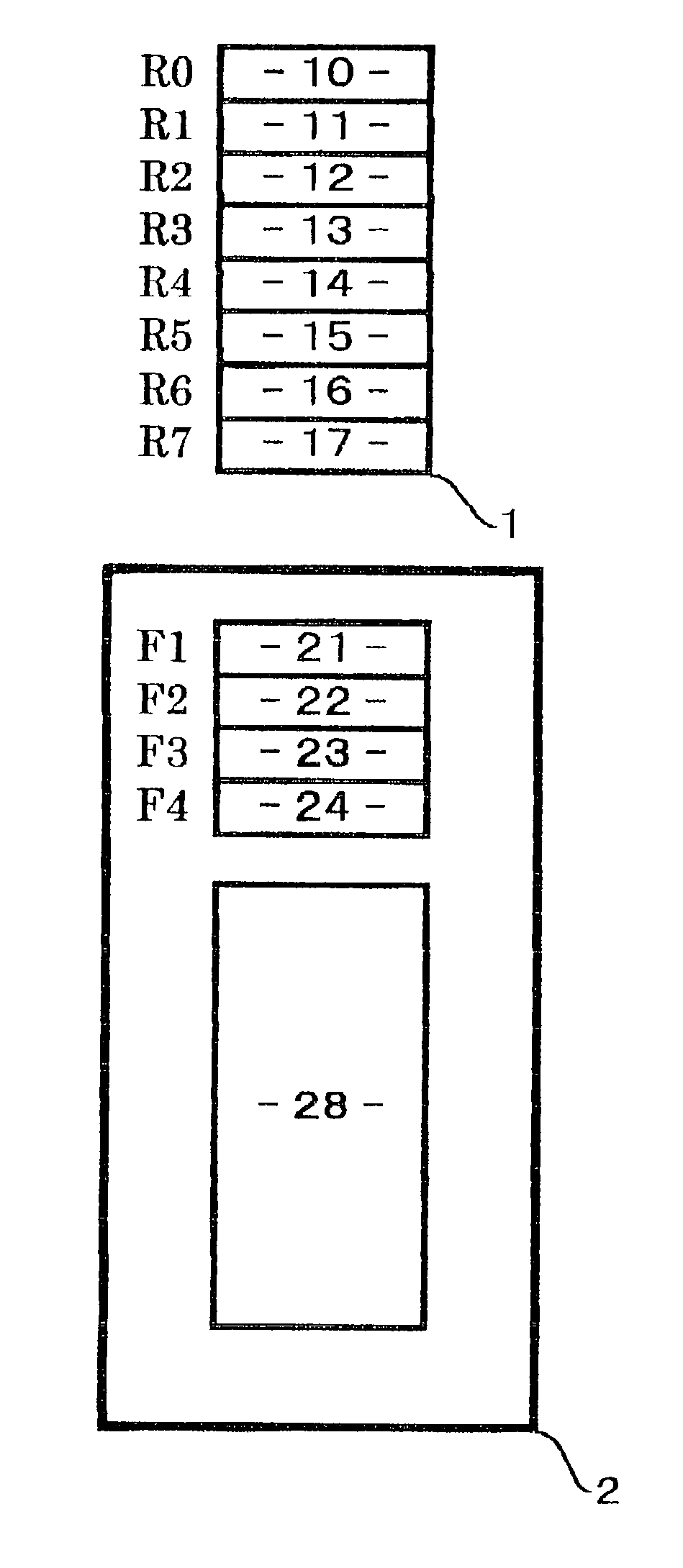

Split Register File for Operands of Different Sizes

ActiveUS20150134935A1Consume integrated circuit areaDigital computer detailsSpecific program execution arrangementsProcessor registerOperand

In an embodiment, a processor includes a register file having multiple widths corresponding to different operands sizes of a given data type implemented by the processor. For example, the integer register file may have 32 bit and 64 bit widths for 32 and 64 bit operand sizes. The register file may have a section of registers for each operand size, and the map unit may allocate registers from the appropriate section for each instruction operation based on the operand size of that instruction operation. The register file may consume less integrated circuit area than another register file having the same number of registers, all of which are implemented at the largest operand size. In some embodiments, only the register file and the map unit (specifically the free list management logic in the map unit) are changed to implement the multiple-width register file.

Owner:APPLE INC

Free memory manager scheme and cache

Free memory can be managed by creating a free list having entries with address of free memory location. A portion of this free list can then be cached in a cache that includes an upper threshold and a lower threshold. Additionally, a plurality of free lists are created for a plurality of memory banks in a plurality of memory channels. A free list is created for each memory bank in each memory channel. Entries from these free lists are written to a global cache. The entries written to the global cache are distributed between the memory channels and memory banks.

Owner:TELEFON AB LM ERICSSON (PUBL)

Hybrid storage subsystem with mixed placement of file contents

InactiveUS8438334B2Digital data information retrievalSpecial data processing applicationsHard disc driveFile system

A storage subsystem combining solid state drive (SSD) and hard disk drive (HDD) technologies provides low access latency and low complexity. Separate free lists are maintained for the SSD and the HDD and blocks of file system data are stored uniquely on either the SSD or the HDD. When a read access is made to the subsystem, if the data is present on the SSD, the data is returned, but if the block is present on the HDD, it is migrated to the SSD and the block on the HDD is returned to the HDD free list. On a write access, if the block is present in the either the SSD or HDD, the block is overwritten, but if the block is not present in the subsystem, the block is written to the HDD.

Owner:IBM CORP

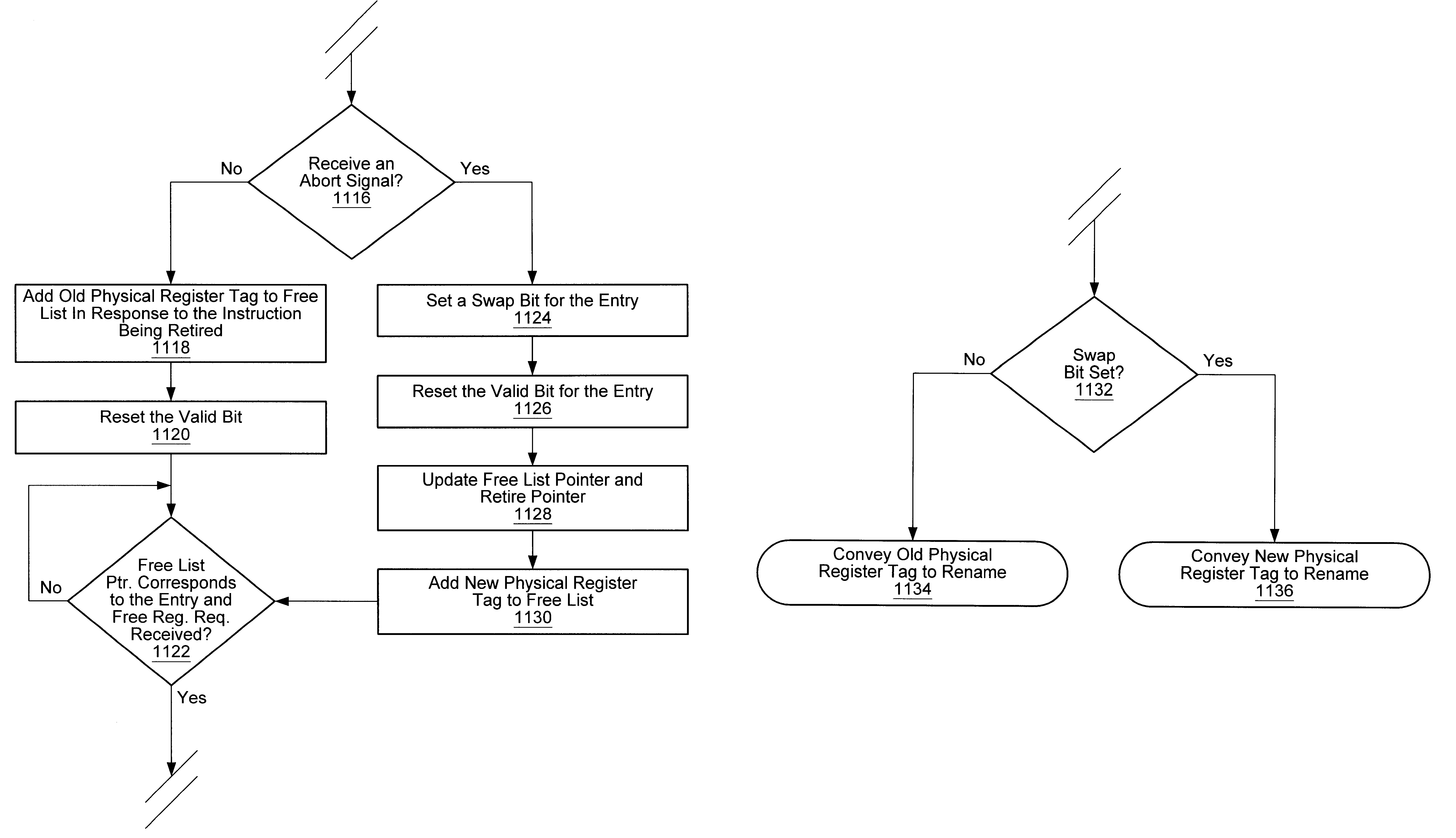

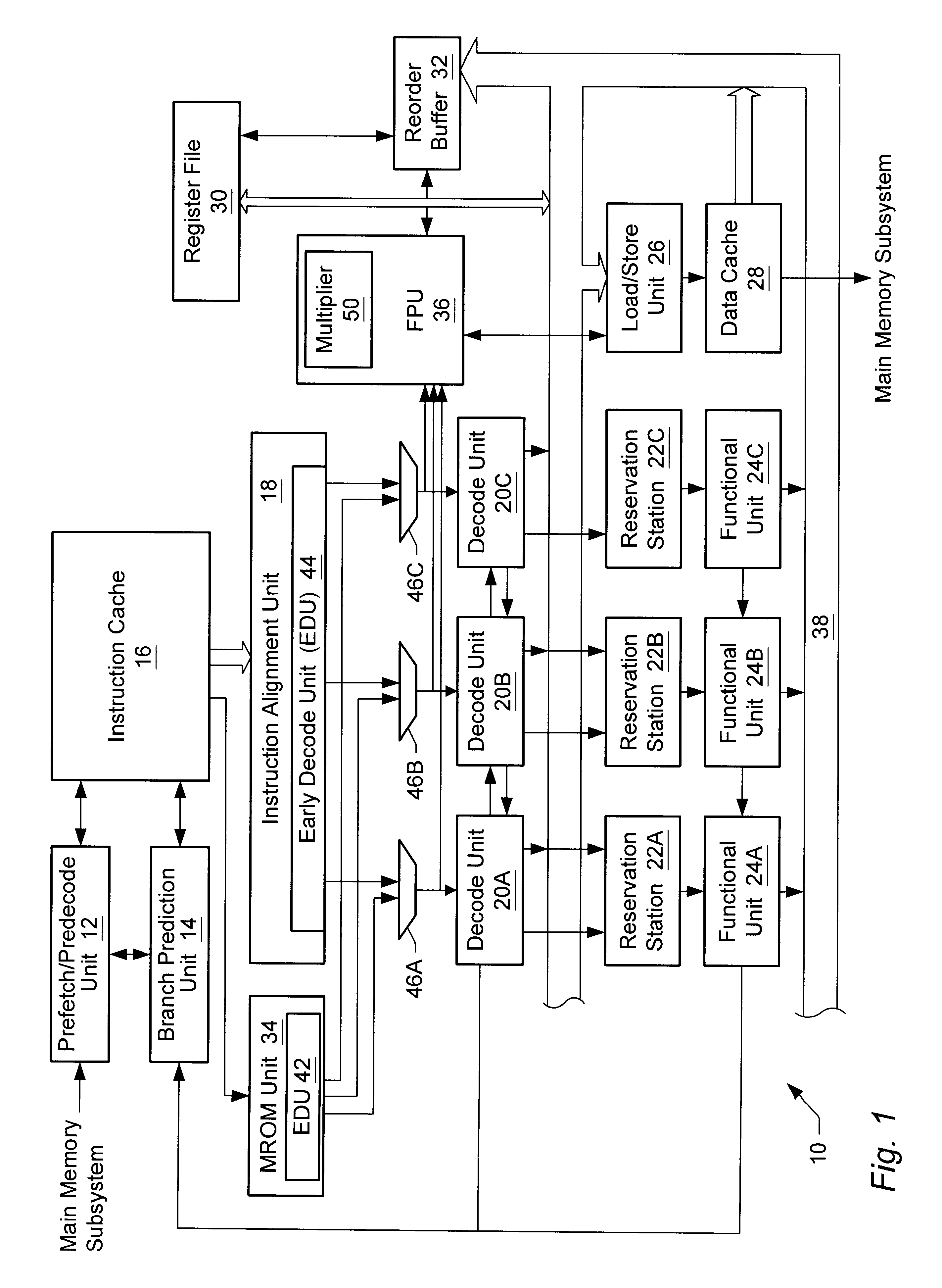

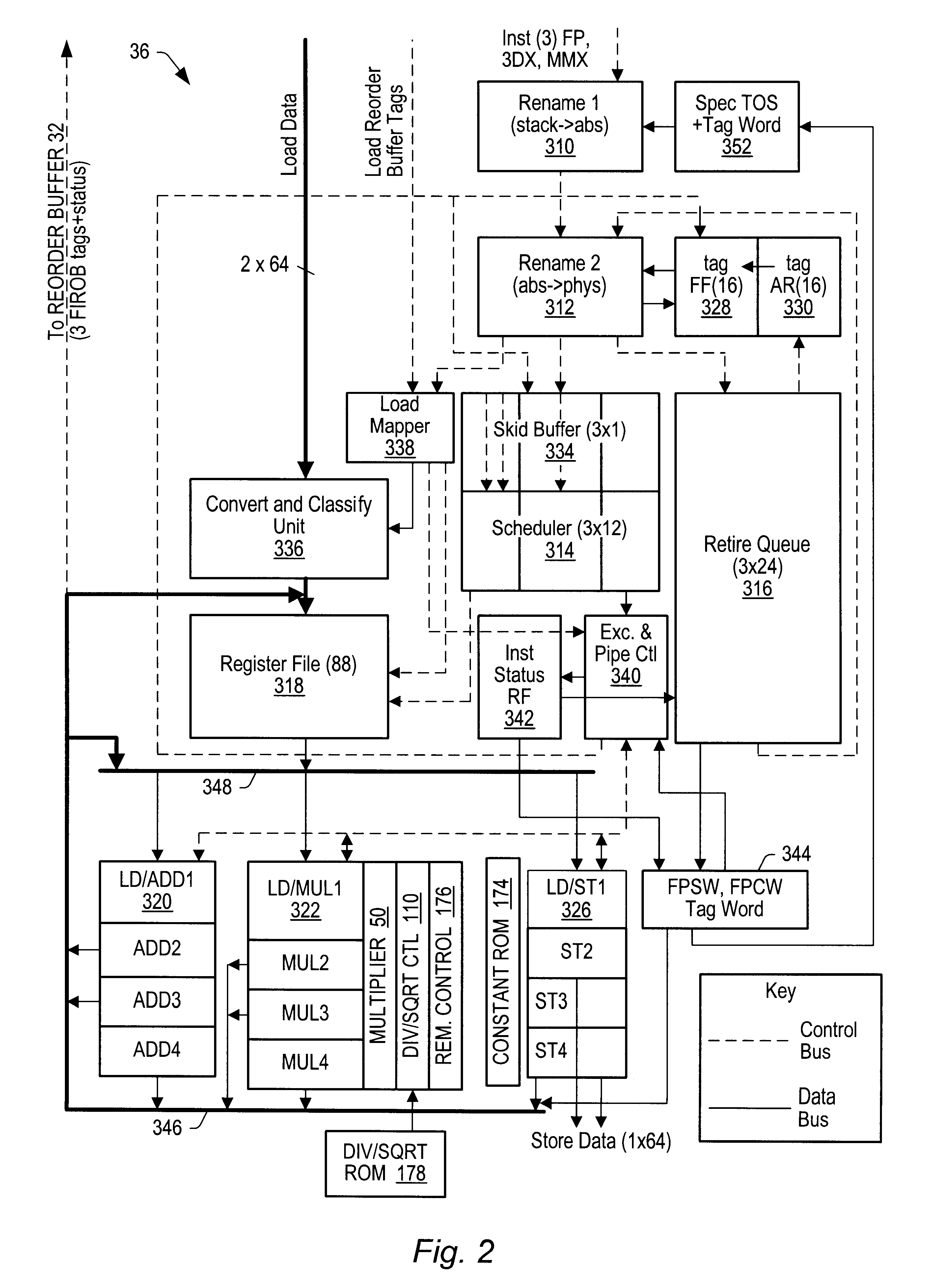

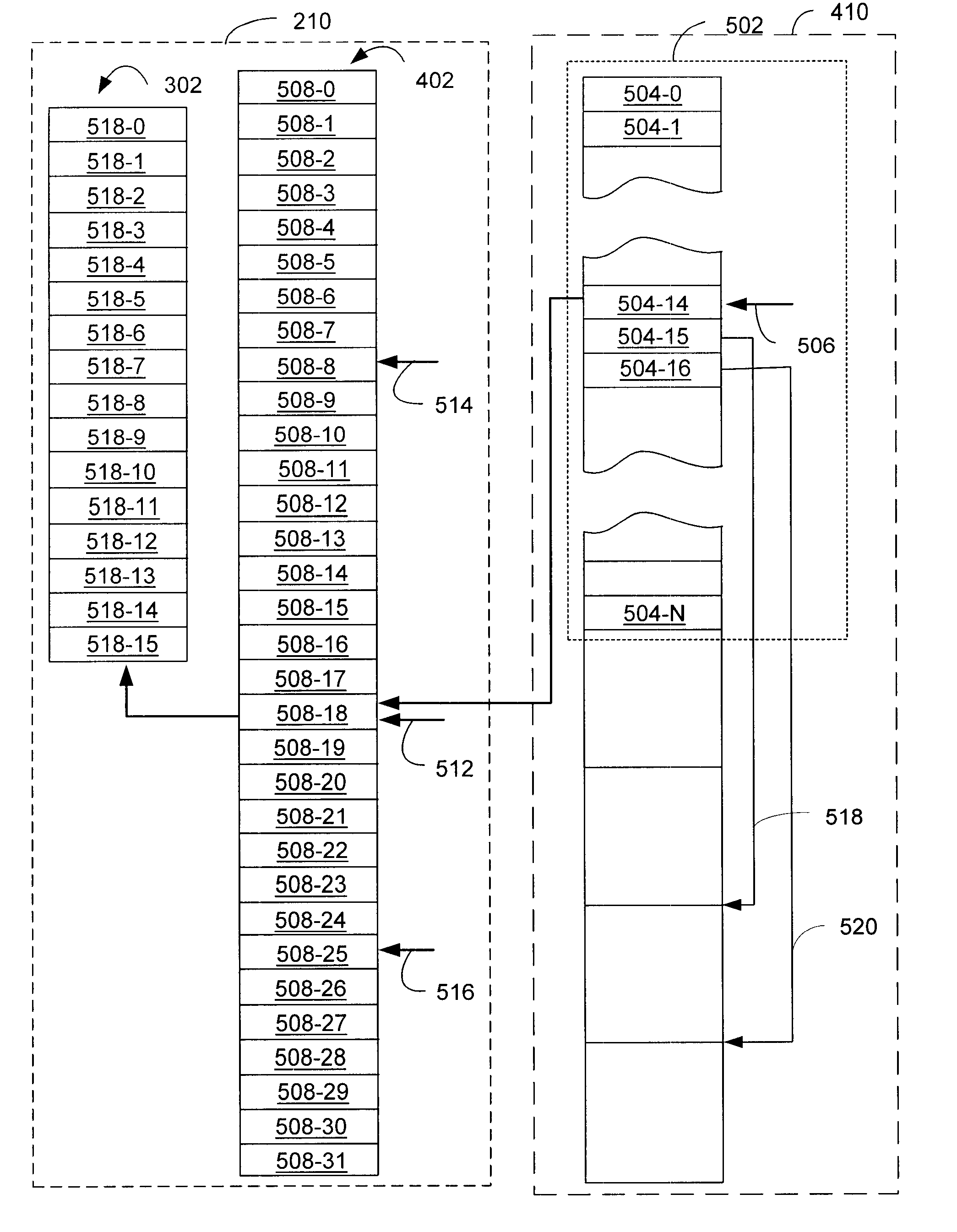

System for implementing a register free-list by using swap bit to select first or second register tag in retire queue

InactiveUS6425072B1Digital computer detailsConcurrent instruction executionProcessor registerExecution unit

An apparatus and method for implementing a register free list scheme is provided. An instruction received in an execution unit can be assigned an absolute register number as its destination register. A new physical register tag from a free list can be assigned to the absolute register number and a tag future file can be updated with the new physical register tag. The old physical register tag can be read from the tag future file and stored in a retire queue entry corresponding to the instruction along with the new physical register tag and an architectural register identifier corresponding to the absolute register number. A valid bit corresponding to the entry can be set in response to the entry being written. In response to an abort signal, a swap bit corresponding to the entry can be set, the valid bit can be reset, and the new physical register tag can be conveyed to a rename unit in response to receiving a free register request. In response to the entry being retired prior to receiving an abort signal, the valid bit corresponding to the entry can be reset and the old physical register tag can be conveyed to a rename unit in response to receiving a free register request.

Owner:ADVANCED MICRO DEVICES INC

Free memory manager scheme and cache

Owner:TELEFON AB LM ERICSSON (PUBL)

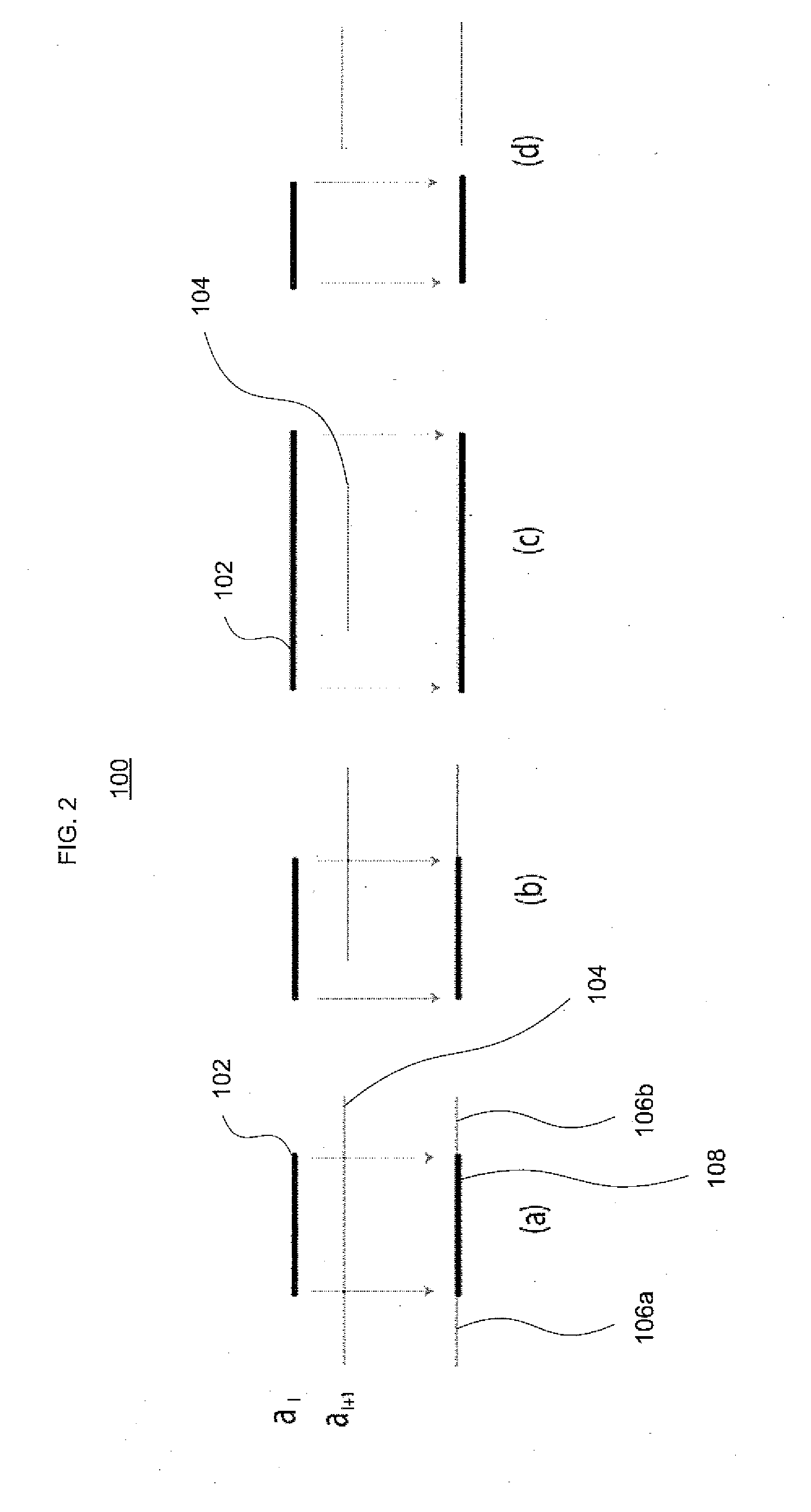

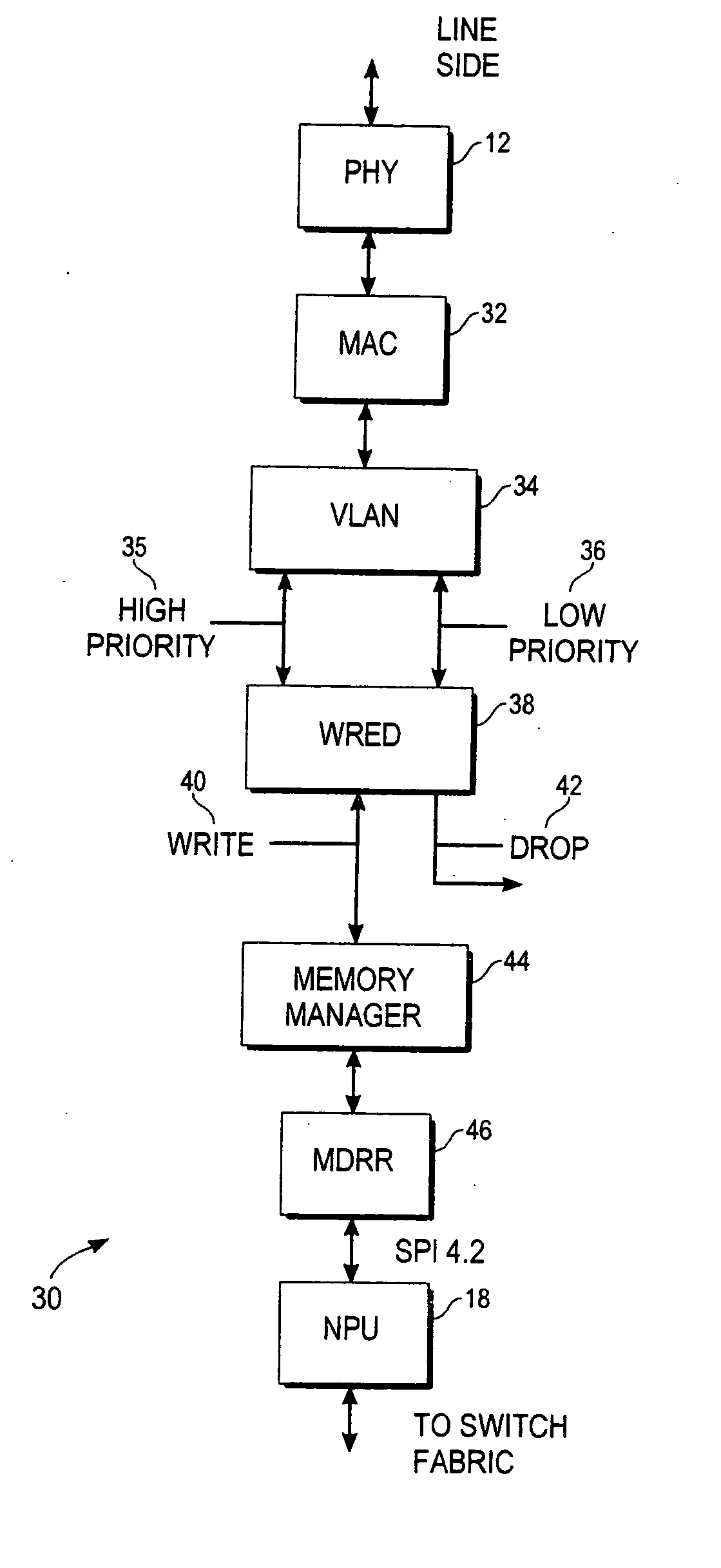

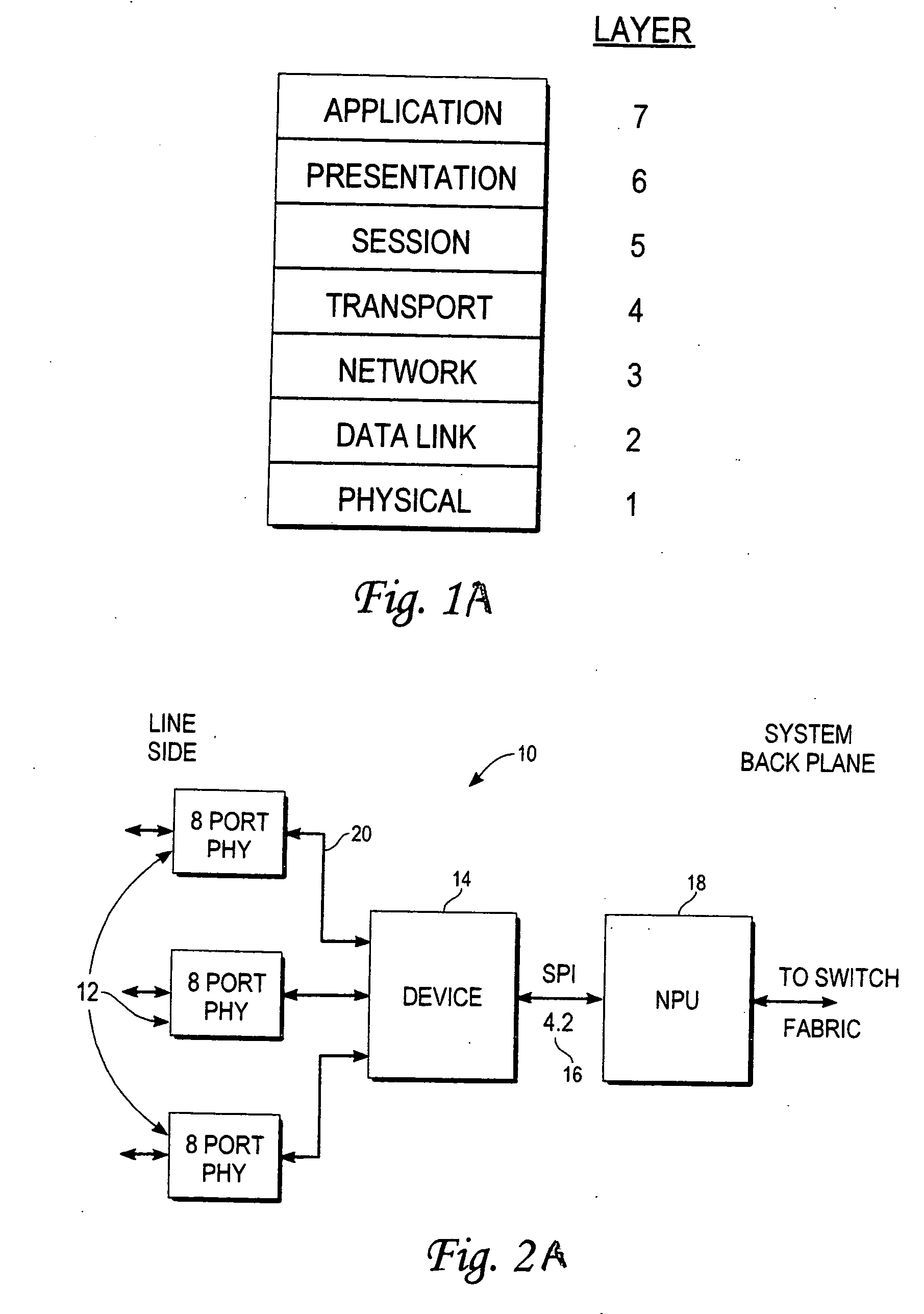

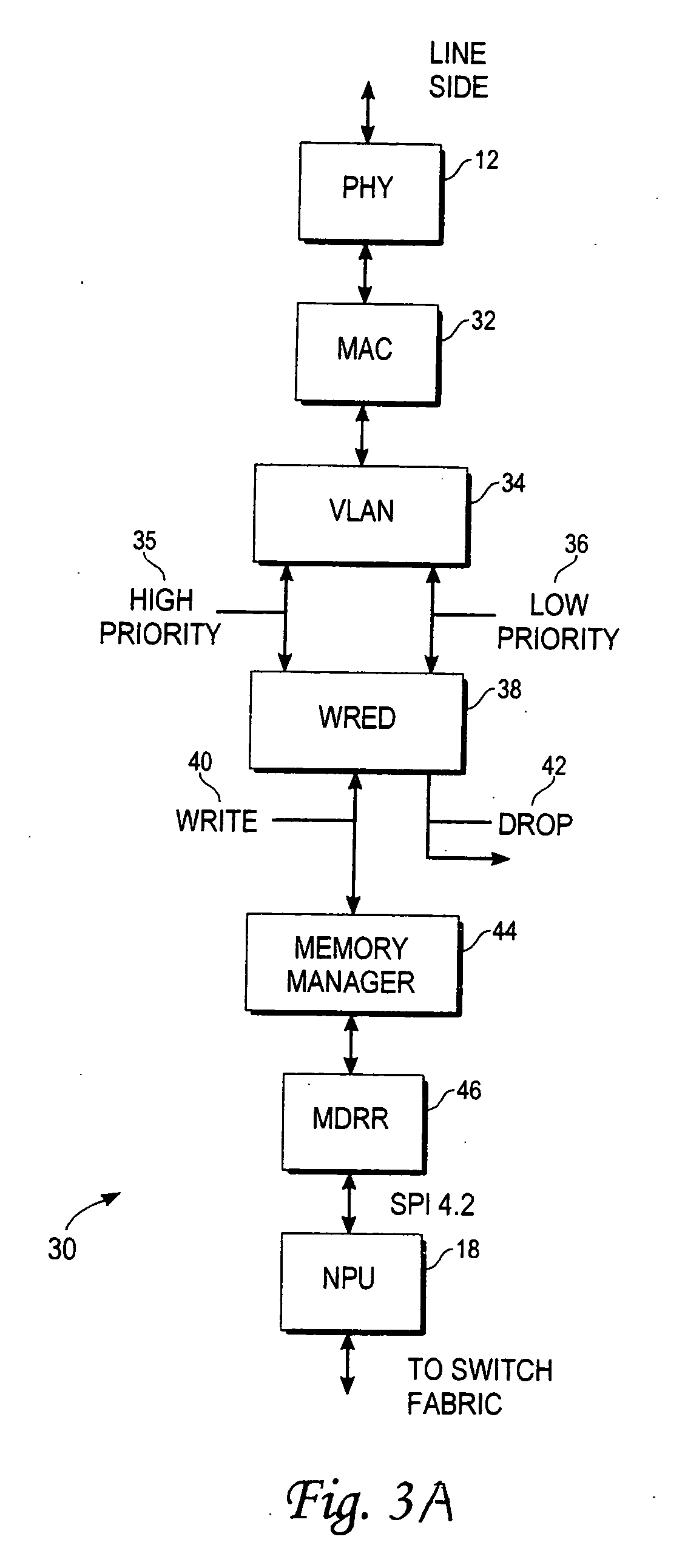

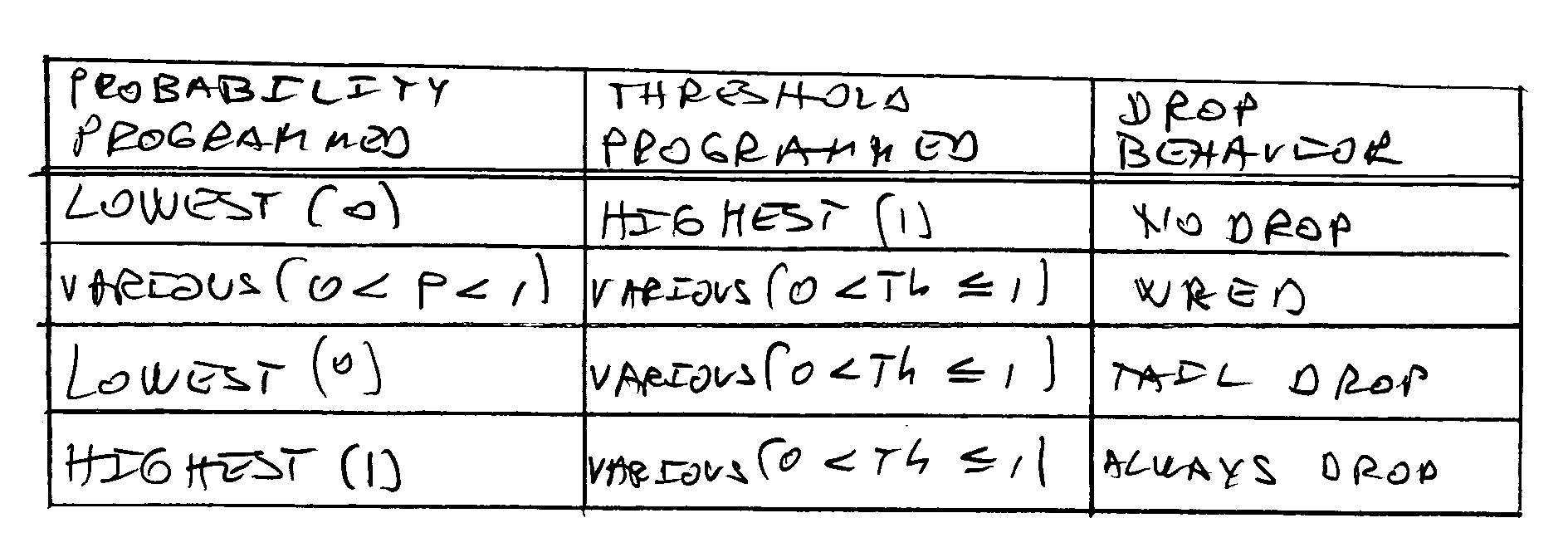

Device and method for managing oversubsription in a network

InactiveUS20060045009A1Error preventionTransmission systemsDeficit round robinRandom early detection

A device and a method for aggregating and managing large quantities of data are disclosed. The received data are prioritized into high and low priority queues. The device receive memory is partitioned into 1 Kilo byte (1 KB) blocks which are further divided into free list and allocation list. The low priority queues occupy between 1 and 48 blocks and the high priority queues occupy between 1 and 32 blocks. The incoming data are further subjected to Weighted Random Early Detection (WRED) process that controls congestion before it occurs by dropping some of the queues. The stored data are read using Modified Deficit Round Robin (MDRR) approach The transmit memory operates with 240 1 KB blocks and the data is transmitted out via an SPI 4.2 or similar device.

Owner:SILICON VALLEY BANK AS AGENT

Virtual disk drive system and method with deduplication

InactiveUS20120166725A1Useful operationMemory loss protectionError detection/correctionRAIDDynamic data

A disk drive system and method capable of dynamically allocating data is provided. The disk drive system may include a RAID subsystem having a pool of storage, for example a page pool of storage that maintains a free list of RAIDs, or a matrix of disk storage blocks that maintain a null list of RAIDs, and a disk manager having at least one disk storage system controller. The RAID subsystem and disk manager dynamically allocate data across the pool of storage and a plurality of disk drives based on RAID-to-disk mapping. Dynamic data allocation and data progression allow a user to acquire a disk drive later in time when it is needed. Data deduplication is provided to improve storage capacity and system performance.

Owner:COMPELLENT TECH

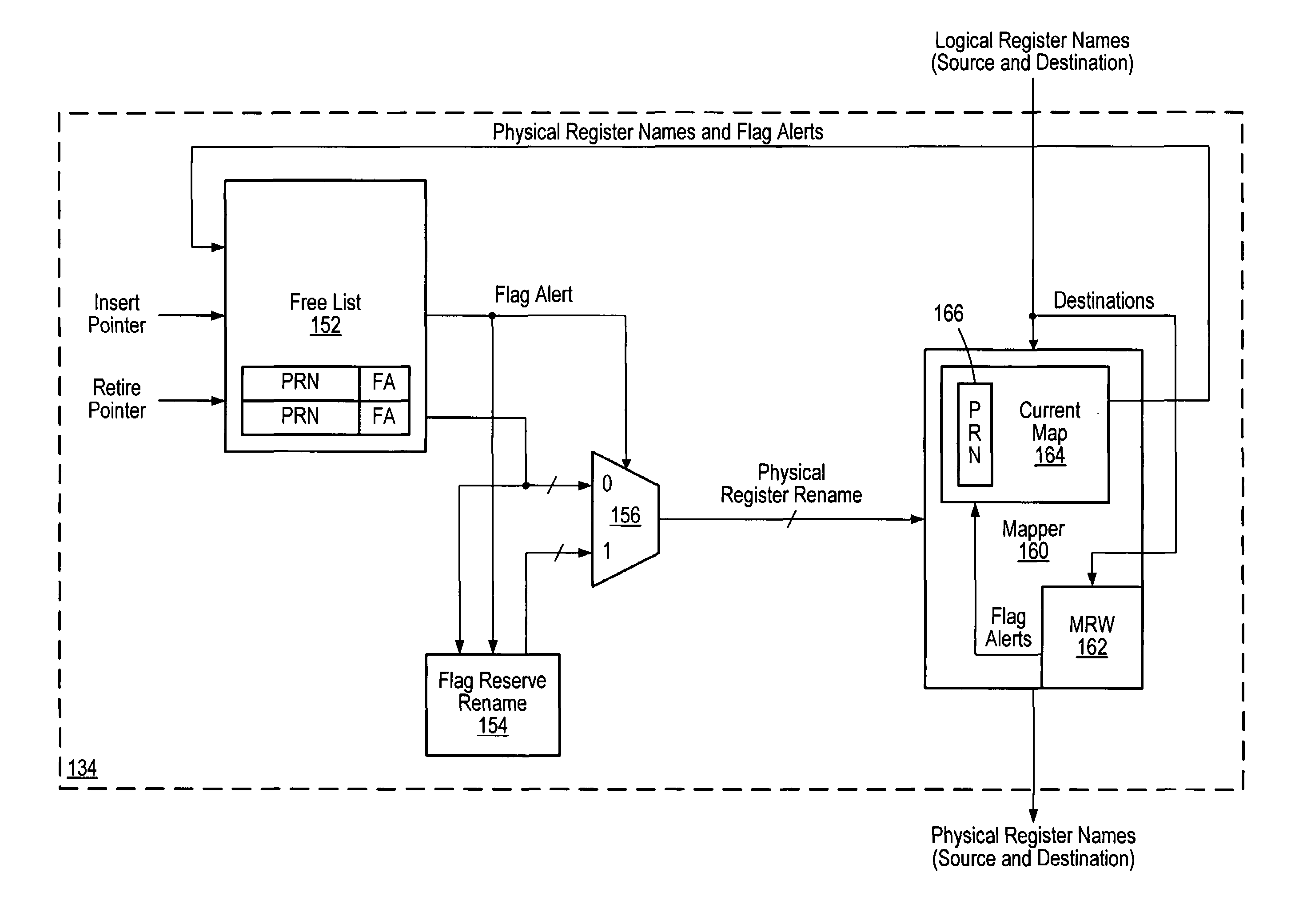

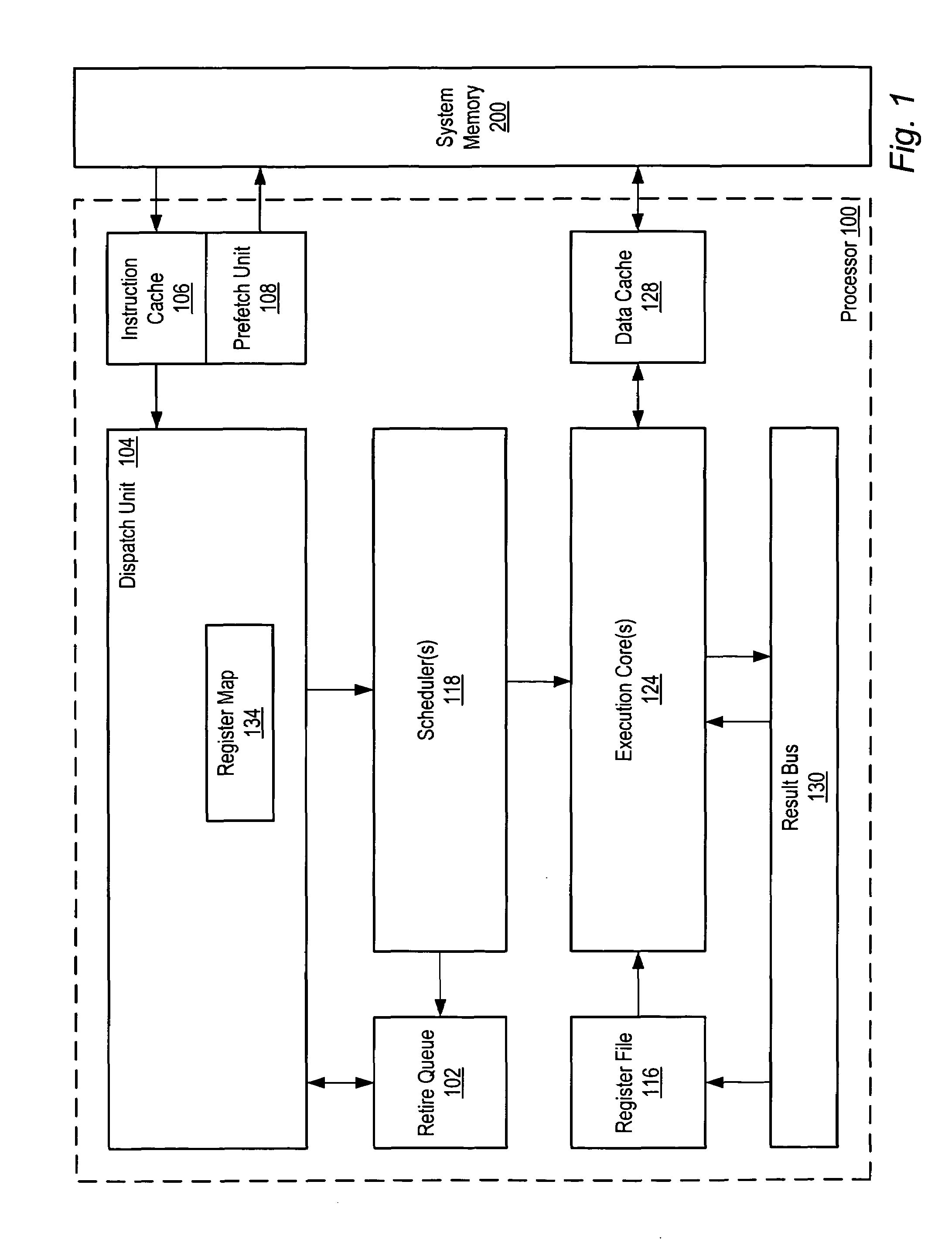

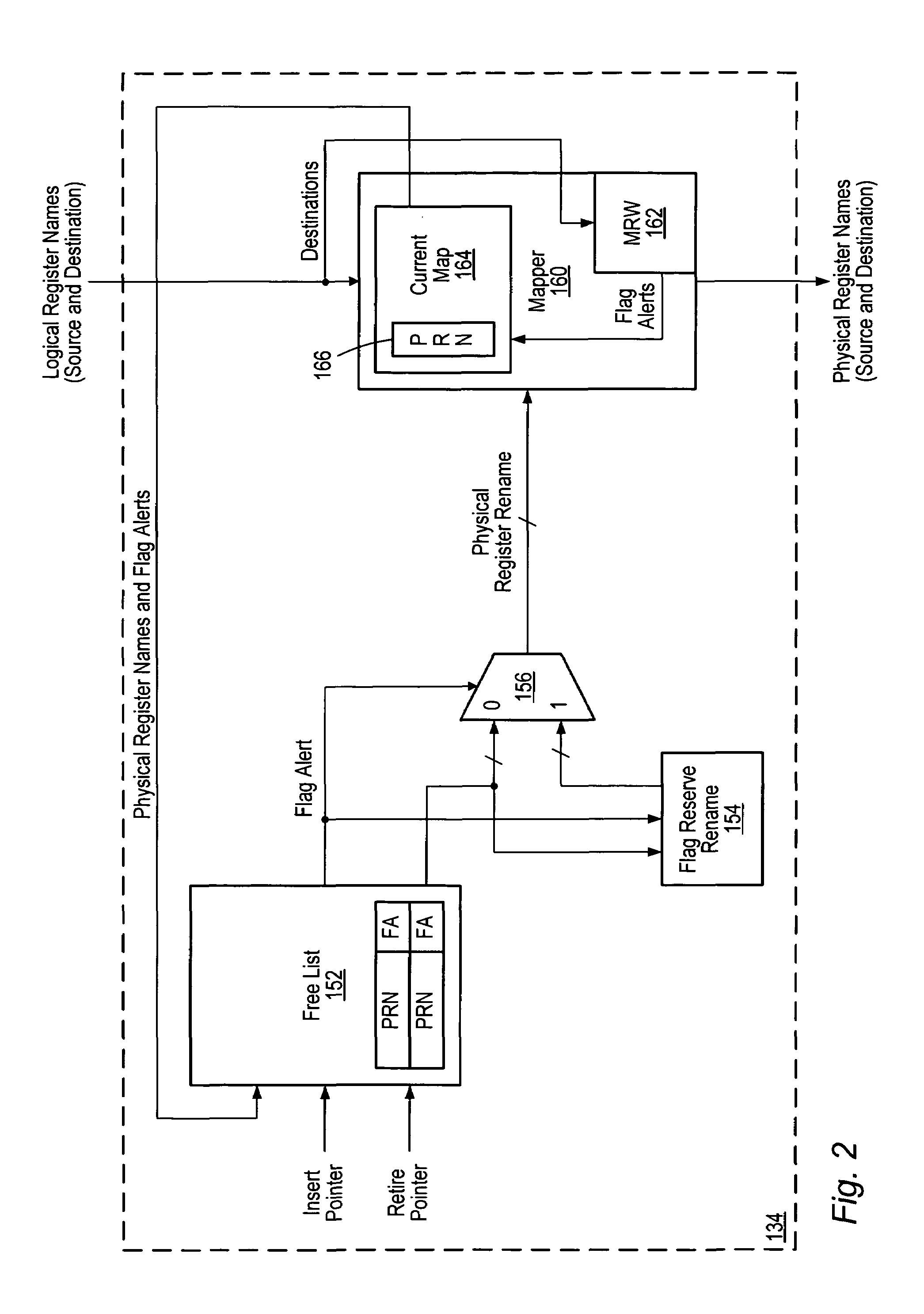

Retaining flag value associated with dead result data in freed rename physical register with an indicator to select set-aside register instead for renaming

ActiveUS7043626B1Digital computer detailsConcurrent instruction executionComputer hardwareProcessor register

A method and apparatus for retaining flag values when an associated data value dies. A first storage circuit includes a free list for storing physical register names (PRNs) and indications indicative of whether a physical register associated with a PRN was assigned to store a logical register result and flag results of a first instruction and a logical register result and a subsequent instruction which overwrites the logical register result but not the flags. A second storage circuit stores PRNs separate from the free list. The first and second storage circuits output first and second PRNs to a selection circuit. If the first indication (associated with the first PRN) is in a first state, the selection circuit may provide the first PRN to a mapper for assignment to a logical register. If the first indication is in a second state, the second PRN may be provided to the mapper.

Owner:MEDIATEK INC

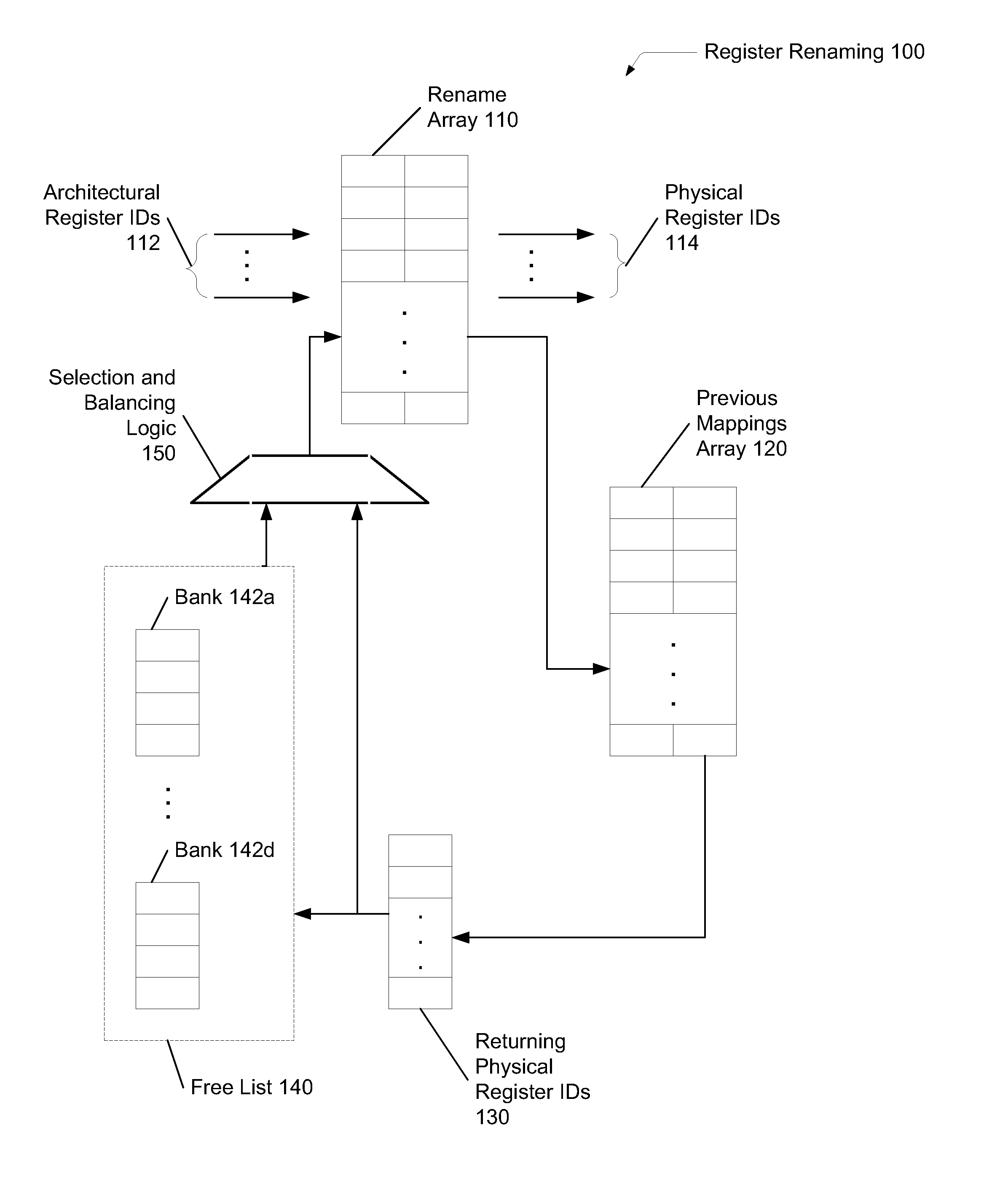

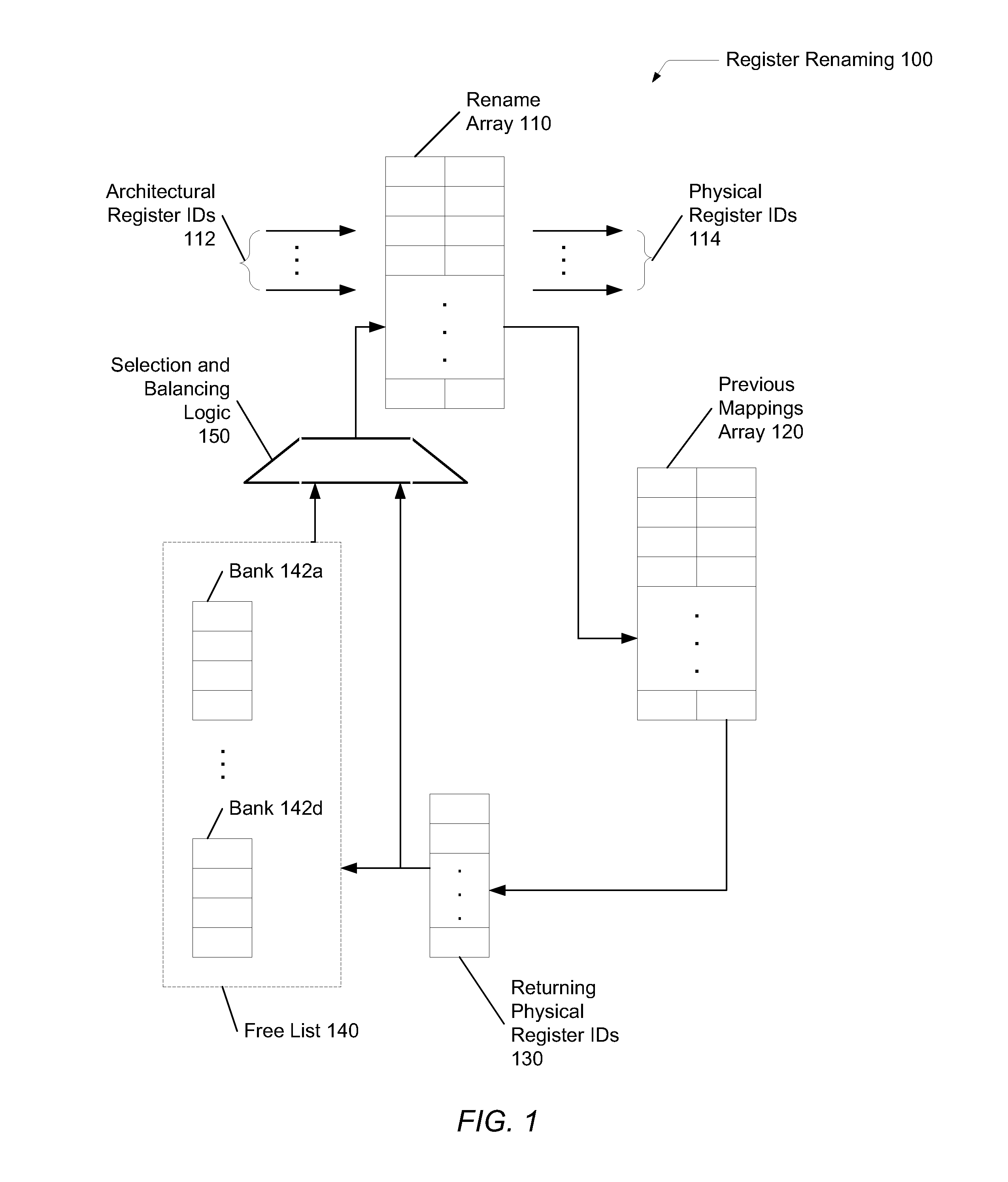

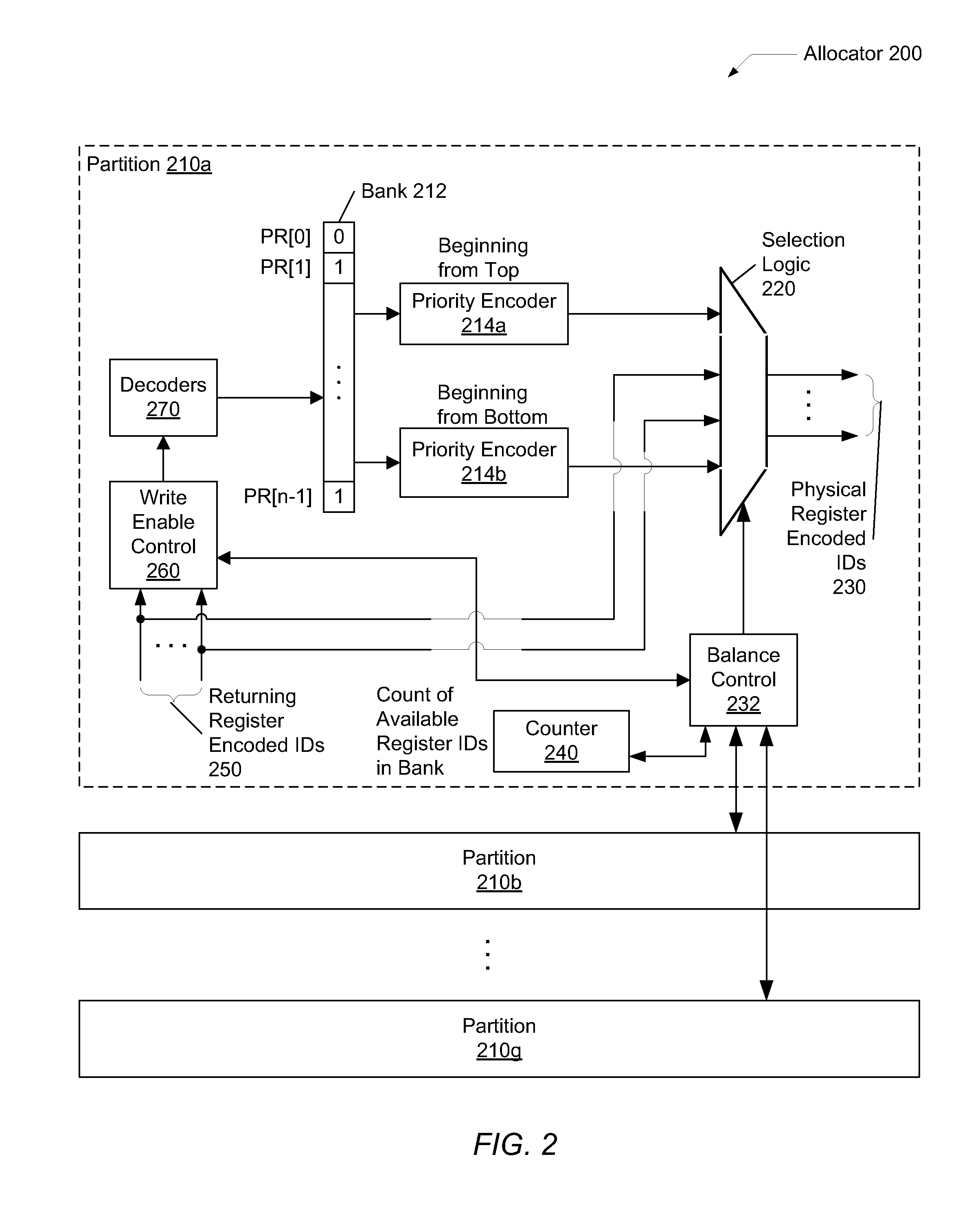

Low power and high performance physical register free list implementation for microprocessors

ActiveUS20140013085A1Reduce latencyReduce total powerRegister arrangementsDigital computer detailsParallel computingLow power dissipation

A system and method for reducing latency and power of register renaming. A free list in processor includes multiple banks for indicating availability of register identifiers used for register renaming. A register rename unit receives one or more destination architectural registers to rename with physical register identifiers. Responsive to determining the multiple banks within the free list are unbalanced with available physical register identifiers, one or more returning physical register identifiers are assigned to the destination architectural registers before assigning any physical register identifiers from any bank of the multiple banks with a lowest number of available physical register identifiers. A returning physical register identifier is a physical register identifier that is available again for assignment to a destination architectural register but not yet indicated in the free list as available. Each of the banks includes a single bit width decoded vector for indicating availability of given physical register identifiers.

Owner:APPLE INC

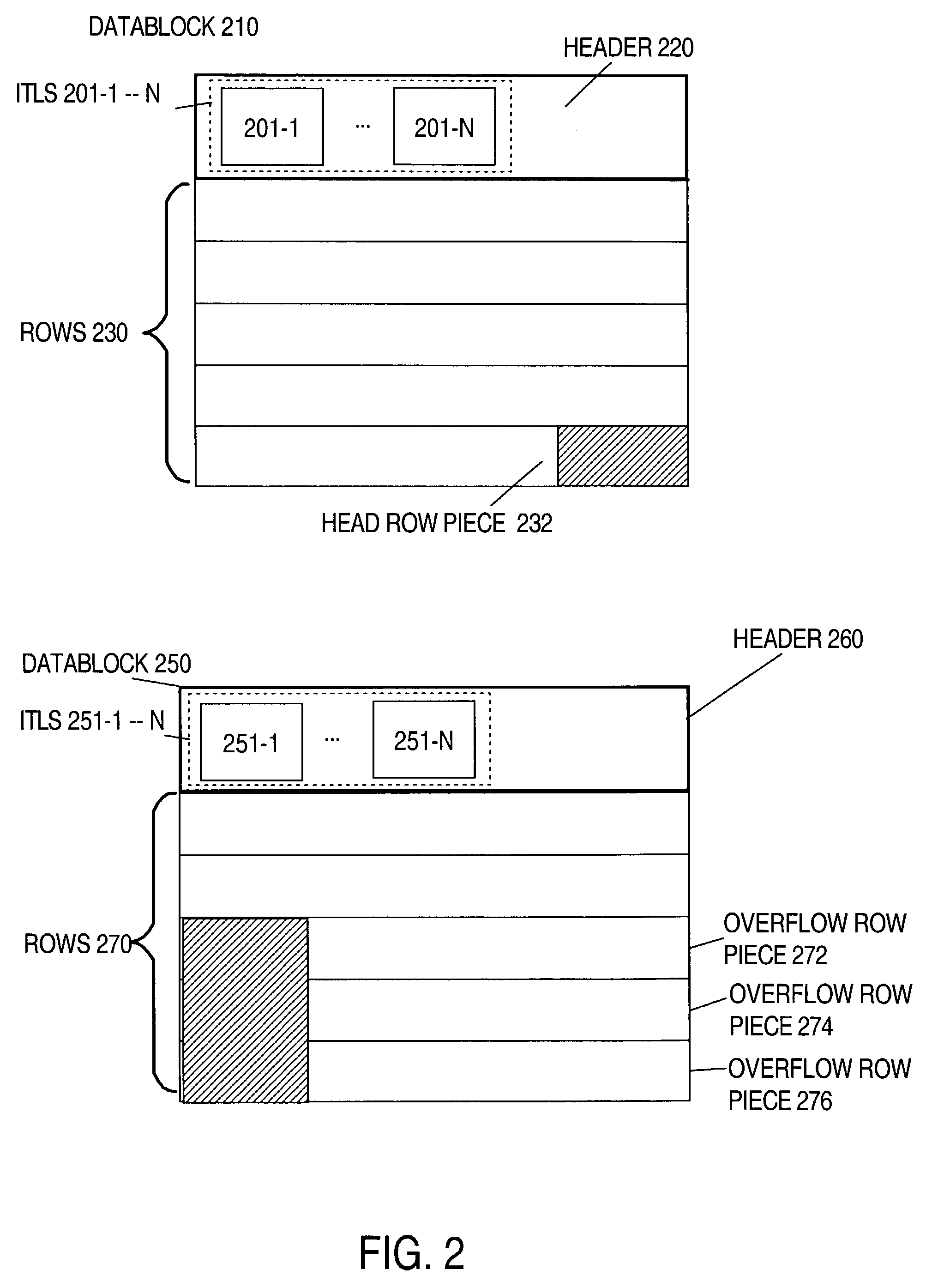

Reducing contention by slaves for free lists when modifying data in a table partition

ActiveUS7188113B1Data processing applicationsDigital data processing detailsParallel computingData storing

Provided herein are techniques that may be used to dramatically increase parallism for distributed DML operations. The work of distributed DML operations are distributed in a way that reduces or eliminates contention for free lists. Scalability is improved because a greater number of slaves may be used to modify data stored in partitions without increasing contention for the free lists.

Owner:ORACLE INT CORP

Free item distribution among multiple free lists during garbage collection for more efficient object allocation

InactiveUS7149866B2Easy to manageEfficient managementData processing applicationsMemory adressing/allocation/relocationParallel computingFree list

A method, system, and program for improving free item distribution among multiple free lists during garbage collection for more efficient object allocation are provided. A garbage collector predicts future allocation requirements and then distributes free items to multiple subpool free lists and a TLH free list during the sweep phase according to the future allocation requirements. The sizes of subpools and number of free items in subpools are predicted as the most likely to match future allocation requests. In particular, once a subpool free list is filled with the number of free items needed according to the future allocation requirements, any additional free items designated for the subpool free list can be divided into multiple TLH sized free items and placed on the TLH free list. Allocation threads are enabled to acquire free items from the TLH free list and to replenish a current TLH without acquiring heap lock.

Owner:INT BUSINESS MASCH CORP

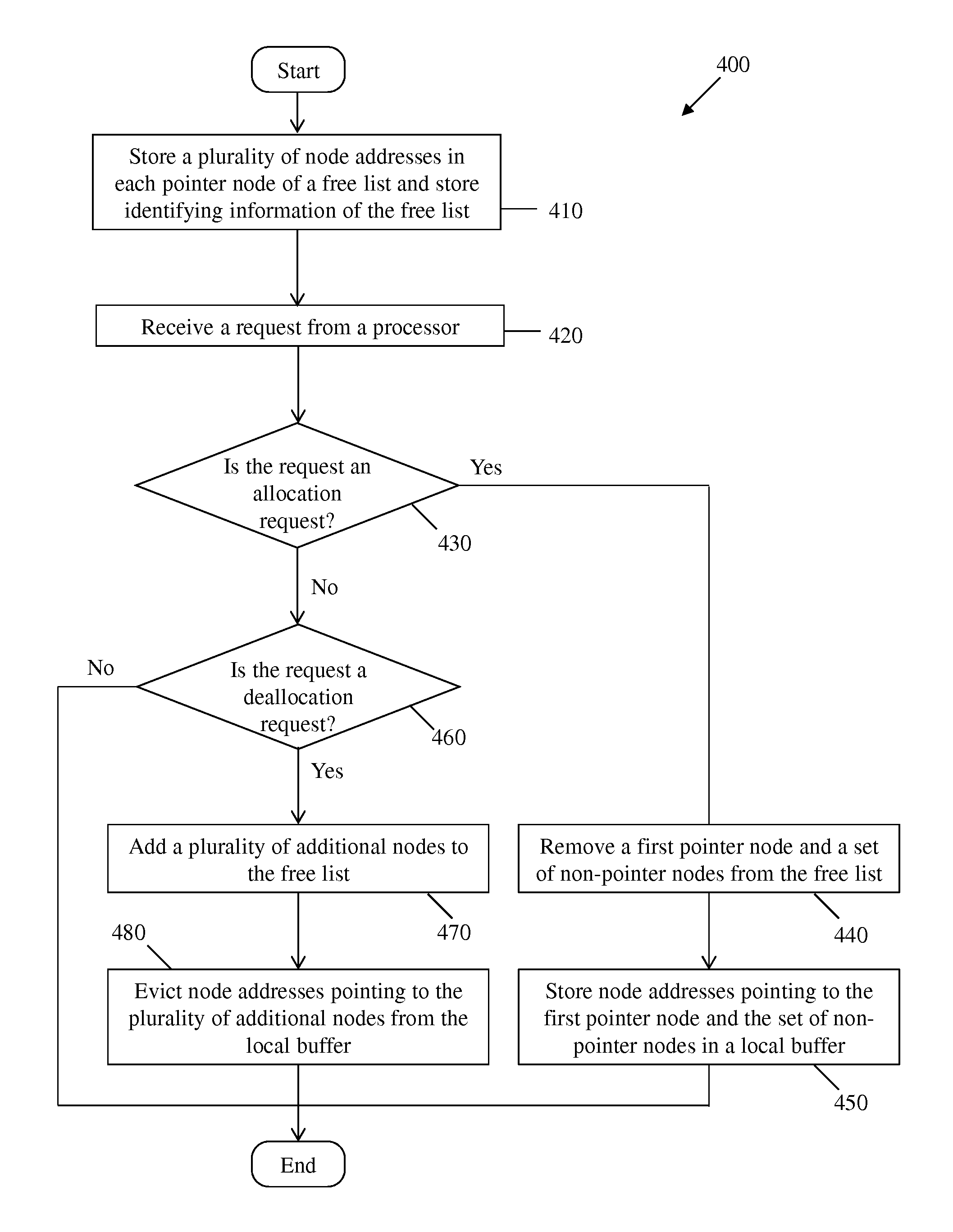

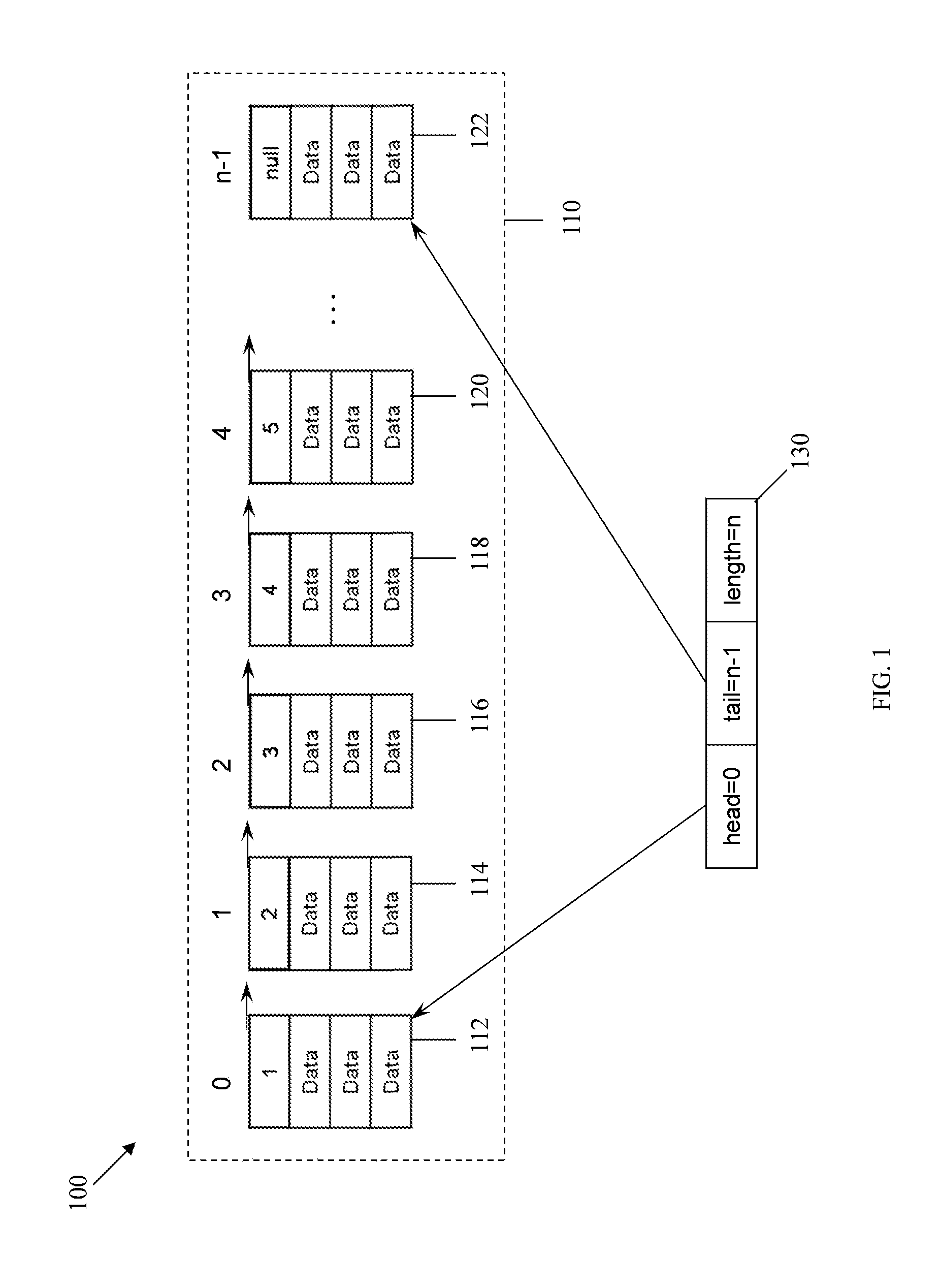

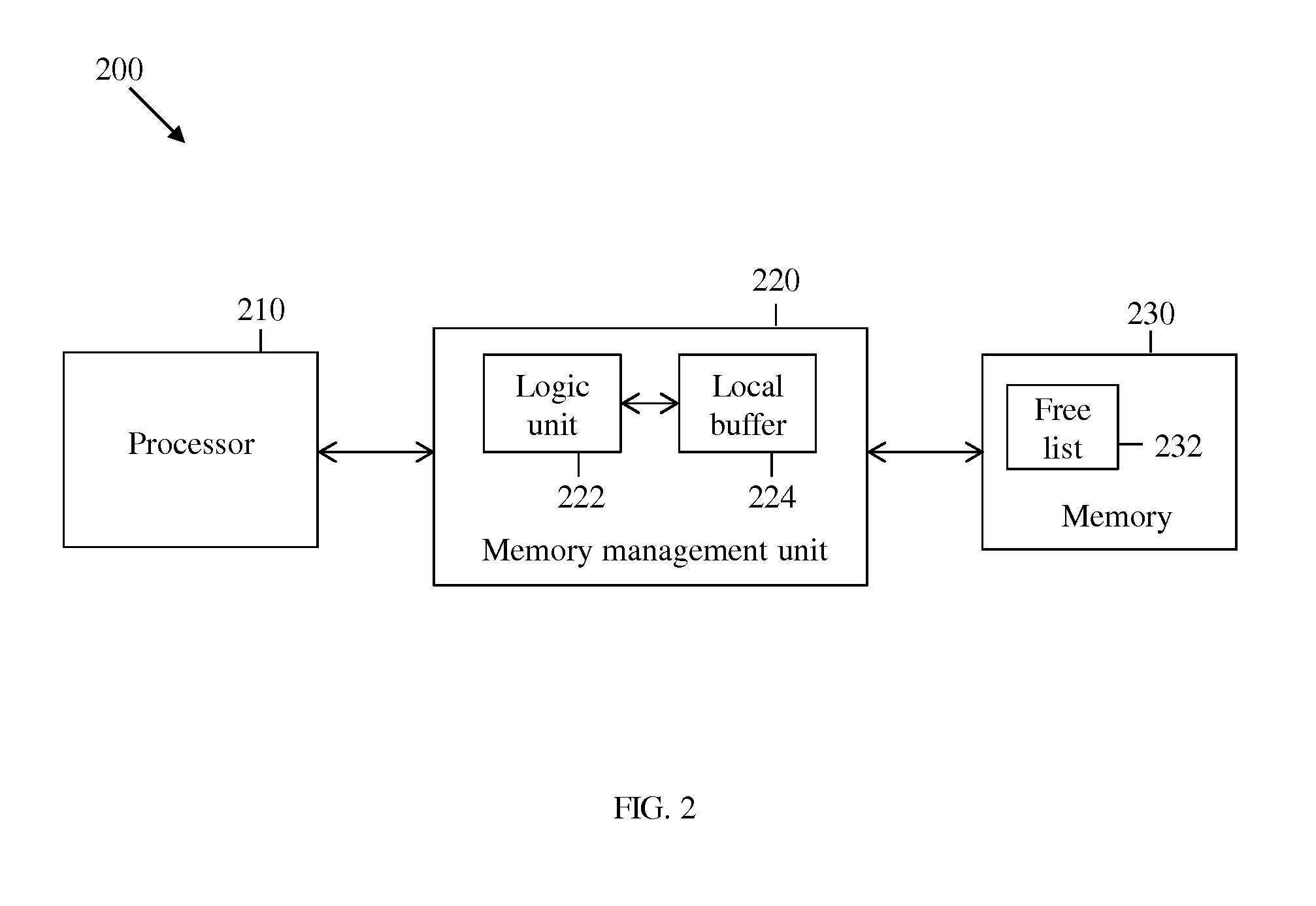

High Performance Free Buffer Allocation and Deallocation

The disclosure includes an apparatus comprising a memory configured to store a free list comprising a plurality of nodes, wherein at least one of the plurality of nodes is configured to store a plurality of node addresses, and wherein each of the plurality of node addresses corresponds to one node in the plurality of nodes. The disclosure further includes a method of memory management comprising using a free list comprising a plurality of nodes and storing a plurality of node addresses in at least one of the plurality of nodes, and wherein each of the plurality of node addresses corresponds to one node in the plurality of nodes.

Owner:FUTUREWEI TECH INC

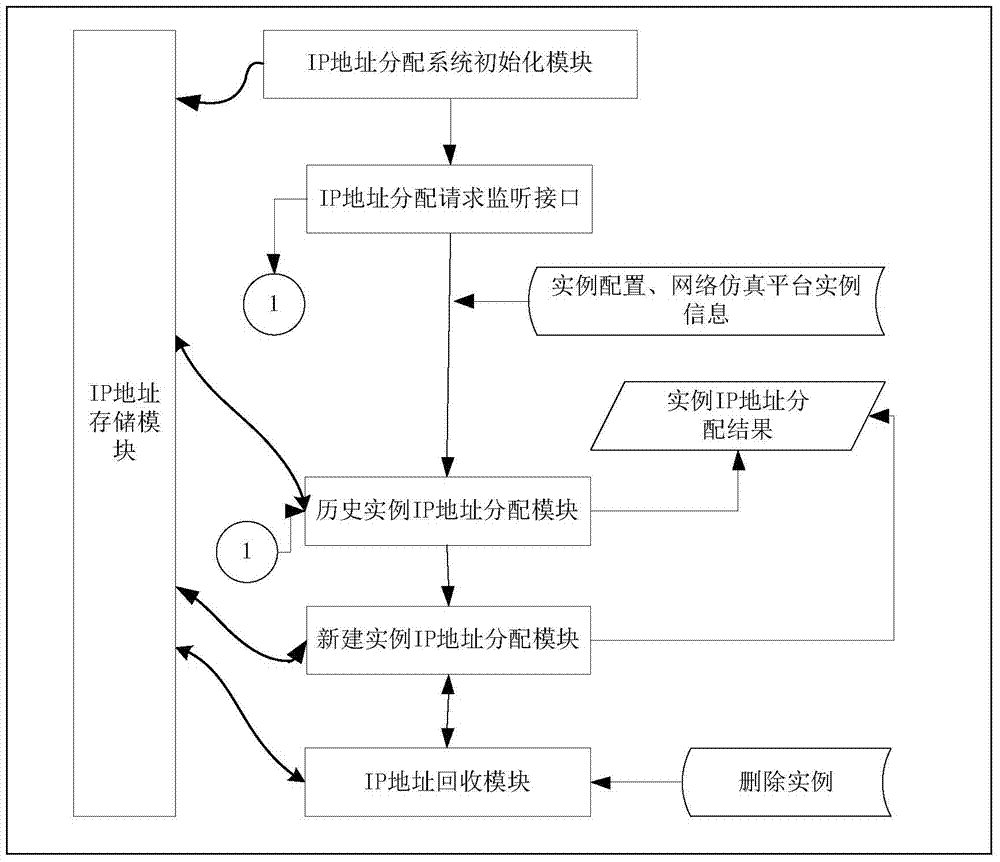

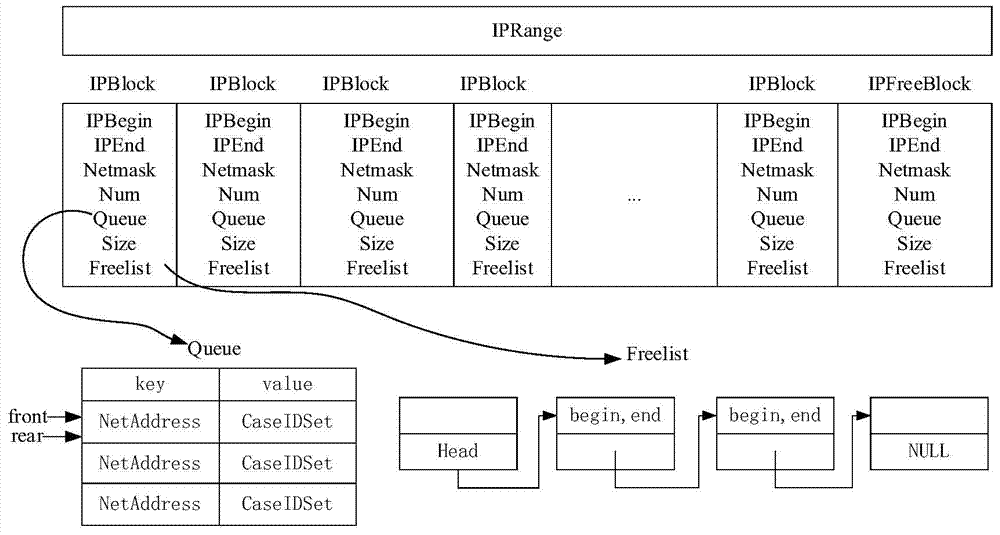

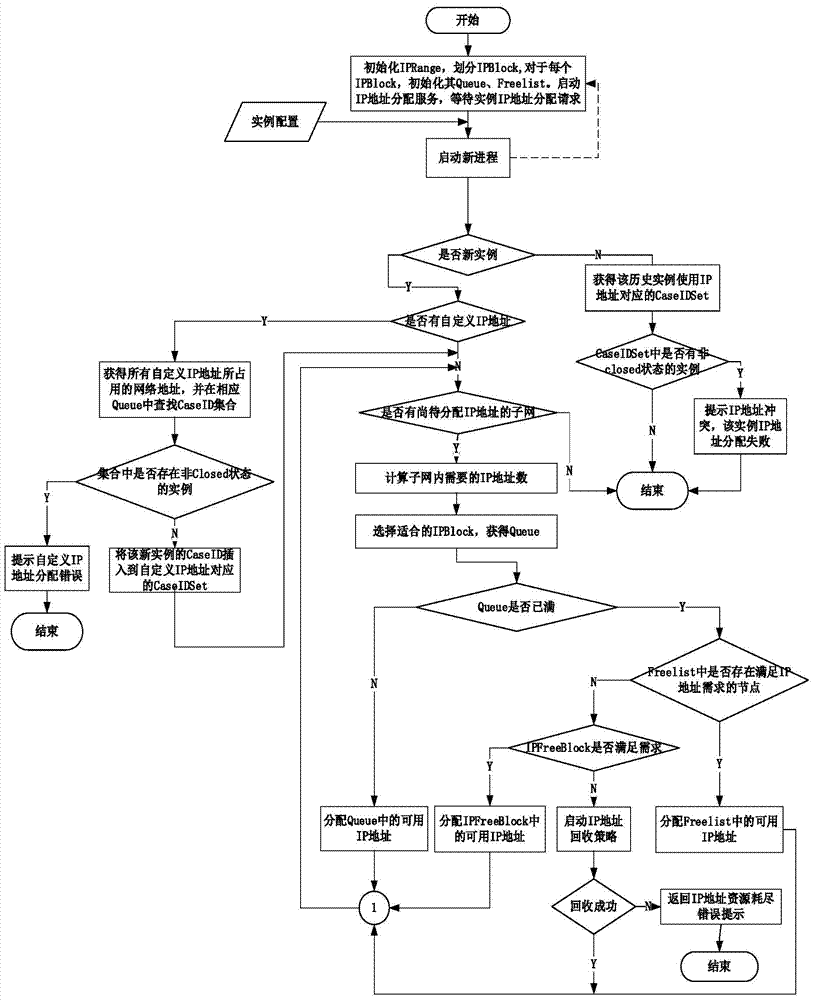

IP address distribution system and method facing distributed network simulation platform

The invention relates to an IP address distribution system and method facing a distributed network simulation platform. The method includes: performing IP address range and IP block division; storing the kind circulation queue and free list of IP block use conditions; performing IP address distribution and IP address recycling. The method has the advantages customized IP addresses in examples can be processed, automatic IP address distribution is achieved, and flexibility of the network simulation platform is increased; a given range can be divided reasonably, and IP address resources can be utilized to the maximum extent; the IP addresses of examples can be isolated and executed, and network errors caused by IP address conflicts of different examples when physical network cards cannot be isolated are avoided; the IP address resources are stored in sequence by the kind circulation queue, the free list is used for storing discrete available IP address resources, sequential distribution and discrete distribution are combined, and the like.

Owner:INST OF INFORMATION ENG CAS

Register renaming system

InactiveUS7171541B1Simple circuitMemory adressing/allocation/relocationGeneral purpose stored program computerInstructions per cycleNumbering scheme

A register renaming system for a processor based on superscalar architecture that can process a larger number of instructions per cycle by providing a free list to hold unallocated physical-register numbers and a mapping table whose entries are provided in respective correspondence with the logical registers and each designed to hold a physical-register number, and by pipelining where dependency checks among instructions are to be done as a pre-process.

Owner:SEKI HAJIME

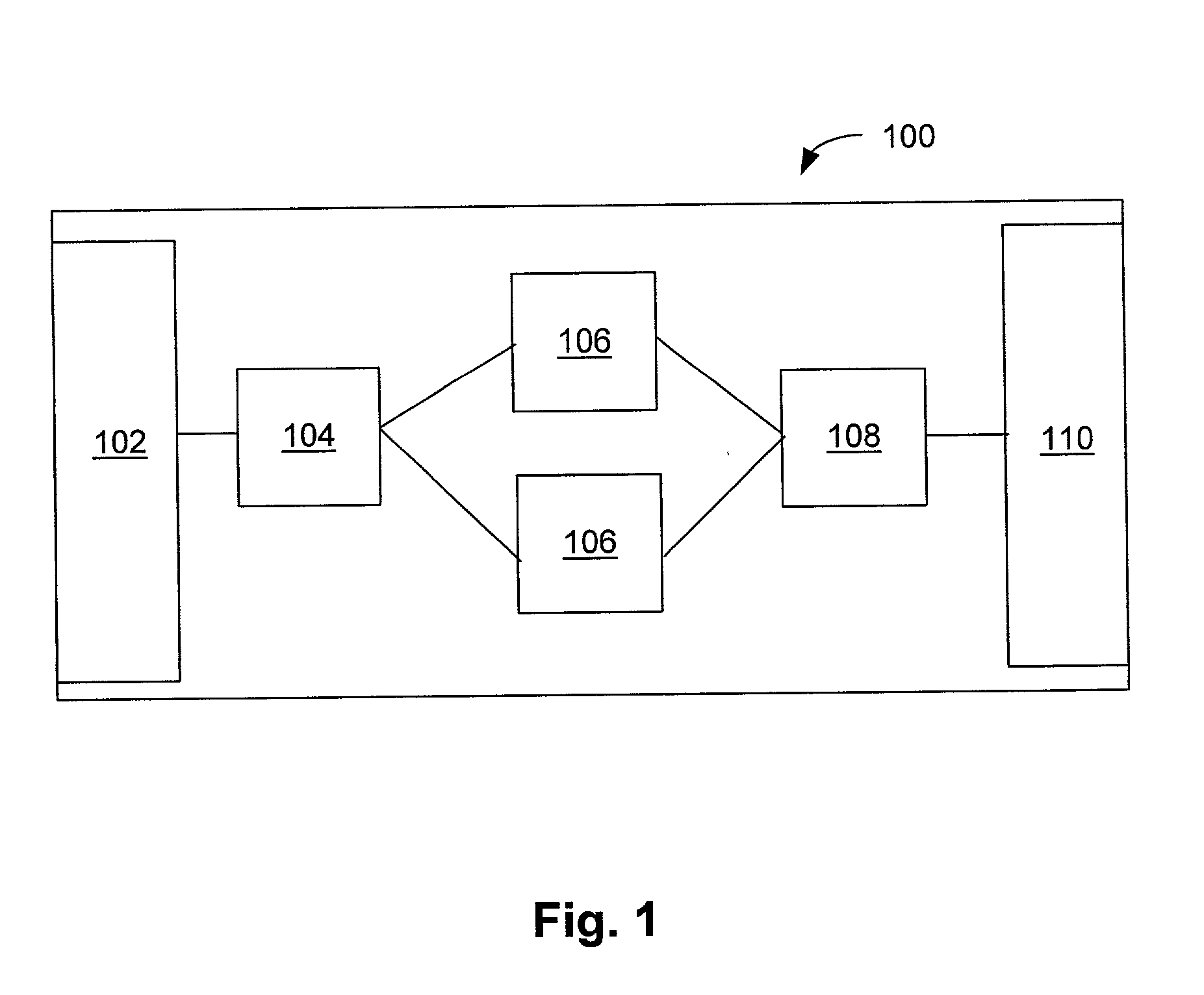

Scalable approach to large scale queuing through dynamic resource allocation

ActiveUS20050047338A1Error preventionFrequency-division multiplex detailsDynamic resourceFibre Channel

Methods and devices are provided for the efficient allocation and deletion of virtual output queues. According to some implementations, incoming packets are classified according to a queue in which the packet (or classification information for the packet) will be stored, e.g., according to a “Q” value. For example, a Q value may be a Q number defined as {Egress port number ∥∥ Priority number∥∥ Ingress port number}. Only a single physical queue is allocated for each classification. When a physical queue is empty, the physical queue is preferably de-allocated and added to a “free list” of available physical queues. Accordingly, the total number of allocated physical queues preferably does not exceed the total number of classified packets. Because the input buffering requirements of Fibre Channel (“FC”) and other protocols place limitations on the number of incoming packets, the dynamic allocation methods of the present invention result in a sparse allocation of physical queues.

Owner:CISCO TECH INC

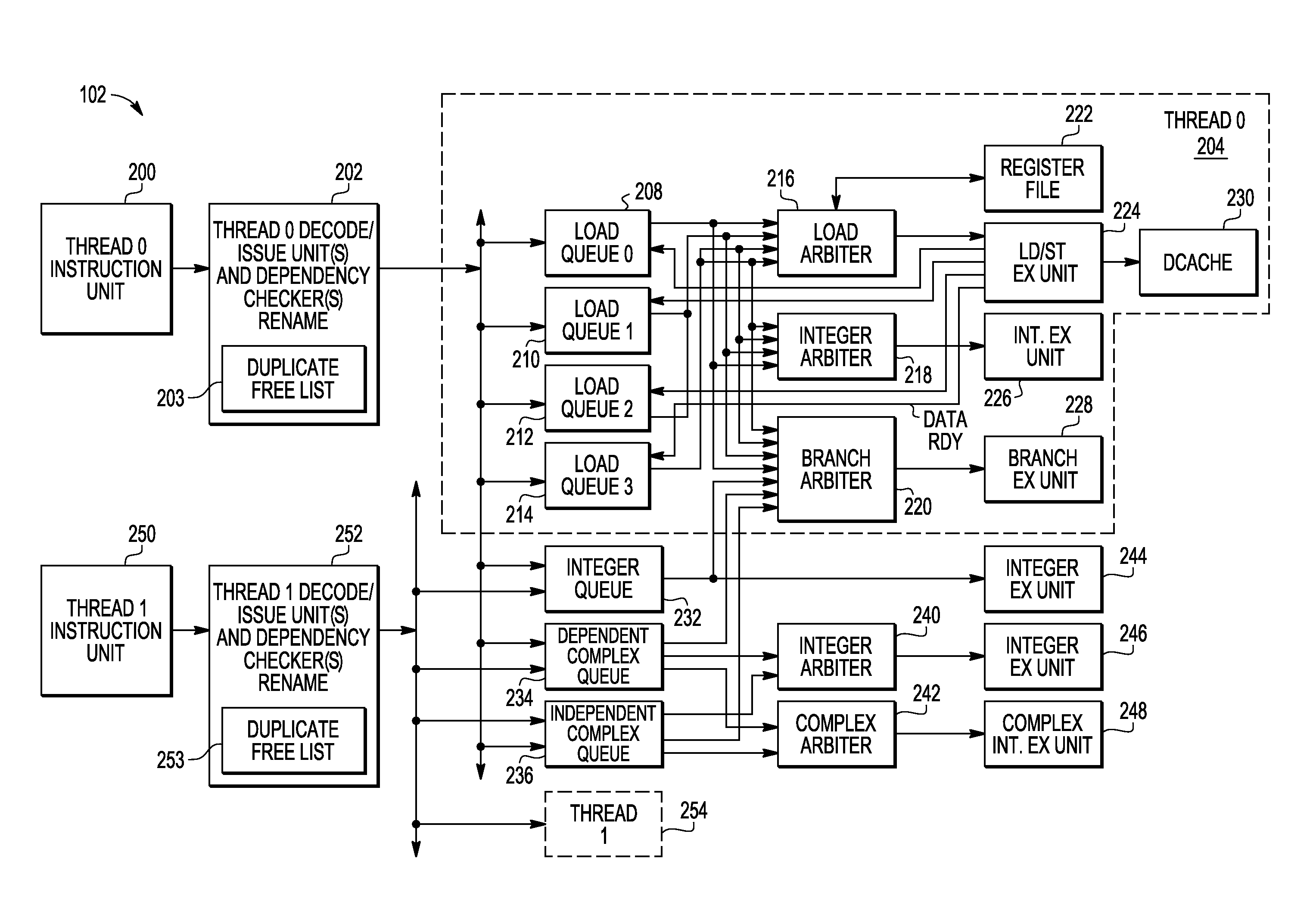

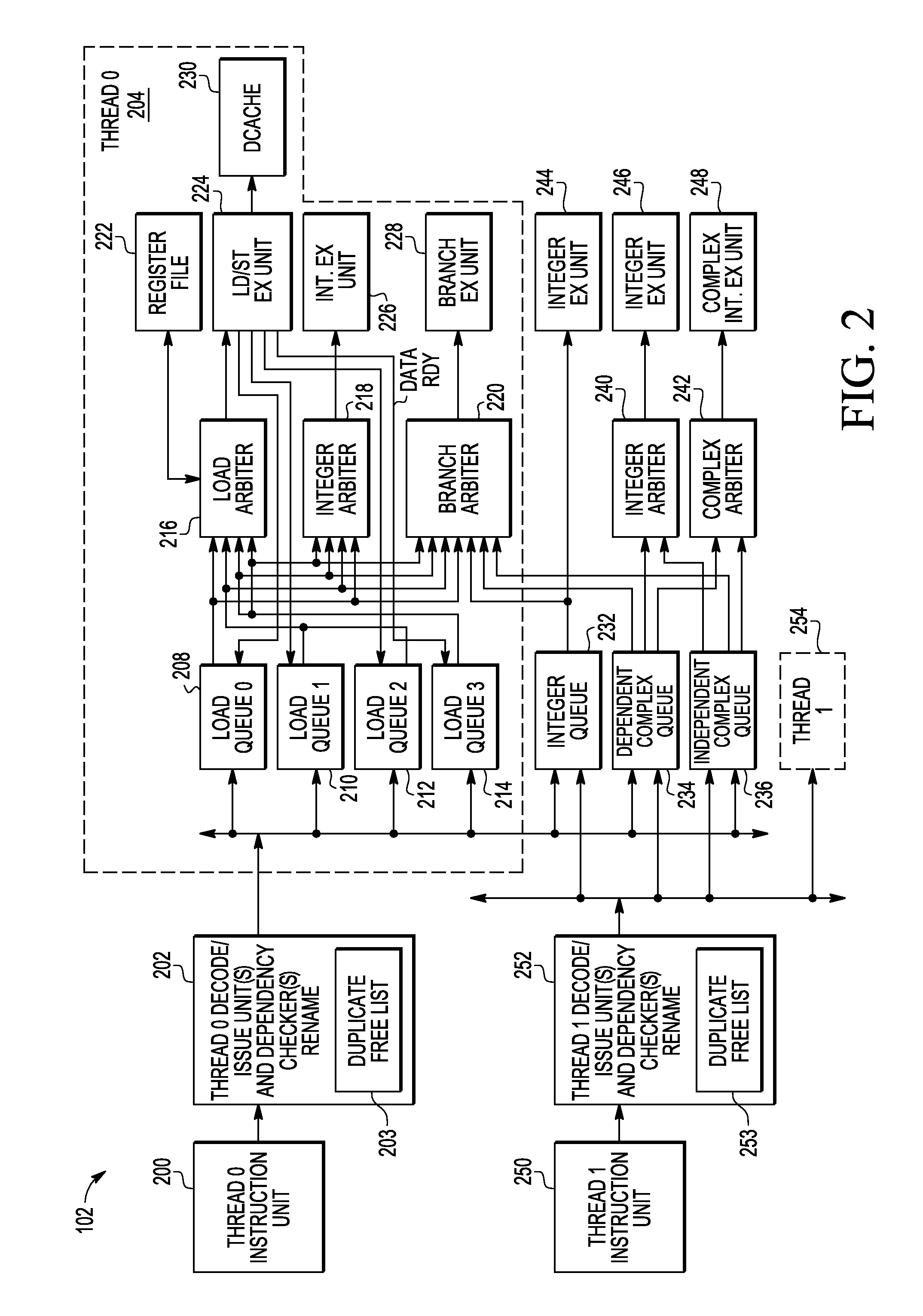

Data processing system with latency tolerance execution

In a processor having an instruction unit, a decode / issue unit, and execution queues configured to provide instructions to correspondingly different types execution units, a method comprises maintaining a duplicate free list for the execution queues. The duplicate free list includes a plurality of duplicate dependent instruction indicators that indicate when a duplicate instruction for a dependent instruction is stored in at least one of the execution queues. One of the duplicate dependent instruction indicators is assigned to an execution queue for a dependent instruction. The dependent instruction is executed only when the one of the duplicate dependent instruction indicators is reset.

Owner:NXP USA INC

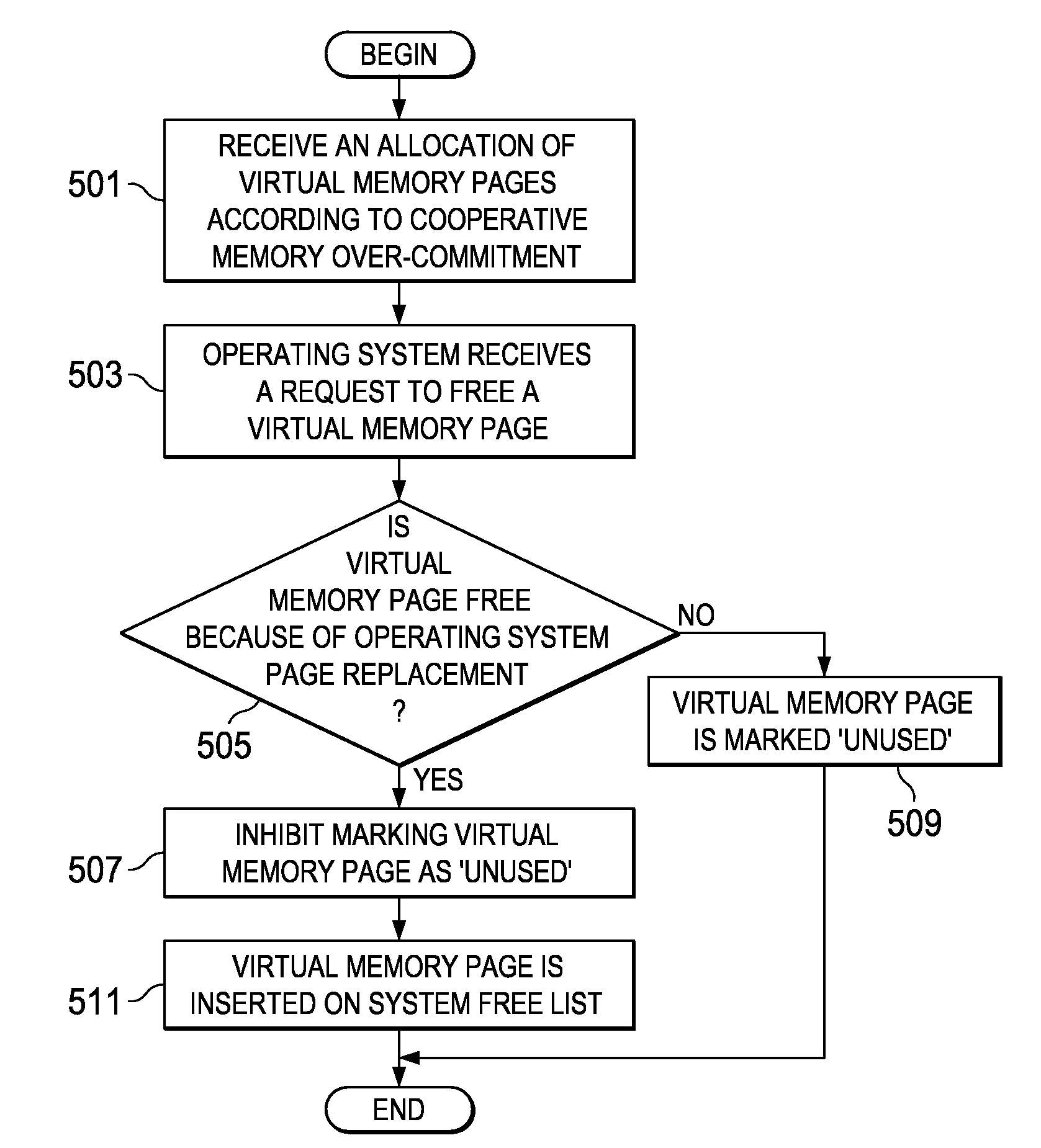

Selectively mark free frames as unused for cooperative memory over-commitment

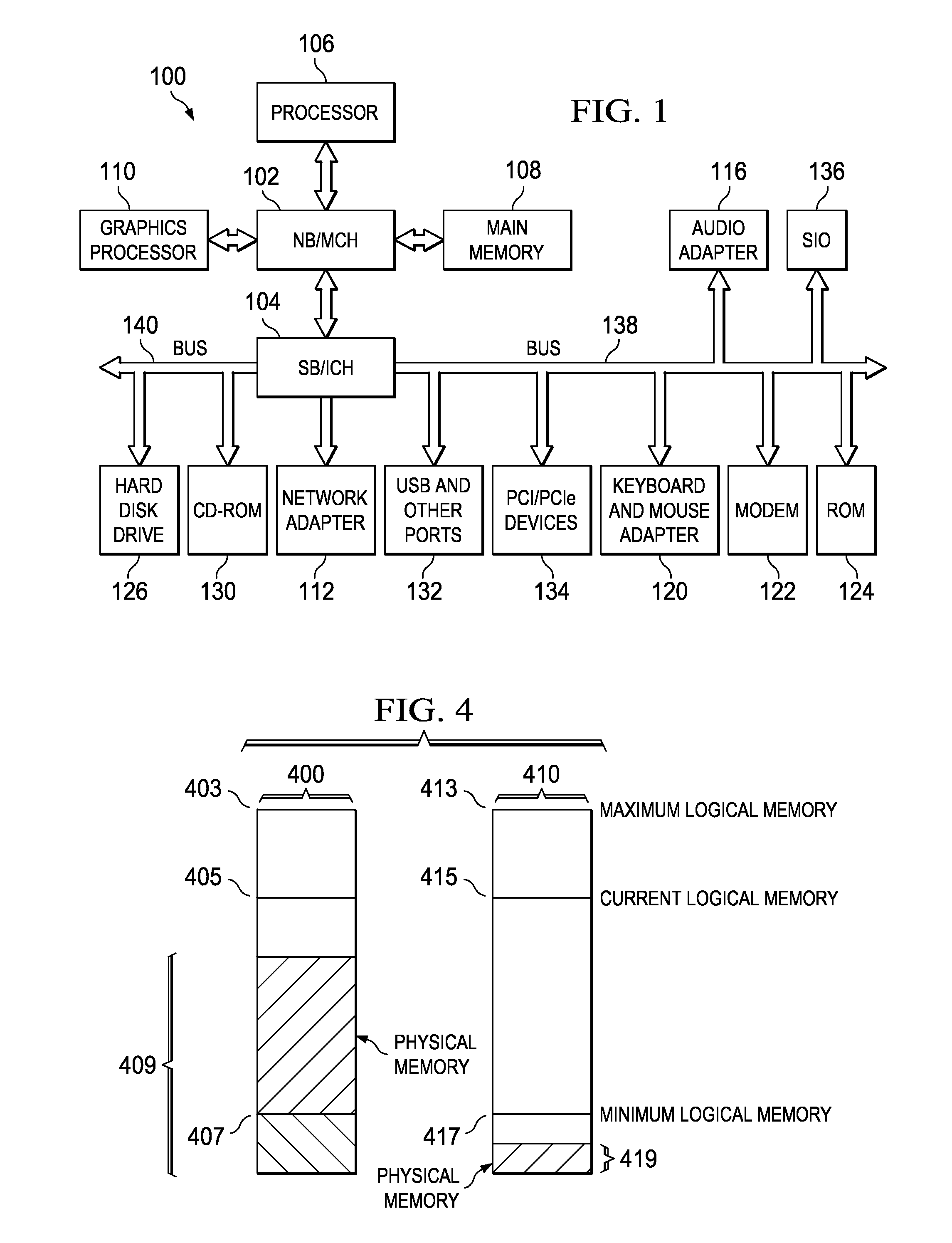

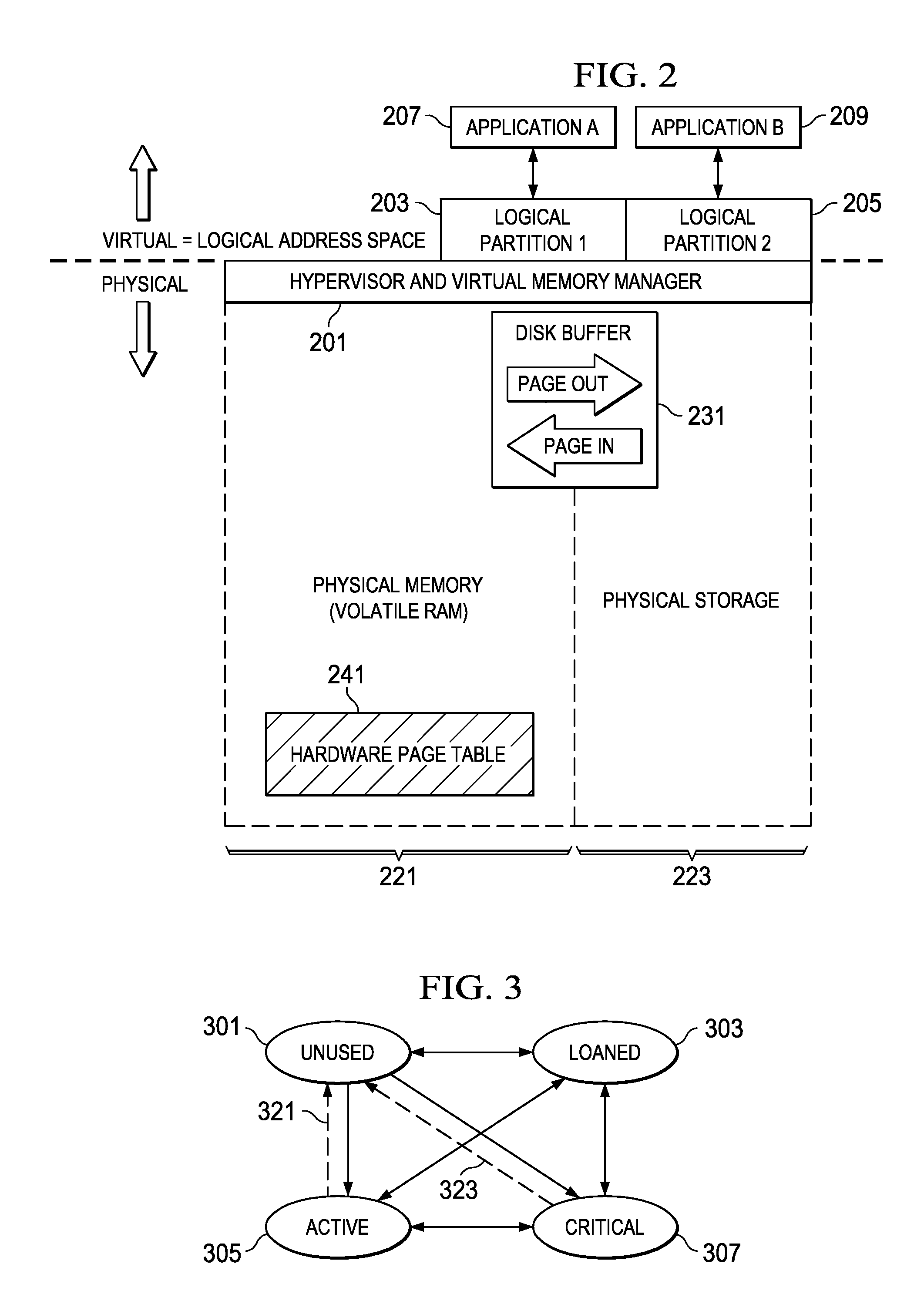

InactiveUS20090307459A1Memory adressing/allocation/relocationMicro-instruction address formationFree listVirtual memory

Disclosed is a computer implemented method, apparatus and computer program product for communicating virtual memory page status to a virtual memory manager. An operating system may receive a request to free a virtual memory page from a first application. The operating system determines whether the virtual memory page is free due to an operating system page replacement. Responsive to a determination that the virtual memory page is free due to the operating system page replacement, the operating system inhibits marking the virtual memory page as unused. Finally, the operating system may insert the virtual memory page on an operating system free list.

Owner:IBM CORP

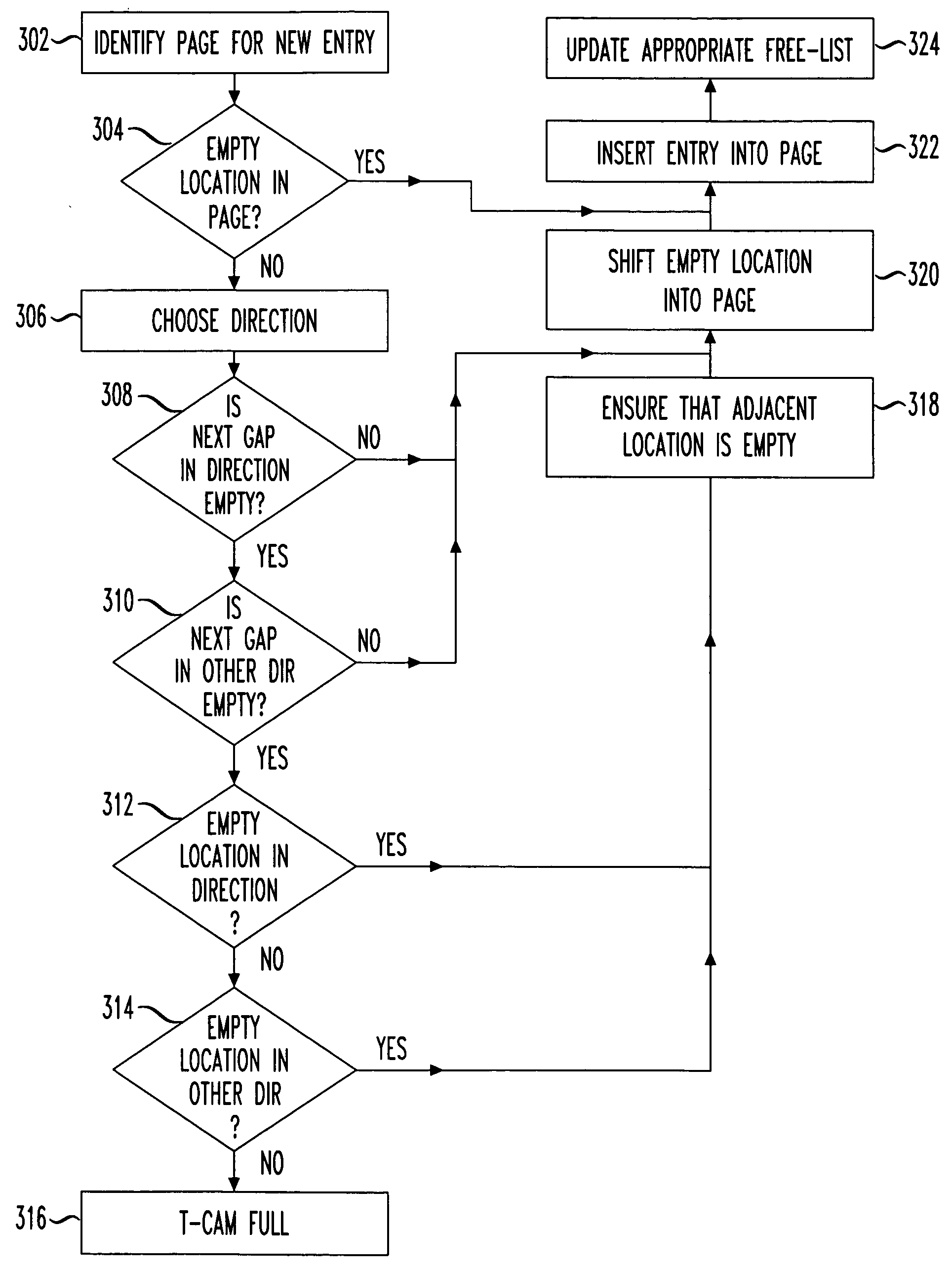

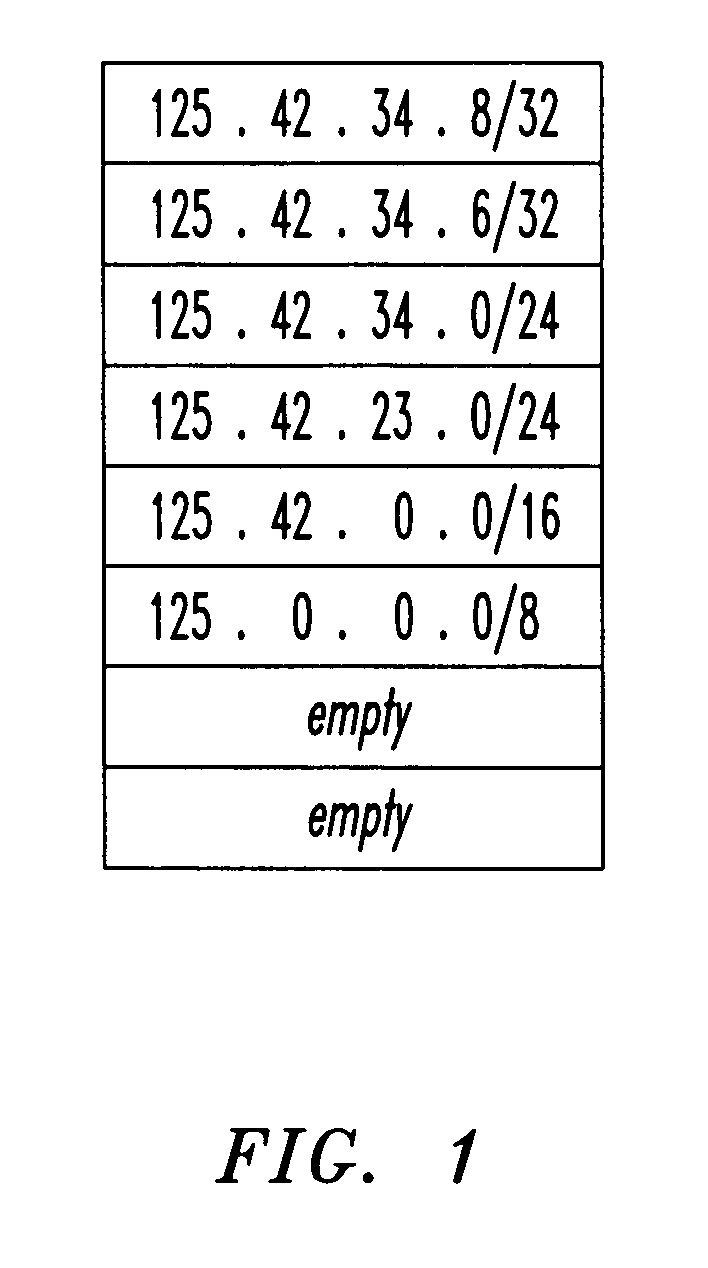

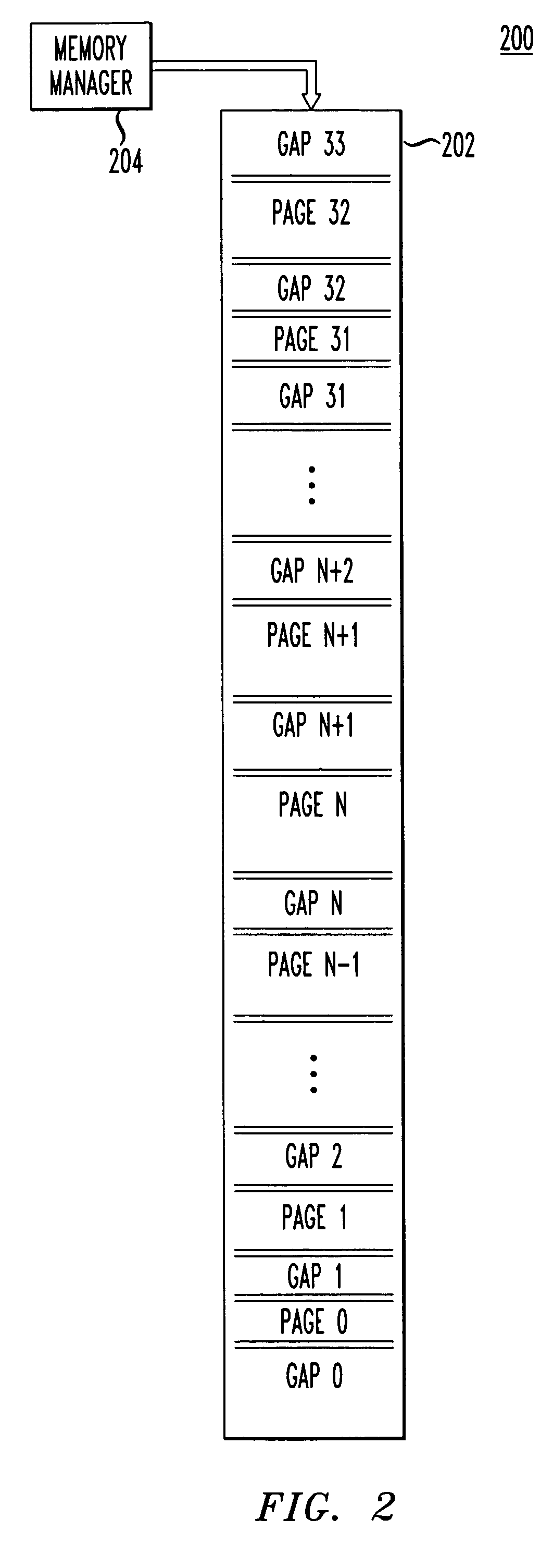

Memory management for ternary CAMs and the like

InactiveUS20050102428A1Memory adressing/allocation/relocationMultiple digital computer combinationsLongest prefix matchMemory management unit

To support IP routing table longest-prefix matching, a (ternary content-addressable) memory is managed by assigning its locations to interleaved pages and gaps. Each page has zero or more locations assigned to it, where all entries in a page have the same prefix length. Each gap has zero or more empty locations assigned to it. The pages are organized by descending prefix length. Associated with each page is a free-list identifying empty locations in that page. An “invariant” rule may dictate that first and last page locations cannot be empty. Whenever an entry is deleted from the first or last location in a page, that location is shifted (i.e., reassigned) to the adjacent gap. The direction chosen to search for an empty location for inserting a new entry is based on a global measure of the relative fullness of memory regions above and below the new entry's page.

Owner:AGERE SYST INC

Hybrid storage subsystem with mixed placement of file contents

InactiveUS20130218892A1Digital data information retrievalDigital data processing detailsHard disc driveFile system

A storage subsystem combining solid state drive (SSD) and hard disk drive (HDD) technologies provides low access latency and low complexity. Separate free lists are maintained for the SSD and the HDD and blocks of file system data are stored uniquely on either the SSD or the HDD. When a read access is made to the subsystem, if the data is present on the SSD, the data is returned, but if the block is present on the HDD, it is migrated to the SSD and the block on the HDD is returned to the HDD free list. On a write access, if the block is present in the either the SSD or HDD, the block is overwritten, but if the block is not present in the subsystem, the block is written to the HDD.

Owner:INT BUSINESS MASCH CORP

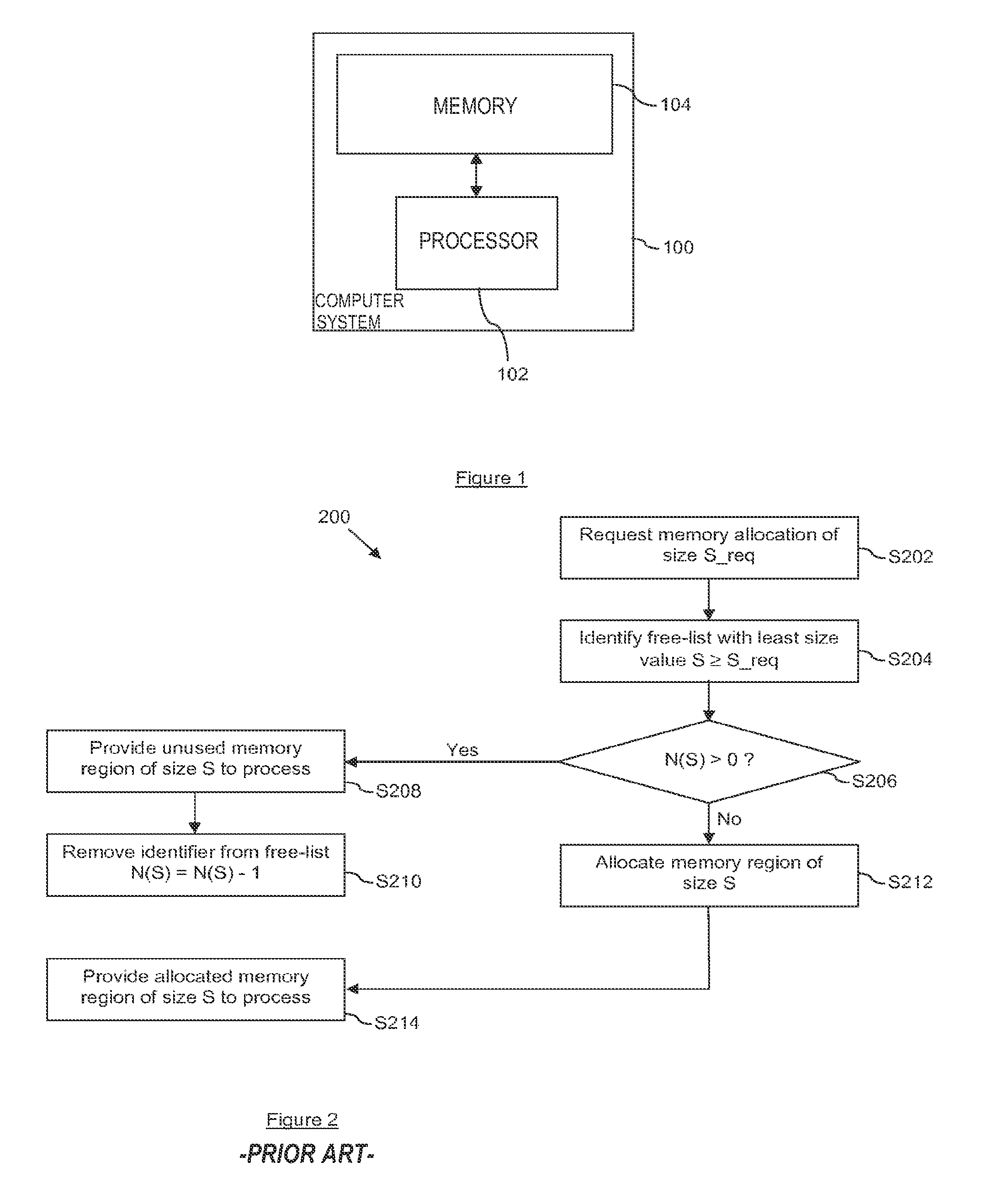

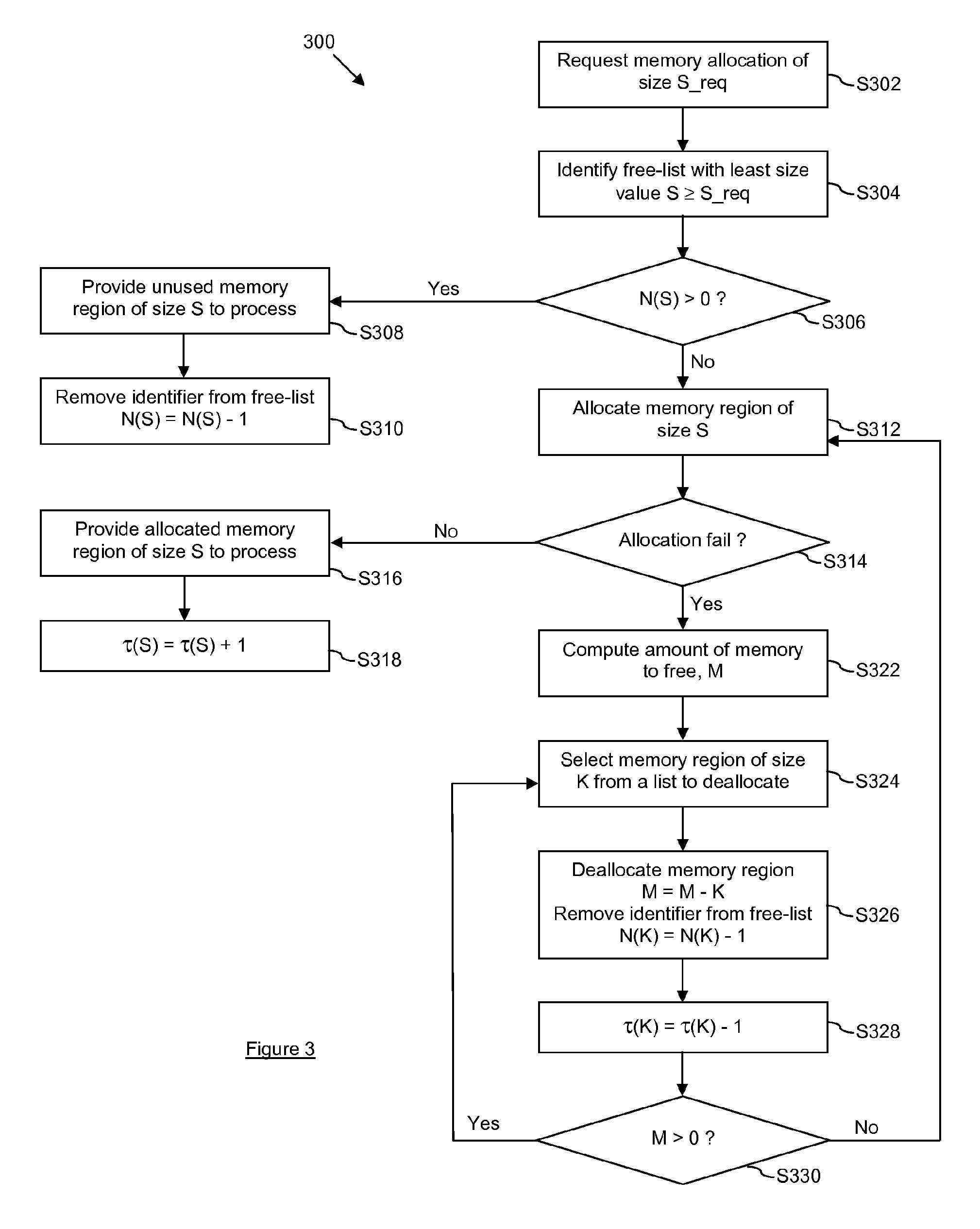

Memory management and method for allocation using free-list

ActiveUS8838928B2Memory architecture accessing/allocationMemory adressing/allocation/relocationMemory management unitSize value

Owner:NXP USA INC

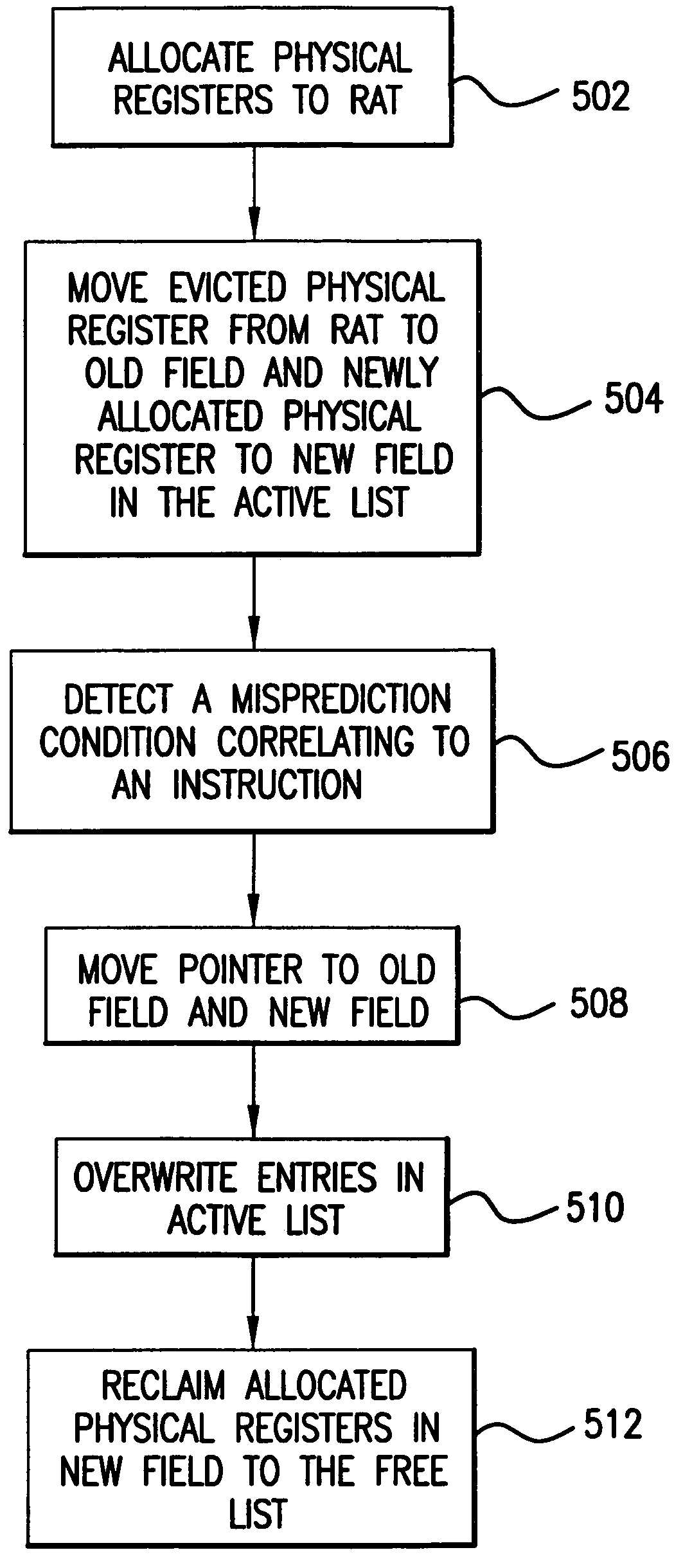

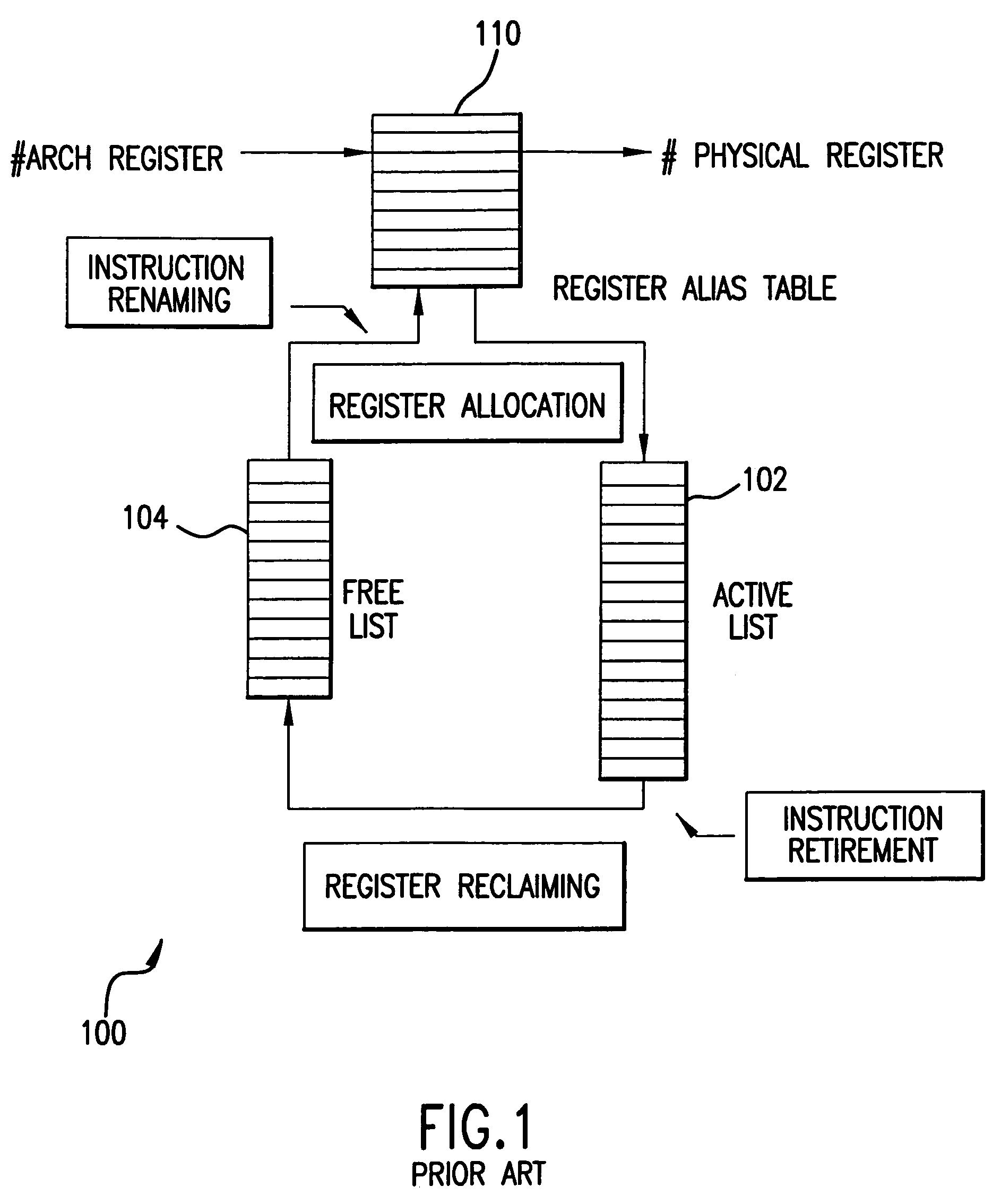

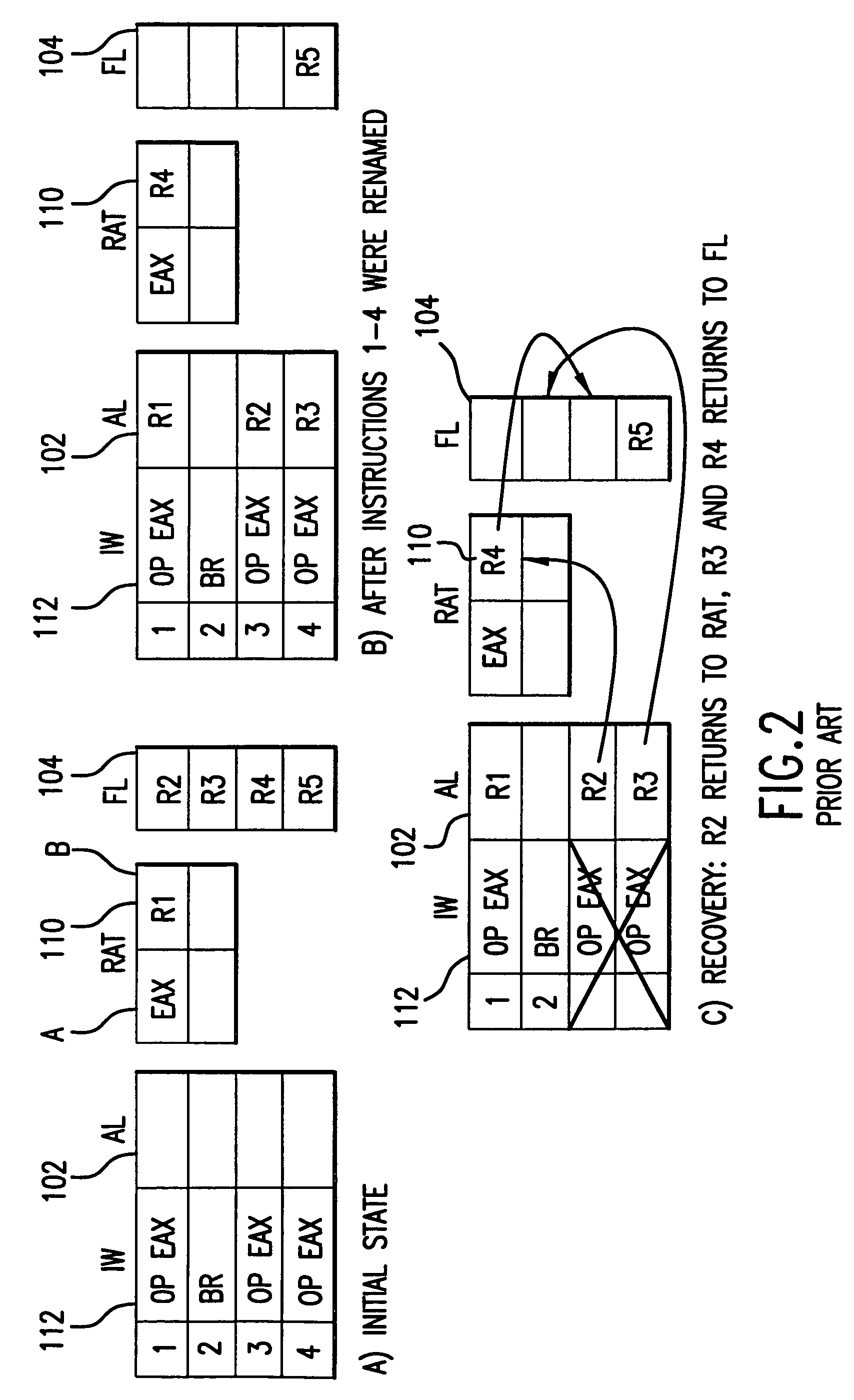

Method and apparatus for a register renaming structure

InactiveUS7155599B2Digital computer detailsConcurrent instruction executionRegister allocationRegister assignment

A processor having a register renaming structure and method is disclosed to recover a free list. The processor includes a physical register file including physical registers. The processor also includes a decoder to decode an instruction to indicate a destination logical register. The processor also includes a register allocation table to map the destination logical register to an allocated physical register. The processor also includes an active list that includes an old field and a new field. The old field includes at least one evicted physical register from the register alias table. The new field includes the allocated physical register. The processor also includes the free list of unallocated physical registers reclaimed from the active list.

Owner:MICRON TECH INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com