Patents

Literature

129 results about "Virtual Output Queues" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

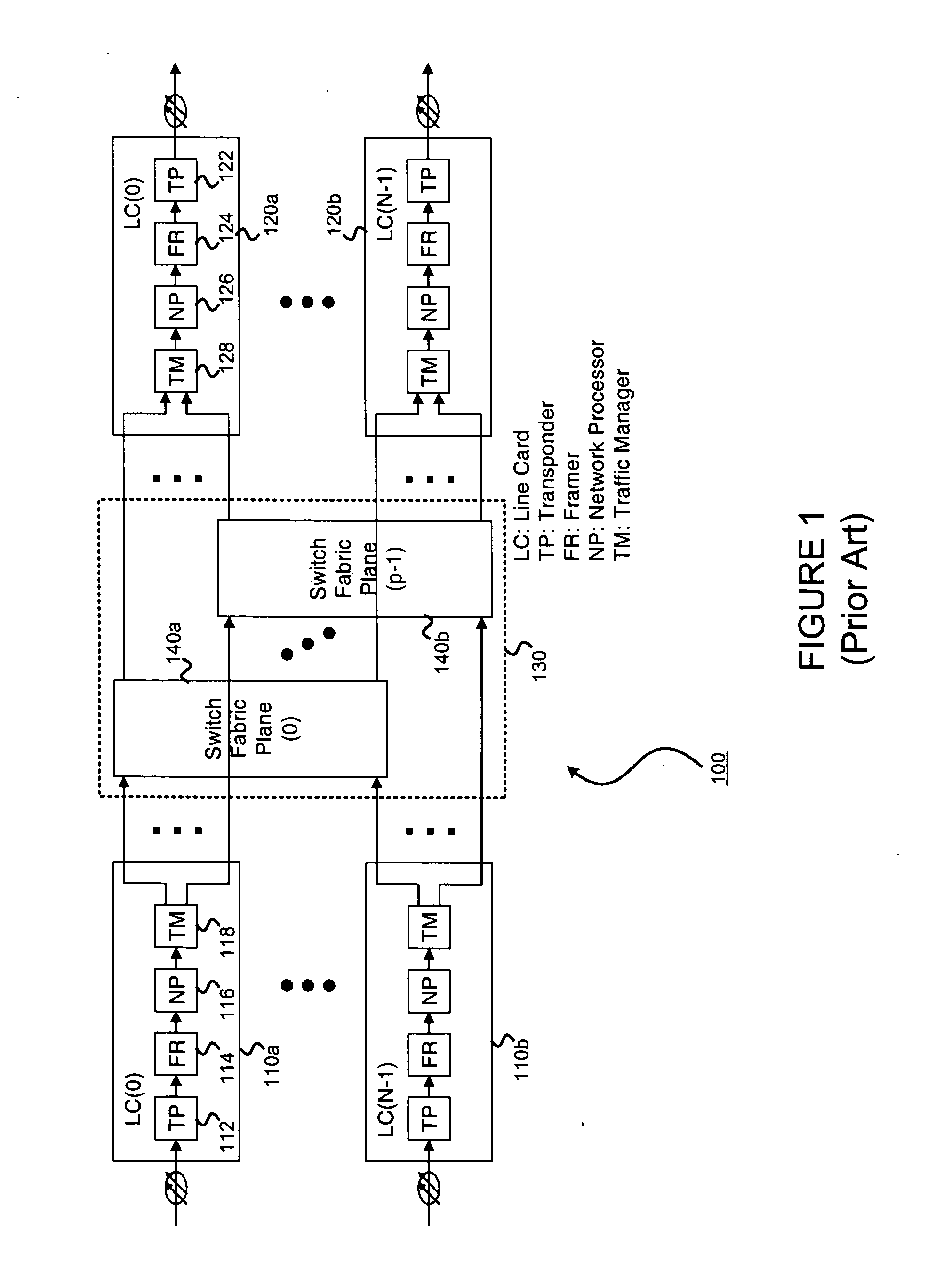

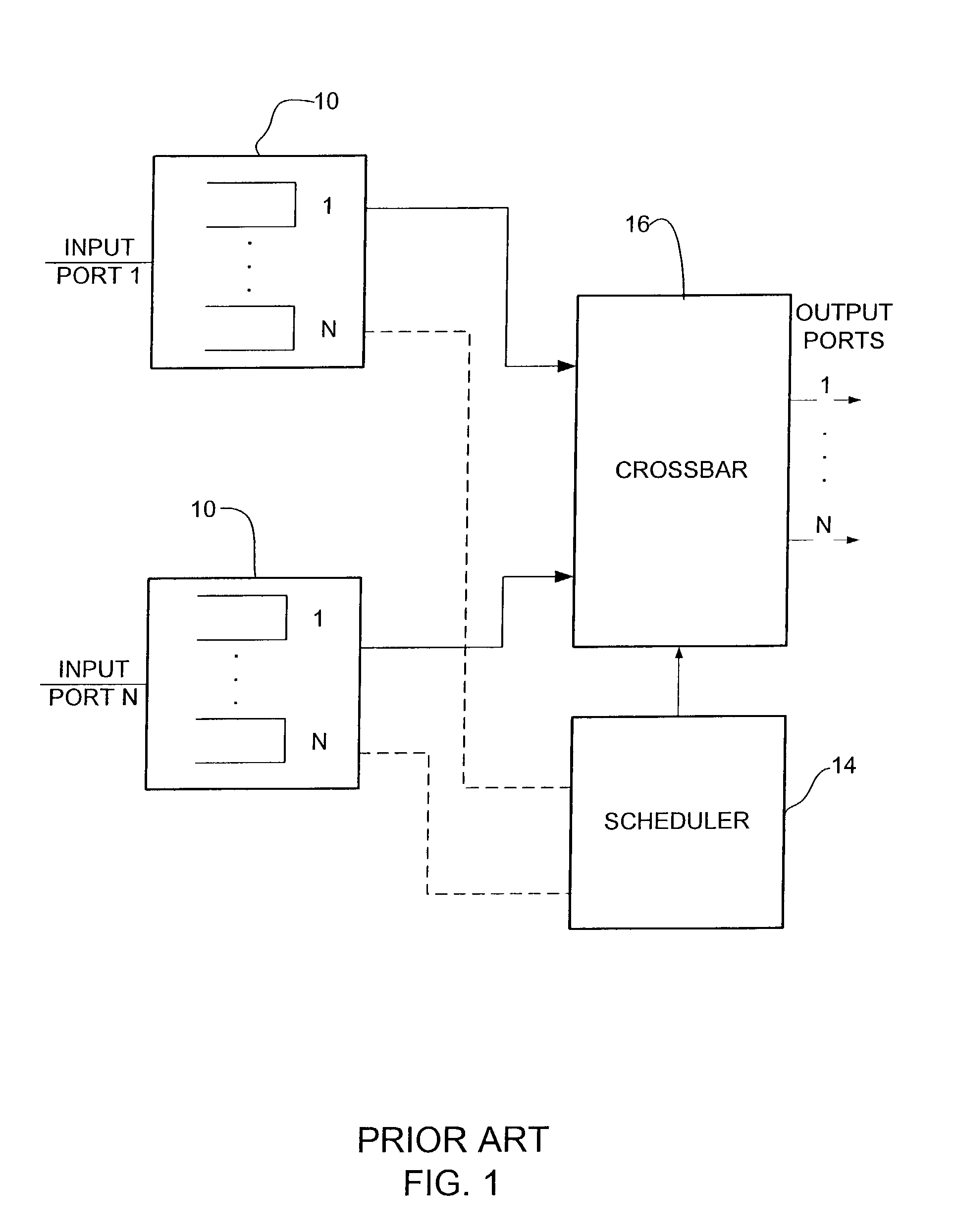

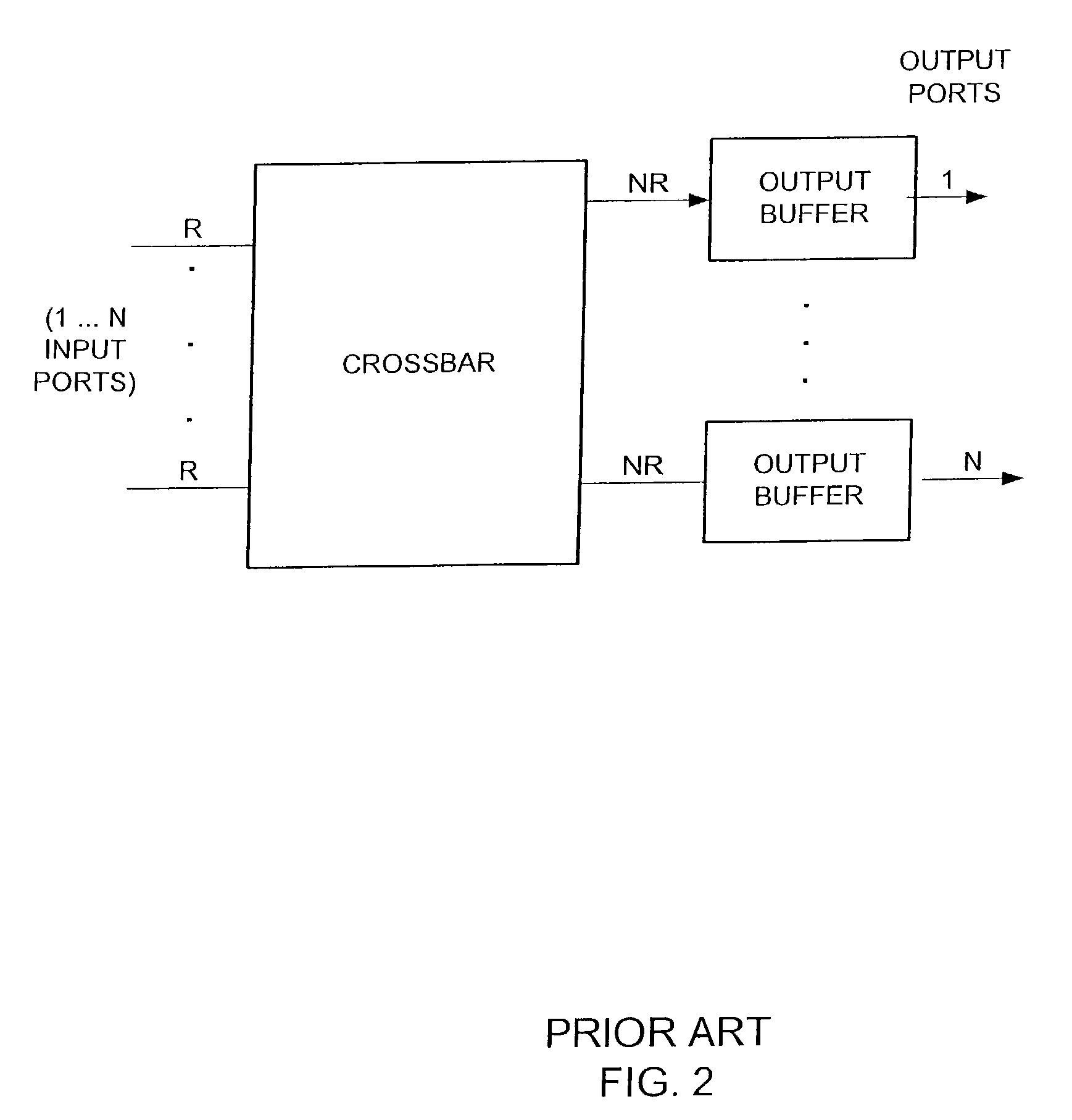

Virtual output queueing (VOQ) is a technique used in certain network switch architectures where, rather than keeping all traffic in a single queue, separate queues are maintained for each possible output location. It addresses a common problem known as head-of-line blocking.

Packet sequence maintenance with load balancing, and head-of-line blocking avoidance in a switch

ActiveUS20050002334A1Reduce memory sizeImprove balanceError preventionFrequency-division multiplex detailsLoad SheddingHead-of-line blocking

Owner:POLYTECHNIC INST OF NEW YORK

Methods and apparatus for provisioning connection oriented, quality of service capabilities and services

InactiveUS20050089054A1Improve efficiencyReduce the amount requiredData switching by path configurationQuality of serviceGranularity

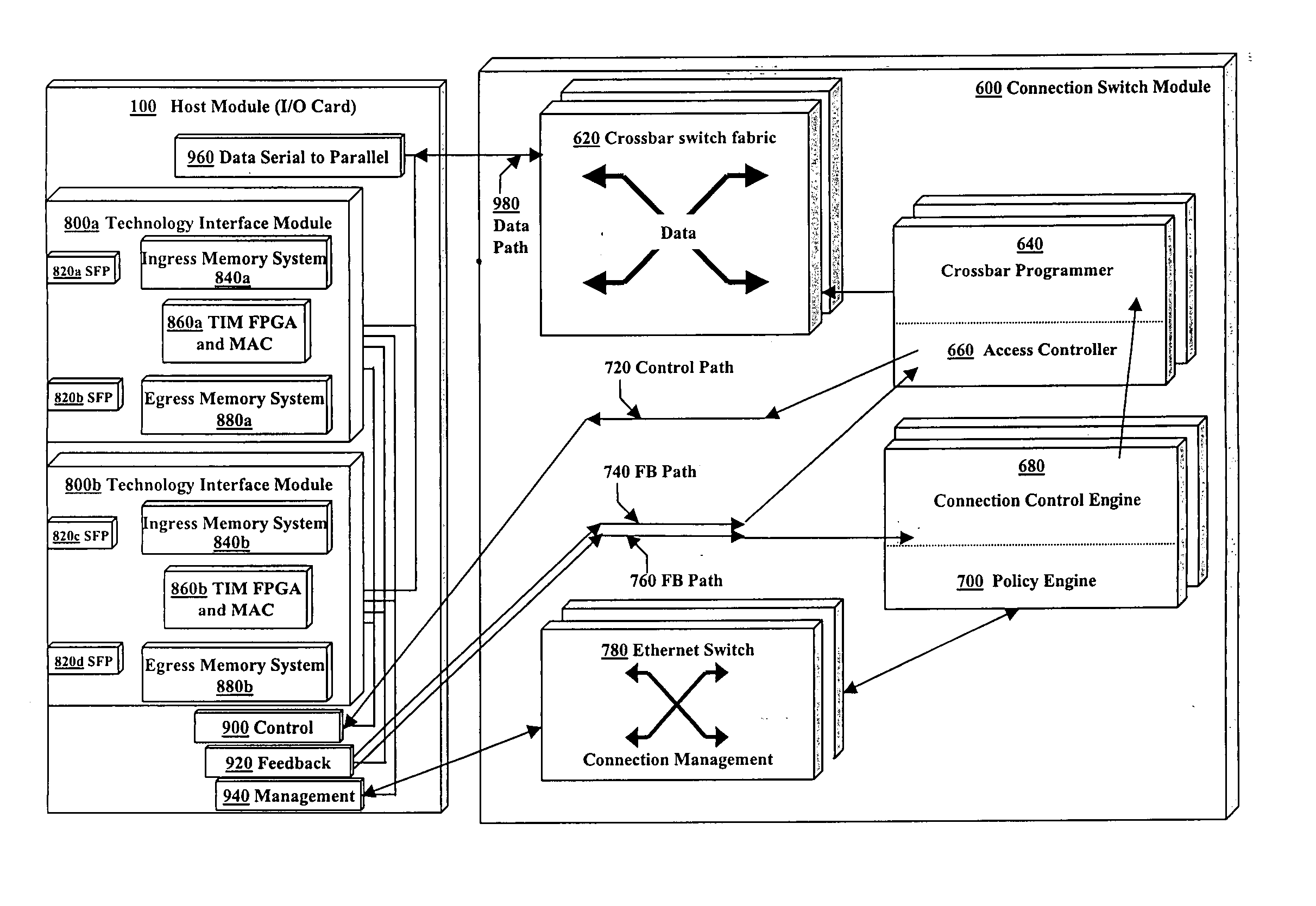

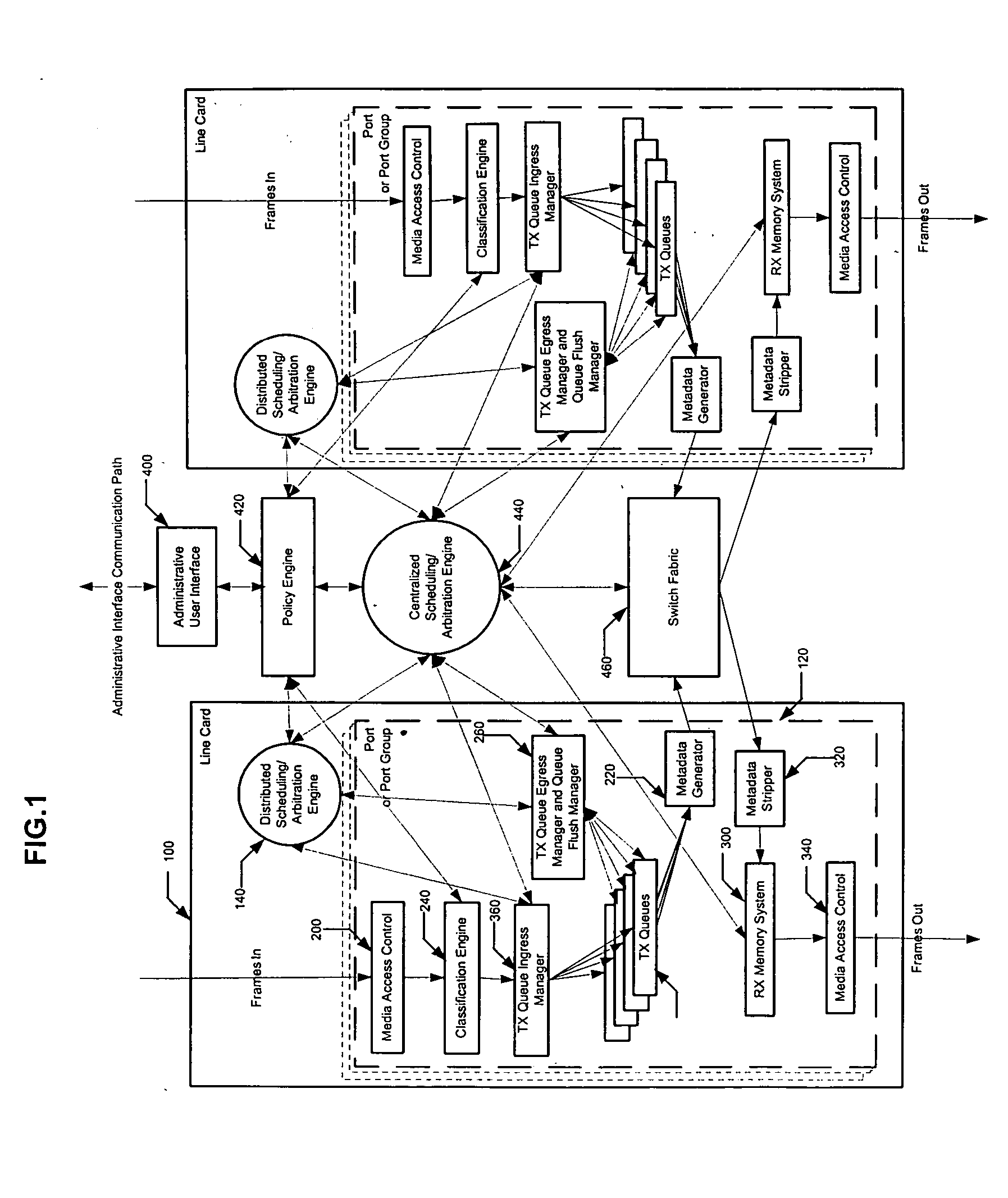

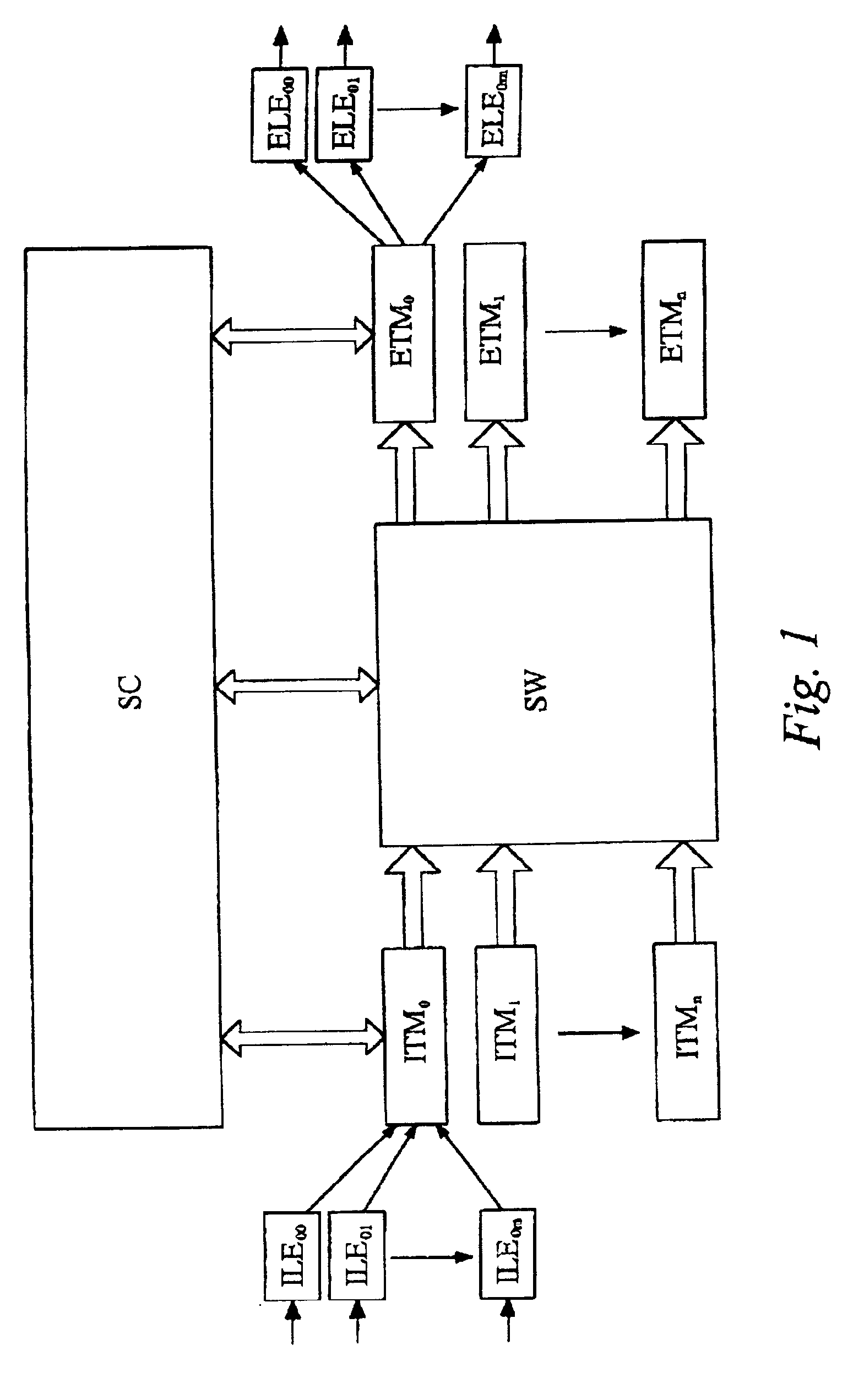

The present invention describes a system for providing quality of service (QoS) features in communications switching devices and routers. The QoS provided by this system need not be intrinsic to the communication protocols being transported through the network. Preferred embodiments also generate statistics with the granularity of the QoS. The system can be implemented in a single application-specific integrated circuit (ASIC), in a chassis-based switch or router, or in a more general distributed architecture. The system architecture is a virtual output queued (VOQed) crossbar. The administrator establishes policies for port pairs within the switch, and optionally with finer granularity. Frames are directed to unique VOQs based on both policy and protocol criteria. Policies are implemented by means of a scheduling engine that allocates time slices (minimum units of crossbar access).

Owner:NETWORK APPLIANCE INC

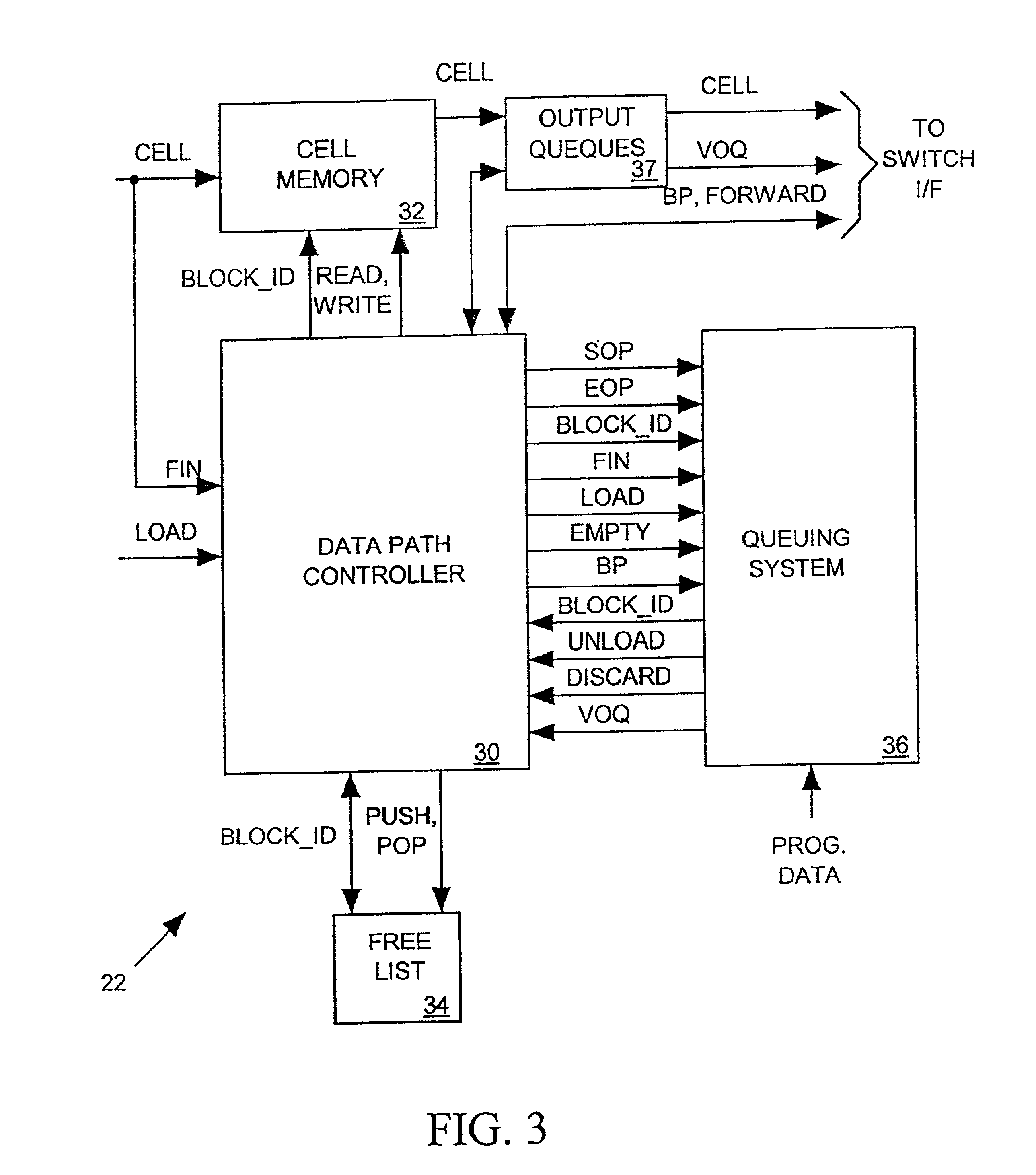

Traffic manager for network switch port

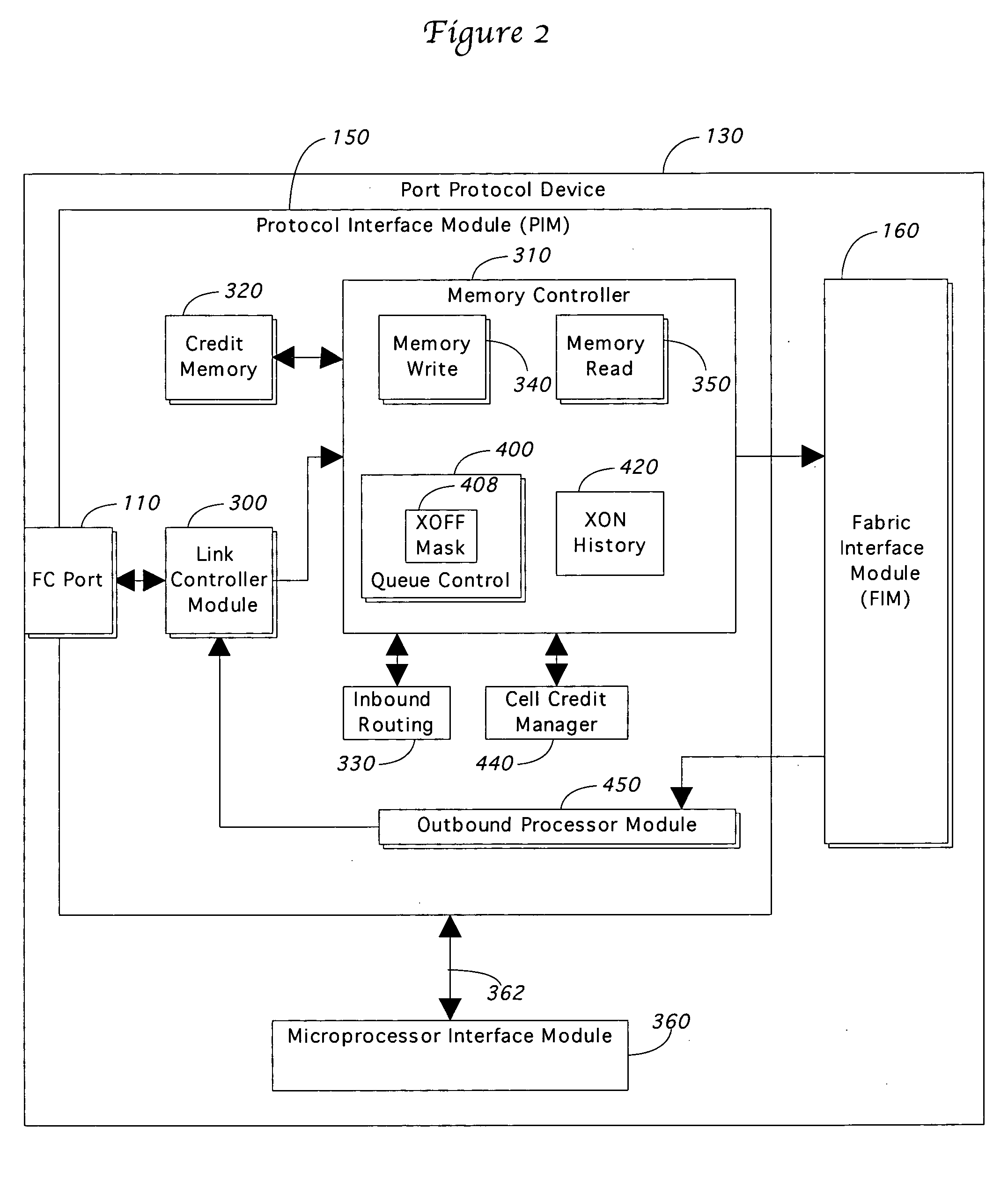

ActiveUS6959002B2Separate controlData switching by path configurationStore-and-forward switching systemsTraffic capacityNetwork switch

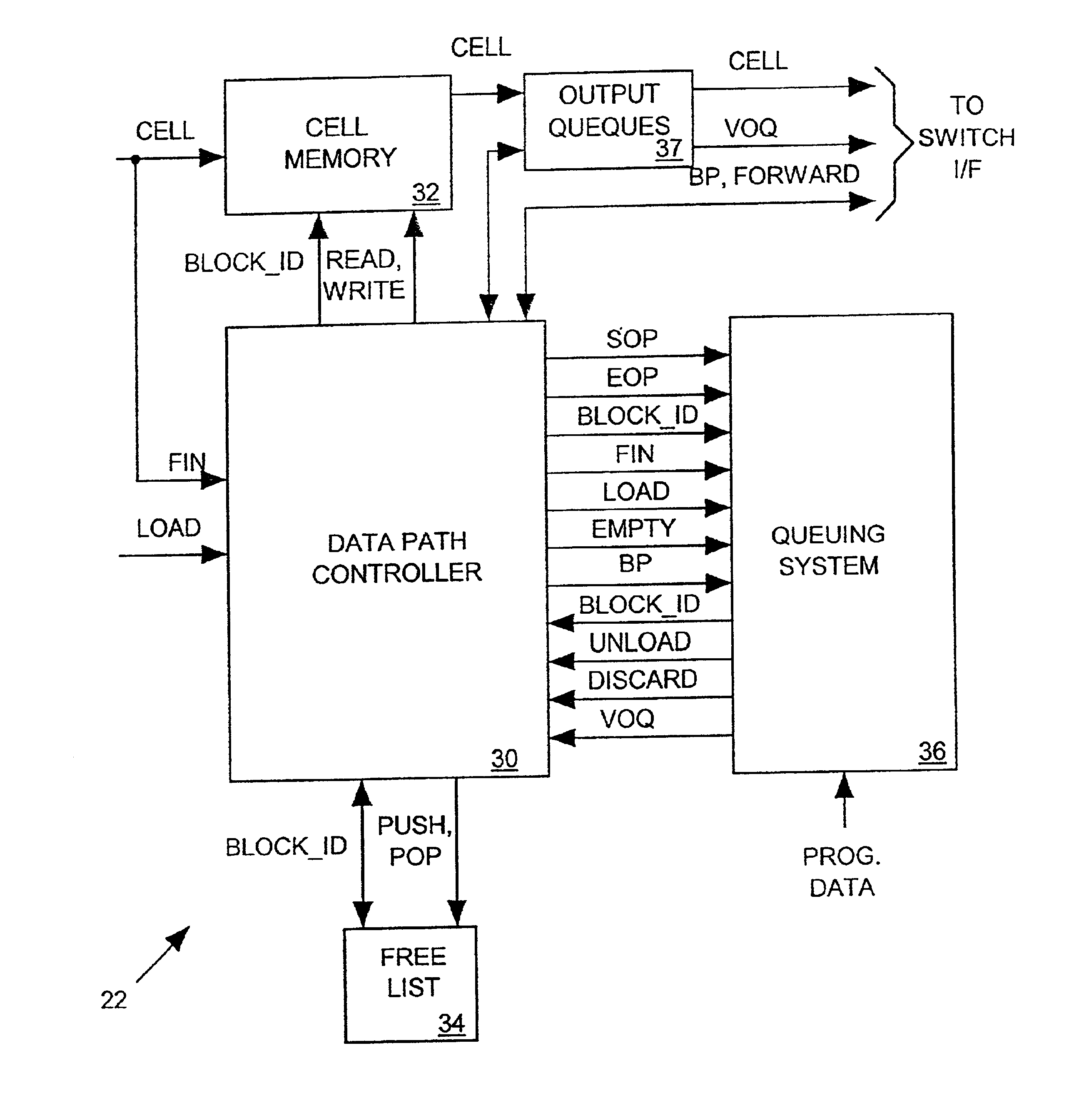

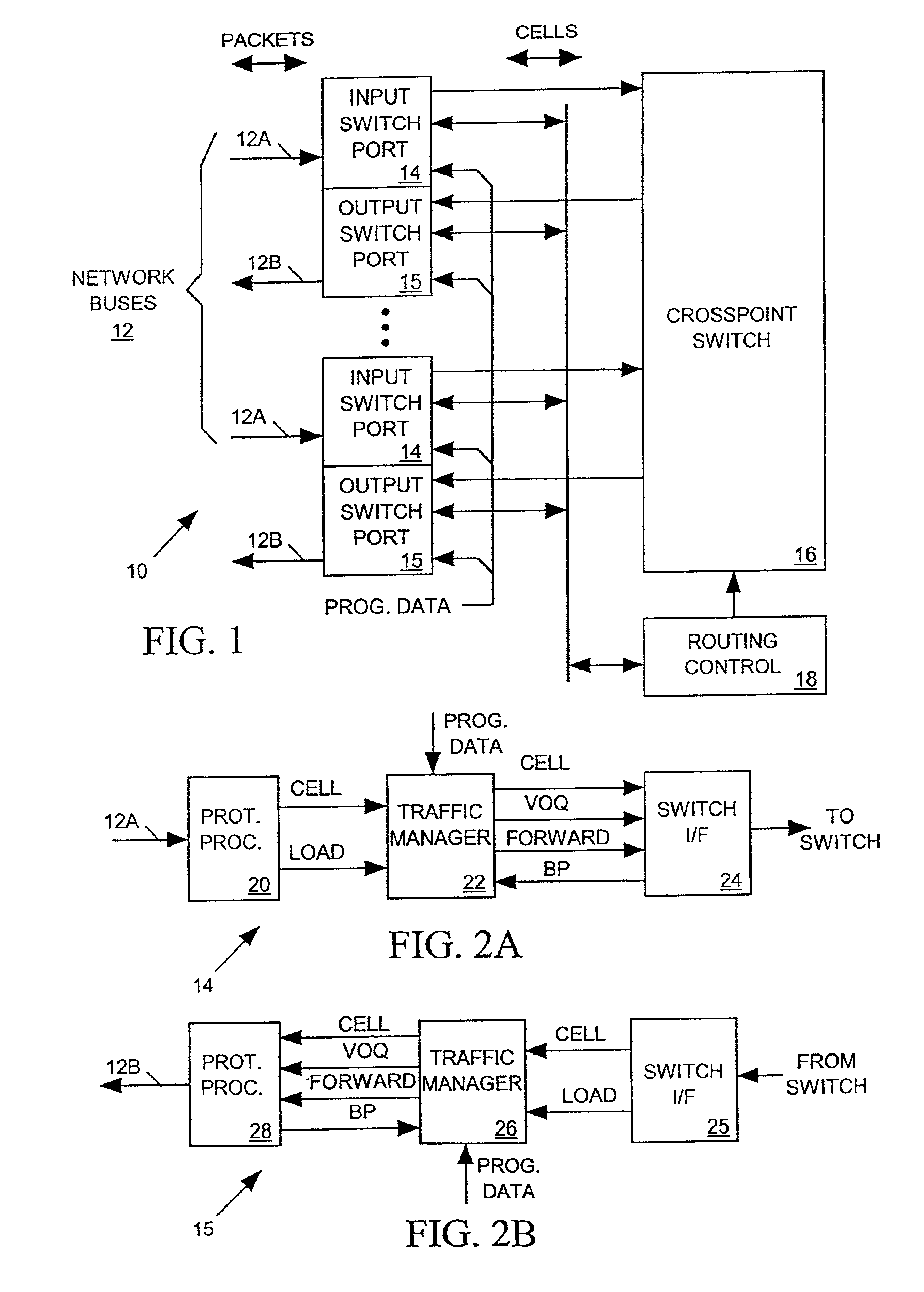

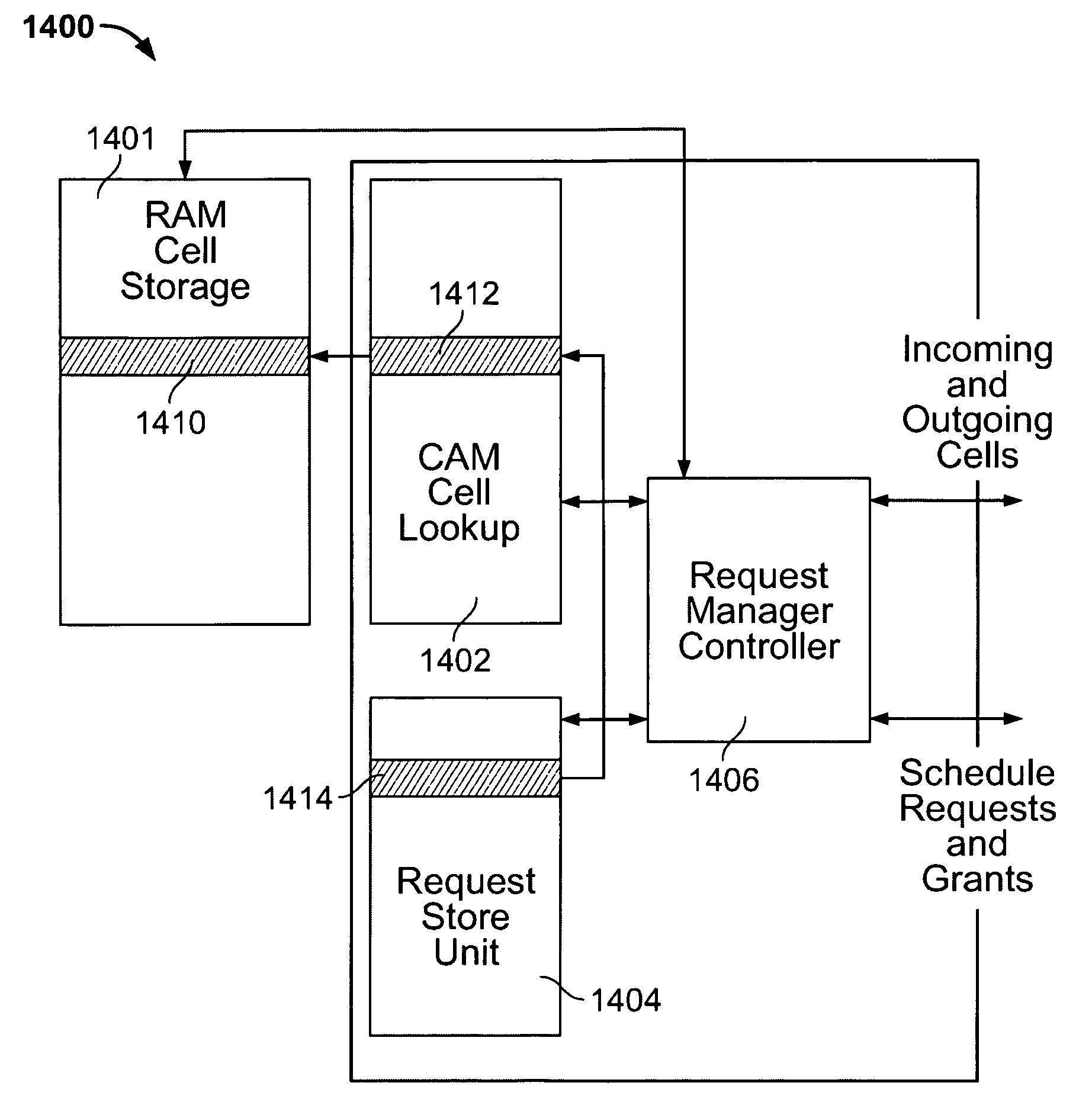

A traffic manager for a network switch input or output port stores incoming cells in a cell memory and later sends each cell out of its cell memory toward one of a set of forwarding resources such as, for example, another switch port or an output bus. Data in each cell references the particular forwarding resource to receive the cell. Each cell is assigned to one of several flow queues such that all cells assigned to the same flow queue are to be sent to the same forwarding resource. The traffic manager maintains a separate virtual output queue (VOQ) associated with each forwarding resource and periodically loads a flow queue (FQ) number identifying each flow queue into the VOQ associated with the forwarding resource that is to receive the cells assigned to that FQ. The traffic manager also periodically shifts an FQ ID out of each non-empty VOQ and forwards the longest-stored cell assigned to that FQ from the cell memory toward its intended forwarding resource. The traffic manager separately determines the rates at which it loads FQ IDs into VOQs and the rates at which it shifts FQ IDs out of each non-empty VOQ. Thus the traffic manager is able to separately control the rate at which cells of each flow queue are forwarded and the rate at which each forwarding resource receives cells.

Owner:ZETTACOM +1

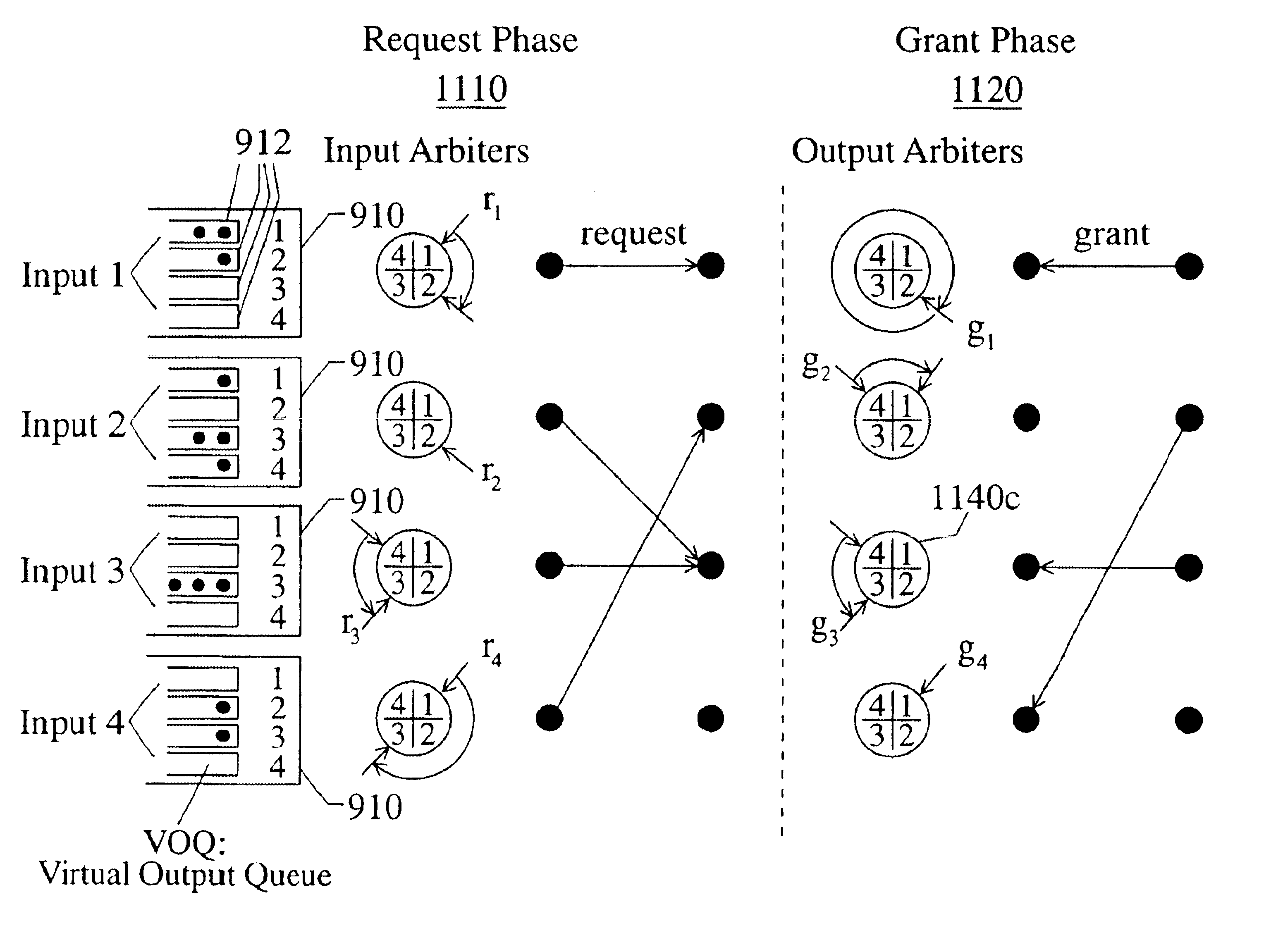

Methods and apparatus for arbitrating output port contention in a switch having virtual output queuing

InactiveUS6667984B1Implementation reasonableReasonable timeData switching by path configurationTime-division multiplexing selectionComputer networkTelecommunications

A dual round robin arbitration technique for a switch in which input ports include virtual output queues. A first arbitration selects, for each of the input ports, one cell from among head of line cells of the virtual output queues to generate a first arbitration winning cell. Then, for each of the output ports, a second arbitration selects one cell from among the first arbitration winning cells requesting the output port.

Owner:POLYTECHNIC INST OF NEW YORK

Scalable crossbar matrix switching apparatus and distributed scheduling method thereof

InactiveUS20050152352A1Multiplex system selection arrangementsData switching by path configurationCrossover switchHigh velocity

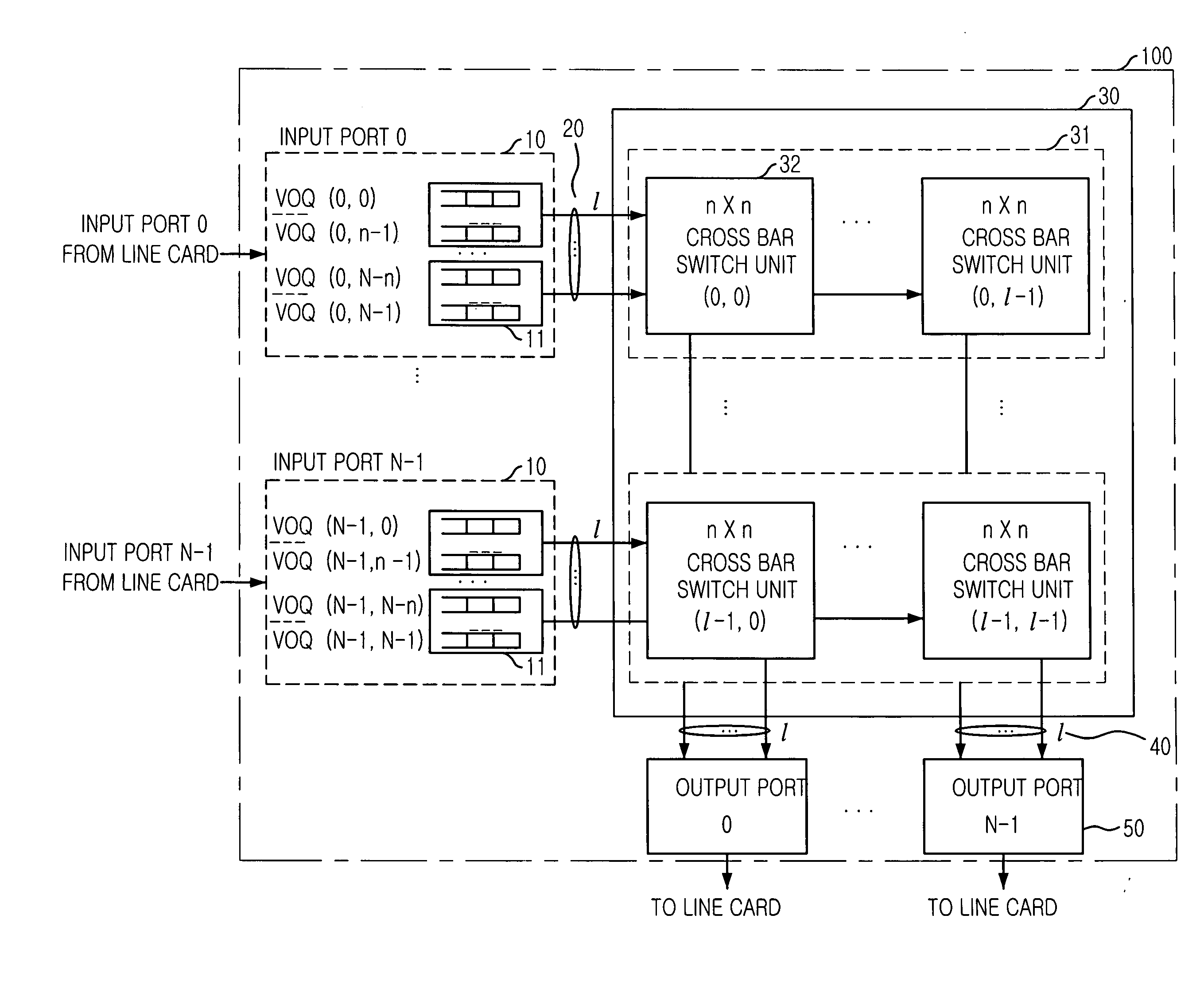

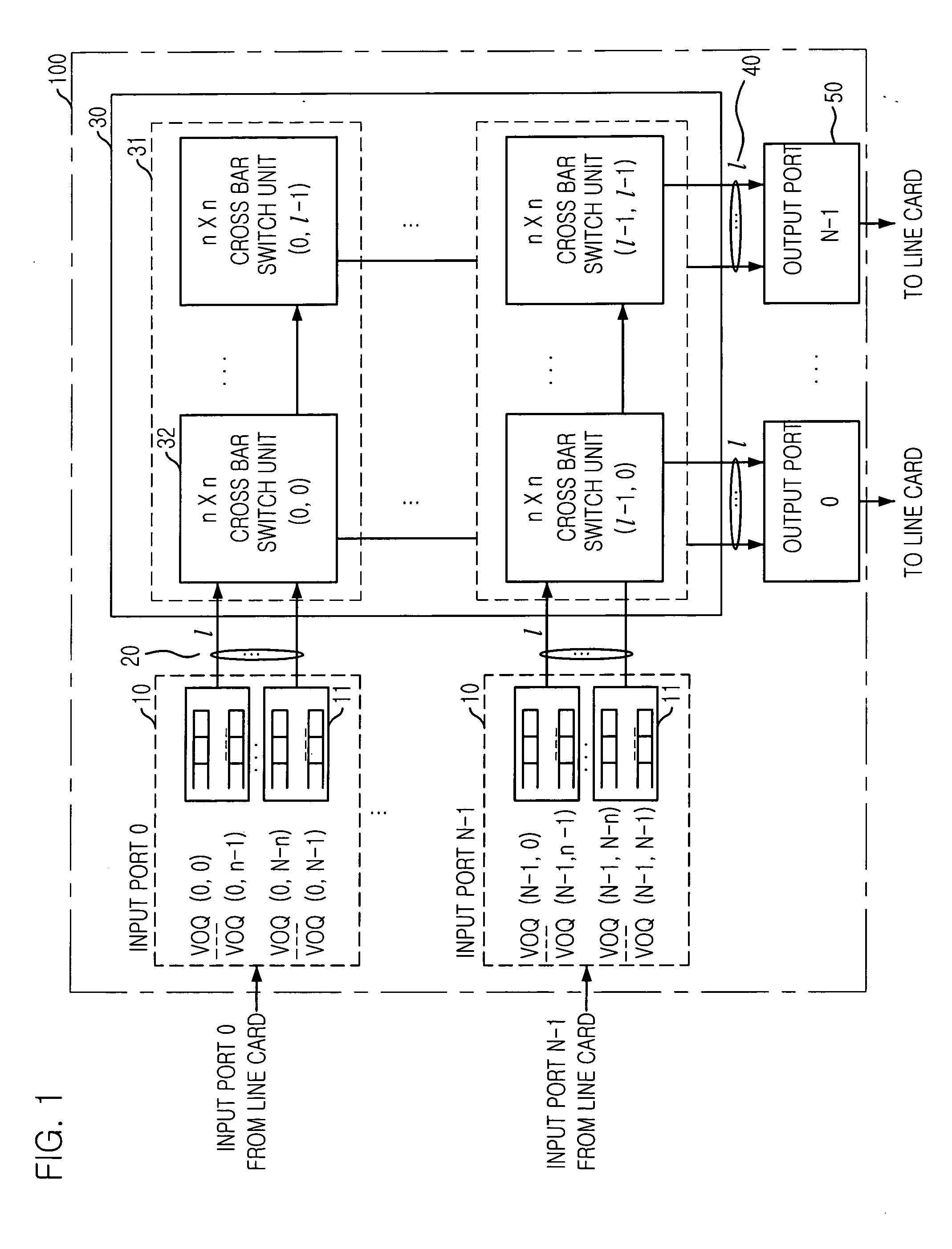

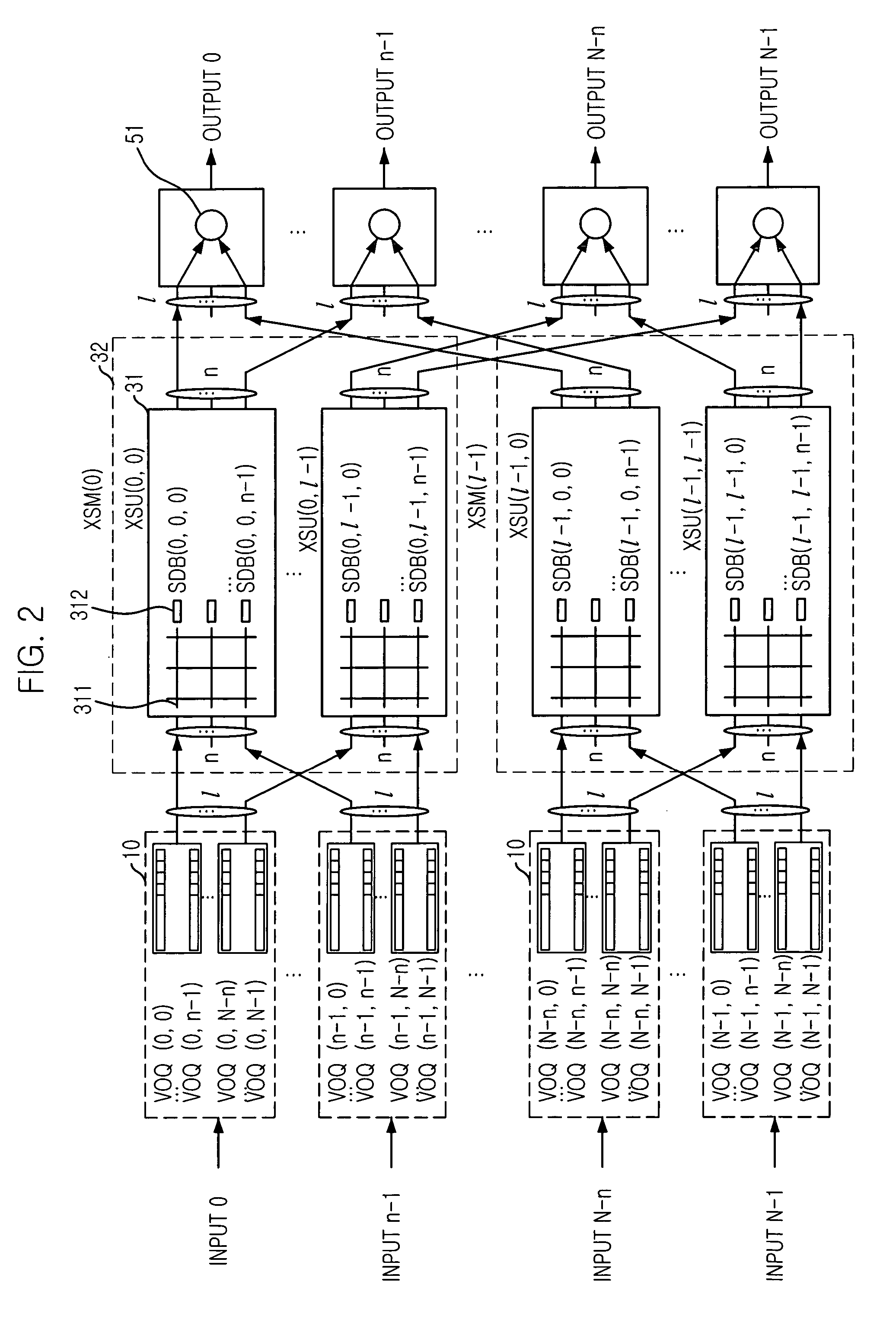

A high speed and high capacity switching apparatus is disclosed. The apparatus includes: N input ports each of which for outputting maximum l cells in a time slot, wherein each of the N input ports includes N virtual output queues (VOQs) which are grouped in l virtual output queues group with n VOQs; N×N switch fabric having l2 crossbar switch units for scheduling cells inputted from N input ports based on a first arbitration function based on a round-robin, wherein l VOQ groups are connected to l XSUs; and N output ports connected to l XSUs for selecting one cell from l XSUs in a cell time slot by scheduling cells by a second arbitration function based on a backlog weighed round-robin, which operates independently of the first arbitration function, and transferring the selected cell to its output link.

Owner:ELECTRONICS & TELECOMM RES INST

Configurable virtual output queues in a scalable switching system

InactiveUS7046687B1Reduce memoryReduce logic requirementData switching by path configurationParallel computingVirtual Output Queues

Configurable virtual output queues (VOQs) in a scalable switching system and methods of using the queues are provided. The system takes advantage of the fact that not all VOQs are active or need to exist at one time. Thus, the system advantageously uses configurable VOQs and may not dedicate memory space and logic to all possible VOQs at one time.

Owner:TAU NETWORKS A CALIFORNIA

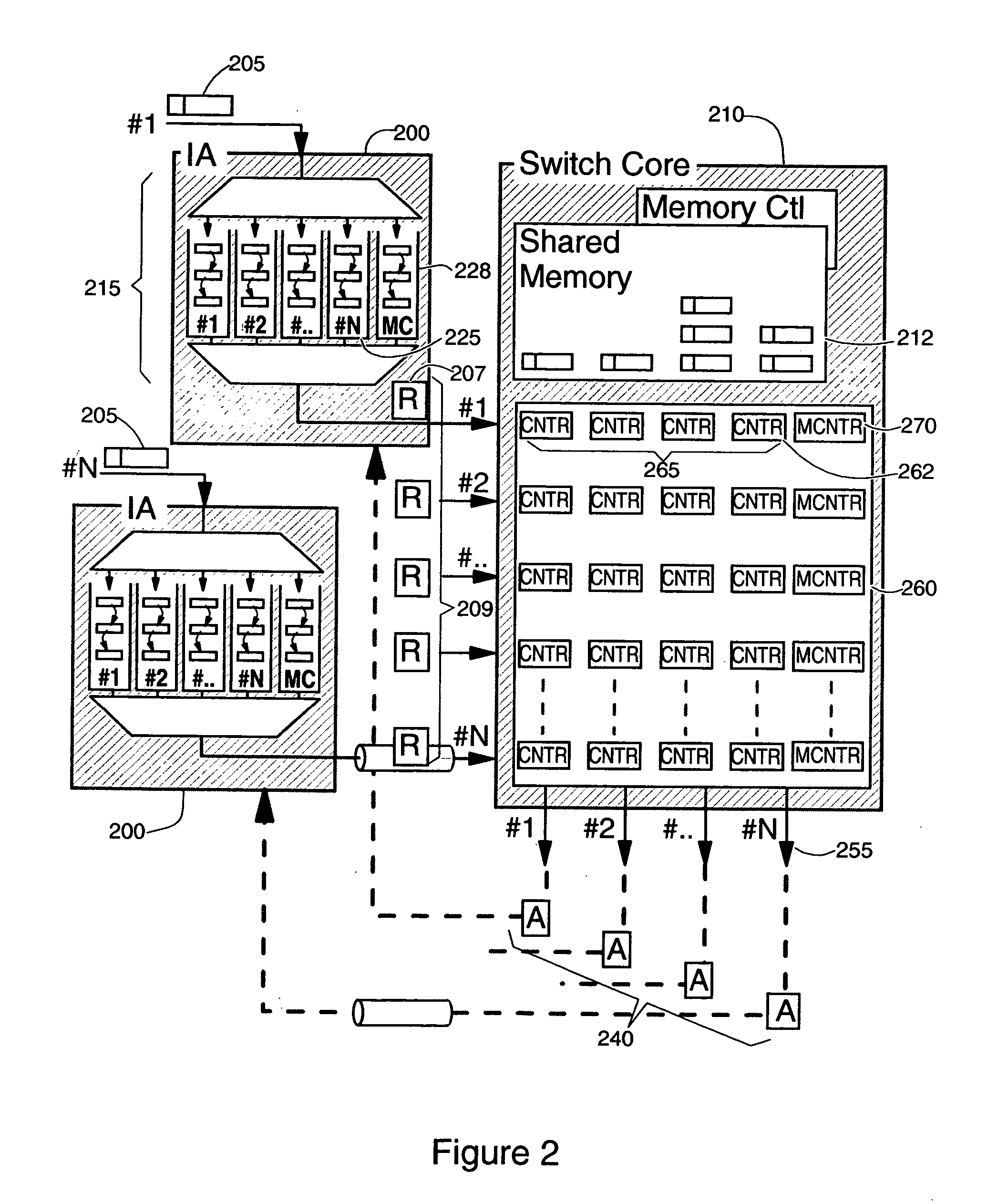

System and method for handling multicast traffic in a shared buffer switch core collapsing ingress VOQ's

InactiveUS20050036502A1Maintenance operationWithout impacting performanceTime-division multiplexLoop networksTraffic capacityTraffic congestion

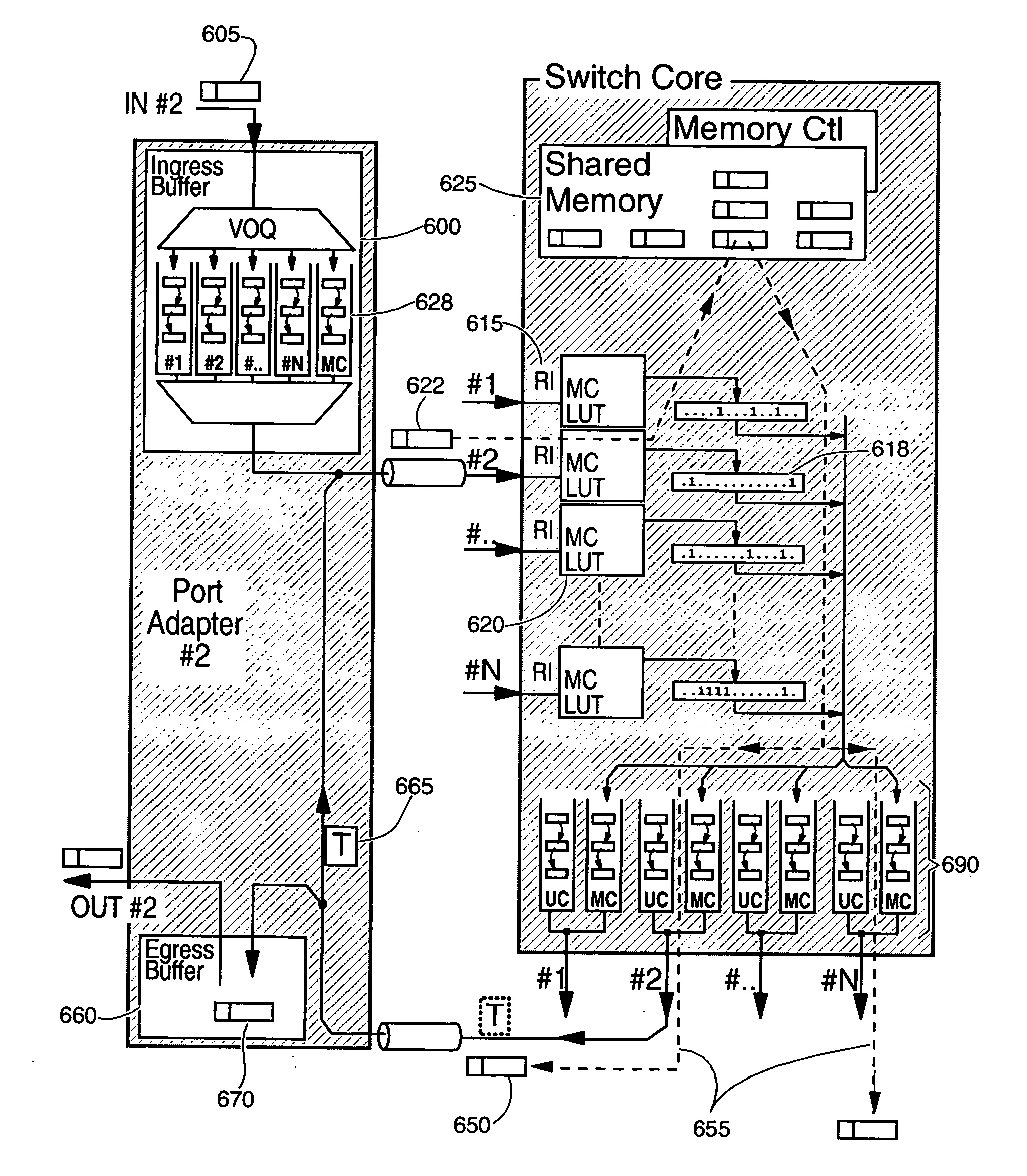

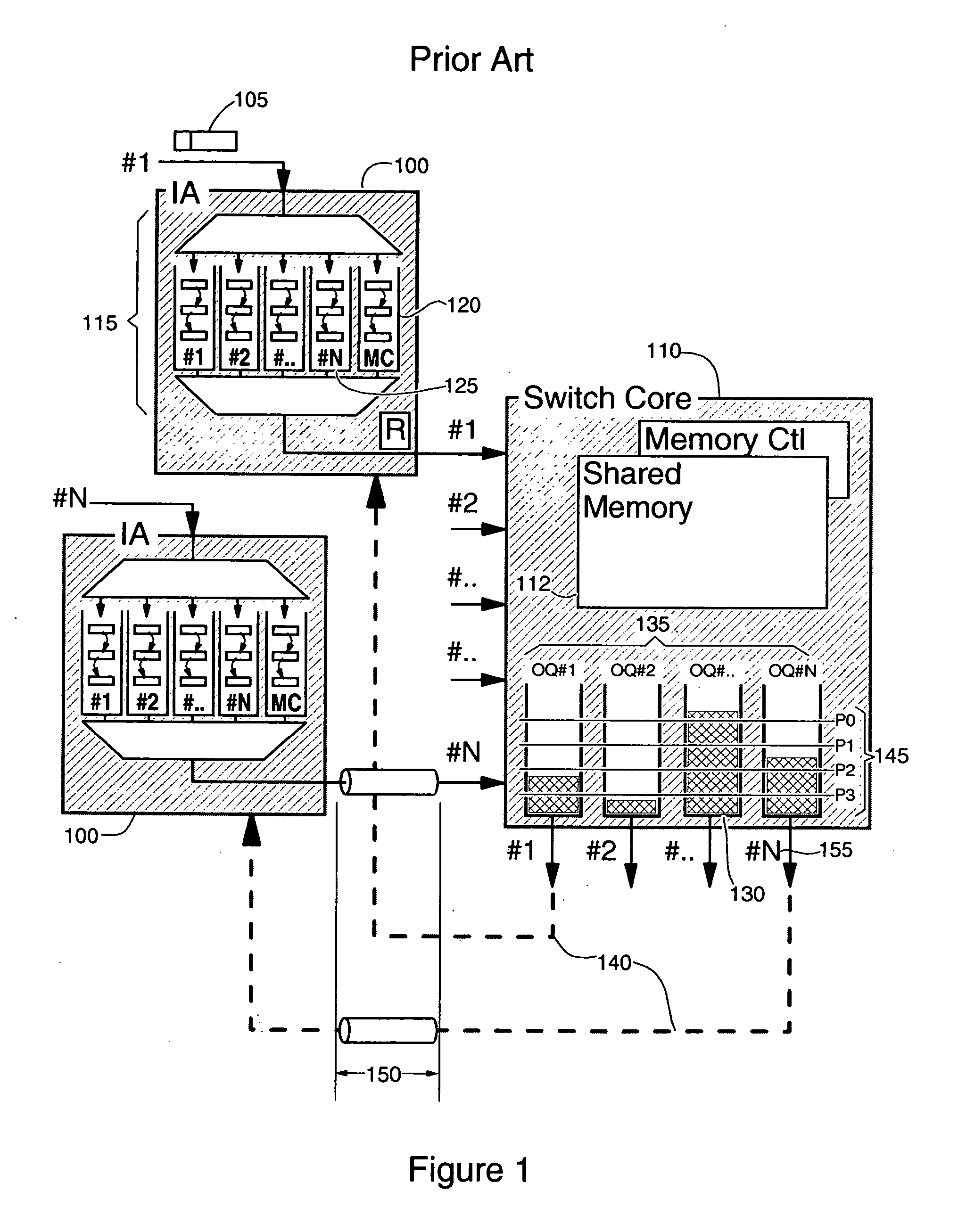

A system and a method to avoid packet traffic congestion in a shared memory switch core, while dramatically reducing the amount of shared memory in the switch core and the associated egress buffers and handling unicast as well as multicast traffic. According to the invention, the virtual output queuing (VOQ) of all ingress adapters of a packet switch fabric are collapsed into its central switch core to allow an efficient flow control. The transmission of data packets from an ingress buffer to the switch core is subject to a mechanism of request / acknowledgment. Therefore, a packet is transmitted from a virtual output queue to the memory shared switch core only if the switch core can send it to the corresponding egress buffer. A token based mechanism allows the switch core to determine the egress buffer's level of occupation. Therefore, since the switch core knows the states of the input and output adapters, it is able to optimize packet switching and to avoid packet congestion. Furthermore, since a packet is admitted in the switch core only if it can be transmitted to the corresponding egress buffer, the shared memory is reduced.

Owner:IBM CORP

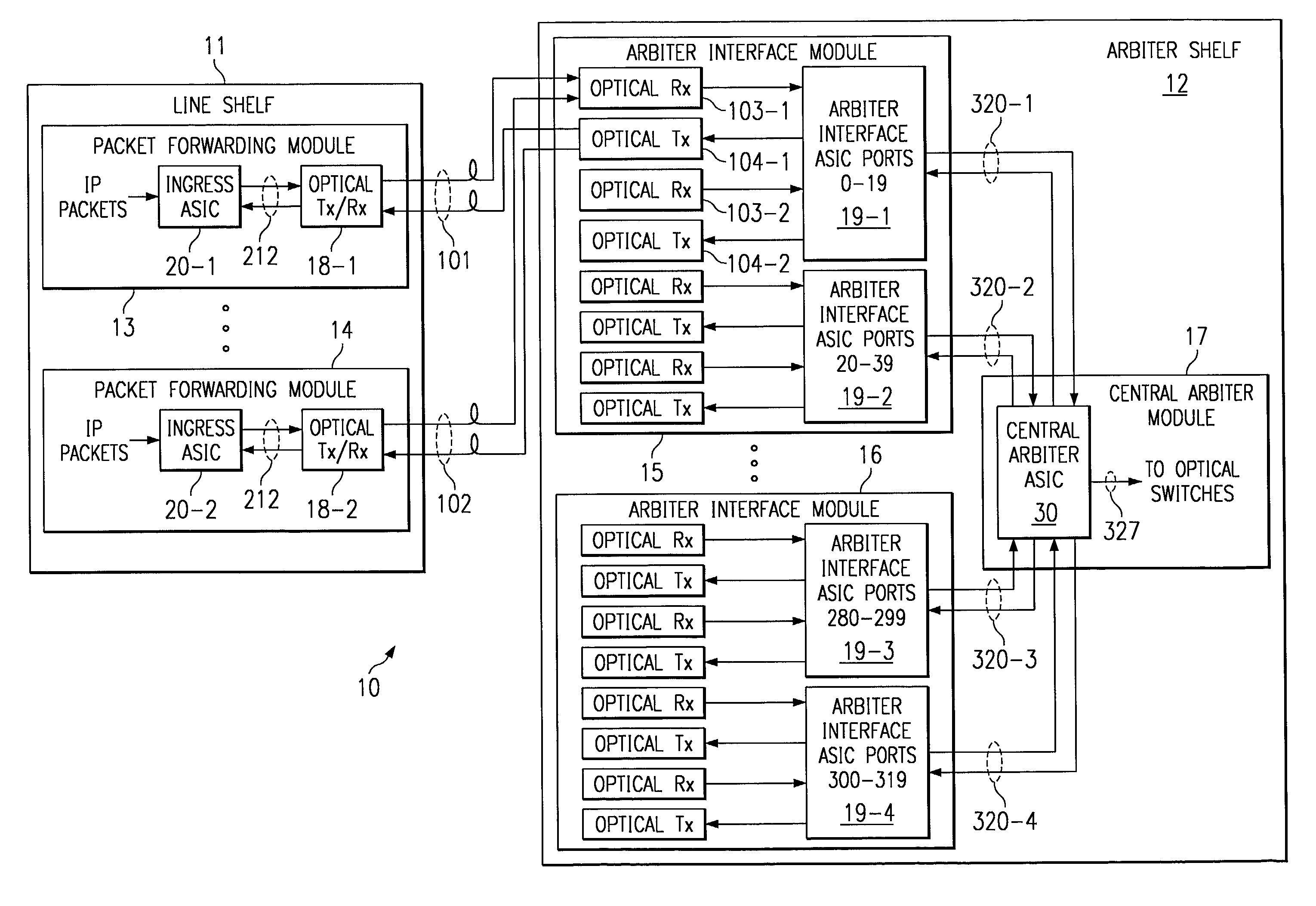

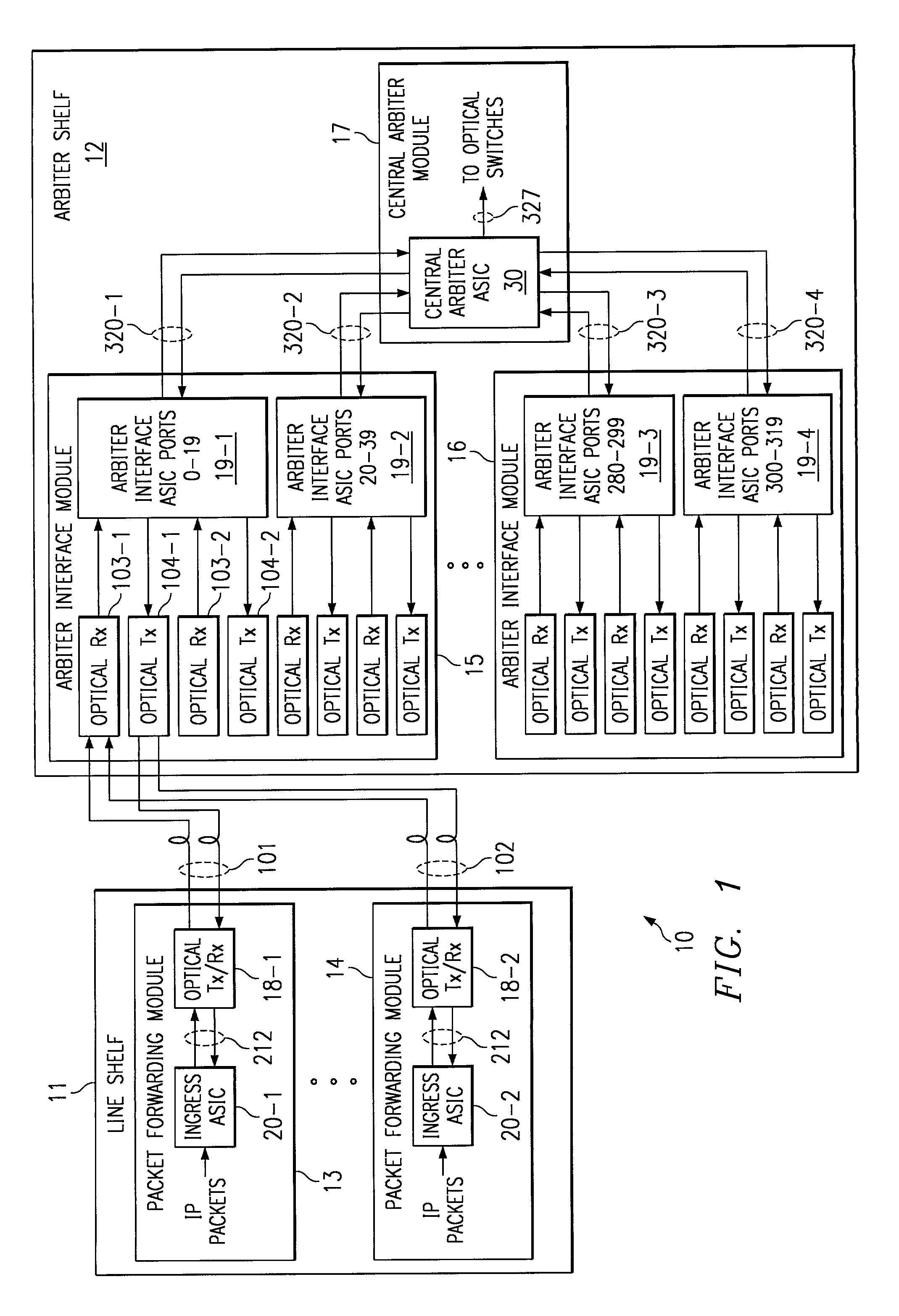

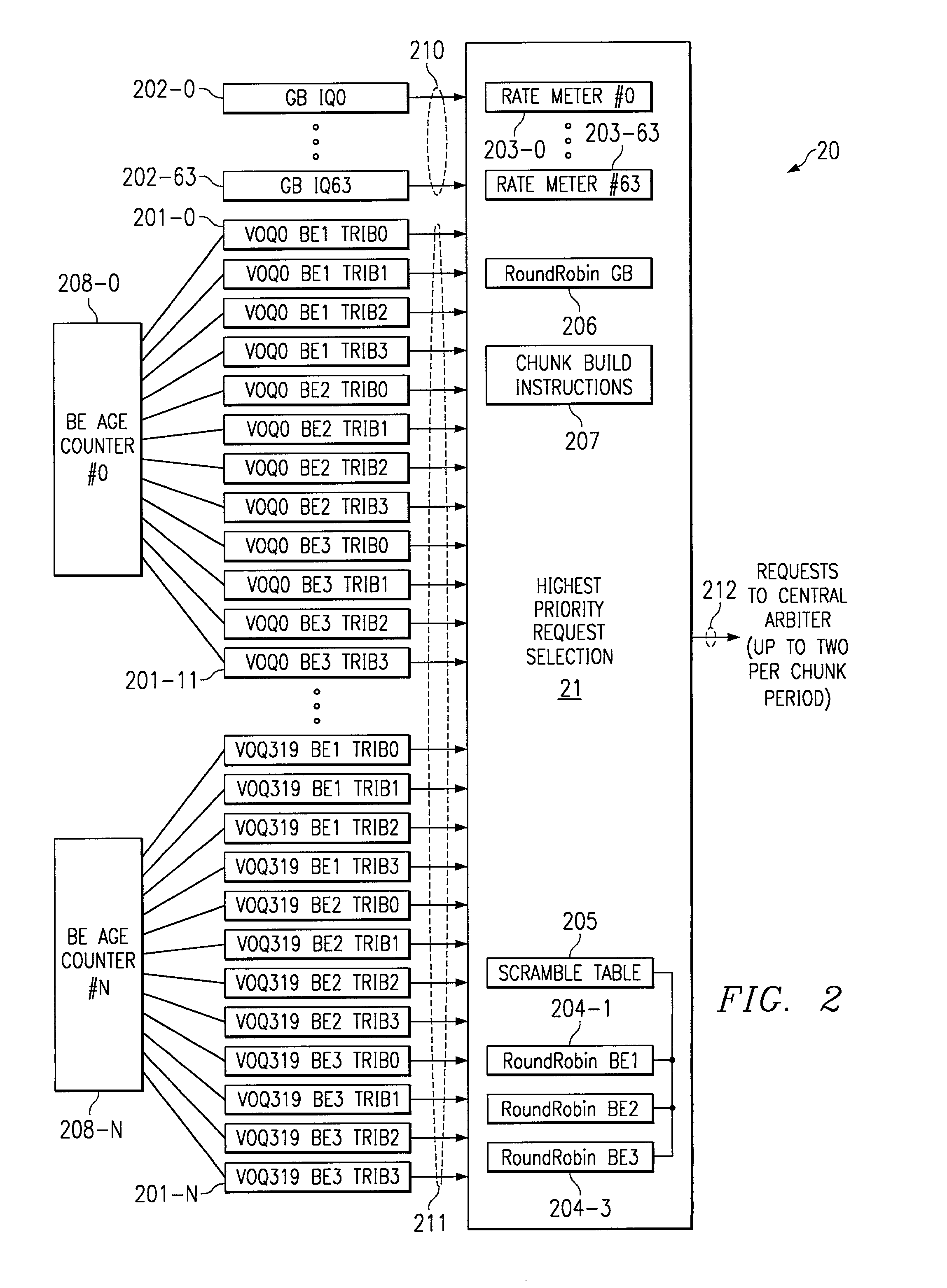

System and method for router central arbitration

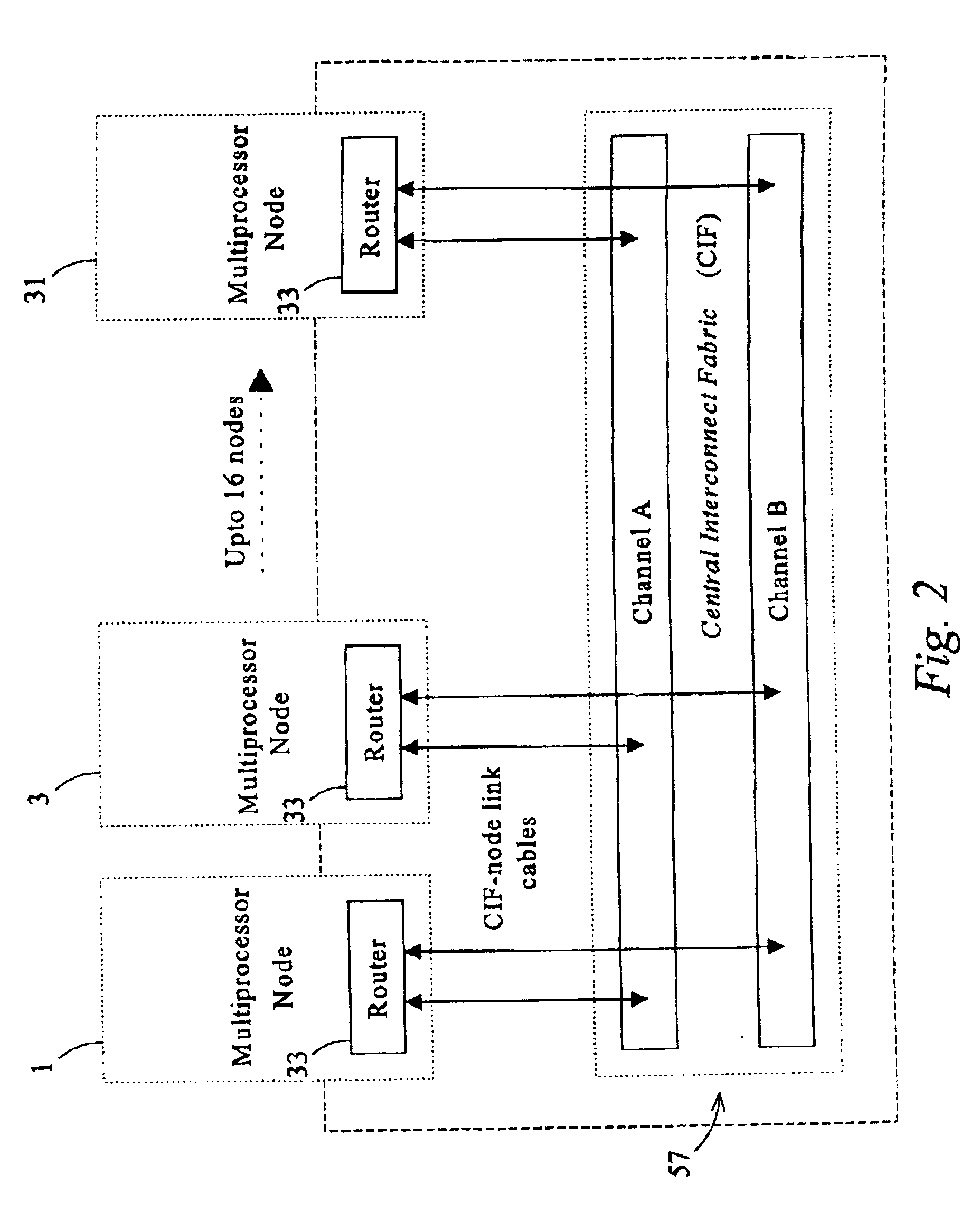

InactiveUS7133399B1Maximizing number of connectionMultiplex system selection arrangementsCircuit switching systemsCrossbar switchDistributed computing

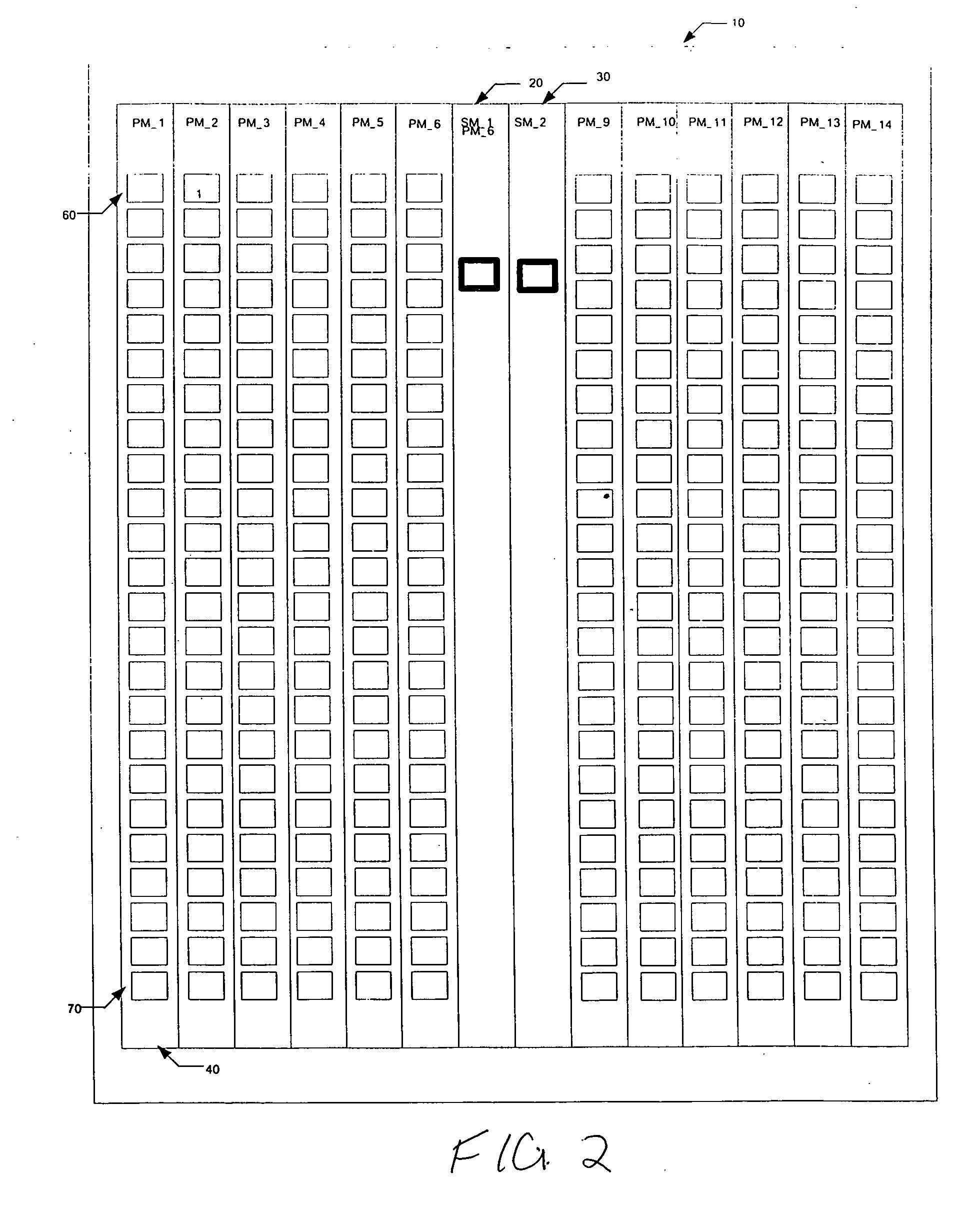

A centralized arbitration mechanism provides that a router switch fabric is configured in a consistent fashion. Remotely distributed packet forwarding modules determine which data chunks are ready to go through the optical switch and communicates this to the central arbiter. Each packet forwarding module has an ingress ASIC containing packet headers in roughly four thousand virtual output queues. Algorithms choose at most two chunk requests per chunk period to be sent to the arbiter, which queues up to roughly 24 requests per output port. Requests are sent through a Banyan network, which models the switch fabric and scales on the order of NlogN, where N is the number of router output ports. Therefore a crossbar switch function can be modeled up to the 320 output ports physically in the system, and yet have the central arbiter scale with the number of ports in a much less demanding way. An algorithm grants at most two requests per port in each chunk period and returns the grants to the ingress ASIC. Also for each chunk period the central arbiter communicates the corresponding switch configuration control information to the switch fabric.

Owner:BROCADE COMMUNICATIONS SYSTEMS

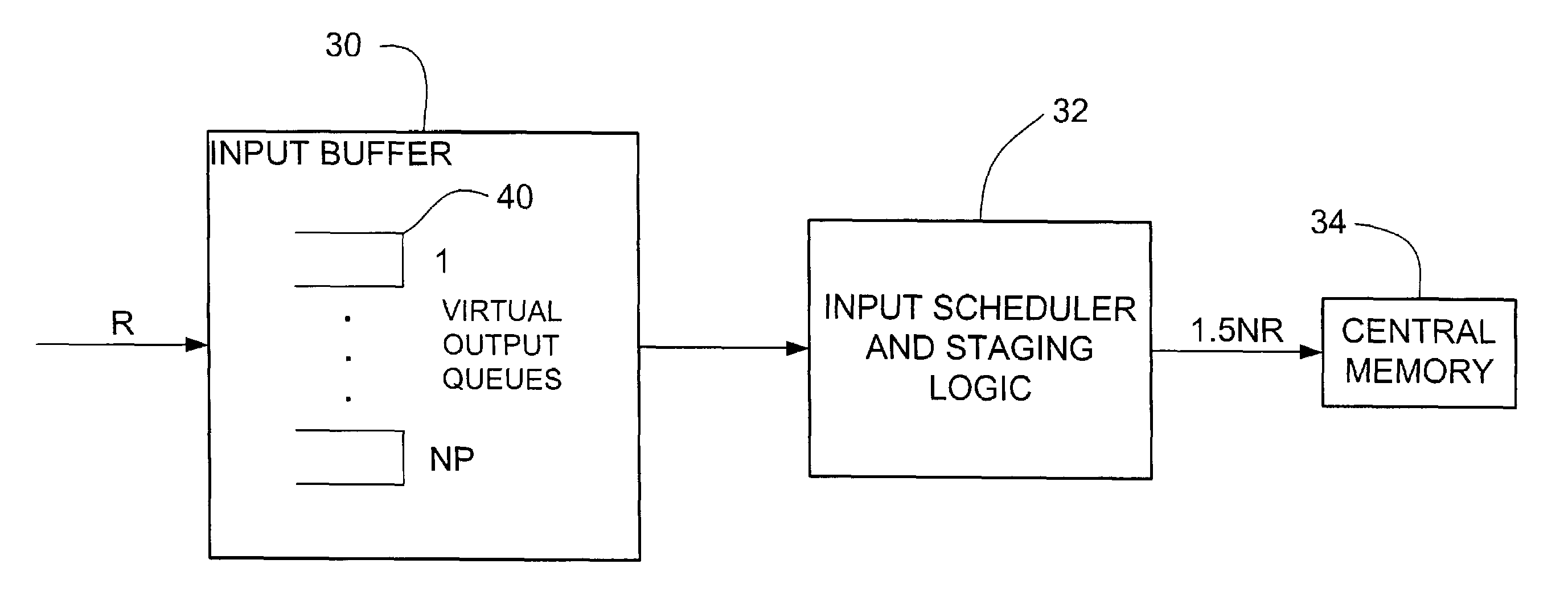

Centralized memory based packet switching system and method

ActiveUS7391786B1Data switching by path configurationStore-and-forward switching systemsData bufferVirtual Output Queues

A packet switching system and method are disclosed. The system includes a plurality of input and output ports and an input buffer at each of the input ports. The system further includes an input scheduler associated with each of the input buffers and a centralized memory shared by the output ports. An output buffer is located at each of the output ports and an output scheduler is associated with each of the output ports. Each of the input buffers comprises a plurality of virtual output queues configured to store a plurality of packets in a packed arrangement.

Owner:CISCO TECH INC

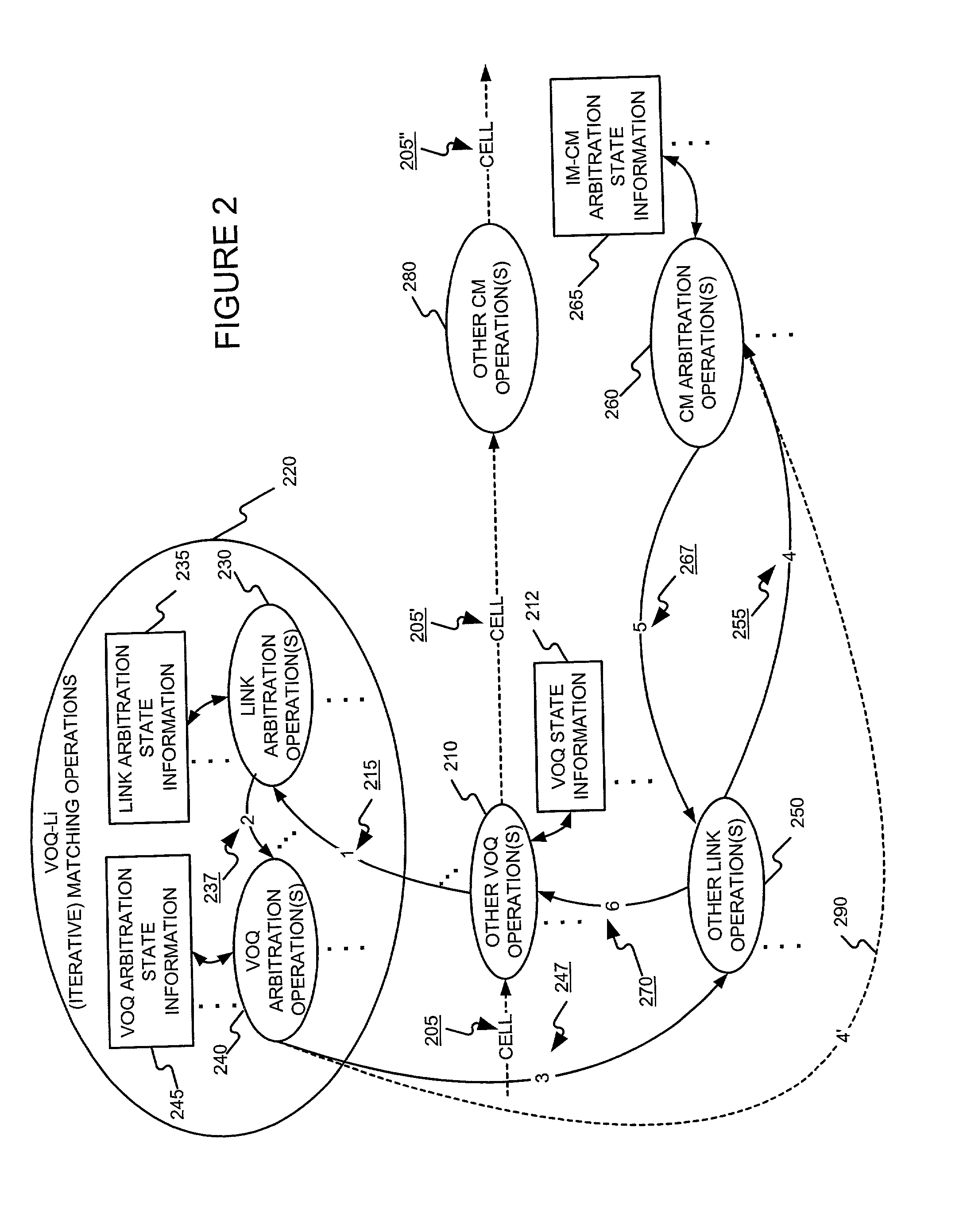

Scheduling the dispatch of cells in non-empty virtual output queues of multistage switches using a pipelined hierarchical arbitration scheme

InactiveUS20030021266A1Improve throughputMinimize timeData switching by path configurationCircuit switching systemsDistributed computingVirtual Output Queues

Owner:POLYTECHNIC INST OF NEW YORK

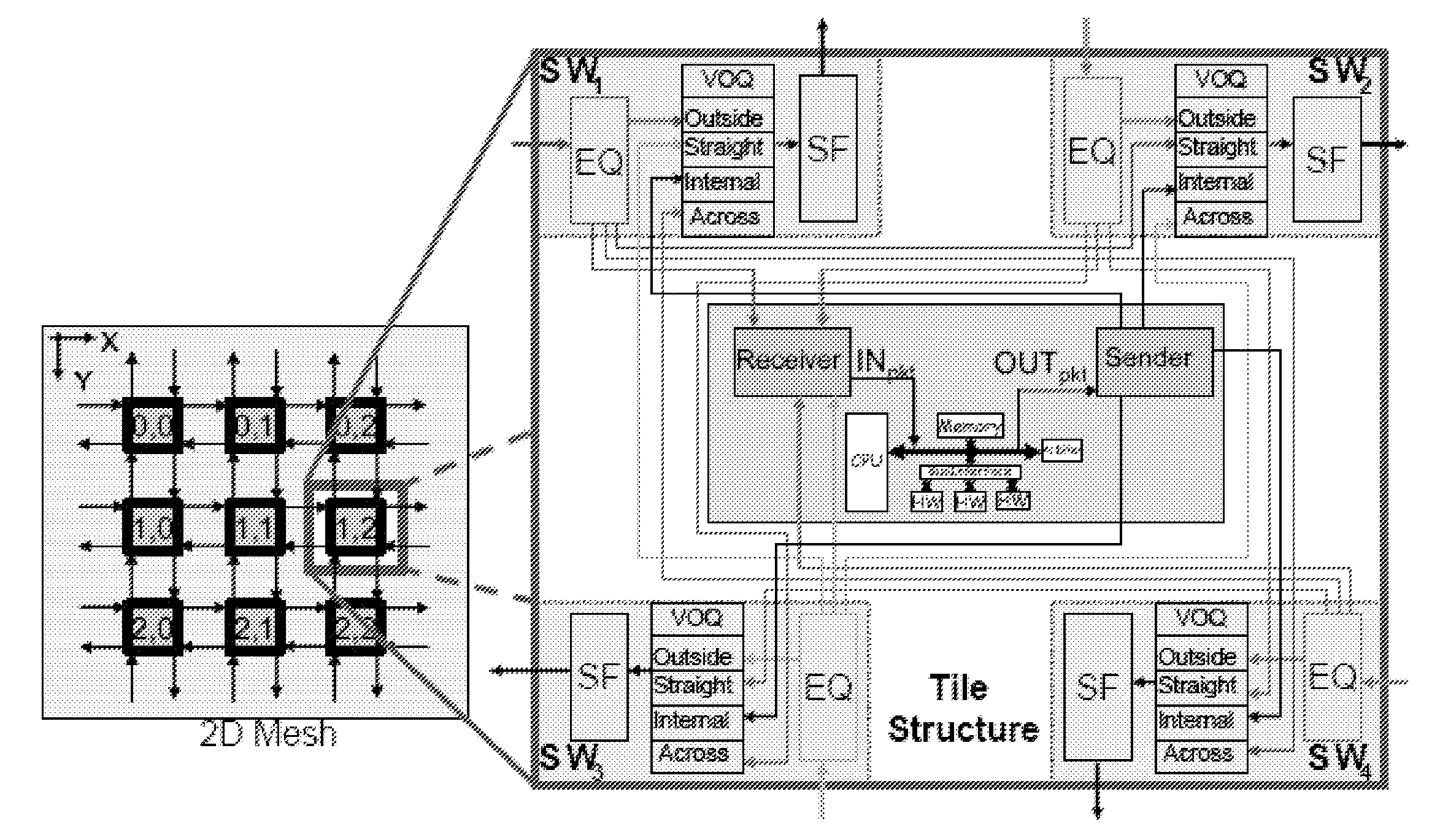

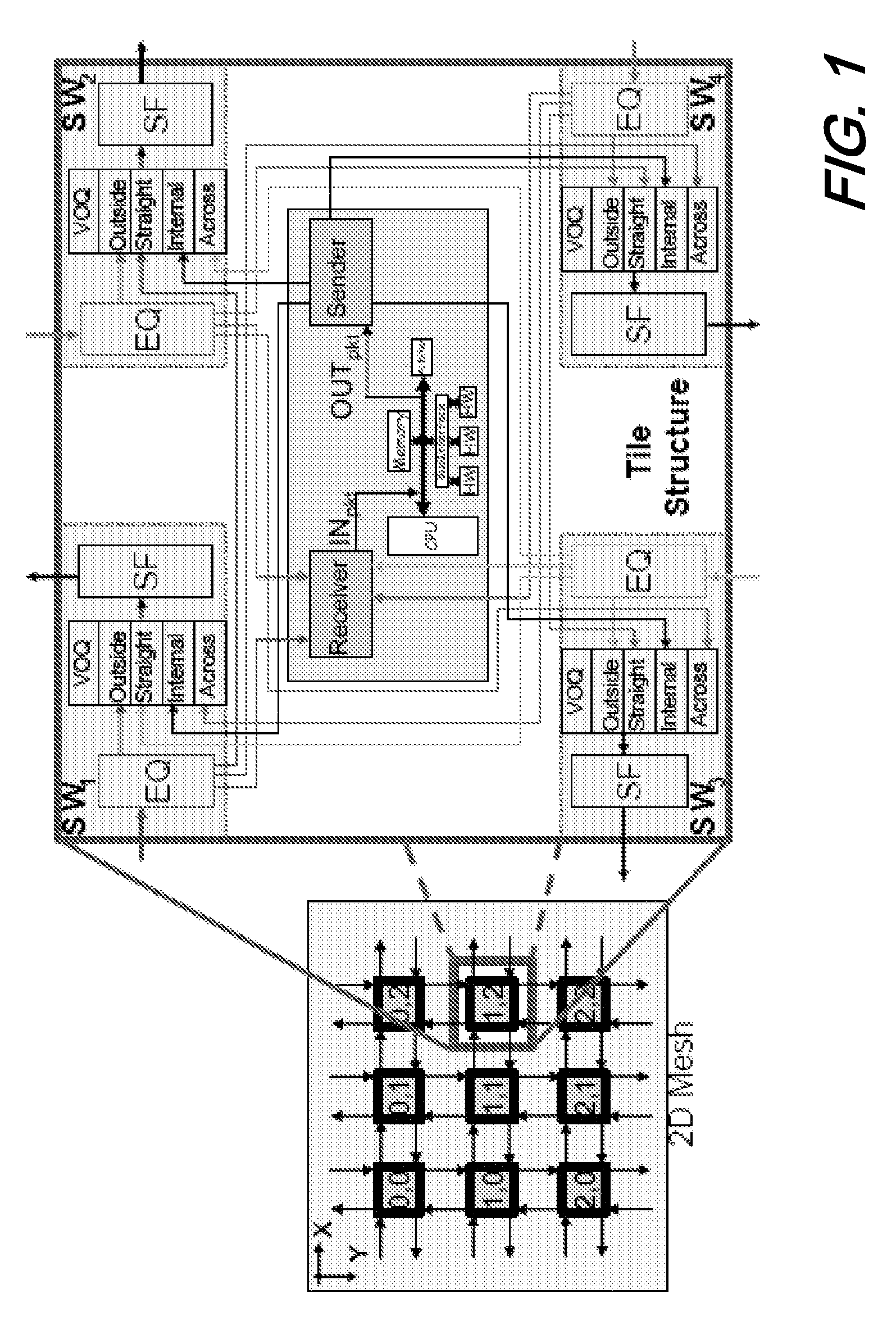

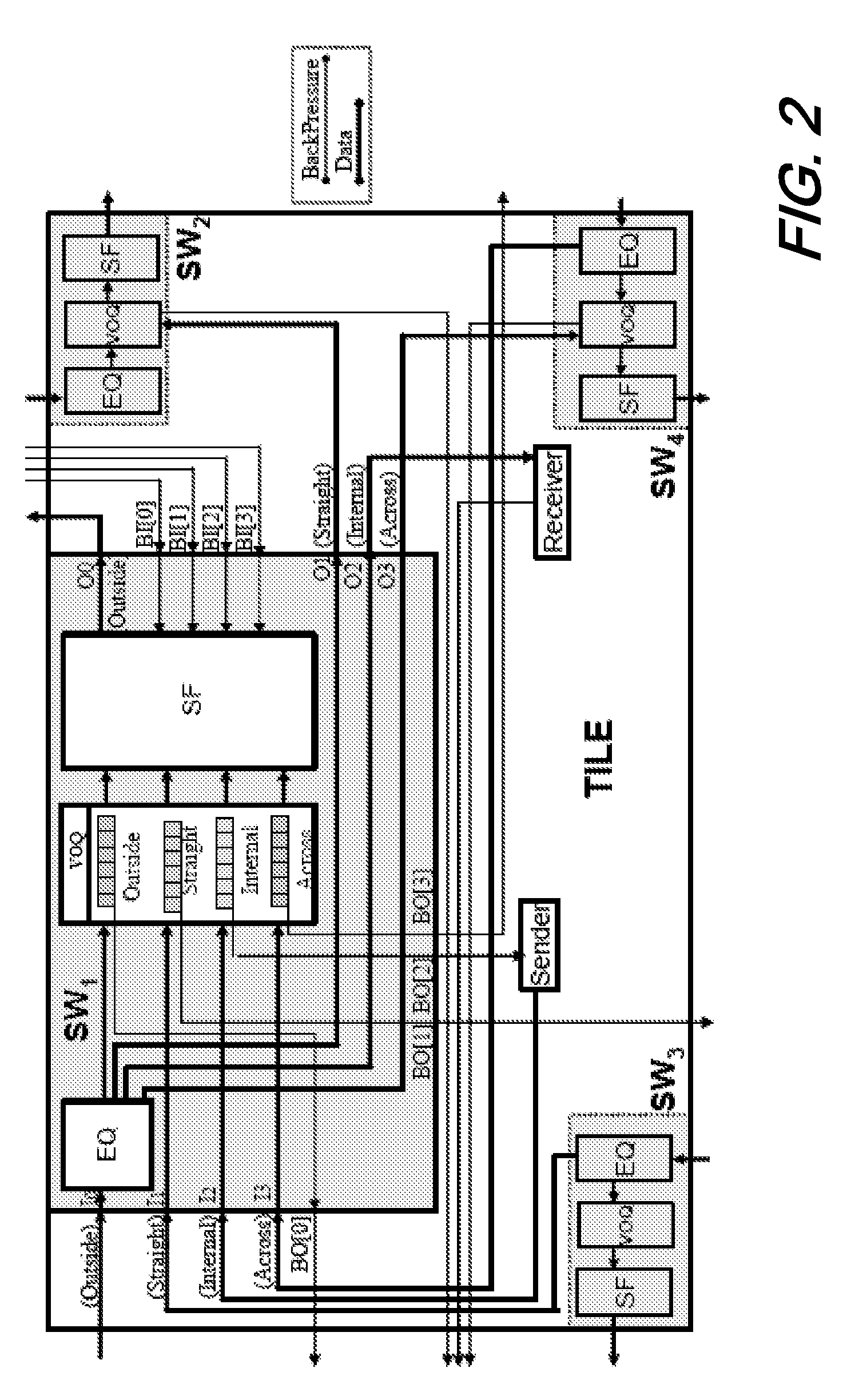

Flexible wrapper architecture for tiled networks on a chip

InactiveUS7502378B2Preserving chip spaceImprove communication efficiencySolid-state devicesData switching by path configurationNetwork onDistributed computing

A wrapper organization and architecture for networks on a chip employing an optimized switch arrangement with virtual output queuing and a backpressure mechanism for congestion control.

Owner:NEC CORP

Scheduling the dispatch of cells in non-empty virtual output queues of multistage switches using a pipelined hierarchical arbitration scheme

InactiveUS7046661B2Improve throughputMinimize timeData switching by path configurationCircuit switching systemsComputer scienceDistributed computing

Owner:POLYTECHNIC INST OF NEW YORK

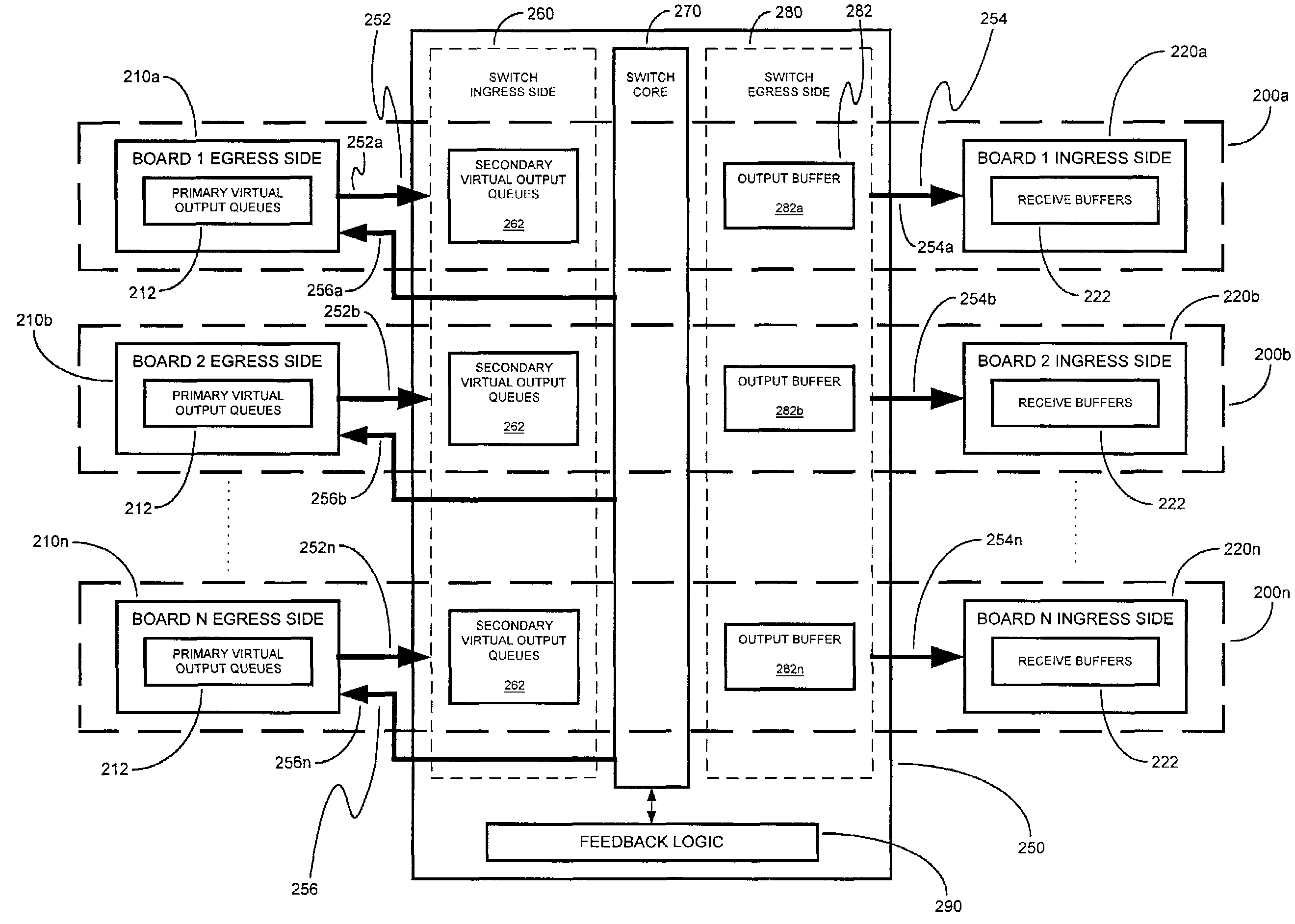

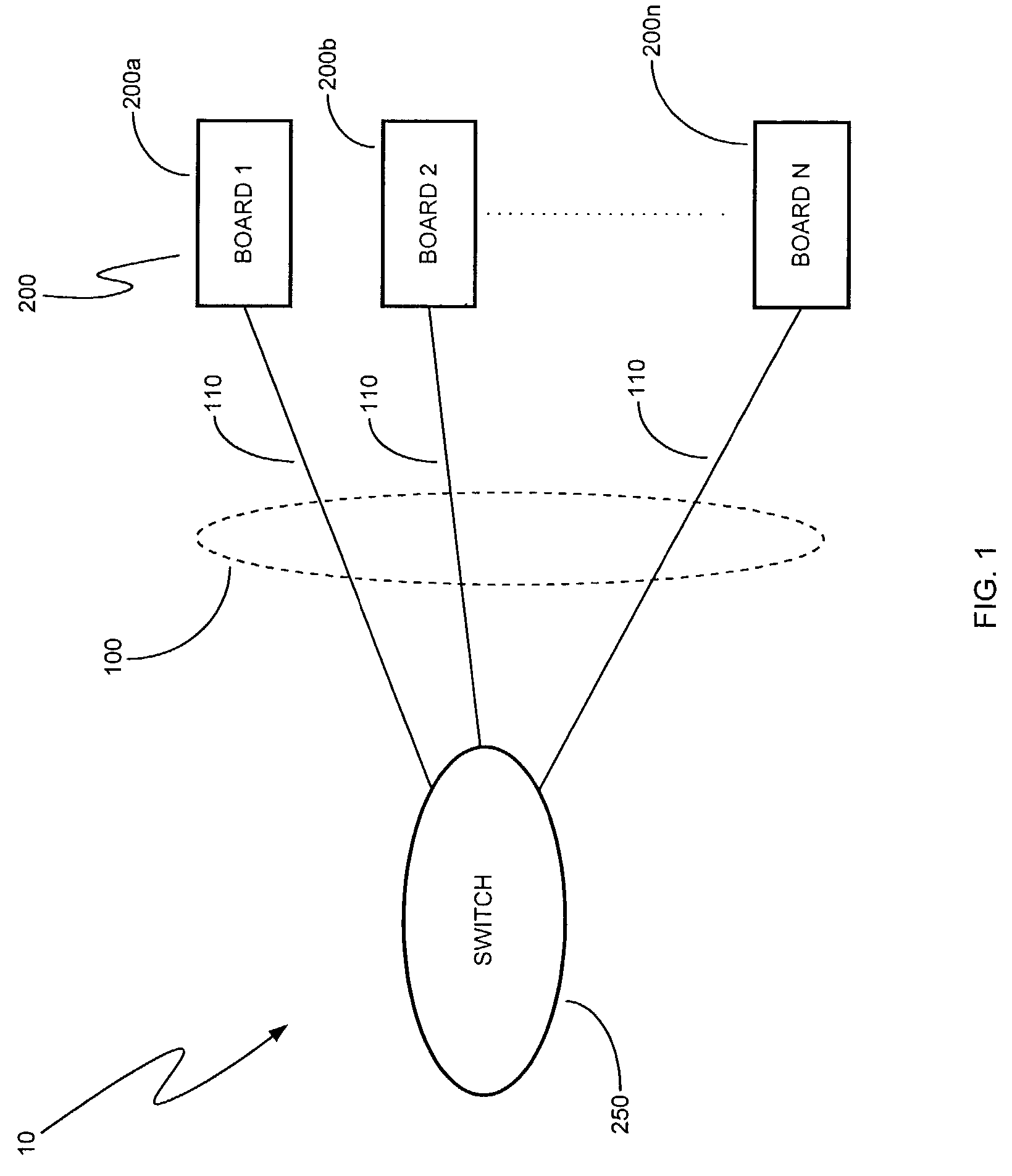

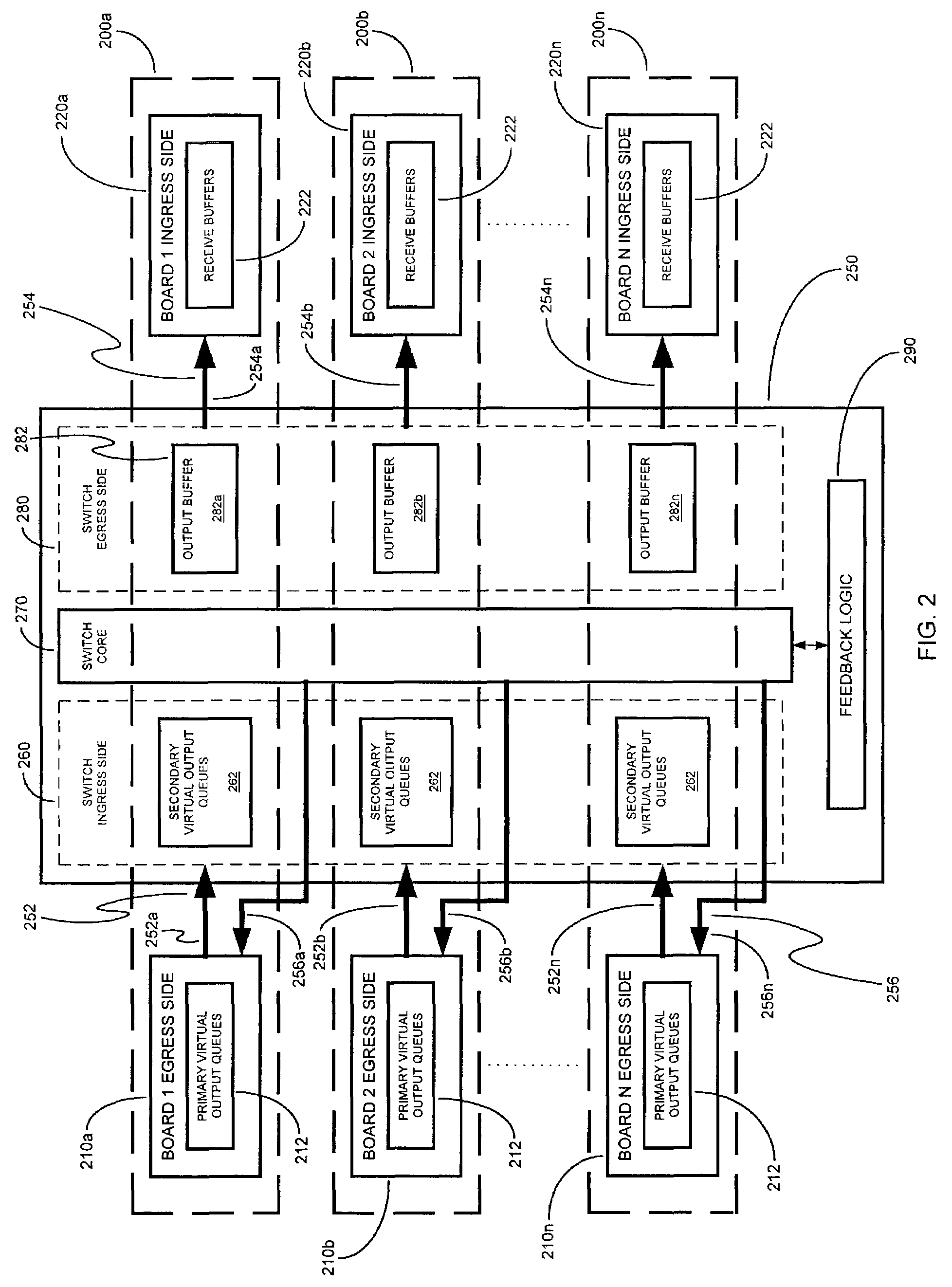

Apparatus and method for virtual output queue feedback

A method of providing virtual output queue feedback to a number of boards coupled with a switch. A number of virtual queues in the switch and / or in the boards are monitored and, in response to one of these queues reaching a threshold occupancy, a feedback signal is provided to one of the boards, the signal directing that board to alter its rate of transmission to another one of the boards. Each board includes a number of virtual output queues, which may be allocated per port and which may be further allocated on a quality of service level basis.

Owner:INTEL CORP

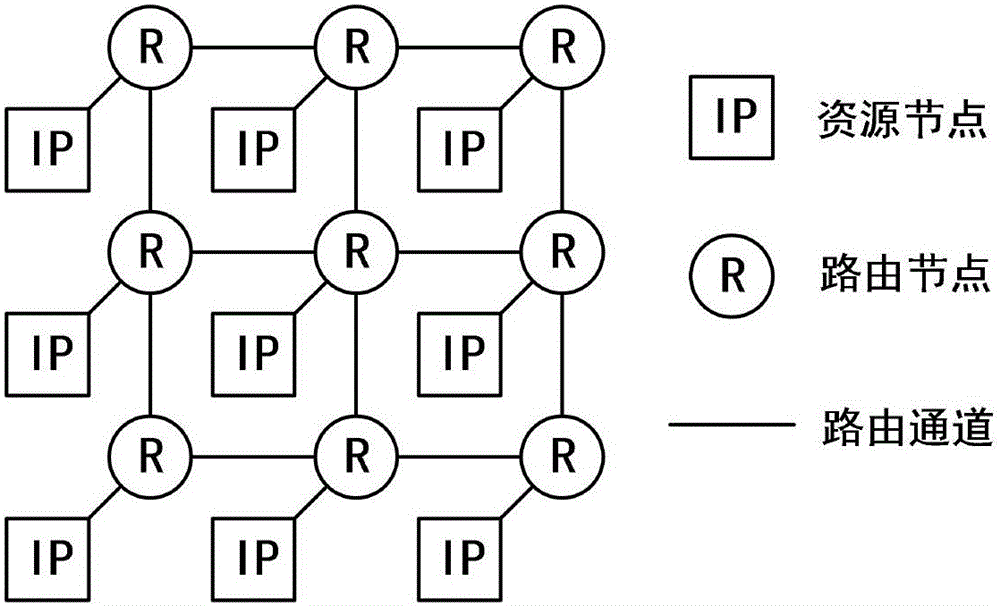

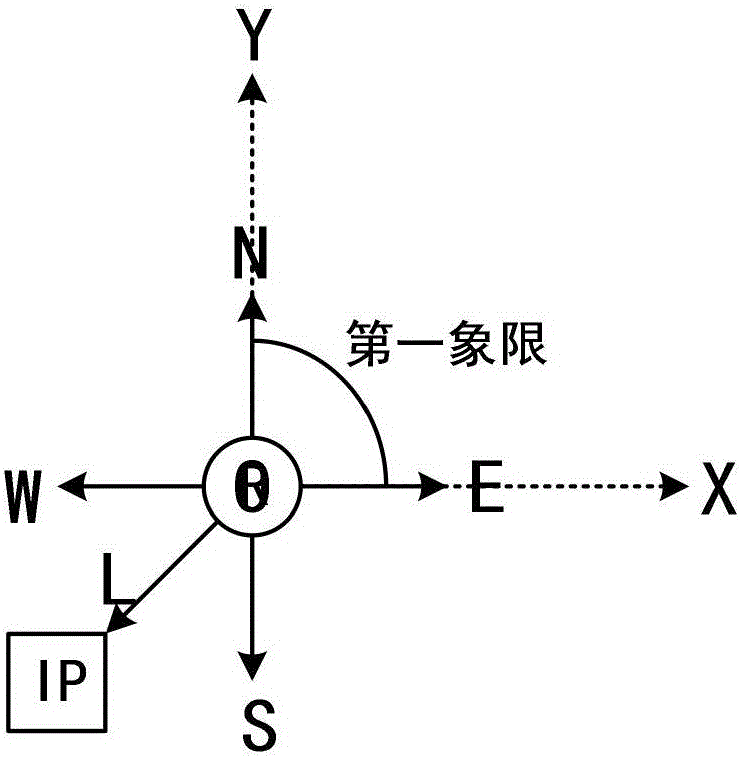

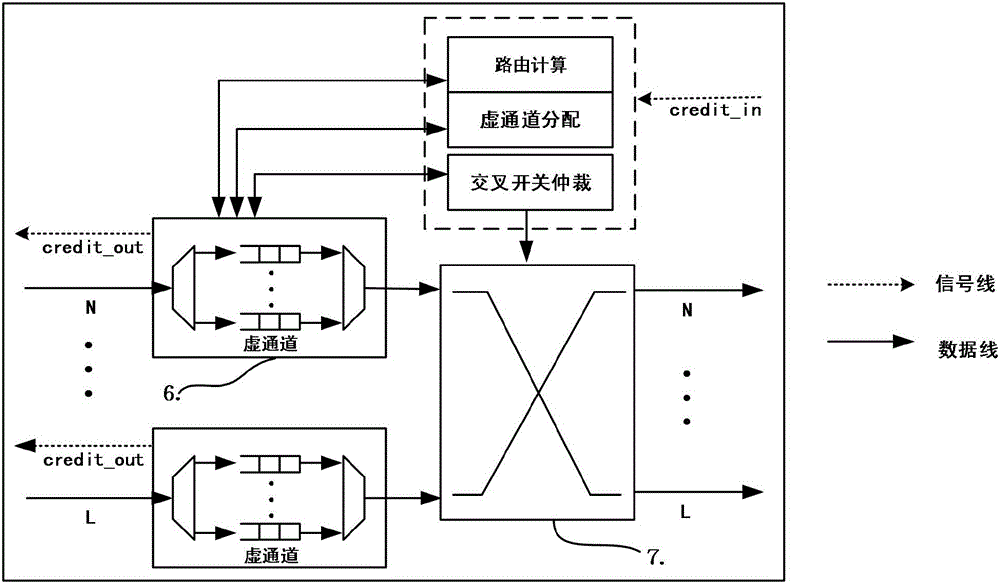

Adaptive router in NoC (network-on-chip) on basis of virtual output queue mechanism

InactiveCN105871742AReduce the possibilityEliminate the effects ofArchitecture with single central processing unitData switching networksCrossbar switchTraffic capacity

The invention discloses an adaptive router in an NoC (network-on-chip) on the basis of a virtual output queue mechanism. The adaptive router comprises five input ports, a port selection module, a valve judgment module, a storage module, an or-onward routing computation module, a header fit modification module, a crossbar switch judgment module, a crossbar switch and five output ports; the input ports partition an input buffer space according to output directions of data packets so as to form virtual output queues; an idle bit of a header fit of each data packet is used for parenthetically propagating congestion information of the network; any one virtual channel is equally divided into two storage spaces each of which is respectively provided with one set of read-write pointer; on a routing computation hierarchy, the output ports are adaptively selected; and in one single virtual channel, the data packet is adaptively read. According to the invention, by adaptation of two hierarchies, traffic distribution of the whole network is balanced and congestion is relieved, so that a possibility of generating HOL (head of line) blocking is reduced; and when the HOL blocking is generated, influence of the HOL blocking is eliminated, so that delay of the network is reduced and throughput of the network is improved.

Owner:HEFEI UNIV OF TECH

Scheduling the dispatch of cells in non-empty virtual output queues of multistage switches using a pipelined arbitration scheme

ActiveUS6940851B2Improve throughputMinimize timeError preventionFrequency-division multiplex detailsDistributed computingVirtual Output Queues

Owner:POLYTECHNIC INST OF NEW YORK

Switching system

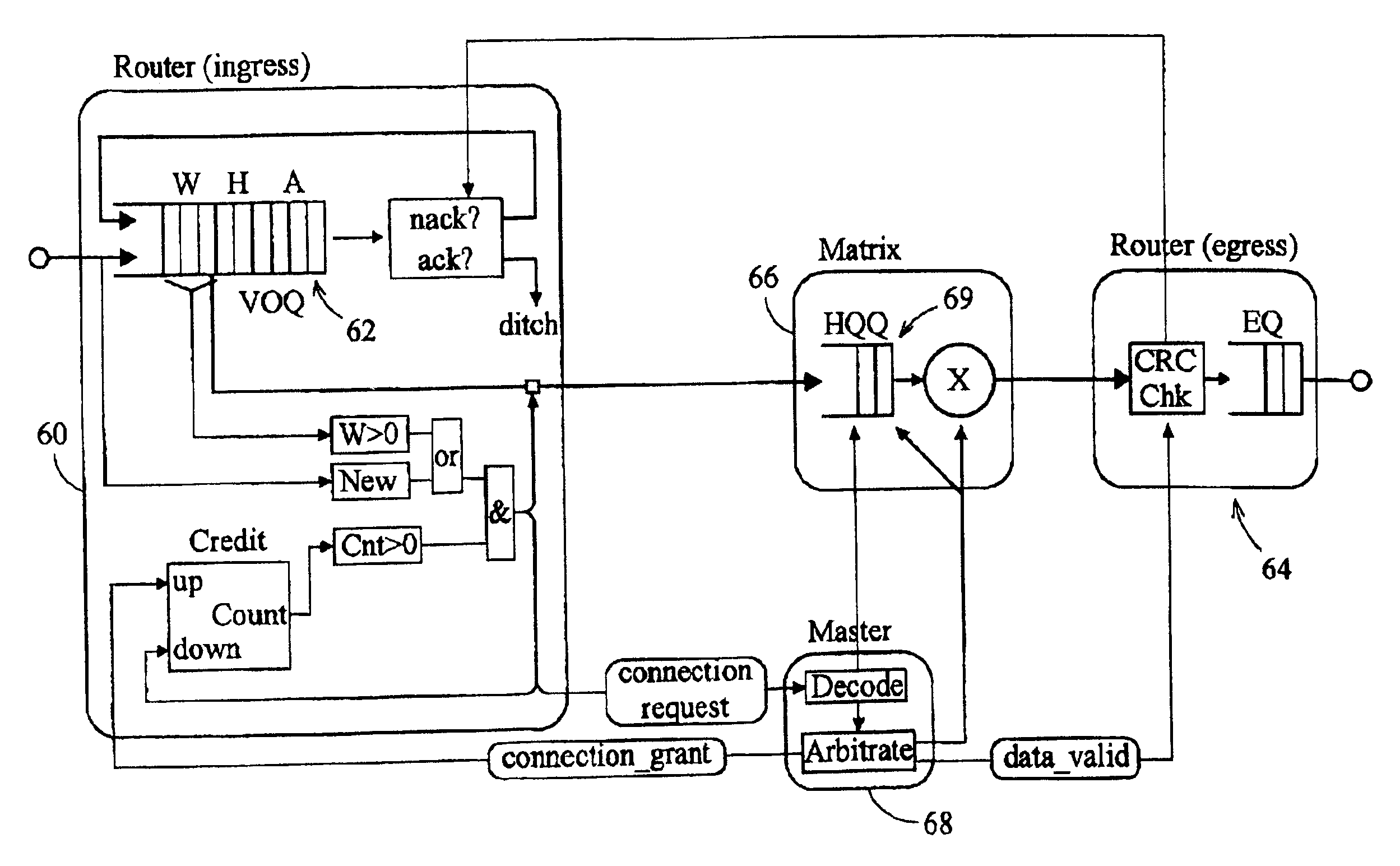

InactiveUS6876663B2Data switching by path configurationTime-division multiplexing selectionEgress routerData interchange

A data switching device has ingress routers and egress routers interconnected by a switching matrix controlled by a controller. Each ingress router maintains one or more virtual output queues for each egress router. The switching matrix itself maintains a head-of queue buffer of cells which are to be transmitted. Each of these queues corresponds to one of the virtual output queues, and the cells stored in the switching matrix are replicated from the cells queuing in the respective virtual output queues. Thus, when it is determined that a connection is to be made between a given input and output of the switching matrix, a cell suitable for transmission along that connection is already available to the switching matrix. Upon receipt of a new cell by one of the ingress routers, the cell is stored in one of the virtual output queues of the ingress router corresponding to the egress router for the cell, and also written the corresponding head of queue buffer, if that buffer has space. If not, the cell is stored, and written to the head of queue buffer when that buffer has space for it.

Owner:MICRON TECH INC

Pipelined scheduling technique

InactiveUS6888841B1Suppressed unfairnessReduced fixed delay timeMultiplex system selection arrangementsData switching by path configurationCrossbar switchDelayed time

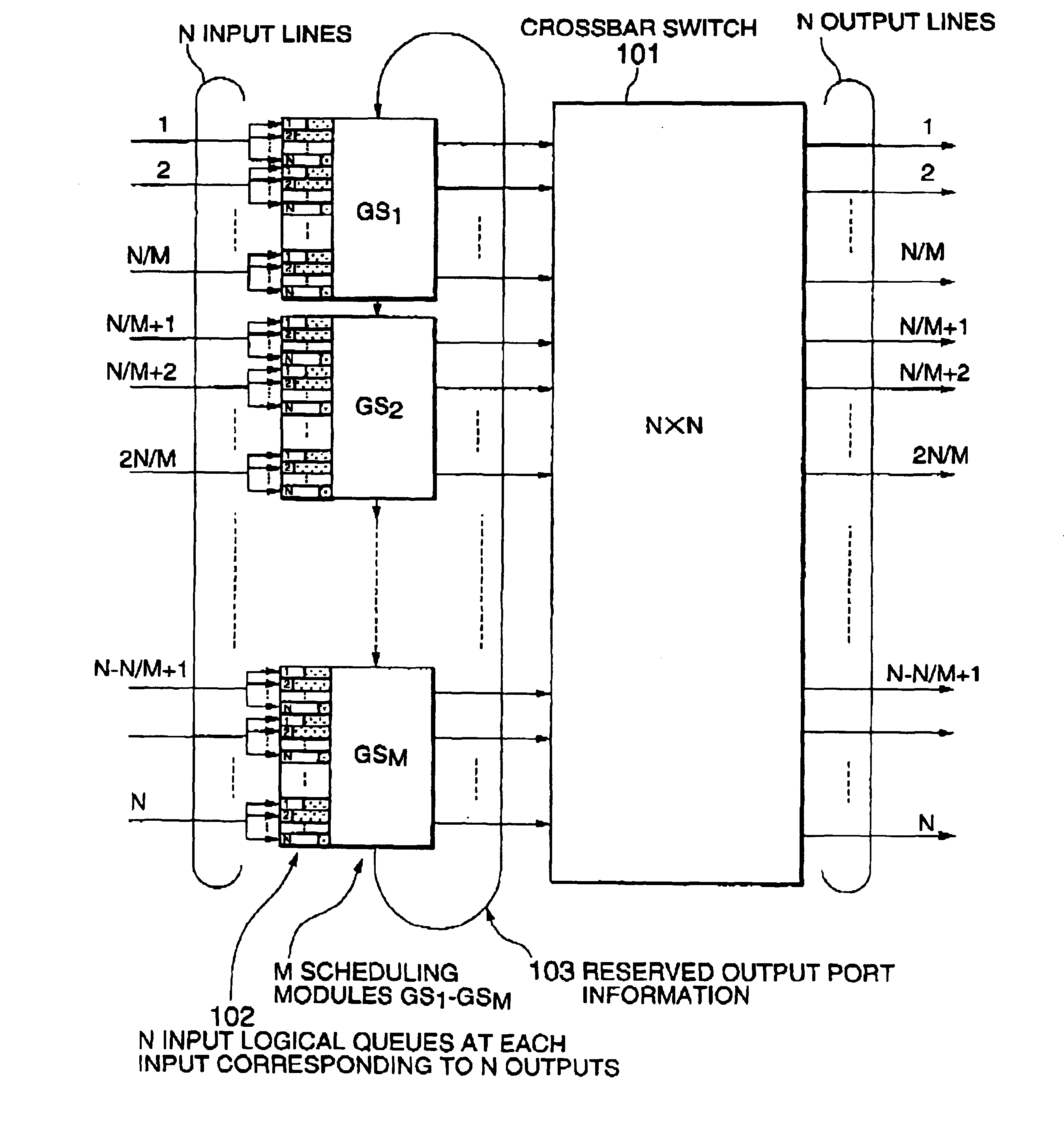

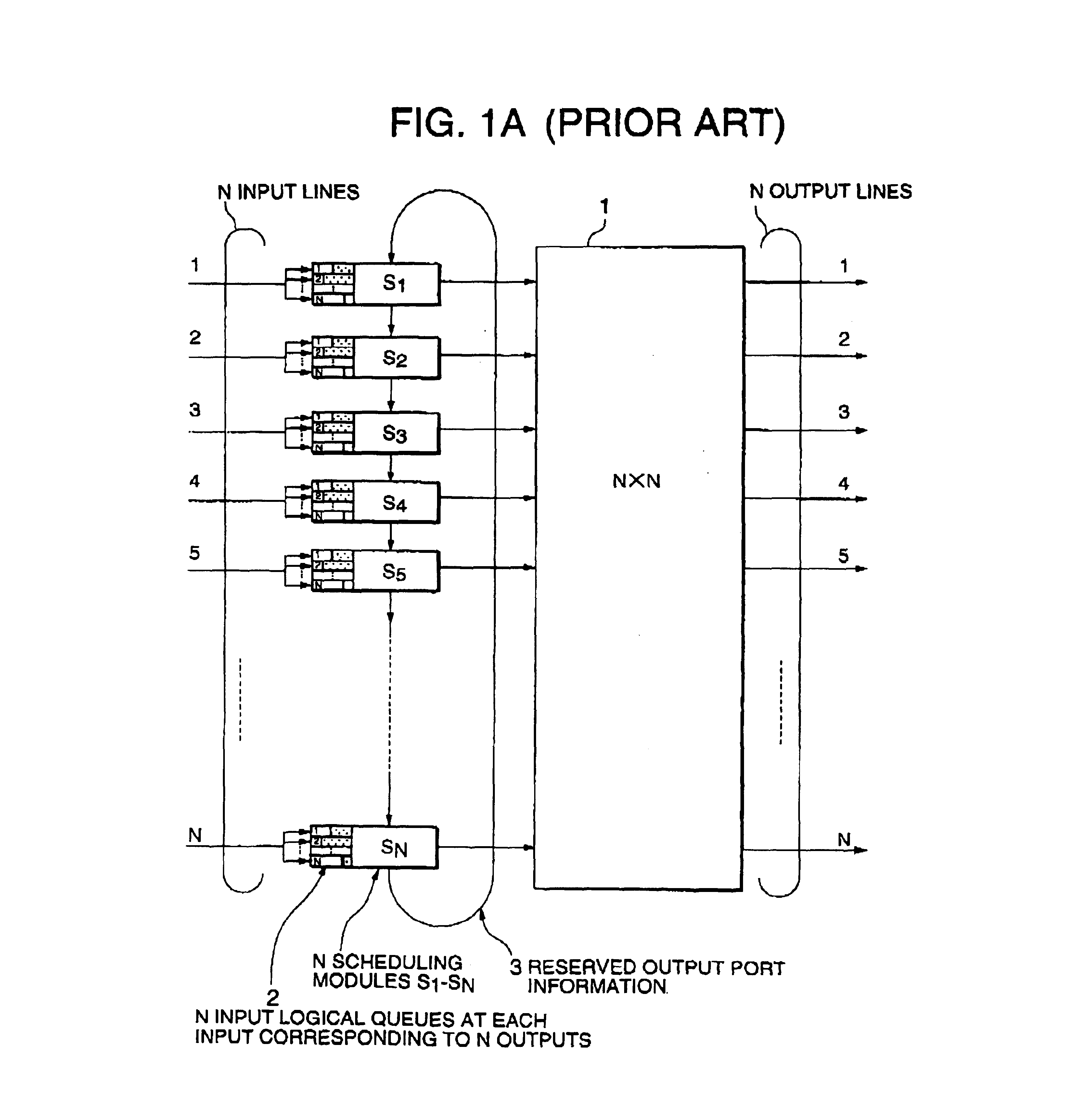

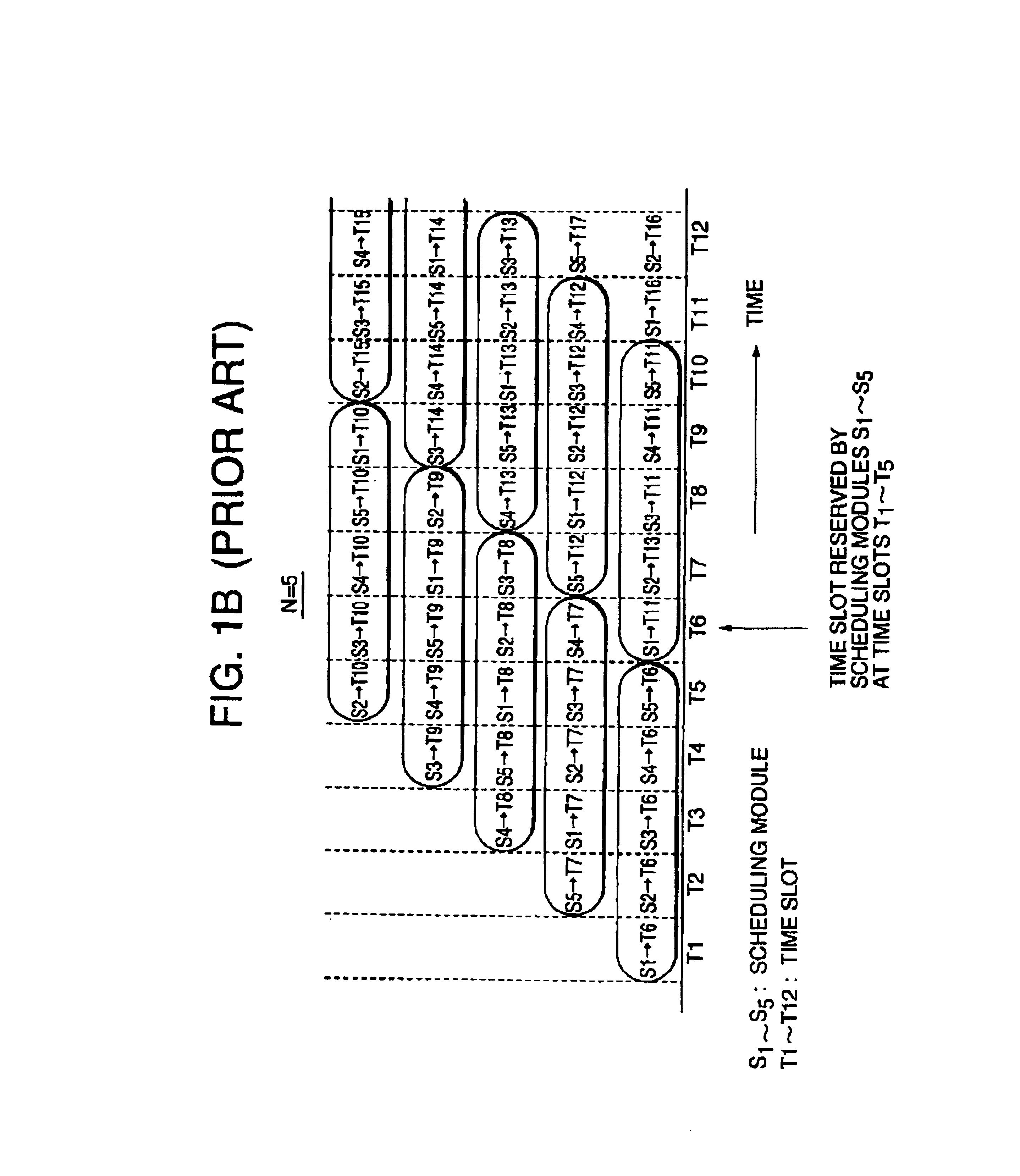

A pipelined scheduling system allowing suppressed unfairness among inputs and reduced fixed delay time is disclosed. Virtual output queues (VOQs) for each input port corresponding to respective ones of the output ports are stored. The VOQs are equally divided into a plurality of groups. Scheduling modules each corresponding to the groups performs a scheduling operation at a single time slot in a round robin fashion such that each of the scheduling modules reserves output ports at a predetermined future time slot for forwarding requests from logical queues of a corresponding group based on a reservation status received from a previous scheduling module, and then transfers an updated reservation status to an adjacent scheduling module. A crossbar switch connects each of the input ports to a selected one of the output ports depending on the reservation status at each time slot.

Owner:NEC CORP

Network data processor having per-input port virtual output queues

Owner:ARISTA NETWORKS

Internal messaging within a switch

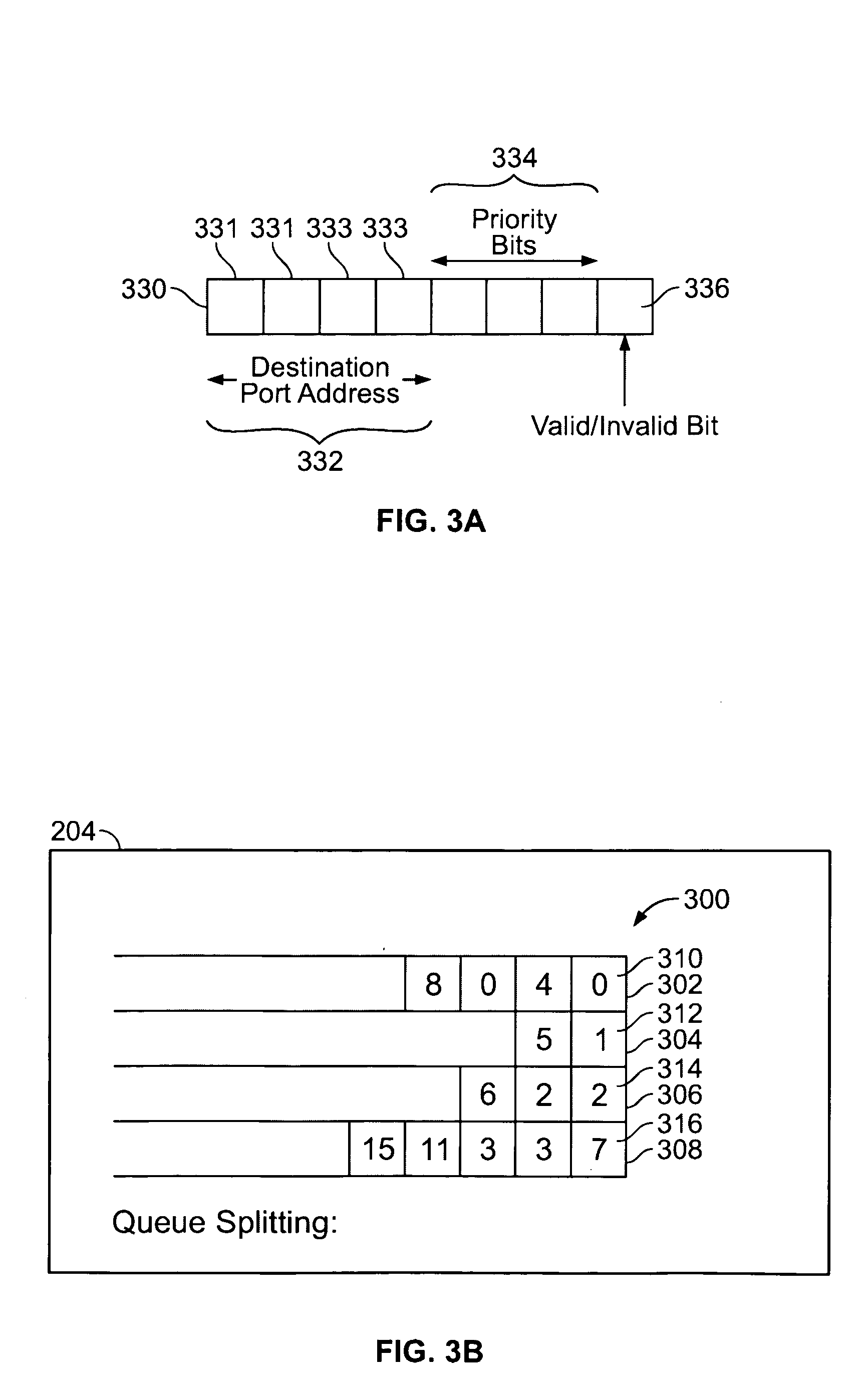

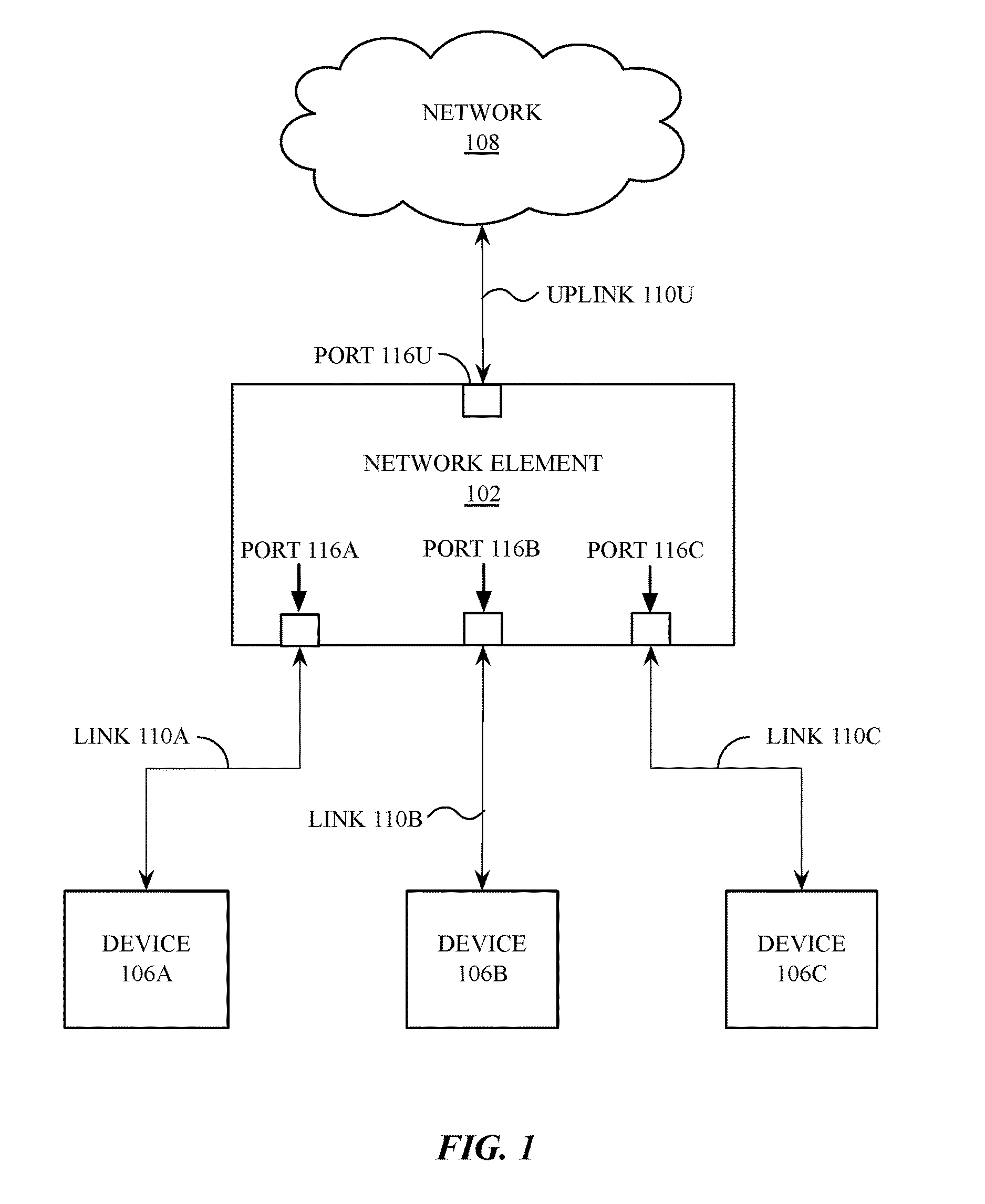

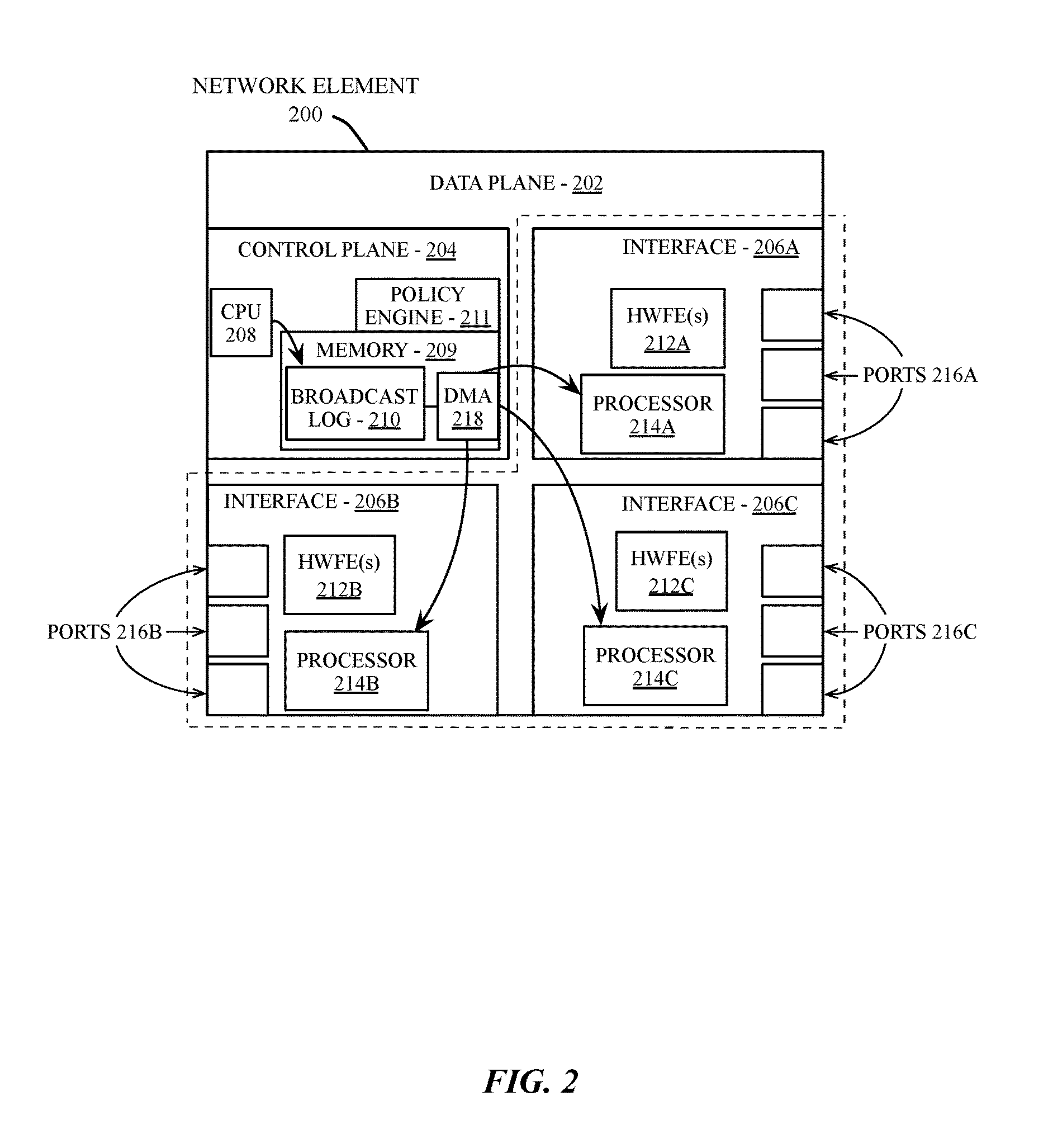

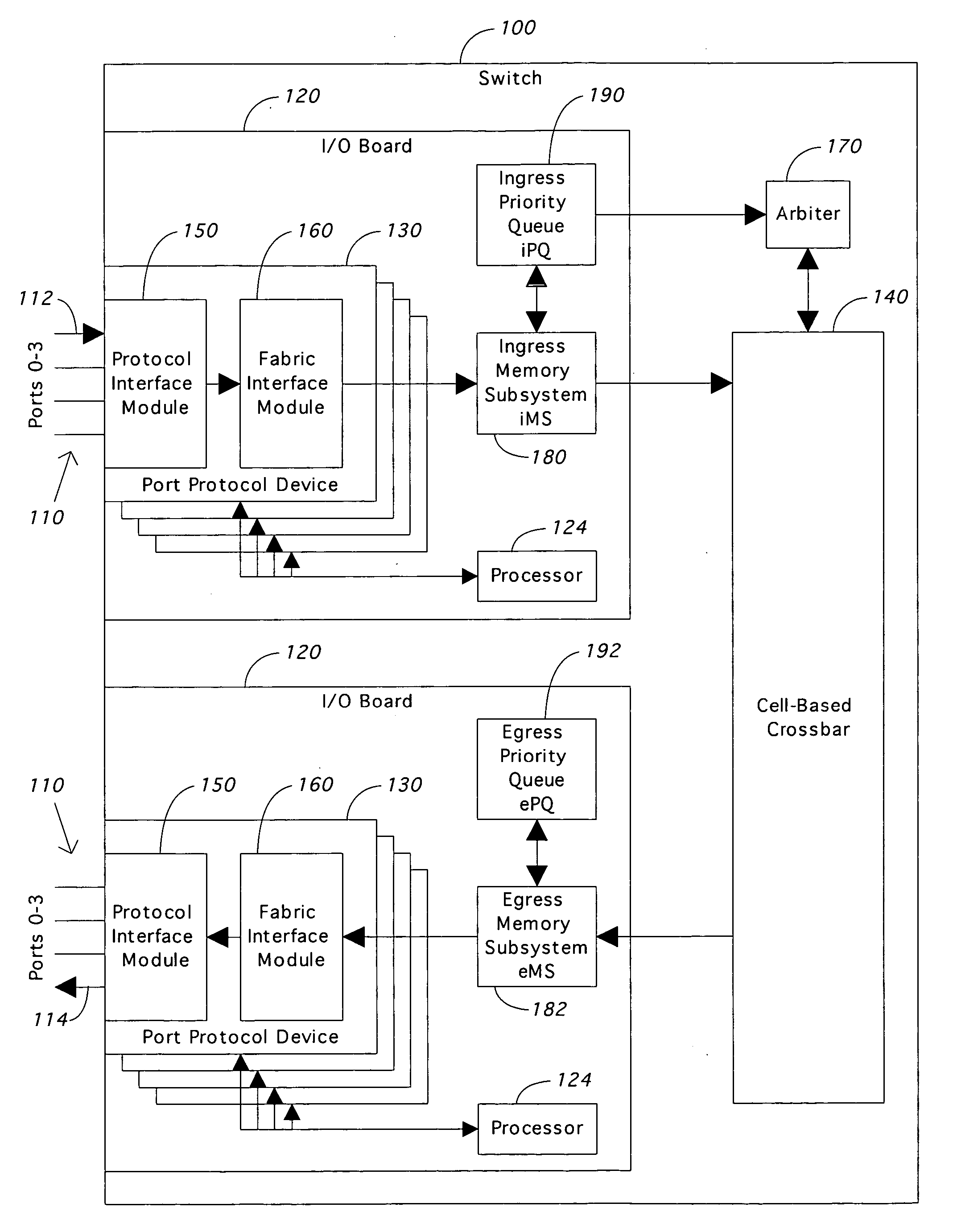

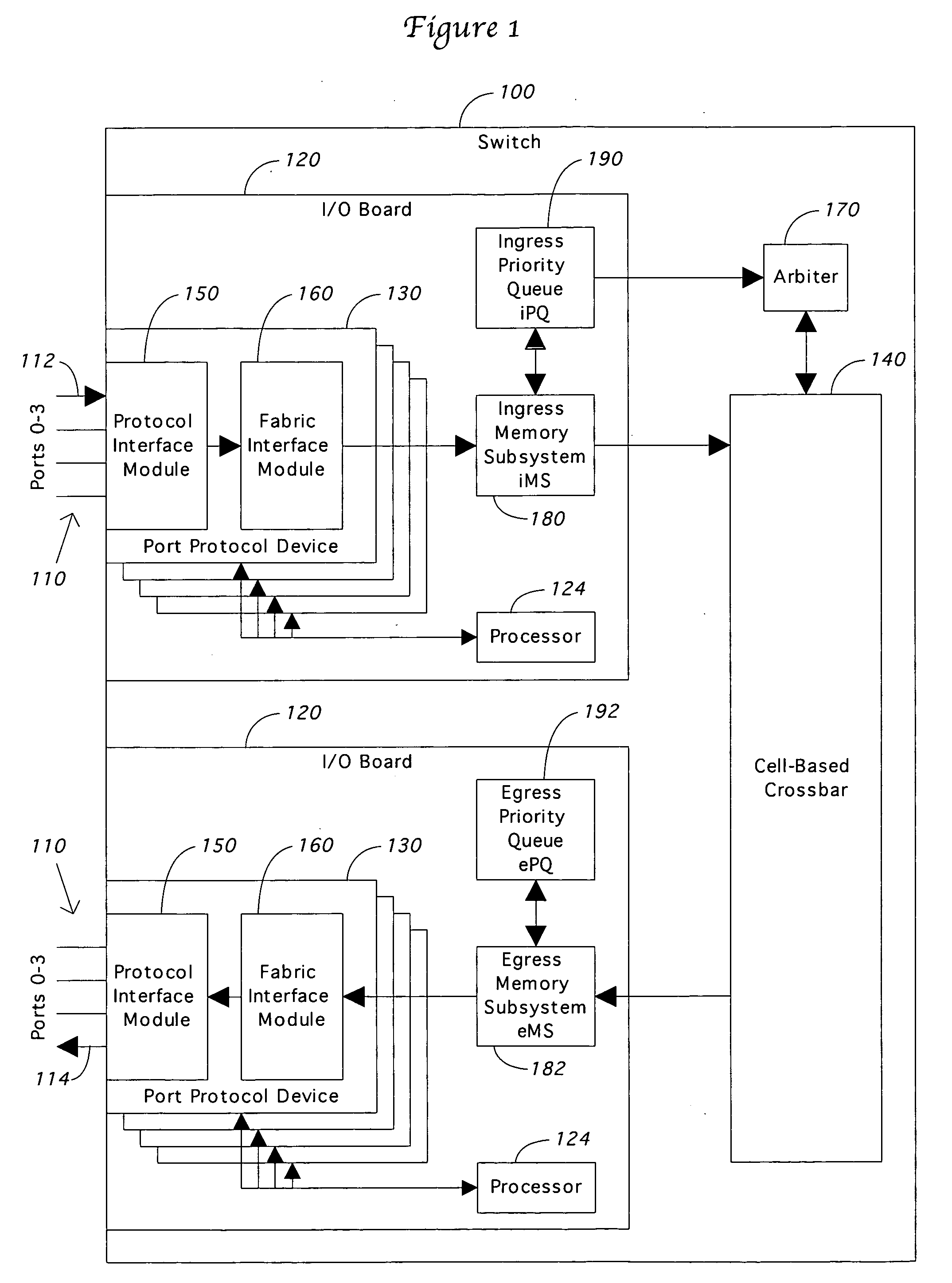

A queuing mechanism is presented that allows port data and processor data to share the same crossbar data pathway without interference. An ingress memory subsystem is dividing into a plurality of virtual output queues according to the switch destination address of the data. Port data is assigned to the address of the physical destination port, while processor data is assigned to the address of one of the physical ports serviced by the processor. Different classes of service are maintained in the virtual output queues to distinguish between port data and processor data. This allows flow control to apply separately to these two classes of service, and also allows a traffic shaping algorithm to treat port data differently than processor data.

Owner:COMP NETWORK TECH

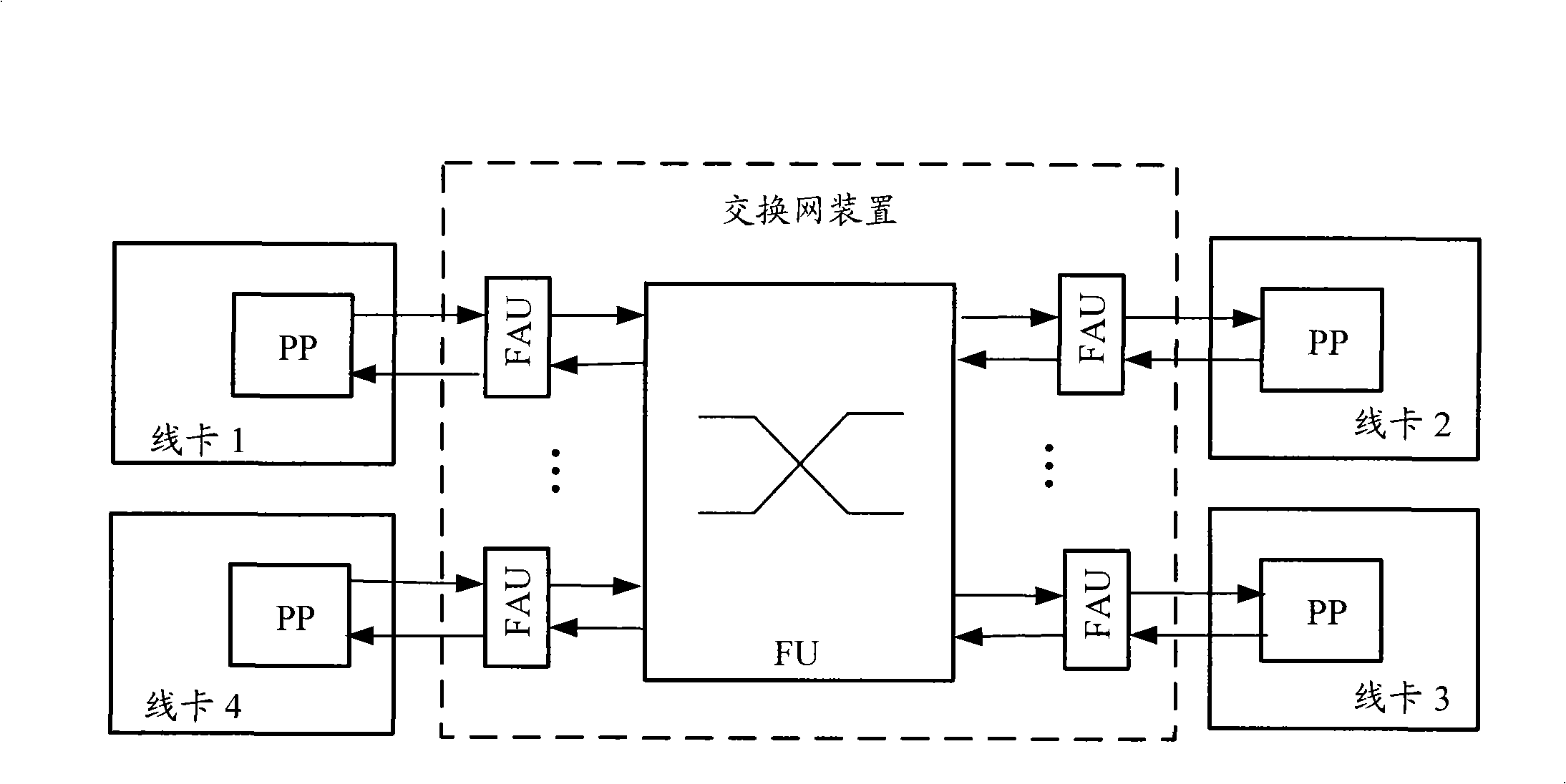

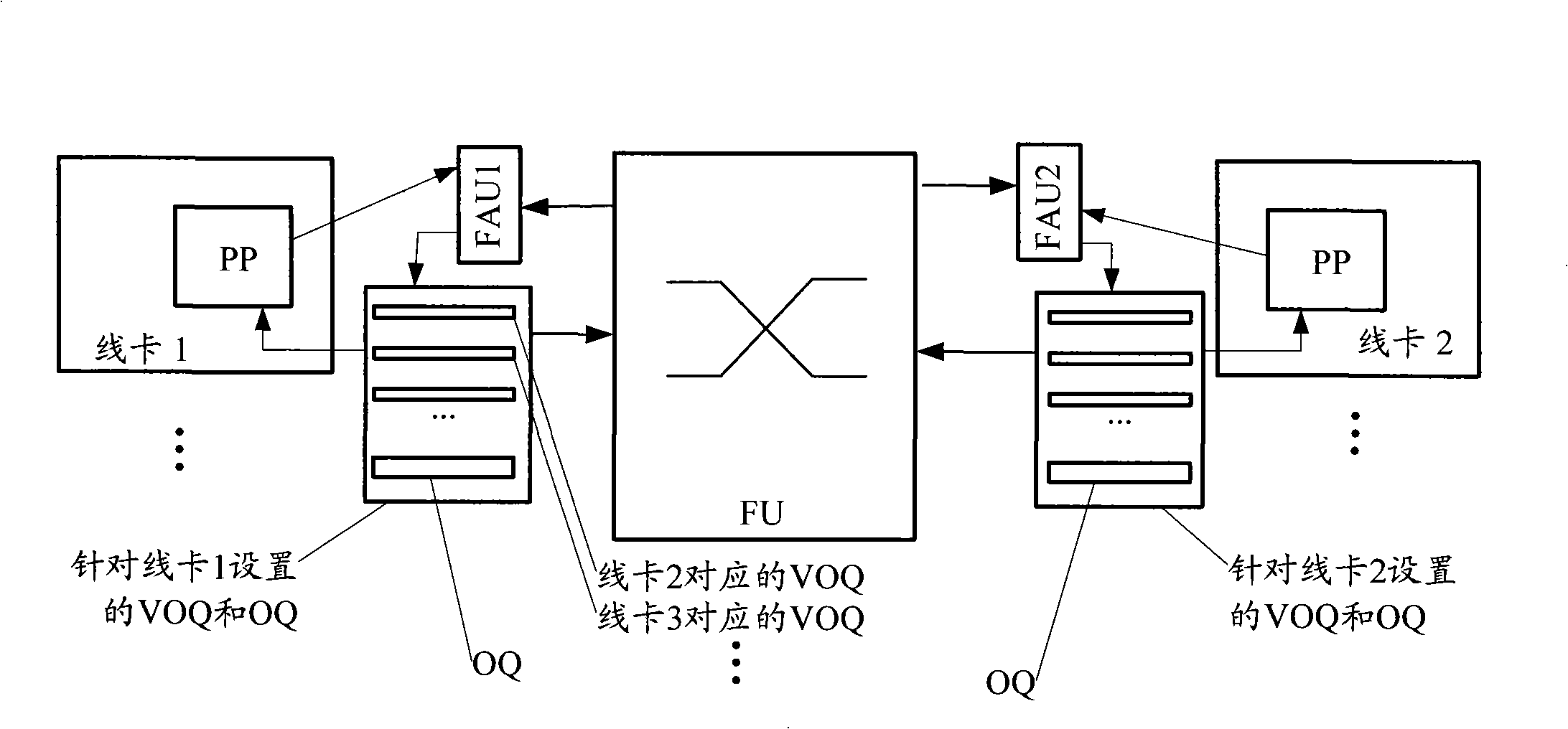

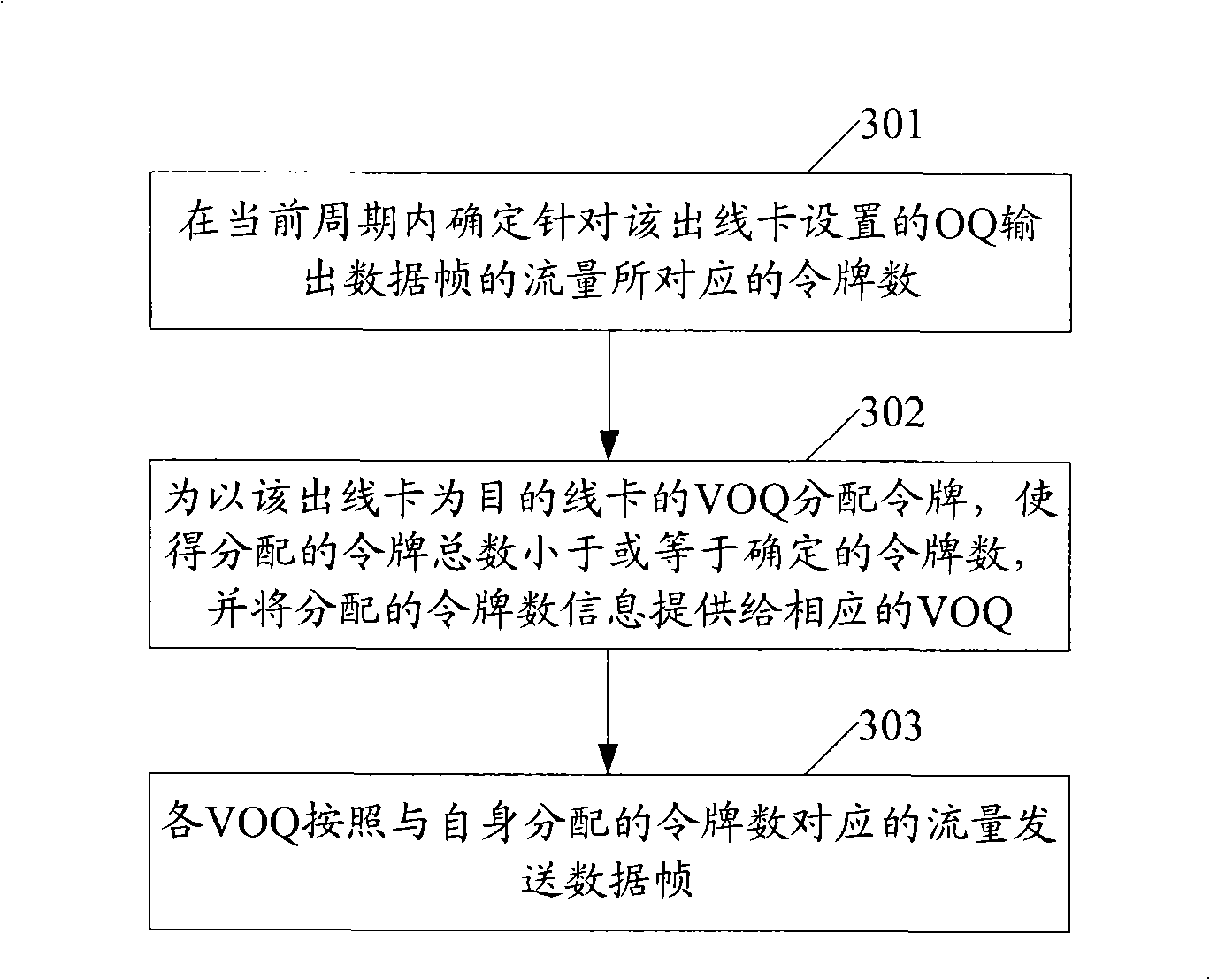

Method, system and device for controlling data flux

The invention provides a method, a system and a device for flux control and the steps as follows are carried out in connection with the periodicity of the outgoing cards: in the current period, the token number corresponding to the flux of the output data frame of an output queue (OQ) arranged in light of the outgoing line cards is confirmed; the tokens are distributed to a virtual output queue (VOQ) by taking the outgoing cards as targeted line cards, so that the total number of the tokens distributed is less than or equal to the confirmed token number; the data frames are sent out according to the flux corresponding to the self-distributed token number by the virtual output queue (VOQ). That is to say, by distributing the tokens, the method, the system and the device distribute the bandwidth output by an exchange network to the outgoing card to the bandwidth input by an ingoing line card to the exchange network, thus preventing the bandwidth of the input exchange network from exceeding the bandwidth output by the exchange network, avoiding data loss phenomenon caused by the buffer overflow of the core exchange unit (FU) of the exchange network and ensuring the quality of service (QoS) of the data exchange business.

Owner:NEW H3C TECH CO LTD

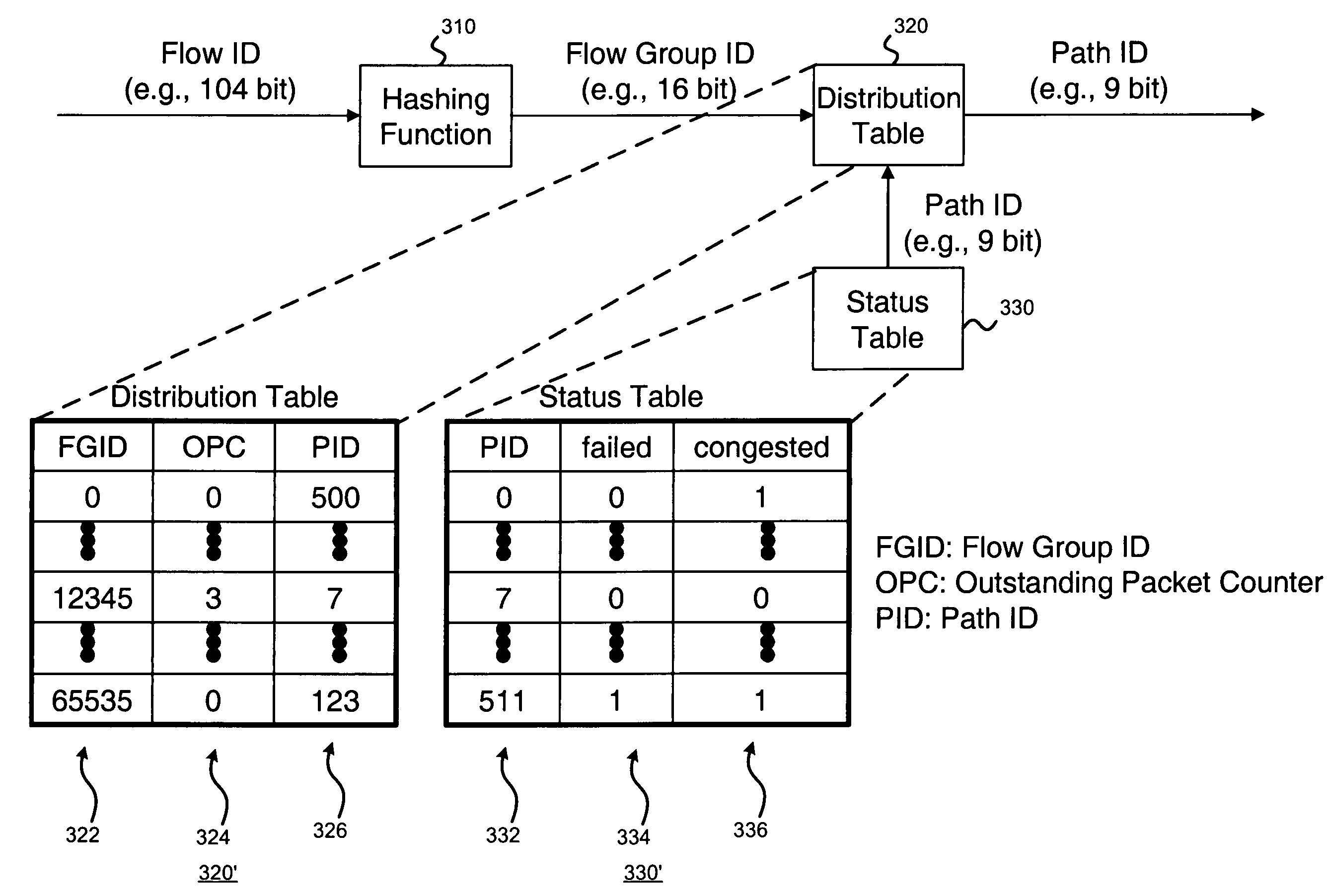

Packet sequence maintenance with load balancing, and head-of-line blocking avoidance in a switch

ActiveUS7894343B2Improve balanceImprove load uniformityError preventionFrequency-division multiplex detailsComputer moduleHead-of-line blocking

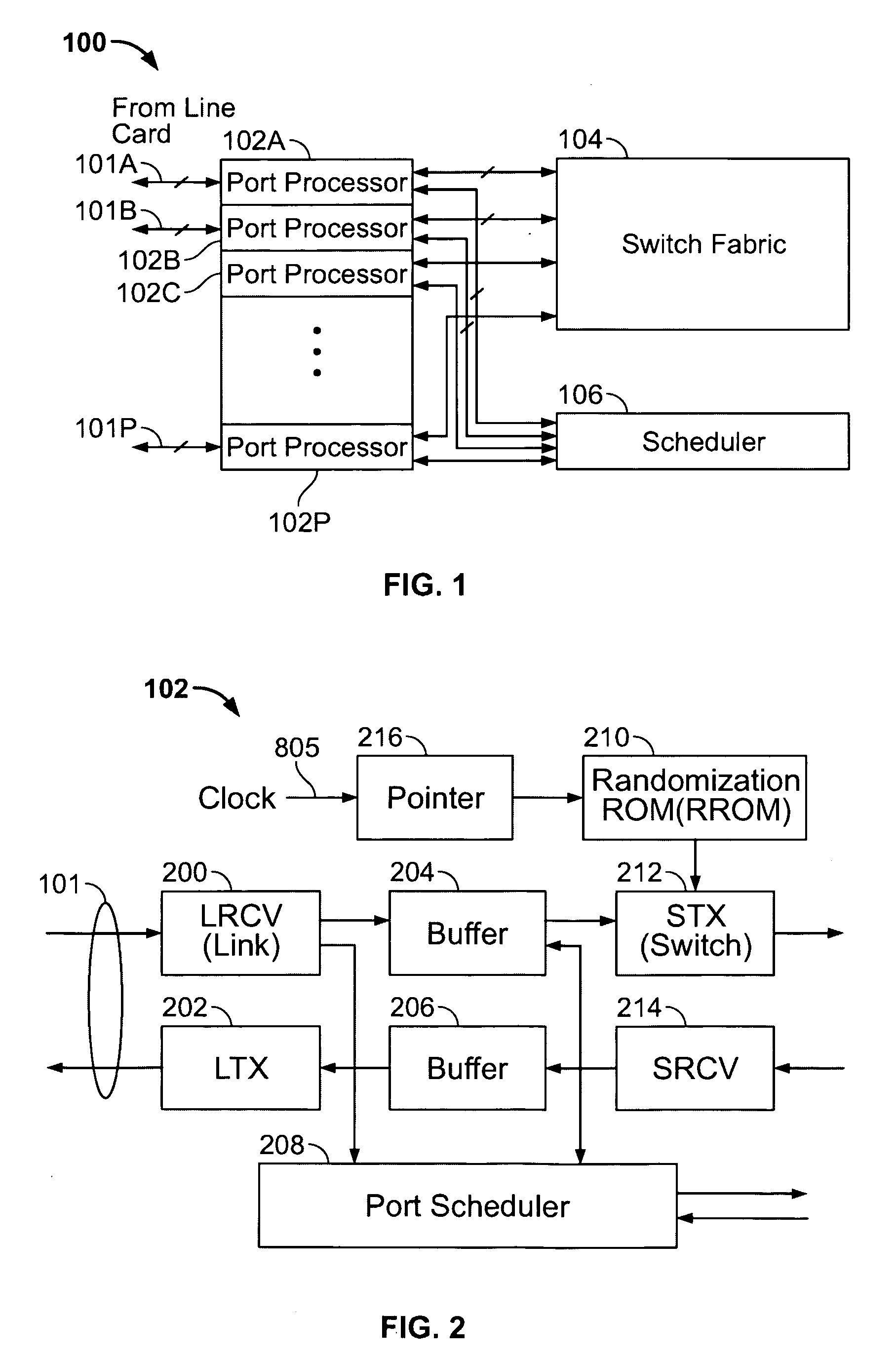

To avoid packet out-of-sequence problems, while providing good load balancing, each input port of a switch monitors the outstanding number of packets for each flow group. If there is an outstanding packet in the switch fabric, the following packets of the same flow group should follow the same path. If there is no outstanding packet of the same flow group in the switch fabric, the (first, and therefore subsequent) packets of the flow can choose a less congested path to improve load balancing performance without causing an out-of-sequence problem. To avoid HOL blocking without requiring too many queues, an input module may include two stages of buffers. The first buffer stage may be a virtual output queue (VOQ) and second buffer stage may be a virtual path queue (VPQ). At the first stage, the packets may be stored at the VOQs, and the HOL packet of each VOQ may be sent to the VPQ. By allowing each VOQ to send at most one packet to VPQ, HOL blocking can be mitigated dramatically.

Owner:POLYTECHNIC INST OF NEW YORK

Scheduling the dispatch of cells in non-empty virtual output queues of multistage switches using a pipelined arbitration scheme

ActiveUS20020110135A1Improve throughputMinimize timeError preventionTransmission systemsDistributed computingVirtual Output Queues

Owner:POLYTECHNIC INST OF NEW YORK

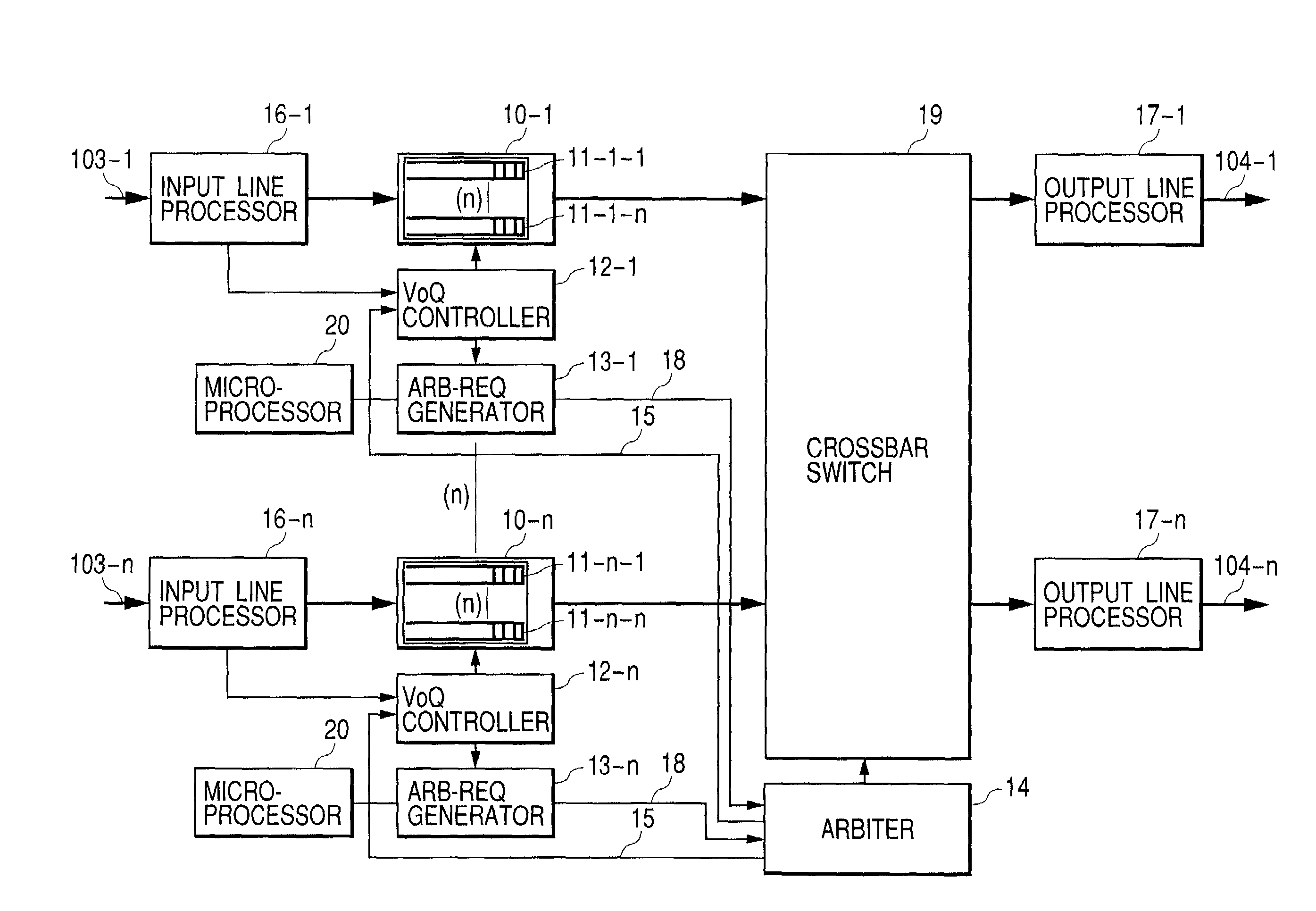

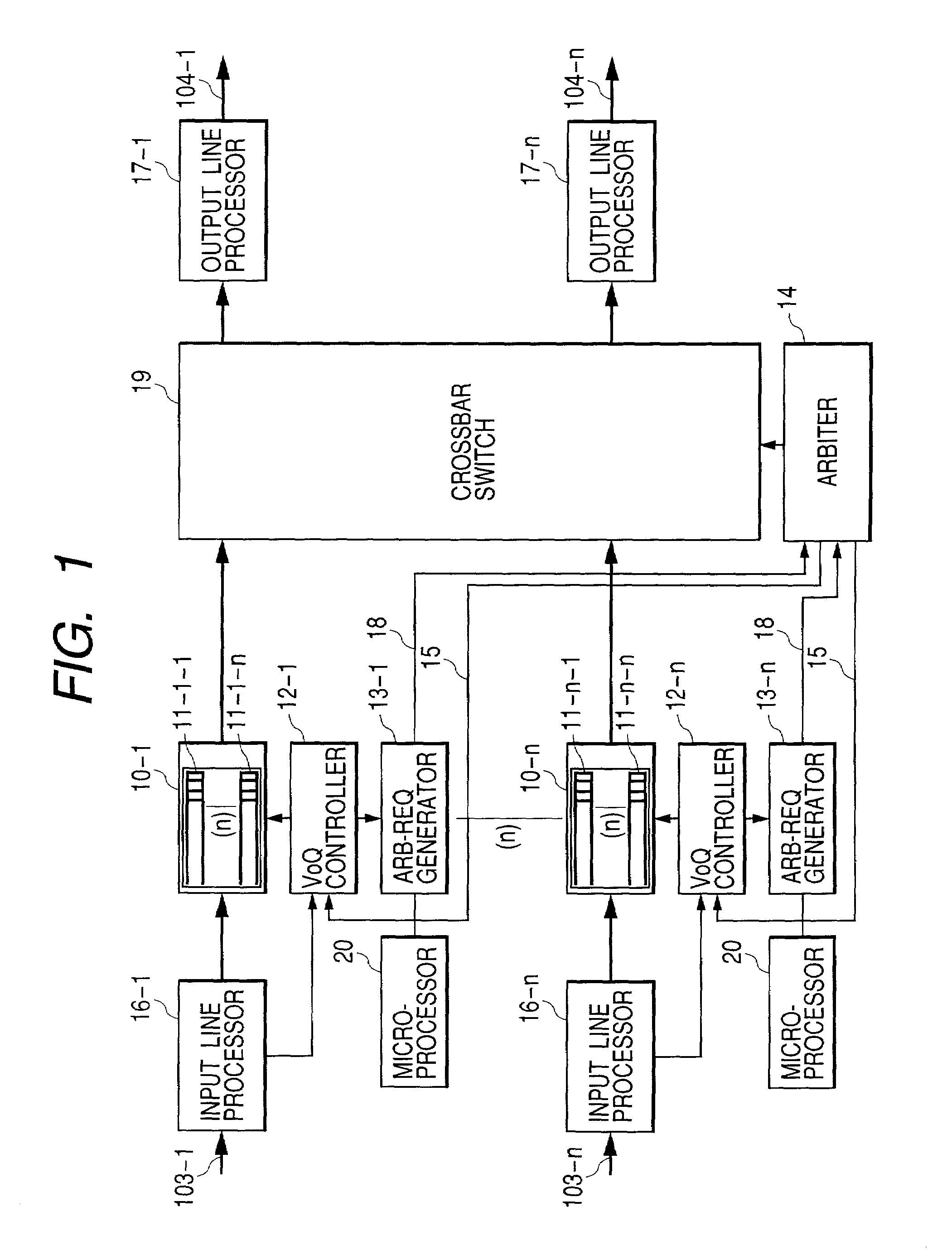

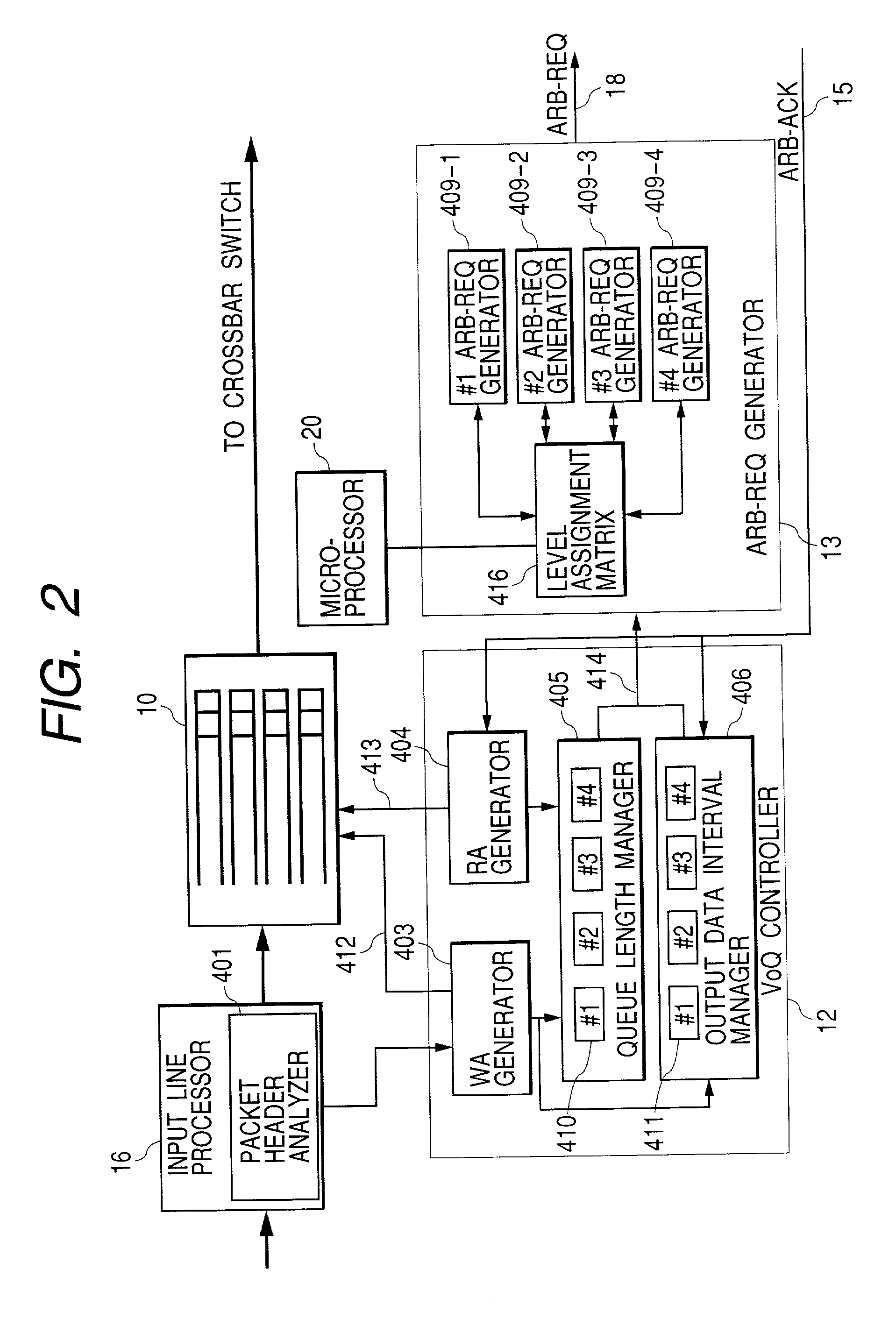

Packet switching system

InactiveUS7120160B2Time-division multiplexData switching by path configurationCrossbar switchLow load

A packet switching system arbitrates between Virtual Output Queues (VoQ) in plural input buffers, so as to grant the right of transmitting data to a crossbar switch to some of the VoQs by taking both an output data interval of a VoQ and the queue length of a VoQ as parameters. The system suppresses the delay time of the segment of a VoQ having a high load, thereby preventing buffers from overflowing; and, also, the system permits a VoQ having a low load to transmit segments under no influence of the VoQ that has a high load and is just reading out the segment.

Owner:HITACHI LTD

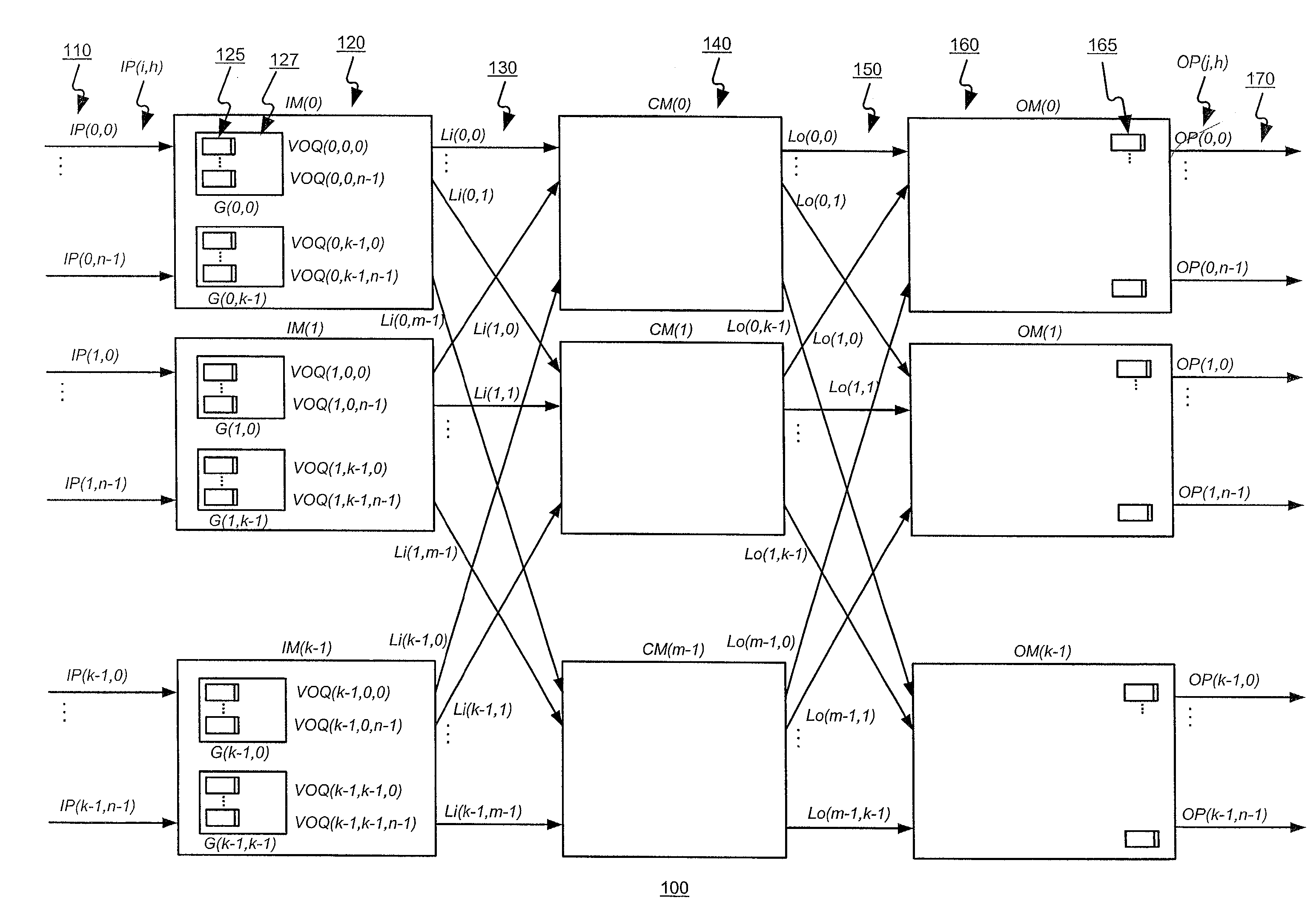

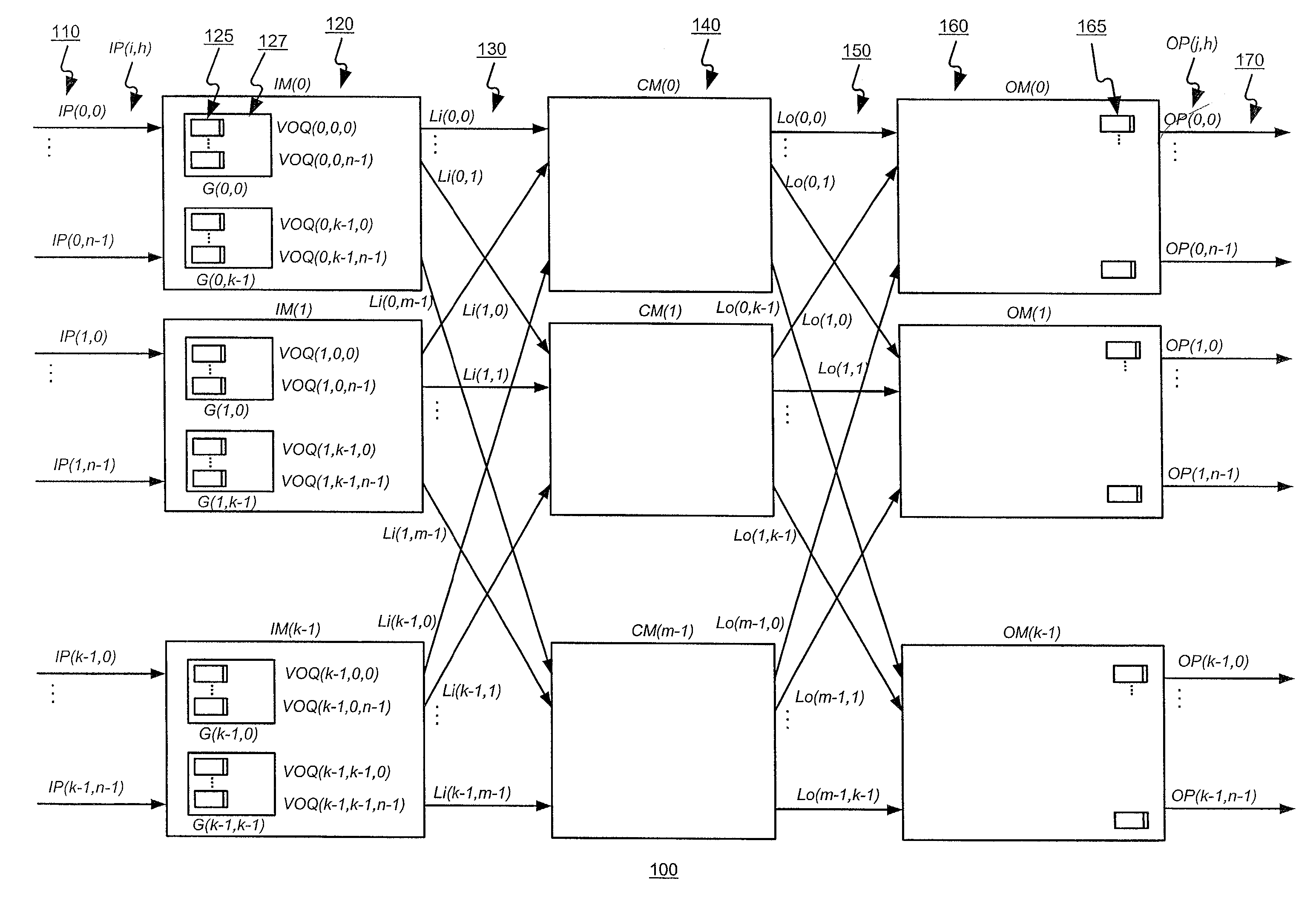

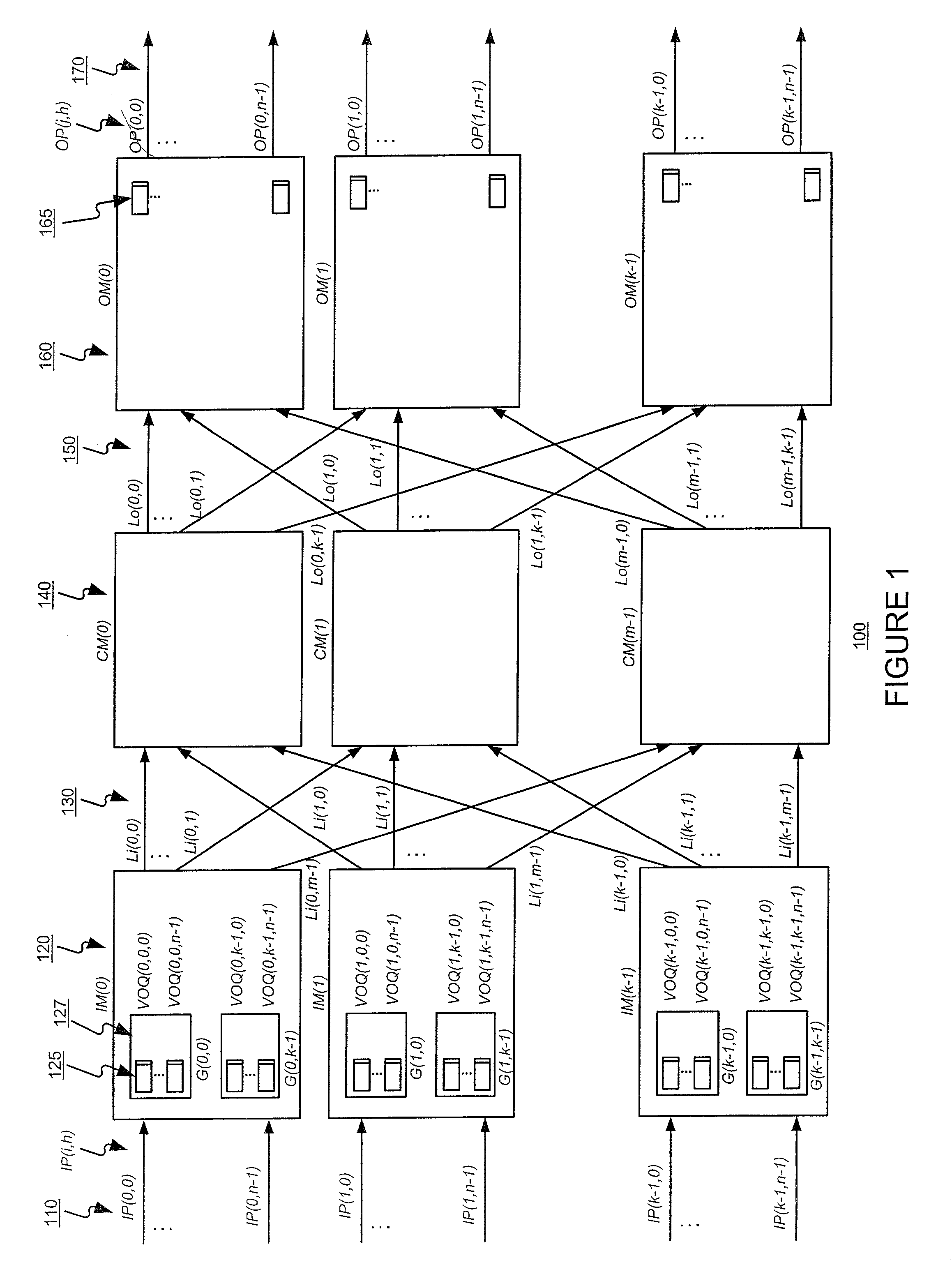

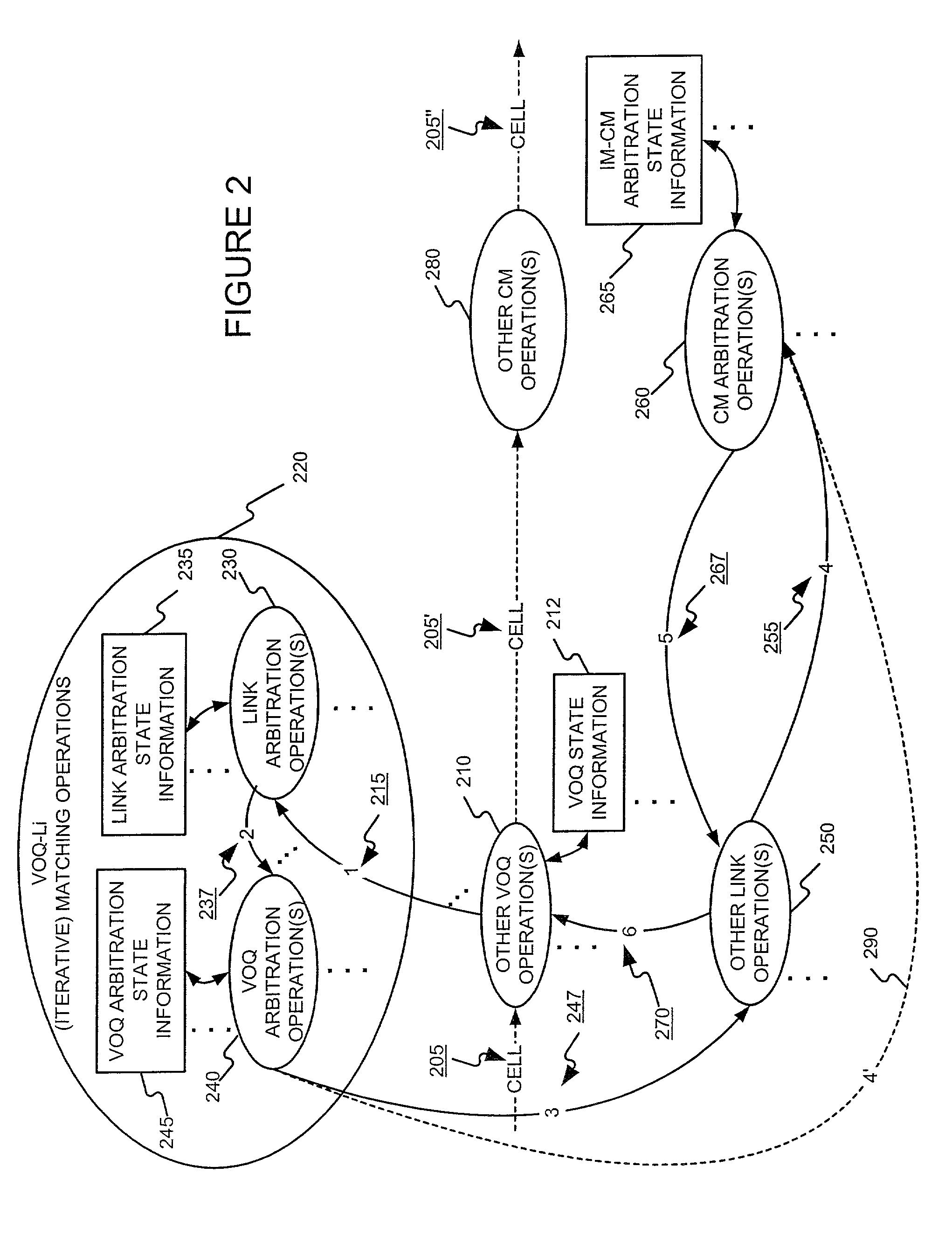

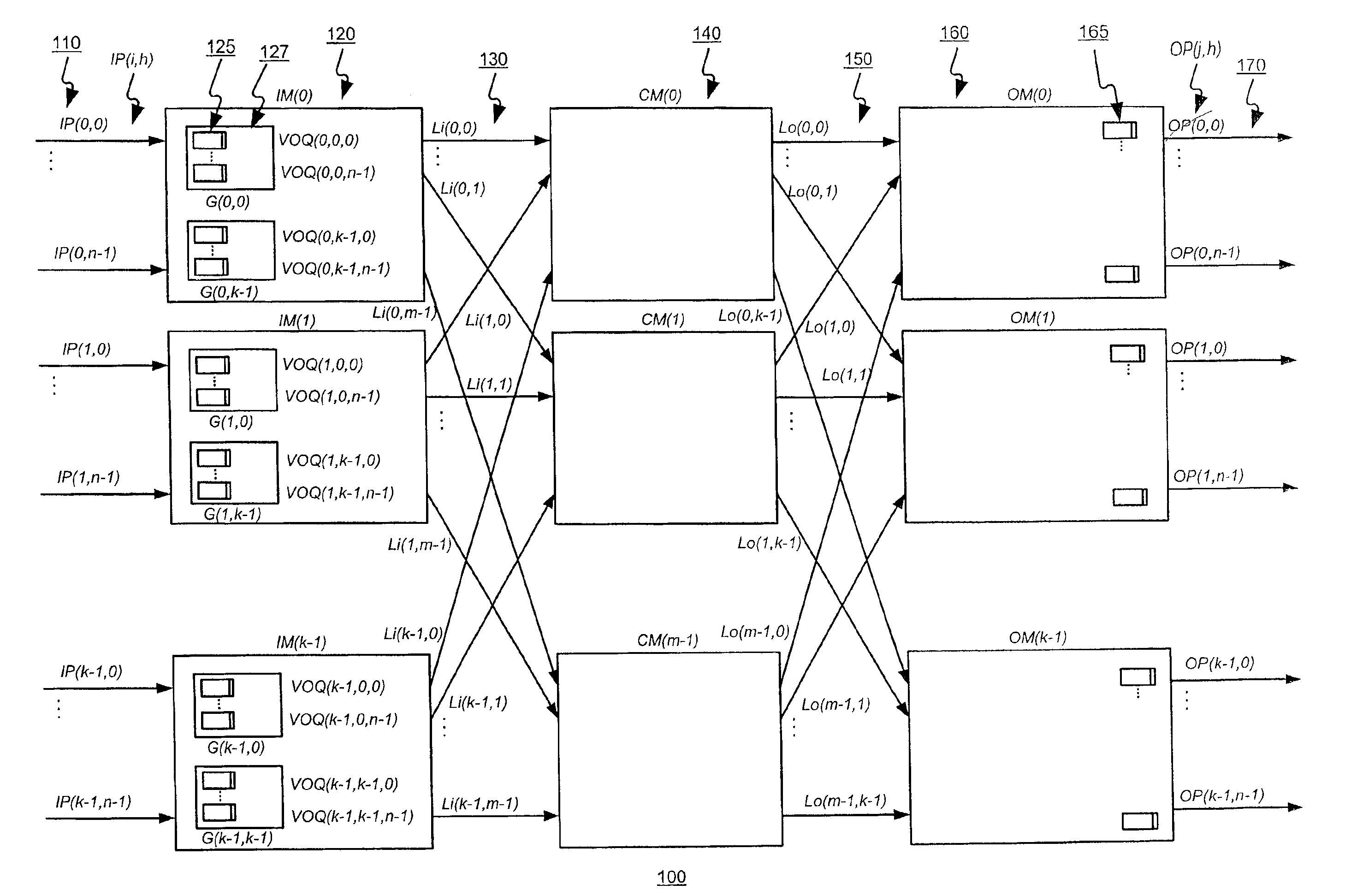

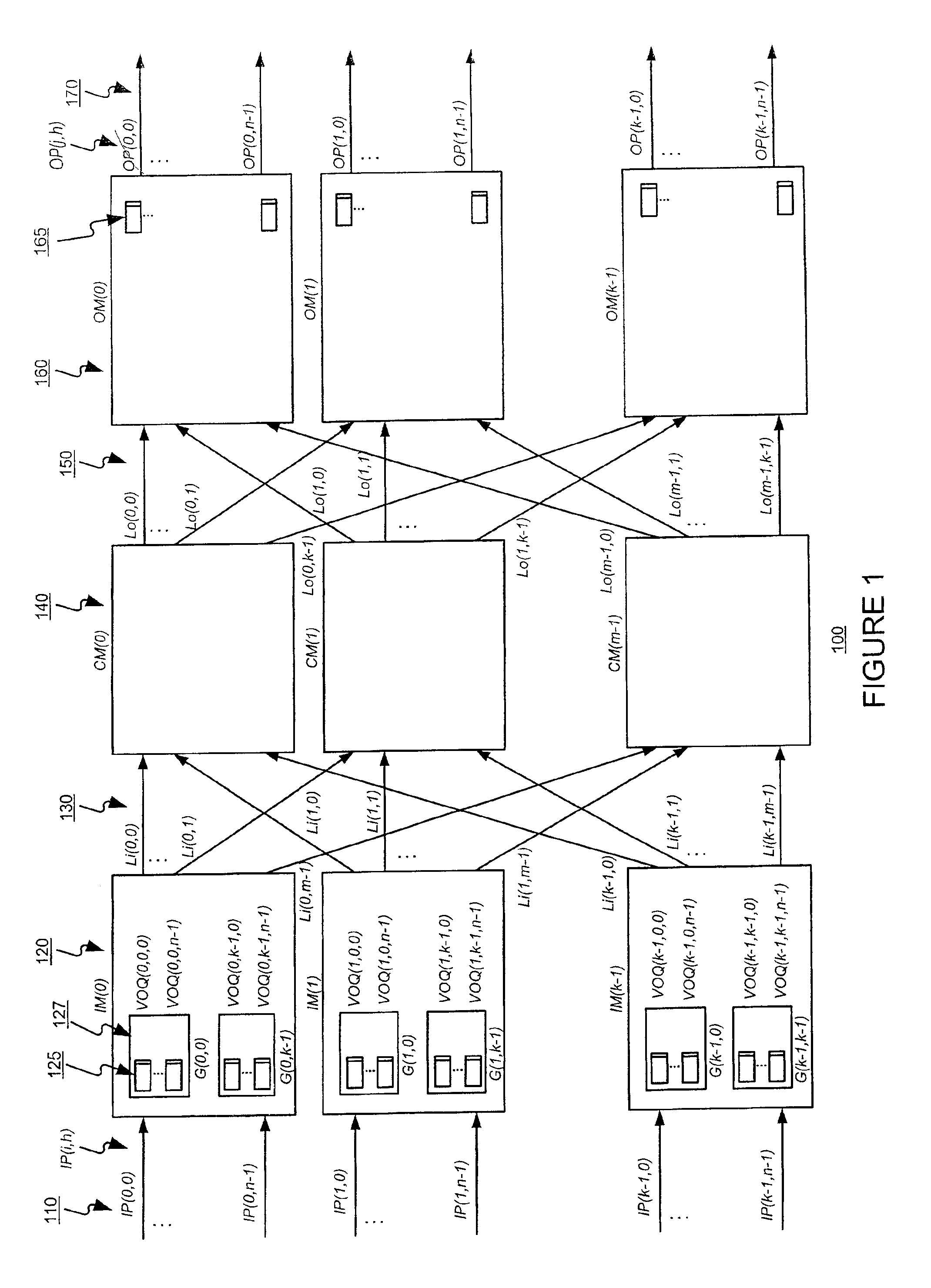

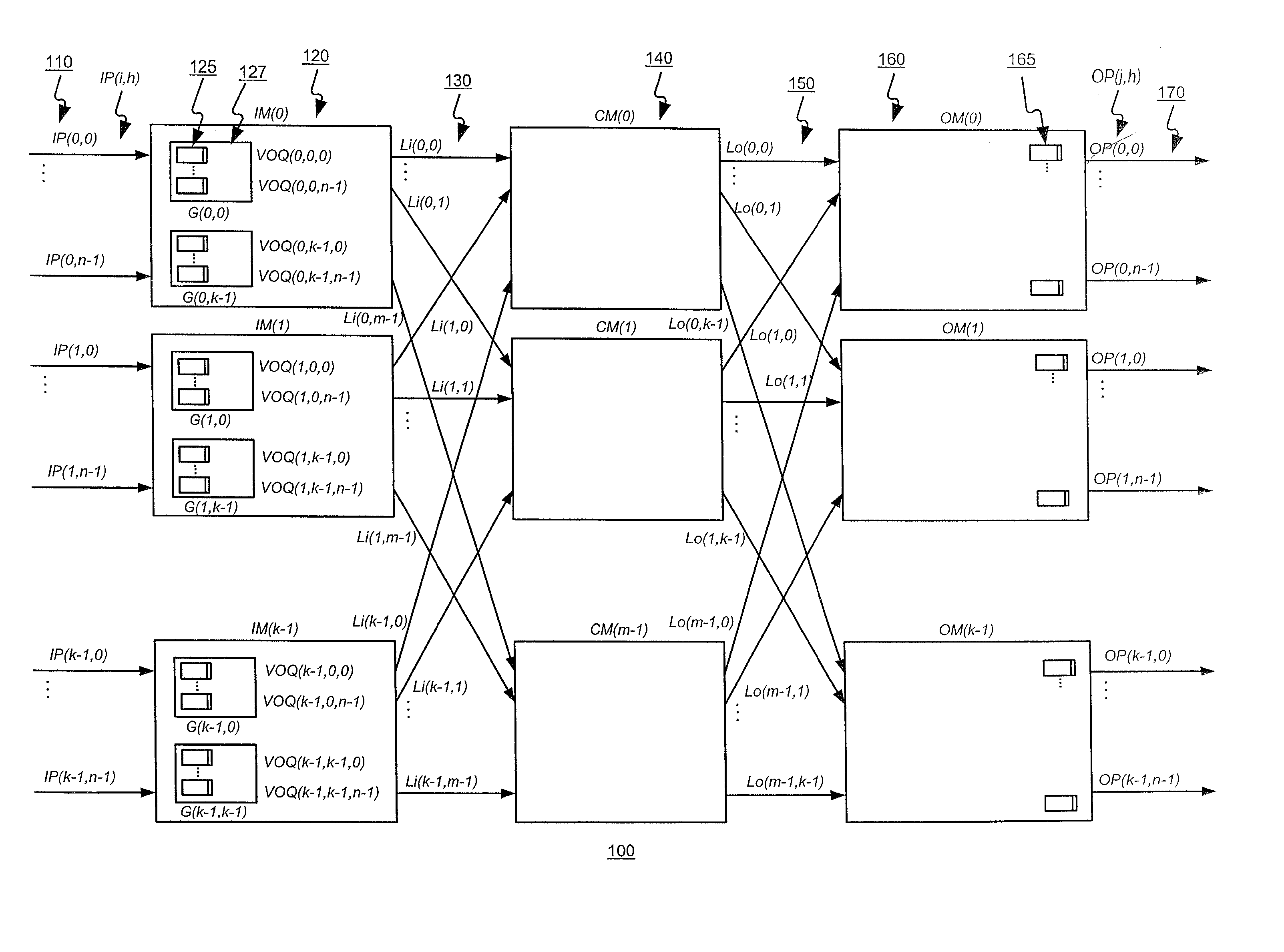

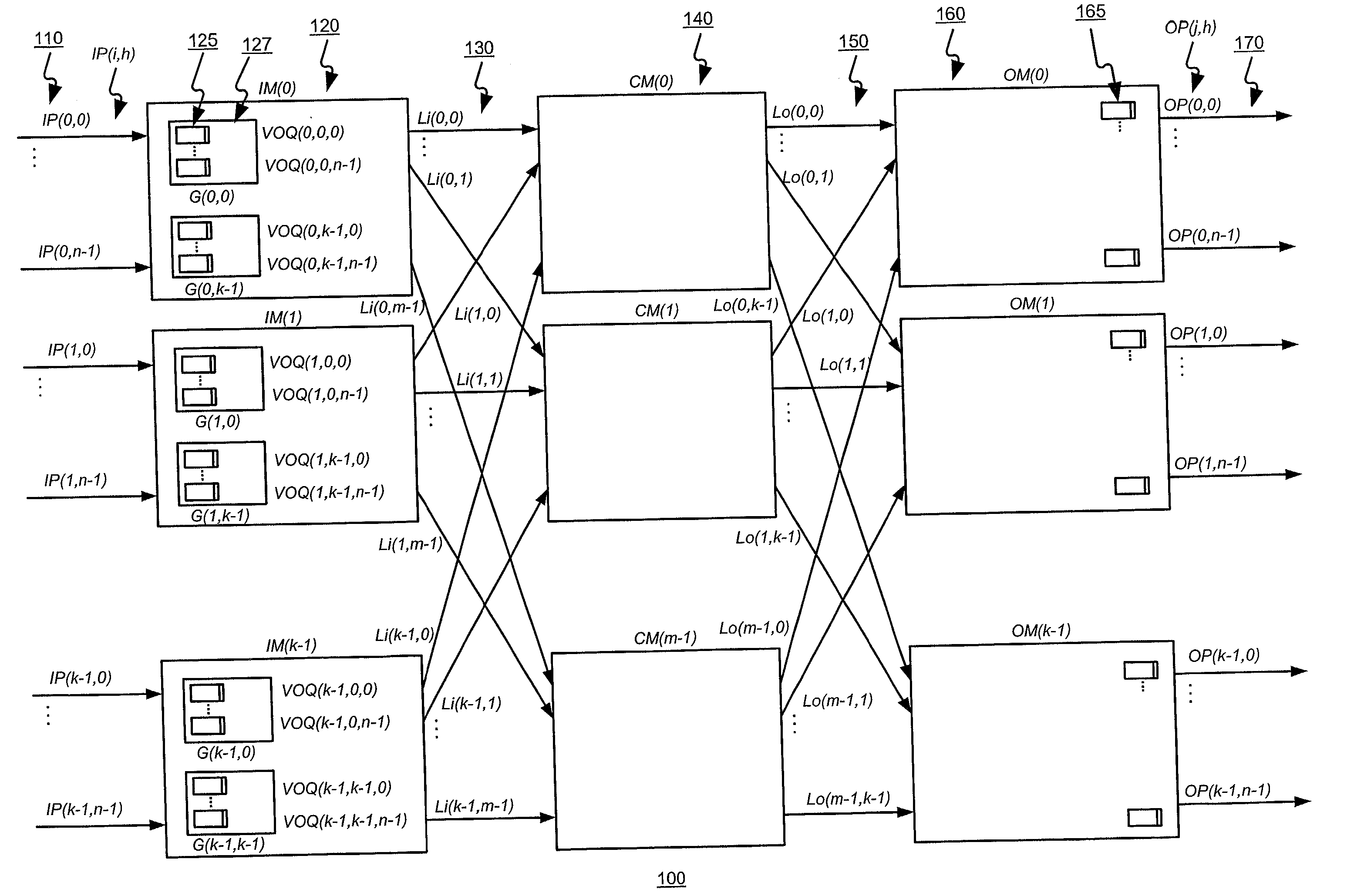

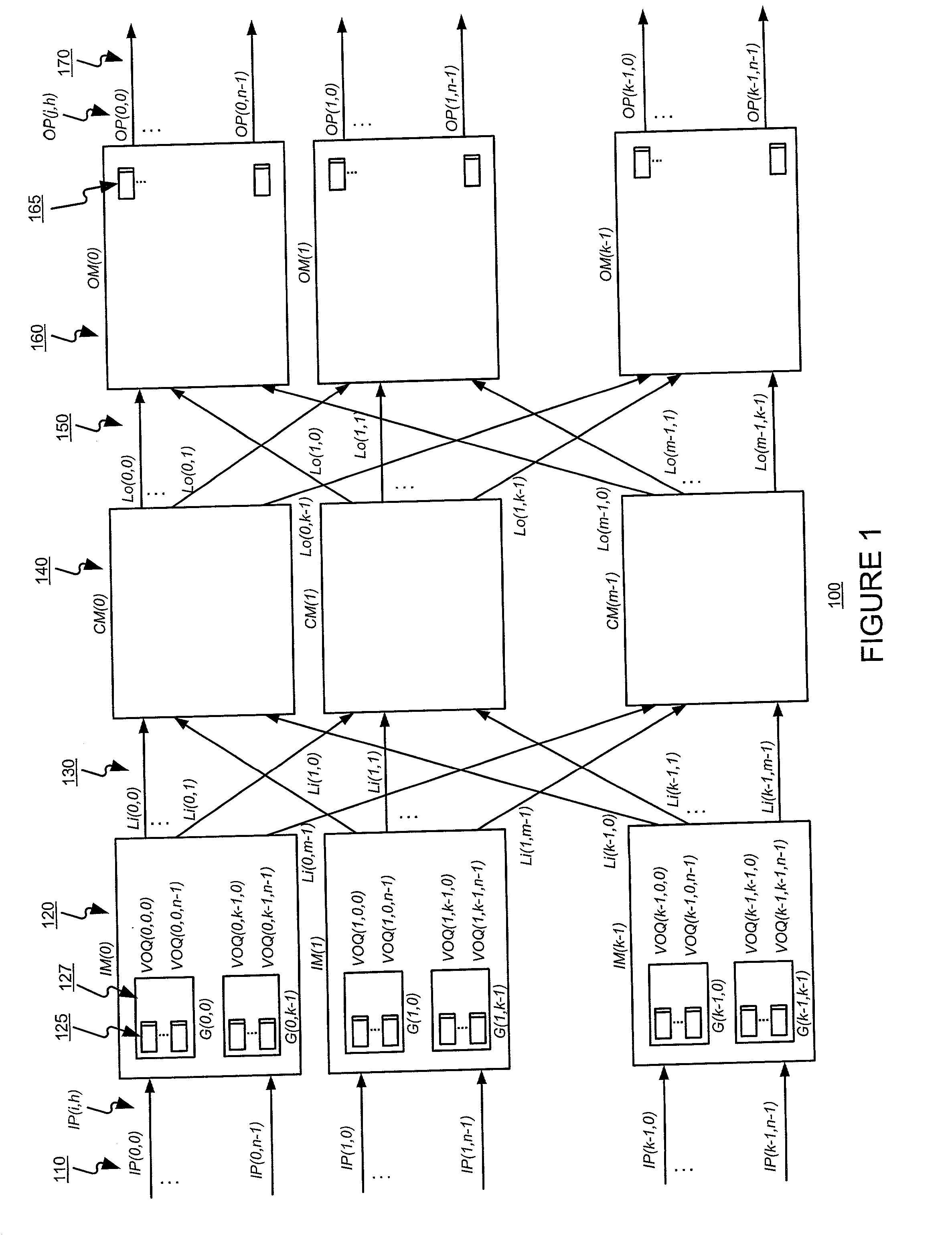

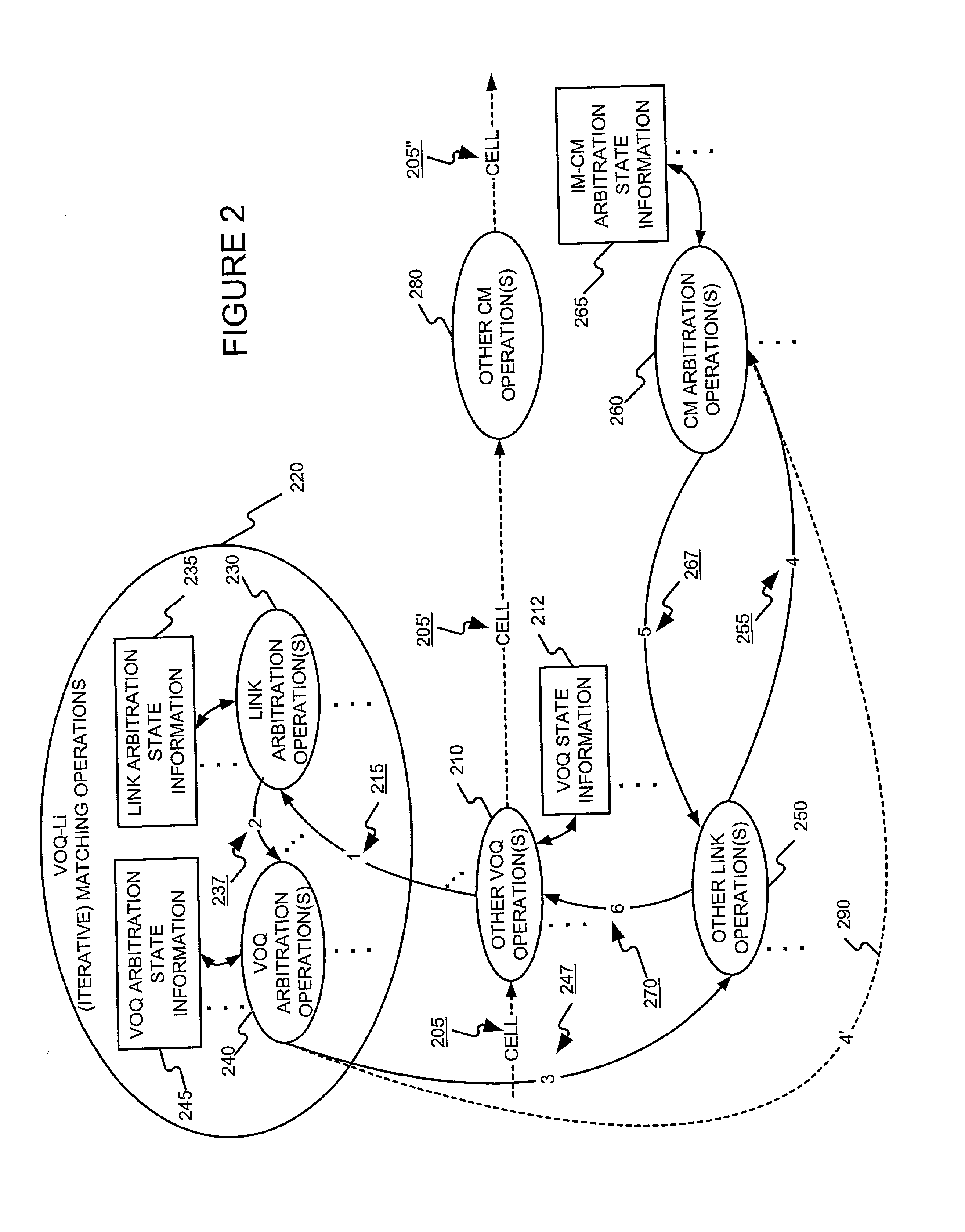

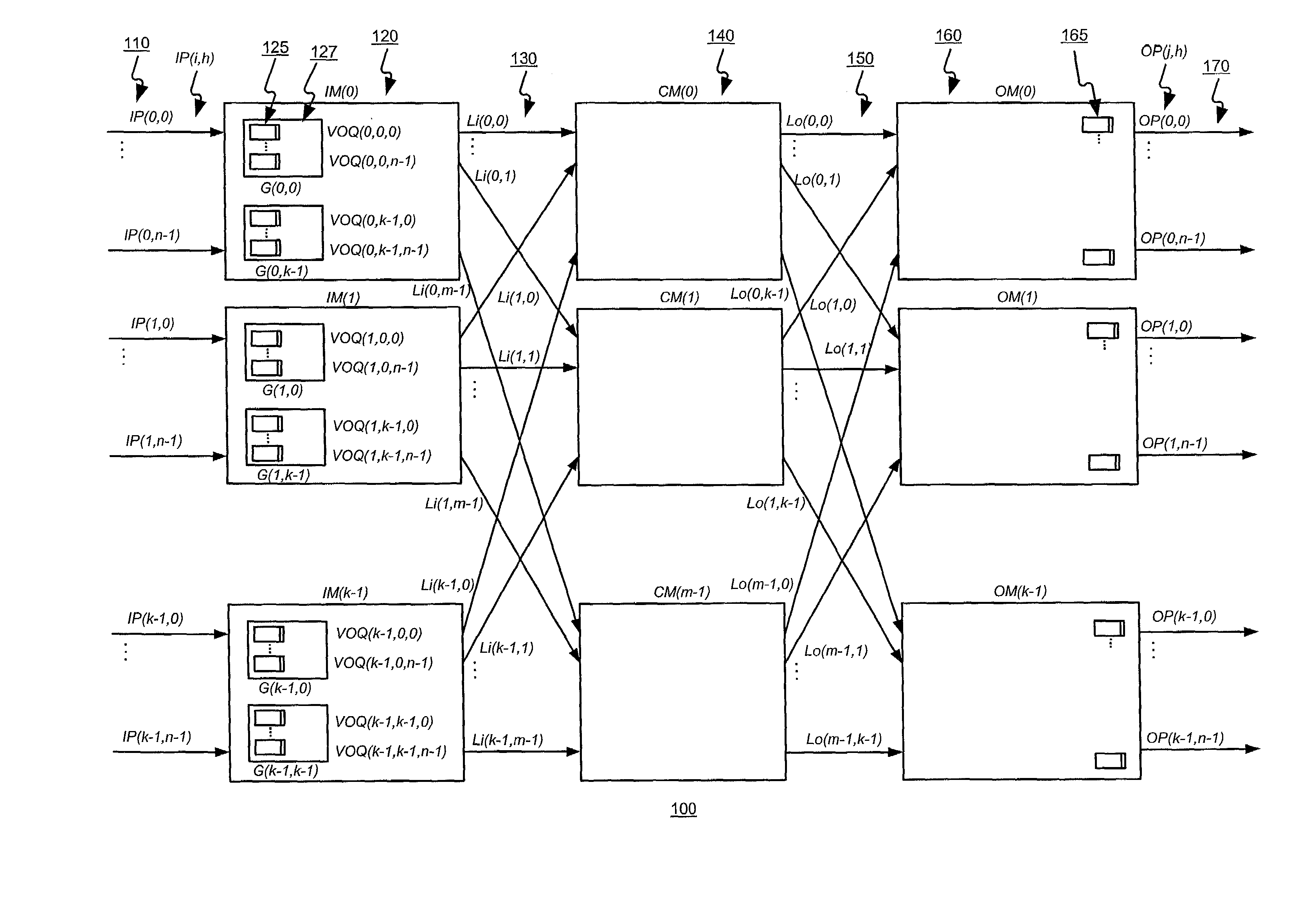

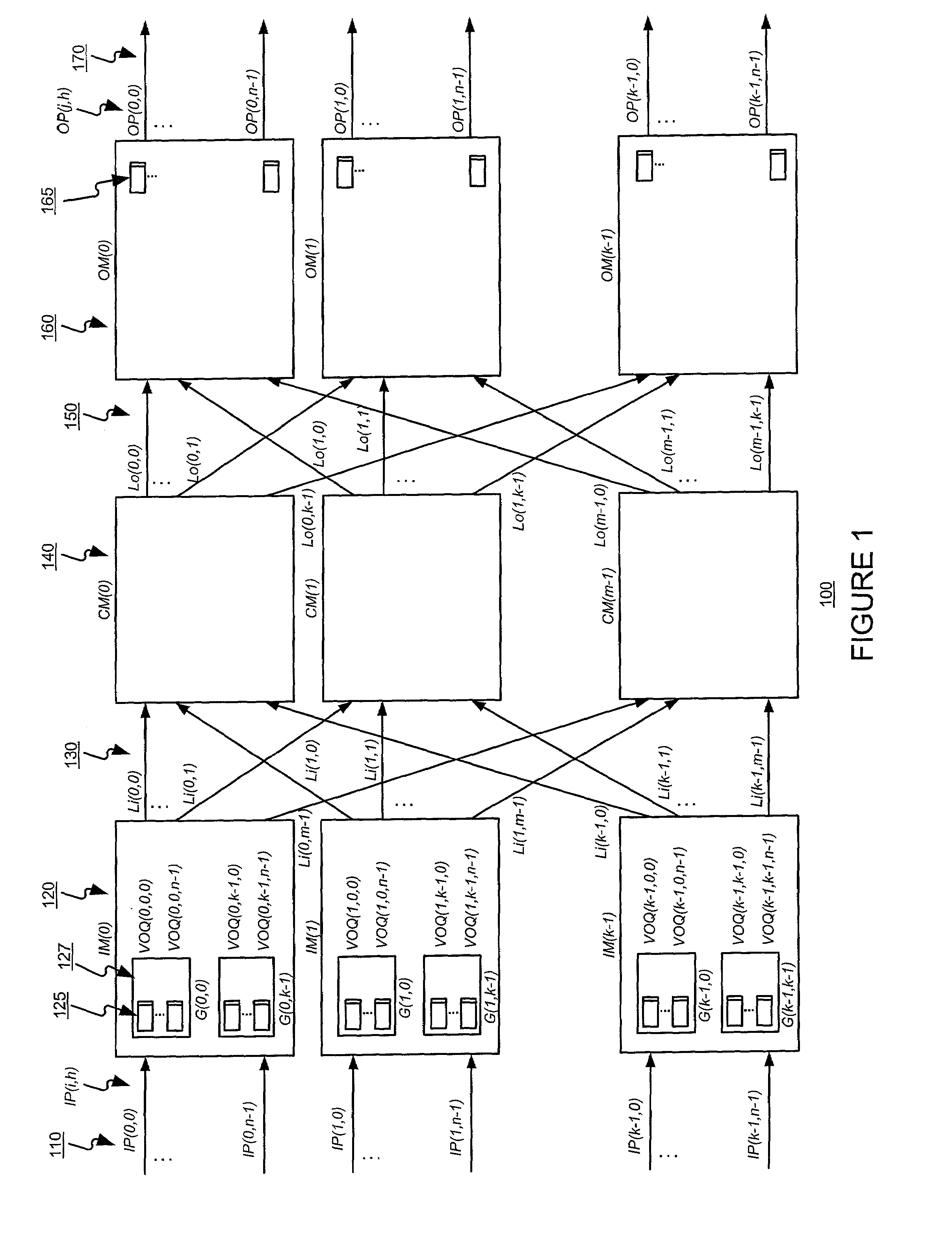

Scheduling the dispatch of cells in multistage switches using a hierarchical arbitration scheme for matching non-empty virtual output queues of a module with outgoing links of the module

ActiveUS20020061028A1Improve throughputMinimizing scheduling timeMultiplex system selection arrangementsData switching by path configurationComputer architectureVirtual Output Queues

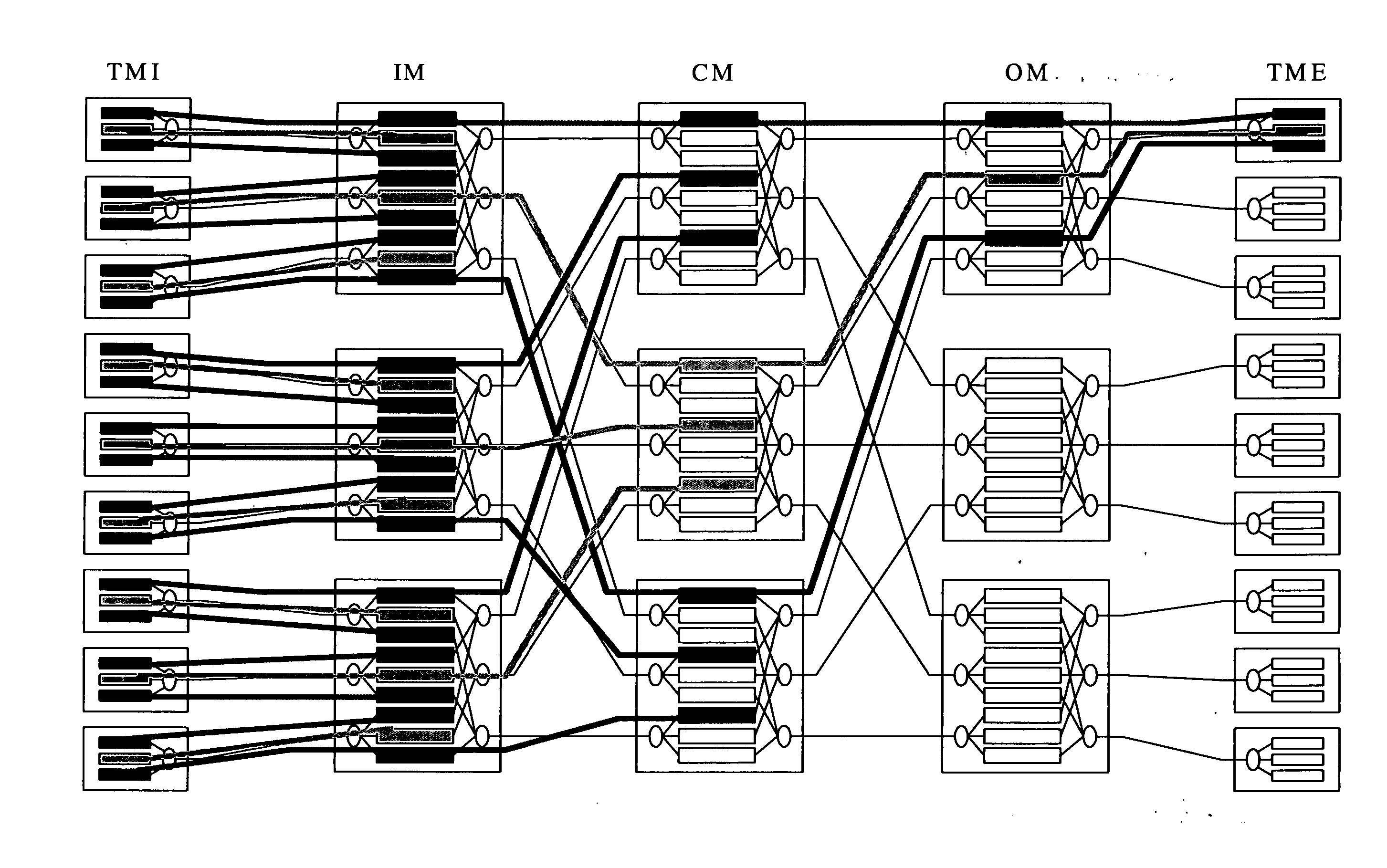

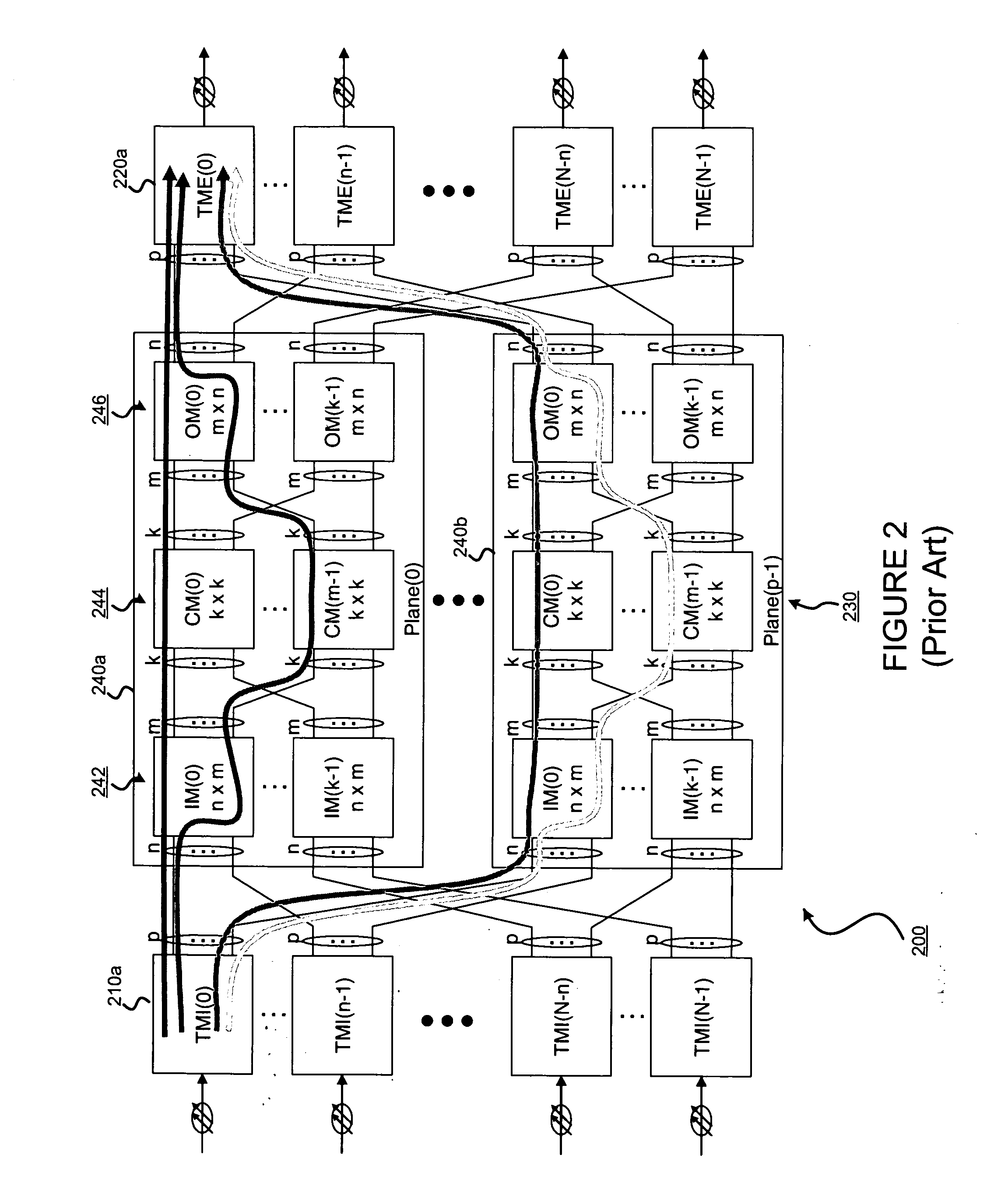

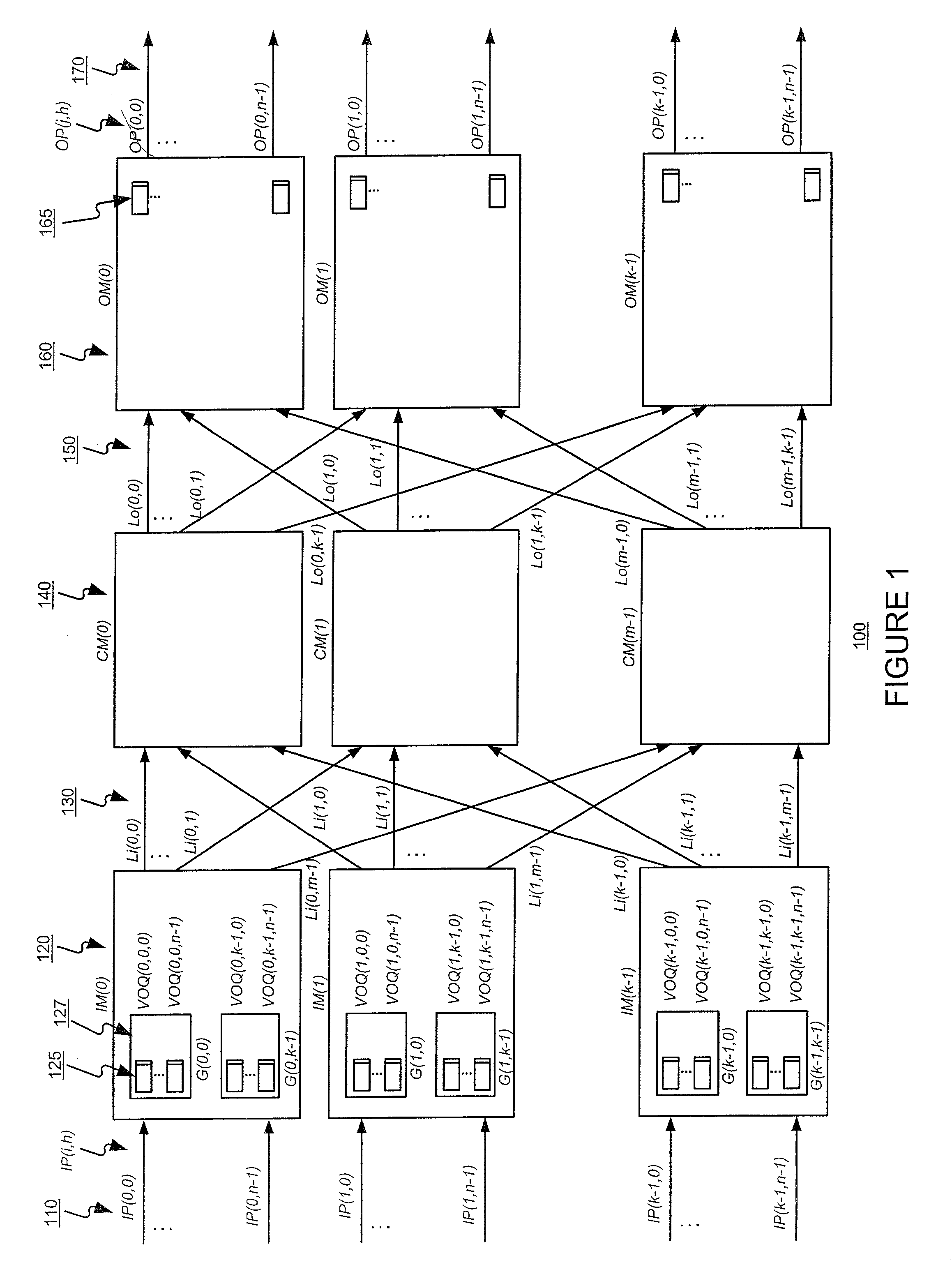

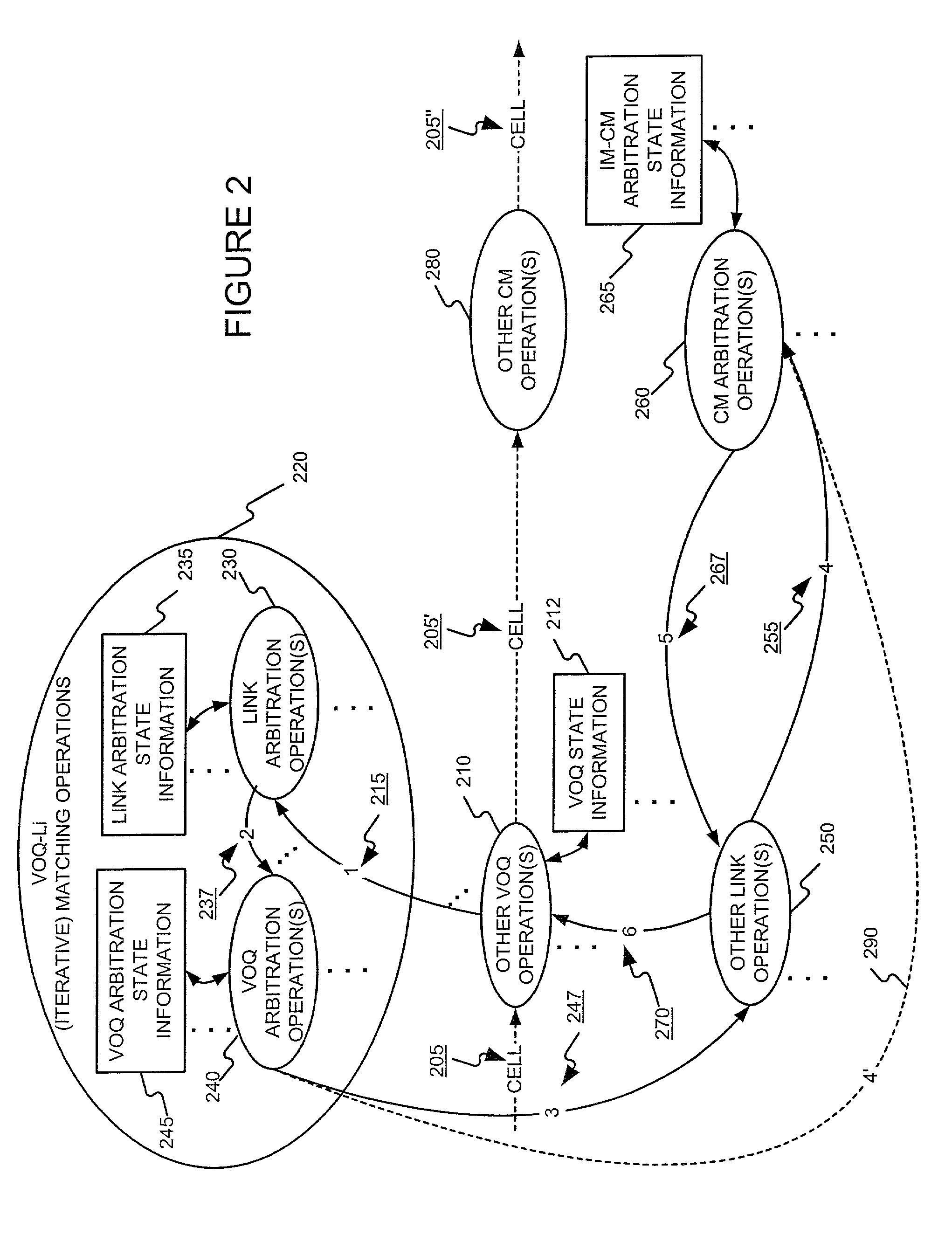

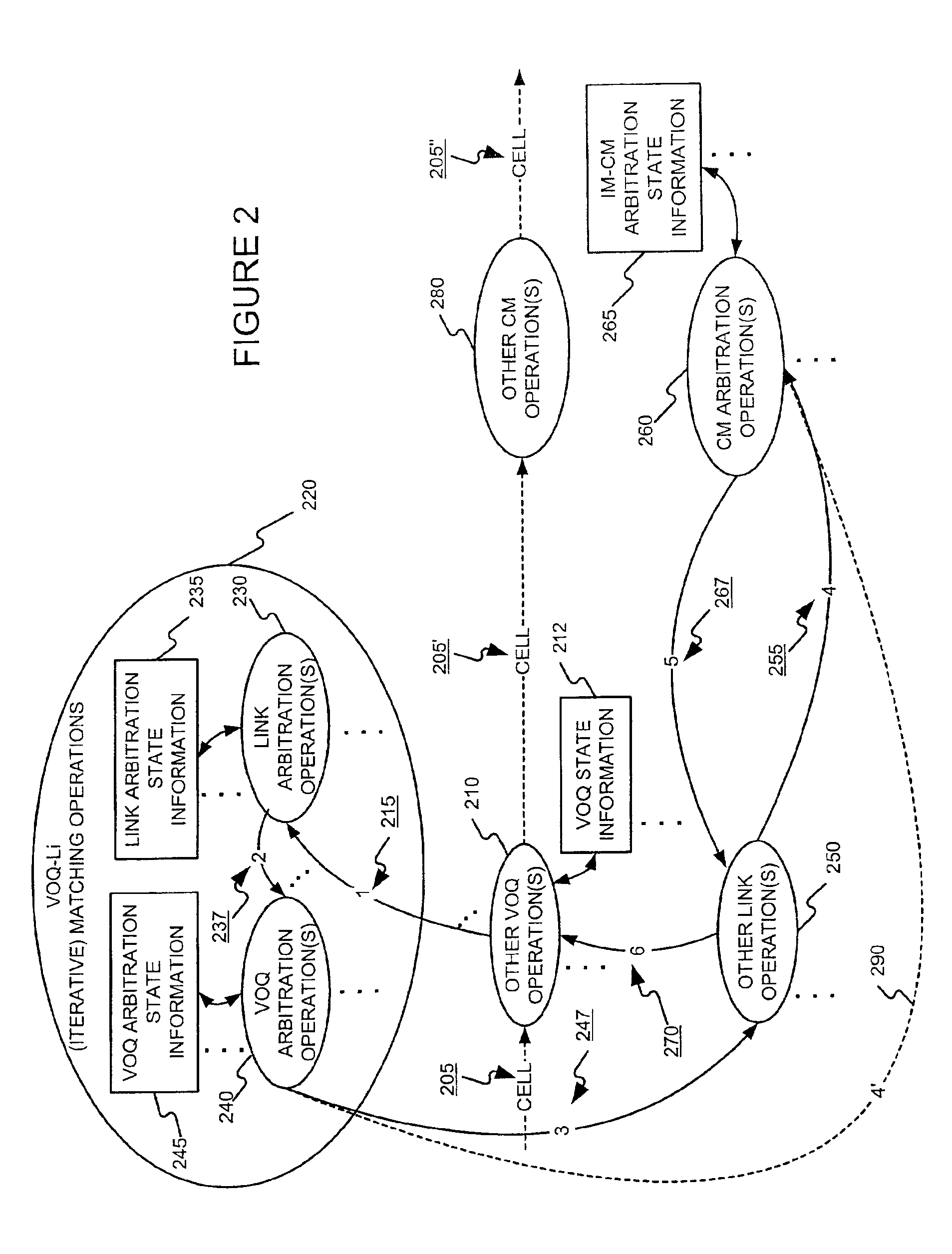

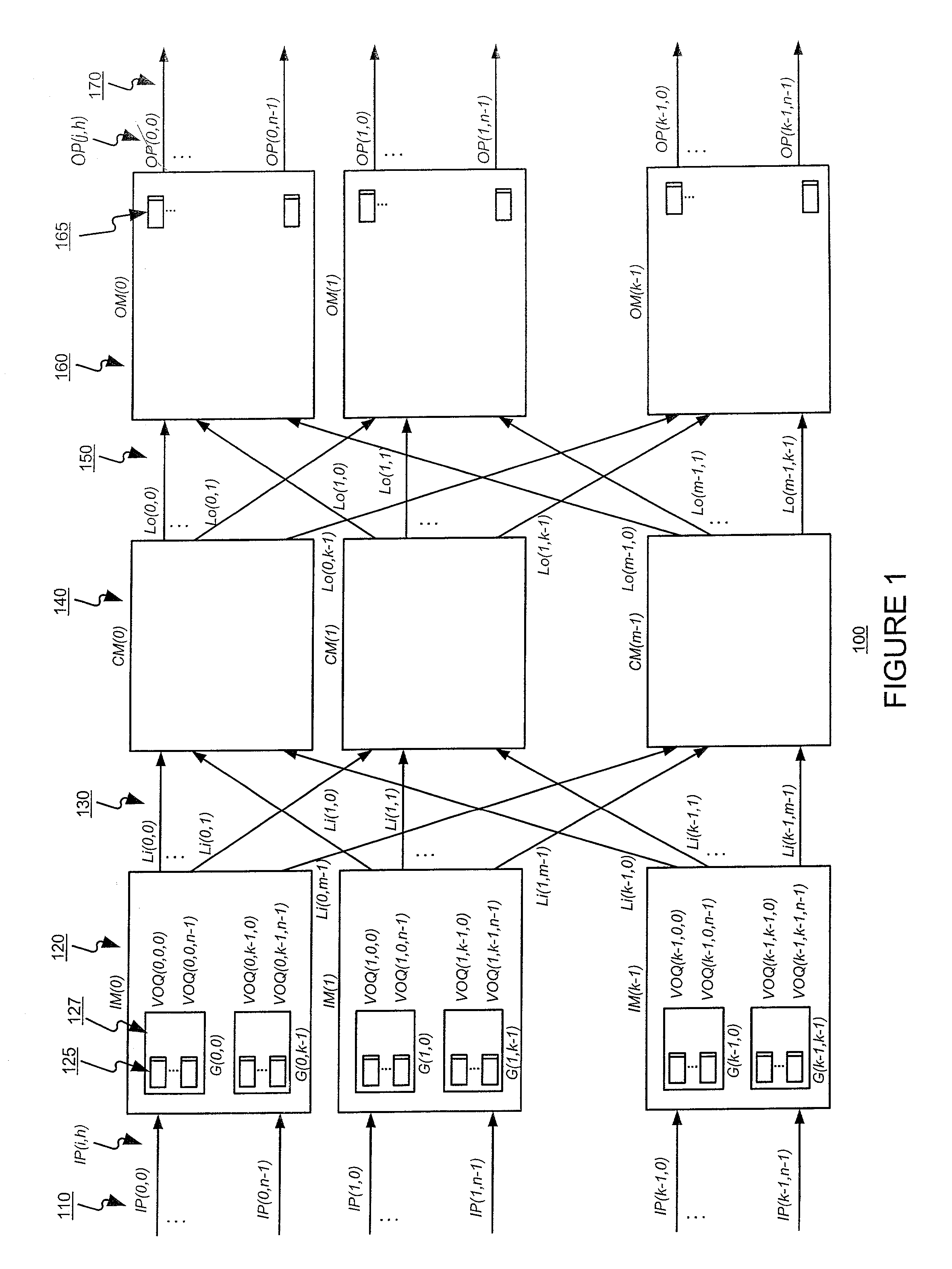

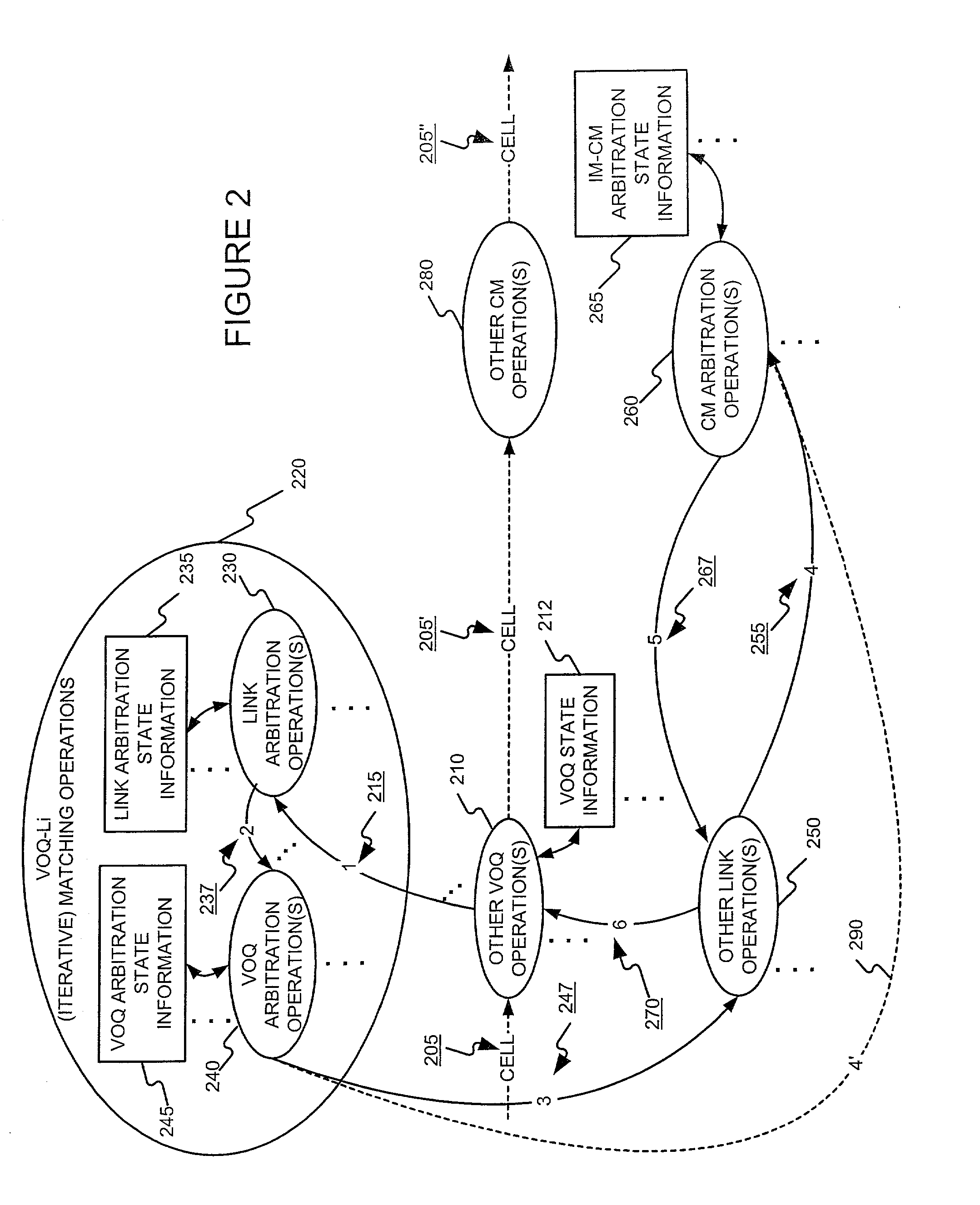

A multiple phase cell dispatch scheme, in which each phase uses a simple and fair (e.g., round robin) arbitration methods, is described. VOQs of an input module and outgoing links of the input module are matched in a first phase. An outgoing link of an input module is matched with an outgoing link of a central module in a second phase. The arbiters become desynchronized under stable conditions which contributes to the switch's high throughput characteristic. Using this dispatch scheme, a scalable multiple-stage switch able to operate at high throughput, without needing to resort to speeding up the switching fabric and without needing to use buffers in the second stage, is possible. The cost of speed-up and the cell out-of-sequence problems that may occur when buffers are used in the second stage are therefore avoided. A hierarchical arbitration scheme used in the input modules reduces the time needed for scheduling and reduces connection lines.

Owner:POLYTECHNIC INST OF NEW YORK

Parallel interchanging switching designing method

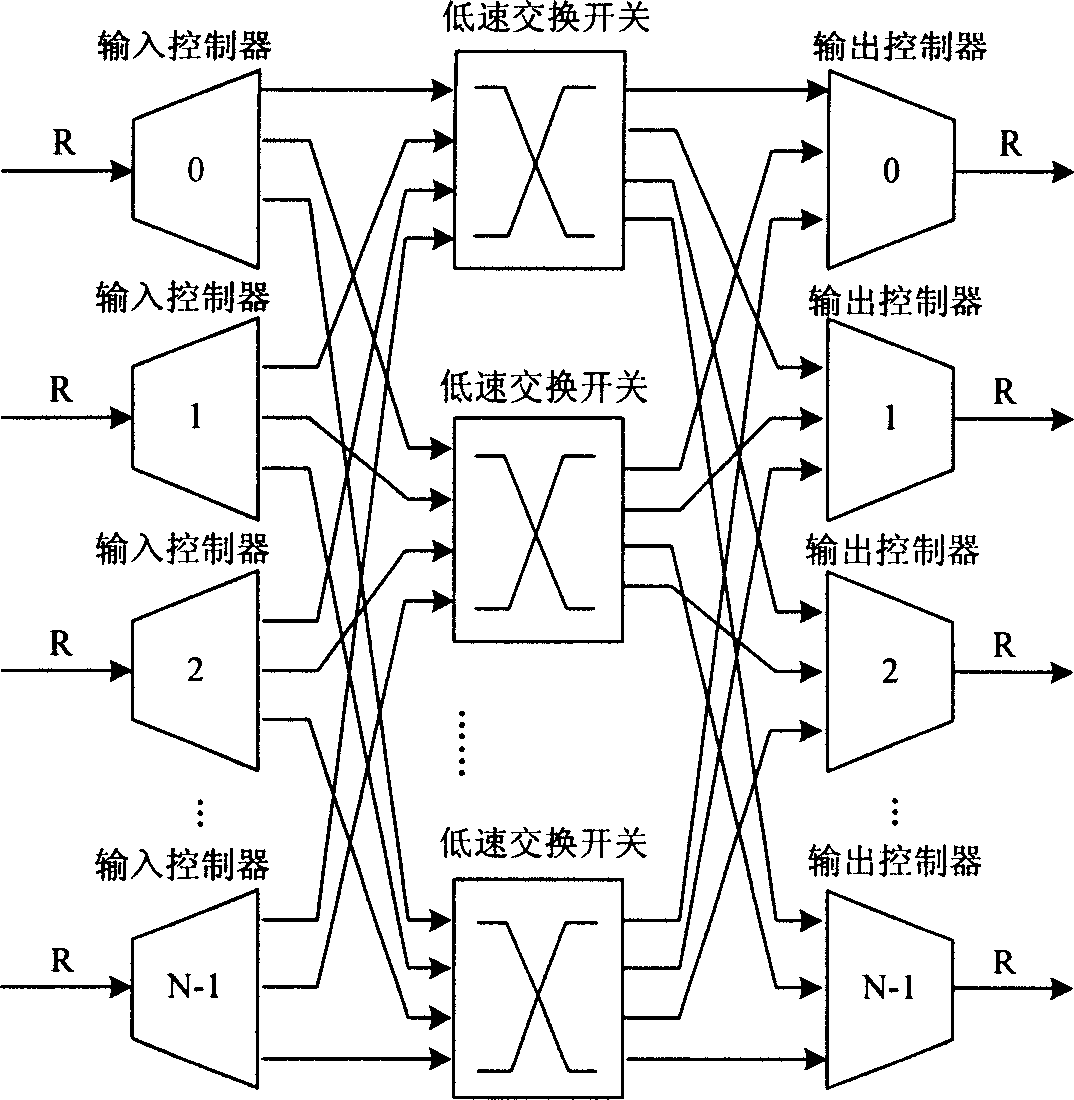

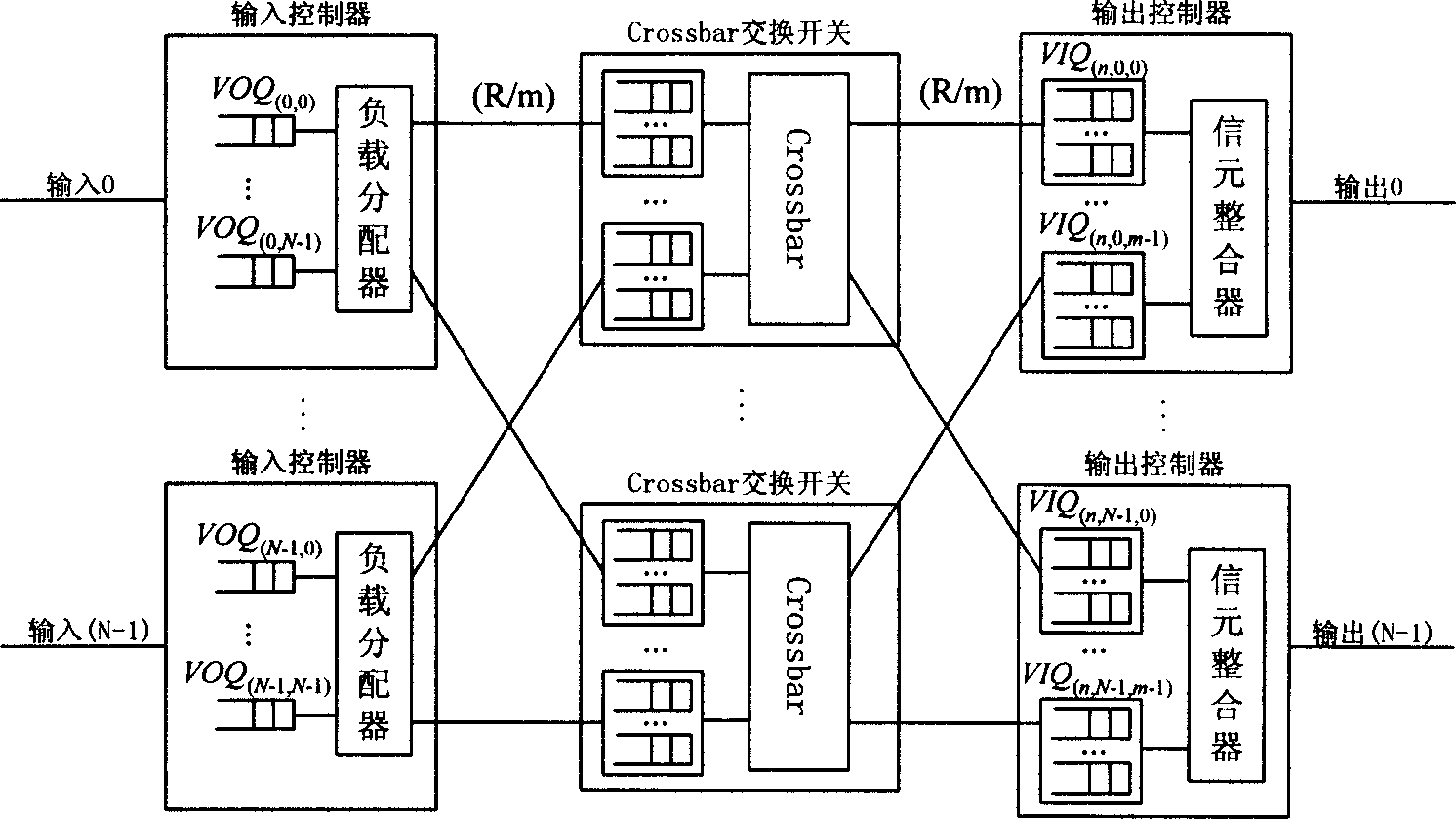

InactiveCN1819523ASolve the problem of short arbitration time and high difficulty in implementationDifficult to solveData switching switchboardsLow speedIntegrator

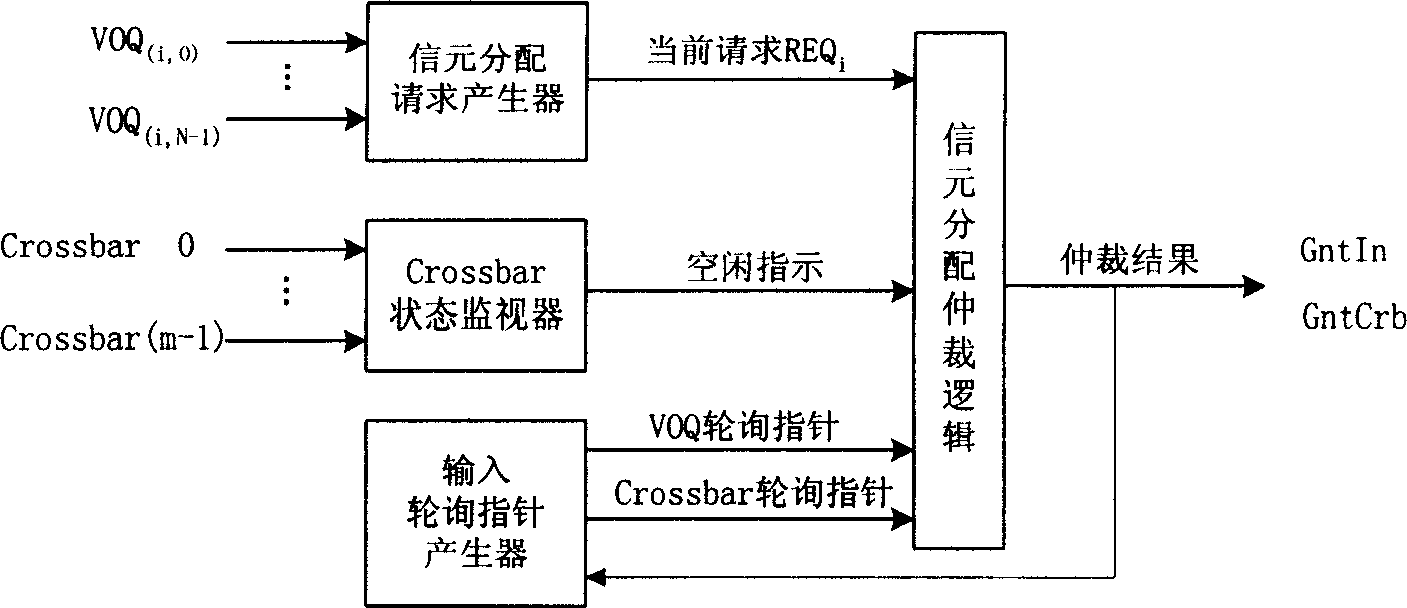

The technical proposal comprises: the parallel interchange box consists of N input controllers, m Crossbar interchange boxes with low speed input buffer and N output controllers. Each input controller contains N virtual output queues and a load divider. The virtual output queue is cell buffer used to save input cell. The load divider is used for keeping load balance and determining the cell sent to Crossbar interchange box in each time slot, and is composed of a cell dividing request generator, a Crossbar state monitor, an input polling pointer generator and a cell dividing arbitration logic. Each output controller comprises mN virtual input queues and a cell integrator. The virtual output queue is a cell buffer. The cell arriving at the output controller firstly queues at the virtual output queue. The cell integrator rearranges the cells and is composed of cell rearrangement request generator, output polling pointer generator and cell rearrangement arbitration logic.

Owner:NAT UNIV OF DEFENSE TECH

Two-level switch-based load balanced scheduling method

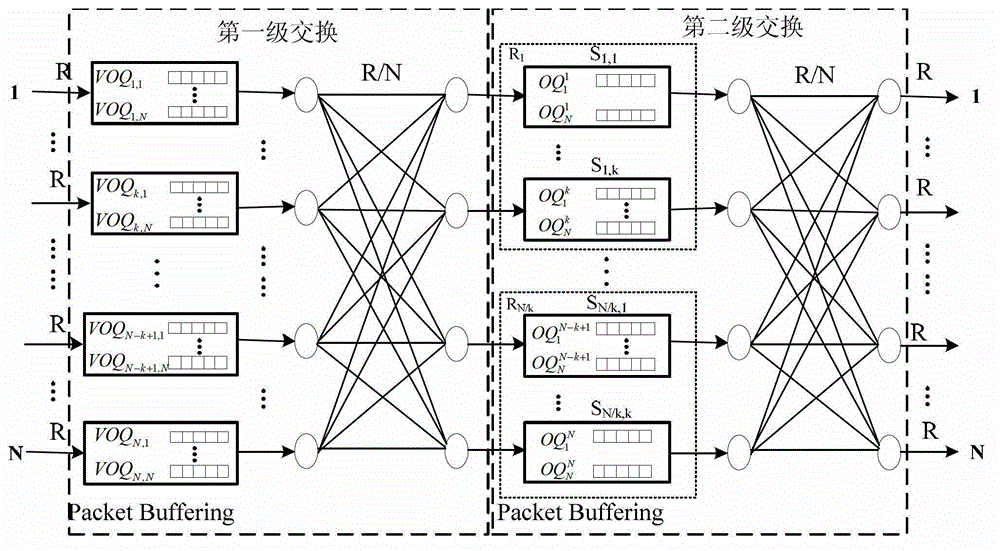

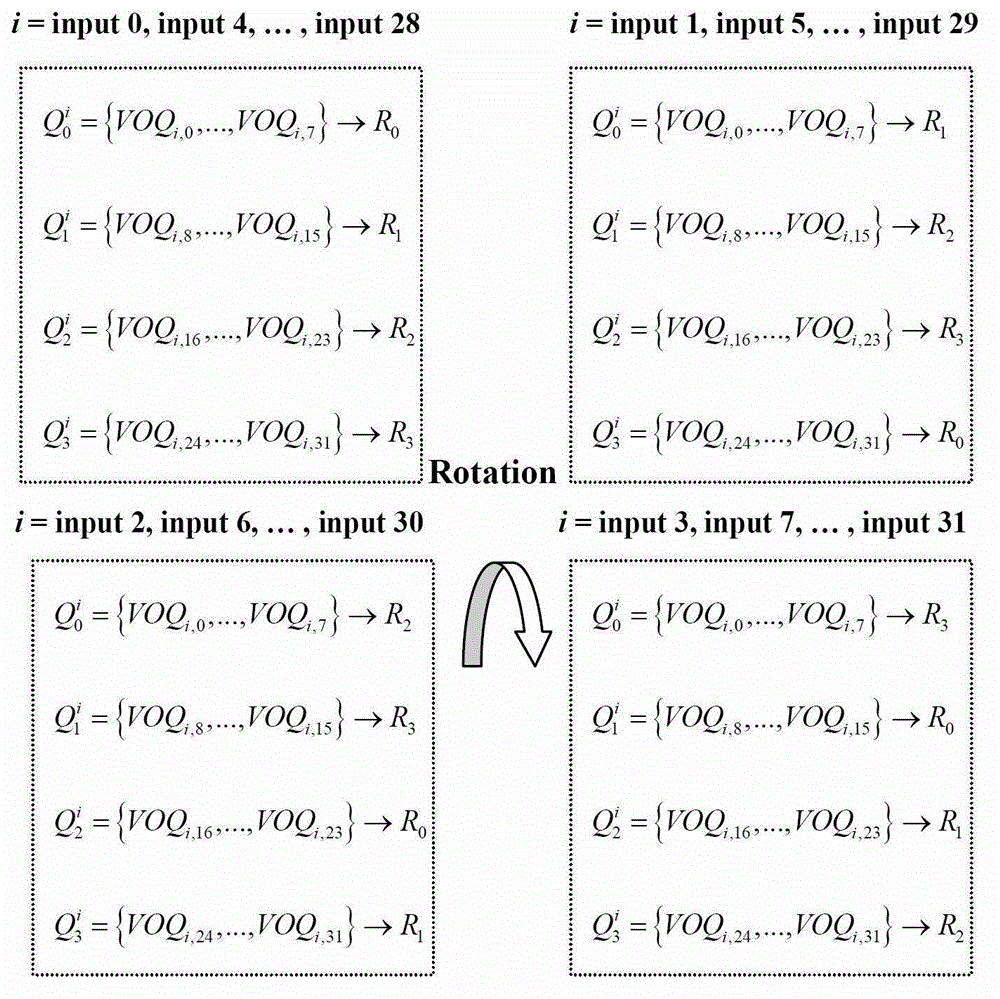

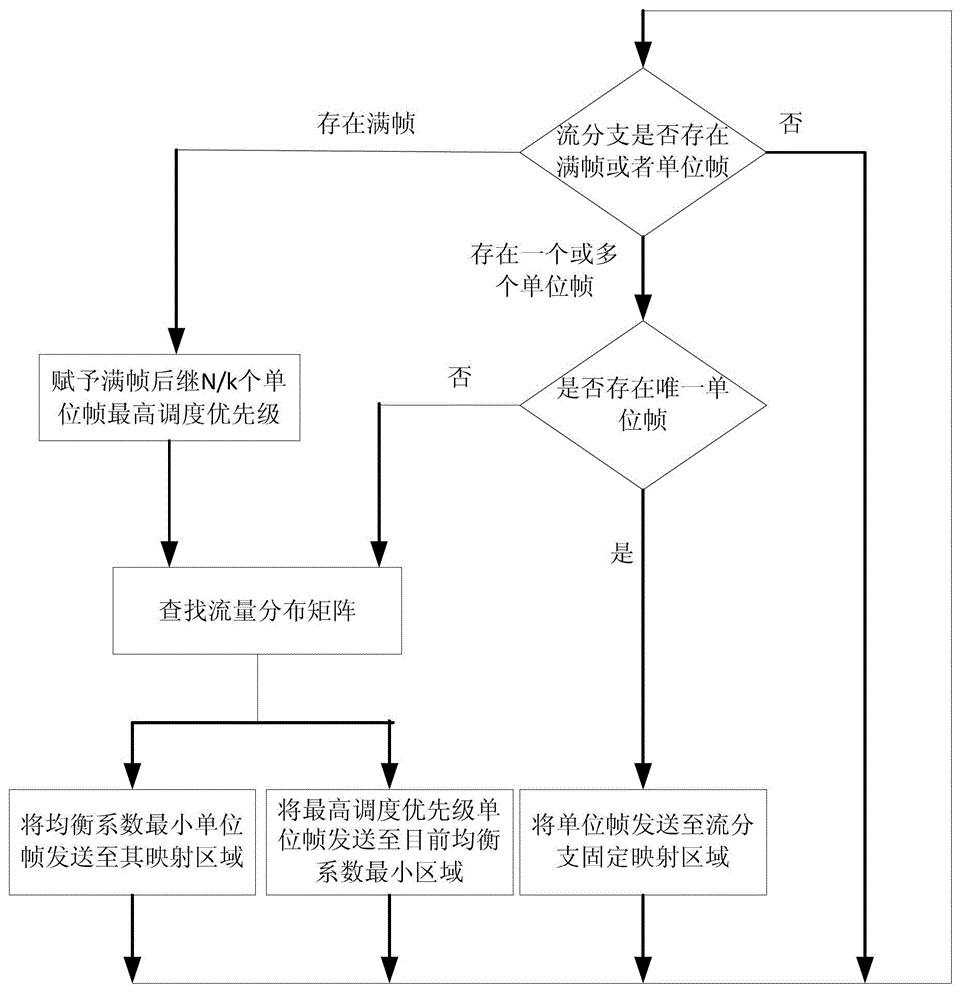

ActiveCN103152281AGuaranteed orderTo achieve orderData switching networksTraffic capacityDistribution matrix

The invention discloses a two-level switch-based load balanced scheduling method, which comprises the following steps that first-level input ports buffer arriving cells in a virtual output queue (VOQ) according to destination ports; a scheduler switches messages to second-level input ports through a first-level switching network, wherein k cells from the same stream in the VOQ are called unit frames; each first-level input port executes minimum length scheduling according to a traffic distribution matrix, and transmits the unit frames of the same stream to a fixed mapping area of the stream through the first-level switching network in k continuous external timeslots; and N second-level input ports are sequentially divided into N / k groups of which each comprises k continuous second-level input ports forming an area, and each area buffers the cells in an output queue (OQ) according to the destination ports, and switches the cells to the destination output ports through a second-level switching network. The method has the advantages that a scheduling process is simple, computation or communication is not required, the method is easily implemented by hardware, the throughput of 100 percent is ensured, the sequence of the messages can be ensured, and the like.

Owner:NAT UNIV OF DEFENSE TECH

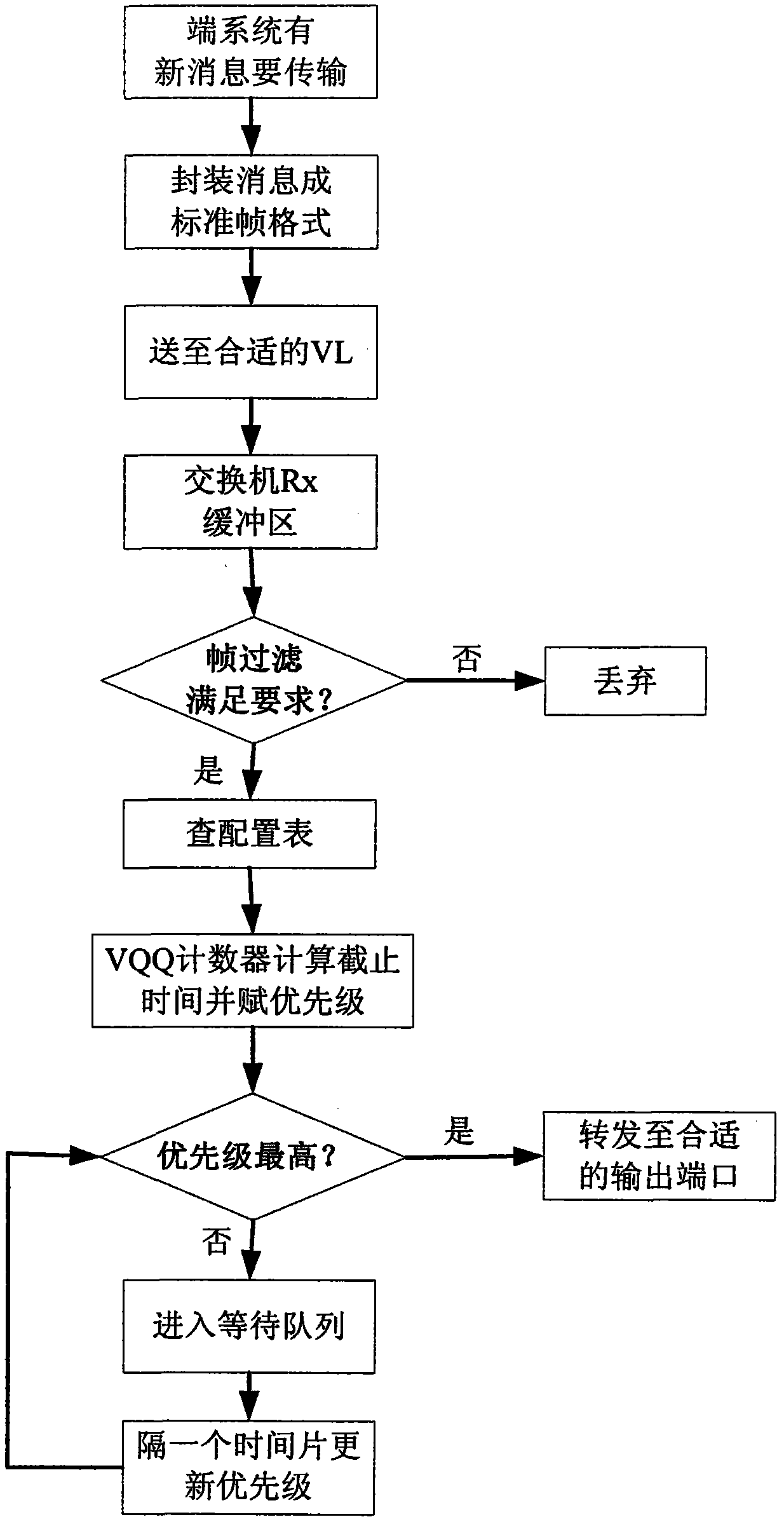

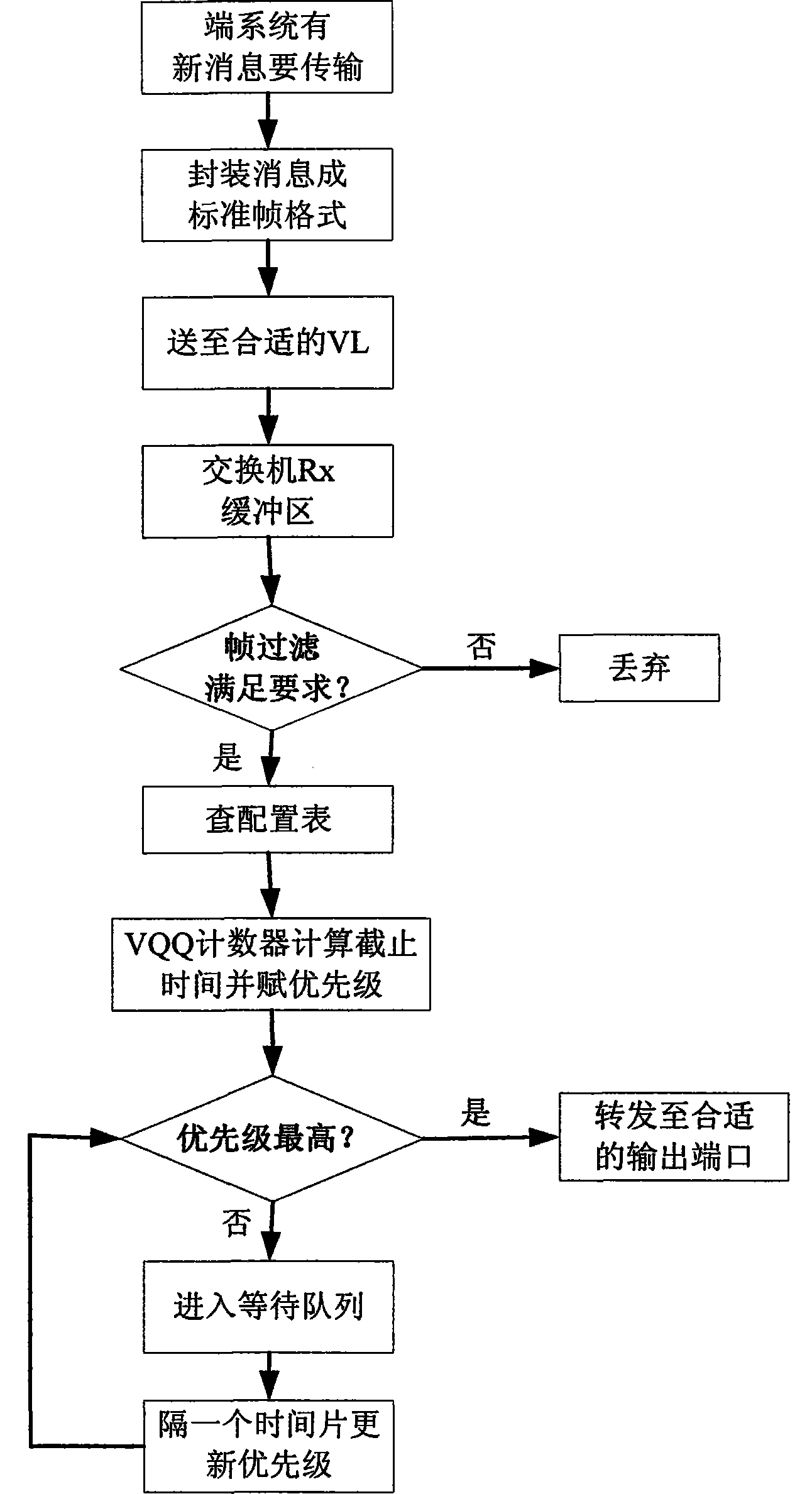

Scheduling method of avionics full duplex switched Ethernet (AFDX) exchanger

InactiveCN102201988AImprove service qualityReduce latencyData switching networksAviationQos quality of service

The invention discloses a scheduling method of an avionics full duplex switched Ethernet (AFDX) exchanger. The method comprises the following steps of: encapsulating a message to be sent into a message of a standard Ethernet format and filtering; sending the filtered message to an output buffer area of the AFDX exchanger according to a route configuration table and establishing a virtual output queue (VQQ); calculating the urgency degree of each VQQ message and setting an initial priority; and sequentially sending messages according to priorities. By adopting the scheduling method, the delay time of high-priority messages is reduced effectively, and the service quality of an AFDX network is enhanced.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

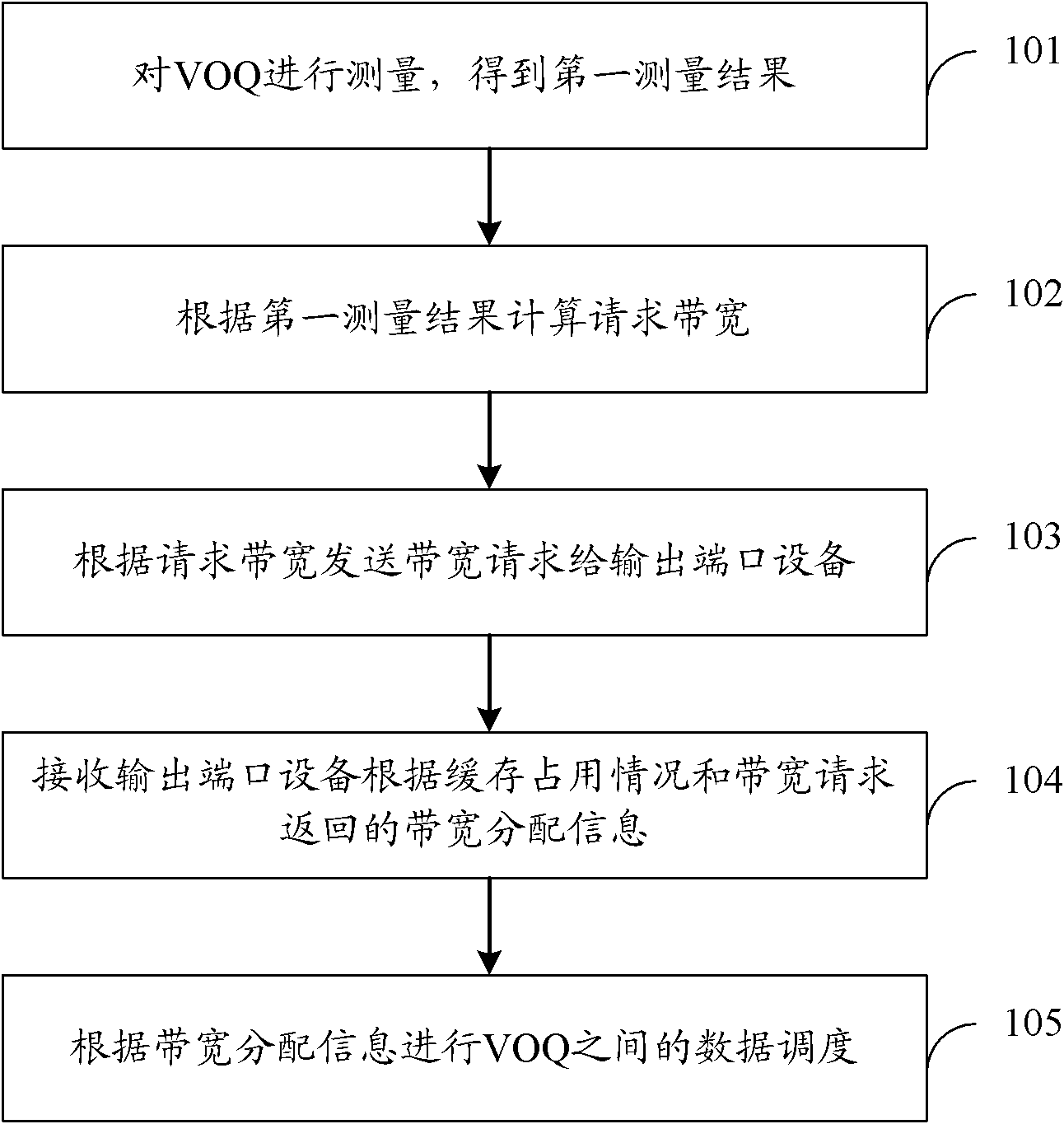

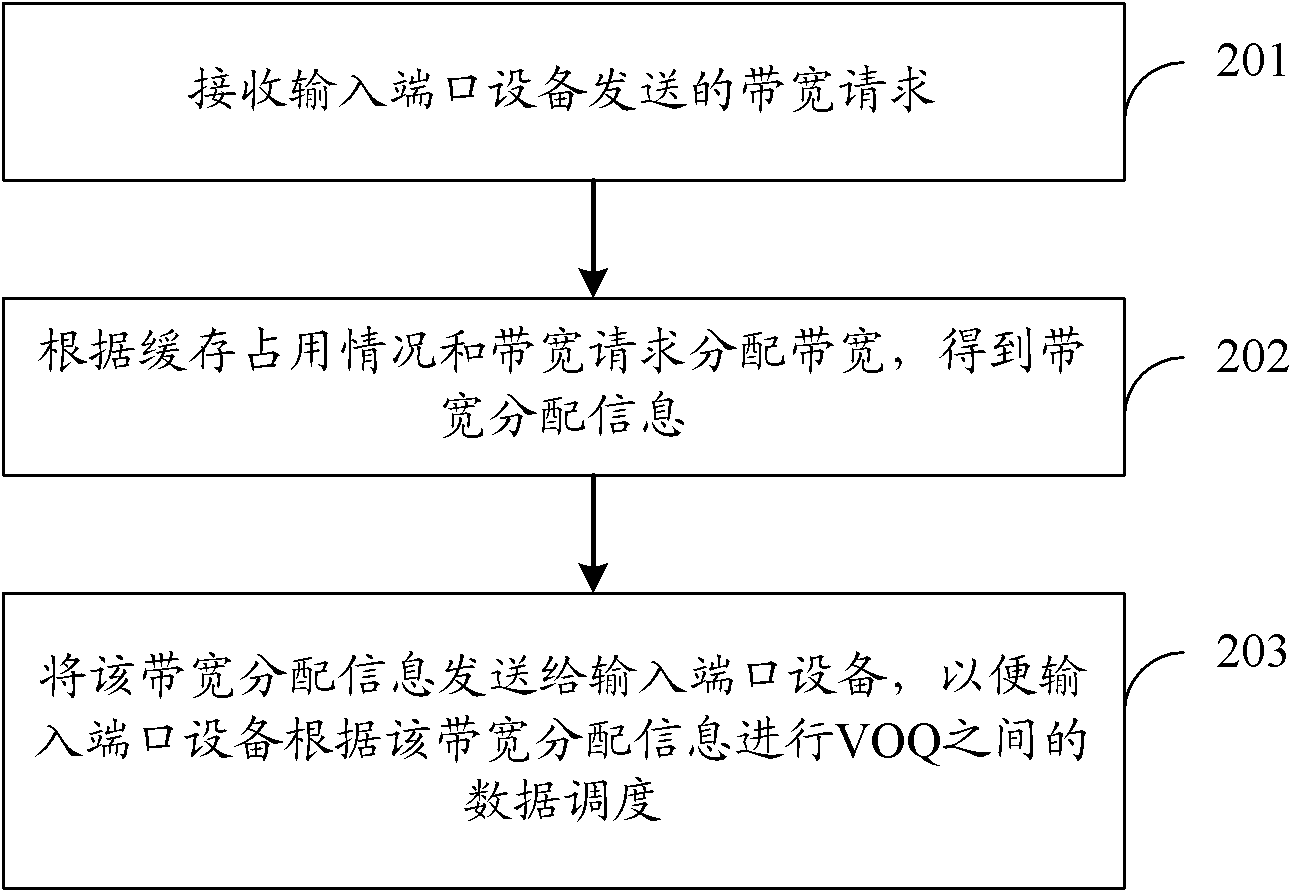

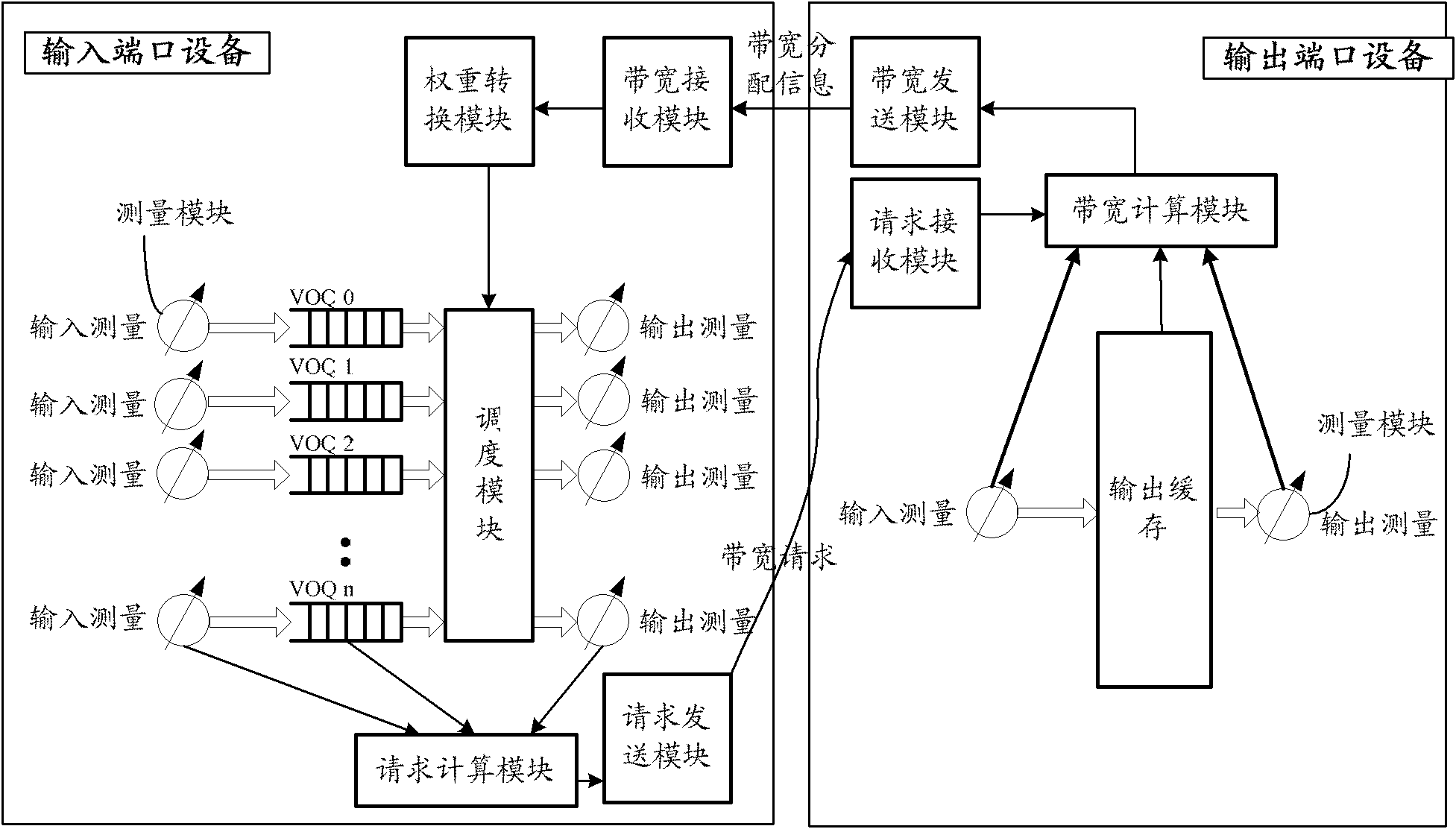

Scheduling method, device and system of data exchange network

InactiveCN102611605AData scheduling is reasonable and effectiveImprove accuracyData switching networksData streamExchange network

The invention discloses a scheduling method, device and system of a data exchange network. According to the embodiment of the invention, input port equipment is used for measuring a VOQ (Virtual Output Queue), and then a request bandwidth is calculated according to a measured result. Compared with the method for determining the request bandwidth by taking a priority level of a data flow according to the cache state of the data flow in the prior art, the method for calculating the request bandwidth has the advantages that the current state of the data flow can be more accurately reflected, so that the data scheduling between the input port equipment and the VOQ can be more reasonable and effective, and the data scheduling can be adapted to the actual state of the current network. Therefore, the scheduling method, device and system of the data exchange network can be more suitable for a large-sized, high-speed and long-distance exchange network.

Owner:HUAWEI TECH CO LTD

Scheduling the dispatch of cells in multistage switches using a hierarchical arbitration scheme for matching non-empty virtual output queues of a module with outgoing links of the module

InactiveUS7103056B2Improve throughputMinimize timeMultiplex system selection arrangementsData switching by path configurationComputer architectureSecondary stage

A multiple phase cell dispatch scheme, in which each phase uses a simple and fair (e.g., round robin) arbitration methods, is described. VOQs of an input module and outgoing links of the input module are matched in a first phase. An outgoing link of an input module is matched with an outgoing link of a central module in a second phase. The arbiters become desynchronized under stable conditions which contributes to the switch's high throughput characteristic. Using this dispatch scheme, a scalable multiple-stage switch able to operate at high throughput, without needing to resort to speeding up the switching fabric and without needing to use buffers in the second stage, is possible. The cost of speed-up and the cell out-of-sequence problems that may occur when buffers are used in the second stage are therefore avoided. A hierarchical arbitration scheme used in the input modules reduces the time needed for scheduling and reduces connection lines.

Owner:POLYTECHNIC INST OF NEW YORK

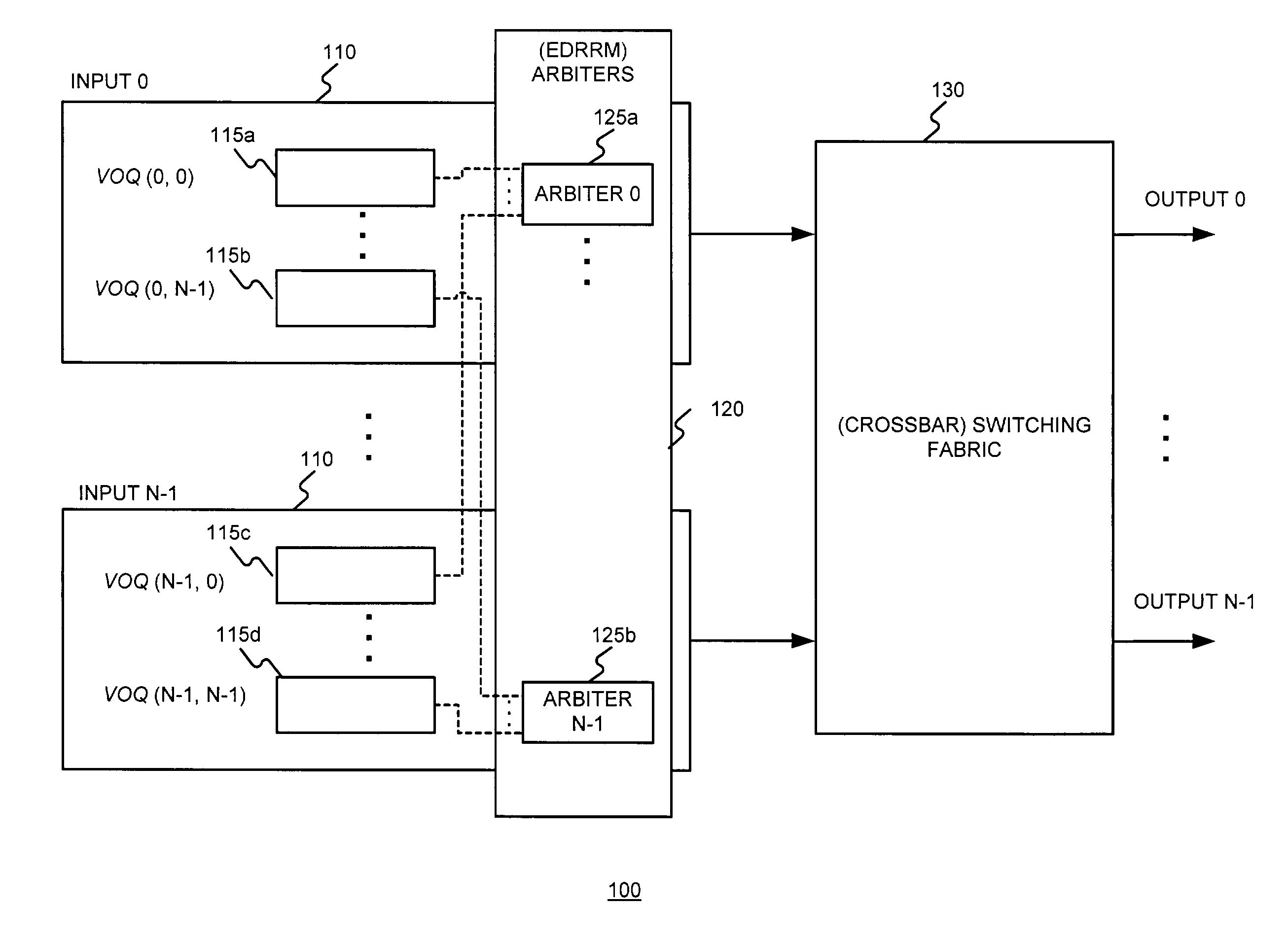

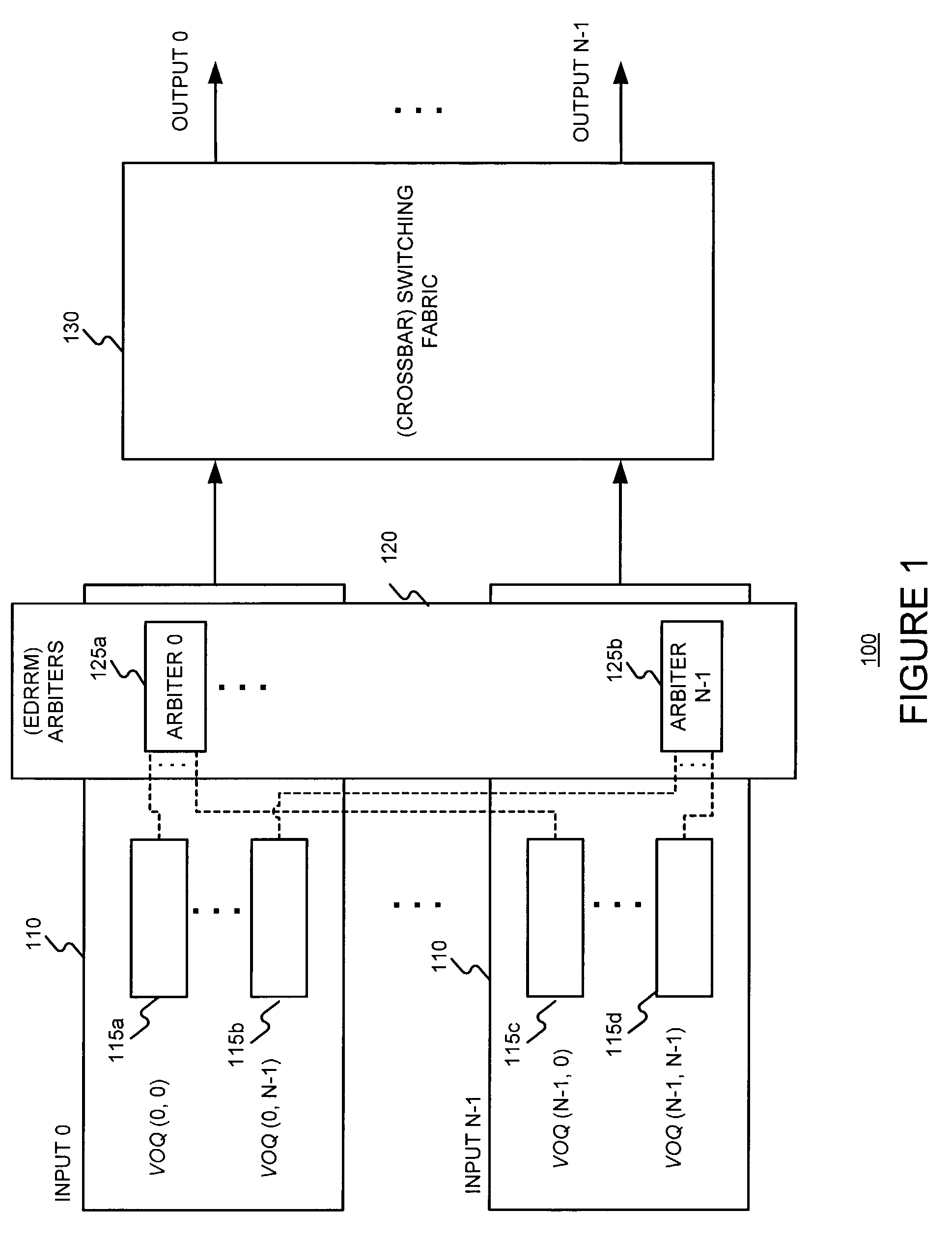

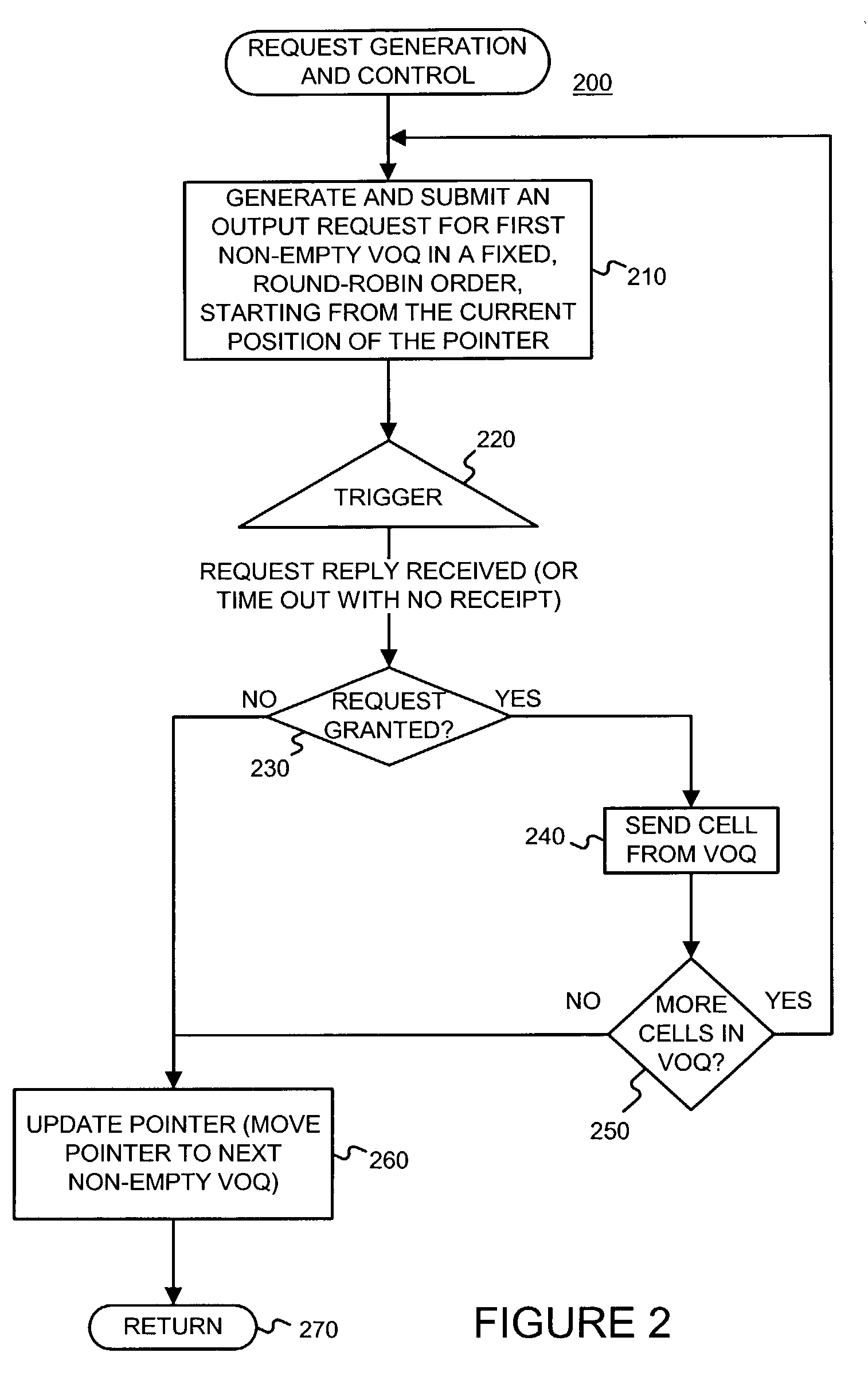

Arbitration using dual round robin matching with exhaustive service of winning virtual output queue

An exhaustive service dual round-robin matching (EDRRM) arbitration process amortizes the cost of a match over multiple time slots. It achieves high throughput under nonuniform traffic. Its delay performance is not sensitive to traffic burstiness, switch size and packet length. Since cells belonging to the same packet are transferred to the output continuously, packet delay performance is improved and packet reassembly is simplified.

Owner:POLYTECHNIC INST OF NEW YORK

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com