Patents

Literature

1367 results about "Application-specific integrated circuit" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

An application-specific integrated circuit (ASIC /ˈeɪsɪk/) is an integrated circuit (IC) customized for a particular use, rather than intended for general-purpose use. For example, a chip designed to run in a digital voice recorder or a high-efficiency bitcoin miner is an ASIC. Application-specific standard products (ASSPs) are intermediate between ASICs and industry standard integrated circuits like the 7400 series or the 4000 series.

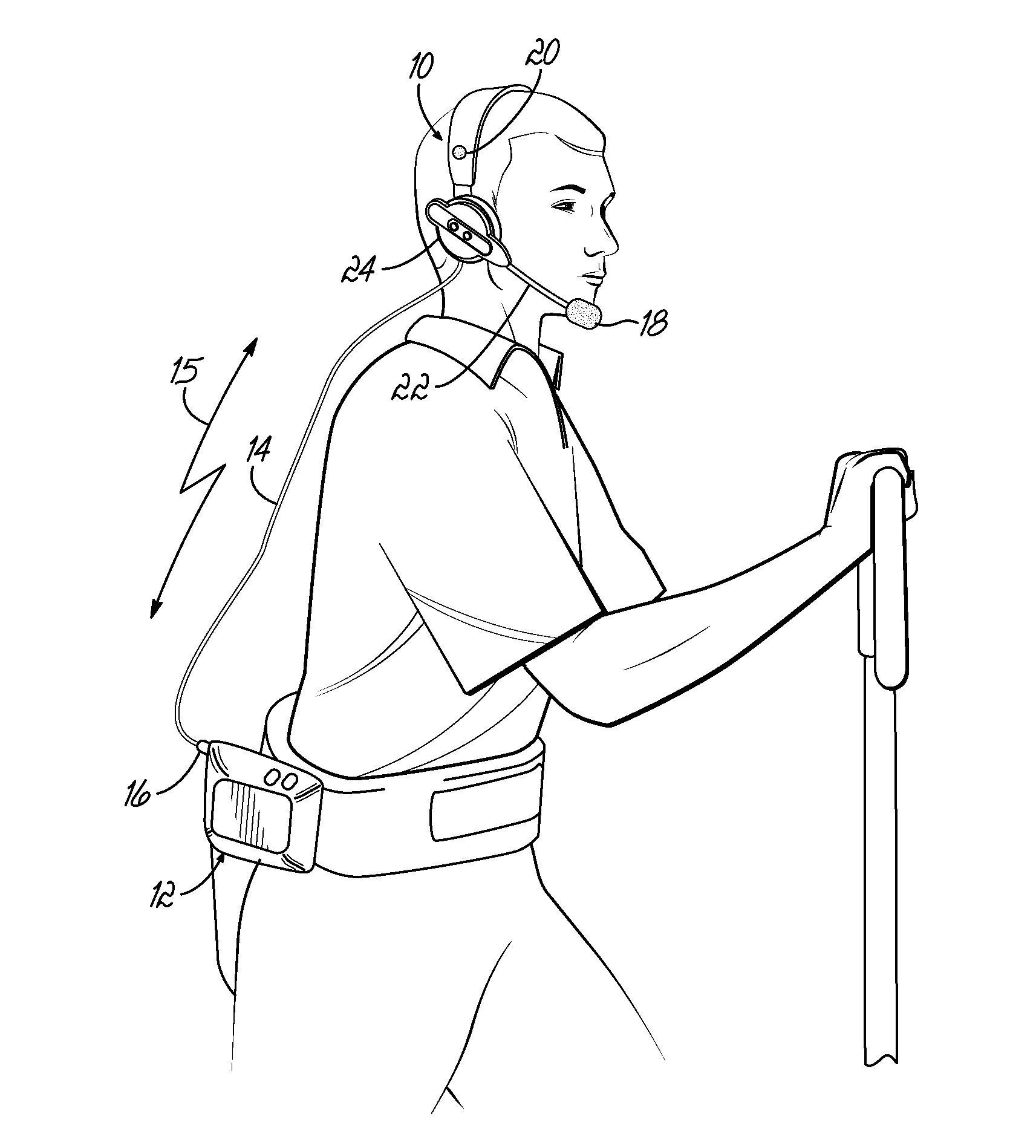

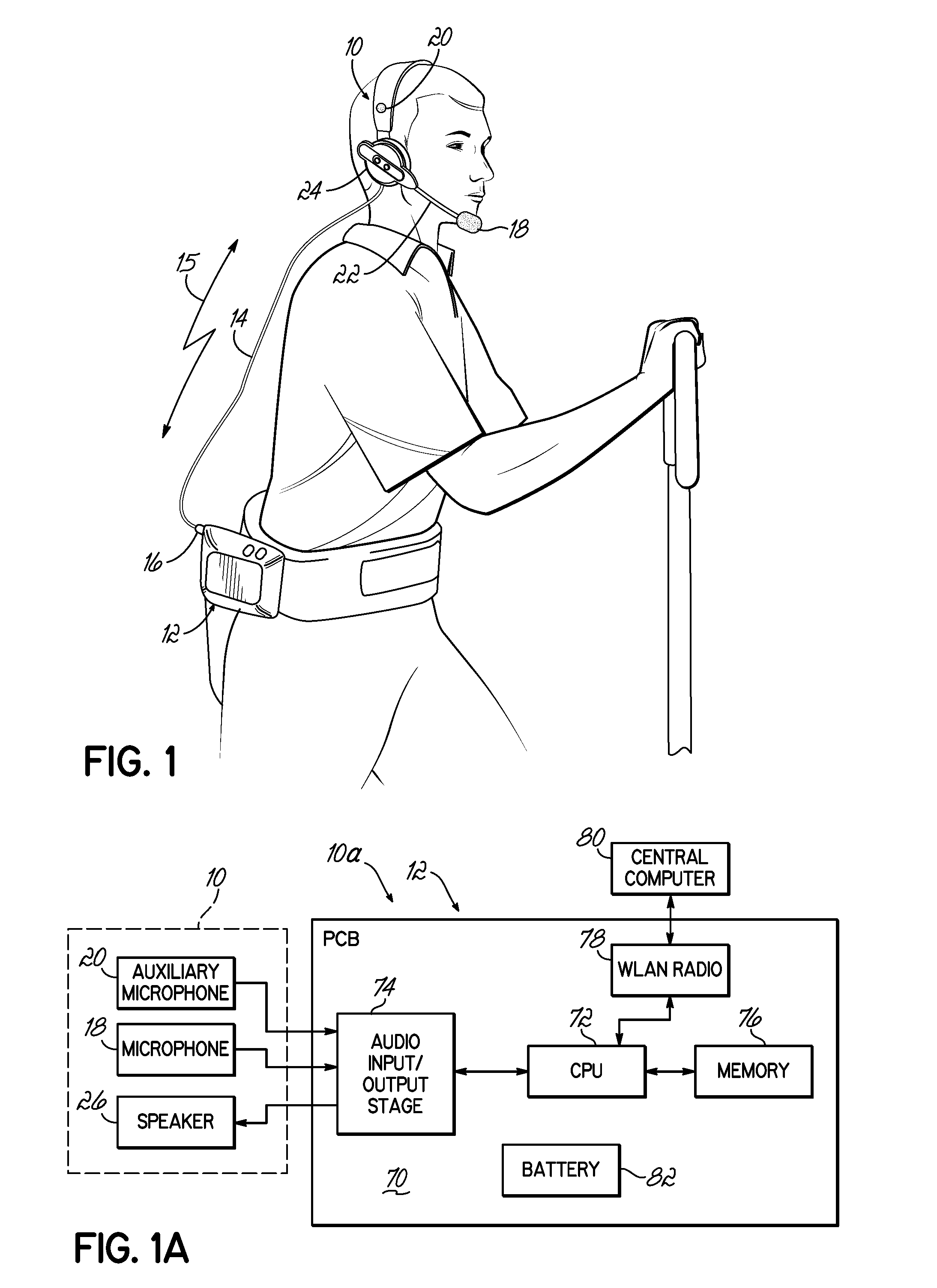

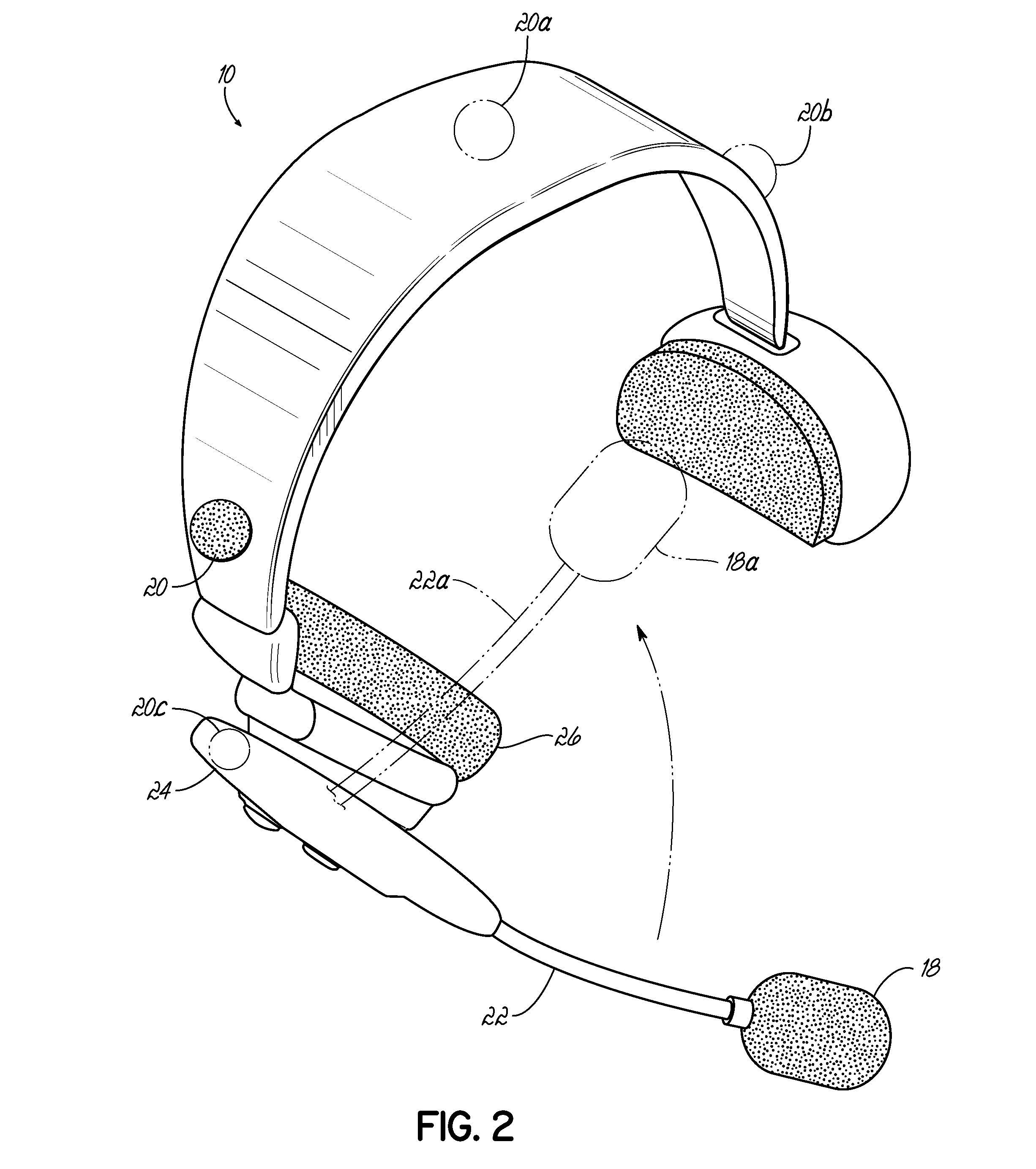

System and method for improving speech recognition accuracy in a work environment

Apparatus and method that improves speech recognition accuracy, by monitoring the position of a user's headset-mounted speech microphone, and prompting the user to reconfigure the speech microphone's orientation if required. A microprocessor or other application specific integrated circuit provides a mechanism for comparing the relative transit times between a user's voice, a primary speech microphone, and a secondary compliance microphone. The difference in transit times may be used to determine if the speech microphone is placed in an appropriate proximity to the user's mouth. If required, the user is automatically prompted to reposition the speech microphone.

Owner:VOCOLLECT

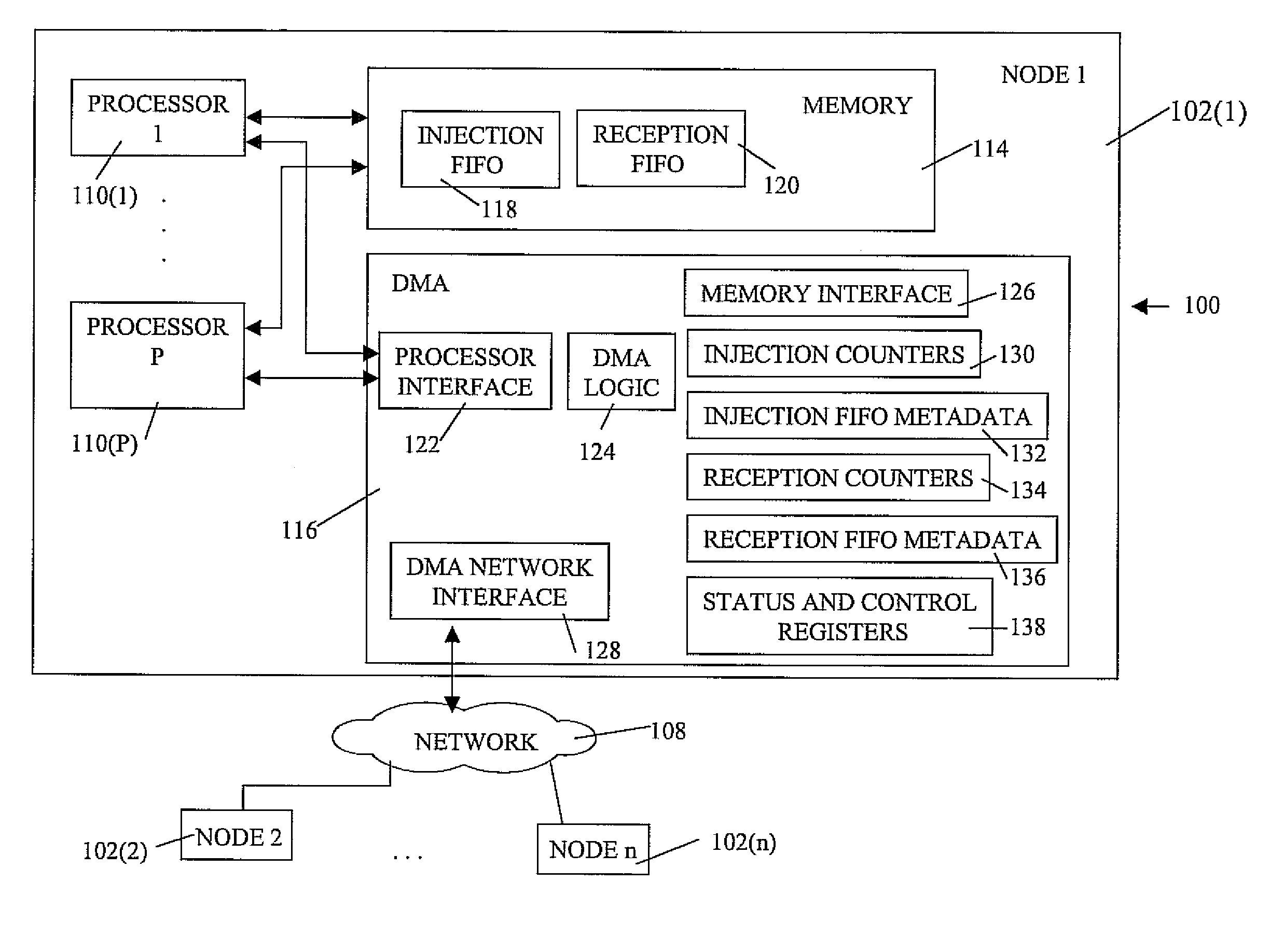

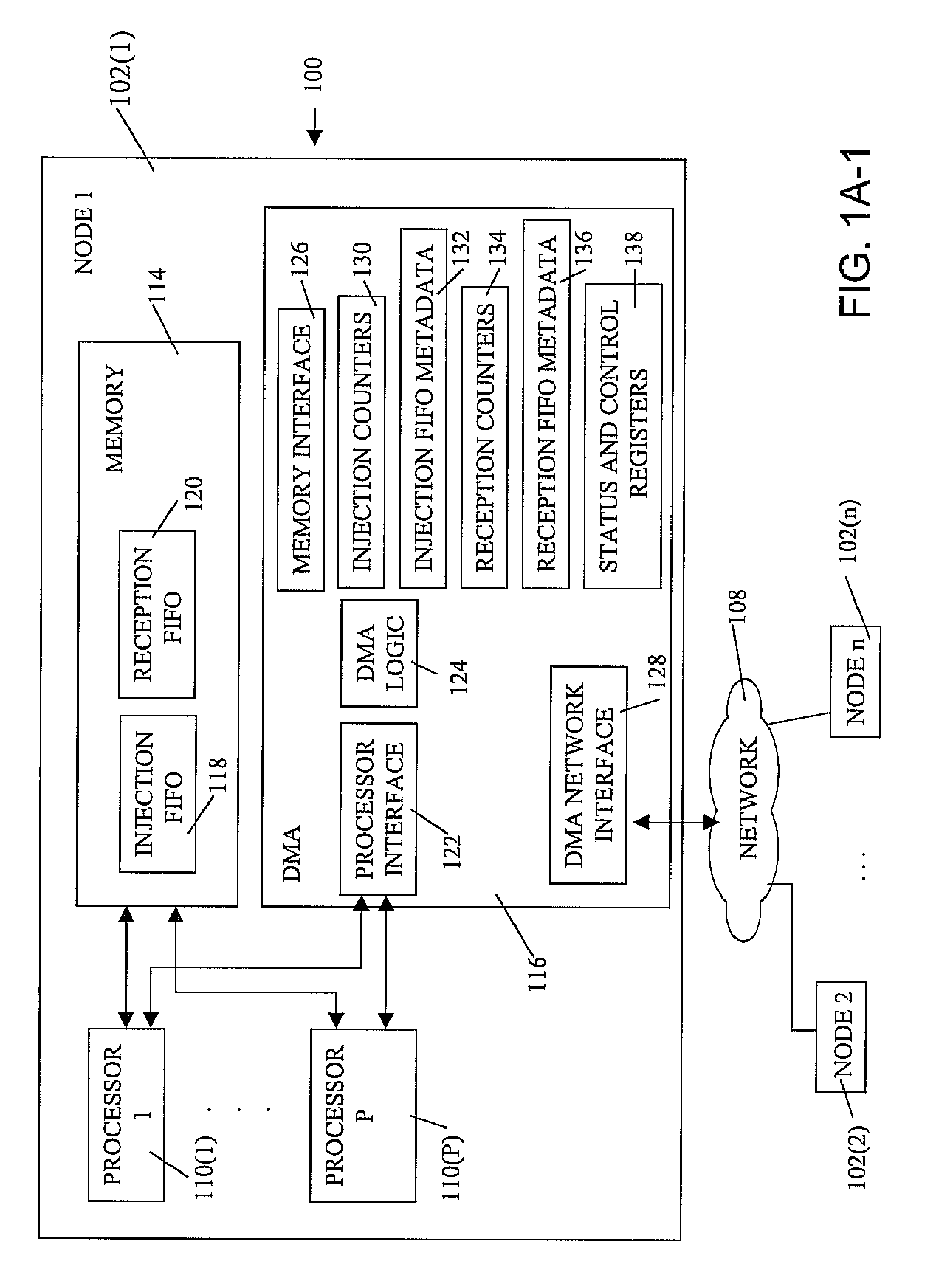

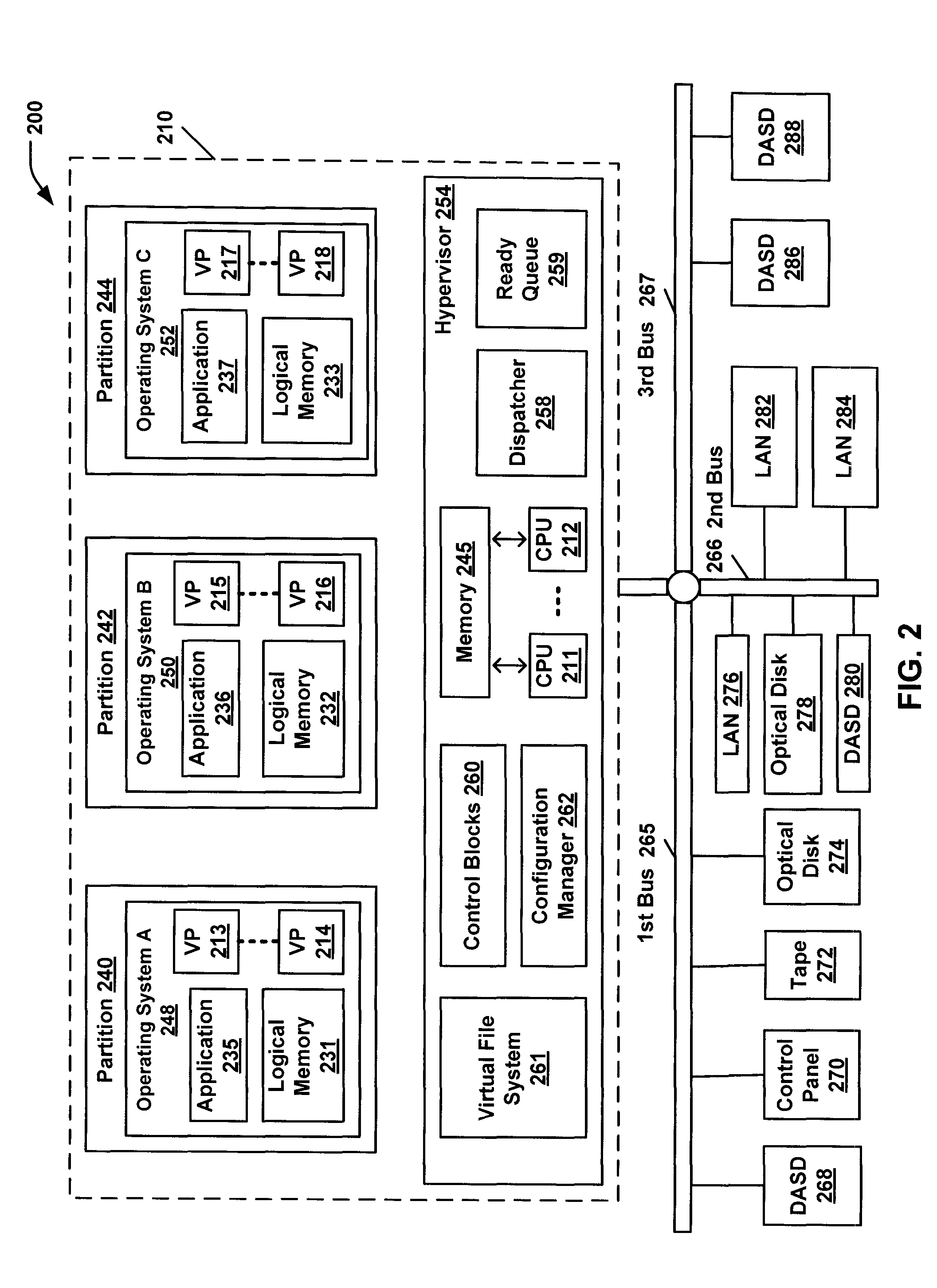

Novel massively parallel supercomputer

InactiveUS20090259713A1Low costReduced footprintError preventionProgram synchronisationSupercomputerPacket communication

Owner:INT BUSINESS MASCH CORP

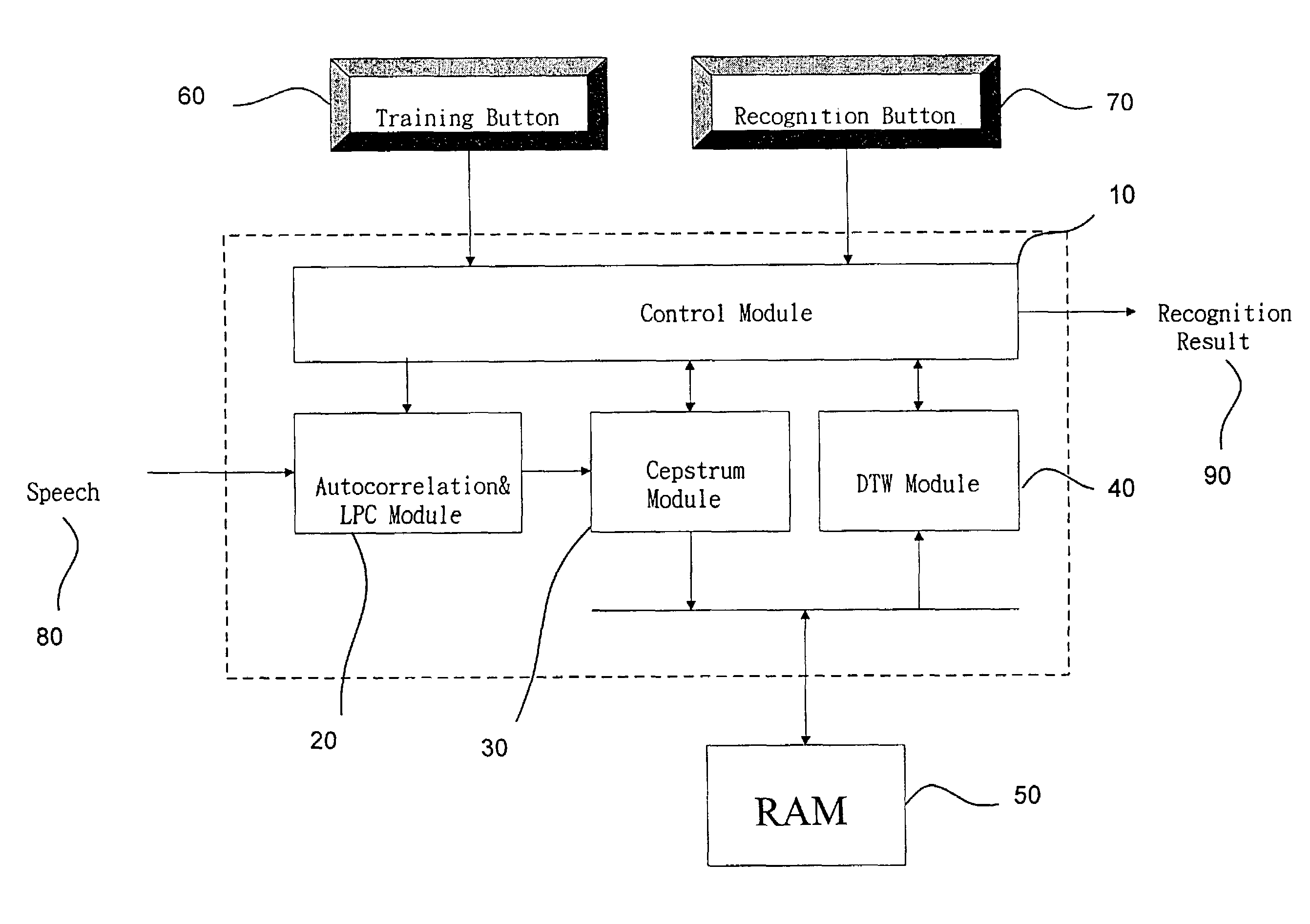

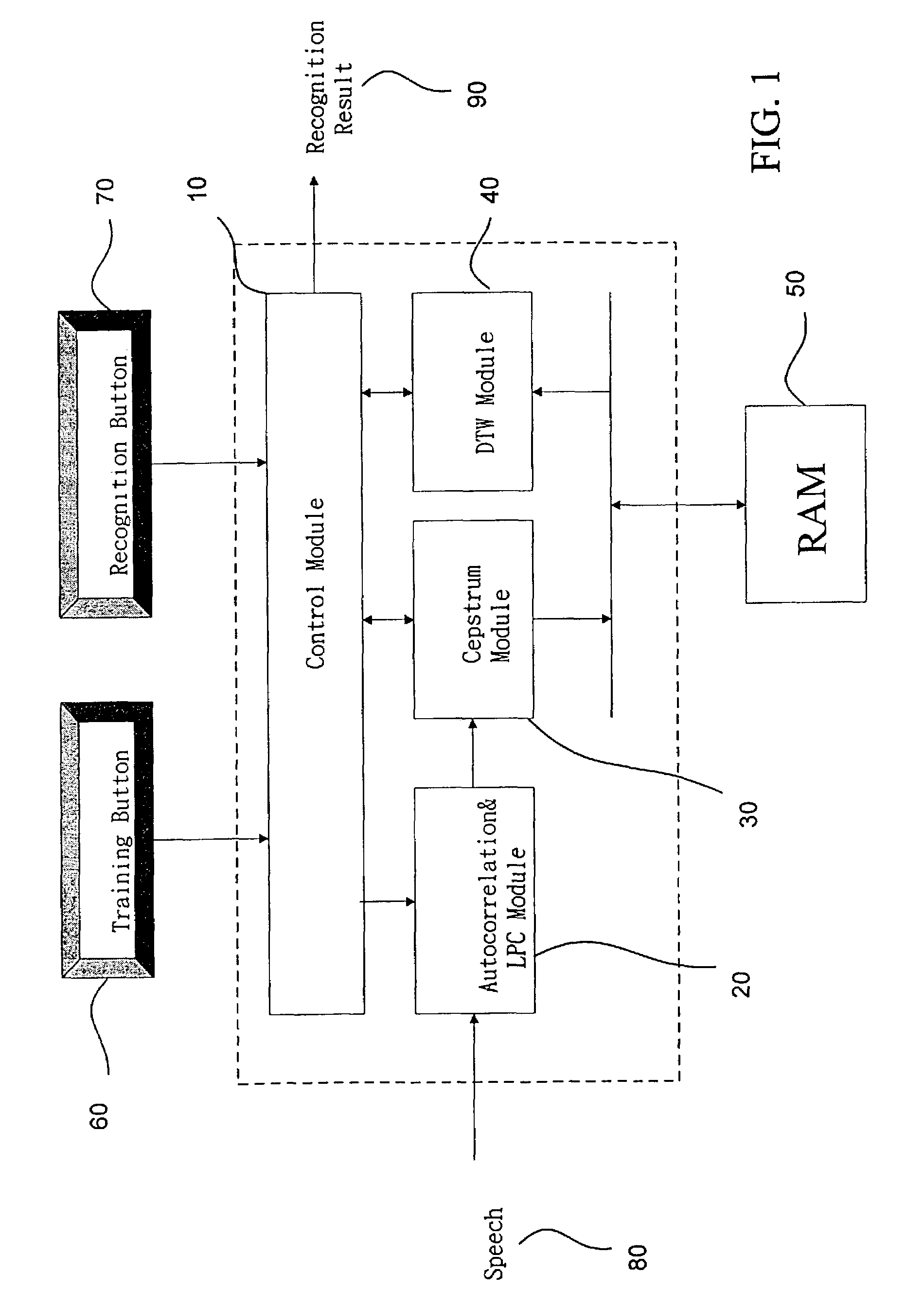

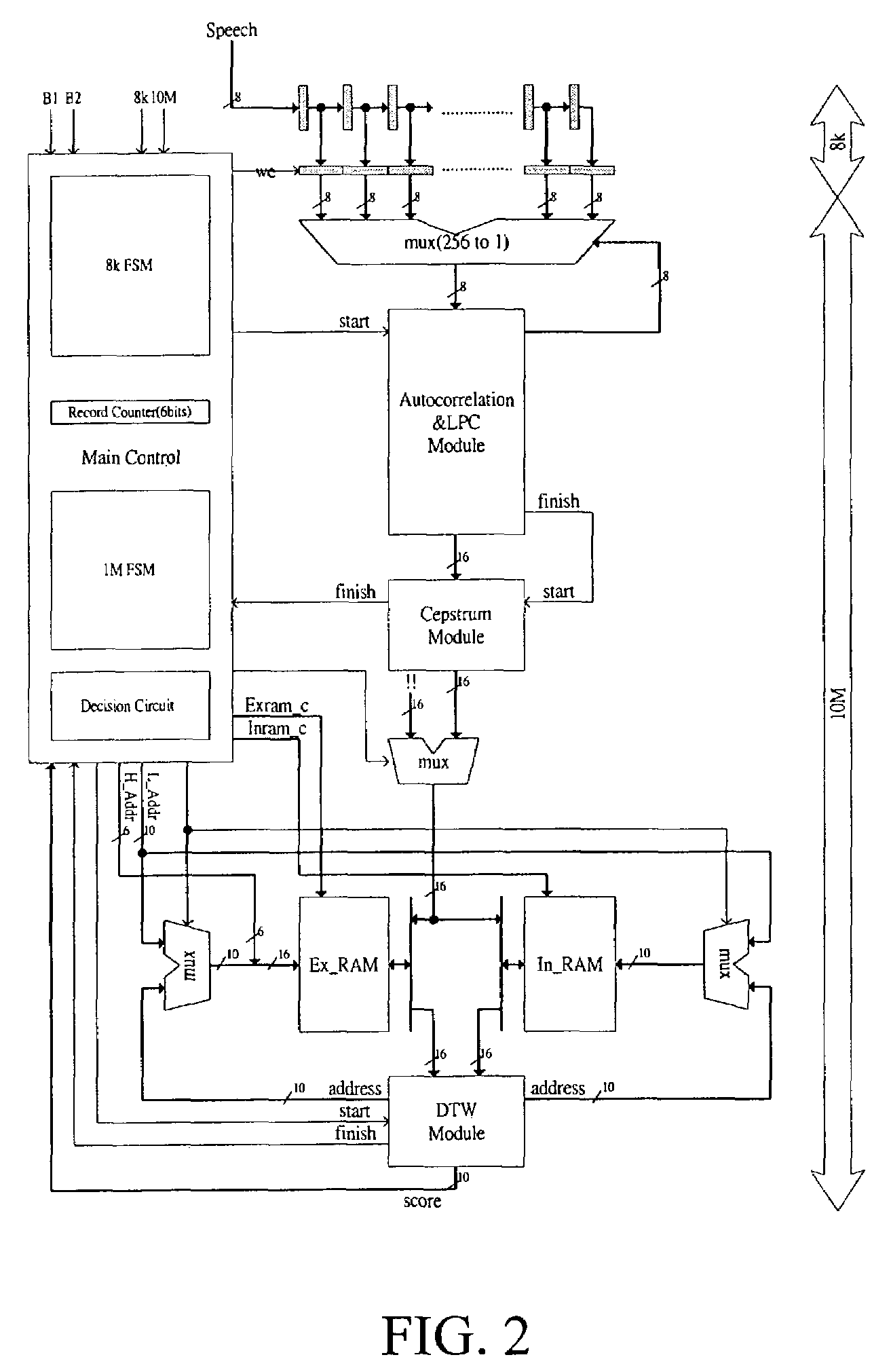

Speech recognition system

InactiveUS7266496B2Improve execution speedLow costSpeech recognitionLinearityLinear prediction coefficient

The present invention discloses a complete speech recognition system having a training button and a recognition button, and the whole system uses the application specific integrated circuit (ASIC) architecture for the design, and also uses the modular design to divide the speech processing into 4 modules: system control module, autocorrelation and linear predictive coefficient module, cepstrum module, and DTW recognition module. Each module forms an intellectual product (IP) component by itself. Each IP component can work with various products and application requirements for the design reuse to greatly shorten the time to market.

Owner:NAT CHENG KUNG UNIV

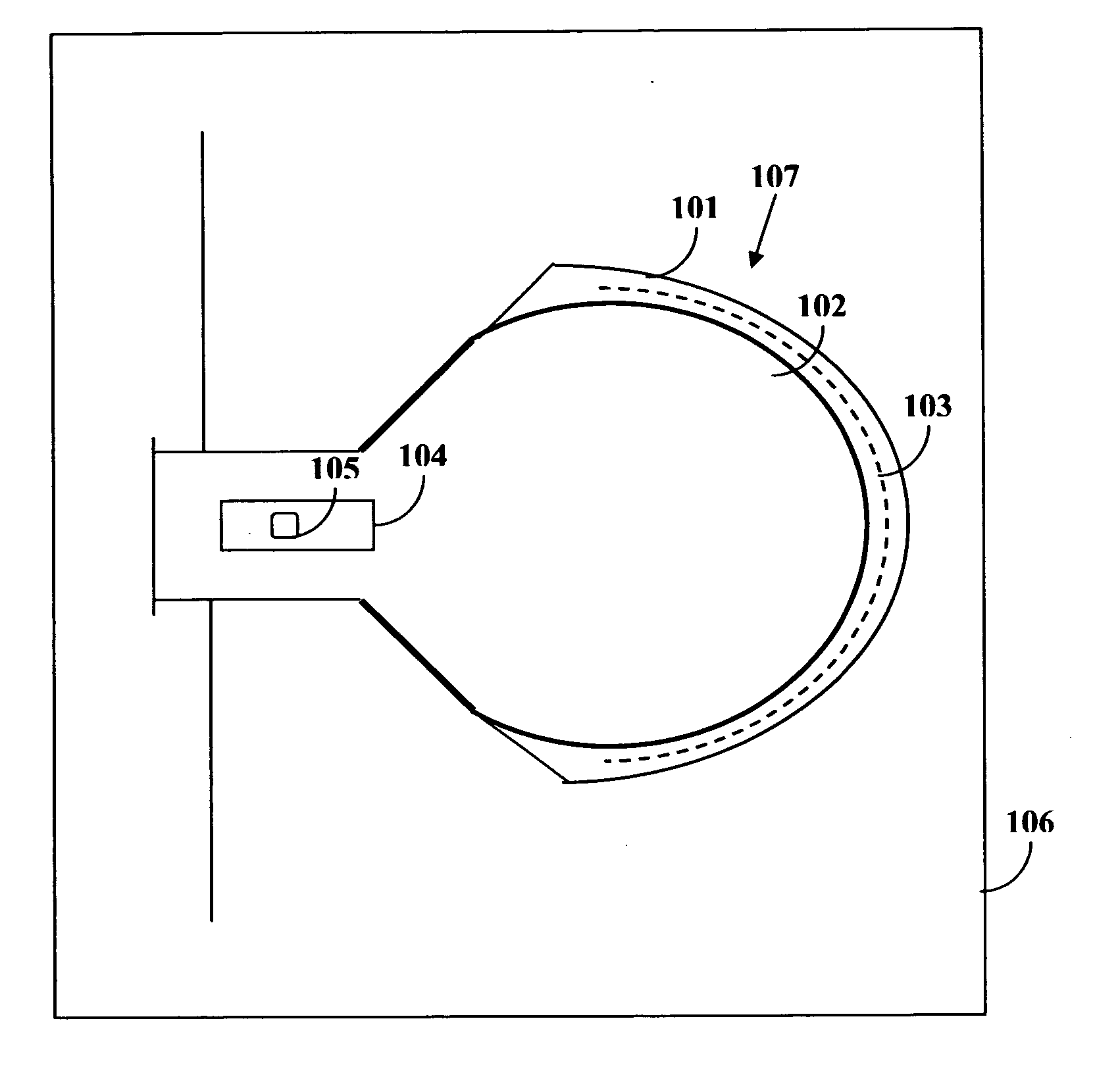

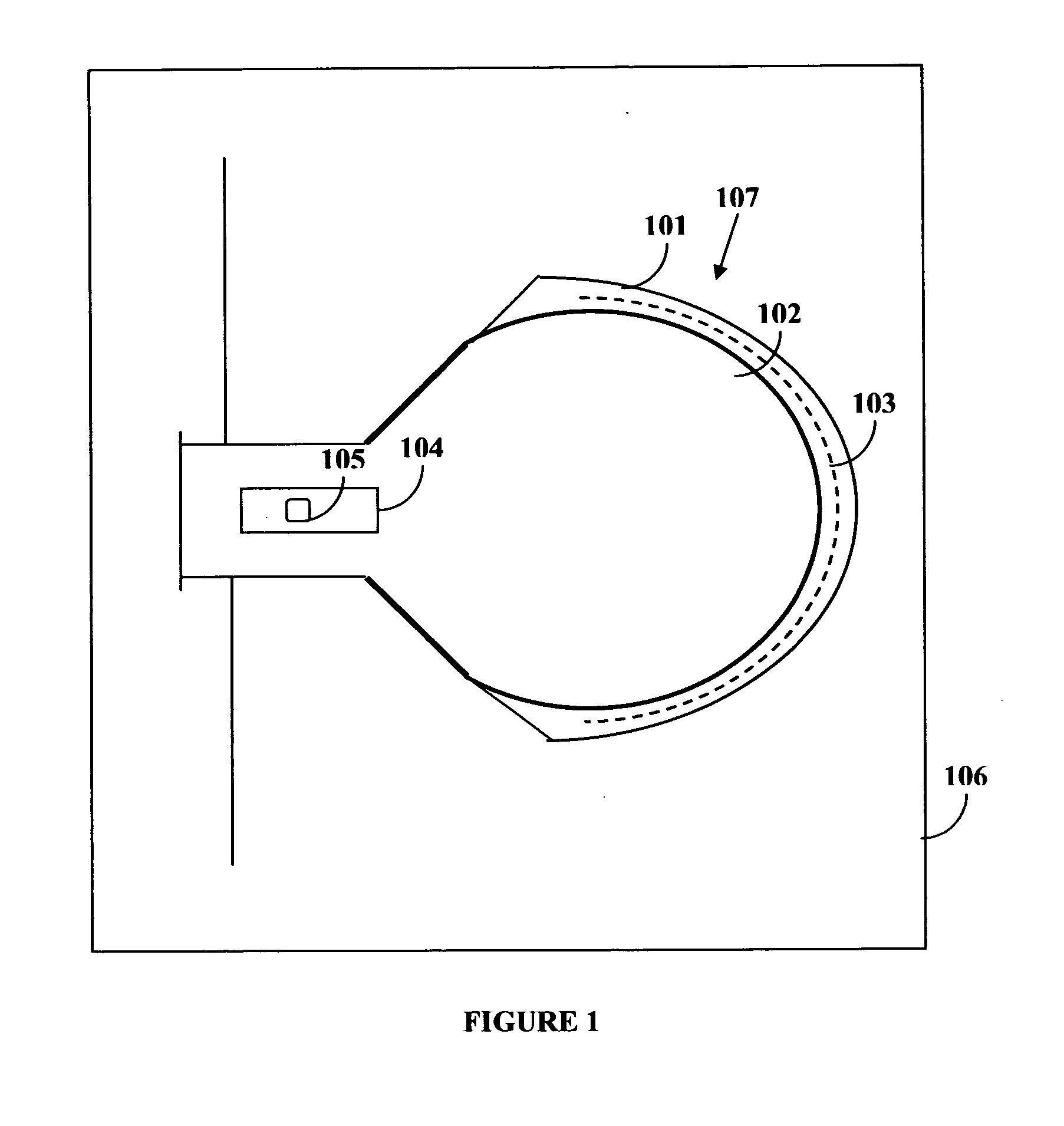

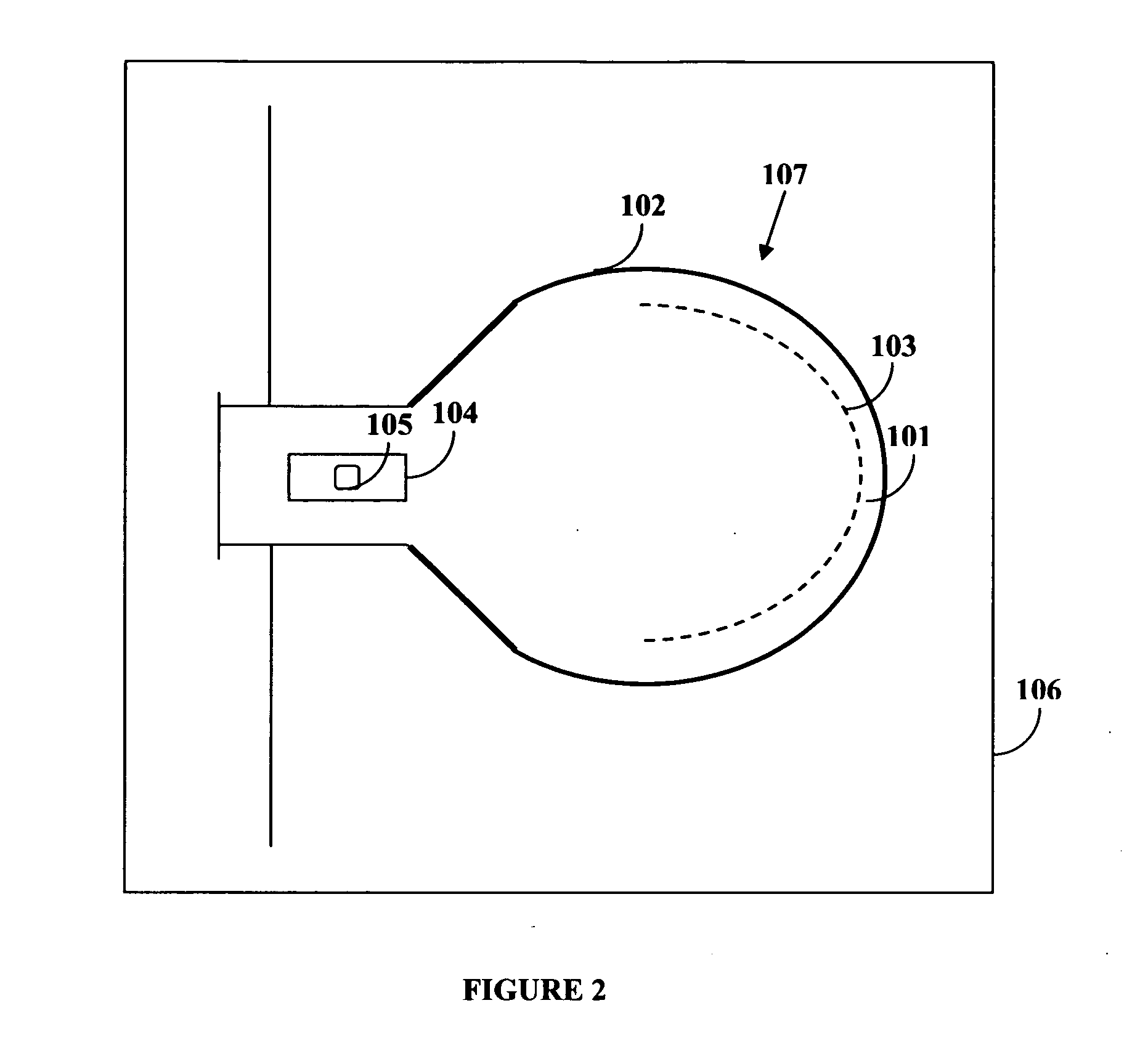

Contoured biometric sensor

InactiveUS20080069412A1Natural feelingCharacter and pattern recognitionSensor arrayPattern recognition

Disclosed herein is an apparatus and system for fingerprint recognition and hand-print recognition comprising a scanner with a contoured surface, or a scanner the surface of which deforms to allow more fingerprint or hand-print data to be captured, an application specific circuit for conversion of analog data from a captured fingerprint image from an embedded sensor array into a digital image and a fingerprint security application for verification of said digital image against stored fingerprint templates. The scanner array wrap with the embedded scanner array may be placed over the fixture to be scanned, or it may be formed as a part of the fixture that is scanned to authenticate the identity of a person.

Owner:CHAMPAGNE KATRINA S +2

System and method of sterilizing an implantable medical device

An implantable system having internal circuitry configured to withstand a pre-determined amount of sterilization radiation is provided. In general, the system includes an internal control module in electrical communication with an implantable medical device. The internal control module can include a circuit board configured to withstand radiation and / or any number of integrated circuits (e.g., application specific integrated circuits) wherein the circuits or at least some portion thereof are fabricated so as to withstand some amount of radiation. For example, some portion of the circuitry can be fabricated utilizing radiation compliant material(s), silicon-on-insulator technology, and / or gallium arsenide technology. Additionally, the circuitry can include various components which are inherently resistant to such radiation (e.g., components fabricated utilizing magnetic field based technology, surface acoustical wave devices, etc.). A method of sterilizing an implantable medical device via radiation is also provided.

Owner:ETHICON ENDO SURGERY INC

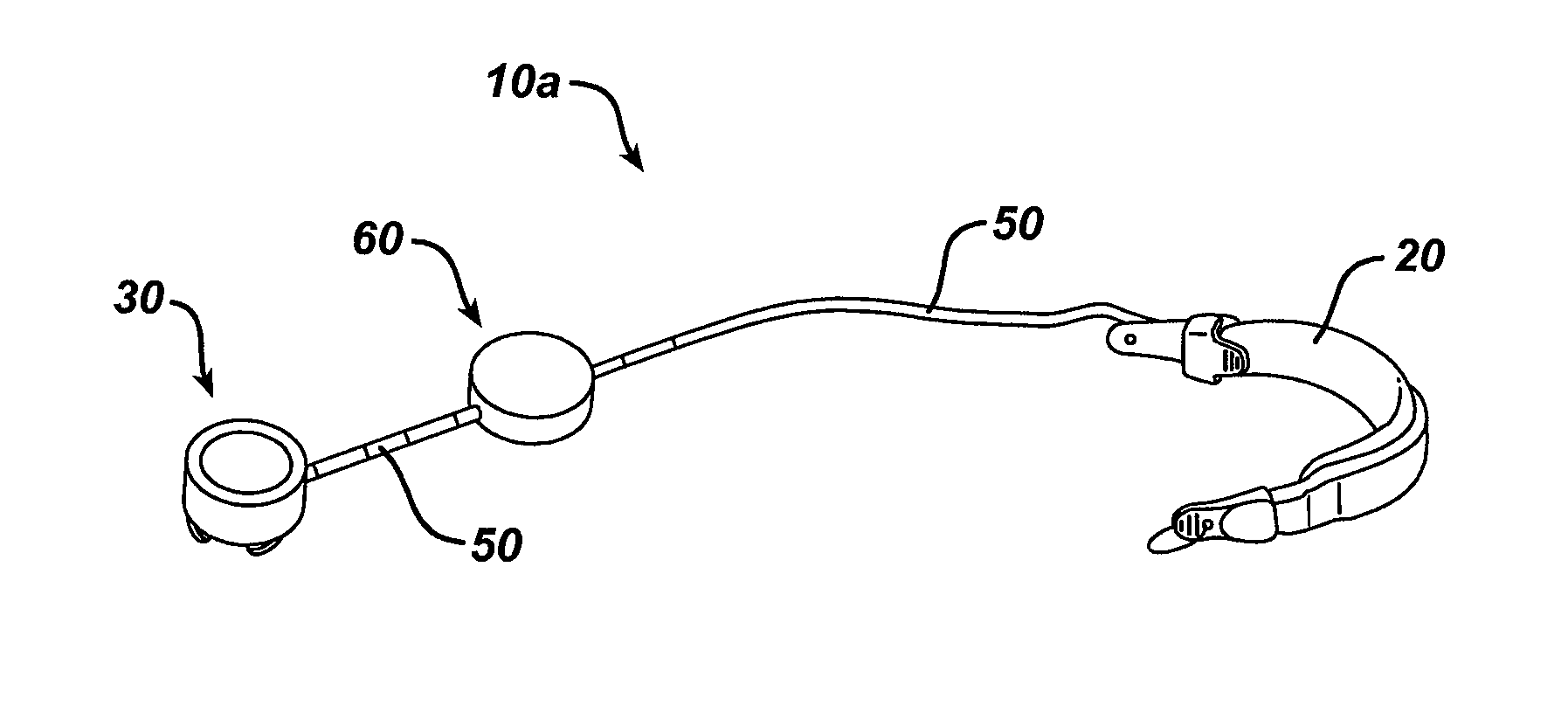

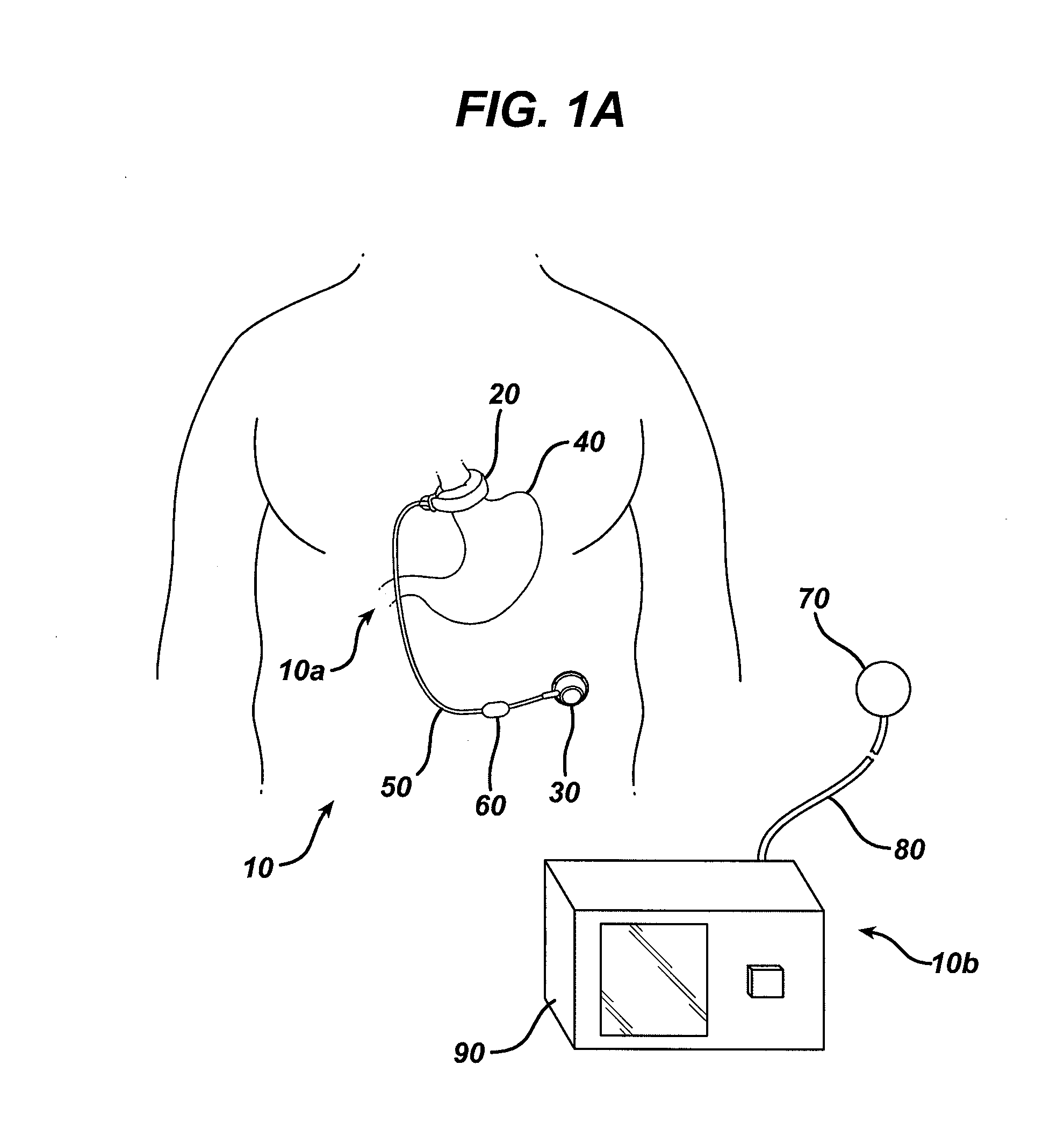

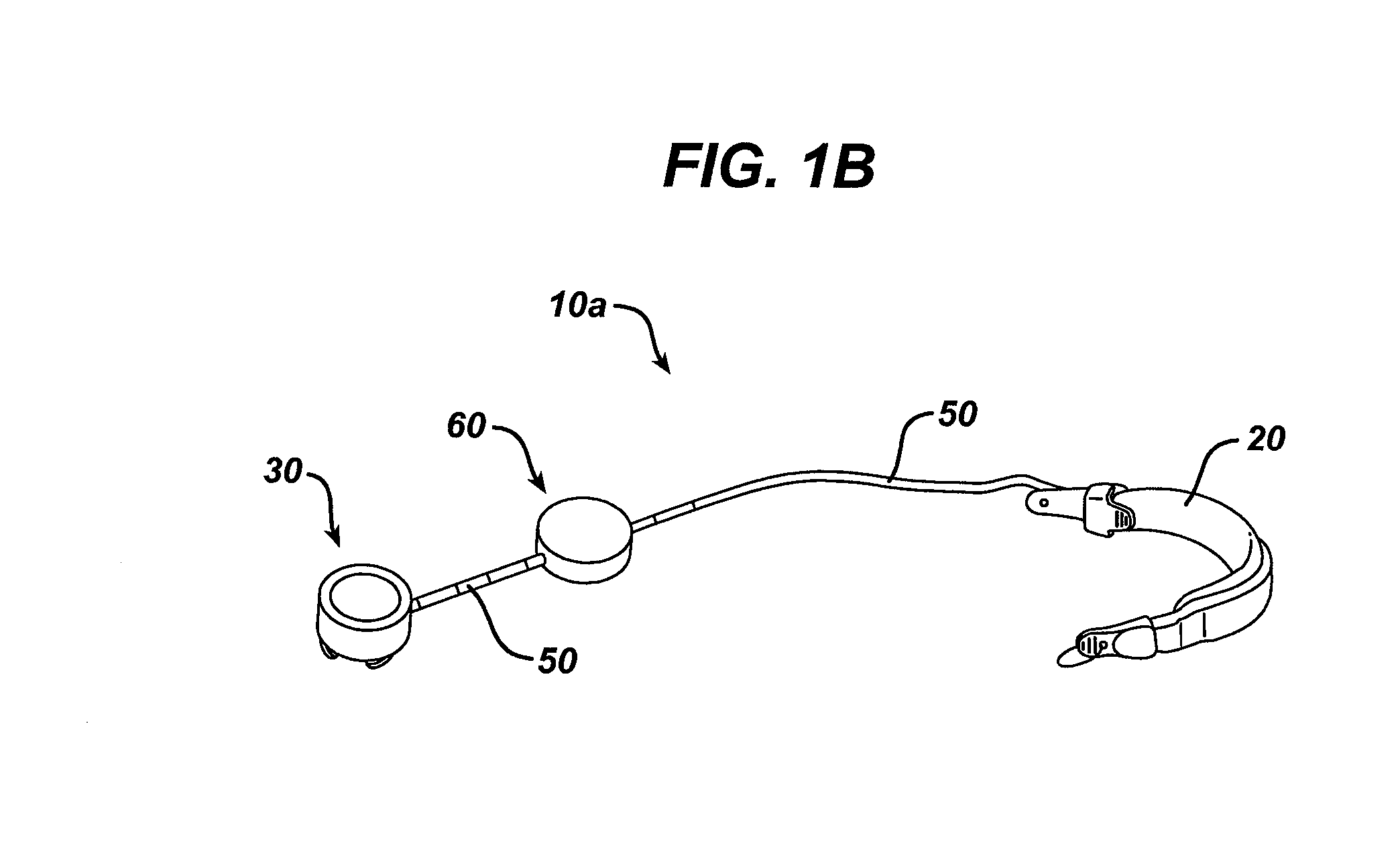

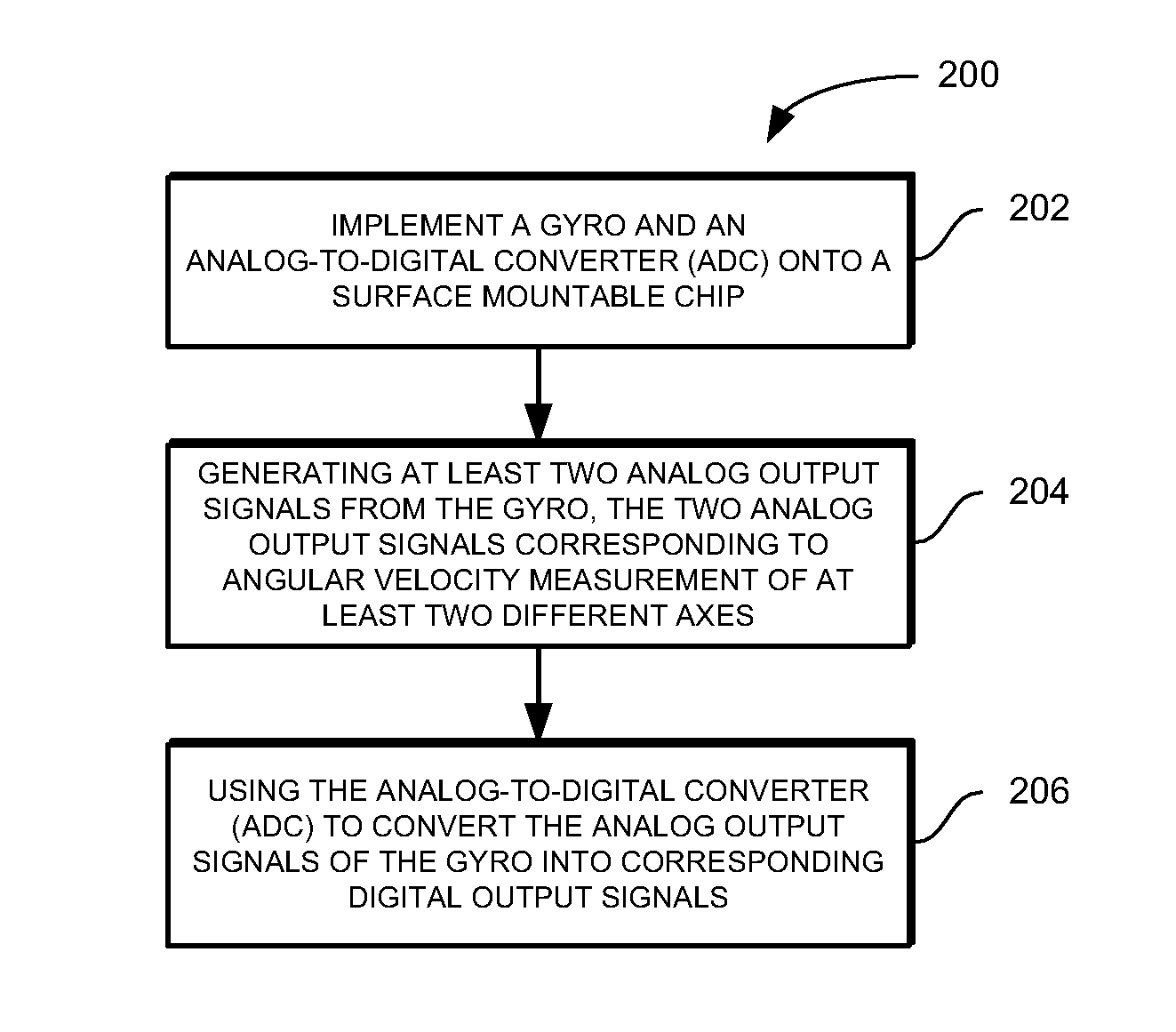

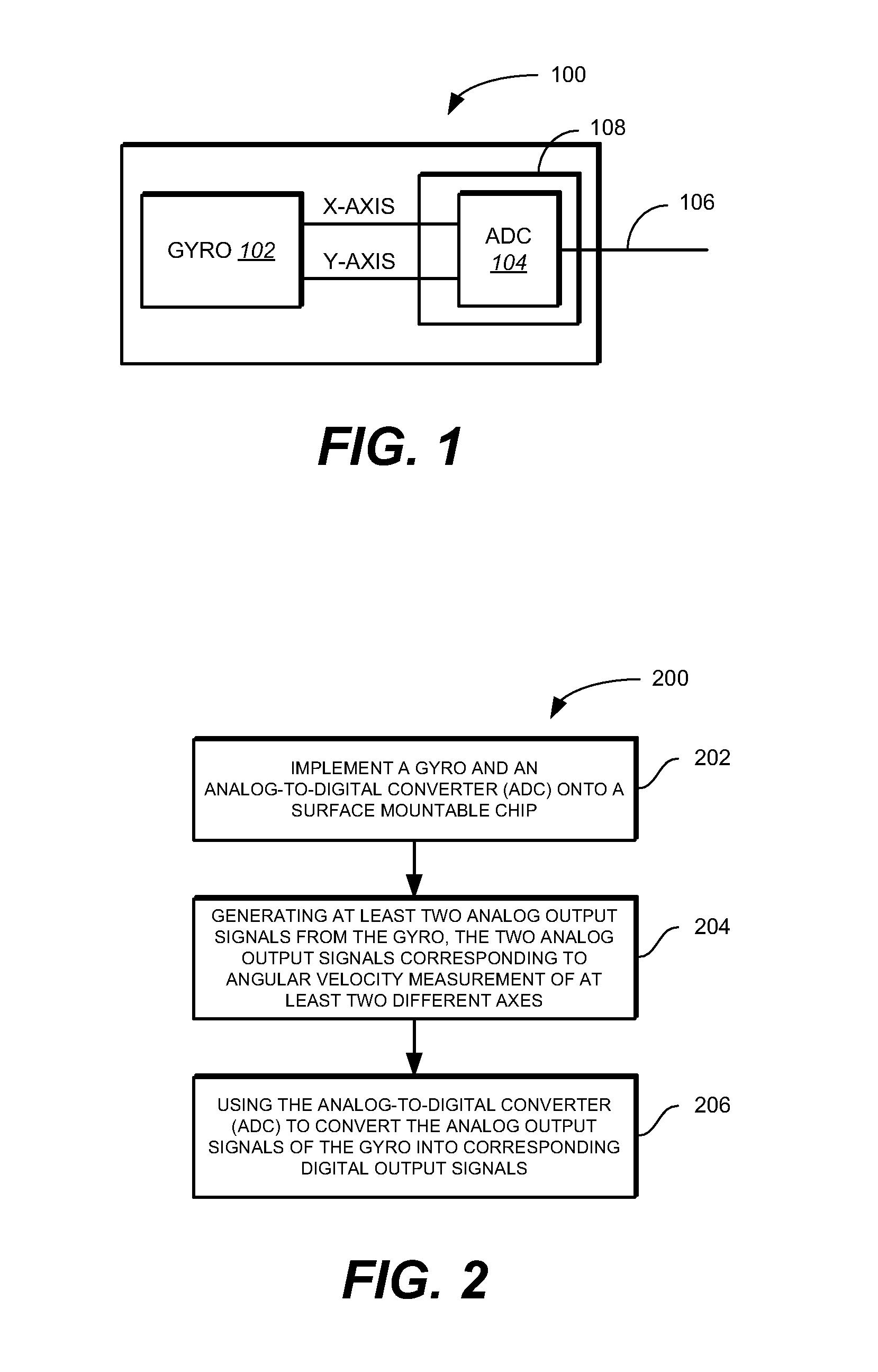

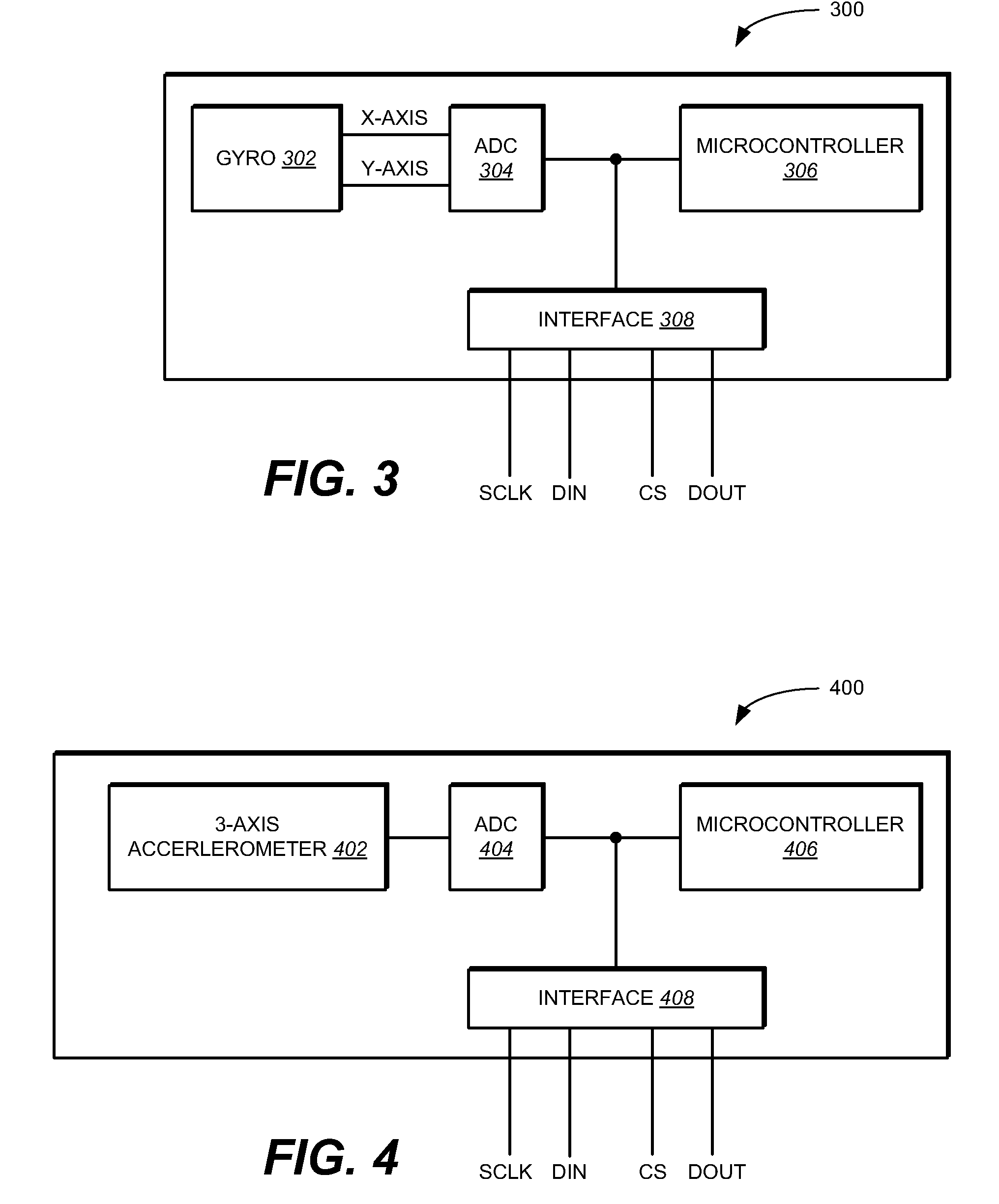

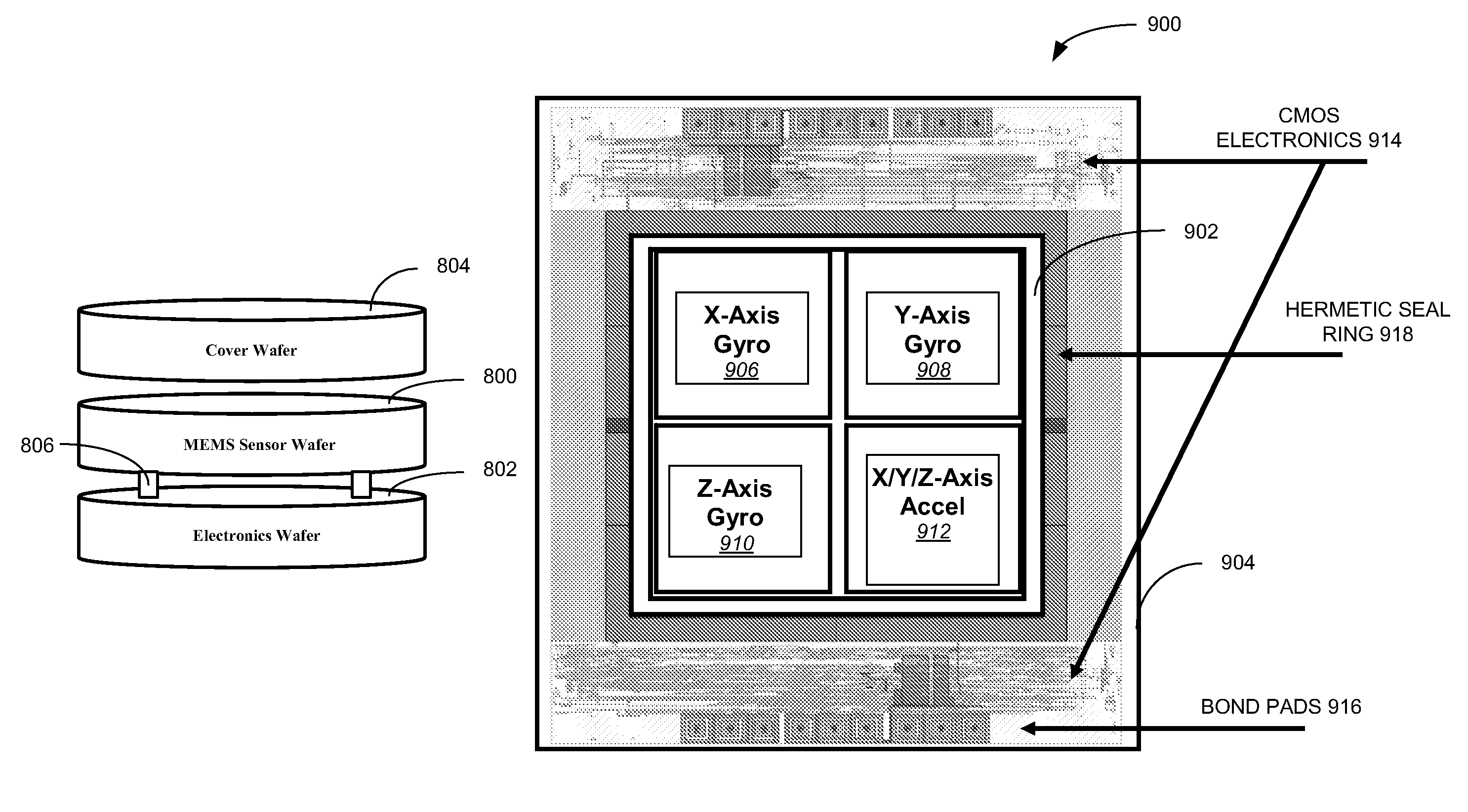

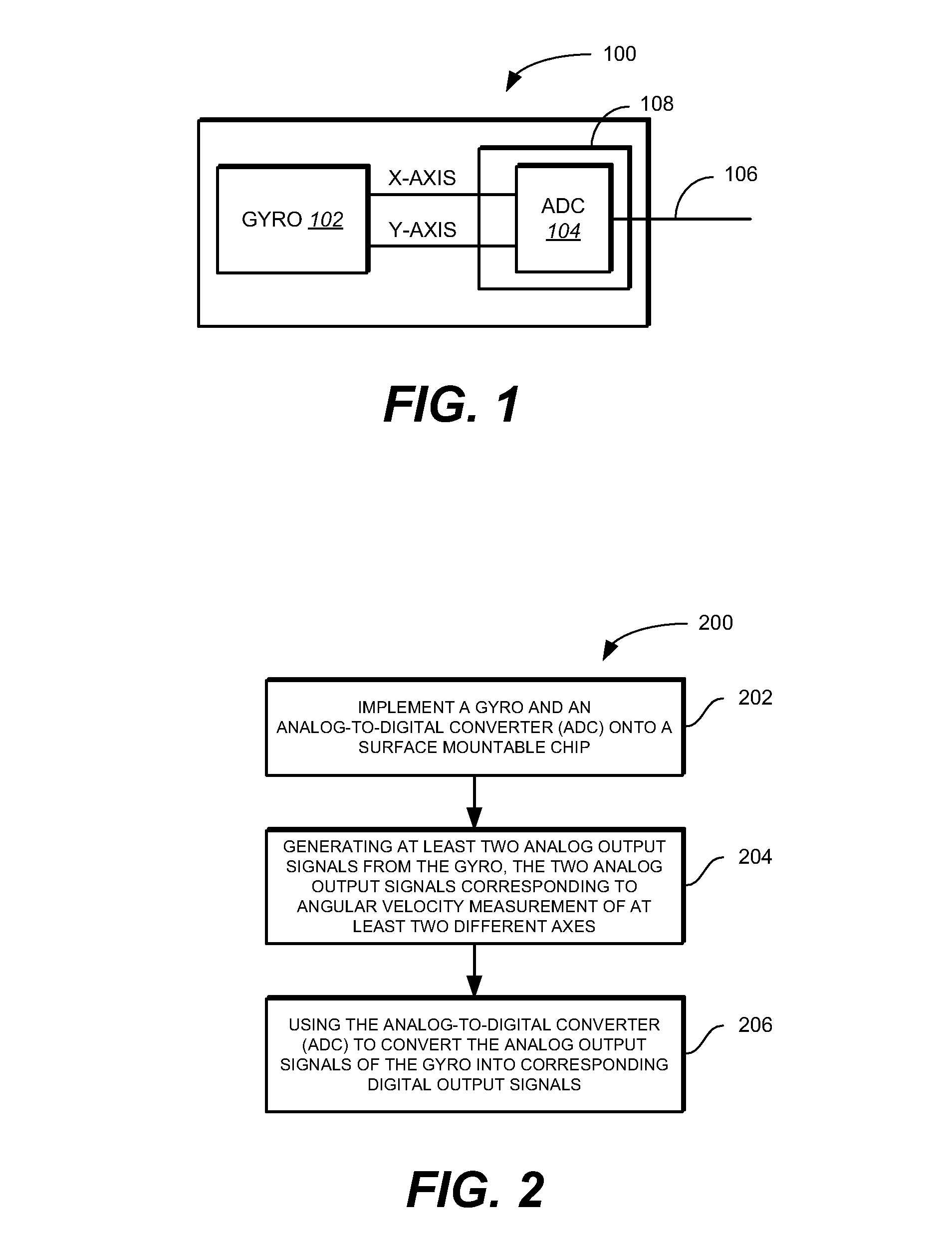

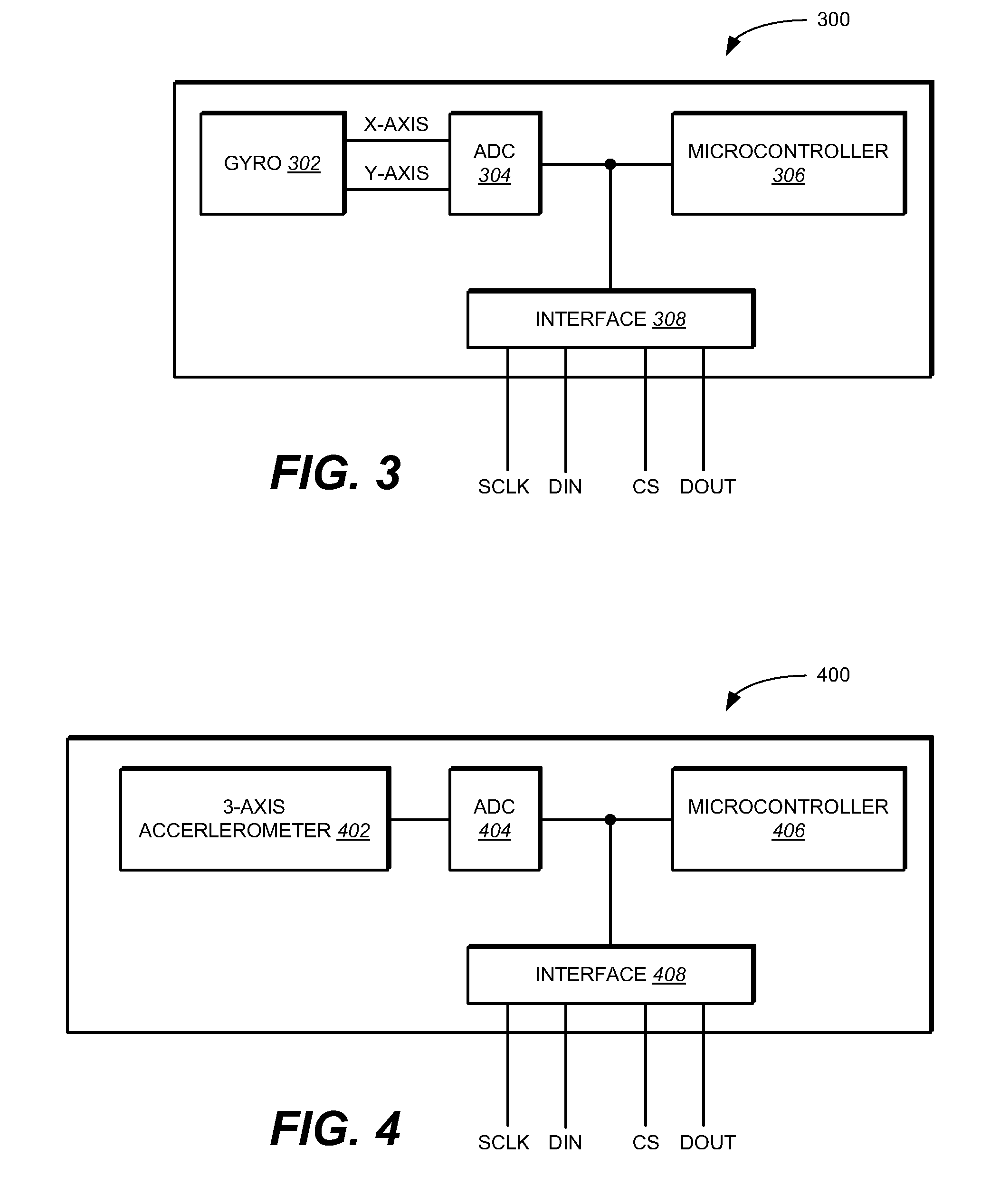

Integrated Motion Processing Unit (MPU) With MEMS Inertial Sensing And Embedded Digital Electronics

ActiveUS20090007661A1Small packageLower performance requirementsElectric signal transmission systemsAcceleration measurement using interia forcesMotion processingAccelerometer

A module operable to be mounted onto a surface of a board. The module includes a linear accelerometer to provide a first measurement output corresponding to a measurement of linear acceleration in at least one axis, and a first rotation sensor operable to provide a second measurement output corresponding to a measurement of rotation about at least one axis. The accelerometer and the first rotation sensor are formed on a first substrate. The module further includes an application specific integrated circuit (ASIC) to receive both the first measurement output from the linear accelerometer and the second measurement output from the first rotation sensor. The ASIC includes an analog-to-digital converter and is implemented on a second substrate. The first substrate is vertically bonded to the second substrate.

Owner:INVENSENSE

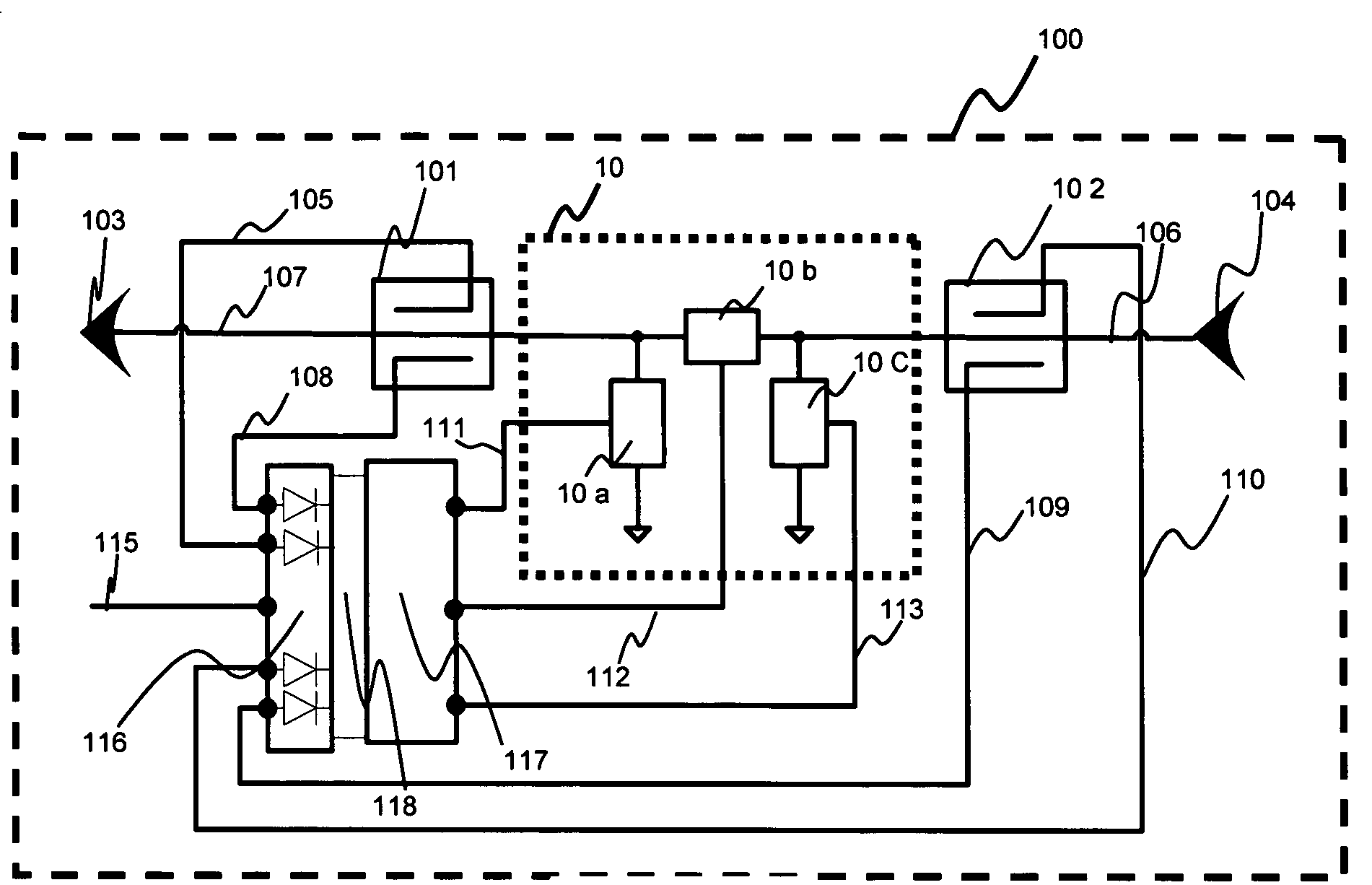

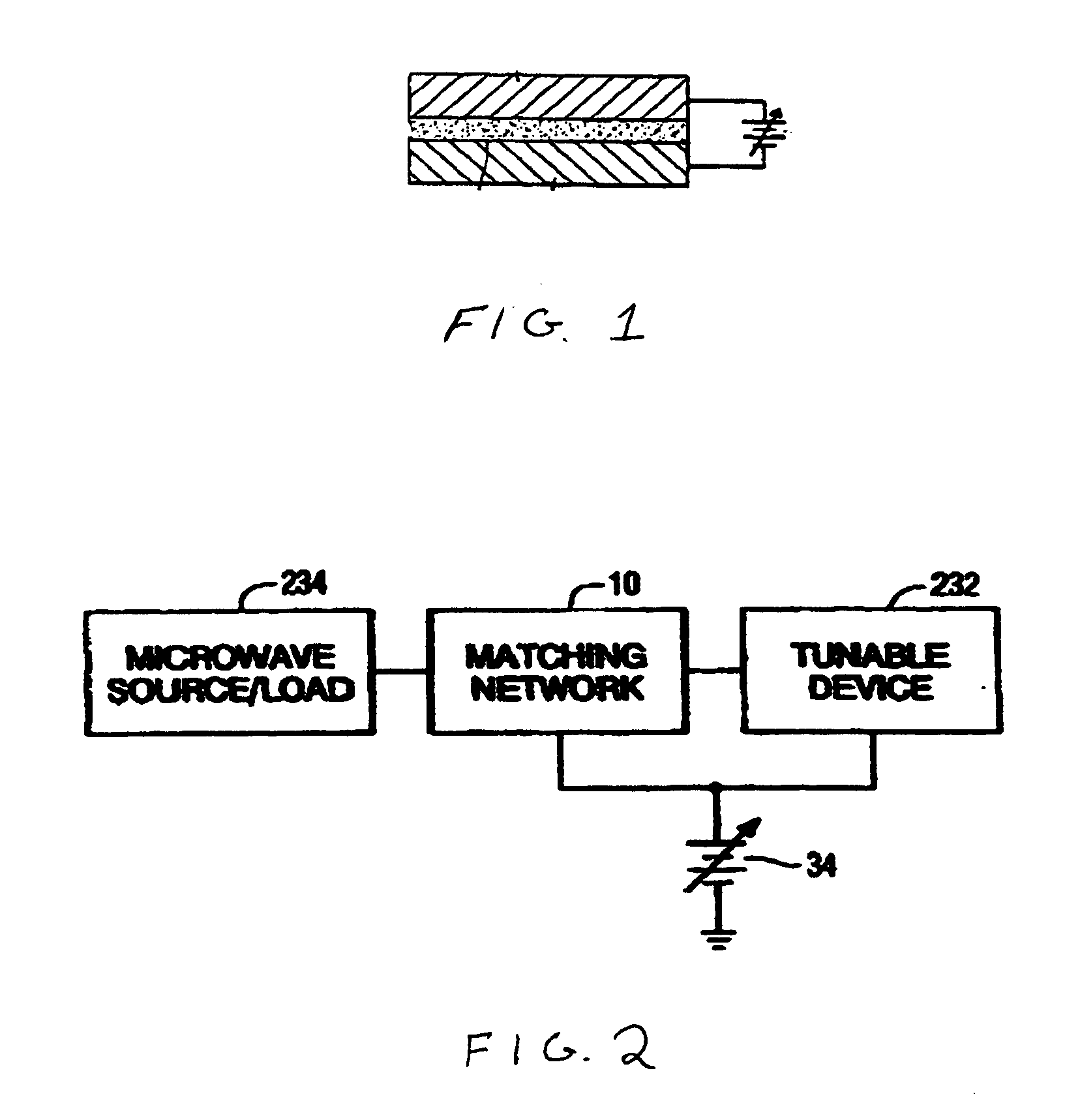

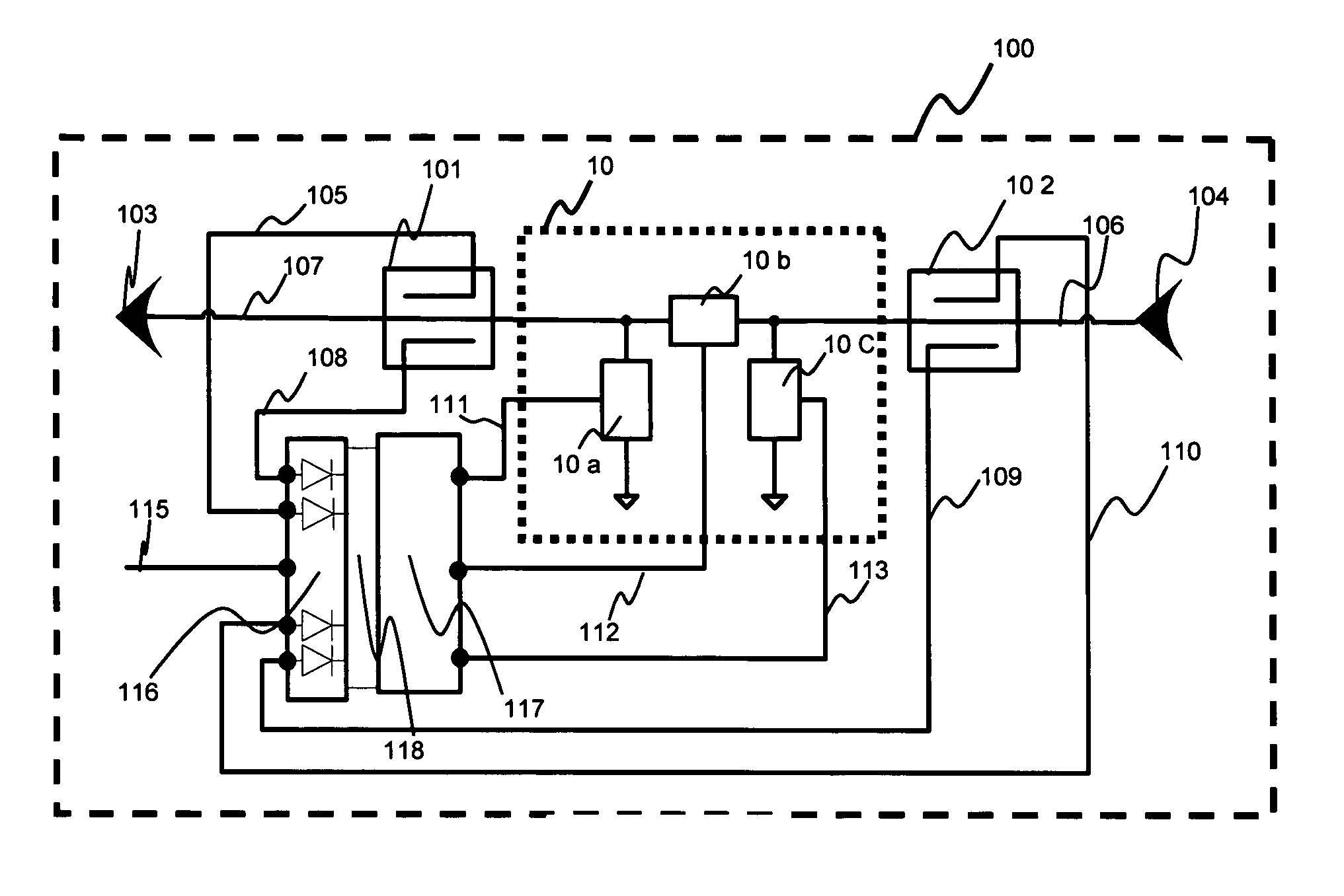

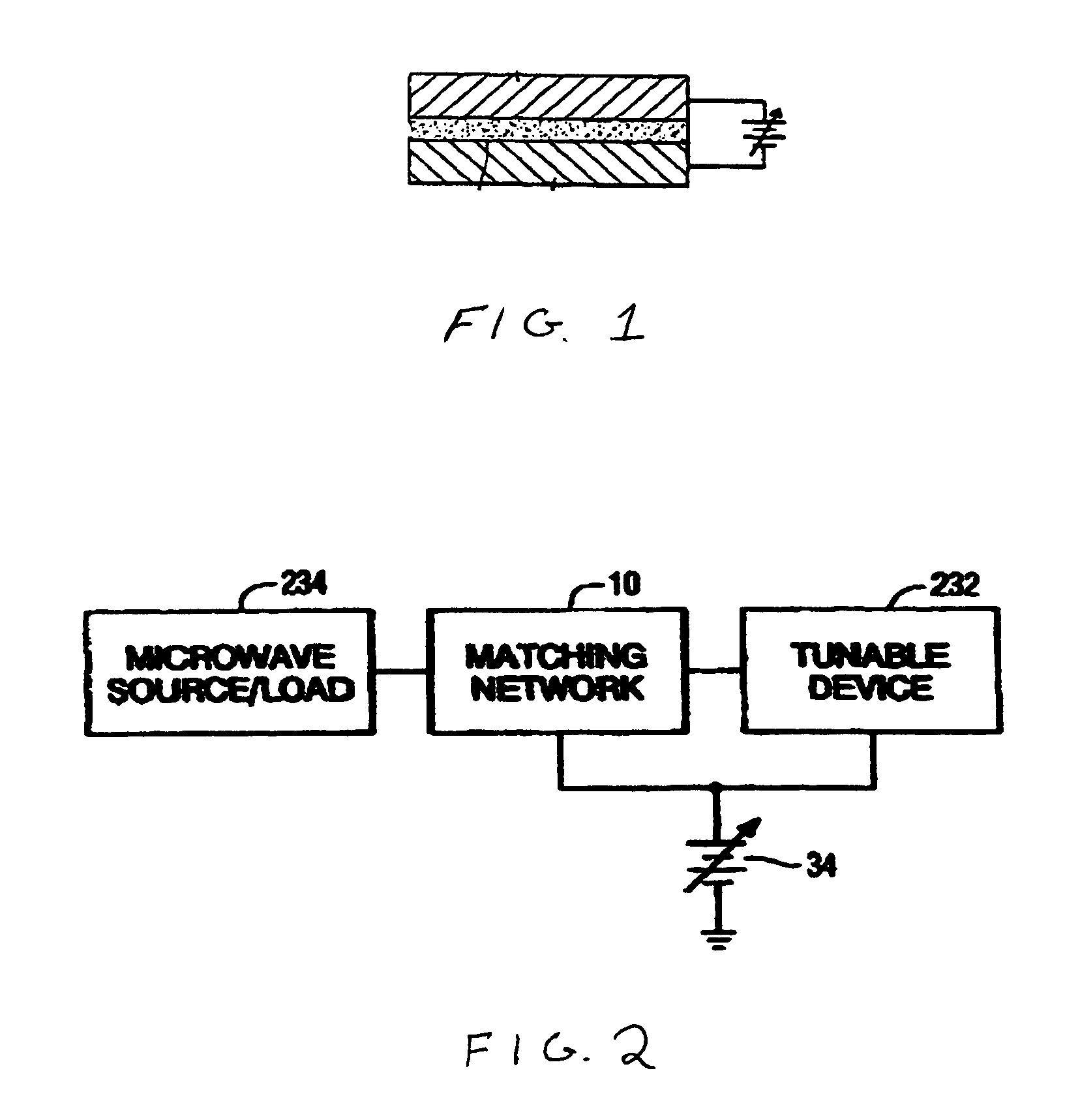

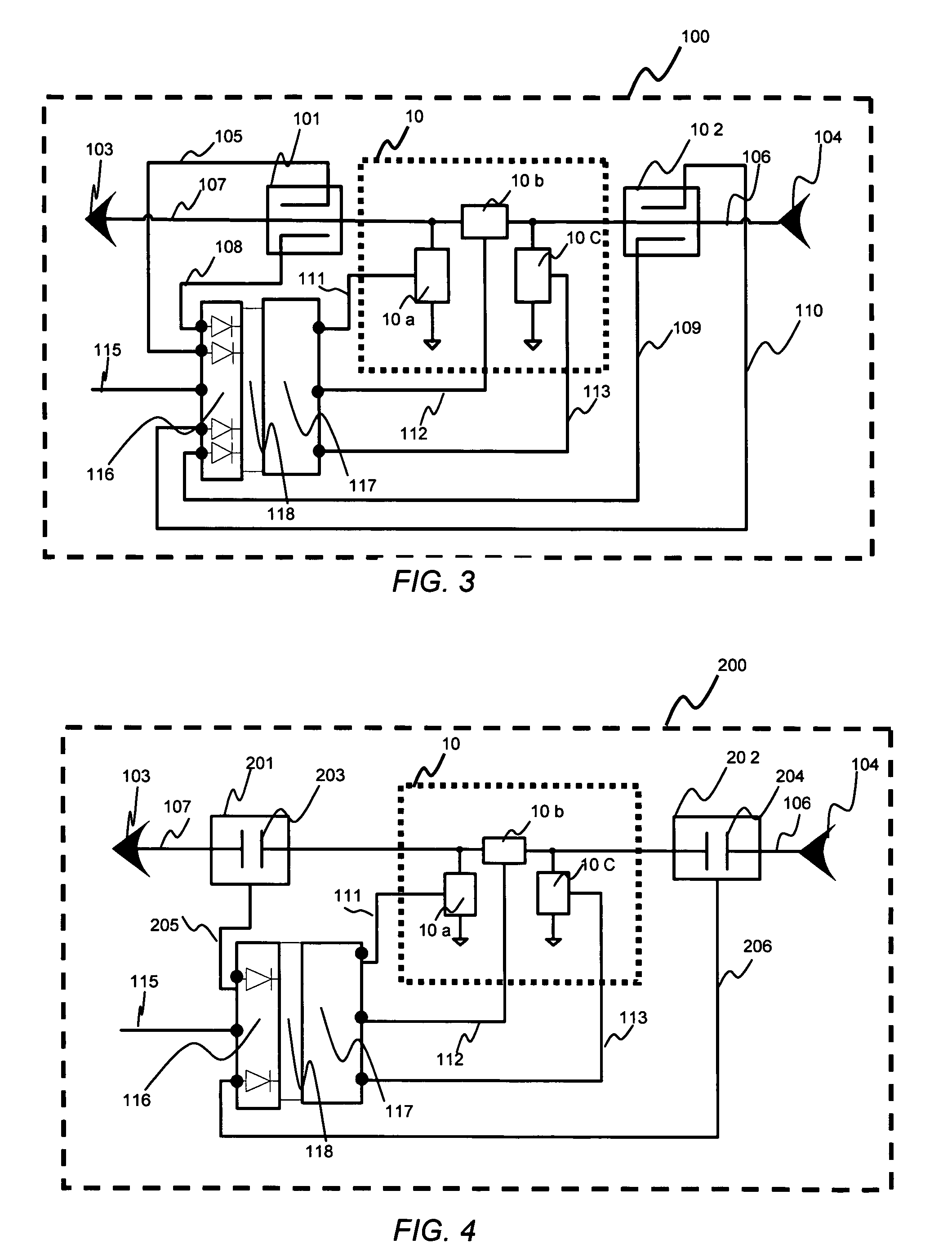

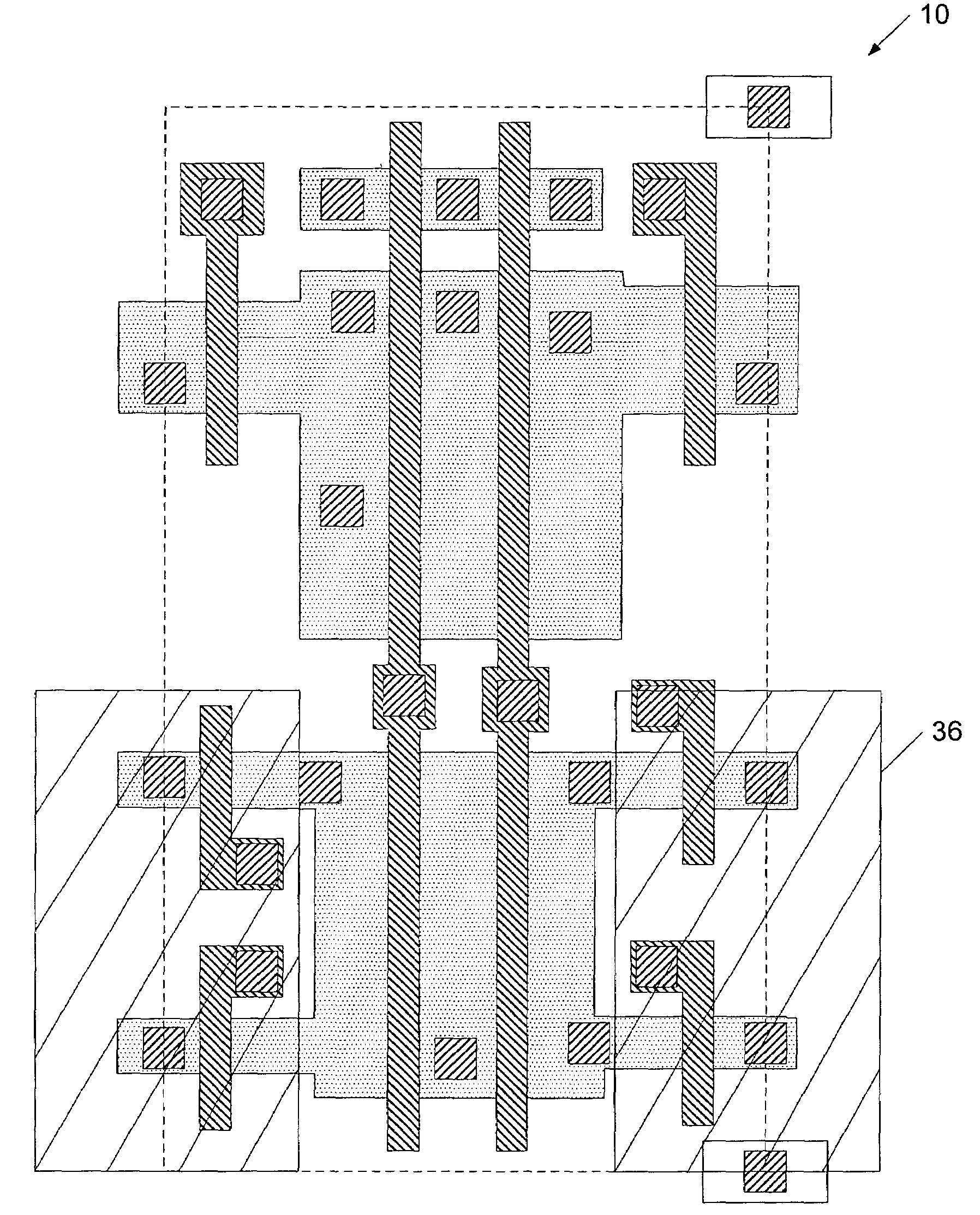

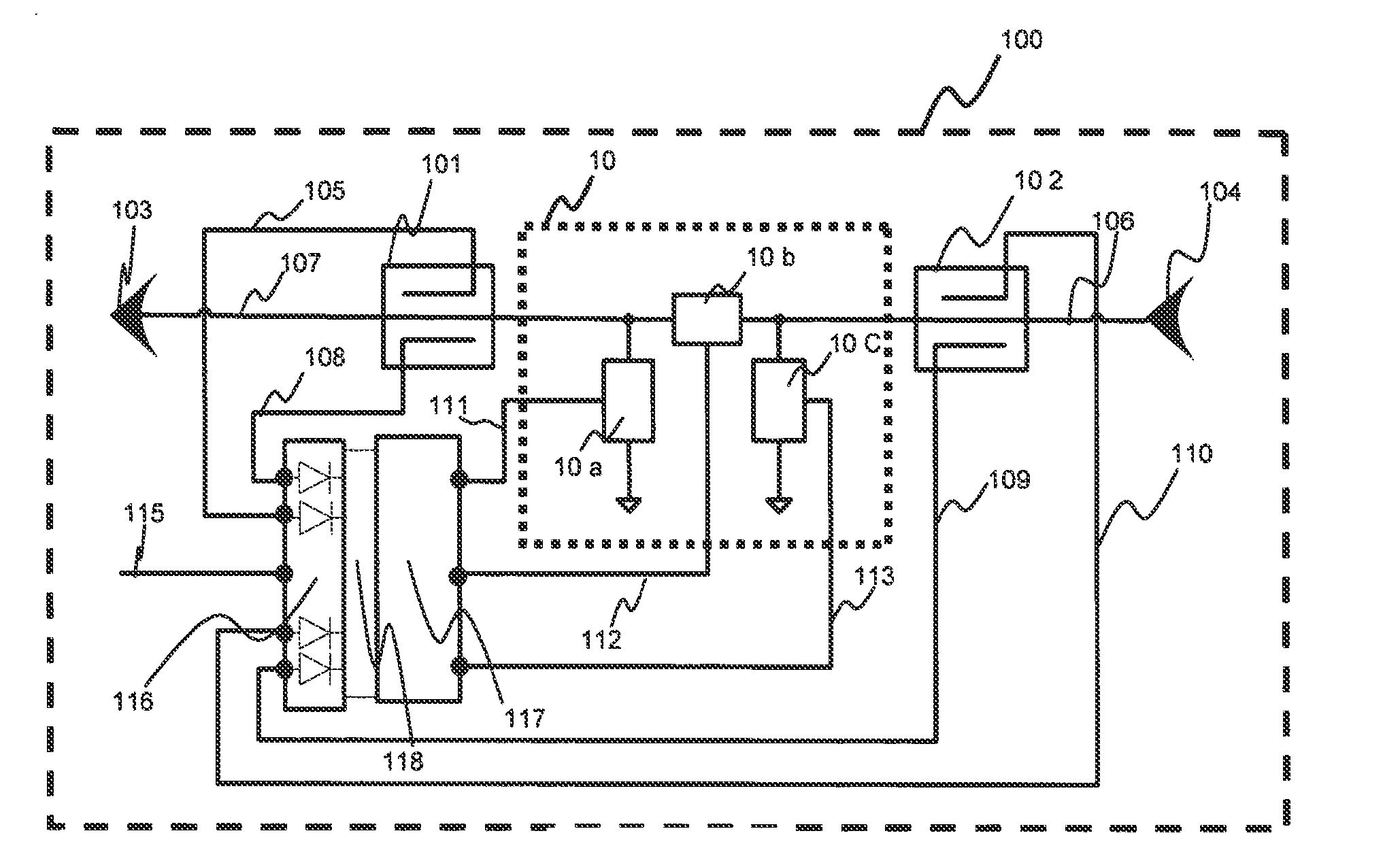

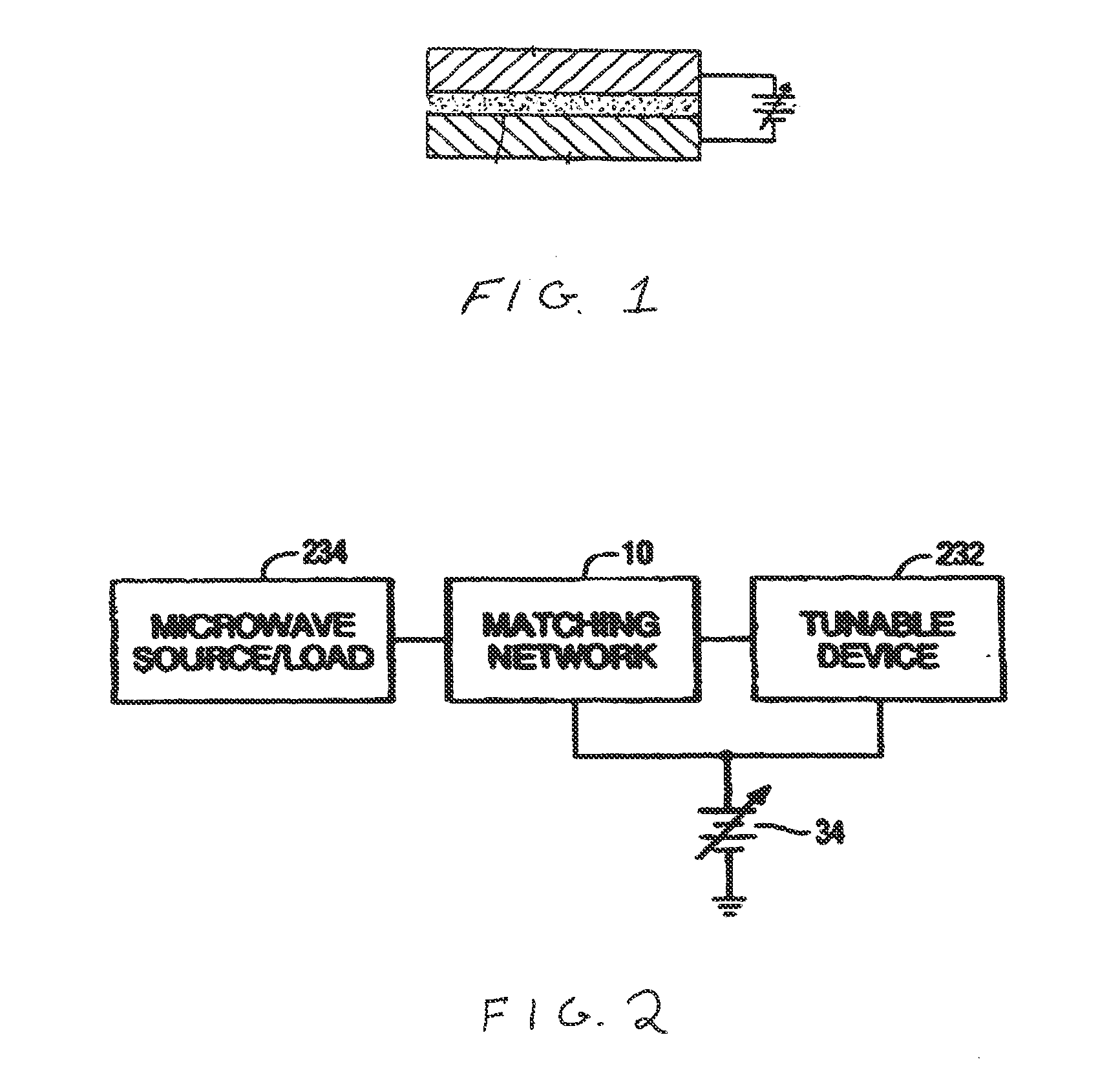

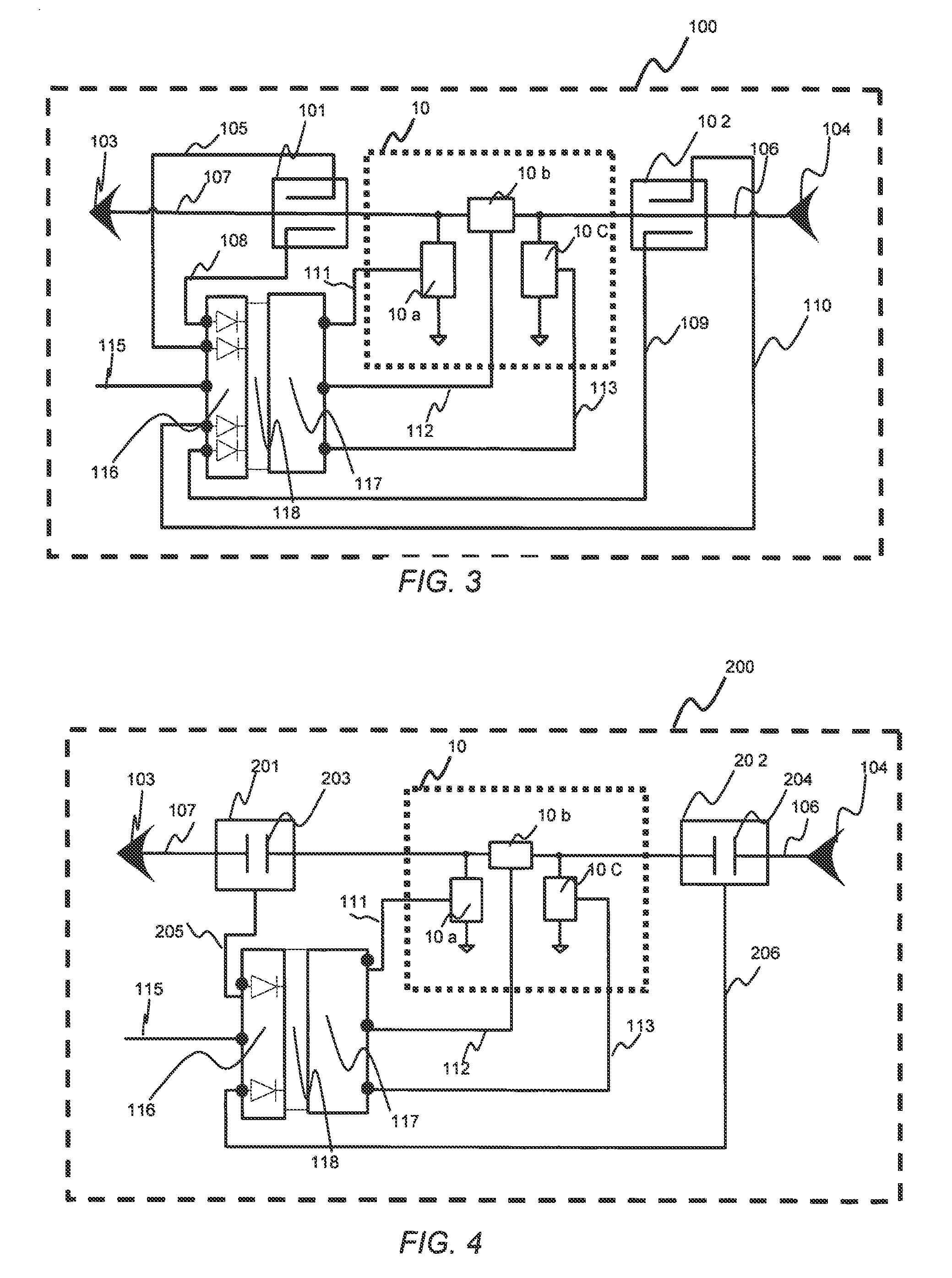

Tunable microwave devices with auto-adjusting matching circuit

ActiveUS20060160501A1Realize automatic adjustmentMultiple-port networksResonant long antennasEngineeringHigh pressure

An embodiment of the present invention provides an apparatus, comprising an input port and a dynamic impedance matching network capable of determining a mismatch at the input port and dynamically changing the RF match by using at least one matching element that includes at least one voltage tunable dielectric capacitor. The matching network may be a “Pi”, a “T”, or “ladder” type network and the apparatus may further comprise at least one directional coupler capable of signal collection by sampling a portion of an incident signal, a reflected signal or both. In an embodiment of the present invention, the apparatus may also include a control and power control & logic unit (PC LU) to convert input analog signals into digital signals and sensing VSWR phase and magnitude and processing the digital signals using an algorithm to give it a voltage value and wherein the voltage values may be compared to values coming from the coupler and once compared and matched, the values may be passed to a Hi Voltage Application Specific Integrated Circuit (HV ASIC) to transfer and distribute compensatory voltages to the matching network elements.

Owner:NXP USA INC

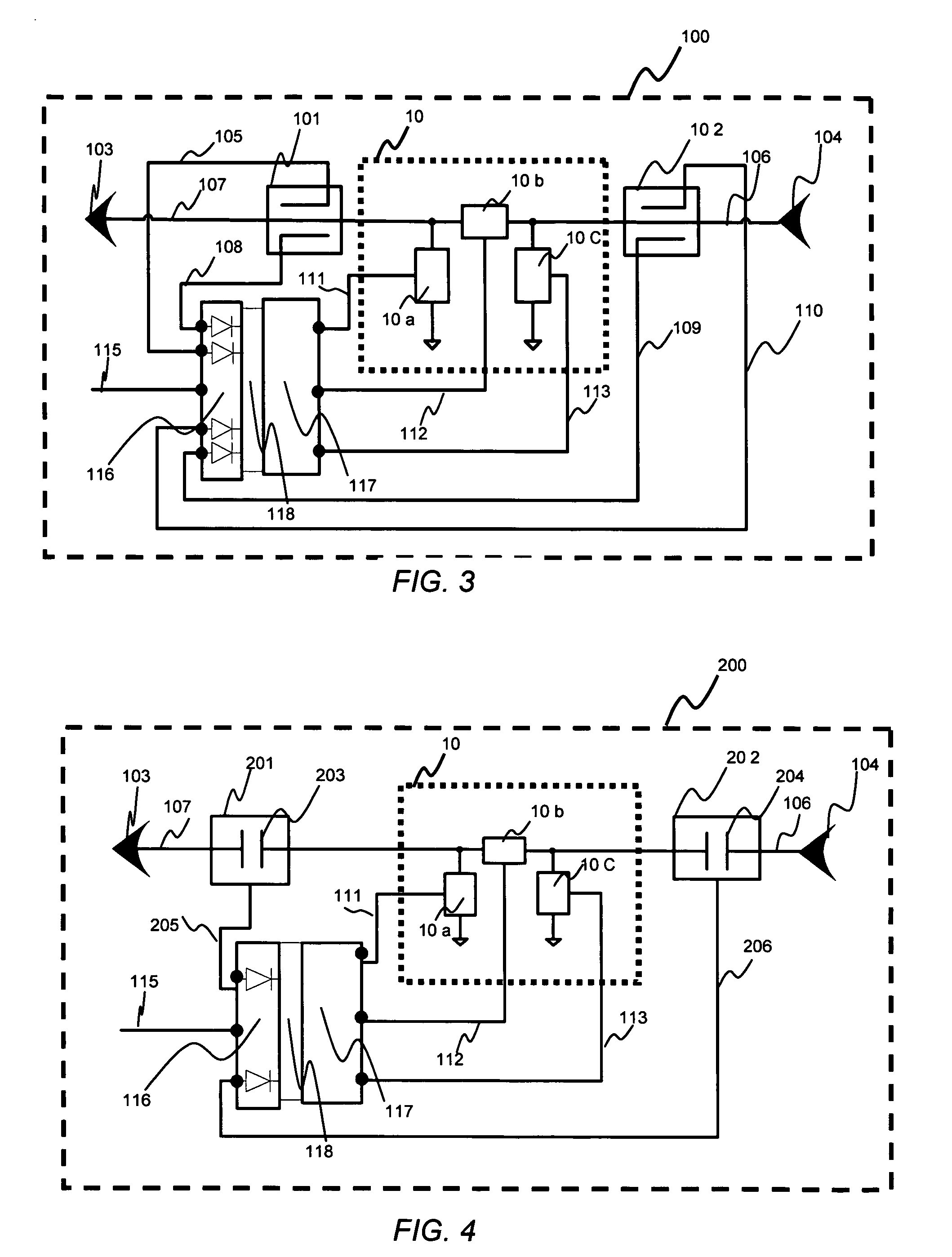

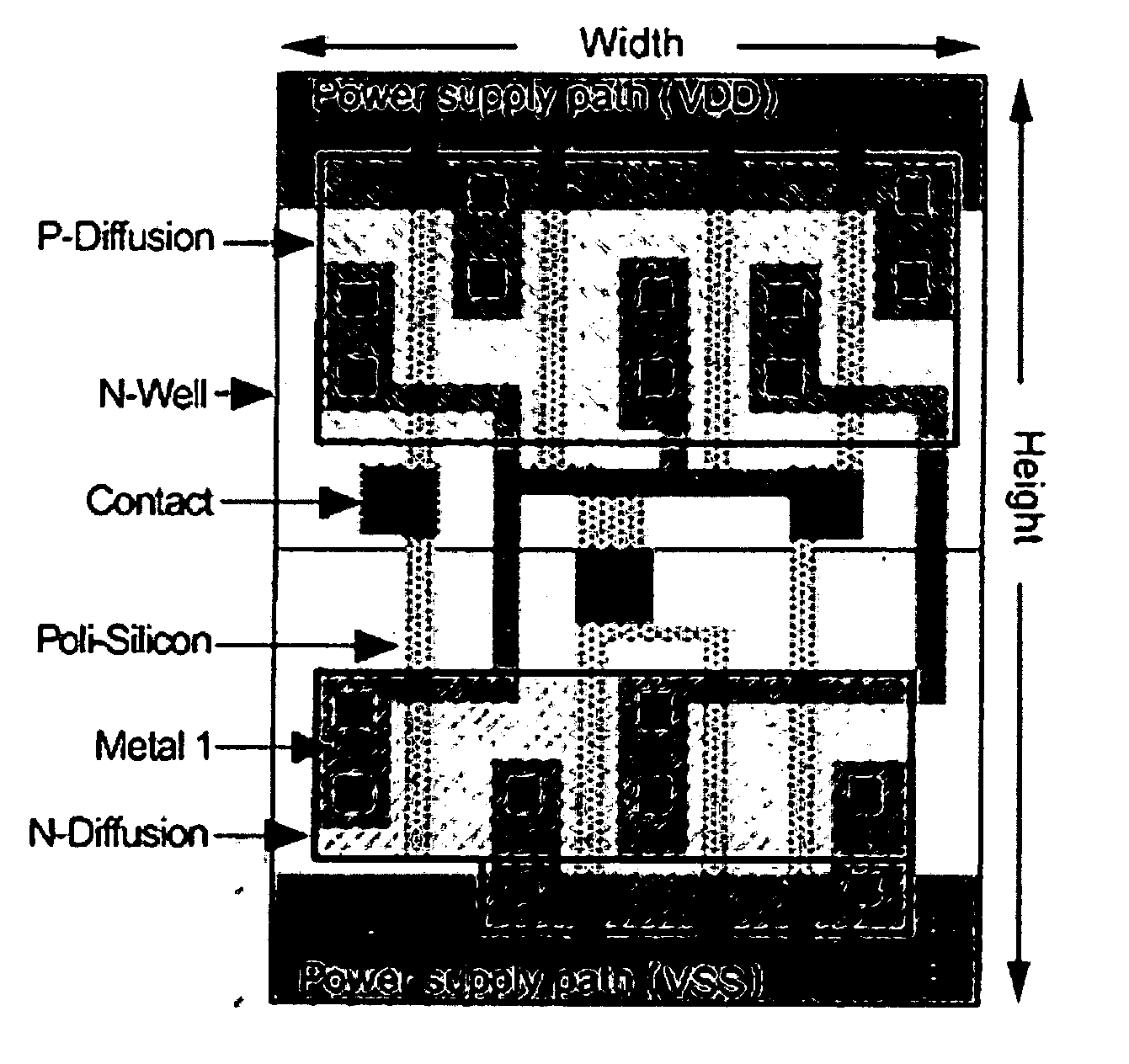

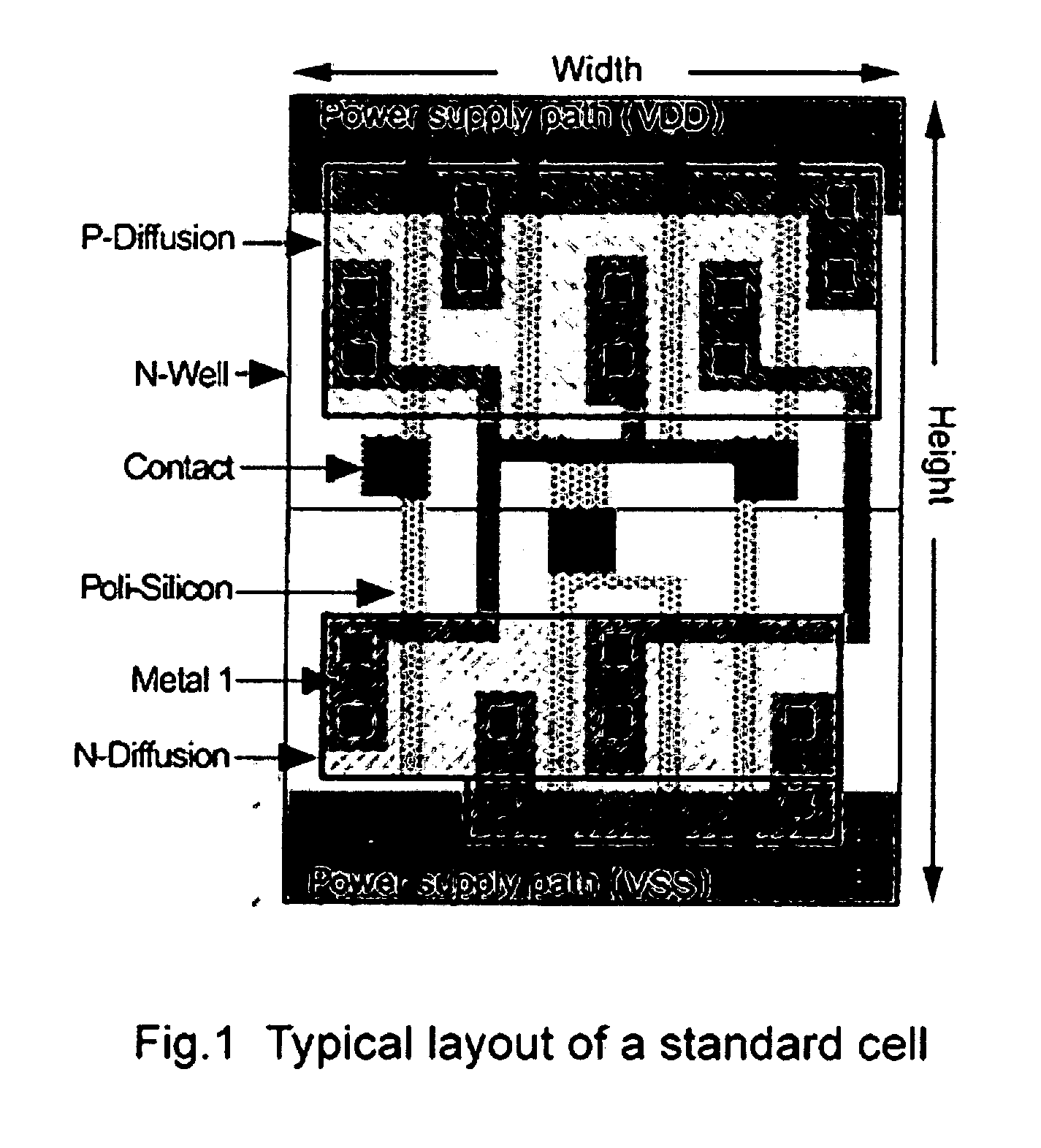

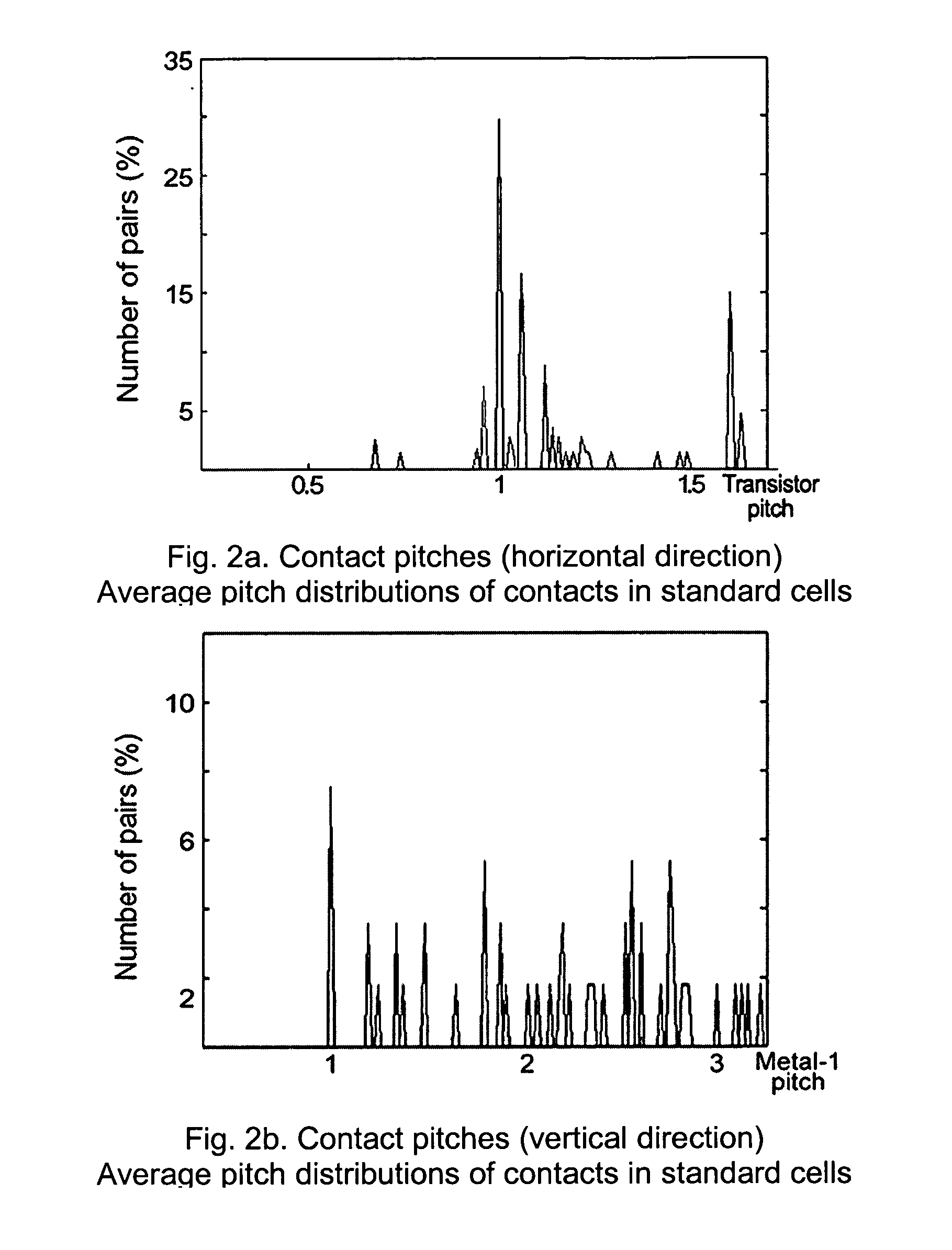

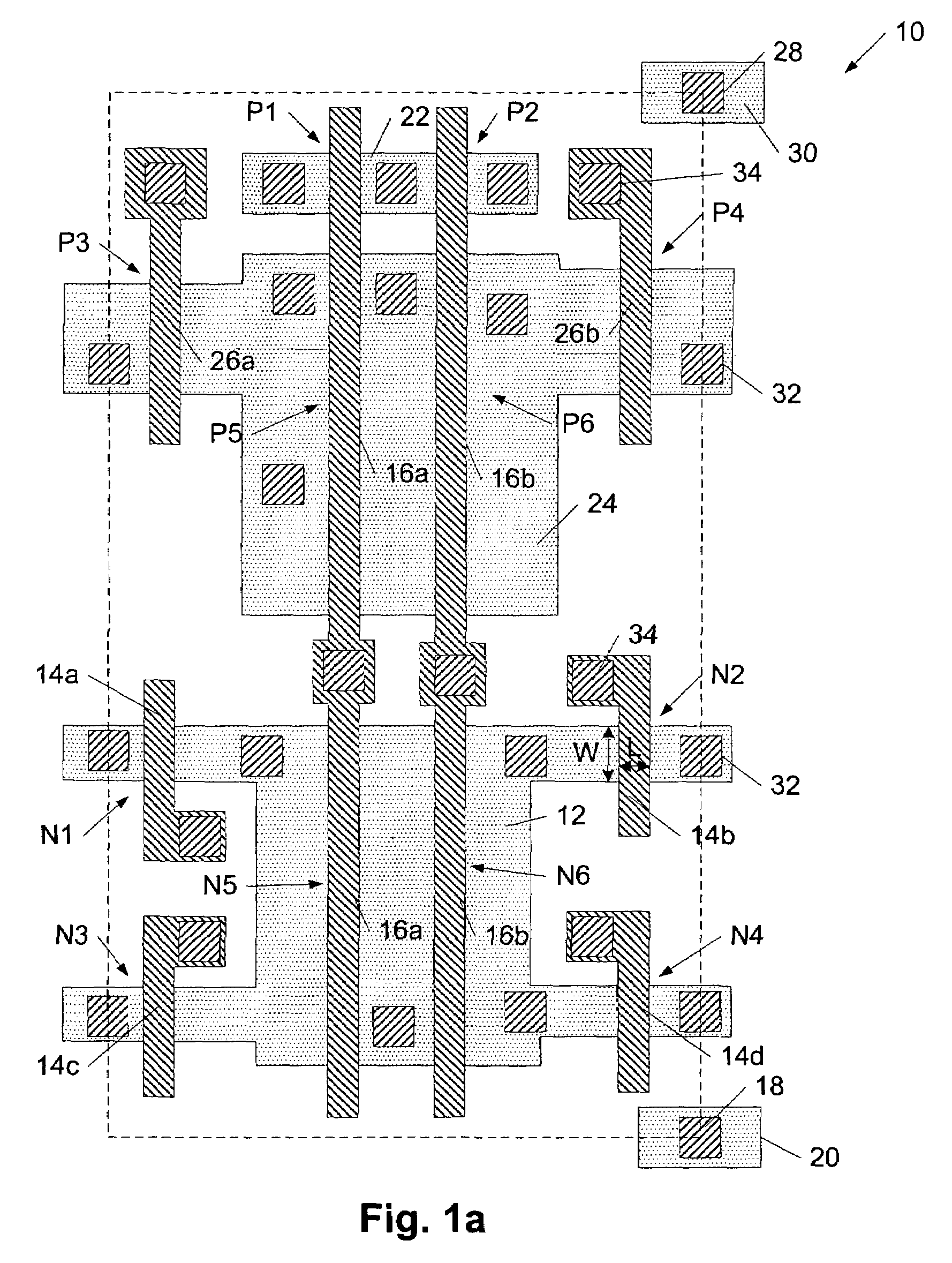

Rectangular contact lithography for circuit performance improvement and manufacture cost reduction

ActiveUS20050196685A1Reduce manufacturing costLot of restrictionPhoto-taking processesSemiconductor/solid-state device manufacturingManufacturing cost reductionEngineering

An optical lithography method is disclosed that uses double exposure of a reusable template mask and a trim mask to fabricate regularly-placed rectangular contacts in standard cells of application-specific integrated circuits (ASICs). A first exposure of the reusable template mask with periodic patterns forms periodic dark lines on a wafer and a second exposure of an application-specific trim mask remove the unwanted part of the dark lines and the small cuts of the dark lines left form the rectangular regularly-placed contacts. All contacts are placed regularly in one direction while unrestrictedly in the perpendicular direction. The regular placement of patterns on the template mask enable more effective use of resolution enhancement technologies, which in turn allows a decrease in manufacturing cost and the minimum contact size and pitch. Since there is no extra application-specific mask needed comparing with the conventional lithography method for unrestrictedly-placed contacts, the extra cost is kept to the lowest. The method of the invention can be used in the fabrication of standard cells in application-specific integrated circuits (ASICs) to improve circuit performance and decrease circuit area and manufacturing cost.

Owner:THE UNIVERSITY OF HONG KONG

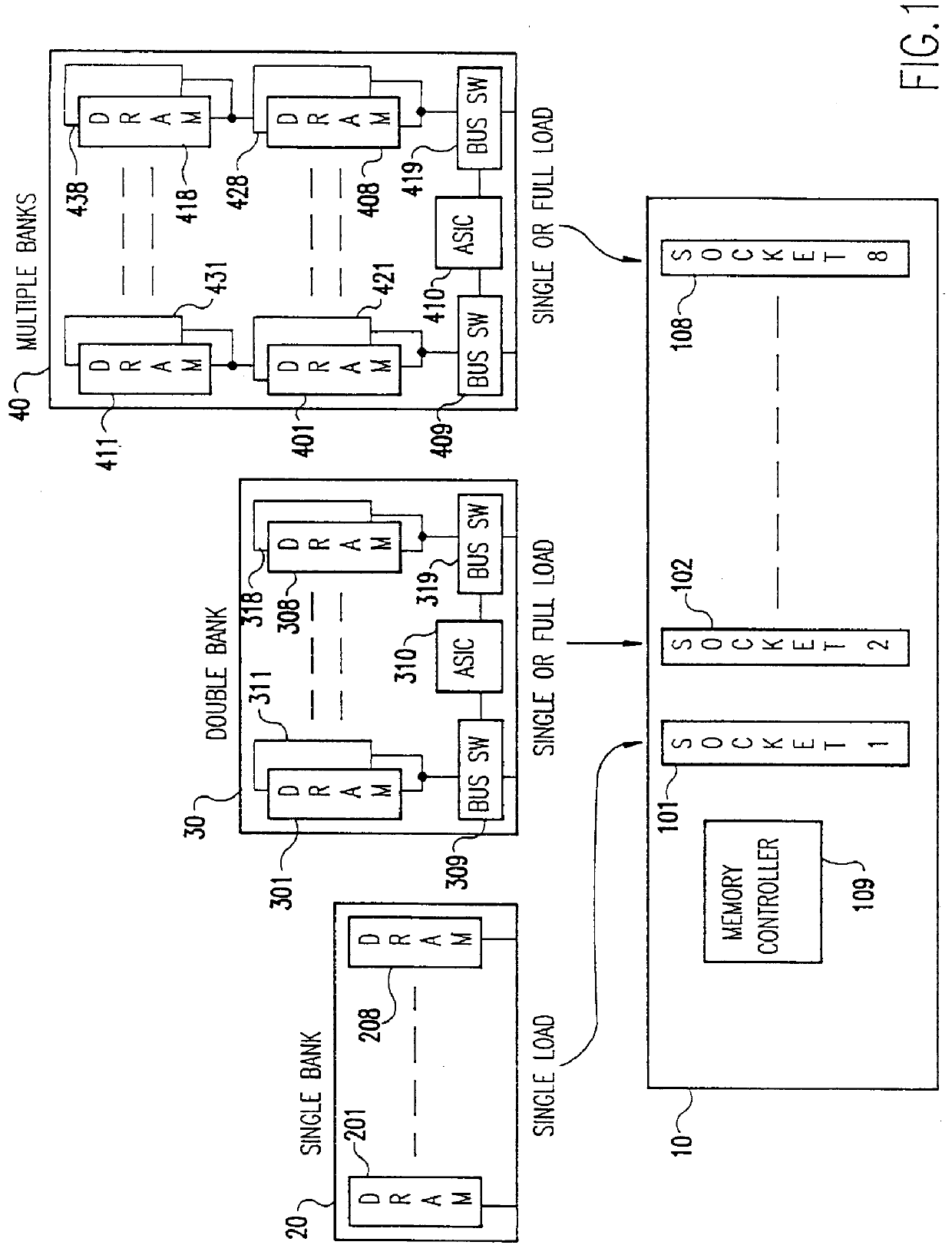

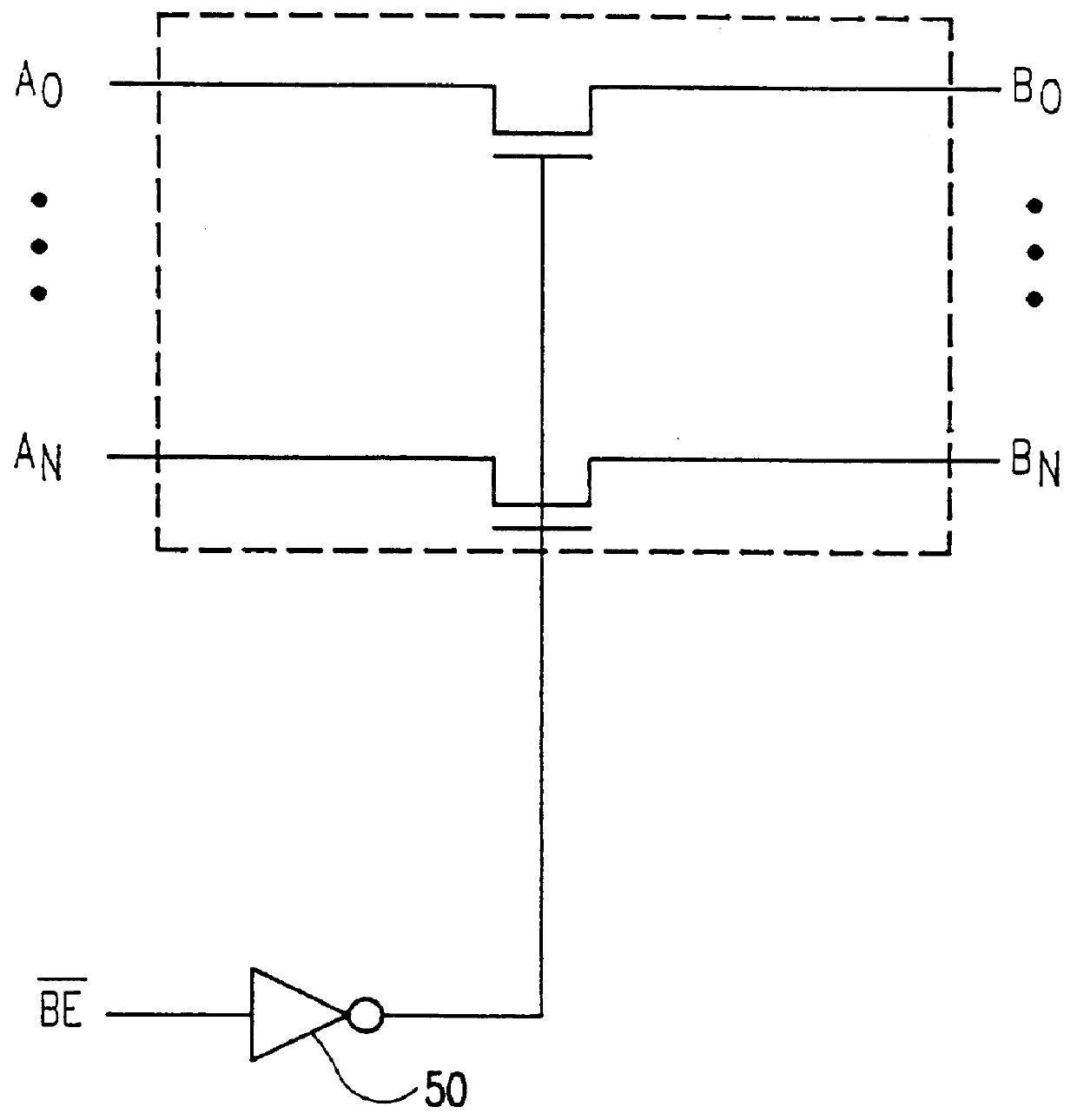

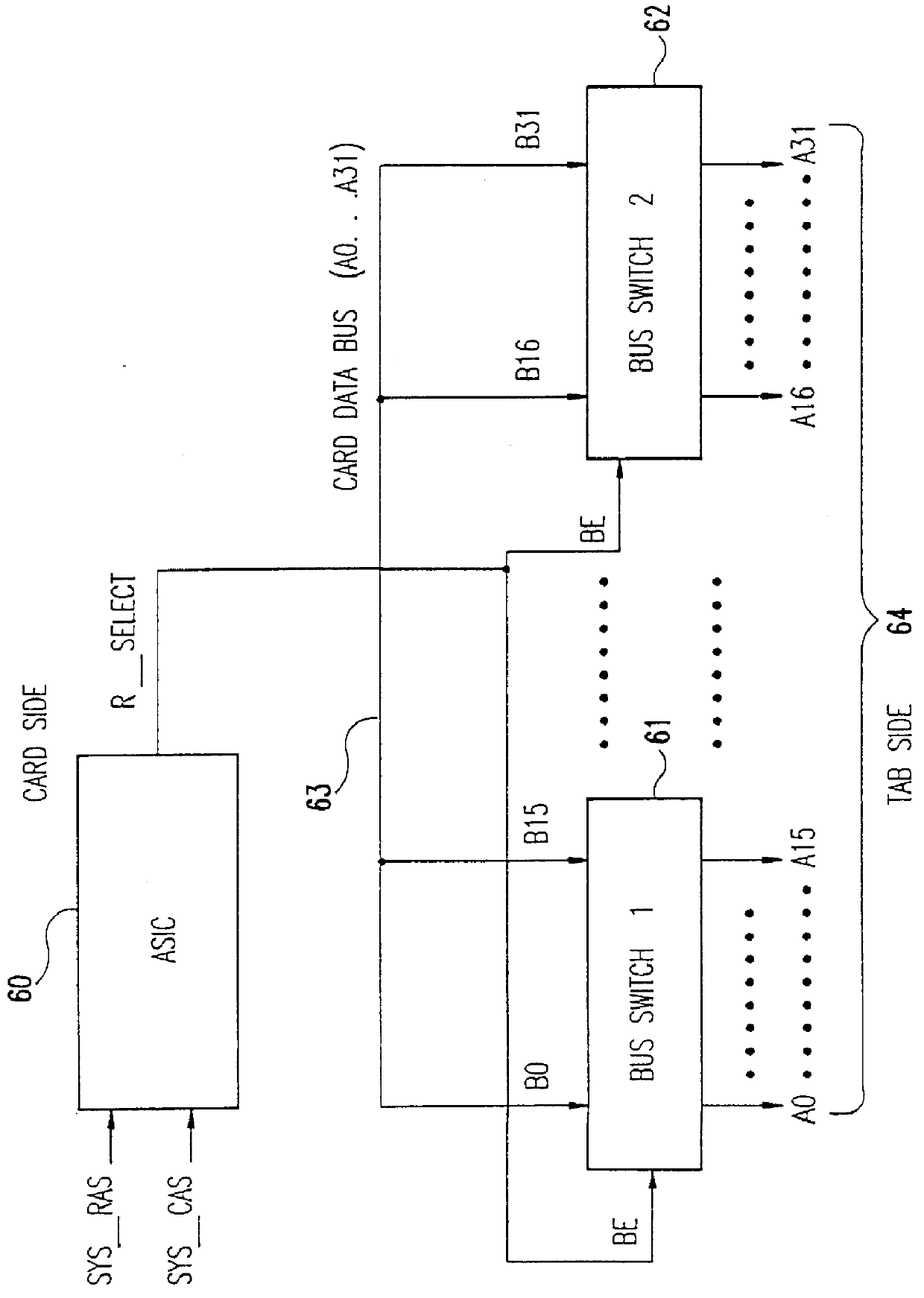

High density memory module with in-line bus switches being enabled in response to read/write selection state of connected RAM banks to improve data bus performance

InactiveUS6070217AMaximize memory densityReduce signal reflectionMemory adressing/allocation/relocationSolid-state devicesCapacitanceBiological activation

Data line loading on high density modules with multiple DRAMs is minimized permitting the maximum memory density of systems of otherwise limited density to be increased without an ensuing performance degradation due to data line capacitive loading. First the single or dual in-line memory module (SIMM or DIMM) includes in-line bus switches. The bus switches are between the SIMM or DIMM module tabs (system) and random access memory devices (RAM) and are either in a high impedance (off) or active state depending on the READ / WRITE state of the RAM. When in the high impedance state, the effective loading of the module is that of the bit switch device. The logic for determining the READ / WRITE state may be embedded in an application specific integrated circuit (ASIC) that monitors bus activity and controls activation of the bus switches, be provided by a memory controller or, generated by the RAM itself. The bus switches are active when the RAM is performing a read or a write and inactive otherwise. The RAM is Fast Page Mode (FPM) and Extended Data Output (EDO) or Synchronous DRAM (SDRAM).

Owner:IBM CORP

Integrated motion processing unit (MPU) with MEMS inertial sensing and embedded digital electronics

ActiveUS8250921B2Low costSmall packageElectric signal transmission systemsSpeed/acceleration/shock instrument detailsMotion processingAccelerometer

Owner:INVENSENSE

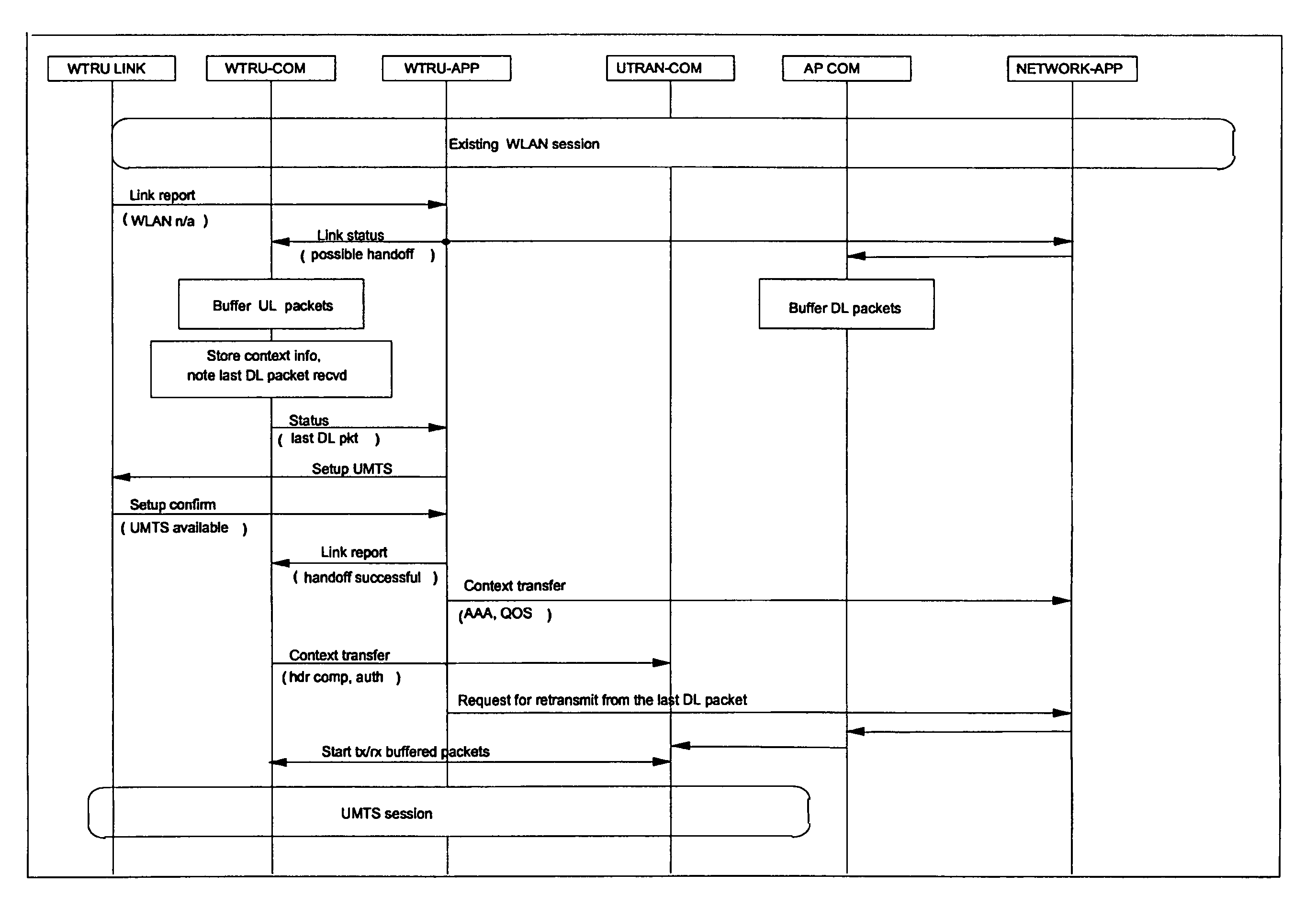

Wireless communication components and methods for multiple system communications

InactiveUS6987985B2Devices with wireless LAN interfaceSubstation equipmentData streamApplication-specific integrated circuit

A mobile wireless transmit / receive unit (WTRU), components and methods therefor provide continuous communications capability while switching from a wireless connection with a first type of wireless system to a wireless connection with a second type of wireless system. Preferably, the WTRU is configured to switch wireless links from a Universal Mobile Telecommunications System (UMTS) to a wireless local area network (WLAN) or vice versa during a continuous communication session. The invention is preferably implemented by providing an Application Broker for control signaling and a Communications broker for user data flow which may be embodied in an application specific integrated circuit (ASIC).

Owner:INTERDIGITAL TECH CORP

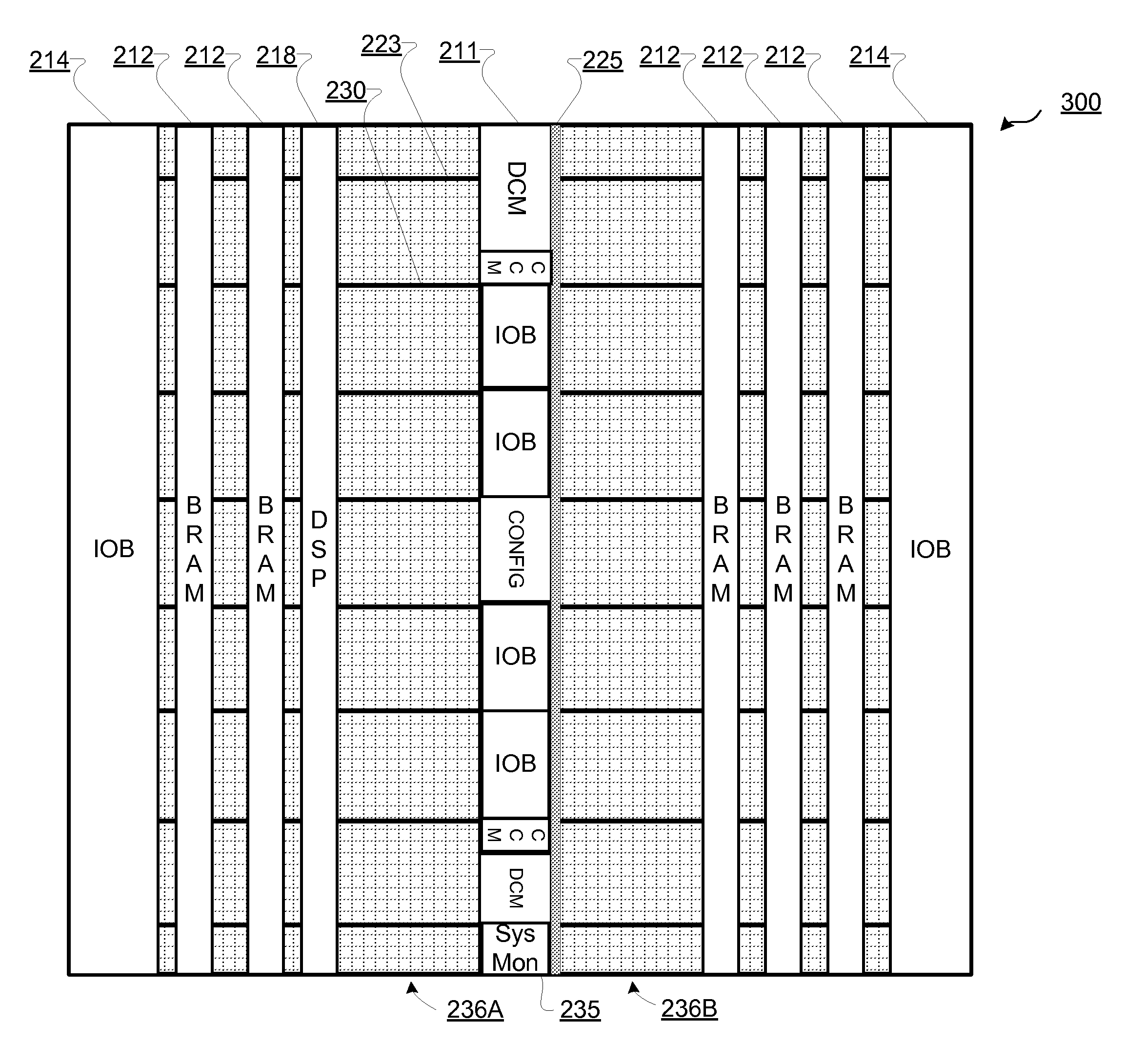

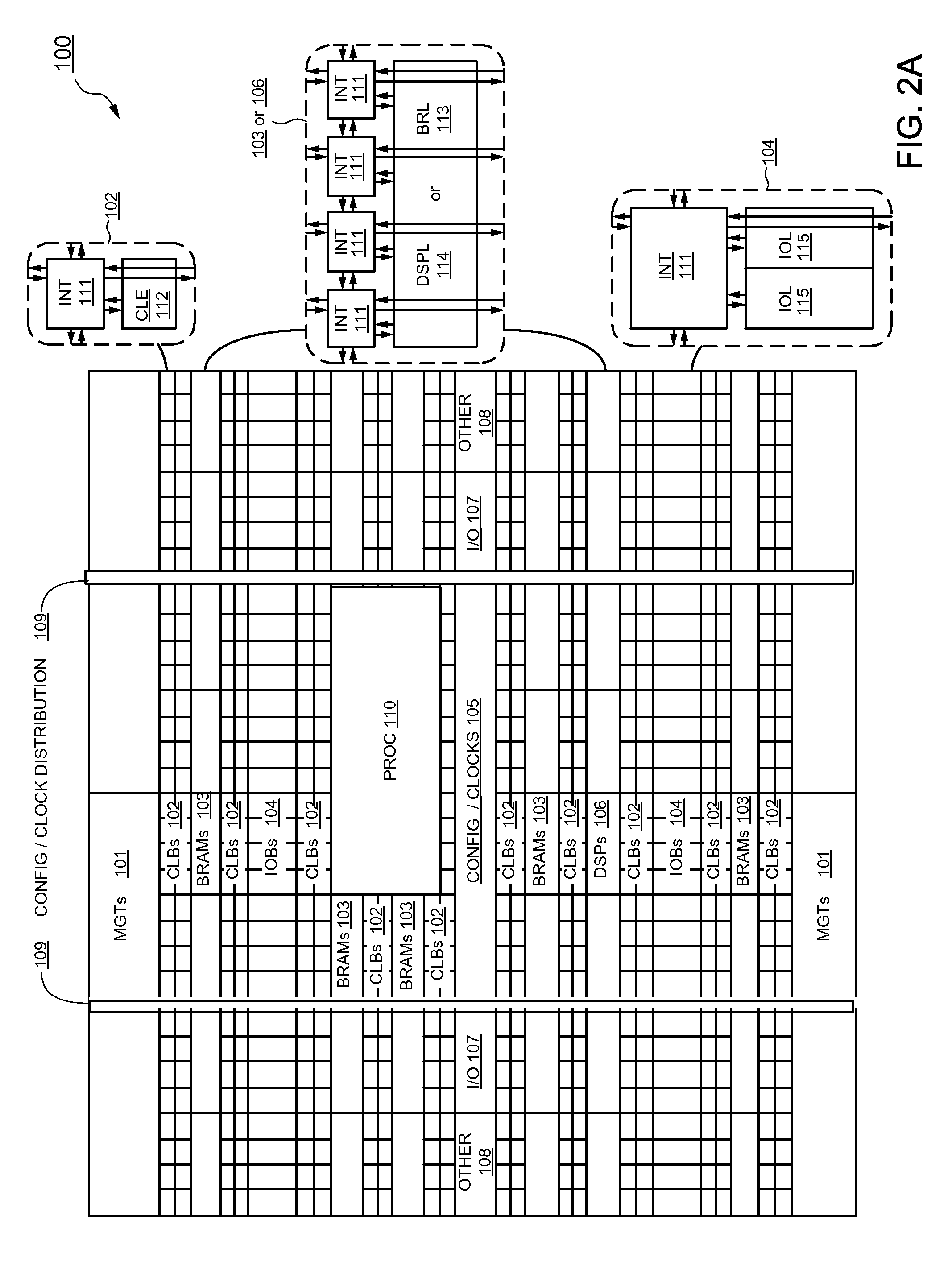

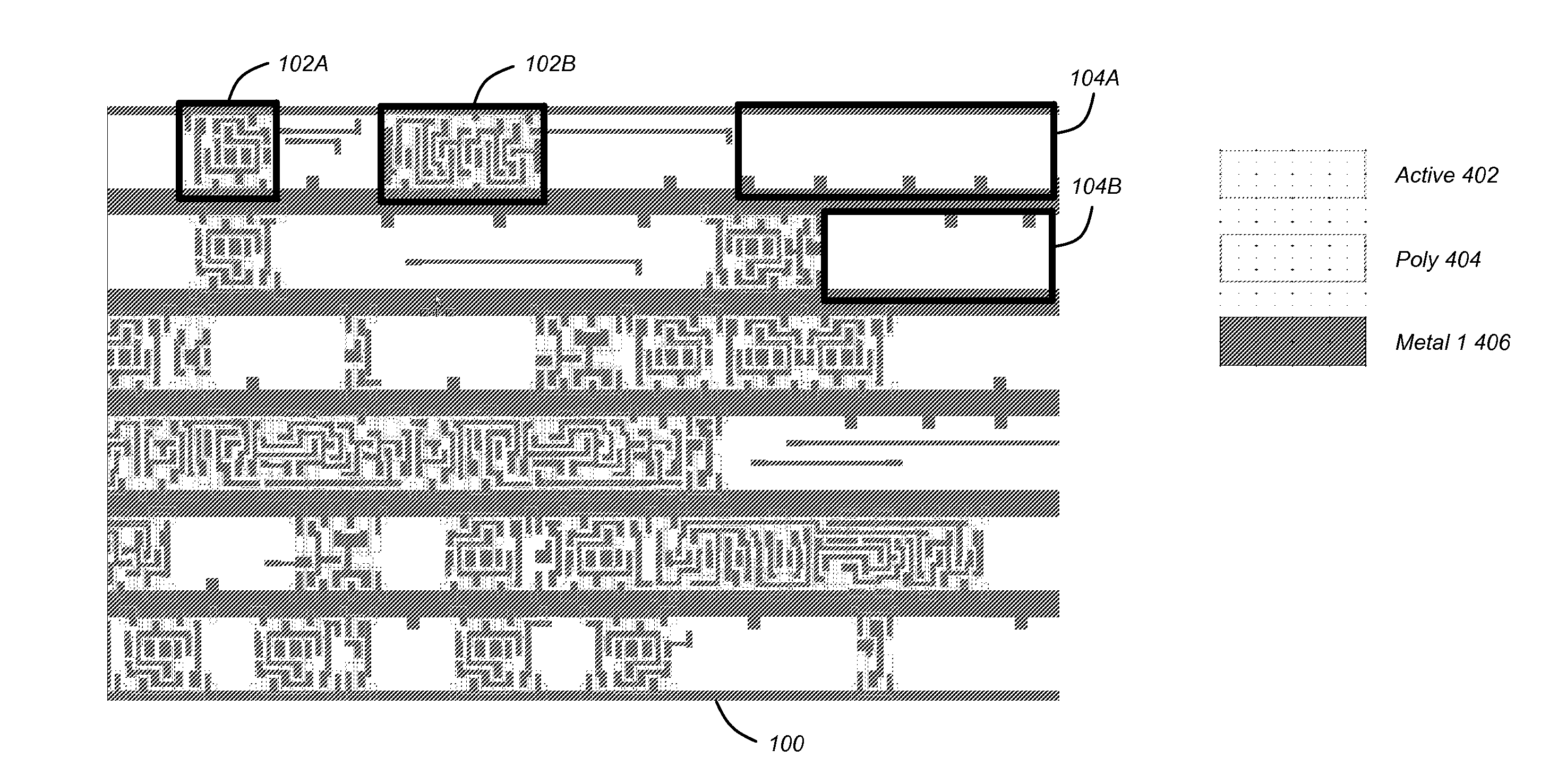

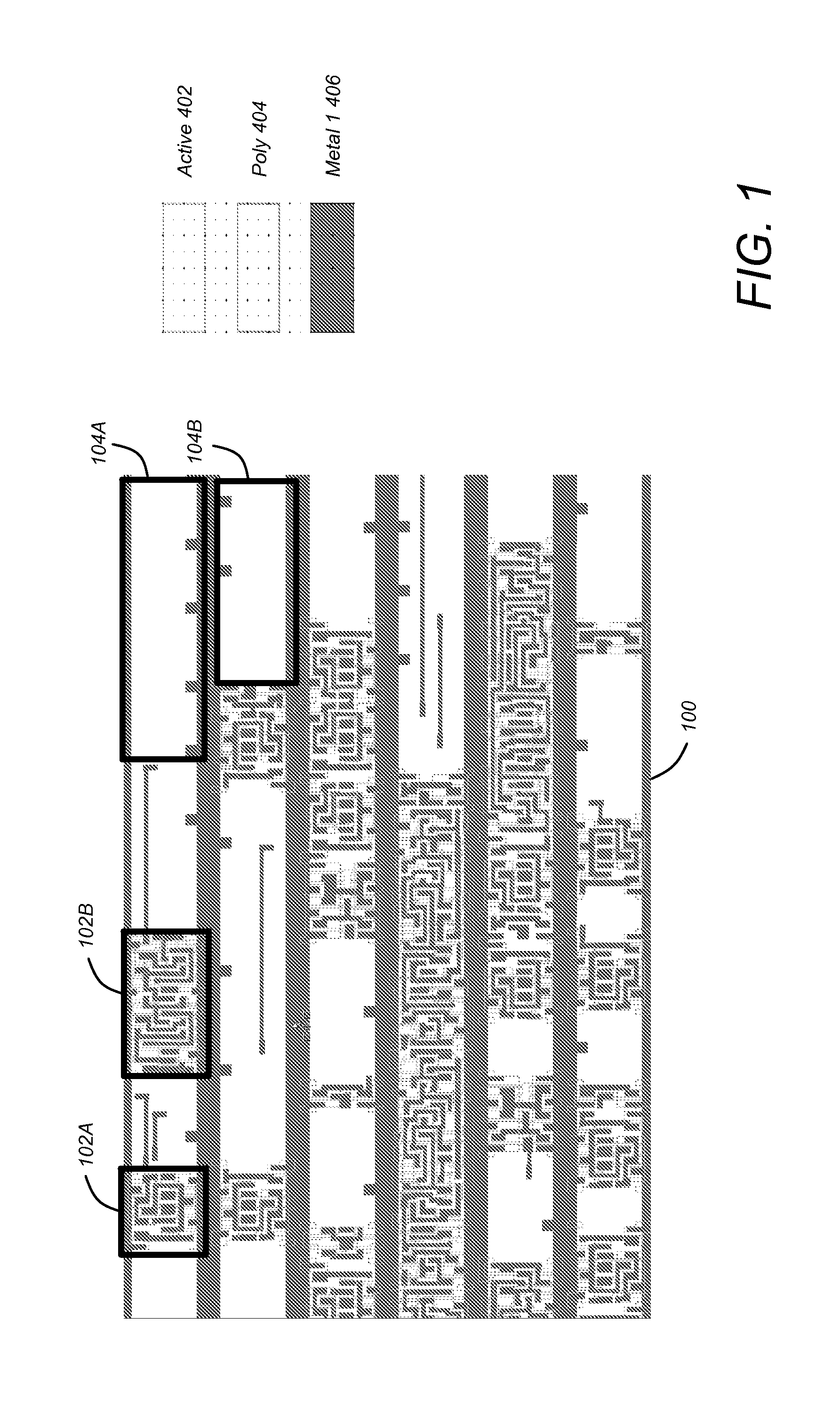

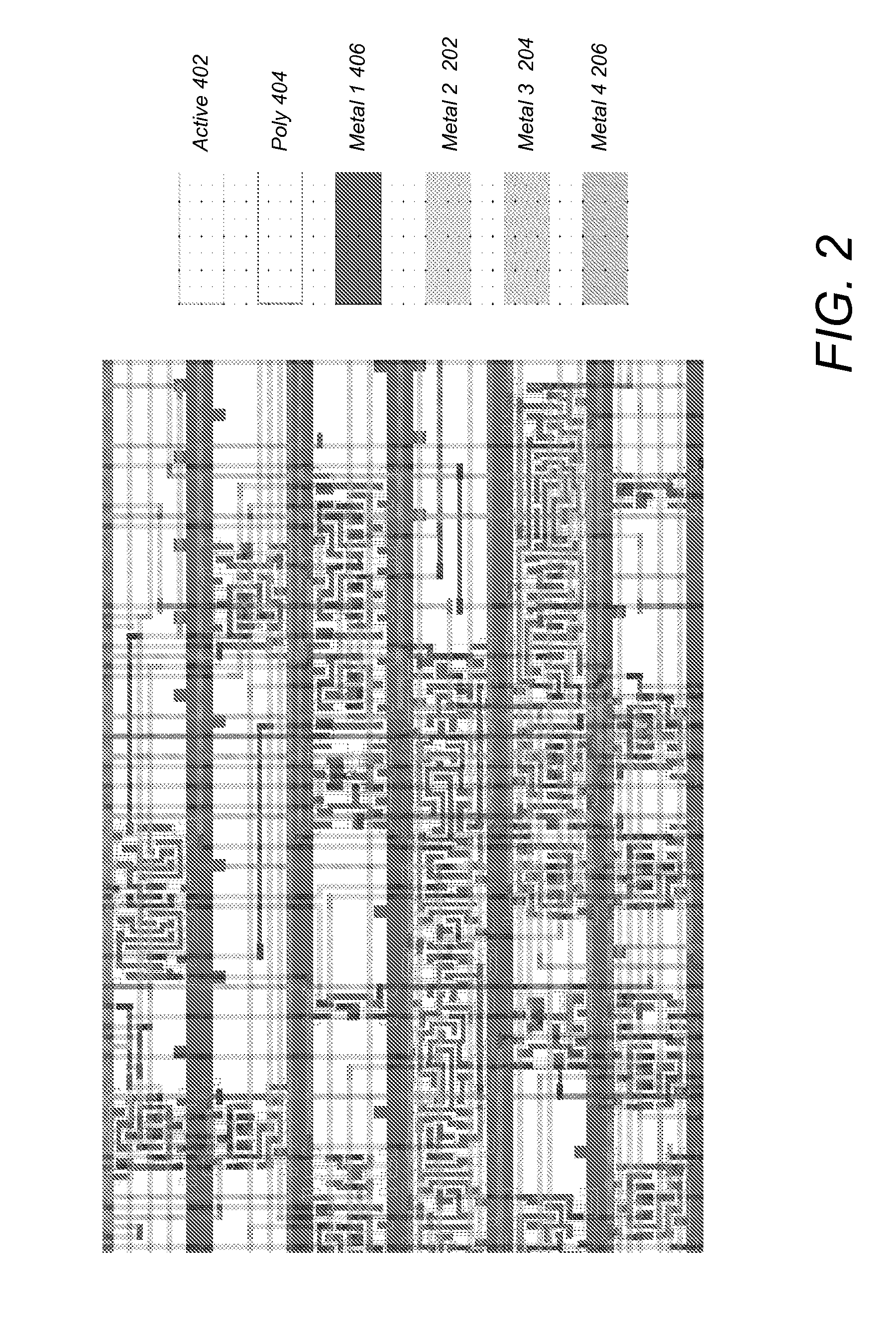

Formation of columnar application specific circuitry using a columnar programmable device

A columnar programmable device (PD) design converted to a columnar application specific integrated circuit-like (ASIC-like) design is described. A user design is instantiated in a PD having a columnar architecture associated with the columnar PD design. The columnar architecture has adjacent columns of circuitry, and one or more of the columns of circuitry as associated with instantiation of the user design in the PD are identified. At least a portion of one or more of the identified columns are swapped with application specific circuitry for implementing all or part of the user design for converting the columnar PD design to the columnar ASIC-like design.

Owner:XILINX INC

Tunable microwave devices with auto-adjusting matching circuit

Owner:NXP USA INC

Basic cell architecture for structured application-specific integrated circuits

A basic cell circuit architecture having plurality of cells with fixed transistors configurable for the formation of logic devices and / or single / dual port memory devices within a structured ASIC is provided. Different configurations of ensuing integrated circuits are achieved by forming variable interconnect layers above the fixed structures. The circuit architecture can achieve interconnection of transistors within a single cell and / or across multiple cells. The interconnection can be configured to form basic logic gates as well as more complex digital and analog subsystems. In addition, each cell contains a layout of transistors that can be variably coupled to achieve a memory device, such as a SRAM device. By having the capability of forming either a logic circuit element, a memory device, or both, the circuit architecture is both memory-centric and logic-centric, and more fully adaptable to modern-day SoCs.

Owner:AVAGO TECH INT SALES PTE LTD

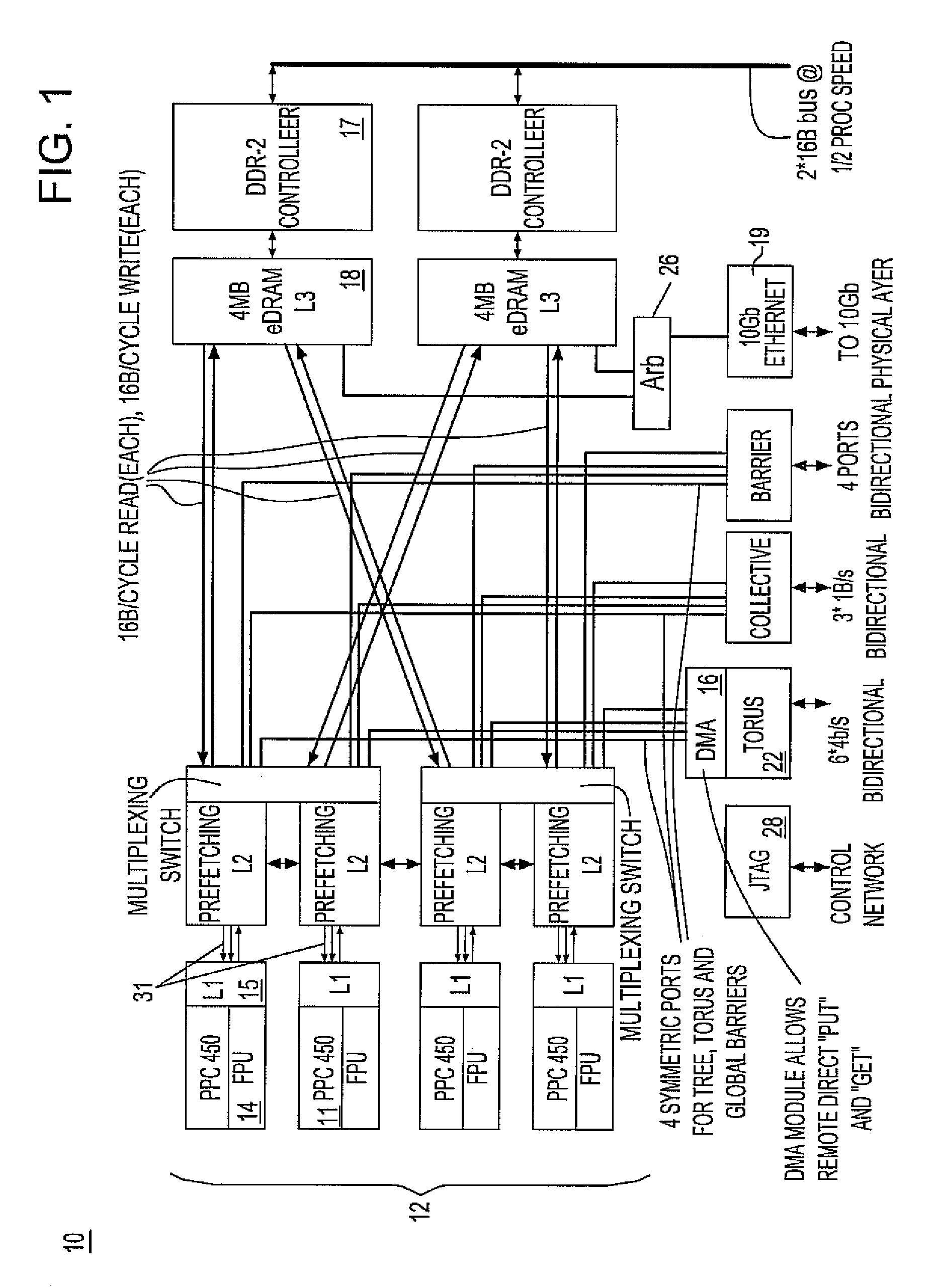

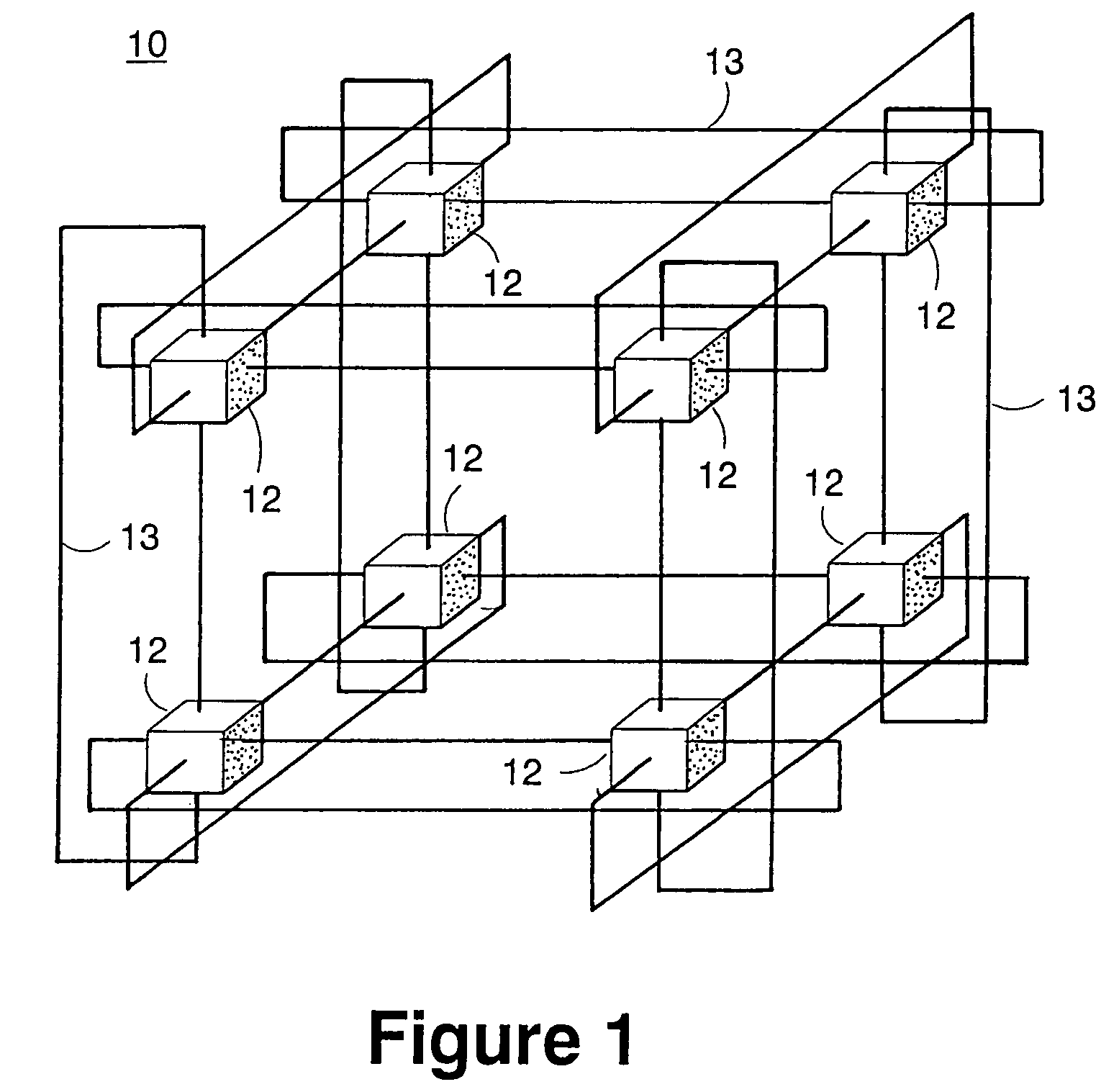

Ultrascalable petaflop parallel supercomputer

InactiveUS7761687B2Maximize throughputDelay minimizationGeneral purpose stored program computerElectric digital data processingSupercomputerPacket communication

A massively parallel supercomputer of petaOPS-scale includes node architectures based upon System-On-a-Chip technology, where each processing node comprises a single Application Specific Integrated Circuit (ASIC) having up to four processing elements. The ASIC nodes are interconnected by multiple independent networks that optimally maximize the throughput of packet communications between nodes with minimal latency. The multiple networks may include three high-speed networks for parallel algorithm message passing including a Torus, collective network, and a Global Asynchronous network that provides global barrier and notification functions. These multiple independent networks may be collaboratively or independently utilized according to the needs or phases of an algorithm for optimizing algorithm processing performance. The use of a DMA engine is provided to facilitate message passing among the nodes without the expenditure of processing resources at the node.

Owner:INT BUSINESS MASCH CORP

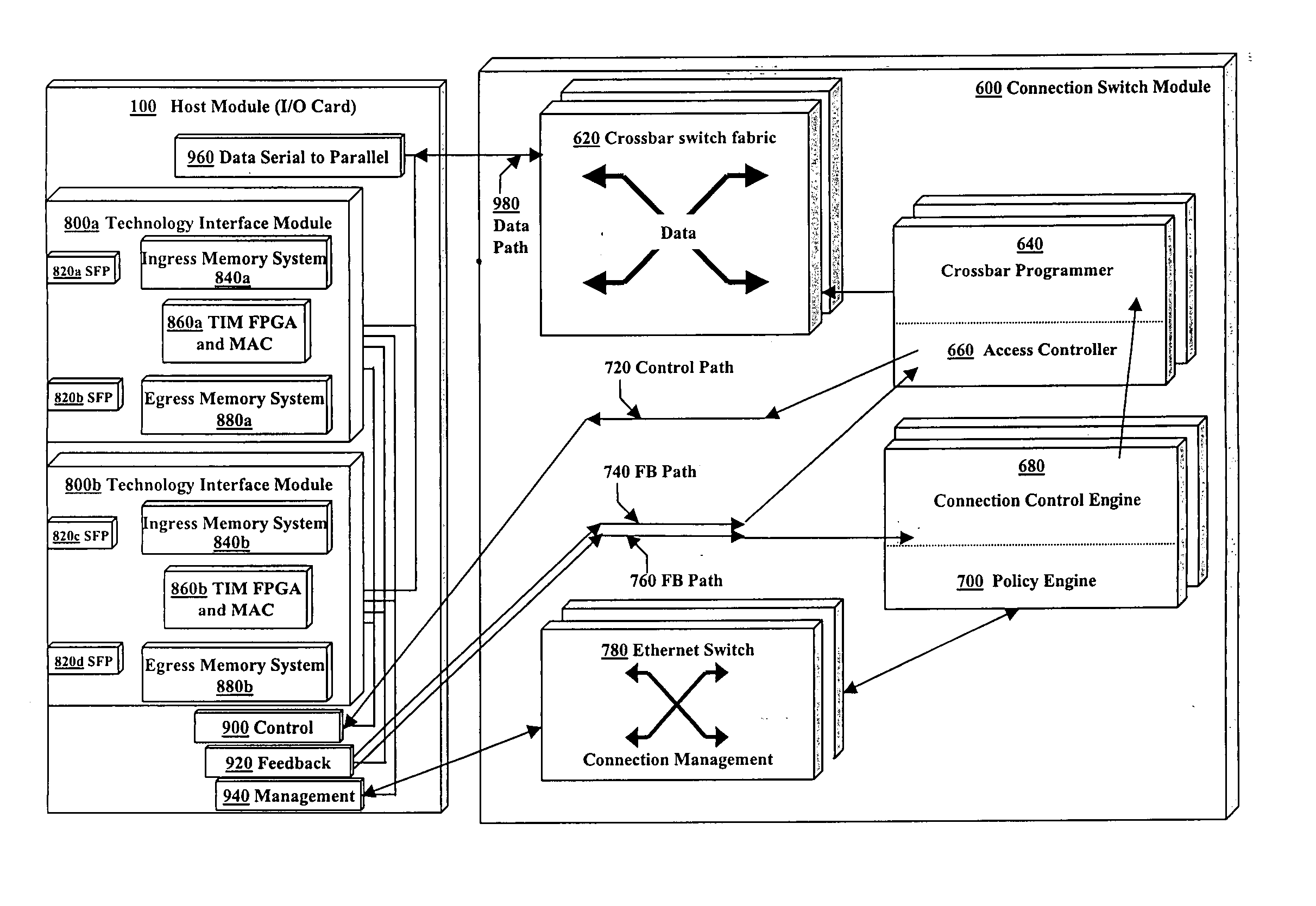

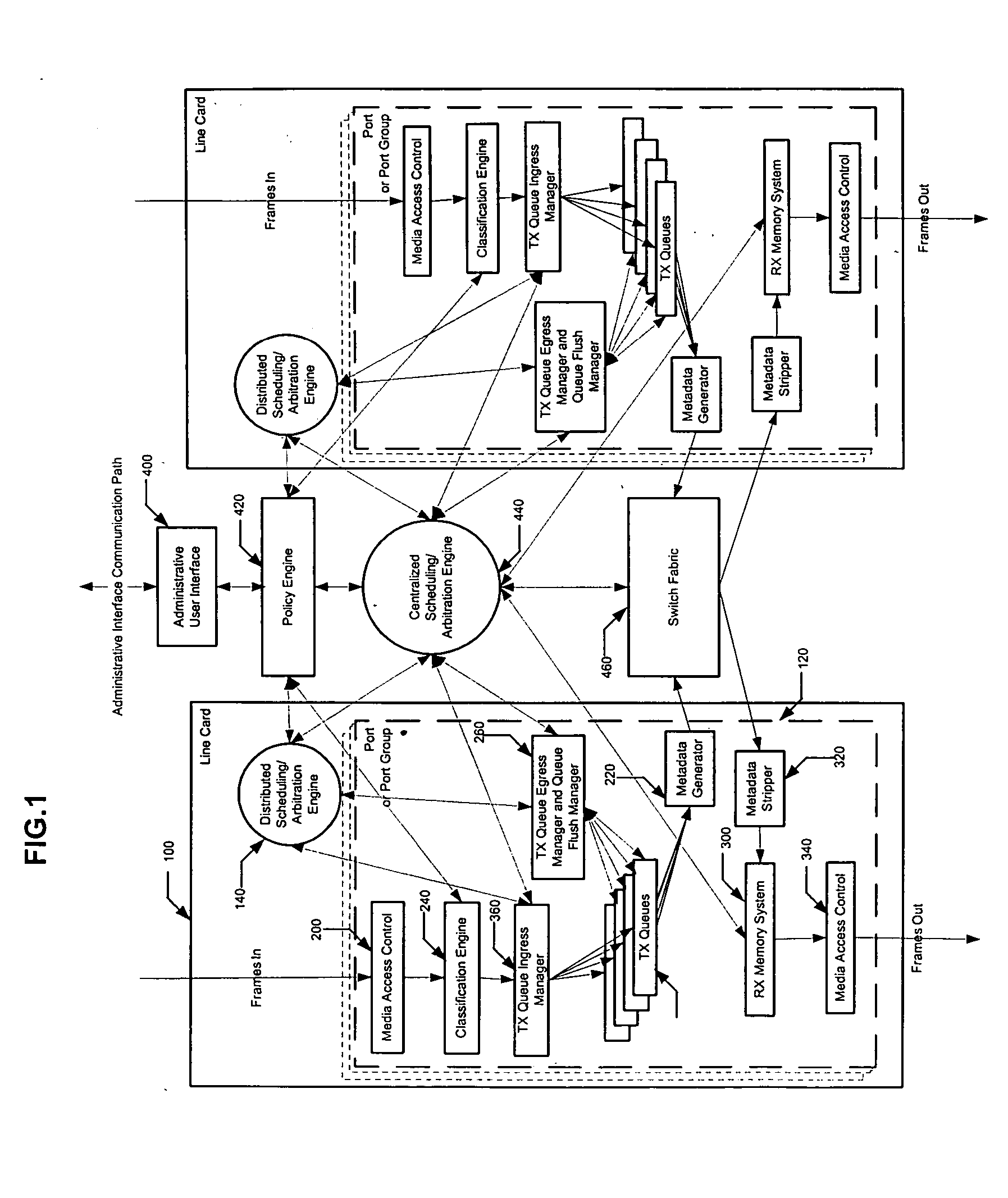

Methods and apparatus for provisioning connection oriented, quality of service capabilities and services

InactiveUS20050089054A1Improve efficiencyReduce the amount requiredData switching by path configurationQuality of serviceGranularity

The present invention describes a system for providing quality of service (QoS) features in communications switching devices and routers. The QoS provided by this system need not be intrinsic to the communication protocols being transported through the network. Preferred embodiments also generate statistics with the granularity of the QoS. The system can be implemented in a single application-specific integrated circuit (ASIC), in a chassis-based switch or router, or in a more general distributed architecture. The system architecture is a virtual output queued (VOQed) crossbar. The administrator establishes policies for port pairs within the switch, and optionally with finer granularity. Frames are directed to unique VOQs based on both policy and protocol criteria. Policies are implemented by means of a scheduling engine that allocates time slices (minimum units of crossbar access).

Owner:NETWORK APPLIANCE INC

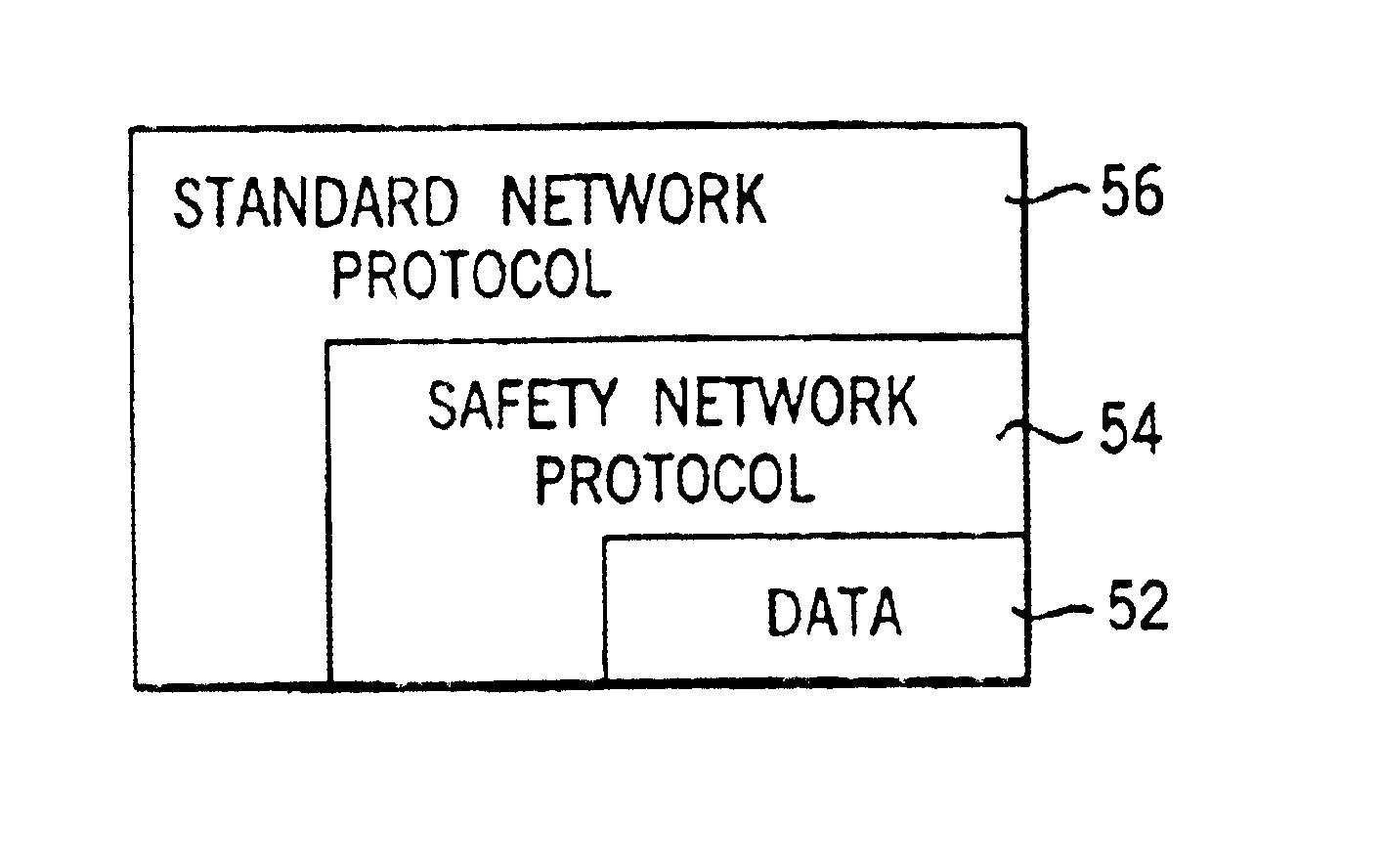

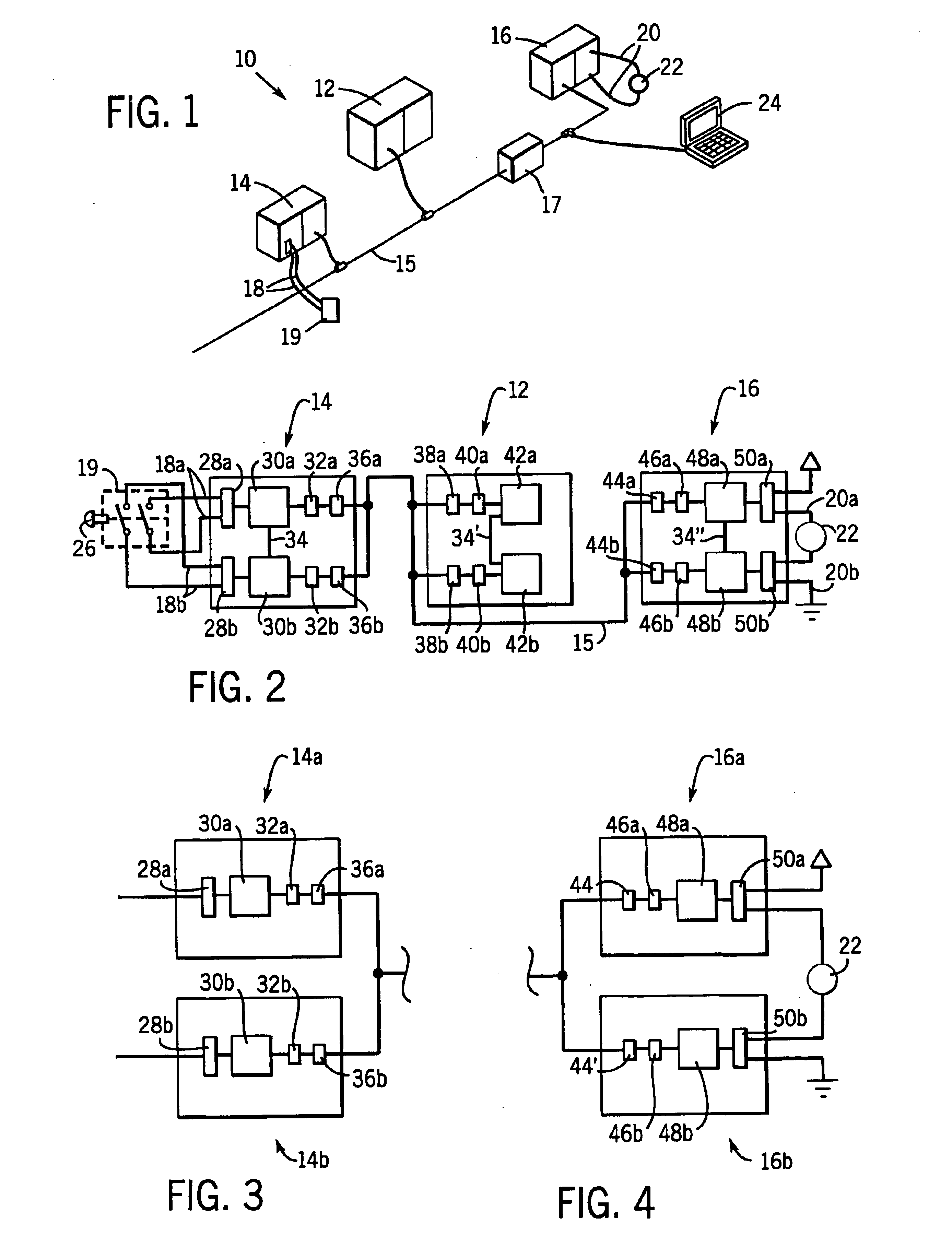

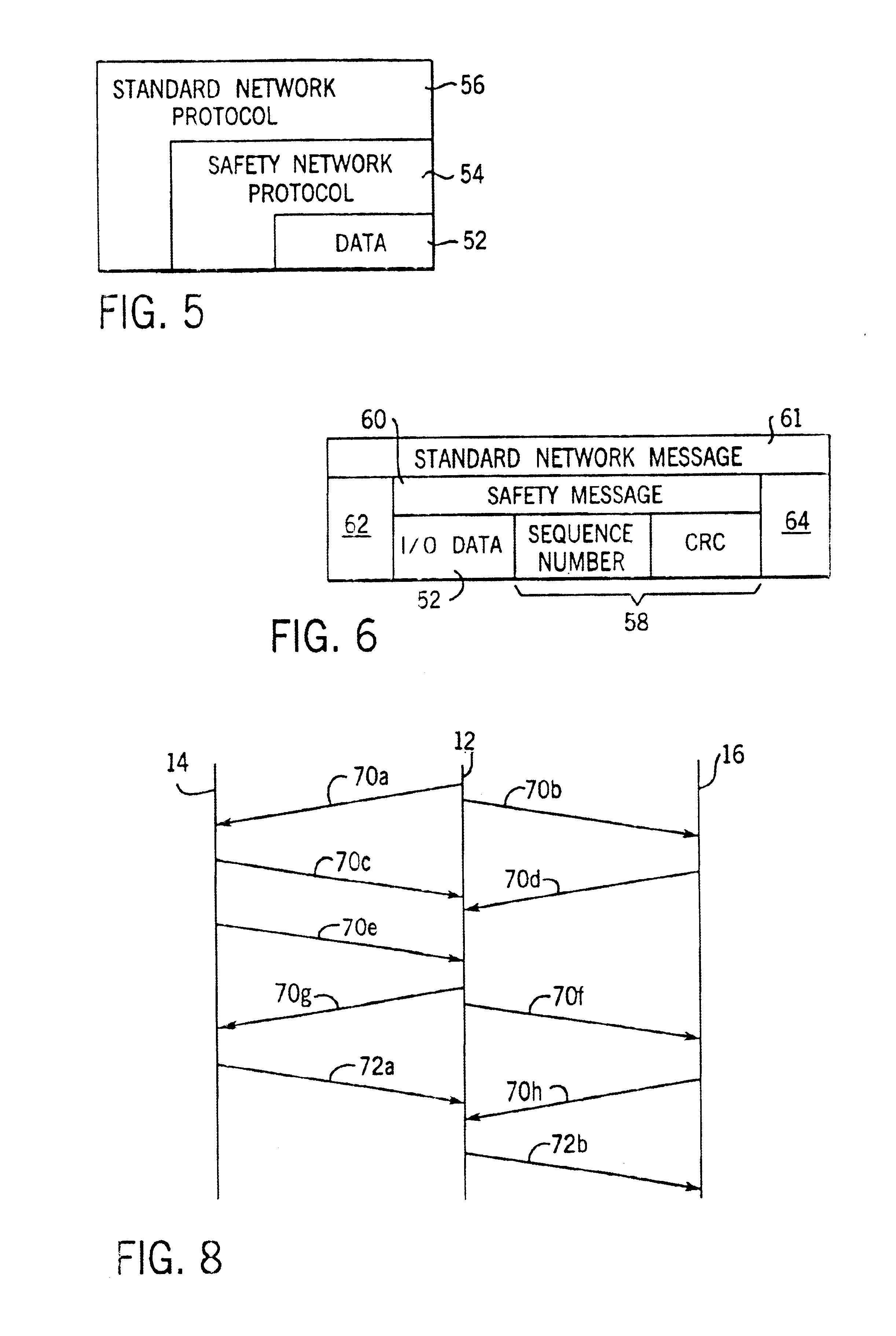

Network independent safety protocol for industrial controller

InactiveUS6891850B1Reduce errorsComputer controlSimulator controlNetworking protocolNetwork generation

A highly reliable industrial control system is produced using a network running a standard serial protocol. A safety protocol is embedded within the standard serial protocol by adding to special error detecting data redundant with the protocol of the standard serial network. In addition an overarching protocol involving timing of messages is imposed to provide the necessary level of reliability in the standard serial network. This approach allows the safety protocol to be used with a wide variety of commercially available serial communication standards without modification of the media or the specialized integrated circuits used for that communication. Safety protocol may be implemented in an additional level for integrated circuits or through firmware changes in programmable aspects of the industrial controller components.

Owner:ROCKWELL TECH

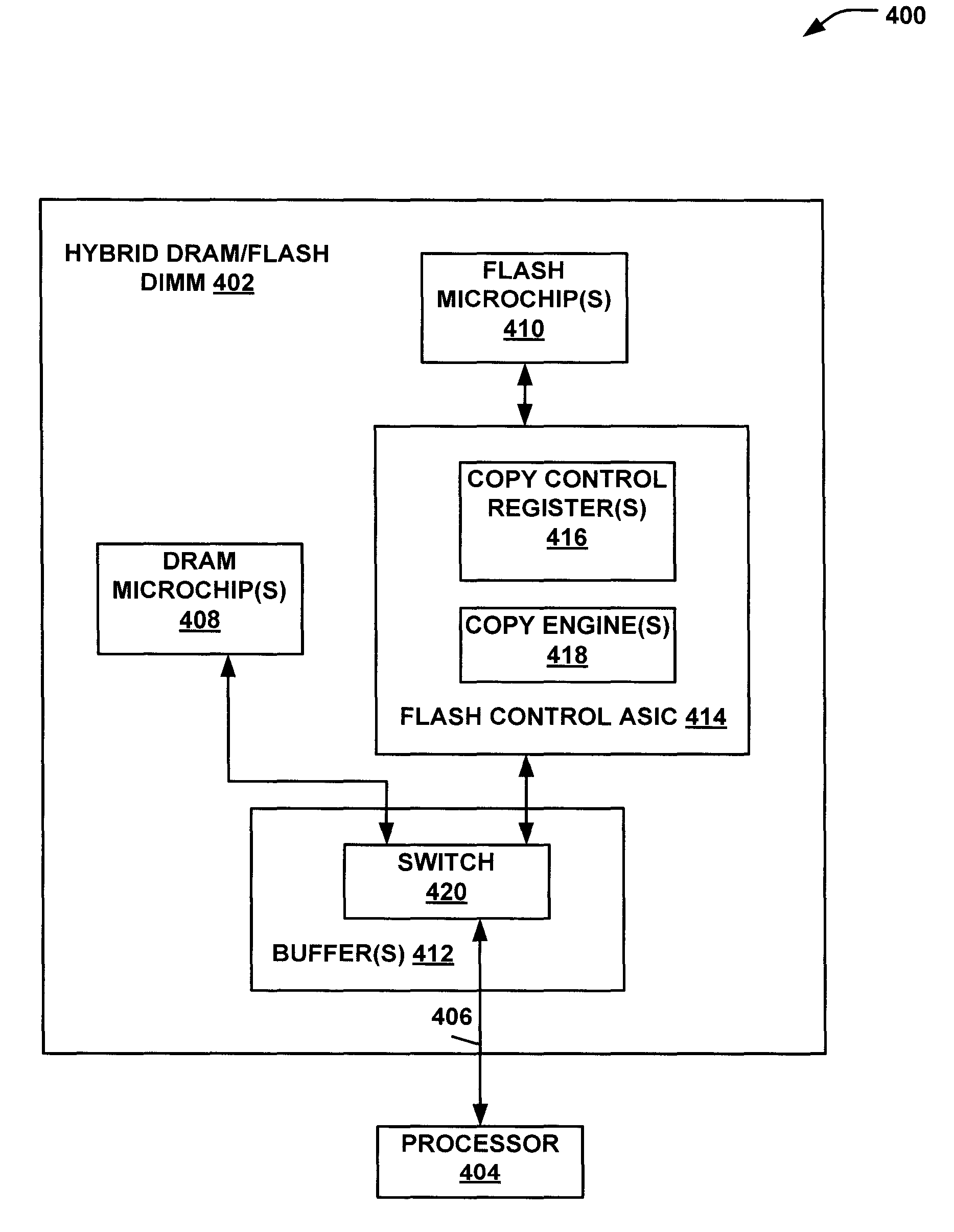

Flash memory dual in-line memory module management

InactiveUS20140095769A1Improve work performanceImprove system performanceRead-only memoriesDigital storageDIMMComputer module

Systems and methods to manage memory on a dual in-line memory module (DIMM) are provided. A particular method may include receiving at a flash application-specific integrated circuit (ASIC) a request from a processor to access data stored in a flash memory of a DIMM. The data may be transferred from the flash memory to a switch of the DIMM. The data may be routed to a dynamic random-access memory (DRAM) of the DIMM. The data may be stored in the DRAM and may be provided from the DRAM to the processor.

Owner:LENOVO ENTERPRISE SOLUTIONS SINGAPORE

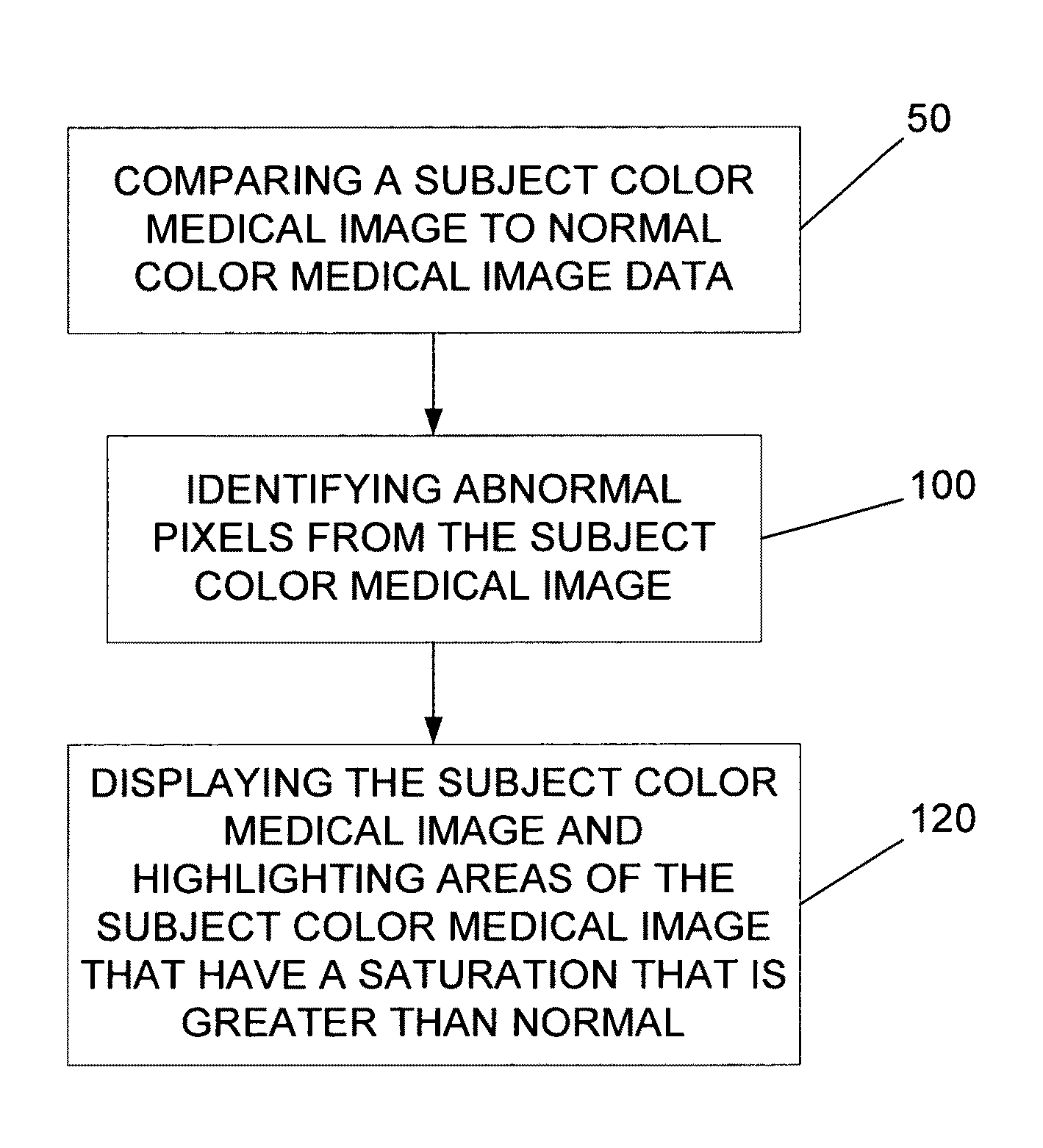

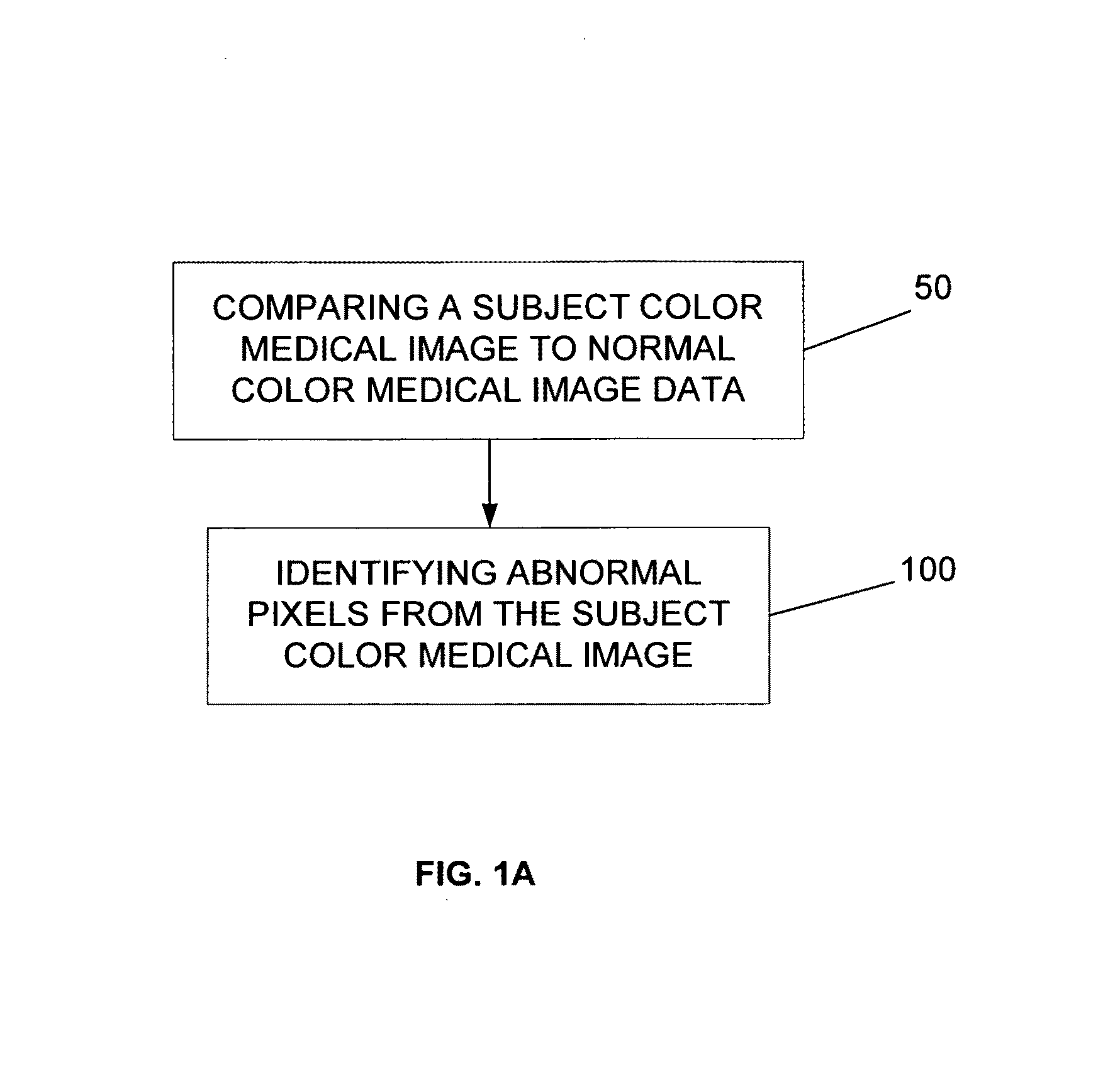

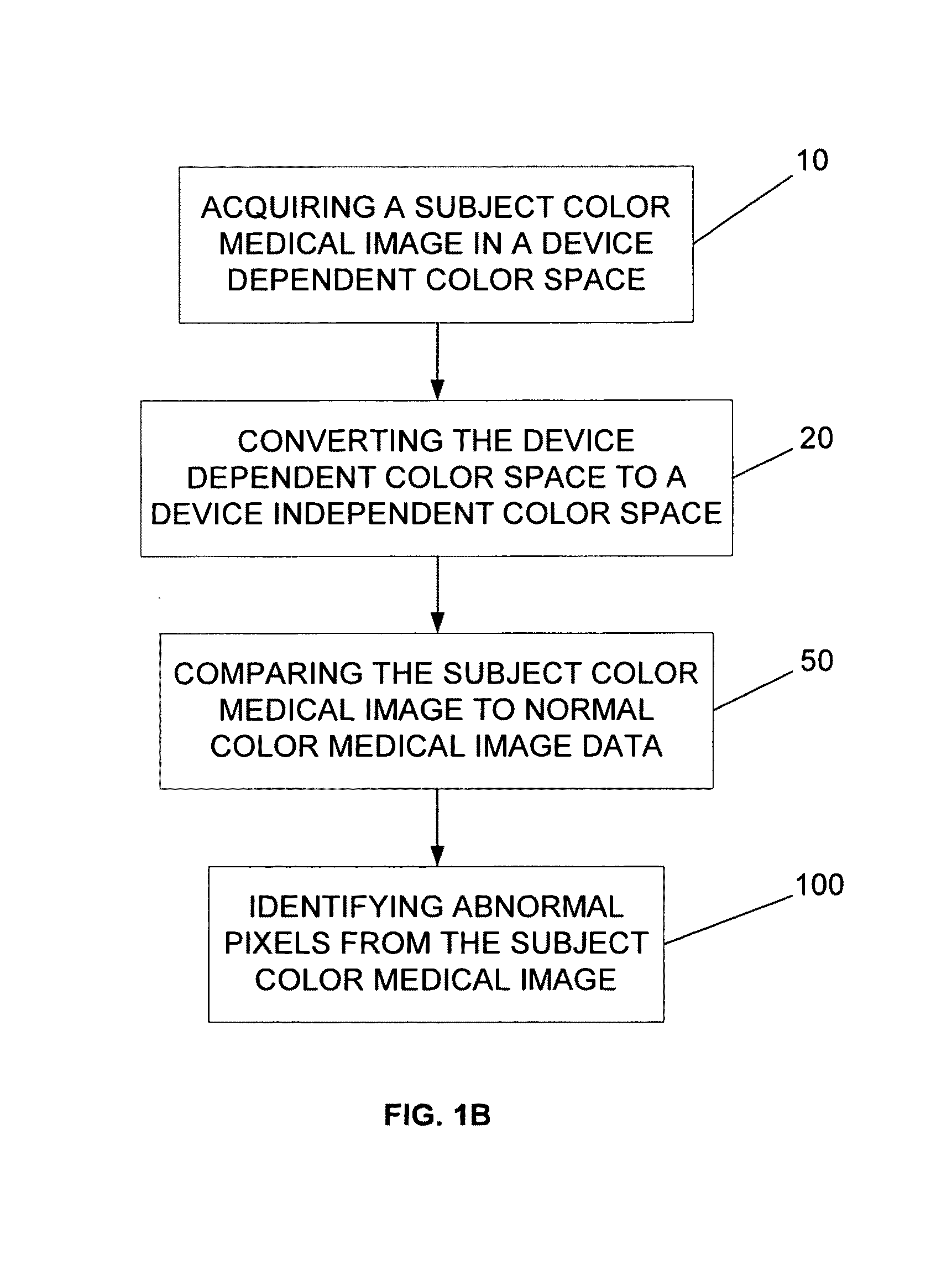

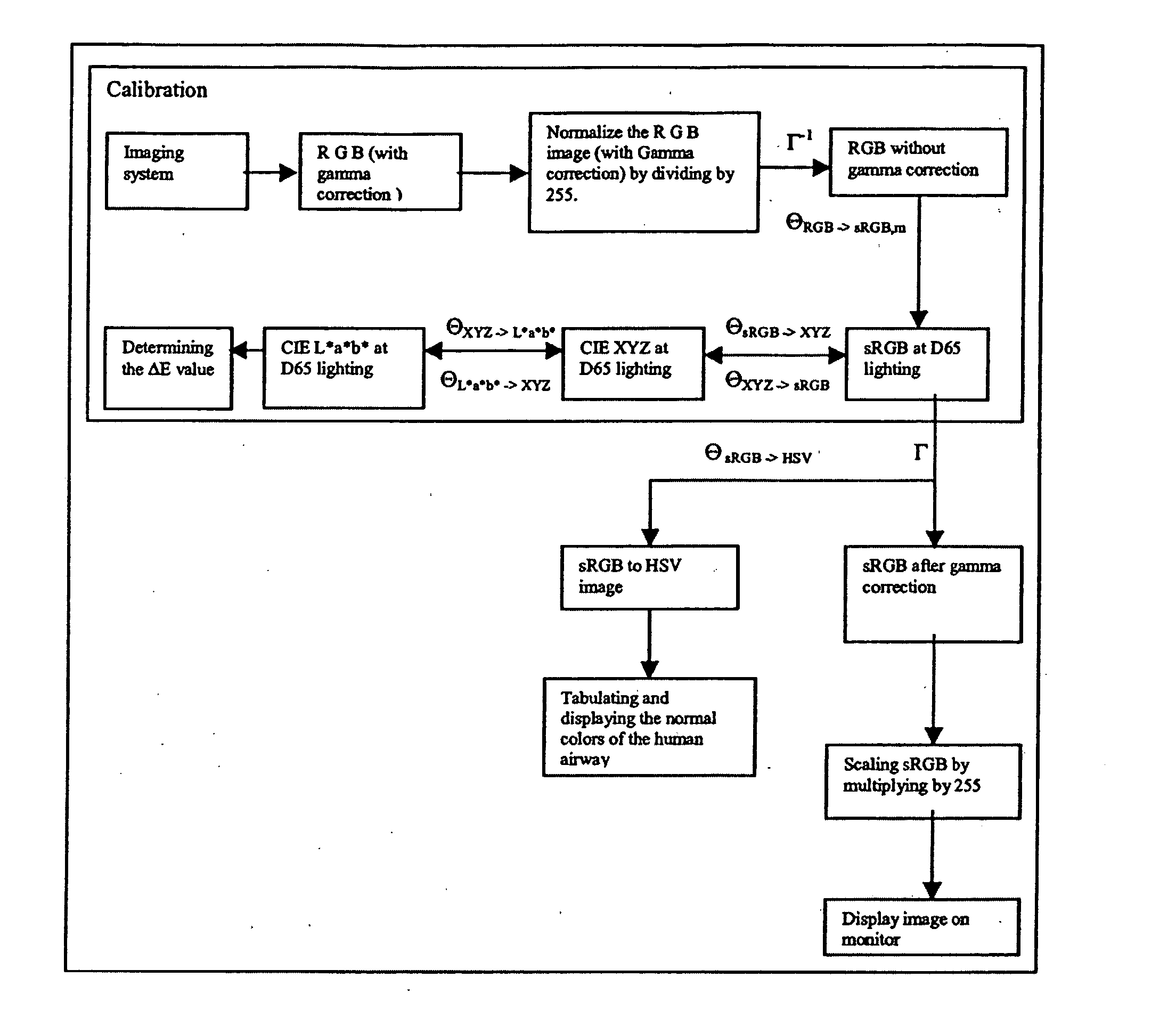

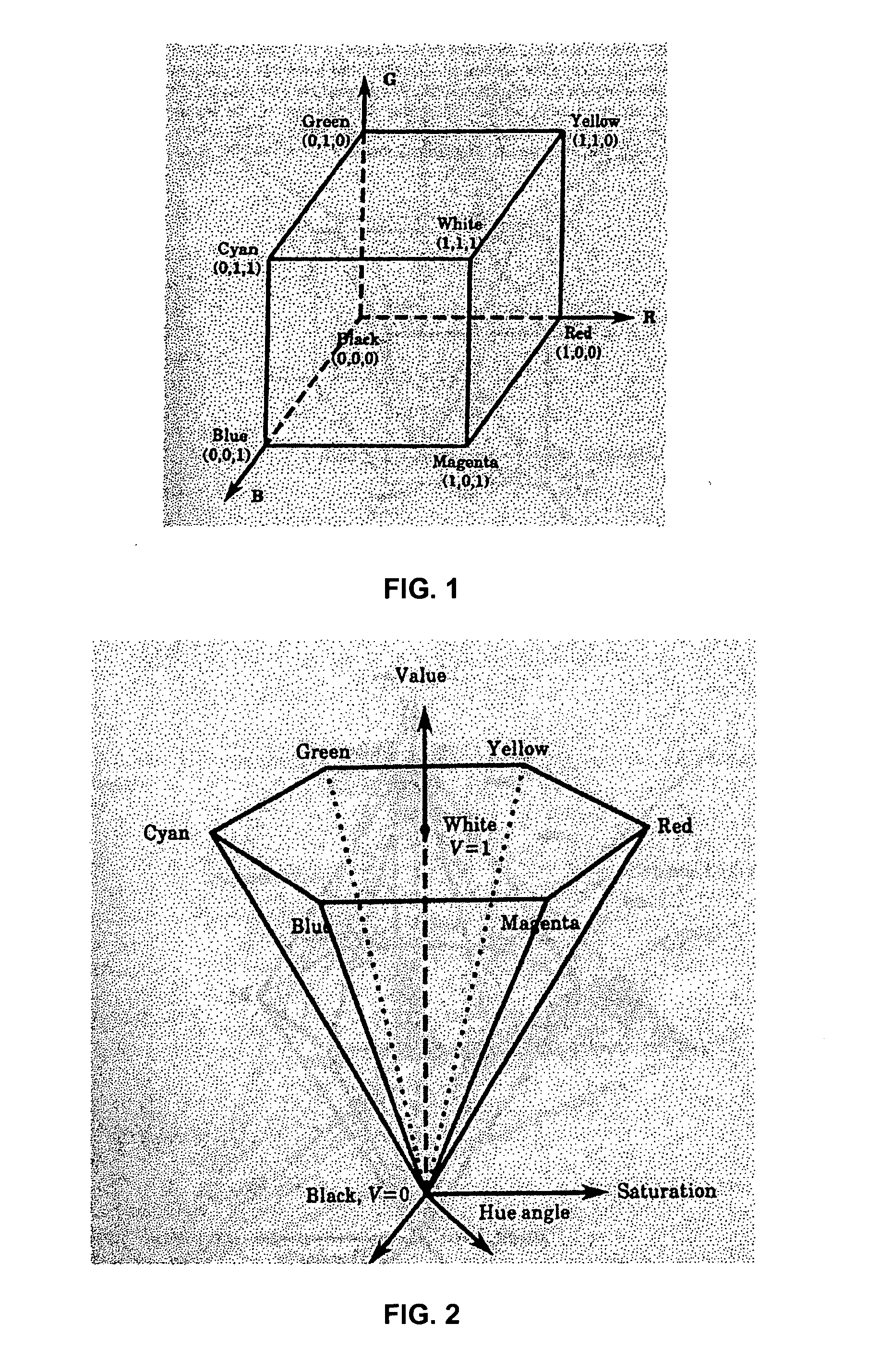

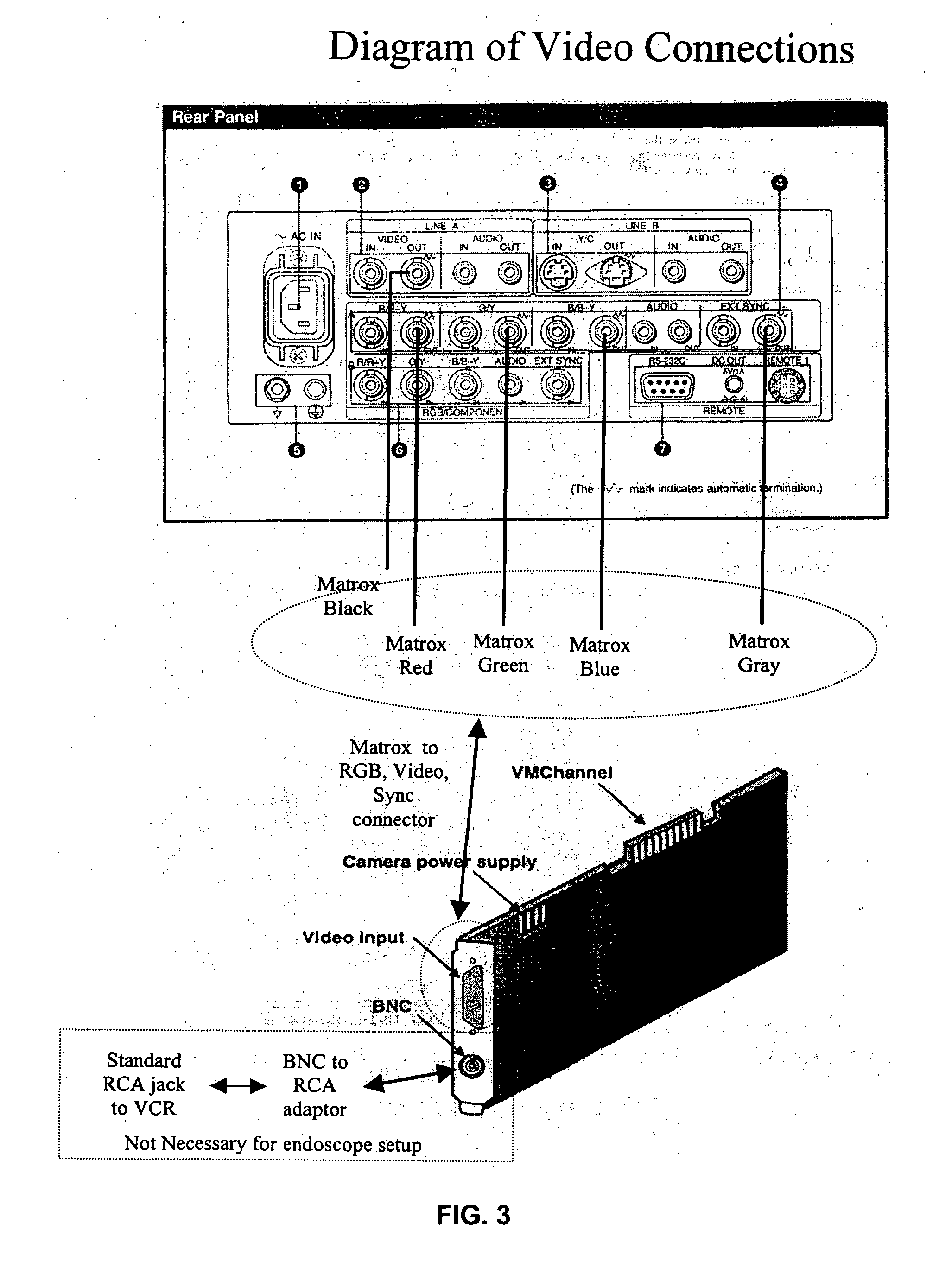

Methods and devices useful for analyzing color medical images

InactiveUS7613335B2Image enhancementImage analysisPattern recognitionApplication-specific integrated circuit

In one embodiment, a method that includes comparing a subject color medical image to normal color medical image data; and identifying abnormal pixels from the subject color medical image. Another embodiment includes a computer readable medium comprising machine readable instructions for implementing one or more steps of that method. Another embodiment includes a device that has a field programmable gate array configured to perform one or more of the steps of that method. Another embodiment includes a device that has an application specific integrated circuit configured to perform one or more of the steps of that method.

Owner:UNIV OF IOWA RES FOUND

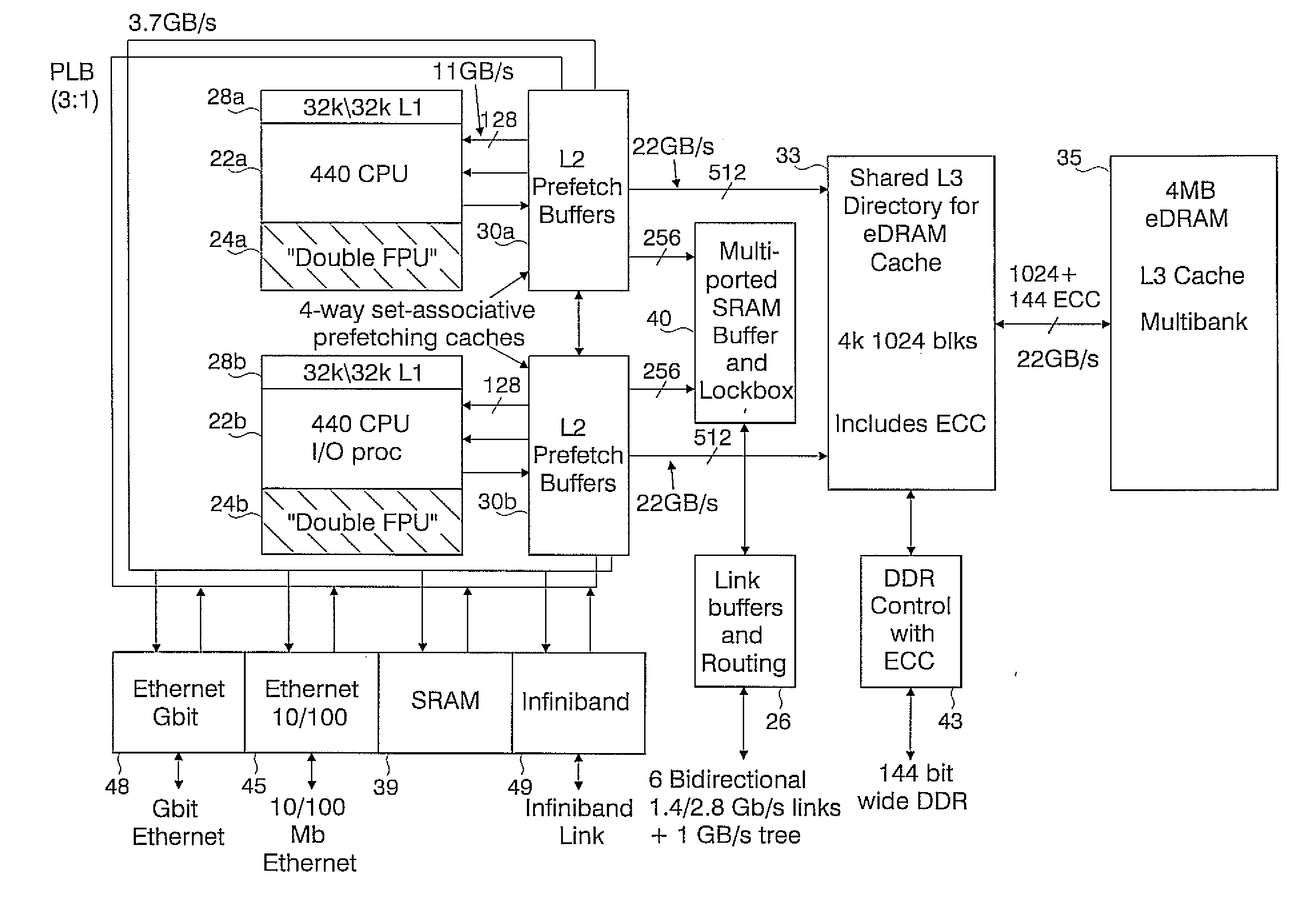

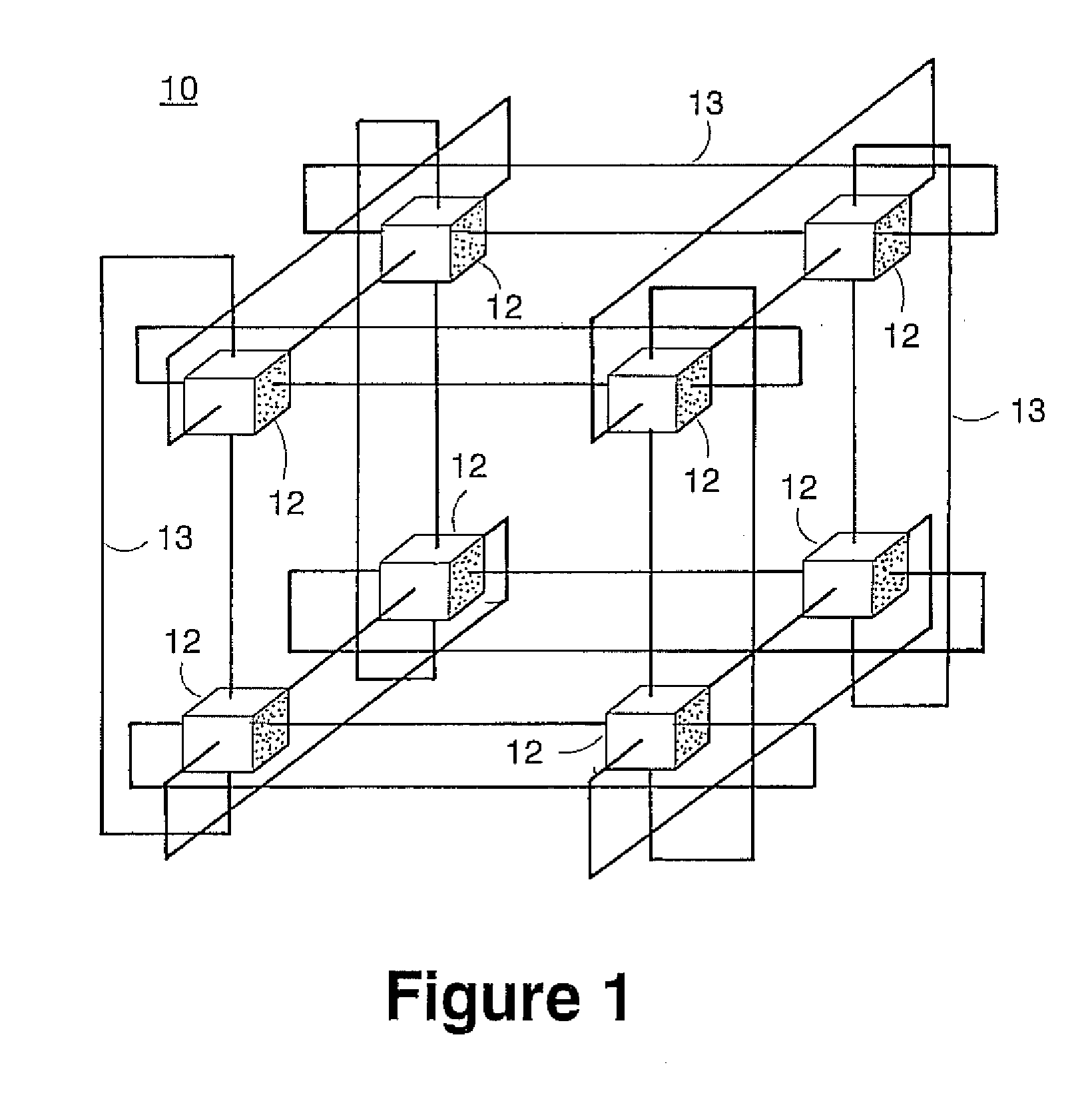

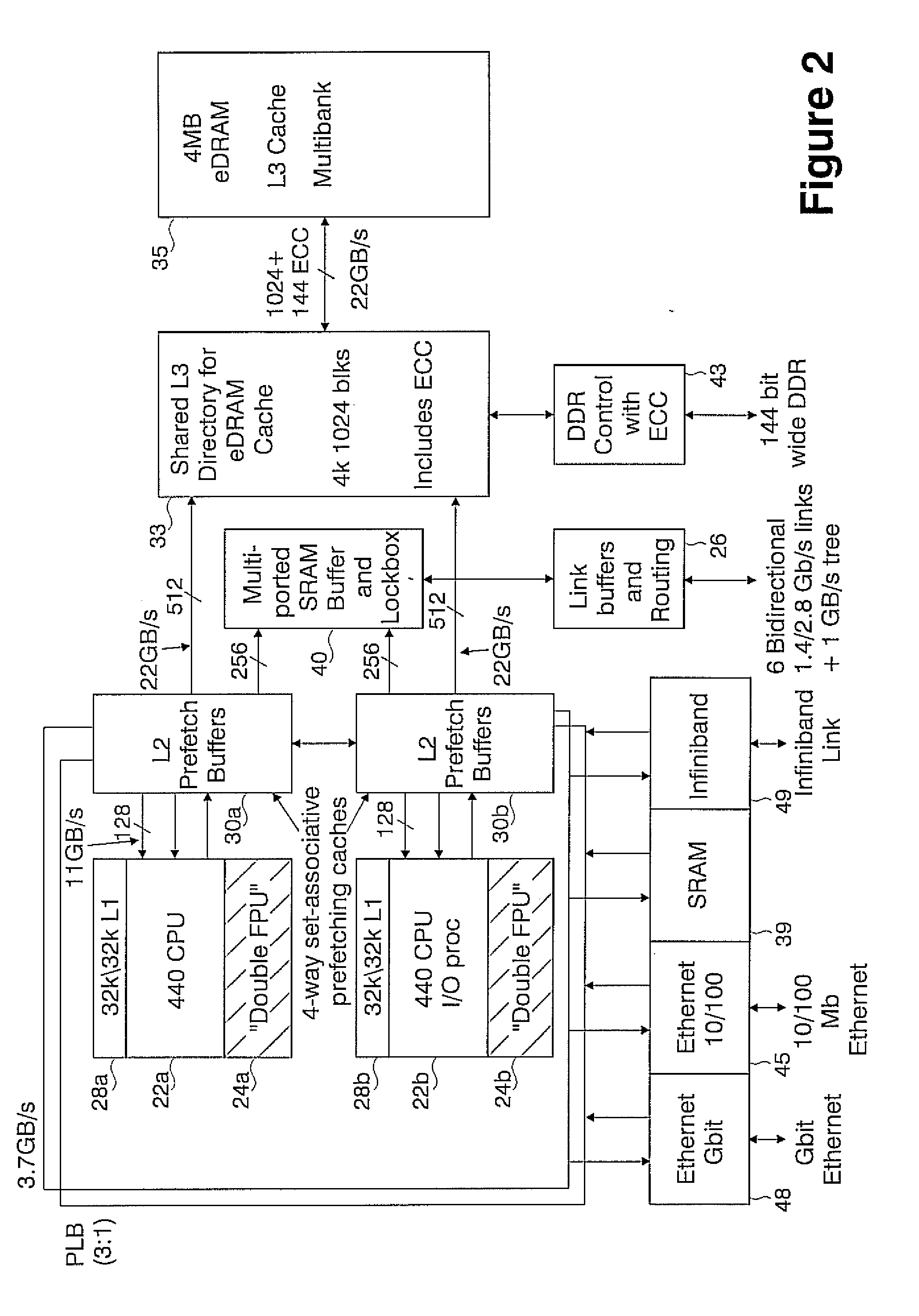

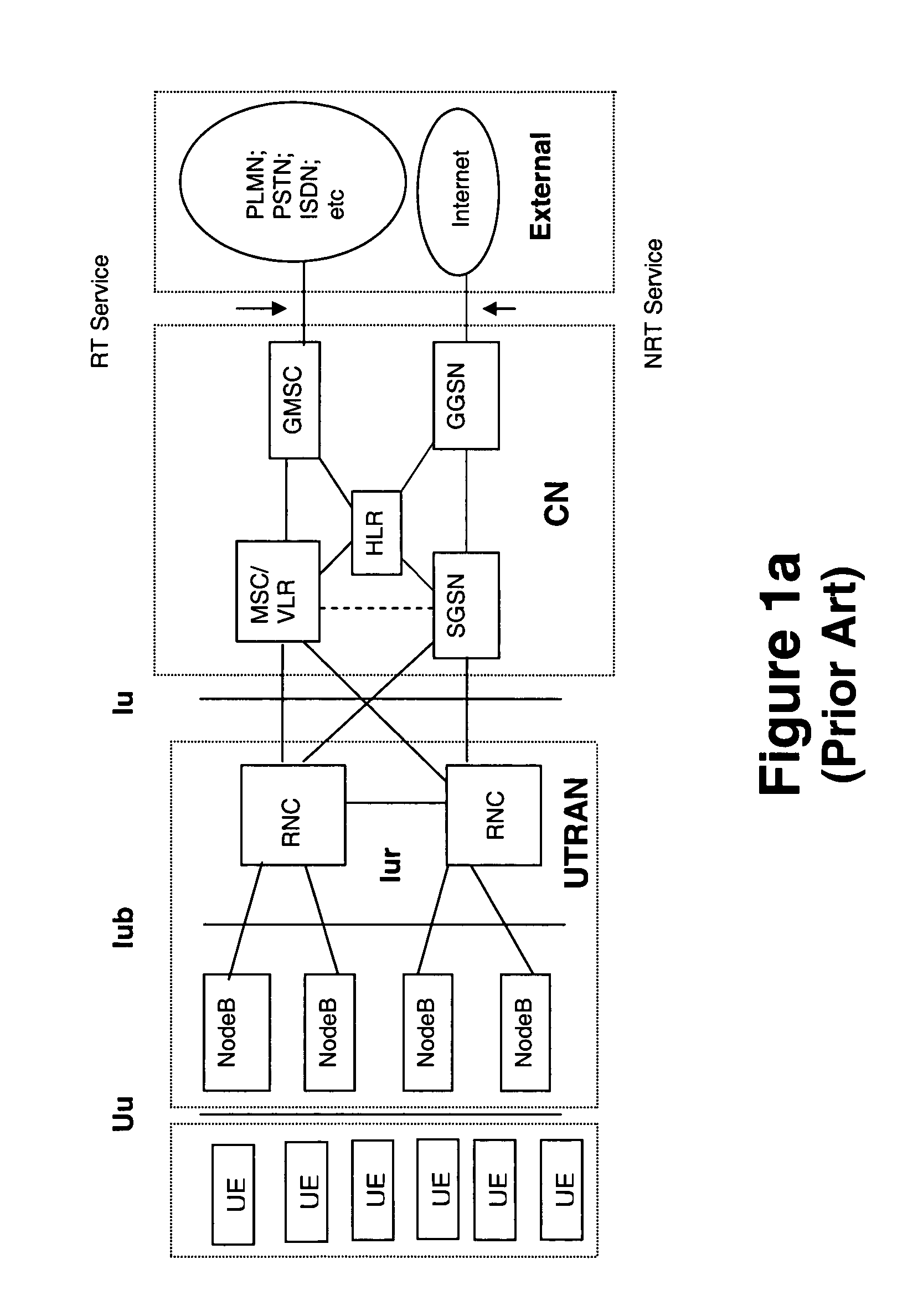

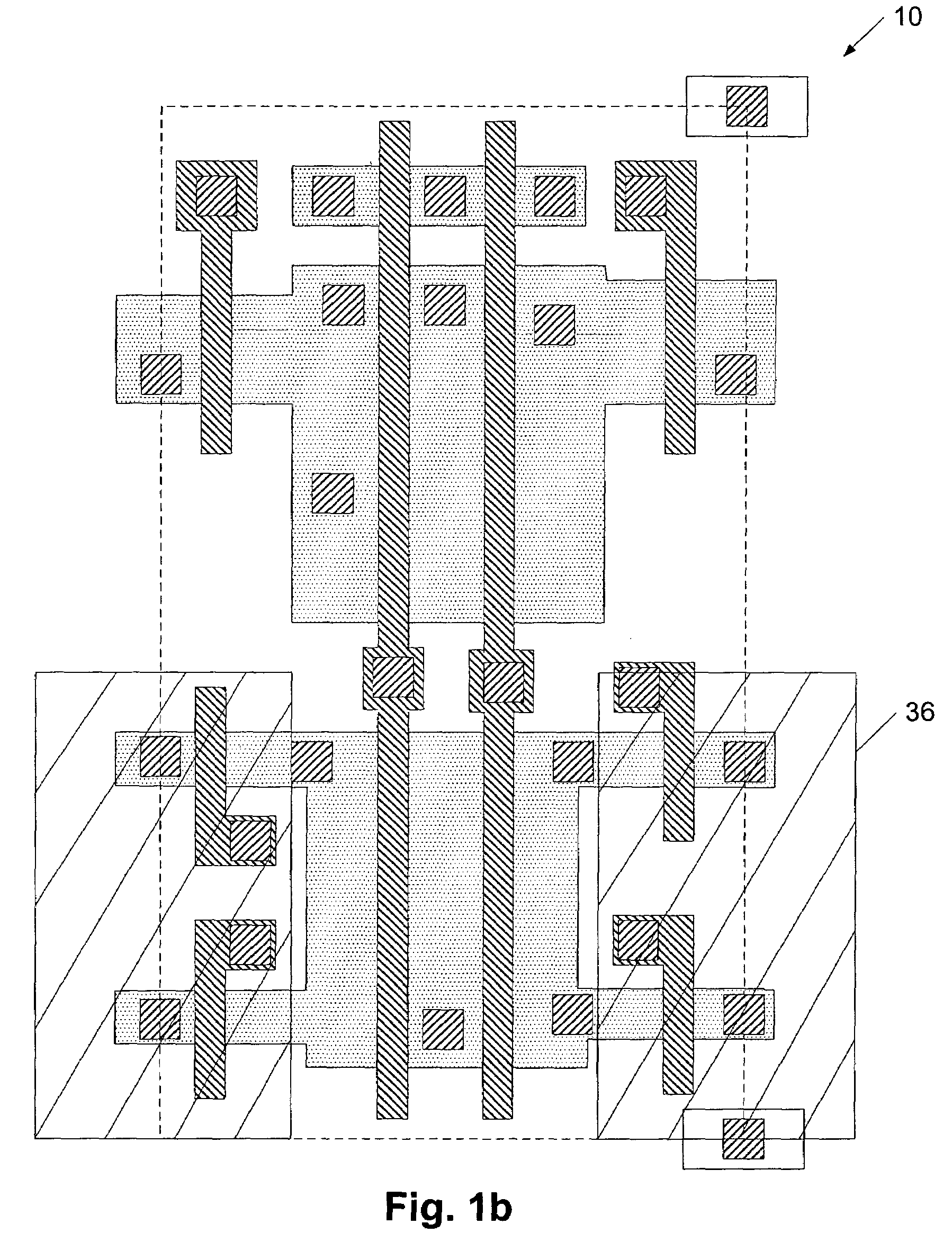

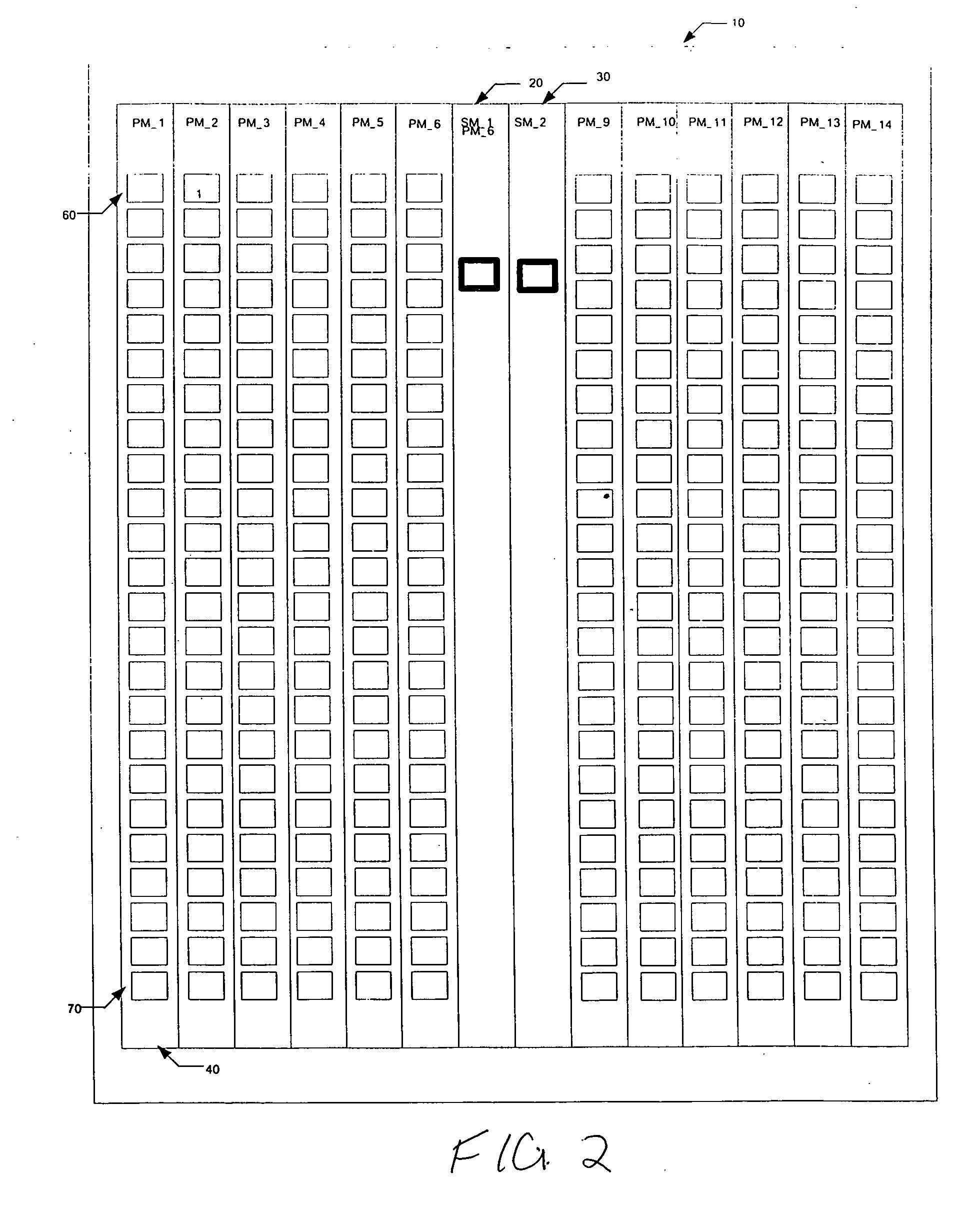

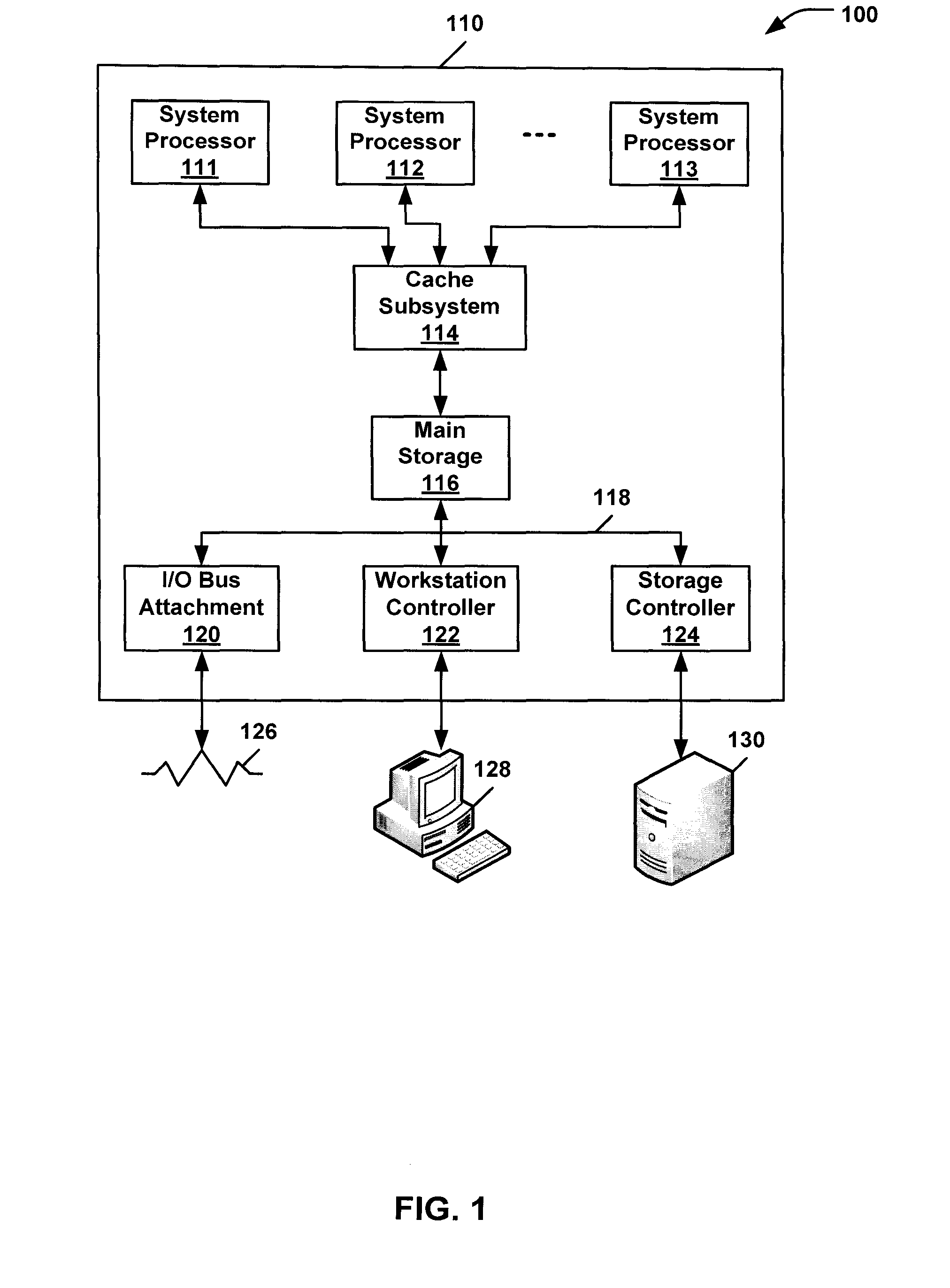

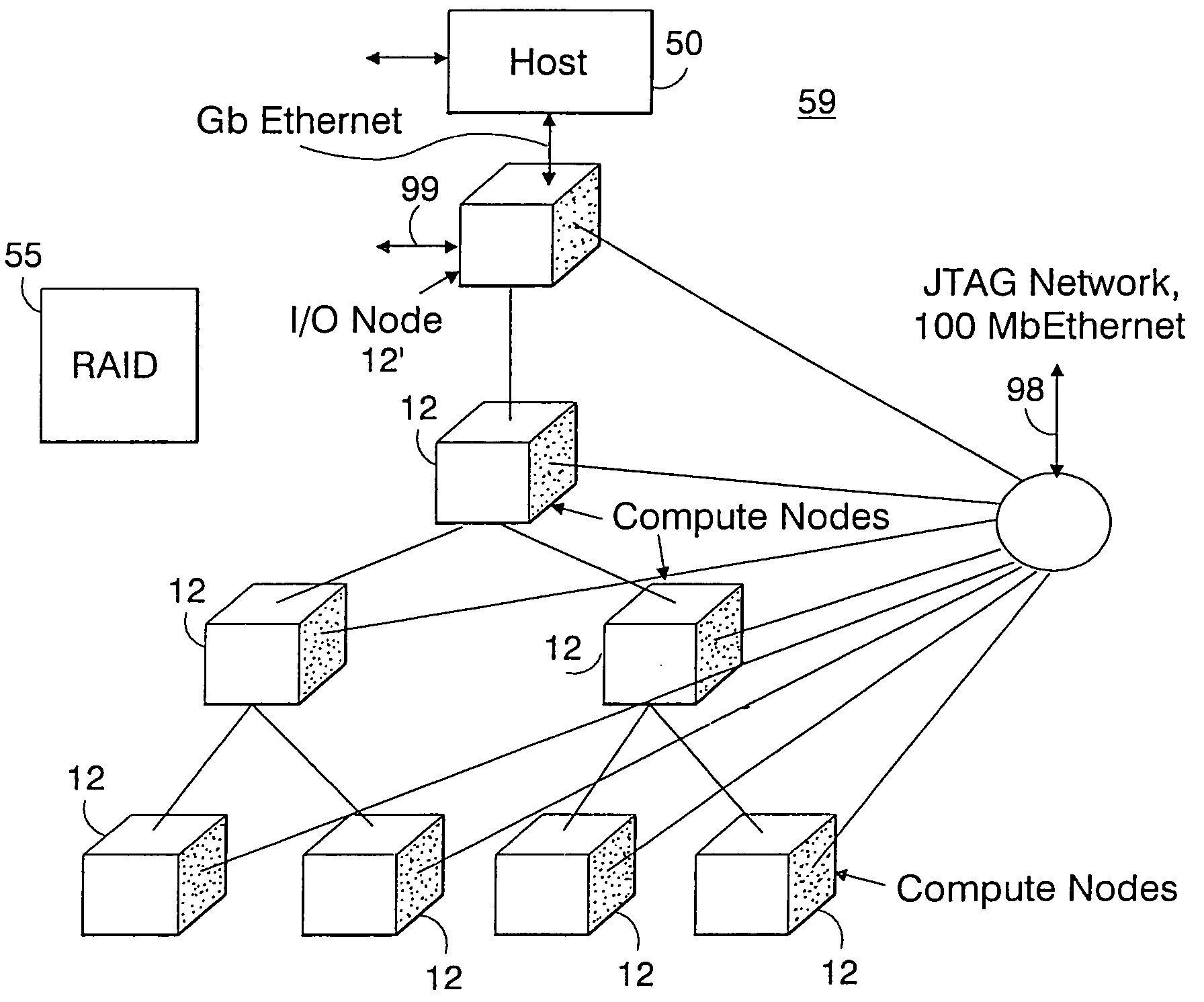

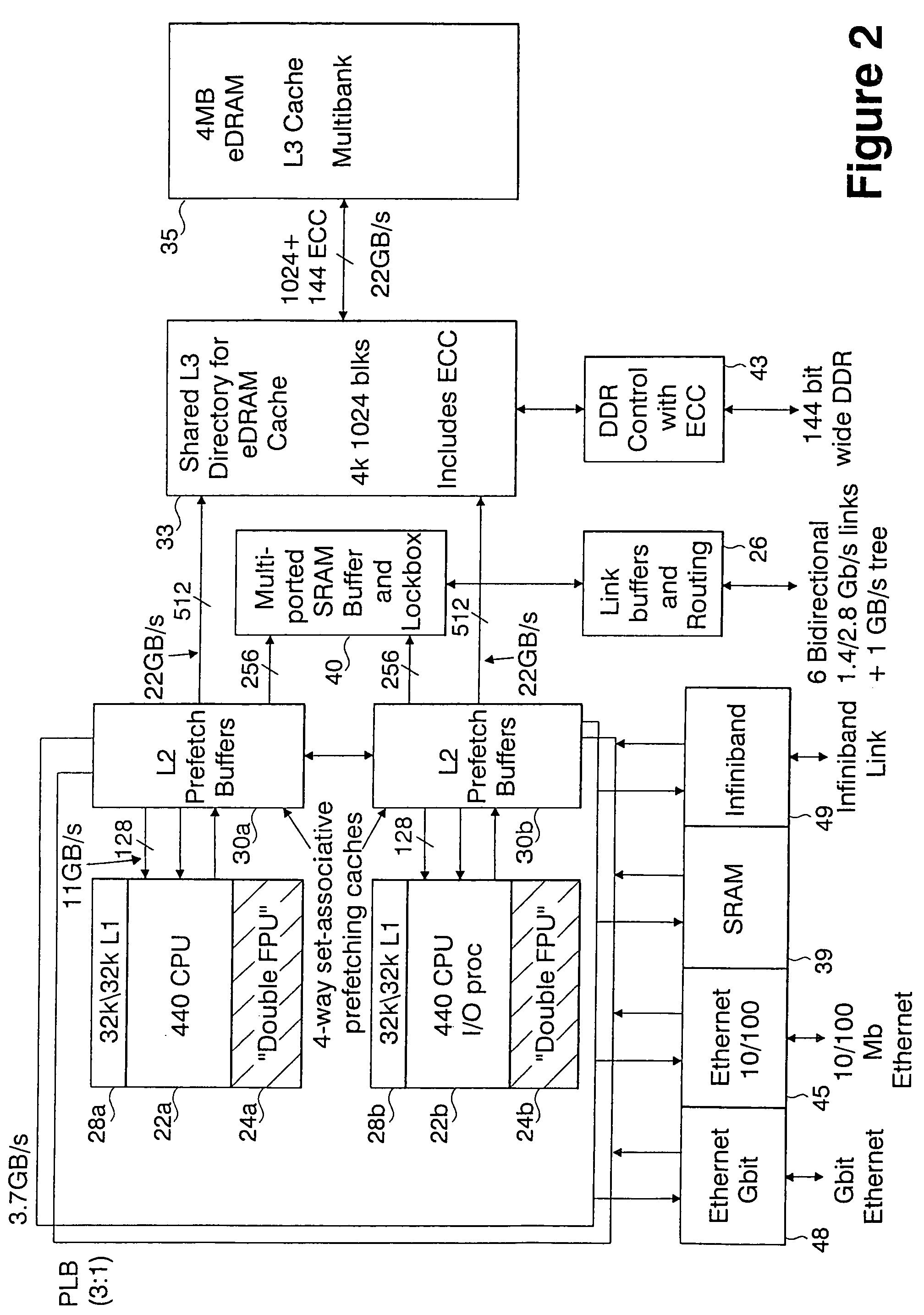

Massively parallel supercomputer

InactiveUS7555566B2Massive level of scalabilityUnprecedented level of scalabilityError preventionProgram synchronisationPacket communicationSupercomputer

A novel massively parallel supercomputer of hundreds of teraOPS-scale includes node architectures based upon System-On-a-Chip technology, i.e., each processing node comprises a single Application Specific Integrated Circuit (ASIC). Within each ASIC node is a plurality of processing elements each of which consists of a central processing unit (CPU) and plurality of floating point processors to enable optimal balance of computational performance, packaging density, low cost, and power and cooling requirements. The plurality of processors within a single node may be used individually or simultaneously to work on any combination of computation or communication as required by the particular algorithm being solved or executed at any point in time. The system-on-a-chip ASIC nodes are interconnected by multiple independent networks that optimally maximizes packet communications throughput and minimizes latency. In the preferred embodiment, the multiple networks include three high-speed networks for parallel algorithm message passing including a Torus, Global Tree, and a Global Asynchronous network that provides global barrier and notification functions. These multiple independent networks may be collaboratively or independently utilized according to the needs or phases of an algorithm for optimizing algorithm processing performance. For particular classes of parallel algorithms, or parts of parallel calculations, this architecture exhibits exceptional computational performance, and may be enabled to perform calculations for new classes of parallel algorithms. Additional networks are provided for external connectivity and used for Input / Output, System Management and Configuration, and Debug and Monitoring functions. Special node packaging techniques implementing midplane and other hardware devices facilitates partitioning of the supercomputer in multiple networks for optimizing supercomputing resources.

Owner:IBM CORP

Methods and devices useful for analyzing color medical images

InactiveUS20050036668A1Image enhancementImage analysisPattern recognitionApplication-specific integrated circuit

In one embodiment, a method that includes comparing a subject color medical image to normal color medical image data; and identifying abnormal pixels from the subject color medical image. Another embodiment includes a computer readable medium comprising machine readable instructions for implementing one or more steps of that method. Another embodiment includes a device that has a field programmable gate array configured to perform one or more of the steps of that method. Another embodiment includes a device that has an application specific integrated circuit configured to perform one or more of the steps of that method.

Owner:UNIV OF IOWA RES FOUND

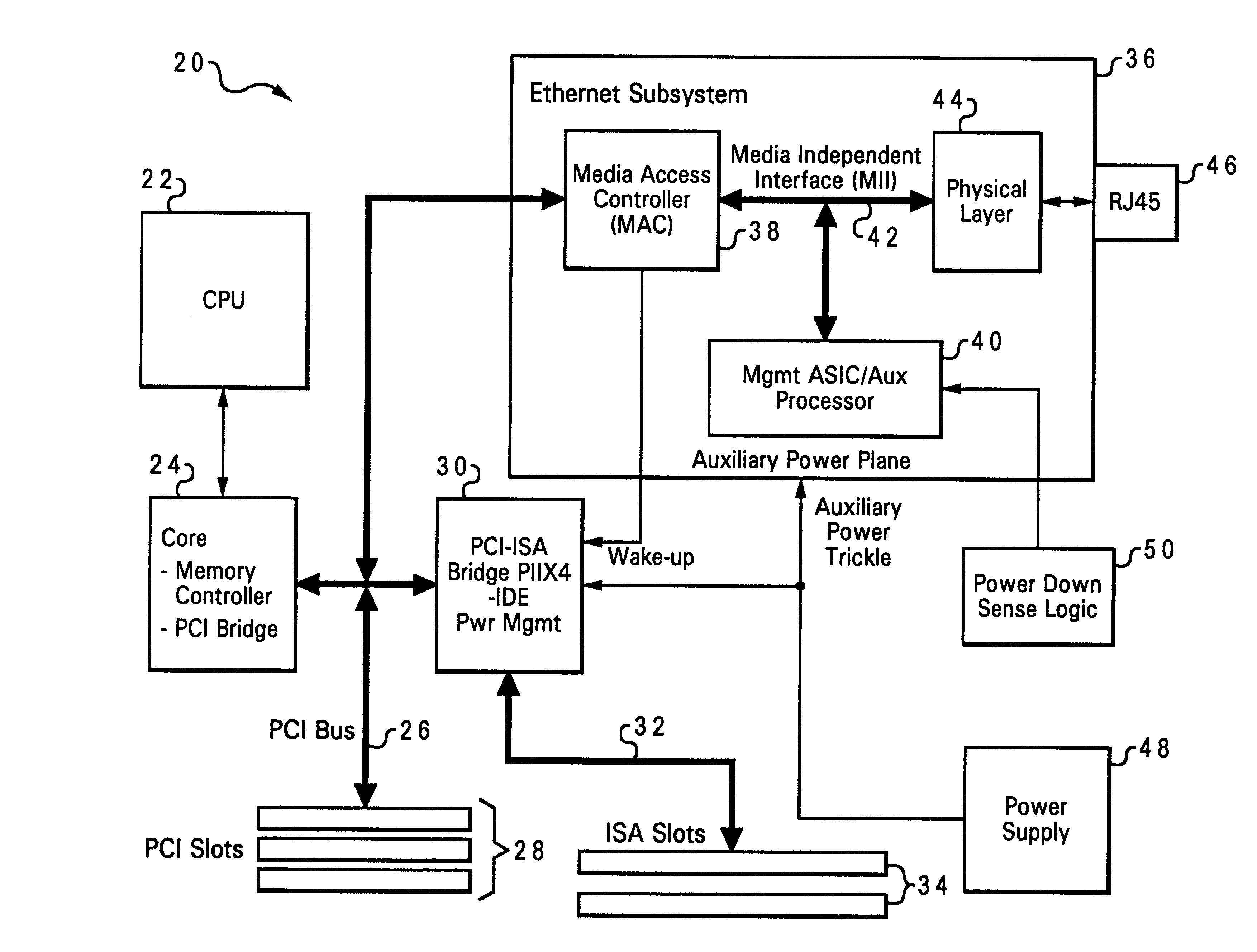

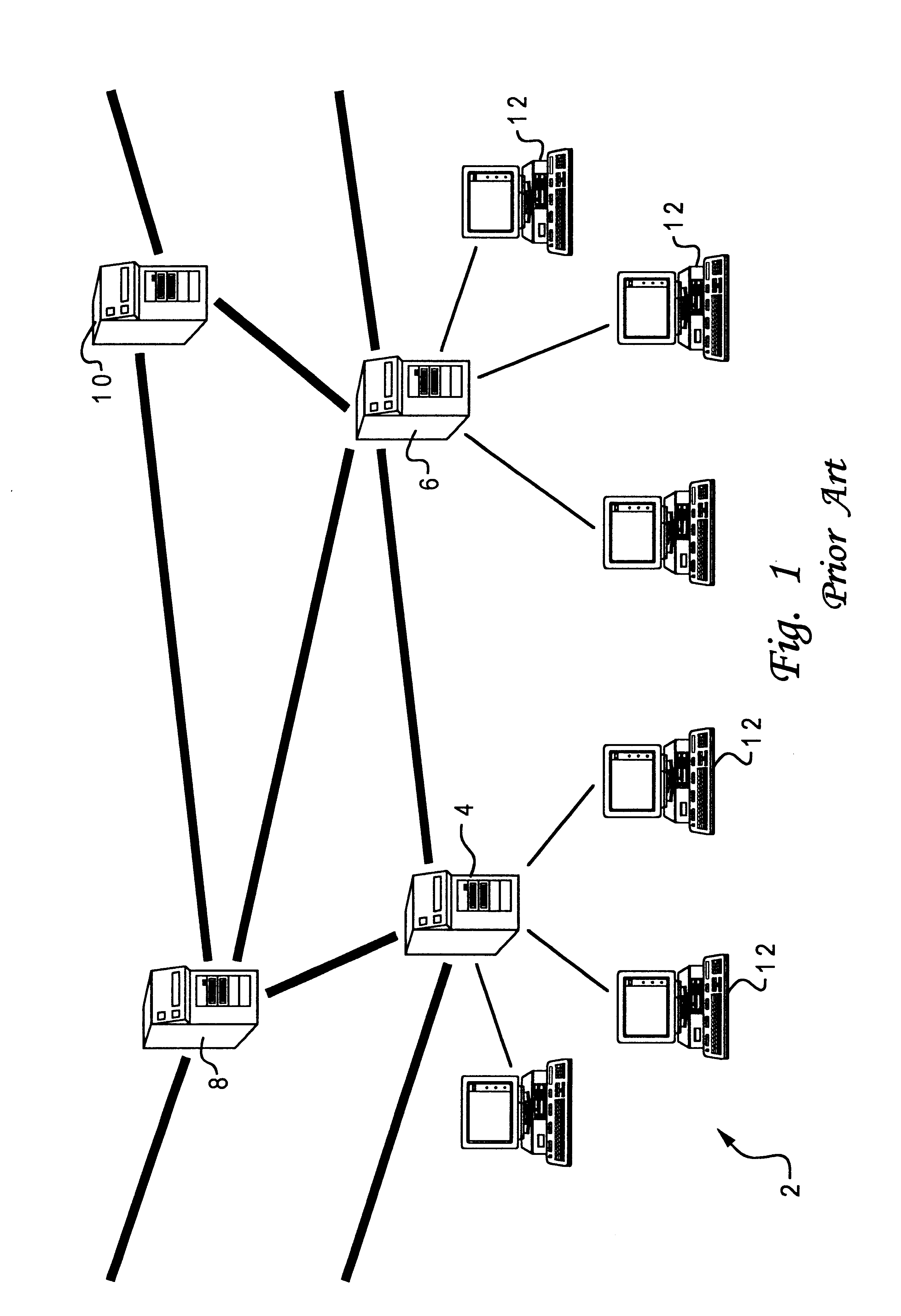

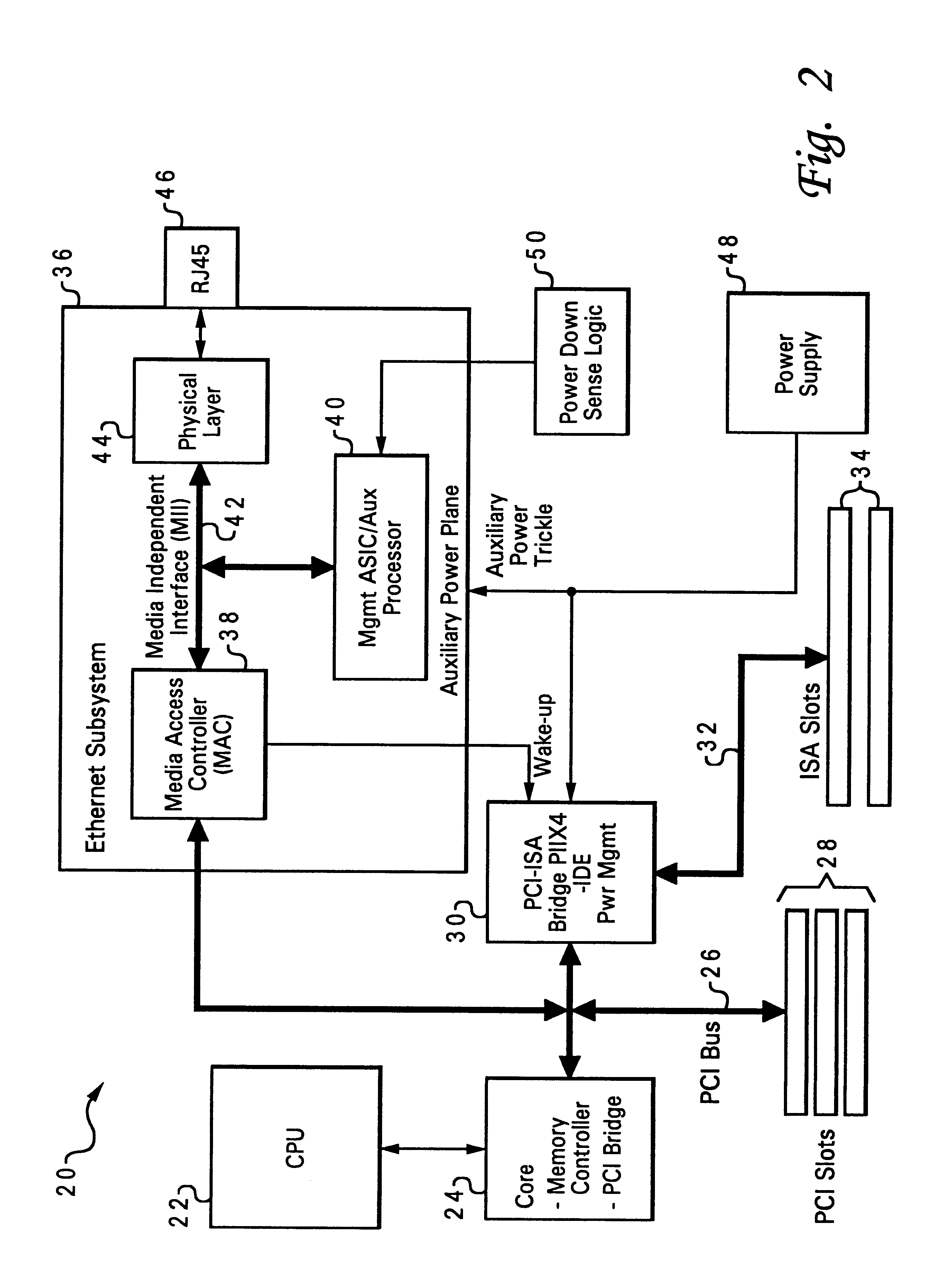

Alert mechanism for service interruption from power loss

A method of monitoring a computer system, by detecting a power interruption to the computer system, using power down sense logic, and generating an alert associated with the power interruption. When the computer system is networked, the alert is transmitted to a remote server. The power down sense logic sends a message to an auxiliary processor (which may be an application-specific integrated circuit, or ASIC), and the auxiliary processor creates a network transmission packet indicating that the computer system is losing power. The auxiliary processor may allow selection of a transmission mode such as uni-cast transmission, multi-cast transmission, or broadcast transmission. A common power supply provides a first power signal to the computer system, and a second power signal to the power down sense logic and auxiliary processor, and maintains the second power signal for a longer duration than the first power signal upon removal of a power source for the power supply, sufficient to carry out the sending of the message from the power down sense logic and the creating of the network alert.

Owner:LENOVO PC INT

Tunable microwave devices with auto-adjusting matching circuit

InactiveUS20110063042A1Realize automatic adjustmentMultiple-port networksAmplifiers wit coupling networksHigh pressureDigital signal

An embodiment of the present invention provides an apparatus, comprising an input port and a dynamic impedance matching network capable of determining a mismatch at the input port and dynamically changing the RF match by using at least one matching element that includes at least one voltage tunable dielectric capacitor. The matching network may be a “Pi”, a “T”, or “ladder” type network and the apparatus may further comprise at least one directional coupler capable of signal collection by sampling a portion of an incident signal, a reflected signal or both. In an embodiment of the present invention, the apparatus may also include a control and power control & logic unit (PC LU) to convert input analog signals into digital signals and sensing VSWR phase and magnitude and processing the digital signals using an algorithm to give it a voltage value and wherein the voltage values may be compared to values coming from the coupler and once compared and matched, the values may be passed to a Hi Voltage Application Specific Integrated Circuit (HV ASIC) to transfer and distribute compensatory voltages to the matching network elements. Additional embodiments are disclosed.

Owner:NXP USA INC

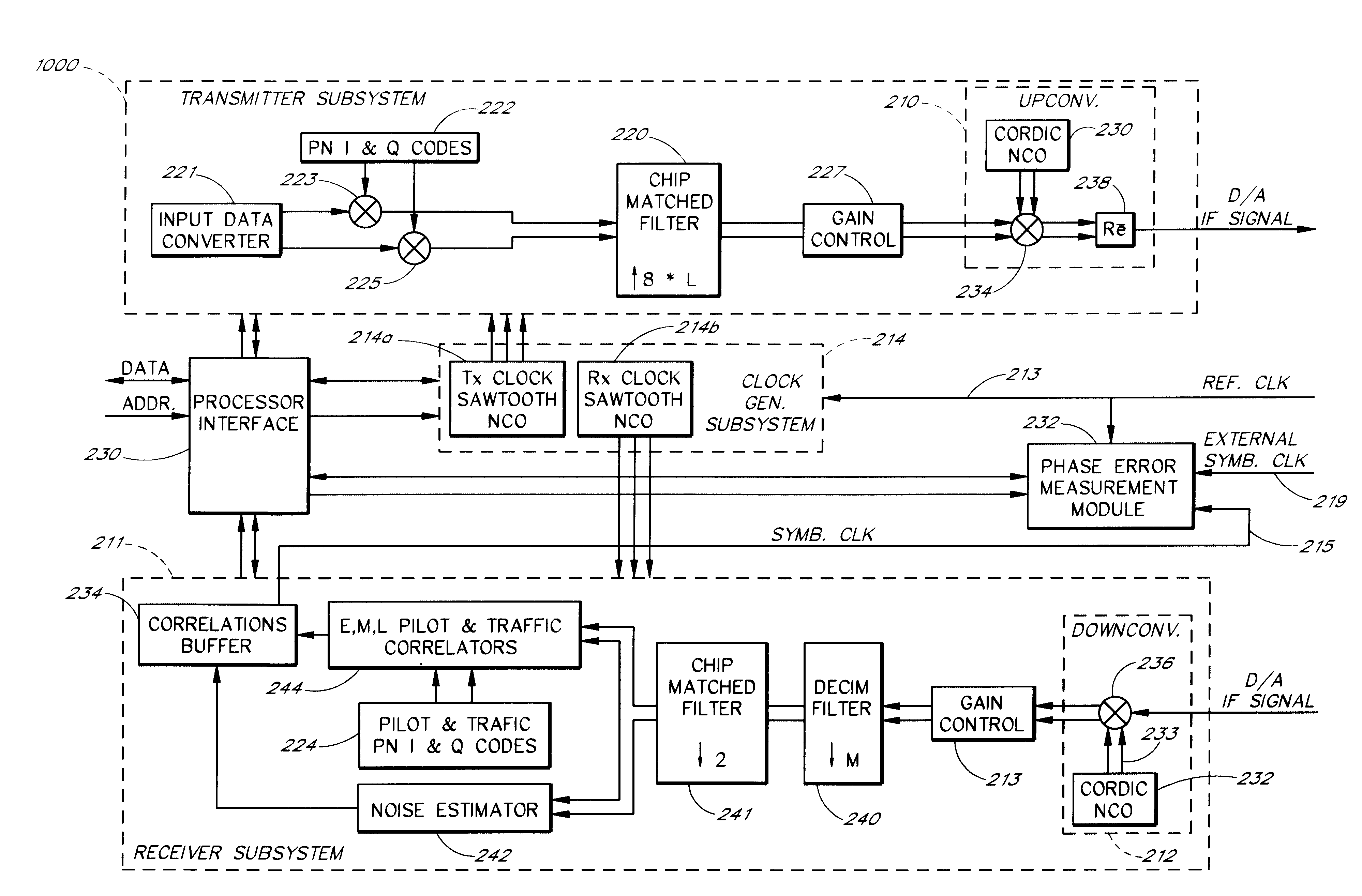

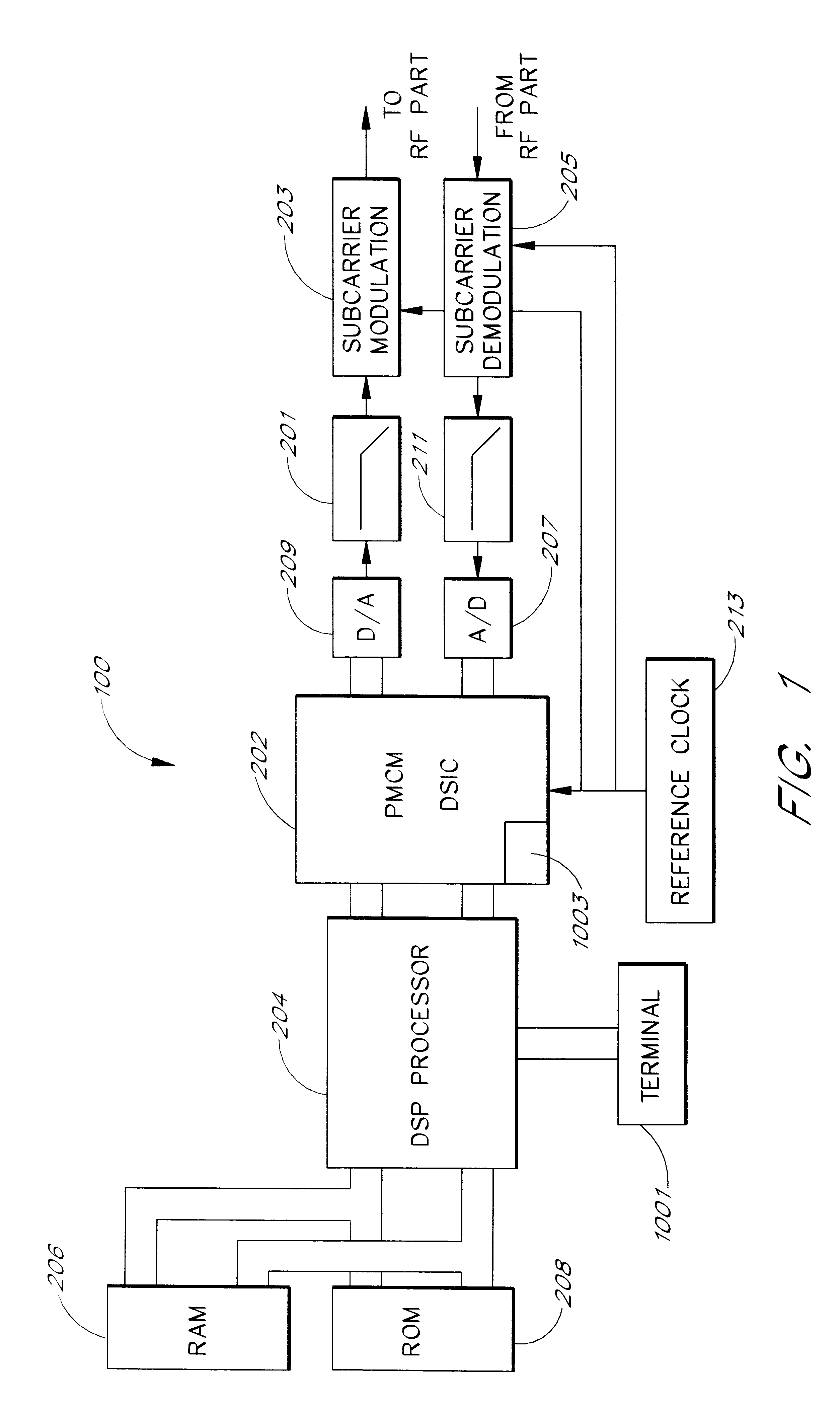

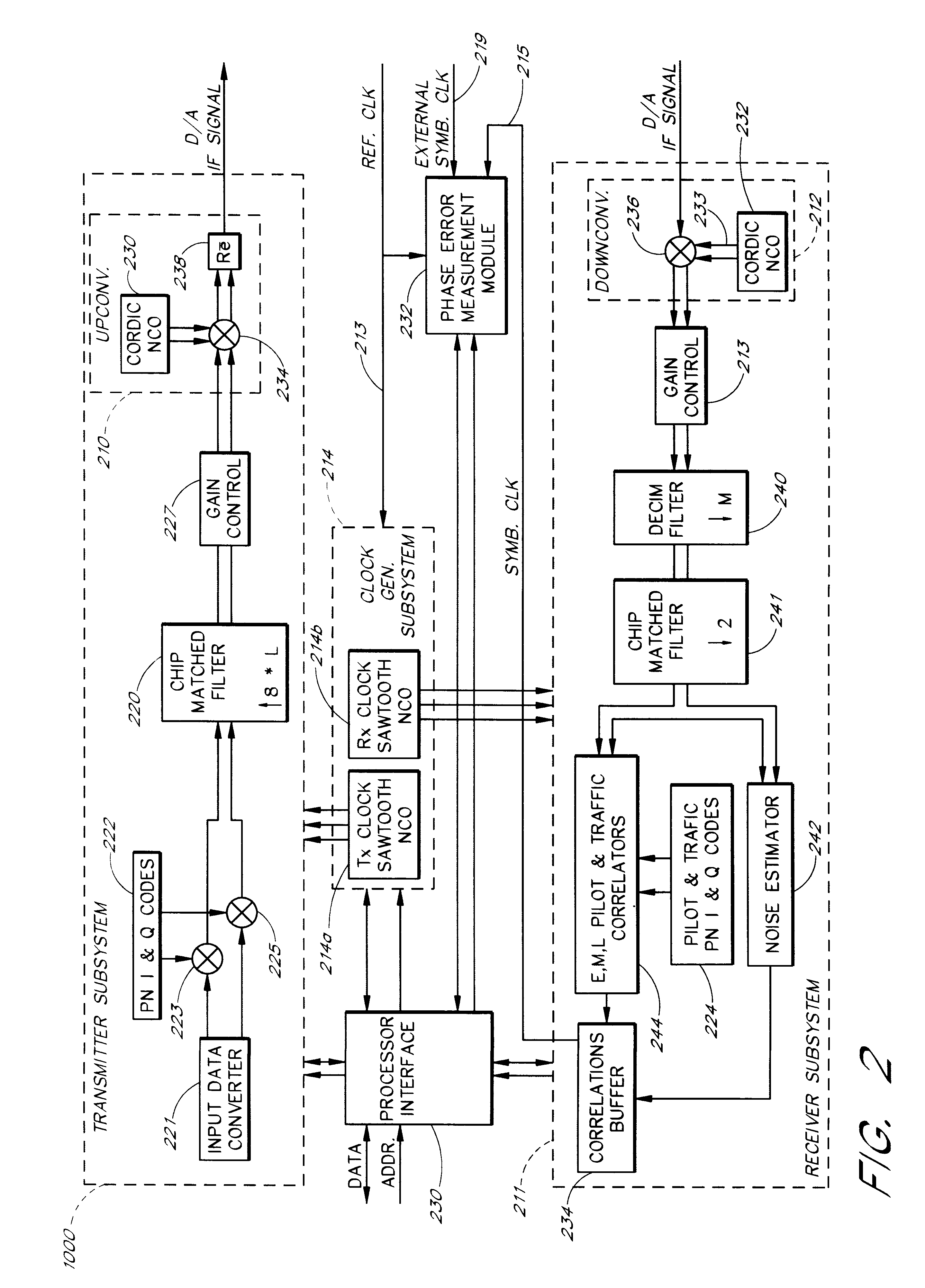

Programmable modem apparatus for transmitting and receiving digital data, design method and use method for the modem

InactiveUS6307877B1Cost-effectiveCost-effective customizationCAD circuit designRequirement analysisDigital dataModem device

The programmable modem for digital data of the present invention provides a highly programmable, digital modem implemented in an integrated circuit which can be customized to specific applications. The programmable modem uses spread spectrum techniques and is specifically programmable to alter the parameters of the modem to improve performance. The present invention also provides a systematic method and development kit to provide rapid customization of a modem for a particular application or for rapid specification of a high-performance application specific integrated circuit mode.

Owner:KEYSIGHT TECH +1

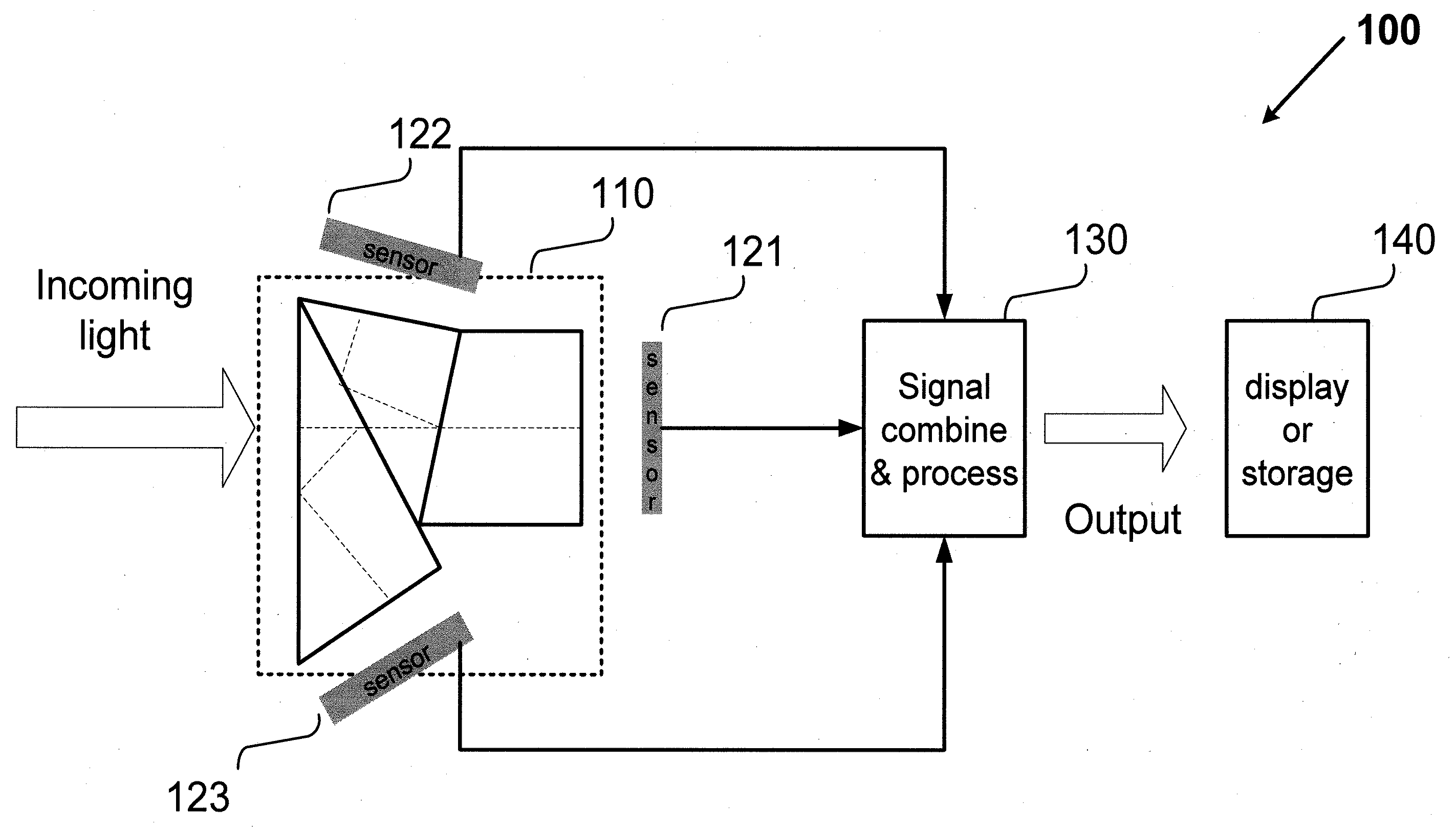

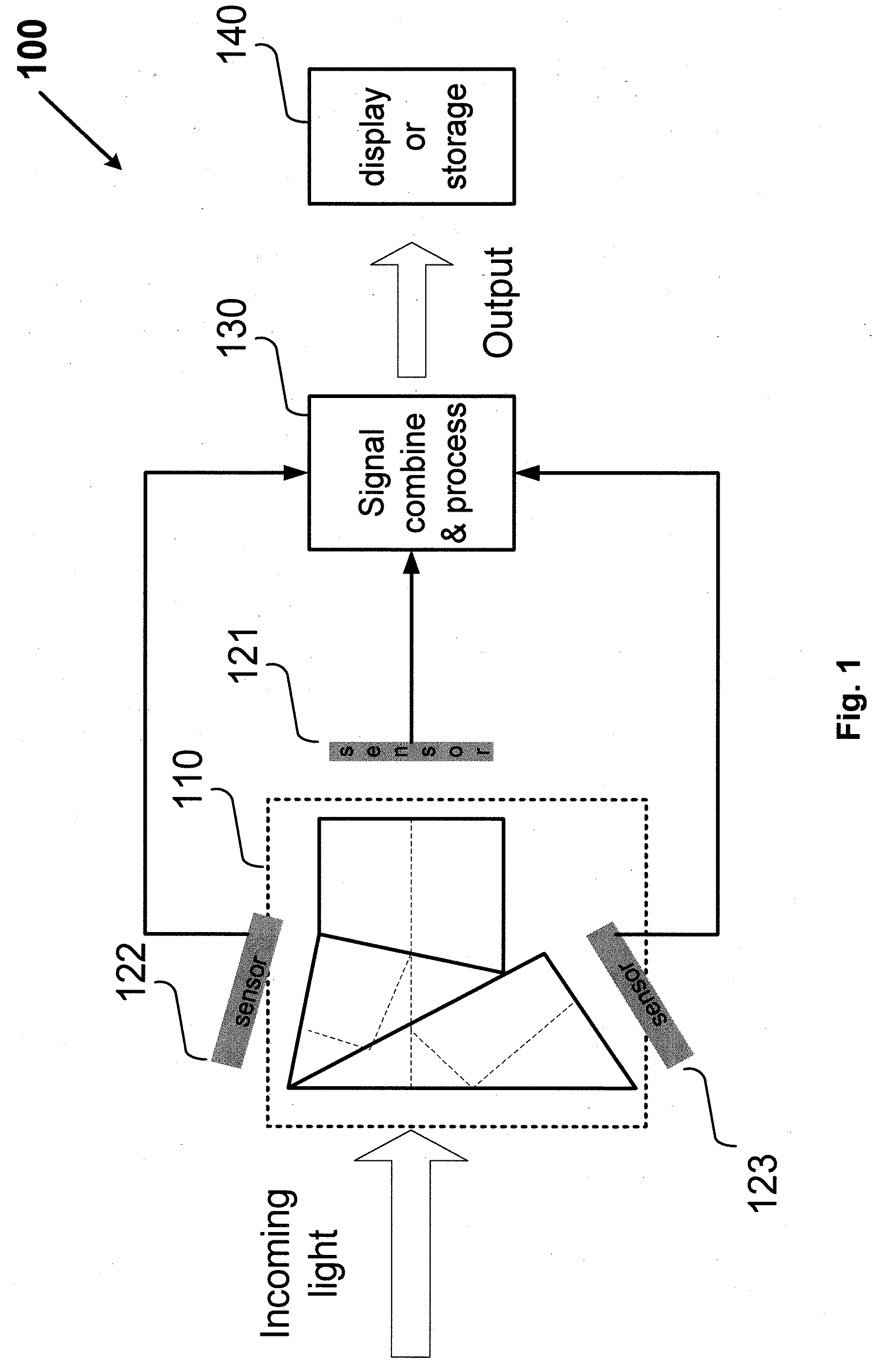

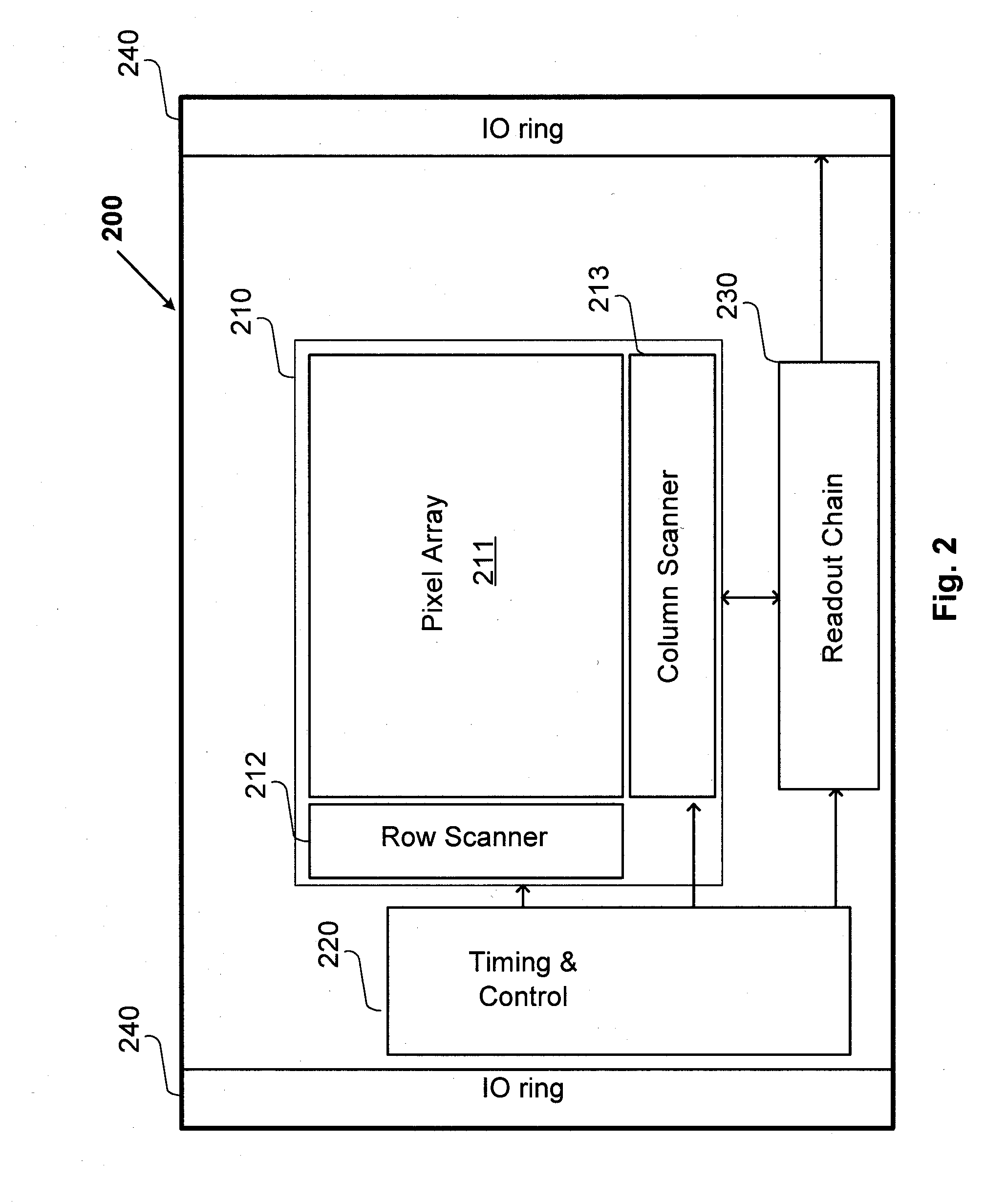

Camera system with multiple pixel arrays on a chip

ActiveUS20090135245A1Compact designEasy to makeTelevision system detailsPulse modulation television signal transmissionTelecommunications linkDigital storage

A camera system uses one or more image sensor IC chips each having multiple pixel arrays on the same semiconductor substrate (i.e., “multiple pixel arrays on a chip”). In one embodiment, such a camera system includes: (a) optical components that create multiple images in close physical proximity of each other (e.g., within a few millimeters or centimeters); and (b) a single sensor substrate (“chip”) containing multiple 2-dimensional pixel arrays that are aligned to capture these multiple images, so as to convert the multiple images into electrical signal. The pixel arrays can be manufactured using a CCD or a CMOS compatible process. For manufacturing reasons, such a chip is typically two centimeters or less on a side. However, large chips can also be made. Optional electronic components for further signal processing of the captured images may be formed either on the sensor chip (i.e., in a “system-on-a-chip” implementation), or in a separate back-end application specific integrated circuit (ASIC). In addition, digital storage components, display elements, and wired or wireless communication links may also be included in any suitable combination to allow review and further processing of the captured images.

Owner:CAPSO VISION INC

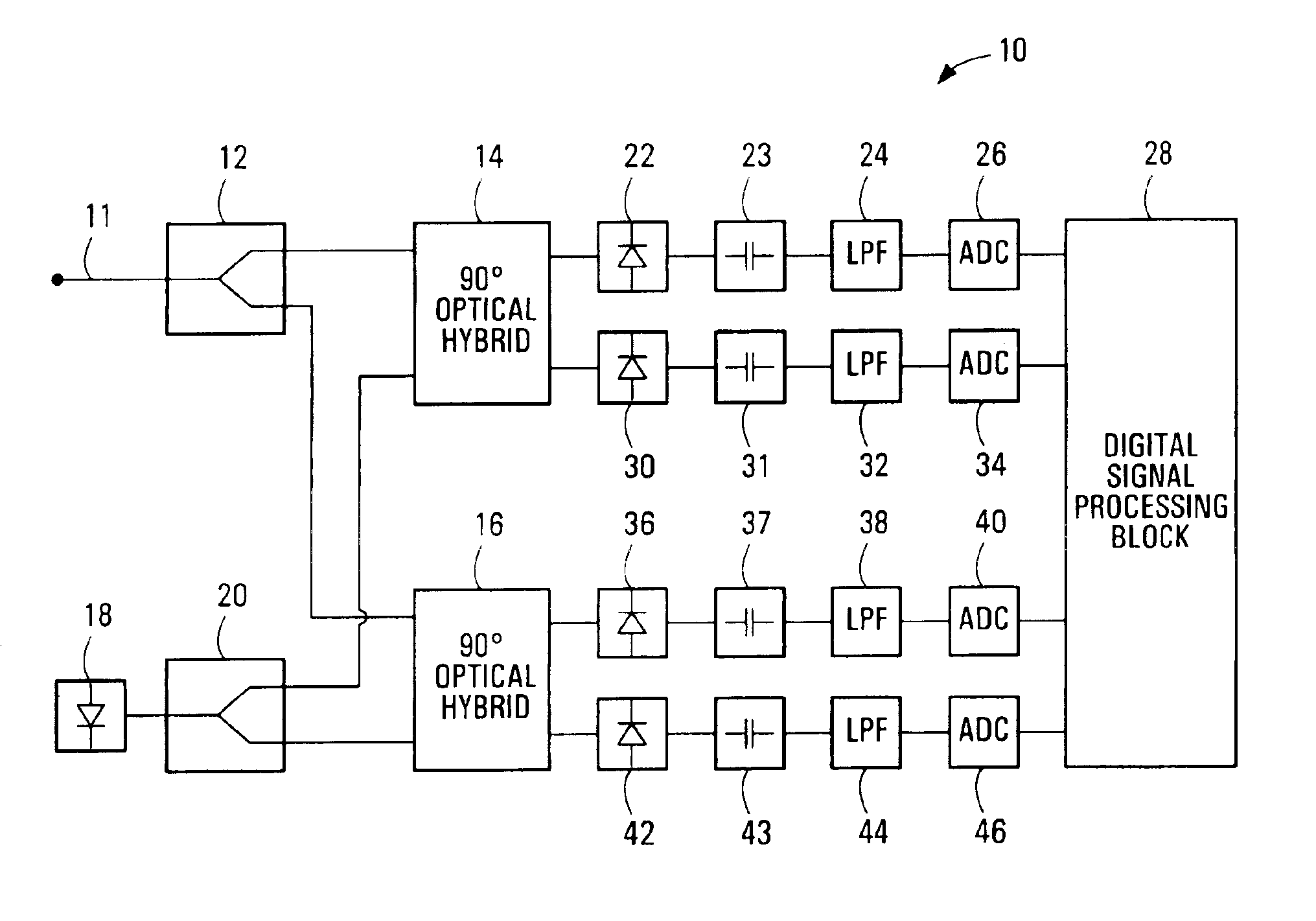

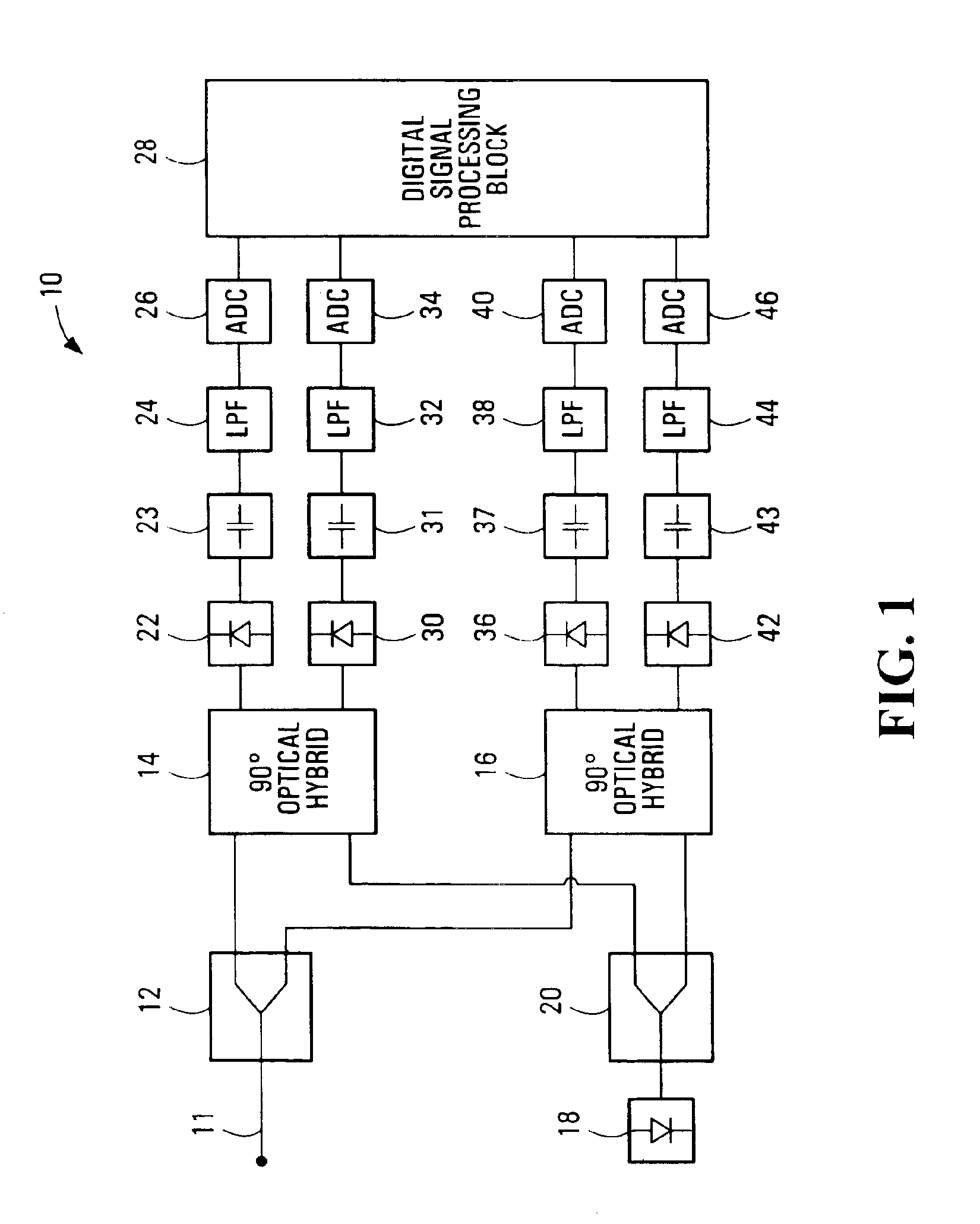

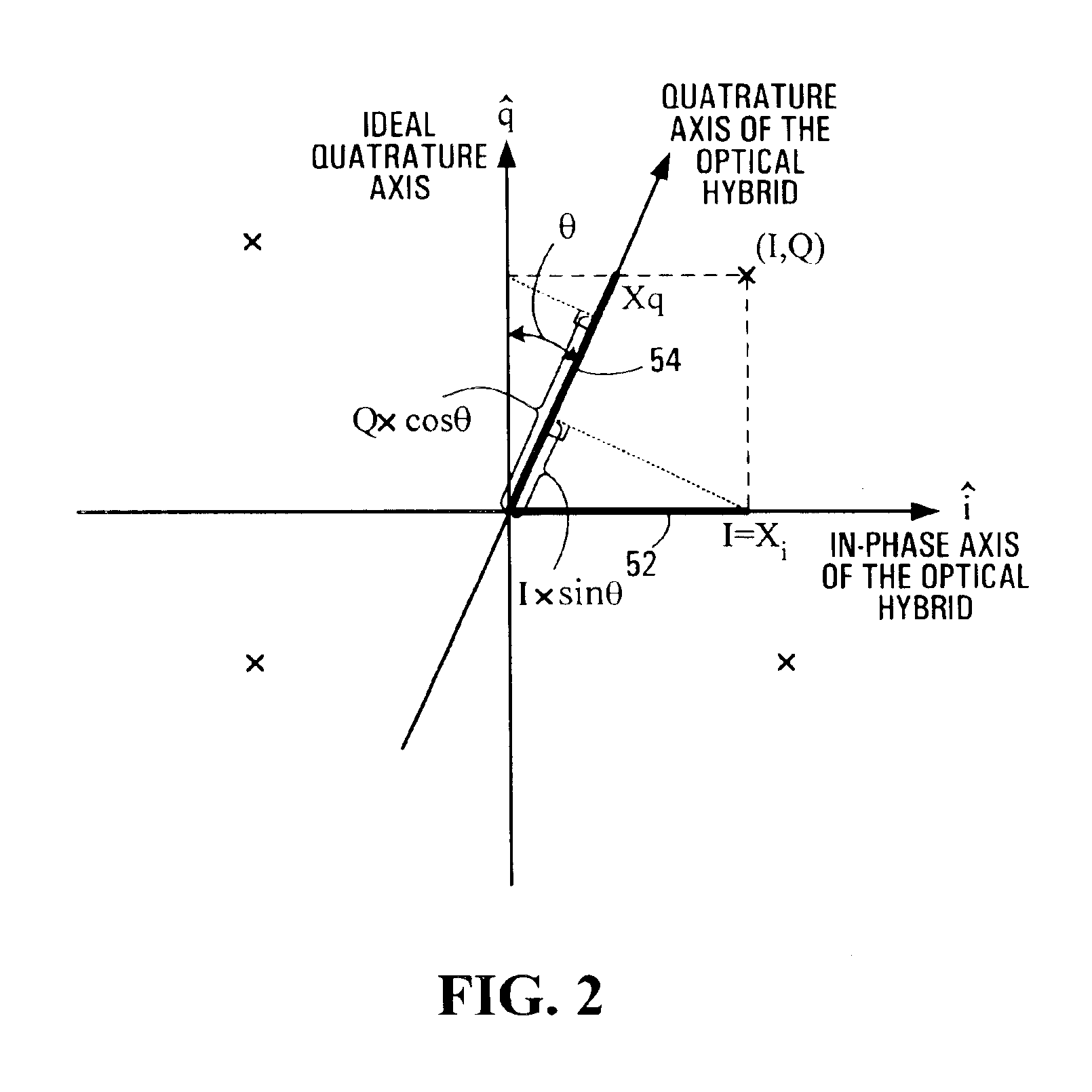

Method for quadrature phase angle correction in a coherent receiver of a dual-polarization optical transport system

ActiveUS6917031B1Material analysis by optical meansPhotoelectric discharge tubesDigital signal processingTransport system

A method is provided for correcting a quadrature angle error that exists in the coherent receiver hardware of a dual-polarization optical transport system. The receiver hardware that causes the quadrature angle error is a 90 degree optical hybrid mixing device. The method involves generating an estimate of the quadrature angle error and compensating for the quadrature angle error by multiplying the first and second detected baseband signals by coefficients that are a function of the estimate of the quadrature angle error. The method is robust to severe channel distortion encountered within an optical fiber transmission channel as well as temperature effects and ageing of the 90 degree optical hybrid. The method is suited for a digital signal processing implementation in the coherent receiver when a modulation scheme used on a transmitted signal is quadriphase-shift keying (QPSK). In other embodiments, the method can be used to correct for quadrature angle error in modulation schemes such as binary PSK, M-ary PSK where M>4, or Quadrature Amplitude Modulation (QAM). The method can be implemented by an application-specific integrated circuit(ASIC).

Owner:CIENA

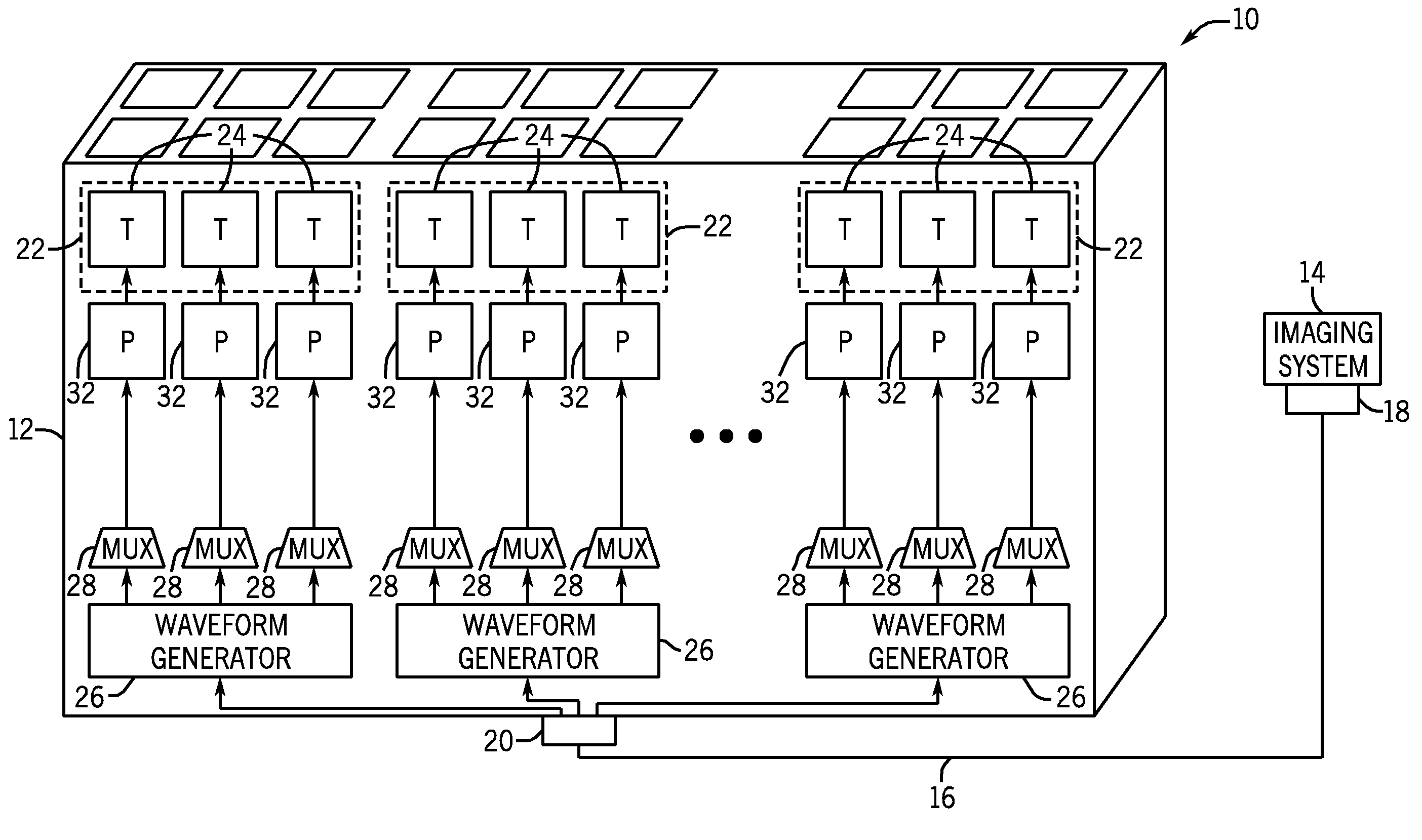

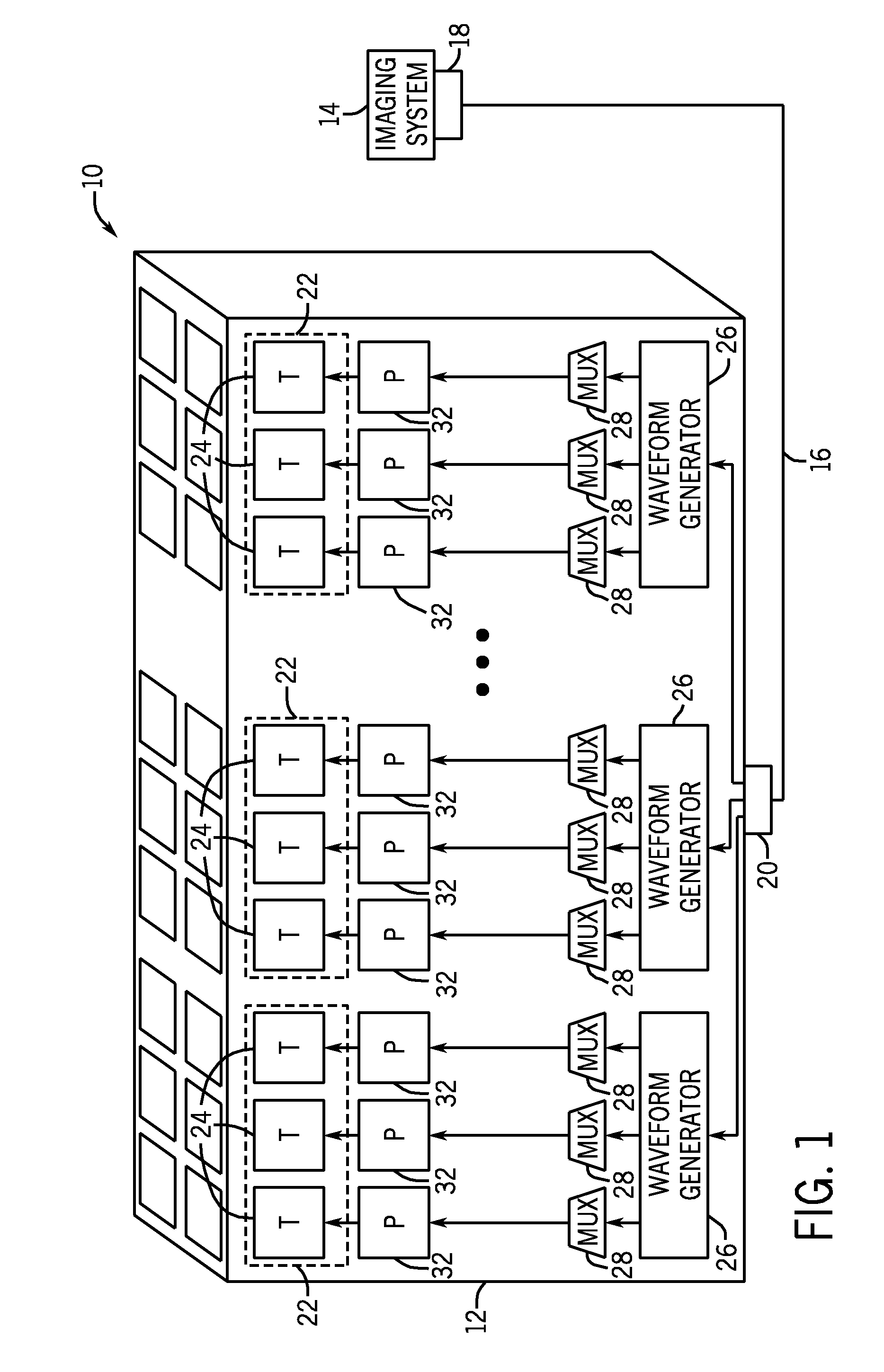

Ultrasound probe with integrated pulsers

InactiveUS20110060225A1Ultrasonic/sonic/infrasonic diagnosticsMechanical vibrations separationSonificationTransducer

Various embodiments of an ultrasound probe for use with an ultrasound system are provided to enable local waveform generation with respect to the ultrasound probe. The ultrasound probe includes a plurality of transducer elements which are independently configured to transmit distinct waveforms. Certain embodiments include a variety of probes that house one or more waveform generators on application specific integrated circuits (ASICs).

Owner:GENERAL ELECTRIC CO

Method and apparatus for camouflaging a standard cell based integrated circuit with micro circuits and post processing

ActiveUS20120139582A1Solid-state devicesCAD circuit designComputer architectureIntegrated circuit layout

A method and apparatus for camouflaging an application specific integrated circuit (ASIC), wherein the ASIC comprises a plurality of interconnected functional logic is disclosed. The method adds functionally inert elements to the logical description or provides alternative definitions of standard logical cells to make it difficult for reverse engineering programs to be used to discover the circuit's function. Additionally, post processing may be performed on GDS layers to provide a realistic fill of the empty space so as to resemble structural elements found in a functional circuit.

Owner:RAMBUS INC

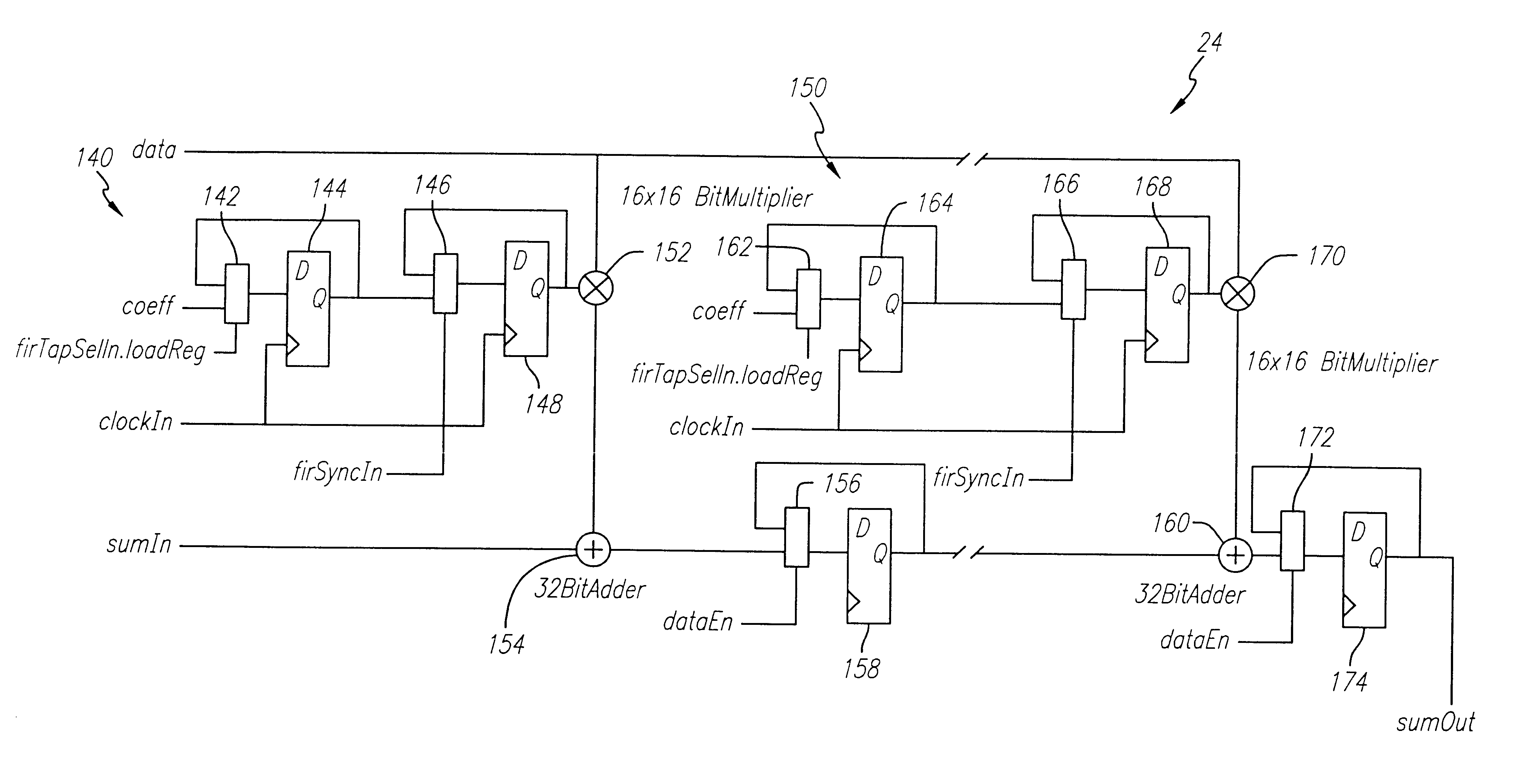

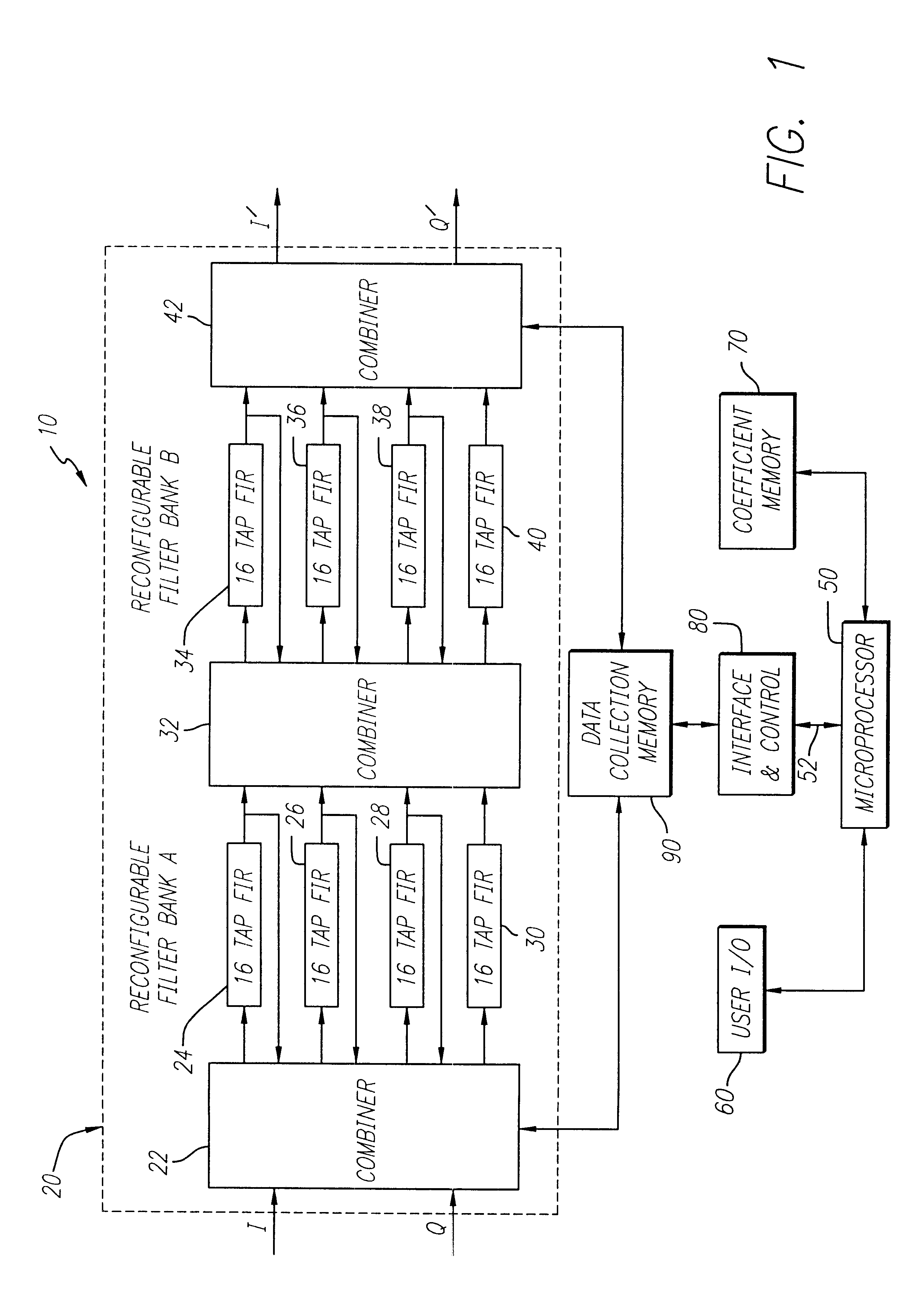

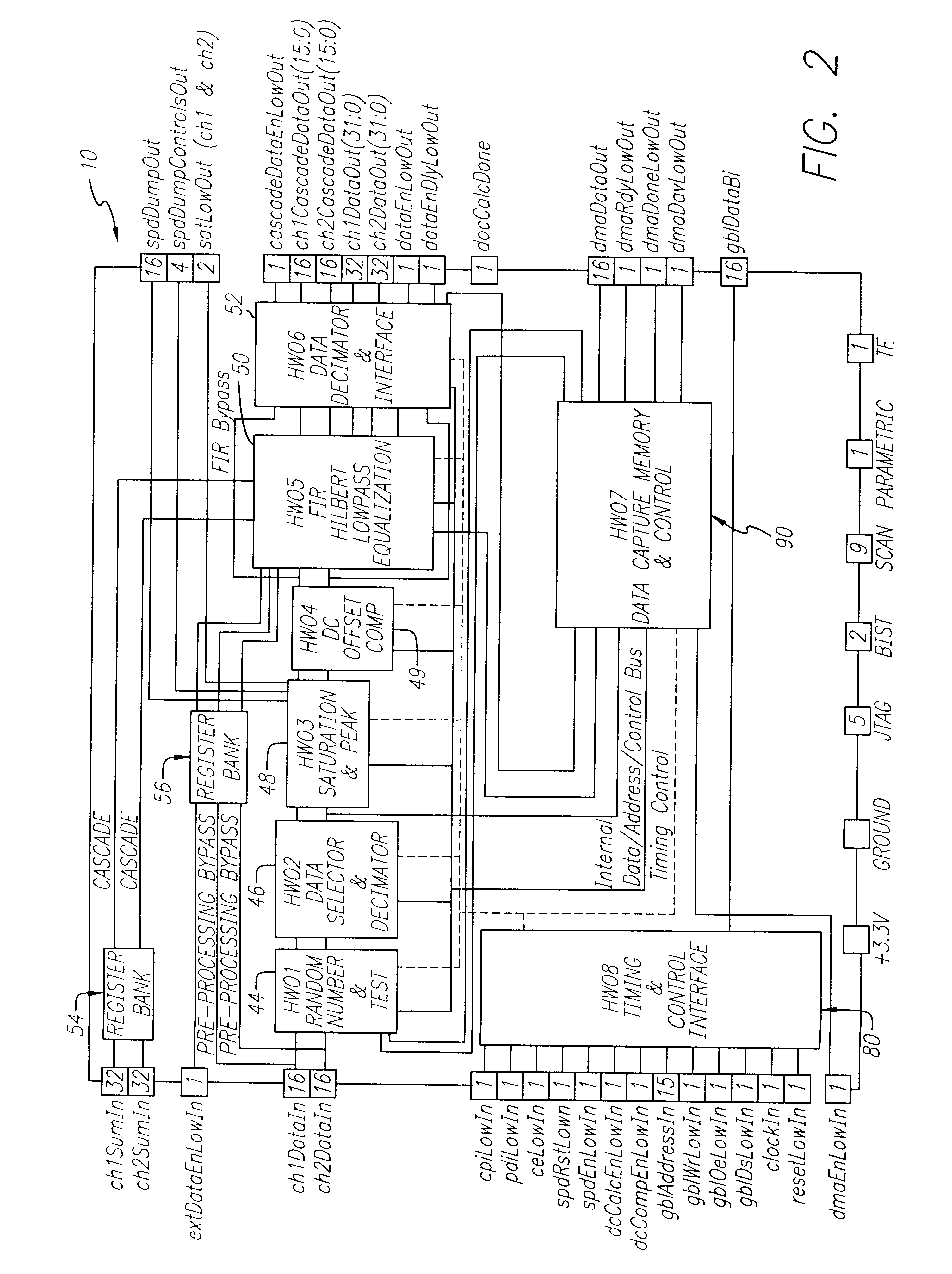

General purpose filter

InactiveUS6407694B1Television system detailsWave based measurement systemsGeneral purposeDigital signal processing

A versatile signal processor. The inventive signal processor includes a plurality of filters which are selectively interconnected to provide a variety of digital signal processing functions. In the illustrative embodiment, each filter is adapted to multiply input data by a coefficient. Further, each filter includes adders for accumulating the products. The coefficients are provided by an external processor which configures the general purpose filter to a particular function, such as a general purpose filter, a Hilbert filter, a finite impulse response filter, an equalizer, a beamforming network, a convolver, a correlator, or an application specific integrated circuit by way of example. When interconnected in accordance with the teachings provided herein, these circuits may be used to provide a digital receiver.

Owner:RAYTHEON CO

Cryptographic asic including circuitry-encoded transformation function

ActiveUS20170206382A1Easy to decipherImprove noiseEncryption apparatus with shift registers/memoriesInternal/peripheral component protectionProof-of-work systemDigital rights management

A transform-enabled integrated circuit for use in cryptographic proof-of-work systems is provided. The transform-enabled integrated circuit includes a transformation block embedded among other circuitry components within the cryptographic datapath of the transform-enabled integrated circuit. The transformation block may be configured at a time subsequent to the manufacture of the integrated circuit to embody as circuitry any one of a plurality of mathematical transformation functions, thus enabling a user to systemically modify the results of cryptographic operations performed by the integrated circuit while retaining the high performance and efficiency characteristics of application-specific integrated circuits. Embodiments of the technology disclosed herein provides an hereto unachievable level of flexibility in the deployment of application-specific integrated circuits within proof-of-work verification systems, such as private block chain systems, public block chain systems, digital rights management, secure token and other cryptography-related fields.

Owner:BLOCKCHAIN ASICS LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com