Patents

Literature

259 results about "Crossover switch" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

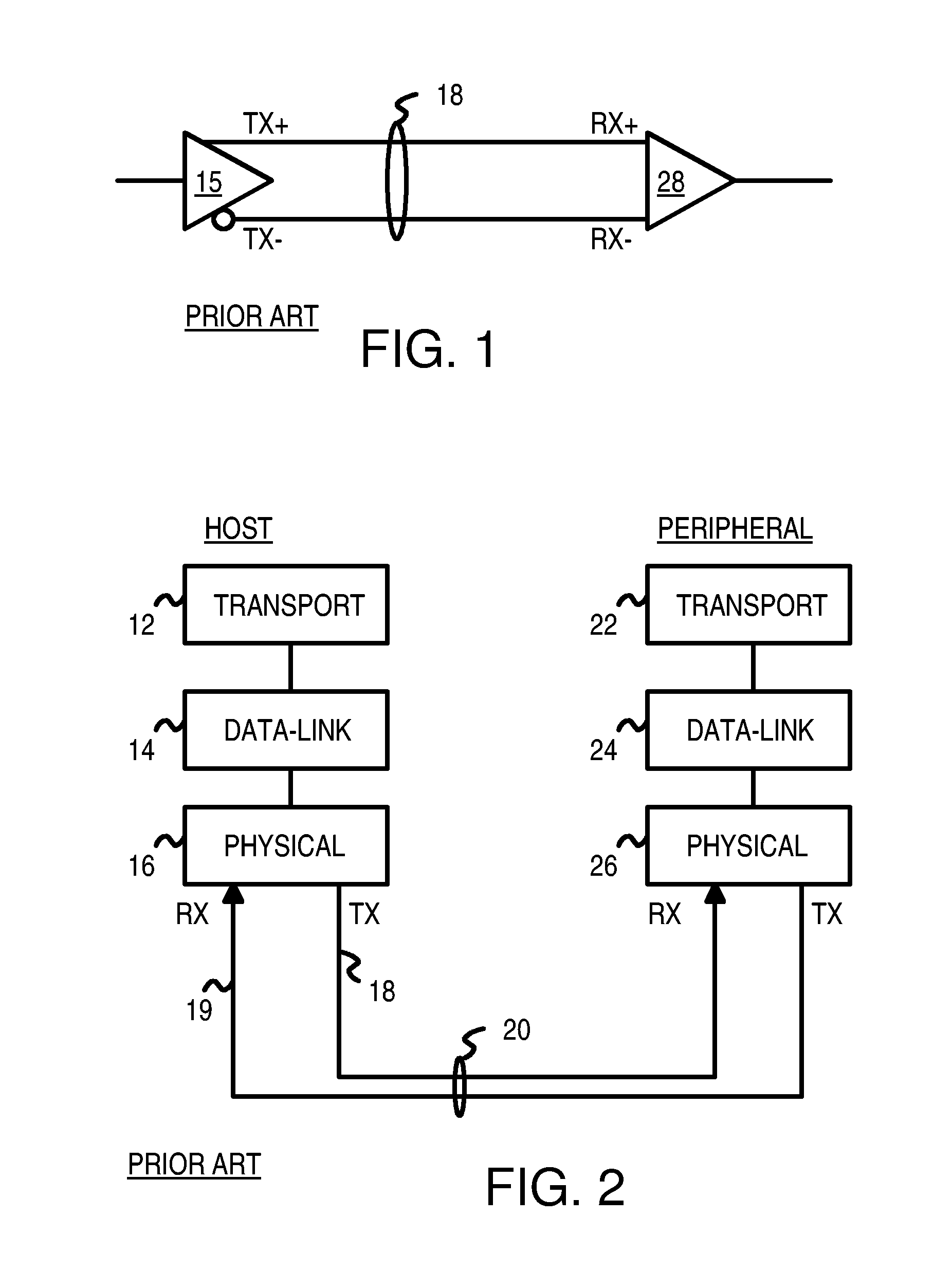

In electronics, a crossover switch or matrix switch is a switch connecting multiple inputs to multiple outputs using complex array matrices designed to switch any one input path to any one (or more) output path(s). There are blocking and non-blocking types of cross-over switches.

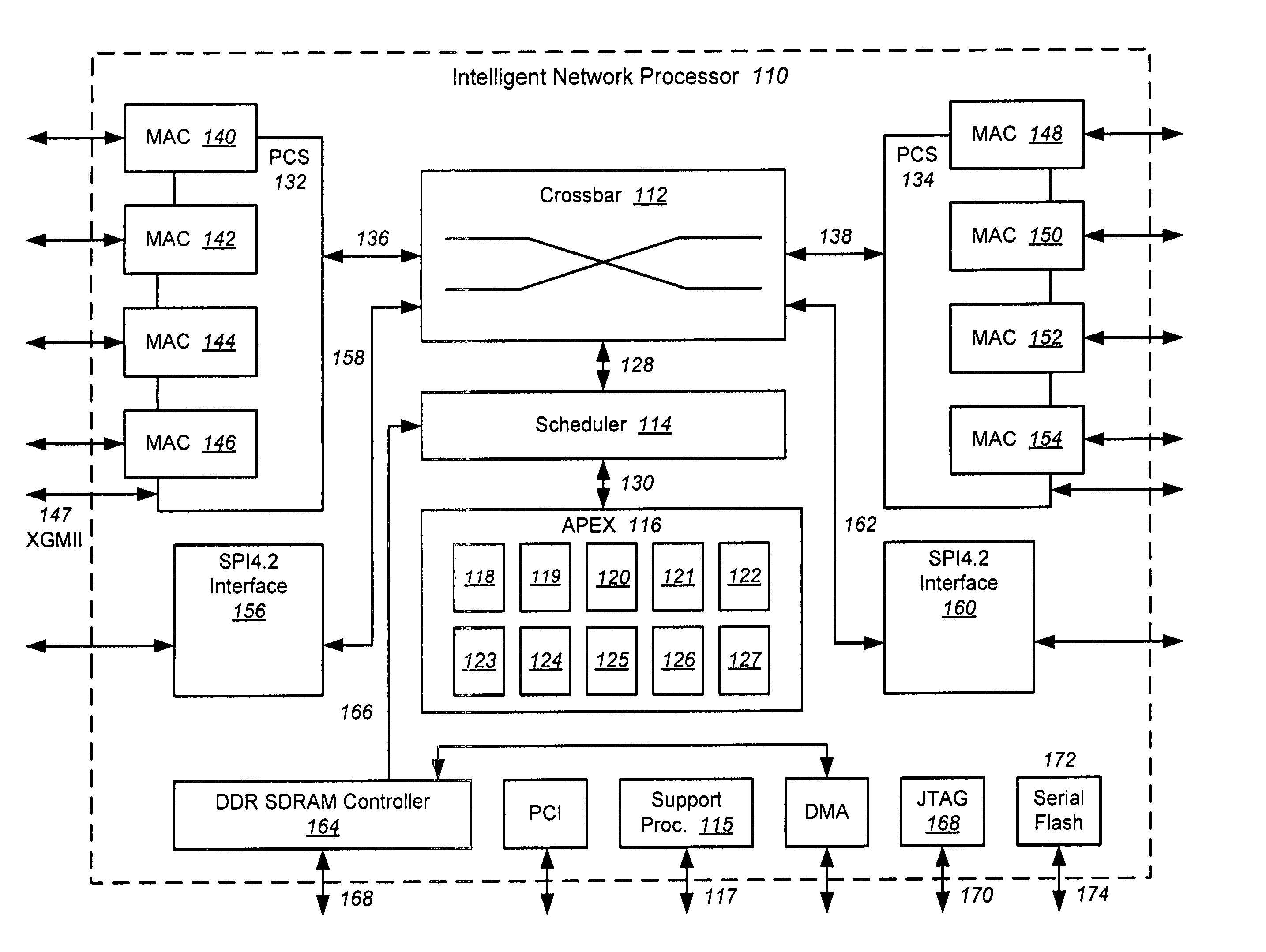

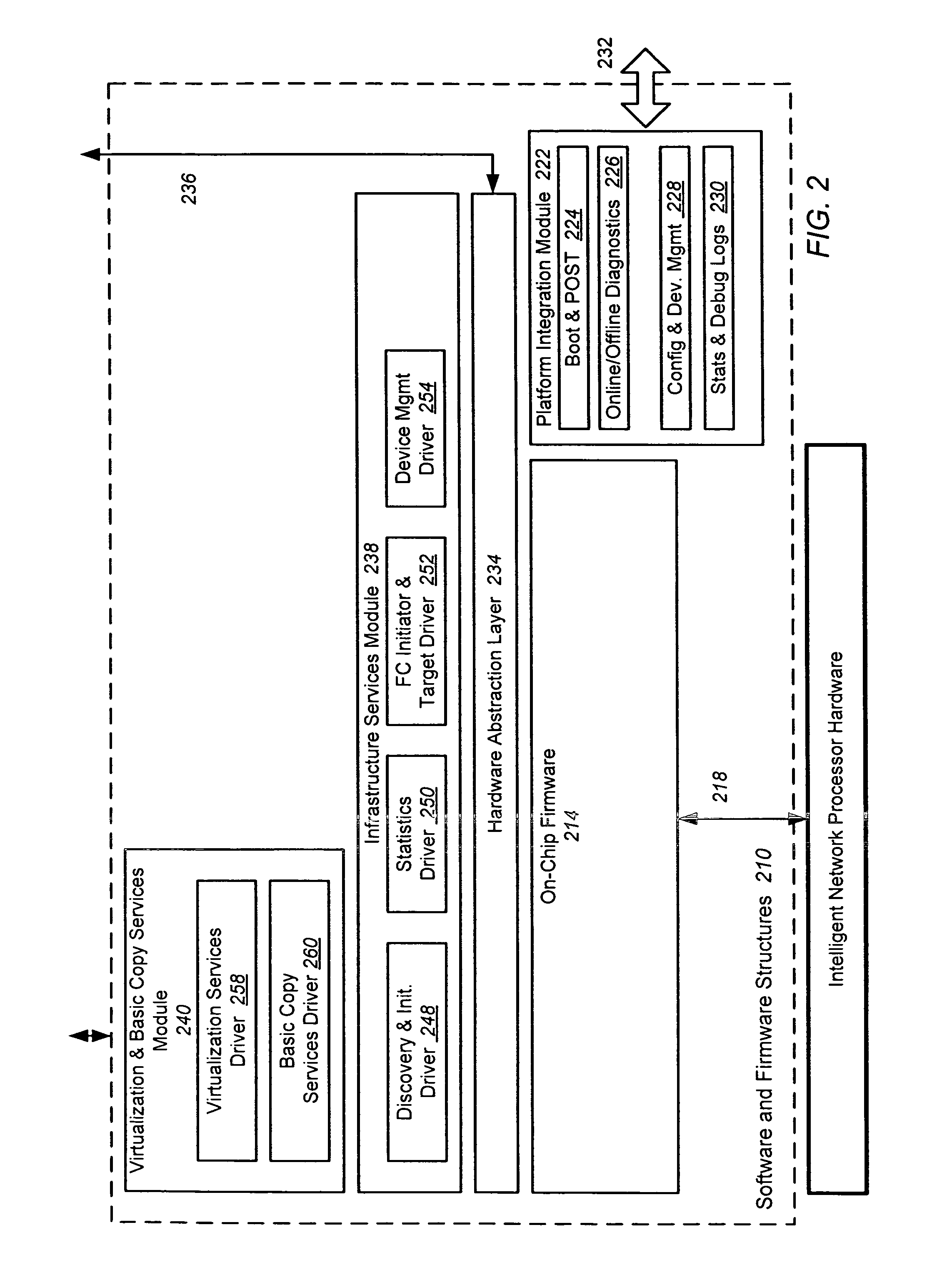

Intelligent network processor and method of using intelligent network processor

ActiveUS20070266179A1Efficient accessImprove creditMultiple digital computer combinationsData switching networksIntelligent NetworkPCI Express

An intelligent network processor is disclosed that provides a PCI express (PCIe) host bus adapter with firmware selectable hardware capabilities and firmware enabled emulation of capabilities not supported by hardware. Support for Fibre Channel (FC) and Gigabit Ethernet (GbE) protocols are provided through the same fabric ports, including multiple port trunking for both protocols. On chip protocol conversion is provided for switching and routing between FC and GbE ports. Switching using the same crossbar module is provided for both FC and GbE protocols. The crossbar module is coupled to directly access external DDR memory so that messages from FC, GbE, and PCIe interfaces may be switched directly to the DDR memory.

Owner:EMULEX COMM CORP

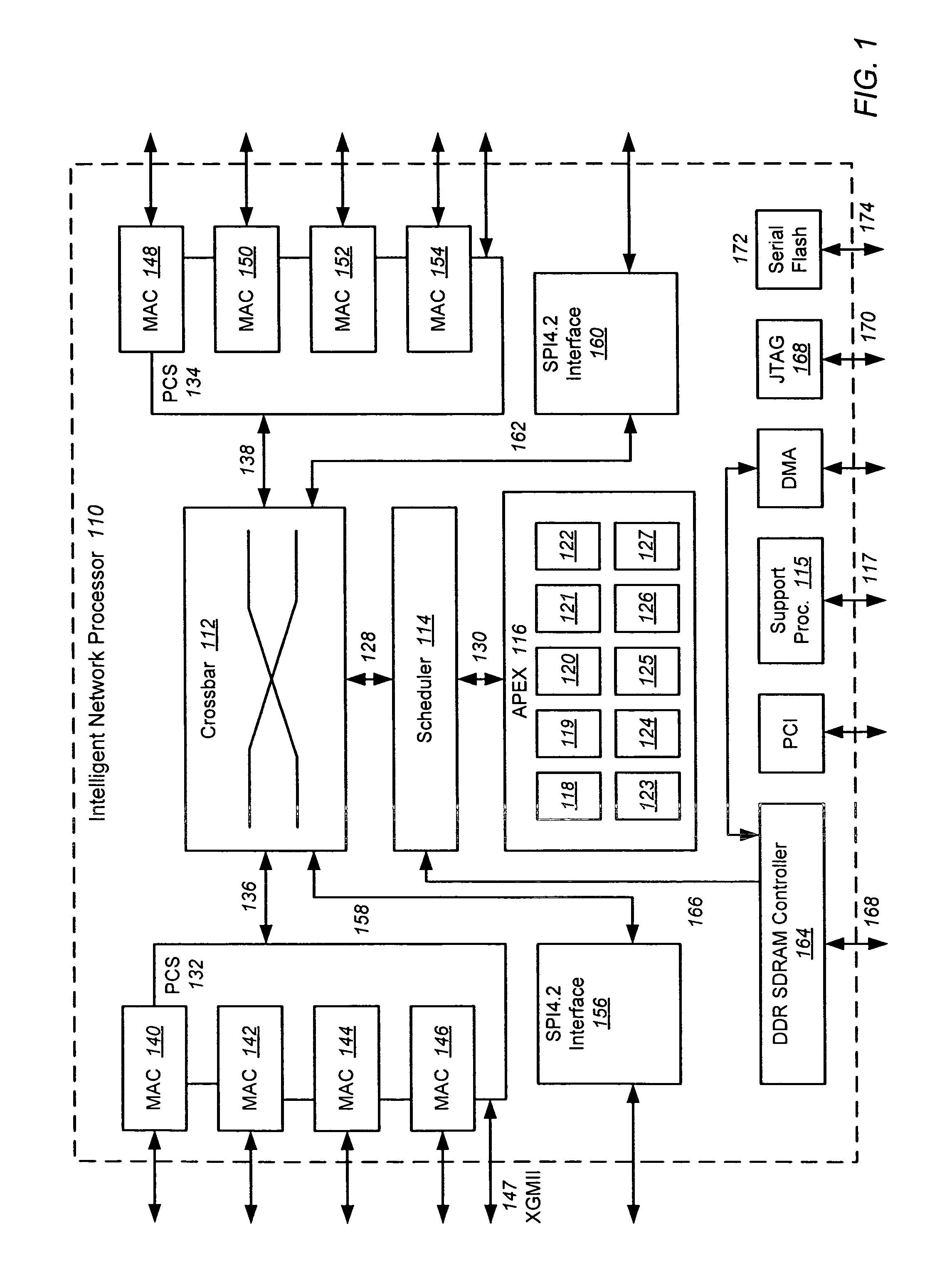

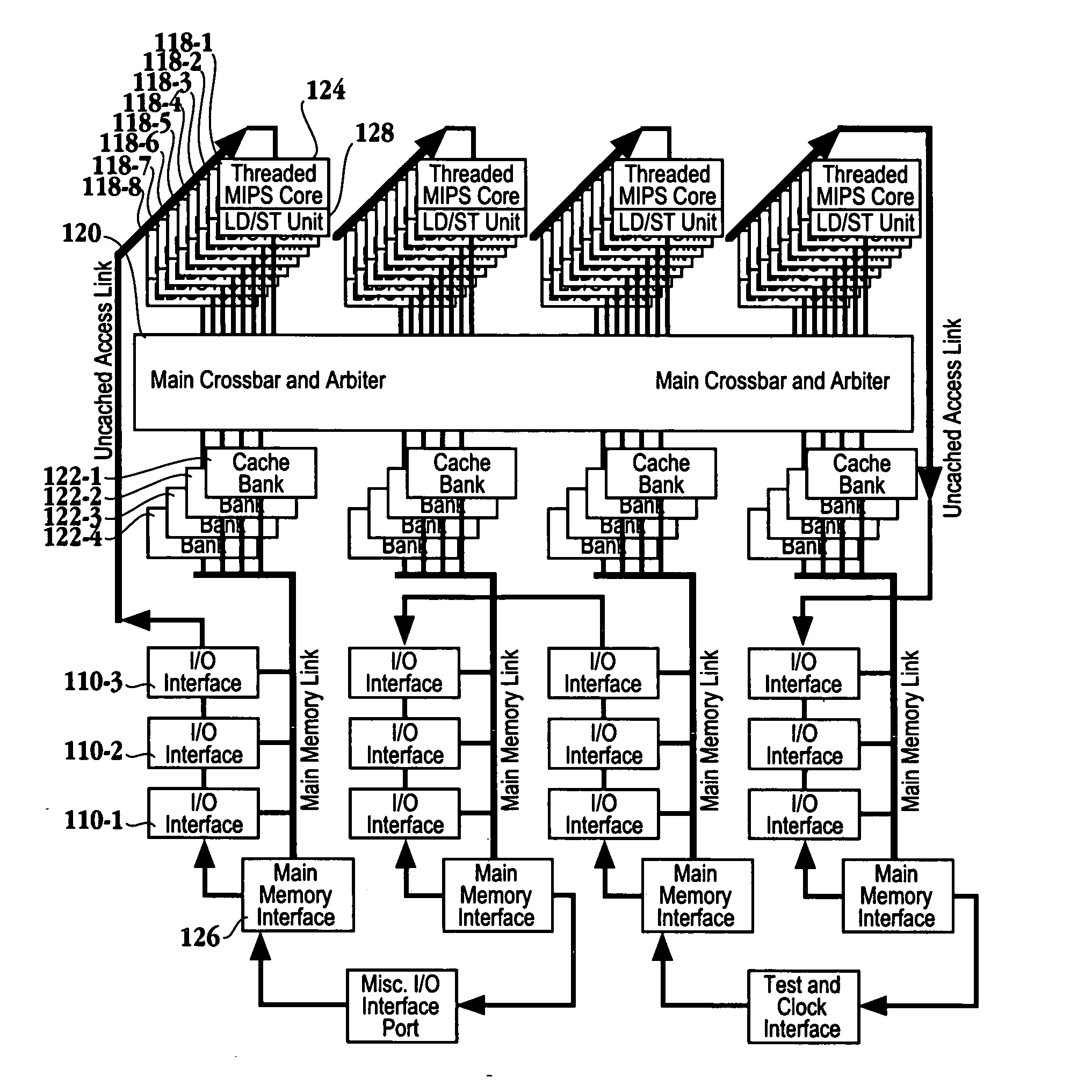

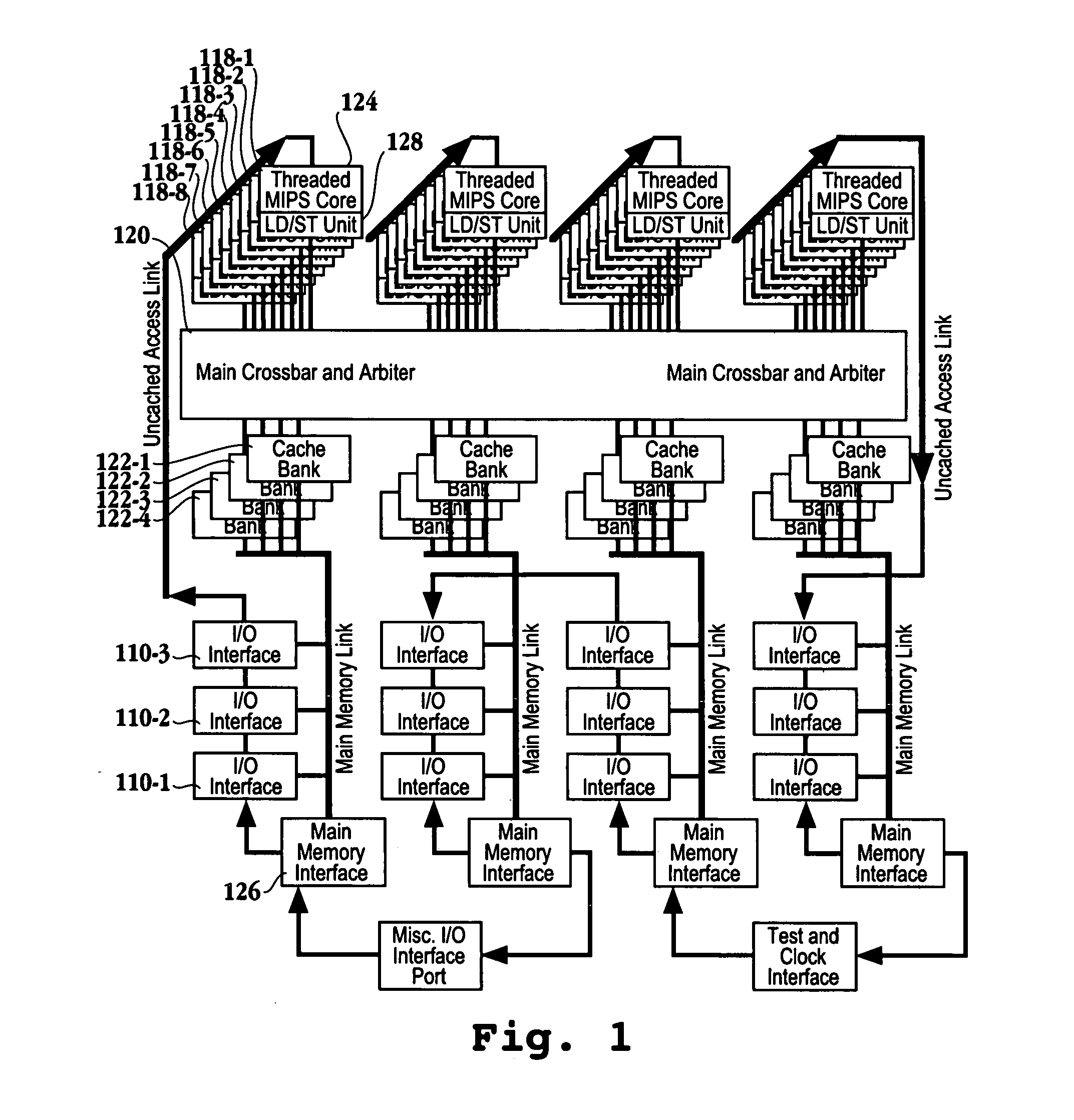

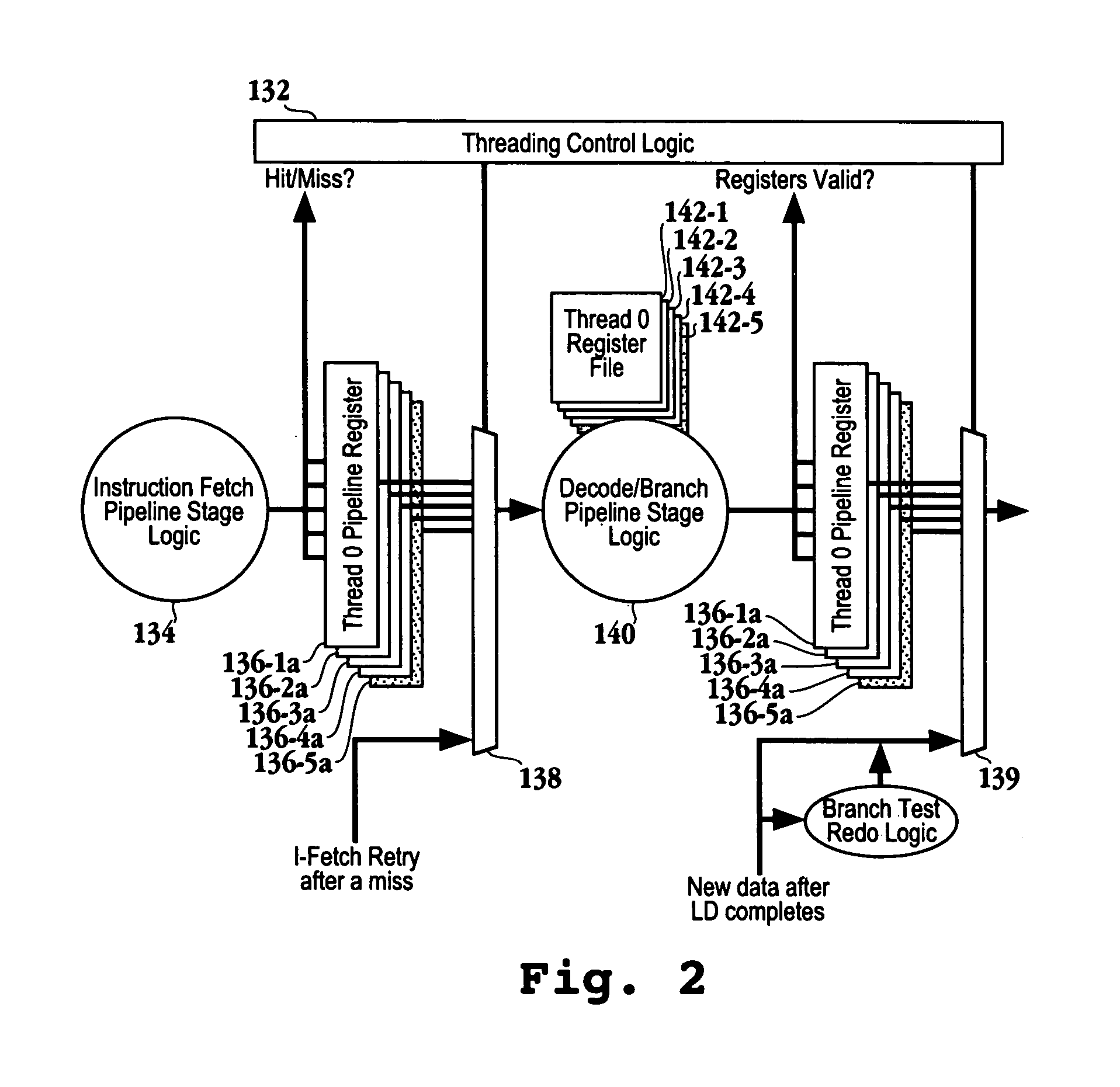

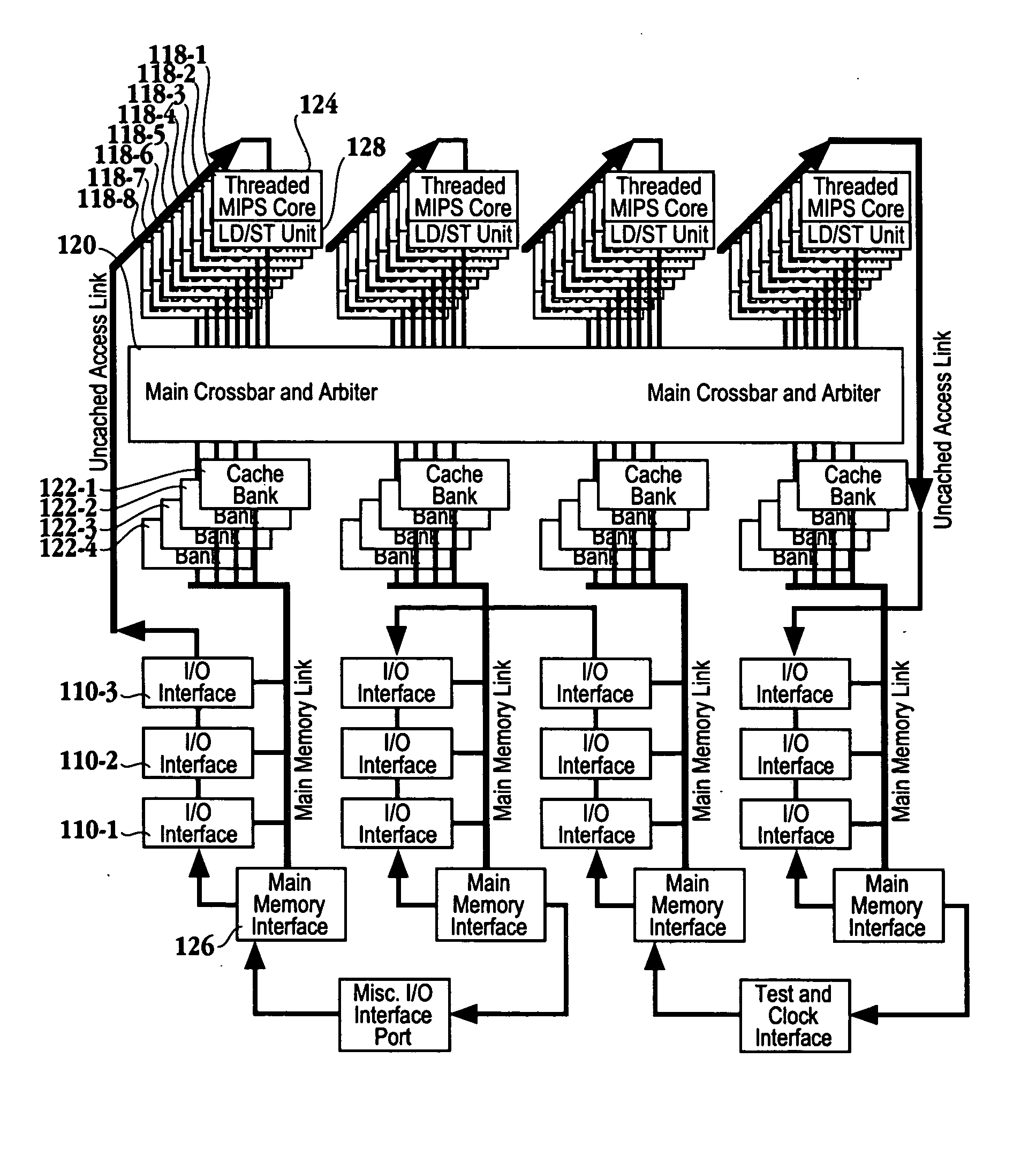

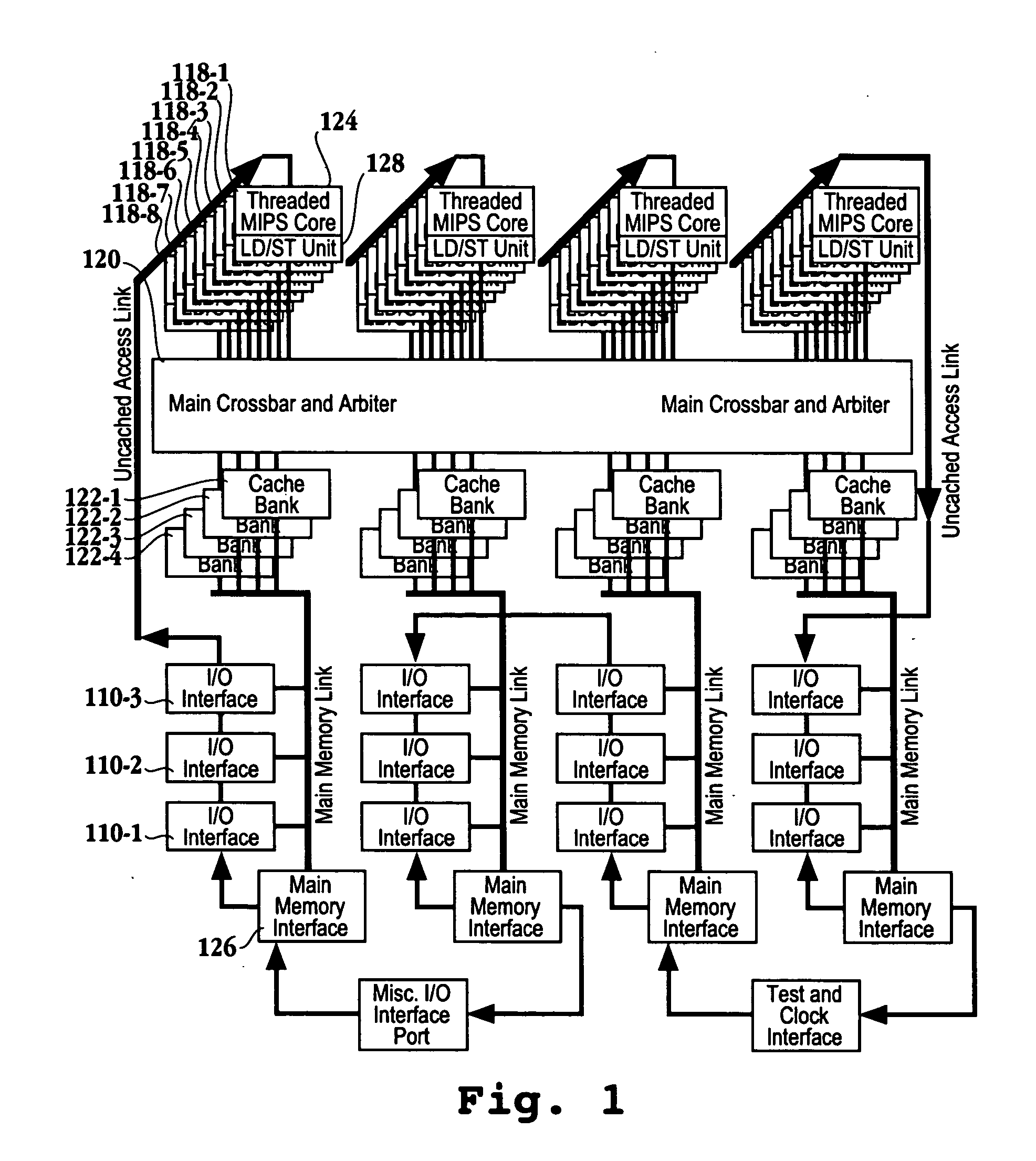

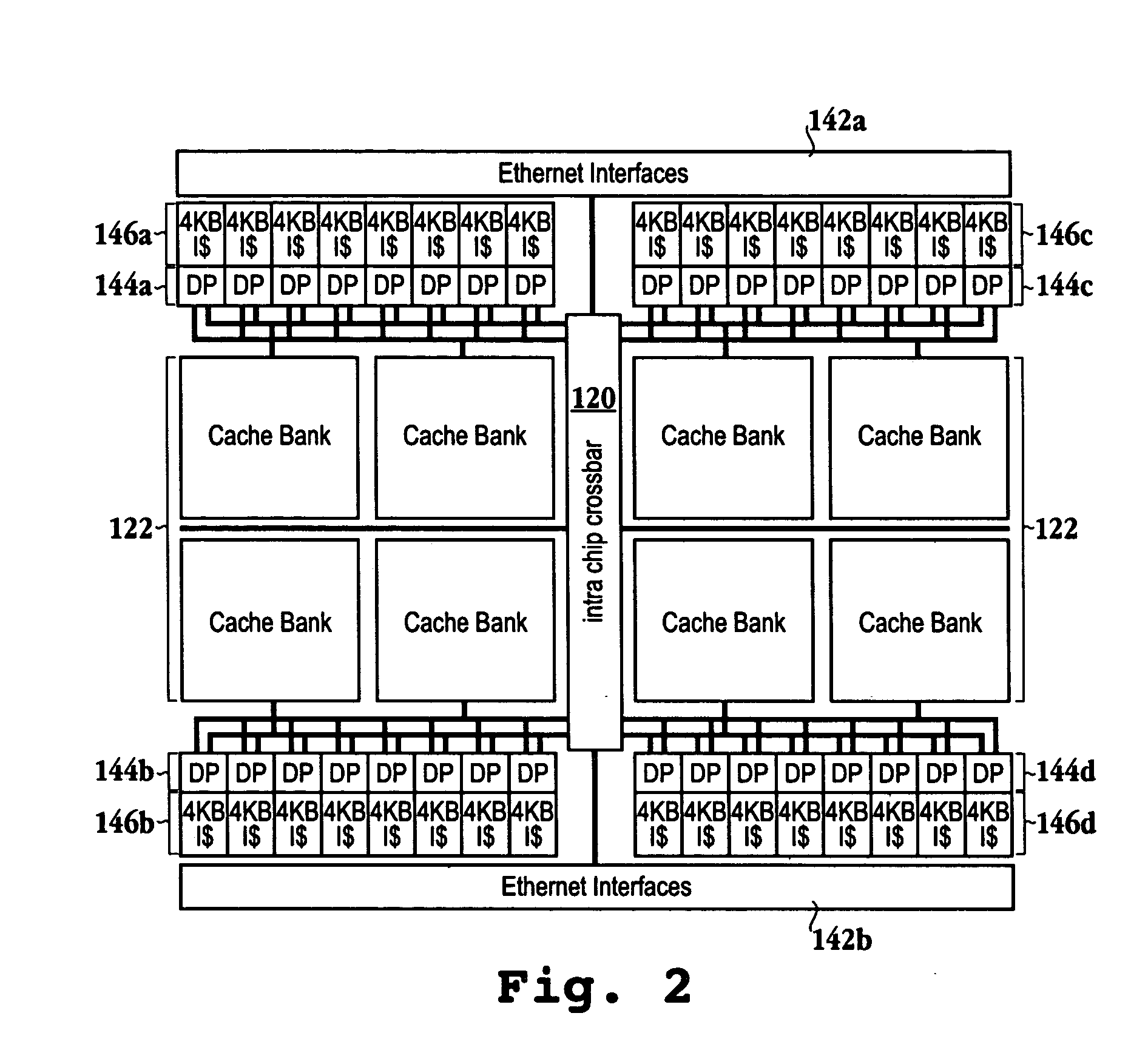

Multi-core multi-thread processor

ActiveUS20050044319A1Easy accessRegister arrangementsMemory adressing/allocation/relocationMemory interfaceParallel computing

A processor is provided. The processor includes at least two cores. The at least two cores have a first level cache memory and are multi-threaded. A crossbar is included. A plurality of cache bank memories in communication with the at least two cores through the crossbar is provided. Each of the plurality of cache bank memories communicates with a main memory interface. A plurality of input / output interface modules in communication with the main memory interface and providing a link to the at least two cores are included. The link bypasses the plurality of cache bank memories and the crossbar. Threading hardware configured to enable the at least two cores to switch from a first thread to a second thread in a manner hiding delays caused by cache accesses is included. A server and a method for determining when to switch threads in a multi-core multi-thread environment are included.

Owner:ORACLE INT CORP

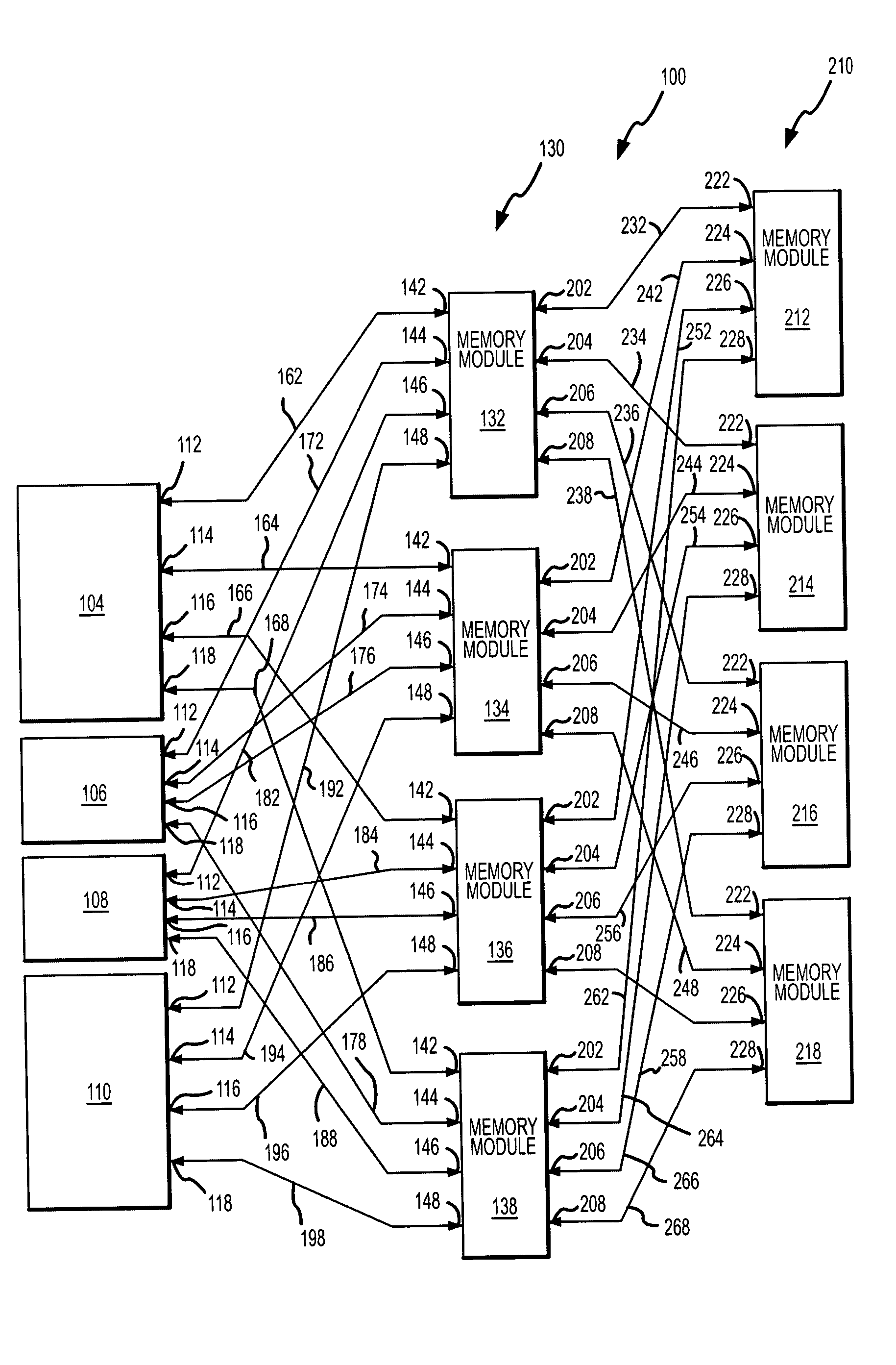

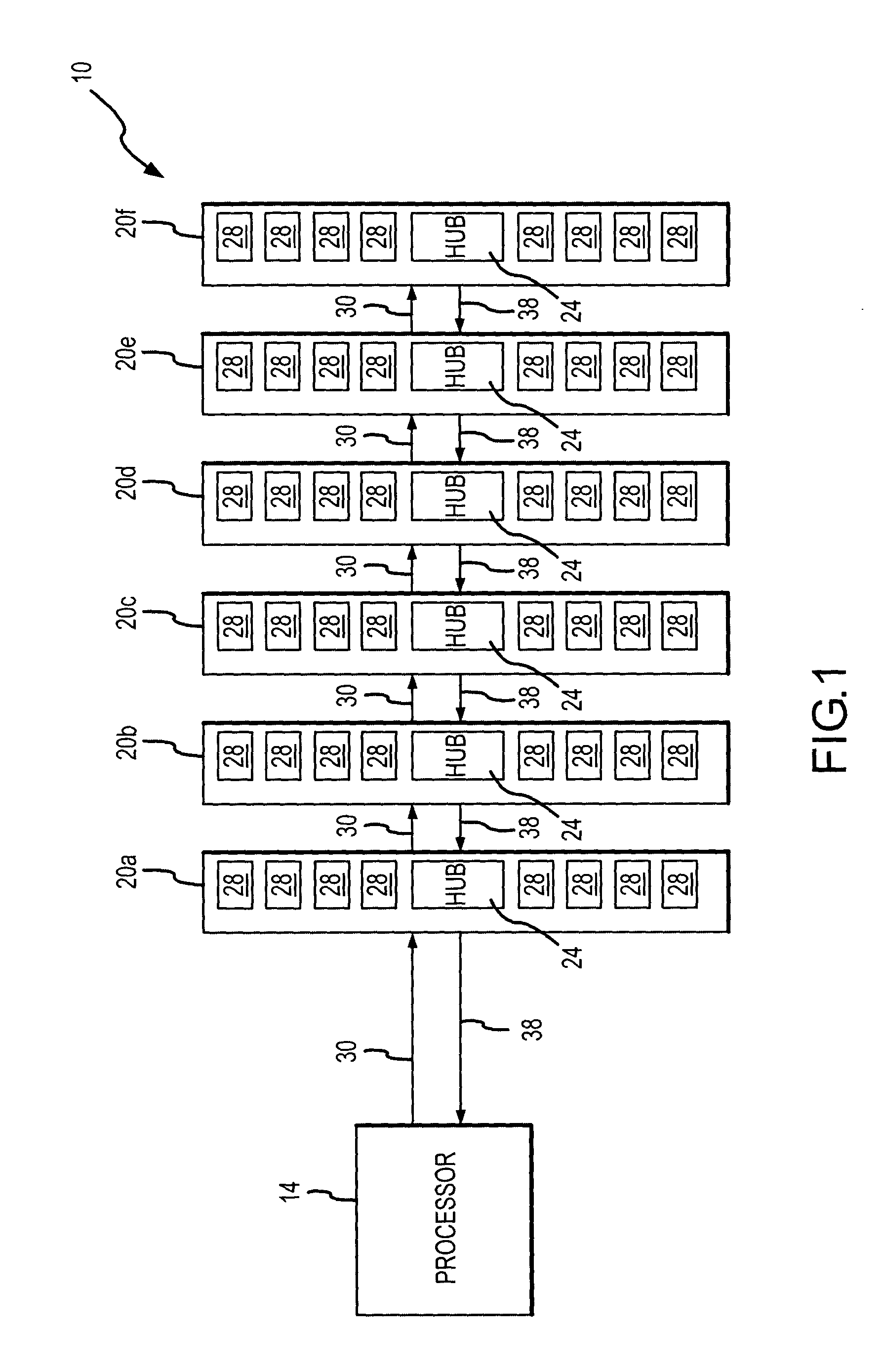

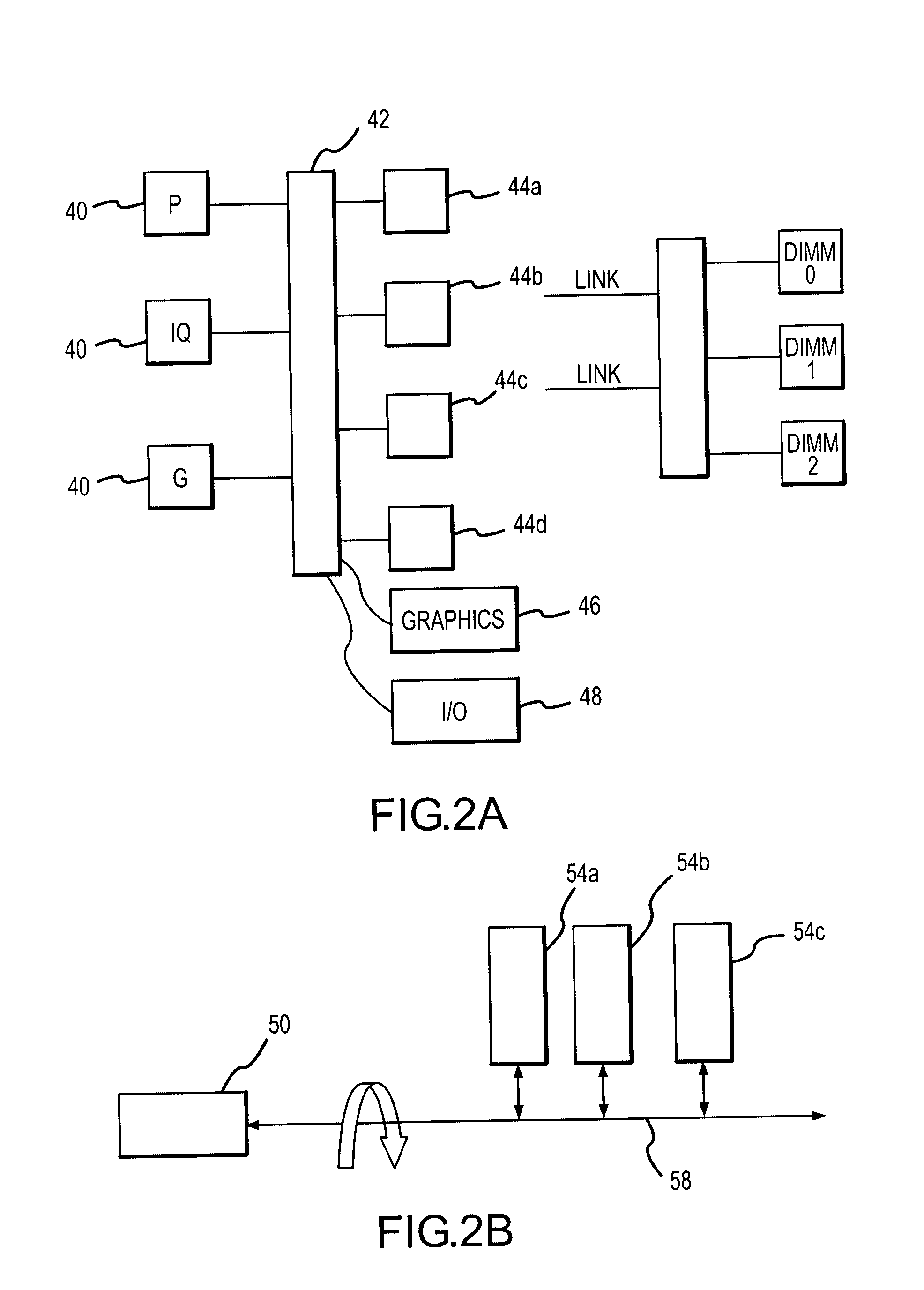

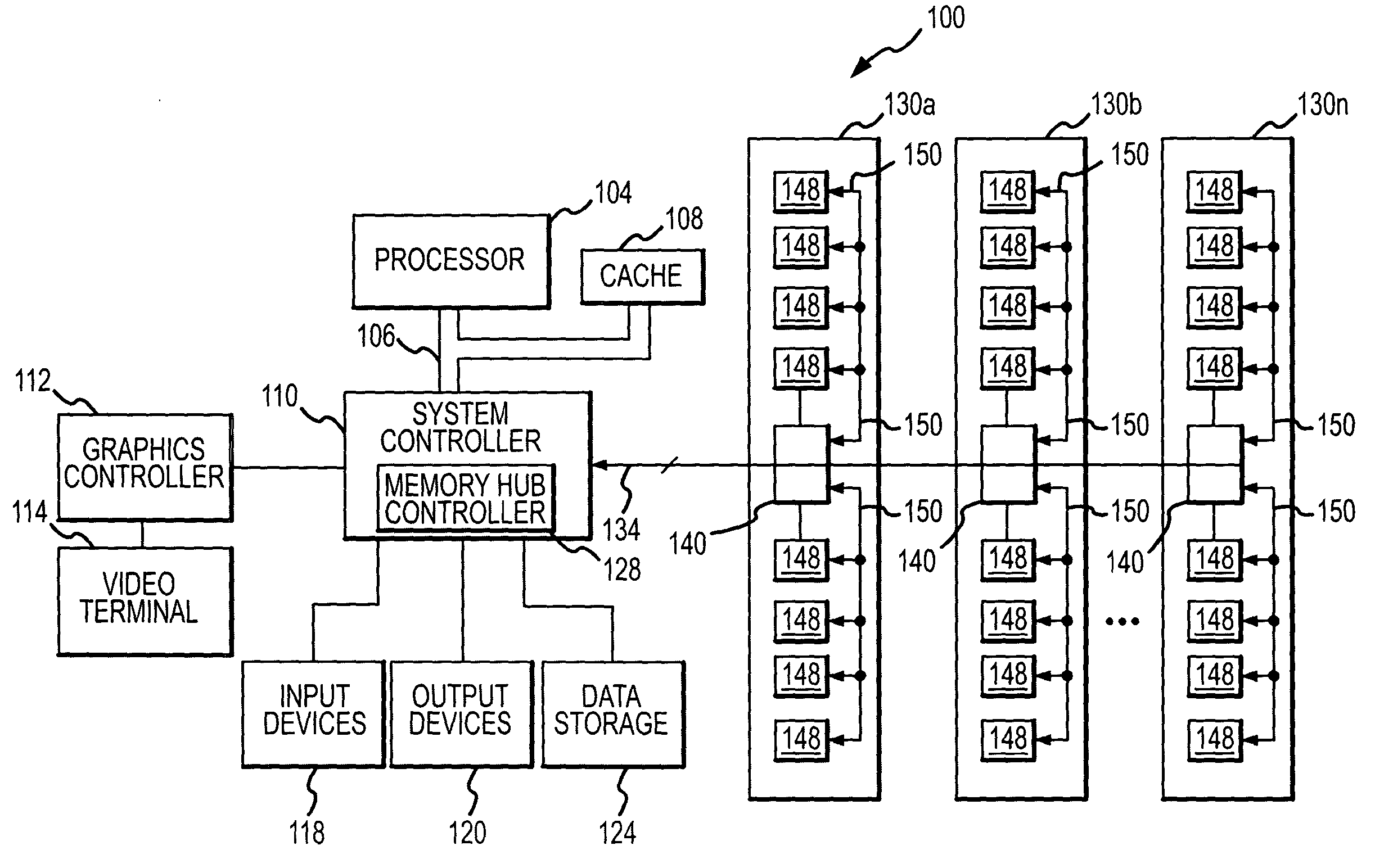

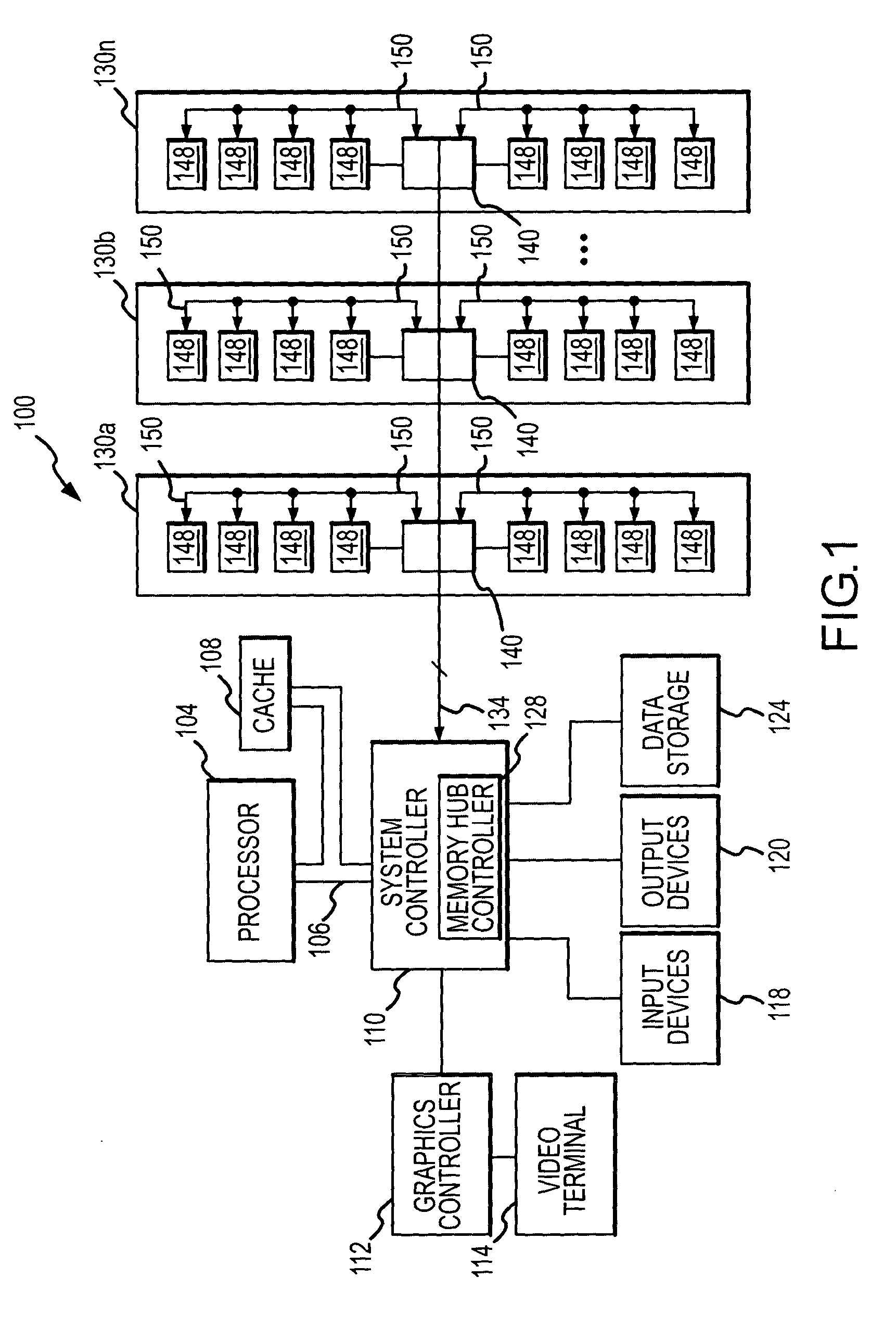

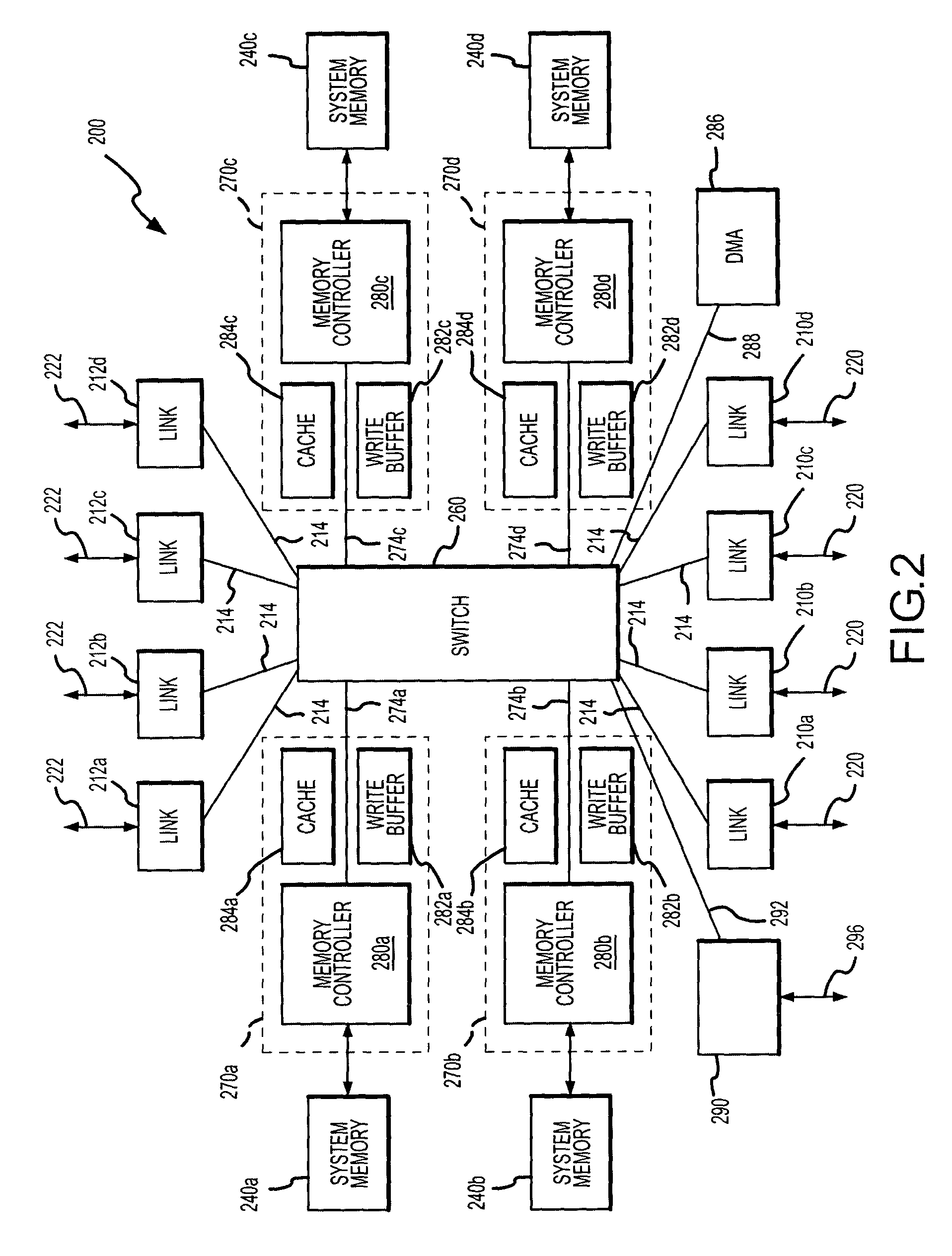

Multiple processor system and method including multiple memory hub modules

InactiveUS20050050255A1Low latency memory accessFlexible handlingStatic storageMemory systemsMemory controllerCrossover switch

A processor-based electronic system includes several memory modules arranged in first and second ranks. The memory modules in the first rank are directly accessed by any of several processors, and the memory modules in the second rank are accessed by the processors through the memory modules in the first rank. The data bandwidth between the processors and the memory modules in the second rank is varied by varying the number of memory modules in the first rank that are used to access the memory module in the second set. Each of the memory modules includes several memory devices coupled to a memory hub. The memory hub includes a memory controller coupled to each memory device, a link interface coupled to a respective processor or memory module, and a cross bar switch coupling any of the memory controllers to any of the link interfaces.

Owner:ROUND ROCK RES LLC

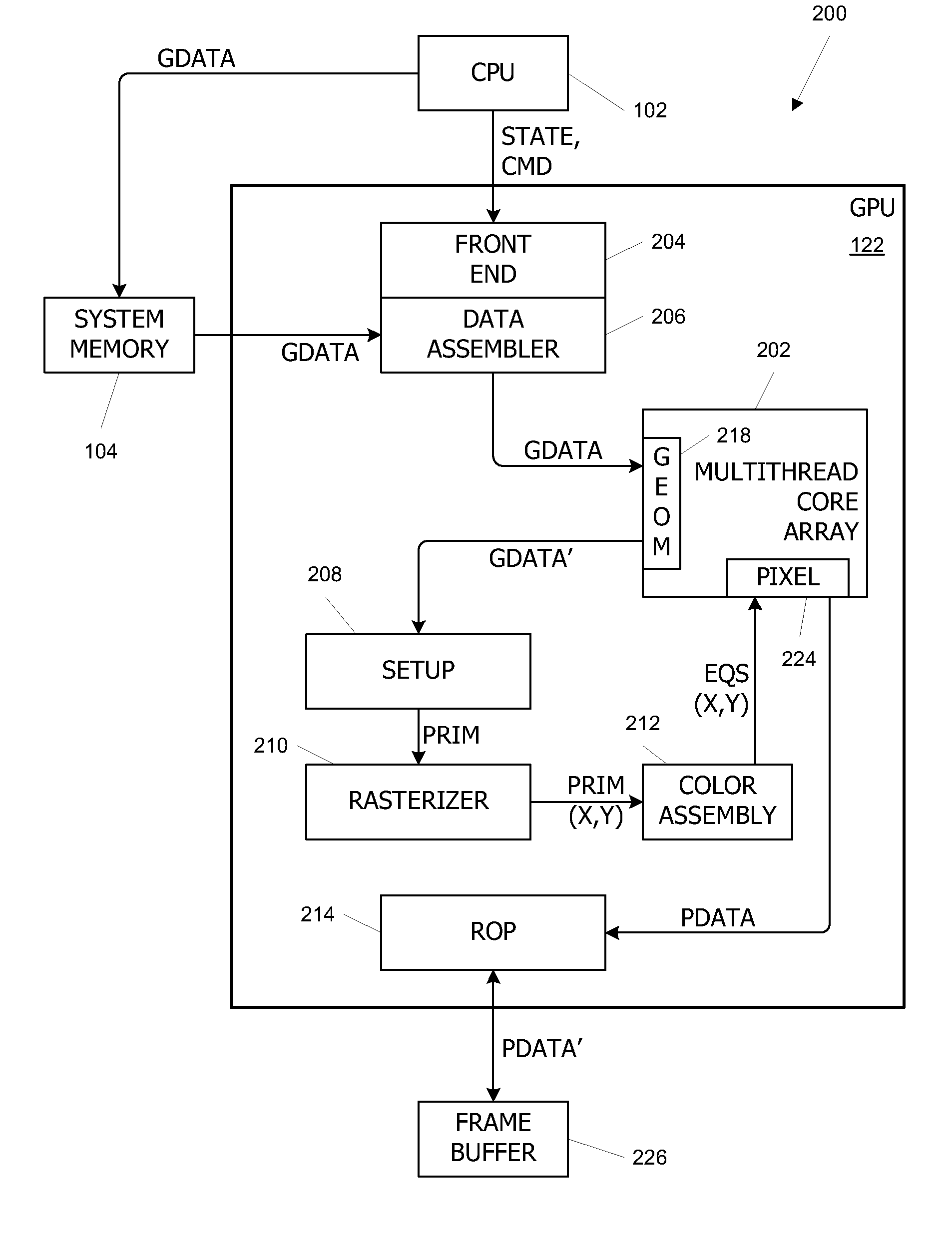

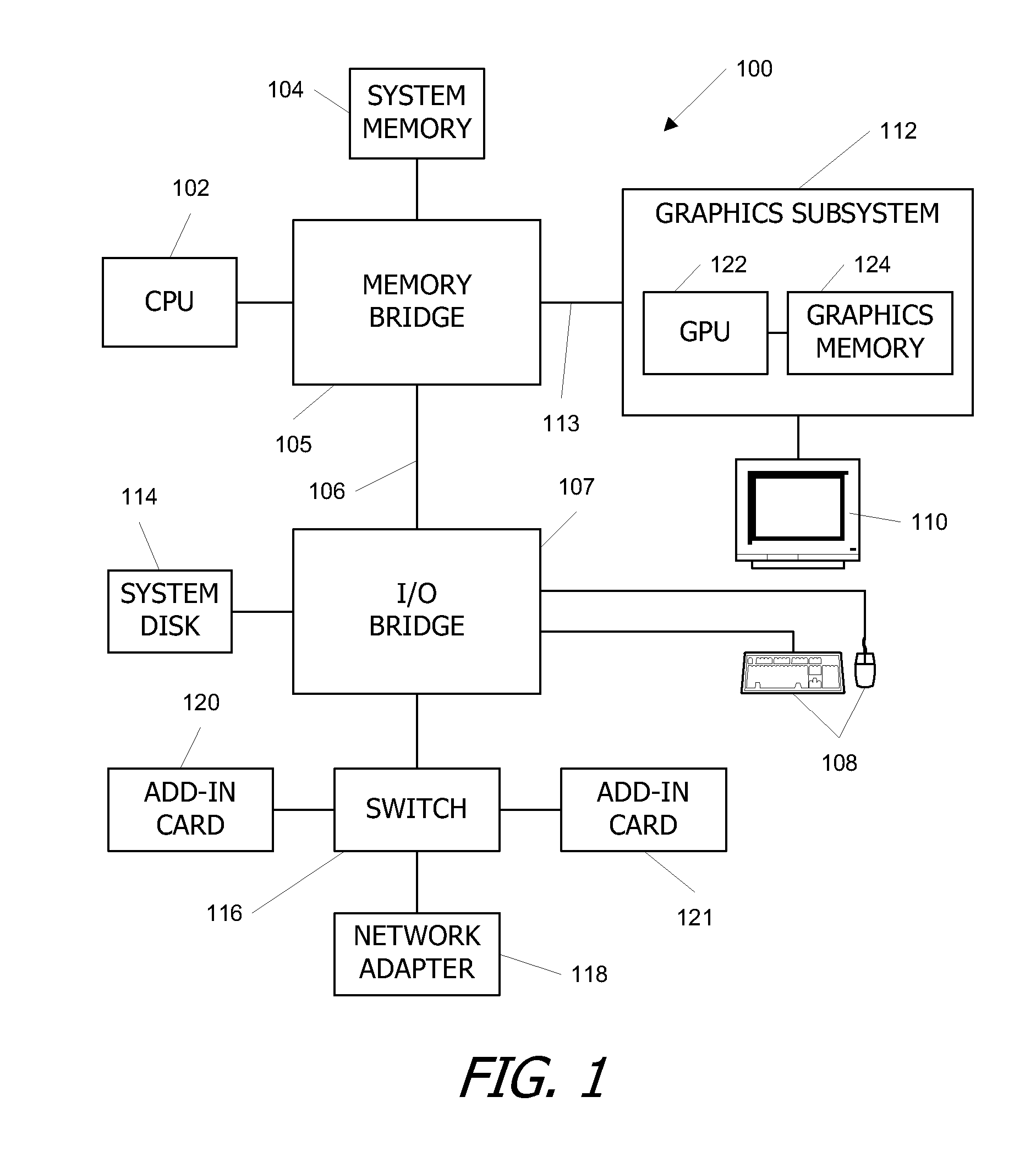

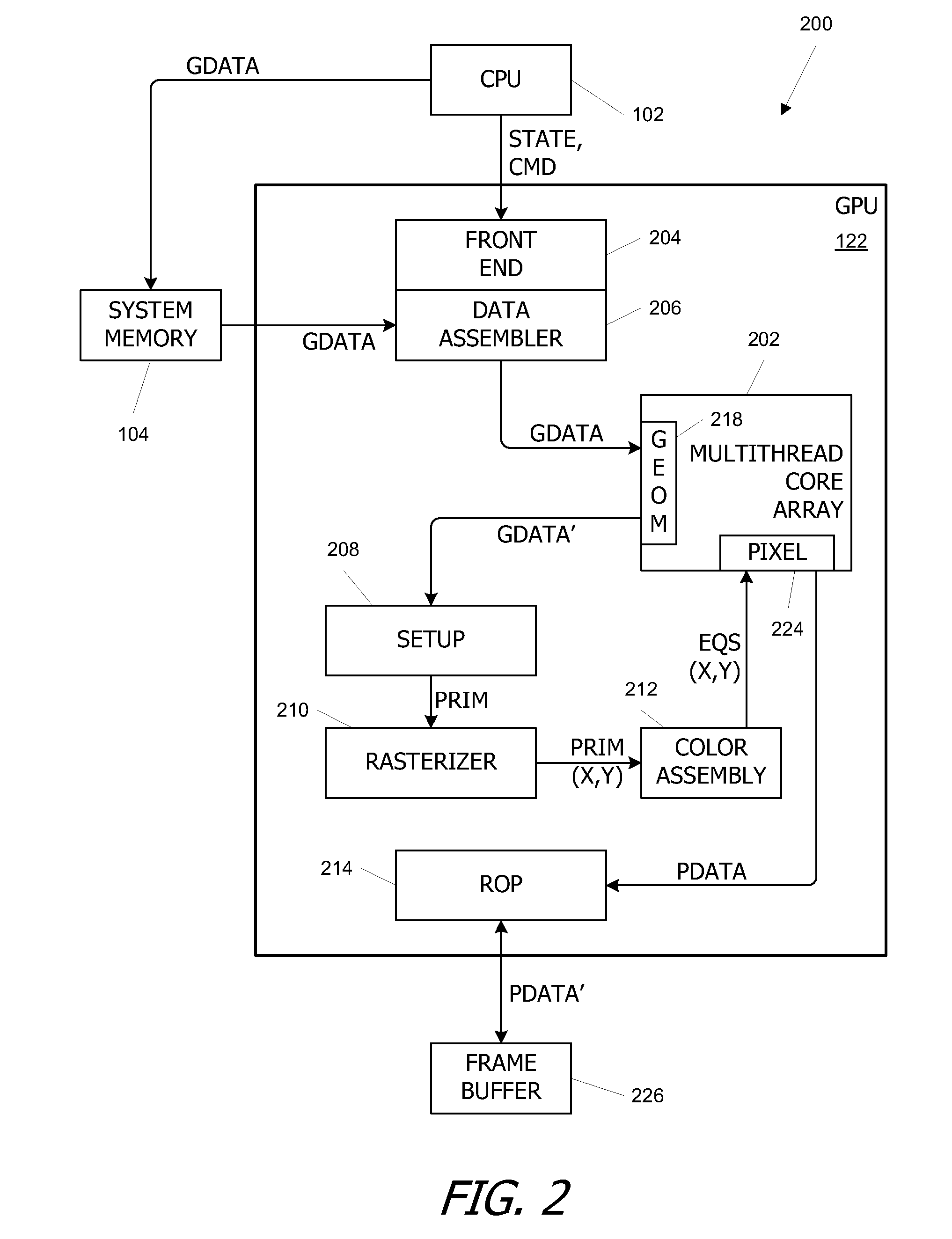

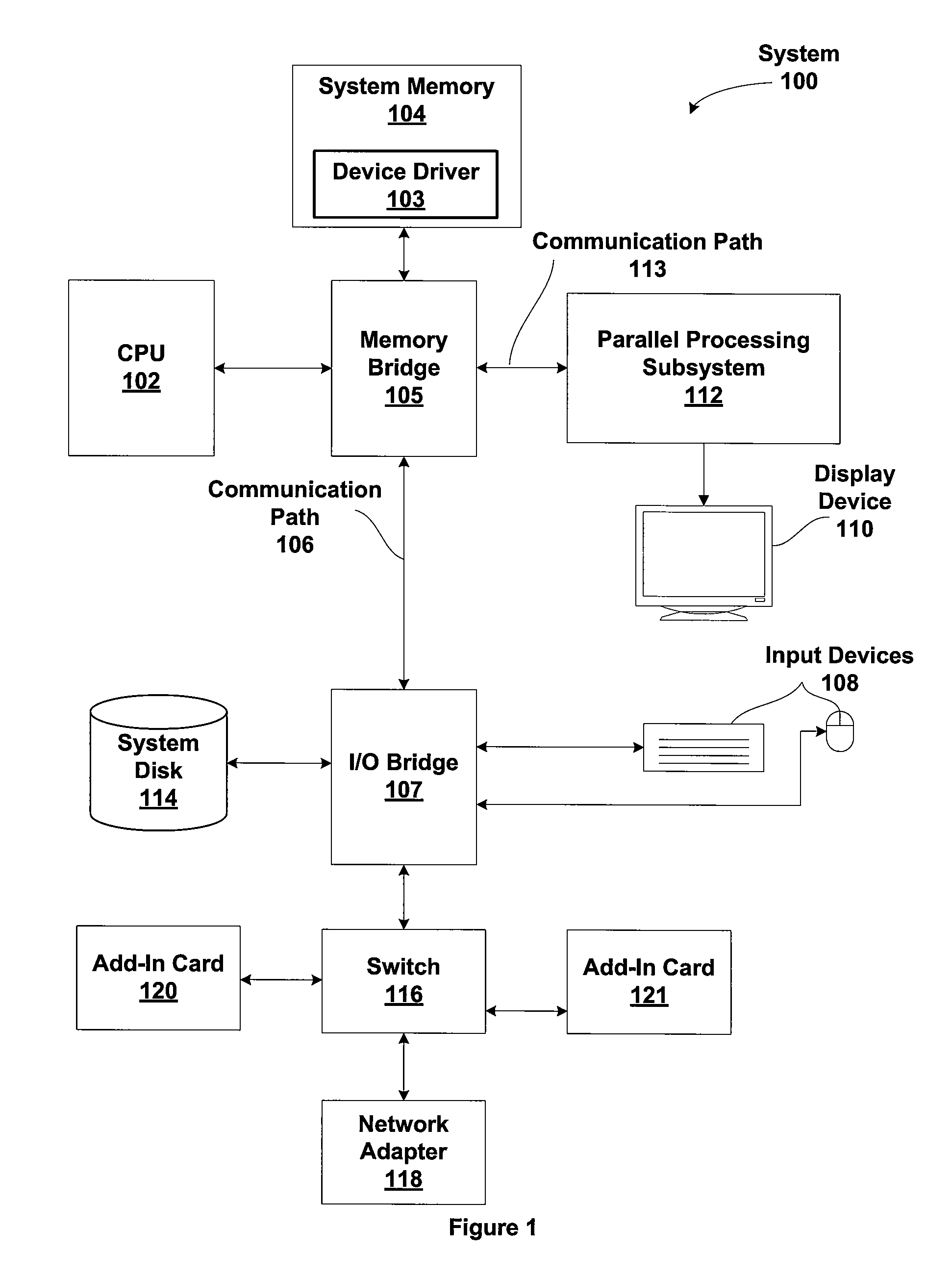

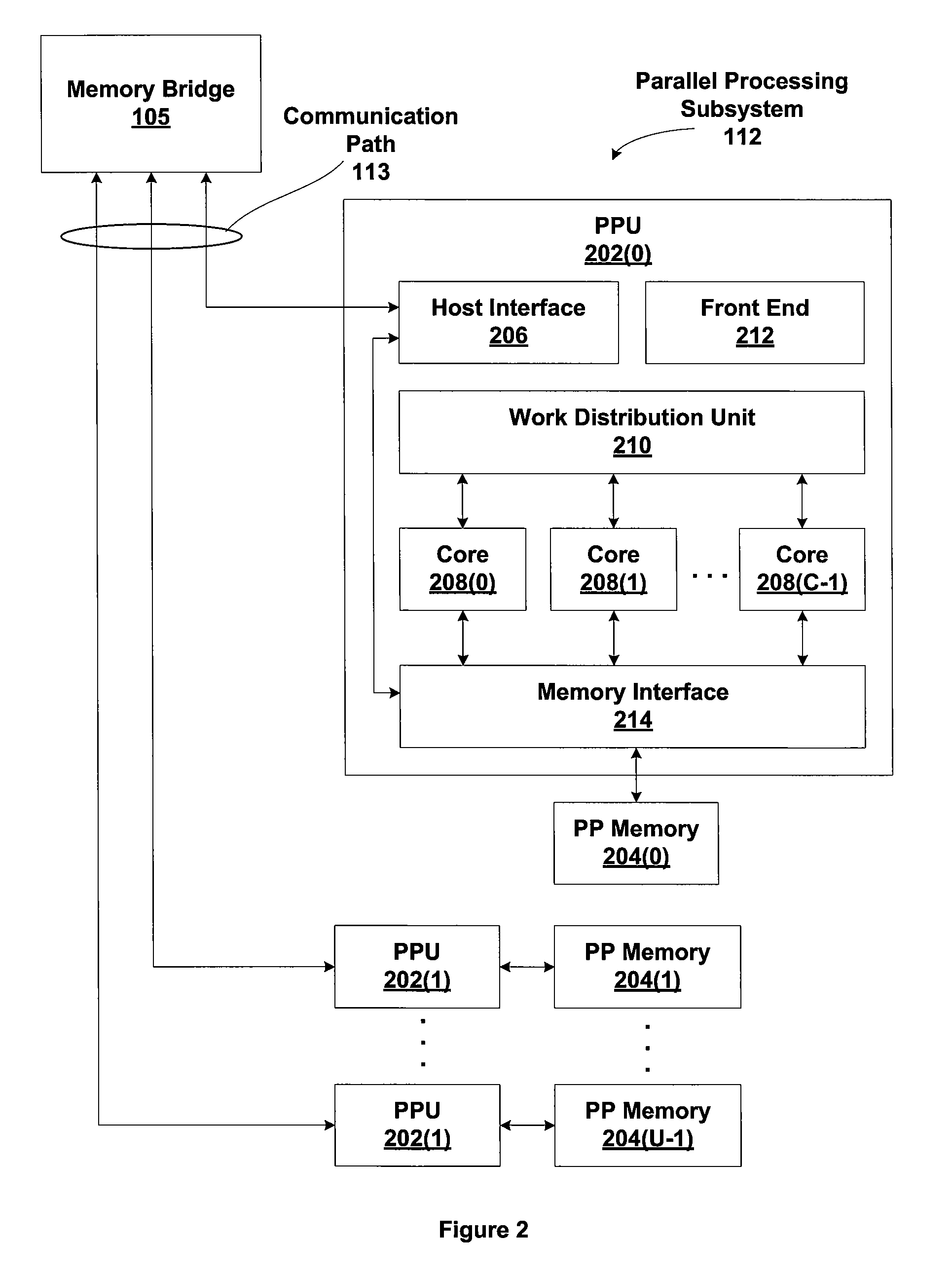

Parallel Array Architecture for a Graphics Processor

InactiveUS20070159488A1Improving memory localityImprove localitySingle instruction multiple data multiprocessorsCathode-ray tube indicatorsProcessing coreParallel computing

A parallel array architecture for a graphics processor includes a multithreaded core array including a plurality of processing clusters, each processing cluster including at least one processing core operable to execute a pixel shader program that generates pixel data from coverage data; a rasterizer configured to generate coverage data for each of a plurality of pixels; and pixel distribution logic configured to deliver the coverage data from the rasterizer to one of the processing clusters in the multithreaded core array. The pixel distribution logic selects one of the processing clusters to which the coverage data for a first pixel is delivered based at least in part on a location of the first pixel within an image area. The processing clusters can be mapped directly to the frame buffers partitions without a crossbar so that pixel data is delivered directly from the processing cluster to the appropriate frame buffer partitions. Alternatively, a crossbar coupled to each of the processing clusters is configured to deliver pixel data from the processing clusters to a frame buffer having a plurality of partitions. The crossbar is configured such that pixel data generated by any one of the processing clusters is deliverable to any one of the frame buffer partitions.

Owner:NVIDIA CORP

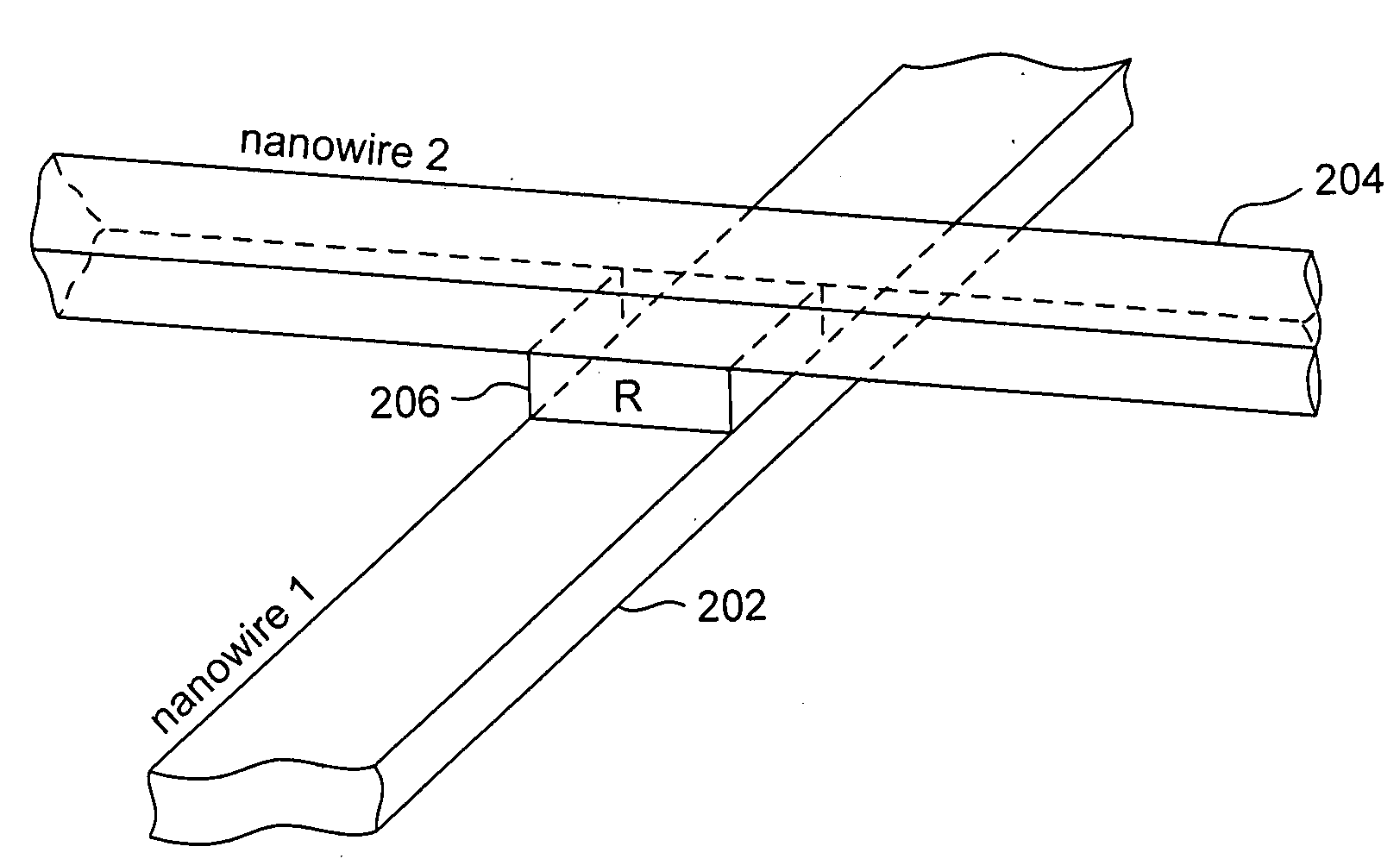

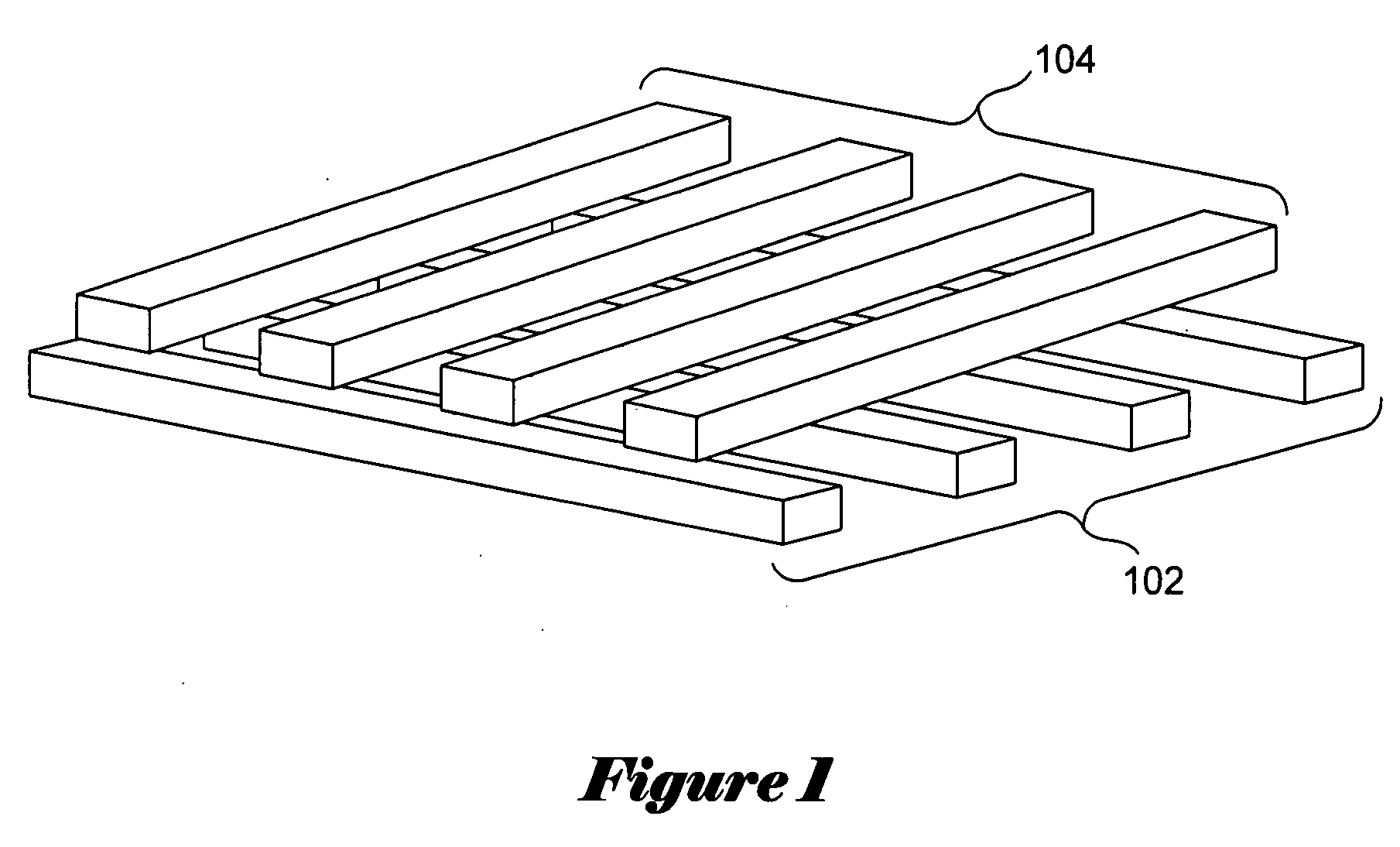

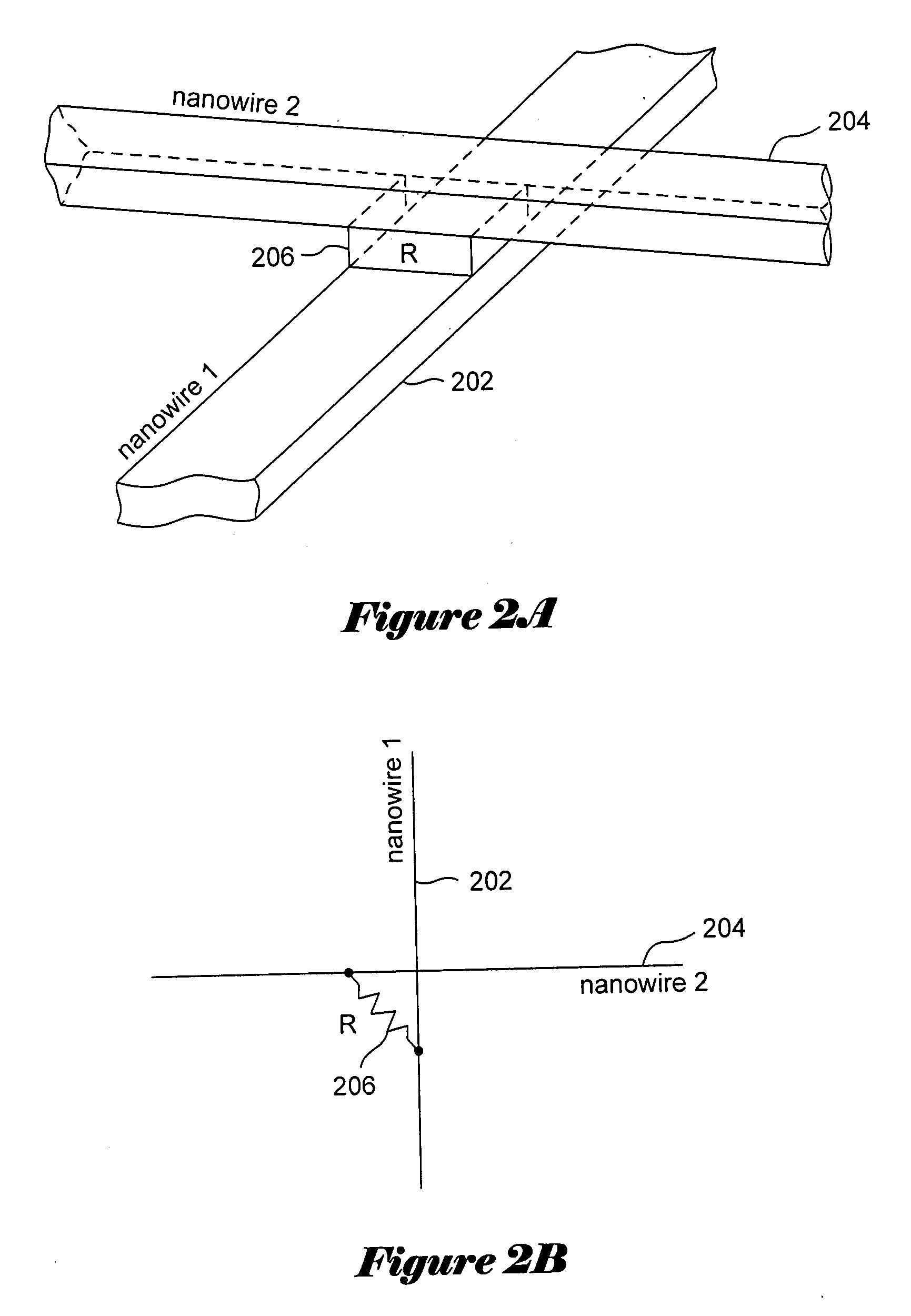

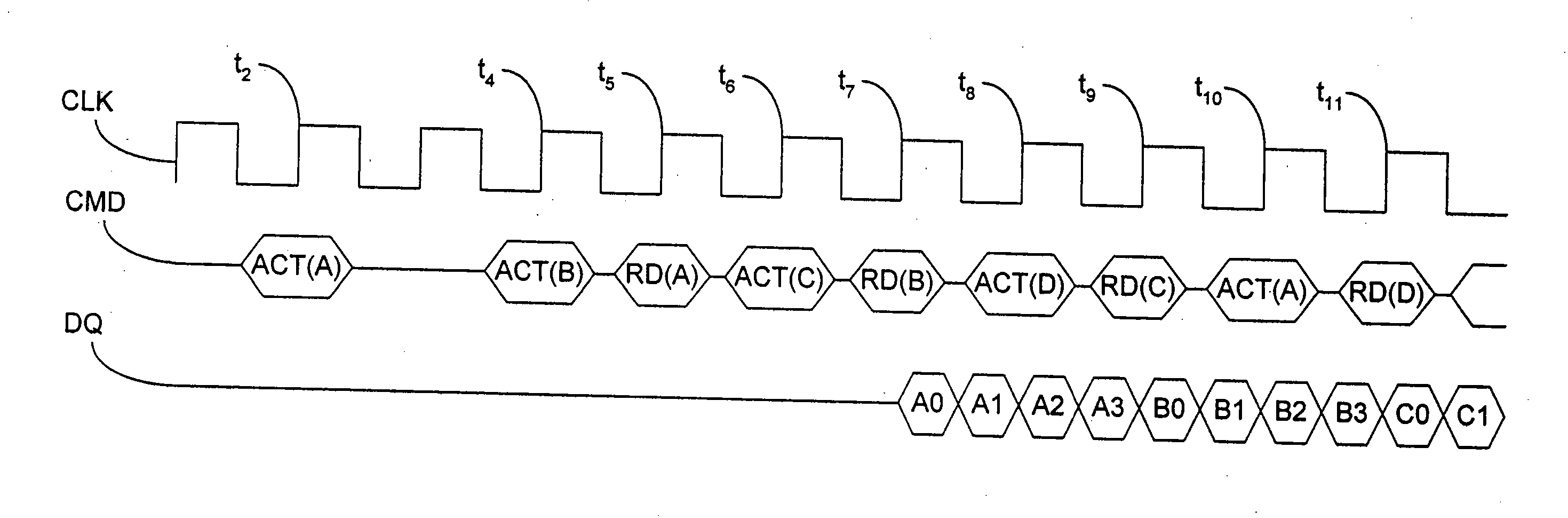

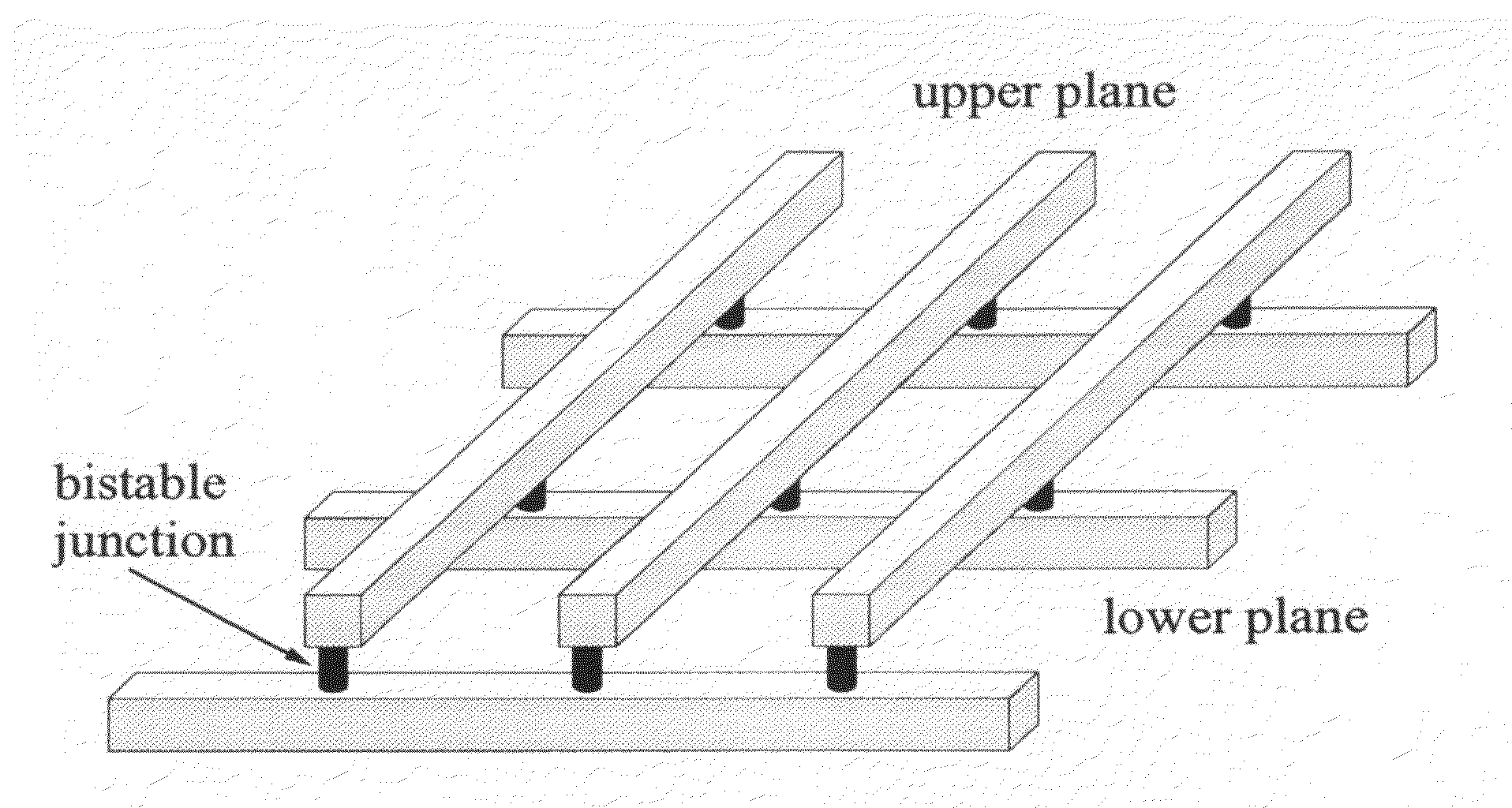

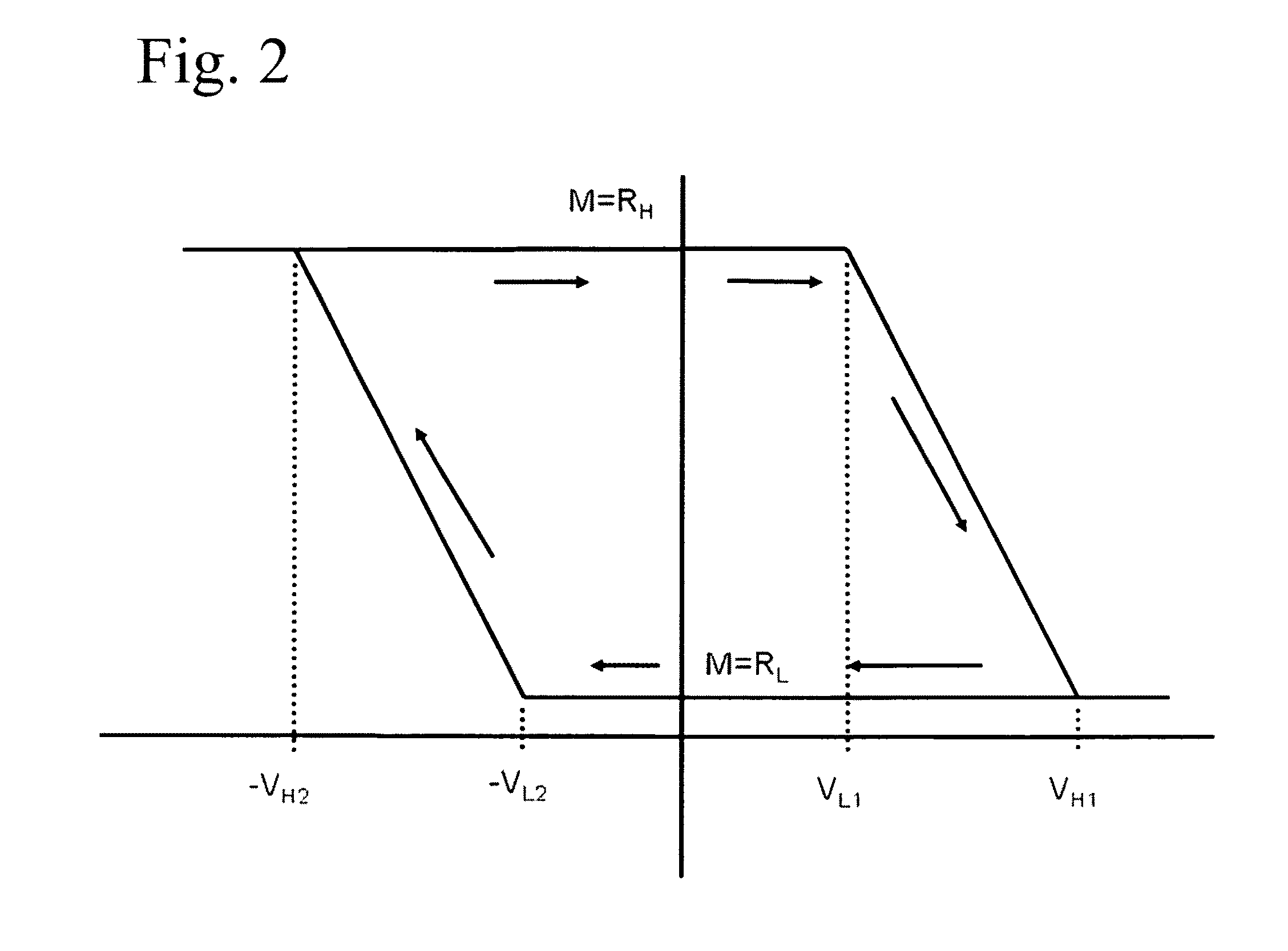

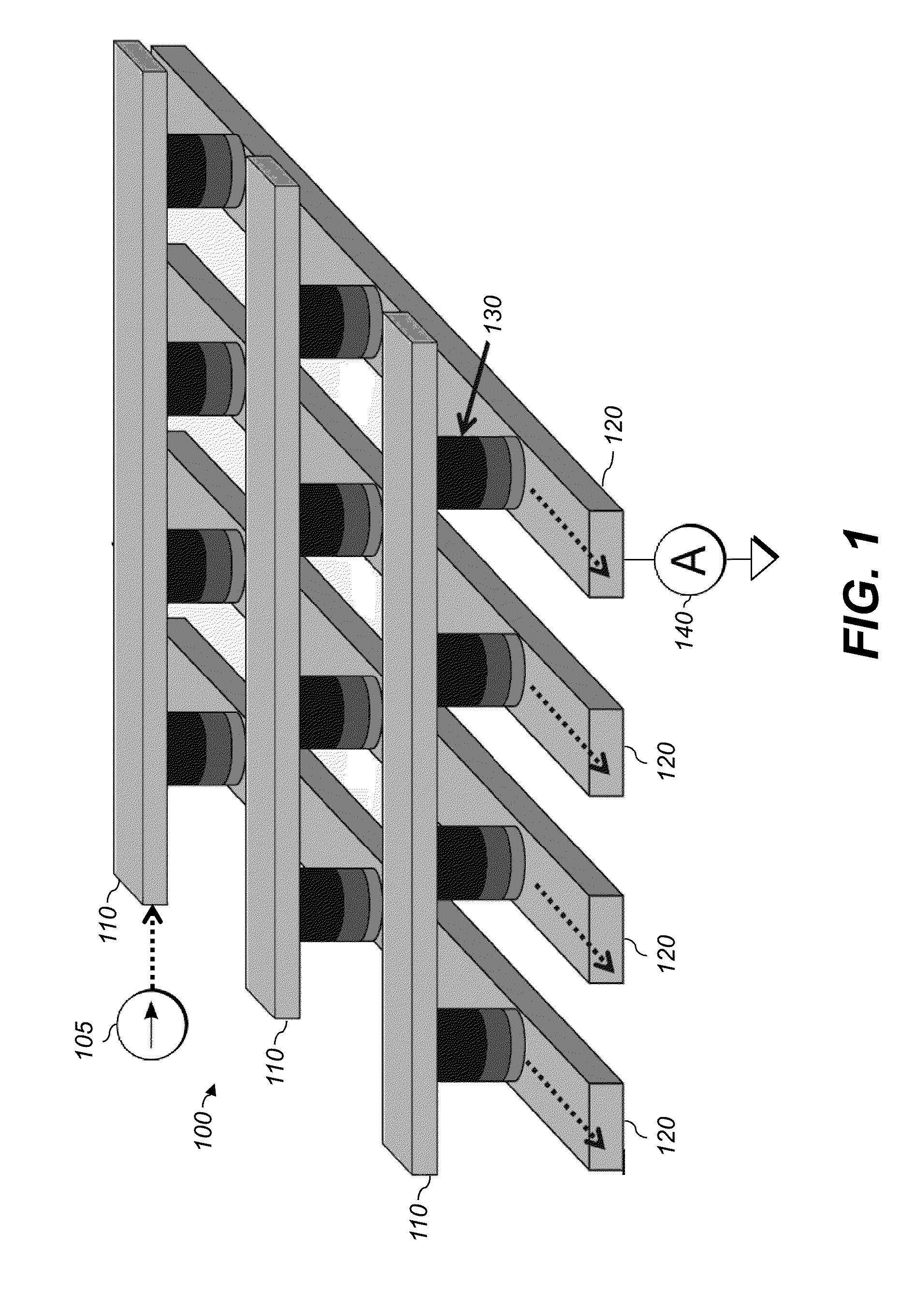

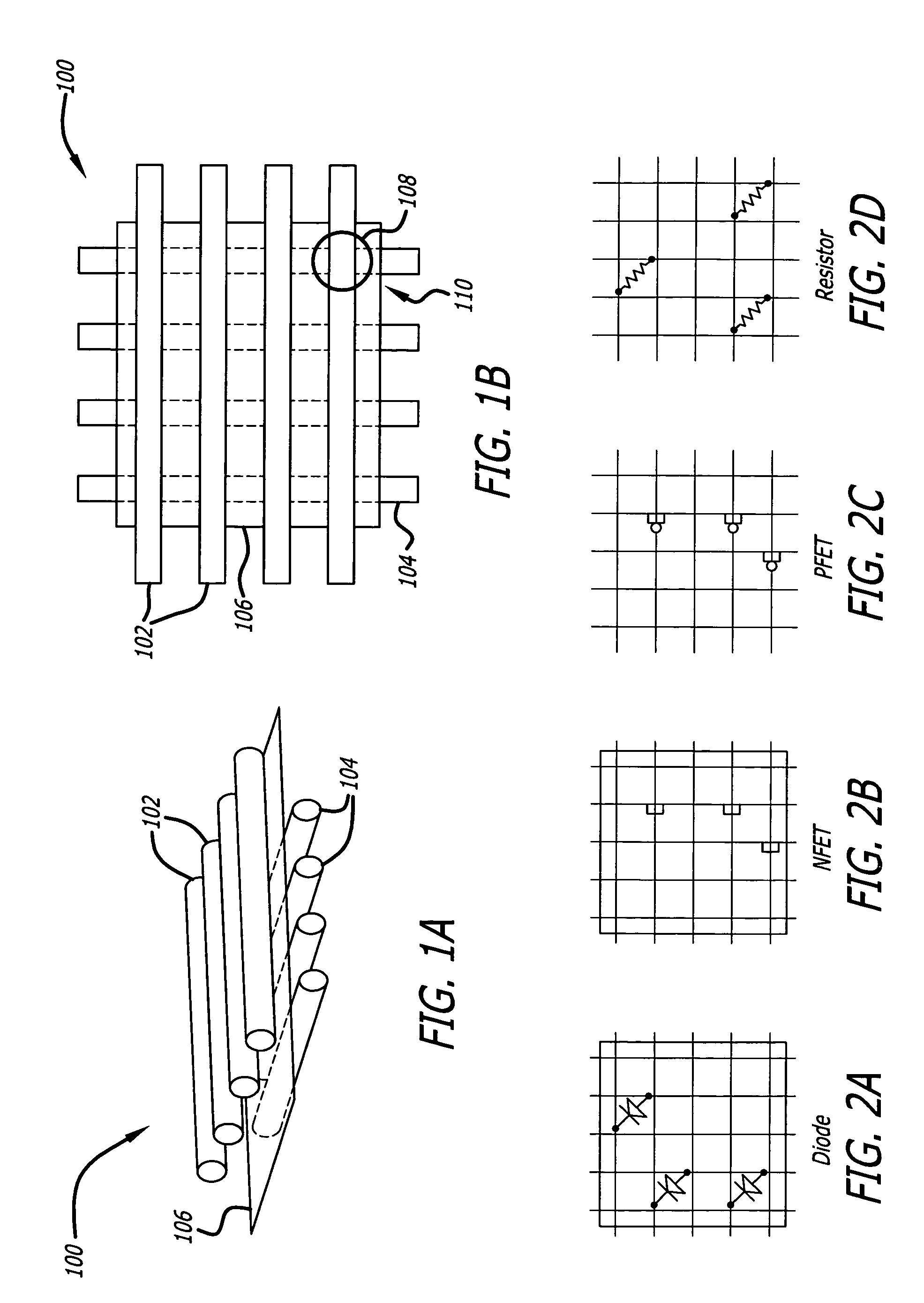

Crossbar-memory systems and methods for writing to and reading from crossbar memory junctions of crossbar-memory systems

Various embodiments of the present invention are directed to crossbar-memory systems to methods for writing information to and reading information stored in such systems. In one embodiment of the present invention, a crossbar-memory system comprises a first layer of microscale signal lines, a second layer of microscale signal lines, a first layer of nanowires configured so that each first layer nanowire overlaps each first layer microscale signal line, and a second layer of nanowires configured so that each second layer nanowire overlaps each second layer microscale signal line and overlaps each first layer nanowire. The crossbar-memory system includes nonlinear-tunneling resistors configured to selectively connect first layer nanowires to first layer microscale signal lines and to selectively connect second layer nanowires to second layer microscale signal lines. The crossbar-memory system also includes nonlinear tunneling-hysteretic resistors configured to connect each first layer nanowire to each second layer nanowire at each crossbar intersection.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Layered crossbar for interconnection of multiple processors and shared memories

InactiveUS20070208901A1Lower latencyLow scalabilityDigital computer detailsMemory systemsMulti processorInterconnection

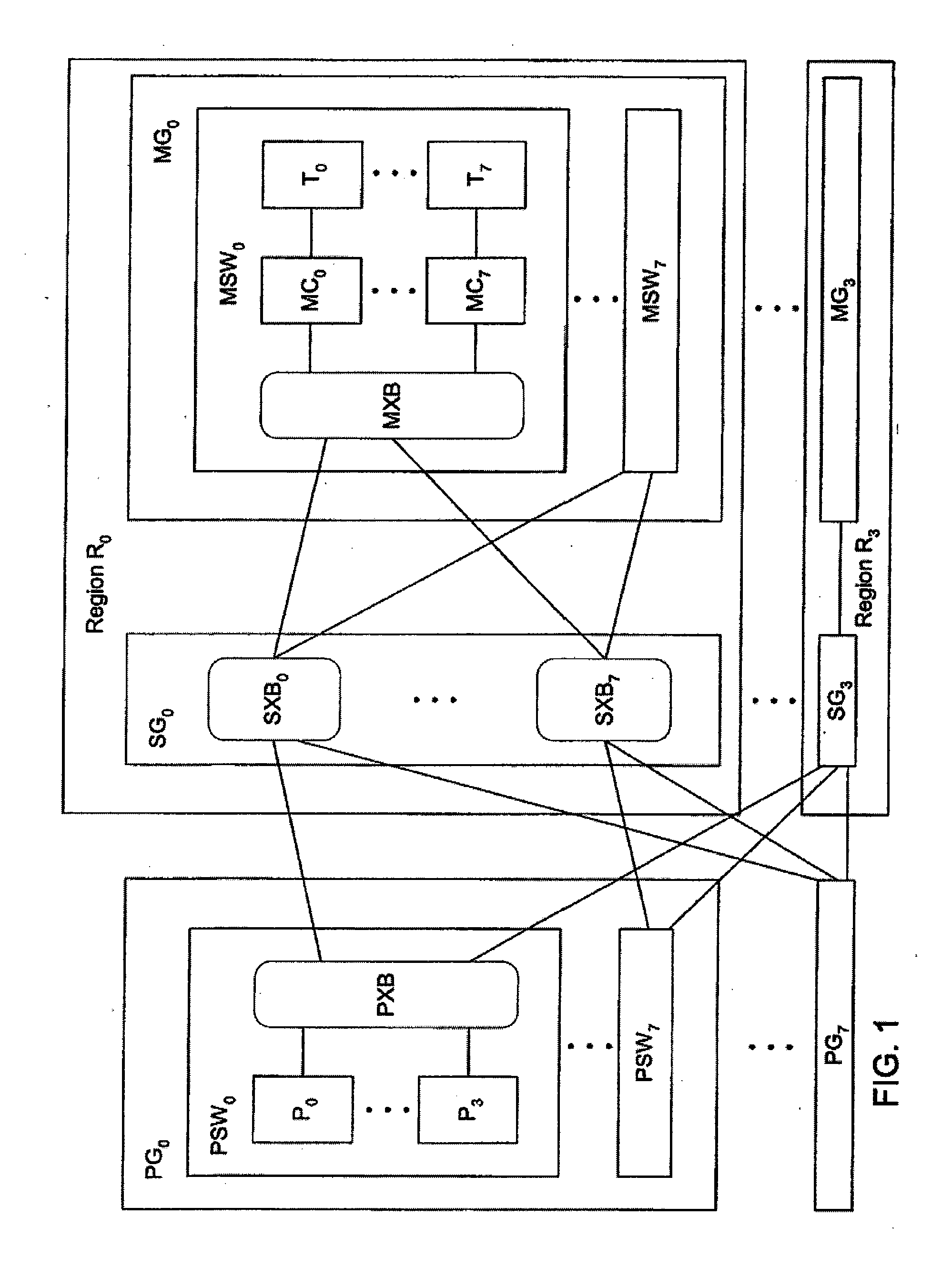

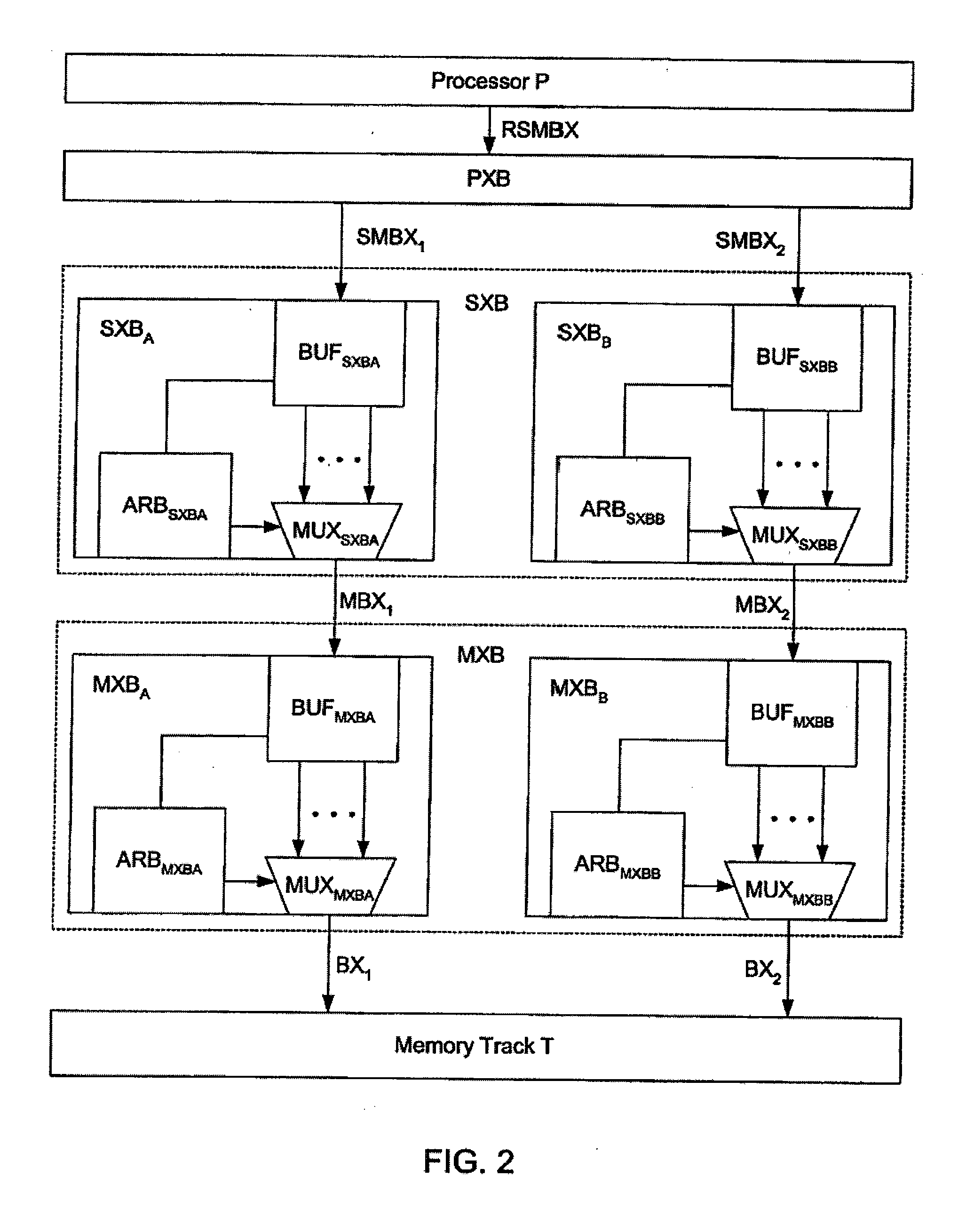

A method and apparatus includes a plurality of processor groups each having a plurality of processor switch chips each having a plurality of processors and a processor crossbar, each processor connected to the processor crossbar; a plurality of switch groups each having a plurality of switch crossbar chips each having a plurality of switch groups each having a plurality of switch crossbar chips each having a plurality of switch crossbars each connected to a processor crossbar in each processor group, wherein no two switch crossbars in a switch group are connected to the same processor crossbar; a plurality of memory groups having a plurality of memory switch chips each having a plurality of memory controllers and a memory crossbar, each memory controller connected to the memory crossbar, each memory crossbar in each memory group connected to all of the switch crossbar in a corresponding one of the switch groups, wherein no two memory groups are connected to the same switch group.

Owner:MIND FUSION LLC

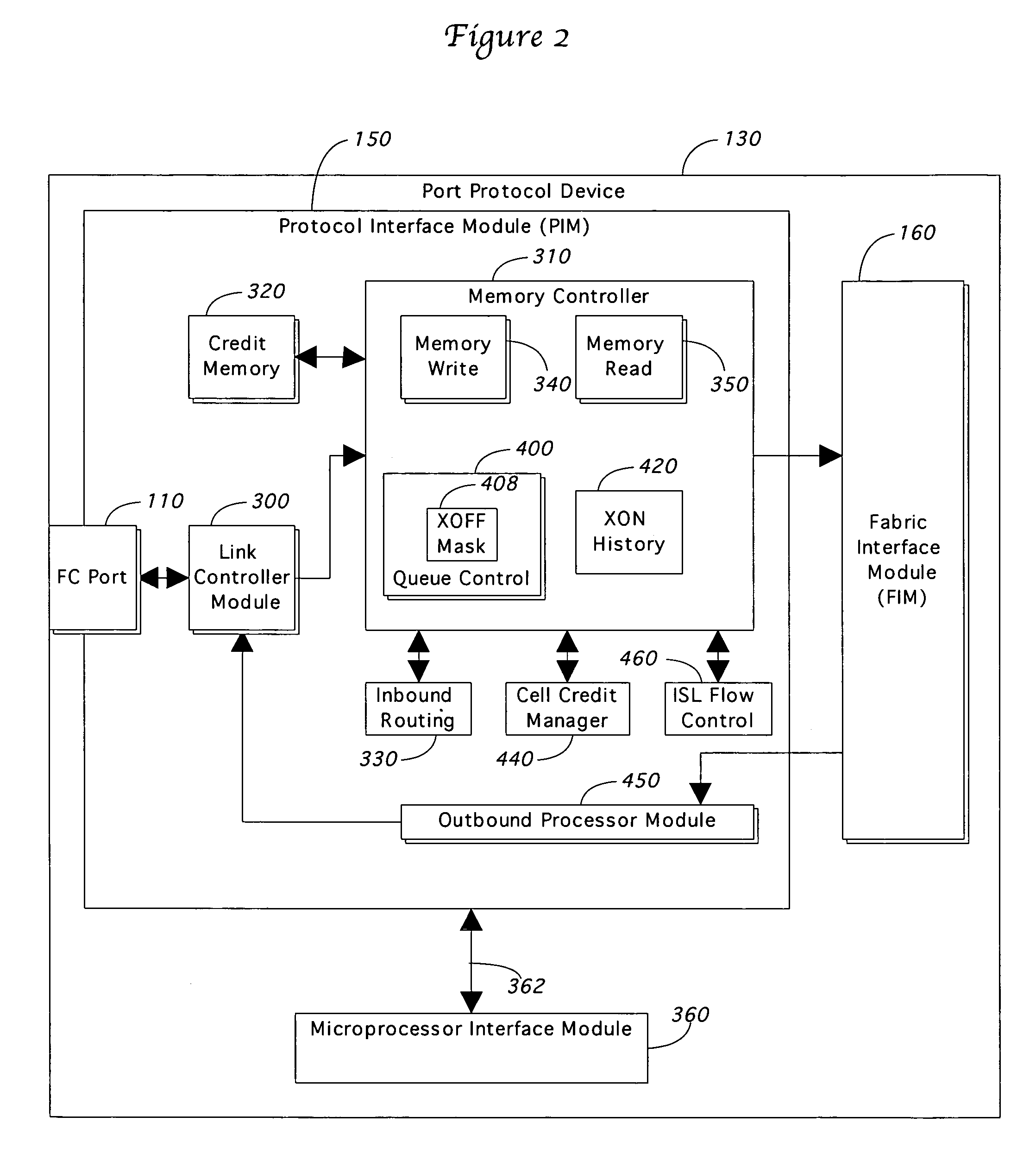

Fiber channel switch

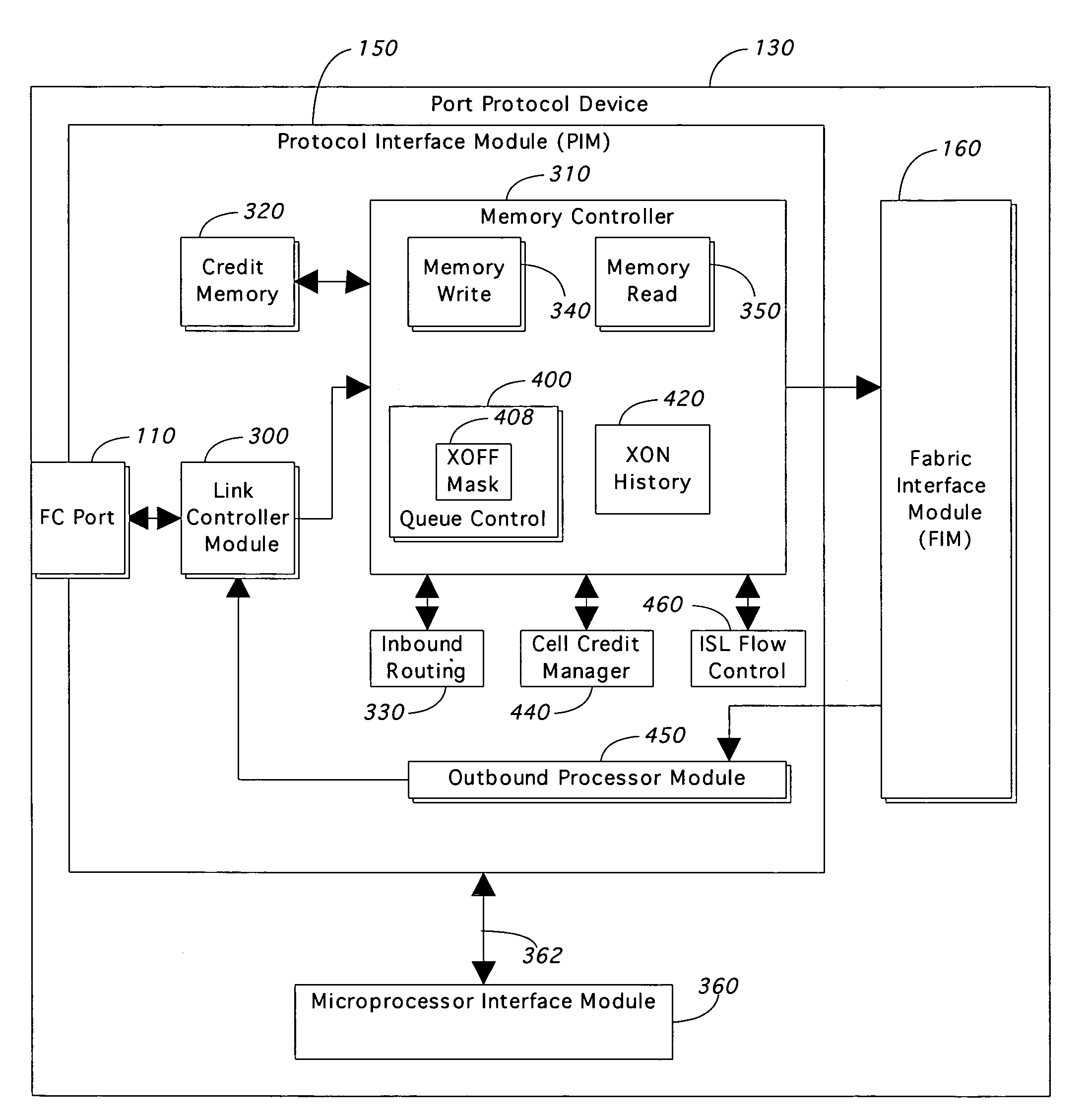

A Fiber Channel switch is presented that tracks the congestion status of destination ports in an XOFF mask at each input. A mapping is maintained between virtual channels on an ISL and the destination ports to allow changes in the XOFF mask to trigger a primitive to an upstream port that provides virtual channel flow control. The XOFF mask is also used to avoid sending frames to a congested port. Instead, these frames are stored on a single deferred queue and later processed in a manner designed to maintain frame ordering. A routing system is provided that applies multiple routing rules in parallel to perform line speed routing. The preferred switch fabric is cell based, with techniques used to manage path maintenance for variable length frames and to adapt to varying transmission rates in the system. Finally, the switch allows data and microprocessor communication to share the same crossbar network.

Owner:MCDATA SERVICES CORP +1

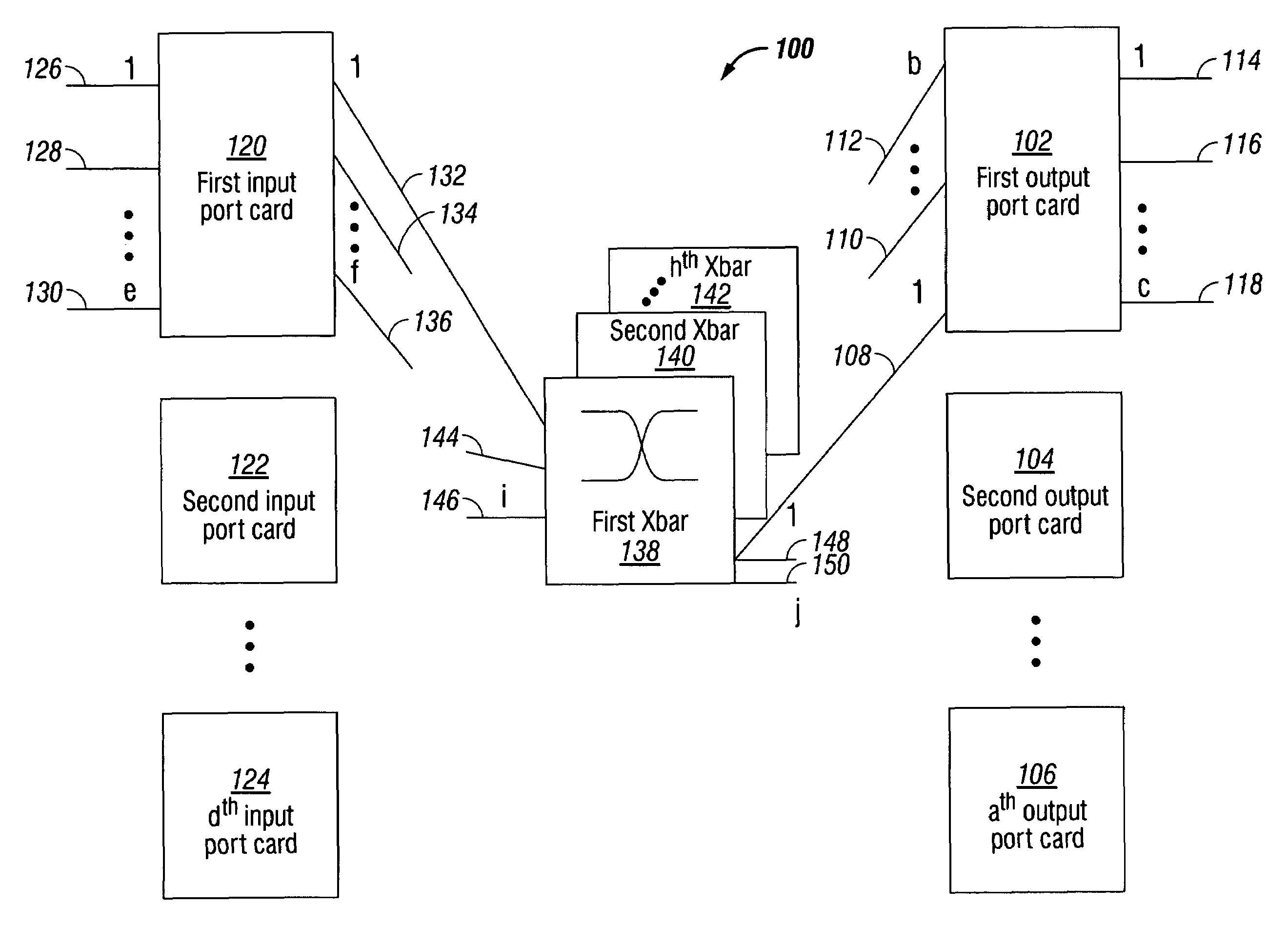

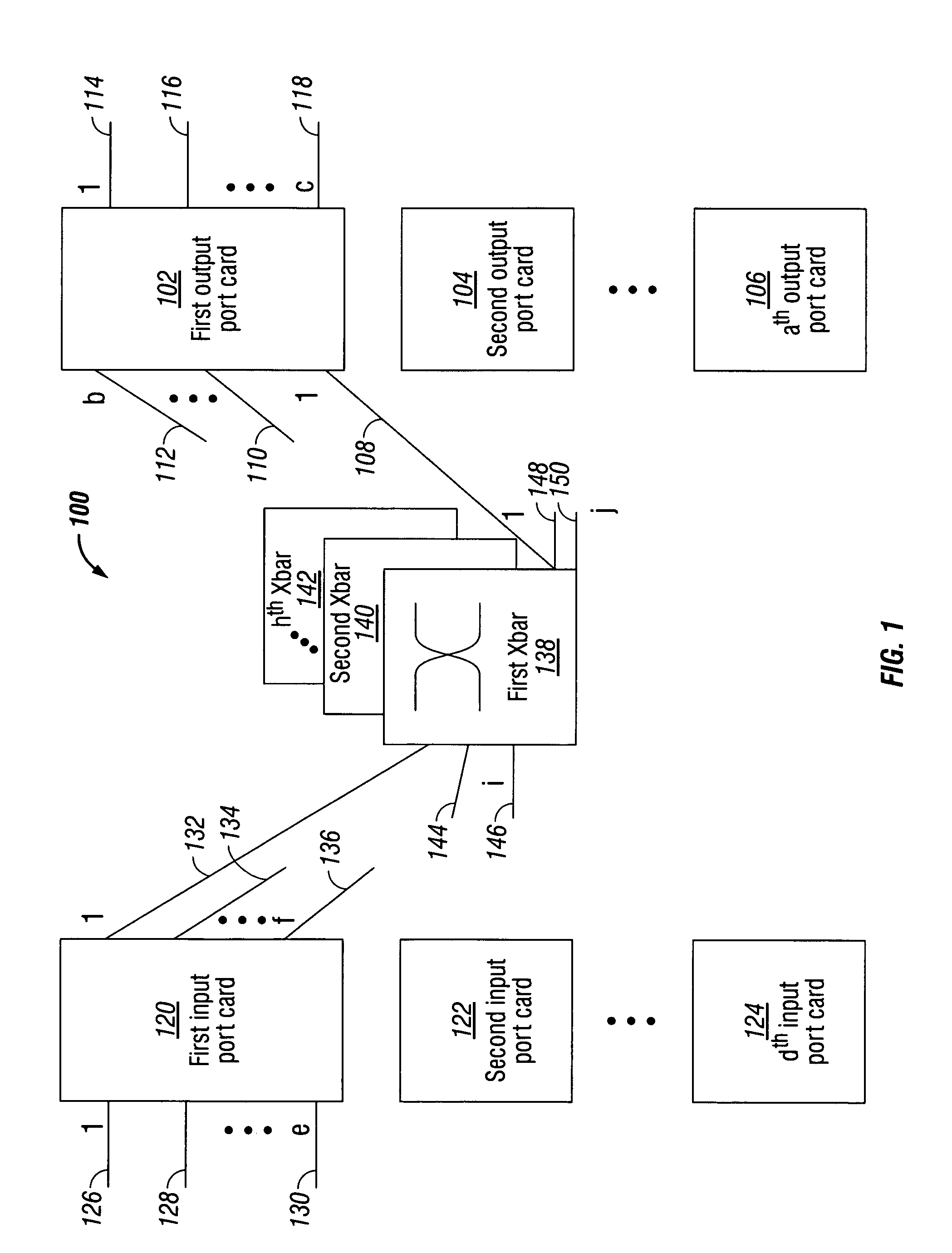

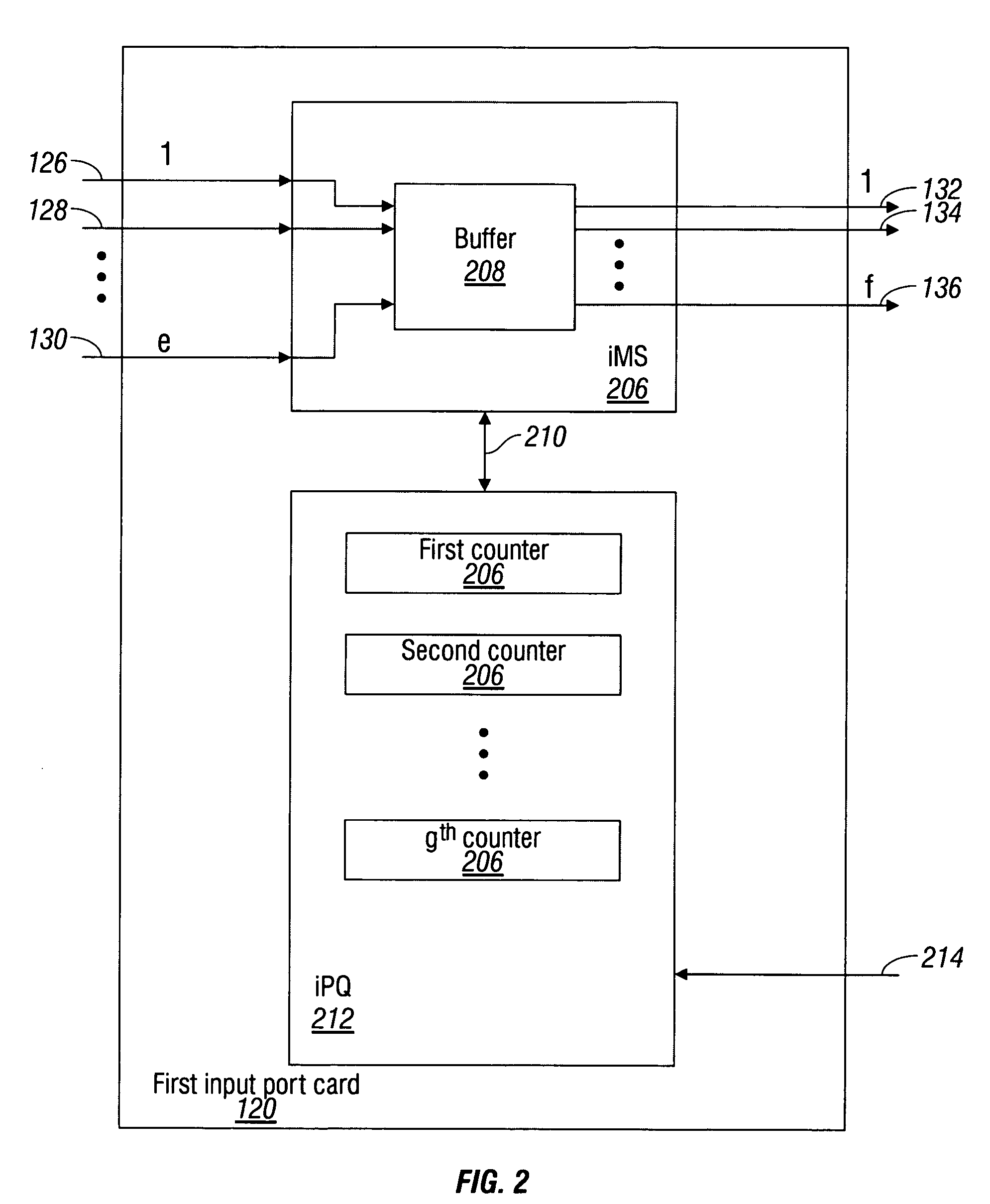

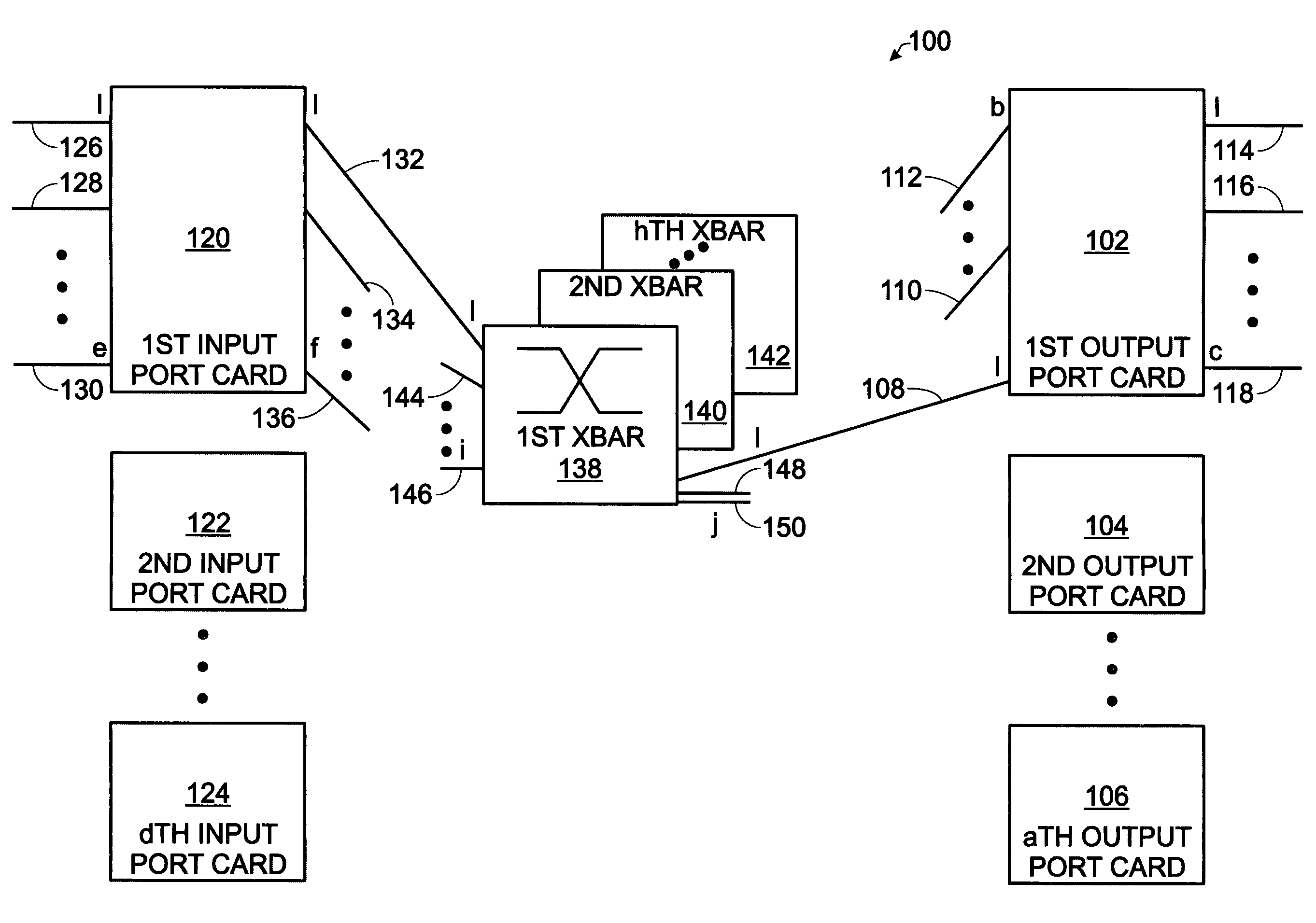

Minimum latency cut-through switch fabric

InactiveUS7230947B1Multiplex system selection arrangementsData switching by path configurationPacket communicationCut-through switching

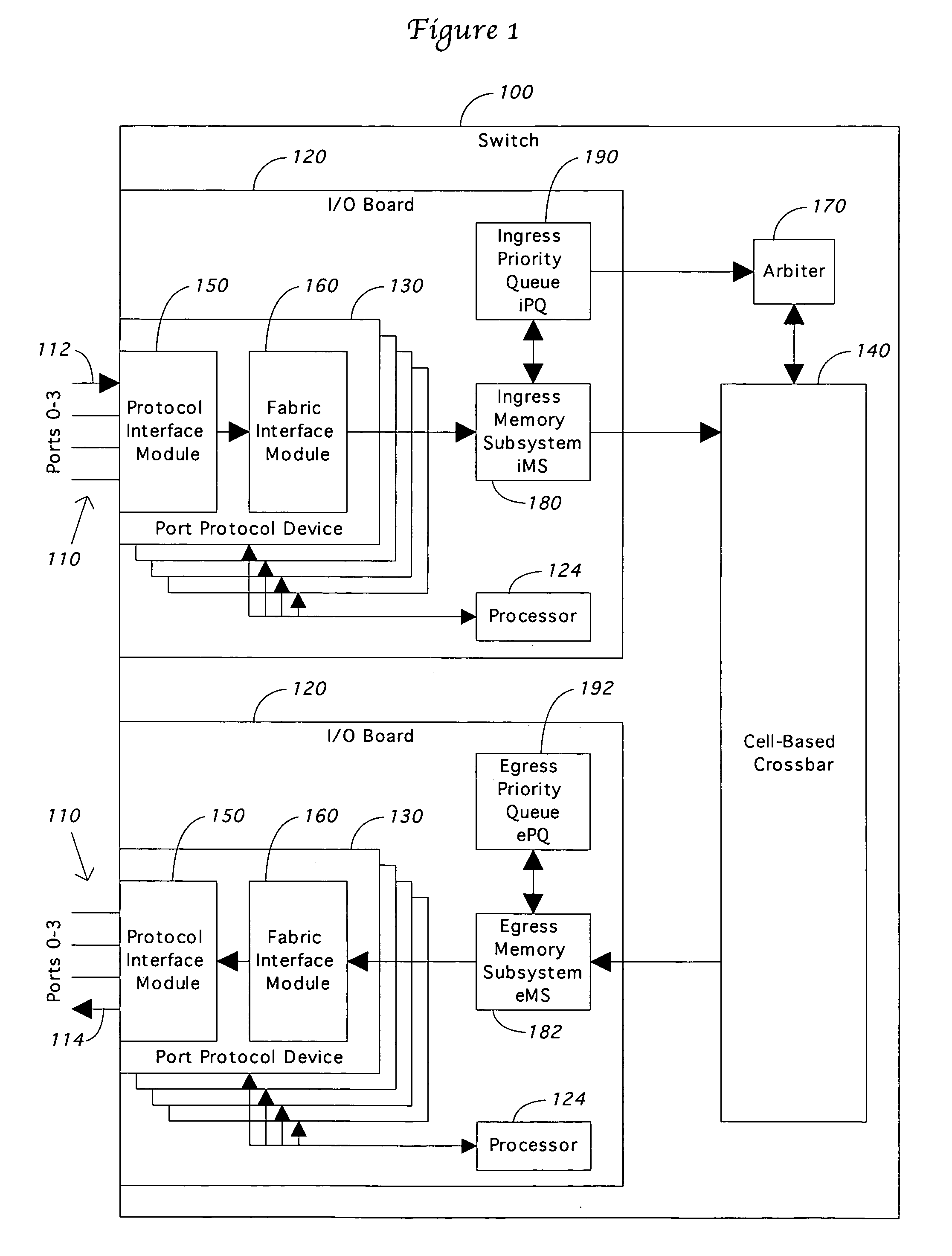

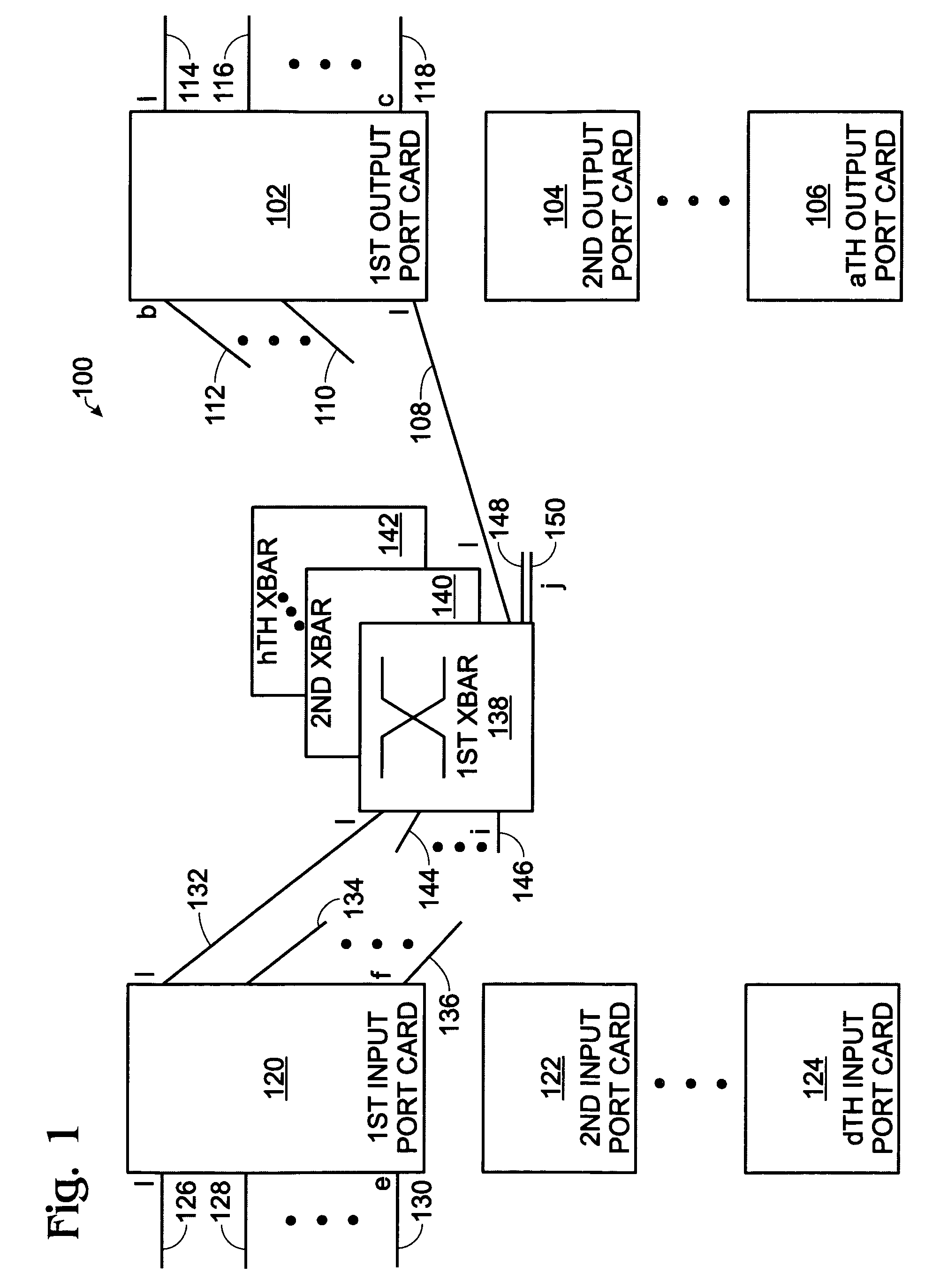

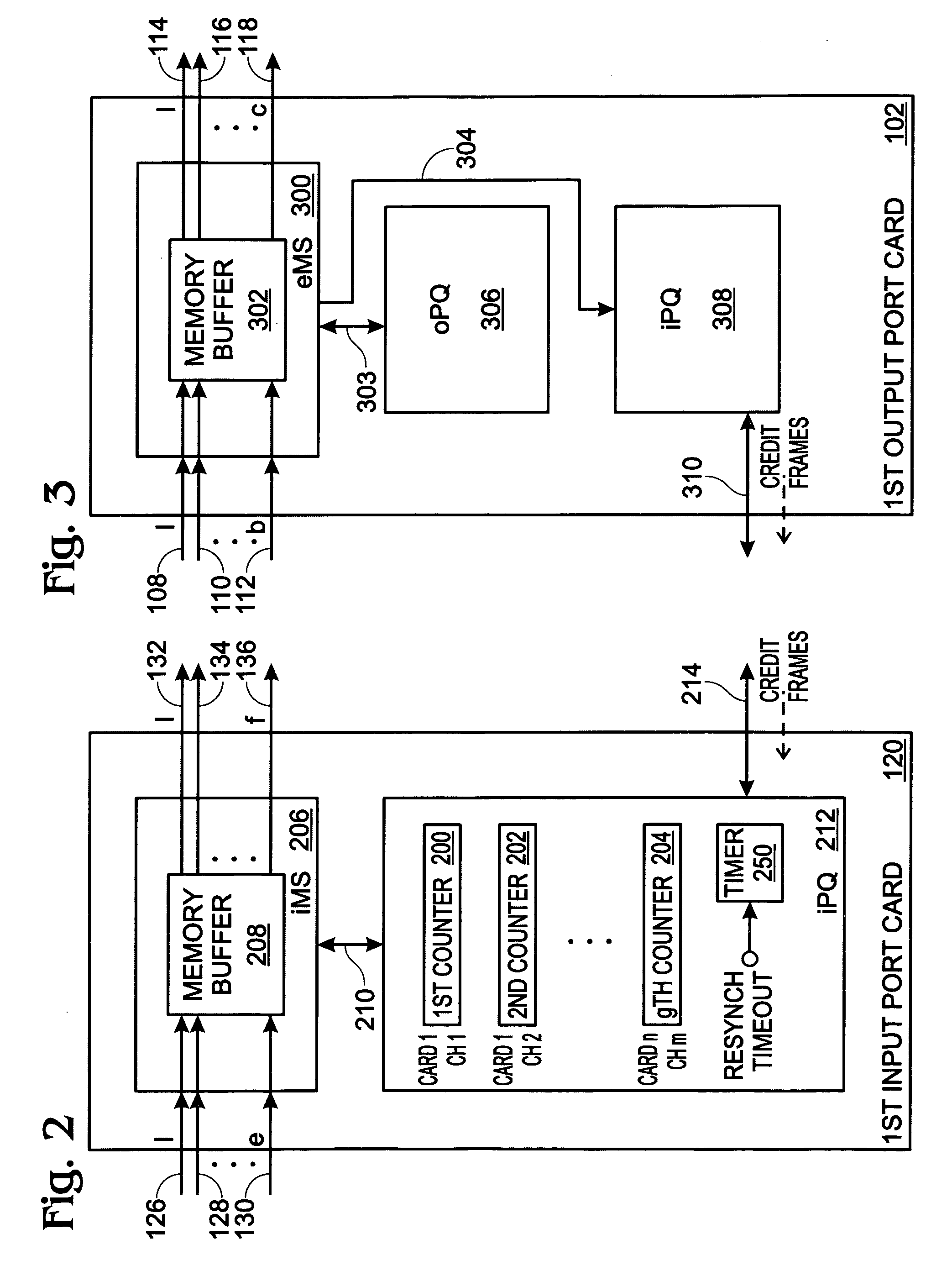

A system and method are provided for cut-through packet routing in a packet communications switch fabric. The method comprises: accepting information packets addressed to a plurality of output port card egress ports at an input port card ingress port; routing information packets between port cards on backplane data links through an intervening crossbar; maintaining a credit counter for each port card egress destination, at the input port card; decrementing the counter in response to transmitting cells in a packet from the input port card; and, incrementing the counter in response to transmitting cells from the packet at the output port card. In some aspects of the method, accepting information includes buffering the packets in an ingress memory subsystem (iMS). Routing information includes the iMS transmitting buffered packets on a selected backplane data link. Decrementing the counter includes the iMS communicating with the iPQ in response to transmitting a cell.

Owner:QUALCOMM INC

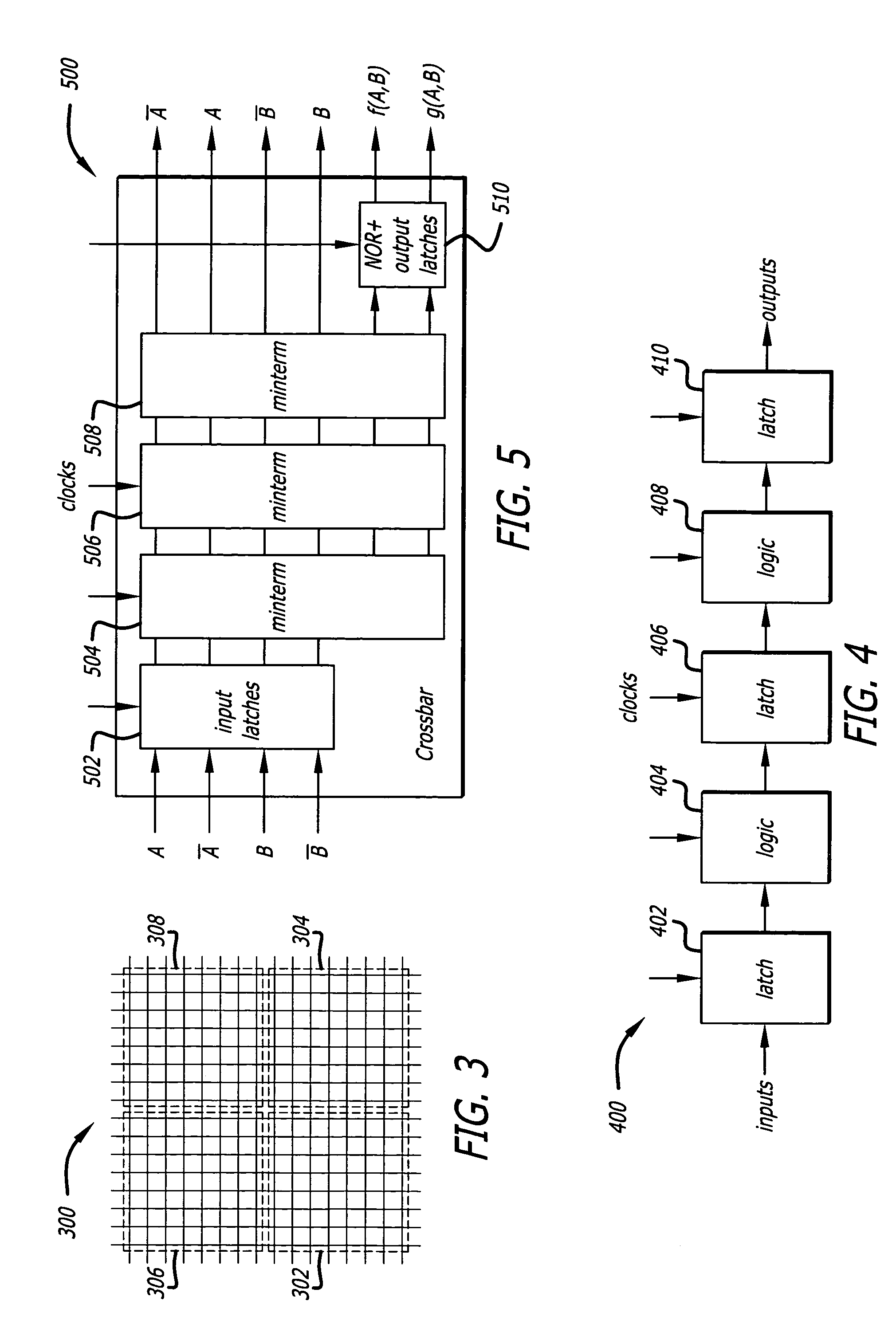

Reconfigurable logic structures

InactiveUS20070146012A1Logic circuits characterised by logic functionNanoinformaticsElectronic structurePhase-change memory

Reconfigurable electronic structures and circuits using programmable, non-volatile memory elements. The programmable, non-volatile memory elements may perform the functions of storage and / or a switch to produce components such as crossbars, multiplexers, look-up tables (LUTs) and other logic circuits used in programmable logic structures (e.g., (FPGAs)). The programmable, non-volatile memory elements comprise one or more structures based on Phase Change Memory, Programmable Metallization, Carbon Nano-Electromechanical (CNT-NEM), or Metal Nano-Electromechanical device technologies.

Owner:CSWITCH

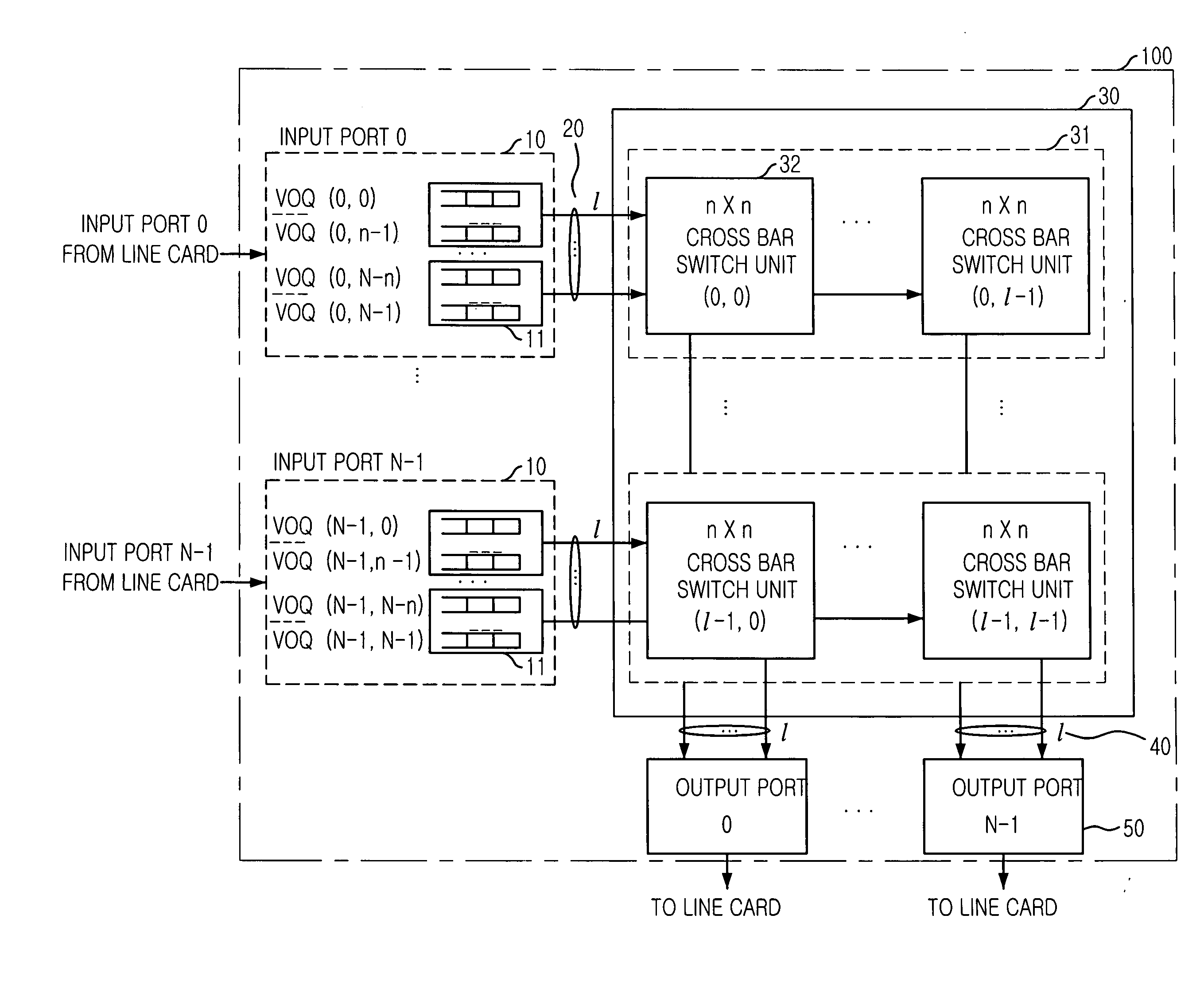

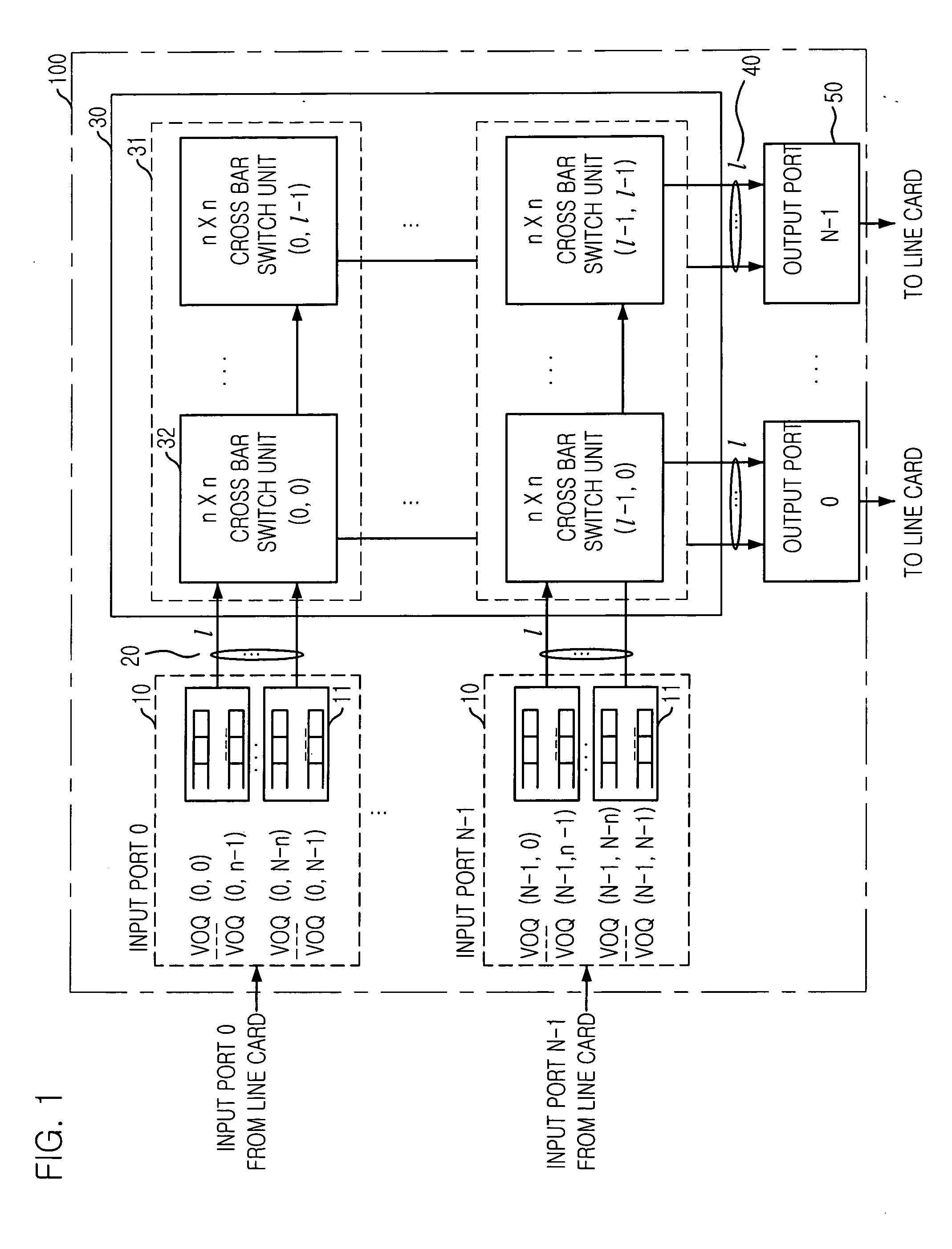

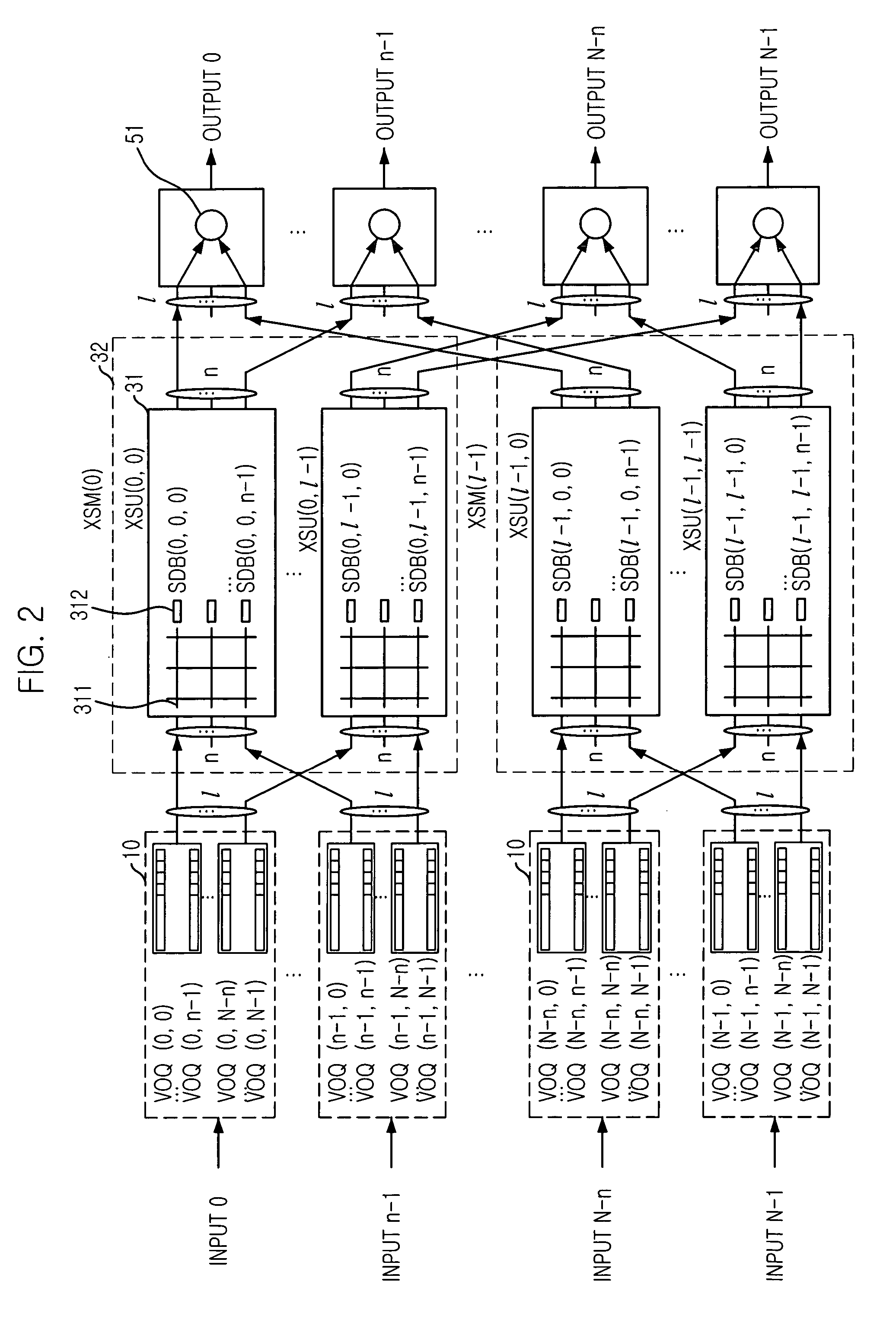

Scalable crossbar matrix switching apparatus and distributed scheduling method thereof

InactiveUS20050152352A1Multiplex system selection arrangementsData switching by path configurationCrossover switchHigh velocity

A high speed and high capacity switching apparatus is disclosed. The apparatus includes: N input ports each of which for outputting maximum l cells in a time slot, wherein each of the N input ports includes N virtual output queues (VOQs) which are grouped in l virtual output queues group with n VOQs; N×N switch fabric having l2 crossbar switch units for scheduling cells inputted from N input ports based on a first arbitration function based on a round-robin, wherein l VOQ groups are connected to l XSUs; and N output ports connected to l XSUs for selecting one cell from l XSUs in a cell time slot by scheduling cells by a second arbitration function based on a backlog weighed round-robin, which operates independently of the first arbitration function, and transferring the selected cell to its output link.

Owner:ELECTRONICS & TELECOMM RES INST

Cache crossbar arbitration

ActiveUS20050060457A1Efficient processingMemory adressing/allocation/relocationConcurrent instruction executionProcessing coreParallel computing

A processor chip is provided. The processor chip includes a plurality of processing cores, where each of the processing cores are multi-threaded. The plurality of processing cores are located in a center region of the processor chip. A plurality of cache bank memories are included. A crossbar enabling communication between the plurality of processing cores and the plurality of cache bank memories is provided. The crossbar includes an arbiter configured to arbitrate multiple requests received from the plurality of processing cores with available outputs. The arbiter includes a barrel shifter configured to rotate the multiple requests for dynamic prioritization, and priority encoders associated with each of the available outputs. Each of the priority encoders have logic gates configured to disable priority encoder outputs. A method for arbitrating requests within a multi-core multi-thread processor is included.

Owner:ORACLE INT CORP

Memristor crossbar neural interface

InactiveUS20090163826A1Improve adaptabilityIncrease speedNanotechSolid-state devicesElectrical resistance and conductanceProsthesis

A device includes an array of electrodes configured for attachment in or on the human head interconnected to control circuitry via a programmable crossbar signal processor having reconfigurable resistance states. In various embodiments the device may be used as a controller for a video game console, a robotic prosthesis, a portable electronic device, or a motor vehicle.

Owner:MOUTTET BLAISE LAURENT

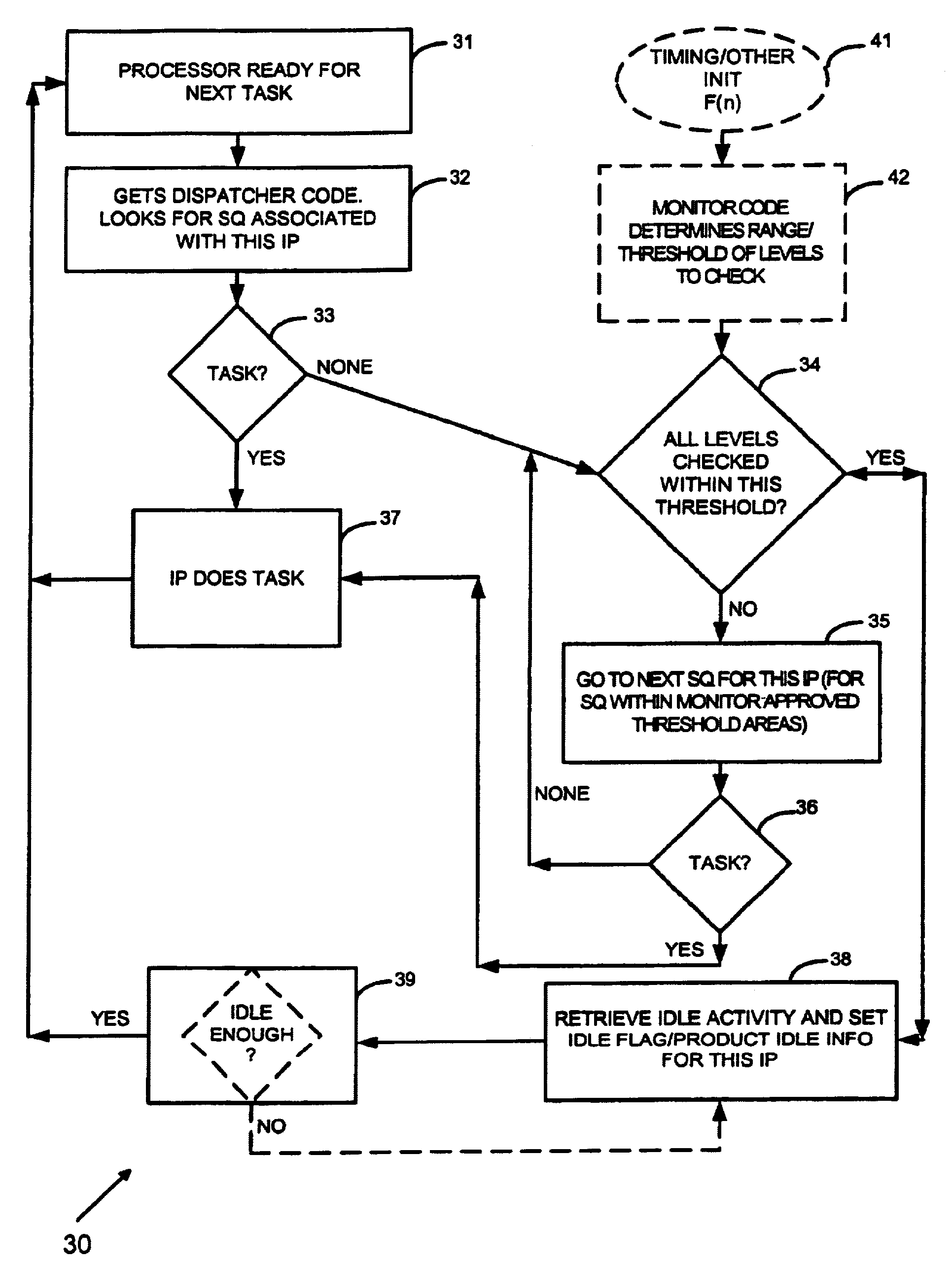

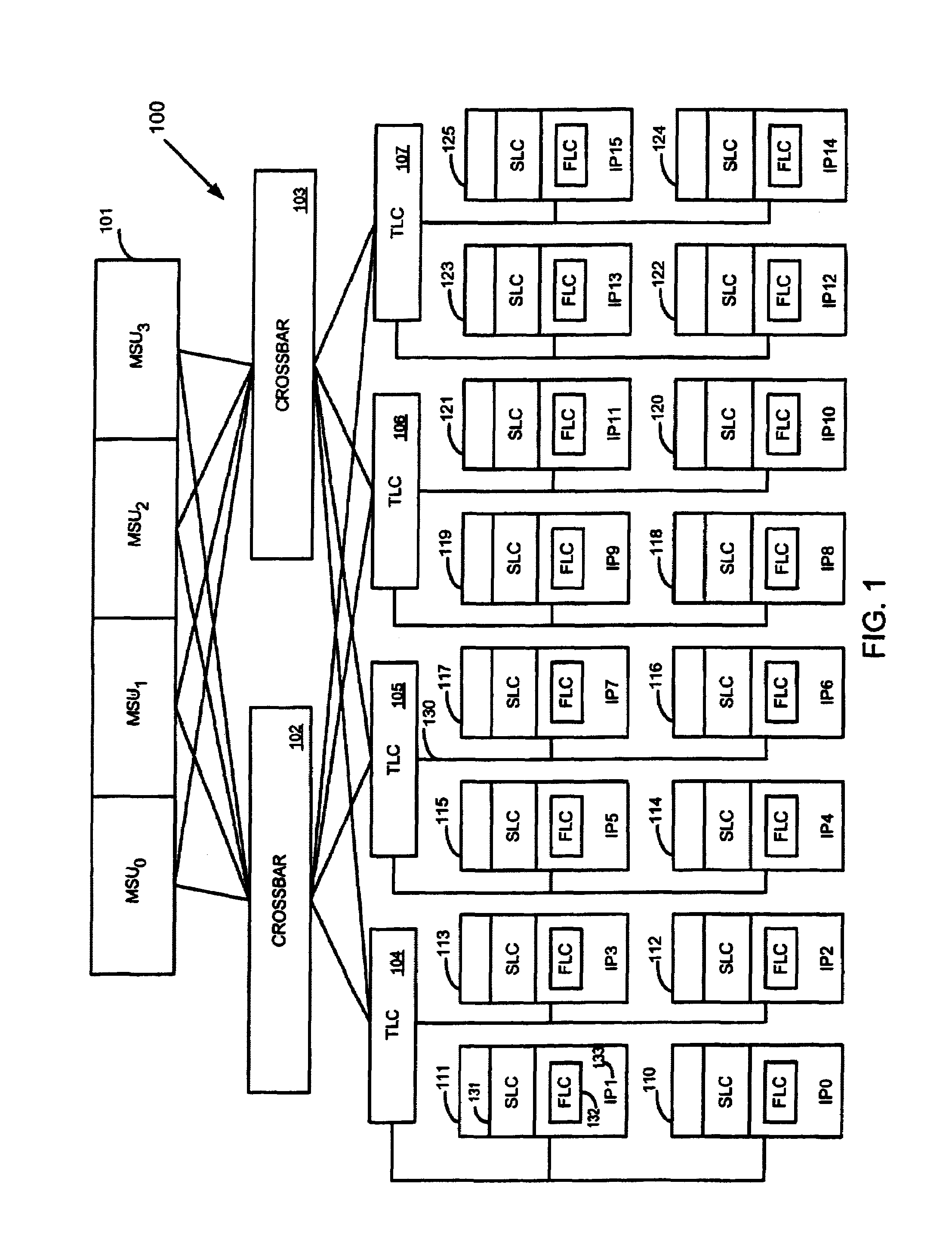

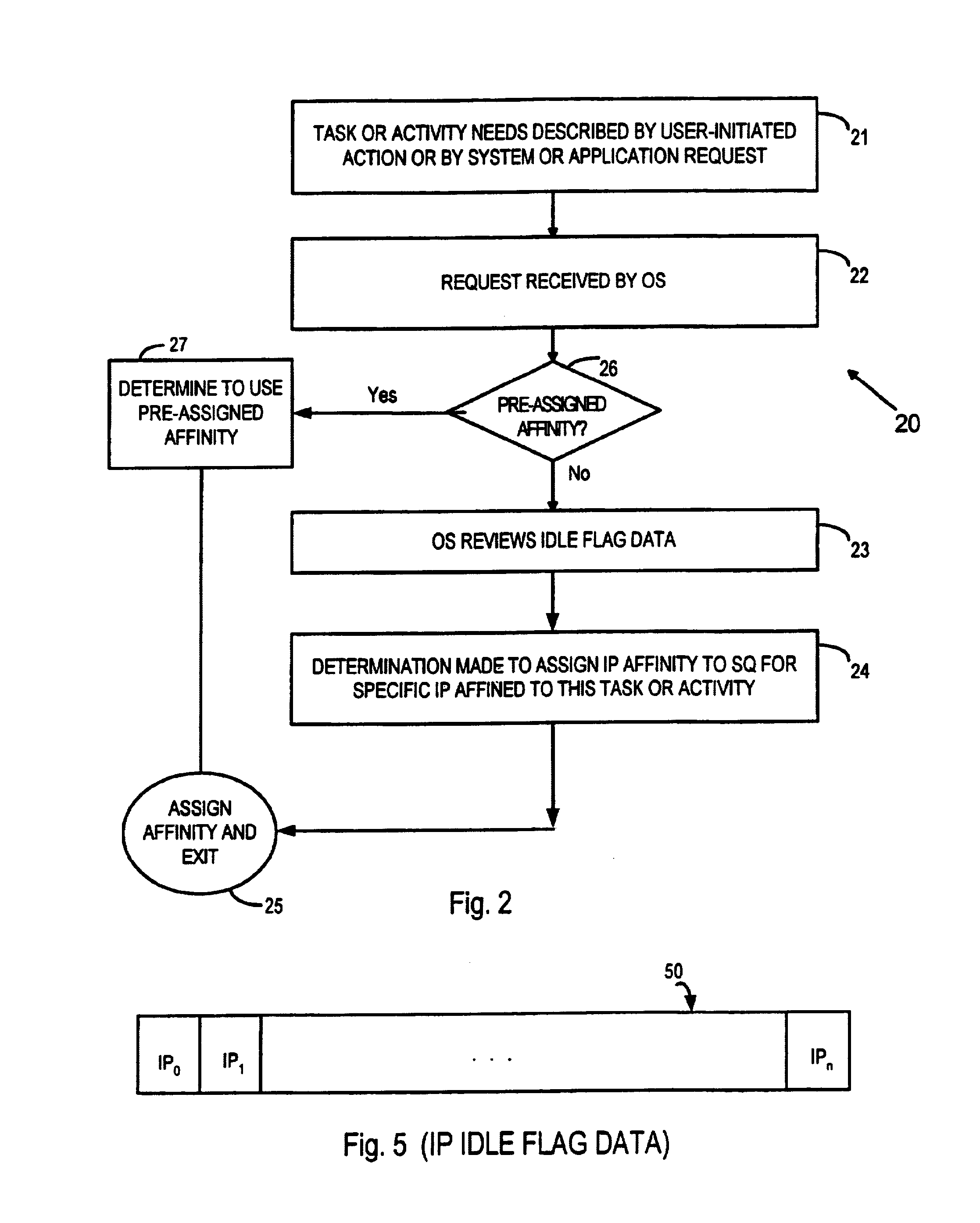

Hierarchical affinity dispatcher for task management in a multiprocessor computer system

ActiveUS6996822B1Reduce the burden onBig advantageMultiprogramming arrangementsMemory systemsCrossover switchAccess time

An Operating System (OS) function maps affinity to processors for each new task and except for certain circumstances where other processors are permitted to steal tasks, this affinity remains unchanged. Hierarchical load balancing is mapped through an affinity matrix (that can be expressed as a table) which is accessed by executable code available through a dispatcher to the multiplicity of instruction processors (IPs) in a multiprocessor computer system. Since the computer system has multiple layers of cache memories, connected by busses, and crossbars to the main memory, the hierarchy mapping matches the cache memories to assign tasks first to IPs most likely to share the same cache memory residue from related tasks, or at least less likely to incur a large access time cost. Each IP has its own switching queue (SQ) for primary task assignments through which the OS makes the initial affinity assignment. When an IP's SQ becomes task free, the dispatcher code has the free IP look to the SQ of other IPs in accord with the mapped hierarchy, if a threshold of idleness is reached.

Owner:UNISYS CORP

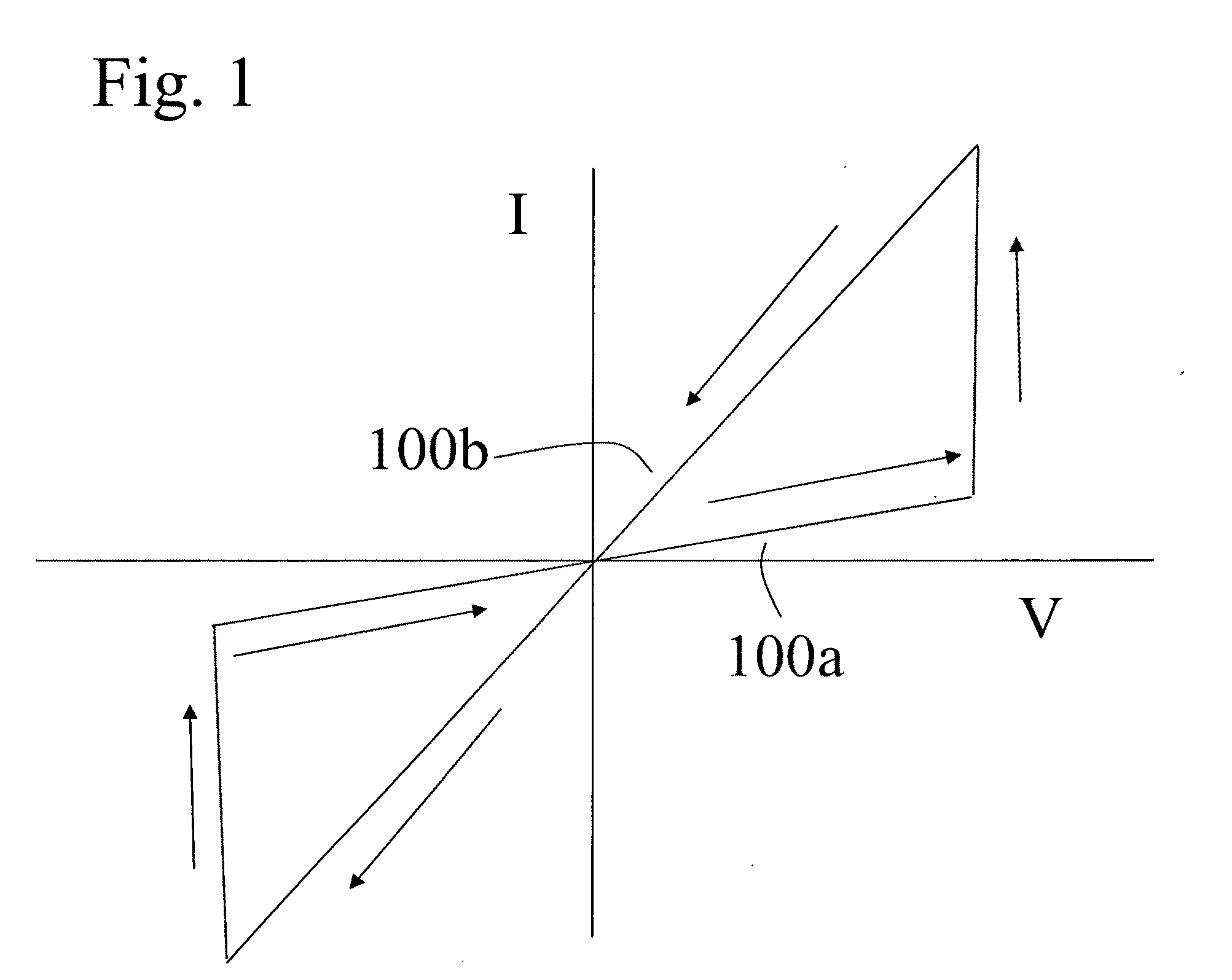

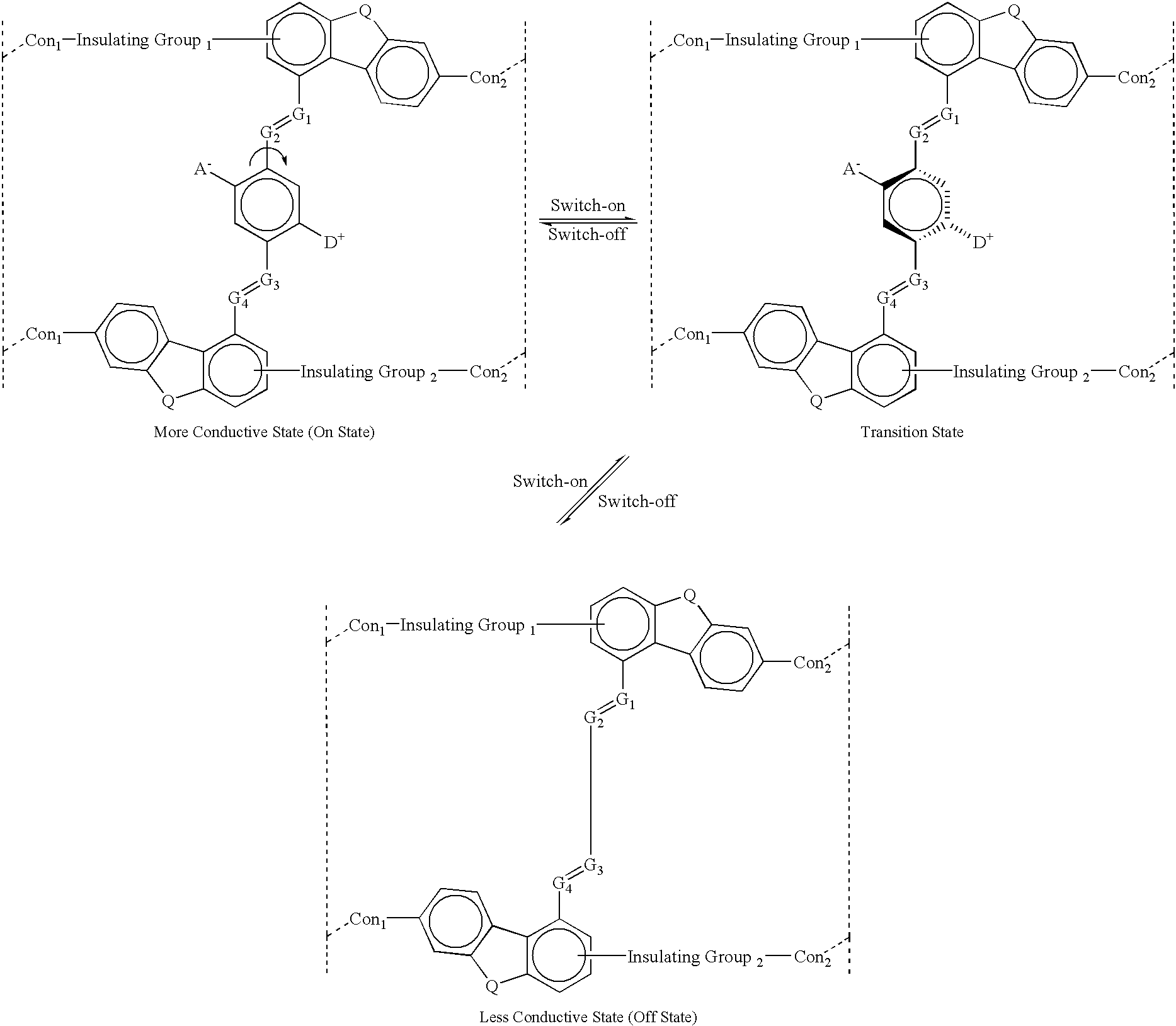

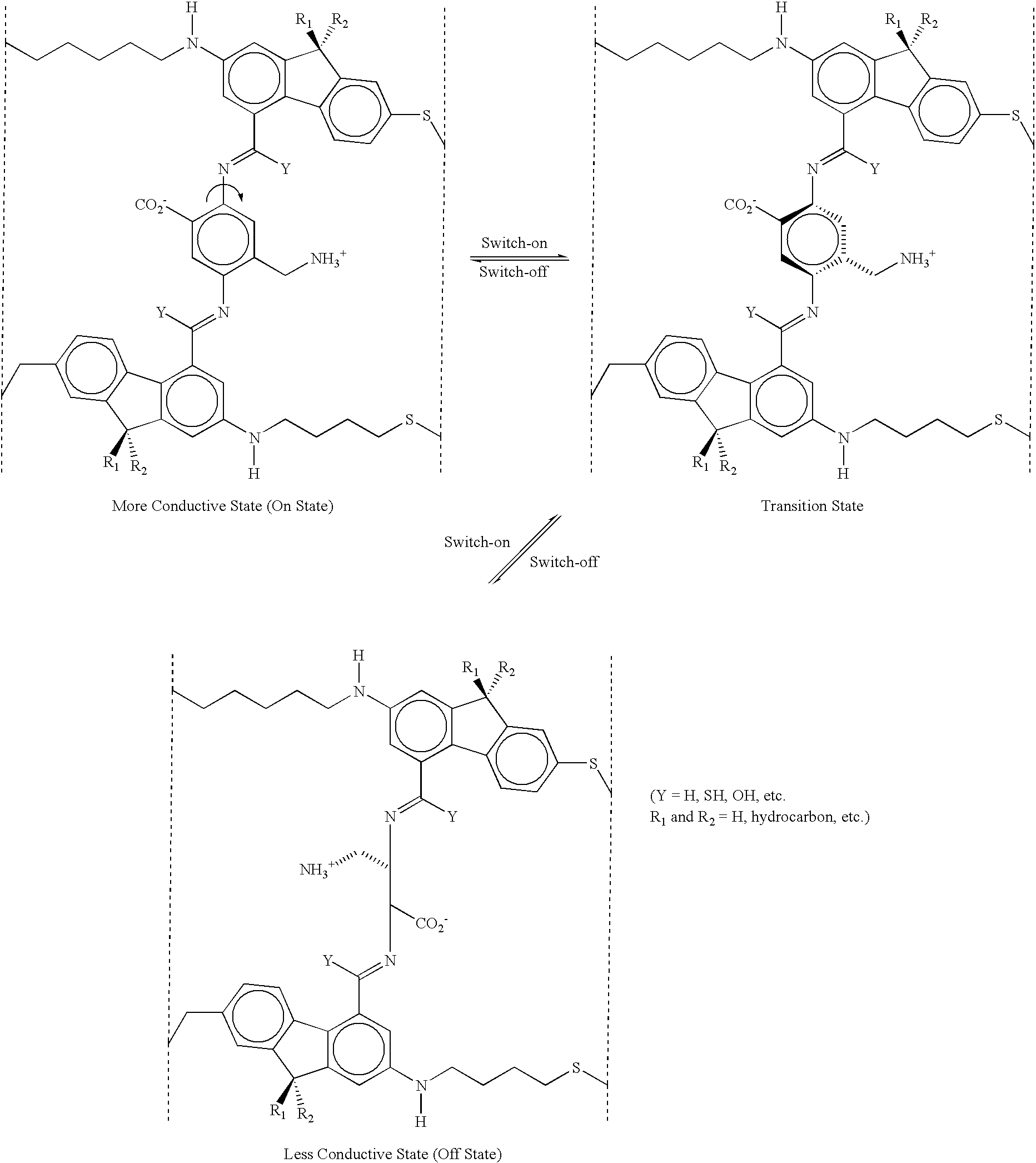

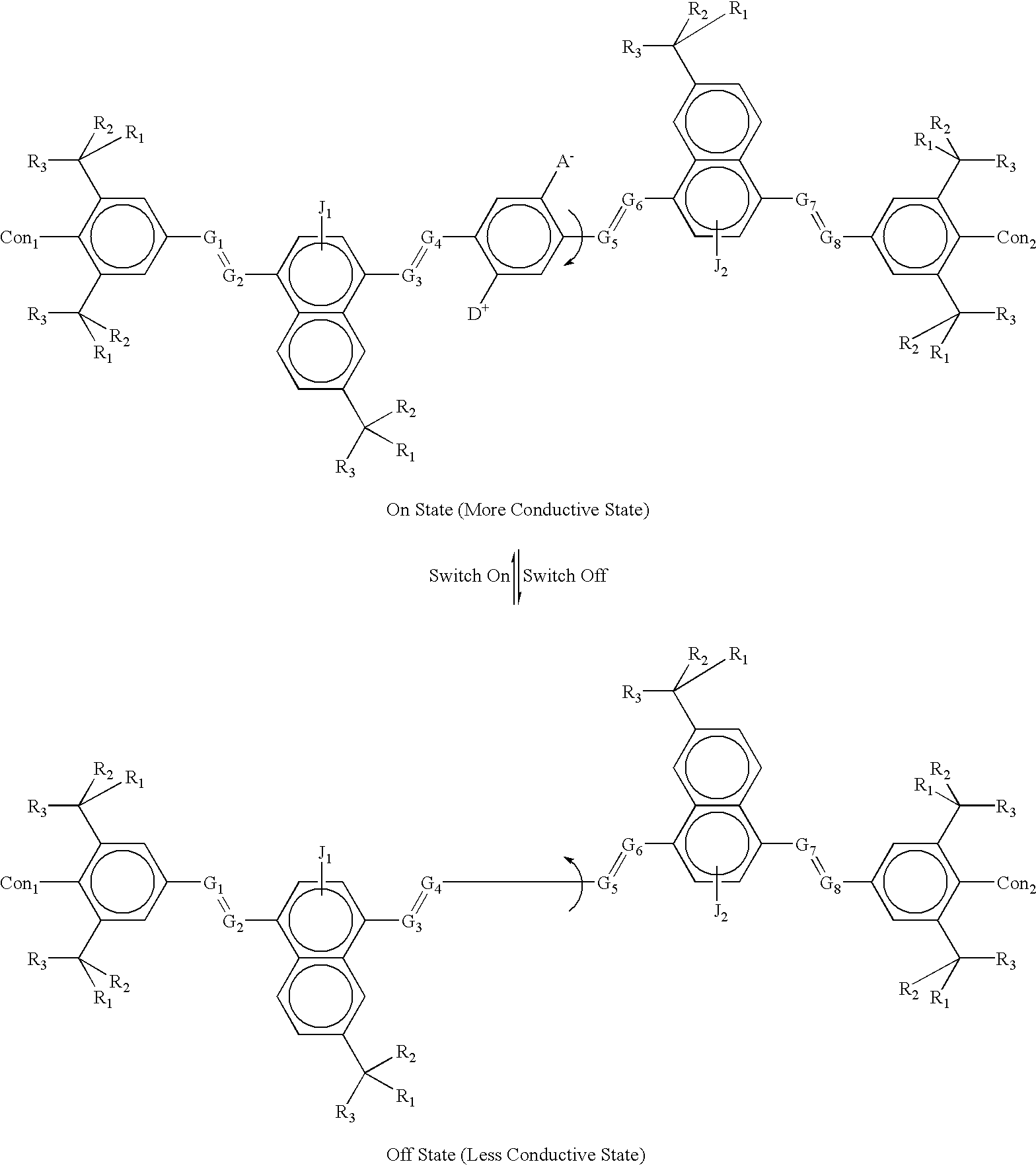

Bistable molecular mechanical devices with a middle rotating segment activated by an electric field for electronic switching, gating, and memory applications

InactiveUS6674932B1Fast switching timeEasy and cheapOrganic chemistryIndividual molecule manipulationElectronic switchEngineering

A molecular system is provided for electric field activated switches, such as a crossed-wire device or a pair of electrodes to which the molecular system is linked by linking moieties. The crossed-wire device comprises a pair of crossed wires that form a junction where one wire crosses another at an angle other than zero degrees and at least one connector species connecting the pair of crossed wires in the junction. The connector species comprises the molecular system, which has an electric field induced band gap change, and thus a change in its electrical conductivity, that occurs via one a molecular conformation change, based on a rotor / stator construction of the molecular system, involving a rotating portion (rotor) connected between to stationary portions (stators). Nanometer-scale reversible electronic switches are thus provided that can be assembled easily to make cross-bar circuits, which provide memory, logic, and communication functions.

Owner:SAMSUNG ELECTRONICS CO LTD

System and method for on-board timing margin testing of memory modules

InactiveUS20050060600A1Increase computing speedElectronic circuit testingError detection/correctionOn boardCrossover switch

A memory module includes several memory devices coupled to a memory hub. The memory hub includes several link interfaces coupled to respective processors, several memory controller coupled to respective memory devices, a cross-bar switch coupling any of the link interfaces to any of the memory controllers, a write buffer and read cache for each memory device and a self-test module. The self-test module includes a pattern generator producing write data having a predetermined pattern, and a flip-flop having a data input receiving the write data. A clock input of the flip-flop receives an internal clock signal from a delay line that receives a variable frequency clock generator. Read data are coupled from the memory devices and their pattern compared to the write data pattern. The delay of the delay line and frequency of the clock signal can be varied to test the speed margins of the memory devices.

Owner:ROUND ROCK RES LLC

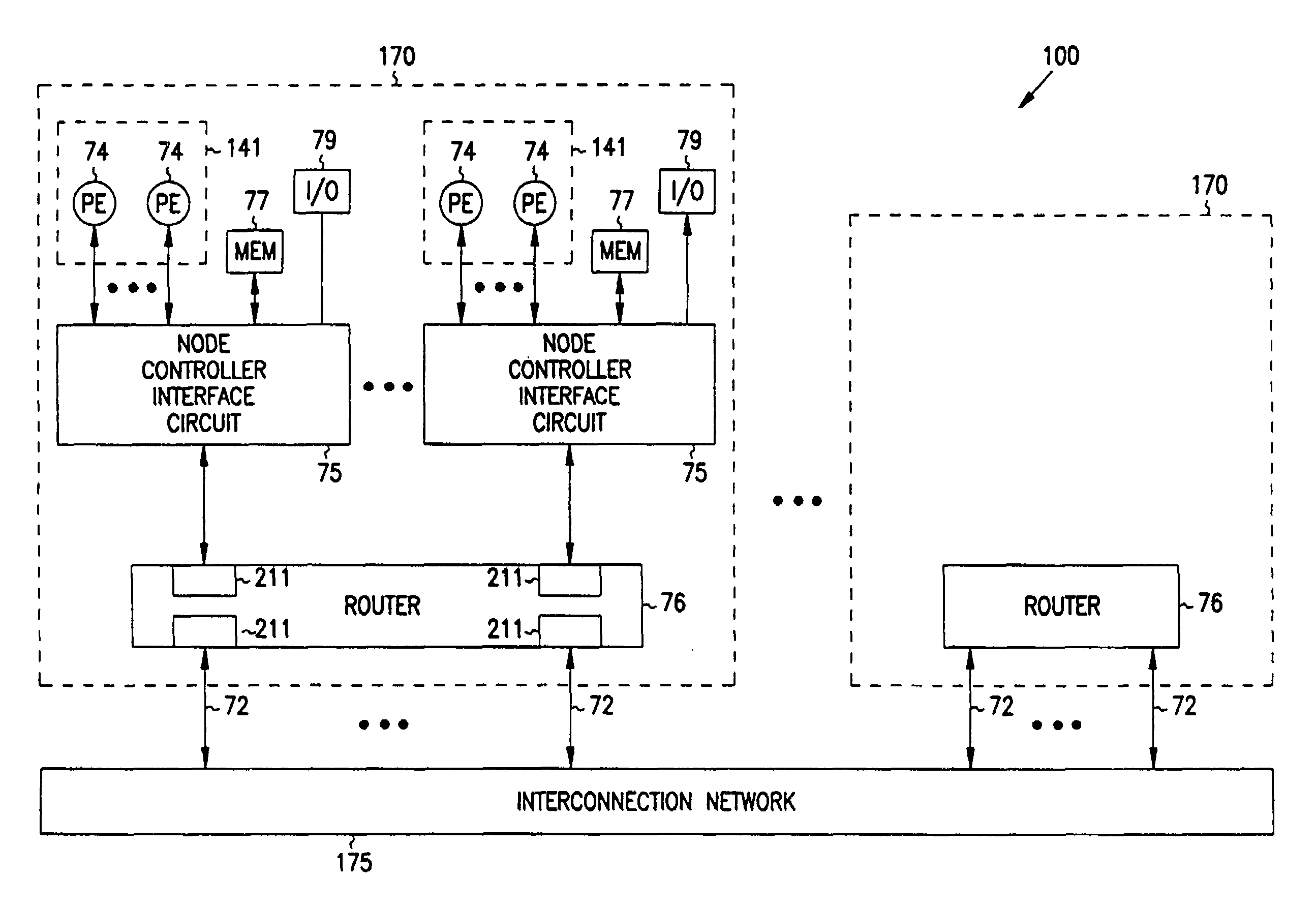

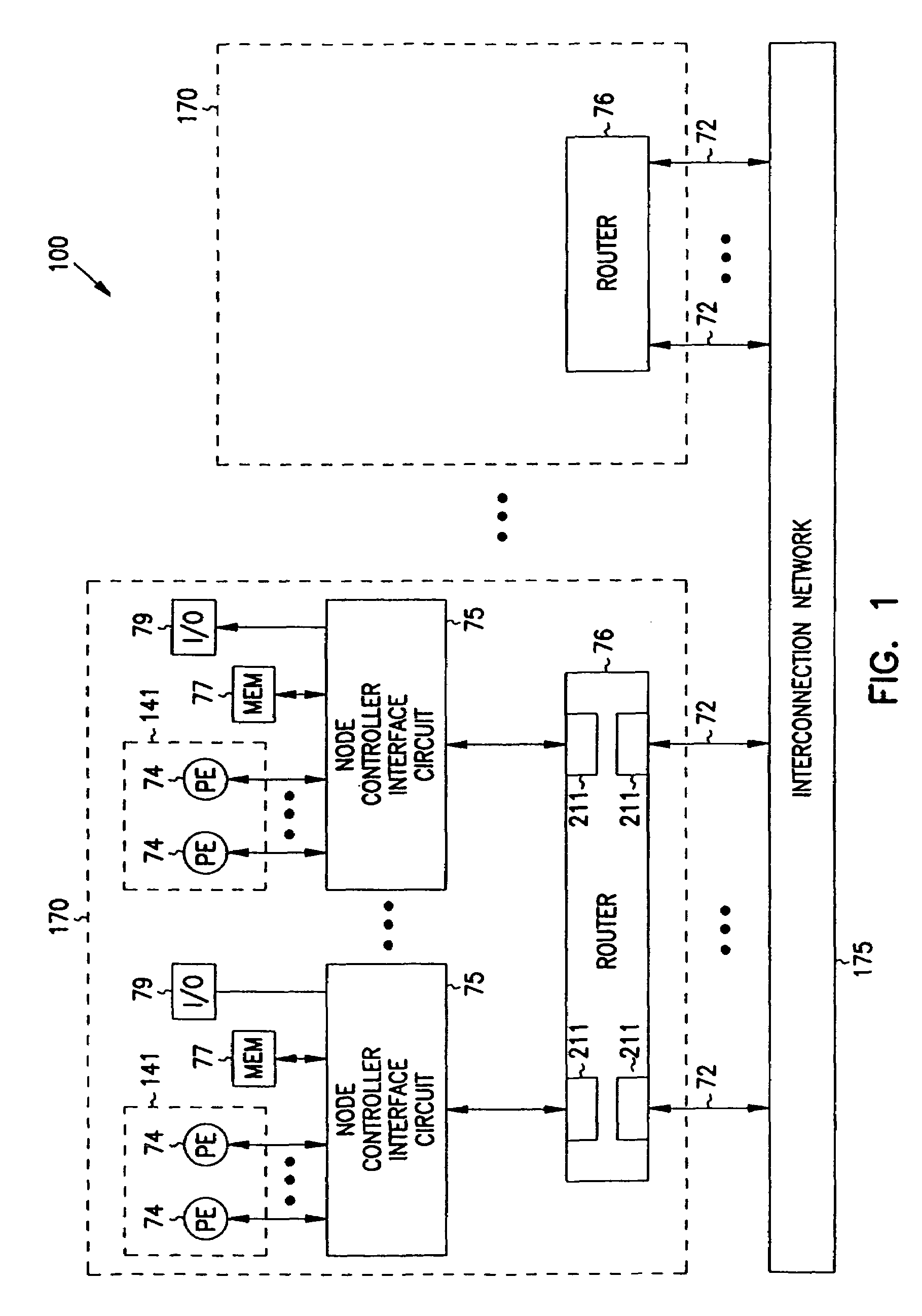

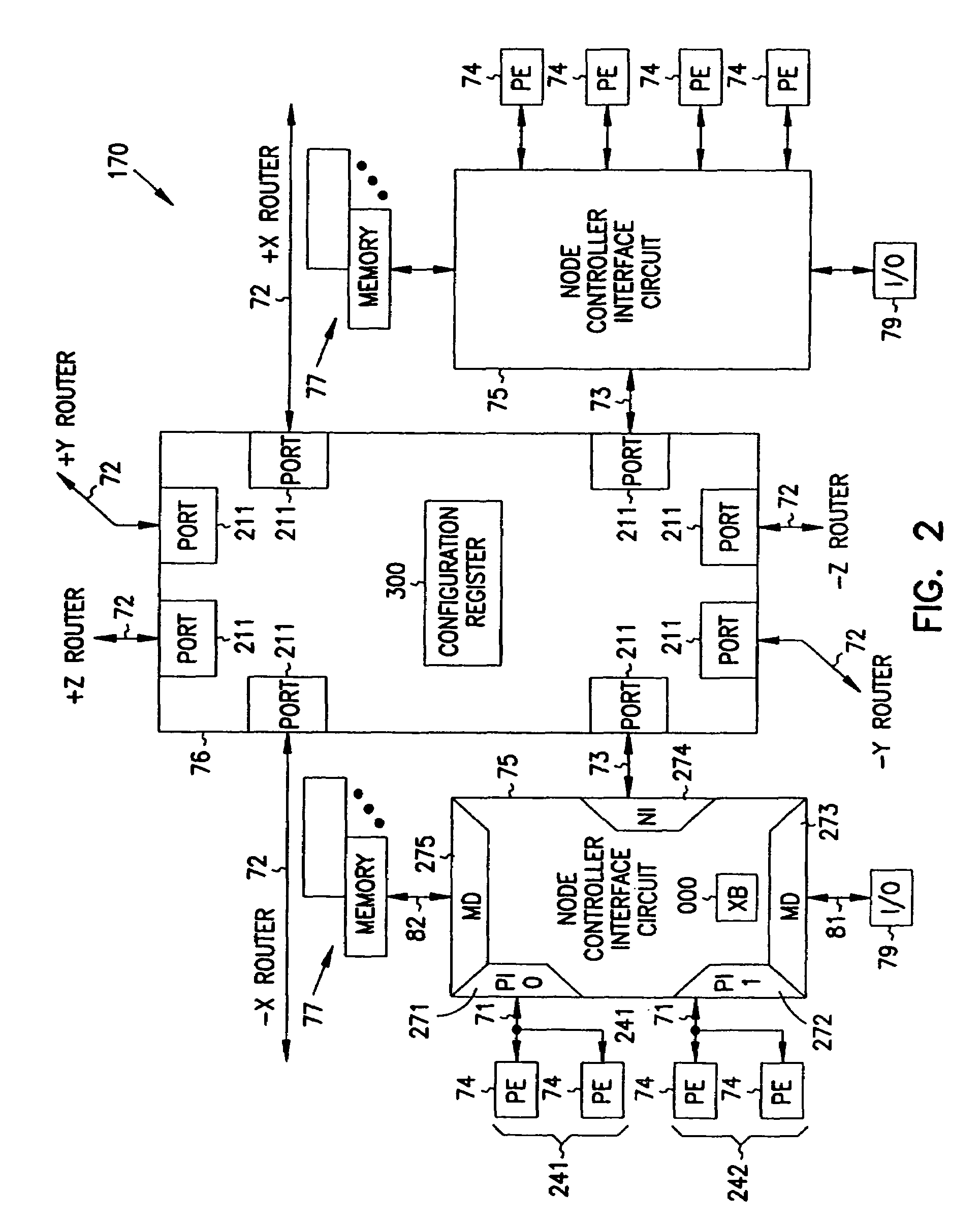

Multiprocessor node controller circuit and method

InactiveUS7406086B2Ease of parallel processingImprove welfareMultiplex system selection arrangementsMemory adressing/allocation/relocationMemory addressMemory chip

Improved method and apparatus for parallel processing. One embodiment provides a multiprocessor computer system that includes a first and second node controller, a number of processors being connected to each node controller, a memory connected to each controller, a first input / output system connected to the first node controller, and a communications network connected between the node controllers. The first node controller includes: a crossbar unit to which are connected a memory port, an input / output port, a network port, and a plurality of independent processor ports. A first and a second processor port connected between the crossbar unit and a first subset and a second subset, respectively, of the processors. In some embodiments of the system, the first node controller is fabricated onto a single integrated-circuit chip. Optionally, the memory is packaged on plugable memory / directory cards wherein each card includes a plurality of memory chips including a first subset dedicated to holding memory data and a second subset dedicated to holding directory data. Further, the memory port includes a memory data port including a memory data bus and a memory address bus coupled to the first subset of memory chips, and a directory data port including a directory data bus and a directory address bus coupled to the second subset of memory chips. In some such embodiments, the ratio of (memory data space) to (directory data space) on each card is set to a value that is based on a size of the multiprocessor computer system.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

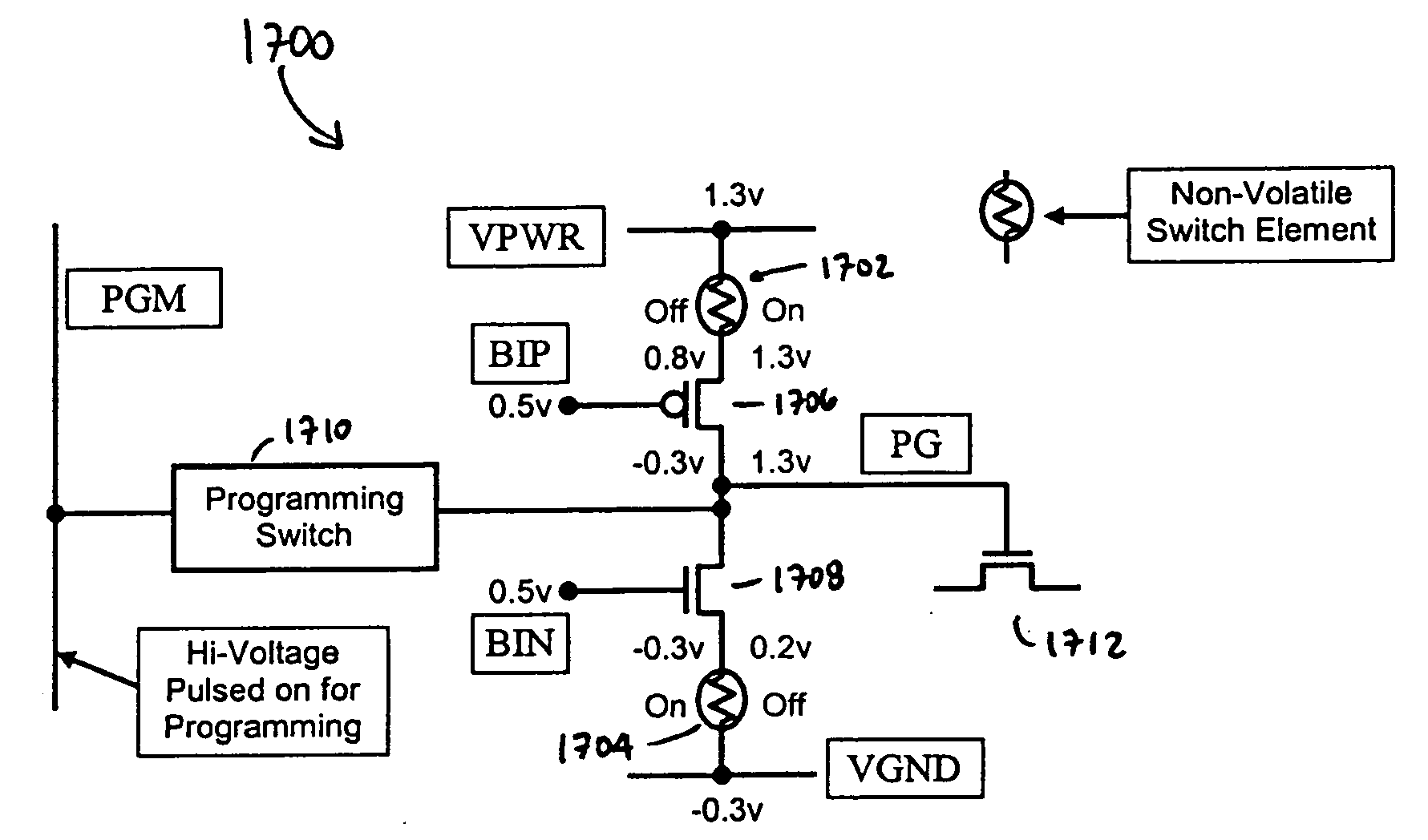

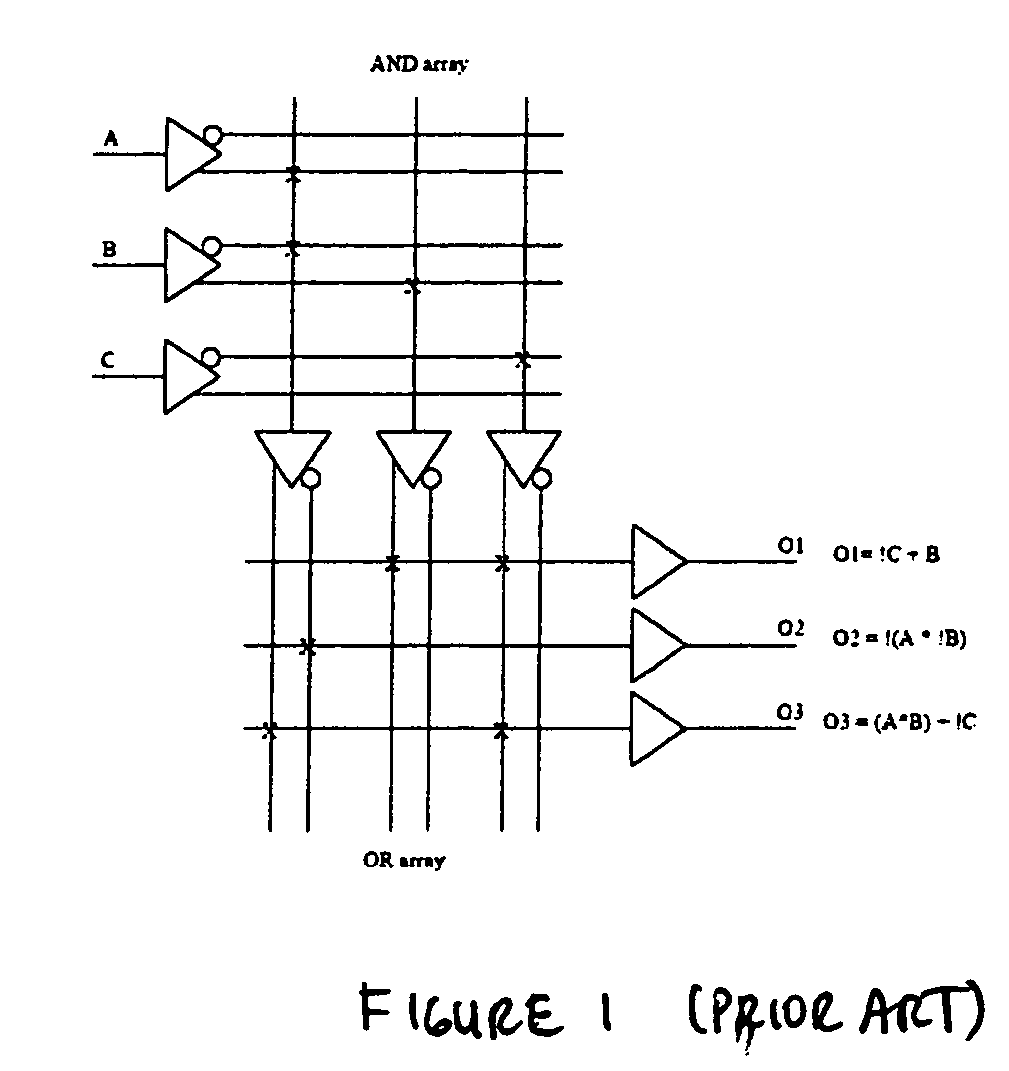

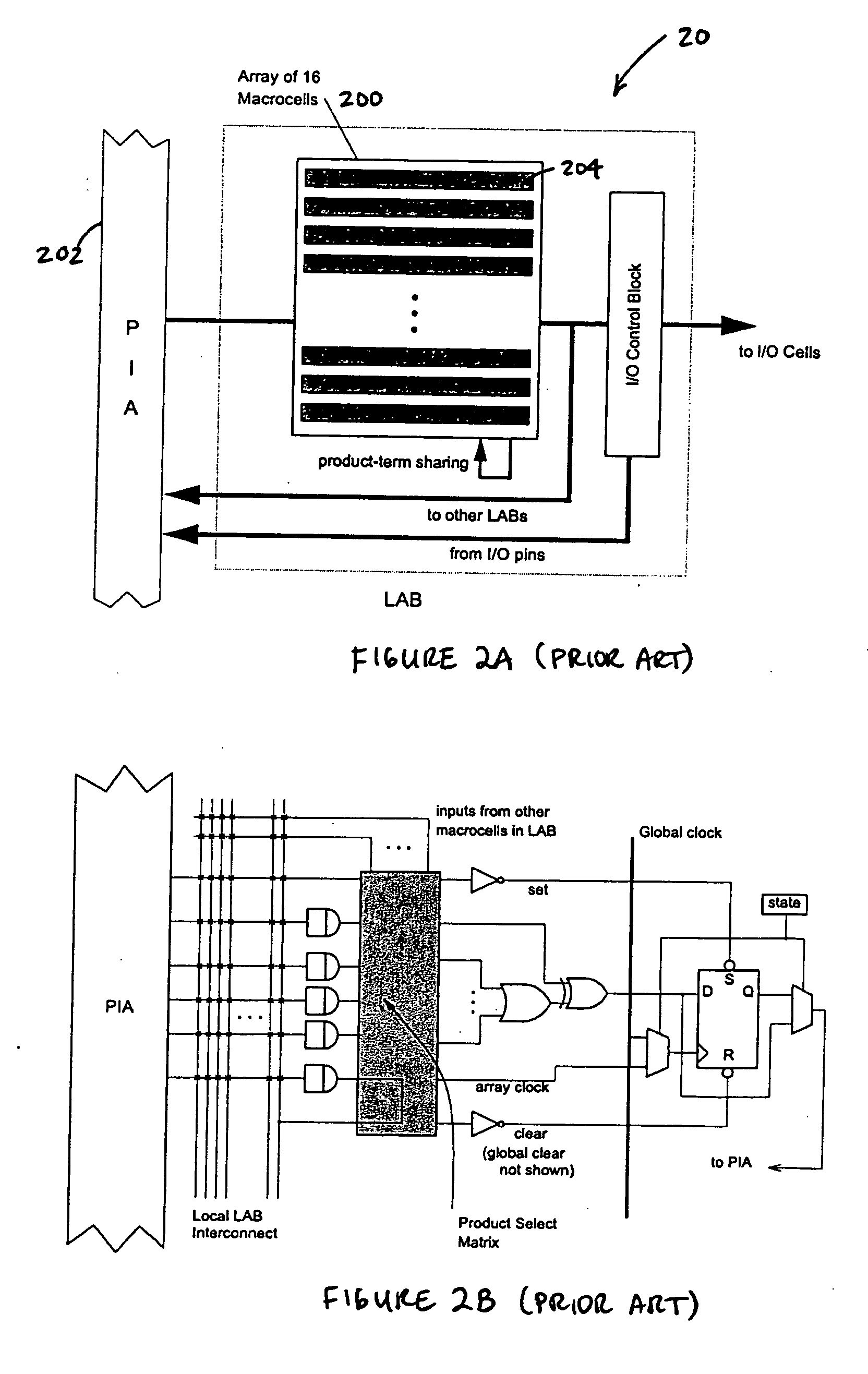

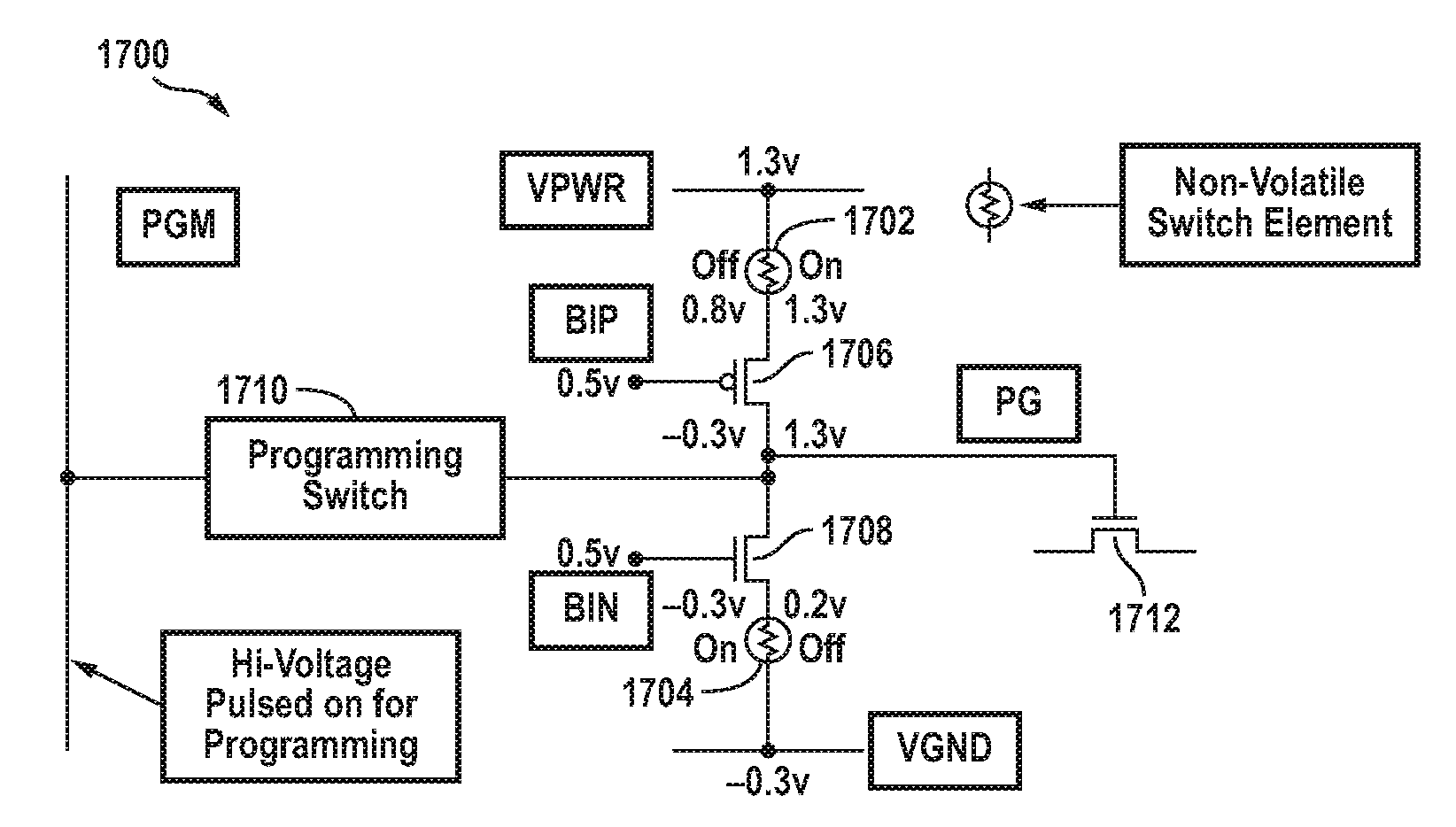

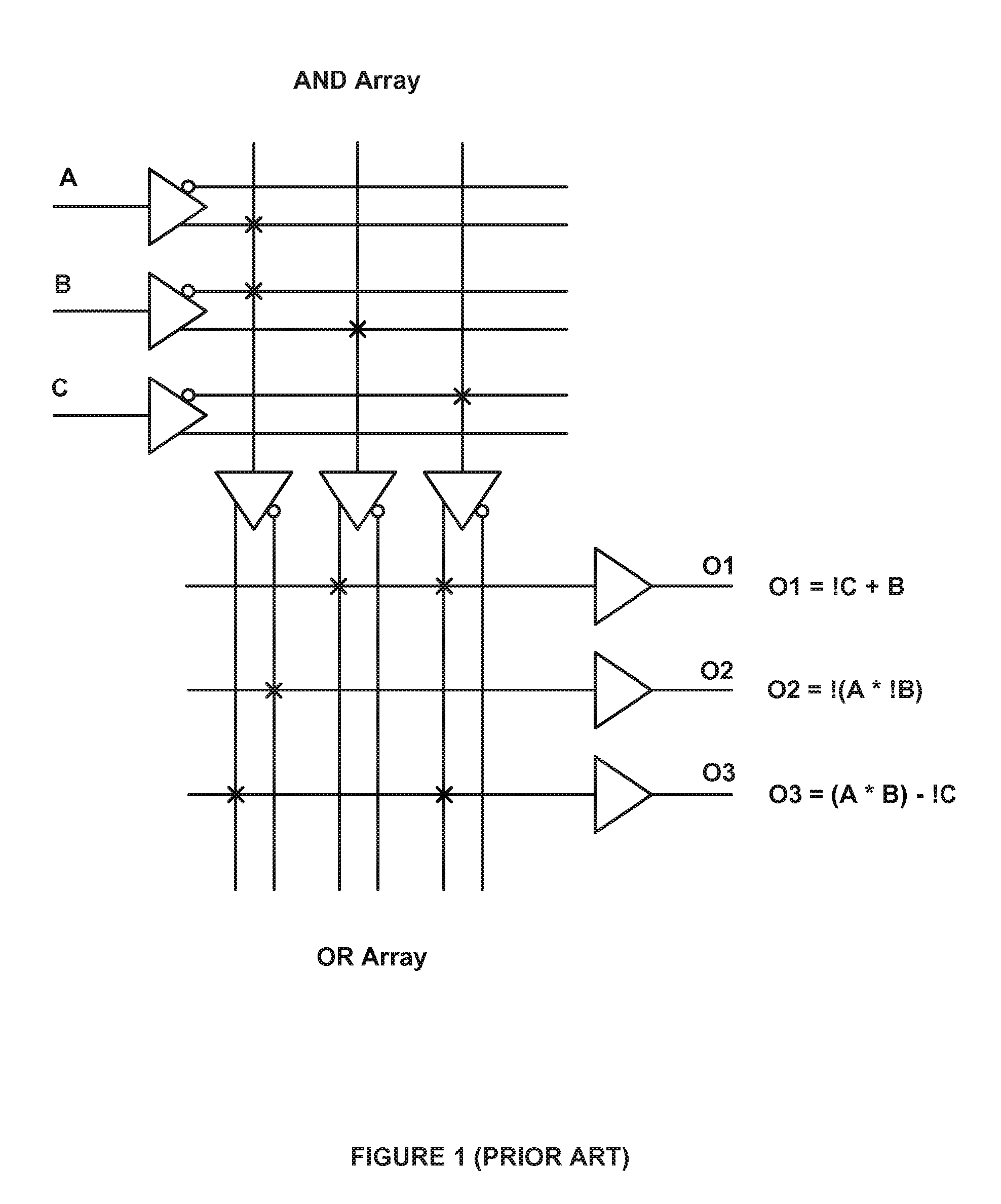

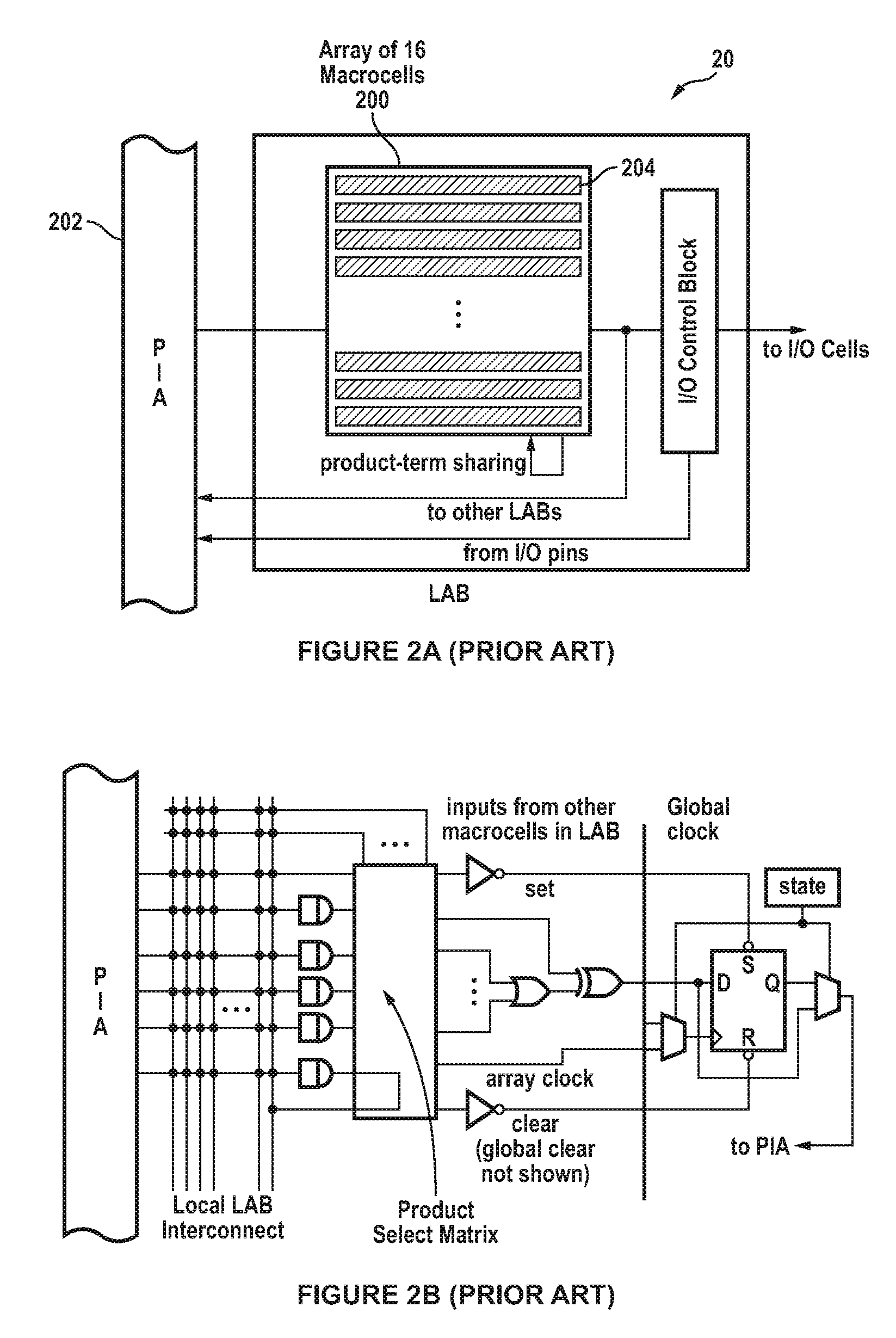

Reconfigurable logic structures

InactiveUS7511532B2Logic circuits characterised by logic functionNanoinformaticsElectronic structurePhase-change memory

Reconfigurable electronic structures and circuits using programmable, non-volatile memory elements. The programmable, non-volatile memory elements may perform the functions of storage and / or a switch to produce components such as crossbars, multiplexers, look-up tables (LUTs) and other logic circuits used in programmable logic structures (e.g., (FPGAs)). The programmable, non-volatile memory elements comprise one or more structures based on Phase Change Memory, Programmable Metallization, Carbon Nano-Electromechanical (CNT-NEM), or Metal Nano-Electromechanical device technologies.

Owner:CSWITCH

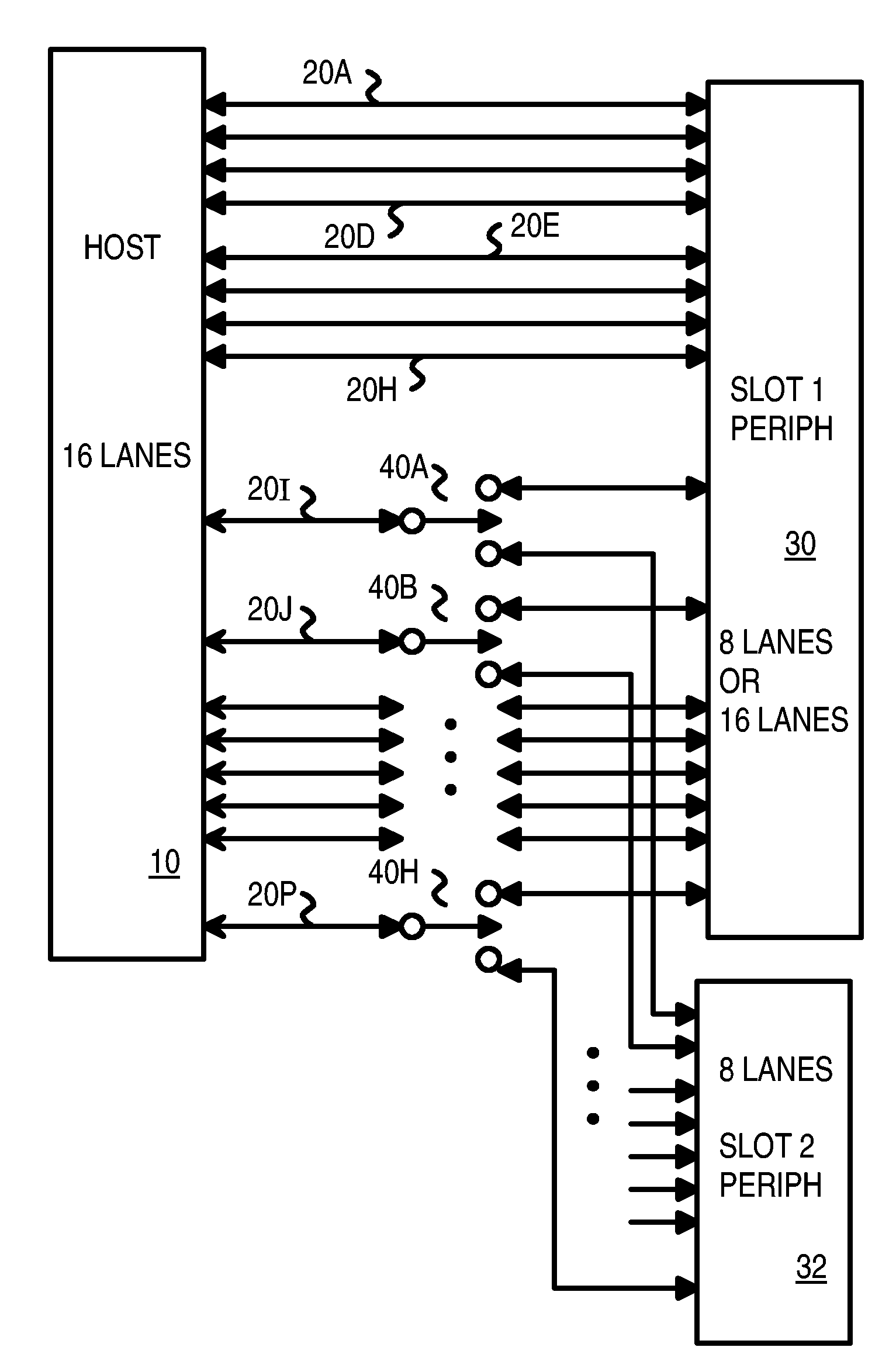

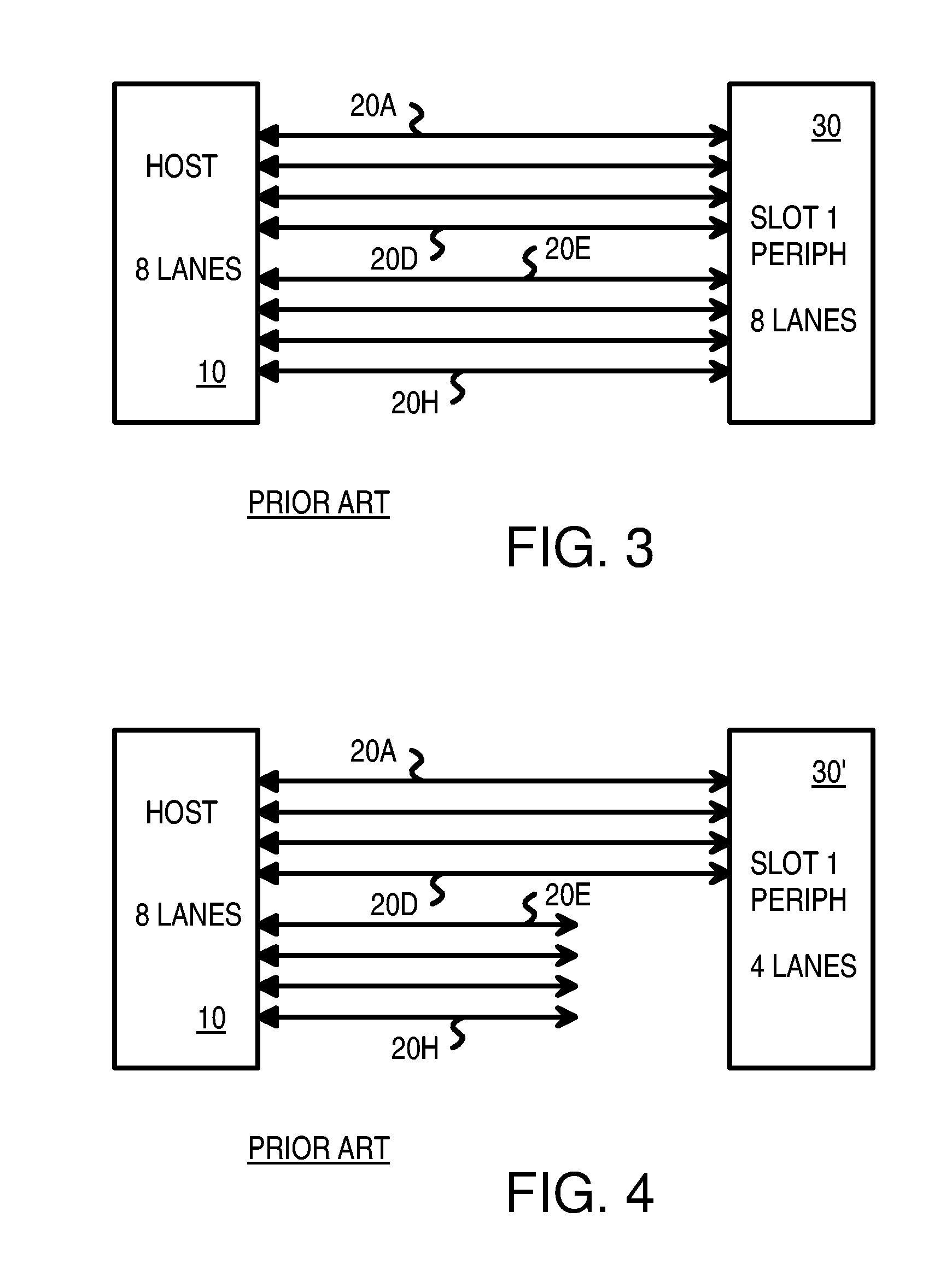

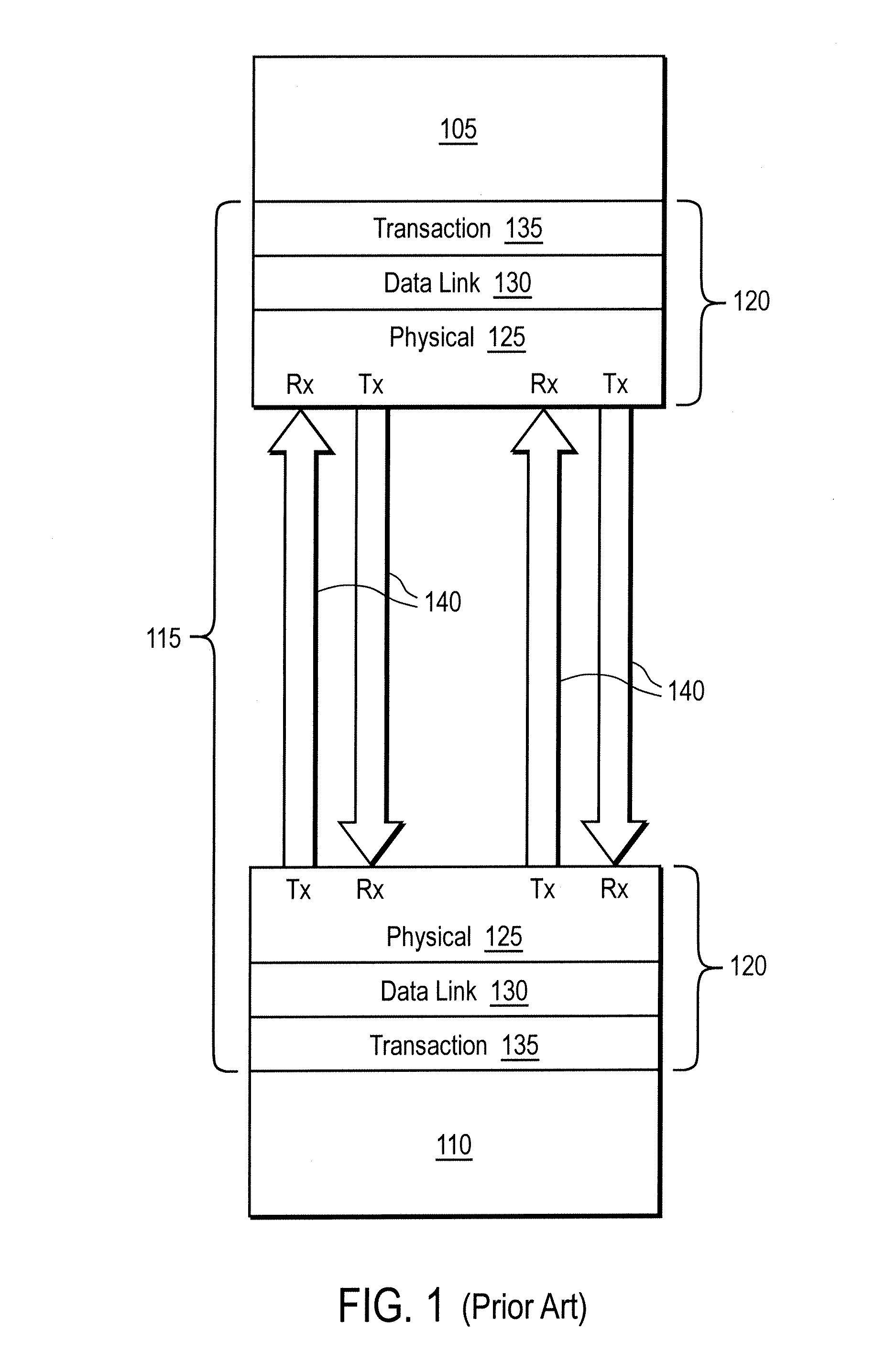

Optimized topographies for dynamic allocation of PCI express lanes using differential muxes to additional lanes to a host

Many Peripheral Component Interconnect Express (PCIE) lanes are available between a root complex host and peripherals inserted into slots. Each PCIE lane is a bi-directional serial bus, with a transmit differential pair and a receive differential pair of data lines. Some lanes are directly connected from the root complex host to each slot. Each slot is driven by a different port and a different direct physical layer on the host. Other lanes are configurable and can be driven by any port and use a configurable physical layer on the host. These configurable lanes pass through an external switch or crossbar that connects the lanes from the host to one or more of the slots. The direct-connect lanes can be the first lanes to a slot while the configurable lanes are the higher-numbered lanes.

Owner:DIODES INC

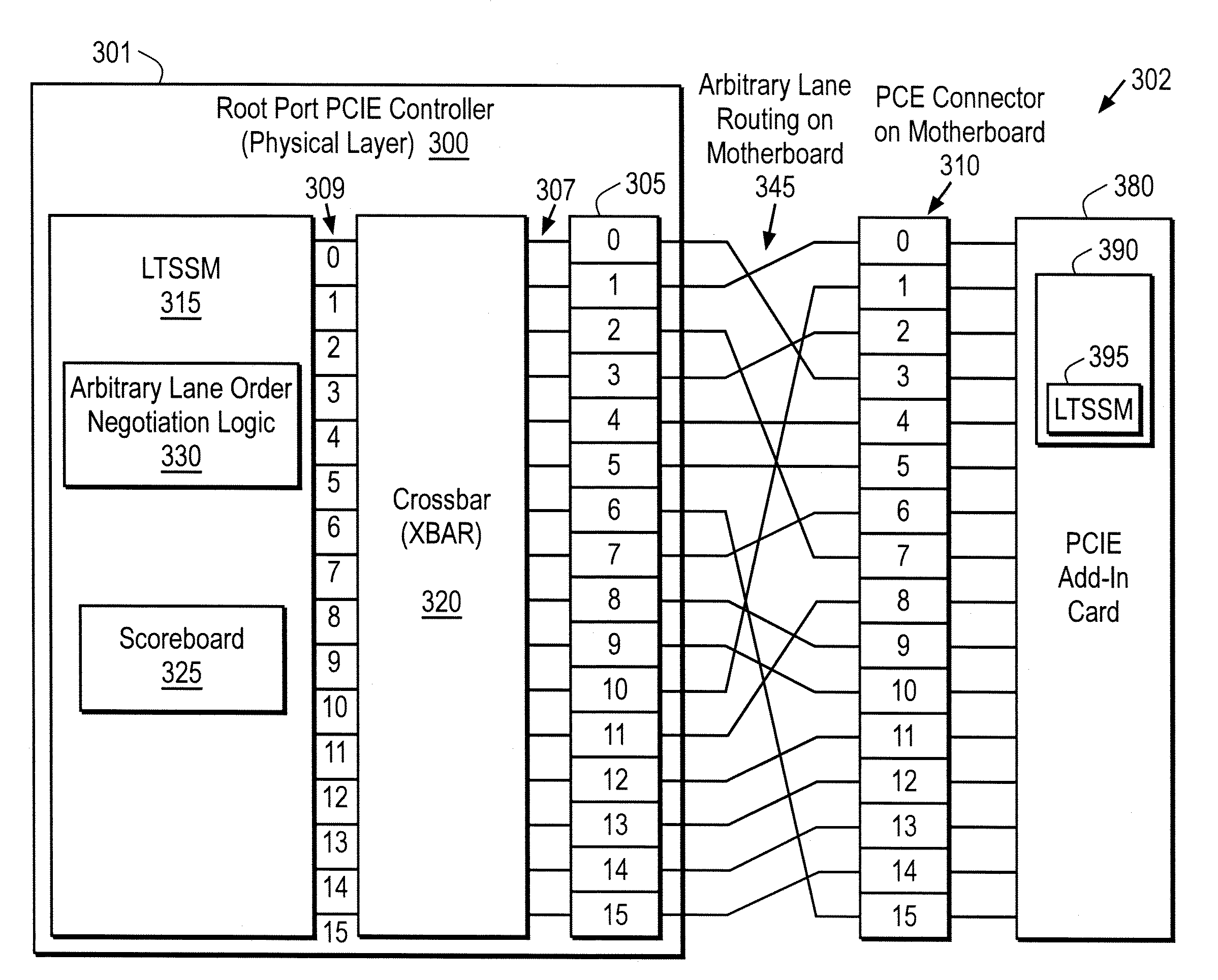

Apparatus, system, and method for swizzling of a PCIe link

ActiveUS7756123B1Great freedomMultiplex system selection arrangementsCircuit switching systemsComputer scienceCrossover switch

A peripheral component interface express (PCIe) controller include a crossbar to reorder data lanes into an order compatible with PCIe negotiation rules. A full crossbar permits an arbitrary swizzling of data lanes, permitting greater flexibility in motherboard lane routing.

Owner:NVIDIA CORP

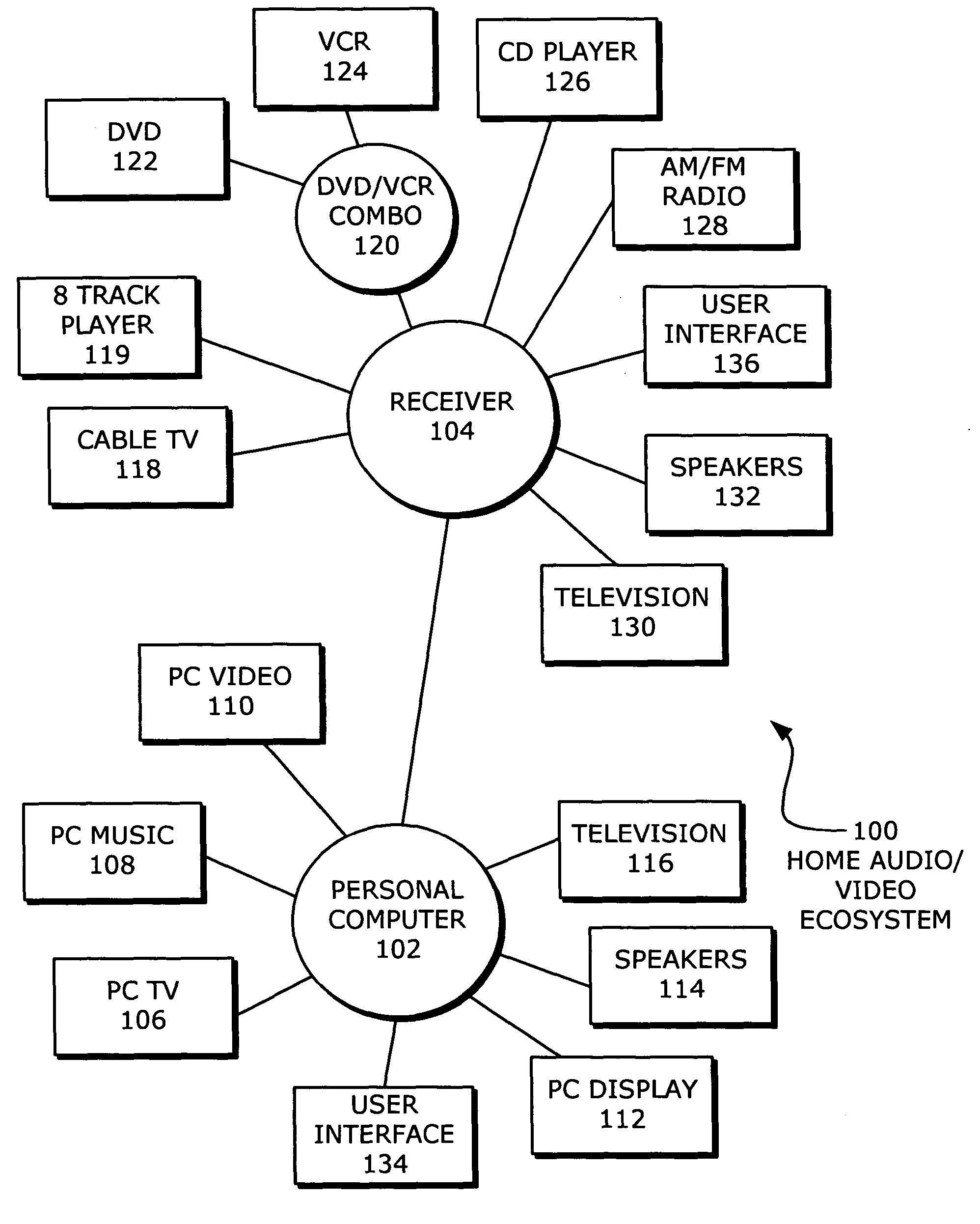

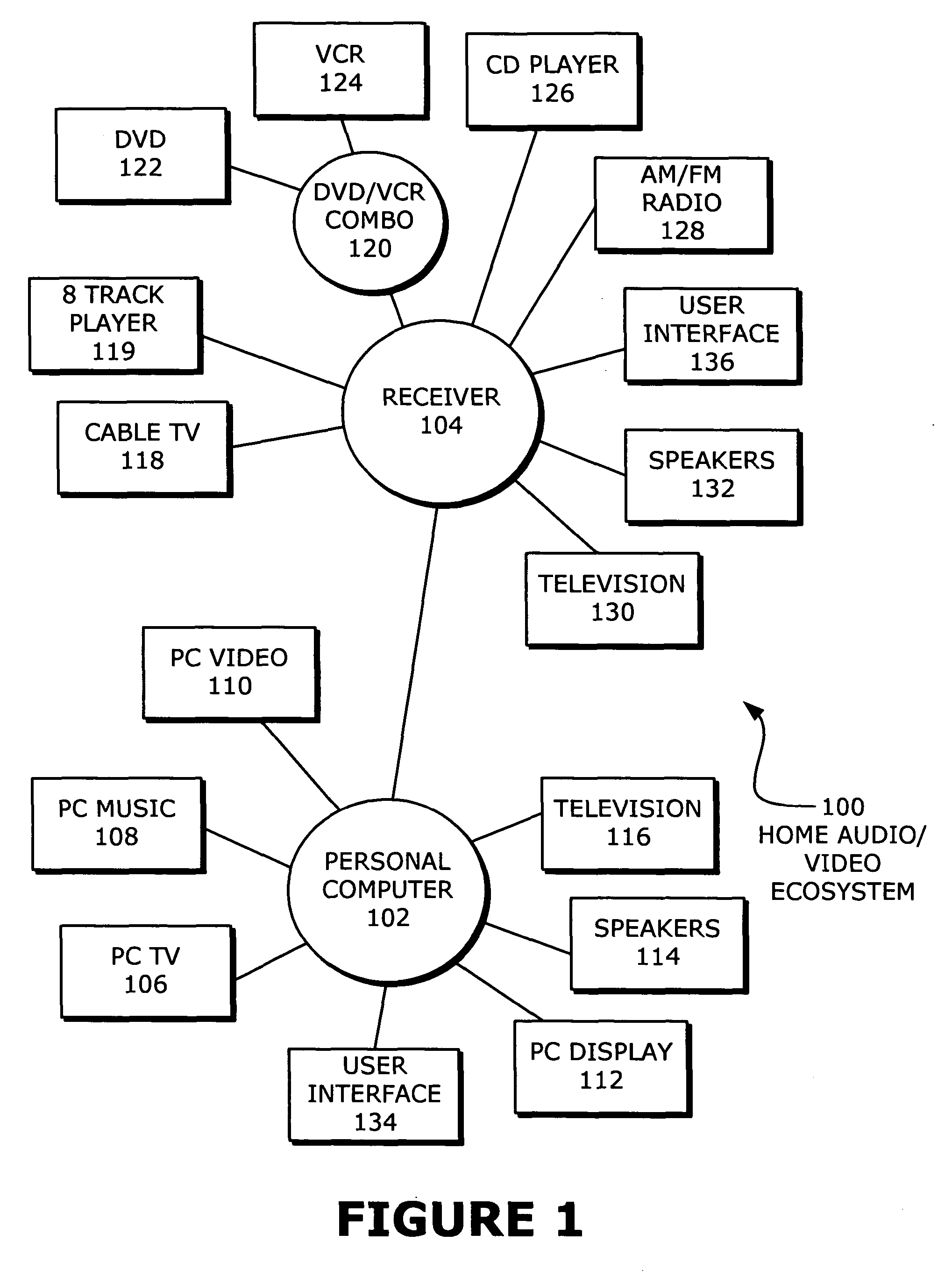

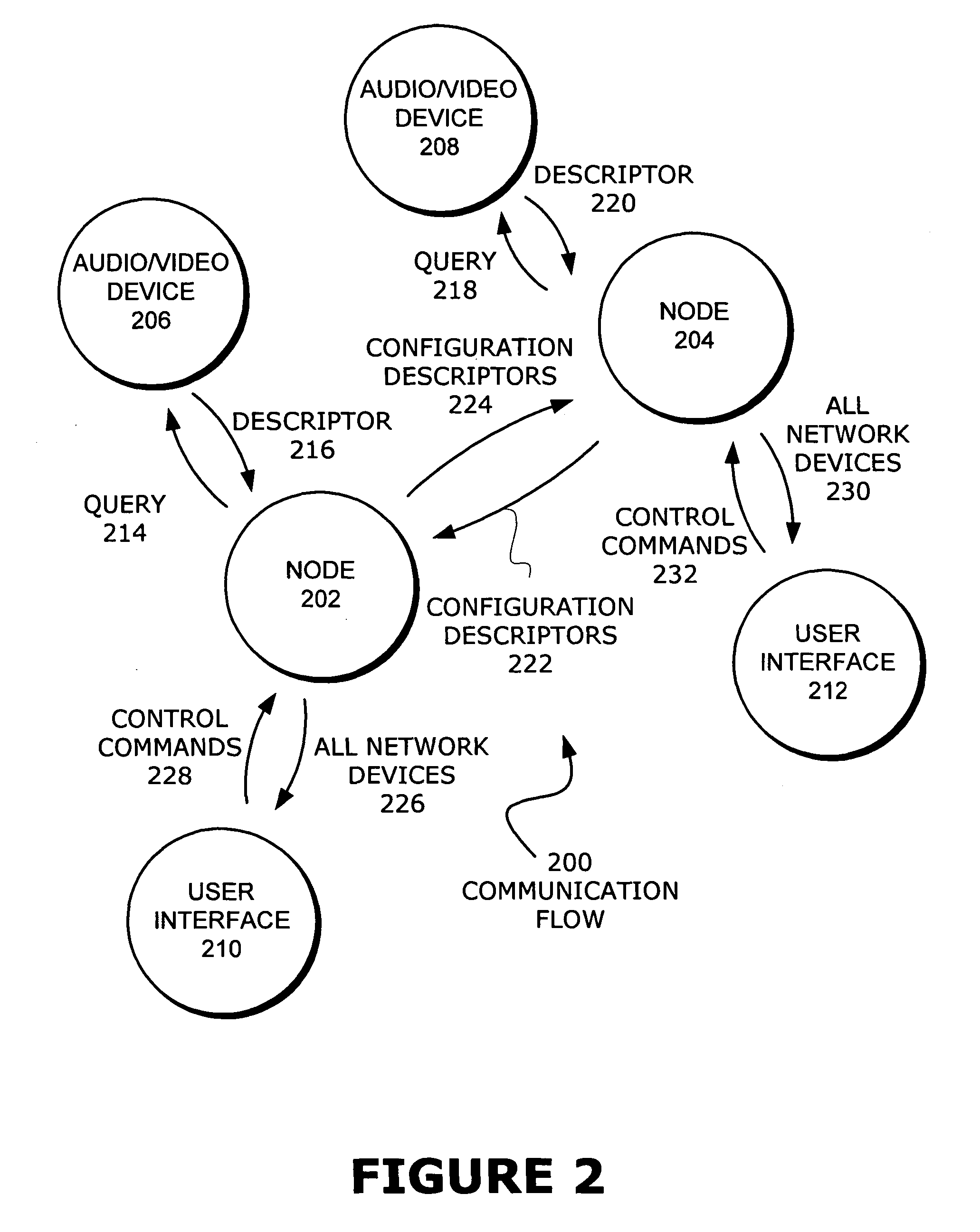

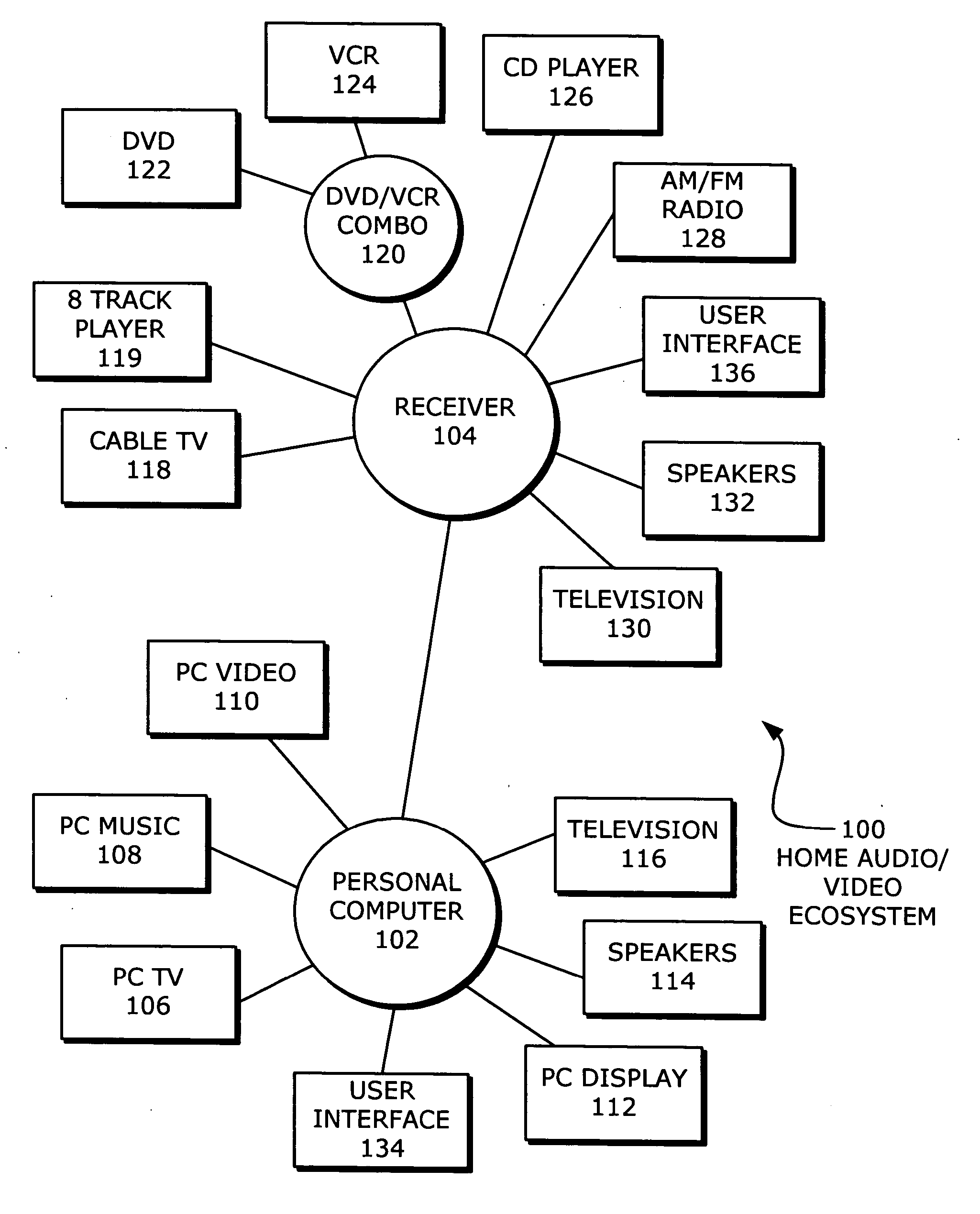

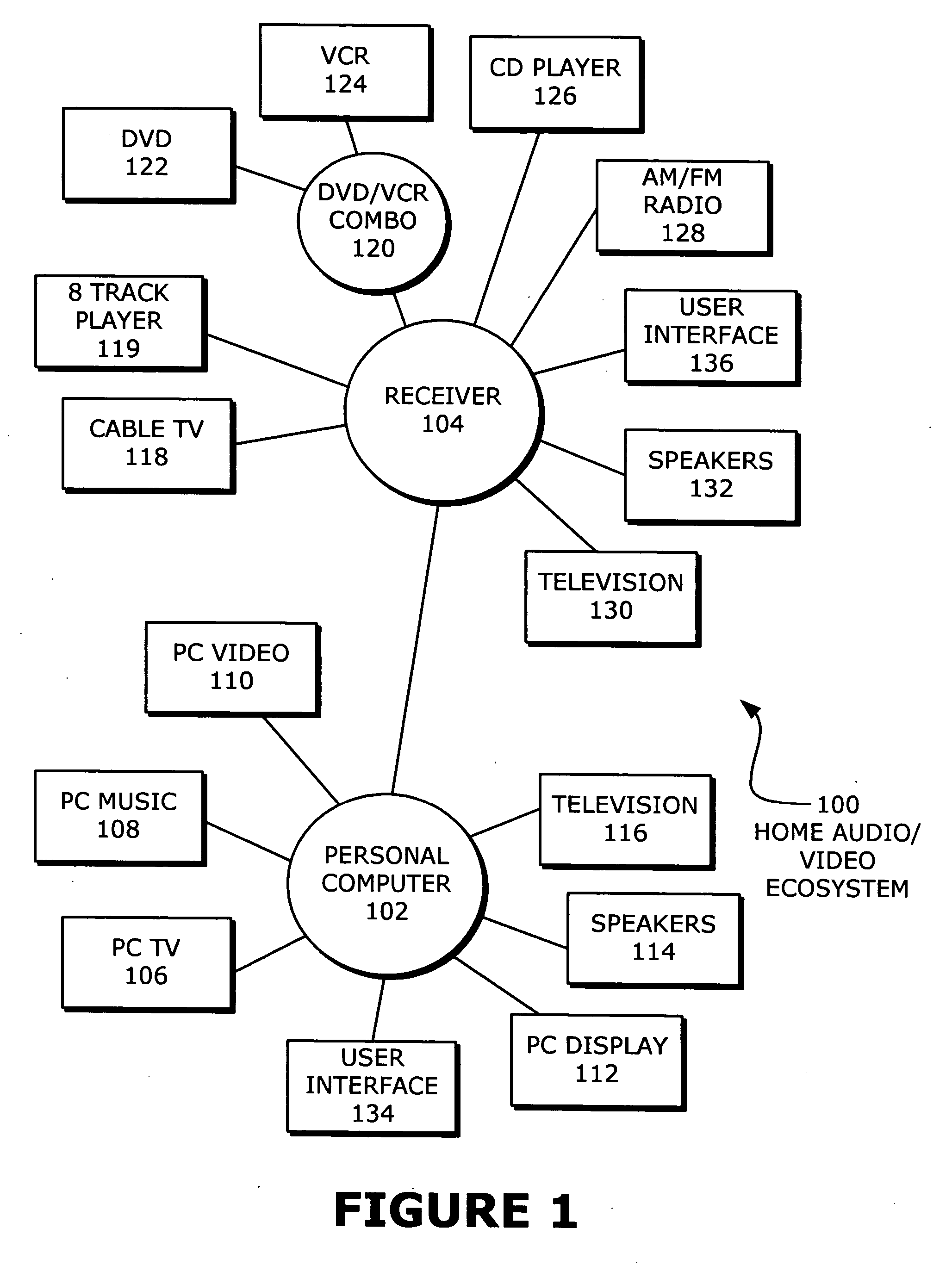

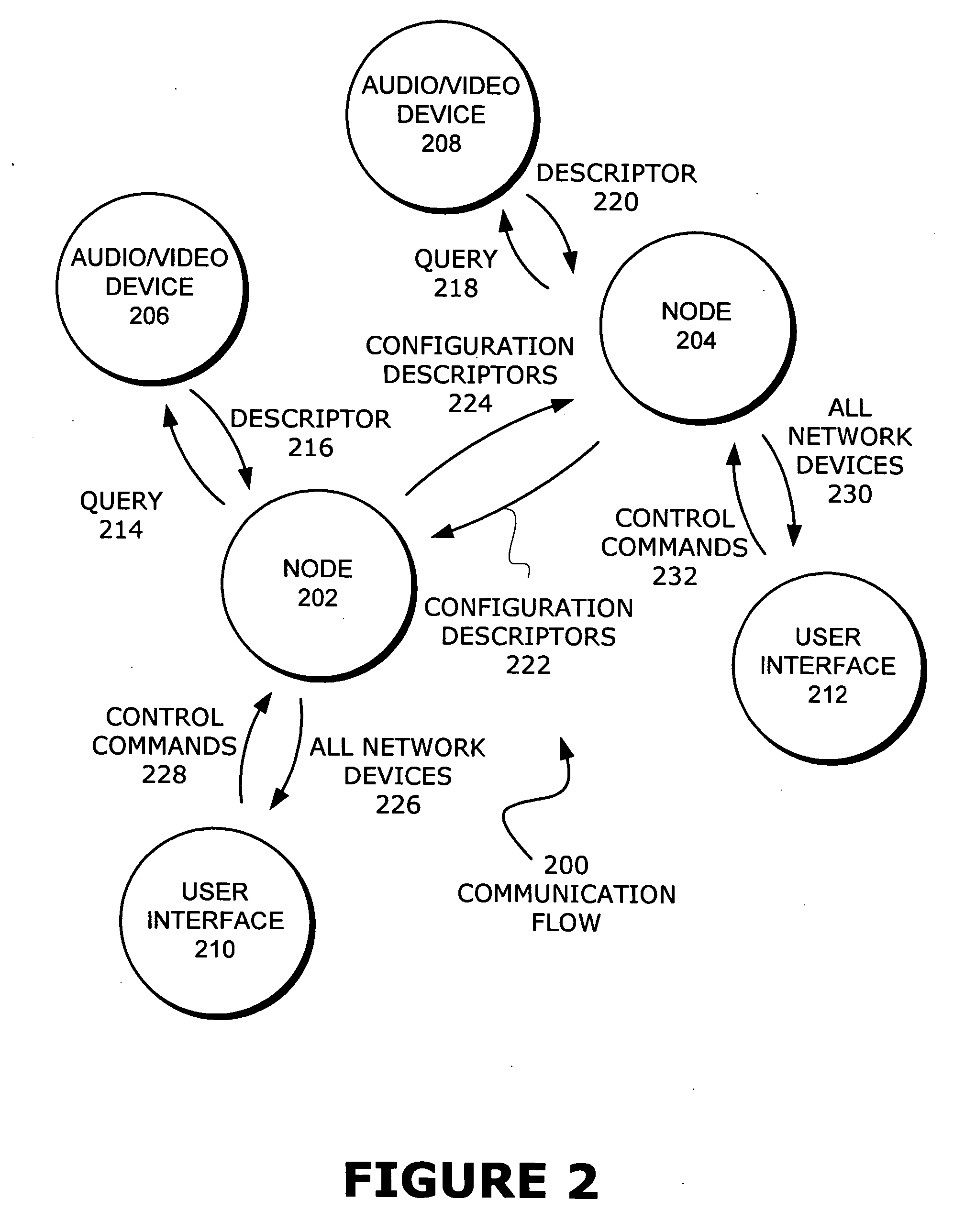

Aggregated audio/video crossbar connections

ActiveUS8700730B2Special service provision for substationGHz frequency transmissionComputer networkPersonal computer

Disparate devices in an audio / video ecosystem may be connected and managed by having multiple crossbar connections at nodes in a node and leaf network. The leaves of the network may be various audio and video devices, with receivers, personal computers, and other devices comprising the nodes, which have at least some crossbar switching ability. When the nodes are connected, some or all of the devices attached to all of the nodes may be made available at each interface for control and display. Each node may act as a crossbar, allowing a user at each interface to enjoy the services of any device, regardless of the node to which the device is attached.

Owner:MICROSOFT TECH LICENSING LLC

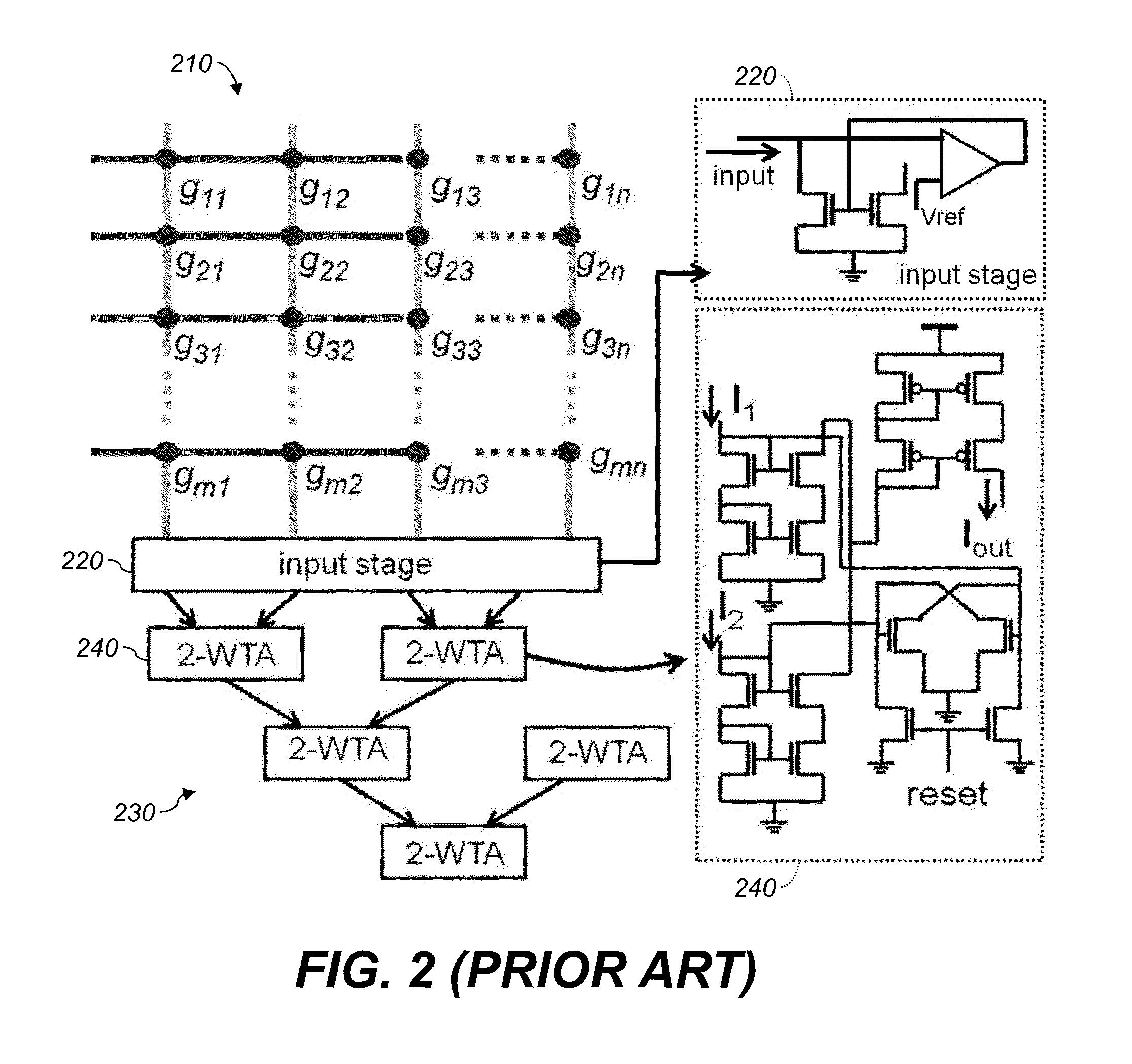

Electronic comparison systems

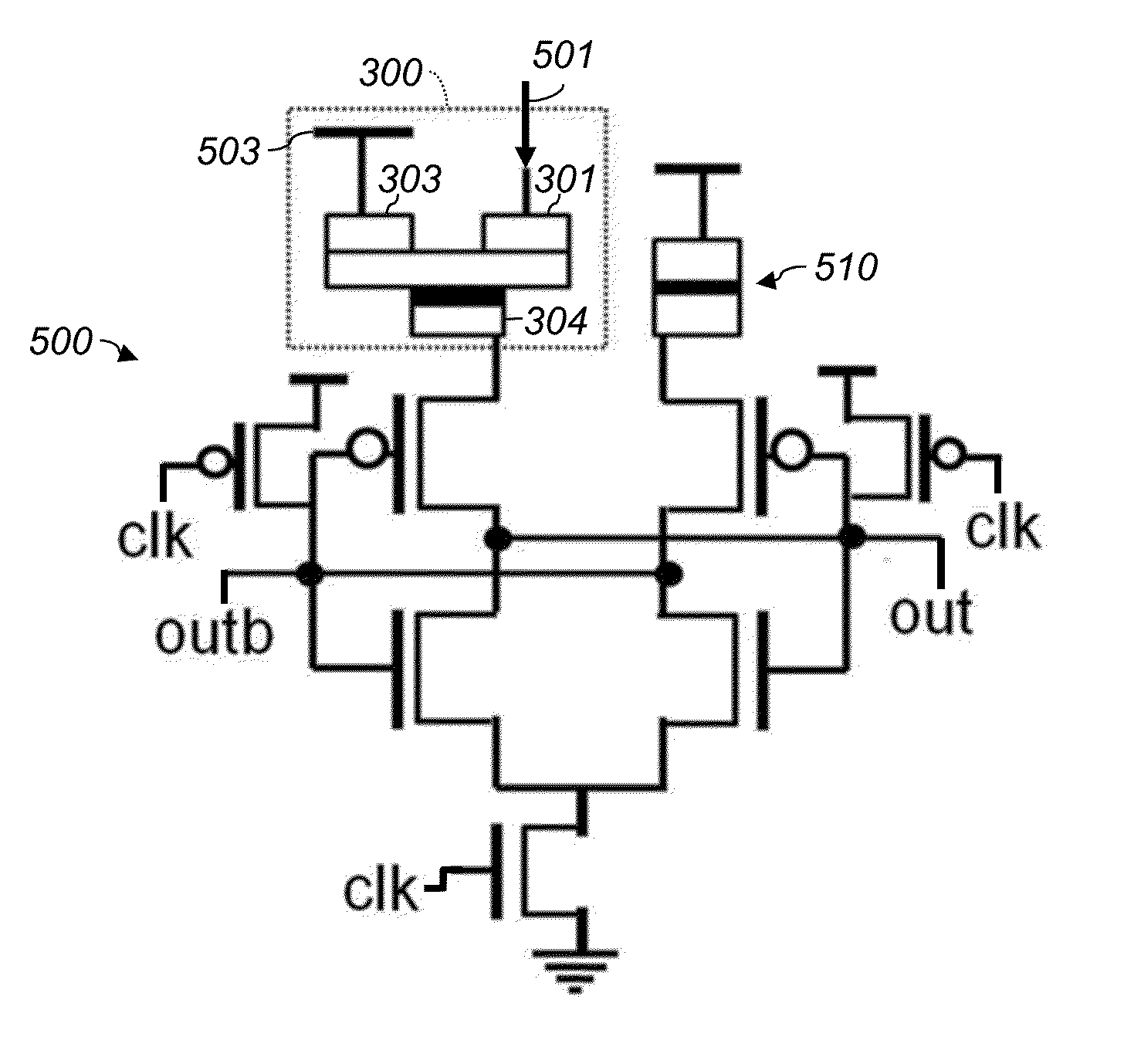

ActiveUS20150347896A1Easy to optimizeReduce consumptionAnalogue/digital conversionElectric signal transmission systemsCMOSNeural network system

An electronic comparison system includes input stages that successively provide bits of code words. One-shots connected to respective stages successively provide a first bit value until receiving a bit having a non-preferred value concurrently with an enable signal, and then provide a second, different bit value. An enable circuit provides the enable signal if at least one of the one-shots is providing the first bit value. A neural network system includes a crossbar with row and column electrodes and resistive memory elements at their intersections. A writing circuit stores weights in the elements. A signal source applies signals to the row electrodes. Comparators compare signals on the column electrodes to corresponding references using domain-wall neurons and store bit values in CMOS latches by comparison with a threshold.

Owner:PURDUE RES FOUND INC

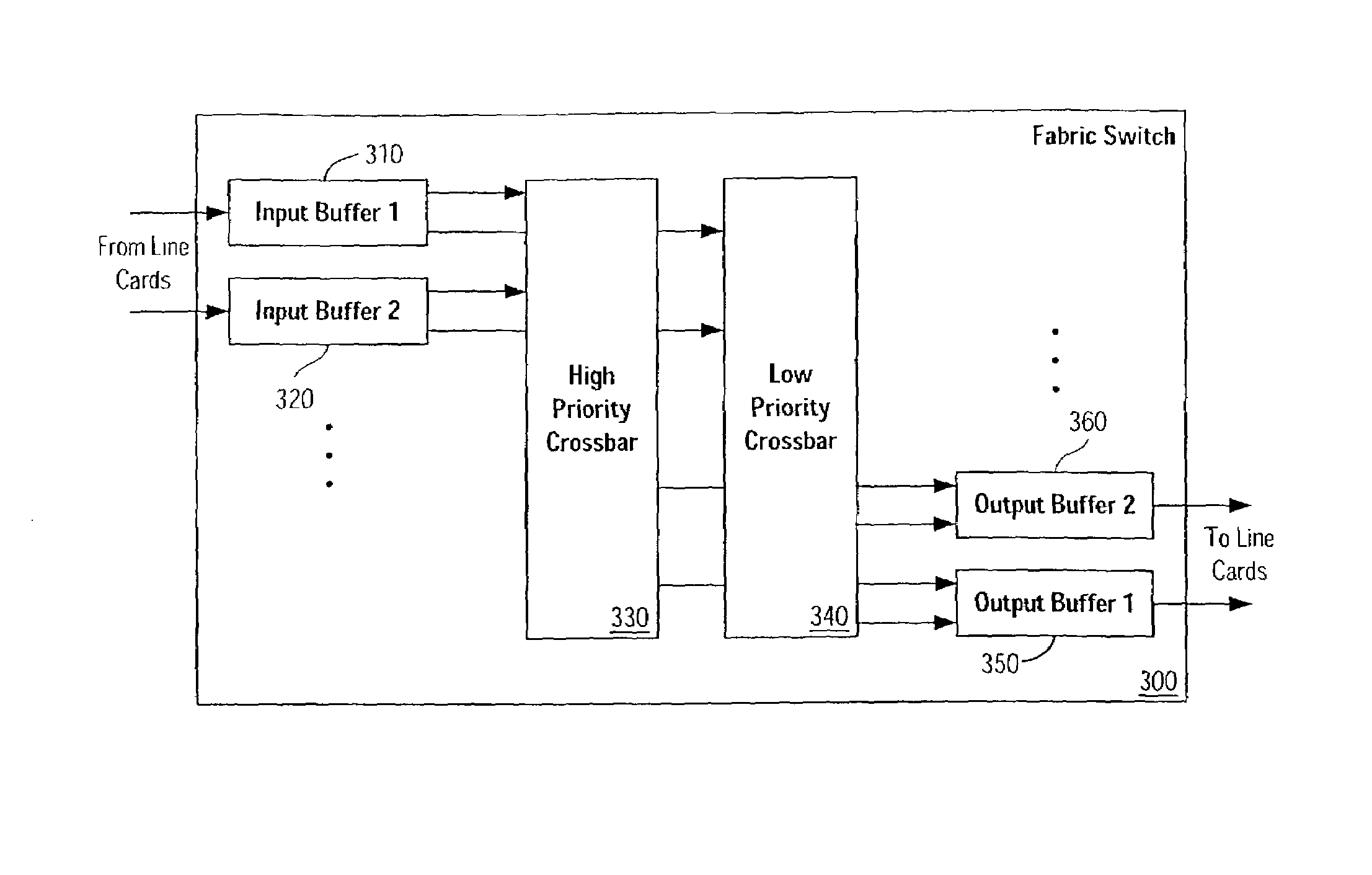

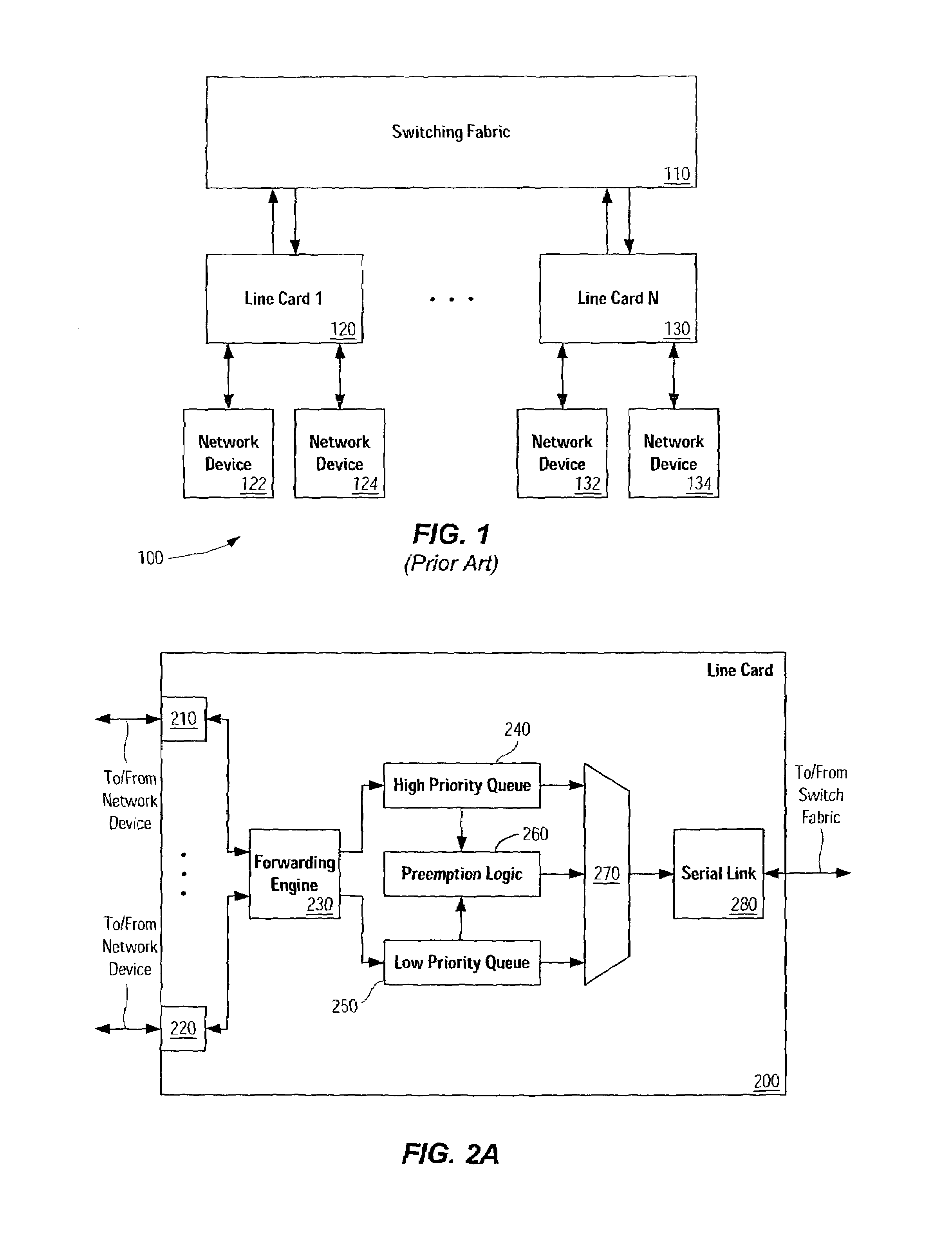

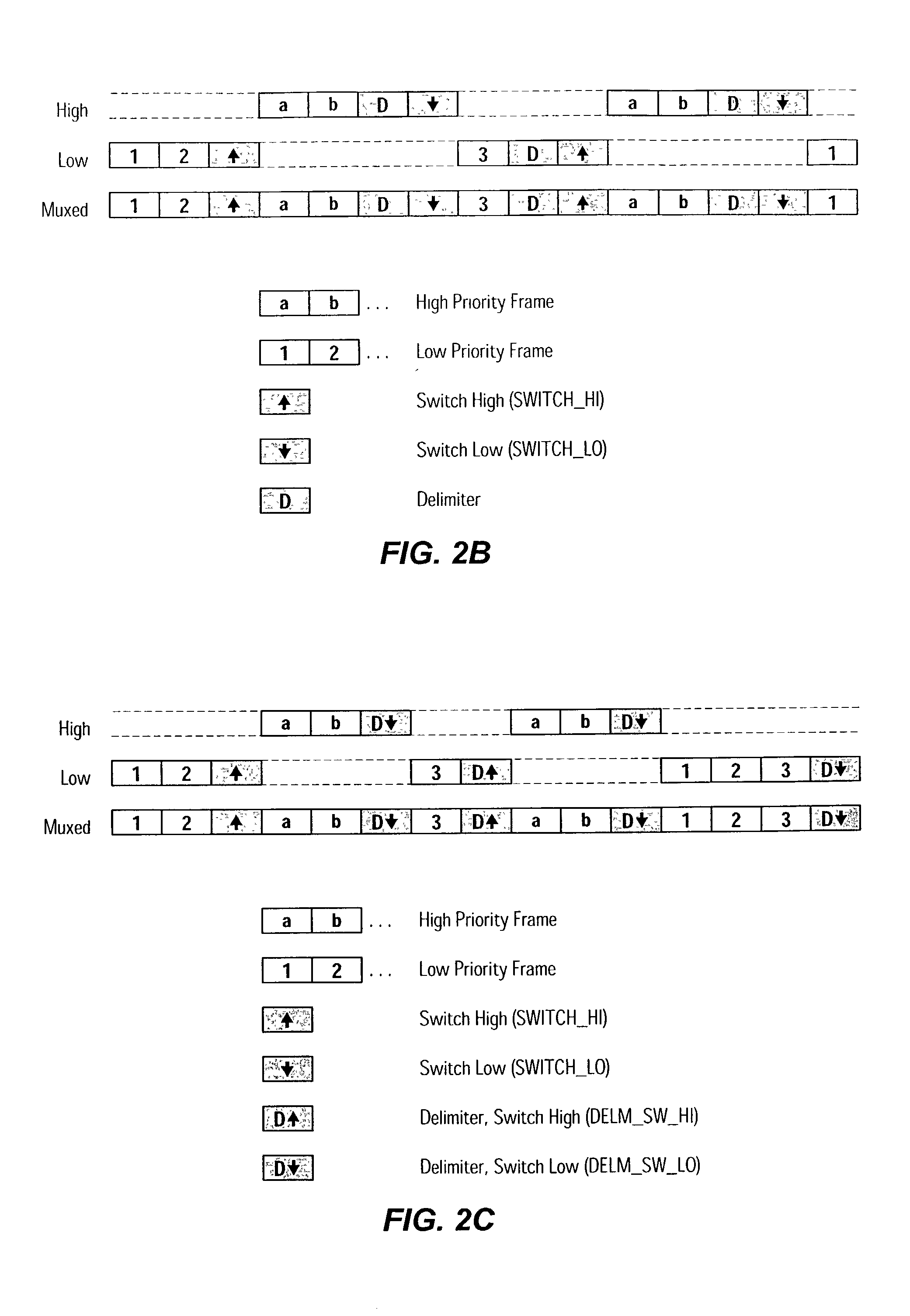

Prioritization and preemption of data frames over a switching fabric

InactiveUS7464180B1Digital computer detailsData switching by path configurationSerial transferData stream

Network switching and / or routing devices can use multiple priority data streams and queues to support prioritized serial transmission of data from line cards (or the like) through a fabric switch to other line cards (or the like). Preemption logic is used to insert within a data stream commands indicating a switch from one priority level data to another. Delimiter commands and combination switch / delimiter commands can also be used. Multiple crossbars are implemented in the fabric switch to support the various data stream priority levels.

Owner:CISCO TECH INC

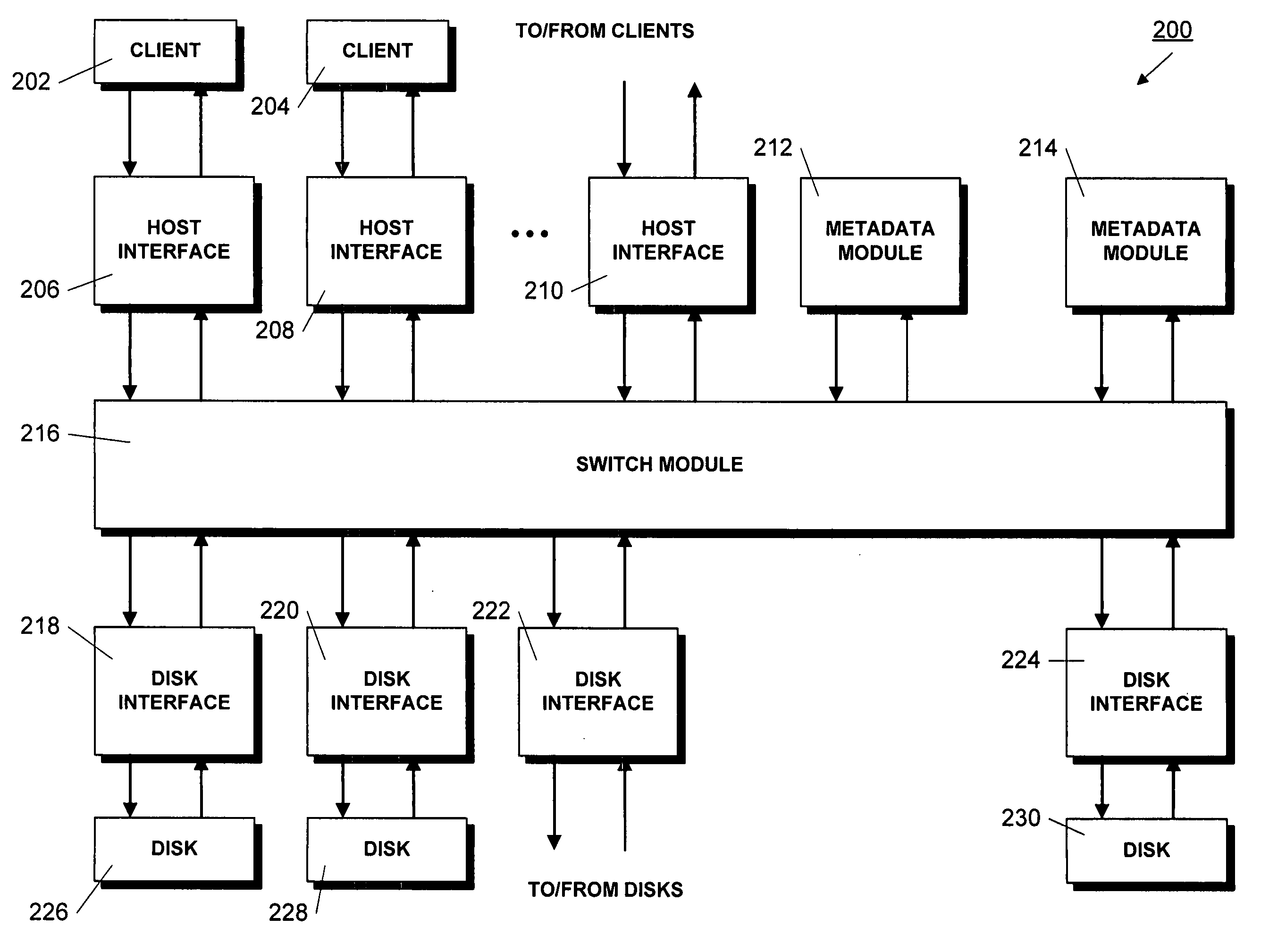

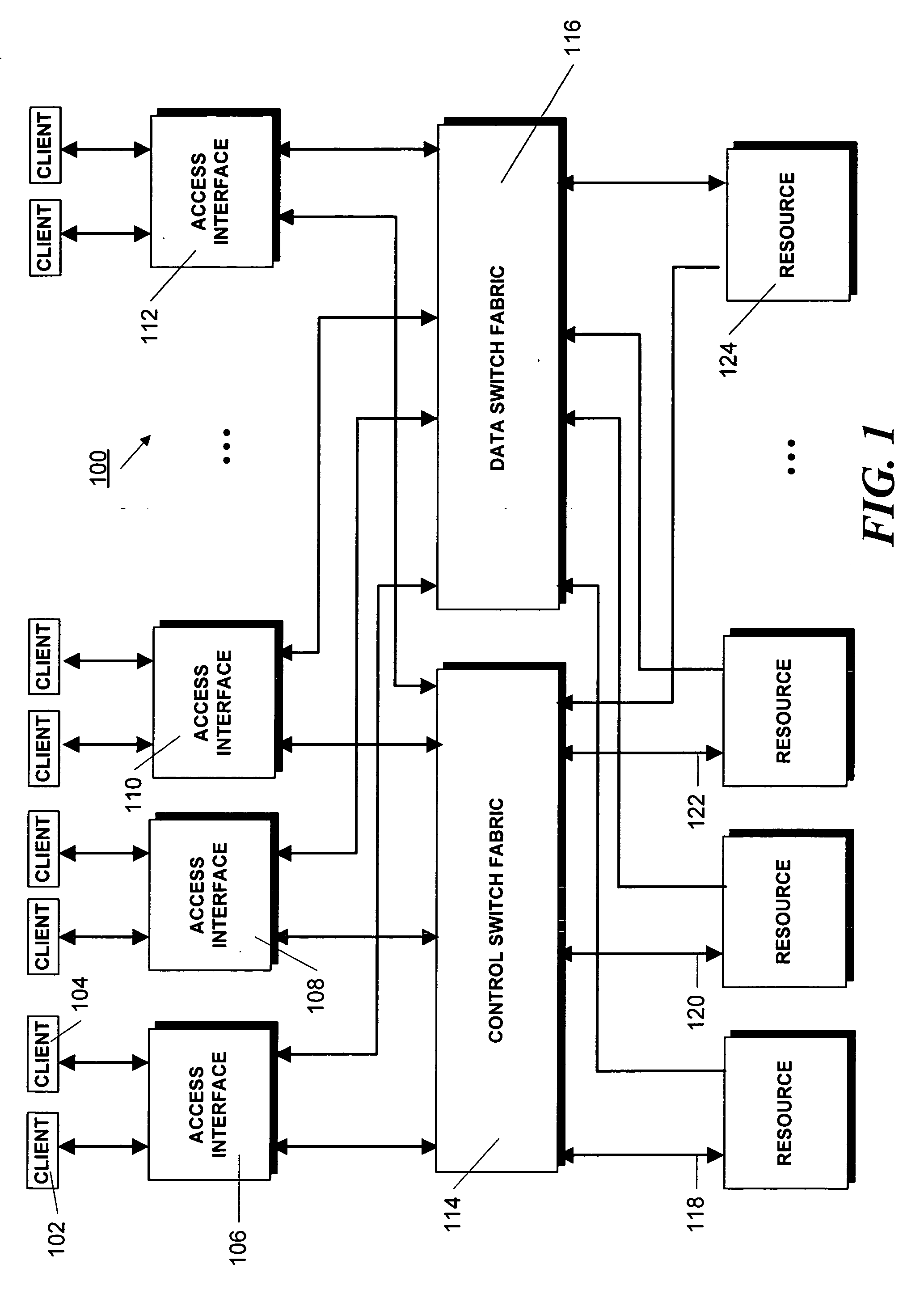

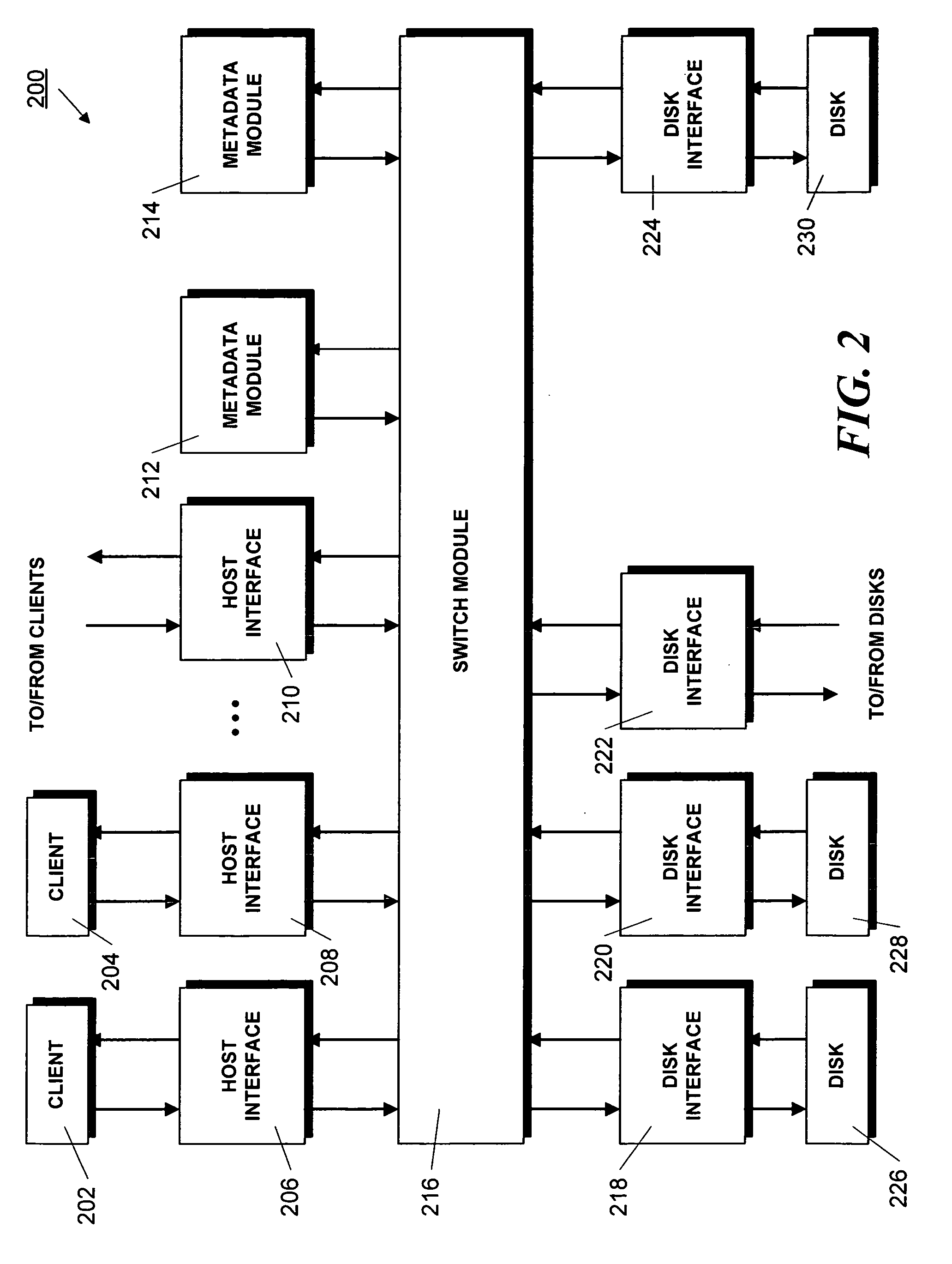

Method and apparatus for implementing high-performance, scaleable data processing and storage systems

ActiveUS20060161678A1More communication pathEliminate the problemResource allocationMultiple digital computer combinationsResource utilizationResource element

A data system architecture is described that allows multiple processing and storage resources to be connected to multiple clients so as 1) to distribute the clients' workload efficiently across the available resources; and 2) to enable scaleable expansion, both in terms of the number of clients and in the number of resources. The major features of the architecture are separate, modular, client and resource elements that can be added independently, a high-performance cross-bar data switch interconnecting these various elements, separate serial communication paths for controlling the cross-bar switch settings, separate communication paths for passing control information among the various elements and a resource utilization methodology that enables clients to distribute processing or storage tasks across all available resources, thereby eliminating “hot spots” resulting from uneven utilization of those resources.

Owner:EMC CORP

Aggregated audio/video crossbar connections

ActiveUS20070041338A1Special service provision for substationTime-division multiplexing selectionComputer networkPersonal computer

Disparate devices in an audio / video ecosystem may be connected and managed by having multiple crossbar connections at nodes in a node and leaf network. The leaves of the network may be various audio and video devices, with receivers, personal computers, and other devices comprising the nodes, which have at least some crossbar switching ability. When the nodes are connected, some or all of the devices attached to all of the nodes may be made available at each interface for control and display. Each node may act as a crossbar, allowing a user at each interface to enjoy the services of any device, regardless of the node to which the device is attached.

Owner:MICROSOFT TECH LICENSING LLC

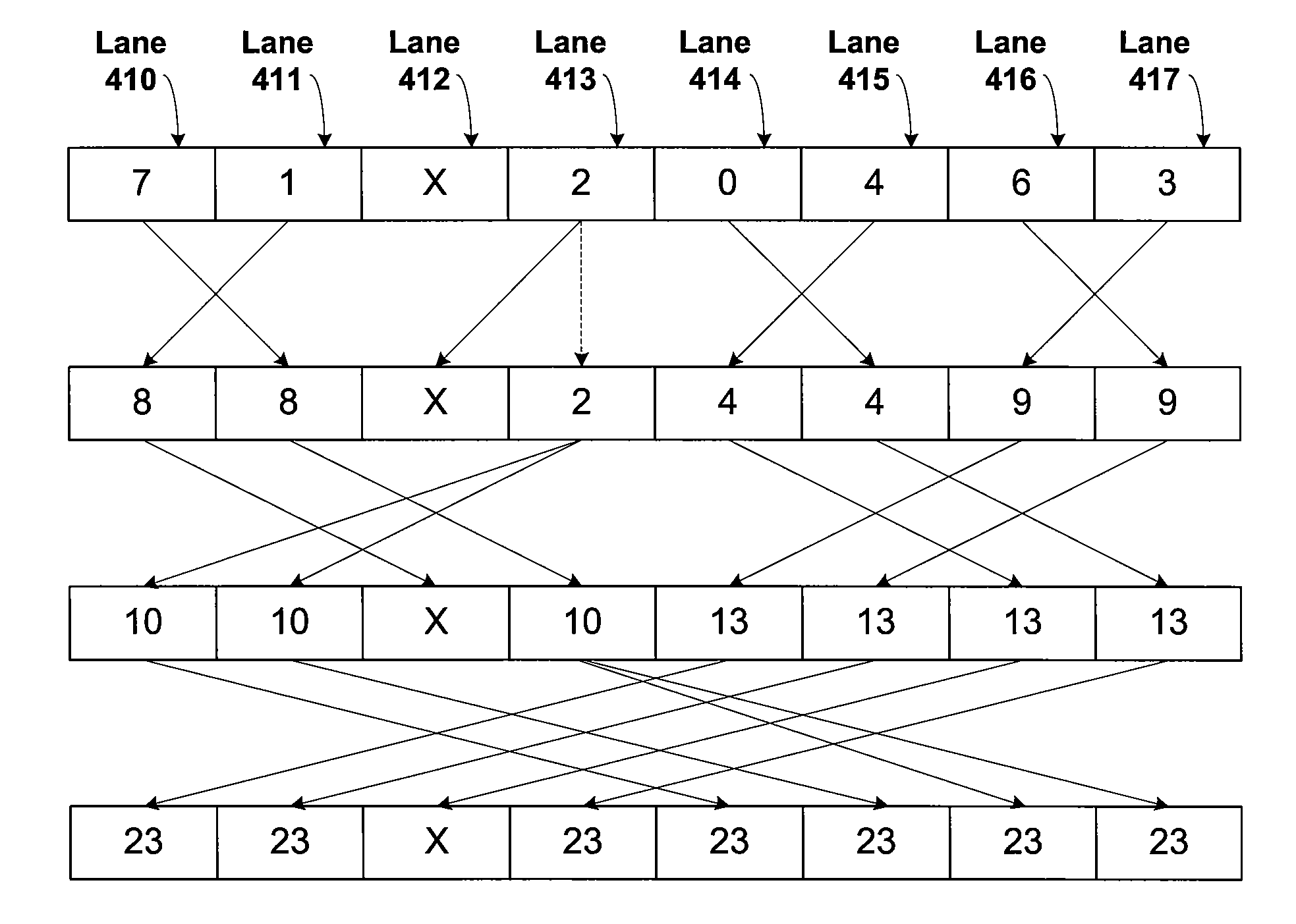

Reduction operations in a synchronous parallel thread processing system with disabled execution threads

ActiveUS8200940B1Ensure correct executionReduce usageModulated-carrier systemsGeneral purpose stored program computerInvalid DataCrossover switch

A system and method for successfully performing reduction operations in a multi-threaded SIMD (single-instruction multiple-data) system while one or more threads are disabled allows for the reduction operations to be performed without a performance penalty compared with performing the same operation with all of the threads enabled. The source data for each intermediate computation of the reduction operation is remapped by a configurable crossbar as needed to avoid using invalid data from the disabled threads. The remapping function is transparent to the user and enables correct execution of order invariant reduction operations and order dependent prefix-reduction operations.

Owner:NVIDIA CORP

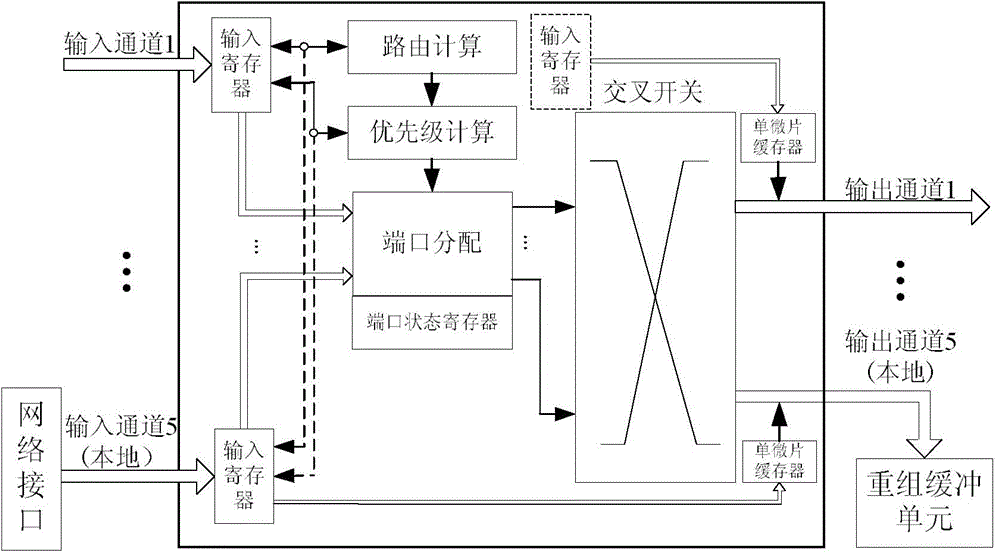

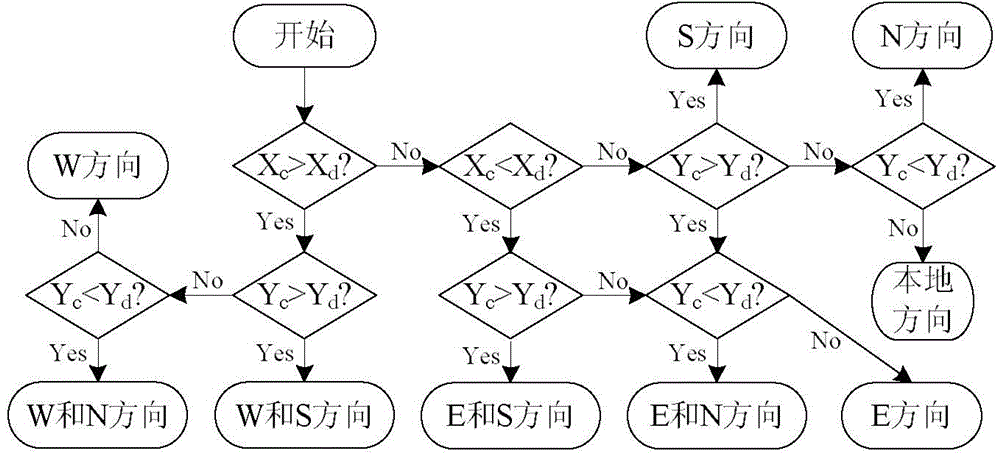

Network-on-chip router with low buffer area and routing method

The invention discloses a network-on-chip router with a low buffer area. The network-on-chip router comprises n input ports, n input registers, a routing computation module, a priority computation module, a port distribution module, a crossbar switch, p single microchip buffers and p output ports, wherein when microchips with high priority and secondary high priority compete for an effective output port, the port distribution module transmits the microchip with secondary high priority to the corresponding single micro-chip buffer according to a priority arbitration strategy, when other micro-chips with low priority compete for the port, a deflected output port is distributed; when the output port is idle, the microchip with secondary high priority is directly output to a lower routing node. According to the network-on-chip router, the area and the power consumption of a network-on-chip can be effectively reduced, meanwhile, the delay performance and the throughput performance are guaranteed, and the network-on-chip router with the low buffer area and the routing method are suitable for constructing a high-performance system on a chip.

Owner:CHINESE AERONAUTICAL RADIO ELECTRONICS RES INST

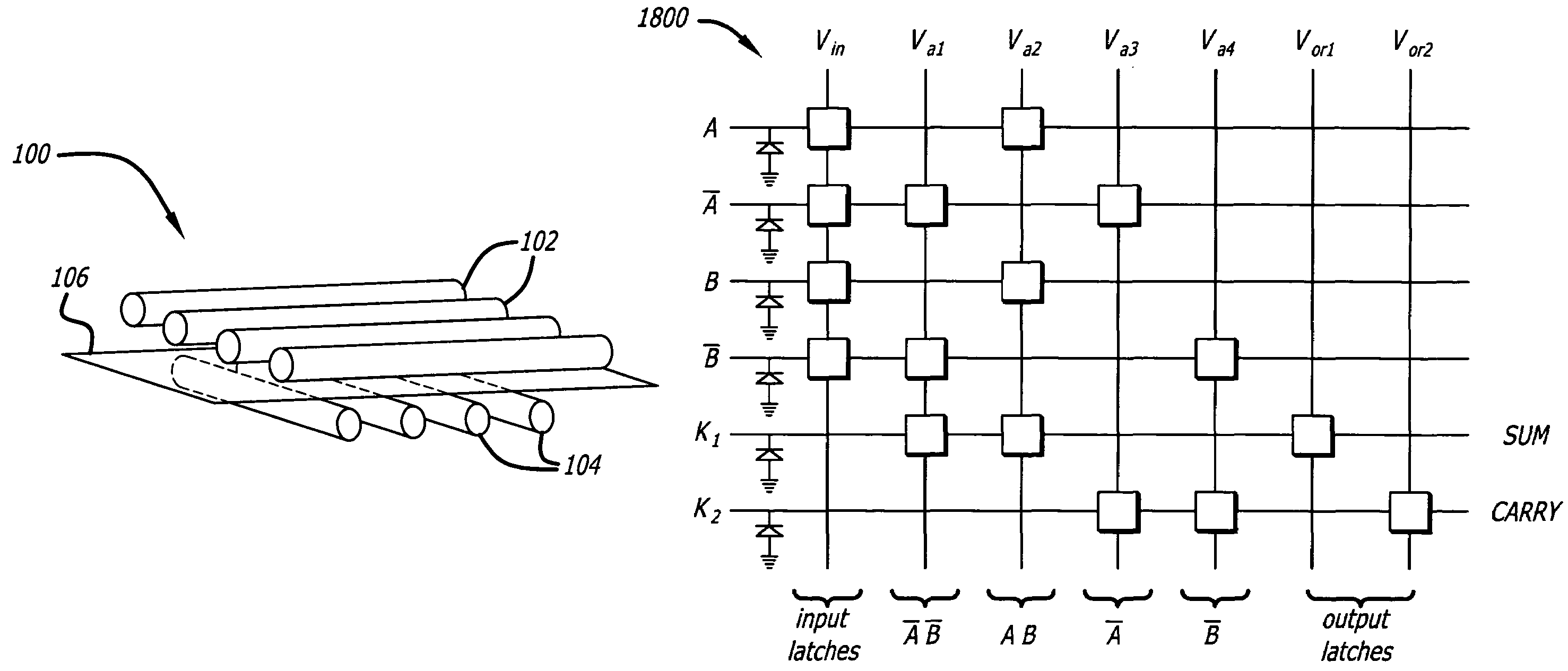

Architecture and methods for computing with reconfigurable resistor crossbars

ActiveUS7203789B2Reliability increasing modificationsLogic circuits characterised by logic functionCrossover switchComputer science

An architecture for computing includes nanometer scale crossbar switches configured to perform a logical function in response to a sequence of pulses that encode logic values in the nanometer scale crossbar switches as impedances.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

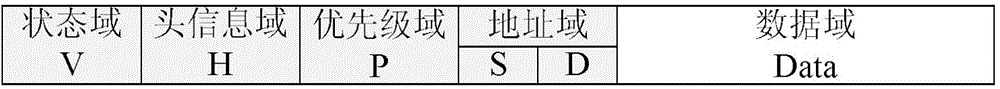

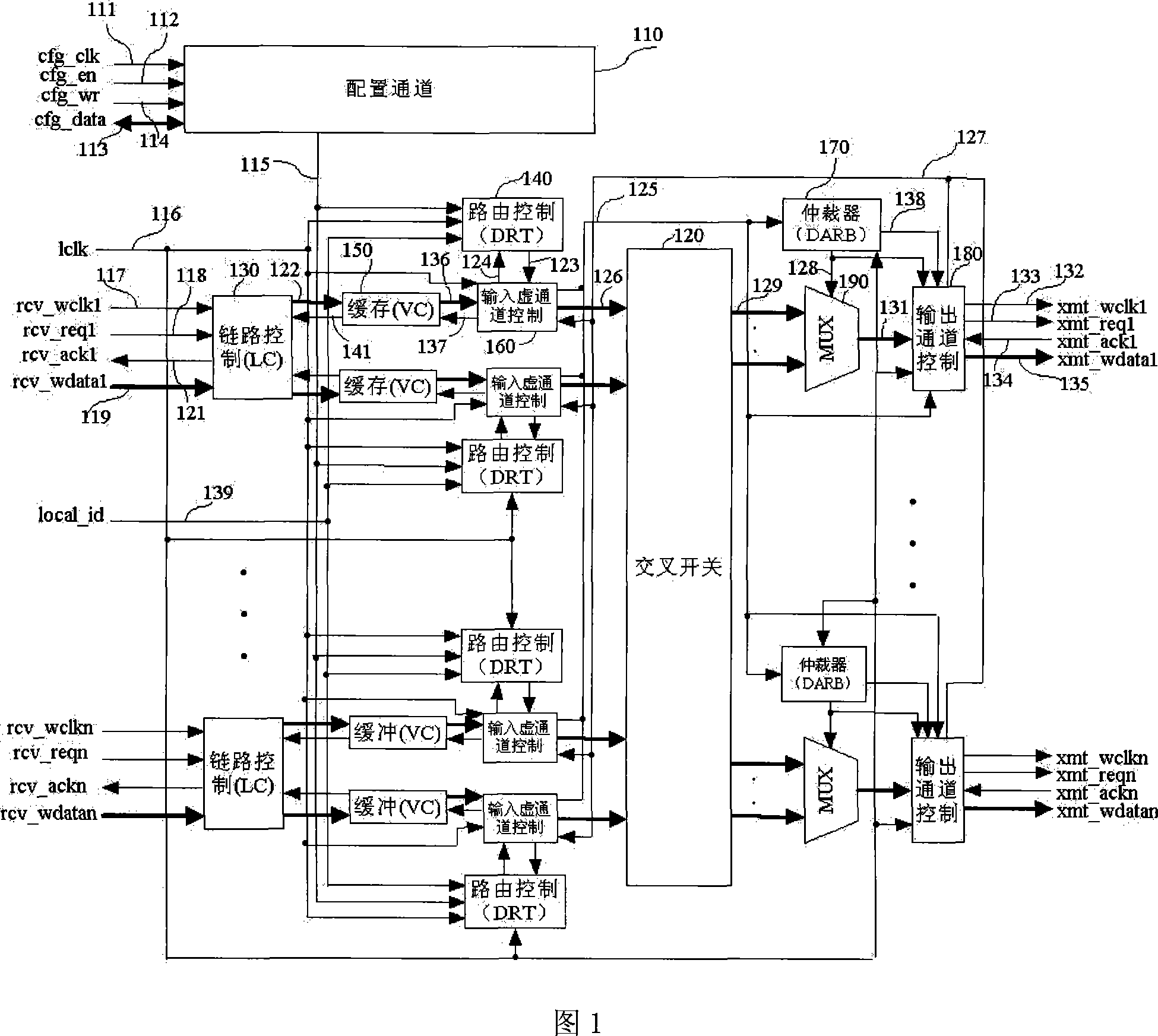

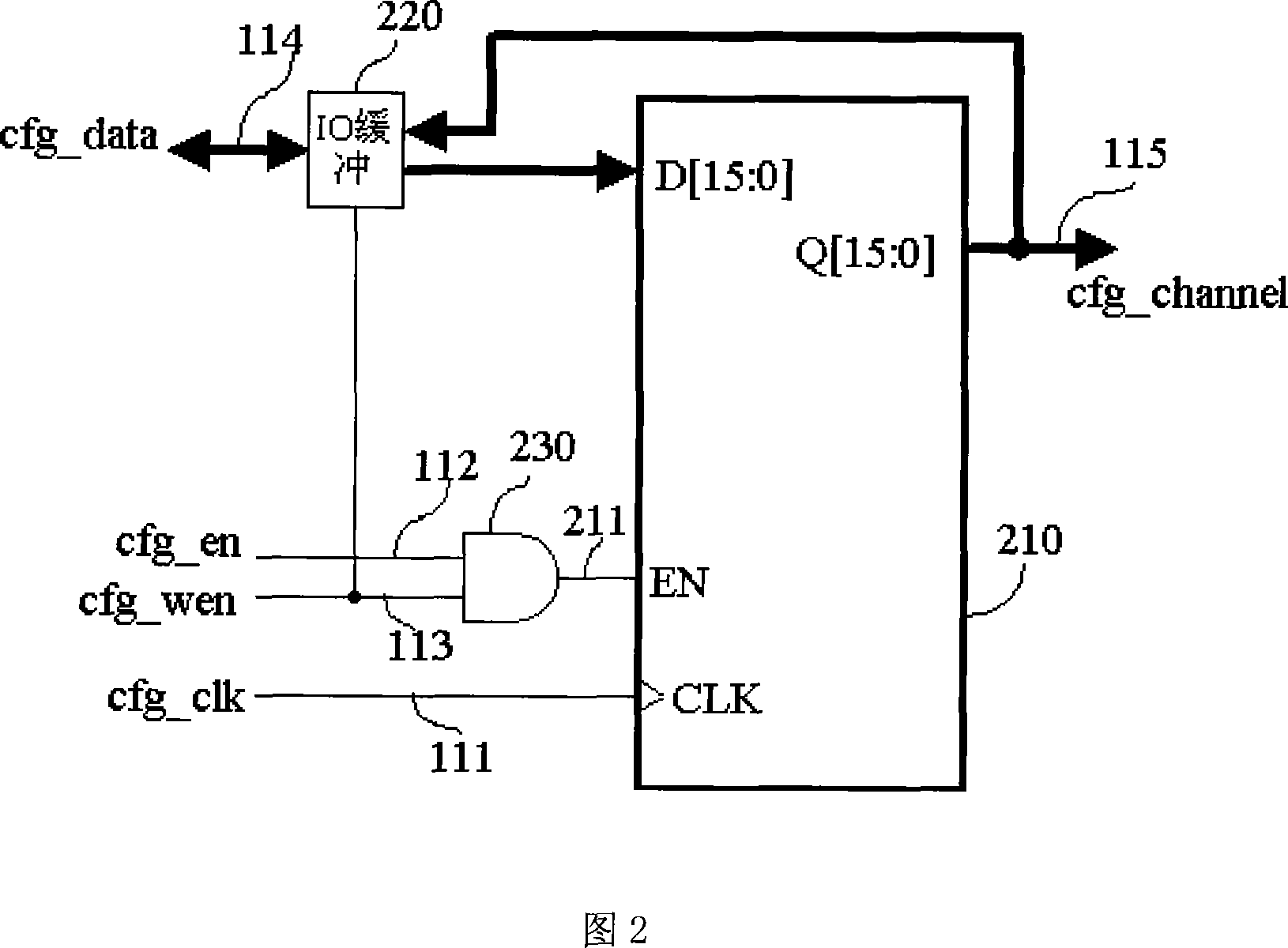

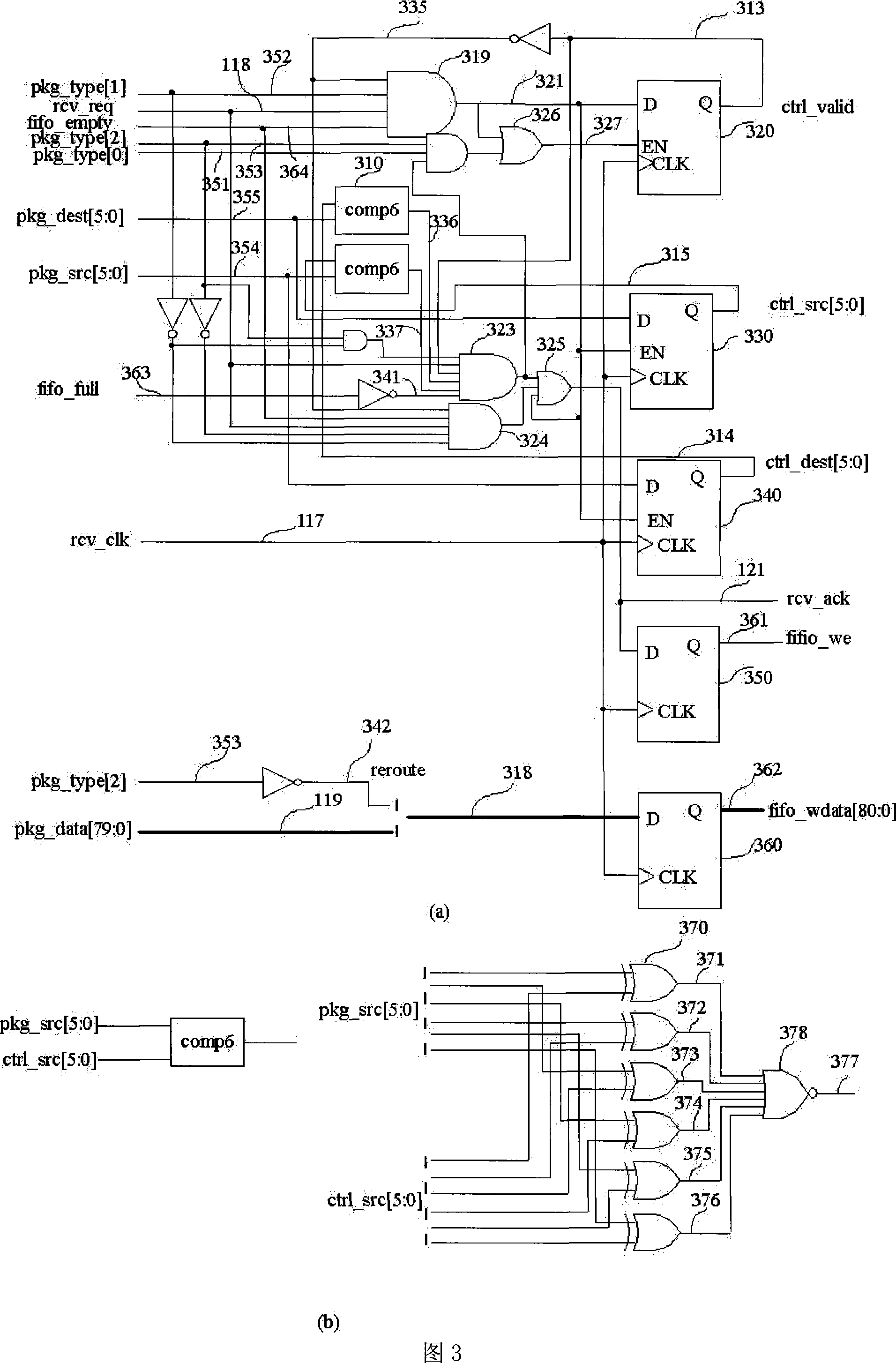

Distributed type testing on-chip network router

InactiveCN101232456ASimple hardware structureStrong scalabilityDigital computer detailsData switching networksMulti processorTransmission channel

The invention discloses a distributing type testable chip network router, which comprises a plurality of physical transmission channels with configurable channel quantity for providing the transmission of physical data; a router configuration channel which is independent of a data transmission network and supports the connection test of the router; a plurality of channel link controllers used for finishing the response to an input request and the allocation to a virtual channel; a cross switch used for providing a full connection between an inputting virtual channel and an outputting channel; a plurality of distributing type route controllers which are distributed at the inputting virtual channel and determine the direction of microchips forwarding according to microchip head information in the channel; and a plurality of distributing type arbitration devices which are distributed in the outputting channel and determine the property ownership of the outputting channel when a plurality of inputting virtual channels request occupying the outputting channel. The router of the invention is applicable for chip network systems in the multiprocessor system chip, and has the advantages that the router is reliable, highly-efficient, testable and expandable.

Owner:ZHEJIANG UNIV

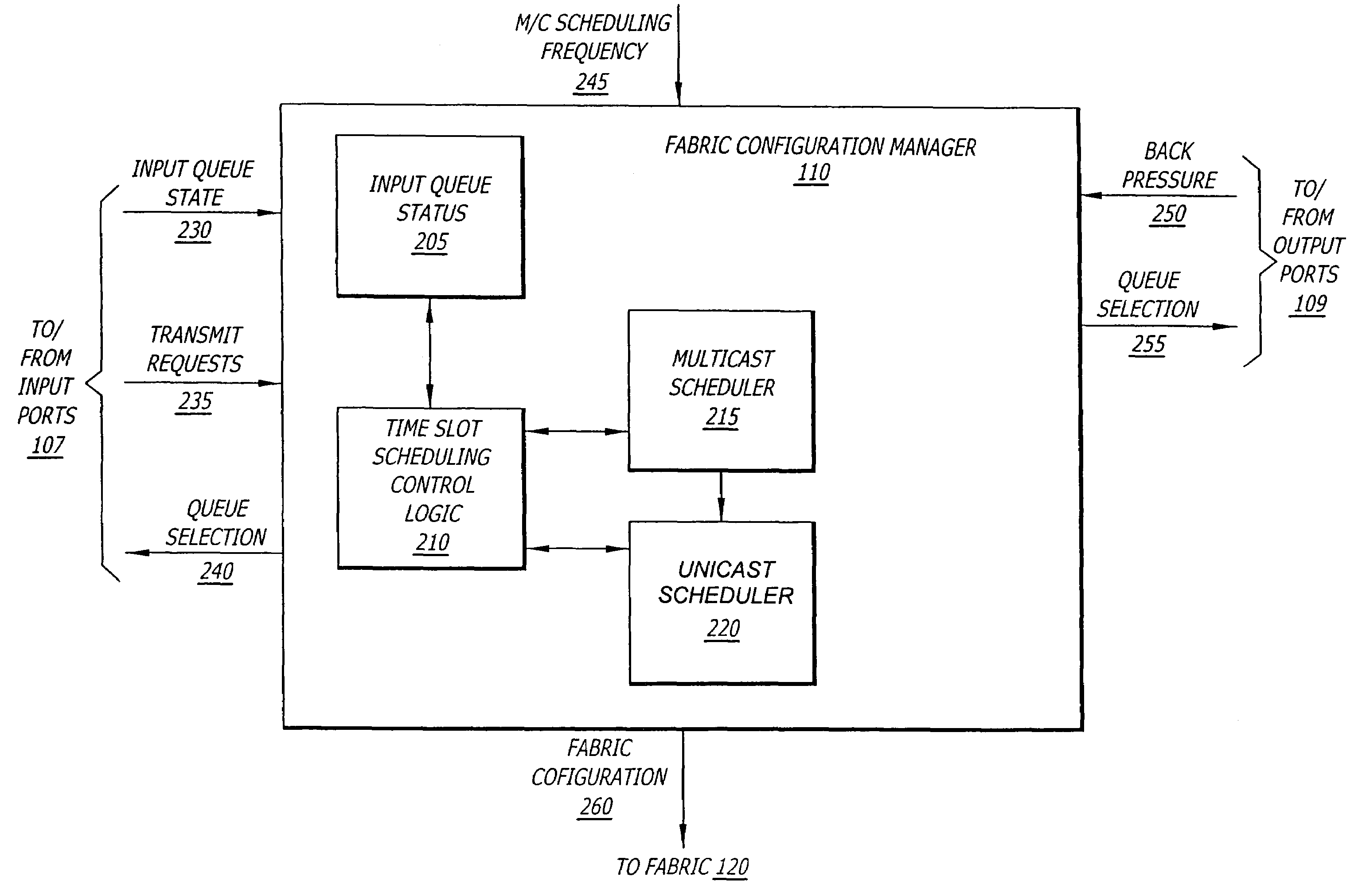

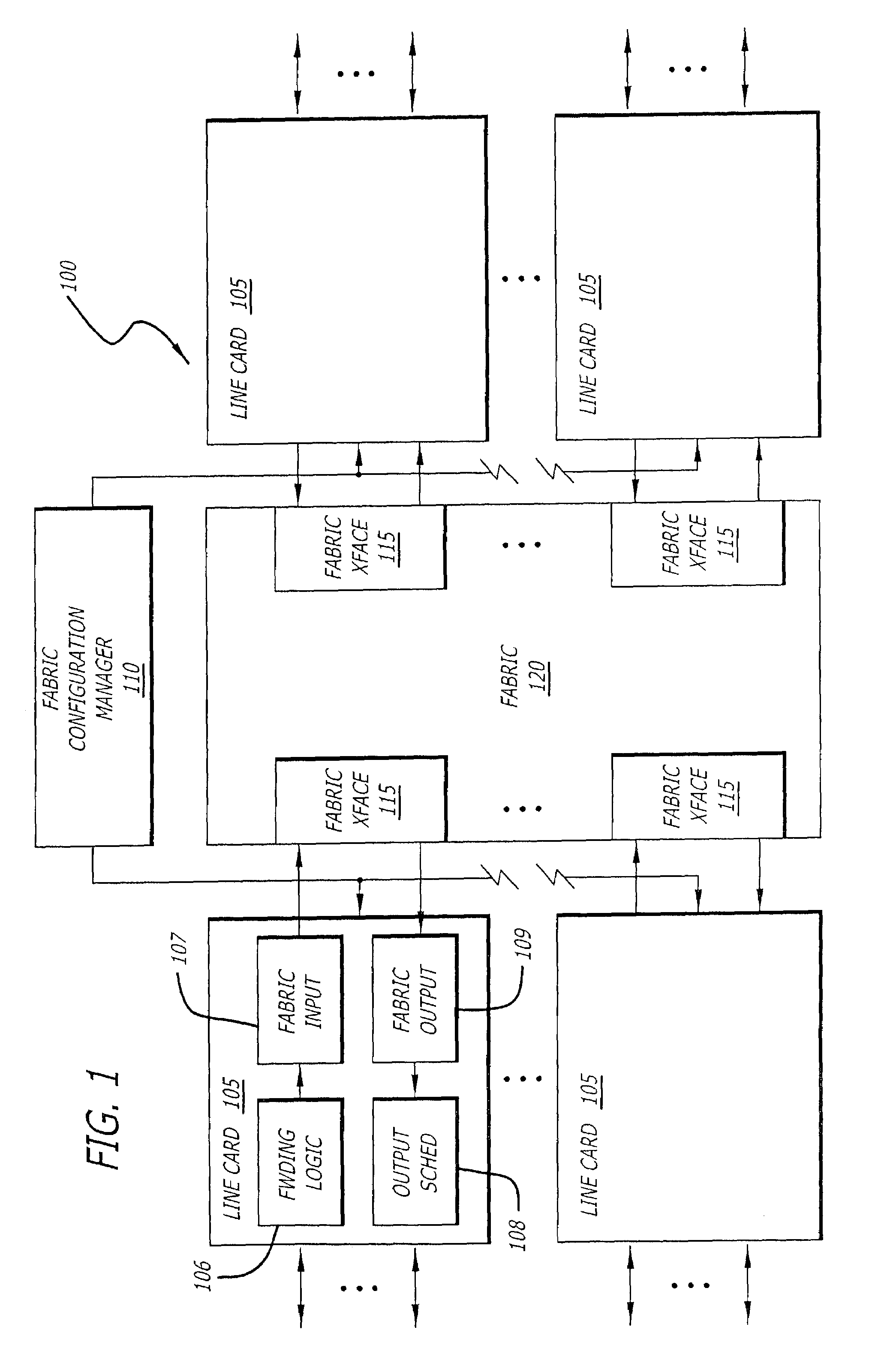

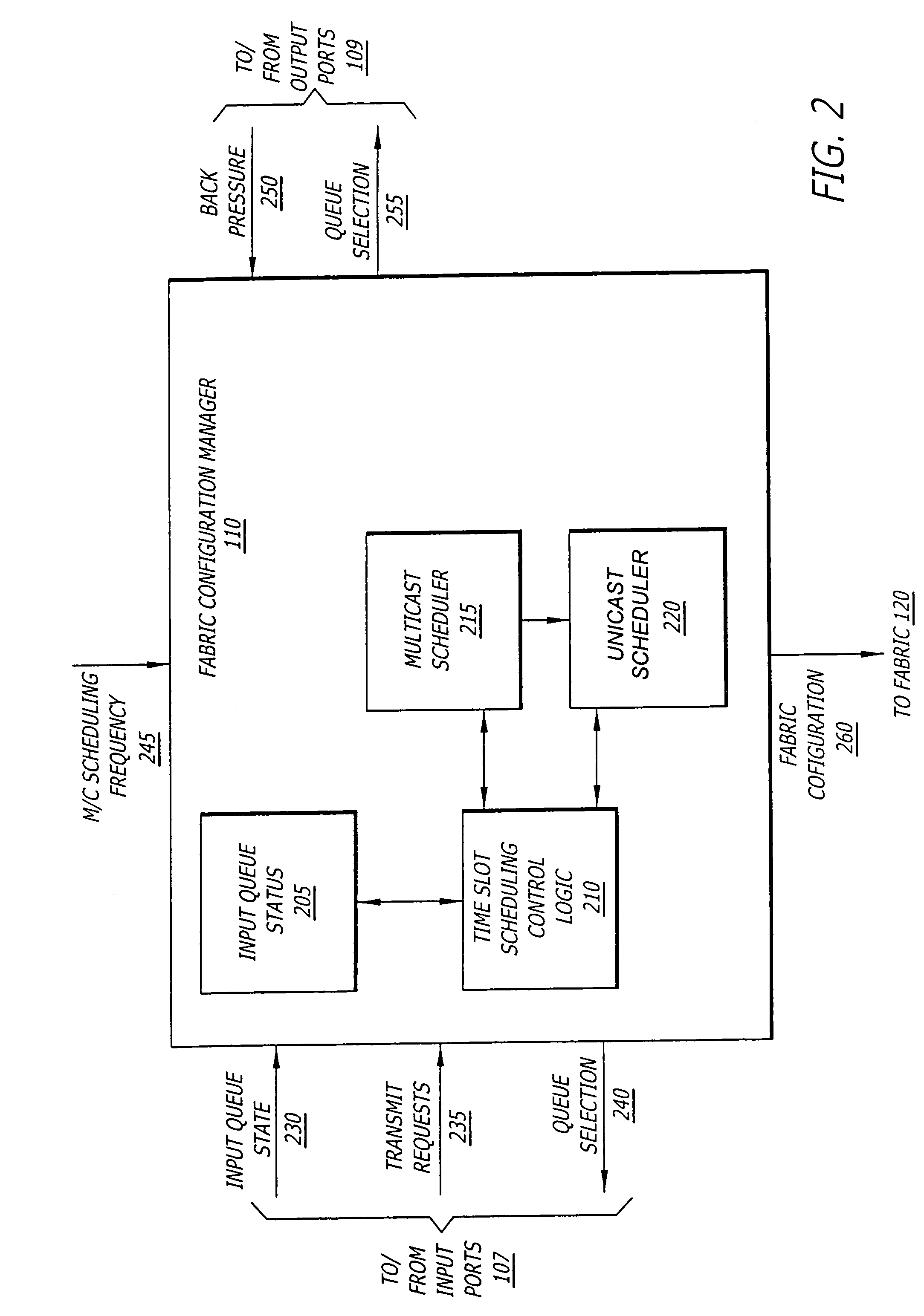

Multicast and unicast scheduling for a network device

InactiveUS7519065B2Special service provision for substationData switching by path configurationCell schedulingClass of service

A method and apparatus are provided for scheduling unicast and multicast data in an input-queued network device. According to one aspect of the present invention, a combined schedule is created by pipelined staging of multicast and unicast scheduling. Multicast cells are scheduled for transmission among multiple interfaces of a crossbar by performing a multicast cell scheduling cycle for multiple classes of service that are supported by the network device. Then, unicast cells are scheduled for transmission among the interfaces at a lower priority than the previously scheduled multicast cells by performing a unicast cell scheduling cycle for the multiple classes of service using only those interfaces that remain unmatched after completion of the multicast cell scheduling cycle.

Owner:AVAYA MANAGEMENT LP

System and method for synchronizing switch fabric backplane link management credit counters

InactiveUS7304987B1Multiplex system selection arrangementsData switching by path configurationPacket communicationStructure of Management Information

A system and method are provided for resynchronizing backplane link management credit counters in a packet communications switch fabric. The method comprises: at an input port card ingress port, accepting information packets including cells and cell headers with destination information; modifying the destination information in the received cell headers; routing information packets between the input port card and output port cards on backplane data links through an intervening crossbar; at the input port card, maintaining a credit counter for each output port card channel; decrementing the counter in response to transmitting cells from the input port card; generating credits in response to transmitting cells from an output port card channel; sending the generated credits to increment the counter, using the modified destination information; and, using the generated credit flow to resynchronize the credit counter.

Owner:QUALCOMM INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com