Patents

Literature

2253results about "Instruction analysis" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

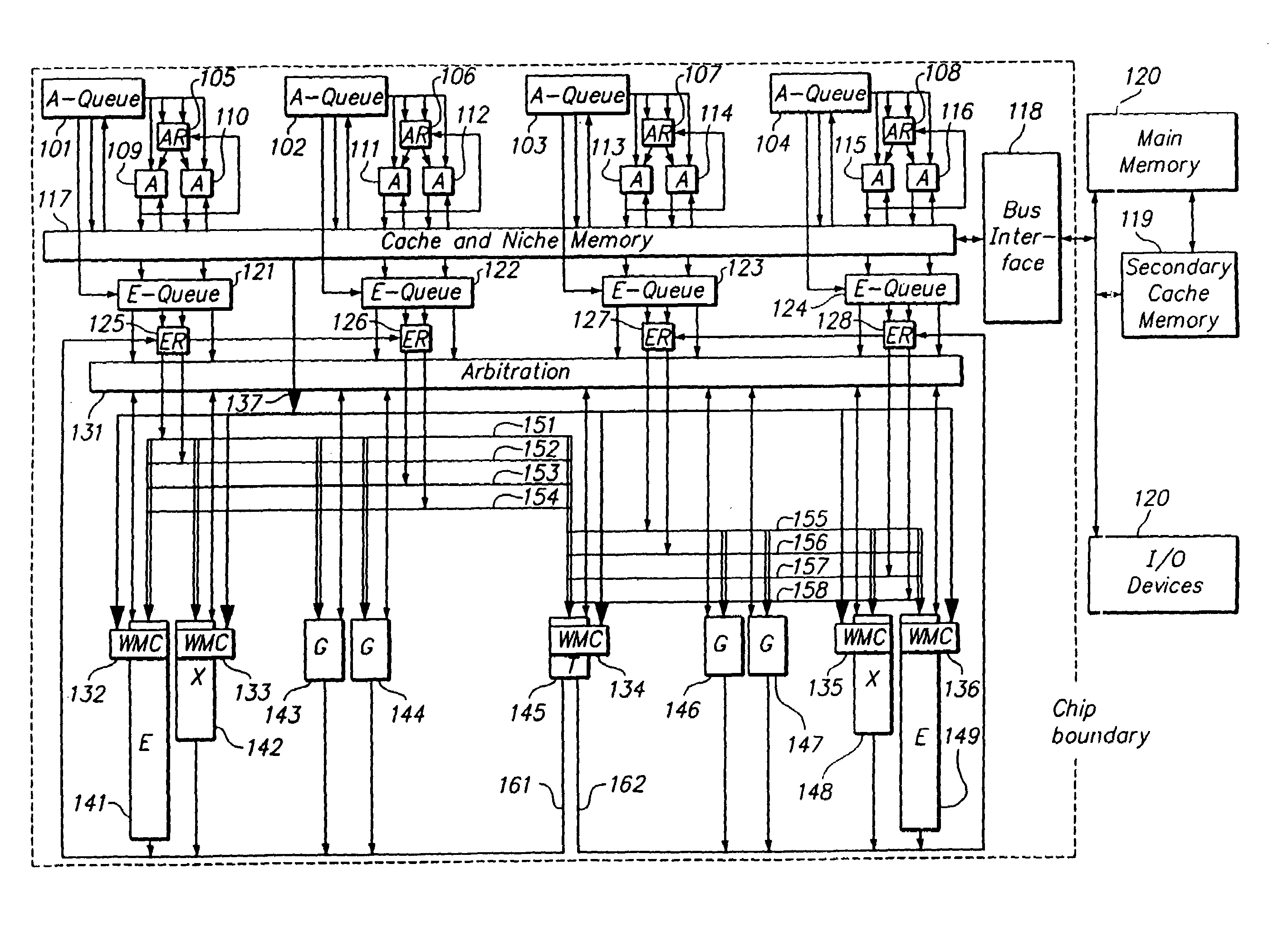

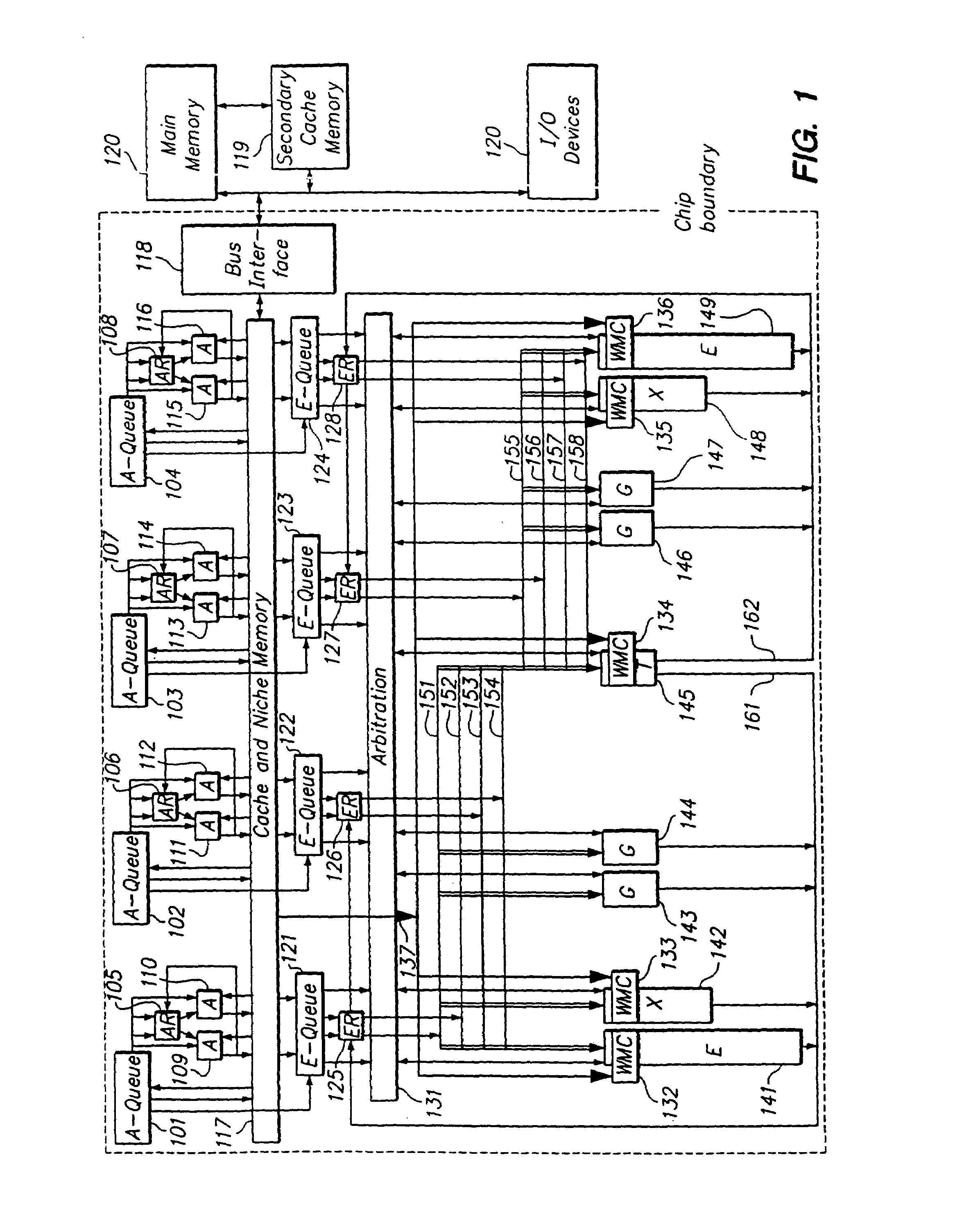

Processor-cache system and method

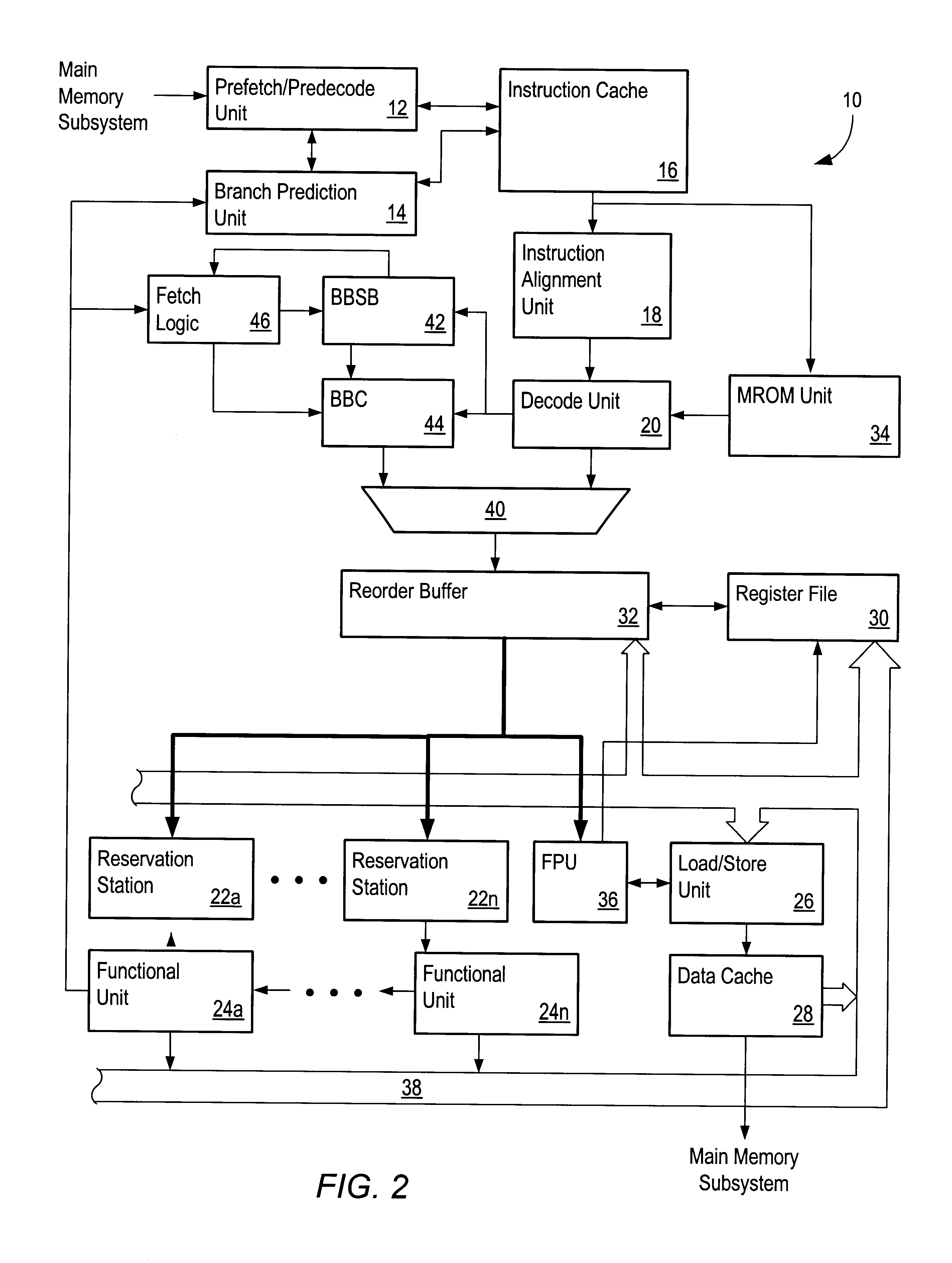

ActiveUS9047193B2Shorten the counting processEfficient and uniform structureEnergy efficient ICTRegister arrangementsAddress generation unitProcessor register

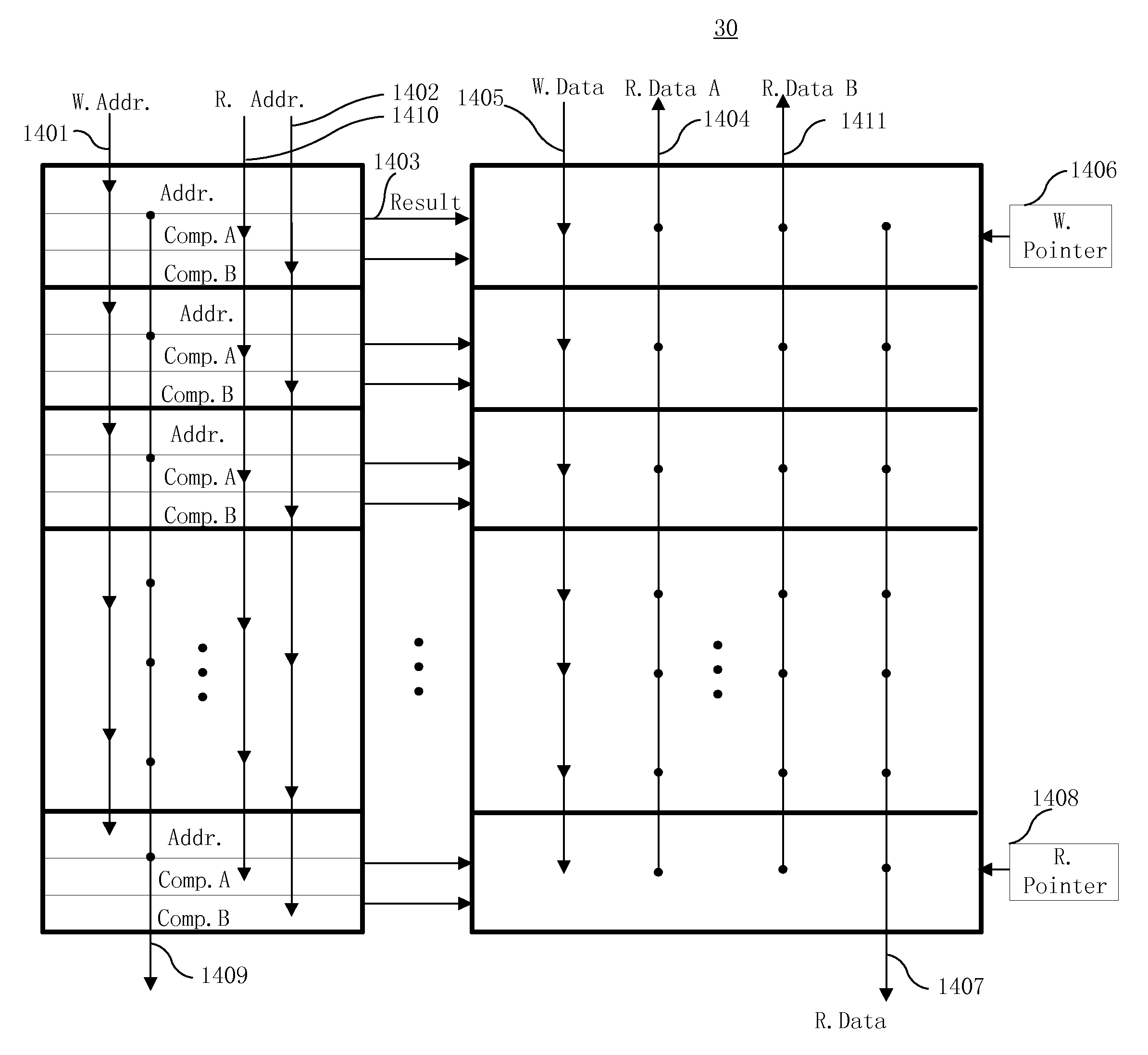

A digital system is provided. The digital system includes an execution unit, a level-zero (L0) memory, and an address generation unit. The execution unit is coupled to a data memory containing data to be used in operations of the execution unit. The L0 memory is coupled between the execution unit and the data memory and configured to receive a part of the data in the data memory. The address generation unit is configured to generate address information for addressing the L0 memory. Further, the L0 memory provides at least two operands of a single instruction from the part of the data to the execution unit directly, without loading the at least two operands into one or more registers, using the address information from the address generation unit.

Owner:SHANGHAI XINHAO MICROELECTRONICS

Microprocessors

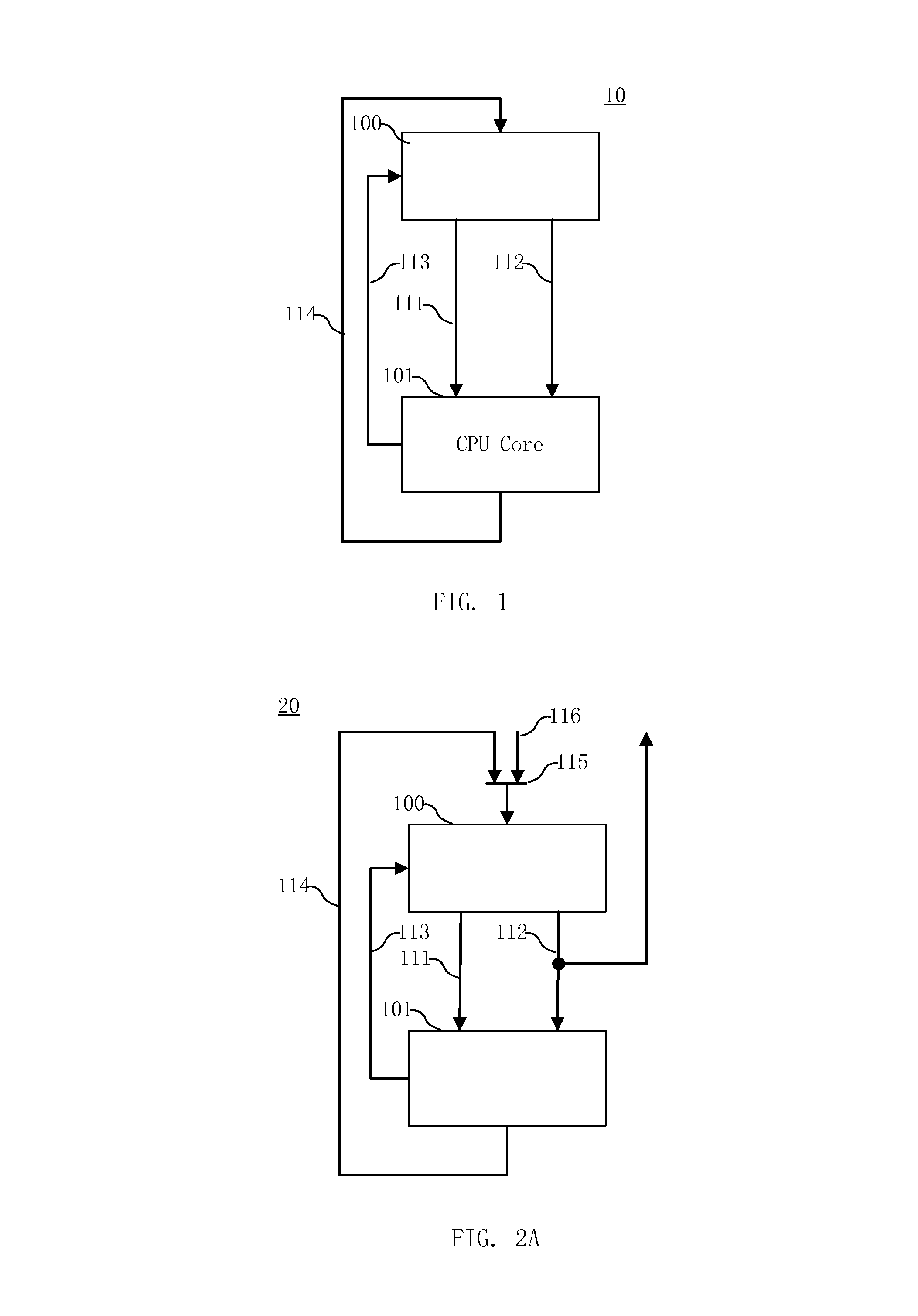

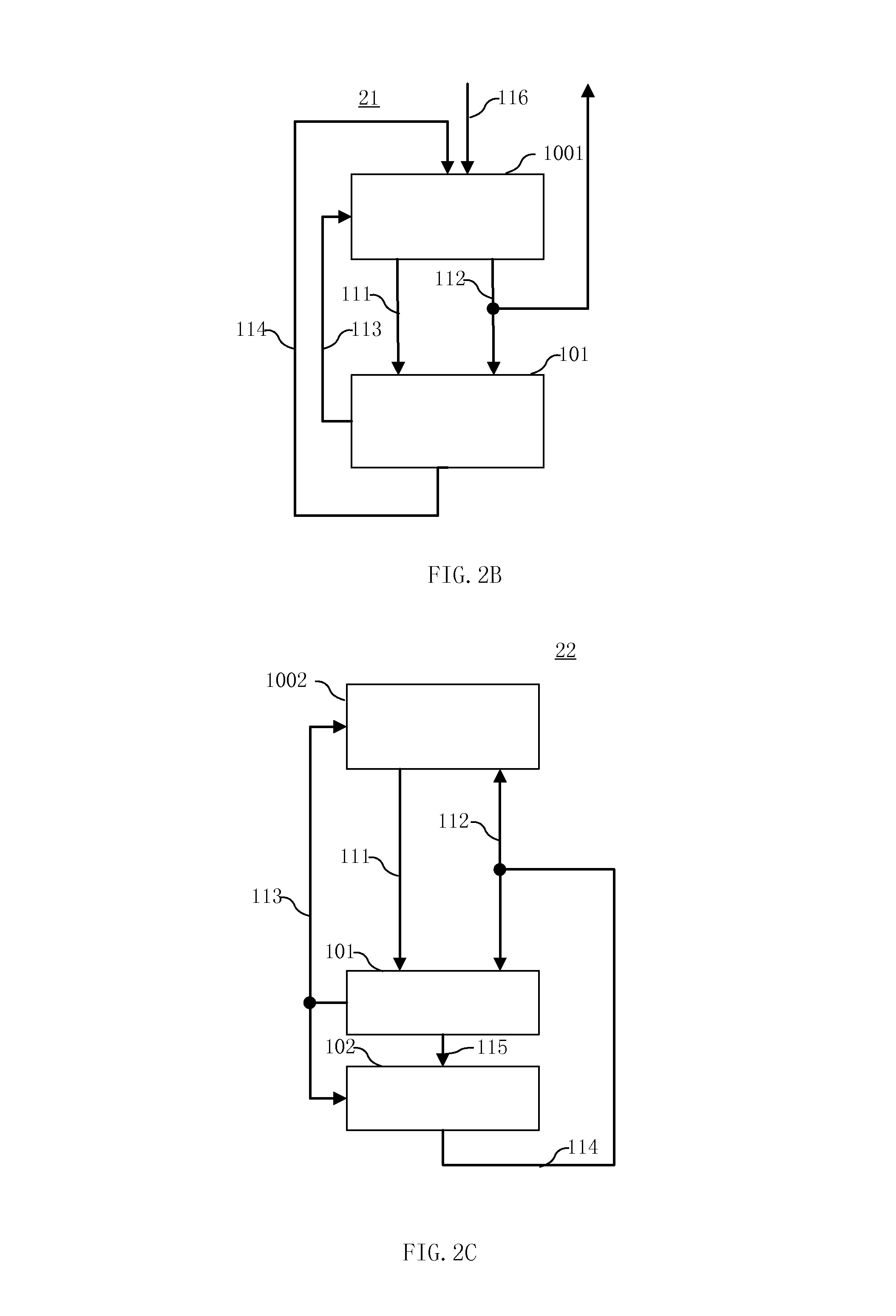

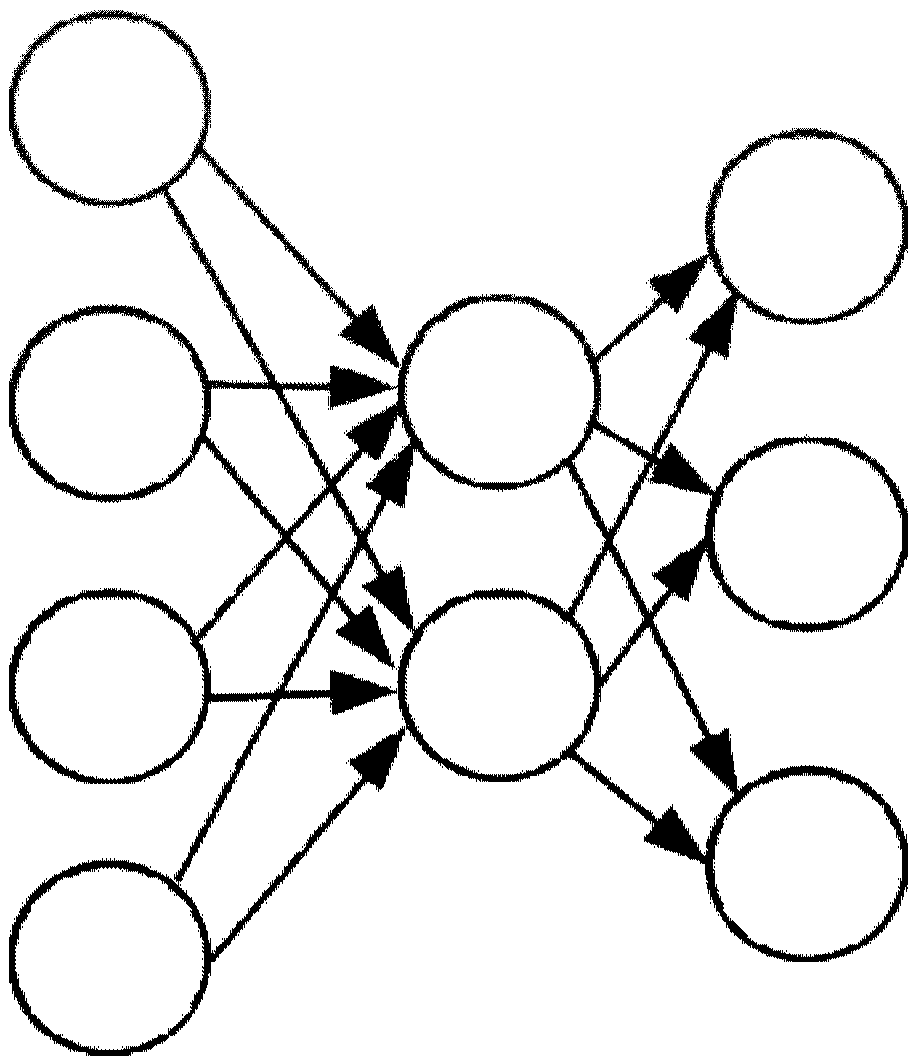

A processor (100) is provided that is a programmable fixed point digital signal processor (DSP) with variable instruction length, offering both high code density and easy programming. Architecture and instruction set are optimized for low power consumption and high efficiency execution of DSP algorithms, such as for wireless telephones, as well as pure control tasks. The processor includes an instruction buffer unit (106), a program flow control unit (108), an address / data flow unit (110), a data computation unit (112), and multiple interconnecting busses. Dual multiply-accumulate blocks improve processing performance. A memory interface unit (104) provides parallel access to data and instruction memories. The instruction buffer is operable to buffer single and compound instructions pending execution thereof. A decode mechanism is configured to decode instructions from the instruction buffer. The use of compound instructions enables effective use of the bandwidth available within the processor. A soft dual memory instruction can be compiled from separate first and second programmed memory instructions. Instructions can be conditionally executed or repeatedly executed. Bit field processing and various addressing modes, such as circular buffer addressing, further support execution of DSP algorithms. The processor includes a multistage execution pipeline with pipeline protection features. Various functional modules can be separately powered down to conserve power. The processor includes emulation and code debugging facilities with support for cache analysis.

Owner:TEXAS INSTR INC

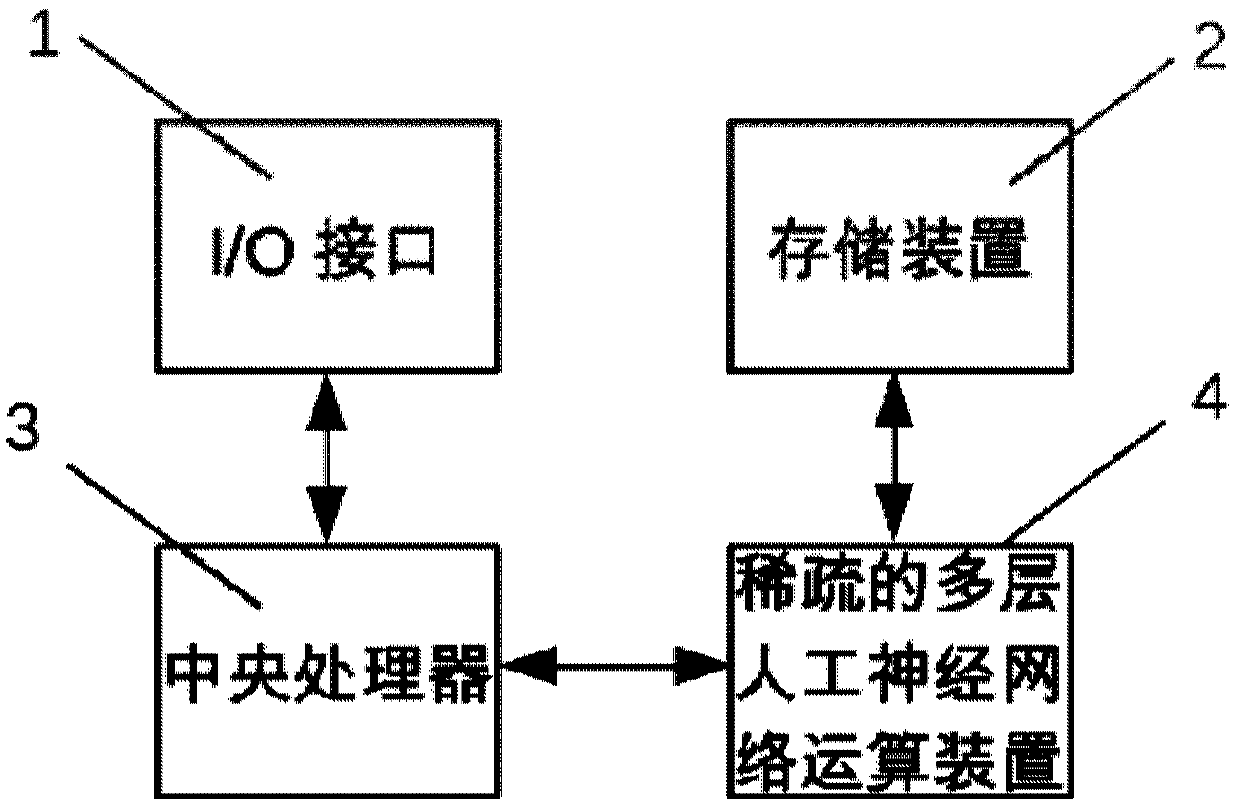

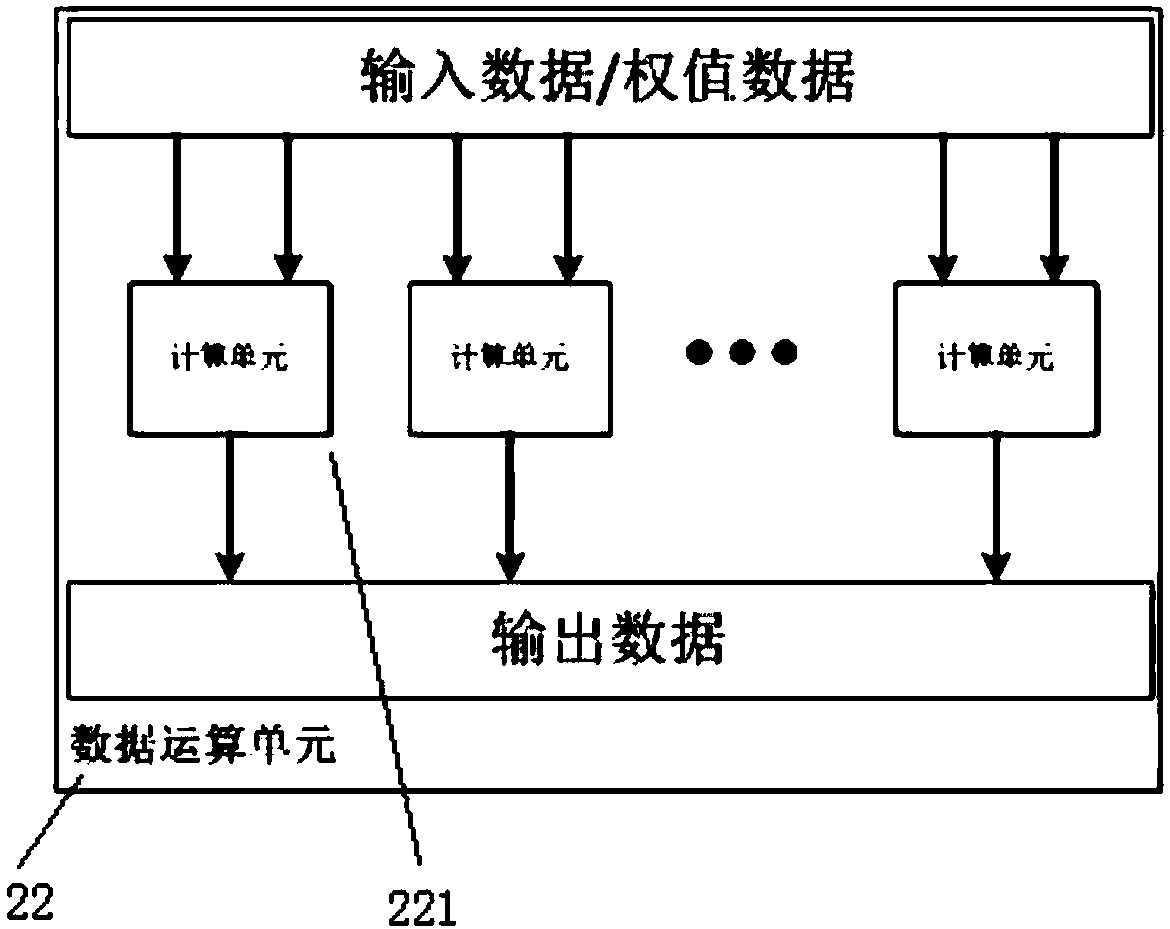

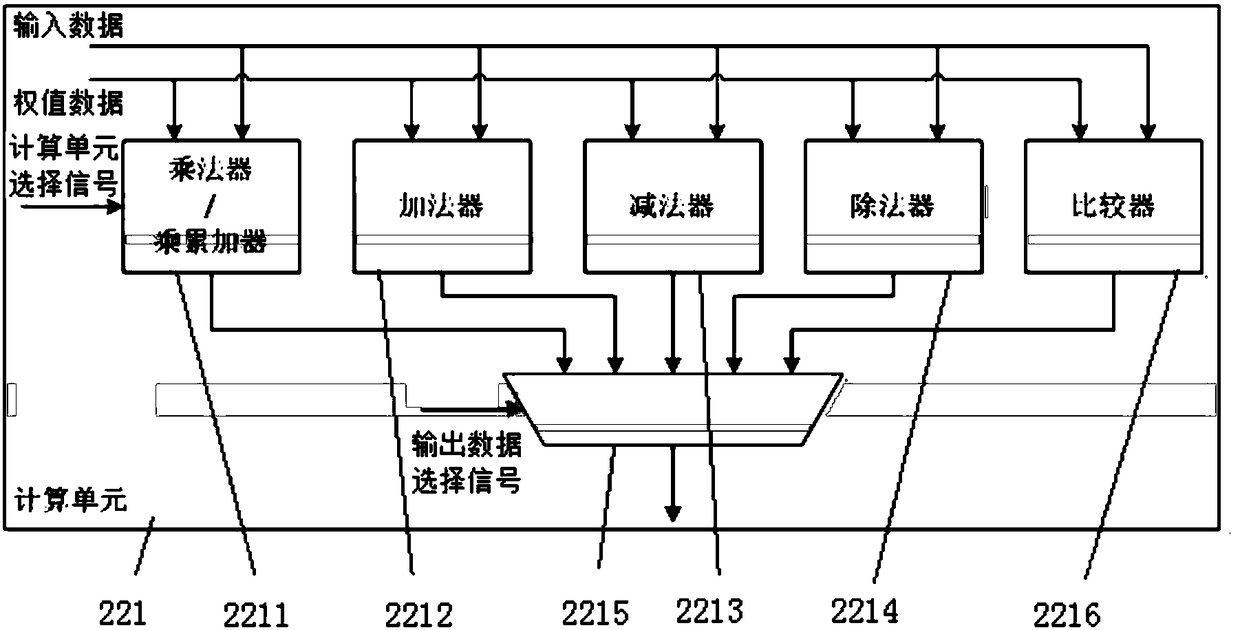

Artificial neural network calculating device and method for sparse connection

ActiveCN105512723ASolve the problem of insufficient computing performance and high front-end decoding overheadAdd supportMemory architecture accessing/allocationDigital data processing detailsActivation functionMemory bandwidth

An artificial neural network calculating device for sparse connection comprises a mapping unit used for converting input data into the storage mode that input nerve cells and weight values correspond one by one, a storage unit used for storing data and instructions, and an operation unit used for executing corresponding operation on the data according to the instructions. The operation unit mainly executes three steps of operation, wherein in the first step, the input nerve cells and weight value data are multiplied; in the second step, addition tree operation is executed, the weighted output nerve cells processed in the first step are added level by level through an addition tree, or the output nerve cells are added with offset to obtain offset-added output nerve cells; in the third step, activation function operation is executed, and the final output nerve cells are obtained. By means of the device, the problems that the operation performance of a CPU and a GPU is insufficient, and the expenditure of front end coding is large are solved, support to a multi-layer artificial neural network operation algorithm is effectively improved, and the problem that memory bandwidth becomes a bottleneck of multi-layer artificial neural network operation and the performance of a training algorithm of the multi-layer artificial neural network operation is solved.

Owner:CAMBRICON TECH CO LTD

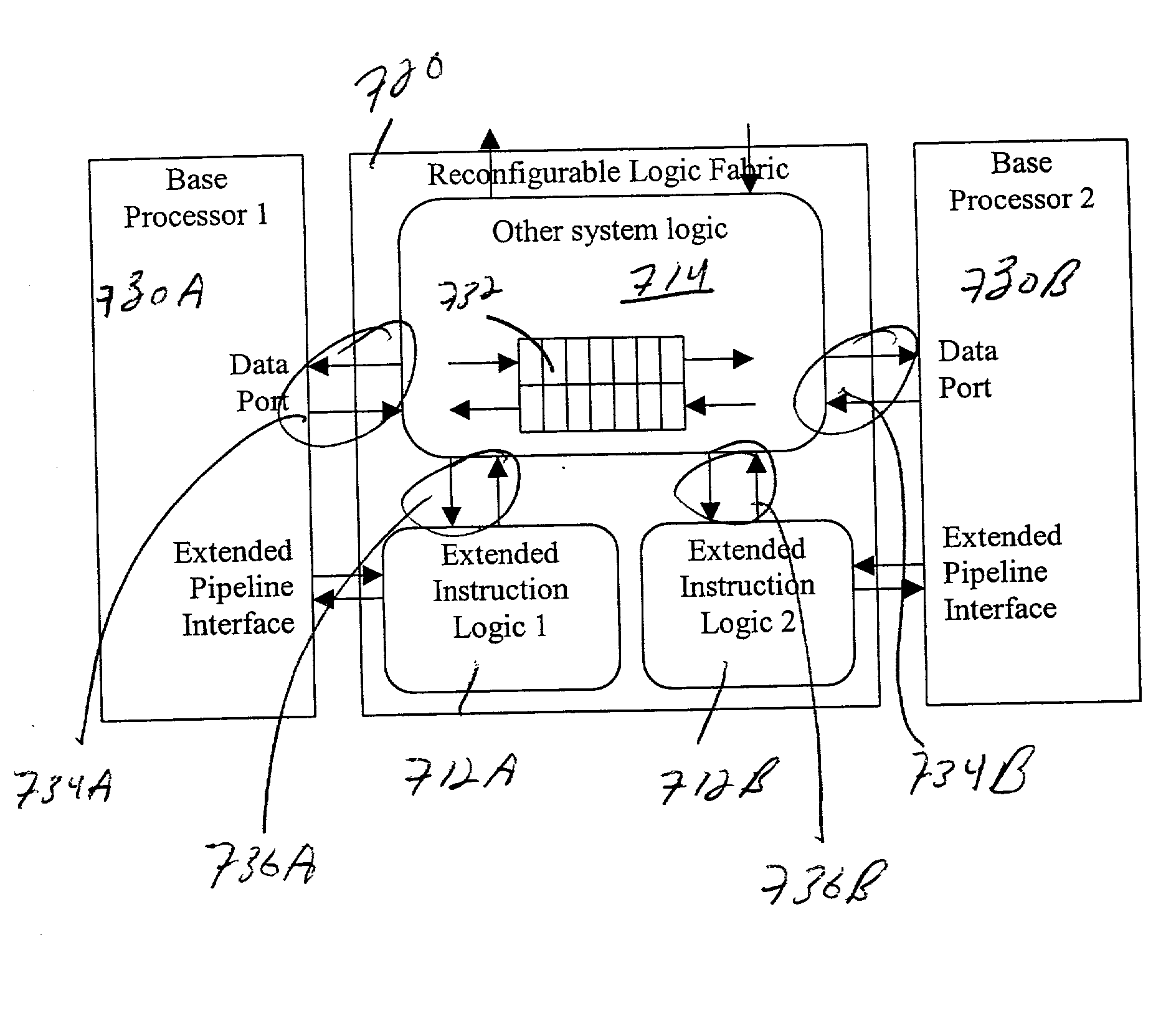

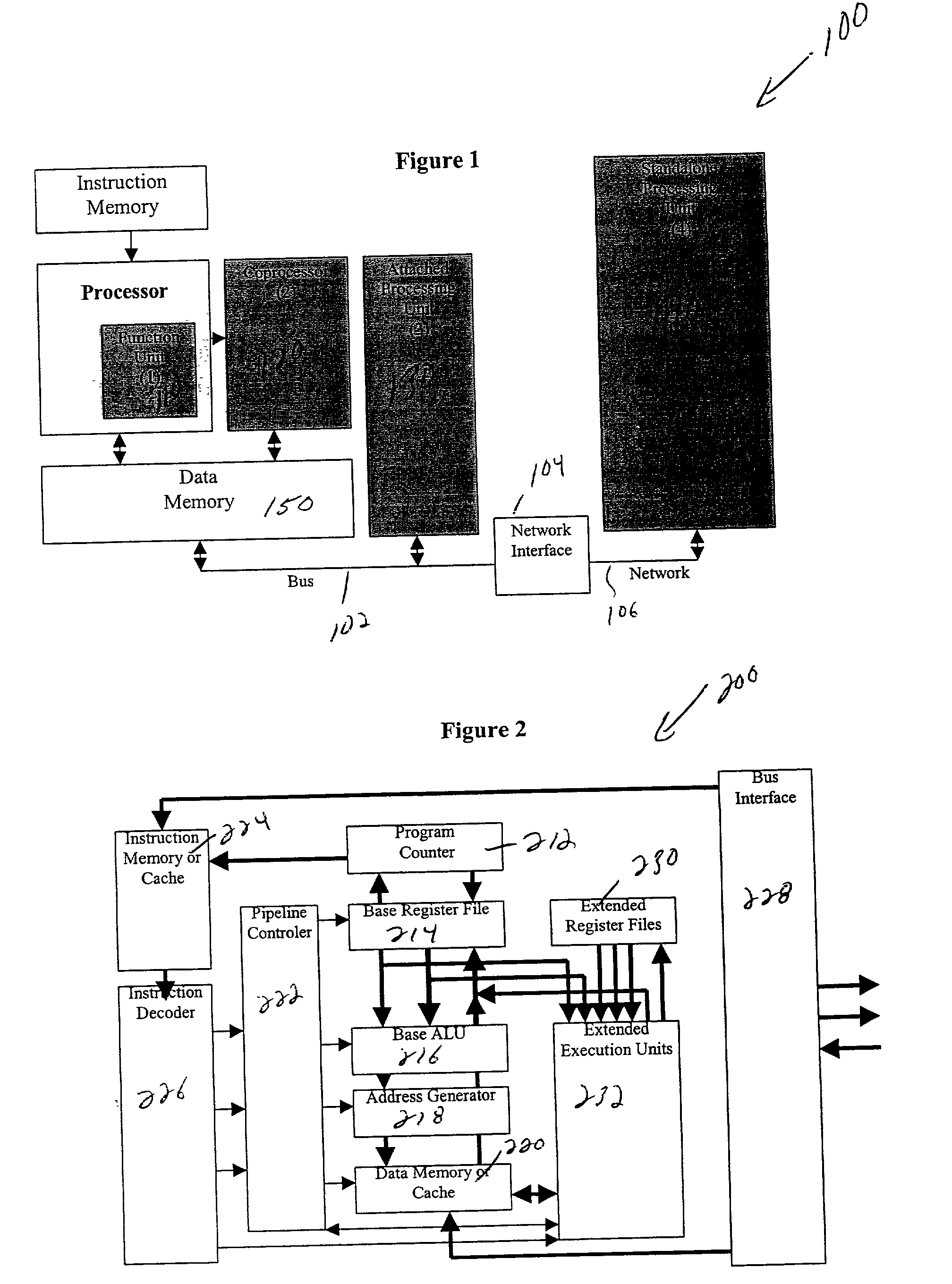

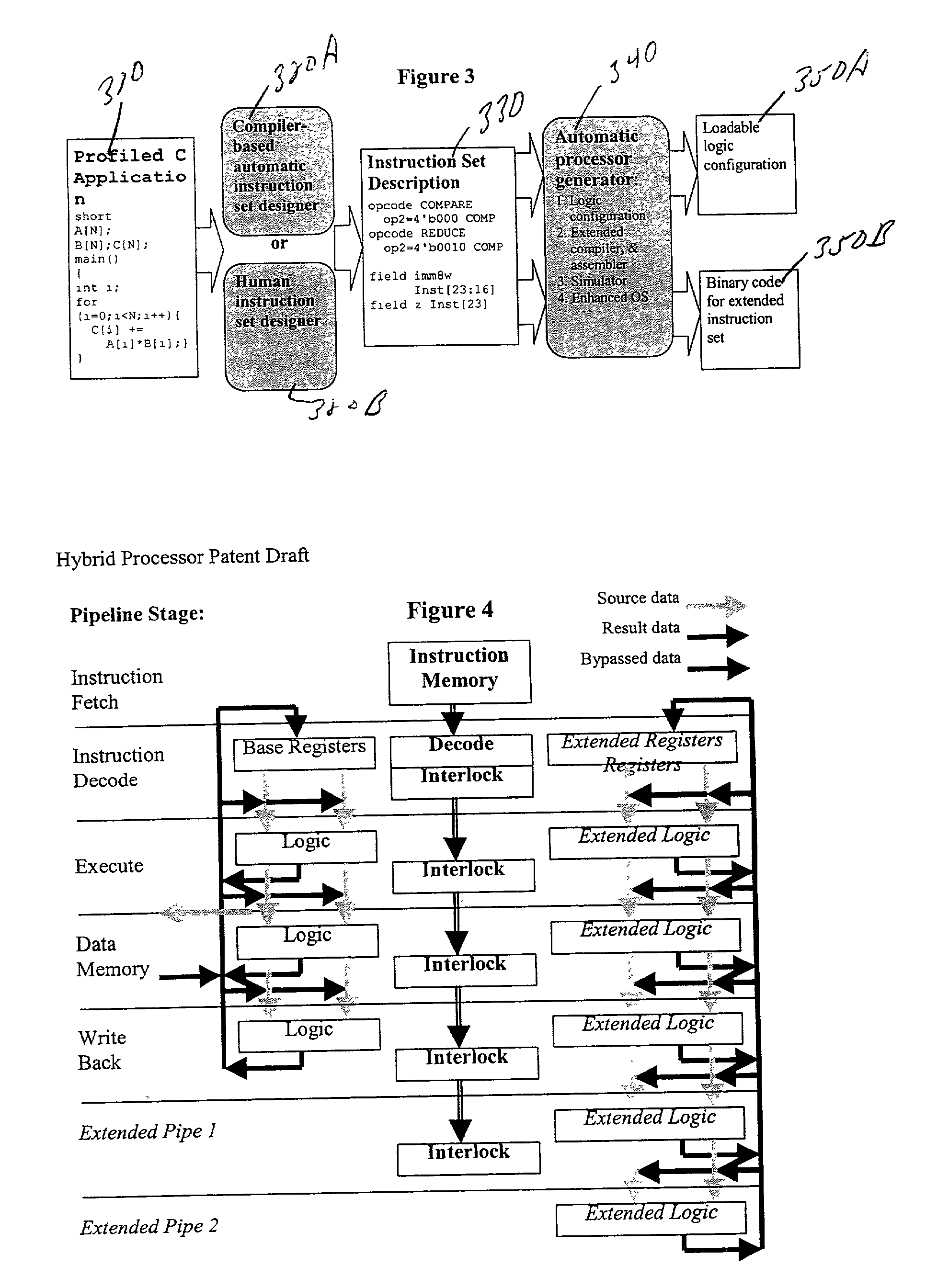

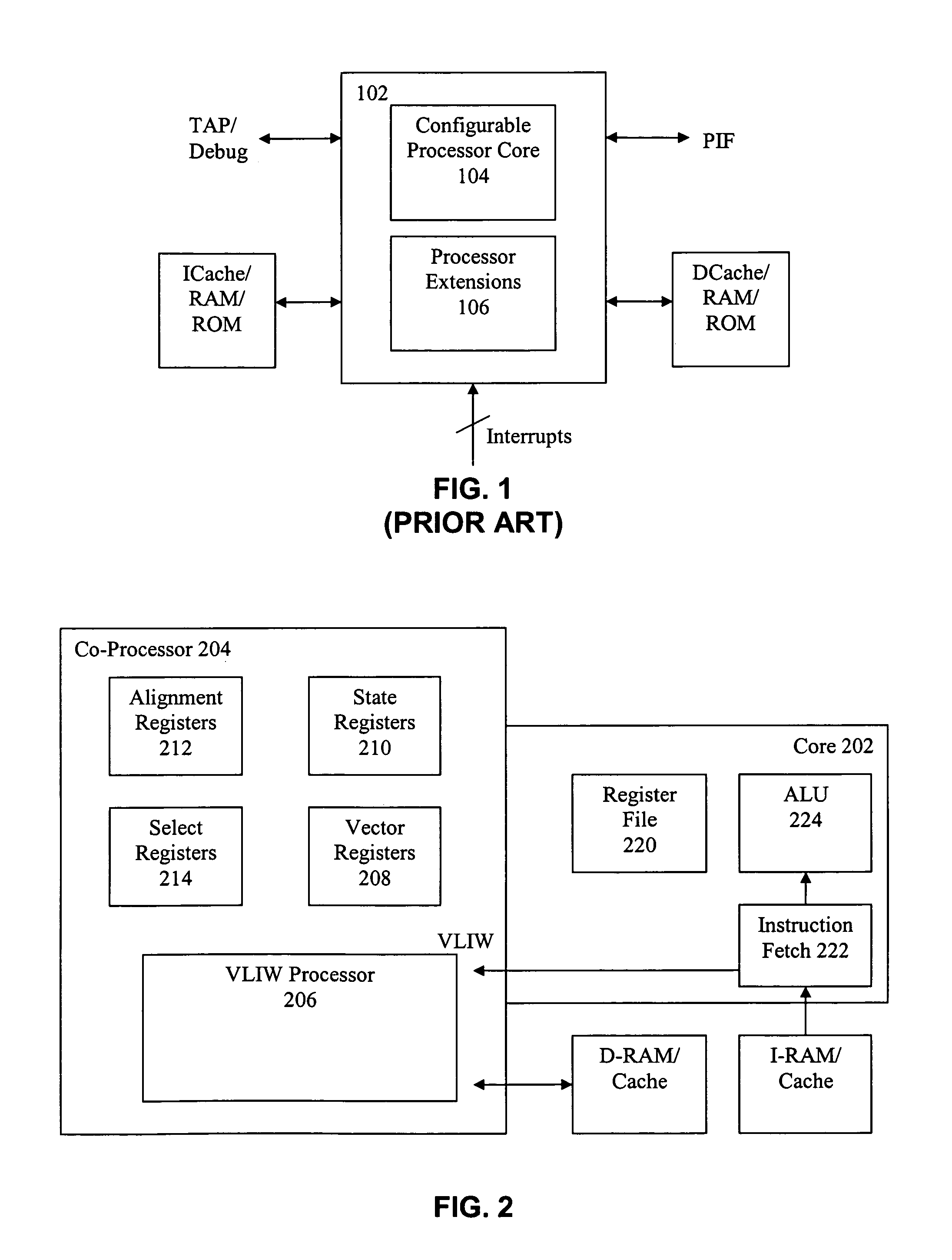

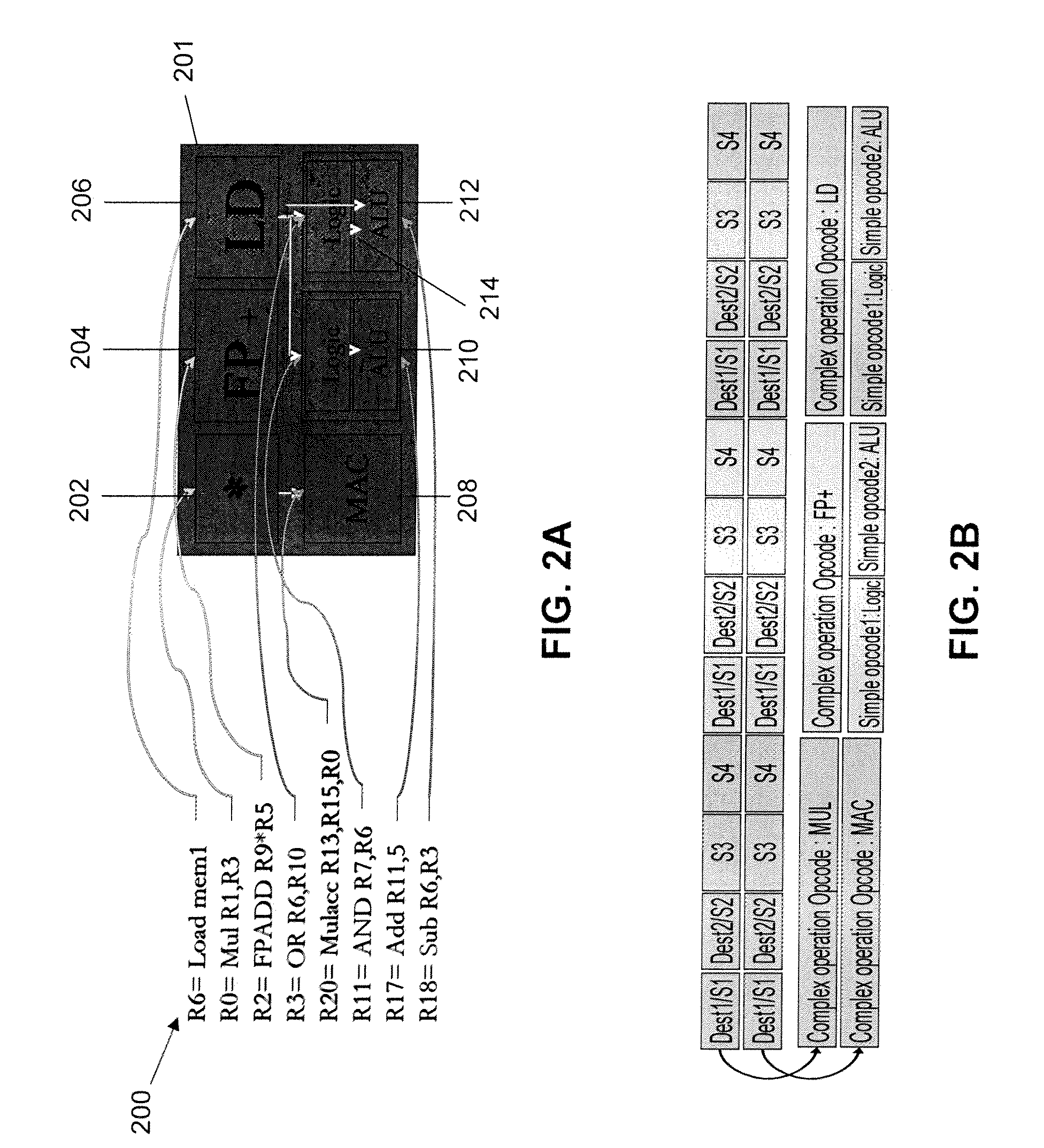

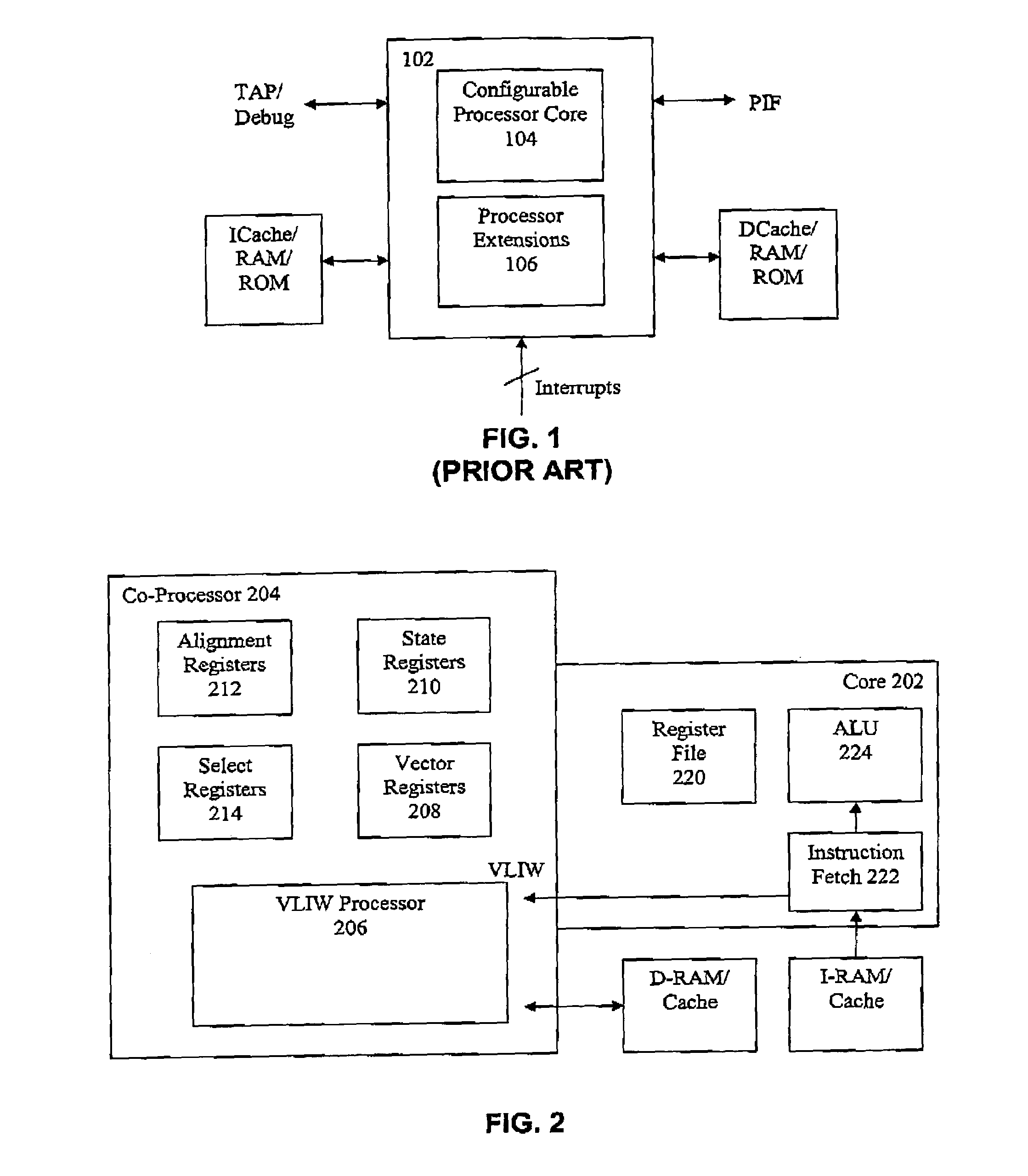

High-performance hybrid processor with configurable execution units

InactiveUS20050166038A1High bandwidthFlexibilityInstruction analysisConcurrent instruction executionHigh bandwidthLatency (engineering)

A new general method for building hybrid processors achieves higher performance in applications by allowing more powerful, tightly-coupled instruction set extensions to be implemented in reconfigurable logic. New instructions set configurations can be discovered and designed by automatic and semi-automatic methods. Improved reconfigurable execution units support deep pipelining, addition of additional registers and register files, compound instructions with many source and destination registers and wide data paths. New interface methods allow lower latency, higher bandwidth connections between hybrid processors and other logic.

Owner:TENSILICA

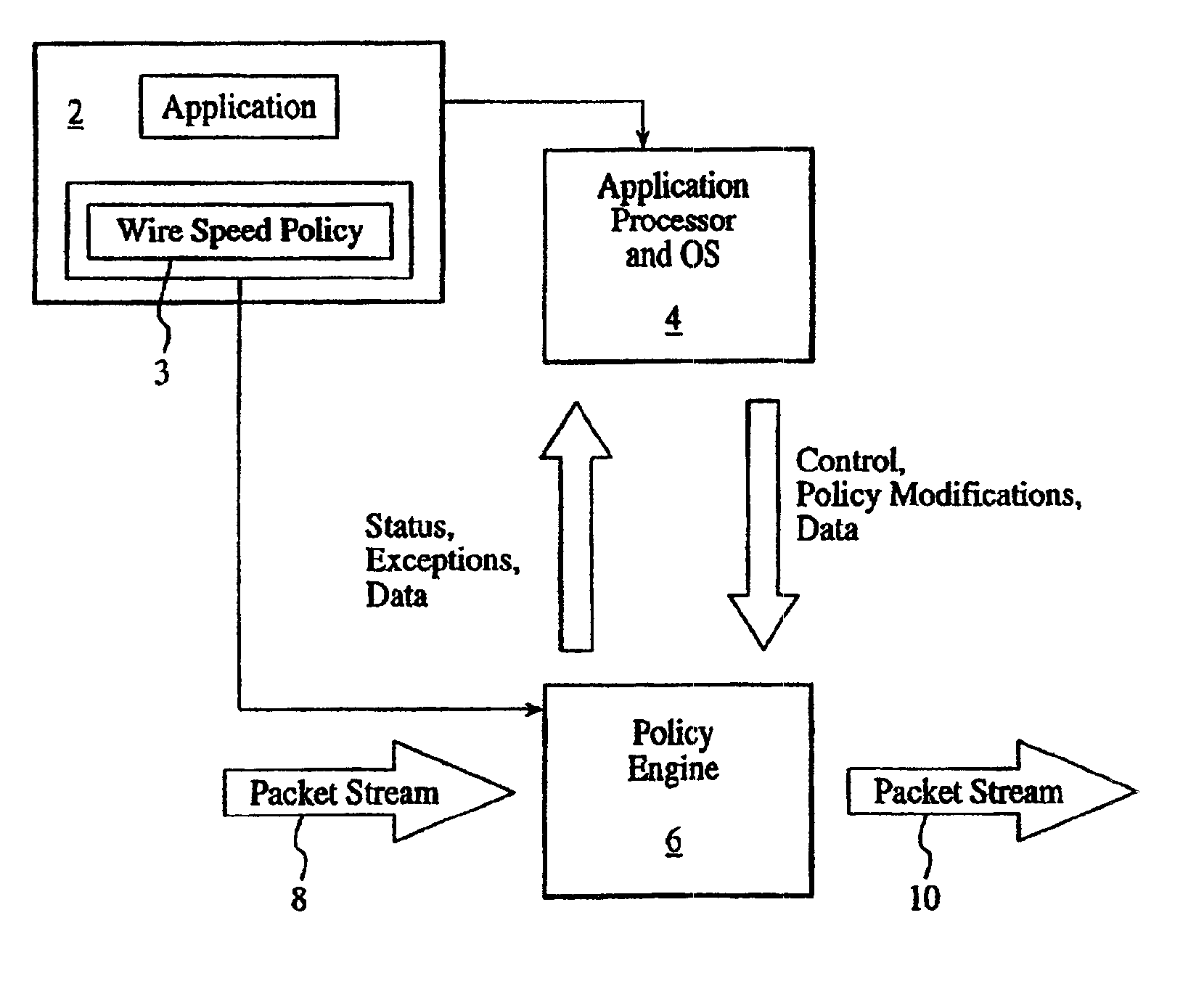

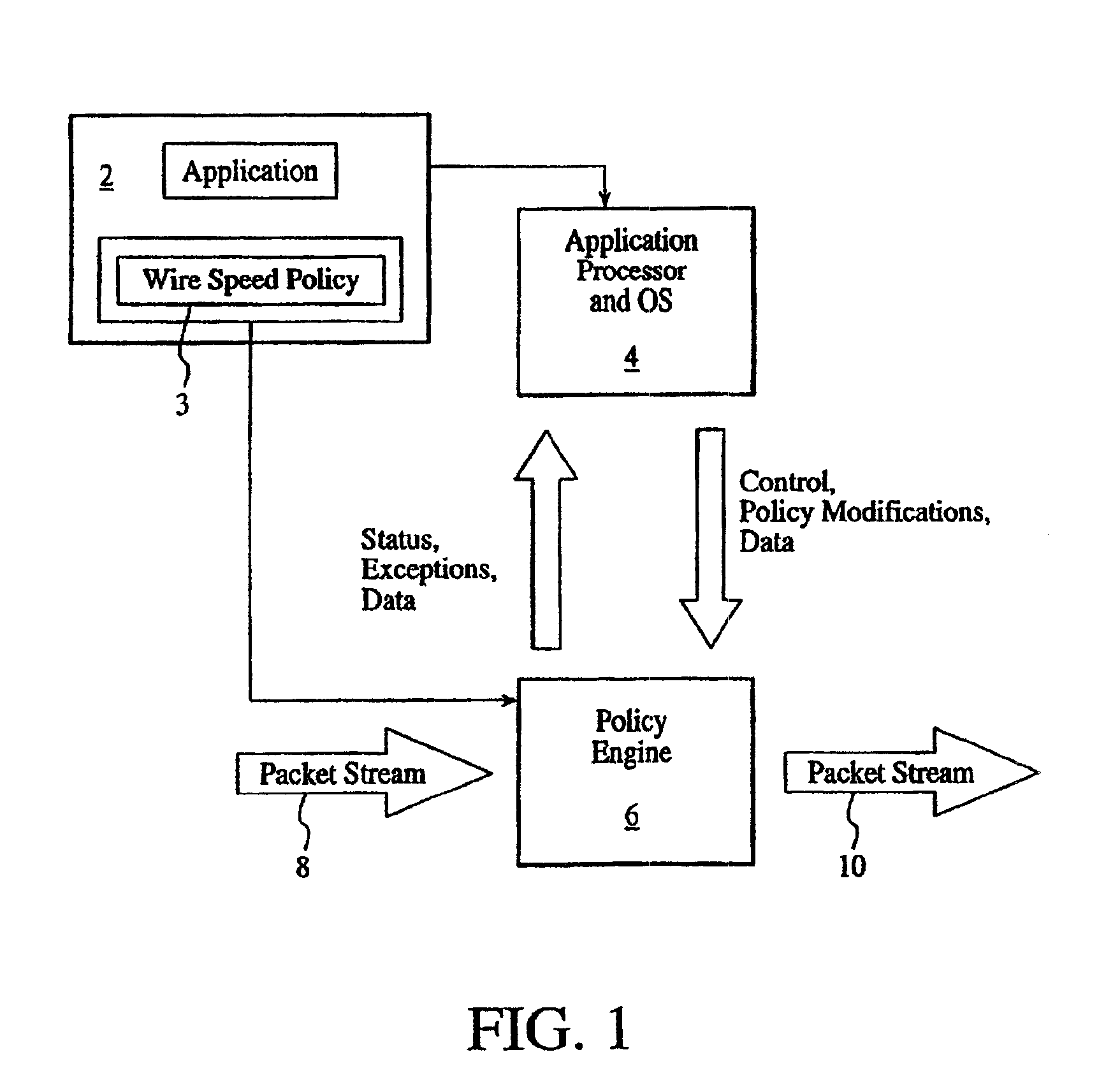

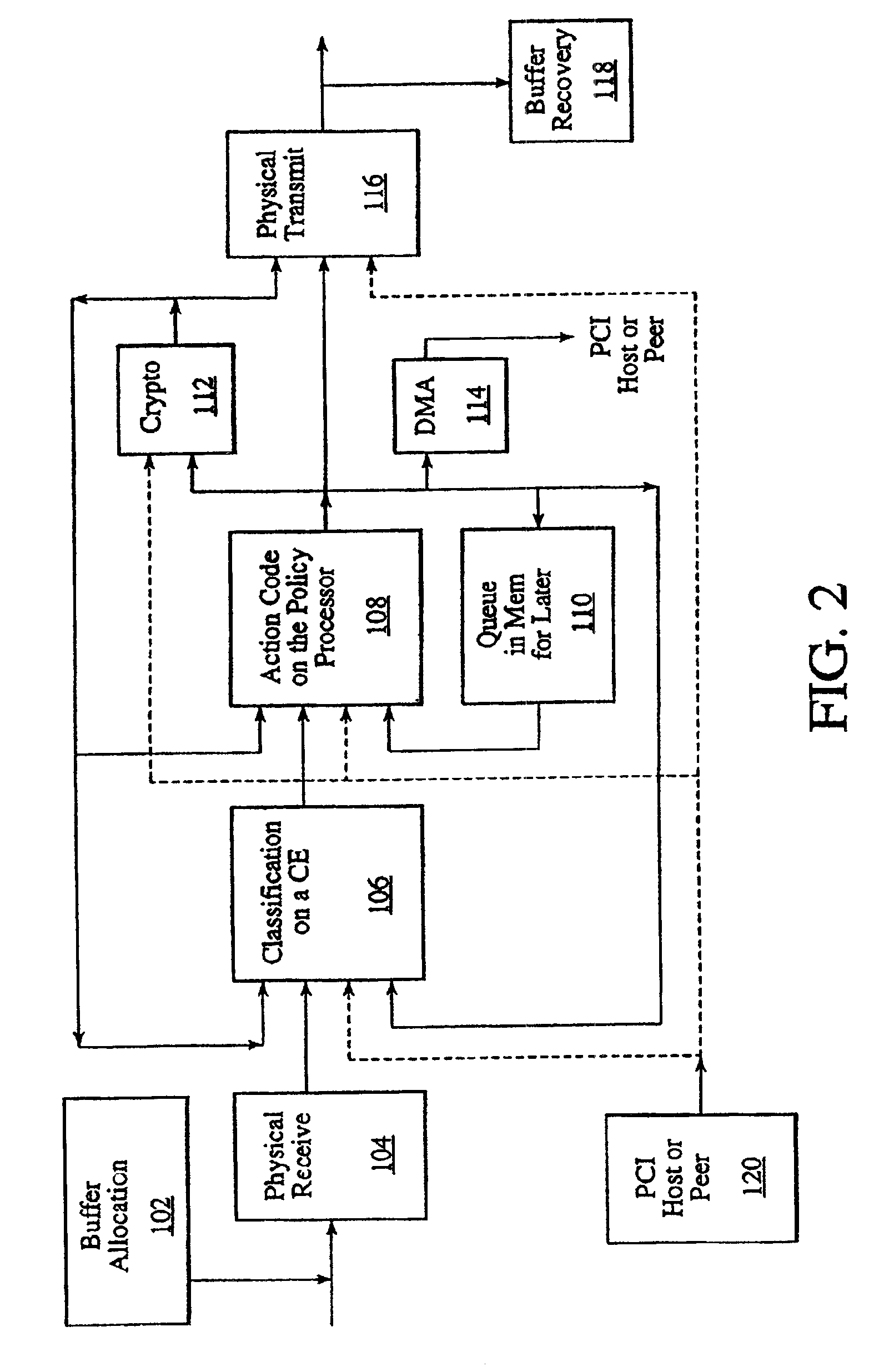

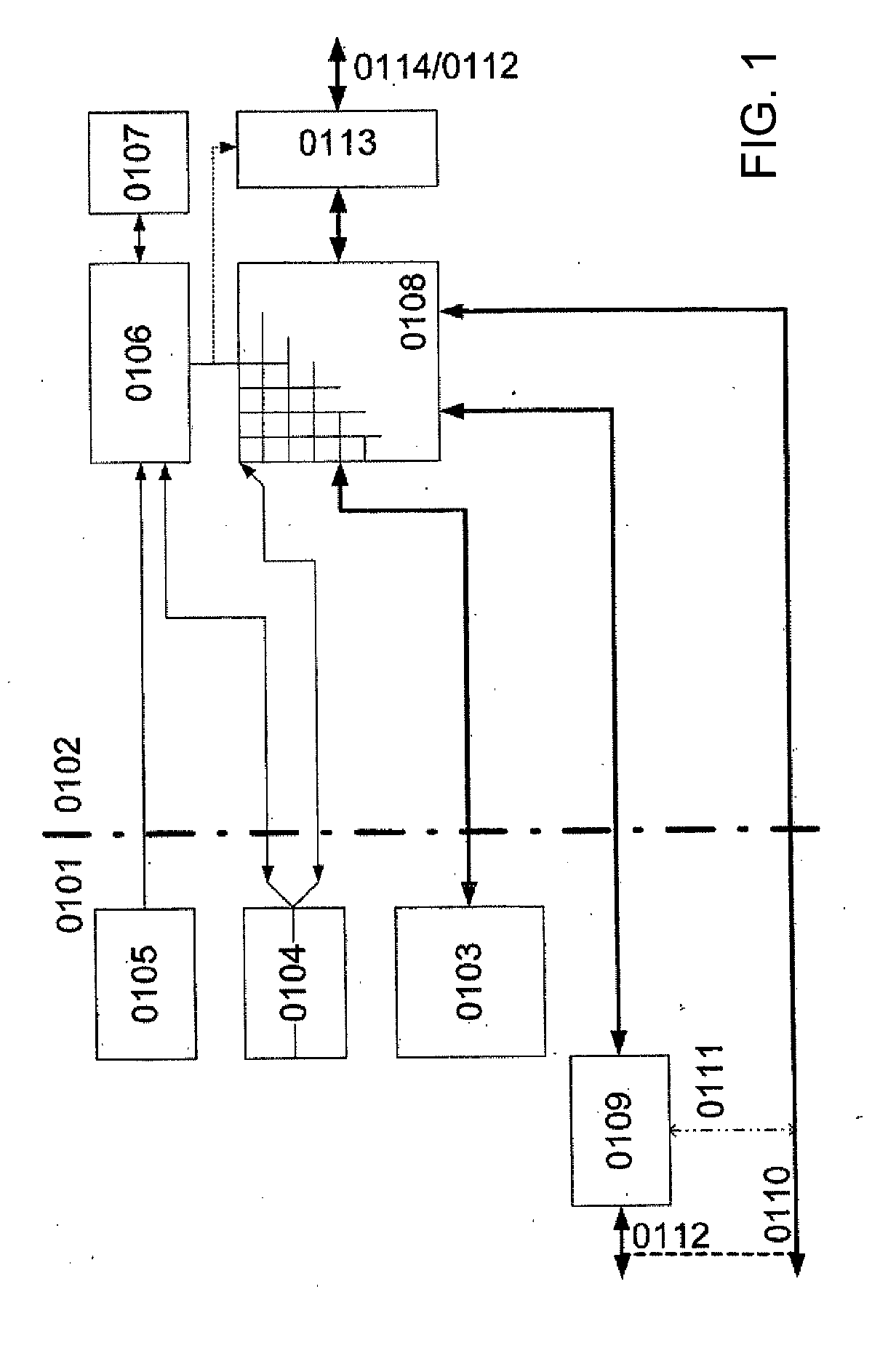

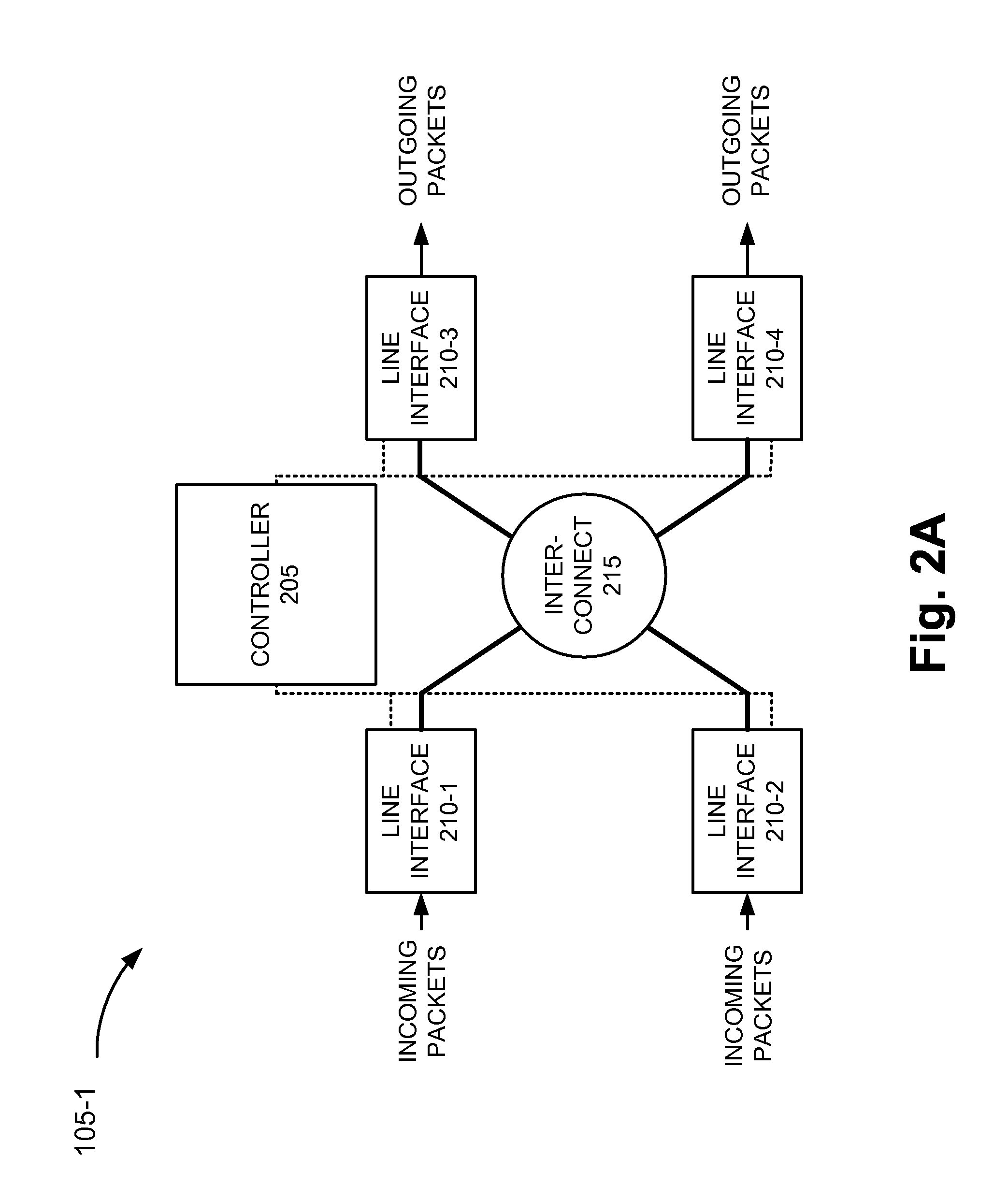

Programmable system for processing a partitioned network infrastructure

InactiveUS6859841B2Many timesMinimize movementConditional code generationInstruction analysisGeneral purposeNetwork interface controller

The present invention relates to a general-purpose programmable packet-processing platform for accelerating network infrastructure applications which have been structured so as to separate the stages of classification and action. Network packet classification, execution of actions upon those packets, management of buffer flow, encryption services, and management of Network Interface Controllers are accelerated through the use of a multiplicity of specialized modules. A language interface is defined for specifying both stateless and stateful classification of packets and to associate actions with classification results in order to efficiently utilize these specialized modules.

Owner:INTEL CORP

Apparatus and Method for Processing Complex Instruction Formats in a Multi-Threaded Architecture Supporting Various Context Switch Modes and Virtualization Schemes

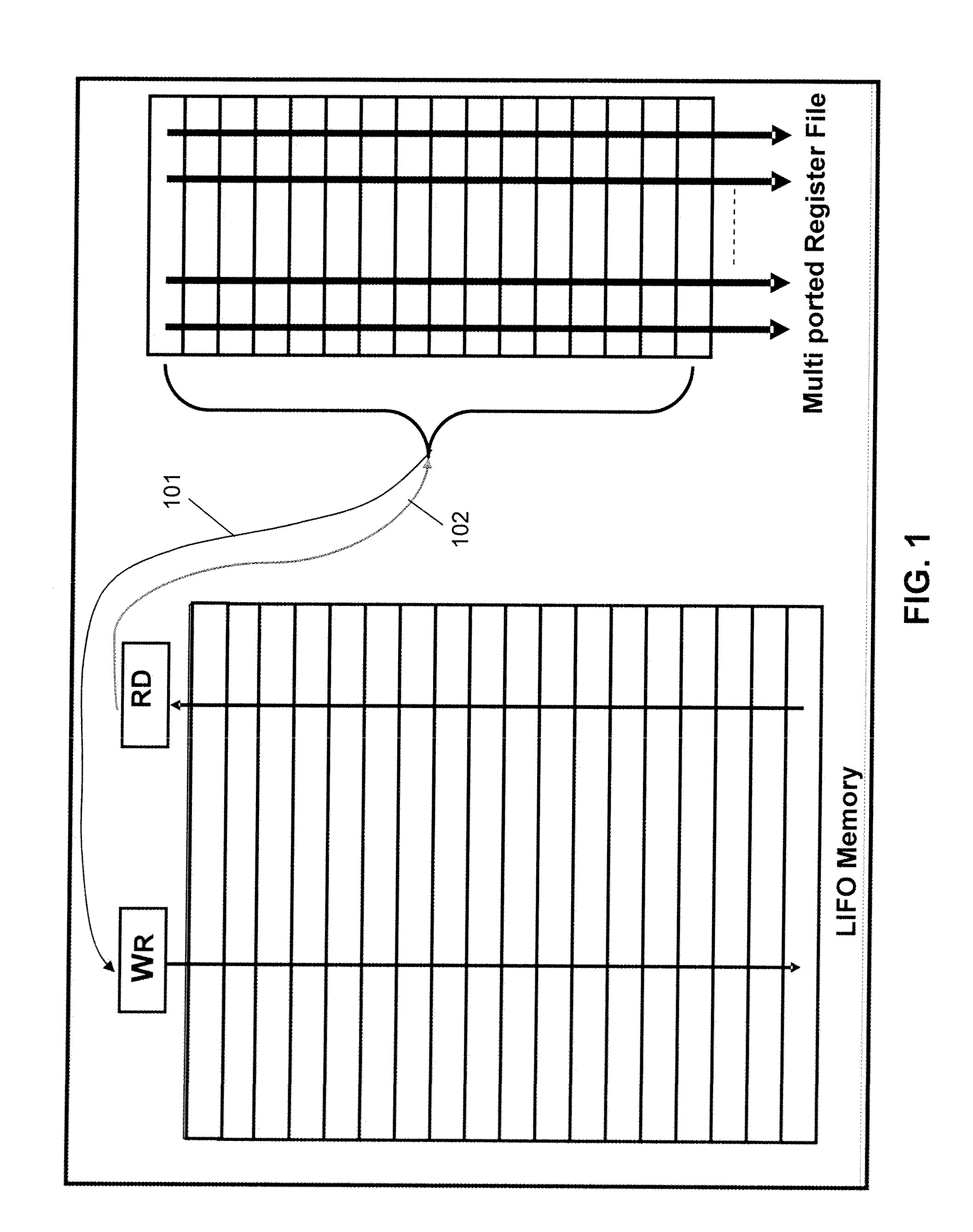

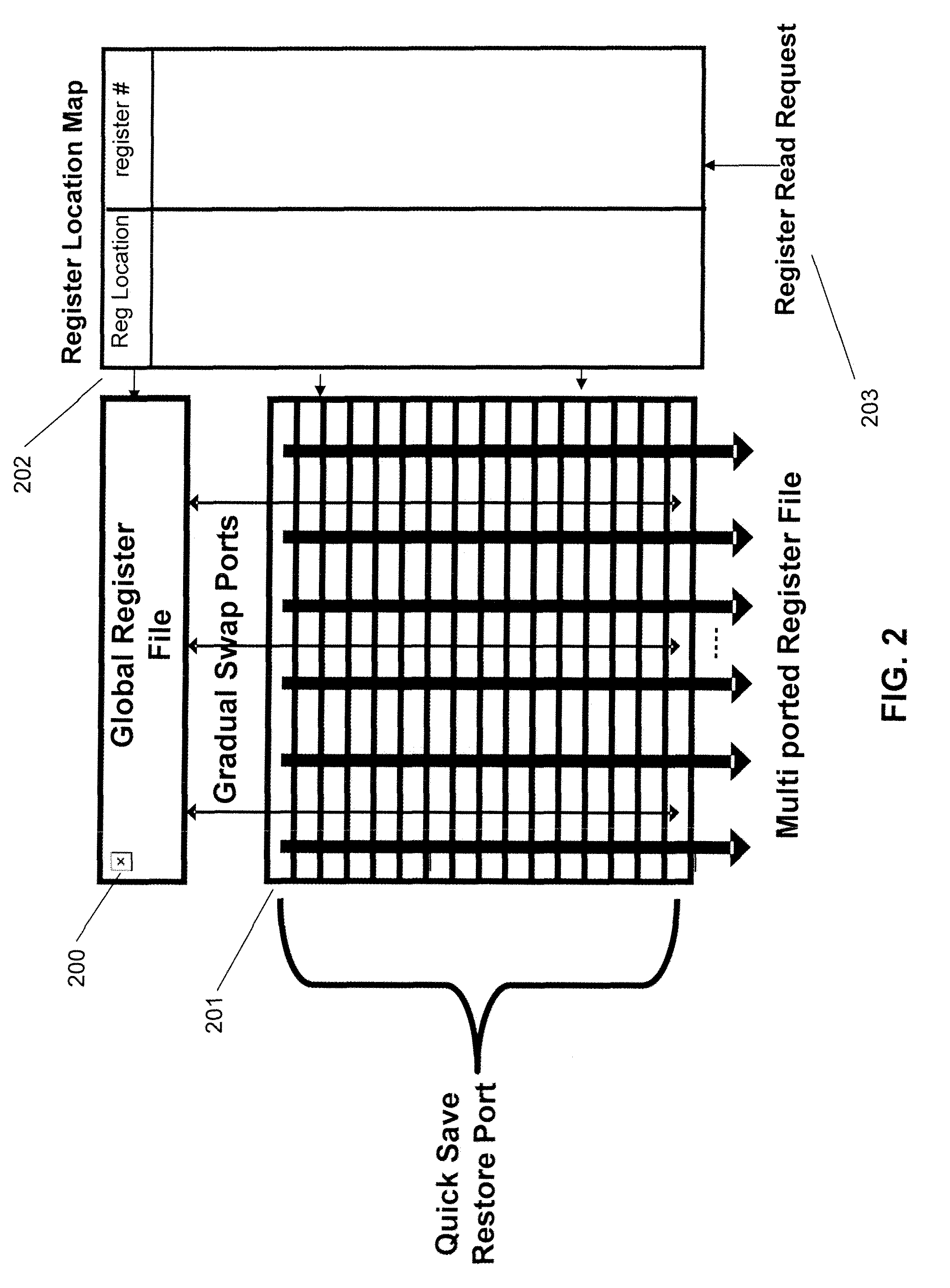

ActiveUS20100161948A1Efficient context switchingEfficient switchingInstruction analysisDigital computer detailsVirtualizationProcessor register

A unified architecture for dynamic generation, execution, synchronization and parallelization of complex instructions formats includes a virtual register file, register cache and register file hierarchy. A self-generating and synchronizing dynamic and static threading architecture provides efficient context switching.

Owner:INTEL CORP

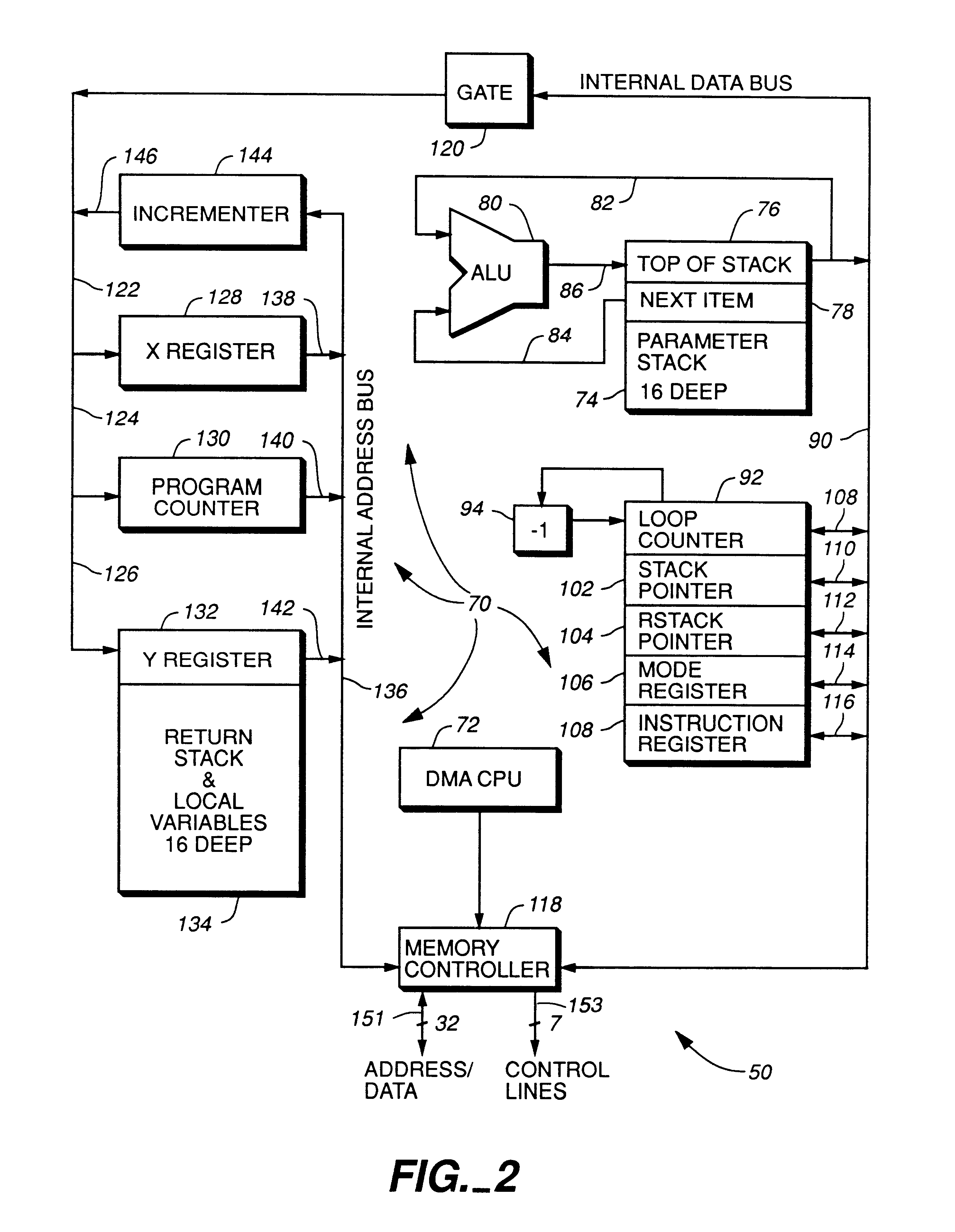

High performance microprocessor having variable speed system clock

InactiveUS6598148B1Improve performanceWithout sacrificing microprocessor speedRandom number generatorsInstruction analysisComputer architectureStatic random-access memory

A microprocessor integrated circuit including a processing unit disposed upon an integrated circuit substrate is disclosed herein. The processing unit is designed to operate in accordance with a predefined sequence of program instructions stored within an instruction register. A memory, capable of storing information provided by the processing unit and occupying a larger area of the integrated circuit substrate than the processing unit, is also provided within the microprocessor integrated circuit. The memory may be implemented using, for example dynamic or static random-access memory. A variable output frequency system clock, such as generated by a ring oscillator, is also disposed on the integrated circuit substrate.

Owner:MOORE CHARLES H TTE UTD 03 21 2006 THE EQUINOX TRUST

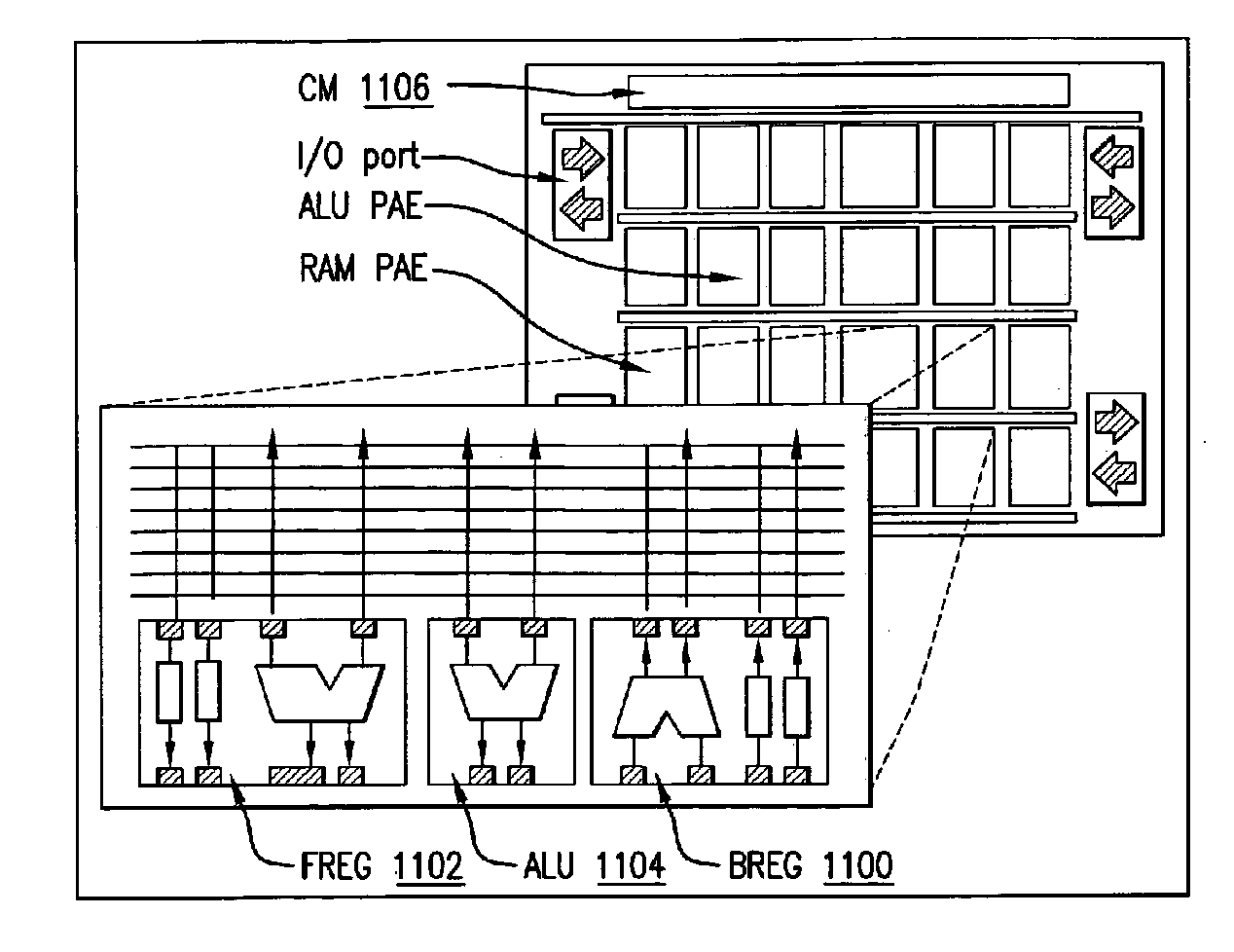

Data Processing System Having Integrated Pipelined Array Data Processor

InactiveUS20150106596A1Improve executionImprove performanceMemory architecture accessing/allocationInstruction analysisData processing systemParallel computing

A data processing system having a data processing core and integrated pipelined array data processor and a buffer for storing list of algorithms for processing by the pipelined array data processor.

Owner:SCIENTIA SOL MENTIS AG

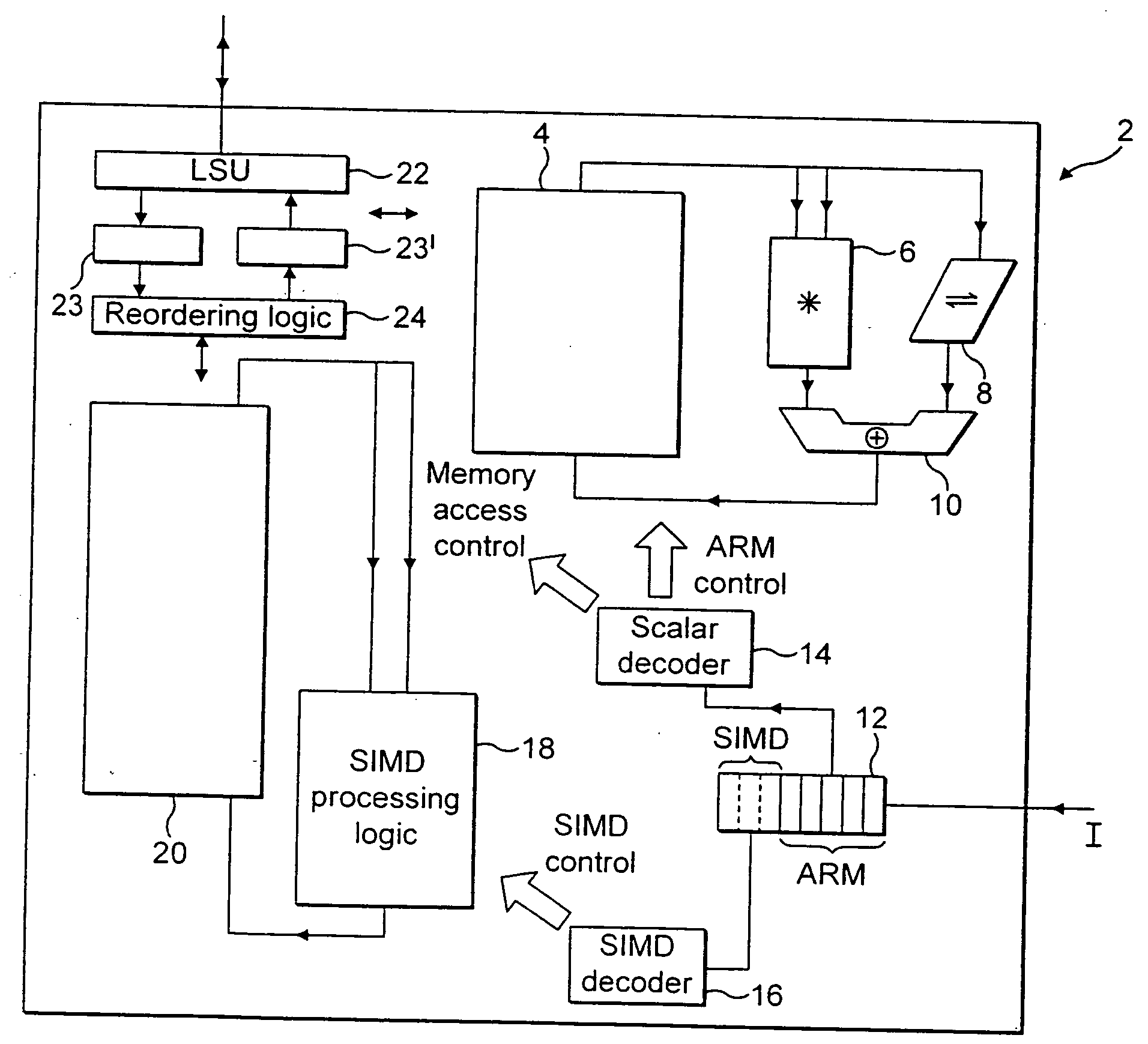

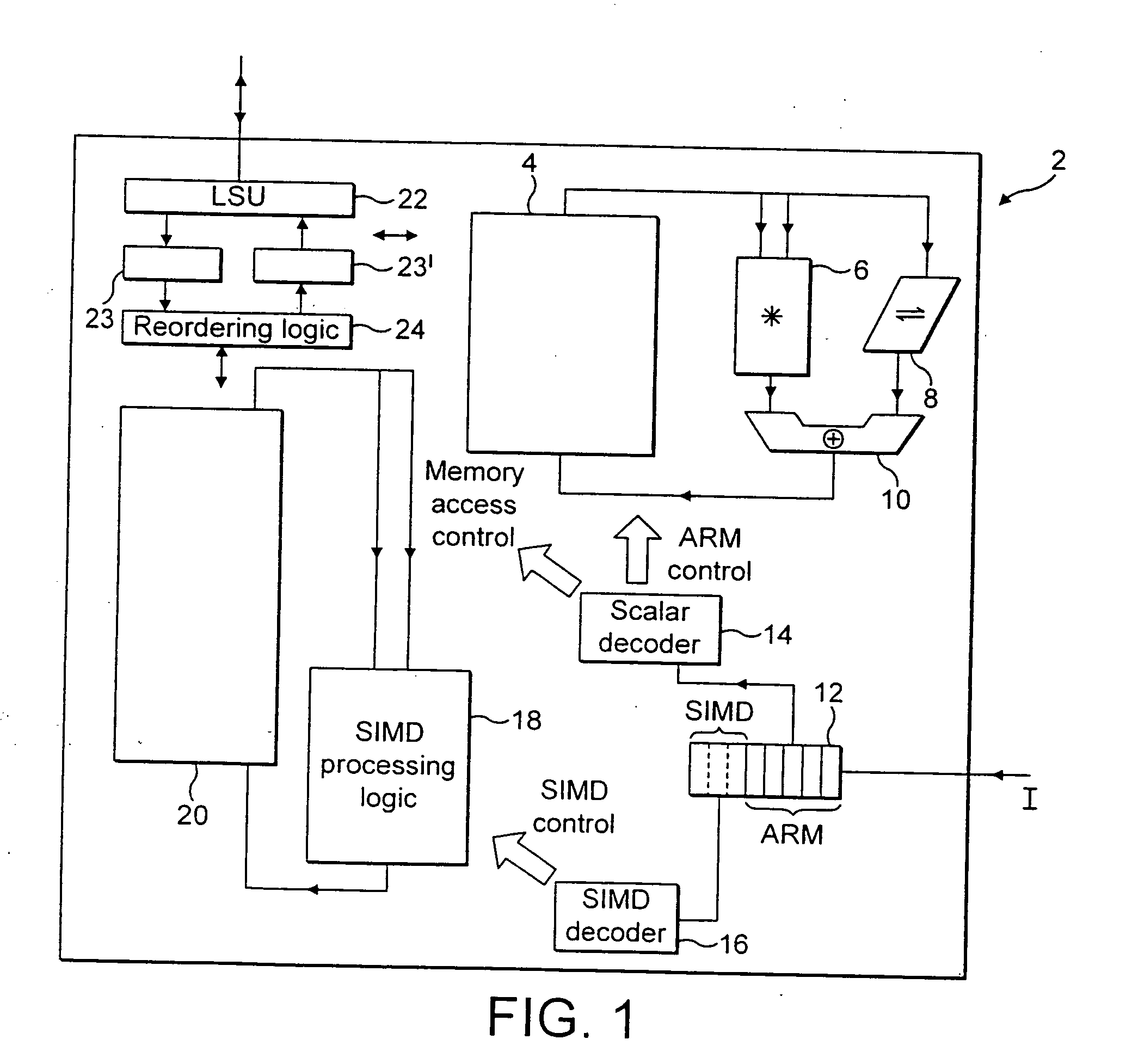

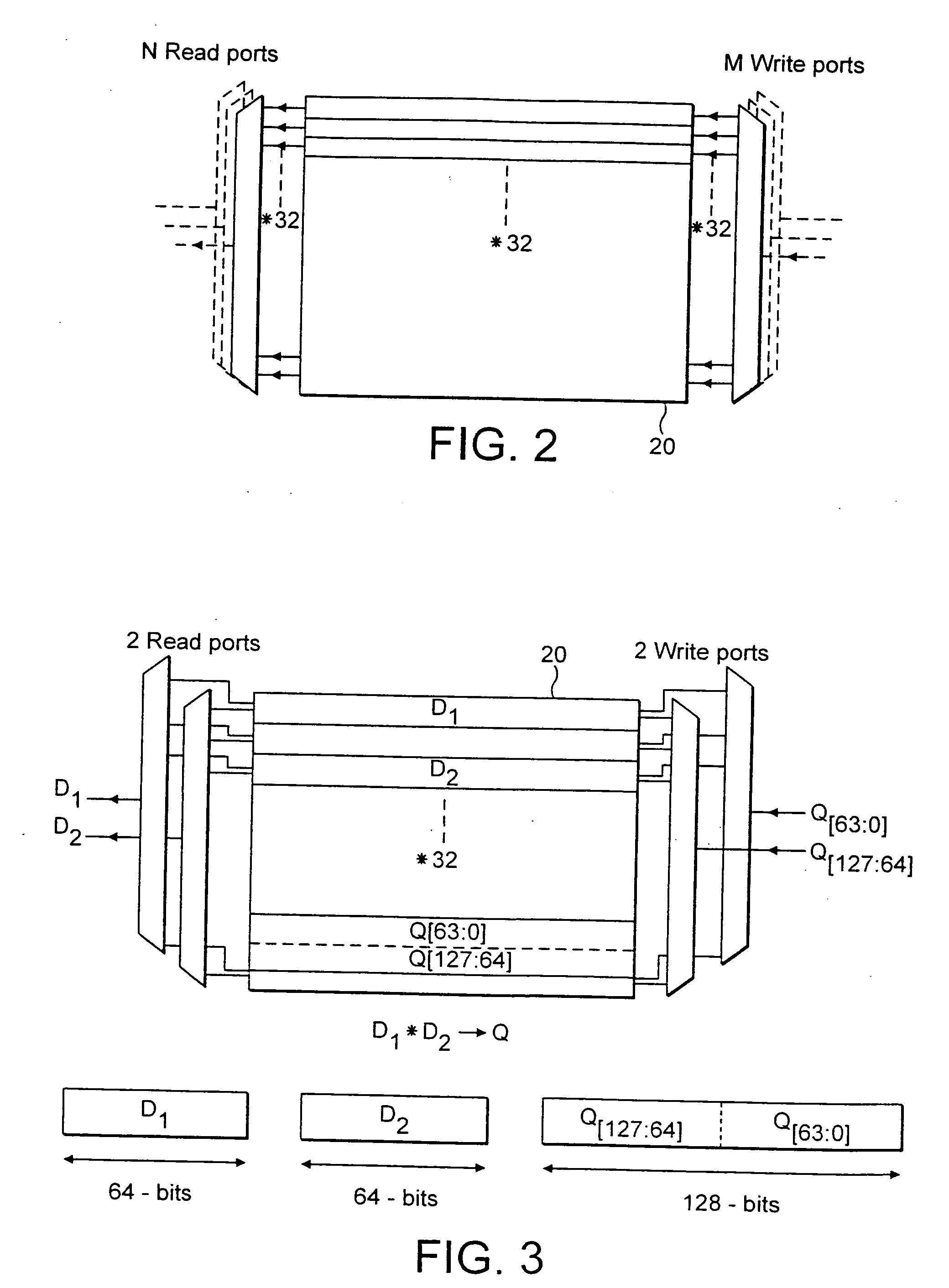

Data processing apparatus and method for performing rearrangement operations

ActiveUS20100106944A1Further flexibilityReduce energy consumptionDigital data processing detailsInstruction analysisControl signalProcessor register

A data processing apparatus and method are provided for performing rearrangement operations. The data processing apparatus has a register data store with a plurality of registers, each register storing a plurality of data elements. Processing circuitry is responsive to control signals to perform processing operations on the data elements. An instruction decoder is responsive to at least one but no more than N rearrangement instructions, where N is an odd plural number, to generate control signals to control the processing circuitry to perform a rearrangement process at least equivalent to: obtaining as source data elements the data elements stored in N registers of said register data store as identified by the at least one re-arrangement instruction; performing a rearrangement operation to rearrange the source data elements between a regular N-way interleaved order and a de-interleaved order in order to produce a sequence of result data elements; and outputting the sequence of result data elements for storing in the register data store. This provides a particularly efficient technique for performing N-way interleave and de-interleave operations, where N is an odd number, resulting in high performance, low energy consumption, and reduced register use when compared with known prior art techniques.

Owner:ARM LTD

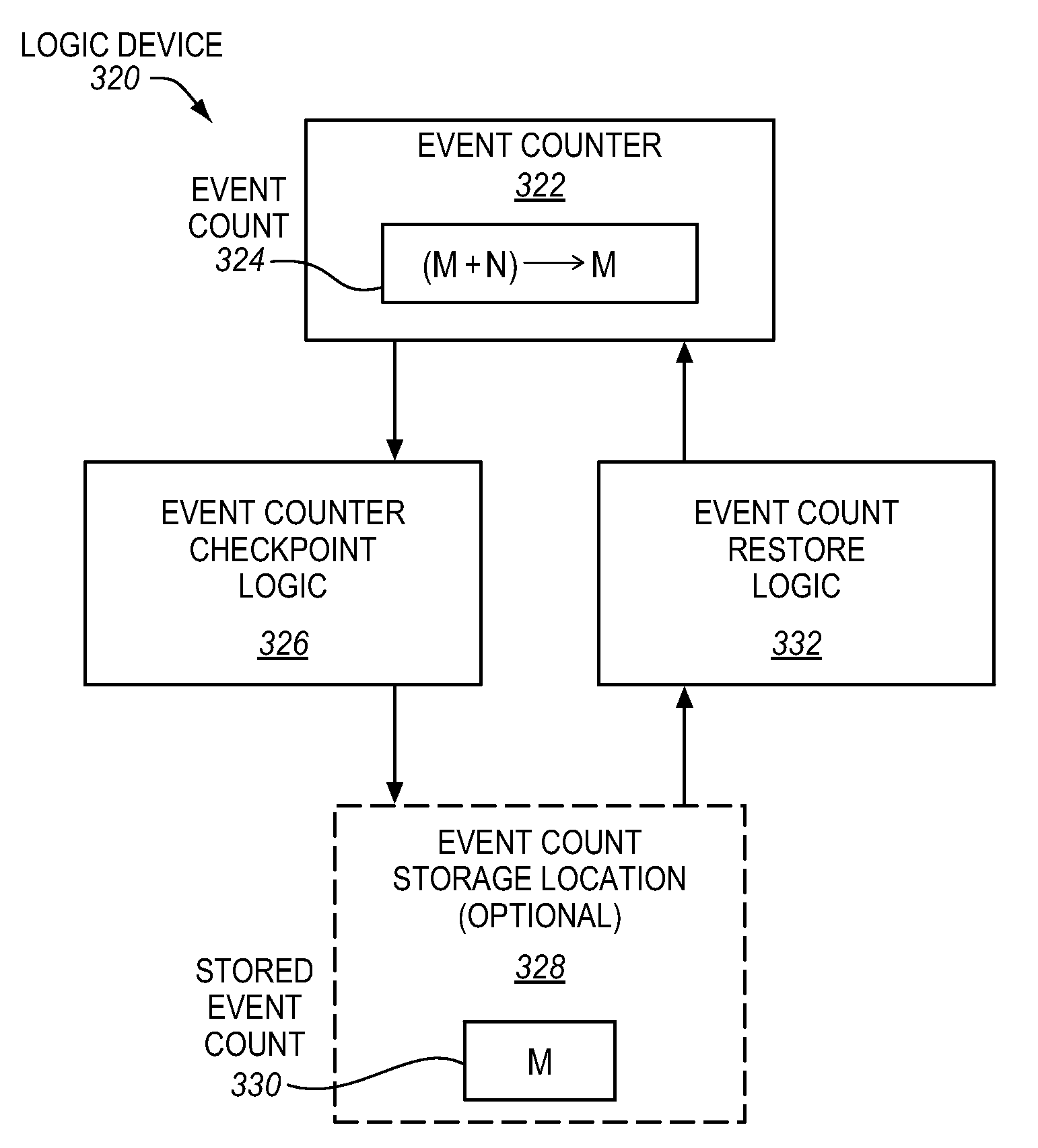

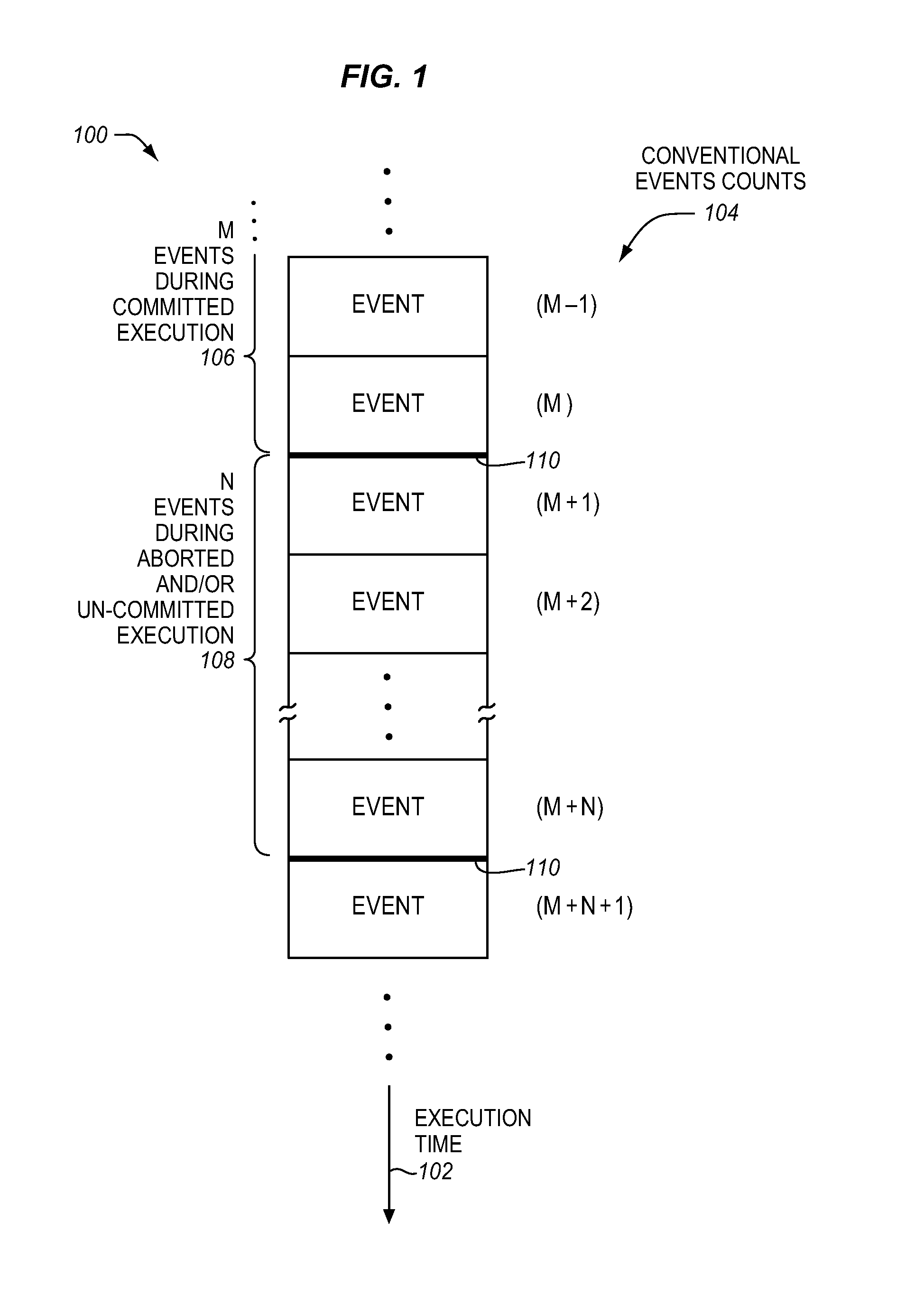

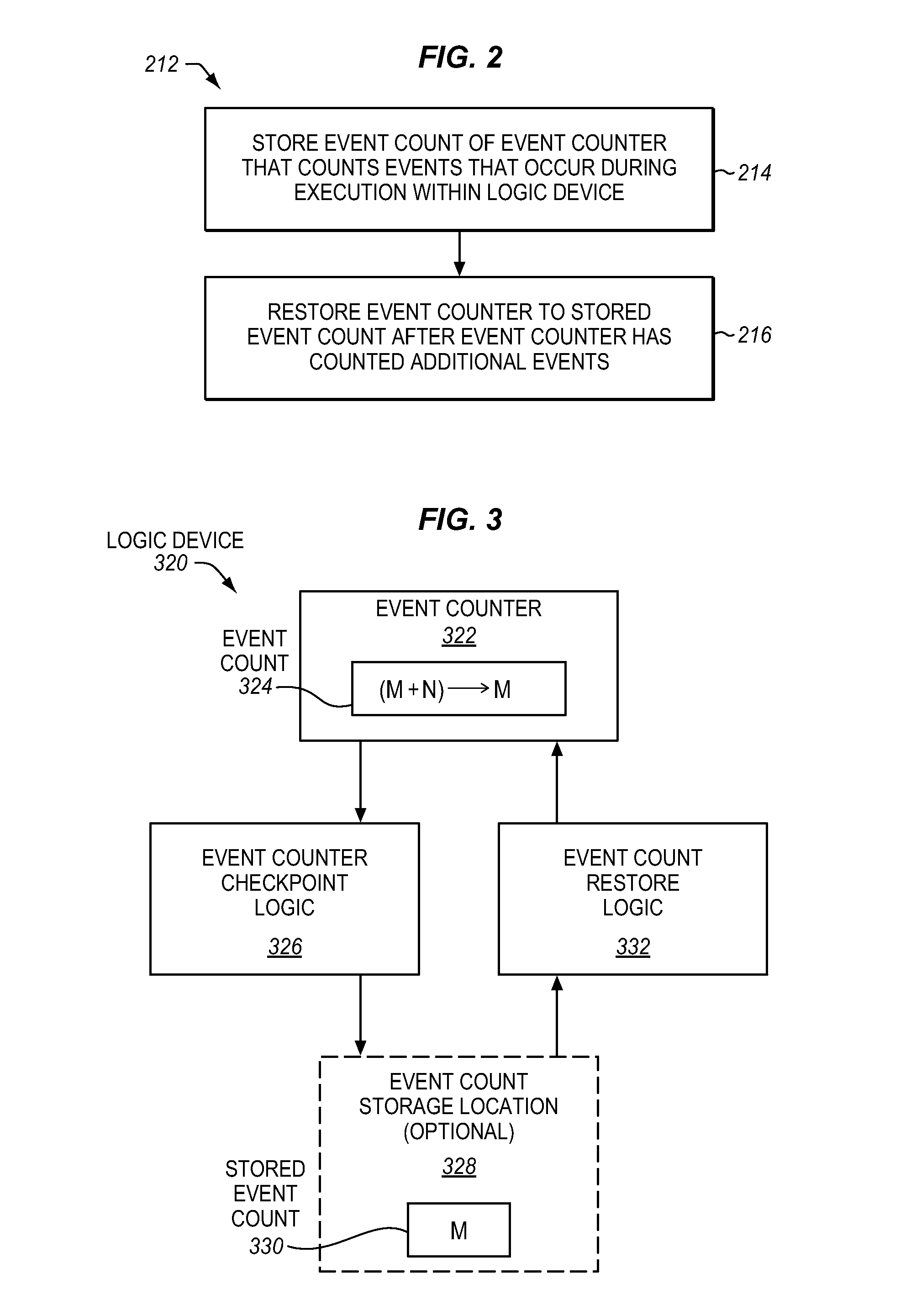

Method, apparatus, and system for speculative execution event counter checkpointing and restoring

InactiveUS20120227045A1Memory architecture accessing/allocationError detection/correctionSpeculative executionExecution cycle

An apparatus, method, and system are described herein for providing programmable control of performance / event counters. An event counter is programmable to track different events, as well as to be checkpointed when speculative code regions are encountered. So when a speculative code region is aborted, the event counter is able to be restored to it pre-speculation value. Moreover, the difference between a cumulative event count of committed and uncommitted execution and the committed execution, represents an event count / contribution for uncommitted execution. From information on the uncommitted execution, hardware / software may be tuned to enhance future execution to avoid wasted execution cycles.

Owner:INTEL CORP

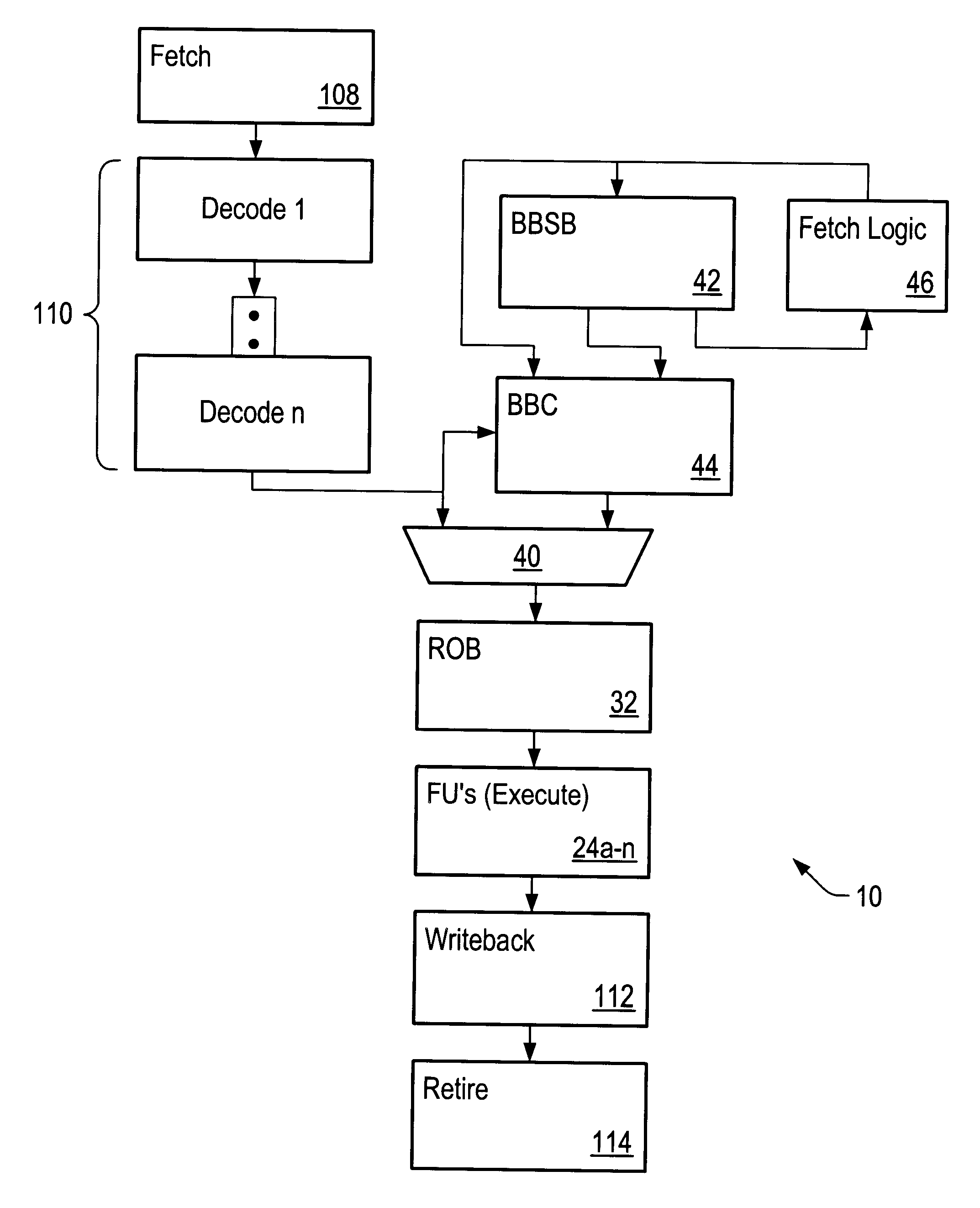

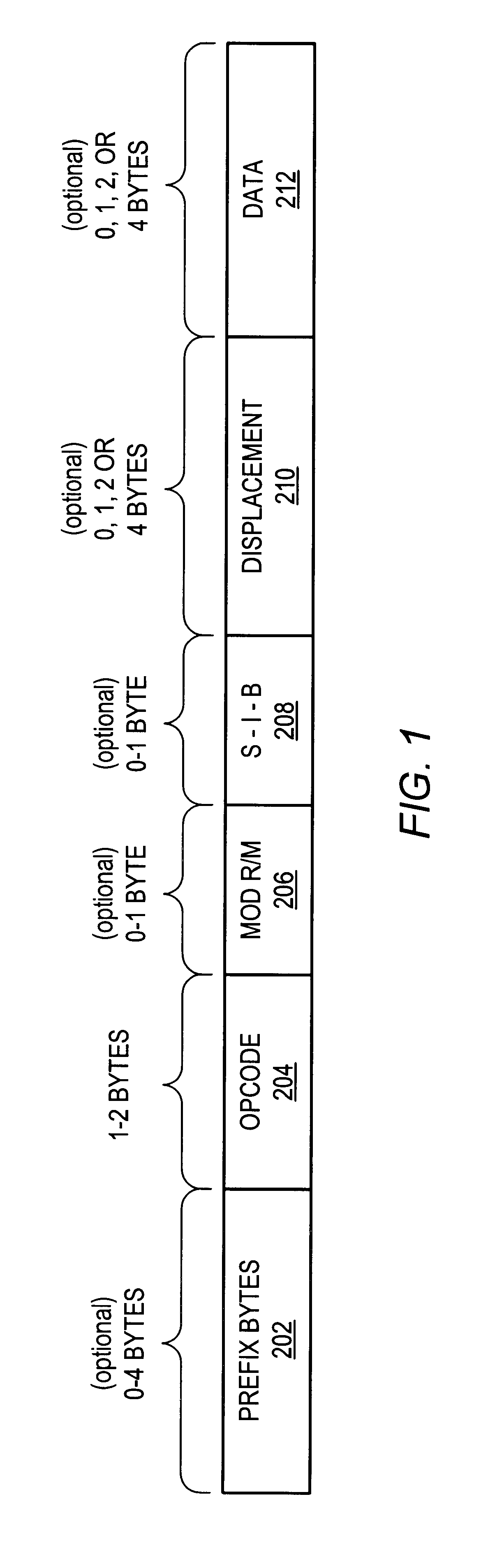

Using padded instructions in a block-oriented cache

InactiveUS6339822B1Memory architecture accessing/allocationInstruction analysisComputerized systemVariable length

A microprocessor configured to cache basic blocks of instructions is disclosed. The microprocessor may comprise decoding logic, a basic block cache, and a branch prediction unit. The decoding logic is coupled to receive and decode variable-length instructions into padded instructions that have one of a predetermined number of predetermined lengths. The decoding logic is further configured to form basic blocks of instructions from the padded and decoded instructions. Basic blocks are natural divisions in instruction streams resulting from branch instructions. The start of a basic block is a target of a branch, and the end is another branch instruction. The basic block cache is configured to store the basic blocks in a plurality of storage locations, wherein each storage location is configured to store an address tag, a link bit, and at least a portion of one basic block. The link bit indicates whether the basic block stored in said storage location extends into another storage location. The branch prediction unit has a branch prediction array storing branch prediction information corresponding to each storage location within the basic block cache. A computer system and method for operating are also disclosed.

Owner:GLOBALFOUNDRIES INC

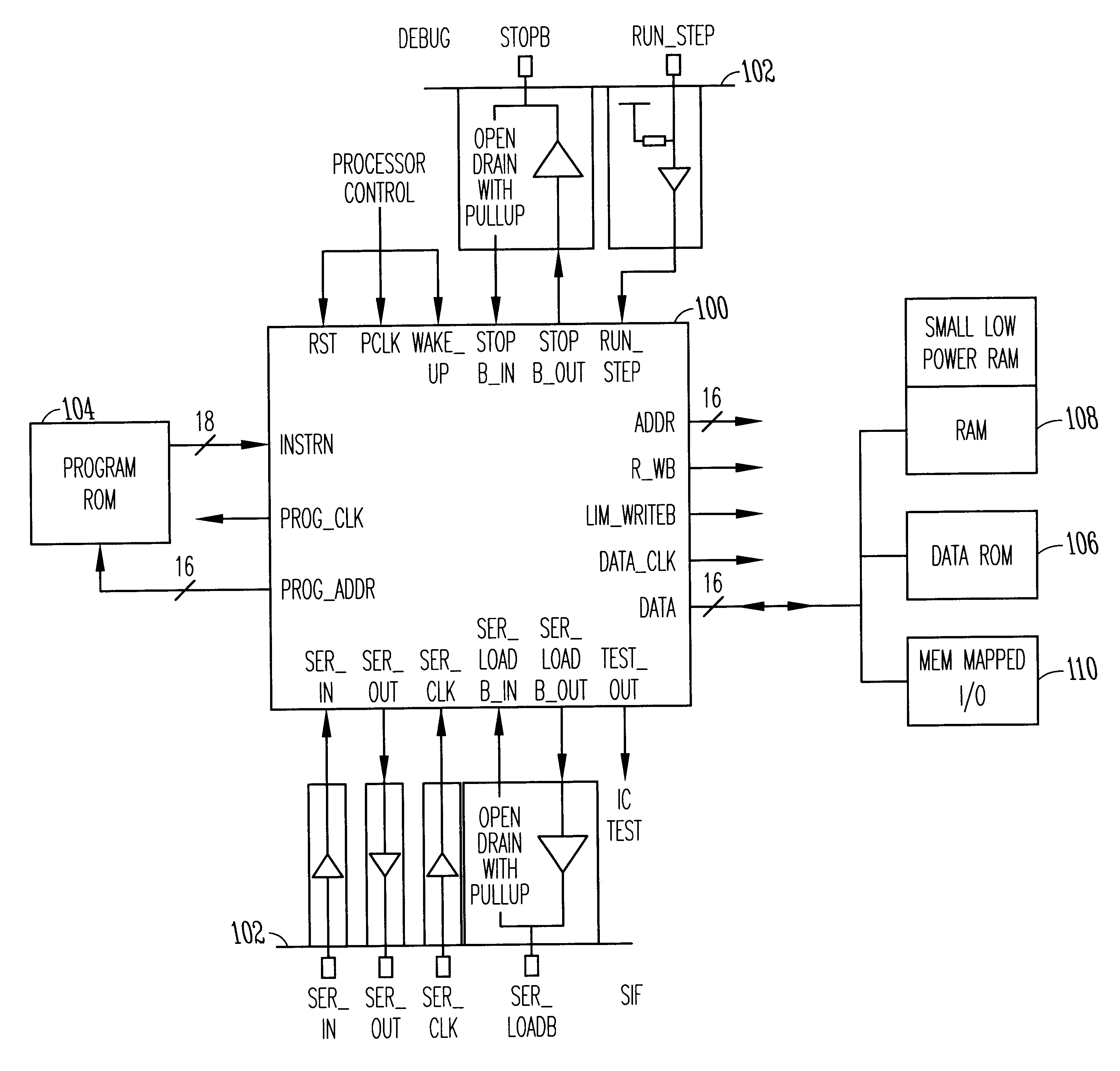

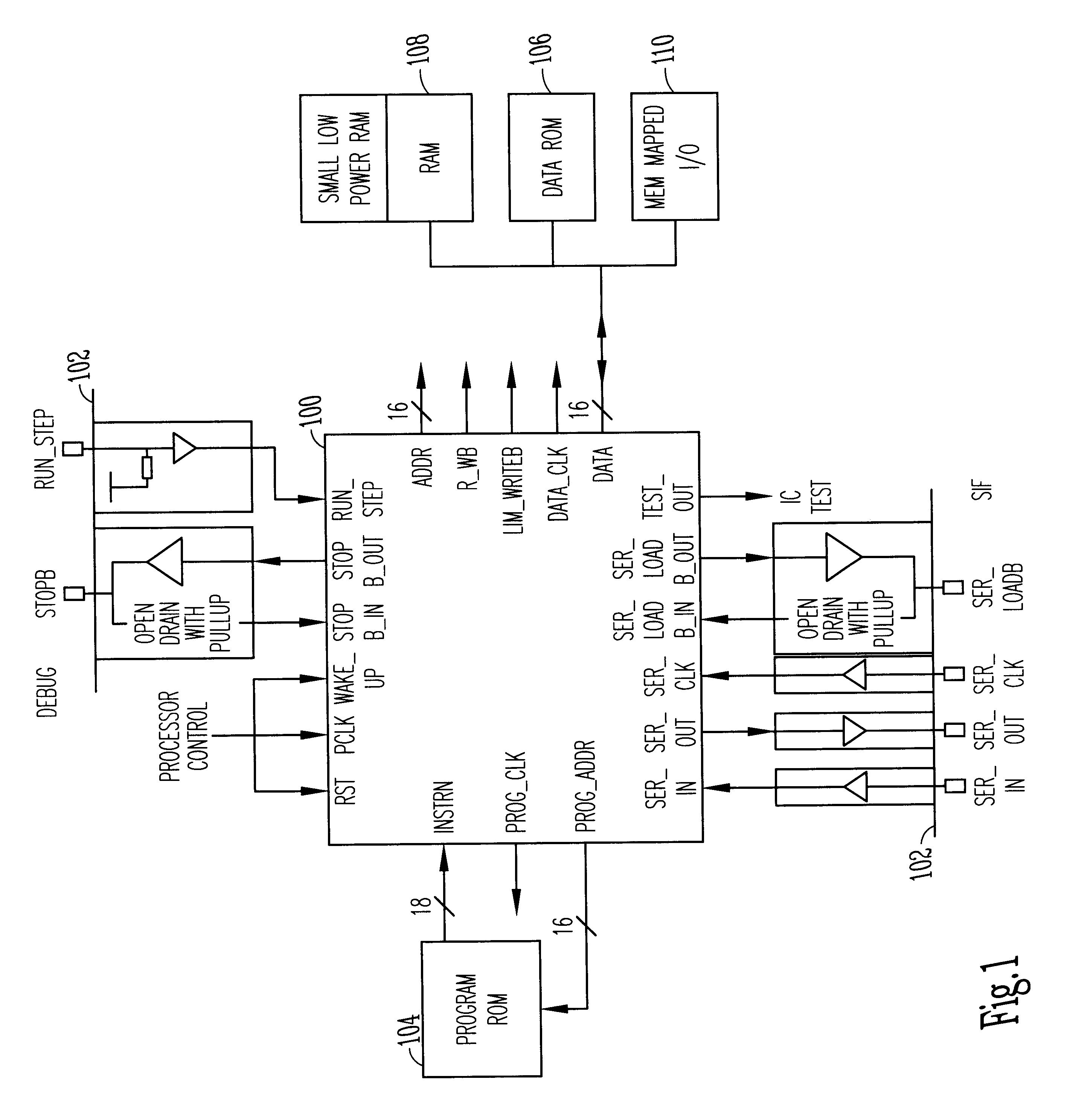

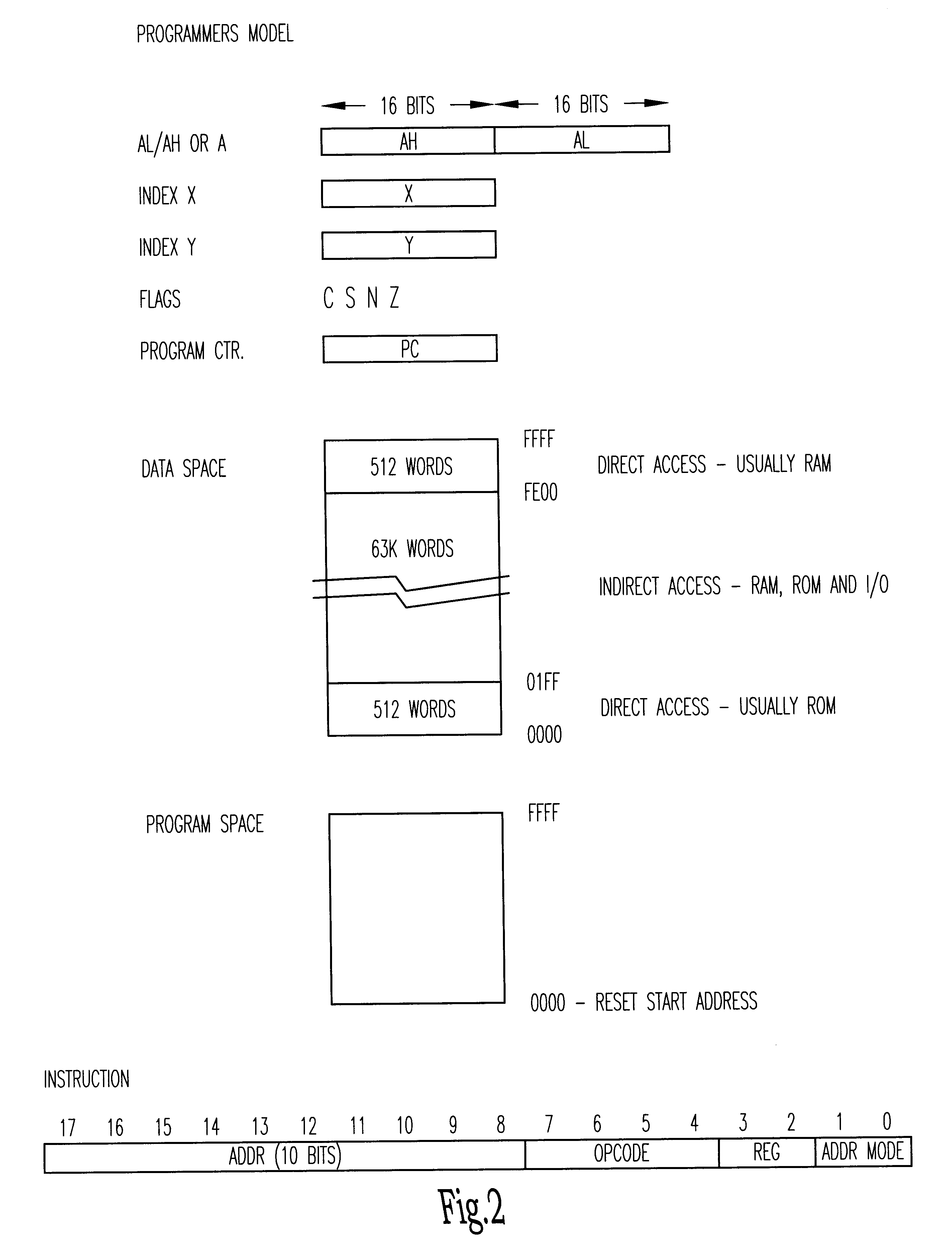

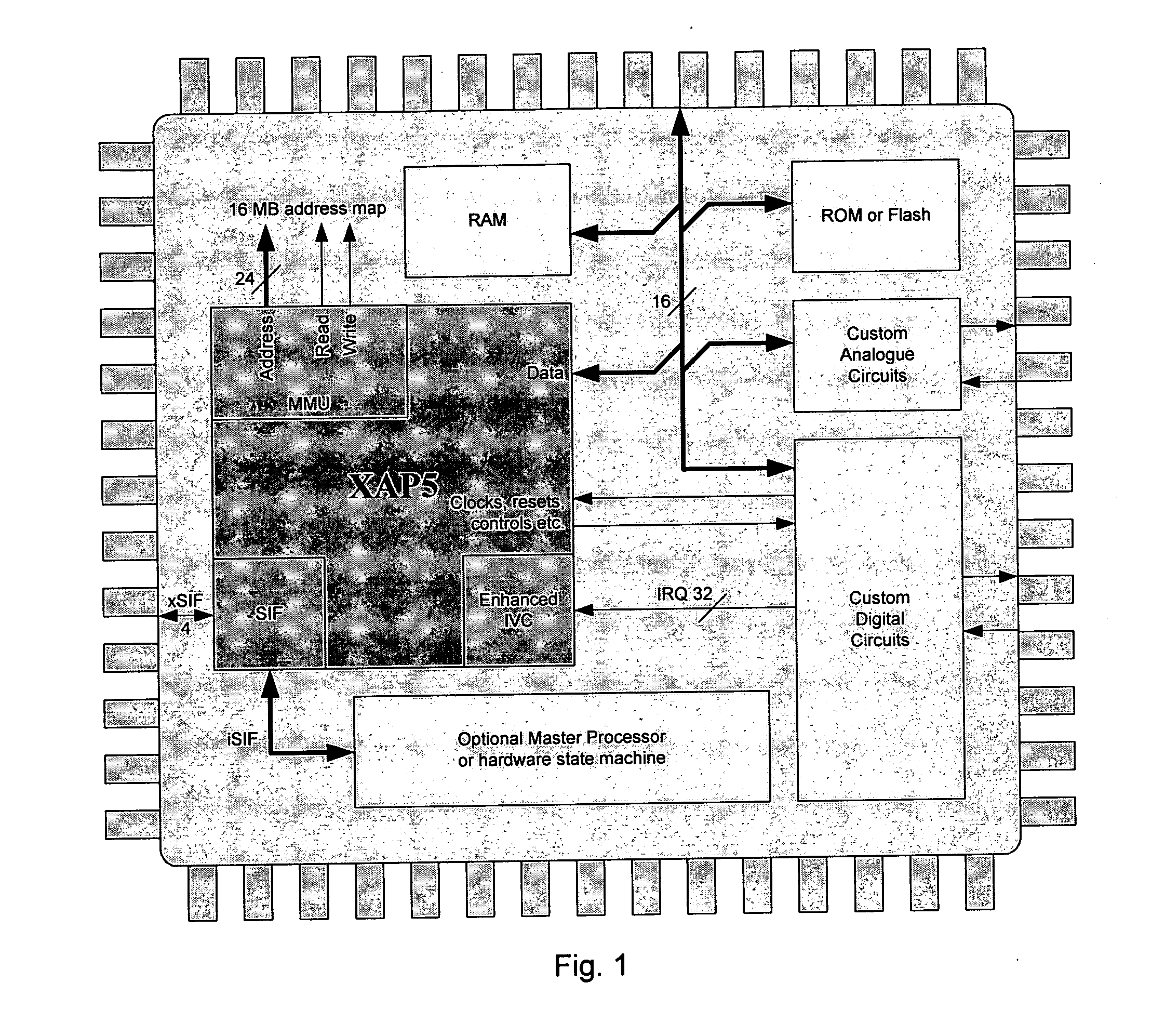

Data processing circuits and interfaces

An integrated circuit contains a microprocessor core, program memory and separate data storage, together with analogue and digital signal processing circuitry. The ALU is 16 bits wide, but a 32-bit shift unit is provided, using a pair of 16-bit registers. The processor has a fixed length instruction format, with an instruction set including multiply and divide operations which use the shift unit over several cycles. No interrupts are provided. External pins of the integrated circuit allow for single stepping and other debug operations, and a serial interface (SIF) which allows external communication of test data or working data as necessary. The serial interface has four wires (SERIN, SEROUT, SER-CLK, SERLOADB), allowing handshaking with a master apparatus, and allowing direct access to the memory space of the processor core, without specific program control. Within each processor cycle, the processor circuitry is divided into plural stages, and latches are interposed between the stages to minimize power consumption.

Owner:CAMBRIDGE CONSULTANTS LTD

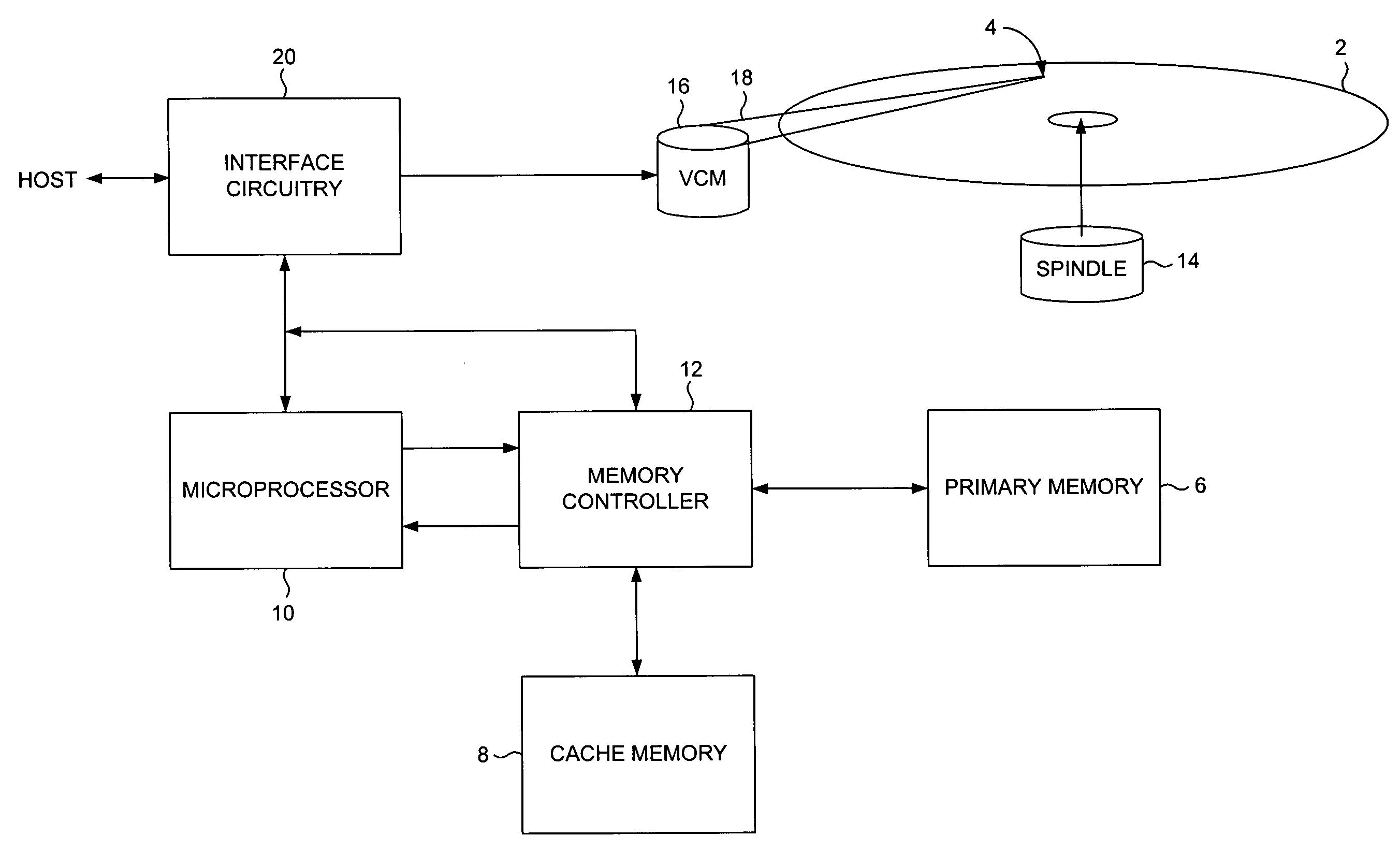

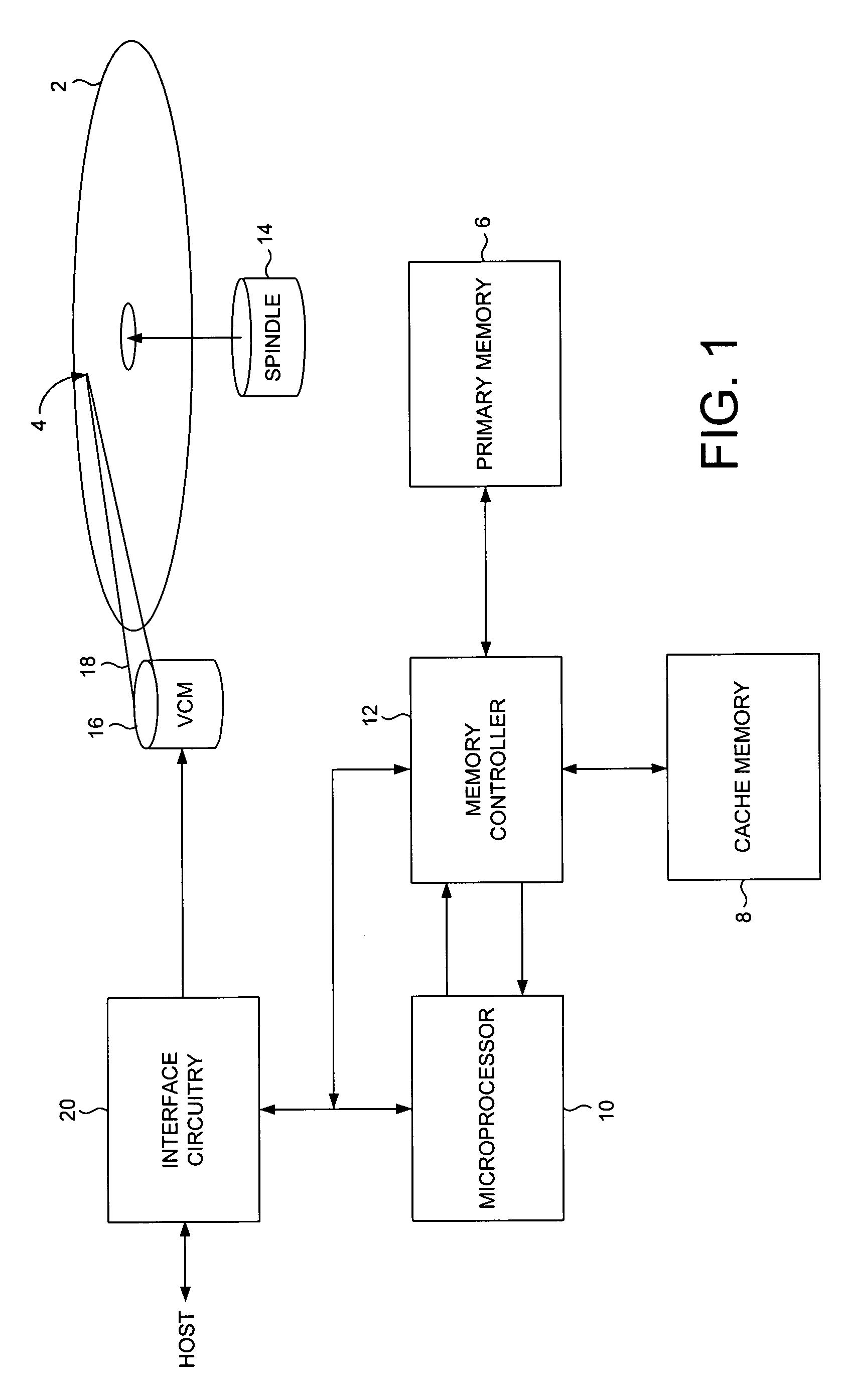

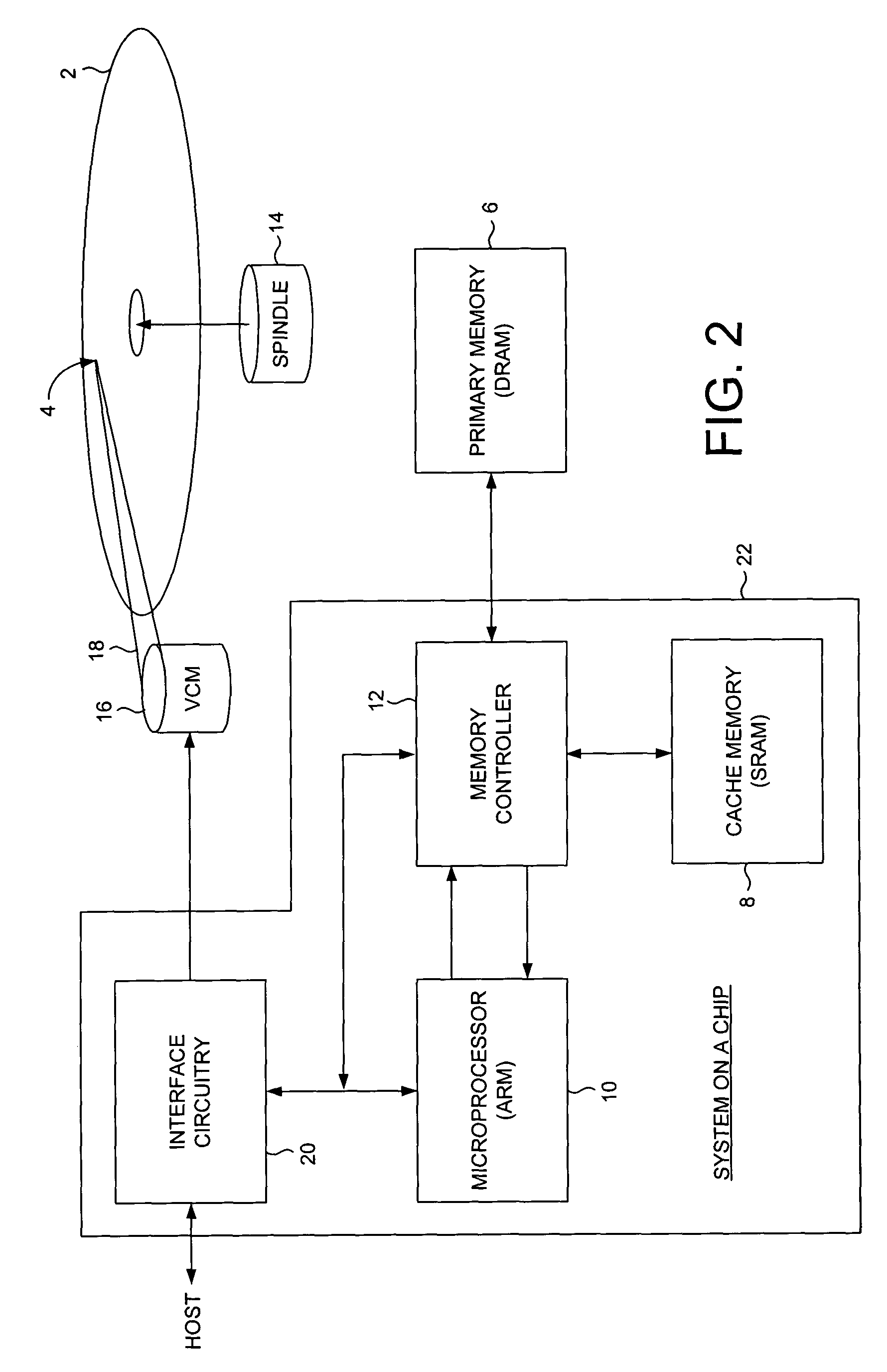

Disk drive employing enhanced instruction cache management to facilitate non-sequential immediate operands

InactiveUS7055000B1Instruction analysisMemory adressing/allocation/relocationOperandCache management

A disk drive is disclosed for executing a program comprising a plurality of instructions. The disk drive comprises a primary memory for storing the instructions, and a cache memory for caching the instructions. The cache management is enhanced by not re-filling the cache due to accessing a non-sequential immediate operand.

Owner:WESTERN DIGITAL TECH INC

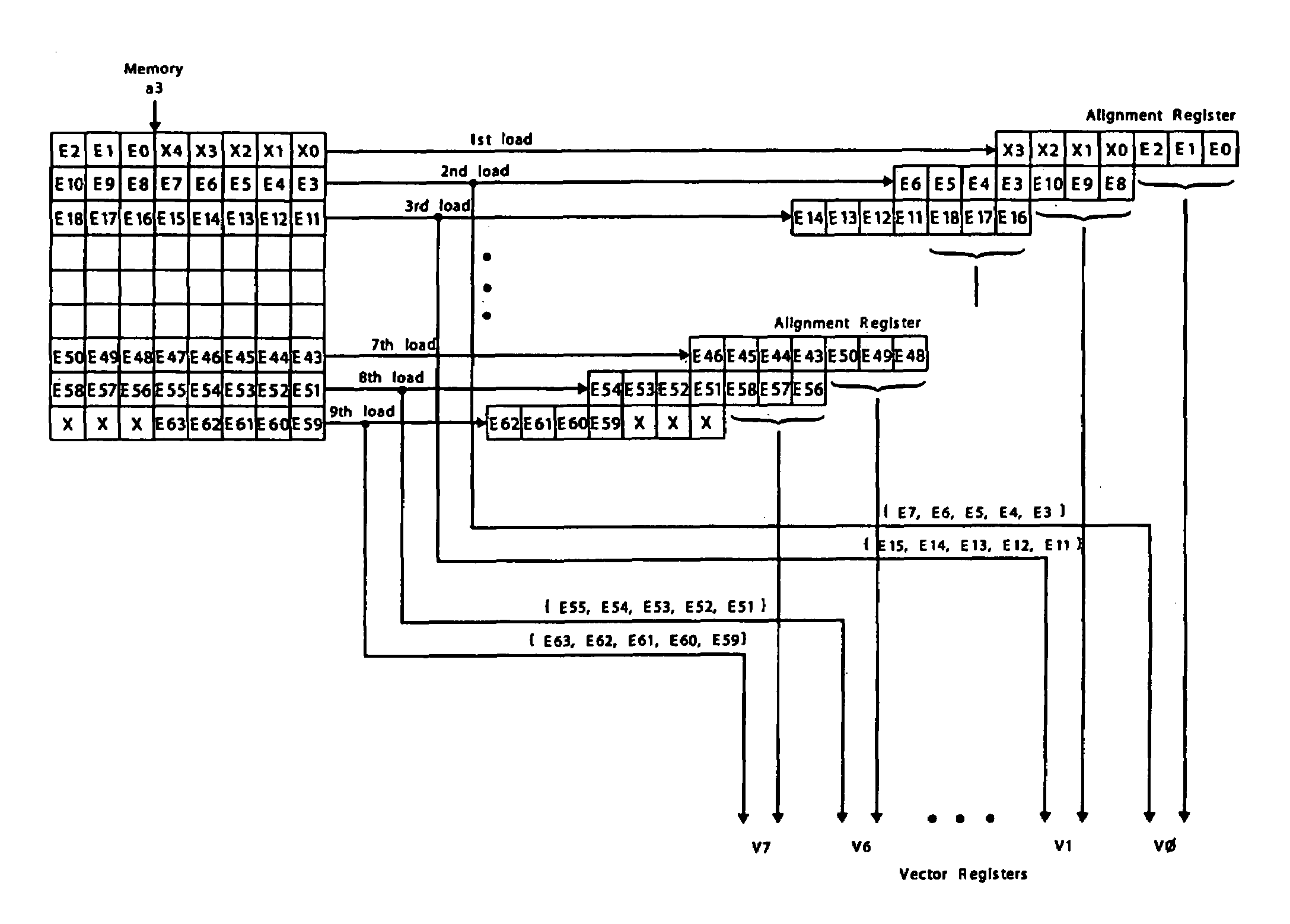

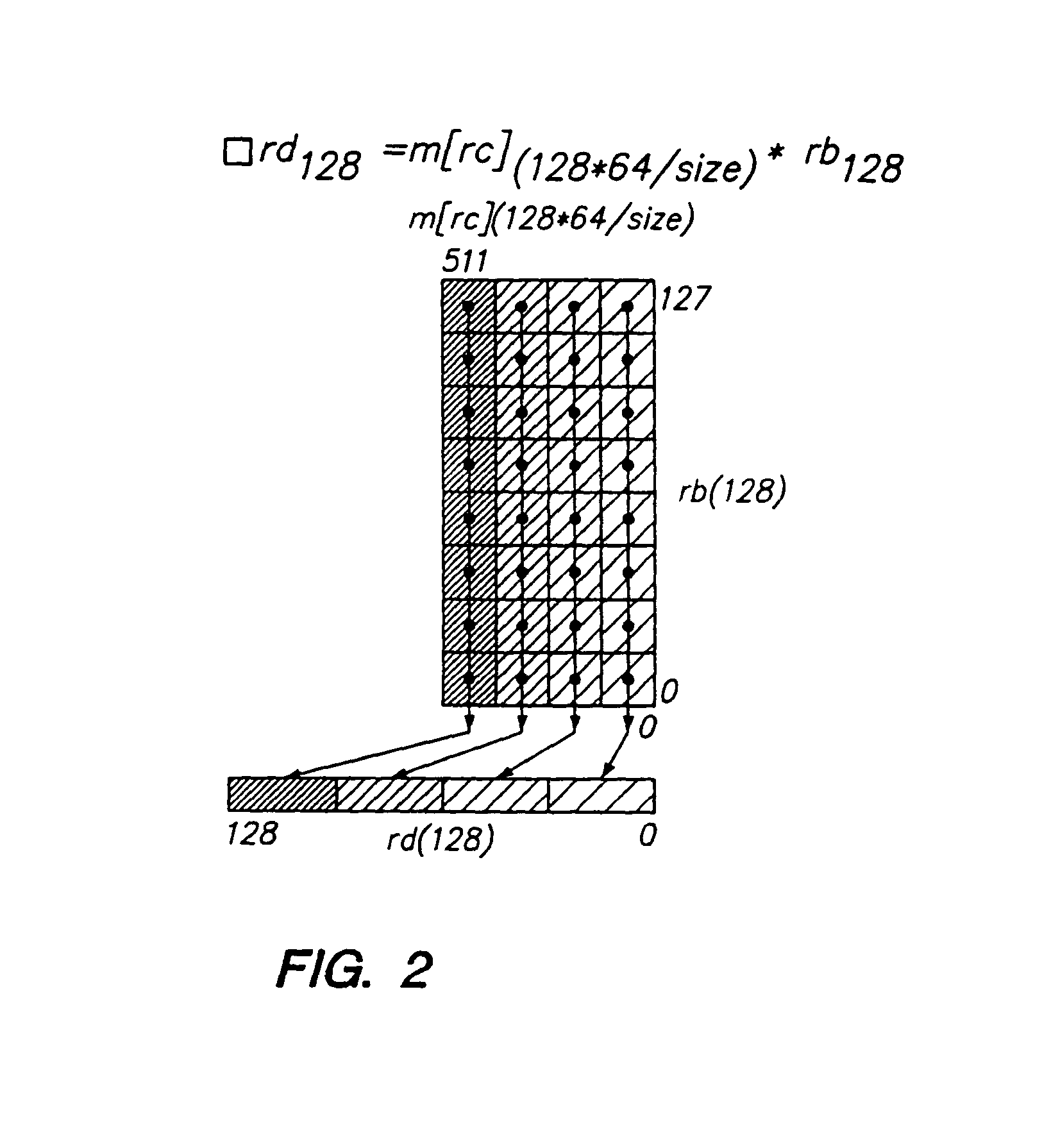

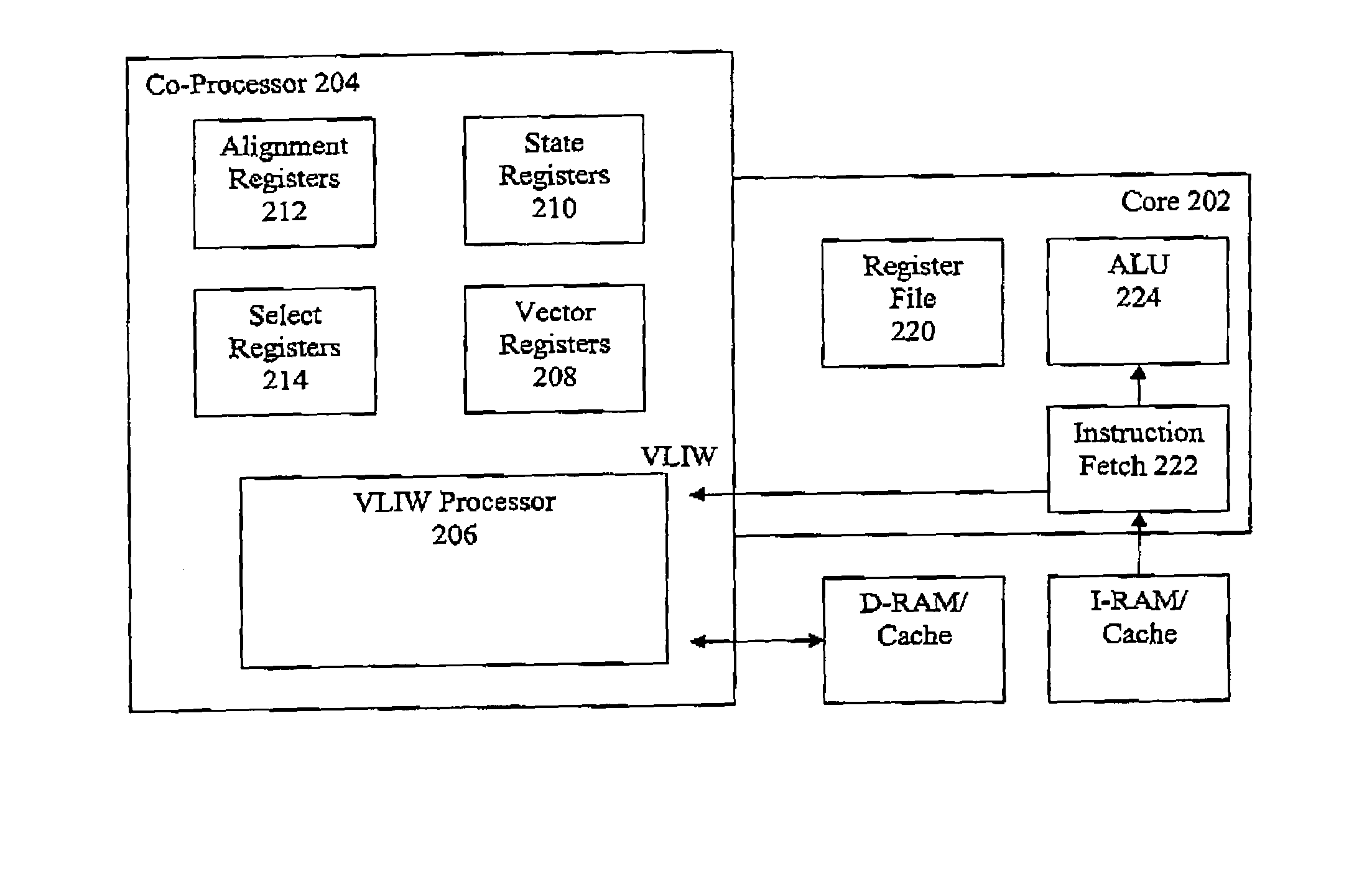

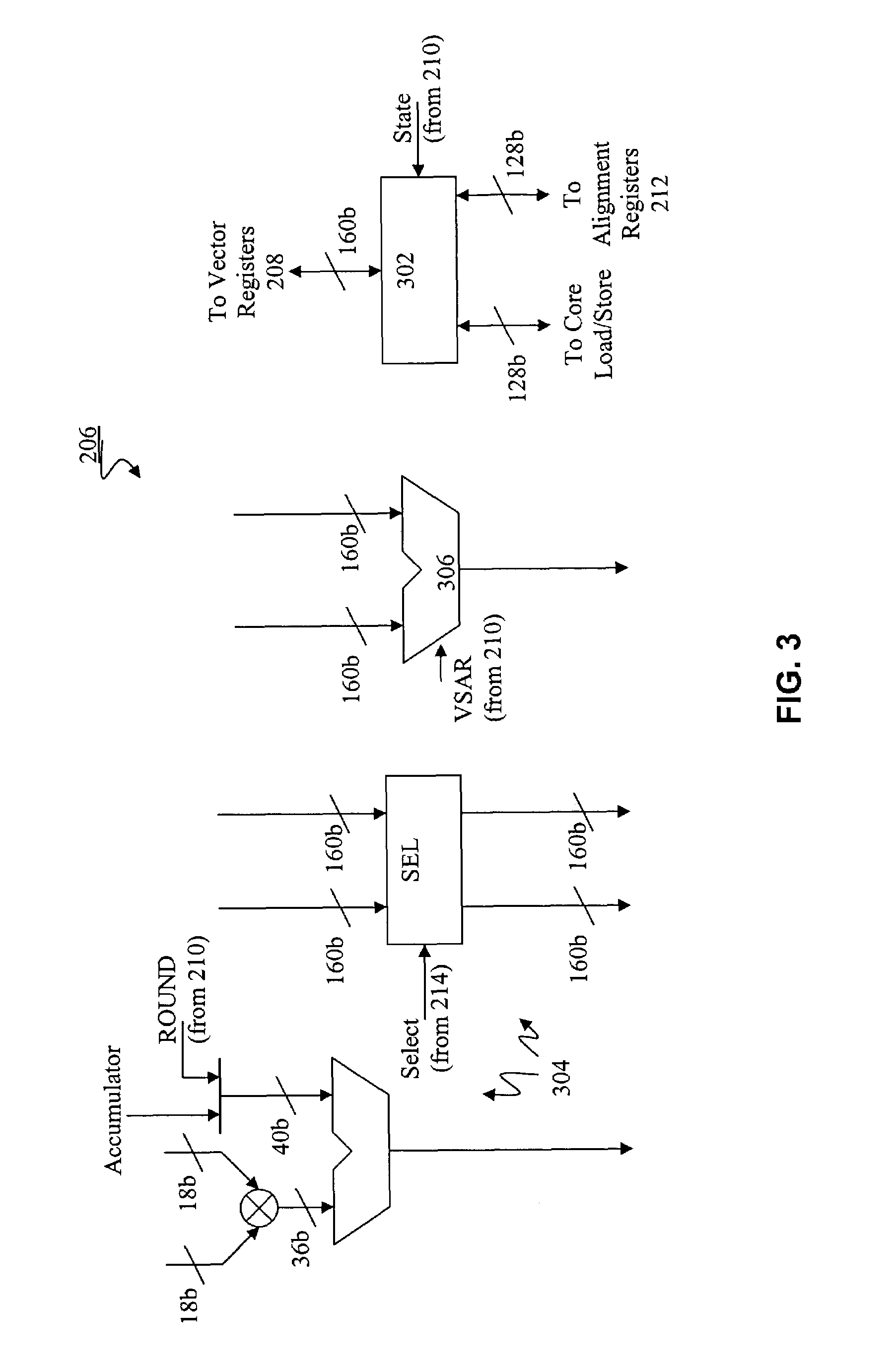

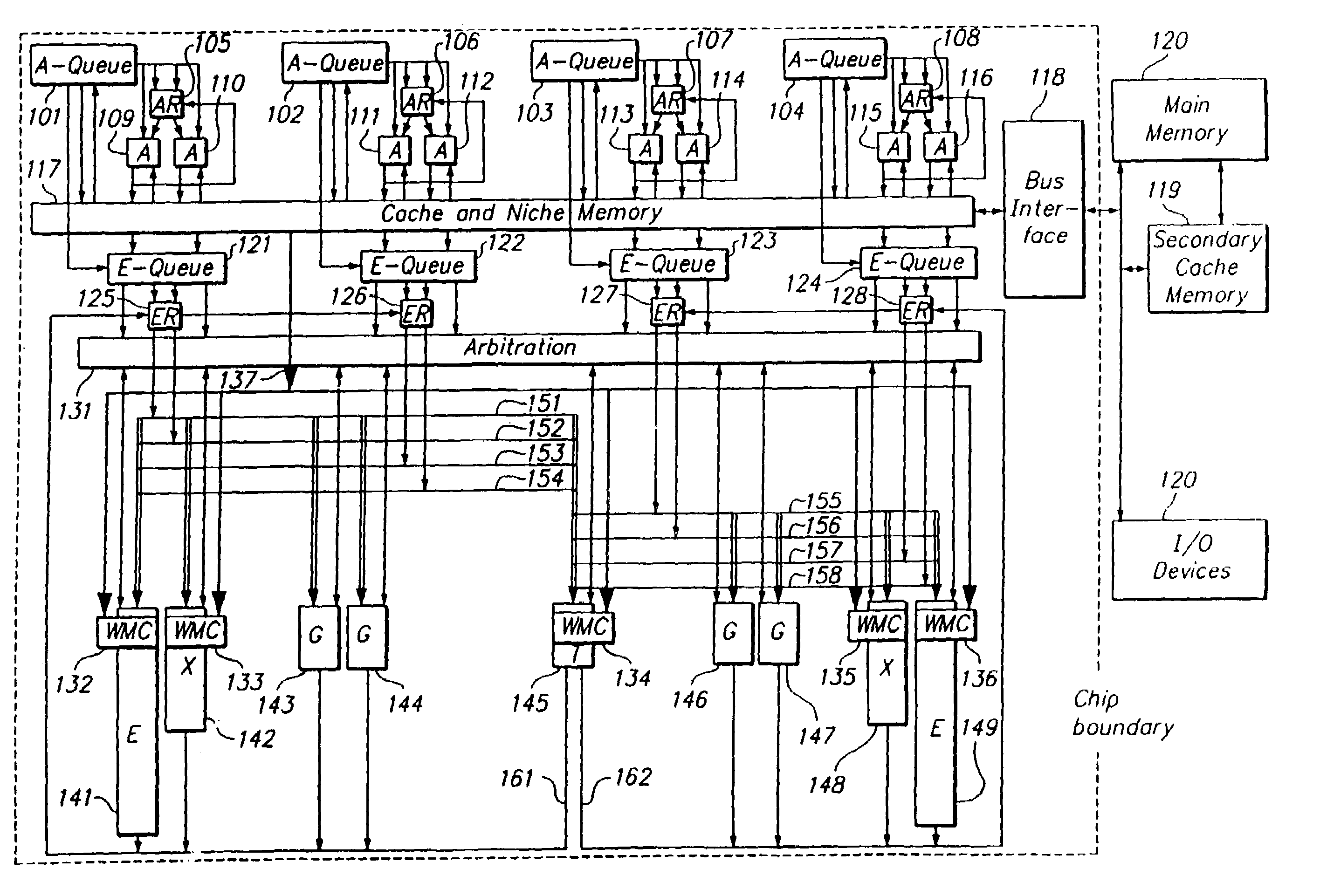

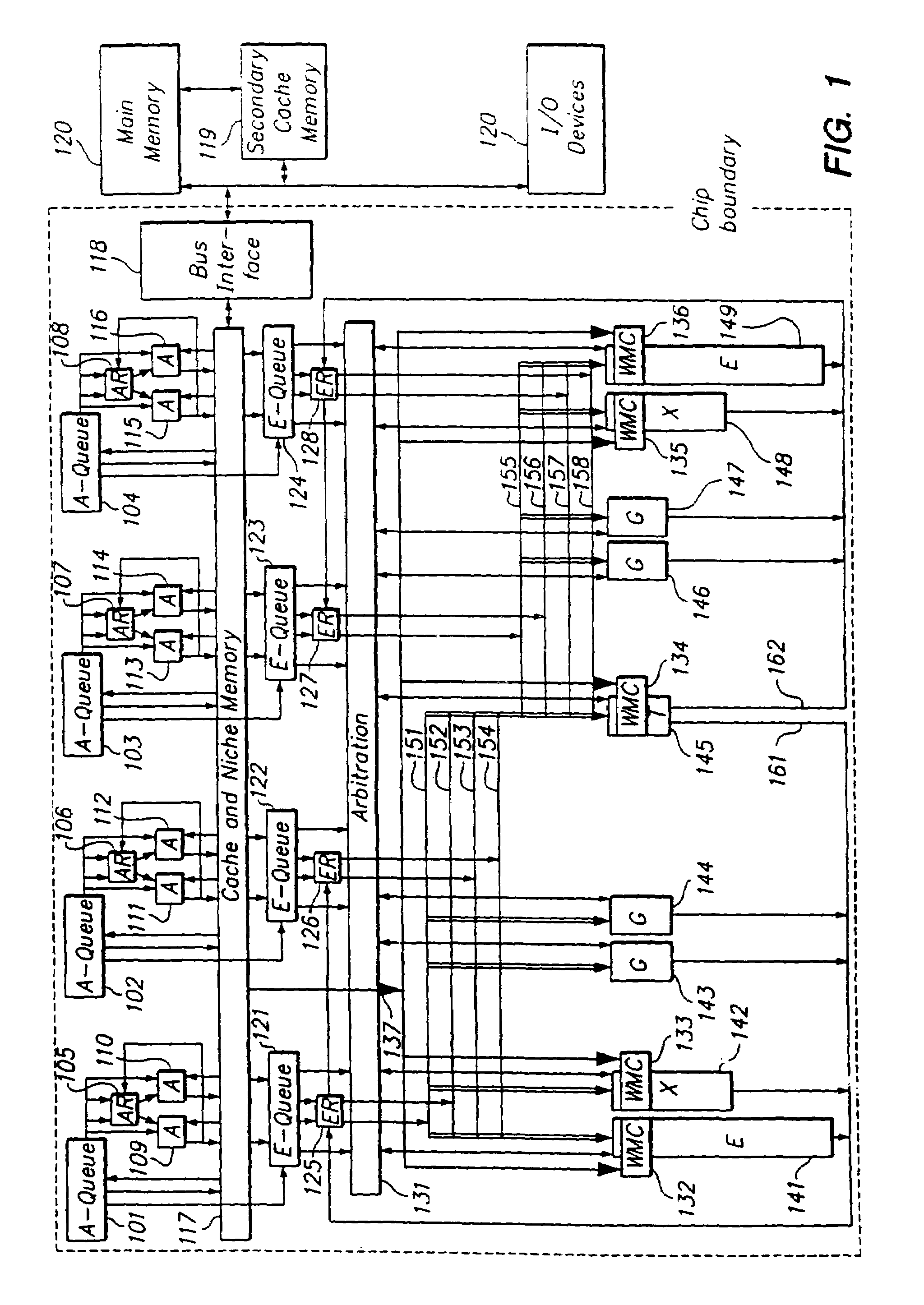

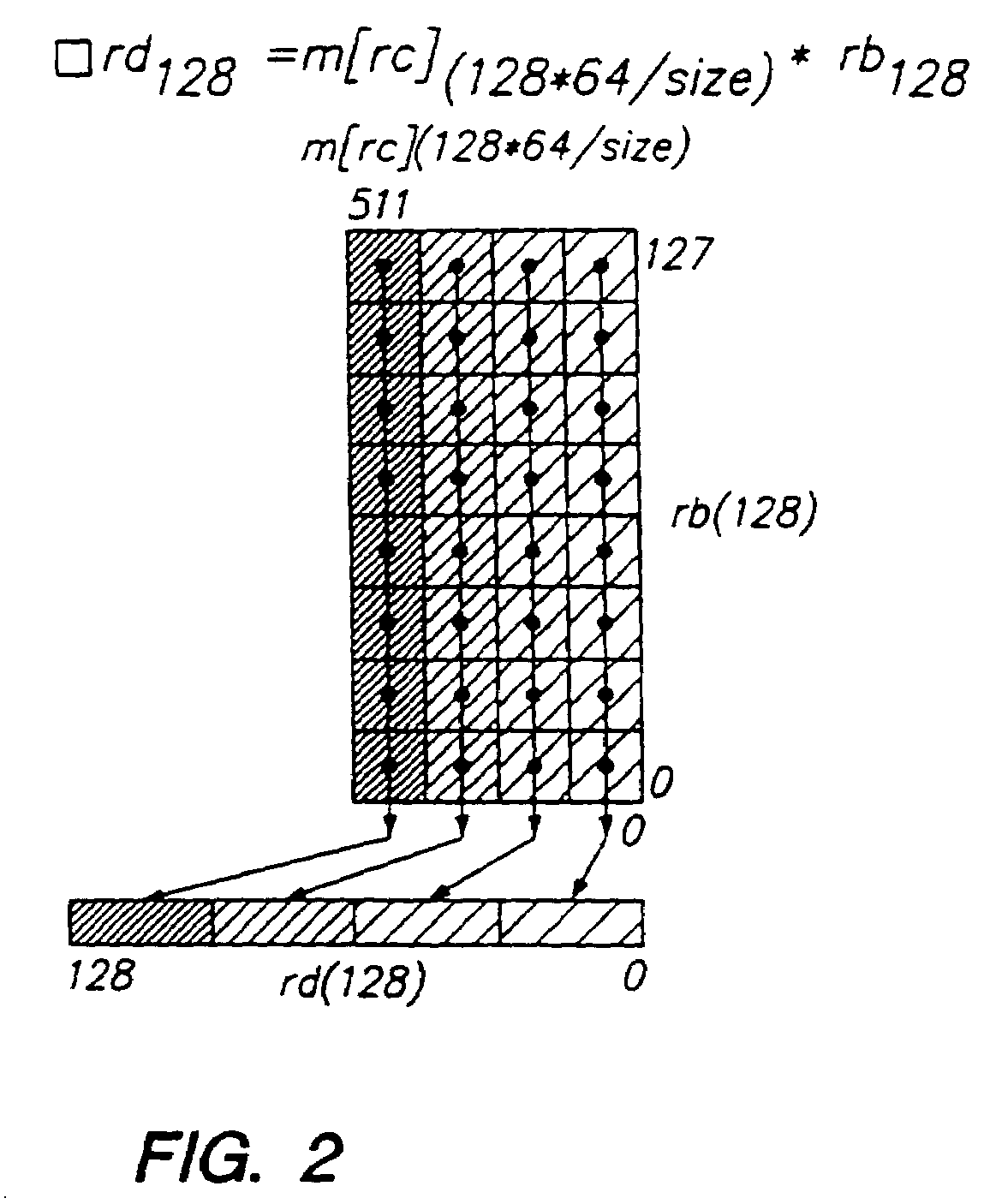

Load/store operation of memory misaligned vector data using alignment register storing realigned data portion for combining with remaining portion

InactiveUS7219212B1High coding densityImprove performanceInstruction analysisGeneral purpose stored program computerDigital signal processingCritical section

A processor can achieve high code density while allowing higher performance than existing architectures, particularly for Digital Signal Processing (DSP) applications. In accordance with one aspect, the processor supports three possible instruction sizes while maintaining the simplicity of programming and allowing efficient physical implementation. Most of the application code can be encoded using two sets of narrow size instructions to achieve high code density. Adding a third (and larger, i.e. VLIW) instruction size allows the architecture to encode multiple operations per instruction for the performance critical section of the code. Further, each operation of the VLIW format instruction can optionally be a SIMD operation that operates upon vector data. A scheme for the optimal utilization (highest achievable performance for the given amount of hardware) of multiply-accumulate (MAC) hardware is also provided.

Owner:TENSILICA

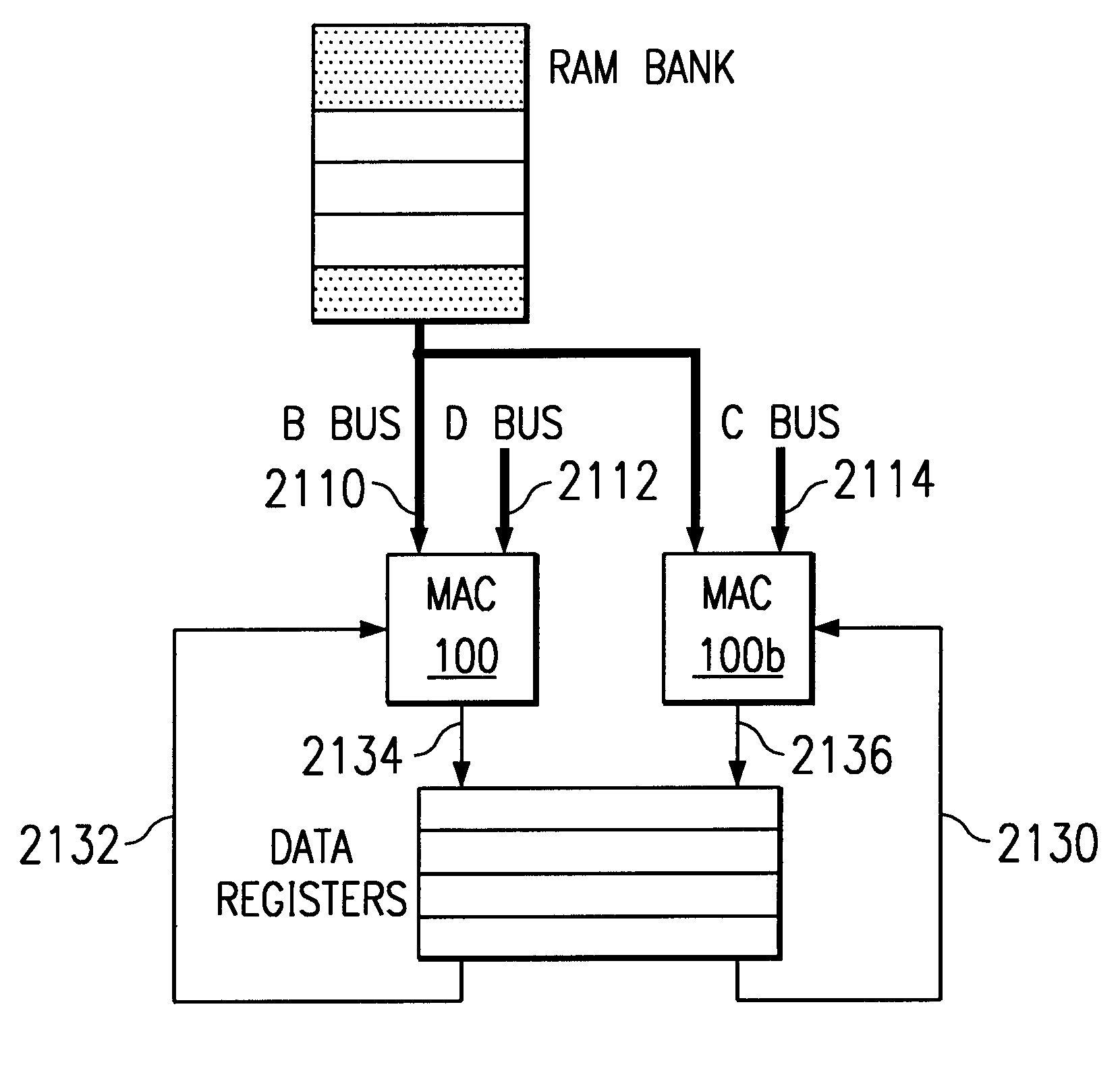

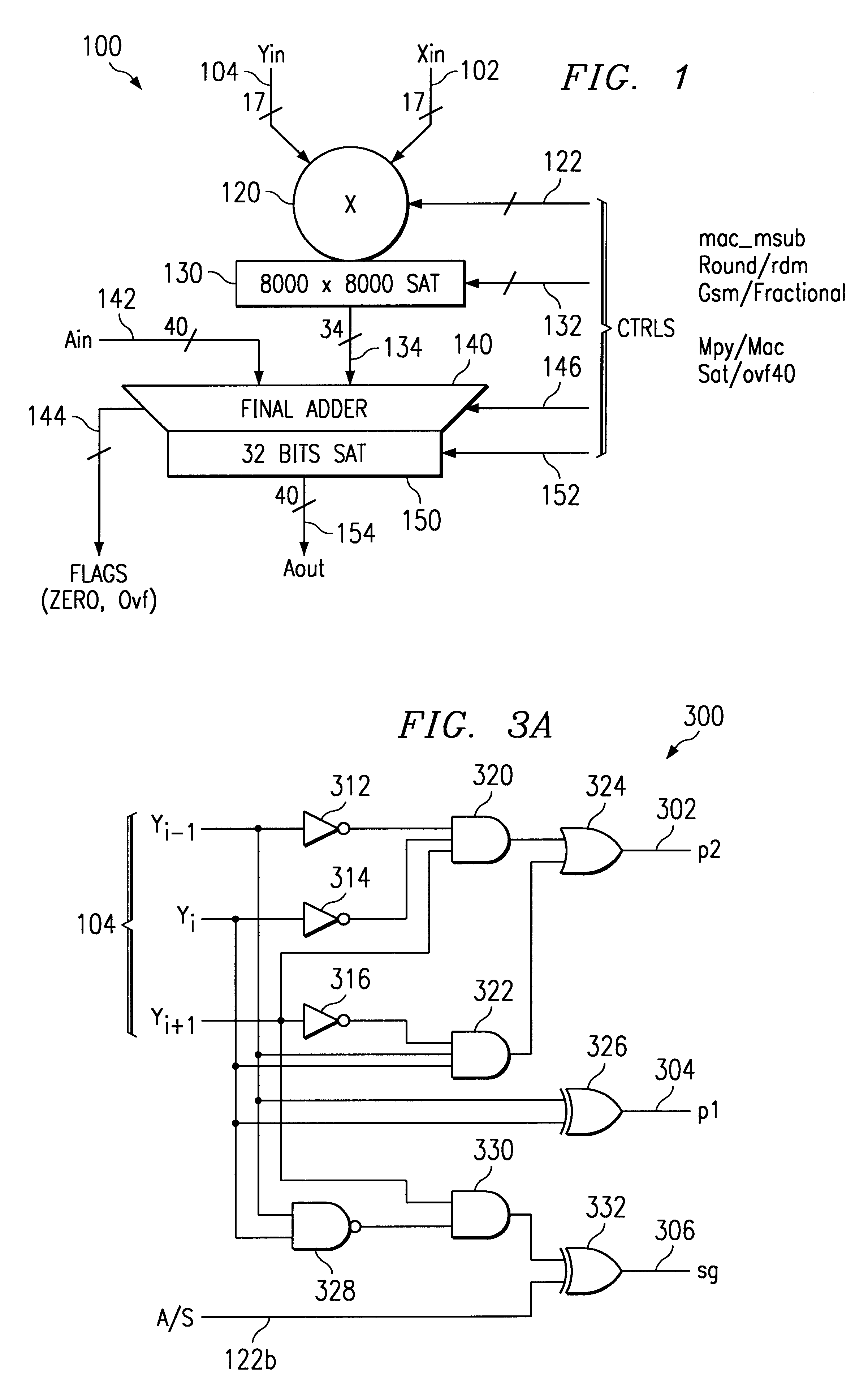

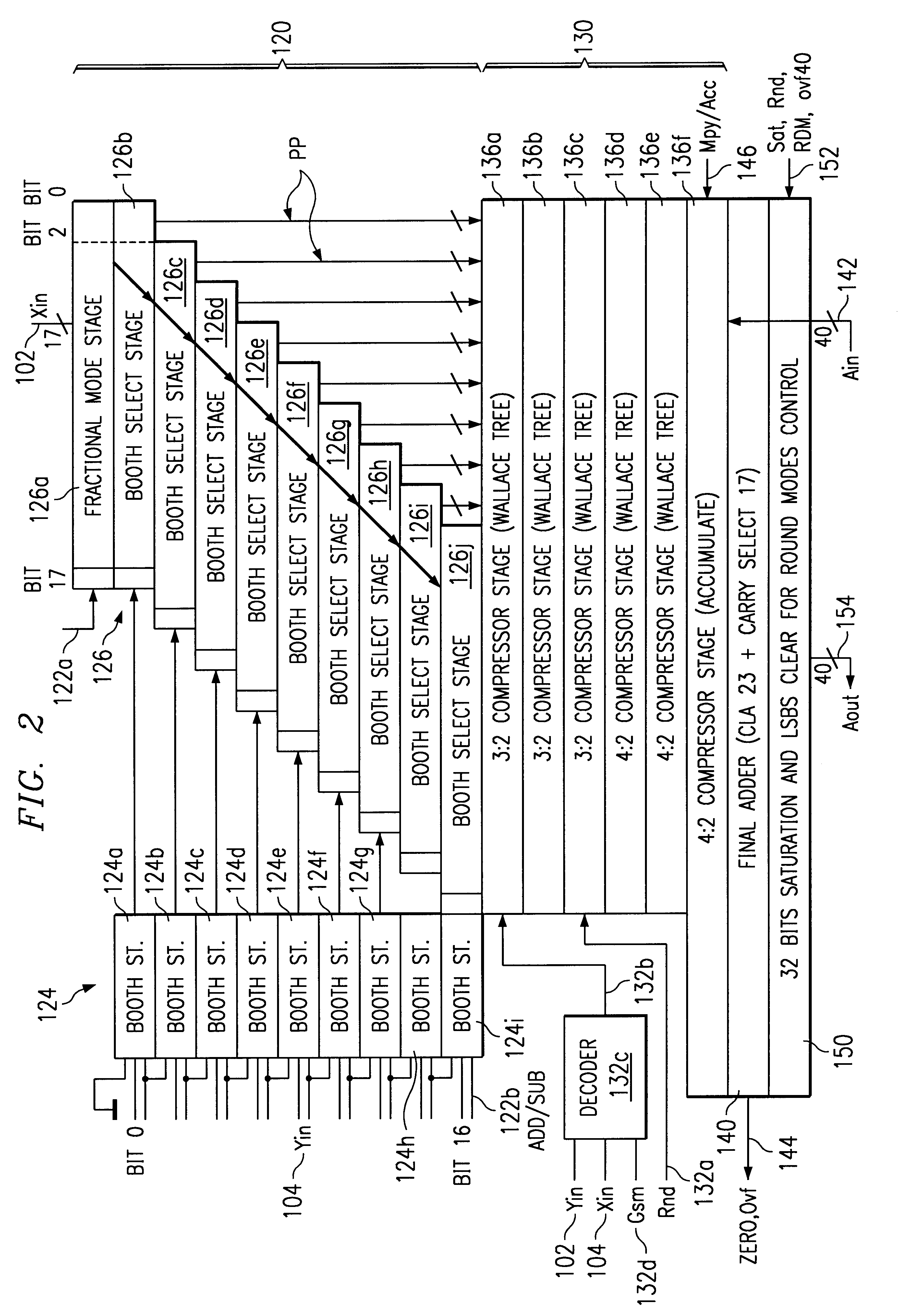

Multiplier accumulator circuits

A multiply-accumulate (MAC) unit, having a first binary operand X, a second binary operand Y, a third binary operand, Booth recode logic for generating a plurality of partial products from said first and second operands, a Wallace tree adder for reducing the partial products and for selectively arithmetically combining the reduced partial products with said third operand, a final adder for generating a final sum, and a saturation circuitry for selectively rounding or saturating said final sum is provided. A dual MAC unit is also provided.

Owner:TEXAS INSTR INC

Programmable processor with group floating-point operations

InactiveUS7216217B2Reduce in quantityImprove performanceInstruction analysisMemory adressing/allocation/relocationComputer architectureEngineering

A programmable processor that comprises a general purpose processor architecture, capable of operation independent of another host processor, having a virtual memory addressing unit, an instruction path and a data path; an external interface; a cache operable to retain data communicated between the external interface and the data path; at least one register file configurable to receive and store data from the data path and to communicate the stored data to the data path; and a multi-precision execution unit coupled to the data path. The multi-precision execution unit is configurable to dynamically partition data received from the data path to account for an elemental width of the data and is capable of performing group floating-point operations on multiple operands in partitioned fields of operand registers and returning catenated results. In other embodiments the multi-precision execution unit is additionally configurable to execute group integer and / or group data handling operations.

Owner:MICROUNITY

Vector register addressing

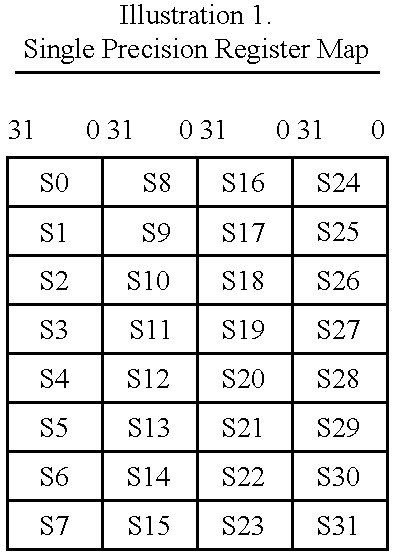

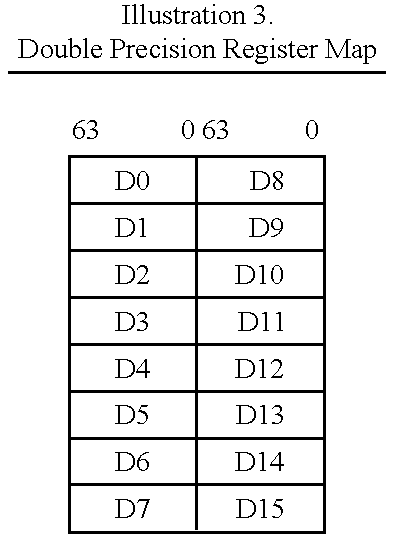

InactiveUS6332186B1Without complexityWithout costRegister arrangementsInstruction analysisMemory addressProcessing Instruction

A floating point unit 26 is provided with a register bank 38 comprising 32 registers that may be used as either vector registers V or scalar registers S. Data values are transferred between memory 30 and the registers within the register bank 38 using contiguous block memory access instructions. Vector processing instructions specify a sequence of processing operations to be performed upon data values within a sequence of registers. The register address is incremented between each operation by an amount controlled by a stride value. Accordingly, the register address can be incremented by values such as 0, 1, 2 or 4 between each iteration. This provides a mechanism for retaining block memory access instructions to contiguous memory addresses whilst supporting vector matrix and / or complex operations in which the data values needed for each iteration are not adjacent to one another in the memory 30.

Owner:ARM LTD

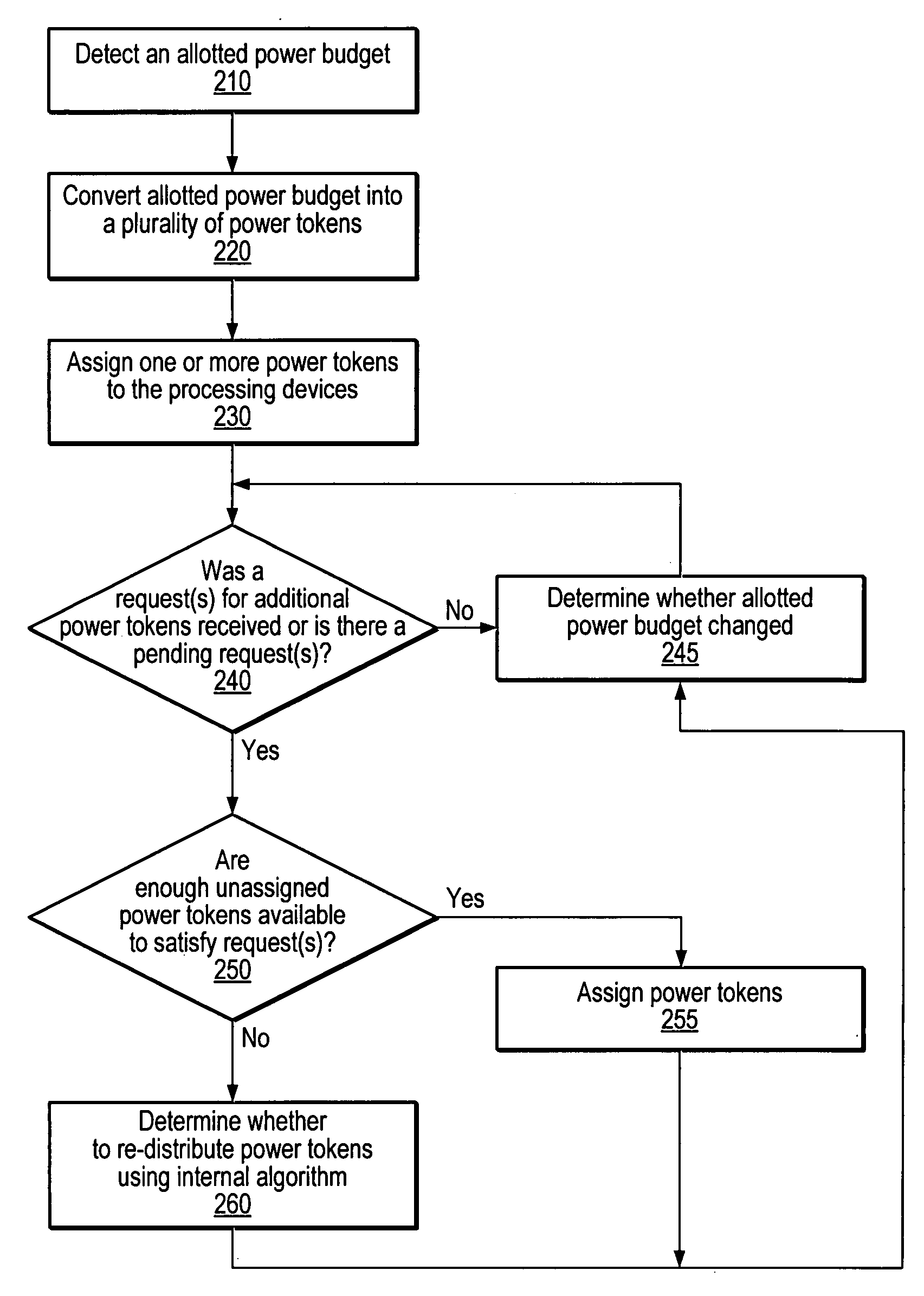

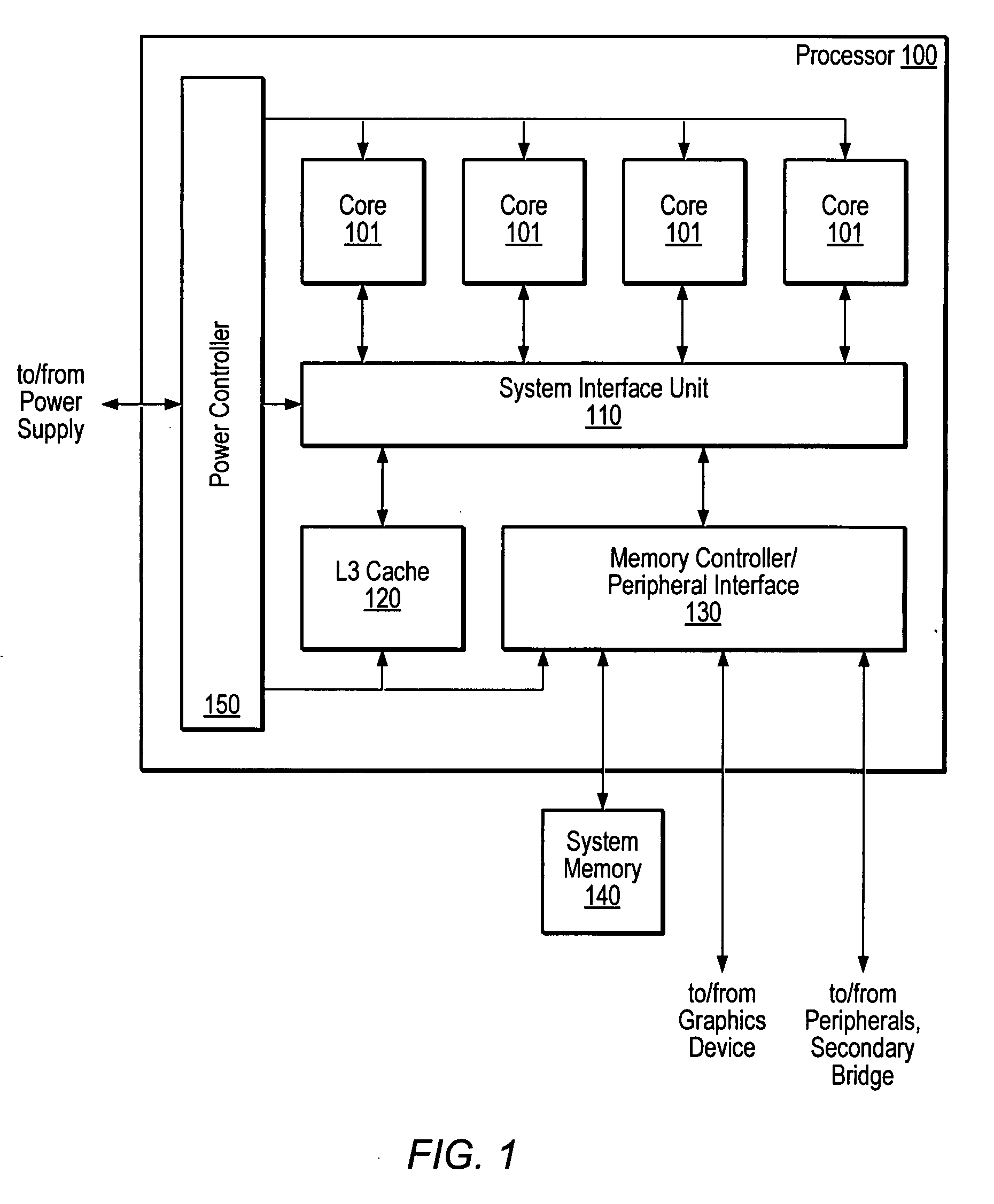

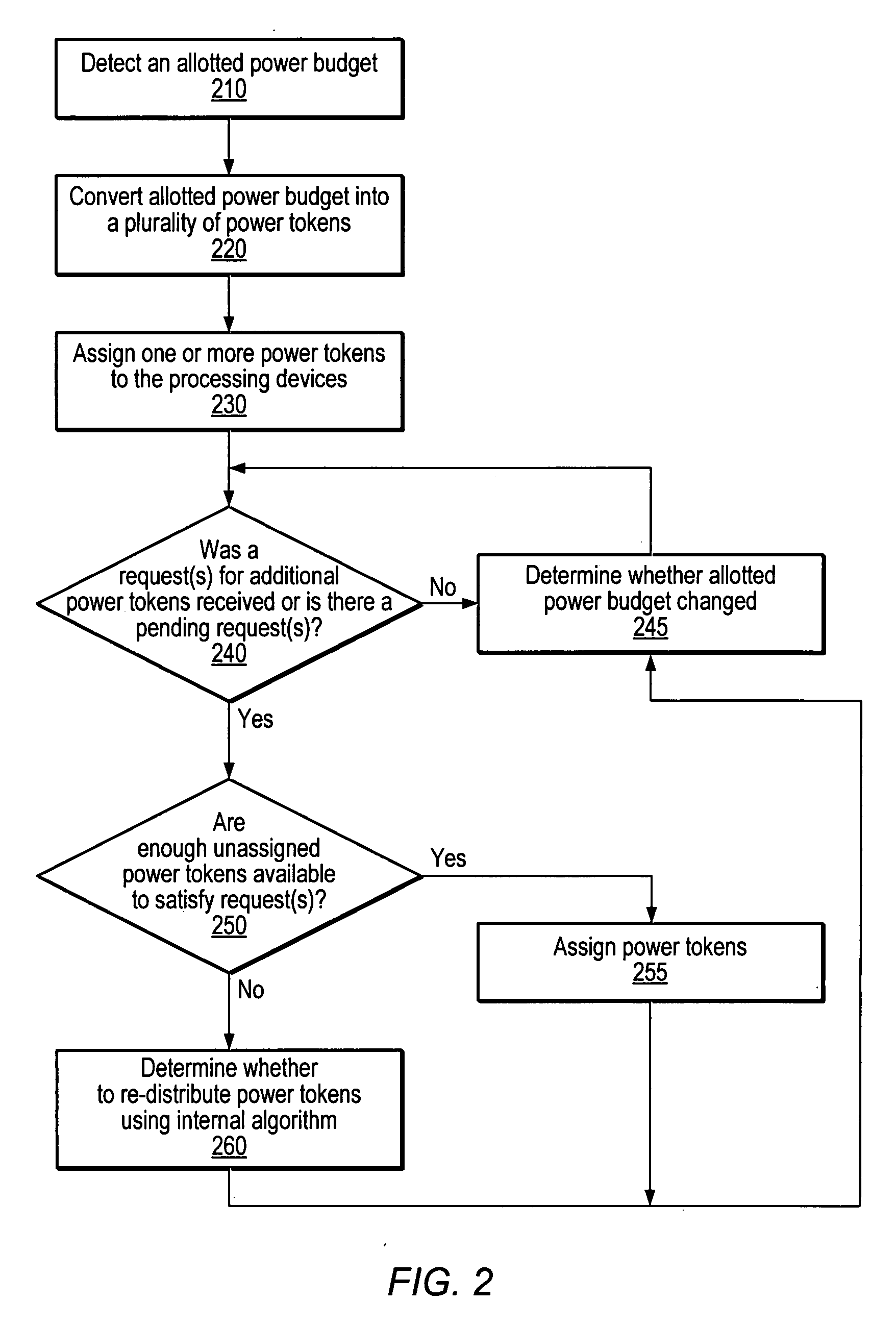

Token based power control mechanism

A token-based power control mechanism for an apparatus including a power controller and a plurality of processing devices. The power controller may detect a power budget allotted for the apparatus. The power controller may convert the allotted power budget into a plurality of power tokens, each power token being a portion of the allotted power budget. The power controller may then assign one or more of the plurality of power tokens to each of the processing devices. The assigned power tokens may determine the power allotted for each of the processing devices. The power controller may receive one or more requests from the plurality of processing devices for one or more additional power tokens. In response to receiving the requests, the power controller may determine whether to change the distribution of power tokens among the processing devices.

Owner:GLOBALFOUNDRIES US INC

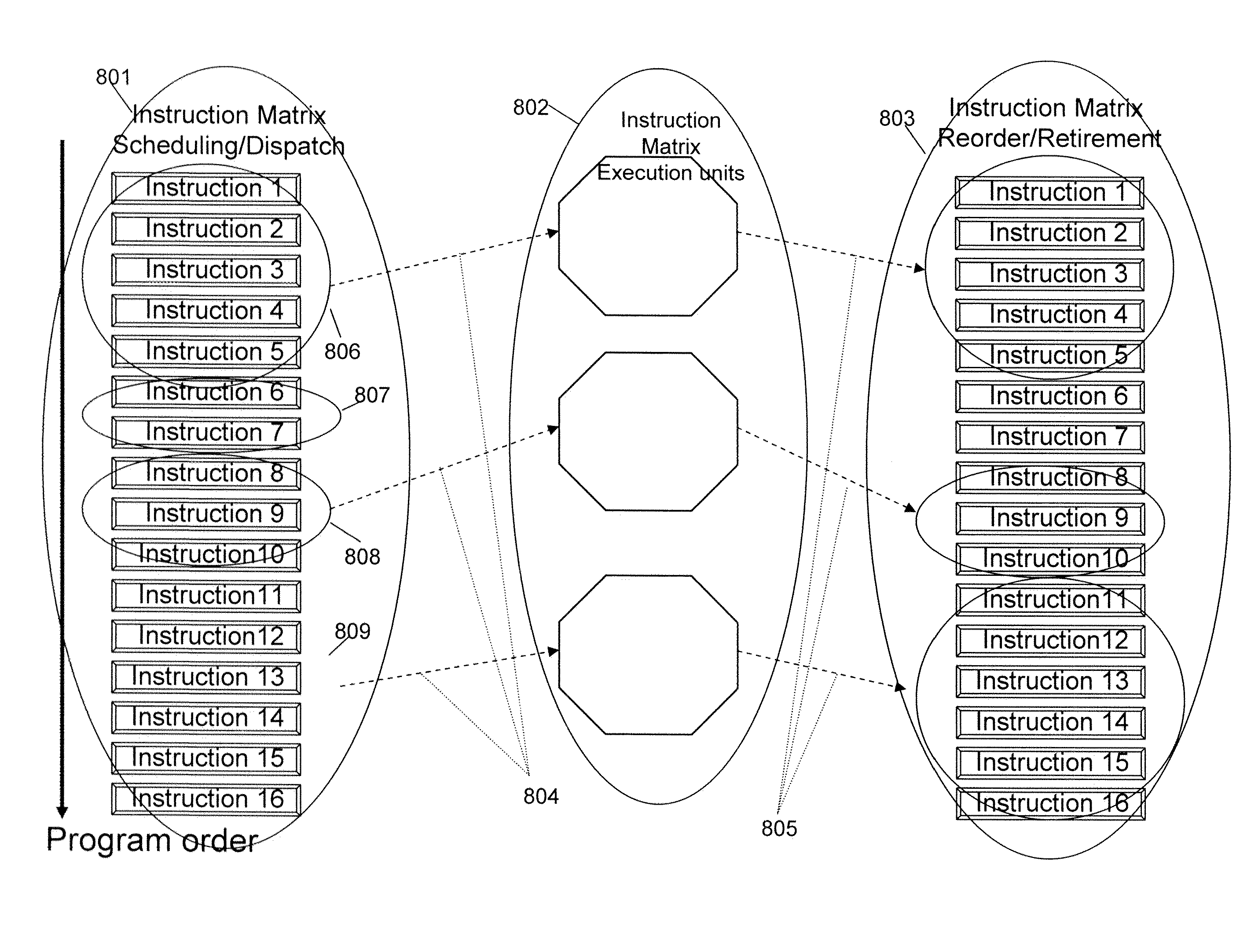

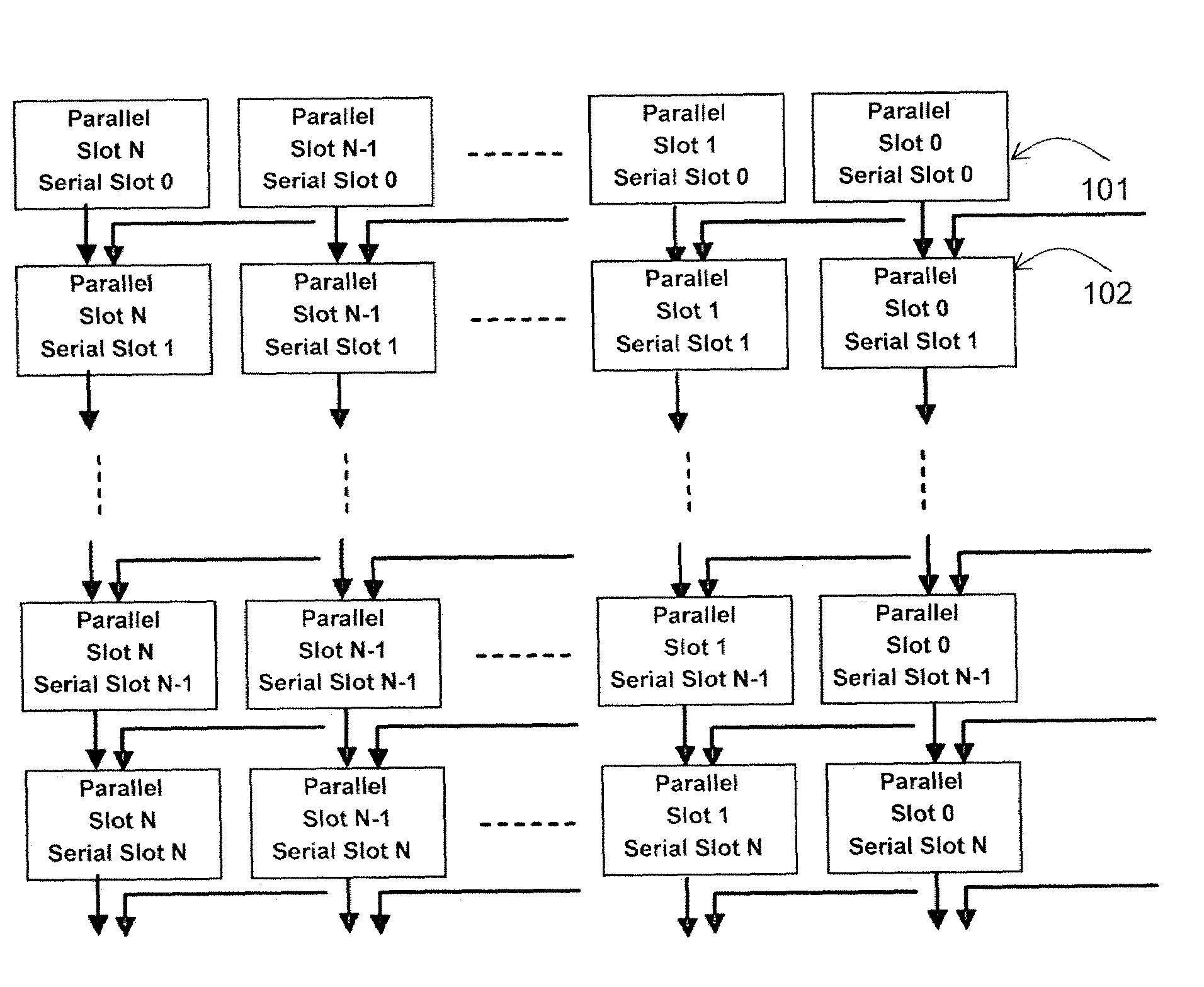

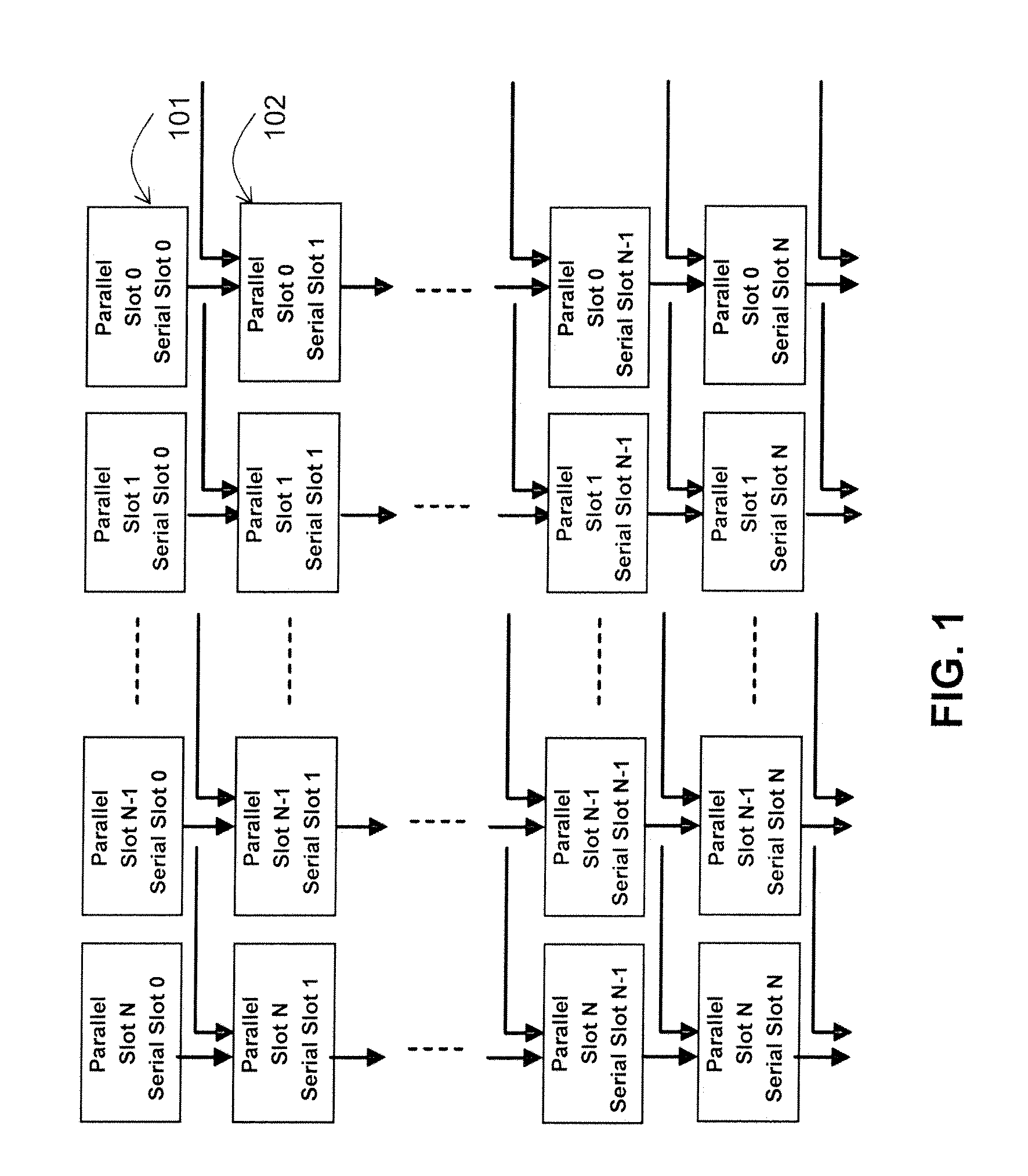

Apparatus and Method for Processing an Instruction Matrix Specifying Parallel and Dependent Operations

ActiveUS20090113170A1Single instruction multiple data multiprocessorsRegister arrangementsParallel computingMatrix manipulation

Owner:INTEL CORP

High data density RISC processor

InactiveUS6282633B1High densityIncrease in CPIInstruction analysisDigital computer detailsProgram instructionProcessor register

A RISC processor implements an instruction set which, in addition to optimizing a relationship between the number of instructions required for execution of a program, clock period and average number of clocks per instruction, also is designed to optimize the equation S=IS * BI, where S is the size of program instructions in bits, IS is the static number of instructions required to represent the program (not the number required by an execution) and BI is the average number of bits per instruction. Compared to conventional RISC architectures, this processor lowers both BI and IS with minimal increases in clock period and average number of clocks per instruction. The processor provides good code density in a fixed-length high-performance encoding based on RISC principles, including a general register with load / store architecture. Further, the processor implements a simple variable-length encoding that maintains high performance.

Owner:TENSILICA

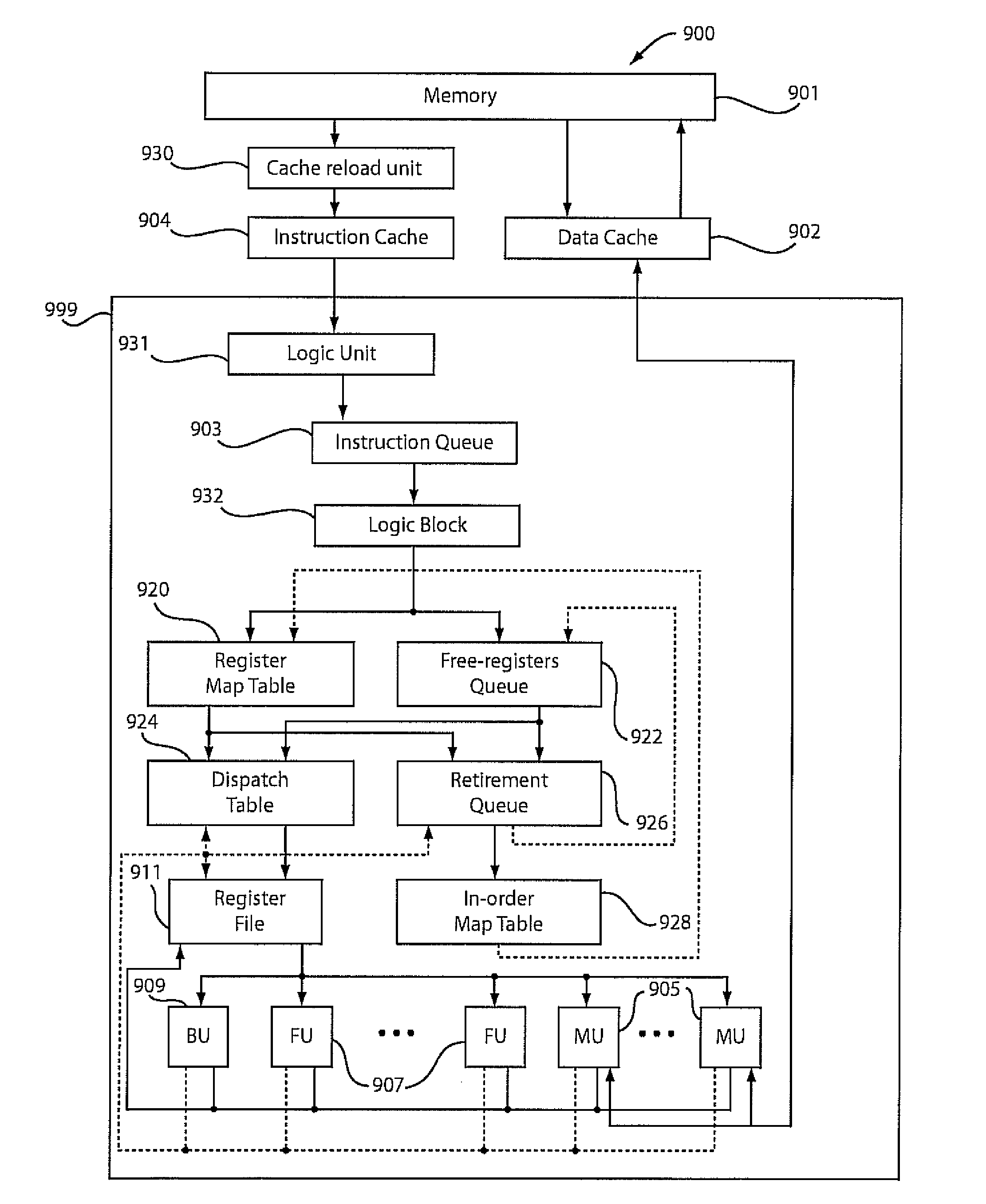

Processor with hybrid pipeline capable of operating in out-of-order and in-order modes

InactiveUS20140281402A1Instruction analysisDigital computer detailsOrder processingInstruction stream

A method and circuit arrangement provide support for a hybrid pipeline that dynamically switches between out-of-order and in-order modes. The hybrid pipeline may selectively execute instructions from at least one instruction stream that require the high performance capabilities provided by out-of-order processing in the out-of-order mode. The hybrid pipeline may also execute instructions that have strict power requirements in the in-order mode where the in-order mode conserves more power compared to the out-of-order mode. Each stage in the hybrid pipeline may be activated and fully functional when the hybrid pipeline is in the out-of-order mode. However, stages in the hybrid pipeline not used for the in-order mode may be deactivated and bypassed by the instructions when the hybrid pipeline dynamically switches from the out-of-order mode to the in-order mode. The deactivated stages may then be reactivated when the hybrid pipeline dynamically switches from the in-order mode to the out-of-order mode.

Owner:IBM CORP

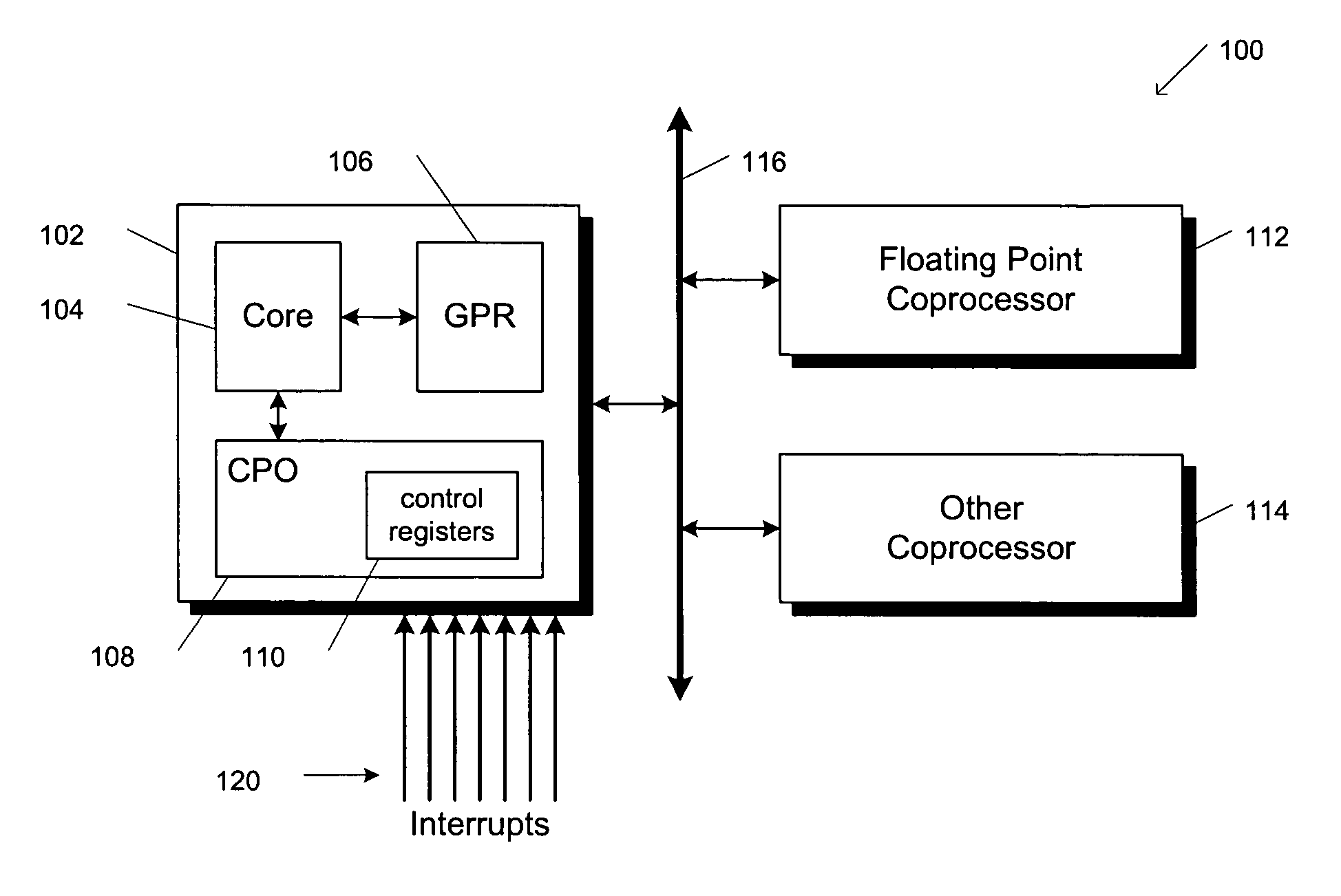

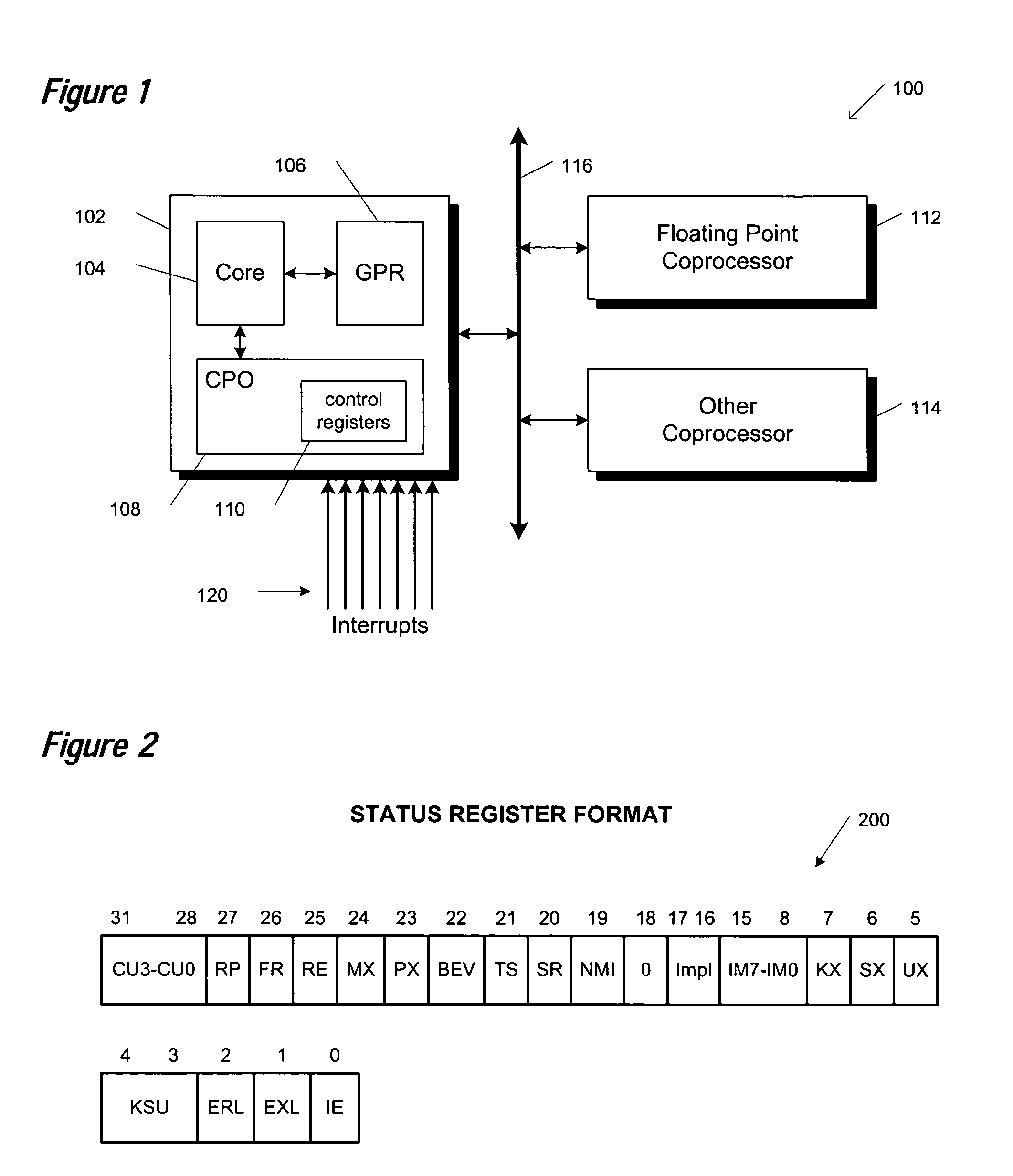

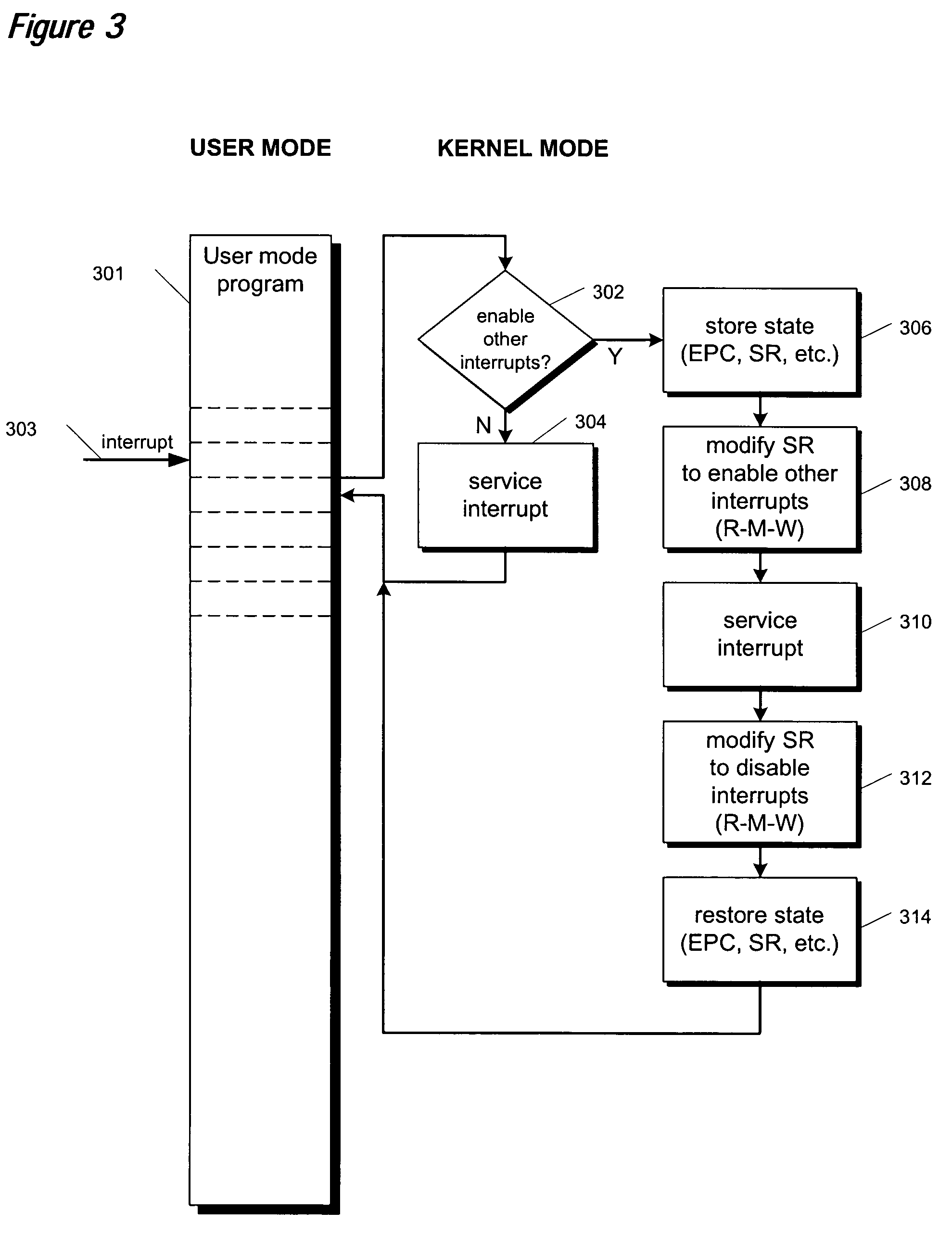

Atomic update of CPO state

A group of bit set and bit clear instructions are provided for a microprocessor to allow atomic modification of privileged architecture control registers. The bit set and bit clear instructions include an opcode that designates to the microprocessor that the instructions are to execute in privileged (kernel) state only, and that the instructions are to communicate with privileged control registers. Two operands are provided for the instructions, the first designating which of the privileged control registers is to be modified, the second designating a general purpose register that contains a bit mask. The bit set instructions set bits in the designated control register according to bits set in the bit mask. The bit clear instructions clear bits in the designated control register according to bits set in the bit mask. By atomically modifying privileged control registers, a requirement for strict nesting of interrupt routines is eliminated.

Owner:MIPS TECH INC

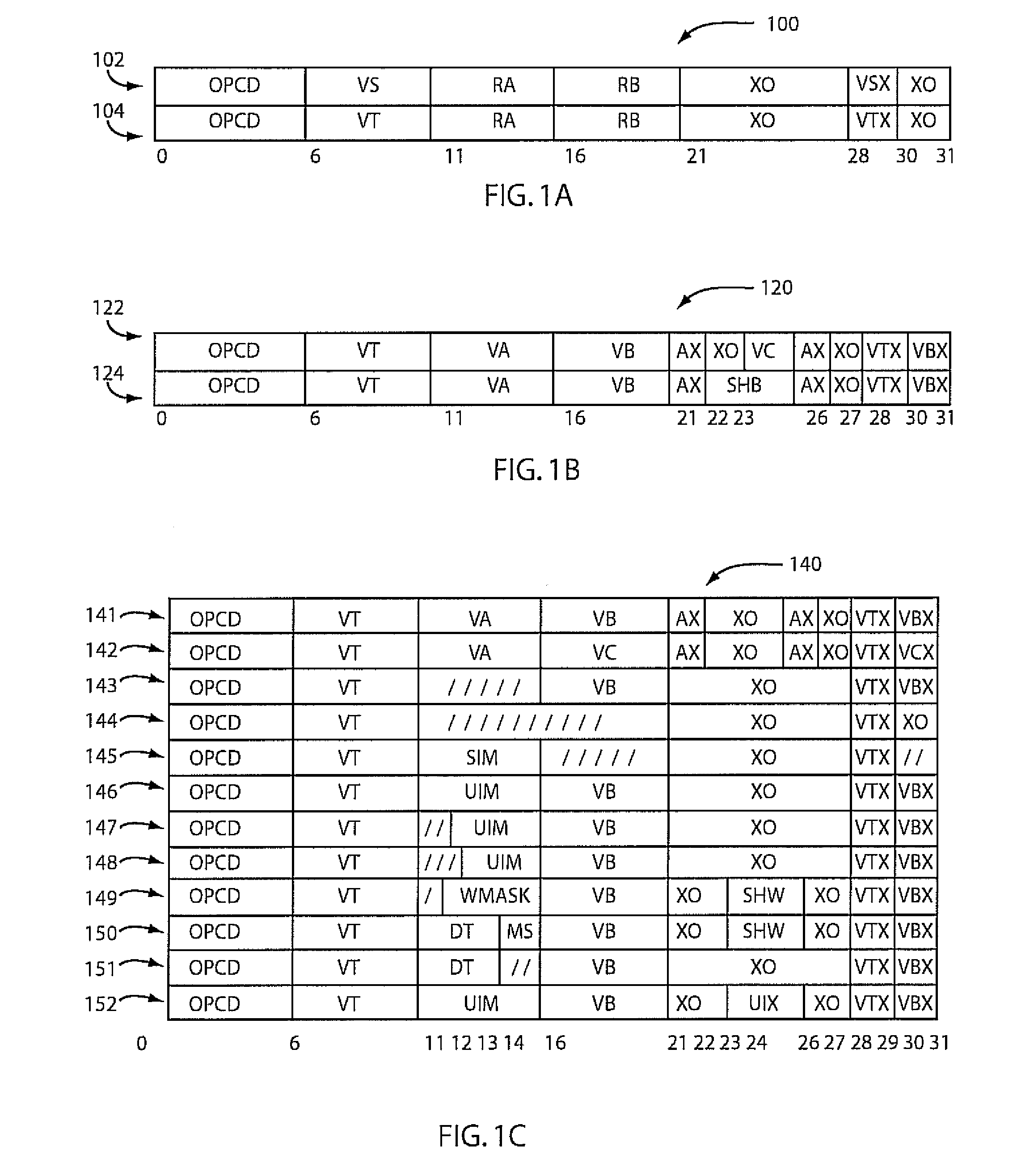

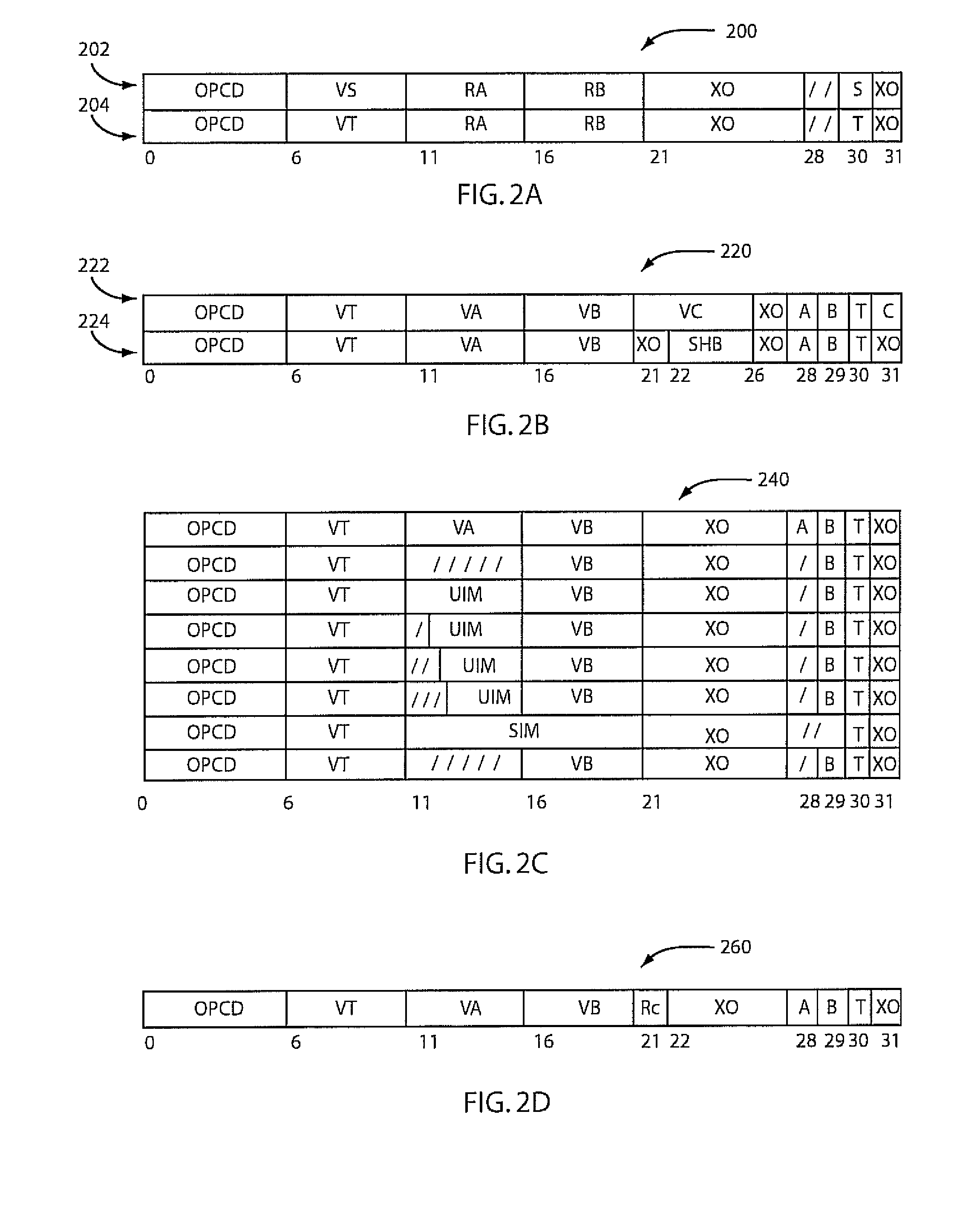

Implementing instruction set architectures with non-contiguous register file specifiers

ActiveUS20080189519A1Register arrangementsInstruction analysisProcessing InstructionProcessor register

There are provided methods and computer program products for implementing instruction set architectures with non-contiguous register file specifiers. A method for processing instruction code includes processing a fixed-width instruction of a fixed-width instruction set using a non-contiguous register specifier of a non-contiguous register specification. The fixed-width instruction includes the non-contiguous register specifier.

Owner:INT BUSINESS MASCH CORP

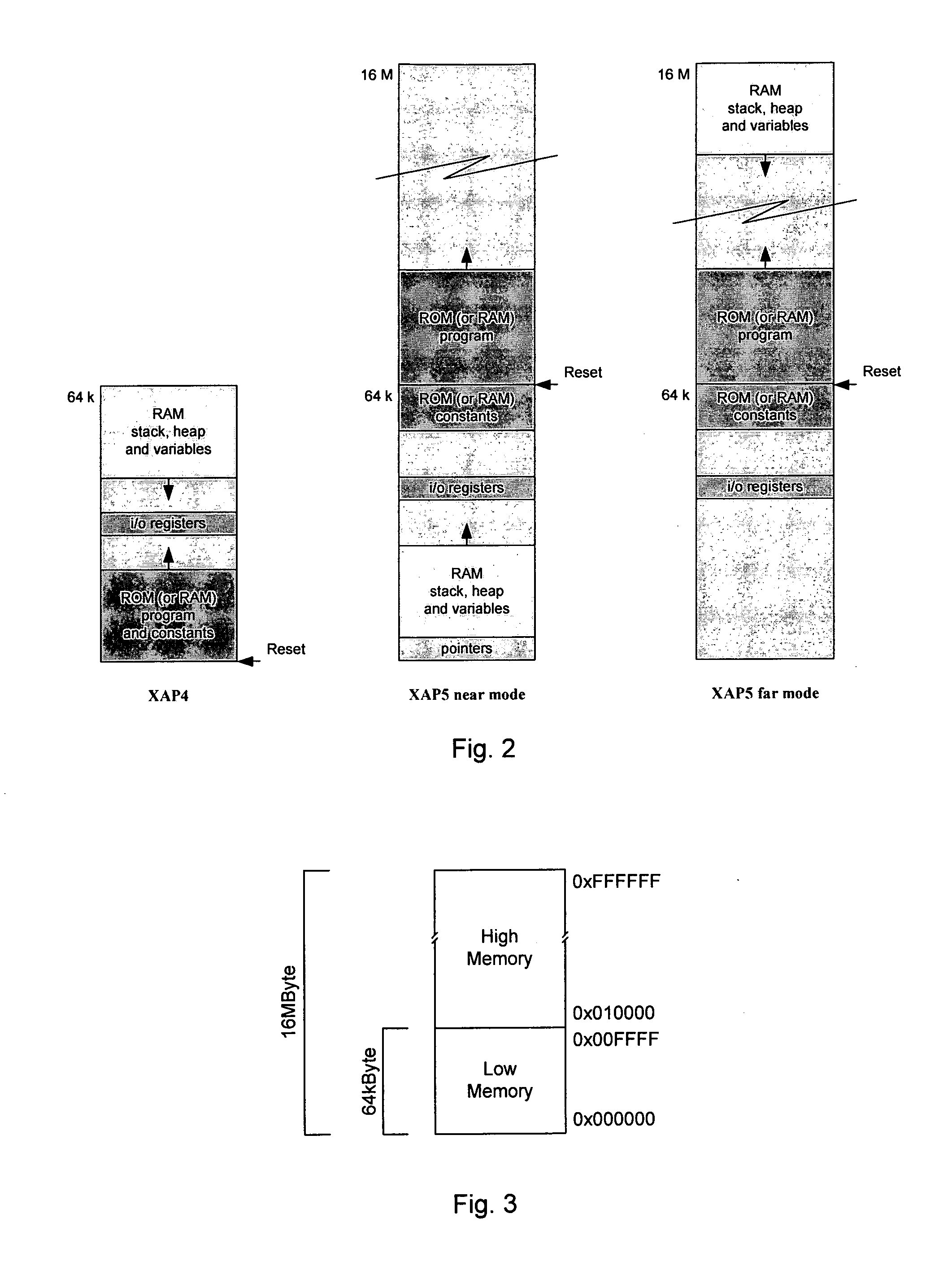

Data processing apparatus

ActiveUS20100293342A1Simple structureSmall spacingConditional code generationRegister arrangementsInstruction setContent-addressable memory

Apparatus comprises a processor configured for operation under a sequence of instructions from an instruction set, wherein said processor comprises: means for conditionally inhibiting at least one type of trap, interrupt or exception (TIE) event, wherein, when operating under a sequence of instructions, said inhibition means is inaccessible by said instructions to inhibit the or each type of TIE event, without interrupting said sequence. A data processing apparatus includes a processor adapted to operate under control of program code comprising instructions selected from an instruction set, the apparatus comprising: a predefined memory space providing a predefined addressable memory for storing program code and data, a larger memory space providing a larger addressable memory, means for accessing program code and data within the predefined memory space, and means for controlling the access means so as to enable the access means to access program code located within the larger memory space.

Owner:CAMBRIDGE CONSULTANTS LTD

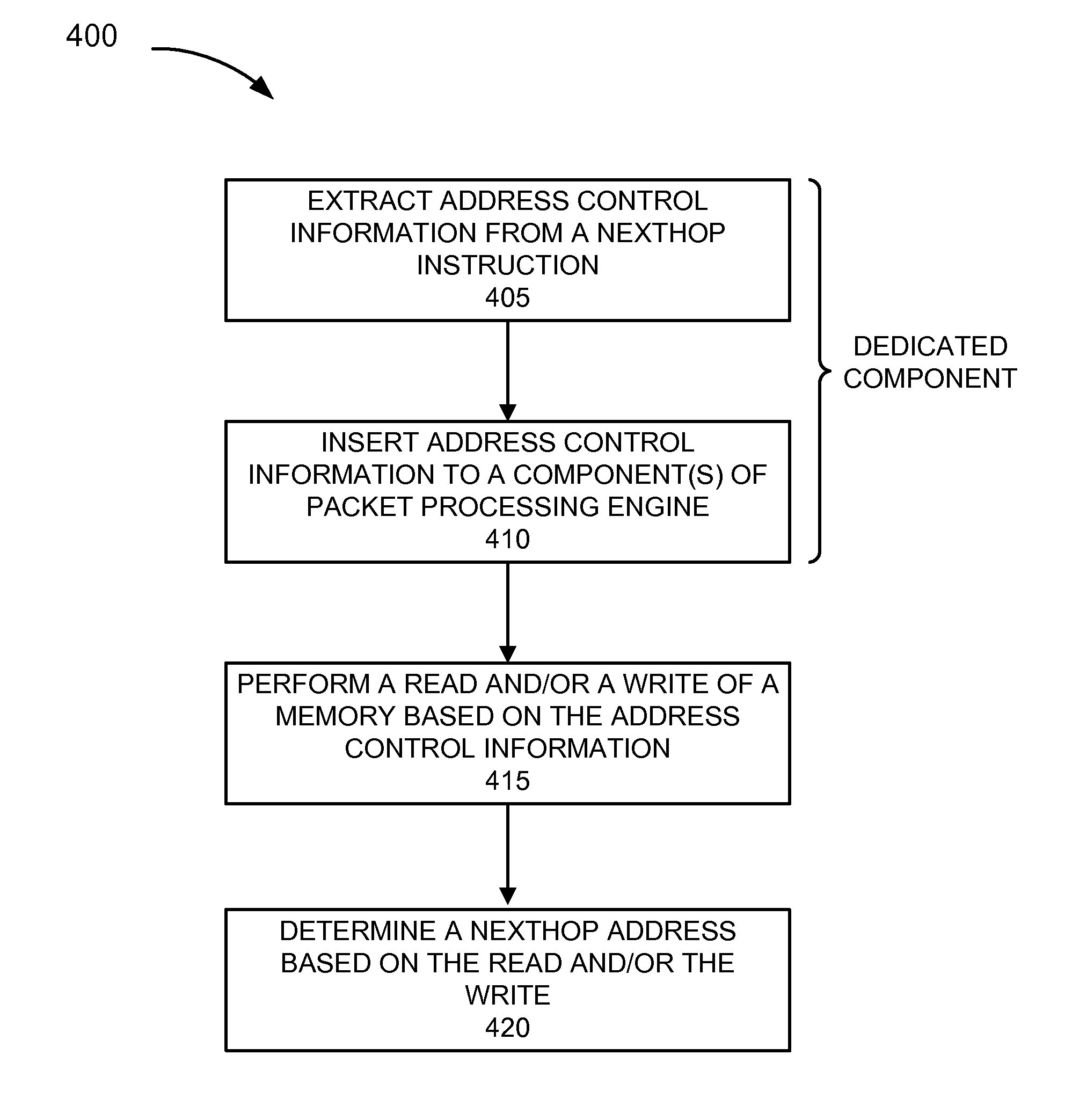

Hardware support for instruction set emulation

ActiveUS8069023B1Instruction analysisAnalogue computers for electric apparatusBit fieldNetwork addressing

A method for emulating a nexthop instruction in microcode with the assistance of dedicated hardware to extract read and write addressing from the nexthop instruction instead of performing this operation in microcode. A method for emulating a nexthop instruction in microcode with the assistance of dedicated hardware to compare a nexthop read address to a special value and to indicate whether the nexthop read address matches the special value, instead of performing this operation in microcode. A method for determining a network address by performing a single extraction of bit fields of a tree instruction to allow multiple tree search processes to be performed.

Owner:JUMIPER NETWORKS INC

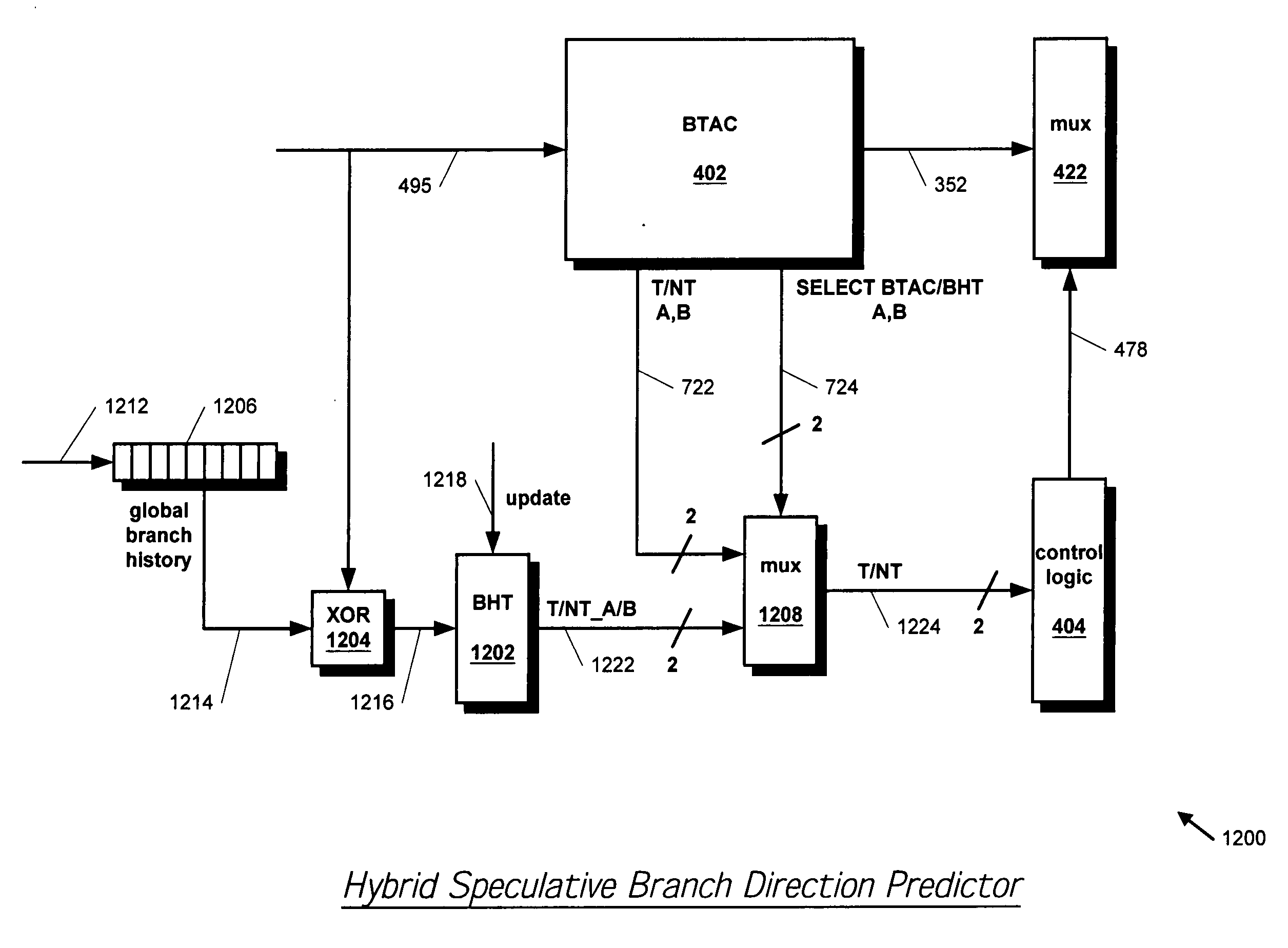

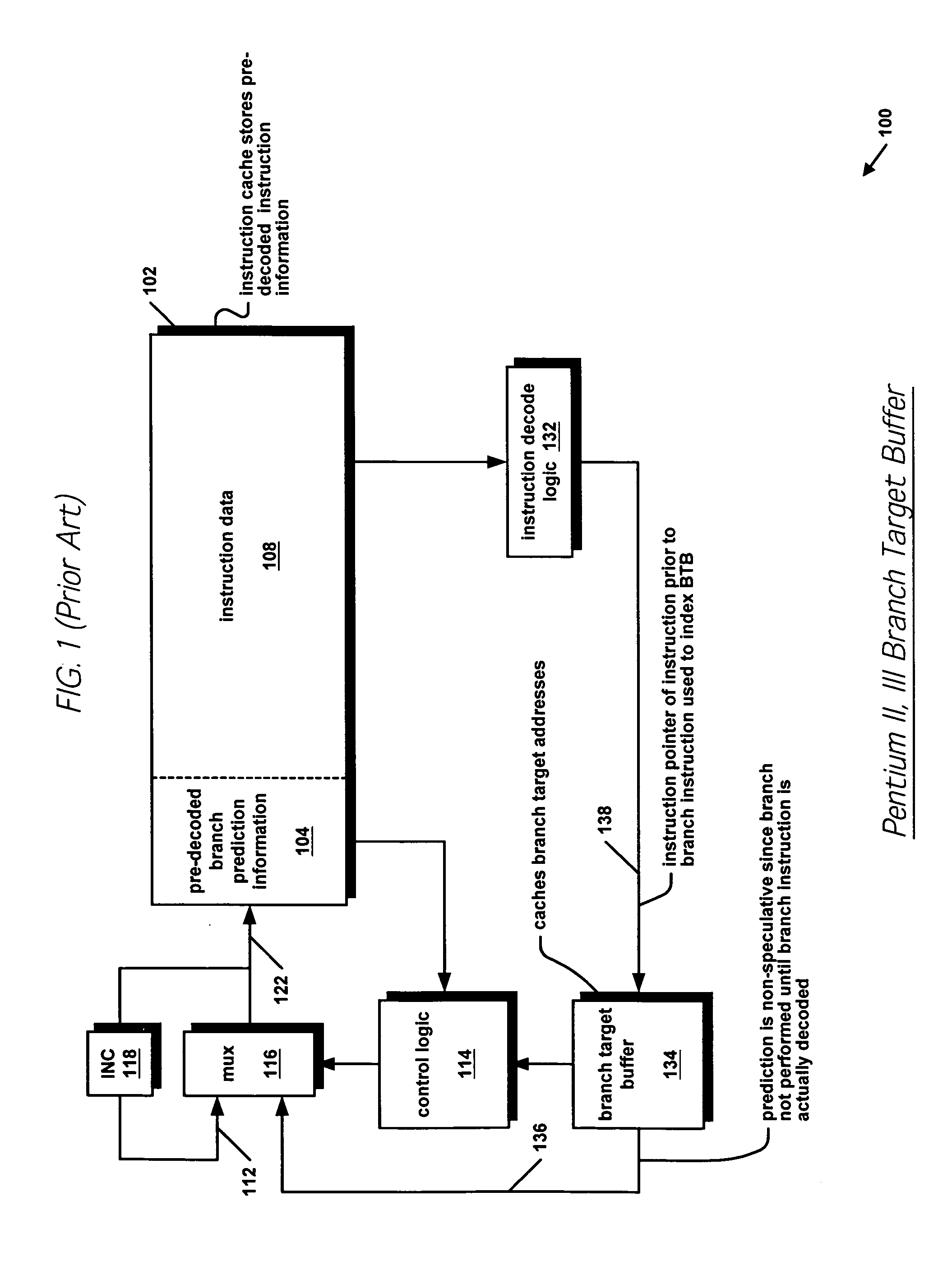

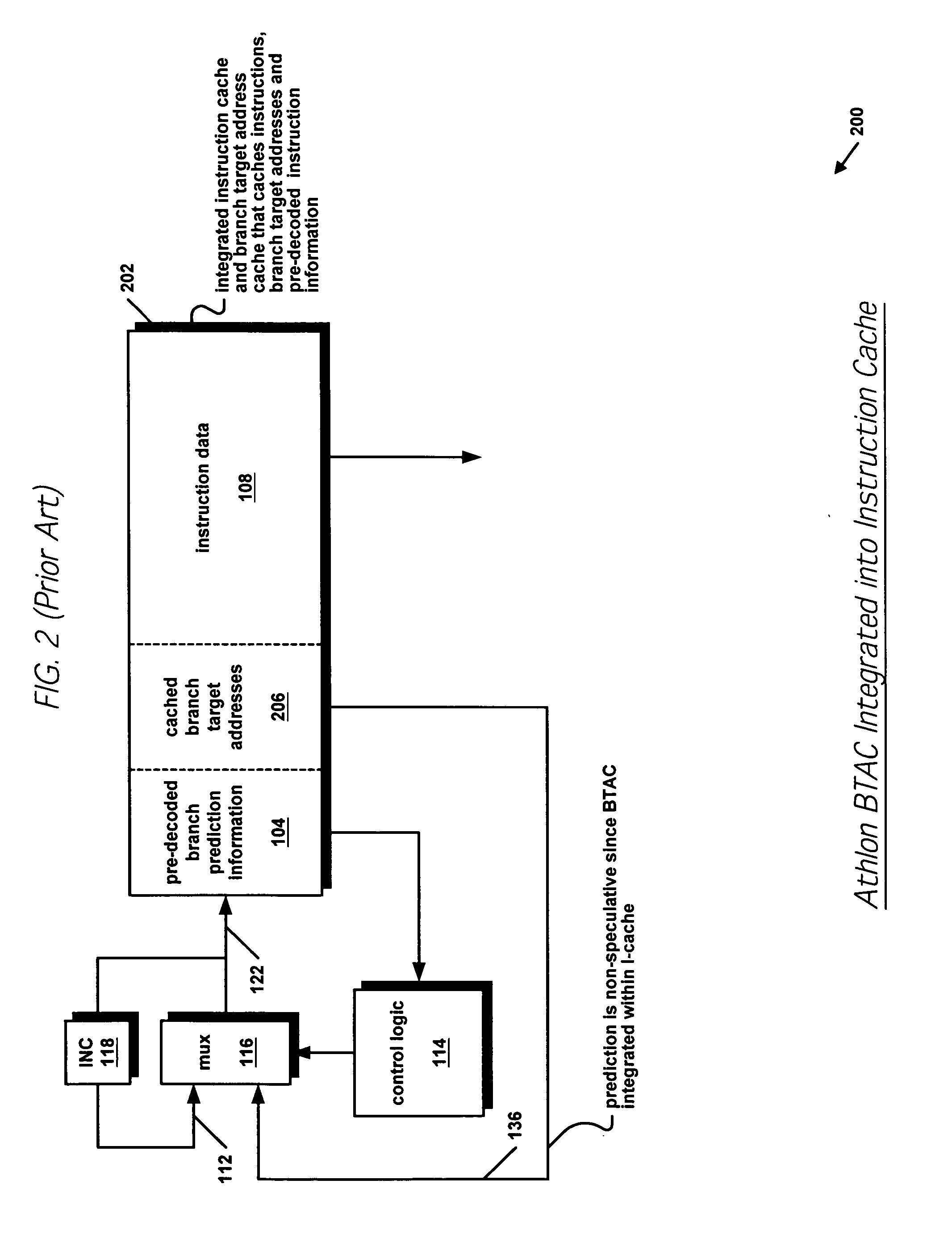

Speculative hybrid branch direction predictor

InactiveUS20050132175A1Improve accuracyReducing overall branch penaltyInstruction analysisDigital computer detailsCache accessParallel computing

An apparatus for speculatively predicting the direction of a branch instruction in a pipelined microprocessor in a hybrid fashion. A branch target address cache (BTAC) stores a direction prediction about executed branch instructions. The BTAC is indexed by an instruction cache fetch address. The BTAC is accessed in parallel with the instruction cache access, such that the direction prediction is provided before the actual instruction is decoded which is presumed to be a branch instruction corresponding to the direction prediction stored in the BTAC. In parallel with the BTAC access, a branch history table (BHT) is accessed to provide a second speculative direction prediction. The BHT is indexed with a gshare function of the instruction cache fetch address and a global branch history stored in a global branch history register. The BTAC also provides a selector that selects between the two speculative direction predictions.

Owner:IP FIRST

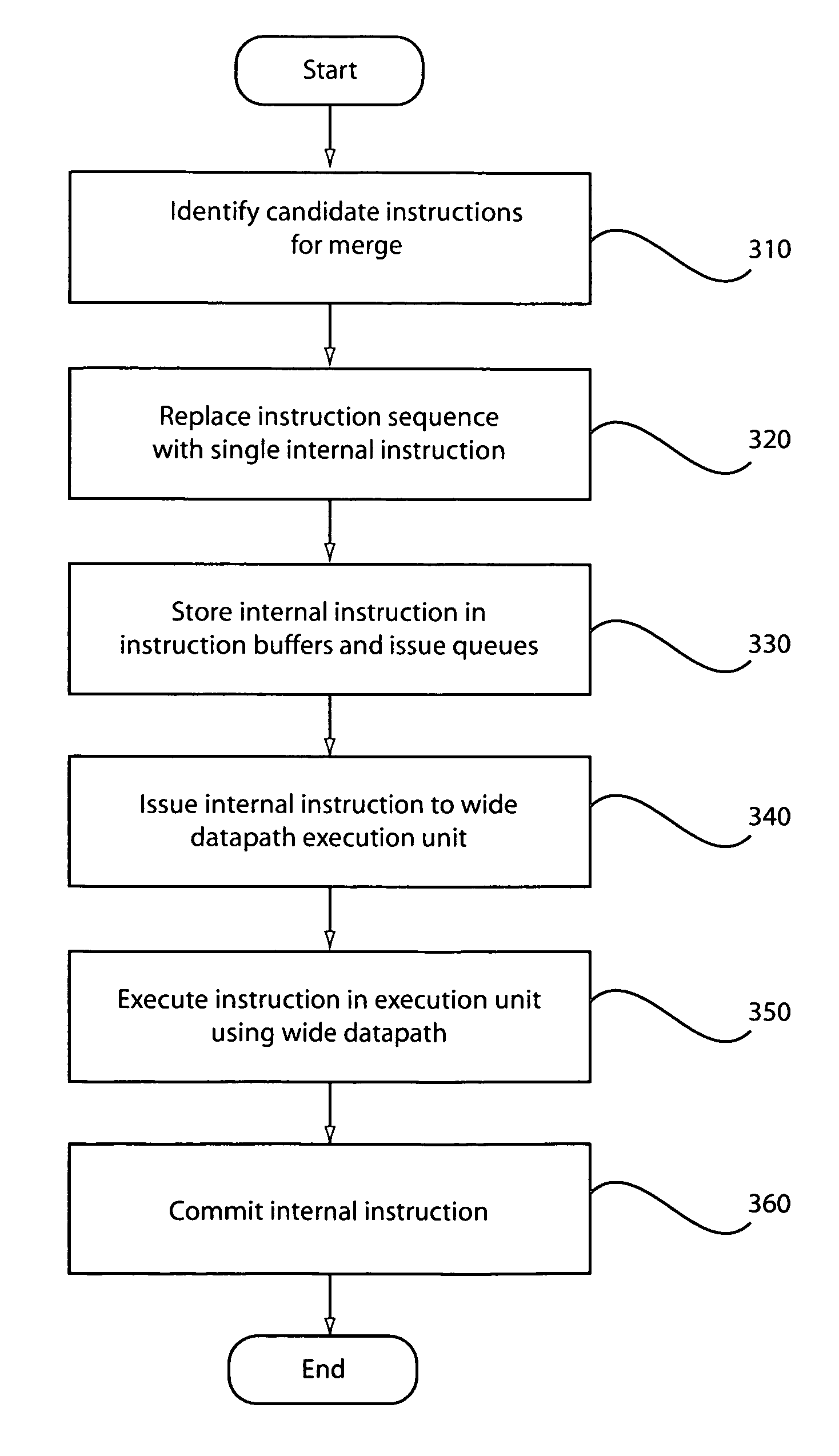

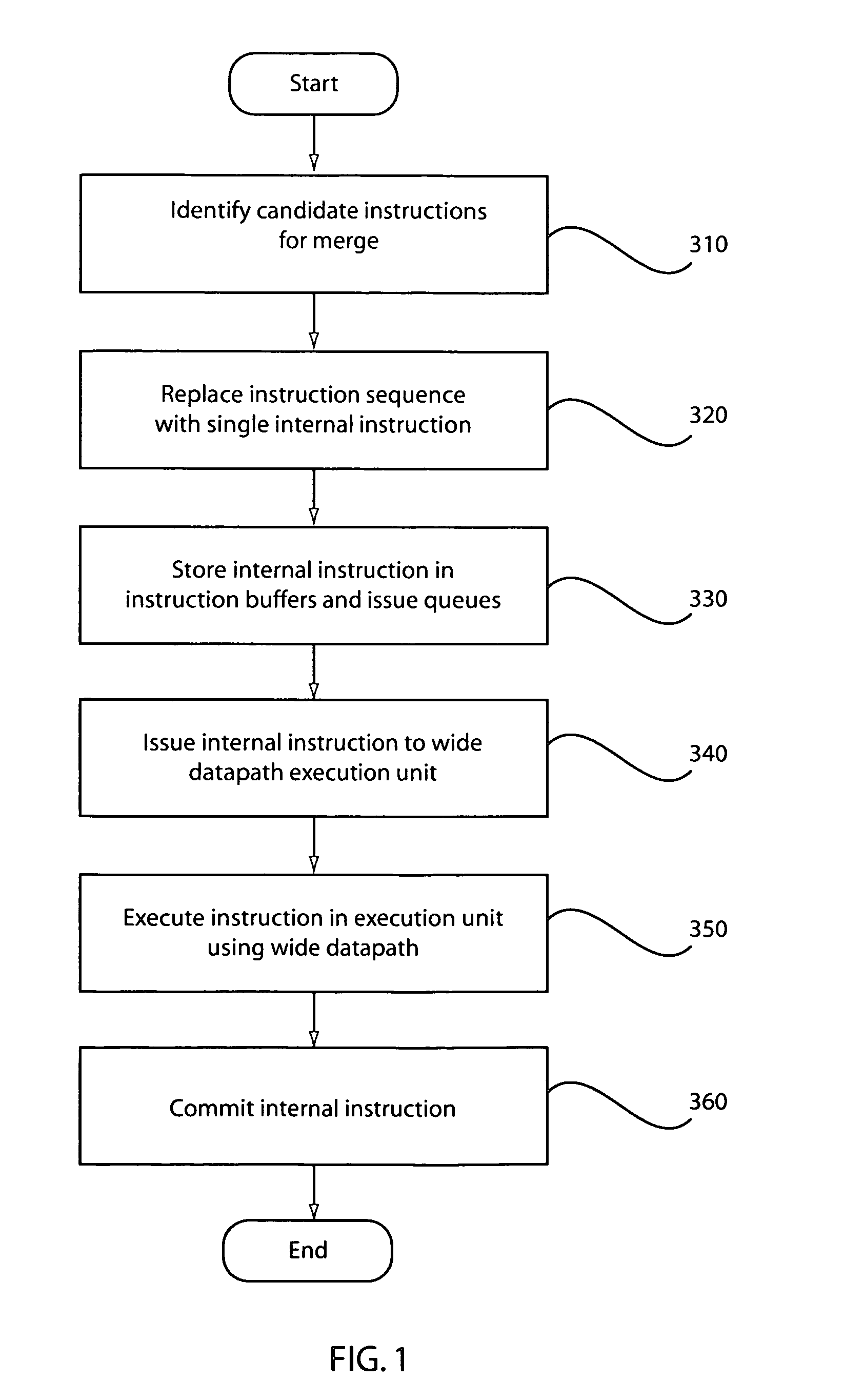

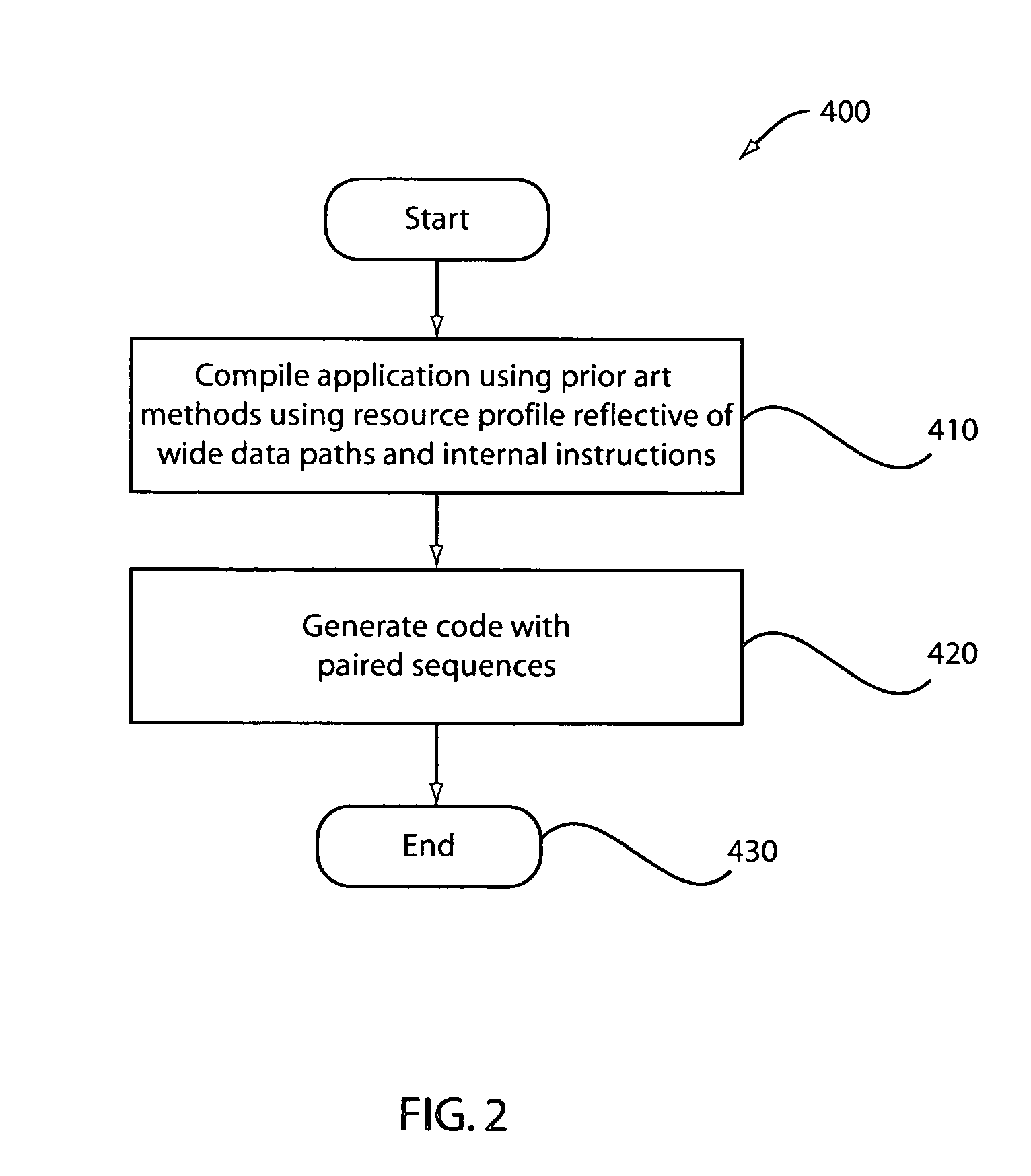

Method and apparatus for the dynamic creation of instructions utilizing a wide datapath

ActiveUS20070260855A1Maintain compatibilityImprove performanceRegister arrangementsInstruction analysisInstruction memoryExecution unit

A processing system and method includes a predecoder configured to identify instructions that are combinable. Instruction storage is configured to merge instructions that are combinable by replacing the combinable instructions with a wide data internal instruction for execution. An instruction execution unit is configured to execute the internal instruction on a wide datapath.

Owner:IBM CORP

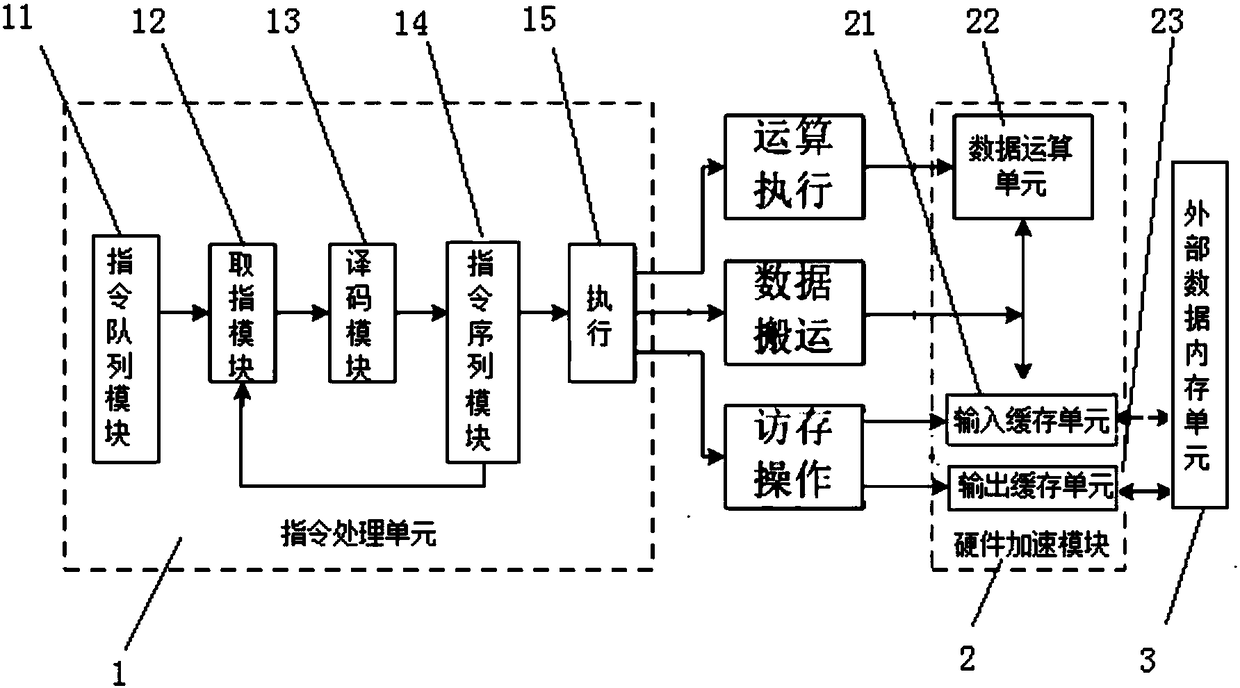

Convolutional neural network hardware acceleration device, convolution calculation method, and storage medium

InactiveCN108197705AImprove efficiencyImprove versatilityInstruction analysisPhysical realisationInstruction processing unitData operations

The invention relates to a convolutional neural network hardware acceleration device, a convolution calculation method, and a storage medium. The device comprises an instruction processing unit, a hardware acceleration module and an external data memory unit, wherein the instruction processing unit decodes an instruction set to execute a corresponding operation so as to control the hardware acceleration module; the hardware acceleration module comprises an input caching unit, a data operation unit and an output caching unit, wherein the input caching unit executes the memory access operation of the instruction processing unit, and stores data read from the external data memory unit; the data operation unit executes the operation execution operation of the instruction processing unit, processes the data operation of the convolutional neural network, and controls a data operation process and a data flow direction according to an operation instruction set; the output caching unit executesthe memory access operation of the instruction processing unit, and stores a calculation result which is output by the data operation unit and needs to be written into the external data memory unit;and the external data memory unit stores a calculation result output by the output caching unit and transmits data to the input caching unit according to the reading of the input caching unit.

Owner:NATIONZ TECH INC

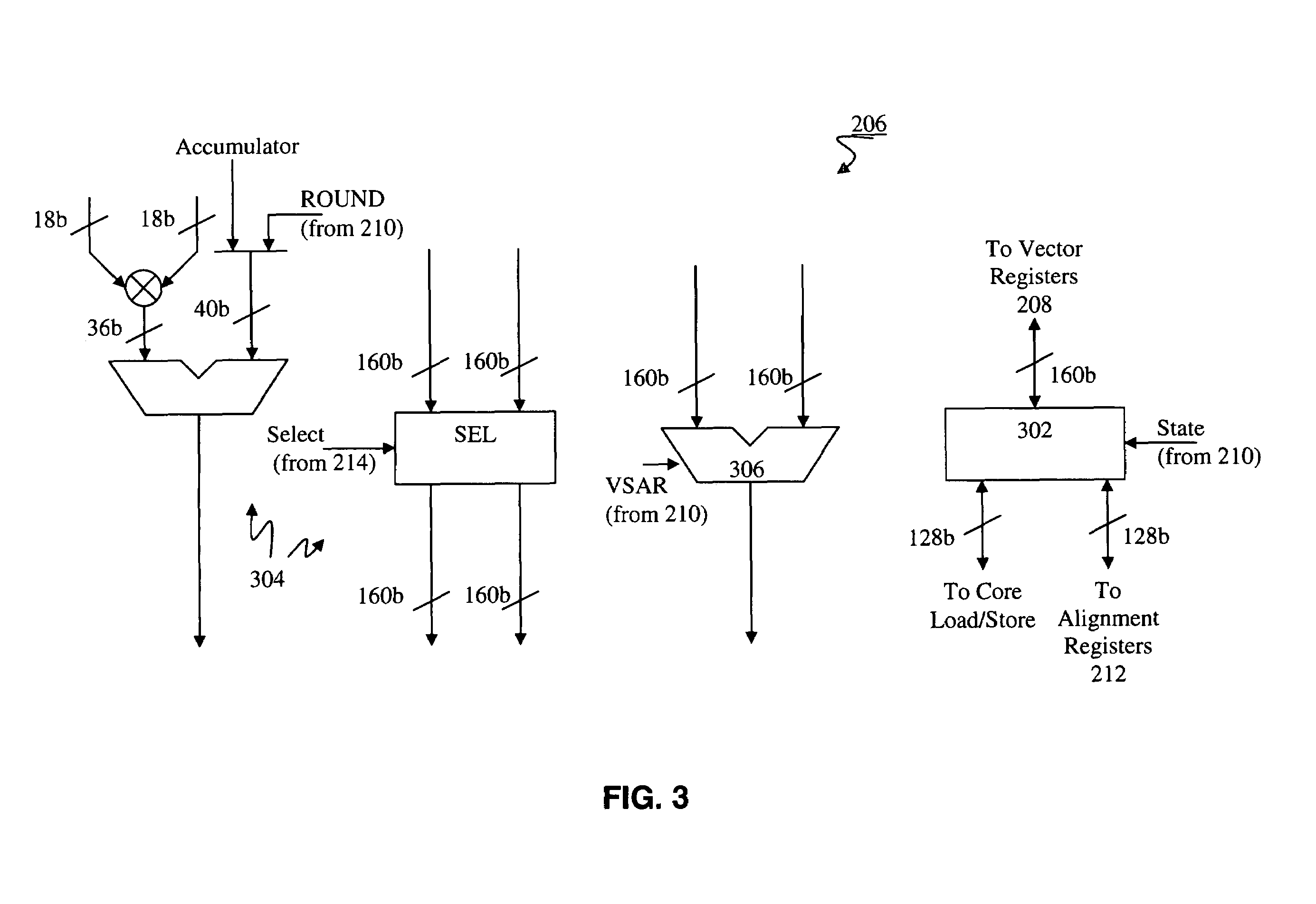

Vector co-processor for configurable and extensible processor architecture

ActiveUS7376812B1High coding densityImprove performanceInstruction analysisGeneral purpose stored program computerDigital signal processingCritical section

A processor can achieve high code density while allowing higher performance than existing architectures, particularly for Digital Signal Processing (DSP) applications. In accordance with one aspect, the processor supports three possible instruction sizes while maintaining the simplicity of programming and allowing efficient physical implementation. Most of the application code can be encoded using two sets of narrow size instructions to achieve high code density. Adding a third (and larger, i.e. VLIW) instruction size allows the architecture to encode multiple operations per instruction for the performance critical section of the code. Further, each operation of the VLIW format instruction can optionally be a SIMD operation that operates upon vector data. A scheme for the optimal utilization (highest achievable performance for the given amount of hardware) of multiply-accumulate (MAC) hardware is also provided.

Owner:TENSILICA

Programmable processor and method for partitioned group element selection operation

InactiveUS7213131B2Improve performanceEnhancing processor flexibilityRegister arrangementsInstruction analysisData selectionProcessor register

A programmable processor and method for improving the performance of processors by incorporating an execution unit operable to decode and execute single instructions specifying a data selection operand and a first and a second register providing a plurality of data elements, the data selection operand comprising a plurality of fields each selecting one of the plurality of data elements, the execution unit operable to provide the data element selected by each field of the data selection operand to a predetermined position in a catenated result.

Owner:MICROUNITY

Popular searches

Energy efficient computing Handling data according to predetermined rules Current supply arrangements Number-of-one counters Specific program execution arrangements Computation using denominational number representation Next instruction address formation Power supply for data processing Memory systems Data conversion

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com