Convolutional neural network hardware acceleration device, convolution calculation method, and storage medium

A convolutional neural network and hardware acceleration technology, applied in the computer field, can solve problems such as insufficient parallel computing, insufficient pertinence of effects, and complex instructions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

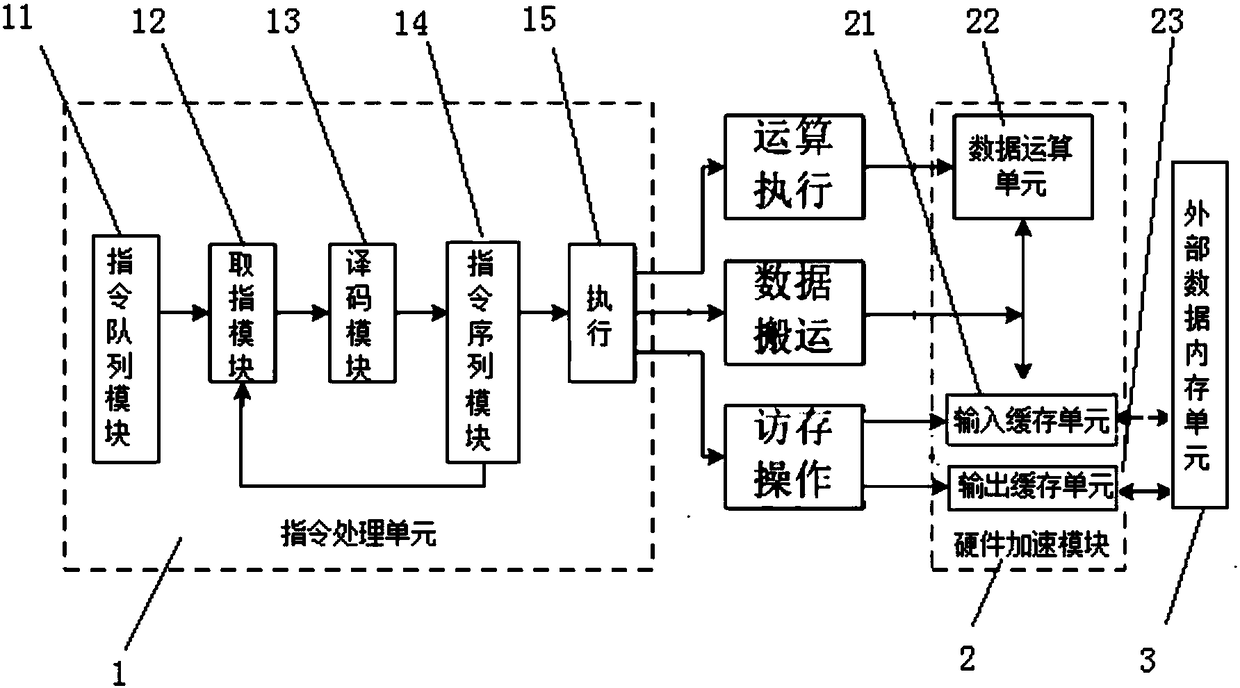

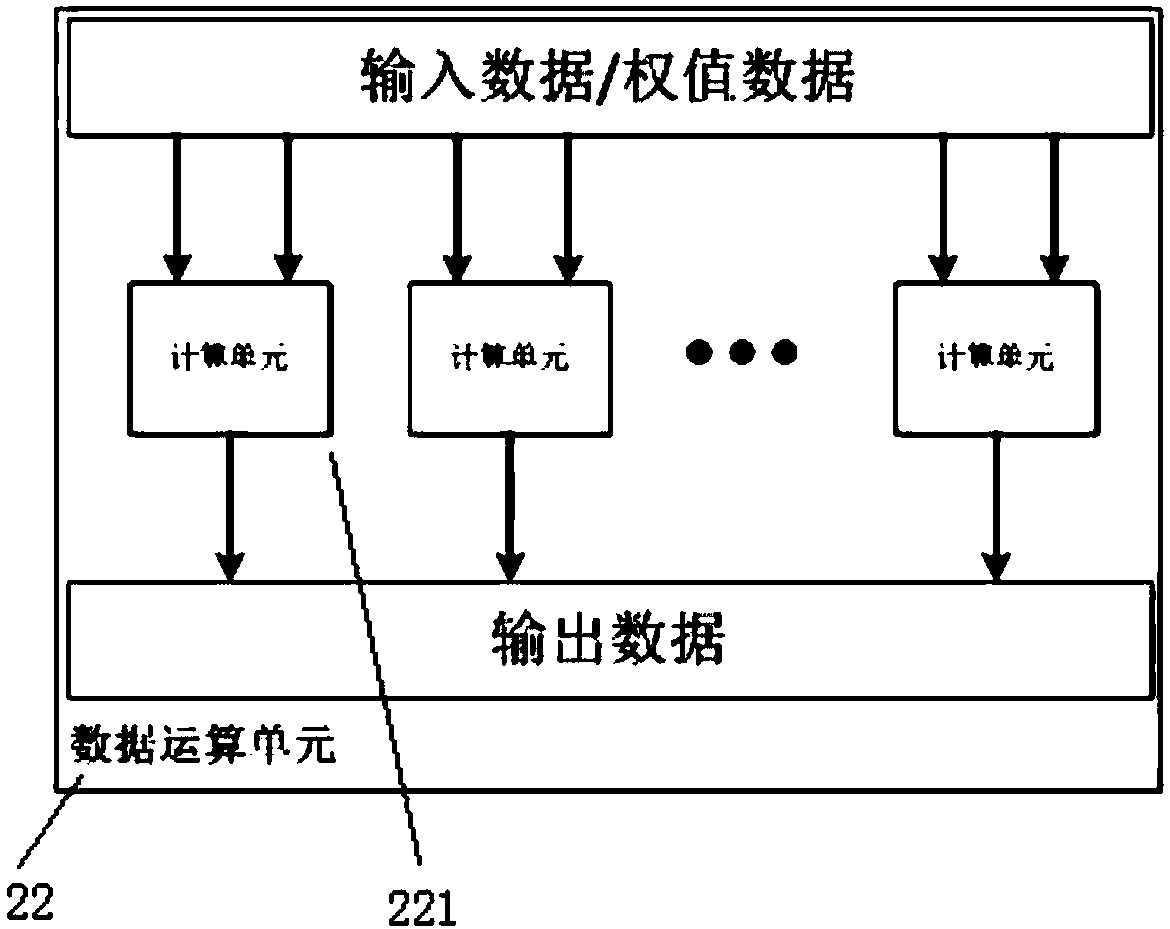

[0149] Using the invention for convolution calculation, the invention provides the following embodiments.

[0150] The convolutional neural network hardware acceleration device, convolution calculation method, and storage medium used in this embodiment are as described in the specific implementation manner. No longer.

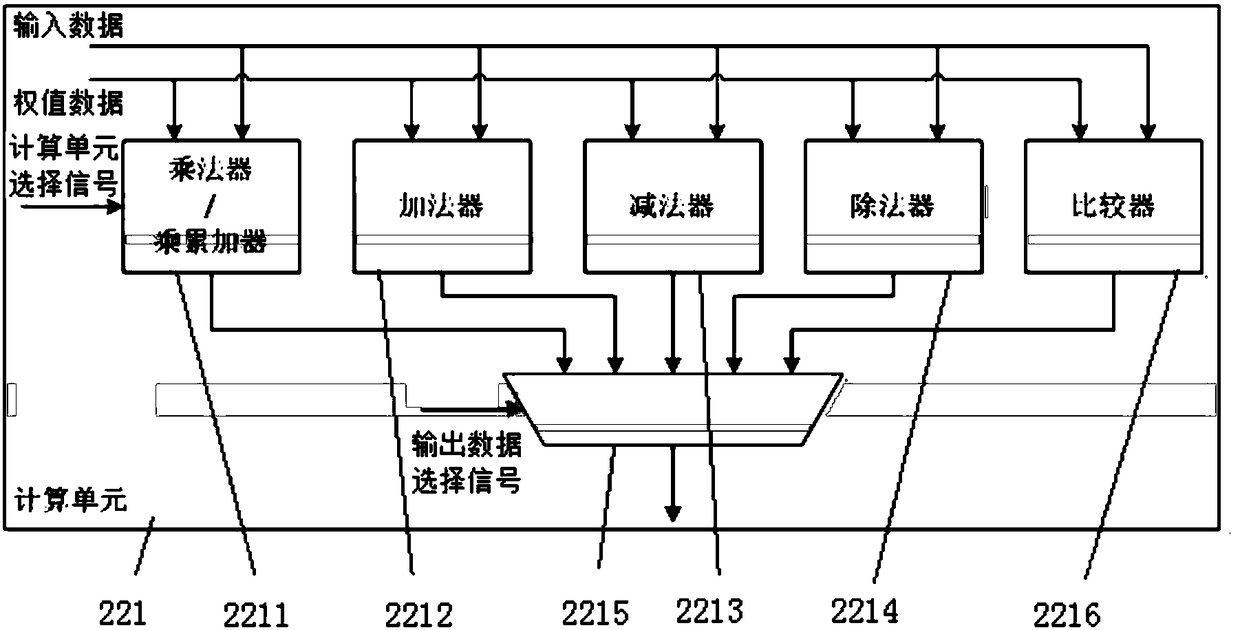

[0151] Figure 5 It is a structural schematic diagram of the parallel multiplier / multiply accumulator of the embodiment. Such as Figure 5 As shown, the instruction processing unit 1 controls the entire data operation unit 22 to perform parallel multiplication / multiplication-accumulation calculations through instruction decoding results, then each parallel multiplier / multiplication-accumulator 2211 can realize multiplication-accumulation or multiplication operations through instruction configuration. The temporary register reg is used to store the temporary value after the multiply-accumulate or multiply operation. The input ports of the parallel multiplier...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com