Patents

Literature

77 results about "Neural network hardware" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

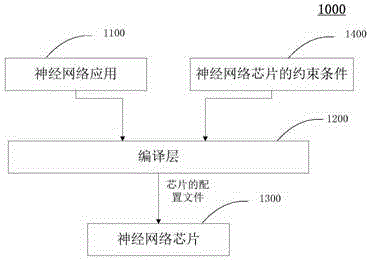

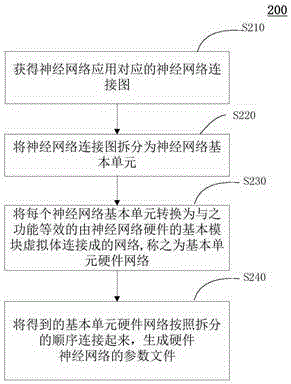

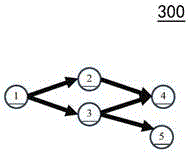

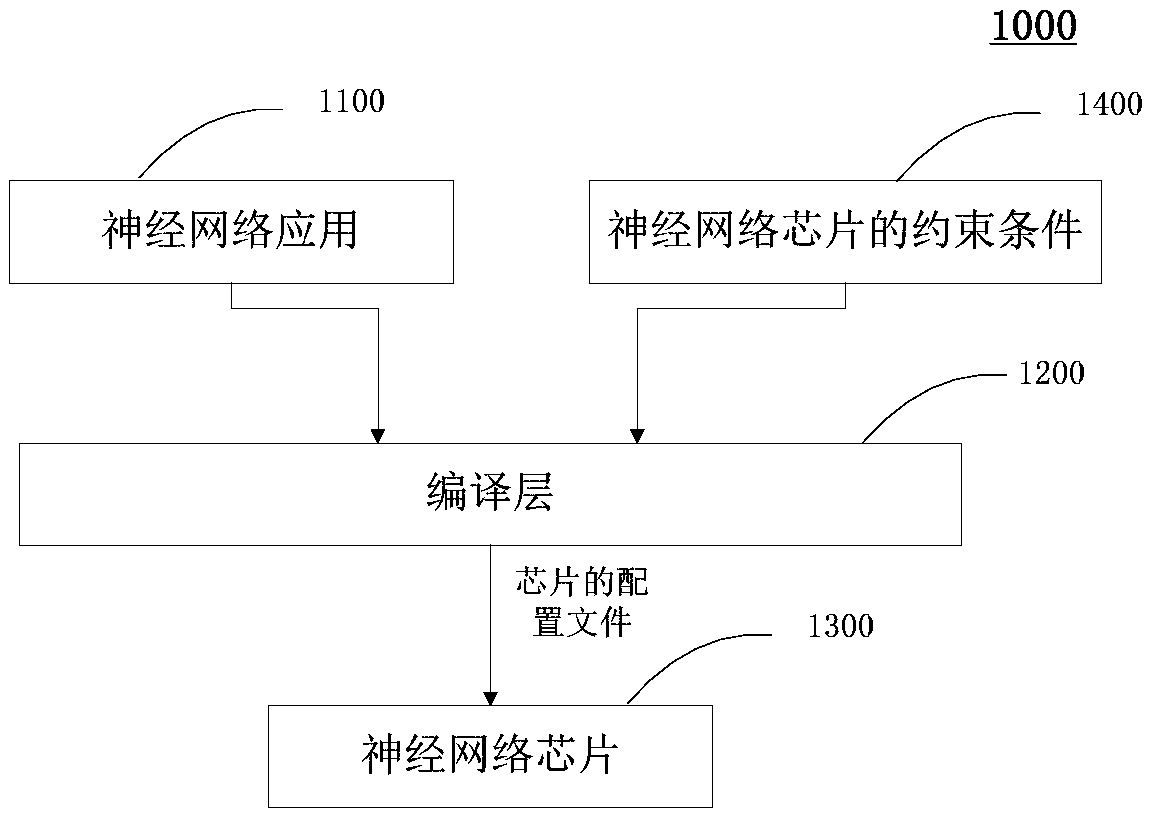

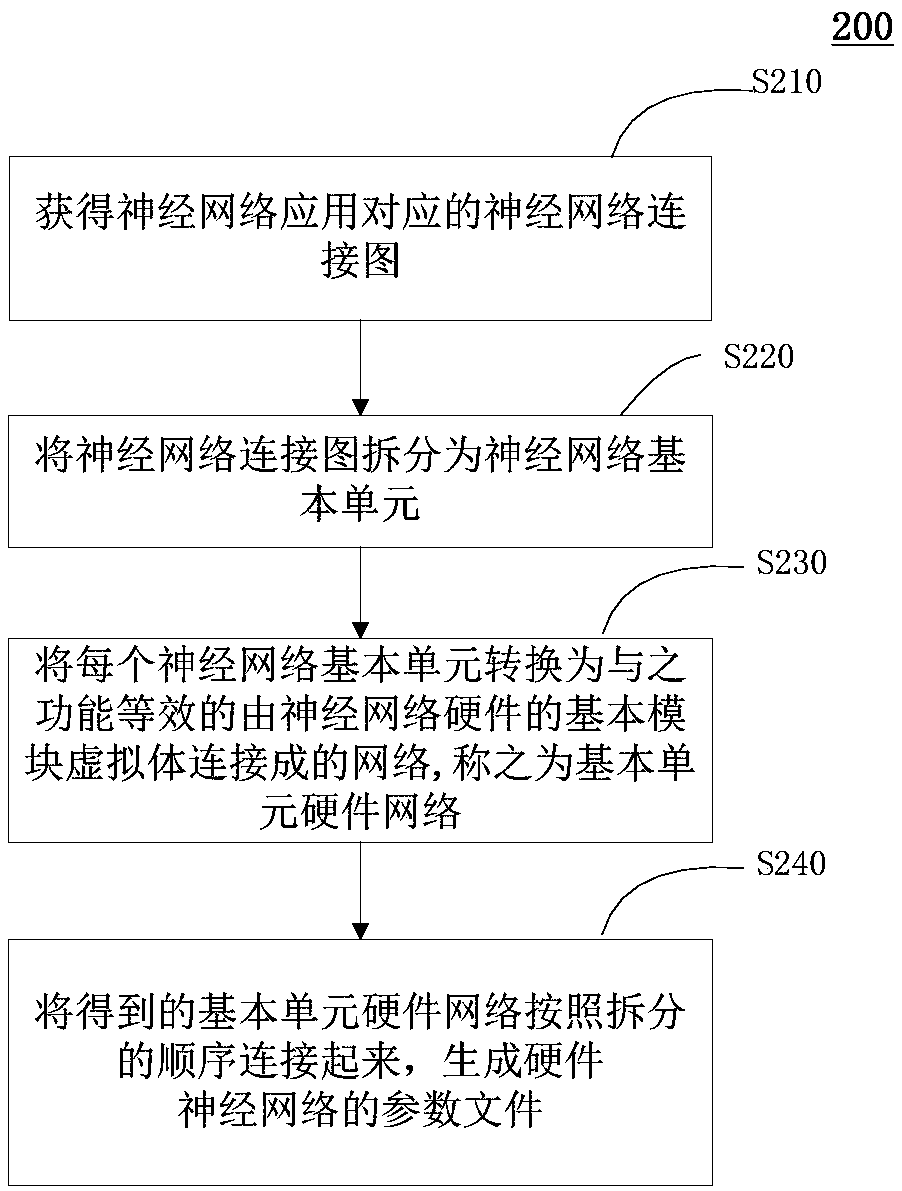

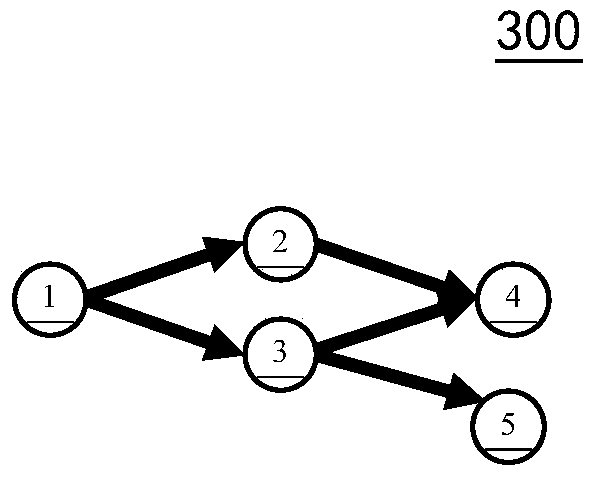

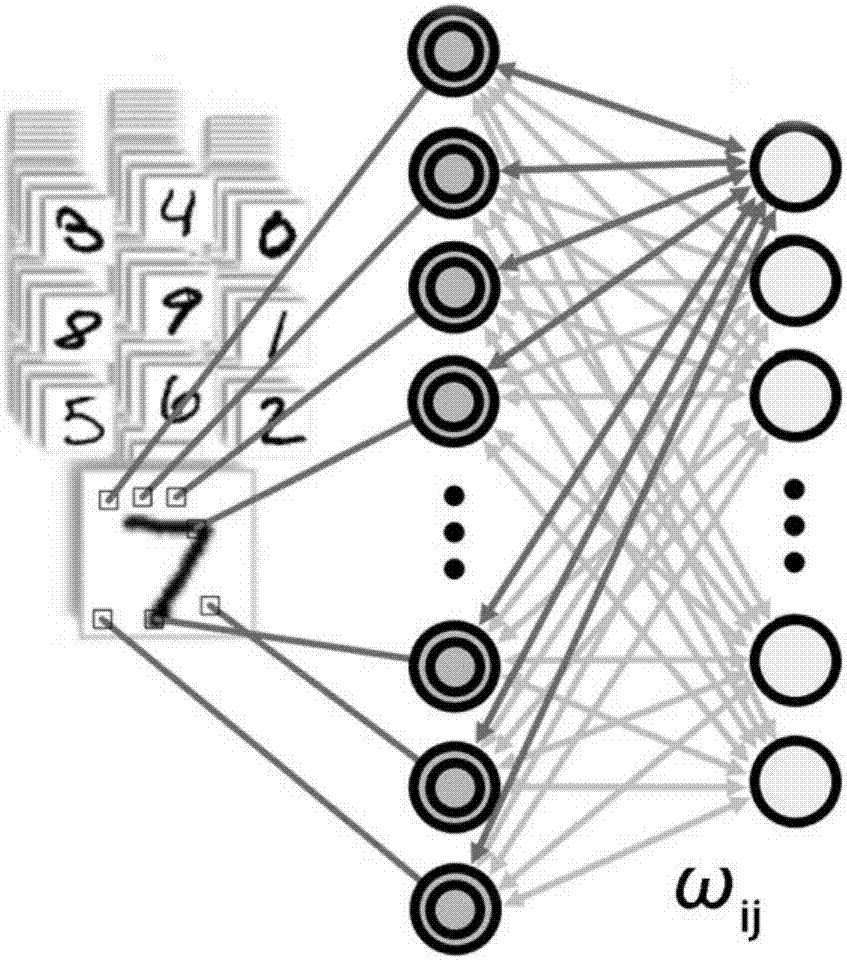

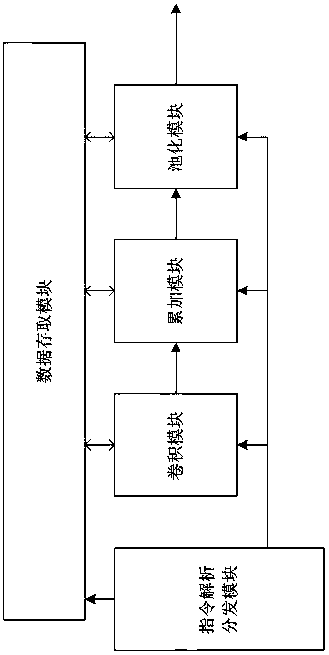

Hardware neural network conversion method, computing device, compiling method and neural network software and hardware collaboration system

ActiveCN106650922ASolve adaptation problemsEasy to operatePhysical realisationNetwork connectionNeural network hardware

The invention provides a hardware neural network conversion method which converts a neural network application into a hardware neural network meeting the hardware constraint condition, a computing device, a compiling method and a neural network software and hardware collaboration system. The method comprises the steps that a neural network connection diagram corresponding to the neural network application is acquired; the neural network connection diagram is split into neural network basic units; each neural network basic unit is converted into a network which has the equivalent function with the neural network basic unit and is formed by connection of basic module virtual bodies of neural network hardware; and the obtained basic unit hardware networks are connected according to the splitting sequence so as to generate the parameter file of the hardware neural network. A brand-new neural network and quasi-brain computation software and hardware system is provided, and an intermediate compiling layer is additionally arranged between the neural network application and a neural network chip so that the problem of adaptation between the neural network application and the neural network application chip can be solved, and development of the application and the chip can also be decoupled.

Owner:TSINGHUA UNIV

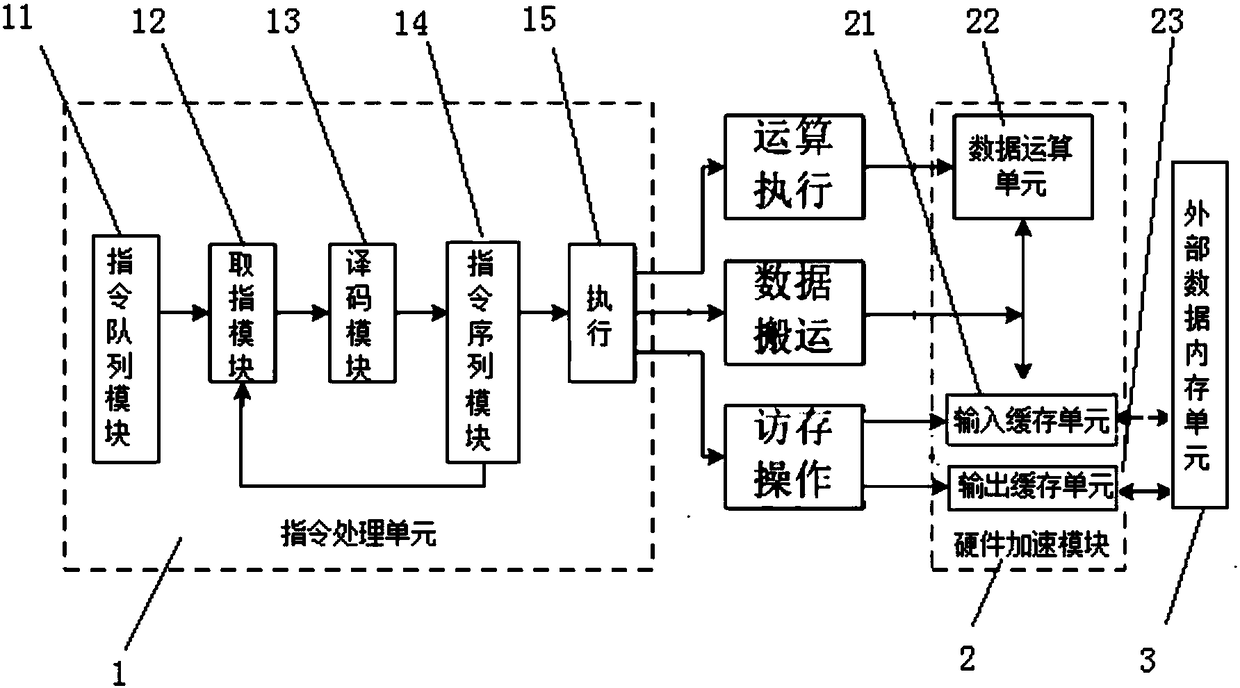

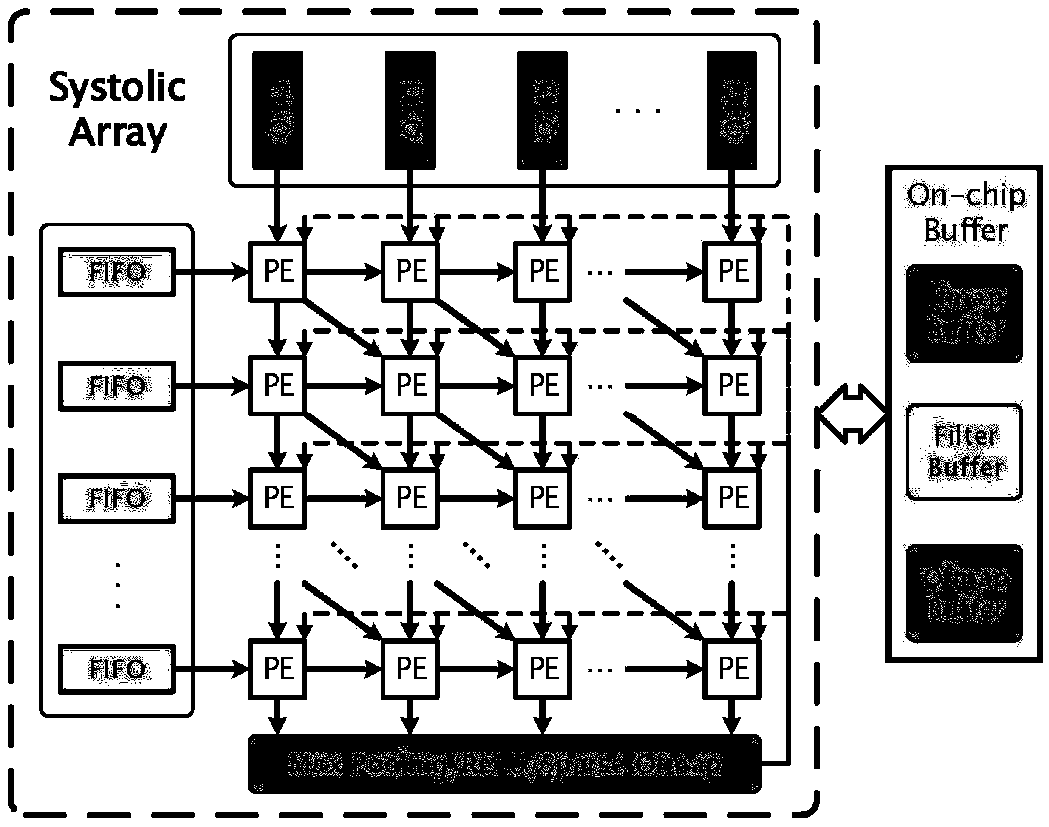

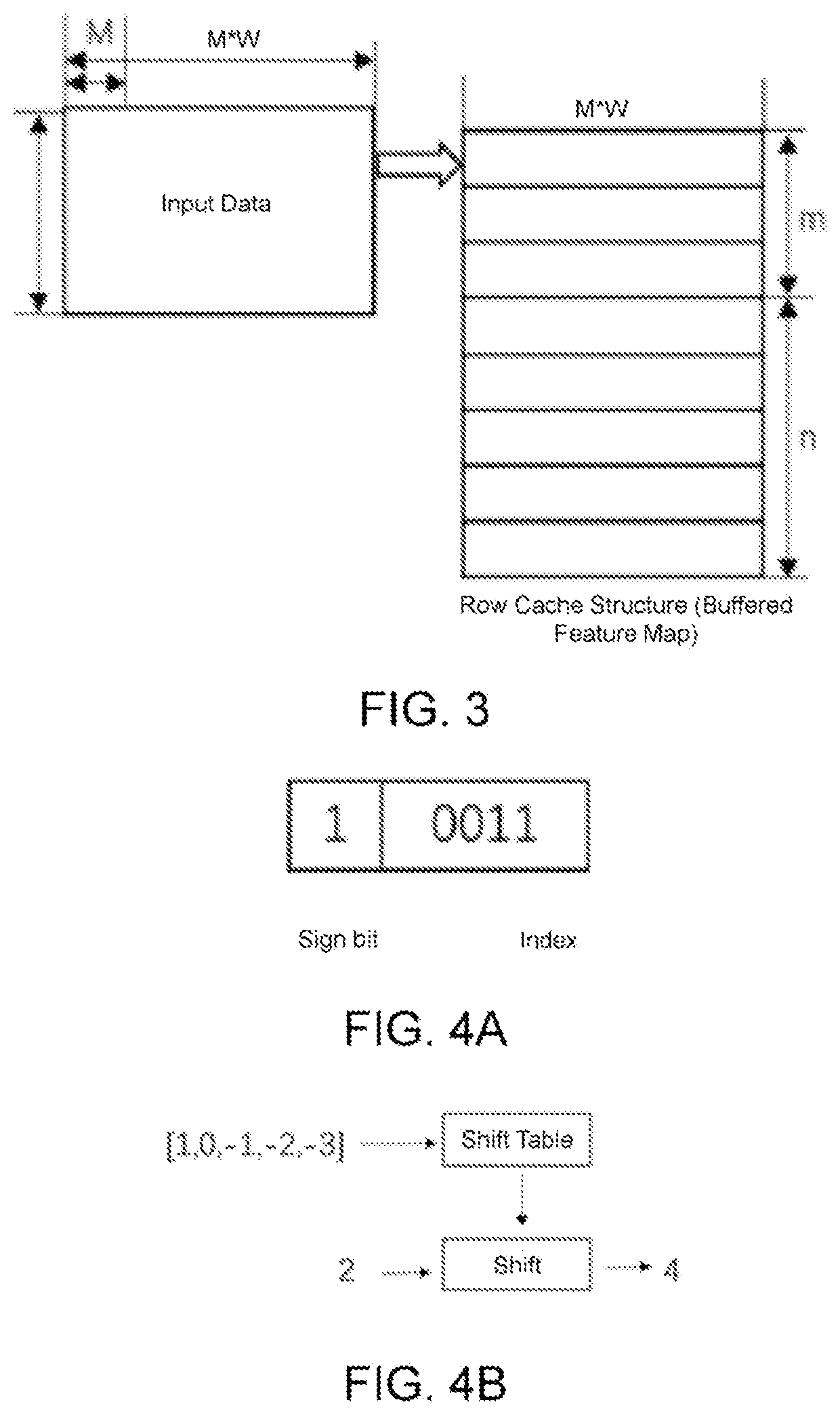

Convolutional neural network hardware acceleration device, convolution calculation method, and storage medium

InactiveCN108197705AImprove efficiencyImprove versatilityInstruction analysisPhysical realisationInstruction processing unitData operations

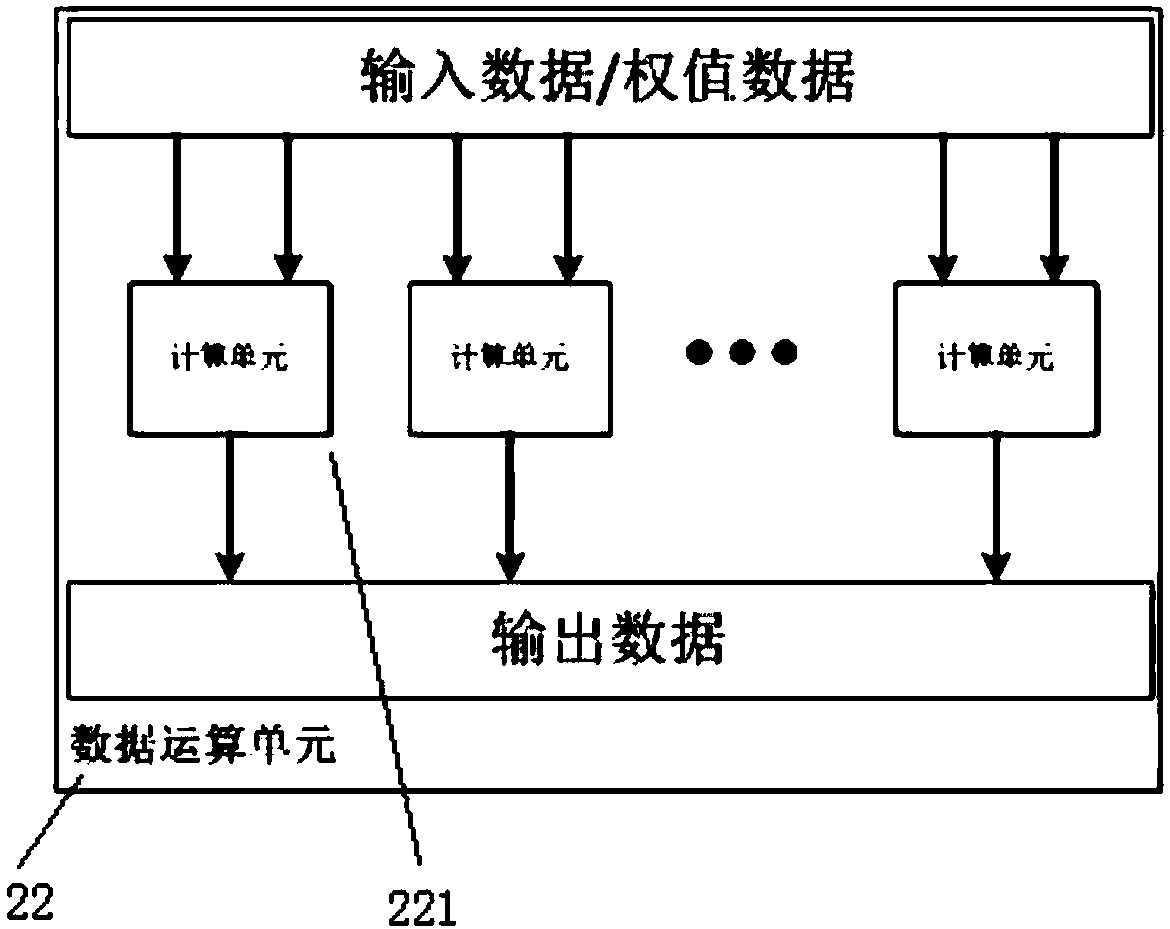

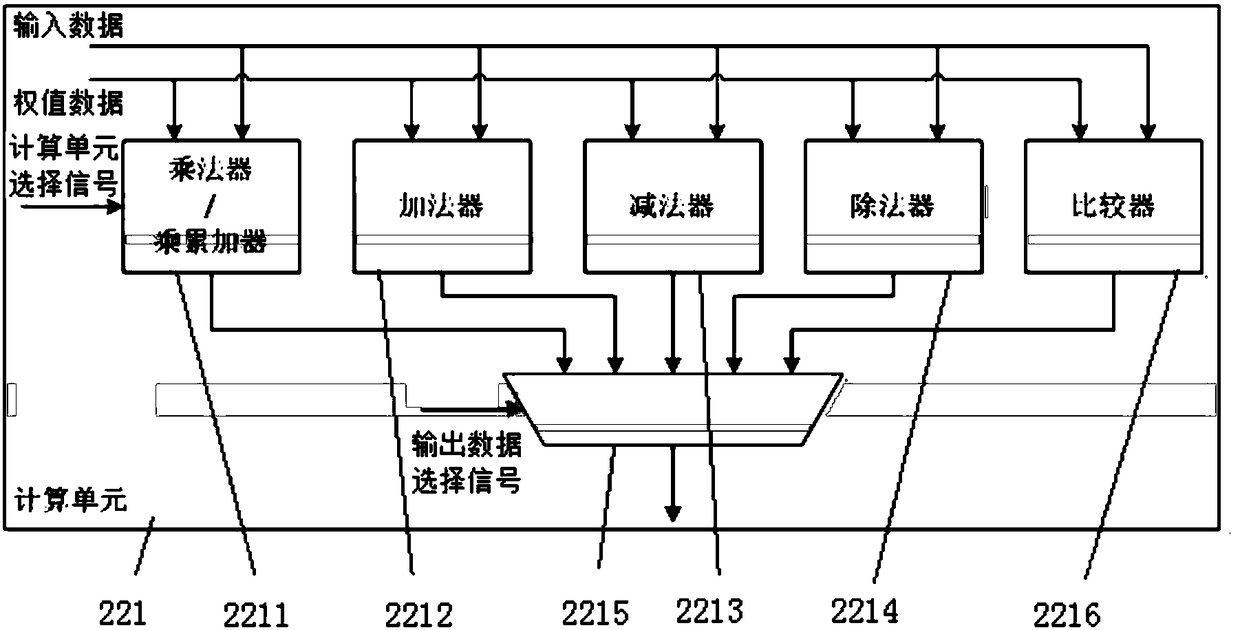

The invention relates to a convolutional neural network hardware acceleration device, a convolution calculation method, and a storage medium. The device comprises an instruction processing unit, a hardware acceleration module and an external data memory unit, wherein the instruction processing unit decodes an instruction set to execute a corresponding operation so as to control the hardware acceleration module; the hardware acceleration module comprises an input caching unit, a data operation unit and an output caching unit, wherein the input caching unit executes the memory access operation of the instruction processing unit, and stores data read from the external data memory unit; the data operation unit executes the operation execution operation of the instruction processing unit, processes the data operation of the convolutional neural network, and controls a data operation process and a data flow direction according to an operation instruction set; the output caching unit executesthe memory access operation of the instruction processing unit, and stores a calculation result which is output by the data operation unit and needs to be written into the external data memory unit;and the external data memory unit stores a calculation result output by the output caching unit and transmits data to the input caching unit according to the reading of the input caching unit.

Owner:NATIONZ TECH INC

Neural network hardware accelerator architectures and operating method thereof

ActiveUS20180075344A1Improve performanceImprove efficiencyComputation using non-contact making devicesDigital storageTimestampNeural network system

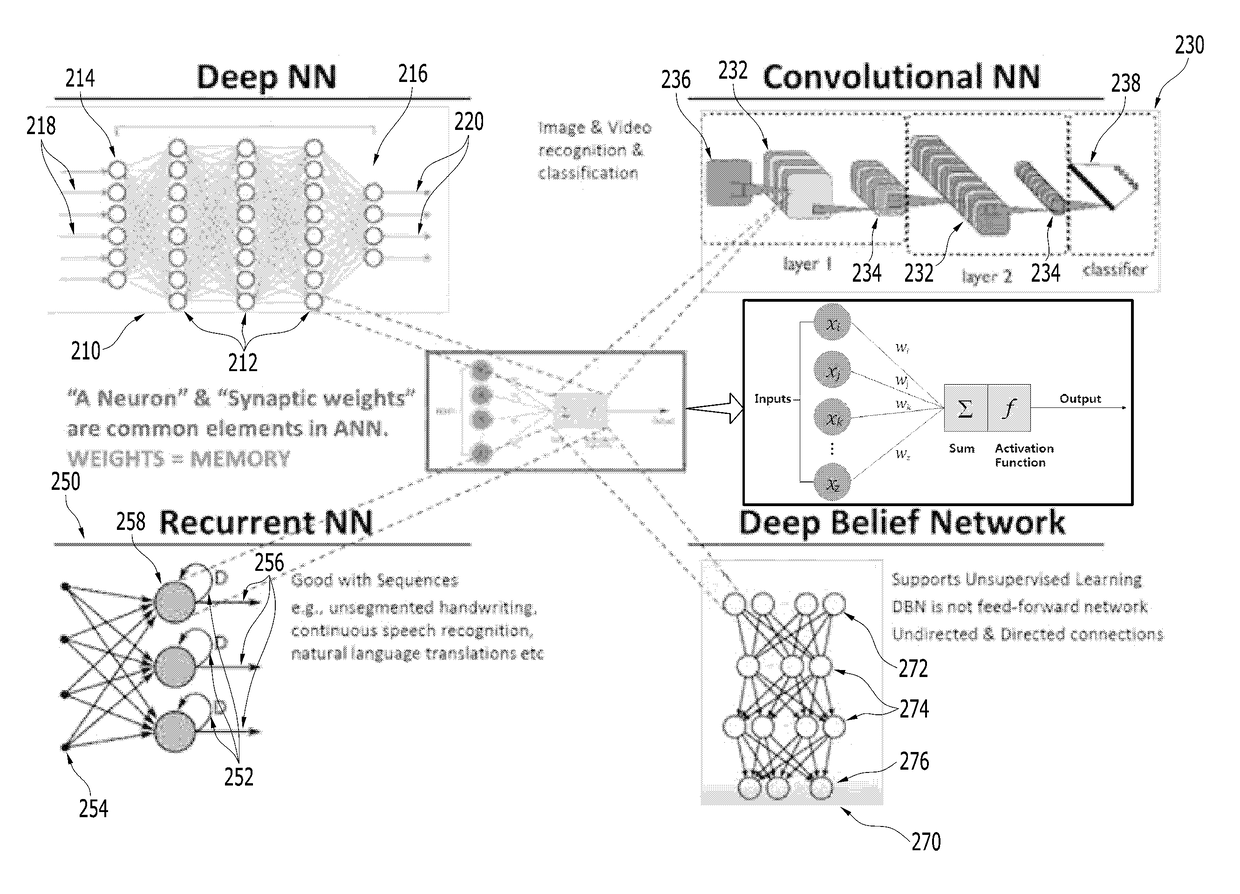

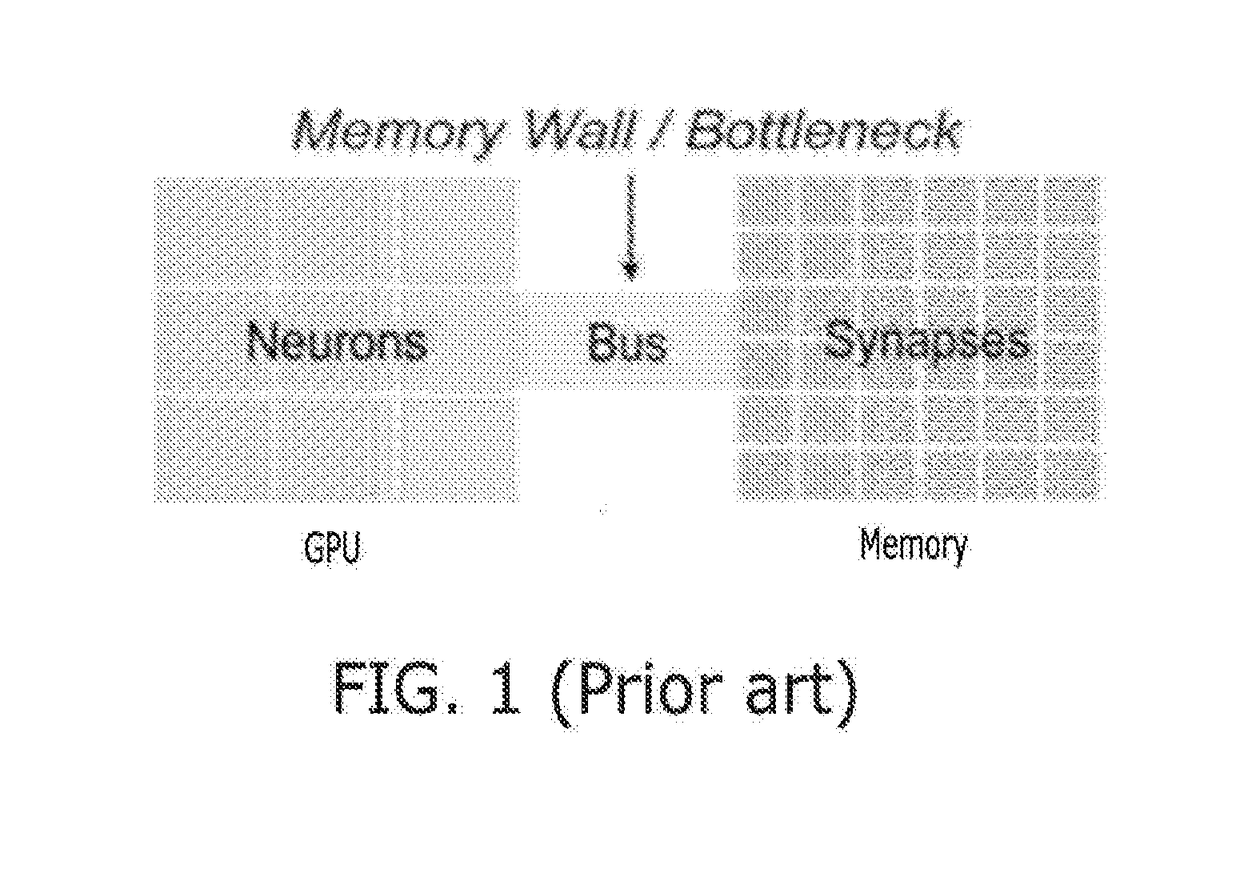

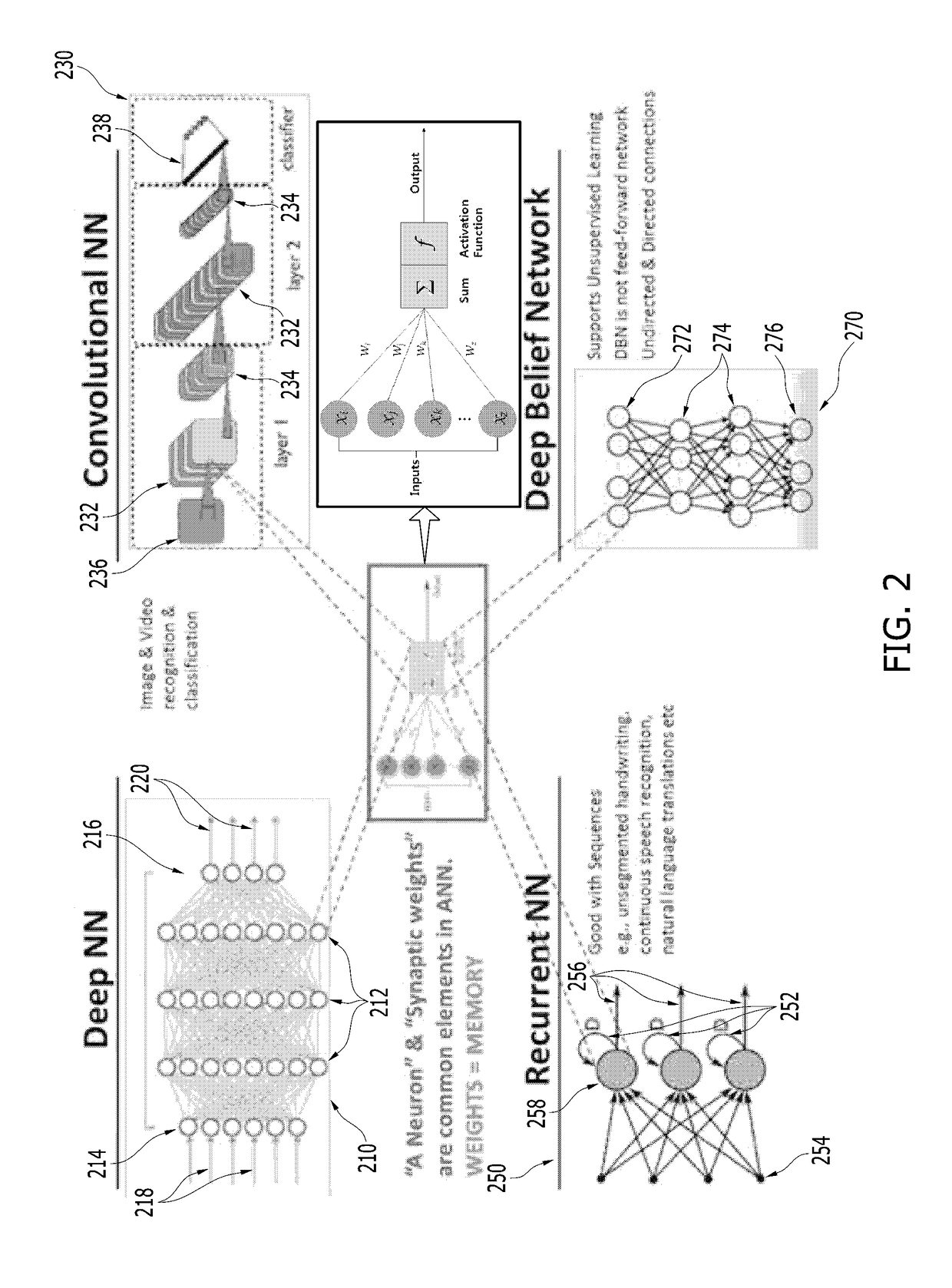

A memory-centric neural network system and operating method thereof includes: a processing unit; semiconductor memory devices coupled to the processing unit, the semiconductor memory devices contain instructions executed by the processing unit; a weight matrix constructed with rows and columns of memory cells, inputs of the memory cells of a same row are connected to one of Axons, outputs of the memory cells of a same column are connected to one of Neurons; timestamp registers registering timestamps of the Axons and the Neurons; and a lookup table containing adjusting values indexed in accordance with the timestamps, the processing unit updates the weight matrix in accordance with the adjusting values.

Owner:SK HYNIX INC

Neural network hardware accelerator architectures and operating method thereof

ActiveUS20180075339A1Improve performanceImprove efficiencyComputation using non-contact making devicesDigital storageTimestampNeural network system

A memory-centric neural network system and operating method thereof includes: a processing unit; semiconductor memory devices coupled to the processing unit, the semiconductor memory devices contain instructions executed by the processing unit; weight matrixes including a positive weight matrix and a negative weight matrix constructed with rows and columns of memory cells, inputs of the memory cells of a same row are connected to one of Axons, outputs of the memory cells of a same column are connected to one of Neurons; timestamp registers registering timestamps of the Axons and the Neurons; and a lookup table containing adjusting values indexed in accordance with the timestamps, the processing unit updates the weight matrixes in accordance with the adjusting values.

Owner:SK HYNIX INC

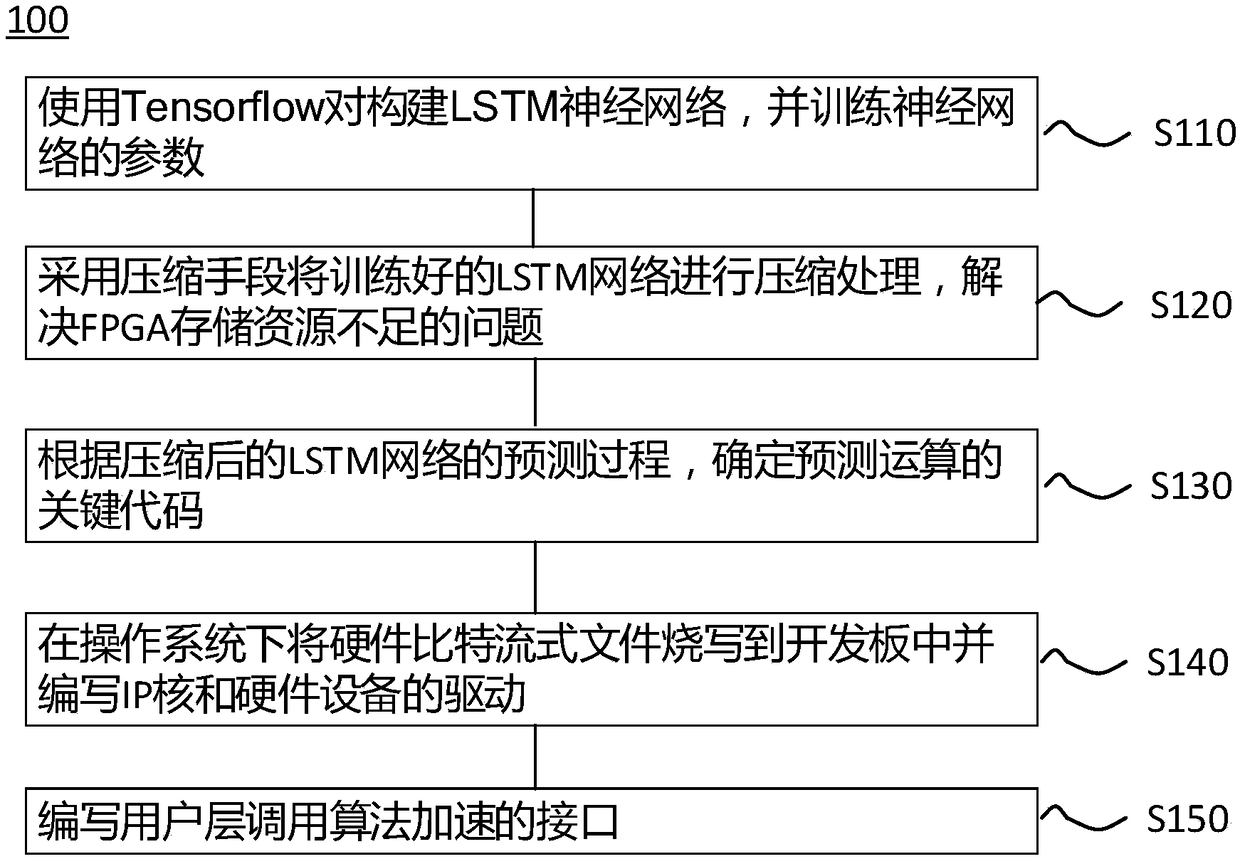

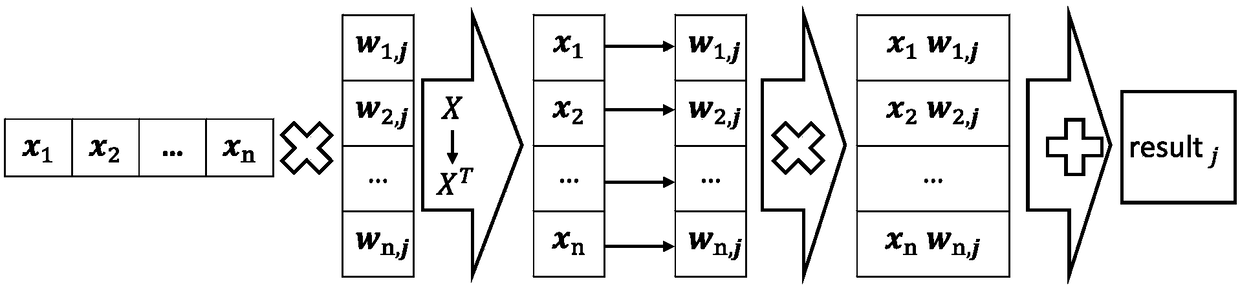

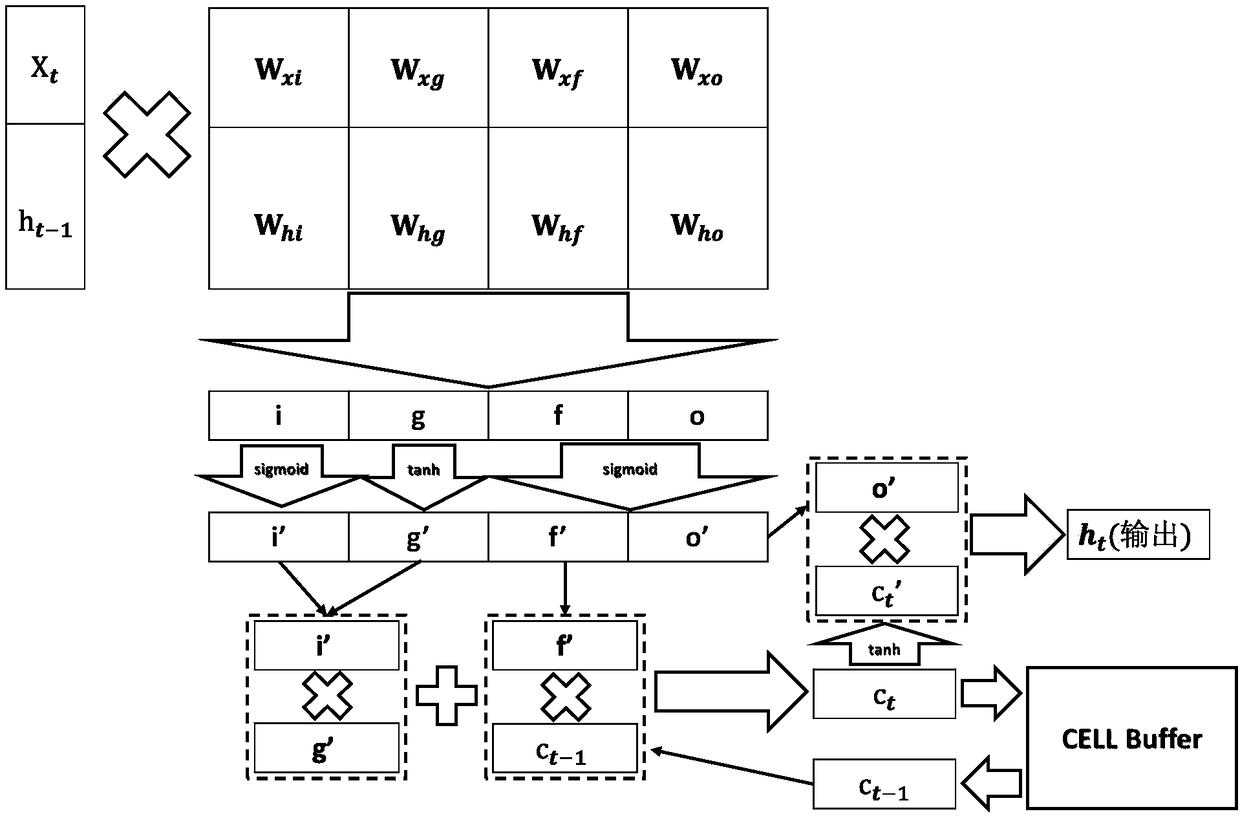

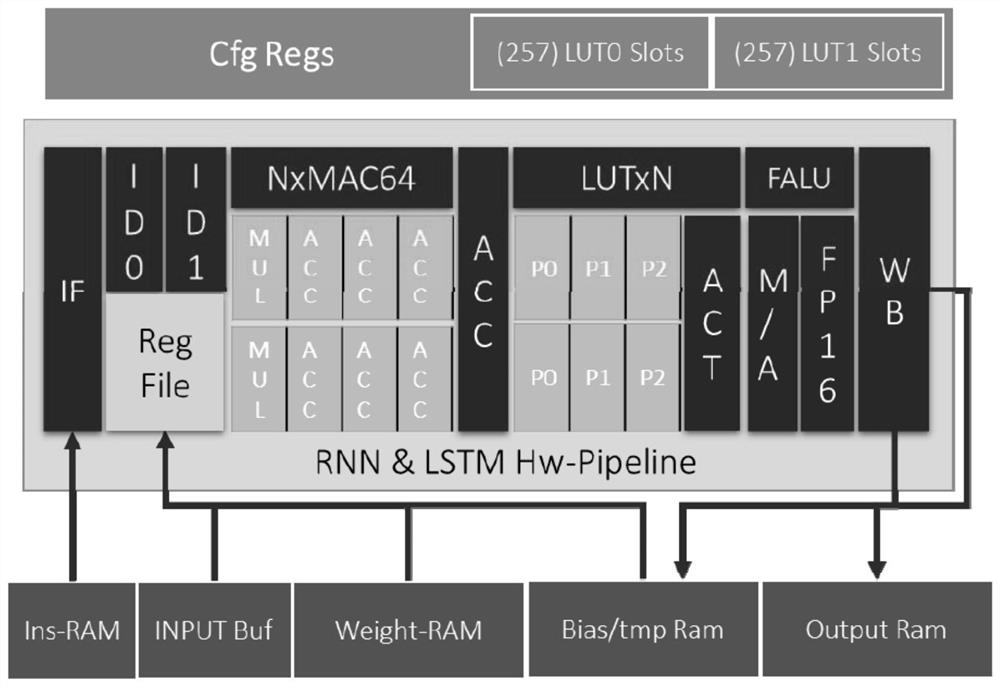

Design method of hardware accelerator based on LSTM recursive neural network algorithm on FPGA platform

InactiveCN108090560AImprove forecastImprove performanceNeural architecturesPhysical realisationNeural network hardwareLow resource

The invention discloses a method for accelerating an LSTM neural network algorithm on an FPGA platform. The FPGA is a field-programmable gate array platform and comprises a general processor, a field-programmable gate array body and a storage module. The method comprises the following steps that an LSTM neural network is constructed by using a Tensorflow pair, and parameters of the neural networkare trained; the parameters of the LSTM network are compressed by adopting a compression means, and the problem that storage resources of the FPGA are insufficient is solved; according to the prediction process of the compressed LSTM network, a calculation part suitable for running on the field-programmable gate array platform is determined; according to the determined calculation part, a softwareand hardware collaborative calculation mode is determined; according to the calculation logic resource and bandwidth condition of the FPGA, the number and type of IP core firmware are determined, andacceleration is carried out on the field-programmable gate array platform by utilizing a hardware operation unit. A hardware processing unit for acceleration of the LSTM neural network can be quicklydesigned according to hardware resources, and the processing unit has the advantages of being high in performance and low in power consumption compared with the general processor.

Owner:SUZHOU INST FOR ADVANCED STUDY USTC

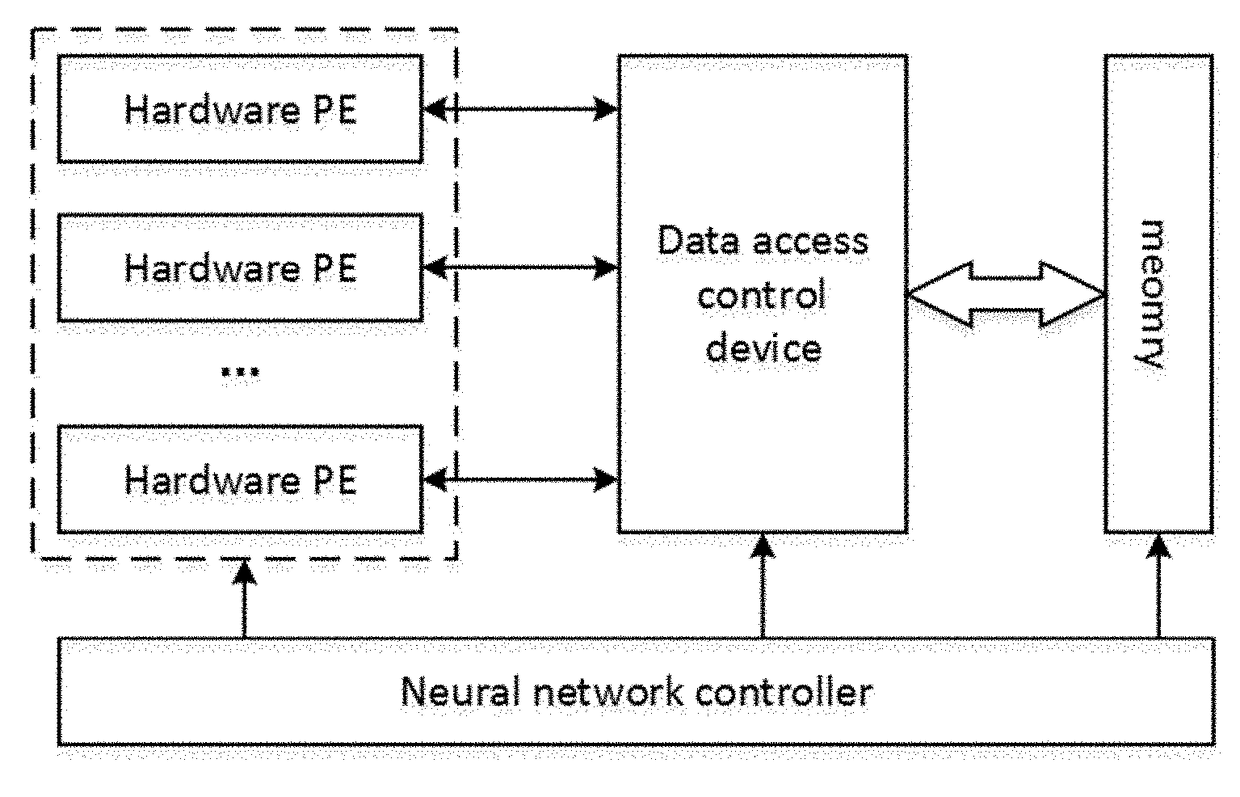

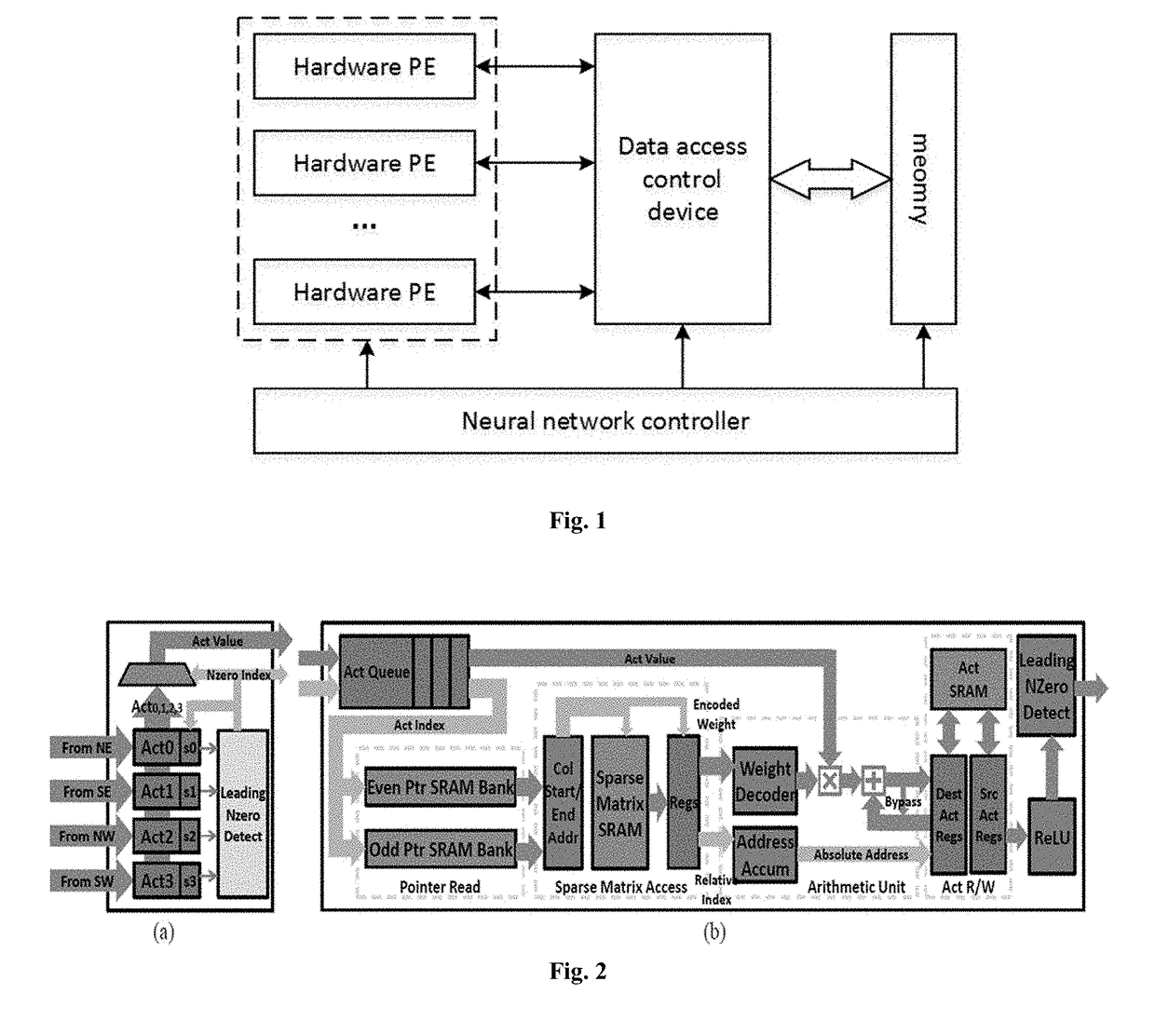

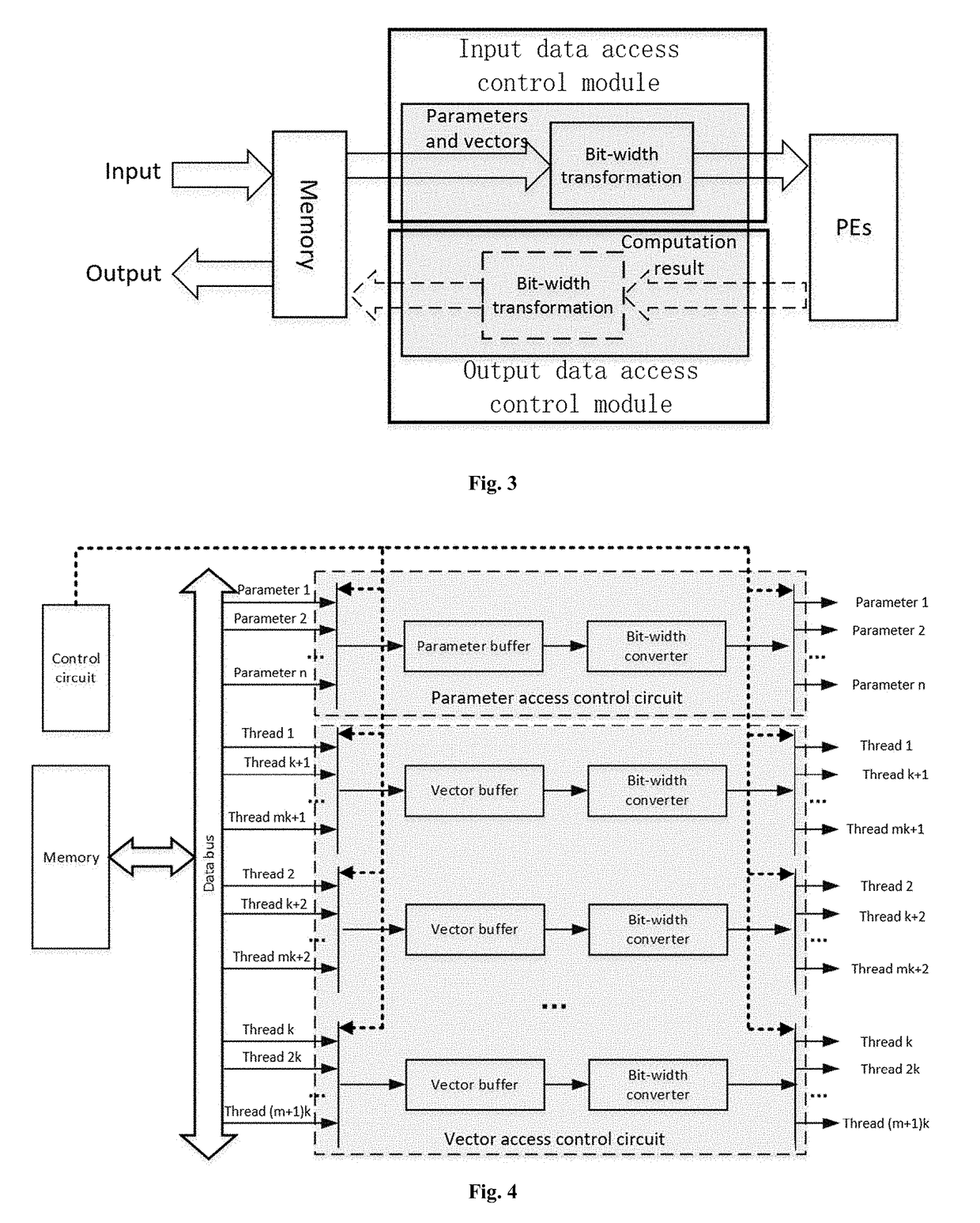

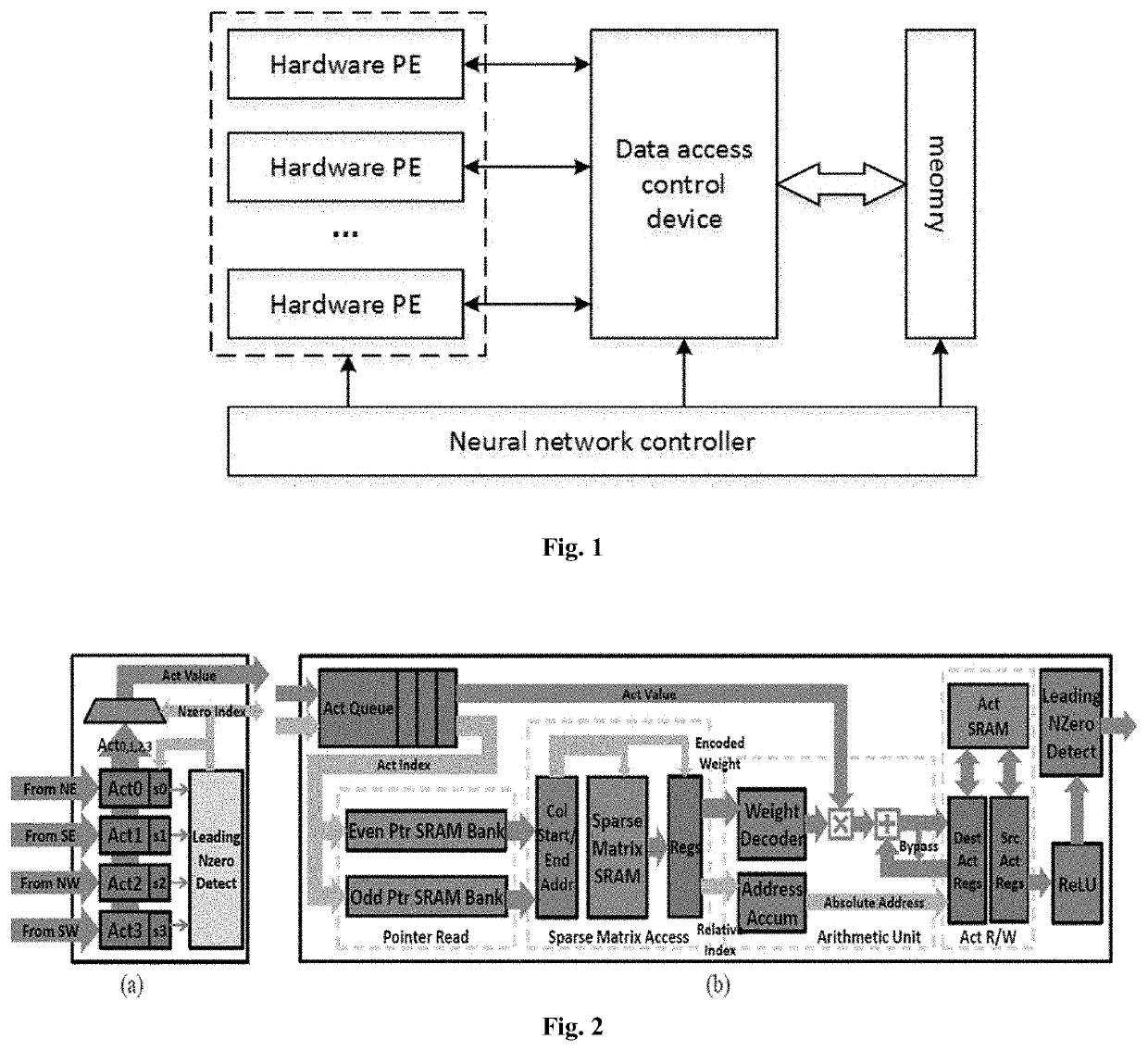

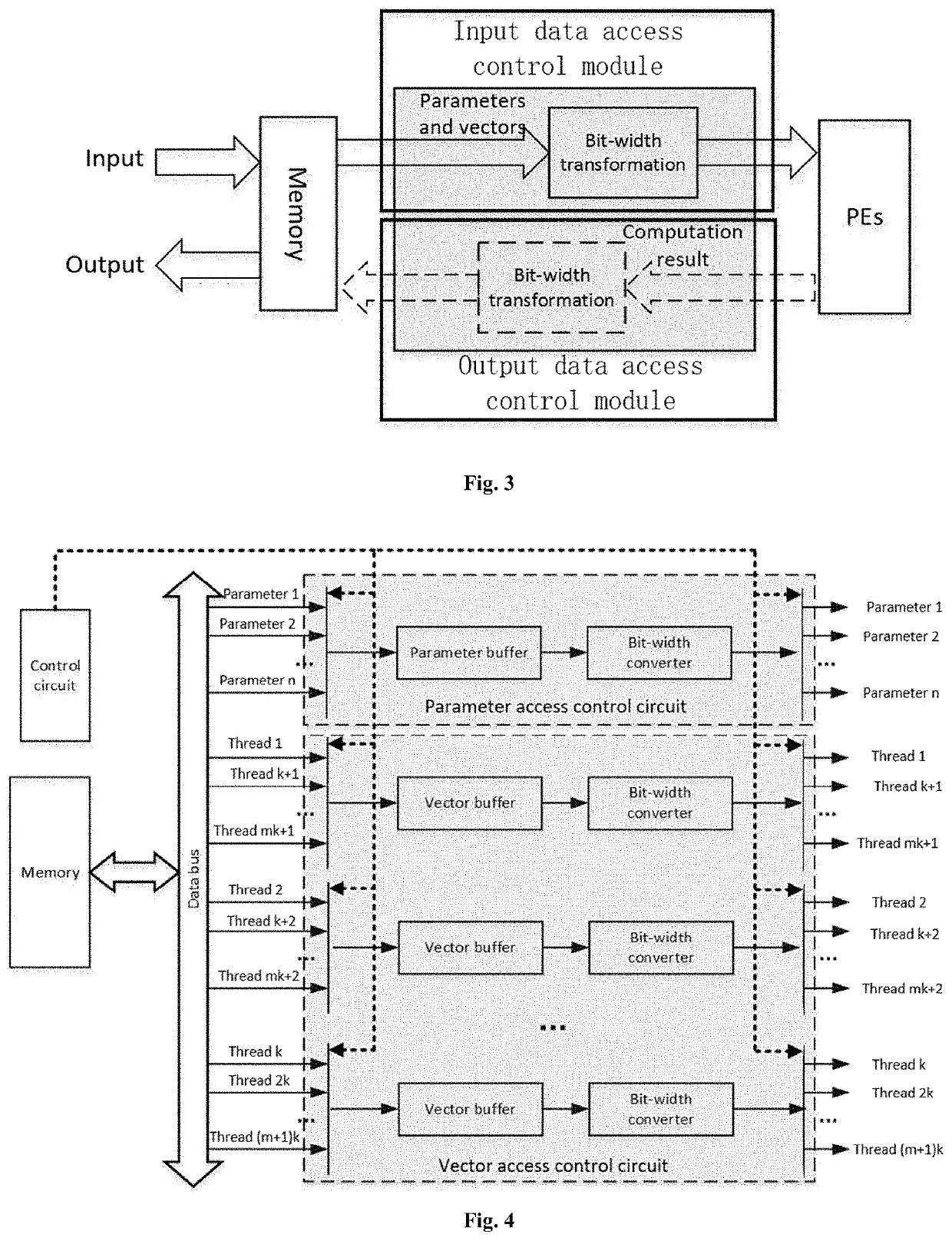

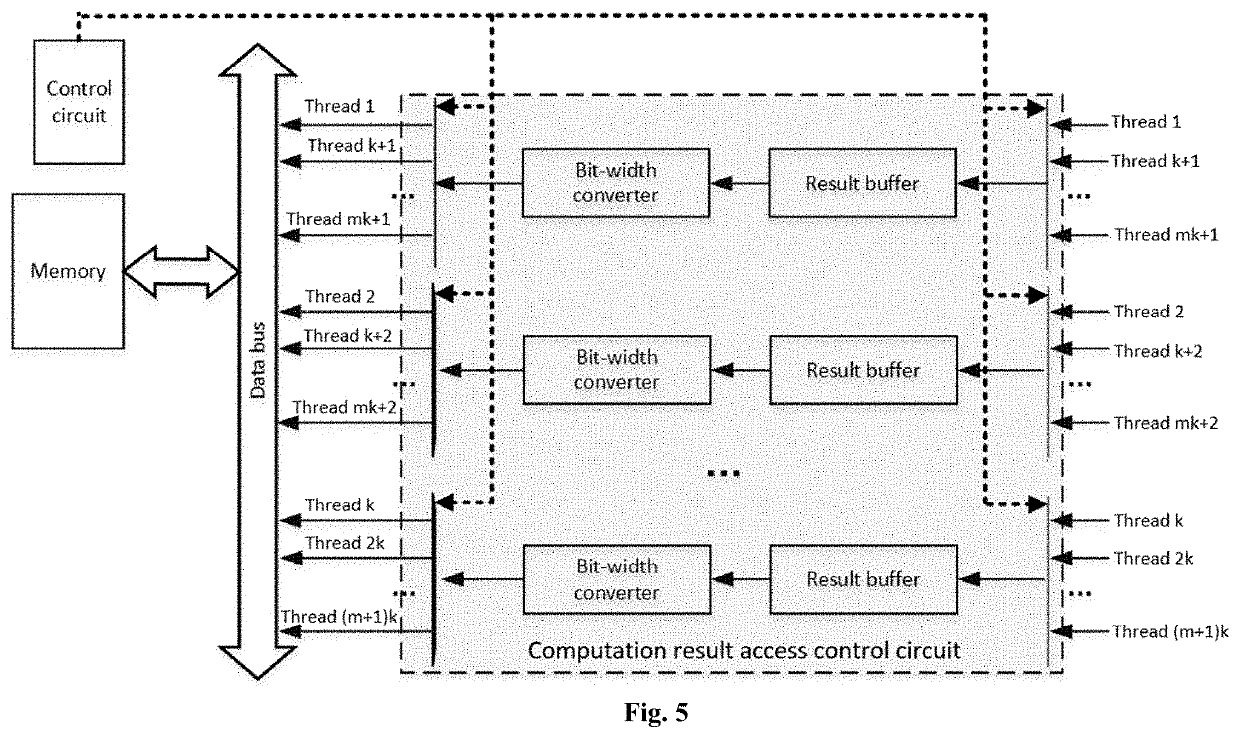

Efficient Data Access Control Device for Neural Network Hardware Acceleration System

ActiveUS20180046905A1Digital data processing detailsNeural architecturesData access controlNeural network hardware

The technical disclosure relates to artificial neural network. In particular, the technical disclosure relates to how to implement efficient data access control in the neural network hardware acceleration system. Specifically, it proposes an overall design of a device that can process data receiving, bit-width transformation and data storing. By employing the technical disclosure, neural network hardware acceleration system can avoid the data access process becomes the bottleneck in neural network computation.

Owner:XILINX INC

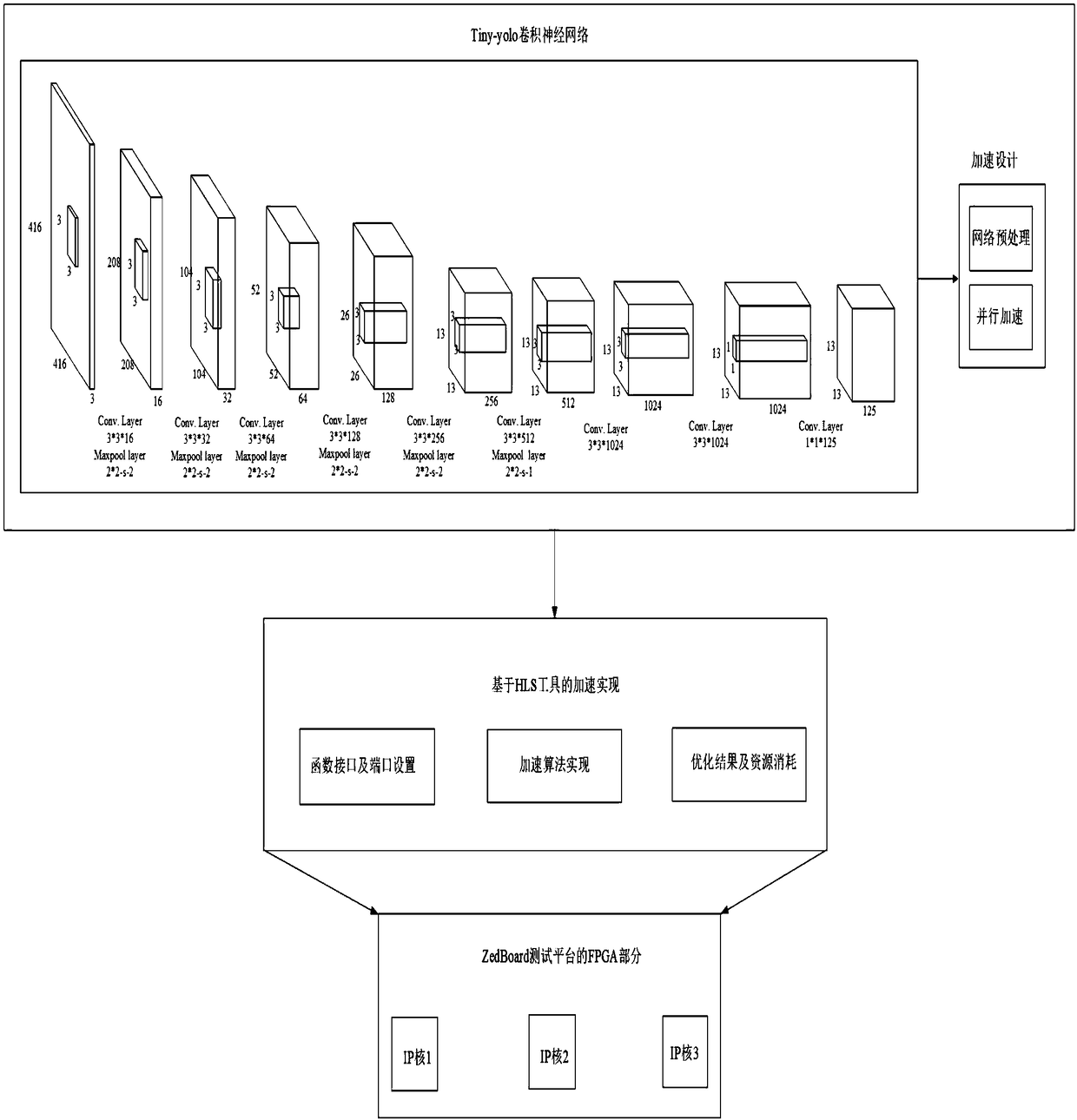

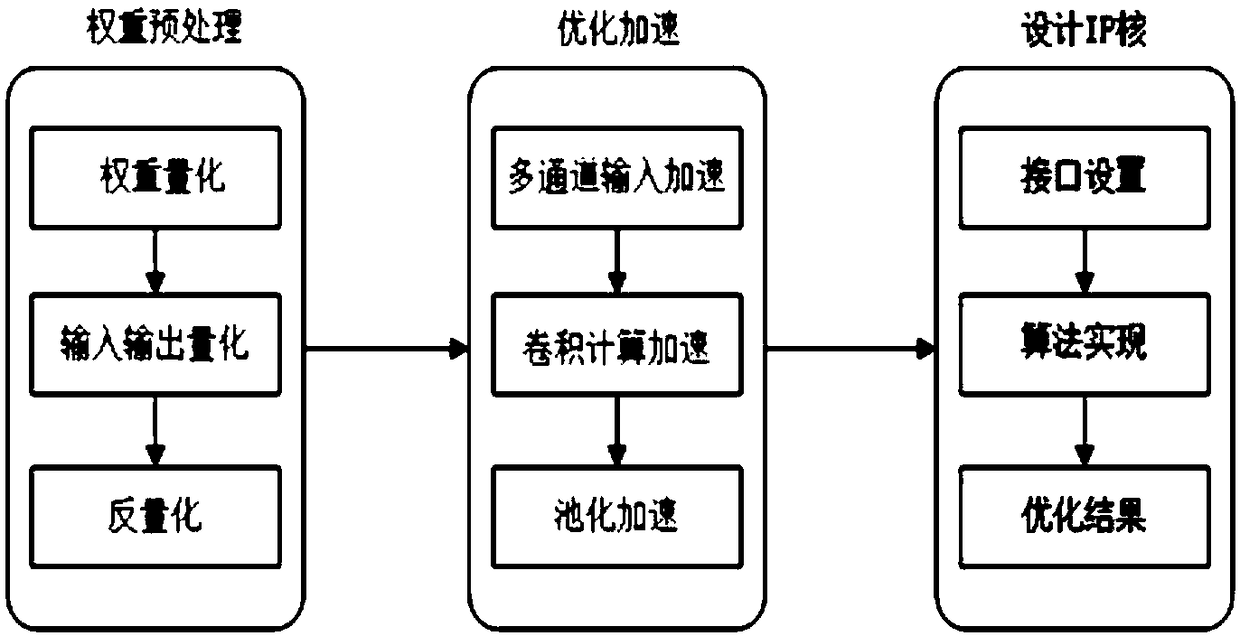

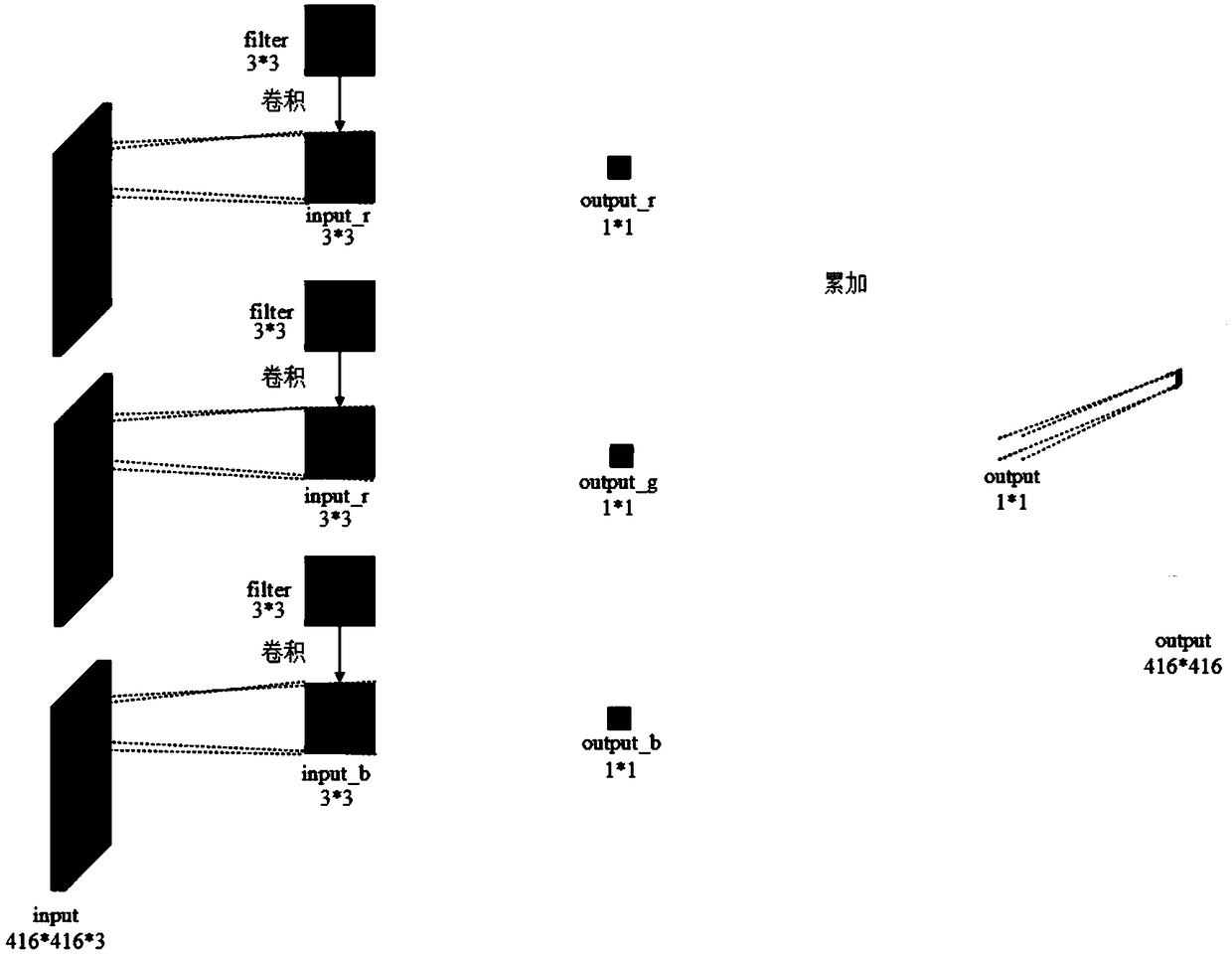

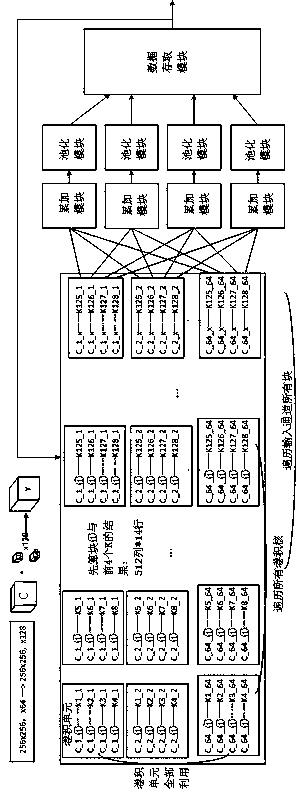

FPGA-based Tiny-yolo convolutional neural network hardware acceleration method and system

ActiveCN108805274ASave resourcesFast operationImage memory managementProcessor architectures/configurationNeural network hardwareParallel processing

The invention discloses an FPGA-based Tiny-yolo convolutional neural network hardware acceleration method and system. By analyzing the parallel characteristics of a Tiny-yolo network, and in combination with the parallel processing capability of hardware, the hardware acceleration is carried out on the Tiny-yolo convolutional neural network. The acceleration scheme is used for performing acceleration improvement on the Tiny-yolo network from three aspects that (1) the processing speed of the Tiny-yolo network is increased through multi-channel parallel input; (2) the convolution computing speed of the Tiny-yolo network is increased through parallel computing; and (3) the pooling process time of the Tiny-yolo network is shortened through pooling embedding. The method greatly increases the detection speed of the Tiny-yolo convolutional neural network.

Owner:CHONGQING UNIV

Hardware neural network conversion method, computing device, software-hardware cooperation system

ActiveCN106650922BSolve adaptation problemsEasy to operatePhysical realisationComputer hardwareNerve network

The invention provides a hardware neural network conversion method which converts a neural network application into a hardware neural network meeting the hardware constraint condition, a computing device, a compiling method and a neural network software and hardware collaboration system. The method comprises the steps that a neural network connection diagram corresponding to the neural network application is acquired; the neural network connection diagram is split into neural network basic units; each neural network basic unit is converted into a network which has the equivalent function with the neural network basic unit and is formed by connection of basic module virtual bodies of neural network hardware; and the obtained basic unit hardware networks are connected according to the splitting sequence so as to generate the parameter file of the hardware neural network. A brand-new neural network and quasi-brain computation software and hardware system is provided, and an intermediate compiling layer is additionally arranged between the neural network application and a neural network chip so that the problem of adaptation between the neural network application and the neural network application chip can be solved, and development of the application and the chip can also be decoupled.

Owner:TSINGHUA UNIV

Hardware circuit of recursive neural network of LS-SVM classification and returning study and implementing method

InactiveCN101308551AEliminate the non-linear partIncrease training speedBiological neural network modelsCharacter and pattern recognitionNerve networkAlgorithm

The invention discloses an LS-SVM classification and regression study recursive neural network hardware circuit and a realization method; the method combines the LS-SVM method with the recursive neural network to is deduce a dynamic equation and a topological structure describing the neural network, and further establishes a hardware circuit for realizing the recursive neural network, so that the hardware circuit is used to realize the least square support vector machine algorithm. Compared with the existing network, the LS-SVM classification and regression study recursive neural network of the invention eliminates the non-linear part of the network, so the neural network structure is simplified and the SVM training speed is greatly improved; meanwhile, the LS-SVM study neural network provided by the invention can realize classification and regression, on the basis of nearly unchanging the topological structure.

Owner:XIAN UNIV OF TECH

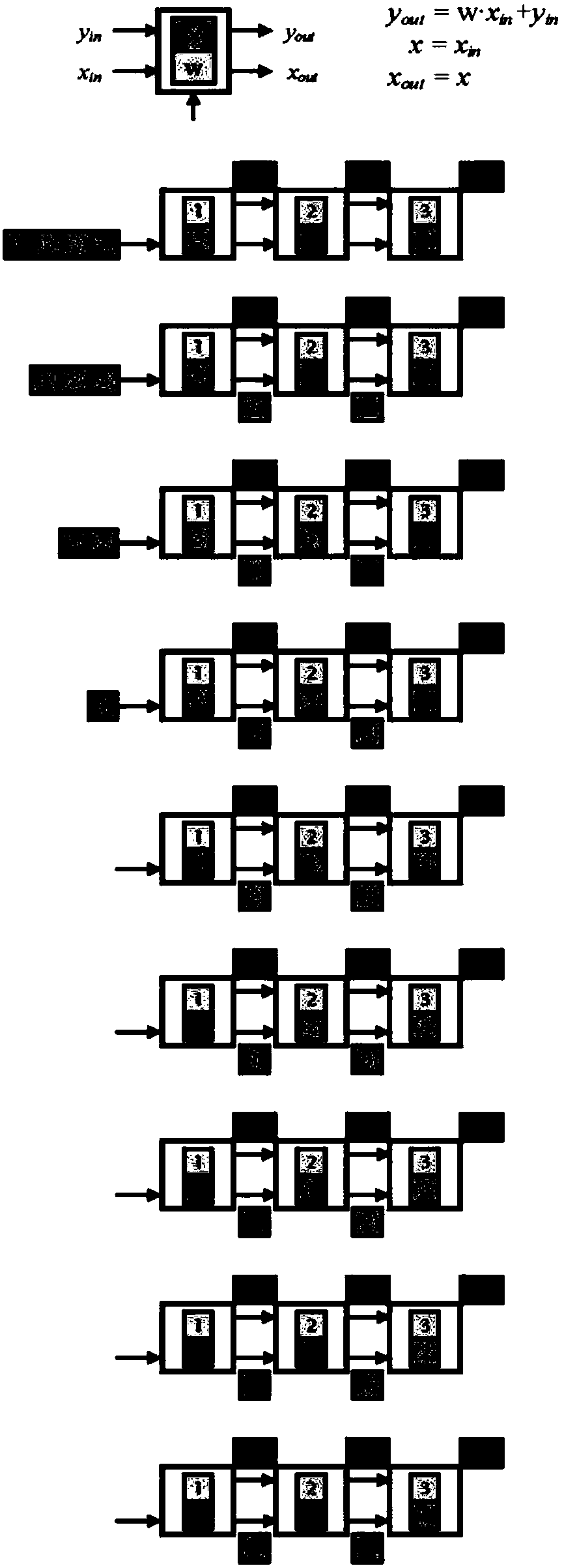

Low-bit efficient deep convolutional neural network hardware acceleration design method based on logarithm quantization, and module and system

ActiveCN108491926AAchieve accelerationAccelerated design method is simpleNeural architecturesNeural learning methodsNerve networkProcess module

The present invention discloses a low-bit efficient deep convolutional neural network hardware acceleration design method based on logarithm quantization. The method comprises the following steps: S1:implementing non-uniform fixed-point quantization of low-bit high-precision based on the logarithmic domain, and using multiple quantization codebooks to quantize the full-precision pre-trained neural network model; and S2: controlling the qualified range by introducing the offset shift parameter, and in the case of extremely low bit non-uniform quantization, adaptively searching the algorithm ofthe optimal quantization strategy to compensate for the quantization error. The present invention also discloses a one-dimensional and two-dimensional pulsation arrays processing module and system byusing the method. According to the technical scheme of the present invention, hardware complexity and power consumption can be effectively reduced.

Owner:SOUTHEAST UNIV

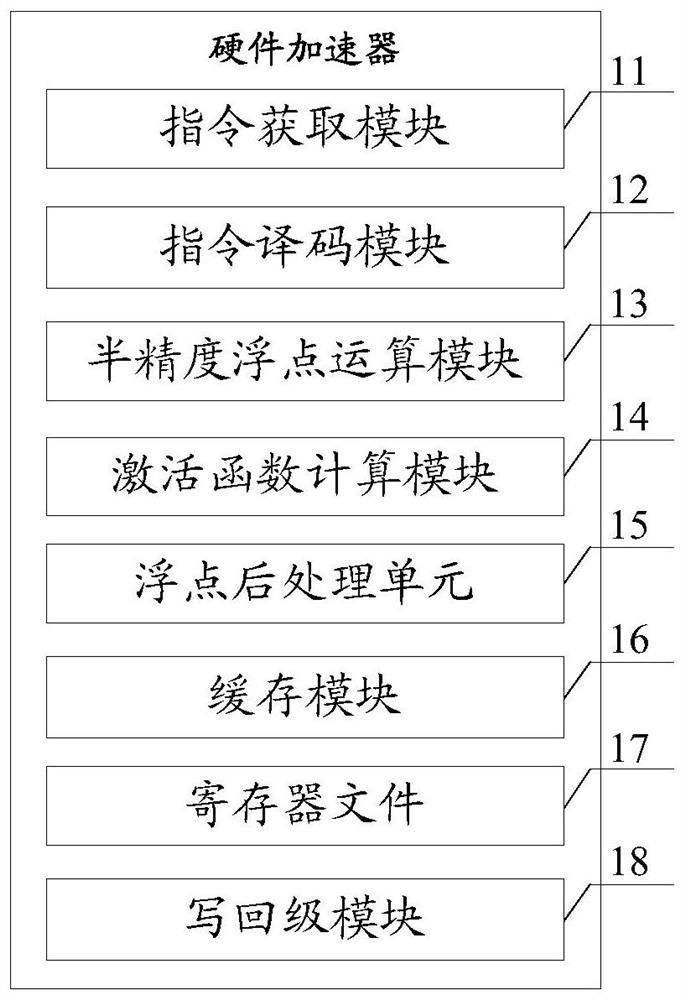

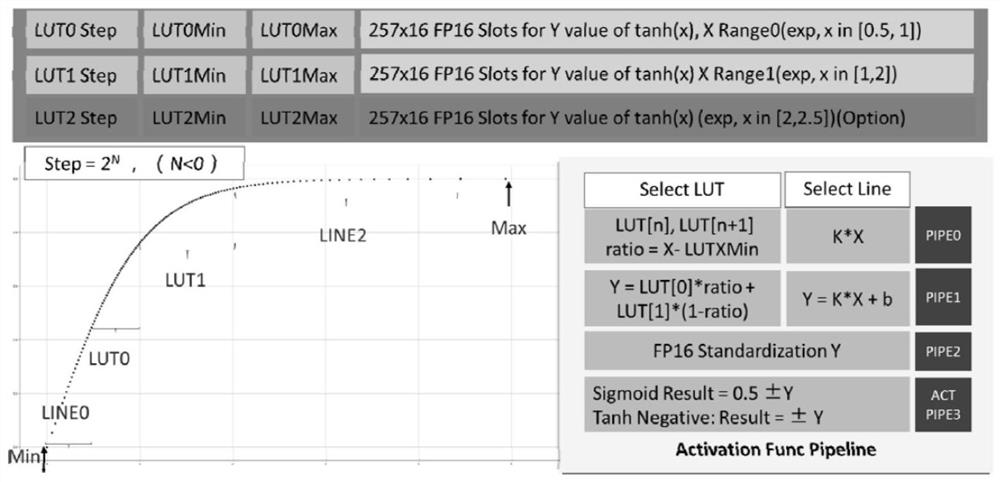

Neural network hardware accelerator

PendingCN111915003AComply with the operation characteristicsAvoid interventionRegister arrangementsInstruction analysisActivation functionNeural network hardware

The invention discloses a neural network hardware accelerator. An assembly line architecture of the hardware accelerator comprises an instruction obtaining module used for acquiring instructions an instruction decoding module used for performing instruction decoding operation; a semi-precision floating point operation module used for carrying out one-dimensional vector operation; an activation function calculation module used for calculating an activation function in a table lookup mode; a floating point post-processing unit used for performing floating point operation on the data calculated by the activation function; and a caching module used for caching intermediate data in the neural network algorithm implementation process. Register files which are distributed on an assembly line andlocated on the same level as the instruction decoding module are used for temporarily storing related instructions, data and addresses in the neural network algorithm implementation process. The hardware resource utilization rate of the hardware accelerator in the RNN neural network algorithm implementation process can be greatly improved, so that the unit power consumption efficiency of the RNN neural network algorithm with the unit calculation amount in unit time when the RNN neural network algorithm is operated through the hardware accelerator is improved.

Owner:SHENZHEN DAPU MICROELECTRONICS CO LTD

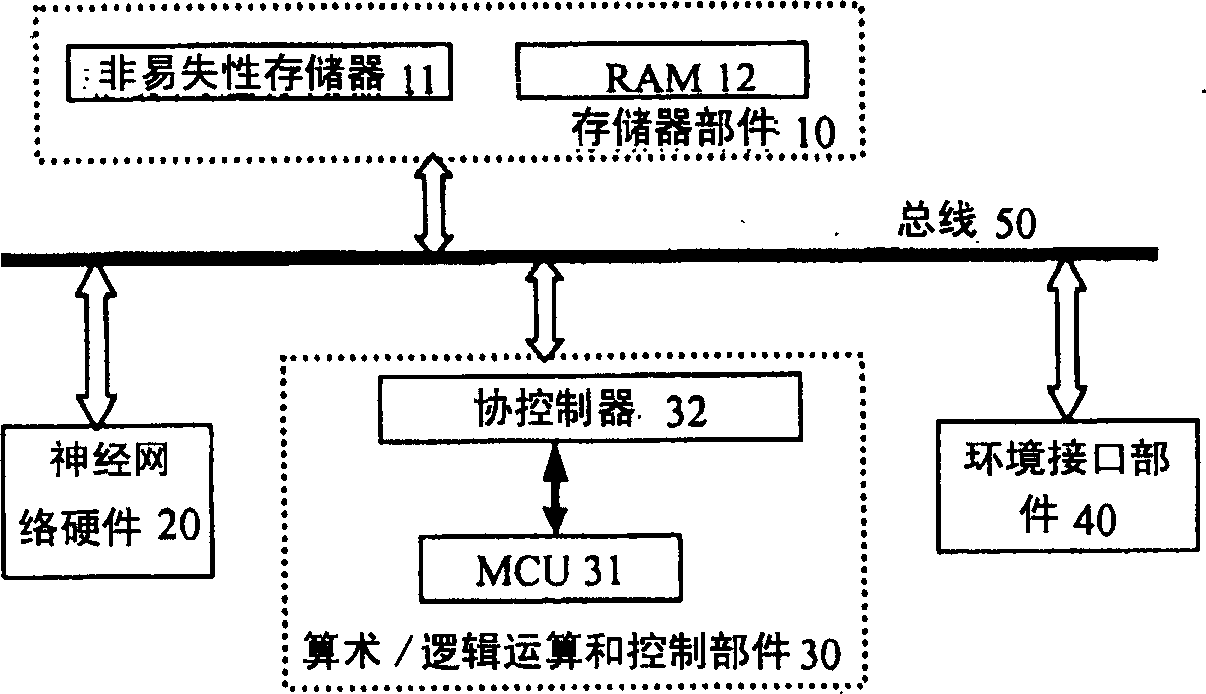

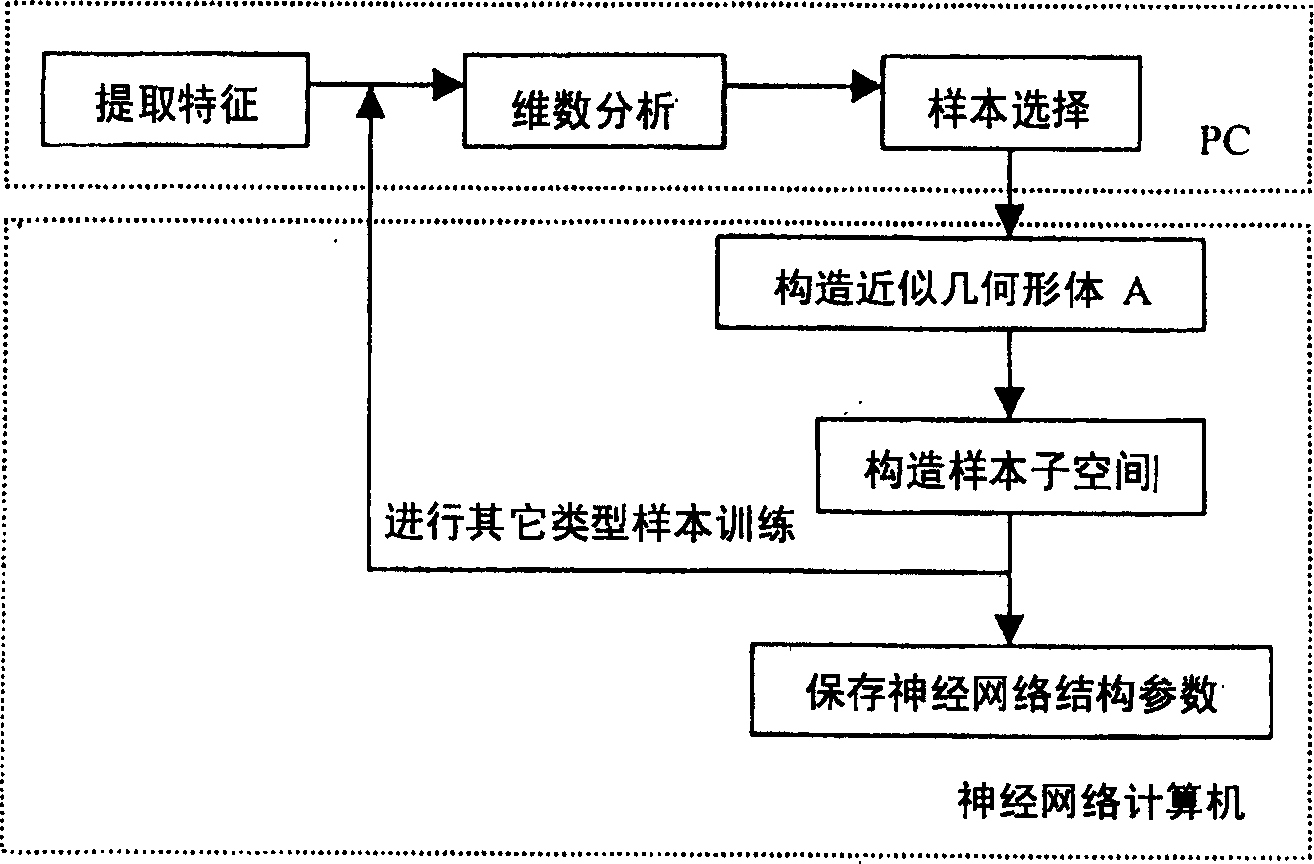

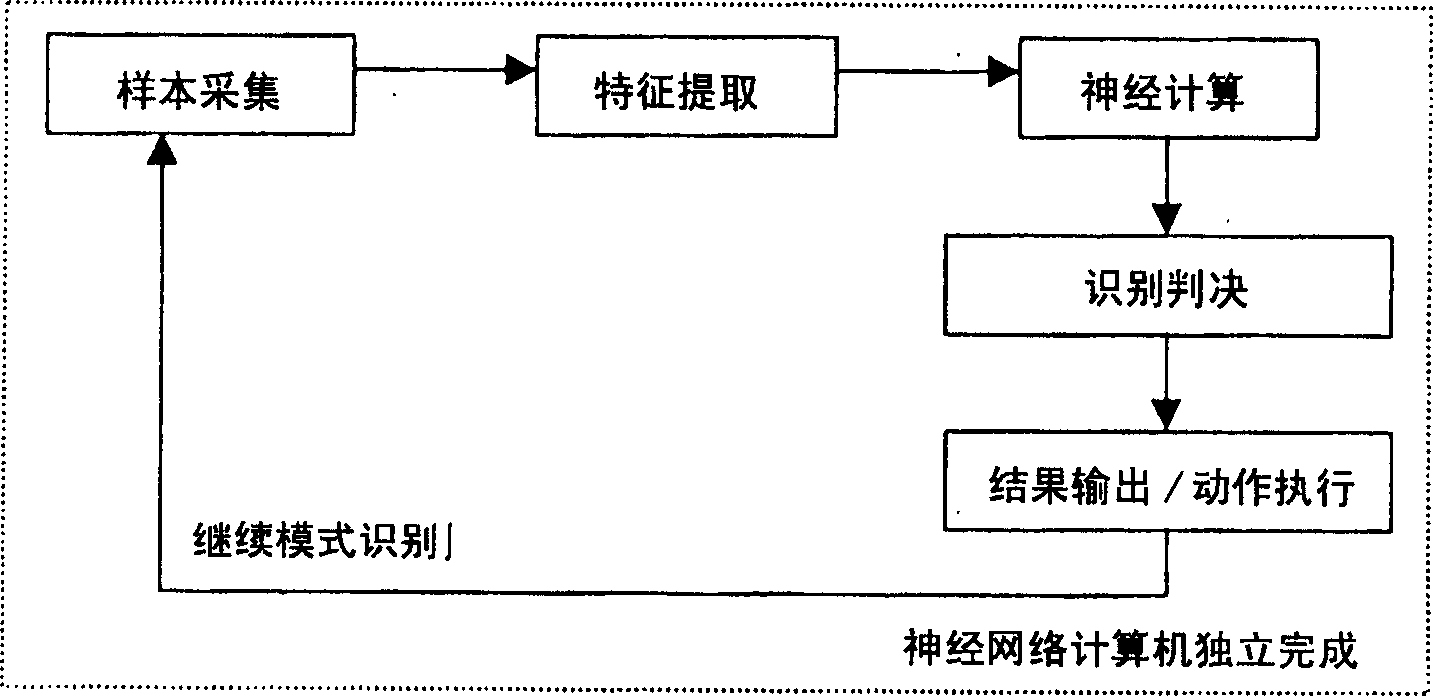

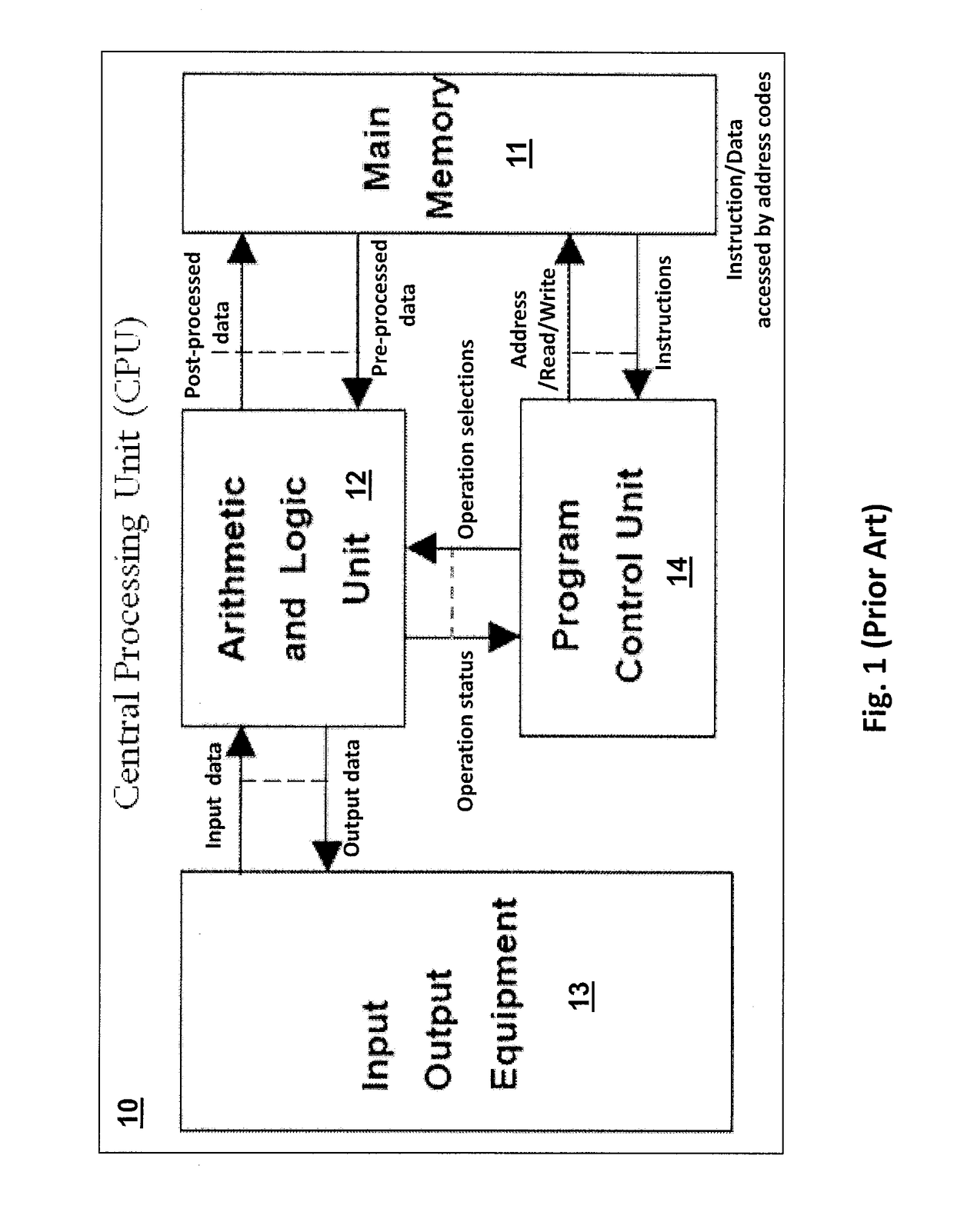

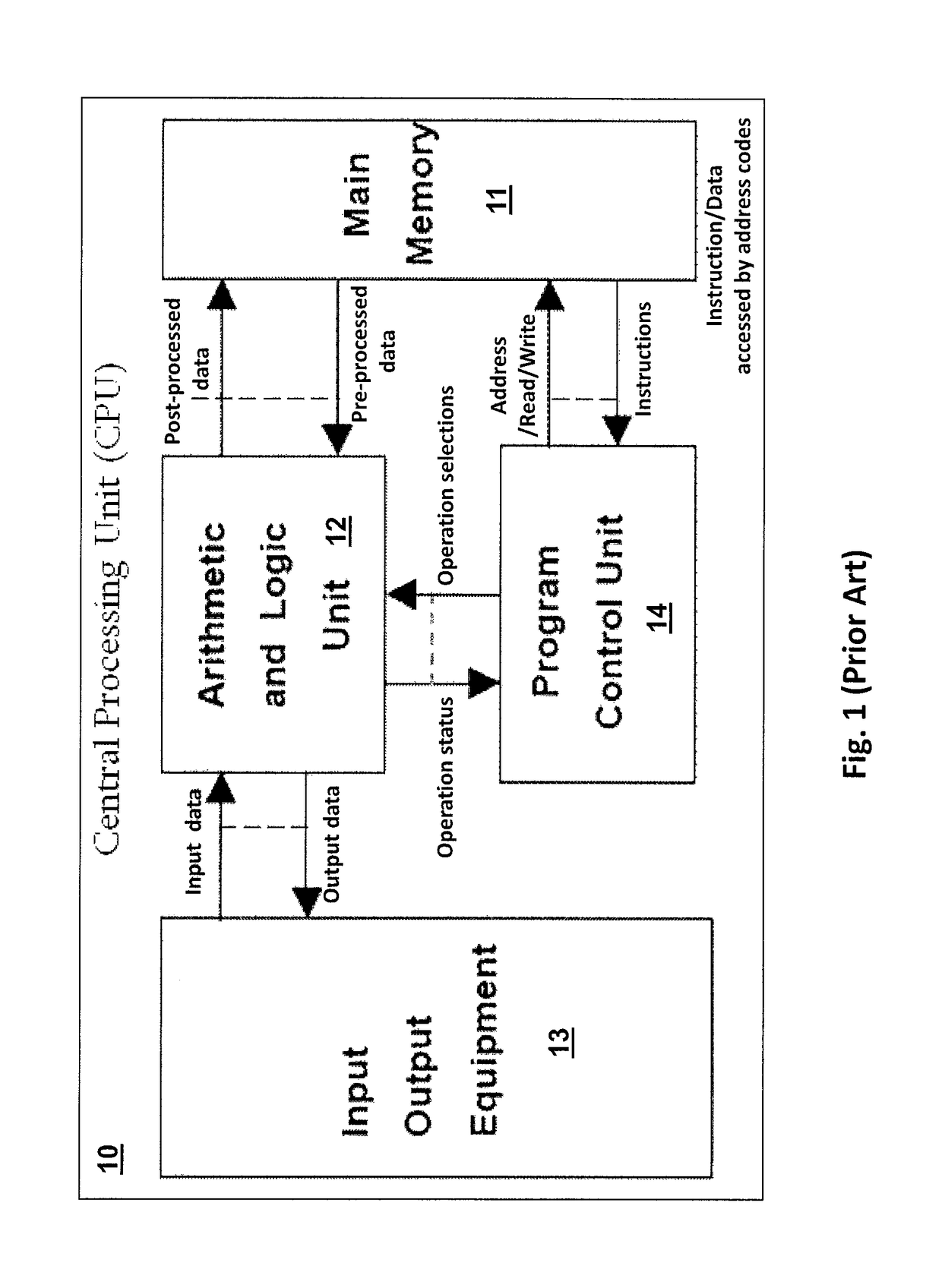

Special purpose neural net computer system for pattern recognition and application method

ActiveCN1700250AImprove sample recognition rateHigh cost performanceBiological neural network modelsCharacter and pattern recognitionNerve networkControl system

The invention relates to a neural net computer system and its method of mode identification single-purpose which comprises: a bus, a storage part to provide data storage space, an arithmetic / logical calculation and control part to do arithmetic / logical calculation, and controls the operation and data exchanging of other parts by bus control system, a neural net hardware which receives data from storage part to do arithmetic / logical calculation through bus according to the order of arithmetic / logical calculation and control part, and stores the results into storage part, an environment interface part which obtains information by the order of arithmetic / logical calculation and control part or expresses system operation results by voice or other modes.

Owner:INST OF SEMICONDUCTORS - CHINESE ACAD OF SCI

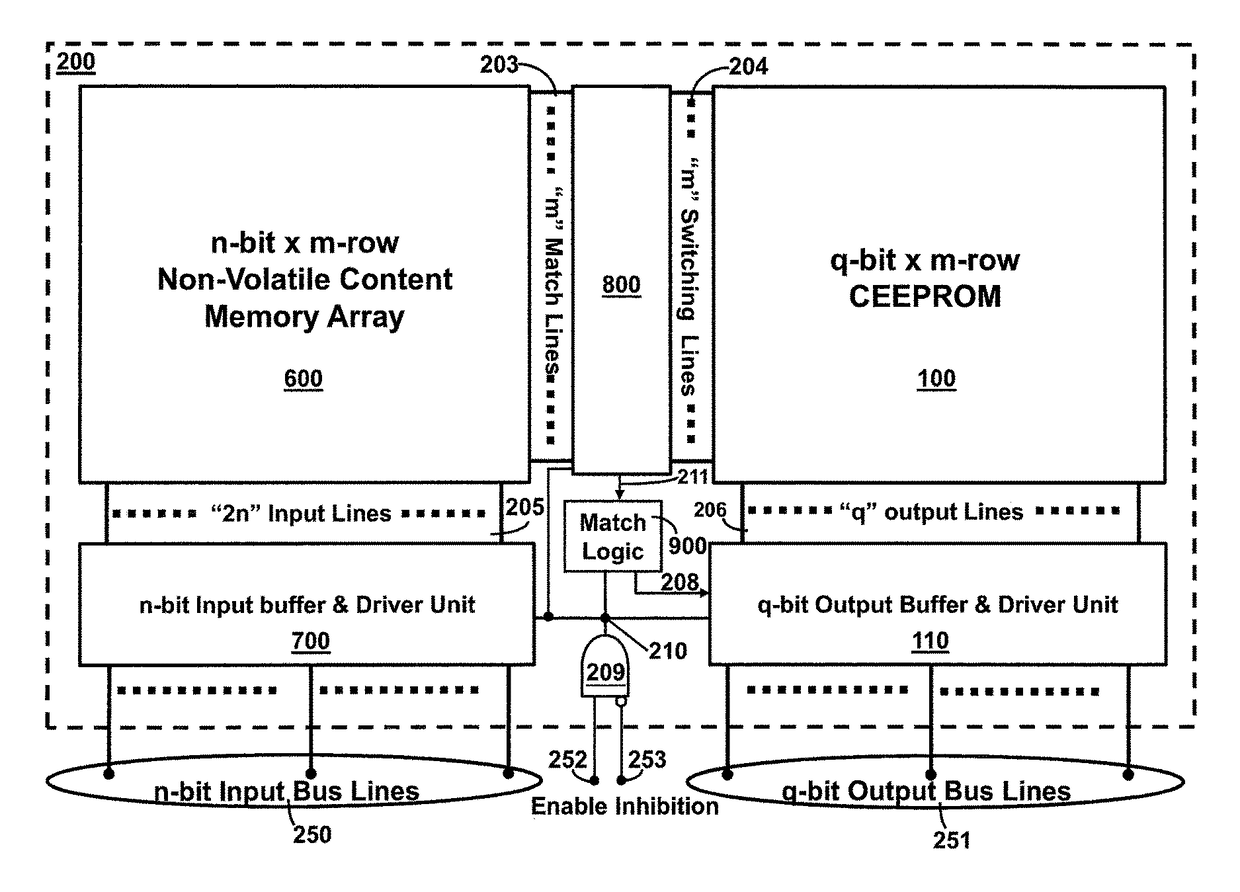

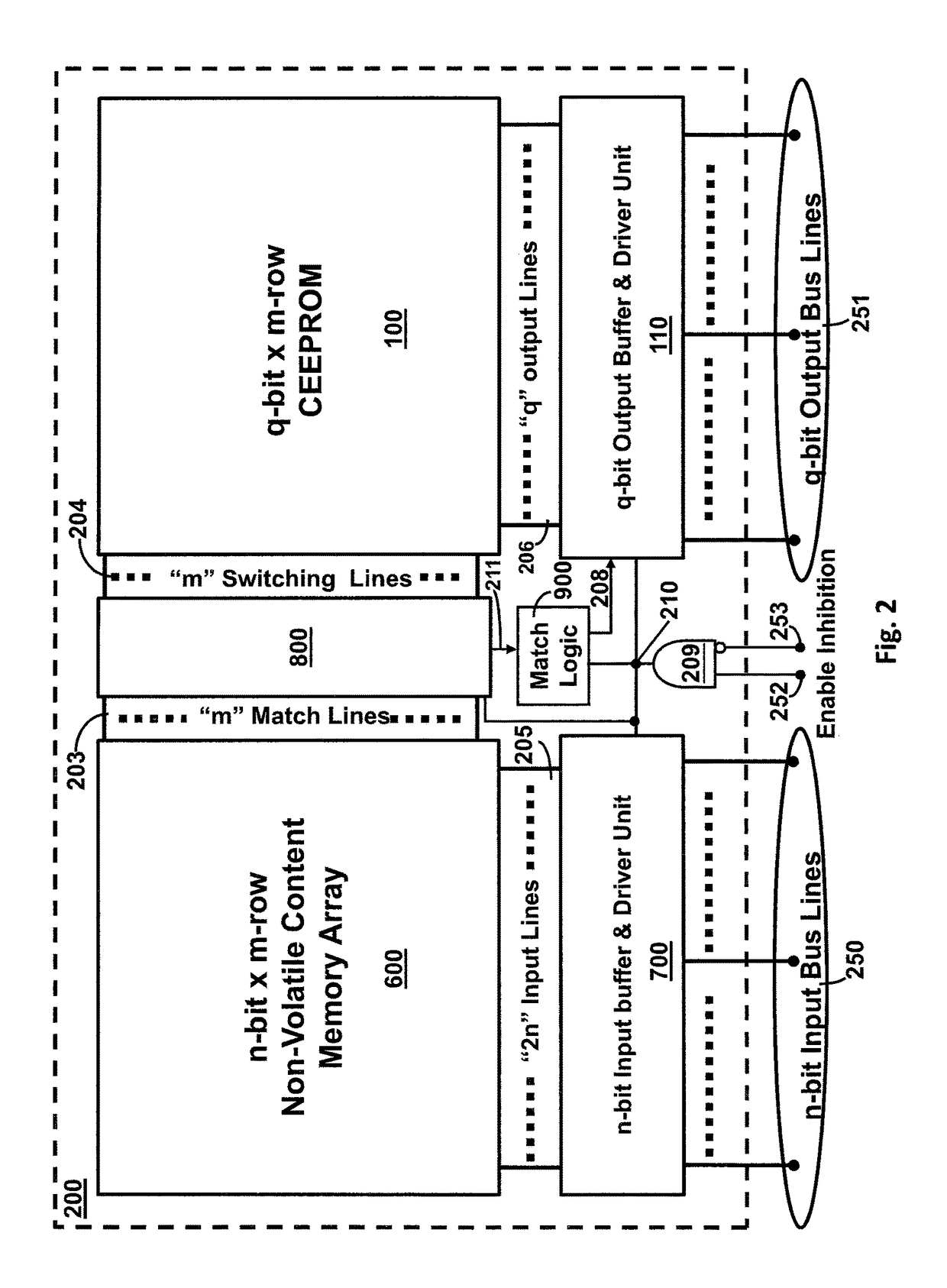

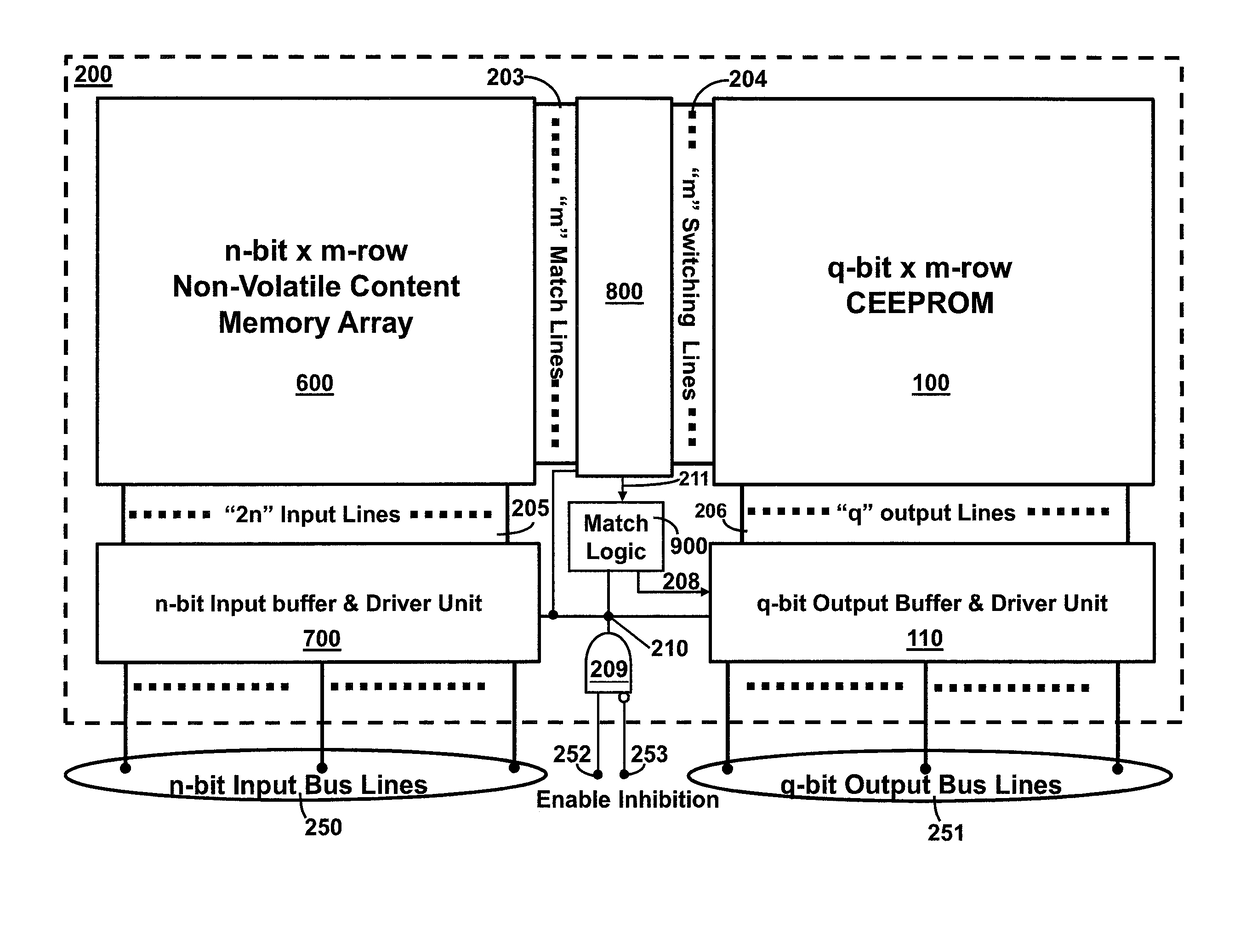

Digital perceptron

ActiveUS20170256296A1Improve processing efficiencyMemory adressing/allocation/relocationDigital storageNeural network hardwareParallel processing

Owner:FLASHSILICON

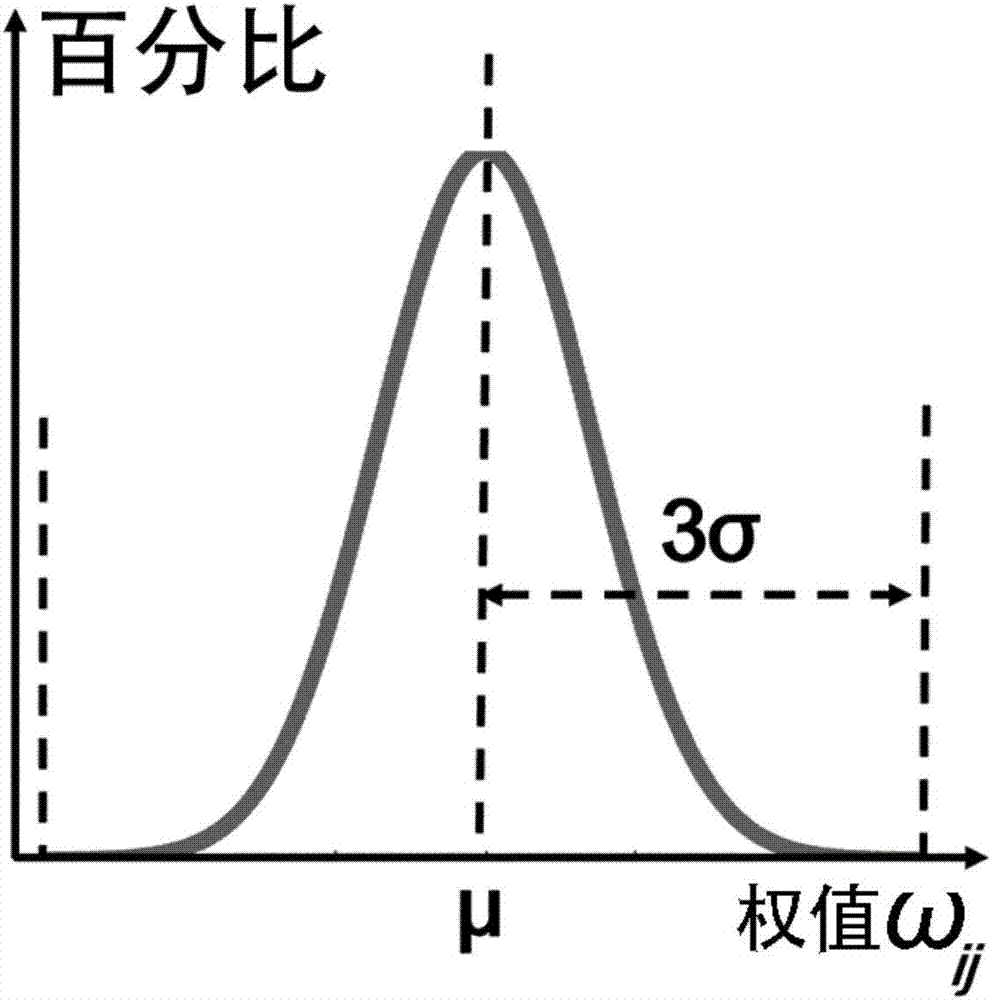

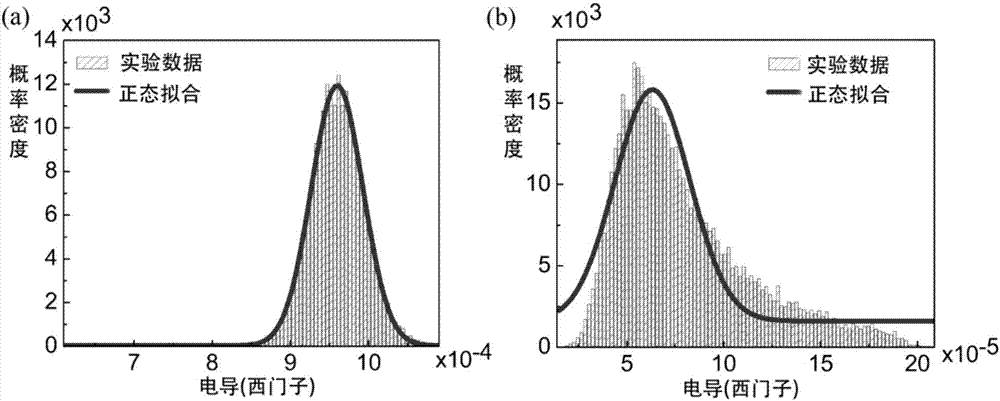

Memory resistor neural network training method based on fuzzy Boltzmann machine

InactiveCN107133668AImprove robustnessUniversalNeural architecturesPhysical realisationRestricted Boltzmann machineNeural network learning

The invention discloses a memory resistor neural network training method based on a fuzzy Boltzmann machine, and the method comprises the steps: employing a fuzzification processing method, changing the connection intensity / weight value in a limited Boltzmann machine network to a fuzzy number from a definite number, and obtaining a fuzzy weight value; substituting the fuzzy weight value into a limited Boltzmann machine, and obtaining a fuzzy limited Boltzmann machine network which is more suitable for the description of the characteristics of a memory resistor device, wherein the network training process is the updating of the fuzzy weight value, thereby obtaining a trained memory resistor neural network. The method avoids the impact on the network precision and stability from the fluctuation of a device when a memory resistor is taken as a synapse unit in neural network hardware, can improve the robustness of the neural network learning, is universal, and can serve as a universal method for building a neural form system with the inherent random fluctuation for a processing device.

Owner:PEKING UNIV

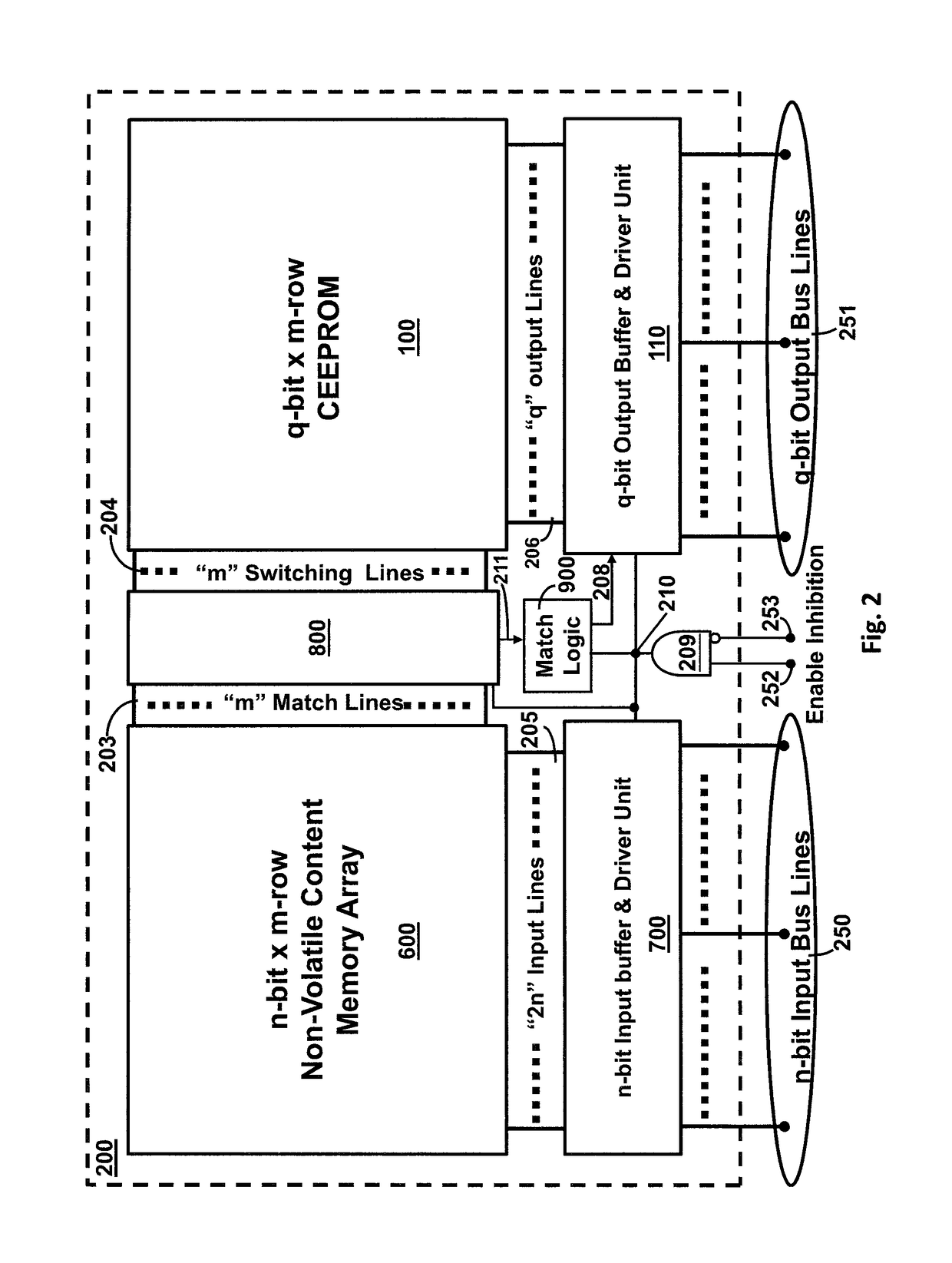

Digital perceptron

ActiveUS9754668B1Improve processing efficiencyMemory adressing/allocation/relocationDigital storageNeural network hardwareParallel processing

In view of the neural network information parallel processing, a digital perceptron device analogous to the build-in neural network hardware systems for parallel processing digital signals directly by the processor's memory content and memory perception in one feed-forward step is disclosed. The digital perceptron device of the invention applies the configurable content and perceptive non-volatile memory arrays as the memory processor hardware. The input digital signals are then broadcasted into the non-volatile content memory array for a match to output the digital signals from the perceptive non-volatile memory array as the content-perceptive digital perceptron device.

Owner:FLASHSILICON

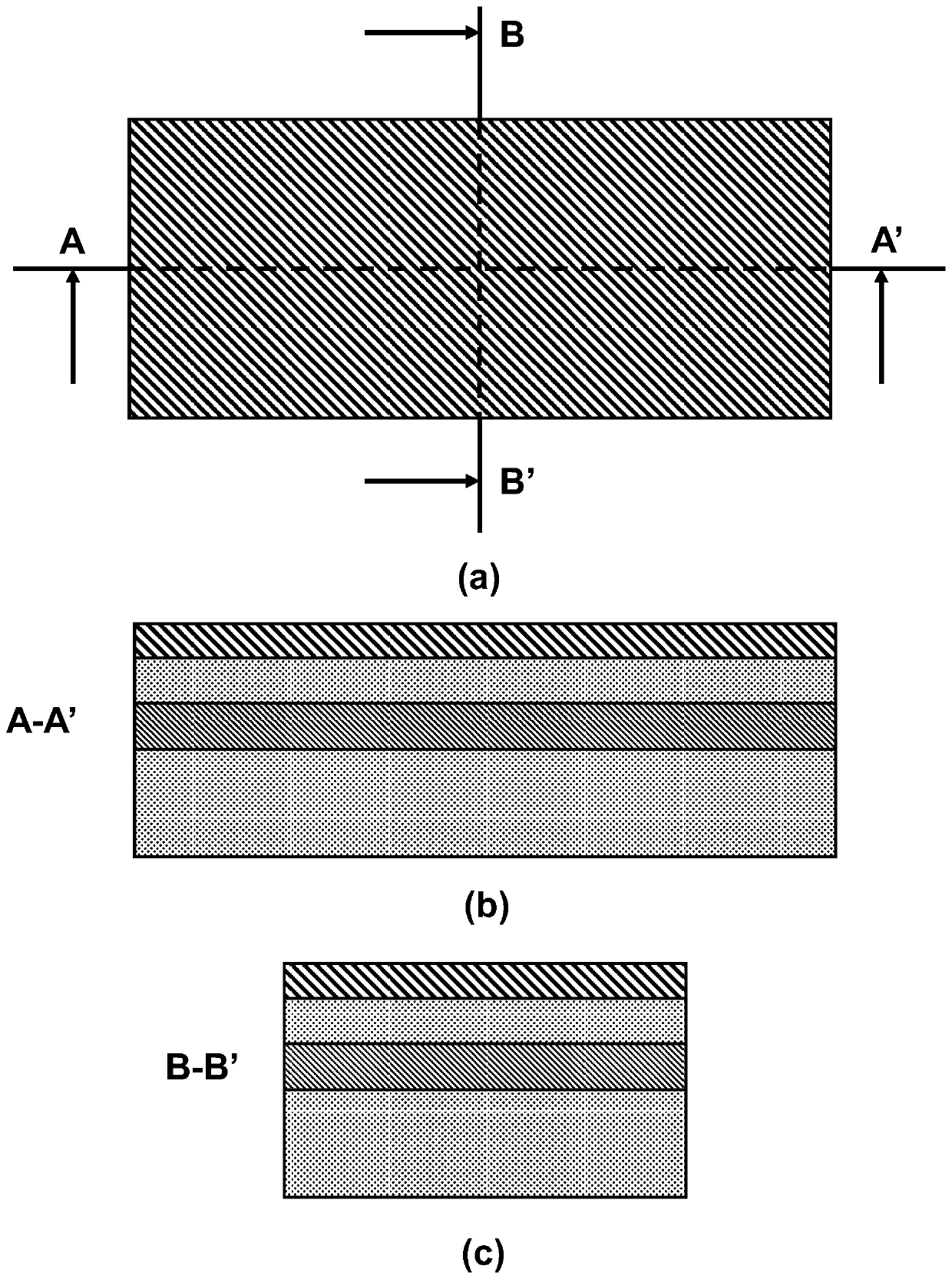

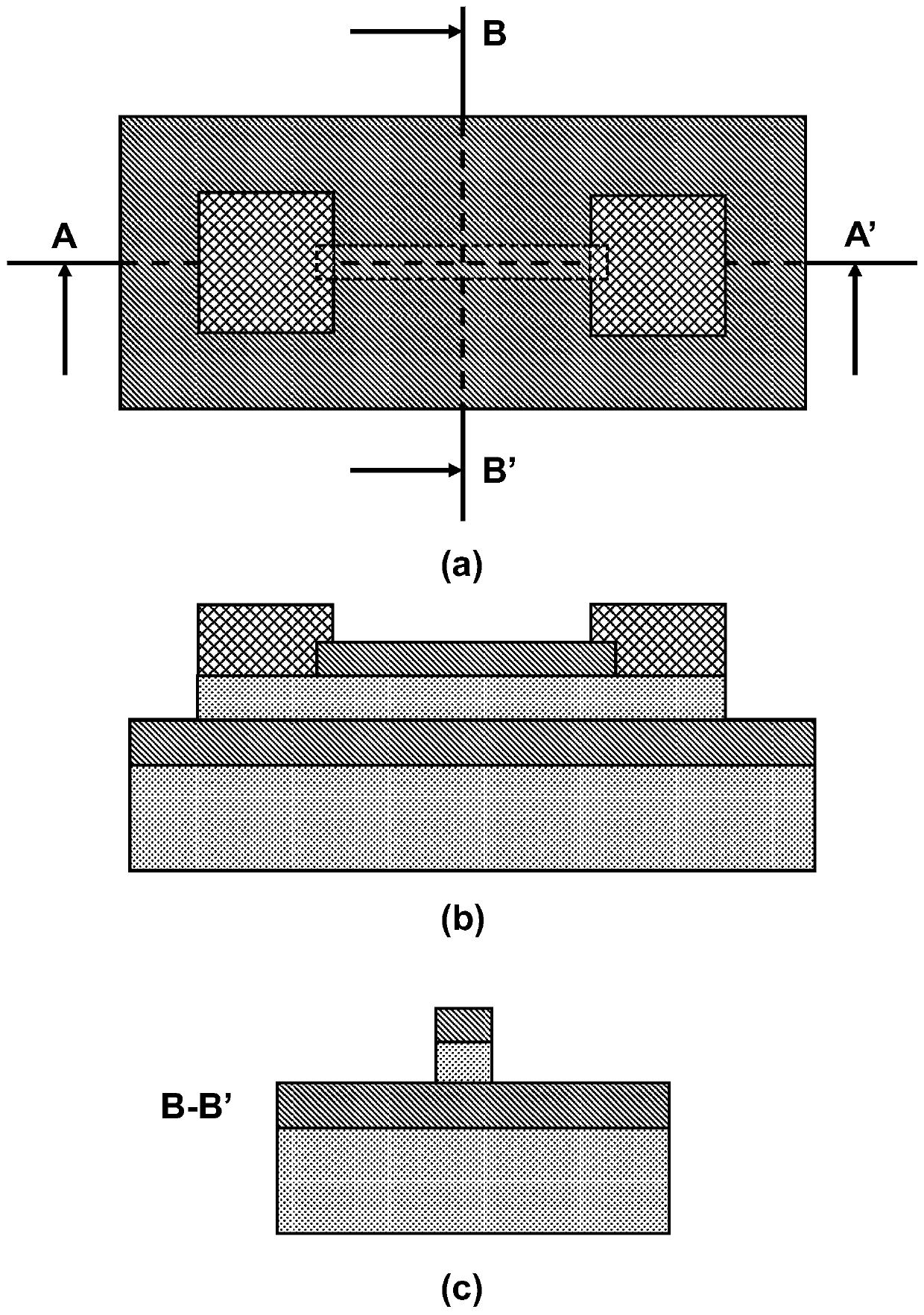

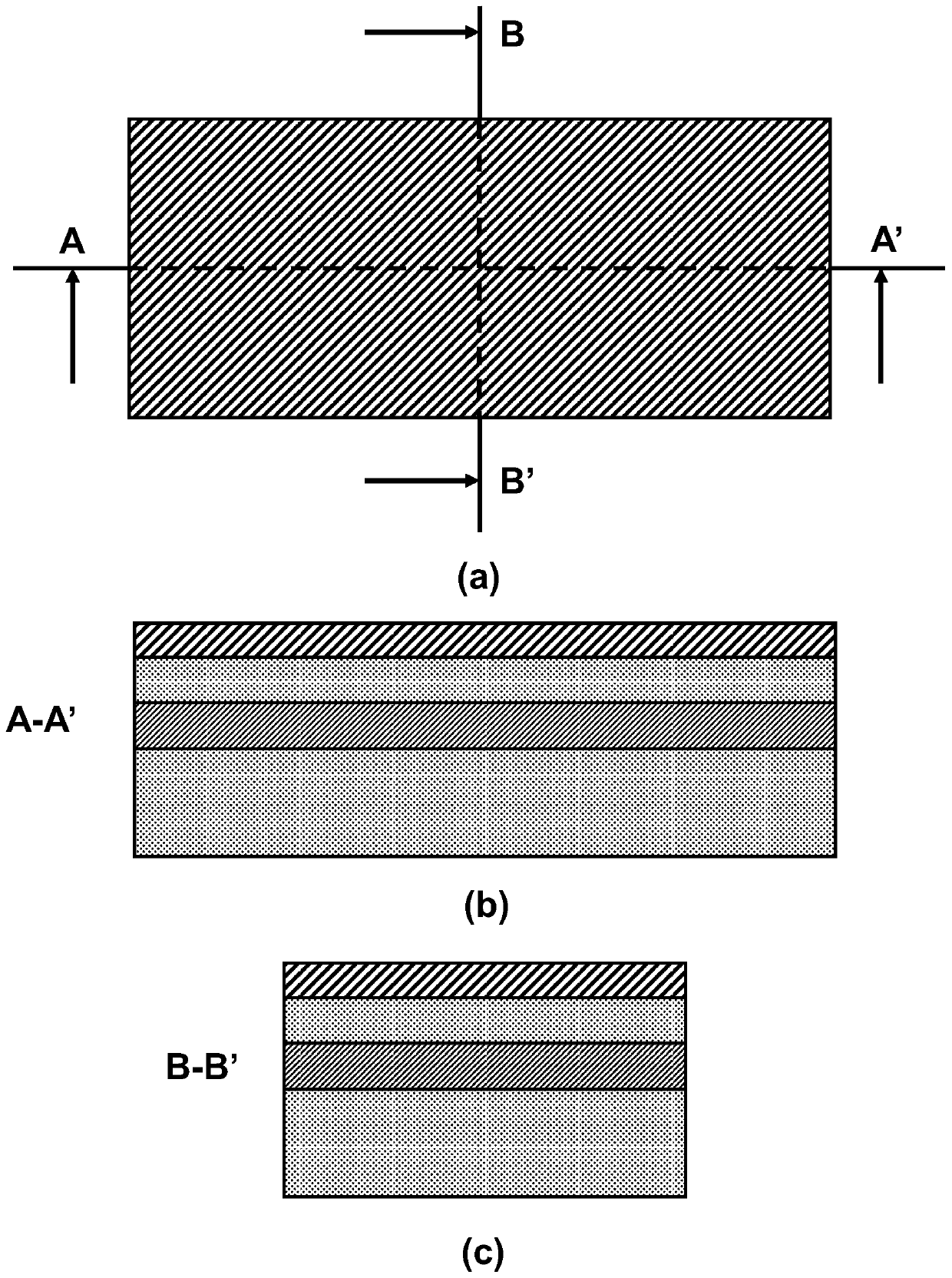

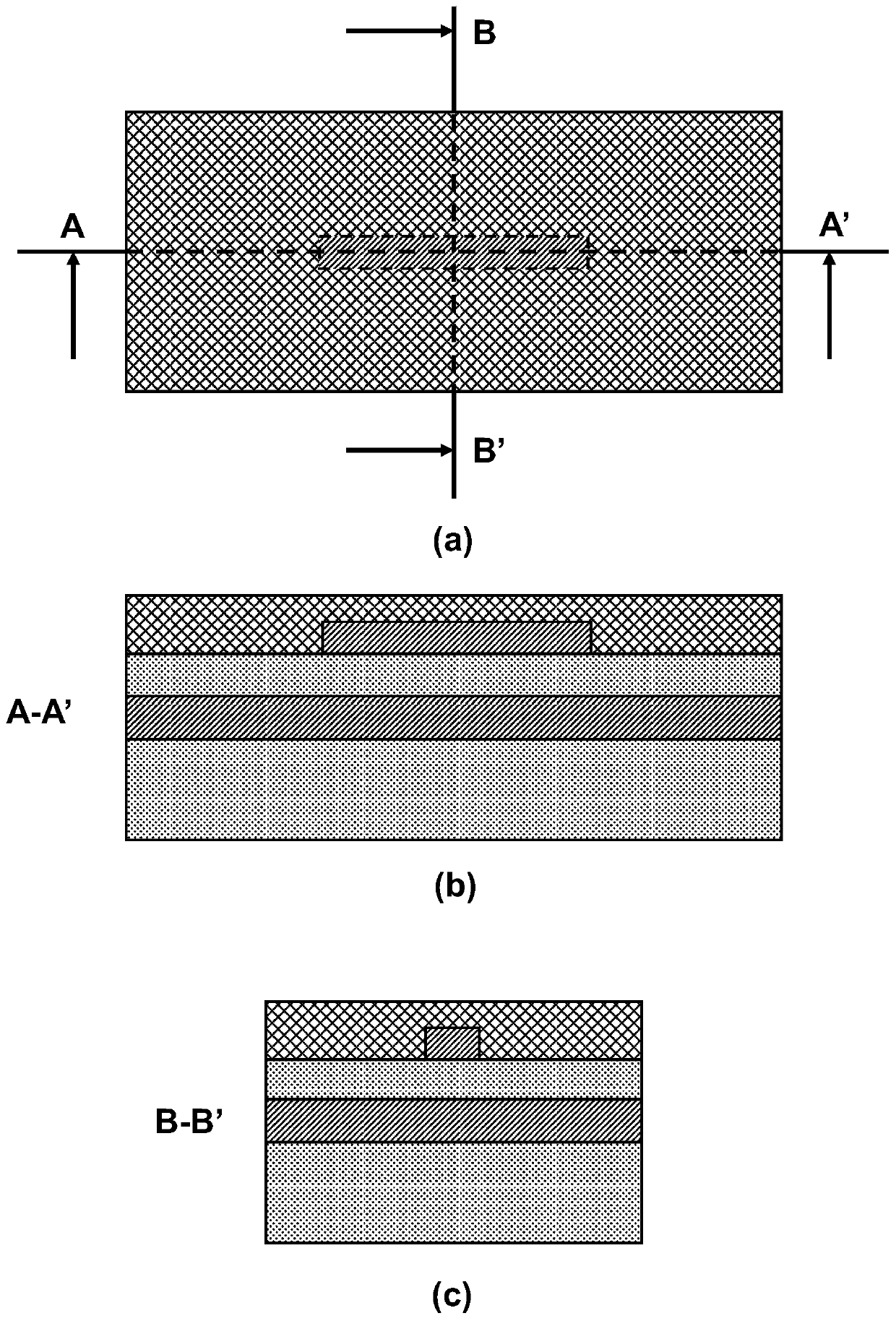

Low-voltage multifunctional charge trapping synaptic transistor and preparation method thereof

ActiveCN111564499AEnhanced interfacial electric fieldConducive to tunnelingSemiconductor/solid-state device manufacturingSemiconductor devicesNanowireNeural network hardware

The invention discloses a low-voltage multifunctional charge trapping type synaptic transistor and a preparation method thereof, and belongs to the field of synaptic devices oriented to neural networkhardware application. According to the invention, a silicon nitride and hafnium oxide double-capture-layer structure is adopted to simultaneously realize short-long-time synaptic plasticity on a single device, so that the functions of the synaptic device are enriched; a three-gate nanowire structure is beneficial to enhancing an interface electric field, so that the FN tunneling width at the interface is reduced, the tunneling probability at the interface is enhanced, and the operation voltage and the device power consumption are reduced; and the device has complete CMOS material and processcompatibility, and due to the excellent device characteristics, the device has potential to be applied to a future large-scale neural network computing system.

Owner:PEKING UNIV

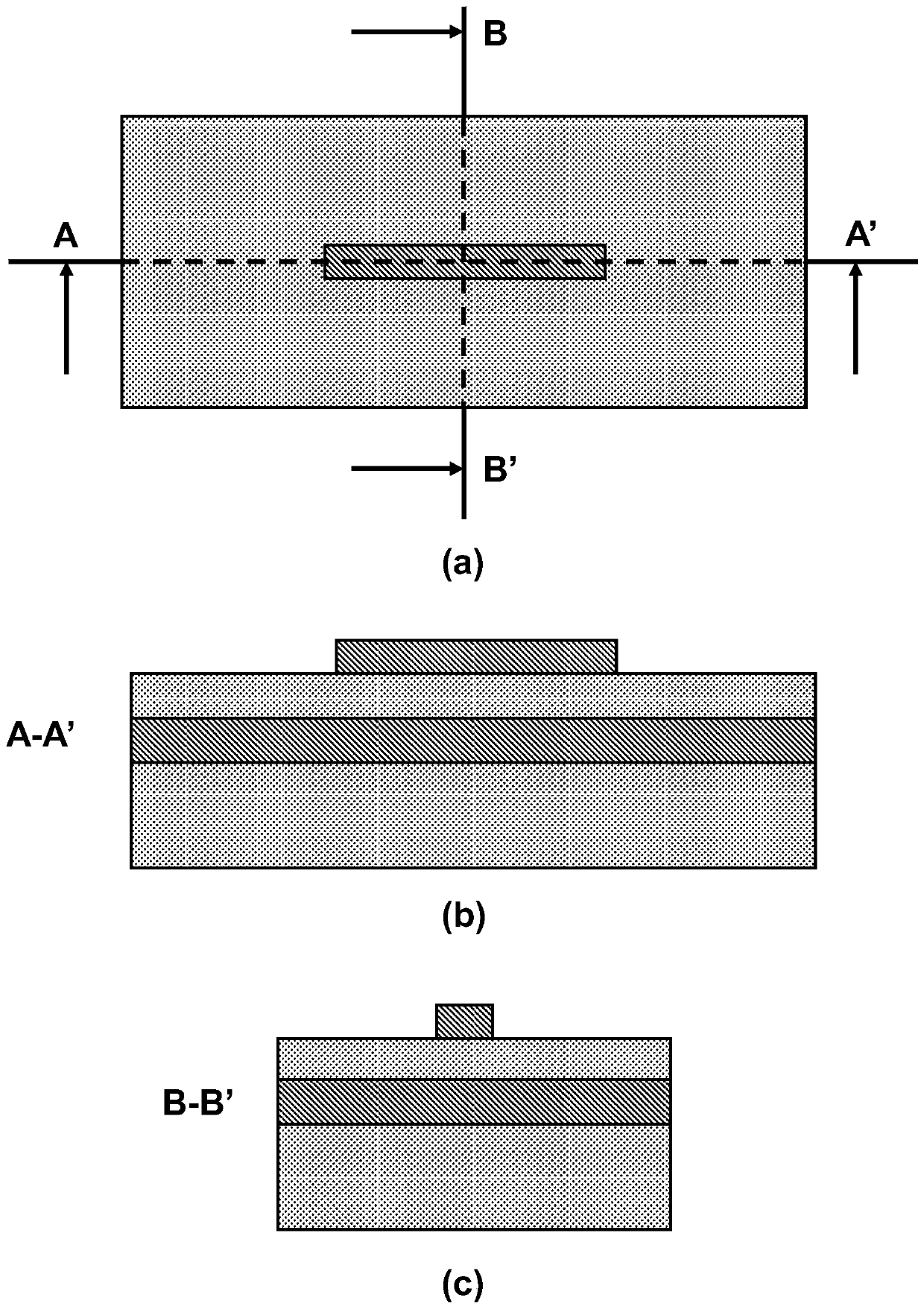

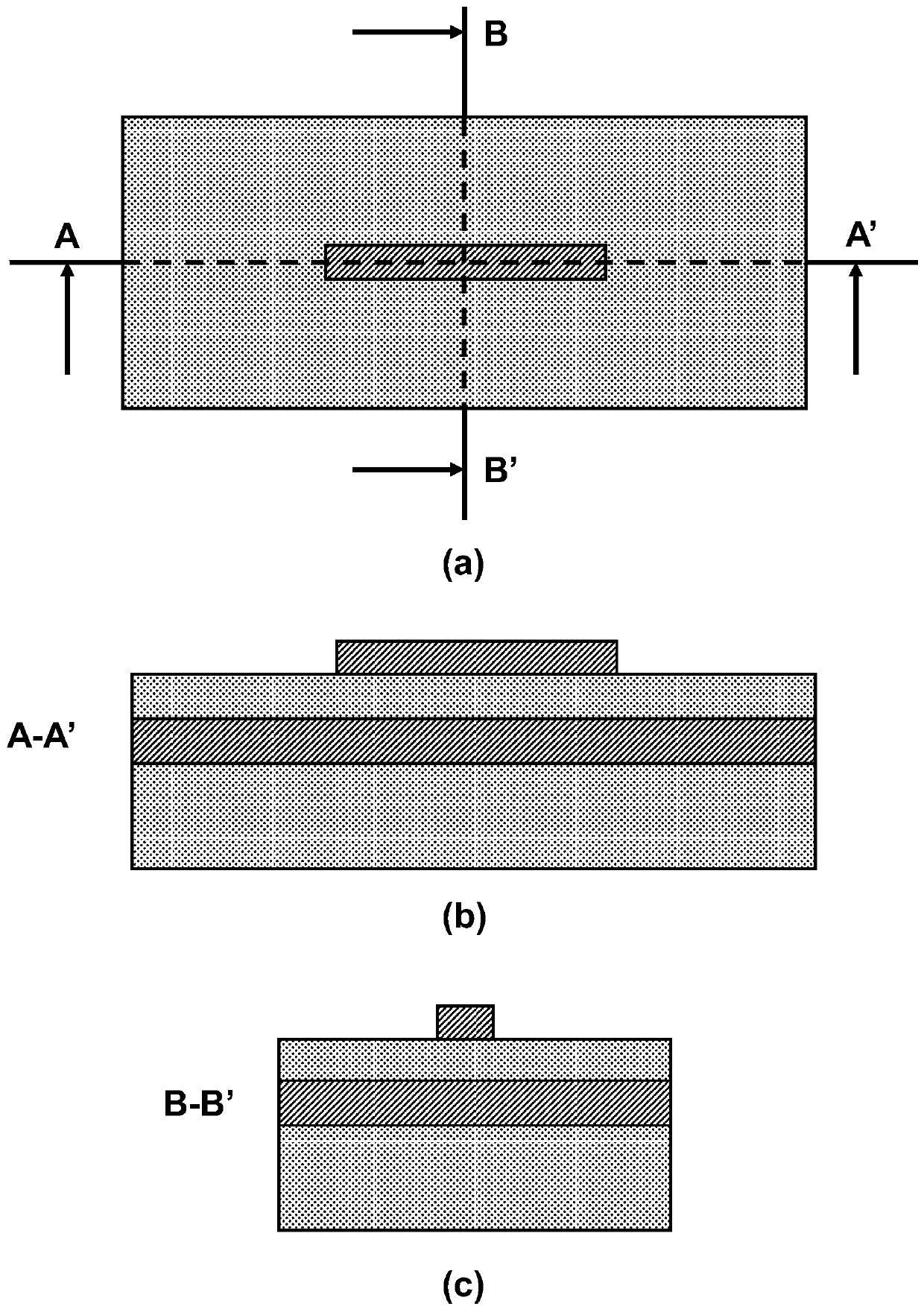

Nanowire ion grid control synaptic transistor and preparation method thereof

ActiveCN111564489AImprove energy efficiencyGood backend integration featuresSemiconductor devicesCMOSNanowire

The invention discloses a nanowire ion grid control synaptic transistor and a preparation method thereof, and belongs to the field of synaptic devices oriented to neural network hardware application.According to the invention, the advantages of good one-dimensional transport characteristic of fence nanowires and low operating voltage in an ion grid control double-electric-layer system are combined, and compared with the existing planar large-size synaptic transistor based on a two-dimensional material or an organic material, the nanowire ion grid control synaptic transistor of the invention can achieve low power consumption and small area overhead; and due to the excellent device consistency and CMOS rear-end integration characteristic, the nanowire ion grid control synaptic transistor has the potential to be applied to a future large-scale neuromorphic calculation circuit.

Owner:PEKING UNIV

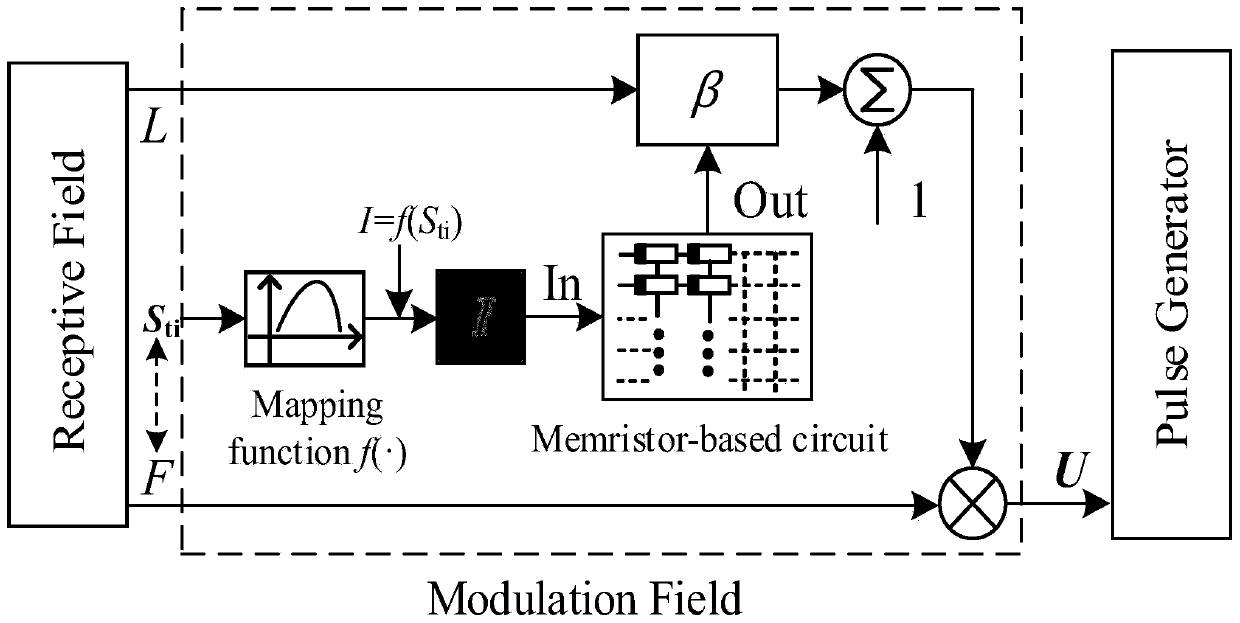

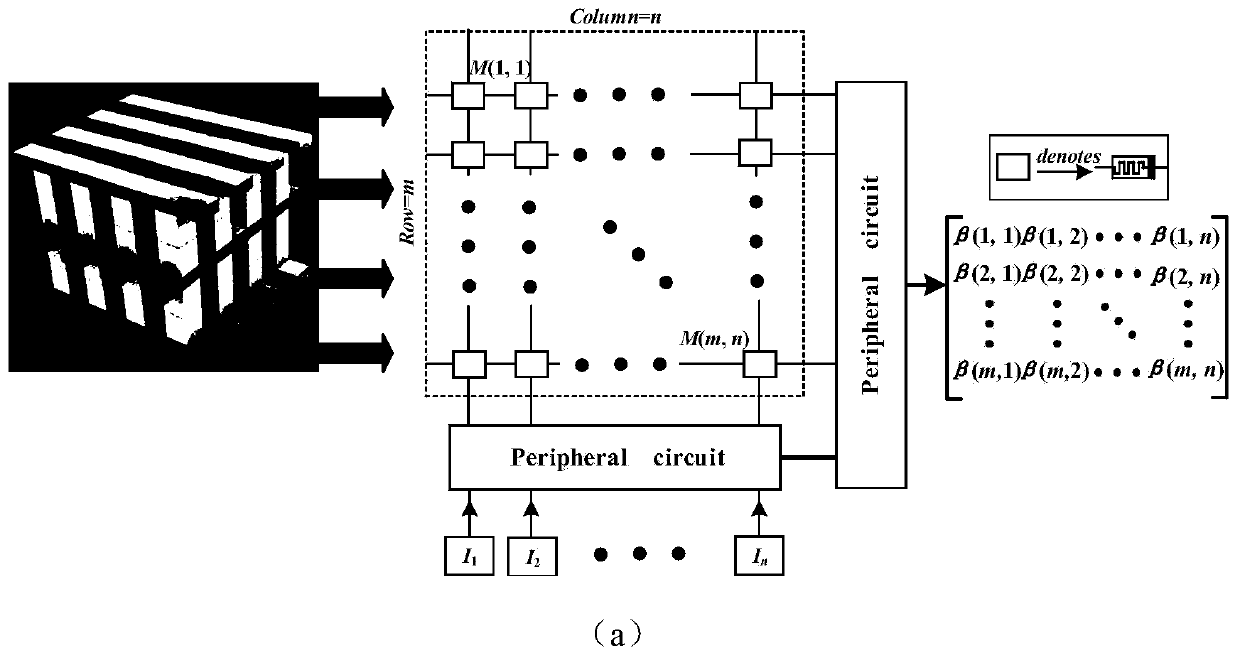

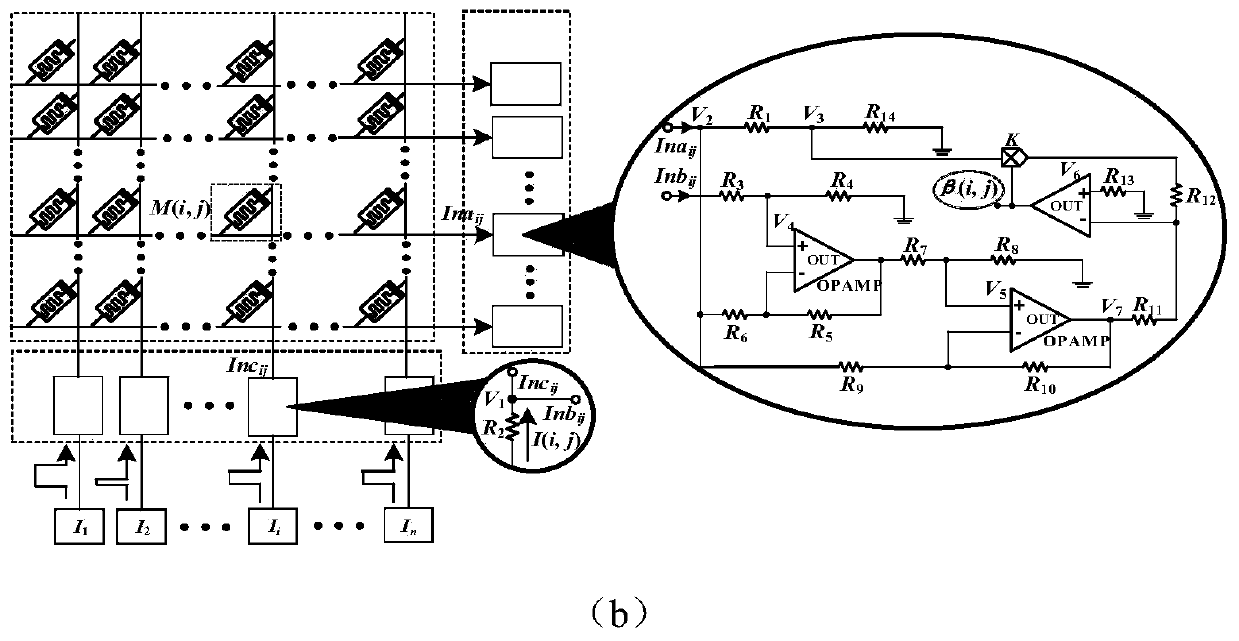

Multi-focus image fusion method based on memristor pulse coupling neural network

InactiveCN111161203AImprove network performancePromote comprehensive hardwareImage enhancementImage analysisAlgorithmNeural network hardware

The invention discloses a multi-focus image fusion method based on a memristor pulse coupling neural network. In an existing pulse coupled neural network (PCNN), a self-adaptive change method of a connection coefficient is completely based on computer analog simulation, so the timeliness of a PCNN model in the operation process is possibly low; meanwhile, a self-adaptive change equation of parameters (connection coefficients) is completely set manually, so the feasibility of parameter self-adaptive change in the actual operation process cannot be guaranteed. The method comprises the followingsteps: designing a self-adaptive memristor PCNN model based on a memristor cross array compact circuit structure; designing a flexible and universal mapping function (Mapping function); applying the self-adaptive memristor PCNN model to multi-focus image fusion, and acquiring a better multi-focus image fusion result by further improving the network structure (single channel to multiple channels) of the multi-focus image fusion model. The method not only provides a brand-new solution for inherent parameter estimation problems in numerous parameter-controlled neural network models, but also facilitates promotion of hardware implementation of the neural network.

Owner:STATE GRID BEIJING ELECTRIC POWER +2

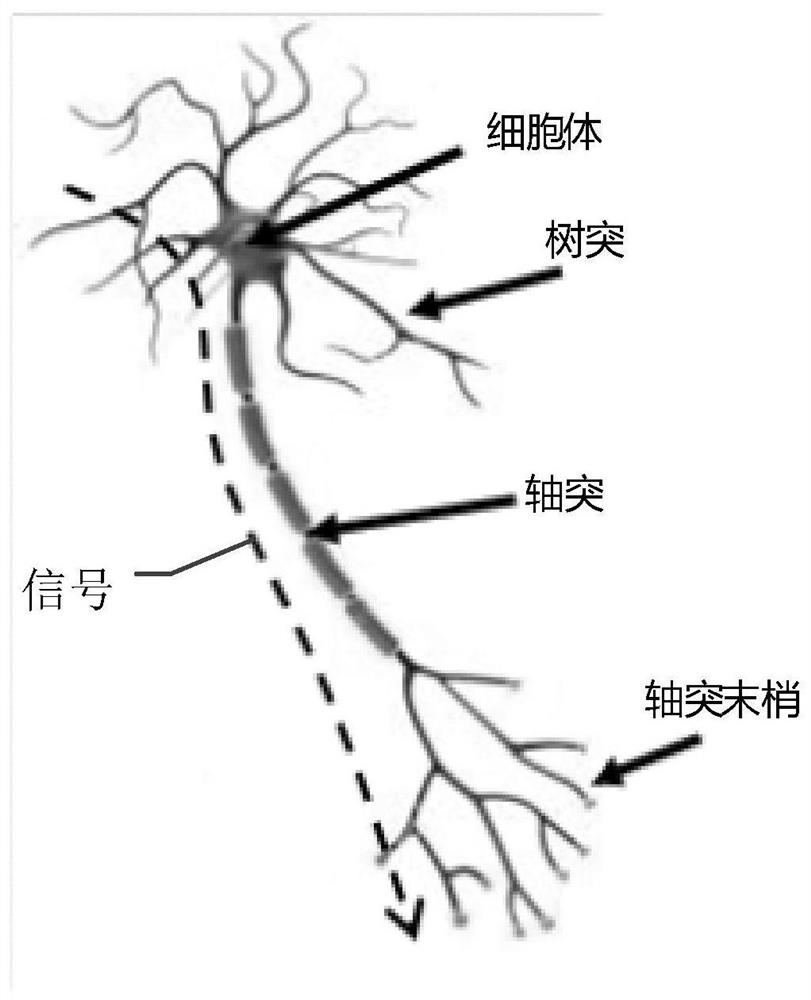

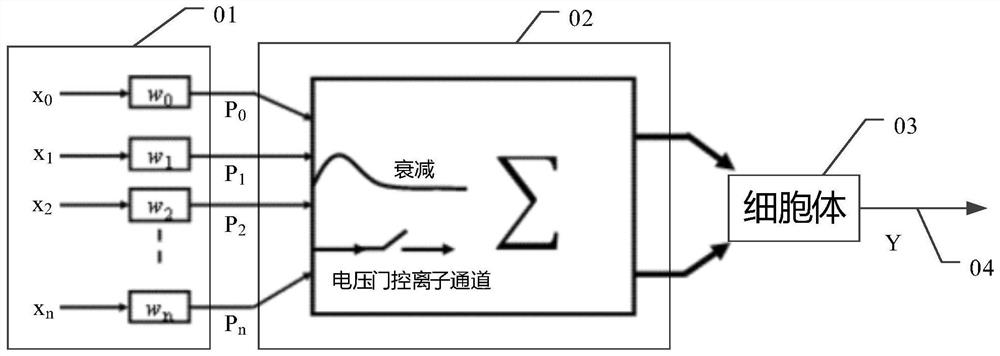

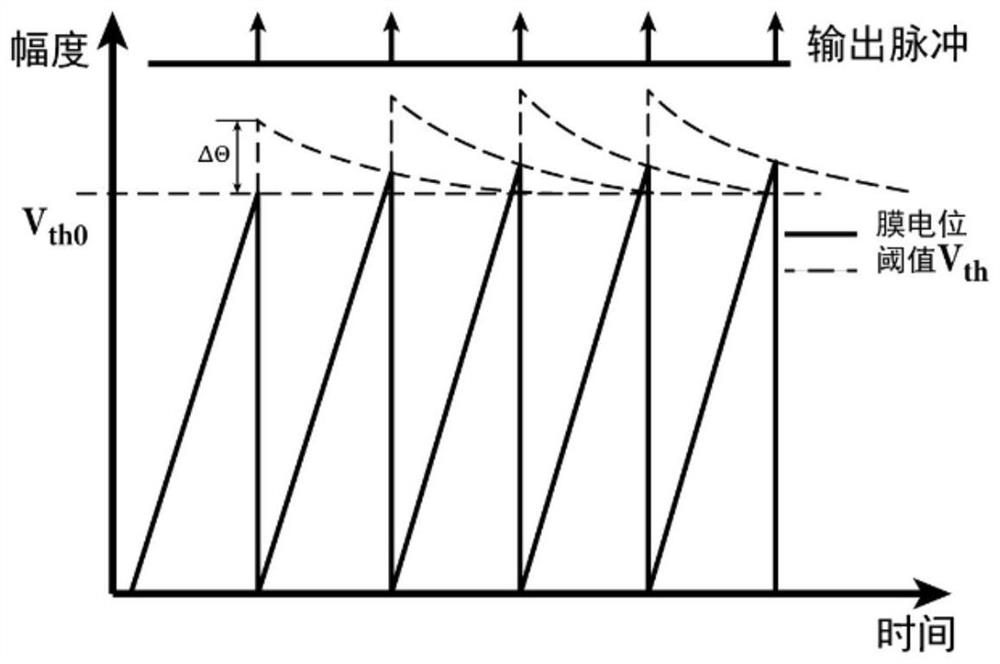

Neuron analog circuit, driving method thereof and neural network device

PendingCN111967589ARaise the threshold voltageNeural architecturesPhysical realisationNetwork activityNeural network hardware

The invention discloses a neuron analog circuit, a driving method thereof and a neural network device. The neuron analog circuit comprises an integrating circuit and a threshold adjusting circuit, andthe integrating circuit is configured to change the voltage of the first node in one direction in response to the excitation signal; the threshold adjusting circuit comprises a first memristor, a second memristor and a monostable circuit, wherein the output end and the input end of the monostable circuit are connected with a second node and a third node respectively; the first end of the first memristor is controlled by the voltage of the first node, and the second end of the first memristor is connected with the third node; the first end of the second memristor is controlled by the voltage of the second node, and the second memristor is configured to change the resistance value of the second memristor according to the voltage difference between the first end and the second end of the second memristor. The neuron simulation circuit enables excitability of neurons to be adaptively adjusted according to network activities, and is suitable for integration of a large-scale spiking neuralnetwork hardware system.

Owner:TSINGHUA UNIV

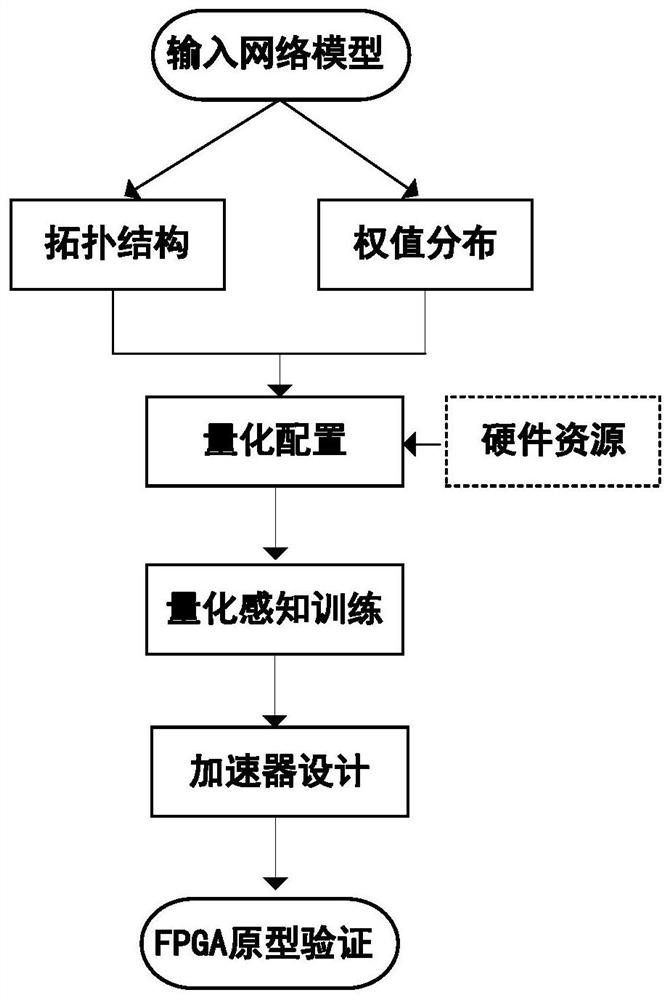

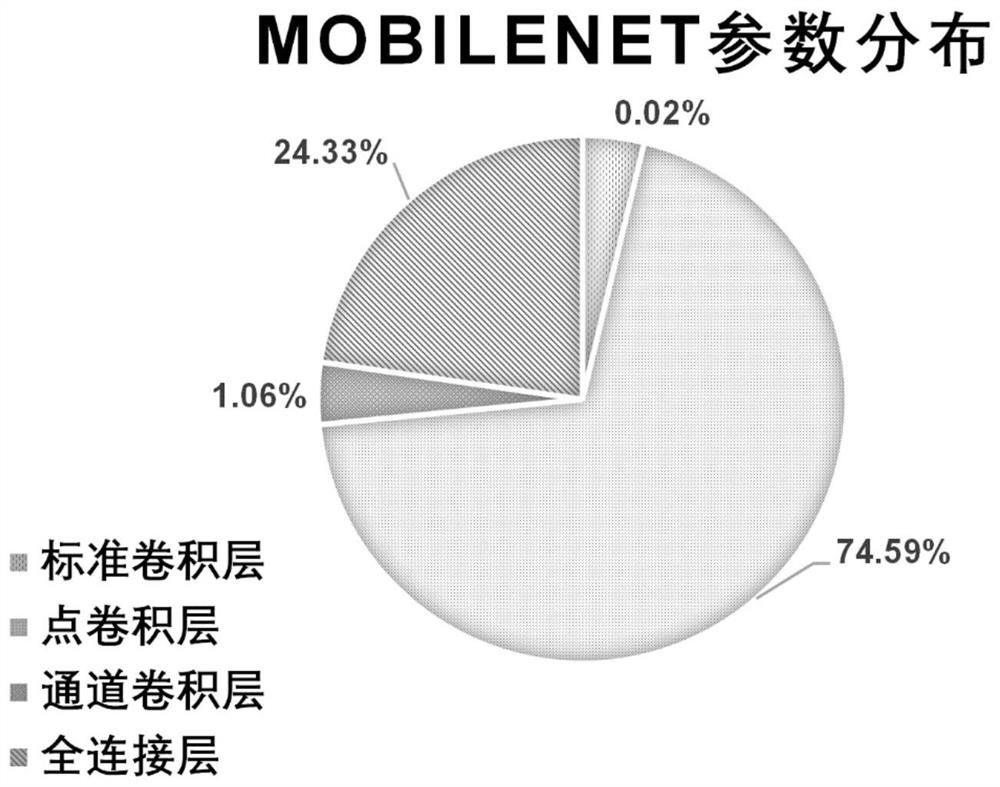

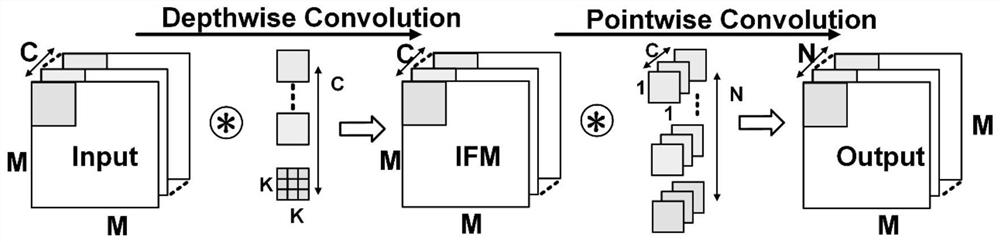

Lightweight neural network hardware accelerator based on depth separable convolution

ActiveCN113033794AReduce the inventory of outbound visitsSuitable for applications with limited power consumptionNeural architecturesPhysical realisationActivation functionMultiplexer

The invention discloses a lightweight neural network hardware accelerator based on depth separable convolution. The lightweight neural network hardware accelerator comprises an A-path K * K channel convolution processing unit parallel array, an A-path 1 * 1 point convolution processing unit parallel array and an on-chip memory used for buffering a convolutional neural network and an input and output feature map, The convolutional neural network is a lightweight neural network obtained by compressing a neural network MobileNet by using a quantitative perception training method; The A-path K * K channel convolution processing unit parallel array and the multi-path 1 * 1 point convolution processing unit parallel array are deployed in a pixel-level assembly line; Each K * K channel convolution processing unit comprises a multiplier, a summator and an activation function calculation unit; and each 1 * 1 point convolution processing unit comprises a multiplexer, a two-stage adder tree and an accumulator. According to the invention, the problem of high-energy-consumption off-chip memory access generated in the reasoning process of the accelerator in the prior art is solved, resources are saved, and the processing performance is improved.

Owner:CHONGQING UNIV

Convolutional neural network hardware module deployment method

InactiveCN108416438AEfficient and rapid conversion deploymentEfficient deploymentNeural architecturesPhysical realisationNeural network hardwareHardware modules

The invention discloses a convolutional neural network hardware module deployment method, and relates to the field of convolutional neural network implementation. The method comprises the steps that an upper layer compiler of a convolutional neural network is used to perform simulation comparison on the implementation form of the convolutional neural network according to a target hardware resourceand a data volume of a convolutional neural network model; the requirements of the hardware resource and convolutional neural network speed are weighed to determine a deployment parameter of each hardware module of the convolutional neural network; the number of each hardware module is divided, and the connection mode among hardware modules is determined, so that the deployment of the convolutional neural network hardware modules is achieved.

Owner:JINAN INSPUR HIGH TECH TECH DEV CO LTD

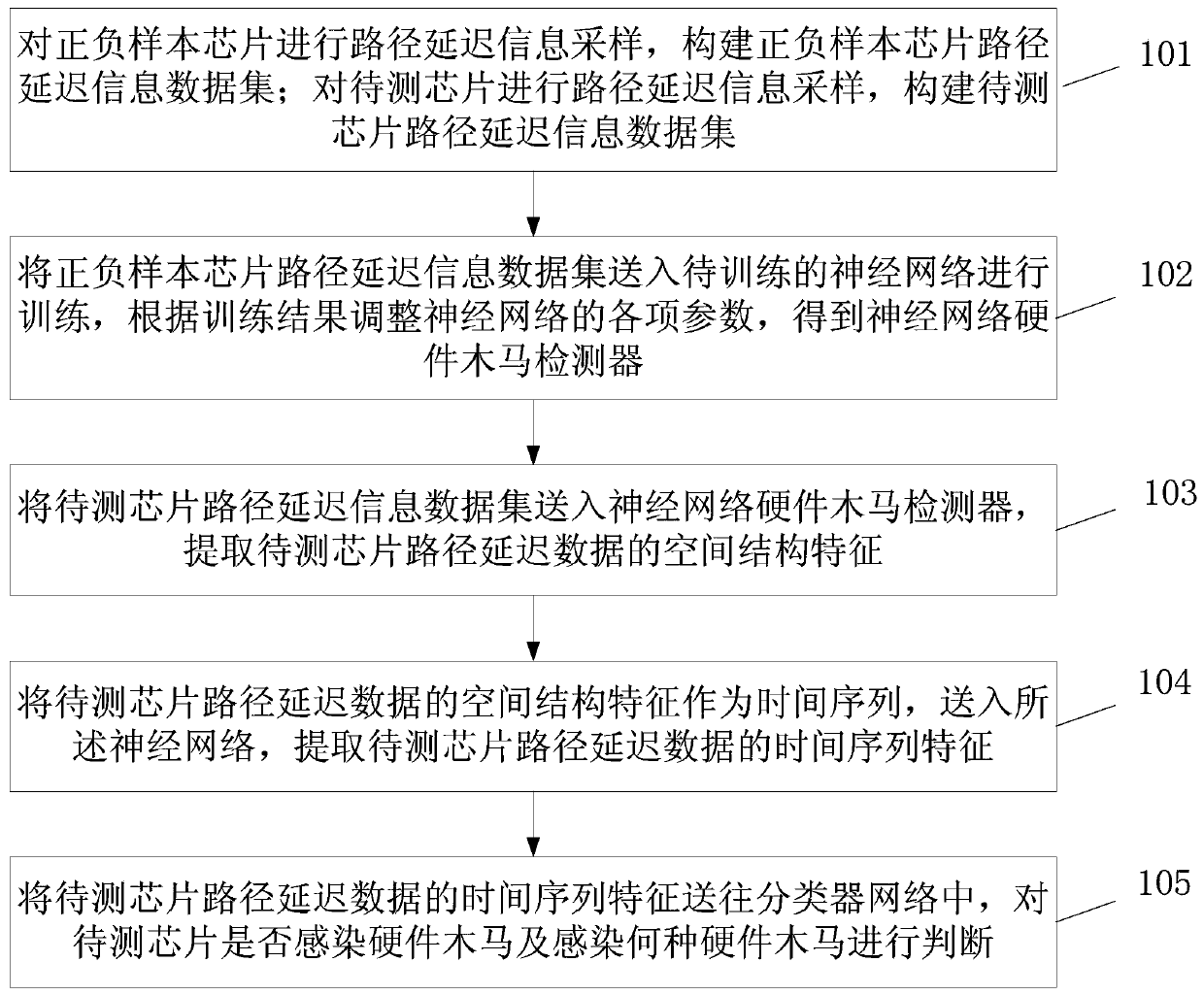

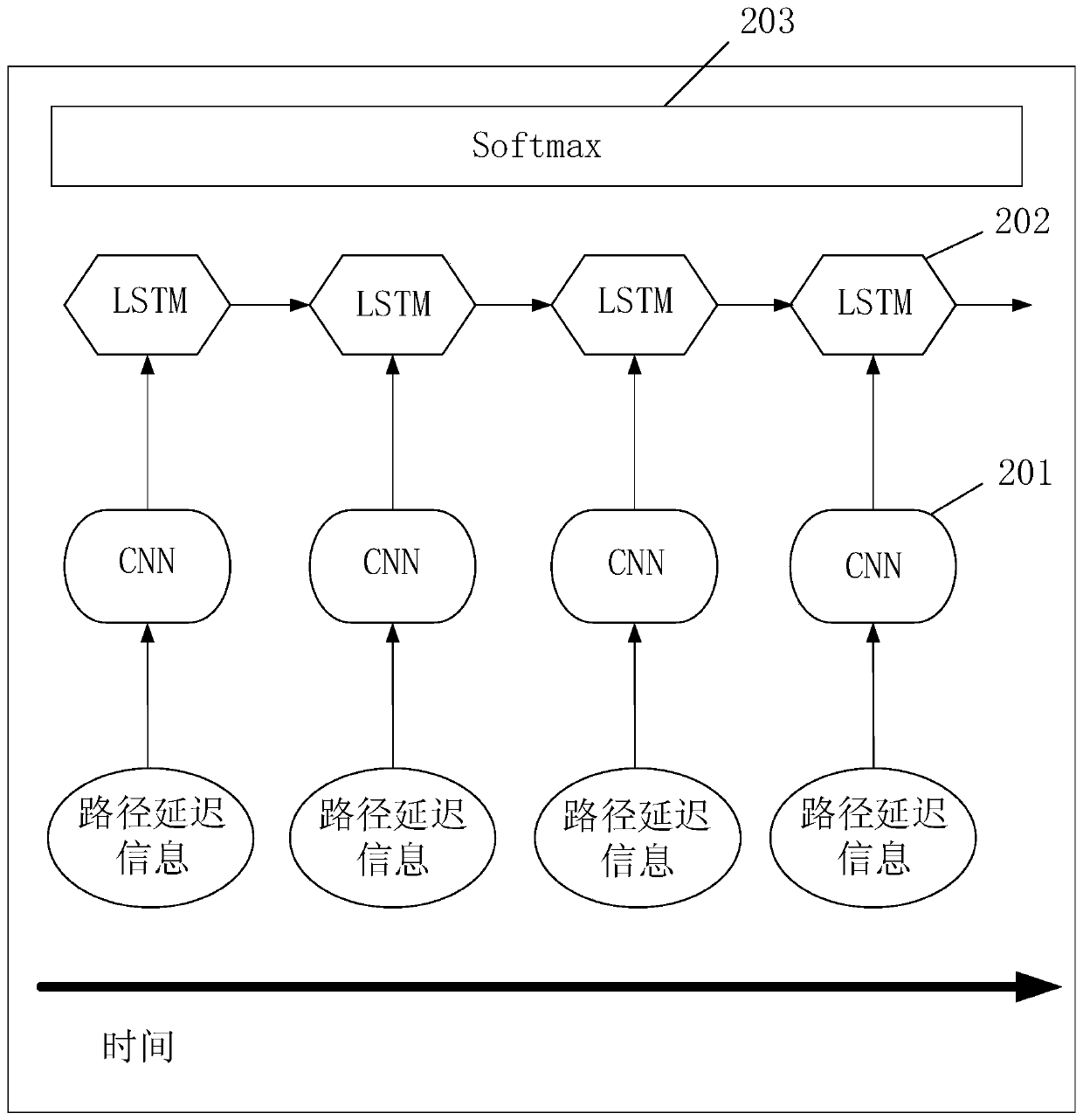

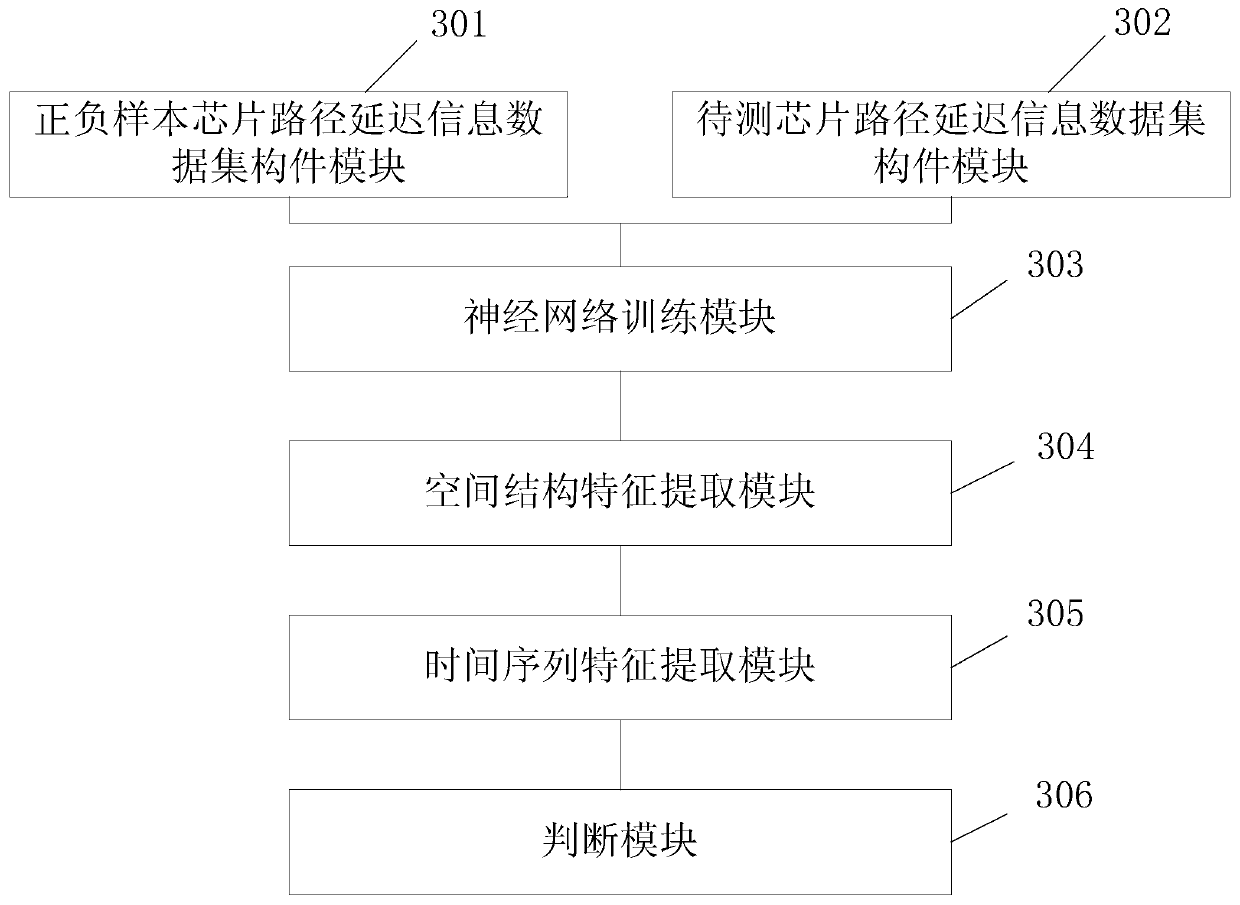

Hardware Trojan horse detection method and device

ActiveCN110059504AImprove detection efficiencyImprove detection accuracyInternal/peripheral component protectionNerve networkData set

The invention provides a hardware Trojan horse detection method and device. The method comprises the following steps: sampling path delay information of positive and negative sample chips, and constructing a path delay information data set of the positive and negative sample chips; performing path delay information sampling on the chip to be tested, and constructing a path delay information data set of the chip to be tested; sending the positive and negative sample chip path delay information data set into a to-be-trained neural network for training to obtain a neural network hardware Trojan horse detector; sending the path delay information data set of the chip to be tested into the neural network hardware Trojan detector, and extracting spatial structure characteristics of the path delaydata of the chip to be tested; taking the spatial structure characteristics of the path delay data of the chip to be tested as a time sequence, sending the time sequence into a neural network, and extracting the time sequence characteristics of the path delay data of the chip to be tested; and sending the time sequence characteristics of the path delay data of the chip to be tested to a classifier network, and judging whether the chip to be tested is infected with the hardware Trojan horse or not and which hardware Trojan horse is infected.

Owner:XIDIAN UNIV

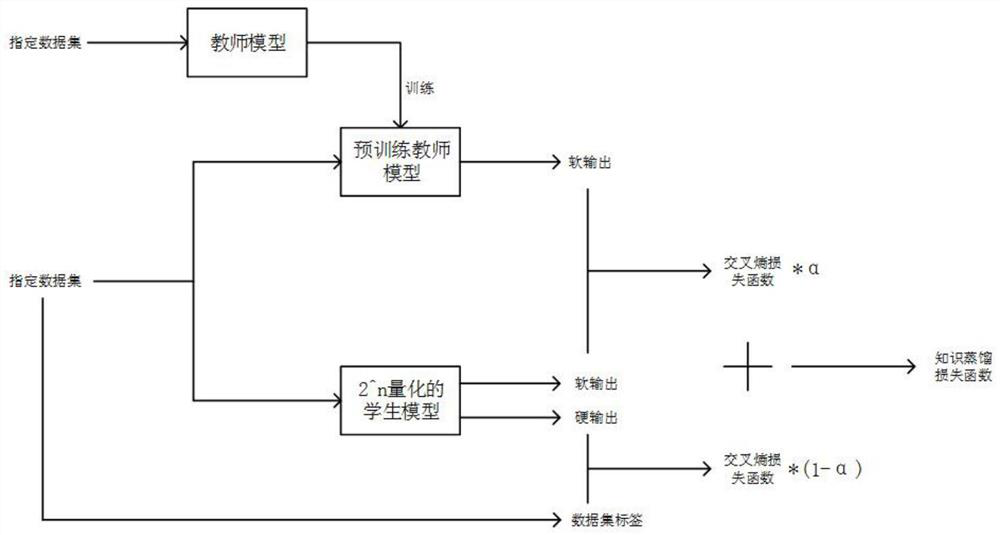

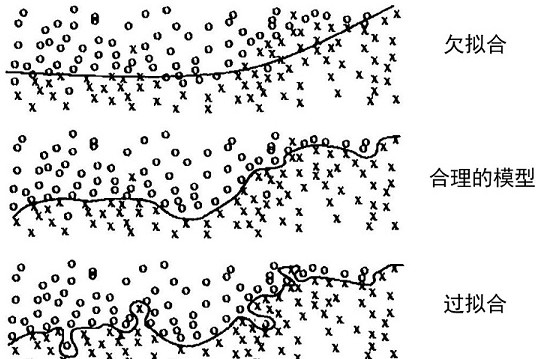

2-exponential power deep neural network quantification method based on knowledge distillation training

PendingCN111985523AReduce precision lossImprove computing efficiencyCharacter and pattern recognitionNeural learning methodsNeural network hardwareEngineering

The invention relates to the technical field of neural networks. The invention further discloses a 2-exponential power deep neural network quantification method based on knowledge distillation training. The method comprises a teacher model and a student model with exponential power quantification of 2, and is characterized in that the teacher network model selects a network model with more parameters and higher precision, and the student model generally selects a network model with fewer parameters and lower precision than the teacher model. According to the invention, an exponential power quantification deep neural network method in which a neural network weight value is quantified into 2 is adopted; an error with a full-precision weight value can be reduced; the precision of the trainednetwork and the precision loss of the unquantified network are effectively reduced; moreover, the exponential power weight multiplication operation of 2 can be completed by displacement, the method has obvious calculation advantages in hardware equipment deployment, the calculation efficiency of neural network hardware can be improved, and the neural network model trained based on the knowledge distillation algorithm can effectively improve the accuracy of the quantitative network.

Owner:HEFEI UNIV OF TECH

Efficient data access control device for neural network hardware acceleration system

ActiveUS10936941B2Digital data processing detailsNeural architecturesData access controlNeural network hardware

The technical disclosure relates to artificial neural network. In particular, the technical disclosure relates to how to implement efficient data access control in the neural network hardware acceleration system. Specifically, it proposes an overall design of a device that can process data receiving, bit-width transformation and data storing. By employing the technical disclosure, neural network hardware acceleration system can avoid the data access process becomes the bottleneck in neural network computation.

Owner:XILINX INC

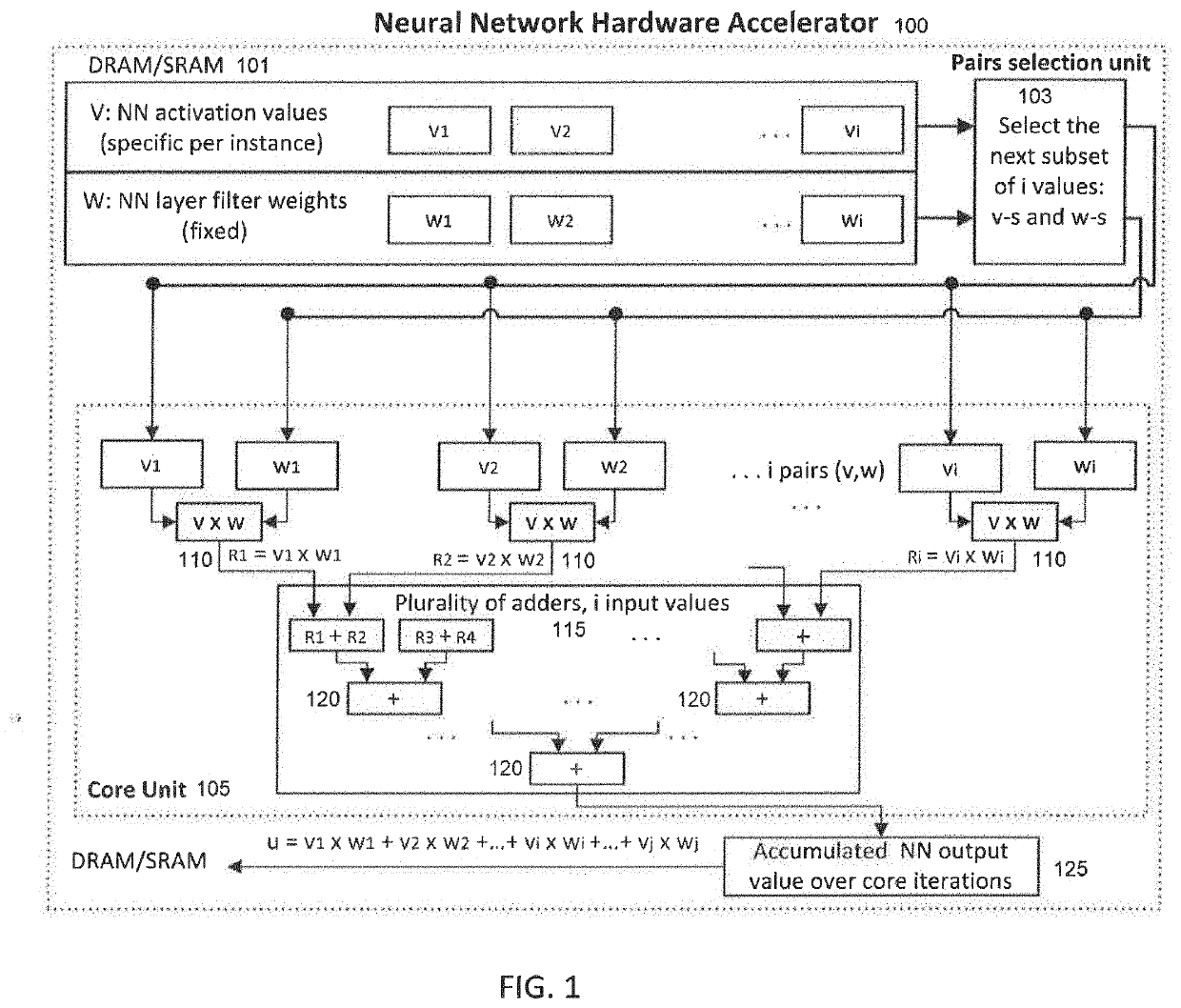

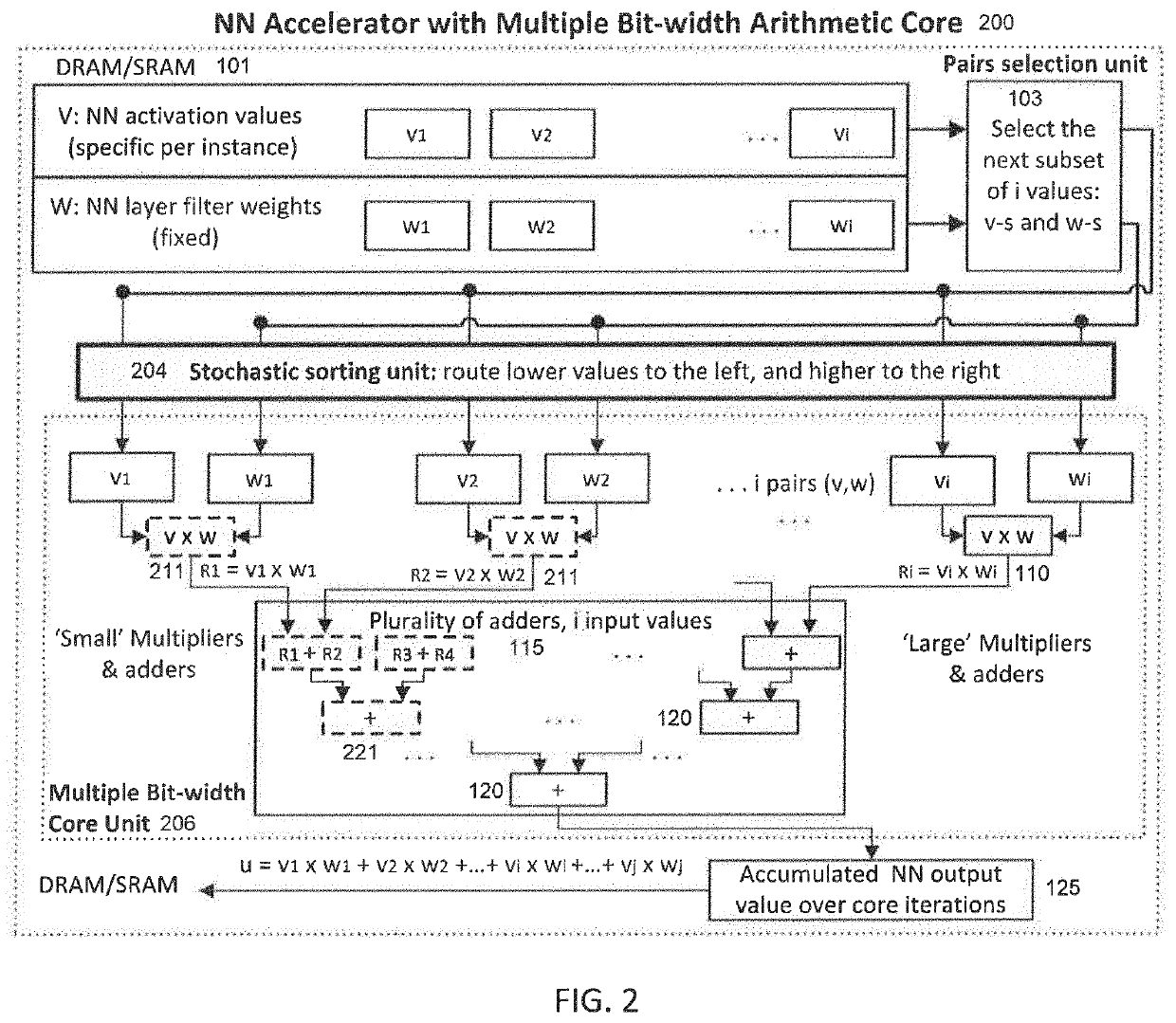

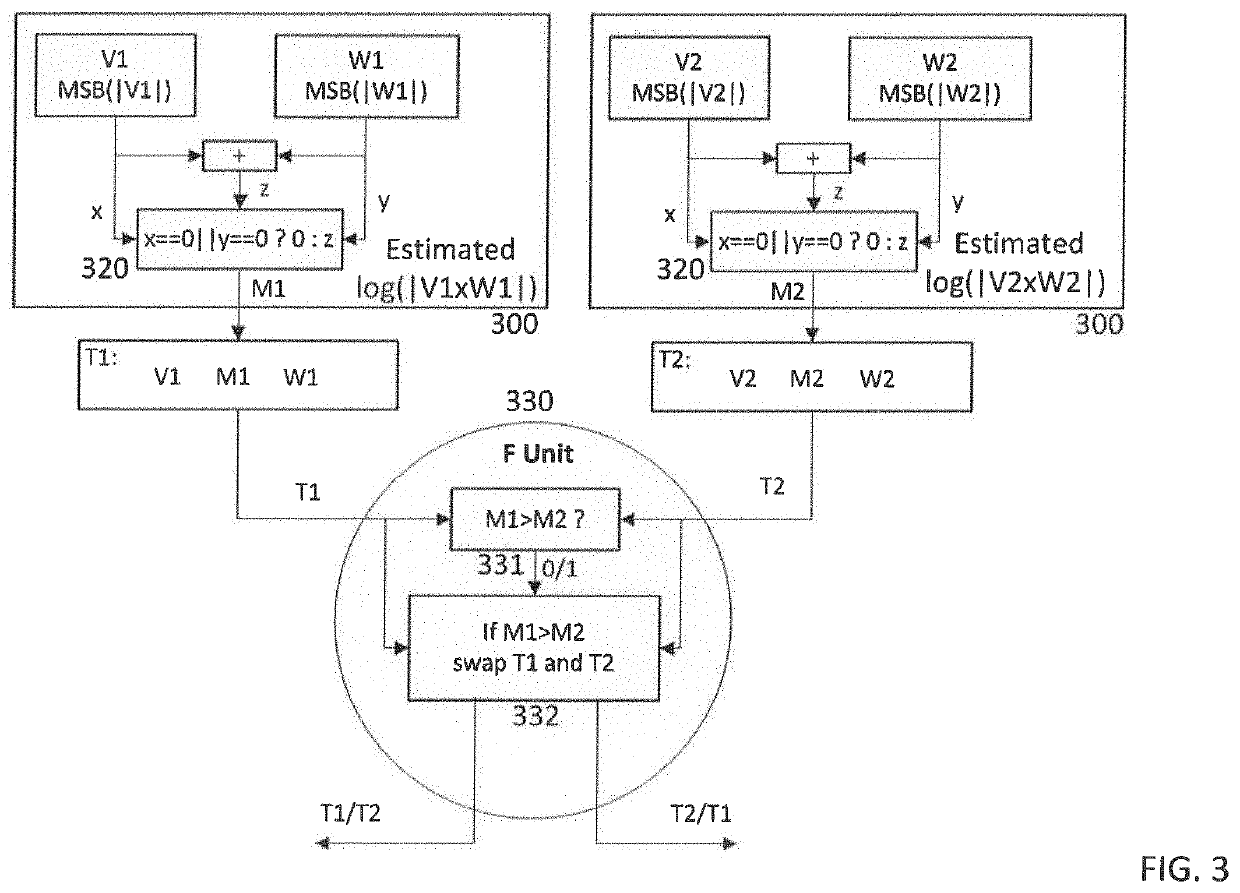

Neural network hardware acceleration with stochastic adaptive resource allocation

ActiveUS20190378001A1Accurate multiplicationConsuming less powerDigital data processing detailsProgram controlBinary multiplierAlgorithm

A digital circuit for accelerating computations of an artificial neural network model includes a pairs selection unit that selects different subsets of pairs of input vector values and corresponding weight vector values to be processed simultaneously at each time step; a sorting unit that simultaneously processes a vector of input-weight pairs wherein pair values whose estimated product is small are routed with a high probability to small multipliers, and pair values whose estimated product is greater are routed with a high probability to large multipliers that support larger input and output values; and a core unit that includes a plurality of multiplier units and a plurality of adder units that accumulate output results of the plurality of multiplier units into one or more output values that are stored back into the memory, where the plurality of multiplier units include the small multipliers and the large multipliers.

Owner:SAMSUNG ELECTRONICS CO LTD

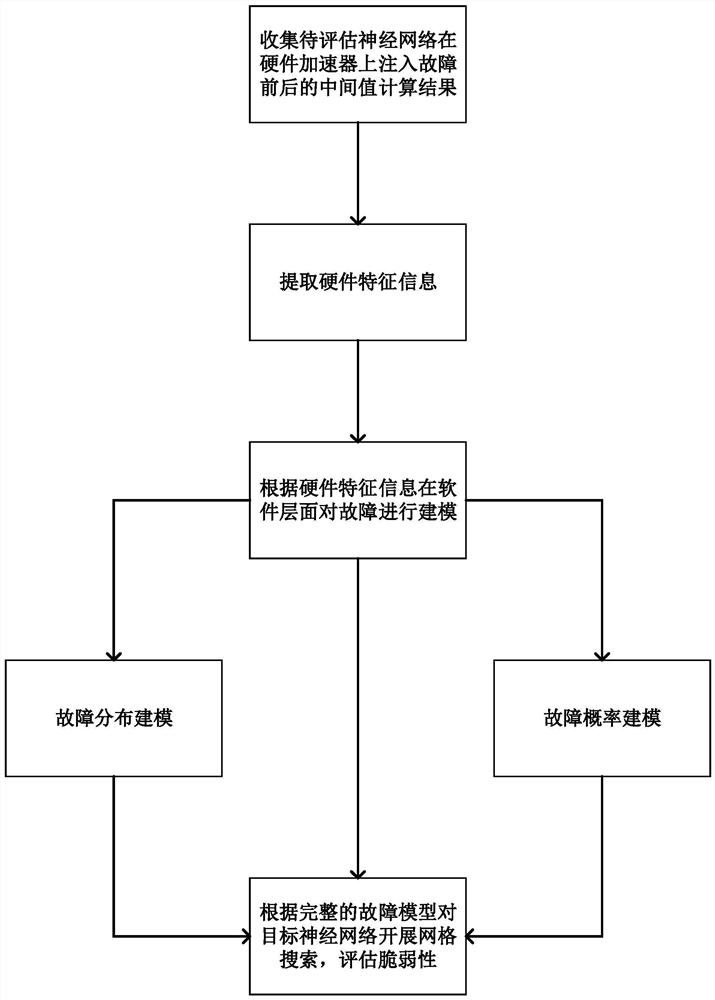

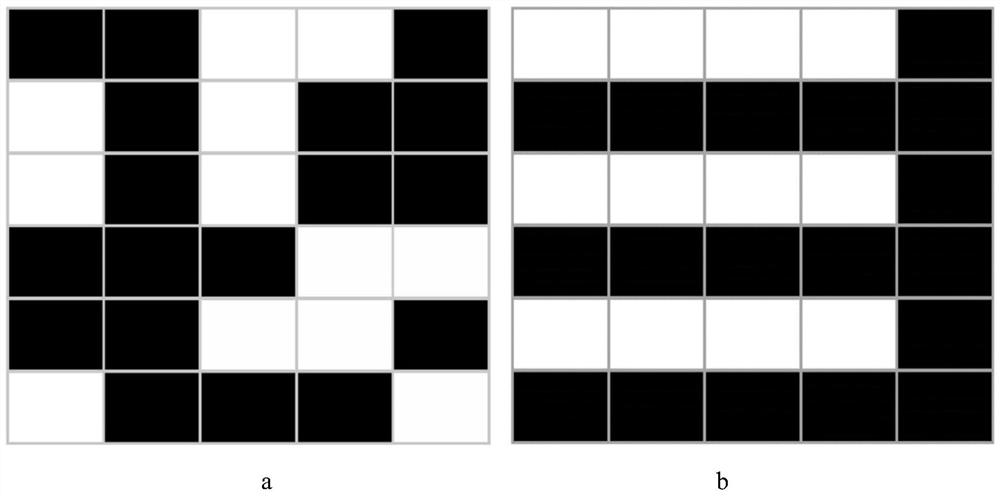

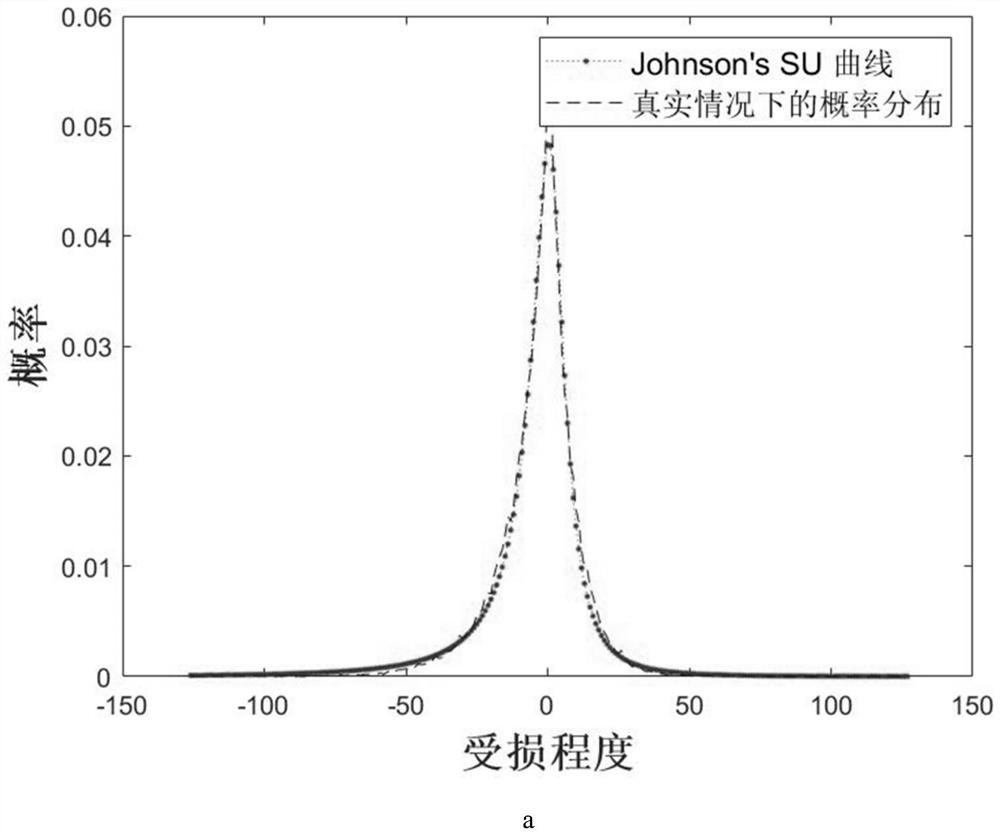

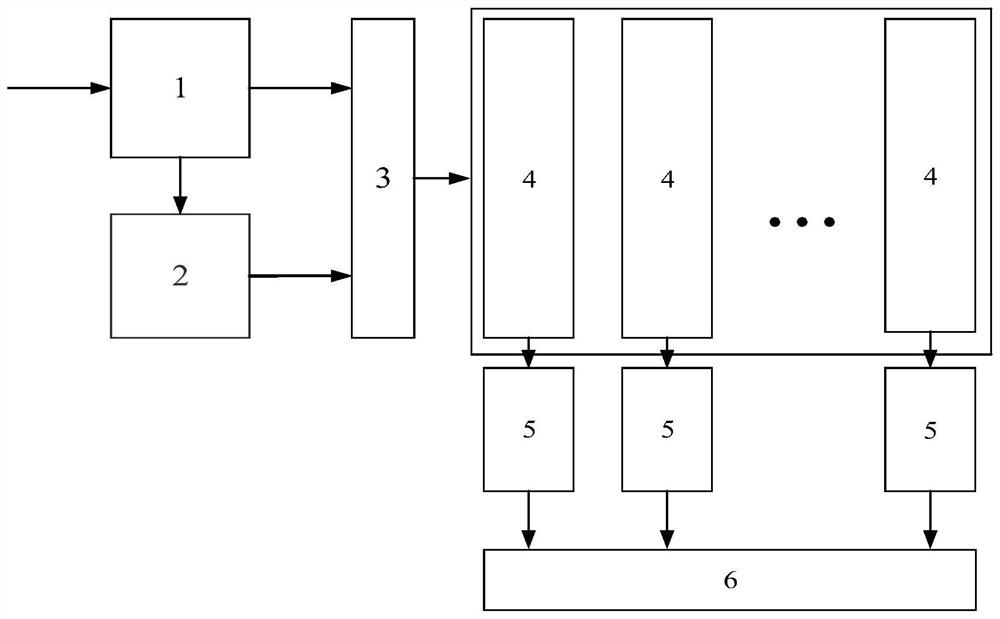

Neural network accelerator fault vulnerability assessment method based on hardware feature information

PendingCN114547966AEnsure safetyFine-grainedCharacter and pattern recognitionDesign optimisation/simulationComputer hardwareAlgorithm

The invention discloses a neural network accelerator fault vulnerability assessment method based on hardware feature information, which comprises the following steps: extracting hardware information features of a neural network operating on a hardware accelerator, the information features comprising features of the neural network in normal operation and information features of the neural network in fault attack; and modeling fault attack by using the extracted information features, predicting the influence of the fault on an actual neural network hardware accelerator through a fault distribution simulation and fault probability simulation method, and judging the vulnerability of the neural network when the neural network is faced with the fault attack through an interlayer search method. According to the method, an existing hardware fault vulnerability assessment framework is improved, and the accuracy of fault simulation is improved through a software and hardware integrated verification method while the fine granularity is optimized. The improved method has a good effect in evaluating common hardware fault attacks, and has a certain application value in the field of hardware fault evaluation.

Owner:ZHEJIANG UNIV

Full-digital pulse neural network hardware system and method based on STDP rule

ActiveCN113408714AIncrease flexibilityImprove reusabilityNeural architecturesEnergy efficient computingComputer hardwareSynapse

The invention discloses a full-digital pulse neural network hardware system and a full-digital pulse neural network hardware method based on an STDP rule, which organically combine the STDP rule in neurobiology and the advantage of parallelized data processing in a digital circuit, and can efficiently complete training and identification tasks on an image data set in the field of image processing. The spiking neural network system comprises an input layer neuron module, a plasticity learning module, a data line control module, a synaptic array module, an output layer neuron module and an experiment report module. The output end of the output layer neuron module is connected with the input end of the data line control module and the input end of the plasticity learning module, the output end of the plasticity learning module is connected with the input end of the data line control module, and the output end of the data line control module is connected with the input end of the synapse array module. The output end of the synapse array module is connected with the input end of the output layer neurons, and the output end of the output layer neurons is connected with the input end of the experiment report module.

Owner:HANGZHOU DIANZI UNIV

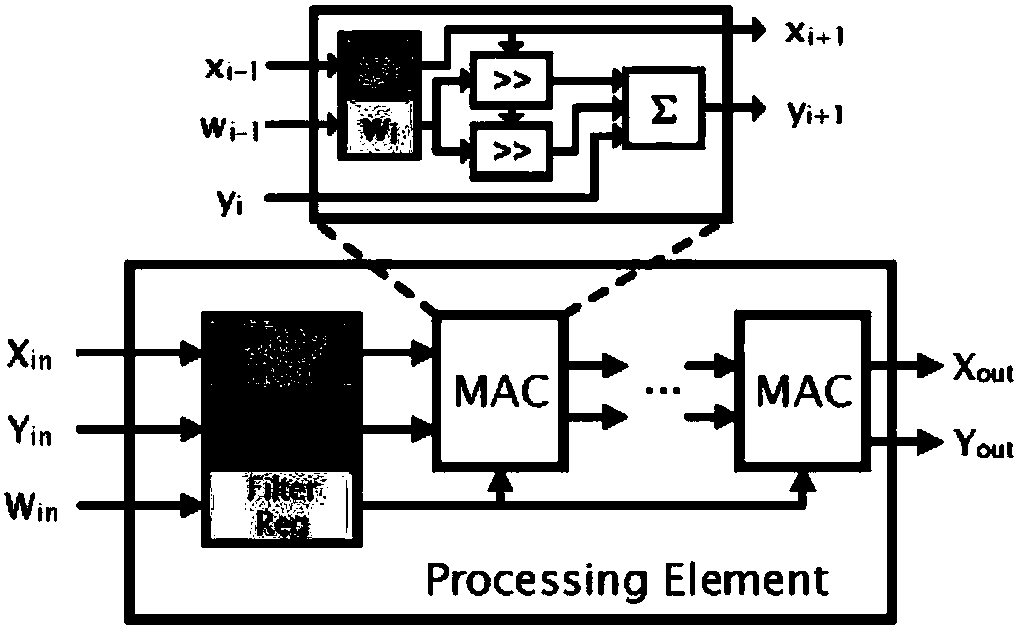

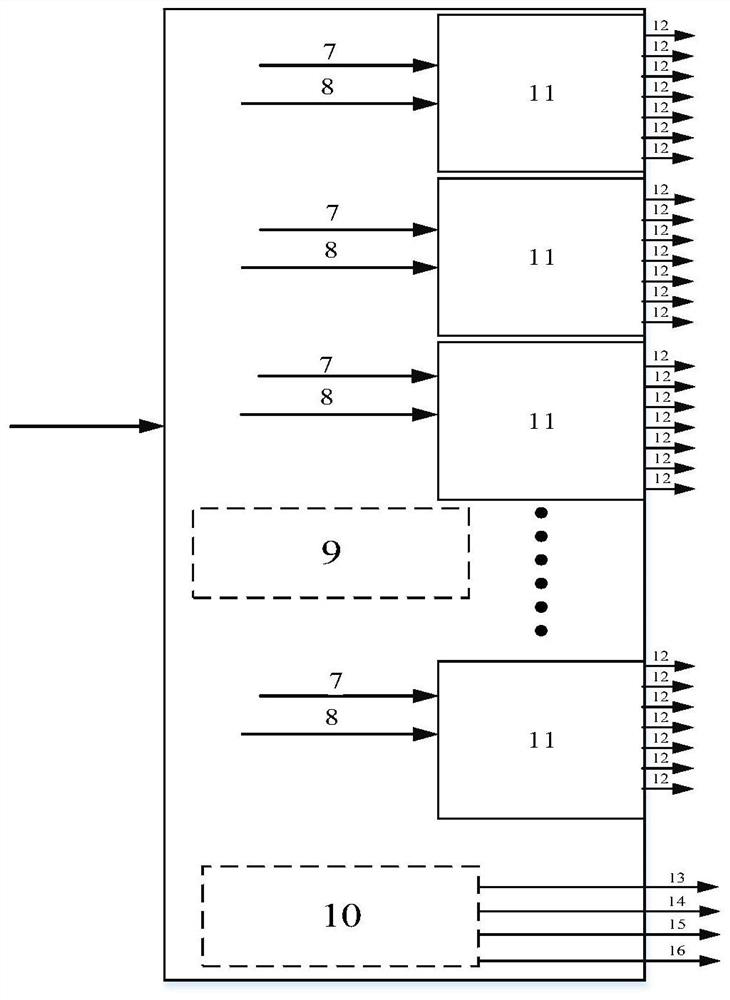

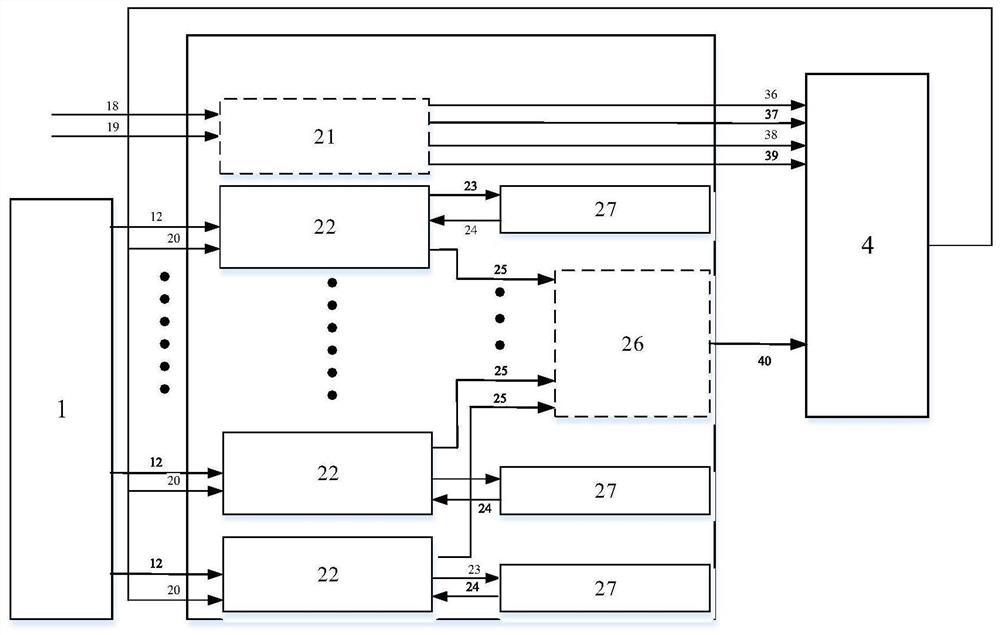

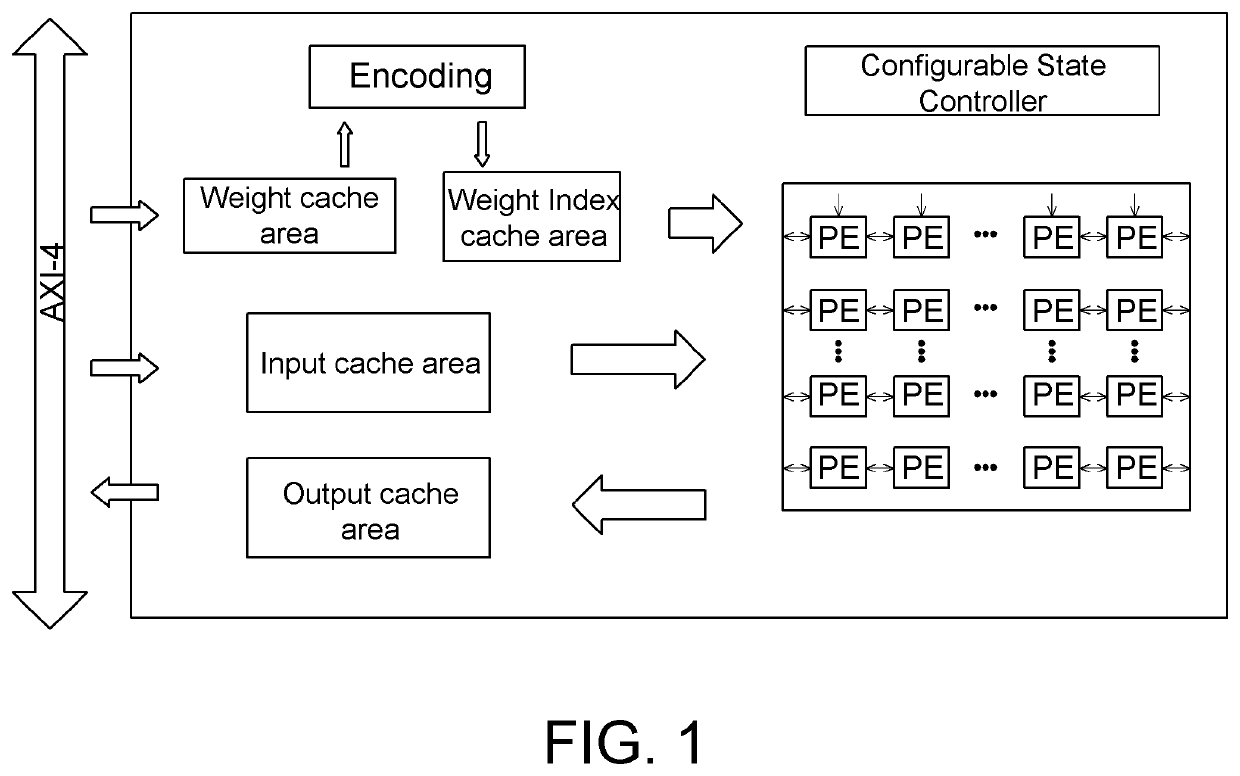

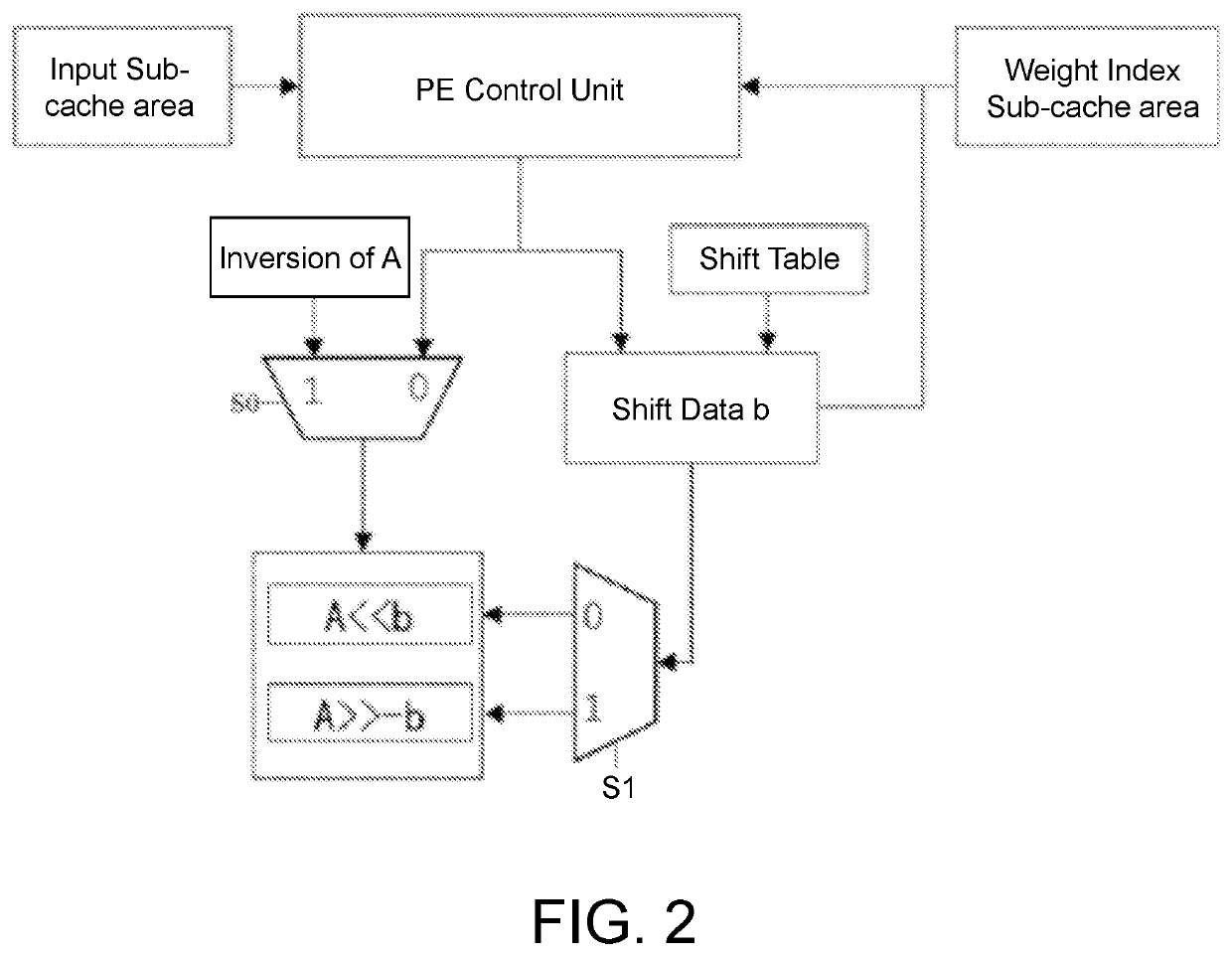

Deep neural network hardware accelerator based on power exponential quantization

PendingUS20210357736A1Lower requirementImprove computing efficiencyResource allocationDigital data processing detailsParallel computingNeural network hardware

A deep neural network hardware accelerator comprises: an AXI-4 bus interface, an input cache area, an output cache area, a weighting cache area, a weighting index cache area, an encoding module, a configurable state controller module, and a PE array. The input cache area and the output cache area are designed as a line cache structure; an encoder encodes weightings according to an ordered quantization set, the quantization set storing the possible value of the absolute value of all of the weightings after quantization. During the calculation of the accelerator, the PE unit reads data from the input cache area and the weighting index cache area to perform shift calculation, and sends the calculation result to the output cache area. The accelerator uses shift operations to replace floating point multiplication operations, reducing the requirements for computing resources, storage resources, and communication bandwidth, and increasing the calculation efficiency of the accelerator.

Owner:SOUTHEAST UNIV

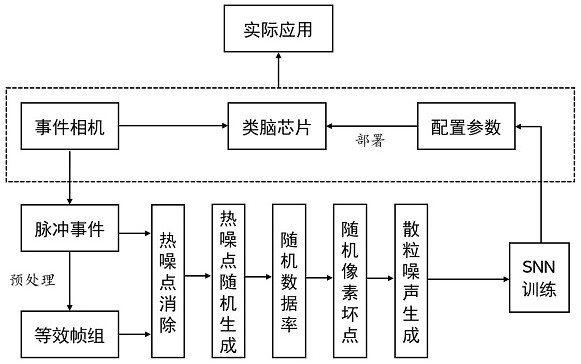

Pulse neural network training method, storage medium, chip and electronic product

ActiveCN114418073AAvoid mismatchSolve application adaptation problemsImage enhancementNeural architecturesAlgorithmSpiking neural network

The invention relates to a spiking neural network training method, a storage medium, a chip and an electronic product. In order to overcome the problem that an algorithm and hardware are difficult to fit due to device mismatch in the prior art and enable a trained network to well adapt to hardware characteristics of different sensors, various event-based and rate-based enhancements are carried out on training data; comprising the steps of random thermal noise generation, shot noise simulation, adaptive data rate adjustment and random firmware necrosis, training is carried out based on enhanced data, and configuration parameters enabling the prediction performance of the pulse neural network to be optimal are obtained. According to the method, the application adaptation problem when different sensors or different environments are connected with pulse neural network hardware is efficiently and uniformly solved, the chip performance is more stable and effective, and the reasoning result is more consistent. The method is suitable for the field of brain-like chips, in particular to the field of pulse neural network training.

Owner:SHENZHEN SYNSENSE TECH CO LTD

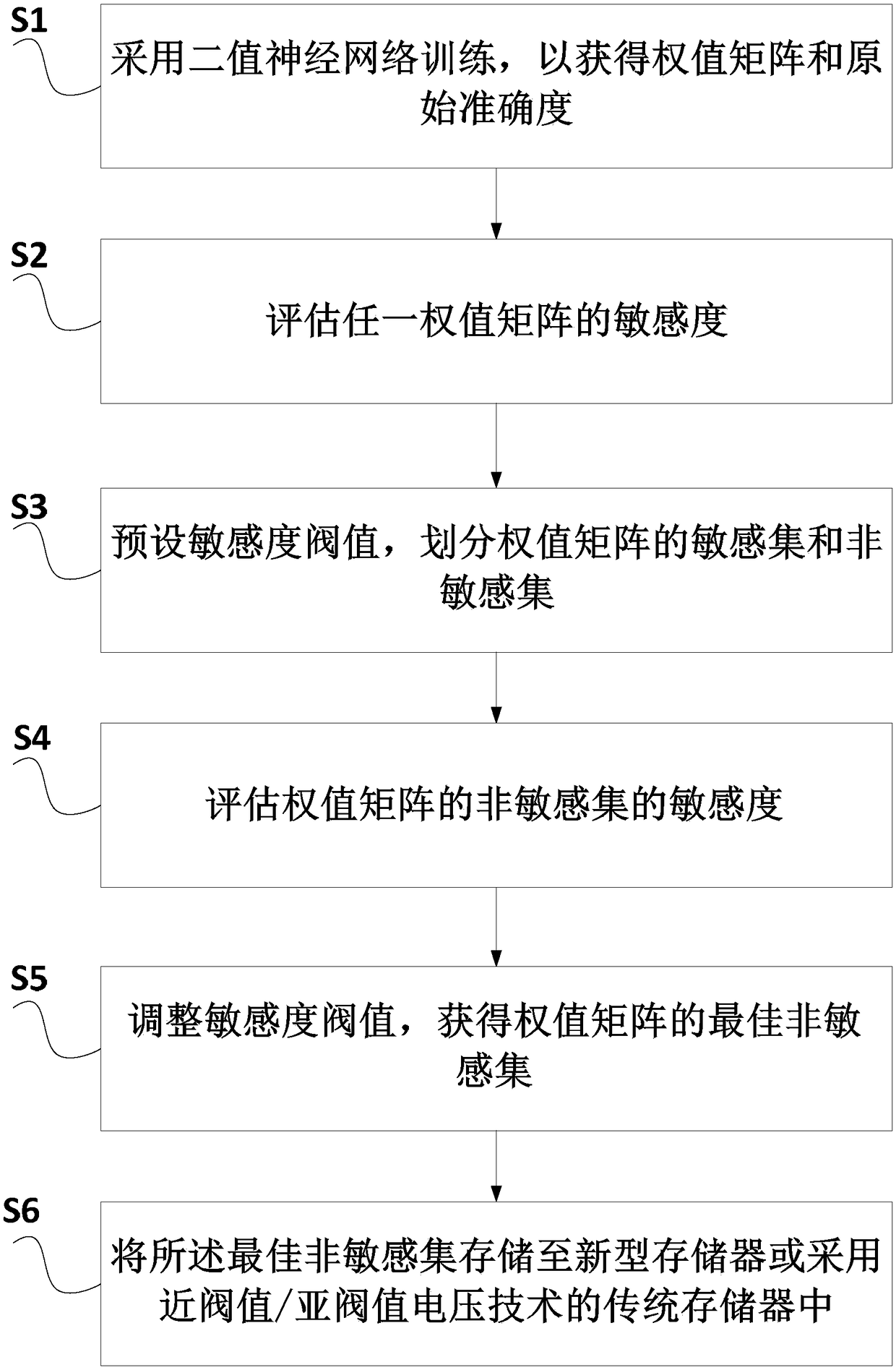

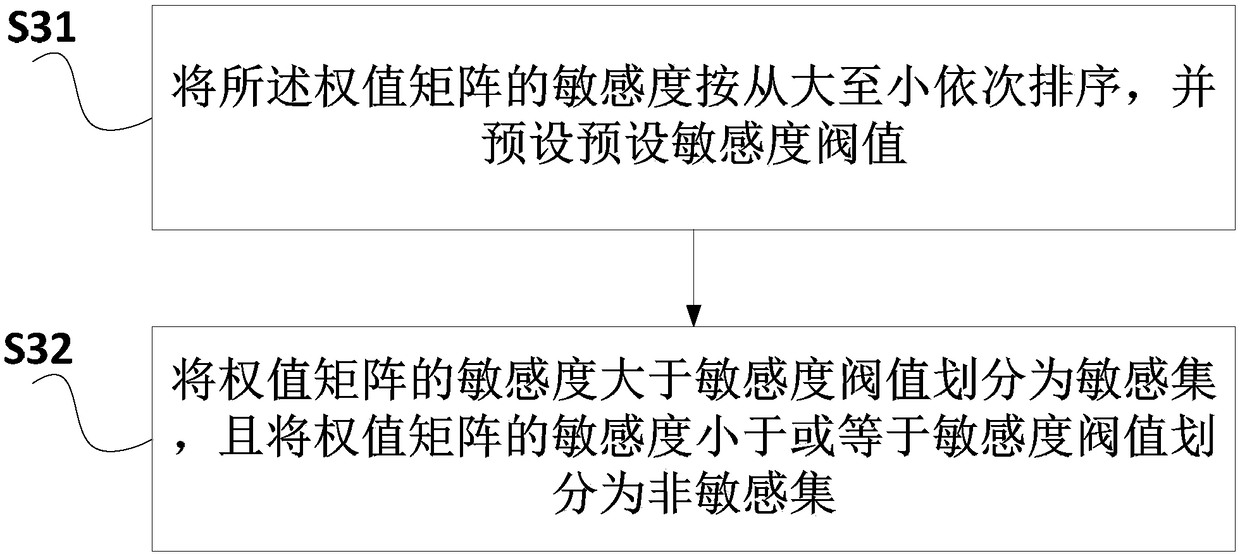

Binary neural network compression method based on weight sensitivity

ActiveCN109212960AGuaranteed recognition accuracyGood non sensitive setAdaptive controlSub thresholdNeural network hardware

The invention discloses a binary neural network compression method based on weight sensitivity. The method comprises the following steps of training by using a binary neural network to acquire weightmatrixes and original accuracy; assessing the sensitivity of any weight matrix; presetting a sensitivity threshold, and dividing a sensitive set and a non-sensitive set of the weight matrixes; assessing the sensitivity of the non-sensitive set of the weight matrixes; adjusting the sensitivity threshold to acquire the best non-sensitive set of the weight matrixes, wherein the sensitivity of the best non-sensitive set is equal to a preset maximum accuracy loss value; and storing the best non-sensitive set into a novel storage or a traditional storage using a nearly threshold / sub-threshold voltage technology. According to the scheme, the method provided by the invention has the advantages of low power consumption, high recognition rate, good universality and low cost, and has the wide marketprospect in the field of hardware compression technologies.

Owner:周军

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com