FPGA-based Tiny-yolo convolutional neural network hardware acceleration method and system

A convolutional neural network and hardware acceleration technology, applied in the field of convolutional neural network hardware acceleration, can solve the problems of large data transmission overhead, reducing network processing speed, floating-point data occupying hardware platform storage resources, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

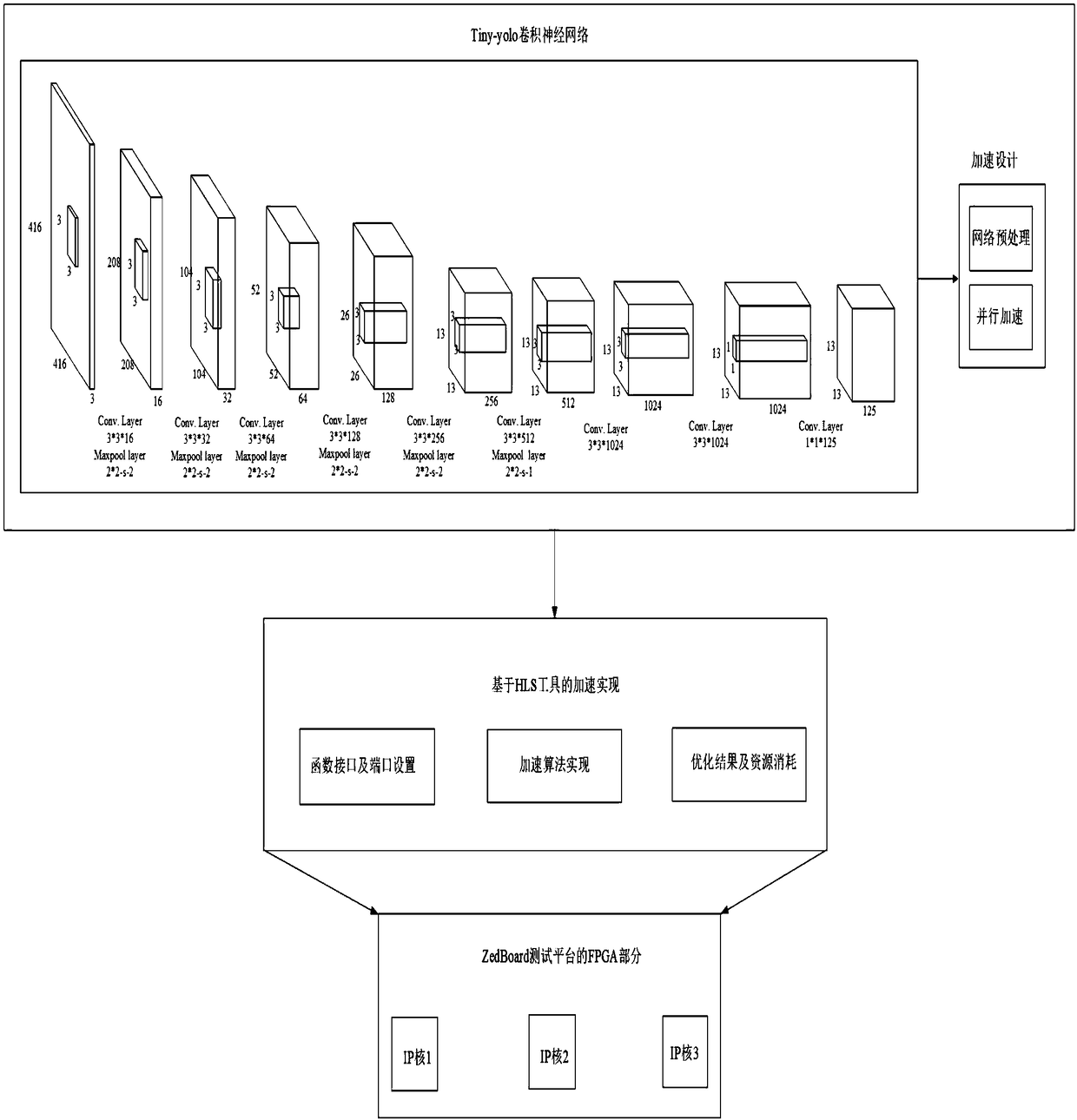

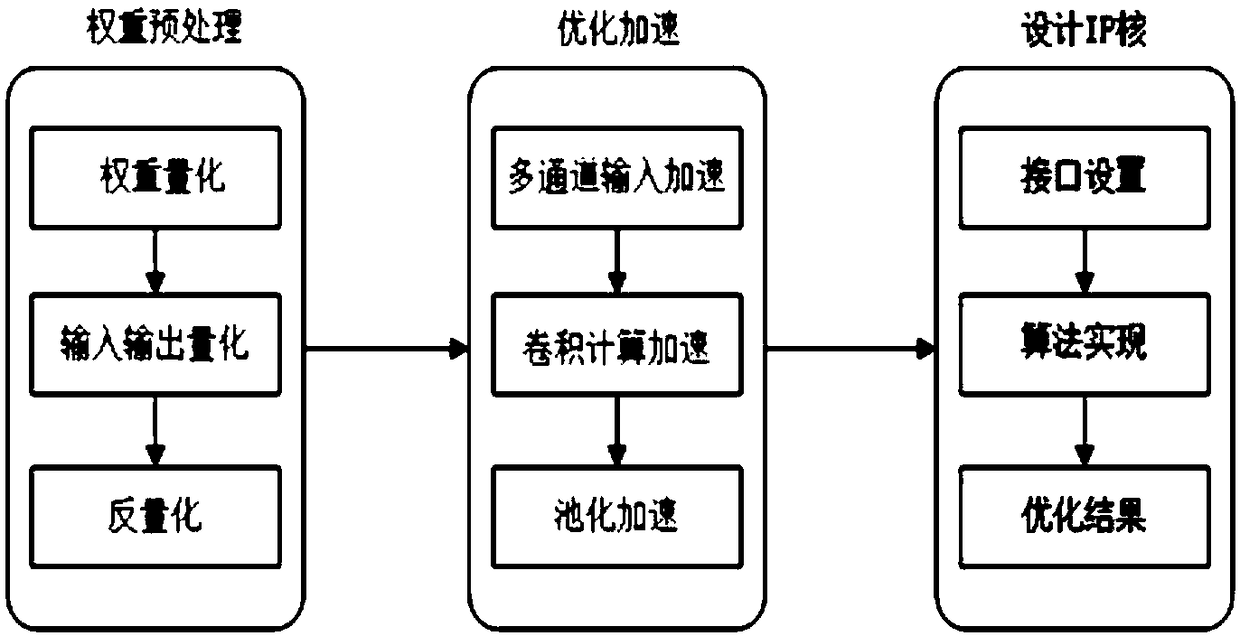

[0059] The present invention analyzes the parallel features of the Tiny-yolo network and combines the parallel processing capability of the hardware (ZedBoard) to accelerate the hardware of the Tiny-yolo convolutional neural network from three aspects: input mode, convolution calculation, and pooling embedding. Use the HLS tool to design the corresponding IP core on the FPGA to implement the acceleration algorithm, and realize the acceleration of the Tiny-yolo convolutional neural network based on the FPGA+ARM dual-architecture ZedBorad test.

[0060] see Figure 1 to Figure 6 , an FPGA-based Tiny-yolo convolutional neural network hardware acceleration method, comprising the following steps:

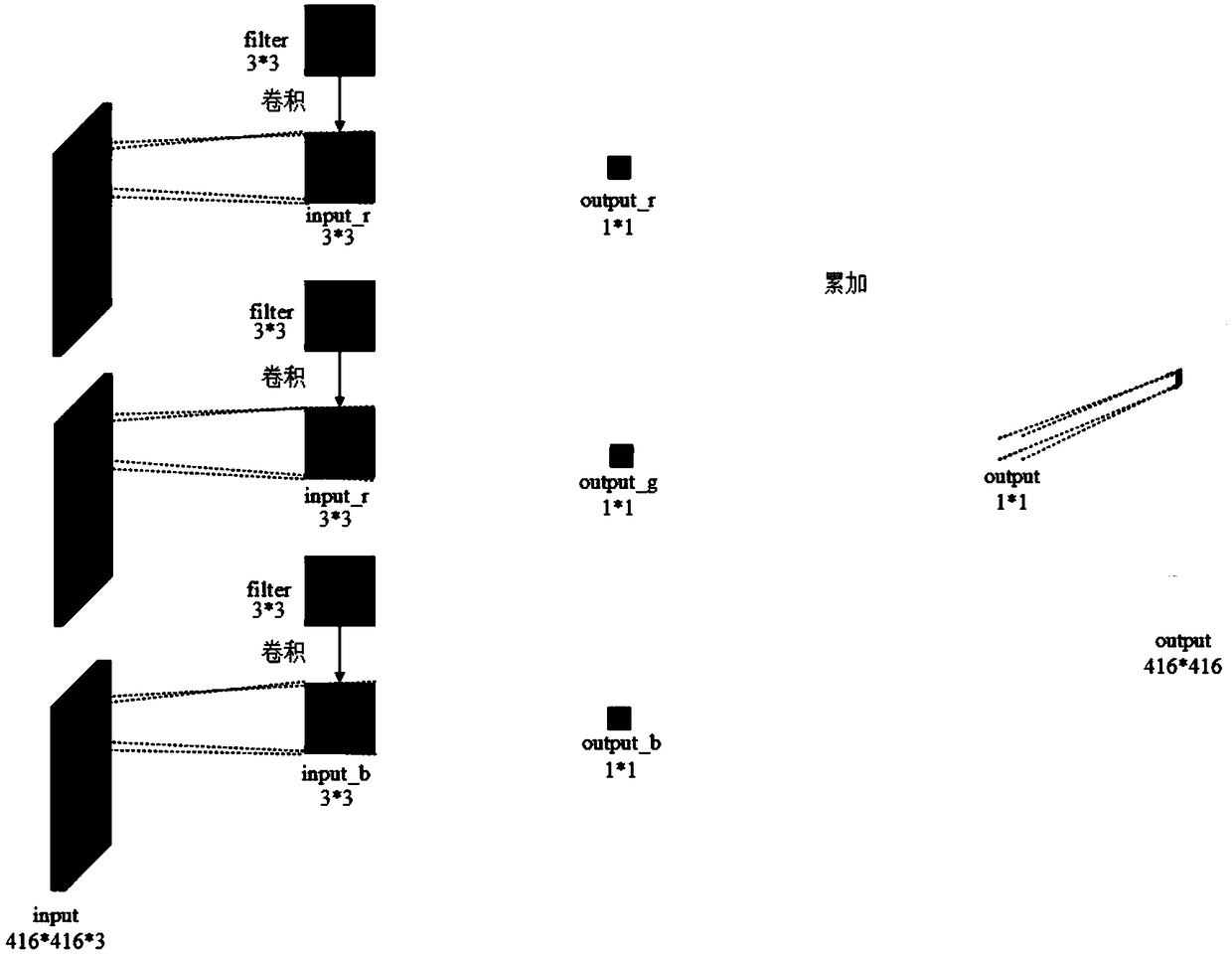

[0061] Based on the ZedBoard hardware resources, the convolutional layer conv1-8 of the Tiny-yolo convolutional neural network in this embodiment adopts a dual-channel input mode. On other hardware platforms, due to different resources, the number of channels input at the same time can b...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com