Patents

Literature

93 results about "Binary neural network" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

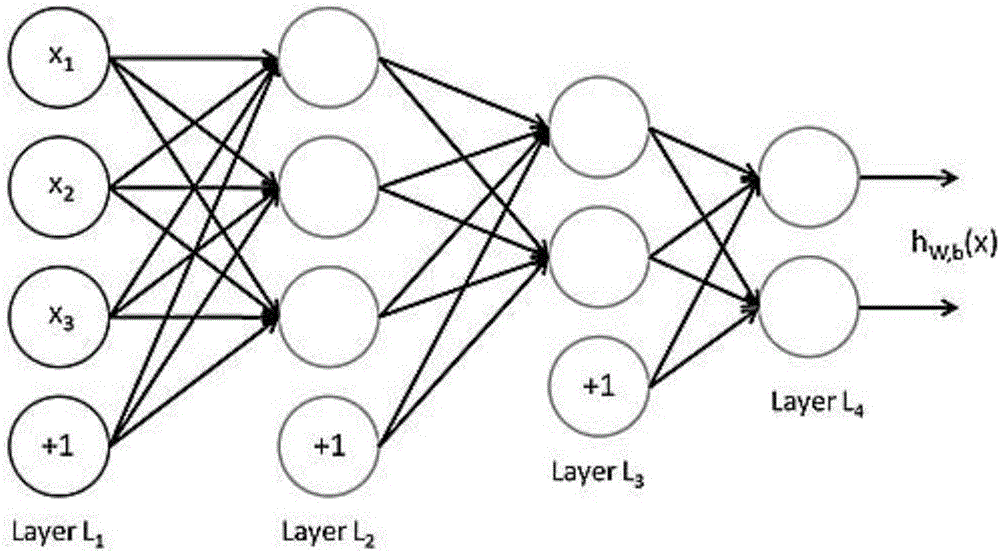

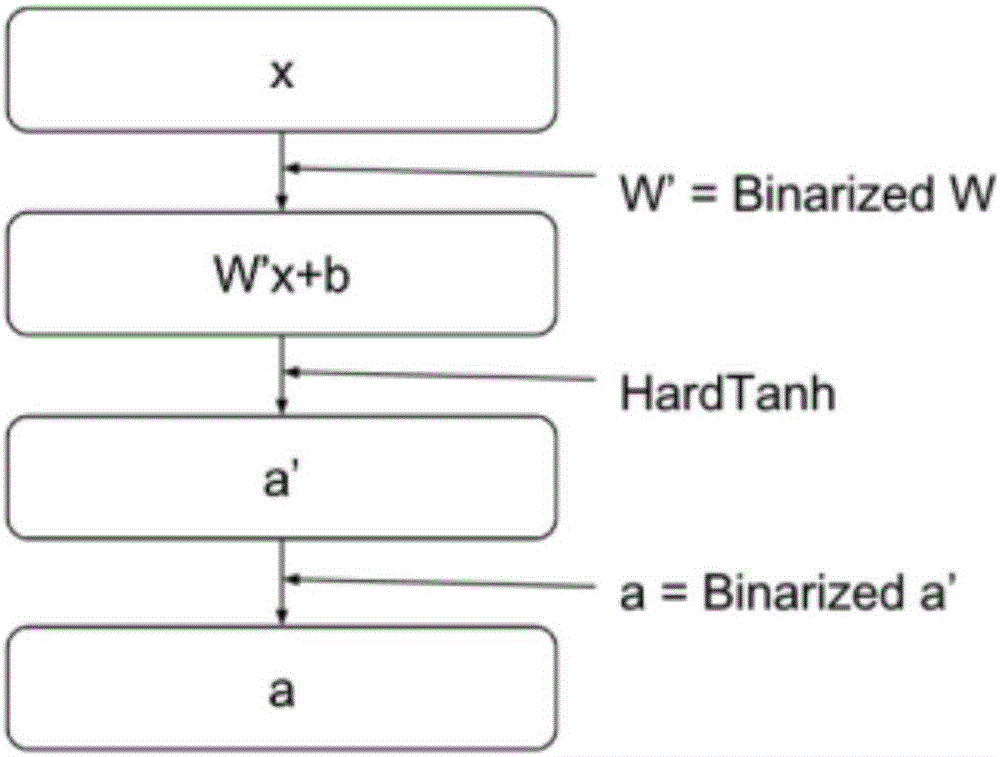

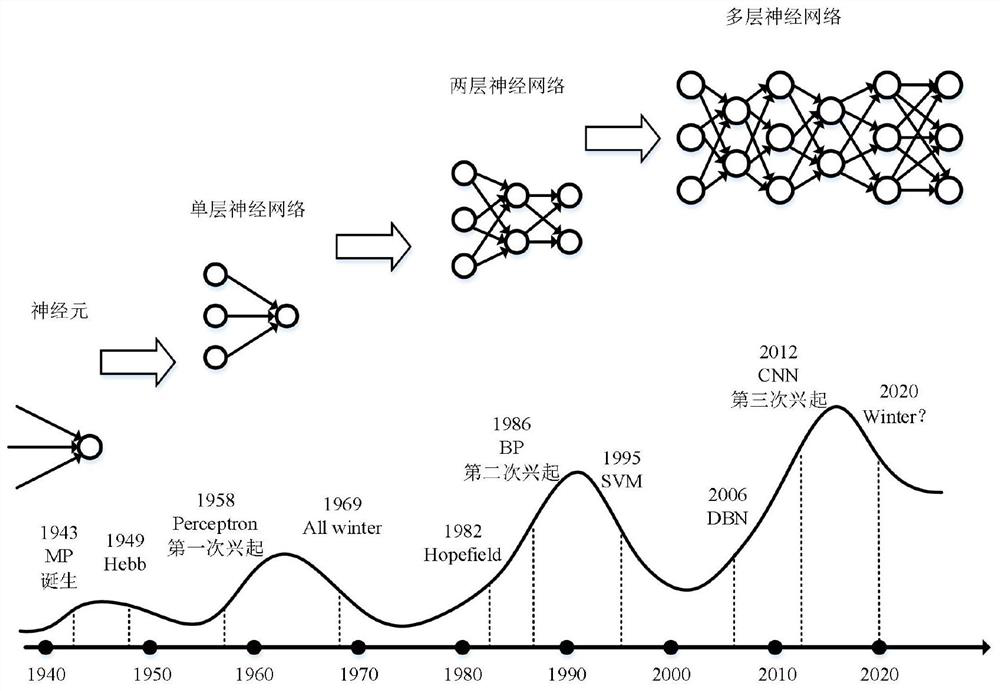

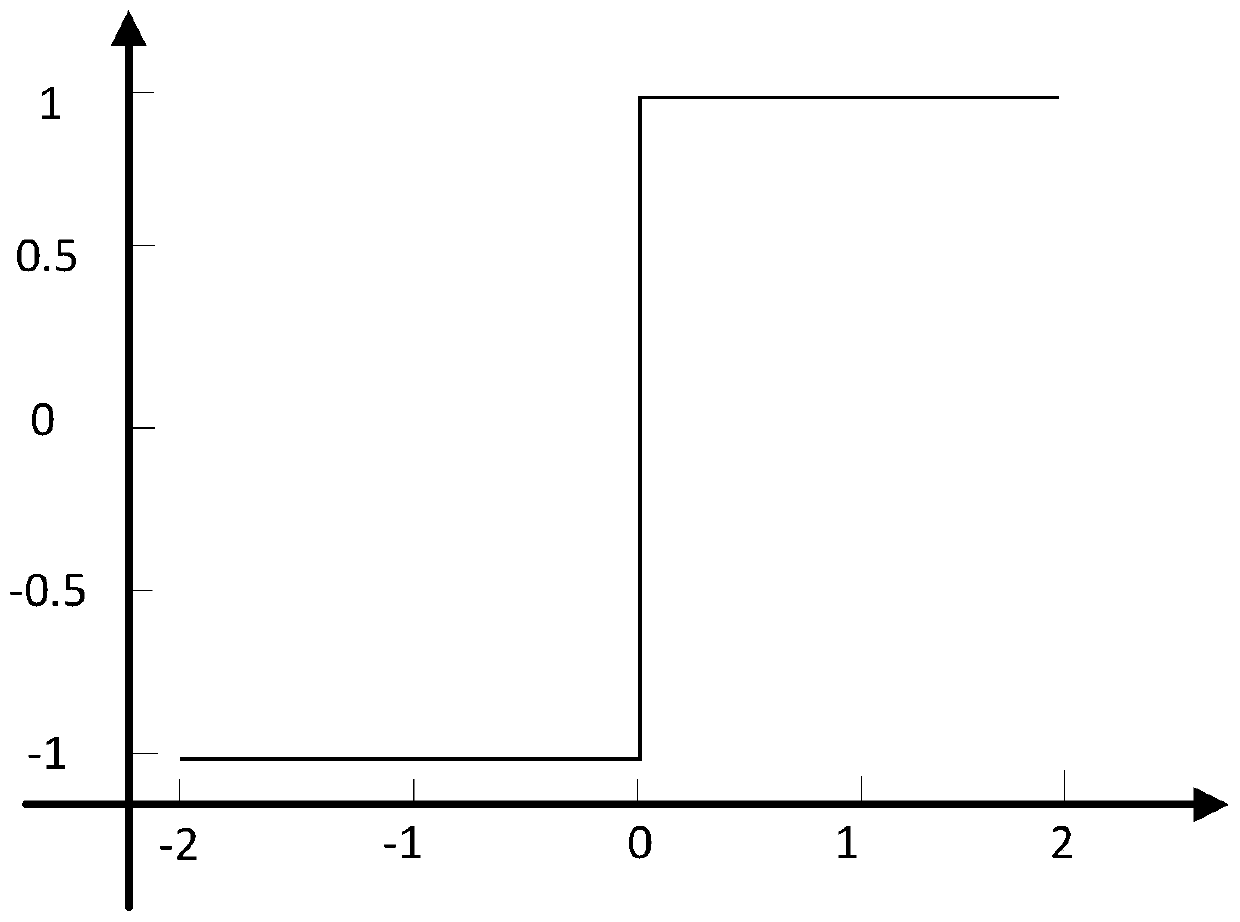

Binary neural networks are networks with binary weights and activations at run time. At training time these weights and activations are used for computing gradients; however, the gradients and true weights are stored in full precision. This procedure allows us to effectively train a network on systems with fewer resources.

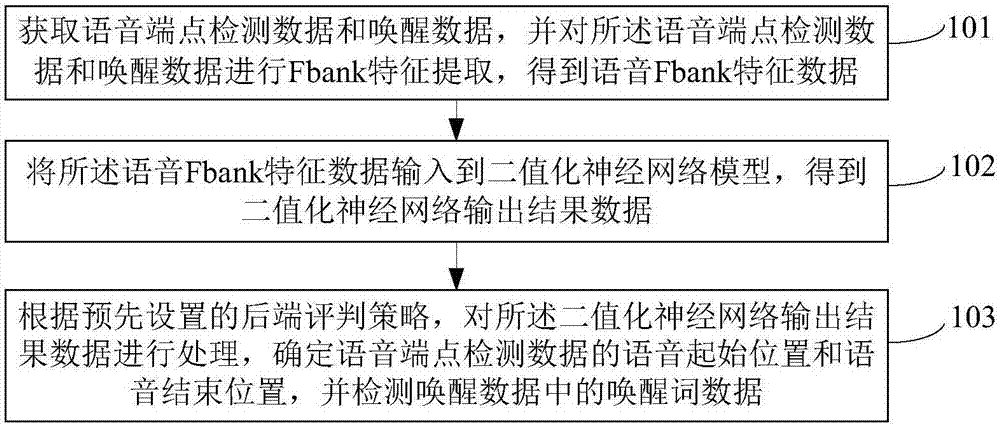

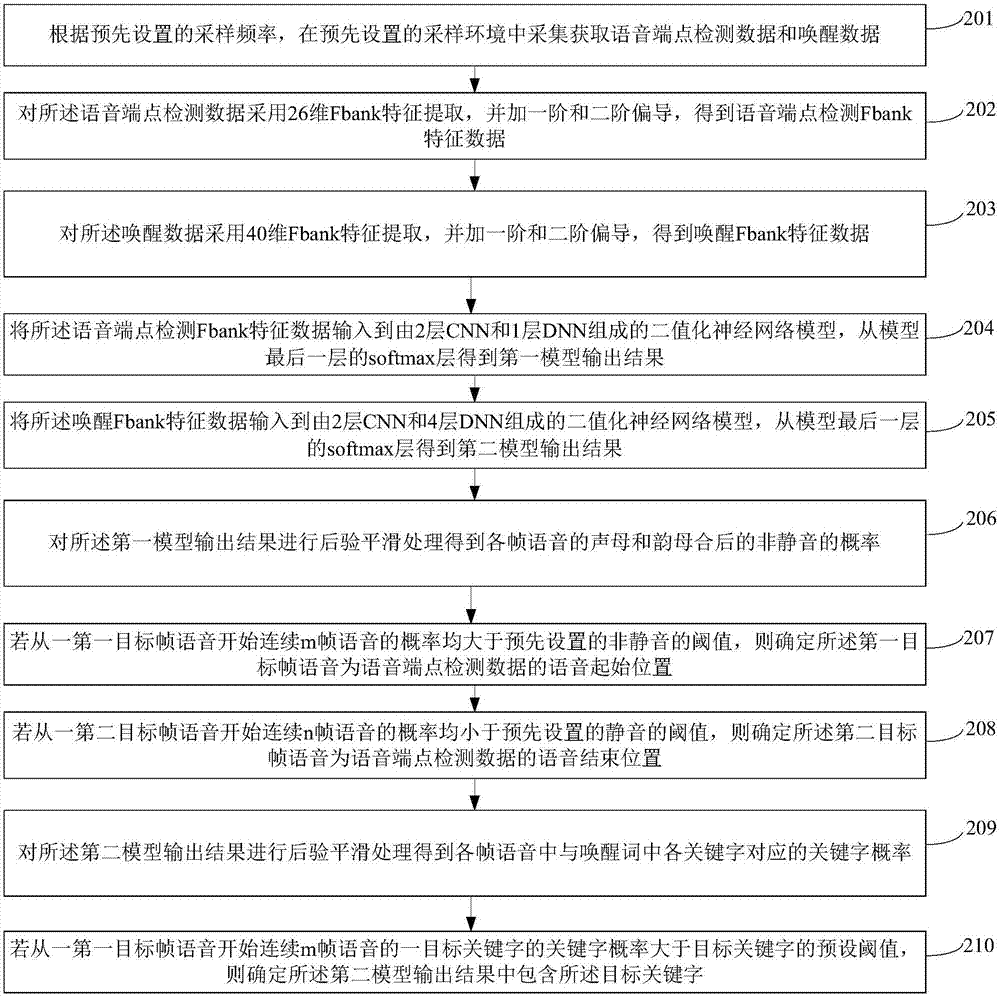

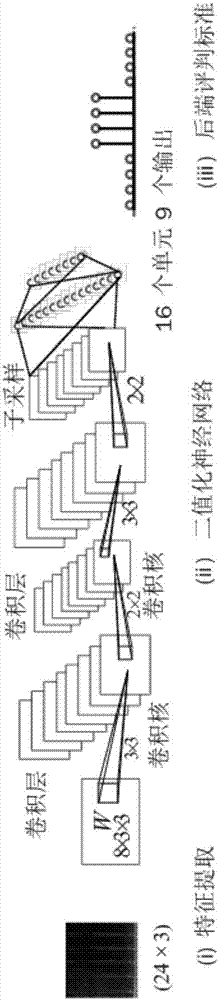

Voice activity detection and wake-up method and device

ActiveCN108010515ALower latencyReduce power consumptionSpeech recognitionFeature extractionNetwork output

The invention provides a voice activity detection and wake-up method and device, and relates to the technical field of machine learning speech recognition. The method includes the steps of acquiring voice activity detection data and wake-up data, and performing Fbank feature extraction on the voice activity detection data and wake-up data to obtain voice Fbank feature data; inputting the voice Fbank feature data to a binary neural network model to obtain binarized neural network output result data; and according to a preset backend evaluation strategy, processing the binarized neural network output result data, determining a voice start position and a voice end position of the voice activity detection data, and detecting wake-up word data in the wake-up data. The system framework of the invention can be applied to voice activity detection and voice wake-up technologies at the same time, and can implement accurate, fast, low-delay, small-model and low-power voice activity detection technologies and voice wake-up technologies.

Owner:TSINGHUA UNIV

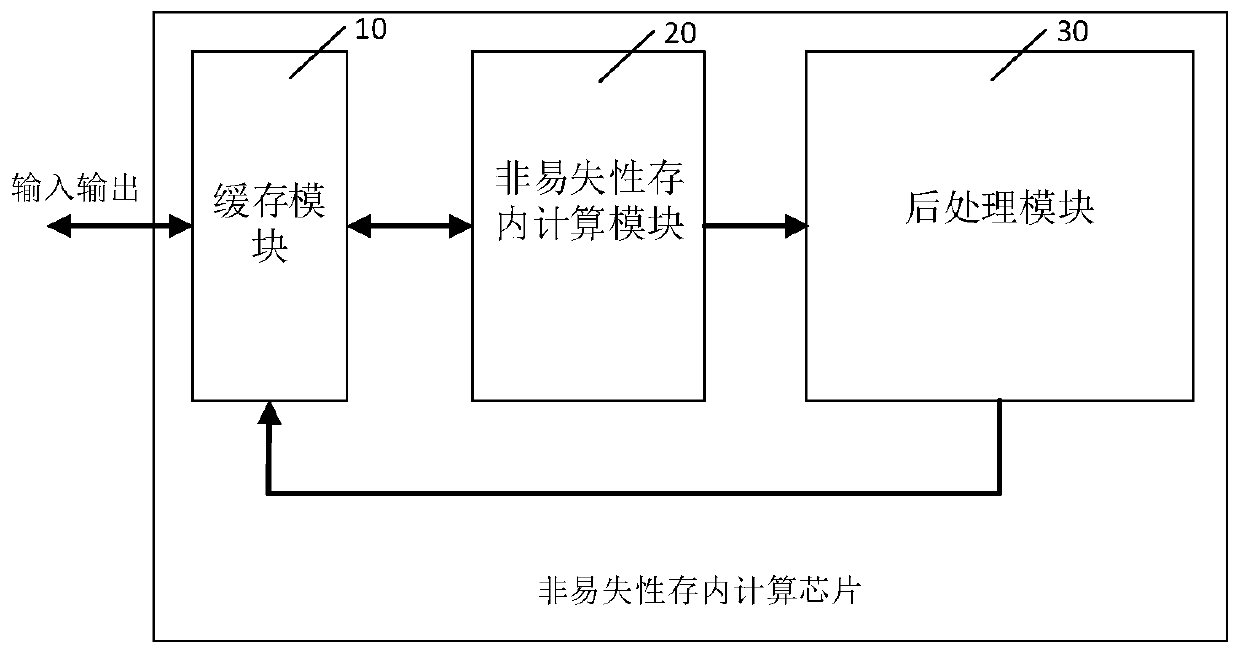

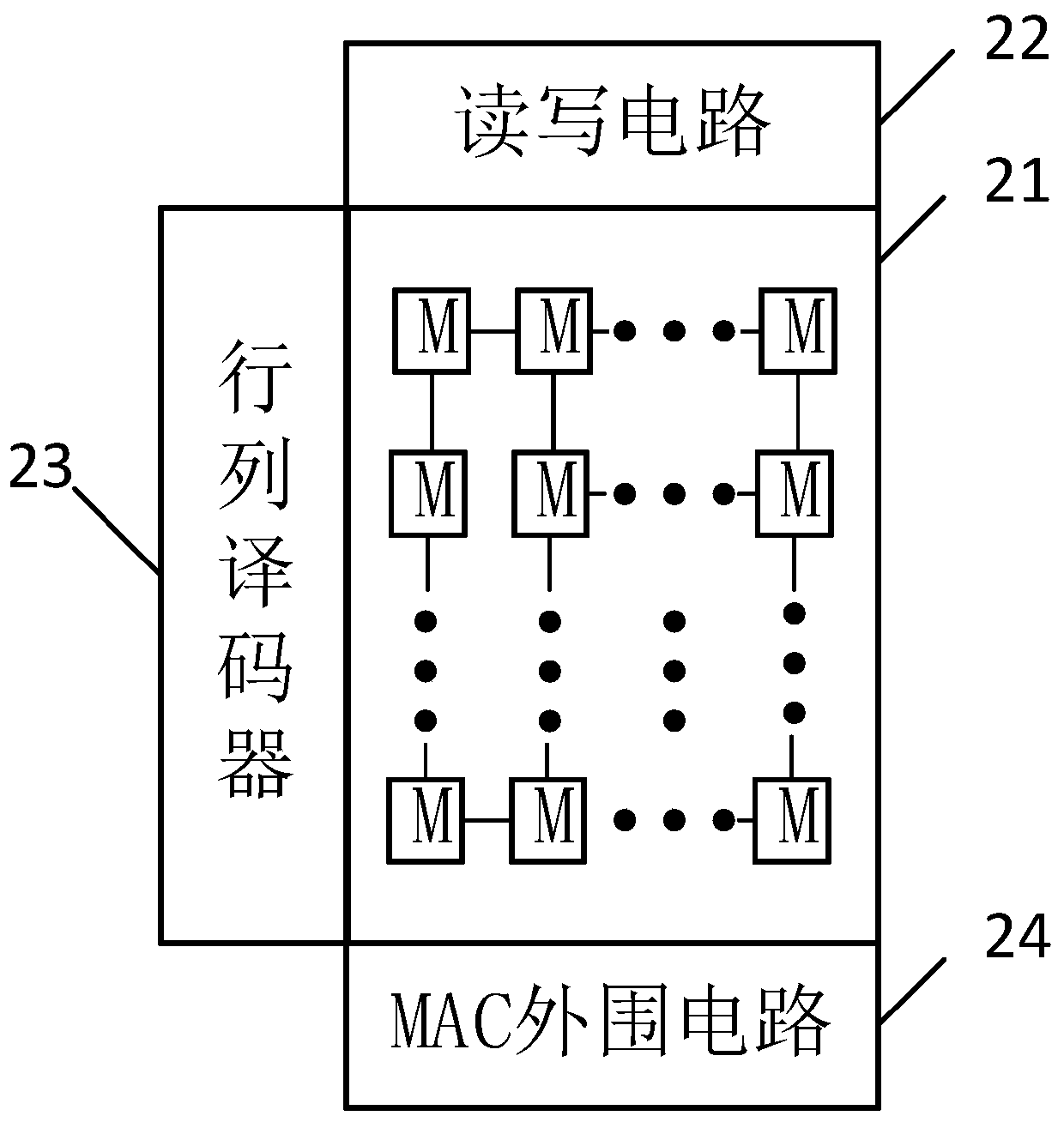

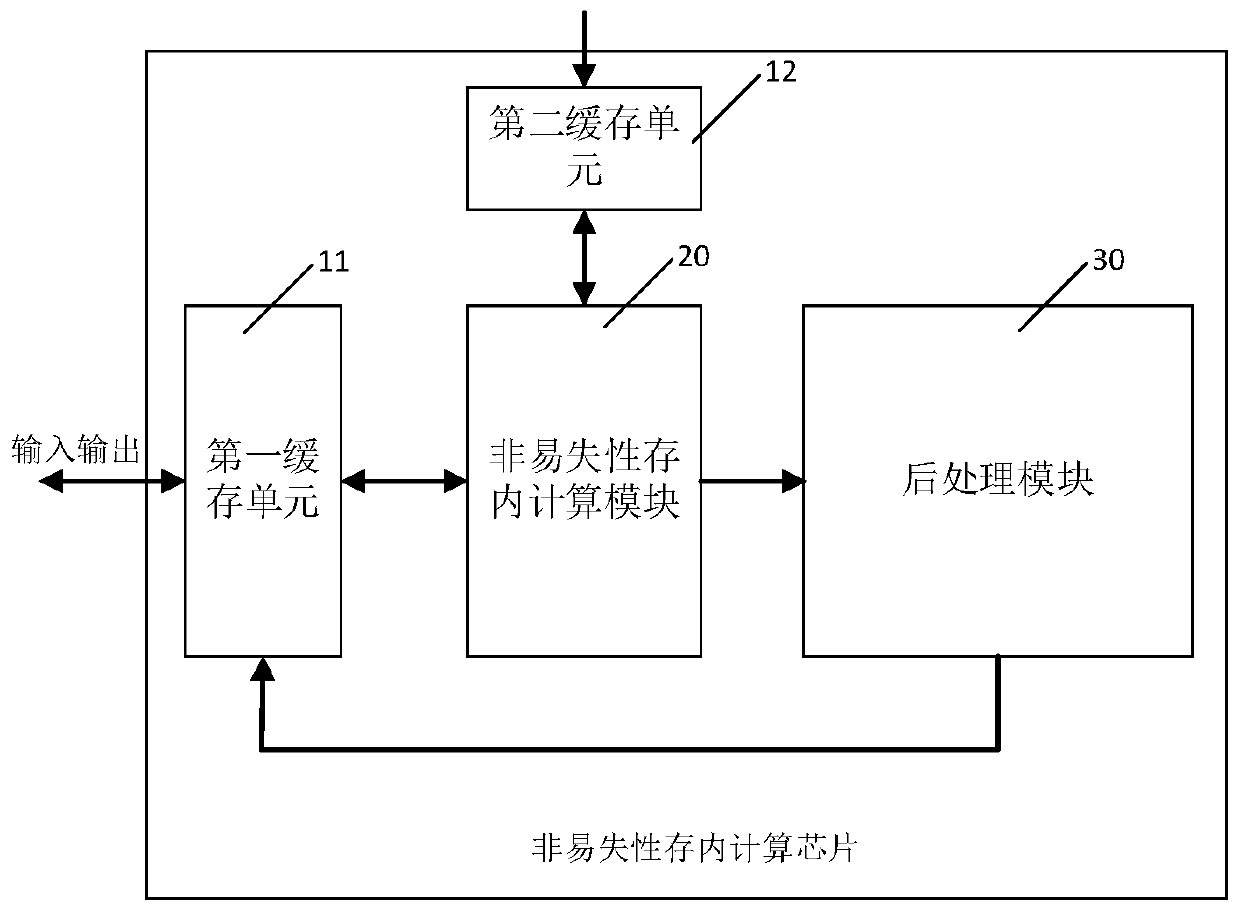

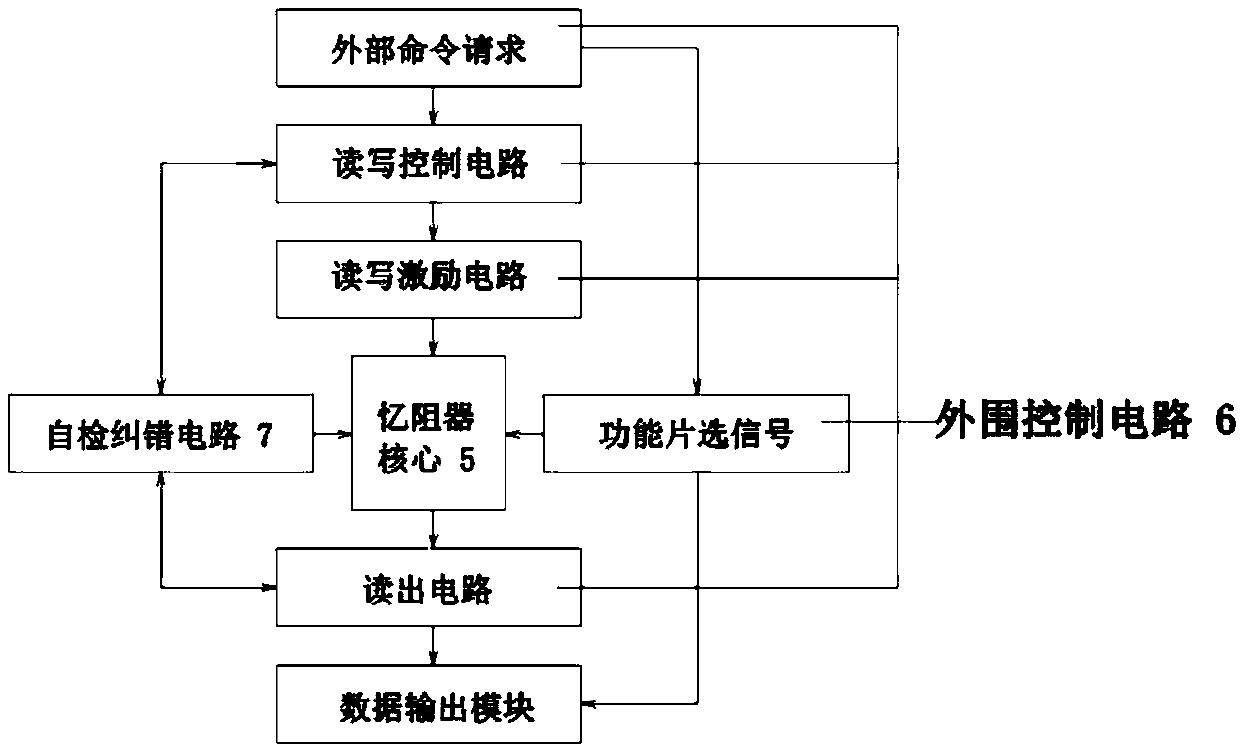

Nonvolatile in-memory computing chip and operation control method thereof

ActiveCN110597555AReduce power consumptionReduce latencyDigital storagePhysical realisationMultiply–accumulate operationTime delays

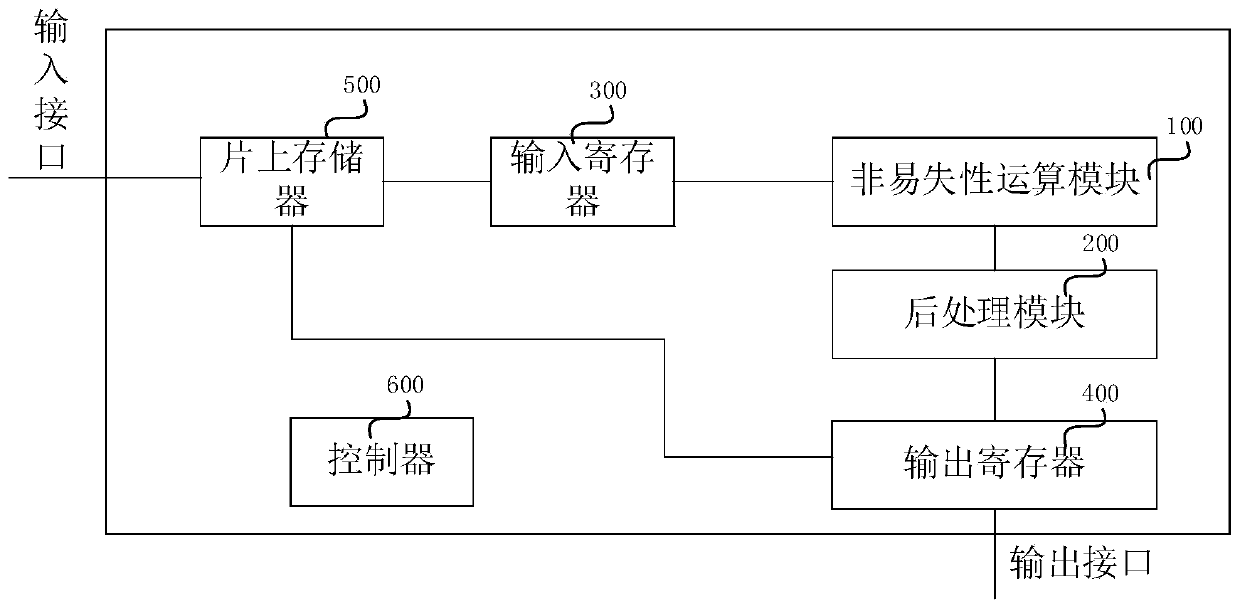

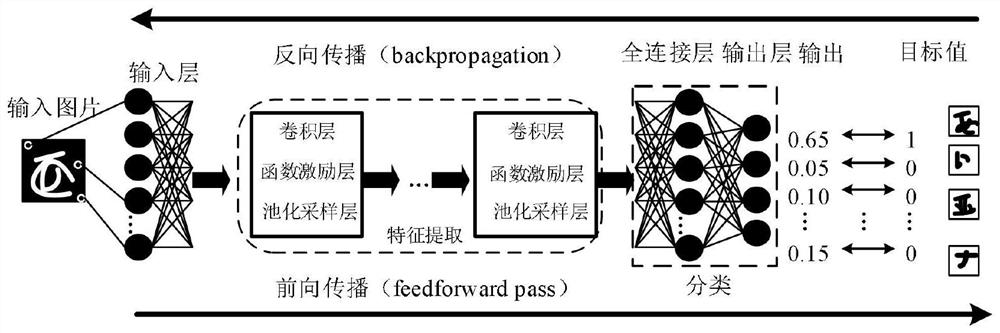

The invention provides a nonvolatile in-memory computing chip and an operation control method thereof. The nonvolatile in-memory computing chip comprises a cache module which is used for caching the data; a nonvolatile in-memory calculation module which is connected with the cache module and is used for executing operation on the data sent by the cache module; a post-processing module which is connected with the non-volatile in-memory calculation module and used for post-processing the operation result of the non-volatile in-memory calculation module, wherein the nonvolatile in-memory computing module comprises a nonvolatile memory cell array, a row and column decoder connected with the nonvolatile memory cell array, and a read-write circuit connected with the nonvolatile memory cell array. According to the present invention, the non-volatile in-memory computing chip is matched with an operation control method, the multiply-accumulate-add operation and the binary neural network operation are achieved based on a storage and calculation integrated technology, the data transmission between a memory and a processor is not needed, and the power consumption and the time delay are reduced.

Owner:BEIHANG UNIV

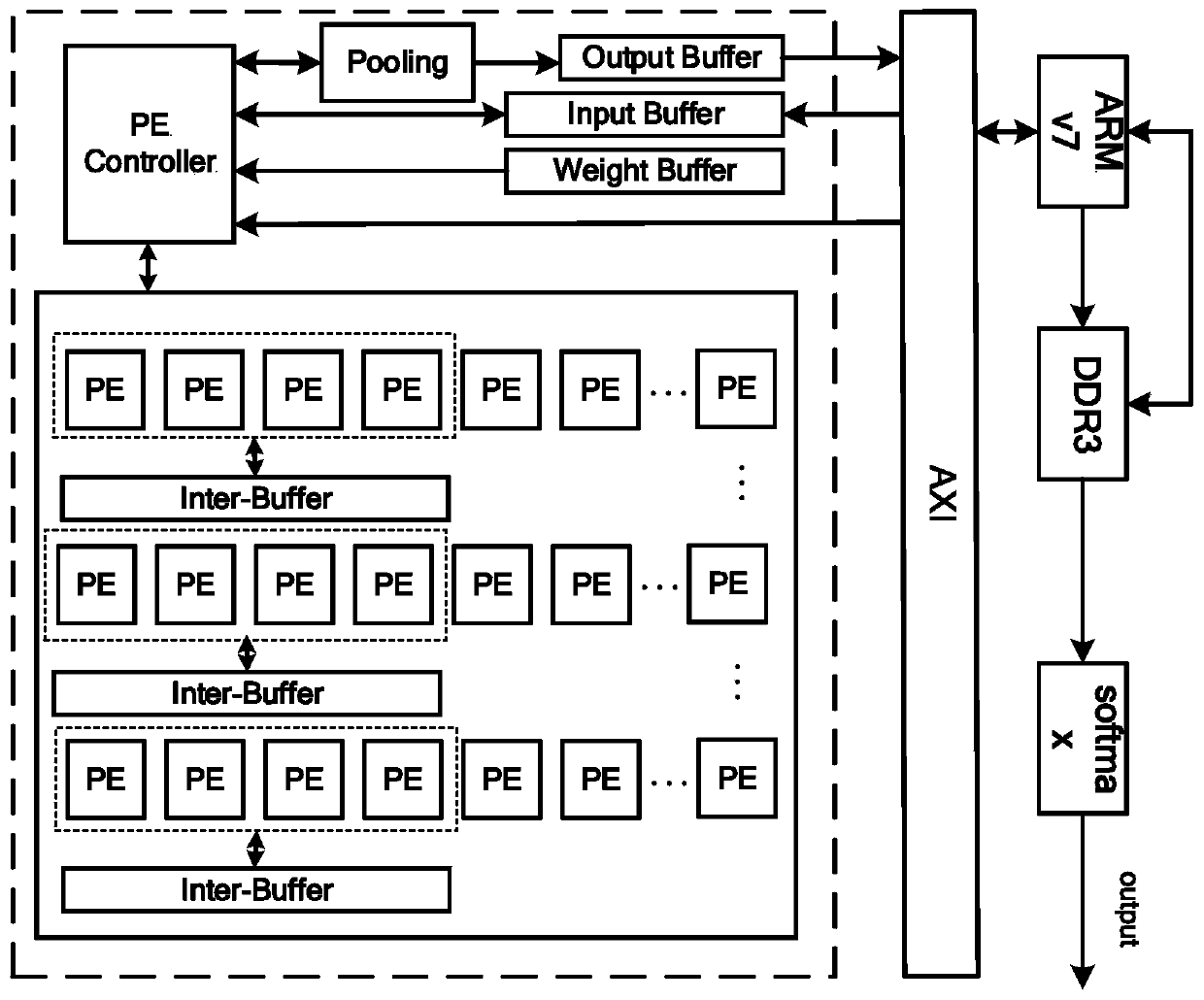

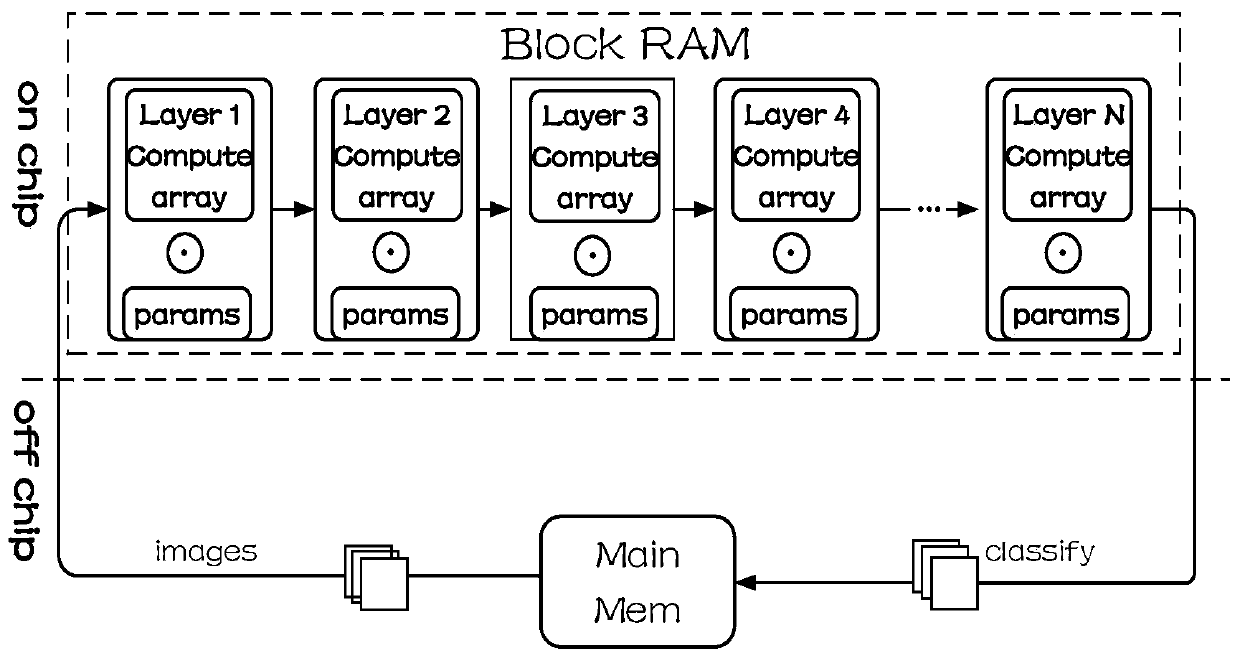

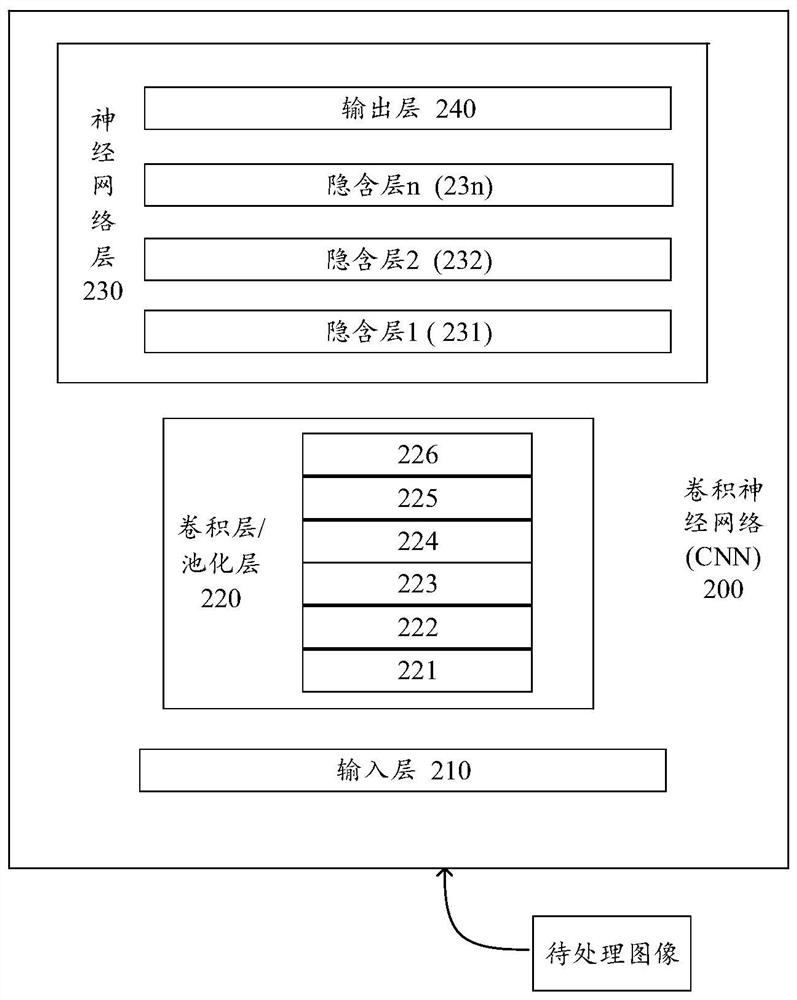

Binary neural network acceleration method and system based on FPGA

ActiveCN110458279AReduce communication costsDetection speedNeural architecturesEnergy efficient computingAlgorithmNetwork structure

The invention discloses a binary neural network acceleration system based on an FPGA. A convolution kernel parameter acquisition module, a binarization convolution neural network structure and a cachemodule which are formed by an FPGA are utilized. The cache module is an on-chip memory of the FPGA; each module obtains an input feature map of a to-be-processed picture, obtains a convolution calculation logic rule and correspondingly carries out binarization convolution calculation. The FPGA traverses convolution calculation of a plurality of threads according to a convolution calculation logicrule. The output feature map data of the to-be-processed image is obtained, and the calculated amount of each layer in the binary neural network is completely unloaded to the on-chip memory through the overall architecture without depending on the interaction between the off-chip memory and the on-chip memory, so that the communication cost between memories is reduced, the calculation efficiencyis greatly improved, and the detection speed of the to-be-detected image is increased.

Owner:武汉魅瞳科技有限公司

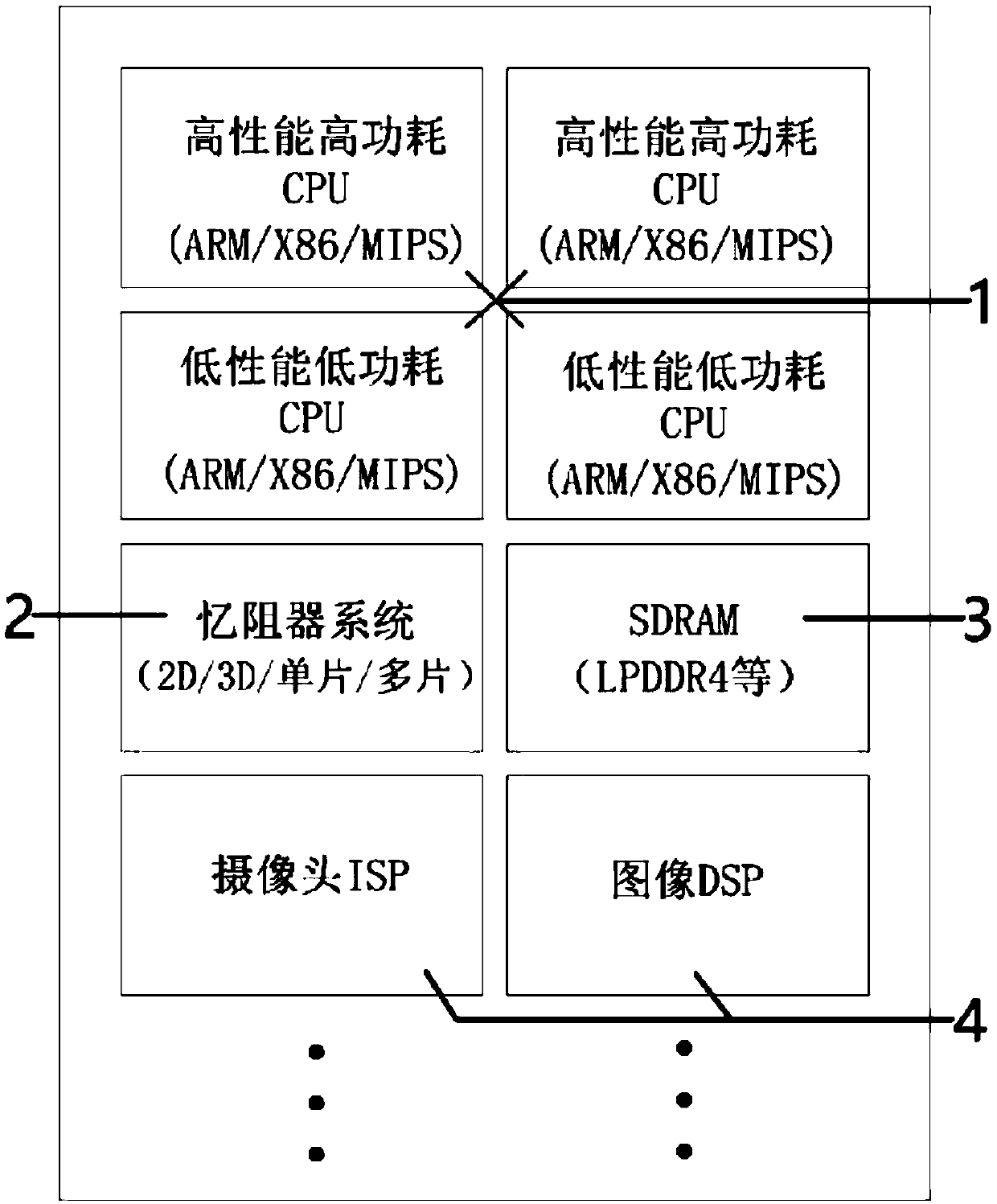

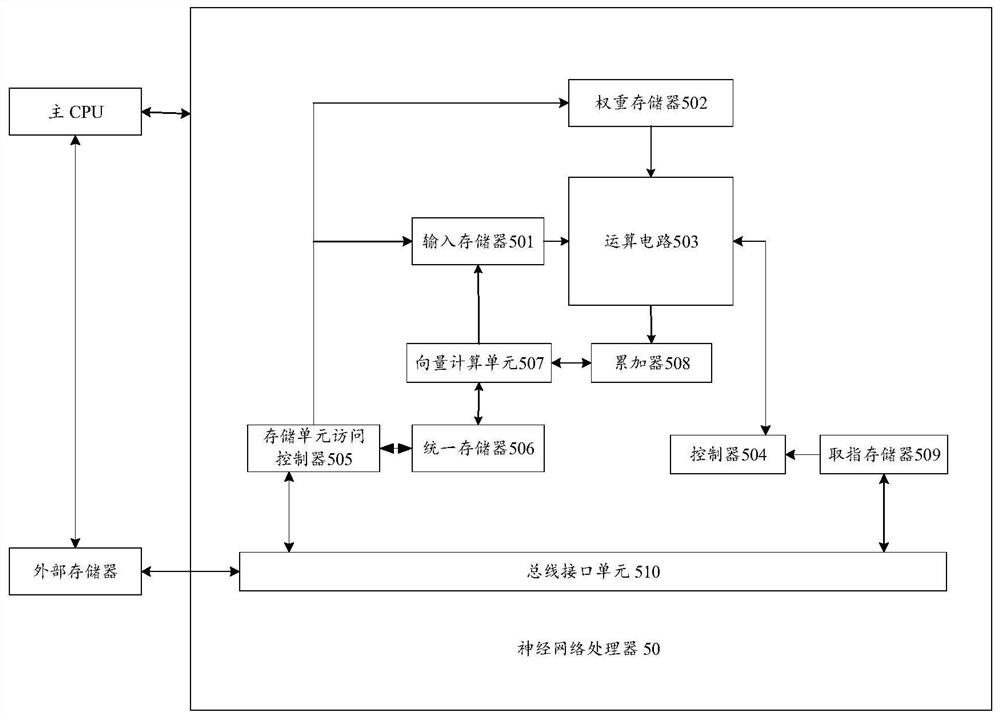

Neural network chip of a binary memristor

ActiveCN109657787AImprove computing efficiencyIncrease computing speedPhysical realisationData streamElectronic information

The invention relates to the technical field of computers and electronic information, in particular to a neural network chip of a binary memristor. According to the invention, the switching ratio of the memristor is utilized, For example, a high resistance state and a low resistance state exist, the memristor is combined with the binary neural network to complete storage and operation on the memristors made of different materials, and the memristors are combined with the central processing unit to improve the calculation efficiency and speed of the neural network. Except the execution of a neural network algorithm, the method can also utilize a binary neural network architecture to carry out field programming similar to an FPGA. The method comprises the following steps: inputting a data stream subjected to special coding processing, carrying out on-site learning according to a binarization neural network method by comparing an output result of the data stream, stopping learning when the correct rate reaches 100%, and executing a corresponding function by the network.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Arhythmia testing method for aiming at electrocardiogram data by means of binary neural network

ActiveCN110379506AImprove generalization abilityImprove trainabilityMedical data miningCharacter and pattern recognitionDistillationAlgorithm

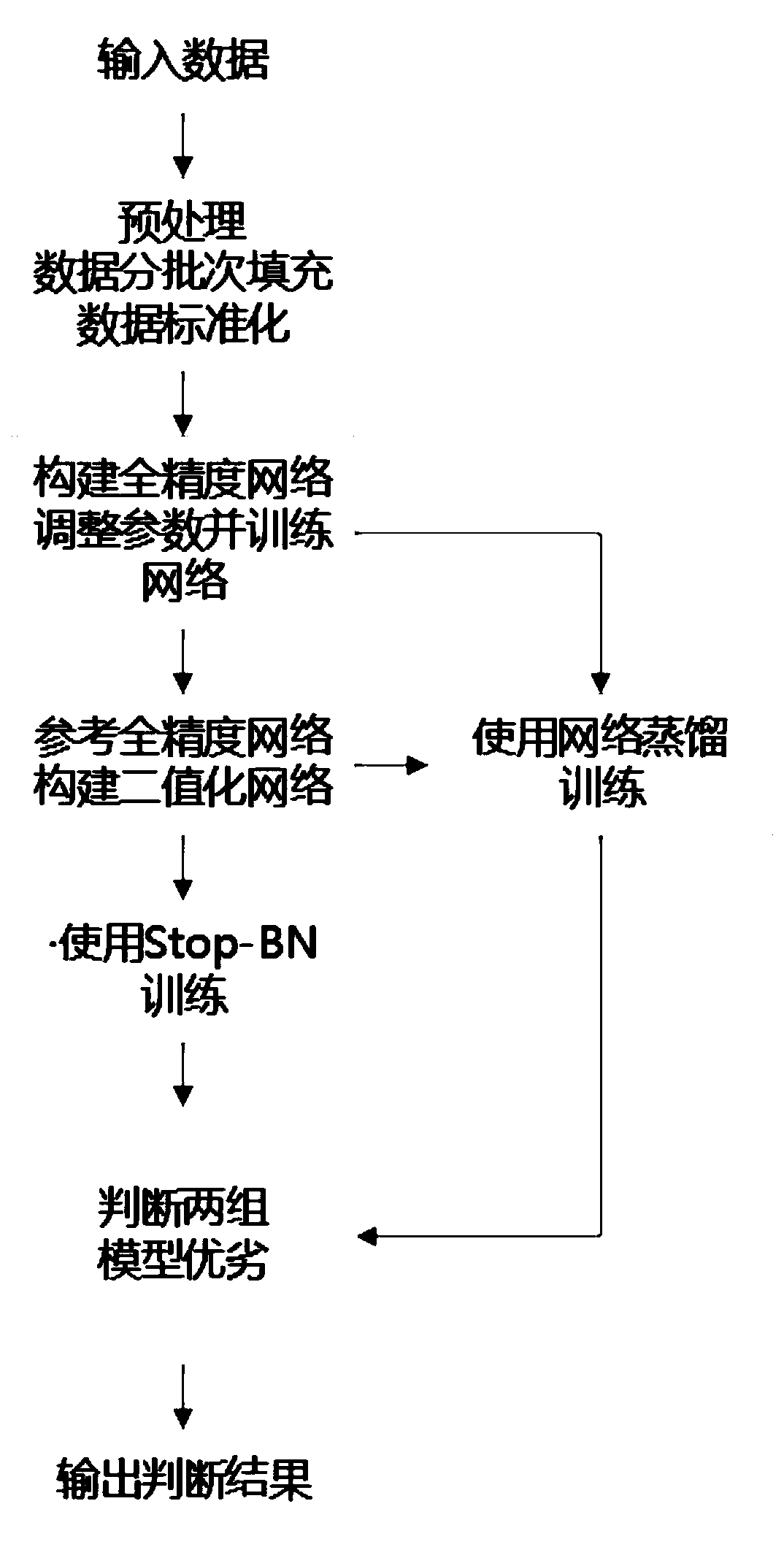

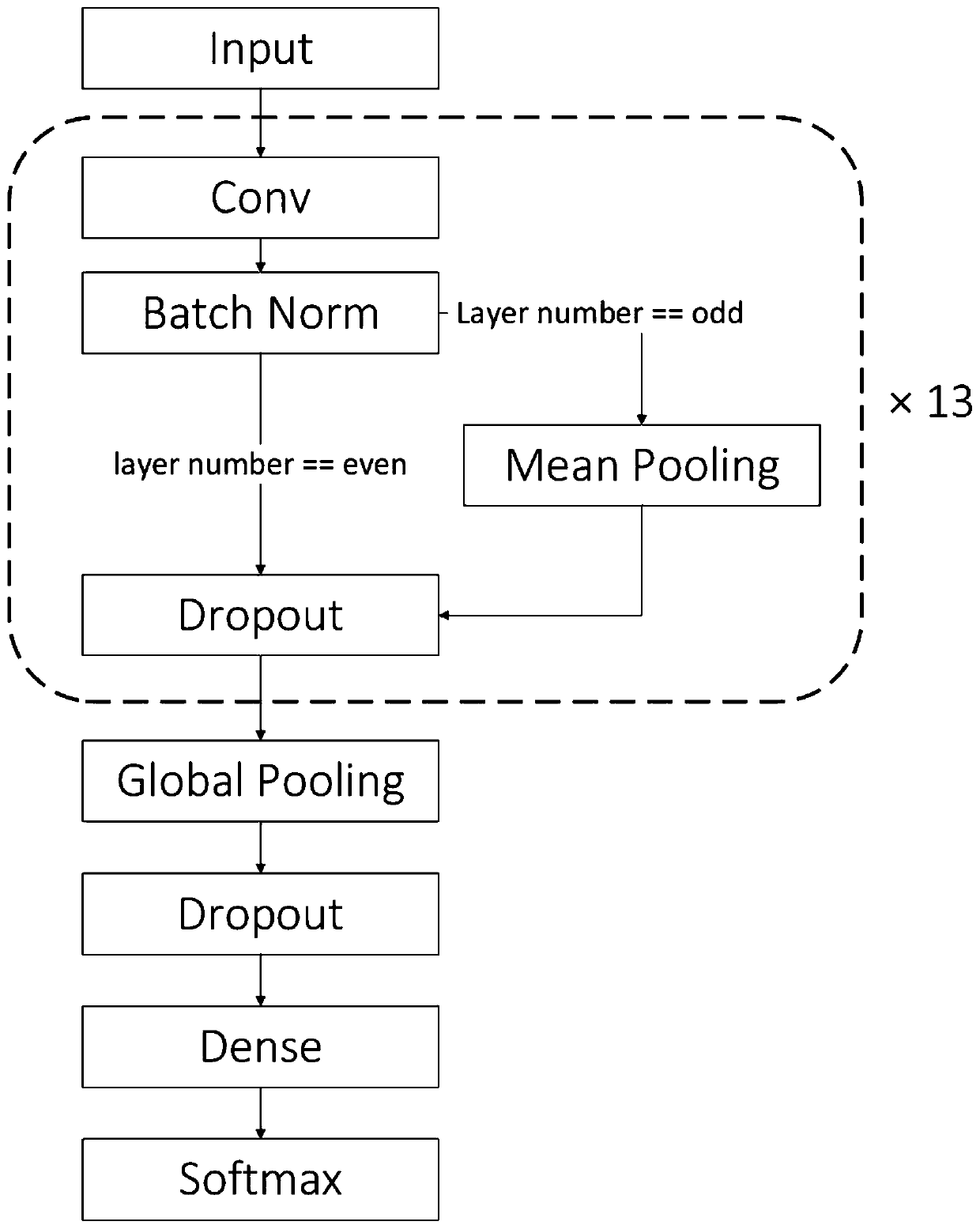

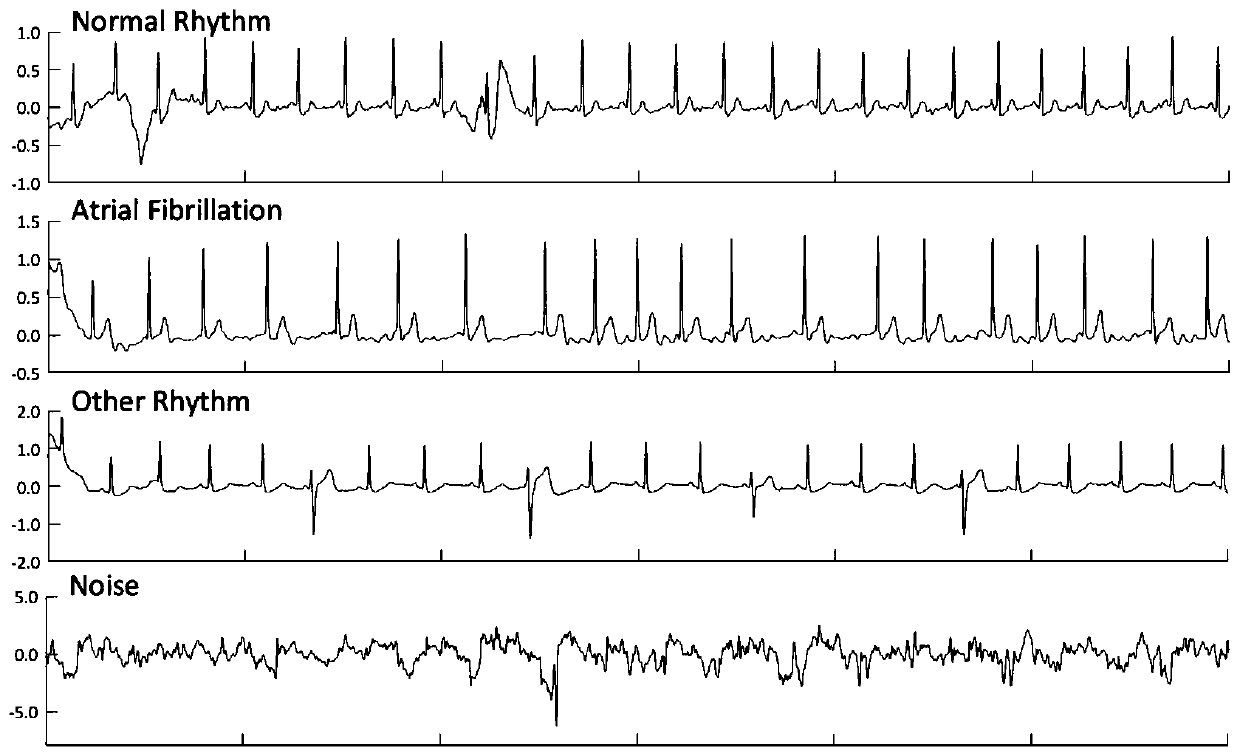

The invention discloses an arhythmia testing method for aiming at electrocardiogram data by means of a binary neural network. The method comprises the steps of firstly acquiring training model data, and preprocessing the data before training; constructing a set of full-precision convolutional network model, and inputting data for training, adjusting a network parameter for obtaining a relatively high effect; referring to the obtained full-precision models for constructing a binary network model, inputting the data for training, performing fine adjustment on a model parameter, and utilizing a Stop-BN training method in training for improving a training effect; using the trained full-precision model as a teacher model and using the untrained binary model as a student model, and performing distillation training on the student model by means of the teacher model, thereby obtaining a training effect better than that in directly training the binary network. The method performs discriminationon atrial fibrillation and can effectively reduce operation memory and operation time. The trained network model realizes relatively high effect for reducing precision loss caused by binarization.

Owner:HANGZHOU DIANZI UNIV

Chip for realizing binary neural network based on nonvolatile in-memory calculation and method

The invention provides a chip for realizing a binary neural network based on nonvolatile in-memory calculation and a method, and the chip comprises a nonvolatile operation module which is used for carrying out the matrix multiply-add operation on a first binary data packet received by the nonvolatile operation module and a second binary data packet pre-stored in the nonvolatile operation module, wherein the weight of the binary neural network is generally fixed during the reasoning process, and the input characteristics corresponding to each layer of neural network are generally changed alongwith the application. The weight of the binary neural network is used as the second binary data packet to be pre-stored in the nonvolatile operation module, and the input characteristics of the binaryneural network are loaded to the nonvolatile operation module, so that the matrix multiplication and addition operation can be realized in the nonvolatile operation module, and the problems of powerconsumption and time delay caused by data migration can be solved.

Owner:BEIHANG UNIV

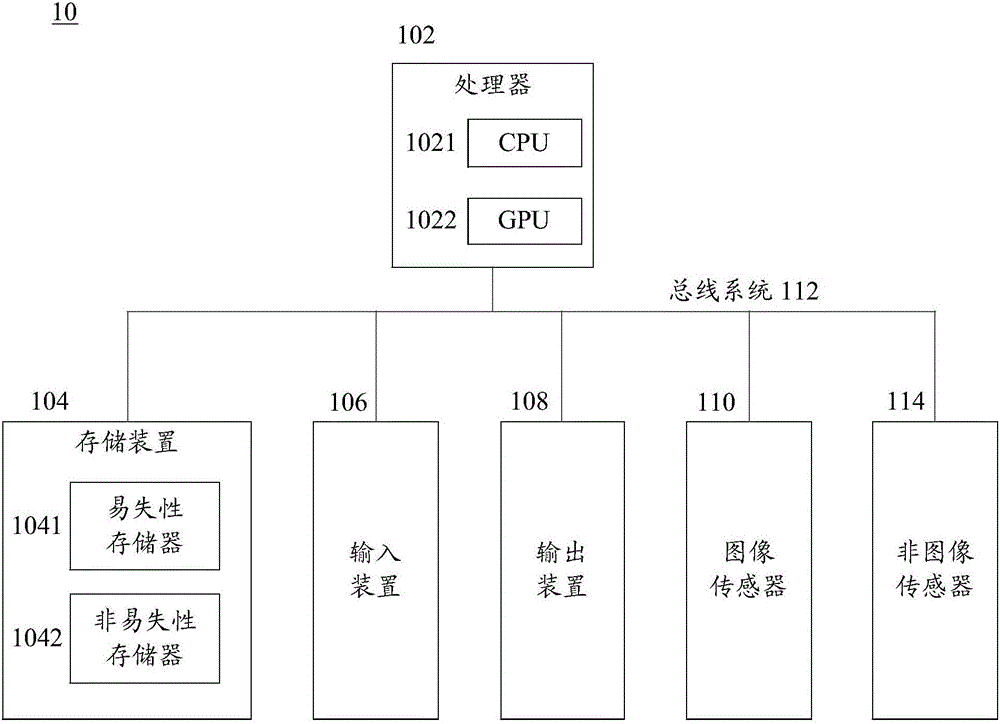

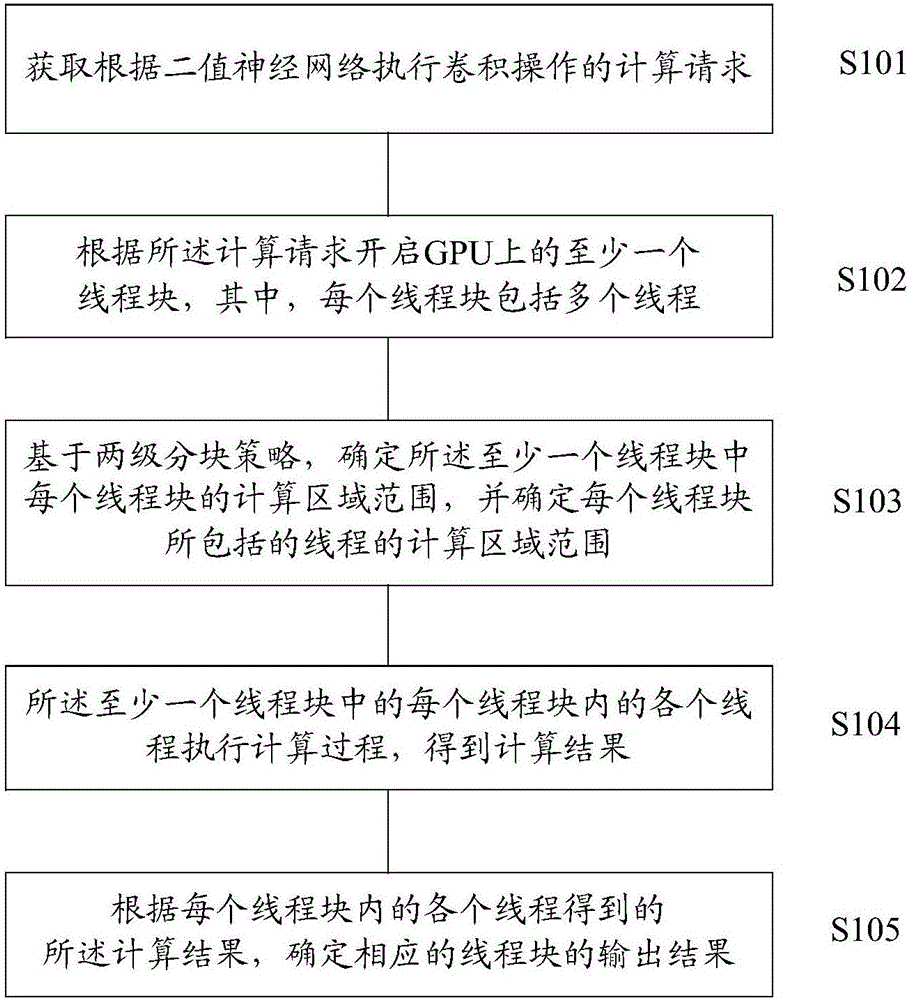

GPU-based method and device for calculating binary neural network convolution

ActiveCN106779057ARealize convolution calculationCalculation speedConcurrent instruction executionPhysical realisationInternal memoryParallel computing

Embodiments of the invention provide a GPU-based method and device for calculating binary neural network convolution. The method comprises the following steps of obtaining a calculation request for executing convolution operation according to a binary neural network; starting at least one thread block on a GPU according to the calculation request, wherein each thread block comprises a plurality of threads; determining a calculation area range of each thread block on the basis of a two-stage blocking strategy, and determining calculation area ranges of the threads included by each thread block; executing a calculation process by each thread in each thread block of the at least one thread block so as to obtain calculation results; and determining output results of corresponding thread blocks according to the calculation result of each thread in each thread block. According to the method, the two-stage blocking strategy on the basis of the GPU thread block is designed, and the access characteristics of the GPU are fully utilized, so that binary neural network convolution calculation can be realized on GPU equipment, the calculation speed is enhanced and the internal memory consumption is decreased.

Owner:BEIJING KUANGSHI TECH +1

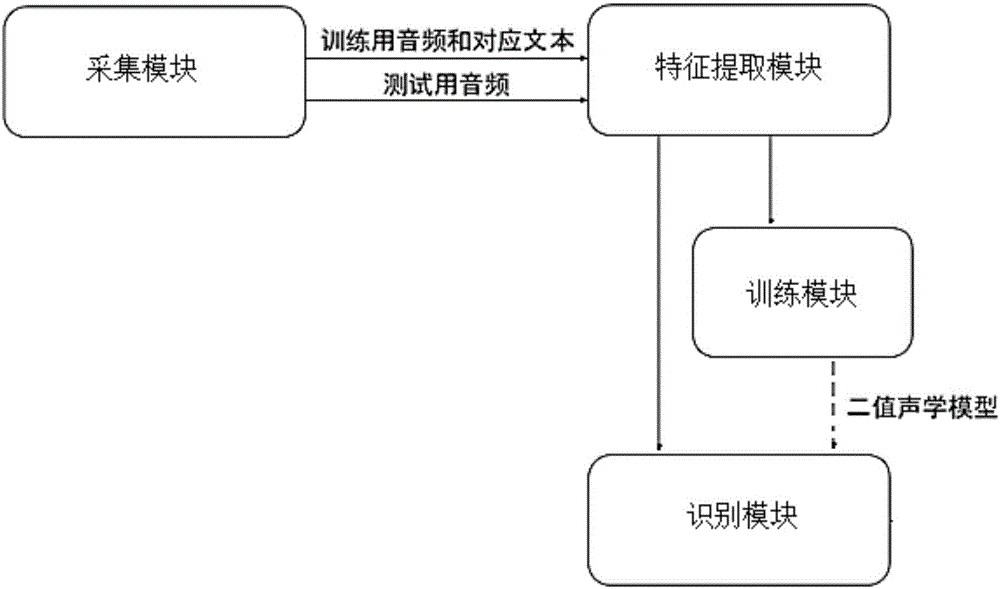

Speech recognition system based on acoustic model of binary neural network

The invention provides a speech recognition system based on the acoustic model of a binary neural network. The observation probability distribution of a hidden markov model is modeled based on the binary neural network, and extracted speech features are trained. In this way, the acoustic model is obtained. Binary data are adopted during the runaway process instead of traditional 32-bit floating-point numbers, so that the storage and memory usage of the model is greatly reduced. By adopting the binary neural network, hardware instructions are fully used during the calculation process for realizing the accelerated operation. In the prior art, a conventional model is provided with a plurality of GPUs only applied to a server for calculation, while the calculation can be conducted on the CPU of mobile equipment. Meanwhile, the model training is conducted based on the acceleration of the binary neural network, so that the model training time can be greatly shortened.

Owner:AISPEECH CO LTD

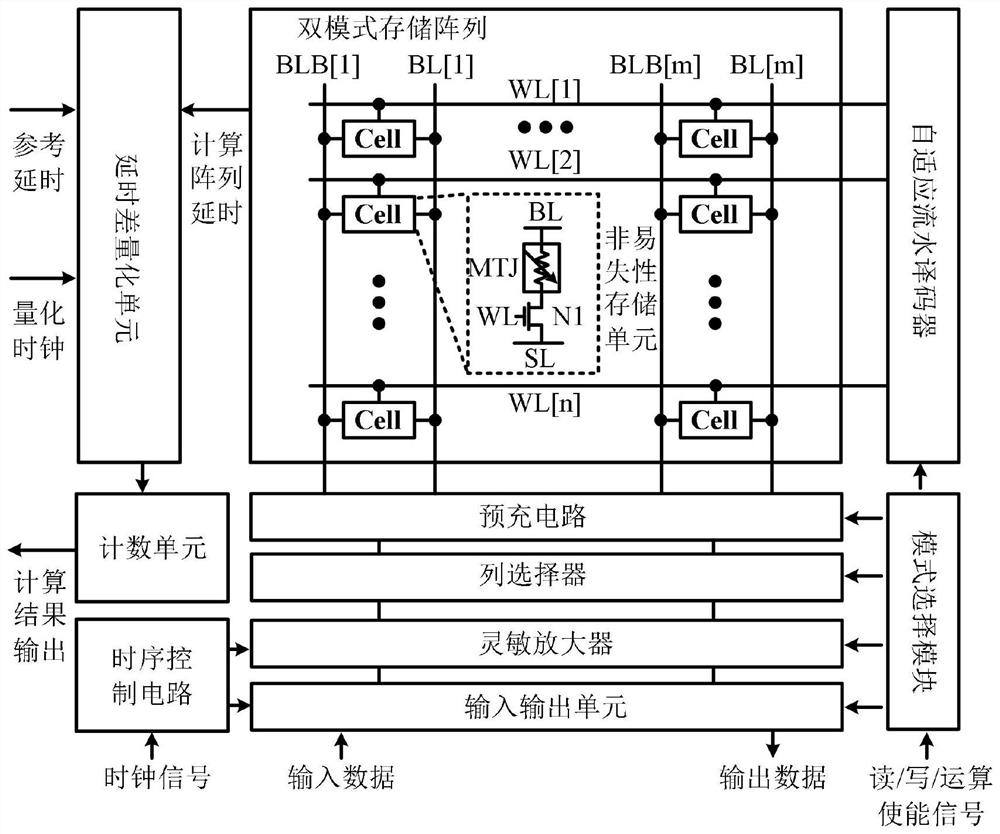

Time domain in-memory computing array structure based on magnetic random access memory

ActiveCN112581996AReduce power consumptionReduce memory access power consumptionDigital storagePhysical realisationTime domainComputer architecture

The invention discloses a time domain in-memory computing array structure based on a magnetic random access memory (MRAM), and belongs to the field of integrated circuit design. The circuit is characterized in that the structure comprises a dual-mode storage array, a self-adaptive pipeline decoder, a pre-charging circuit, a column selector, a sensitive amplifier, an input and output unit, a delaydifference quantization unit, a counting unit, a time sequence control circuit and a mode selection module. The method has a standard read-write mode and an in-memory calculation mode. Under the standard read-write mode, the read-write operation of the data in the storage array can be realized; the in-memory calculation mode can realize multiply-accumulate operation in binary neural network calculation. Multiply-accumulate calculation is completed during the reading of data; meanwhile, the delay quantization unit and the storage array are integrated together to reduce memory access energy consumption; compared with a conventional Von Noemann architecture neural network accelerator, the network operation energy efficiency is effectively improved.

Owner:SOUTHEAST UNIV

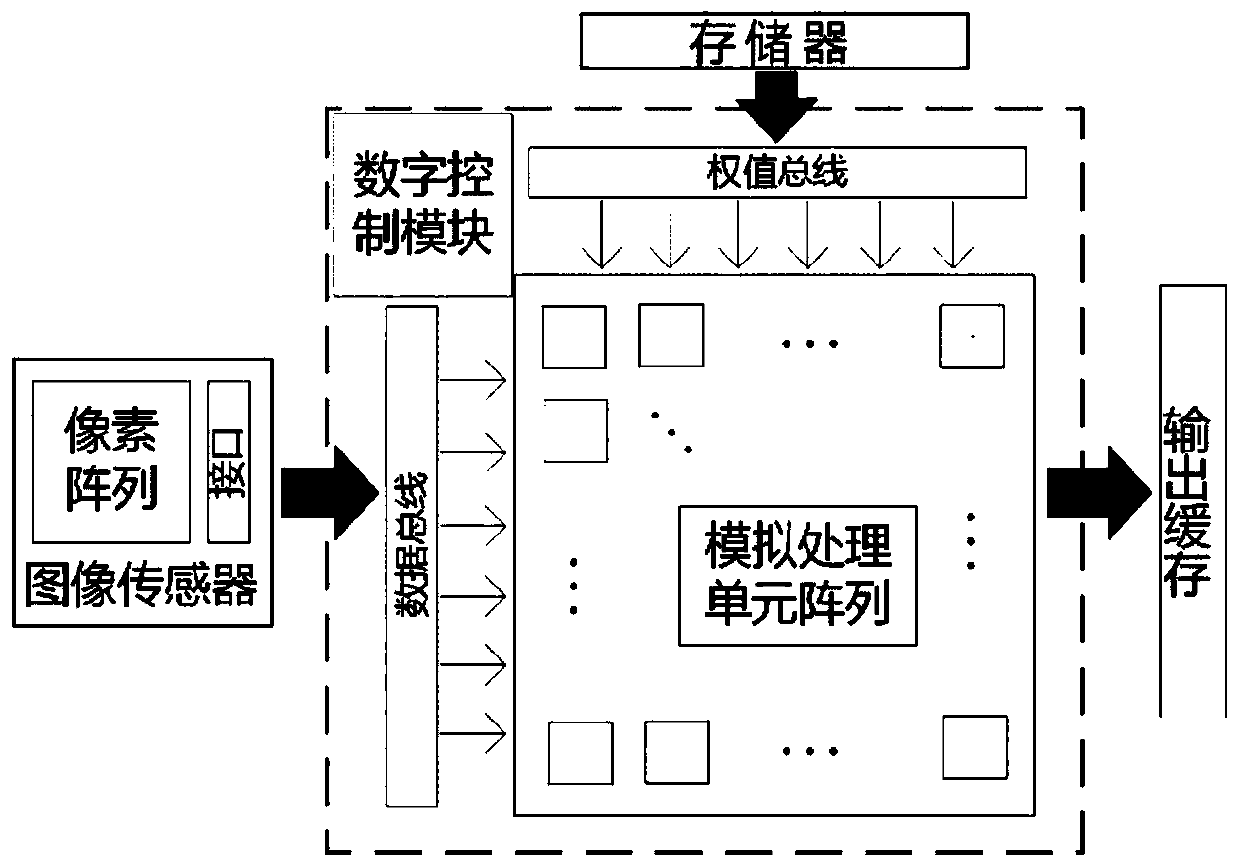

Near-sensor visual perception processing chip and Internet of Things sensing device

ActiveCN110288510AAvoiding Analog-to-Digital Conversion OverheadImprove energy efficiencyProcessor architectures/configurationElectric digital data processingThe InternetVisual perception

The embodiment of the invention provides a near-sensor visual perception processing chip and an Internet of Things sensing device, wherein the chip comprises a control unit and an analog processing unit, the control unit is used for inputting the binarized analog data into the analog processing unit, the binarized analog data is composed of an analog voltage signal acquired by a sensor and a weight signal corresponding to the analog voltage signal, and the analog processing unit is used for processing the received binary analog data to obtain a current value corresponding to the analog voltage signal. The sensing device comprises a CMOS image sensor, the chip and a communication module. According to the near-sensor visual perception processing chip and the Internet of Things sensing device provided by the embodiment of the present invention, the analog processing unit adopting a binary neural network algorithm is placed behind the CMOS image sensor and in front of the ADC, so that the chip can directly process the analog voltage signals, the huge energy consumption during the analog-to-digital conversion is avoided, and the energy efficiency is effectively improved.

Owner:TSINGHUA UNIV

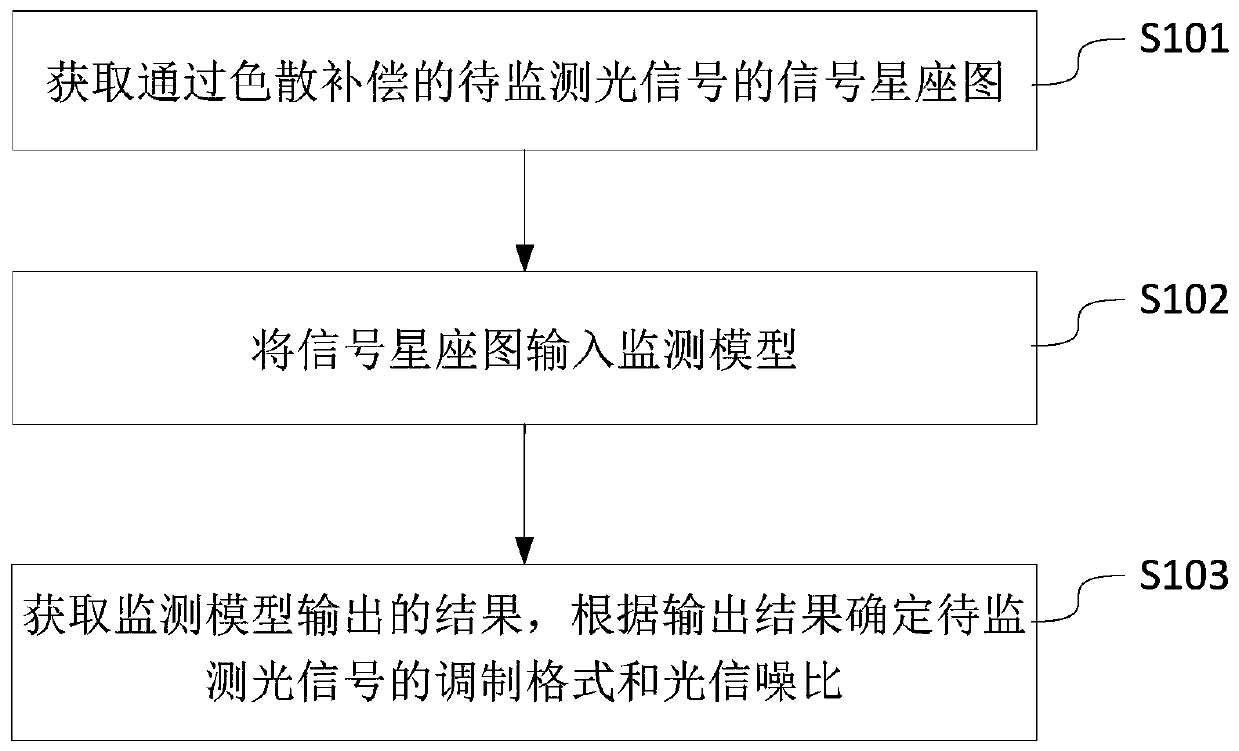

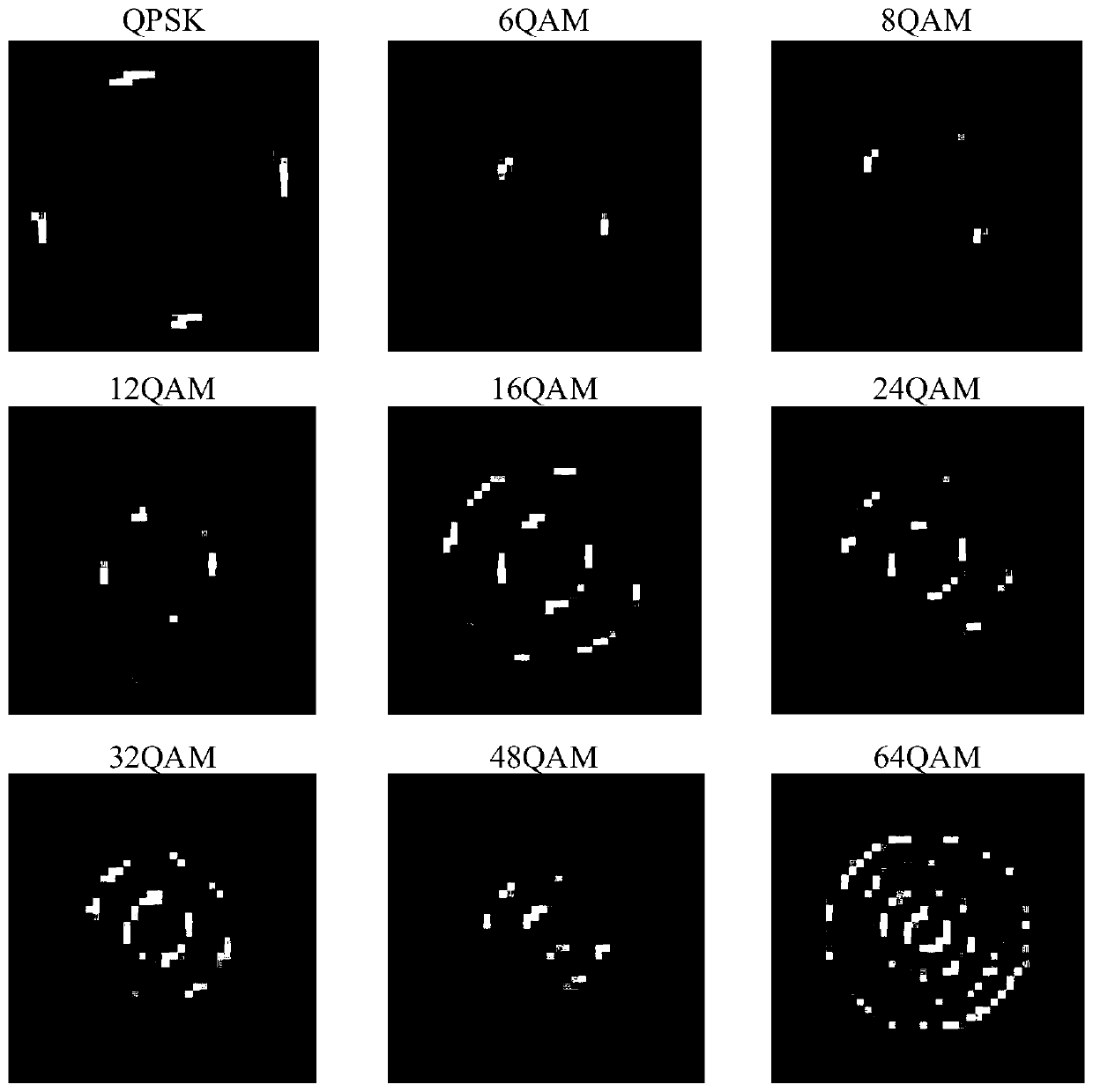

Optical performance monitoring method and device, electronic equipment and medium

InactiveCN110324080AReduce complexityReduce computational complexityElectromagnetic transmissionOptical signal to noise ratioOptical performance monitoring

The embodiment of the invention provides an optical performance monitoring method and device, electronic equipment and a medium, relates to the technical field of optical fiber communication, and is used for realizing optical performance monitor. According to the scheme of the embodiment of the invention, the method comprises the following steps: sacquiring a signal constellation diagram of the to-be-monitored optical signal subjected to dispersion compensation; inputting the signal constellation diagram into a monitoring model; wherein the monitoring model is obtained by training based on a binary neural network and a training set, the training set comprises a signal constellation diagram of the to-be-trained optical signals and modulation formats and optical signal to noise ratios corresponding to the to-be-trained optical signals, acquiring a result output by the monitoring model and determining the modulation formats and the optical signal to noise ratios of the to-be-monitored optical signals according to the output result.

Owner:BEIJING UNIV OF POSTS & TELECOMM

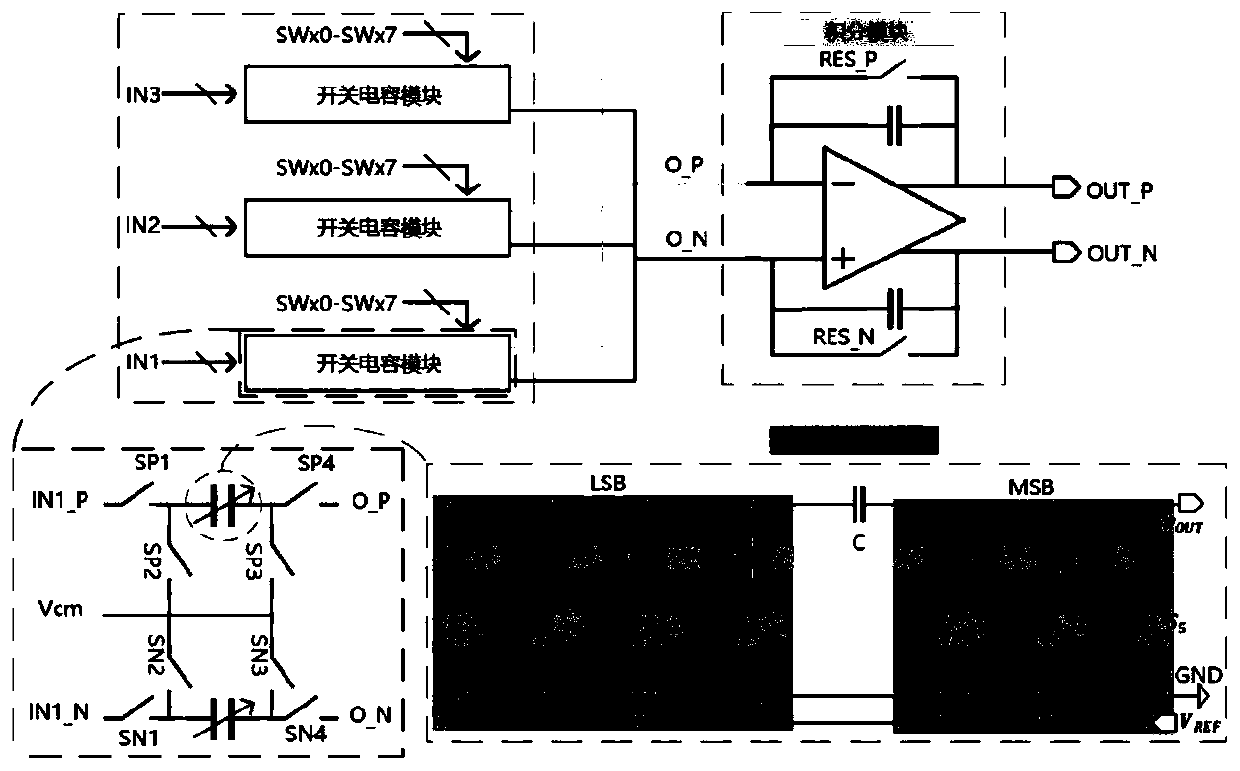

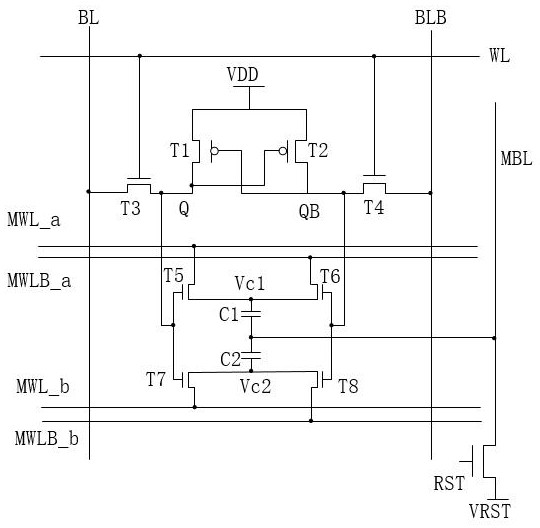

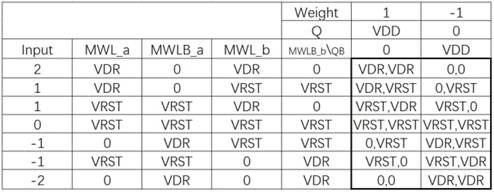

Bit cell applied to in-memory computation and memory computation array device

The invention provides a bit cell applied to in-memory computation. The bit unit comprises a four-tube storage unit and a peripheral storage circuit; wherein the weight output end of the four-tube storage unit is connected with the weight input end of the four-tube storage unit, and the anti-weight output end of the four-tube storage unit is connected with the anti-weight input end of the four-tube storage unit. The four-tube storage unit is arranged and replaces a six-tube storage unit to be applied to the storage array module, the structure of the storage array device is simplified, the peripheral storage circuit is used for carrying out accumulative addition operation, binary neural network accumulative operation is completed through analog mixed signal capacitive coupling calculation,input activation of five values is achieved, and the computational accuracy is improved; in addition, no quiescent current exists in the calculation process, so that the power consumption is reduced,and a capacitance coupling mechanism has better stability. Therefore, the storage and calculation array structure is simplified, the power consumption is reduced, and the storage and calculation efficiency and precision are improved.

Owner:中科南京智能技术研究院

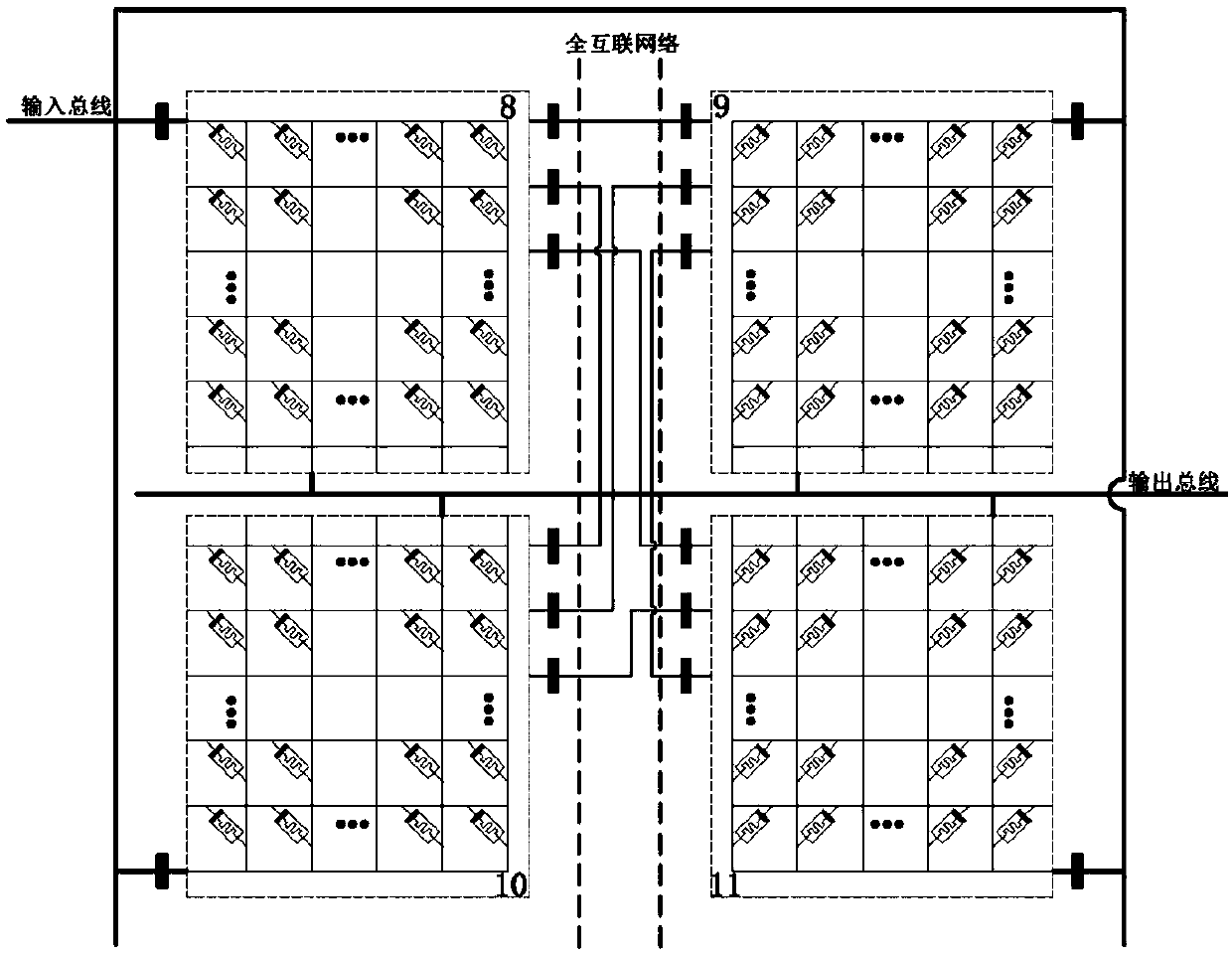

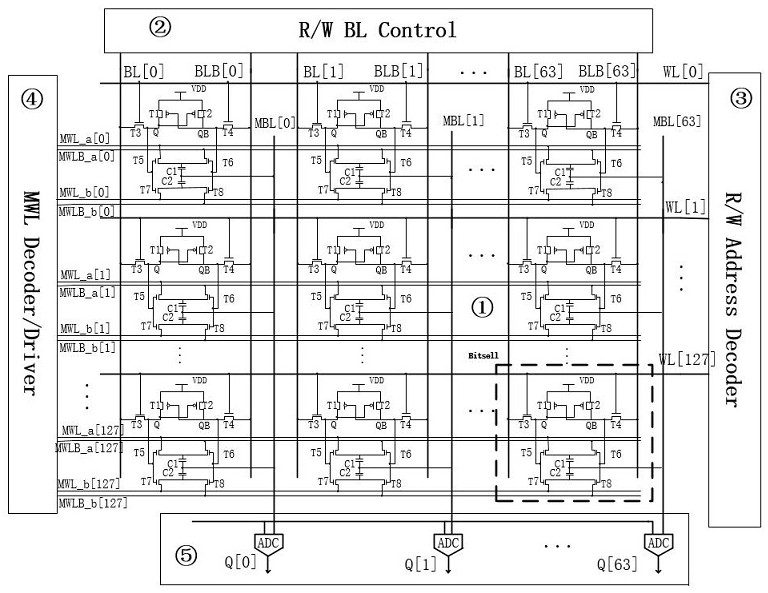

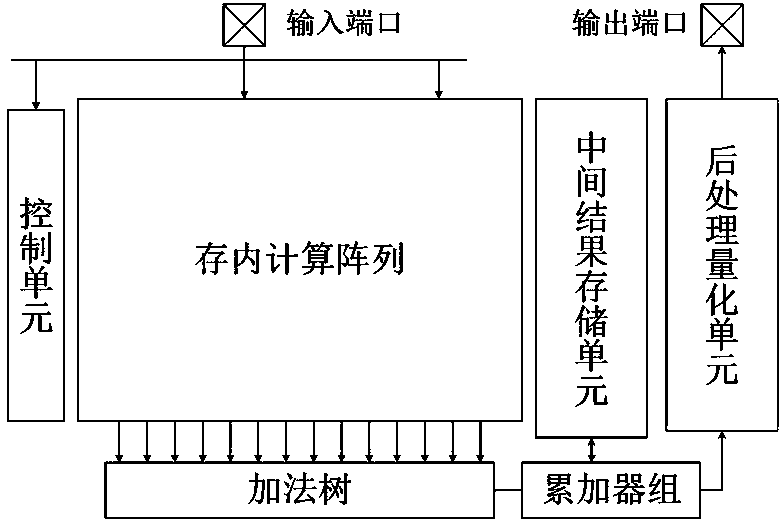

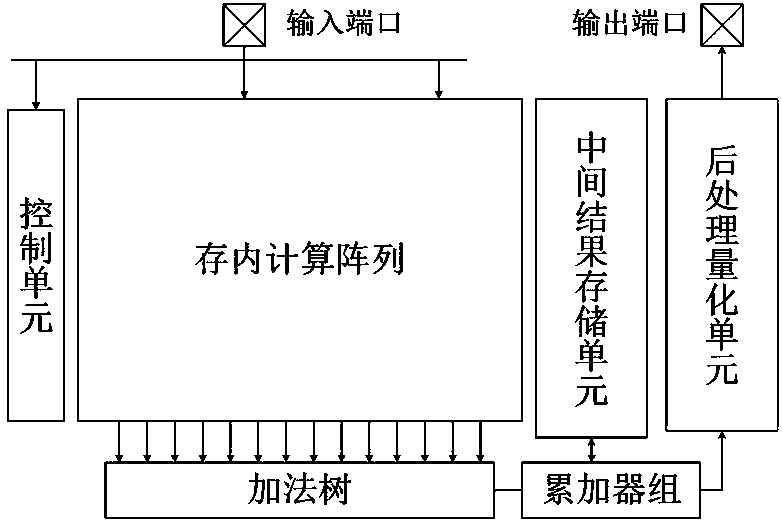

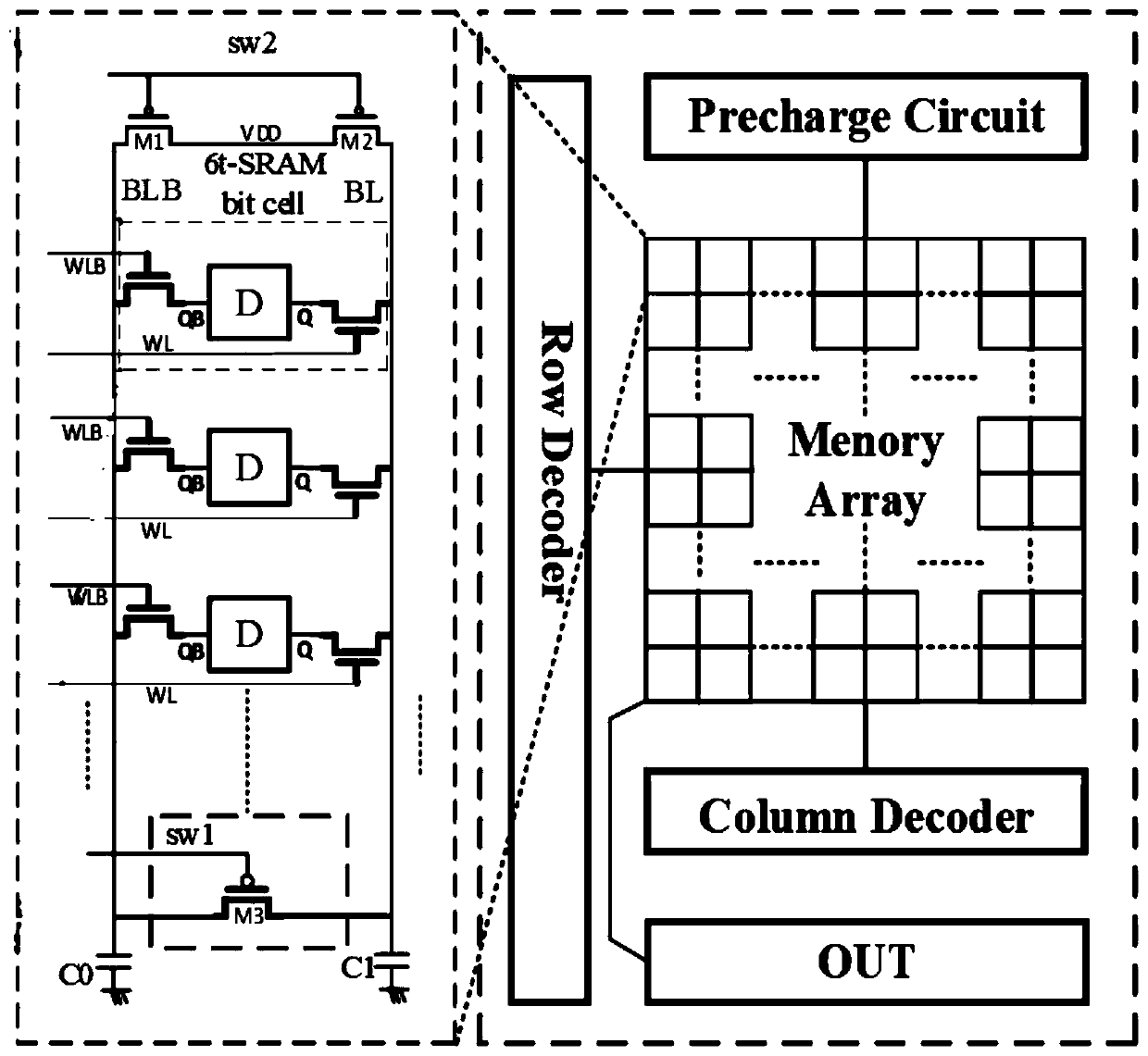

In-memory computing device suitable for binary convolutional neural network computing

ActiveCN111126579AFrequent data exchangeReduce power consumptionDigital data processing detailsPhysical realisationMulti inputStatic random-access memory

The invention belongs to the technical field of integrated circuits, and particularly relates to an in-memory computing device suitable for binary convolutional neural network computing. The device comprises an in-memory computing array based on a static random access memory and used for realizing inter-vector exclusive-OR operation; a multi-input addition tree which is used for accumulating exclusive-OR results in different input channels; a storage unit is used for temporarily storing an intermediate result; the accumulator group is used for updating the intermediate result; the post-processing quantization unit is used for quantizing the high-precision accumulation result into a one-bit output characteristic value; and the control unit is used for controlling the calculation process andthe data flow direction. According to the device, the XOR operation in the binary neural network can be completed while the input data is stored, and frequent data exchange between the storage unit and the calculation unit is avoided, so that the calculation speed is improved, and the power consumption of the chip is reduced.

Owner:FUDAN UNIV

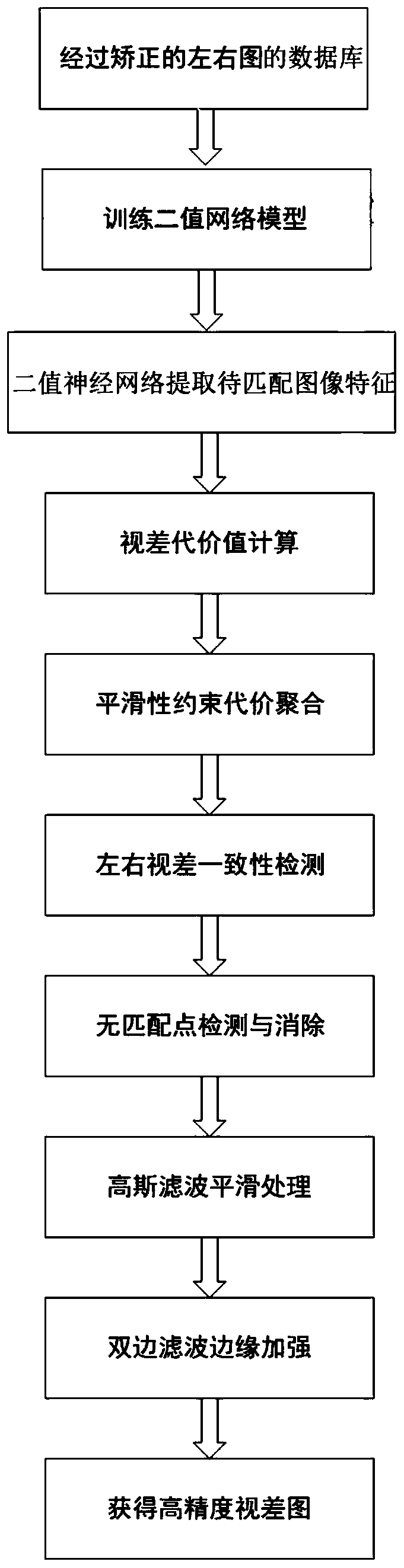

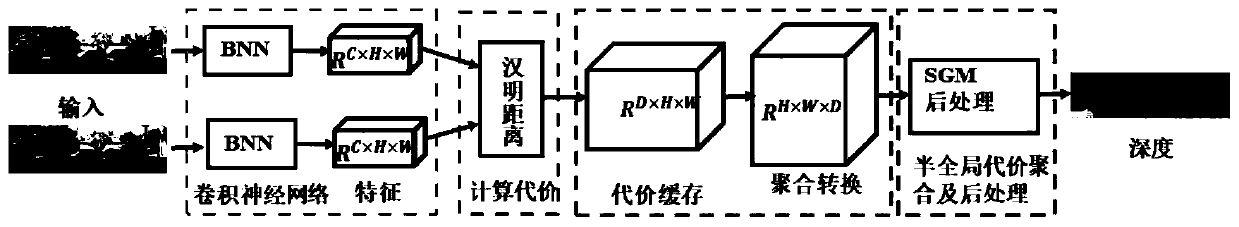

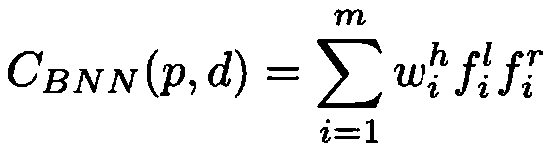

Binocular stereo vision matching method based on neural network and operation framework thereof

InactiveCN110738241AHigh precisionFast operationCharacter and pattern recognitionNeural architecturesBinocular stereoFeature description

The invention relates to a binocular stereo vision matching method based on a neural network and an operation framework thereof, and the matching method comprises the following steps: 1. constructingthe operation framework of the neural network, constructing a binary neural network and carrying out the training; 2. initializing a neural network operation framework; 3, inputting the left image andthe right image into a binary neural network for image feature extraction to obtain a string of binary sequences as feature description of image pixel points; 4. Using a binary neural network for replacing a convolutional neural network to be used for feature extraction of an image, and designing a neural network training mode and an operation framework of operation special for the binary neuralnetwork, so that binocular stereoscopic vision matching is higher in precision, and meanwhile, the operation speed is higher.

Owner:SUN YAT SEN UNIV

Balanced binarization neural network quantification method and system

PendingCN110472725AImprove classification performanceNeural architecturesNeural learning methodsEntropy maximizationQuantification methods

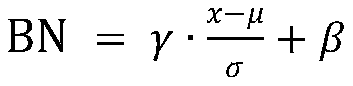

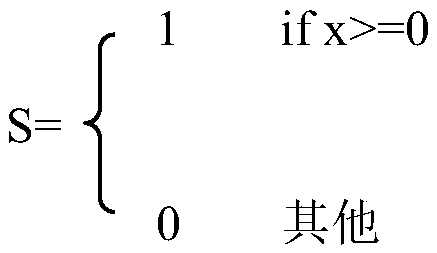

The invention discloses a balanced binarization neural network quantification method and system. The method comprises the following steps of S1, performing balance standard binarization operation on aweight in a neural network to obtain a binarized weight; S2, performing balanced binarization operation on the activation value in the neural network to obtain a binarized activation value; and S3, executing the steps S1 and S2 on the convolutional layer in the network in the iterative training process of the neural network to generate a balanced binary neural network. The balanced and standardized binarization network weight and the balanced and binarization network activation value are used, so that the neural network can achieve activation value information entropy maximization and weightand activation quantization loss minimization by minimizing a loss function in the training process, the quantization loss is reduced, and the classification performance of the binarization neural network is improved.

Owner:BEIHANG UNIV

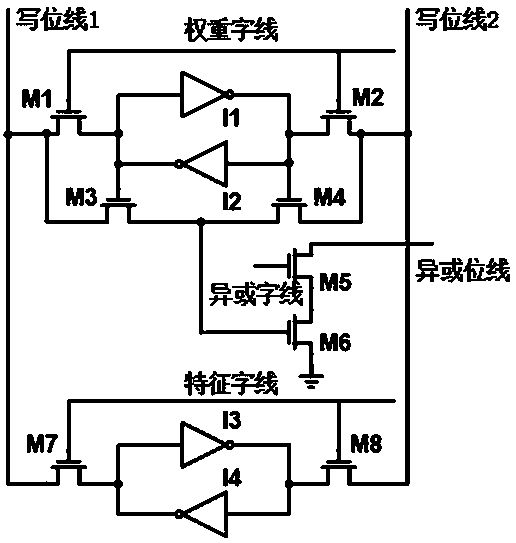

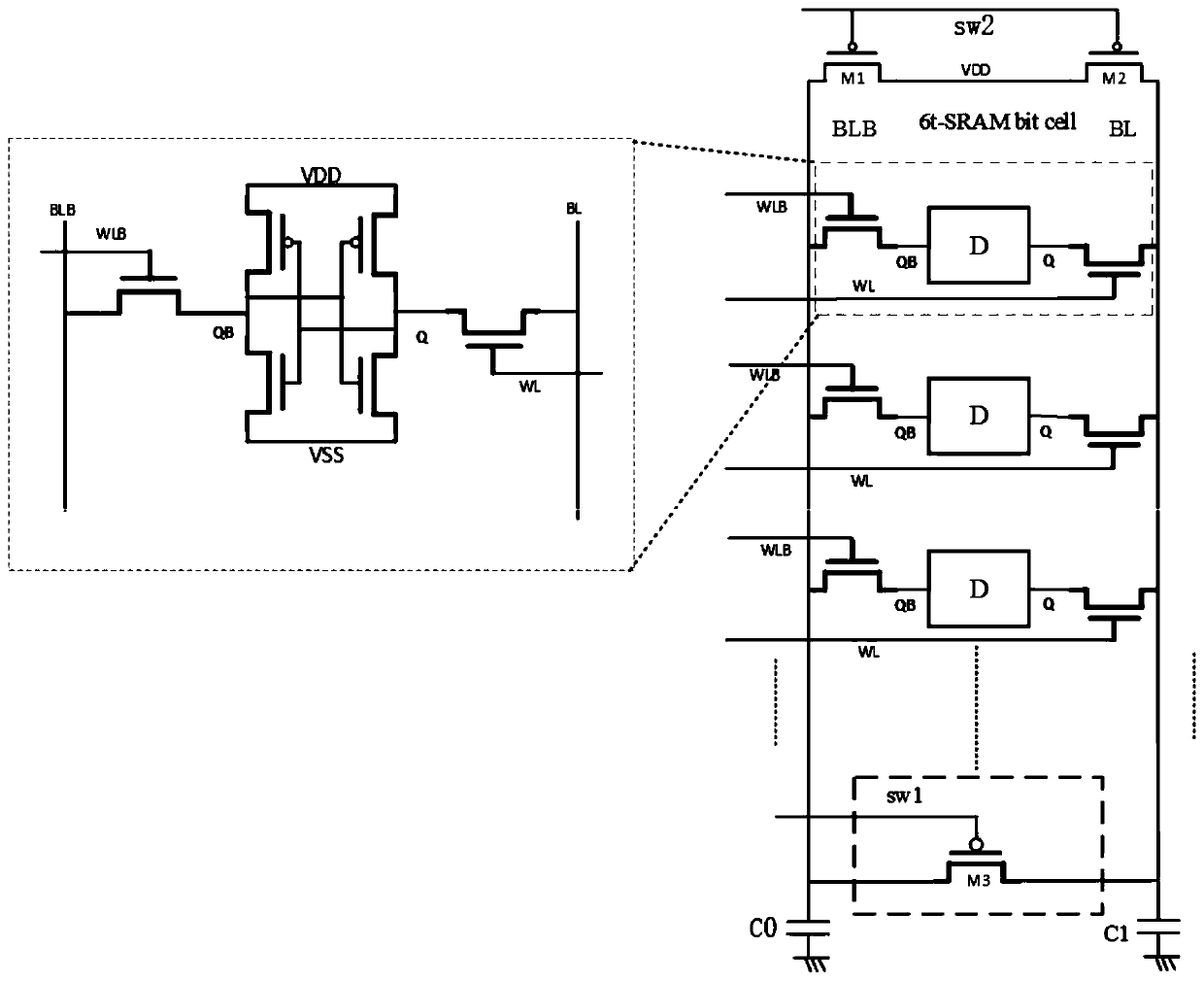

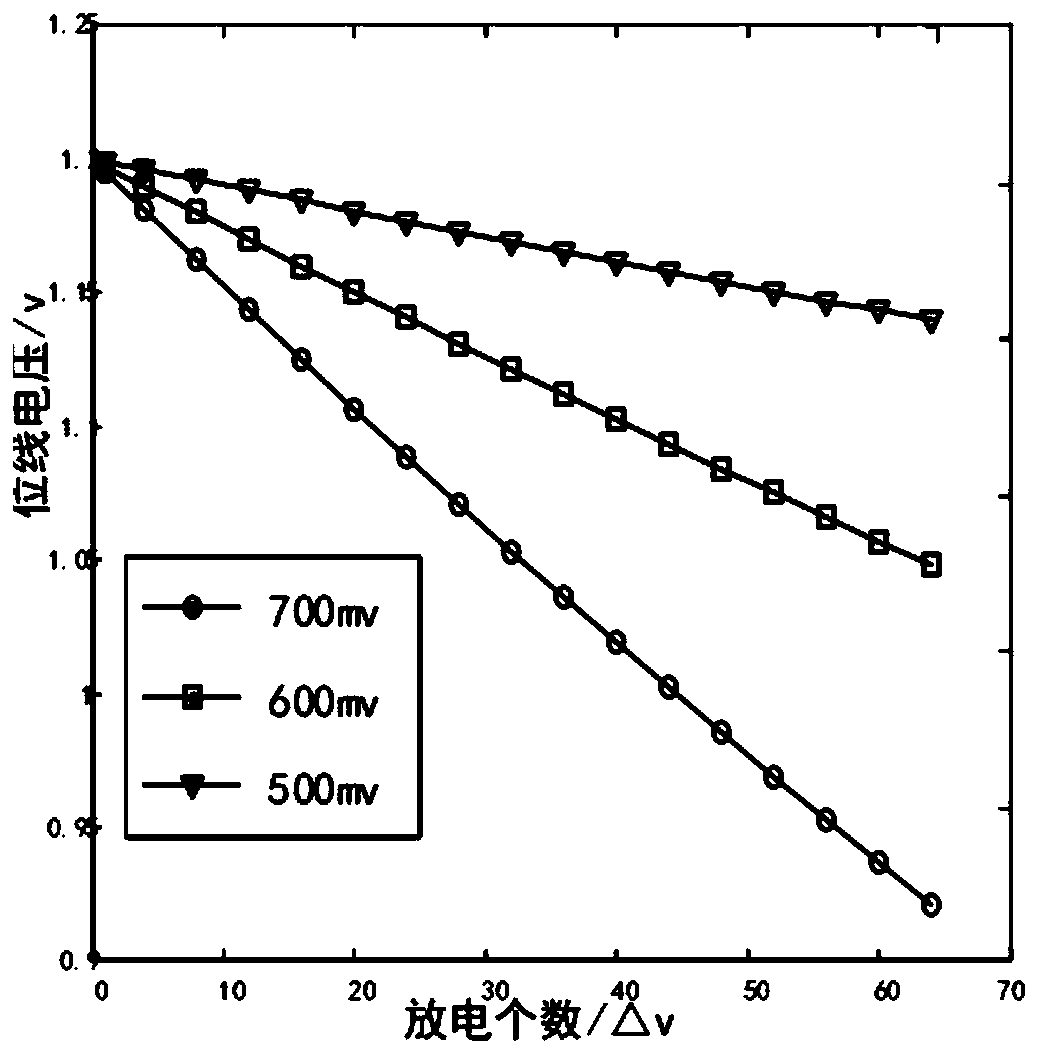

Double-word-line 6TSRAM unit circuit for binary neural network

ActiveCN110941185AReduce the amount of calculationReduce circuit complexityAdaptive controlBit lineNerve network

The invention discloses a double-word-line 6TSRAM unit circuit for a binary neural network, and the circuit is characterized in that PMOS transistors M1 and M2 are pre-charging tubes, the source electrodes of M1 and M2 are connected to a power supply Vdd, the drain electrode of M1 is connected to a bit line BLB, and the drain electrode of M2 is connected to a bit line BL; grid electrodes of the M1and the M2 are jointly connected to a control end sw2; the PMOS transistor M3 is a balance voltage tube shared by a column of 6TSRAM unit arrays, and a source electrode and a drain electrode of the PMOS transistor M3 are respectively connected with bit lines BLB and BL and are used for balancing voltages on the two bit lines BL and BLB; the grid electrode of the M3 is connected to the control endsw1; and the capacitors C0 and C1 are parasitic capacitors on the bit lines BLB and BL. According to the circuit structure, the area and the power consumption are reduced, the linearity is improved,meanwhile, the operation of an analog domain and the operation of a digital domain are combined, and the calculation amount of the analog domain and the complexity of the circuit are reduced.

Owner:ANHUI UNIVERSITY

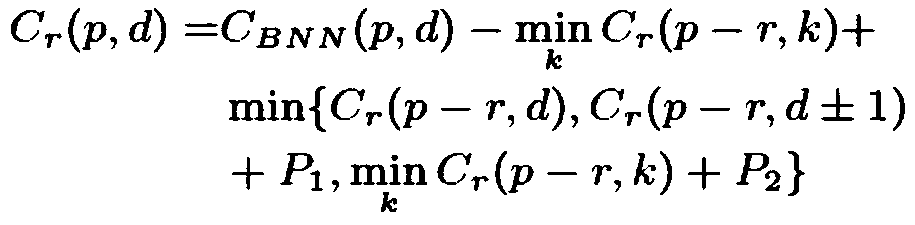

Binary neural network stereo vision matching method realized based on FPGA

ActiveCN111553296AReduce consumptionReduced to bit operationsProcessor architectures/configurationNeural architecturesParallaxFeature vector

The invention relates to a binary neural network stereo vision matching method based on an FPGA. The method comprises the following steps of 1, obtaining periodic input streams of pixels in a binocular matching grayscale image, 2, acquiring an image block from the pixel, 3, inputting the image blocks in the step 2 into a binary neural network with preset weights and parameters to obtain binary feature vectors, 4, performing cost calculation on the feature vector within the maximum search parallax to obtain a matching cost, 5, inputting the cost into semi-global cost aggregation for cost aggregation to obtain aggregated cost, 6, selecting the position with the minimum cost from the aggregated cost as parallax, and 7, carrying out consistency detection and parallax refinement calculation onthe selected parallax to obtain a parallax graph, and outputting parallax values of the pixels one by one according to a period. By means of the binarization method, computing and storage resources ofthe network can be effectively reduced, and therefore the high-precision stereo matching network can be deployed into the FPGA.

Owner:SUN YAT SEN UNIV

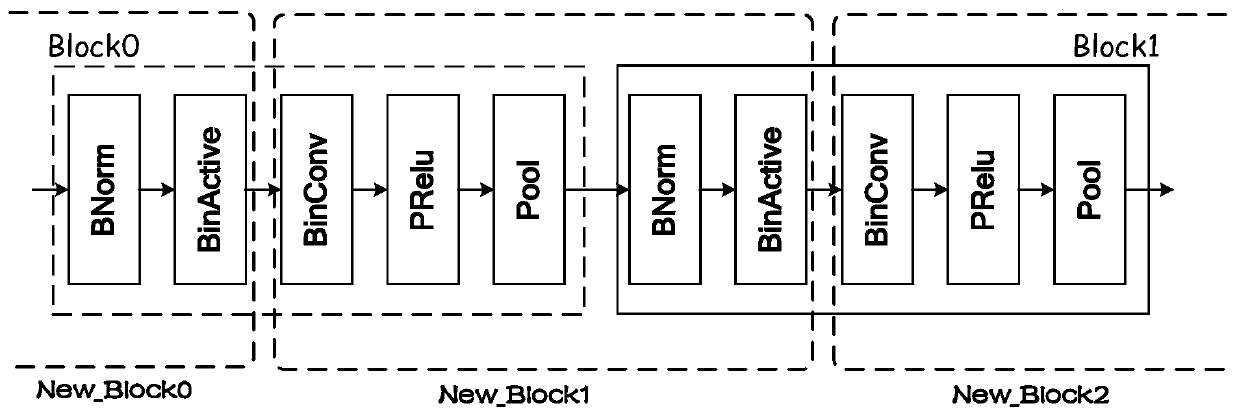

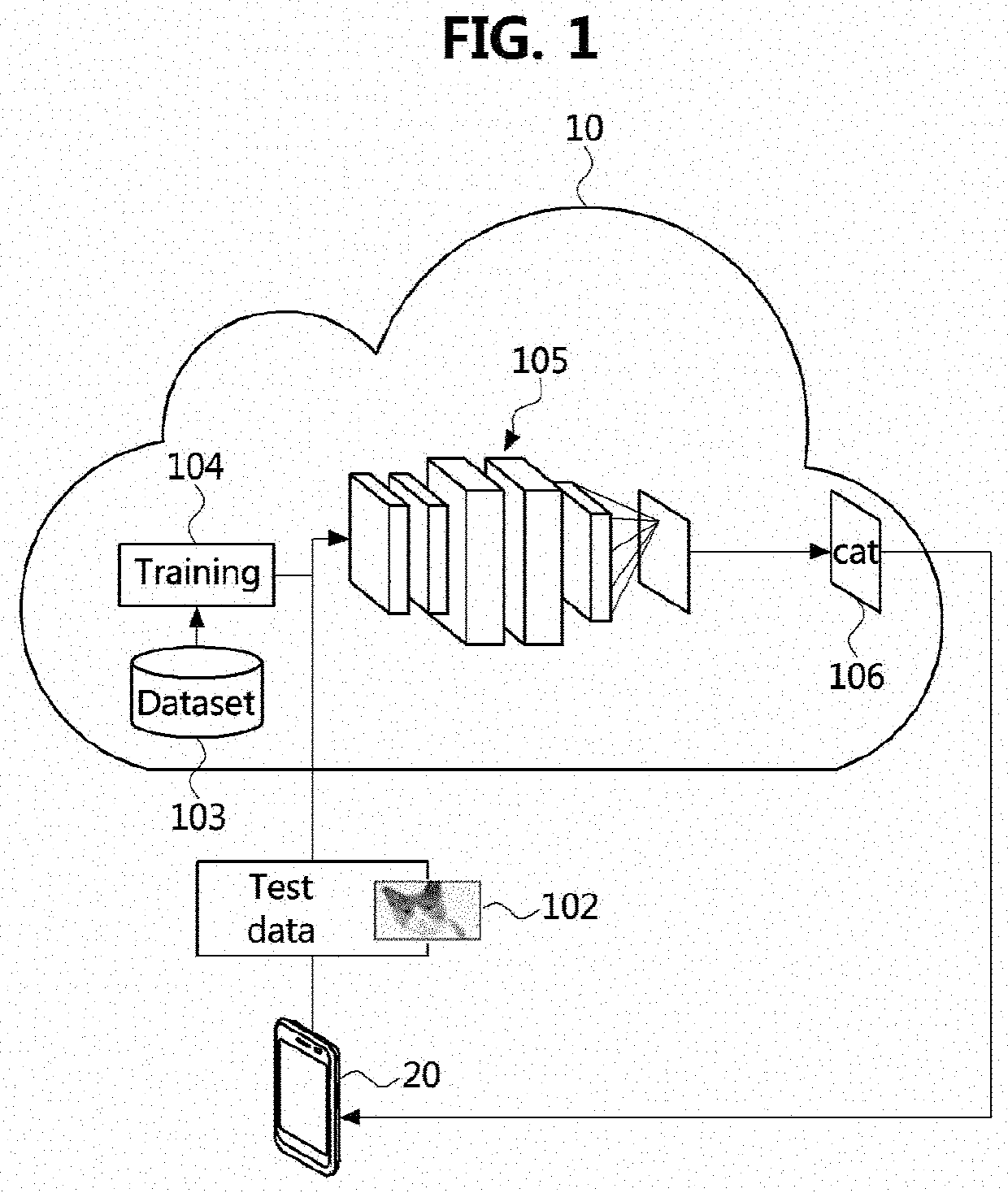

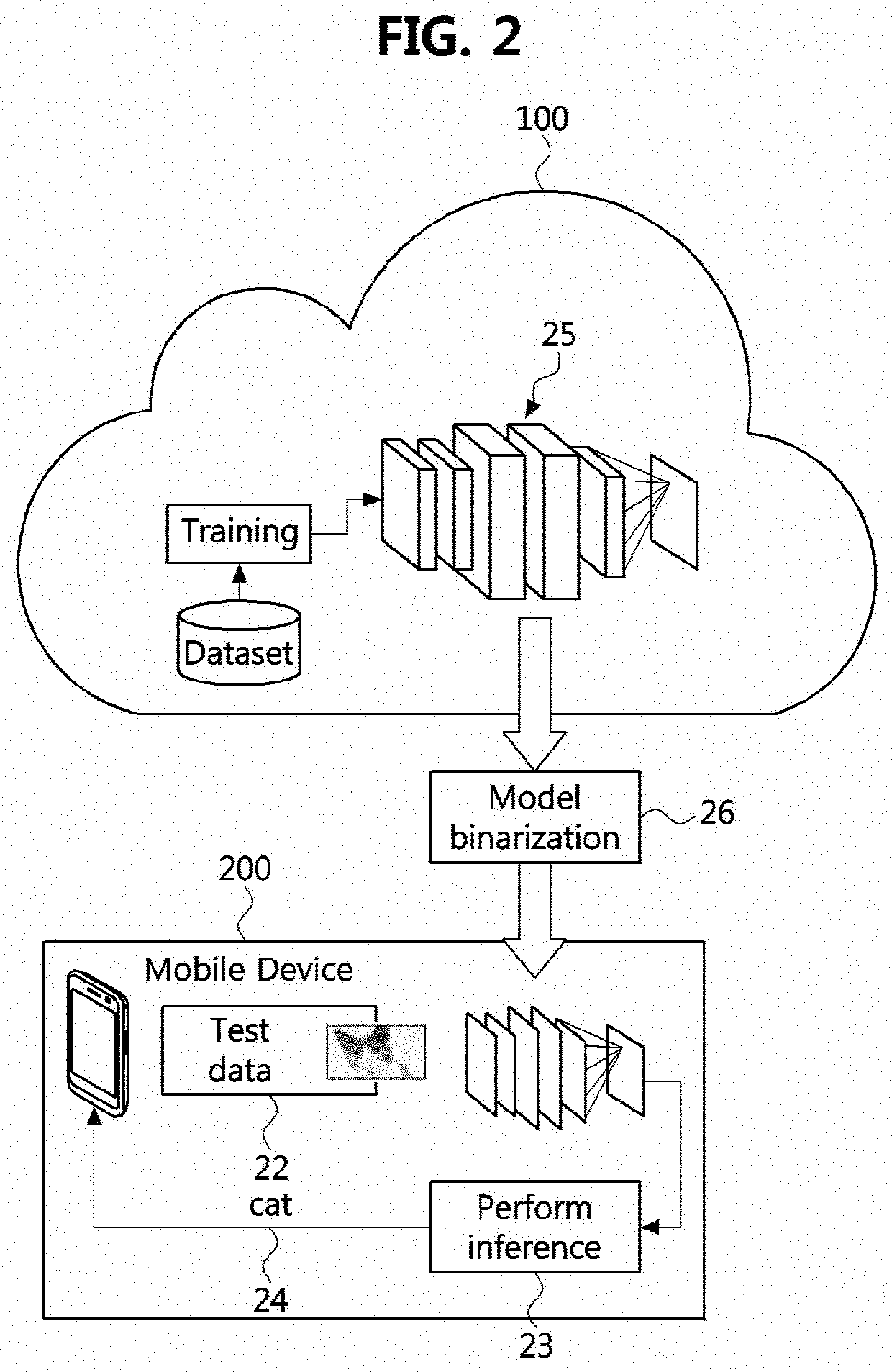

Method and apparatus for re-configuring neural network

Disclosed are a method and apparatus for generating an ultra-light binary neural network which may be used by an edge device, such as a mobile terminal. A method of re-configuring a neural network includes obtaining a neural network model on which training for inference has been completed, generating a neural network model having a structure identical with the neural network model on which the training has been completed, performing sequential binarization on an input layer and filter of the generated neural network model for each layer, and storing the binarized neural network model. The method may further include providing the binarized neural network model to a mobile terminal.

Owner:ELECTRONICS & TELECOMM RES INST

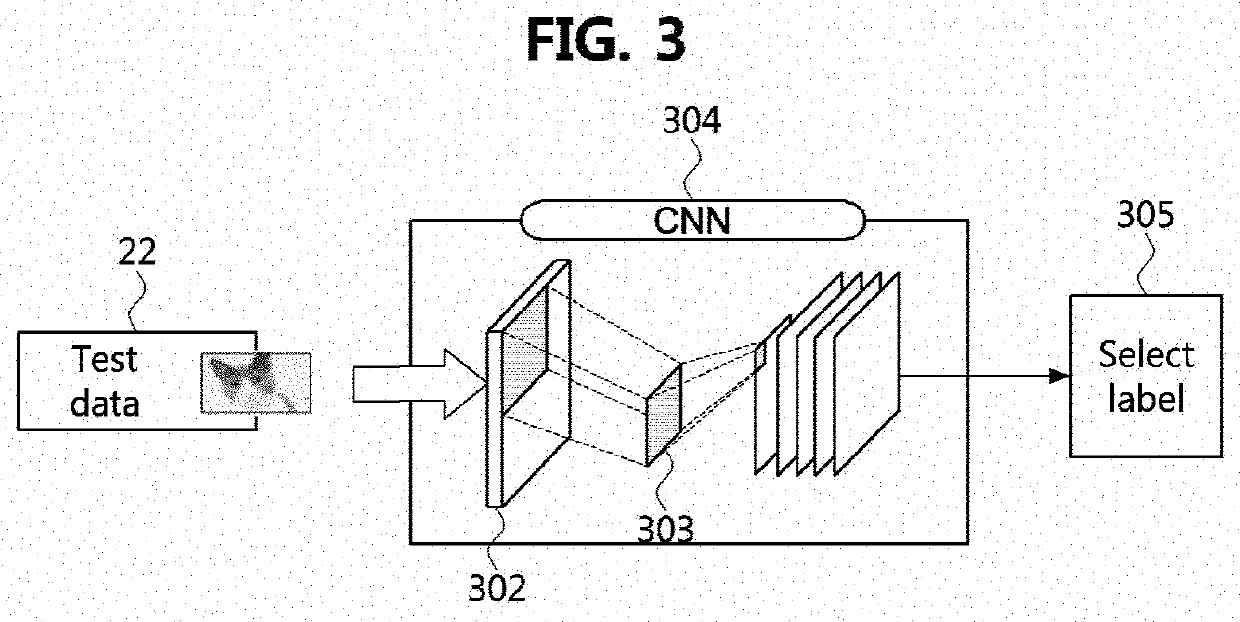

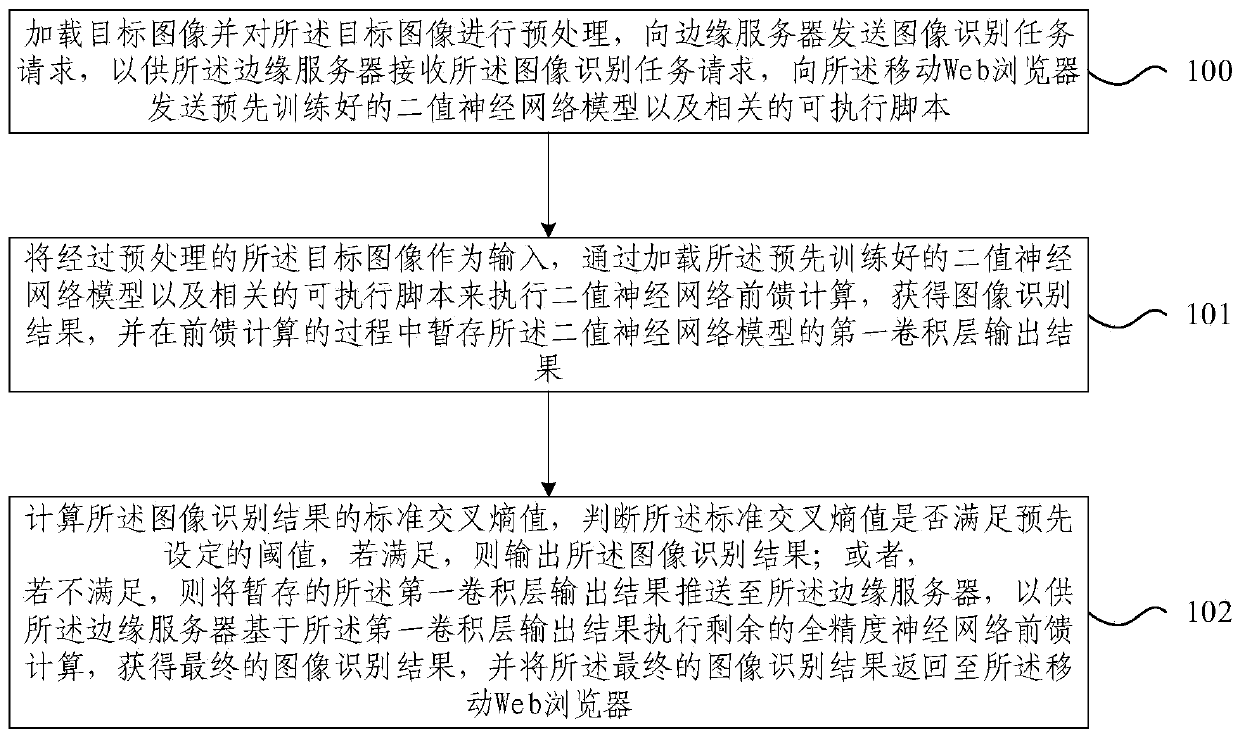

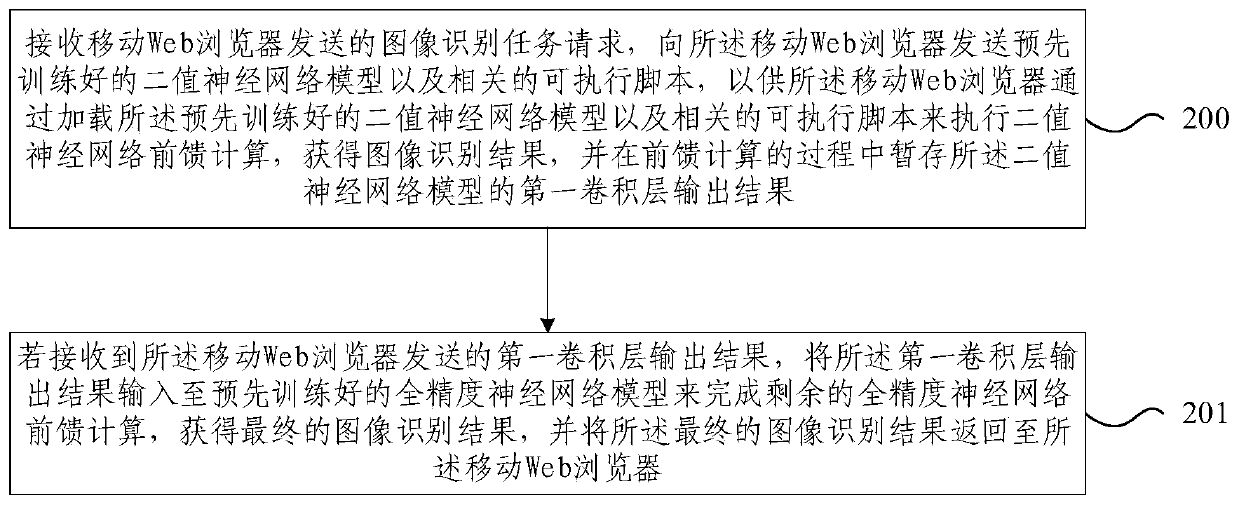

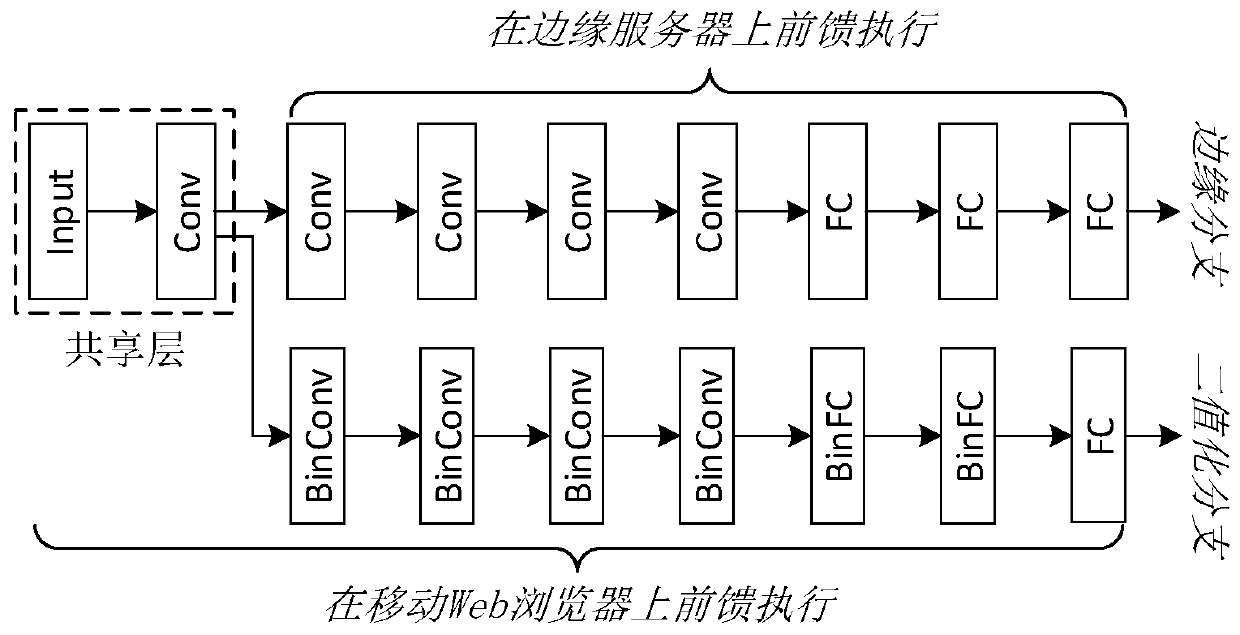

Binary-neural-network-based lightweight Web AR identification method and system

ActiveCN110232338AAccelerated Feedforward InferenceReduce sizeNeural architecturesEnergy efficient computingAlgorithmEnergy expenditure

The embodiment of the invention provides a binary-neural-network-based lightweight Web AR identification method and system. Thebinary-neural-network-based lightweight Web AR identification method comprises the steps: loading a target image through a mobile Web browser, preprocessing the image, and sending a target identification task request to an edge server; receiving a binary neural network model and a related executable script returned by the edge server, and performing binary neural network feedforward calculation, obtaining an image identification result, temporarily storing an output result of the sharing layer, judging whether the cross entropy of the image identification result meets a preset threshold; and if not, sending the output result of the sharing layer to the edge serverfor feedforward reasoning. According to the embodiment of the invention, the binary neural network is introduced to accelerate network reasoning, and reduce image recognition loading time delay and equipment energy consumption pressure; computing resources of the mobile terminal are fully utilized, thus effectively relieving computing pressure of the edge server, and a real-time solution for Web AR application is provided.

Owner:江西金虎保险设备集团有限公司

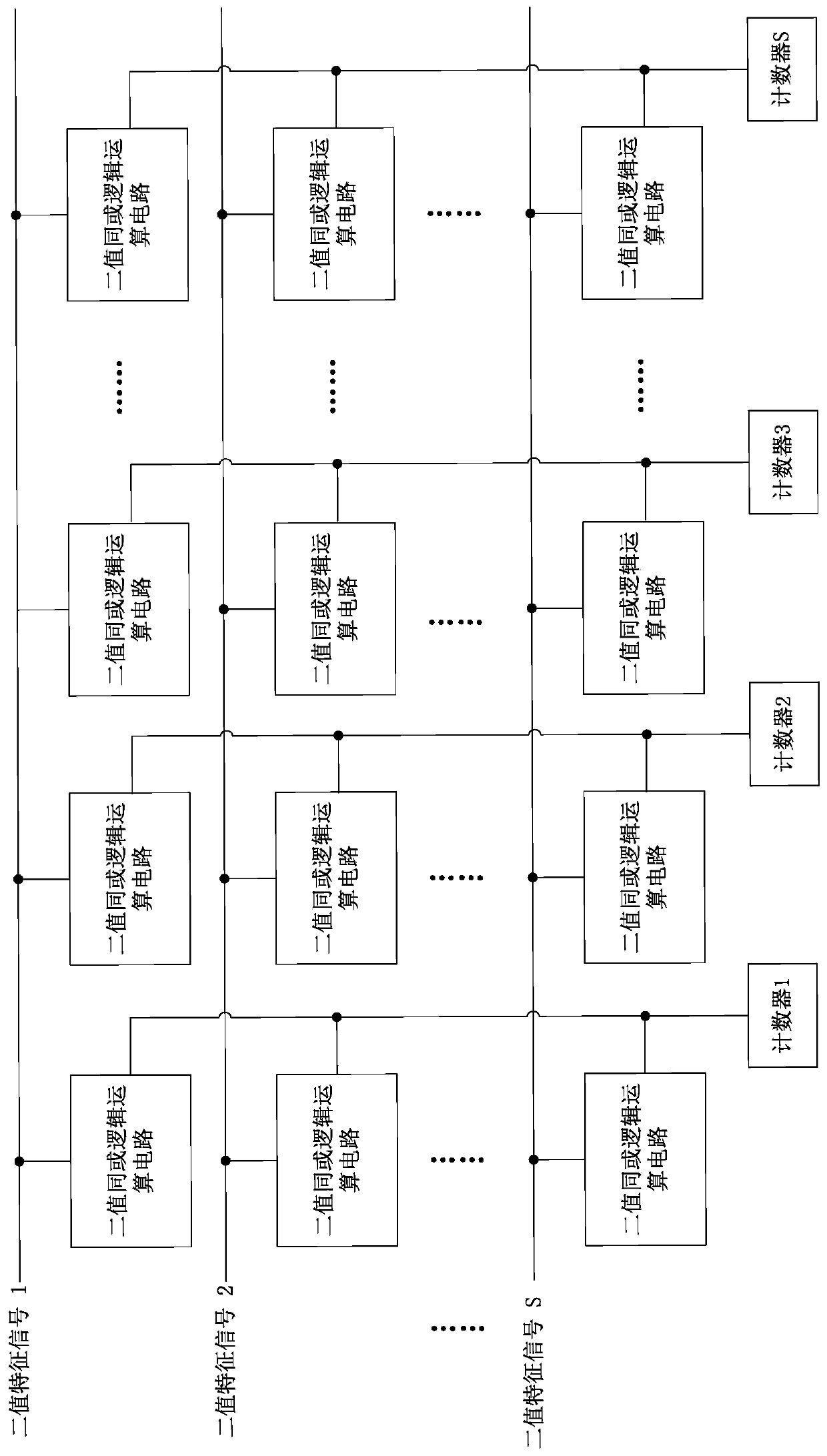

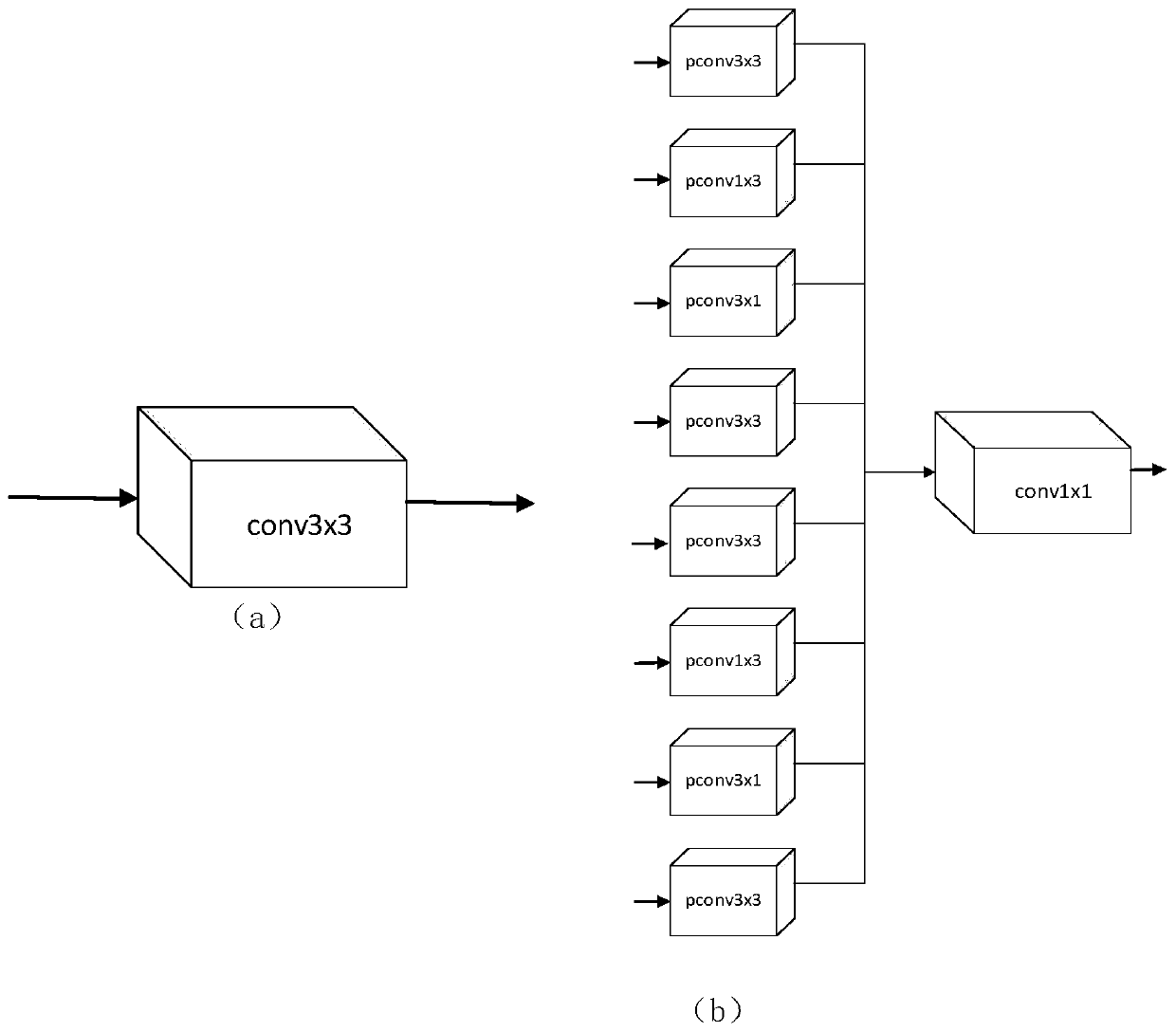

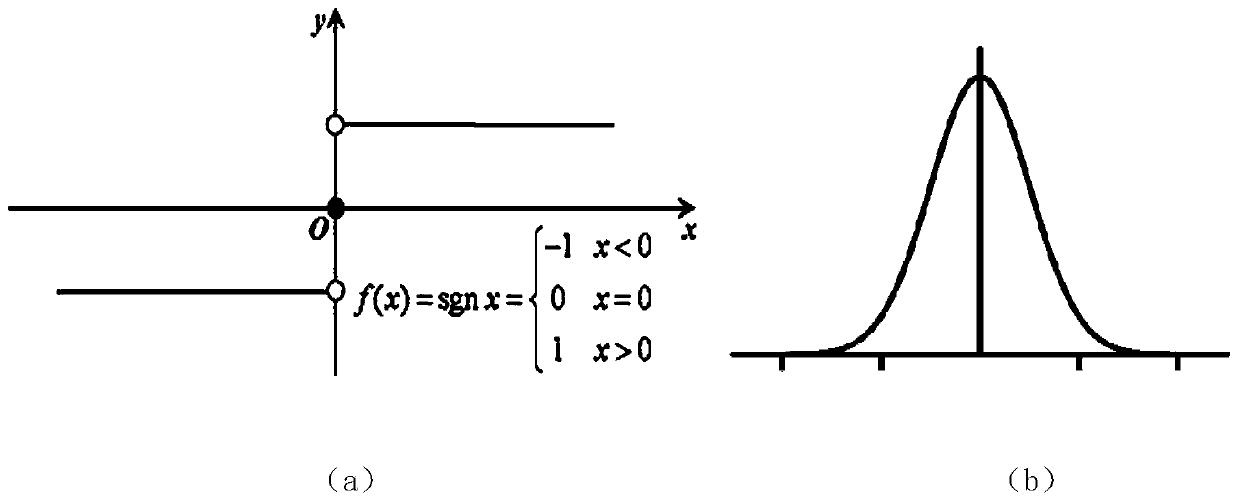

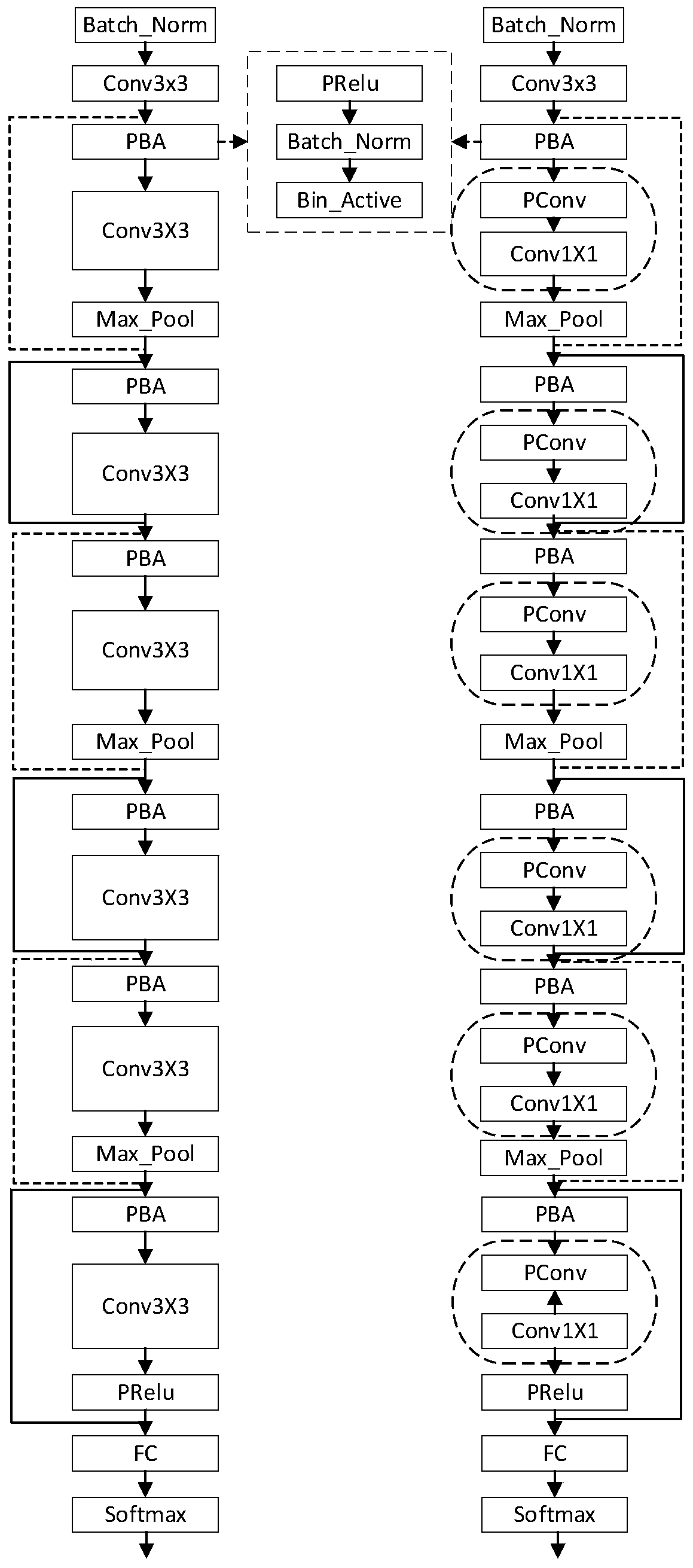

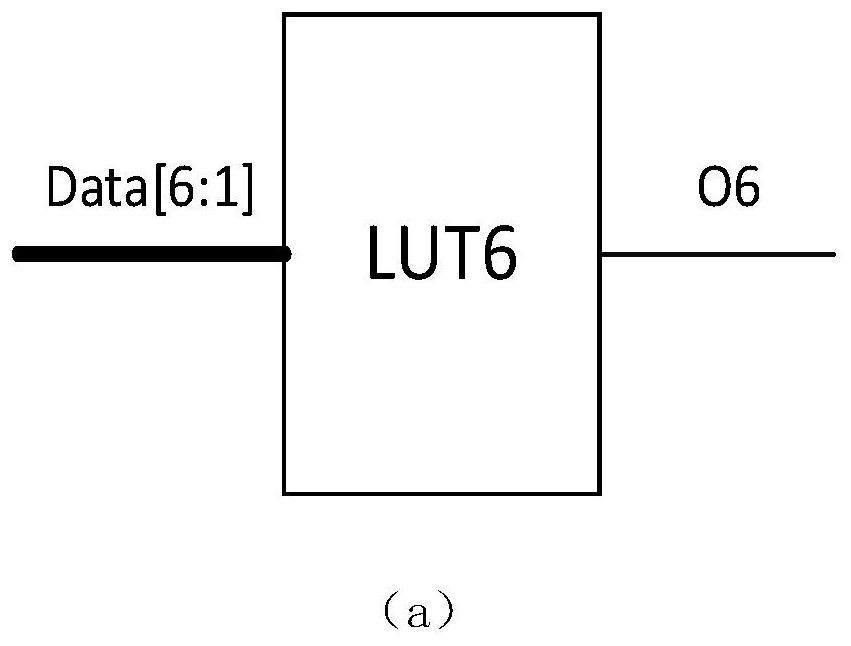

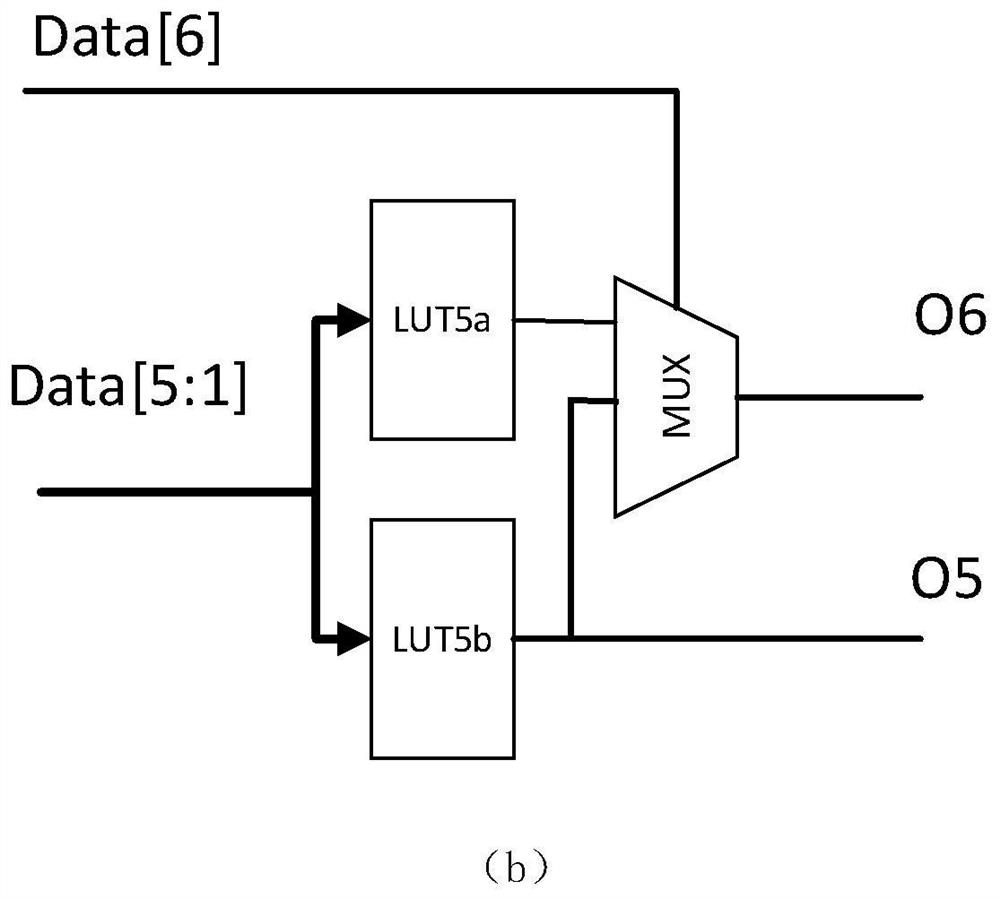

Double-layer same-or binary neural network compression method based on lookup table calculation

ActiveCN109993279AReduce in quantityReduce computational complexityNeural architecturesPhysical realisationAlgorithmResource utilization

The invention discloses a double-layer same or binary neural network compression method based on lookup table calculation. The compression method is completed by a double-layer convolution structure,and the algorithm comprises the following steps: firstly, performing non-linear activation, batch normalization and binary activation on an input feature map, and grouping to perform first-layer convolution operation with different convolution kernel sizes to obtain a first-layer output result; and then, carrying out 1 * 1 second-layer convolution operation on the first-layer output result to obtain an output feature map. In terms of hardware implementation, a traditional double-layer sequential calculation mode is replaced by three-input XOR operation of double-layer parallel calculation forimproved double-layer convolution, all double-layer convolution operations are calculated in a lookup table mode, and the utilization rate of hardware resources is increased. The compression method provided by the invention is an algorithm hardware collaborative compression scheme integrating a full-precision high-efficiency neural network technique and a lookup table calculation mode, has a relatively good compression effect in structure, and also reduces the consumption of logic resources in hardware.

Owner:SOUTHEAST UNIV

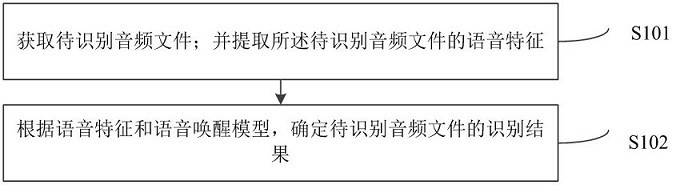

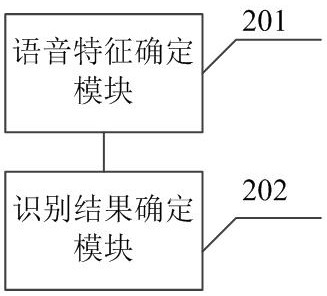

Binary neural network voice wake-up method and system

ActiveCN113409773ASimple structureReduce the difficulty of deploymentSpeech recognitionNeural architecturesAlgorithmEngineering

The invention relates to a binary neural network voice wake-up method and system. The method comprises the following steps: acquiring a to-be-identified audio file; extracting voice features of the to-be-recognized audio file; determining an identification result of the to-be-identified audio file according to the voice features and a voice wake-up model, wherein the voice wake-up model is established through a trained binarized depth separable convolutional neural network; the specific recognition process of the voice wake-up model comprises the following steps: performing quantization processing on input by using a first convolutional layer; carrying out convolution multiplication according to the quantized voice features, the binary quantization parameter weight of a network layer and a network layer correction factor, and adding convolution data and the bias coefficient of the first convolution layer; taking the output of the first convolutional layer as the input of a second convolutional layer; and replacing the first convolutional layer with the second convolutional layer, and returning to the quantization step until an identification result is output. According to the invention, power consumption can be reduced on the basis of ensuring identification accuracy.

Owner:中科南京智能技术研究院

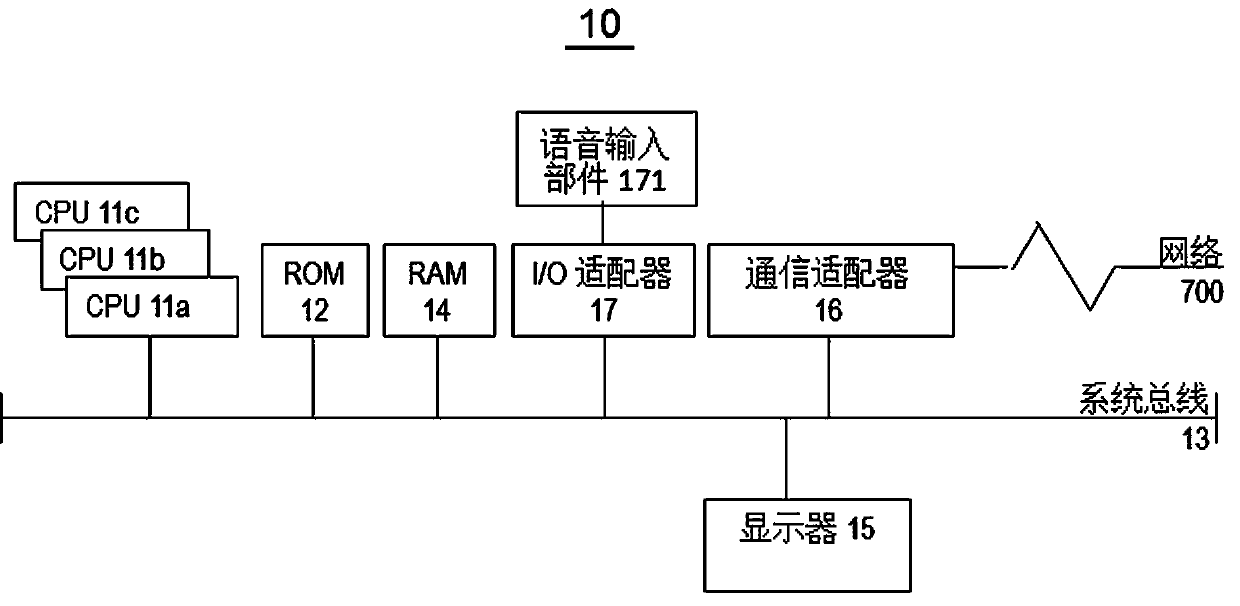

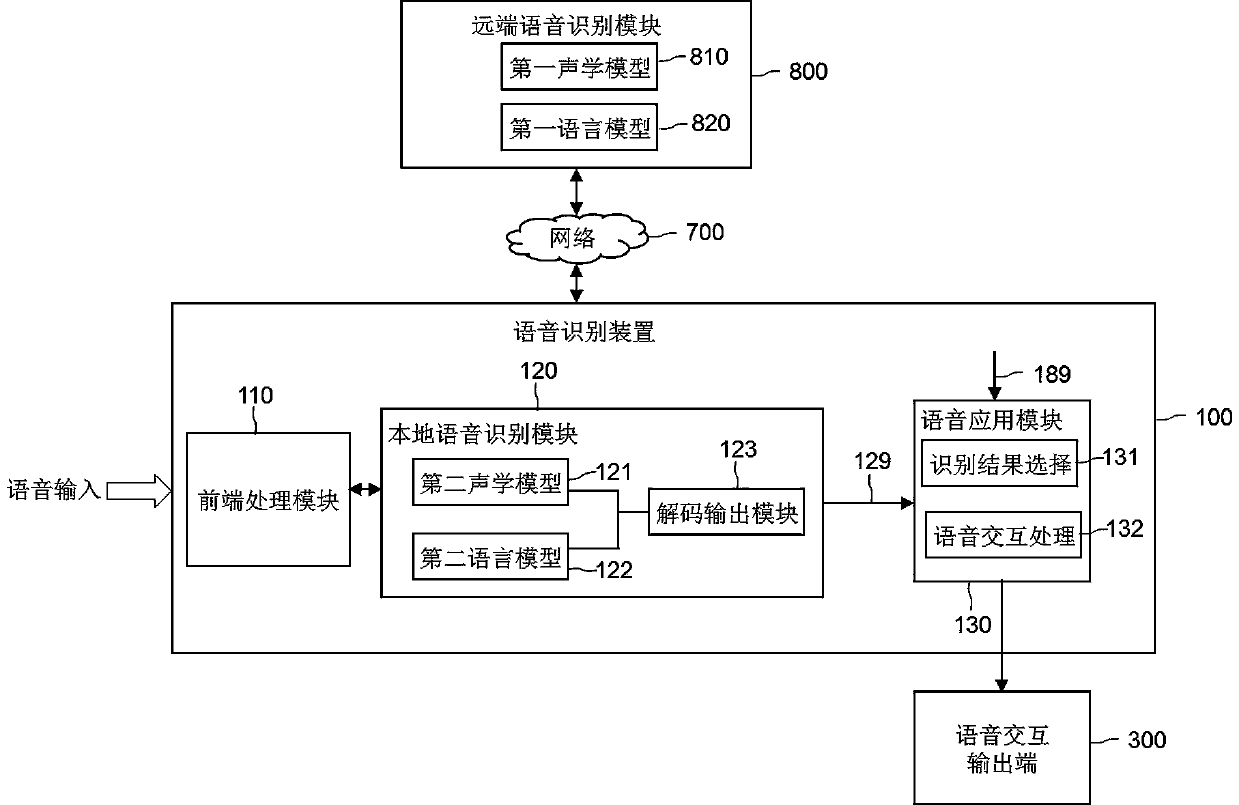

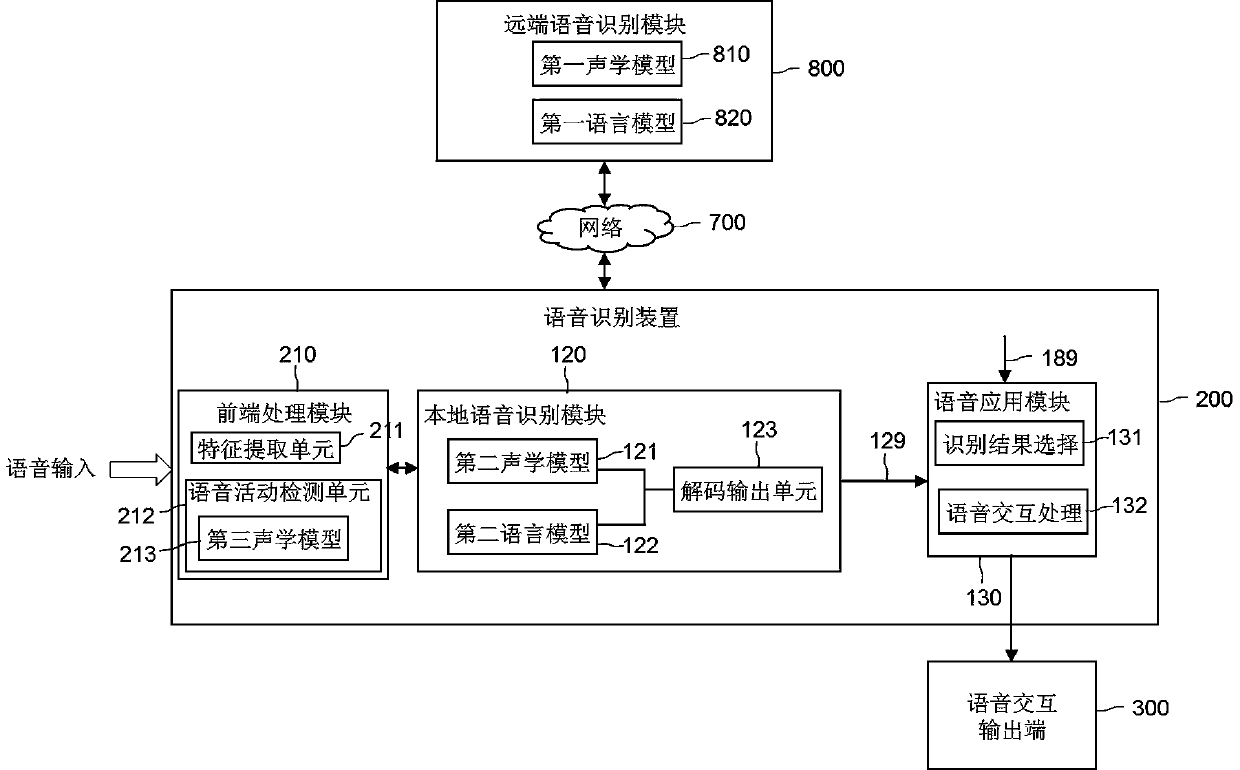

Voice recognition device and method and voice interaction system and method

The invention relates to a voice recognition device and method and a voice interaction system and method. The voice recognition device can receive voice input of a user, and can receive a first voicerecognition result output by a far-end voice recognition module after online processing of the voice input from the far-end voice recognition module; the voice recognition device also comprises a local voice recognition module which is provided with a second acoustic model constructed based on a binary neural network algorithm, wherein the local voice recognition module processes voice features extracted from the voice input at least through the second acoustic model so as to output a second voice recognition result. The voice recognition is timely and accurate, the influence of the network connection condition is small, and the user experience is good.

Owner:NIO ANHUI HLDG CO LTD

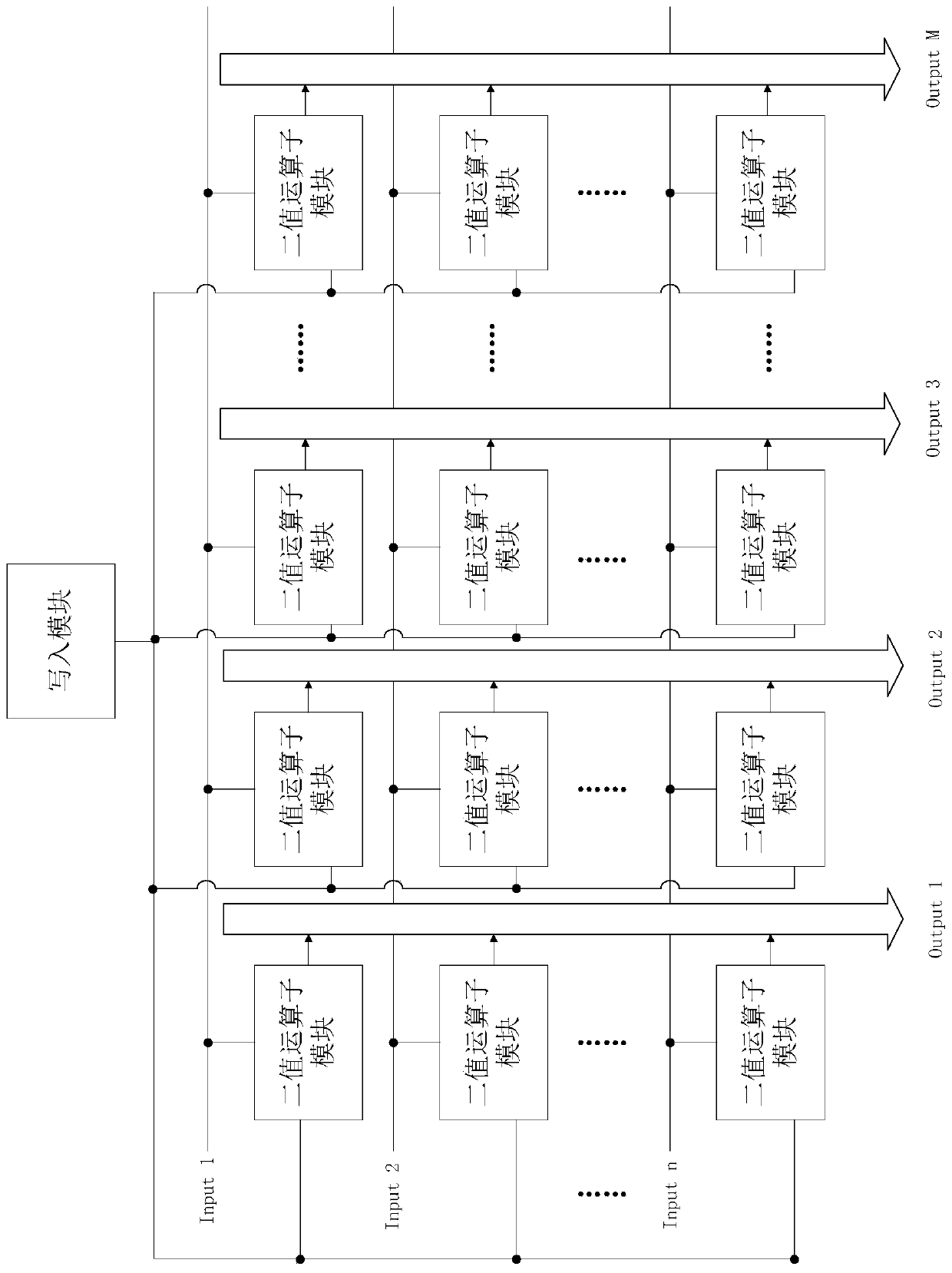

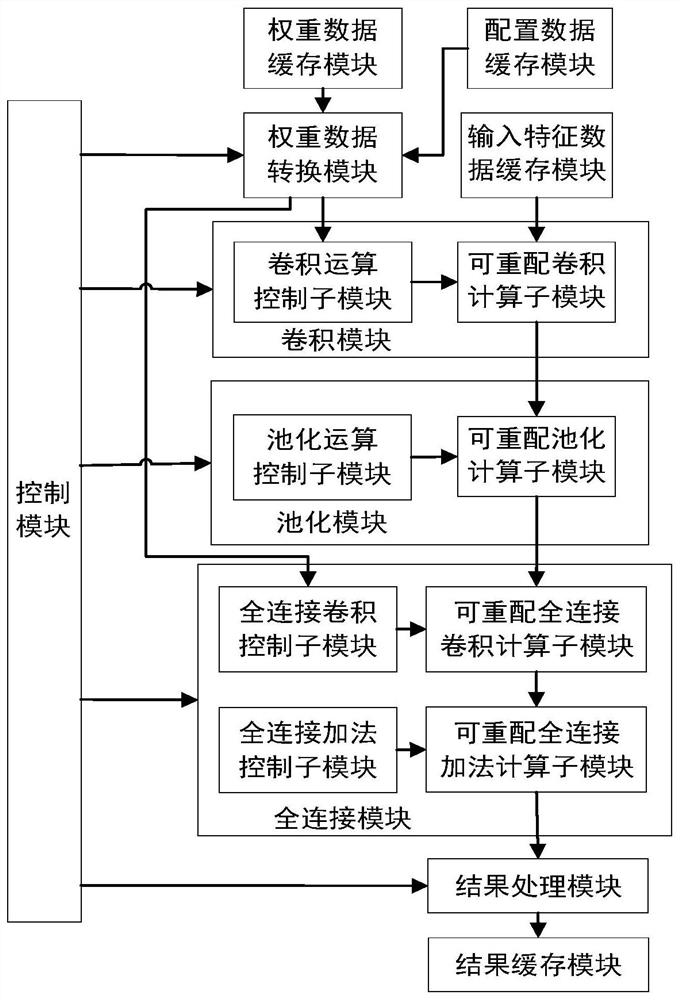

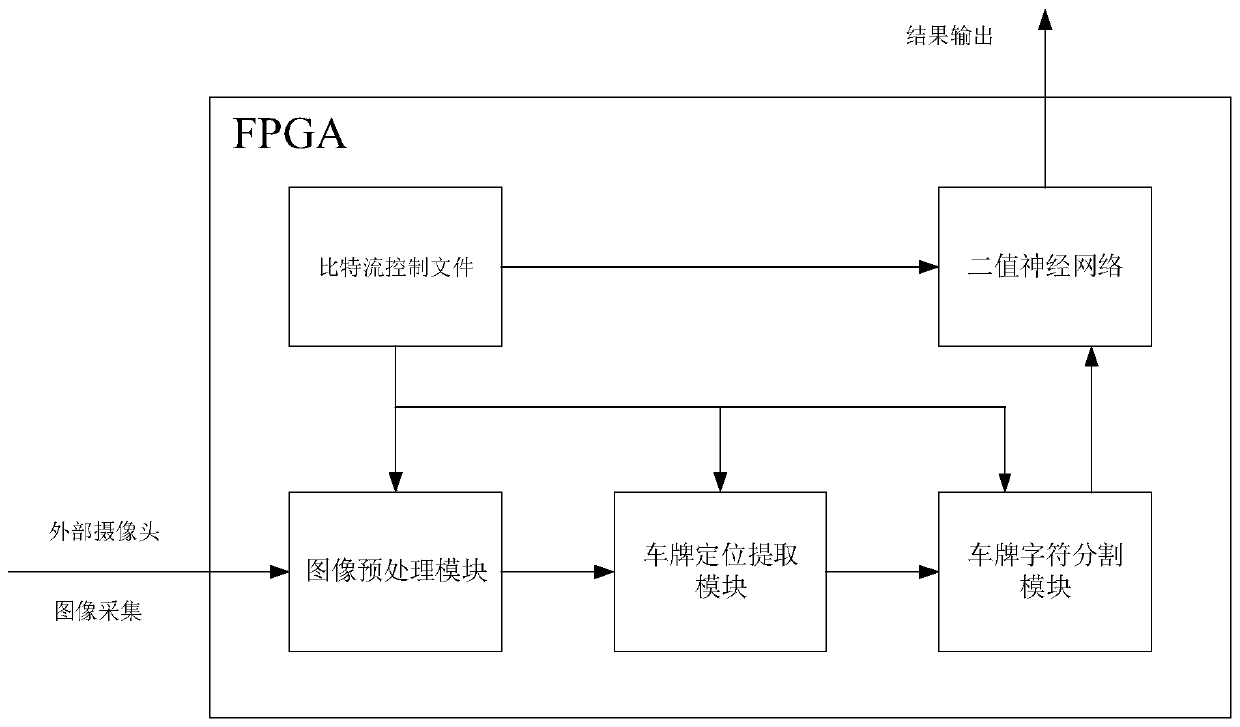

FPGA-based binary neural network acceleration system

PendingCN111931925AWith convolution calculation functionSolve the technical problem that the calculation speed is easily limited by serial calculationNeural architecturesArchitecture with single central processing unitCircuit designNeural network nn

The invention provides an FPGA-based binary neural network acceleration system, belongs to the technical field of integrated circuit design, and is used for solving the technical problems that the calculation speed is easily limited by serial calculation and more resources are occupied due to a long key calculation path of convolution operation in the prior art. The acceleration system comprises aweight data caching module, an input characteristic data caching module, a configuration data caching module, a weight data conversion module, a convolution module, a pooling module, a full connection module, a result processing module, a result caching module and a control module which are realized through an FPGA. The method can be applied to scenes such as rapid target detection in an embeddedenvironment.

Owner:XIDIAN UNIV

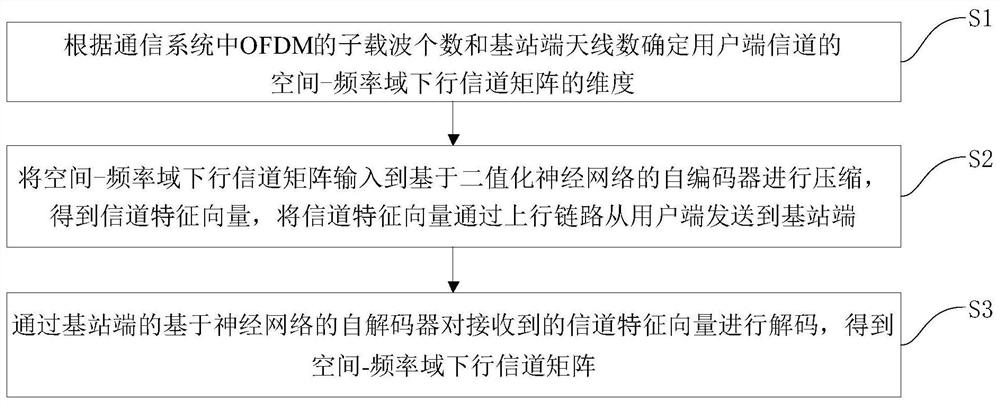

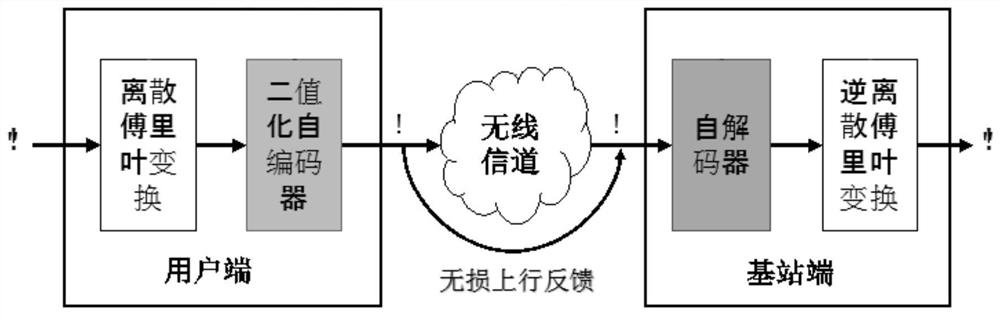

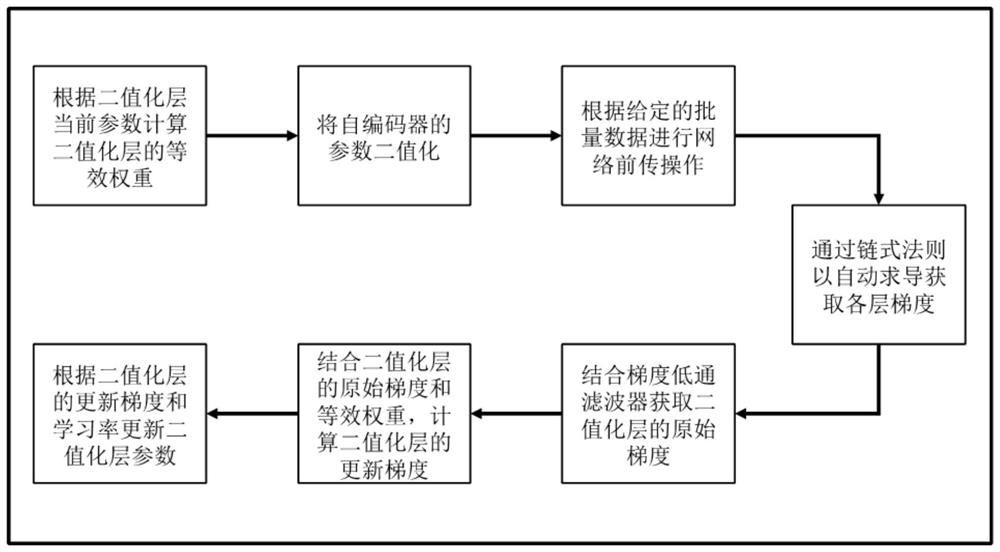

Efficient MIMO channel feedback method based on binary neural network and device

ActiveCN113098805AFacilitate network deploymentBaseband system detailsRadio transmissionAlgorithmCarrier signal

The invention discloses an efficient MIMO channel feedback method based on a binary neural network and a device thereof. The method comprises the following steps: determining the dimension of a space-frequency domain downlink channel matrix of a user side channel according to the number of OFDM subcarriers in a communication system and the number of base station end antennas; inputting the space-frequency domain downlink channel matrix into an auto-encoder based on a binary neural network for compression to obtain a channel feature vector, and sending the channel feature vector from a user side to a base station side through an uplink; and decoding the received channel feature vector through a neural network-based self-decoder at the base station end to obtain a space-frequency domain downlink channel matrix. According to the scheme, low-overhead auto-encoder neural network deployment can be carried out on a resource-limited user side, and a more practical channel compression feedback scheme is realized.

Owner:TSINGHUA UNIV

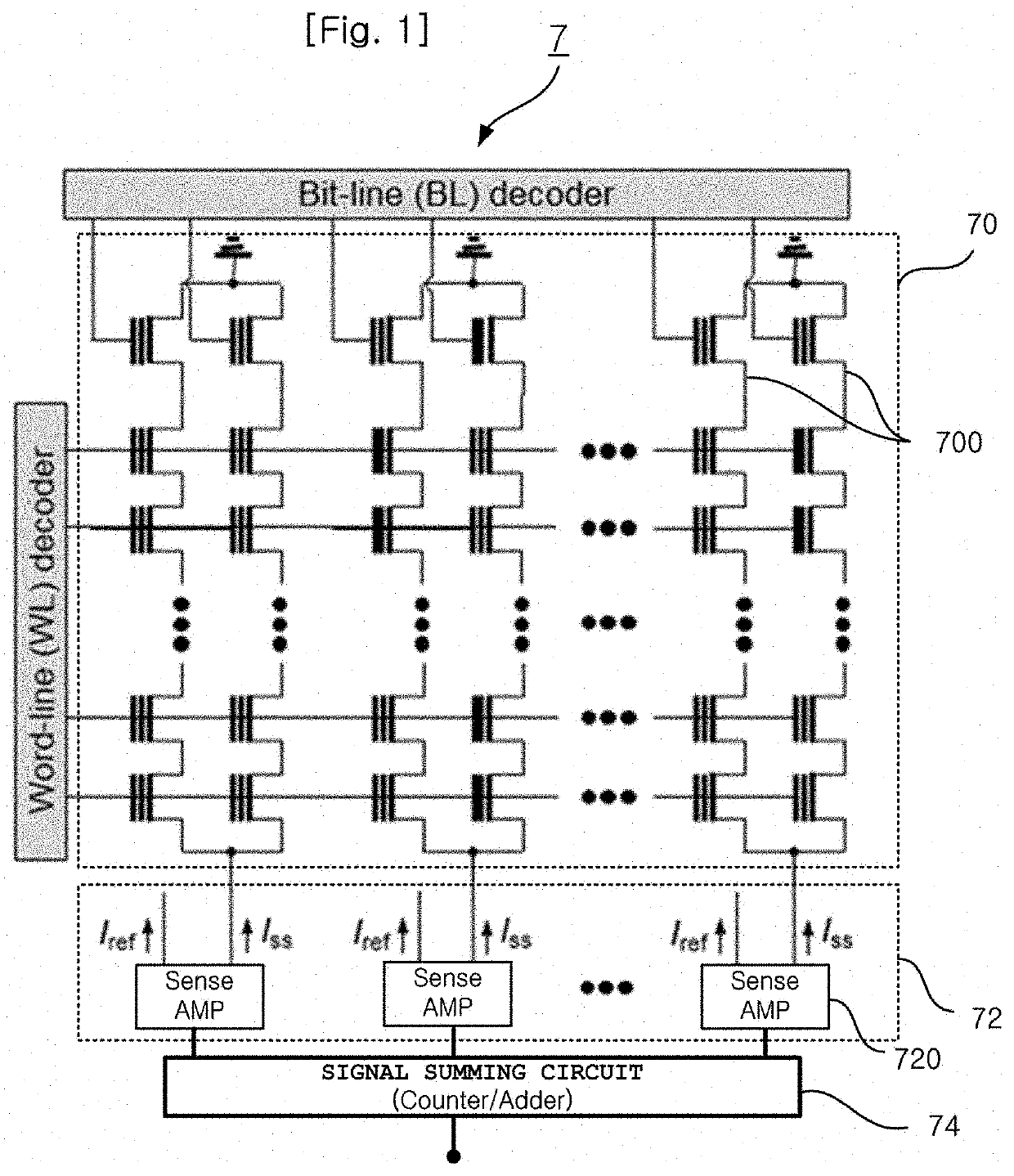

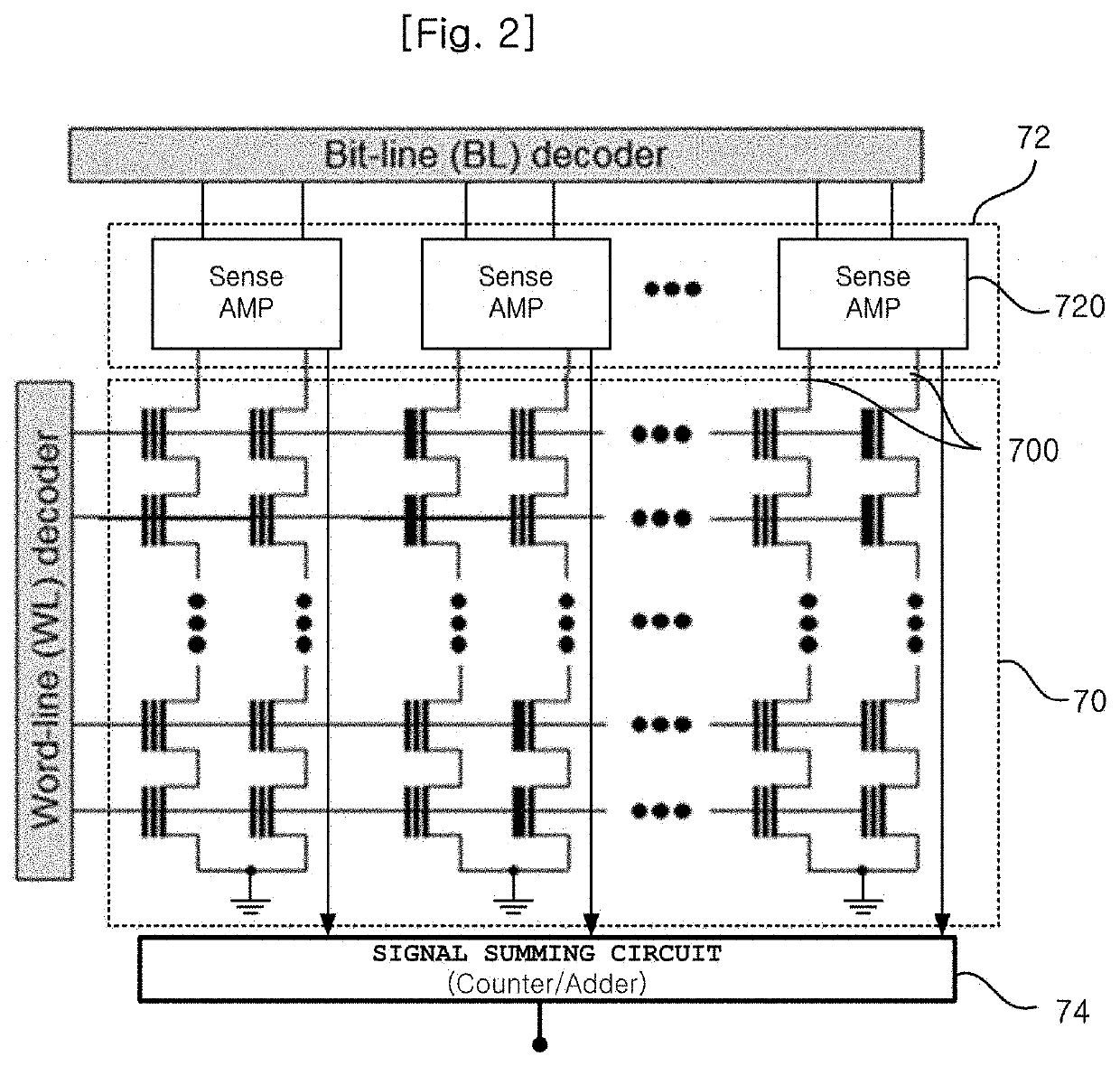

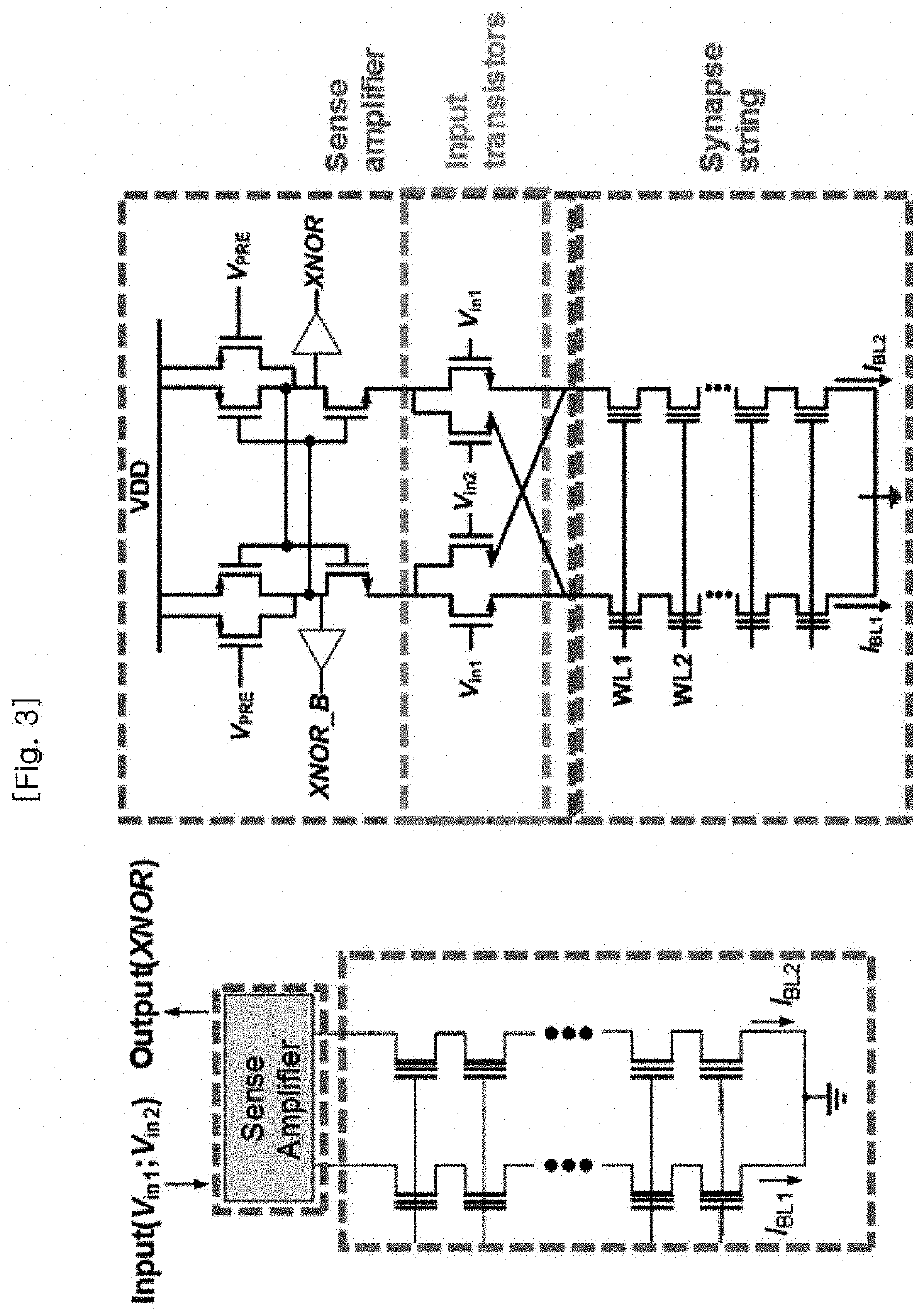

Neural network with synapse string array

ActiveUS20210166108A1Highly integratedIncreased durabilityRead-only memoriesDigital storageMemory cellComputer science

Provided is a binary neural network including: a synapse string array in which multiple synapse strings are sequentially connected. The synapse string includes: first and second cell strings, each including memory cell devices connected in series; and switching devices connected to first ends of two-side ends of the first and second cell strings. The memory cell devices of the first and second cell strings are in one-to-on correspondence to each other, and a pair of the memory cell devices being in one-to-on correspondence to each other have one-side terminals electrically connected to each other to constitute one synapse morphic device. A plurality of the pairs of memory cell devices configured with the first and second cell strings constituting each synapse string constitute a plurality of the synapse morphic devices. The synapse morphic devices of each synapse string are electrically connected to the synapse morphic devices of other synapse strings.

Owner:SEOUL NAT UNIV R&DB FOUND

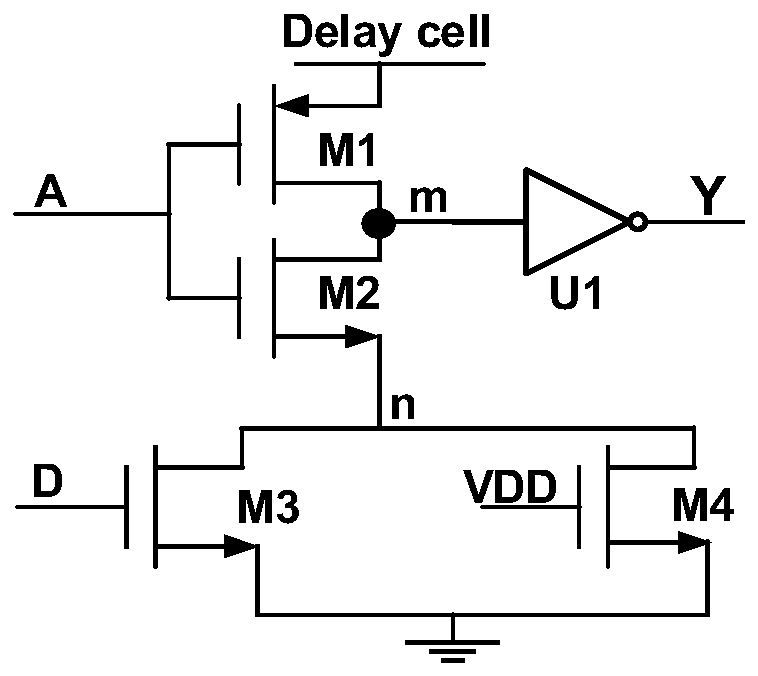

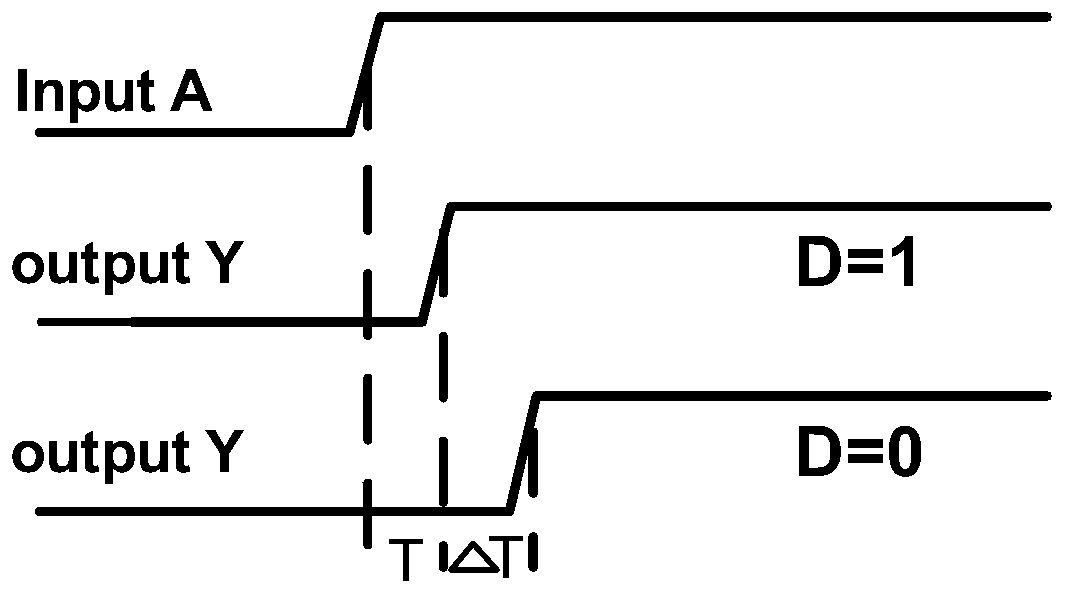

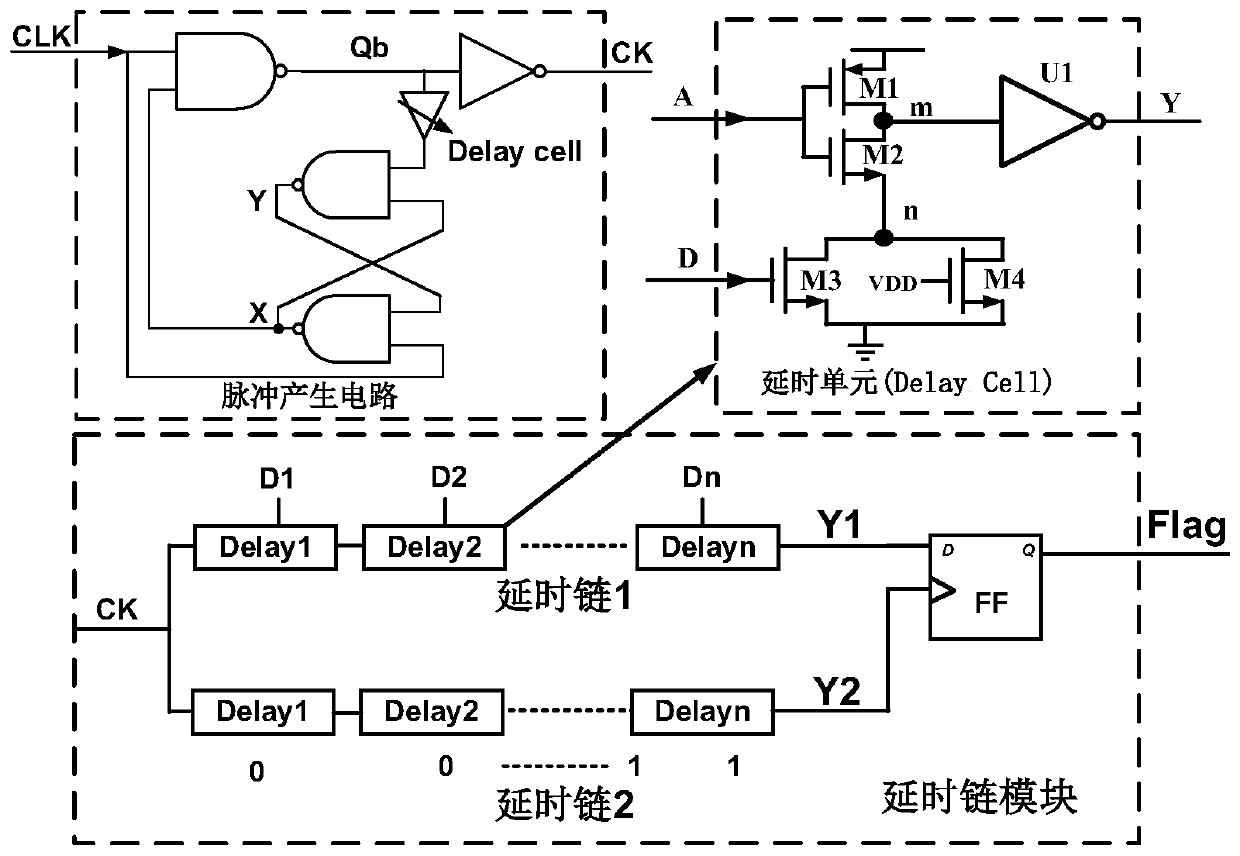

Binary neural network accumulator circuit based on analog delay chain

ActiveCN110428048AReduce power consumptionReduce overheadNeural architecturesPhysical realisationSimulation basedEngineering

The invention discloses a binary neural network accumulator circuit based on an analog delay chain, which belongs to the technical field of basic electronic circuits and comprises a delay chain modulewith two delay chains and a pulse generating circuit, the analog delay chain is composed of a plurality of analog delay units connected in series, each analog delay unit adopts six metal oxide semiconductor (MOS) tubes, and '0' and '1' are judged according to delay. According to the invention, accumulation calculation in traditional digital circuit design is replaced by an analog calculation method, the accumulator structure can stably work under wide voltage, the circuit is simple to realize, the power consumption of binary neural network accumulation calculation is effectively reduced, andthe energy efficiency of the neural network circuit can be greatly improved.

Owner:SOUTHEAST UNIV

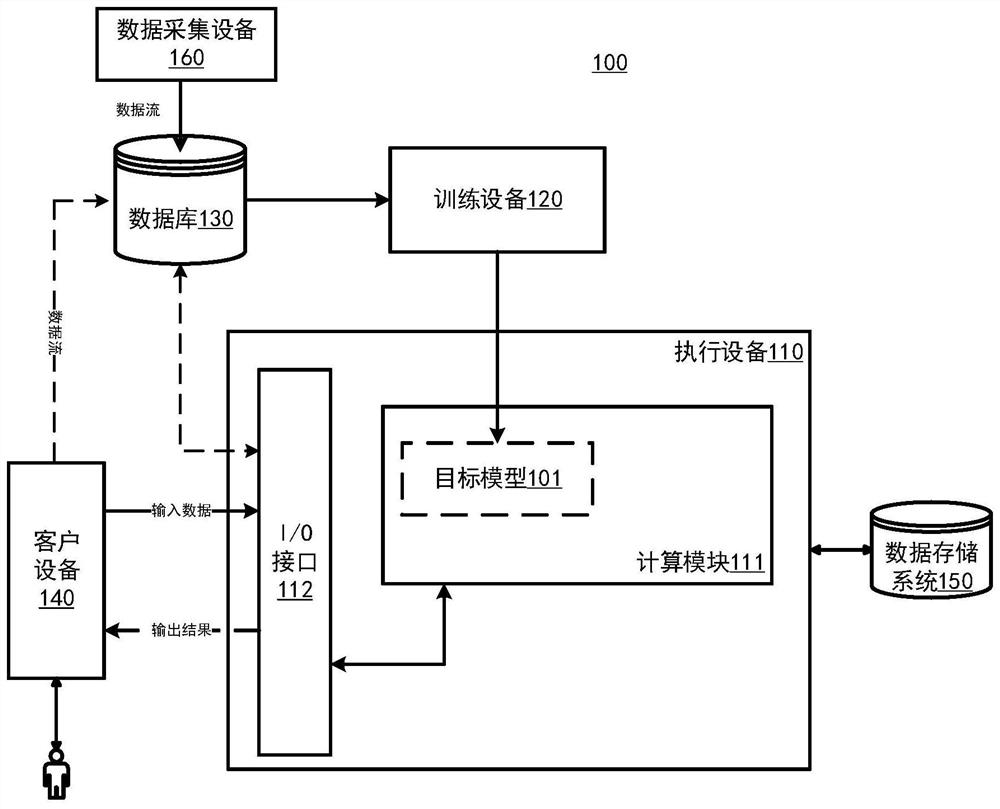

Binary neural network model training method, image processing method and device

ActiveCN113191489ANeural architecturesEnergy efficient computingPattern recognitionImaging processing

The invention relates to an image processing technology in the field of computer vision in the field of artificial intelligence, and discloses a binary neural network model training method and device and an image processing method and device. The training method comprises the following steps: S1, determining a knowledge distillation framework, wherein the teacher network is a trained neural network model, and the student network is an initial binary neural network model M0; S2, training a binary neural network model Mj by using the (j + 1) th batch of images and a target loss function to obtain a binary neural network model Mj + 1, wherein the target loss function comprises an angle loss item, and the angle loss item is used for describing the difference between the included angle between the feature matrix and the weight matrix in the teacher network and the included angle between the feature matrix and the weight matrix in the student network; S3, when a preset condition is met, taking the binary neural network model Mj + 1 as a target binary neural network model; otherwise, enabling j to be equal to j + 1, and repeating the step S2. According to the embodiment of the invention, the prediction precision of the binary neural network model can be improved.

Owner:HUAWEI TECH CO LTD

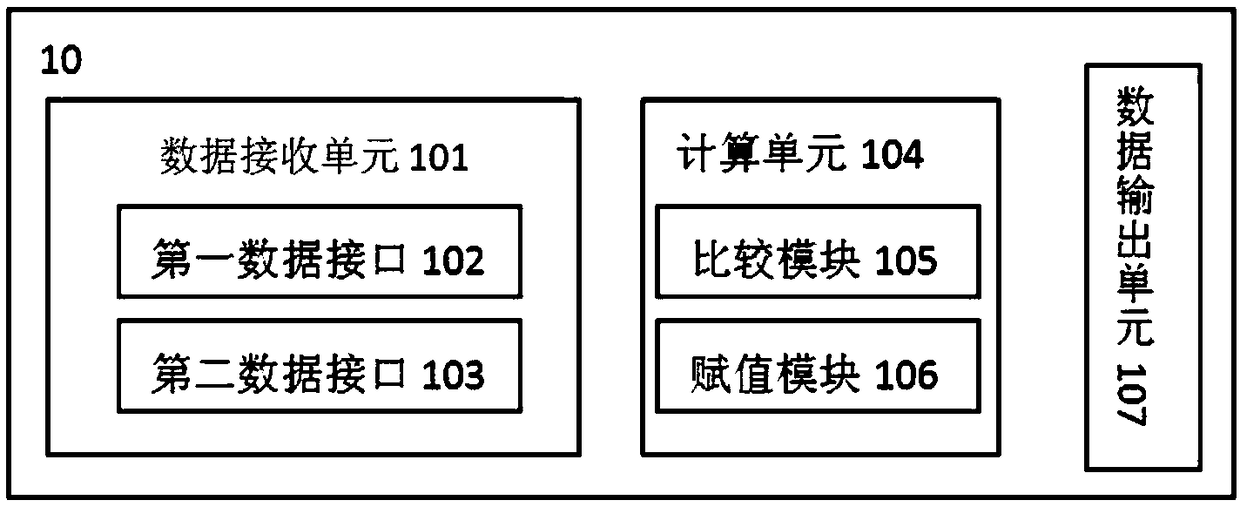

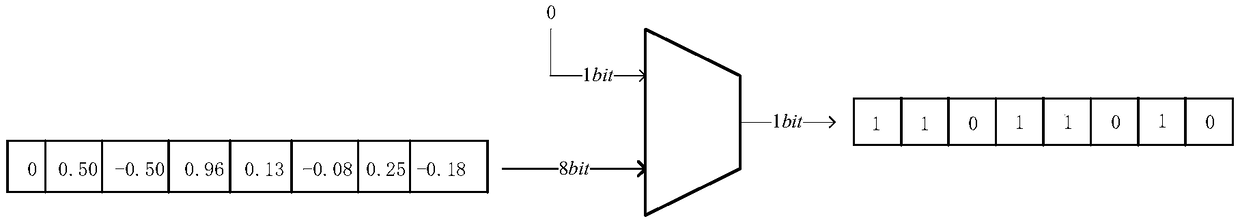

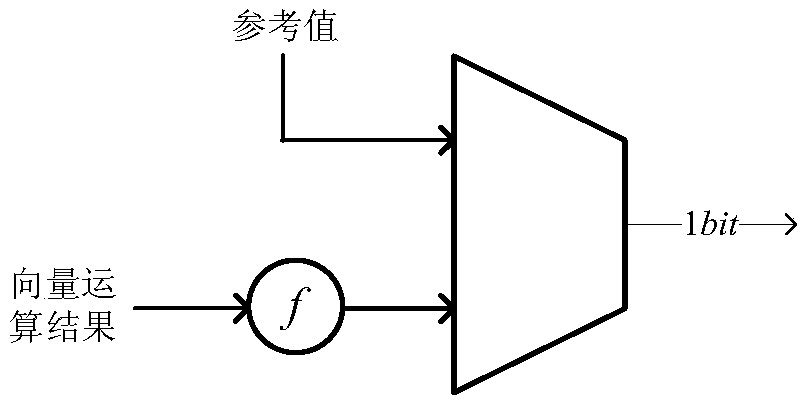

Binarization device, method and application oriented to binary neural network

ActiveCN109308517ABinarization is universally applicableFlexible operationNeural architecturesNeural learning methodsPattern recognitionAlgorithm

The invention relates to a binarization device oriented to a binary neural network, comprising a data receiving unit for receiving non-binary input data to be binarized and preset binary parameters ofthe neural network, wherein, the non-binary input data are neuron data and / or weight data; A binary calculation unit for performing binary calculation for the input data; A data output unit for outputting a binarization result obtained by the binarization calculation unit.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

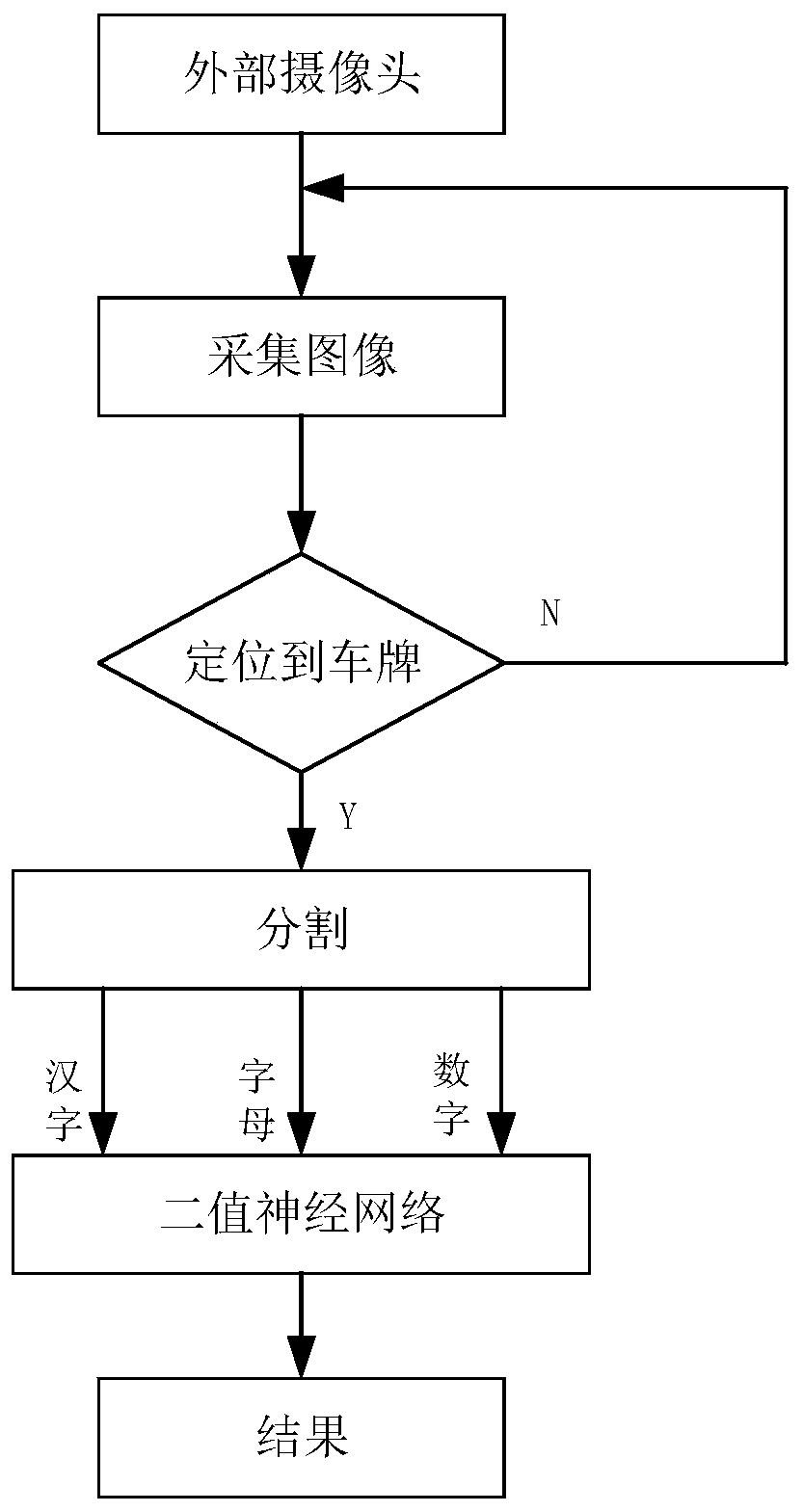

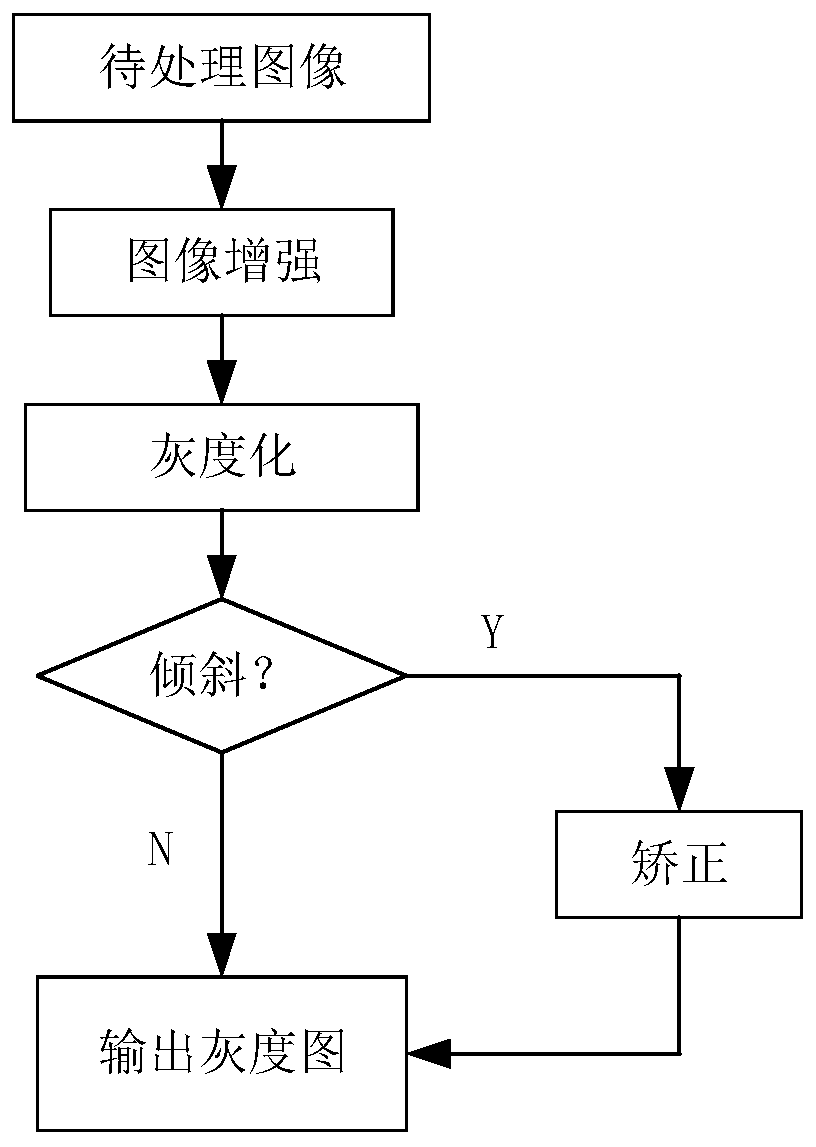

Binary neural network license plate recognition method and system based on FPGA

InactiveCN110751150AImprove extraction efficiencyImprove operational efficiencyCharacter recognitionPattern recognitionEngineering

The invention relates to a binary neural network license plate recognition method and system based on FPGA, and the method comprises the steps: 1, carrying out the refinement of an input image throughan image preprocessing module, and obtaining a grey-scale map; 2, further processing the grey-scale map by using a license plate positioning and extracting module to complete positioning and extracting of the license plate; 3, segmenting the positioned and extracted license plate characters by using a license plate character segmentation module, and binarizing the segmented license plate characters to form image blocks with fixed sizes; 4, training a binary neural network in the binary neural network module; and utilizing the trained binary neural network model to identify image blocks with fixed sizes, outputting a result. The system matched with the method comprises an image preprocessing module, a license plate positioning extraction module, a license plate character segmentation module and a binary neural network module and is realized based on an FPGA platform. According to the invention, the neural network and the FPGA hardware are combined, the advantages of the neural networkand the FPGA hardware are brought into full play, and high efficiency and low power consumption are realized while the license plate recognition precision is ensured.

Owner:SHANGHAI UNIV OF ENG SCI

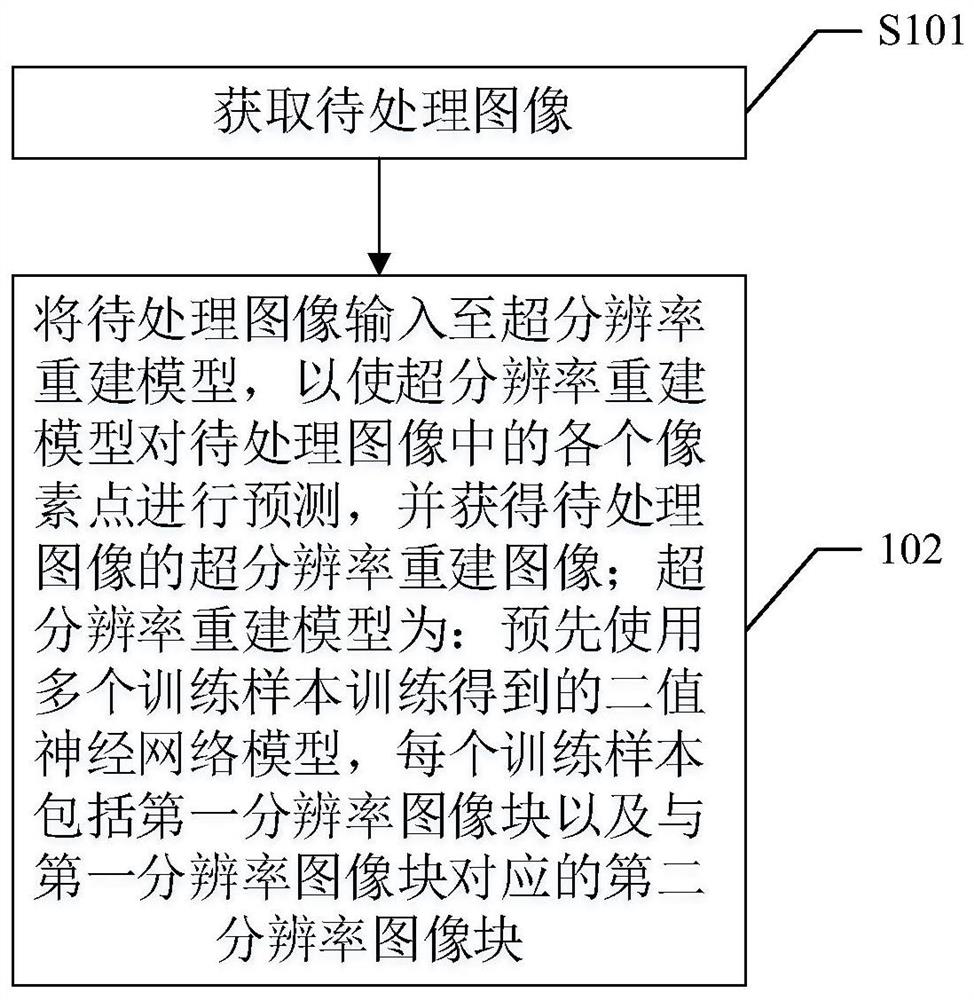

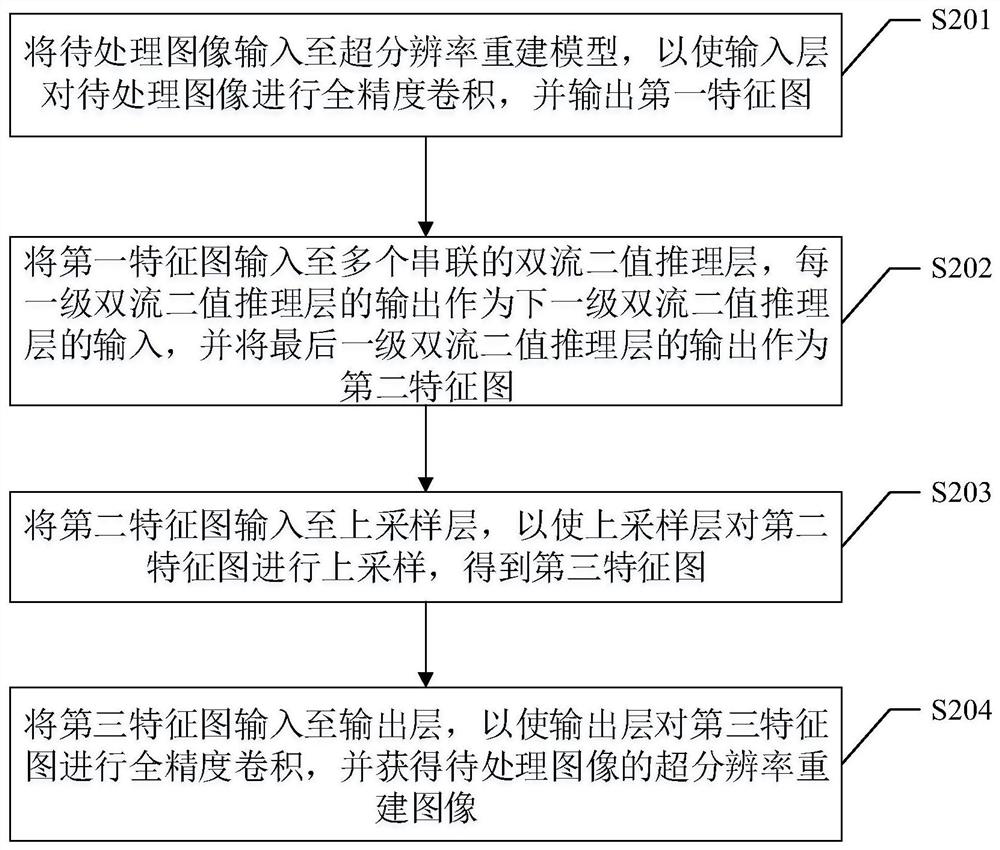

Image super-resolution reconstruction method and device, electronic equipment and storage medium

PendingCN113222813AImprove performanceQuality assuranceGeometric image transformationNeural architecturesImage resolutionNetwork structure

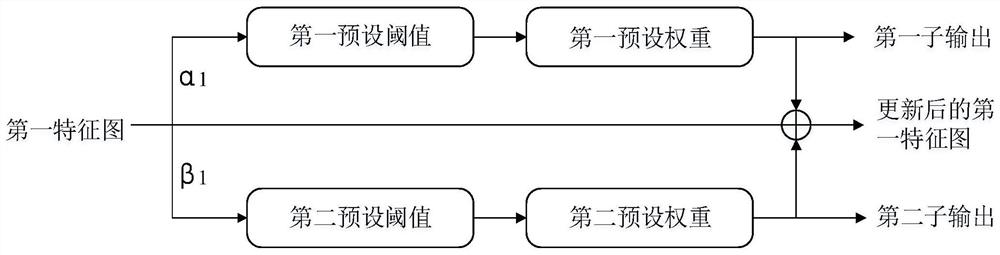

The invention discloses an image super-resolution reconstruction method and apparatus, an electronic device and a storage medium. The method comprises the steps of obtaining a to-be-processed image; inputting the to-be-processed image into the super-resolution reconstruction model to enable the super-resolution reconstruction model to predict each pixel point in the to-be-processed image, and obtaining a super-resolution reconstruction image of the to-be-processed image; enabling the super-resolution reconstruction model to be a binary neural network model obtained by training a plurality of training samples in advance, wherein each training sample comprises a first resolution image block and a corresponding second resolution image block, and the second resolution ratio is greater than the first resolution ratio. The double-flow binary reasoning layer in the super-resolution reconstruction model can improve the binary quantization precision through a quantization threshold value and improve the information bearing capacity of the super-resolution reconstruction model through a double-flow network structure, so that the performance of the super-resolution reconstruction model can be remarkably improved; and meanwhile, the reconstruction speed can be improved on the basis of ensuring the image reconstruction precision.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com