In-memory computing device suitable for binary convolutional neural network computing

A binary convolution neural and computing device technology, applied in the field of integrated circuits, can solve the problems of reducing computing speed and wasting power consumption, and achieve the effects of increasing computing speed, avoiding data exchange, and reducing chip power consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The present invention will be further described in detail below in conjunction with embodiments and drawings. The embodiments provided by the present invention should not be regarded as limited to the embodiments set forth herein.

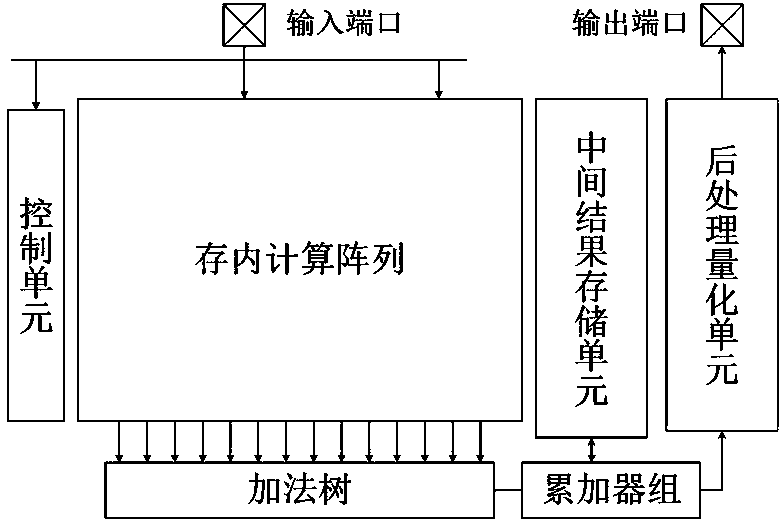

[0026] The embodiment is an in-memory computing device suitable for binary convolutional neural network computing. figure 1 Block diagram of its top-level circuit module.

[0027] The device includes a 256x128 memory calculation array, a 128 input addition tree, a static random storage unit for storing intermediate results and a corresponding accumulator group for updating the intermediate results, a post-processing quantization unit and control unit.

[0028] Each row of the in-memory calculation array can store the weight or 128 input channels of the input feature map. The control unit selects two corresponding rows according to the weight and the input feature map address to complete the exclusive OR operation.

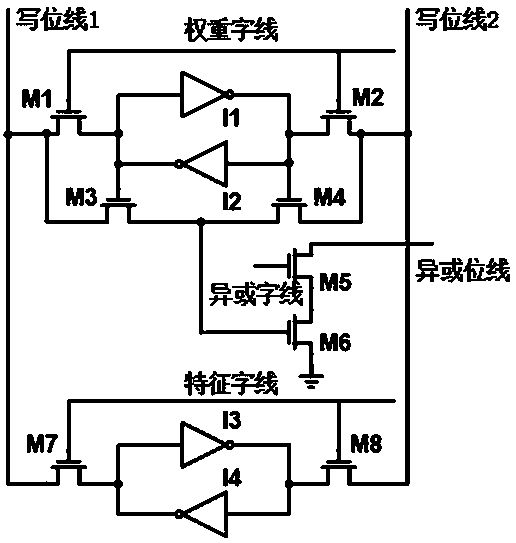

[0029] The exclusive OR output r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com