Double-layer same-or binary neural network compression method based on lookup table calculation

A technology of binary neural network and compression method, which is applied in the field of digital image processing, can solve the problems of large structural power consumption and logic resource consumption, and achieve the effect of reducing the amount of parameters and computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The technical solution of the present invention will be further described below in conjunction with the accompanying drawings.

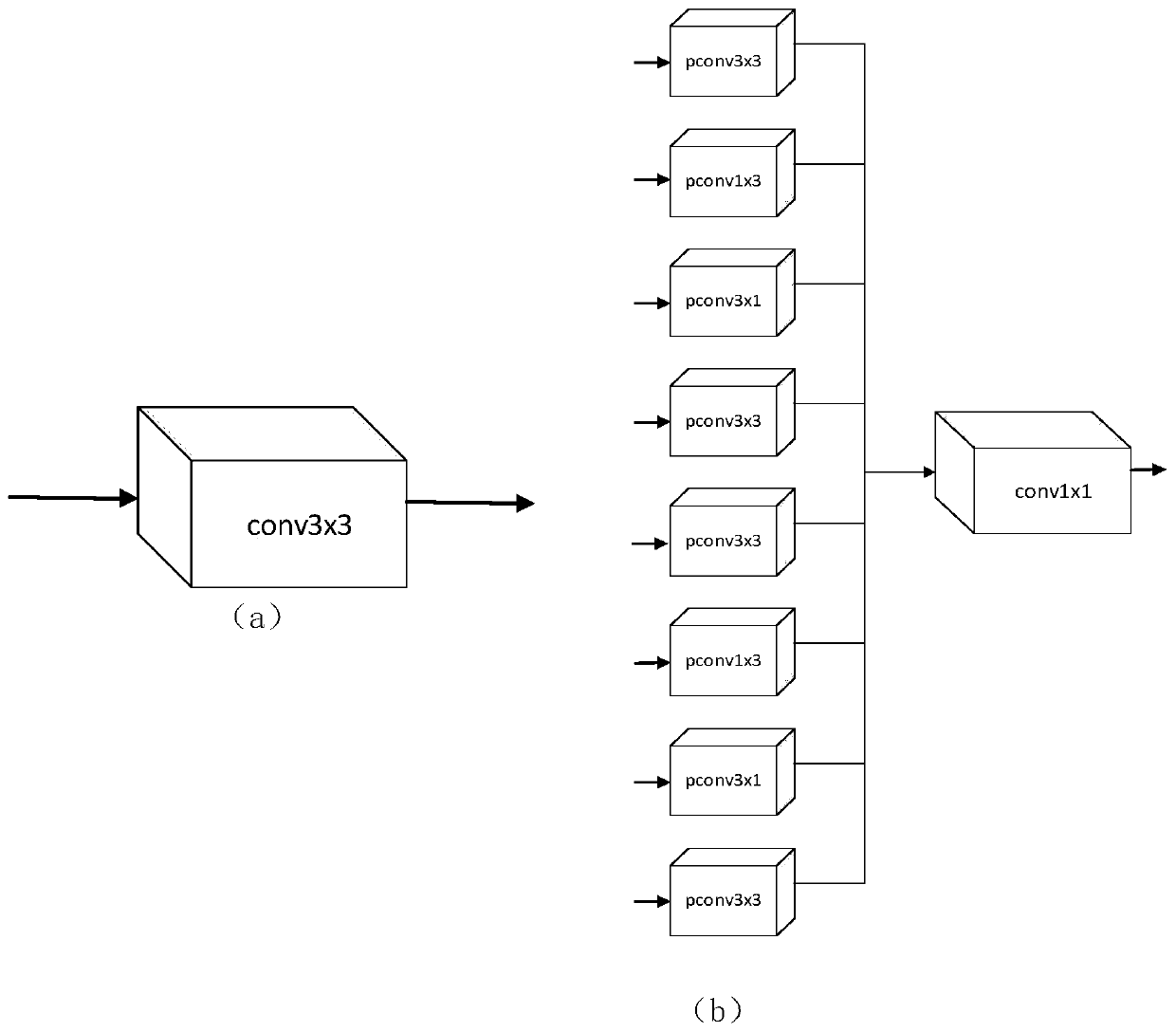

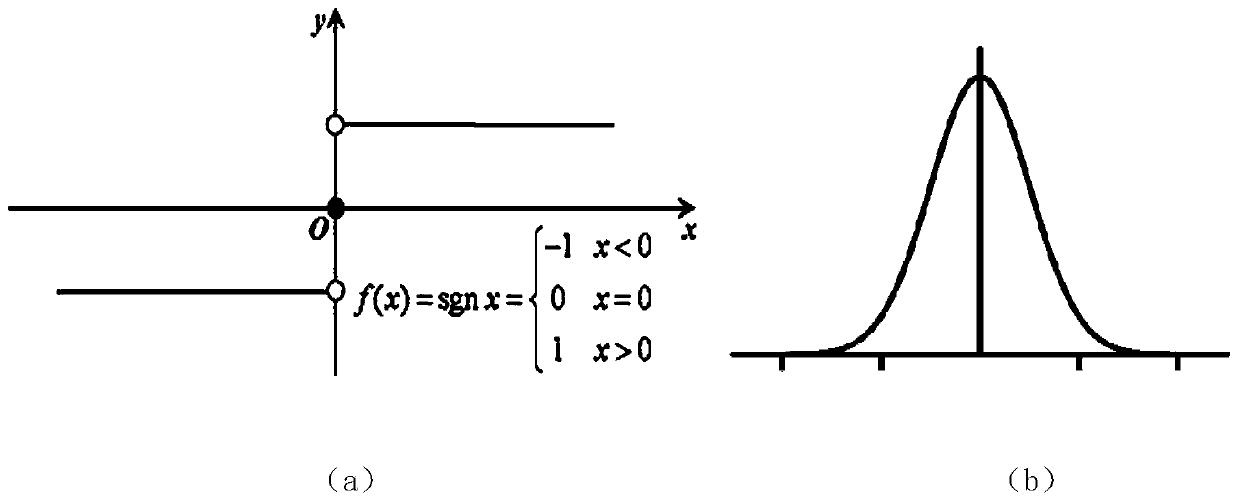

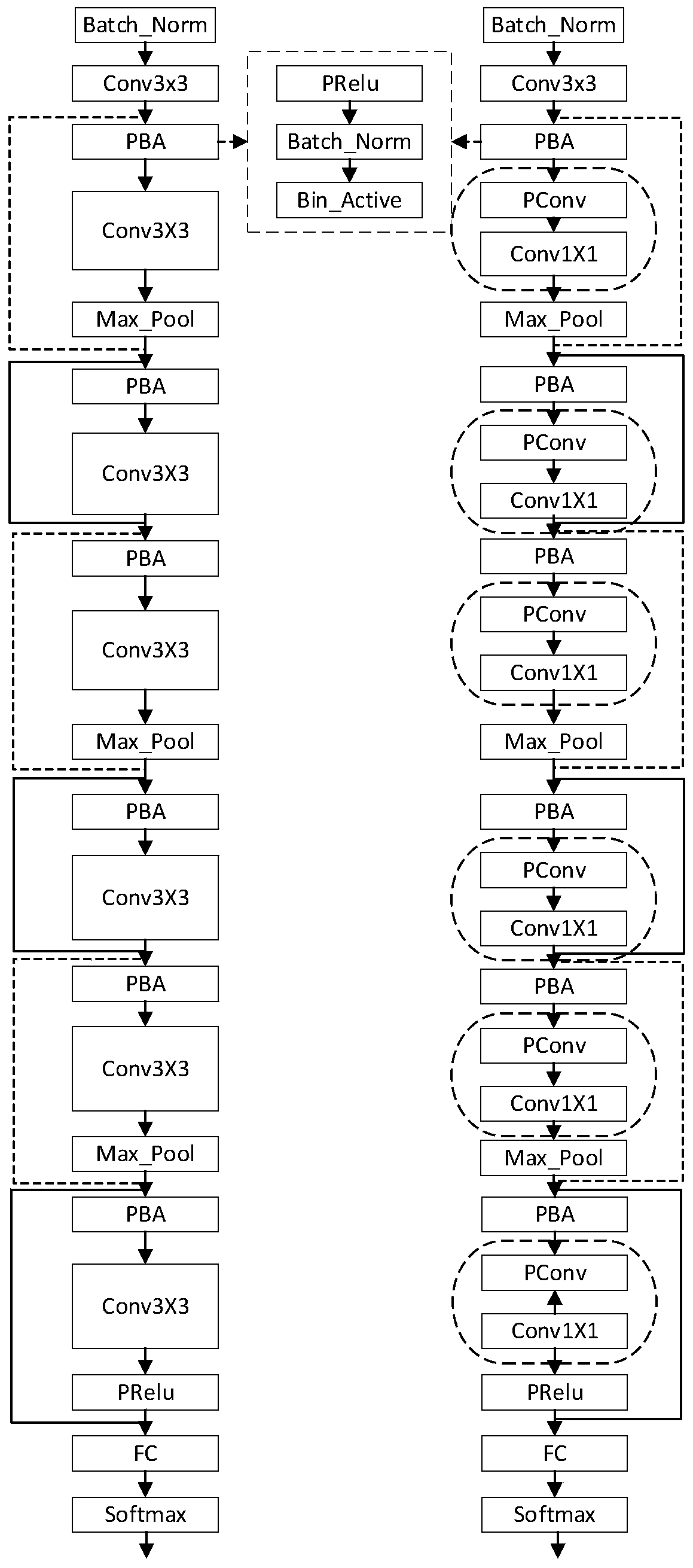

[0026] A double-layer homogeneous or binary neural network compression method based on lookup table calculation, the compression method is completed by a double-layer convolution structure, and its algorithm includes the following steps: first, the input feature map is nonlinearly activated, batch normalized After normalization and binary activation, the first-layer convolution operation with different convolution kernel sizes is performed in groups to obtain the first-layer output results. Then, the output feature map is obtained by using the second layer convolution operation with a size of 1×1 on the output result of the first layer.

[0027] Its hardware implementation steps include:

[0028] (1) After the hardware realizes the non-linear activation, batch normalization and binary activation process, the convolution module of the first la...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com