Patents

Literature

59 results about "Multiply–accumulate operation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

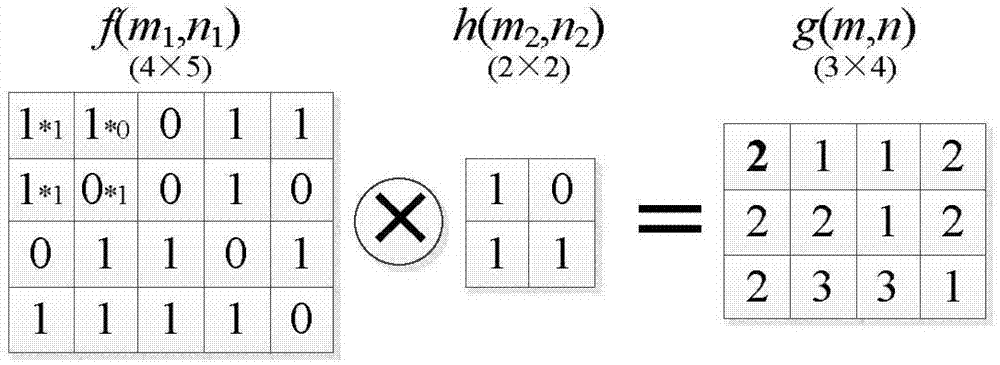

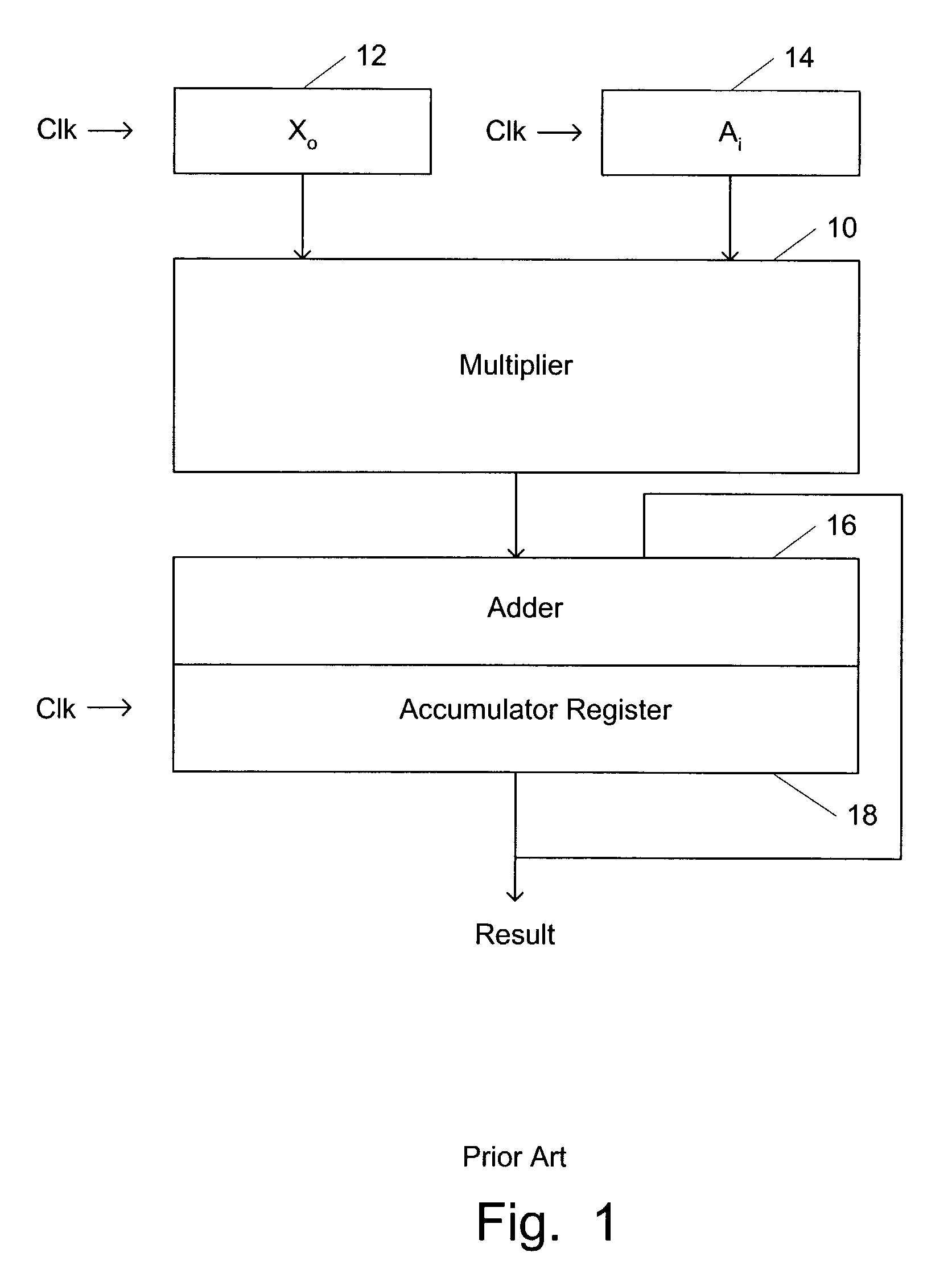

In computing, especially digital signal processing, the multiply–accumulate operation is a common step that computes the product of two numbers and adds that product to an accumulator. The hardware unit that performs the operation is known as a multiplier–accumulator (MAC, or MAC unit); the operation itself is also often called a MAC or a MAC operation. The MAC operation modifies an accumulator a: a←a+(b×c) When done with floating point numbers, it might be performed with two roundings (typical in many DSPs), or with a single rounding.

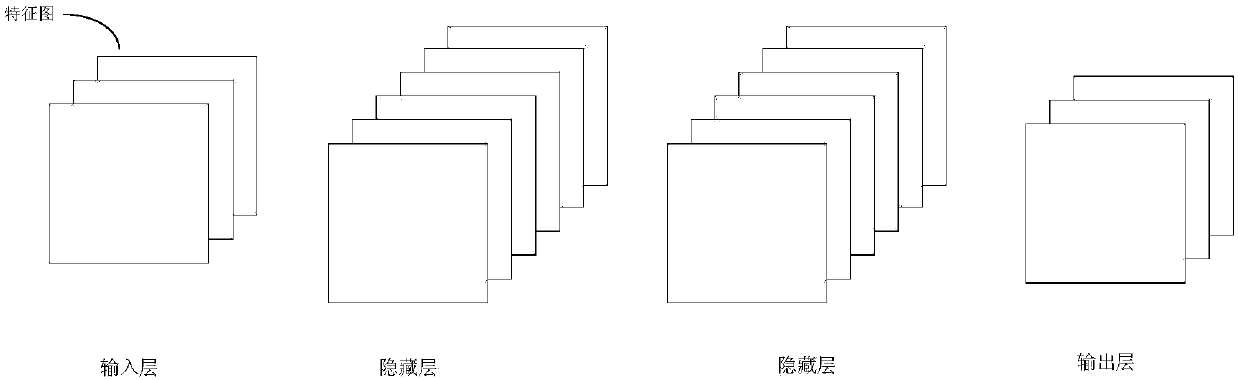

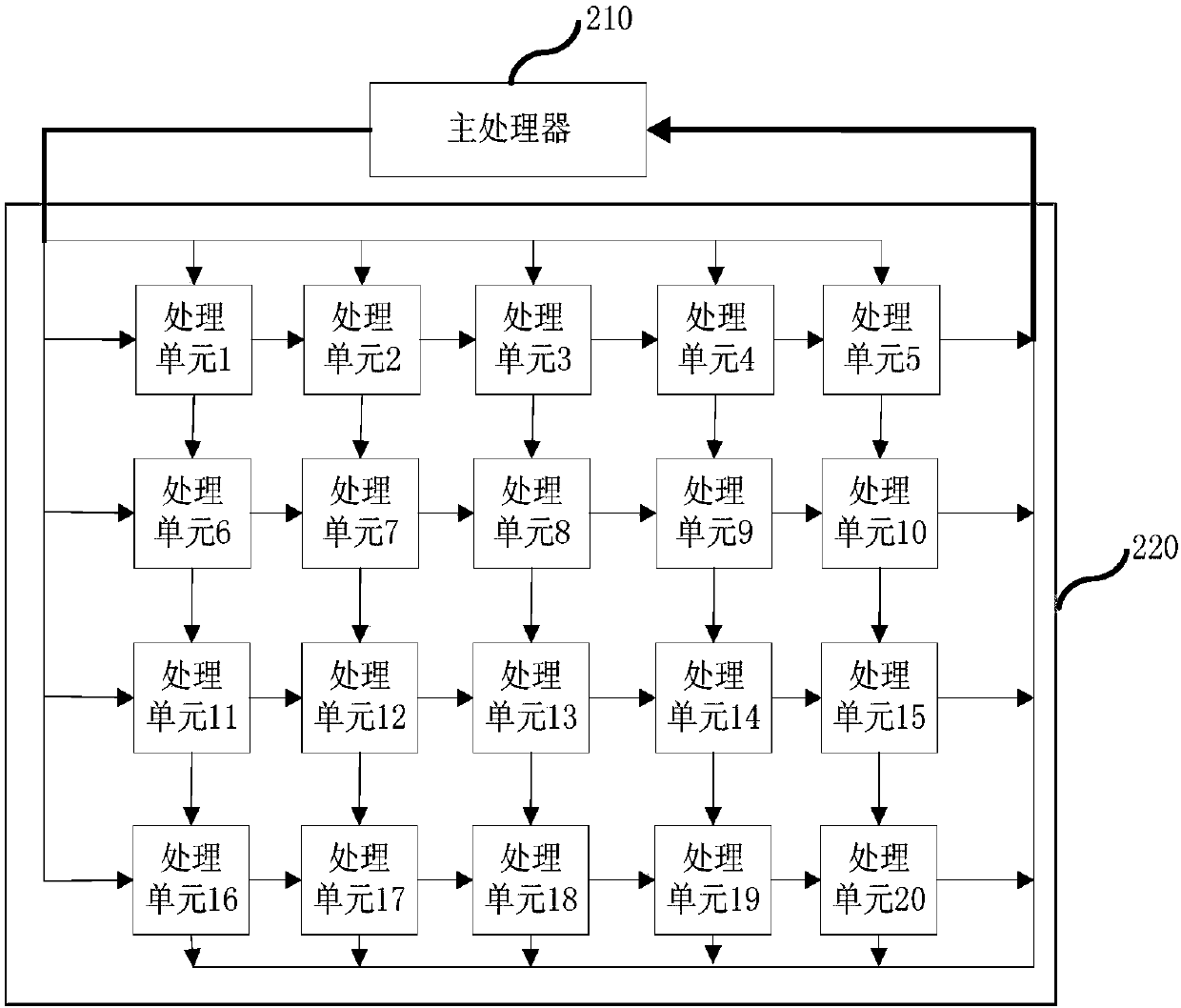

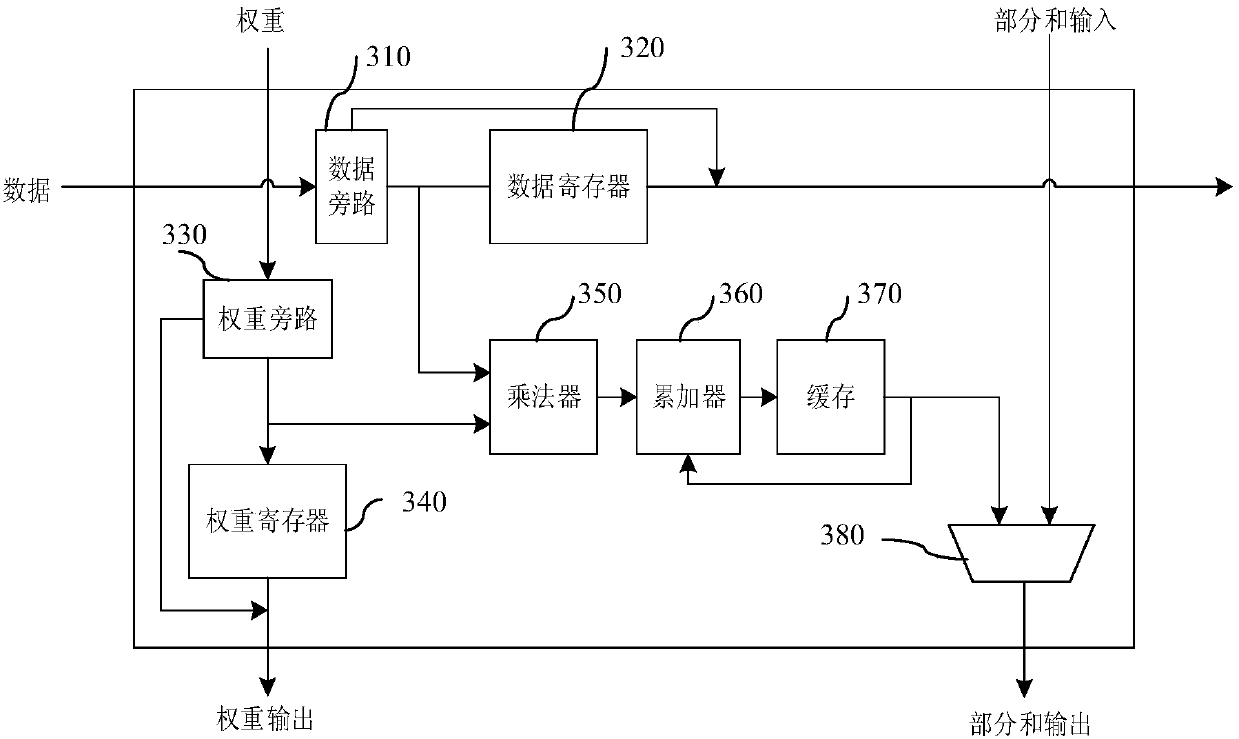

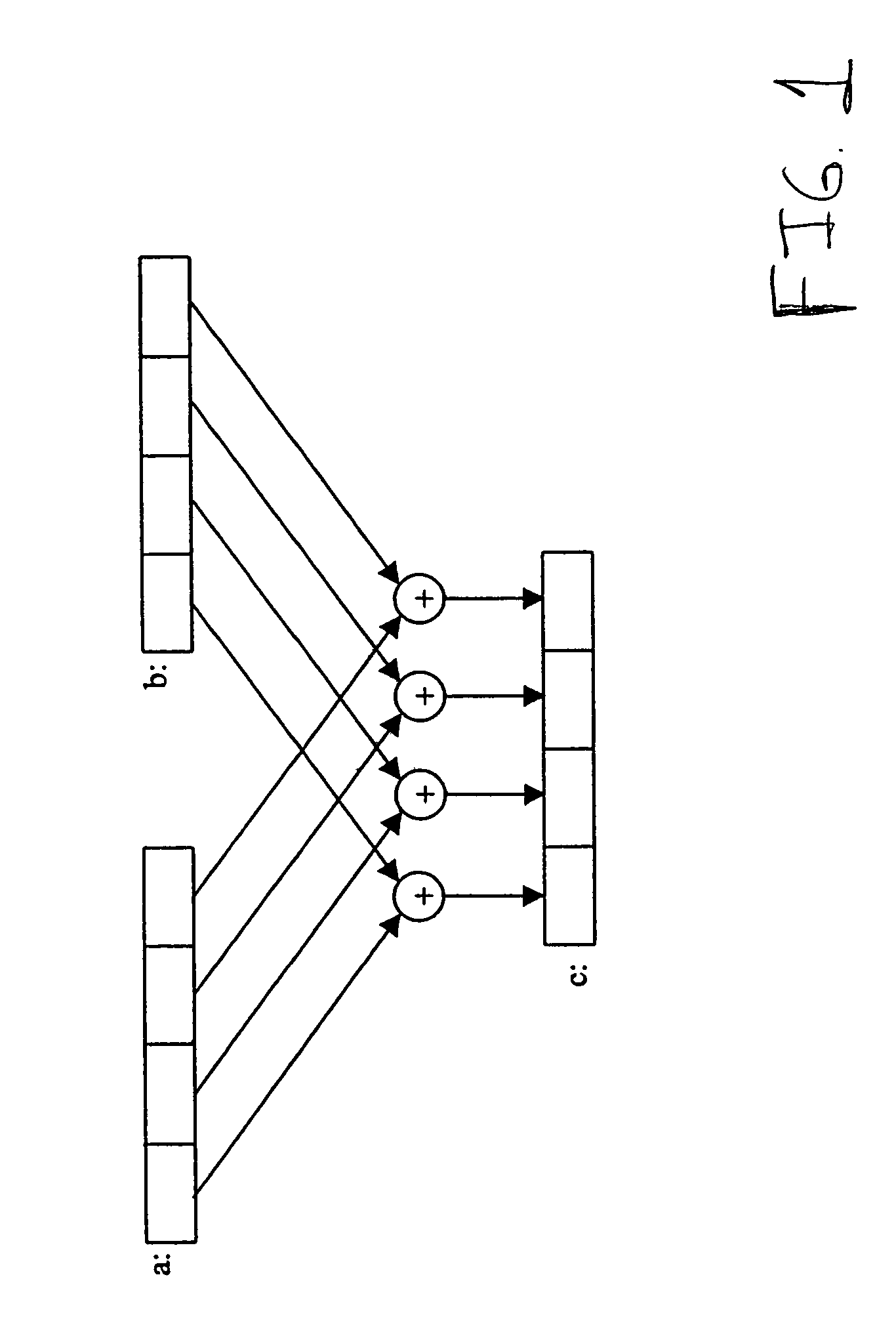

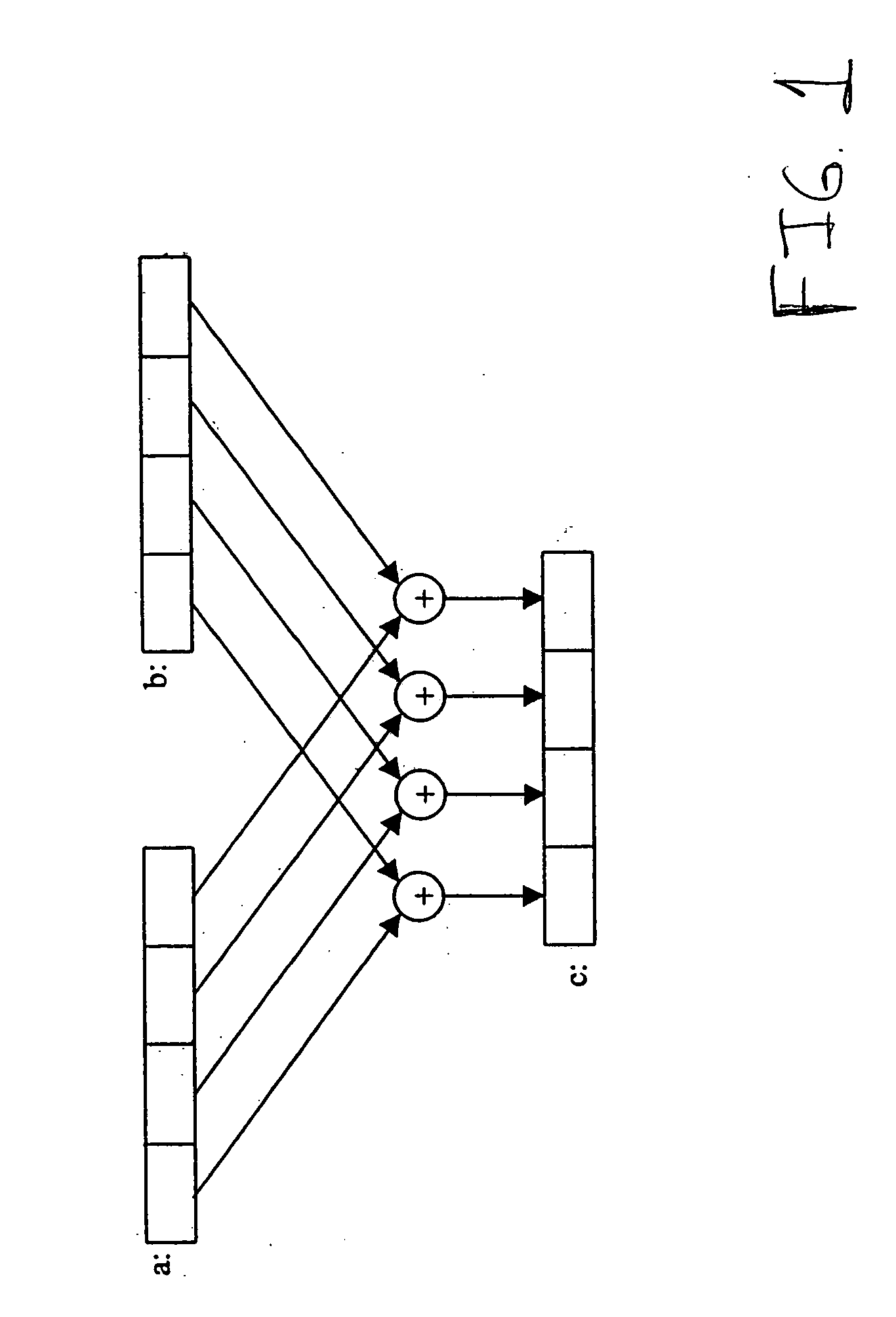

Computing array based neural network processor

InactiveCN107918794AImprove computing efficiencyReduce bandwidth requirementsPhysical realisationNeural learning methodsNerve networkMultiply–accumulate operation

The present invention provides a neural network processor. The processor comprises at least one computing unit consisting of a host processor and a computing array, wherein the computing array is organized into a row and column two-dimensional matrix by a plurality of processing units, the host processor controls to load neuron data and weight values into the computing array, and the processing unit carries out multiply-accumulate operation on the received neuron data and weight values and passes neuron data and weight values in different directions to a next-level processing unit; and a control unit, for controlling the computing unit to carry out relevant computation of a neural network. By virtue of the computing device provided by the present invention, the computation speed of the neural network can be accelerated and the bandwidth requirements in the computation process can be reduced.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

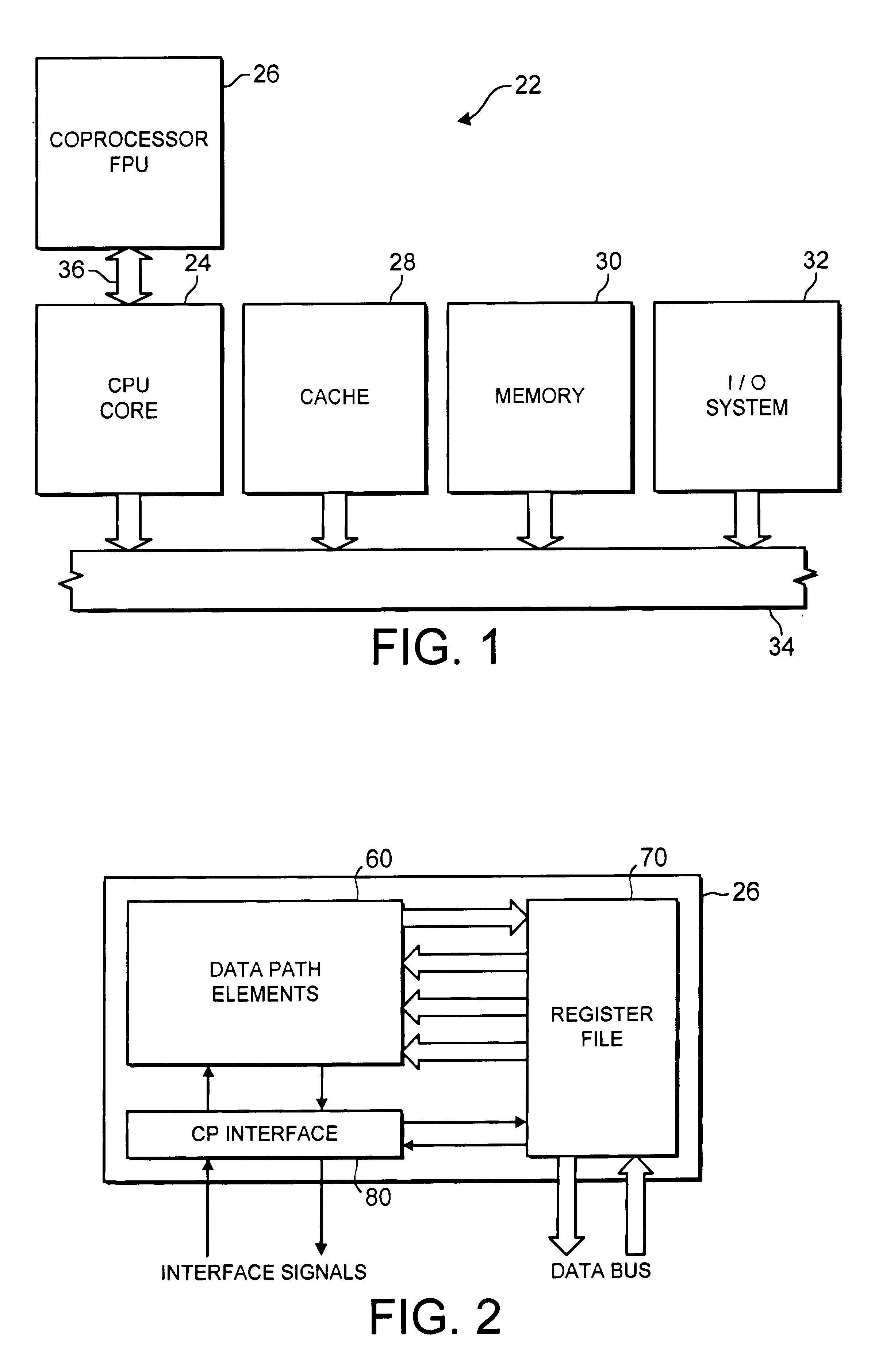

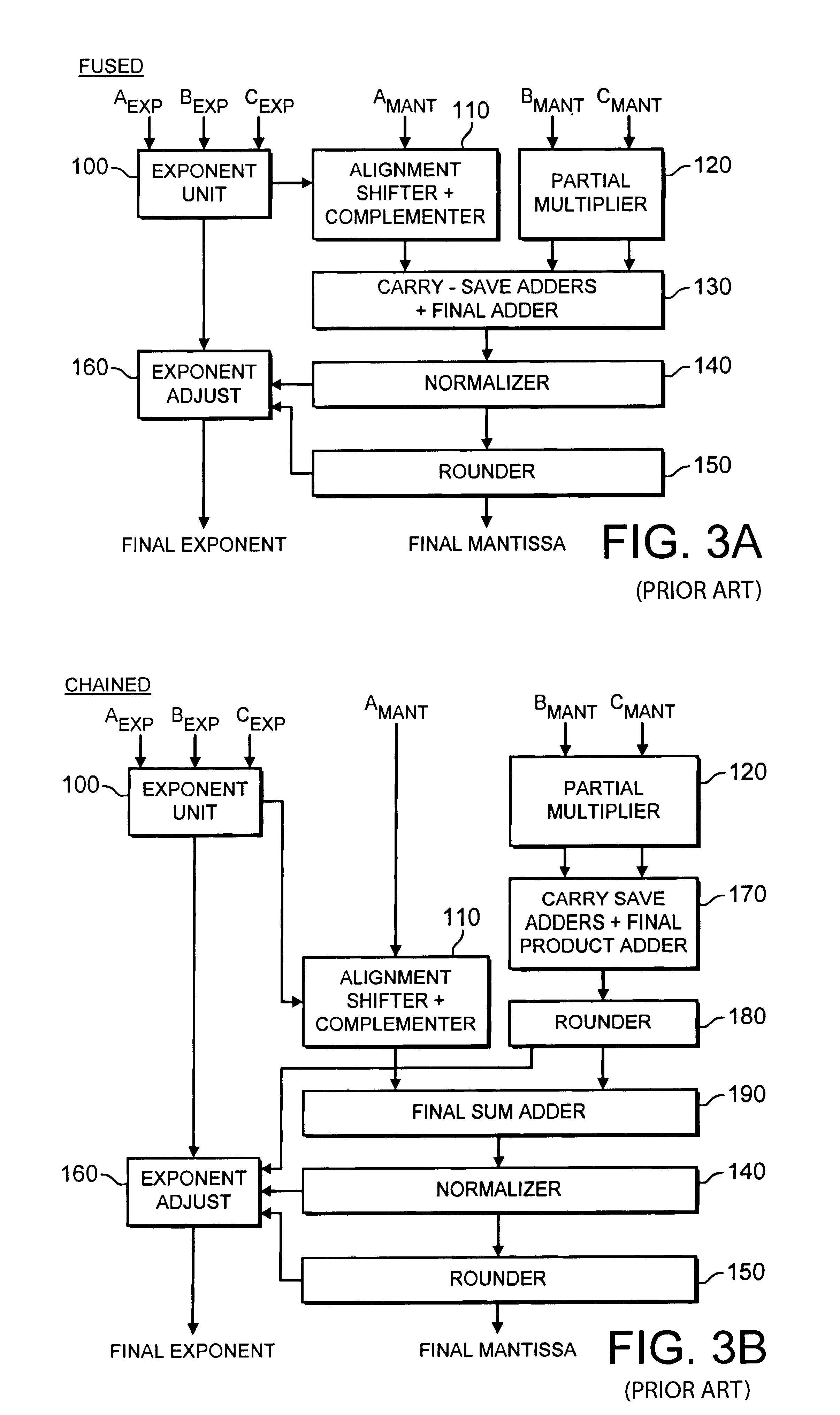

Data processing apparatus and method for applying floating-point operations to first, second and third operands

InactiveUS6542916B1Computation using non-contact making devicesComplex mathematical operationsBinary multiplierMultiply–accumulate operation

A data processing apparatus and method is provided for applying a floating-point multiply-accumulate operation to first, second and third operands. The apparatus comprises a multiplier for multiplying the second and third operands and applying rounding to produce a rounded multiplication result, and an adder for adding the rounded multiplication result to the first operand to generate a final result and for applying rounding to generate a rounded final result. Further, control logic is provided which is responsive to a first single instruction to control the multiplier and adder to cause the rounded final result generated by the adder to be equivalent to the subtraction of the rounded multiplication result from the first operand. In preferred embodiments, the control logic is also responsive to a further single instruction to control the multiplier and adder to cause the rounded final result generated by the adder to be equivalent to the subtraction of the rounded multiplication result from the negated first operand.By this approach, multiply-accumulate logic can be arranged to provide fast execution of a first single instruction to generate a result equivalent to the subtraction of the rounded multiplication result from the first operand, or a second single instruction to generate a result equivalent to the subtraction of the rounded multiplication result from the negated first operand, whilst producing results which are compliant with the IEEE 754-1985 standard.

Owner:ARM LTD

Multiply-accumulate instruction which adds or subtracts based on a predicate value

A data processing apparatus and method for performing multiply-accumulate operations is provided. The data processing apparatus includes data processing circuitry responsive to control signals to perform data processing operations on at least one input data element. Instruction decoder circuitry is responsive to a predicated multiply-accumulate instruction specifying as input operands a first input data element, a second input data element, and a predicate value, to generate control signals to control the data processing circuitry to perform a multiply-accumulate operation by: multiplying said first input data element and said second input data element to produce a multiplication data element; if the predicate value has a first value, producing a result accumulate data element by adding the multiplication data element to an initial accumulate data element; and if the predicate value has a second value, producing the result accumulate data element by subtracting the multiplication data element from the initial accumulate data element.

Owner:ARM LTD

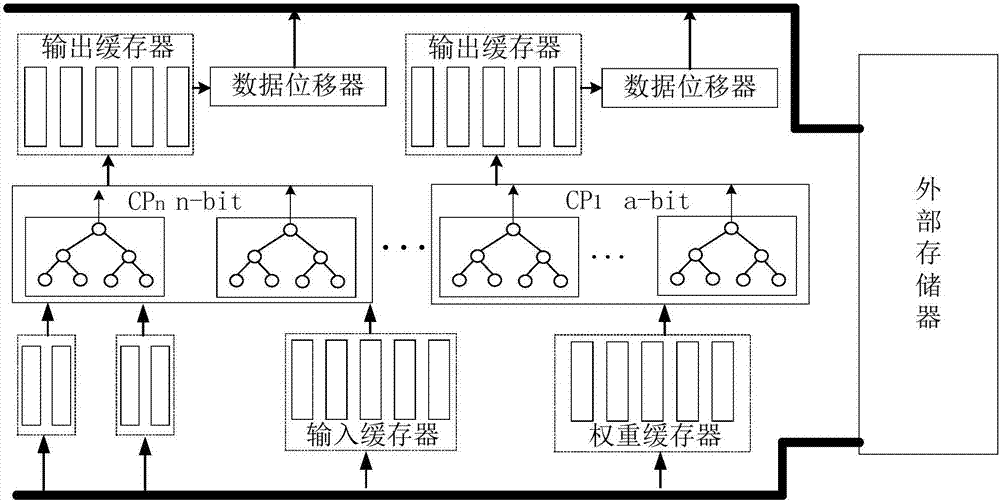

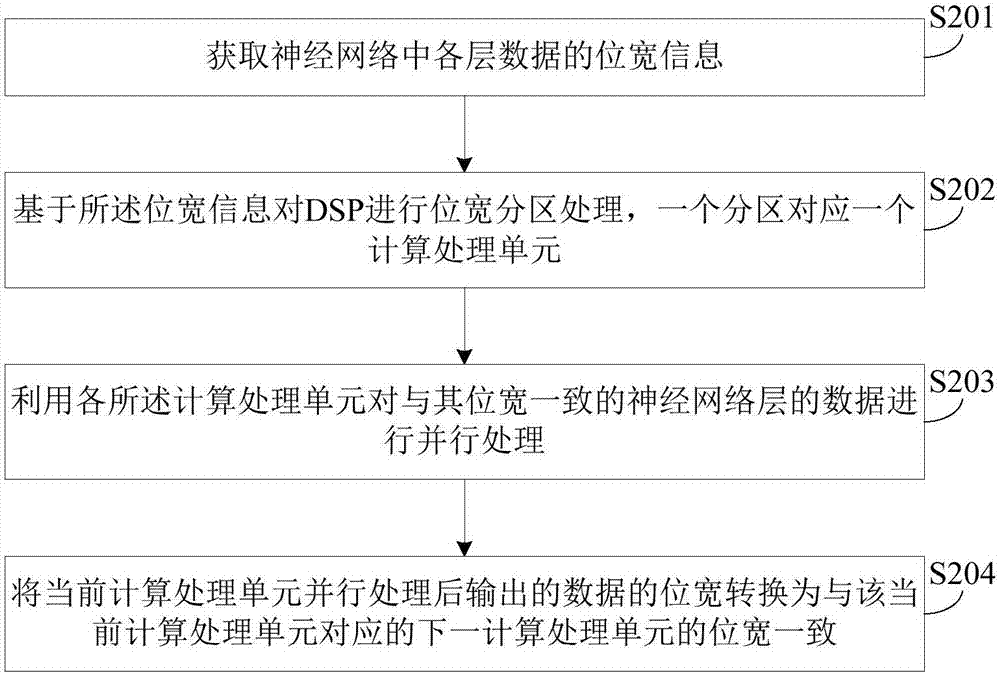

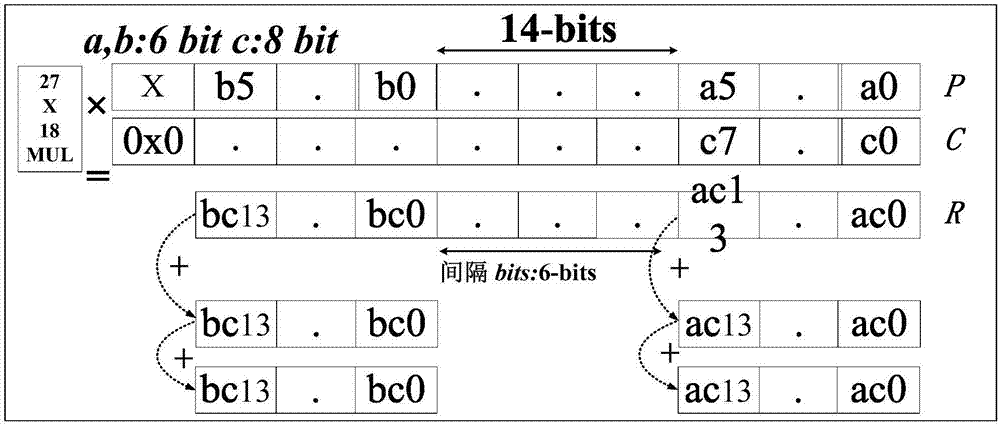

Neural network accelerator for bit width partitioning and implementation method of neural network accelerator

ActiveCN107451659AIncrease profitImprove resource usage efficiencyPhysical realisationExternal storageNerve network

The present invention provides a neural network accelerator for bit width partitioning and an implementation method of the neural network accelerator. The neural network accelerator includes a plurality of computing and processing units with different bit widths, input buffers, weight buffers, output buffers, data shifters and an off-chip memory; each of the computing and processing units obtains data from the corresponding input buffering area and weight buffer, and performs parallel processing on data of a neural network layer having a bit width consistent with the bit width of the corresponding computing and processing unit; the data shifters are used for converting the bit width of data outputted by the current computing and processing unit into a bit width consistent with the bit width of a next computing and processing unit corresponding to the current computing and processing unit; and the off-chip memory is used for storing data which have not been processed and have been processed by the computing and processing units. With the neural network accelerator for bit width partitioning and the implementation method of the neural network accelerator of the invention adopted, multiply-accumulate operation can be performed on a plurality of short-bit width data, so that the utilization rate of a DSP can be increased; and the computing and processing units (CP) with different bit widths are adopted to perform parallel computation of each layer of a neural network, and therefore, the computing throughput of the accelerator can be improved.

Owner:TSINGHUA UNIV

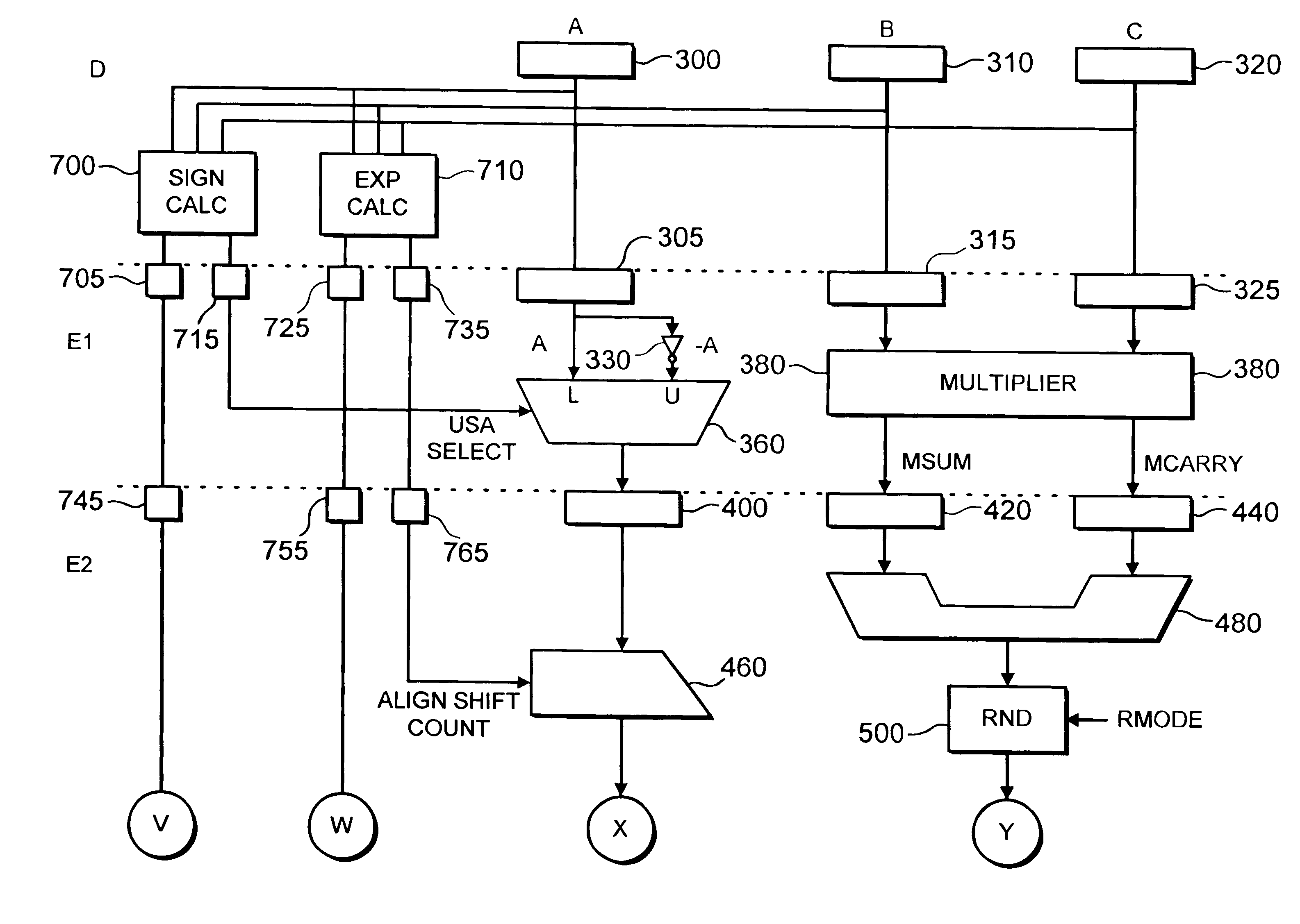

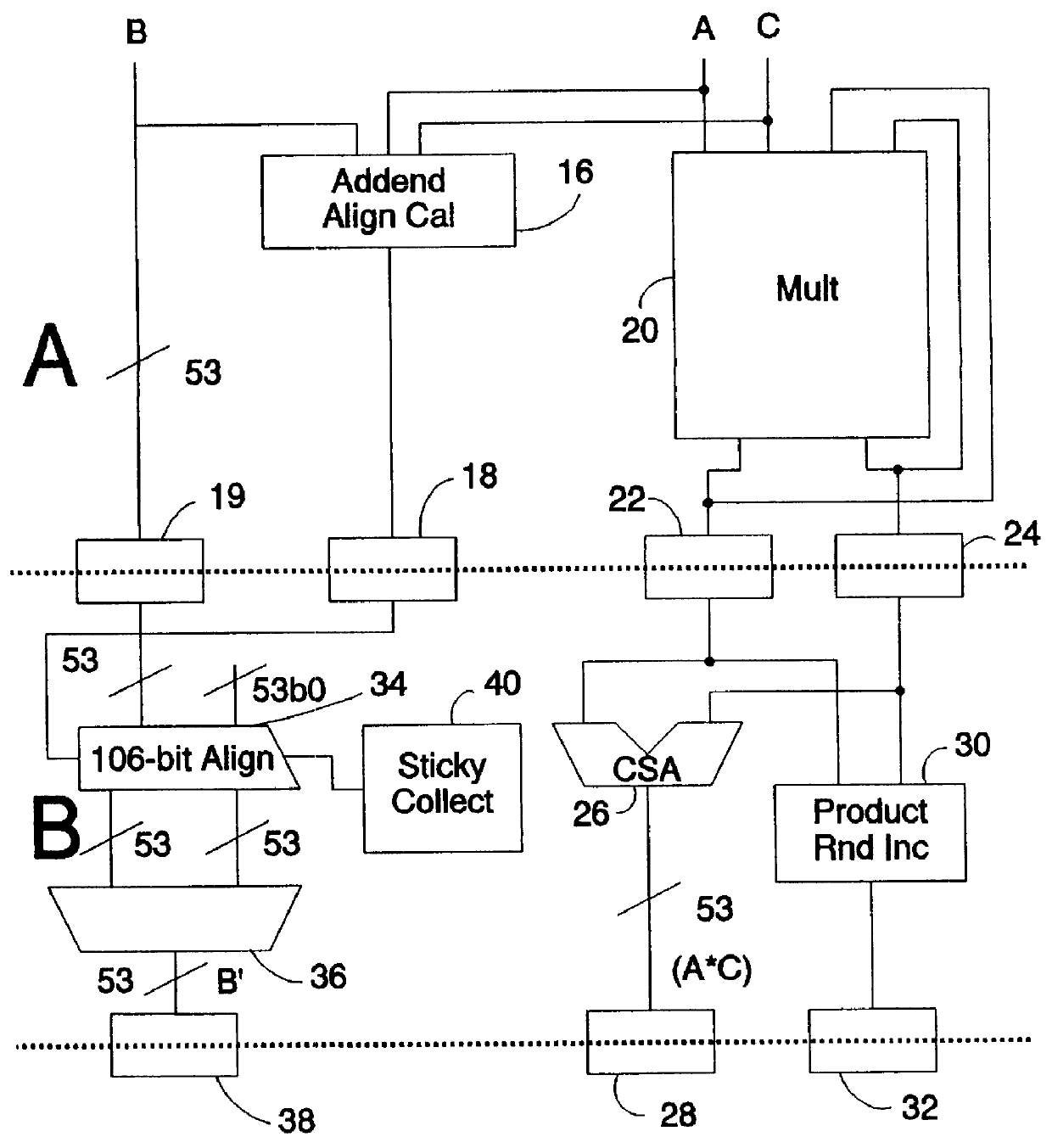

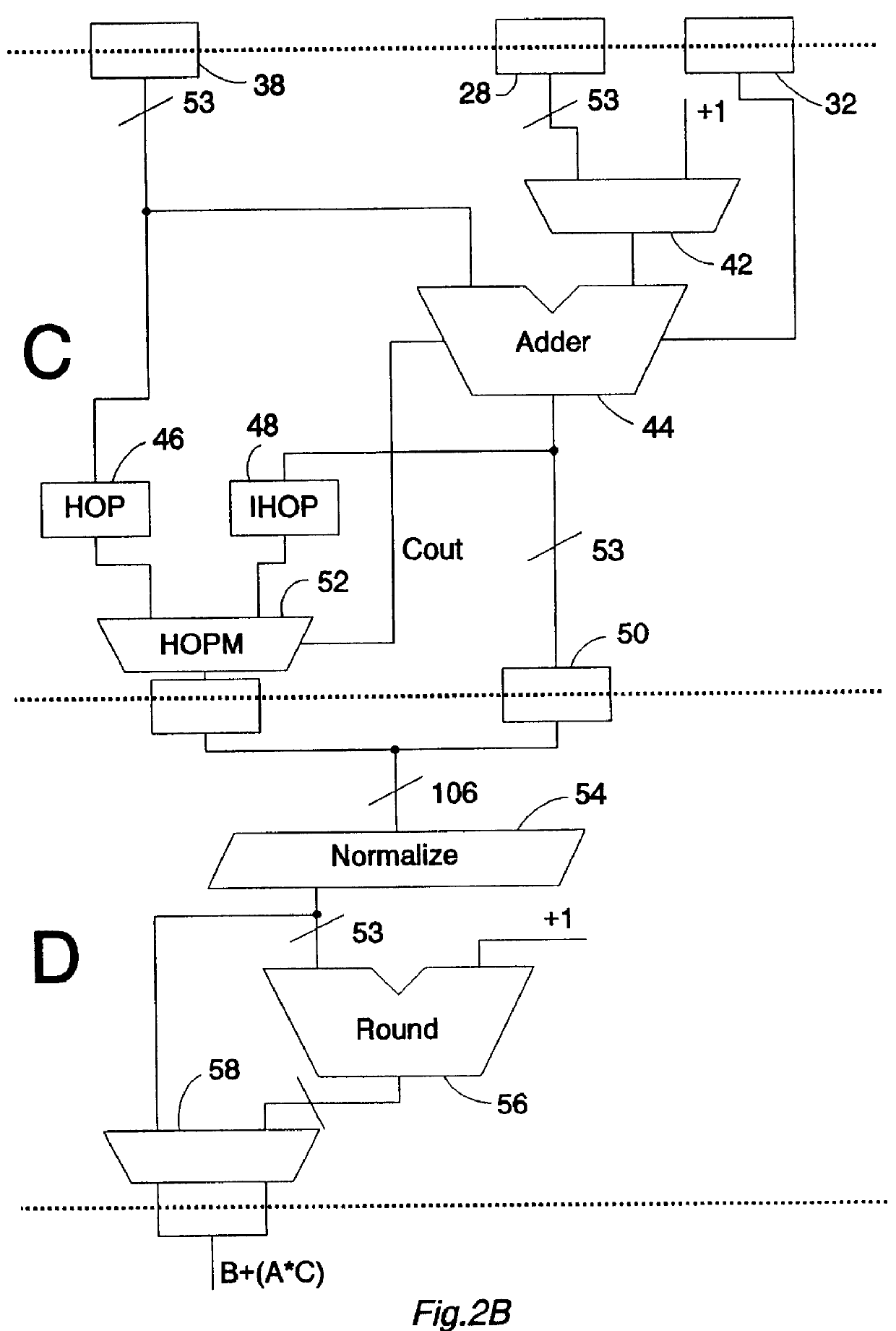

Floating point multiply-accumulate unit

InactiveUS6115729ALow costReduce circuit sizeComputation using non-contact making devicesBinary multiplierMultiply–accumulate operation

A floating point unit 10 provides a multiply-accumulate operation to determine a result B+(A*C). The multiplier 20 takes several processing cycles to determine the product (A*C). Whilst the multiplier 20 and its subsequent carry-save-adder 26 operate, an aligned value B' of the addend B is generated by an alignment-shifter 34. The aligned-addend B' may only partially overlap with the product (A*C) to which it is to be added using an adder 44. Any high-order-portion HOP of the aligned-addend B' that does not overlap with the product (A*C) must be subsequently concatenated with the output of the adder 44 that sums the product (A*C) with the overlapping portion of the aligned-addend B'. If the sum performed by the adder 44 generates a carry then it is an incremented version IHOP of the high-order-portion that should be concatenated with the output of the adder 44. This incremented-high-order-portion is generated by the adder 44 during otherwise idle processing cycles present due to the multiplier 20 operating over multiple cycles.

Owner:ARM LTD

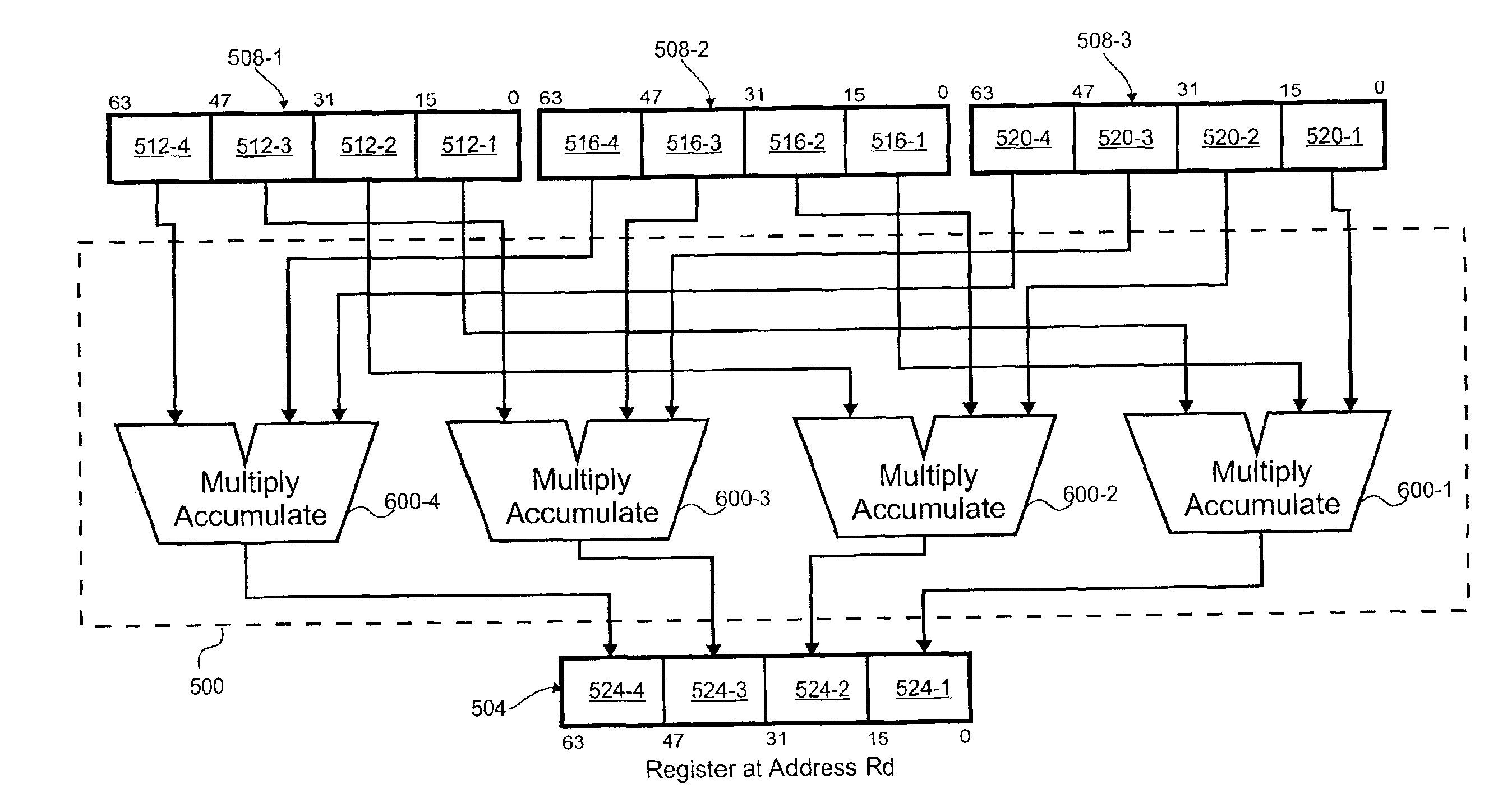

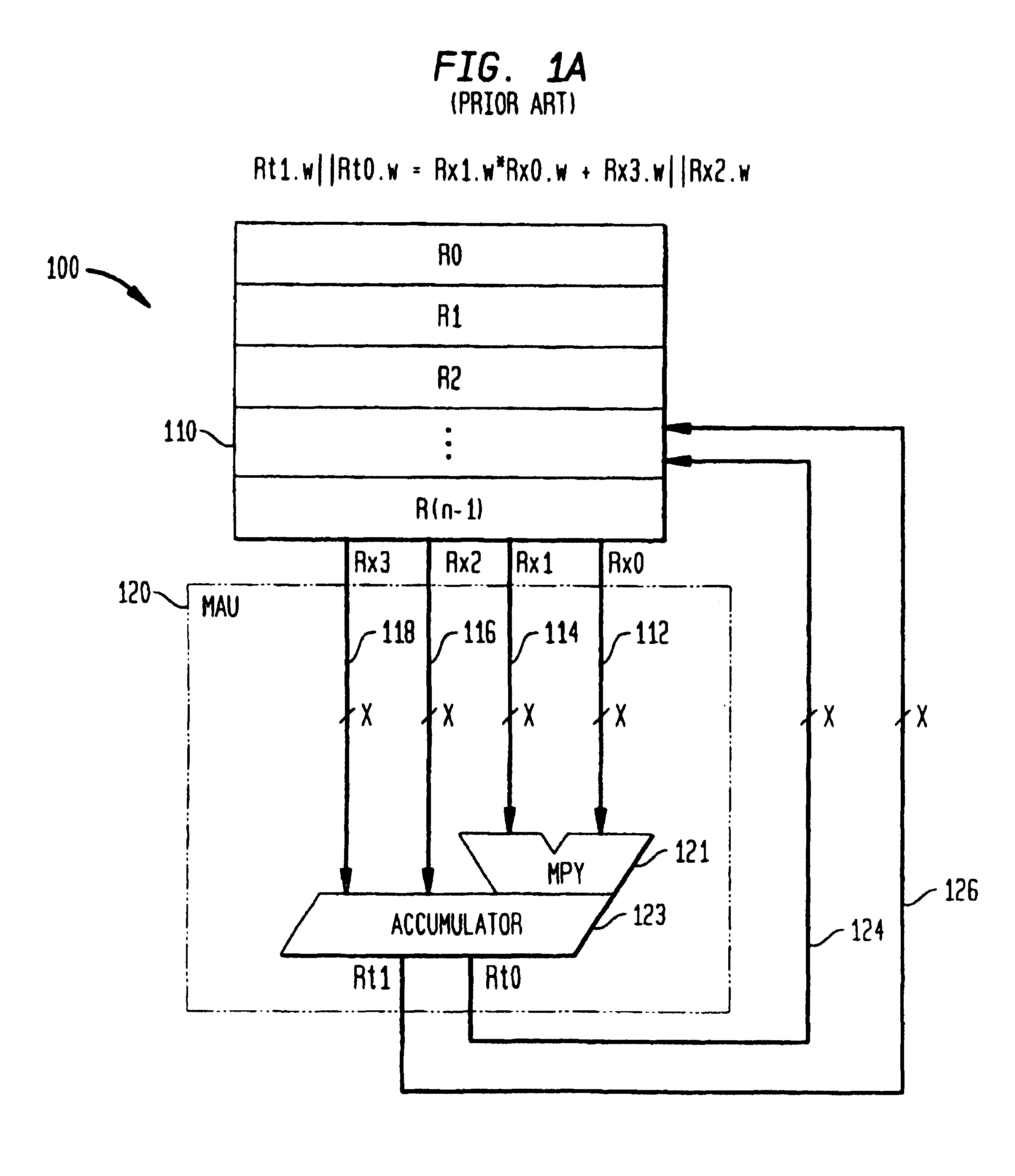

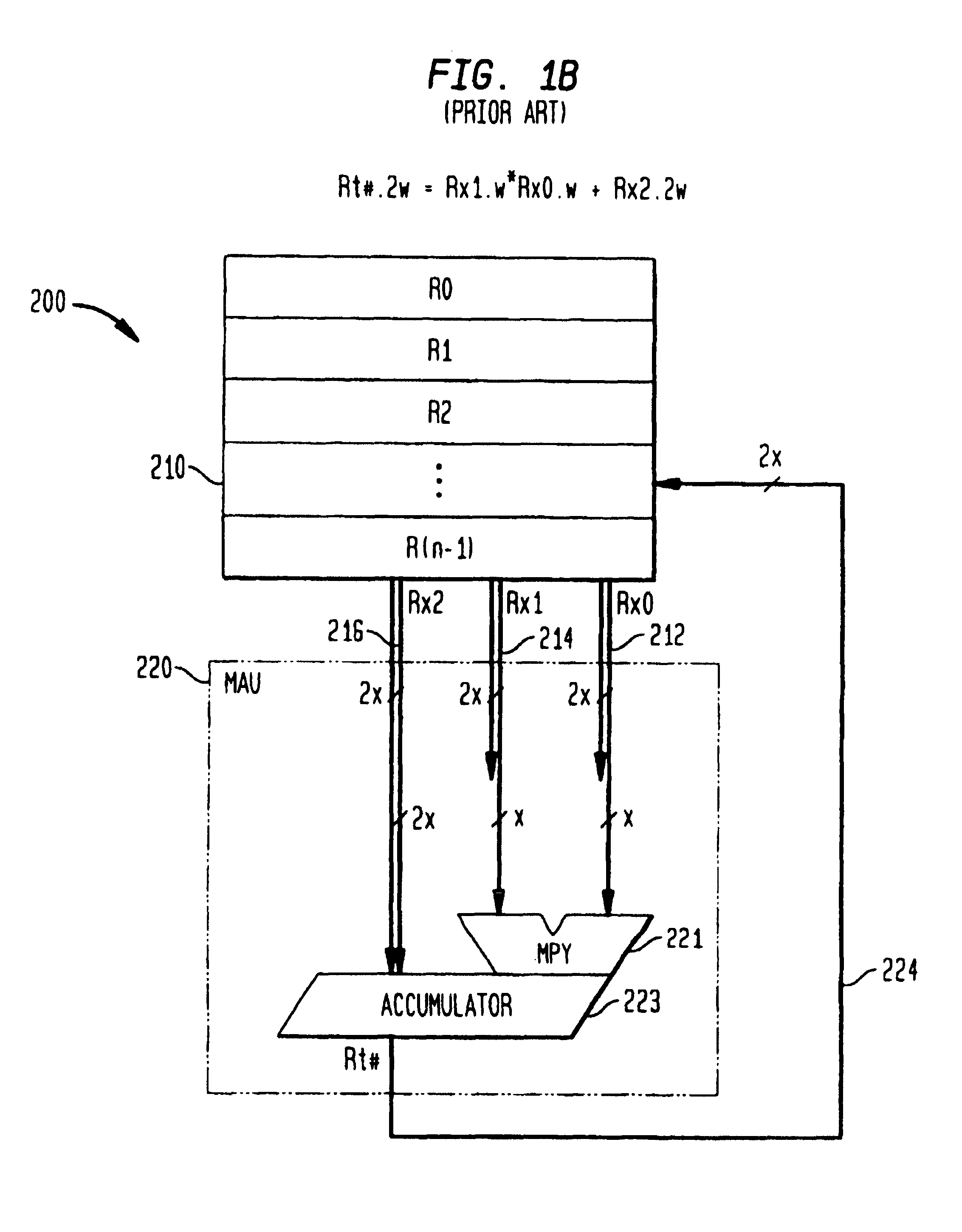

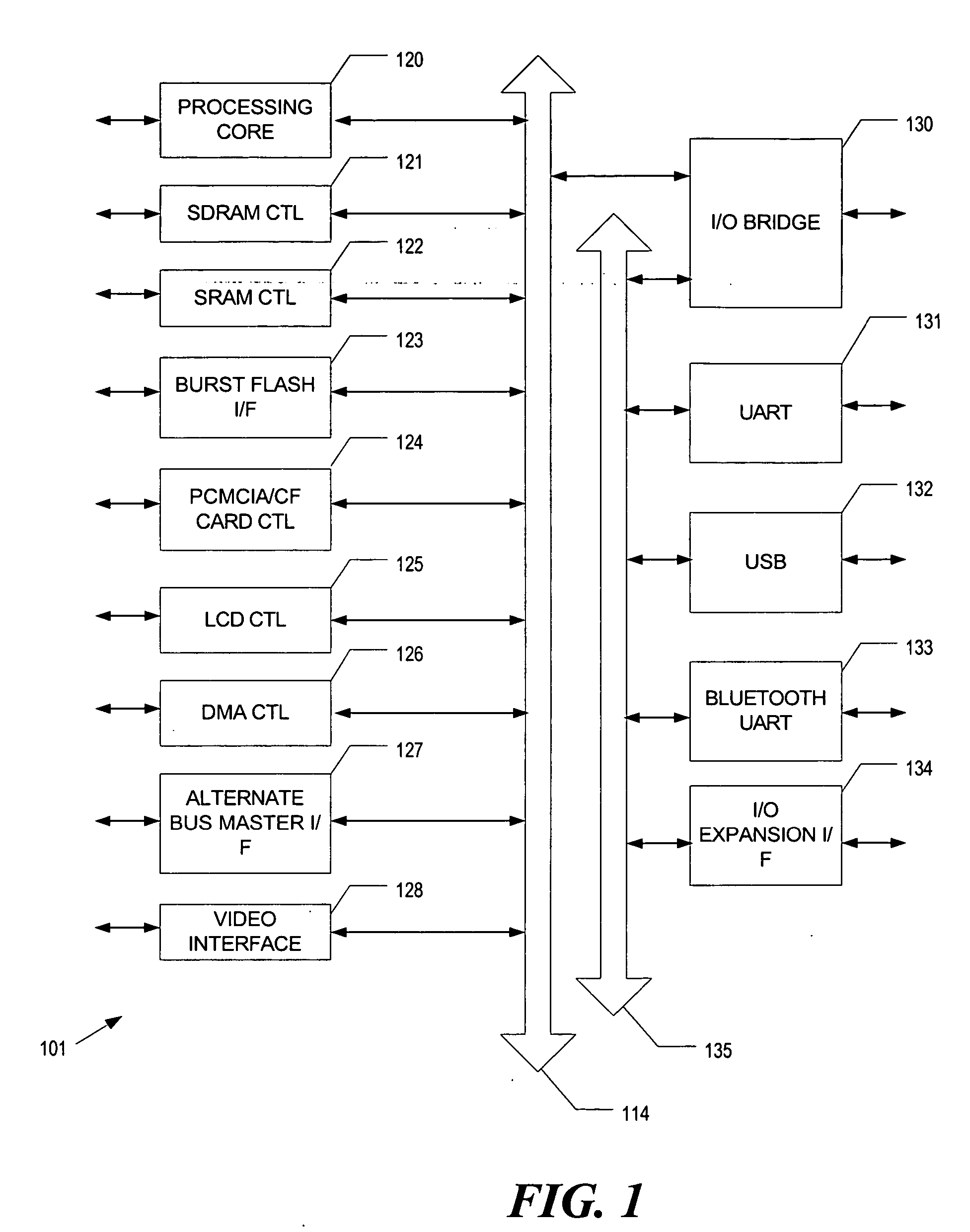

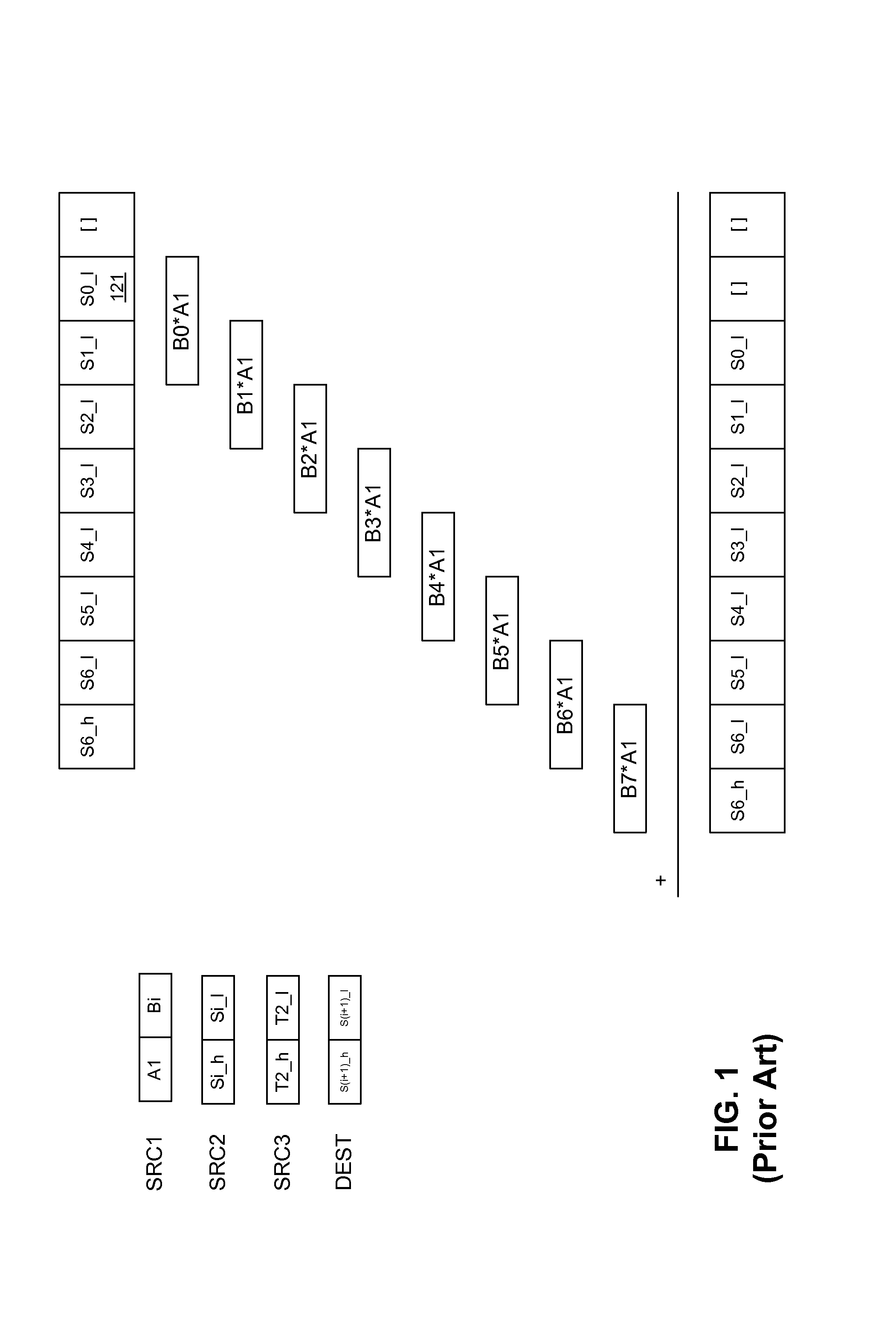

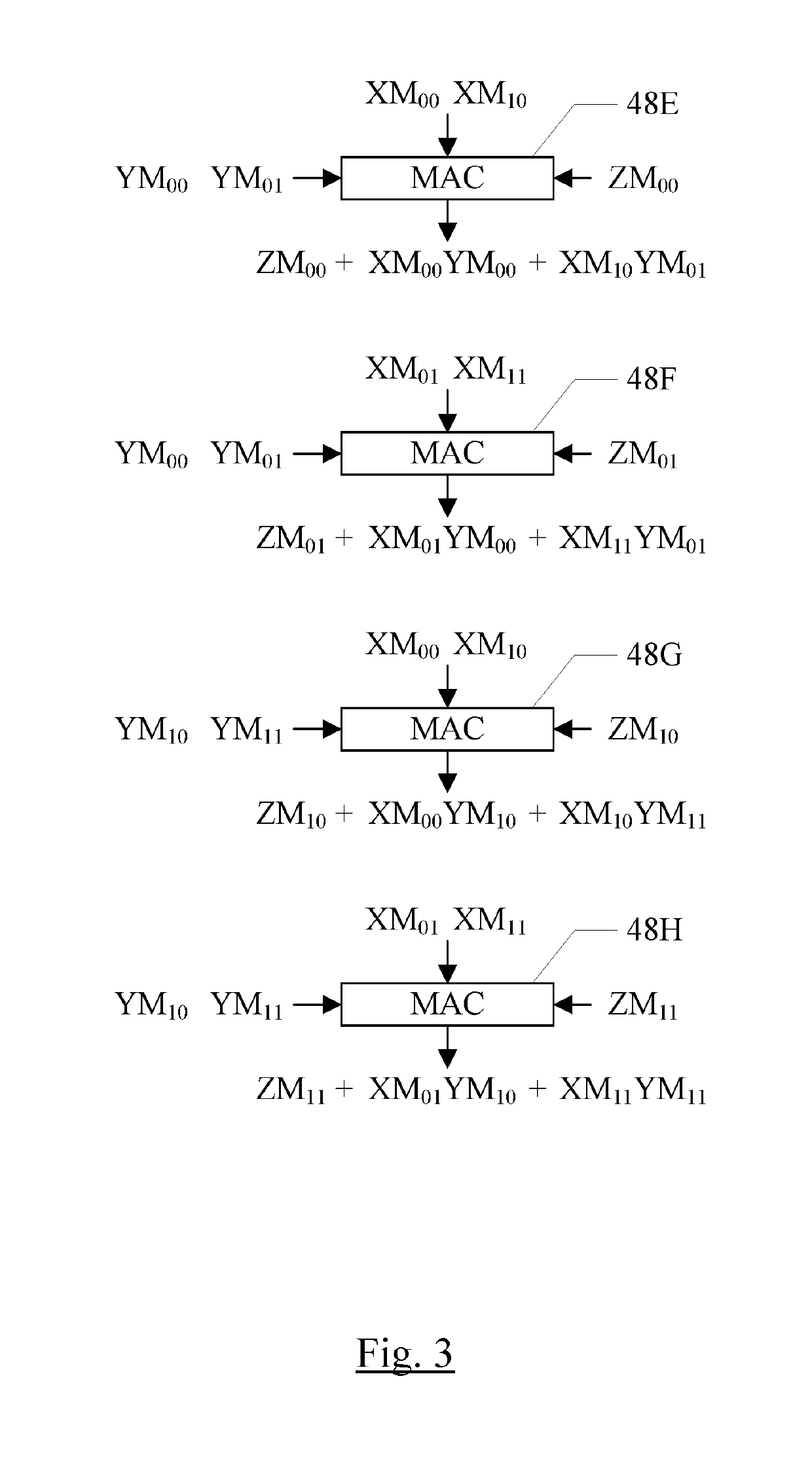

Methods and apparatus for performing parallel integer multiply accumulate operations

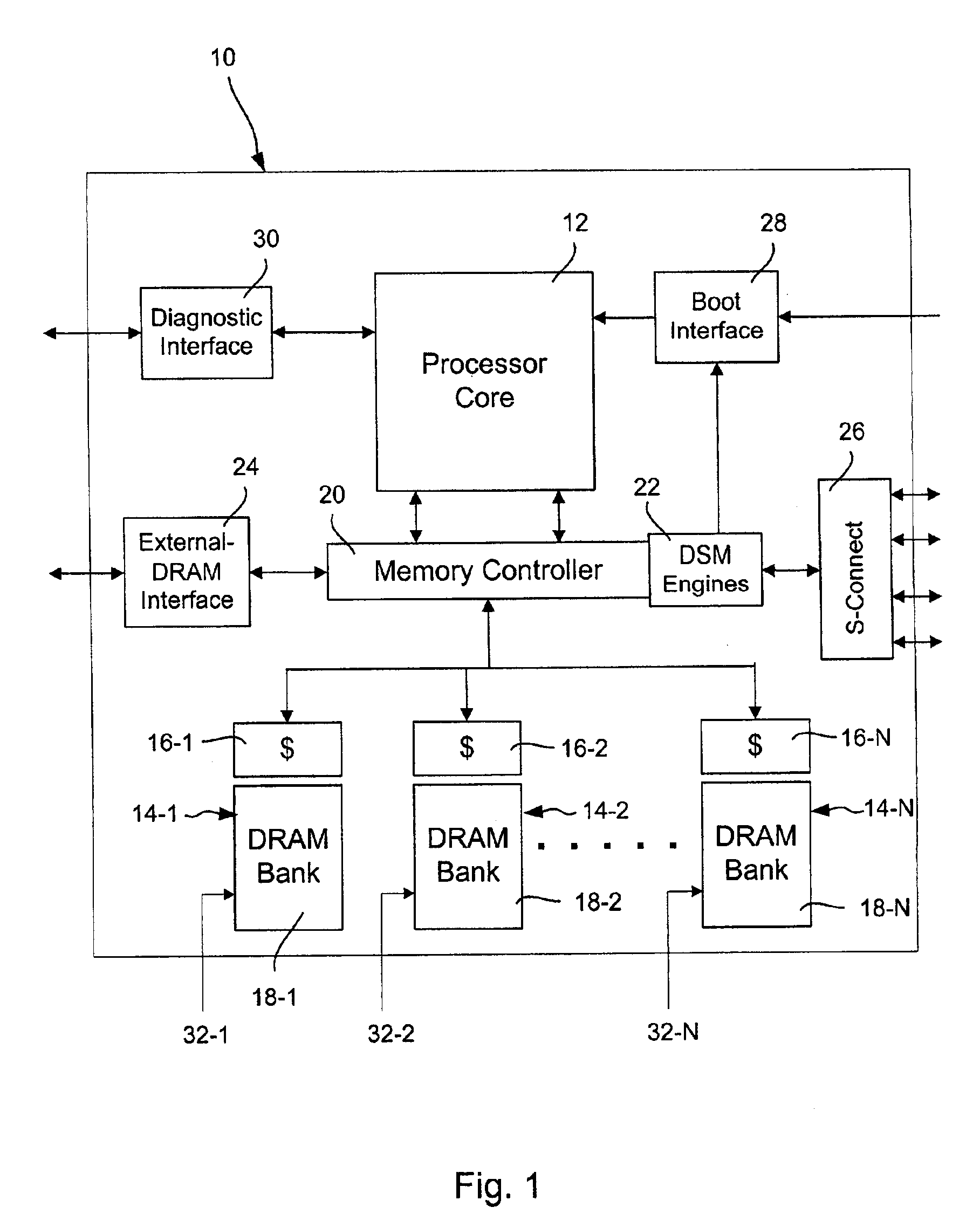

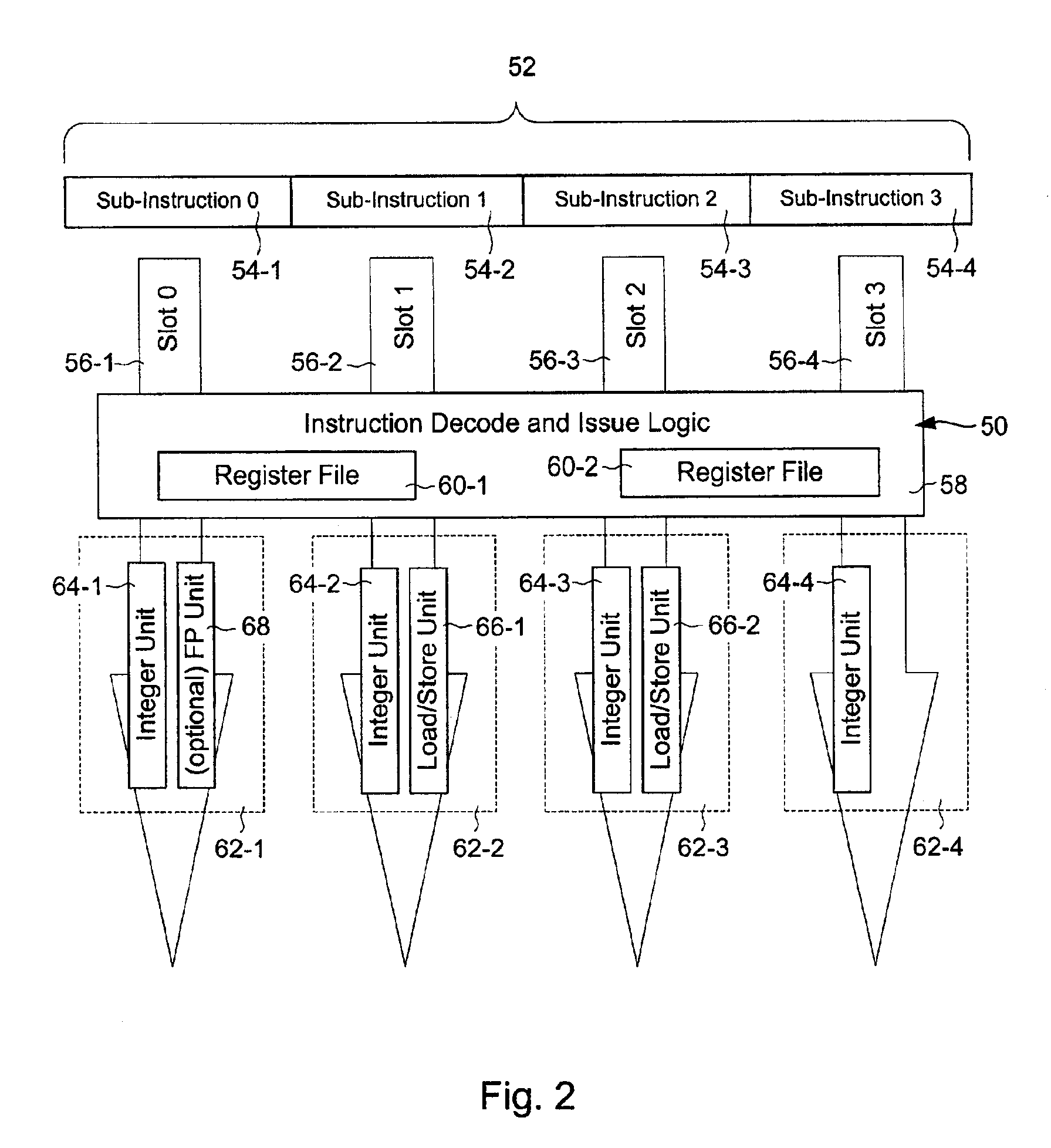

ActiveUS7013321B2Computation using denominational number representationProcessing coreProcessor register

According to the invention, a processing core that executes a parallel multiply accumulate operation is disclosed. Included in the processing core are a first, second and third input operand registers; a number of functional blocks; and, an output operand register. The first, second and third input operand registers respectively include a number of first input operands, a number of second input operands and a number of third input operands. Each of the number of functional blocks performs a multiply accumulate operation. The output operand register includes a number of output operands. Each of the number of output operands is related to one of the number of first input operands, one of the number of second input operands and one of the number of third input operands.

Owner:ORACLE INT CORP

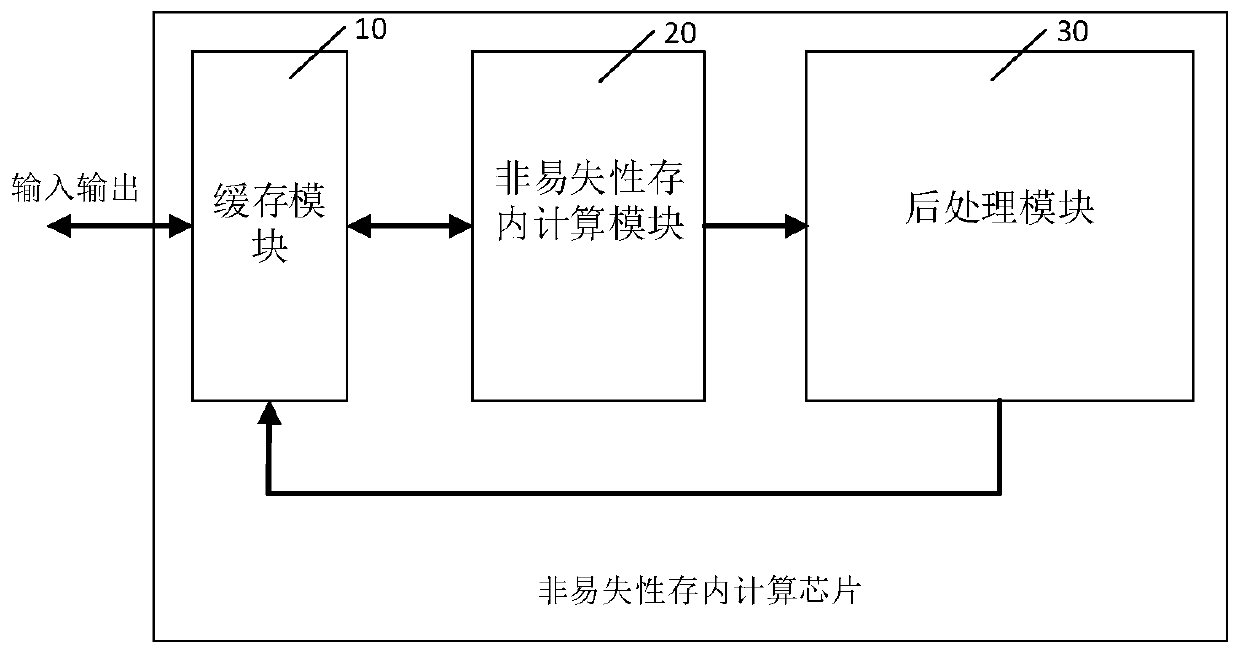

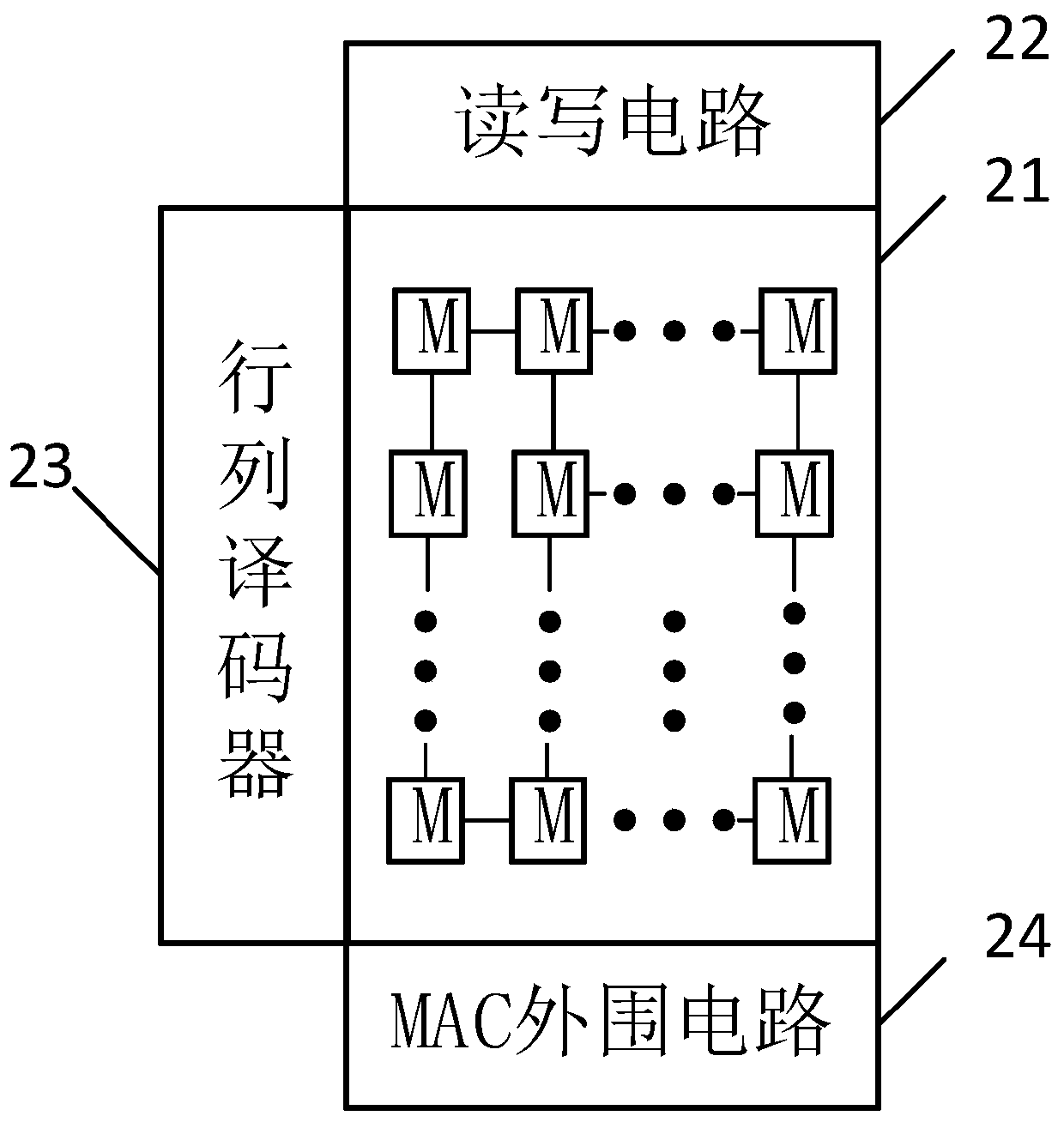

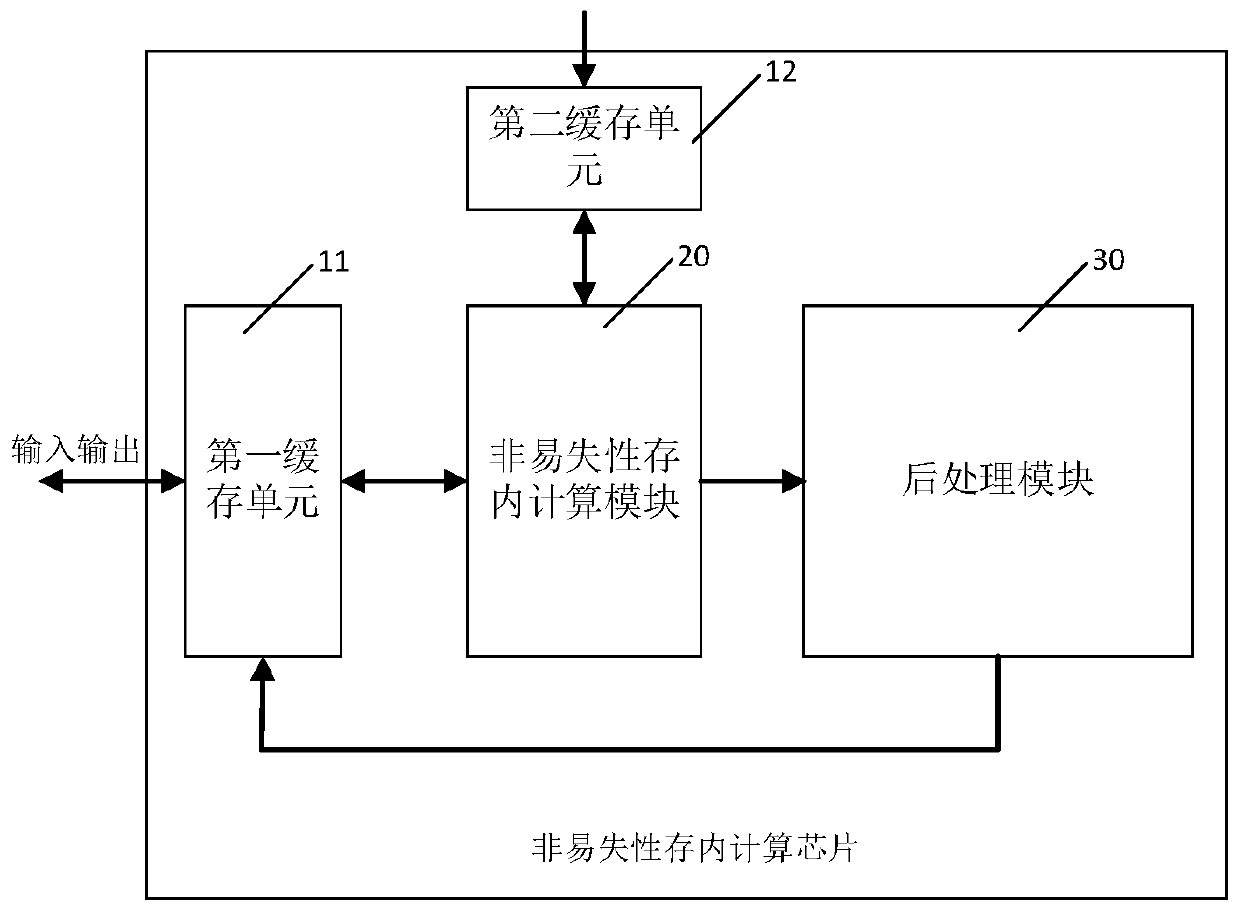

Nonvolatile in-memory computing chip and operation control method thereof

ActiveCN110597555AReduce power consumptionReduce latencyDigital storagePhysical realisationMultiply–accumulate operationTime delays

The invention provides a nonvolatile in-memory computing chip and an operation control method thereof. The nonvolatile in-memory computing chip comprises a cache module which is used for caching the data; a nonvolatile in-memory calculation module which is connected with the cache module and is used for executing operation on the data sent by the cache module; a post-processing module which is connected with the non-volatile in-memory calculation module and used for post-processing the operation result of the non-volatile in-memory calculation module, wherein the nonvolatile in-memory computing module comprises a nonvolatile memory cell array, a row and column decoder connected with the nonvolatile memory cell array, and a read-write circuit connected with the nonvolatile memory cell array. According to the present invention, the non-volatile in-memory computing chip is matched with an operation control method, the multiply-accumulate-add operation and the binary neural network operation are achieved based on a storage and calculation integrated technology, the data transmission between a memory and a processor is not needed, and the power consumption and the time delay are reduced.

Owner:BEIHANG UNIV

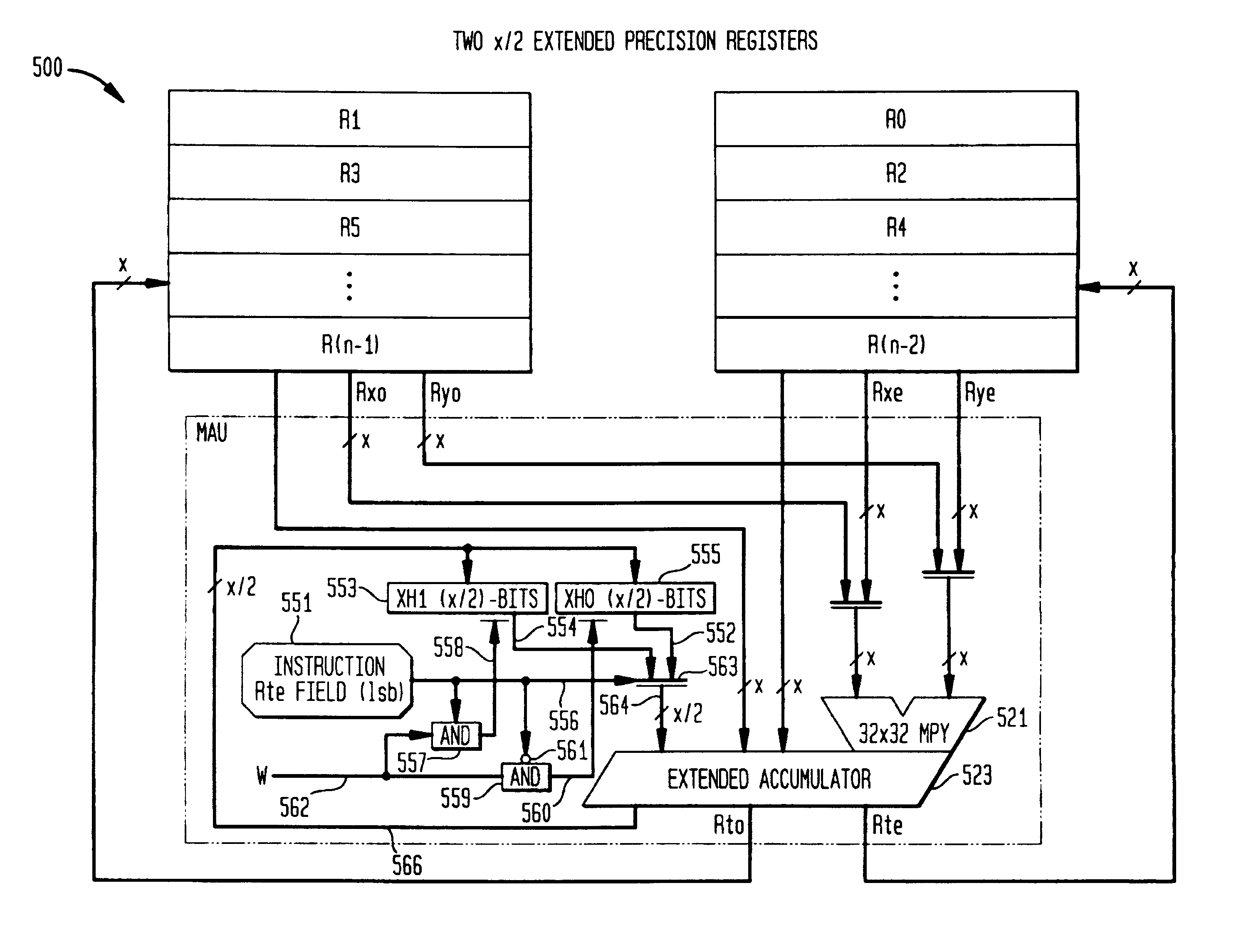

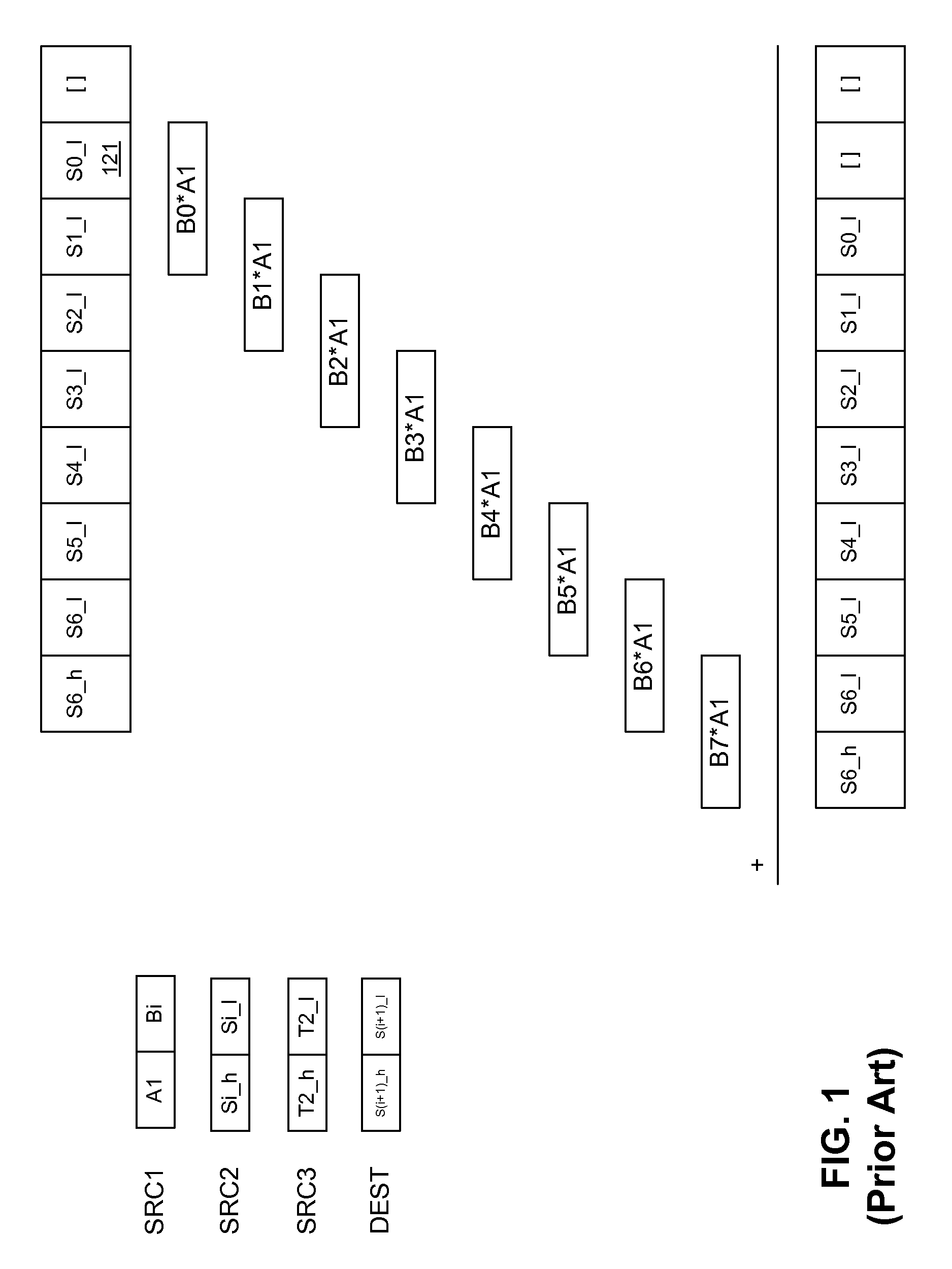

Methods and apparatus for dynamic instruction controlled reconfigurable register file with extended precision

InactiveUSRE40883E1Extended precision data widthSmall sizeRegister arrangementsInstruction analysisProcessor registerMultiply–accumulate operation

A reconfigurable register file integrated in an instruction set architecture capable of extended precision operations, and also capable of parallel operation on lower precision data is described. A register file is composed of two separate files with each half containing half as many registers as the original. The halves are designated even or odd by virtue of the register addresses which they contain. Single width and double width operands are optimally supported without increasing the register file size and without increasing the number of register file ports. Separate extended registers are also employed to provide extended precision for operations such as multiply-accumulate operations.

Owner:ALTERA CORP

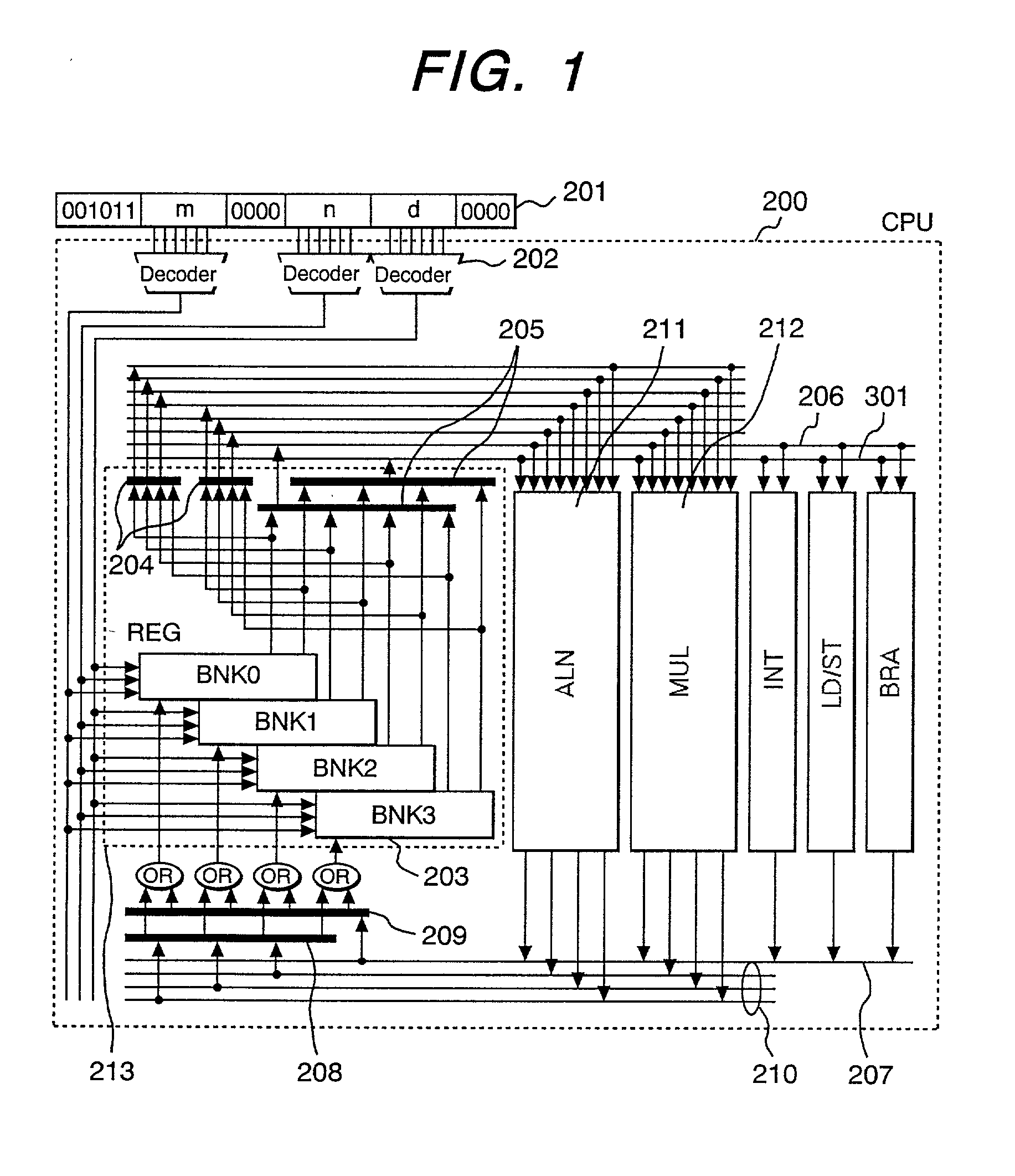

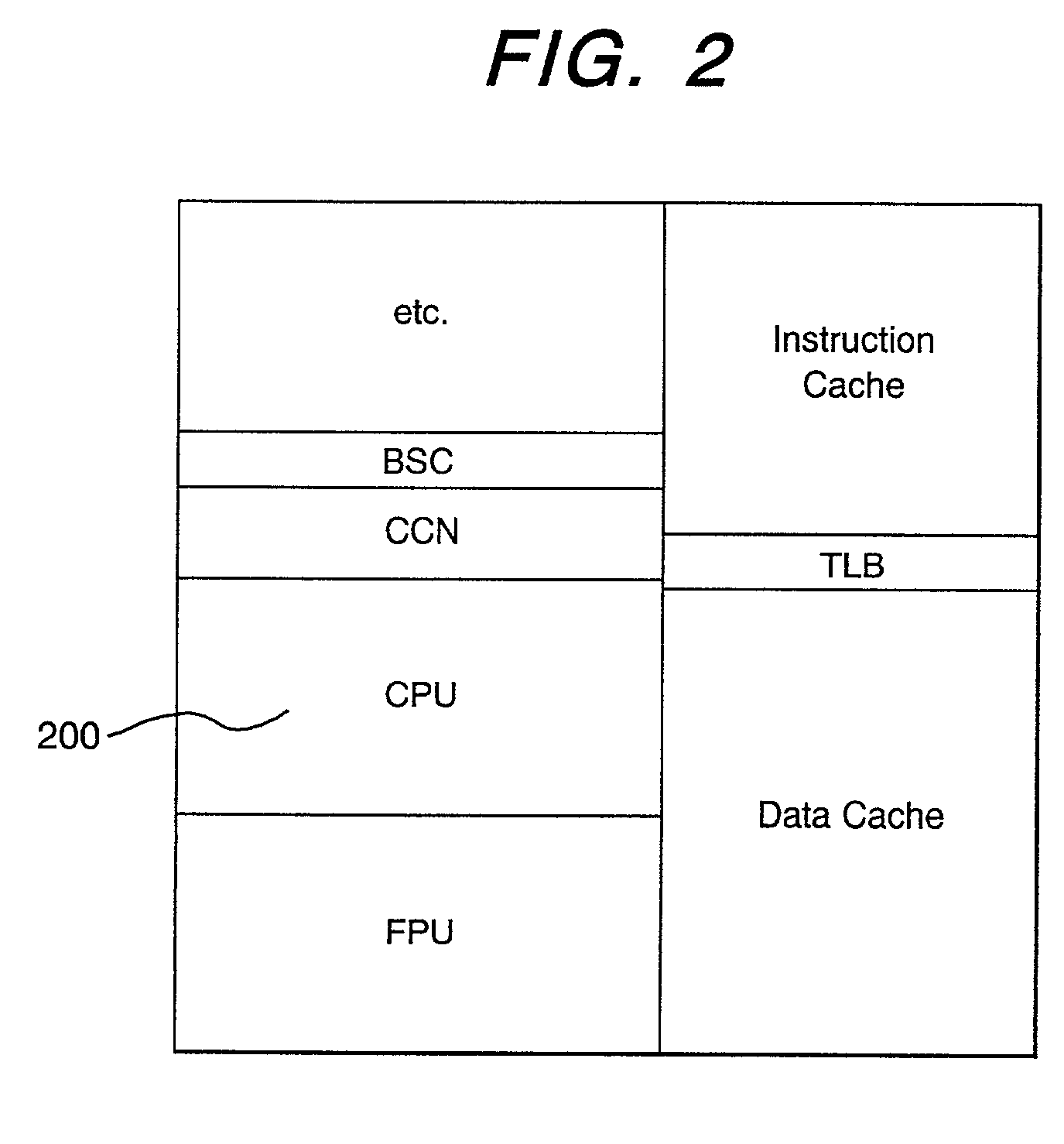

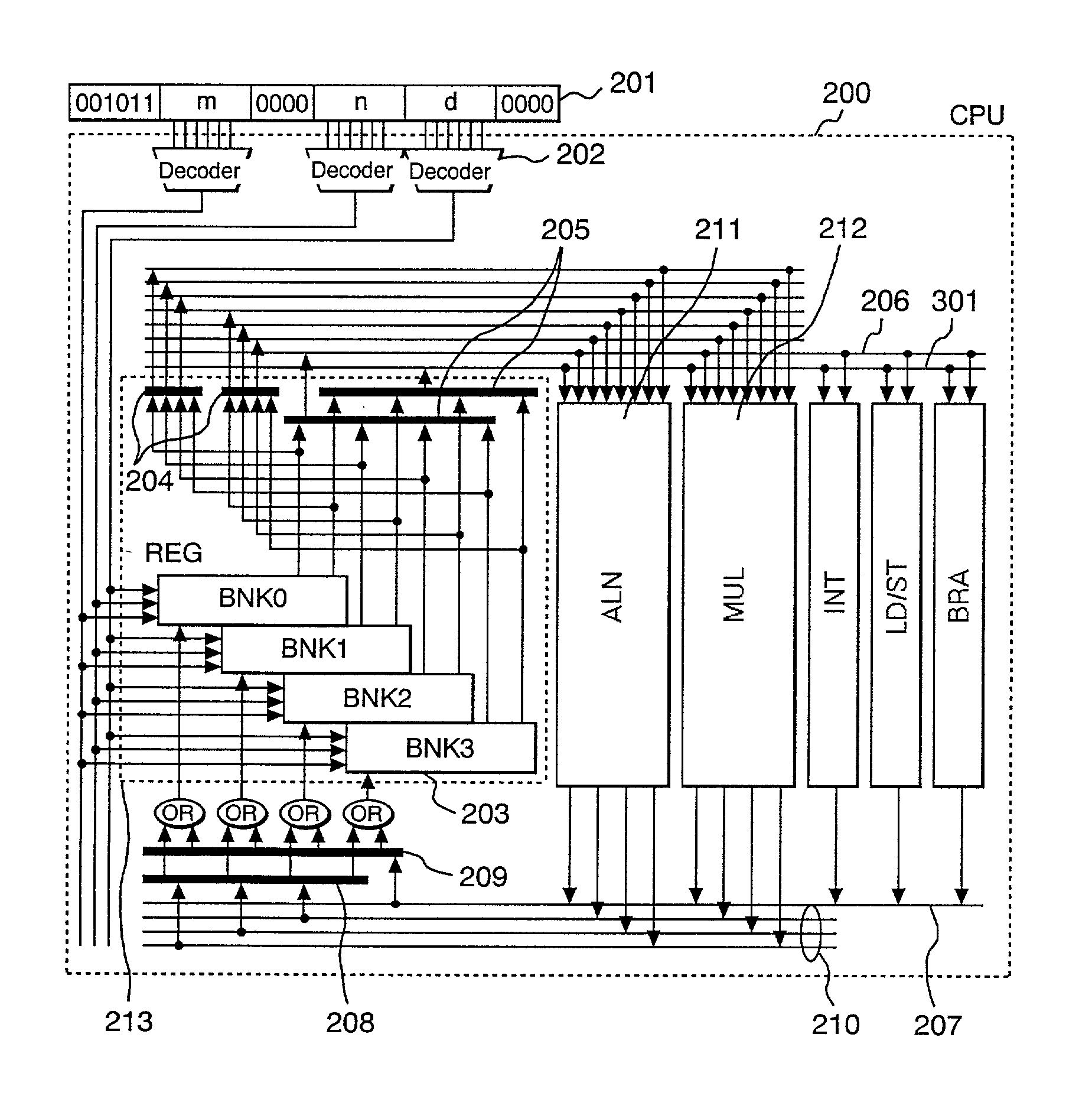

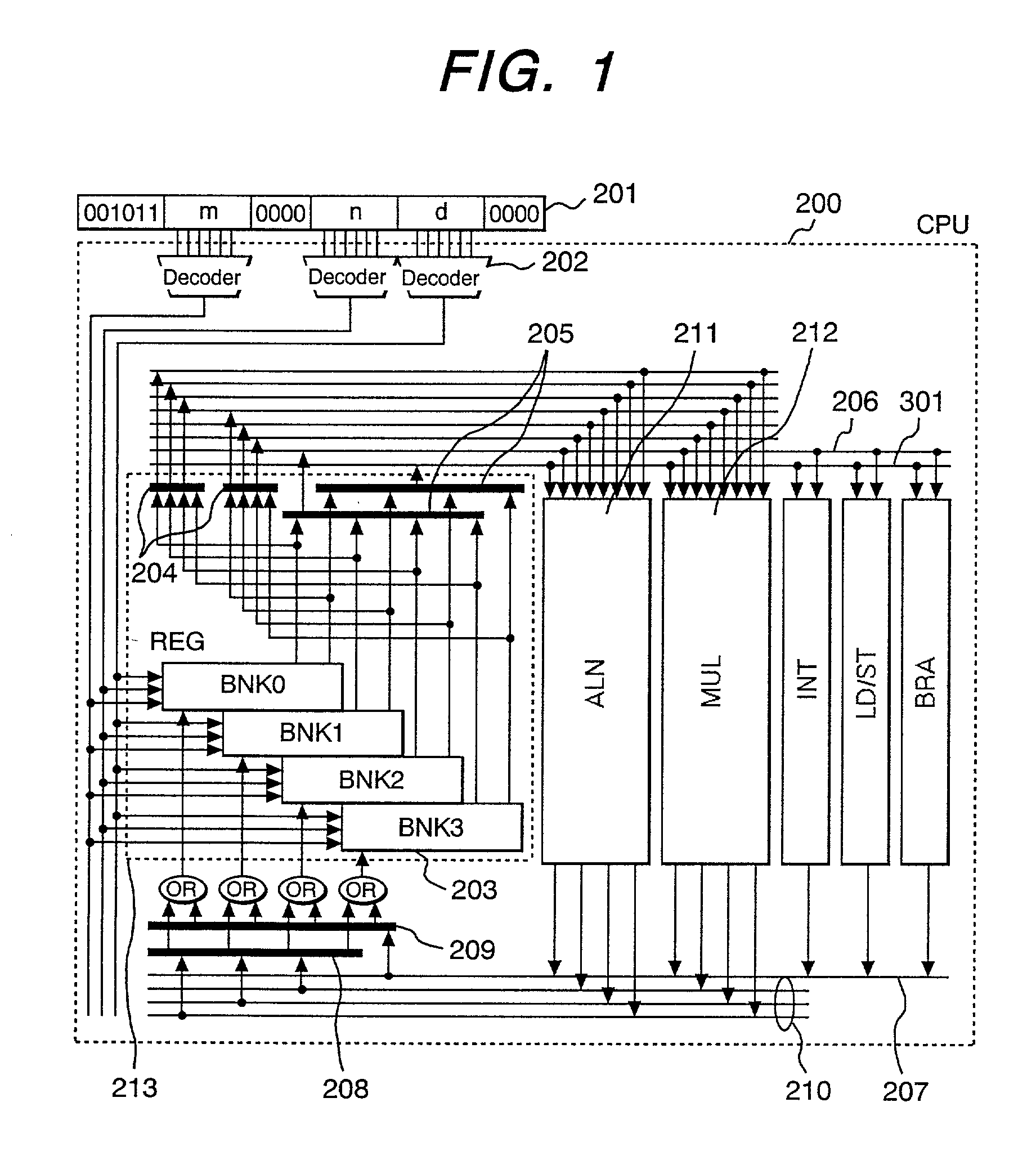

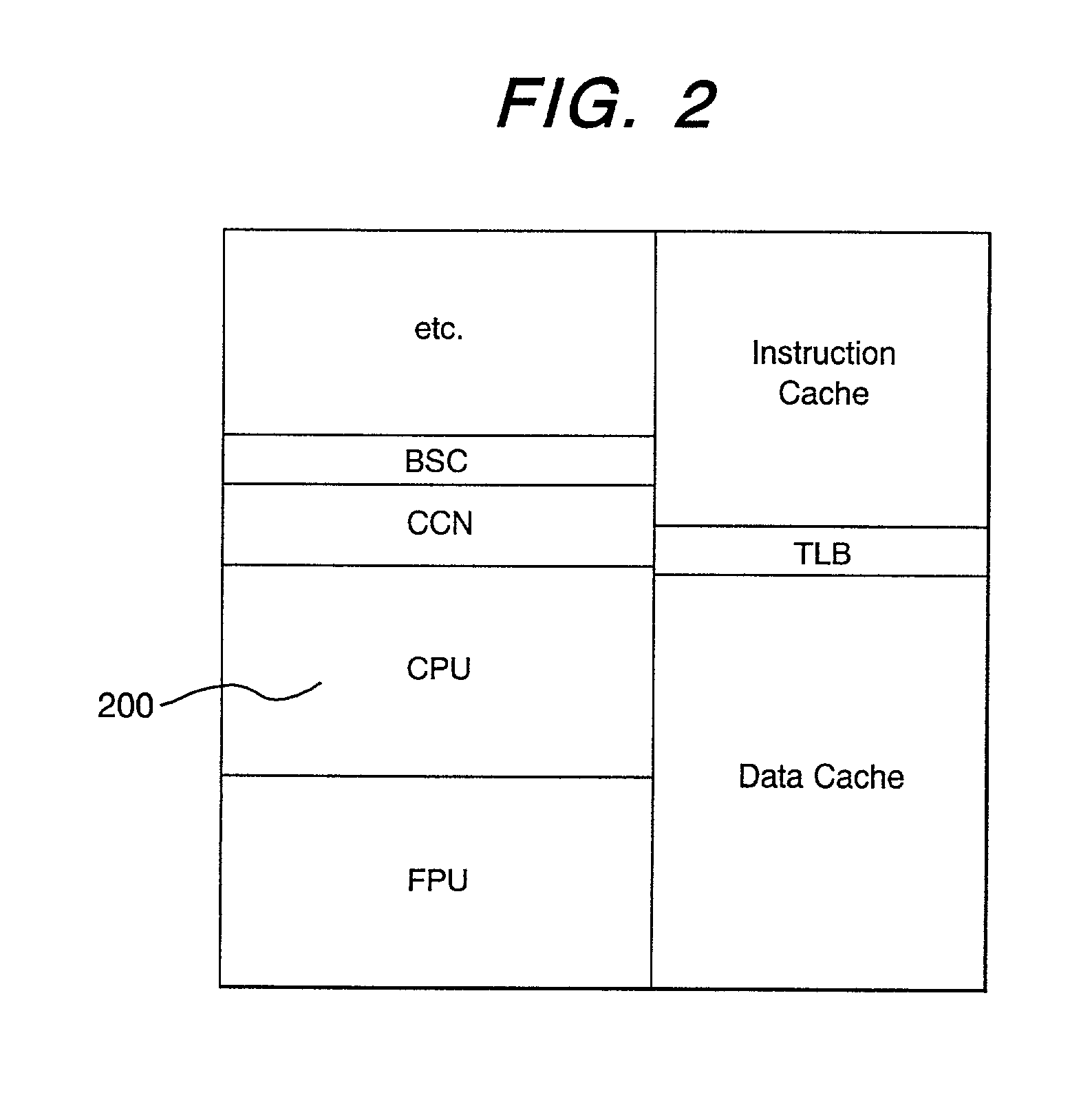

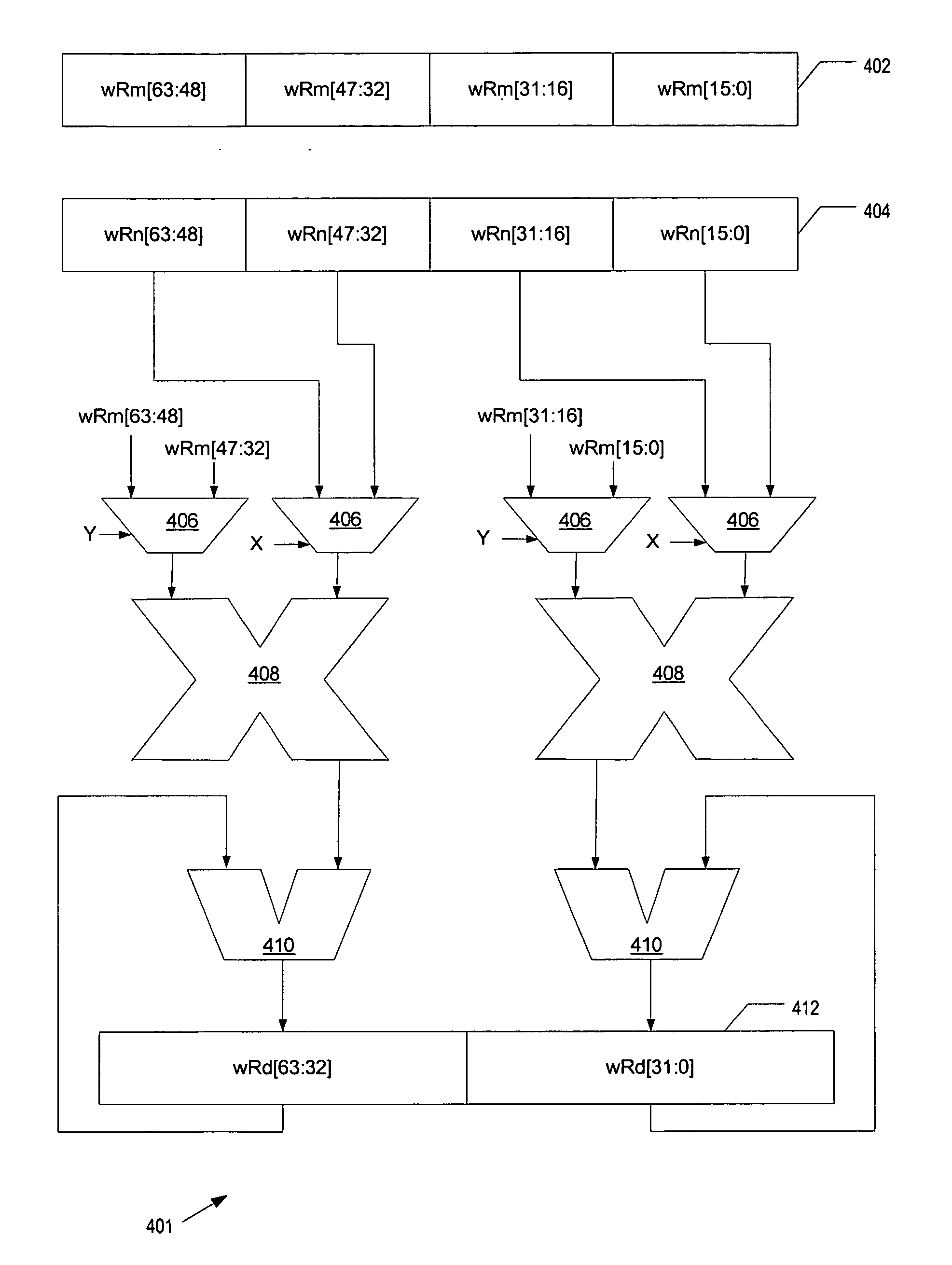

SIMD operation system capable of designating plural registers

InactiveUS20020026570A1Maximize the effectMaintain accuracyRegister arrangementsConcurrent instruction executionOperational systemNetwork packet

In view of a necessity of alleviating factors obstructing an effect of SIMD operation such as in-register data alignment in high speed formation of an SIMD processor, numerous data can be supplied to a data alignment operation pipe 211 by dividing a register file into four banks and enabling to designate a plurality of registers by a single piece of operand to thereby enable to make access to four registers simultaneously and data alignment operation can be carried out at high speed. Further, by defining new data pack instruction, data unpack instruction and data permutation instruction, data supplied in a large number can be aligned efficiently. Further, by the above-described characteristic, definition of multiply accumulate operation instruction maximizing parallelism of SIMD can be carried out.

Owner:RENESAS ELECTRONICS CORP

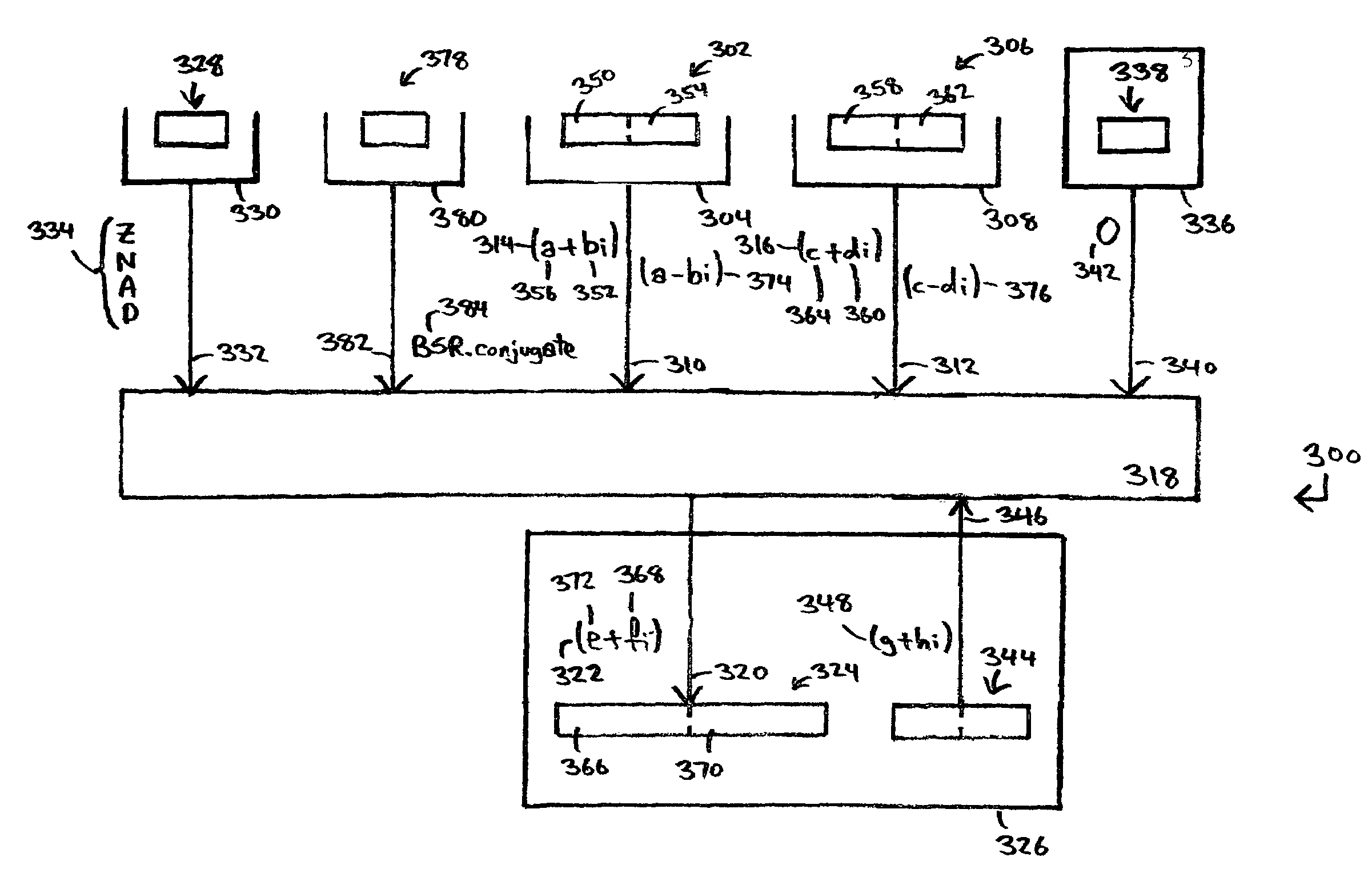

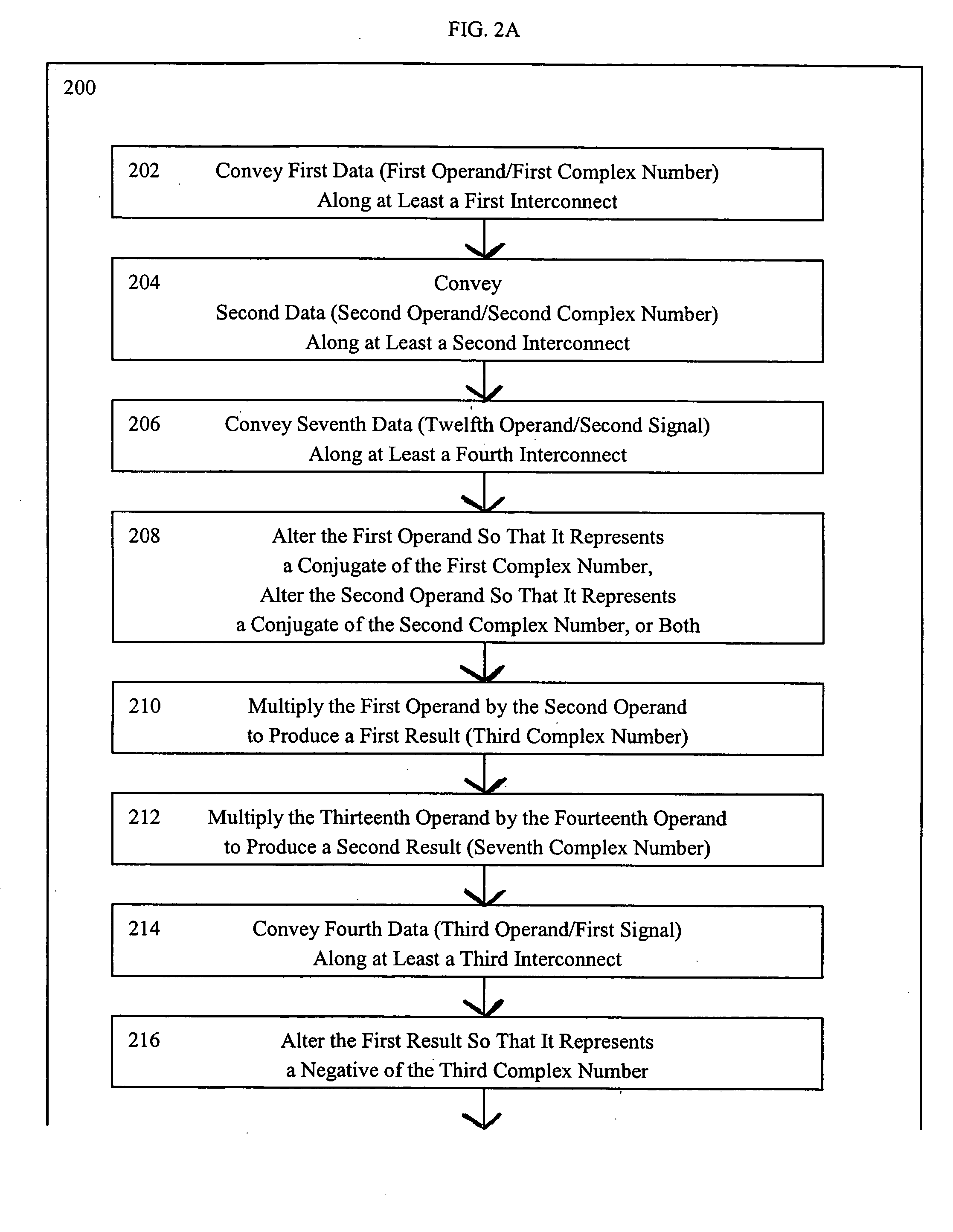

Systems for performing multiply-accumulate operations on operands representing complex numbers

InactiveUS7546330B2Computation using non-contact making devicesProcessor registerMultiply–accumulate operation

A method for multiplying, at an execution unit of a processor, two complex numbers in which a real part and an imaginary part of a product of the multiplying can be stored in a same register of the processor. First data is conveyed along at least a first interconnect of the processor. The first data has a first operand. The first operand represents a first complex number. Second data is conveyed along at least a second interconnect of the processor. The second data has a second operand. The second operand represents a second complex number. The first operand is multiplied at the execution unit by the second operand to produce a first result. The first result represents a third complex number. Third data is stored at a first register of the processor. The third data has the first result. The first result has at least the product of the multiplying.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

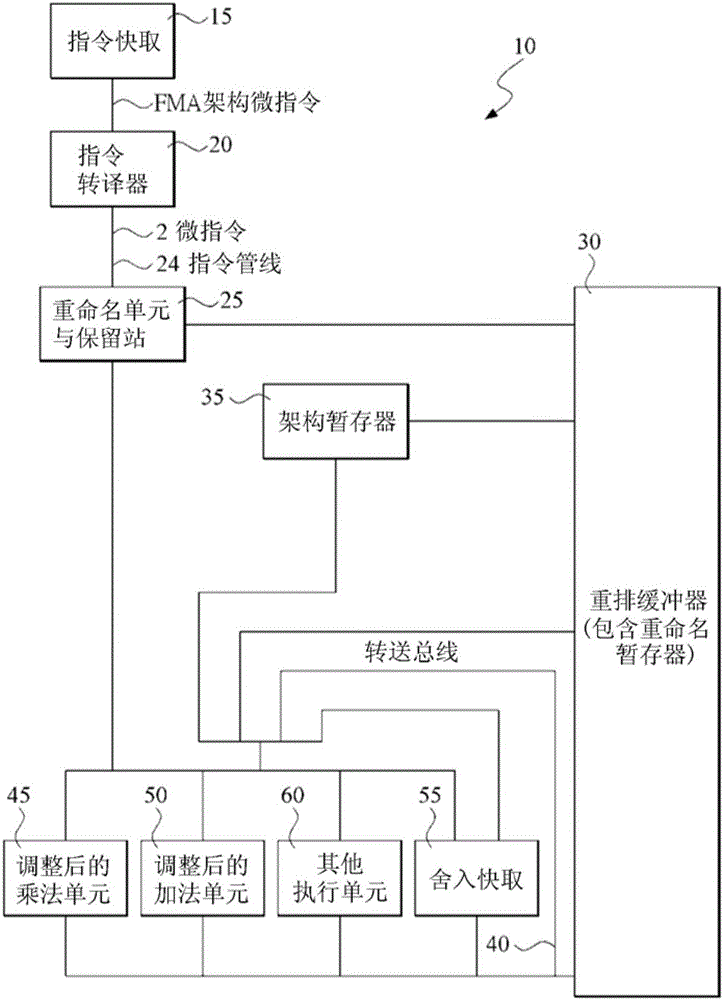

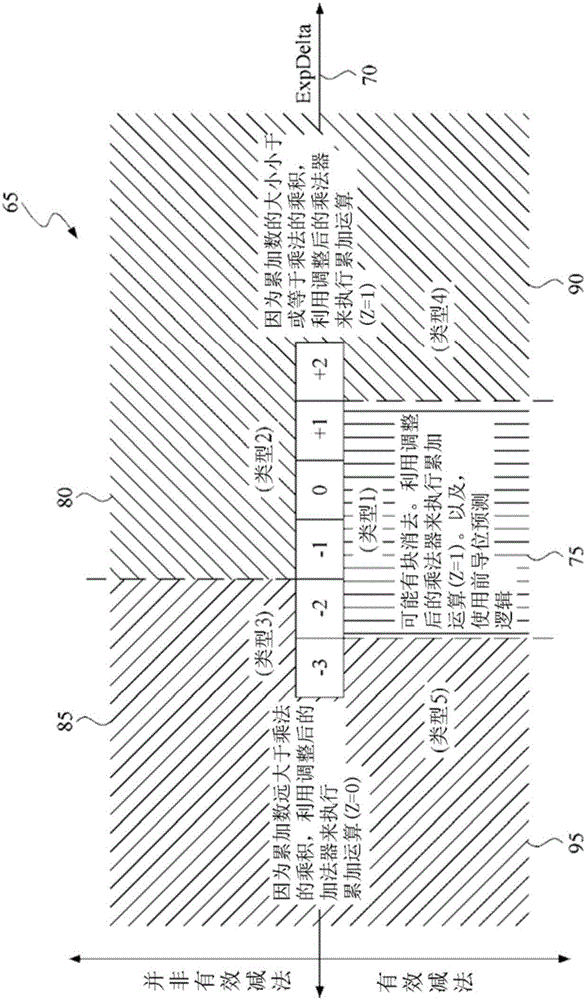

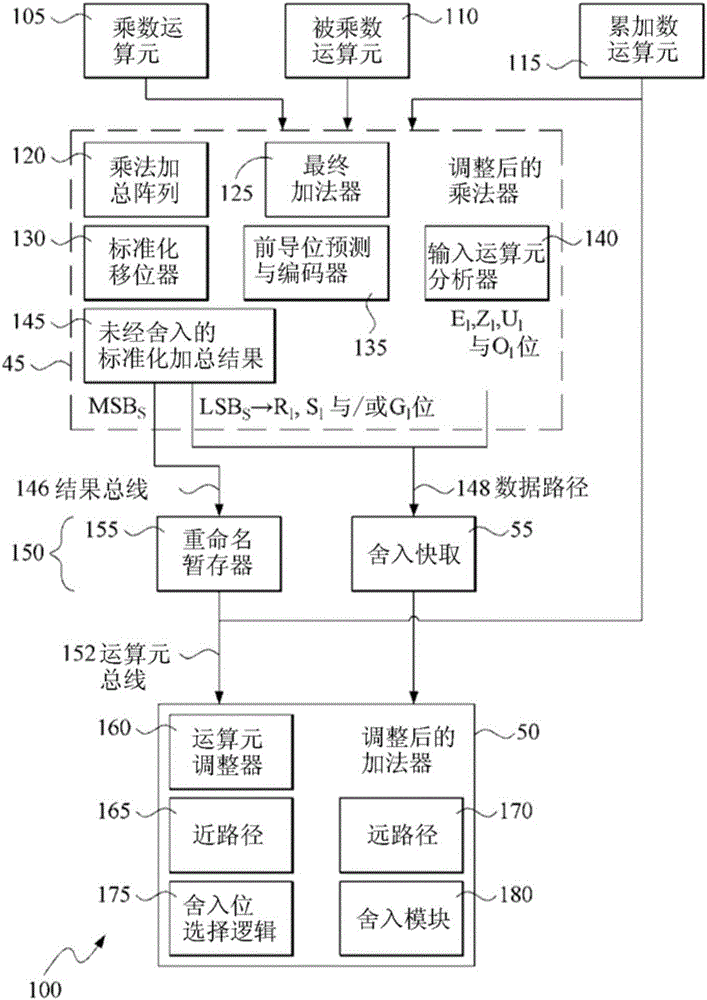

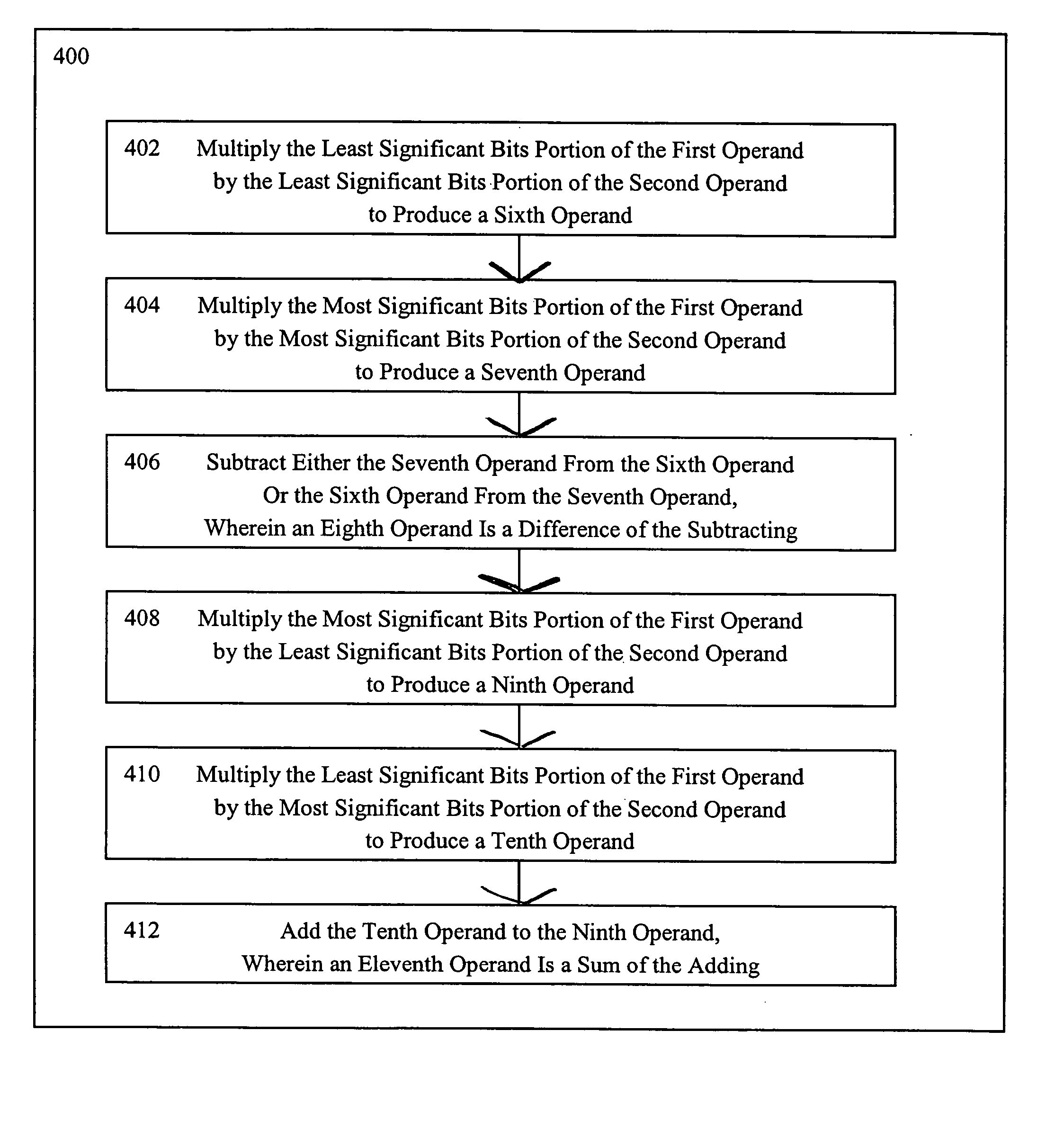

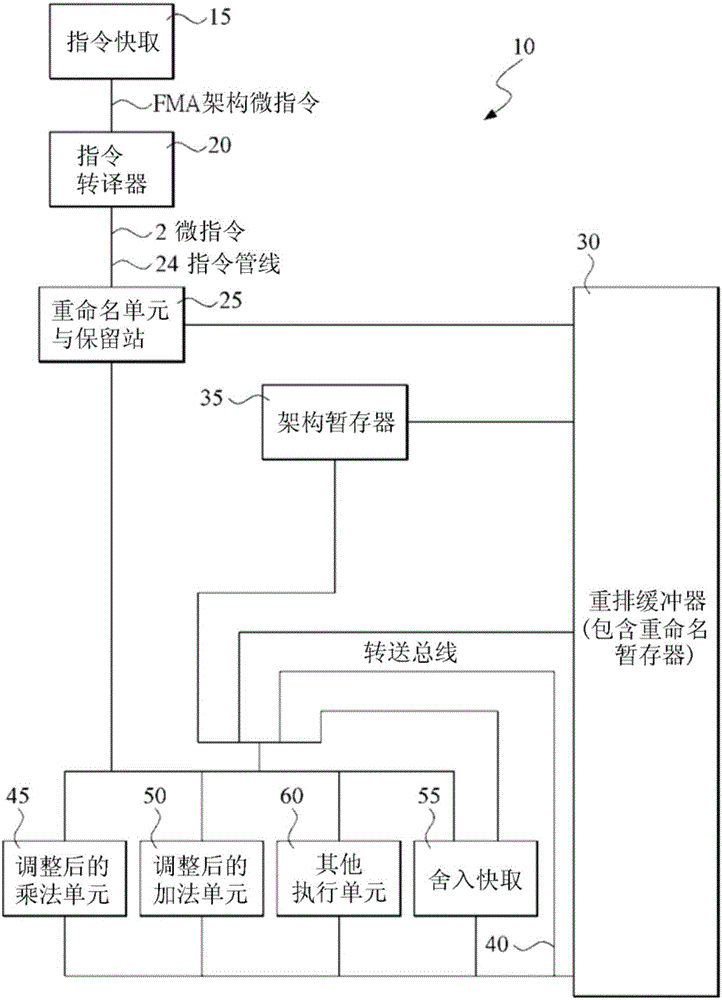

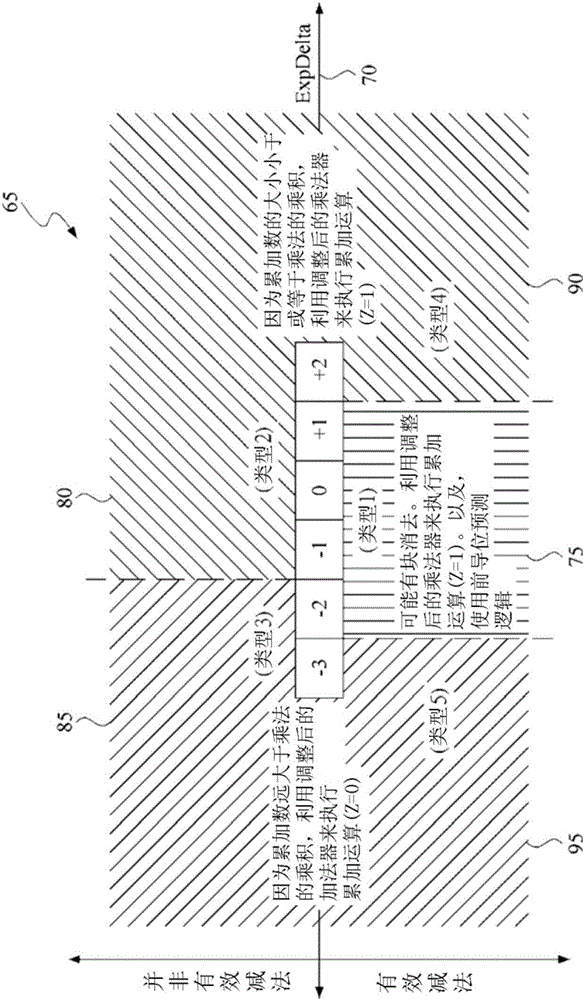

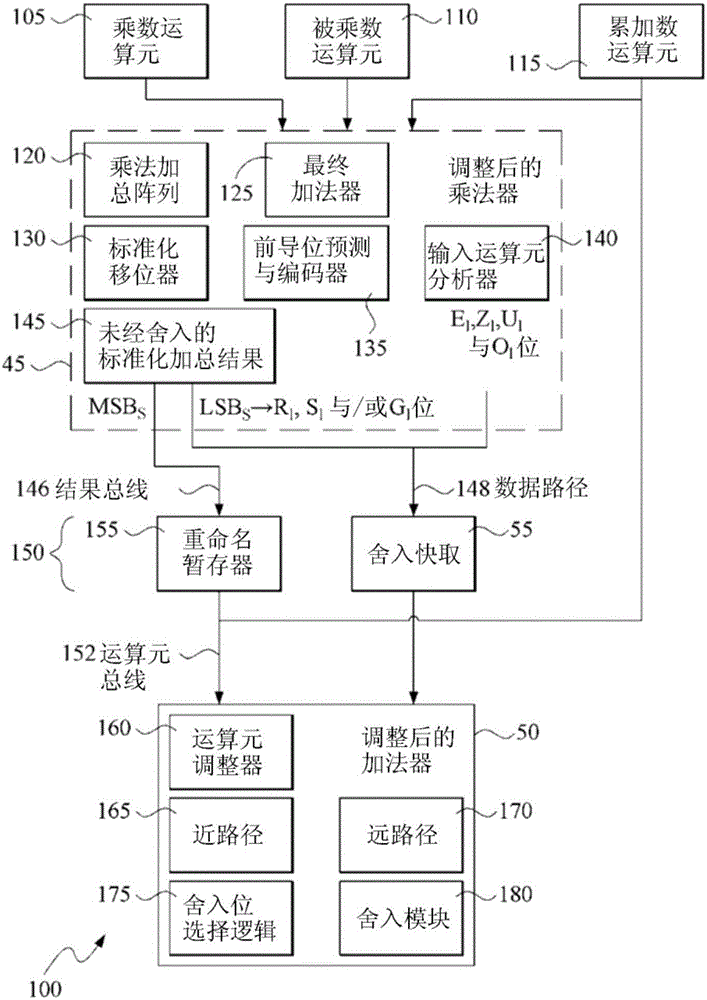

Split-path fused multiply-accumulate operation using first and second sub-operations

ActiveCN105849690AReduce cumulative power consumptionRound cache avoidanceDigital data processing detailsInstruction analysisMultiply–accumulate operationParallel computing

A microprocessor executes a fused multiply-accumulate operation of a form +-A * B +- C by dividing the operation in first and second suboperations. The first suboperation selectively accumulates the partial products of A and B with or without C and generates an intermediate result vector and a plurality of calculation control indicators. The calculation control indicators indicate how subsequent calculations to generate a final result from the intermediate result vector should proceed. The intermediate result vector, in combination with the plurality of calculation control indicators, provides sufficient information to generate a result indistinguishable from an infinitely precise calculation of the compound arithmetic operation whose result is reduced in significance to a target data size.

Owner:上海兆芯集成电路股份有限公司

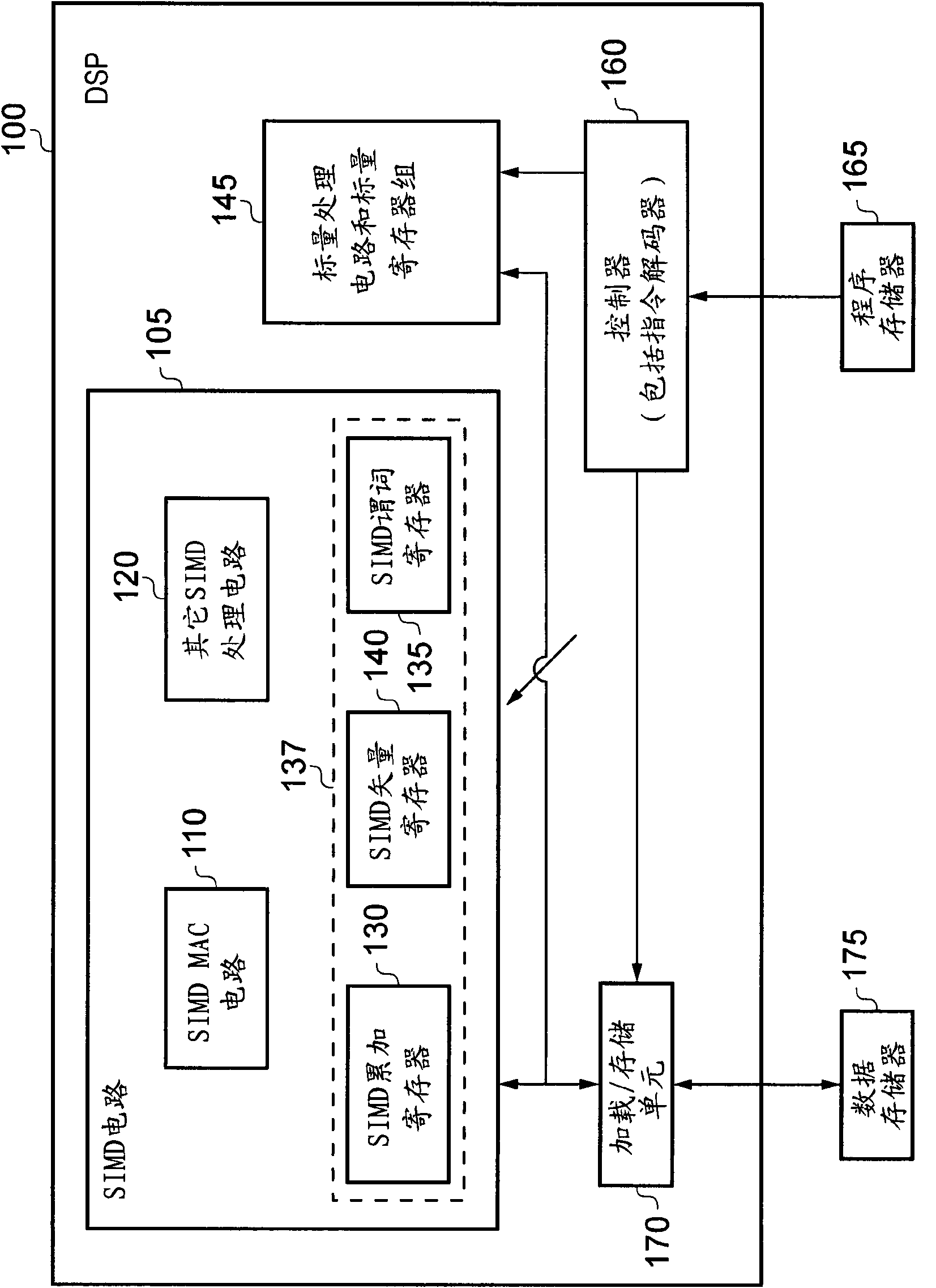

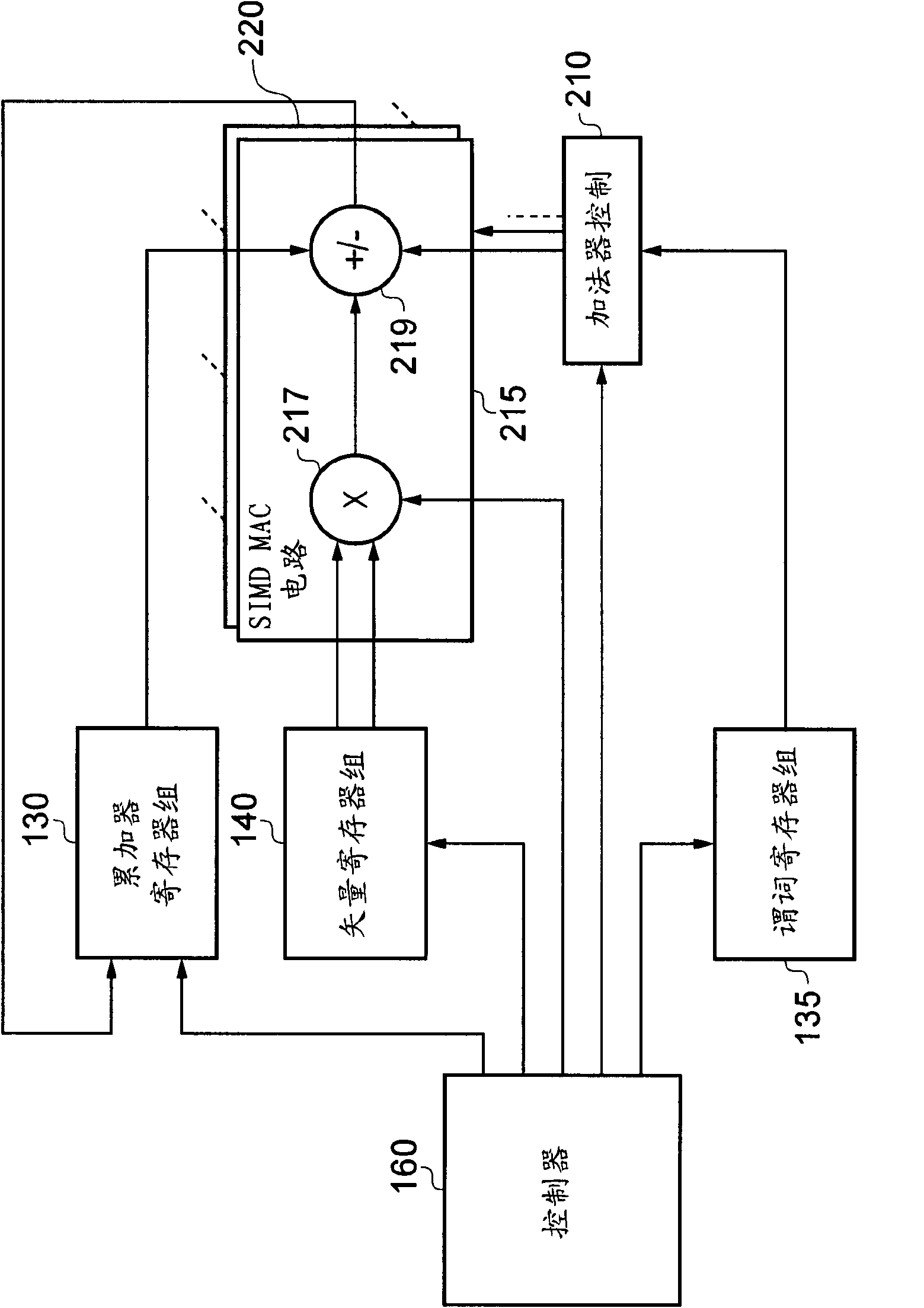

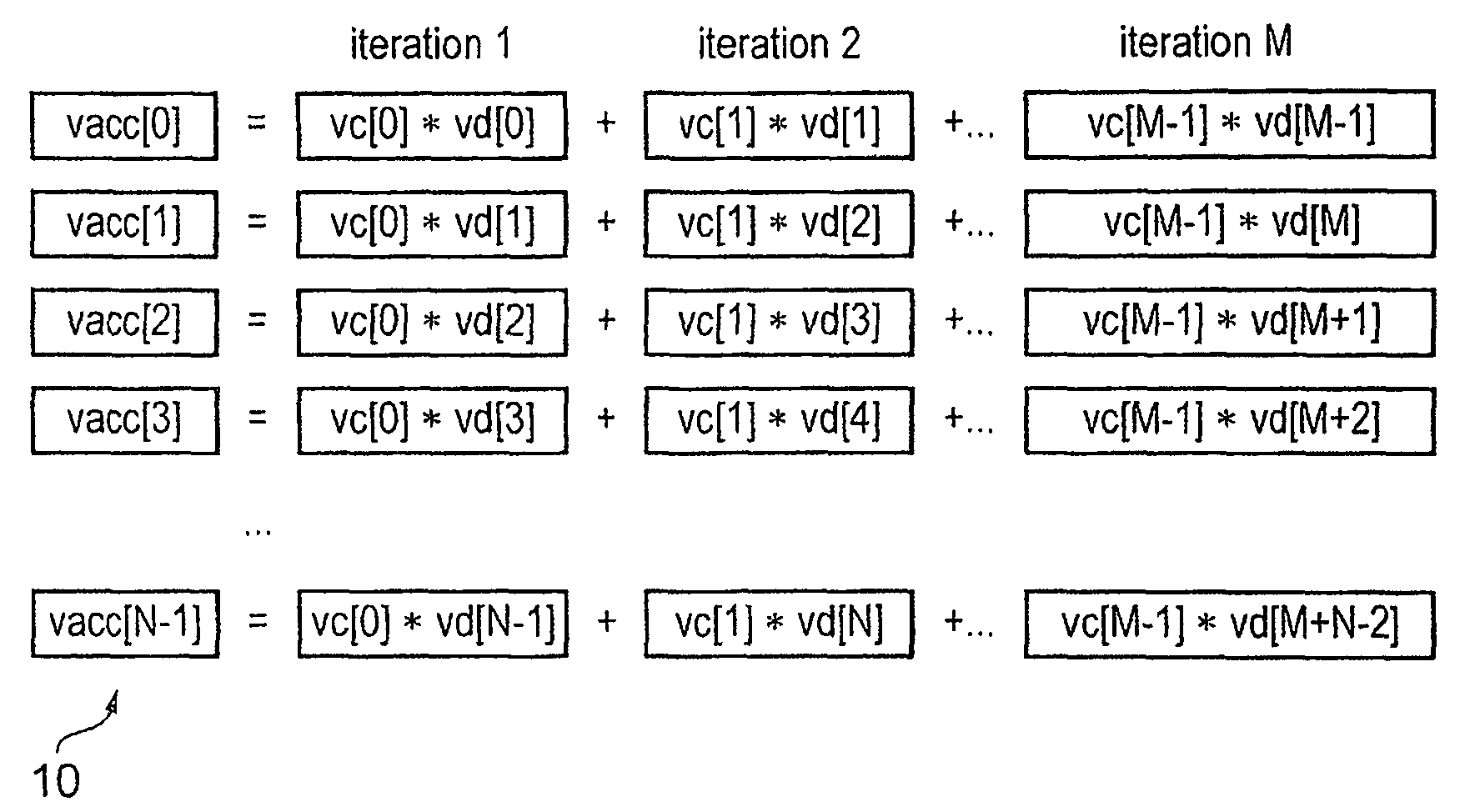

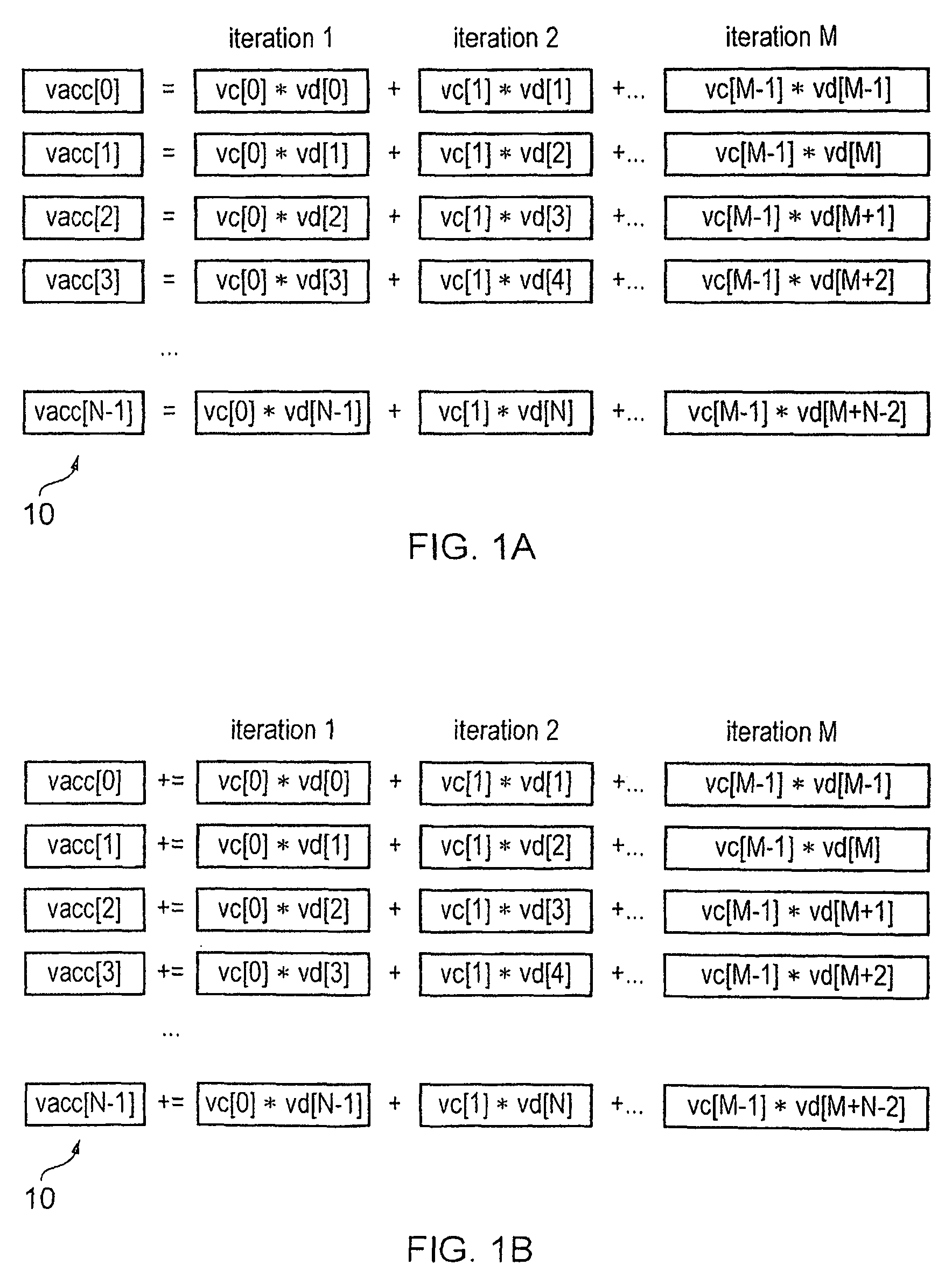

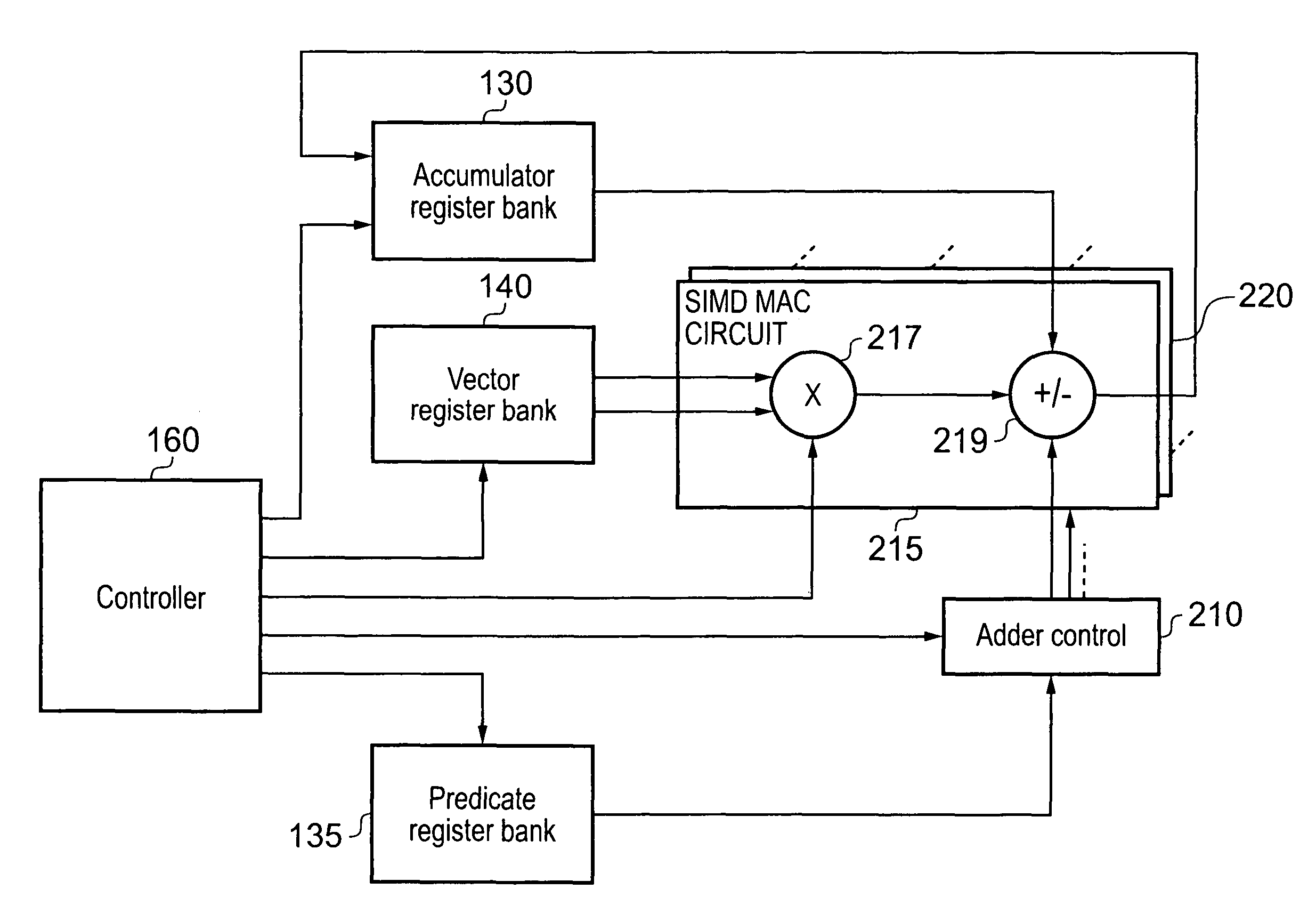

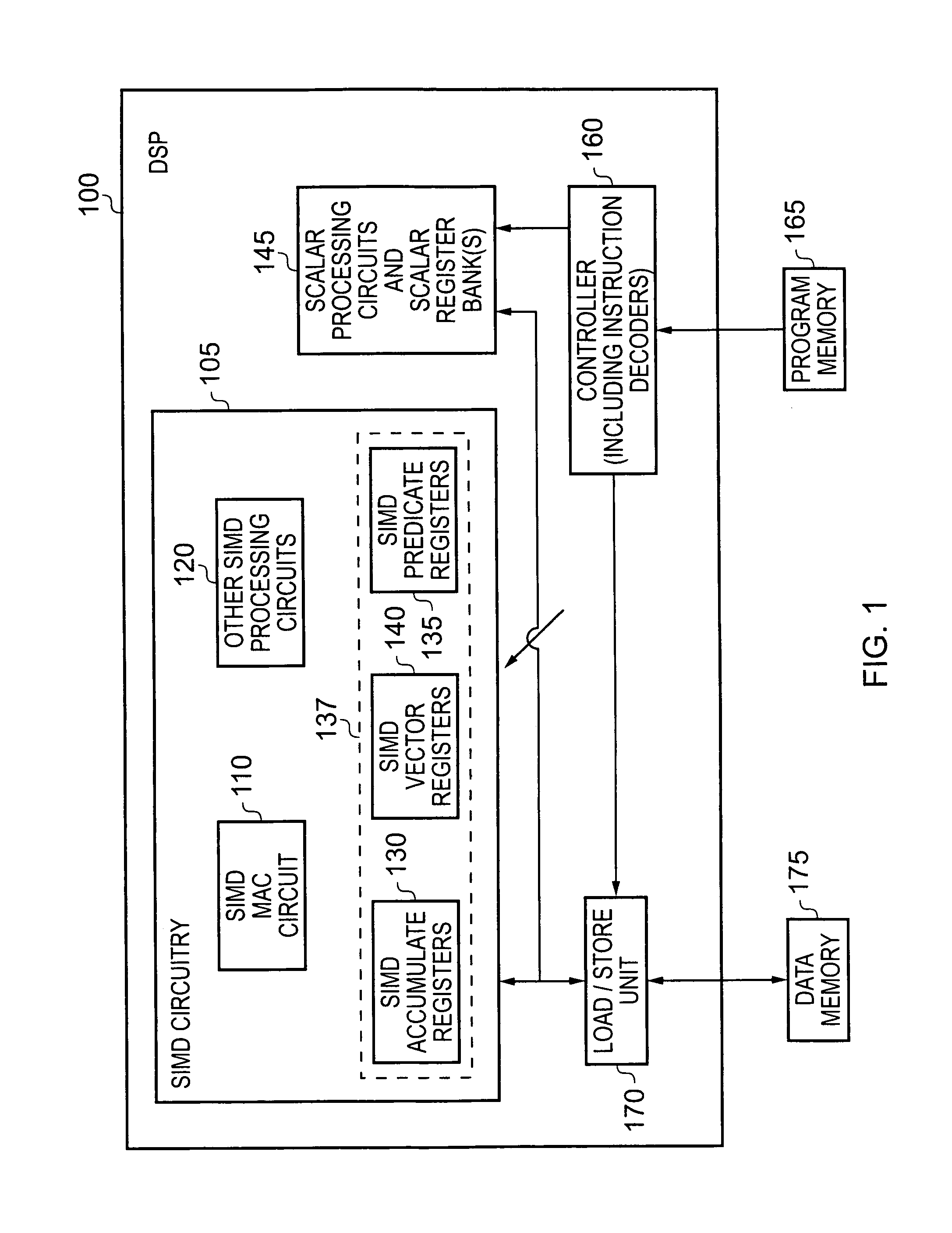

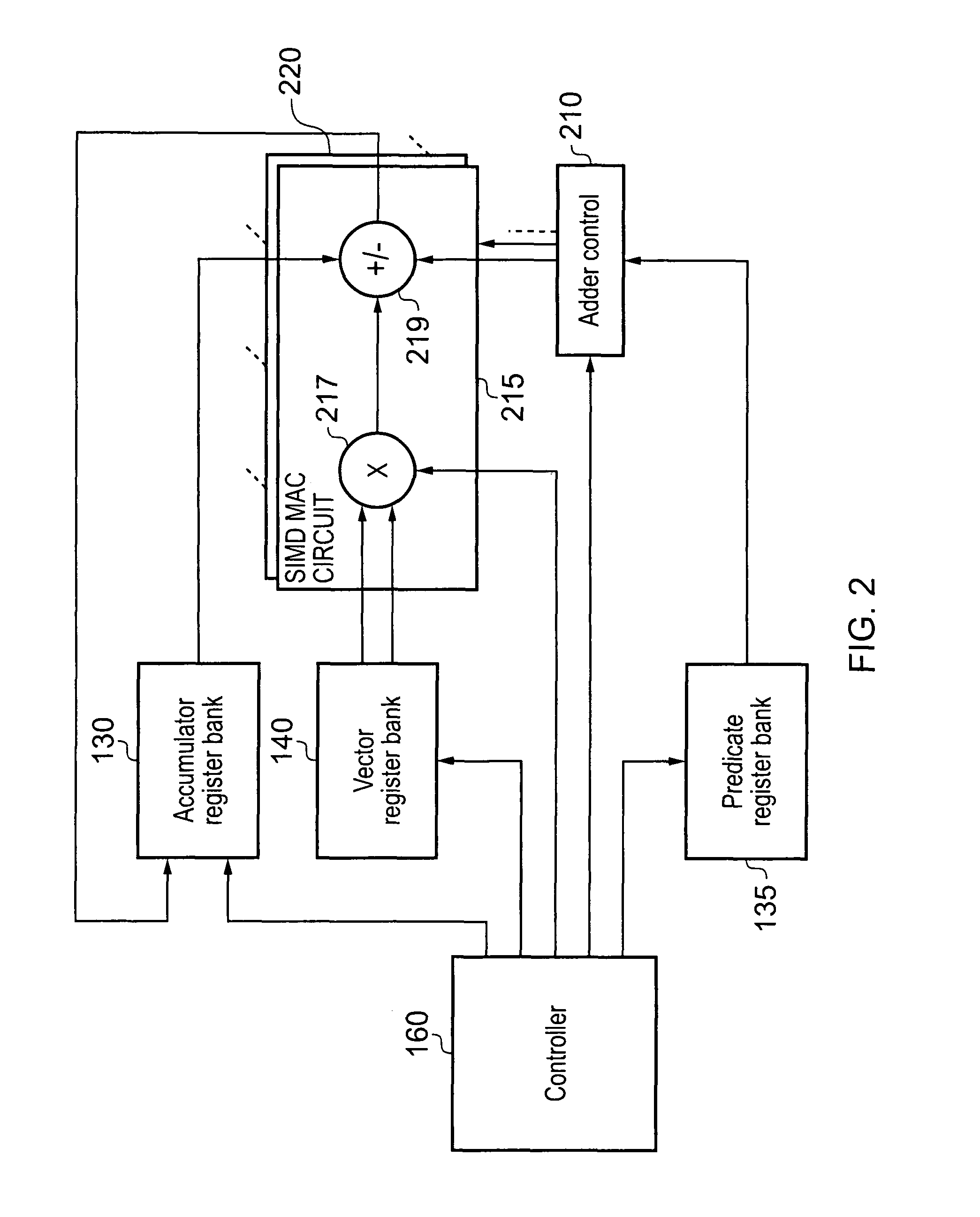

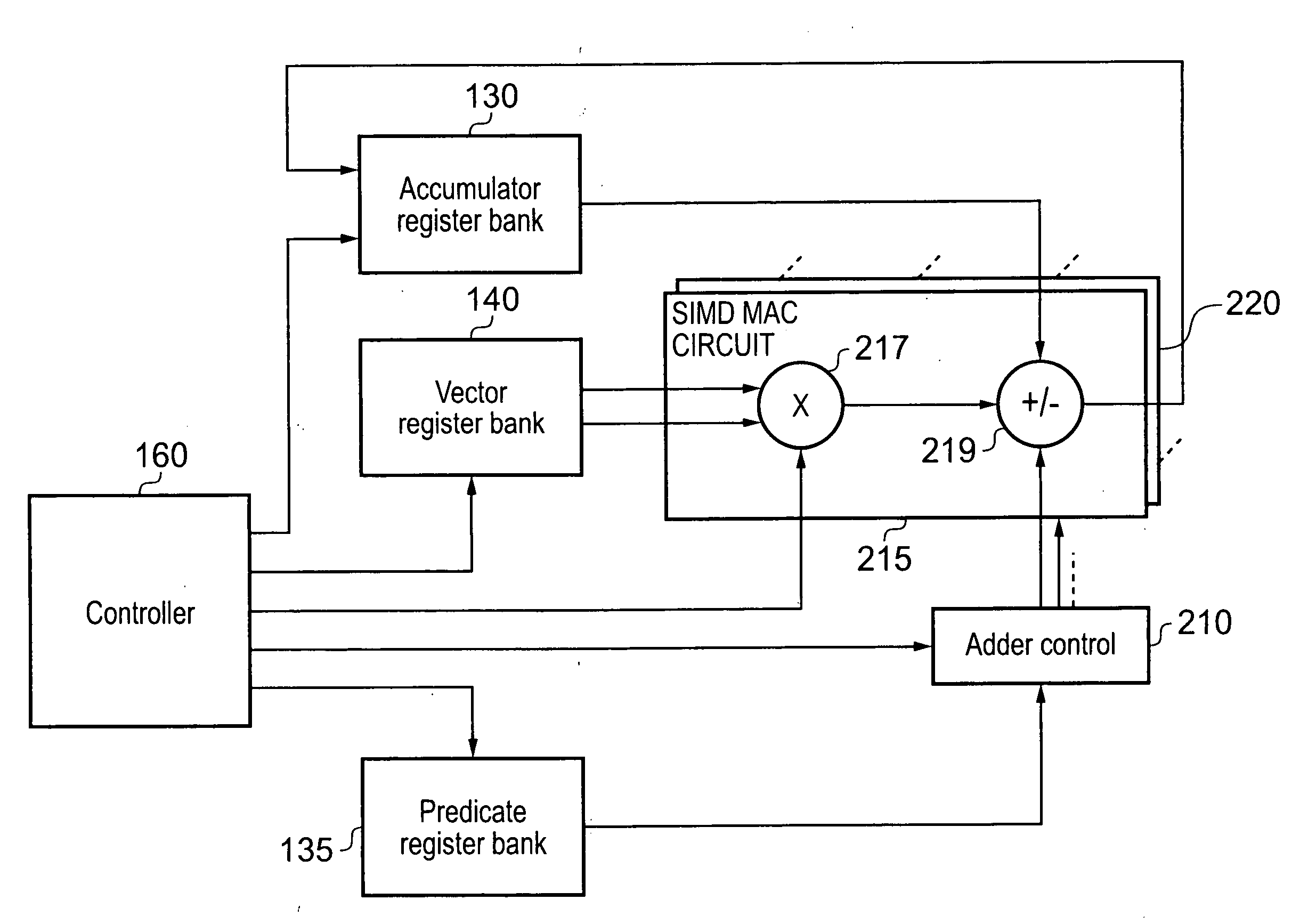

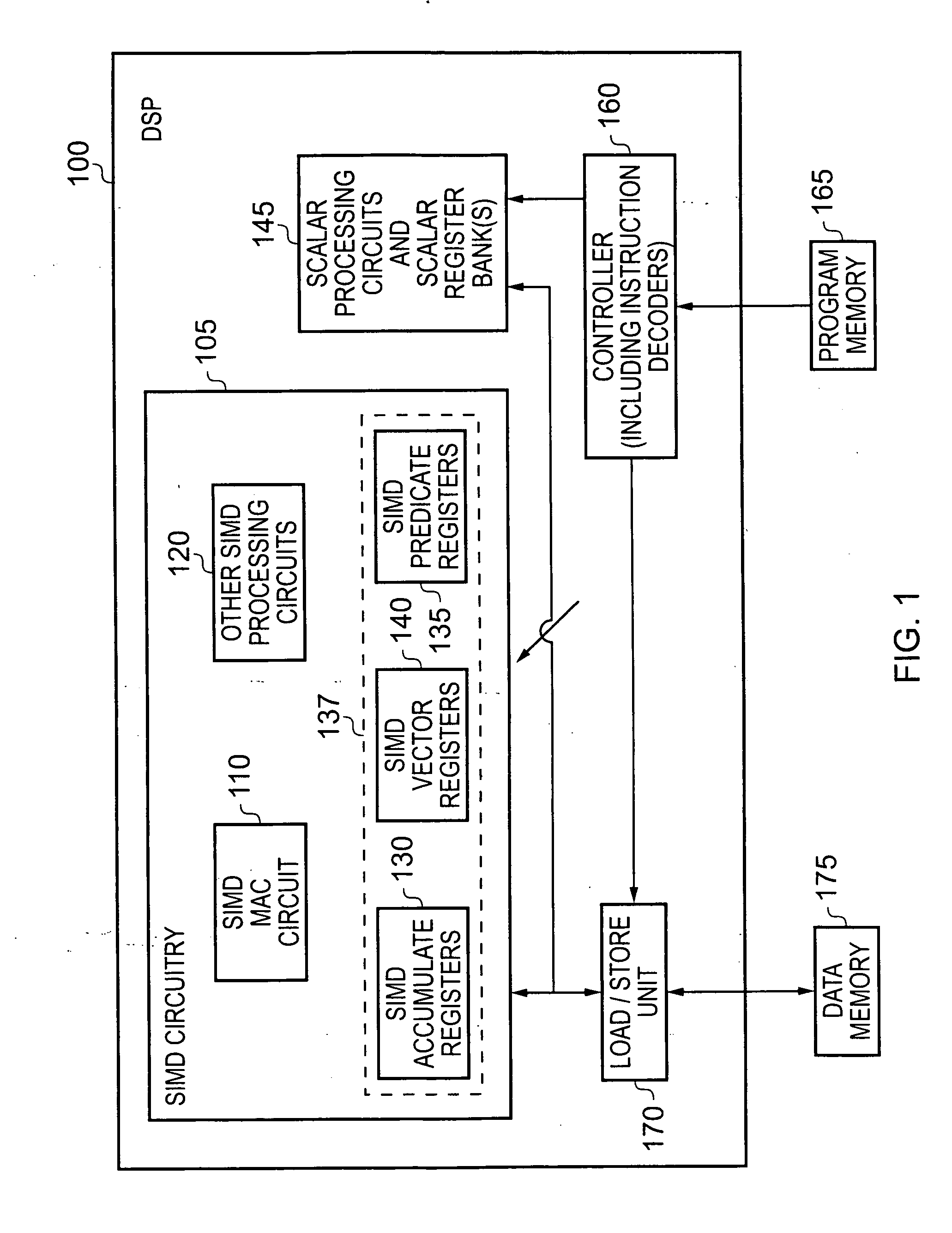

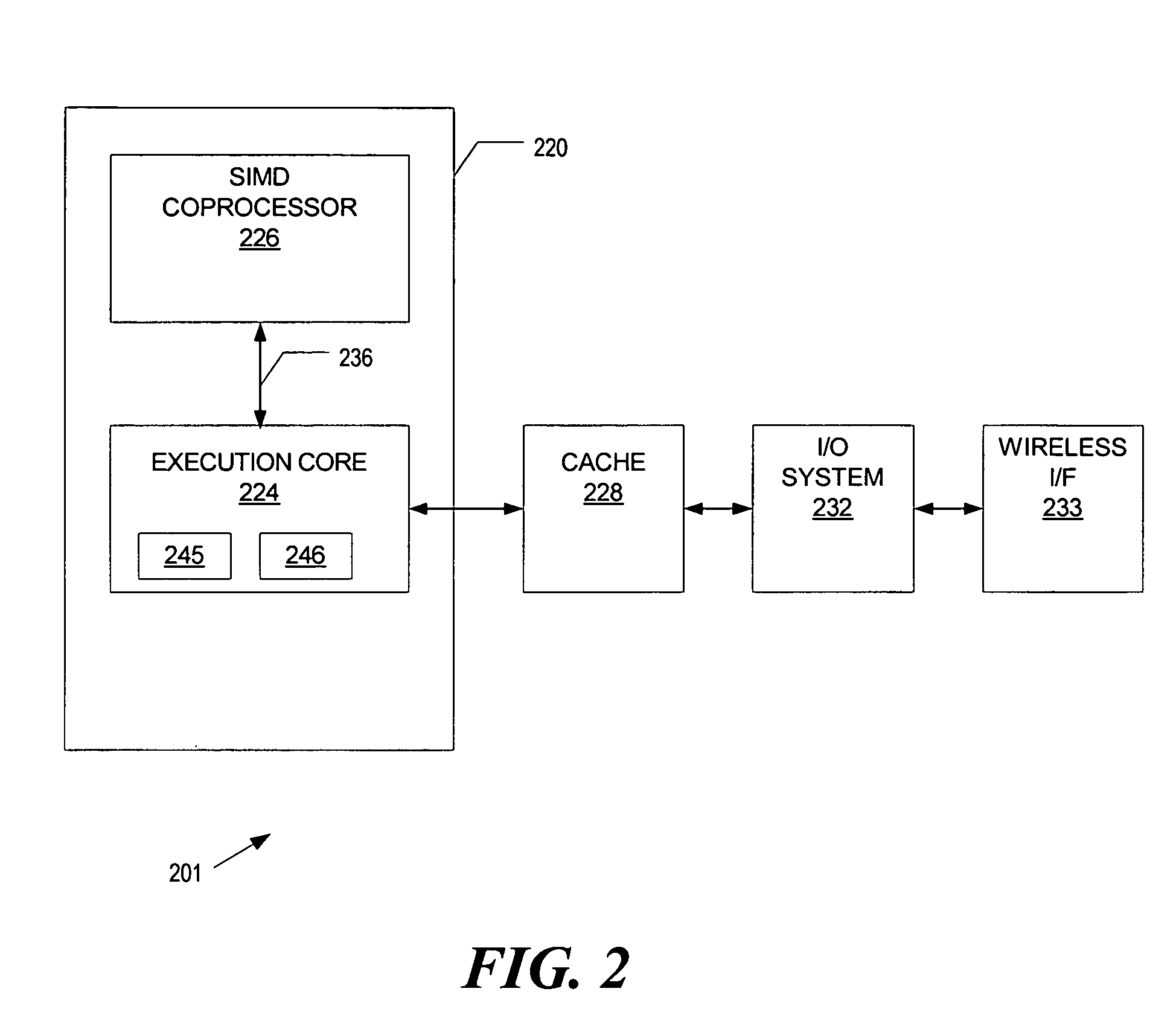

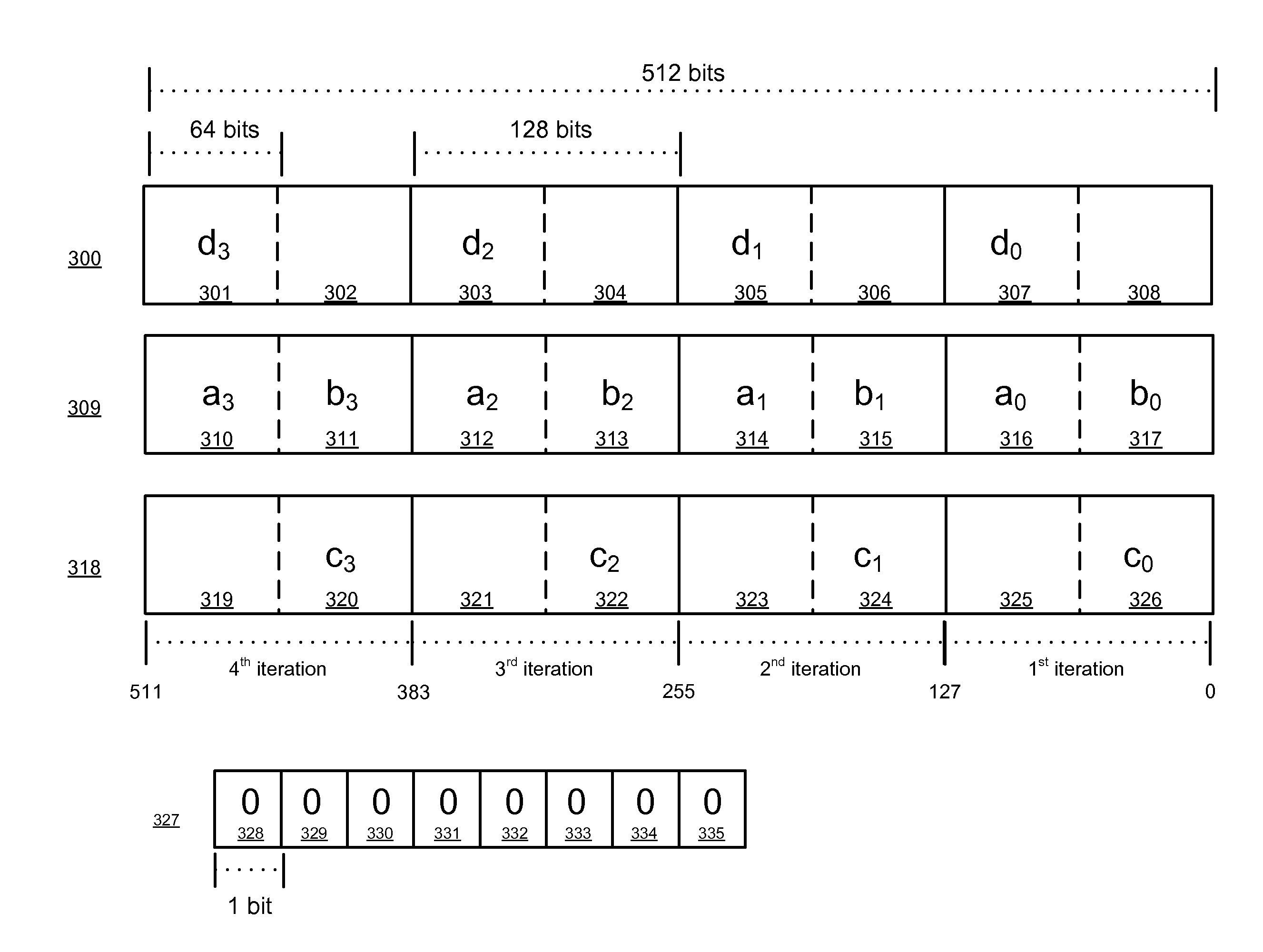

Apparatus and method for performing SIMD multiply-accumulate operations

ActiveUS8443170B2Reduce energy consumptionImprove energy consumptionAssociative processorsNext instruction address formationProgram instructionControl signal

An apparatus and method for performing SIMD multiply-accumulate operations includes SIMD data processing circuitry responsive to control signals to perform data processing operations in parallel on multiple data elements. Instruction decoder circuitry is coupled to the SIMD data processing circuitry and is responsive to program instructions to generate the required control signals. The instruction decoder circuitry is responsive to a single instruction (referred to herein as a repeating multiply-accumulate instruction) having as input operands a first vector of input data elements, a second vector of coefficient data elements, and a scalar value indicative of a plurality of iterations required, to generate control signals to control the SIMD processing circuitry. In response to those control signals, the SIMD data processing circuitry performs the plurality of iterations of a multiply-accumulate process, each iteration involving performance of N multiply-accumulate operations in parallel in order to produce N multiply-accumulate data elements. For each iteration, the SIMD data processing circuitry determines N input data elements from said first vector and a single coefficient data element from the second vector to be multiplied with each of the N input data elements. The N multiply-accumulate data elements produced in a final iteration of the multiply-accumulate process are then used to produce N multiply-accumulate results. This mechanism provides a particularly energy efficient mechanism for performing SIMD multiply-accumulate operations, as for example are required for FIR filter processes.

Owner:U-BLOX

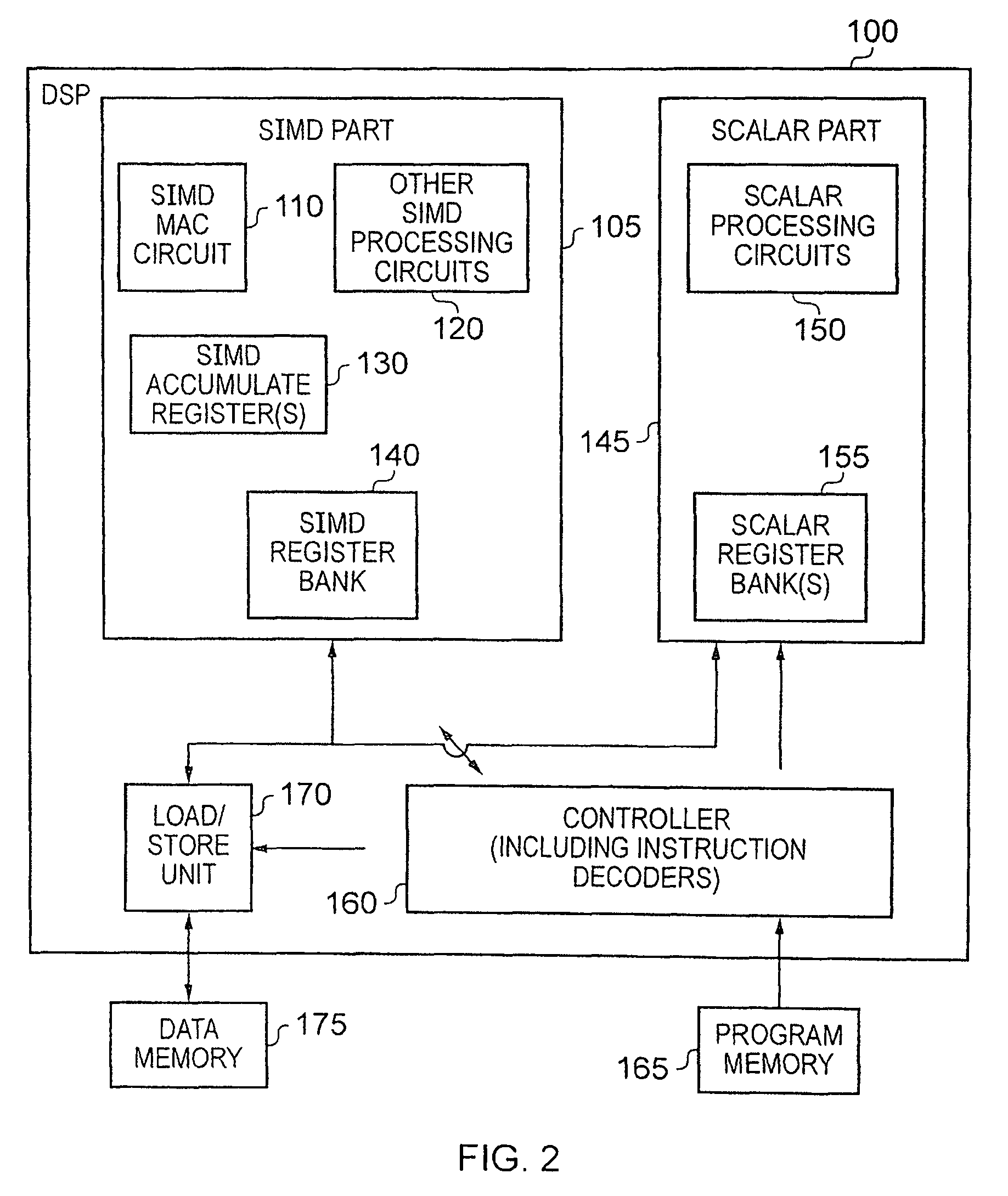

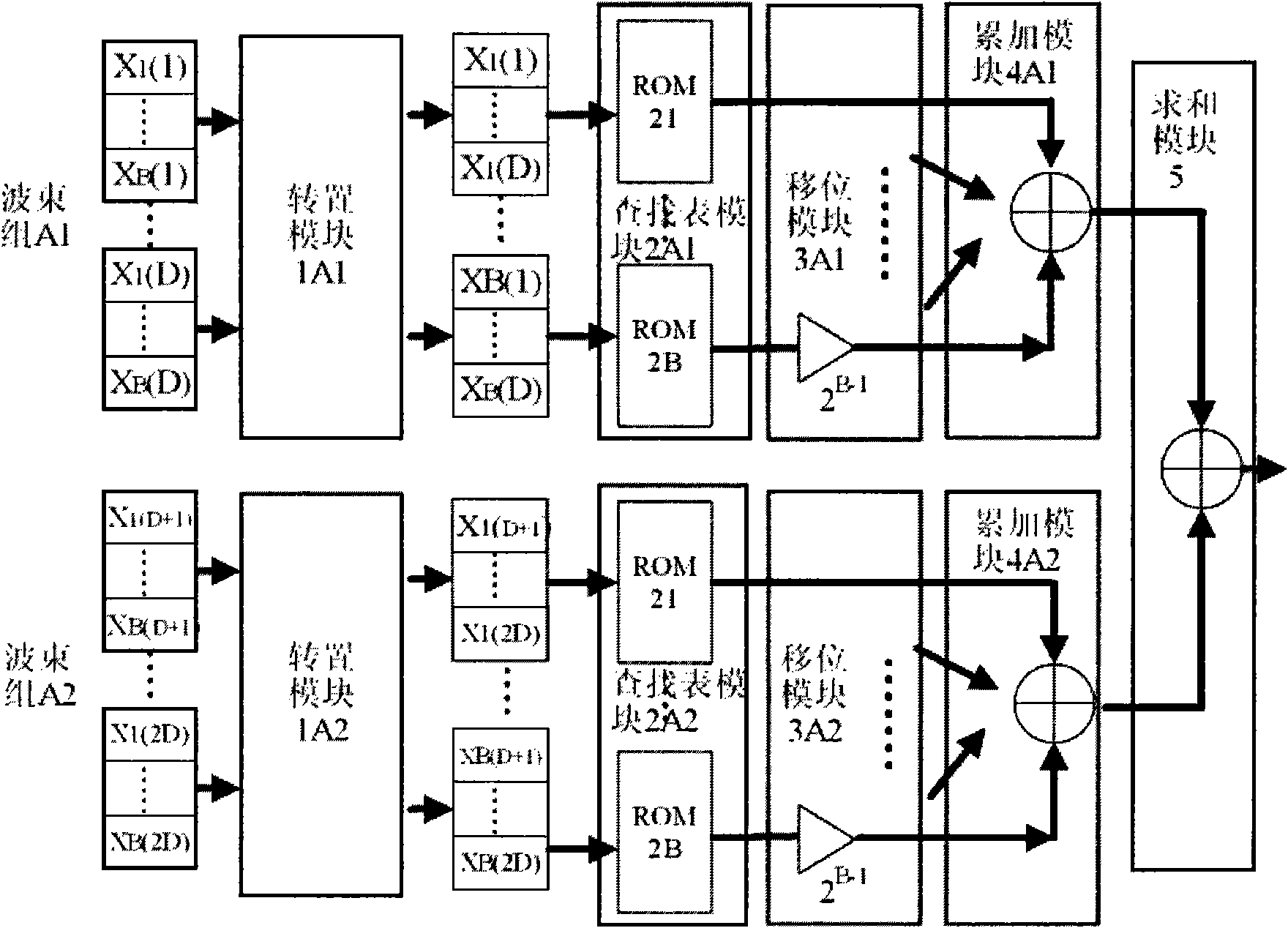

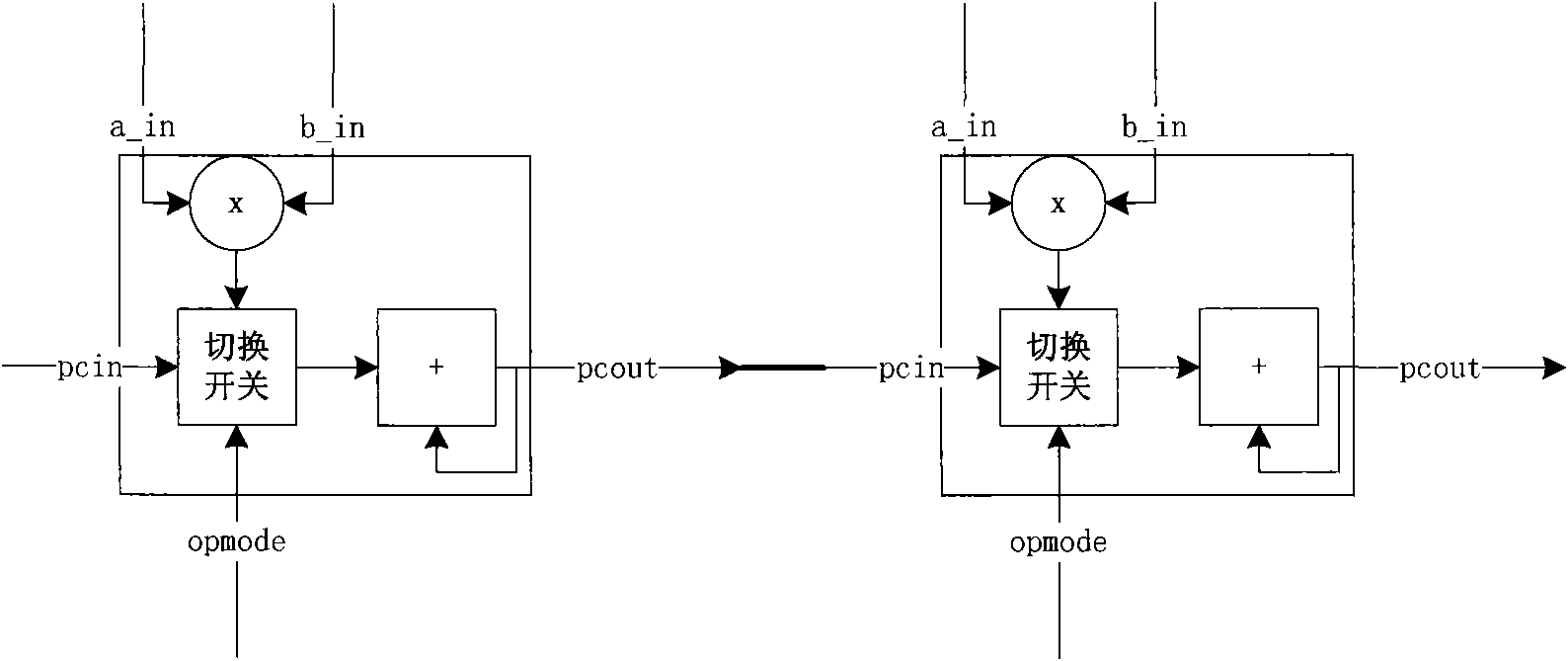

Distributed type digital beam formation network and digital beam formation processing method

InactiveCN101931449AReduce demandReduce difficultySpatial transmit diversityMultiply–accumulate operationField-programmable gate array

The invention discloses a distributed type digital beam formation network which completes parallel multiply-accumulate operation by using memory resources to replace hardware multiplier resources according to a distributed algorithm, is especially suitable for hardware system framework with rich memory resources, such as a FPGA (Field Programmable Gate Array), and the like and also can be realized by configuration of a flash memory, and the like, and therefore, the invention greatly reduces the requirement of hardware resources and the difficulty of hardware design and uses lower cost to process complicated large-scale beam formation.

Owner:SHANGHAI INST OF MICROSYSTEM & INFORMATION TECH CHINESE ACAD OF SCI

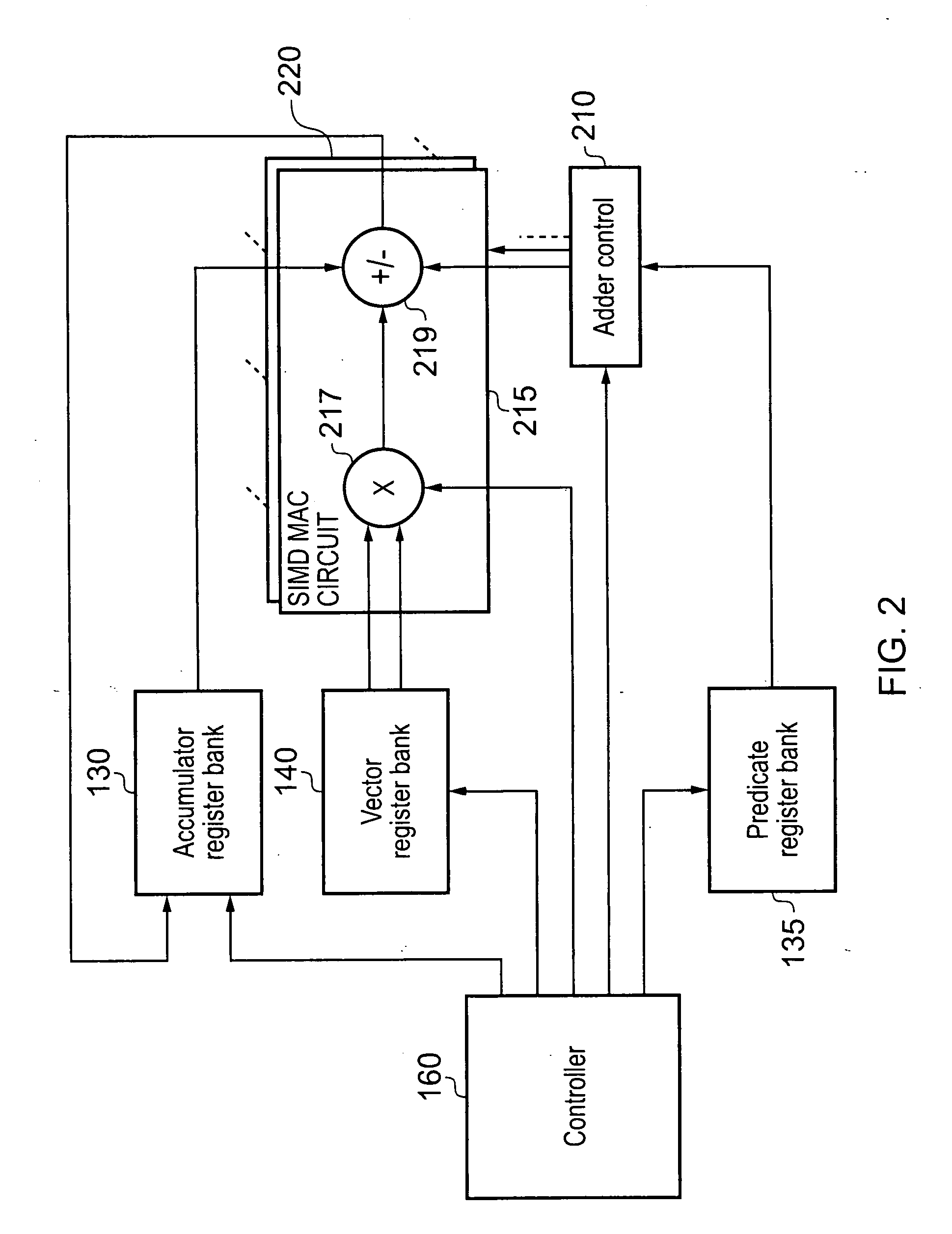

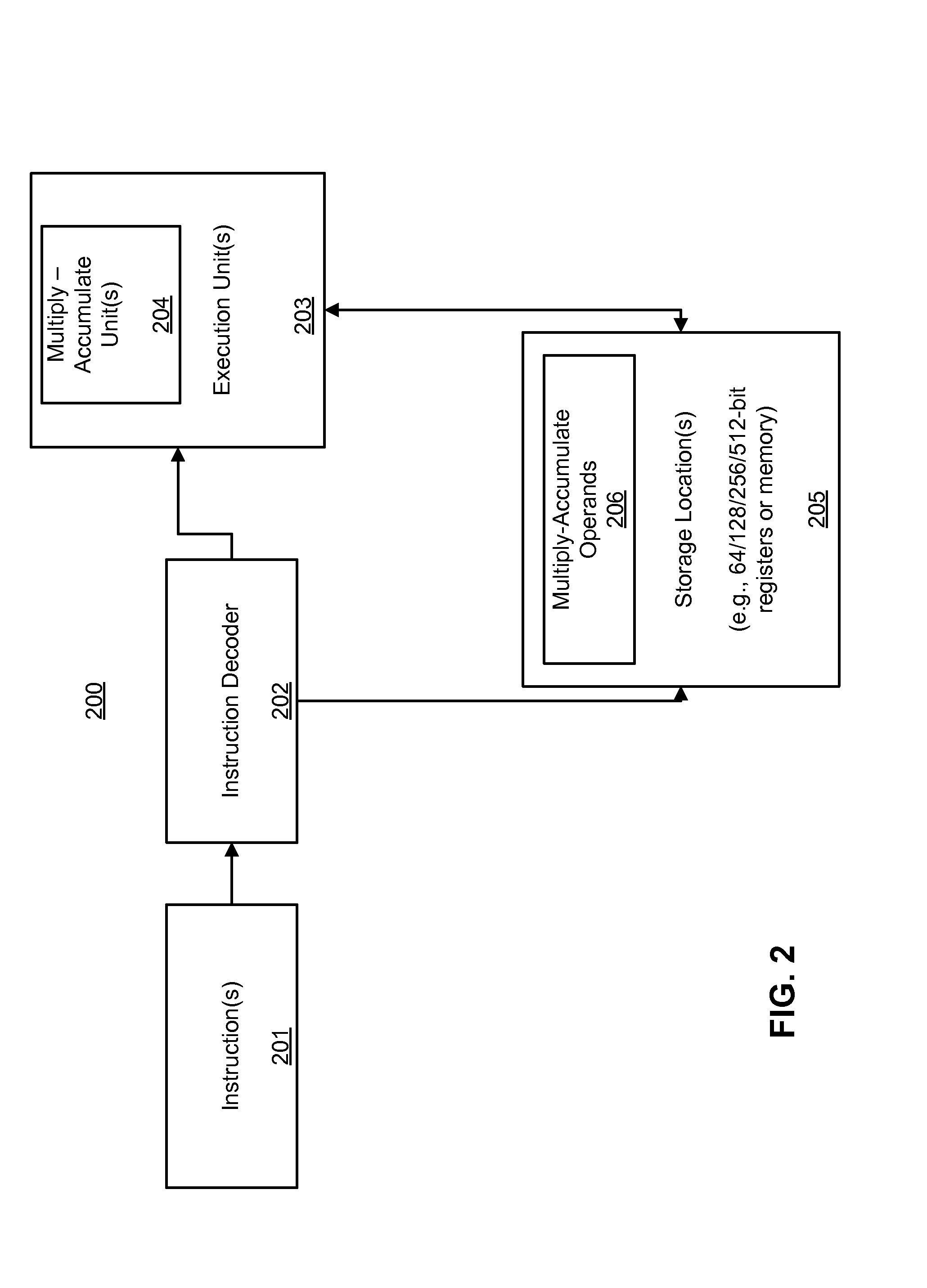

Apparatus and method for performing multiply-accumulate operations

ActiveUS8595280B2Reduce the amount requiredEasy to useDigital computer detailsProgram controlControl signalMultiply–accumulate operation

Owner:ARM LTD

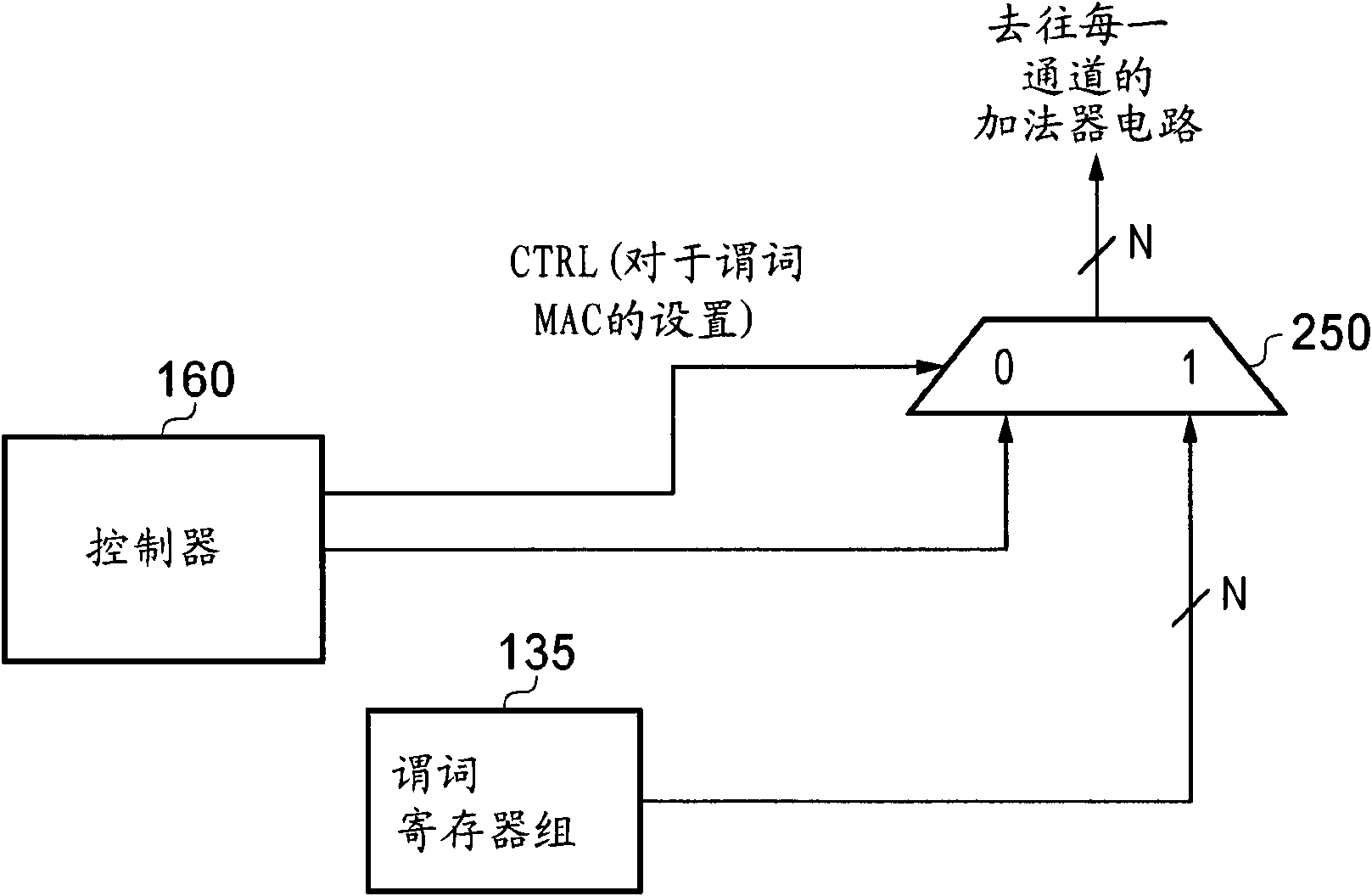

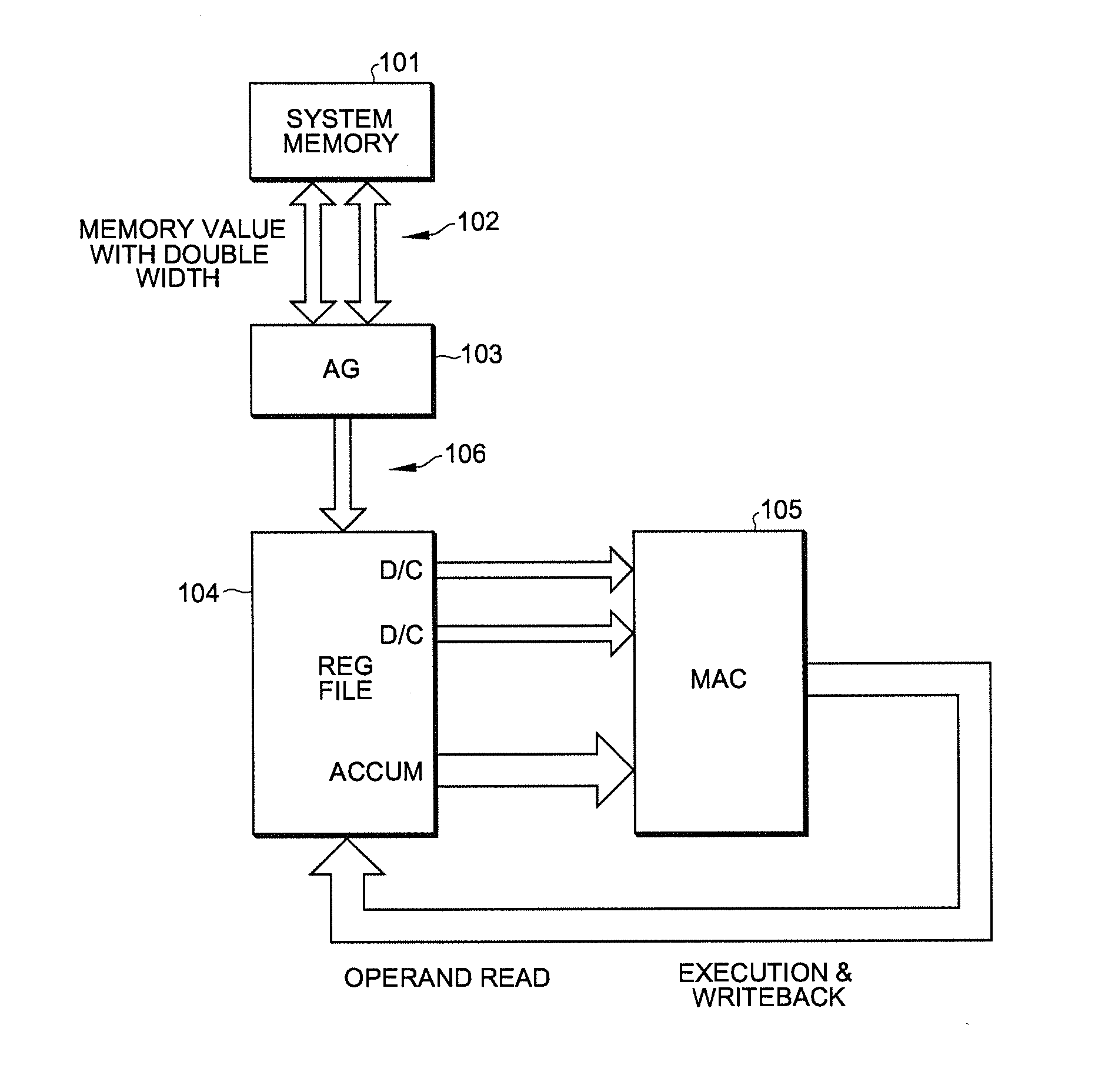

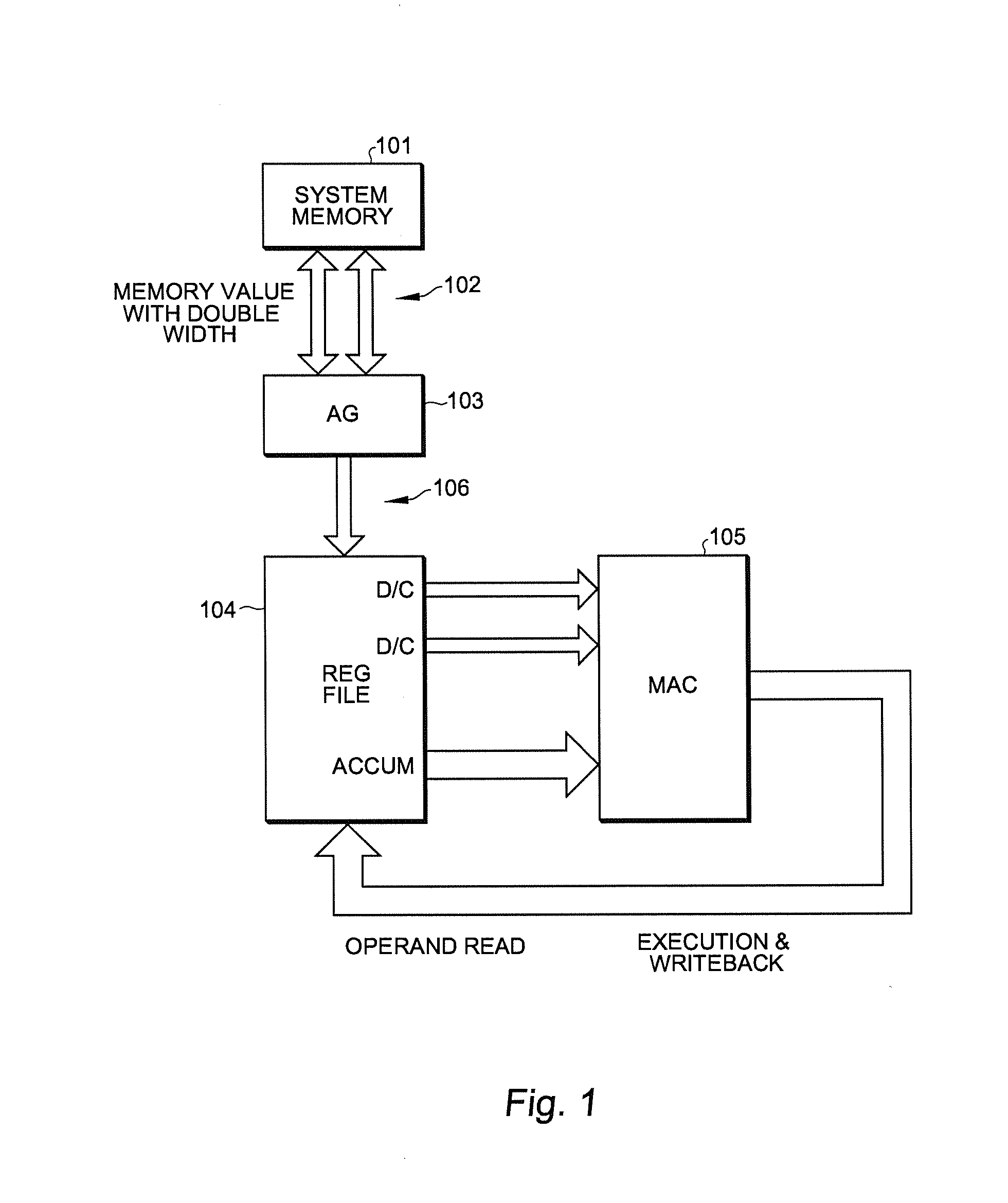

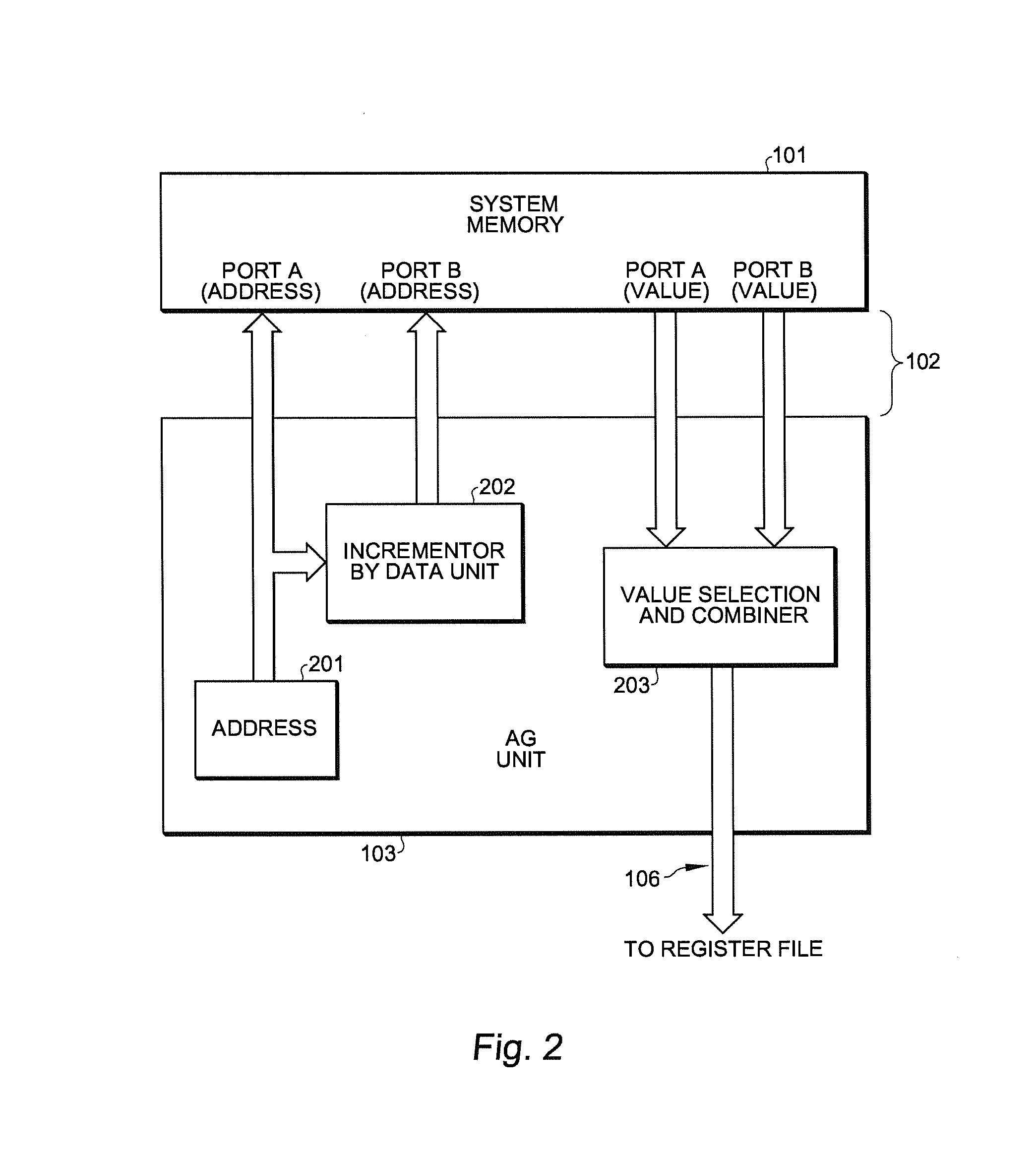

Modified balanced throughput data-path architecture for special correlation applications

ActiveUS20140019727A1Eliminate needDigital computer detailsSpecific program execution arrangementsDigital signal processingAddress generator

Apparatus and method for a modified, balanced throughput data-path architecture is given for efficiently implementing the digital signal processing algorithms of filtering, convolution and correlation in computer hardware, in which both data and coefficient buffers can be implemented as sliding windows. This architecture uses a multiplexer and a data path branch from the Address Generator unit to the multiply-accumulate execution unit. By selecting between the data path of Address Generator to execution unit and the data path of register to execution unit, the unbalanced throughput and multiply-accumulate bubble cycles caused by misaligned addressing on coefficients can be overcome. The modified balanced throughput data-path architecture can achieve a high multiply-accumulate operation rate per cycle in implementing digital signal processing algorithms.

Owner:STMICROELECTRONICS BEIJING R& D +1

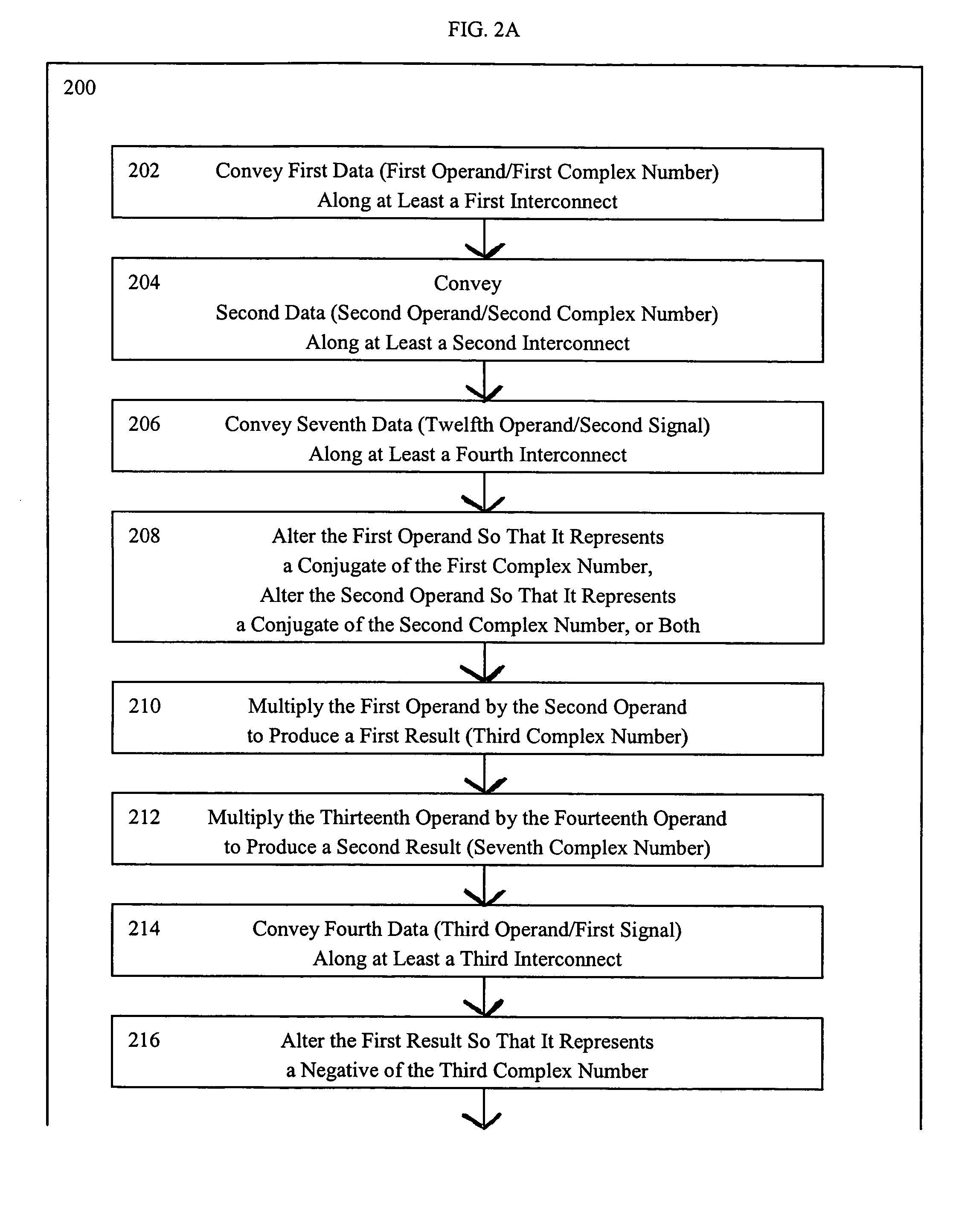

Methods for performing multiply-accumulate operations on operands representing complex numbers

InactiveUS20050071415A1Computation using non-contact making devicesMultiply–accumulate operationOperand

A method for multiplying, at an execution unit of a processor, two complex numbers in which a real part and an imaginary part of a product of the multiplying can be stored in a same register of the processor. First data is conveyed along at least a first interconnect of the processor. The first data has a first operand. The first operand represents a first complex number. Second data is conveyed along at least a second interconnect of the processor. The second data has a second operand. The second operand represents a second complex number. The first operand is multiplied at the execution unit by the second operand to produce a first result. The first result represents a third complex number. Third data is stored at a first register of the processor. The third data has the first result. The first result has at least the product of the multiplying.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

SIMD operation system capable of designating plural registers via one register designating field

InactiveUS7043627B2Maximize the effectMaintain accuracyRegister arrangementsConcurrent instruction executionNetwork packetProcessor register

In view of a necessity of alleviating factors obstructing an effect of SIMD operation such as in-register data alignment in high speed formation of an SIMD processor, numerous data can be supplied to a data alignment operation pipe 211 by dividing a register file into four banks and enabling to designate a plurality of registers by a single piece of operand to thereby enable to make access to four registers simultaneously and data alignment operation can be carried out at high speed. Further, by defining new data pack instruction, data unpack instruction and data permutation instruction, data supplied in a large number can be aligned efficiently. Further, by the above-described characteristic, definition of multiply accumulate operation instruction maximizing parallelism of SIMD can be carried out.

Owner:RENESAS ELECTRONICS CORP

Apparatus and method for performing multiply-accumulate operations

ActiveUS20110106871A1Reduce the amount requiredEasy to useComputation using non-contact making devicesDigital computer detailsControl signalMultiply–accumulate operation

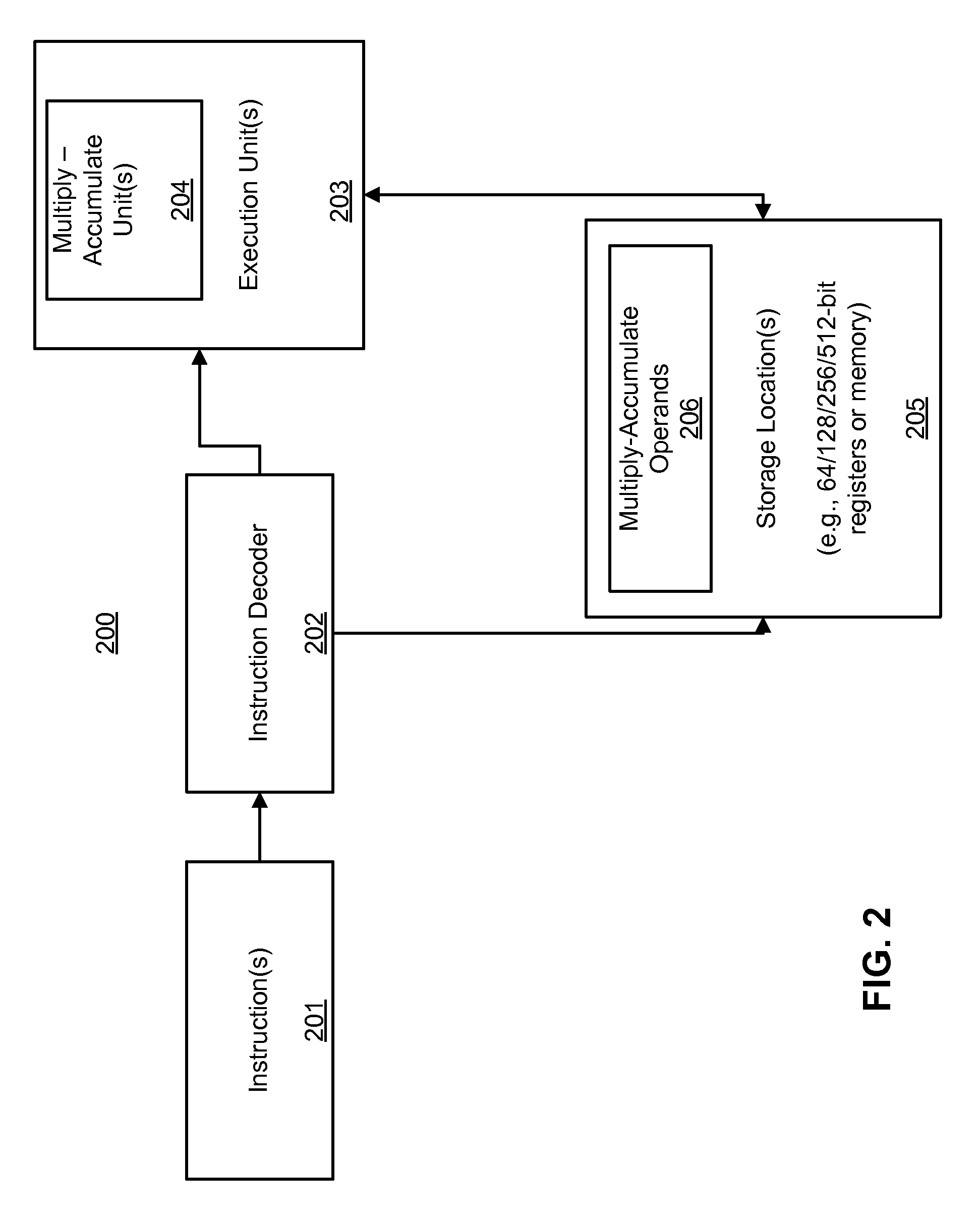

A data processing apparatus and method for performing multiply-accumulate operations is provided. The data processing apparatus includes data processing circuitry responsive to control signals to perform data processing operations on at least one input data element. Instruction decoder circuitry is responsive to a predicated multiply-accumulate instruction specifying as input operands a first input data element, a second input data element, and a predicate value, to generate control signals to control the data processing circuitry to perform a multiply-accumulate operation by: multiplying said first input data element and said second input data element to produce a multiplication data element; if the predicate value has a first value, producing a result accumulate data element by adding the multiplication data element to an initial accumulate data element; and if the predicate value has a second value, producing the result accumulate data element by subtracting the multiplication data element from the initial accumulate data element. Such an approach provides a particularly efficient mechanism for performing complex sequences of multiply-add and multiply-subtract operations, facilitating improvements in performance, energy consumption and code density when compared with known prior art techniques.

Owner:ARM LTD

Dual-multiply-accumulator operation optimized for even and odd multisample calculations

ActiveUS20050235025A1Complex mathematical operationsComputation using denominational number representationMultiply–accumulate operationComputer science

Owner:MARVELL ASIA PTE LTD

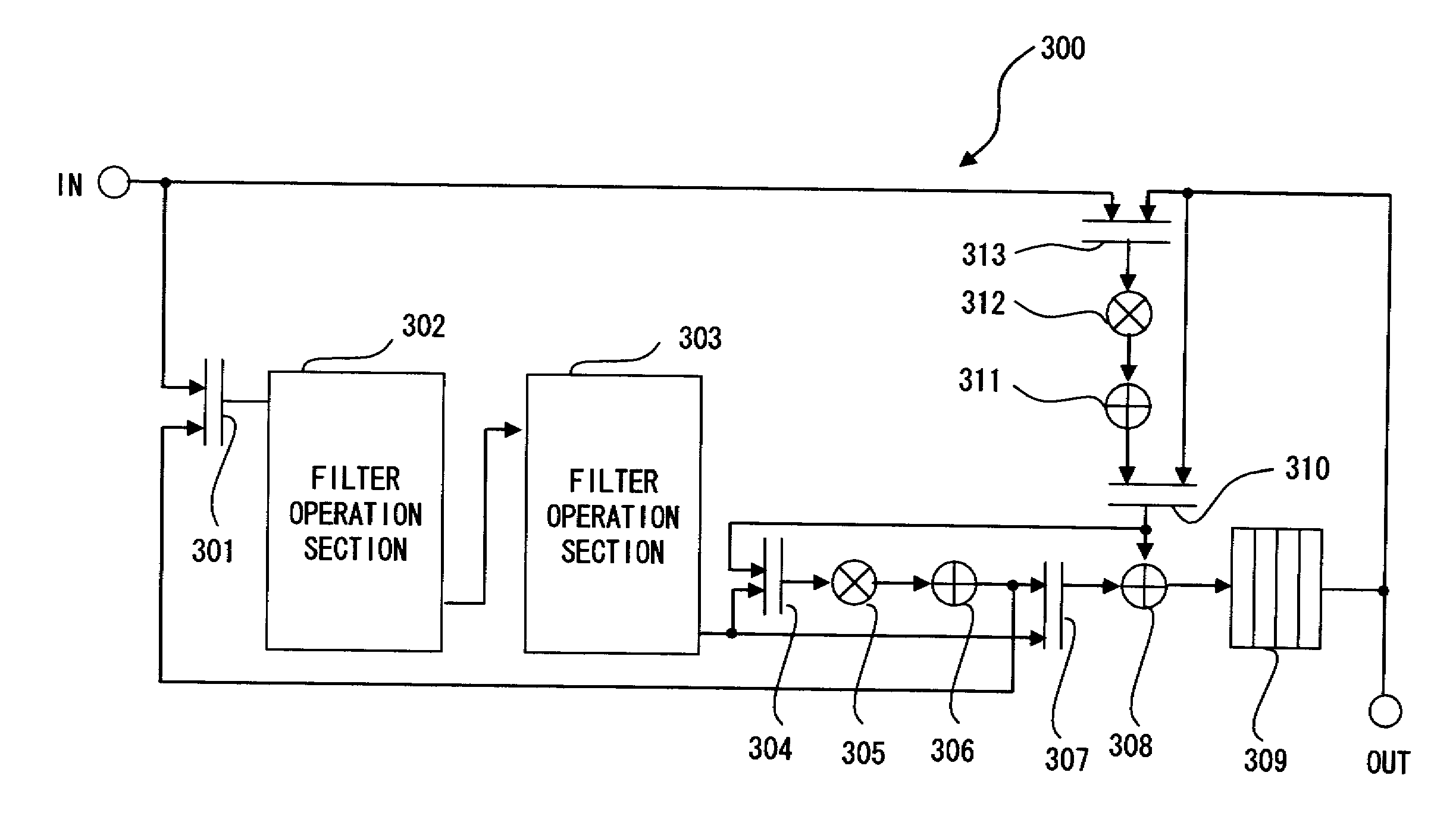

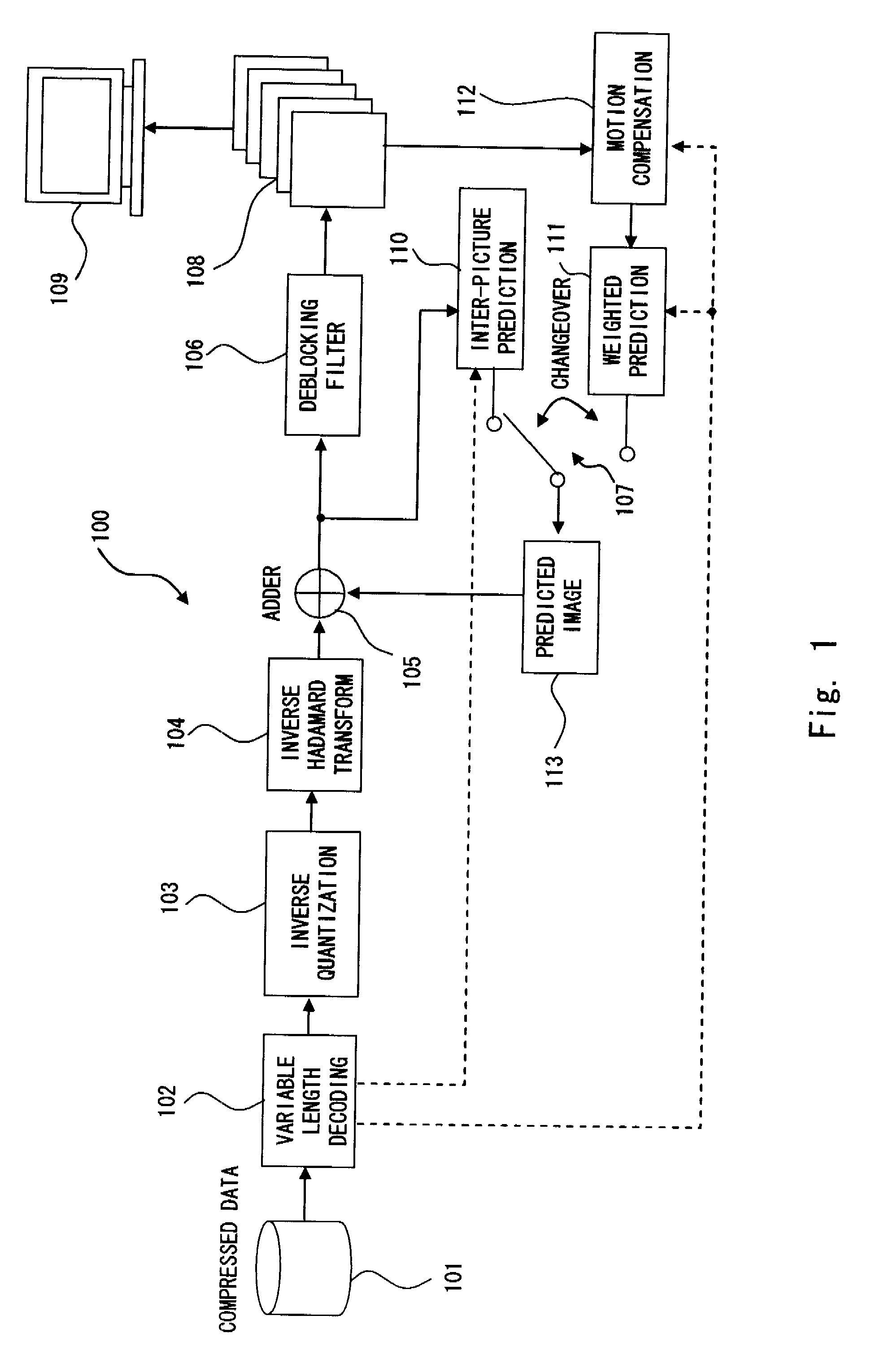

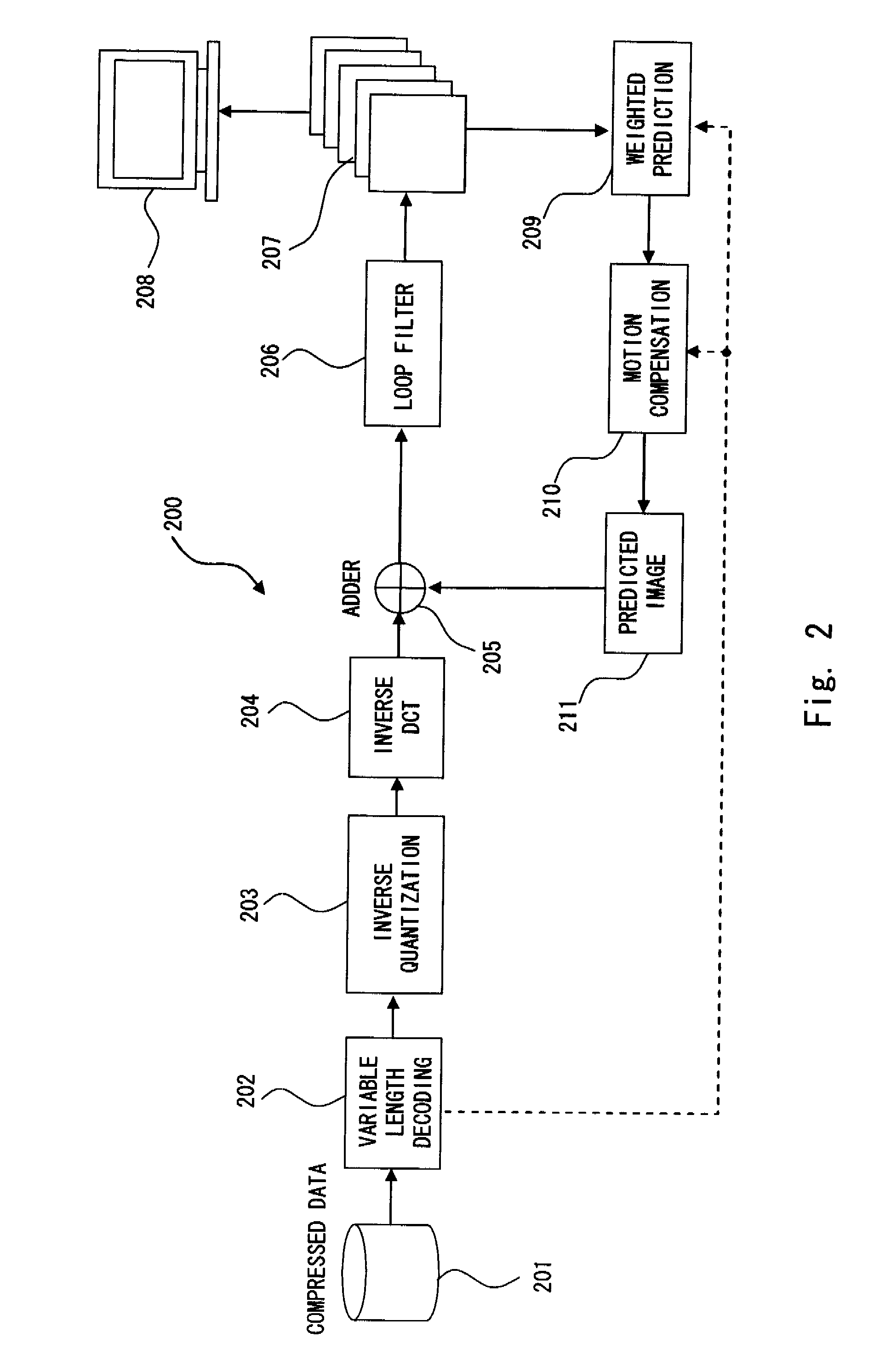

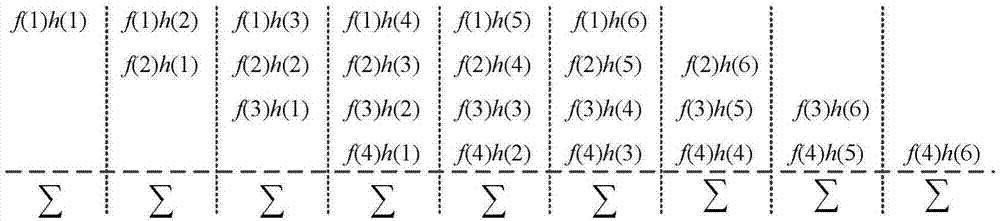

Filter operation unit and motion-compensating device

ActiveUS20090094303A1Small calculated valueReduce circuit sizeComputations using contact-making devicesDigital technique networkArray data structureBinary multiplier

A filter operation unit that performs a multiply-accumulate operation on input data and a filter coefficient group including a plurality of coefficients using Booth's algorithm. The filter operation unit includes: at least two filter multiplier units that multiply the input data and a difference between adjacent filter coefficients in a filter coefficient group to obtain multiplication results; and an adder that adds the multiplication results of the multiplier units adjacent to each other. The filter multiplier units each include: a partial product generation unit that repeatedly generates a partial product according to Booth's algorithm; and an adder that cumulatively adds the partial products generated by the partial product generation unit.

Owner:RENESAS ELECTRONICS CORP +1

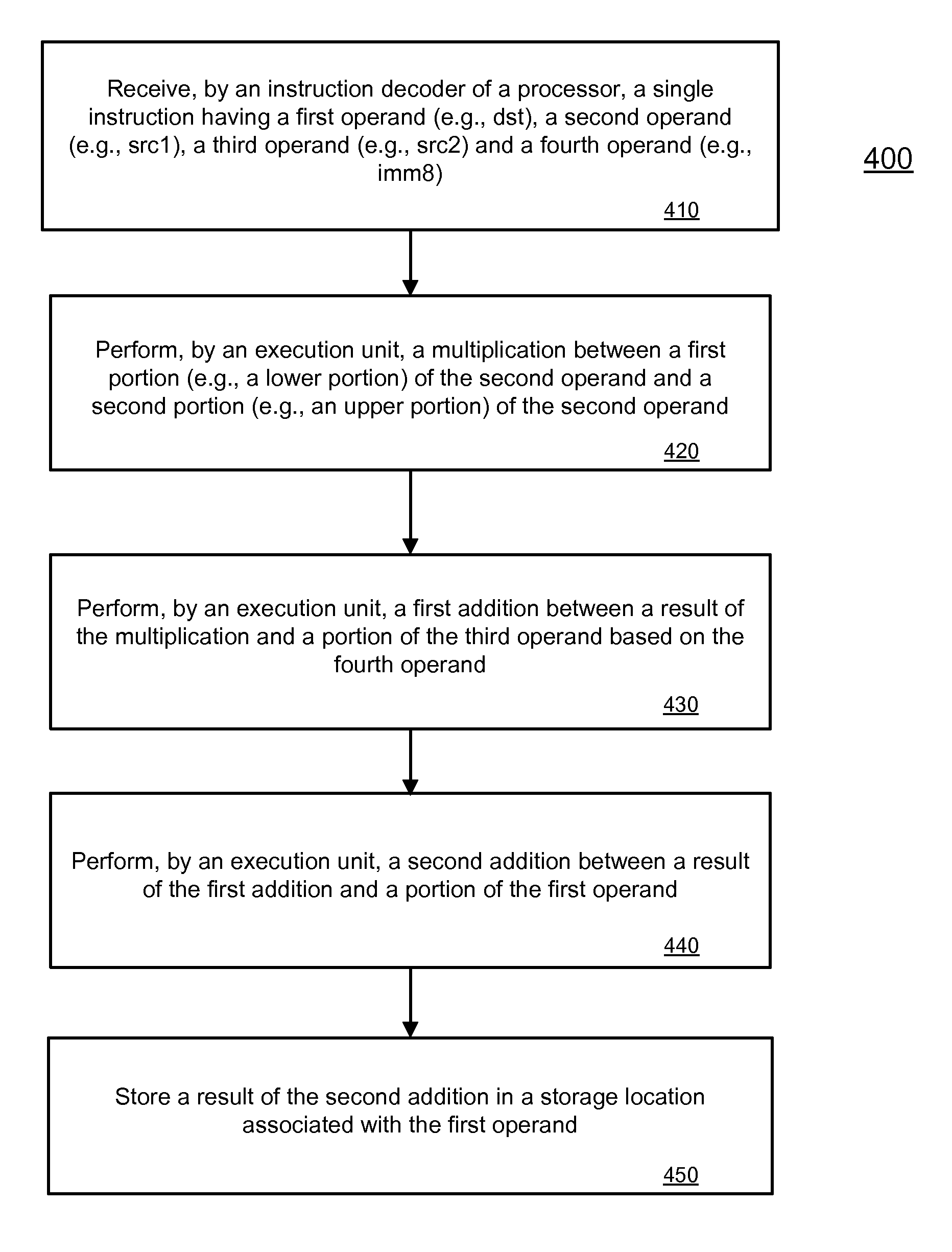

Method and apparatus to process 4-operand SIMD integer multiply-accumulate instruction

ActiveUS20140082328A1Digital data processing detailsInstruction analysisMultiply–accumulate operationExecution unit

According to one embodiment, a processor includes an instruction decoder to receive an instruction to process a multiply-accumulate operation, the instruction having a first operand, a second operand, a third operand, and a fourth operand. The first operand is to specify a first storage location to store an accumulated value; the second operand is to specify a second storage location to store a first value and a second value; and the third operand is to specify a third storage location to store a third value. The processor further includes an execution unit coupled to the instruction decoder to perform the multiply-accumulate operation to multiply the first value with the second value to generate a multiply result and to accumulate the multiply result and at least a portion of a third value to an accumulated value based on the fourth operand.

Owner:TAHOE RES LTD

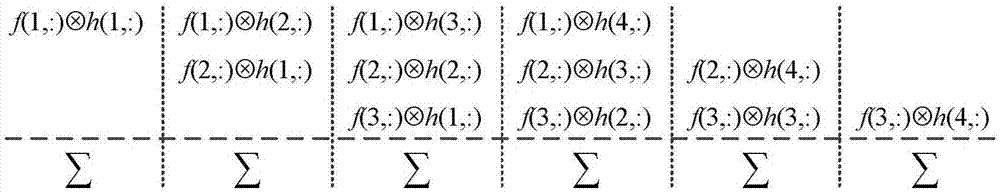

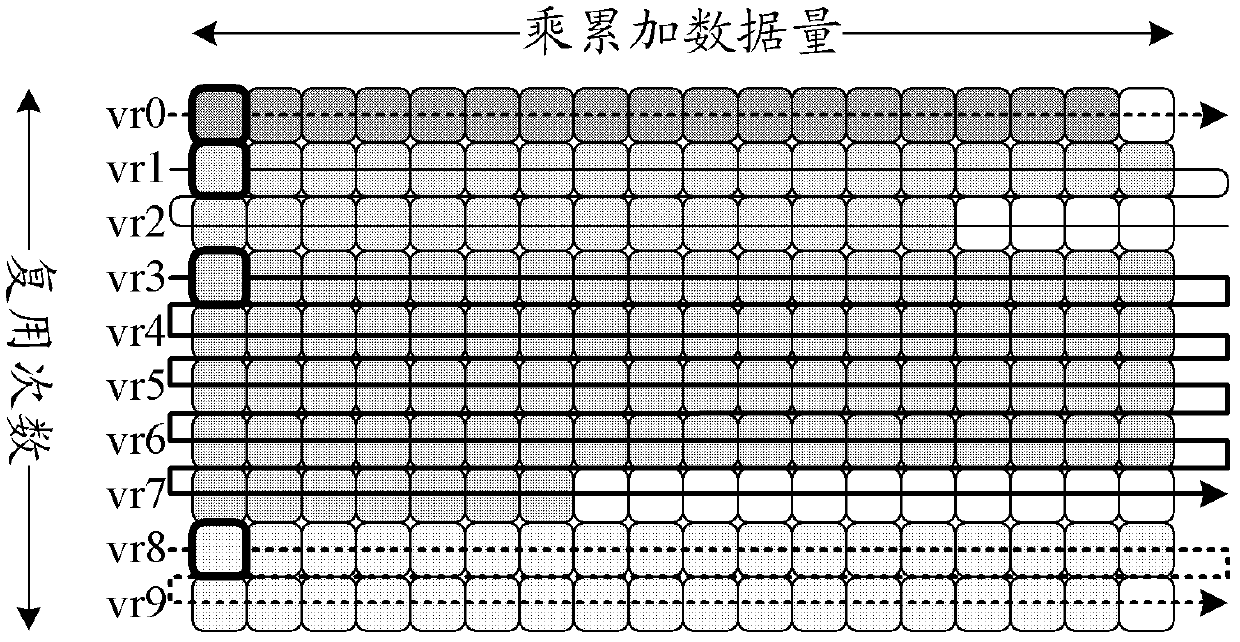

Reconfigurable computation structure meeting requirement for arbitrary-dimension convolution and computation scheduling method and device

ActiveCN107491416ARealize computingEasing Computational Efficiency BalanceComplex mathematical operationsMultiplexingMultiply–accumulate operation

The invention relates to a reconfigurable computation structure meeting the requirement for arbitrary-dimension convolution and a computation scheduling method and device. The reconfigurable computation structure comprises an interface controller and a reconfigurable computation module, the reconfigurable computation module comprises at least one multiply-accumulate computation processing array, each multiply-accumulate computation processing array comprises multiple multiply-accumulate operation processing units, each multiply-accumulate operation processing unit is configured with a corresponding internal bus, and the multiply-accumulate operation processing units are connected in pairs through the internal buses and then connected with a control bus; the interface controller conducts scheduling management on the connection mode between the multiply-accumulate operation processing units and the time-sharing multiplexing frequency of the multiply-accumulate operation processing units through the control bus. According to the reconfigurable computation structure, for arbitrary-dimension convolution, convolution computation is achieved by rapidly reconfiguring the processing units with different computation functions, the flexibility of variable-dimension convolution computation is improved, the parallelism and streamline of the computation process are fully mined, and the convolution computation efficiency is greatly improved.

Owner:THE PLA INFORMATION ENG UNIV +1

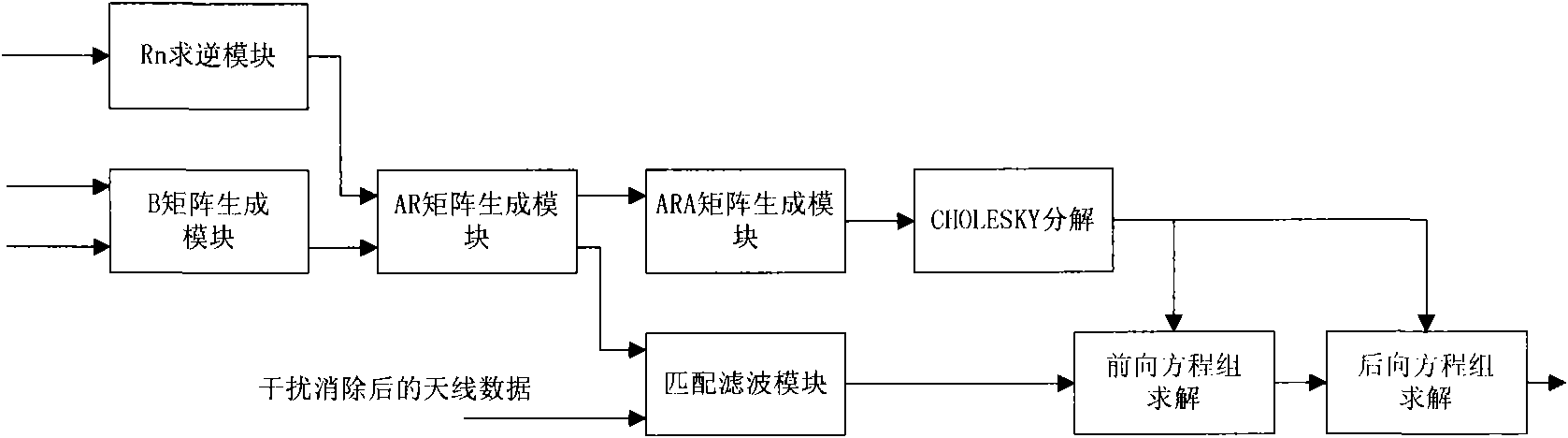

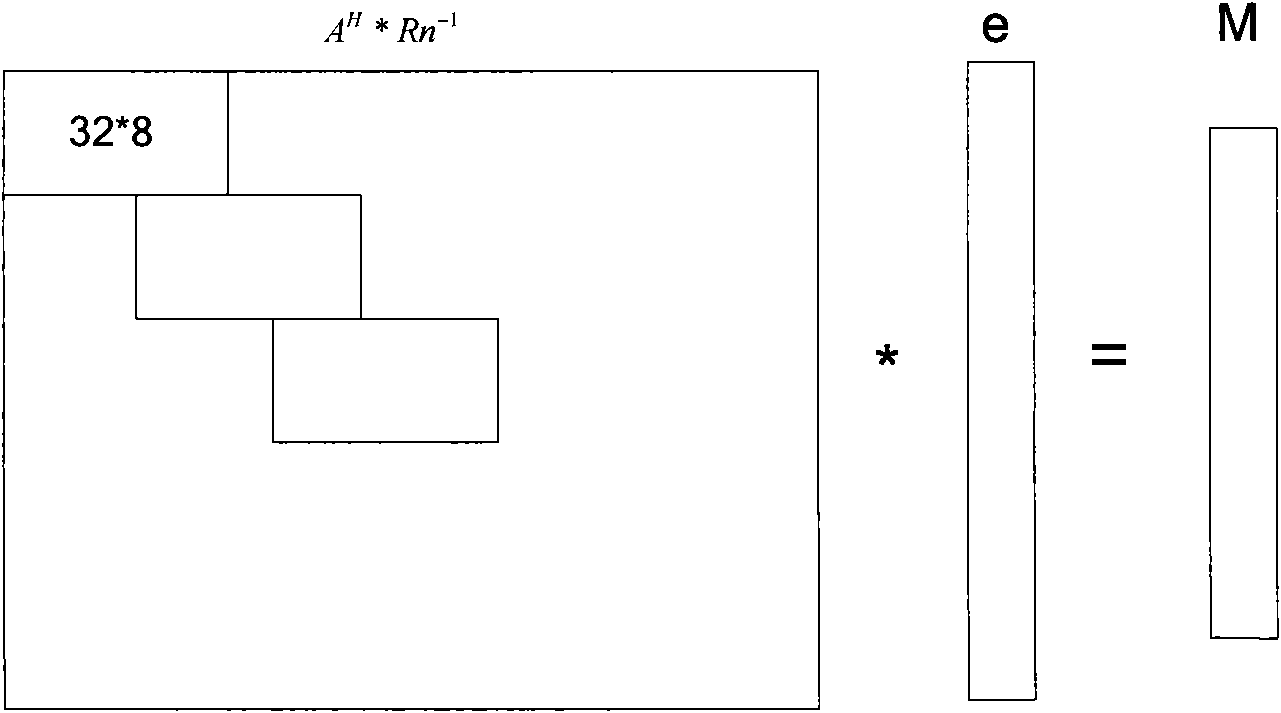

Method and device for matched filtering in combined detection

ActiveCN101527919ATransmission monitoringWireless communicationComputer architectureMultiply–accumulate operation

The invention discloses a method and a device for matched filtering in combined detection. When a matched filtering device calculates matched filtering data of each symbol of each virtual spread spectrum unit, a complex operation between matched filtering matrix data and antenna data is decomposed into four real operations on four groups of operation subunits in parallel, and the operation subunit number in each group of operation subunits is the same as the antenna number; and when the matched filtering data of one symbol are calculated, each operation subunit in the group firstly finishes a real product accumulation operation decomposed into the group from multiple complex product accumulation operations corresponding to the antenna, then accumulates the operation result of each operation subunit in the group, and finally adds two of four accumulated sums and subtracts the other two to obtain a real part and a virtual part of the matched filtering data of the symbol. The device adopts a universal circuit apparatus with a simple structure and low cost, and a hardware realizing method is provided for the matched filtering operation.

Owner:SANECHIPS TECH CO LTD

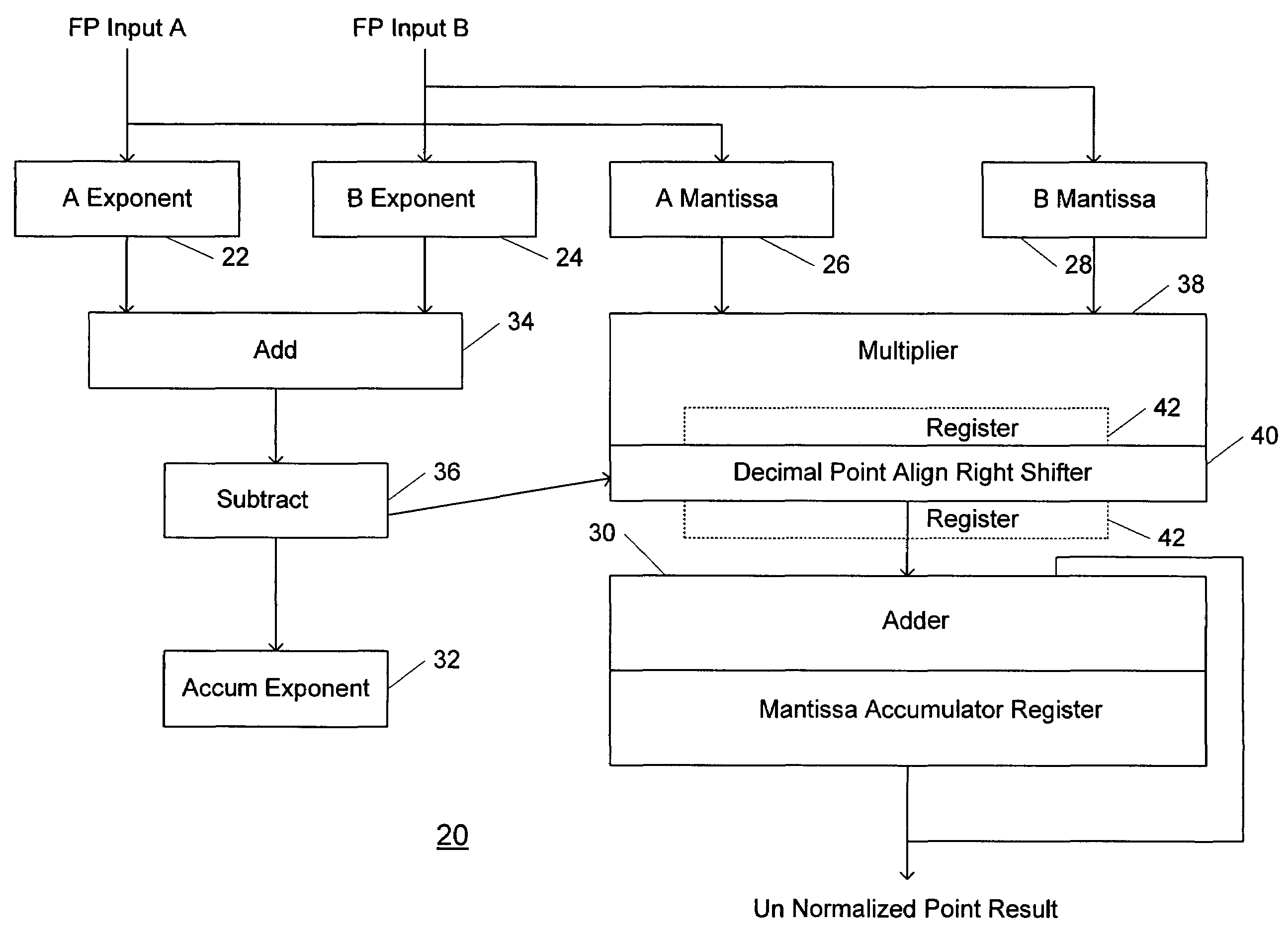

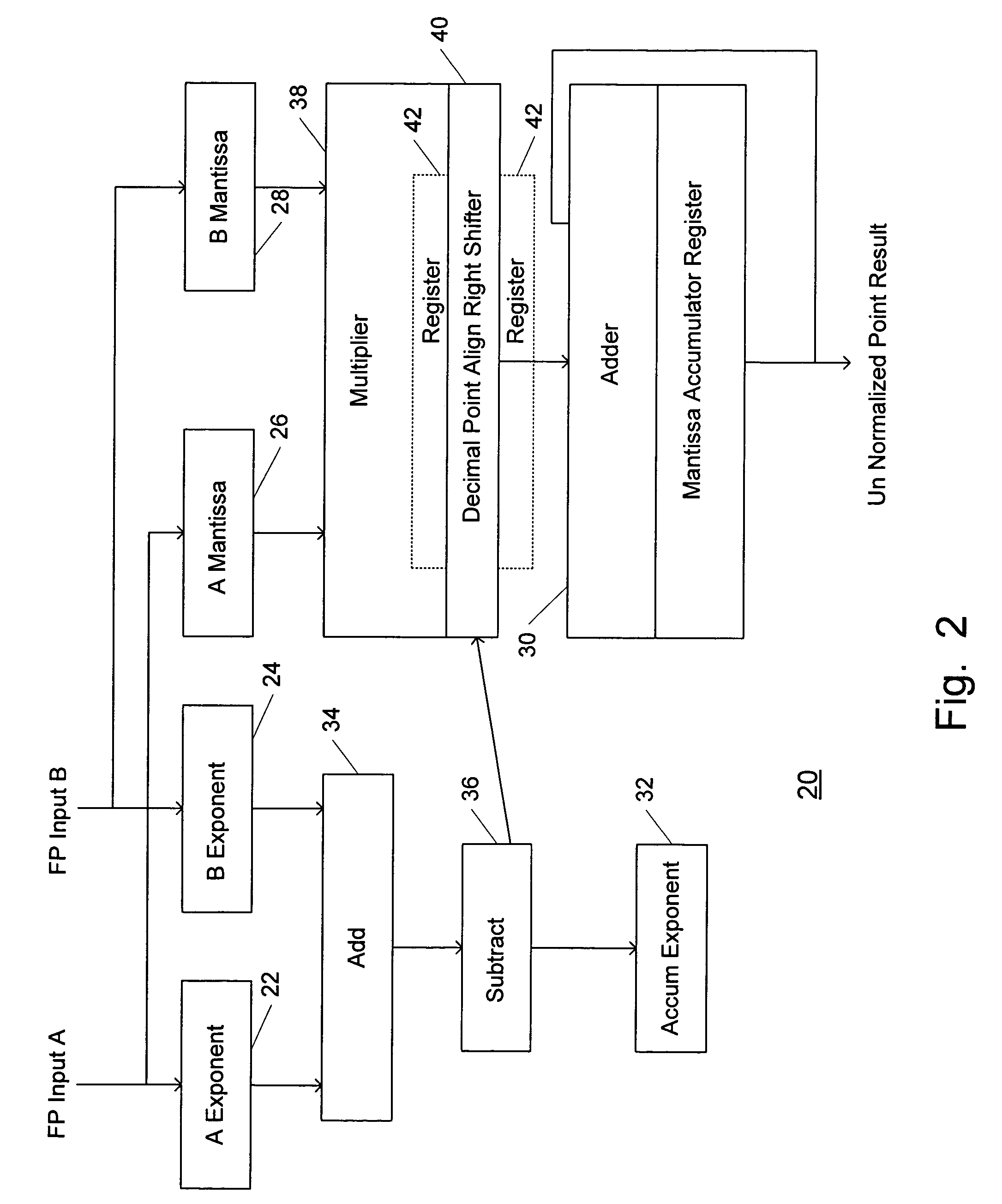

Method and system for a floating point multiply-accumulator

InactiveUS7225216B1Computation using non-contact making devicesMultiply–accumulate operationEngineering

Aspects for performing a multiply-accumulate operation on floating point numbers in a single clock cycle are described. These aspects include mantissa logic for combining a mantissa portion of floating point inputs and exponent logic coupled to the mantissa logic. The exponent logic adjusts the combination of an exponent portion of the floating point inputs by a predetermined value to produce a shift amount and allows pipeline stages in the mantissa logic, wherein an unnormalized floating point result is produced from the mantissa logic on each clock cycle.

Owner:NVIDIA CORP

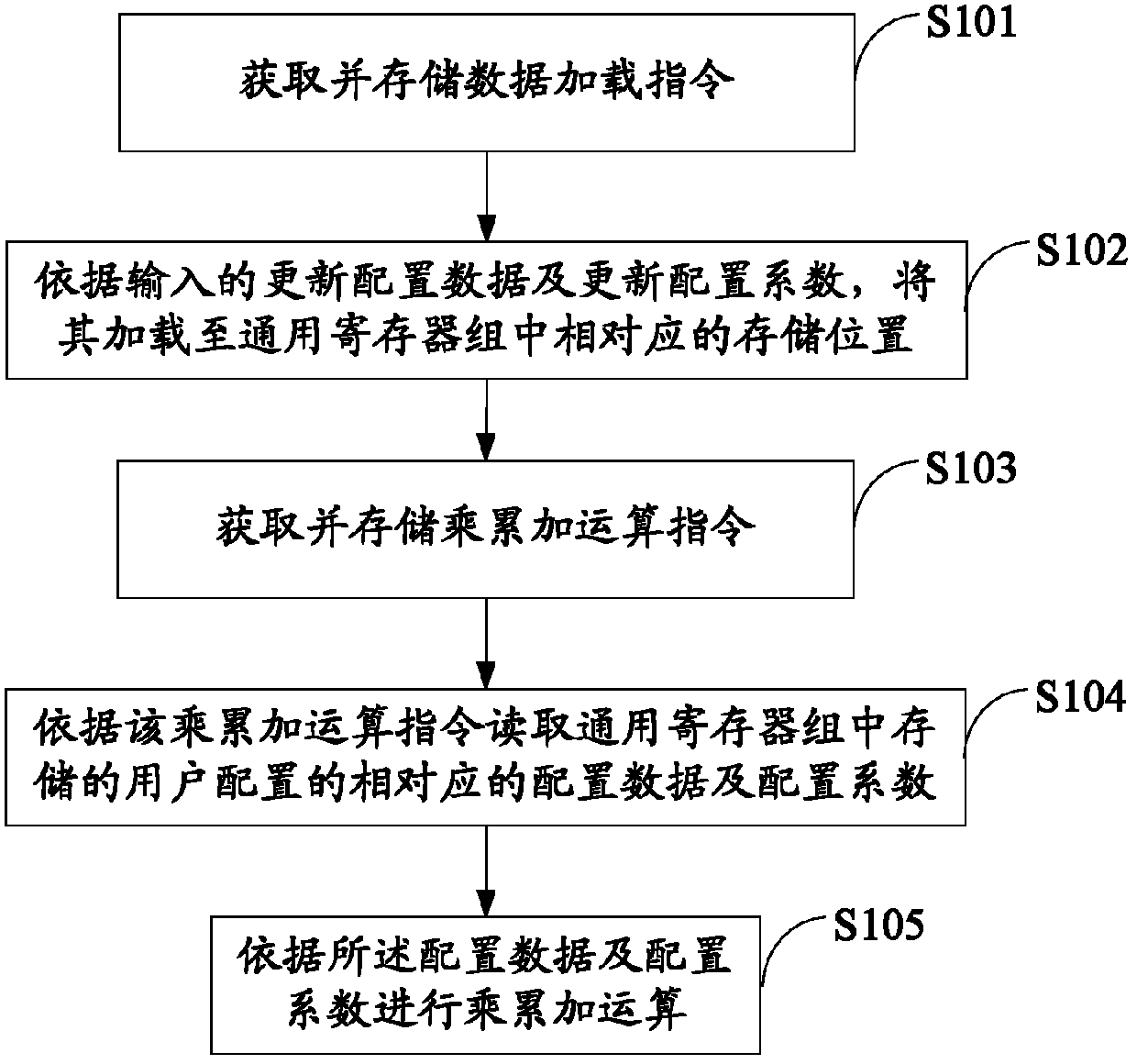

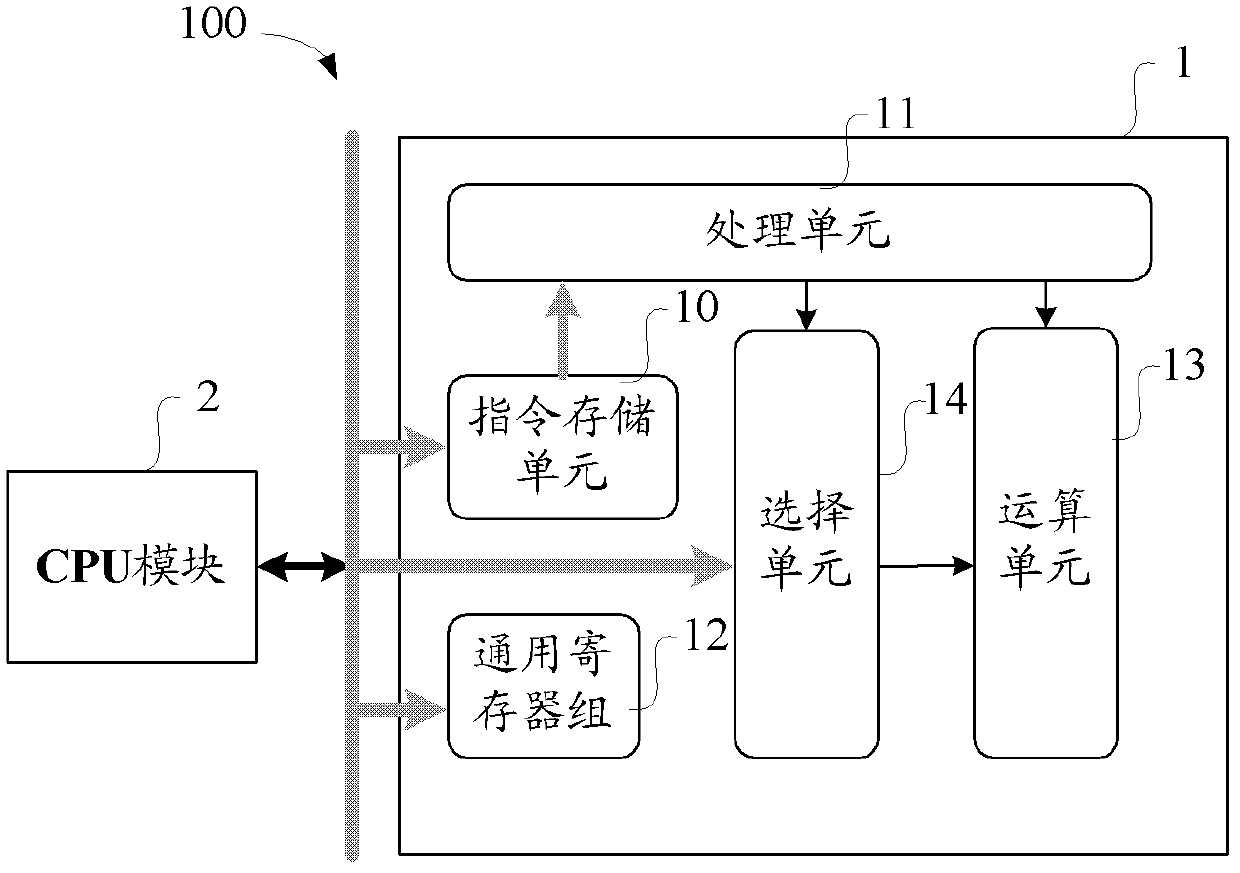

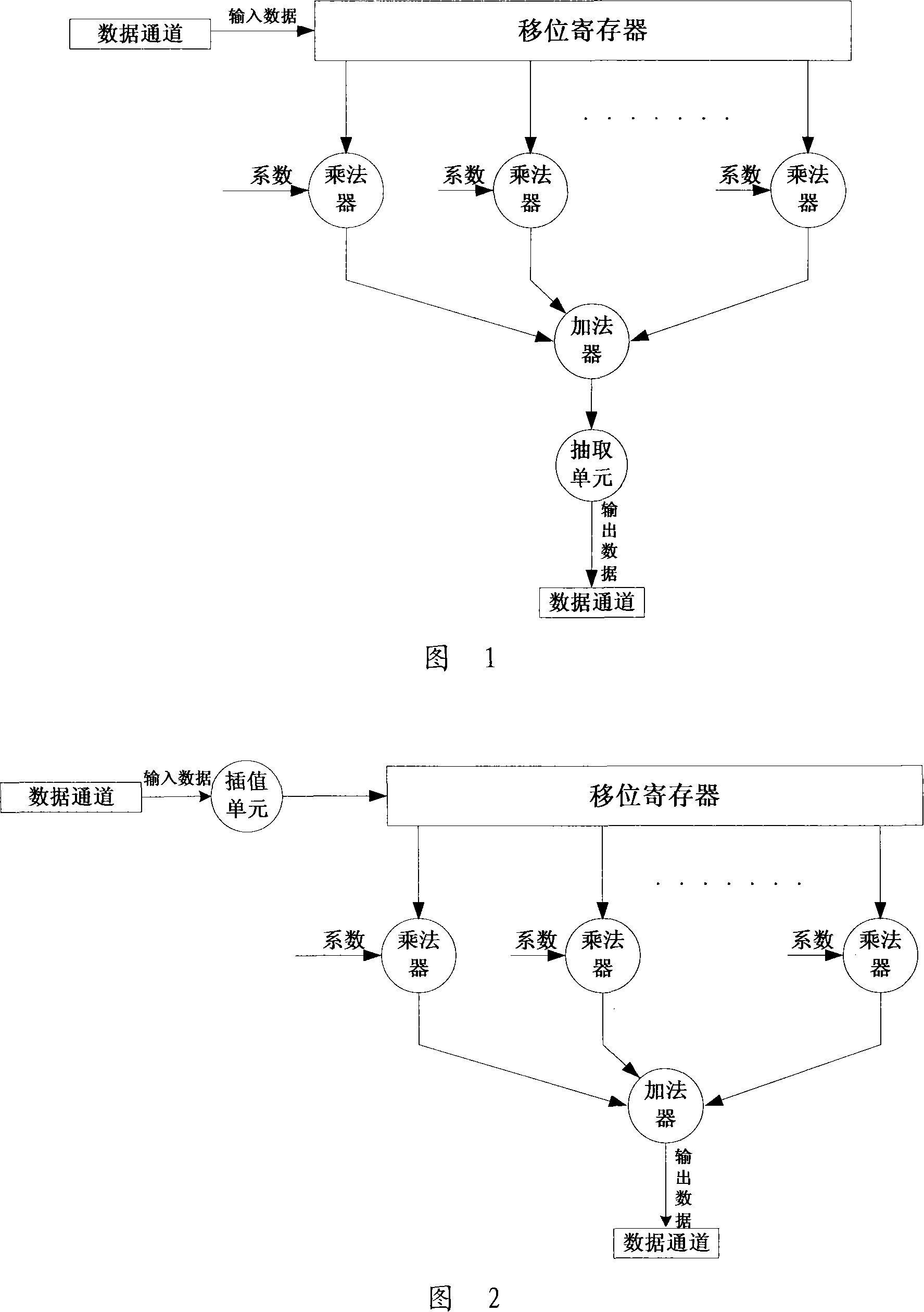

Programmable digital filtering implementation method, apparatus, baseband chip and terminal thereof

InactiveCN103378820AFlexible programmingAny multiply-accumulate operationDigital technique networkGeneral purposeMultiply–accumulate operation

The invention discloses a programmable digital filtering implementation method, an apparatus, a baseband chip and a terminal thereof. The method comprises the steps of acquiring and storing a multiply-accumulate operation instruction; reading corresponding configuration data and a configuration coefficient that are stored in a general-purpose register block to user configuration according to the multiply-accumulate operation instruction; and carrying out a multiply-accumulate operation based on the configuration data and the configuration coefficient. The digital filtering device with microprocessor architecture can be used for achieving any multiply-accumulate operation. Especially in the multi-mode mobile terminal baseband processing, a set of filter resources is used for replacing different filter resources in multiple modes, so that the area of the device is greatly reduced. Different types of filter can be programmed flexibly, and through the processing of software radio, system resources can be saved and energy consumption can be reduced to some extent.

Owner:SANECHIPS TECH CO LTD

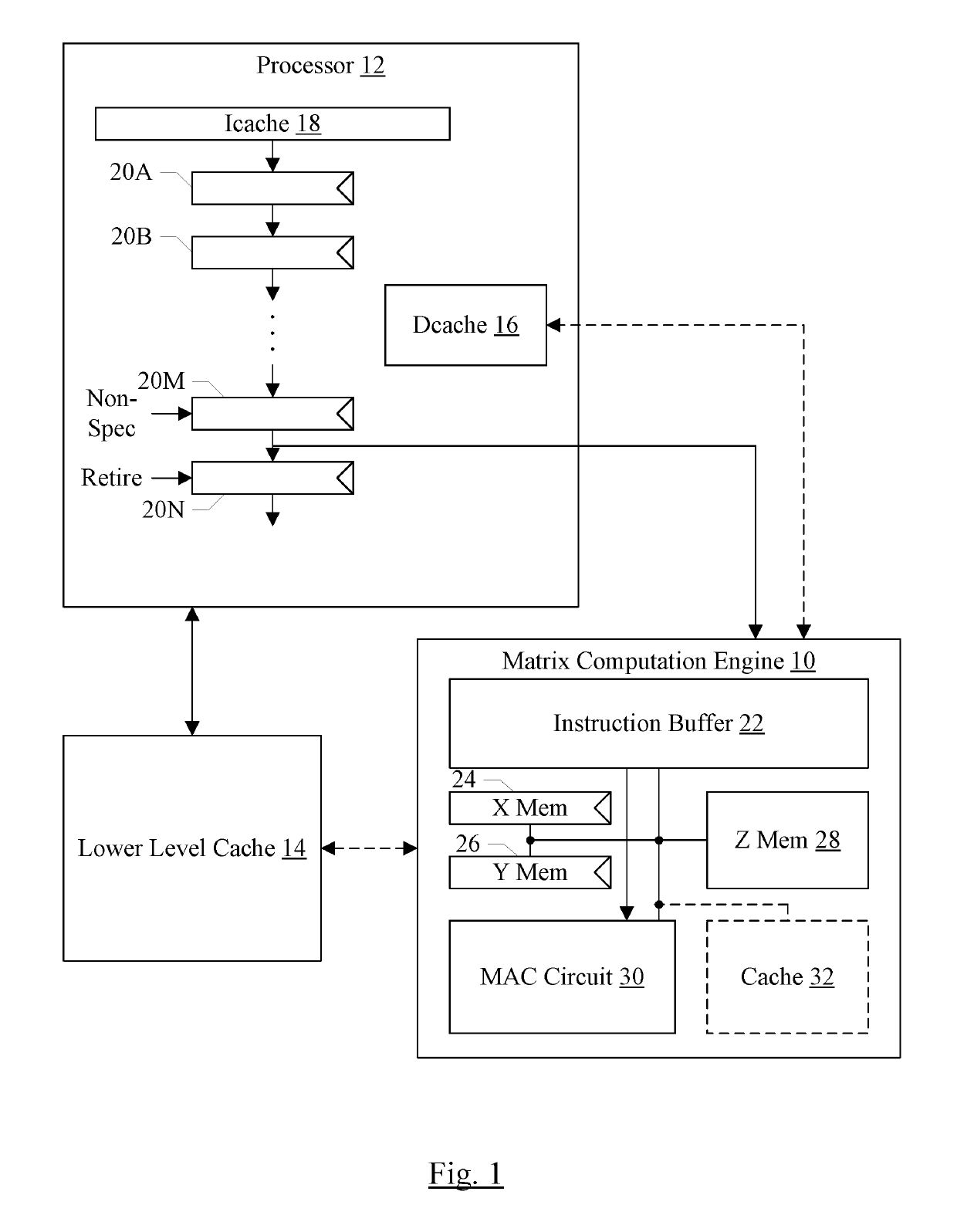

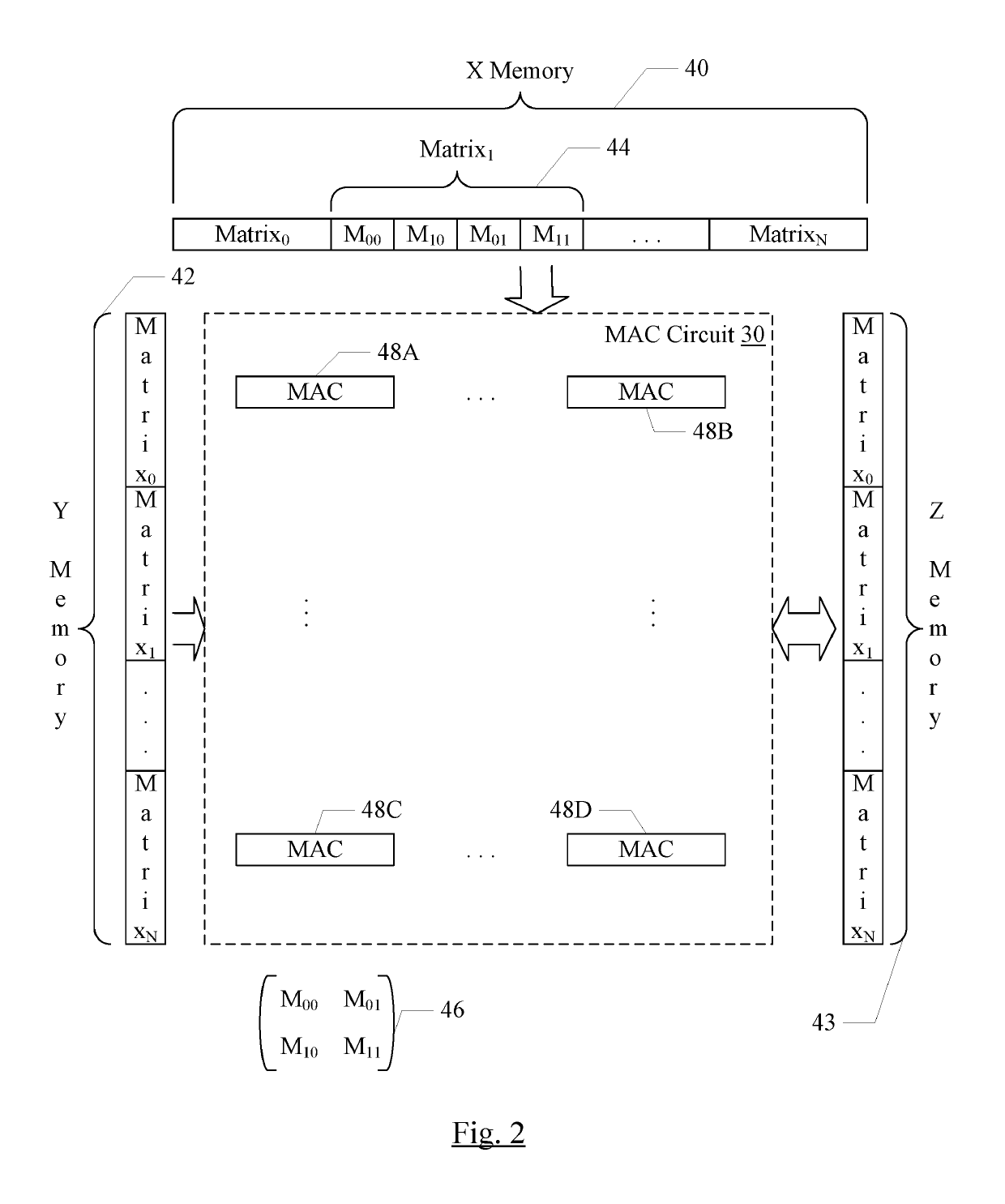

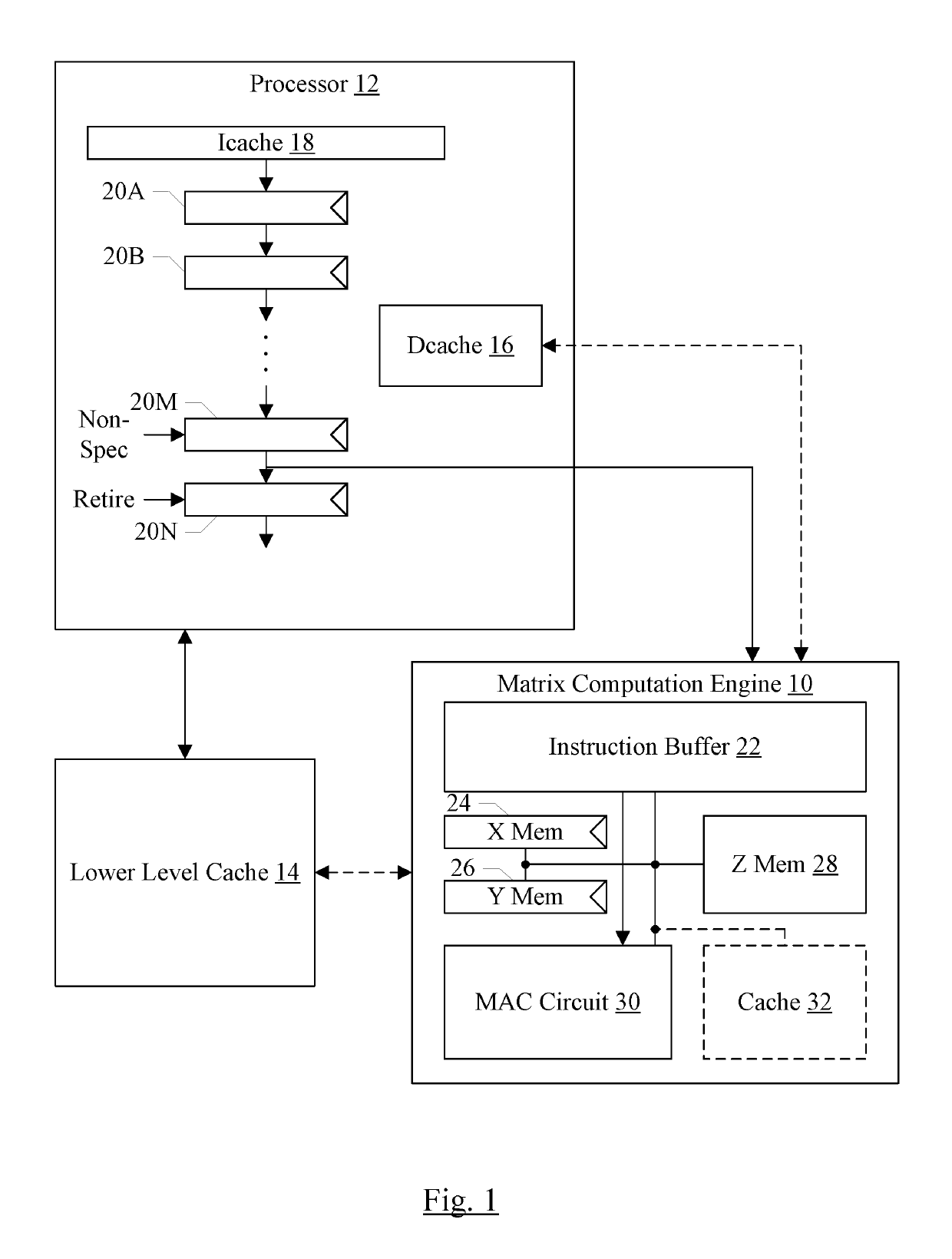

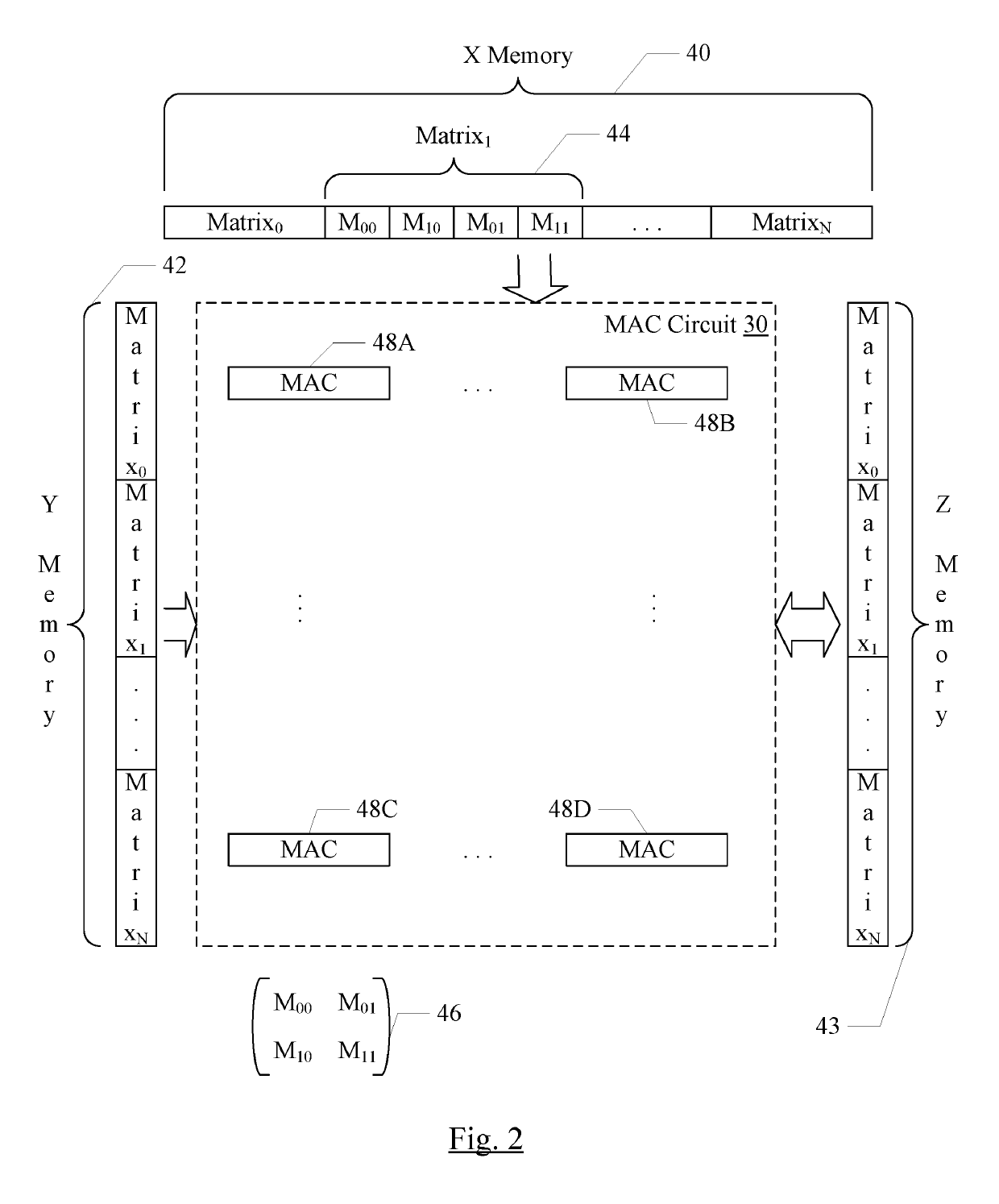

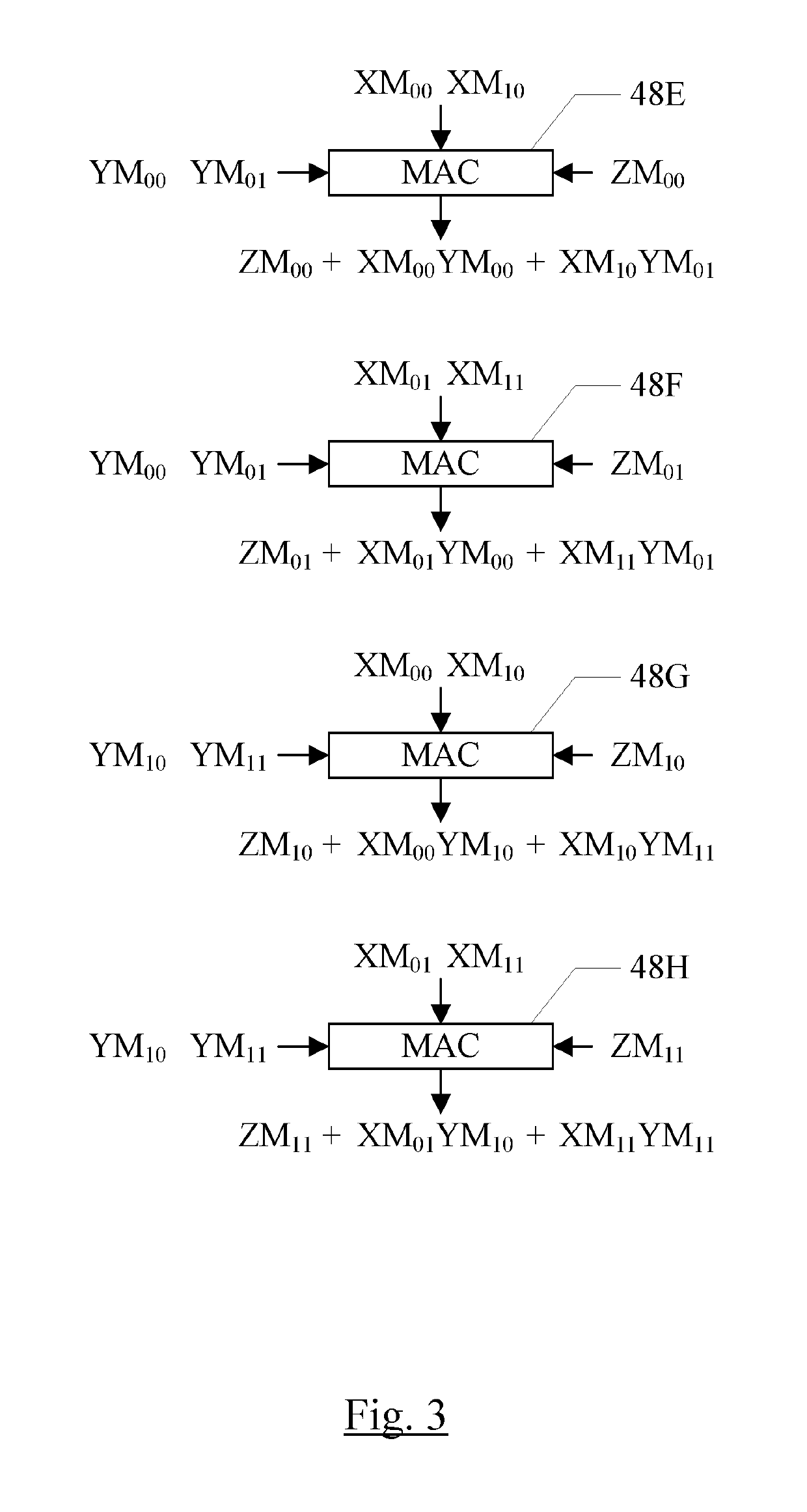

Matrix computation engine

ActiveUS10346163B2Improve performanceIncrease powerDigital data processing detailsConcurrent instruction executionMultiply–accumulate operationParallel computing

In an embodiment, a matrix computation engine is configured to perform matrix computations (e.g. matrix multiplications). The matrix computation engine may perform numerous matrix computations in parallel, in an embodiment. More particularly, the matrix computation engine may be configured to perform numerous multiplication operations in parallel on input matrix elements, generating resulting matrix elements. In an embodiment, the matrix computation engine may be configured to accumulate results in a result memory, performing multiply-accumulate operations for each matrix element of each matrix.

Owner:APPLE INC

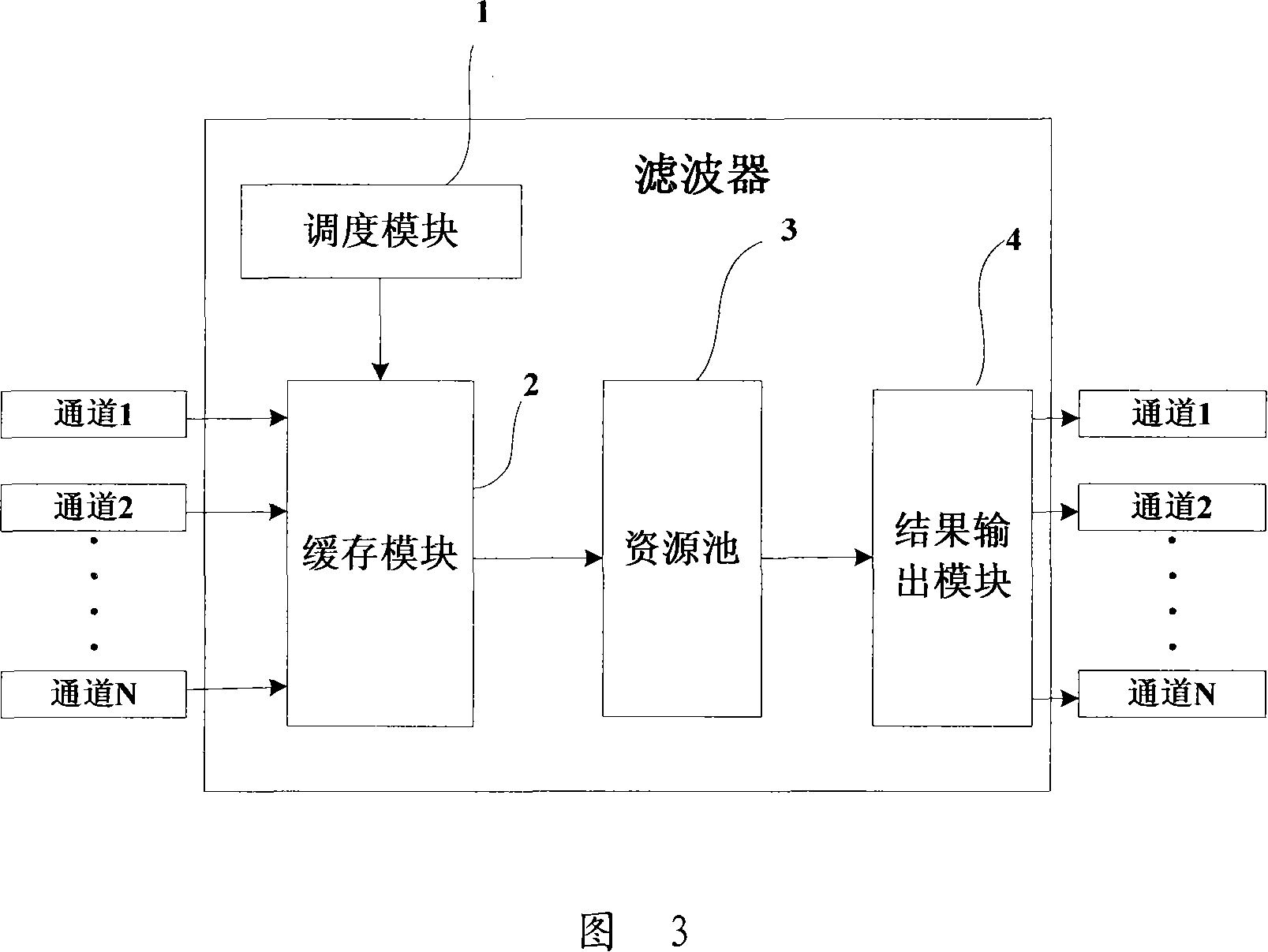

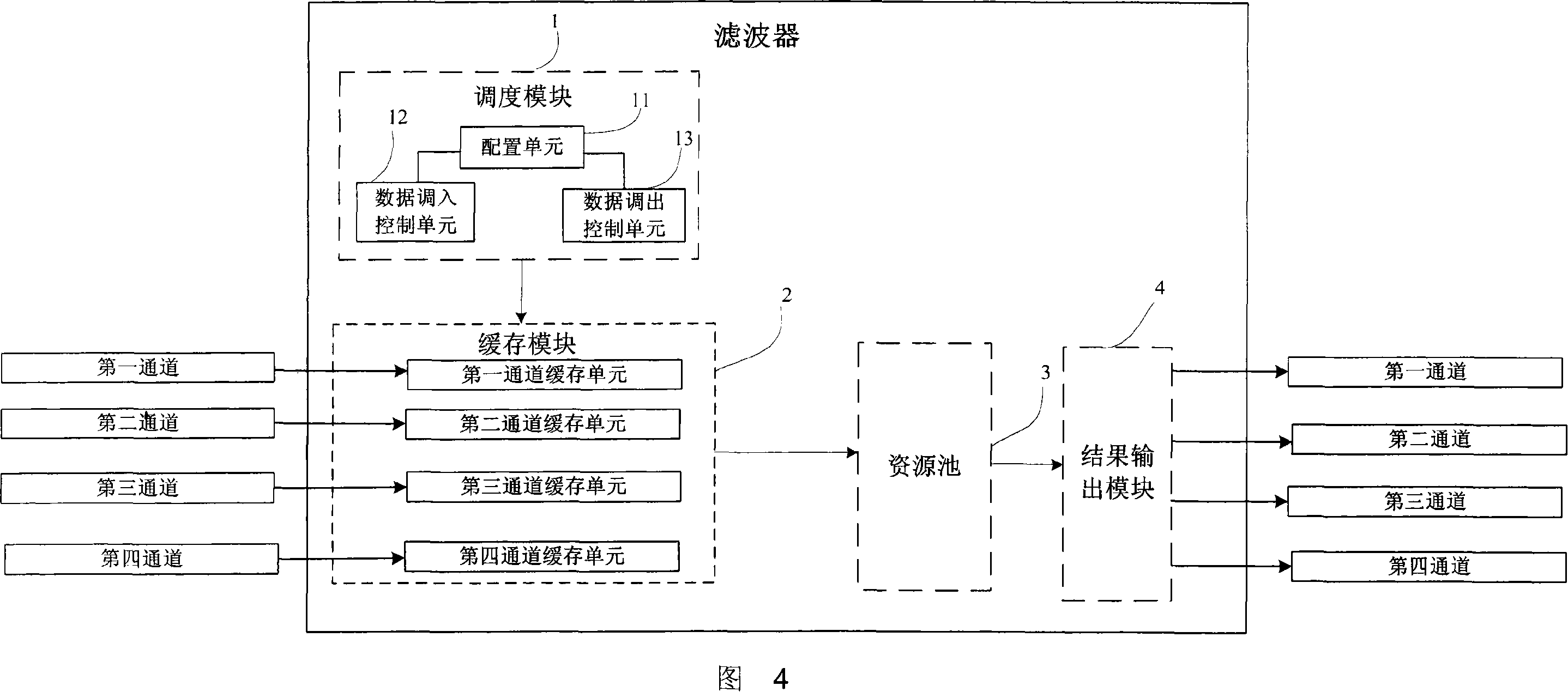

Wave filter and its filtering method

ActiveCN101072019ARealize requirementsTo meet the needs of filteringAdaptive networkResource poolMultiply–accumulate operation

The filter includes at least following parts: a scheduler module is in use for configuring filtering parameter group of filter, and schedules data according to the said parameter group; a buffer memory module is in use for receiving the input data or outputting data based on schedule of the scheduler module; a resource pool is in use for outputting data to the said buffer memory module, as well as carrying out accumulation operation of arithmetic product for filtering coefficient configured by the scheduler module; a result output module is in use for outputting operated result of the resource pool. The invention also discloses a filtering method. The disclosed filter and the filtering method can support filtering requirement of variable bandwidth of multiple channels and in multiple operational modes in order to meet filtering performances required by different protocols.

Owner:HUAWEI TECH CO LTD

Method in microprocessor

ActiveCN106126189AReduce cumulative power consumptionRound cache avoidanceDigital data processing detailsInstruction analysisMultiply–accumulate operationParallel computing

Accoridng to a method in a microprocessor, the microprocessor prepares a fused multiply-accumulate operation of a form +-A*B+-C for execution by issuing first and second multiply-accumulate microinstructions to one or more instruction execution units to complete the fused multiply-accumulate operation. The first multiply-accumulate microinstruction causes an unrounded nonredundant result vector to be generated from a first accumulation of a selected one of (a) the partial products of A and B or (b) C with the partial products of A and B. The second multiply-accumulate microinstruction causes performance of a second accumulation of C with the unrounded nonredundant result vector, if the first accumulation did not include C. The second multiply-accumulate microinstruction also causes a final rounded result to be generated from the unrounded nonredundant result vector, wherein the final rounded result is a complete result of the fused multiply-accumulate operation.

Owner:VIA ALLIANCE SEMICON CO LTD

Matrix Computation Engine

ActiveUS20190129719A1Improve performanceIncrease powerDigital data processing detailsConcurrent instruction executionMultiply–accumulate operationParallel computing

In an embodiment, a matrix computation engine is configured to perform matrix computations (e.g. matrix multiplications). The matrix computation engine may perform numerous matrix computations in parallel, in an embodiment. More particularly, the matrix computation engine may be configured to perform numerous multiplication operations in parallel on input matrix elements, generating resulting matrix elements. In an embodiment, the matrix computation engine may be configured to accumulate results in a result memory, performing multiply-accumulate operations for each matrix element of each matrix.

Owner:APPLE INC

Method and apparatus to process 4-operand SIMD integer multiply-accumulate instruction

ActiveUS9292297B2Digital data processing detailsInstruction analysisMultiply–accumulate operationExecution unit

According to one embodiment, a processor includes an instruction decoder to receive an instruction to process a multiply-accumulate operation, the instruction having a first operand, a second operand, a third operand, and a fourth operand. The first operand is to specify a first storage location to store an accumulated value; the second operand is to specify a second storage location to store a first value and a second value; and the third operand is to specify a third storage location to store a third value. The processor further includes an execution unit coupled to the instruction decoder to perform the multiply-accumulate operation to multiply the first value with the second value to generate a multiply result and to accumulate the multiply result and at least a portion of a third value to an accumulated value based on the fourth operand.

Owner:TAHOE RES LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com