Patents

Literature

114 results about "Simd processing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

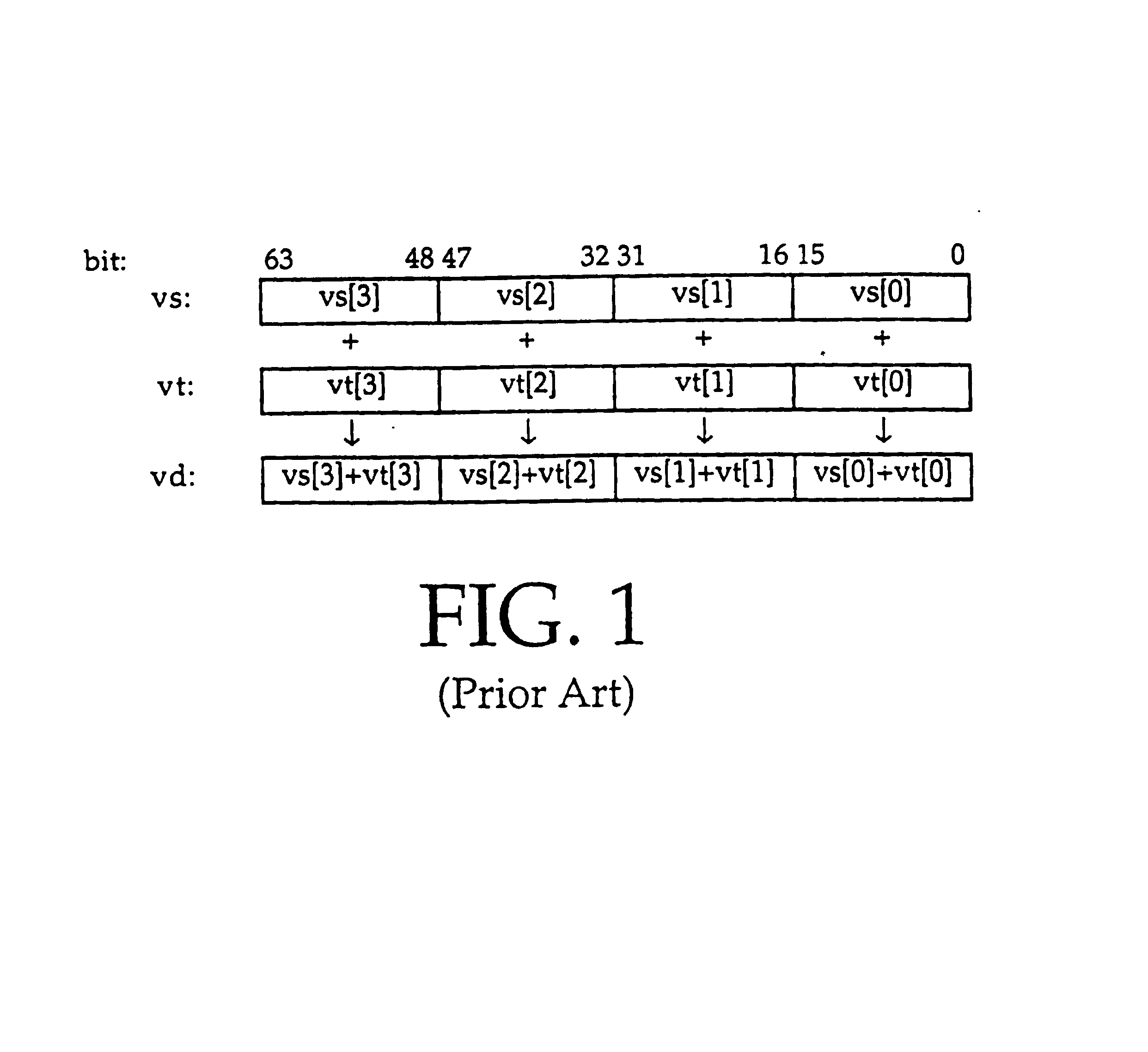

Definition. SIMD processing, in which single instruction is applied on multiple data, is suitable for multimedia processing, and therefore it is implemented in contemporary processors.

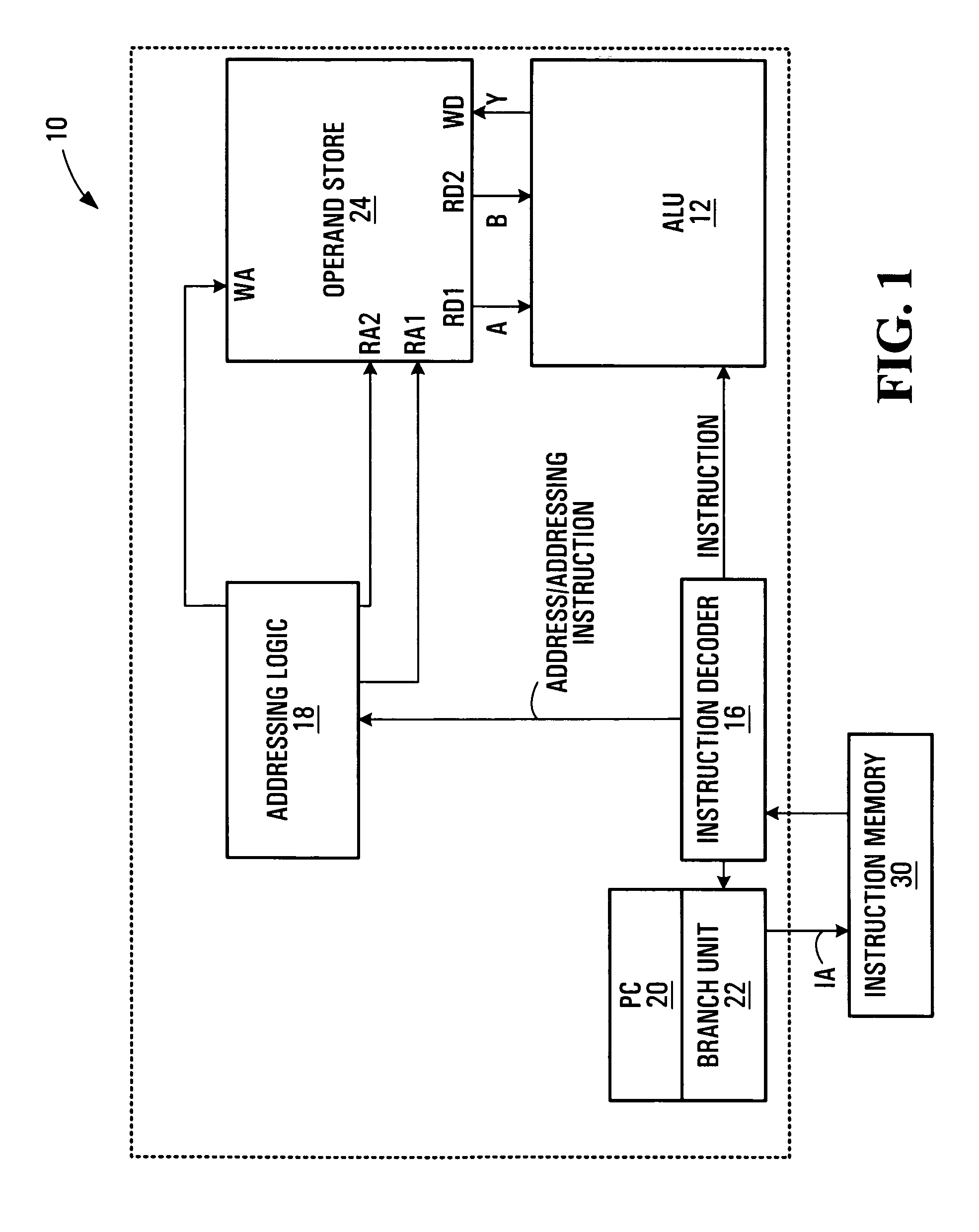

SIMD processor and addressing method

InactiveUS20060047937A1Without unduly consuming processor resourcesMemory adressing/allocation/relocationMicro-instruction address formationMemory addressProcessor register

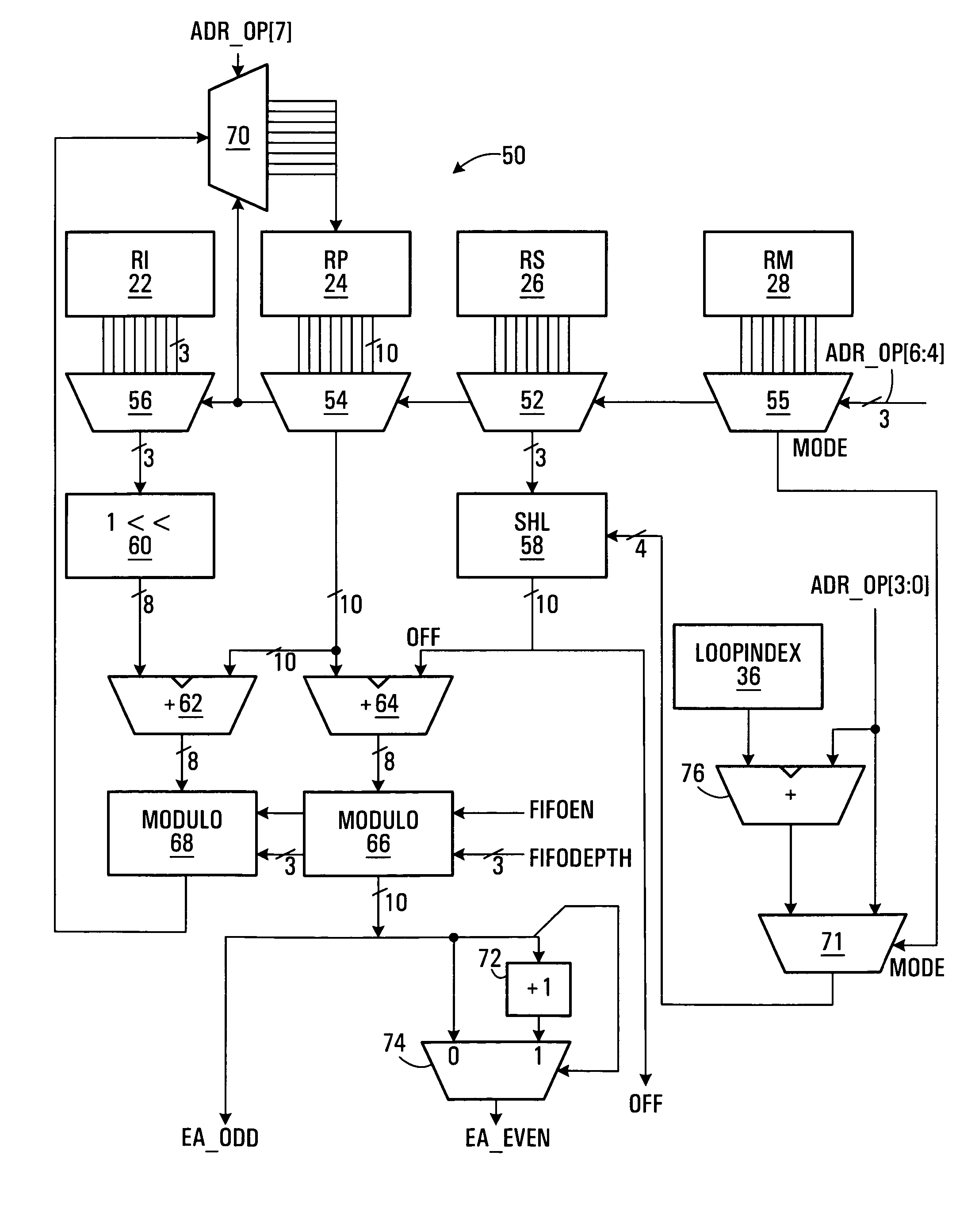

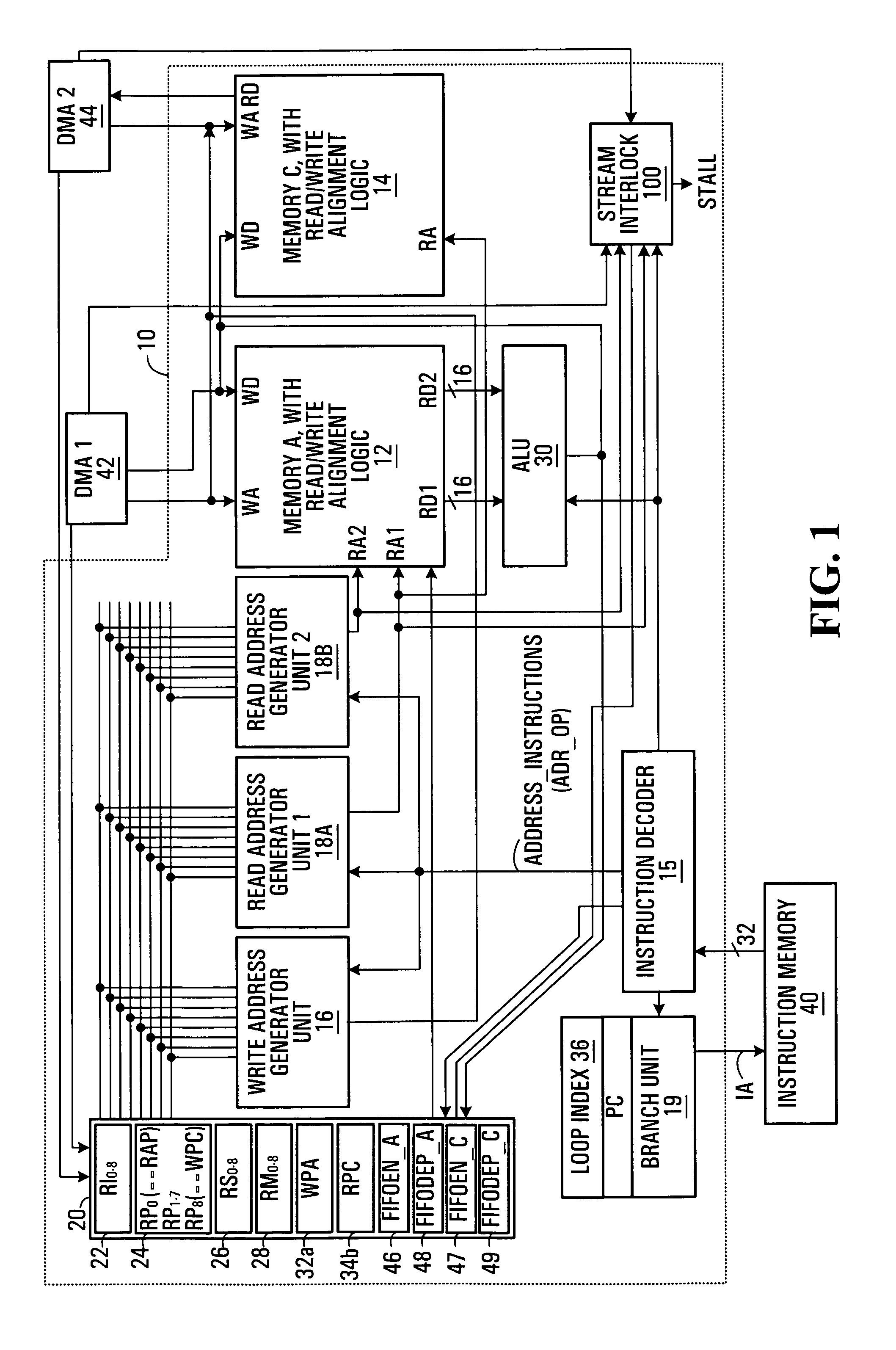

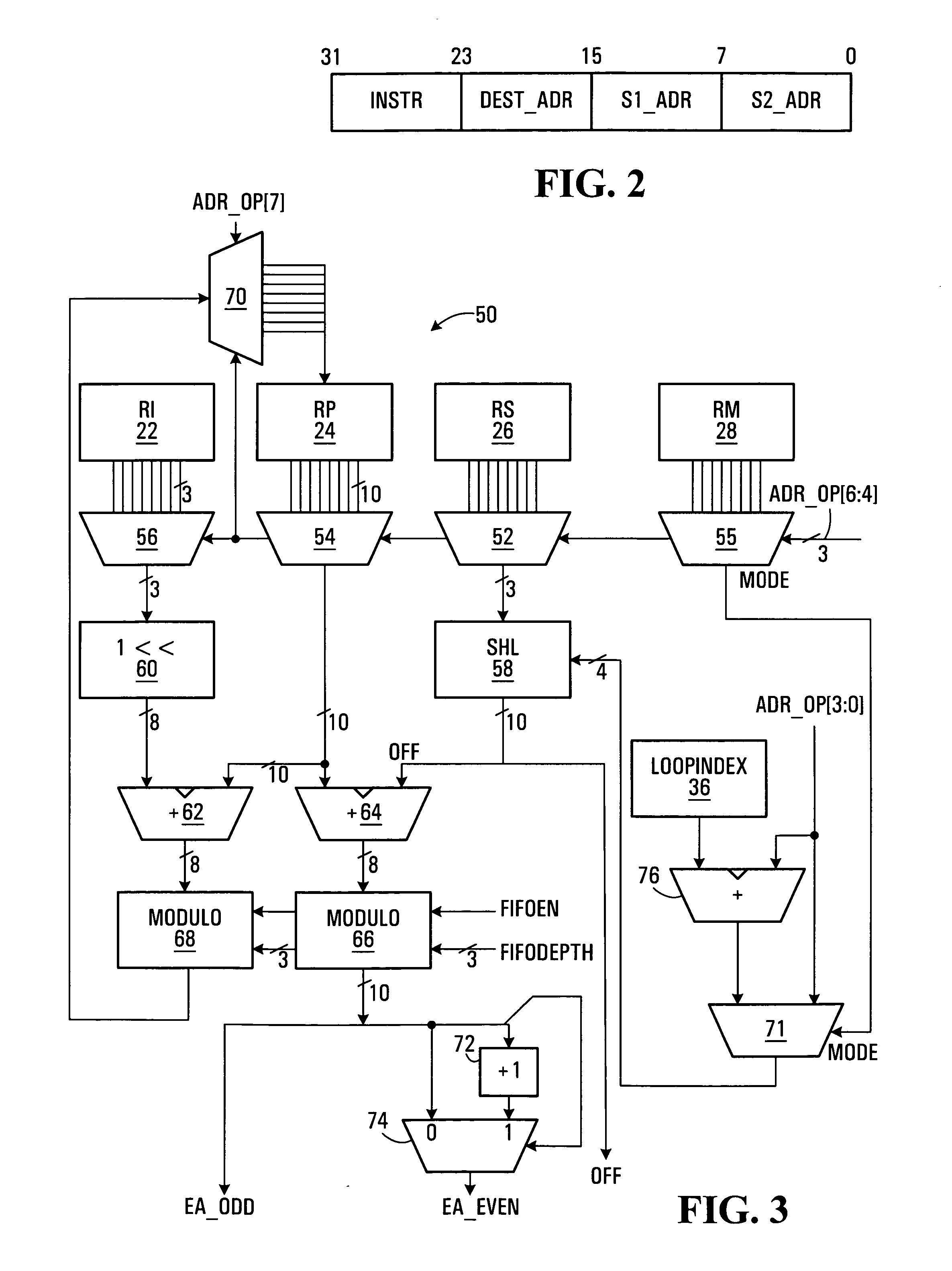

A single instruction, multiple data (SIMD) processor including a plurality of addressing register sets, used to flexibly calculate effective operand source and destination memory addresses is disclosed. Two or more address generators calculate effective addresses using the register sets. Each register set includes a pointer register, and a scale register. An address generator forms effective addresses from a selected register set's pointer register and scale register; and an offset. For example, the effective memory address may be formed by multiplying the scale value by an offset value and summing the pointer and the scale value multiplied by the offset value.

Owner:AVAGO TECH INT SALES PTE LTD

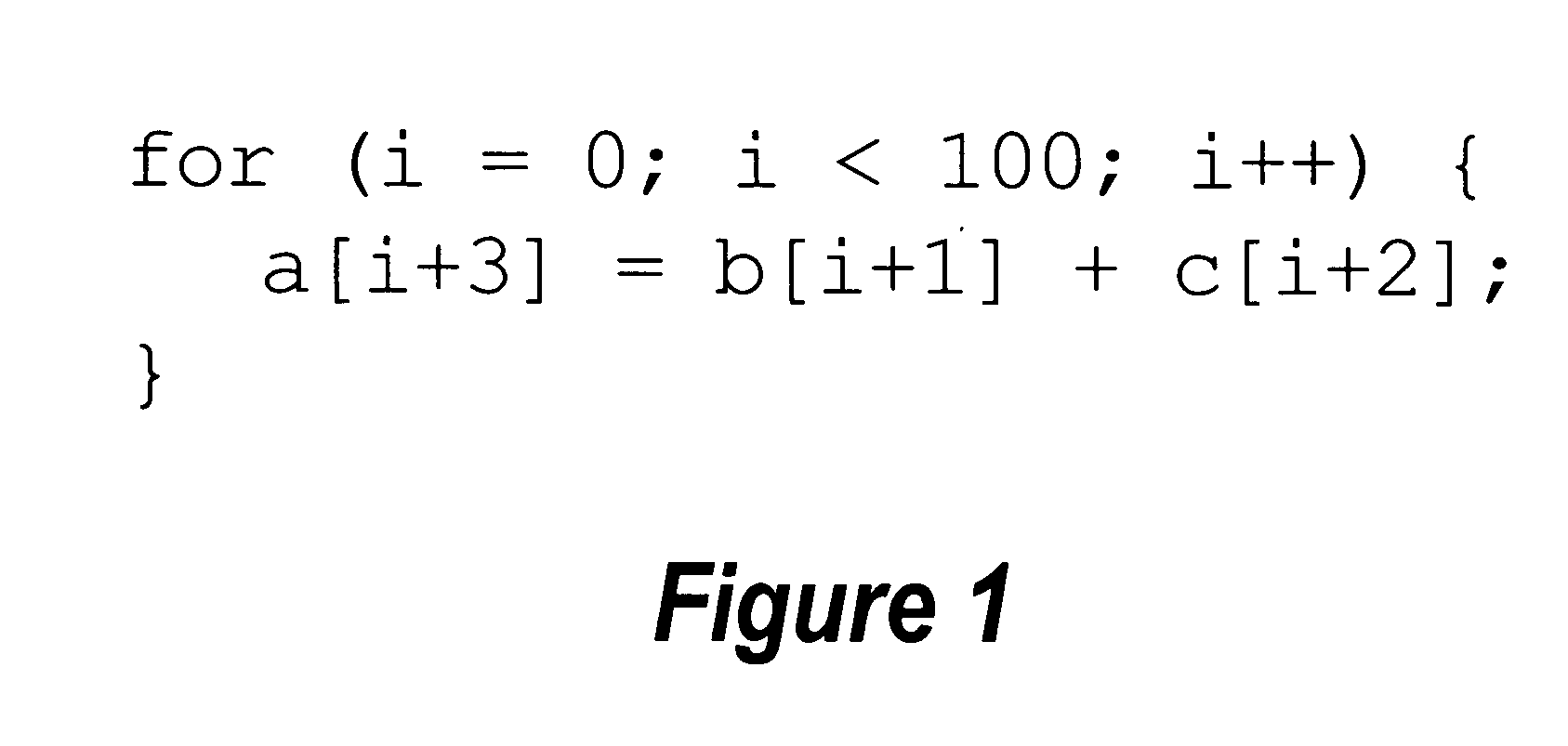

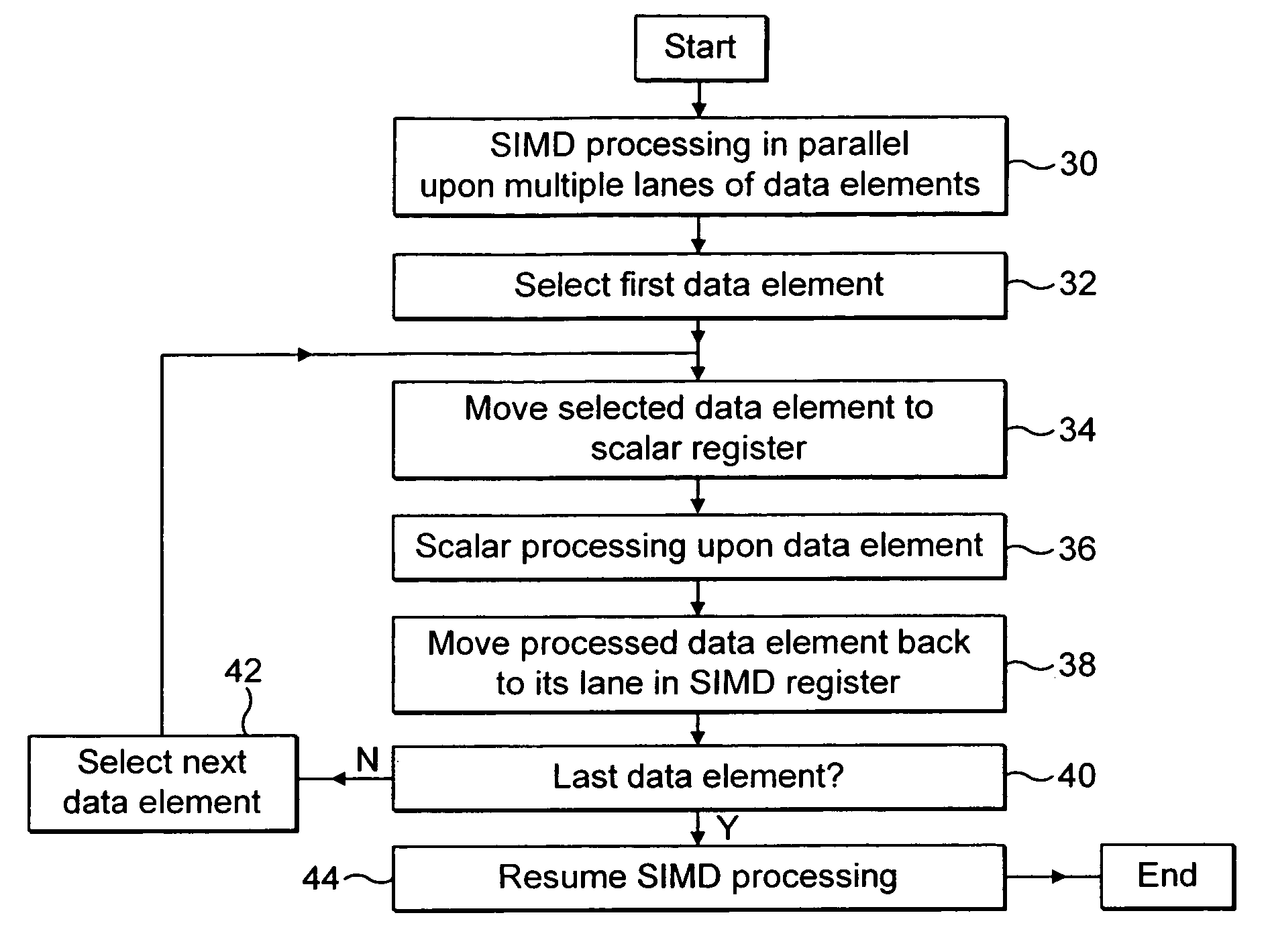

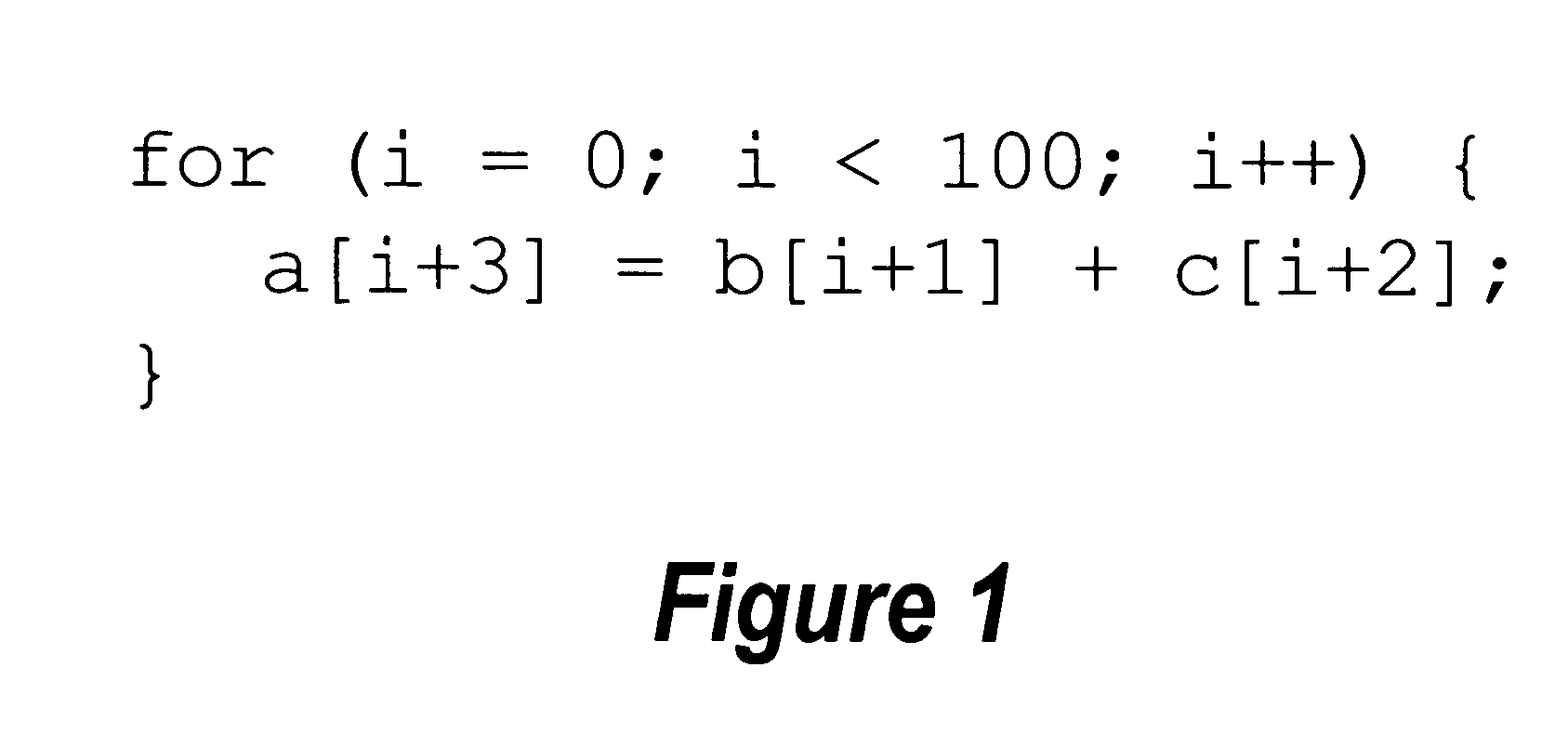

Framework for generating mixed-mode operations in loop-level simdization

InactiveUS20050273769A1Software engineeringSpecific program execution arrangementsProgram instructionParallel computing

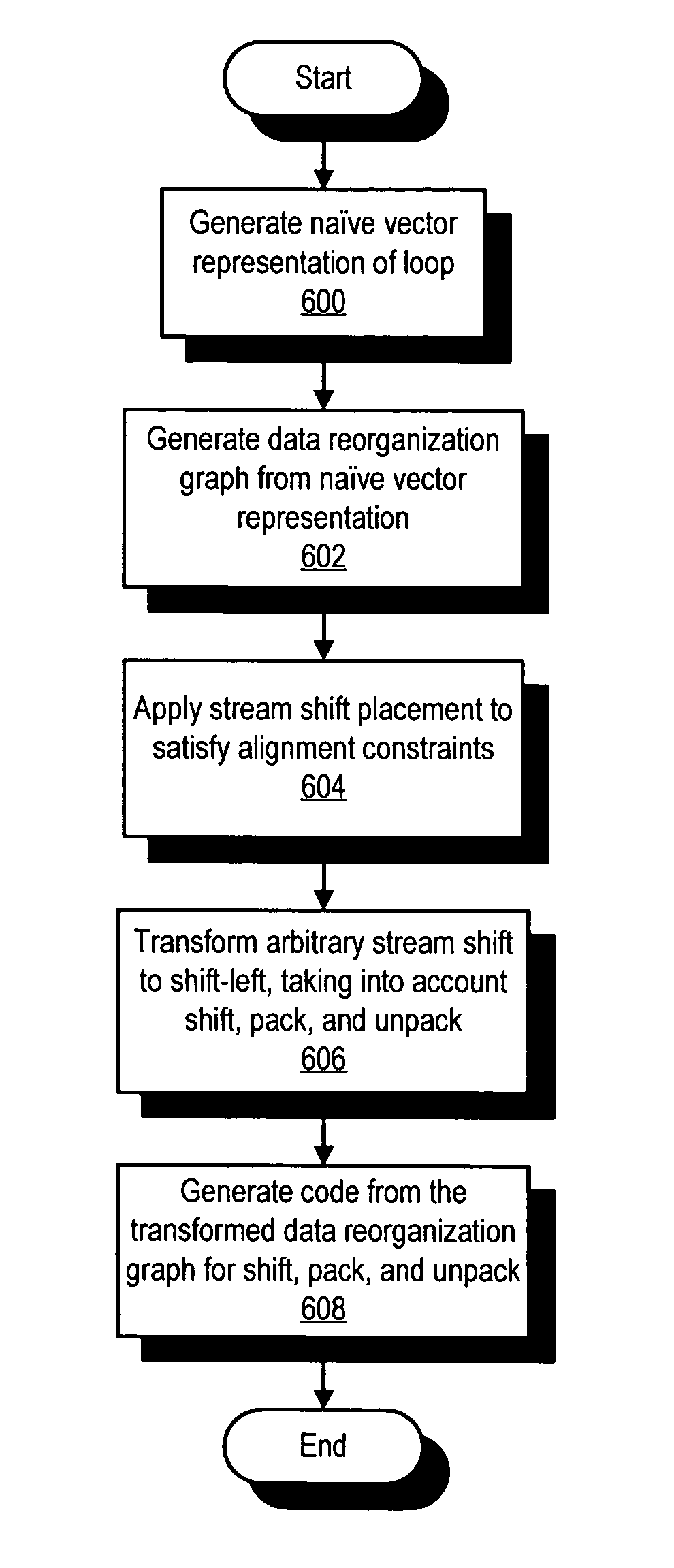

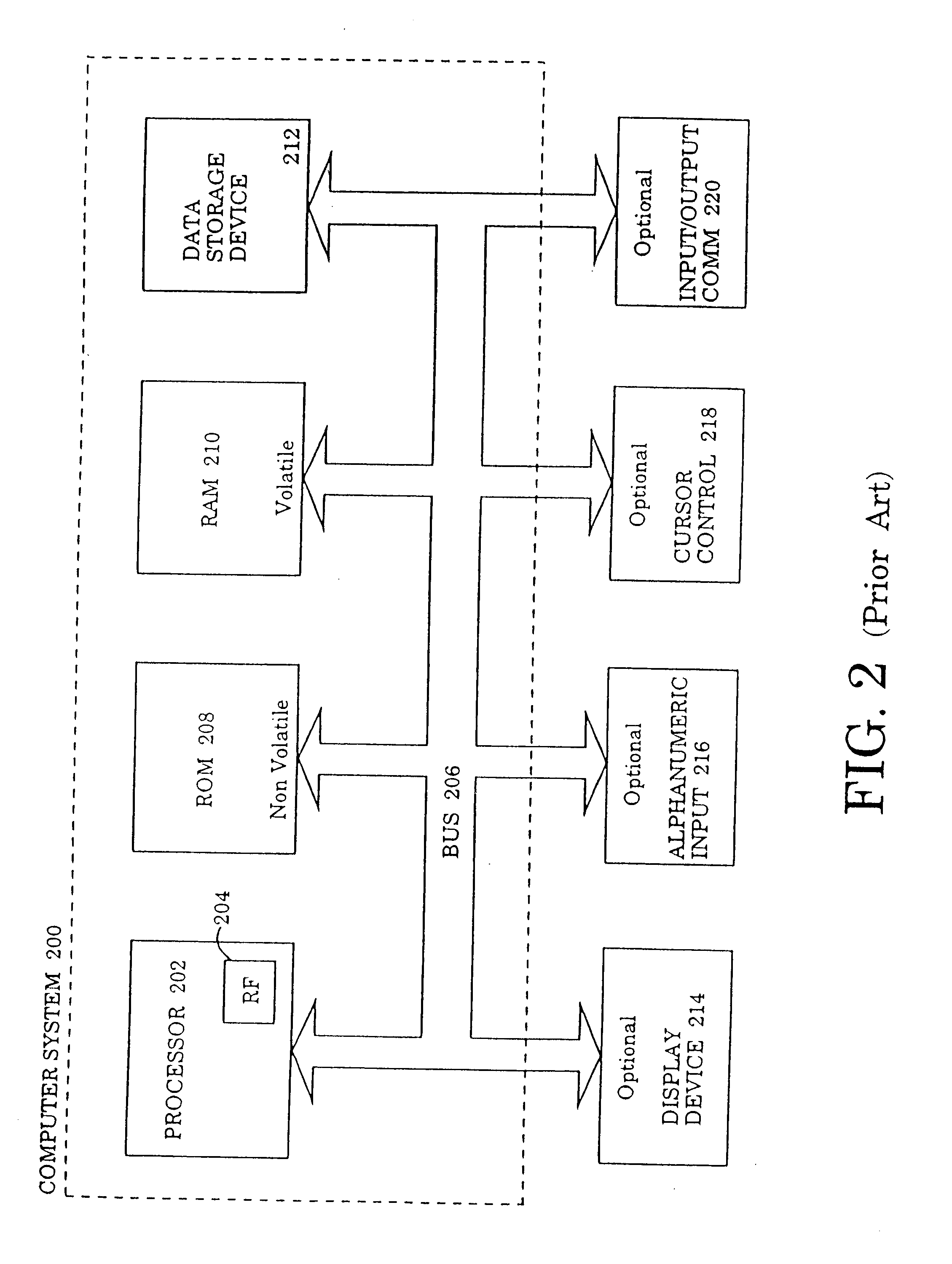

A method, computer program product, and information handling system for generating mixed-mode operations in the compilation of program code for processors having vector or SIMD processing units is disclosed. In a preferred embodiment of the present invention, program instructions making up the body of a loop are abstracted into virtual vector instructions. These virtual vector instructions are treated, for initial code optimization purposes, as vector instructions (i.e., instructions written for the vector unit). The virtual vector instructions are eventually expanded into native code for the target processor, at which time a determination is made for each virtual vector instruction as to whether to expand the virtual vector instruction into native vector instructions, into native scalar instructions, into calls to pre-defined library functions, or into a combination of these. A cost model is used to determine the optimal choice of expansion based on hardware / software constraints, performance costs / benefits, and other criteria.

Owner:IBM CORP

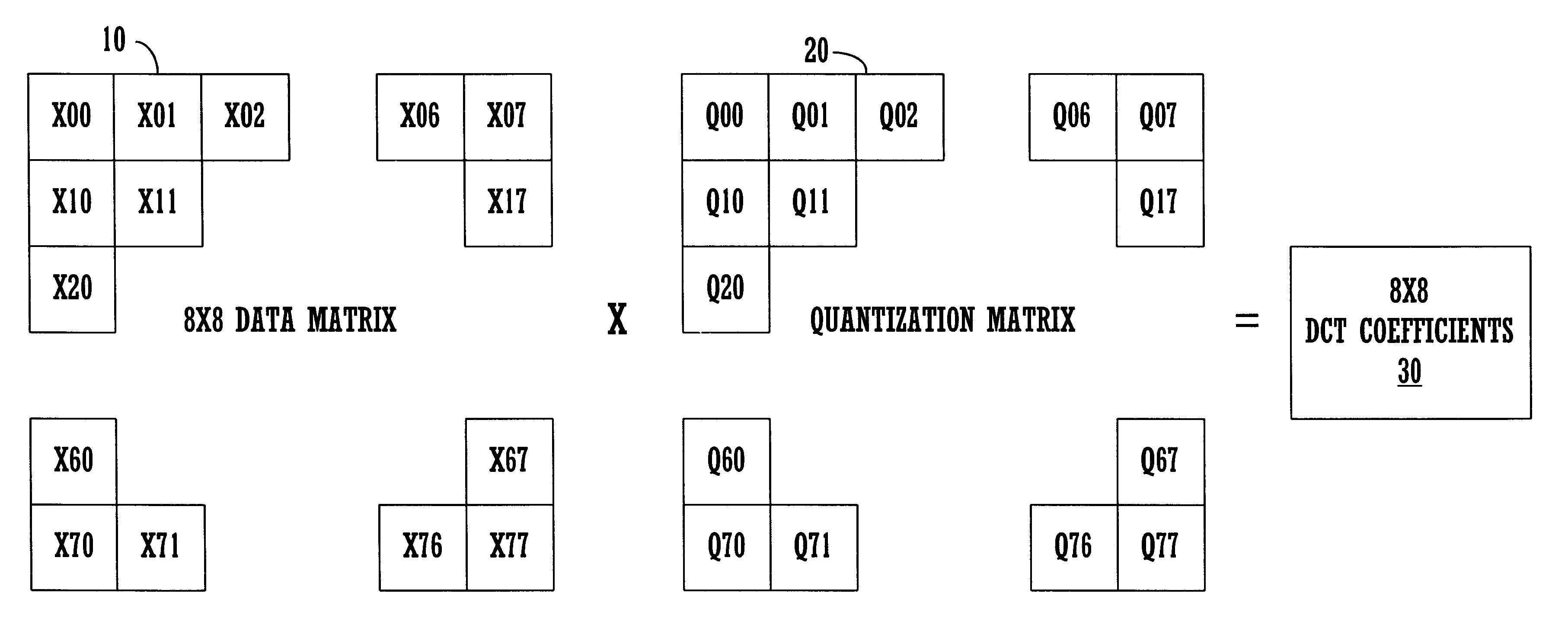

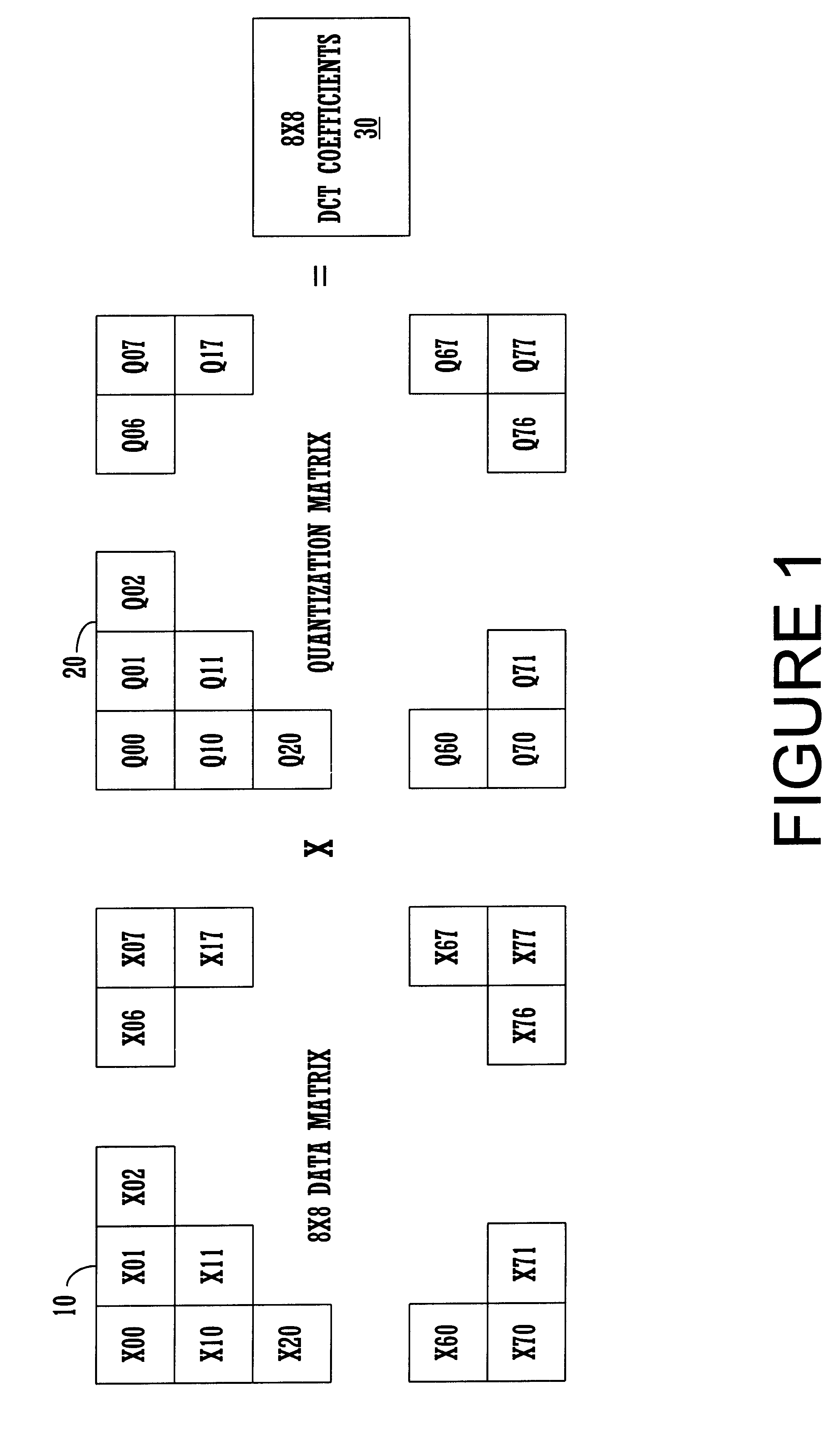

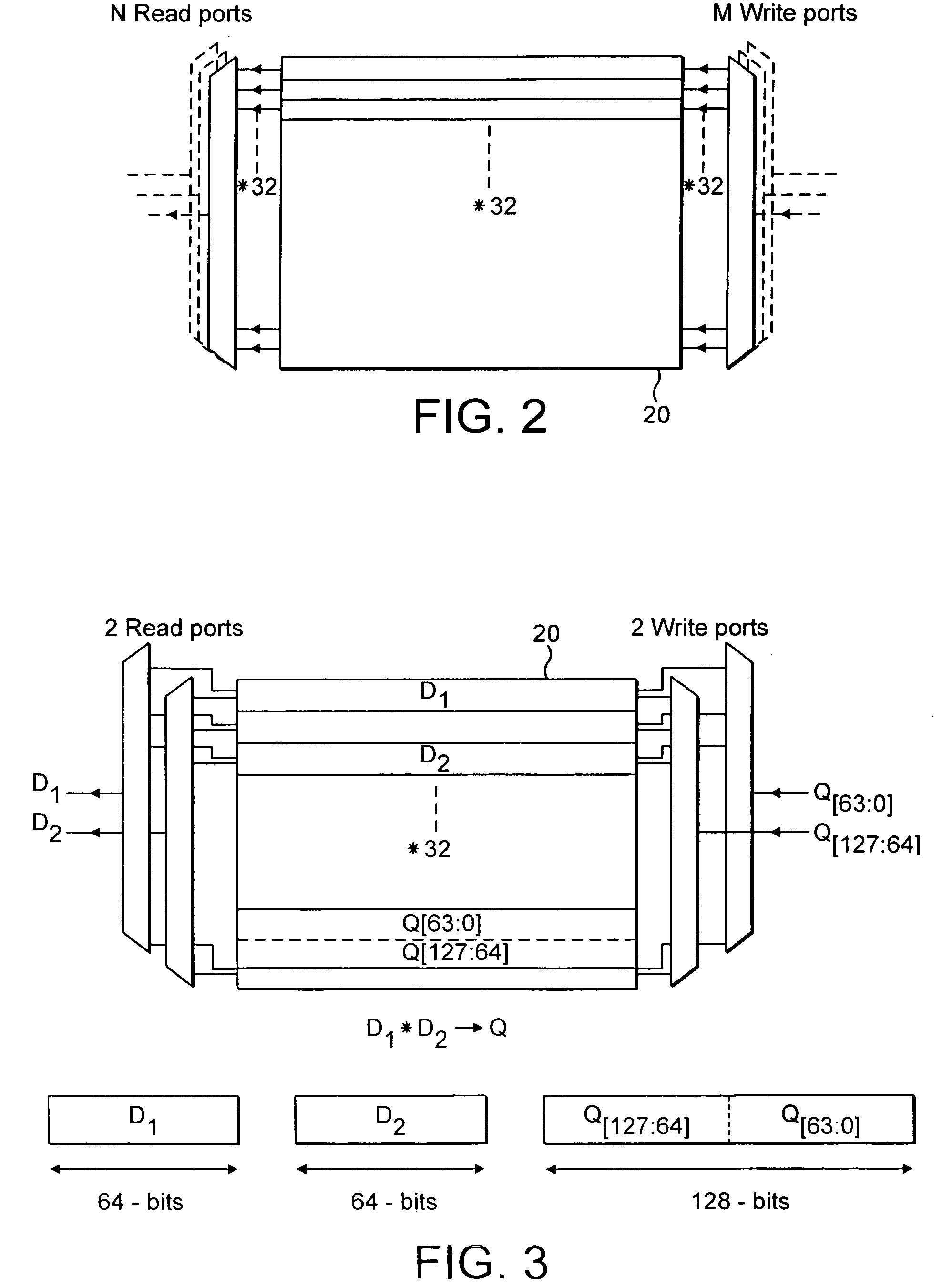

Efficient de-quantization in a digital video decoding process using a dynamic quantization matrix for parallel computations

InactiveUS6507614B1Efficient productionPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningDigital videoArray data structure

An efficient digital video (DV) decoder process that utilizes a specially constructed quantization matrix allowing an inverse quantization subprocess to perform parallel computations, e.g., using SIMD processing, to efficiently produce a matrix of DCT coefficients. The present invention utilizes a first look-up table (for 8x8 DCT) which produces a 15-valued quantization scale based on class number information and a QNO number for an 8x8 data block ("data matrix") from an input encoded digital bit stream to be decoded. The 8x8 data block is produced from a deframing and variable length decoding subprocess. An individual 8-valued segment of the 15-value output array is multiplied by an individual 8-valued segment, e.g., "a row," of the 8x8 data matrix to produce an individual row of the 8x8 matrix of DCT coefficients ("DCT matrix"). The above eight multiplications can be performed in parallel using a SIMD architecture to simultaneously generate a row of eight DCT coefficients. In this way, eight passes through the 8x8 block are used to produce the entire 8x8 DCT matrix, in one embodiment consuming only 33 instructions per 8x8 block. After each pass, the 15-valued output array is shifted by one value position for proper alignment with its associated row of the data matrix. The DCT matrix is then processed by an inverse discrete cosine transform subprocess that generates decoded display data. A second lookup table can be used for 2x4x8 DCT processing.

Owner:SONY ELECTRONICS INC +1

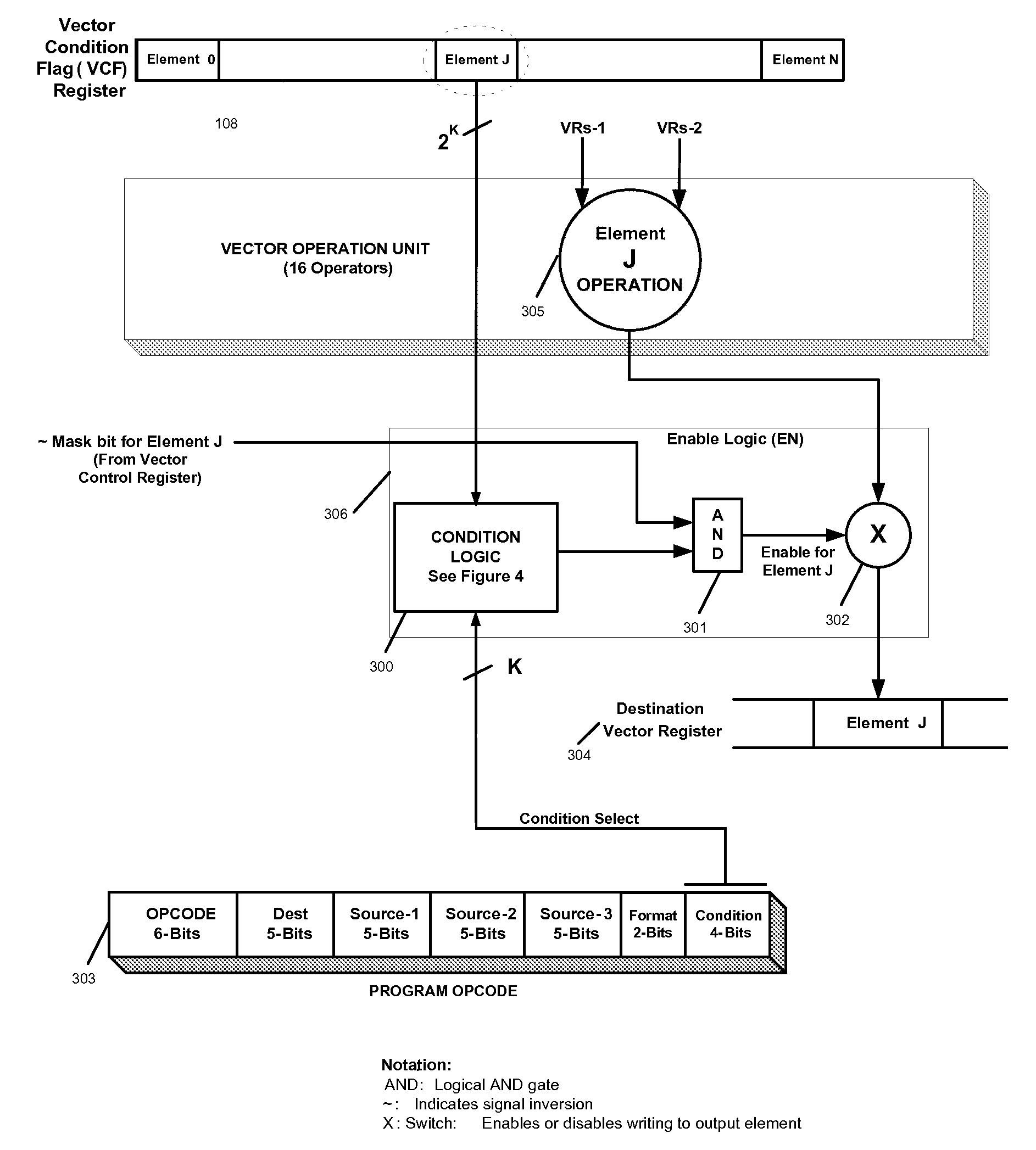

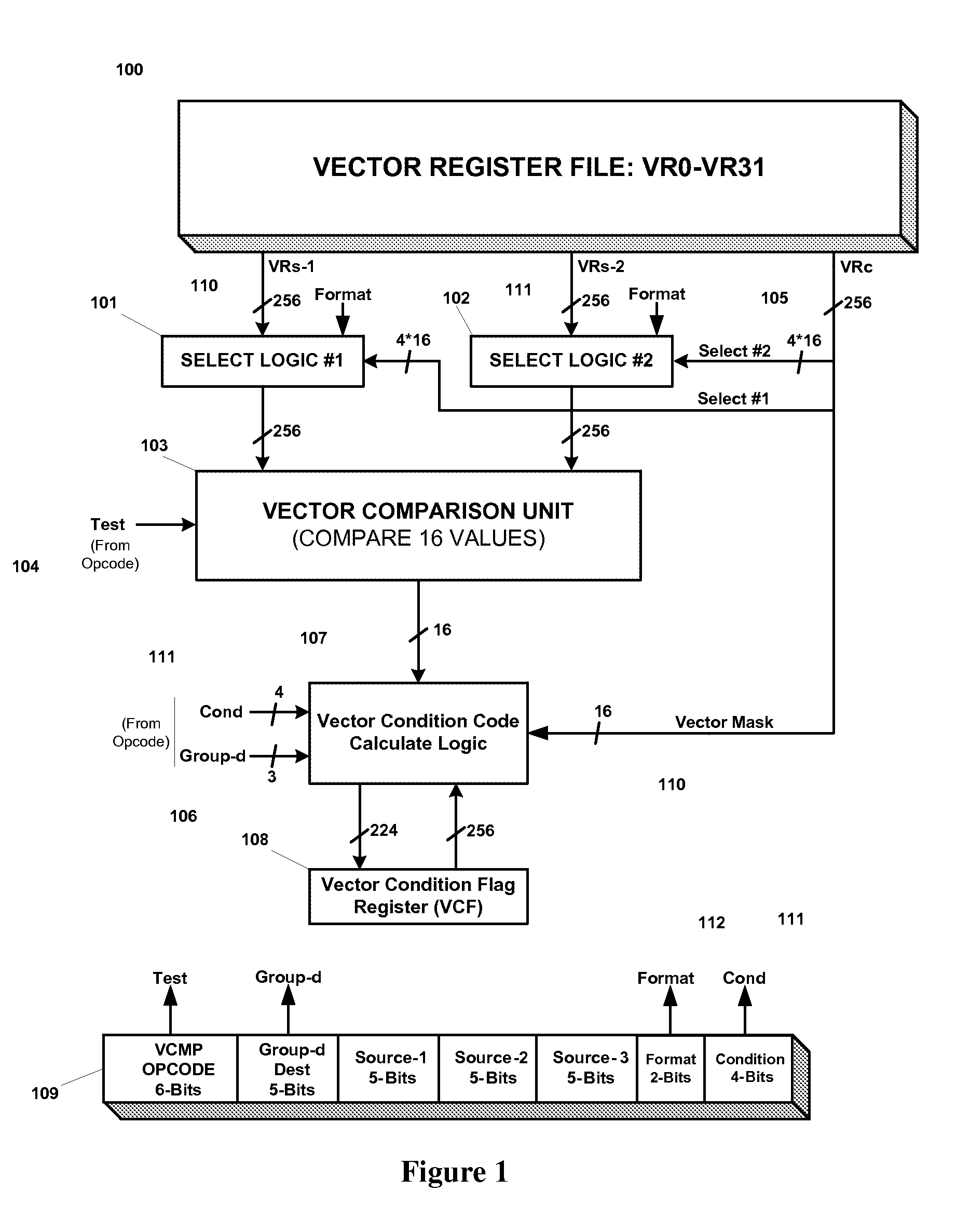

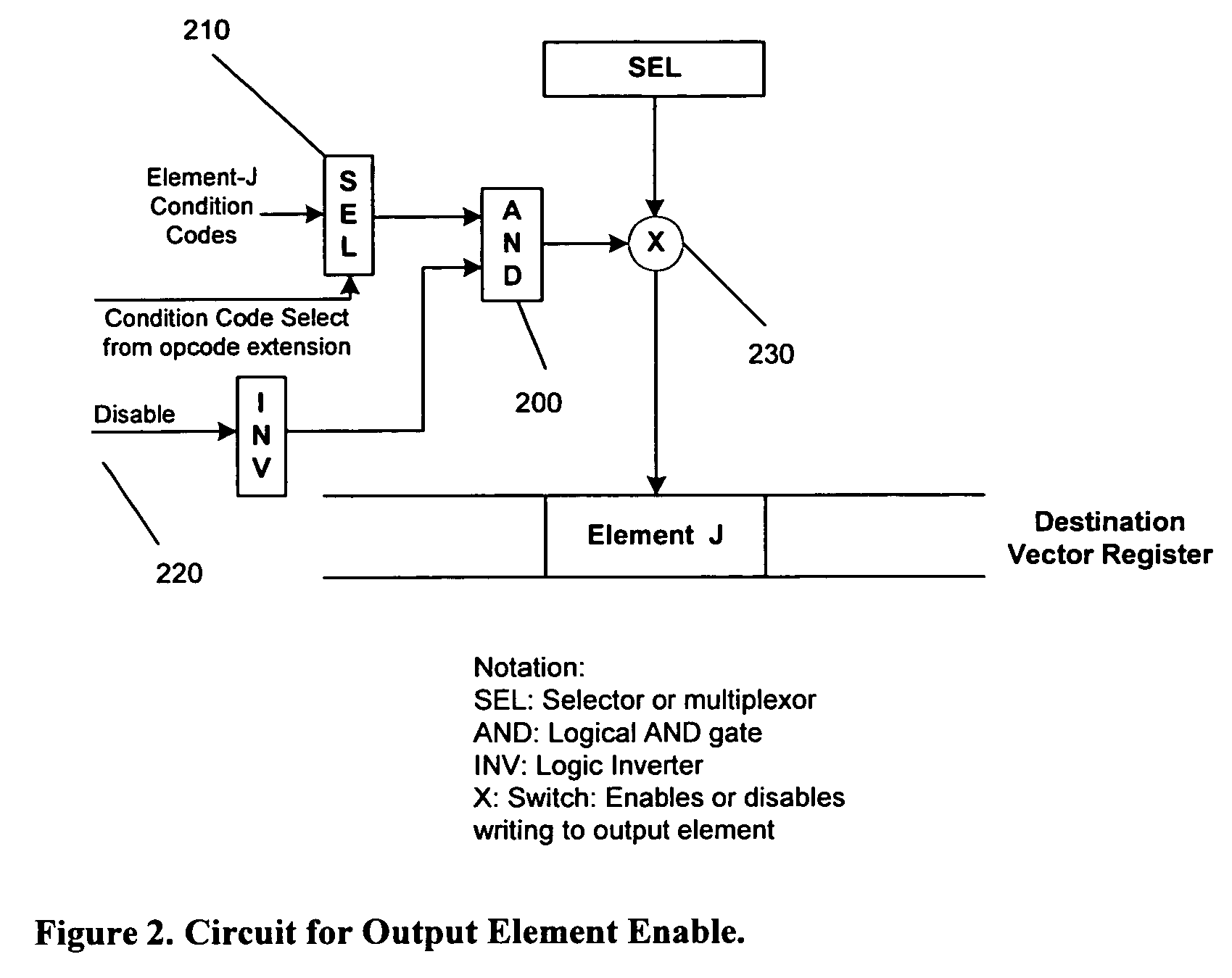

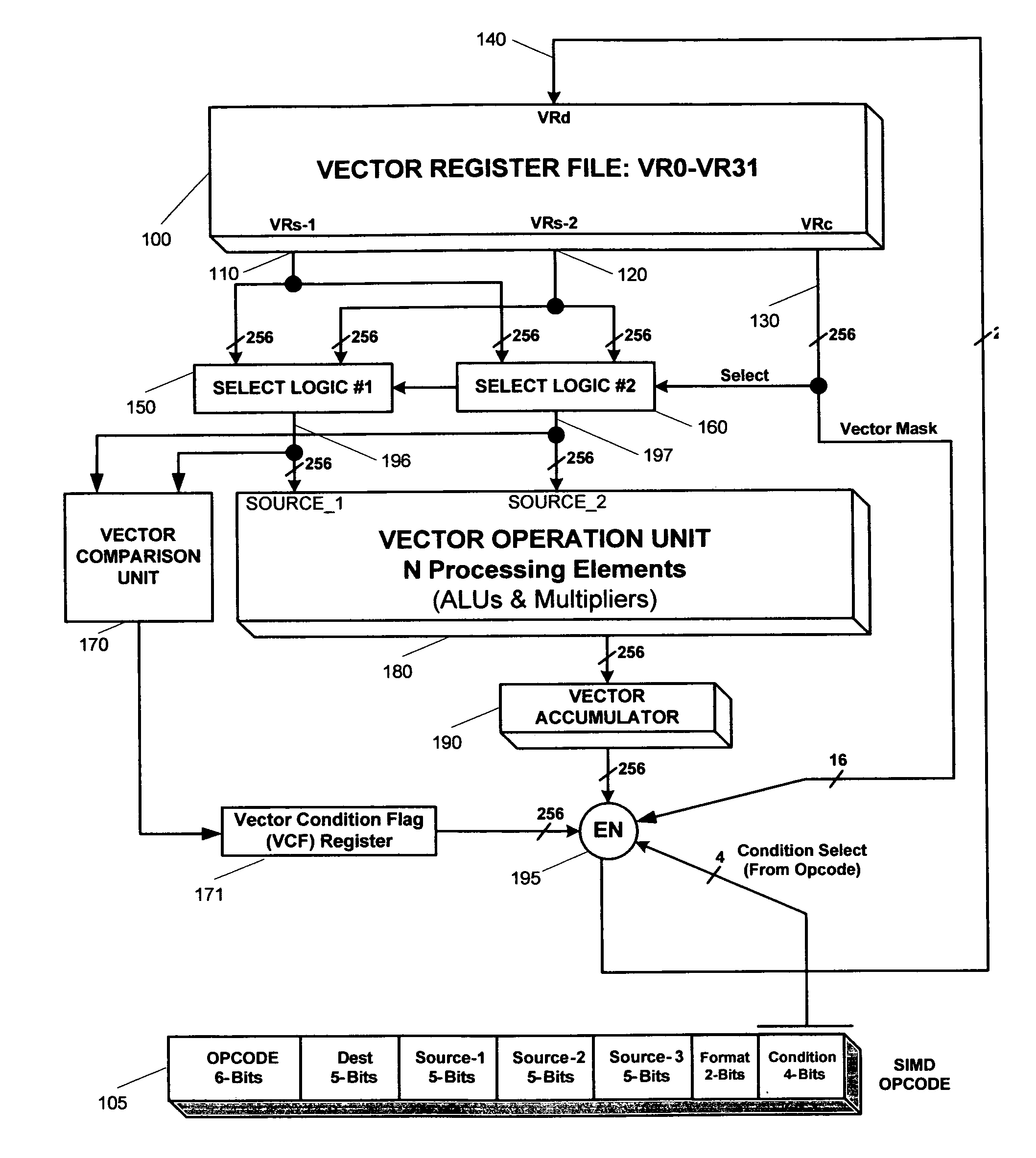

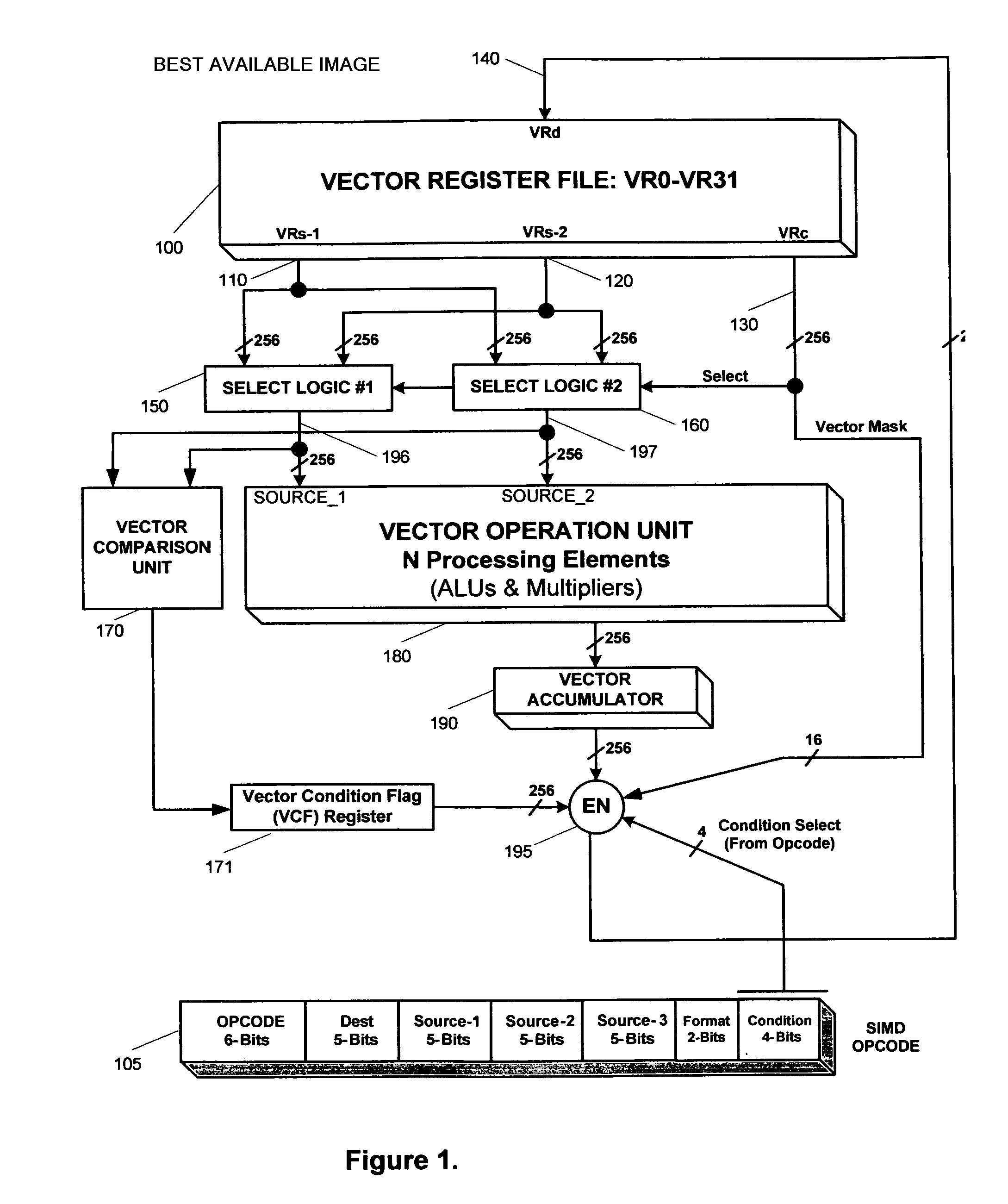

Efficient handling of vector high-level language conditional constructs in a SIMD processor

InactiveUS7793084B1Minimize the numberLow costConditional code generationDigital computer detailsVector elementSimd processing

The present invention provides an efficient method to implement nested if-then-else conditional statements in a SIMD processor, which requires only one vector compare instruction for both if and else parts of the conditional construct. No stack and stack-handling instructions are needed for vector condition codes. Two condition code flag bits representing if and else parts of testing per element provide for nesting of multiple if-then-else. All SIMD instructions are conditional including the vector compare instruction, and this provides a method for aggregating multiple conditions in nested if-then-else statements. M full levels of if-then-else nesting requires (2M−1) nodes or vector test instructions and 2M+1 condition code flags per vector element. Also, capability to compare any element of first source vector register with any element of second source vector is provided.

Owner:CUE BIOTECH

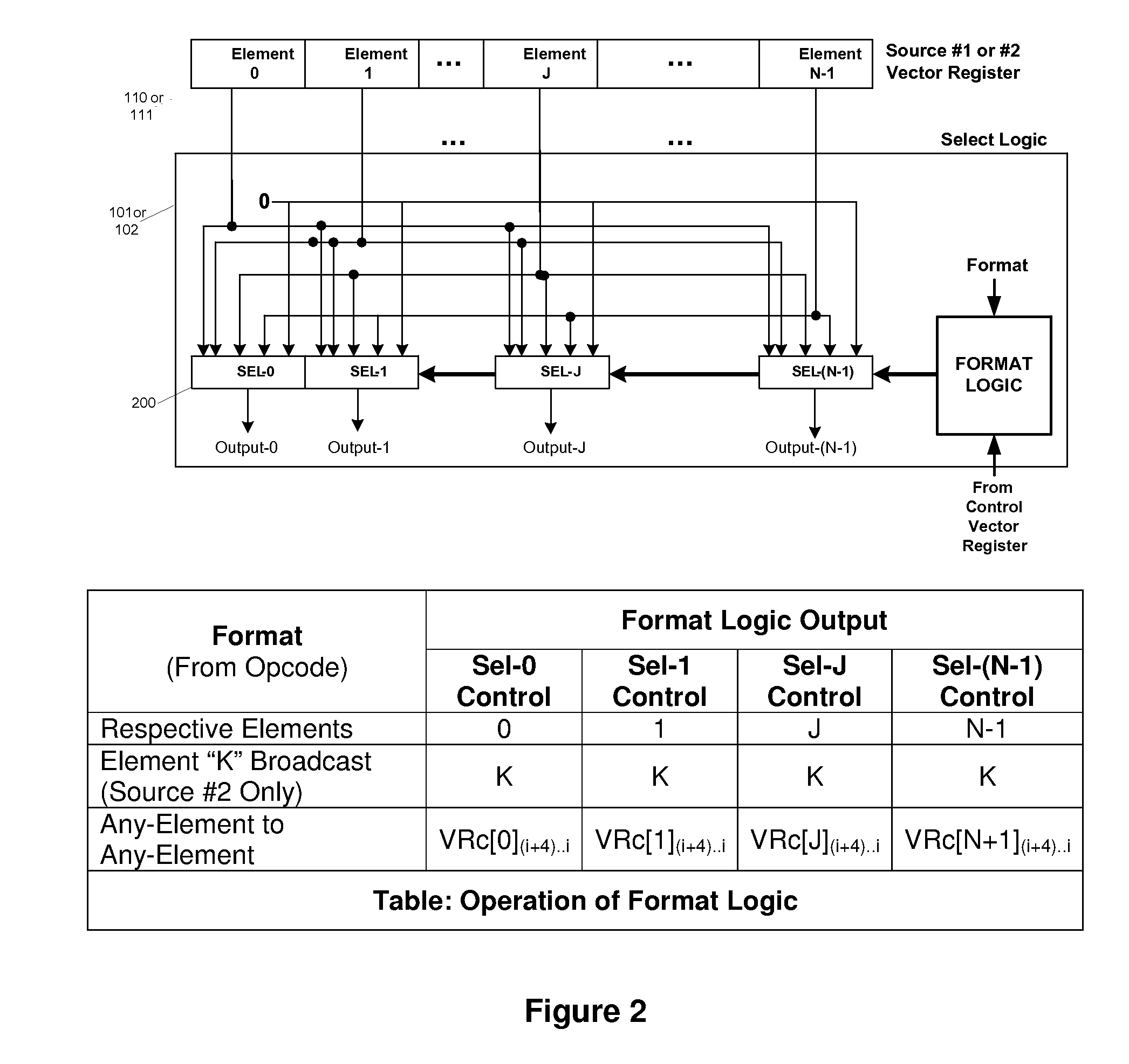

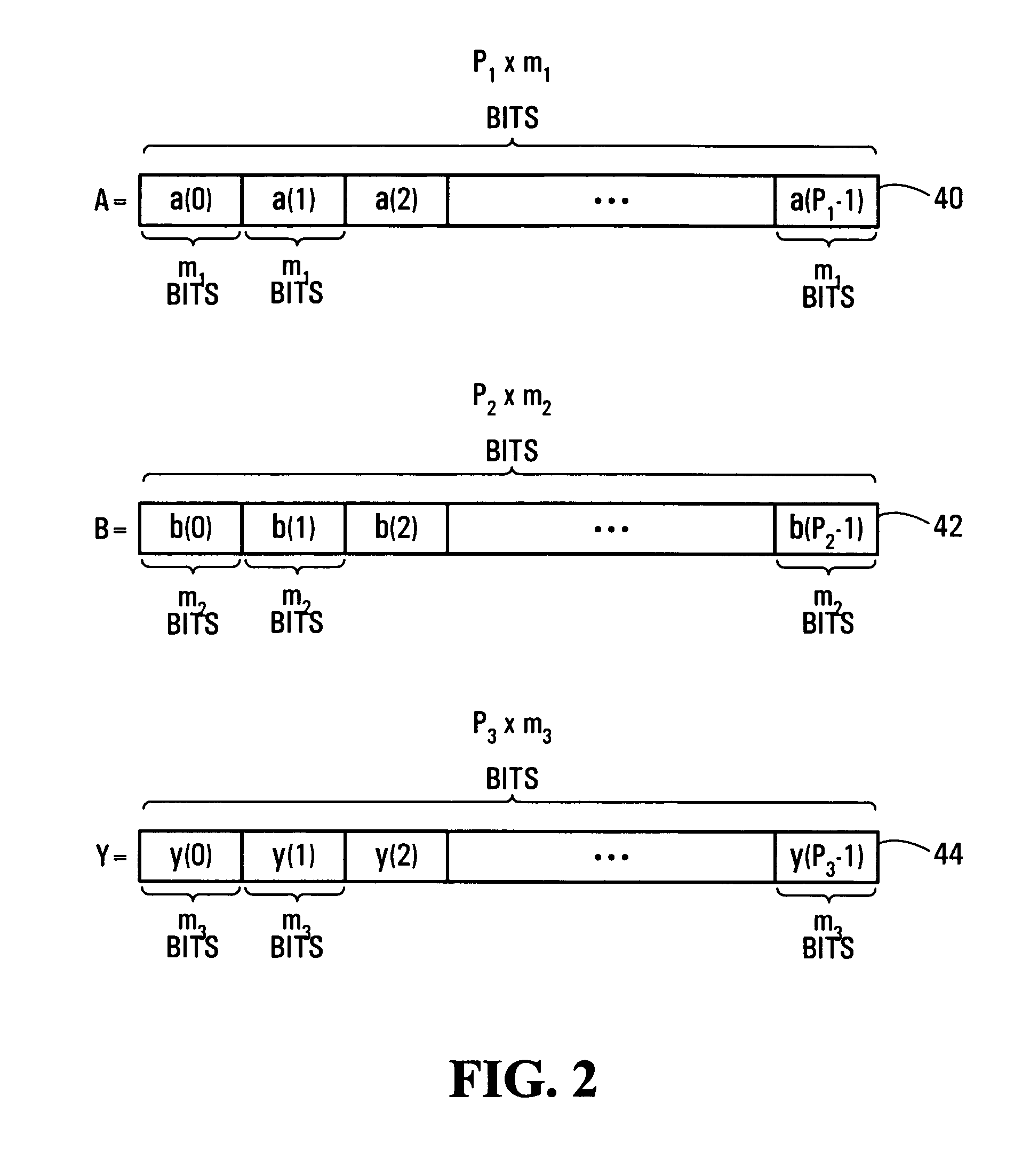

Alignment and ordering of vector elements for single instruction multiple data processing

InactiveUS7197625B1Specific program execution arrangementsMemory systemsProcessor registerVector element

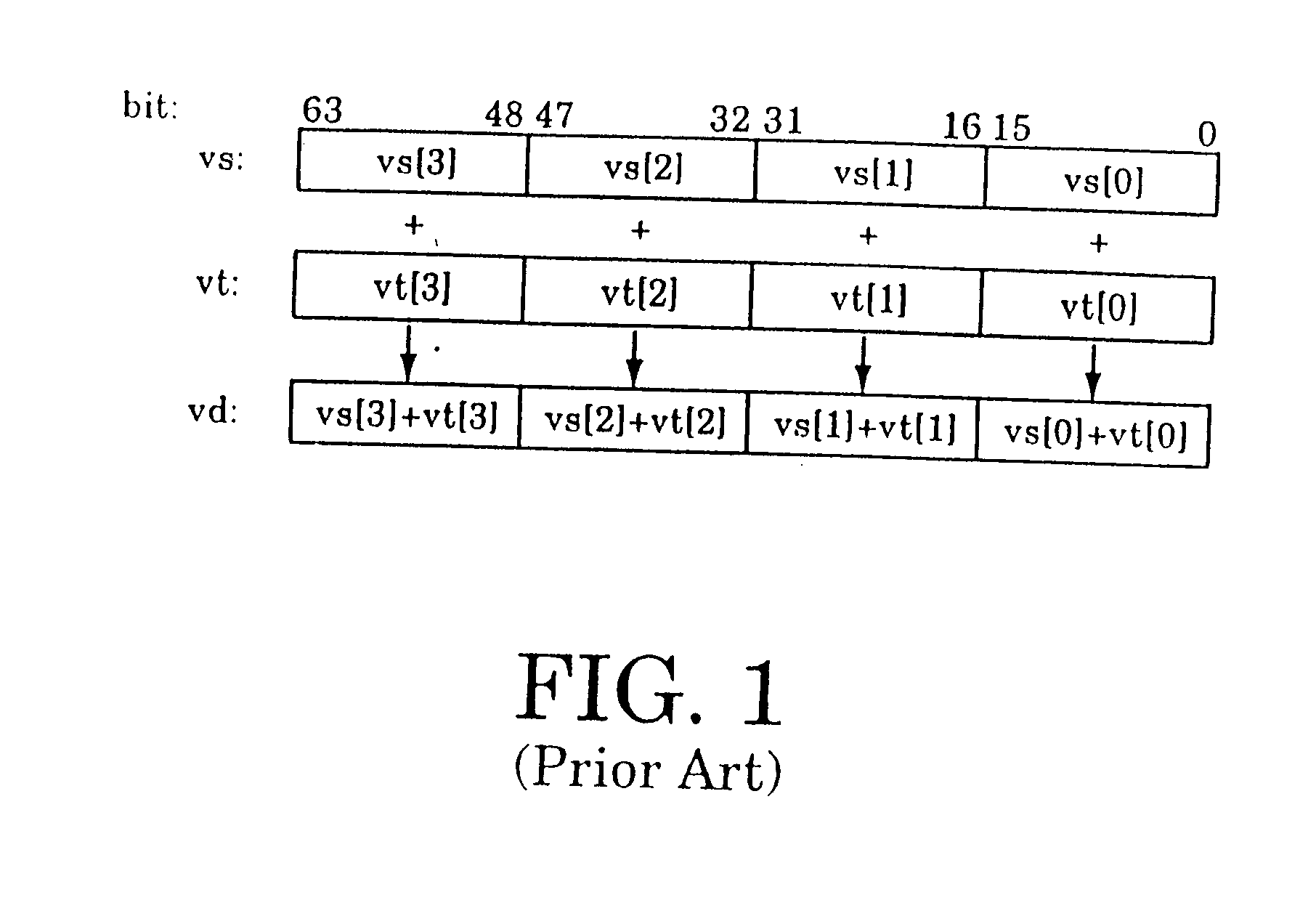

The present invention provides alignment and ordering of vector elements for SIMD processing. In the alignment of vector elements for SIMD processing, one vector is loaded from a memory unit into a first register and another vector is loaded from the memory unit into a second register. The first vector contains a first byte of an aligned vector to be generated. Then, a starting byte specifying the first byte of an aligned vector is determined. Next, a vector is extracted from the first register and the second register beginning from the first bit in the first byte of the first register continuing through the bits in the second register. Finally, the extracted vector is replicated into a third register such that the third register contains a plurality of elements aligned for SIMD processing. In the ordering of vector elements for SIMD processing, a first vector is loaded from a memory unit into a first register and a second vector is loaded from the memory unit into a second register. Then, a subset of elements are selected from the first register and the second register. The elements from the subset are then replicated into the elements in the third register in a particular order suitable for subsequent SIMD vector processing.

Owner:ARM FINANCE OVERSEAS LTD

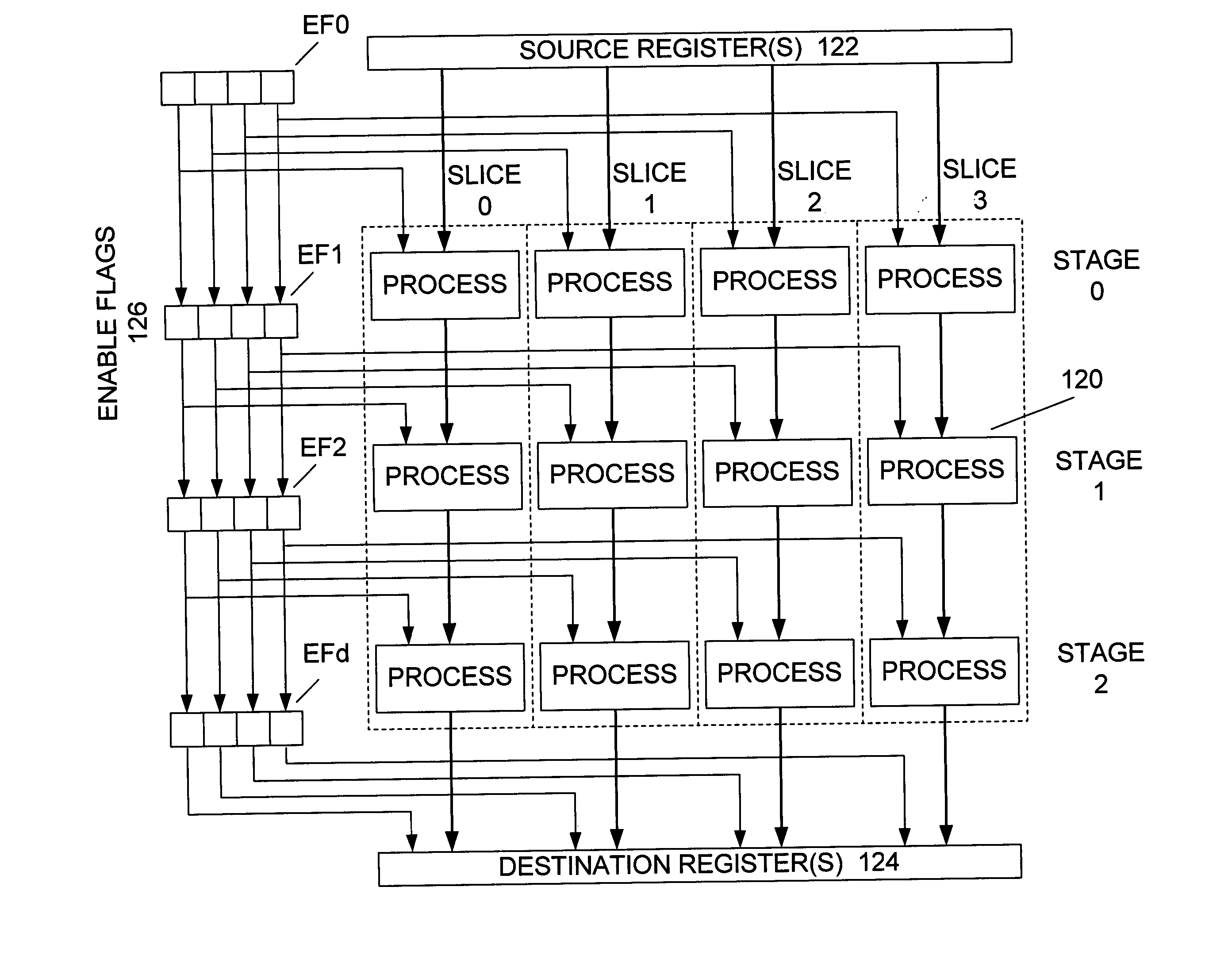

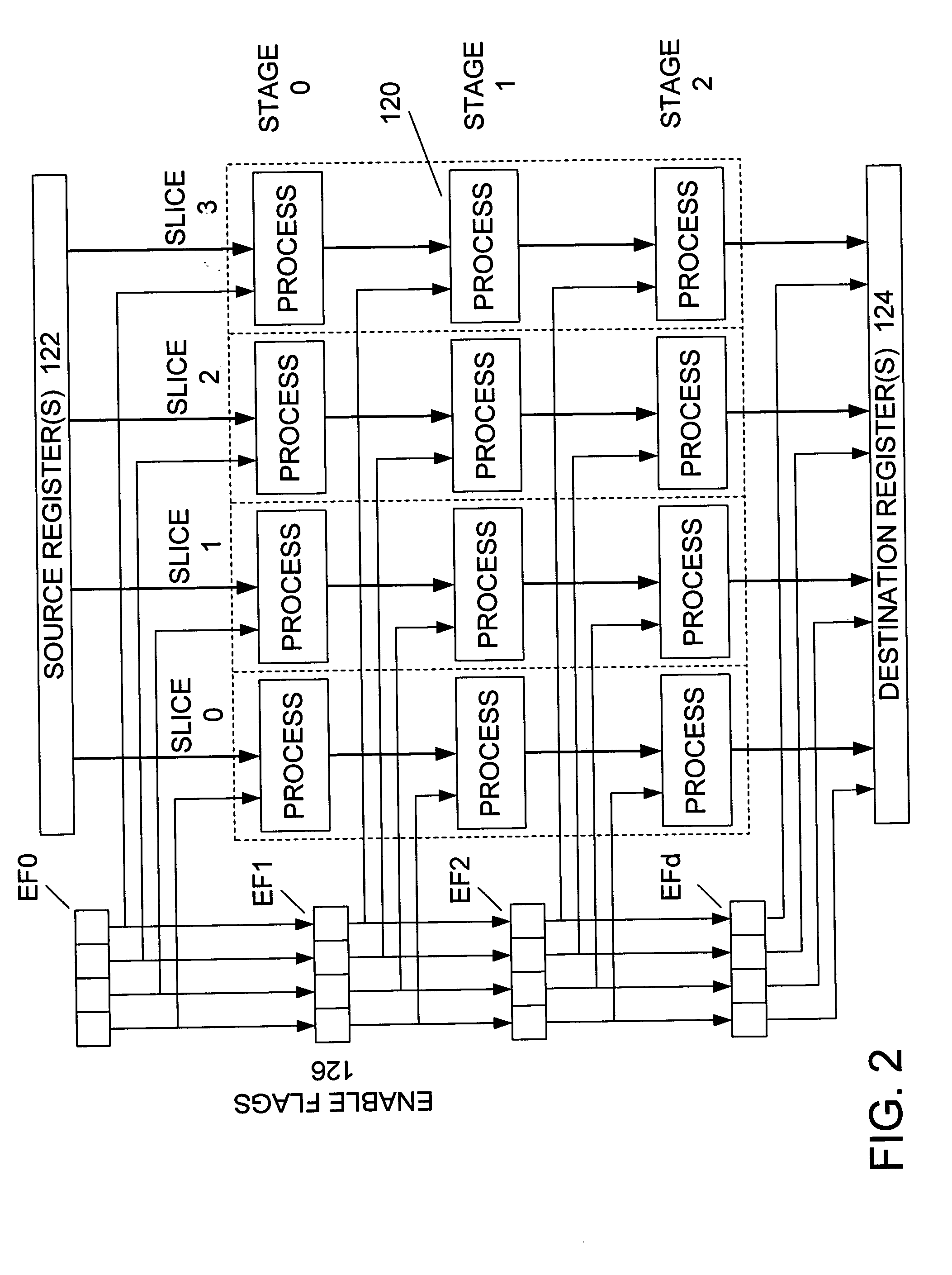

Method and apparatus for enable/disable control of SIMD processor slices

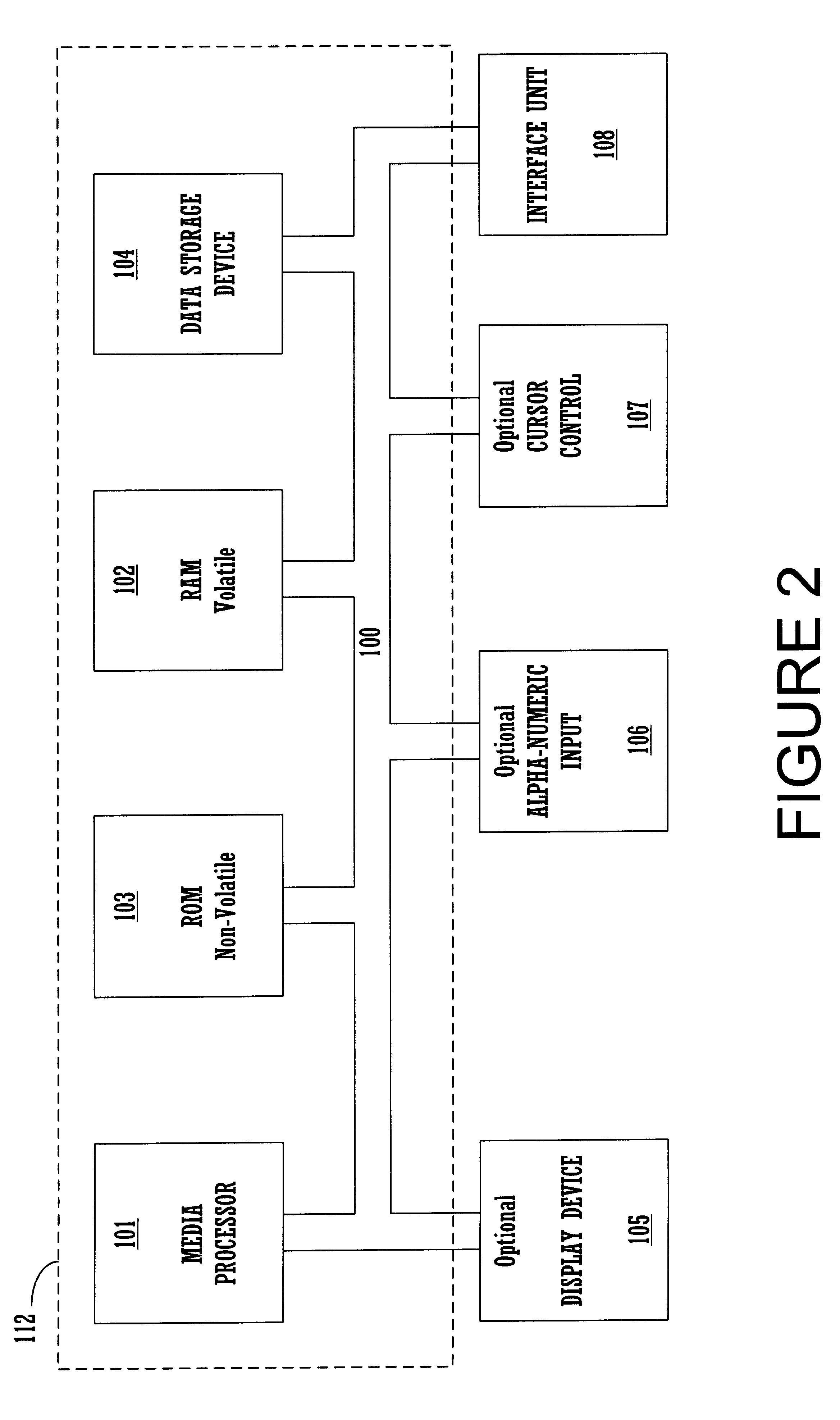

ActiveUS20060155964A1Avoid writingEnergy efficient ICTDigital data processing detailsPathPingComputer architecture

Methods and apparatus provide for disabling at least some data path processing circuits of a SIMD processing pipeline, in which the processing circuits are organized into a matrix of slices and stages, in response to one or more enable flags during a given cycle.

Owner:SONY COMPUTER ENTERTAINMENT INC

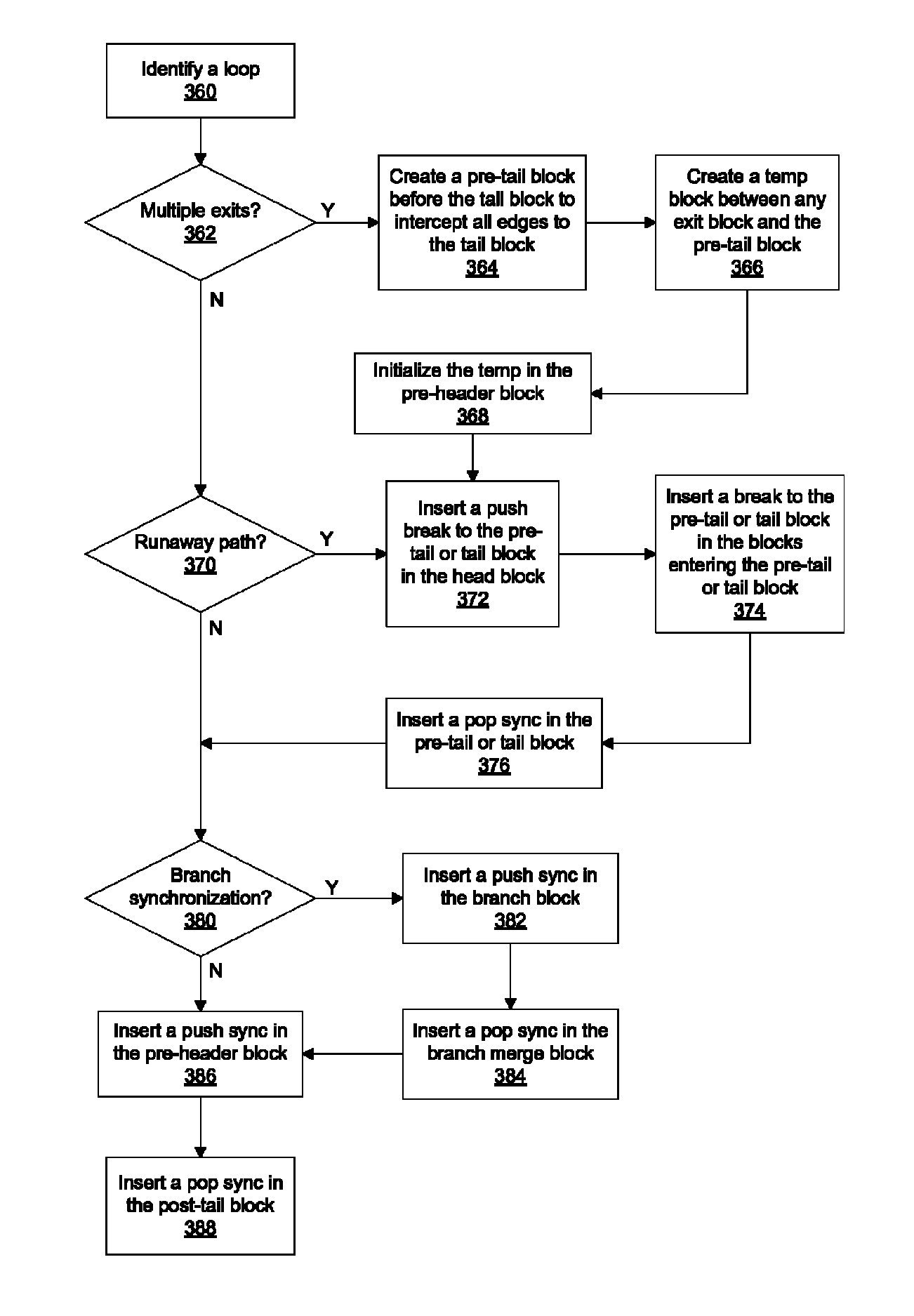

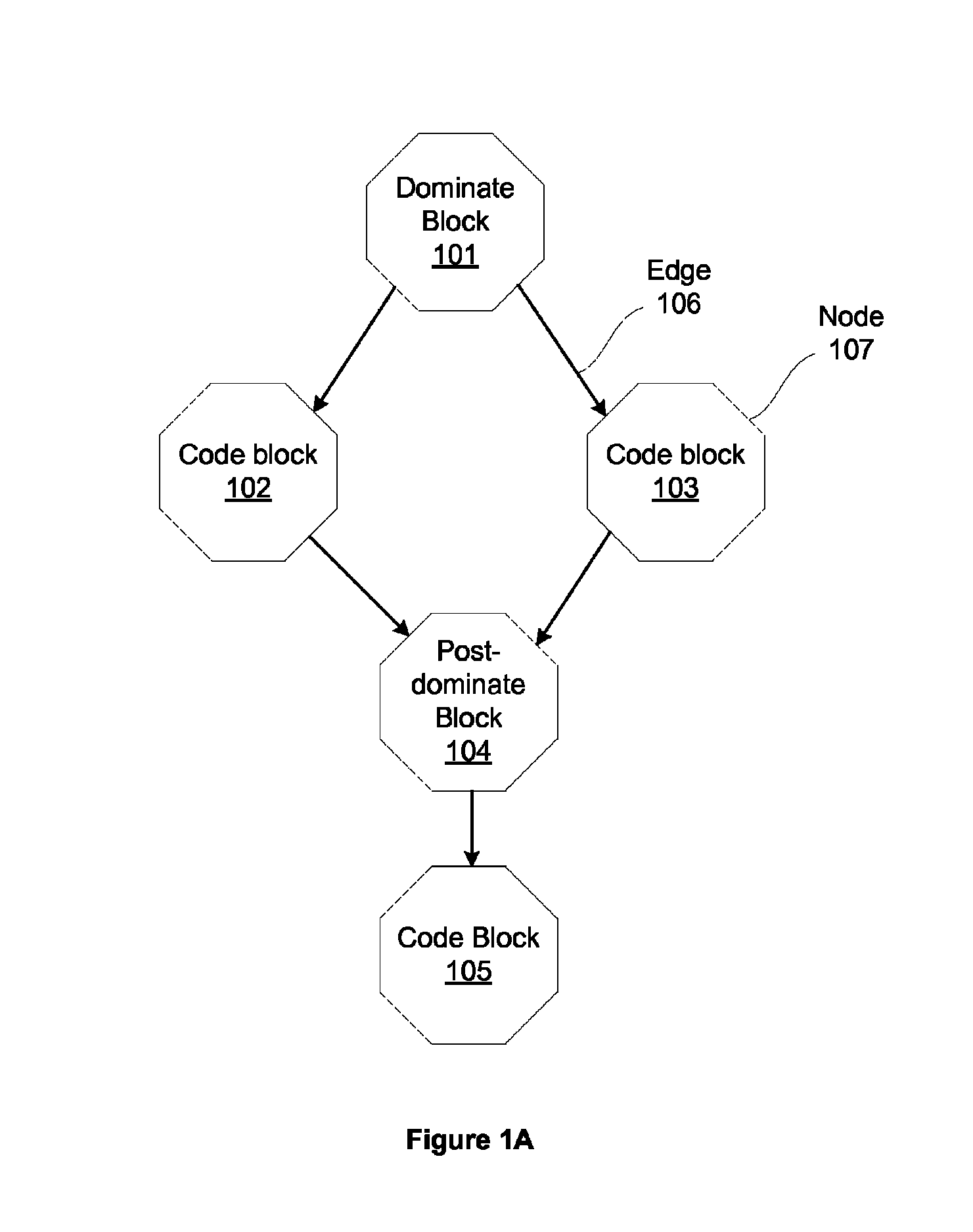

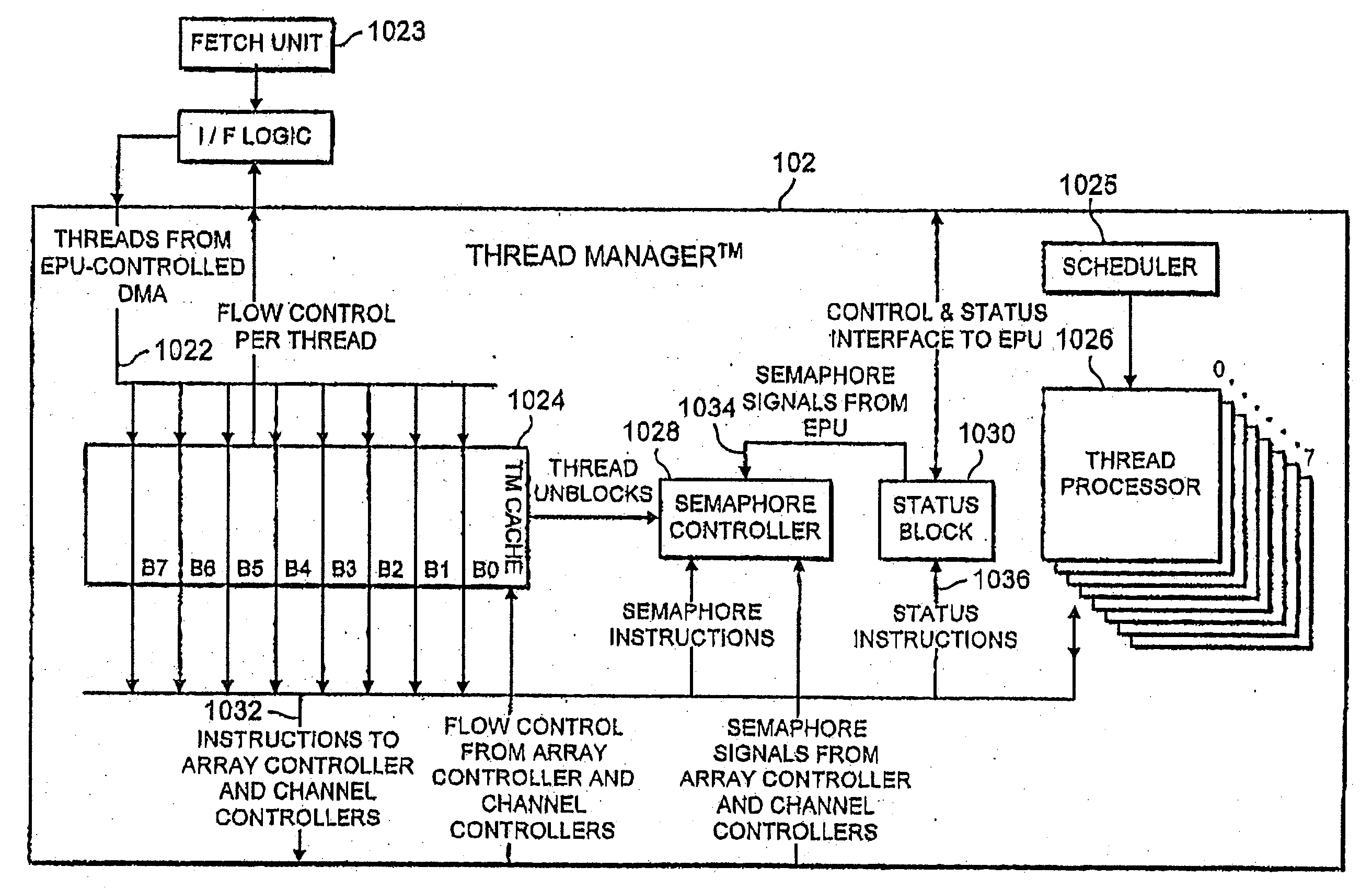

Insertion of multithreaded execution synchronization points in a software program

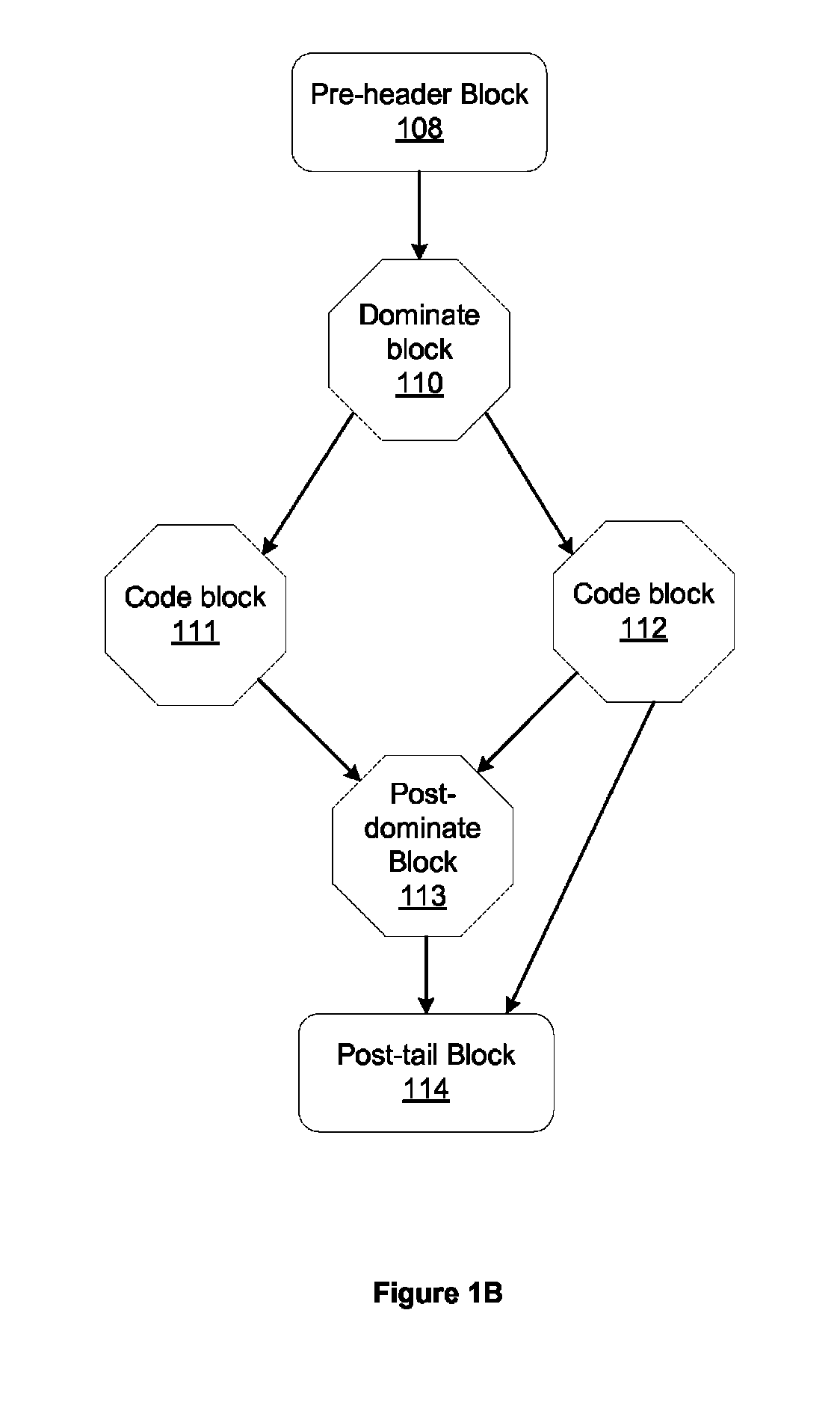

ActiveUS8381203B1Good benefitImprove performanceSoftware engineeringMultiprogramming arrangementsGraphicsMIMD

A compiler is configured to determine a set of points in a flow graph for a software program where multithreaded execution synchronization points are inserted to synchronize divergent threads for SIMD processing. MIMD execution of divergent threads is allowed and execution of the divergent threads proceeds until a synchronization point is reached. When all of the threads reach the synchronization point, synchronous execution resumes. The synchronization points are needed to ensure proper execution of the certain instructions that require synchronous execution as defined in some graphics APIs and when synchronous execution improves performance based on a SIMD architecture.

Owner:NVIDIA CORP

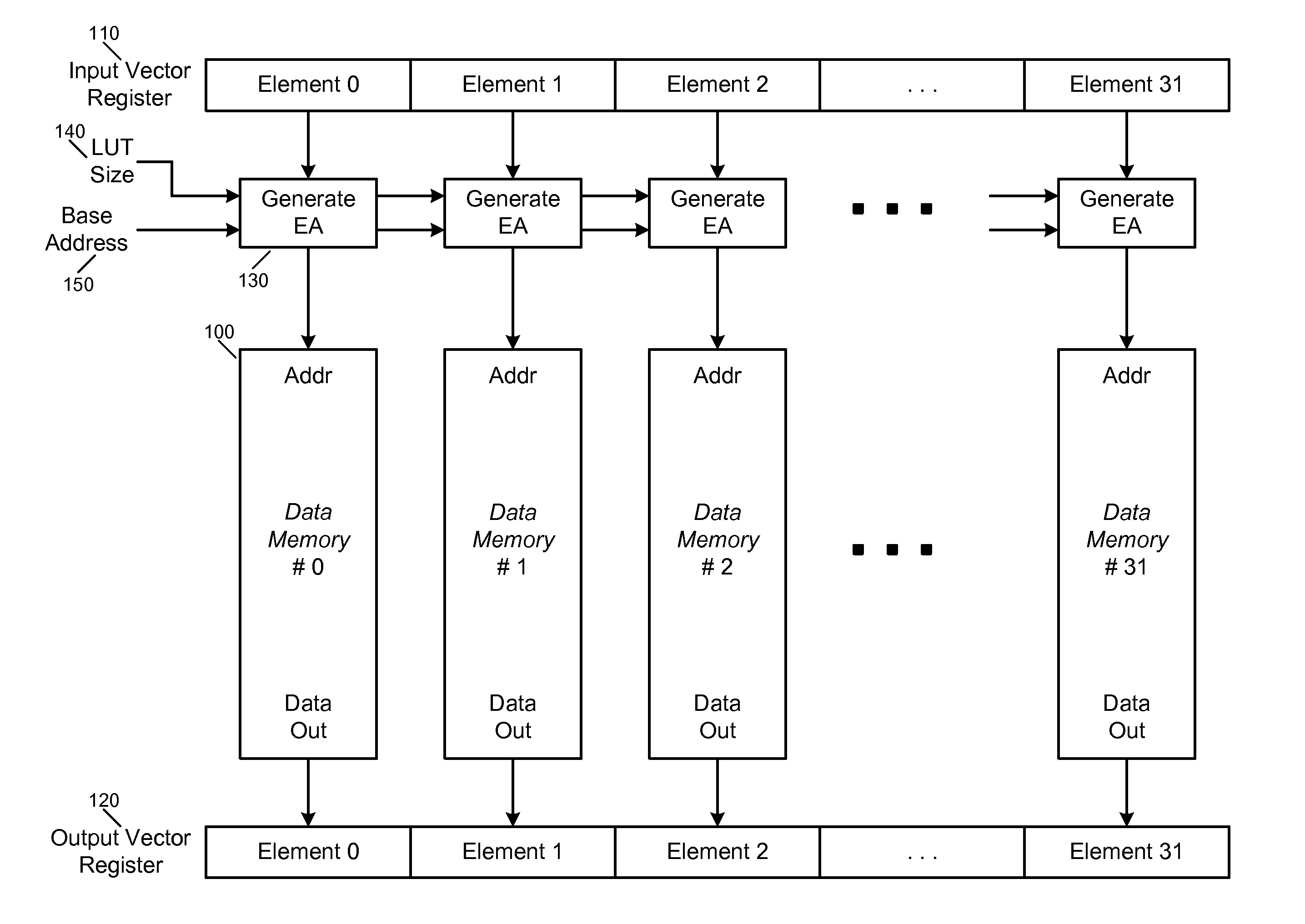

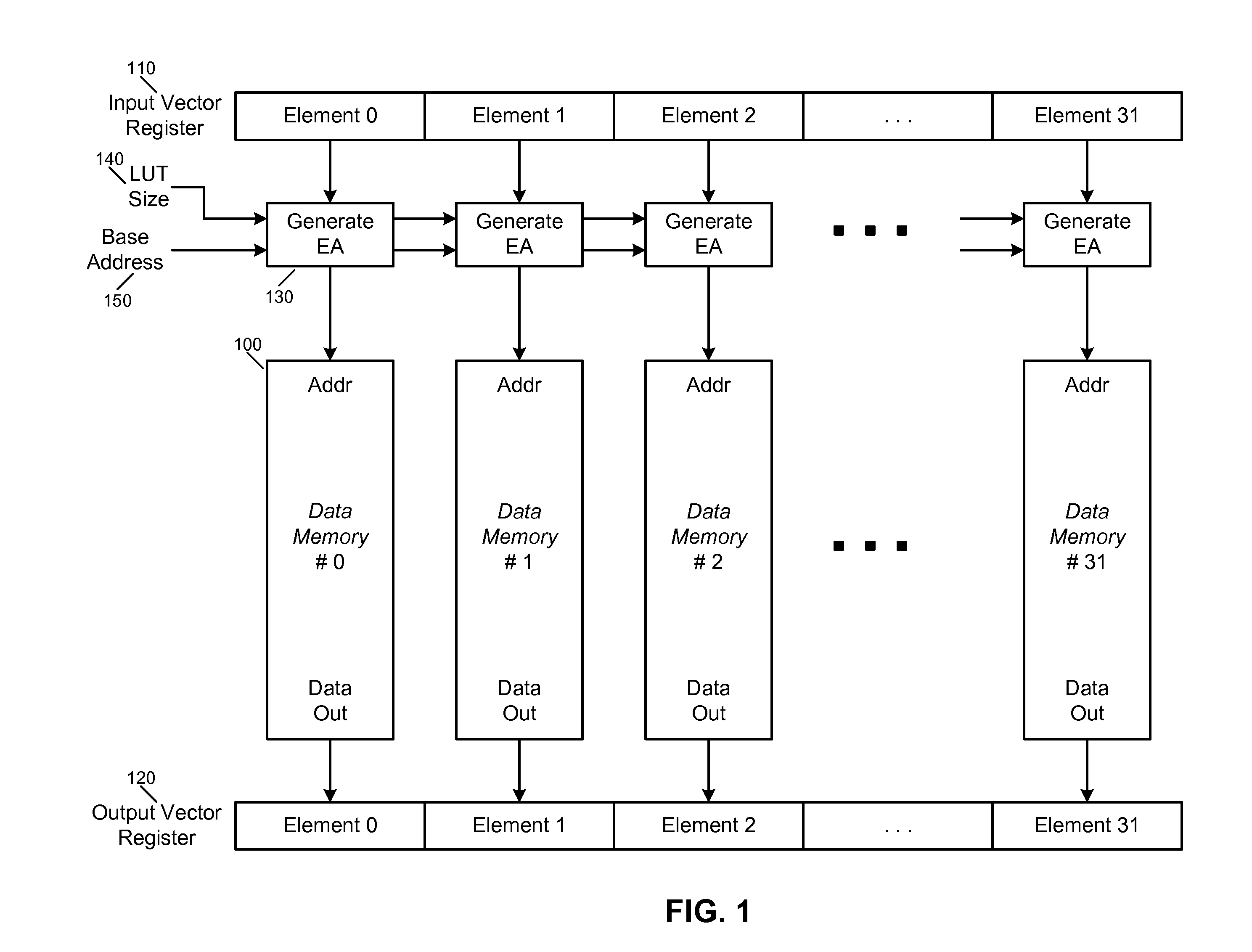

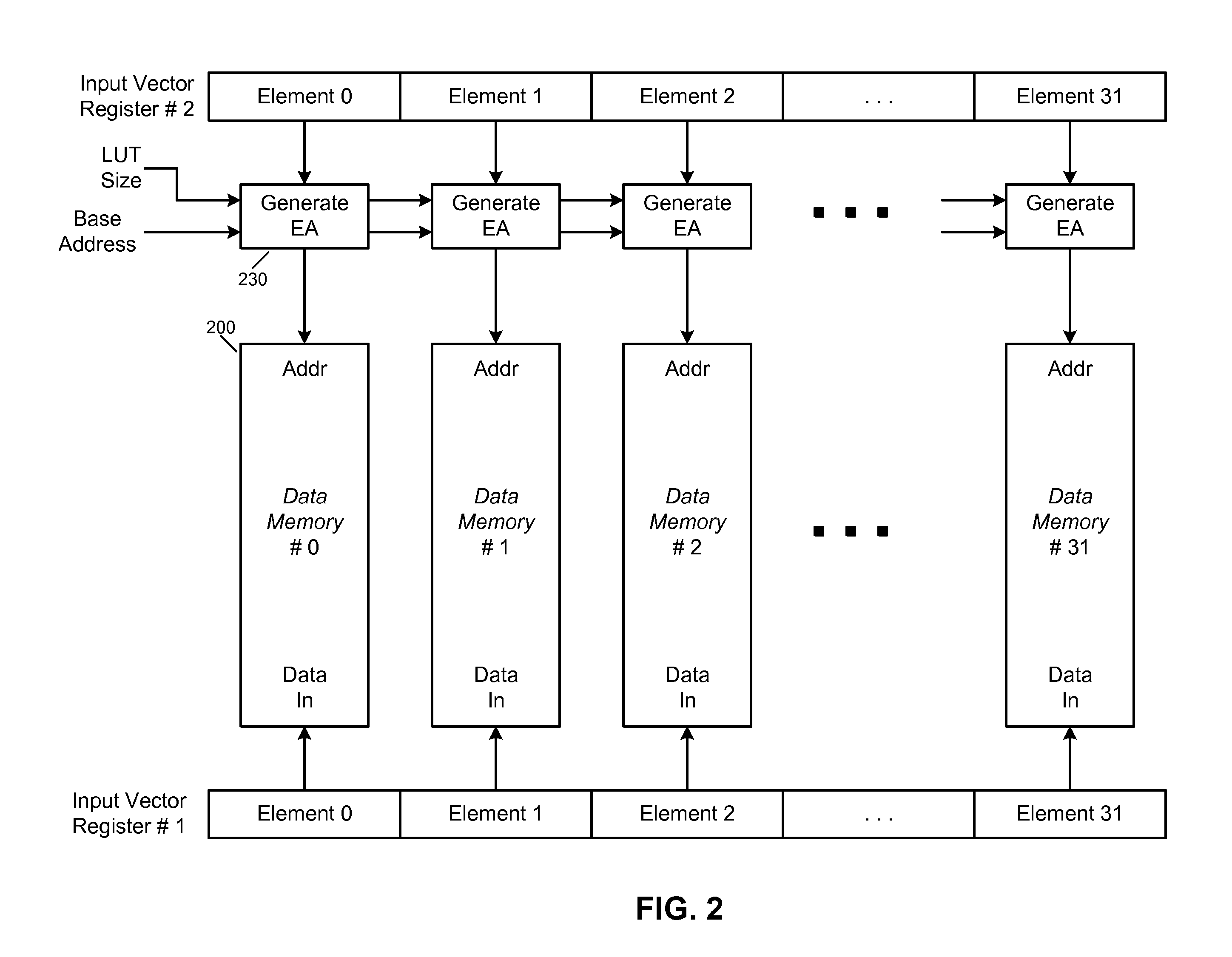

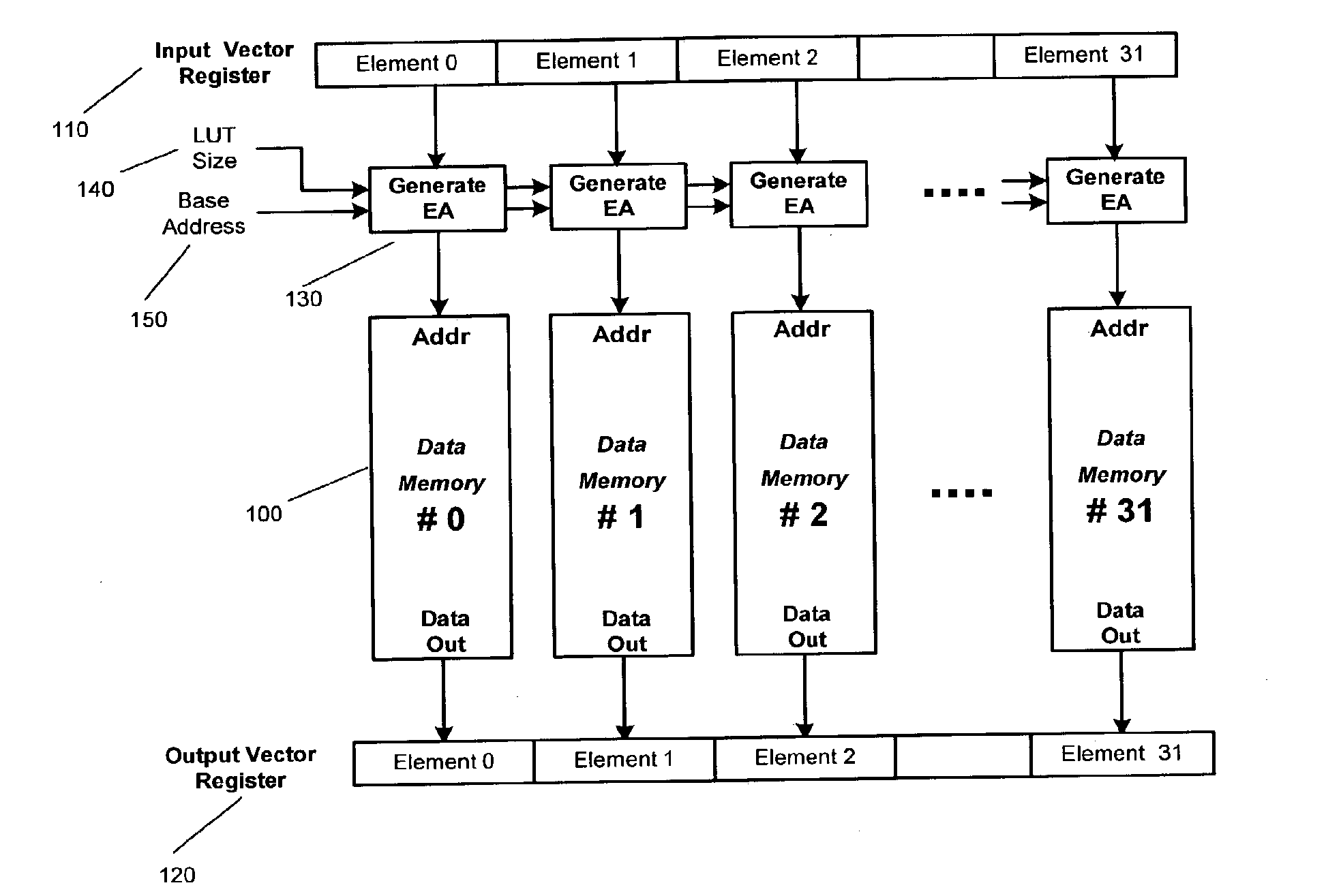

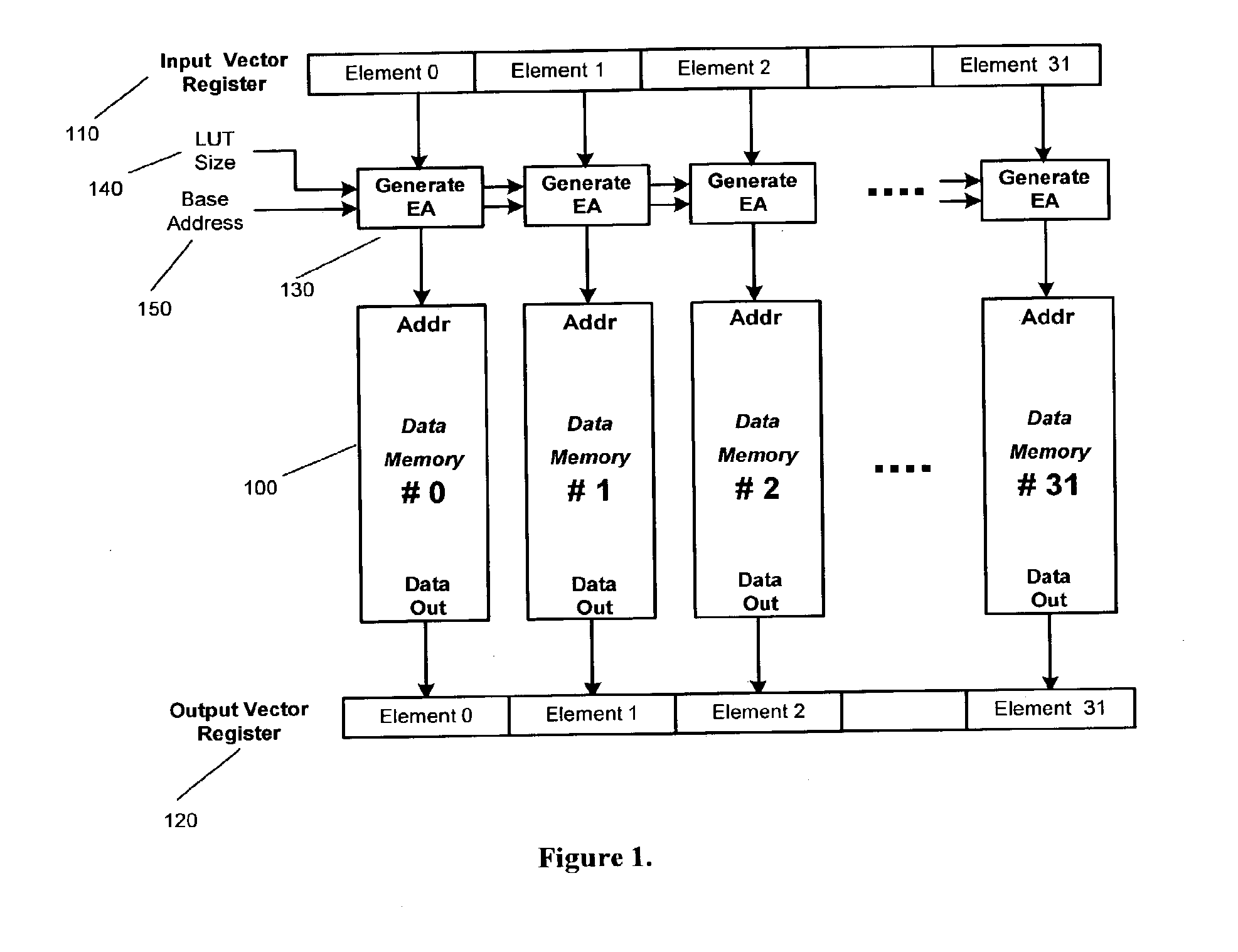

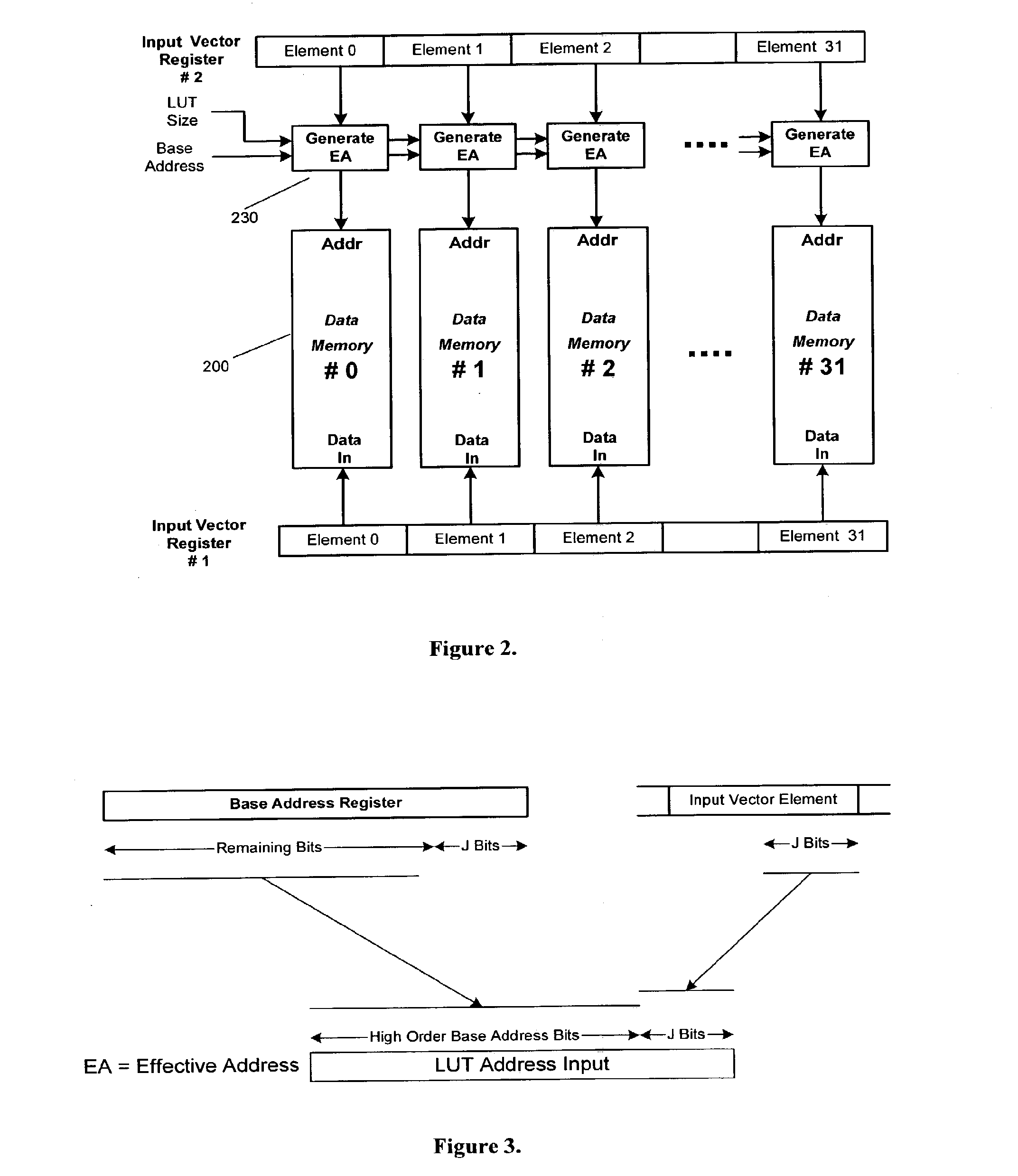

Method for performing random read access to a block of data using parallel lut read instruction in vector processors

InactiveUS20160124651A1Solve excessive overheadMemory architecture accessing/allocationInput/output to record carriersProcessor registerSimd processor

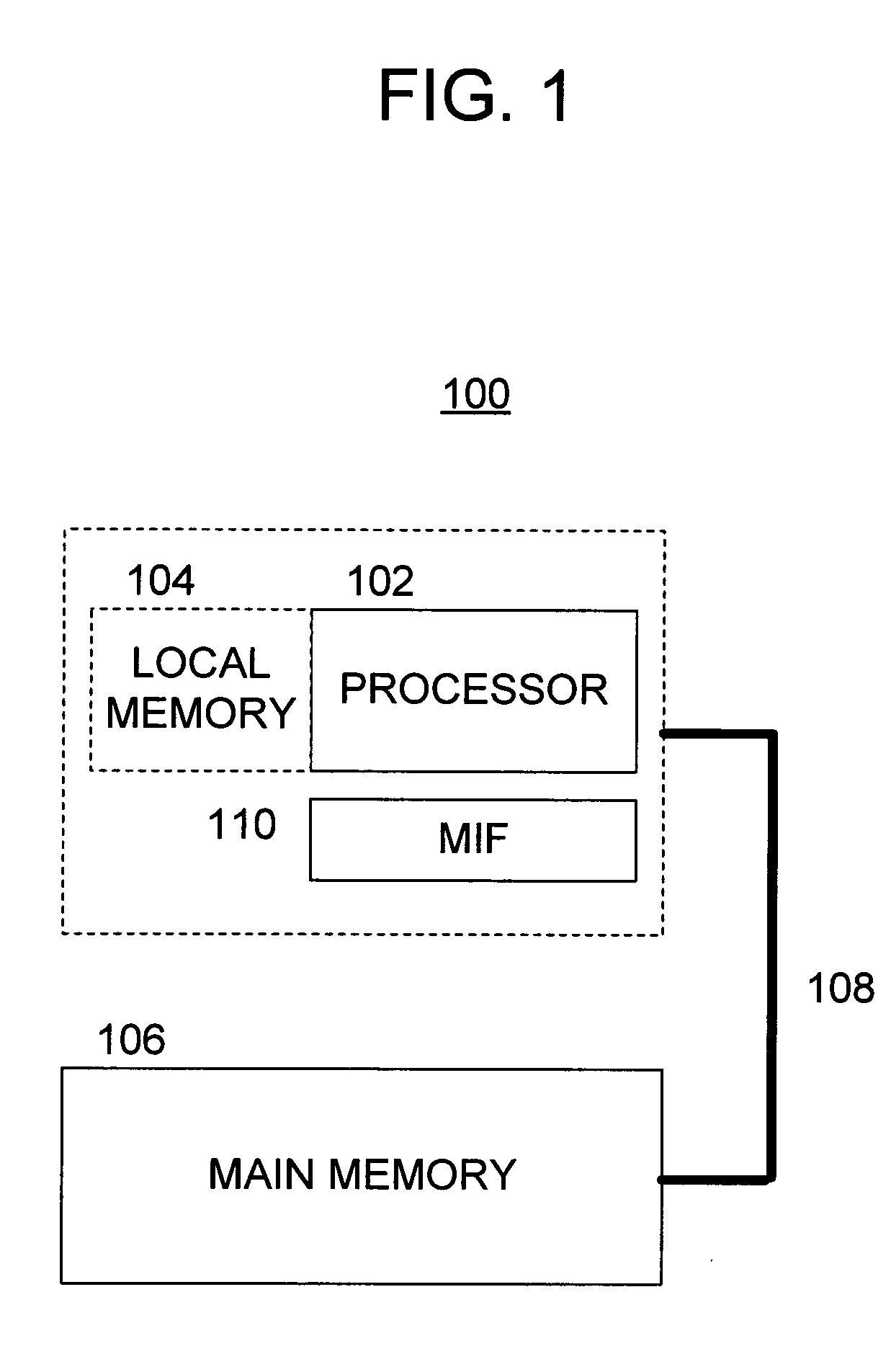

This invention deals with the problem of paralleling random read access within a reasonably sized block of data for a vector SIMD processor. The invention sets up plural parallel look up tables, moves data from main memory to each plural parallel look up table and then employs a look up table read instruction to simultaneously move data from each parallel look up table to a corresponding part a vector destination register. This enables data processing by vector single instruction multiple data (SIMD) operations. This vector destination register load can be repeated if the tables store more used data. New data can be loaded into the original tables if appropriate. A level one memory is preferably partitioned as part data cache and part directly addressable memory. The look up table memory is stored in the directly addressable memory.

Owner:TEXAS INSTR INC

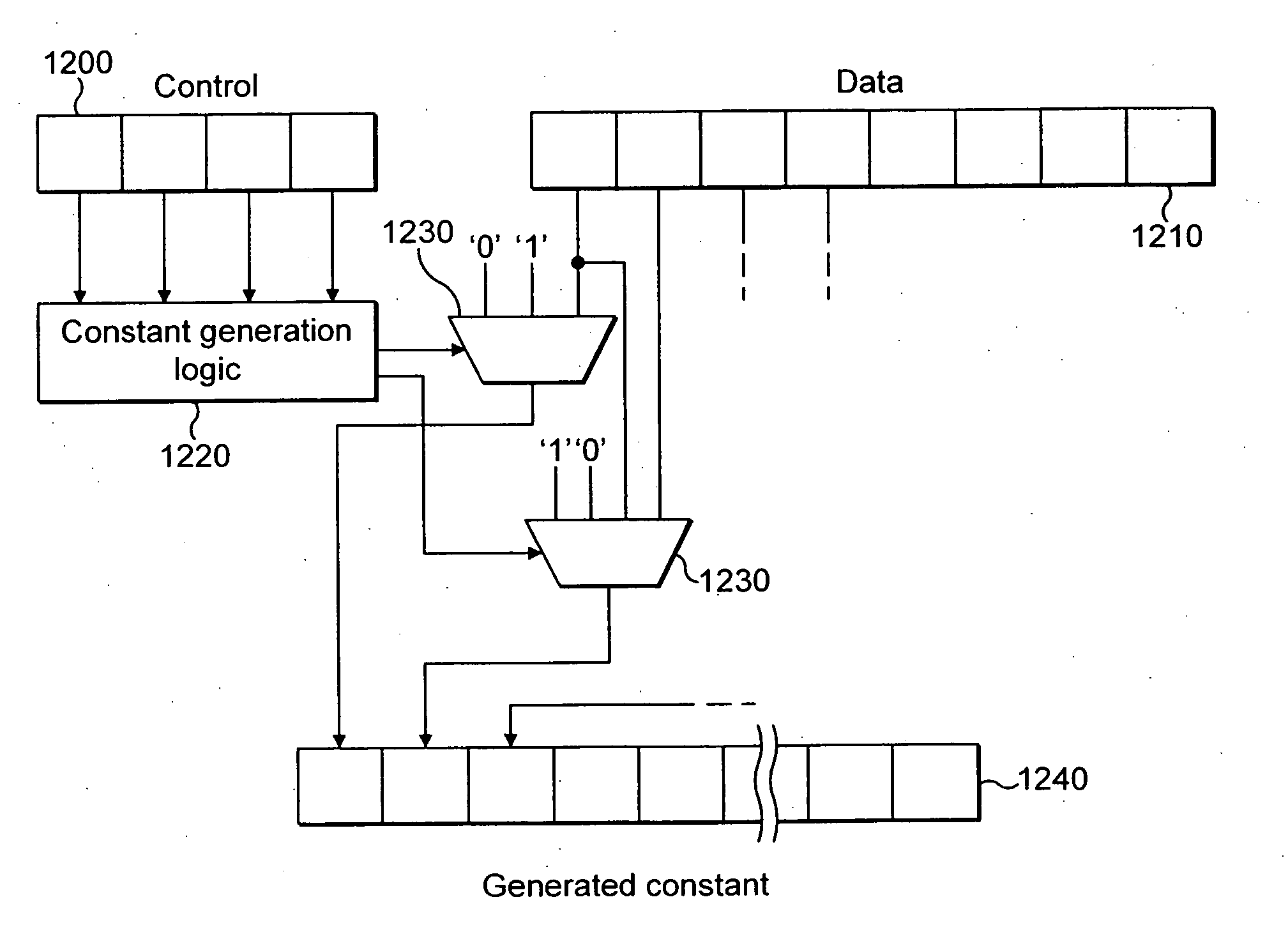

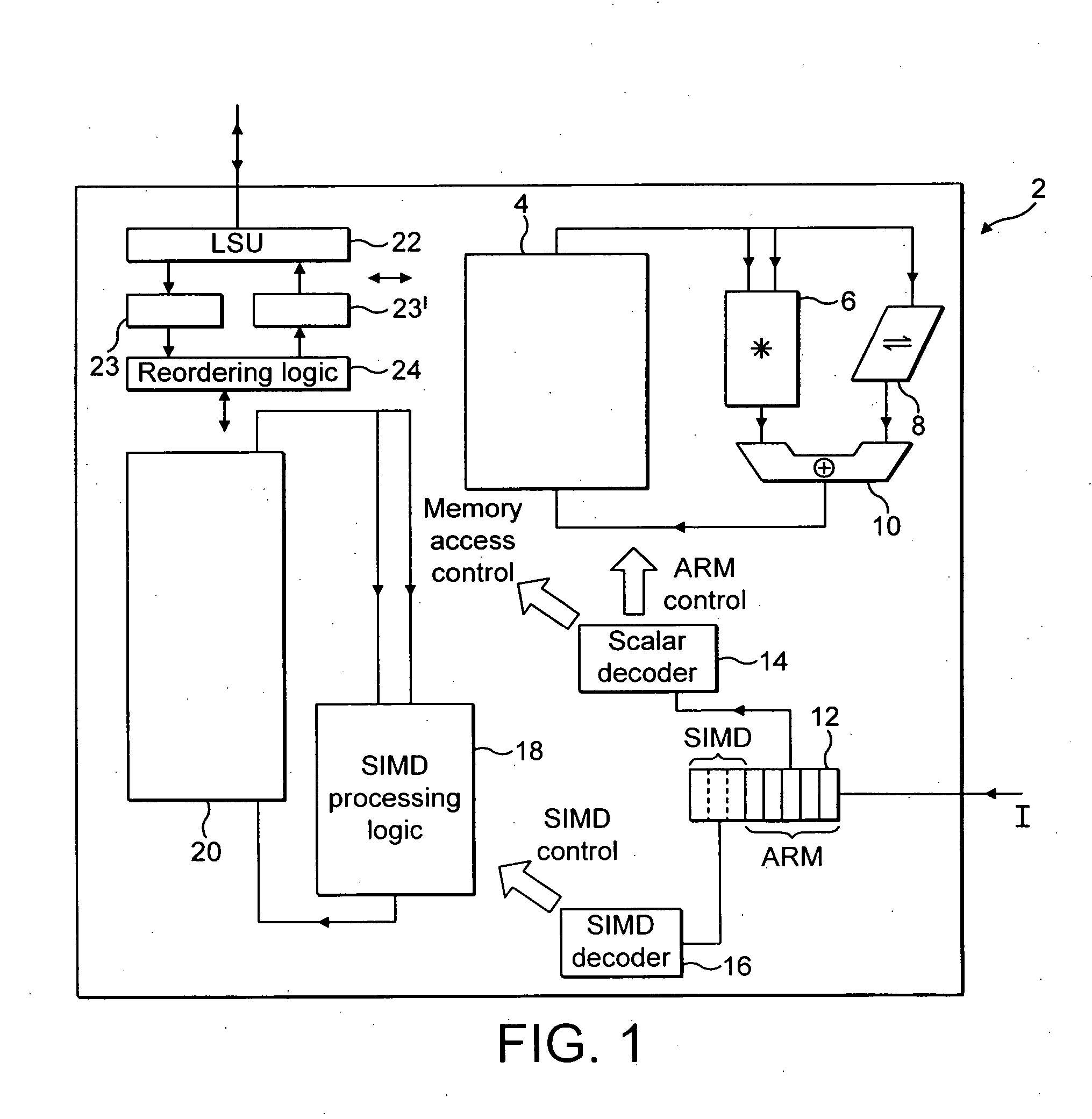

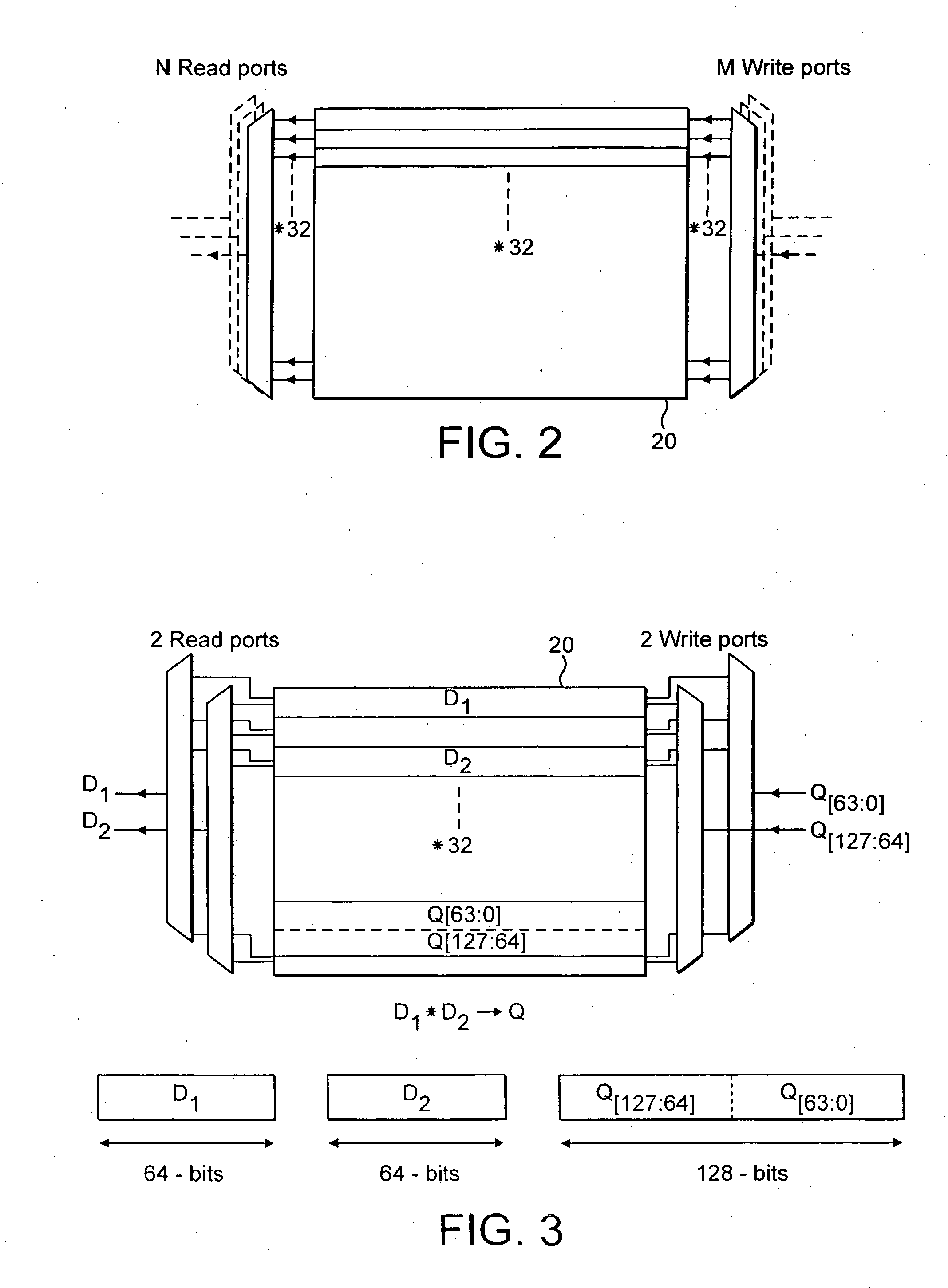

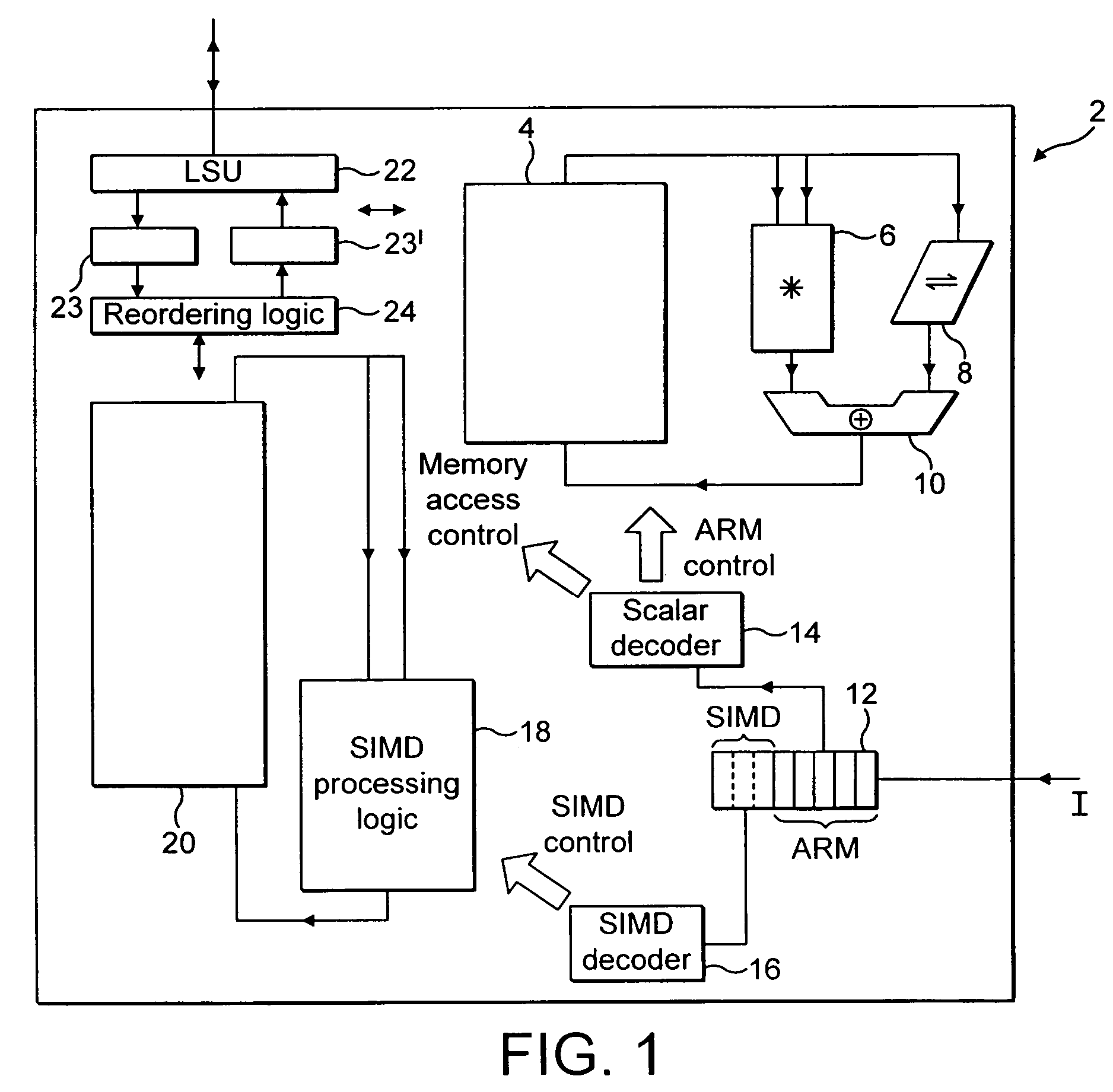

Constant generation in SIMD processing

A data processing apparatus (2) comprising: a register data store operable to store data elements; an instruction decoder (14, 16) operable to decode an instruction with generated constant, said instruction having a data value associated therewith; a data processor. (18) operable to perform data processing operations within parallel processing lanes on at least one source operand in response to a data processing instruction decoded by said instruction decoder (16); and said data processor being operable in response to said decoded instruction with generated constant and associated data value to expand at least a data portion (1210) of said associated data value, said expansion being performed in response to said instruction with generated constant and depending on a selected function, to generate a constant (1240), said generated constant (1240) forming one of said at least one source operands.

Owner:ARM LTD

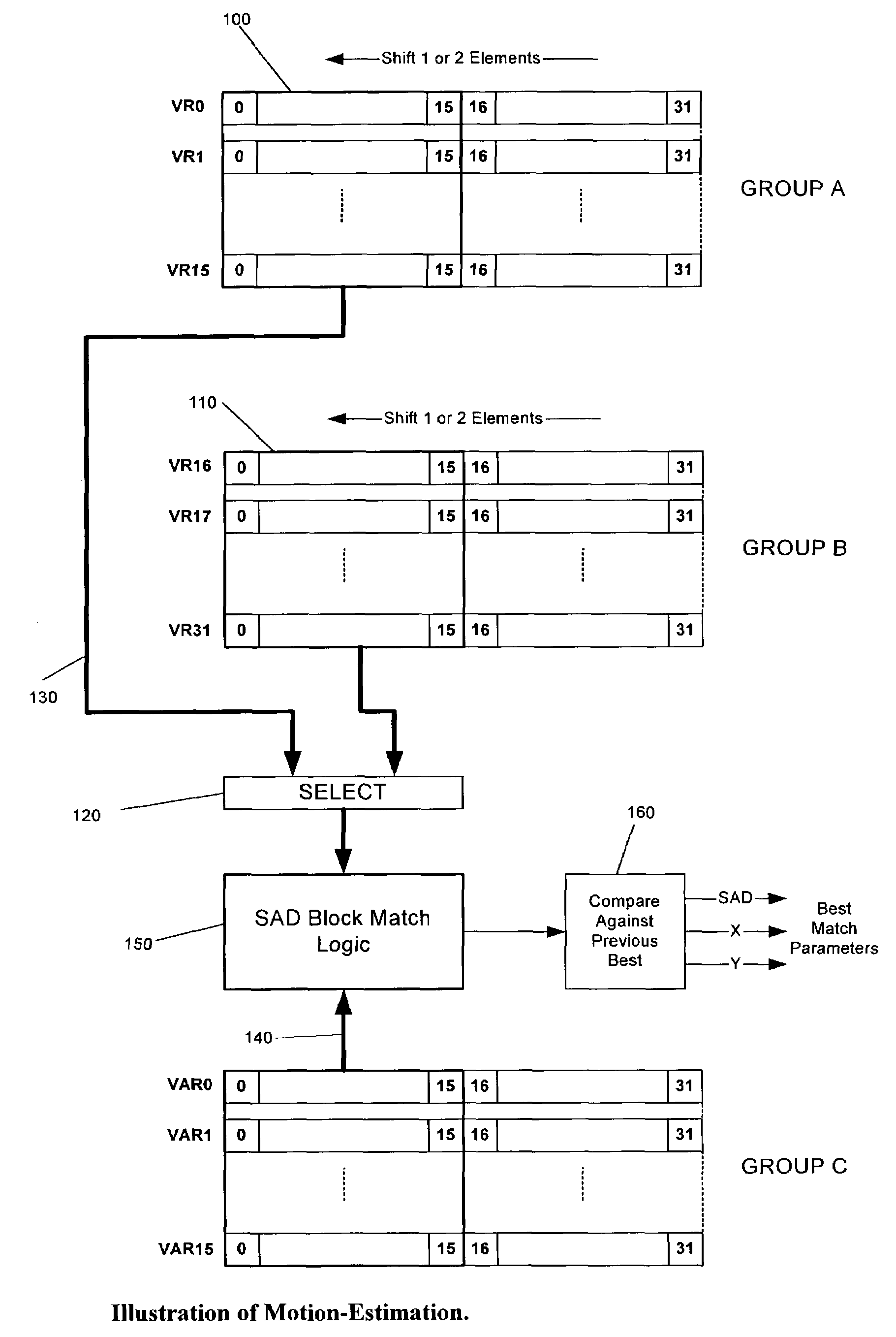

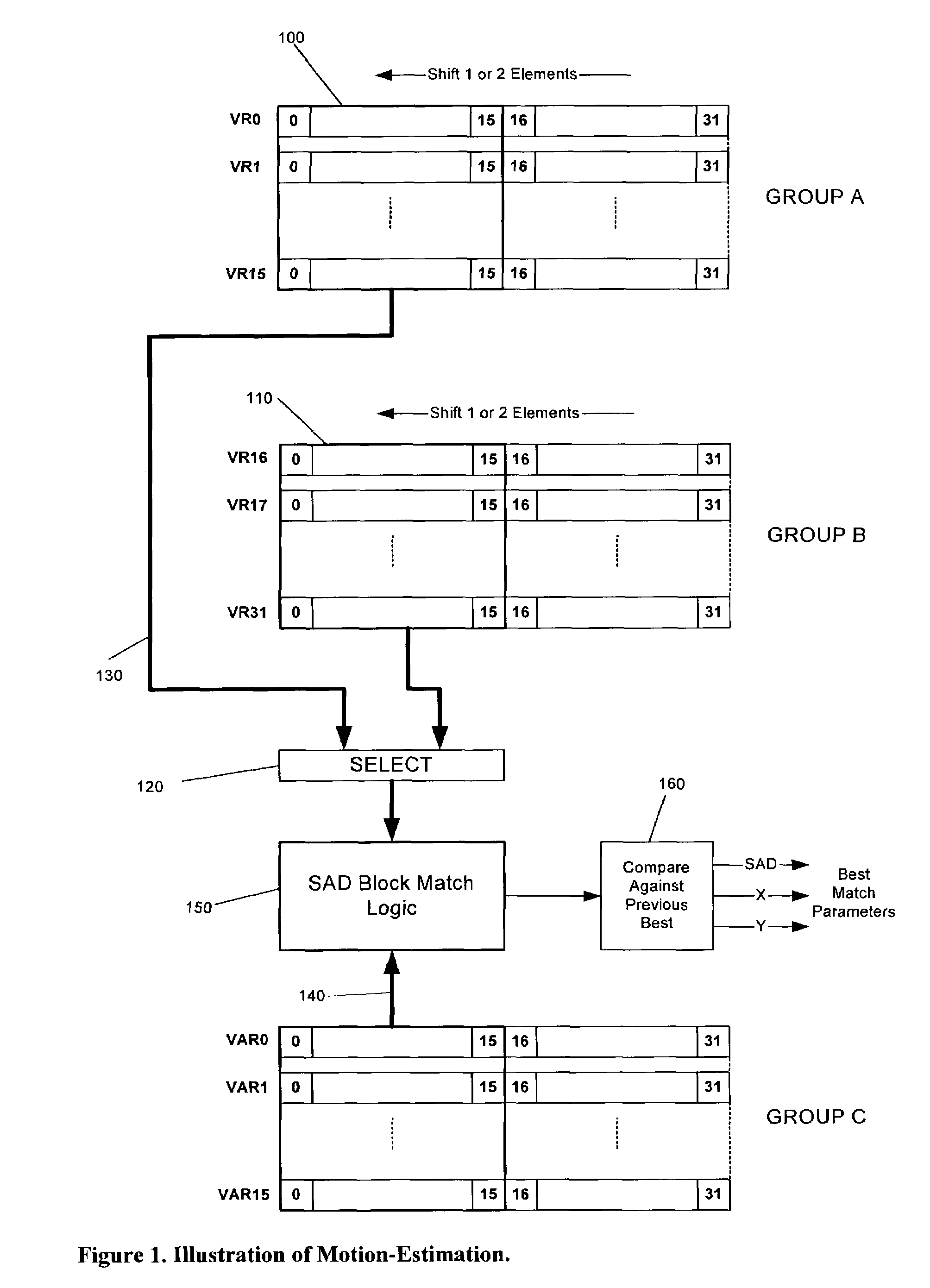

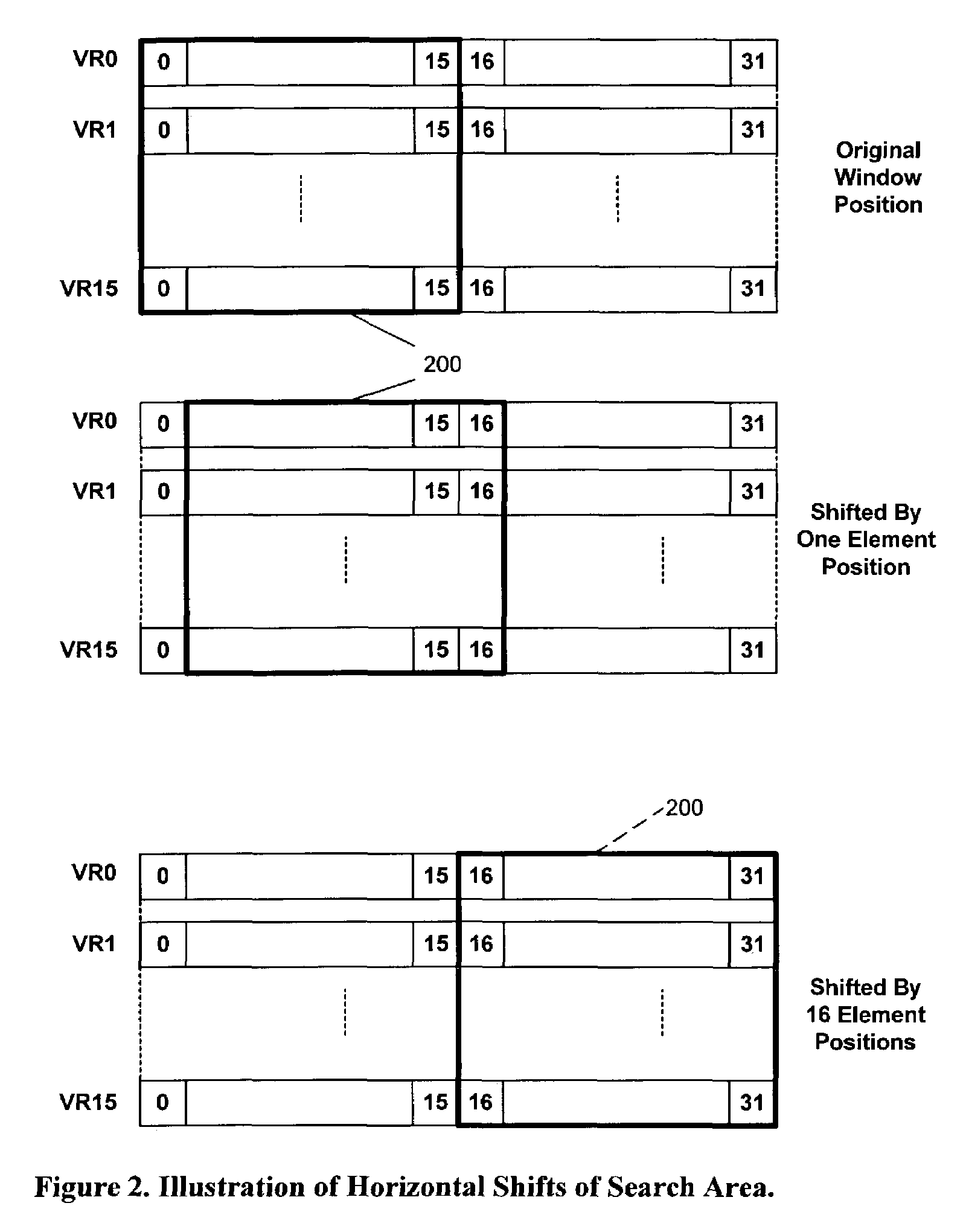

Method for programmable motion estimation in a SIMD processor

The present invention provides a 16×16-sliding window using vector register file with zero overhead for horizontal or vertical shifts to incorporate motion estimation into SIMD vector processor architecture. SIMD processor's vector load mechanism, vector register file with shifting of elements capability, and 16×16 parallel SAD calculation hardware and instruction are used. Vertical shifts of all sixteen-vector registers occur in a ripple-through fashion when the end vector register is loaded. The parallel SAD calculation hardware can calculate one 16-by-16-block match per clock cycle in a pipelined fashion. In addition, hardware for best-match SAD value comparisons and maintaining their pixel location reduces the software overhead. Block matching for less than 16 by 16 block areas is supported using a mask register to mask selected elements, thereby reducing search area to any block size less than 16 by 16.

Owner:MIMAR TIBET

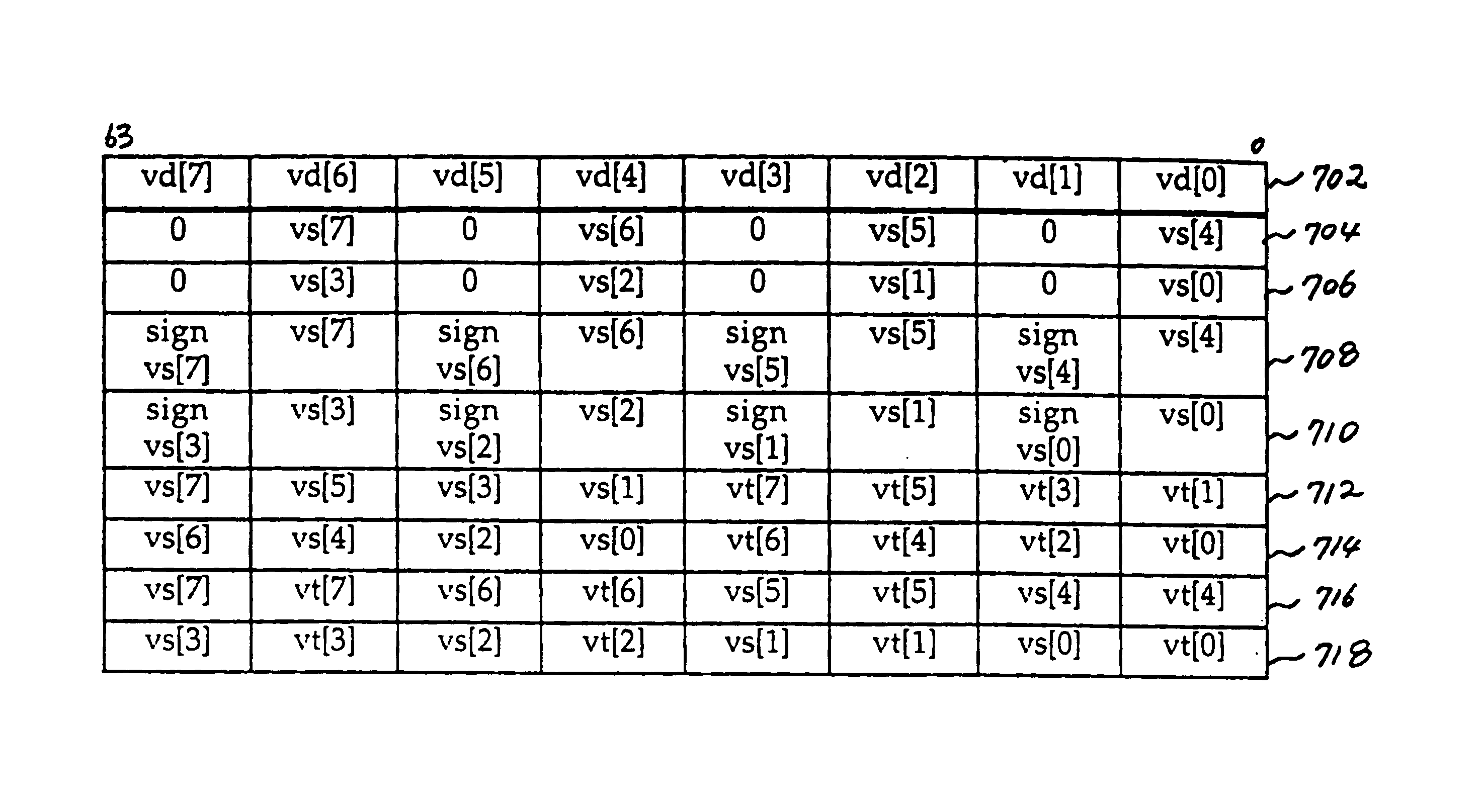

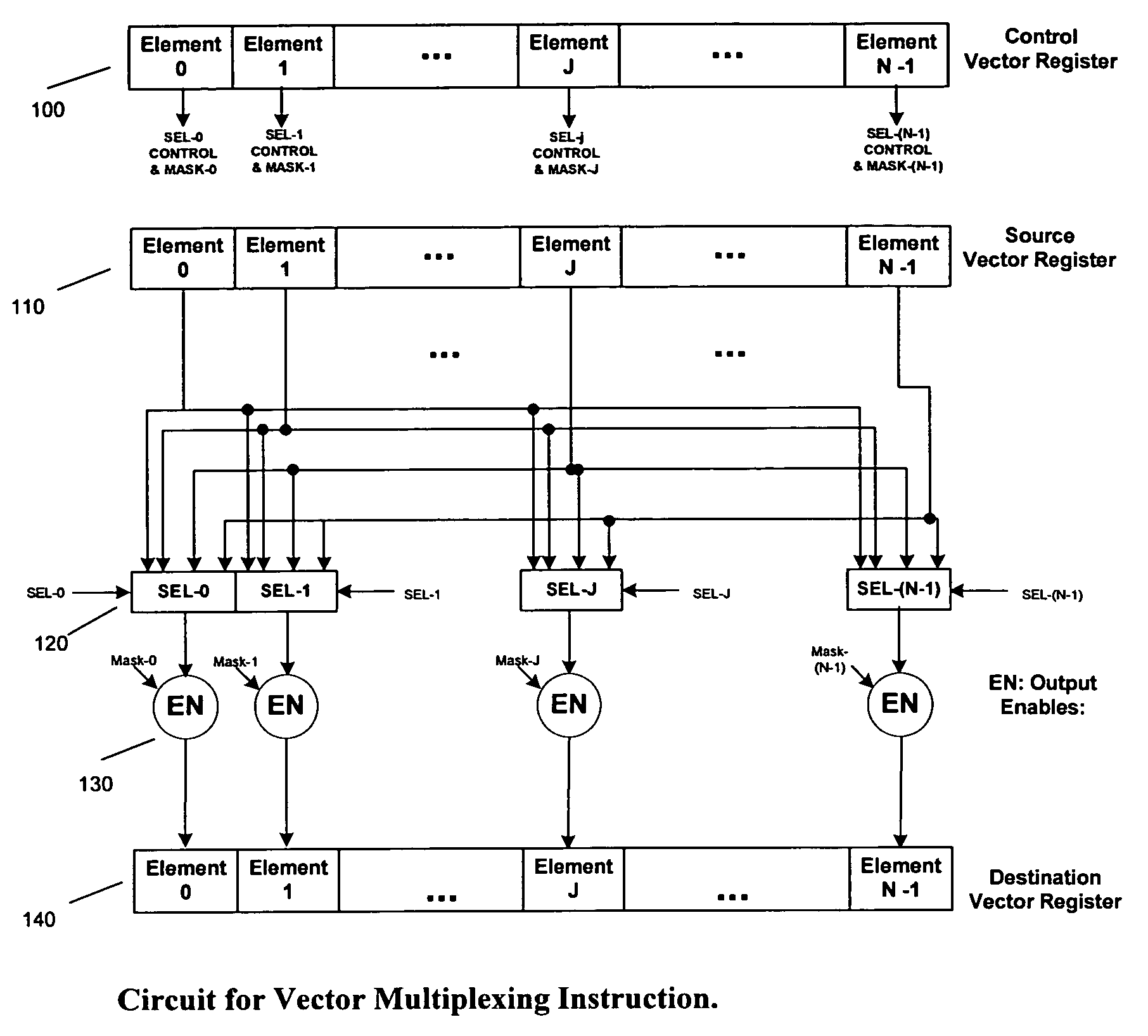

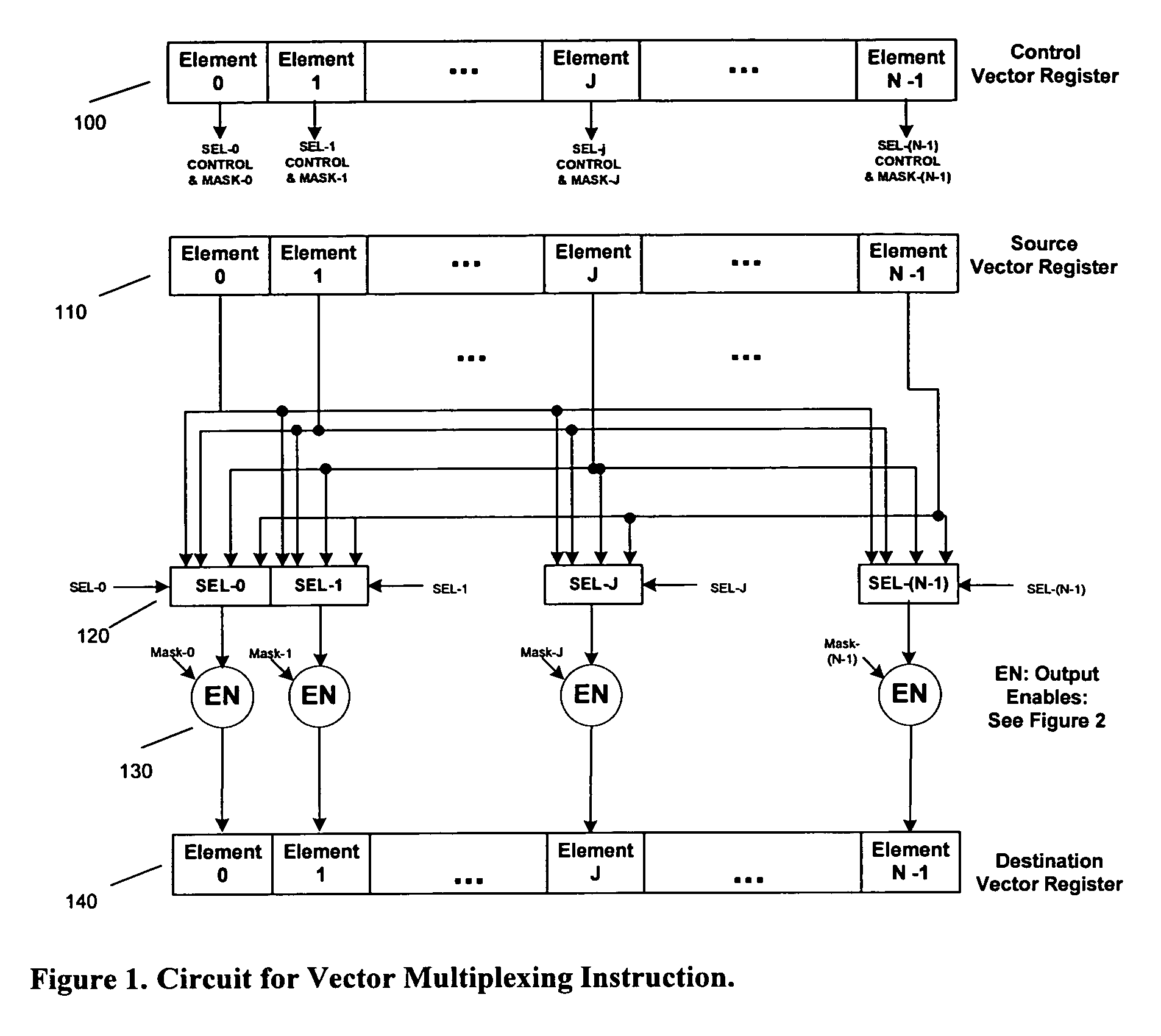

Fast and flexible scan conversion and matrix transpose in a SIMD processor

InactiveUS6963341B1Efficient and flexibleQuick implementationProgram controlArchitecture with single central processing unitPresent methodScan conversion

The present invention provides efficient ways to implement scan conversion and matrix transpose operations using vector multiplex operations in a SIMD processor. The present method provides a very fast and flexible way to implement different scan conversions, such as zigzag conversion, and matrix transpose for 2×2, 4×4, 8×8 blocks commonly used by all video compression and decompression algorithms.

Owner:MIMAR TIBET

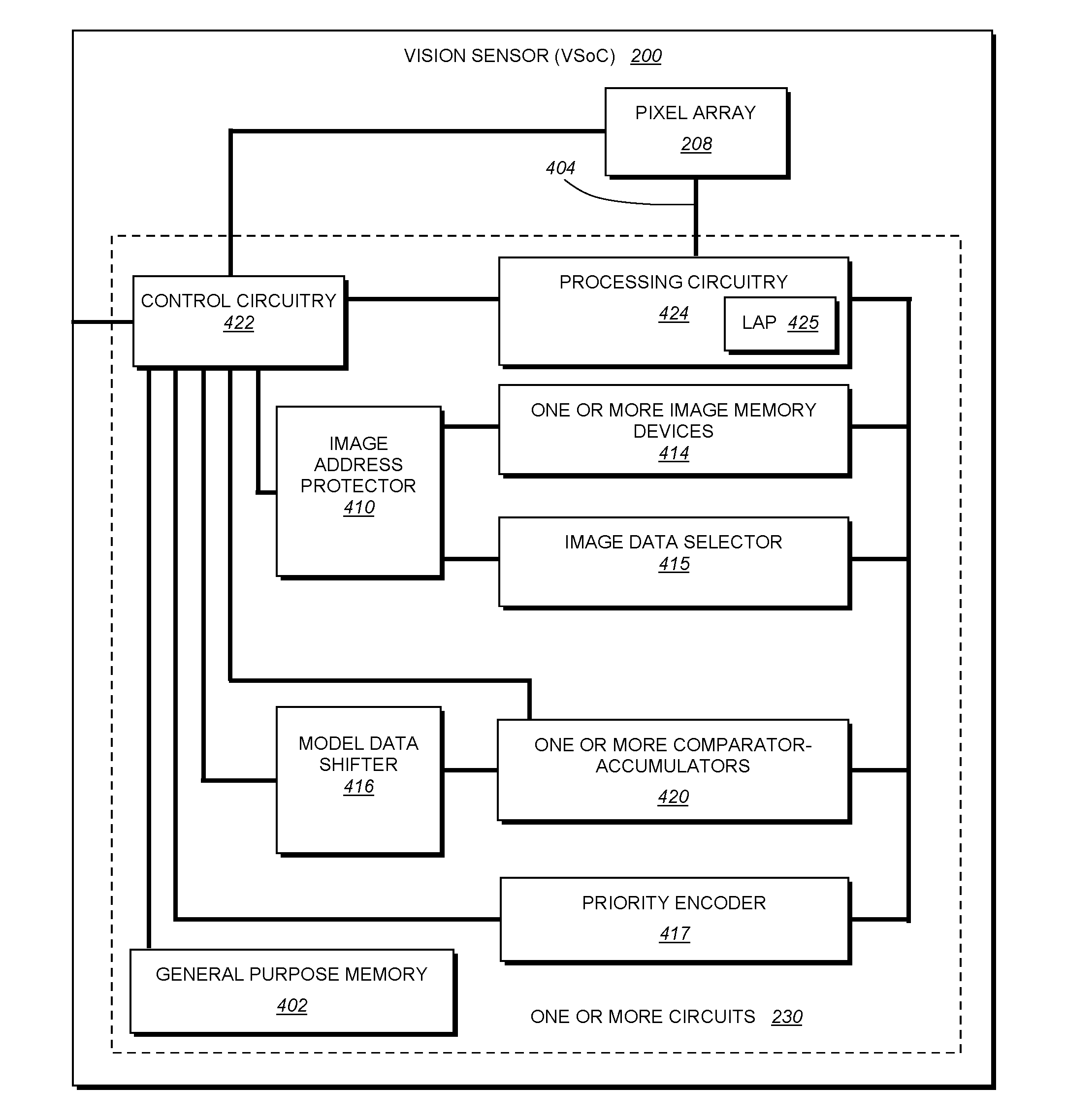

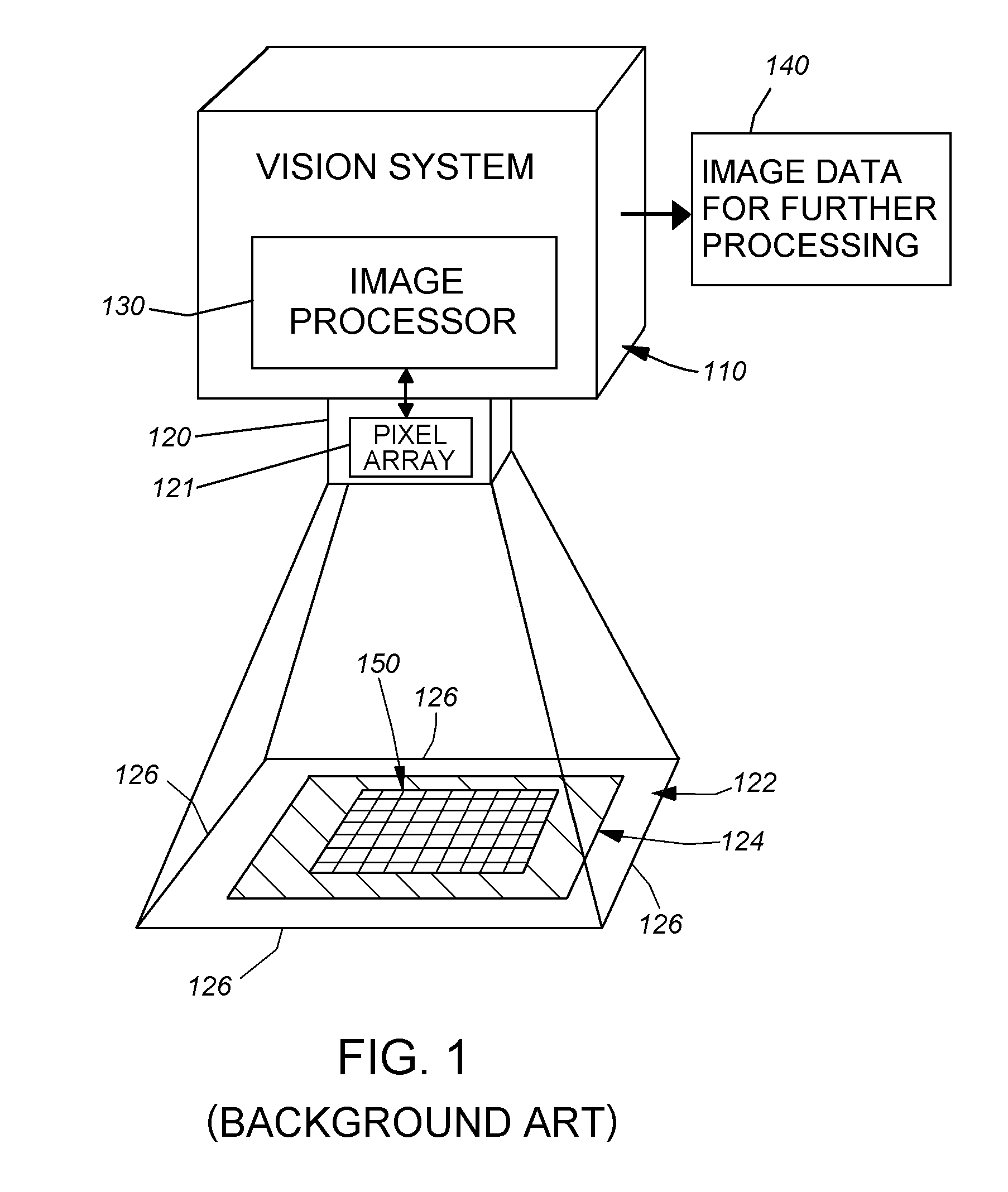

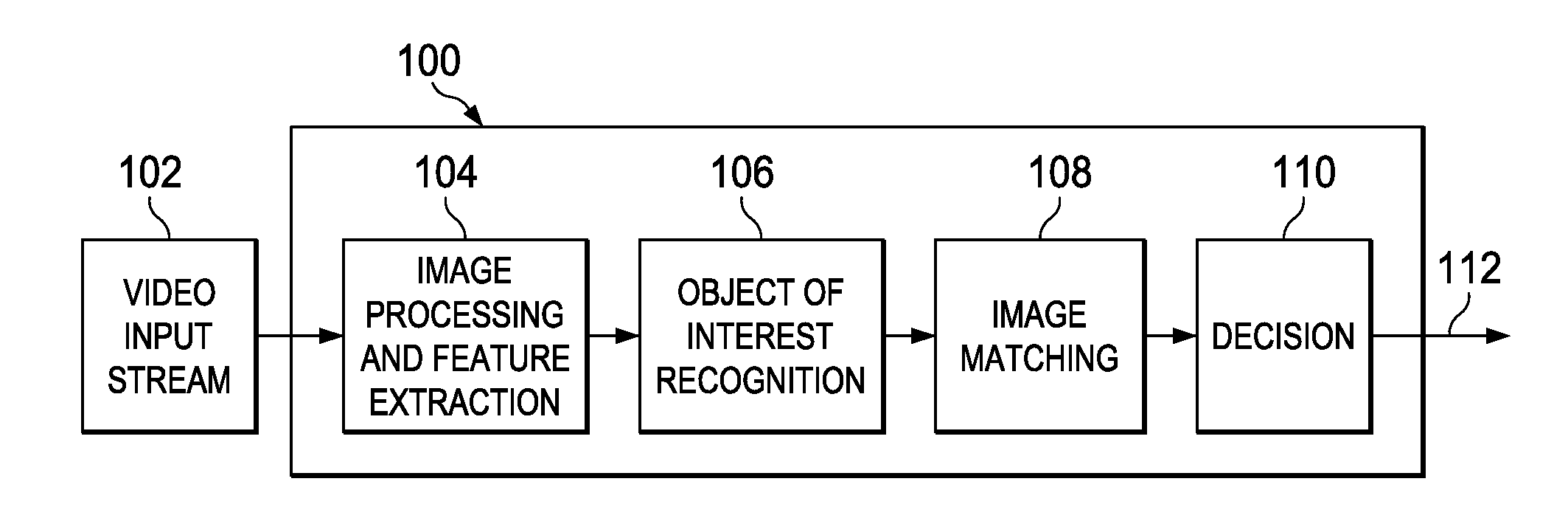

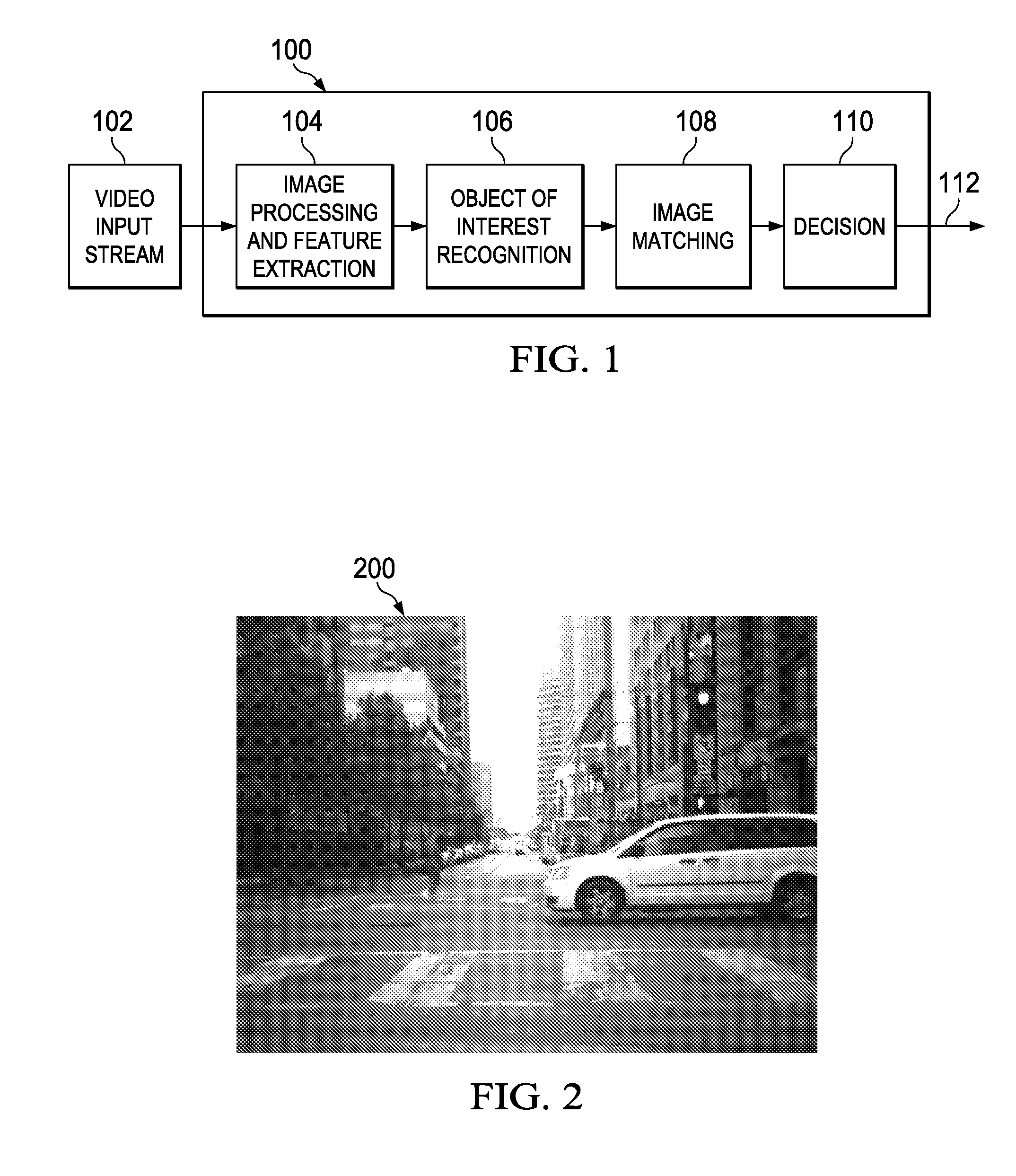

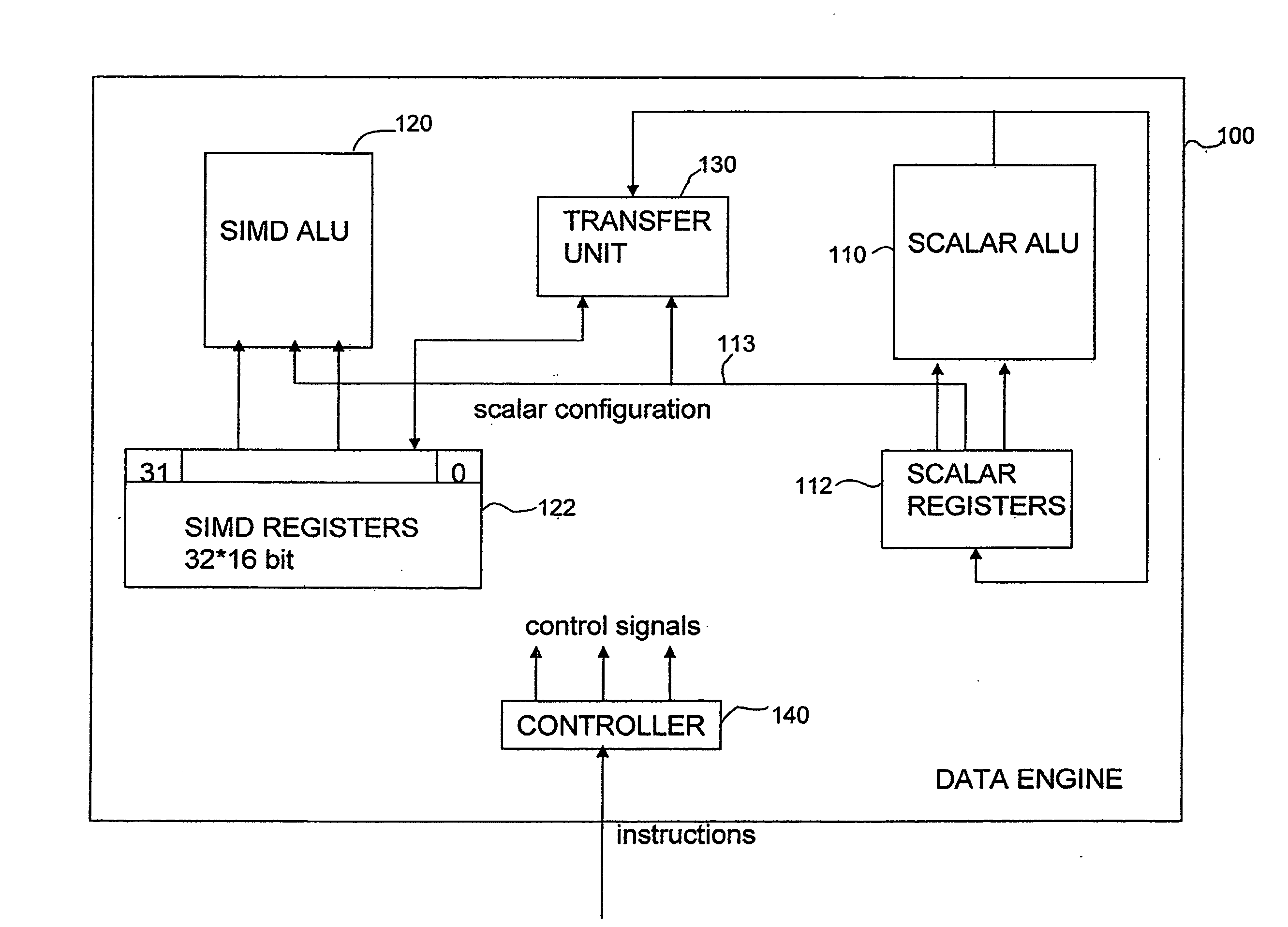

System and method for processing image data relative to a focus of attention within the overall image

InactiveUS20110211726A1Process time savingSave data storage overheadTelevision system detailsProgram control using wired connectionsSensor arrayDigital data

This invention provides a system and method for processing discrete image data within an overall set of acquired image data based upon a focus of attention within that image. The result of such processing is to operate upon a more limited subset of the overall image data to generate output values required by the vision system process. Such output value can be a decoded ID or other alphanumeric data. The system and method is performed in a vision system having two processor groups, along with a data memory that is smaller in capacity than the amount of image data to be read out from the sensor array. The first processor group is a plurality of SIMD processors and at least one general purpose processor, co-located on the same die with the data memory. A data reduction function operates within the same clock cycle as data-readout from the sensor to generate a reduced data set that is stored in the on-die data memory. At least a portion of the overall, unreduced image data is concurrently (in the same clock cycle) transferred to the second processor while the first processor transmits at least one region indicator with respect to the reduced data set to the second processor. The region indicator represents at least one focus of attention for the second processor to operate upon.

Owner:COGNEX CORP

Method and apparatus for constant generation in SIMD processing

A data processing apparatus (2) comprising: a register data store operable to store data elements; an instruction decoder (14, 16) operable to decode an instruction with generated constant, said instruction having a data value associated therewith; a data processor (18) operable to perform data processing operations within parallel processing lanes on at least one source operand in response to a data processing instruction decoded by said instruction decoder (16); and said data processor being operable in response to said decoded instruction with generated constant and associated data value to expand at least a data portion (1210) of said associated data value, said expansion being performed in response to said instruction with generated constant and depending on a selected function, to generate a constant (1240), said generated constant (1240) forming one of said at least one source operands.

Owner:ARM LTD

Determining Median Value of an Array on Vector SIMD Architectures

A method for determining a median value of an array of pixels in a vision system may be performed in an efficient manner using the parallel computing capabilities of a SIMD processing engine. Each column of an array may be sorted in ascending (descending) order to form a first sorted array. Each row of the first sorted array may be sorted in ascending (descending) order to form a second sorted array. A pixel may be selected as the median value from a diagonal portion of the second sorted array, wherein the diagonal portion bisects a lower value region and a higher value region of the second sorted array.

Owner:TEXAS INSTR INC

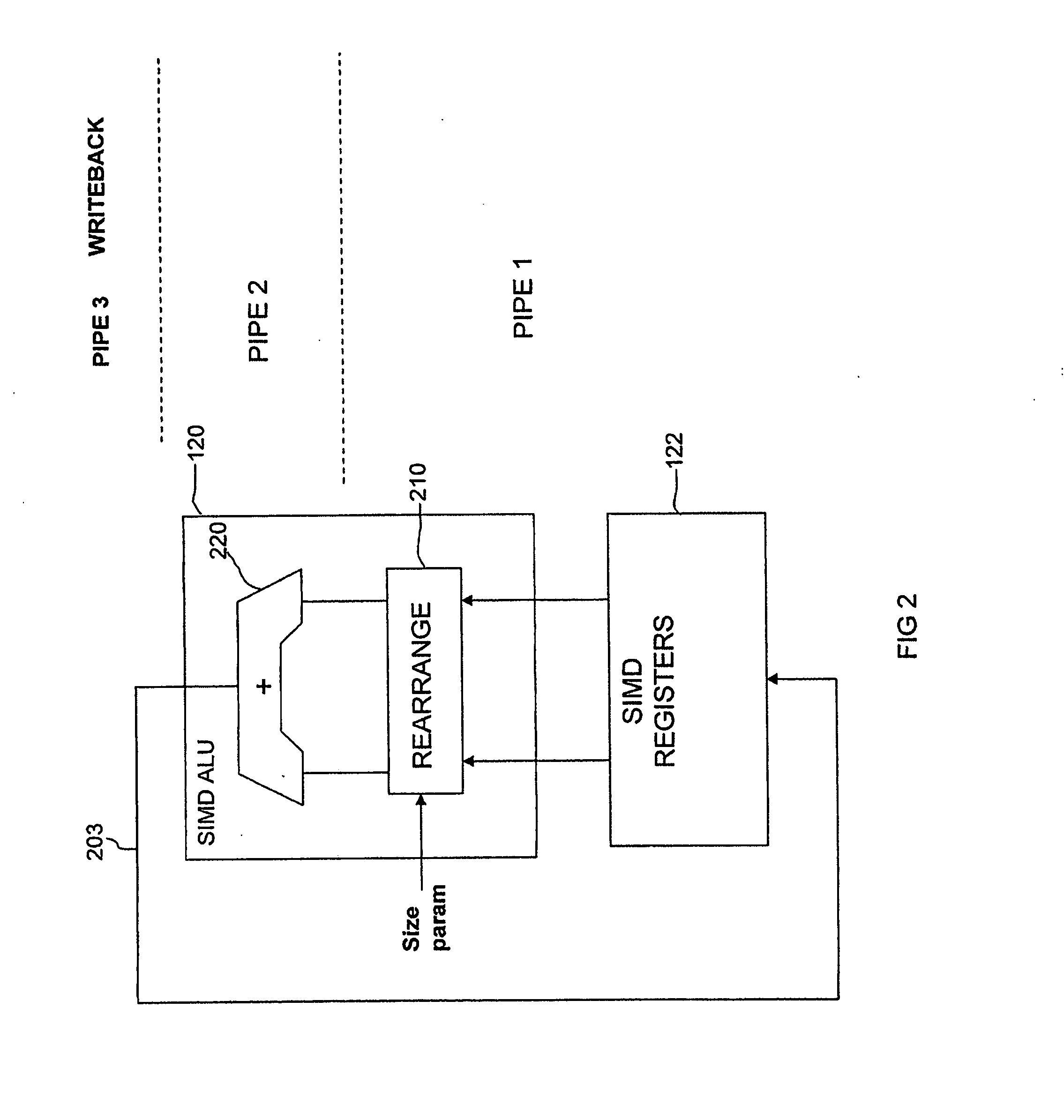

Apparatus and method for performing rearrangement and arithmetic operations on data

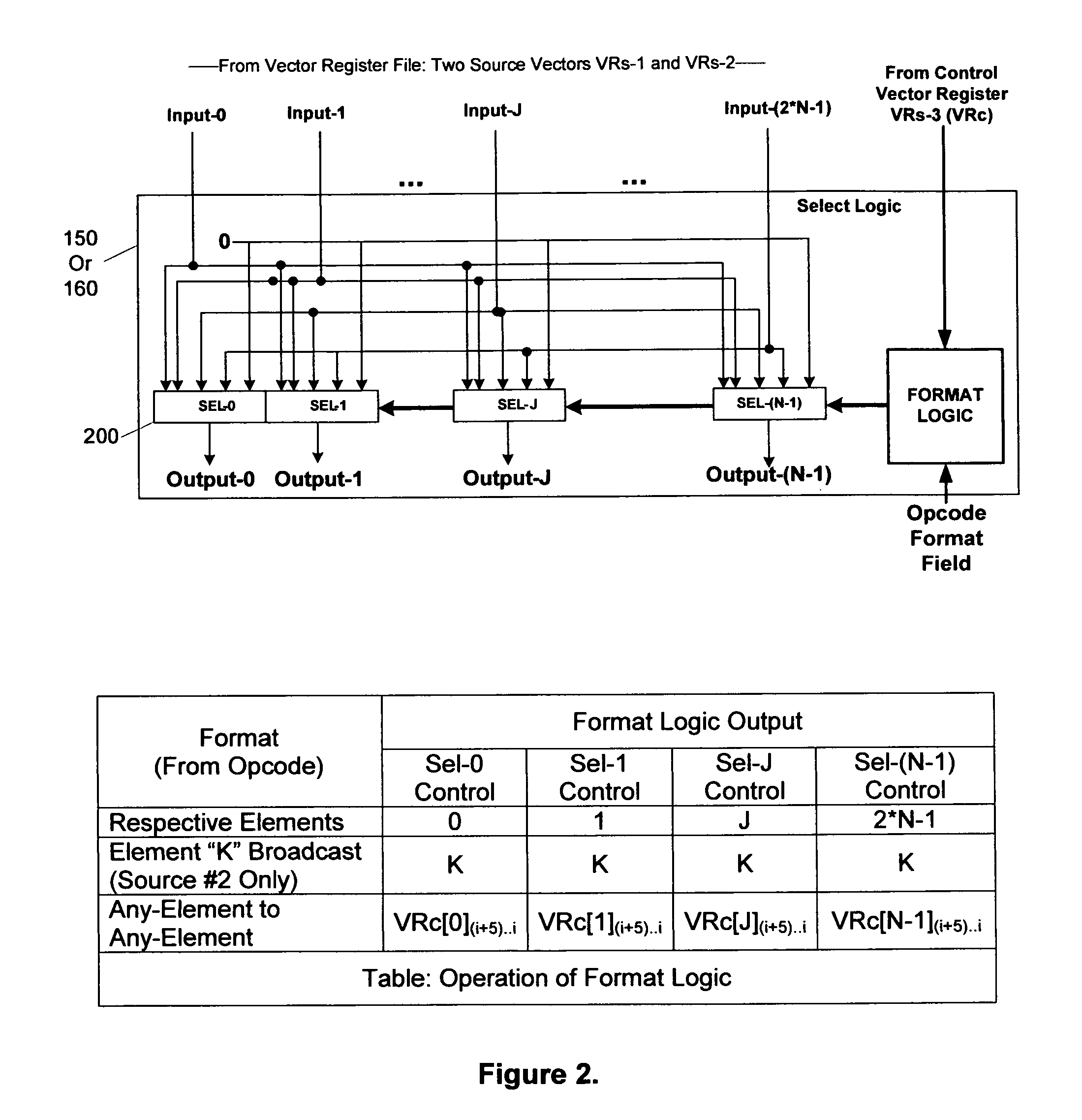

ActiveUS20080140750A1Flexible configurationFacilitates rearrangement operationRegister arrangementsComputation using non-contact making devicesProgram instructionData element

An apparatus and method are provided for performing rearrangement operations and arithmetic operations on data. The data processing apparatus has processing circuitry for performing SIMD processing operations and scalar processing operations, a register bank for storing data and control circuitry responsive to program instructions to control the processing circuitry to perform data processing operations. The control circuitry is arranged to responsive to a combined rearrangement arithmetic instruction to control the processing circuitry to perform a rearrangement operation and at least one SIMD arithmetic operation on a plurality of data elements stored in the register bank. The rearrangement operation is configurable by a size parameter derived at least in part from the register bank. The size parameter provides an indication of a number of data elements forming a rearrangement element for the purposes of the rearrangement operation. The associated method involves controlling processing circuitry to perform a rearrangement operation and at least one SIMD arithmetic operation in response to a combined rearrangement arithmetic instruction and providing the scalar logic size parameter to configure the rearrangement operation. Computer program product is also provided comprising at least one combined rearrangement arithmetic instruction.

Owner:ARM LTD

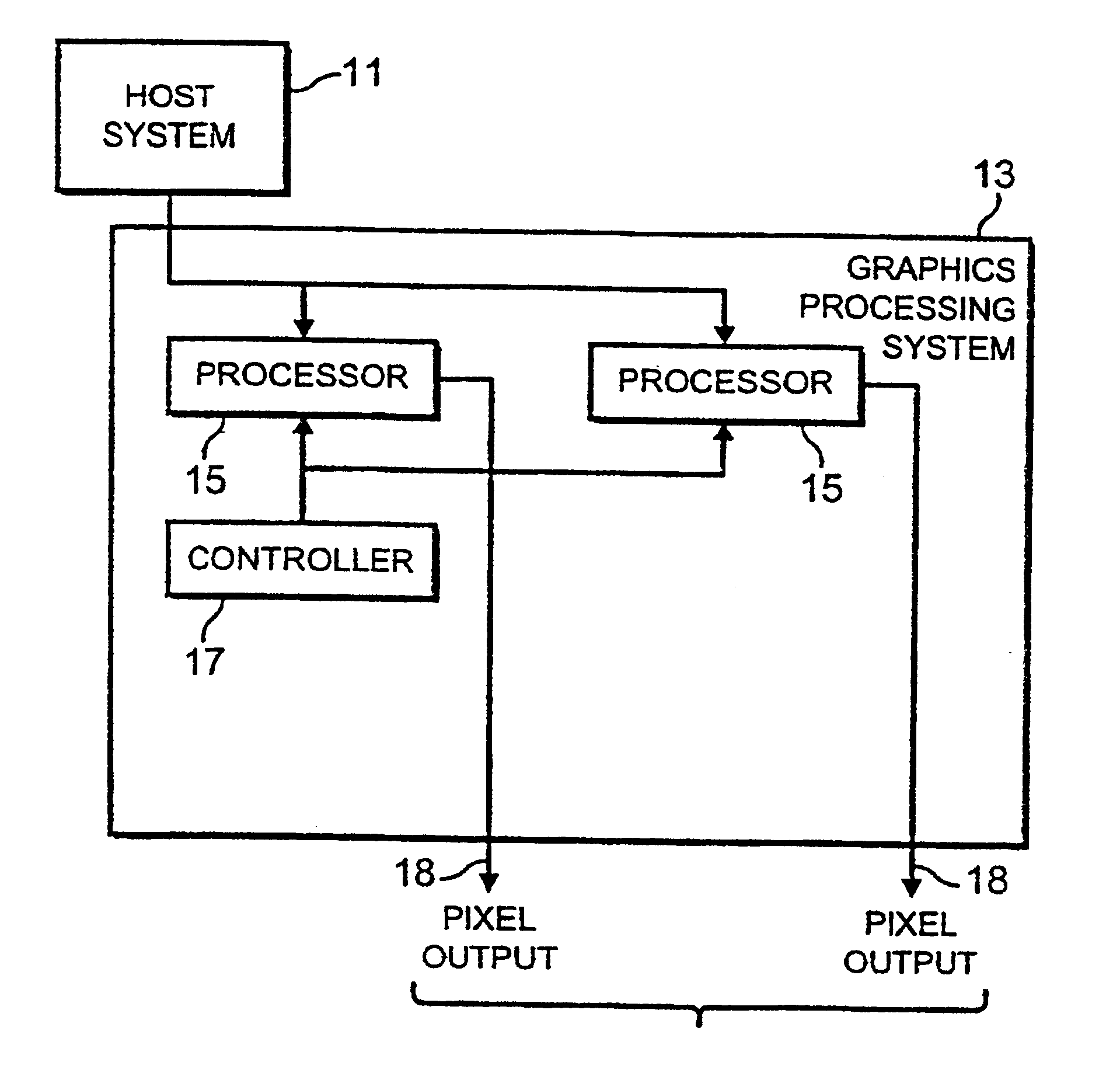

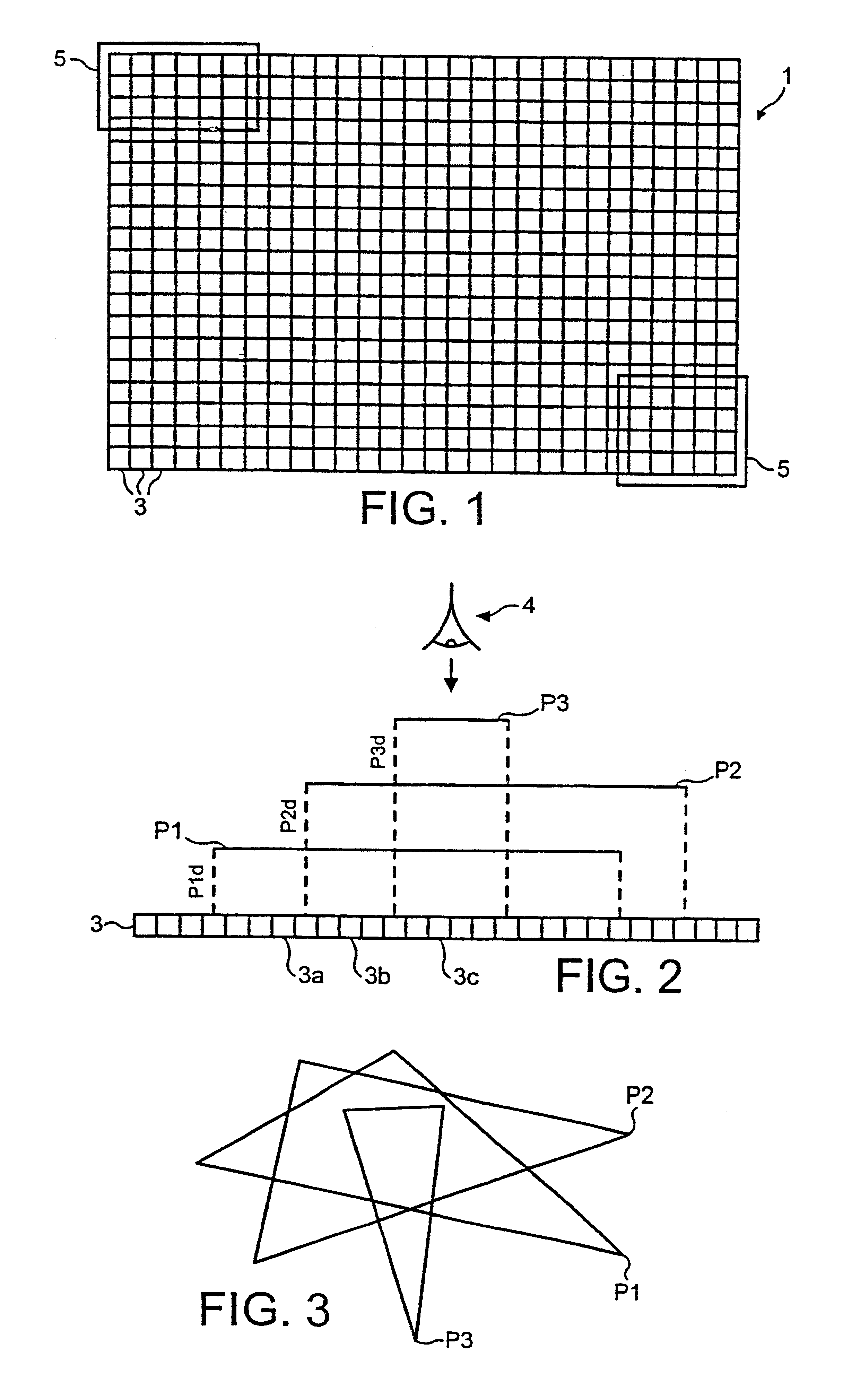

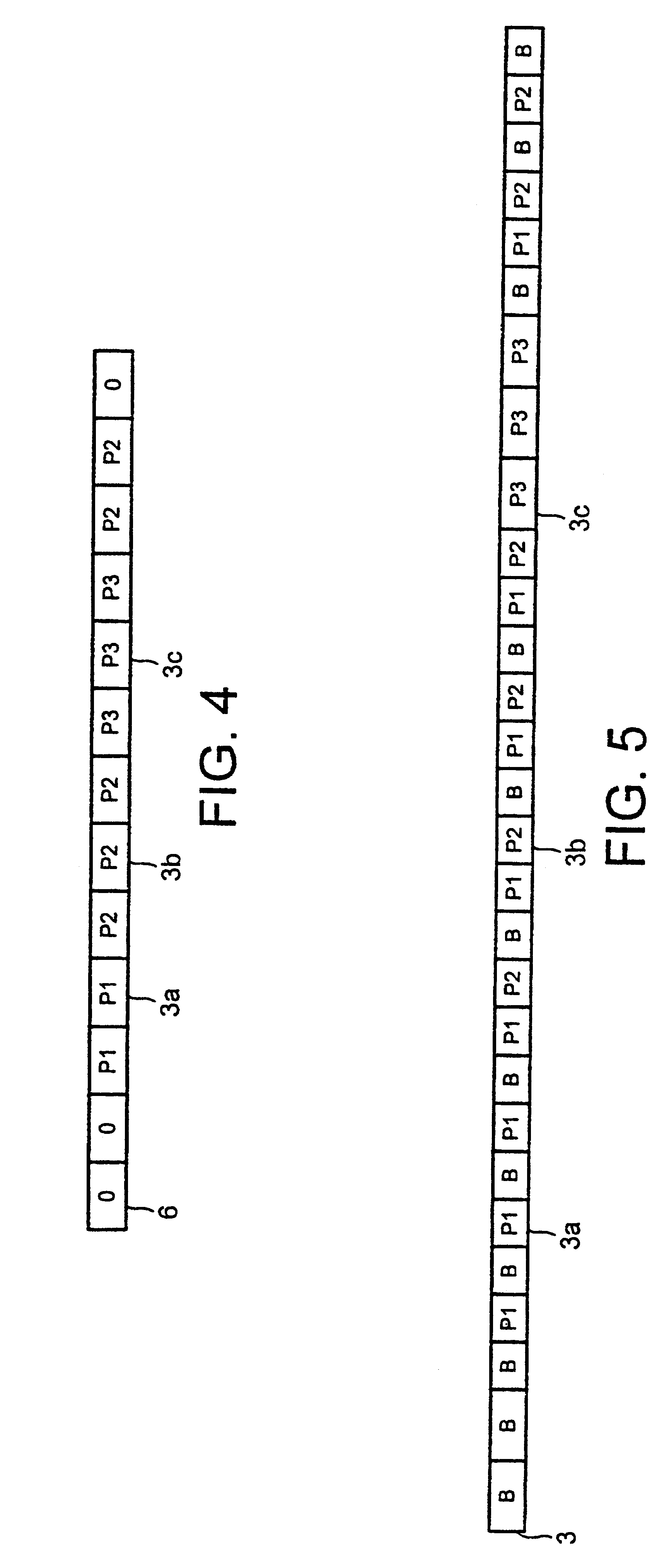

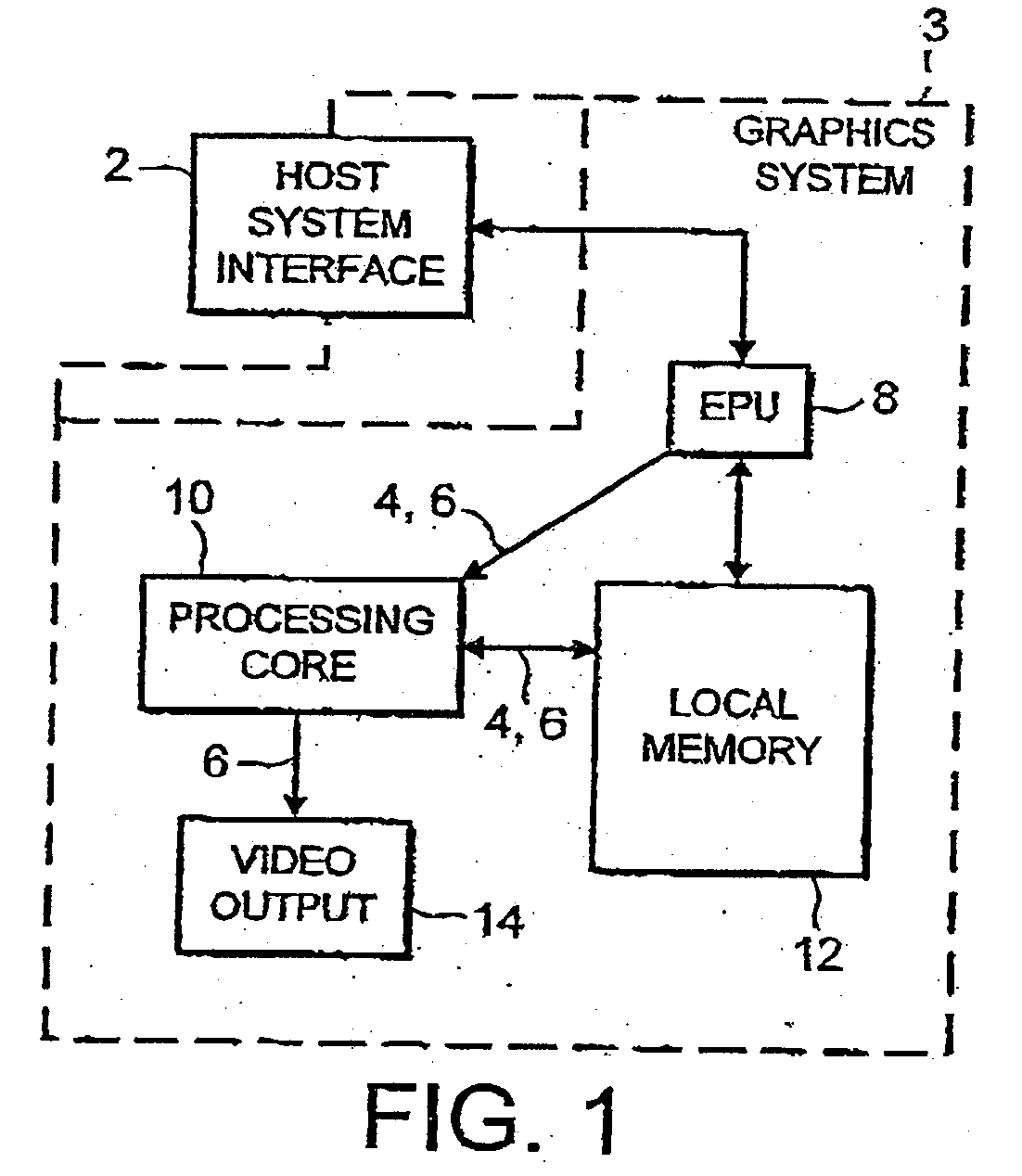

Method and apparatus for SIMD processing using multiple queues

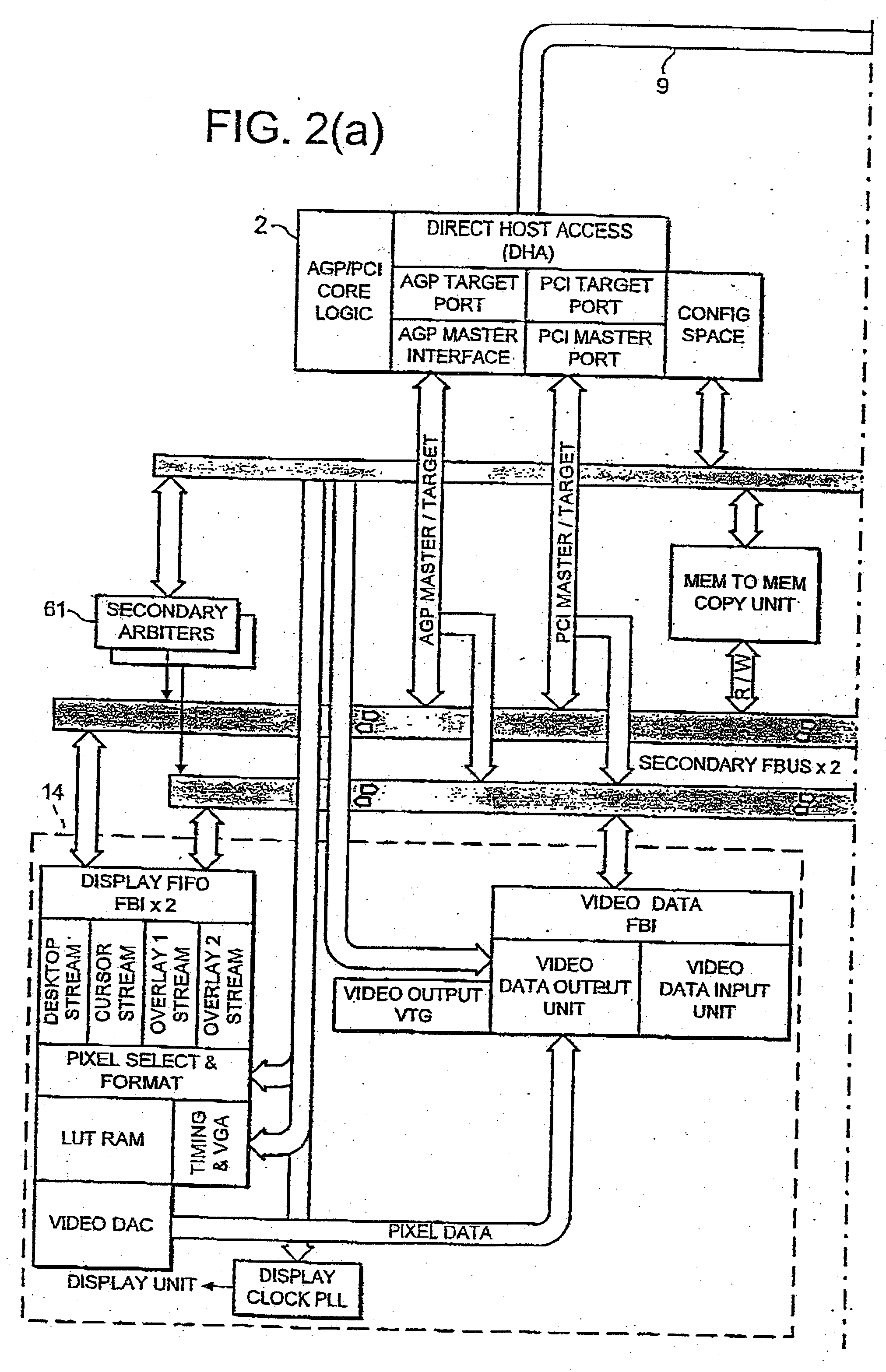

InactiveUS6898692B1Operational speed enhancementGeneral purpose stored program computerGraphicsMultilevel queue

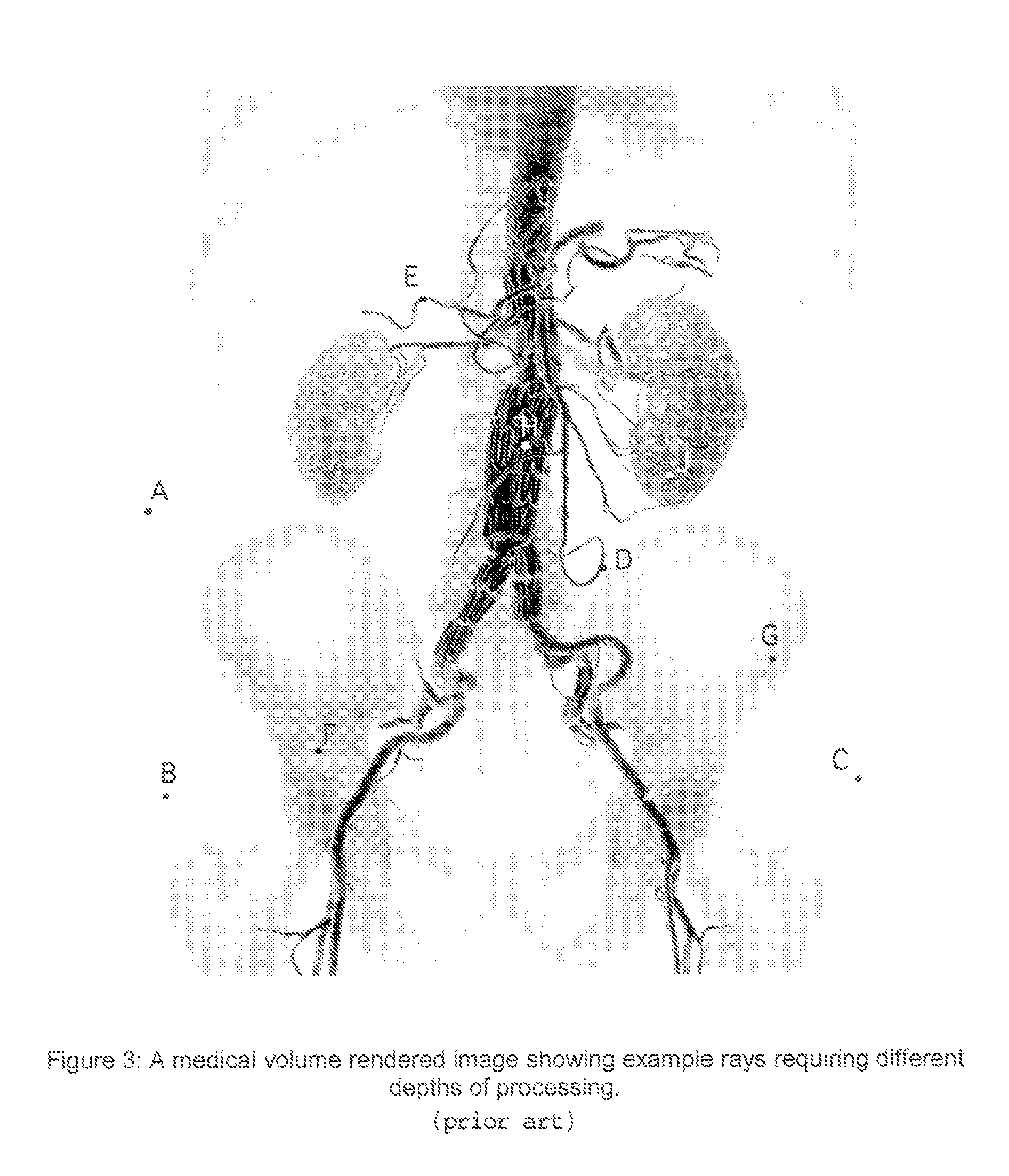

A method of processing data relating to graphical primitives to be displayed on a display device using region-based SIMD multiprocessor architecture, has the shading and blending operations deferred until rasterization of the available graphical primitive data is completed. For example, the method may comprise the steps of: a) defining a data queue having a predetermined number of locations therein; b) receiving fragment information belonging to an image to be displayed by the pixel; c) determining whether the fragment information belongs to an opaque image or to a blended image; d) if the fragment information relates to a blended image, storing the fragment information on the next available location in the queue; e) if the fragment information relates to an opaque image, clearing the locations of the queue and storing the fragment information in the first location in the queue; f) repeating steps b) to e) for new fragment information until fragment information is stored in all the locations in the data queue or until no further fragment information is available; and g) processing in turn fragment information stored in the locations of the queue to produce respective pixel display values.

Owner:RAMBUS INC

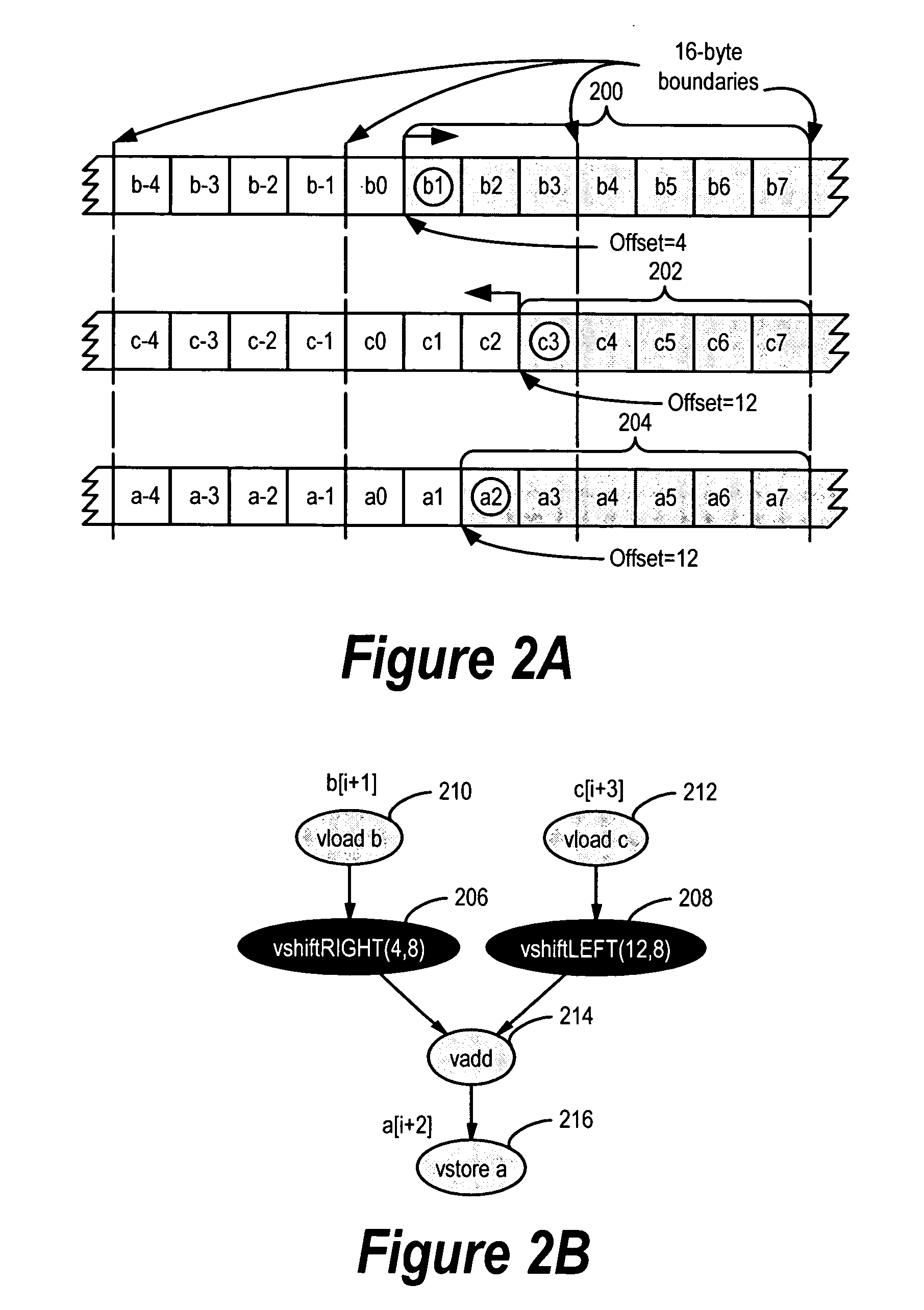

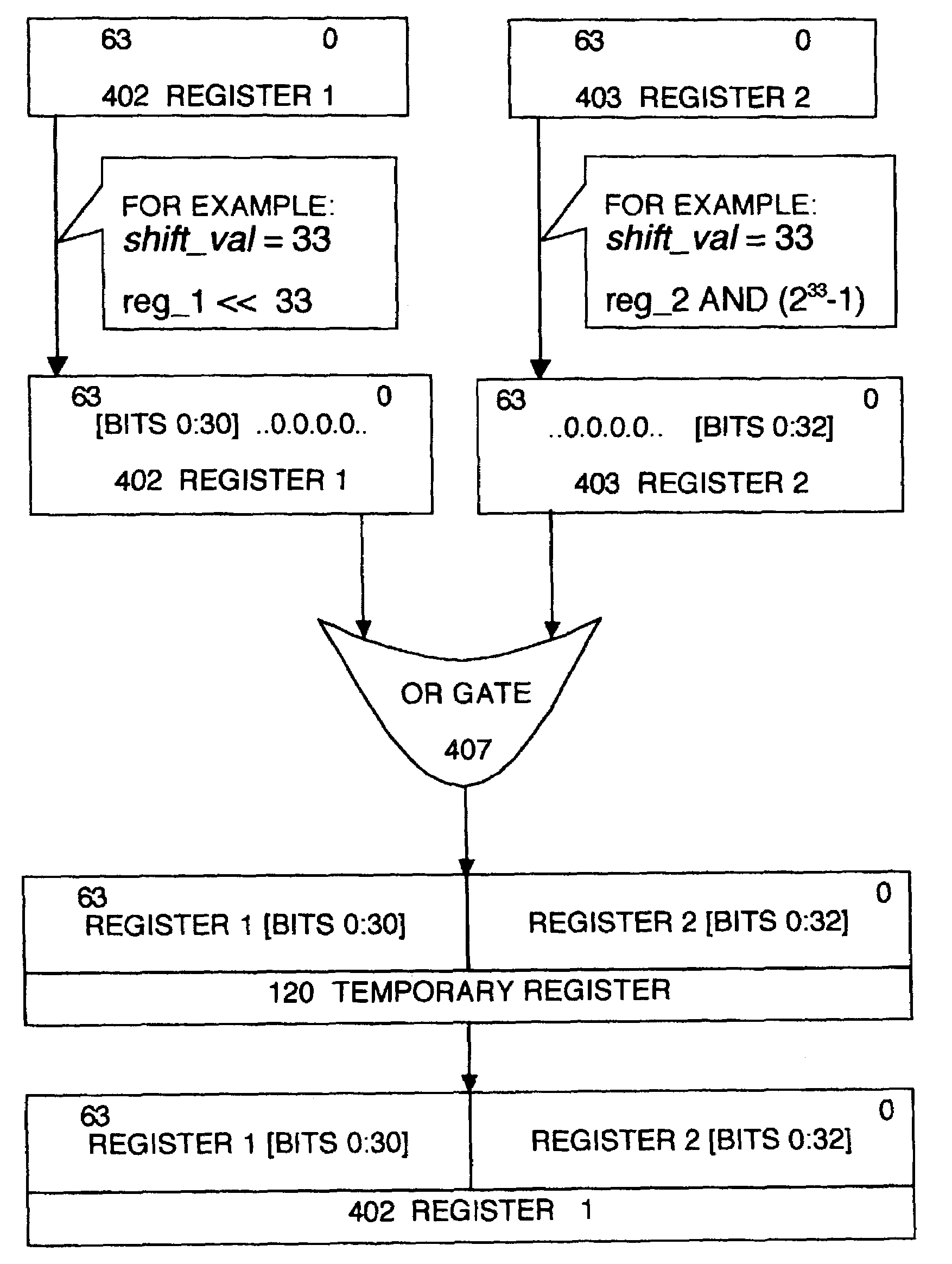

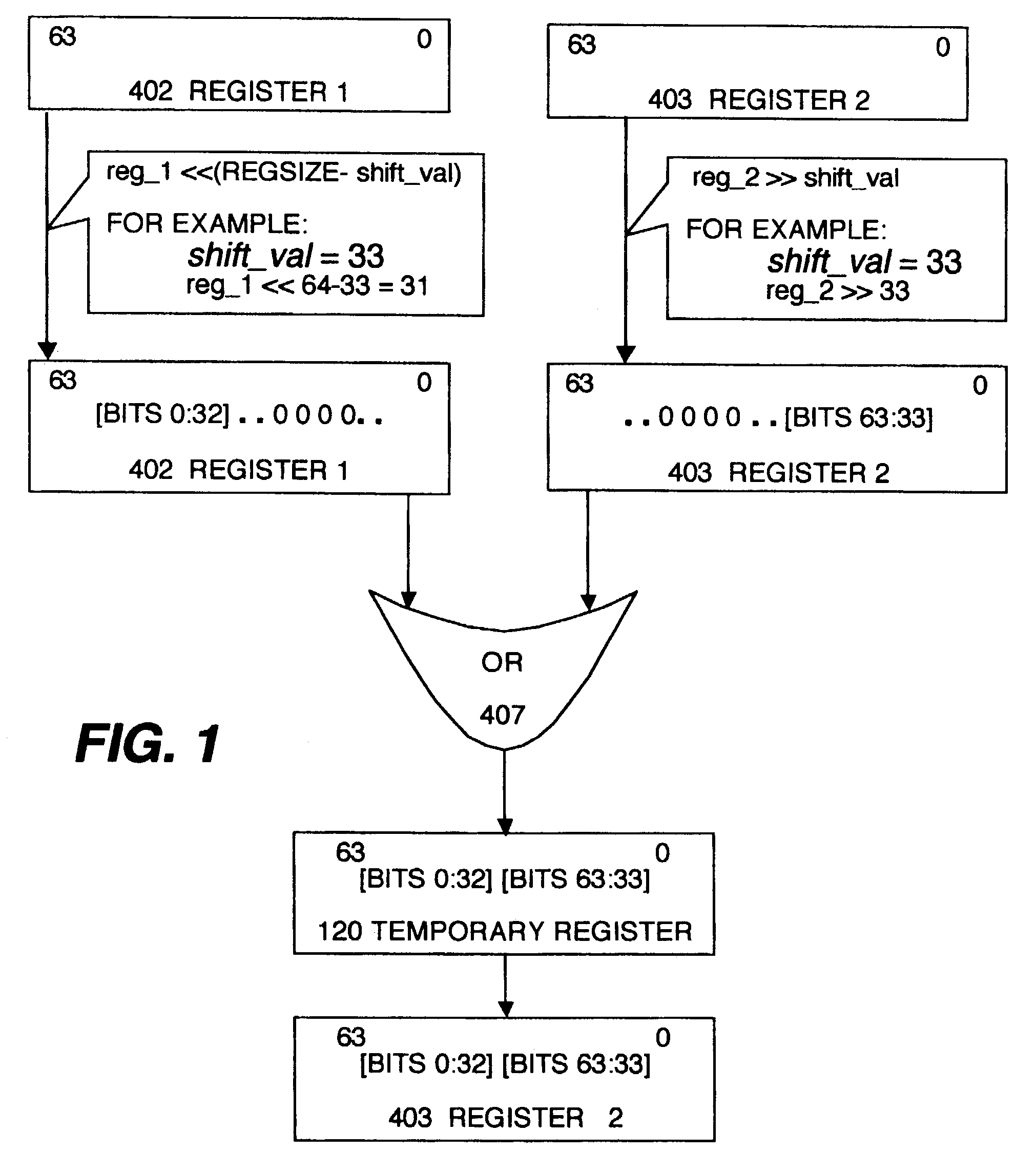

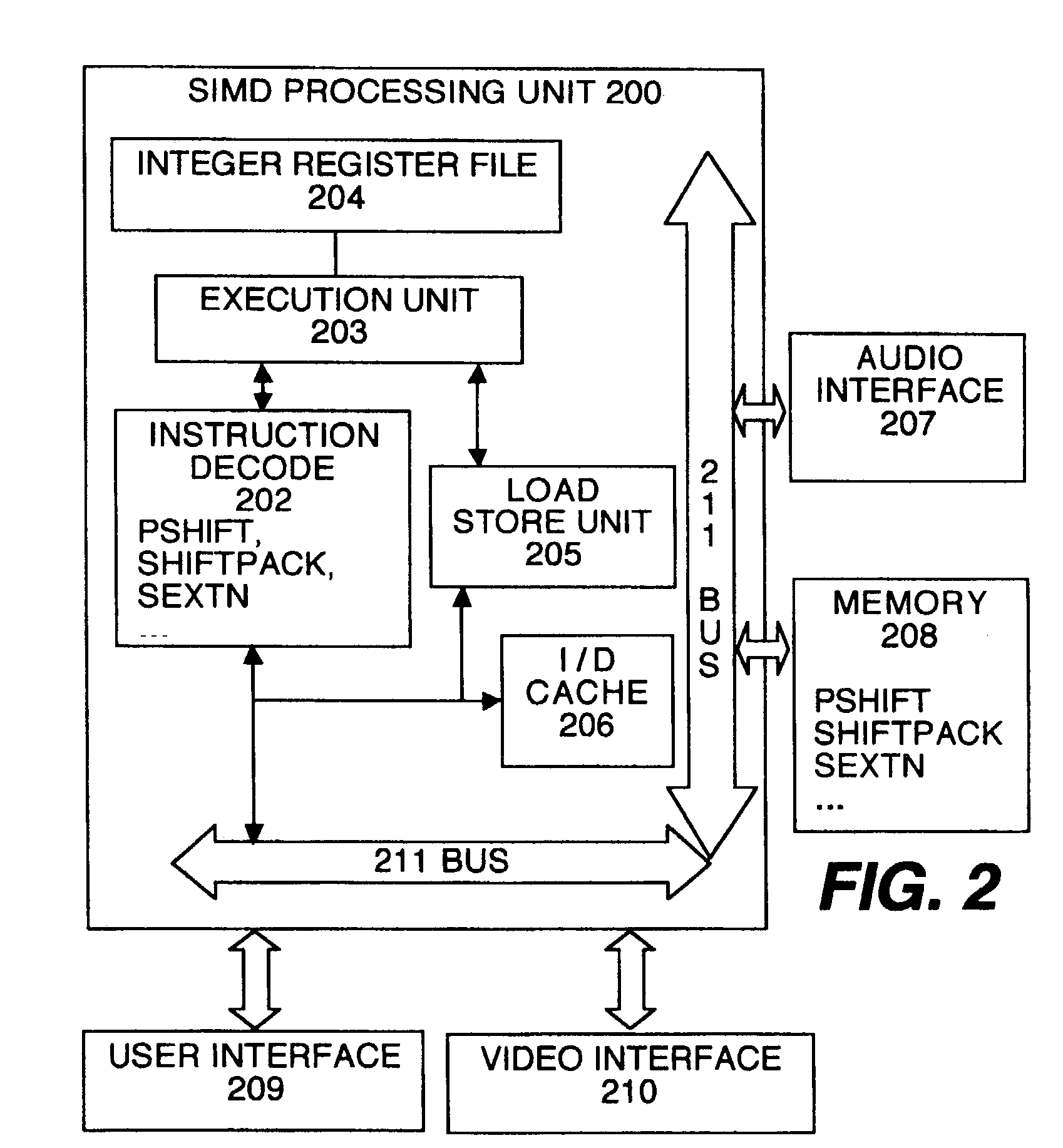

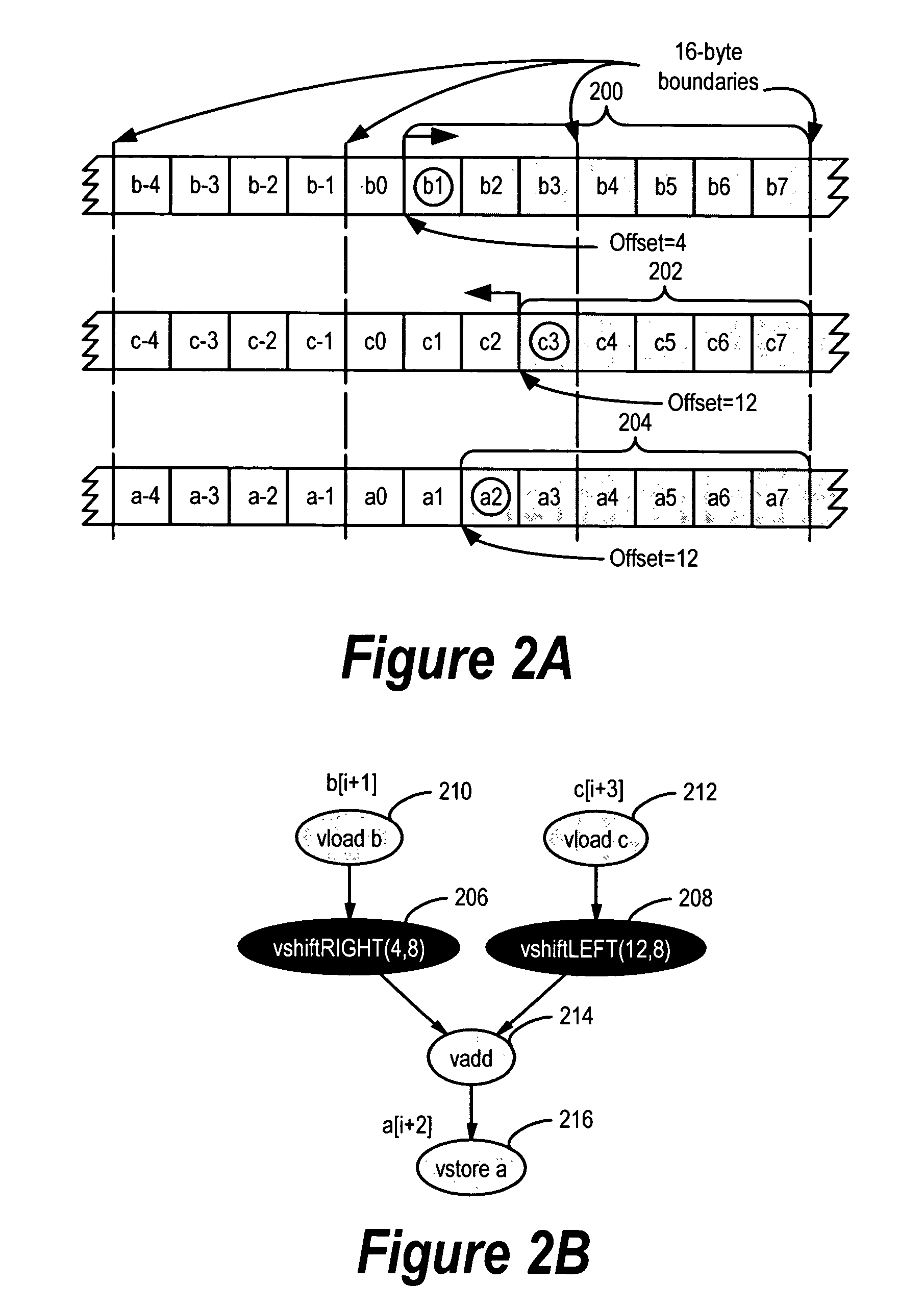

Method and apparatus for data alignment and parsing in SIMD computer architecture

InactiveUS7275147B2Reduce in quantityIncrease speedGeneral purpose stored program computerSpecific program execution arrangementsProcessor registerSimd processor

Execution of a single stand-alone instruction manipulates two n bit strings of data to pack data or align the data. Decoding of the single instruction identifies two registers of n bits each and a shift value, preferably as parameters of the instruction. A first and a second subset of data of less than n bits are selected, by logical shifting, from the two registers, respectively, based solely upon the shift value. Then, the subsets are concatenated, preferably by a logical OR, to obtain an output of n bits. The output may be aligned data or packed data, particularly useful for performing a single operation on multiple sets of the data through parallel processing with a SIMD processor.

Owner:HITACHI LTD

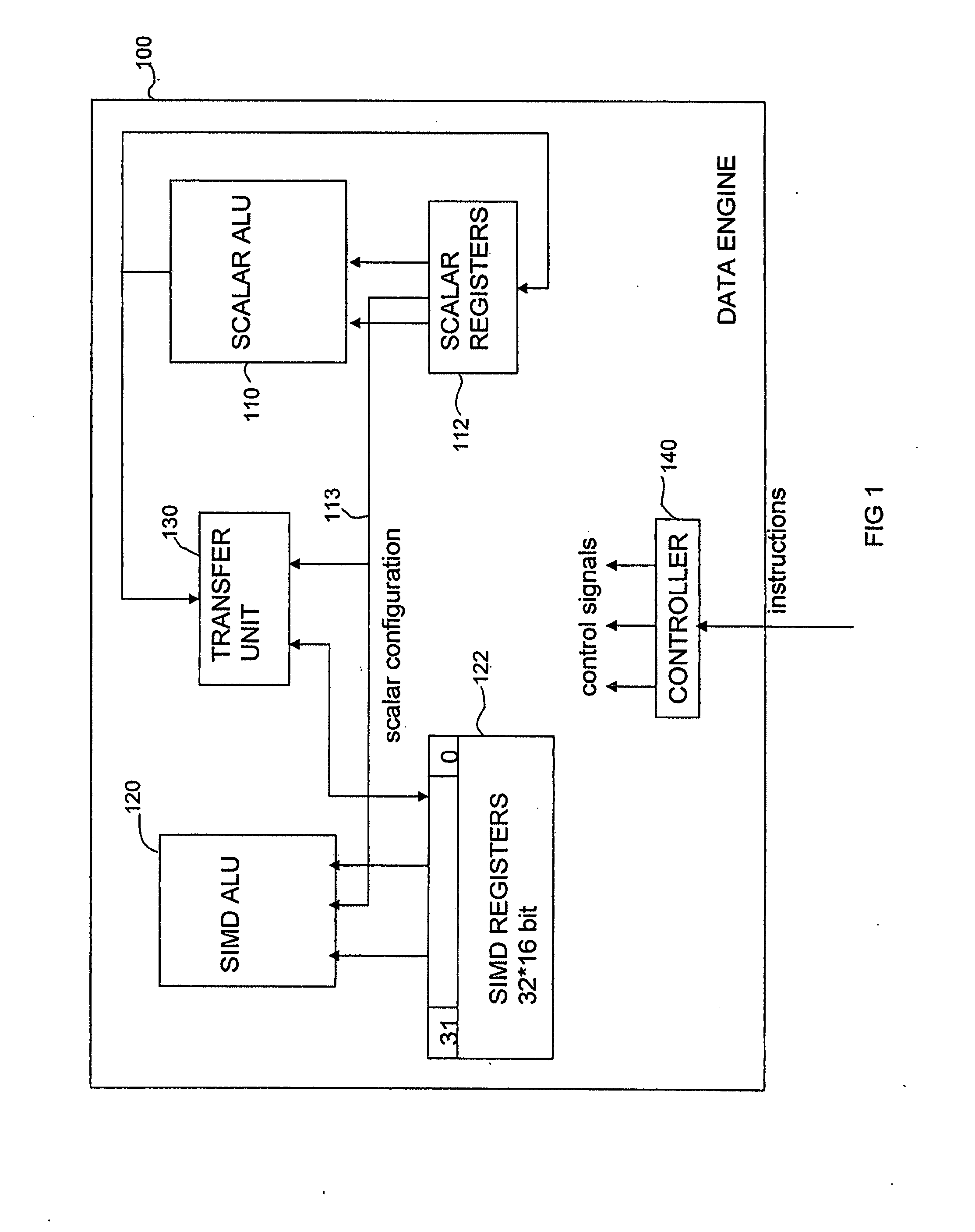

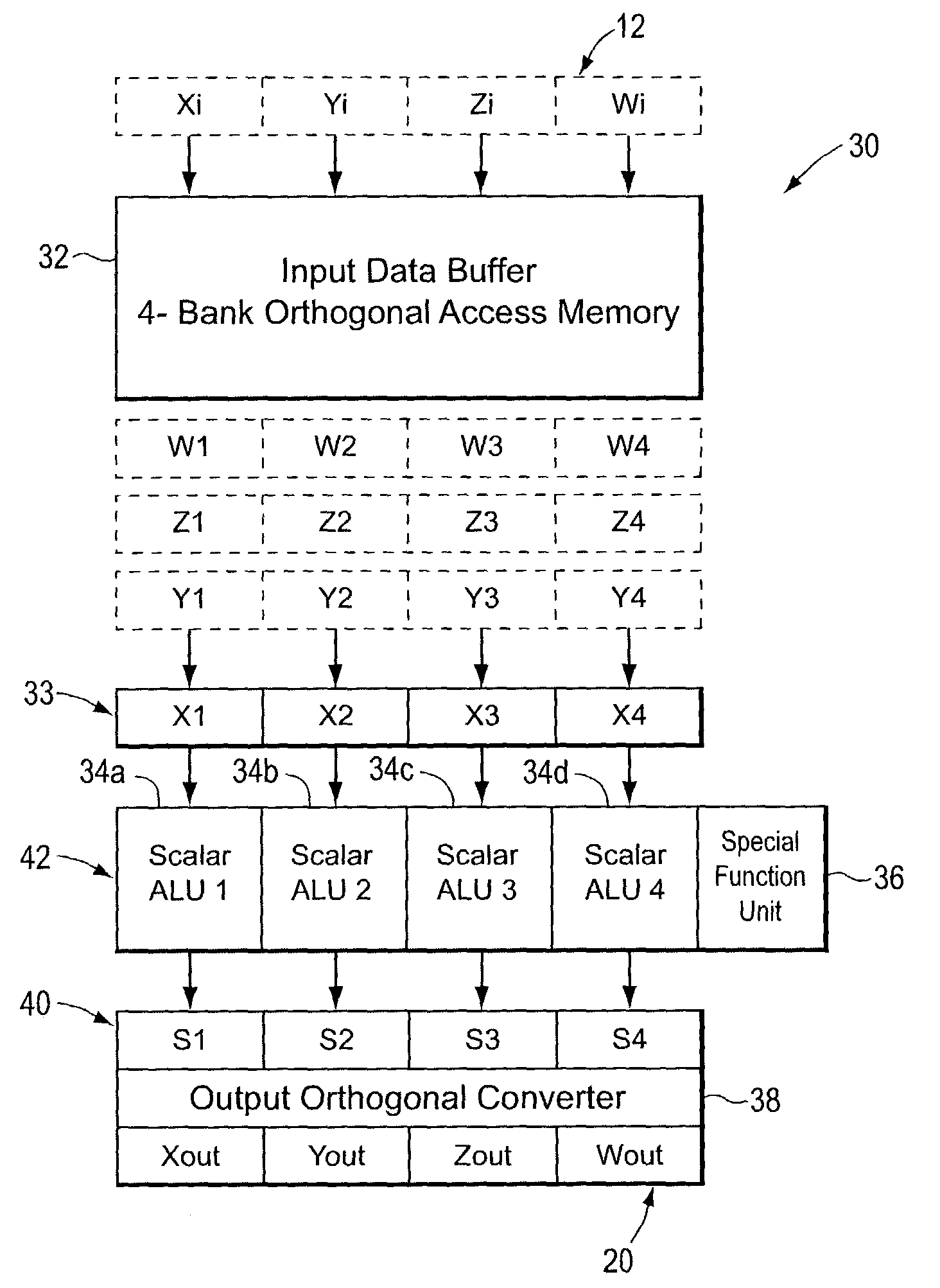

SIMD processor with scalar arithmetic logic units

ActiveUS7146486B1Shorten the timeAvoid confictConcurrent instruction executionArchitecture with single central processing unitArithmetic logic unitScalar processor

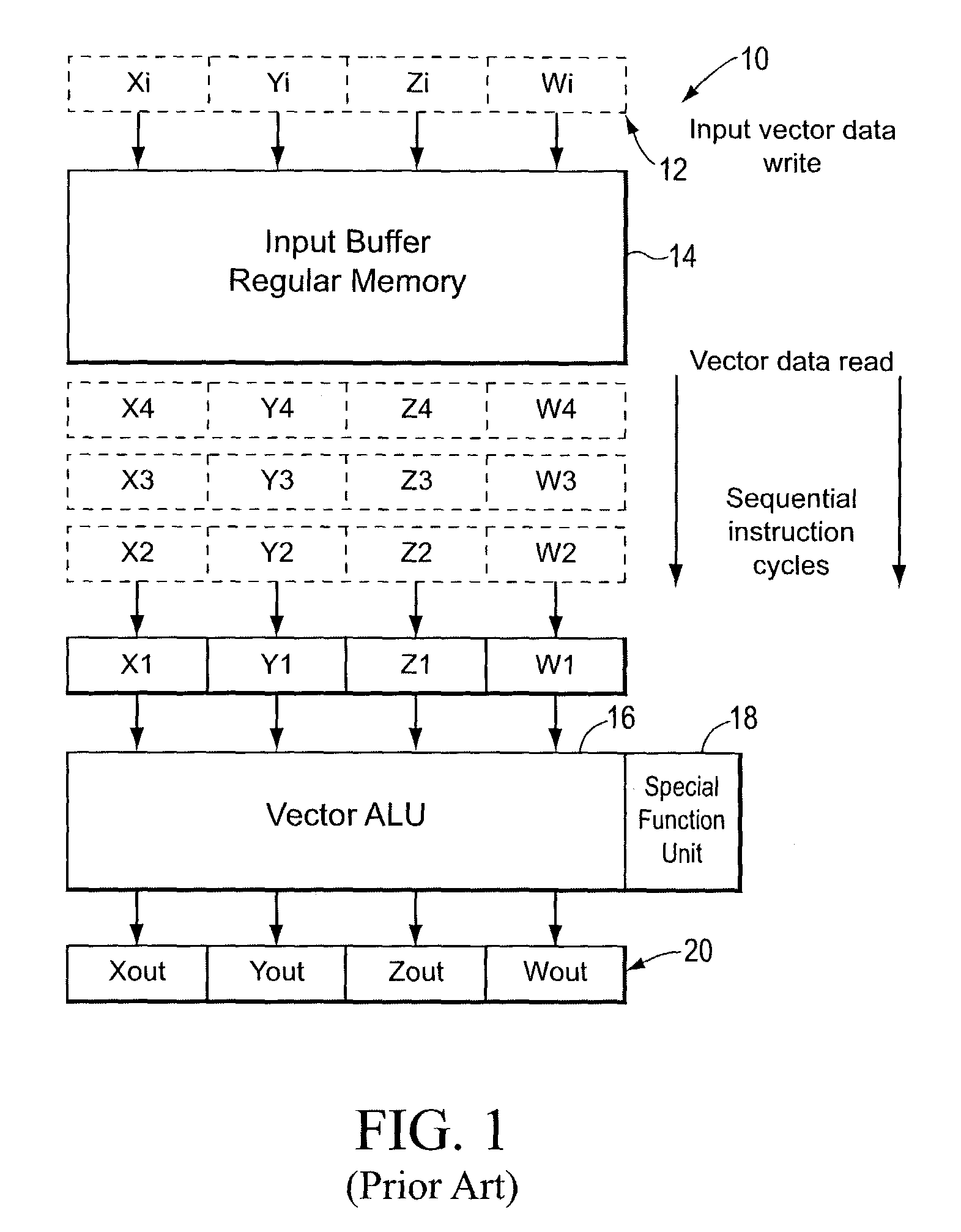

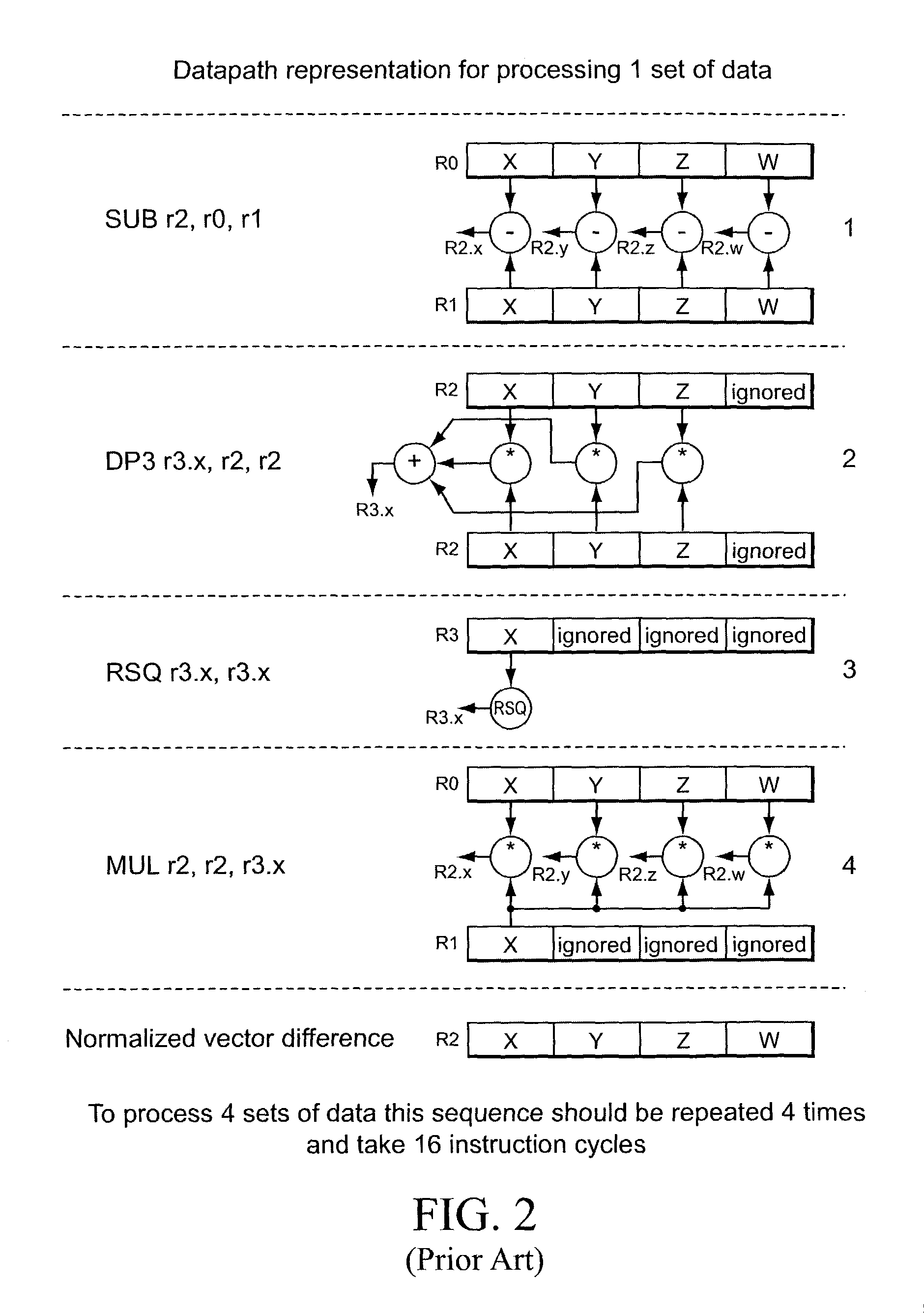

A scalar processor that includes a plurality of scalar arithmetic logic units and a special function unit. Each scalar unit performs, in a different time interval, the same operation on a different data item, where each different time interval is one of a plurality of successive, adjacent time intervals. Each unit provides an output data item in the time interval in which the unit performs the operation and provides a processed data item in the last of the successive, adjacent time intervals. The special function unit provides a special function computation for the output data item of a selected one of the scalar units, in the time interval in which the selected scalar unit performs the operation, so as to avoid a conflict in use among the scalar units. A vector processing unit includes an input data buffer, the scalar processor, and an output orthogonal converter.

Owner:S3 GRAPHICS

Method for efficient and parallel color space conversion in a programmable processor

InactiveUS20110072236A1Easy to operateEffective coloringInstruction analysisProgram control using wired connectionsParallel computingSimd processor

The present invention relates to an efficient implementation of color space conversion in a SIMD processor as part of converting output of video decompression to interface to a display unit.

Owner:MIMAR TIBET

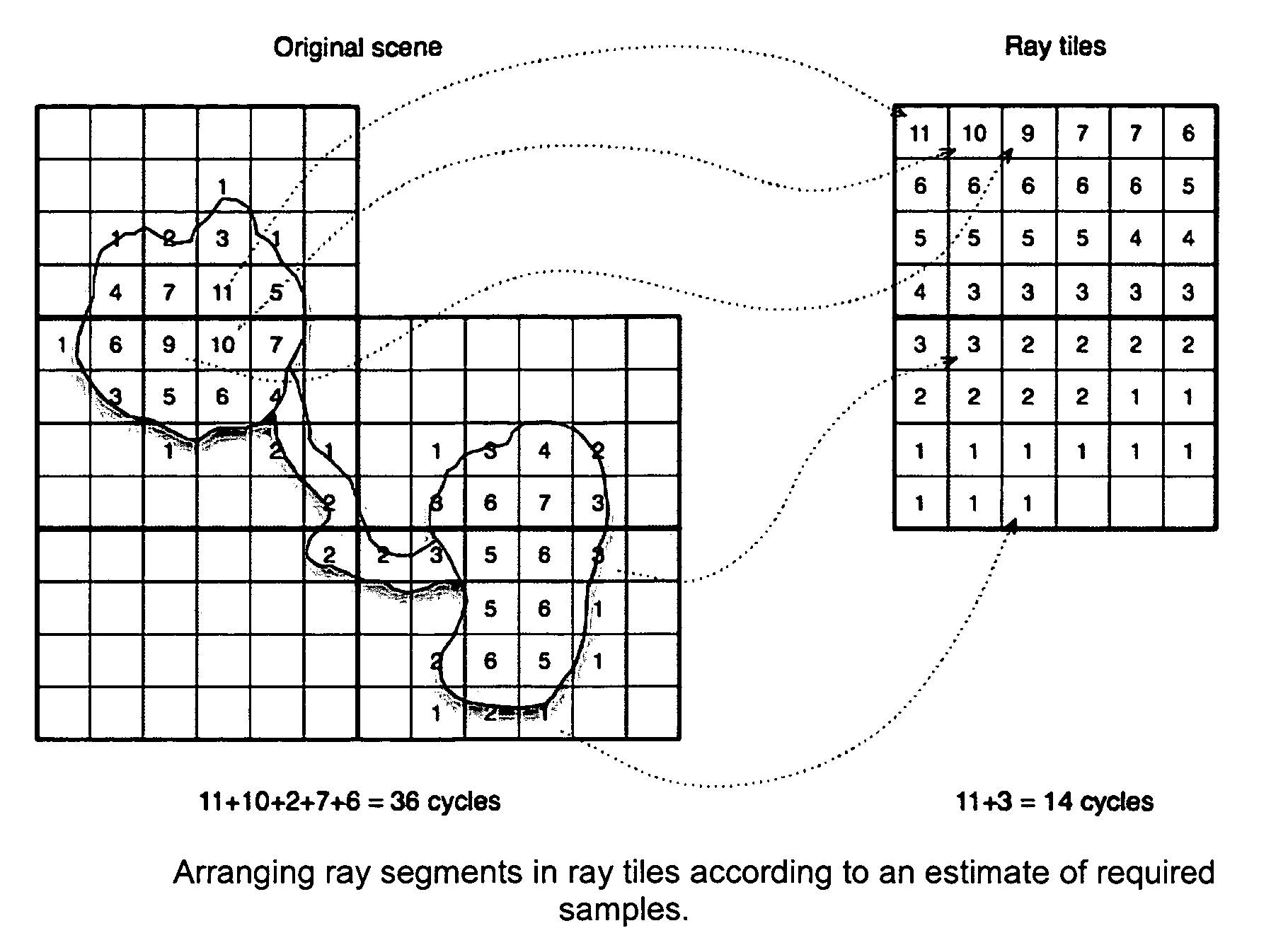

Histogram generation with vector operations in SIMD and VLIW processor by consolidating LUTs storing parallel update incremented count values for vector data elements

InactiveUS7506135B1Program controlArchitecture with multiple processing unitsScalar processorSimd processor

The present invention provides histogram calculation for images and video applications using a SIMD and VLIW processor with vector Look-Up Table (LUT) operations. This provides a speed up of histogram calculation by a factor of N times over a scalar processor where the SIMD processor could perform N LUT operations per instruction. Histogram operation is partitioned into a vector LUT operation, followed by vector increment, vector LUT update, and at the end by reduction of vector histogram components. The present invention could be used for intensity, RGBA, YUV, and other type of multi-component images.

Owner:MIMAR TIBET

Framework for generating mixed-mode operations in loop-level simdization

InactiveUS8549501B2Software engineeringSpecific program execution arrangementsProgram instructionParallel computing

Generating mixed-mode operations in the compilation of program code for processors having vector or SIMD processing units is disclosed. In a preferred embodiment of the present invention, program instructions making up the body of a loop are abstracted into virtual vector instructions. These virtual vector instructions are treated, for initial code optimization purposes, as vector instructions (i.e., instructions written for the vector unit). The virtual vector instructions are eventually expanded into native code for the target processor, at which time a determination is made for each virtual vector instruction as to whether to expand the virtual vector instruction into native vector instructions, into native scalar instructions, into calls to pre-defined library functions, or into a combination of these. A cost model is used to determine the optimal choice of expansion based on hardware / software constraints, performance costs / benefits, and other criteria.

Owner:INT BUSINESS MASCH CORP

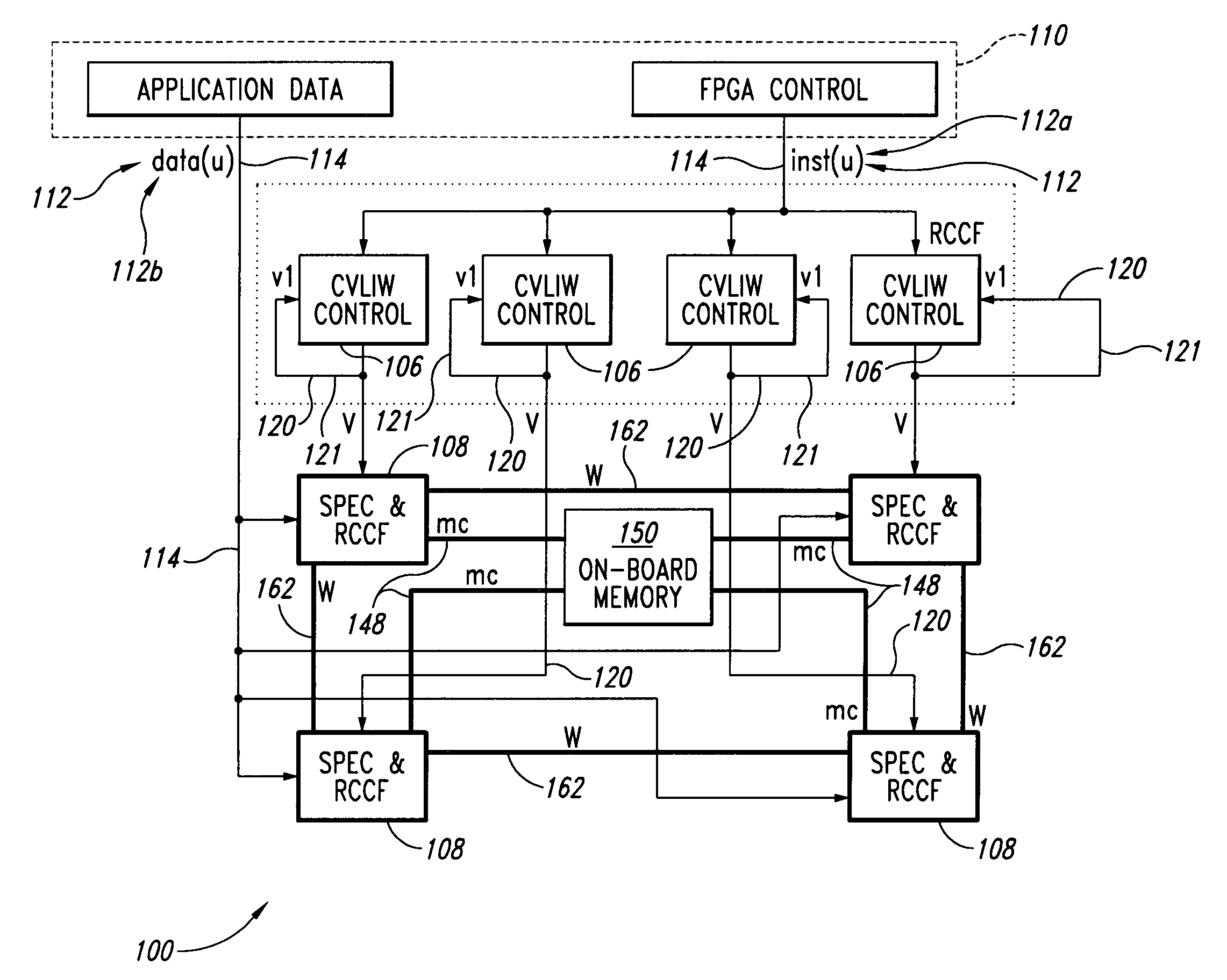

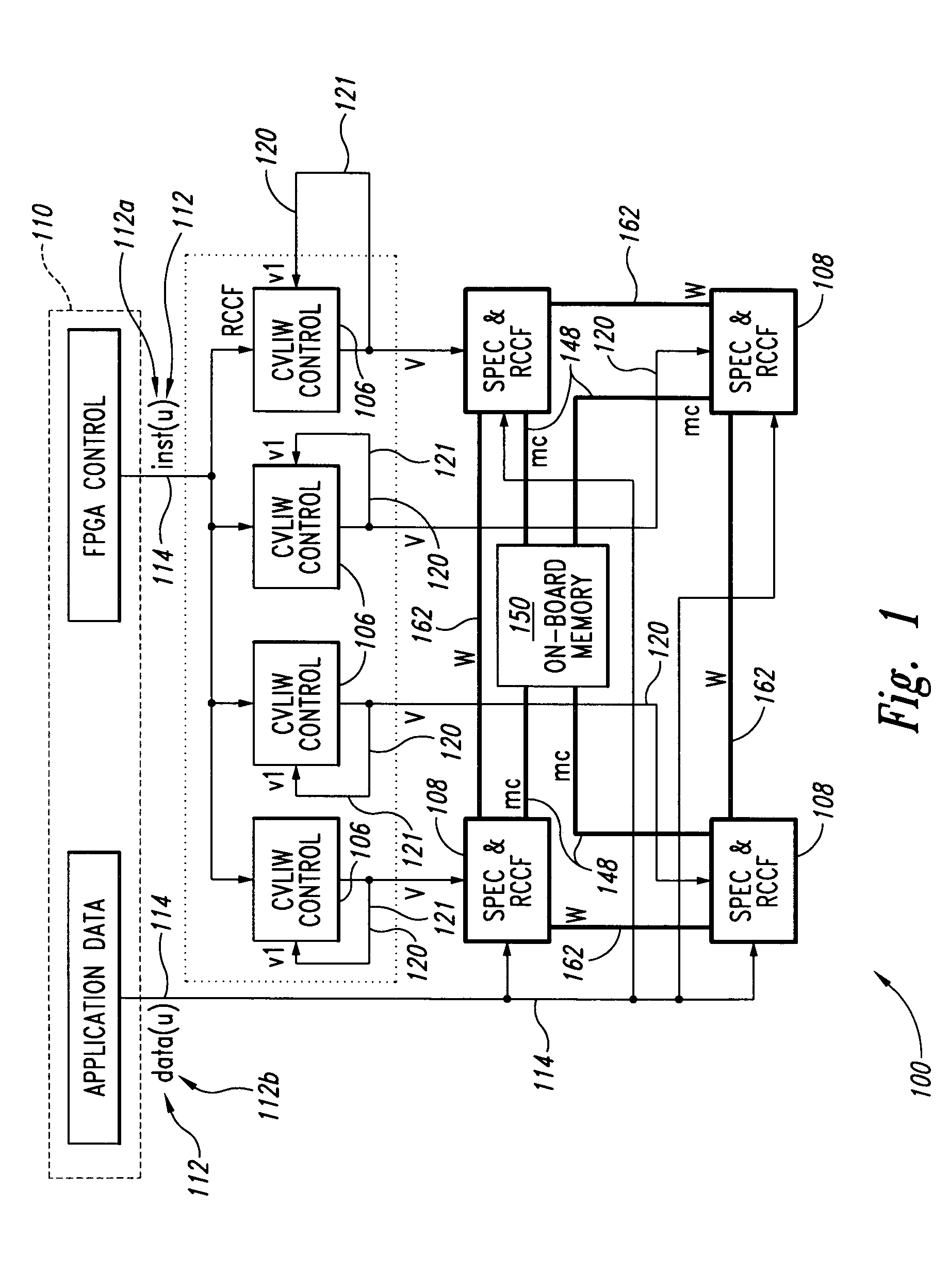

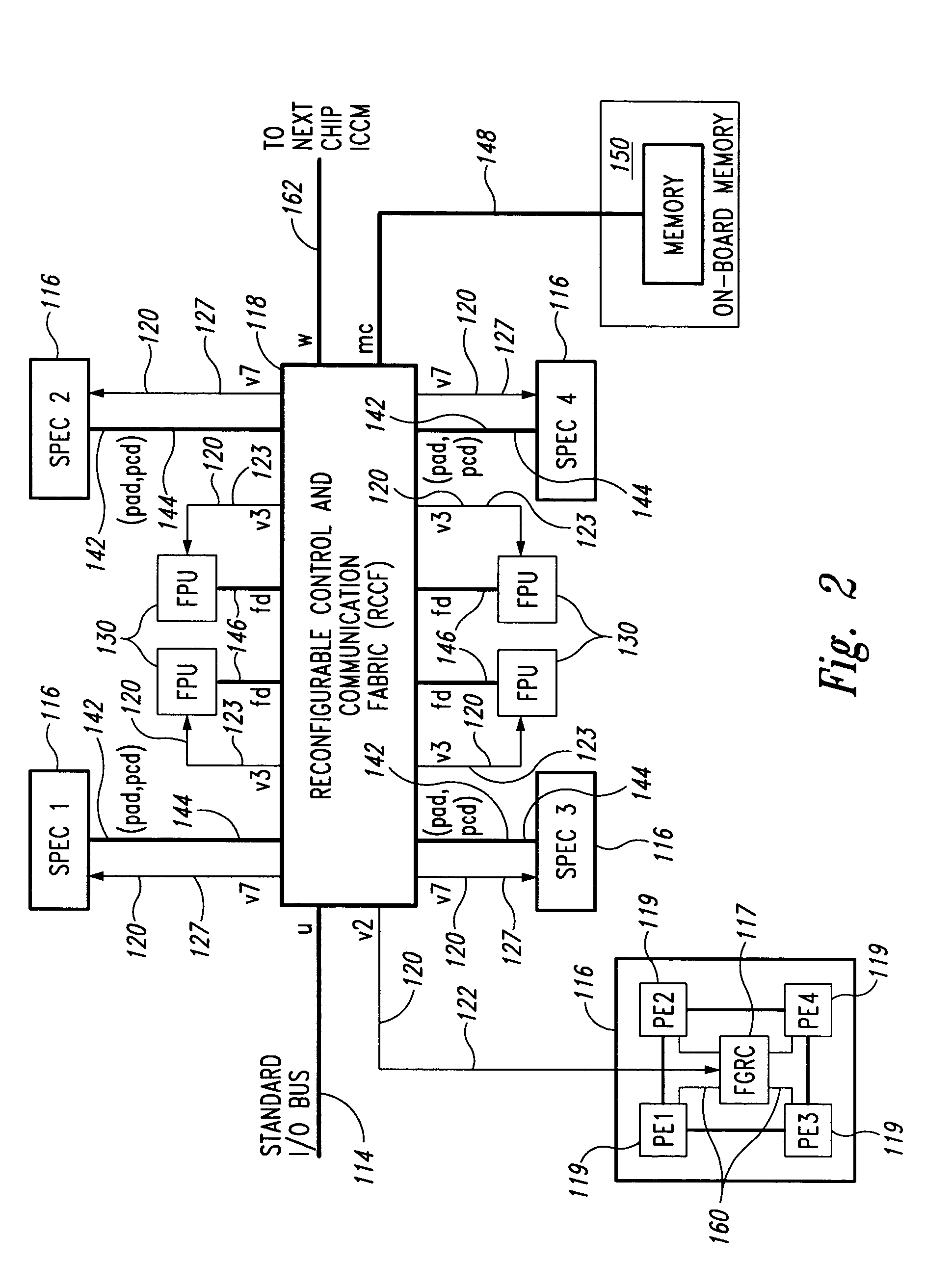

Super-reconfigurable fabric architecture (SURFA): a multi-FPGA parallel processing architecture for COTS hybrid computing framework

InactiveUS7299339B2Single instruction multiple data multiprocessorsMemory adressing/allocation/relocationBus interfaceProcessing element

A field programmable gate array includes a virtual bus interface that receives a control word from a host processor over a standard I / O bus. A configurable very long instruction word (VLIW) controller receives the control word via virtual bus interface signals mapped from the virtual bus interface. A reconfigurable communication and control fabric controls the data paths and programming modes of single instruction-multiple data (SIMD) processing element cells. The configurable VLIW controller has an interface with the reconfigurable communication and control fabric. SIMD processing element cells are controlled by the configurable VLIW controller through the reconfigurable communication and control fabric via the interface.

Owner:THE BOEING CO

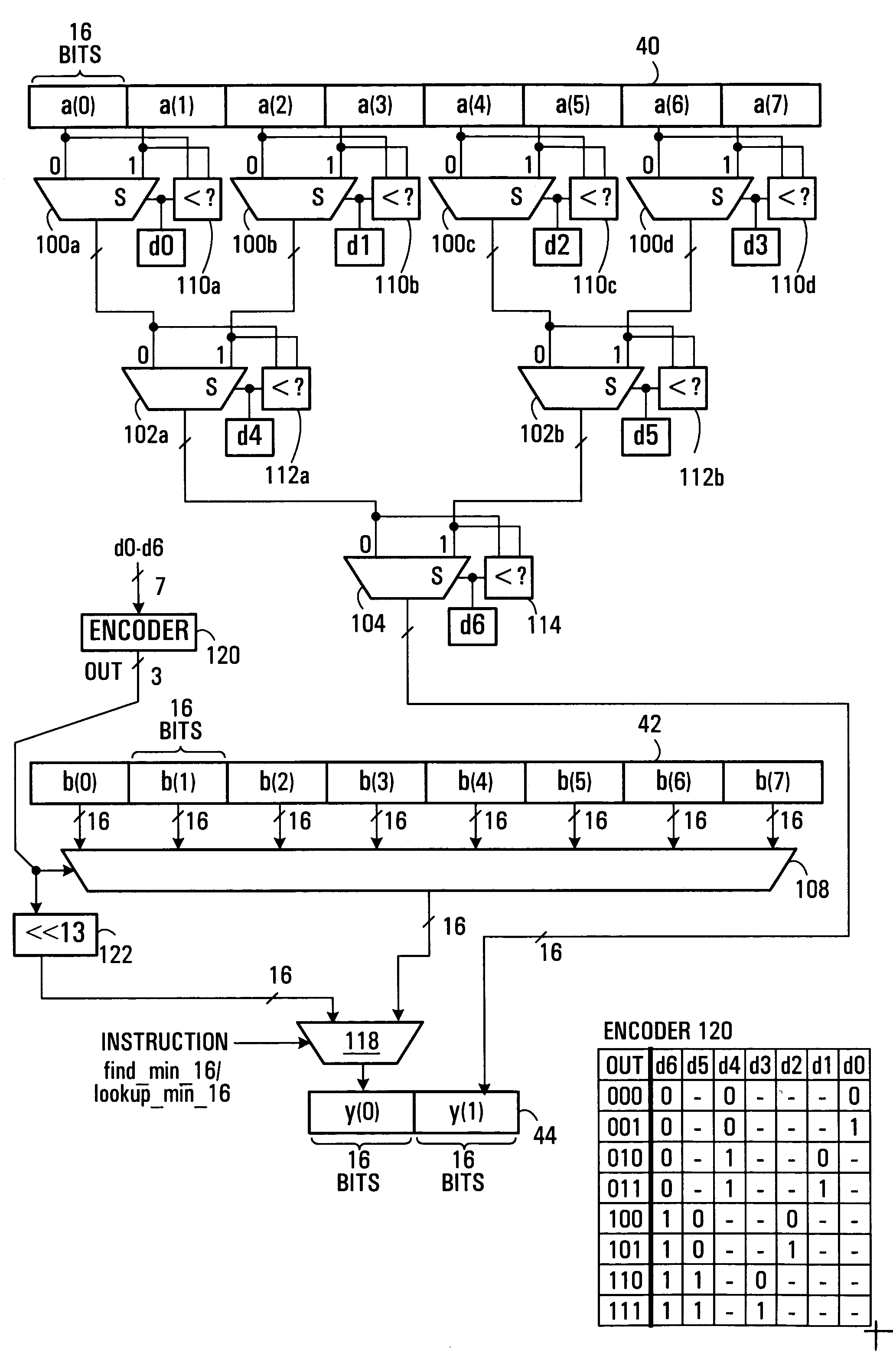

SIMD processor executing min/max instructions

ActiveUS20060095739A1Computation using non-contact making devicesGeneral purpose stored program computerParallel computingSimd processor

A SIMD processor responds to a single min / max instruction to find the minimum or maximum valued data unit in an array of data units. The determined minimum / maximum value and an associated index value thereto may be output. Alternatively, the value of a data unit in another array may be output at a corresponding location. A further single instruction executable by the SIMD processor, may be applied to results obtained using such a single min / max instruction, to allow such instructions to operate on two dimensional arrays.

Owner:AVAGO TECH INT SALES PTE LTD

Method and system for parallel histogram calculation in a simd and vliw processor

InactiveUS20090276606A1Program control using stored programsGeneral purpose stored program computerScalar processorParallel computing

The present invention provides histogram calculation for images and video applications using a SIMD and VLIW processor with vector Look-Up Table (LUT) operations. This provides a speed up of histogram calculation by a factor of N times over a scalar processor where the SIMD processor could perform N LUT operations per instruction. Histogram operation is partitioned into a vector LUT operation, followed by vector increment, vector LUT update, and at the end by reduction of vector histogram components. The present invention could be used for intensity, RGBA, YUV, and other type of multi-component images.

Owner:MIMAR TIBET

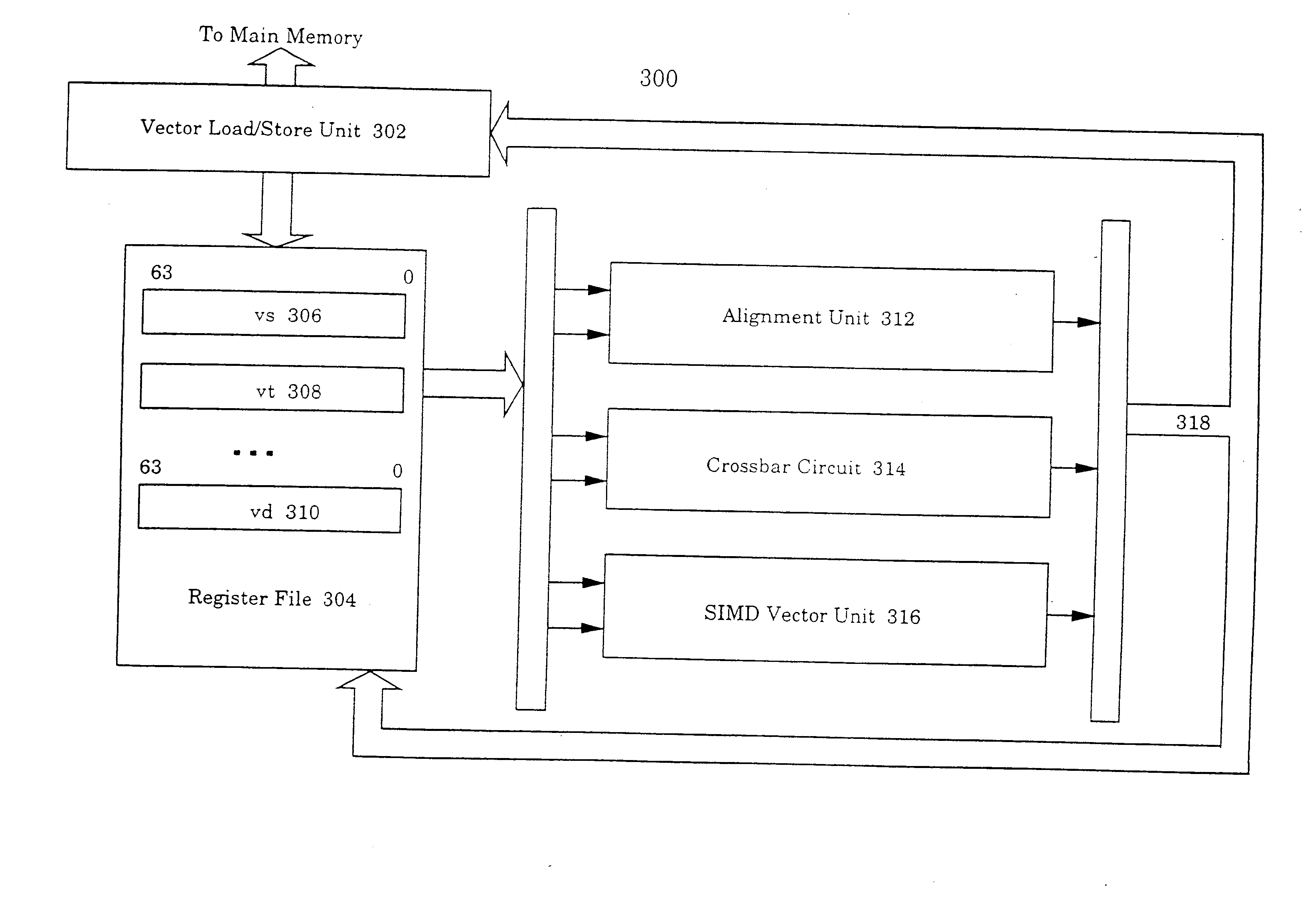

Volume rendering apparatus and process

Owner:TOSHIBA MEDICAL VISUALIZATION SYST EURO

Alignment and ordering of vector elements for single instruction multiple data processing

InactiveUS20070250683A1General purpose stored program computerSpecific program execution arrangementsVector elementData treatment

The present invention provides alignment and ordering of vector elements for SIMD processing. In the alignment of vector elements for SIMD processing, one vector is loaded from a memory unit into a first register and another vector is loaded from the memory unit into a second register. The first vector contains a first byte of an aligned vector to be generated. Then, a starting byte specifying the first byte of an aligned vector is determined. Next, a vector is extracted from the first register and the second register beginning from the first bit in the first byte of the first register continuing through the bits in the second register. Finally, the extracted vector is replicated into a third register such that the third register contains a plurality of elements aligned for SIMD processing. In the ordering of vector elements for SIMD processing, a first vector is loaded from a memory unit into a first register and a second vector is loaded from the memory unit into a second register. Then, a subset of elements are selected from the first register and the second register. The elements from the subset are then replicated into the elements in the third register in a particular order suitable for subsequent SIMD vector processing.

Owner:ARM FINANCE OVERSEAS LTD

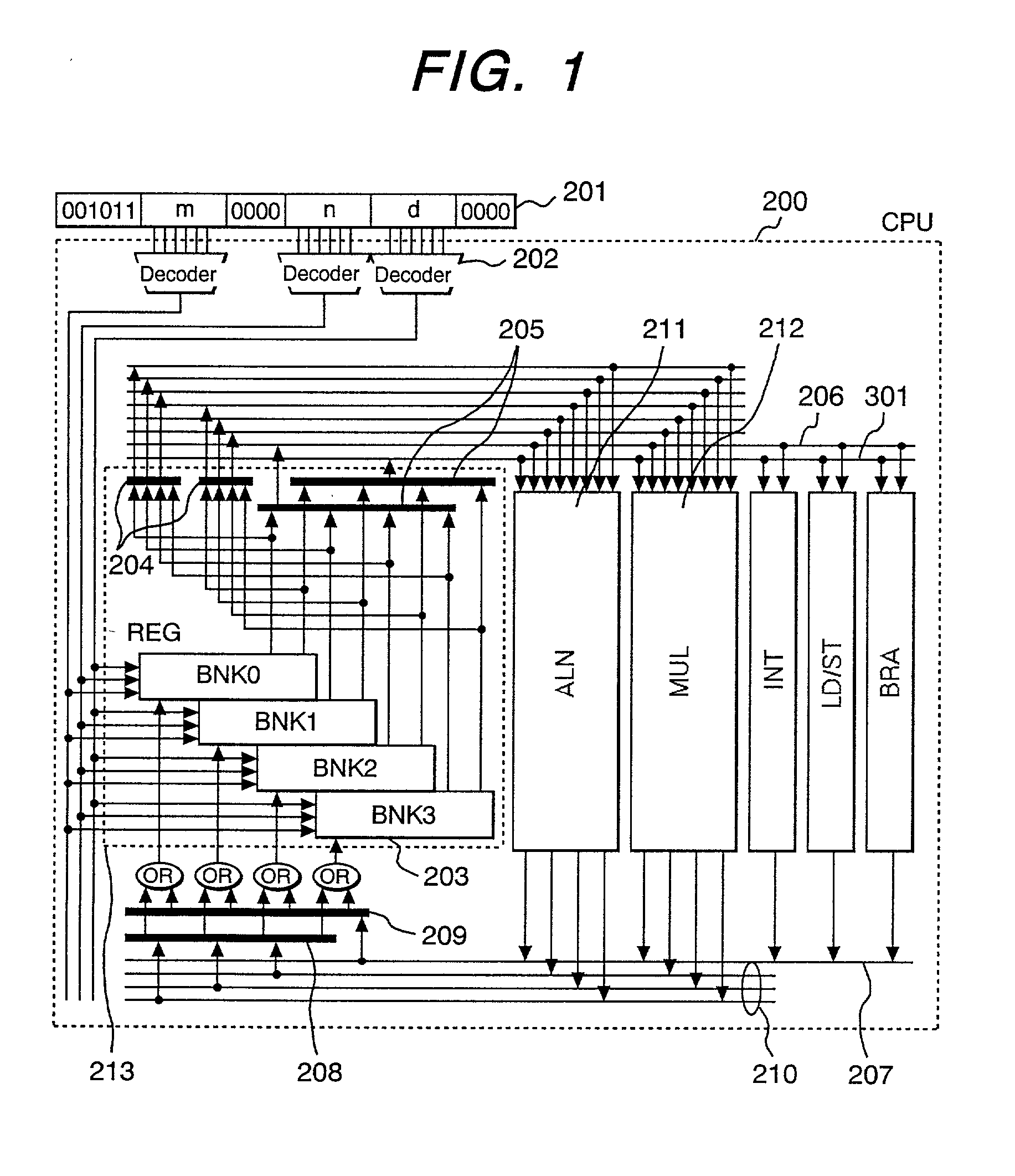

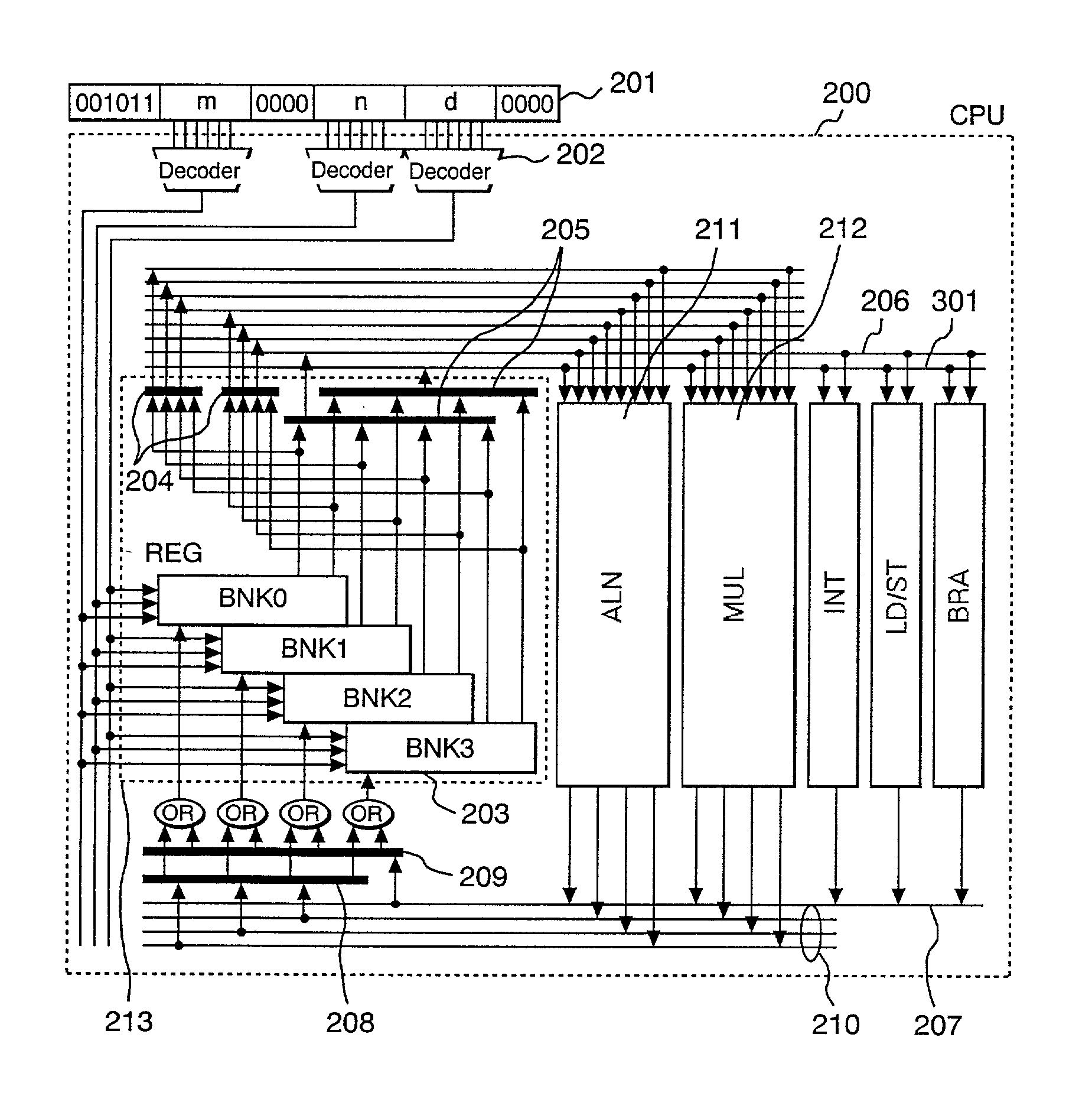

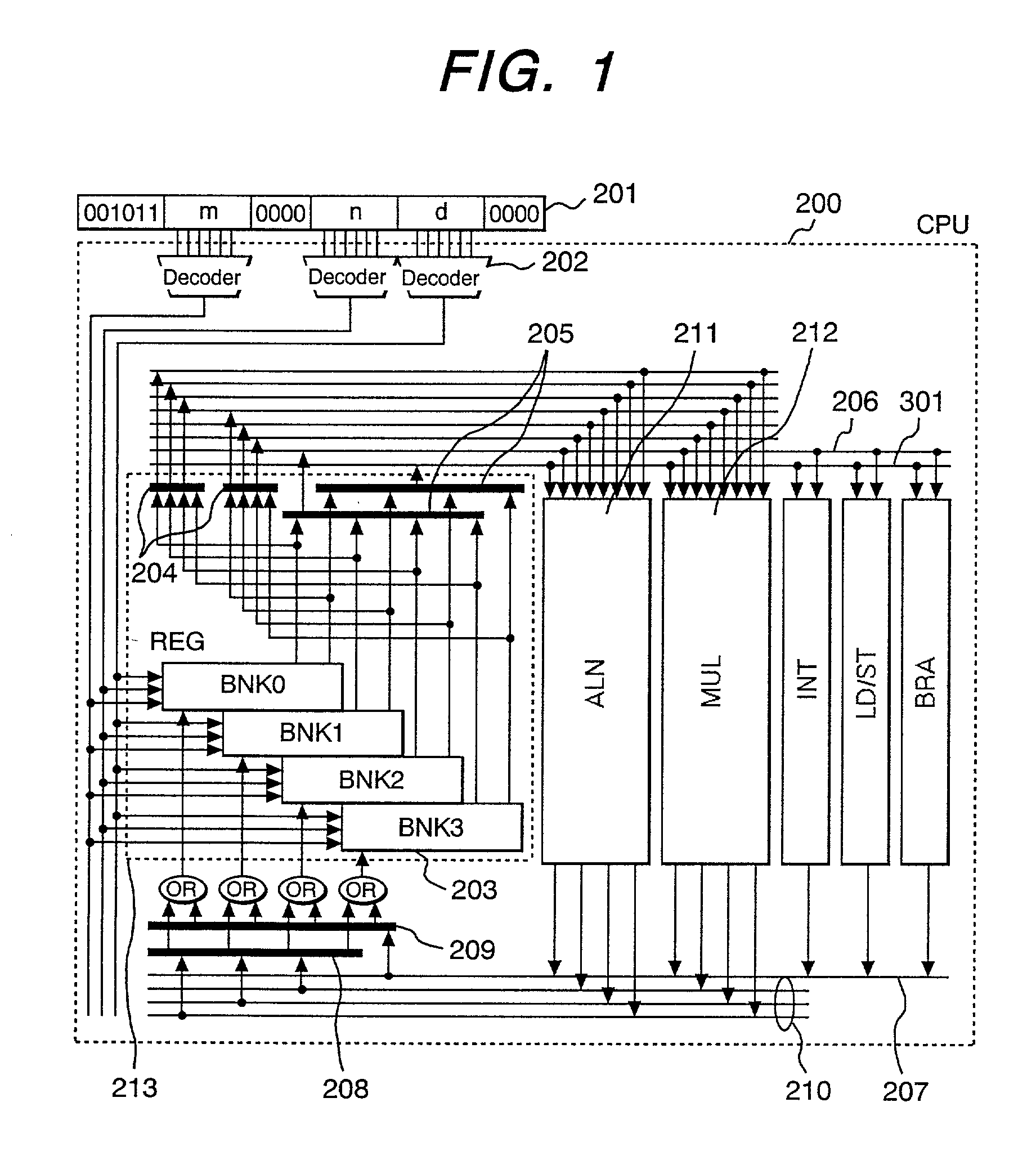

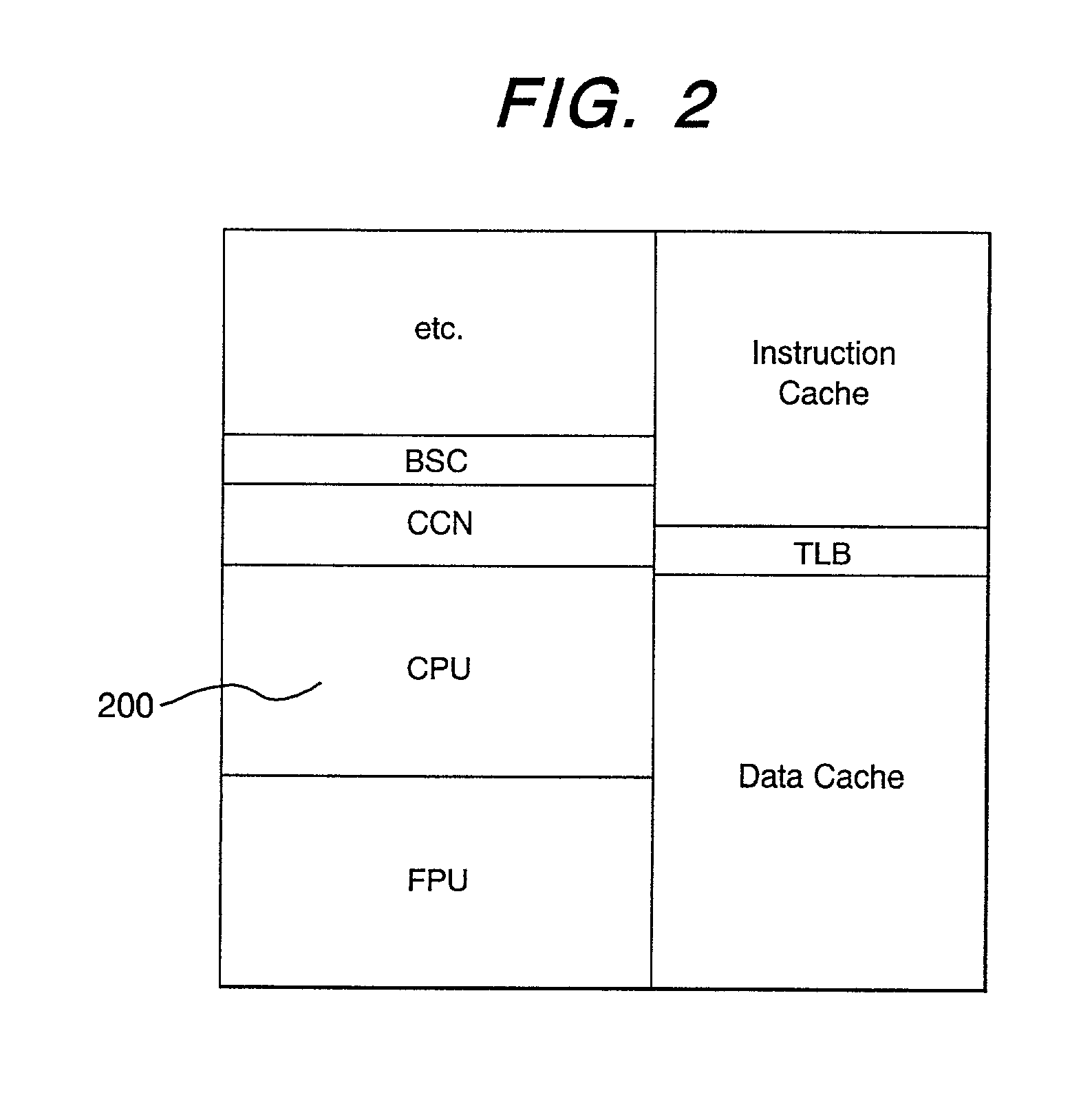

SIMD operation system capable of designating plural registers

InactiveUS20020026570A1Maximize the effectMaintain accuracyRegister arrangementsConcurrent instruction executionOperational systemNetwork packet

In view of a necessity of alleviating factors obstructing an effect of SIMD operation such as in-register data alignment in high speed formation of an SIMD processor, numerous data can be supplied to a data alignment operation pipe 211 by dividing a register file into four banks and enabling to designate a plurality of registers by a single piece of operand to thereby enable to make access to four registers simultaneously and data alignment operation can be carried out at high speed. Further, by defining new data pack instruction, data unpack instruction and data permutation instruction, data supplied in a large number can be aligned efficiently. Further, by the above-described characteristic, definition of multiply accumulate operation instruction maximizing parallelism of SIMD can be carried out.

Owner:RENESAS ELECTRONICS CORP

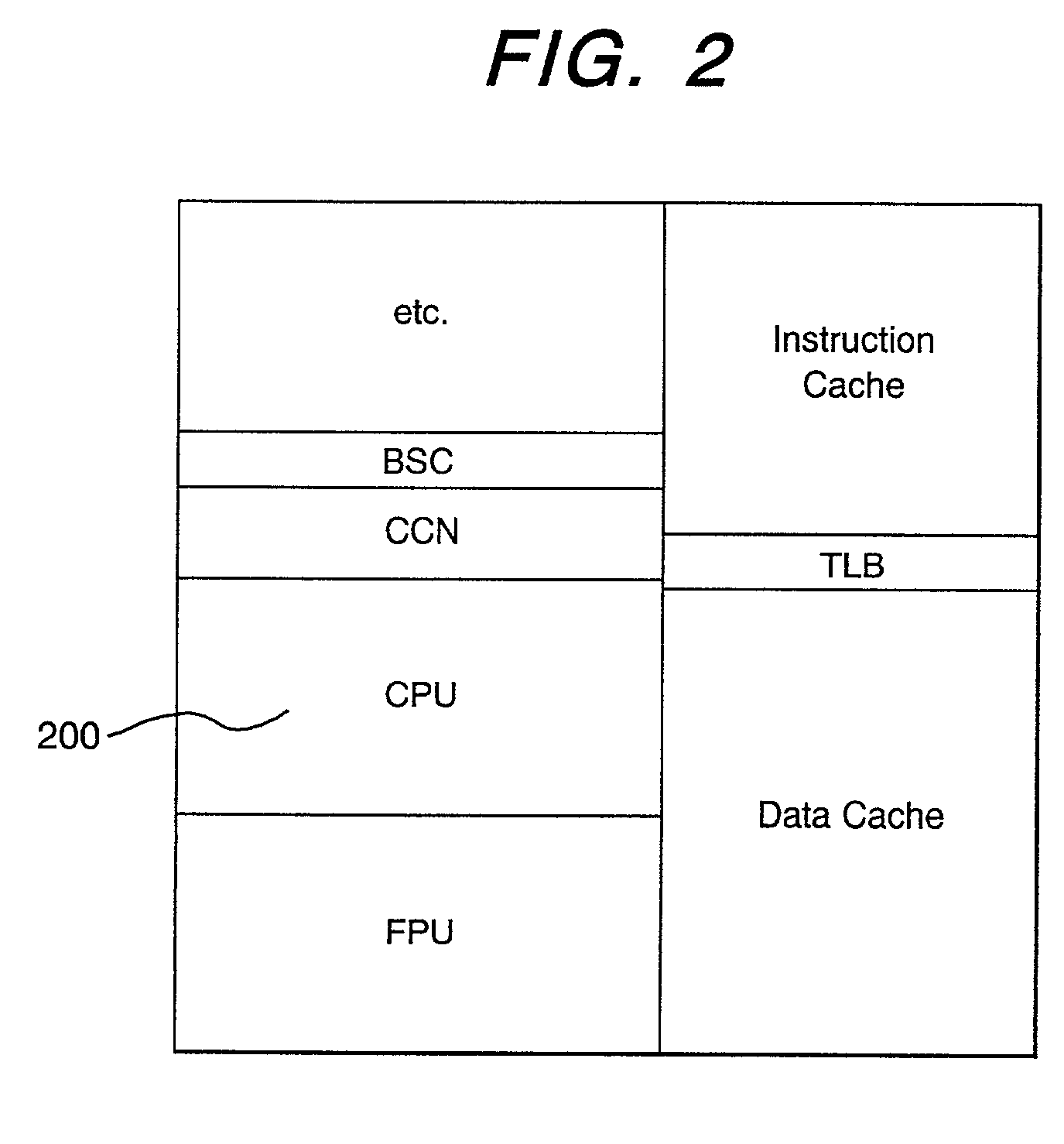

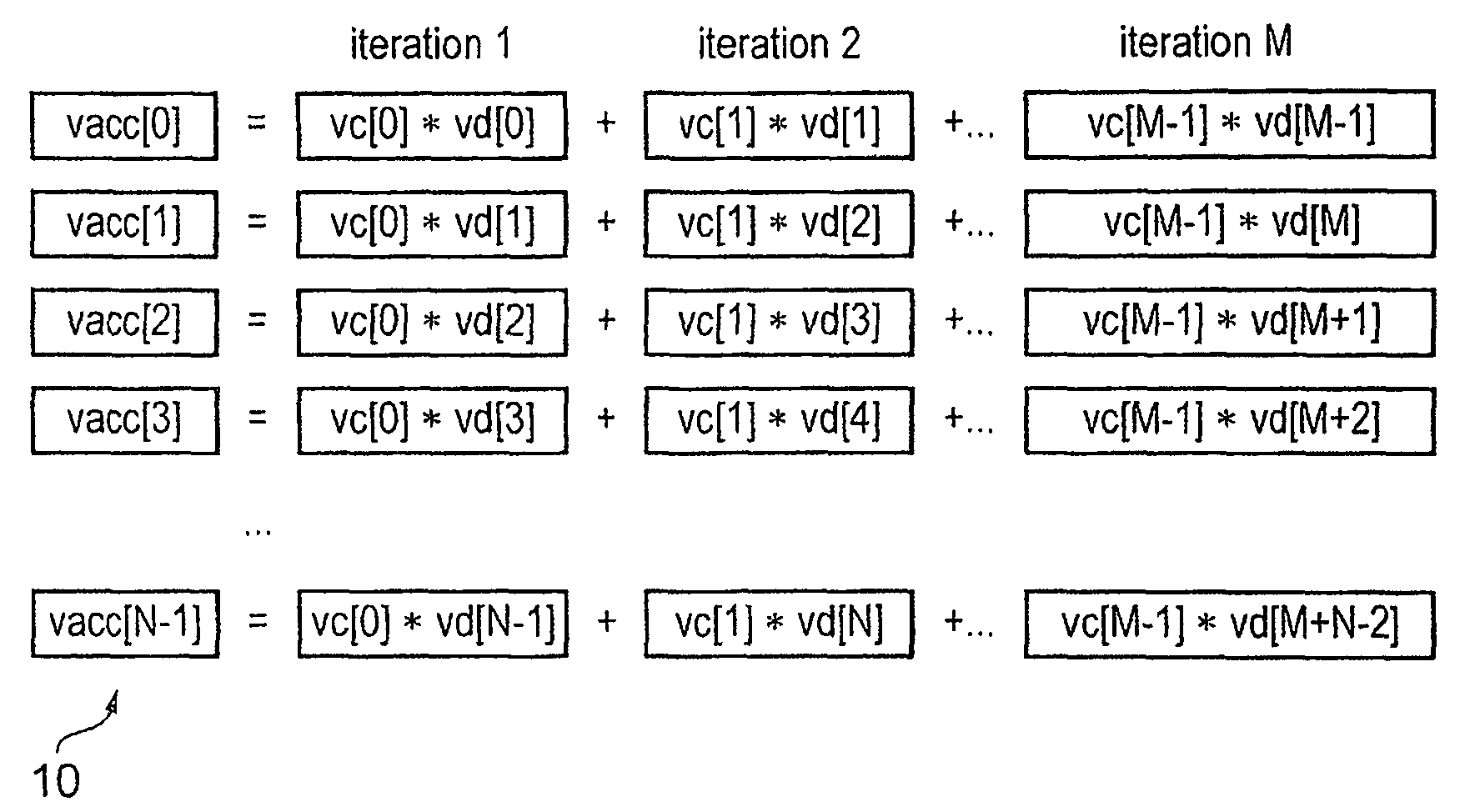

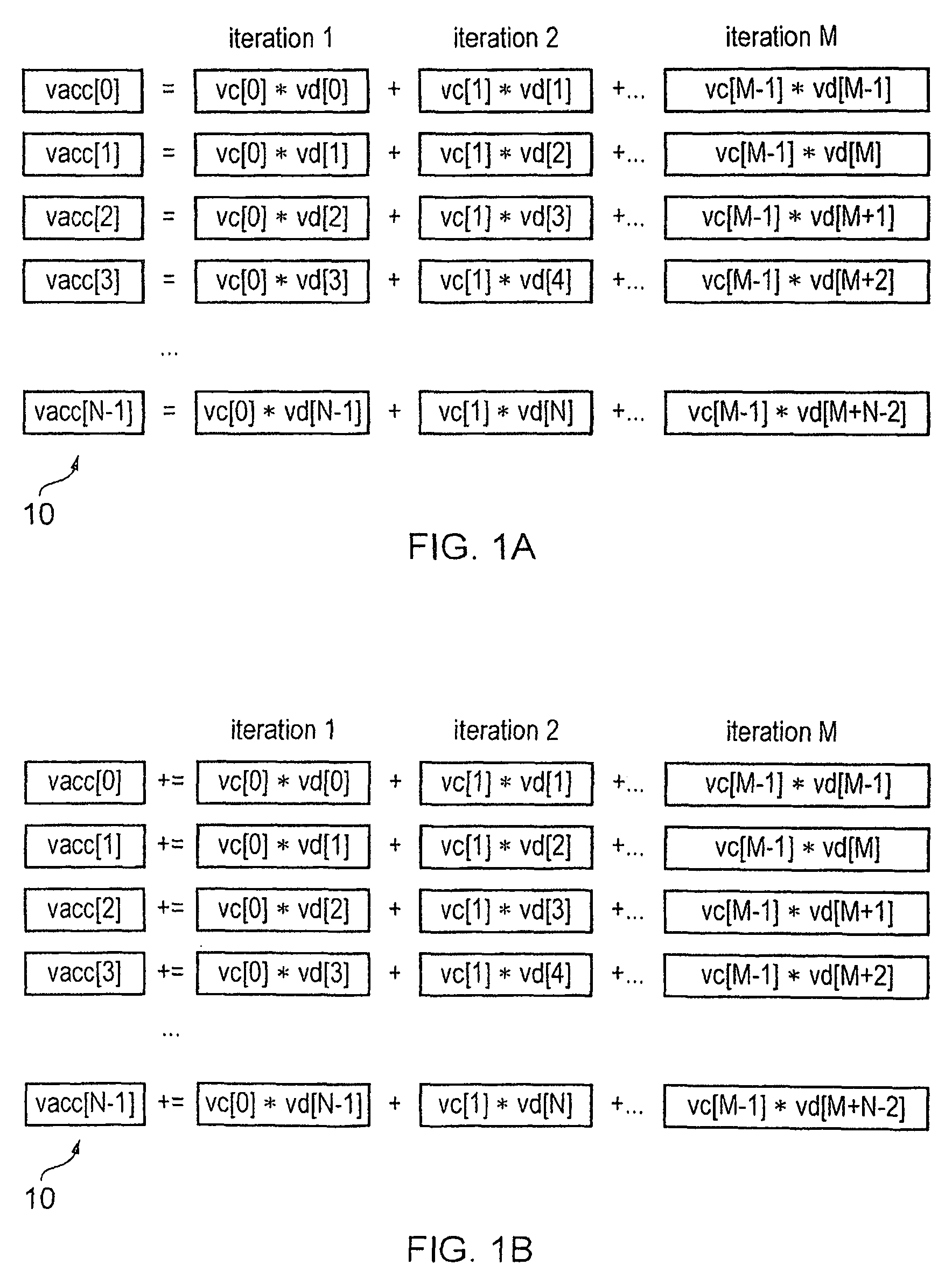

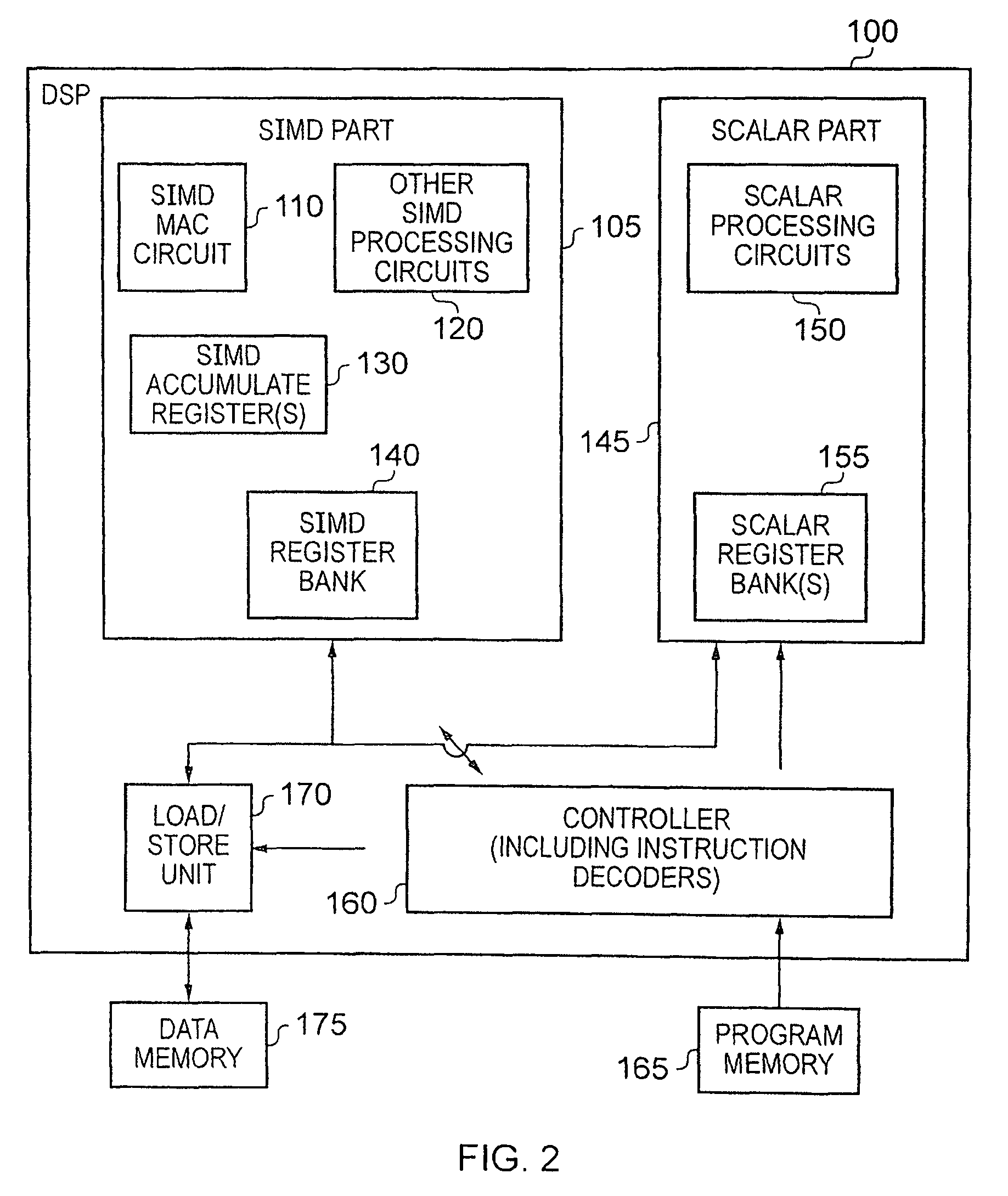

Apparatus and method for performing SIMD multiply-accumulate operations

ActiveUS8443170B2Reduce energy consumptionImprove energy consumptionAssociative processorsNext instruction address formationProgram instructionControl signal

An apparatus and method for performing SIMD multiply-accumulate operations includes SIMD data processing circuitry responsive to control signals to perform data processing operations in parallel on multiple data elements. Instruction decoder circuitry is coupled to the SIMD data processing circuitry and is responsive to program instructions to generate the required control signals. The instruction decoder circuitry is responsive to a single instruction (referred to herein as a repeating multiply-accumulate instruction) having as input operands a first vector of input data elements, a second vector of coefficient data elements, and a scalar value indicative of a plurality of iterations required, to generate control signals to control the SIMD processing circuitry. In response to those control signals, the SIMD data processing circuitry performs the plurality of iterations of a multiply-accumulate process, each iteration involving performance of N multiply-accumulate operations in parallel in order to produce N multiply-accumulate data elements. For each iteration, the SIMD data processing circuitry determines N input data elements from said first vector and a single coefficient data element from the second vector to be multiplied with each of the N input data elements. The N multiply-accumulate data elements produced in a final iteration of the multiply-accumulate process are then used to produce N multiply-accumulate results. This mechanism provides a particularly energy efficient mechanism for performing SIMD multiply-accumulate operations, as for example are required for FIR filter processes.

Owner:U-BLOX

Parallel data processing apparatus

InactiveUS20080040575A1Single instruction multiple data multiprocessorsError detection/correctionScheduling instructionsProcessing element

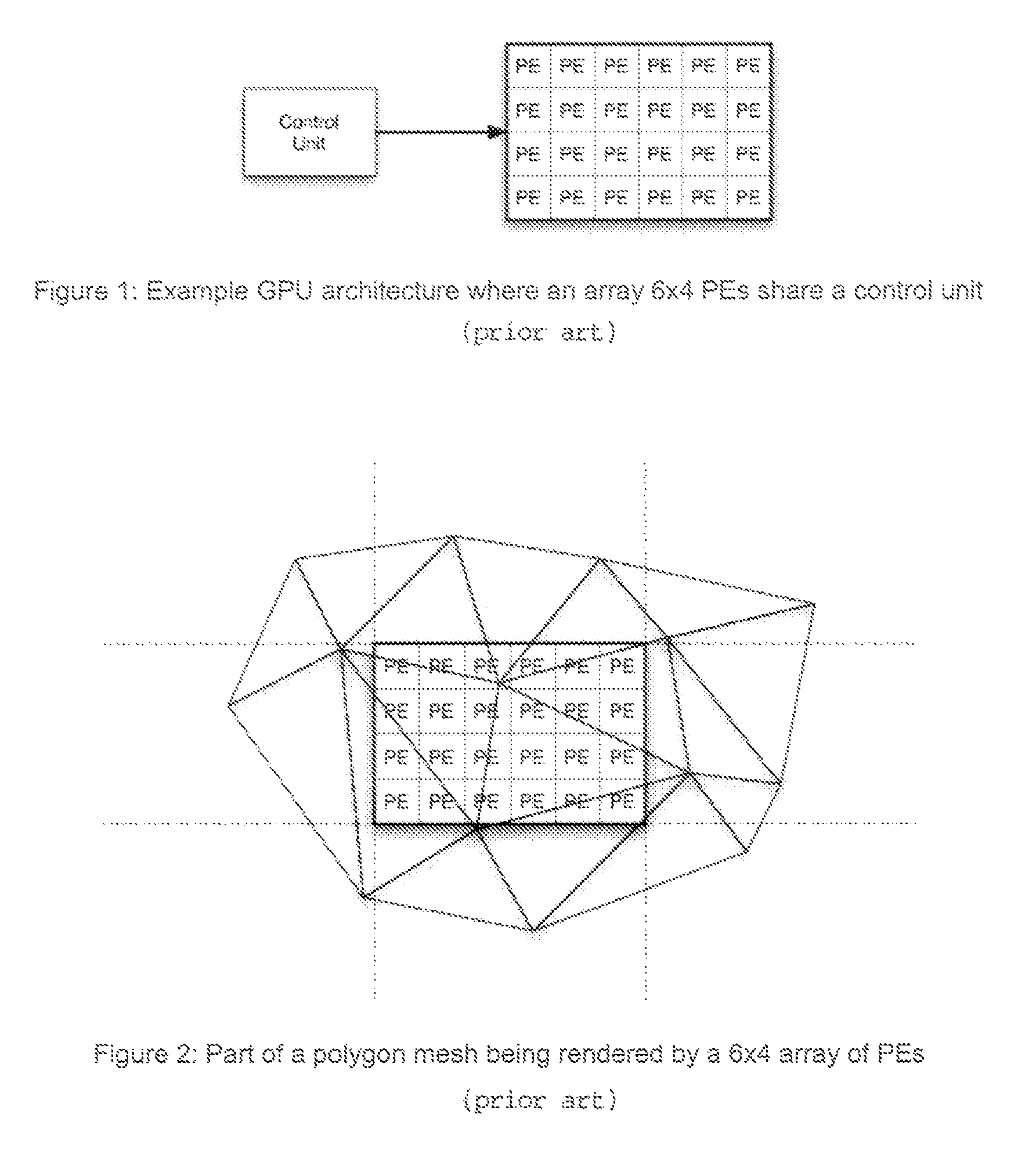

A method of scheduling instruction streams in a SIMD (single instruction multiple data) array of processing elements in which the processing elements are arranged in a plurality of SIMD processing blocks, comprises determining which instruction stream has priority at a particular moment in time, and transferring that determined instruction stream to the SIMD array.

Owner:RAMBUS INC

SIMD operation system capable of designating plural registers via one register designating field

InactiveUS7043627B2Maximize the effectMaintain accuracyRegister arrangementsConcurrent instruction executionNetwork packetProcessor register

In view of a necessity of alleviating factors obstructing an effect of SIMD operation such as in-register data alignment in high speed formation of an SIMD processor, numerous data can be supplied to a data alignment operation pipe 211 by dividing a register file into four banks and enabling to designate a plurality of registers by a single piece of operand to thereby enable to make access to four registers simultaneously and data alignment operation can be carried out at high speed. Further, by defining new data pack instruction, data unpack instruction and data permutation instruction, data supplied in a large number can be aligned efficiently. Further, by the above-described characteristic, definition of multiply accumulate operation instruction maximizing parallelism of SIMD can be carried out.

Owner:RENESAS ELECTRONICS CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com