Method for performing random read access to a block of data using parallel lut read instruction in vector processors

a vector processor and random read technology, applied in the field of digital data processing, can solve the problems of insufficient vector load instructions to perform parallel, the performance of the concerned algorithm drops drastically, and the random read access within the block of data is difficult to paralleliz

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

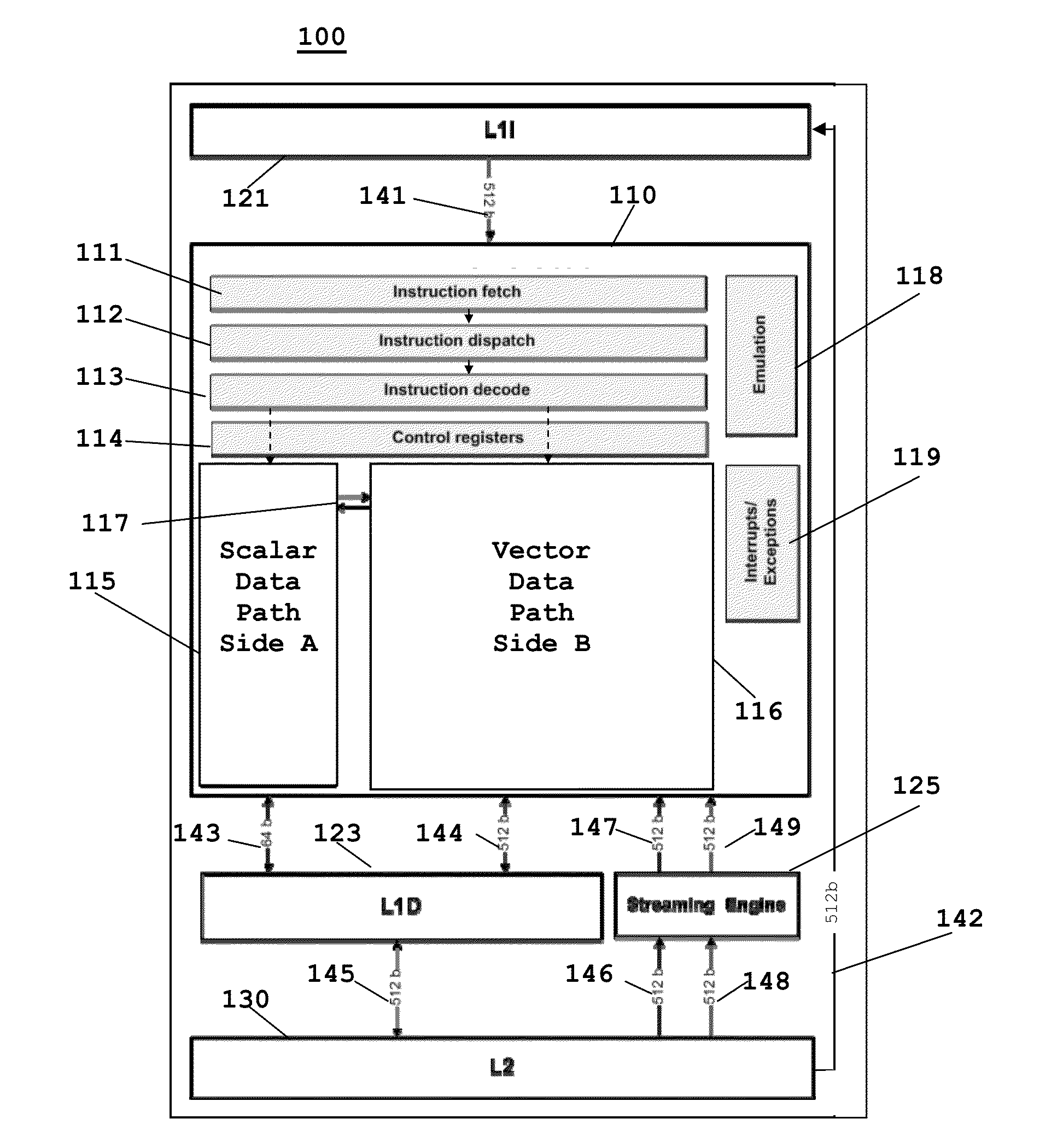

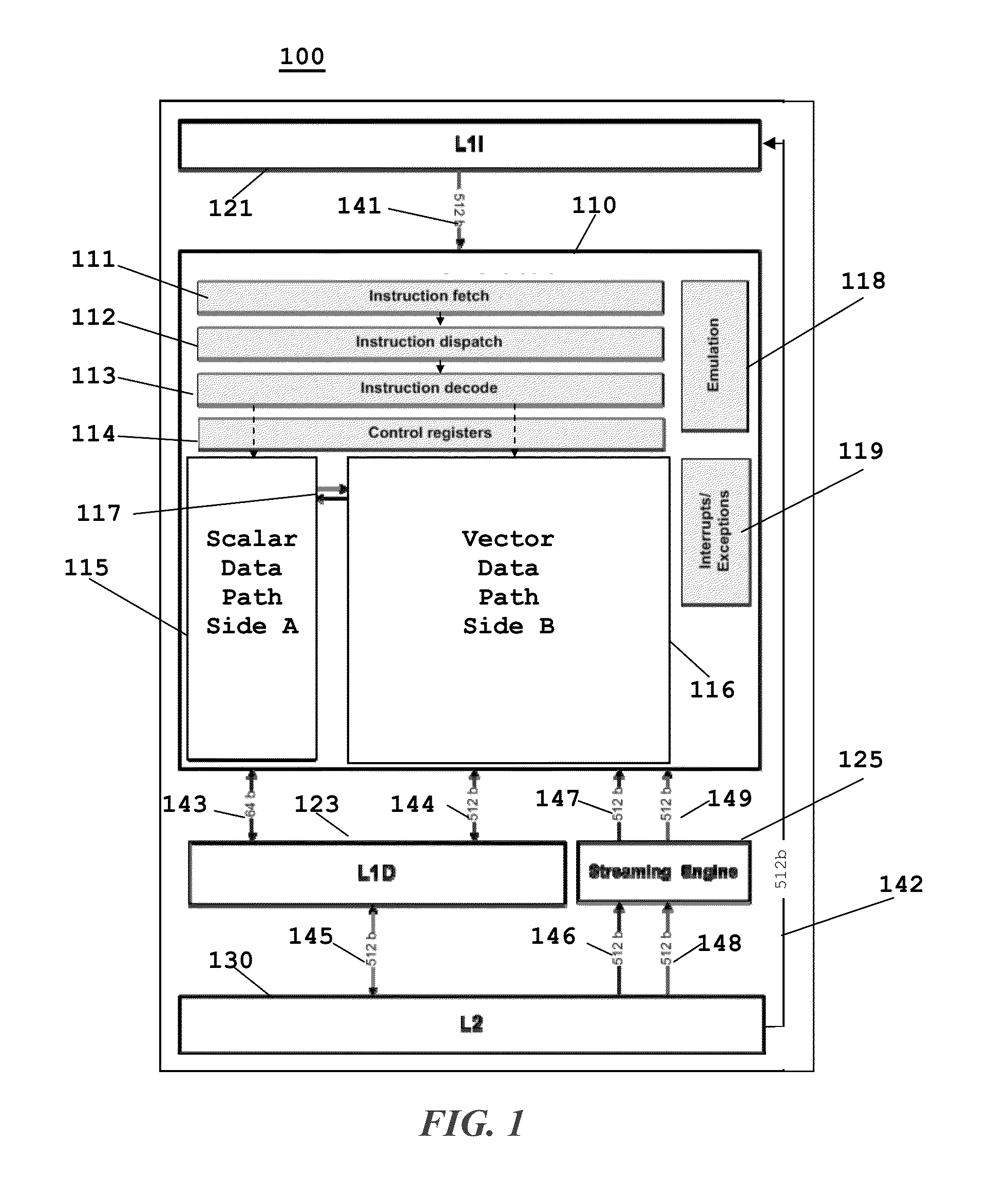

[0038]FIG. 1 illustrates a dual scalar / vector datapath processor according to a preferred embodiment of this invention. Processor 100 includes separate level one instruction cache (L1I) 121 and level one data cache (L1D) 123. Processor 100 includes a level two combined instruction / data cache (L2) 130 that holds both instructions and data. FIG. 1 illustrates connection between level one instruction cache 121 and level two combined instruction / data cache 130 (bus 142). FIG. 1 illustrates connection between level one data cache 123 and level two combined instruction / data cache 130 (bus 145). In the preferred embodiment of processor 100 level two combined instruction / data cache 130 stores both instructions to back up level one instruction cache 121 and data to back up level one data cache 123. In the preferred embodiment level two combined instruction / data cache 130 is further connected to higher level cache and / or main memory in a manner not illustrated in FIG. 1. In the preferred embo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com