Patents

Literature

274 results about "Vector processor" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

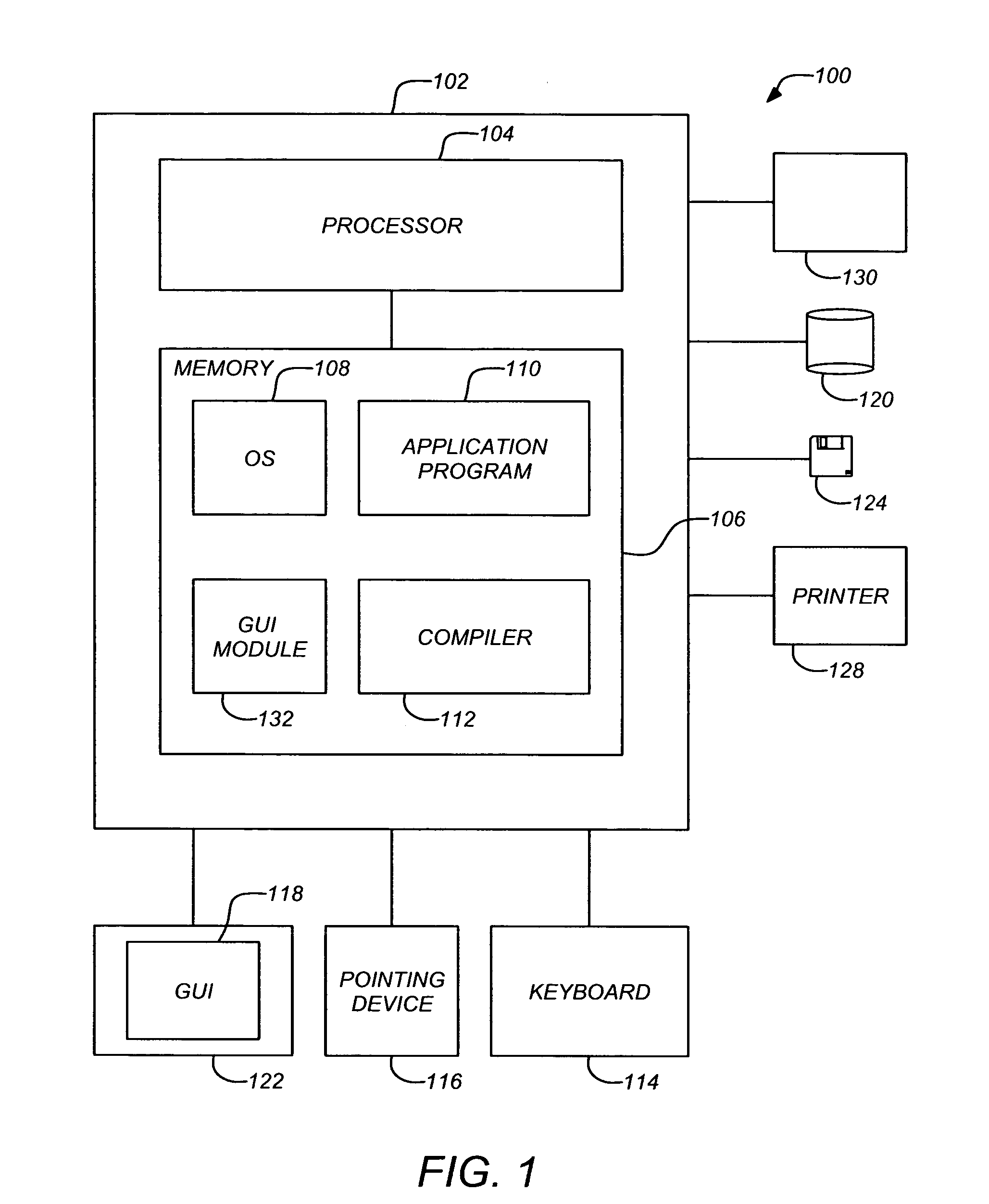

In computing, a vector processor or array processor is a central processing unit (CPU) that implements an instruction set containing instructions that operate on one-dimensional arrays of data called vectors, compared to the scalar processors, whose instructions operate on single data items. Vector processors can greatly improve performance on certain workloads, notably numerical simulation and similar tasks. Vector machines appeared in the early 1970s and dominated supercomputer design through the 1970s into the 1990s, notably the various Cray platforms. The rapid fall in the price-to-performance ratio of conventional microprocessor designs led to the vector supercomputer's demise in the later 1990s.

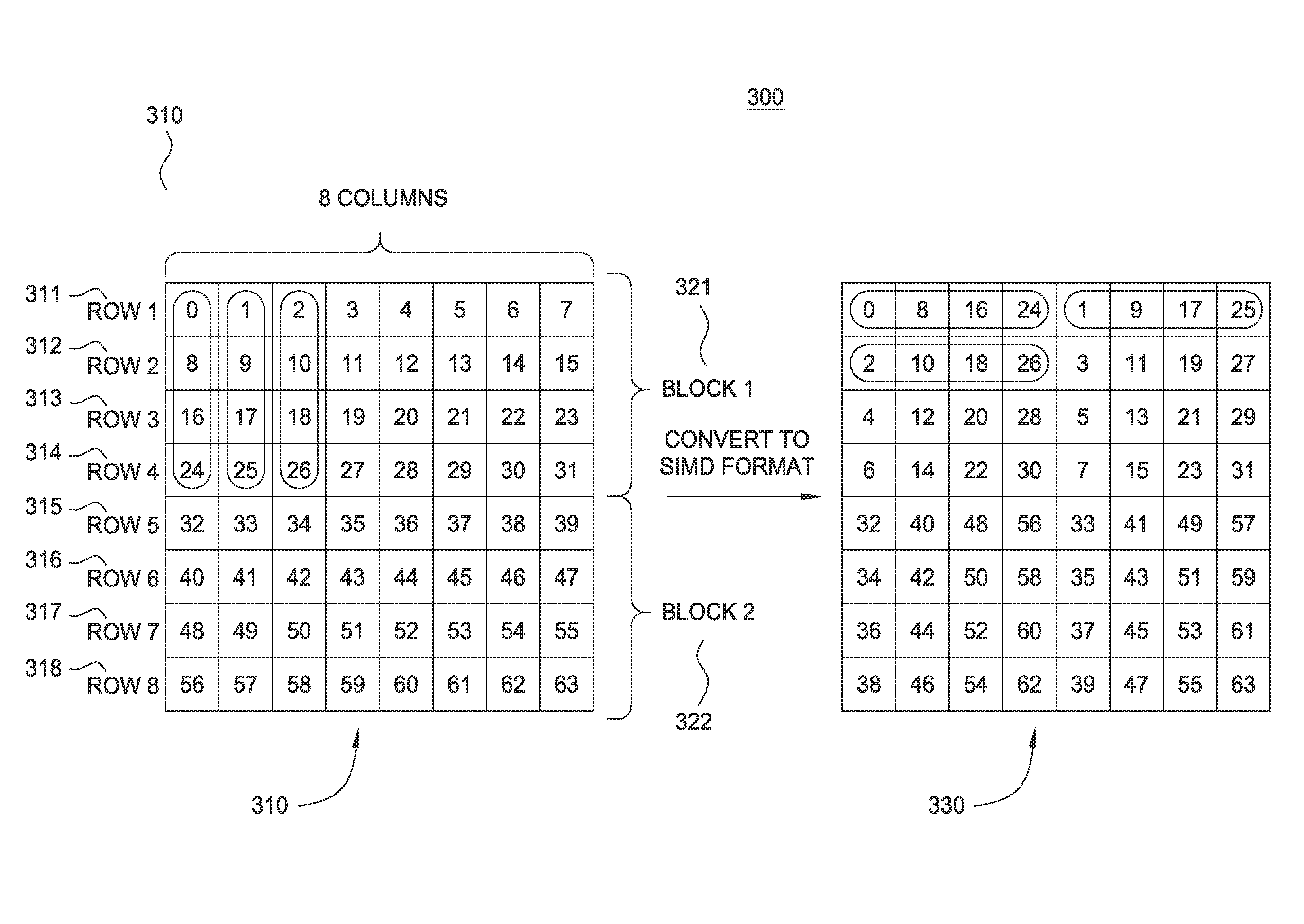

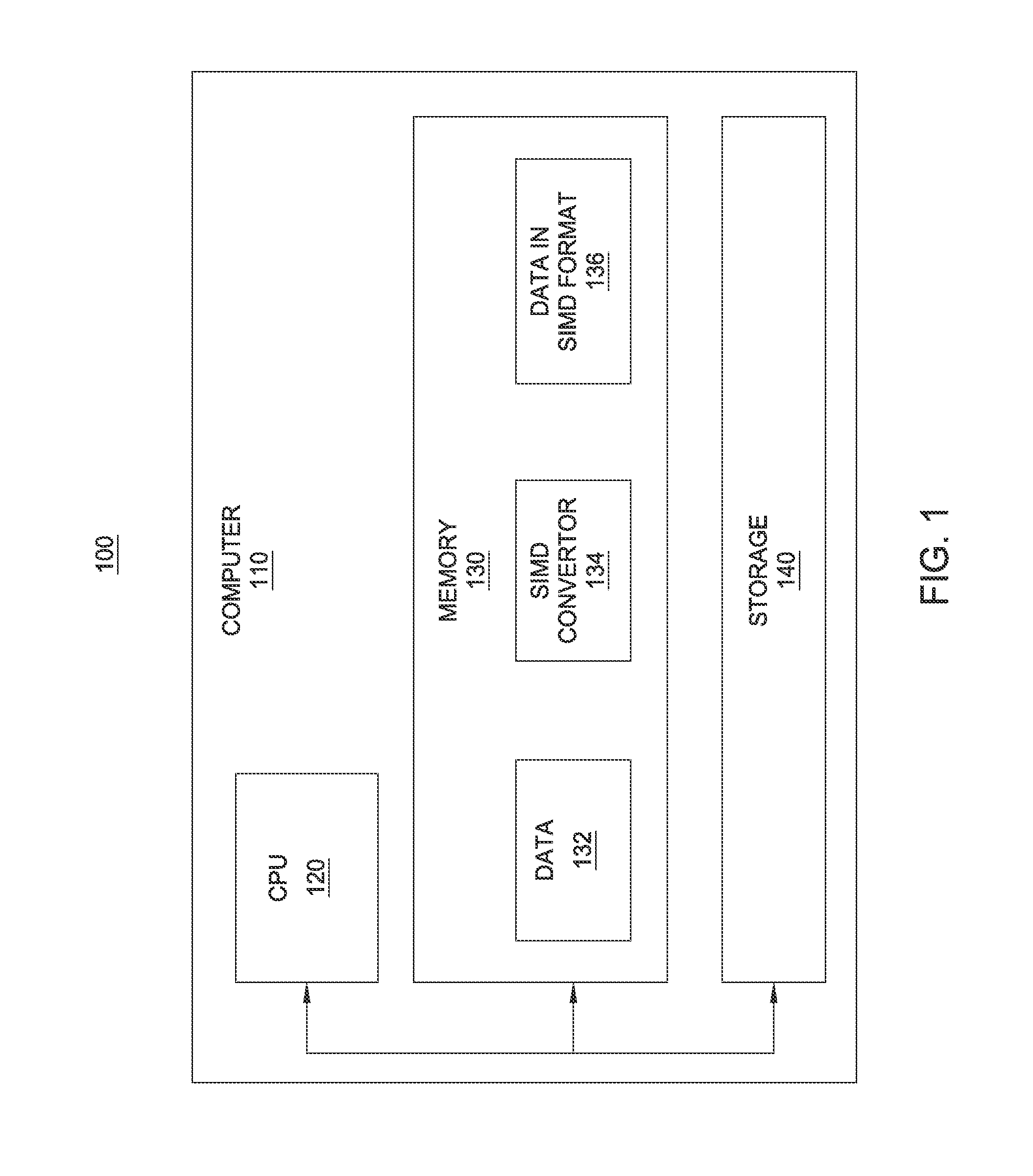

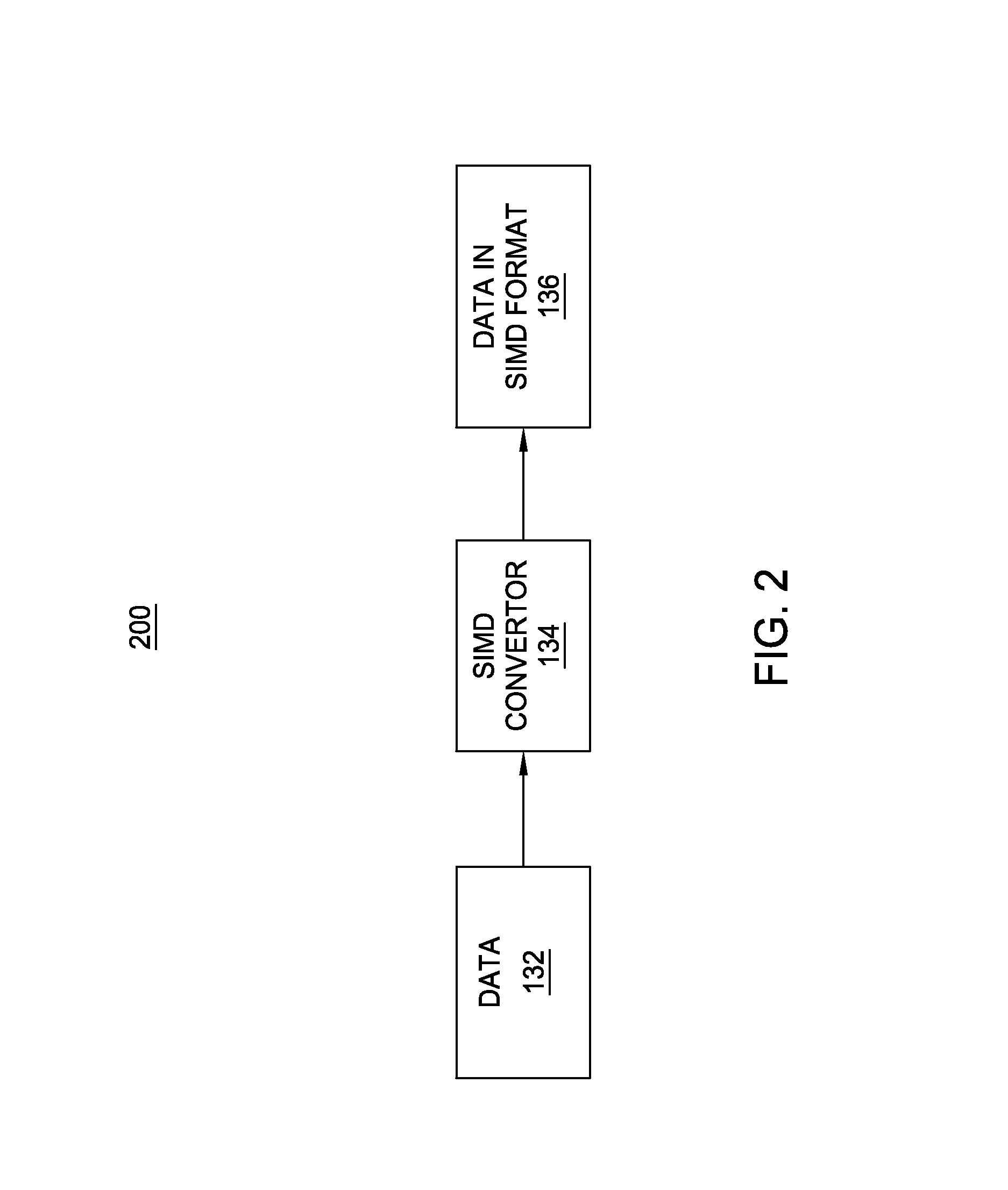

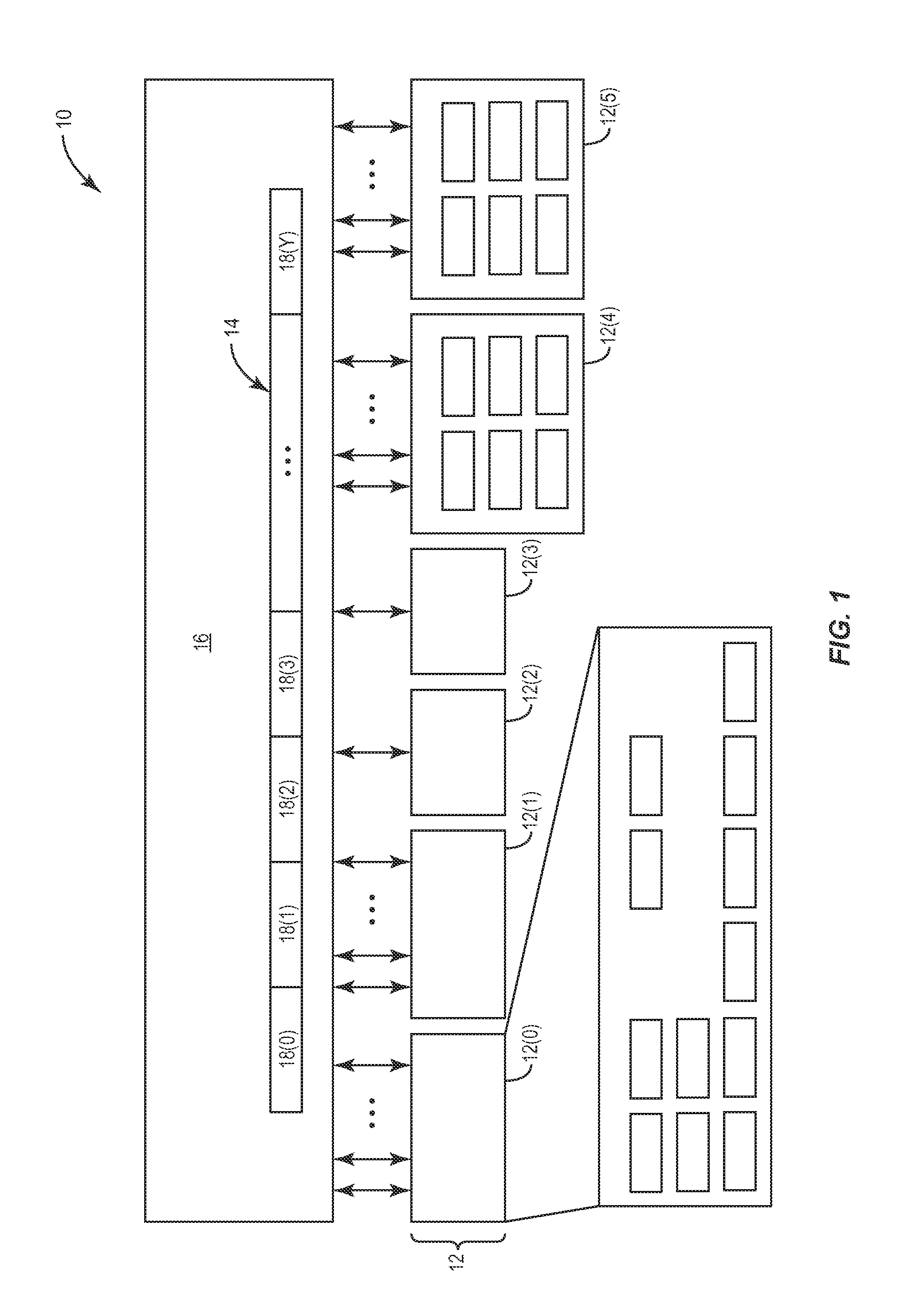

Processing array data on SIMD multi-core processor architectures

InactiveUS8484276B2Program control using stored programsGeneral purpose stored program computerFast Fourier transformFourier transform on finite groups

Owner:INT BUSINESS MASCH CORP

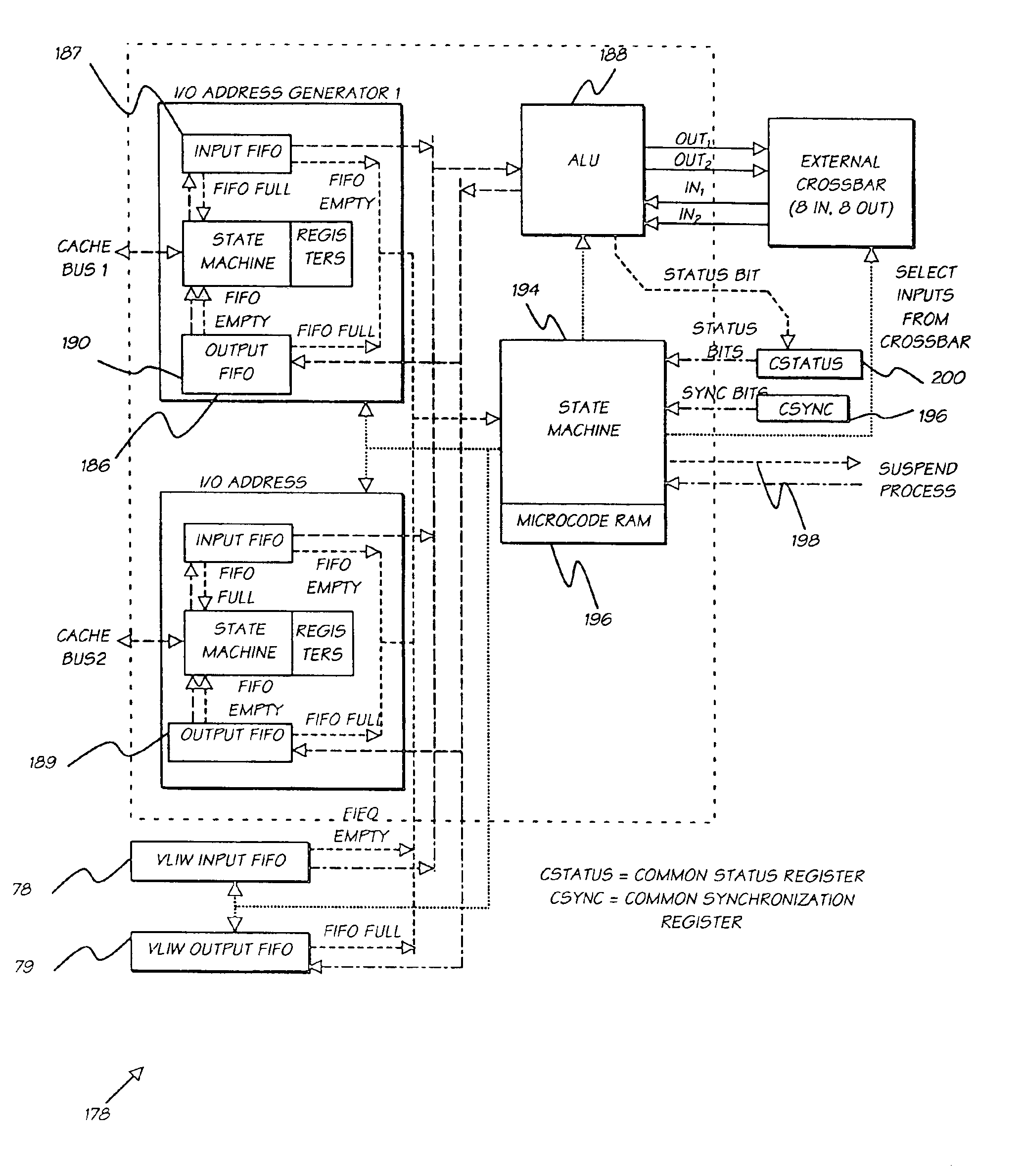

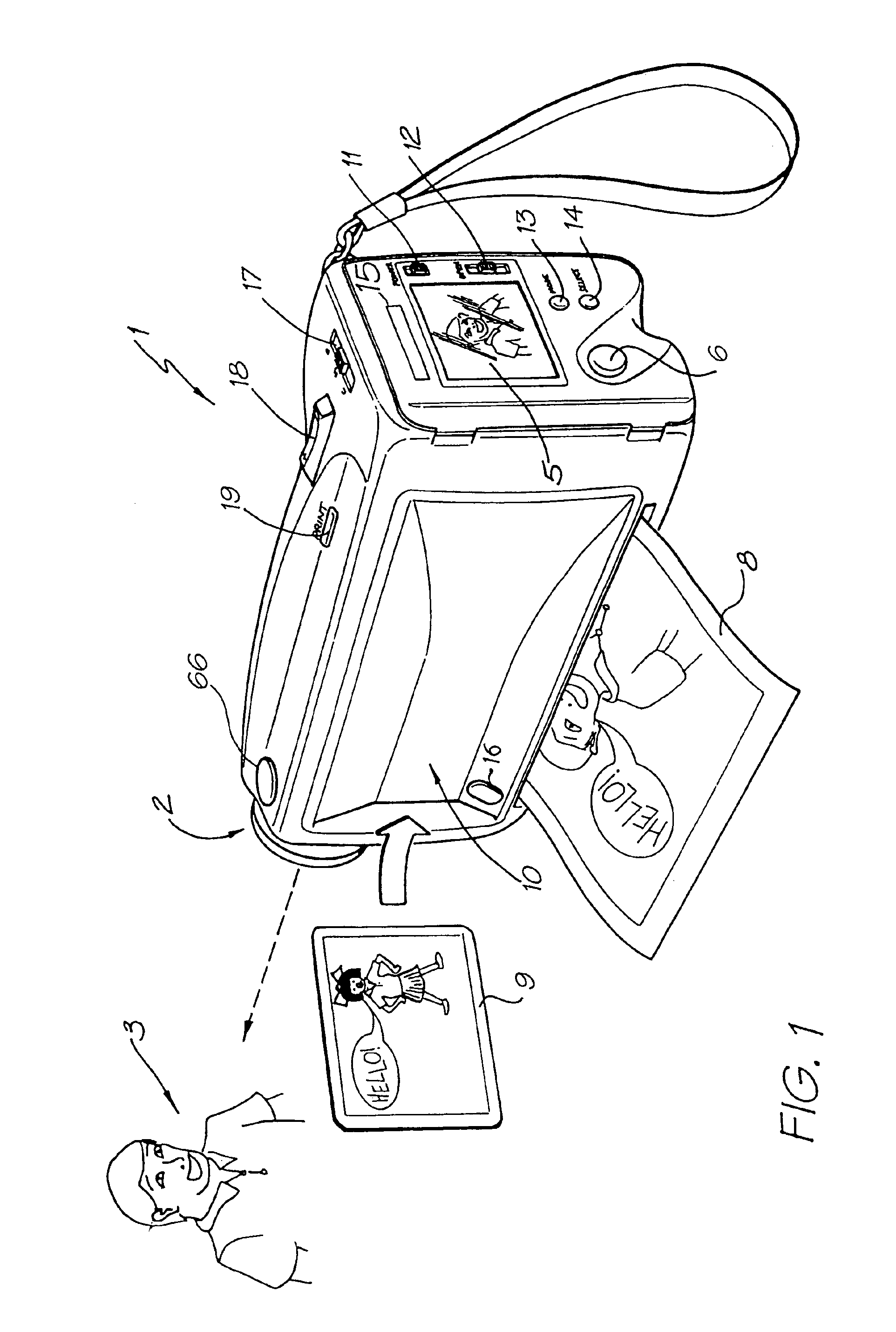

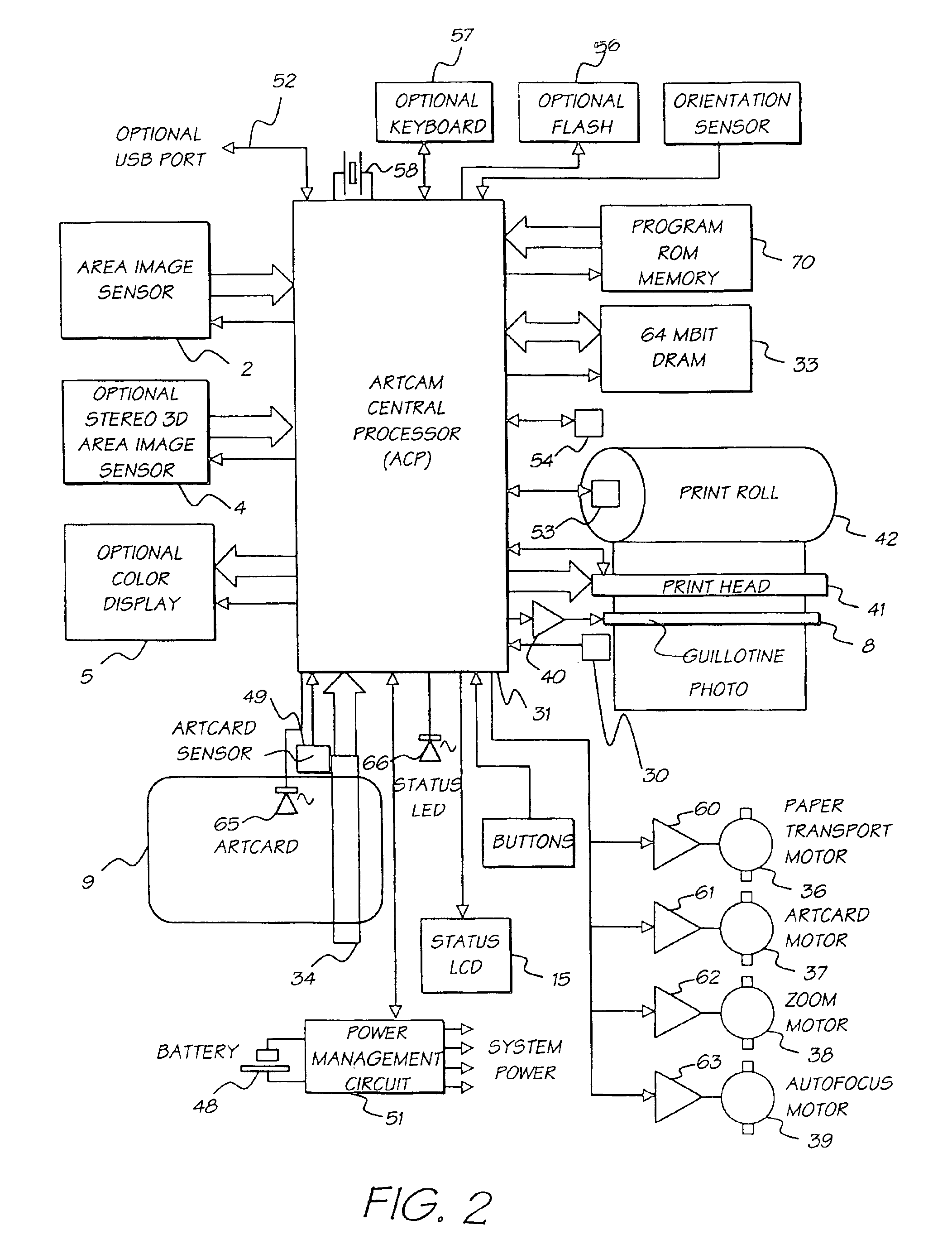

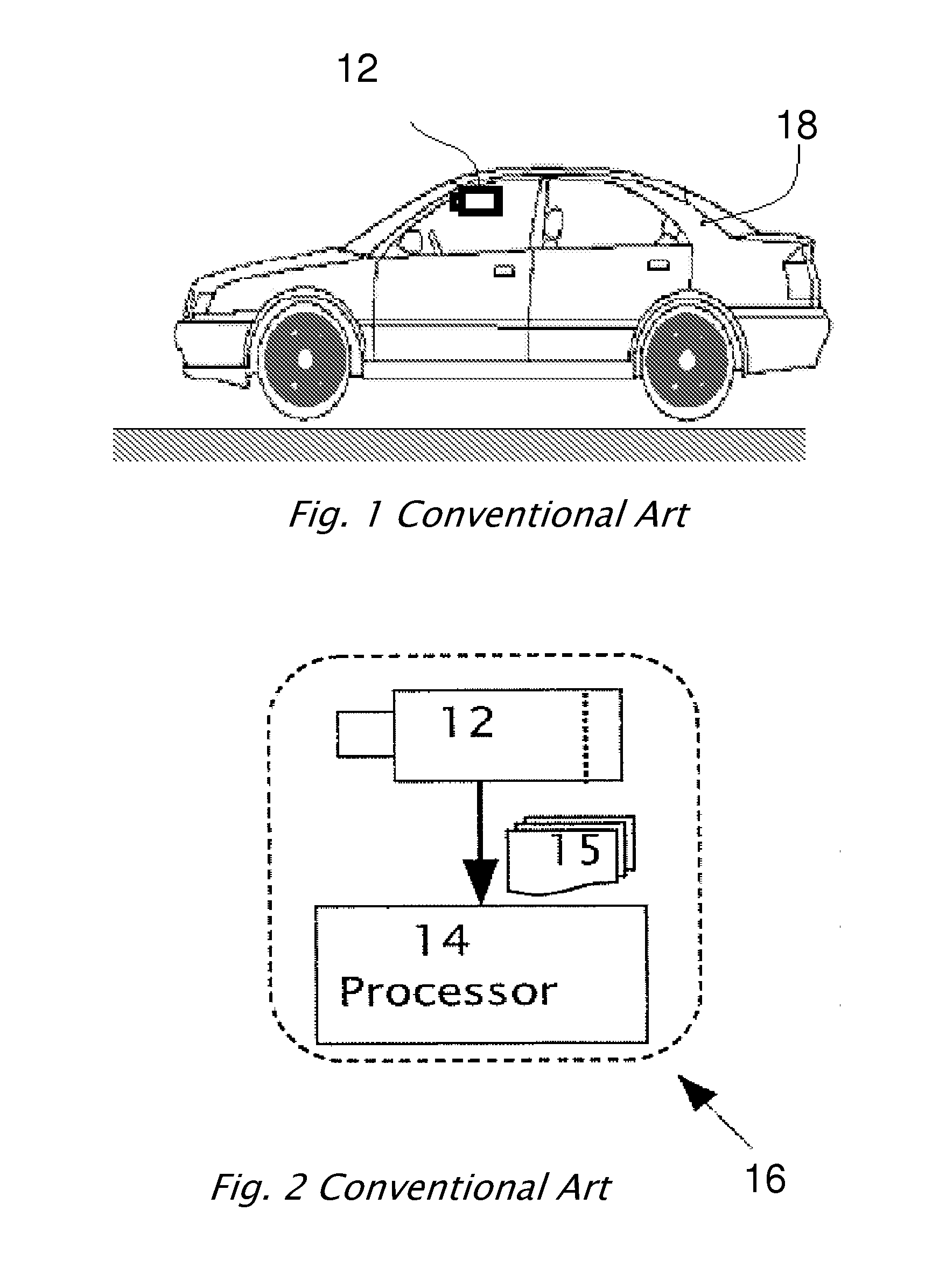

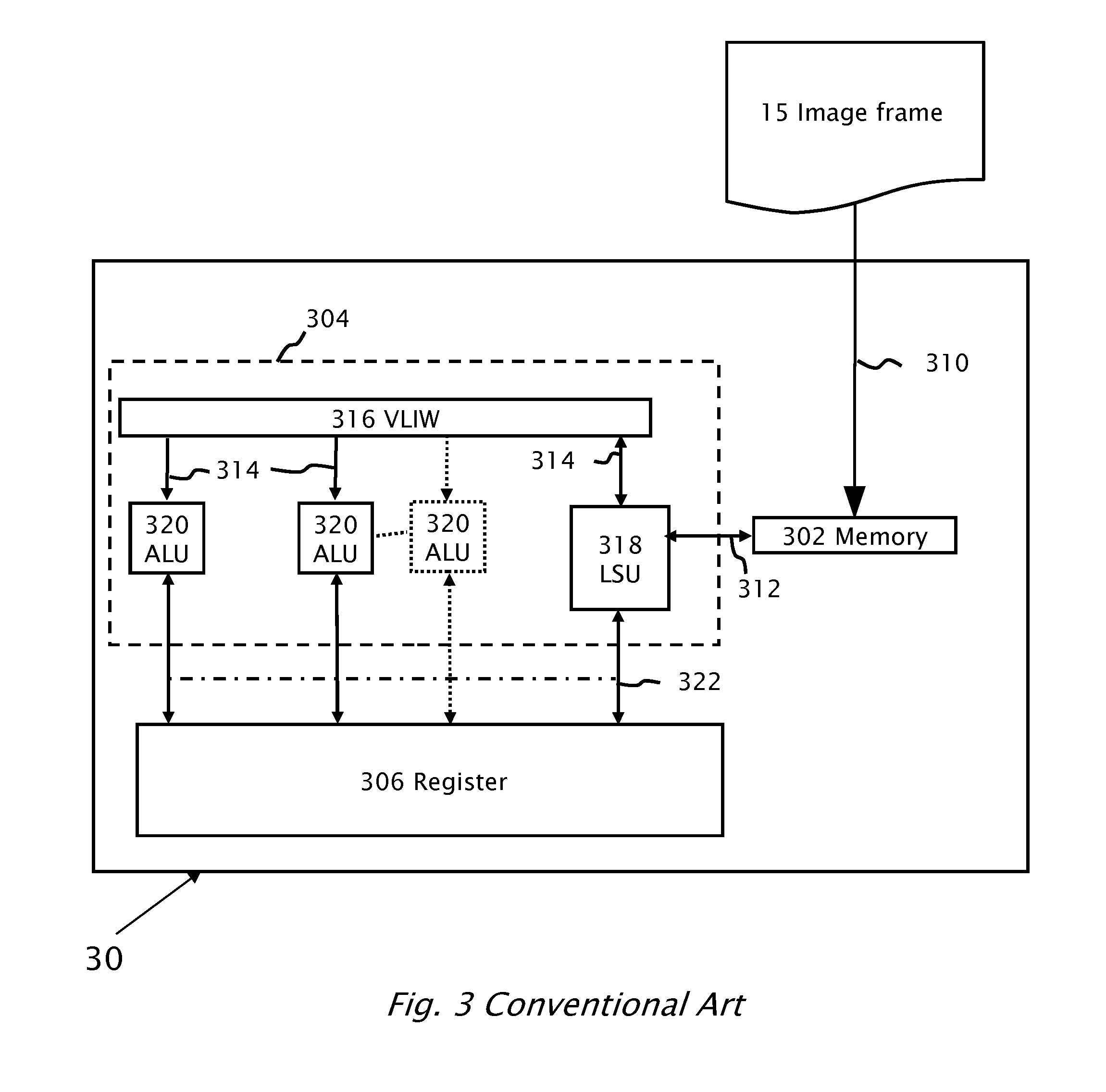

Digital camera system containing a VLIW vector processor

InactiveUS6879341B1Material nanotechnologyTelevision system detailsCrossbar switchArithmetic logic unit

A digital camera has a sensor for sensing an image, a processor for modifying the sensed image in accordance with instructions input into the camera and an output for outputting the modified image where the processor includes a series of processing elements arranged around a central crossbar switch. The processing elements include an Arithmetic Logic Unit (ALU) acting under the control of a writeable microcode store, an internal input and output FIFO for storing pixel data to be processed by the processing elements and the processor is interconnected to a read and write FIFO for reading and writing pixel data of images to the processor. Each of the processing elements can be arranged in a ring and each element is also separately connected to its nearest neighbors. The ALU receives a series of inputs interconnected via an internal crossbar switch to a series of core processing units within the ALU and includes a number of internal registers for the storage of temporary data. The core processing units can include at least one of a multiplier, an adder and a barrel shifter. The processing elements are further connected to a common data bus for the transfer of a pixel data to the processing elements and the data bus is interconnected to a data cache which acts as an intermediate cache between the processing elements and a memory store for storing the images.

Owner:GOOGLE LLC

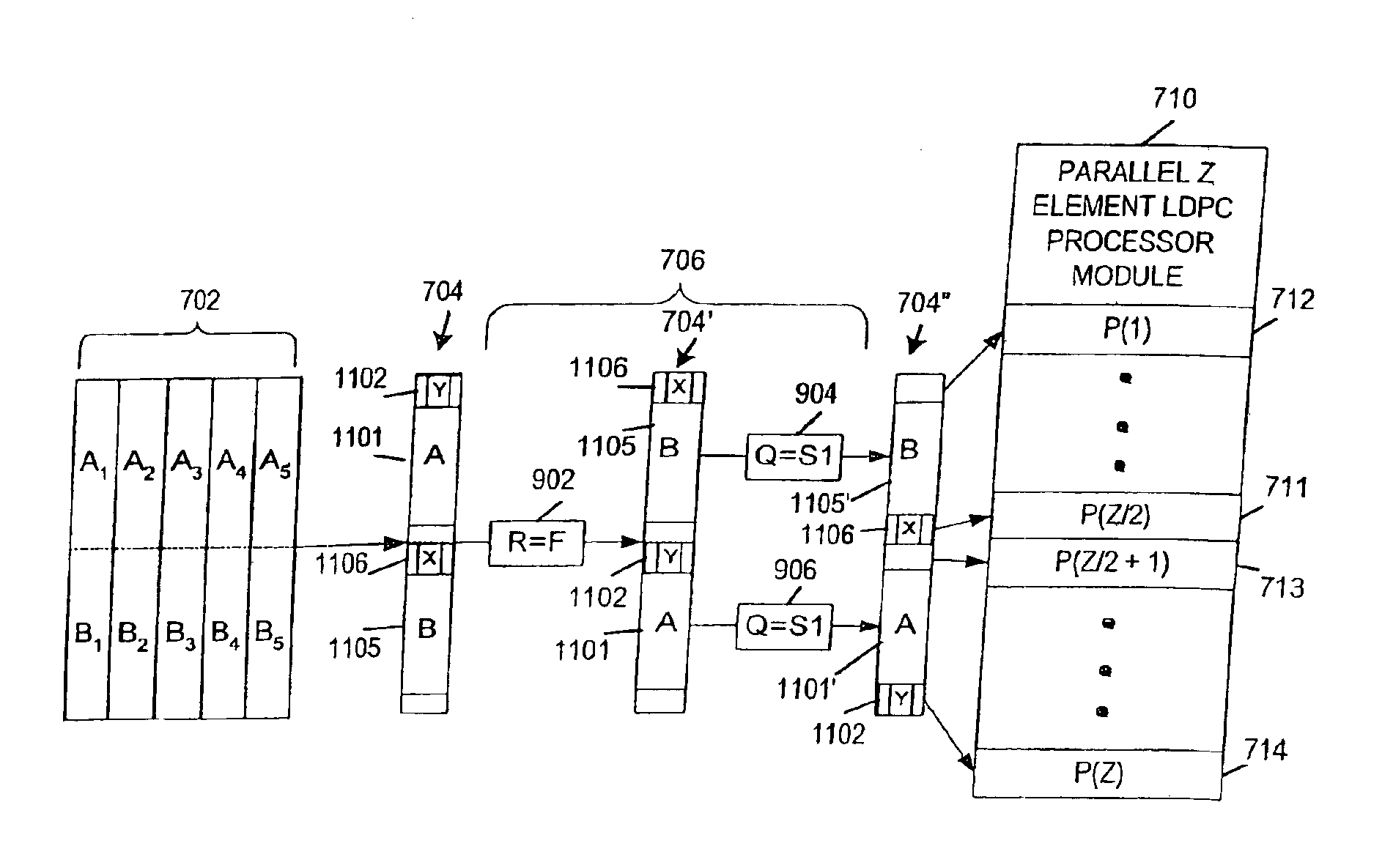

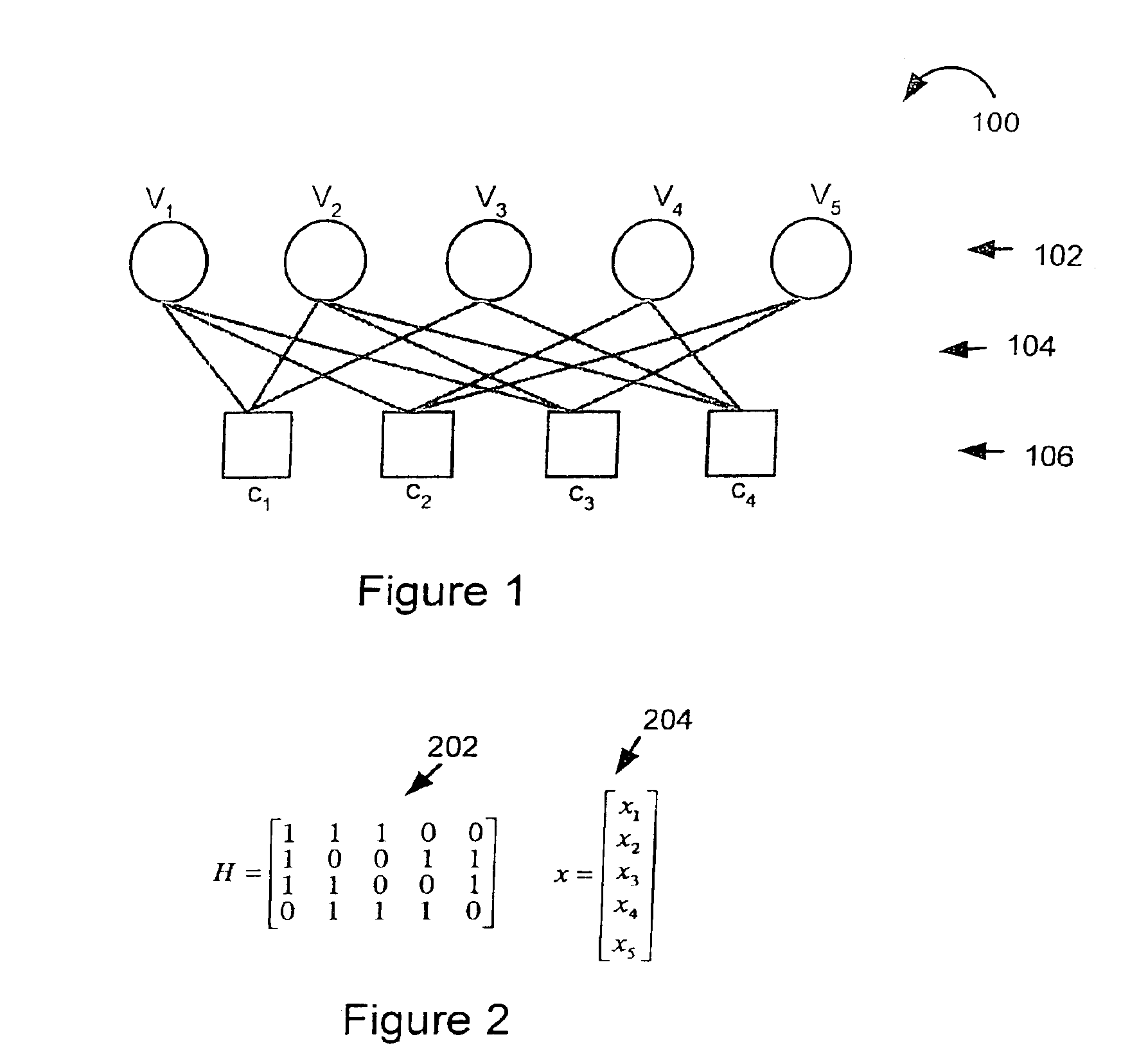

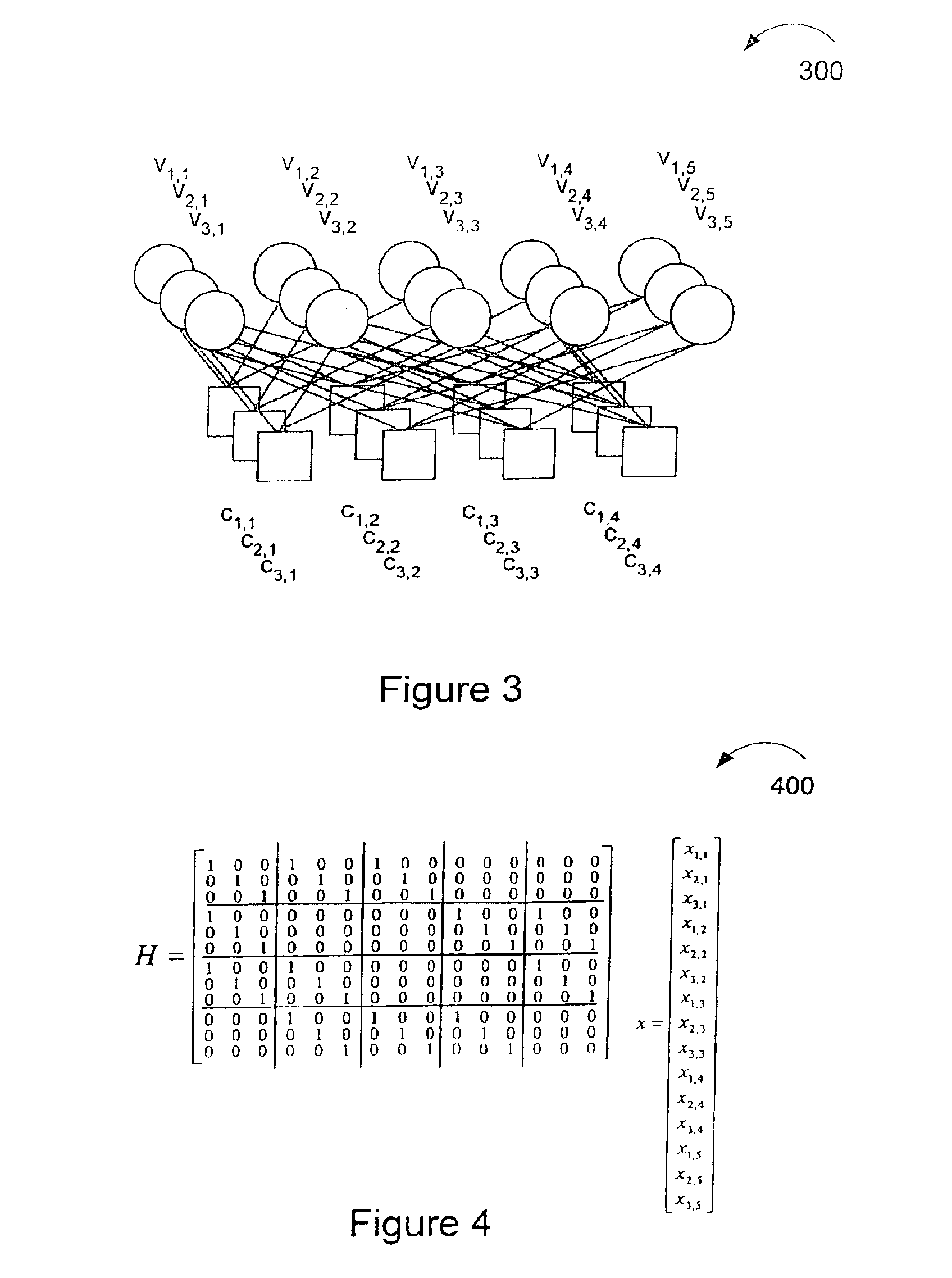

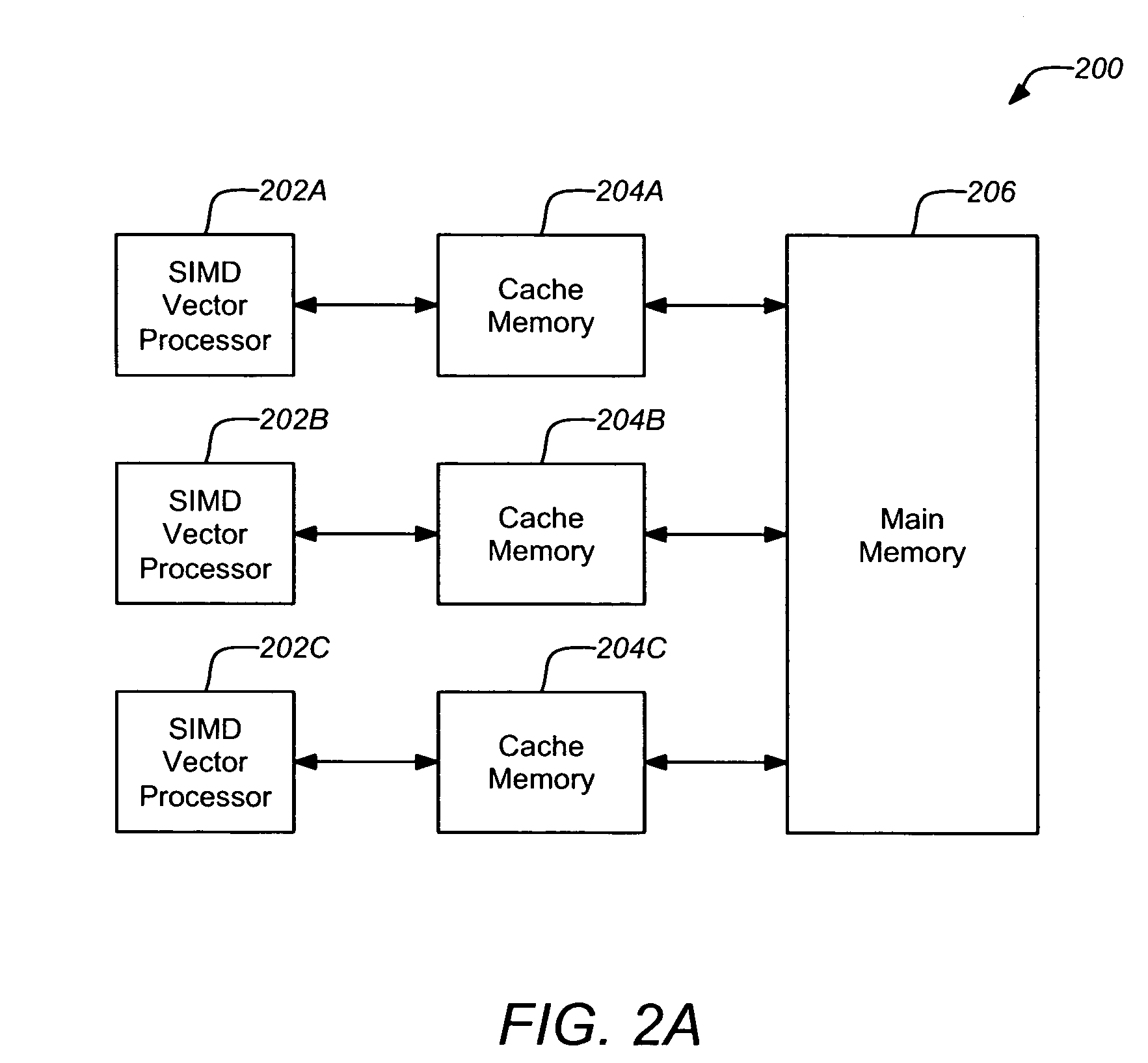

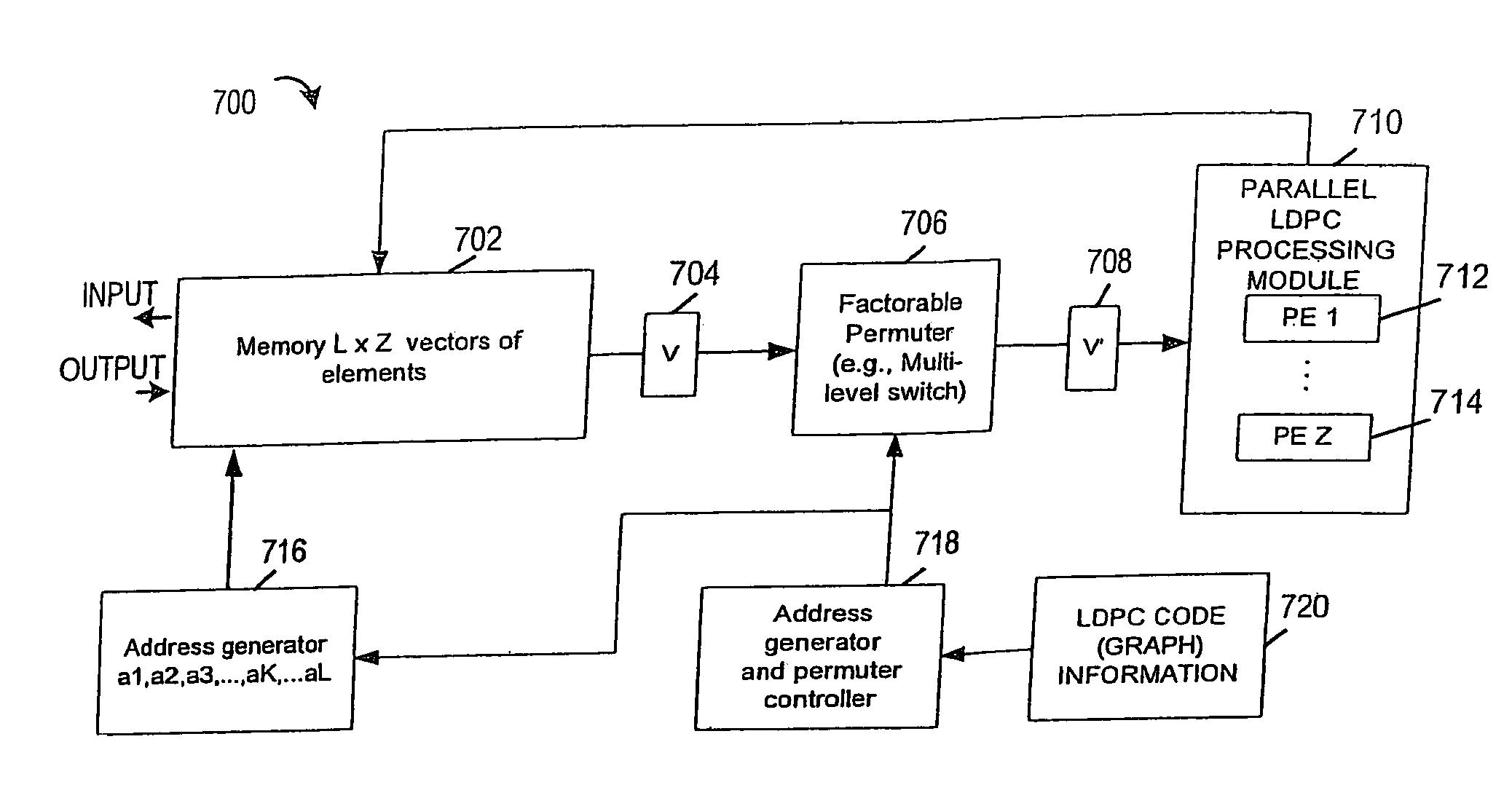

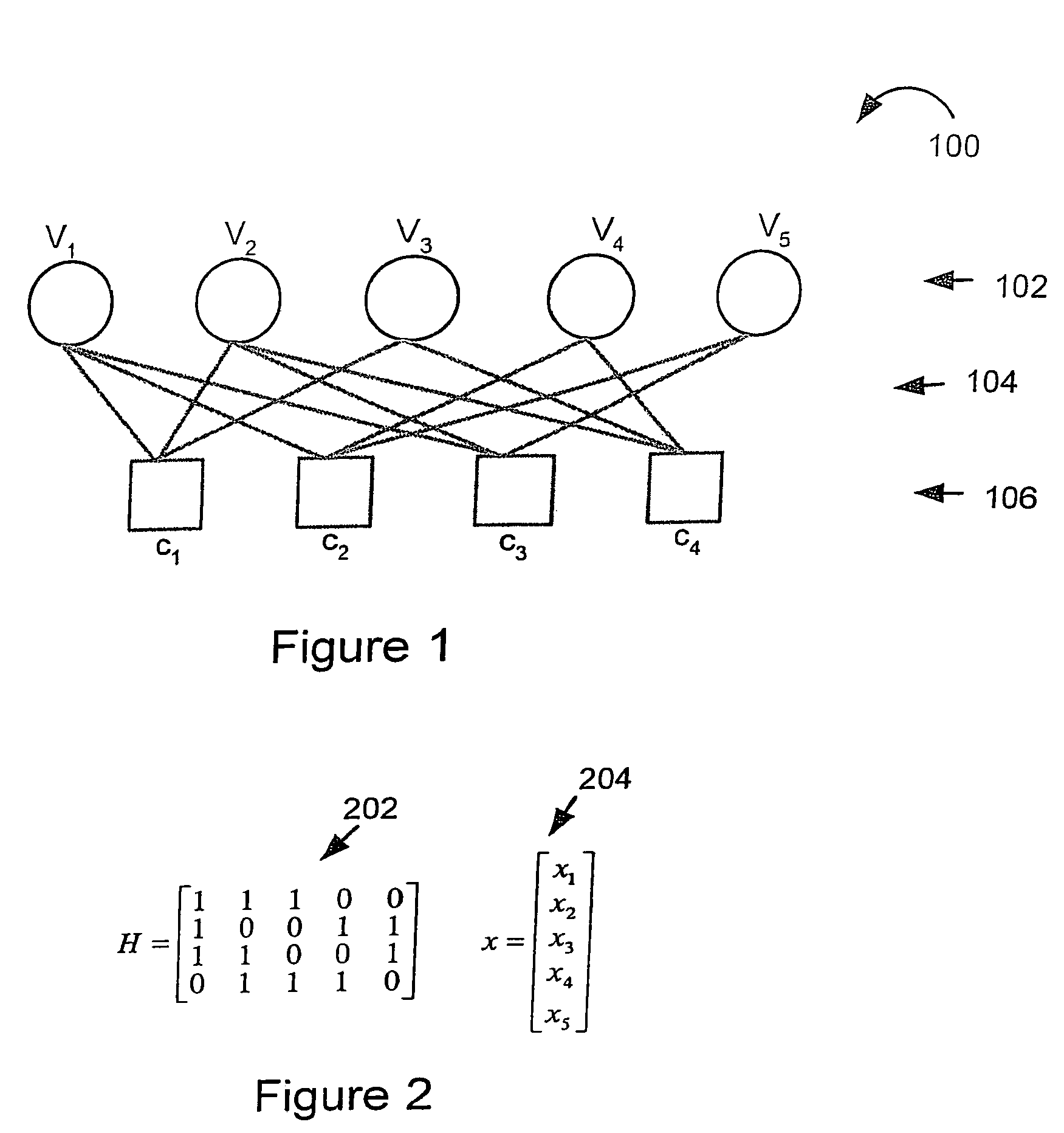

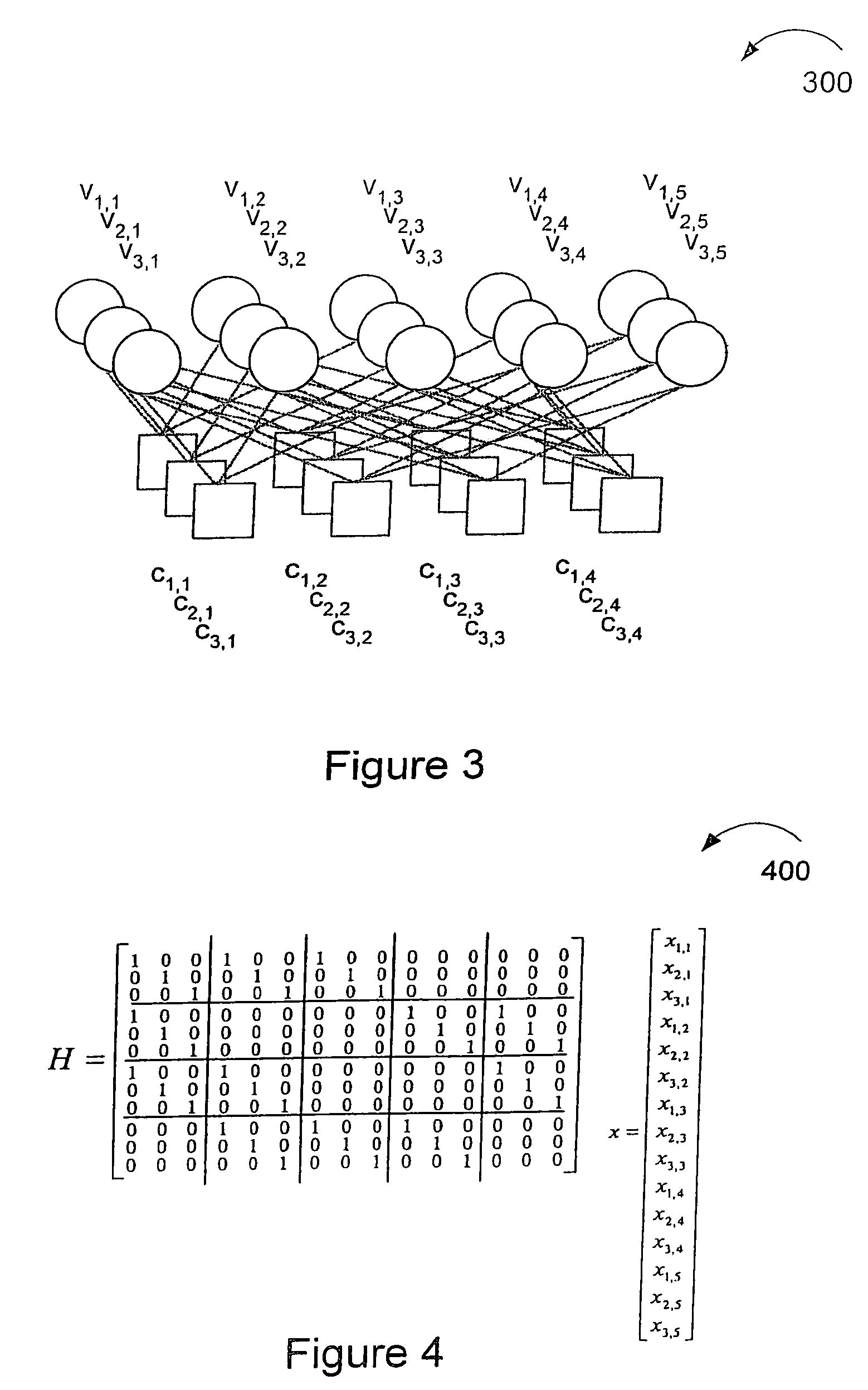

Method and apparatus for performing low-density parity-check (LDPC) code operations using a multi-level permutation

ActiveUS6957375B2Easy to implementError correction/detection using multiple parity bitsCode conversionGraphicsCommunications system

Methods and apparatus of the present invention can be used to implement a communications system wherein different devices using the same LDPC code can be implemented using different levels of parallelism. The use of a novel class of LDPC codes makes such differences in parallelism possible. Use of a factorable permuter in various embodiments of the invention make LDPC devices with different levels of parallelism in the encoder and decoder relatively easy to implement when using the codes in the class of LDPC codes discussed herein. The factorable permuter may be implemented as a controllable multi-stage switching devices which performs none, one, or multiple sequential reordering operations on a Z element vector passed between memory and a Z element vector processor, with the switching one individual vectors being controlled in accordance with the graph structure of the code being implemented.

Owner:QUALCOMM INC

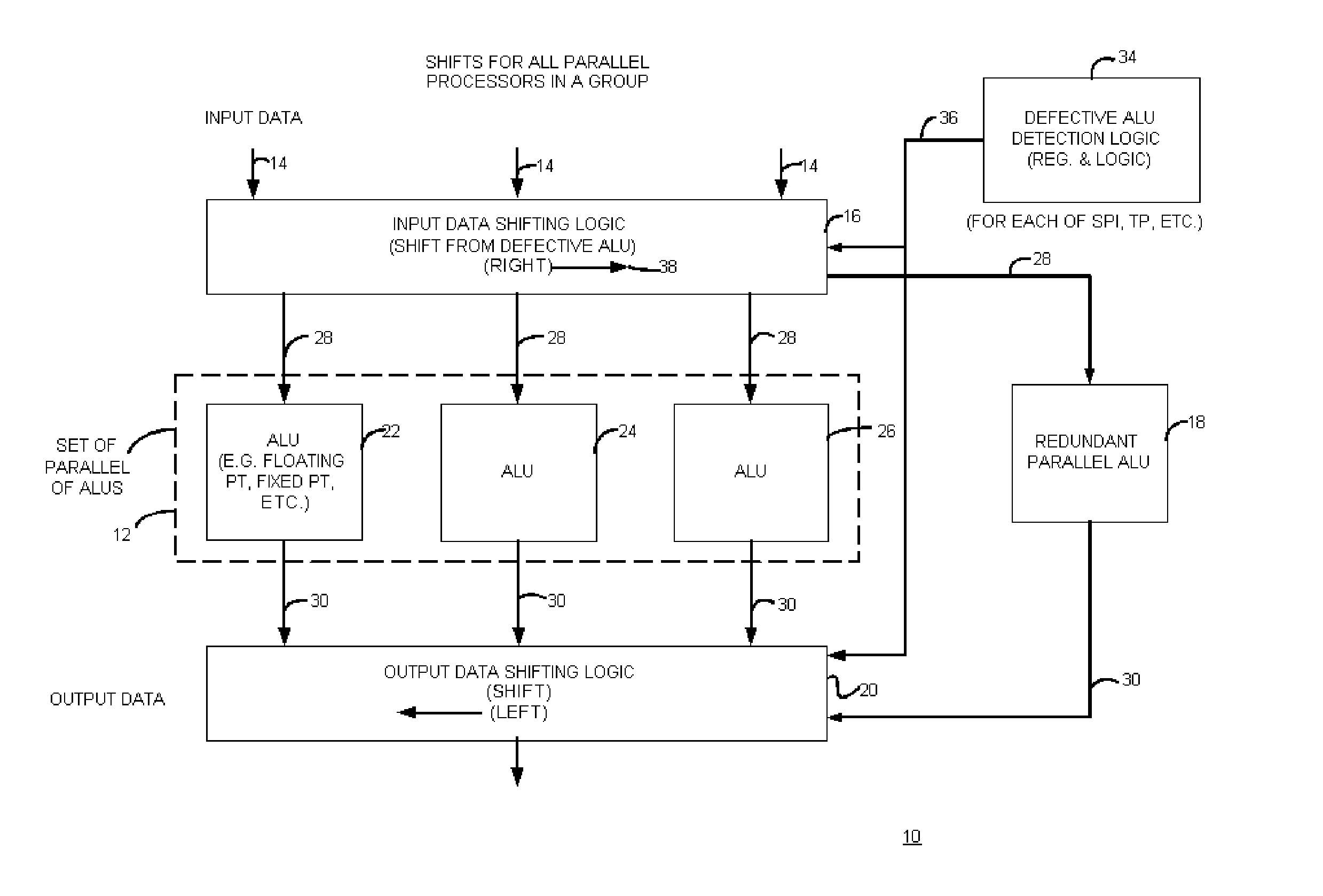

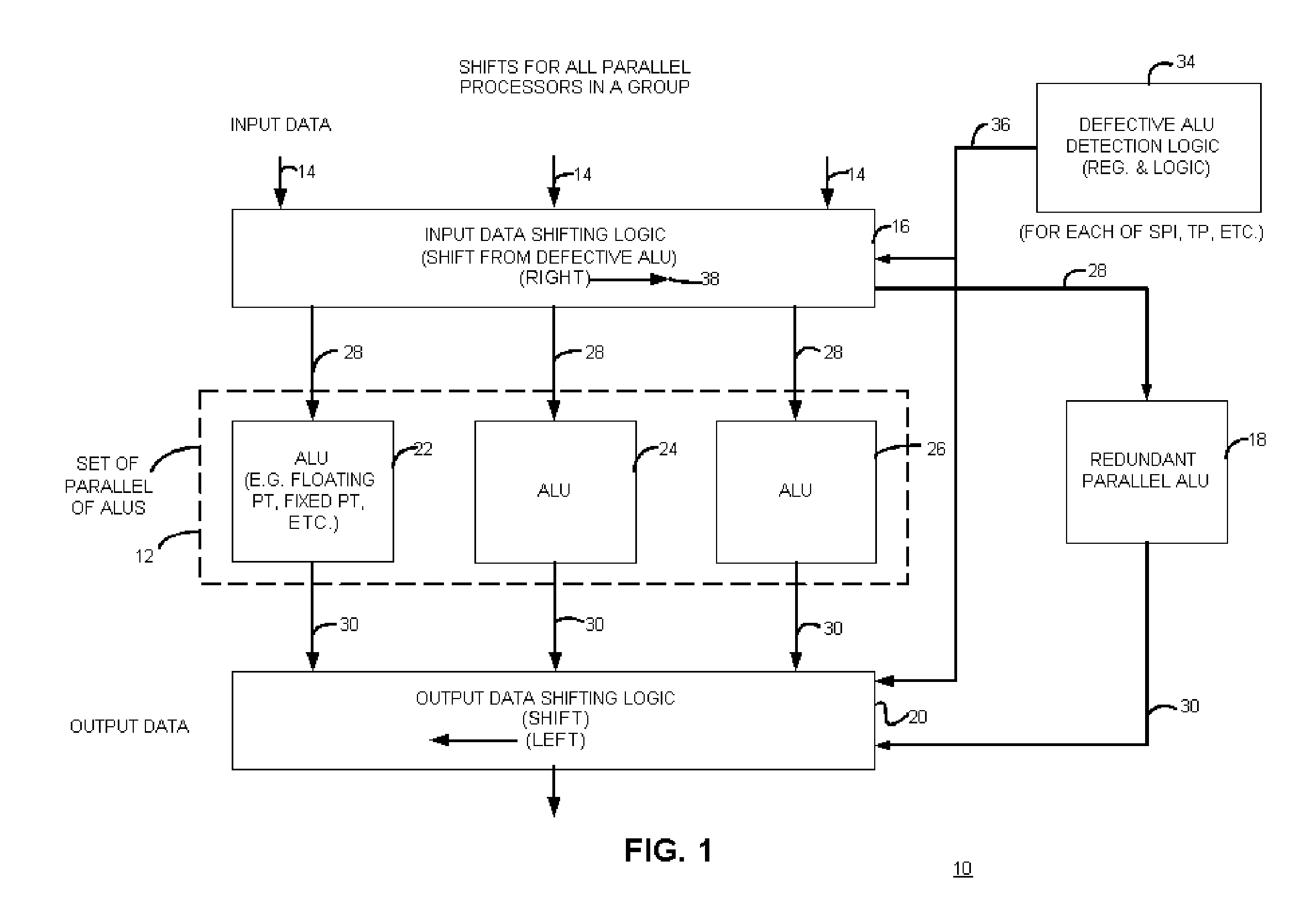

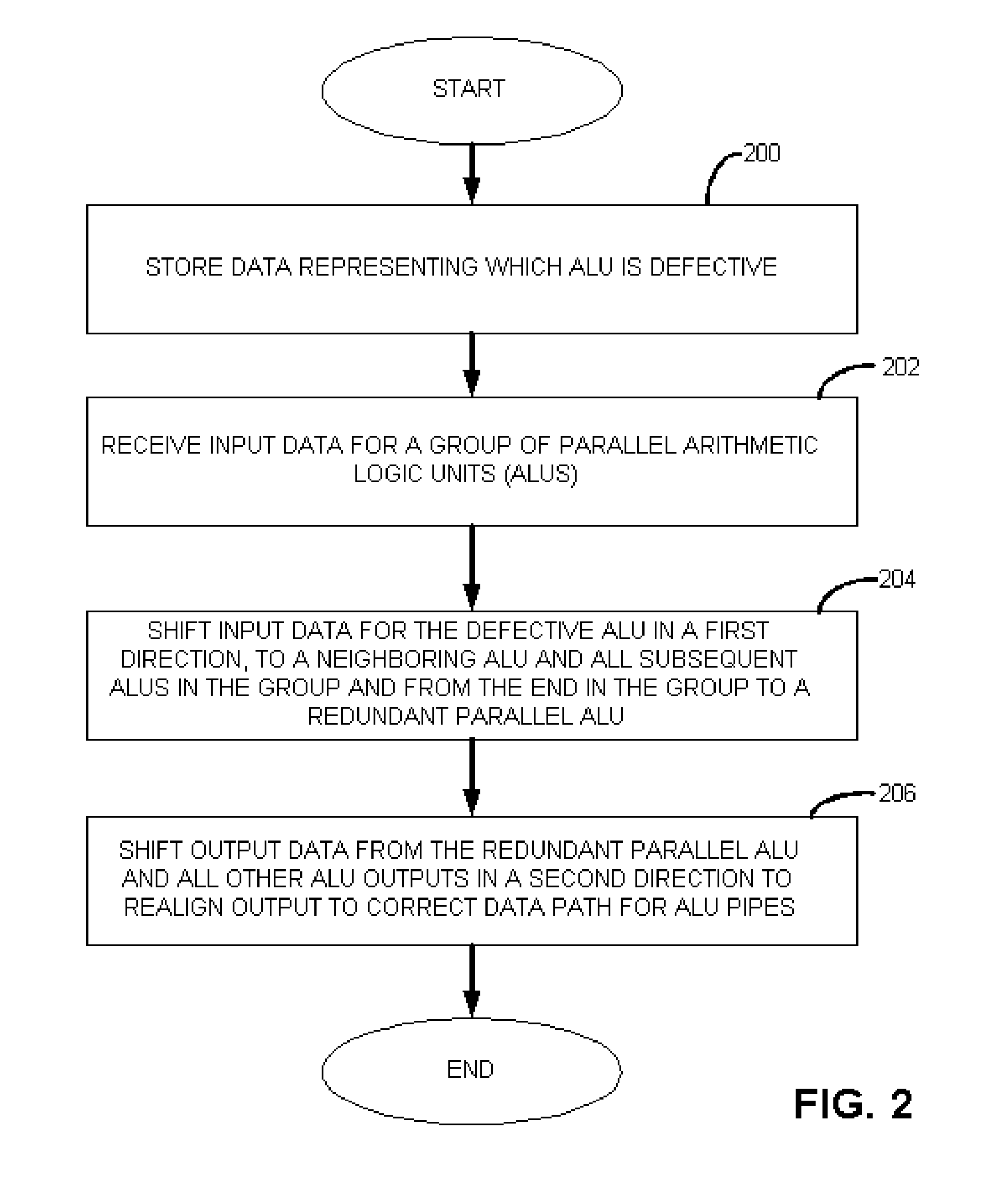

Graphics processing logic with variable arithmetic logic unit control and method therefor

Briefly, graphics data processing logic includes a plurality of parallel arithmetic logic units (ALUs), such as floating point processors or any other suitable logic, that operate as a vector processor on at least one of pixel data and vertex data (or both) and a programmable storage element that contains data representing which of the plurality of arithmetic logic units are not to receive data for processing. The graphics data processing logic also includes parallel ALU data packing logic that is operatively coupled to the plurality of arithmetic logic processing units and to the programmable storage element to pack data only for the plurality of arithmetic logic units identified by the data in the programmable storage element as being enabled.

Owner:ATI TECH INC

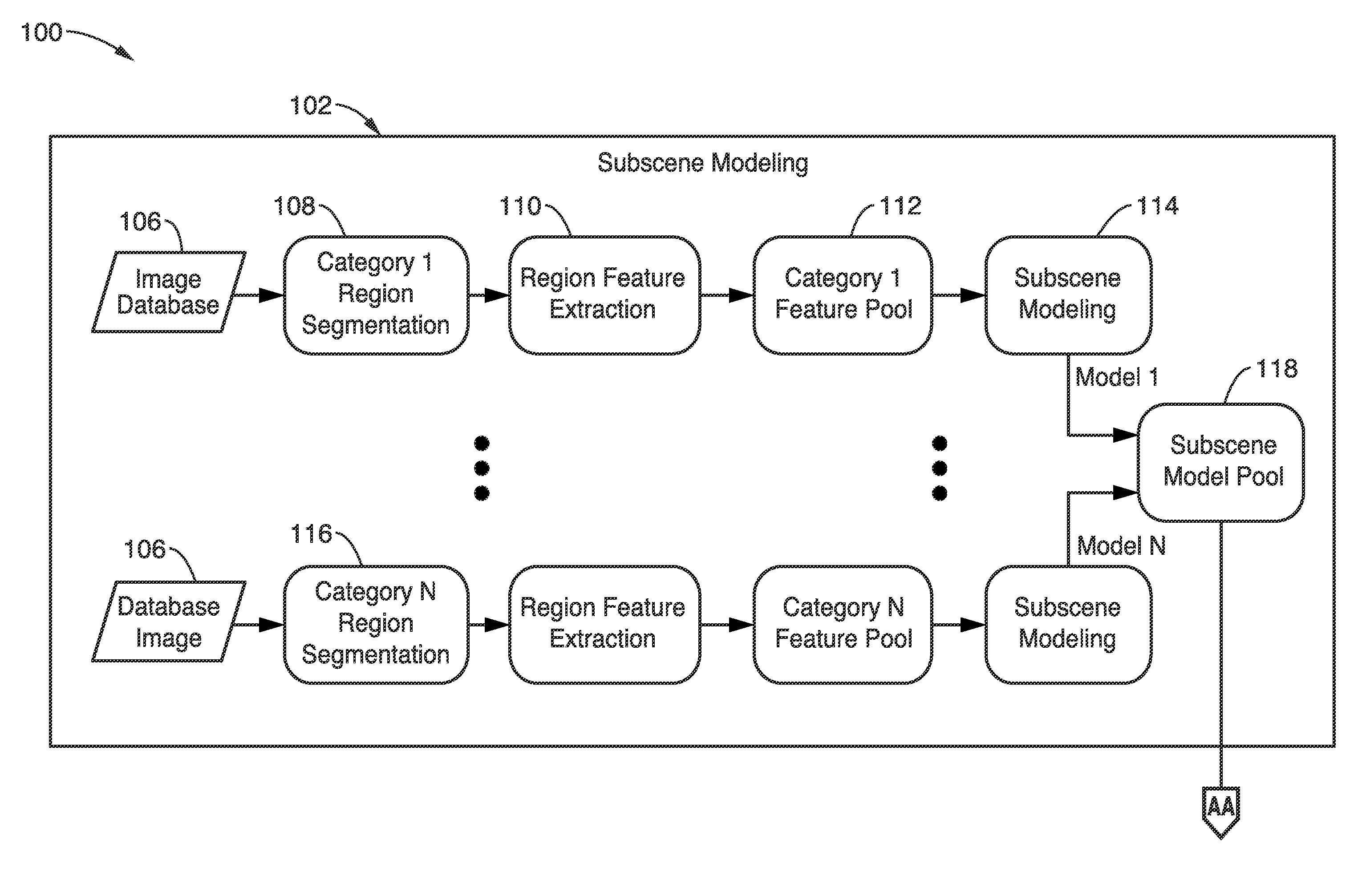

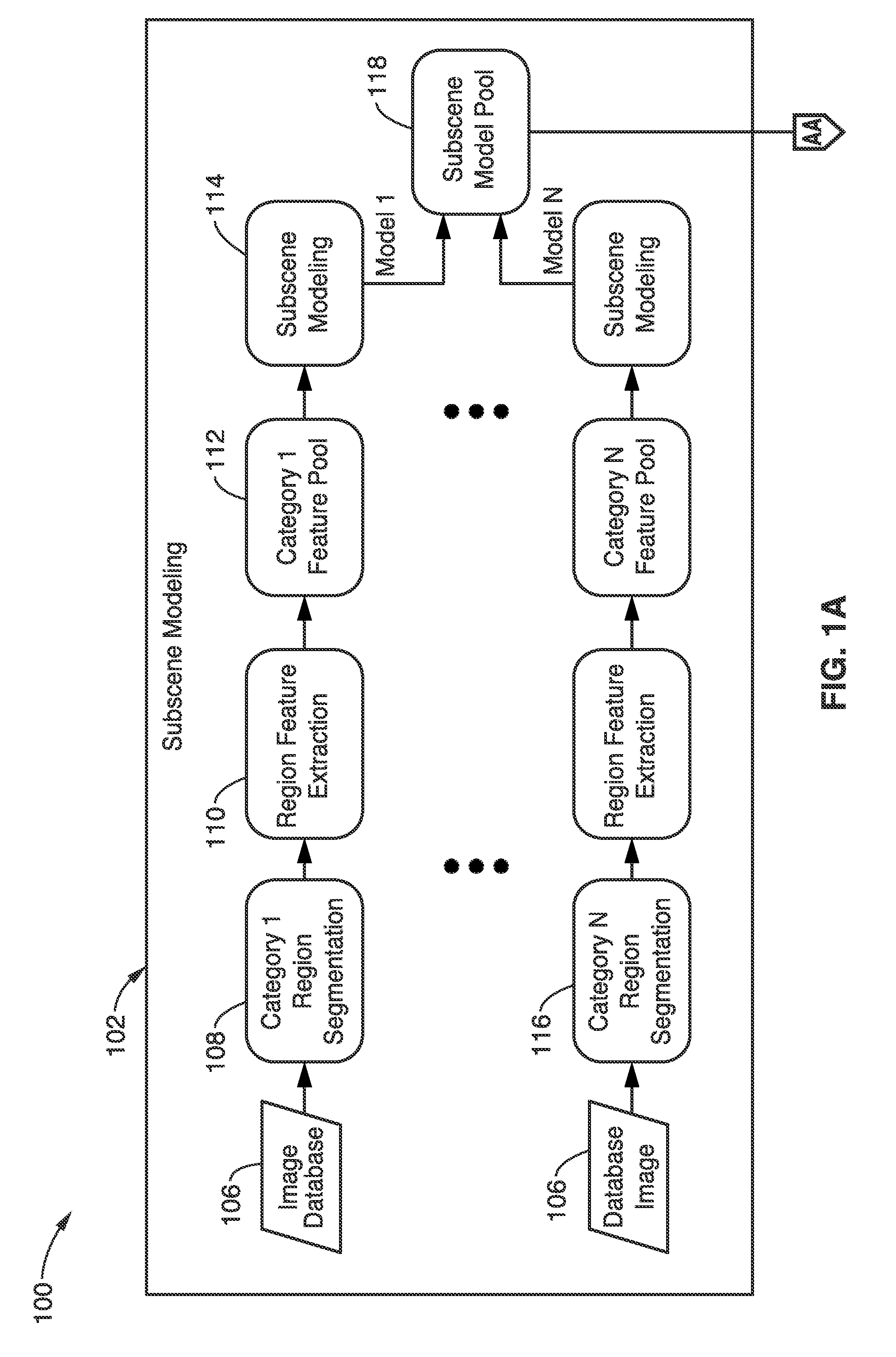

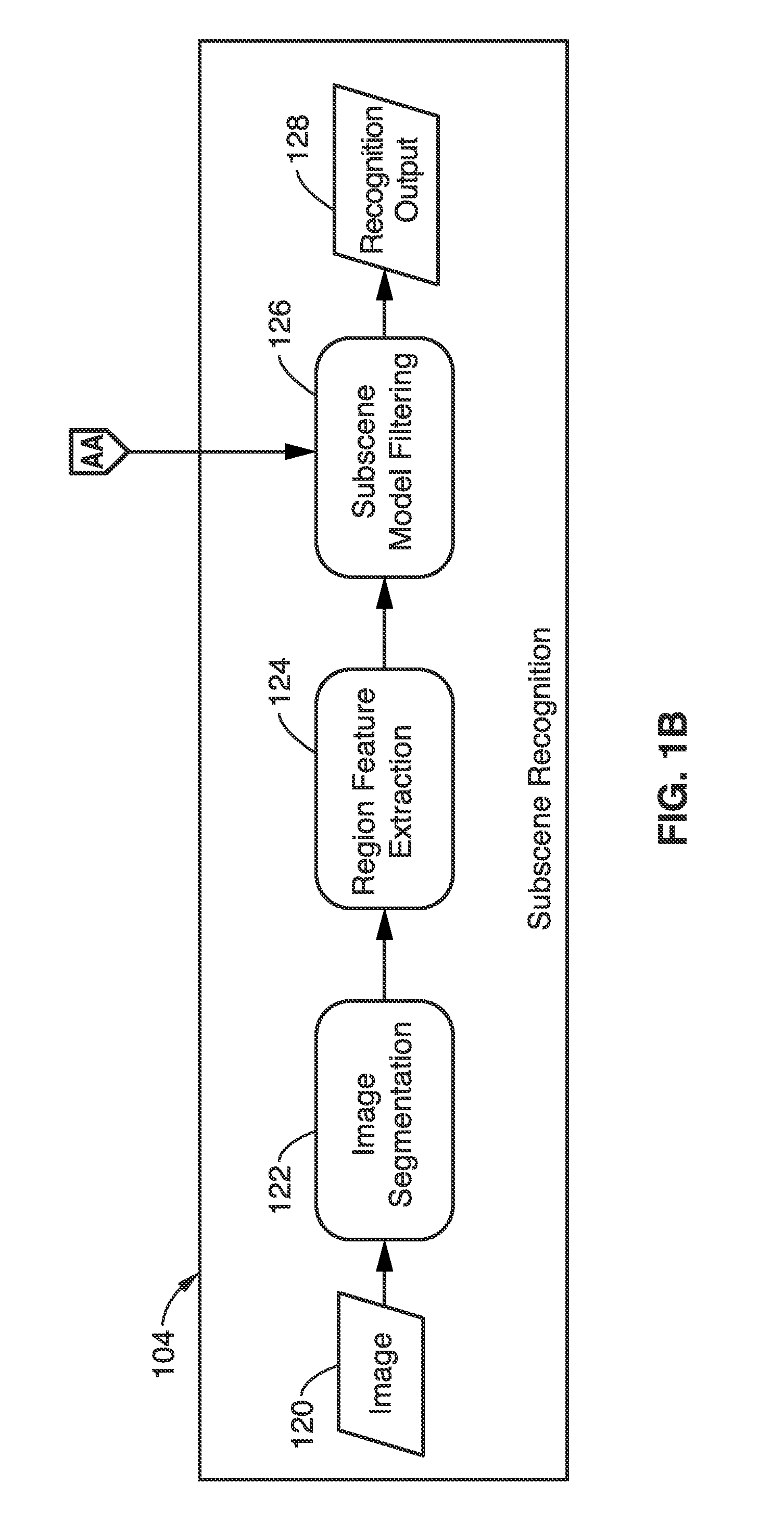

Region description and modeling for image subscene recognition

A method and apparatus is described here that categorizes images by extracting regions and describing the regions with a 16-dimensional subscene feature vector, which is a concatenation of color, texture, and spatial feature vectors. By comparing the spatial feature vectors in images with similarly-obtained feature vectors in a Gaussian mixture based model pool (obtained in a subscene modeling phase), the images may be categorized (in a subscene recognition phase) with probabilities relating to each region or subscene. Higher probabilities are likelier correlations. The device may be a single or multiple core CPU, or parallelized vector processor for characterizing many images. The images may be photographs, videos, or video stills, without restriction. When used real-time, the method may be used for visual searching or sorting.

Owner:SONY CORP

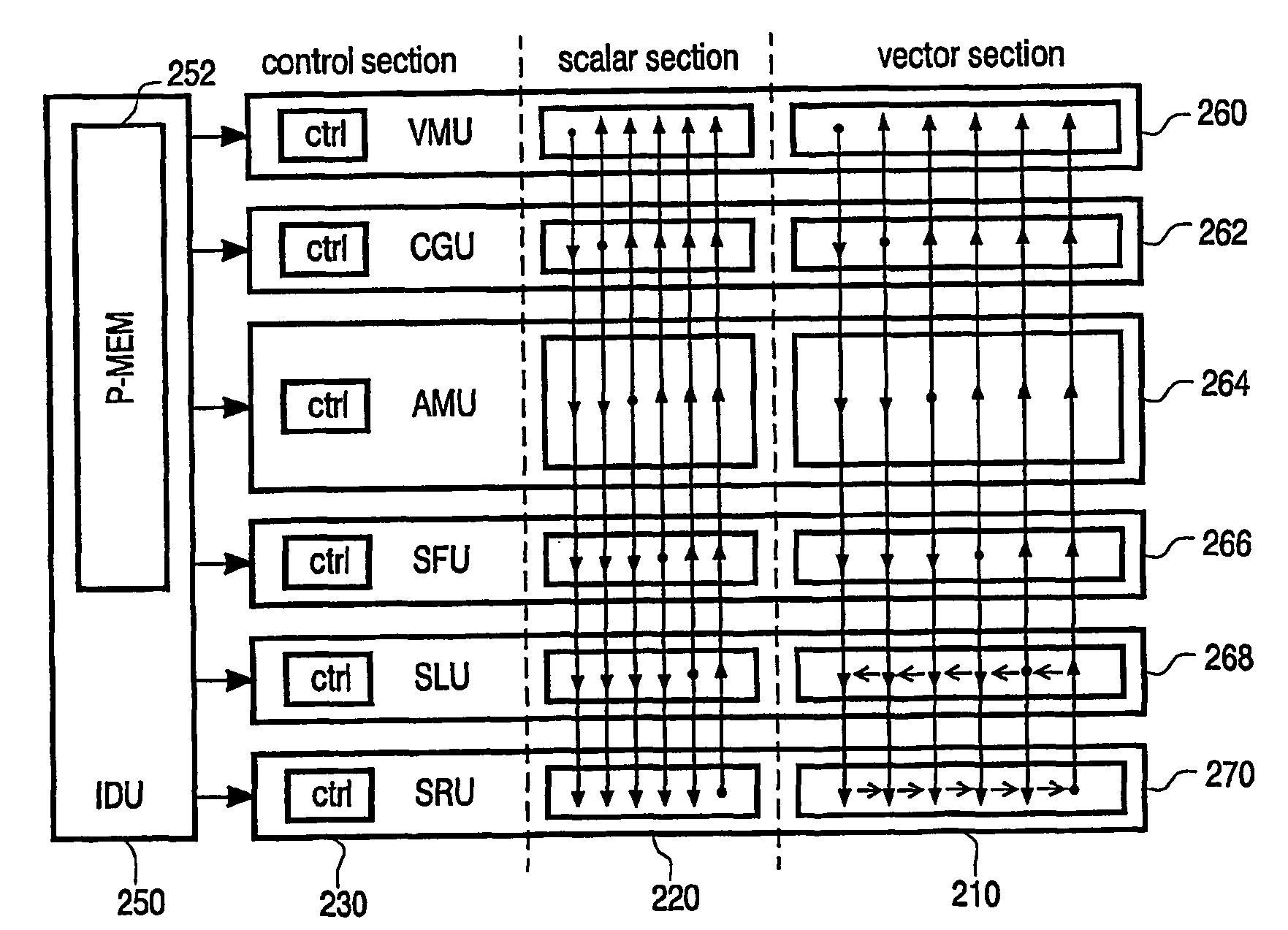

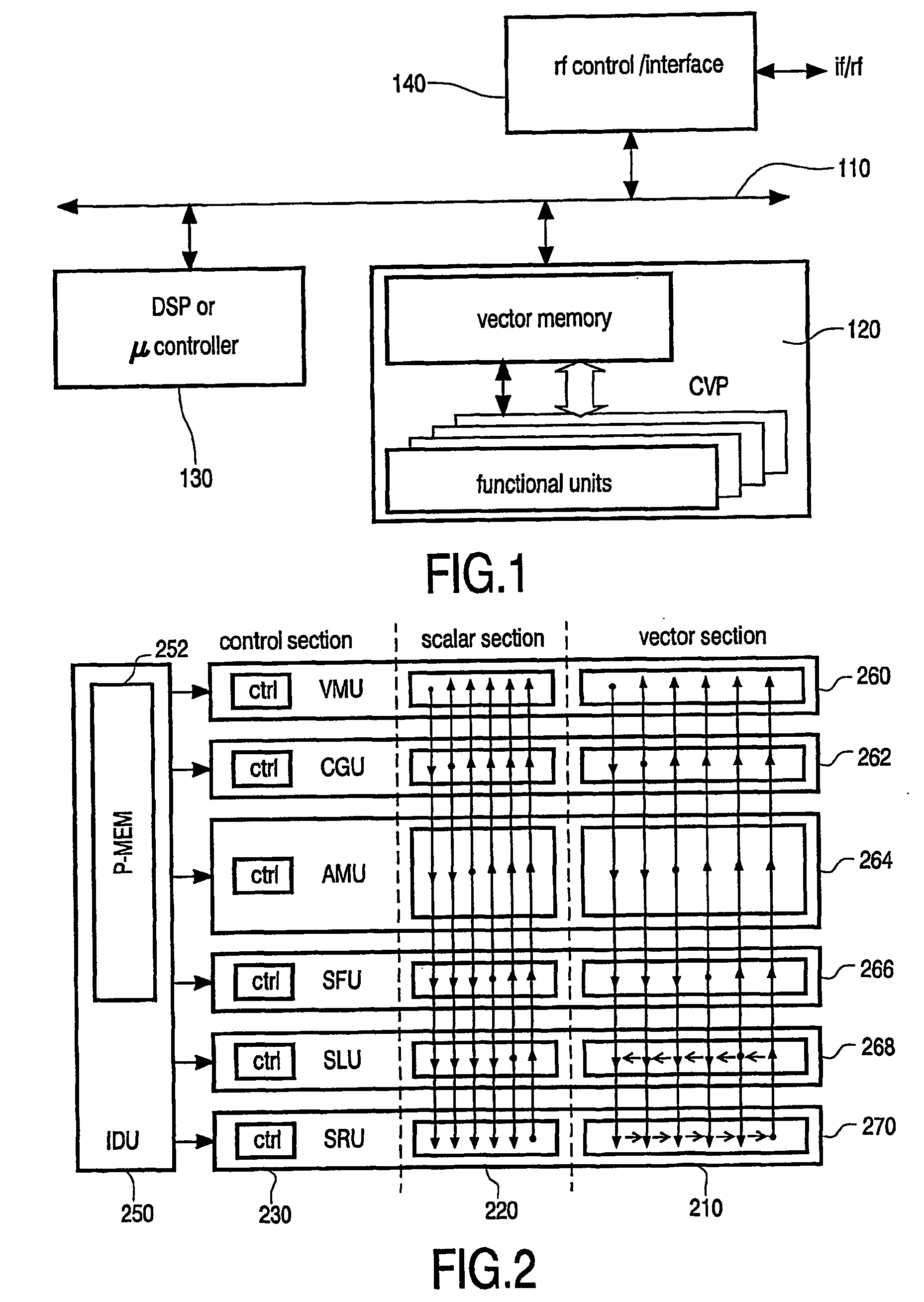

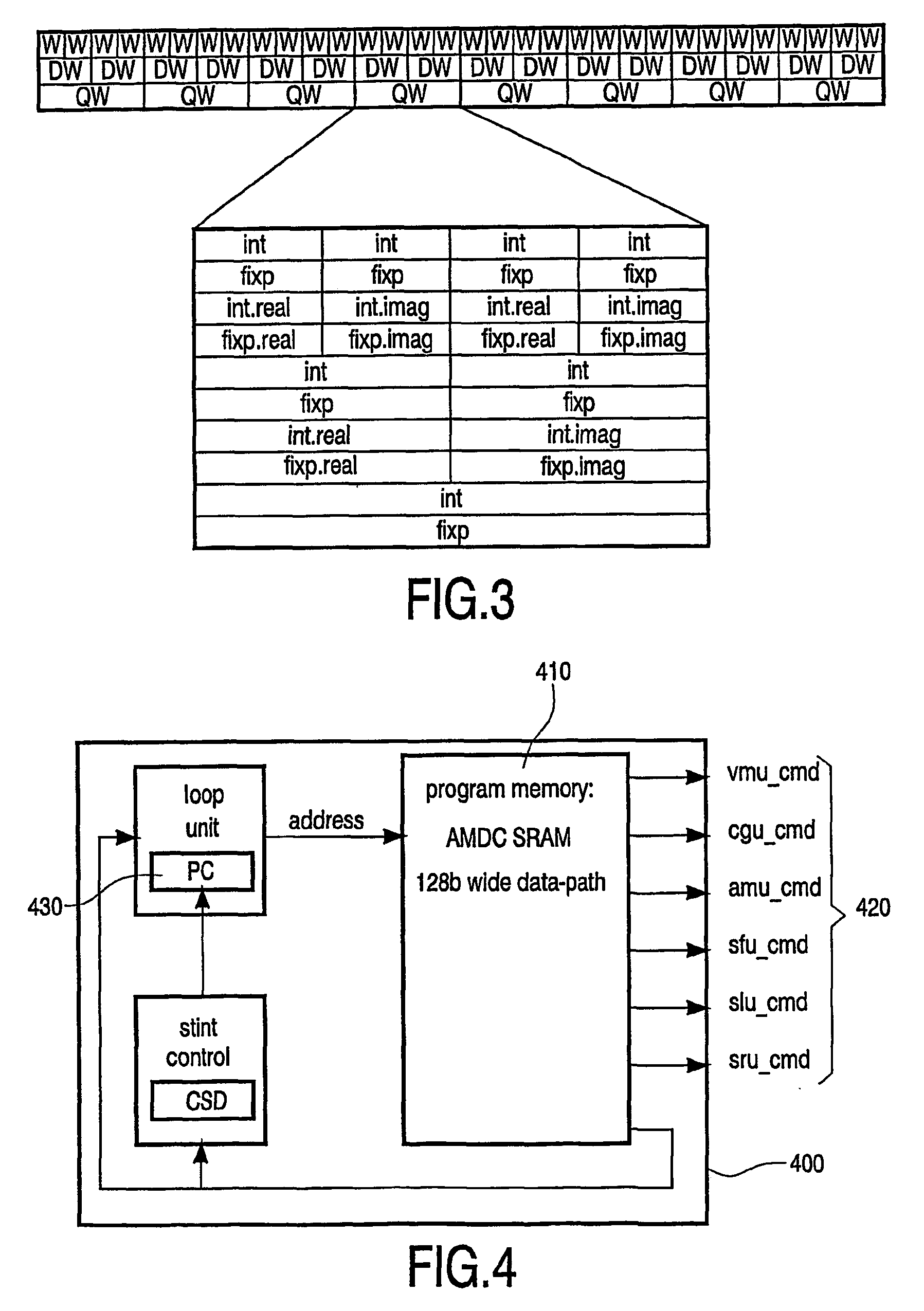

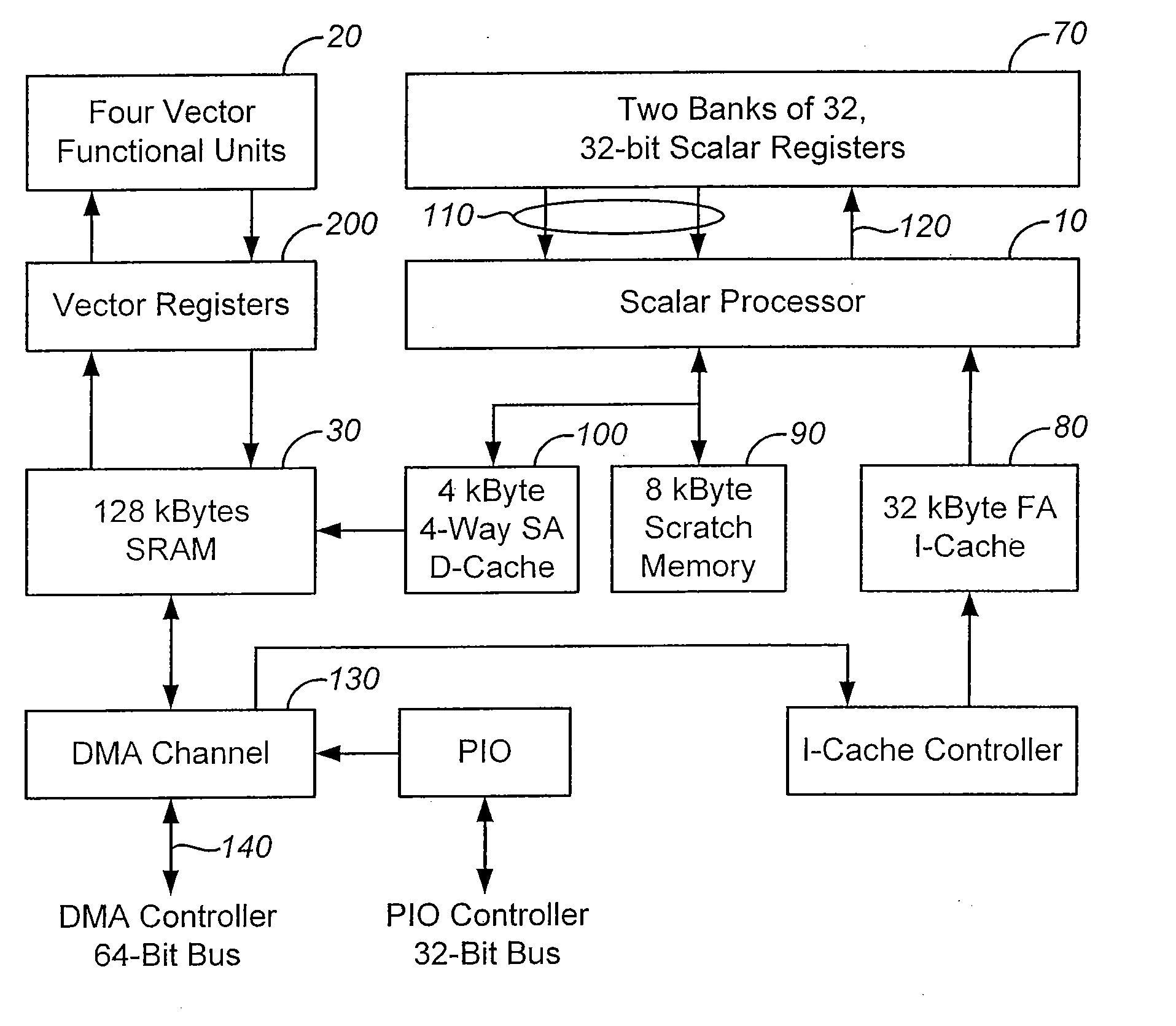

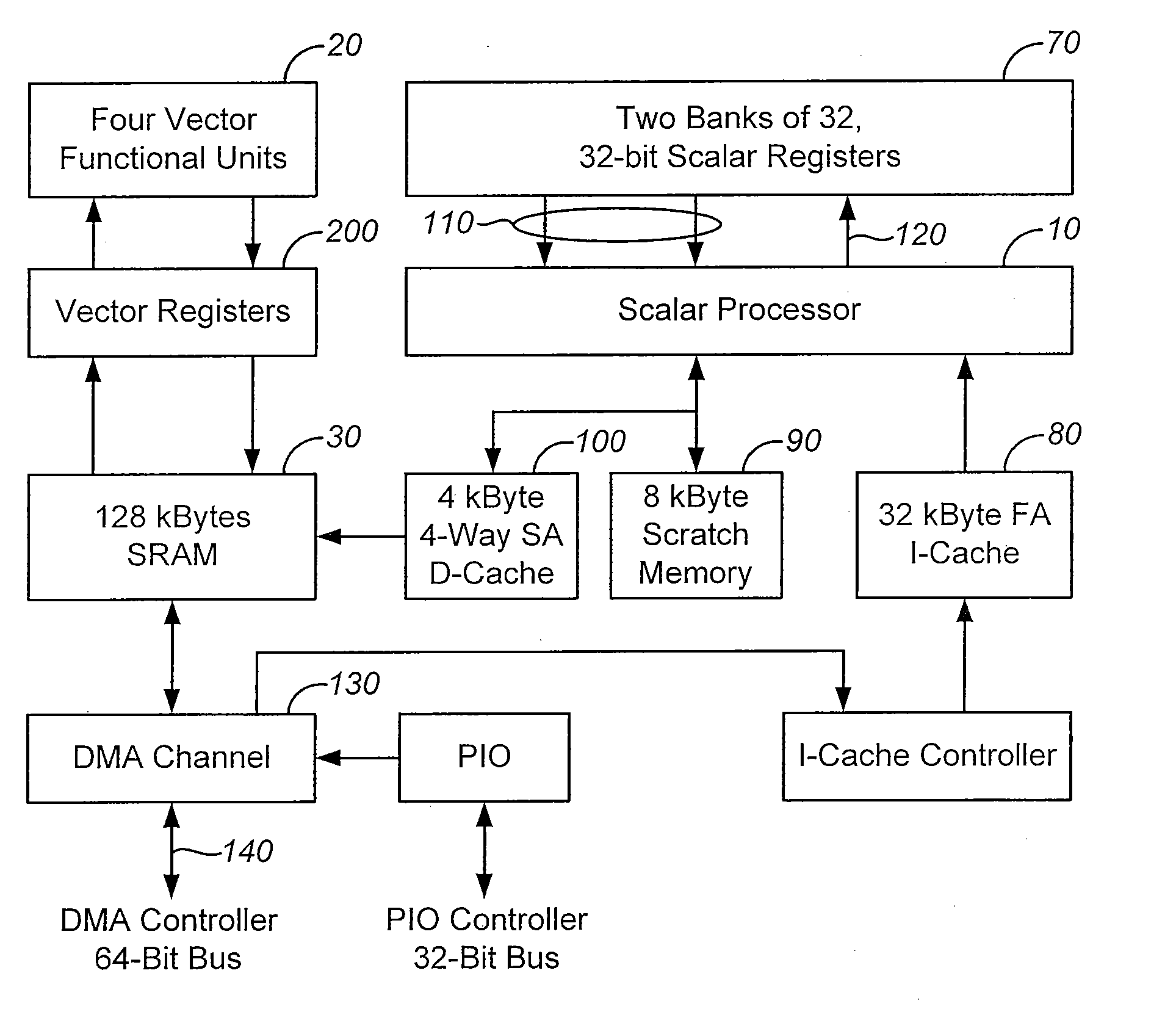

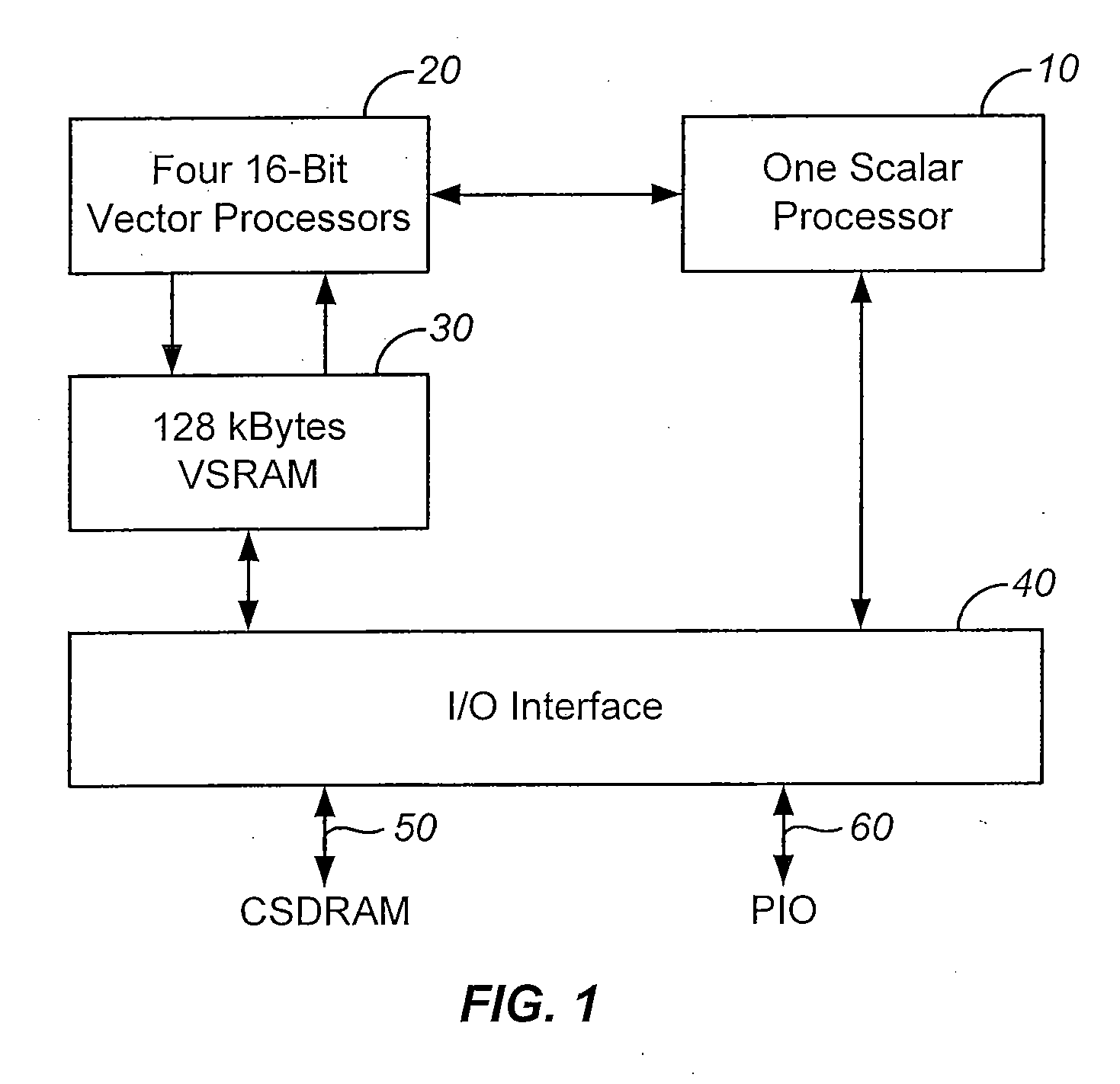

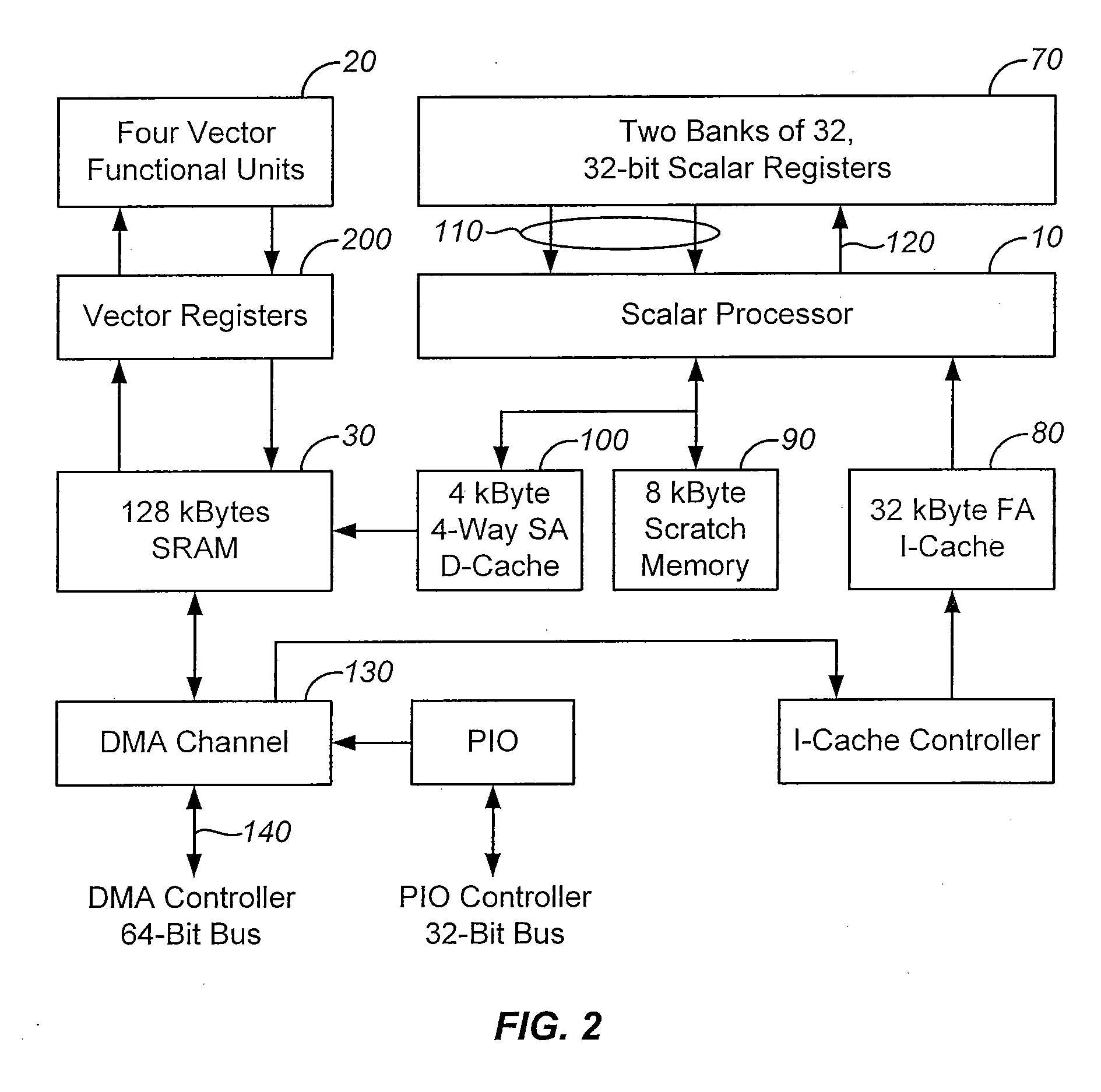

Scalar/vector processor

ActiveUS20050240644A1Improve performanceEasy programmingConcurrent instruction executionArchitecture with single central processing unitVector processor

A scalar / vector processor includes a plurality of functional units (252, 260, 262, 264, 266, 268, 270). At least one of the functional units includes a vector section (210) for operating on at least one vector and a scalar section (220) for operating on at least one scalar. The vector section and scalar section of the functional unit co-operate by the scalar section being arranged to provide and / or consume at least one scalar required by and / or supplied by the vector section of the functional unit.

Owner:TELEFON AB LM ERICSSON (PUBL)

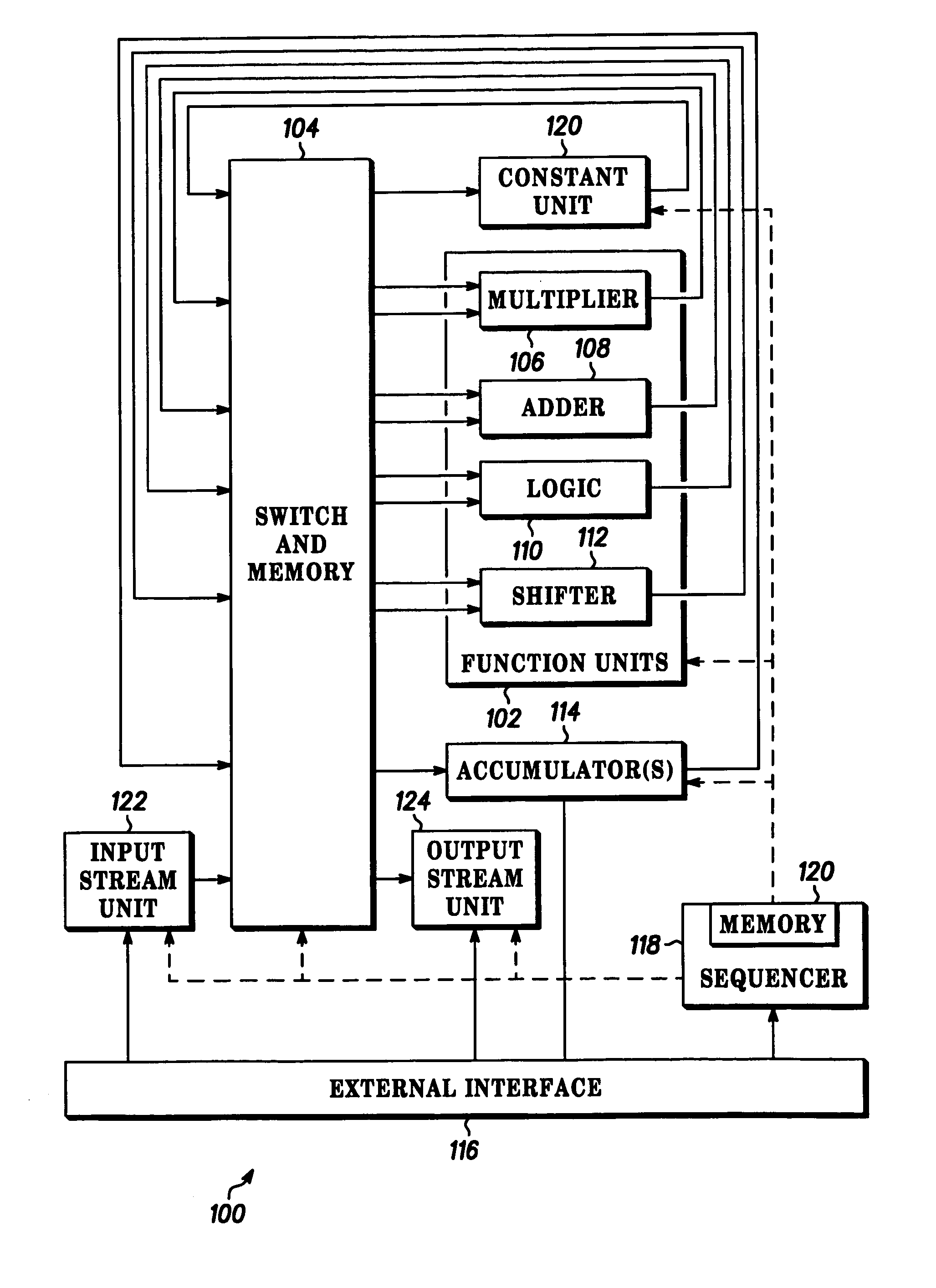

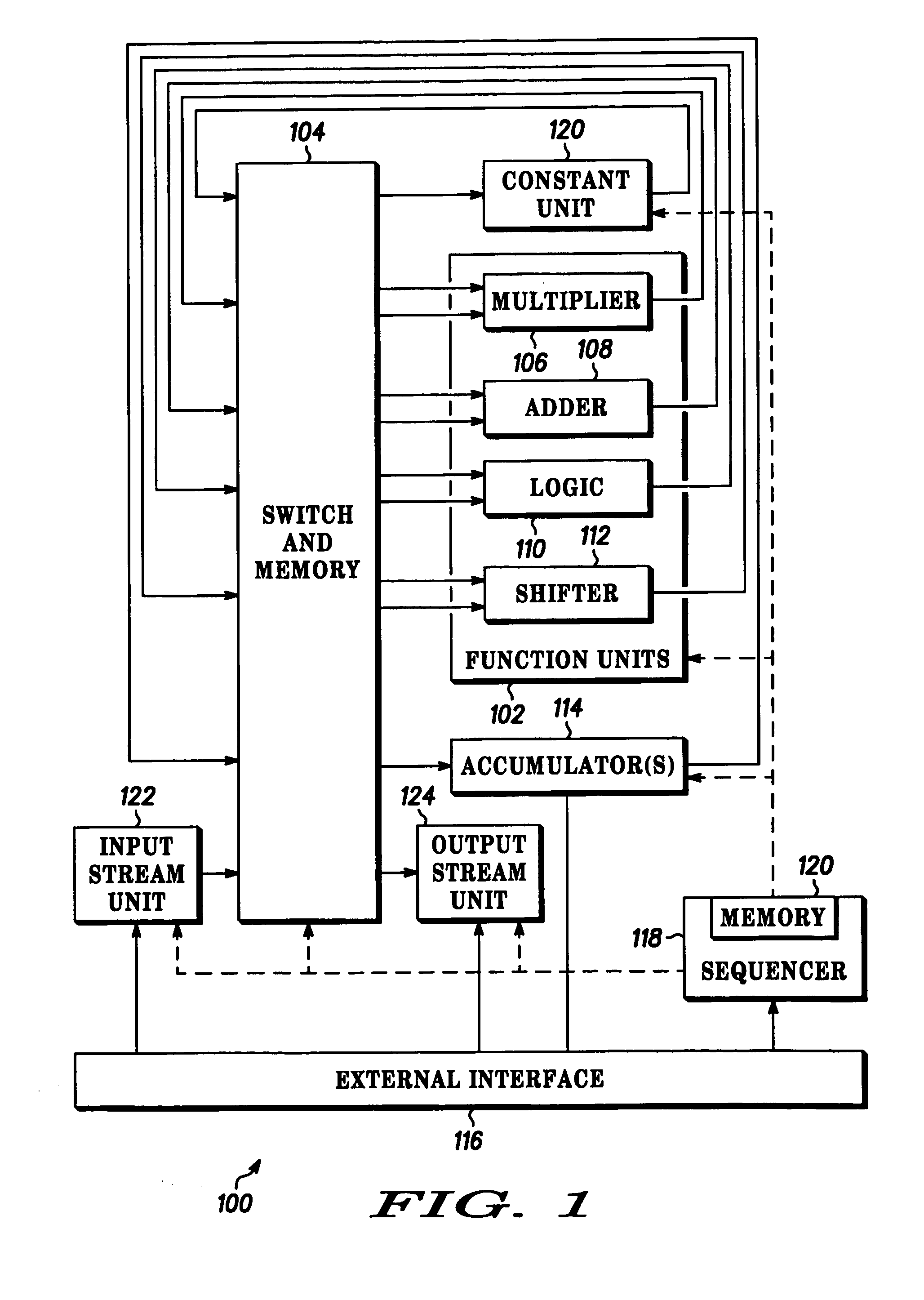

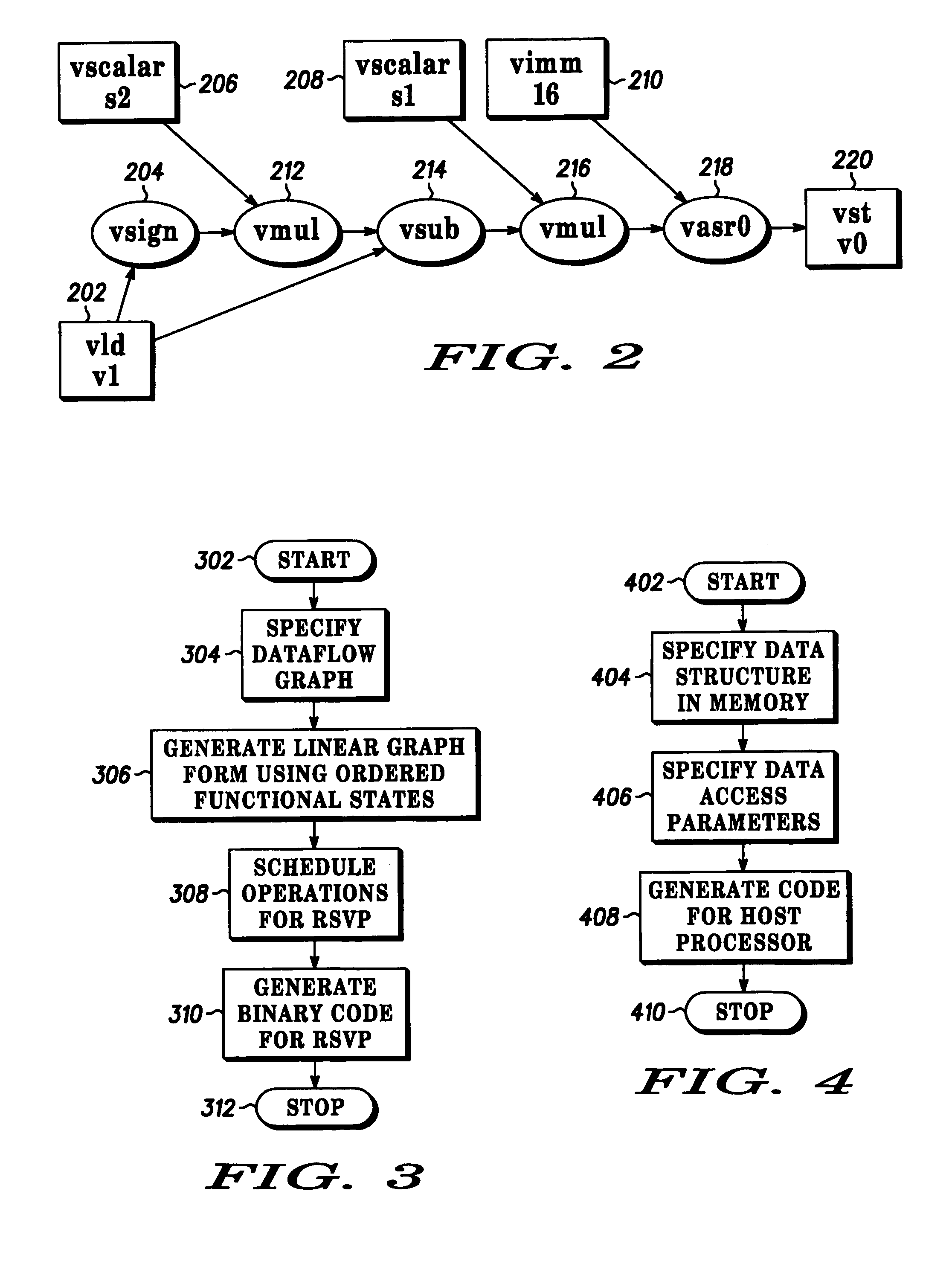

Streaming vector processor with reconfigurable interconnection switch

InactiveUS7159099B2Concurrent instruction executionArchitecture with single central processing unitAs DirectedInterconnection

A re-configurable, streaming vector processor (100) is provided which includes a number of function units (102), each having one or more inputs for receiving data values and an output for providing a data value, a re-configurable interconnection switch (104) and a micro-sequencer (118). The re-configurable interconnection switch (104) includes one or more links, each link operable to couple an output of a function unit (102) to an input of a function unit (102) as directed by the micro-sequencer (118). The vector processor may also include one or more input-stream units (122) for retrieving data from memory. Each input-stream unit is directed by a host processor and has a defined interface (116) to the host processor. The vector processor also includes one or more output-stream units (124) for writing data to memory or to the host processor. The defined interface of the input-stream and output-stream units forms a first part of the programming model. The instructions stored in a memory, in the sequence that direct the re-configurable interconnection switch, form a second part of the programming model.

Owner:MOTOROLA INC

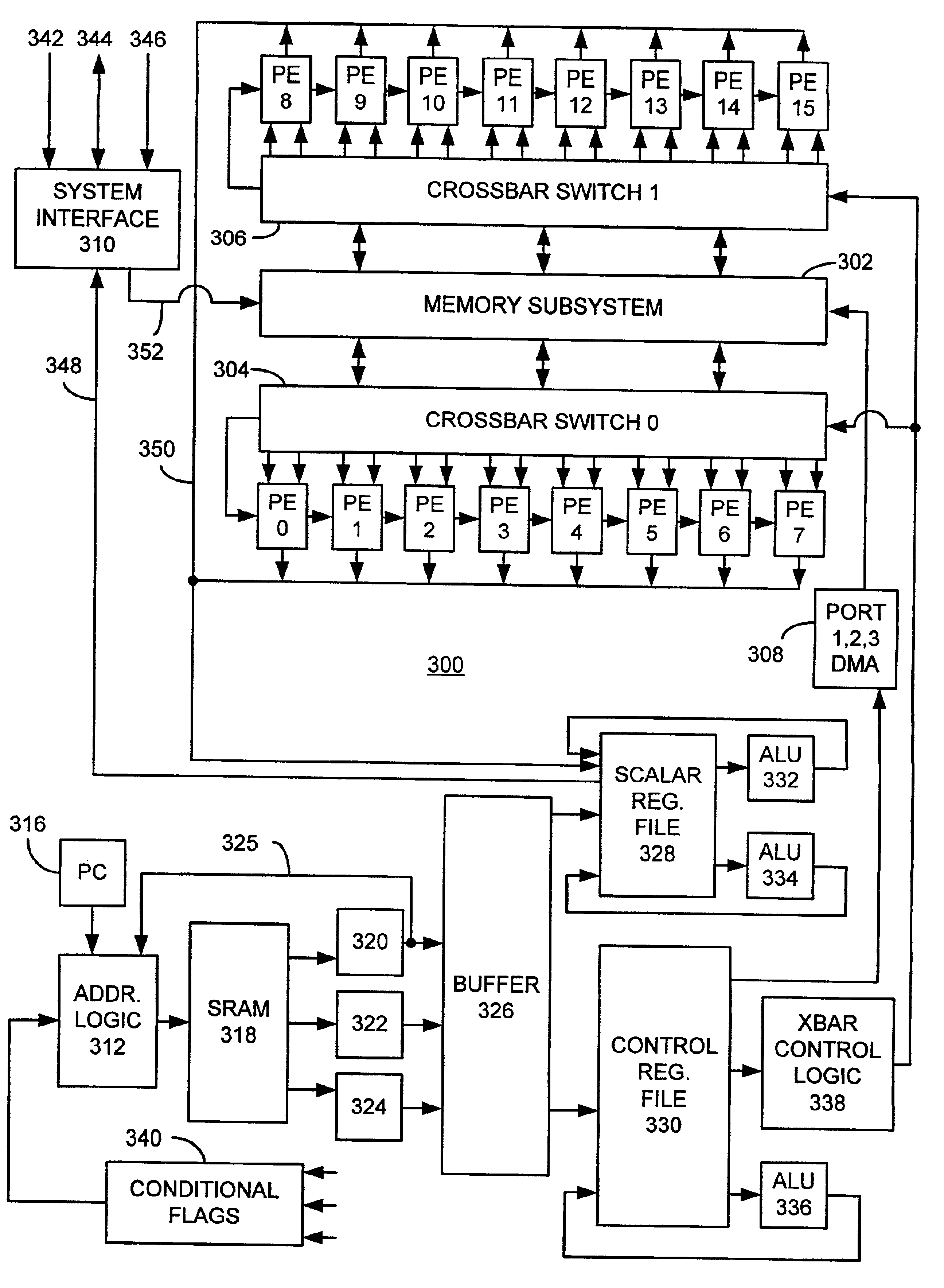

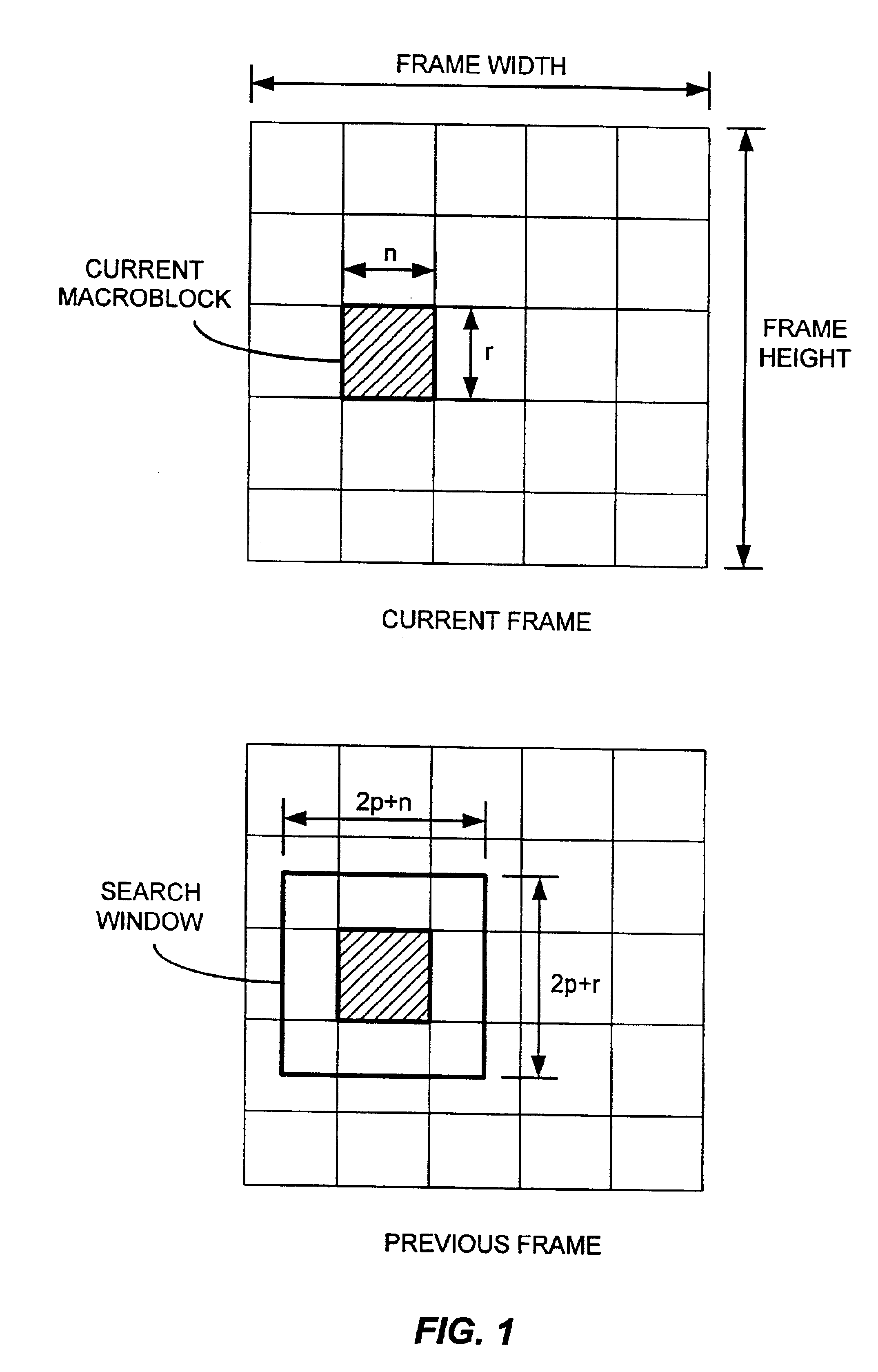

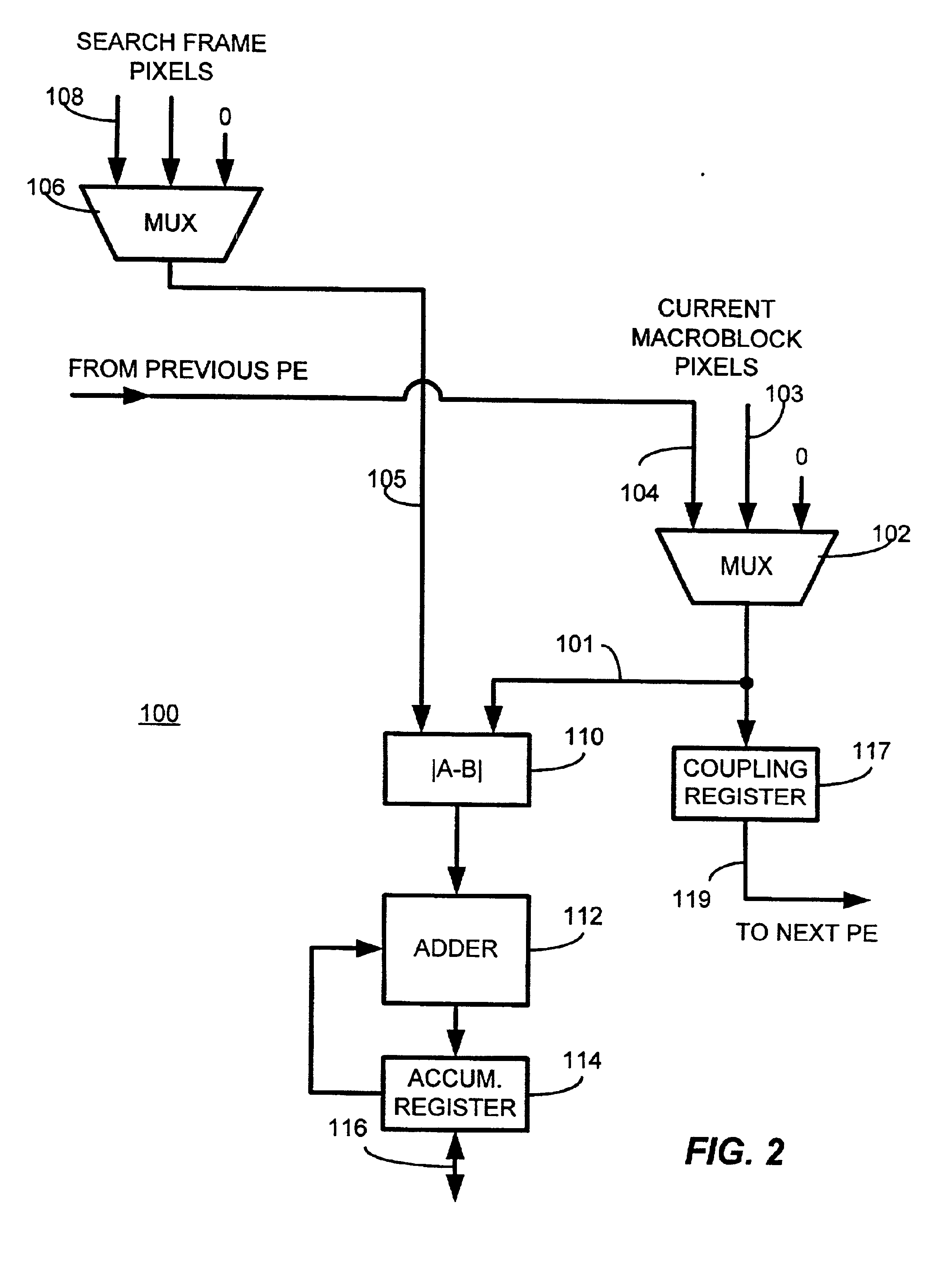

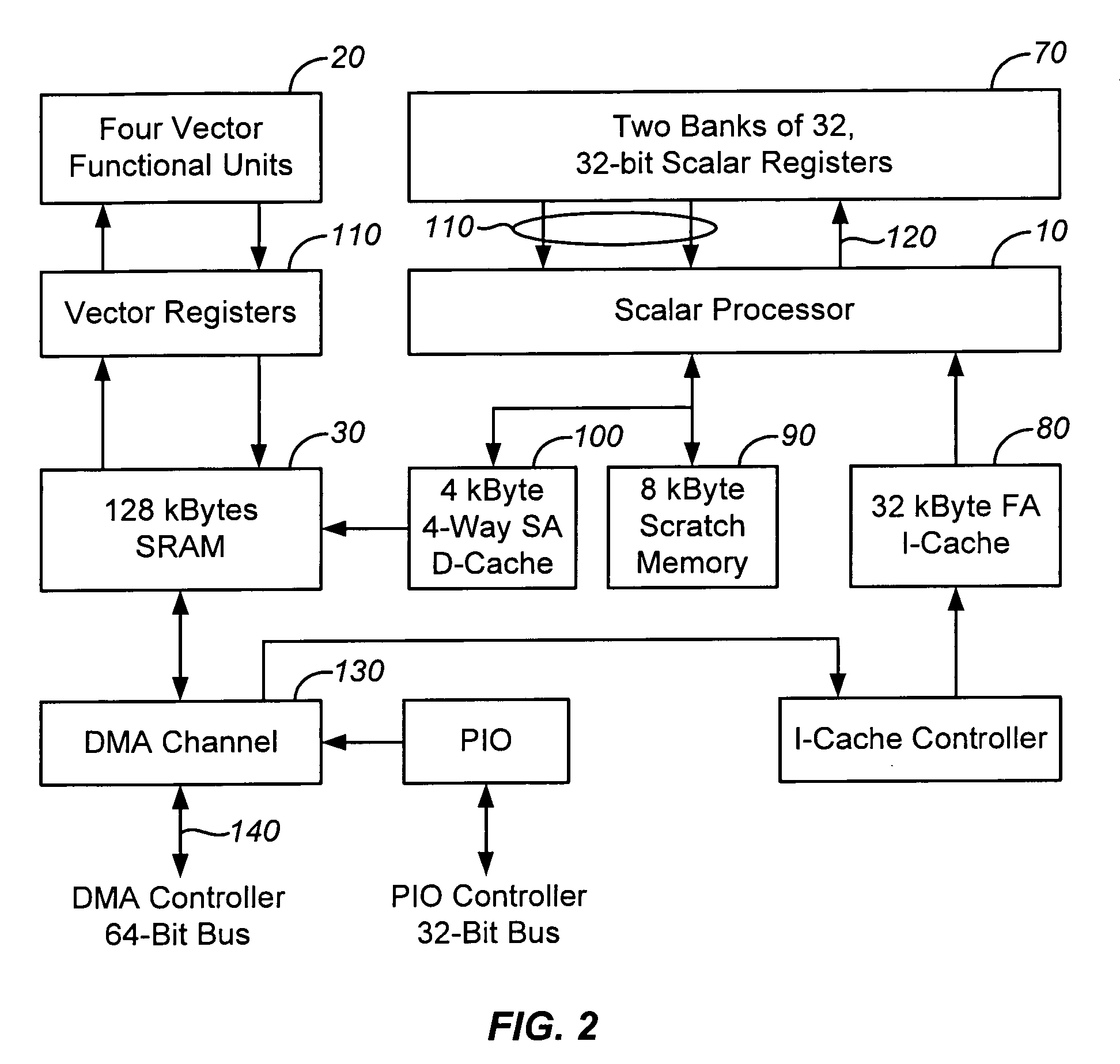

Programmable motion estimation module with vector array unit

InactiveUS6868123B2Reduce power consumptionTelevision system detailsImage enhancementDigital videoMicrocontroller

A programmable motion estimation module for processing pixel values from a sequence of digital video images. The module includes a programmable microcontroller, scalar and control register files, arithmetic logic units, a direct memory access unit and a vector array processor. The vector array processor includes a series of processing elements, a memory subsystem for storing pixel values and a crossbar switch for distributing pixel values from the memory subsystem to the processing elements. The module provides a flexible platform that can be programmed to implement a variety of different Motion Estimation (ME) algorithms using an associated Instruction Set Architecture without the need to modify the hardware.

Owner:GOOGLE TECH HLDG LLC

Vector processor with special purpose registers and high speed memory access

InactiveUS20060259737A1Limited instruction widthGreat instruction widthConditional code generationRegister arrangementsVector processorHigh speed memory

A vector processor includes a set of vector registers for storing data to be used in the execution of instructions and a vector functional unit coupled to the vector registers for executing instructions. The functional unit executes instructions using operation codes provided to it which operation codes include a field referencing a special register. The special register contains information about the length and starting point for each vector instruction. The processor includes a high speed memory access system to facilitate faster operation.

Owner:MEADLOCK JAMES W MEAD

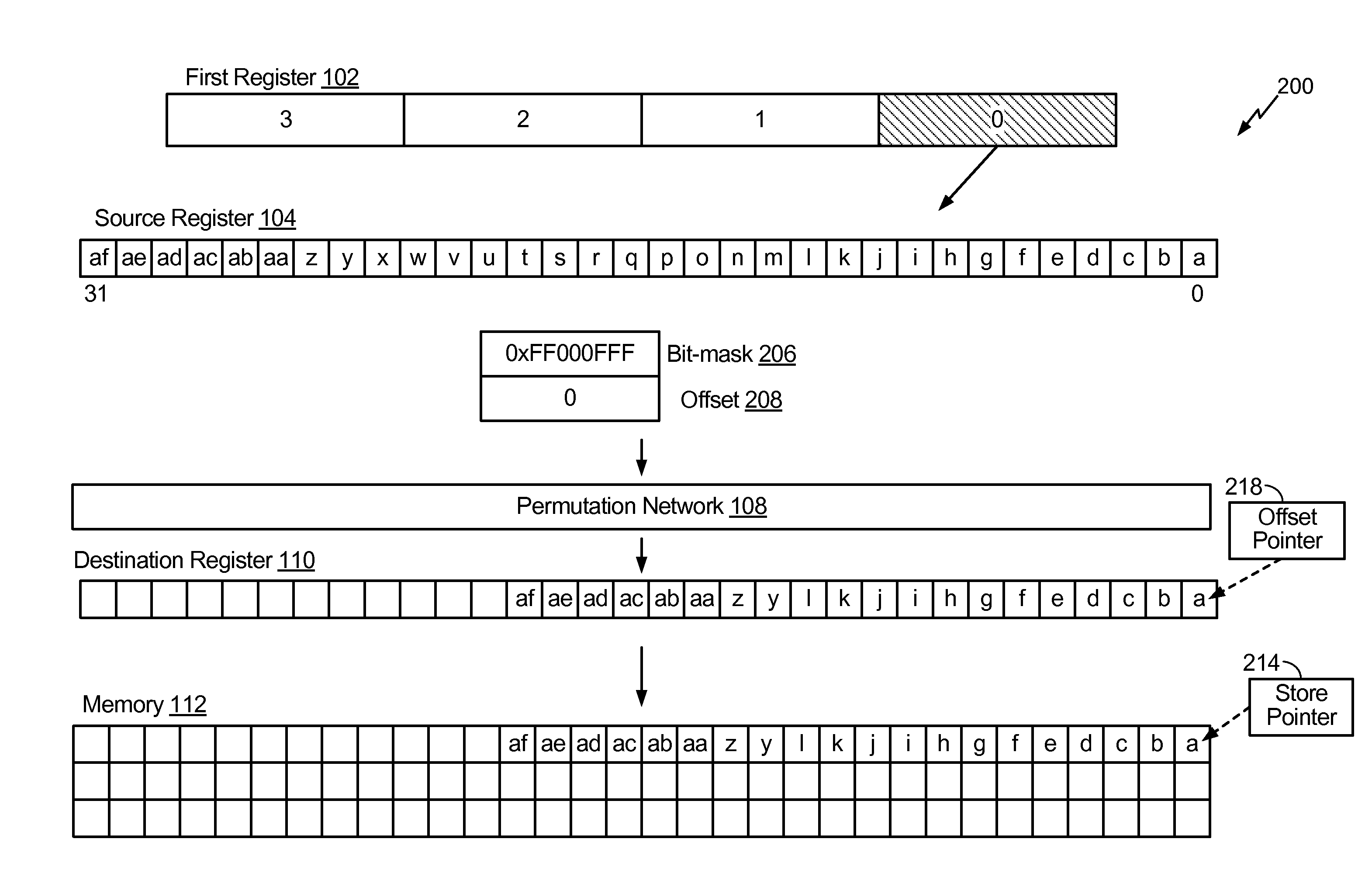

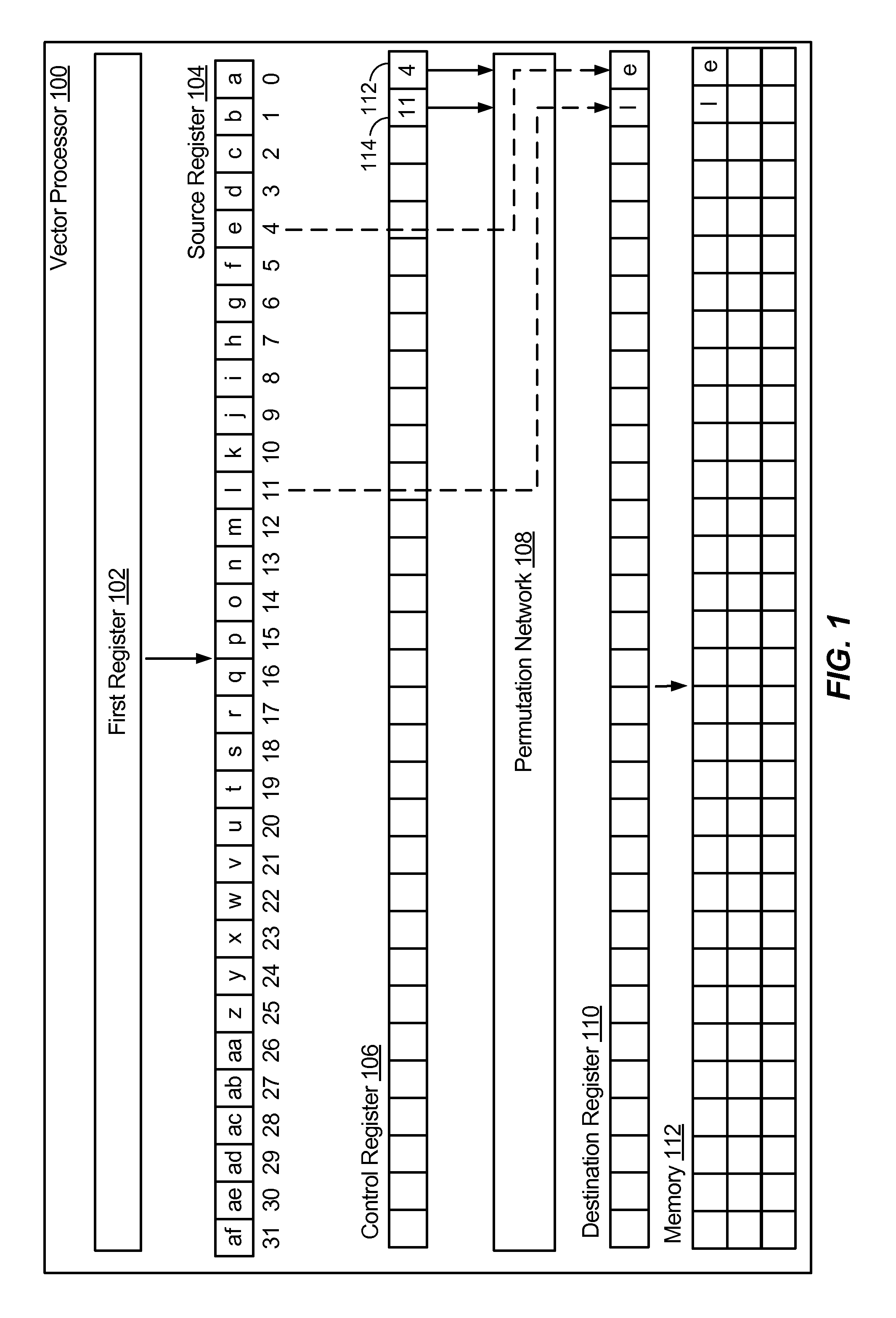

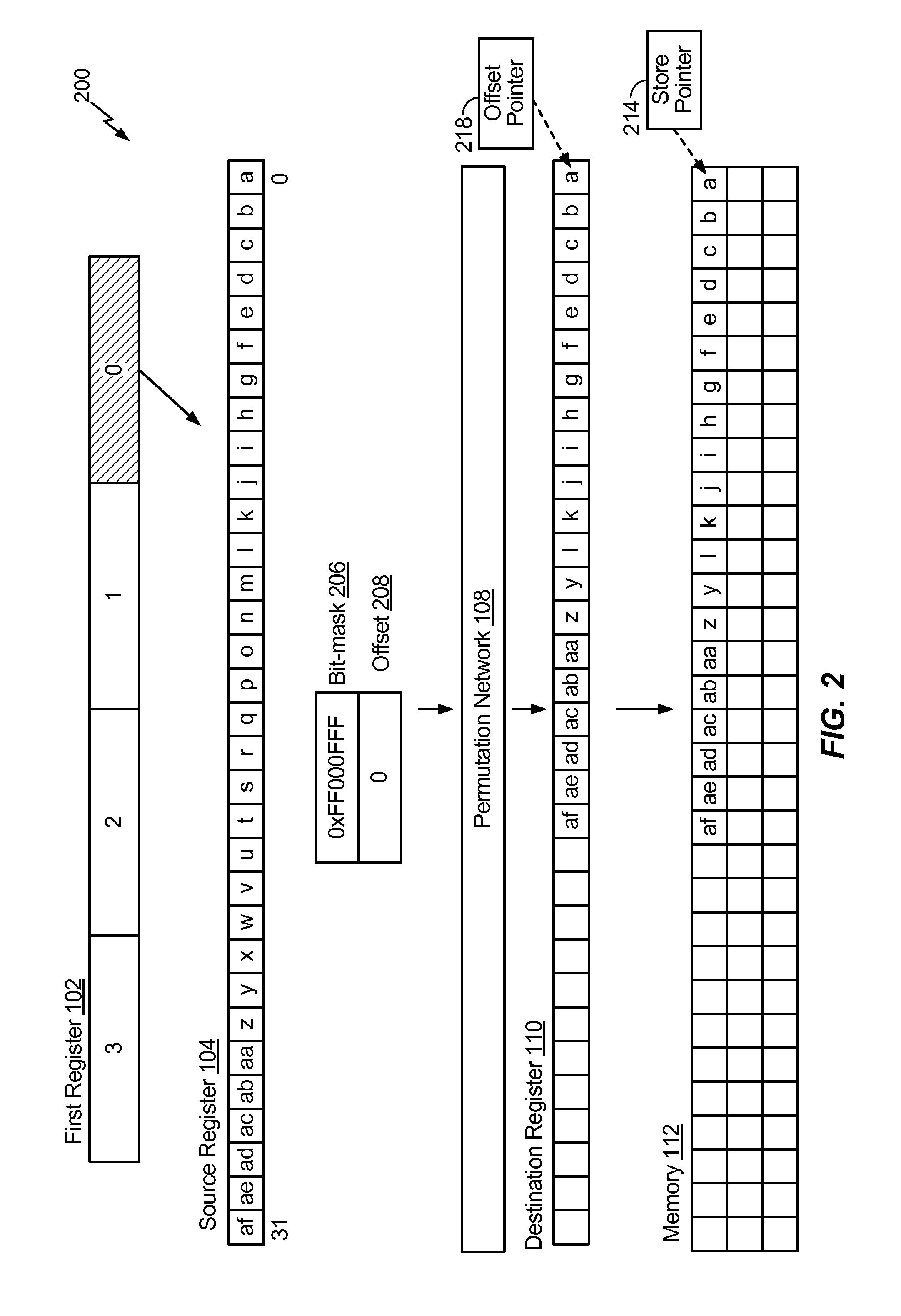

Systems and methods of data extraction in a vector processor

ActiveUS20140059323A1General purpose stored program computerProgram controlProcessor registerData element

Systems and methods of data extraction in a vector processor are disclosed. In a particular embodiment a method of data extraction in a vector processor includes copying at least one data element to a source register of a permutation network. The method includes reordering multiple data elements of the source register, populating a destination register of the permutation network with the reordered data elements, and copying the reordered data elements from the destination register to a memory.

Owner:QUALCOMM INC

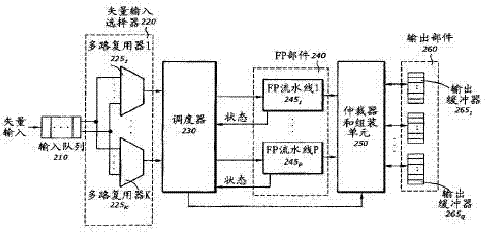

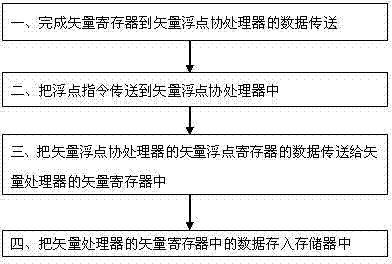

Vector floating-point computing device and method based on vector computing

InactiveCN102262525ADigital data processing detailsConcurrent instruction executionHardware structureCoprocessor

The invention discloses a vector-operation-based floating point operational device, which is a novel hardware structure, and comprises a vector processor, a storage device, a vector floating point coprocessor and a vector floating point coprocessor storage device, wherein a bus interface between the vector processor and the vector floating point coprocessor can adopt a general coprocessor bus structure. By adopting the coprocessor, on the basis of ensuring all operations of floating points, the operational speed of the floating points is improved and the design complexity is reduced.

Owner:孙瑞玮

Method for performing random read access to a block of data using parallel lut read instruction in vector processors

InactiveUS20160124651A1Solve excessive overheadMemory architecture accessing/allocationInput/output to record carriersProcessor registerSimd processor

This invention deals with the problem of paralleling random read access within a reasonably sized block of data for a vector SIMD processor. The invention sets up plural parallel look up tables, moves data from main memory to each plural parallel look up table and then employs a look up table read instruction to simultaneously move data from each parallel look up table to a corresponding part a vector destination register. This enables data processing by vector single instruction multiple data (SIMD) operations. This vector destination register load can be repeated if the tables store more used data. New data can be loaded into the original tables if appropriate. A level one memory is preferably partitioned as part data cache and part directly addressable memory. The look up table memory is stored in the directly addressable memory.

Owner:TEXAS INSTR INC

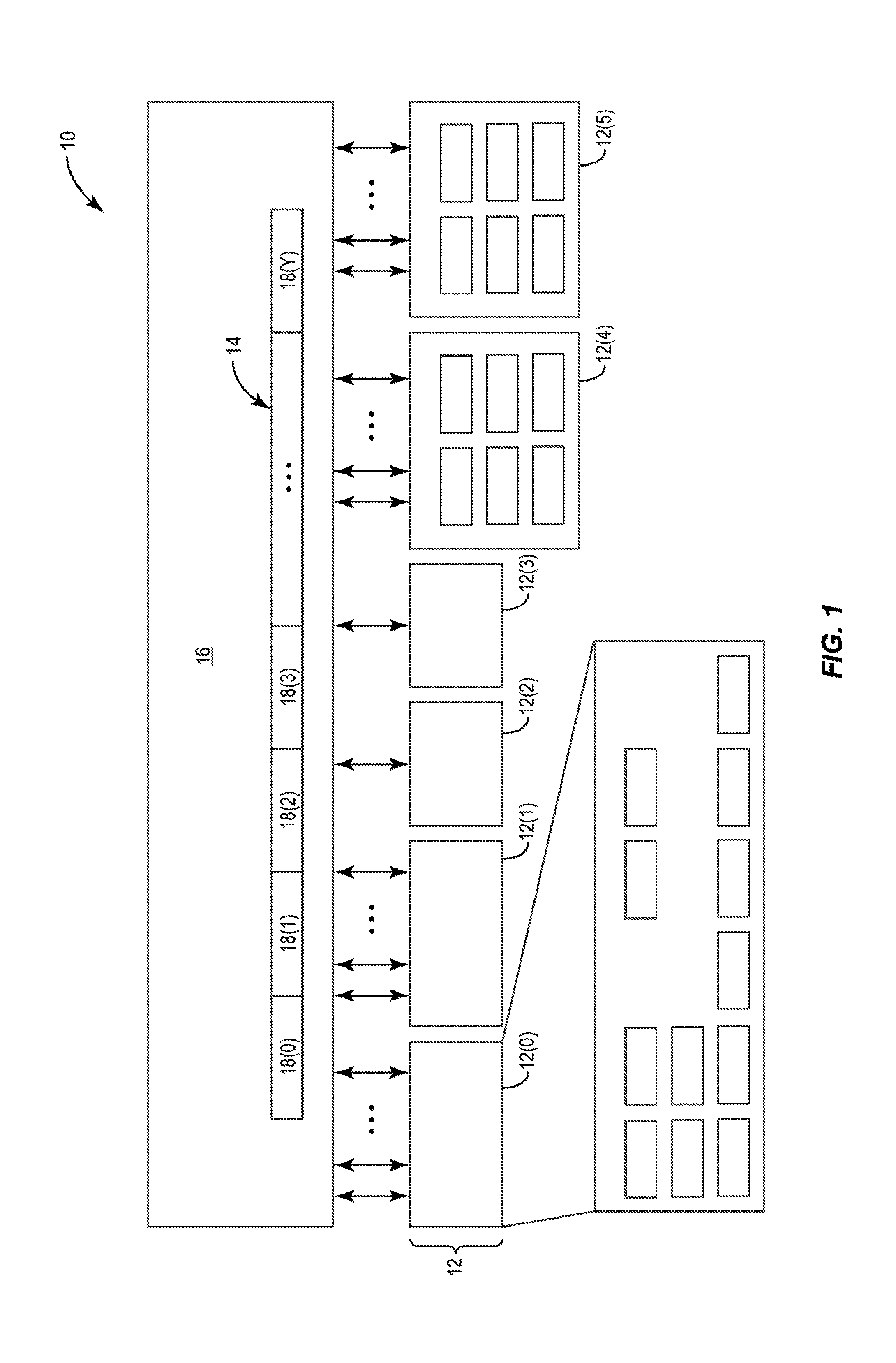

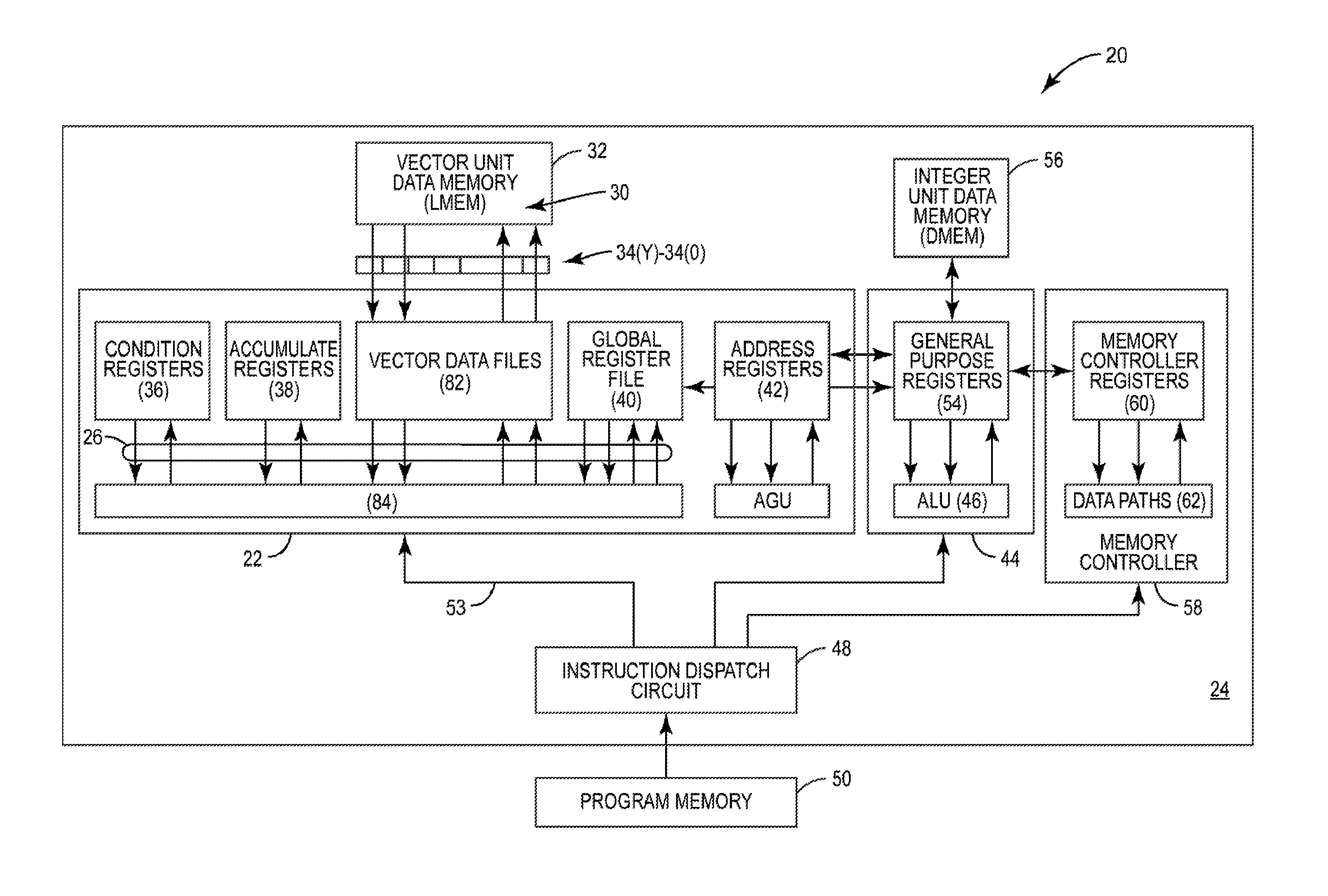

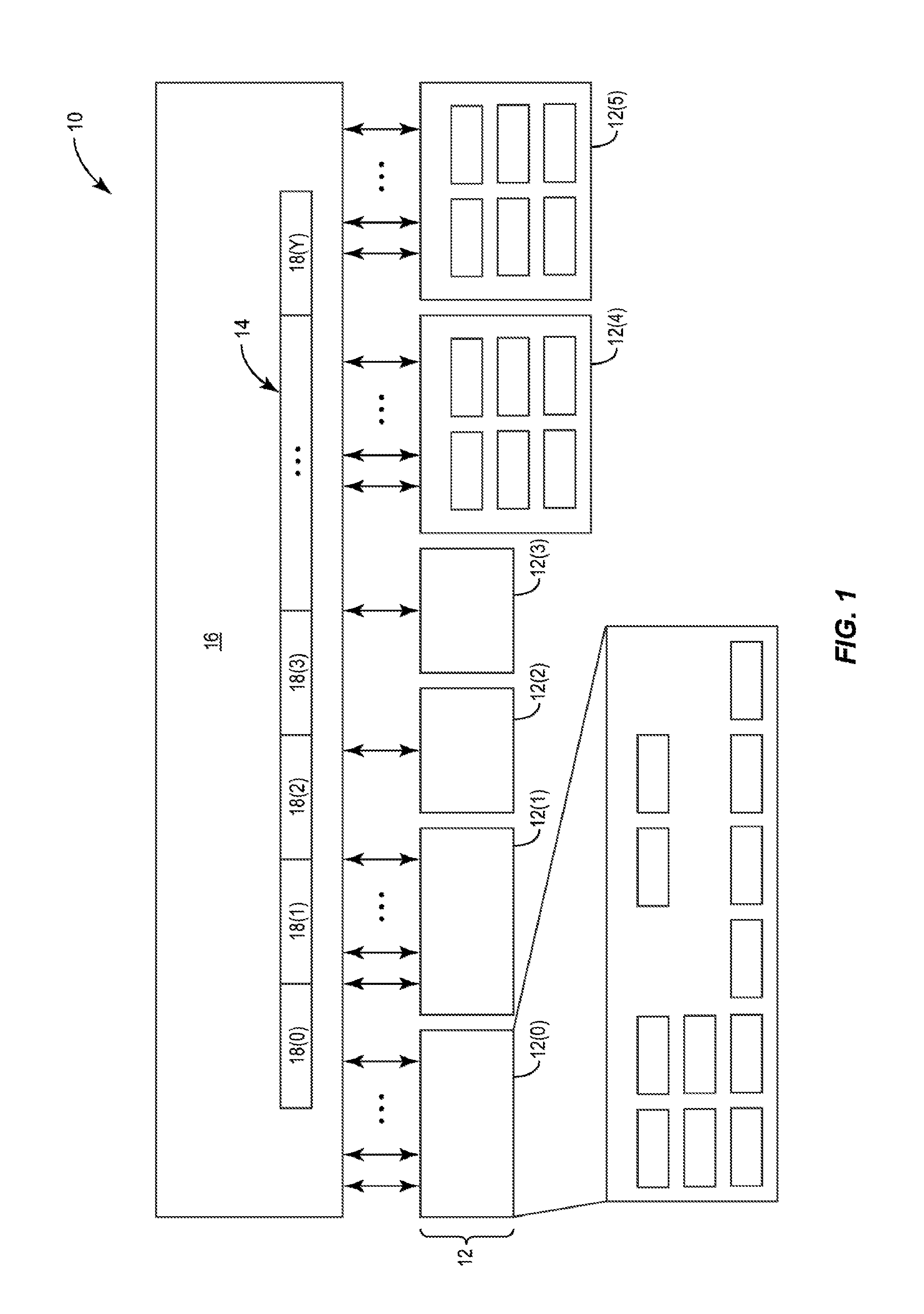

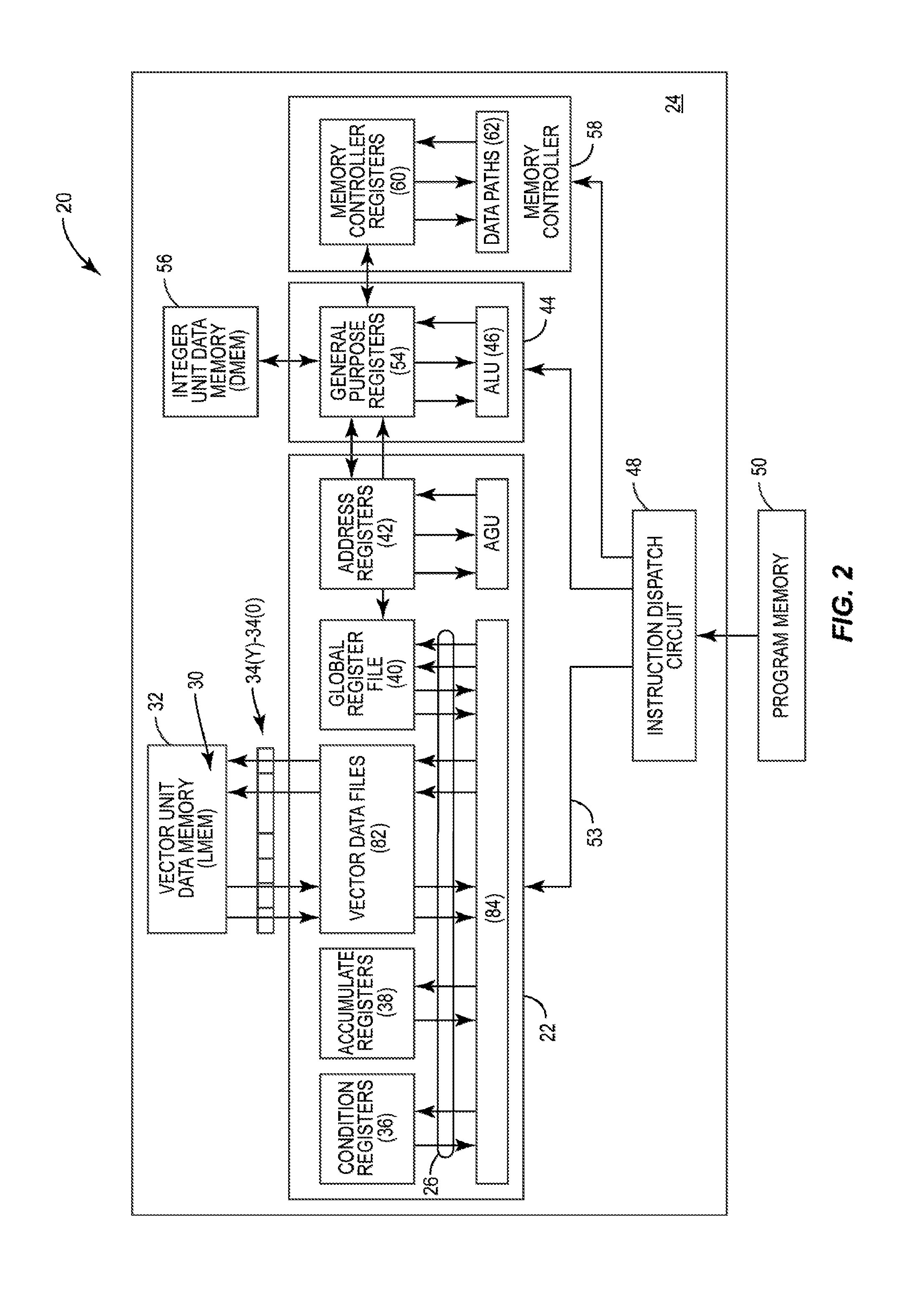

Vector processing engines having programmable data path configurations for providing multi-mode radix-2x butterfly vector processing circuits, and related vector processors, systems, and methods

ActiveUS20140280420A1Save extra spaceFast processing efficiencyDigital data processing detailsDigital computer detailsFourier transform on finite groupsDatapath

Vector processing engines (VPEs) having programmable data path configurations for providing multi-mode Radix-2X butterfly vector processing circuits. Related vector processors, systems, and methods are also disclosed. The VPEs disclosed herein include a plurality of vector processing stages each having vector processing blocks that have programmable data path configurations for performing Radix-2X butterfly vector operations to perform Fast Fourier Transform (FFT) vector processing operations efficiently. The data path configurations of the vector processing blocks can be programmed to provide different types of Radix-2X butterfly vector operations as well as other arithmetic logic vector operations. As a result, fewer VPEs can provide desired Radix-2X butterfly vector operations and other types arithmetic logic vector operations in a vector processor, thus saving area in the vector processor while still retaining vector processing advantages of fewer register writes and faster vector instruction execution times over scalar processing engines.

Owner:QUALCOMM INC

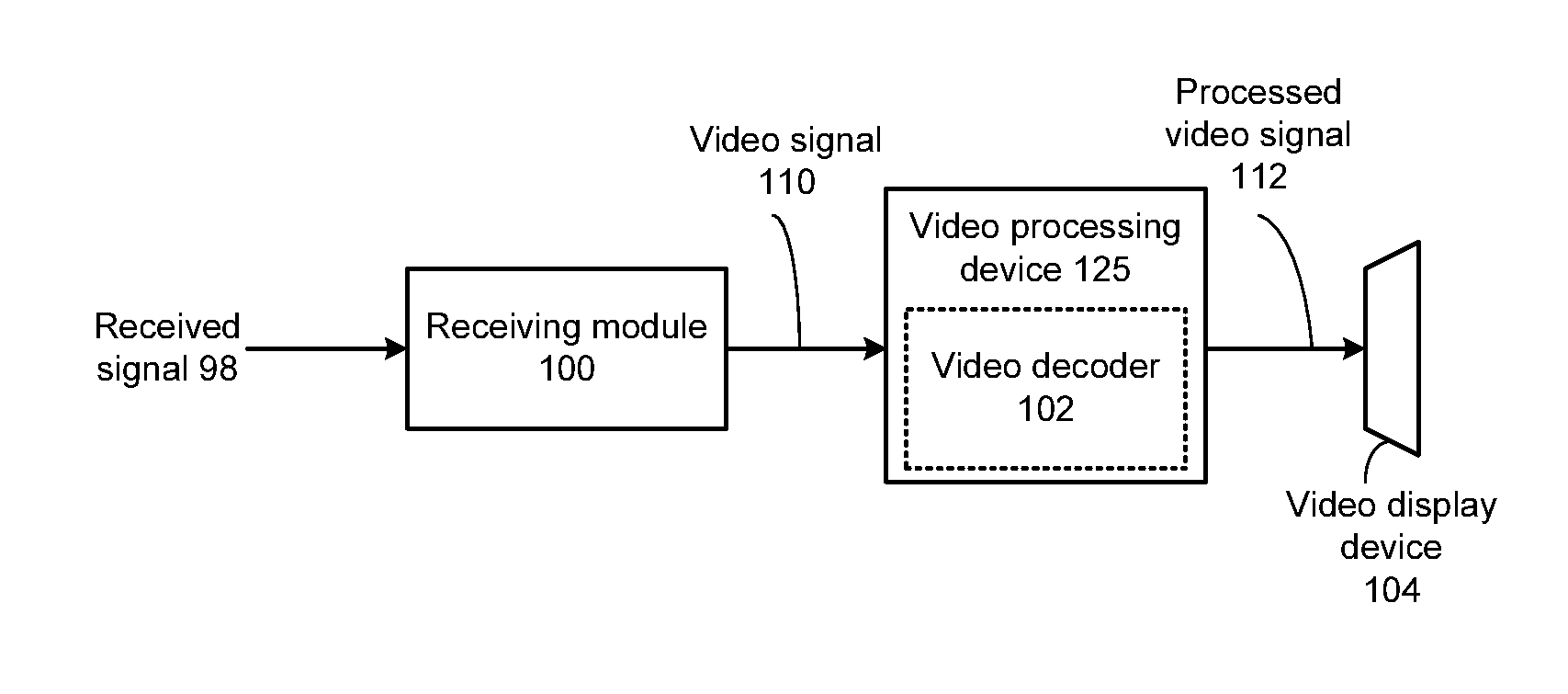

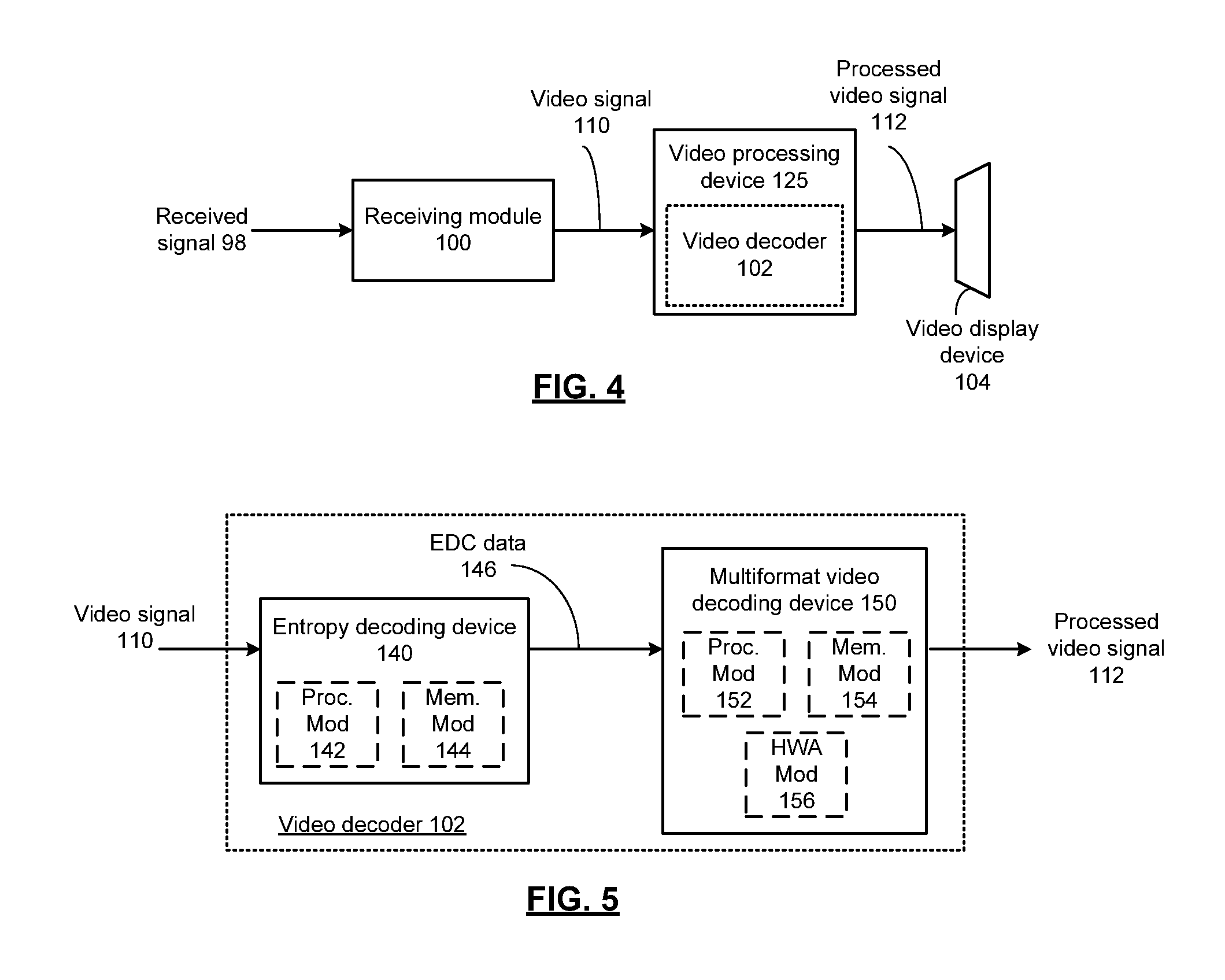

Video decoder with multi-format vector processor and methods for use therewith

ActiveUS20120314774A1Color television with pulse code modulationColor television with bandwidth reductionMatrix multiplierComputer science

A multi-format video decoder includes an entropy decoding device that generates entropy decoded (EDC) data from an encoded video signal. A multi-format video decoding device includes a memory module that stores a plurality of operational instructions including at least one matrix multiply instruction that includes matrix input configuration data. A plurality of vector processor units generate a decoded video signal from the EDC data, wherein at least one of the plurality of vector processors include a matrix multiplier that generates output data based on a multiplication of first input data and second input data in accordance with the matrix input configuration data, wherein the matrix input configuration data indicates the dimensionality of the first input data and the second input data.

Owner:VIXS SYSTEMS INC

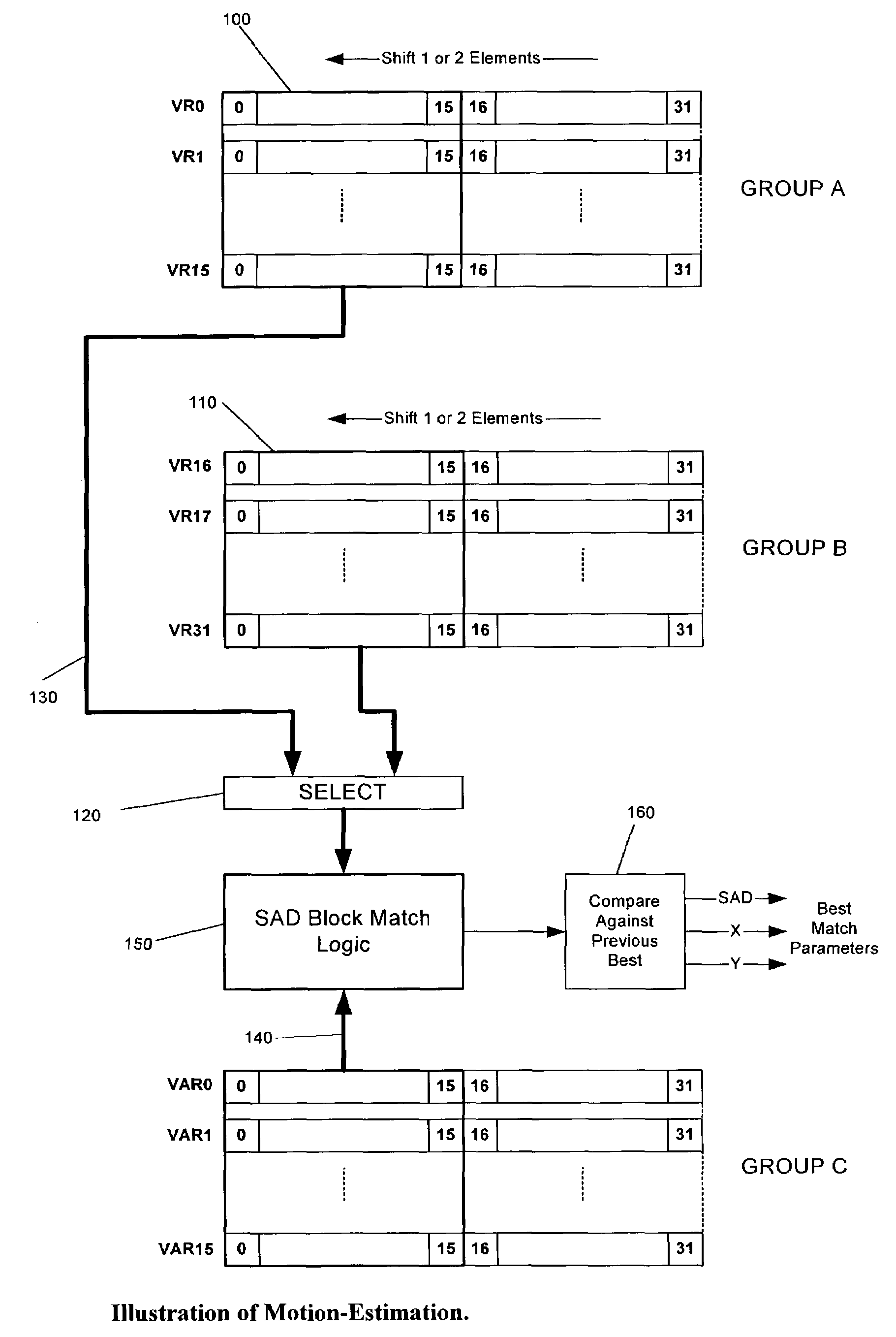

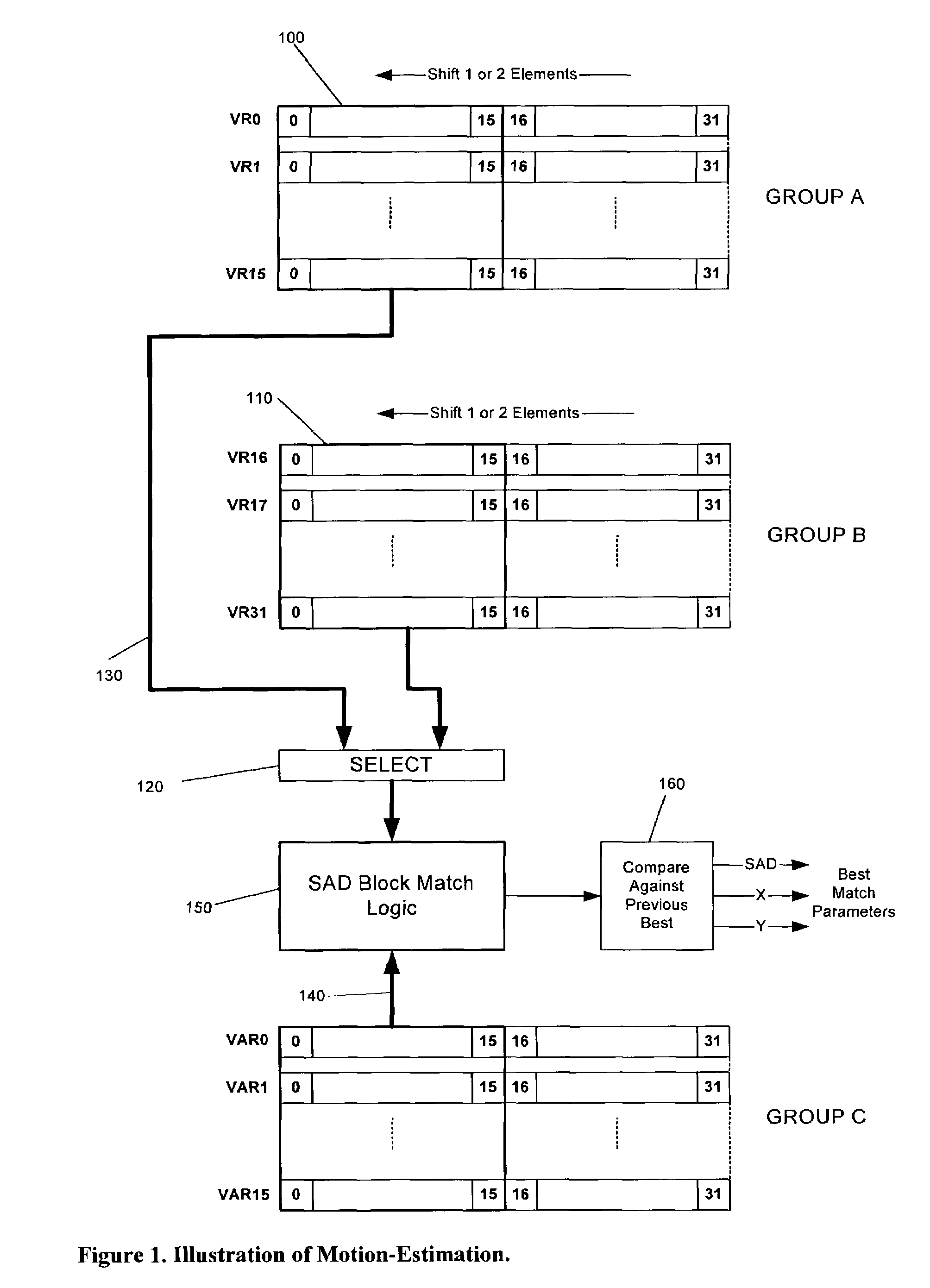

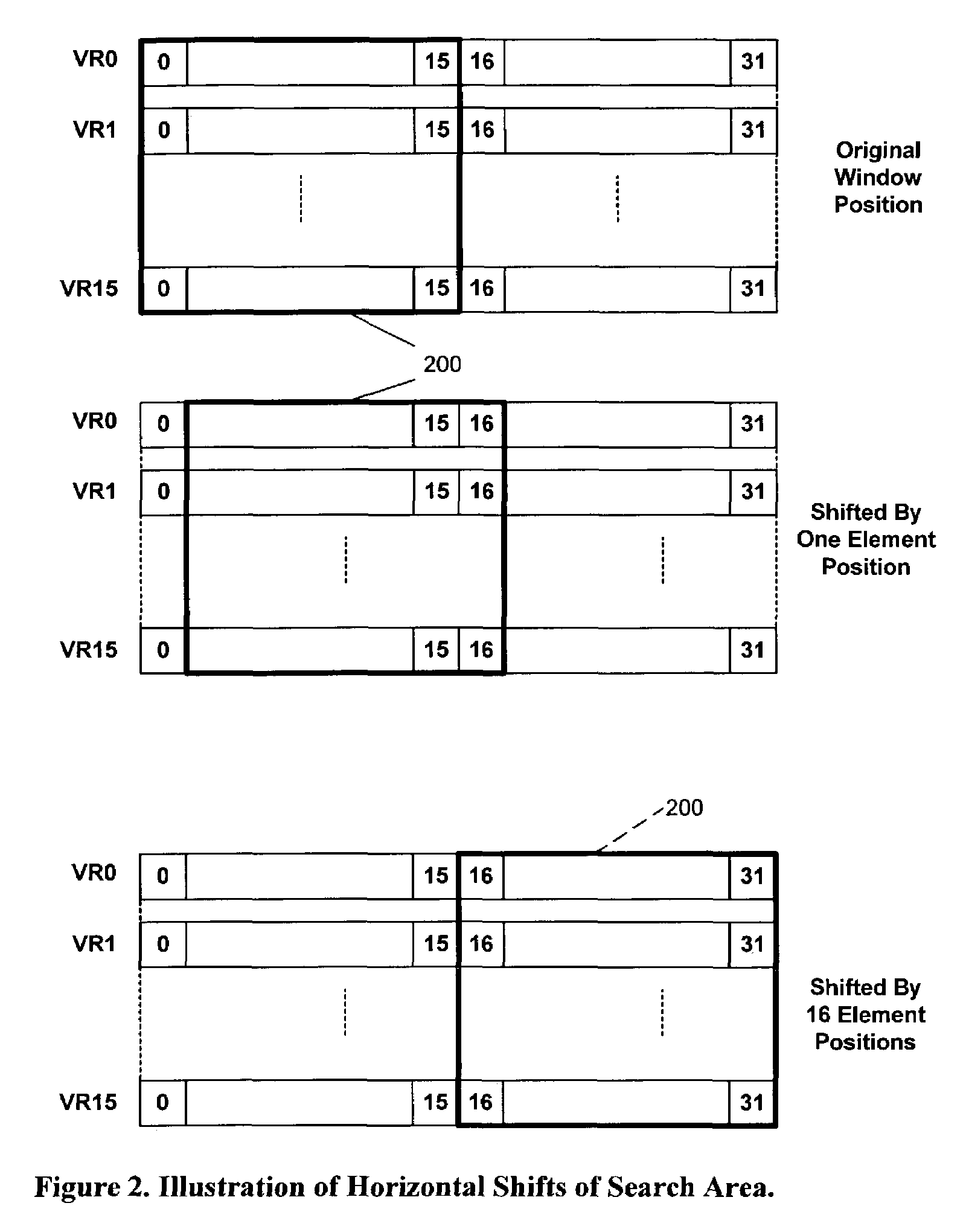

Method for programmable motion estimation in a SIMD processor

The present invention provides a 16×16-sliding window using vector register file with zero overhead for horizontal or vertical shifts to incorporate motion estimation into SIMD vector processor architecture. SIMD processor's vector load mechanism, vector register file with shifting of elements capability, and 16×16 parallel SAD calculation hardware and instruction are used. Vertical shifts of all sixteen-vector registers occur in a ripple-through fashion when the end vector register is loaded. The parallel SAD calculation hardware can calculate one 16-by-16-block match per clock cycle in a pipelined fashion. In addition, hardware for best-match SAD value comparisons and maintaining their pixel location reduces the software overhead. Block matching for less than 16 by 16 block areas is supported using a mask register to mask selected elements, thereby reducing search area to any block size less than 16 by 16.

Owner:MIMAR TIBET

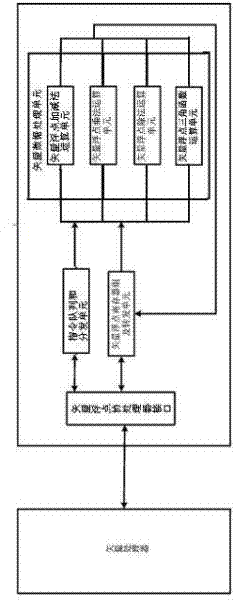

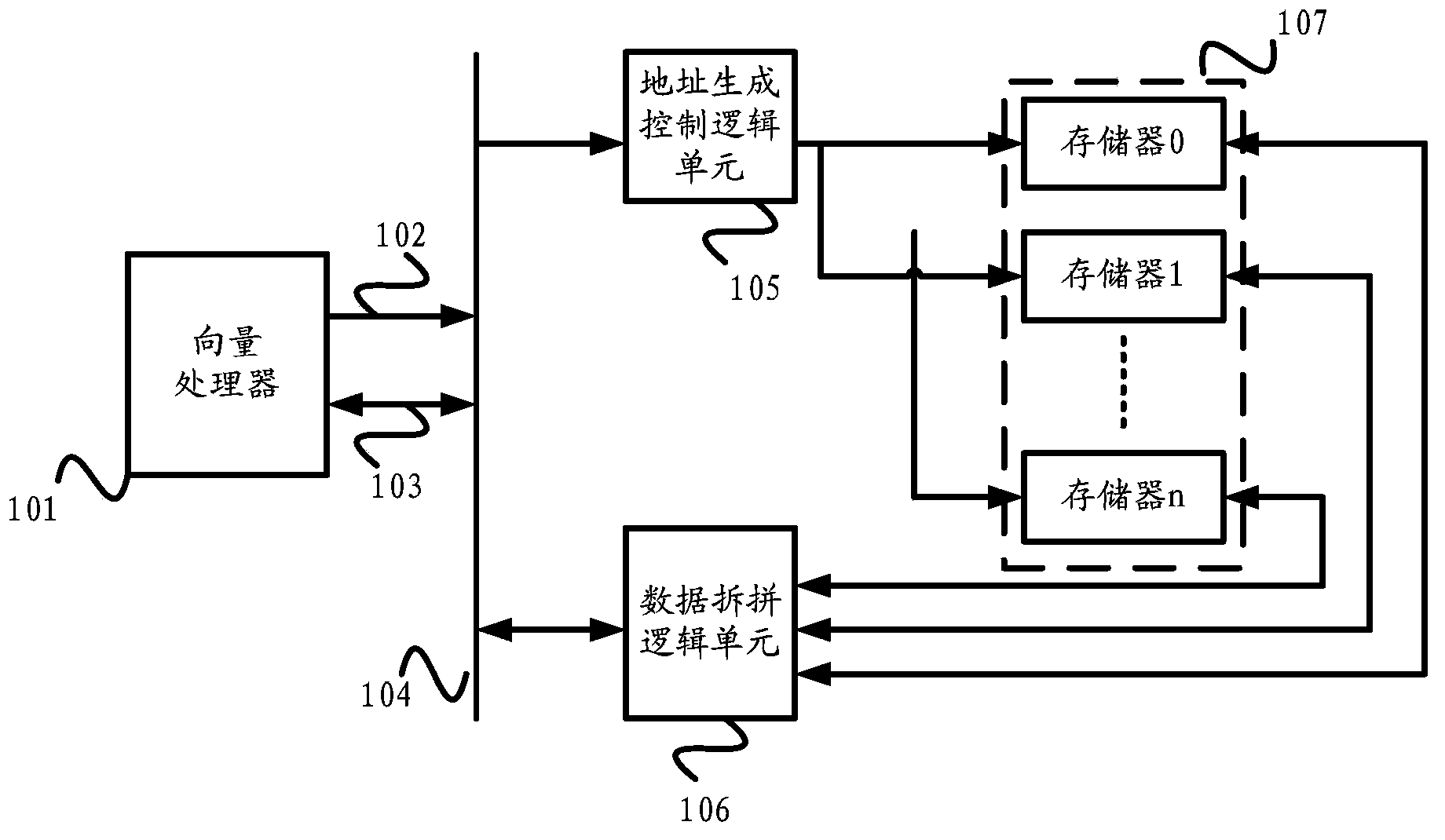

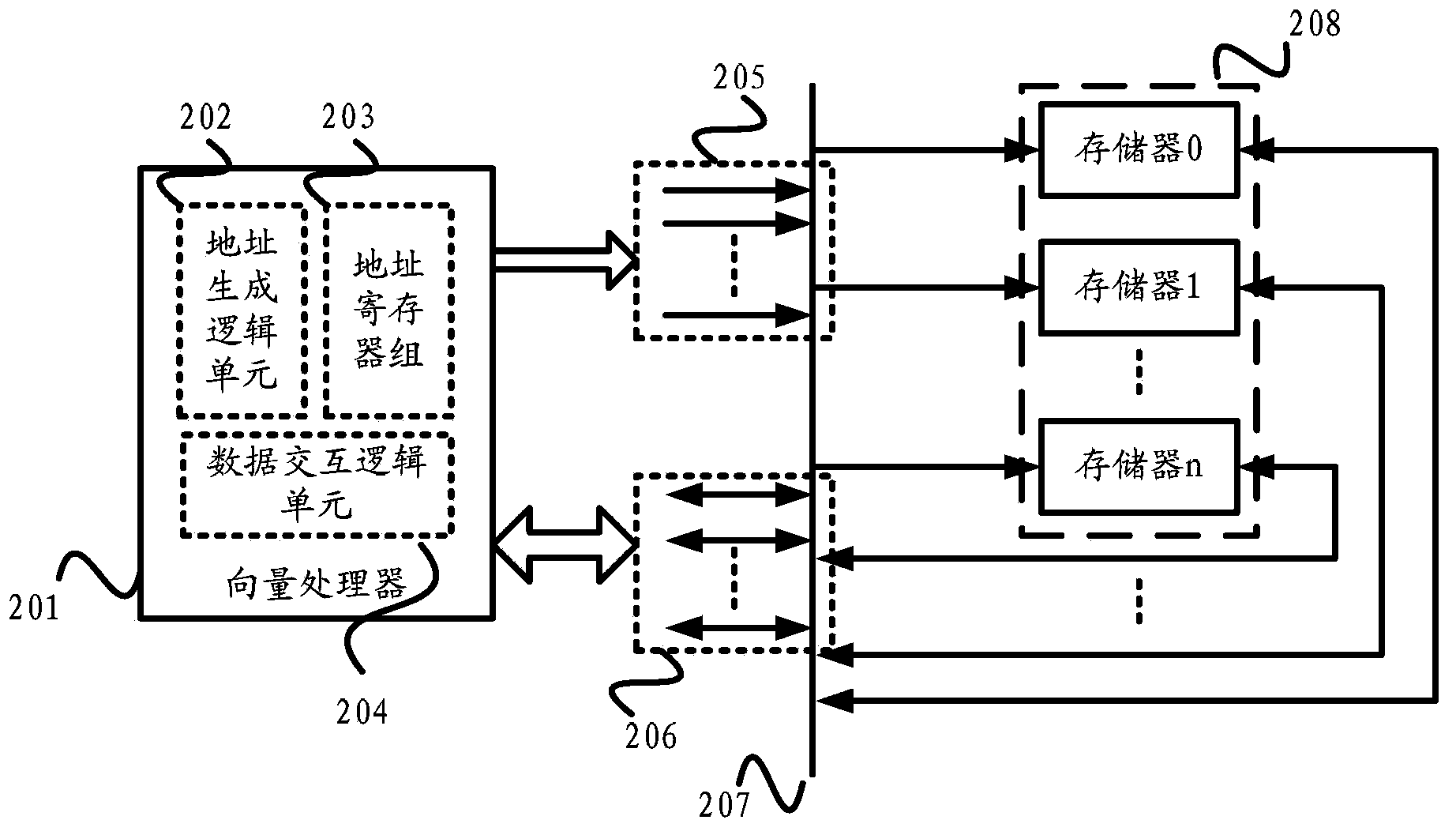

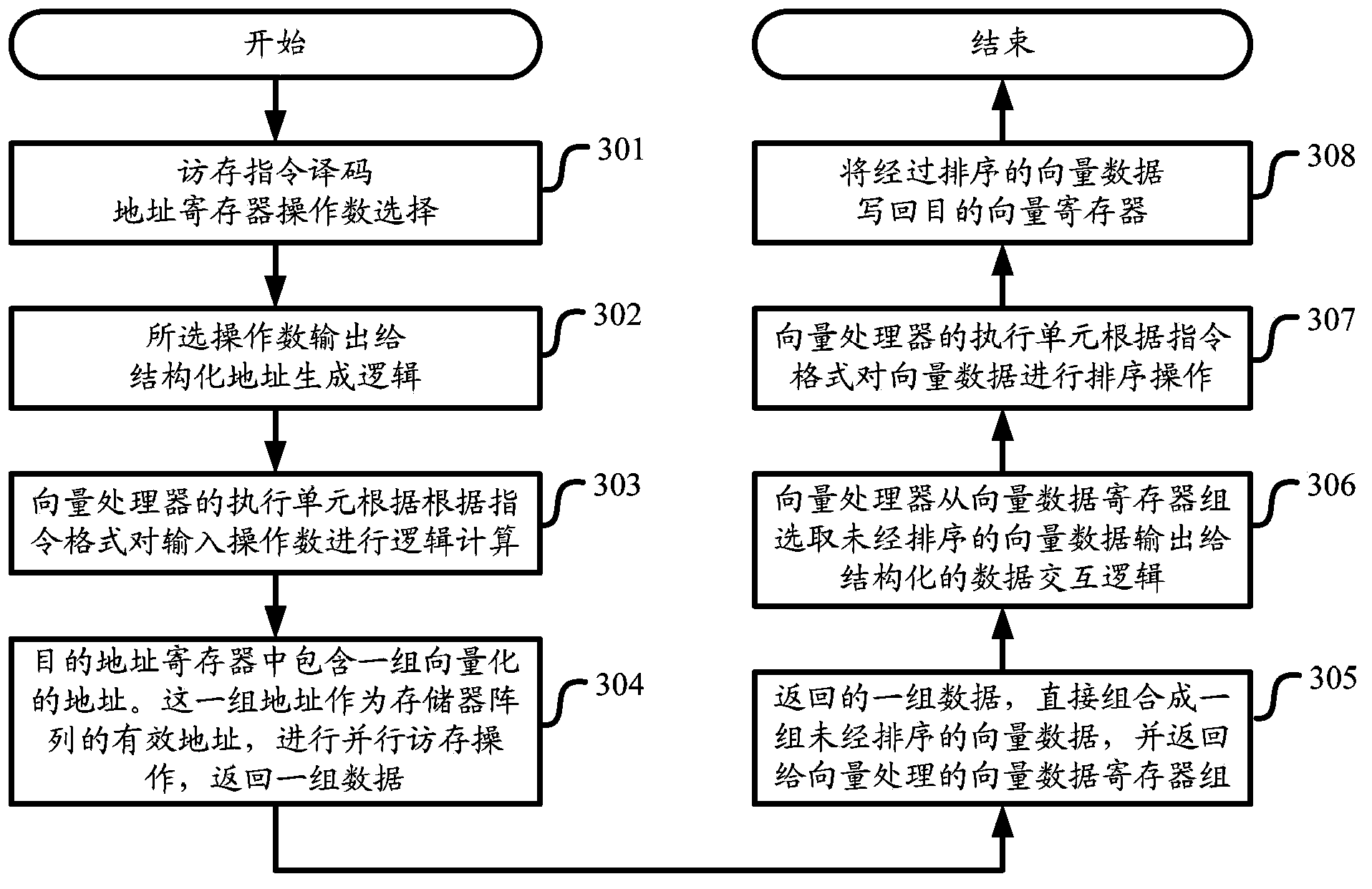

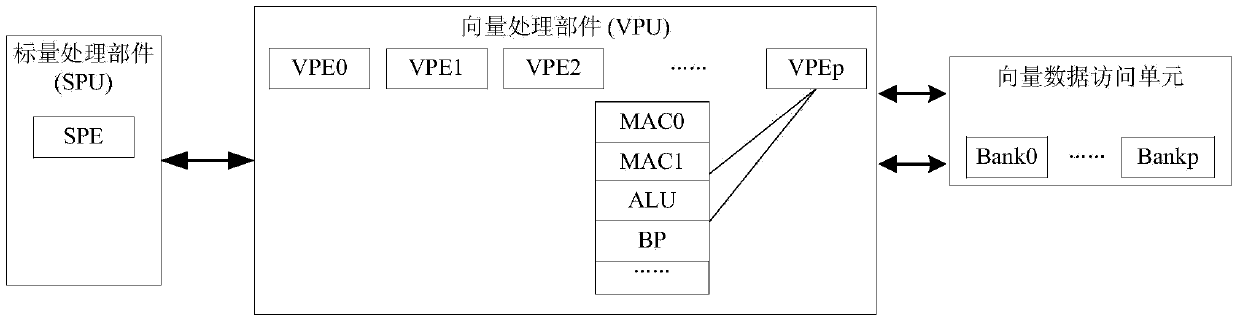

Vector processor and vector data access and interaction method thereof

ActiveCN103699360AImprove efficiencyProcessing speedMachine execution arrangementsArithmetic logic unitLogic cell

The invention discloses a vector processor and a vector data access and interaction method thereof. The vector processor comprises an address register set, an address generation logic unit and a data interaction logic unit, wherein the address register set is used for storing an operand and vectorized address data and transmitting the vectorized address data to a memory set through an address bus interface, and the vectorized address data are used for accessing the memory set; the address generation logic unit is used for calculating the vectorized address data according to the operand through an arithmetic logic unit and storing the vectorized address data in the address register set; the data interaction logic unit is used for carrying out splitting / splicing operation on vector data, which are accessed from the memory set through the address bus interface, through the arithmetic logic unit. According to the vector processor and the vector data access and interaction method thereof, the efficient arithmetic logic unit of the vector processor can be utilized sufficiently, so that the data processing speed is high and the efficiency is high.

Owner:北京中科晶上科技股份有限公司

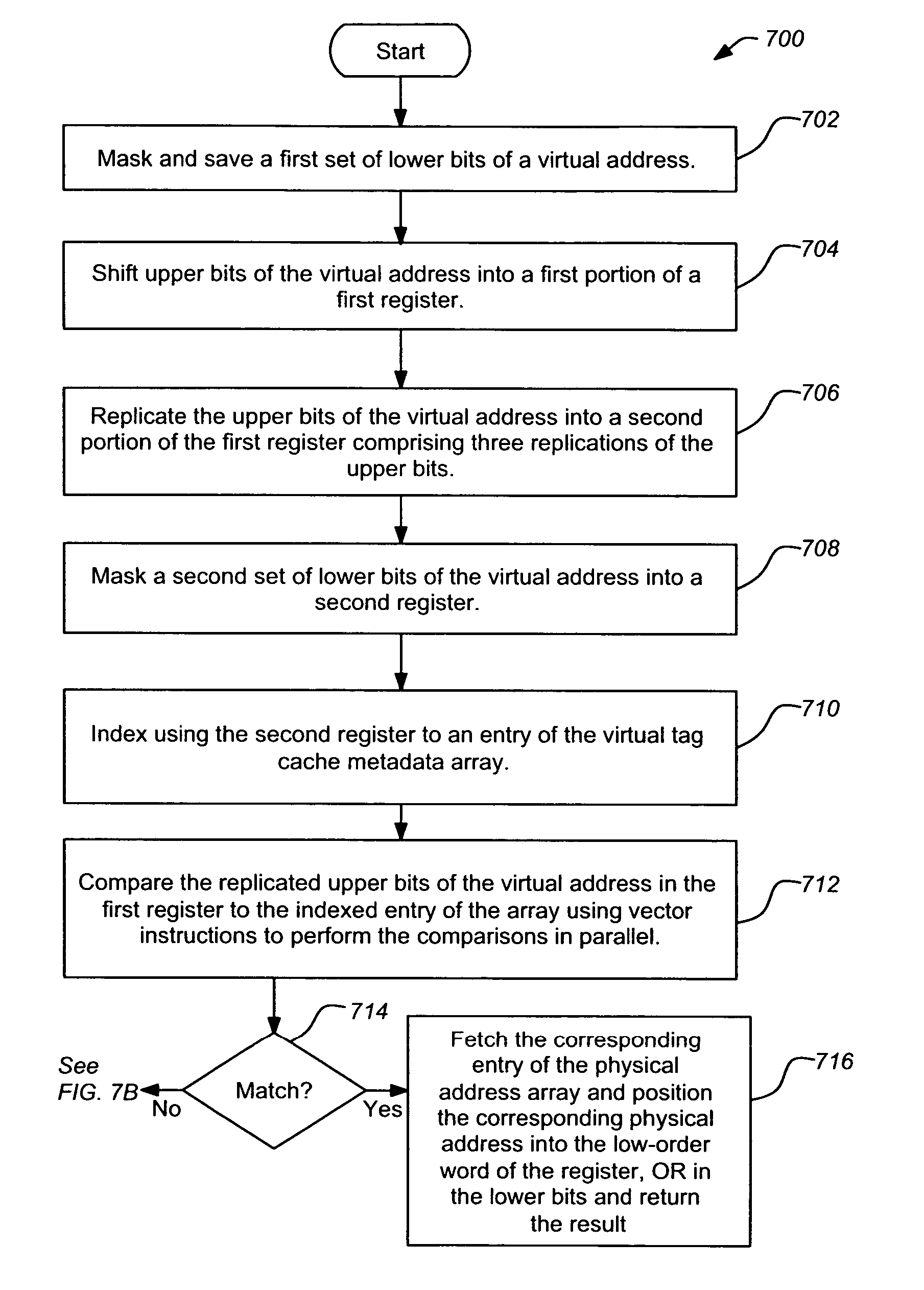

Using vector processors to accelerate cache lookups

InactiveUS20060288151A1Memory adressing/allocation/relocationMicro-instruction address formationMemory addressPhysical address

Typical embodiments of the present invention maintain the cache metadata in arrays, and use vector instructions to process the array elements in parallel. The cache metadata comprises virtual tags corresponding to main memory addresses and physical addresses corresponding to cache memory addresses. The virtual tags and physical addresses may be interleaved in a single array in the cache memory. Alternately, virtual tags and physical addresses may be maintained in corresponding separate arrays. A roving pointer may be used to identify the next block to be ejected from the cache memory.

Owner:IBM CORP

Method and apparatus for performing low-density parity-check (LDPC) code operations using a multi-level permutation

InactiveUS7237171B2Easy to implementError correction/detection using multiple parity bitsCode conversionGraphicsCommunications system

Methods and apparatus of the present invention can be used to implement a communications system wherein different devices using the same LDPC code can be implemented using different levels of parallelism. The use of a novel class of LDPC codes makes such differences in parallelism possible. Use of a factorable permuter in various embodiments of the invention make LDPC devices with different levels of parallelism in the encoder and decoder relatively easy to implement when using the codes in the class of LDPC codes discussed herein. The factorable permuter may be implemented as a controllable multi-stage switching devices which performs none, one, or multiple sequential reordering operations on a Z element vector passed between memory and a Z element vector processor, with the switching one individual vectors being controlled in accordance with the graph structure of the code being implemented.

Owner:QUALCOMM INC

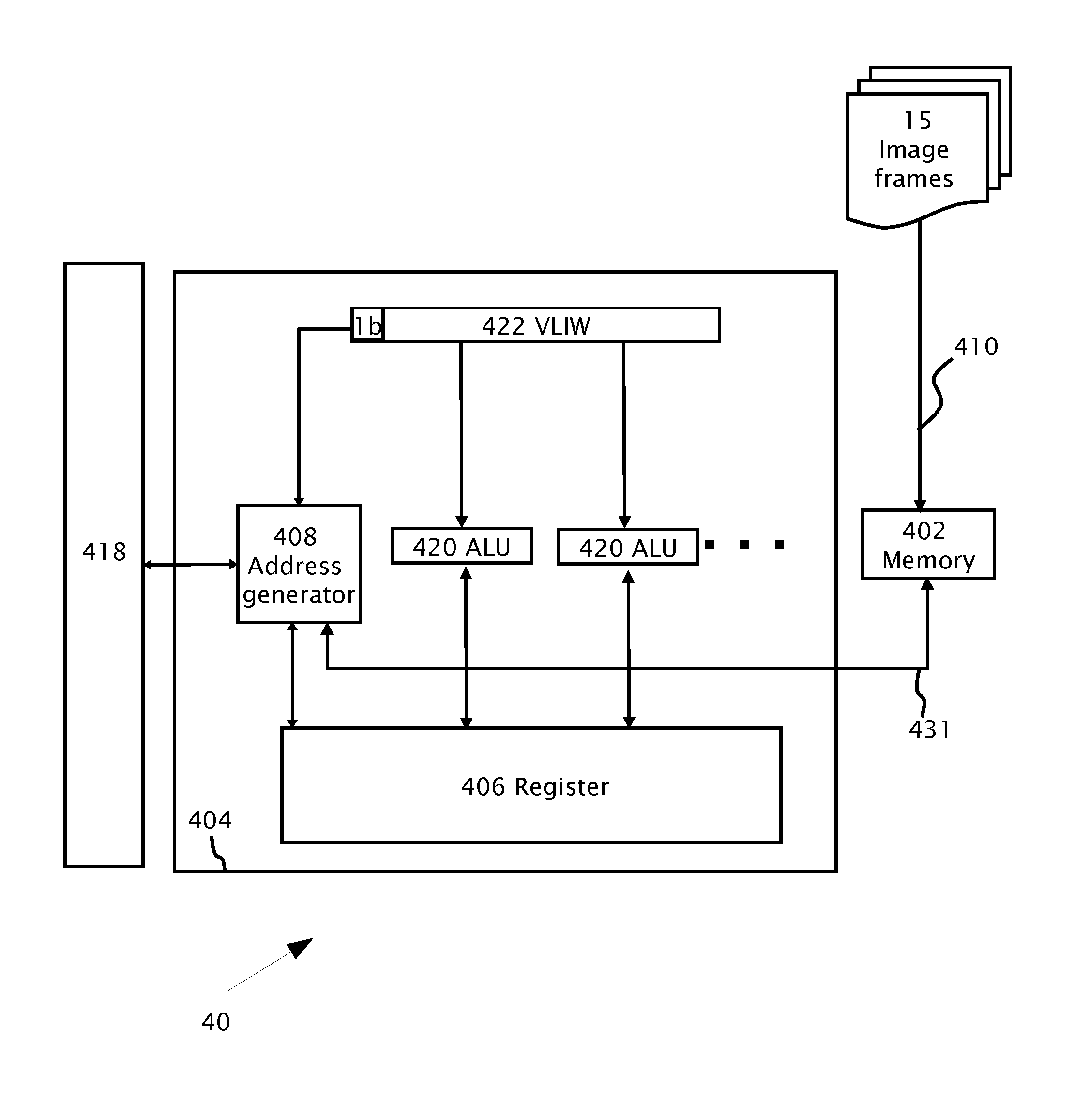

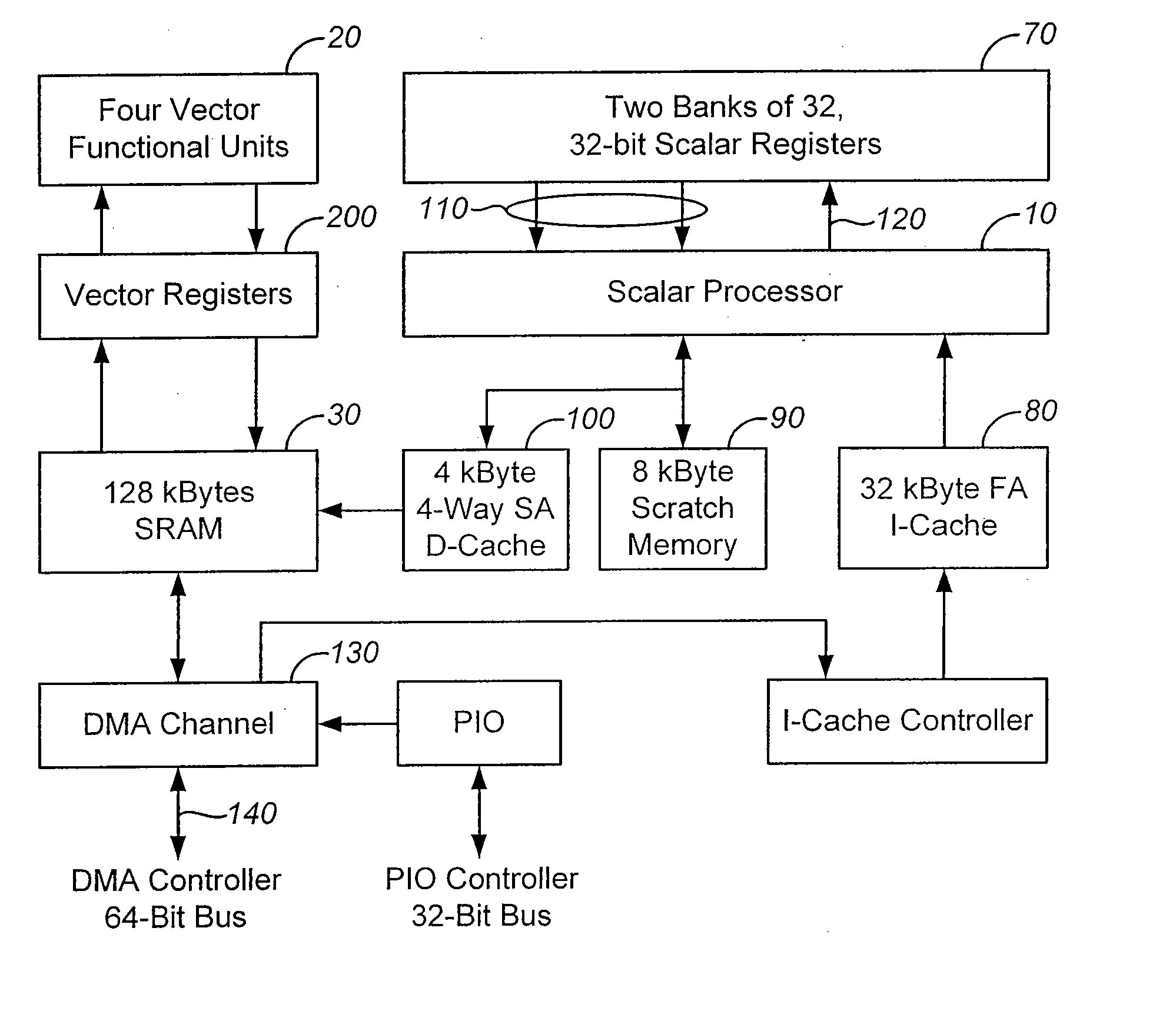

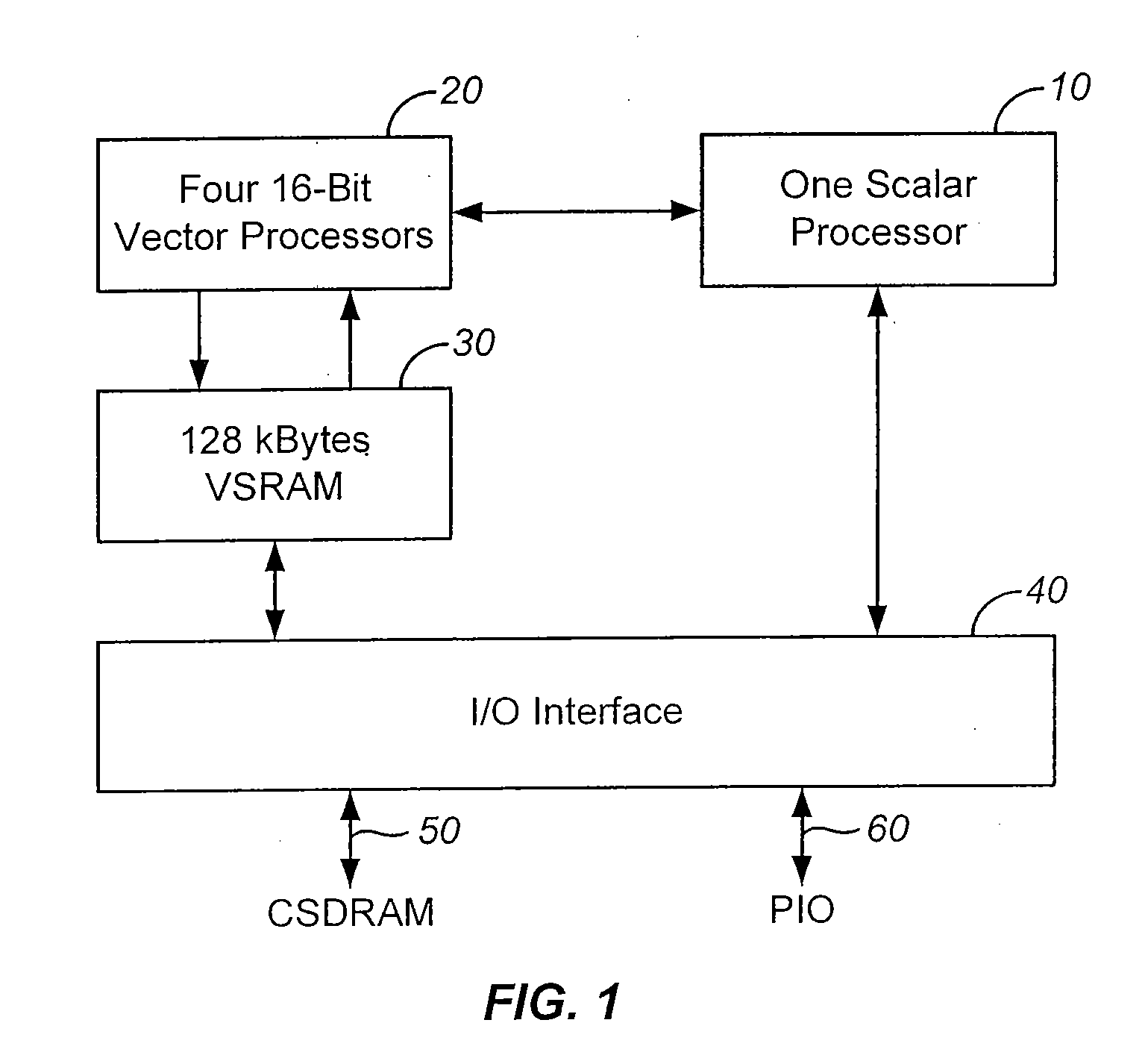

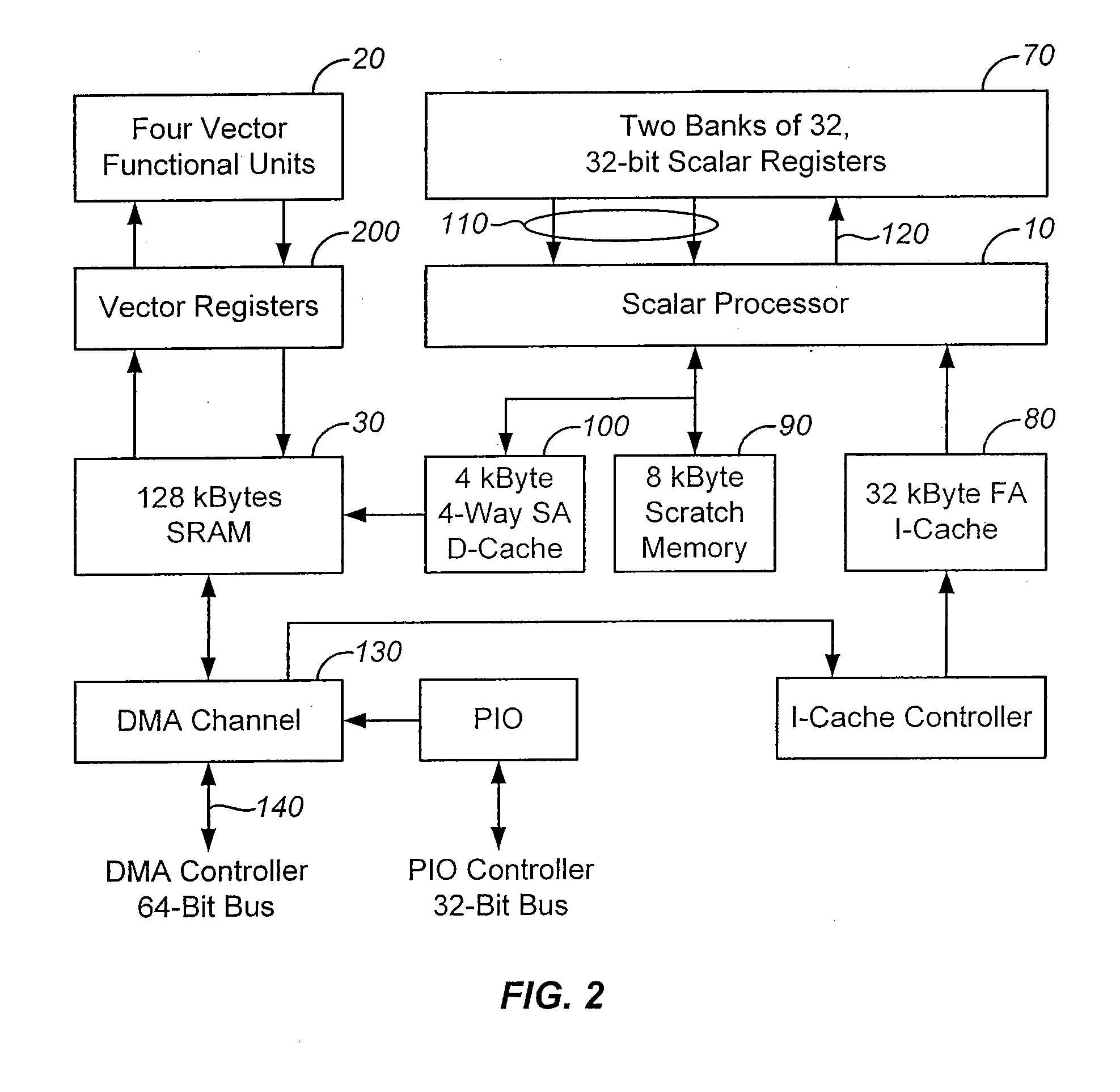

Image Processing Address Generator

ActiveUS20110307684A1Program control using wired connectionsGeneral purpose stored program computerImaging processingAddress generator

An image processing system including a vector processor and a memory adapted for attaching to the vector processor. The memory is adapted to store multiple image frames. The vector processor includes an address generator operatively attached to the memory to access the memory. The address generator is adapted for calculating addresses of the memory over the multiple image frames. The addresses may be calculated over the image frames based upon an image parameter. The image parameter may specify which of the image frames are processed simultaneously. A scalar processor may be attached to the vector processor. The scalar processor provides the image parameter(s) to the address generator for address calculation over the multiple image frames. An input register may be attached to the vector processor. The input register may be adapted to receive a very long instruction word (VLIW) instruction. The VLIW instruction may be configured to transfer only: (i) parameters for image processing calculations over the image frames by the ALU units and (ii) a single bit to the address generator.

Owner:MOBILEYE VISION TECH LTD

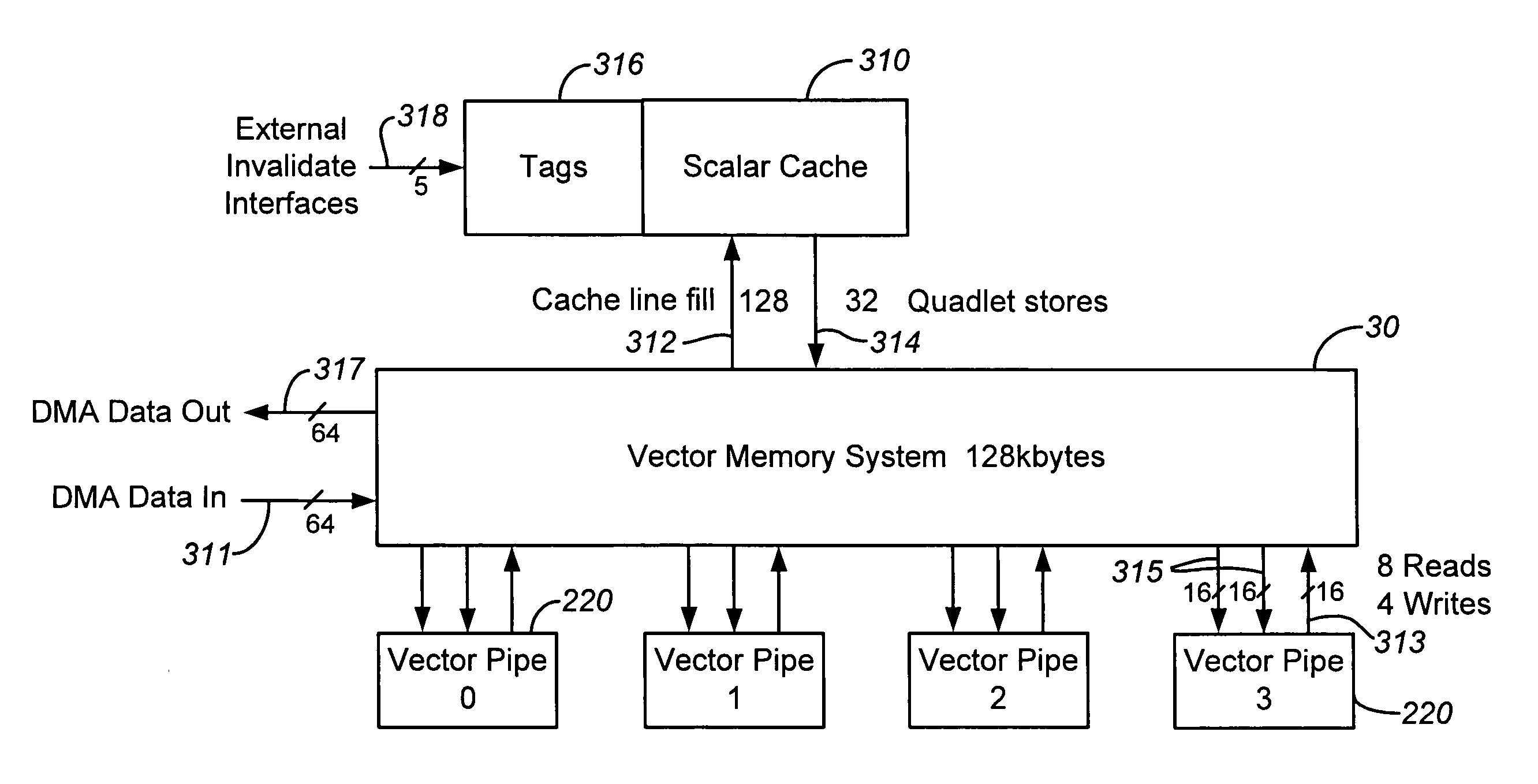

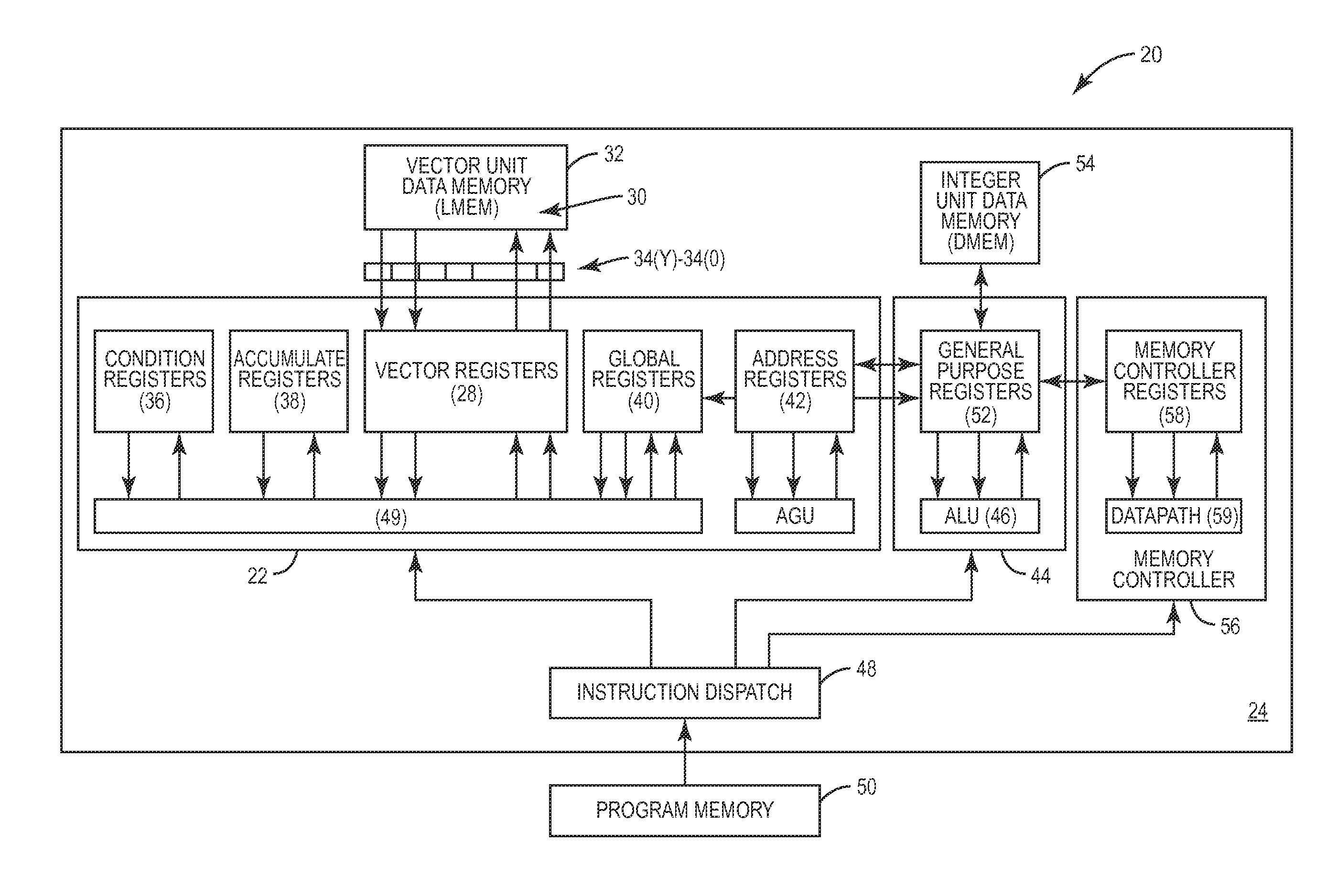

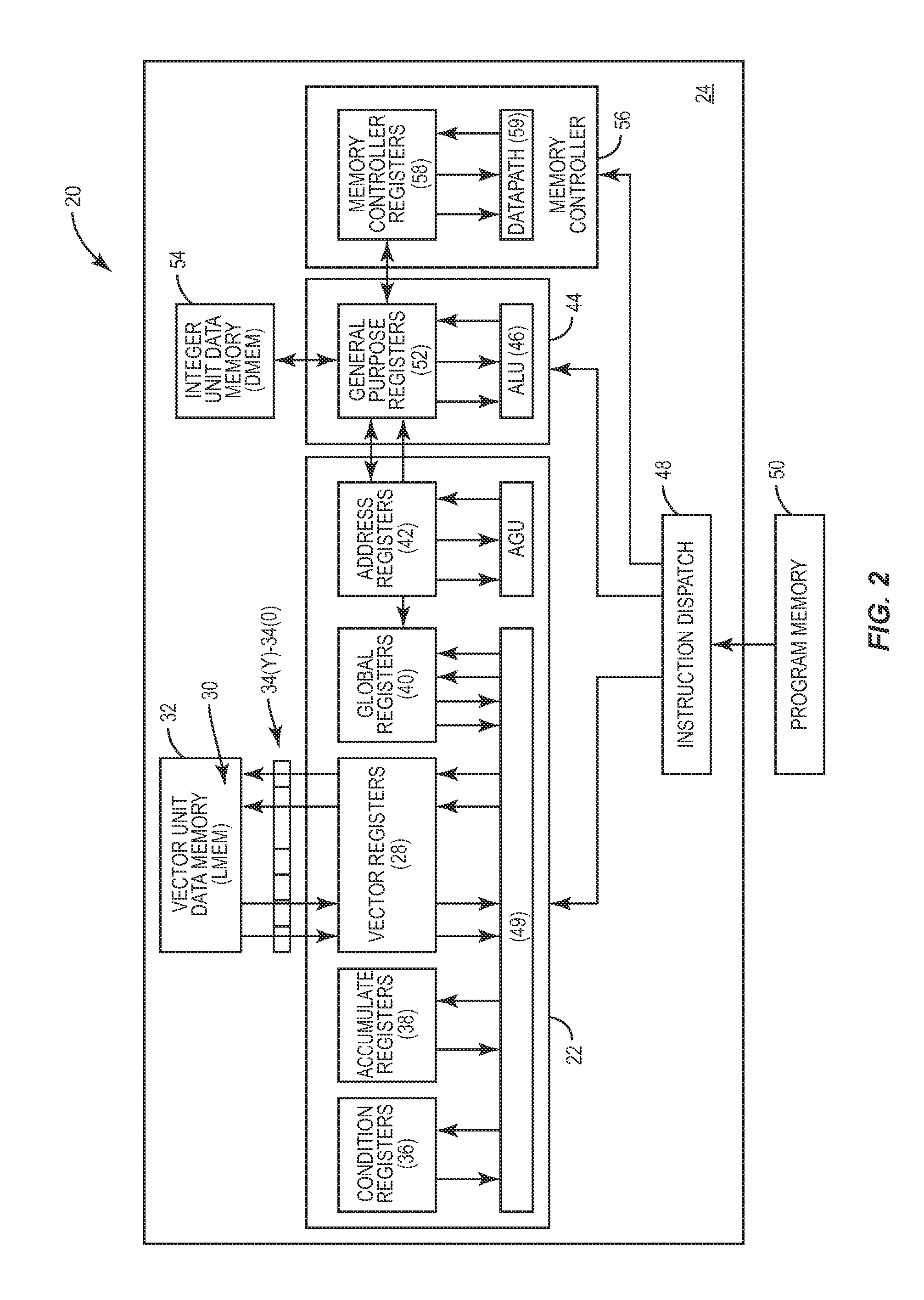

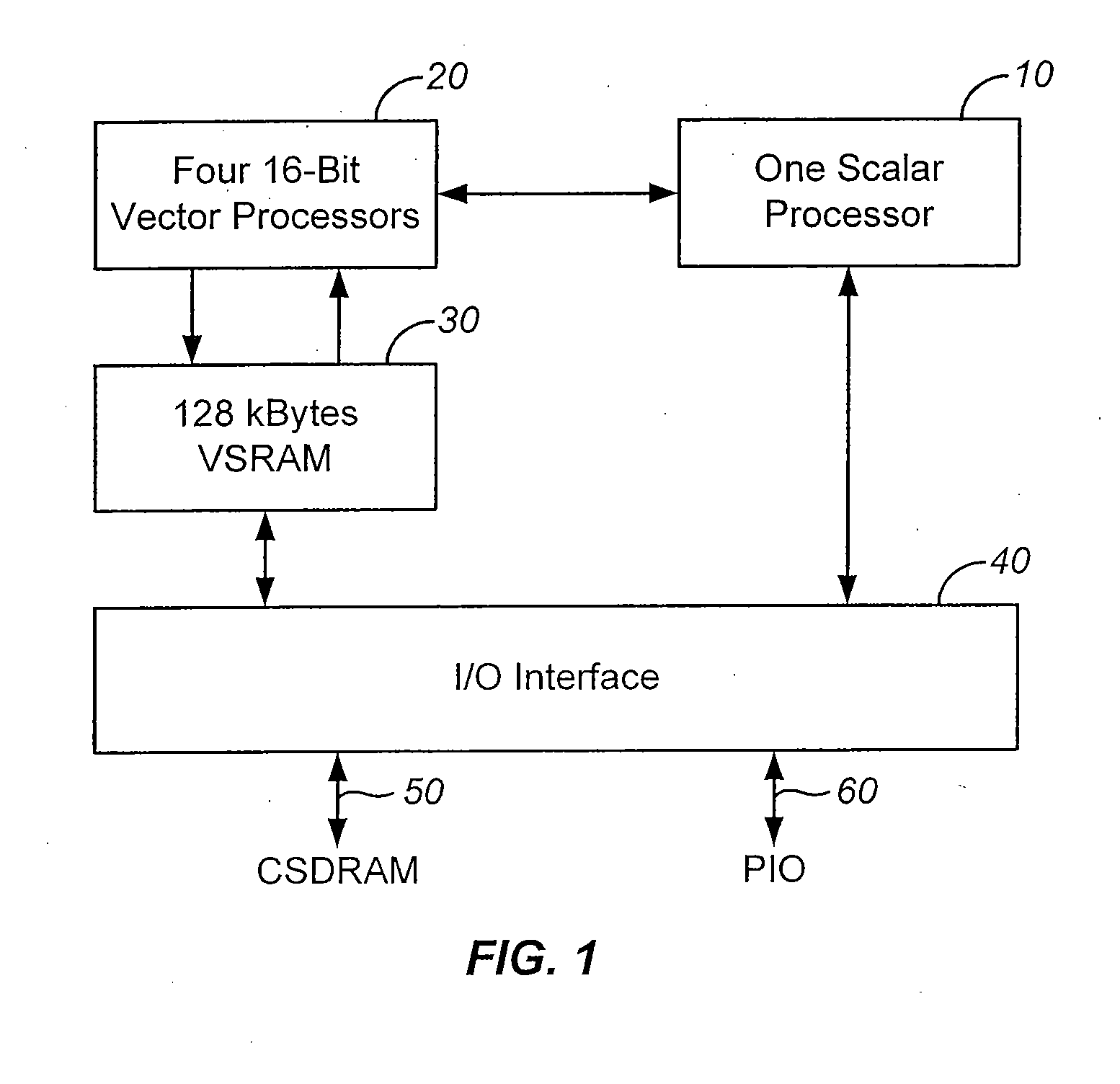

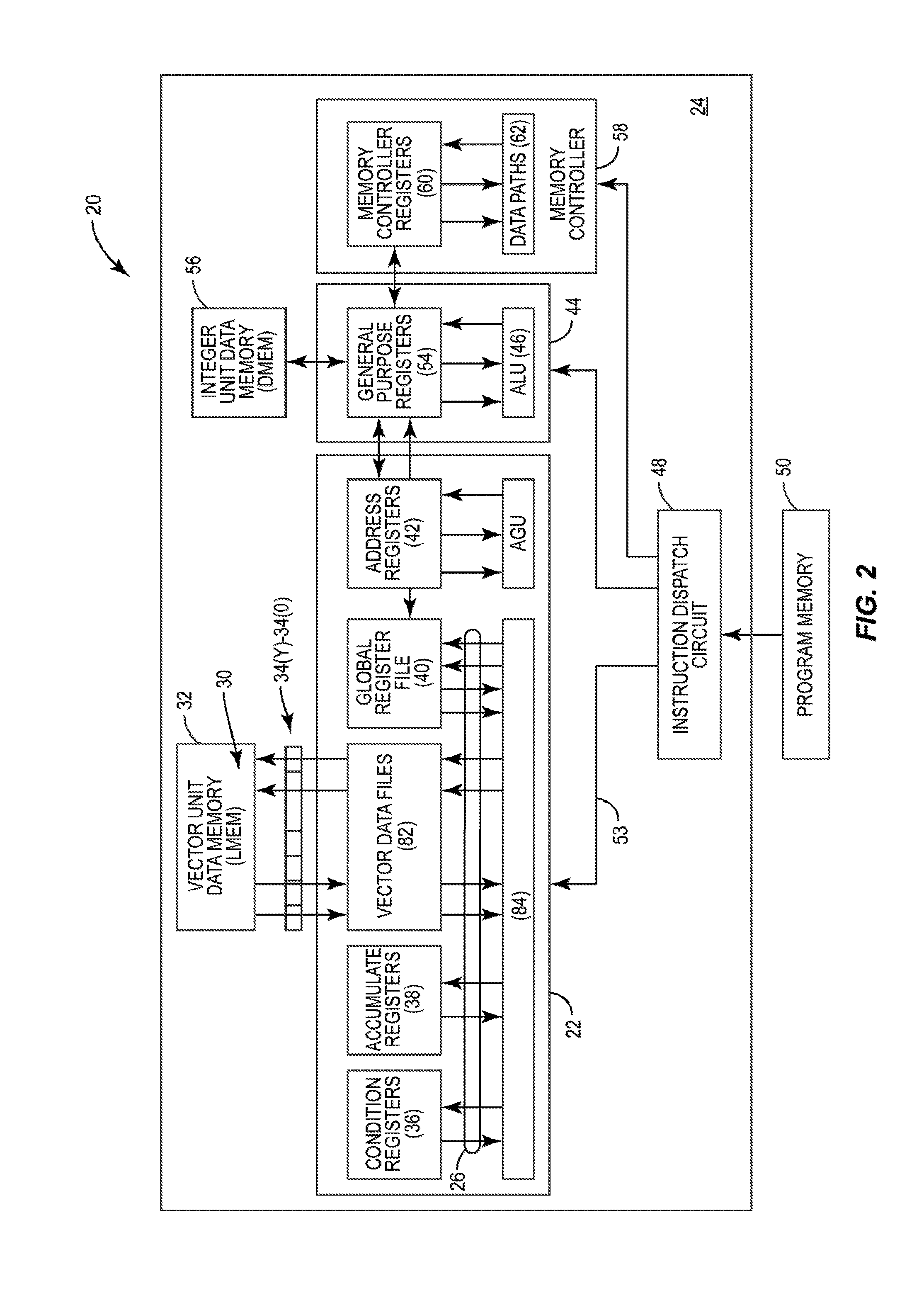

Memory architecture for vector processor

InactiveUS20080059758A1Limited instruction widthGreat instruction widthConditional code generationRegister arrangementsRapid processingTerm memory

A vector processor includes a set of vector registers for storing data to be used in the execution of instructions and a vector functional unit coupled to the vector registers for executing instructions. The functional unit executes instructions using operation codes provided to it which operation codes include a field referencing a special register. The special register contains information about the length and starting point for each vector instruction. A series of new instructions to enable rapid handling of image pixel data are provided.

Owner:MEADLOCK JAMES W MEAD

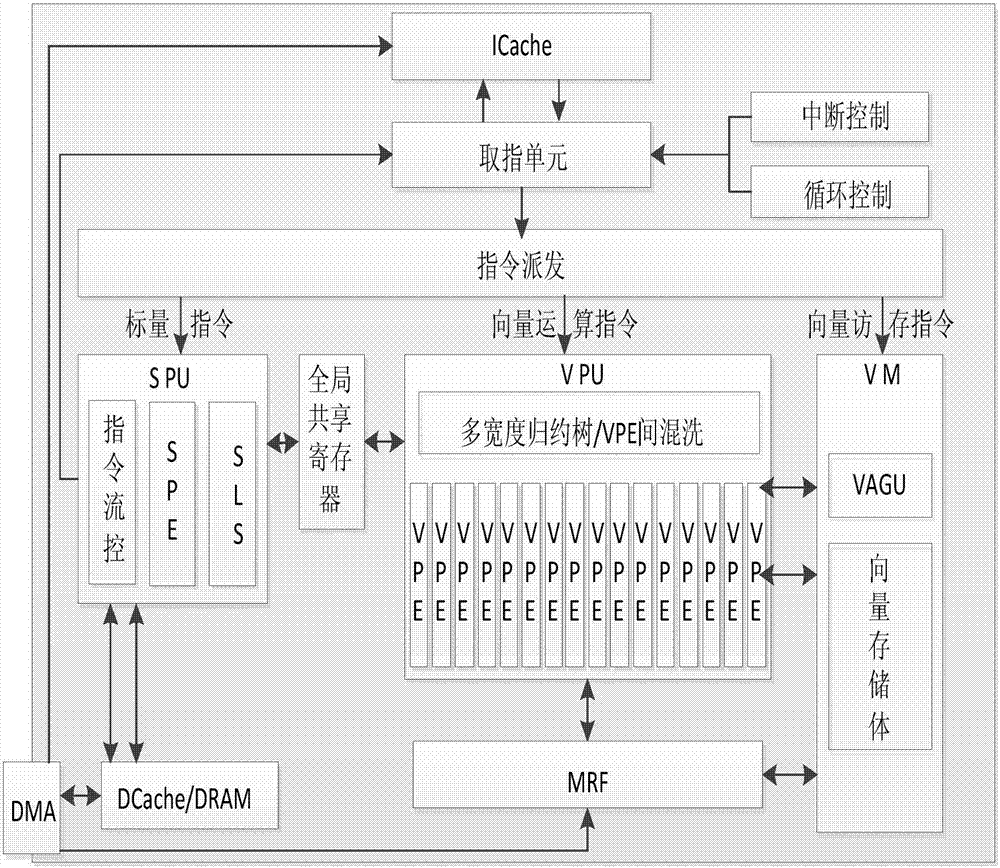

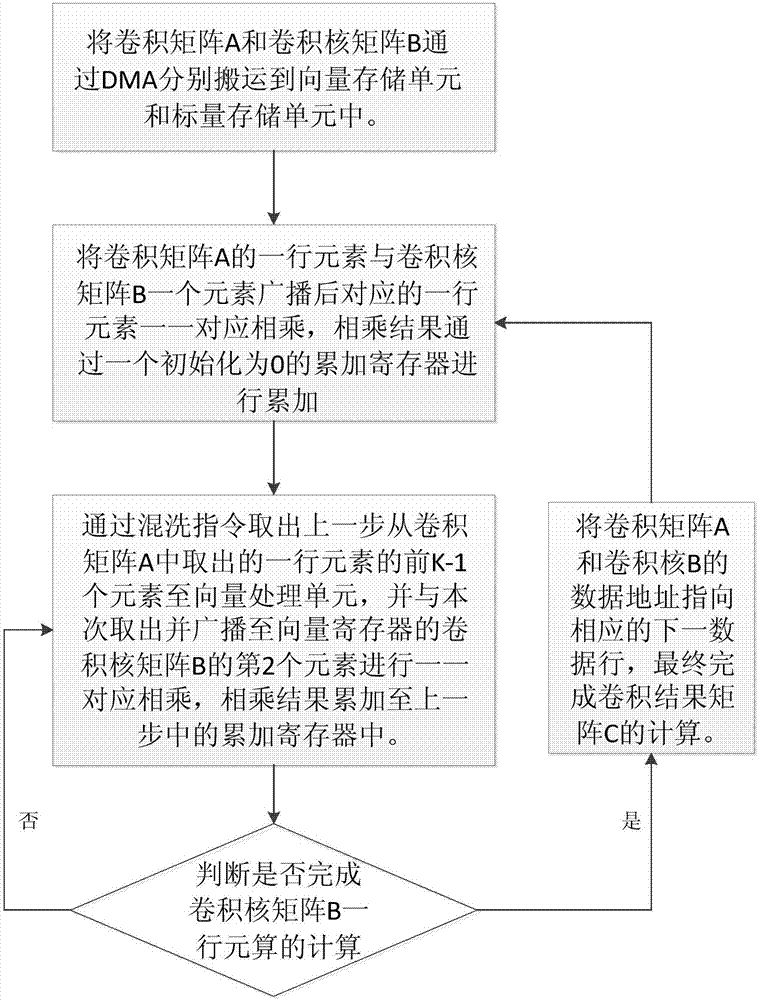

Vector processor-oriented vectorization realization method for two-dimensional matrix convolution

ActiveCN106970896AImprove computing efficiencyReduce memory accessComplex mathematical operationsEuclidean vectorConvolution

Owner:NAT UNIV OF DEFENSE TECH

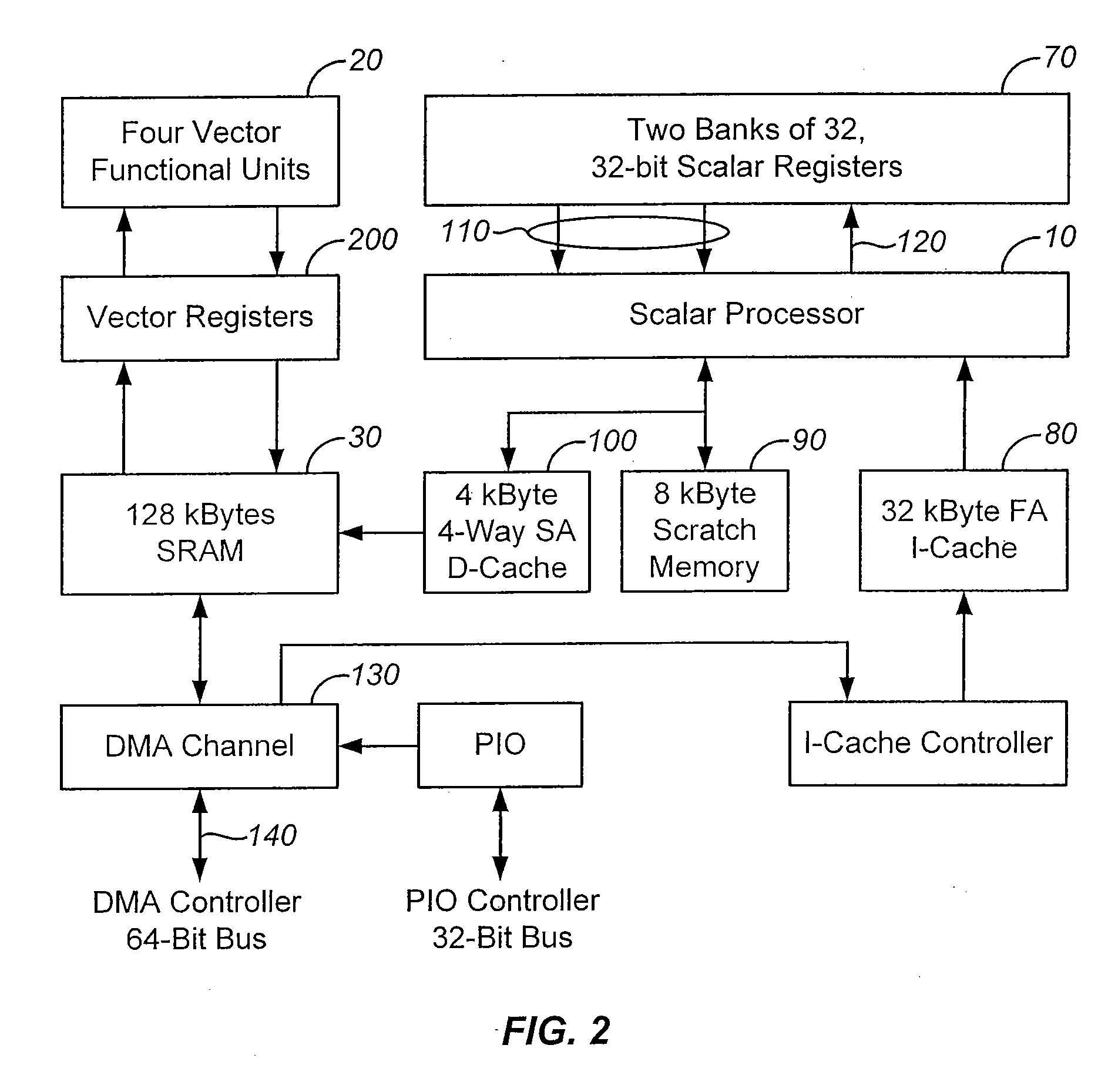

Vector Processor Architecture

InactiveUS20080059759A1Limited instruction widthGreat instruction widthConditional code generationRegister arrangementsRapid processingInformation provision

A vector processor includes a set of vector registers for storing data to be used in the execution of instructions and a vector functional unit coupled to the vector registers for executing instructions. The functional unit executes instructions using operation codes provided to it which operation codes include a field referencing a special register. The special register contains information about the length and starting point for each vector instruction. A series of new instructions to enable rapid handling of image pixel data are provided.

Owner:MEADLOCK JAMES W MEAD

VECTOR PROCESSING ENGINES (VPEs) EMPLOYING FORMAT CONVERSION CIRCUITRY IN DATA FLOW PATHS BETWEEN VECTOR DATA MEMORY AND EXECUTION UNITS TO PROVIDE IN-FLIGHT FORMAT-CONVERTING OF INPUT VECTOR DATA TO EXECUTION UNITS FOR VECTOR PROCESSING OPERATIONS, AND RELATED VECTOR PROCESSOR SYSTEMS AND METHODS

ActiveUS20150143086A1Reduce power consumptionData efficientDigital computer detailsHandling data according to predetermined rulesData streamExecution unit

Vector processing engines (VPEs) employing format conversion circuitry in data flow paths between vector data memory and execution units to provide in-flight format-converting of input vector data to execution units for vector processing operations are disclosed. Related vector processor systems and methods are also disclosed. Format conversion circuitry is provided in data flow paths between vector data memory and execution units in the VPE. The format conversion circuitry is configured to convert input vector data sample sets fetched from vector data memory in-flight while the input vector data sample sets are being provided over the data flow paths to the execution units to be processed. In this manner, format conversion of the input vector data sample sets does not require pre-processing, storage, and re-fetching from vector data memory, thereby reducing power consumption and not limiting efficiency of the data flow paths by format conversion pre-processing delays.

Owner:QUALCOMM INC

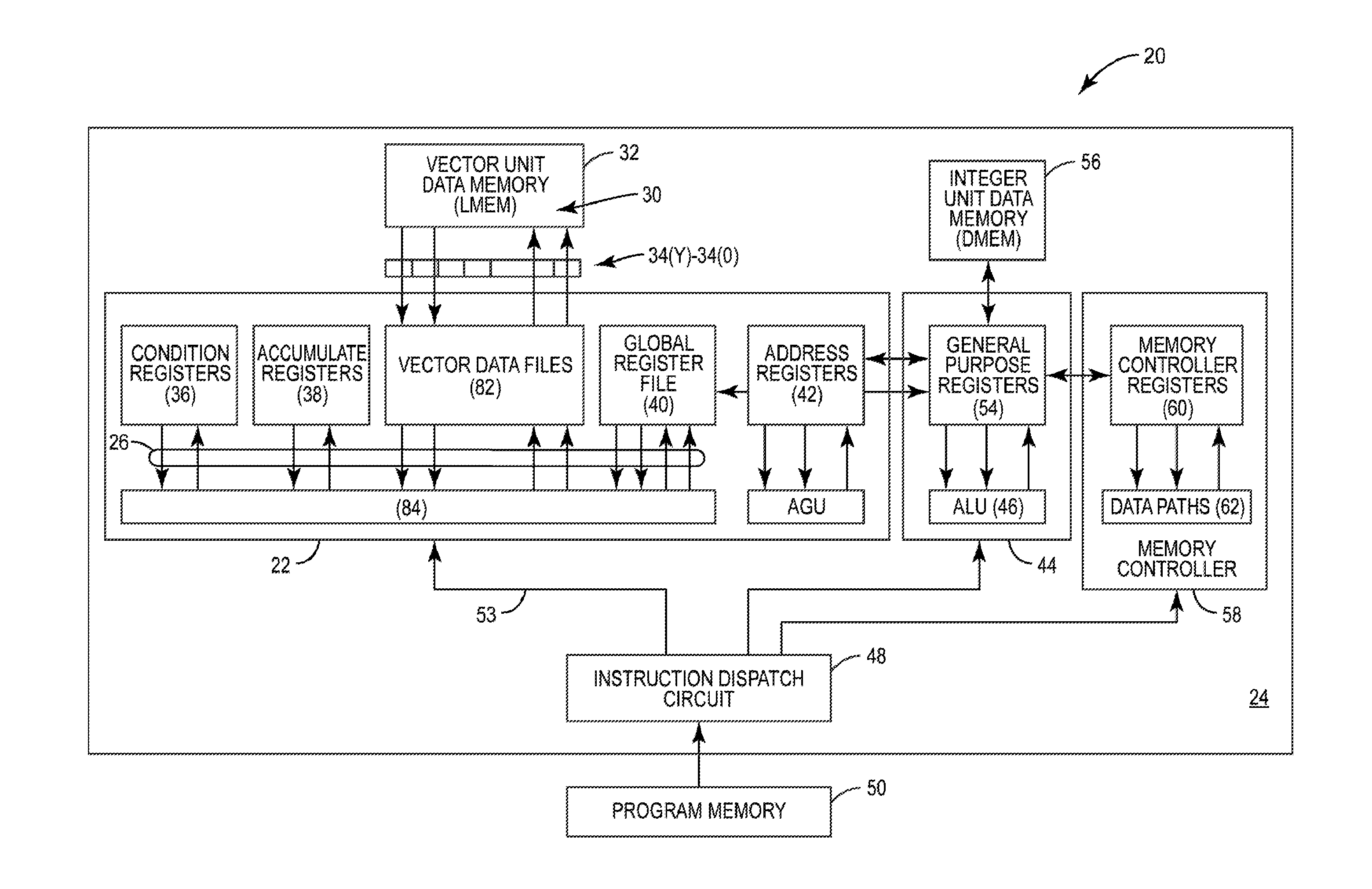

Instructions for Vector Processor

InactiveUS20080059760A1Limited instruction widthGreat instruction widthConditional code generationRegister arrangementsRapid processingInformation provision

A vector processor includes a set of vector registers for storing data to be used in the execution of instructions and a vector functional unit coupled to the vector registers for executing instructions. The functional unit executes instructions using operation codes provided to it which operation codes include a field referencing a special register. The special register contains information about the length and starting point for each vector instruction. A series of new instructions to enable rapid handling of image pixel data are provided.

Owner:MEADLOCK JAMES W MEAD

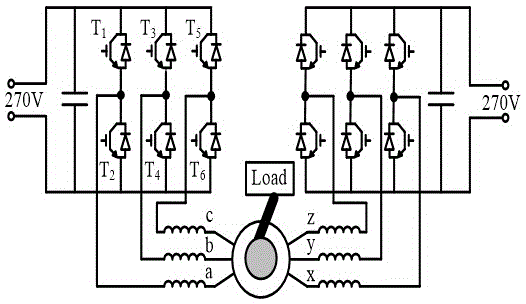

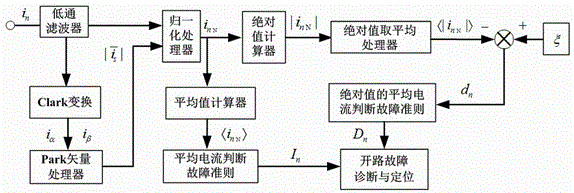

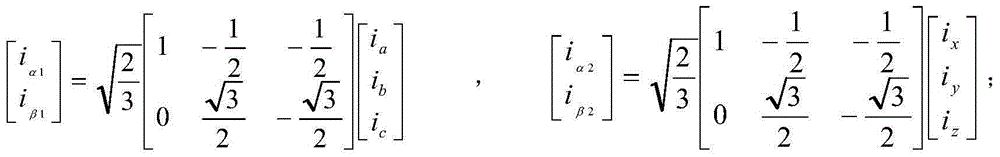

Open-circuit fault diagnosis method for drive system of double-winding fault-tolerant permanent-magnet motor

ActiveCN105158627AImprove reliabilityAvoid misjudgmentElectrical testingPhase currentsLow-pass filter

The invention discloses an open-circuit fault diagnosis method for the drive system of a double-winding fault-tolerant permanent-magnet motor. According to the method, the collected phase current of a motor is respectively subjected to the converting and processing process by a low-pass filter, a Clarke convertor, a Park vector processor, a normalization processor, an average value calculator, an absolute value calculator and an absolute value averaging processor, so as to obtain the average value of the normalized phase current of the motor and the average value of the absolute value of the normalized phase current of the motor. With the average value of the normalized phase current of the motor and the average value of the absolute value of the normalized phase current of the motor as a basis, fault diagnostic variables for the system are constructed, so that the open-circuit fault of the drive system of the double-winding fault-tolerant permanent-magnet motor can be detected and positioned in real time. Based on the above method, no extra current sensor is required, so that the method is simple, feasible and high in reliability. Based on the combined utilization of the average value of the normalized current and the average value of the absolute value of the normalized current, the incorrect diagnosis caused by load sudden change and other reasons during the conventional diagnosis process can be avoided. Meanwhile, the diagnosis time is greatly reduced. Therefore, the open-circuit fault of the drive system can be effectively detected and positioned in real time.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

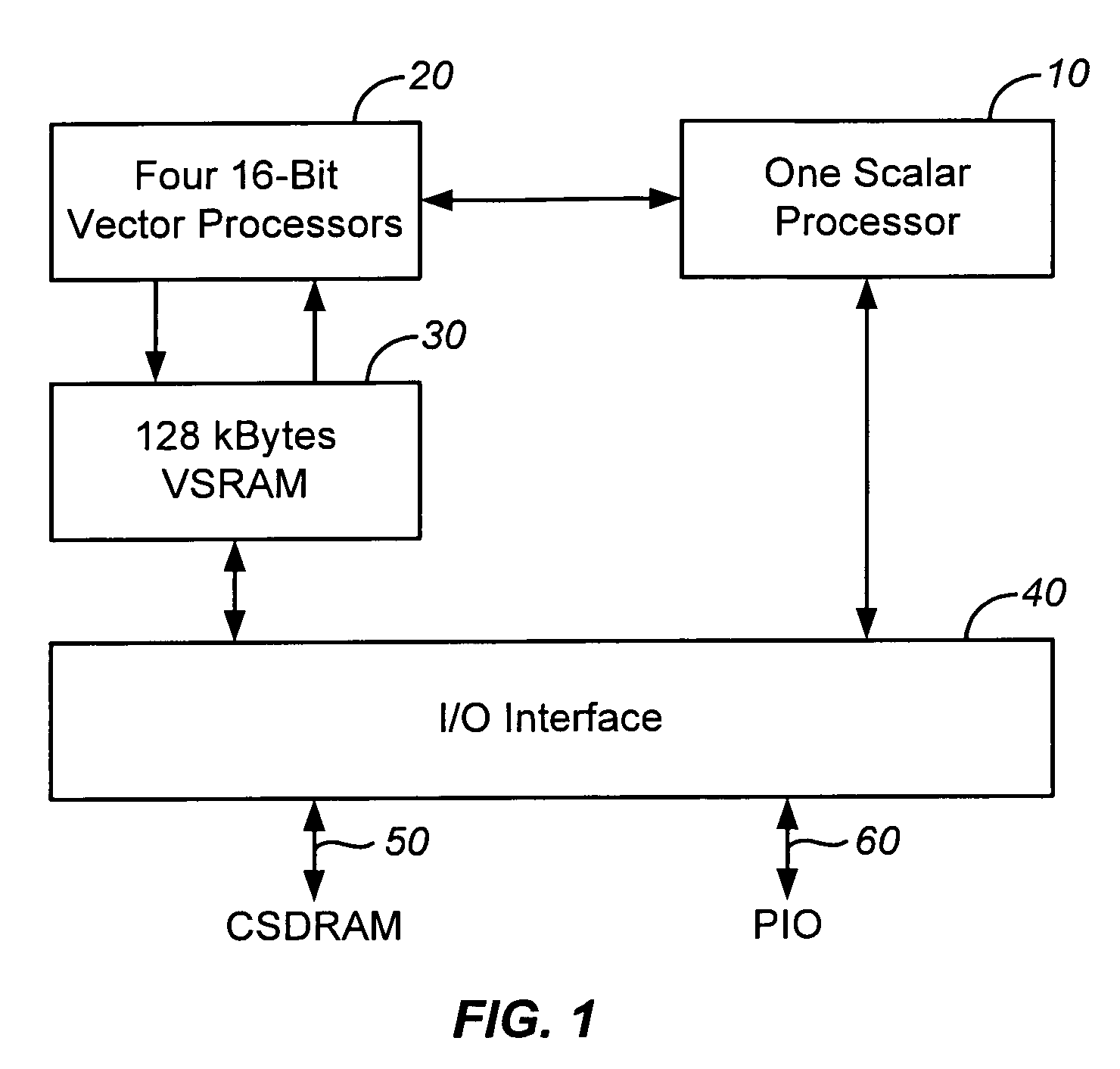

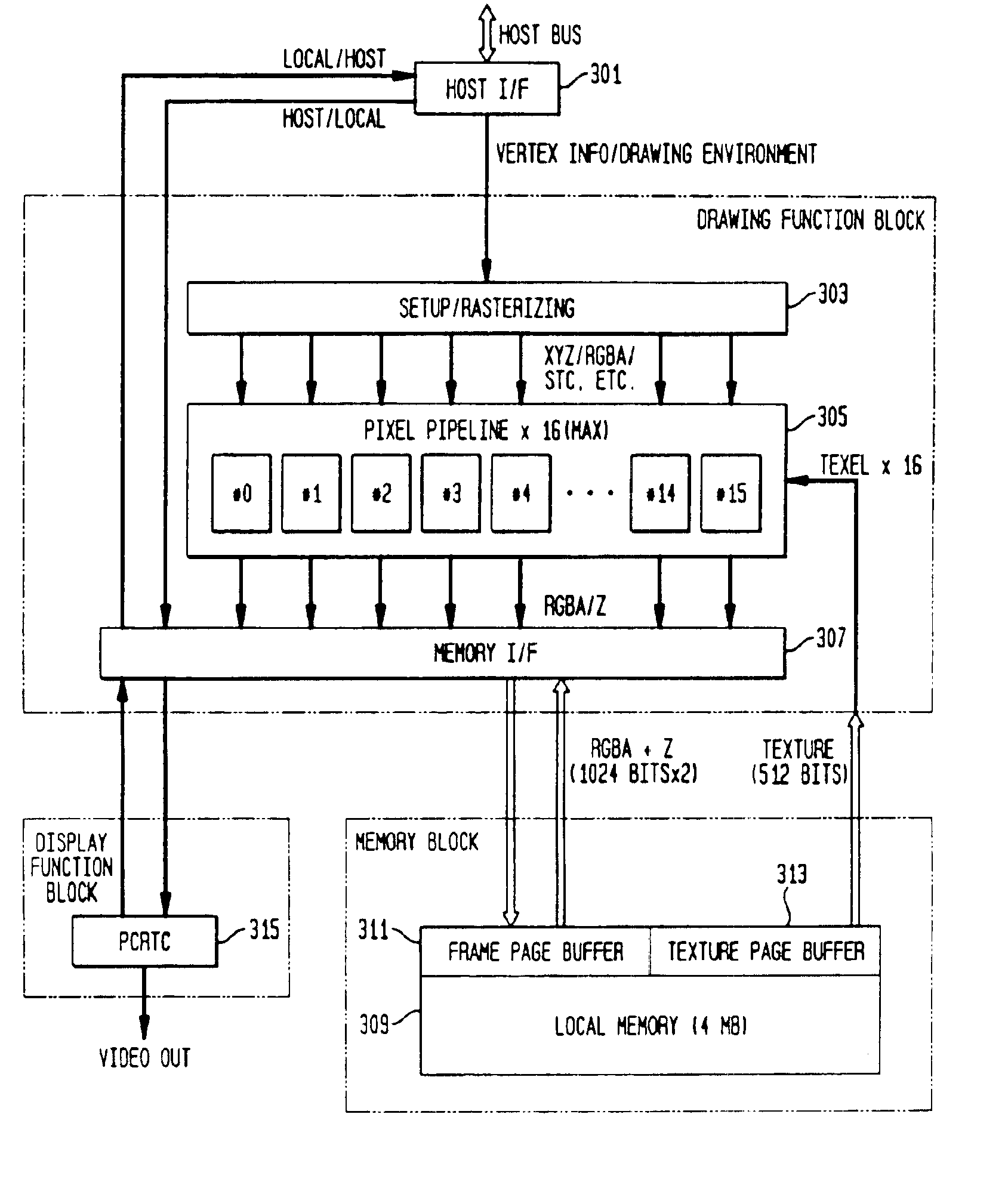

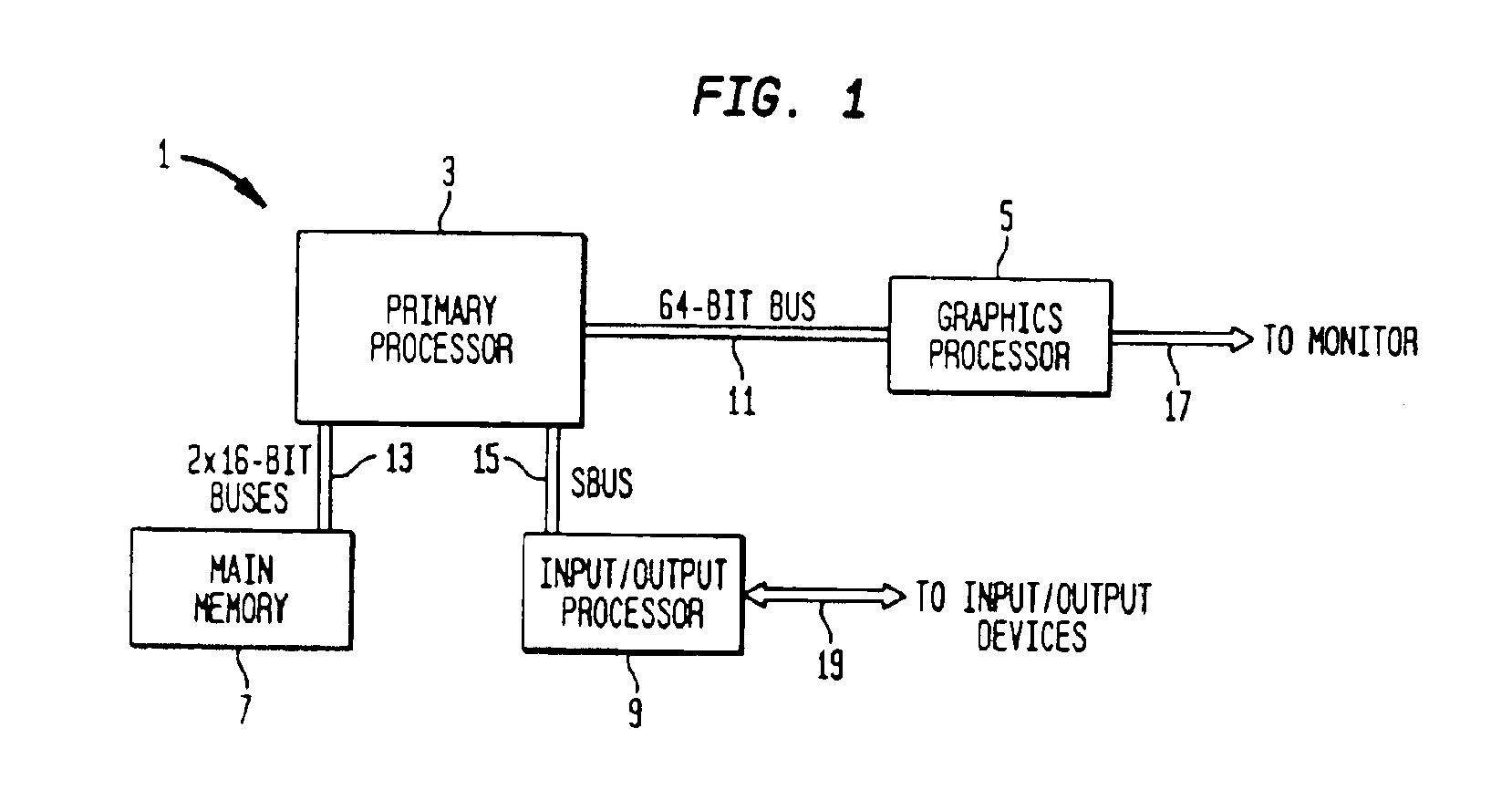

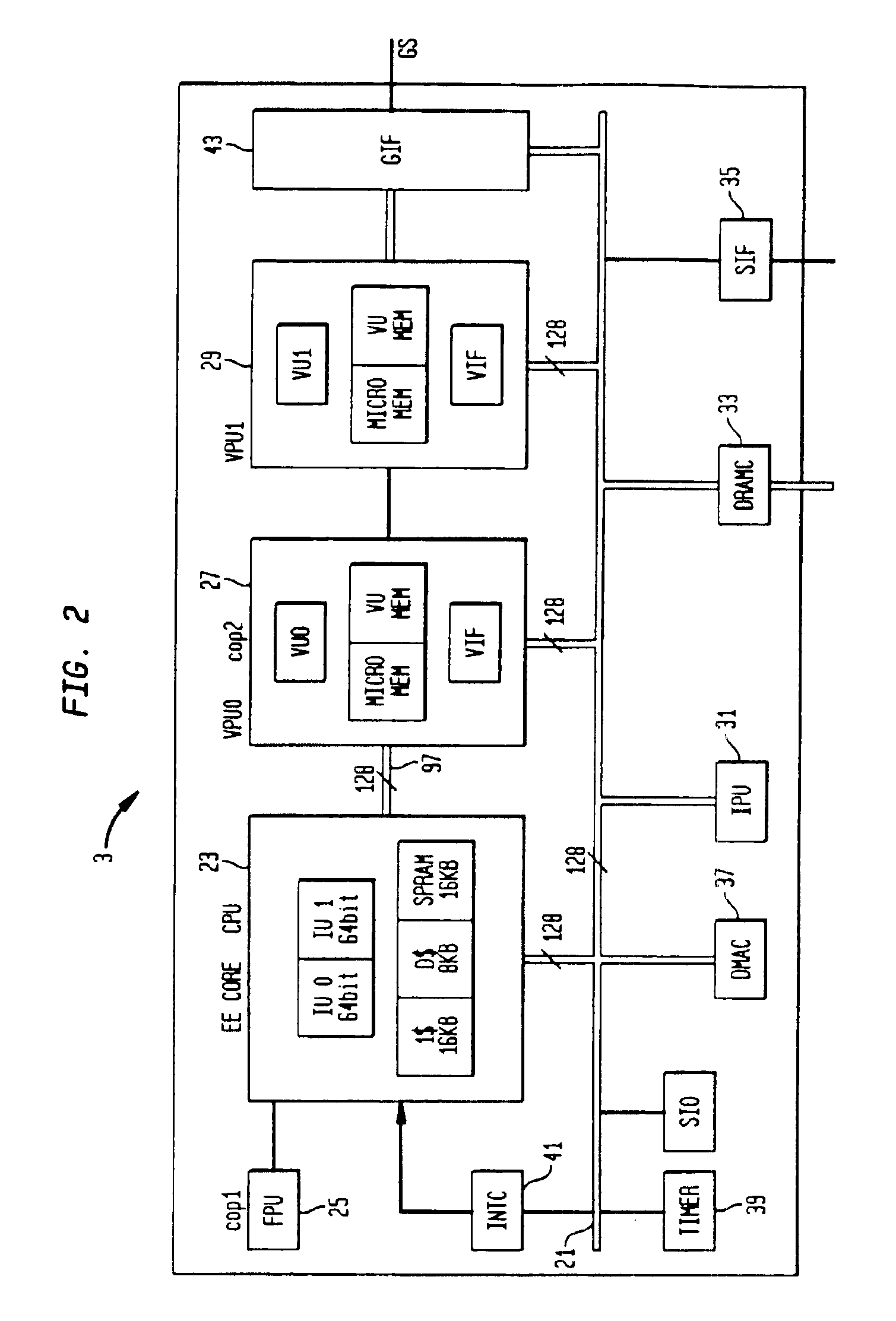

Game system with graphics processor

InactiveUS6891544B2Reduce data volumeHide main memory latencyIndoor gamesDigital computer detailsFill rateData stream

The present invention relates to the architecture and use of a computer system optimized for the efficient modeling of graphics. The computer system has a primary processor and a graphics processor. The primary processor has two vector processor units within it, one which is closely connected to central processor unit. Simultaneously performing complex modeling calculations on the first vector processor and CPU, and geometry transformation calculations on the second vector processor, allows for efficient modeling of graphics. Furthermore, the graphics processor is optimized to rapidly switch between data flows from the two vector processors. In addition, the graphics processor is able to render many pixels simultaneously, and has a local memory on the graphics processor chip that acts as a frame buffer, texture buffer, and z buffer. This allows a high fill rate to the frame buffer.

Owner:SONY COMPUTER ENTERTAINMENT INC

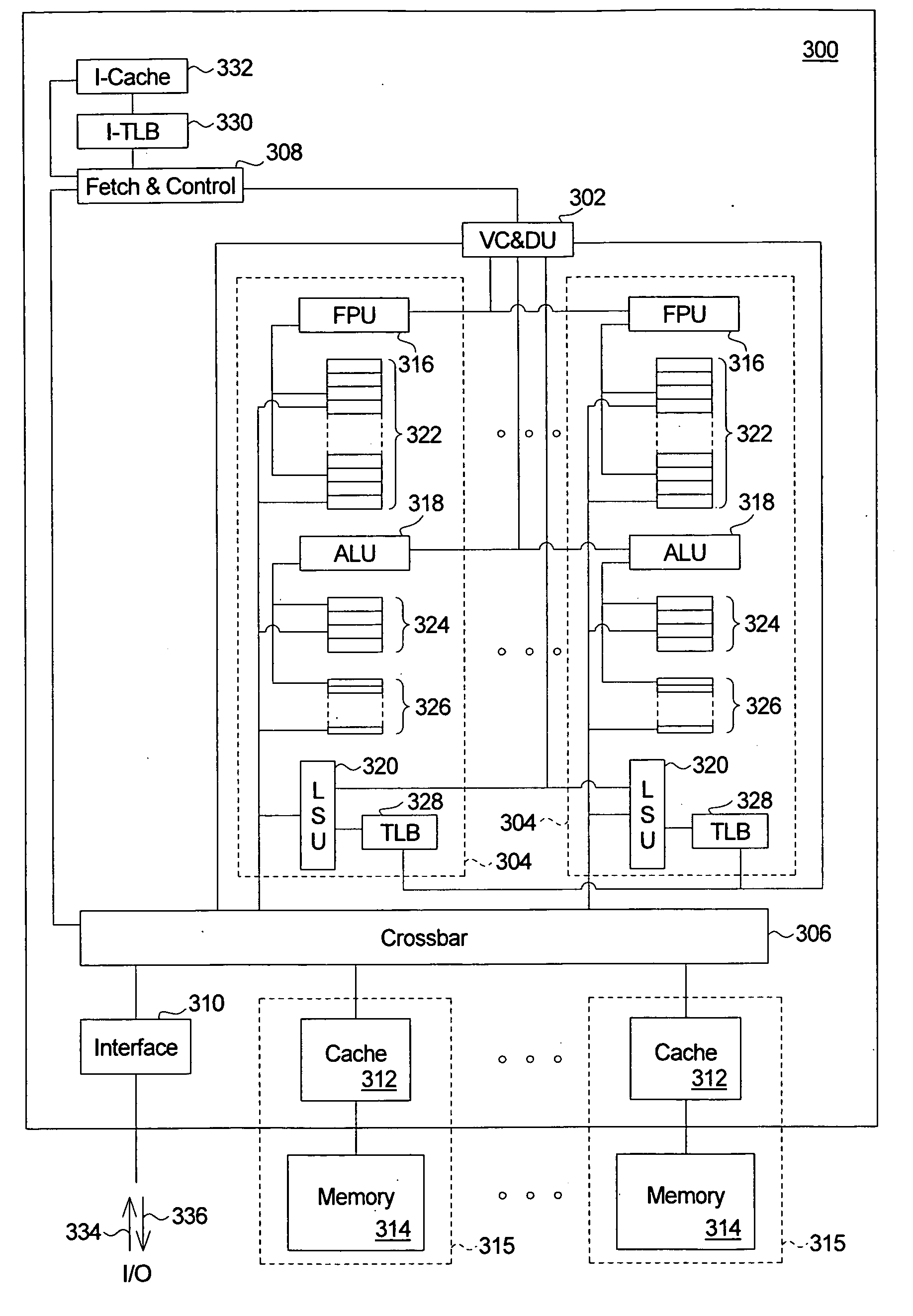

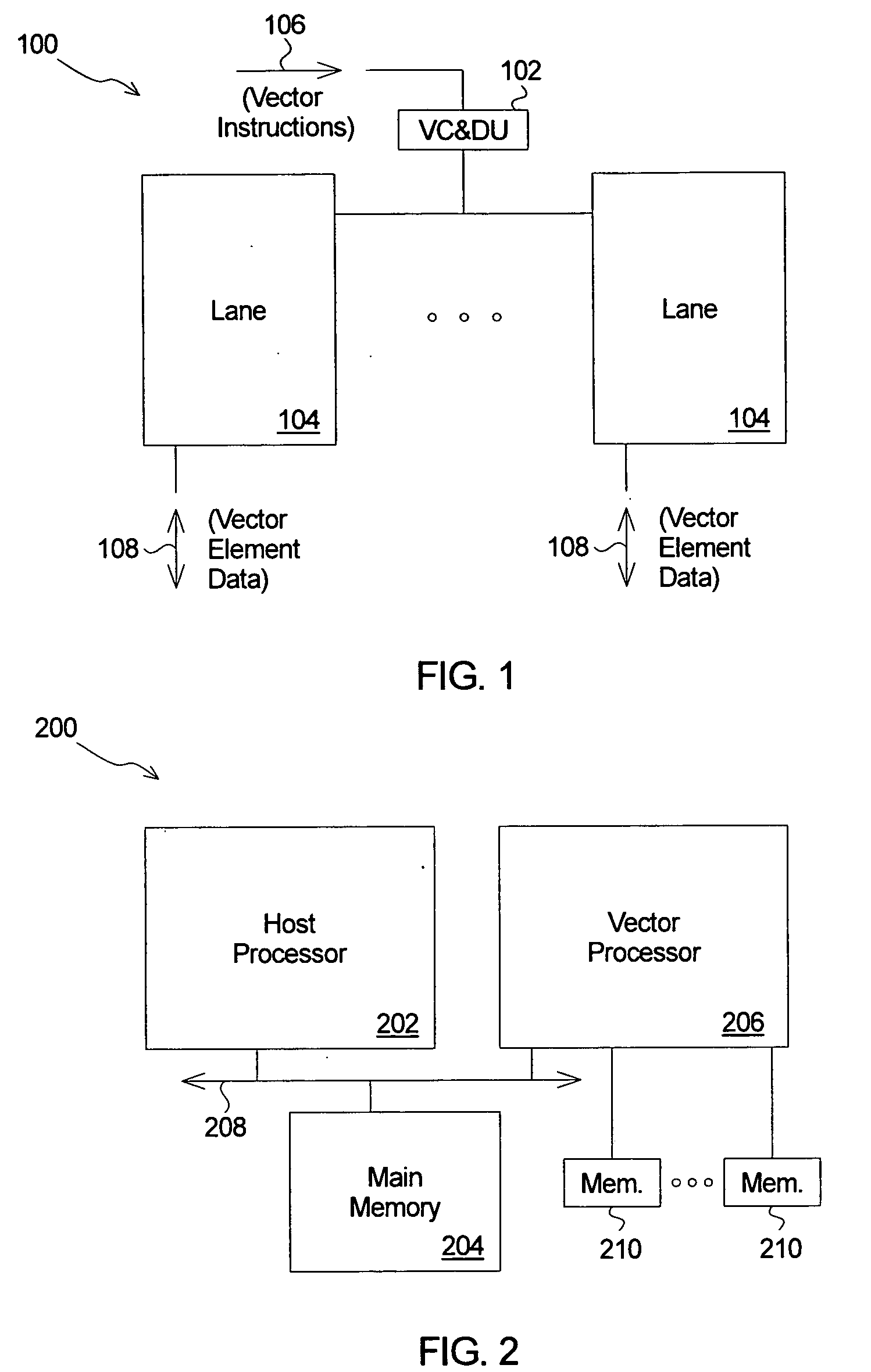

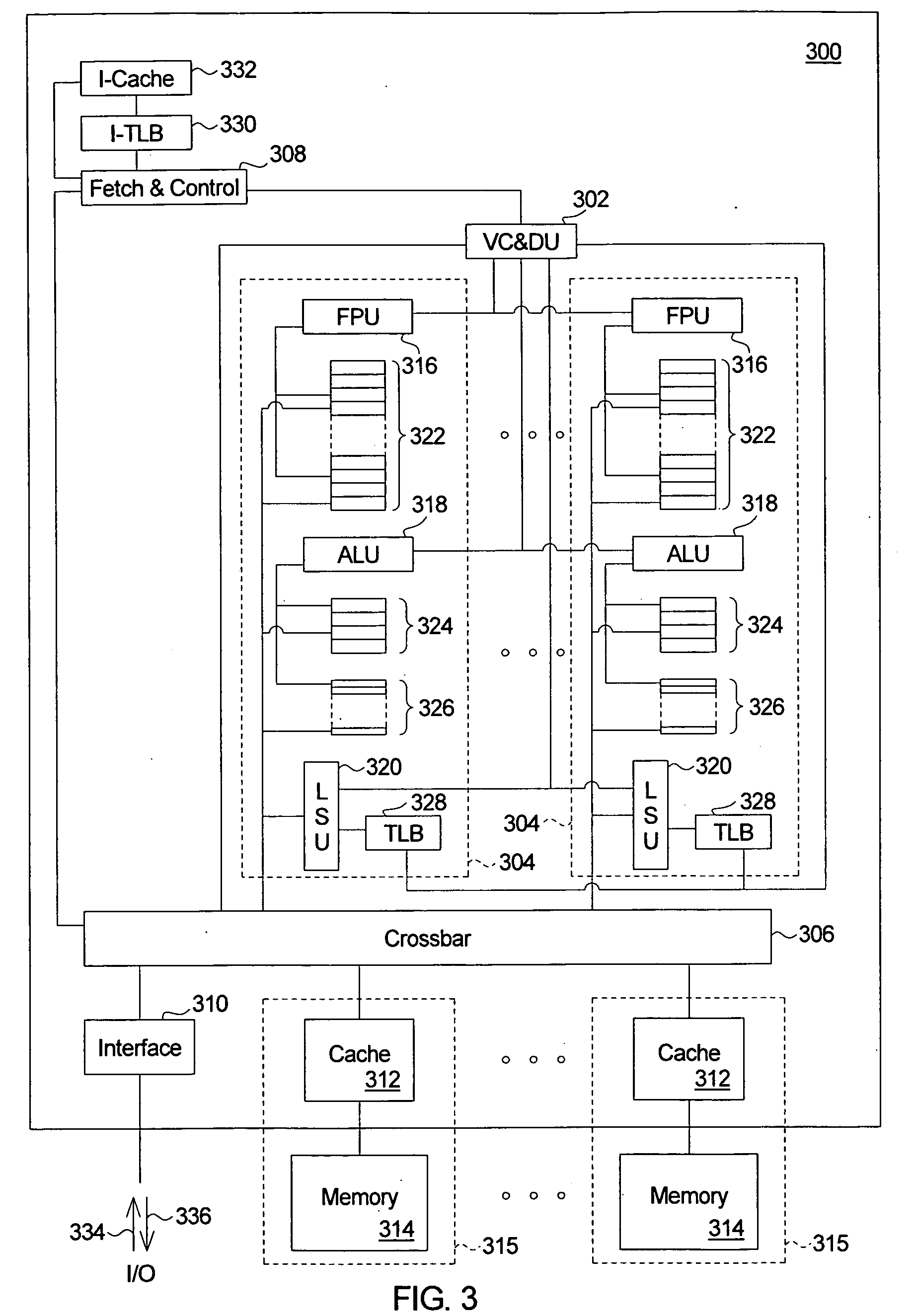

Vector processor and system for vector processing

An embodiment of a vector processor includes a vector control and distribution unit and lanes. In operation, the vector control and distribution unit receives vector instructions, decomposes the vector instructions into vector element operations, and forwards the vector element operations for execution. Each lane proceeds to execute vector element operations independently of other lanes. An embodiment of a vector processing system includes a host processor, a main memory, and a vector processor. In operation, the host processor forwards vector instructions and vector data to the vector processor for processing. The vector control and distribution unit decomposes the vector instructions into vector element operations and forwards the vector element operations to the lanes. Each lane proceeds to execute vector element operations that the lane receives on a portion of the vector data independent of execution of instructions executing in other lanes.

Owner:HEWLETT PACKARD DEV CO LP

VECTOR PROCESSING ENGINES (VPEs) EMPLOYING REORDERING CIRCUITRY IN DATA FLOW PATHS BETWEEN EXECUTION UNITS AND VECTOR DATA MEMORY TO PROVIDE IN-FLIGHT REORDERING OF OUTPUT VECTOR DATA STORED TO VECTOR DATA MEMORY, AND RELATED VECTOR PROCESSOR SYSTEMS AND METHODS

ActiveUS20150143085A1Process controlDigital computer detailsHandling data according to predetermined rulesData streamExecution unit

Vector processing engines (VPEs) employing reordering circuitry in data flow paths between execution units and vector data memory to provide in-flight reordering of output vector data stored to vector data memory are disclosed. Related vector processor systems and methods are also disclosed. Reordering circuitry is provided in data flow paths between execution units and vector data memory in the VPE. The reordering circuitry is configured to reorder output vector data sample sets from execution units as a result of performing vector processing operations in-flight while the output vector data sample sets are being provided over the data flow paths from the execution units to the vector data memory to be stored. In this manner, the output vector data sample sets are stored in the reordered format in the vector data memory without requiring additional post-processing steps, which may delay subsequent vector processing operations to be performed in the execution units.

Owner:QUALCOMM INC

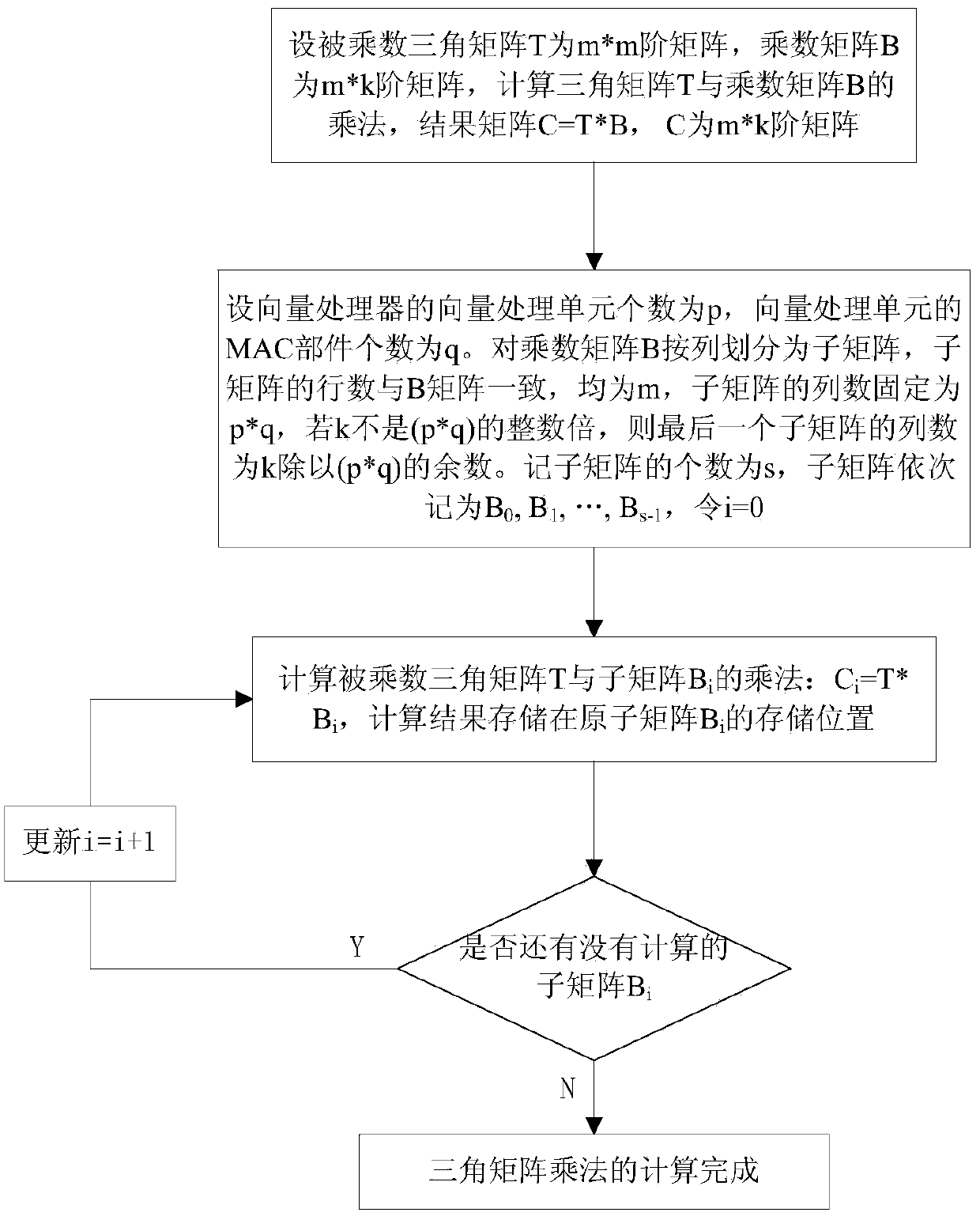

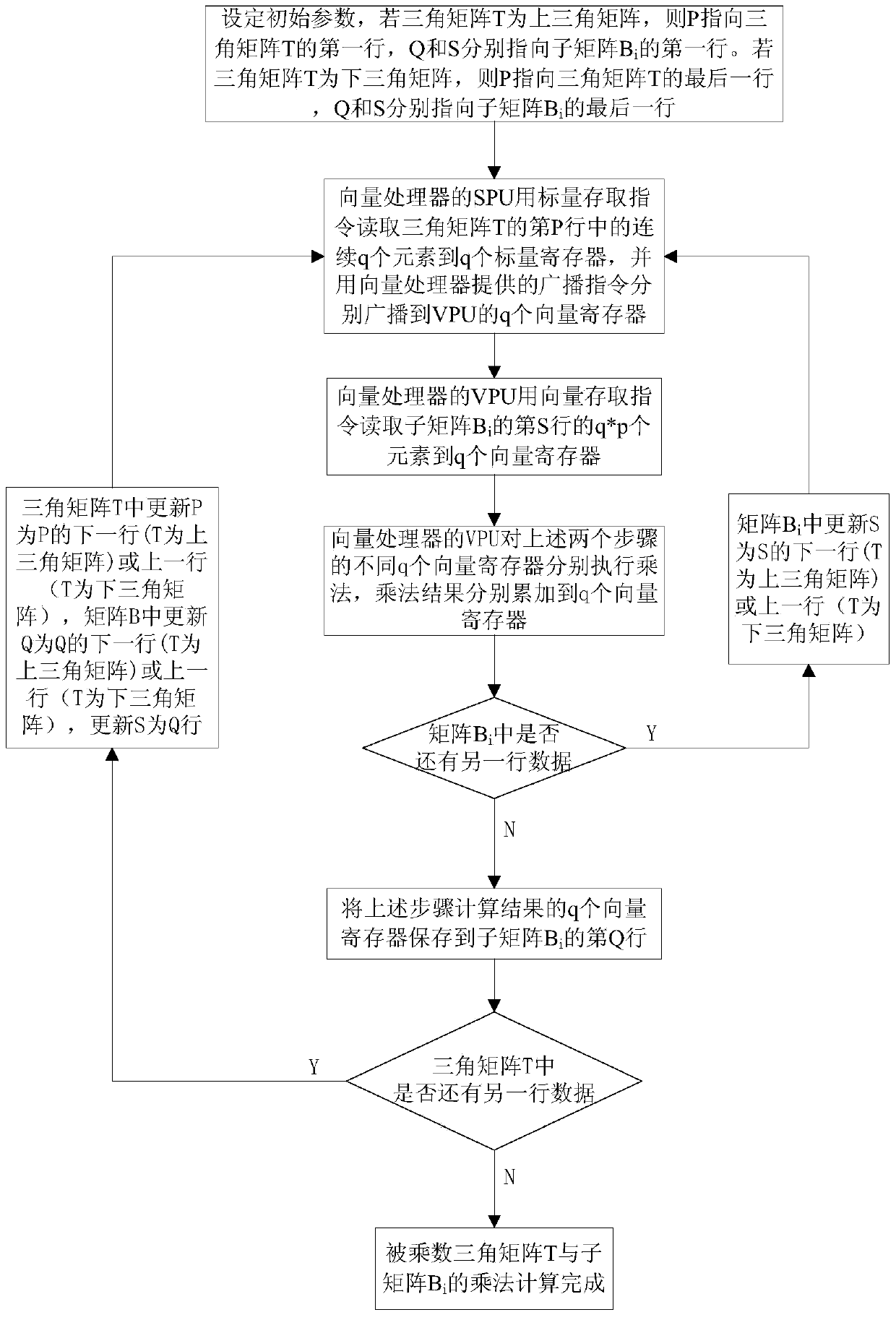

Triangular matrix multiplication vectorization method of vector processor

ActiveCN103440121ASmall amount of calculationRealize in-situ computingConcurrent instruction executionVector processorTriangular matrix

The invention discloses a triangular matrix multiplication vectorization method of a vector processor. The triangular matrix multiplication vectorization method of the vector processor comprises the steps that (1) triangular matrix elements in a multiplicand triangular matrix T are stored continuously by row; (2) a multiplier matrix B is divided into a plurality of sub-matrixes Bi by row according to the number of vector processing units of the vector processor and the number of MAC parts of the vector processing units; (3) the sub-matrixes Bi are multiplied by the multiplicand triangular matrix T in sequence and then the results are stored on storage positions of the original sub-matrixes Bi; (4) the sub-matrixes Bi of the multiplier matrix are traversed and then the fact that whether sub-matrixes Bi which are not multiplied by the multiplicand triangular matrix exist is judged, the I is updated according to the formula i=i+1 and the steps are repeated from the step (3) if sub-matrixes Bi which are not multiplied by the multiplicand triangular matrix exist, and step (5) is executed if sub-matrixes Bi which are not multiplied by the multiplicand triangular matrix do not exist; (5) triangular matrix multiplication is accomplished. The triangular matrix multiplication vectorization method of the vector processor has the advantages that the principle is simple, operation is easy and convenient, and the calculation efficiency of the vector processor can be fully performed.

Owner:NAT UNIV OF DEFENSE TECH

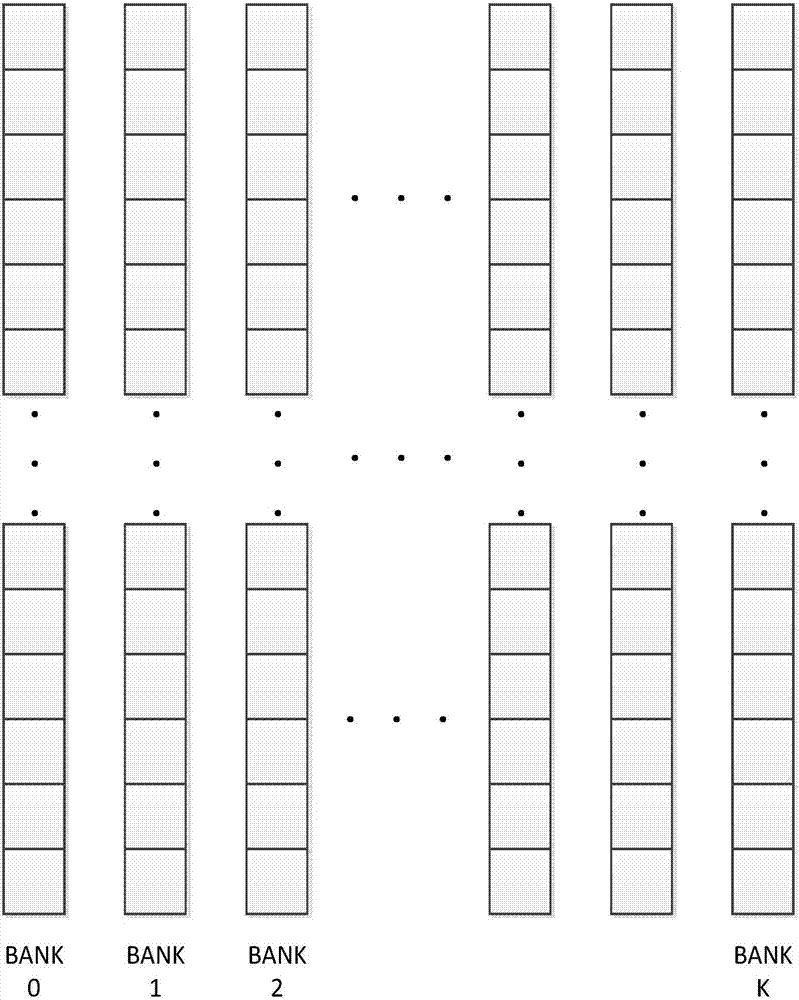

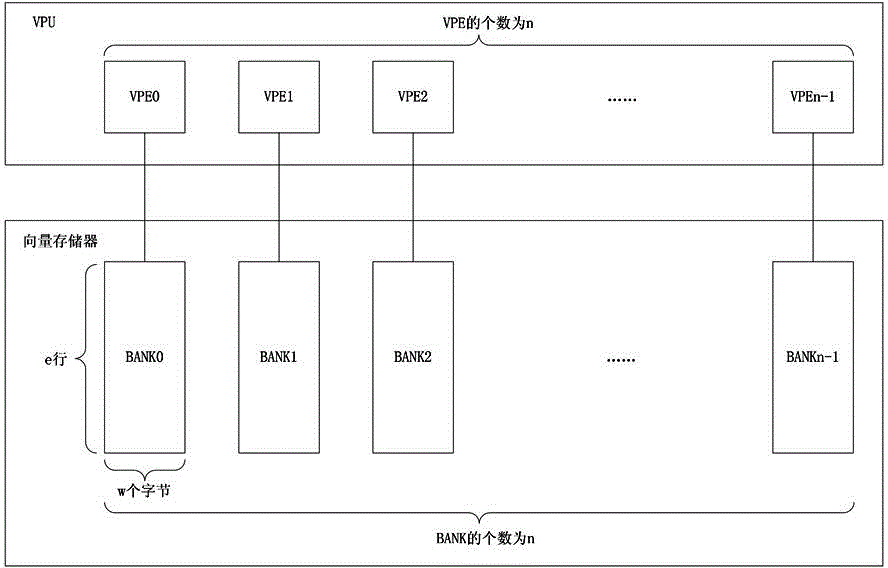

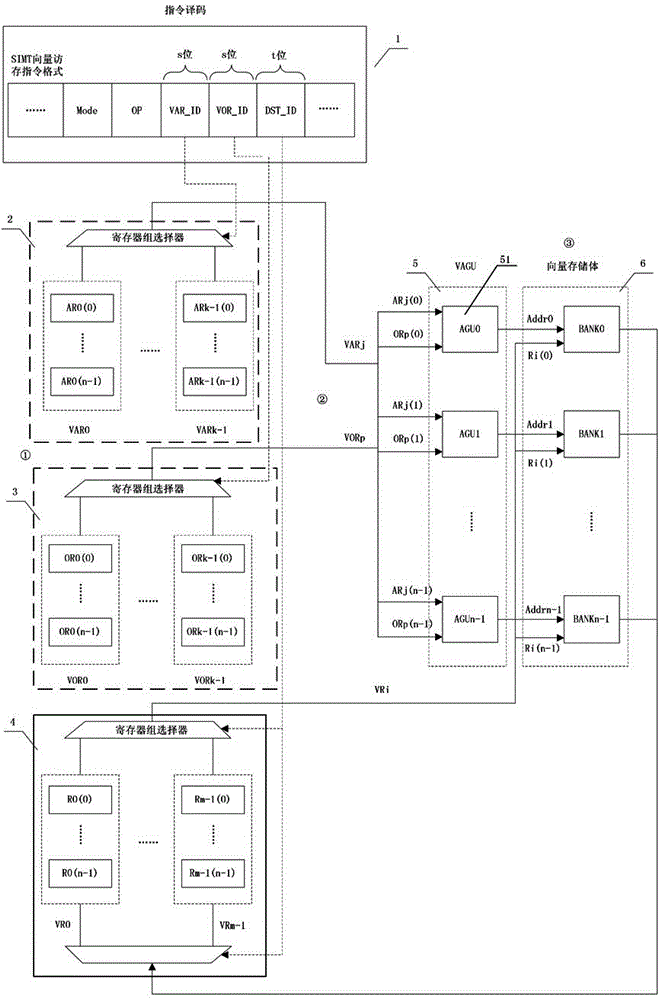

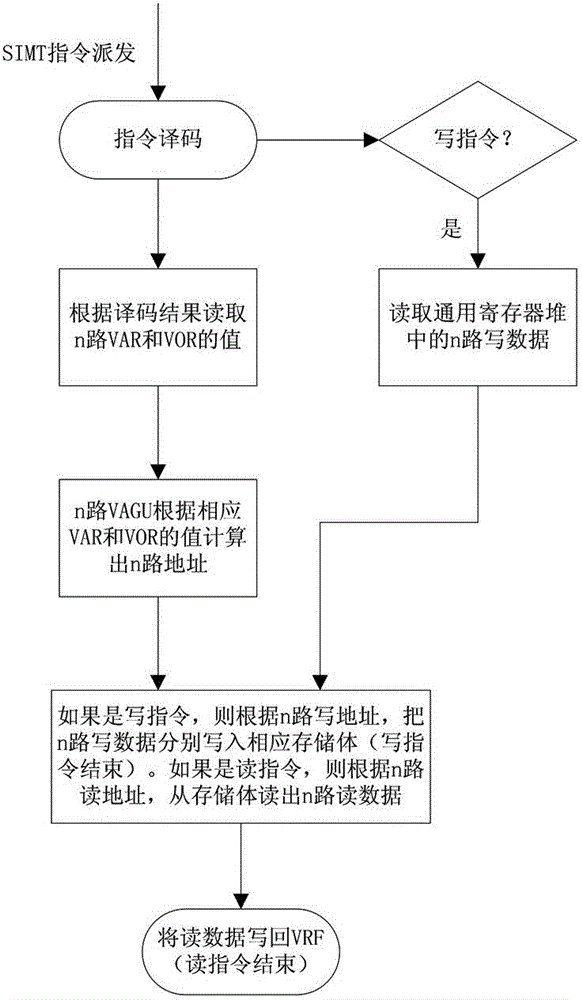

Vector access and storage device supporting SIMT in vector processor and control method

ActiveCN104699465AIncrease flexibilityImprove parallelismResource allocationConcurrent instruction executionProcessor registerMemory bank

The invention discloses a vector access and storage device supporting SIMT in a vector processor and a control method. The device comprises a base address vector register unit, an offset vector register unit and a vector address calculation unit; each of the base address vector register unit and the offset vector register unit comprises a plurality of groups of vector registers; each group of vector registers is composed of a plurality of vector registers; the vector address calculation unit comprises a plurality of address calculation subunits; each address calculation subunit is connected with each memory bank in the vector processor correspondingly one to one; each of the base address and the offset address of each thread is obtained by use of one group of the vector registers, and then the base address and the offset address are output to the address calculation subunits for calculation, and the obtained access and storage address of each thread is output to the corresponding memory bank; the method is the control method of the vector access and storage device. The vector access and storage device has the advantages of high vector access and storage flexibility, high parallel access and storage efficiency and low power consumption, and is capable of supporting SIMT thread level parallelism.

Owner:NAT UNIV OF DEFENSE TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com