Patents

Literature

89results about How to "Reduce memory access" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

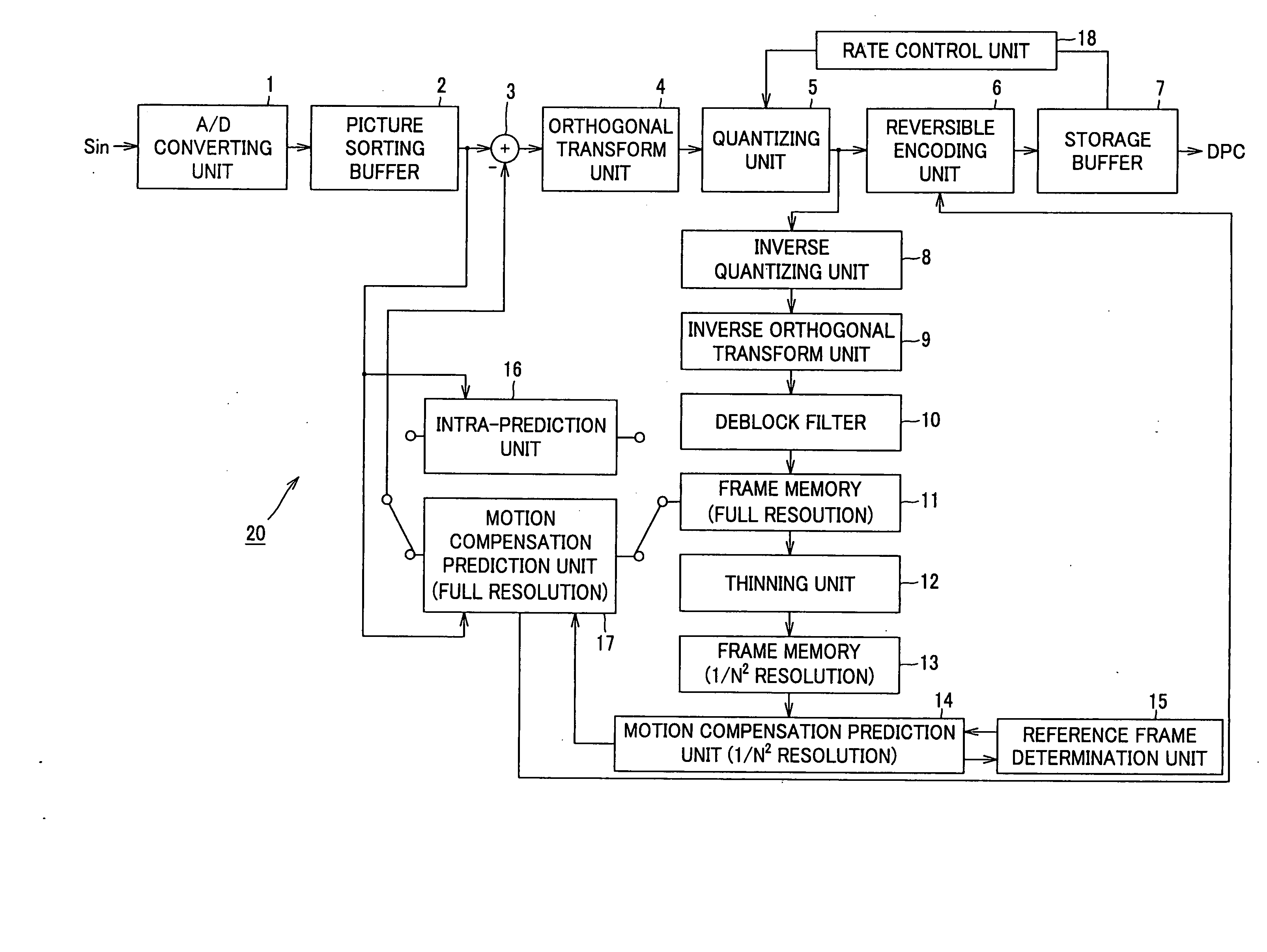

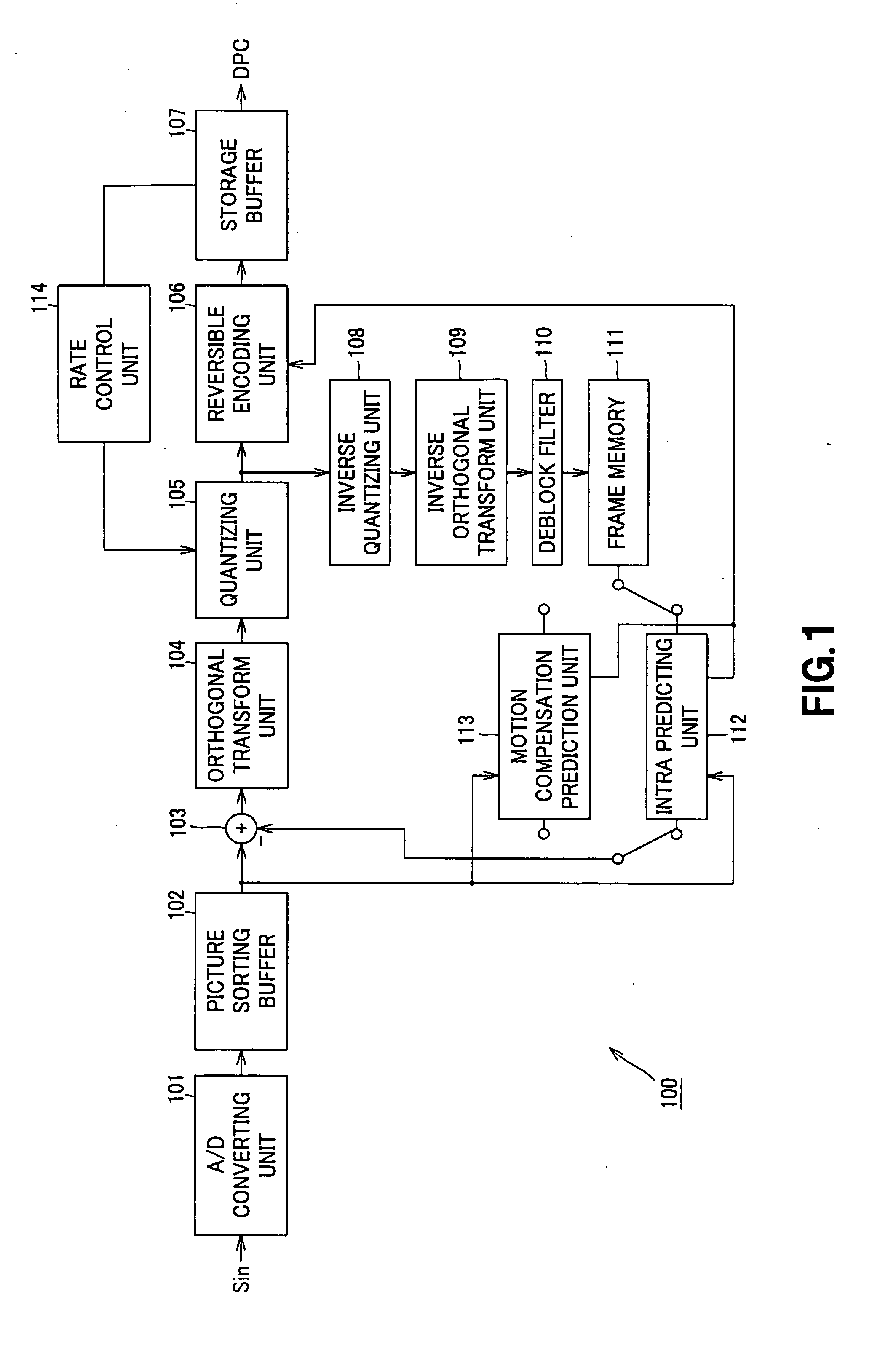

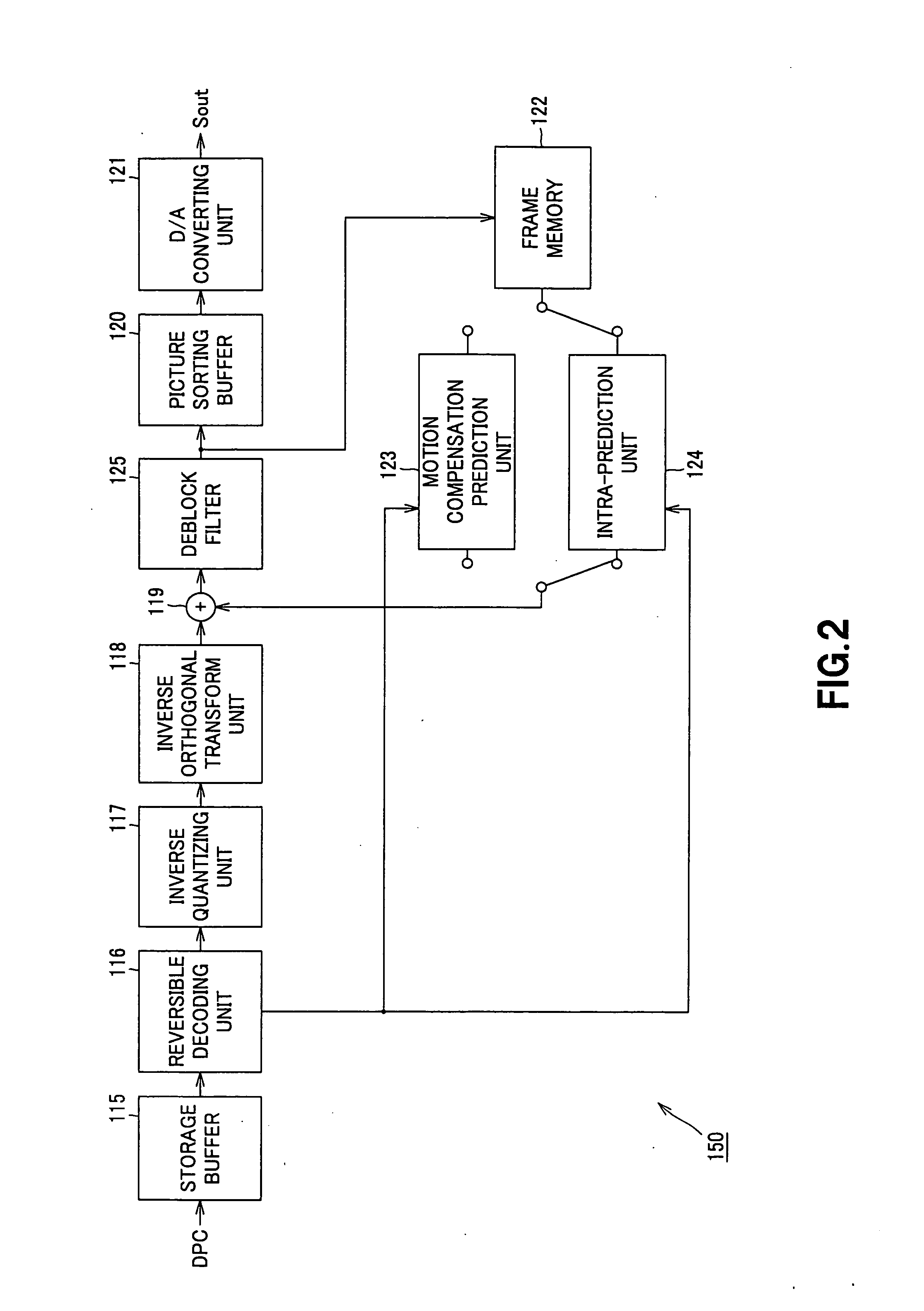

Motion Compensation Prediction Method and Motion Compensation Prediction Apparatus

InactiveUS20080037642A1Increase speedReduce memory accessColor television with pulse code modulationColor television with bandwidth reductionContraction factorImage resolution

When motion vector is searched based on hierarchical search by designating any one of reference images of plural frames used every respective motion blocks, which have reference images of plural frames and are obtained by dividing an object frame image to be processed among successive frame images, a thinning unit (12) is operative to thin pixels of the motion compensation block having the largest pixel size caused to be uppermost layer among pixel sizes of the motion compensation block to thereby generate a contracted image of lower layer having a predetermined contraction factor; a reference frame determination unit (15) is operative to determine a contracted reference image on the contracted image; a motion compensation prediction unit (1 / N2 resolution) (15) is operative to search motion vector by using the contracted image thus generated to search; with respect to an image before contraction, motion compensation prediction unit (full resolution) (17) is operative to search motion vector and perform motion compensation prediction by using a predetermined retrieval range designated by motion vector which has been searched at the motion compensation prediction unit (1 / N2 resolution) (15).

Owner:SONY CORP

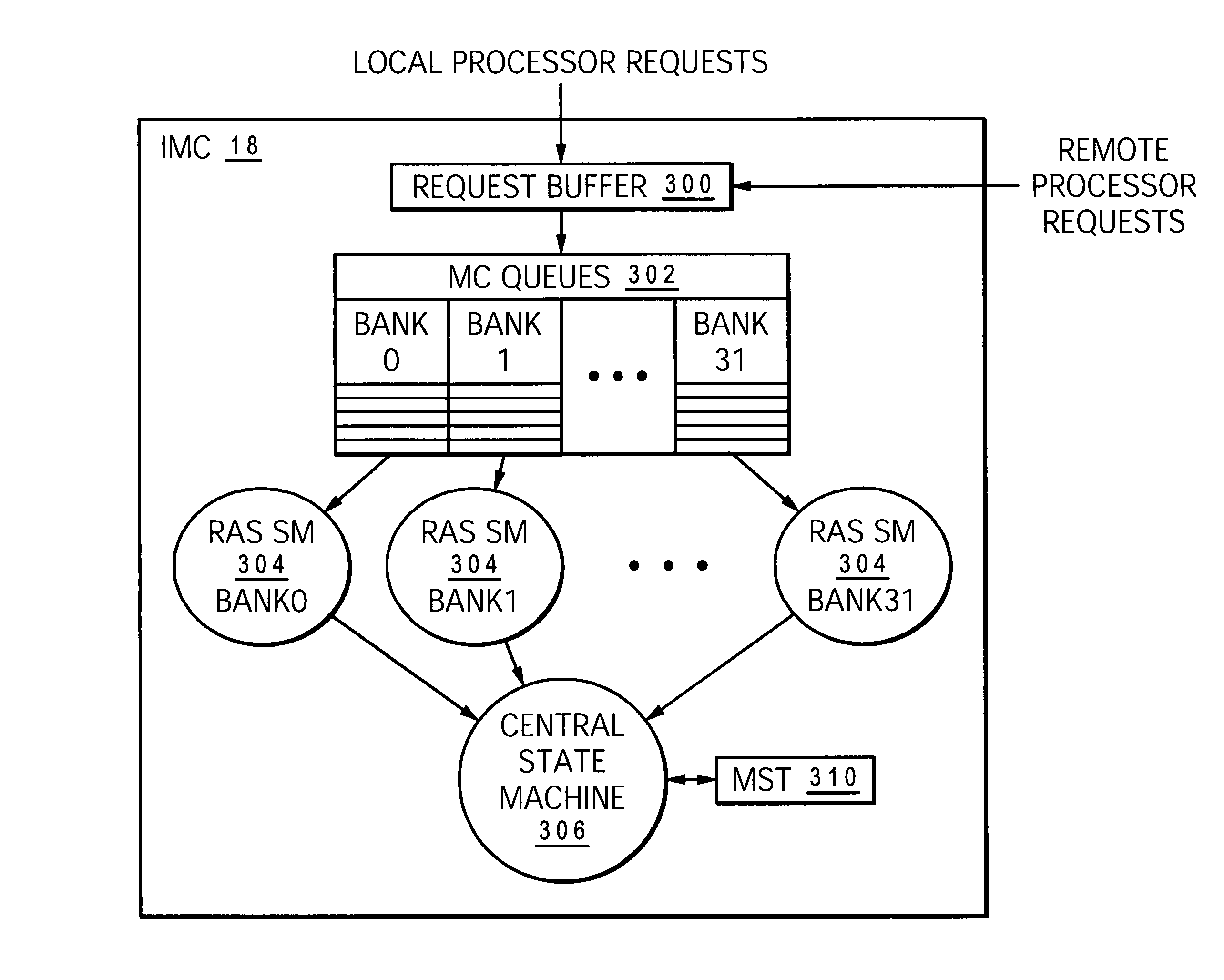

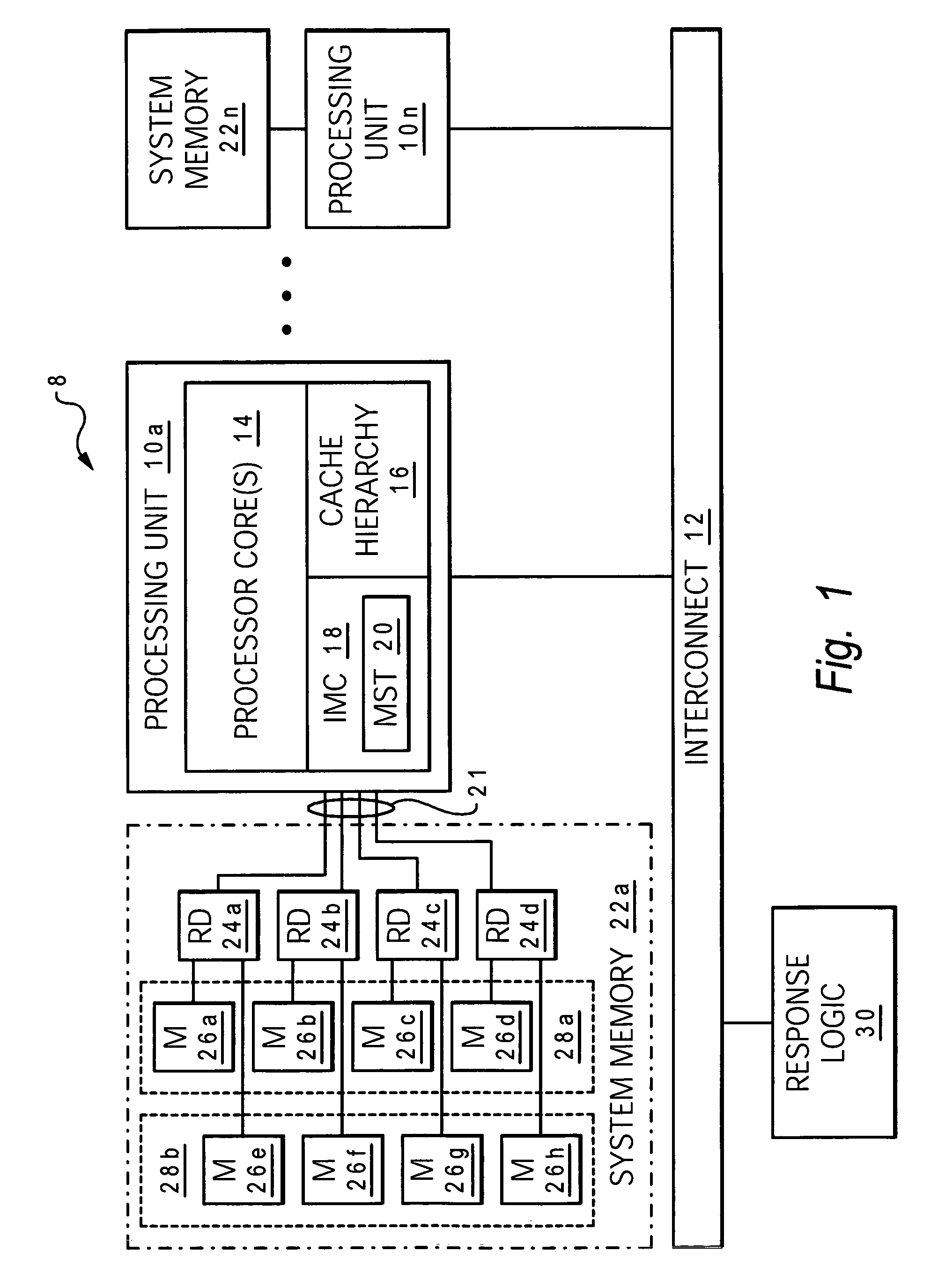

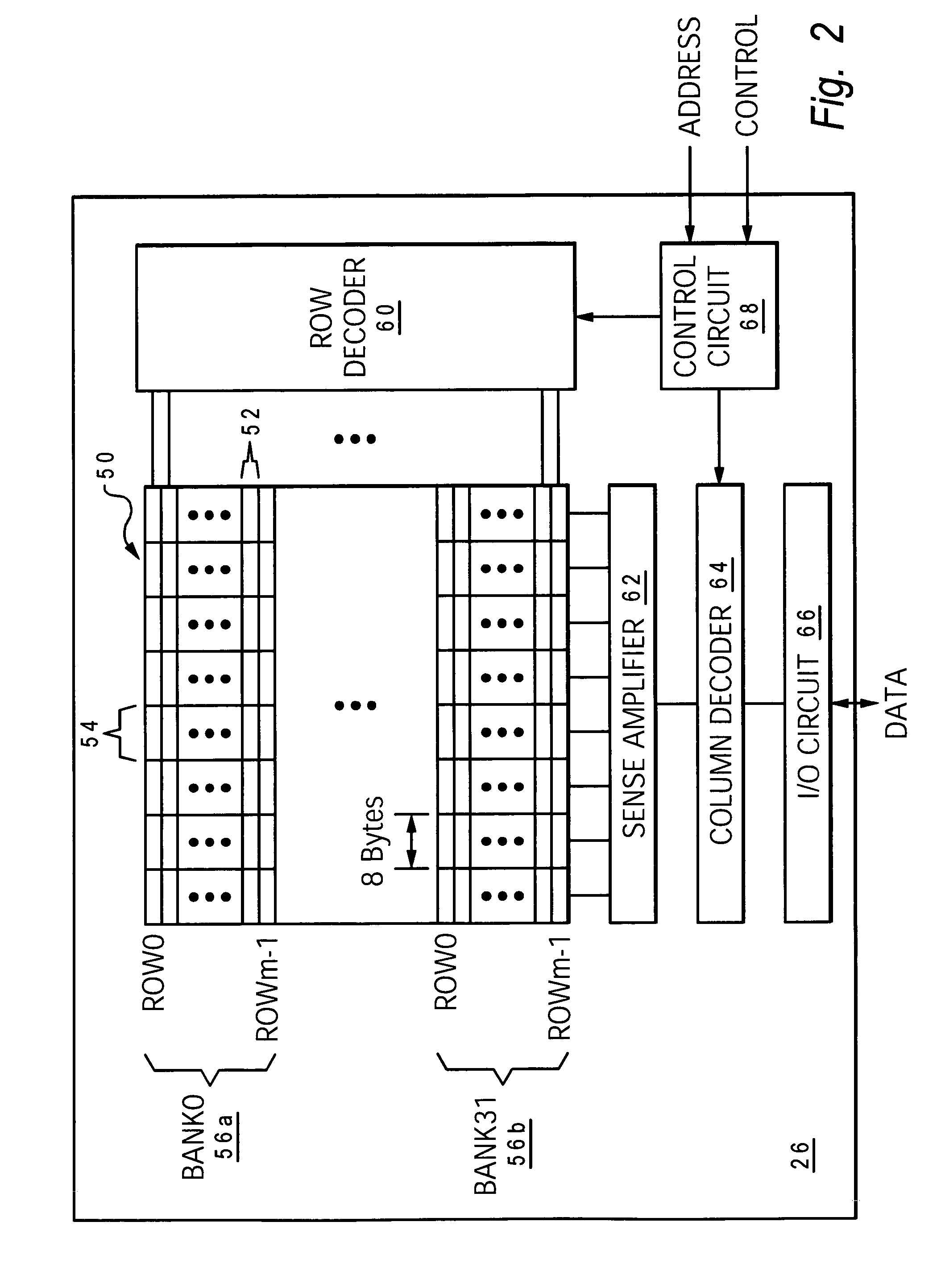

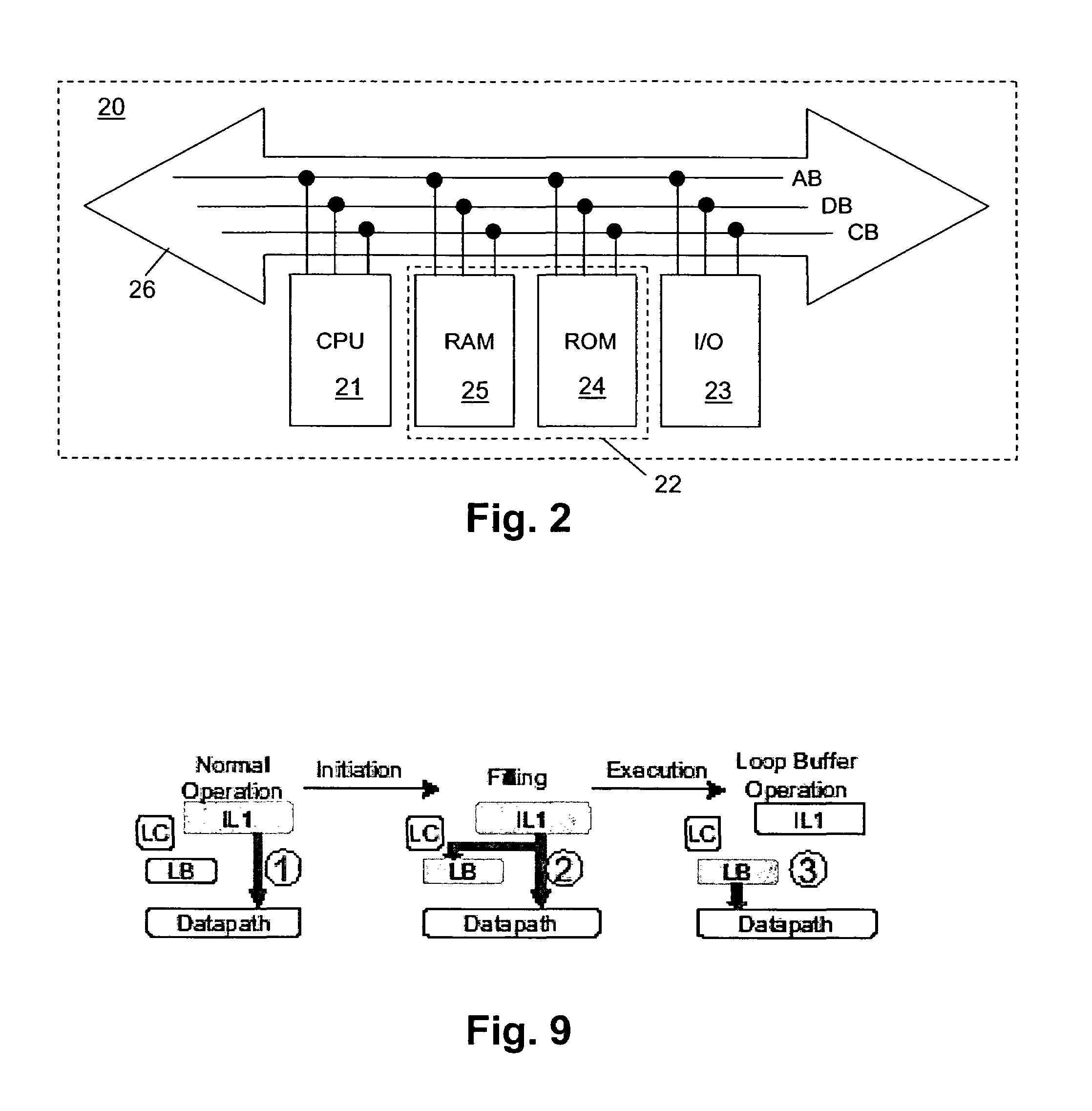

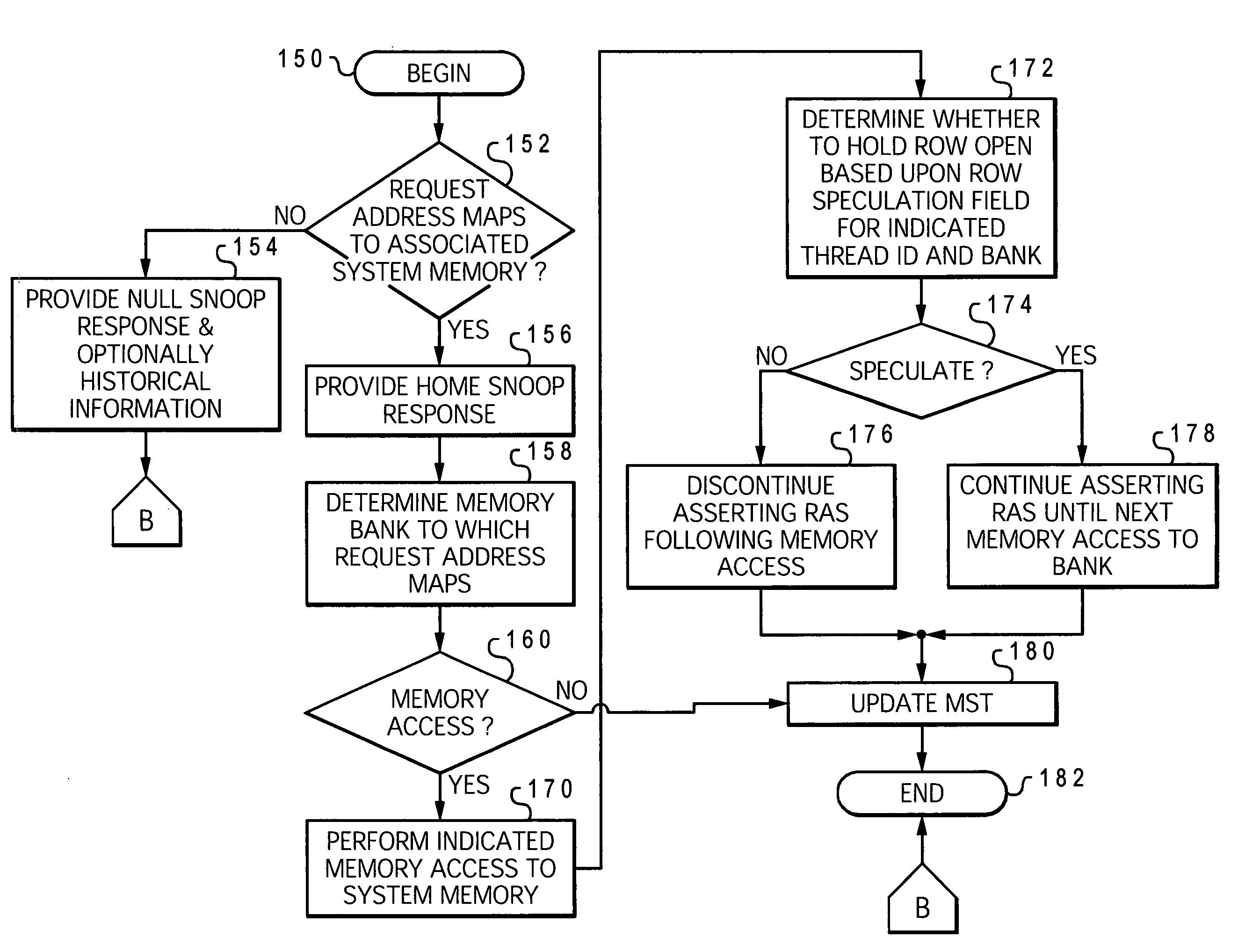

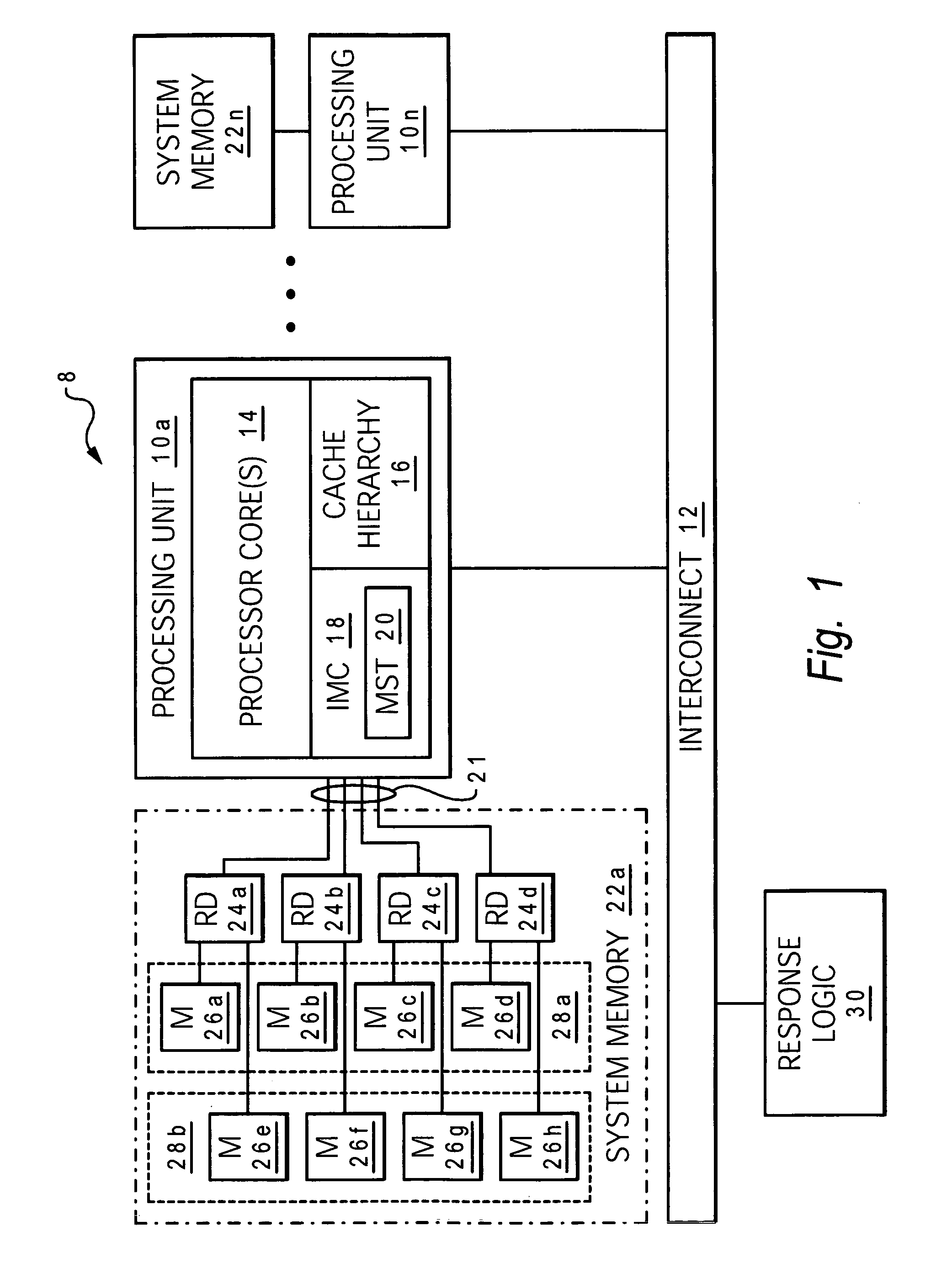

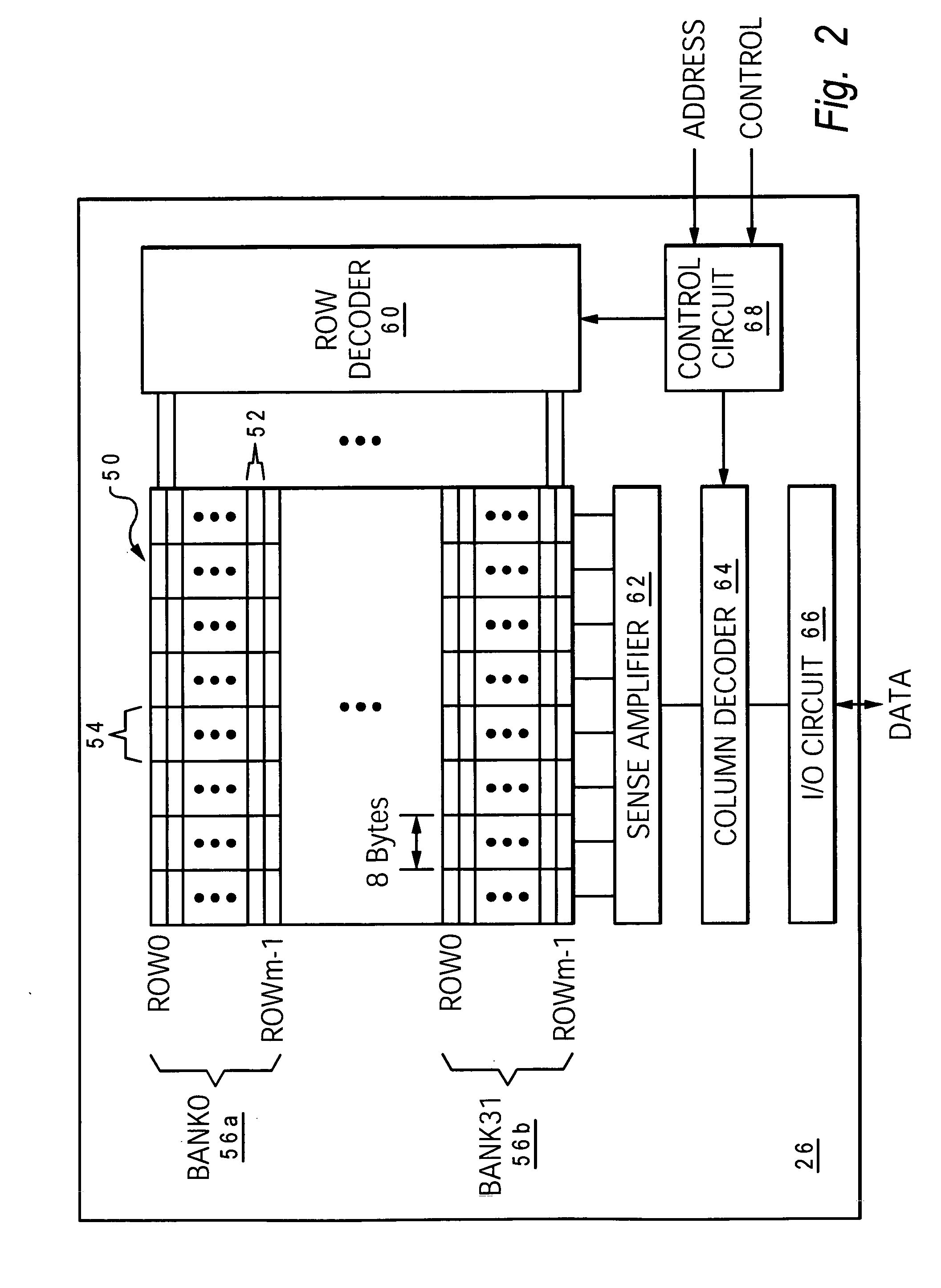

Method and system for supplier-based memory speculation in a memory subsystem of a data processing system

InactiveUS7130967B2Improvement in average memory access latencySignificant comprehensive benefitsMemory adressing/allocation/relocationConcurrent instruction executionData processing systemProcessing core

A data processing system includes one or more processing cores, a system memory having multiple rows of data storage, and a memory controller that controls access to the system memory and performs supplier-based memory speculation. The memory controller includes a memory speculation table that stores historical information regarding prior memory accesses. In response to a memory access request, the memory controller directs an access to a selected row in the system memory to service the memory access request. The memory controller speculatively directs that the selected row will continue to be energized following the access based upon the historical information in the memory speculation table, so that access latency of an immediately subsequent memory access is reduced.

Owner:INT BUSINESS MASCH CORP

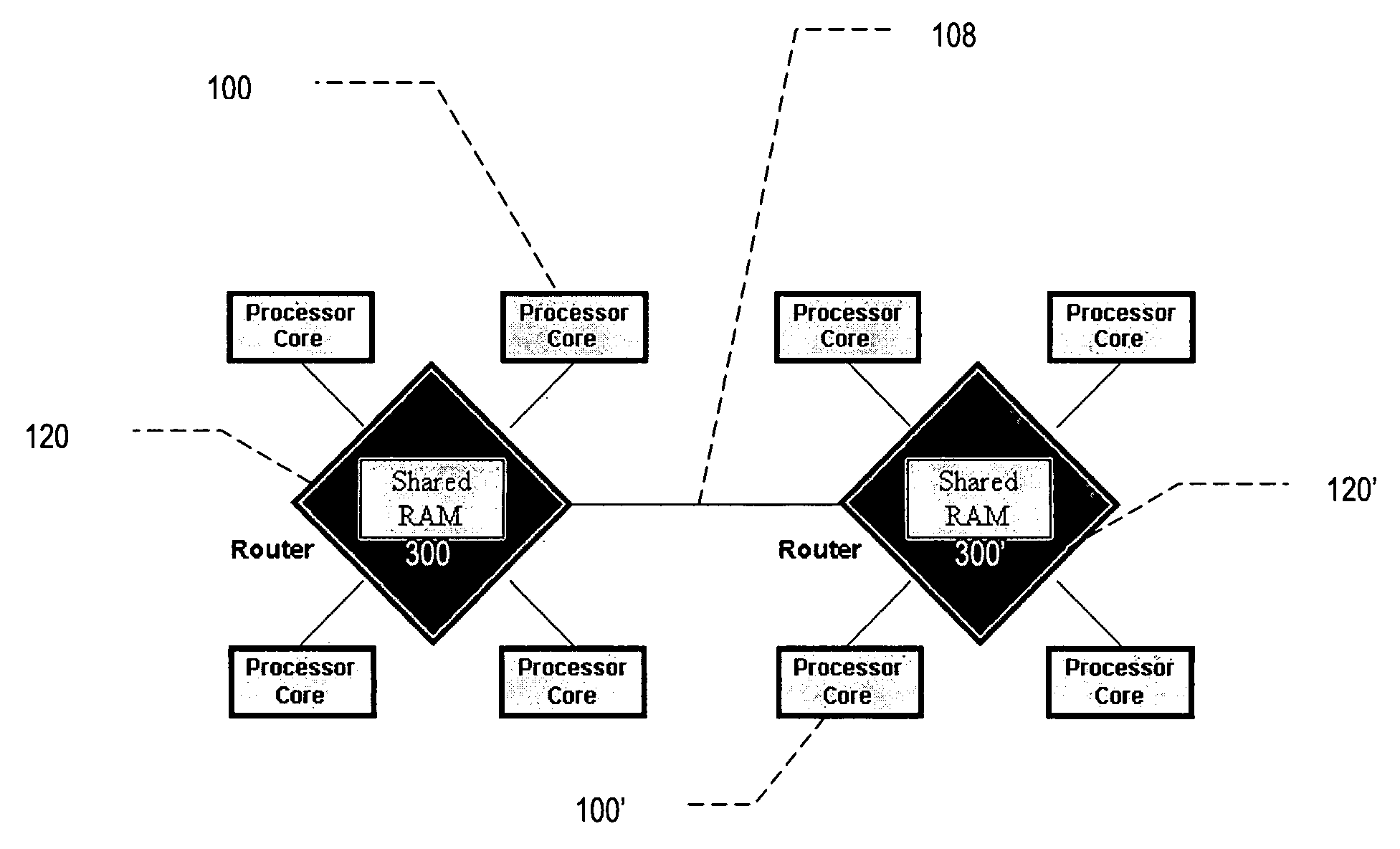

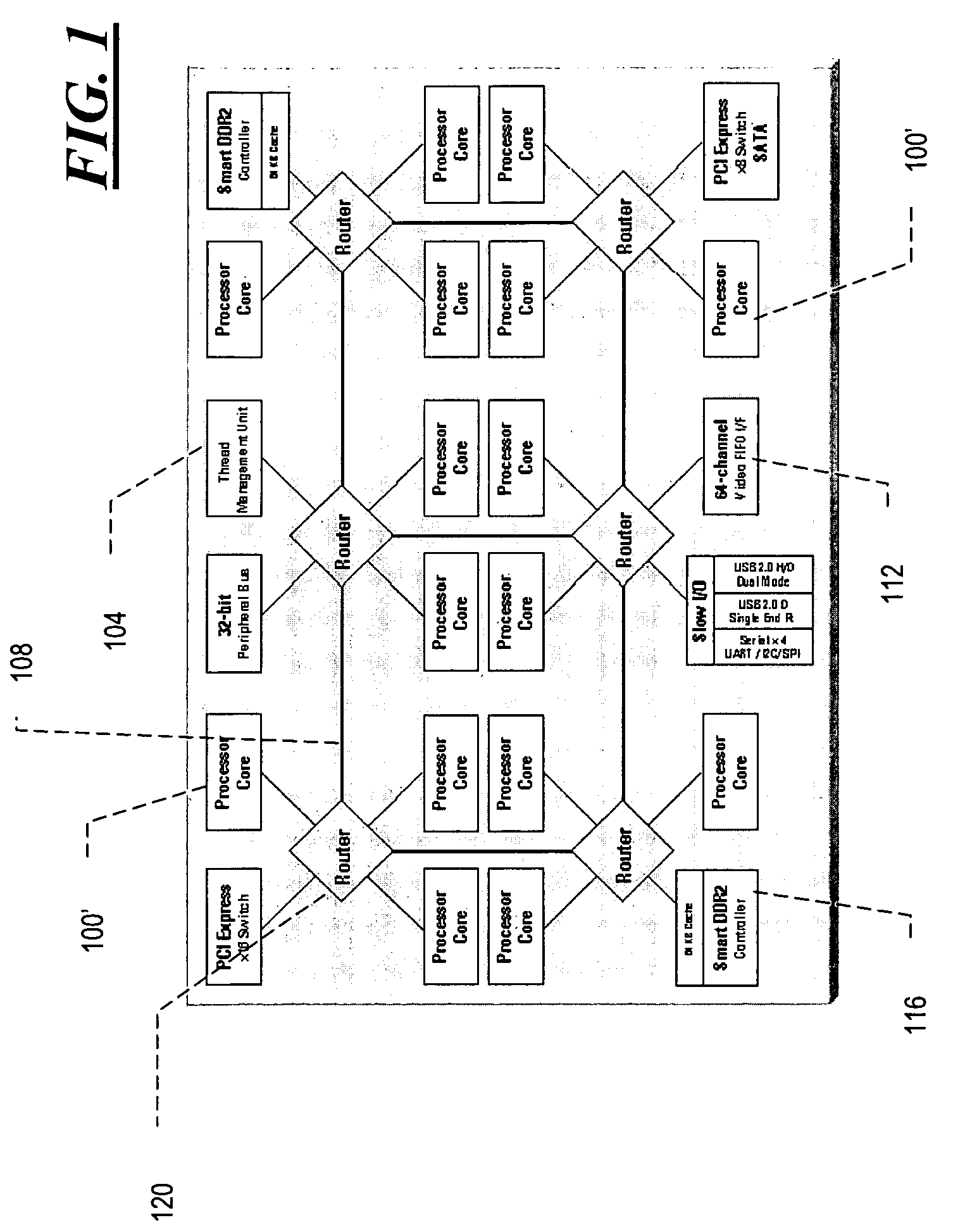

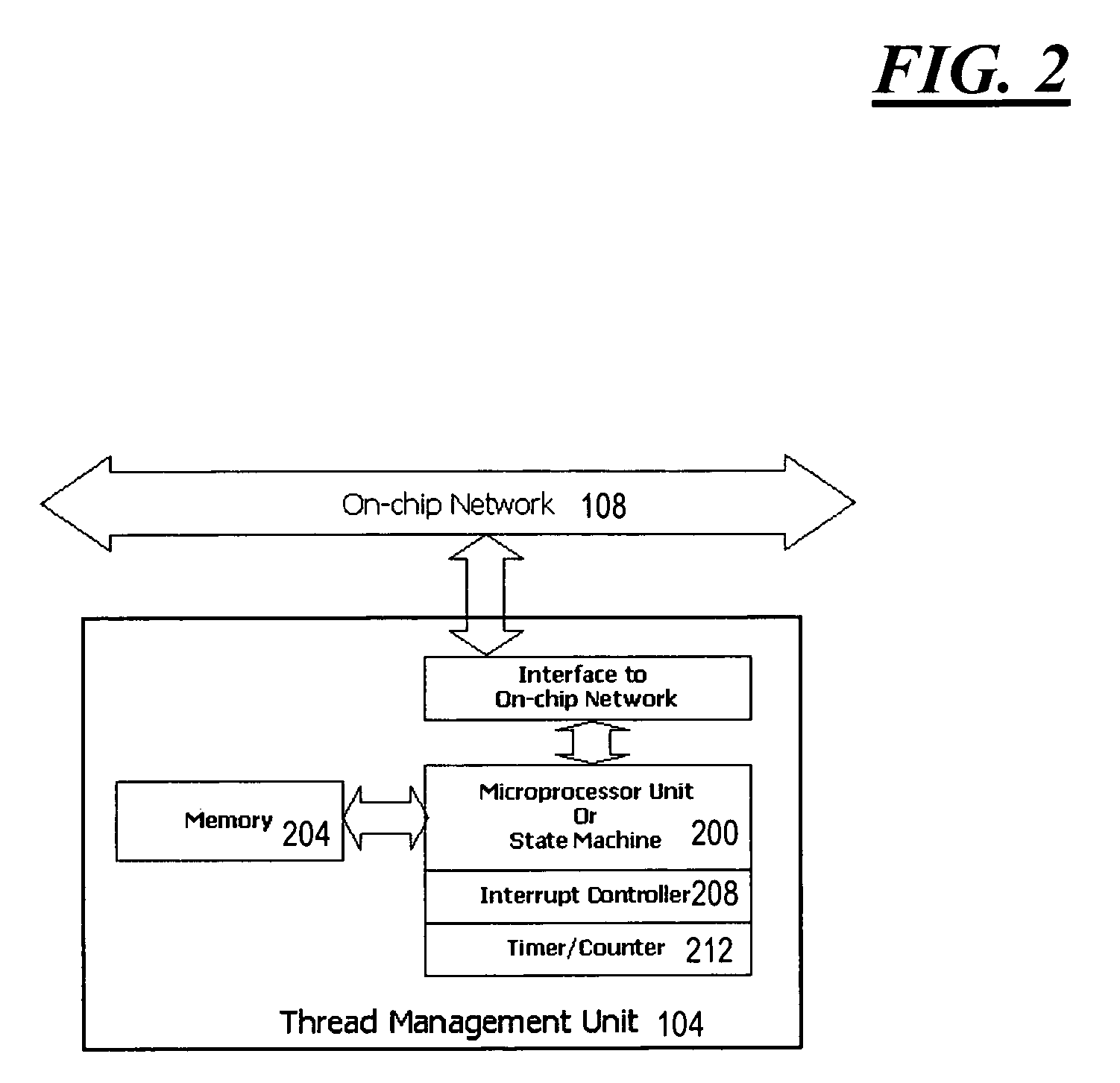

Shared memory for multi-core processors

InactiveUS20080307422A1Low latency accessReduced interconnect trafficEnergy efficient ICTProgram control using wired connectionsMulti-core processorControl logic

A shared memory for multi-core processors. Network components configured for operation in a multi-core processor include an integrated memory that is suitable for, e.g., use as a shared on-chip memory. The network component also includes control logic that allows access to the memory from more than one processor core. Typical network components provided in various embodiments of the present invention include routers and switches.

Owner:BOSTON CIRCUITS

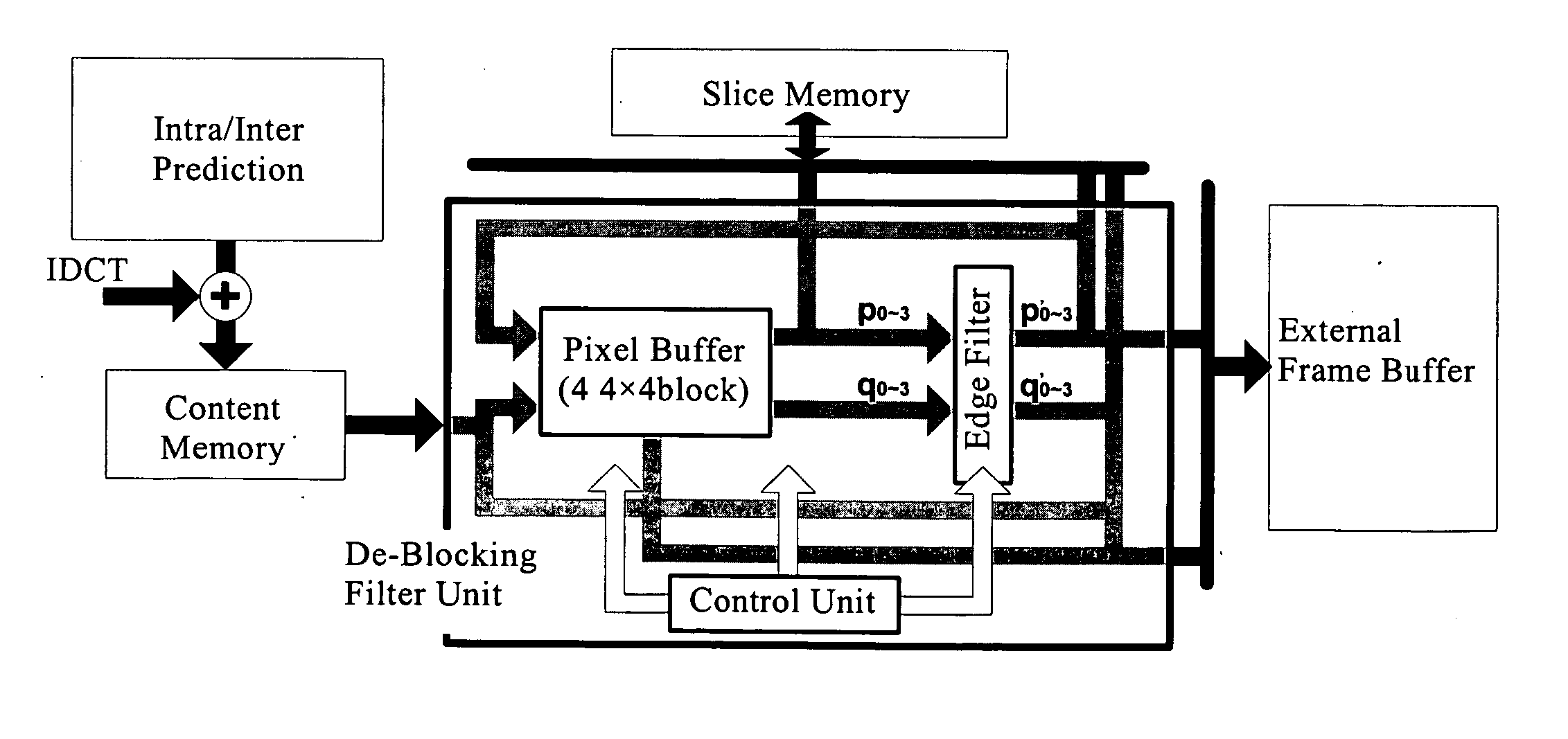

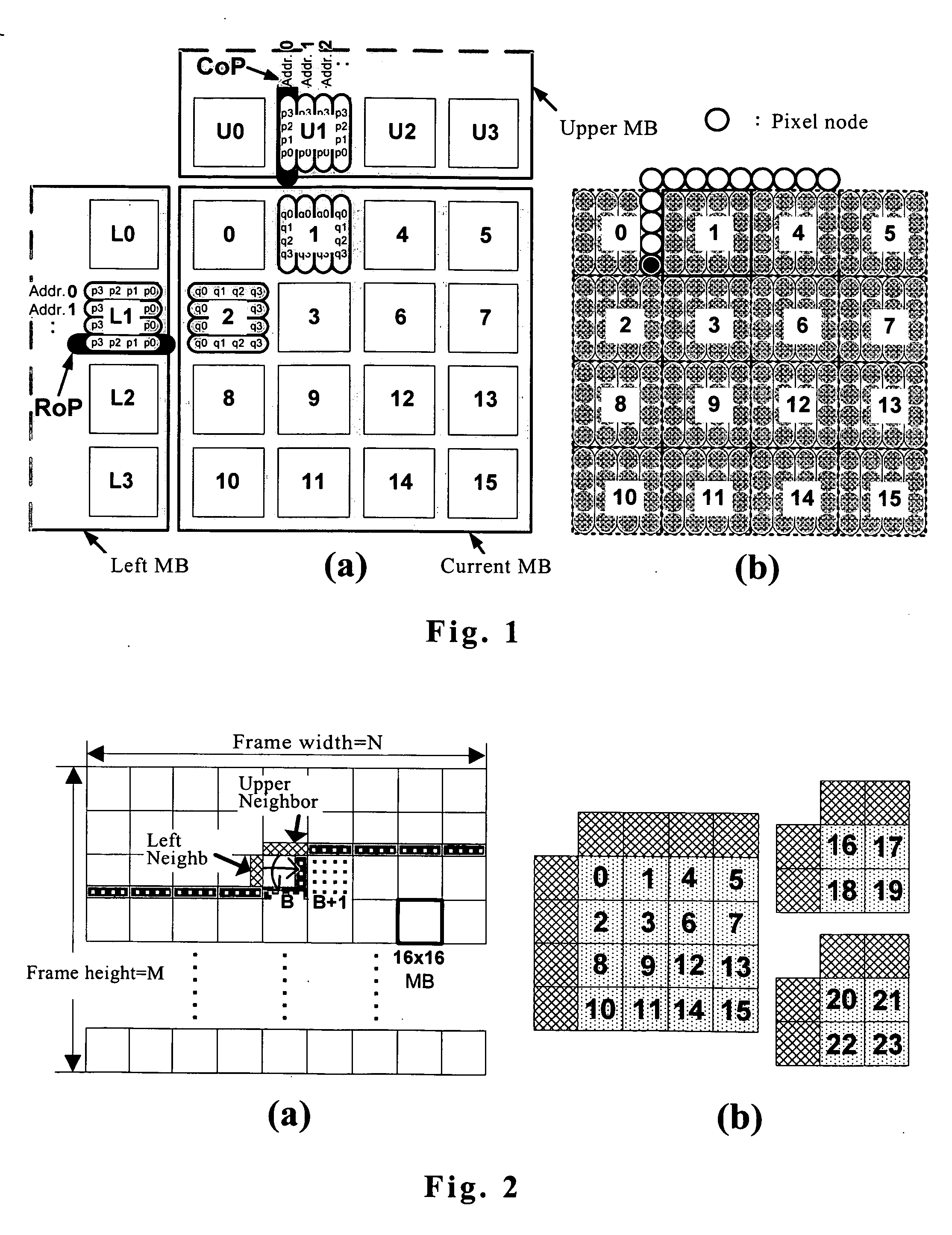

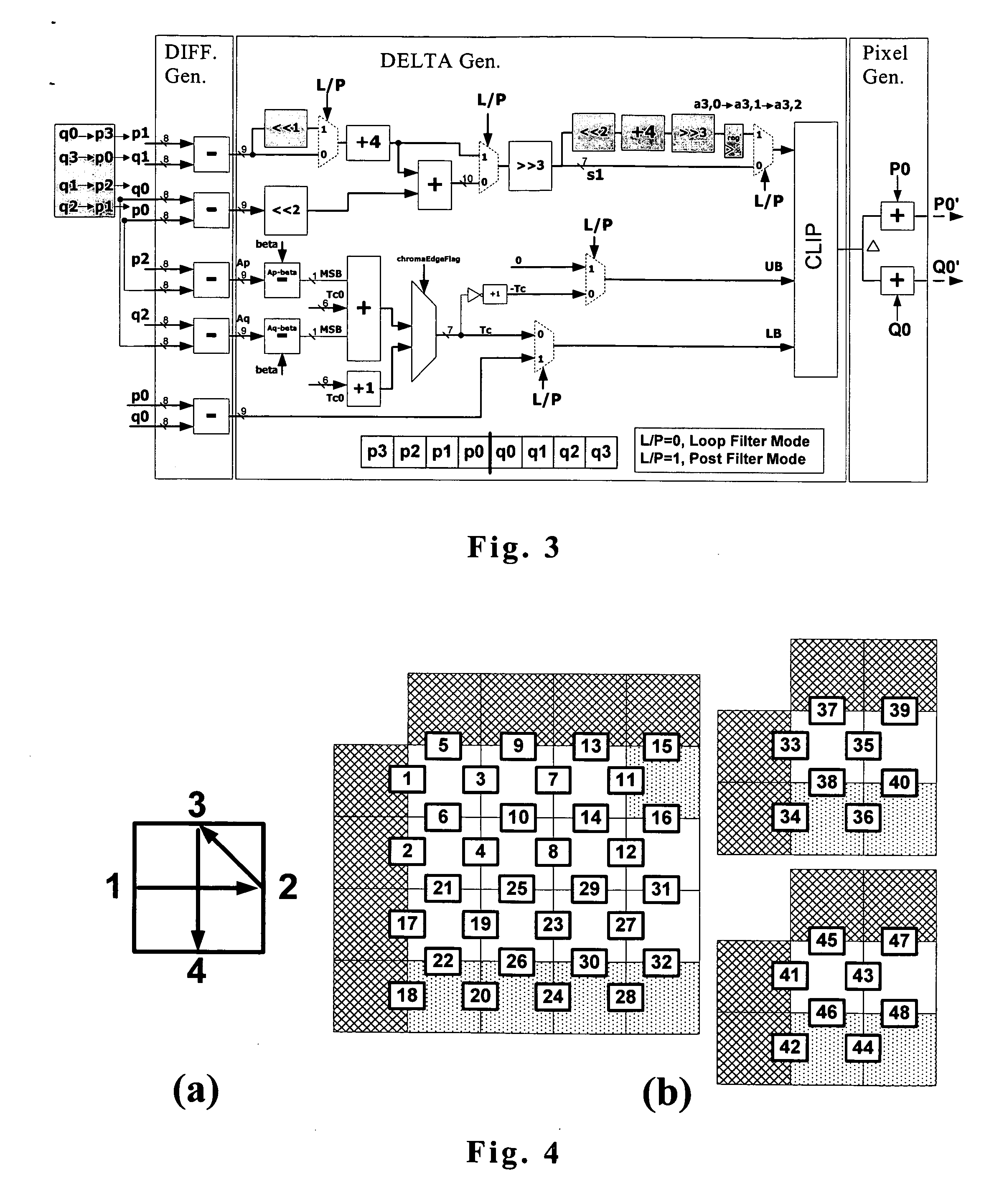

Dual-mode high throughput de-blocking filter

InactiveUS20060262990A1Easy to integrateReduce memory accessCharacter and pattern recognitionDigital video signal modificationLoop filterRound complexity

This invention provides the unique and high-throughput architecture for multiple video standards. Particularly, we propose a novel scheme to integrate the standard in-loop filter and the informative post-loop filter. Due to the non-standardization of post filter, it provides high freedom to develop a certain suitable algorithm for the integration with loop-filter. We modify the post filter algorithm to make a compromise between hardware integration complexity and performance loss. Further, we propose a hybrid scheduling to reduce the processing cycles and improve the system throughput. The main idea is that we use four pixel buffers to keep the intermediate pixel value and perform the horizontal and vertical filtering process in one hybrid scheduling flow. In our approach, we reduce processing cycles, and the synthesized gate counts are very small. Meanwhile, the synthesized results also indicate lower cost for hardware.

Owner:NAT CHIAO TUNG UNIV

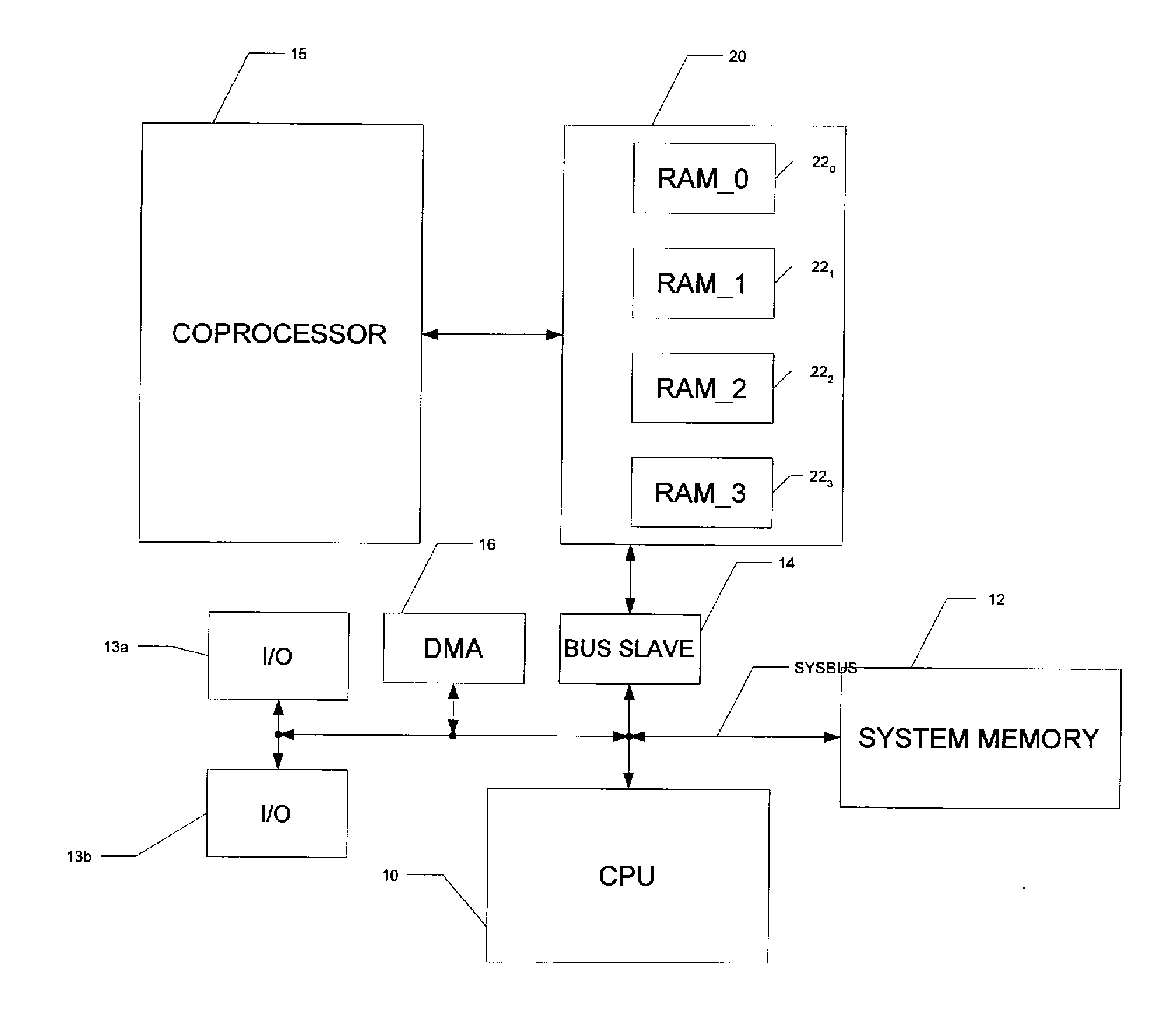

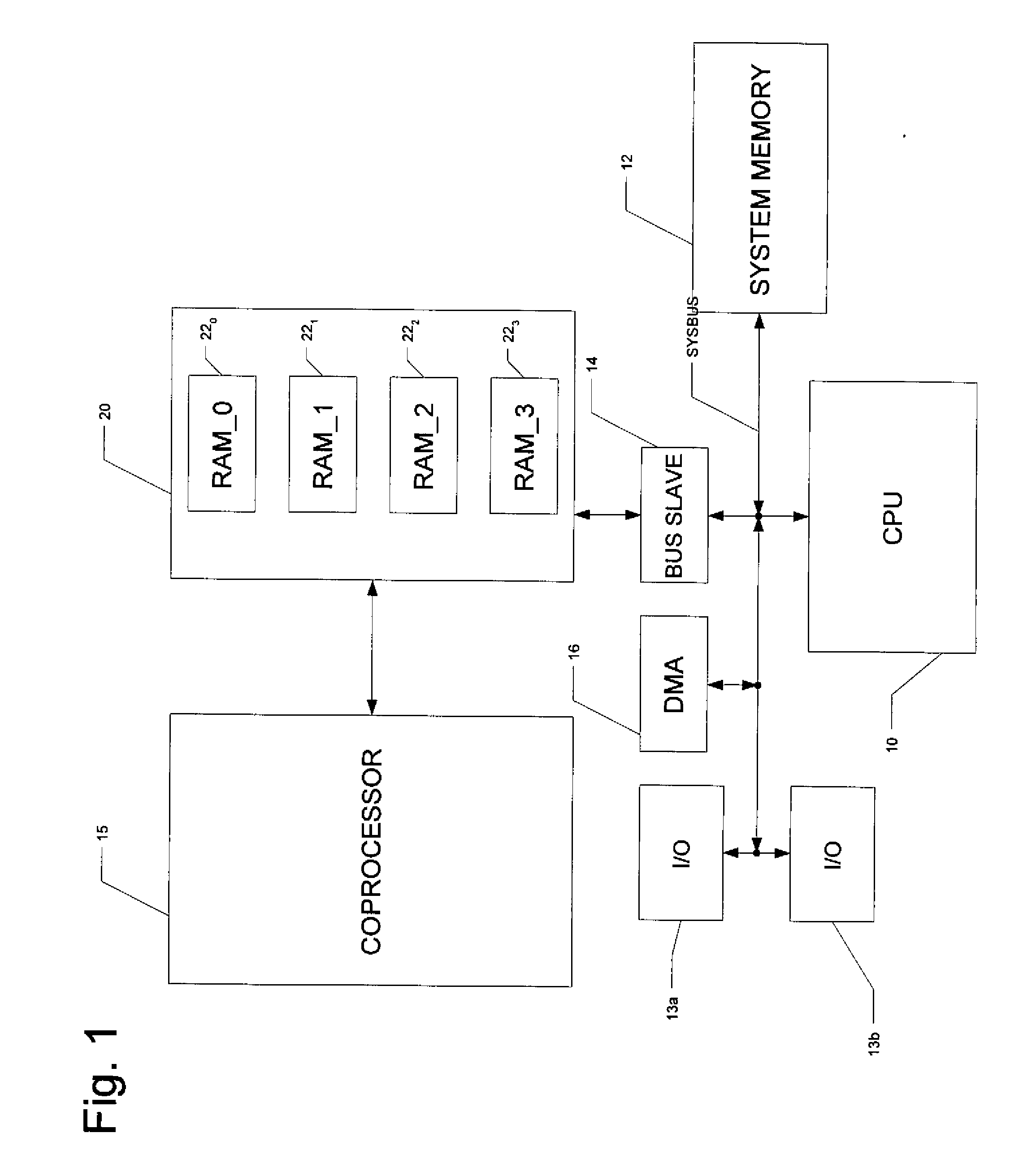

Low-Power Co-Processor Architecture

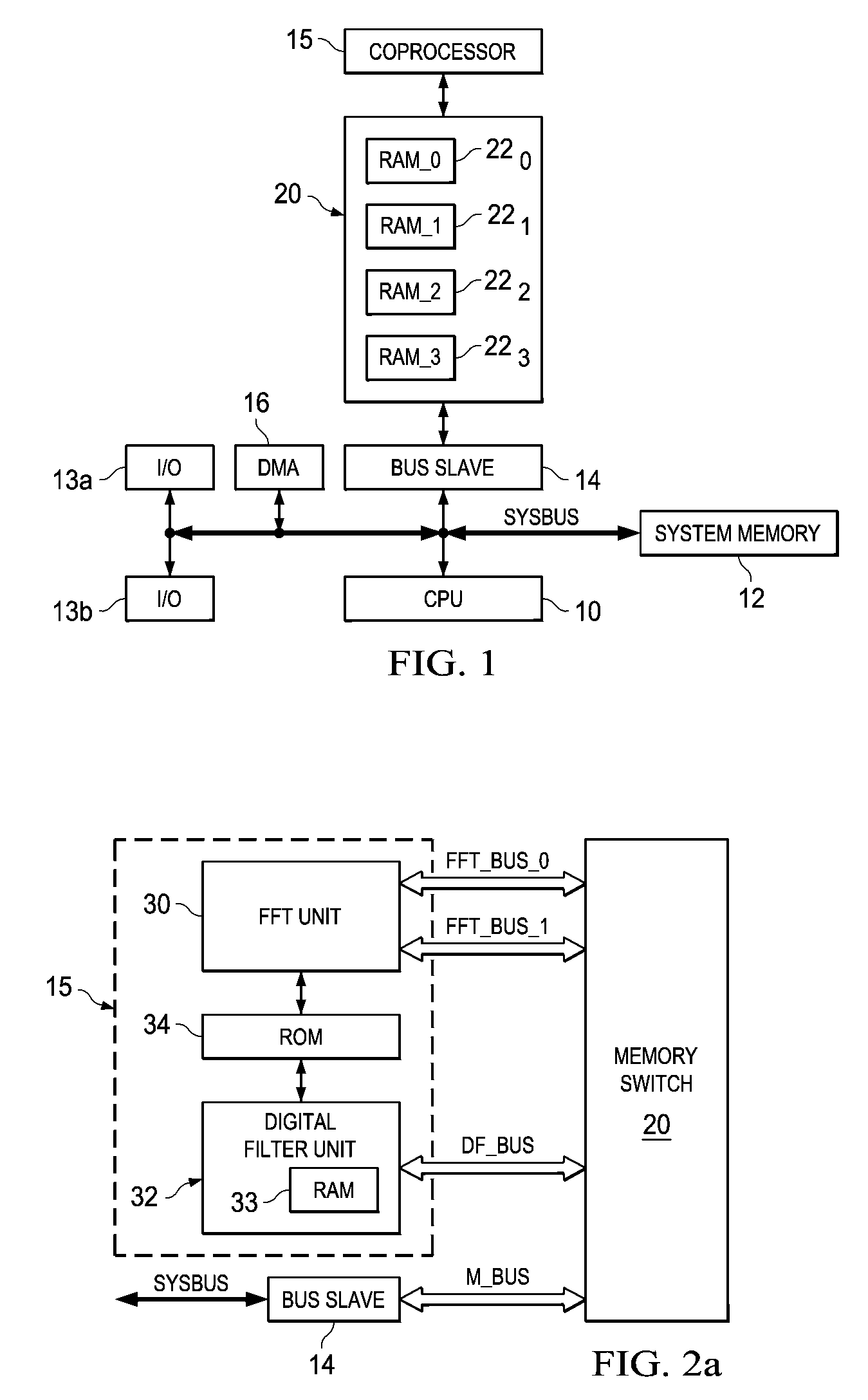

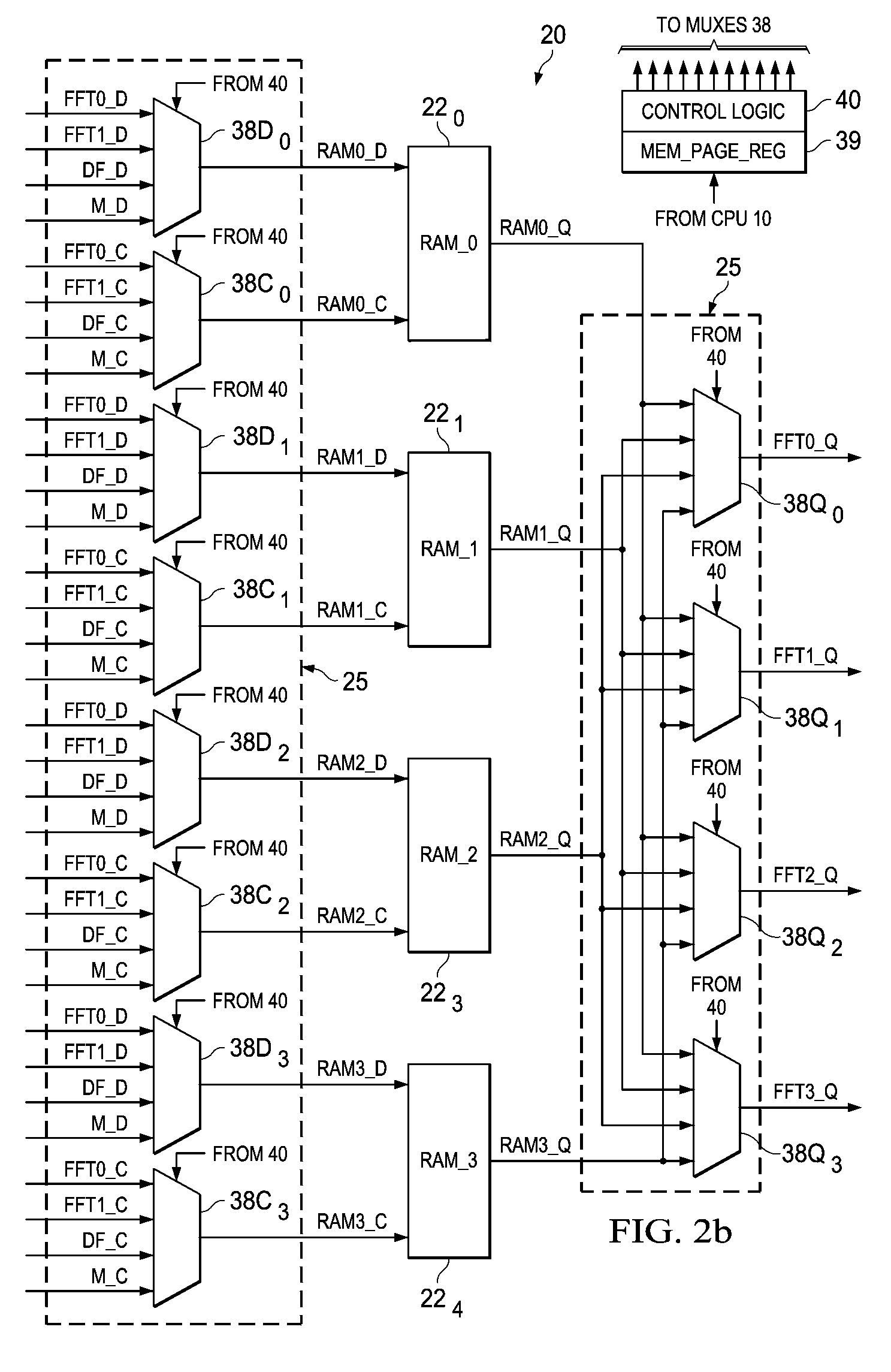

ActiveUS20070113048A1Reduce memory accessEnergy efficient ICTGeneral purpose stored program computerCoprocessorMultiplexer

A system architecture including a co-processor and a memory switch resource is disclosed. The memory switch includes multiple memory blocks and switch circuitry for selectably coupling processing units of the co-processor, and also a bus slave circuit coupled to a system bus of the system, to selected ones of the memory blocks. The memory switch may be constructed as an array of multiplexers, controlled by control logic of the memory switch in response to the contents of a control register. The various processing units of the co-processor are each able to directly access one of the memory blocks, as controlled by the switch circuitry. Following processing of a block of data by one of the processing units, the memory switch associates the memory blocks with other functional units, thus moving data from one functional unit to another without requiring reading and rewriting of the data.

Owner:TEXAS INSTR INC

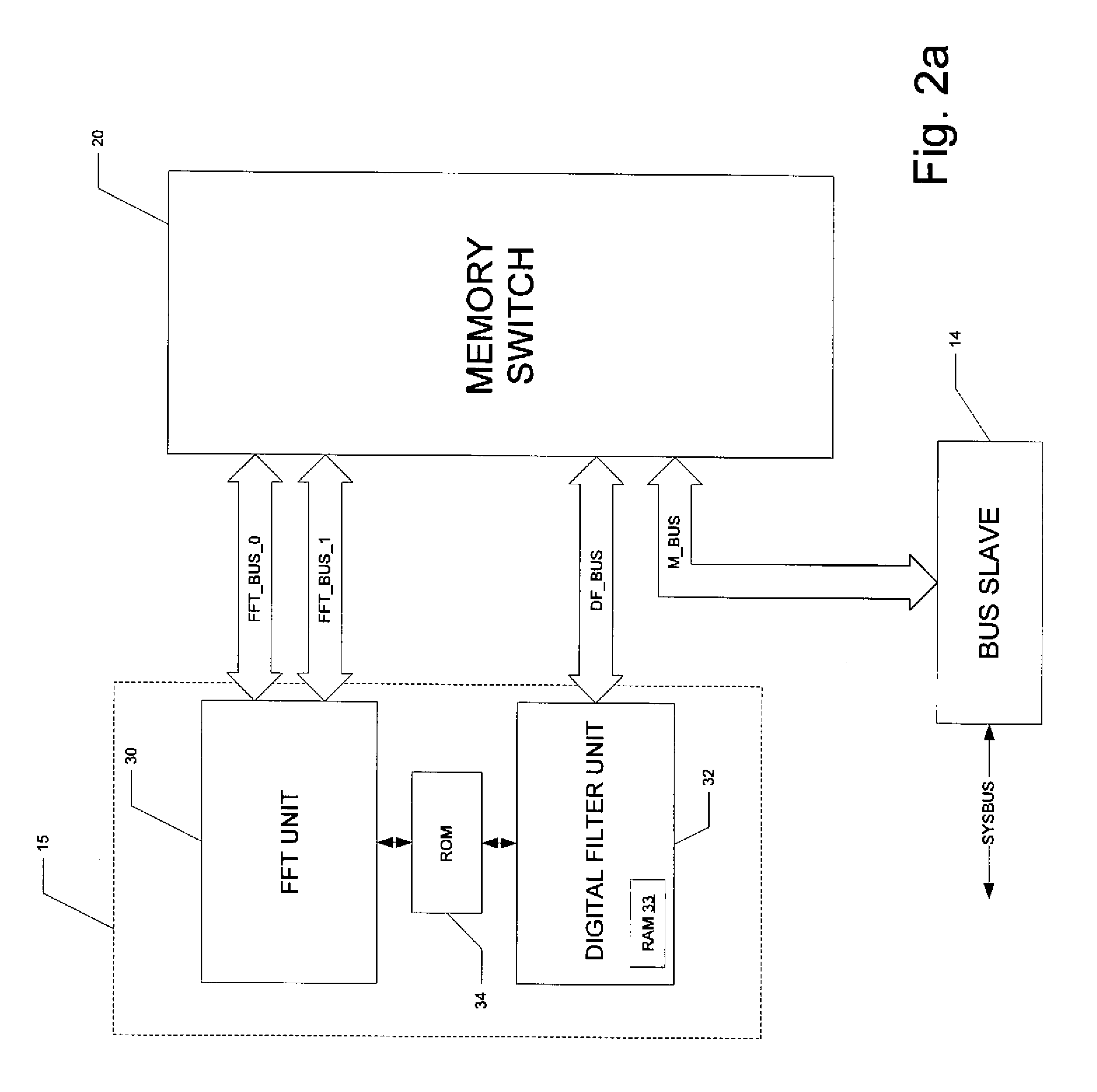

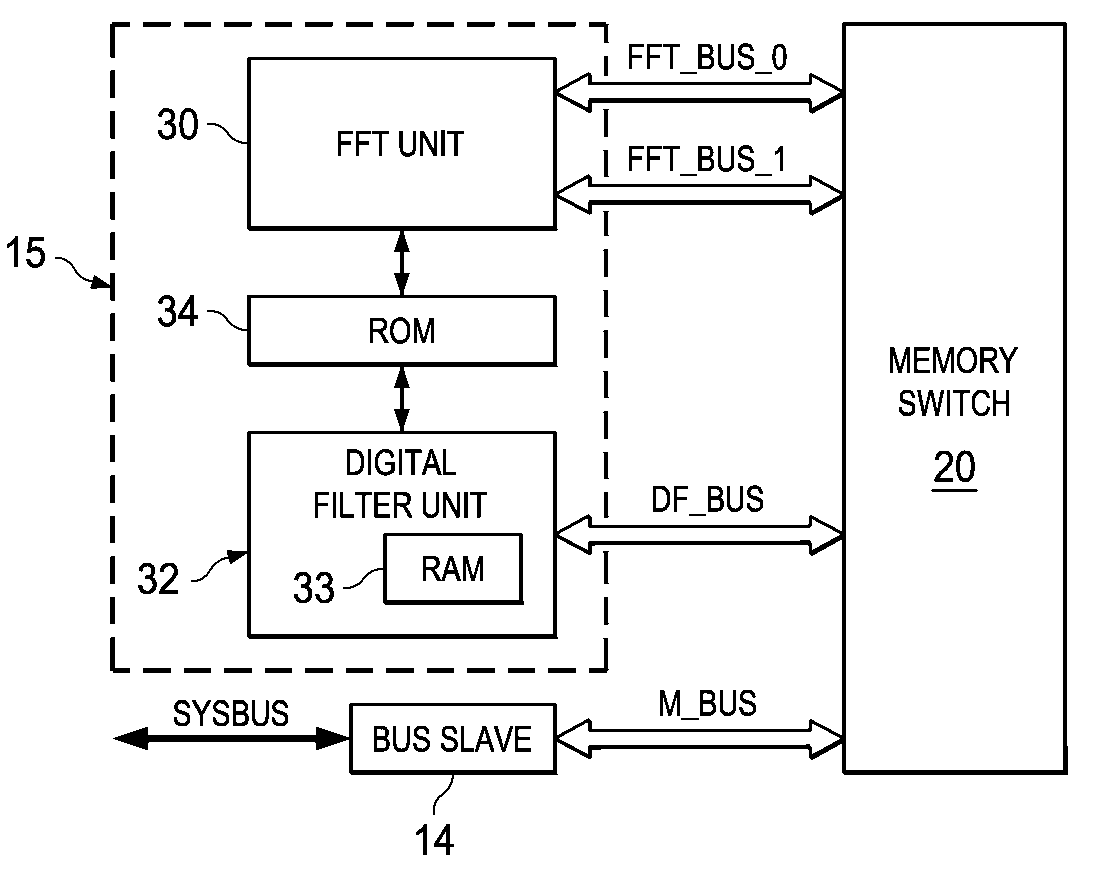

Pipelined access by FFT and filter units in co-processor and system bus slave to memory blocks via switch coupling based on control register content

ActiveUS7587577B2Reduce memory accessEnergy efficient ICTGeneral purpose stored program computerMultiplexerCoupling

A system architecture including a co-processor and a memory switch resource is disclosed. The memory switch includes multiple memory blocks and switch circuitry for selectably coupling processing units of the co-processor, and also a bus slave circuit coupled to a system bus of the system, to selected ones of the memory blocks. The memory switch may be constructed as an array of multiplexers, controlled by control logic of the memory switch in response to the contents of a control register. The various processing units of the co-processor are each able to directly access one of the memory blocks, as controlled by the switch circuitry. Following processing of a block of data by one of the processing units, the memory switch associates the memory blocks with other functional units, thus moving data from one functional unit to another without requiring reading and rewriting of the data.

Owner:TEXAS INSTR INC

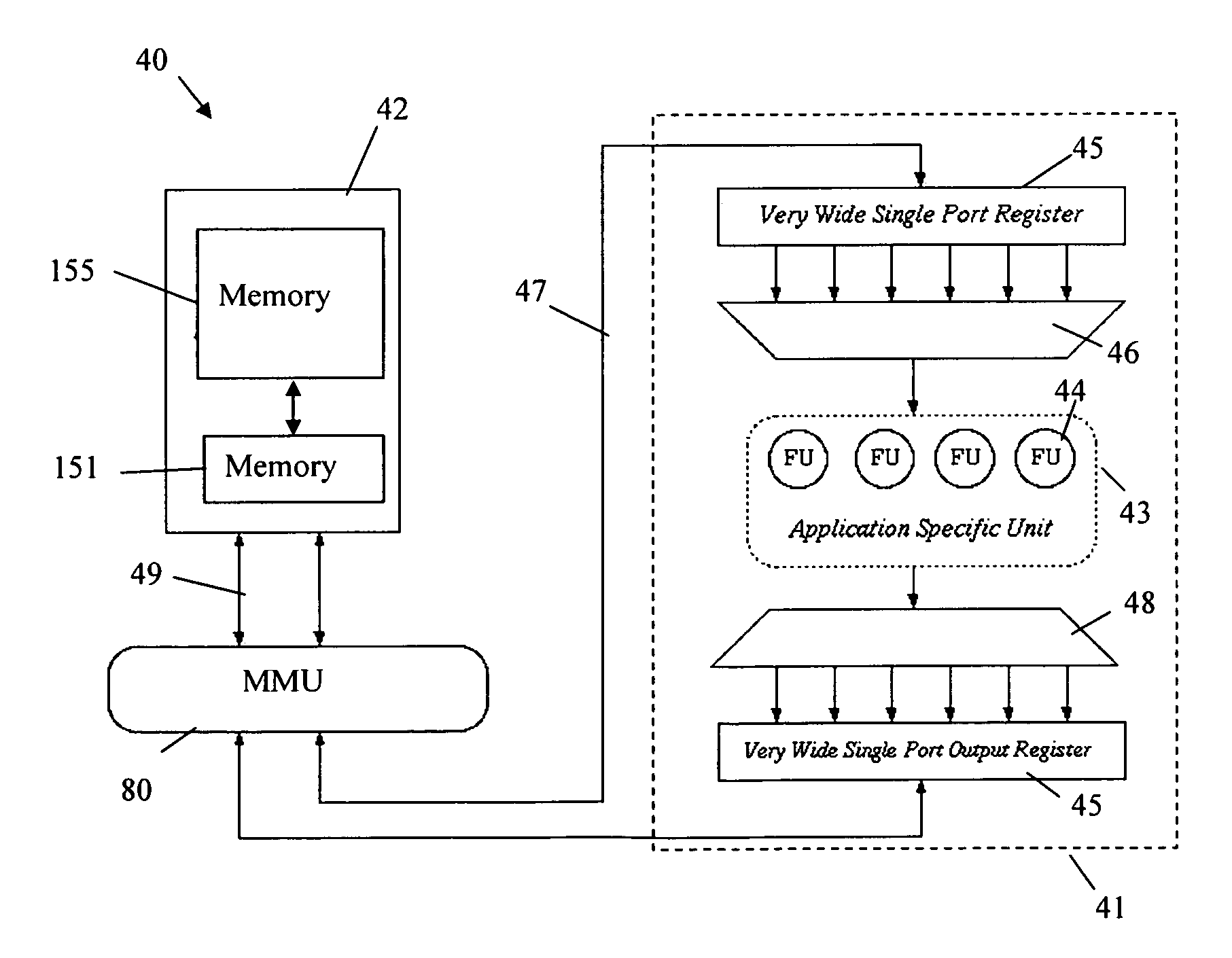

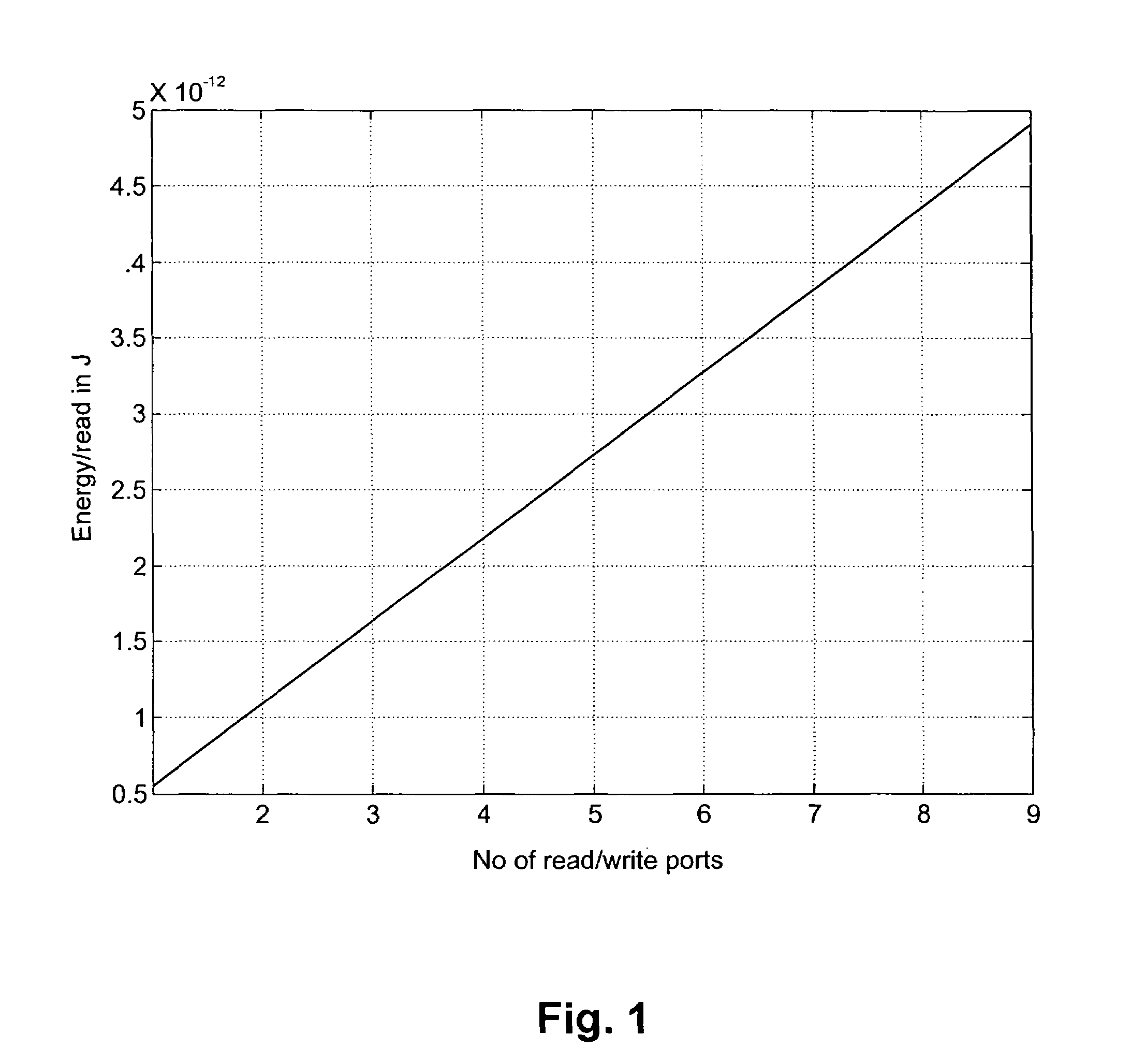

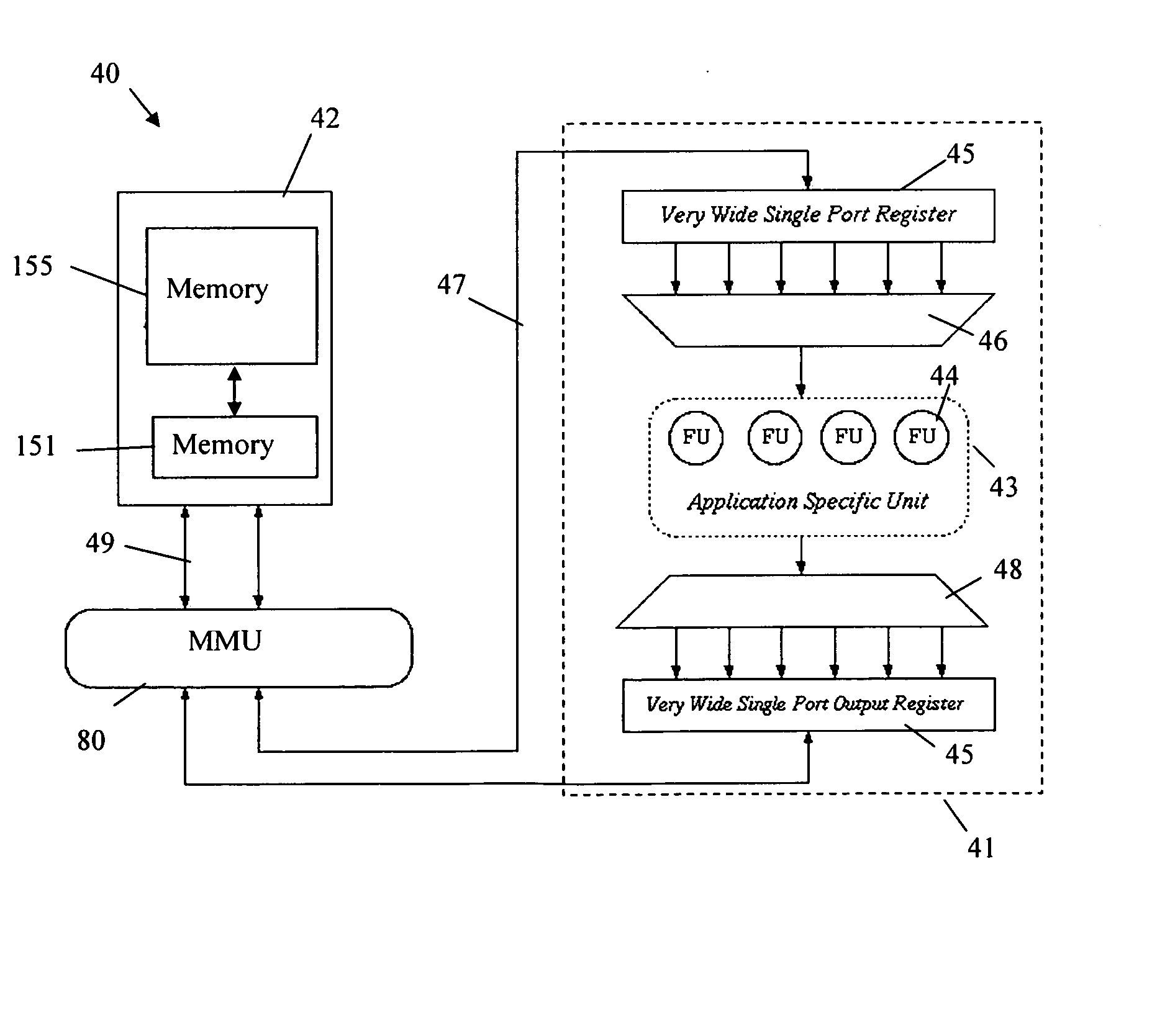

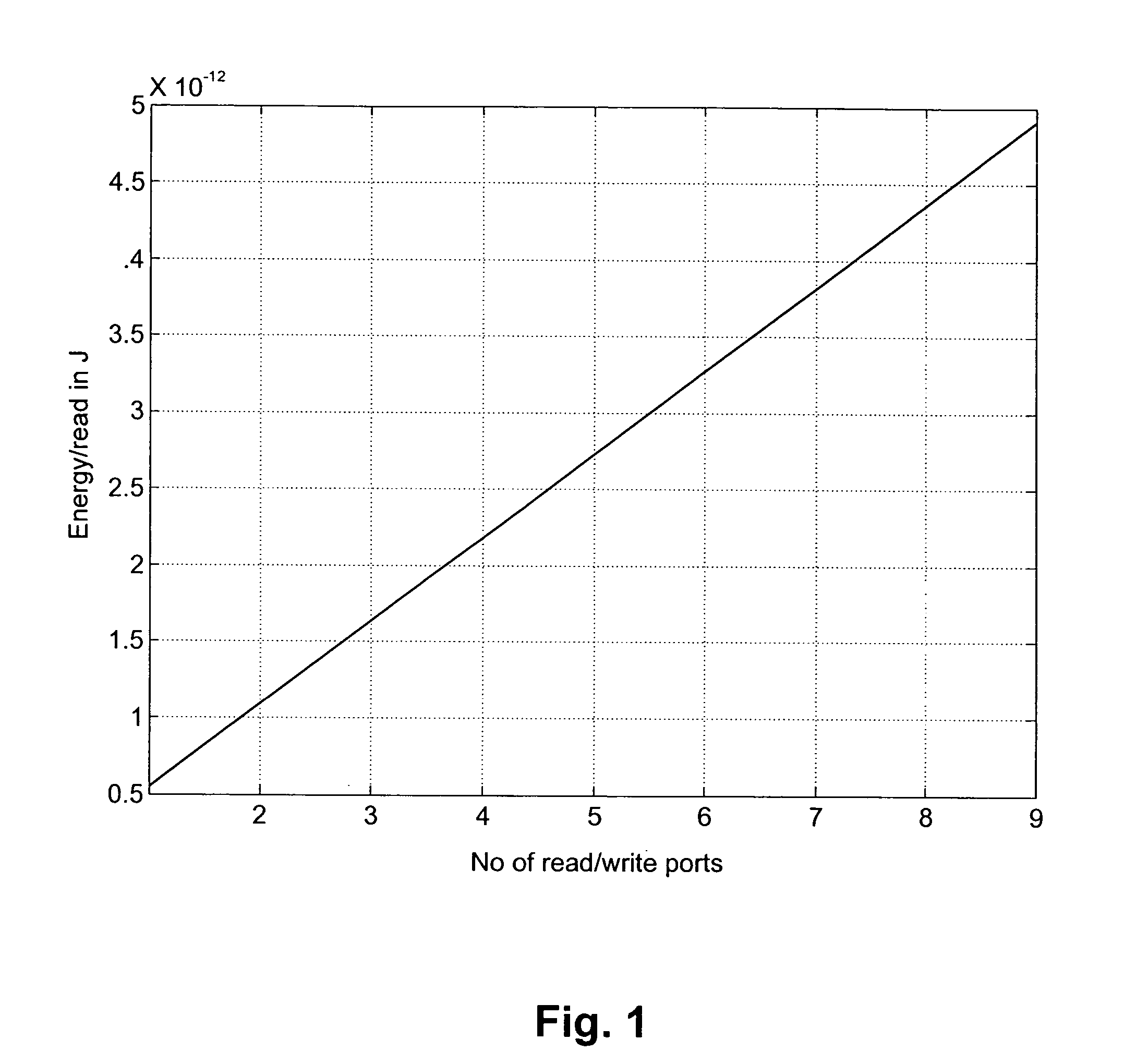

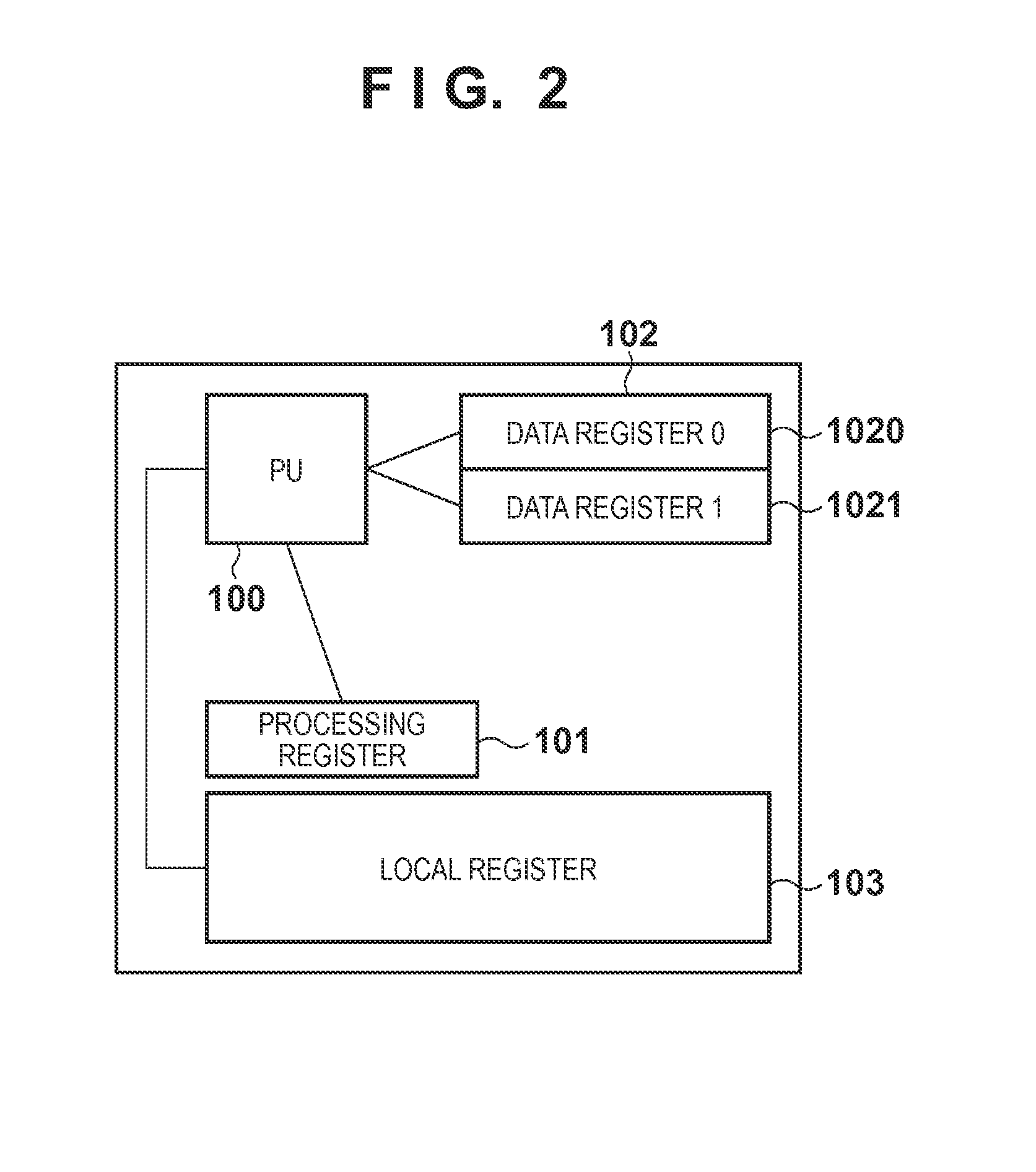

Ultra low power ASIP architecture

ActiveUS7694084B2Reduce power consumptionReduce memory accessRegister arrangementsDigital computer detailsMicrocomputerParallel computing

A microcomputer architecture comprises a microprocessor unit and a first memory unit, the microprocessor unit comprising a functional unit and at least one data register, the functional unit and the at least one data register being linked to a data bus internal to the microprocessor unit. The data register is a wide register comprising a plurality of second memory units which are capable to each contain one word. The wide register is adapted so that the second memory units are simultaneously accessible by the first memory unit, and so that at least part of the second memory units are separately accessible by the functional unit.

Owner:INTERUNIVERSITAIR MICRO ELECTRONICS CENT (IMEC VZW)

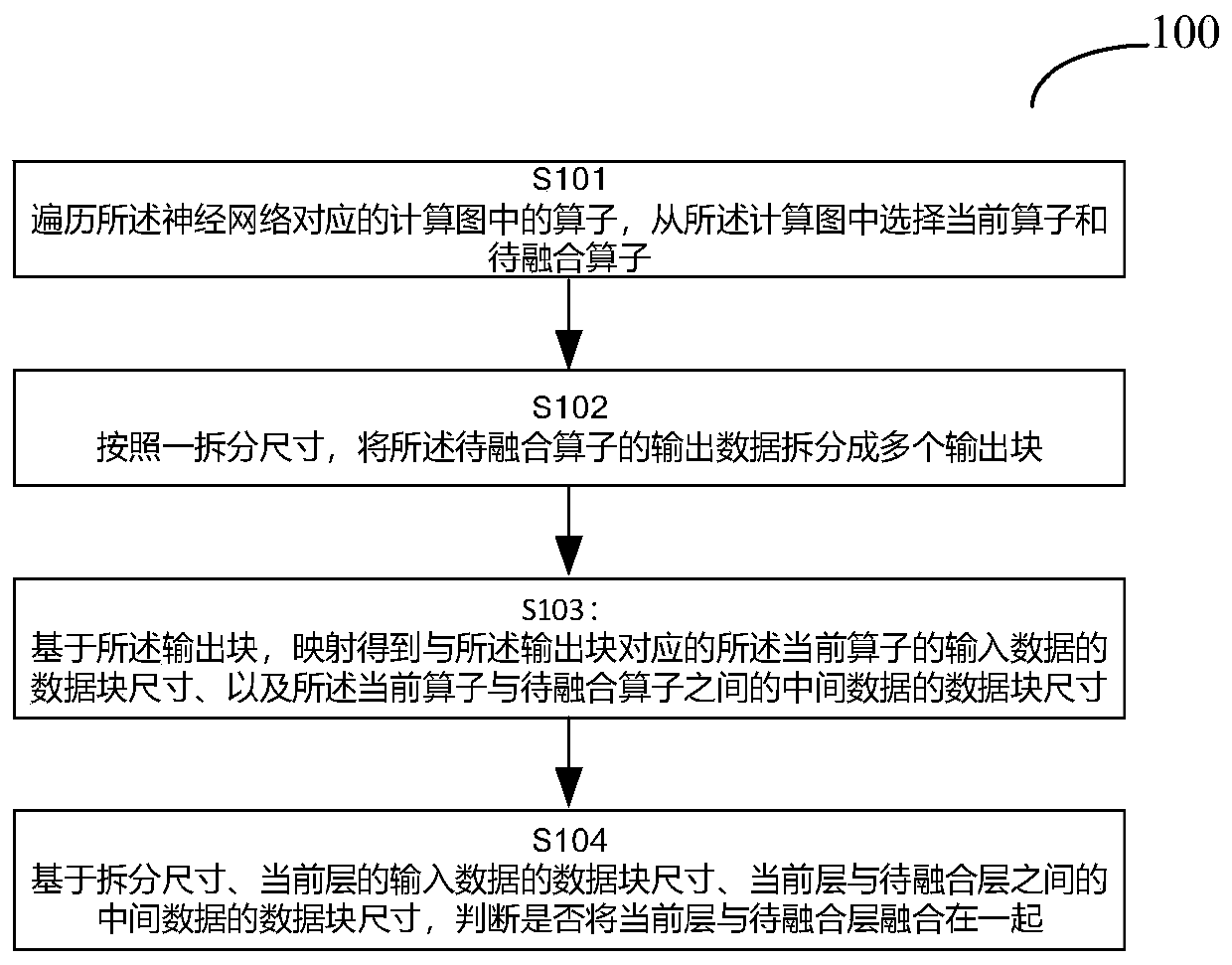

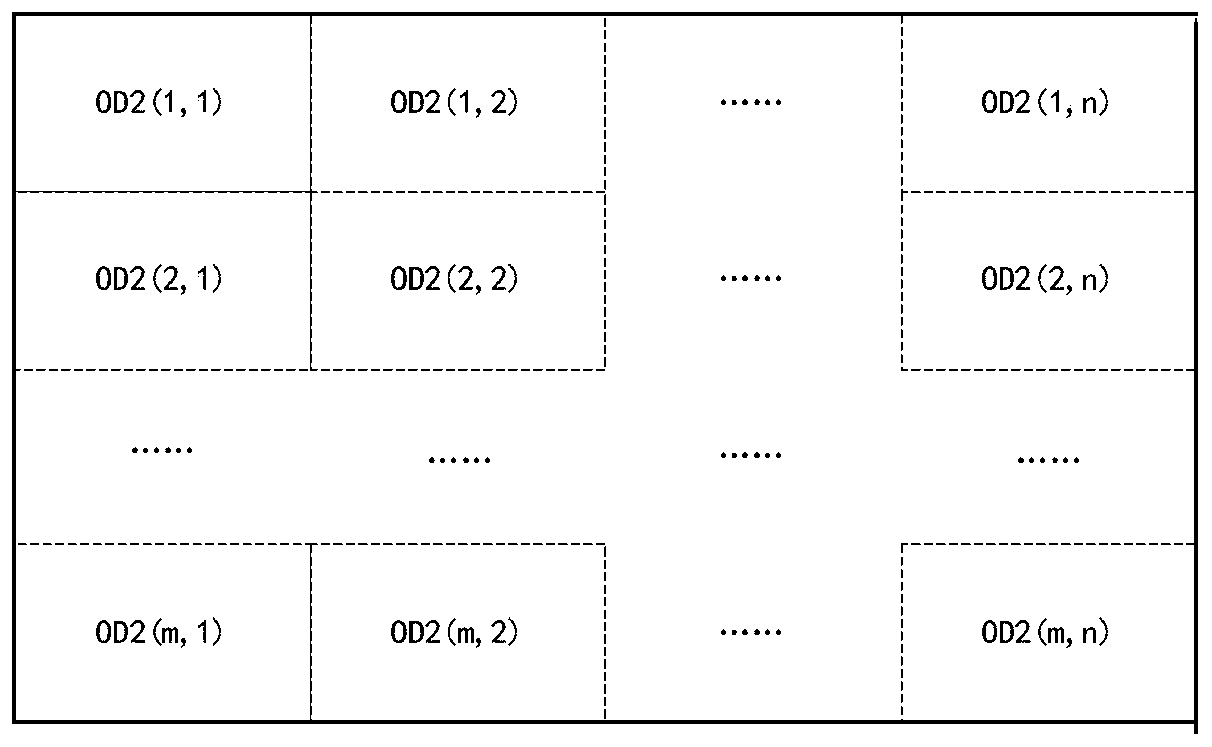

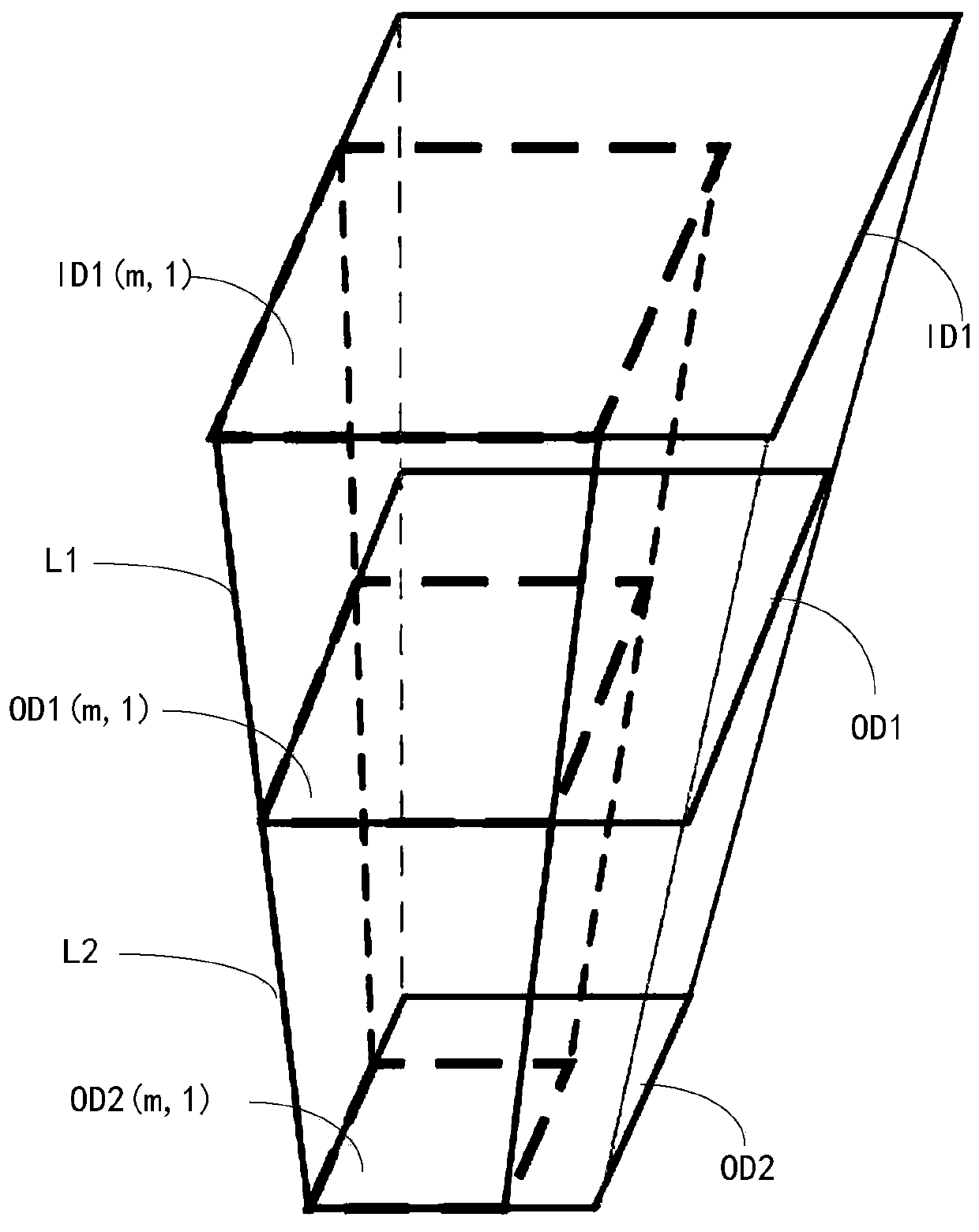

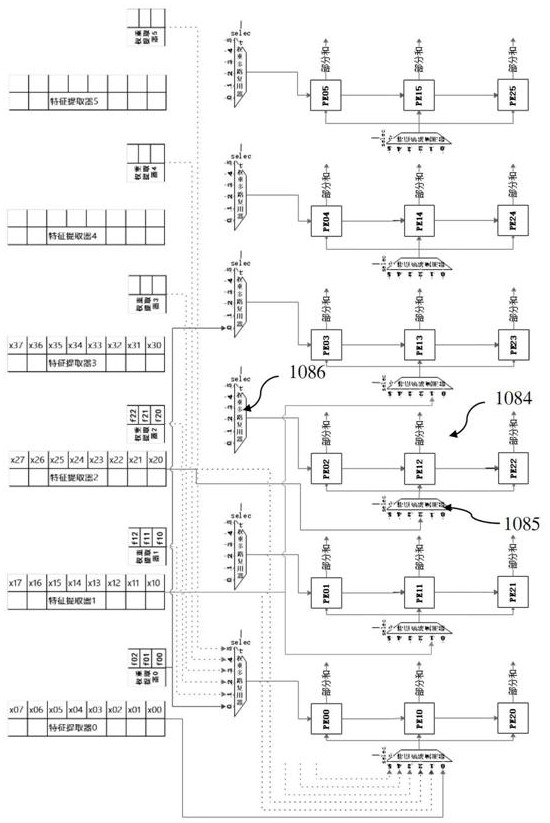

Operator fusion method for neural network and related product thereof

ActiveCN110490309AReduce memory accessShorten the timeNeural architecturesPhysical realisationNerve networkAlgorithm

The invention relates to an operator fusion method for a neural network and a related product, and the method comprises the steps: traversing operators in a calculation graph corresponding to the neural network, and selecting a current operator and a to-be-fused operator from the calculation graph; splitting the output data of the operator to be fused into a plurality of output blocks according toa splitting size; based on the output block, mapping to obtain a data block size of input data of the current operator corresponding to the output block and a data block size of intermediate data between the current operator and an operator to be fused; and based on the splitting size, the data block size of the input data of the current operator and the data block size of the intermediate data between the current operator and the operator to be fused, judging whether the current operator and the operator to be fused are fused together or not.

Owner:CAMBRICON TECH CO LTD

Ultra low power ASIP architecture

ActiveUS20060212685A1Reduce power consumptionReduce memory accessRegister arrangementsDigital computer detailsMicrocomputerParallel computing

A microcomputer architecture comprises a microprocessor unit and a first memory unit, the microprocessor unit comprising a functional unit and at least one data register, the functional unit and the at least one data register being linked to a data bus internal to the microprocessor unit. The data register is a wide register comprising a plurality of second memory units which are capable to each contain one word. The wide register is adapted so that the second memory units are simultaneously accessible by the first memory unit, and so that at least part of the second memory units are separately accessible by the functional unit.

Owner:INTERUNIVERSITAIR MICRO ELECTRONICS CENT (IMEC VZW)

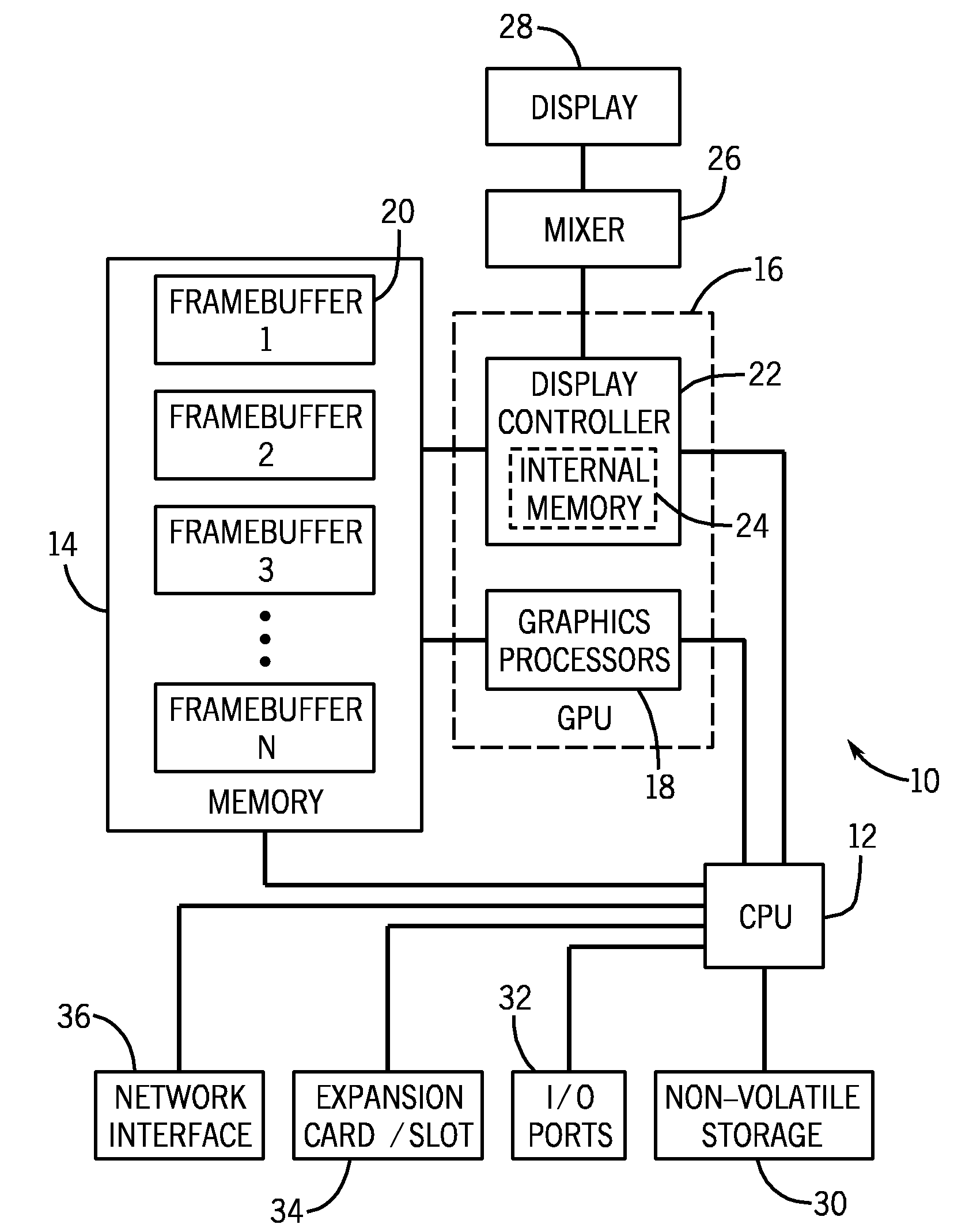

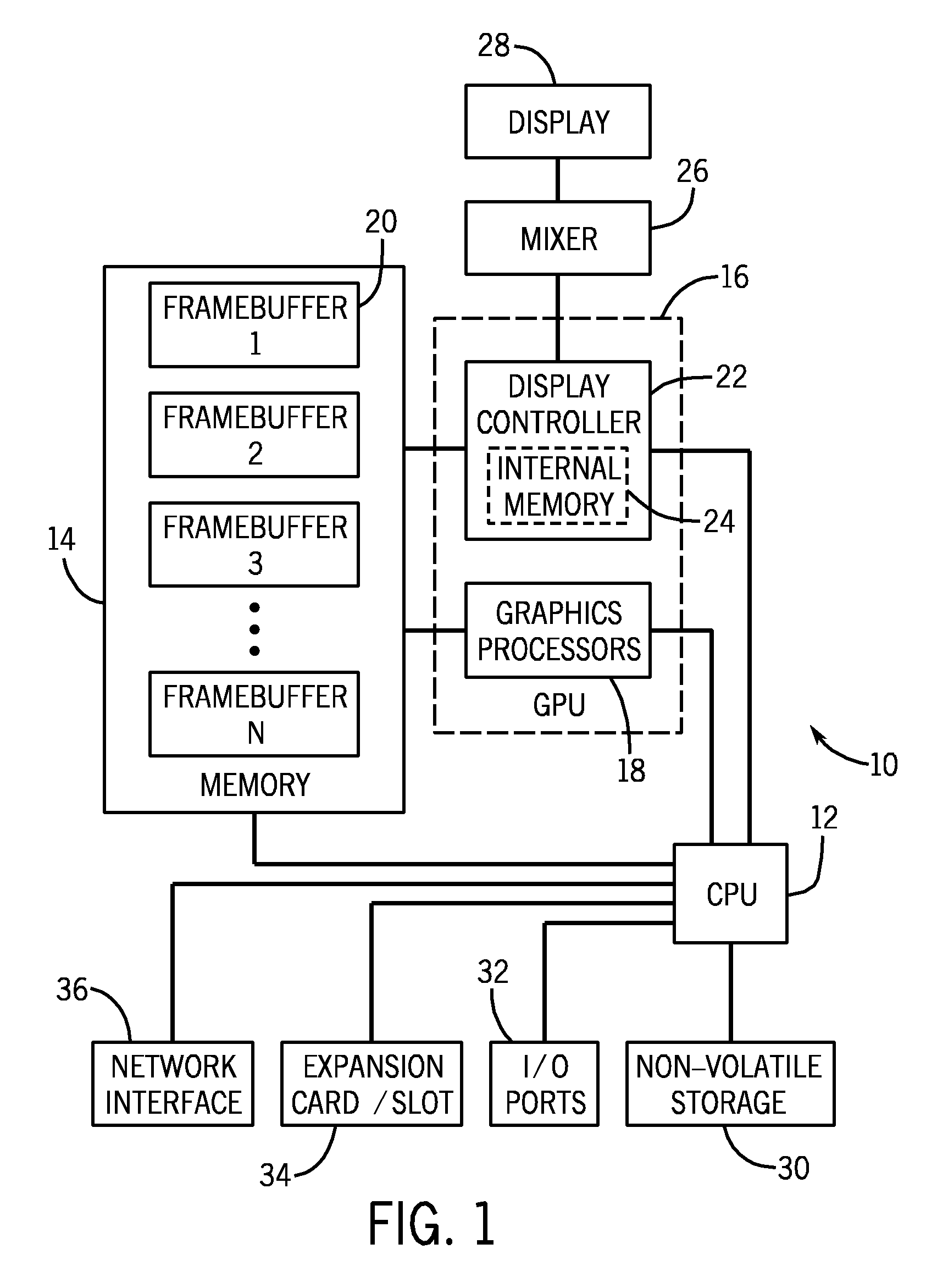

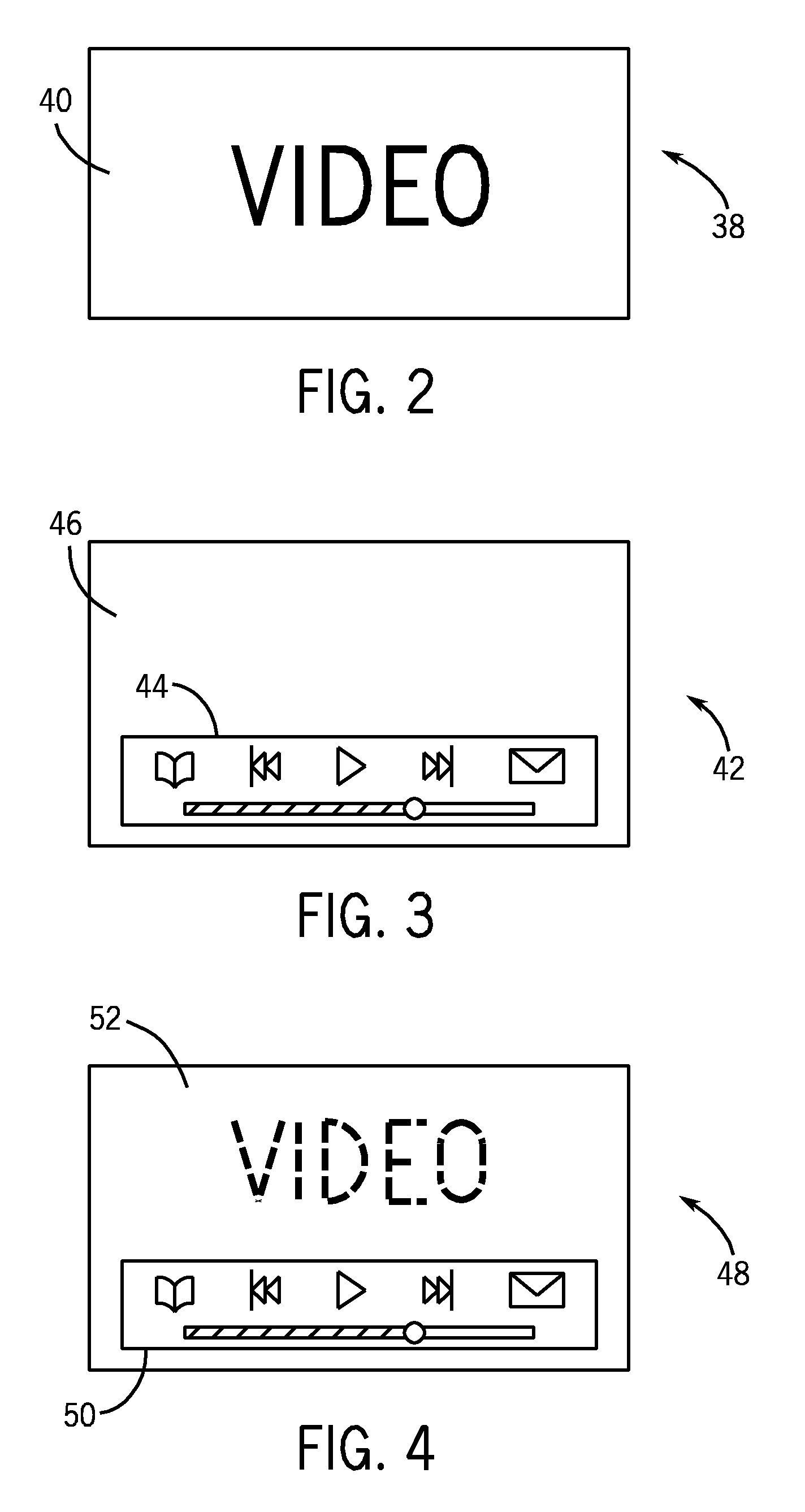

Method for reducing framebuffer memory accesses

InactiveUS20090201306A1Reduce memory accessCathode-ray tube indicatorsComputer hardwareComputer science

Owner:APPLE INC

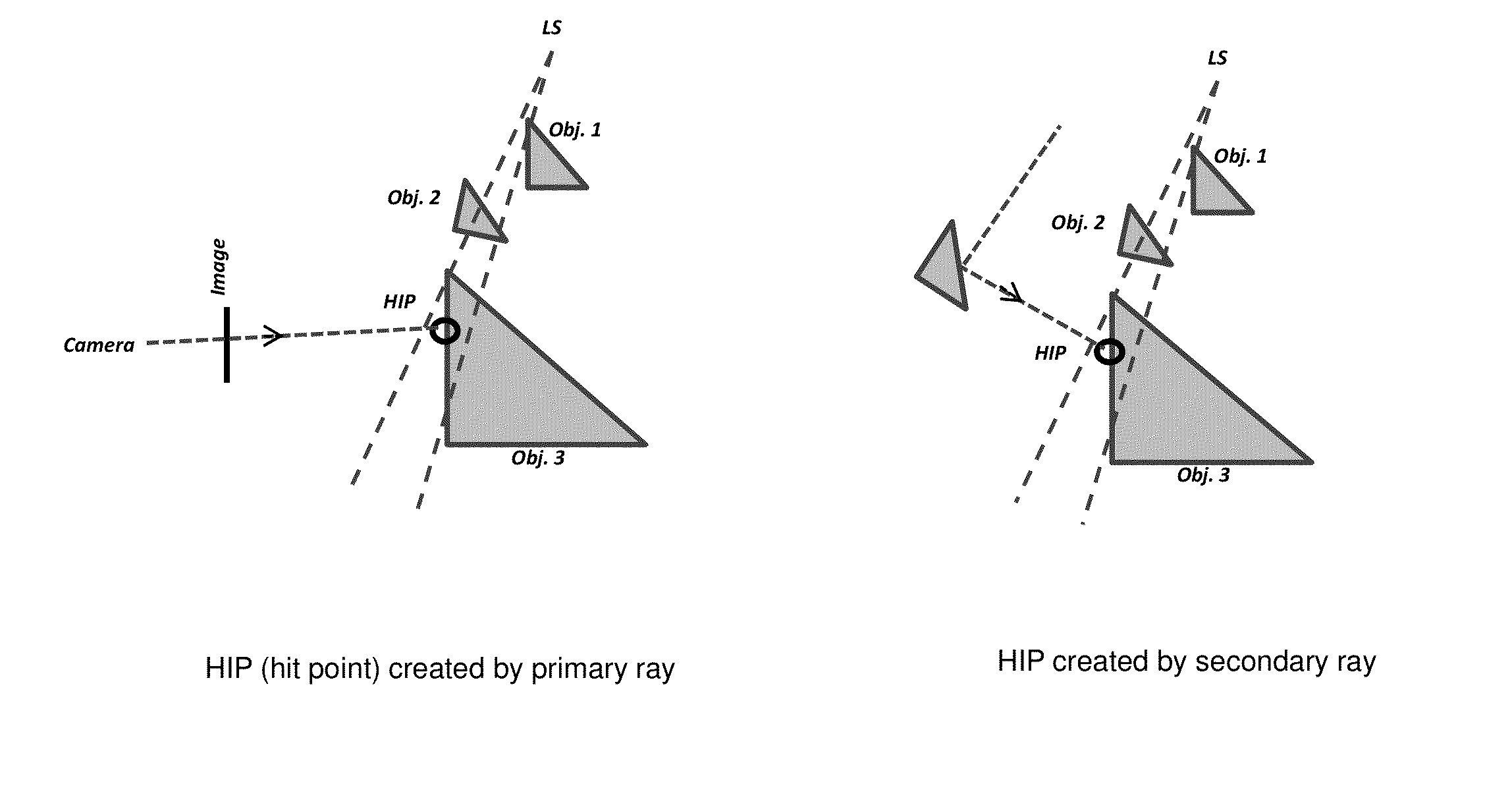

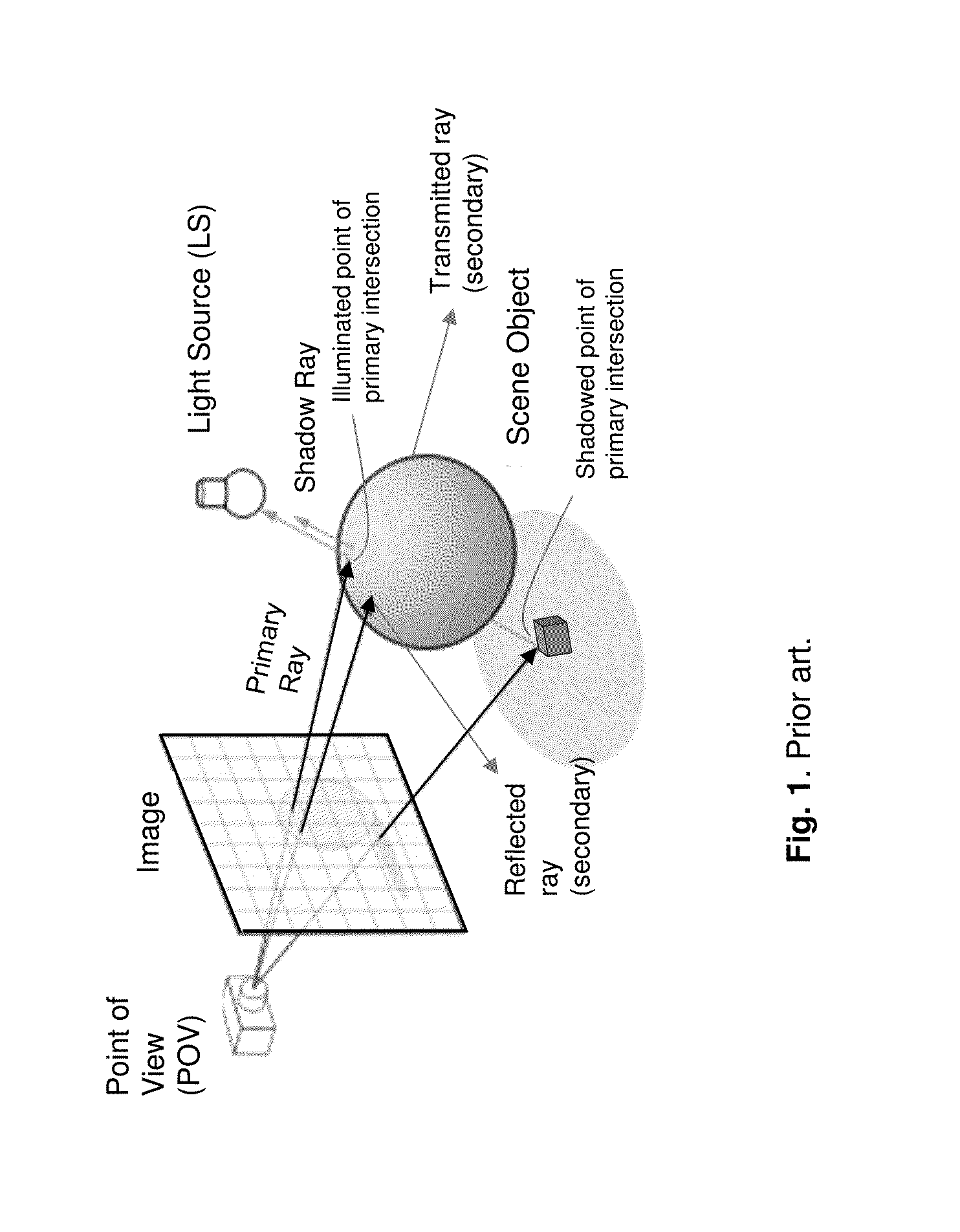

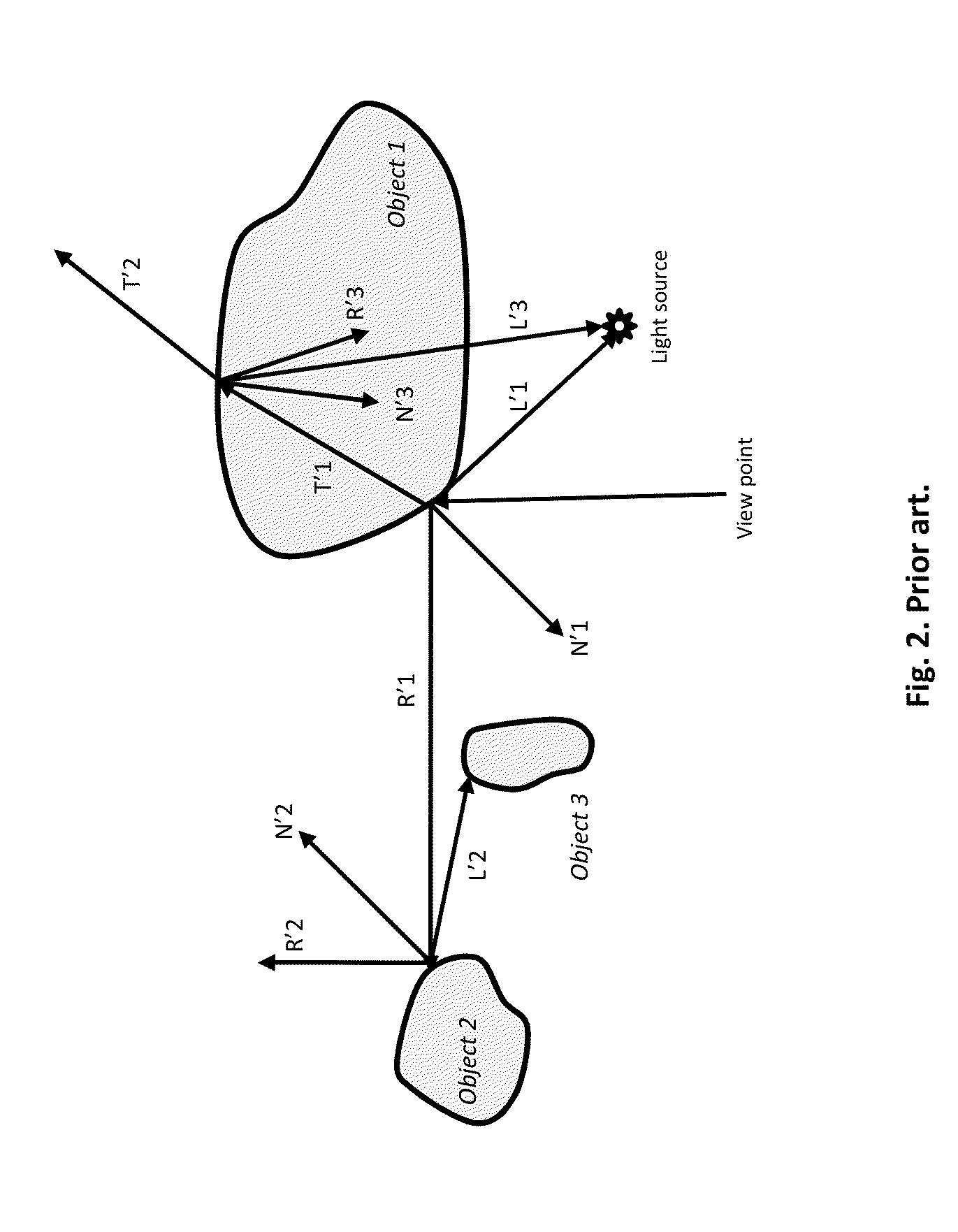

Method and System for a Separated Shadowing in Ray Tracing

InactiveUS20150254889A1Reduce processGood for load balancingDetails involving 3D image dataDigital computer detailsShadowingsComputer science

The present disclosure describes a new ray tracing shadowing method. The method is unique as it separates the shadowing from the tracing stages of primary and secondary rays. It provides high data locality, reduced amount of intersection tests, no traversals and no reconstruction of complex acceleration structures, as well as improved load balancing based on actual processing load.

Owner:ADSHIR LTD

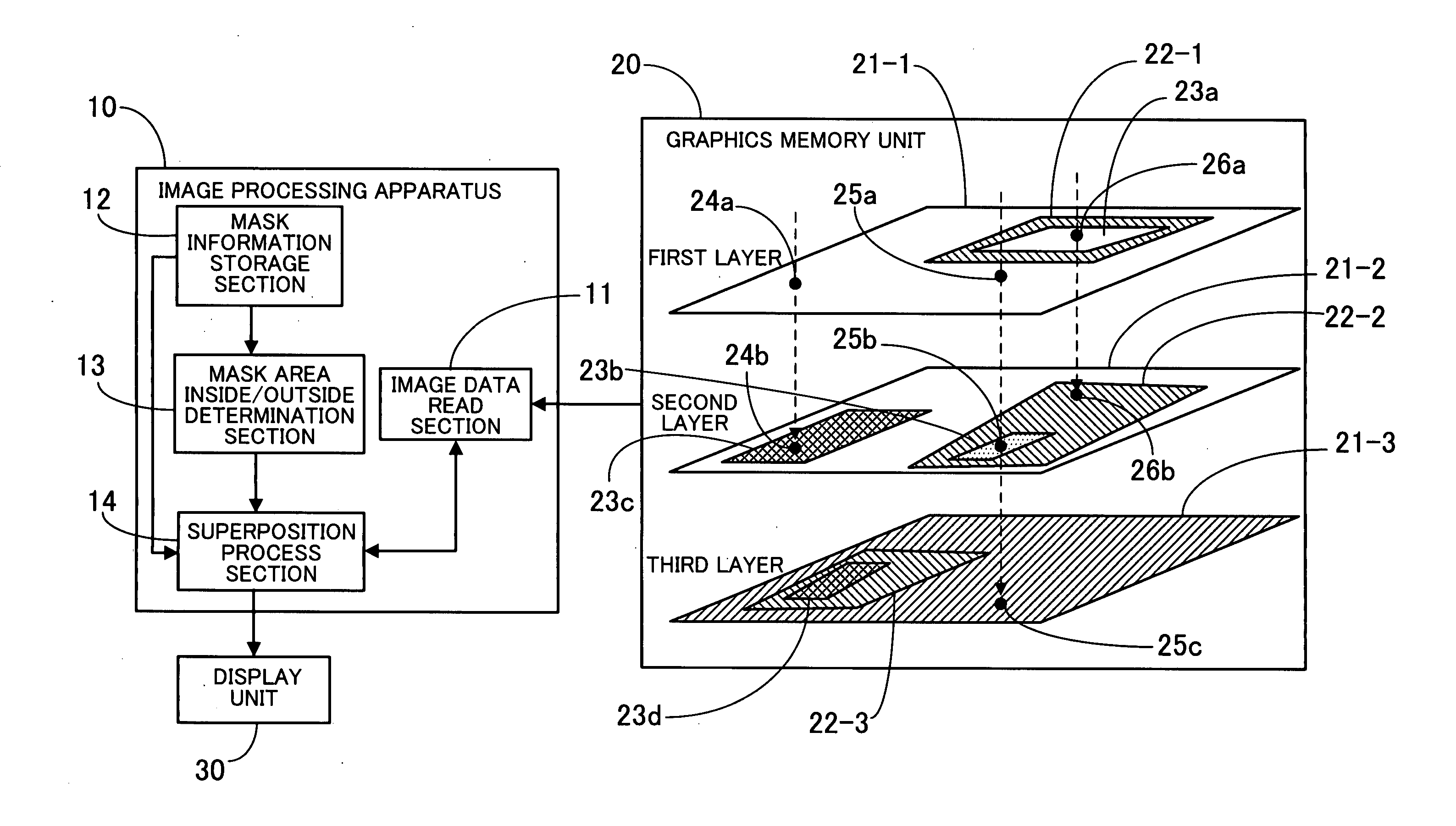

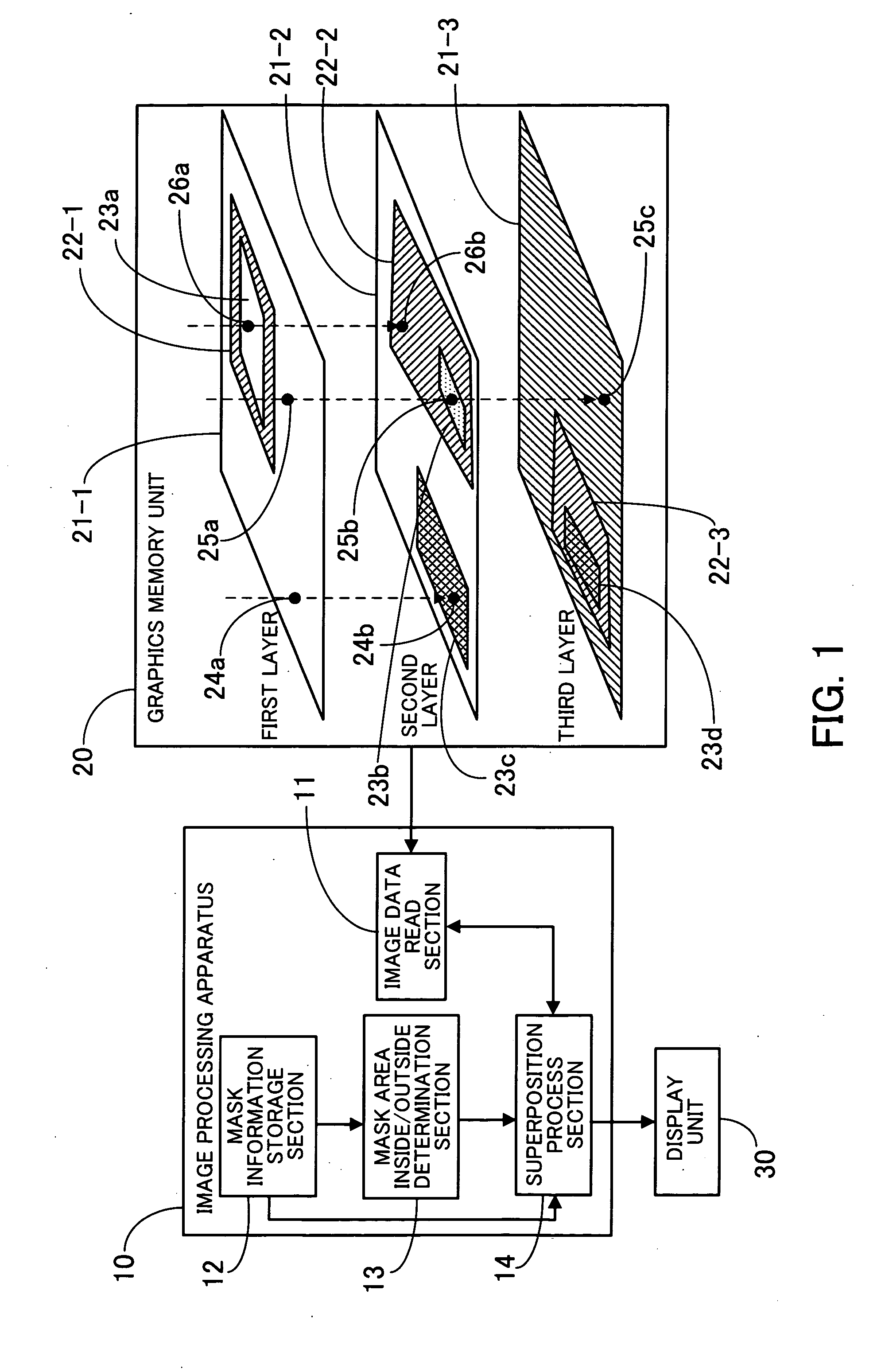

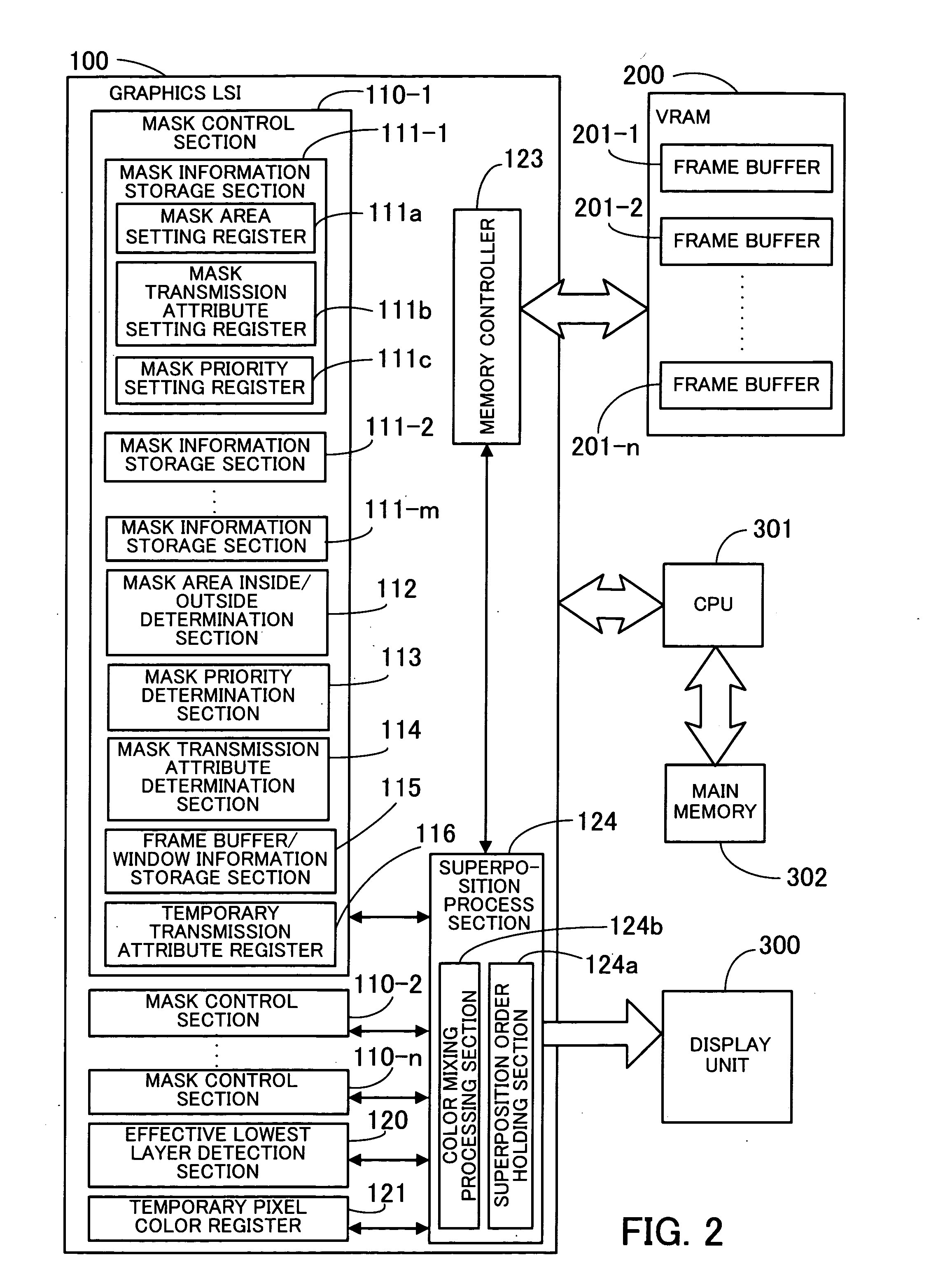

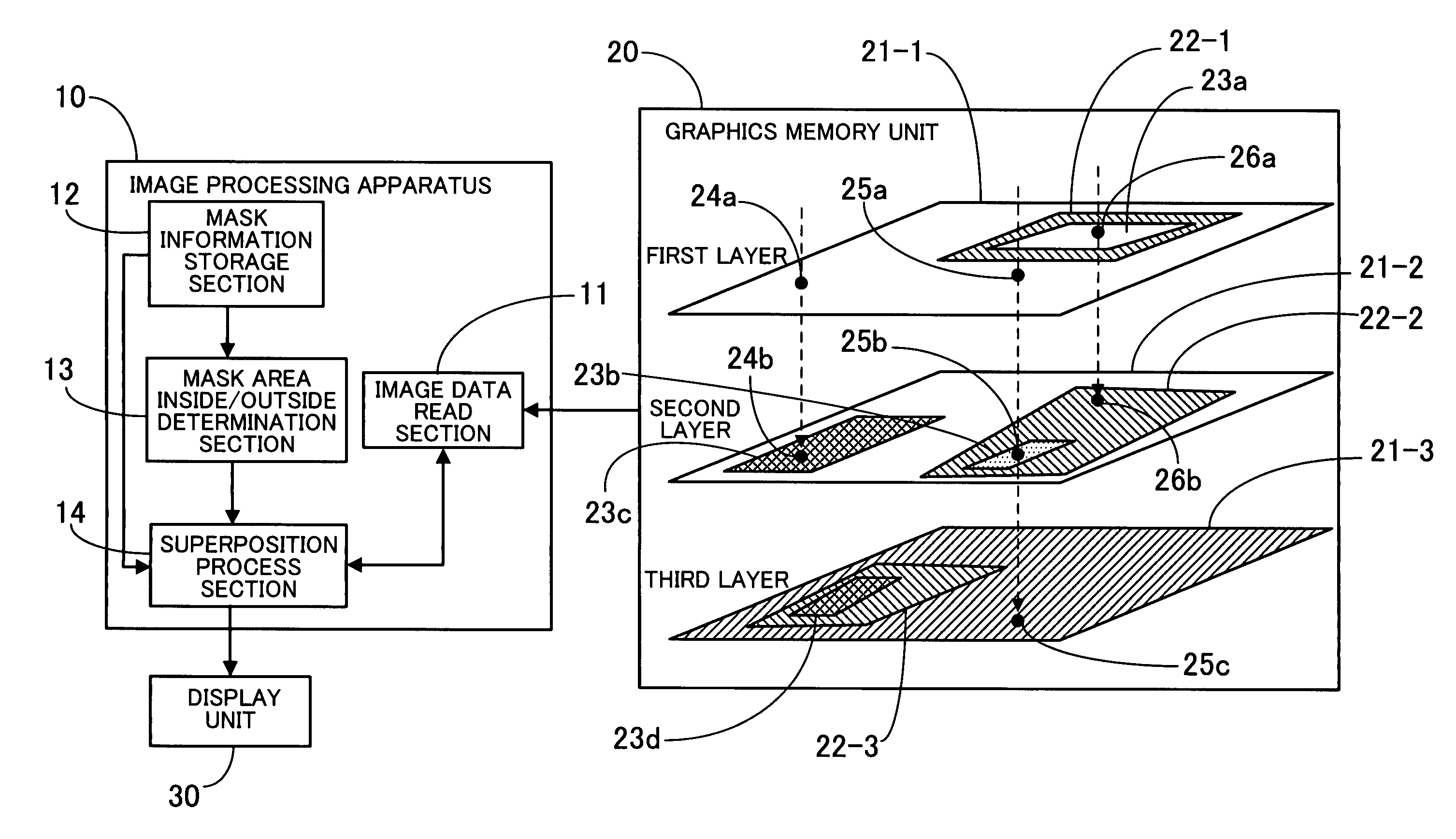

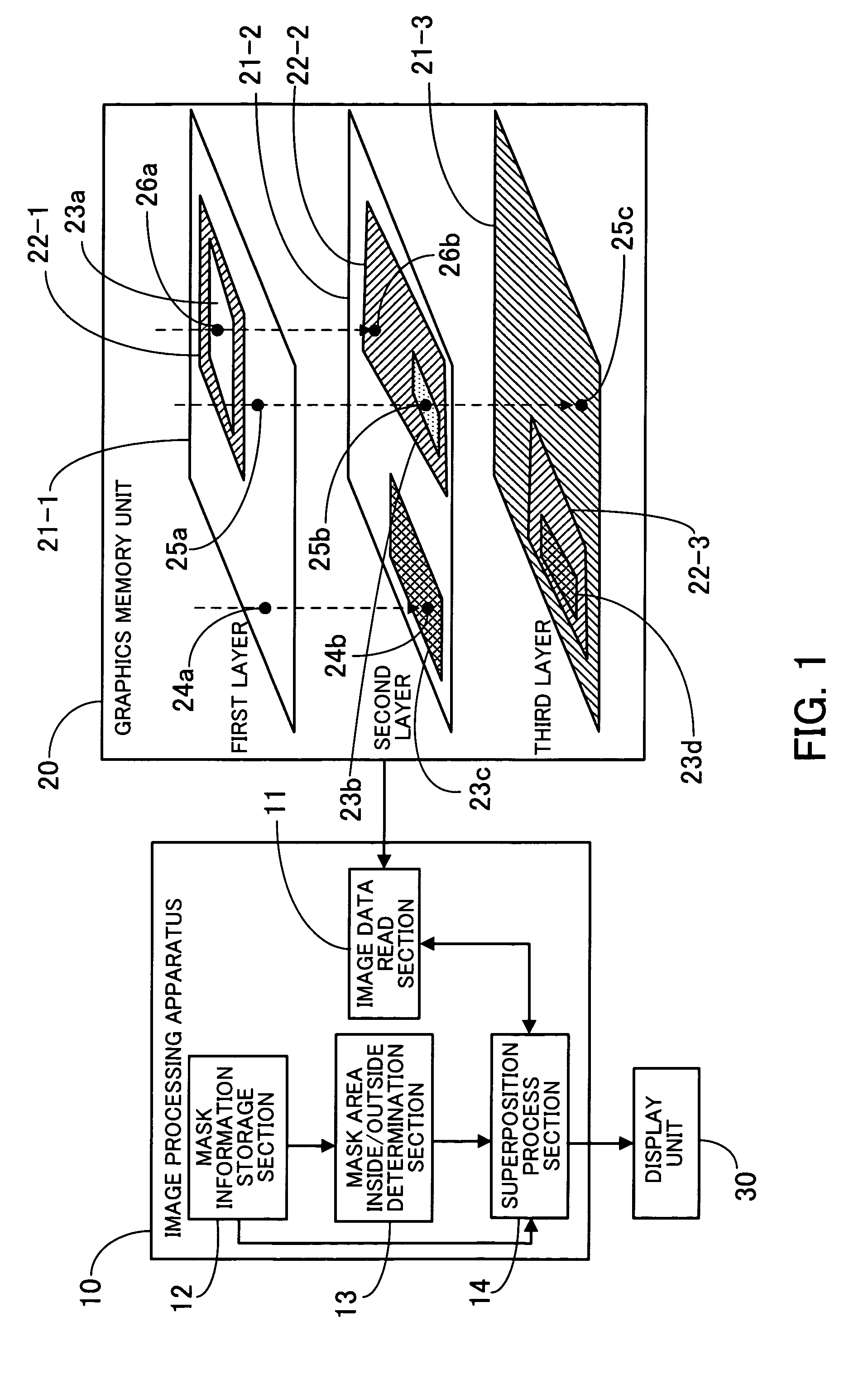

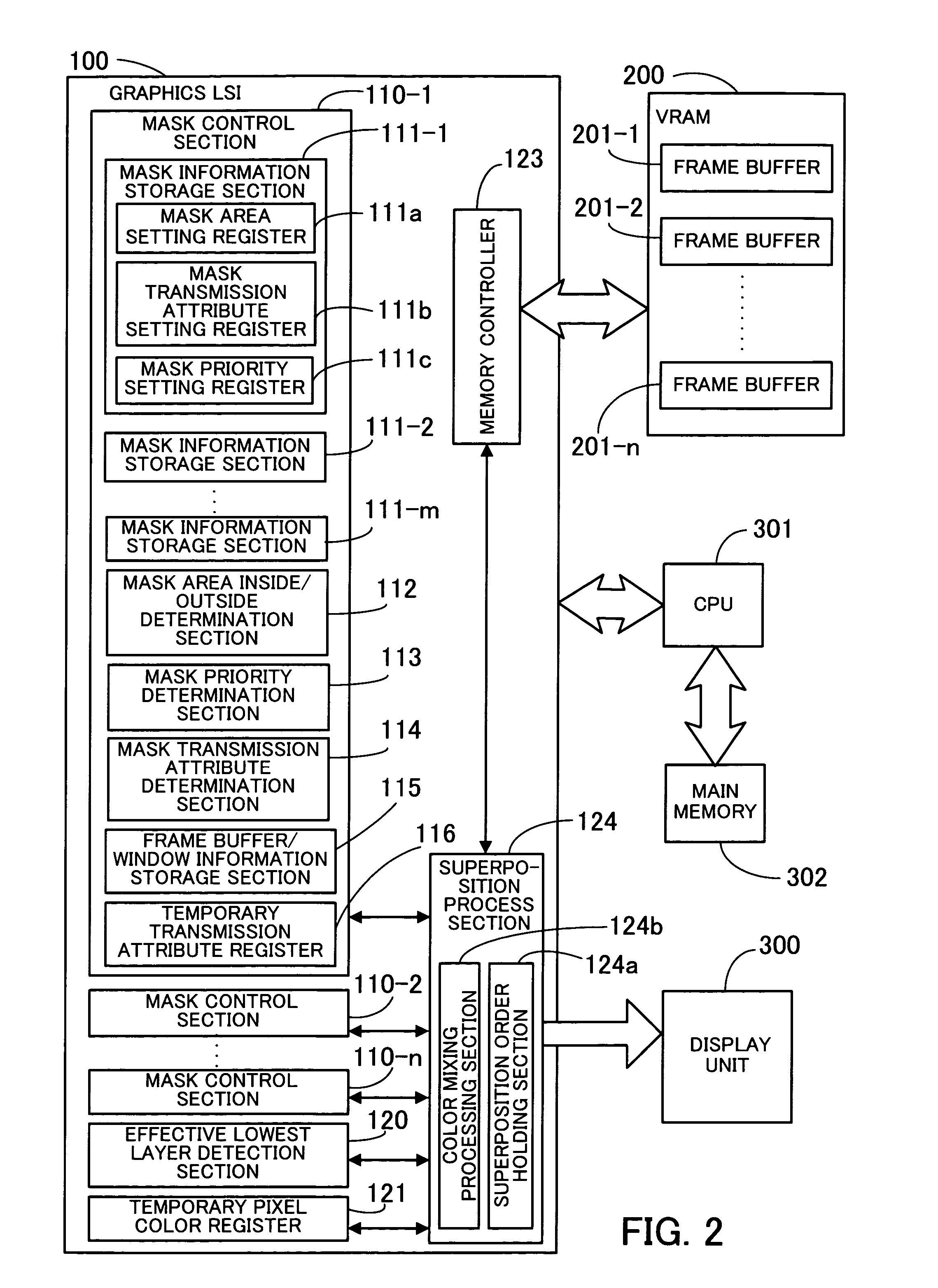

Image processing apparatus and graphics memory unit

ActiveUS20070009182A1Reduce memory accessCharacter and pattern recognitionCathode-ray tube indicatorsFramebufferComputer hardware

An image processing apparatus and graphics memory unit which reduces useless memory access to a graphics memory unit. When an image data read section reads image data from frame buffers or windows, a mask area inside / outside determination section determines by reference to mask information stored in a mask information storage section whether image data which is being scanned is in a memory access mask area. If the image data which is being scanned is in the memory access mask area, then a superposition process section performs a superposition process according to a transmission attribute assigned to the memory access mask area regardless of transmission attributes assigned to the frame buffers or the windows.

Owner:SOCIONEXT INC

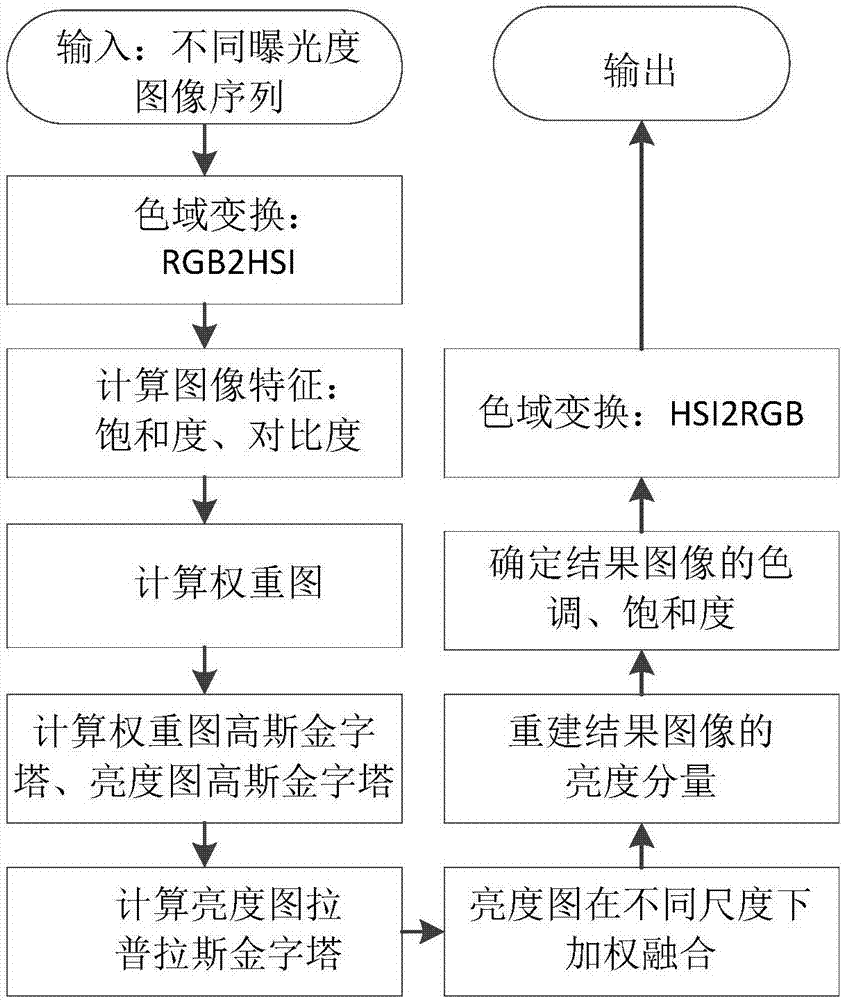

Direct multi-exposure fusion parallel acceleration method based on OpenCL

ActiveCN107292804AIncrease workloadReduce running timeImage enhancementImage data processing detailsFloating pointRunning time

The invention discloses a direct multi-exposure fusion parallel acceleration method based on the OpenCL; the method comprises the following steps: using a CPU+GPU heterogeneous parallel framework and the GPU strong floating point calculating capability, fusing cores under the OpenCL exploitation environment, caching a Gaussian kernel to a constant memory, caching reuse data to a local memory, and increasing the work load of each work item, thus shortening an access and calculation time, and realizing faster multi-exposure fusion. Compared with a conventional serial processing method, the method can obtain the maximum speed-up ratio reaching 11.19, thus effectively reducing the multi-exposure fusion algorithm operation time, and providing strong guarantee for further applications of the multi-exposure fusion algorithm; in addition, the method can obviously improve the space frequency and average gradient, thus effectively solving the scene detail information loss problems caused by insufficient electrography and display equipment dynamic scope.

Owner:XIDIAN UNIV

Method and system for supplier-based memory speculation in a memory subsystem of a data processing system

InactiveUS20050132147A1Improvement in average memory access latencyReduce apparent memory access latencyMemory adressing/allocation/relocationConcurrent instruction executionMemory controllerHandling system

A data processing system includes one or more processing cores, a system memory having multiple rows of data storage, and a memory controller that controls access to the system memory and performs supplier-based memory speculation. The memory controller includes a memory speculation table that stores historical information regarding prior memory accesses. In response to a memory access request, the memory controller directs an access to a selected row in the system memory to service the memory access request. The memory controller speculatively directs that the selected row will continue to be energized following the access based upon the historical information in the memory speculation table, so that access latency of an immediately subsequent memory access is reduced.

Owner:IBM CORP

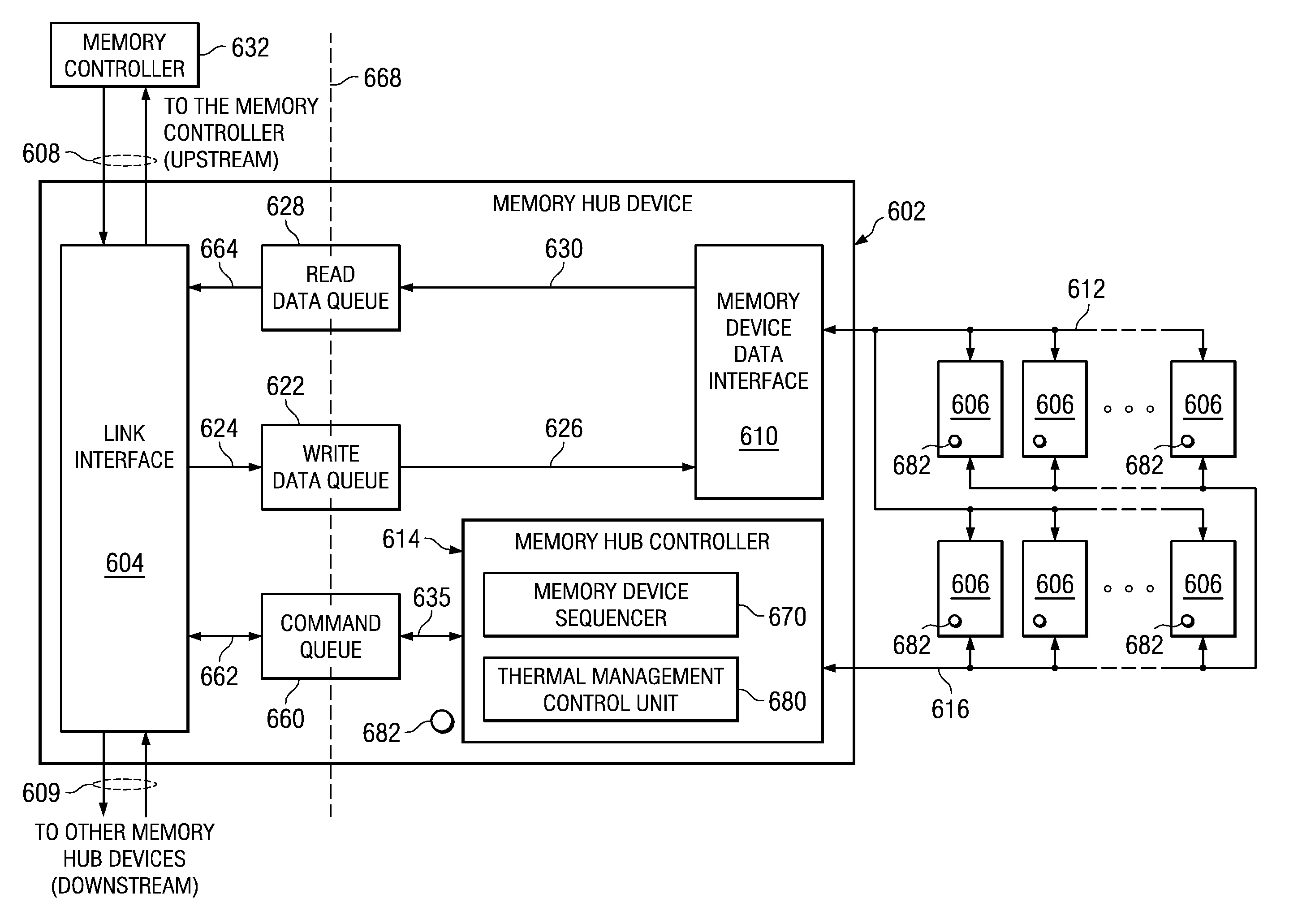

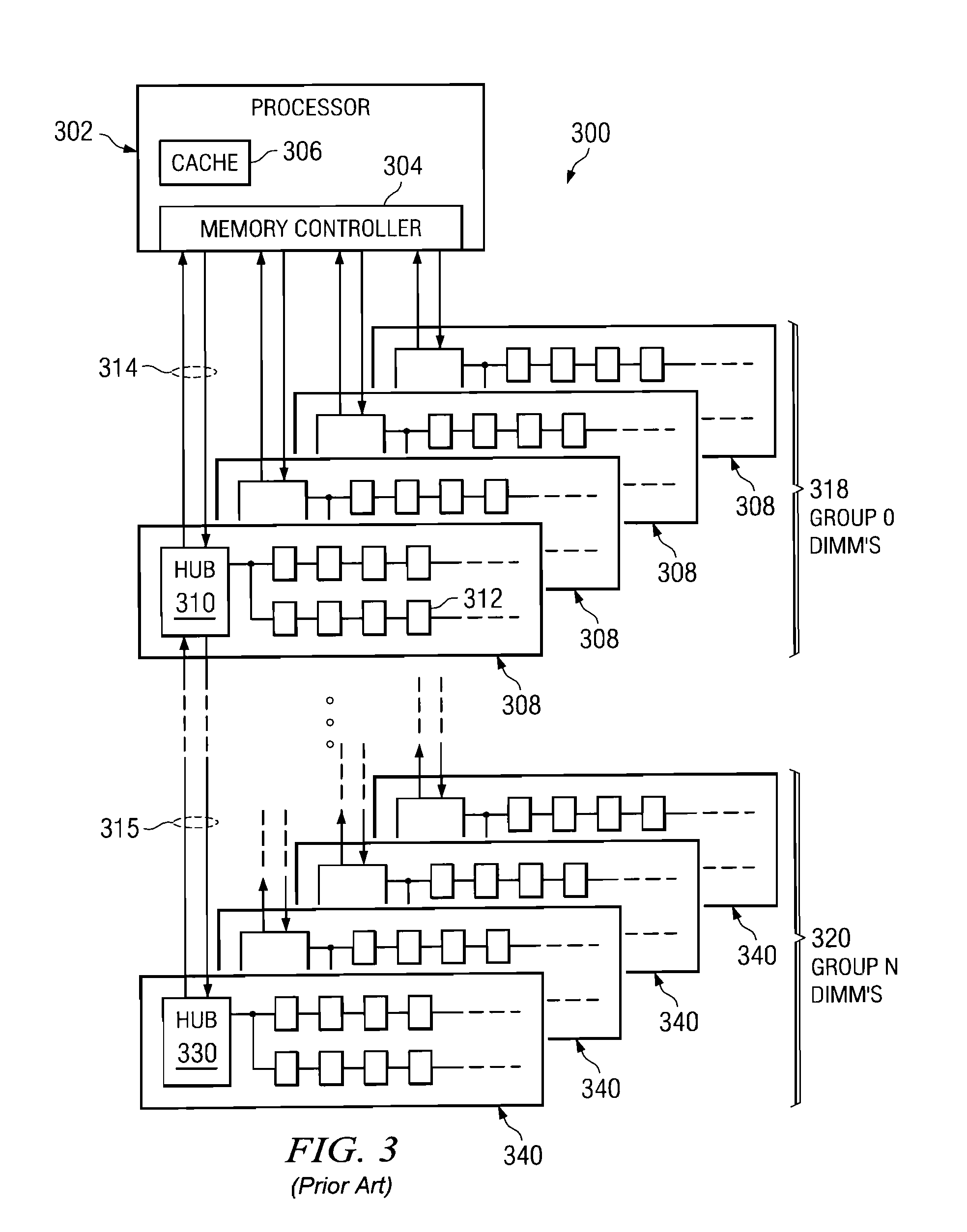

System to enable a memory hub device to manage thermal conditions at a memory device level transparent to a memory controller

InactiveUS7930470B2High bandwidthReduce operating frequencyEnergy efficient ICTDigital data processing detailsParallel computingDevice Monitor

A memory system is provided that manages thermal conditions at a memory device level transparent to a memory controller. The memory systems comprises a memory hub device integrated in a memory module, a set of memory devices coupled to the memory hub device, and a first set of thermal sensors integrated in the set of memory devices. A thermal management control unit integrated in the memory hub device monitors a temperature of the set of memory devices sensed by the first set of thermal sensors. The memory hub device reduces a memory access rate to the set of memory devices in response to a predetermined thermal threshold being exceeded thereby reducing power used by the set of memory devices which in turn decreases the temperature of the set of memory devices.

Owner:INT BUSINESS MASCH CORP

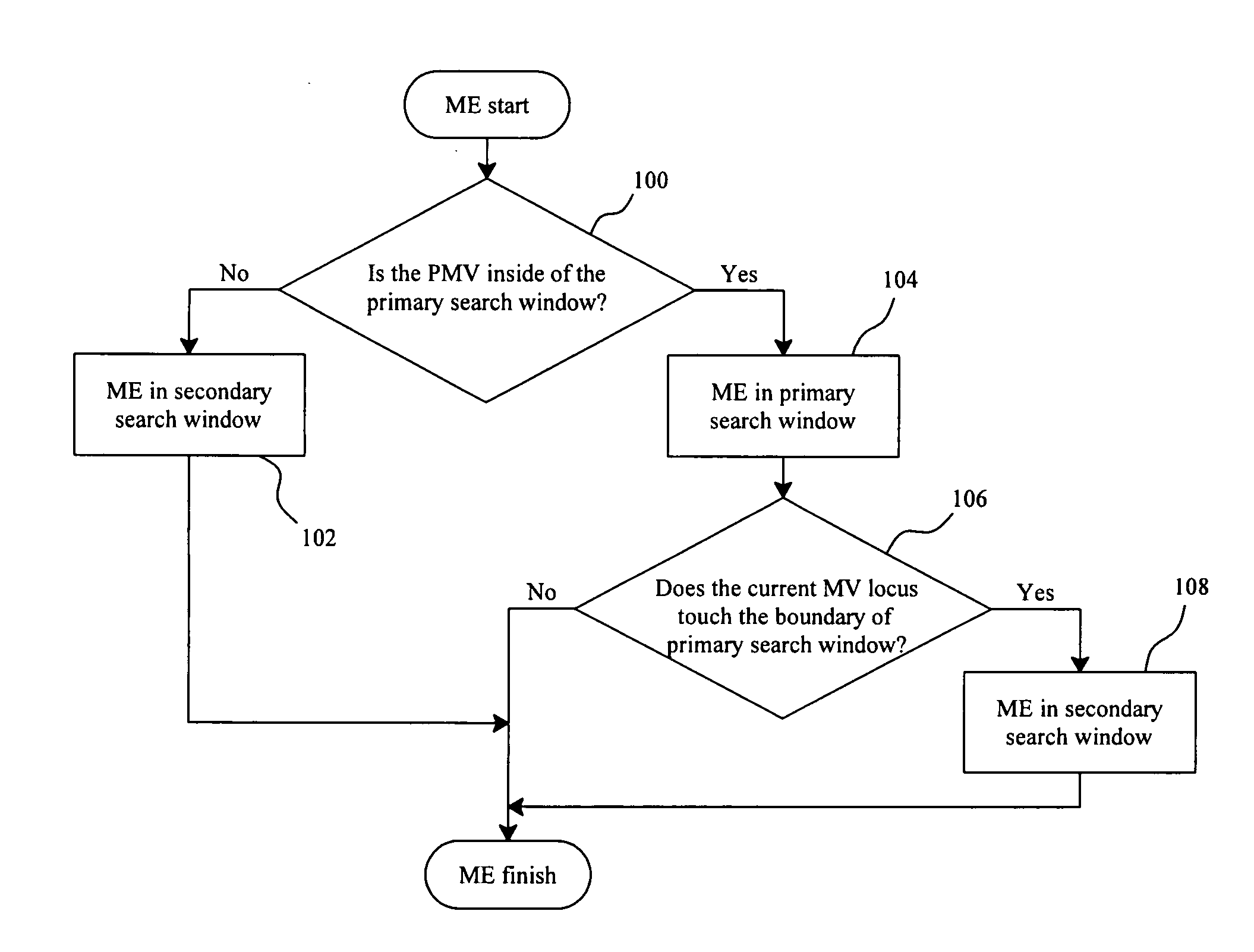

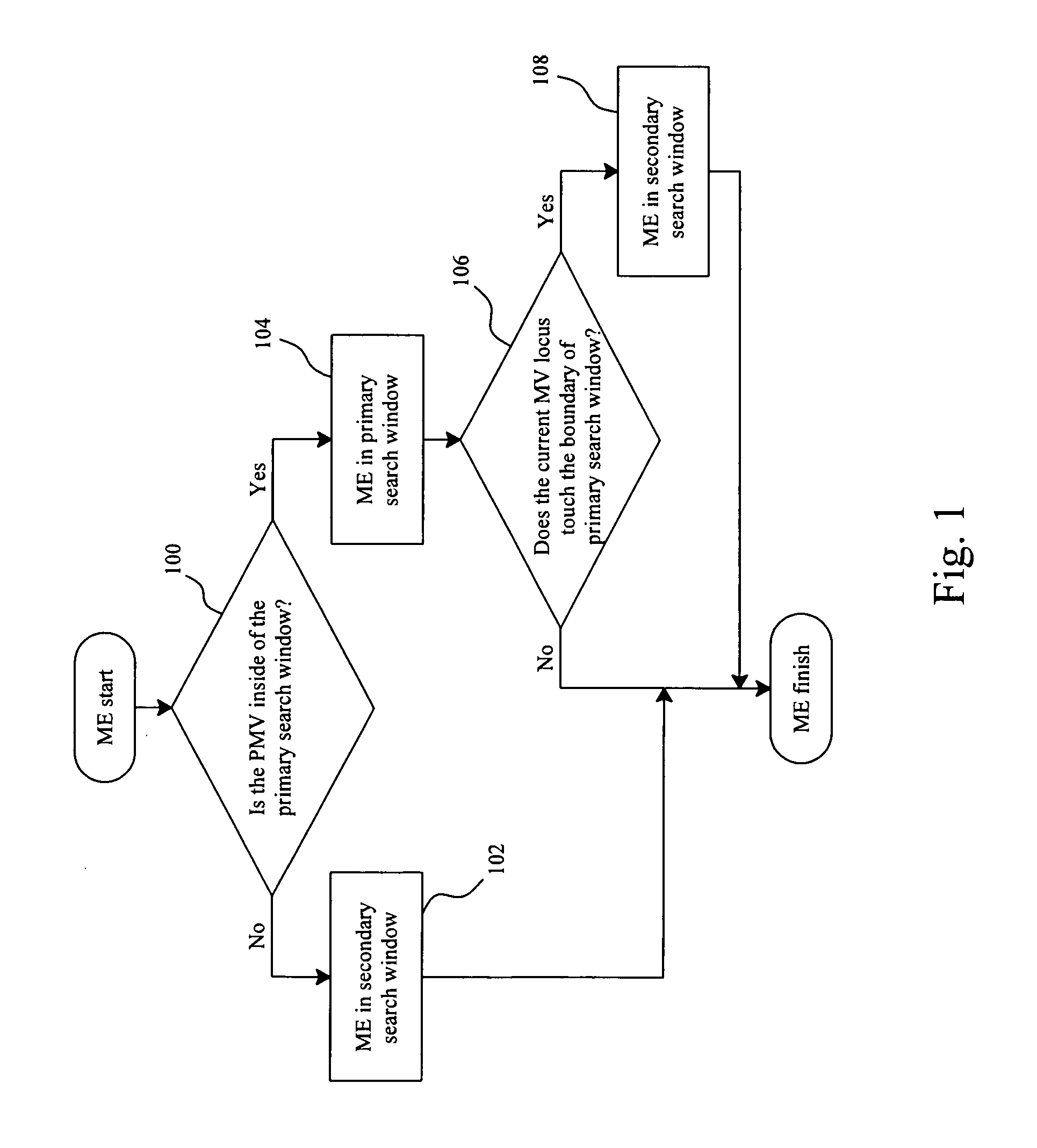

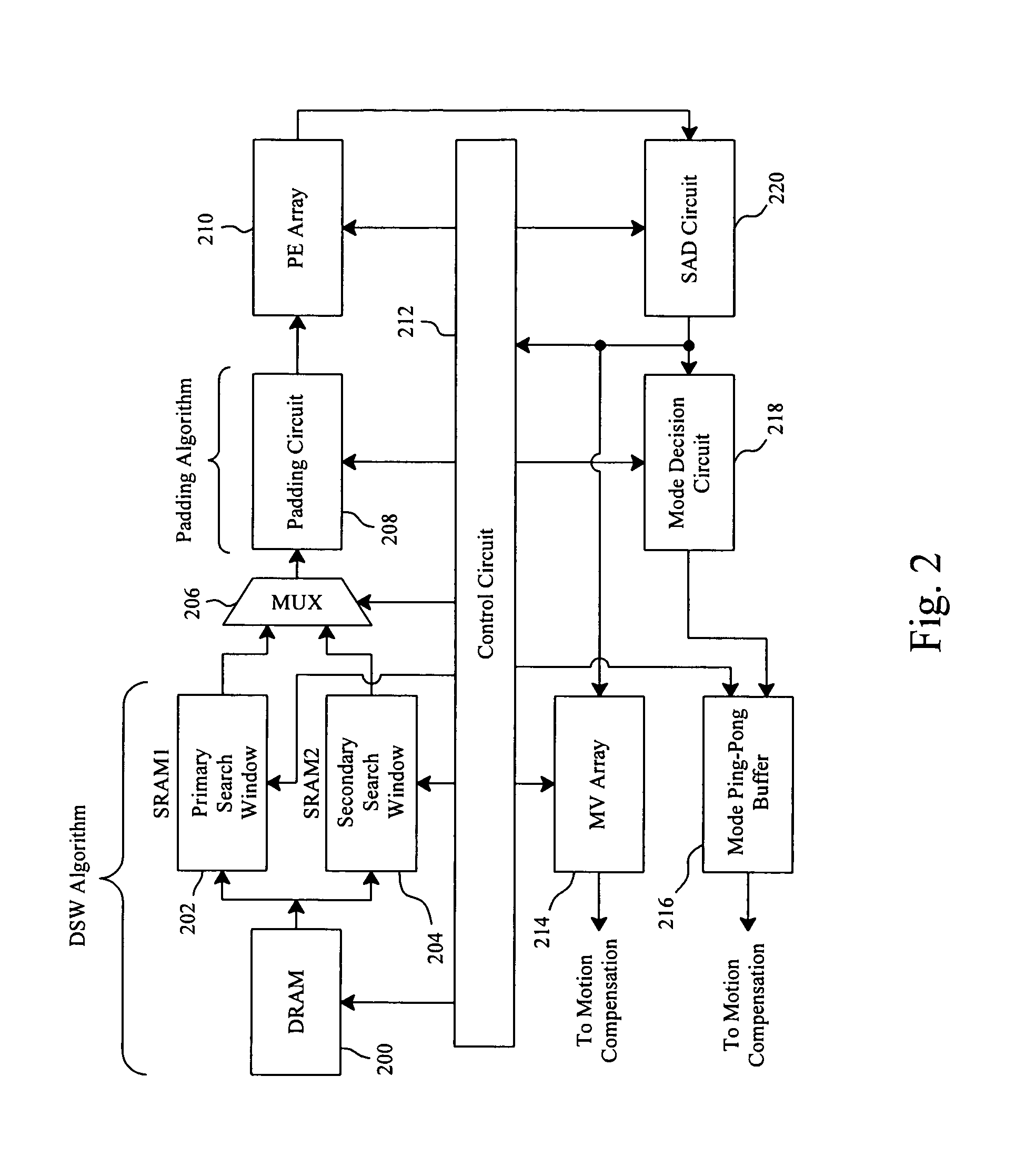

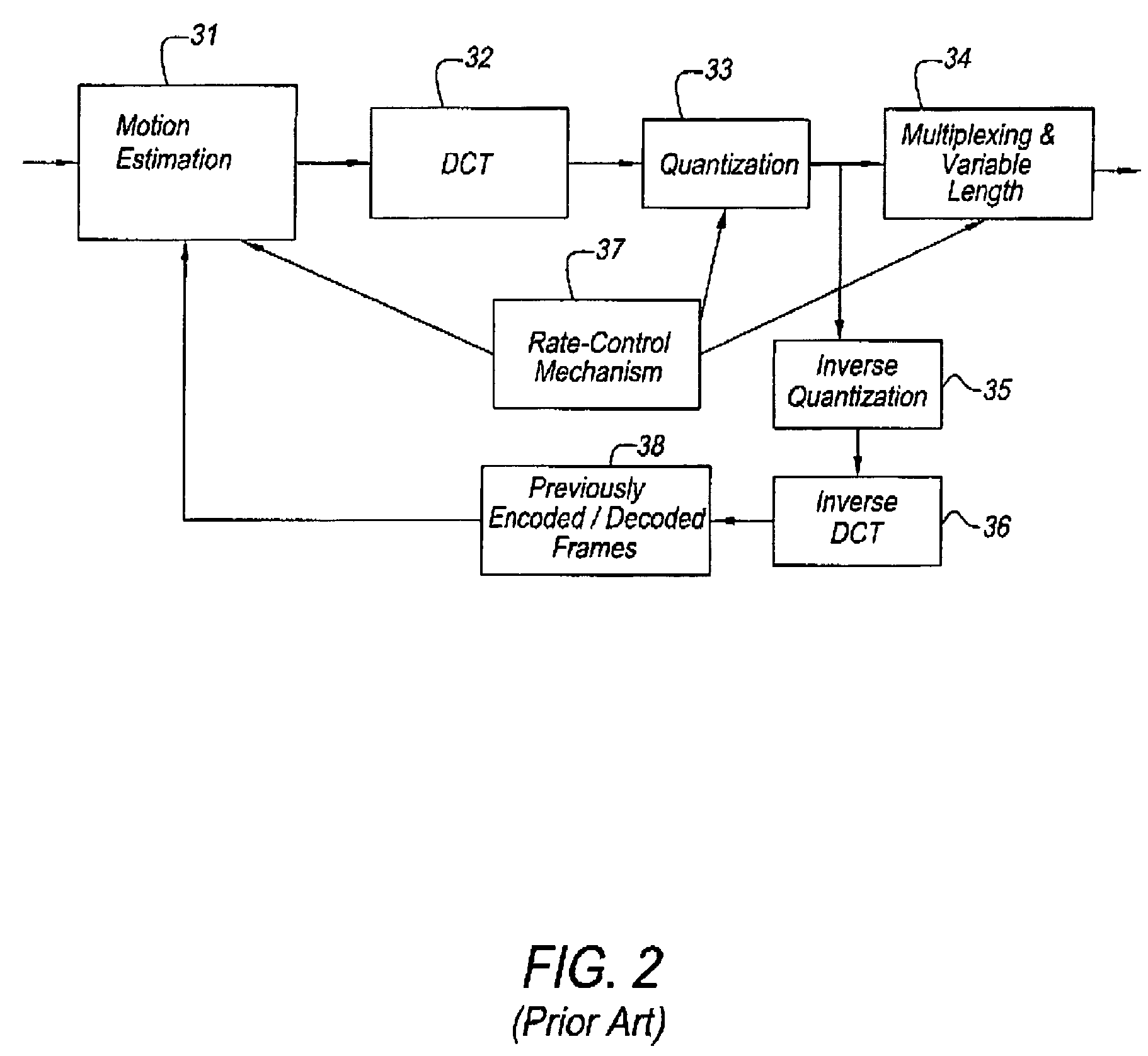

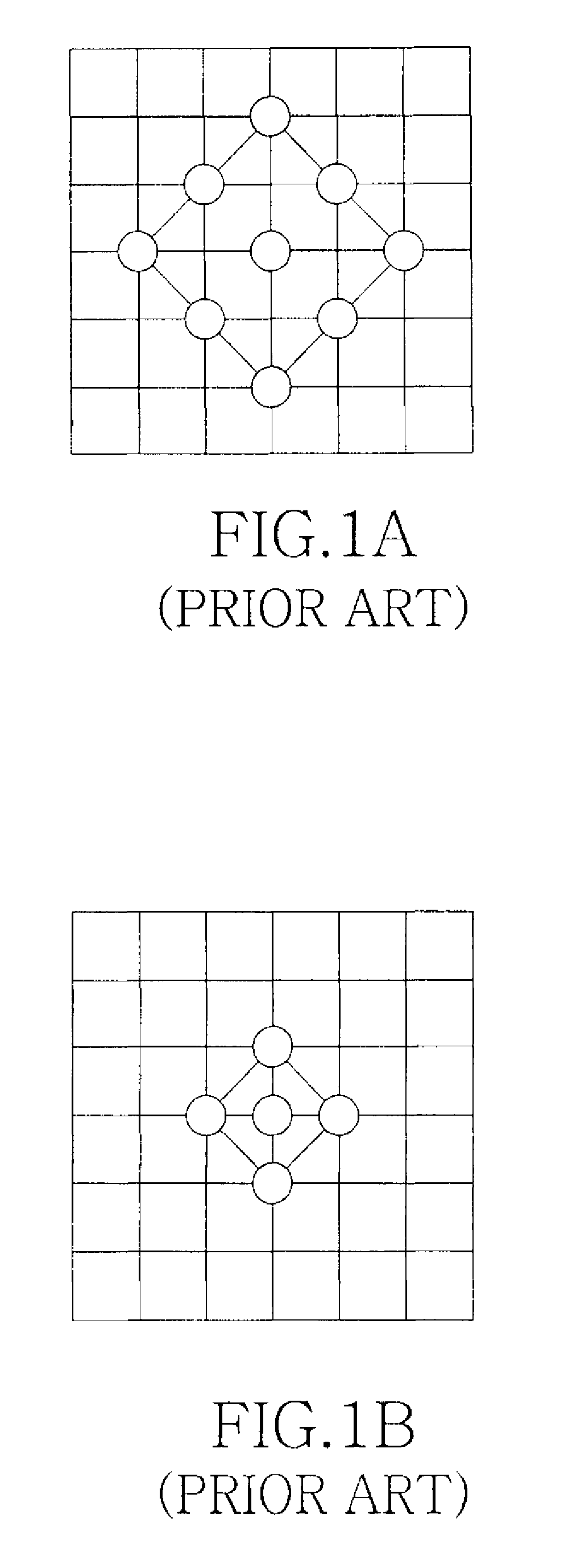

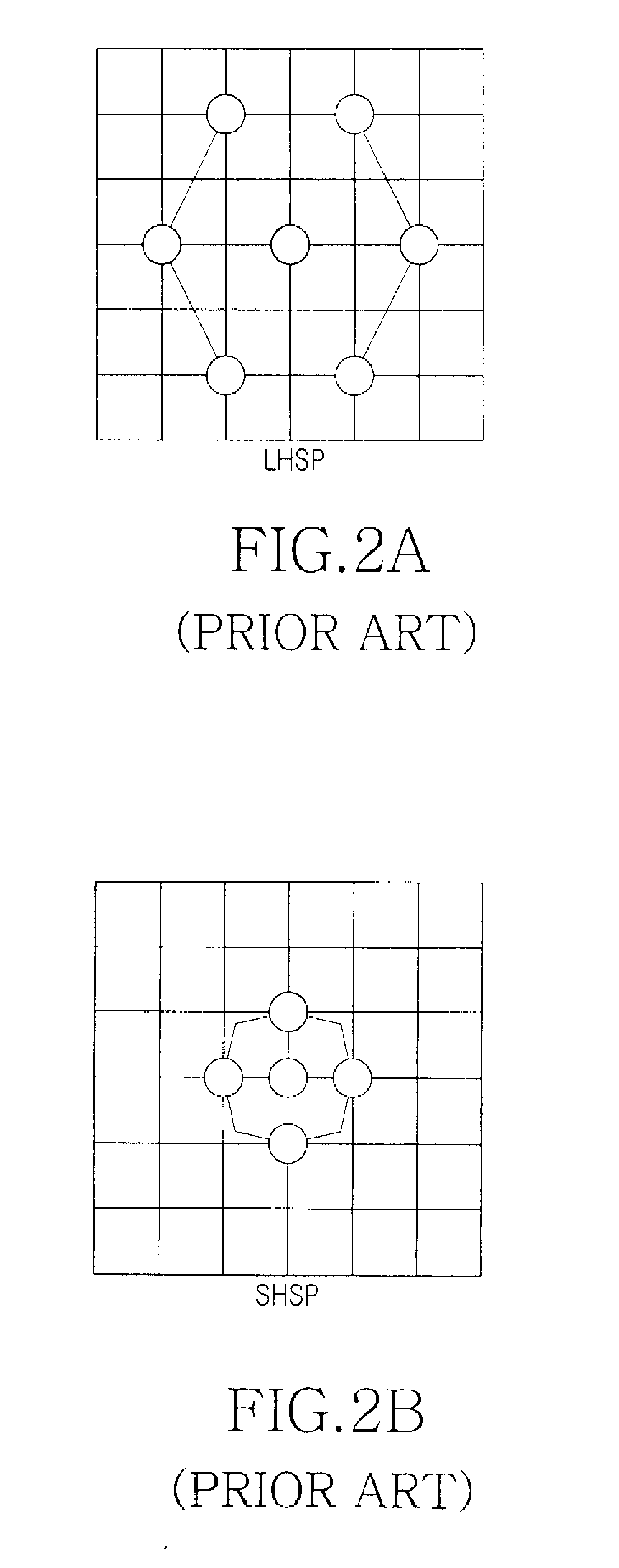

Motion estimation with dual search windows for high resolution video coding

InactiveUS20080212679A1Small on-chip memoryHigh data reusabilityColor television with pulse code modulationColor television with bandwidth reductionExternal storageMotion vector

A memory-efficient motion estimation technique for high-resolution video coding is proposed. The main objective is to reduce the external memory access, especially for limited local memory resource. The reduction of memory access can successfully save the notorious power consumption. The key to reduce the memory access is based on center-biased algorithm in that the center-biased algorithm performs the motion vector searching with the minimum search data. While considering the data reusability, the proposed dual-search-windowing approaches use a secondary windowing as an option per searching necessity, by which the loading of search windows can be alleviated and hence reduce the required external memory bandwidth, without significant quality degradation.

Owner:AVISONIC TECH

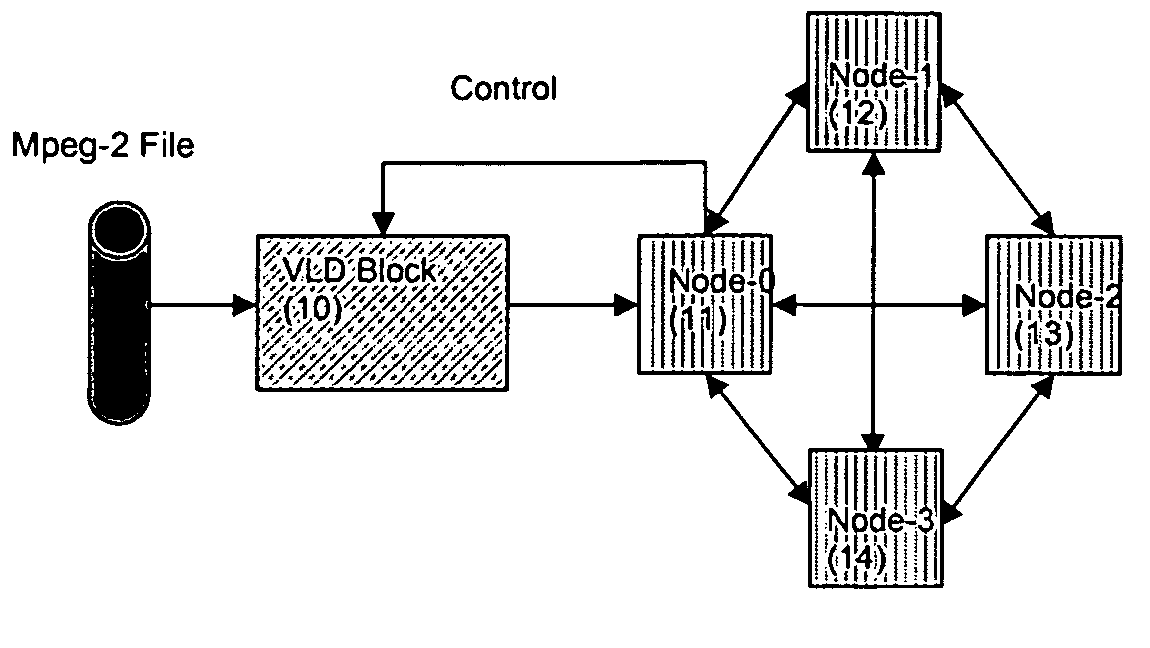

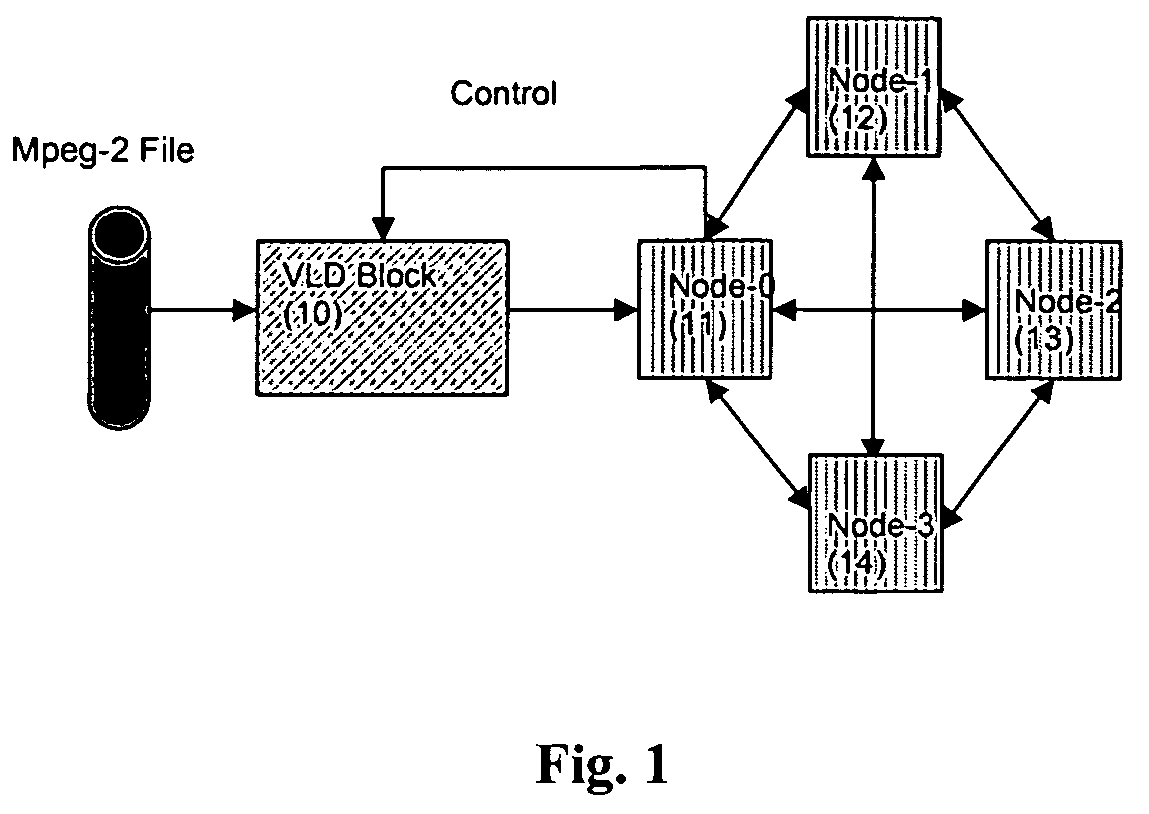

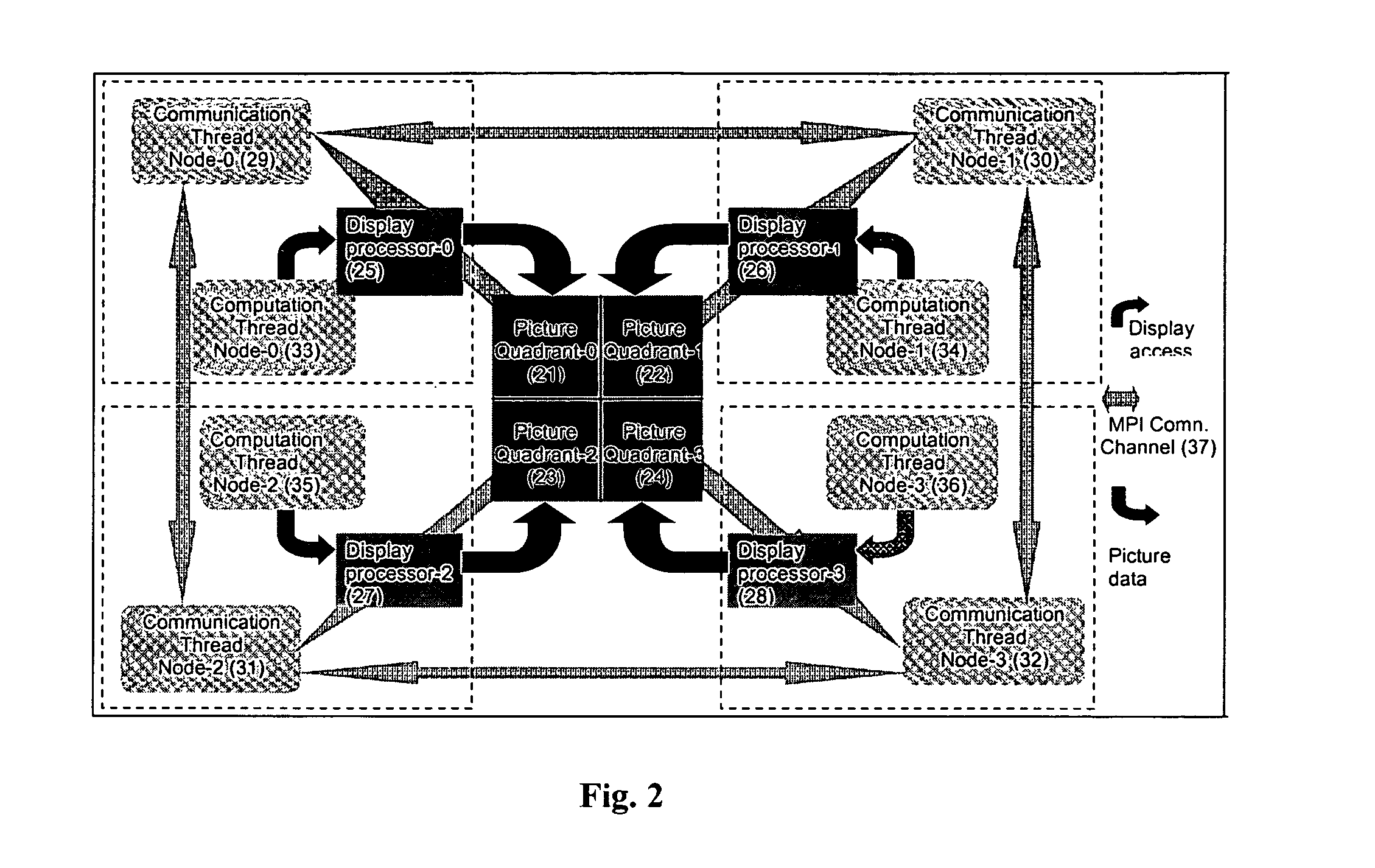

Macro-block level parallel video decoder

ActiveUS7983342B2Conducive to loadLess data dependencyColor television with pulse code modulationColor television with bandwidth reductionVariable lengthParallel processing

Owner:STMICROELECTRONICS PVT LTD

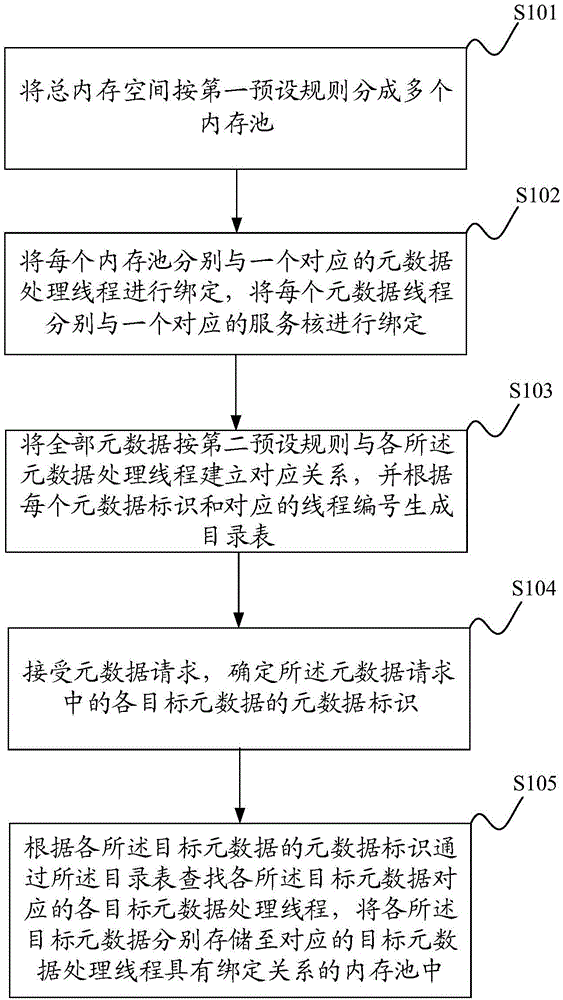

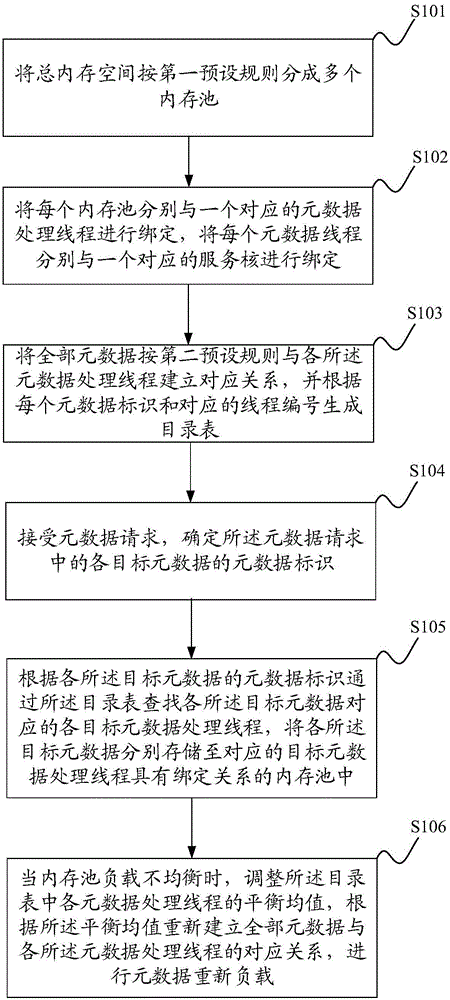

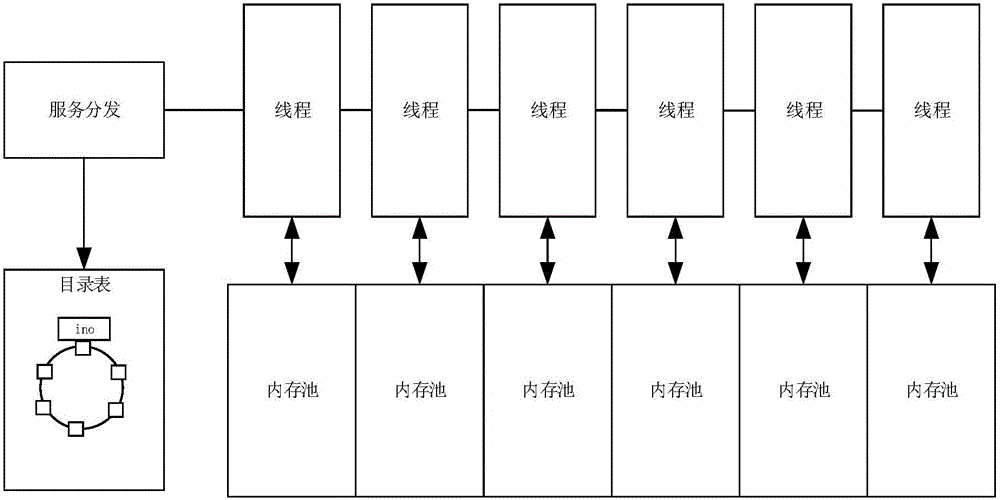

File request processing method and system

ActiveCN105094992AImprove caching capacityImprove hitResource allocationSpecial data processing applicationsMetadataMemory pool

The invention discloses a file request processing method and system. A total memory space is divided into multiple memory pools according to a first preset rule, each memory pool is bound to one corresponding metadata processing thread, each metadata processing thread is bound to one corresponding service core, correspondence relations between all metadata and all the metadata processing threads are established according to a second preset rule, a directory table is generated according to each metadata identifier and the corresponding thread number, a metadata request is accepted, metadata identifiers of all target metadata in the metadata request are determined, target metadata processing threads corresponding to the target metadata are searched through the directory table according to the metadata identifiers of the target metadata, all the target metadata are stored to the memory pools bound to the corresponding target metadata processing threads respectively, and multi-thread cache performance and memory access performance are improved.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

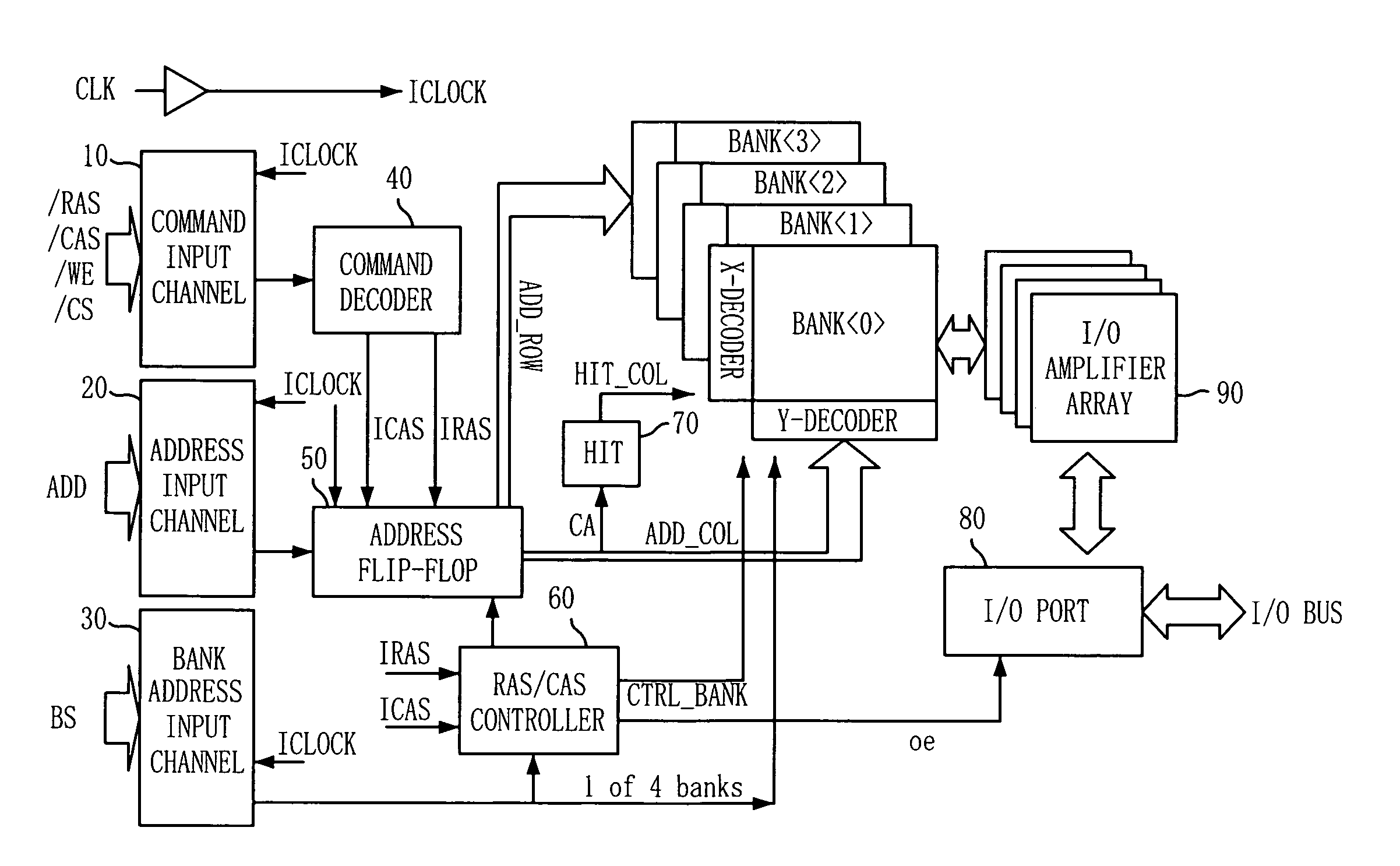

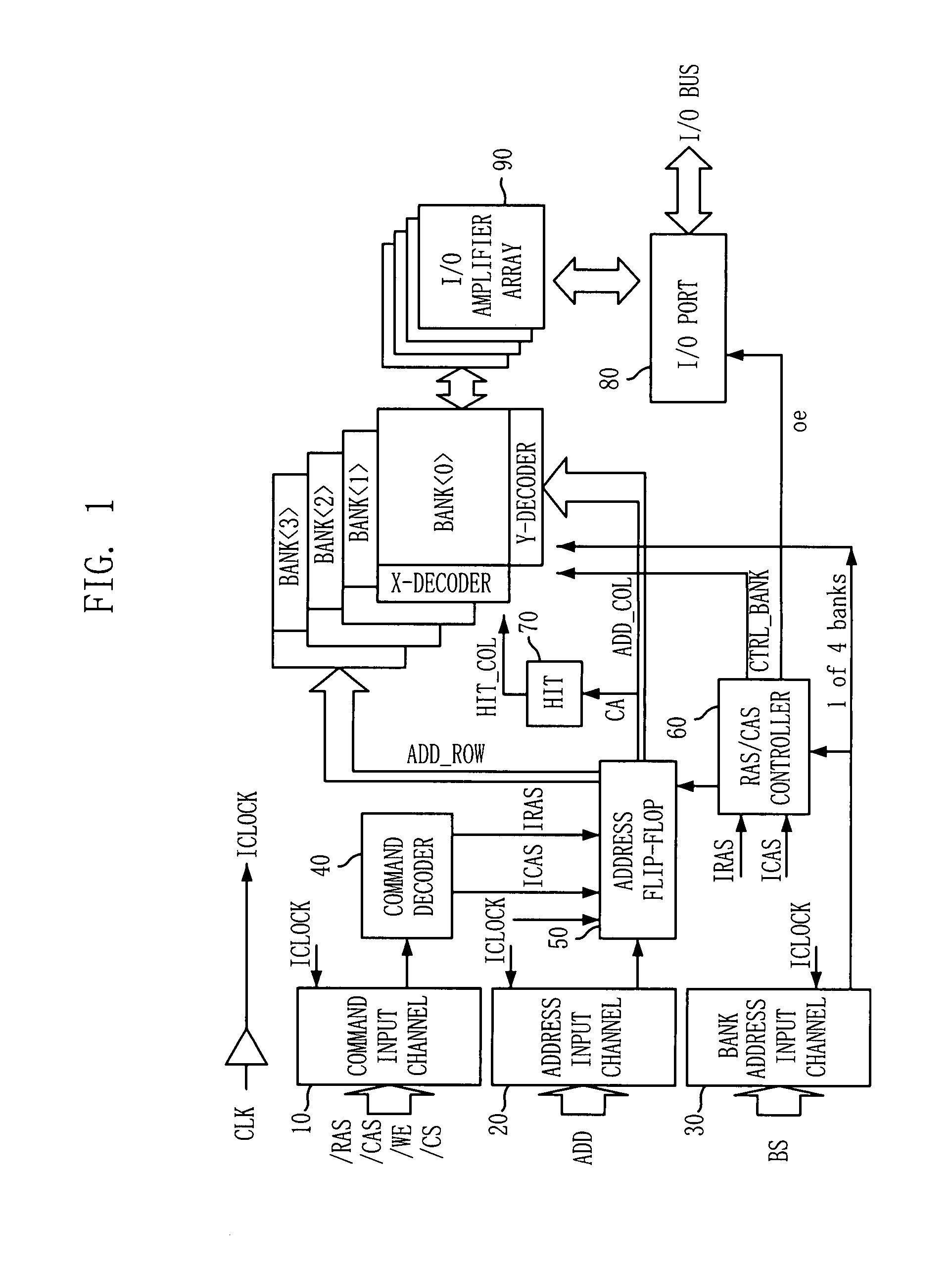

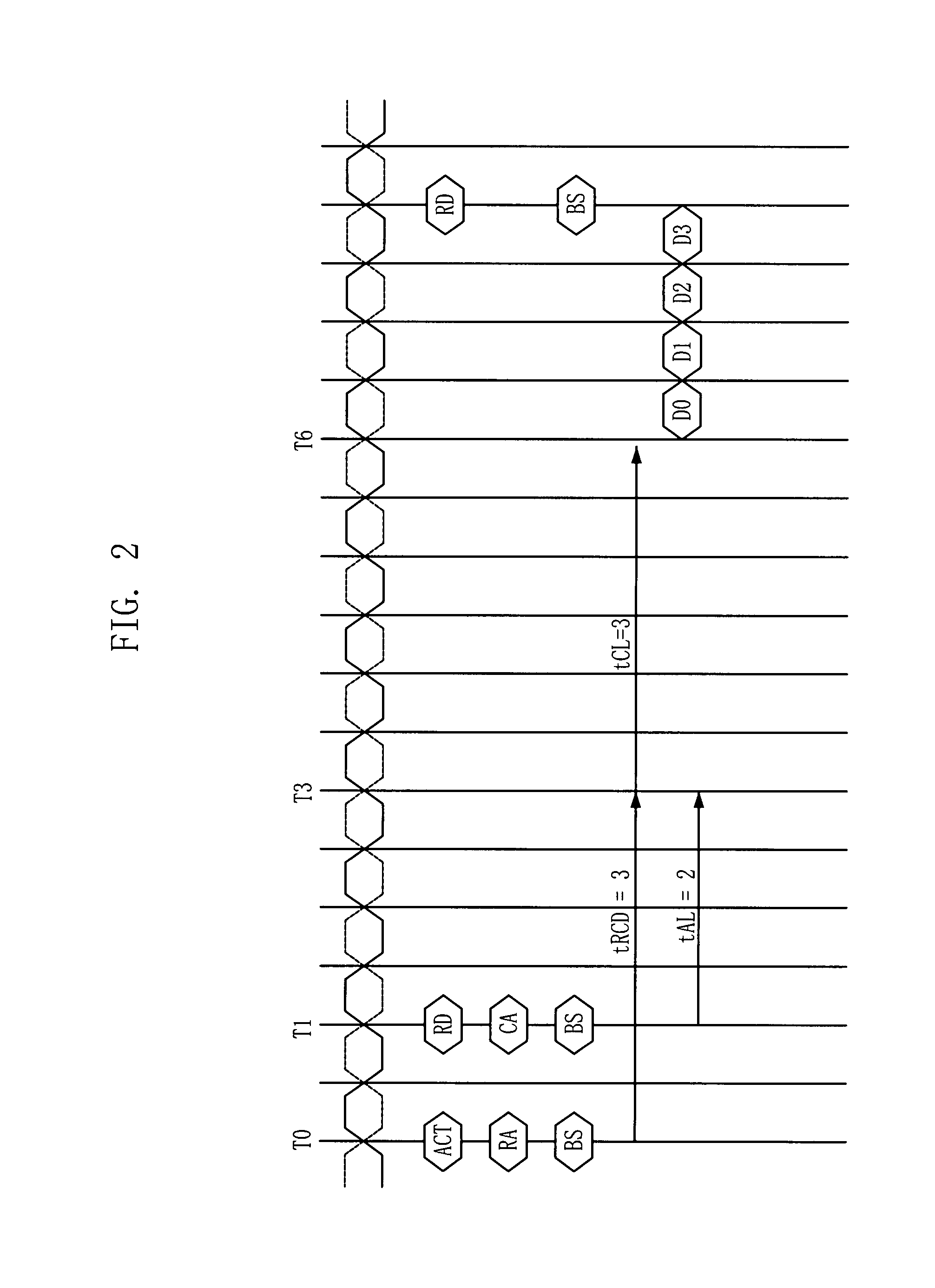

Low-power dram and method for driving the same

ActiveUS20080094933A1Reduce power consumptionReduce memory accessDigital storageComputer engineeringDynamic random-access memory

A dynamic random access memory includes: an address latch configured to latch a row address in response to a row address strobe (RAS) signal and latch a column address in response to a column address strobe (CAS) signal; a row decoder configured to decode the row address; an enabler configured to decode a part of most significant bits (MSB) of the column address to locally enable a part of one page area corresponding to the row address; and a column decoder configured to decode the column address.

Owner:SK HYNIX INC

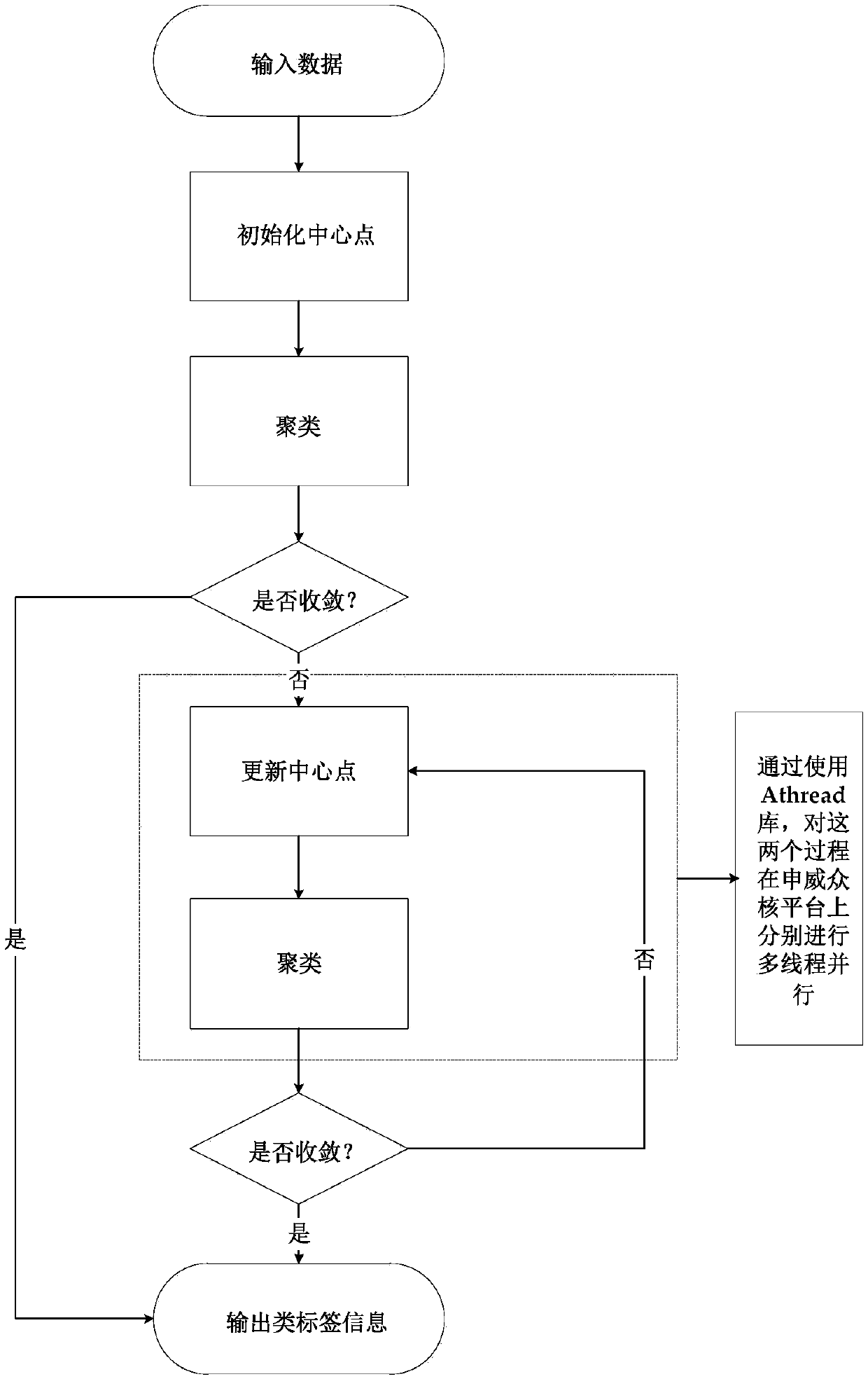

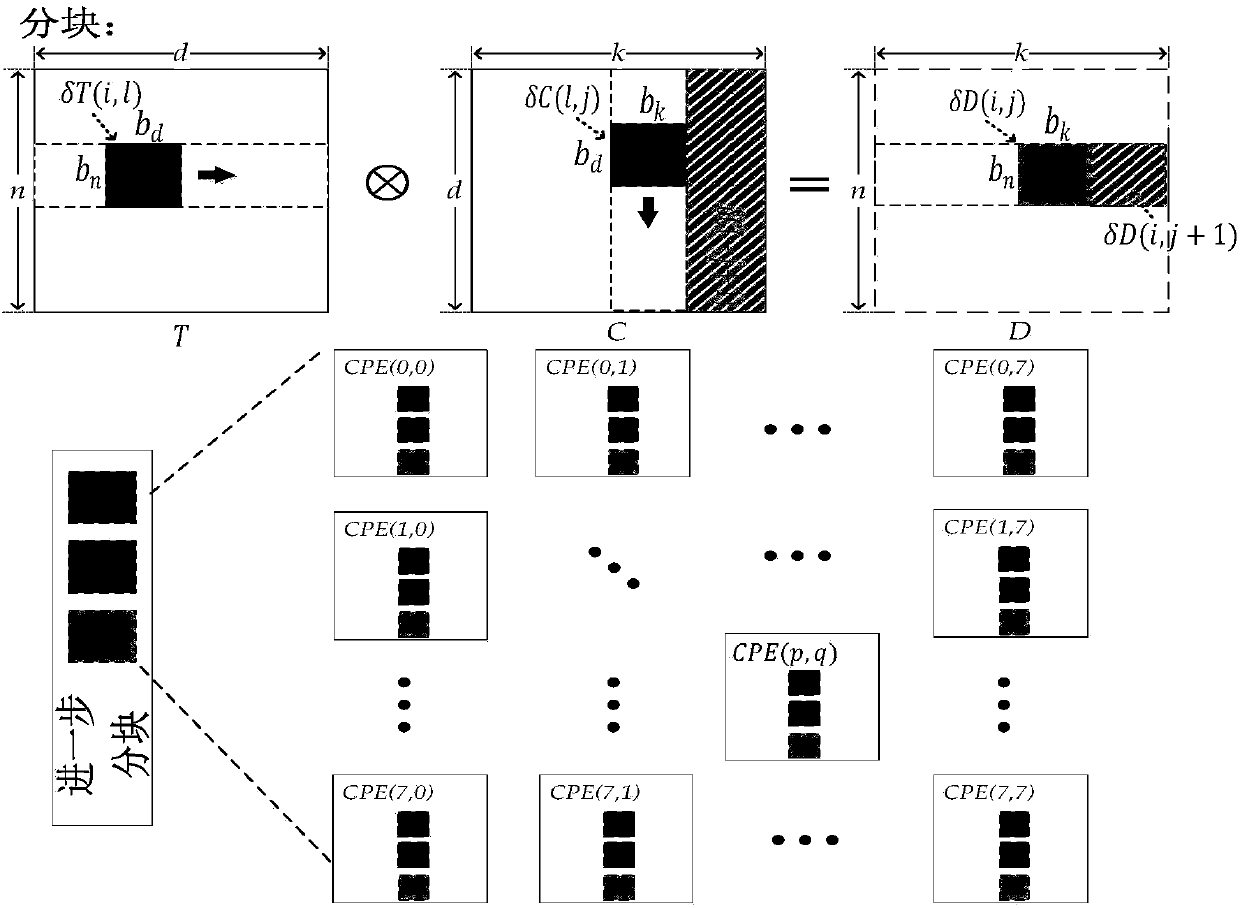

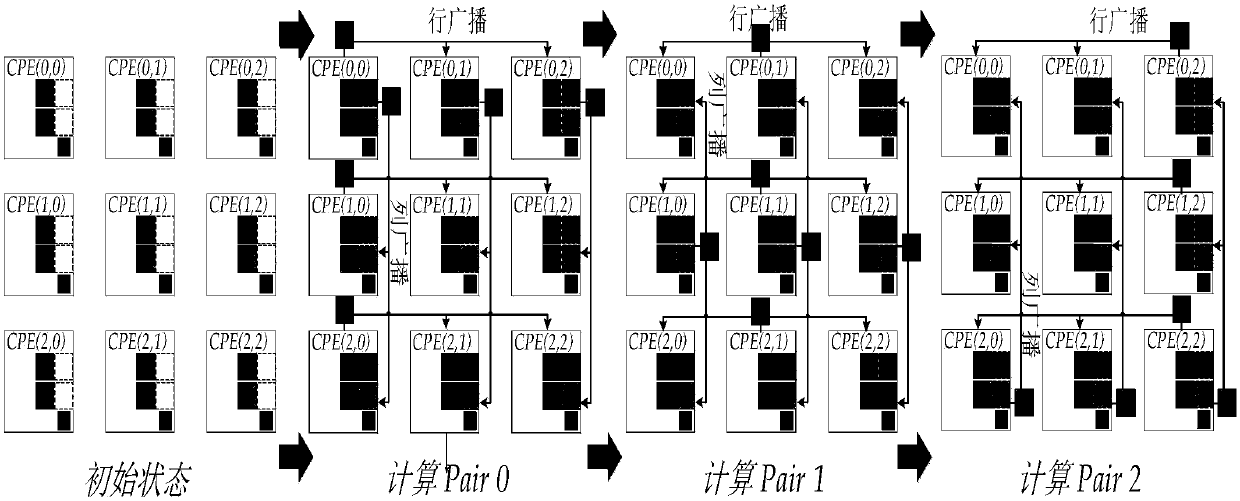

High-performance parallel implementation method of K-means algorithm on domestic Sunway 26010 multi-core processor

ActiveCN108509270AReduce storageReduce memory accessProgram initiation/switchingResource allocationData setAlgorithm

The invention provides a high-performance parallel implementation method of a K-means algorithm on a domestic Sunway 26010 multi-core processor. Based on a domestic processor Sunway 26010 platform, for a clustering stage, the invention designs a calculation framework fused with a block distance matrix calculation and a convention operation; the framework adopts a three-layer blocking strategy fortask partitioning; and meanwhile a collaborative internuclear data sharing scheme and a cluster label convention method based on a register communication mechanism are designed; and a double bufferingtechnology and instruction rearrangement optimization techniques are adopted. For the stage of updating a center point, the invention designs a dynamic scheduling task partitioning mode. According tothe high-performance parallel implementation method of the K-means algorithm on the domestic Sunway 26010 multi-core processor, through test on a real data set, the floating-point calculation performance with maximum 348.1GFlops can be achieved; compared with the theoretical maximum performance, 47%-84% of floating-point calculation efficiency can be obtained; and compared with non-fused calculation mode, an accelerative ratio of 1.7x at most and 1.3x on average can be obtained.

Owner:INST OF SOFTWARE - CHINESE ACAD OF SCI

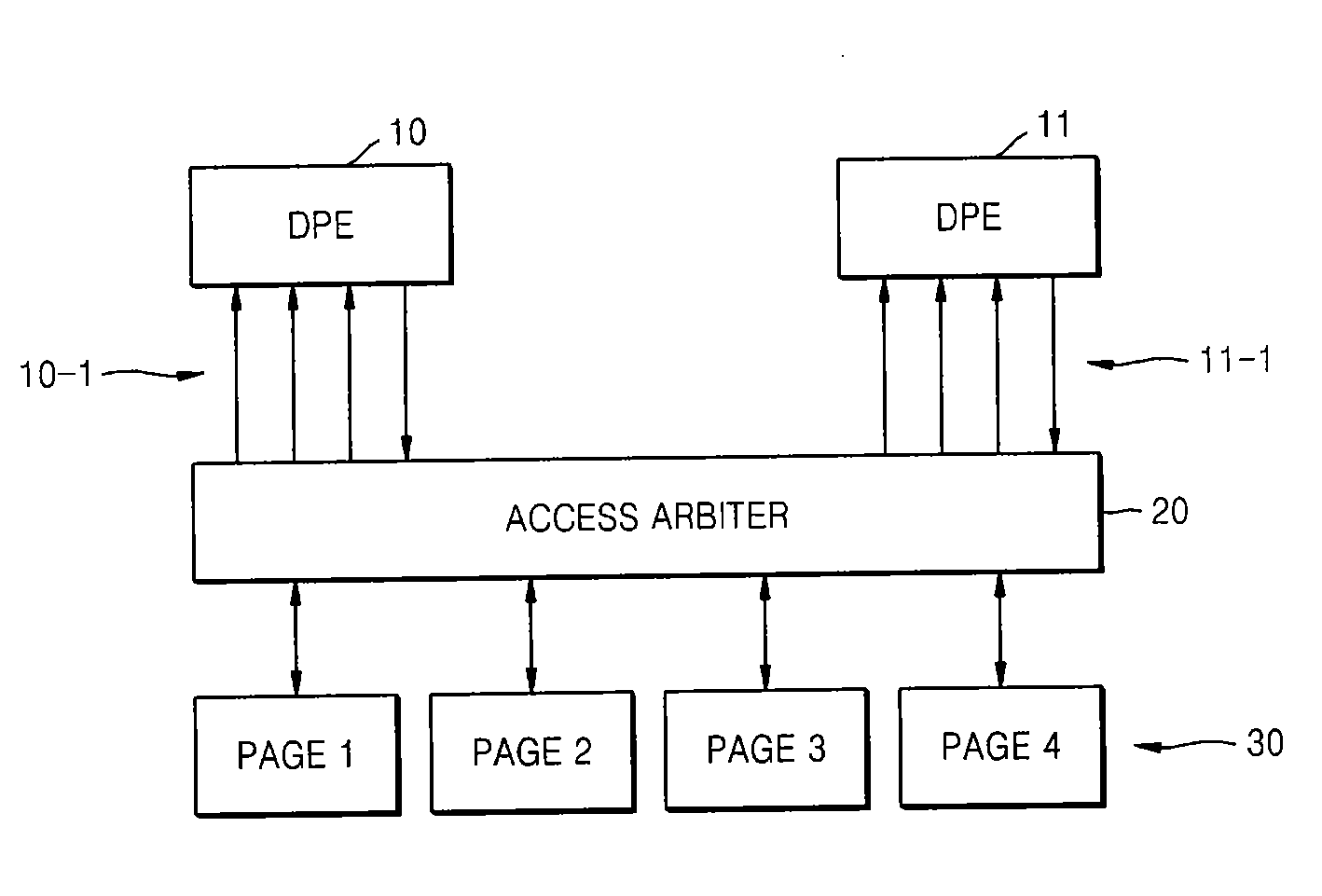

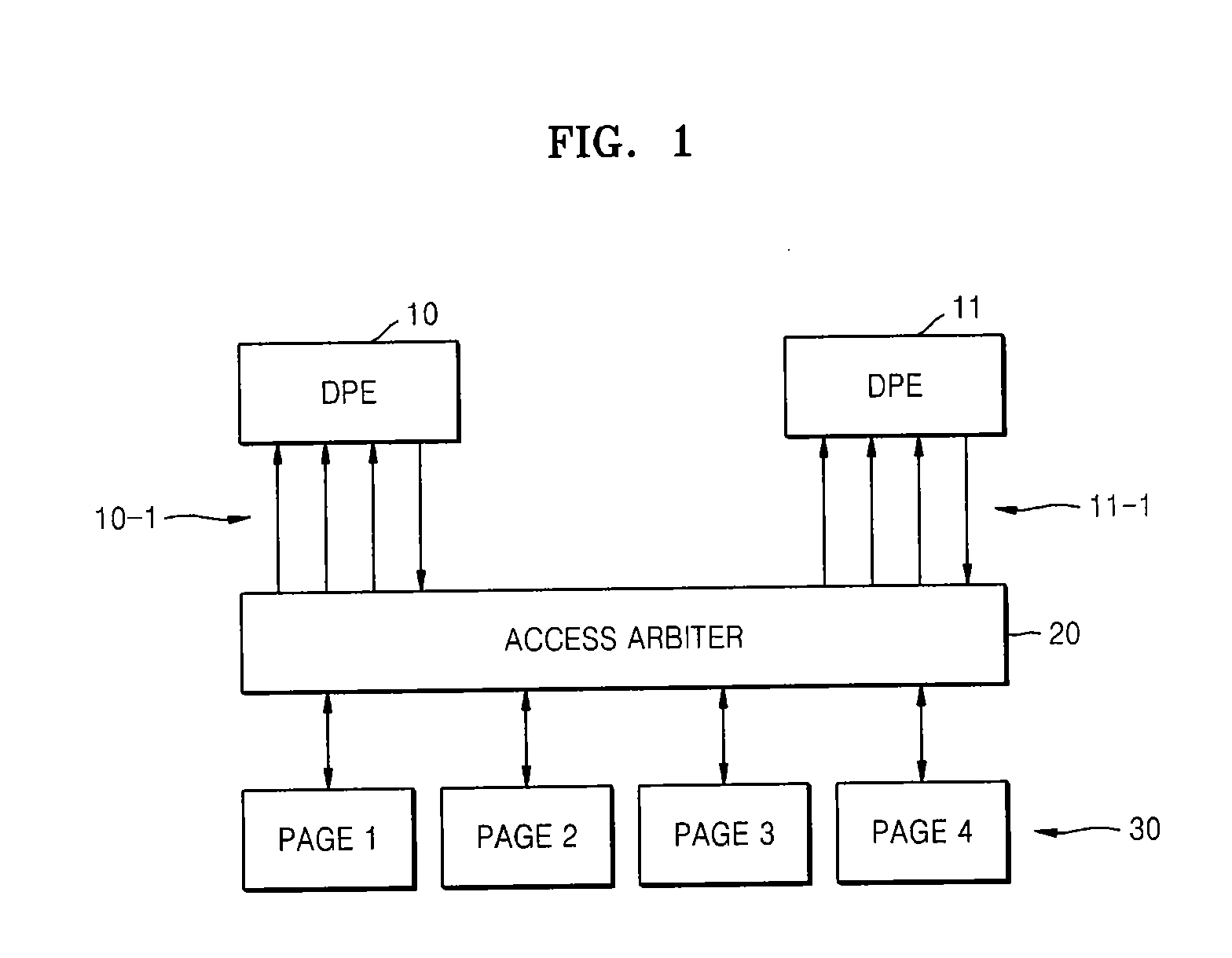

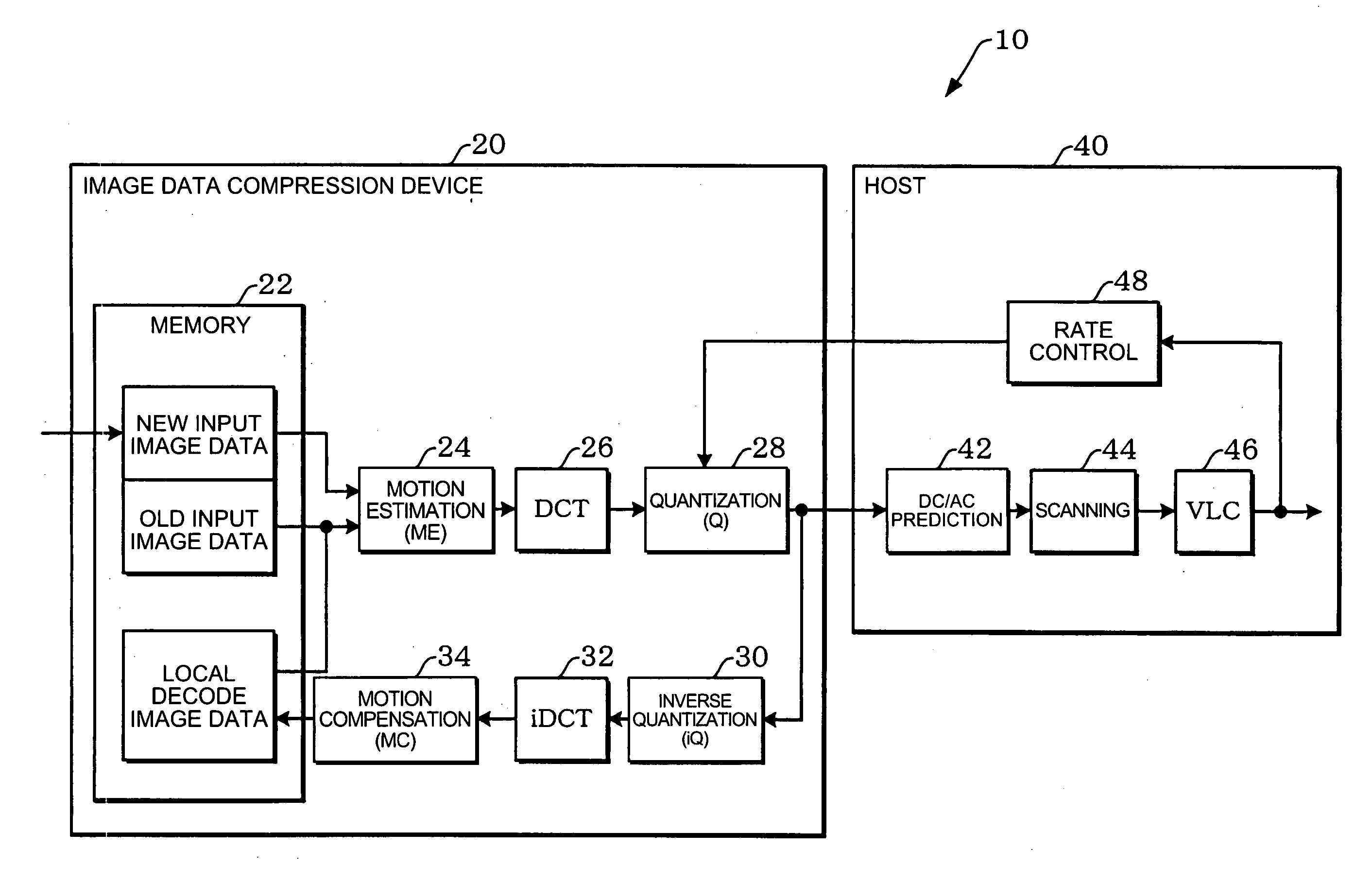

Apparatus and method for reducing memory access conflict

ActiveUS20090150644A1Minimize memory accessReduce memory accessMemory adressing/allocation/relocationStatic storageData processingSubpage

Provided are an apparatus and a method of reducing memory access conflict. An apparatus for reducing memory access conflict when a plurality of data processing elements perform simultaneous access to a memory including a plurality of pages, each of which includes a plurality of subpages, the apparatus comprising: an access arbiter mapping a subpage division address corresponding to least significant bits of a memory access address received from each of the data processing elements to another address having a same number of bits as the subpage division address in order for data to be output from each of the subpages in a corresponding page at a time of the simultaneous access; and a selector, prepared for each of the pages, selecting to output one of the data output from the subpages using the mapped results.

Owner:ELECTRONICS & TELECOMM RES INST

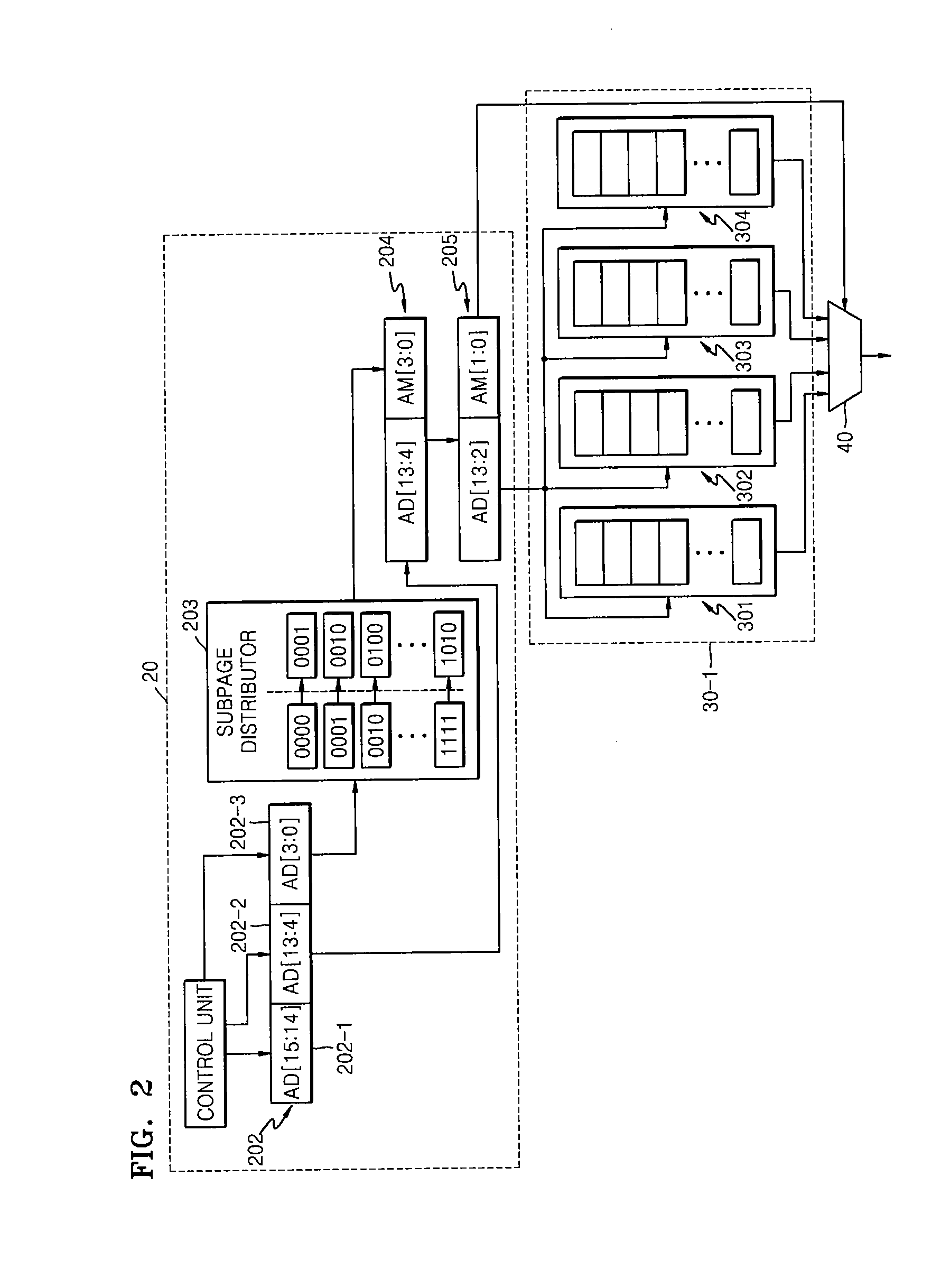

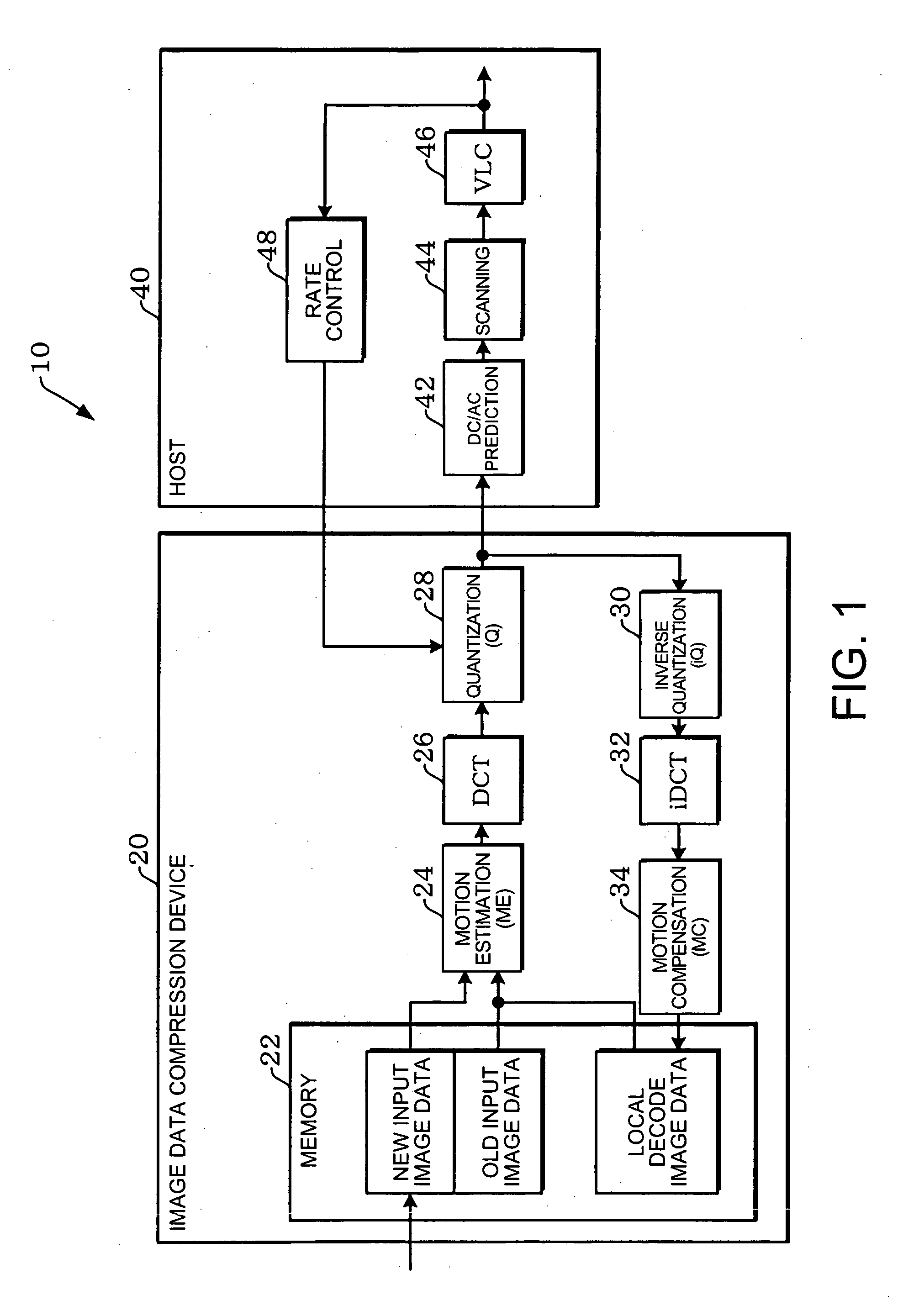

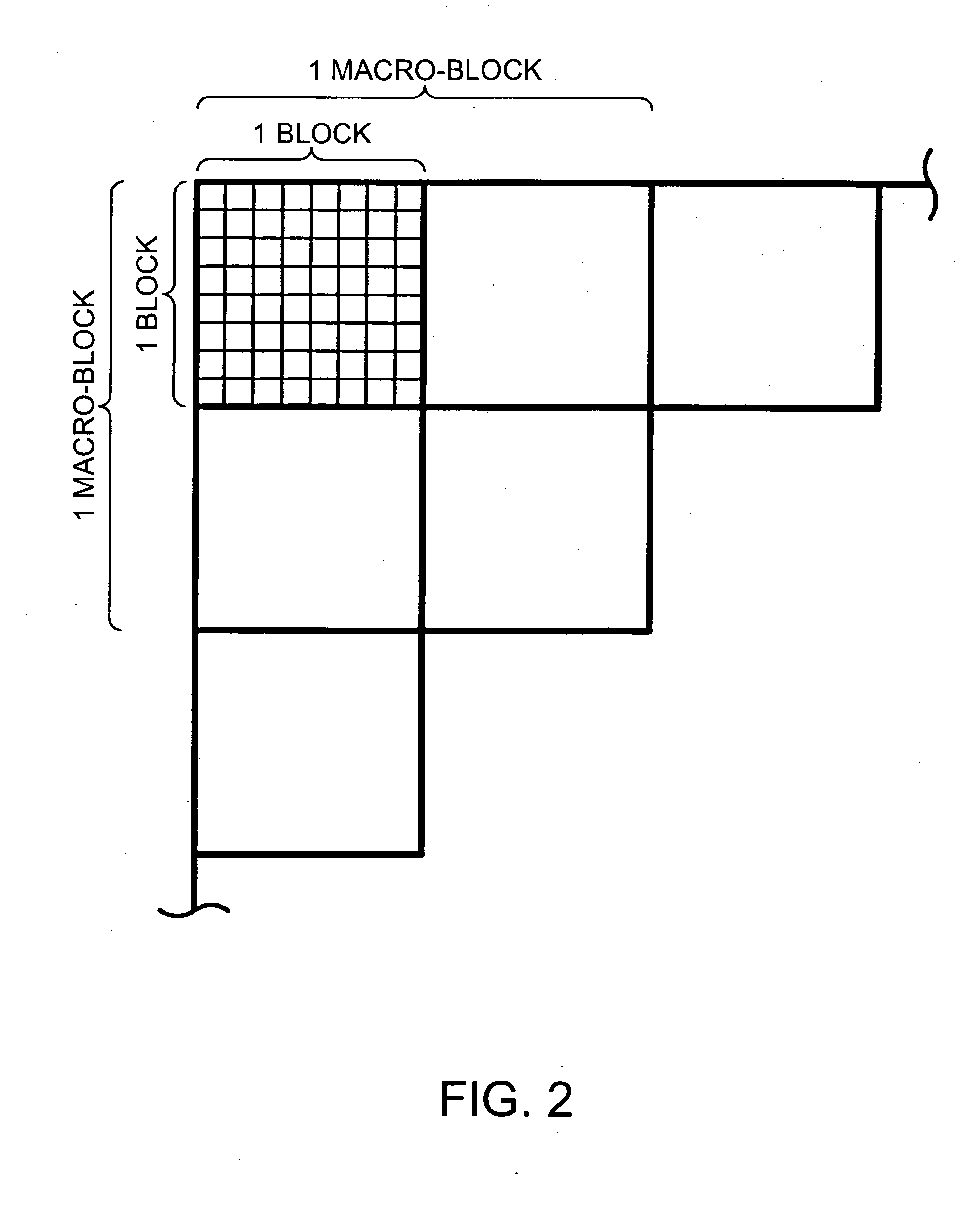

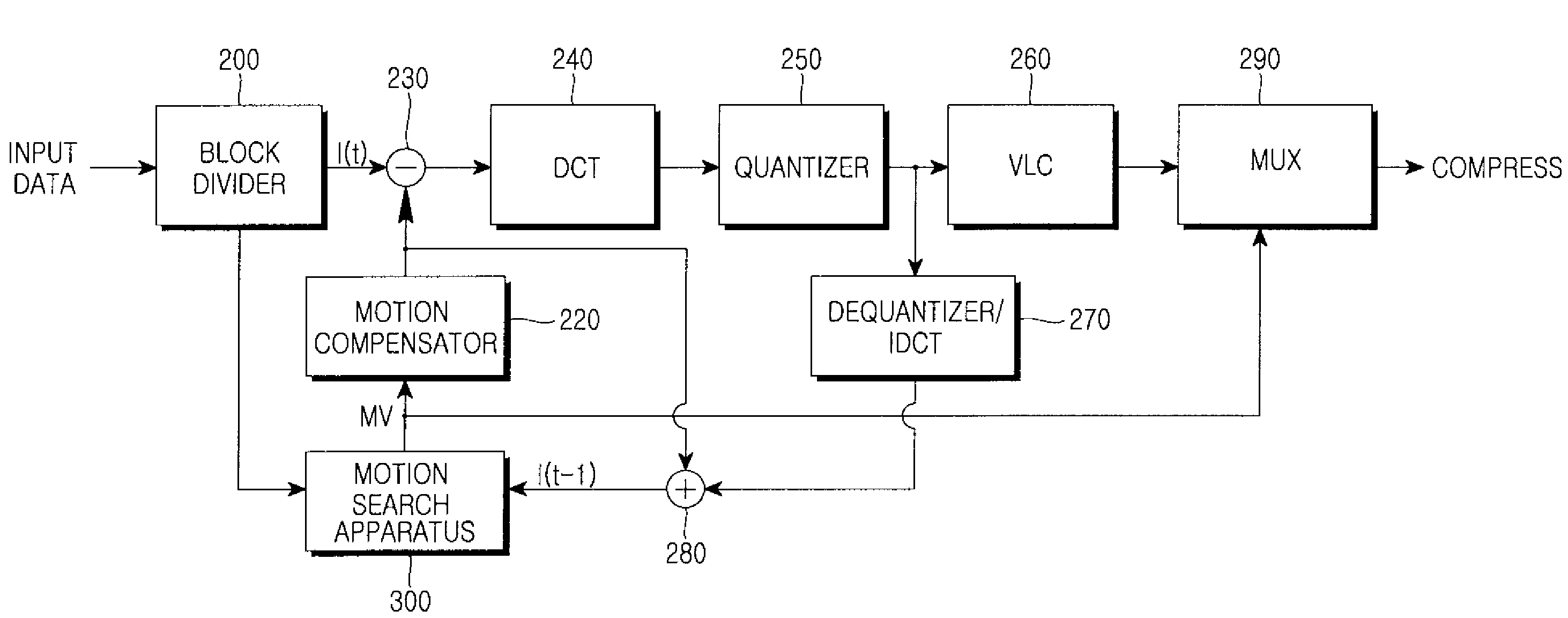

Method for calculating image difference, apparatus thereof, motion estimation device and image data compression device

InactiveUS20050276492A1Speed upReduce memory accessImage analysisCode conversionData compressionMotion estimation

A method is provided for calculating an image difference between a present image and a reference image that is older than the present image by each predetermined area.

Owner:SEIKO EPSON CORP

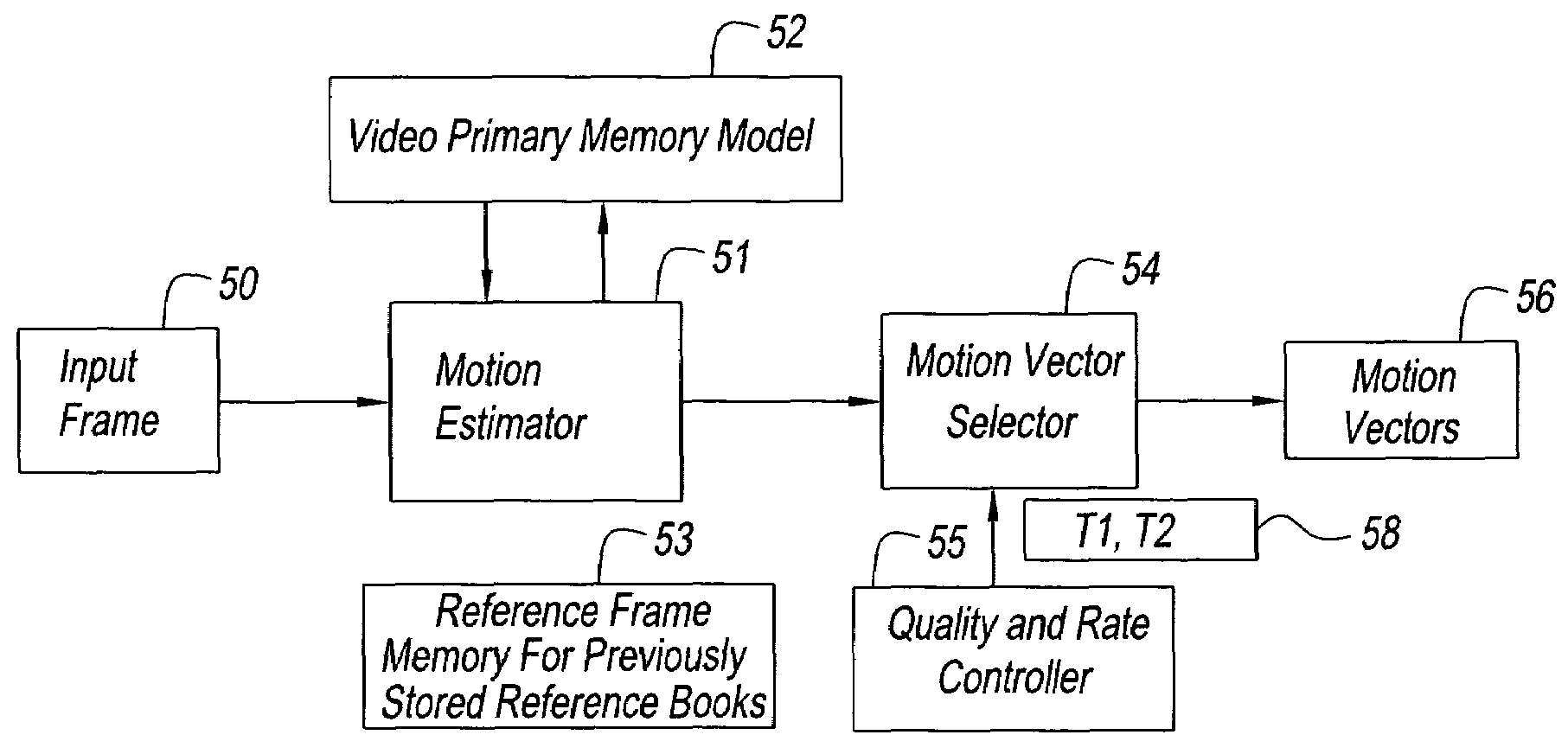

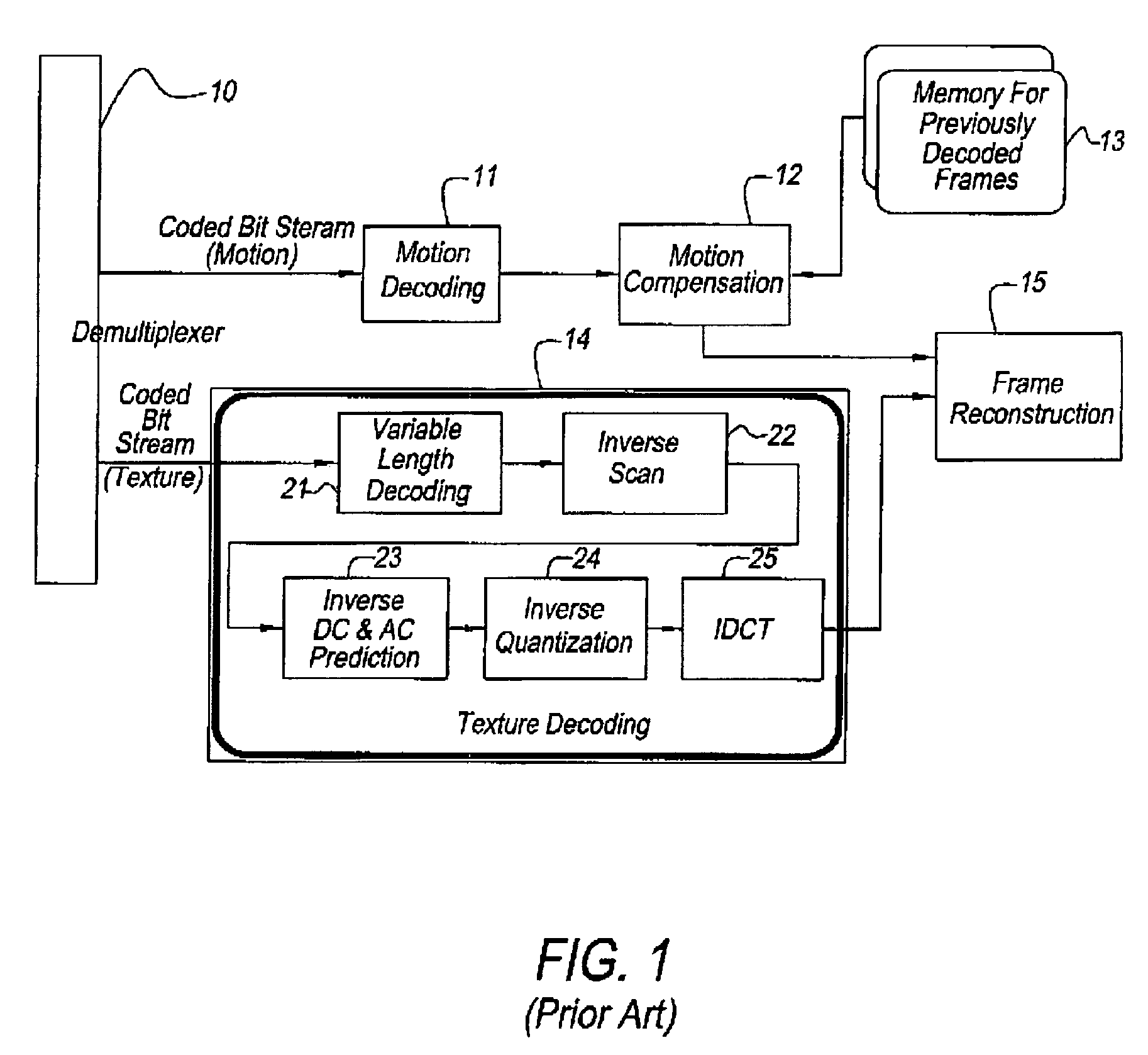

Power-aware on-chip memory management for video coding algorithms

ActiveUS7715479B2Reduce trafficReduce traffic problemsColor television with pulse code modulationColor television with bandwidth reductionData streamVideo encoding

A decoding power aware encoding method for generating a predictively encoded data stream, in which predictions, that result in a reduction in the amount of data transferred from the secondary memory to primary memory during the decoding process, are favored, said method for favoring certain predictions comprising: a model for transfer of data from secondary memory to primary memory in the decoding process; a scheme for weighting the relative merits of favoring a certain prediction and the associated loss in compression gain; and based on said weighting scheme, choosing a particular prediction from the candidates allowed by the compression scheme.

Owner:TWITTER INC

Image processing apparatus and graphics memory unit

ActiveUS8619092B2Reduce memory accessCathode-ray tube indicatorsDigital output to display deviceImaging processingImaging data

An image processing apparatus and graphics memory unit which reduces useless memory access to a graphics memory unit. When an image data read section reads image data from frame buffers or windows, a mask area inside / outside determination section determines by reference to mask information stored in a mask information storage section whether image data which is being scanned is in a memory access mask area. If the image data which is being scanned is in the memory access mask area, then a superposition process section performs a superposition process according to a transmission attribute assigned to the memory access mask area regardless of transmission attributes assigned to the frame buffers or the windows.

Owner:SOCIONEXT INC

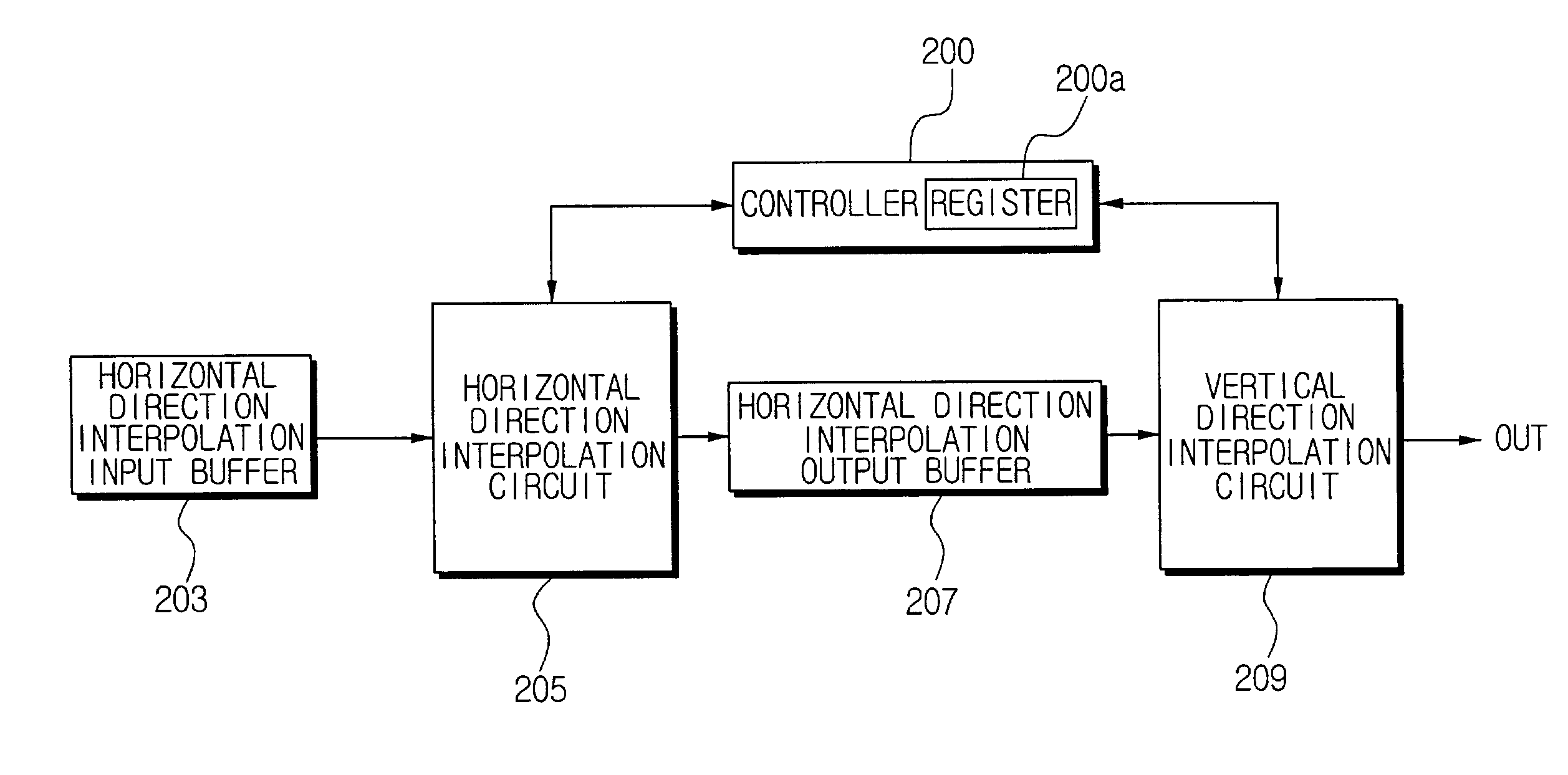

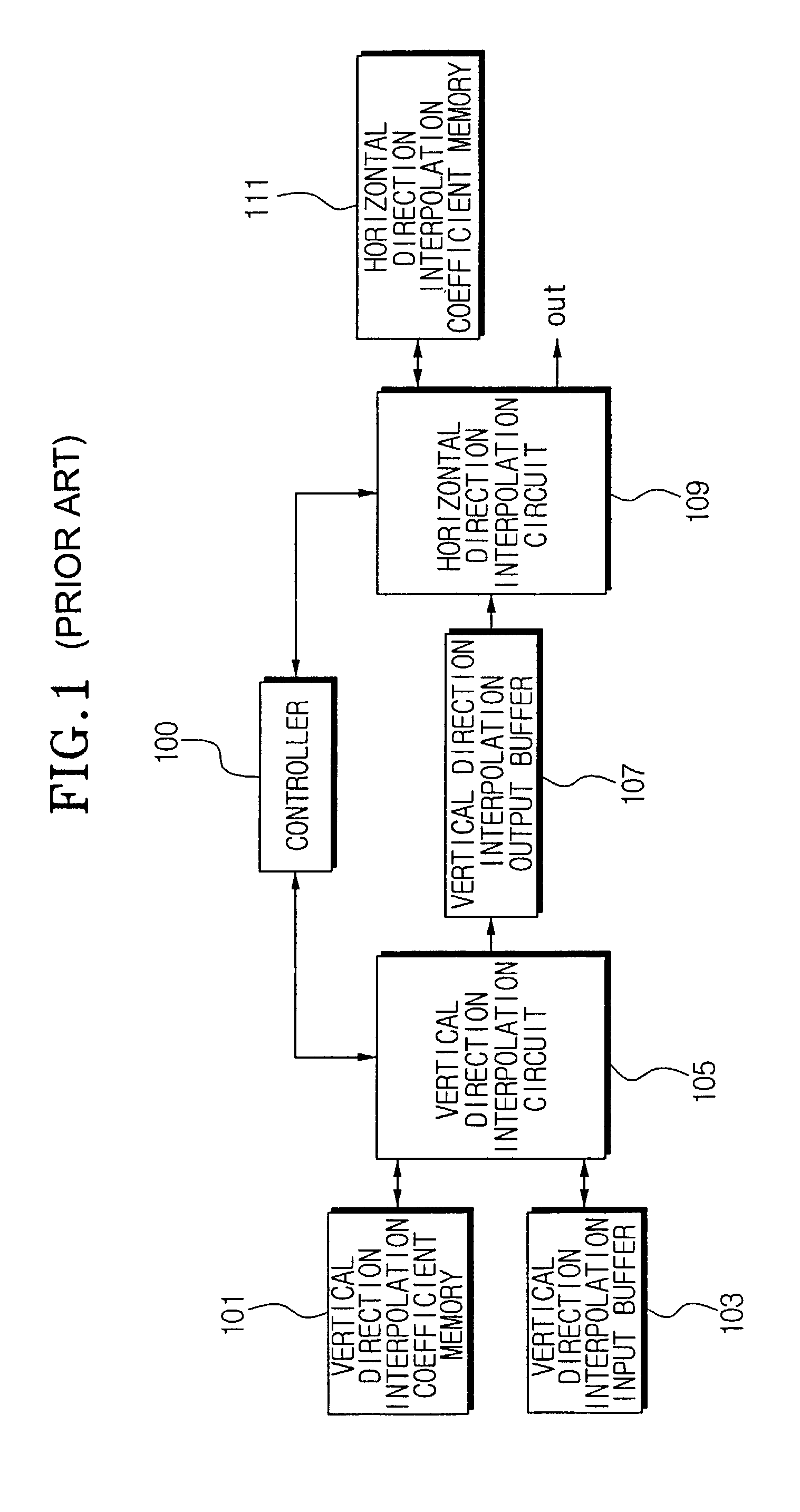

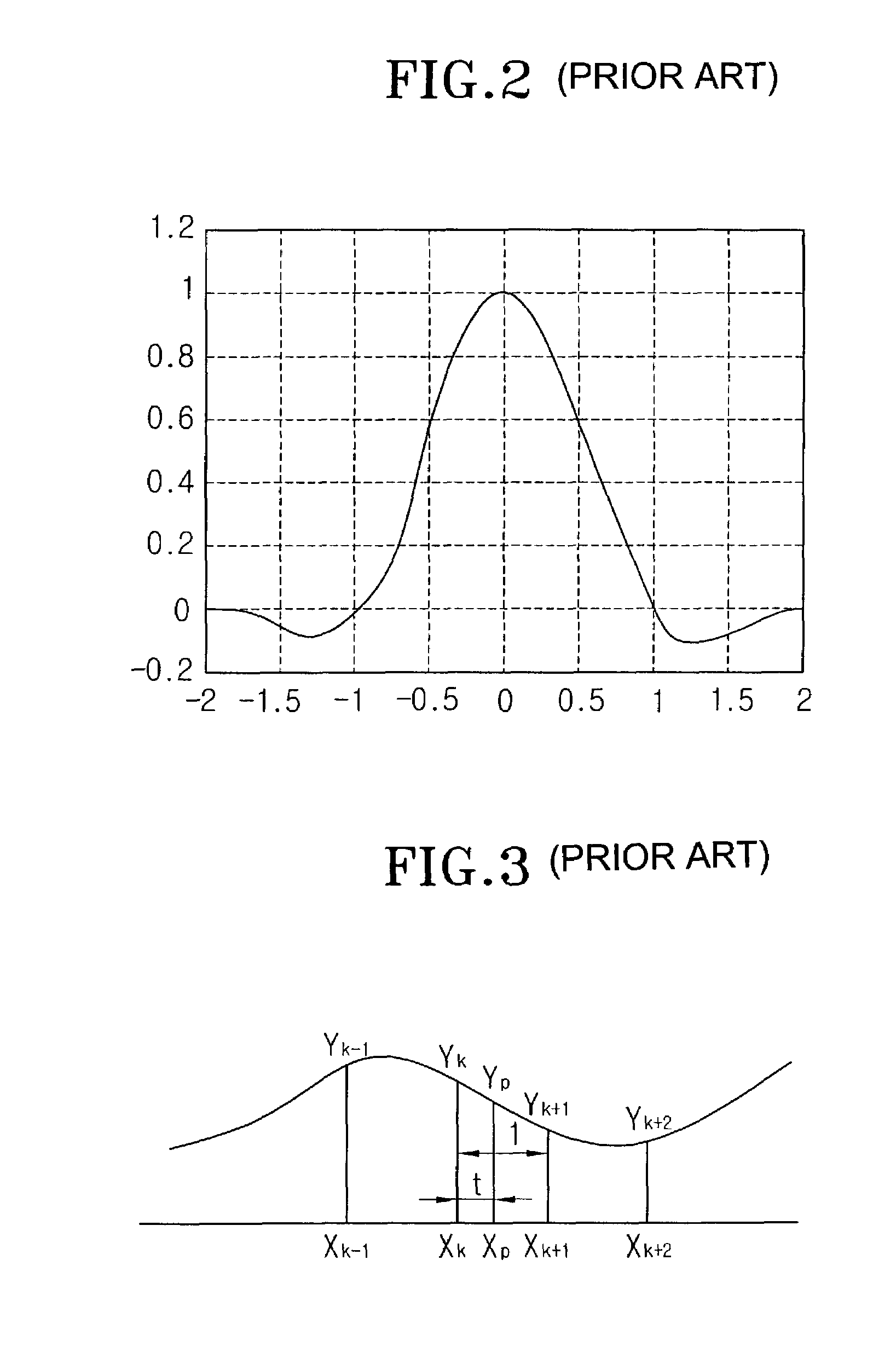

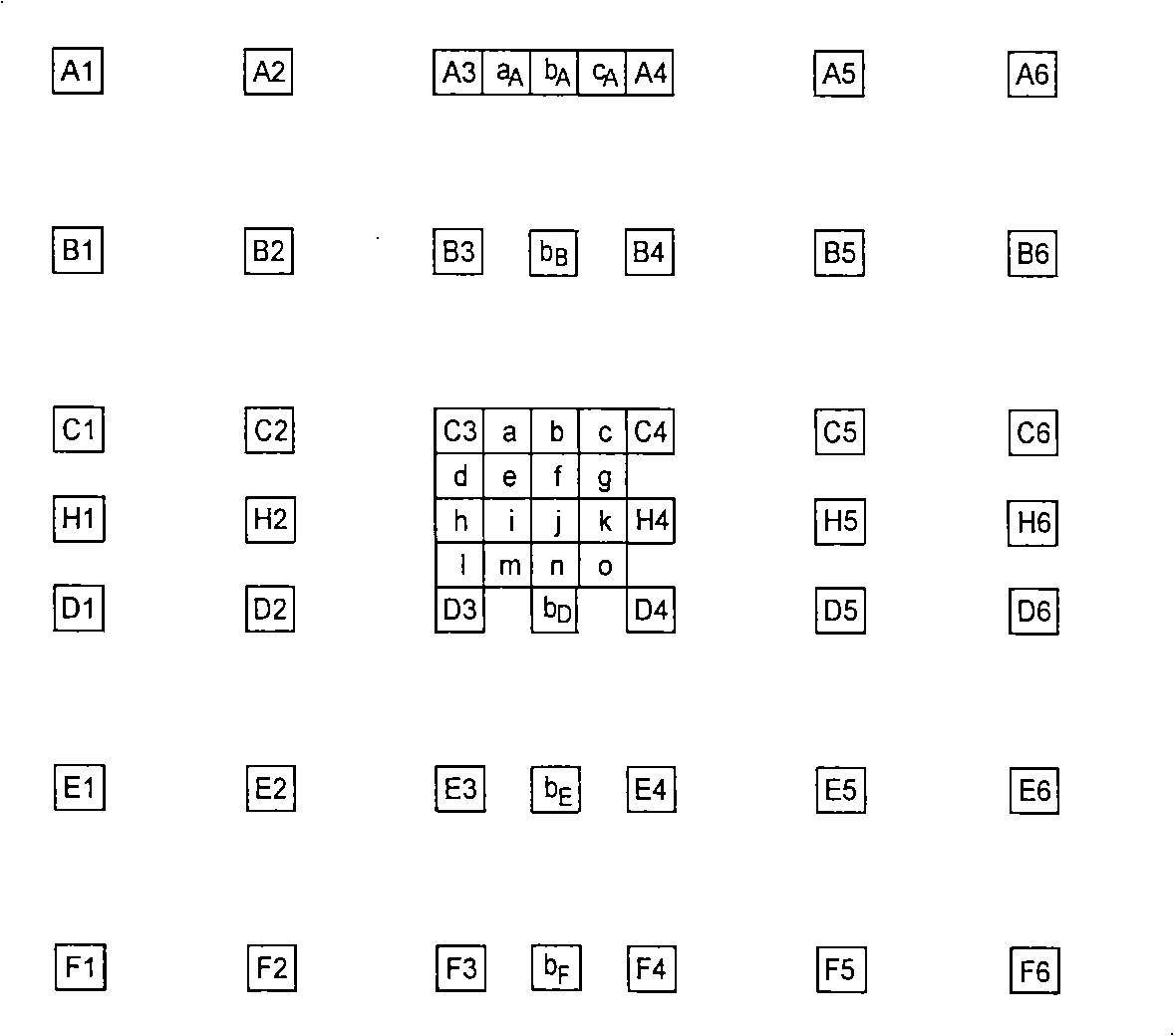

Apparatus for processing digital image and method therefor

InactiveUS7110620B2Reduce processing timeShorten access timeTelevision system detailsColor signal processing circuitsProcessor registerComputer vision

An apparatus which processes a digital image and a method therefor which can reduce an error when calculating an output value obtained by interpolating pixel values of the input digital image. The apparatus includes an interpolation processing unit which interpolates an input digital image, and a controller which measures an interpolation interval of pixel values of the digital image, calculates a coefficient by substituting the interpolation interval for a coefficient equation stored in a register, and calculates an interpolation node for the digital image by substituting the coefficient and an output pixel position value of the digital image for an interpolation node calculation equation. The controller controls the interpolation processing unit so as to interpolate the digital image to the interpolation node. As a result, it is possible to reduce an error between an interpolated output pixel position value and an output pixel position value for the pixel values of the input digital image.

Owner:SAMSUNG ELECTRONICS CO LTD

Method for filtering interpolation

InactiveCN101350925AReduce memory accessReduce computational complexityTelevision systemsDigital video signal modificationComputer architectureAccess time

The invention relates to an interpolation filtering method which belongs to the technical field of video compression; the method is that two groups of sub-pixels interpolation coefficients are resolved at a decoder terminal from code stream and used for the interpolation filters of data blocks of different patterns. The invention can decrease the decoder access times and simplify the decoder to enhance coding performance.

Owner:TSINGHUA UNIV

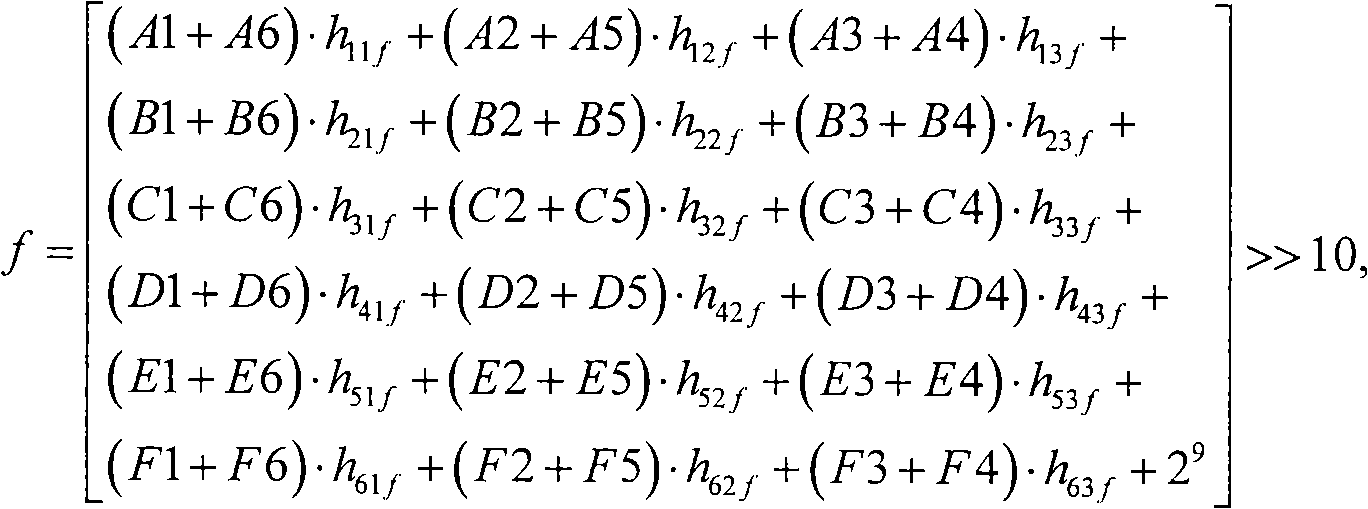

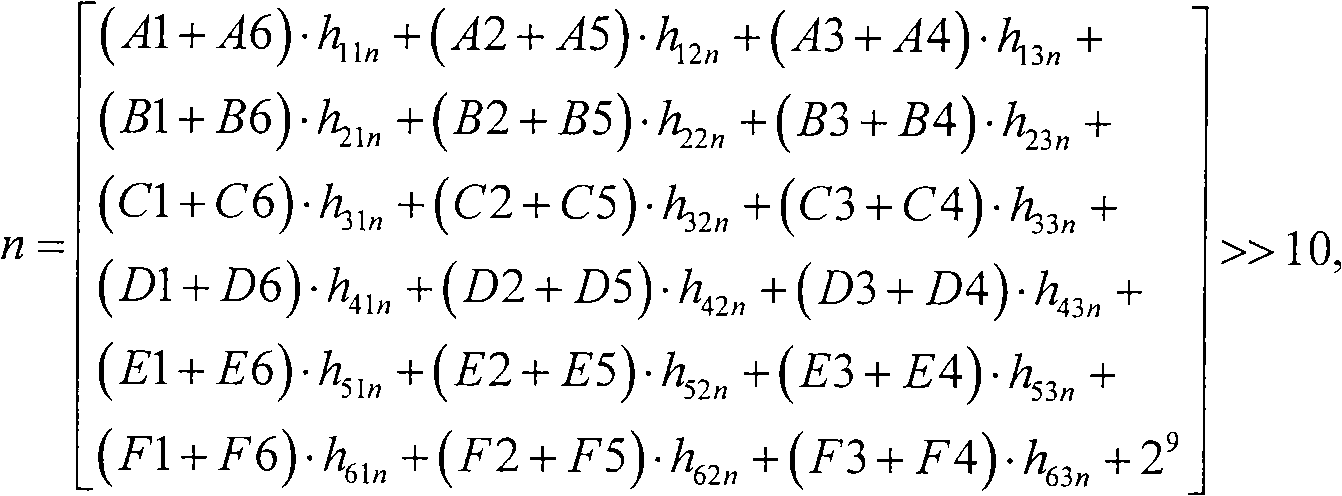

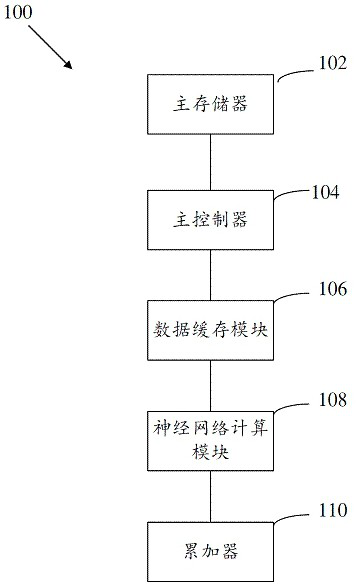

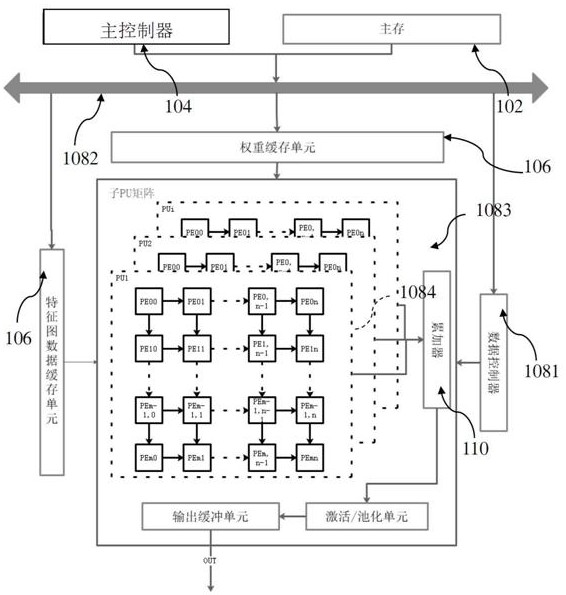

Neural network acceleration device and method and communication equipment

PendingCN113807509AImprove energy efficiency of accessReduce cache requirementsNeural architecturesPhysical realisationData streamEngineering

The invention provides a neural network acceleration device and method and communication equipment, and belongs to the field of data processing, and the method specifically comprises the steps that a main memory receives and stores feature map data and weight data of a to-be-processed image; a main controller generates configuration information and an operation instruction according to the structure parameters of the neural network; a data caching module comprises a feature data caching unit for caching feature line data extracted from the feature map data and a convolution kernel caching unit for caching convolution kernel data extracted from the weight data; a data controller adjusts a data path according to the configuration information and the instruction information and controls the data flow extracted by a data extractor to be loaded to a corresponding neural network calculation unit, the neural network calculation unit at least completes convolution operation of one convolution kernel and feature map data and completes accumulation of multiple convolution results in at least one period, and therefore, circuit reconstruction and data multiplexing are realized; and an accumulator accumulates the convolution results and outputs output feature map data corresponding to a convolution core.

Owner:绍兴埃瓦科技有限公司 +1

Motion search method and apparatus for minimizing off-chip memory access

InactiveUS20080181310A1Improve search speedReduce memory accessColor television with pulse code modulationColor television with bandwidth reductionDirect memory accessMotion vector

A motion search method and apparatus for minimizing an off-chip memory access to reduce cycles for predicting a motion vector. A sum of absolute differences (SAD) calculation processing is performed, while an off-chip memory is accessed using Direct Memory Access (DMA). A position of a current macro block is determined if a frame is input and the length of a horizontal search line is set according to adjacent motion vectors and predetermined external parameters. The SADs are calculated by calculating medians of the adjacent motion vectors as a predictive motion vector, setting a vertical direction value of the predictive motion vector as an initial search line, and sequentially searching at least one horizontal search line, and determining a position of a minimum SAD from the at least one horizontal search line. The minimum SAD position is selected as a final motion vector.

Owner:SAMSUNG ELECTRONICS CO LTD +1

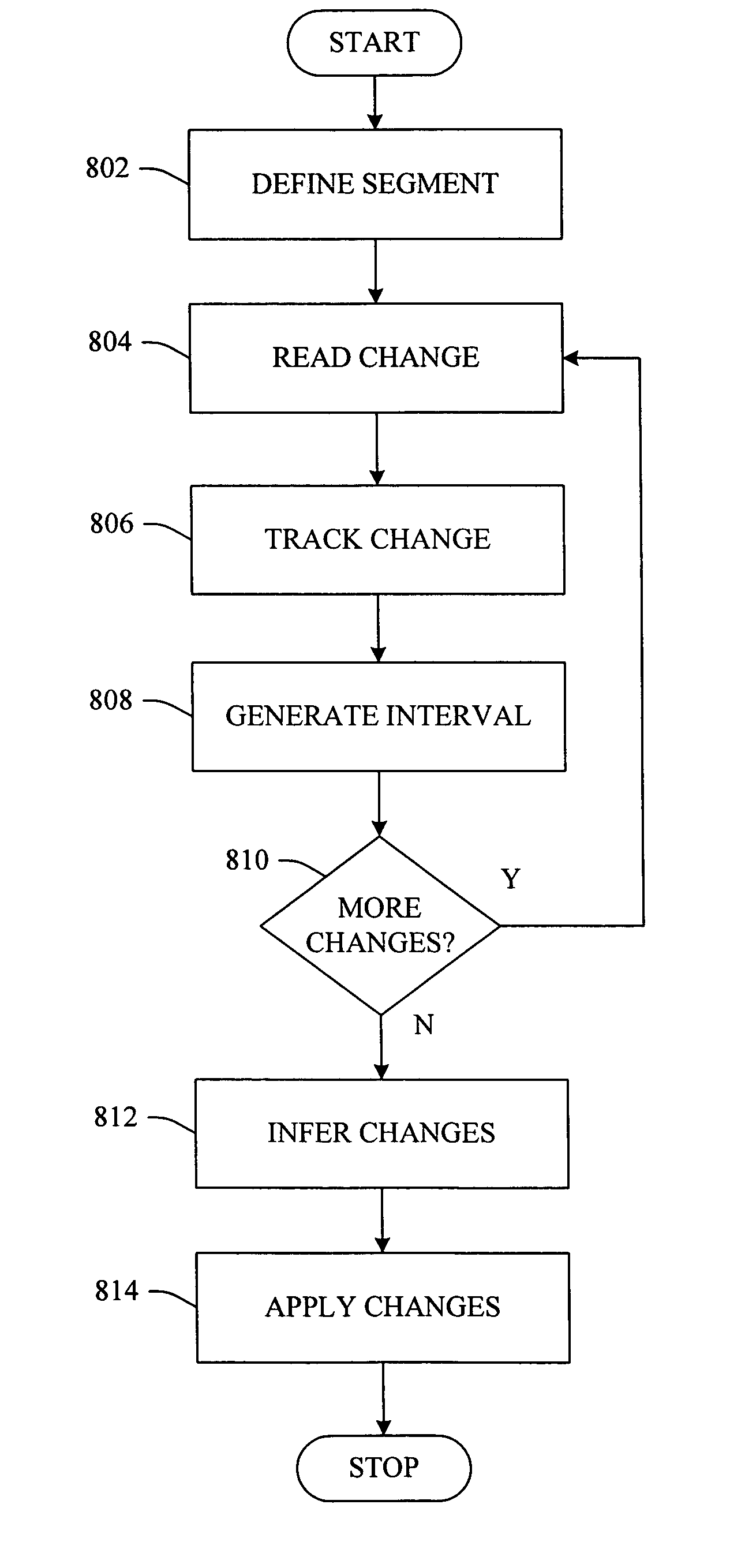

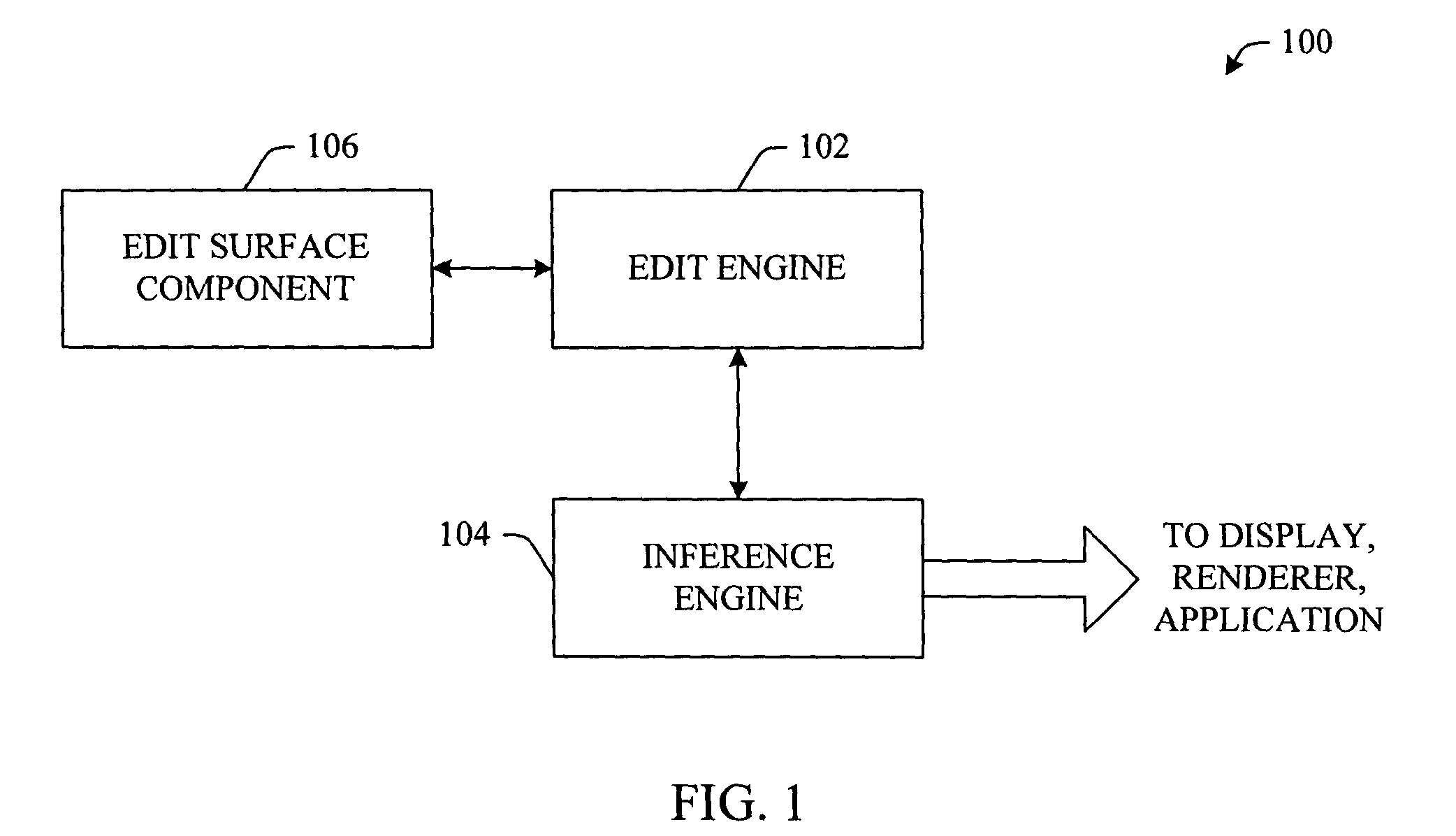

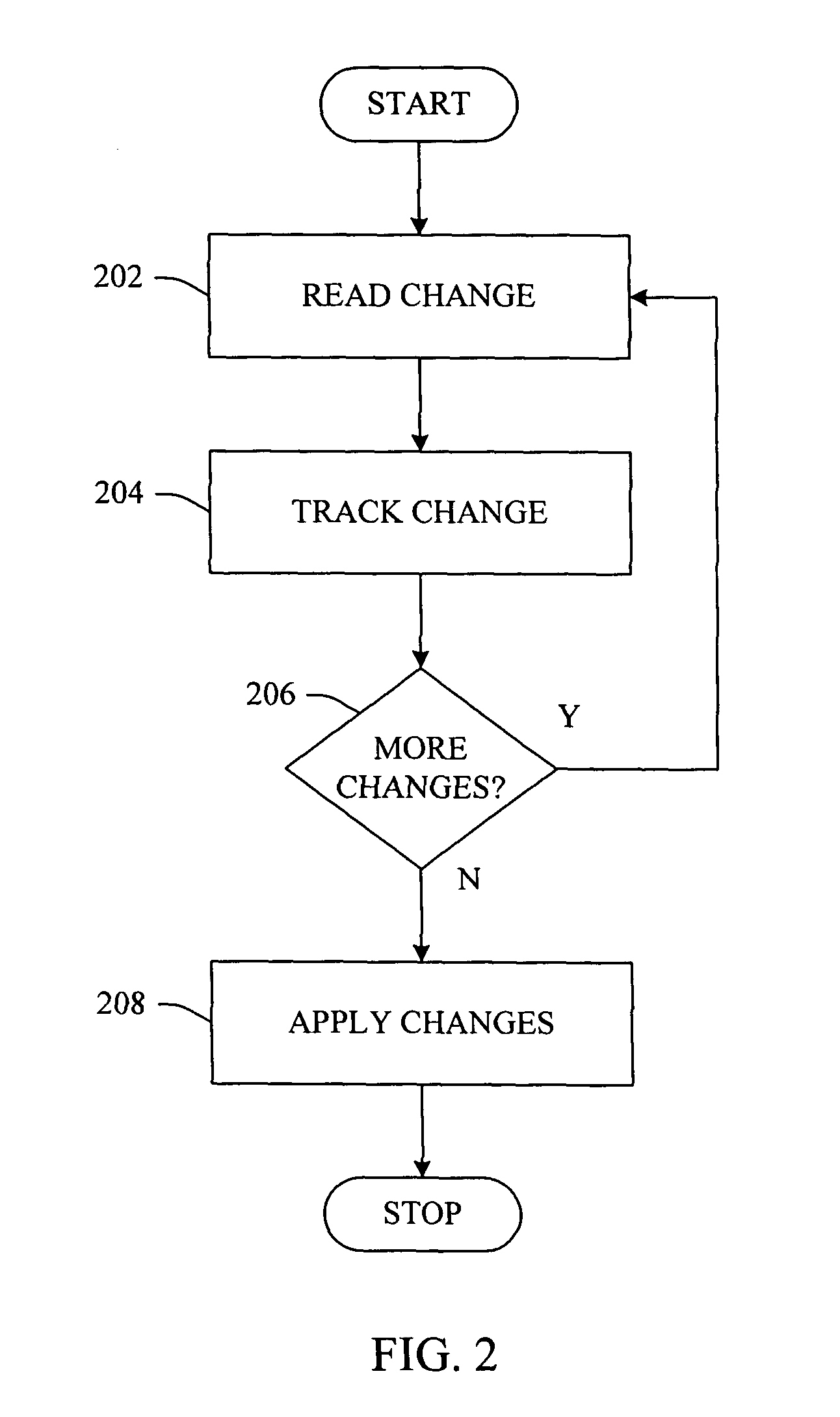

Memory optimizing for re-ordering user edits

InactiveUS8010894B2Improve performanceReduce memory accessNatural language data processingSpecial data processing applicationsRe sequencingChronological time

The subject invention can track and apply user edits to a source document as a sequence of changes. The changes can be applied in a document or spatial order irrespective of temporal factors. The invention can maintain intervals that represent user operations (e.g., insertions, deletions, zero-net-length changes). As well, the invention can infer a location in the original document that corresponds to a particular operation. In accordance therewith, the invention can arrange temporally sequenced user document modifications into an order consistent with the layout of the document file encoding. This functionality of mapping re-sequenced changes into the original document data representation is one novel feature of the invention. The invention can enable portions of the source document loaded into memory on an as-needed basis whereby changes relevant to the instant portion can be made.

Owner:MICROSOFT TECH LICENSING LLC

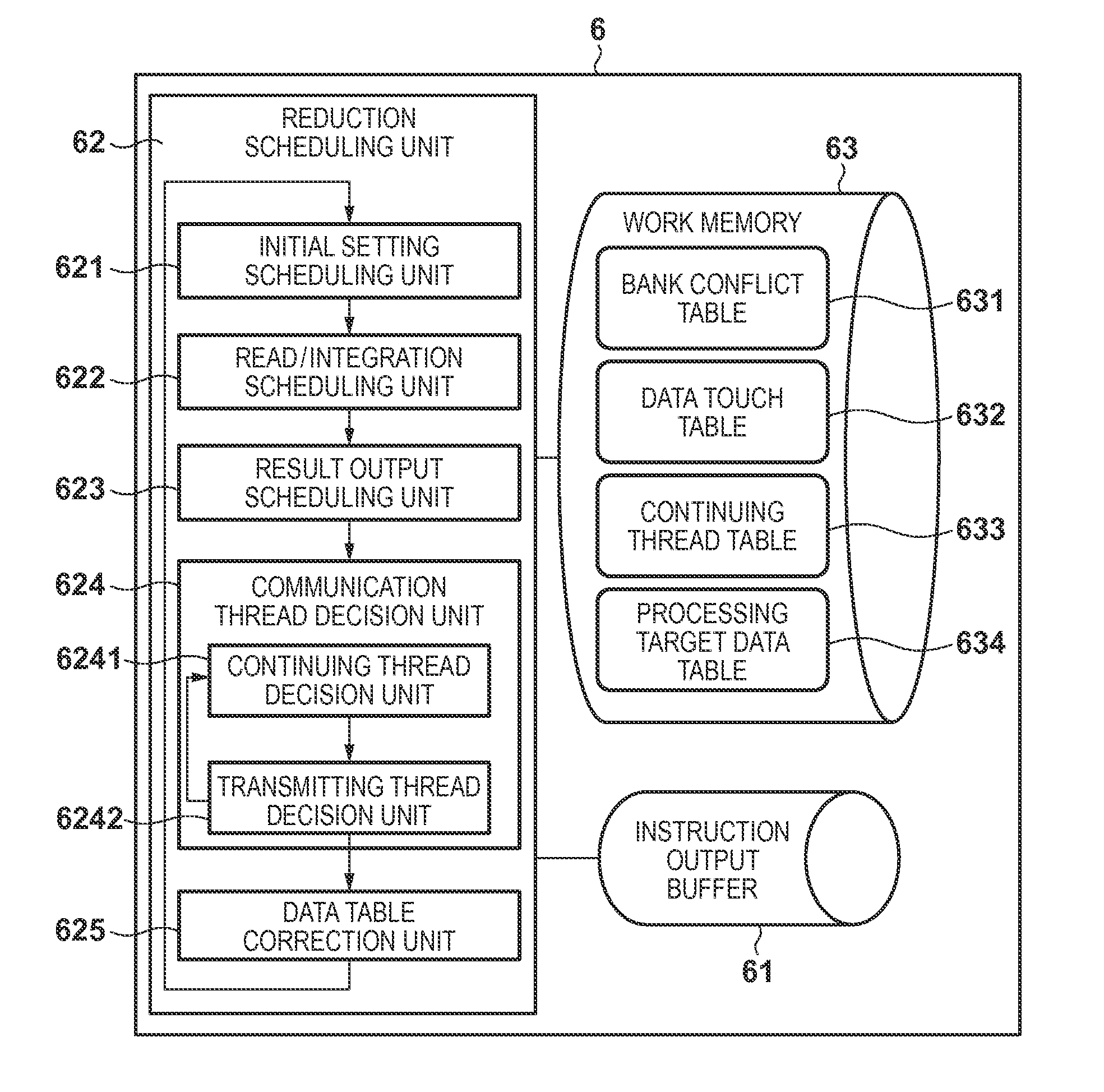

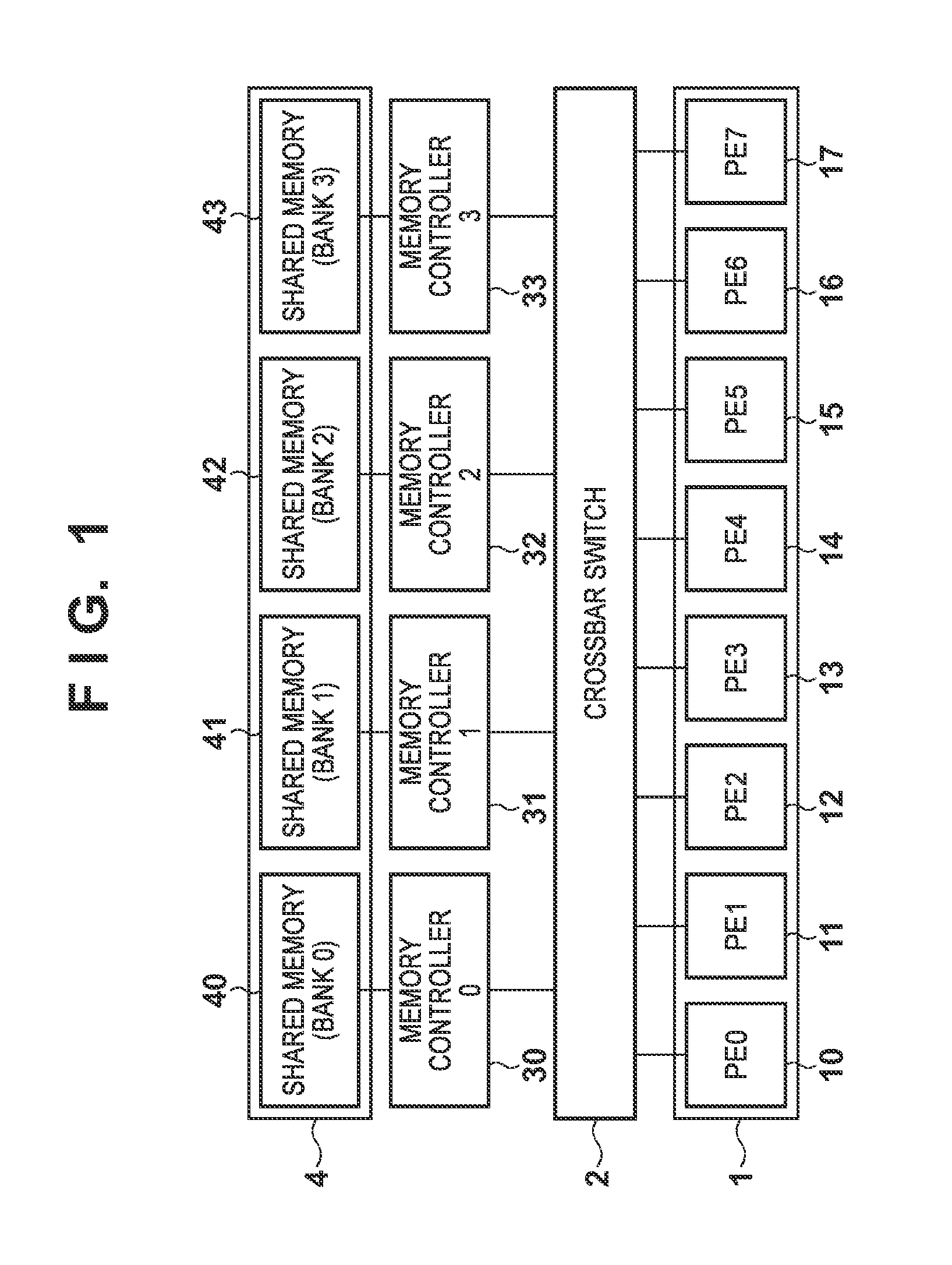

Information processing apparatus, information processing method, and storage medium

ActiveUS20130145373A1Increase computing speedReducing memory access conflictProgram initiation/switchingMemory systemsInformation processingMemory bank

There is provided with an information processing apparatus for controlling execution of a plurality of threads which run on a plurality of calculation cores connected to a memory including a plurality of banks. A first selection unit is configured to select a thread as a continuing thread which receives data from other thread, out of threads which process a data group of interest, wherein the number of accesses for a bank associated with the selected thread is less than a predetermined count. A second selection unit is configured to select a thread as a transmitting thread which transmits data to the continuing thread, out of the threads which process the data group of interest.

Owner:CANON KK

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com