Patents

Literature

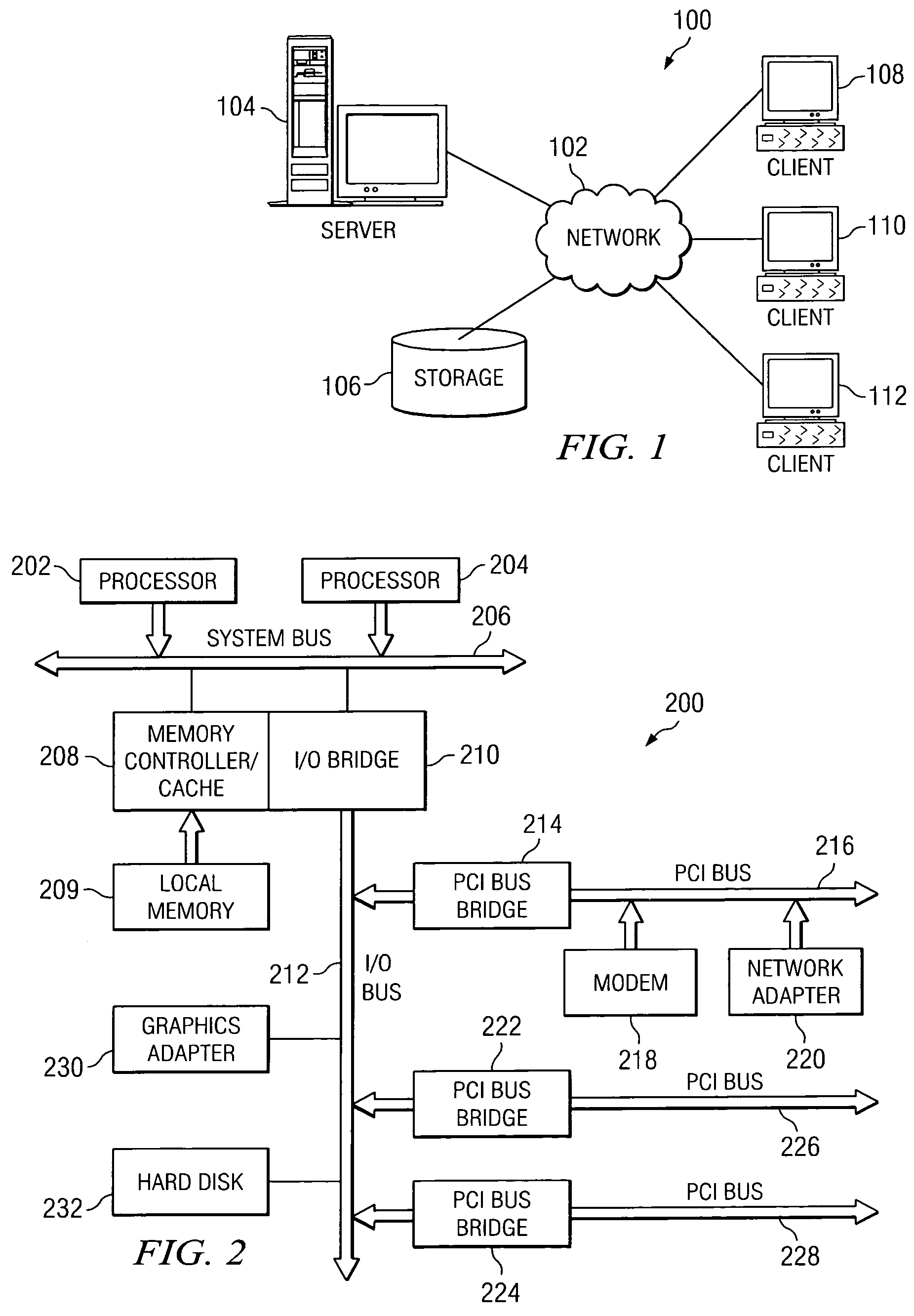

125results about How to "Improve caching capacity" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

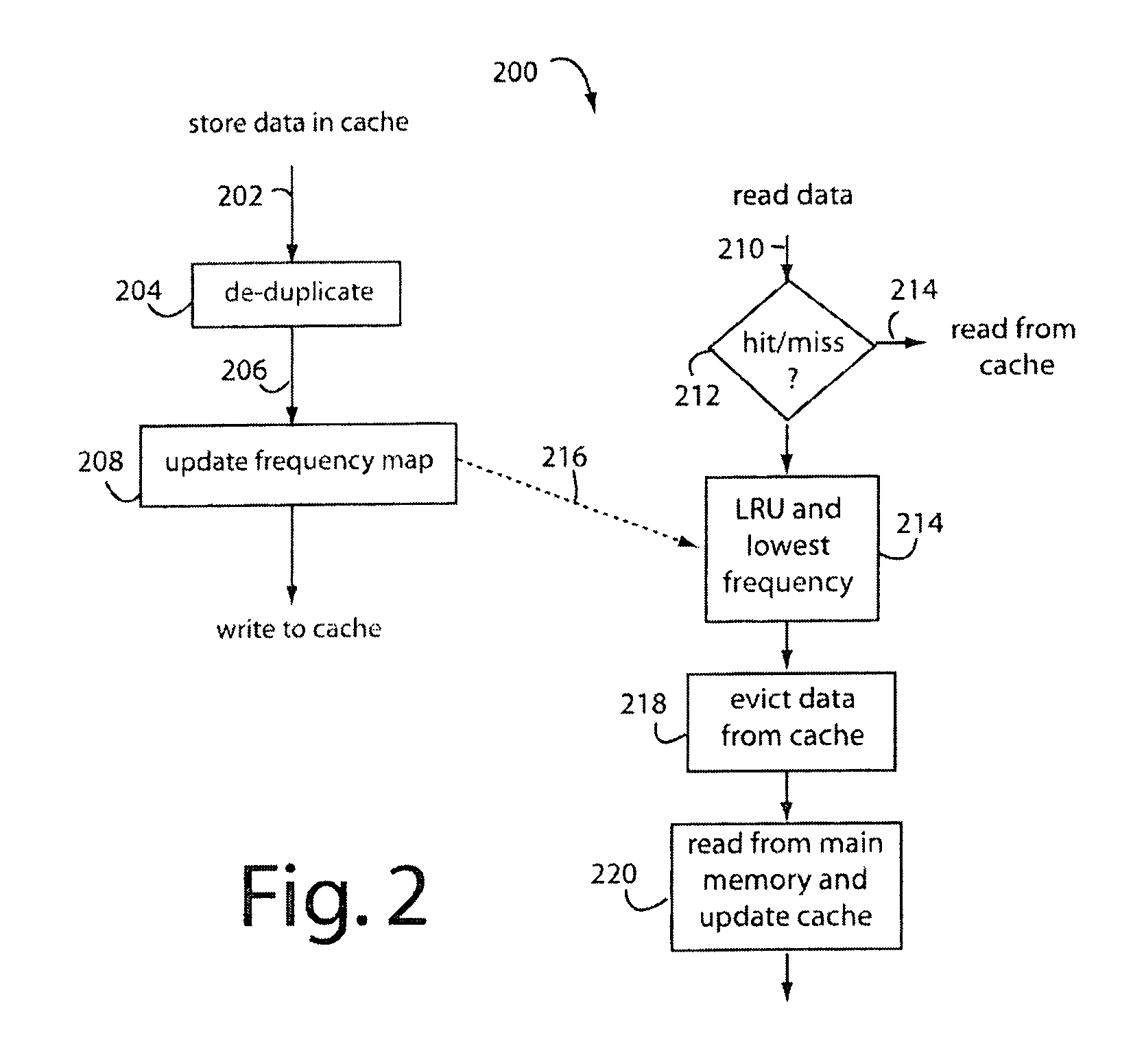

Data block frequency map dependent caching

InactiveUS20090204765A1Improve caching capacityImprove system performanceMemory systemsParallel computingCache algorithms

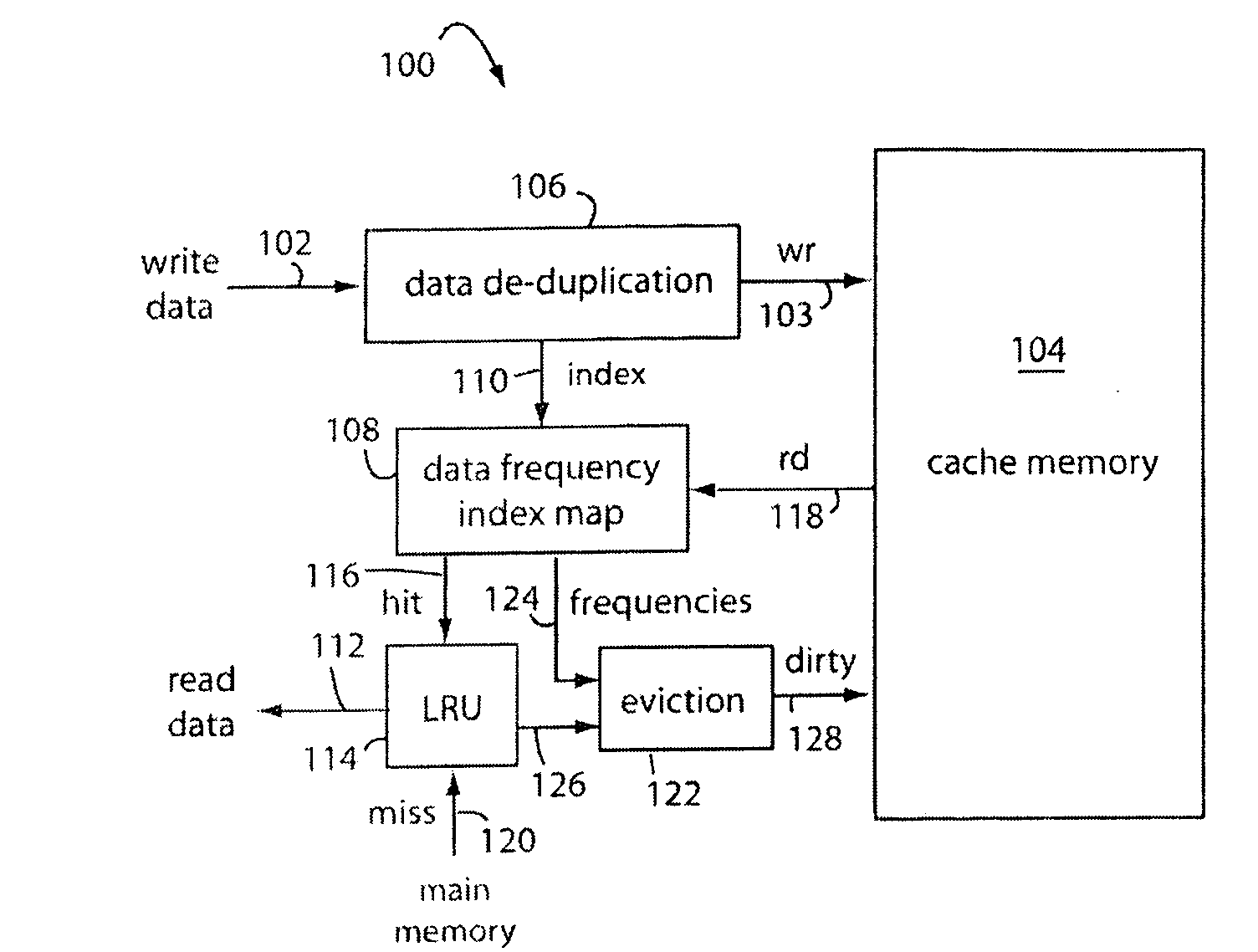

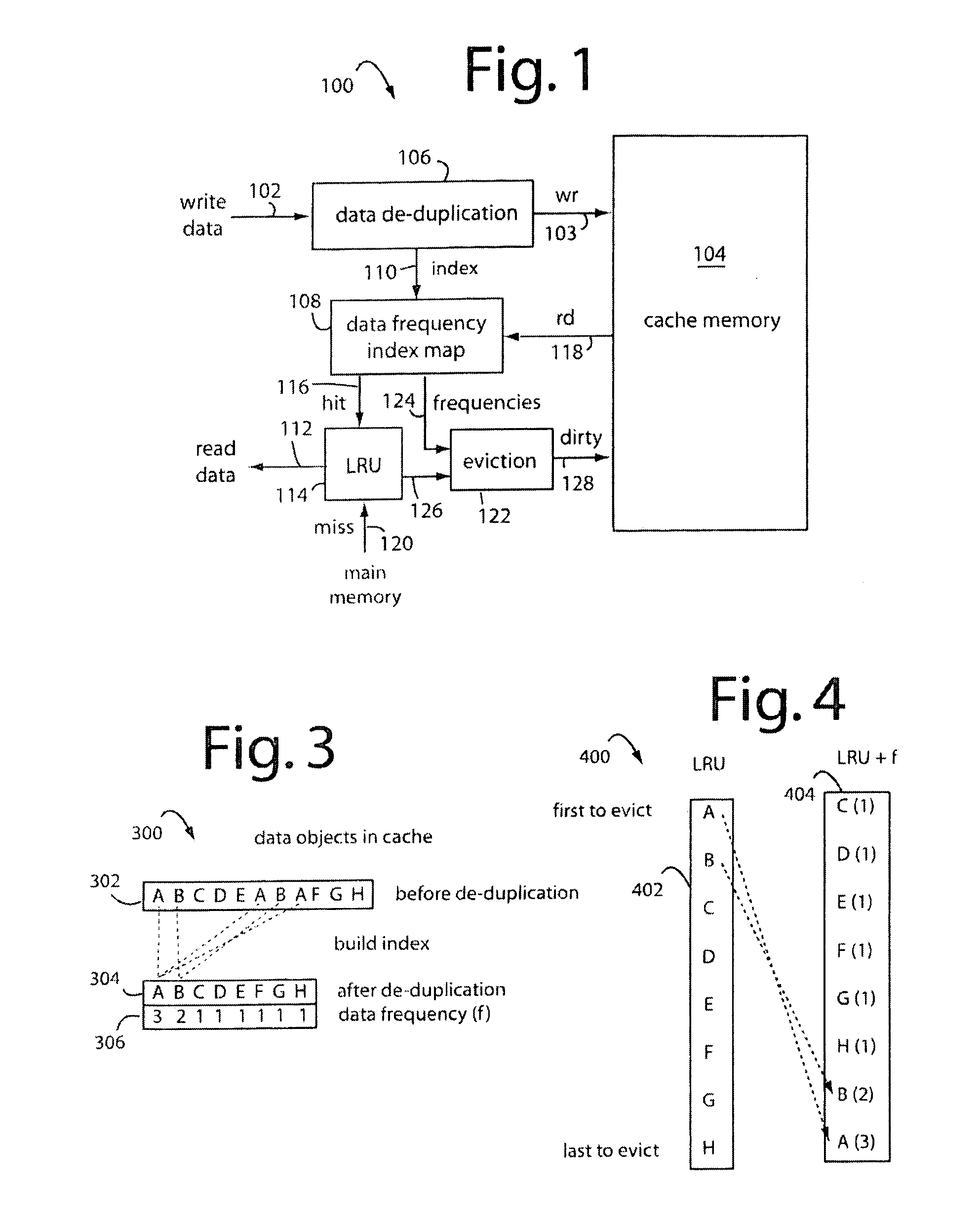

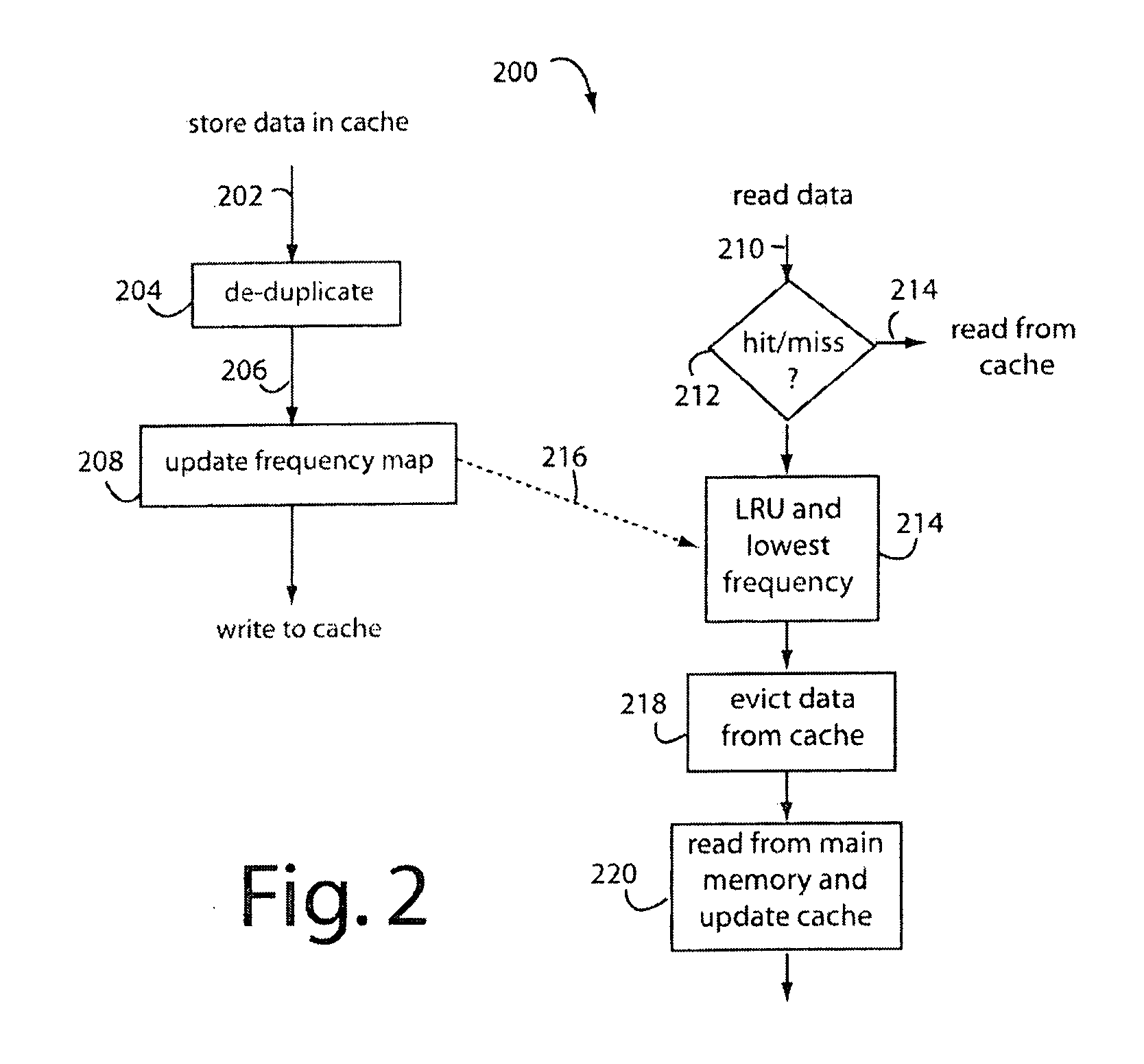

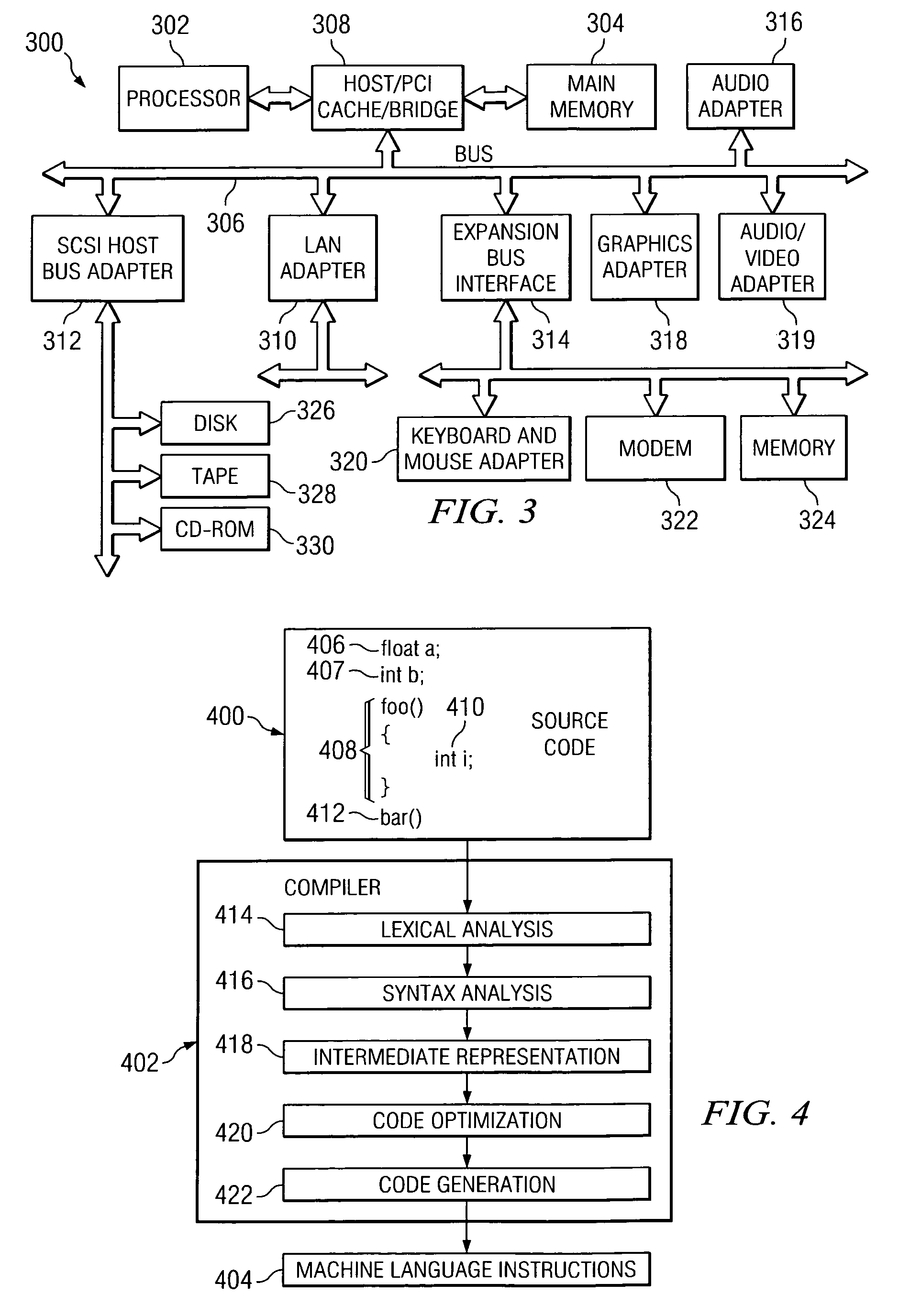

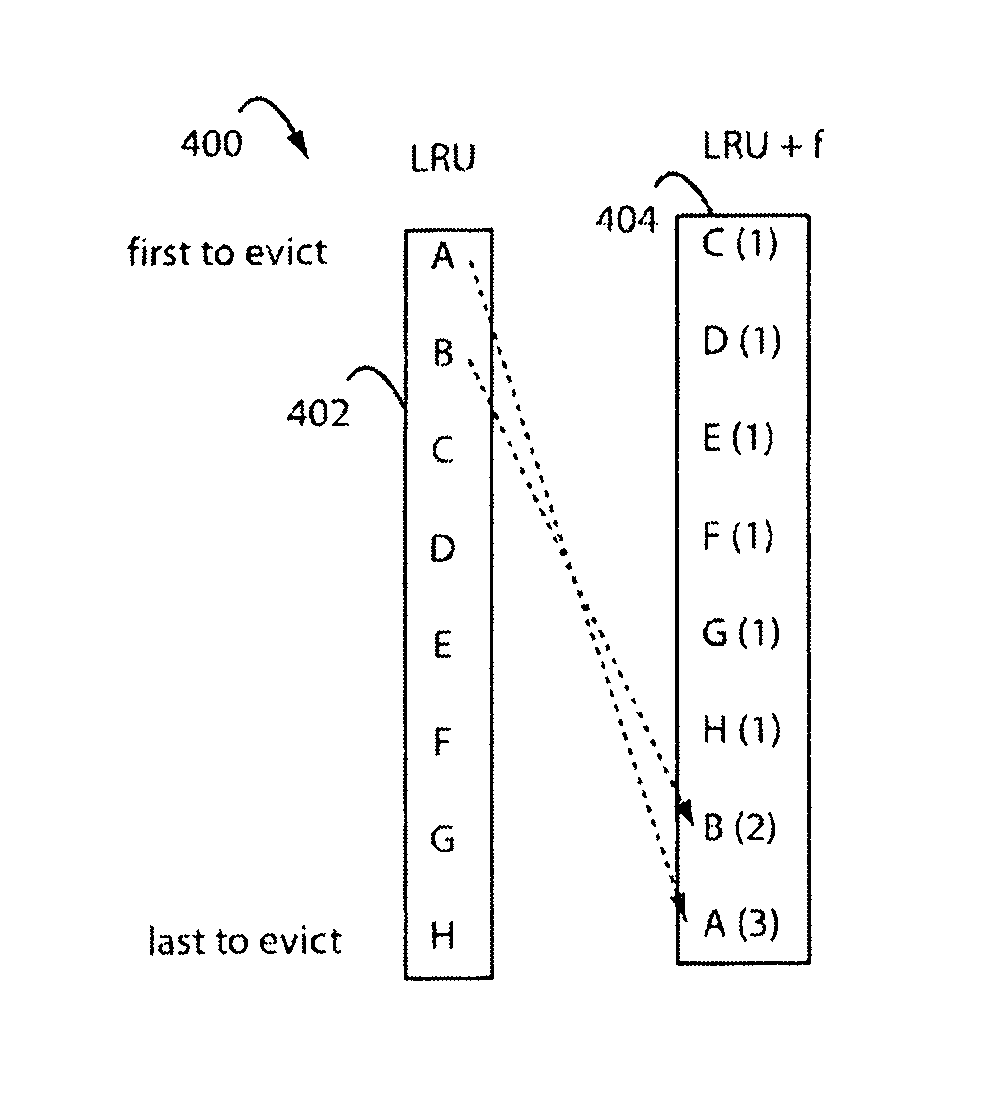

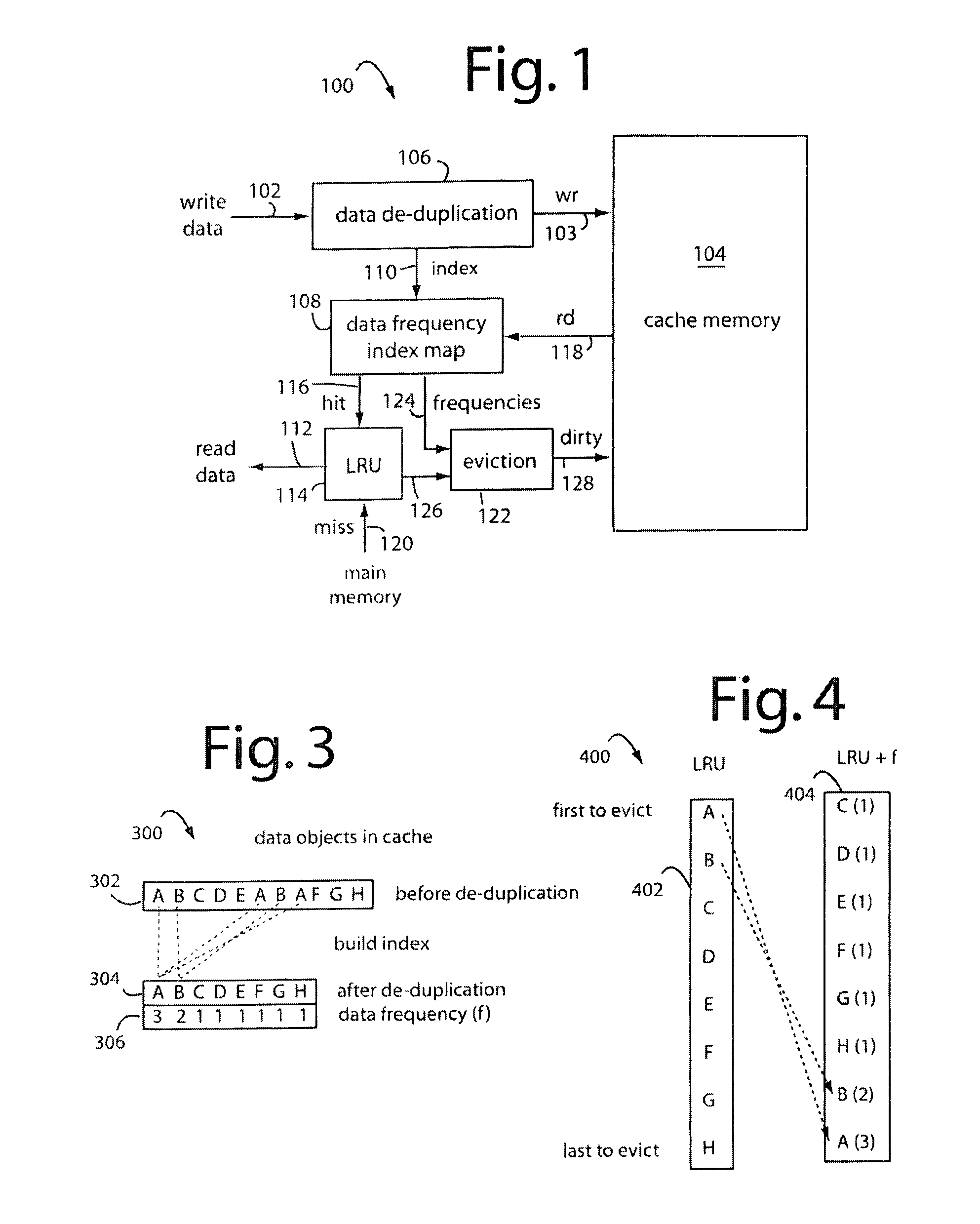

A method for increasing the performance and utilization of cache memory by combining the data block frequency map generated by data de-duplication mechanism and page prefetching and eviction algorithms like Least Recently Used (LRU) policy. The data block frequency map provides weight directly proportional to the frequency count of the block in the dataset. This weight is used to influence the caching algorithms like LRU. Data blocks that have lesser frequency count in the dataset are evicted before those with higher frequencies, even though they may not have been the topmost blocks for page eviction by caching algorithms. The method effectively combines the weight of the block in the frequency map and its eviction status by caching algorithms like LRU to get an improved performance and utilization of the cache memory.

Owner:IBM CORP

Deferring and combining write barriers for a garbage-collected heap

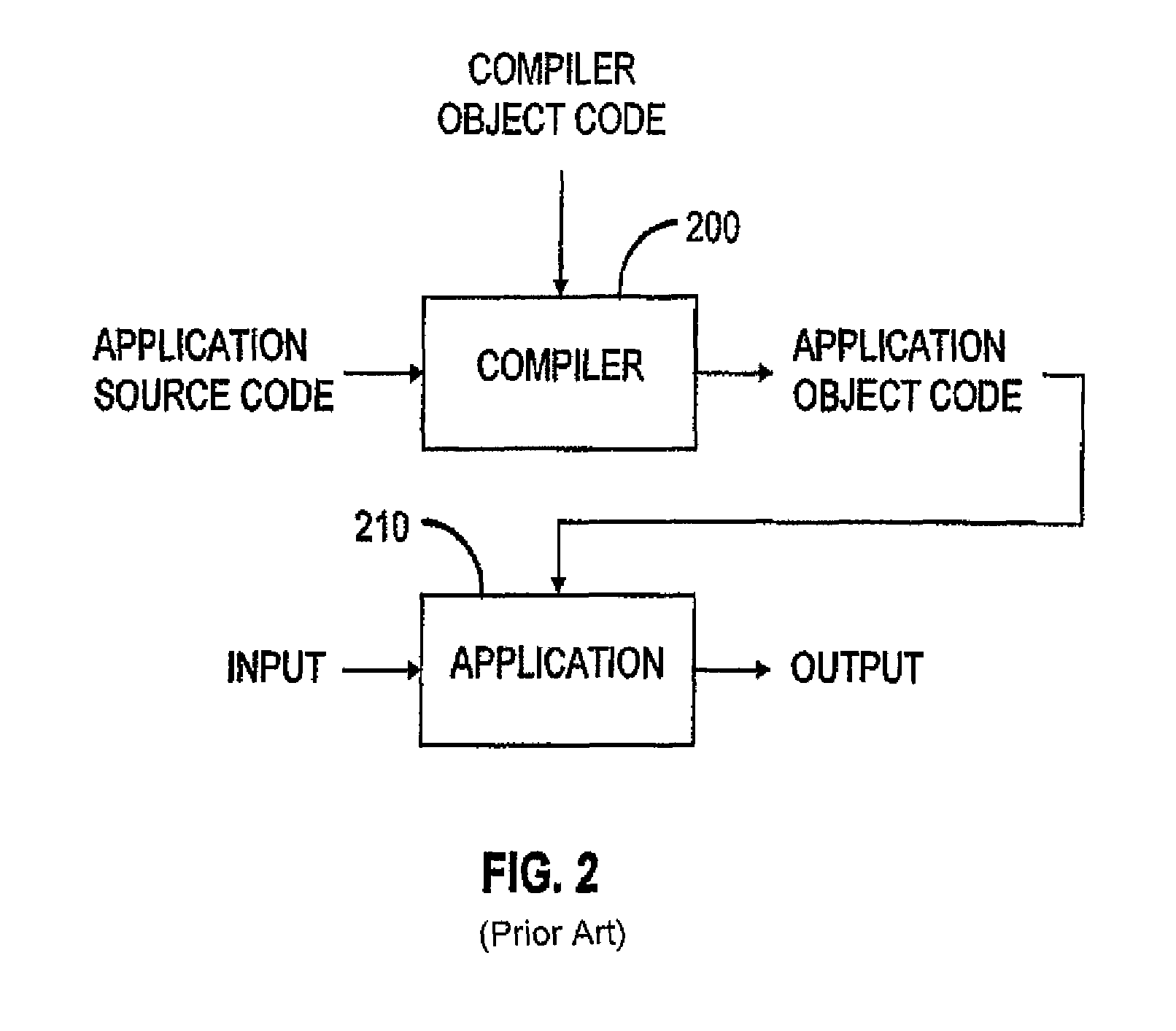

ActiveUS7404182B1Reduce numberMinimize amountData processing applicationsSpecial data processing applicationsWrite barrierObject code

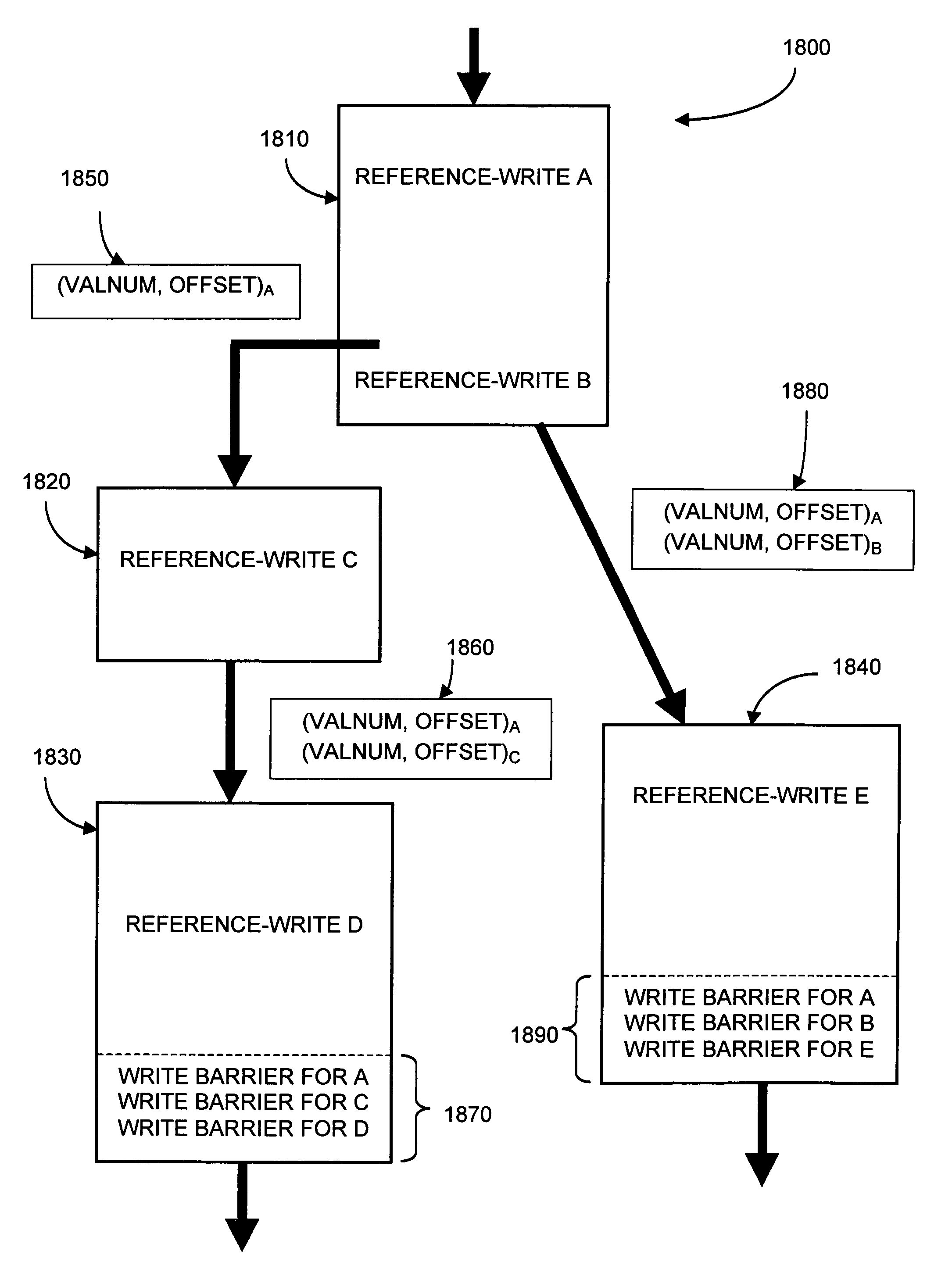

The present invention provides a technique for reducing the number of write barriers without compromising garbage collector performance or correctness. To that end, a compiler defers emitting write barriers until it reaches a subsequent instruction in the mutator code. At this point, the compiler may elide repeated or unnecessary write-barrier code so as to emit only those write barriers that provide useful information to the garbage collector. By eliminating write-barrier code in this manner, the amount of write-barrier overhead in the mutator can be minimized, consequently enabling the mutator to execute faster and more efficiently. Further, collocating write barriers after the predetermined instruction also enables the compiler to generate object code having better cache performance and more efficient use of guard code than is possible using conventional write-barrier implementations.

Owner:ORACLE INT CORP

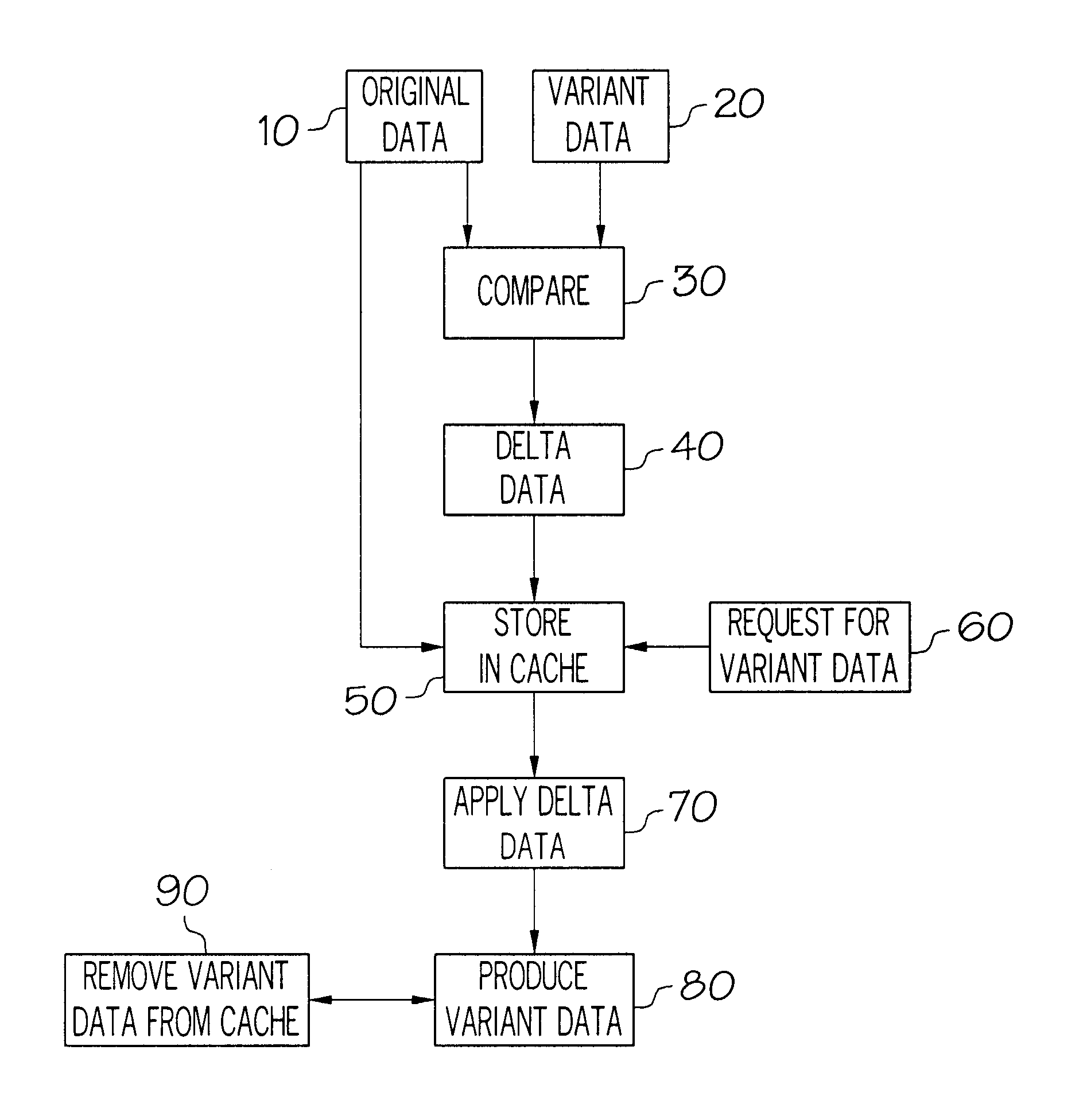

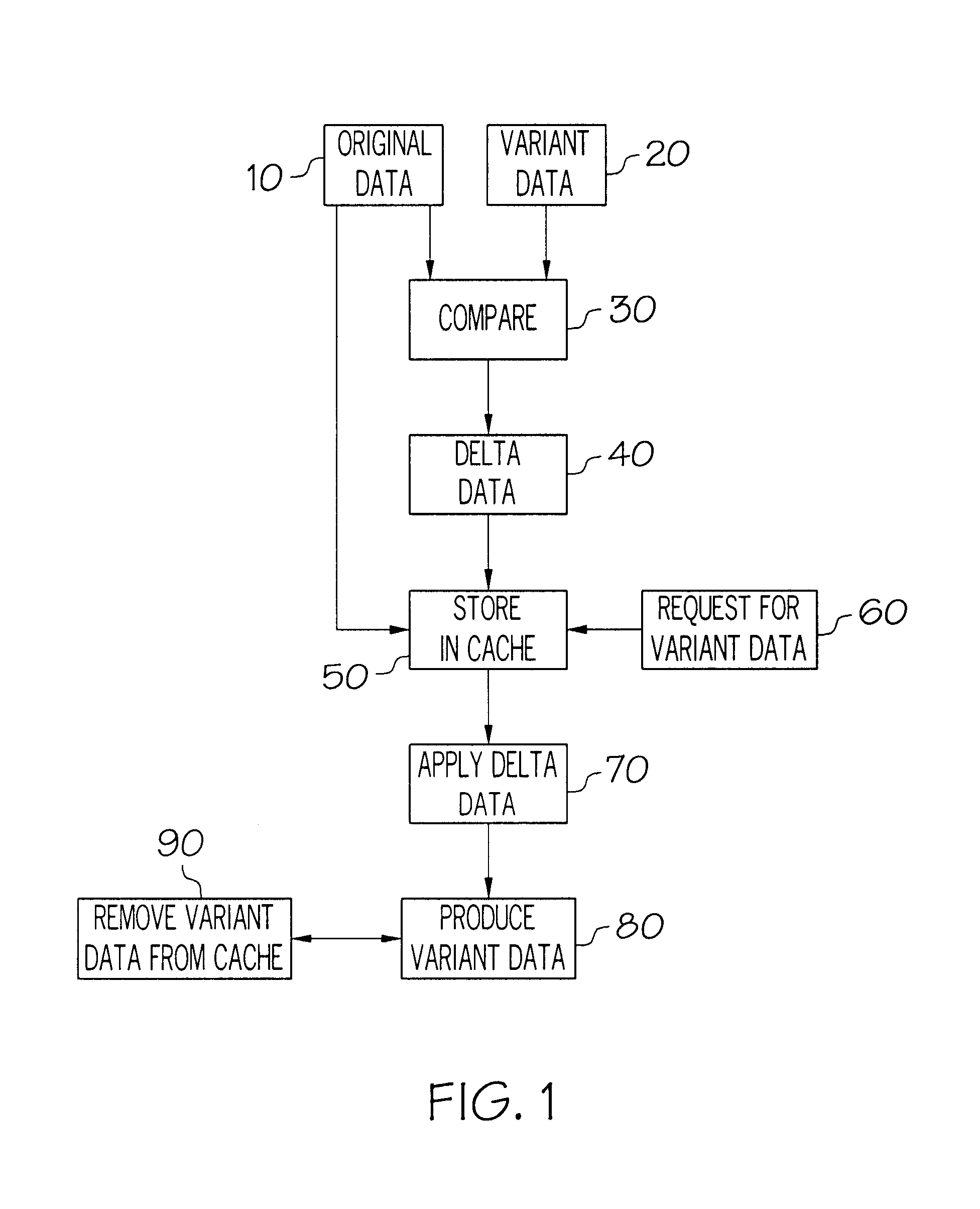

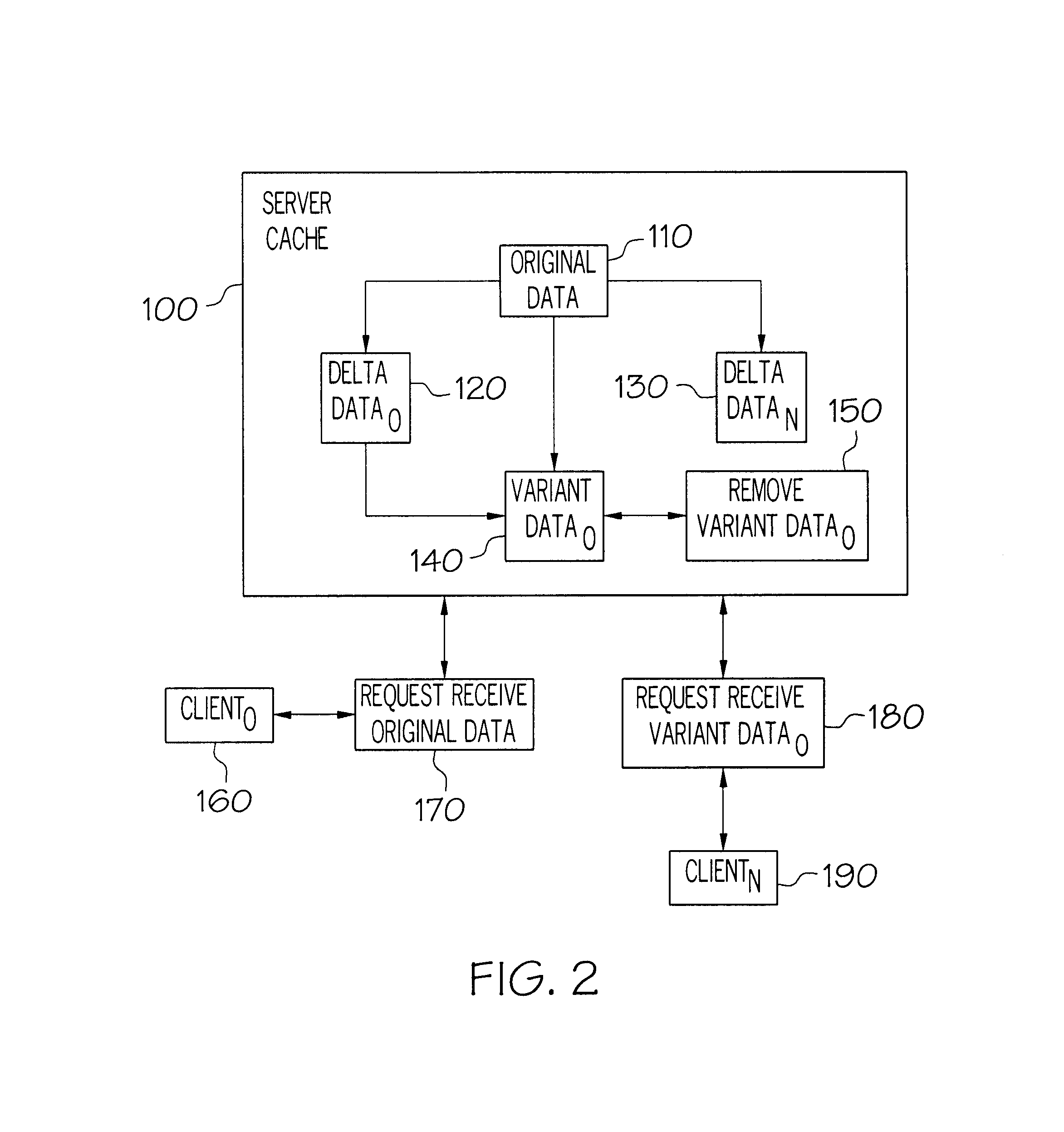

Methods for increasing cache capacity utilizing delta data

InactiveUS6850964B1Improve caching capacityIncrease capacityDigital data information retrievalData processing applicationsOriginal dataParallel computing

Methods of increasing cache capacity are provided. A method of increasing cache capacity is provided wherein original data are received in a cache with access to one or more variants of the original data being required, but the variants do not reside in the cache. Access is provided to the variants by using one or more delta data operable to be applied against the original data to produce the variants. Moreover, a method of reducing cache storage requirements is provided wherein original data and variants of the original data are identified and located within the cache. Further, delta data are produced by noting one or more differences between the original data and the variants, with the variants being purged from the cache. Furthermore, a method of producing derivatives from an original data is provided wherein the original data and variants of the original data are identified. Delta data are produced by recording the difference between each variant and the original data, with the delta data and the original data being retained in the cache.

Owner:MICRO FOCUS SOFTWARE INC

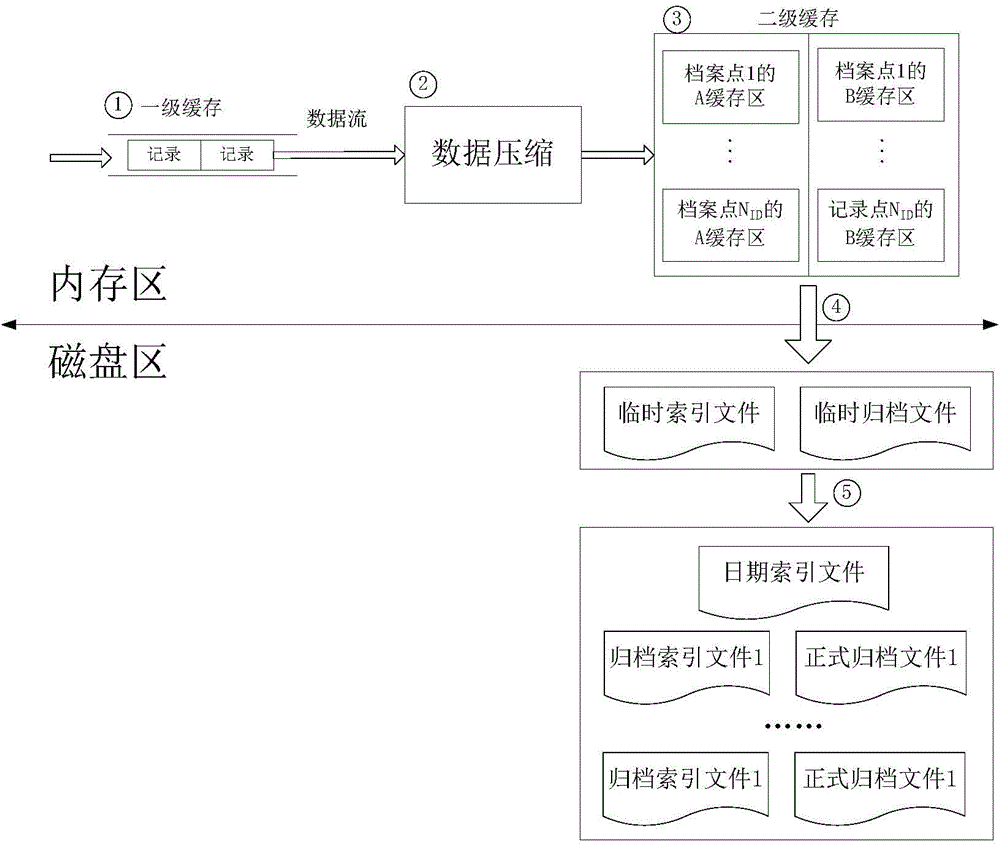

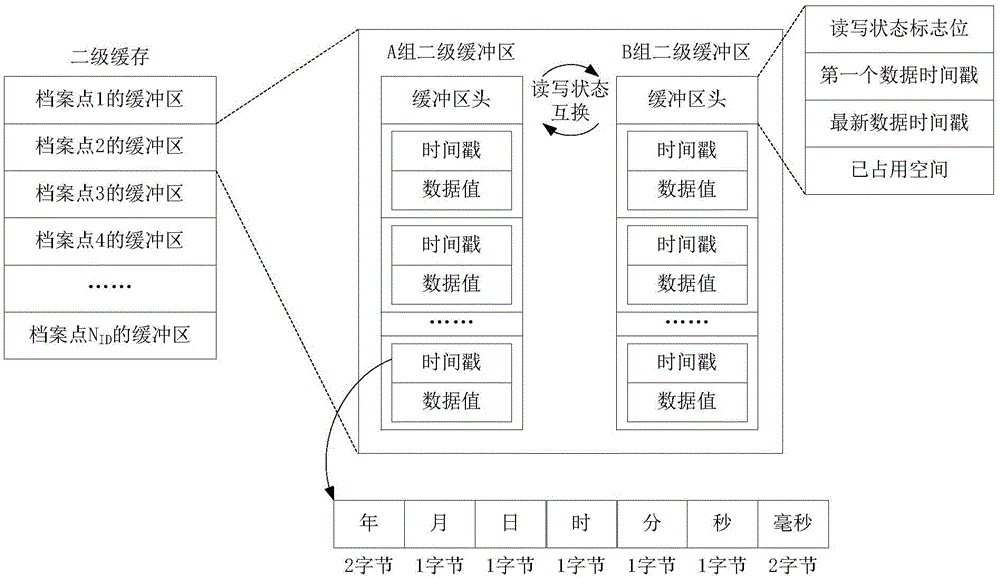

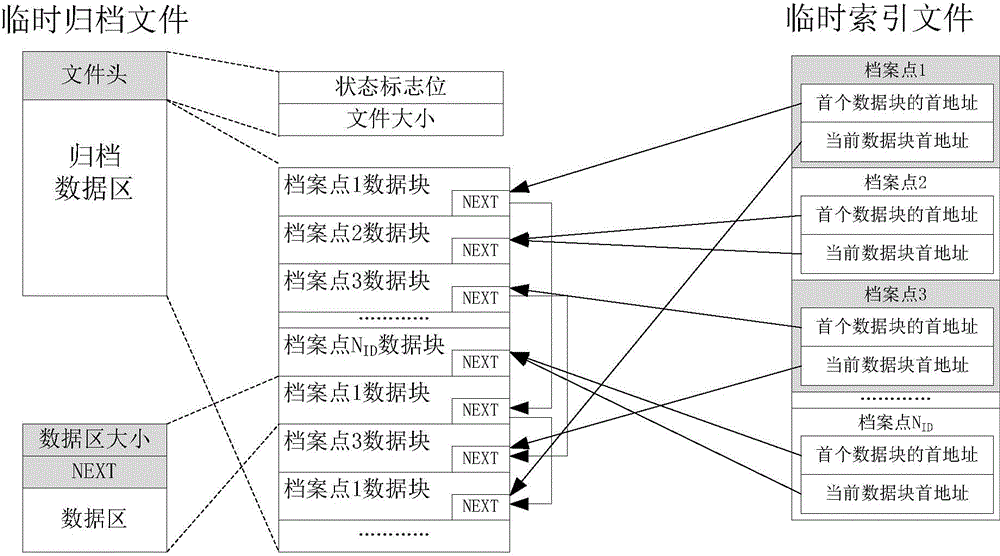

Historical data storage and indexing method

ActiveCN104090987AImprove caching capacityAvoid lossSpecial data processing applicationsDatabase indexingData accessData store

The invention discloses a historical data storage and indexing method and belongs to the field of real-time historical databases. According to the characteristics of industrial historical data storage formats, a simple and efficient historical data storage method and an efficient indexing mechanism are given. According to the historical data storage method, on the one hand, the I / O of a magnetic disk is reduced as much as possible by performing compression and cache on historical data and optimizing the data archiving sorting process; on the other hand, the I / O load of the magnetic disk in the data archiving sorting process is distributed to all time points in a balanced mode, and thus it is guaranteed that the requirement for storage of current historical data is met, and meanwhile the storage method has the dynamic extension characteristic. Through the indexing mechanism, the historical data can be fast accessed, and when a database is dynamically extended, the system can be not influenced by data access by dynamically modifying indexing files.

Owner:HUAZHONG UNIV OF SCI & TECH

Method and system for improving performance and scalability of applications that utilize a flow-based-programming methodology

InactiveUS20050289505A1Improve caching capacityEliminating idle file memoryProgram initiation/switchingSpecific program execution arrangementsFlow-based programmingAuxiliary memory

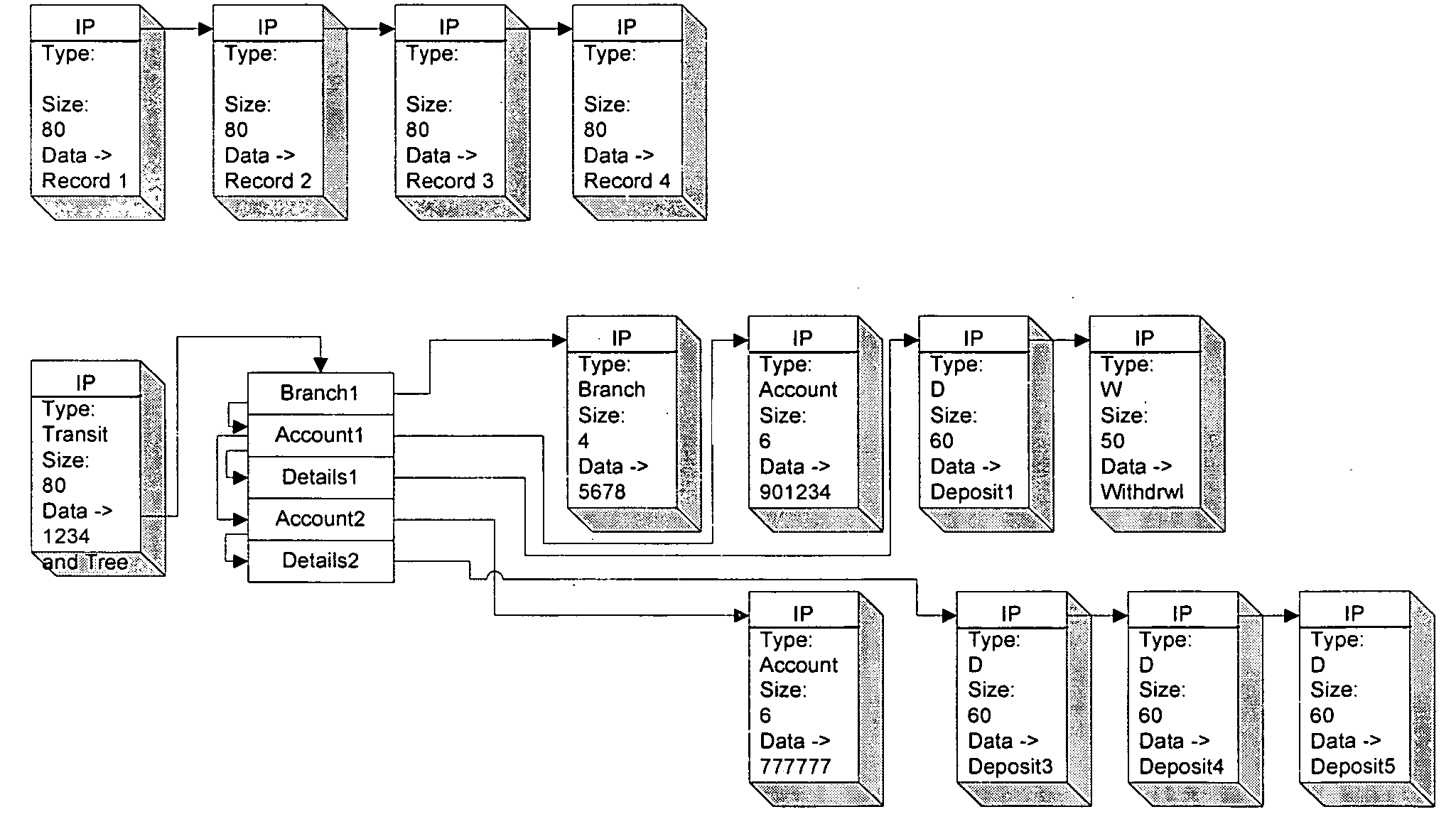

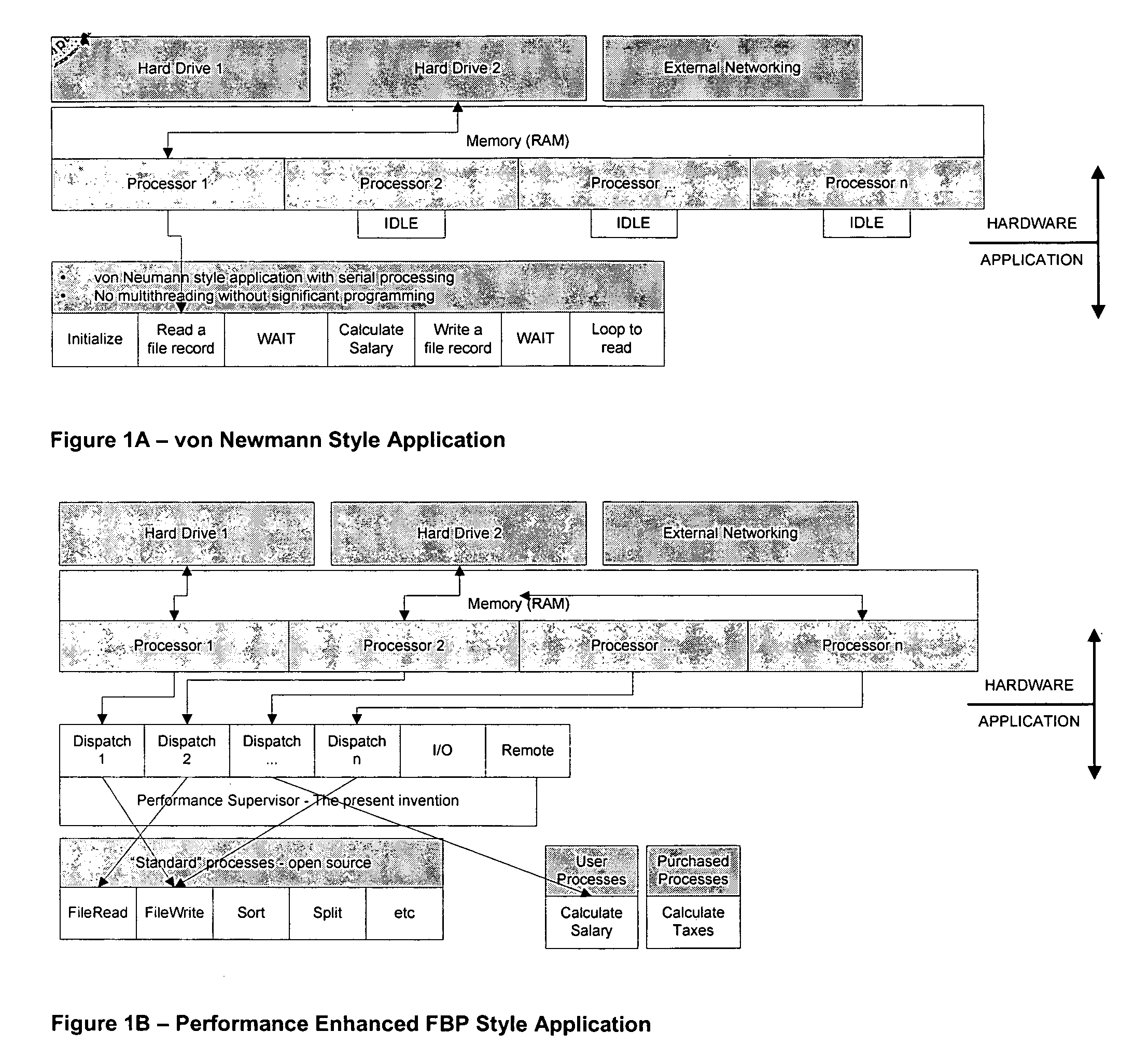

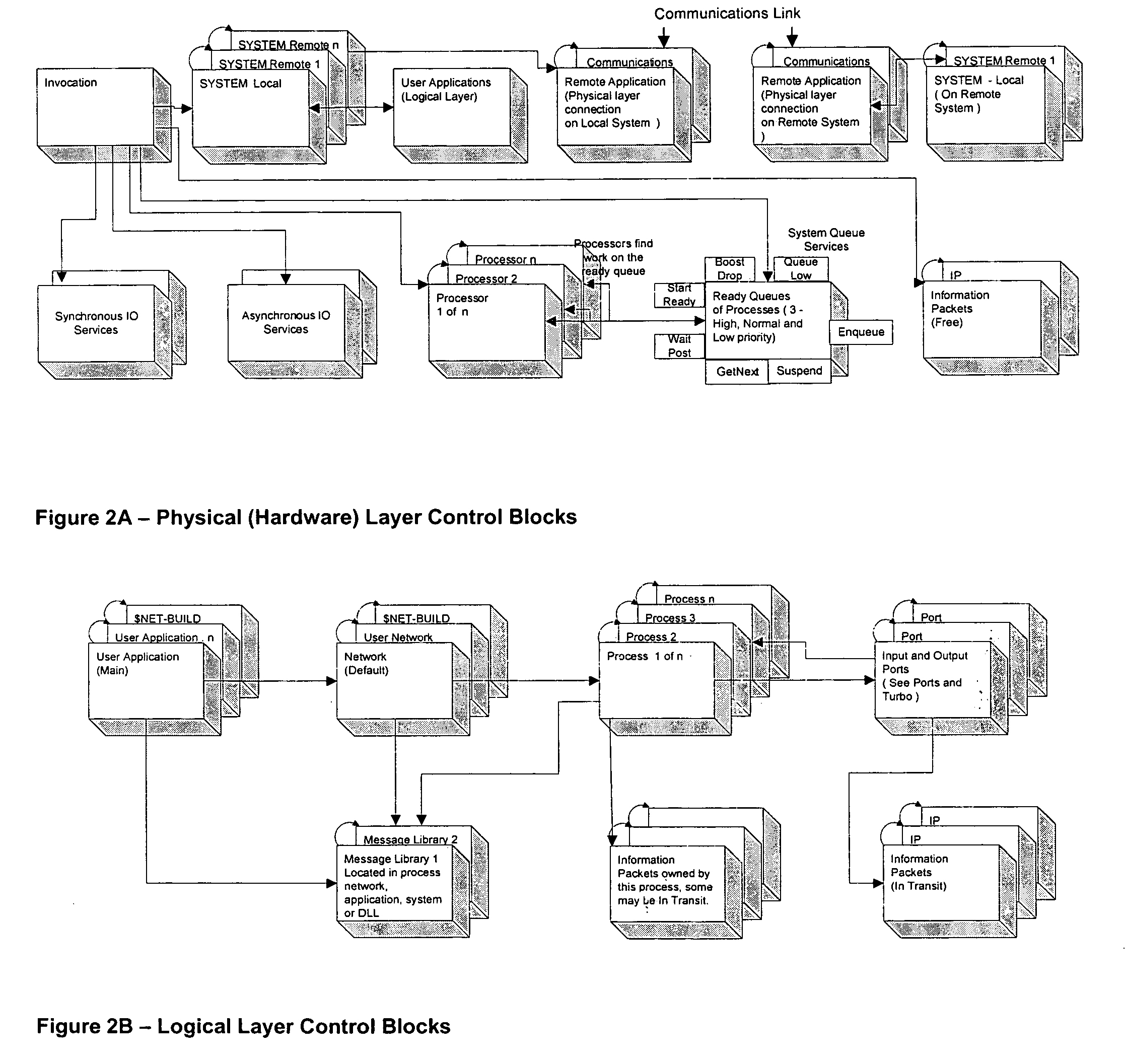

A method, system, apparatus, and computer program product is presented for improving the execution performance of flow-based-program (FBP) programs and improving the execution performance further on systems with additional processing resources (scalability). A FBP supervisor is inserted as the initial executable program, which program will interrogate the features of the operating system upon which it is executing including but not limited to number of processors, memory capacity, auxiliary memory capacity (paging dataset size), and networking capabilities. The supervisor will create an optimum number of processing environments (e.g. threads in a Windows environment) to service the user FBP application. The supervisor will further expose other services to the FBP application which improve the concurrent execution of the work granules (processes) within that FBP application. The supervisor further improves the generation and logging of messages through structured message libraries which are extended to the application programmer. The overall supervisor design maximizes concurrency, eliminates unnecessary work, and offers services so a process should suspend rather than block.

Owner:WILLIAMS STANLEY NORMAN

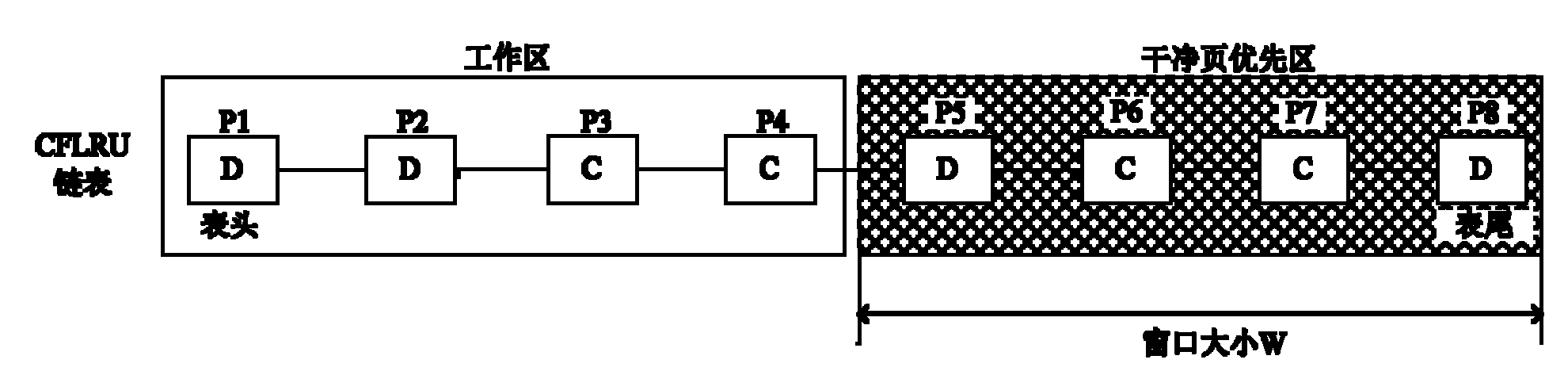

Data page caching method for file system of solid-state hard disc

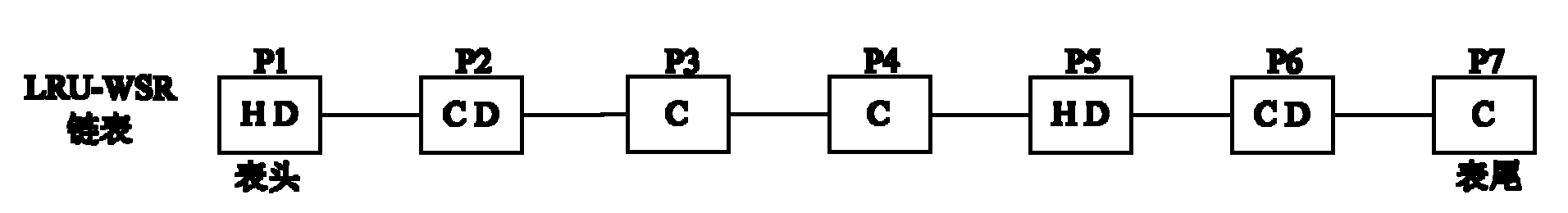

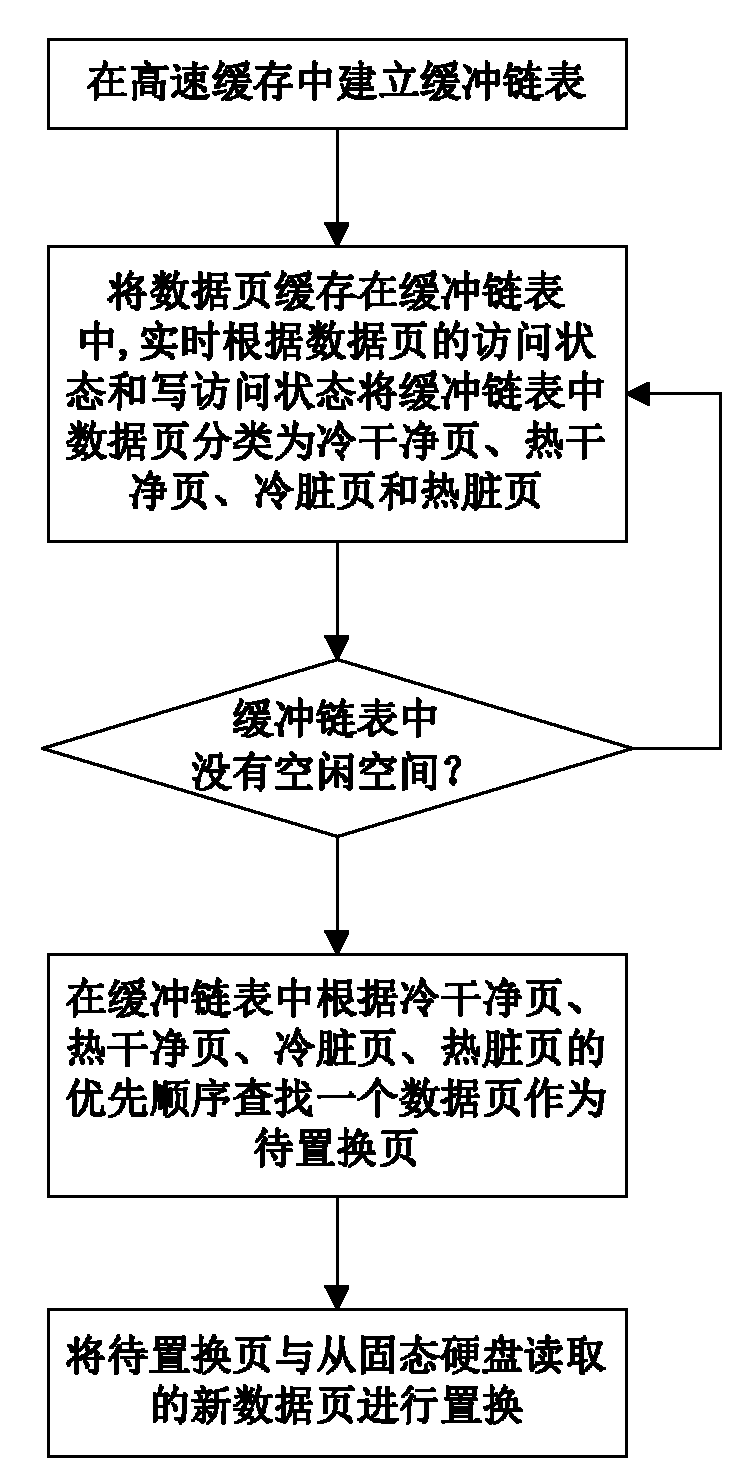

ActiveCN102156753AReduce overheadImprove hit rateSpecial data processing applicationsDirty pageExternal storage

The invention discloses a data page caching method for a file system of a solid-state hard disc, which comprises the following implementation steps of: (1) establishing a buffer link list used for caching data pages in a high-speed cache; (2) caching the data pages read in the solid-state hard disc in the buffer link list for access, classifying the data pages in the buffer link list into cold clean pages, hot clean pages, cold dirty pages and hot dirty pages in real time according to the access states and write access states of the data pages; (3) firstly searching a data page as a page to be replaced in the buffer link list according to the priority of the cold clean pages, the hot clean pages, the cold dirty pages and the hot dirty pages, and replacing the page to be replaced with a new data page read from the solid-state hard disc when a free space does not exist in the buffer link list. In the invention, the characteristics of the solid-state hard disc can be sufficiently utilized, the performance bottlenecks of the external storage can be effectively relieved, and the storage processing performance of the system can be improved; moreover, the data page caching method has the advantages of good I / O (Input / Output) performance, low replacement cost for cached pages, low expense and high hit rate.

Owner:NAT UNIV OF DEFENSE TECH

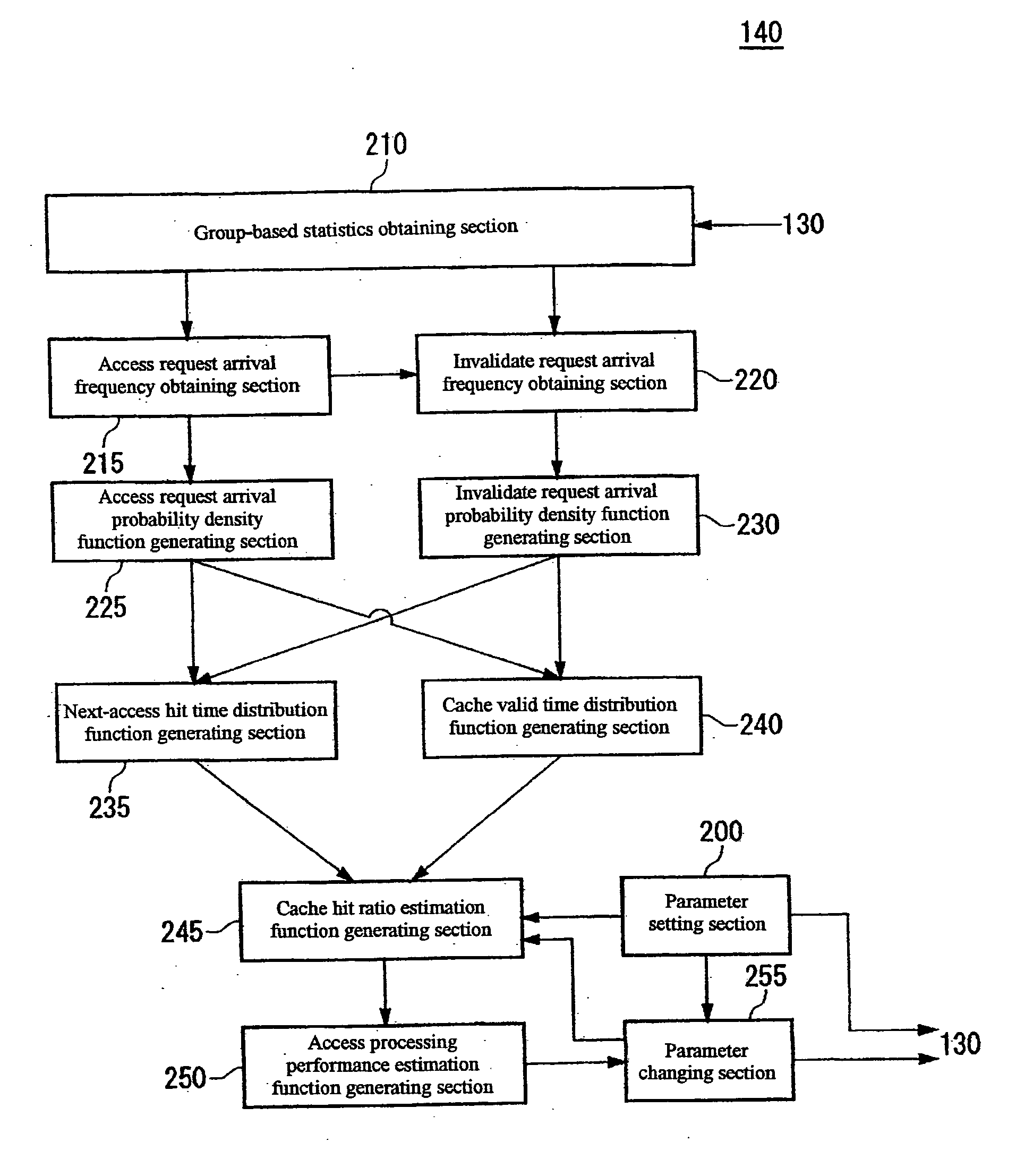

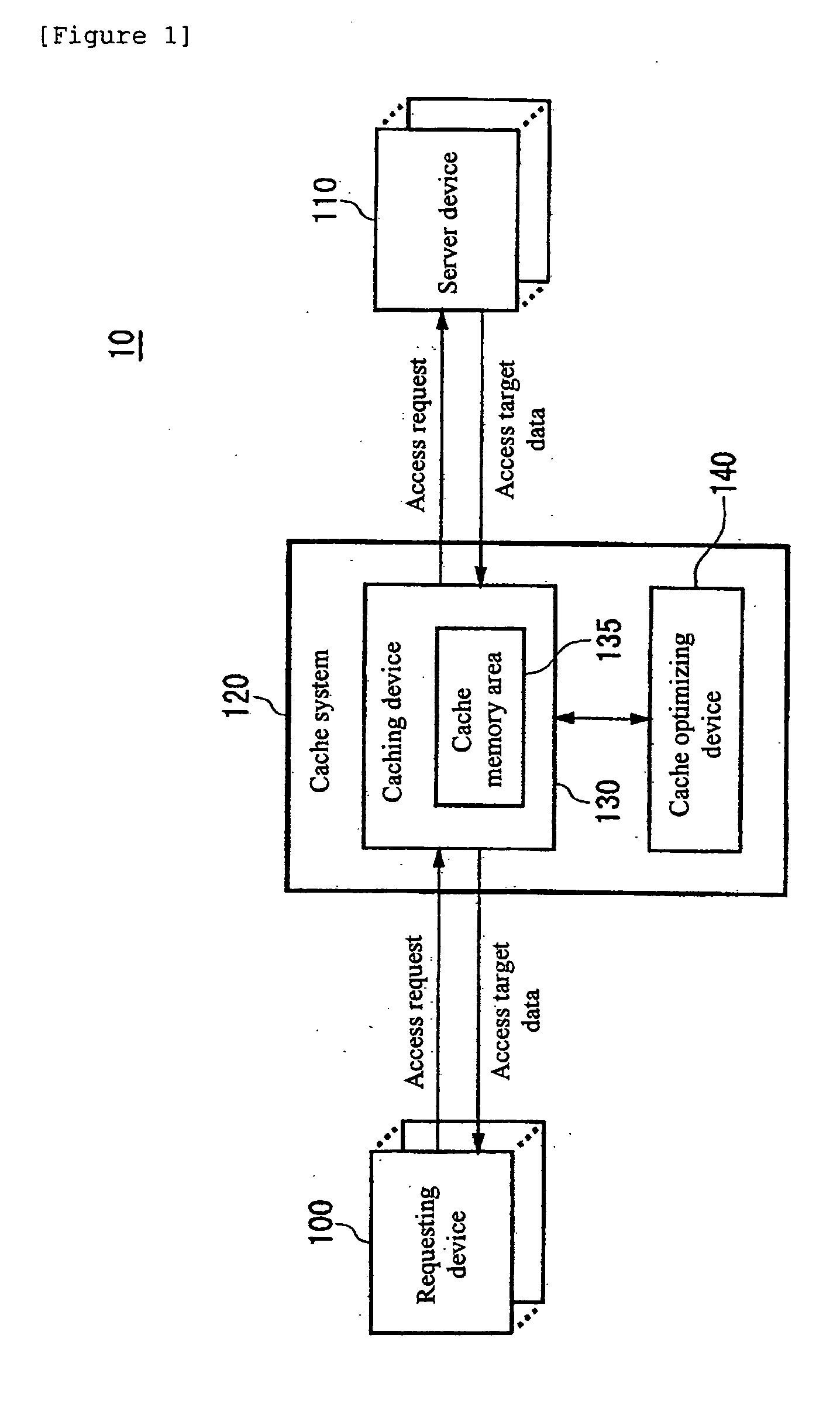

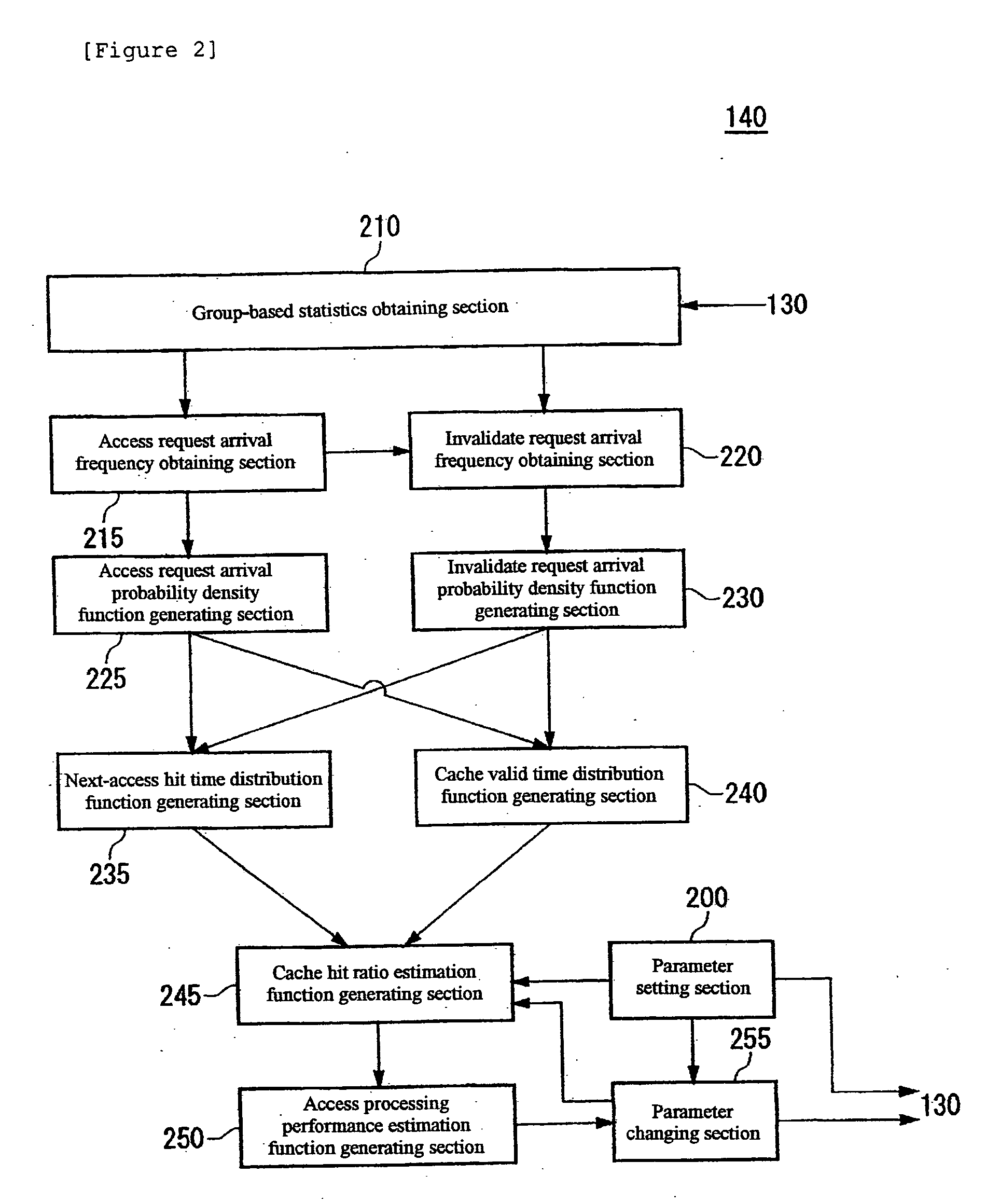

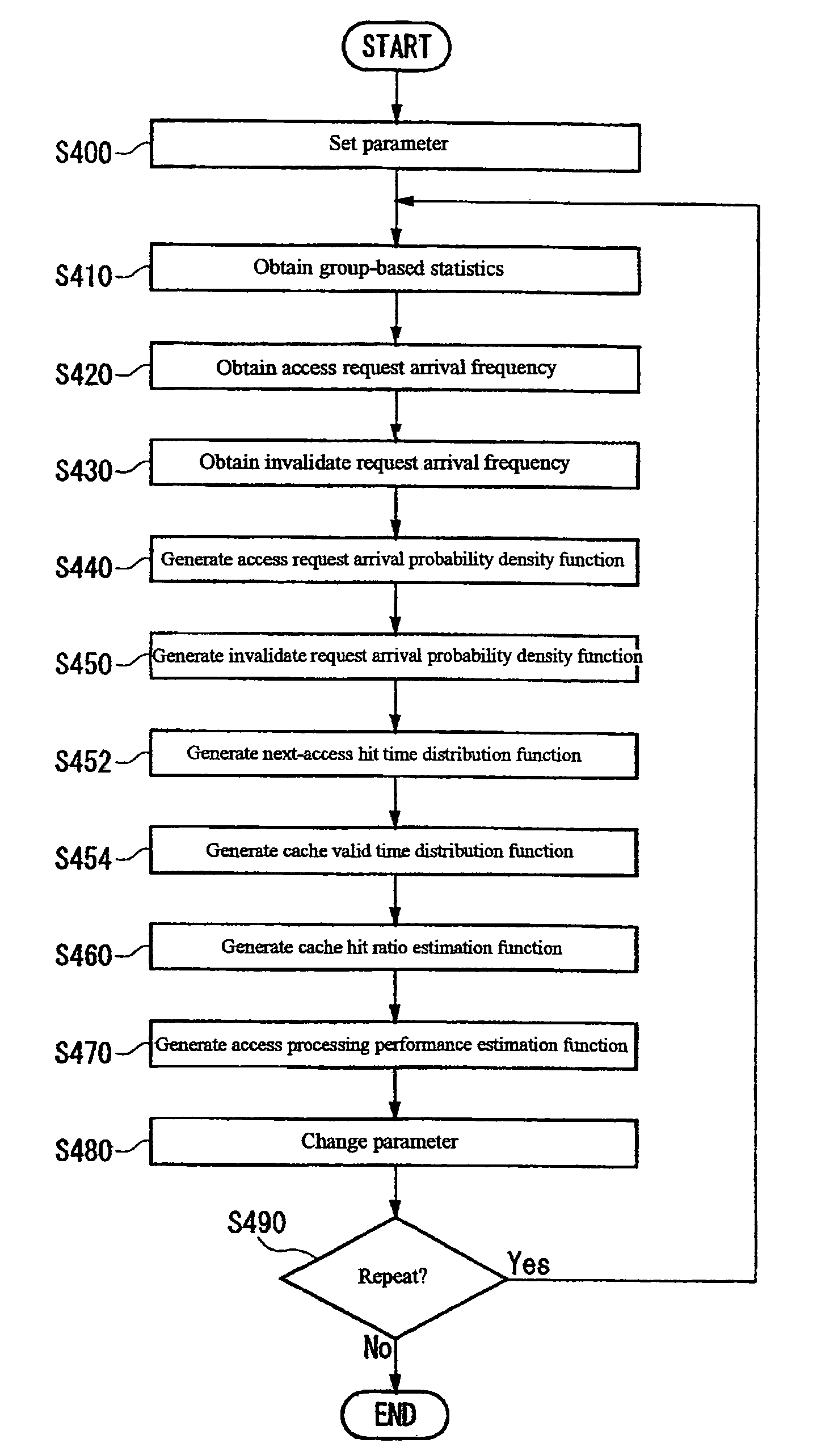

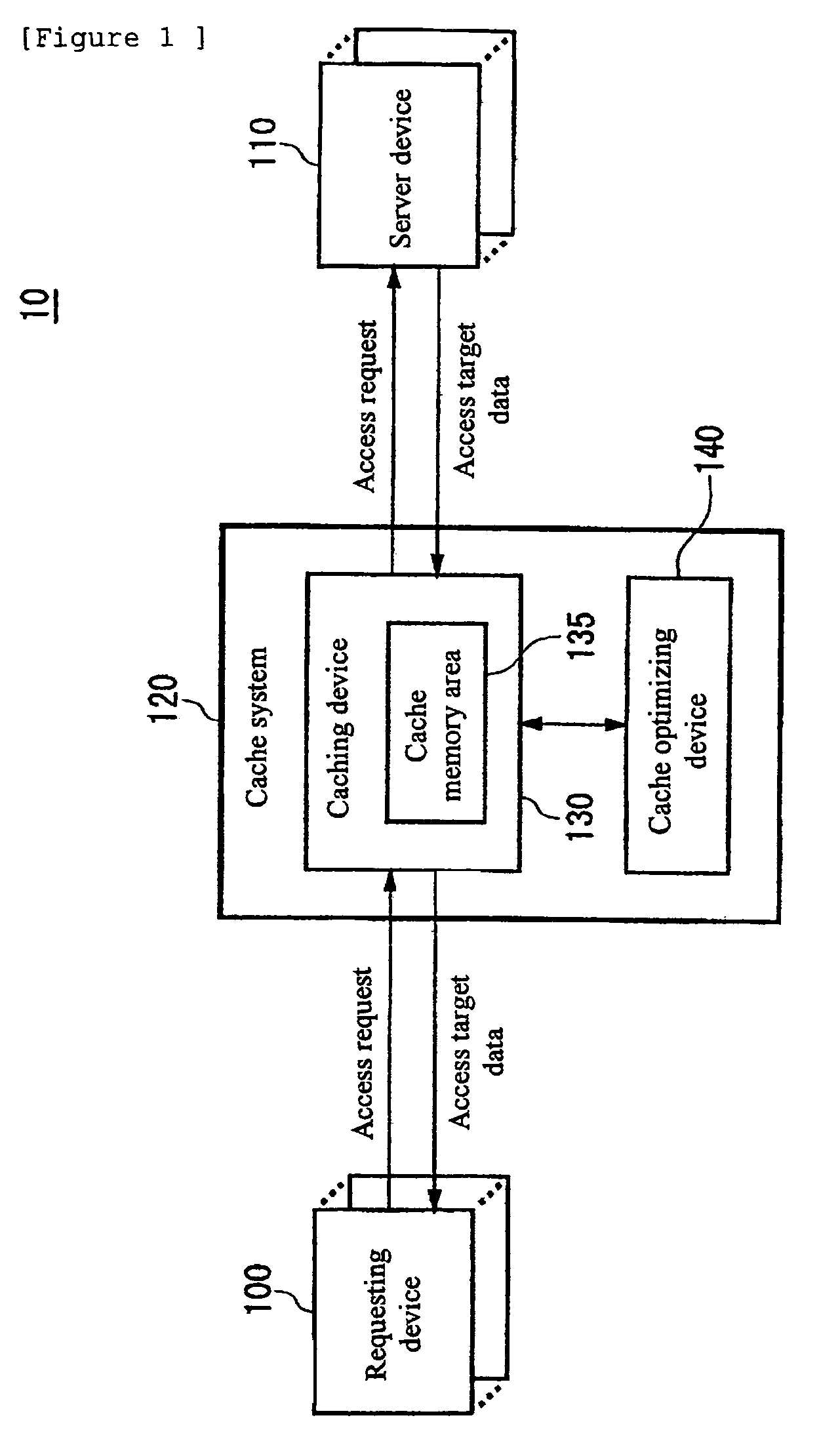

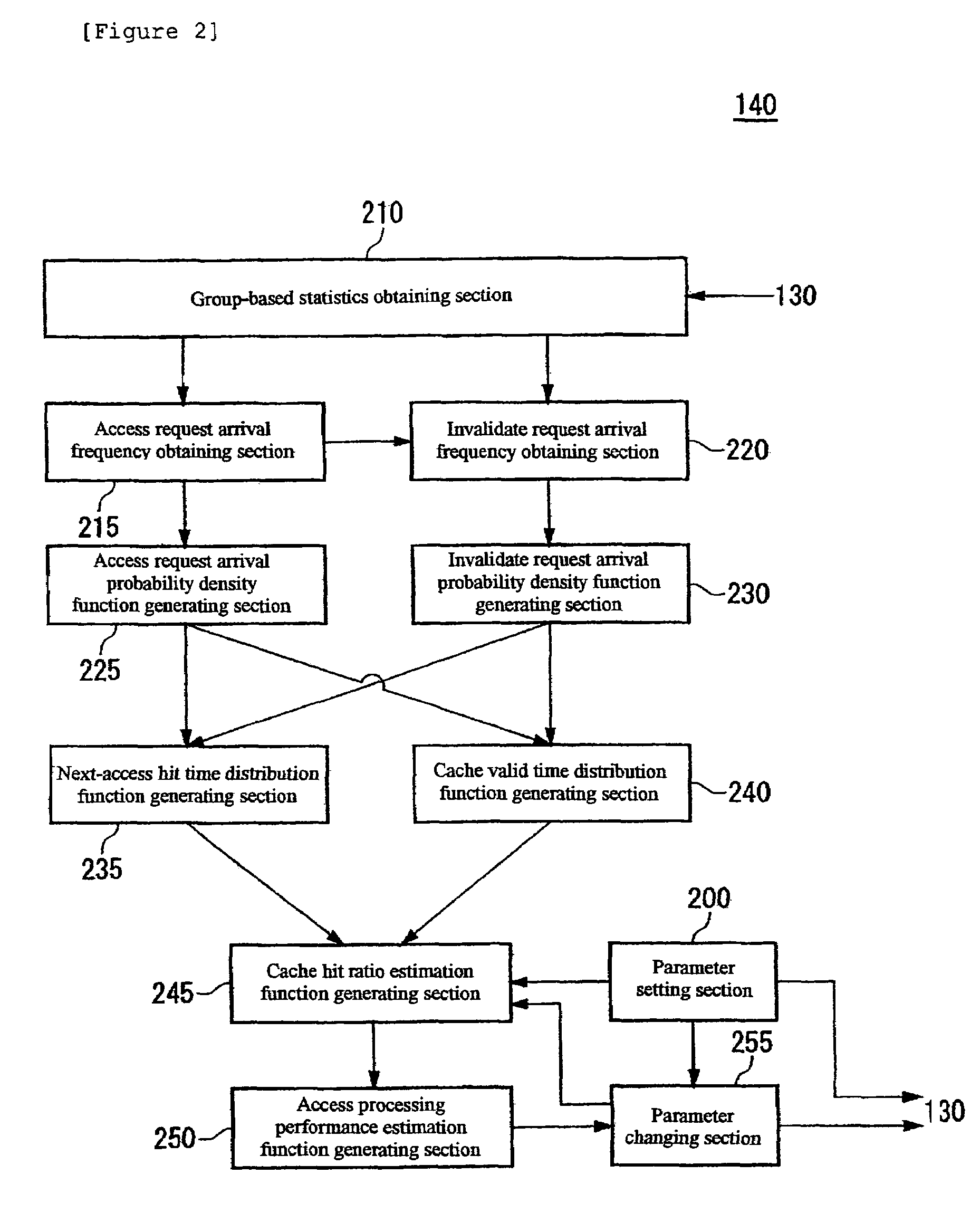

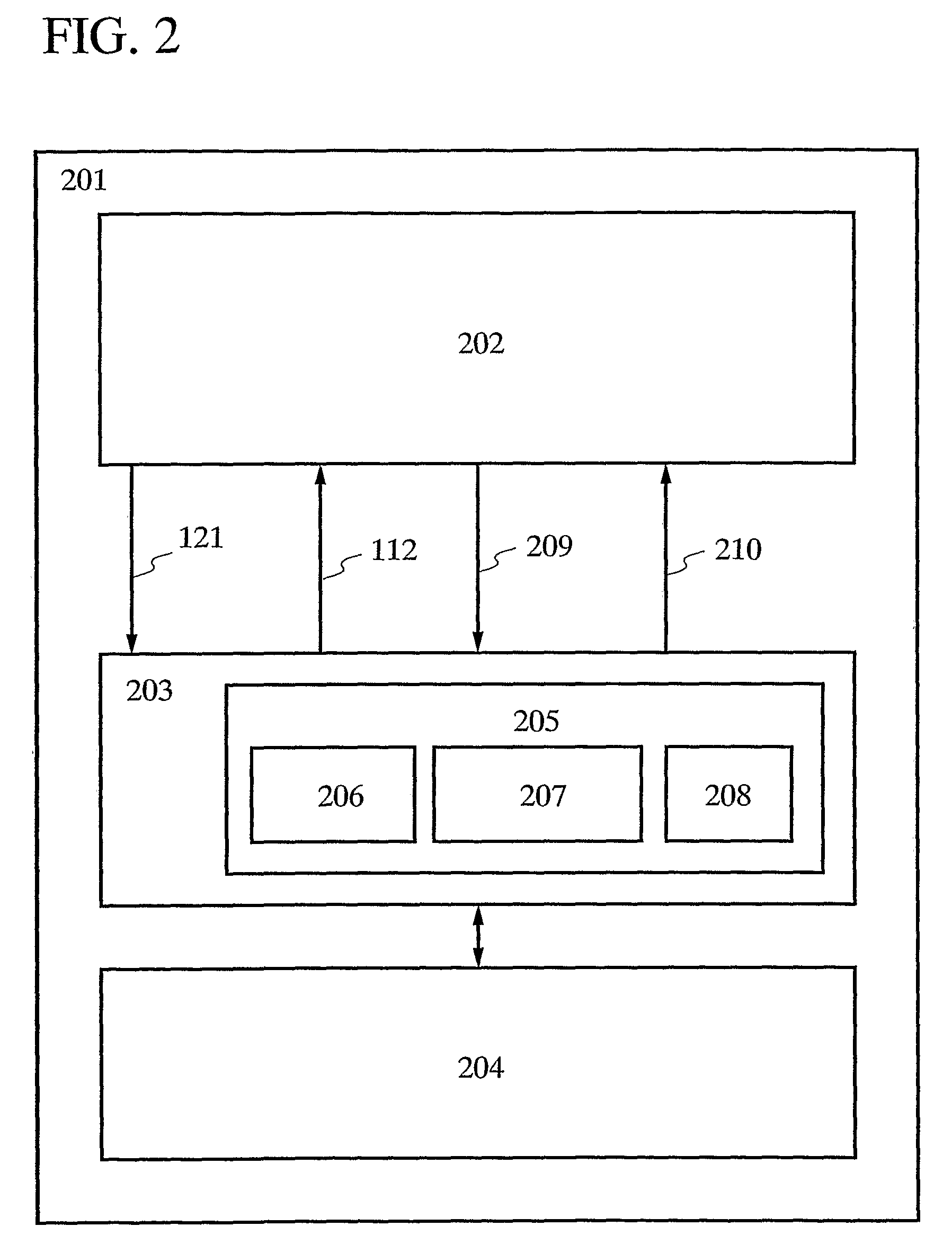

Cache hit ratio estimating apparatus, cache hit ratio estimating method, program, and recording medium

InactiveUS20050268037A1Improve accuracyEasy to processInput/output to record carriersError detection/correctionCache accessNormal density

Determining a cache hit ratio of a caching device analytically and precisely. There is provided a cache hit ratio estimating apparatus for estimating the cache hit ratio of a caching device, caching access target data accessed by a requesting device, including: an access request arrival frequency obtaining section for obtaining an average arrival frequency measured for access requests for each of the access target data; an access request arrival probability density function generating section for generating an access request arrival probability density function which is a probability density function of arrival time intervals of access requests for each of the access target data on the basis of the average arrival frequency of access requests for the access target data; and a cache hit ratio estimation function generating section for generating an estimation function for the cache hit ratio for each of the access target data on the basis of the access request arrival probability density function for the plurality of the access target data.

Owner:GOOGLE LLC

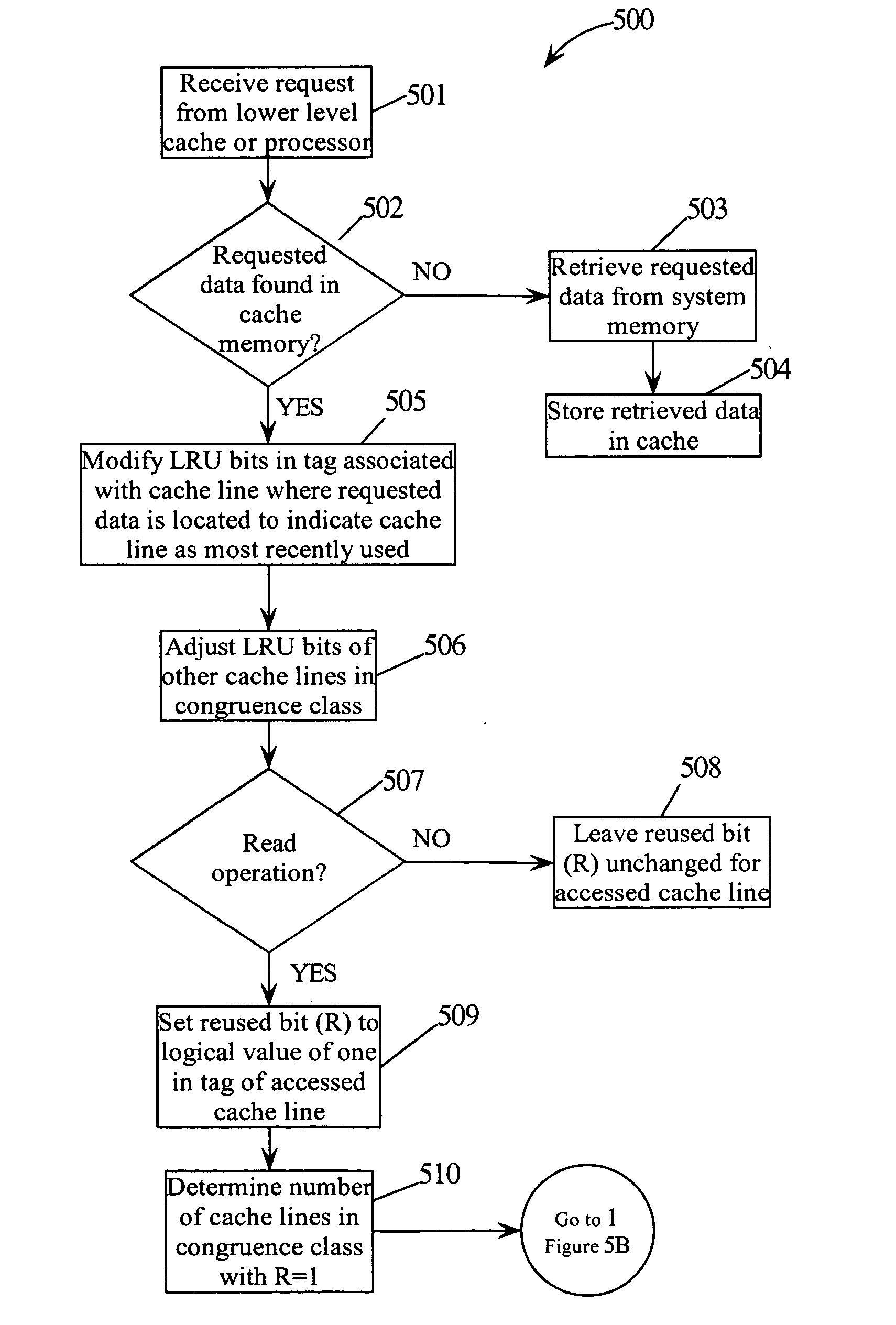

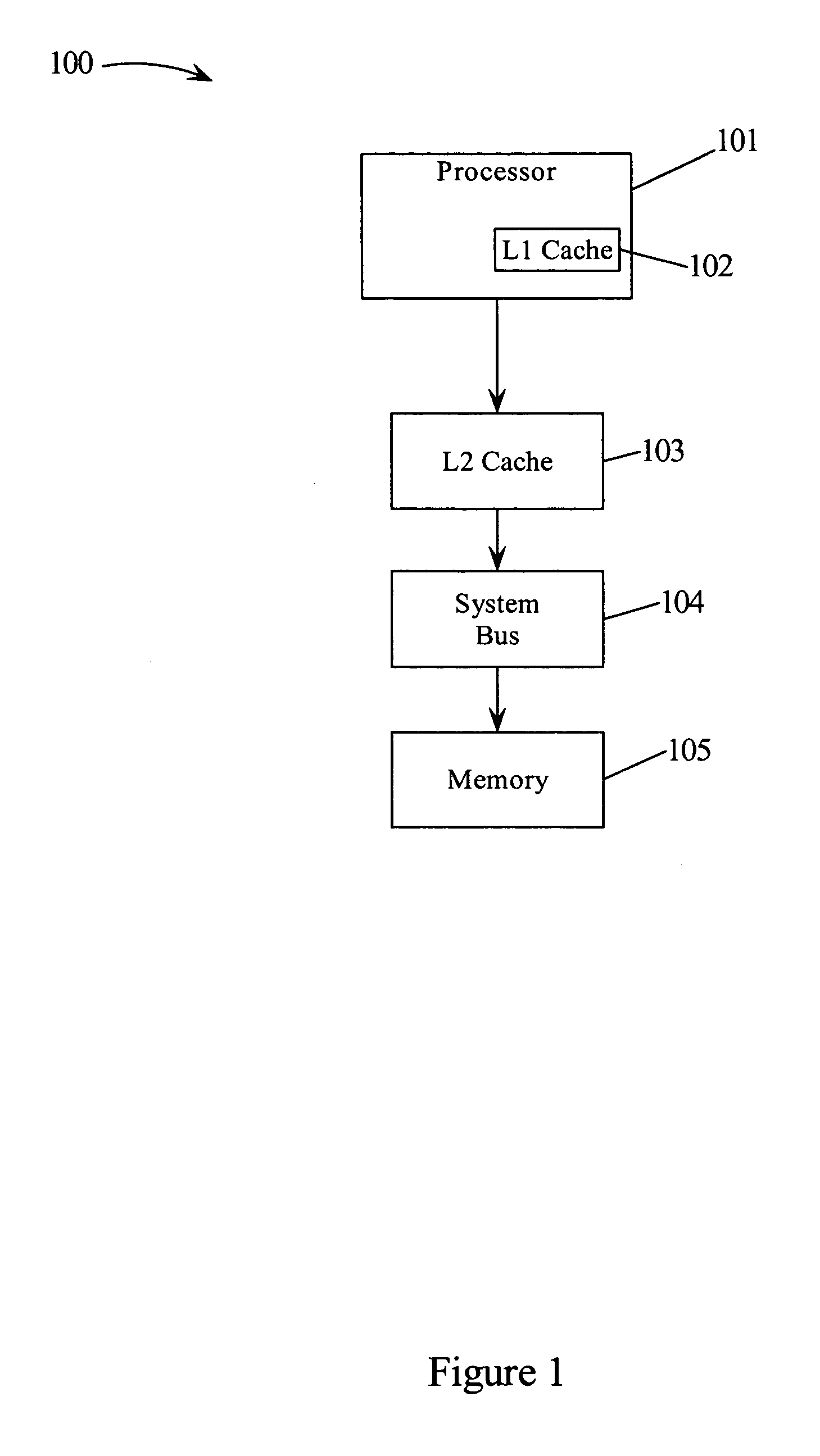

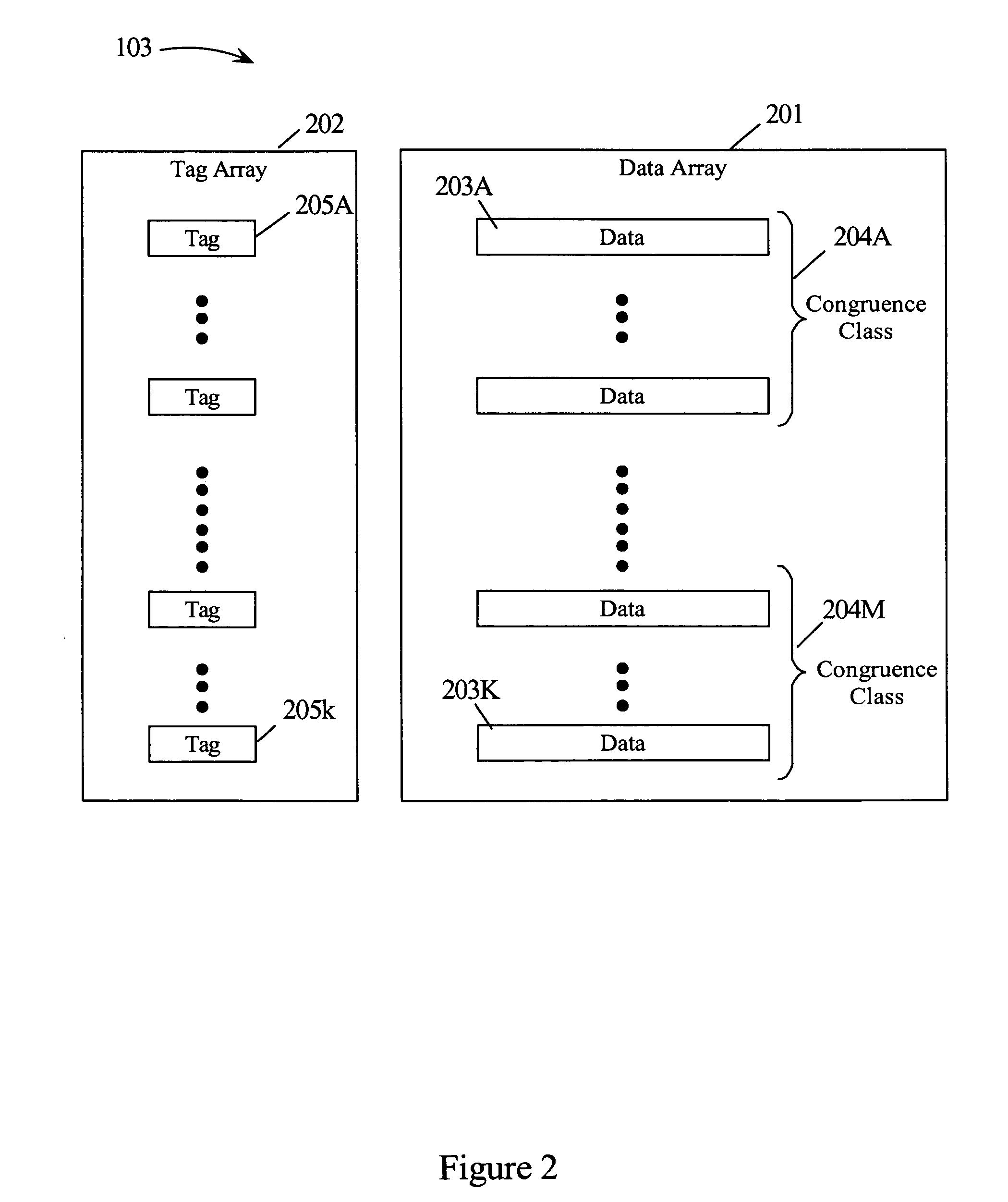

Performance of a cache by detecting cache lines that have been reused

ActiveUS20060224830A1Improve performanceCache hit may be improvedMemory systemsMicro-instruction address formationParallel computingTrace Cache

A method and system for improving the performance of a cache. The cache may include an array of tag entries where each tag entry includes an additional bit (“reused bit”) used to indicate whether its associated cache line has been reused, i.e., has been requested or referenced by the processor. By tracking whether a cache line has been reused, data (cache line) that may not be reused may be replaced with the new incoming cache line prior to replacing data (cache line) that may be reused. By replacing data in the cache memory that might not be reused prior to replacing data that might be reused, the cache hit may be improved thereby improving performance.

Owner:META PLATFORMS INC

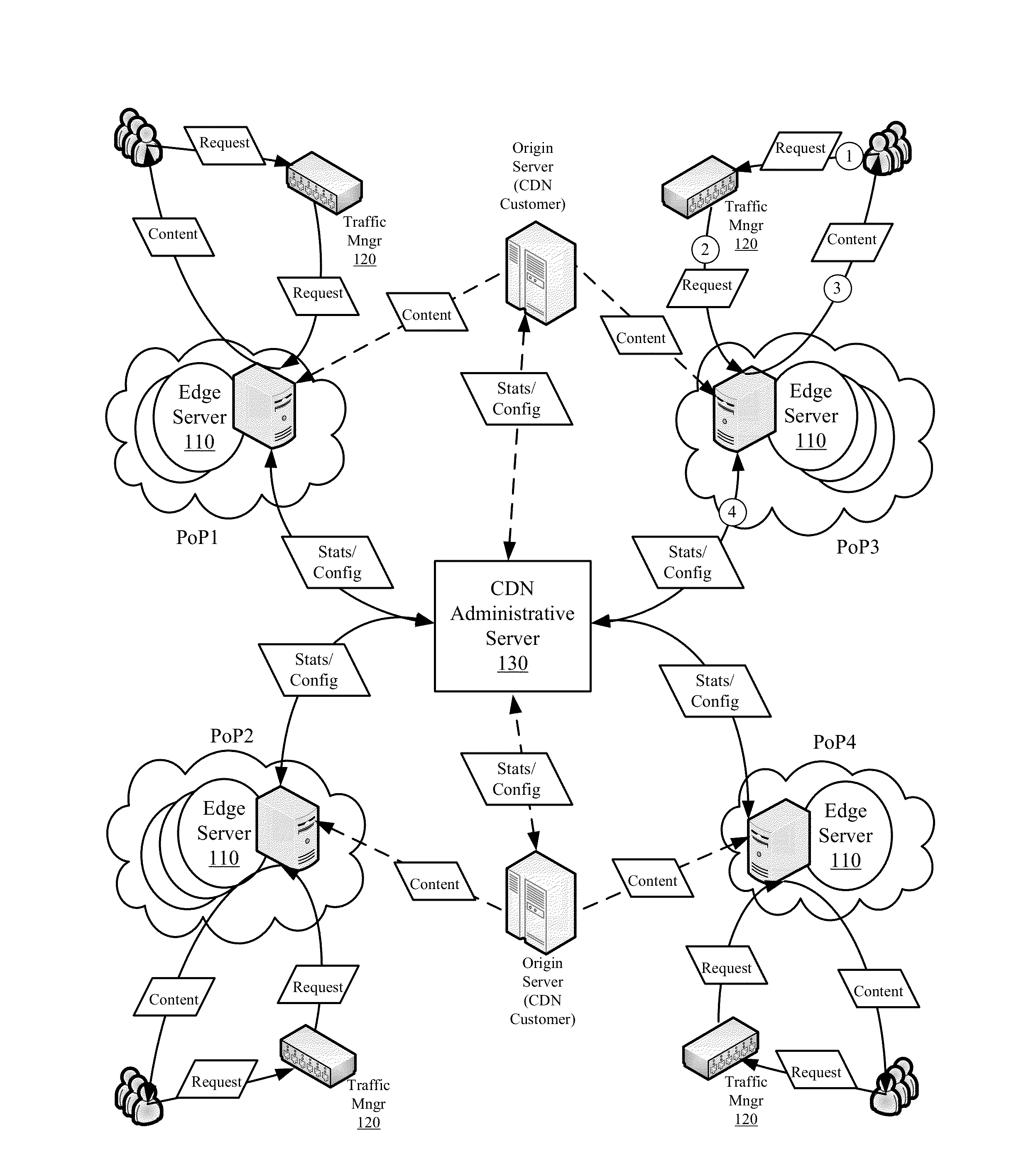

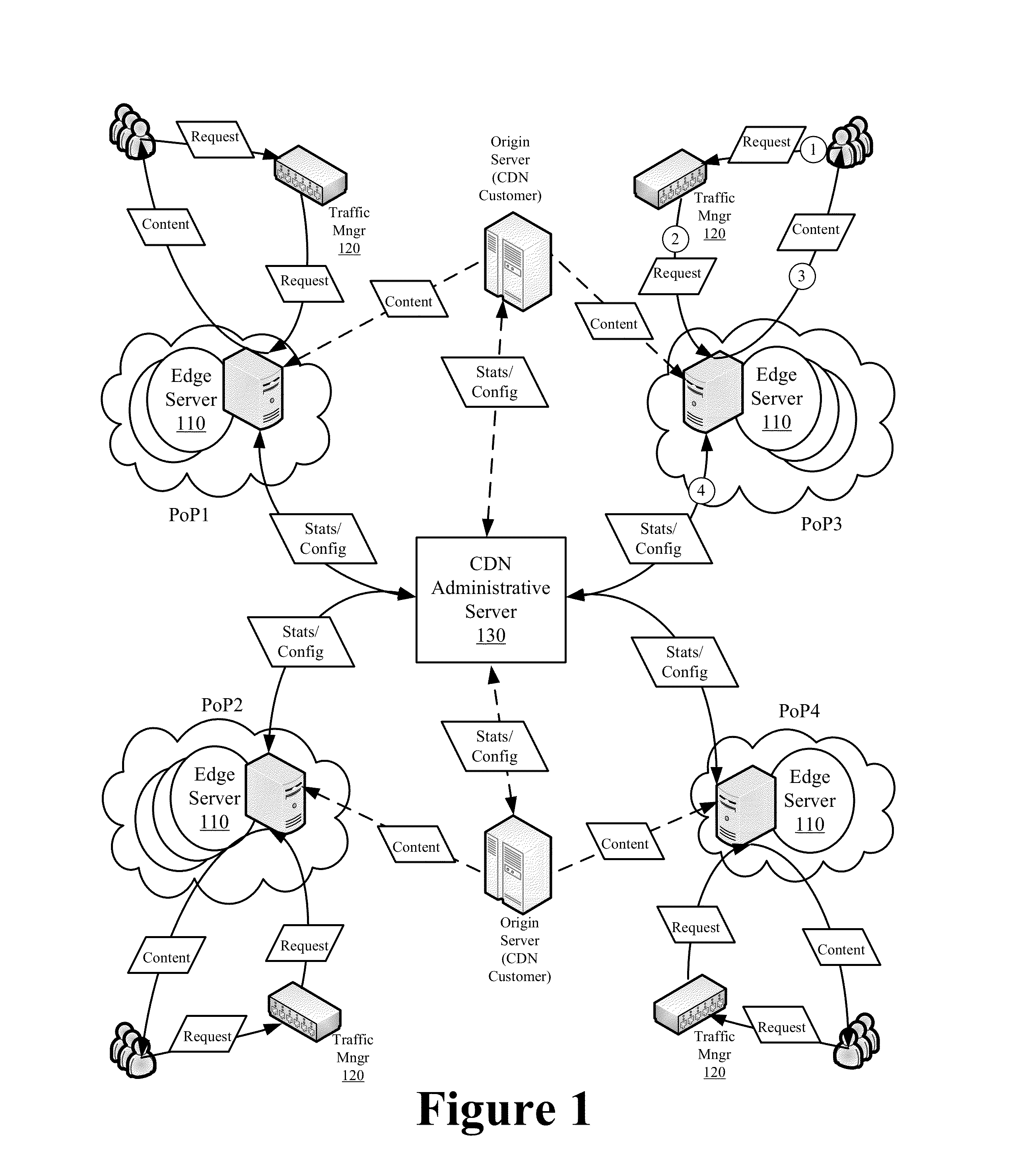

Efficient Cache Validation and Content Retrieval in a Content Delivery Network

ActiveUS20140095804A1Enhanced content deliveryIncrease contentError detection/correctionMemory adressing/allocation/relocationContent retrievalChecksum

Some embodiments provide systems and methods for validating cached content based on changes in the content instead of an expiration interval. One method involves caching content and a first checksum in response to a first request for that content. The caching produces a cached instance of the content representative of a form of the content at the time of caching. The first checksum identifies the cached instance. In response to receiving a second request for the content, the method submits a request for a second checksum representing a current instance of the content and a request for the current instance. Upon receiving the second checksum, the method serves the cached instance of the content when the first checksum matches the second checksum and serves the current instance of the content upon completion of the transfer of the current instance when the first checksum does not match the second checksum.

Owner:EDGIO INC

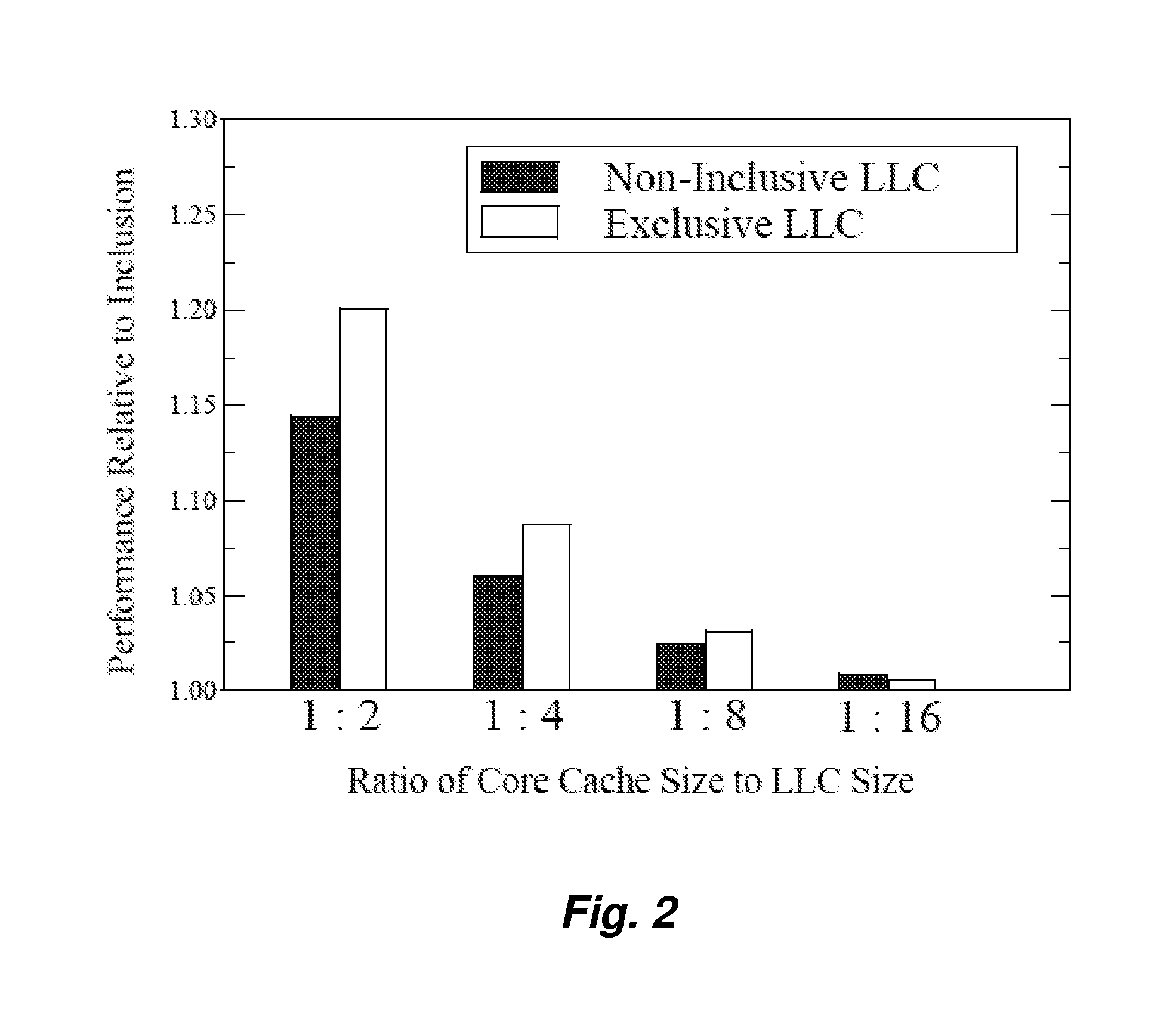

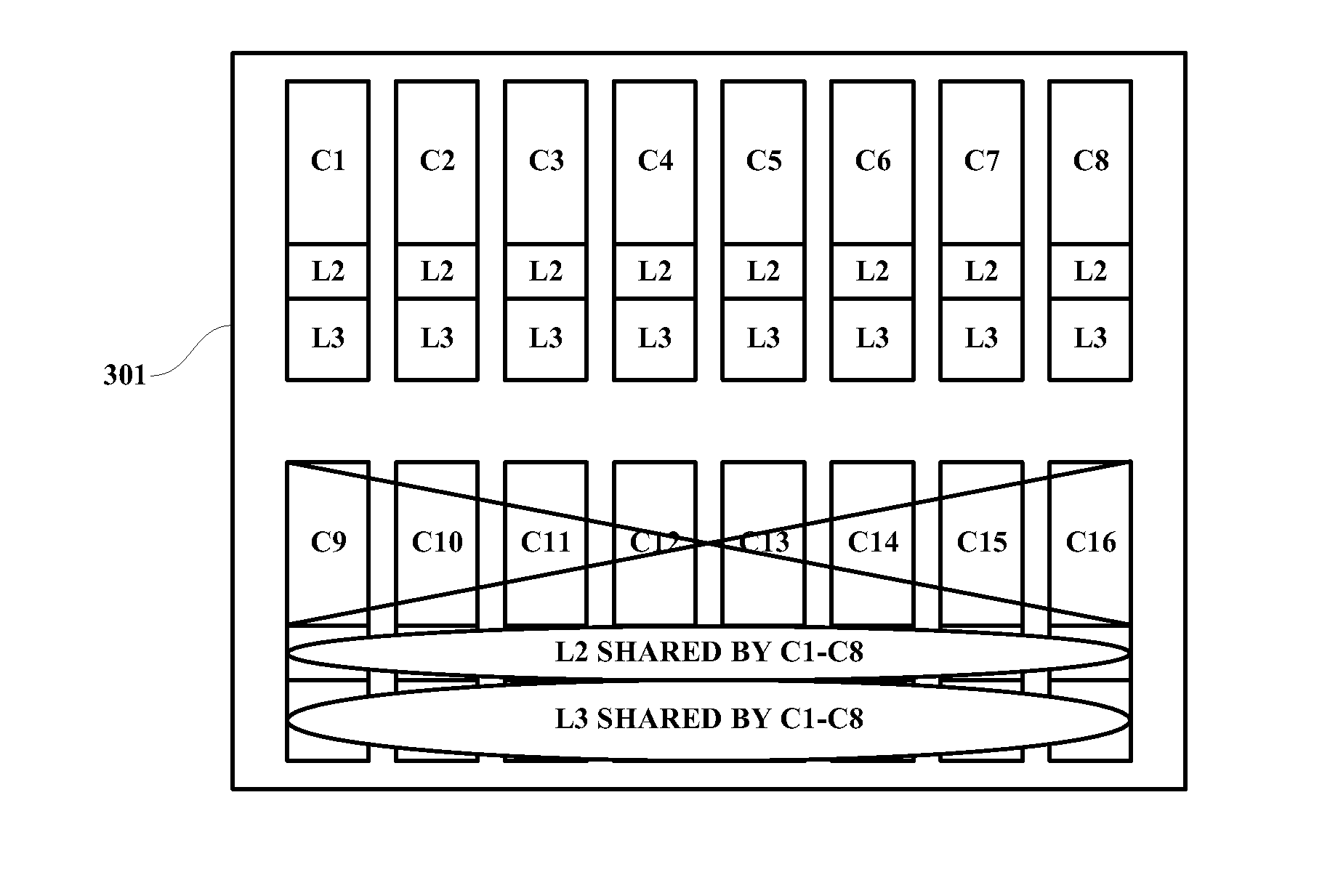

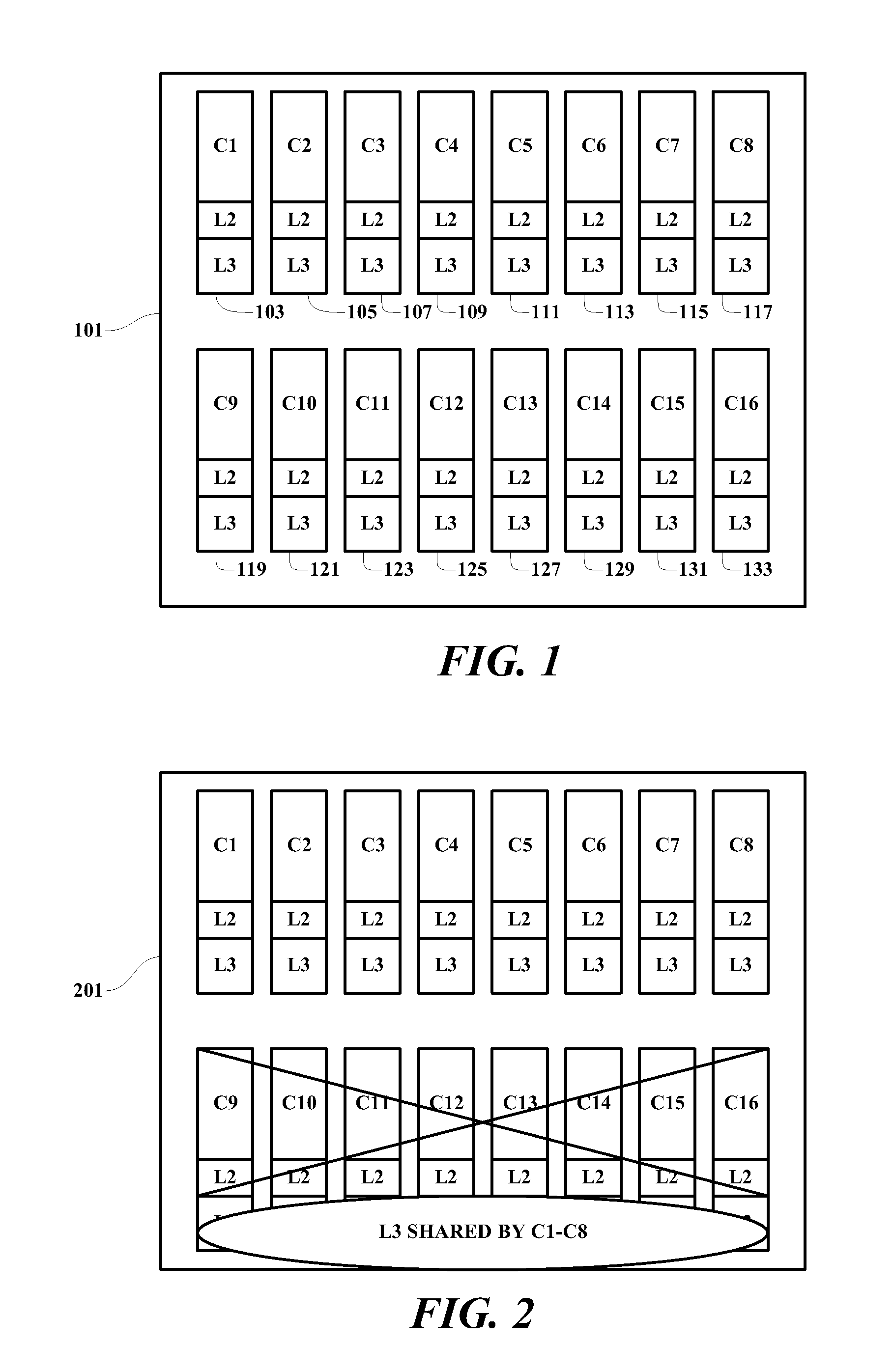

Adaptive Spill-Receive Mechanism for Lateral Caches

InactiveUS20100030970A1Improve caching capacityMemory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemParallel computing

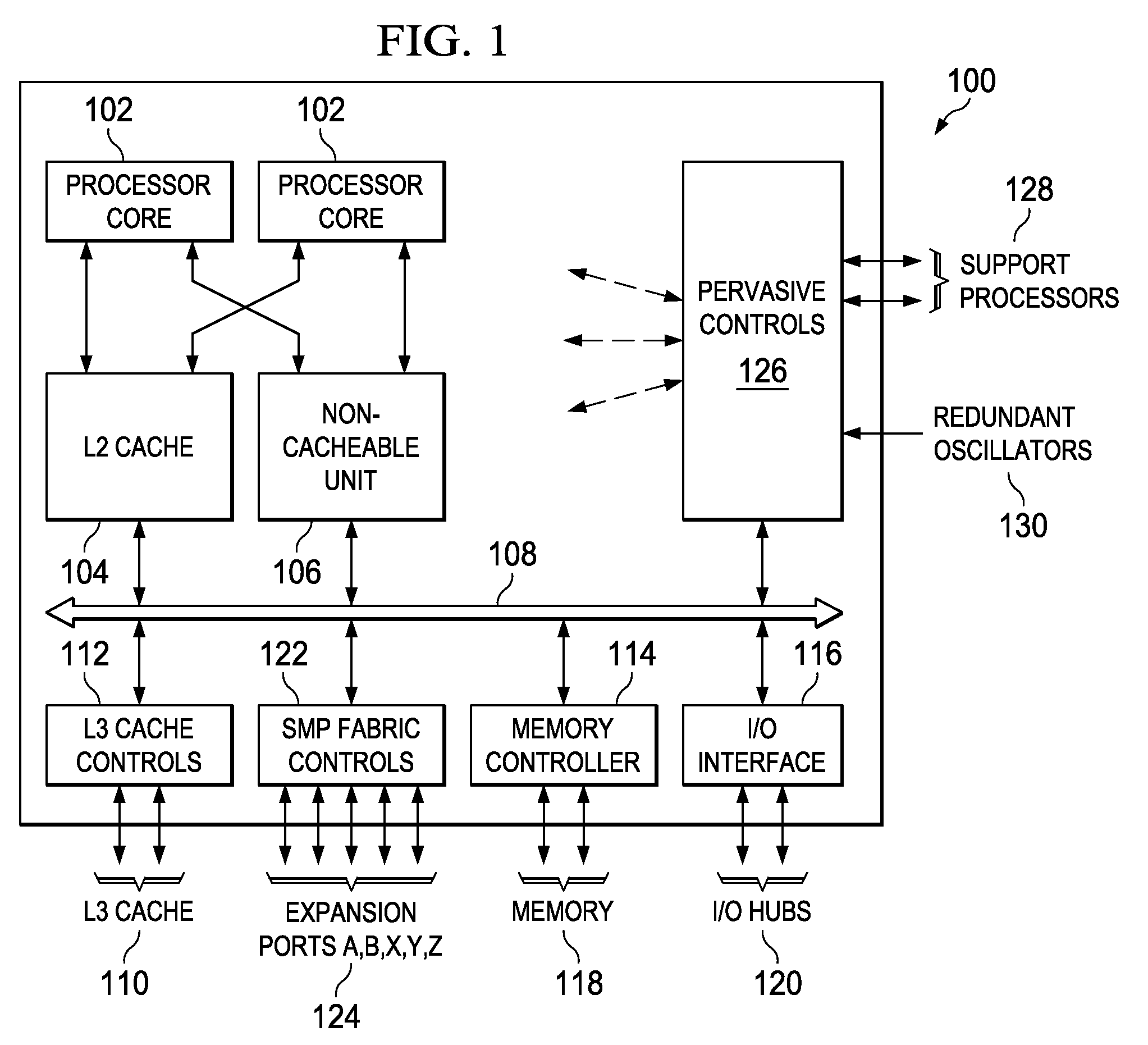

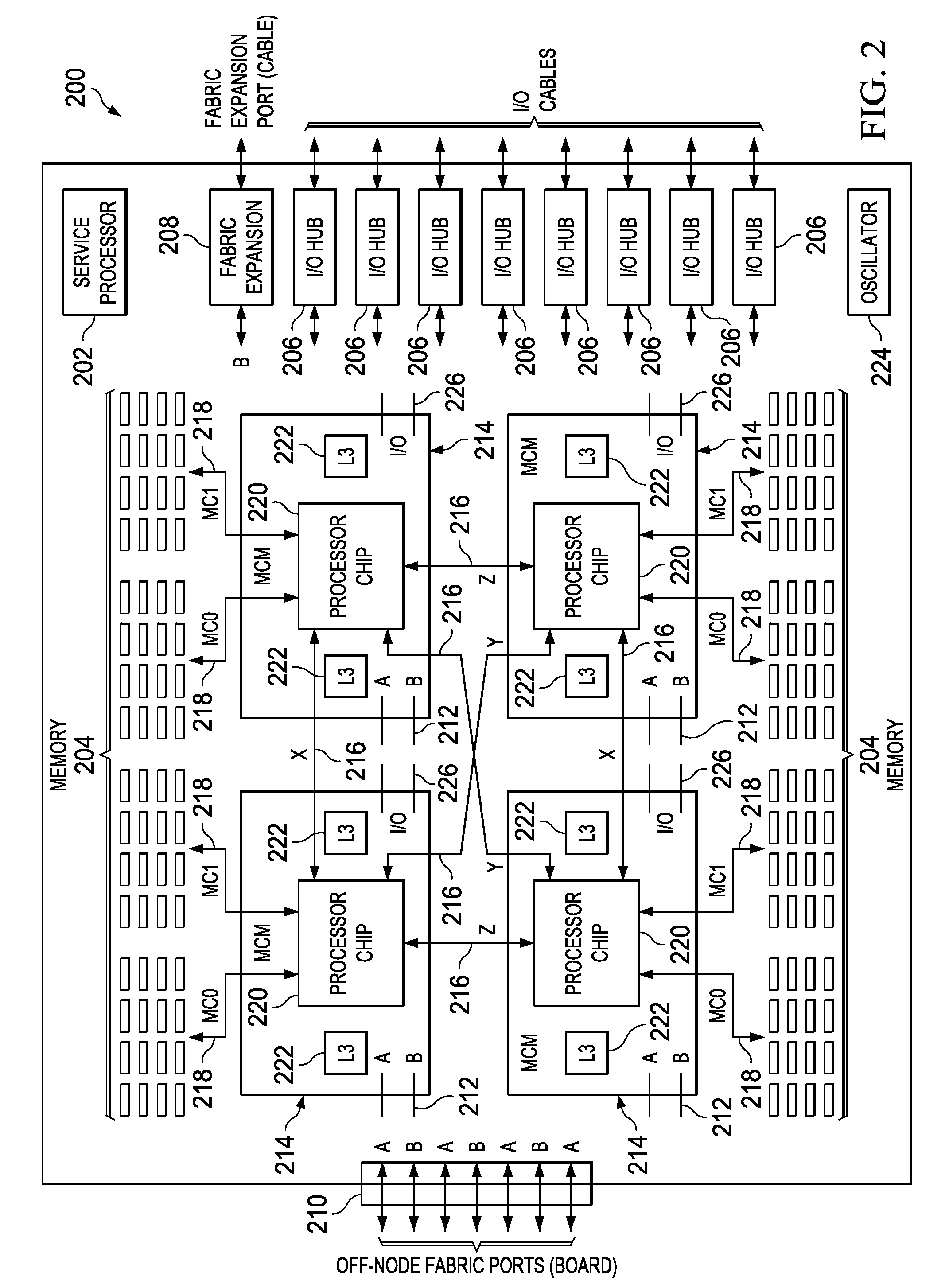

Improving cache performance in a data processing system is provided. A cache controller monitors a counter associated with a cache. The cache controller determines whether the counter indicates that a plurality of non-dedicated cache sets within the cache should operate as spill cache sets or receive cache sets. The cache controller sets the plurality of non-dedicated cache sets to spill an evicted cache line to an associated cache set in another cache in the event of a cache miss in response to an indication that the plurality of non-dedicated cache sets should operate as the spill cache sets. The cache controller sets the plurality of non-dedicated cache sets to receive an evicted cache line from another cache set in the event of the cache miss in response to an indication that the plurality of non-dedicated cache sets should operate as the receive cache sets.

Owner:IBM CORP

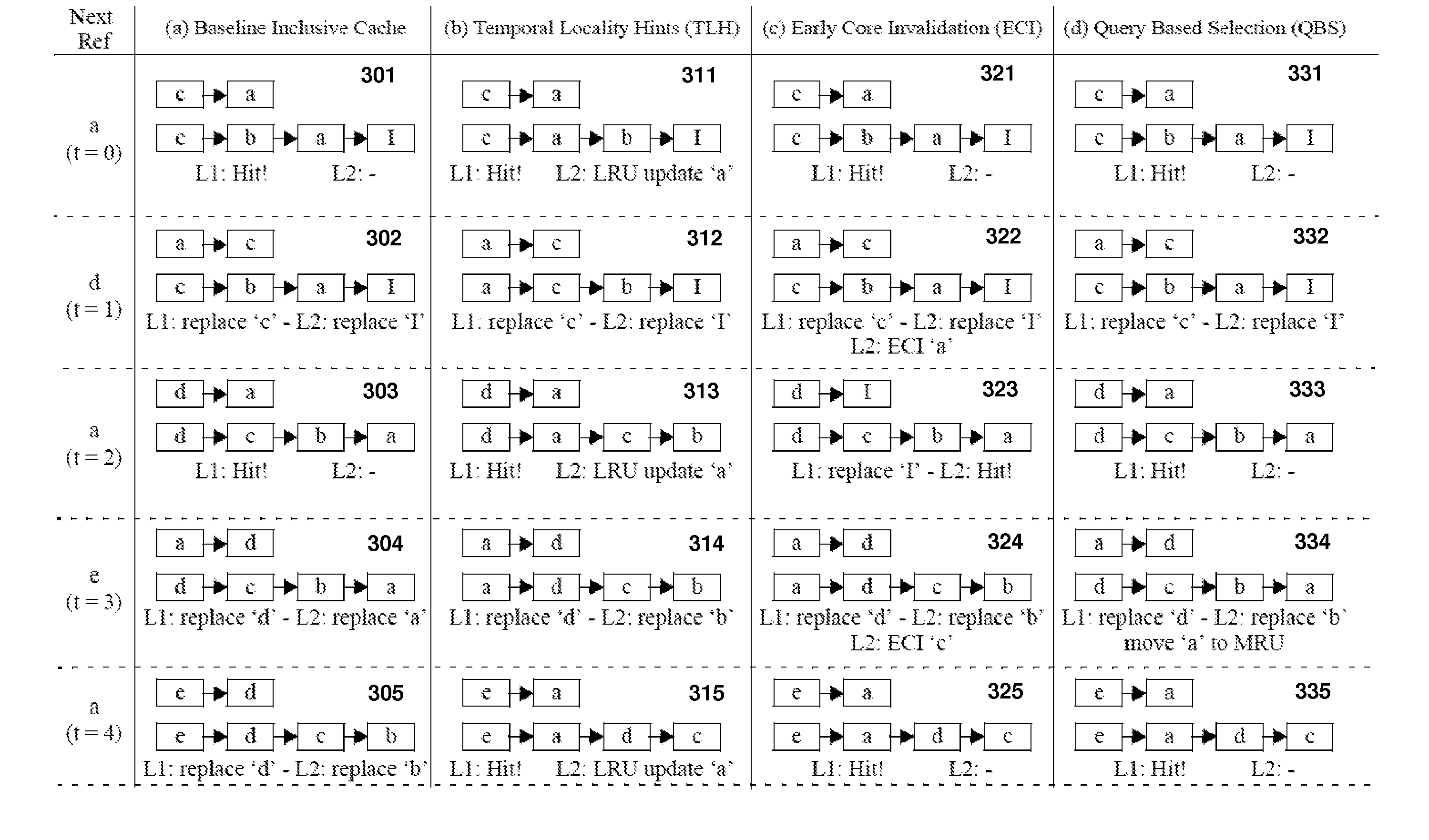

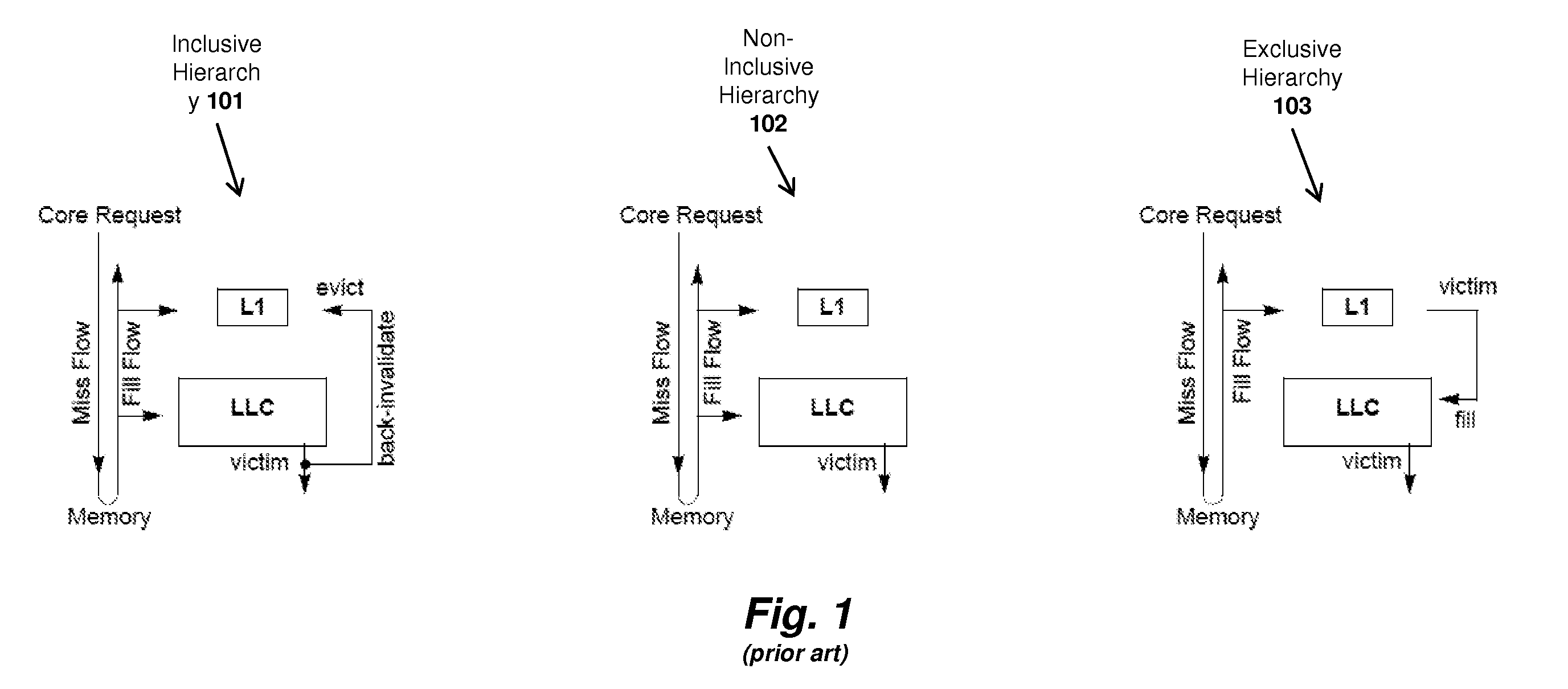

Method and apparatus for achieving non-inclusive cache performance with inclusive caches

InactiveUS20120159073A1Simplify cache coherenceImprove fluencyMemory adressing/allocation/relocationCache hierarchyParallel computing

An apparatus and method for improving cache performance in a computer system having a multi-level cache hierarchy. For example, one embodiment of a method comprises: selecting a first line in a cache at level N for potential eviction; querying a cache at level M in the hierarchy to determine whether the first cache line is resident in the cache at level M, wherein M<N; in response to receiving an indication that the first cache line is not resident at level M, then evicting the first cache line from the cache at level N; in response to receiving an indication that the first cache line is resident at level M, then retaining the first cache line and choosing a second cache line for potential eviction.

Owner:INTEL CORP

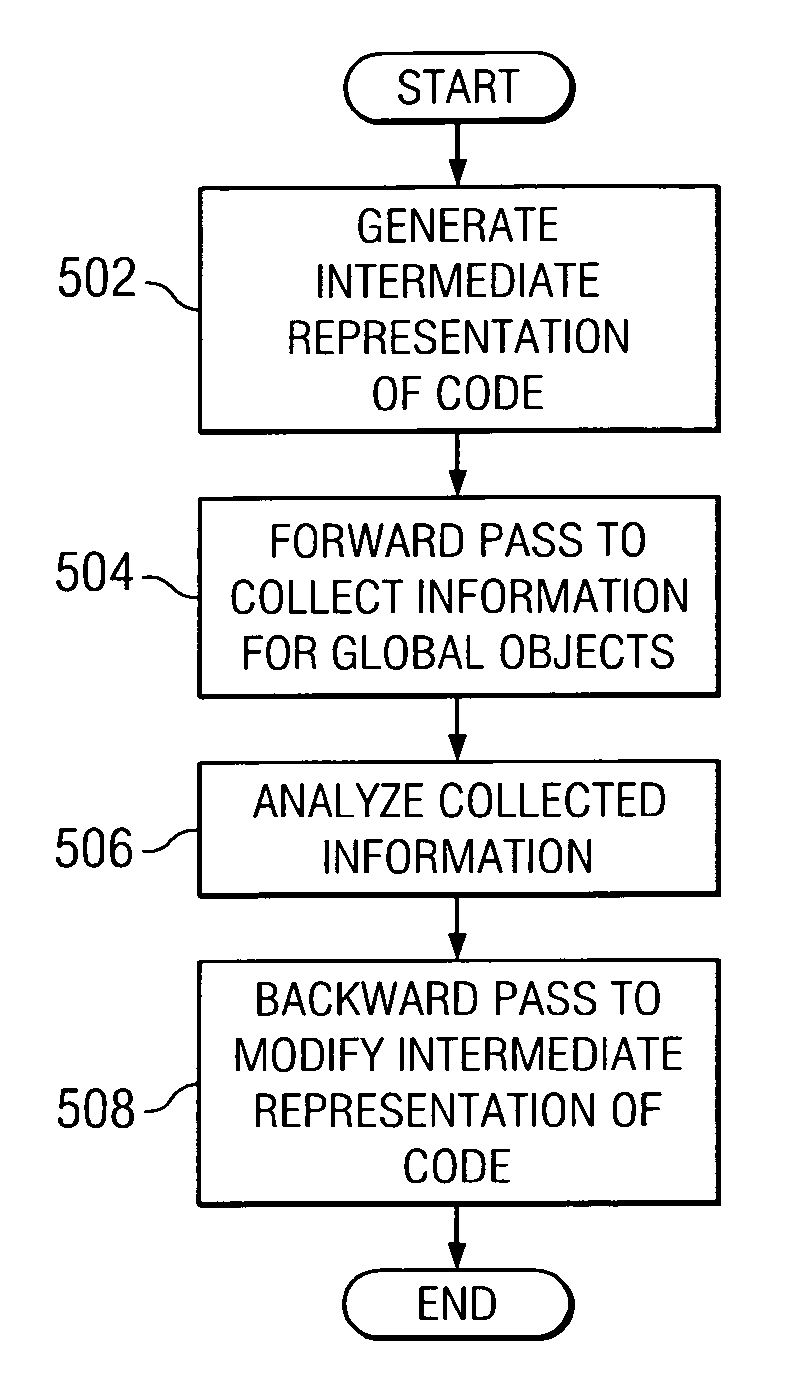

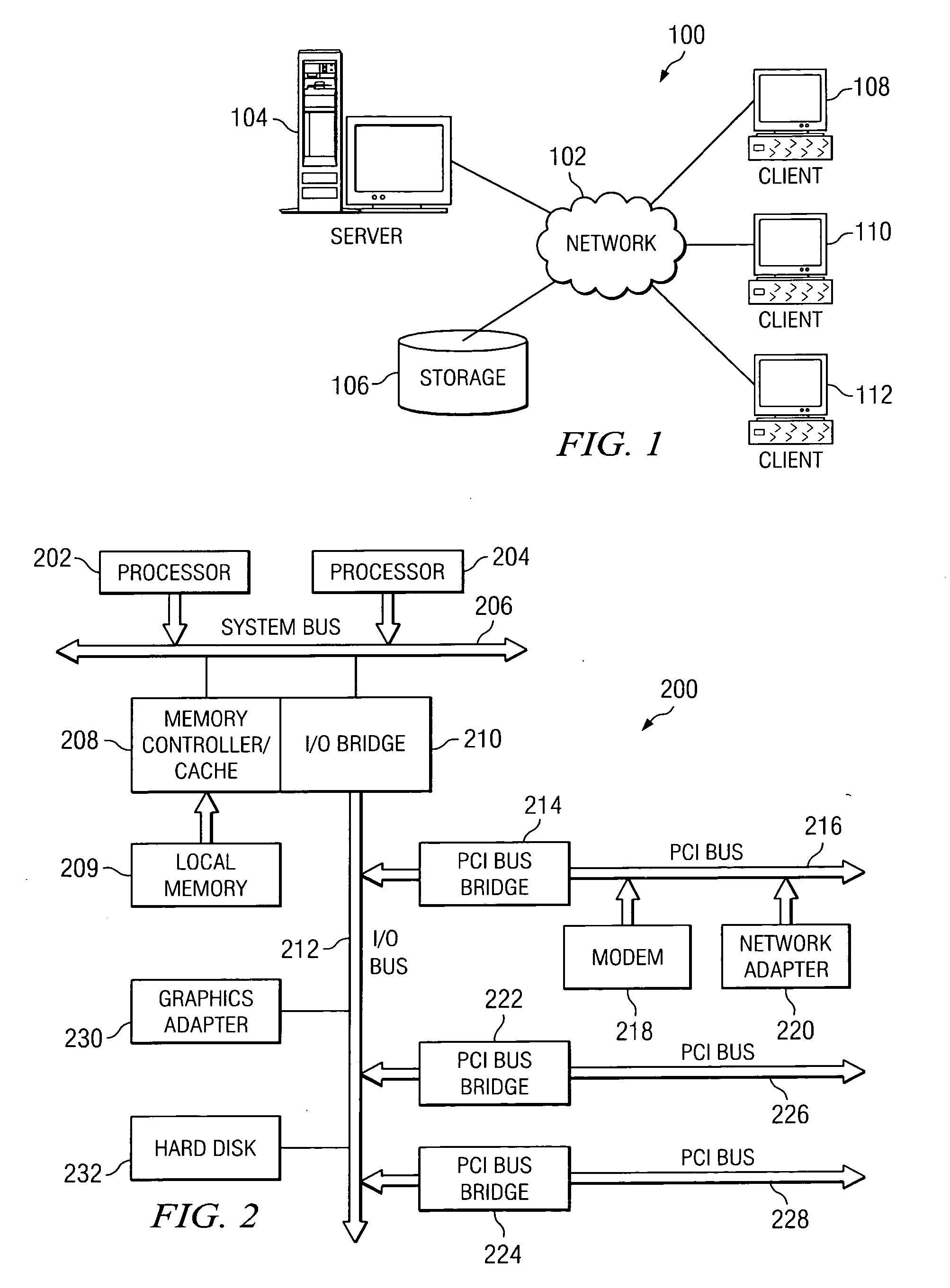

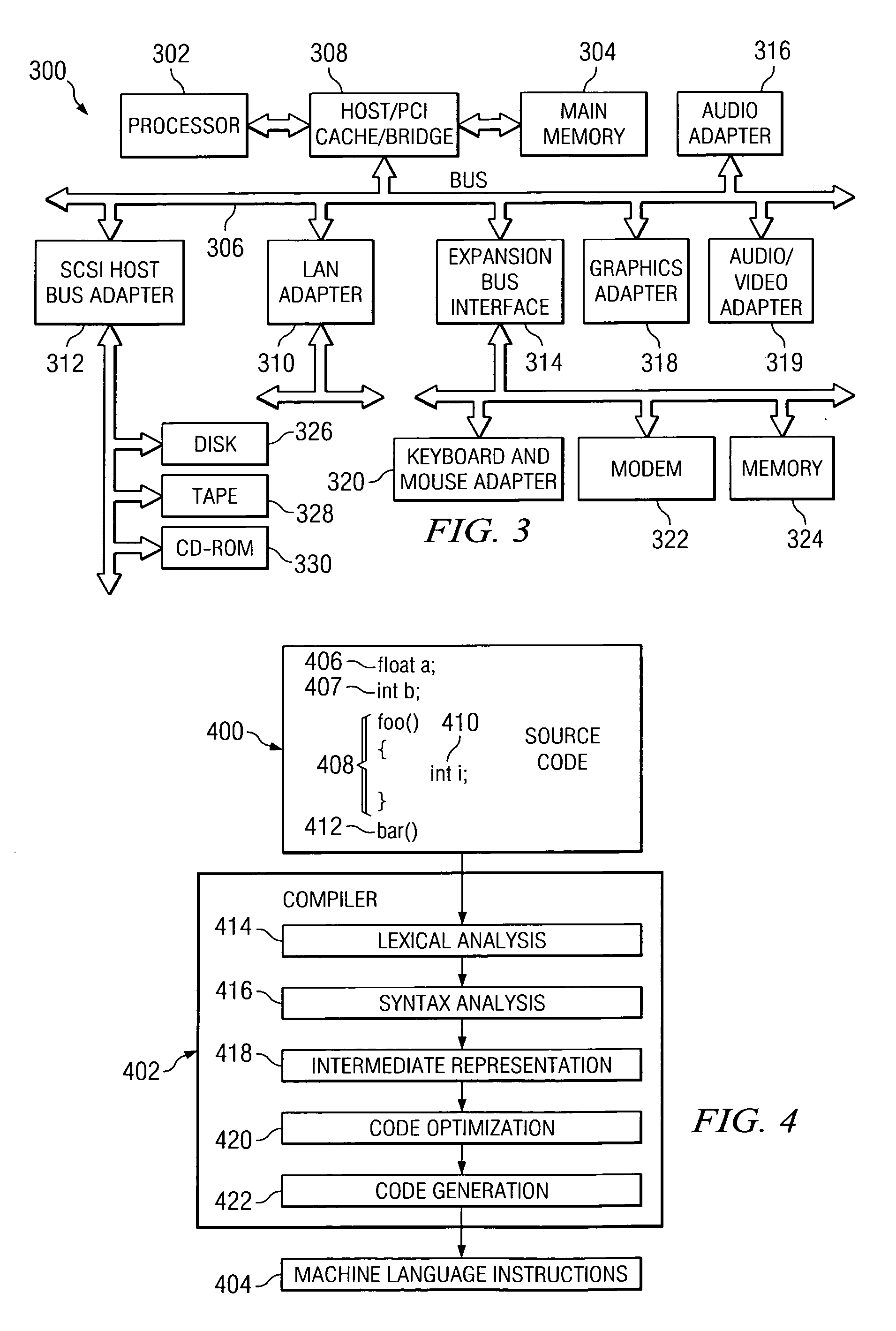

Method and apparatus for improving data cache performance using inter-procedural strength reduction of global objects

InactiveUS20060048103A1Improving data cache performanceImprove caching capacitySoftware engineeringSpecific program execution arrangementsParallel computingForward pass

Inter-procedural strength reduction is provided by a mechanism of the present invention to improve data cache performance. During a forward pass, the present invention collects information of global variables and analyzes the usage pattern of global objects to select candidate computations for optimization. During a backward pass, the present invention remaps global objects into smaller size new global objects and generates more cache efficient code by replacing candidate computations with indirect or indexed reference of smaller global objects and inserting store operations to the new global objects for each computation that references the candidate global objects.

Owner:IBM CORP

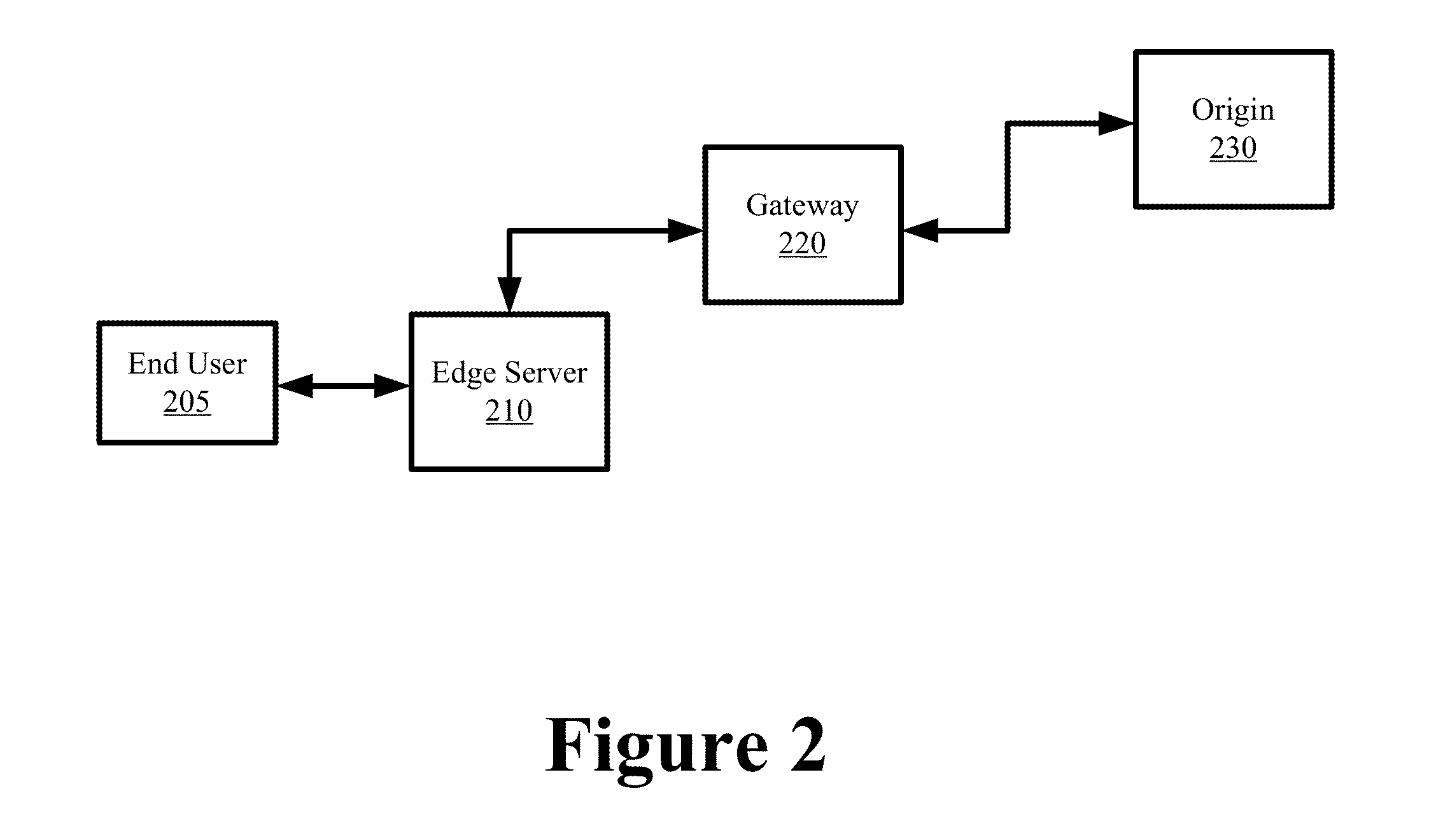

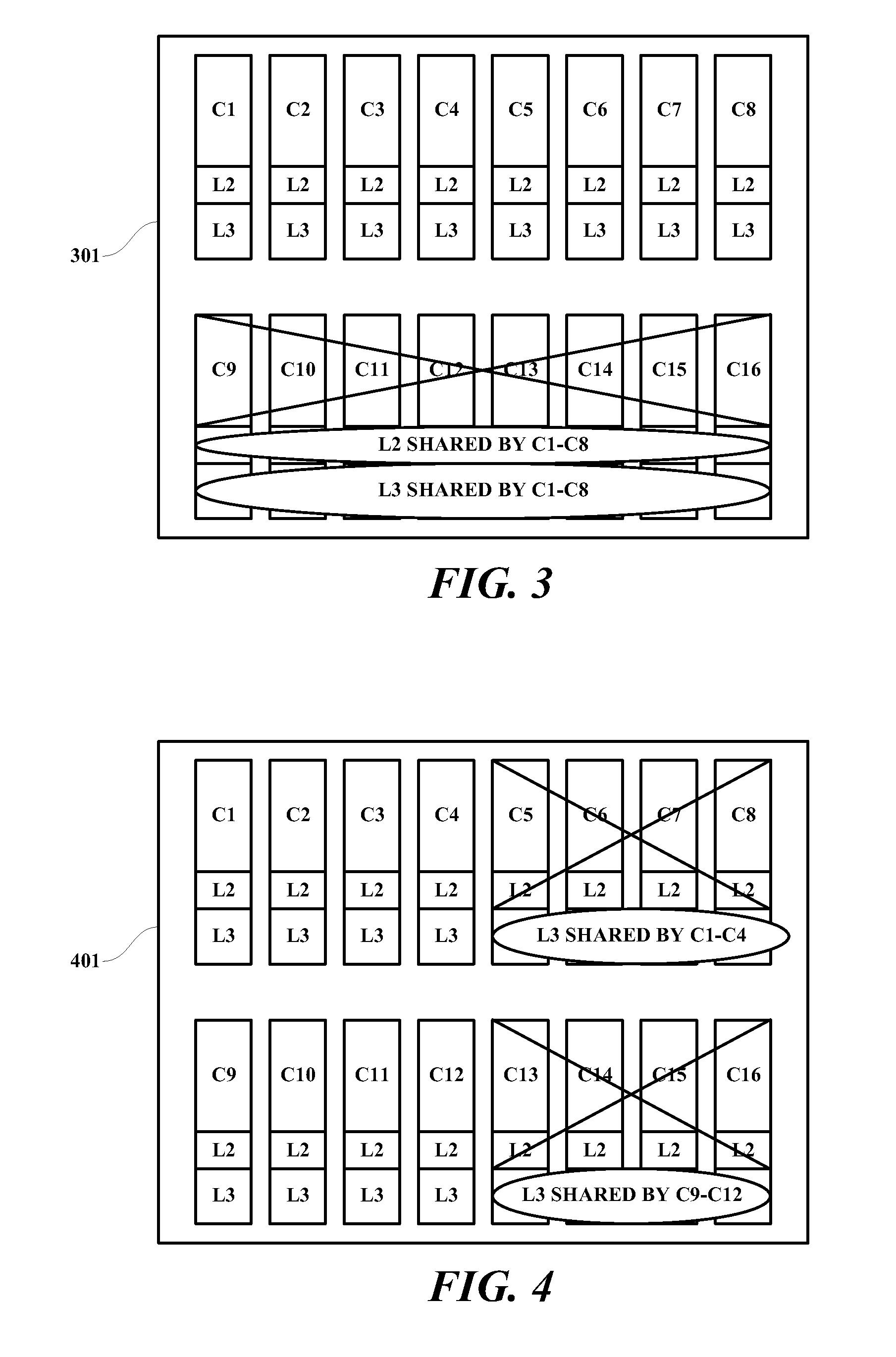

Extended Cache Capacity

ActiveUS20110107031A1Improve caching capacityMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache capacity

A method, programmed medium and system are provided for enabling a core's cache capacity to be increased by using the caches of the disabled or non-enabled cores on the same chip. Caches of disabled or non-enabled cores on a chip are made accessible to store cachelines for those chip cores that have been enabled, thereby extending cache capacity of enabled cores.

Owner:IBM CORP

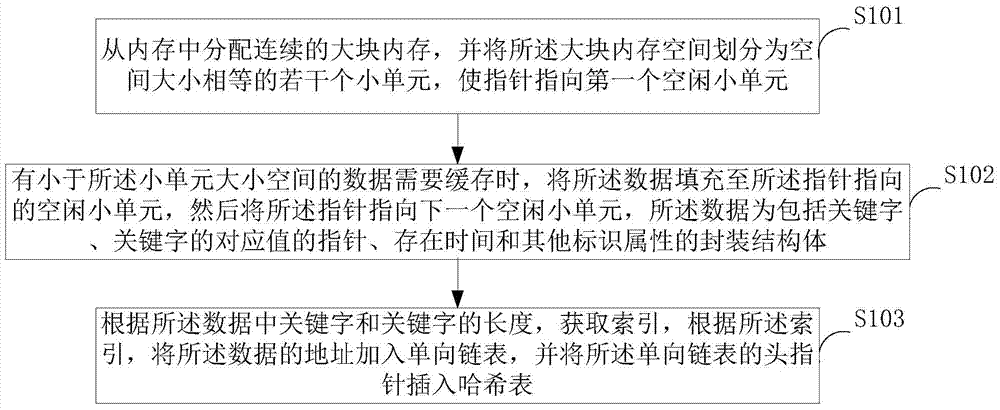

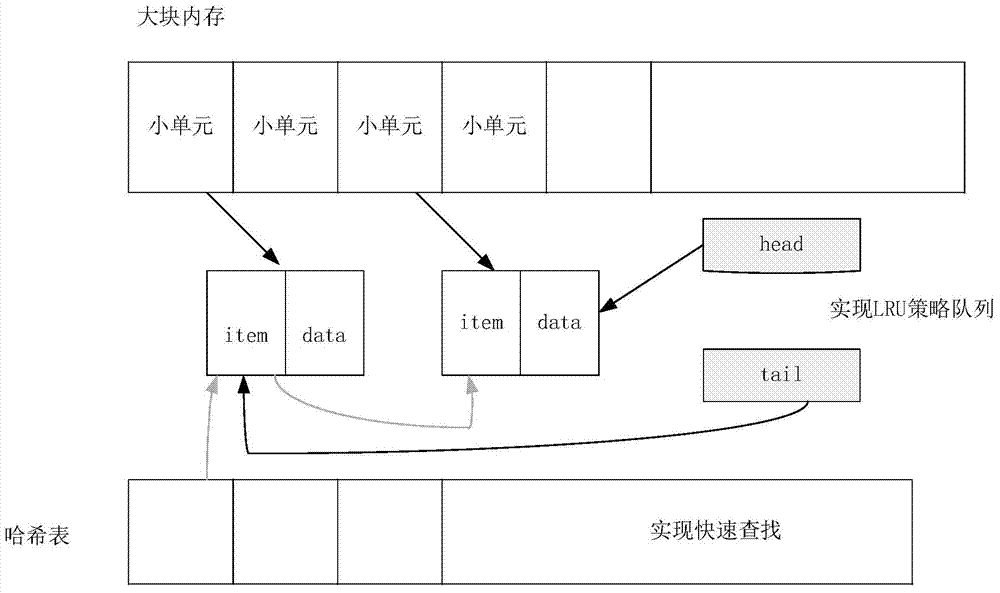

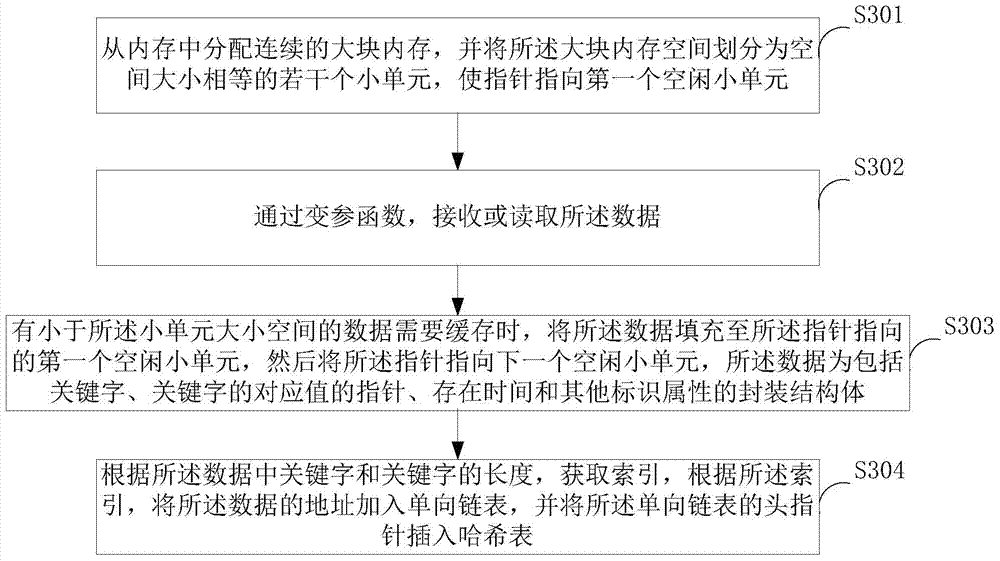

Local data cache management method and device

ActiveCN103678172AQuick access to dataImprove cache performanceMemory adressing/allocation/relocationHash tableSmall unit

The invention belongs to the technical field of computer cache management, and provides a local data cache management method and device. The method comprises the steps that a continuous large block of internal storage space is distributed from an internal storage, the large block of internal storage space is divided into a plurality of small units with the same space size, and a pointer is made to point to the first idle small unit; when data with the space size smaller than the space size of the small units need to be cached, the idle small unit which the pointer points to is filled with the data, and then the pointer is made to point to the next idle small unit; an index is obtained according to keywords in the data and the lengths of the keywords, the address of the data is added into a one-way chain table according to the index, and a head point of the one-way chain table is inserted into a hash table. According to the local data cache management method and device, the continuous internal storage space is distributed according to Memcached, the small units with the fixed size are used as the smallest cache units, the hash table is used for storing the chain table of the data address, the data are conveniently, flexibly and rapidly stored and retrieved, and the caching performance is high.

Owner:TCL CORPORATION

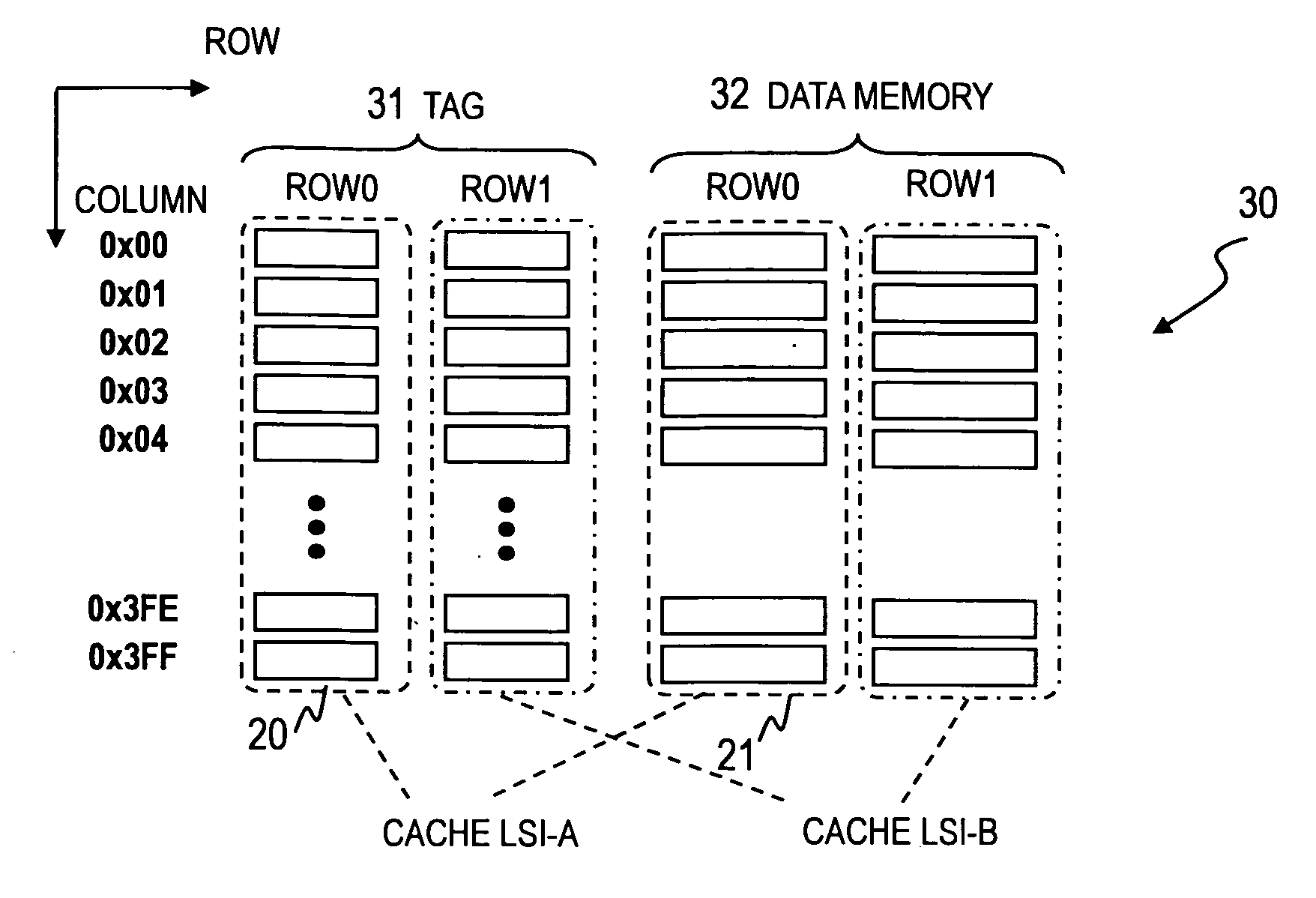

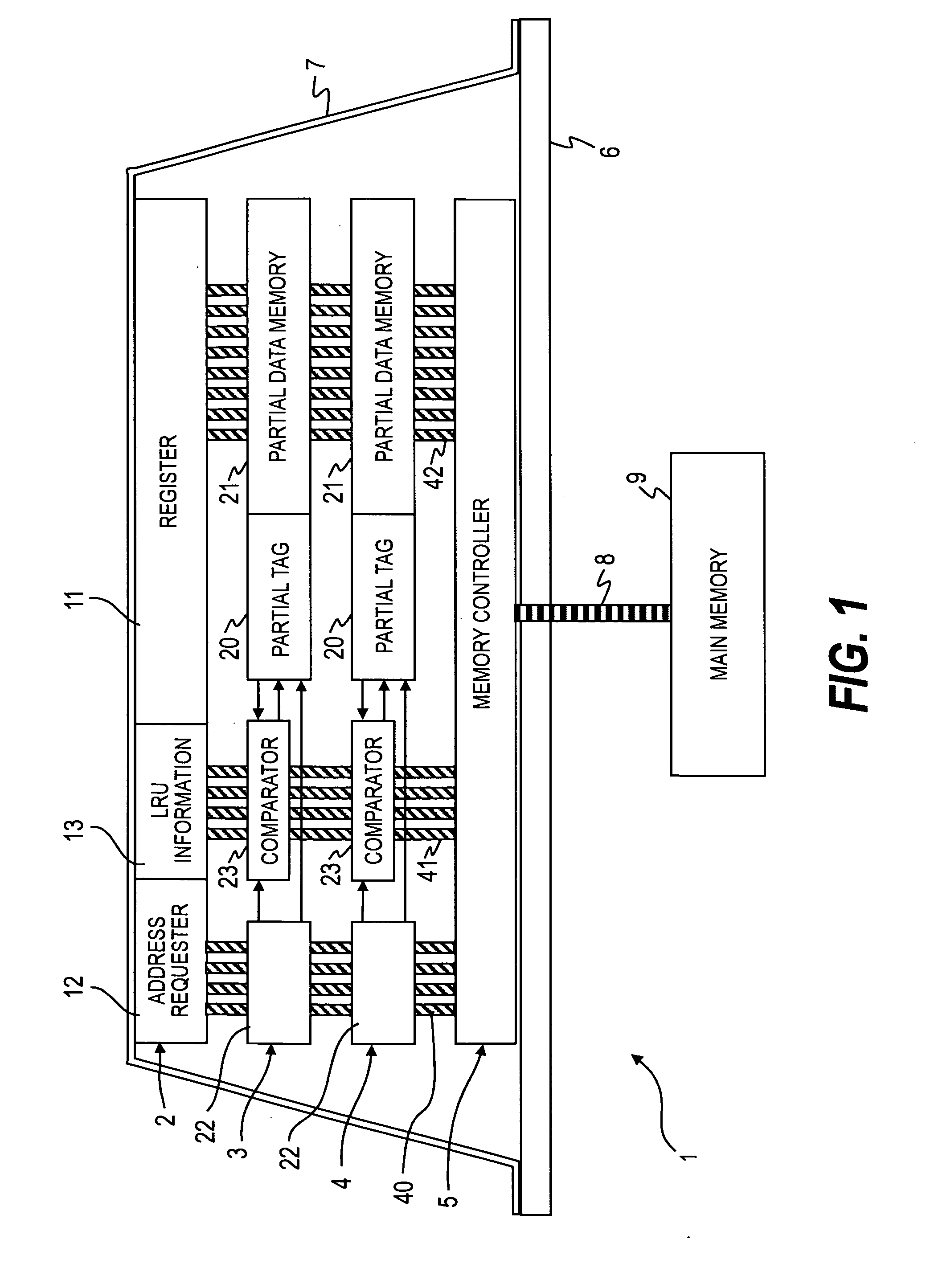

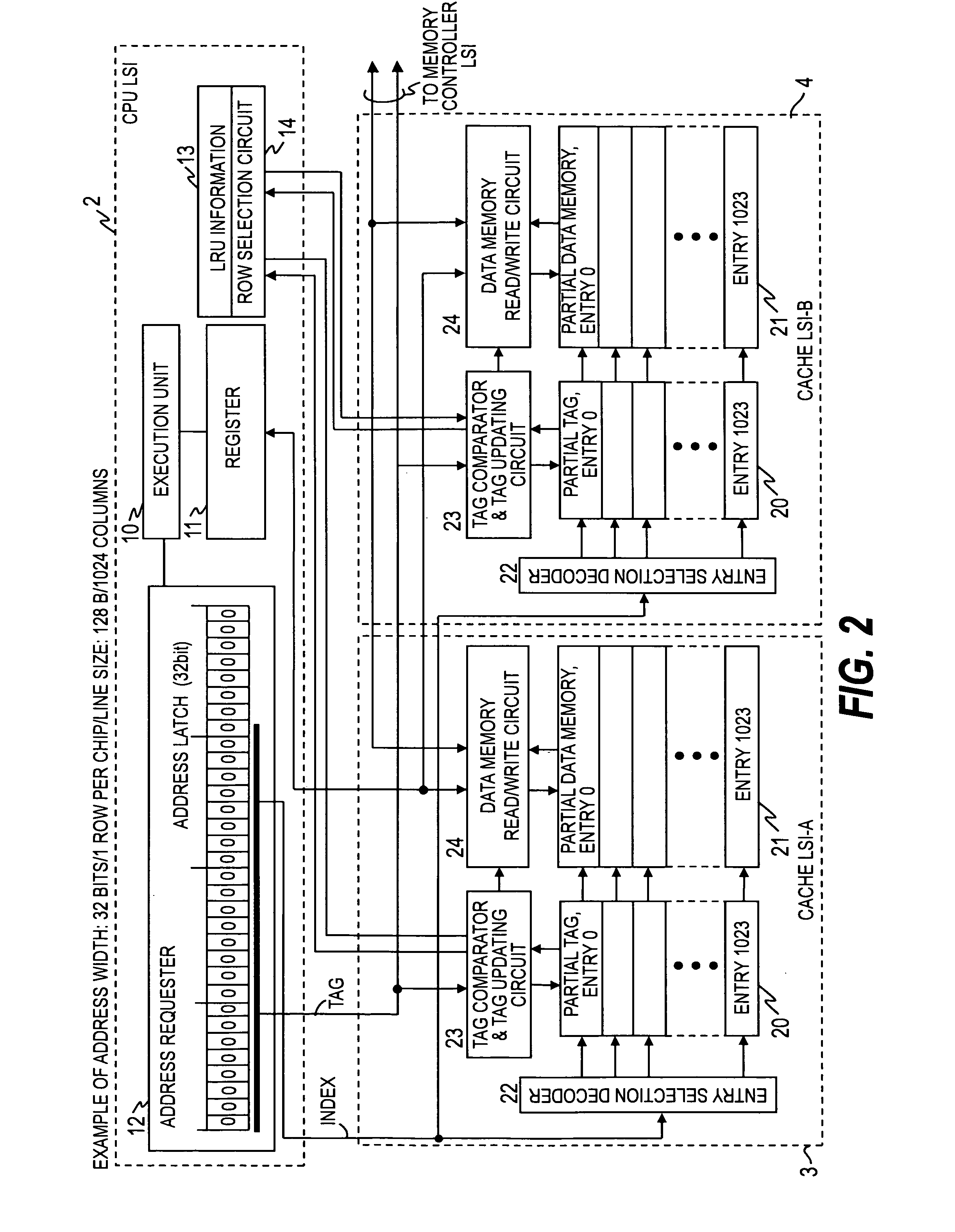

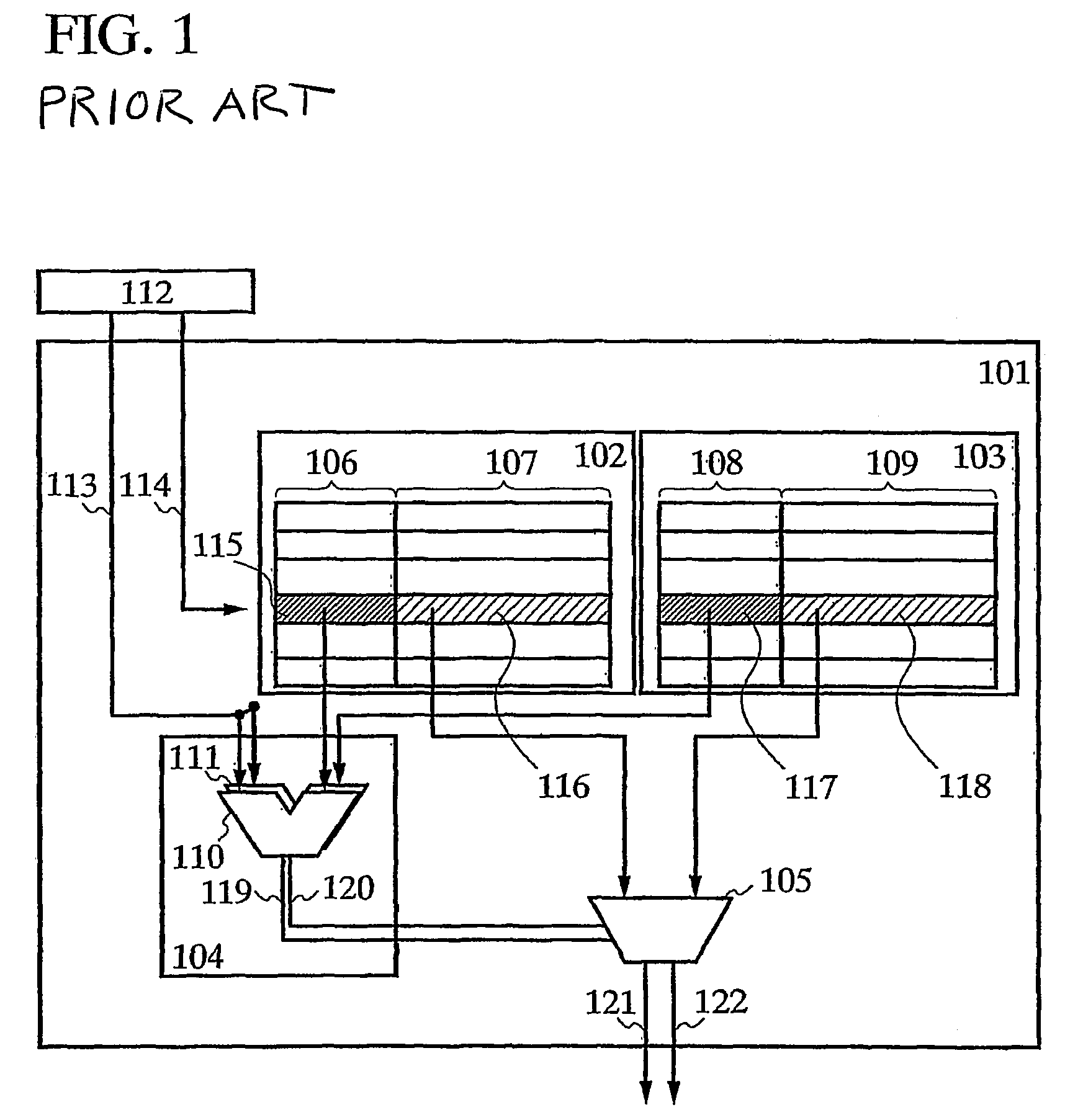

Processor having a cache memory which is comprised of a plurality of large scale integration

InactiveUS20090172288A1Simple circuit structureEasy to processMemory architecture accessing/allocationMemory adressing/allocation/relocationInformation transmissionParallel computing

To provide an easy way to constitute a processor from a plurality of LSIs, the processor includes: a first LSI containing a processor; a second LSI having a cache memory; and information transmission paths connecting the first LSI to a plurality of the second LSIs, in which the first LSI contains an address information issuing unit which broadcasts, to the second LSIs, via the information transmission paths, address information of data, the second LSI includes: a partial address information storing unit which stores a part of address information; a partial data storing unit which stores data that is associated with the address information; and a comparison unit which compares the address information broadcast with the address information stored in the partial address information storing unit to judge whether a cache hit occurs, and the comparison units of the plurality of the second LSIs are connected to the information transmission paths.

Owner:HITACHI LTD

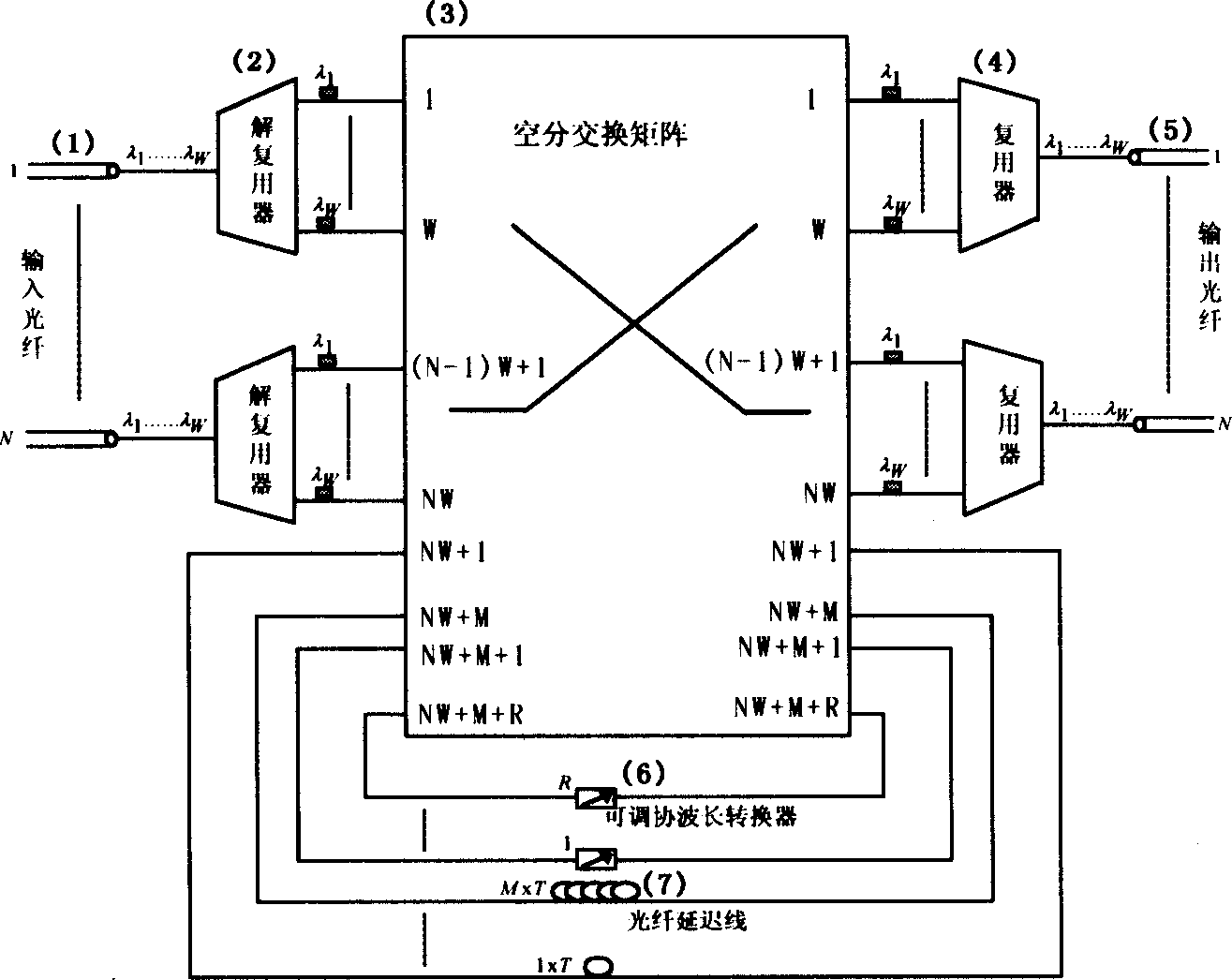

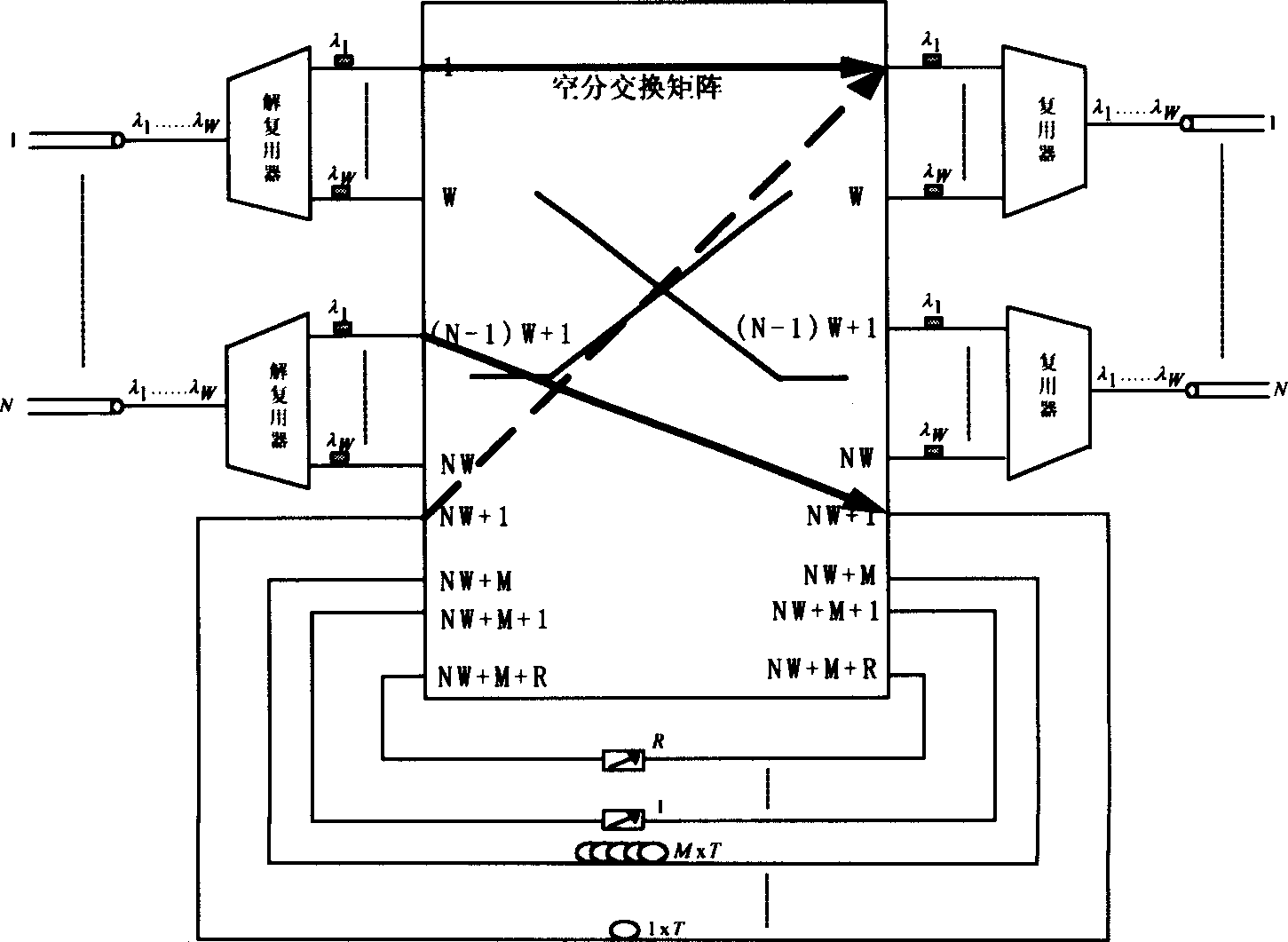

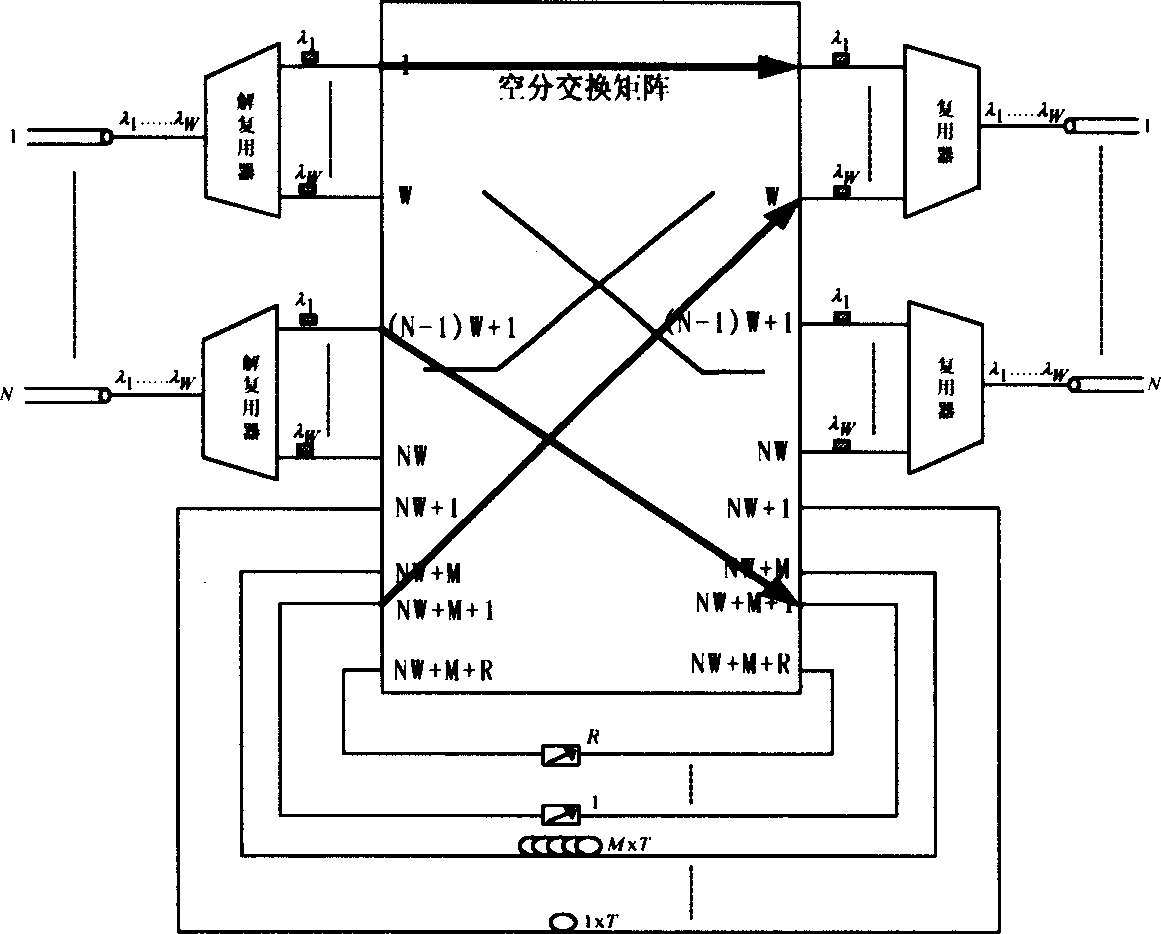

Full optical packet switching node structure for supporting burst or non-burst businesses

InactiveCN1472969AReduce the numberReduce volumeMultiplex system selection arrangementsWavelength-division multiplex systemsFull waveMultiplexer

A full optical structure for exchanging nodes in grouping, which supports the abrupt and non-abrupt business is a symmetric structure consisting of N numbers of 1XW light-wave decomposing multiplexer, N numbers of Wx1 light-wave decomposing multiplexer, one of (WN+R+M)x(WN+R+M) full optical unblocking exchanging matrix module, R numbers of full wave-length converter and M numbers of optical fiber delaying line, wherein N is the number of input / output optical fiber port, W is the number of wave-length channel which can be transmitted at each port, M number of delaying line constitute a structure of degenerate form, the shortest or the longest delaying line provides the buffer-storing time T or MT to the grouping respectively.

Owner:SHANGHAI JIAO TONG UNIV

Cache Replacement Using Active Cache Line Counters

ActiveUS20120324172A1Improve performanceImprove caching capacityMemory adressing/allocation/relocationParallel computingComputer science

Owner:BEIJING XIAOMI MOBILE SOFTWARE CO LTD

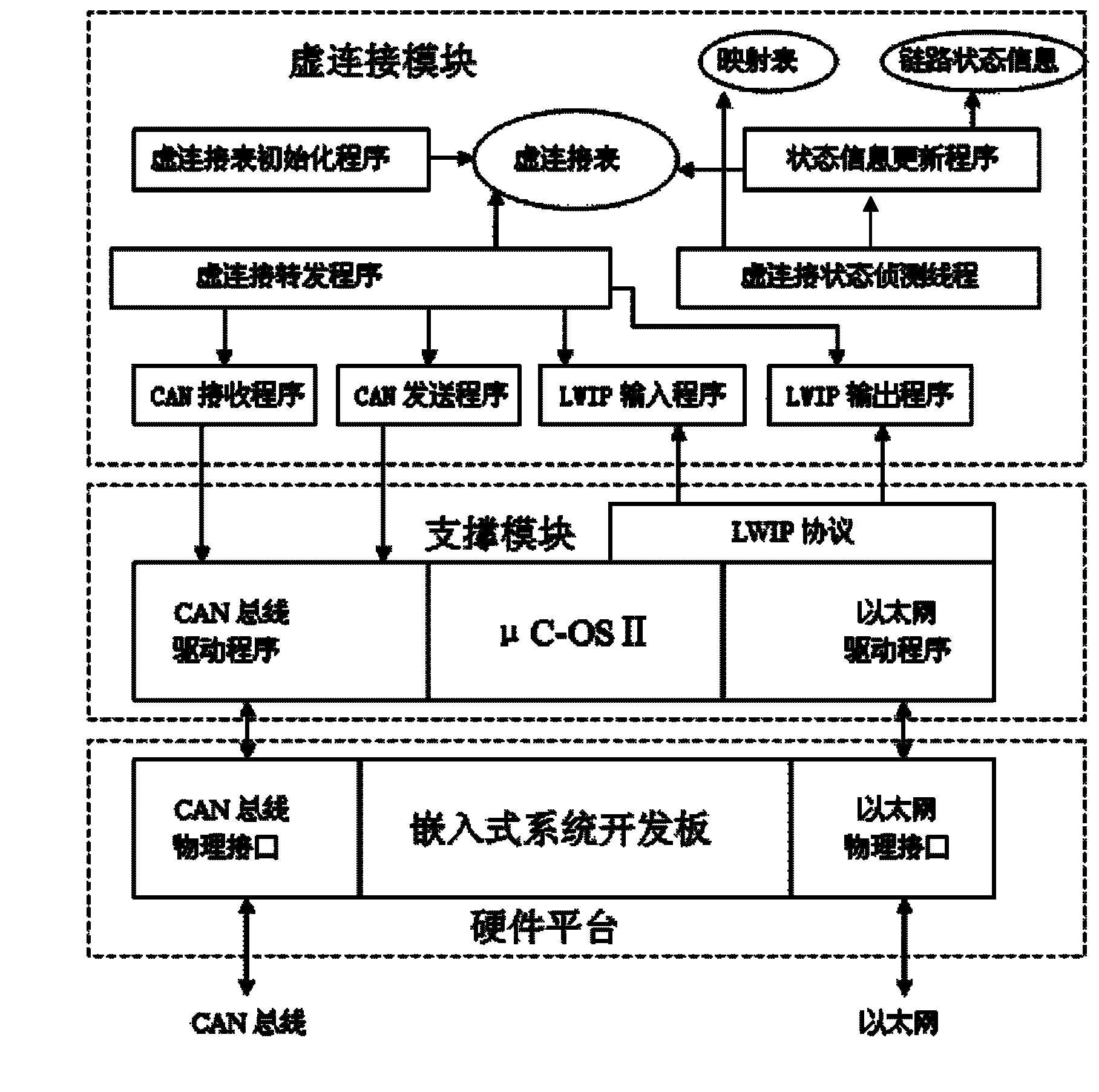

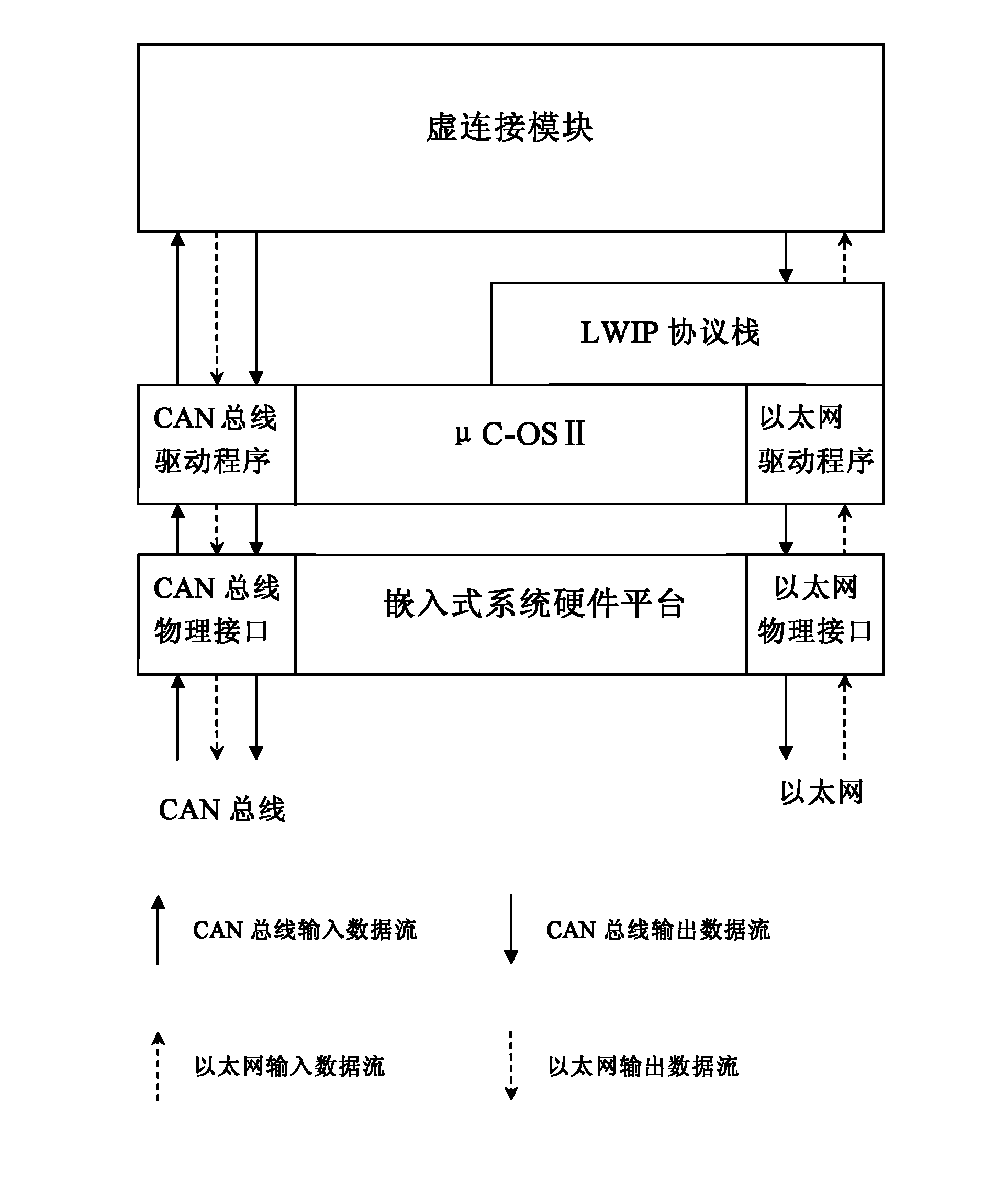

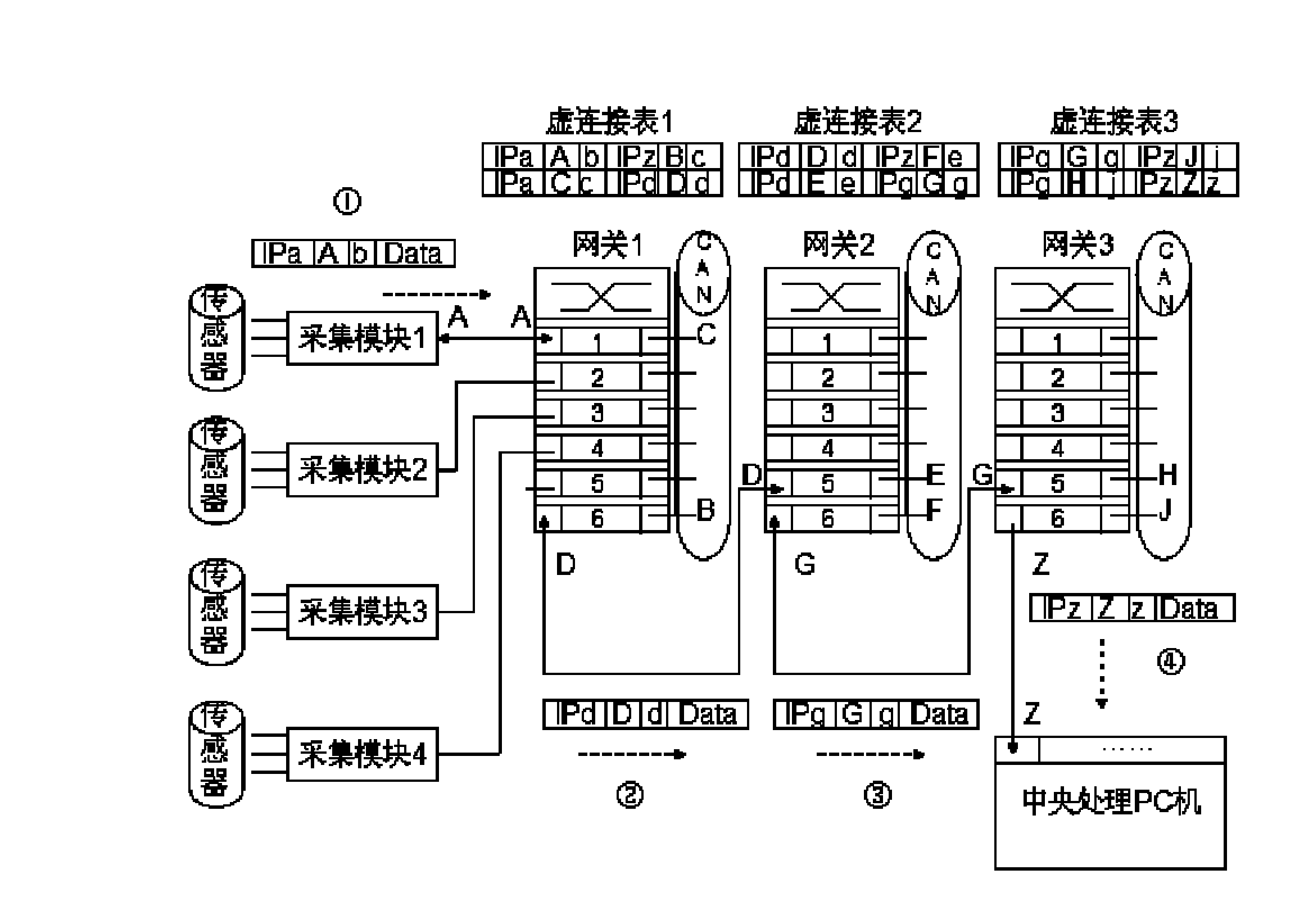

Virtual connection supporting real-time embedded gateway based on controller area network (CAN) bus and Ethernet

InactiveCN102158435AImprove real-time performanceSolve the bottleneck problem that the real-time reliability cannot be improvedNetwork connectionsExtensibilityArea network

The invention discloses a virtual connection supporting real-time embedded gateway based on a controller area network (CAN) bus and Ethernet. The real-time embedded gateway comprises hardware, a supporting module and a virtual connection module. A plurality of embedded development boards simultaneously with CAN bus interfaces and Ethernet interfaces are adopted, and are connected by the CAN bus to form a virtual connection service supporting trunking system. The supporting module of the gateway consists of a driver, a mu c-OS II operating system and a light weight Internet protocol (LWIP) protocol stack. The virtual connection module comprises a virtual connection protocol sub-module and a virtual connection state sensing sub-module, and is used for finishing actual virtual connection forwarding and maintenance work. The real-time embedded gateway has the advantages of configuration flexibility, high adaptability, high extensibility, low energy consumption and the like, and is applied to data acquisition and transmission systems of relatively larger scales.

Owner:深圳市千方航实科技有限公司

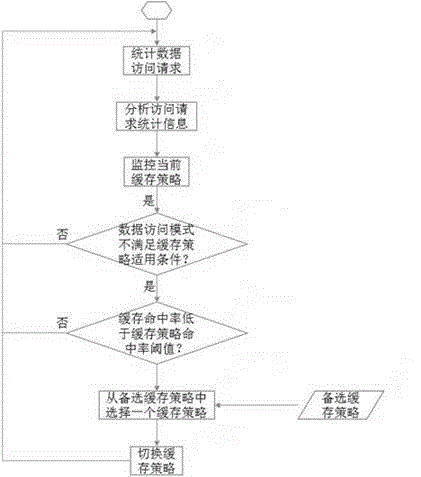

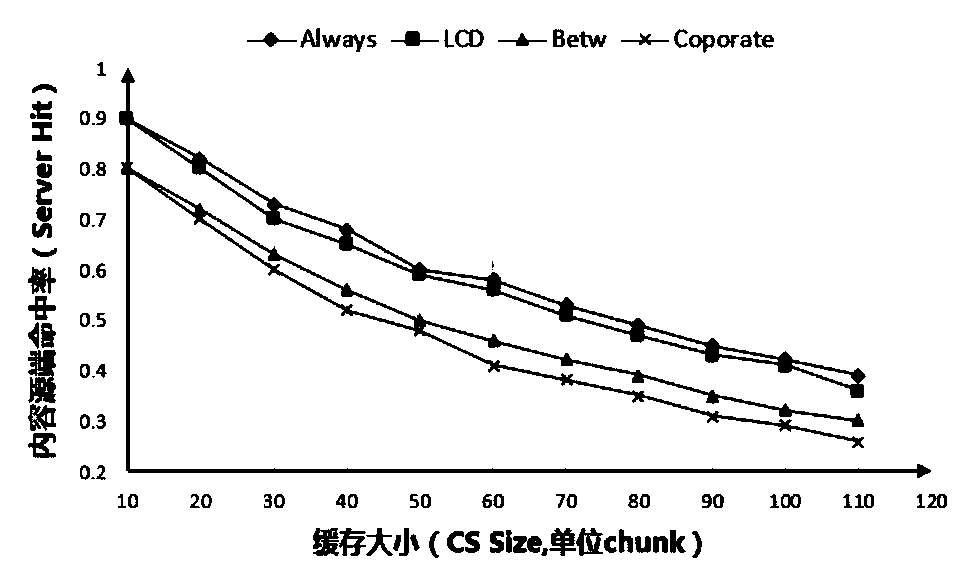

Storage system caching strategy self-adaptive method

ActiveCN104572502AImprove performanceSolve the inability to adapt to complex and changing business needsMemory adressing/allocation/relocationStatistical analysisData access

The invention particularly relates to a storage system caching strategy self-adaptive method. The storage system caching strategy self-adaptive method includes the steps of: performing statistical analysis on data access requests of a storage system to obtain a data access mode, and automatically selecting a proper caching strategy according to the date access mode. The storage system caching strategy self-adaptive method solves the problems that the single caching strategy of the storage system cannot adapt to complex and fickle business requirements and change of the caching strategy needs to be performed manually, so that the storage system can automatically select the caching strategy best for the current date access mode according to change of the actual date access characters, the cache hit ratio is improved, the caching pollution is reduced, the caching performance overhead is lowered, and thereby the performance of the storage system is promoted.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

Cache hit ratio estimating apparatus, cache hit ratio estimating method, program, and recording medium

InactiveUS7318124B2Improve caching capacityEstimations for access processing performanceInput/output to record carriersError detection/correctionCache accessNormal density

Determining a cache hit ratio of a caching device analytically and precisely. There is provided a cache hit ratio estimating apparatus for estimating the cache hit ratio of a caching device, caching access target data accessed by a requesting device, including: an access request arrival frequency obtaining section for obtaining an average arrival frequency measured for access requests for each of the access target data; an access request arrival probability density function generating section for generating an access request arrival probability density function which is a probability density function of arrival time intervals of access requests for each of the access target data on the basis of the average arrival frequency of access requests for the access target data; and a cache hit ratio estimation function generating section for generating an estimation function for the cache hit ratio for each of the access target data on the basis of the access request arrival probability density function for the plurality of the access target data.

Owner:GOOGLE LLC

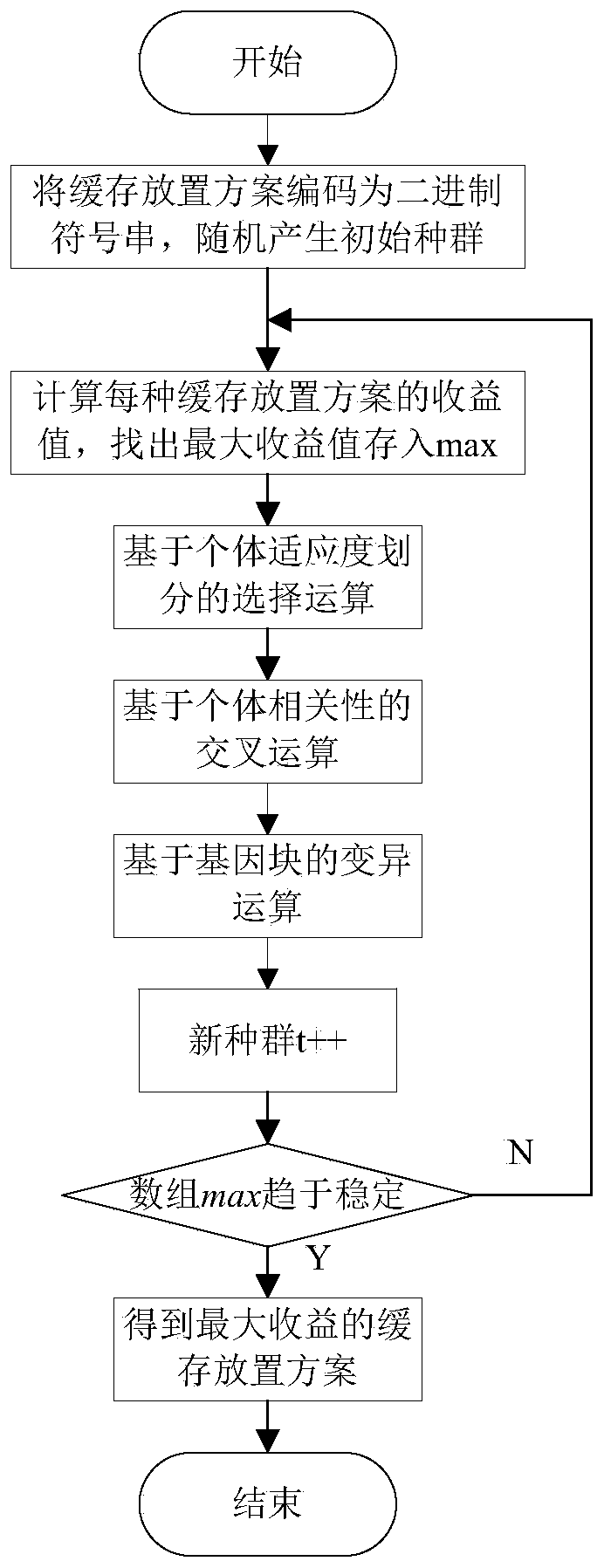

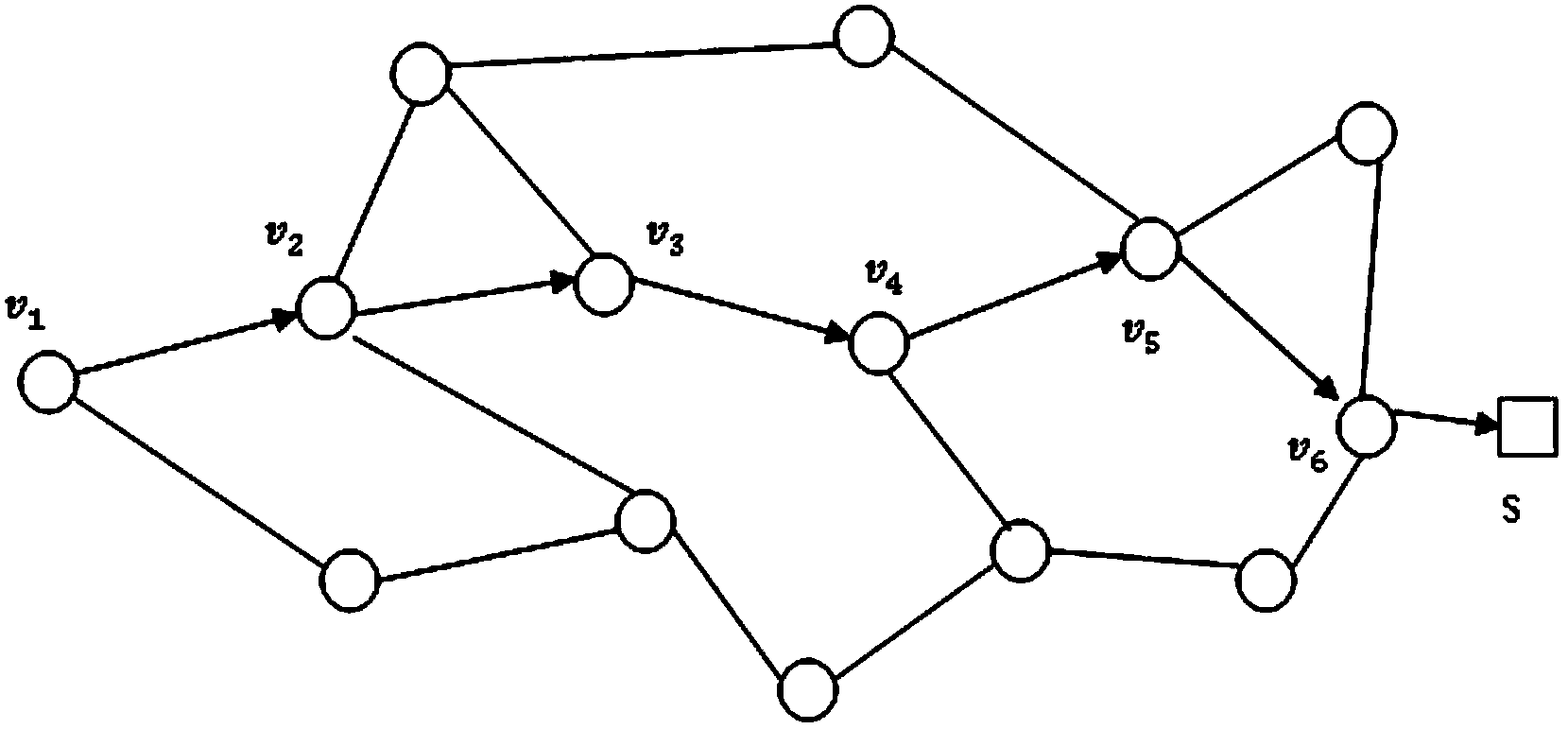

Method oriented to prediction-based optimal cache placement in content central network

ActiveCN104166630AReduce access latencyReduce redundancyInput/output to record carriersMemory adressing/allocation/relocationArray data structureData diversity

The invention belongs to the technical field of networks, and particularly relates to a method oriented to prediction-based optimal cache placement in a content central network. The method can be used for data cache in the content central network. The method includes the steps that cache placement schemes are encoded into binary symbol strings, 1 stands for cached objects, 0 stands for non-cached objects, and an initial population is generated randomly; the profit value of each cache placement scheme is calculated, and the maximum profit value is found and stored in an array max; selection operation based on individual fitness division is conducted; crossover operation based on individual correlation is conducted; variation operation based on gene blocks is conducted; a new population, namely, a new cache placement scheme is generated; whether the array max tends to be stable or not is judged, and if the array max is stable, maximum profit cache placement is acquired. The method has the advantages that user access delay is effectively reduced, the content duplicate request rate and the network content redundancy are reduced, network data diversity is enhanced, the cache performance of the whole network is remarkably improved, and higher cache efficiency is achieved.

Owner:HARBIN ENG UNIV

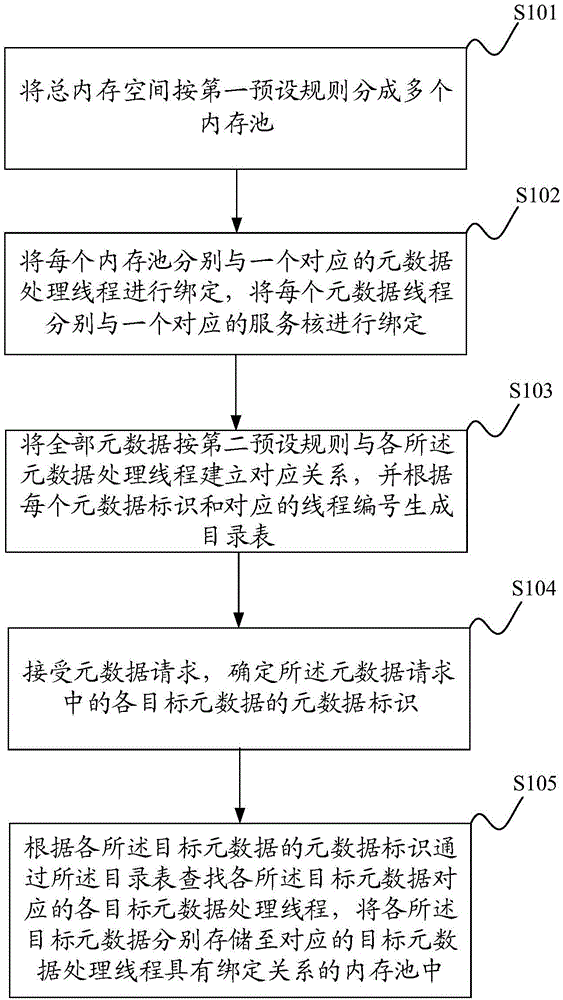

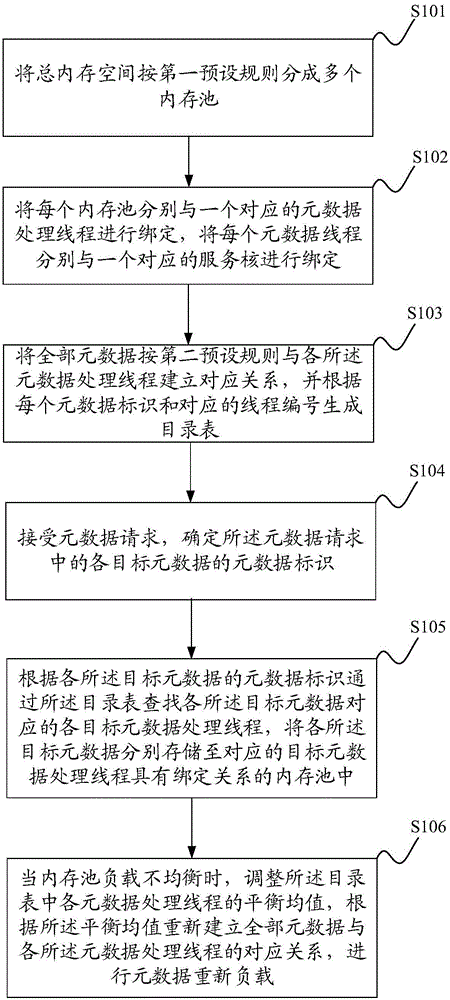

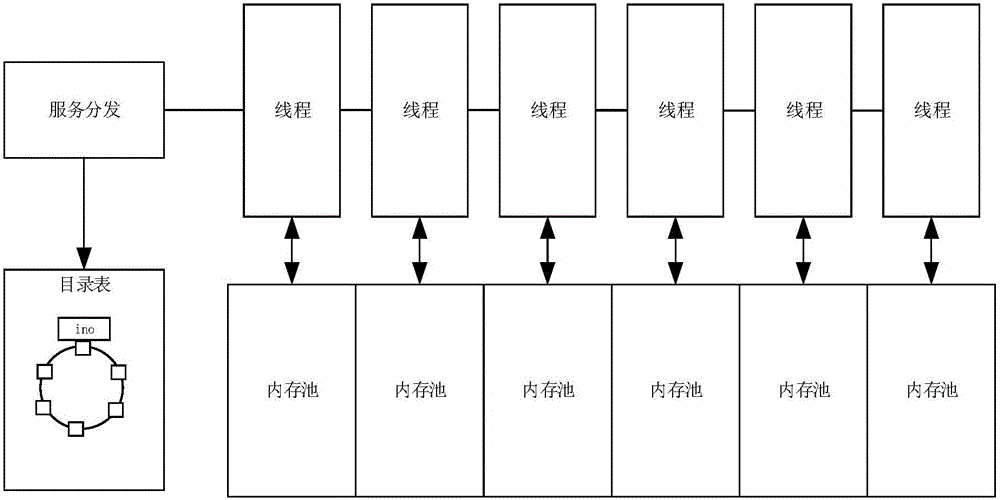

File request processing method and system

ActiveCN105094992AImprove caching capacityImprove hitResource allocationSpecial data processing applicationsMetadataMemory pool

The invention discloses a file request processing method and system. A total memory space is divided into multiple memory pools according to a first preset rule, each memory pool is bound to one corresponding metadata processing thread, each metadata processing thread is bound to one corresponding service core, correspondence relations between all metadata and all the metadata processing threads are established according to a second preset rule, a directory table is generated according to each metadata identifier and the corresponding thread number, a metadata request is accepted, metadata identifiers of all target metadata in the metadata request are determined, target metadata processing threads corresponding to the target metadata are searched through the directory table according to the metadata identifiers of the target metadata, all the target metadata are stored to the memory pools bound to the corresponding target metadata processing threads respectively, and multi-thread cache performance and memory access performance are improved.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

Access trace locality analysis-based shared buffer optimization method in multi-core environment

InactiveCN104572501AImprove caching capacityCutting costsResource allocationMemory adressing/allocation/relocationData migrationDistribution method

The invention provides an access trace locality analysis-based shared buffer optimization method in a multi-core environment. The method relates to a technology for improving program buffer performance in the multi-core environment and reducing cache interference between tasks. An access trace sequence of a task to be operated is pre-analyzed through a locality analysis method, the stage information is marked, and dynamic distribution and adjustment are performed in the actual execution process according to the stage change of the task. A buffer distribution method is adopted in the distribution and adjustment process; the execution of other tasks cannot be affected according to distribution and adjustment of each task, and the data migration is greatly reduced. The buffer optimization method has the advantages of obvious optimization effect, flexibility in use and small extra cost.

Owner:凯习(北京)信息科技有限公司

Method and apparatus for improving data cache performance using inter-procedural strength reduction of global objects

InactiveUS7555748B2Improve caching capacitySoftware engineeringSpecific program execution arrangementsParallel computingForward pass

Inter-procedural strength reduction is provided by a mechanism of the present invention to improve data cache performance. During a forward pass, the present invention collects information of global variables and analyzes the usage pattern of global objects to select candidate computations for optimization. During a backward pass, the present invention remaps global objects into smaller size new global objects and generates more cache efficient code by replacing candidate computations with indirect or indexed reference of smaller global objects and inserting store operations to the new global objects for each computation that references the candidate global objects.

Owner:INT BUSINESS MASCH CORP

Data block frequency map dependent caching

InactiveUS8271736B2Improve system performanceImprove caching capacityMemory systemsParallel computingCache algorithms

A method for increasing the performance and utilization of cache memory by combining the data block frequency map generated by data de-duplication mechanism and page prefetching and eviction algorithms like Least Recently Used (LRU) policy. The data block frequency map provides weight directly proportional to the frequency count of the block in the dataset. This weight is used to influence the caching algorithms like LRU. Data blocks that have lesser frequency count in the dataset are evicted before those with higher frequencies, even though they may not have been the topmost blocks for page eviction by caching algorithms. The method effectively combines the weight of the block in the frequency map and its eviction status by caching algorithms like LRU to get an improved performance and utilization of the cache memory.

Owner:IBM CORP

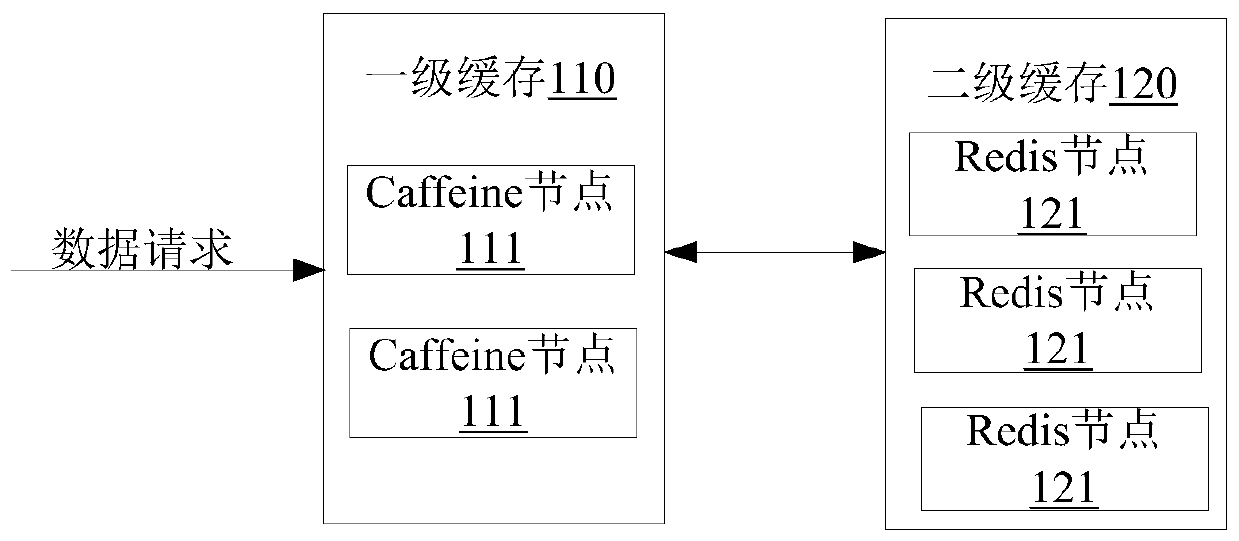

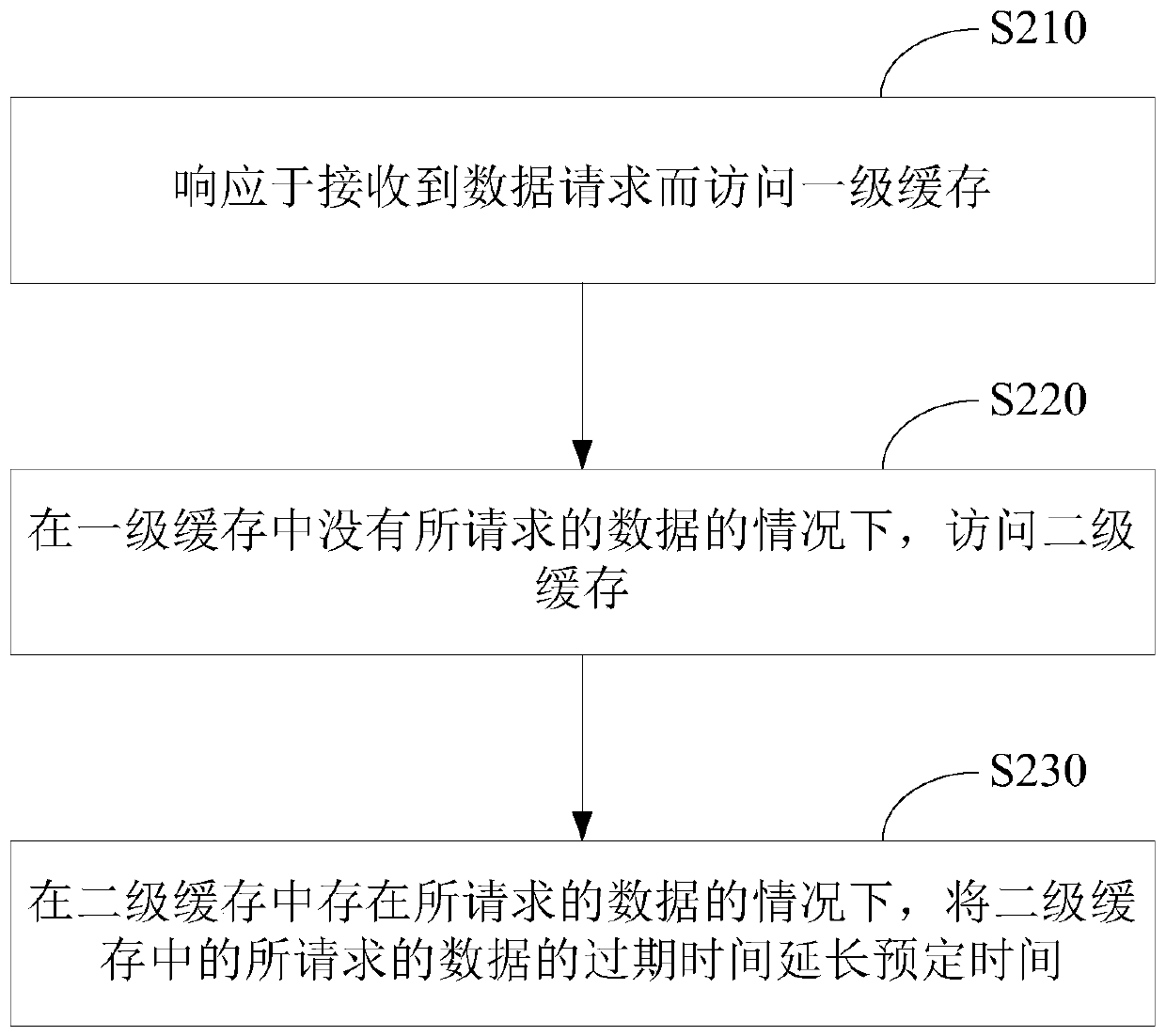

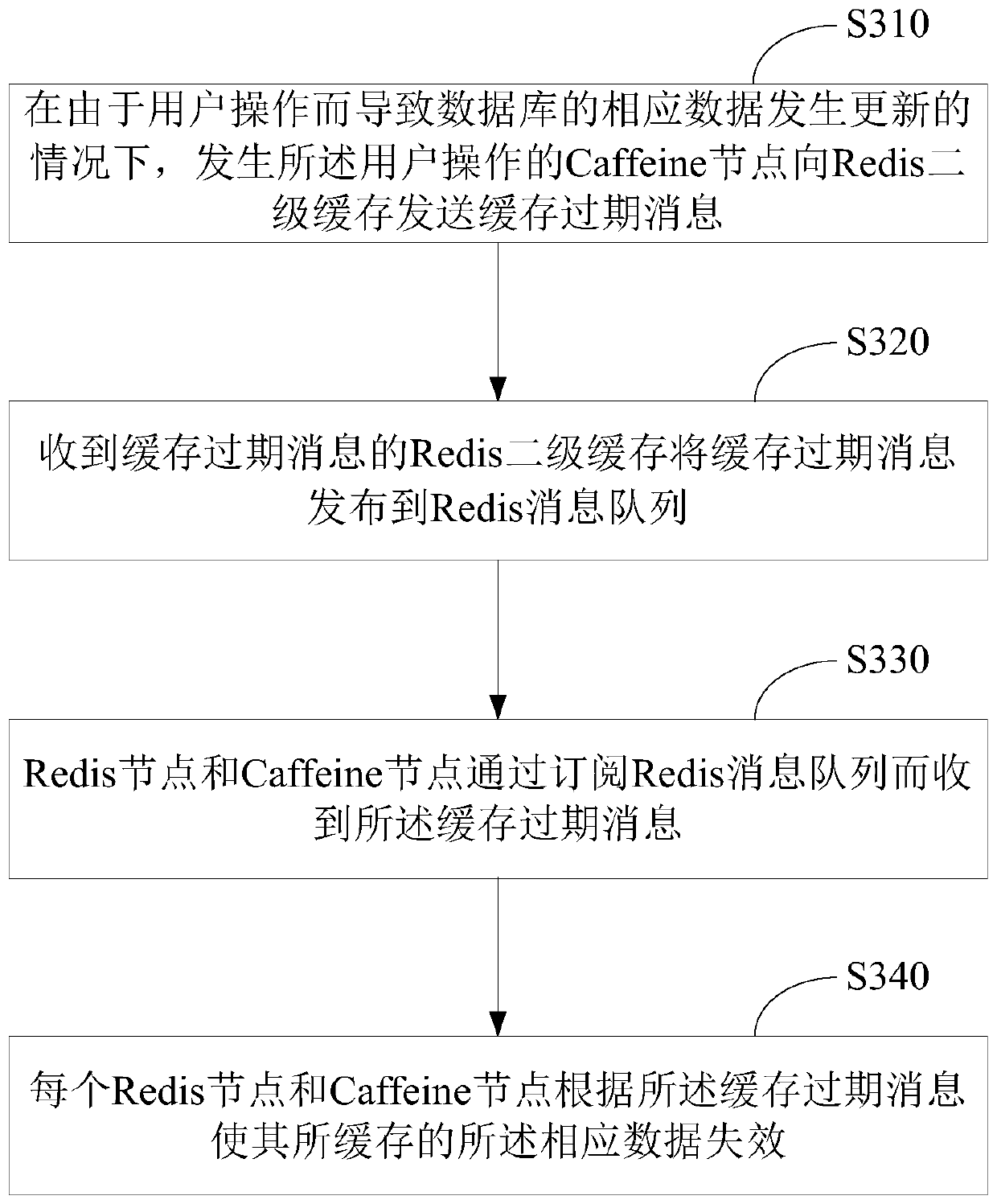

Multilevel cache system, access control method and device thereof and storage medium

PendingCN110069419AImprove caching efficiencyImprove caching capacityTransmissionMemory systemsExpiration TimeAccess control matrix

The invention relates to the technical field of cloud storage, in particular to a multi-level caching system and an access control method and device thereof and a storage medium. The method comprisesthe following steps: accessing a first-level cache in response to receiving a data request; under the condition that the requested data does not exist in the first-level cache, accessing the second-level cache; if there is the requested data in the second level cache, extending the expiration time of the requested data in the second level cache for a predetermined time. Through the embodiments ofthe invention, an optimized distributed storage technology can be provided, the cache efficiency is improved, and the cache capacity expansion cost is reduced.

Owner:CHINA PING AN LIFE INSURANCE CO LTD

Surface resource view hash for coherent cache operations in texture processing hardware

ActiveUS20150089151A1Lack textureFetches from system memory are thereby reducedMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingHash table

Owner:NVIDIA CORP

Selectively powering down tag or data memories in a cache based on overall cache hit rate and per set tag hit rate

InactiveUS7818502B2Easy to handleReduce power consumptionMemory architecture accessing/allocationEnergy efficient ICTParallel computingData memory

A CPU incorporating a cache memory is provided, in which a high processing speed and low power consumption are realized at the same time. A CPU incorporating an associative cache memory including a plurality of sets is provided, which includes a means for observing a cache memory area which does not contribute to improving processing performance of the CPU in accordance with an operating condition, and changing such a cache memory area to a resting state dynamically. By employing such a structure, a high-performance and low-power consumption CPU can be provided.

Owner:SEMICON ENERGY LAB CO LTD

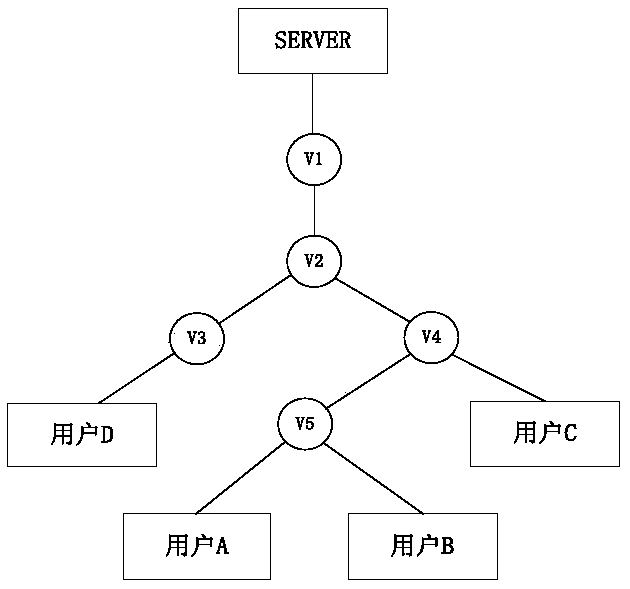

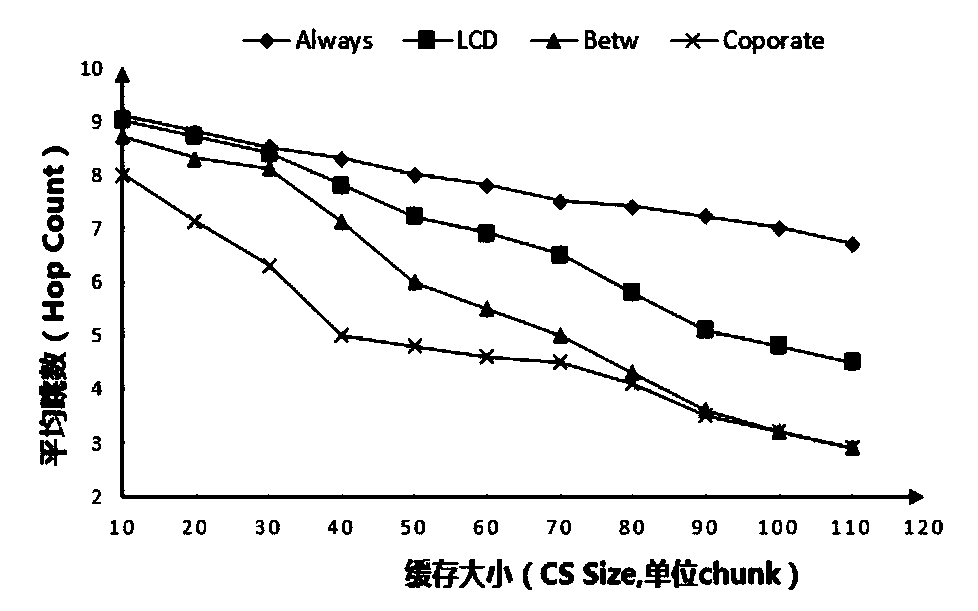

CCN caching method based on content popularity and node importance

ActiveCN108366089ASolve the problem of frequent cache replacementReduce redundancyTransmissionRankingCache hit rate

The invention discloses a CCN caching method based on content popularity and node importance. On the basis of carrying out ranking on the content popularity, a centrality of a node is considered; whenrequest contents are returned along an original path, the contents are cached on a node with the maximum node centrality; after the node is full cached, a content popularity ranking table is generated in the node; then popularity of newly arrived contents is respectively compared to the maximum and minimum popularity in the node; and then whether the new contents are cached in the node is decided. A simulation result shows that according to the scheme, a routing node cache hit ratio is improved; and an average hop count of acquired contents is reduced.

Owner:NANJING UNIV OF POSTS & TELECOMM

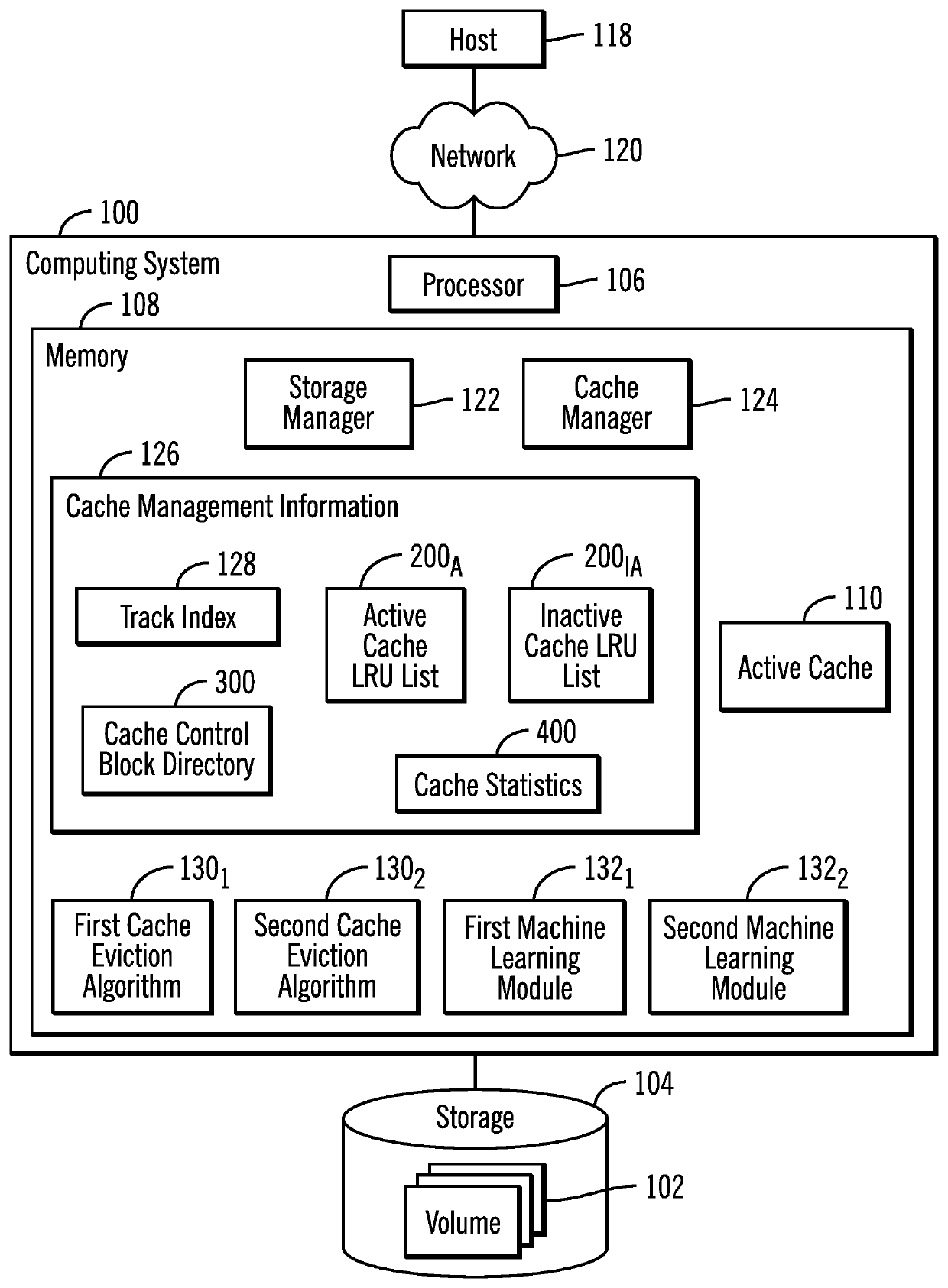

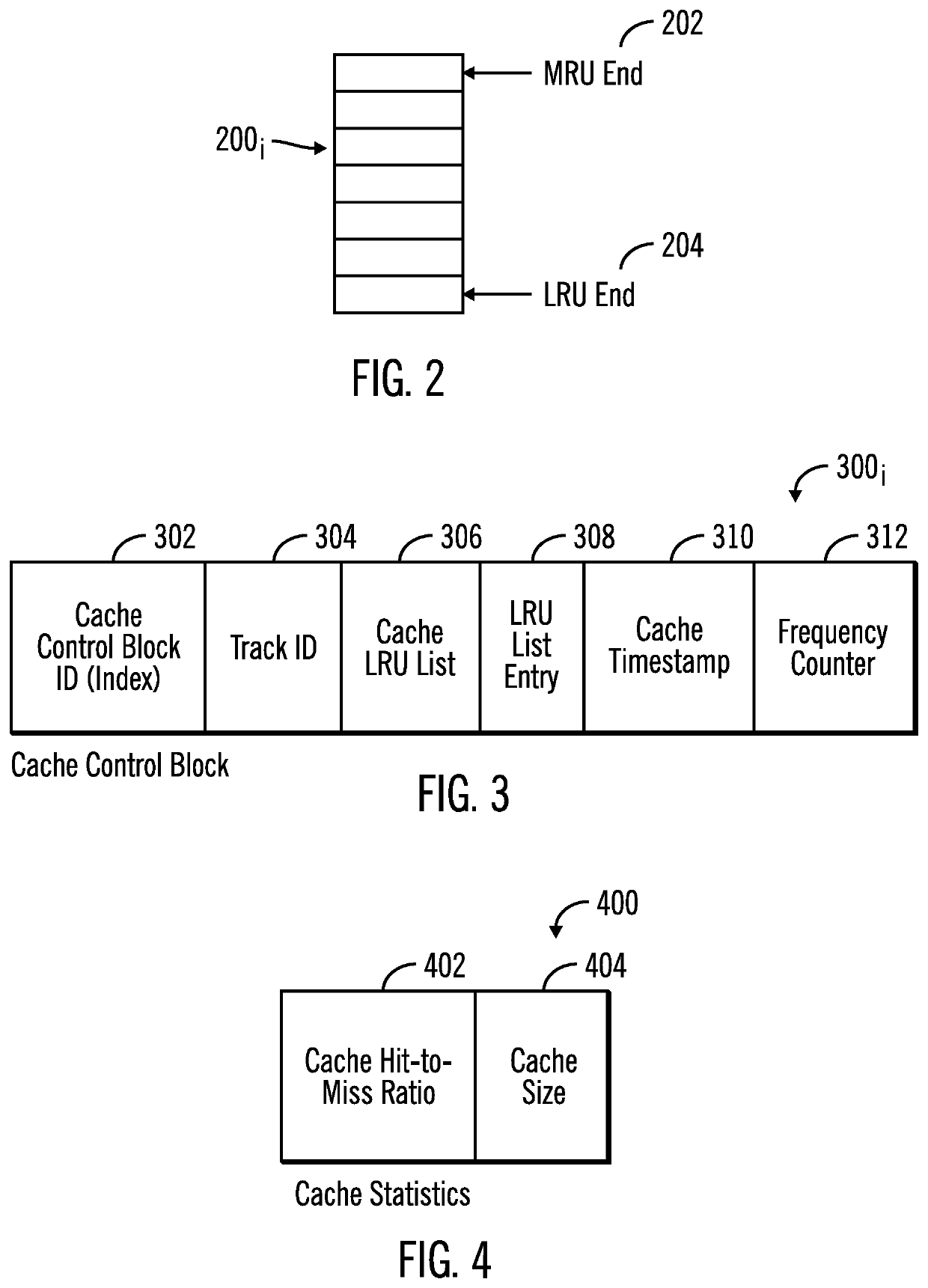

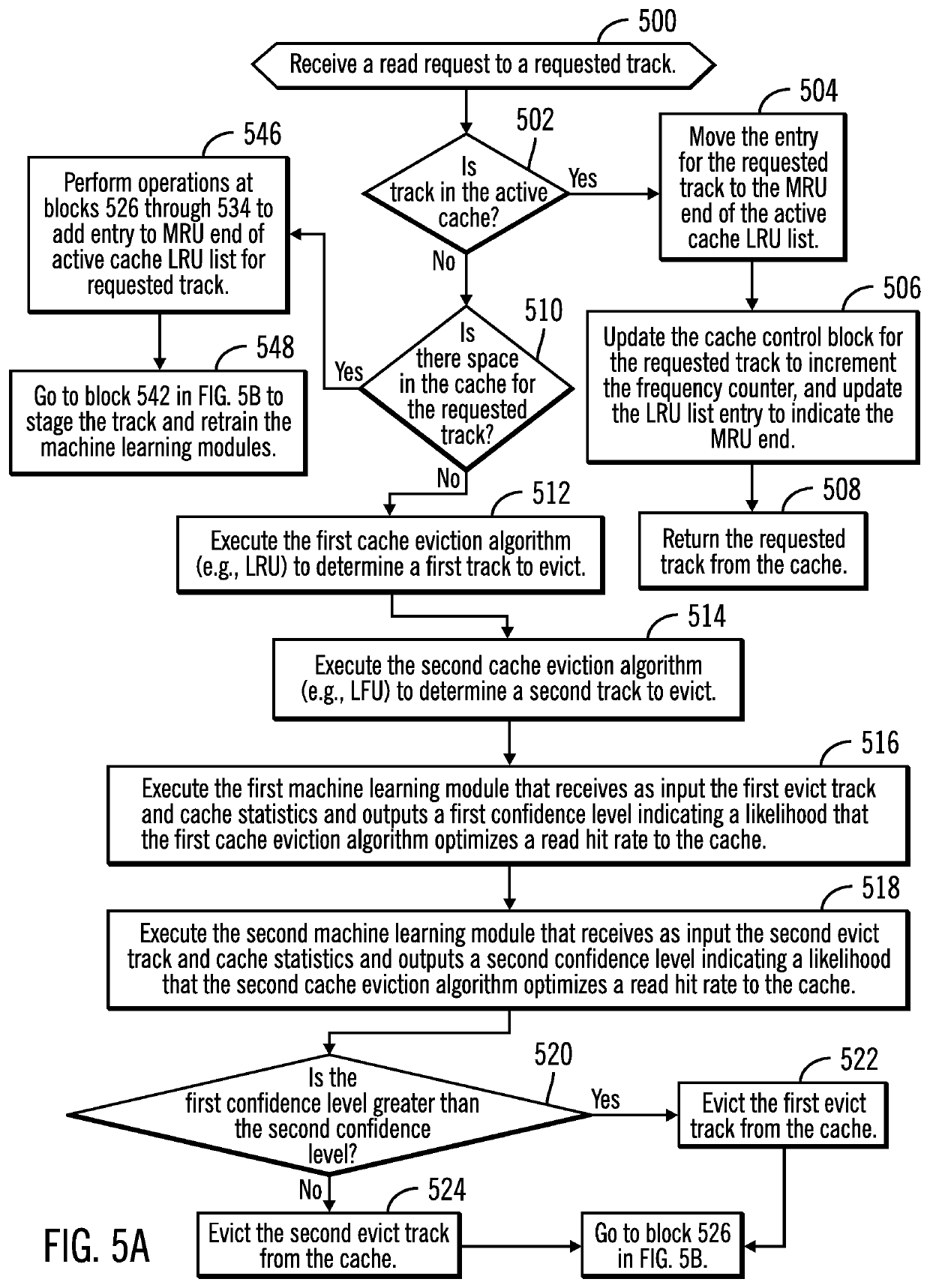

Selecting one of multiple cache eviction algorithms to use to evict a track from the cache by training a machine learning module

ActiveUS20190354489A1Improve hit rateReduce the possibilityMemory architecture accessing/allocationNeural architecturesParallel computingComputer program

Provided are a computer program product, system, and method for using a machine learning module to select one of multiple cache eviction algorithms to use to evict a track from the cache. A first cache eviction algorithm determines tracks to evict from the cache. A second cache eviction algorithm determines tracks to evict from the cache, wherein the first and second cache eviction algorithms use different eviction schemes. At least one machine learning module is executed to produce output indicating one of the first cache eviction algorithm and the second cache eviction algorithm to use to select a track to evict from the cache. A track is evicted that is selected by one of the first and second cache eviction algorithms indicated in the output from the at least one machine learning module.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com