Patents

Literature

163 results about "Dirty page" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Dirty pages are the pages which contain the data which are yet not committed to the hard drive. (Please note that we are not talking about Dirty Reads here. We are talking about the dirty pages in memory which essentially means data which is in memory but not yet moved to hard drive).

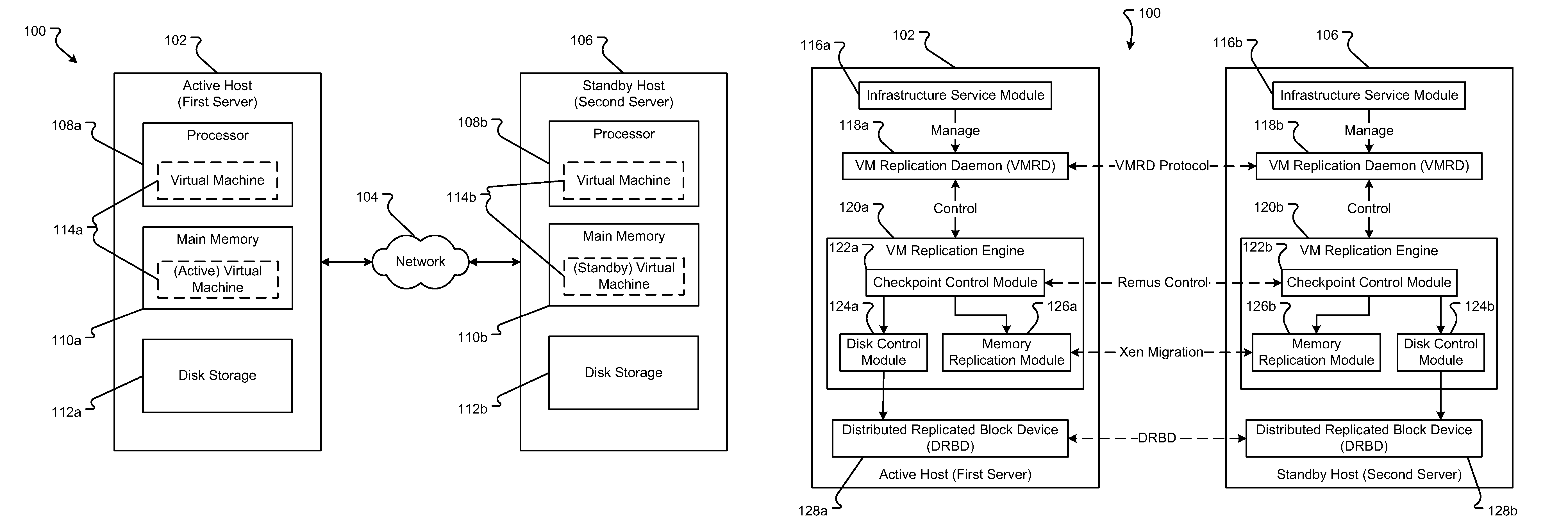

Method and Apparatus for Efficient Memory Replication for High Availability (HA) Protection of a Virtual Machine (VM)

ActiveUS20120084782A1Prevents buffer overflowSmall bufferMemory architecture accessing/allocationError detection/correctionDirty pageBuffer overflow

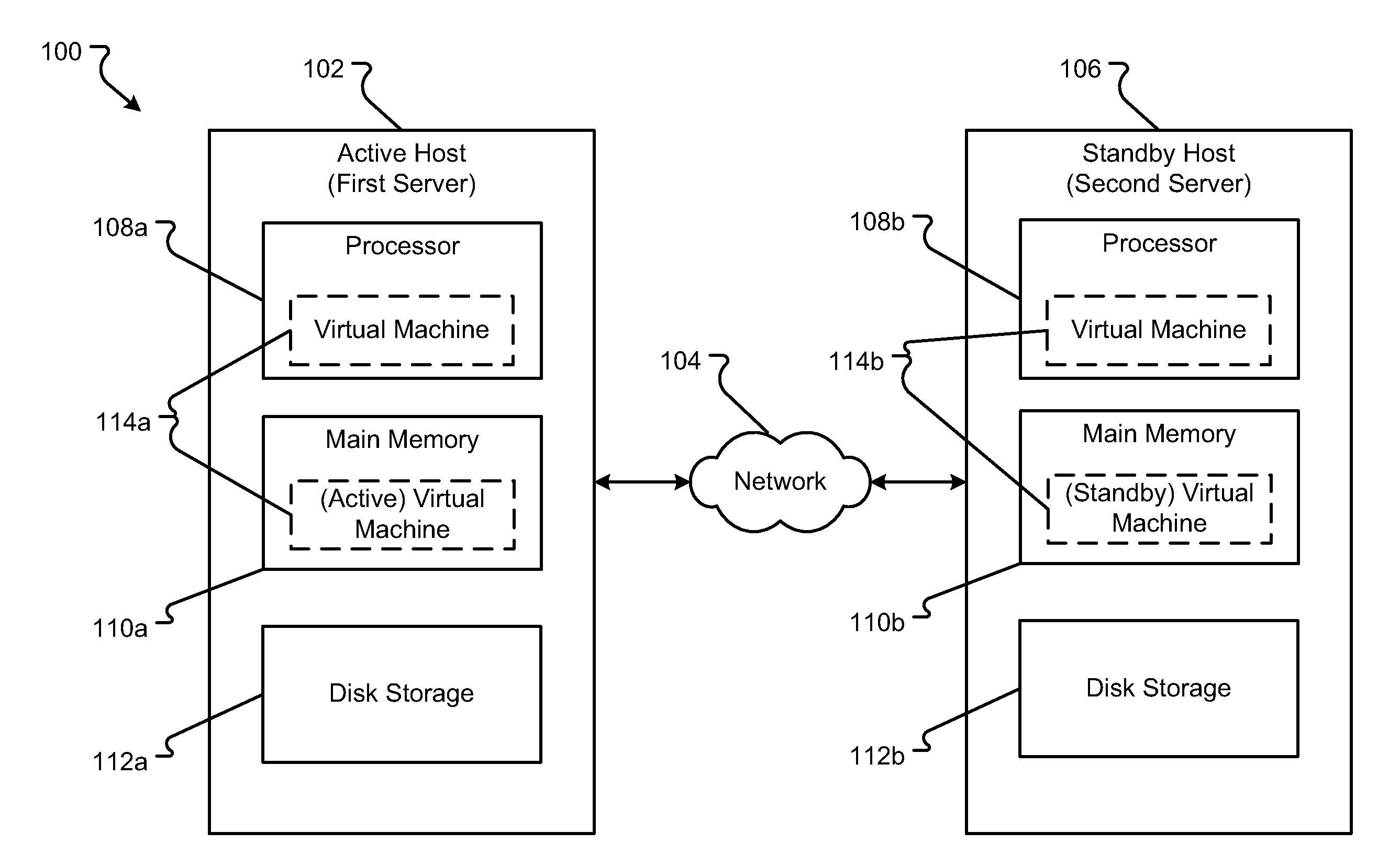

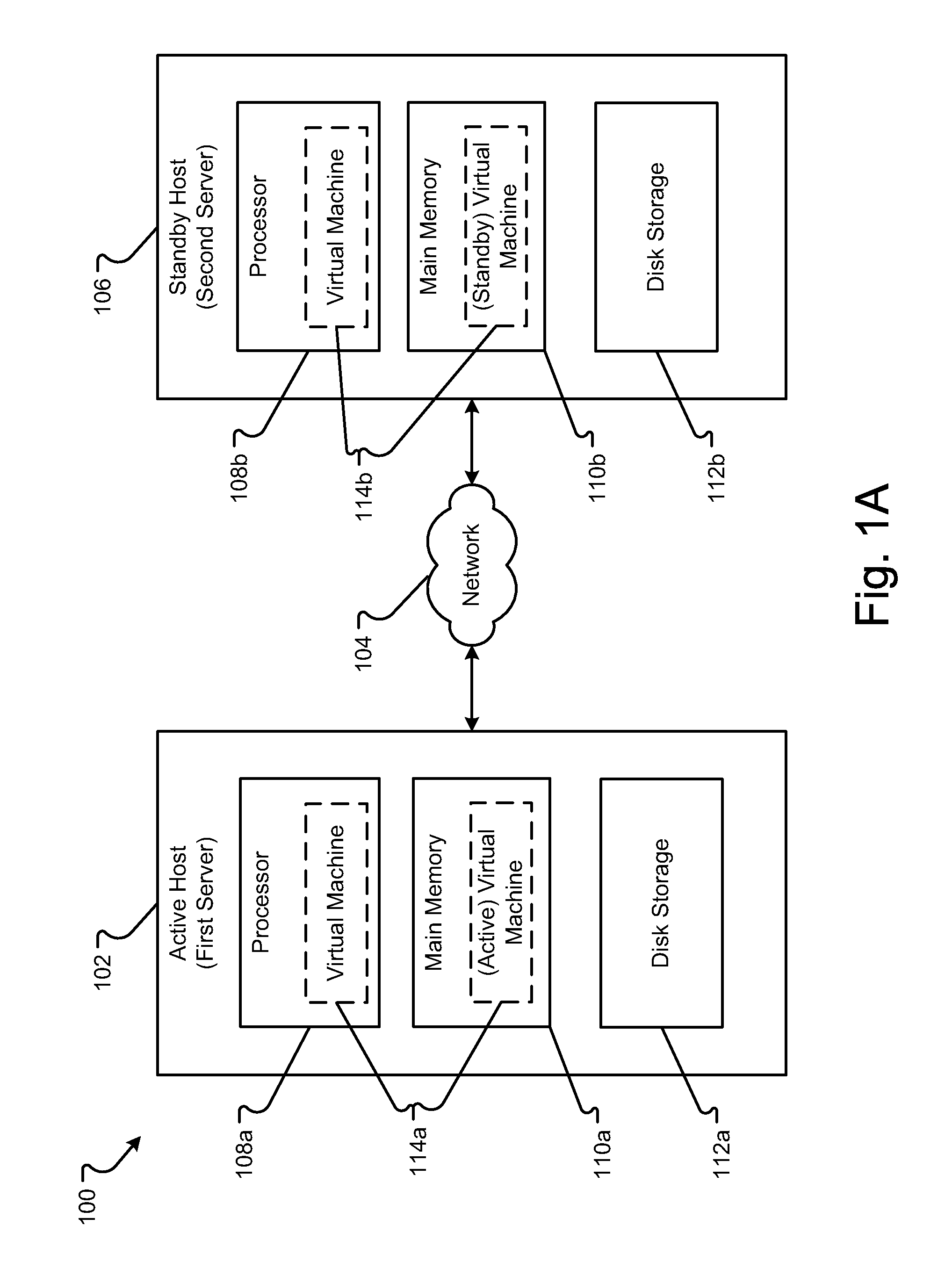

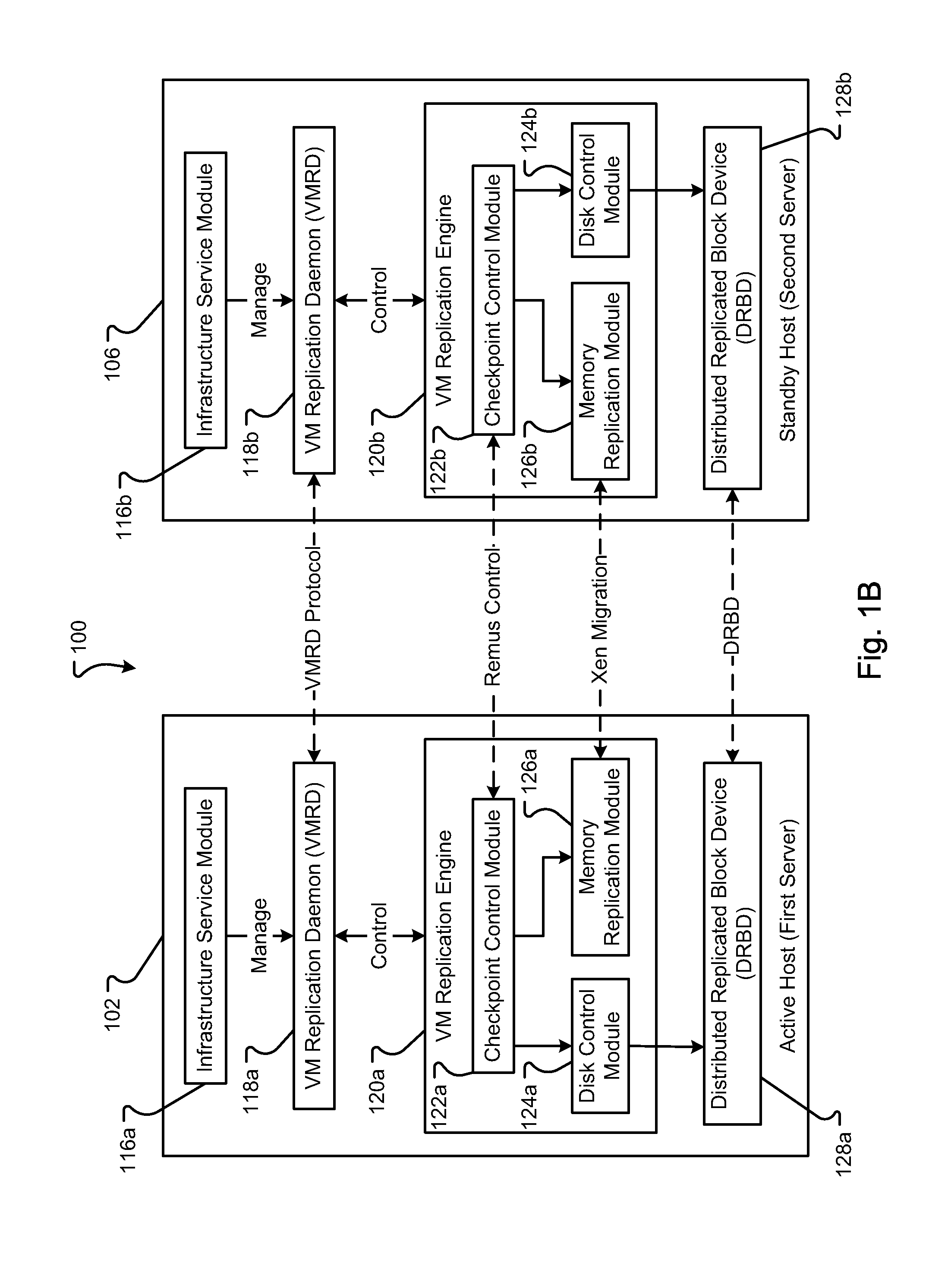

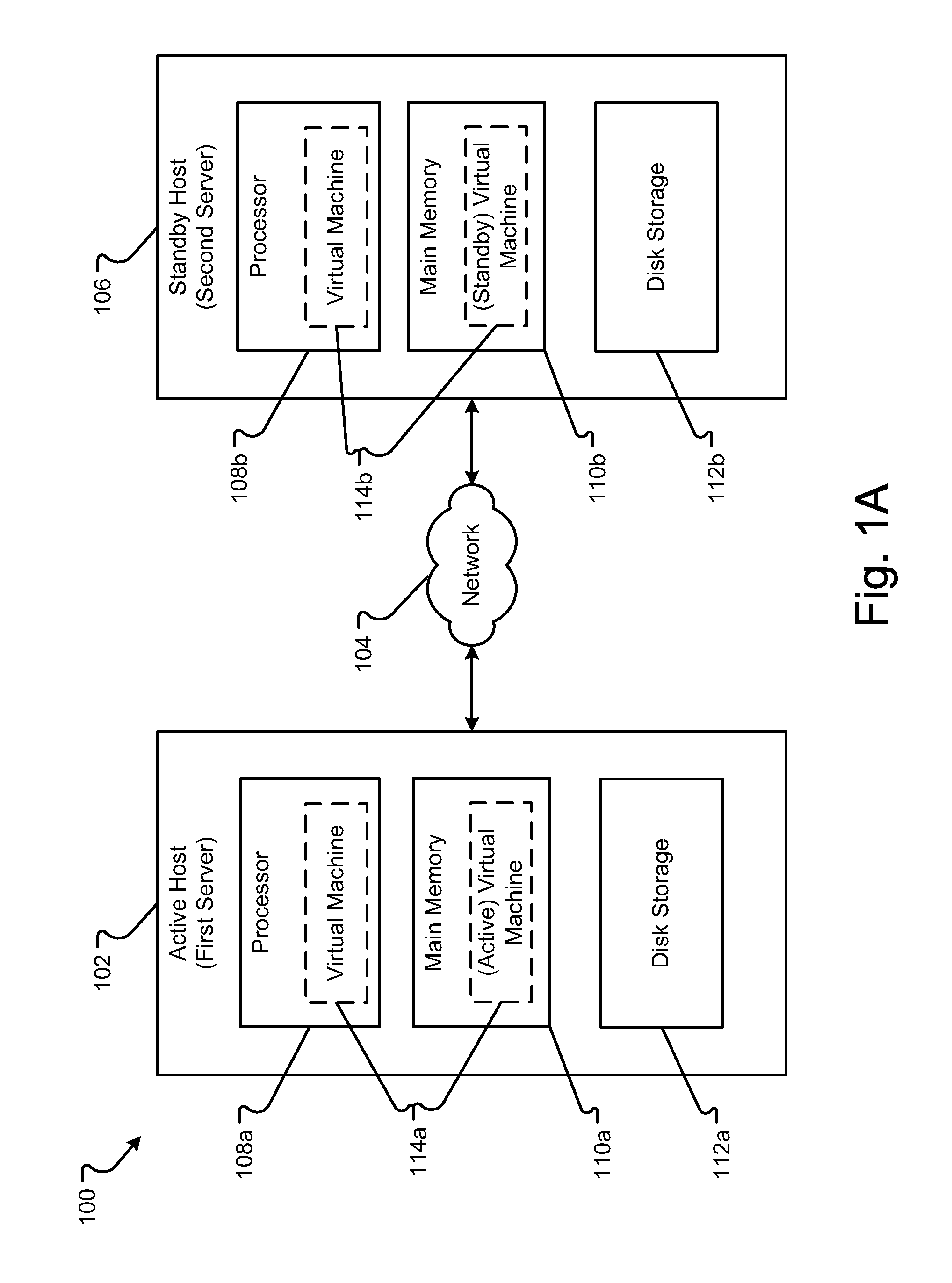

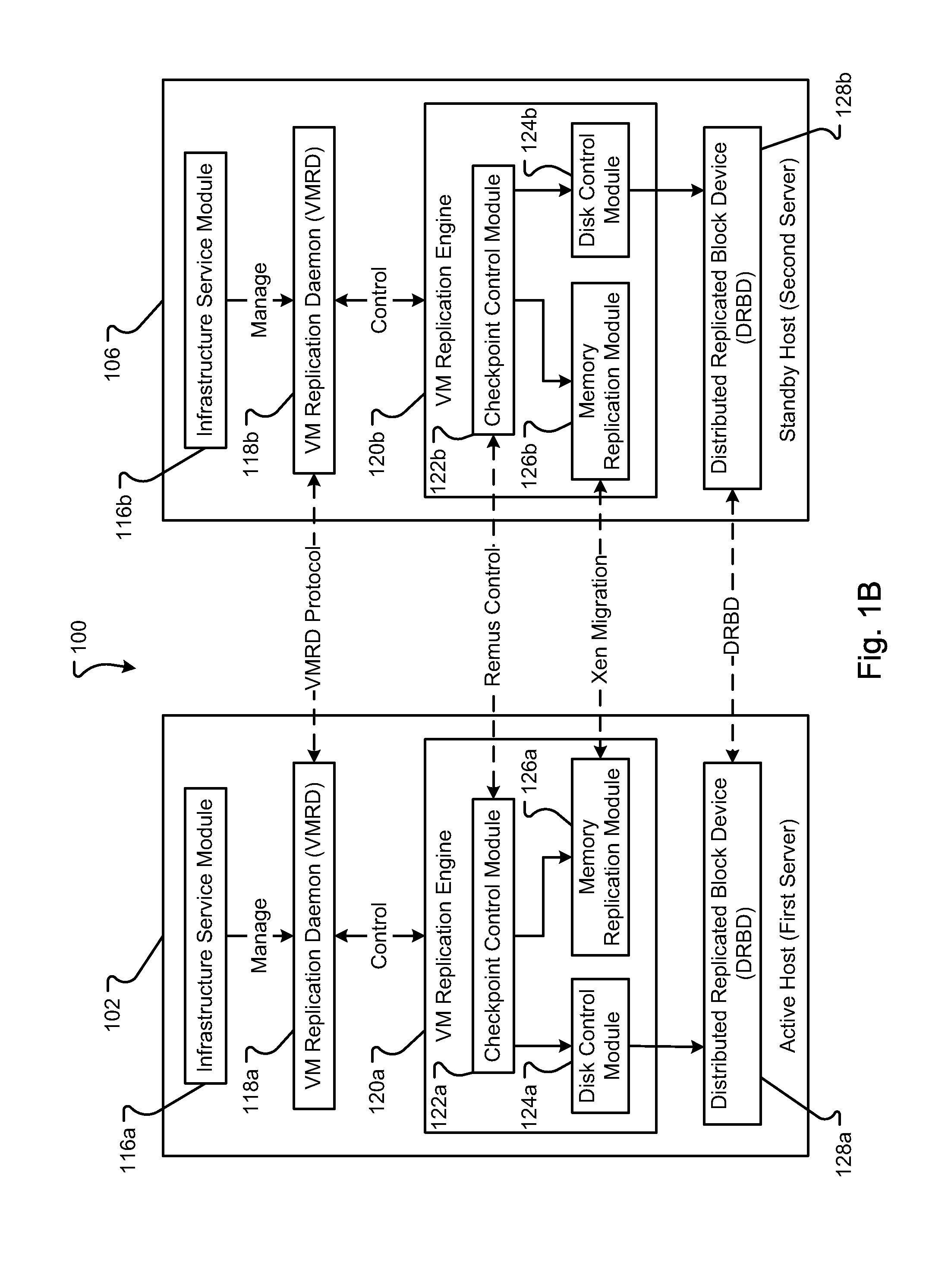

High availability (HA) protection is provided for an executing virtual machine. At a checkpoint in the HA process, the active server suspends the virtual machine; and the active server copies dirty memory pages to a buffer. During the suspension of the virtual machine on the active host server, dirty memory pages are copied to a ring buffer. A copy process copies the dirty pages to a first location in the buffer. At a predetermined benchmark or threshold, a transmission process can begin. The transmission process can read data out of the buffer at a second location to send to the standby host. Both the copy and transmission processes can operate substantially simultaneously on the ring buffer. As such, the ring buffer cannot overflow because the transmission process continues to empty the ring buffer as the copy process continues. This arrangement allows for smaller buffers and prevents buffer overflows.

Owner:AVAYA INC

Method and apparatus for facilitating process migration

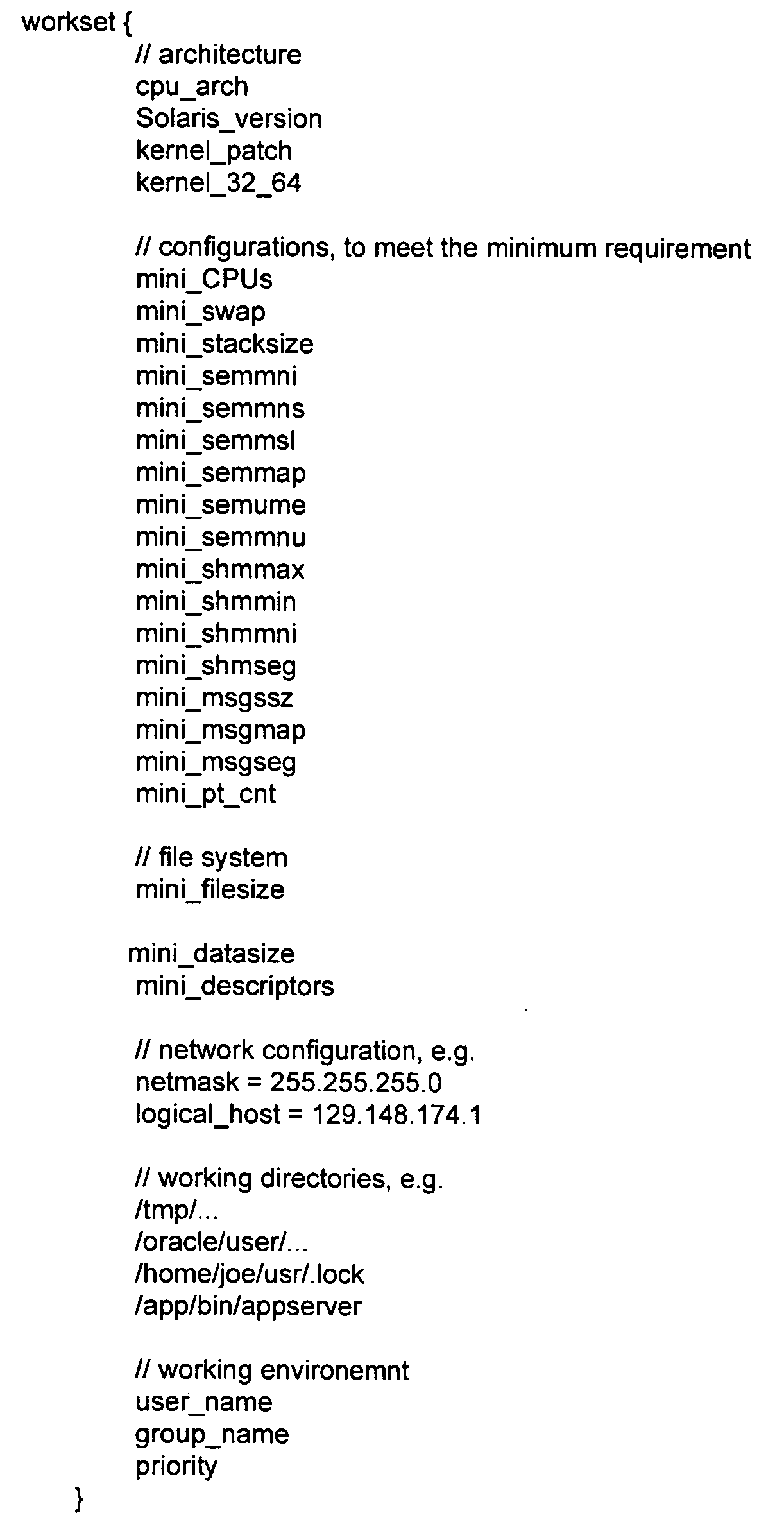

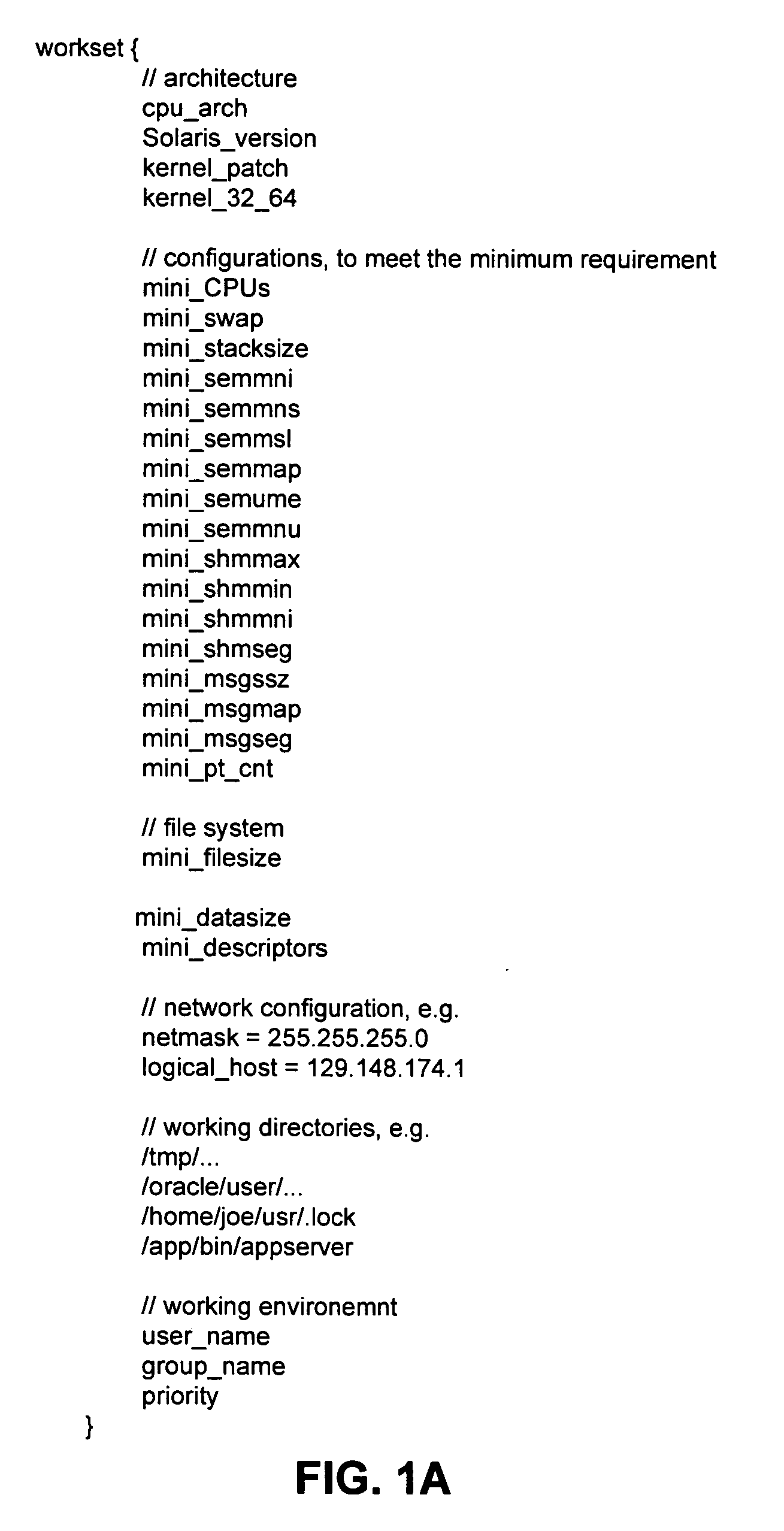

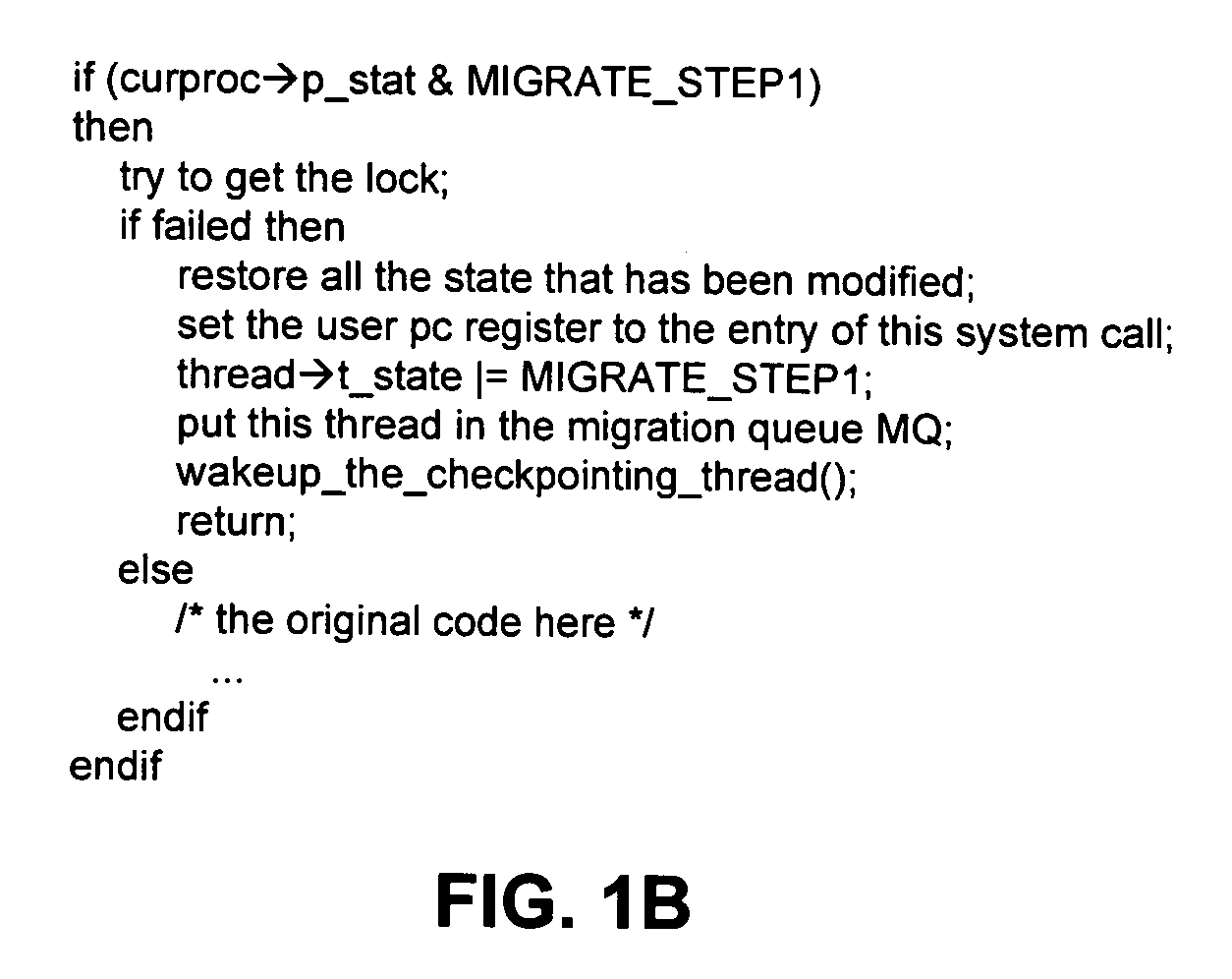

A system that migrates a process from a source computer system to a target computer system. During operation, the system generates a checkpoint for the process on the source computer system, wherein the checkpoint includes a kernel state for the process. Next, the system swaps out dirty pages of a user context for the process to a storage device which is accessible by both the source computer system and the target computer system and transfers the checkpoint to the target computer system. The system then loads the kernel state contained in the checkpoint into a skeleton process on the target computer system. Next, the system swaps in portions of the user context for the process from the storage device to the target computer system and resumes execution of the process on the target computer system.

Owner:ORACLE INT CORP

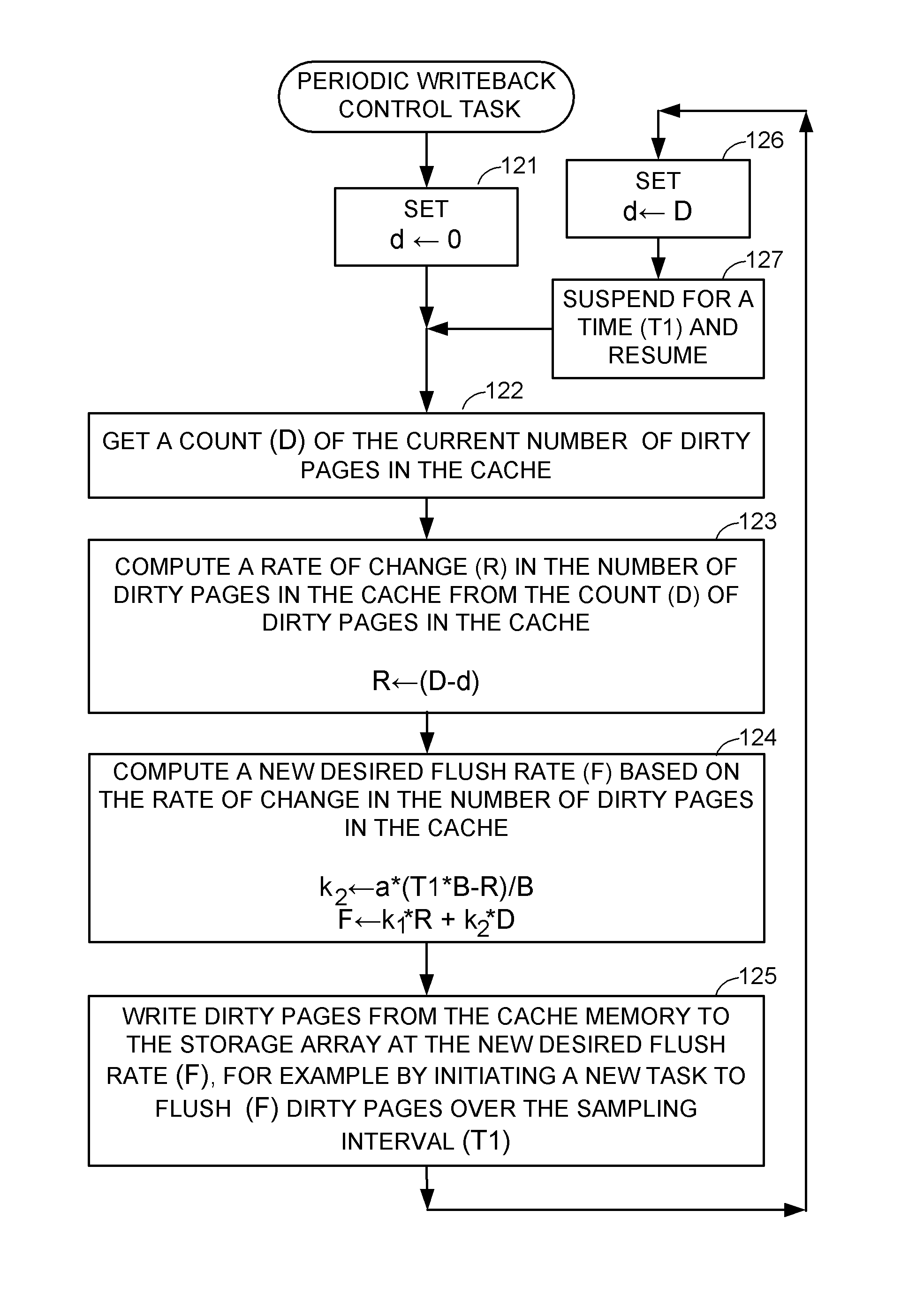

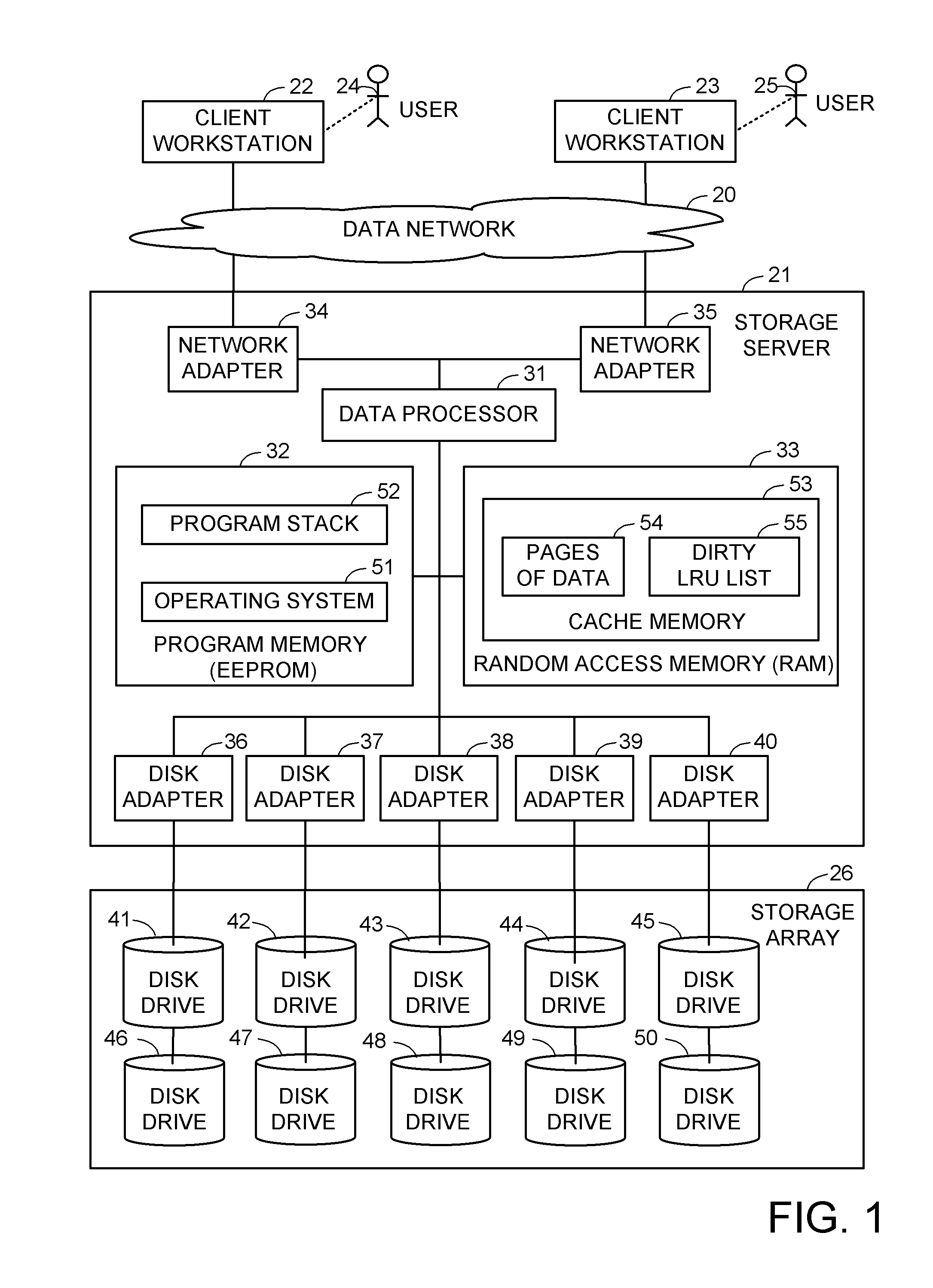

Rate proportional cache write-back in a storage server

ActiveUS8402226B1Good cache writeback performanceMinimal interferenceMemory systemsInput/output processes for data processingPage countHigh rate

Based on a count of the number of dirty pages in a cache memory, the dirty pages are written from the cache memory to a storage array at a rate having a component proportional to the rate of change in the number of dirty pages in the cache memory. For example, a desired flush rate is computed by adding a first term to a second term. The first term is proportional to the rate of change in the number of dirty pages in the cache memory, and the second term is proportional to the number of dirty pages in the cache memory. The rate component has a smoothing effect on incoming I / O bursts and permits cache flushing to occur at a higher rate closer to the maximum storage array throughput without a significant detrimental impact on client application performance.

Owner:EMC IP HLDG CO LLC

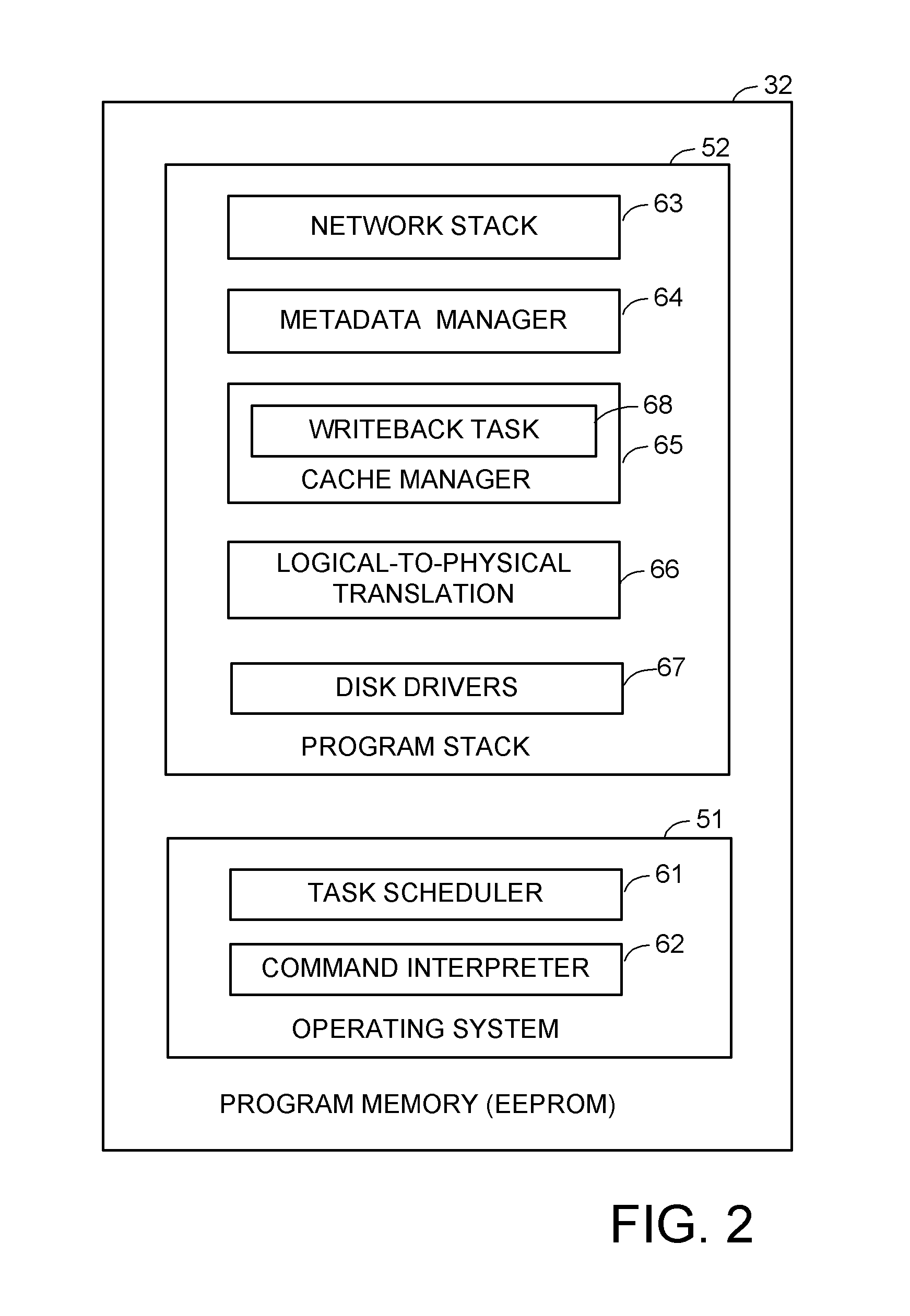

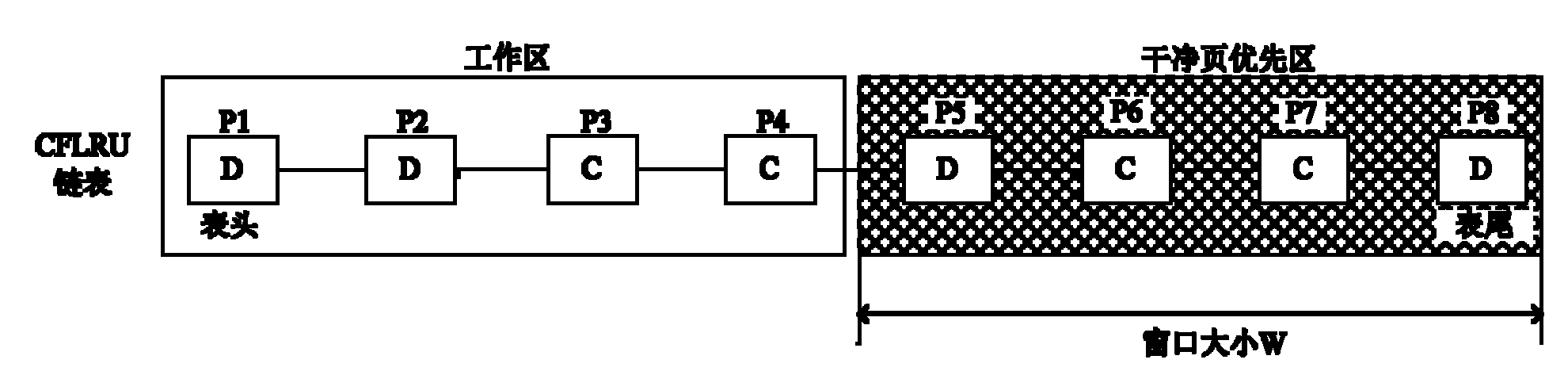

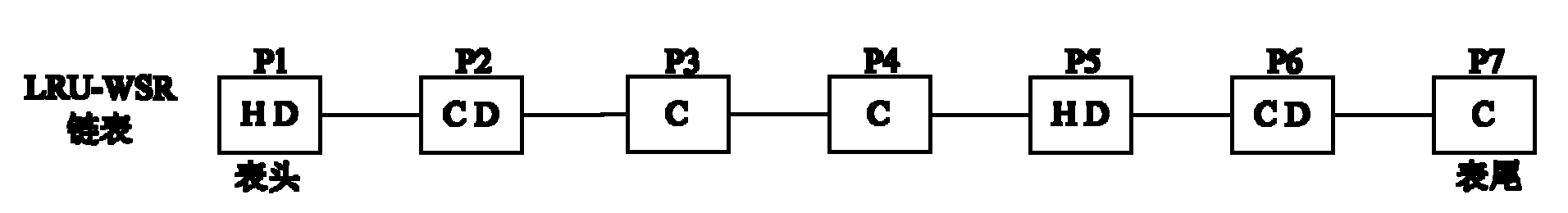

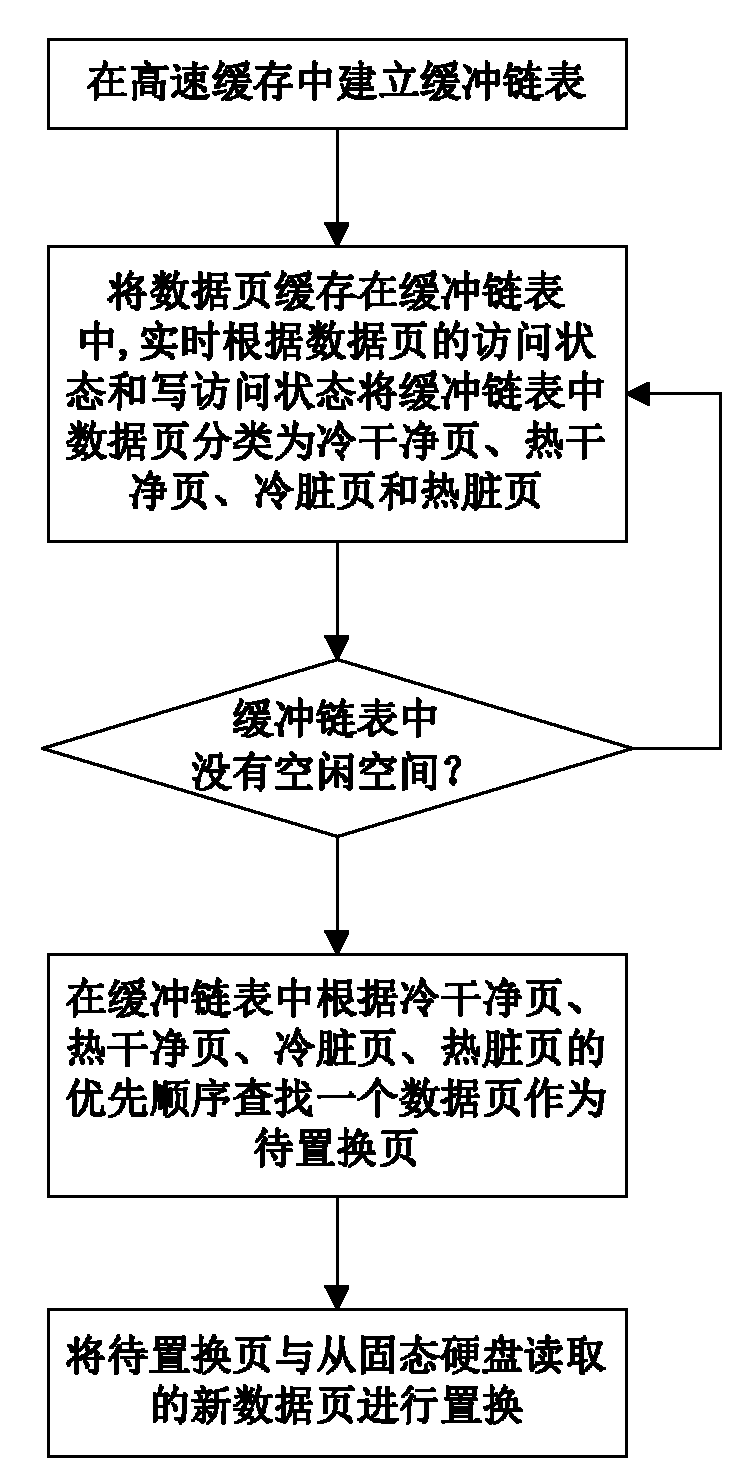

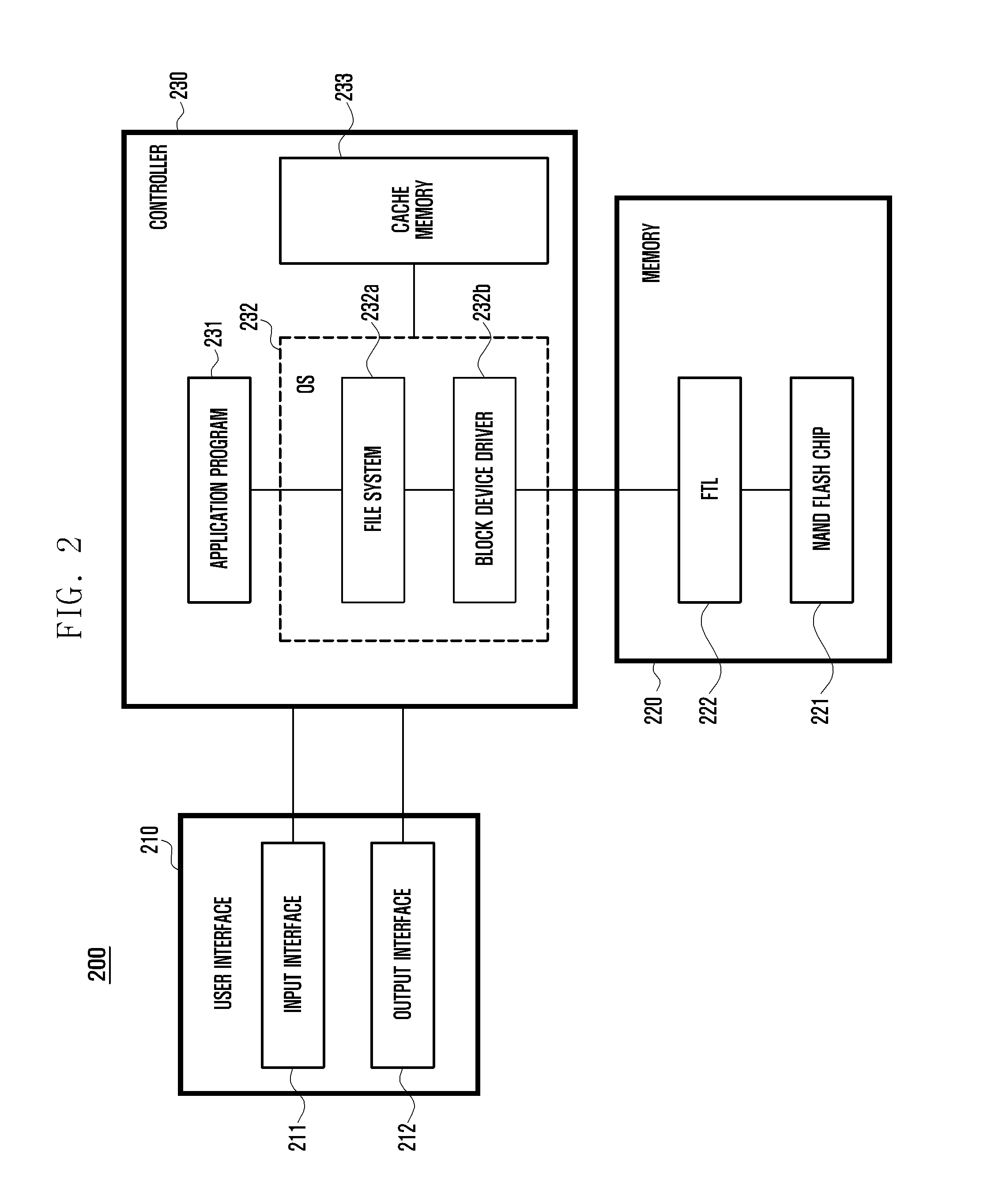

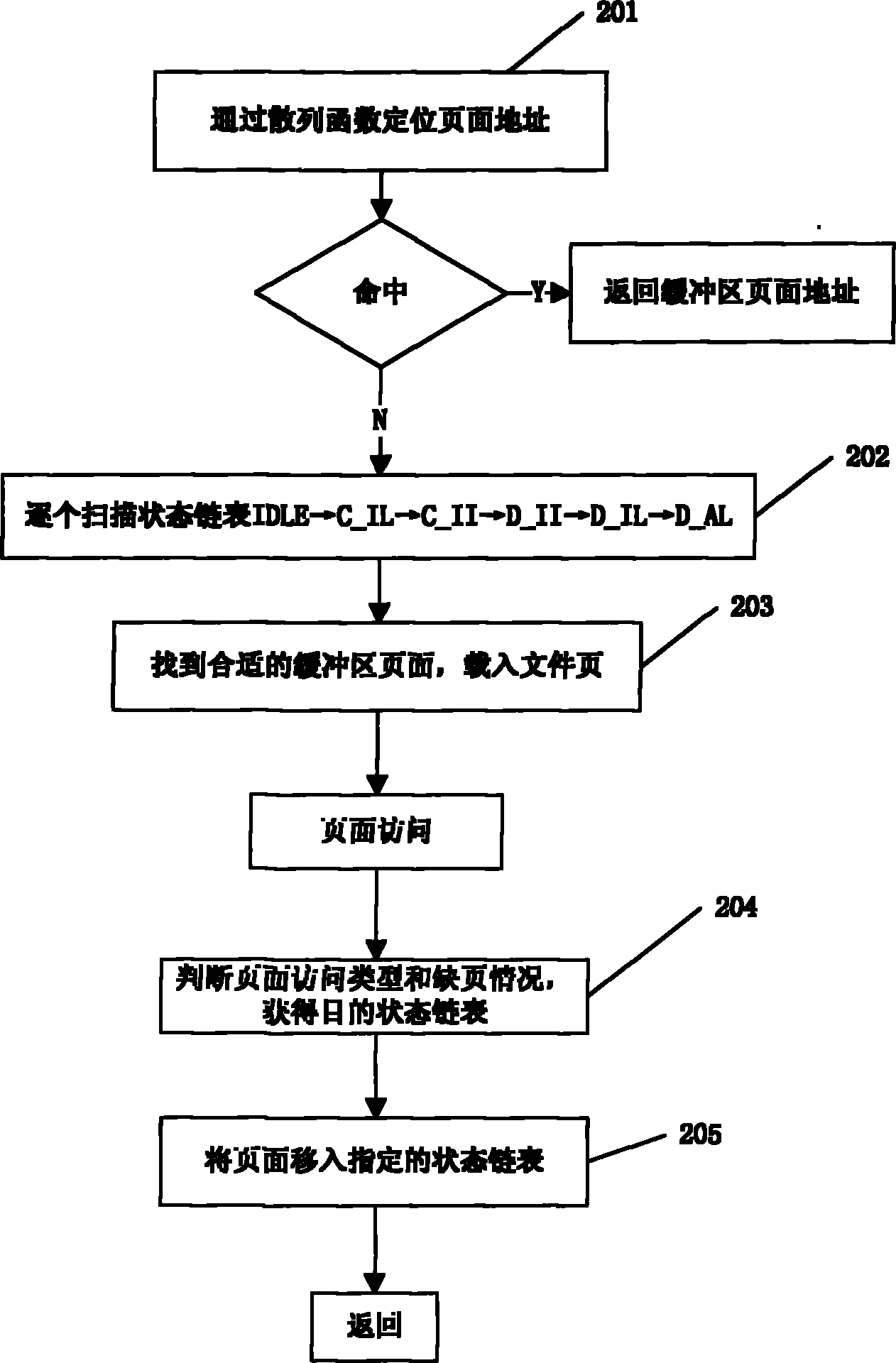

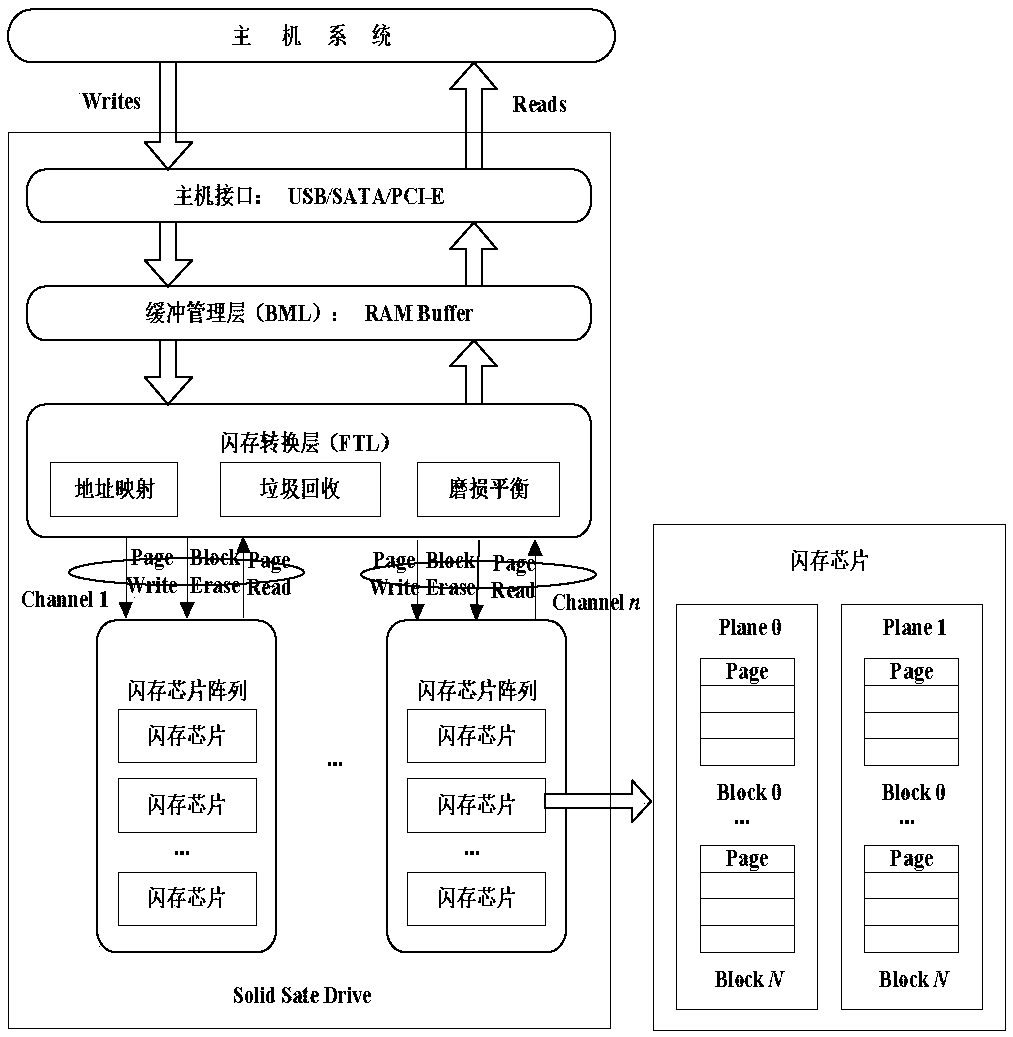

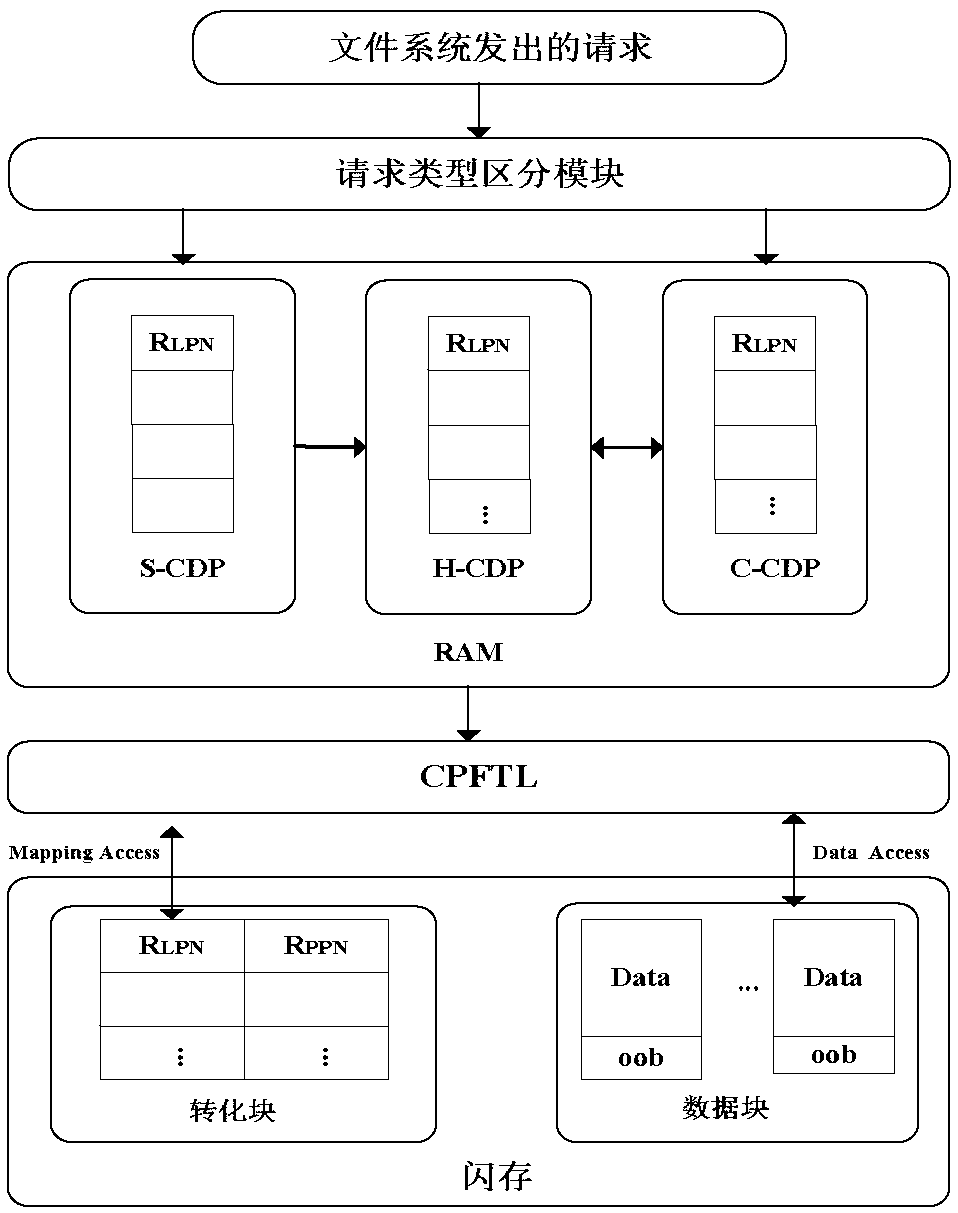

Data page caching method for file system of solid-state hard disc

ActiveCN102156753AReduce overheadImprove hit rateSpecial data processing applicationsDirty pageExternal storage

The invention discloses a data page caching method for a file system of a solid-state hard disc, which comprises the following implementation steps of: (1) establishing a buffer link list used for caching data pages in a high-speed cache; (2) caching the data pages read in the solid-state hard disc in the buffer link list for access, classifying the data pages in the buffer link list into cold clean pages, hot clean pages, cold dirty pages and hot dirty pages in real time according to the access states and write access states of the data pages; (3) firstly searching a data page as a page to be replaced in the buffer link list according to the priority of the cold clean pages, the hot clean pages, the cold dirty pages and the hot dirty pages, and replacing the page to be replaced with a new data page read from the solid-state hard disc when a free space does not exist in the buffer link list. In the invention, the characteristics of the solid-state hard disc can be sufficiently utilized, the performance bottlenecks of the external storage can be effectively relieved, and the storage processing performance of the system can be improved; moreover, the data page caching method has the advantages of good I / O (Input / Output) performance, low replacement cost for cached pages, low expense and high hit rate.

Owner:NAT UNIV OF DEFENSE TECH

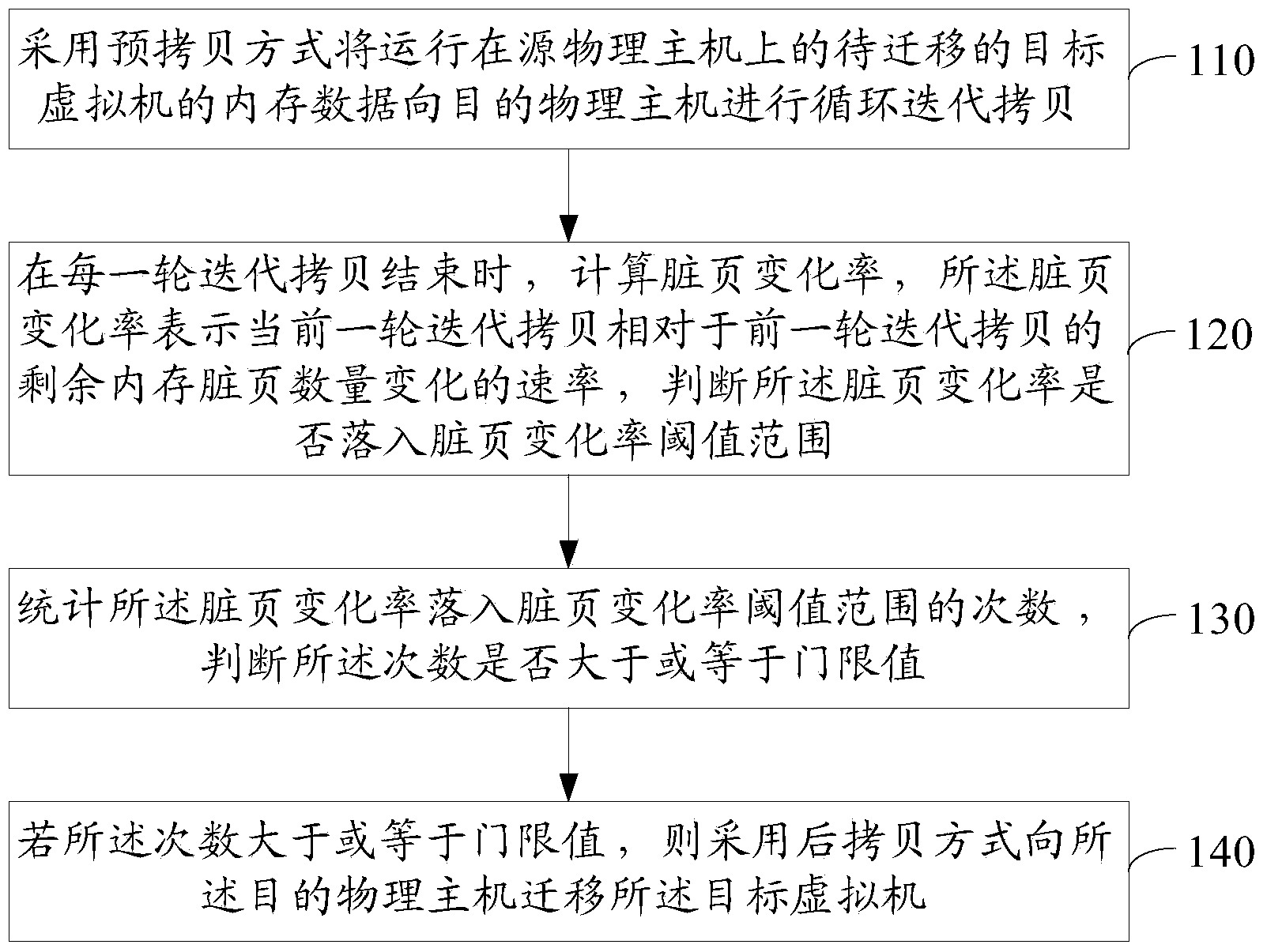

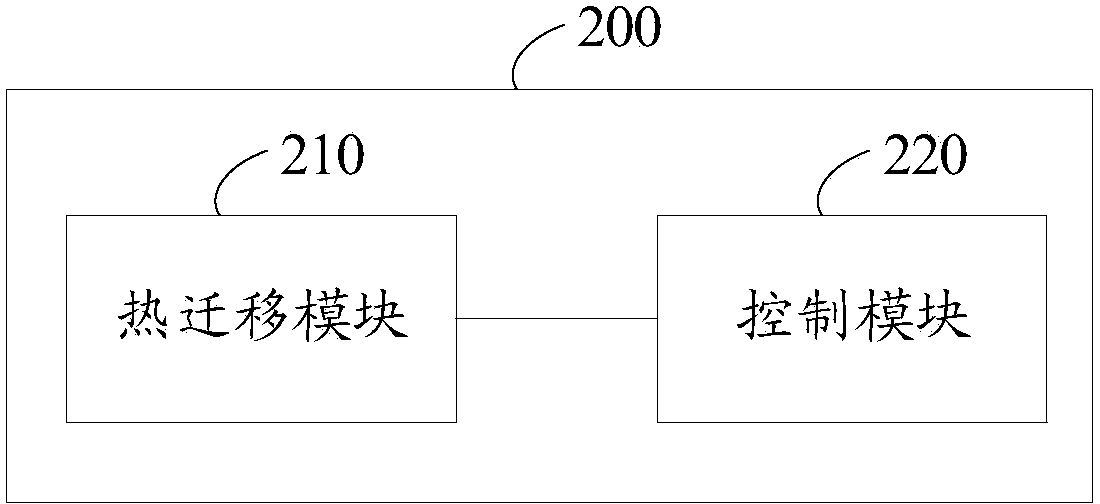

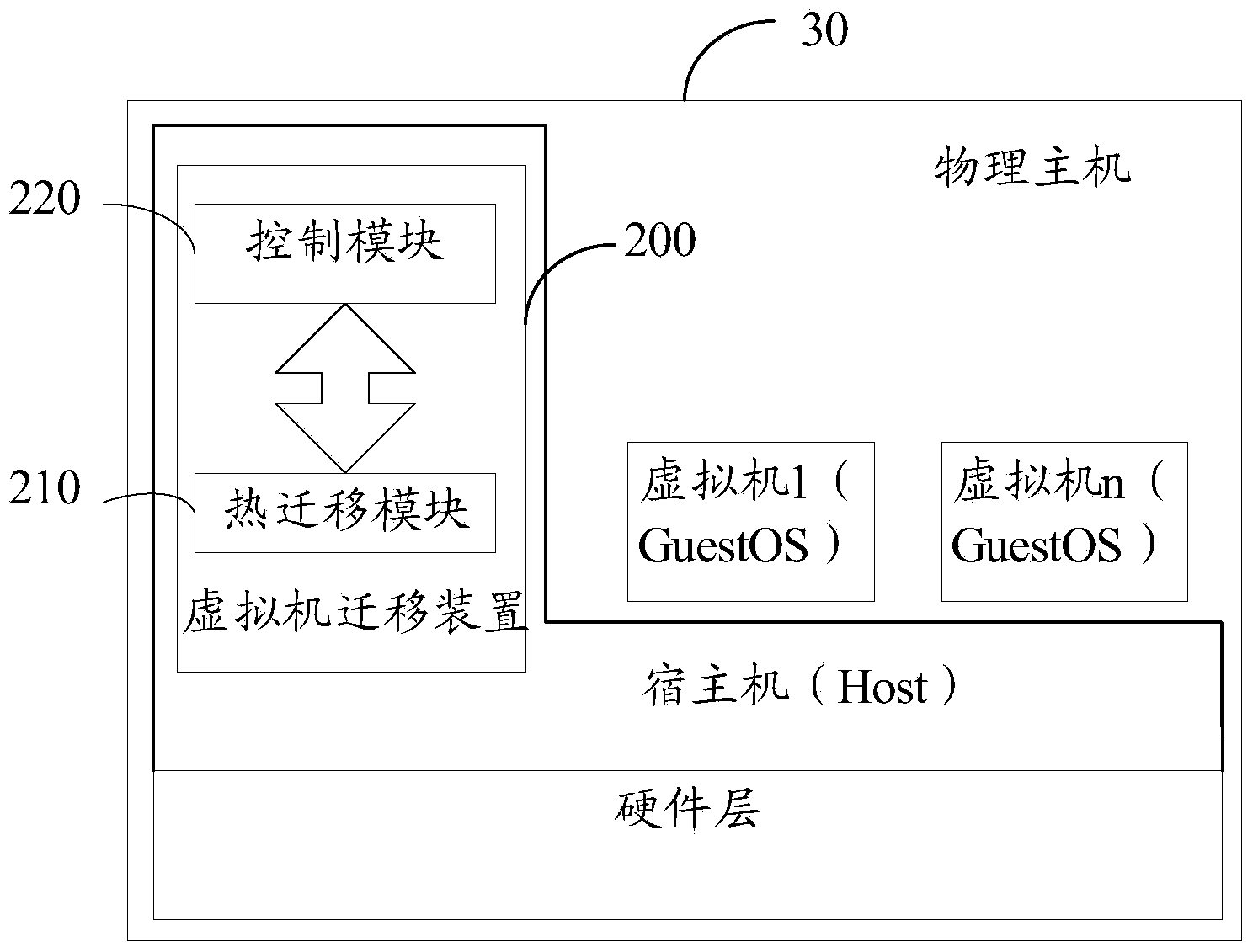

Migrating method and device for virtual machine, as well as physical host

ActiveCN103955399AImprove migration efficiencyEasy to useProgram initiation/switchingSoftware simulation/interpretation/emulationInternal memoryDirty page

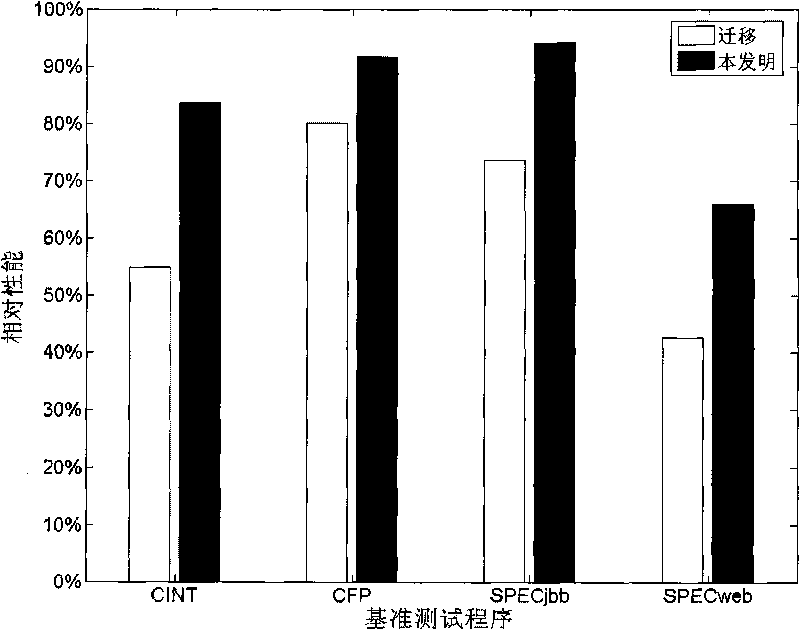

The invention discloses a migrating method and device for a virtual machine, and partly solves the problem that the switch timing between a precopy and a post-copy in a live migration hybrid-copy method is hard to determine. In some of the applicable embodiment of the invention, the virtual machine migration method comprises the steps as follows: firstly, copying the memory data of a target virtual machine to be migrated on a source physical host to a target physical host in a circulatory iteration manner by adopting a precopy method; secondly, after each round of iteration copy is ended, calculating the changing rate of a dirty page, wherein the changing rate of the dirty page represents the rate of the number changing of the remaining internal memory dirty page in a current round of iteration copy relative to the remaining internal memory dirty page in a previous round of iteration copy; thirdly, judging whether the dirty page changing rate is within a dirty page changing rate threshold range or not; fourthly, counting the frequency that the dirty page changing rate is within the dirty page changing rate threshold range and judging whether the frequency is larger than or equal to the threshold value; finally, if the frequency is larger than or equal to the threshold value, adopting the post-copy method for migrating the target virtual machine to the target physical host.

Owner:常州横塘科技产业有限公司

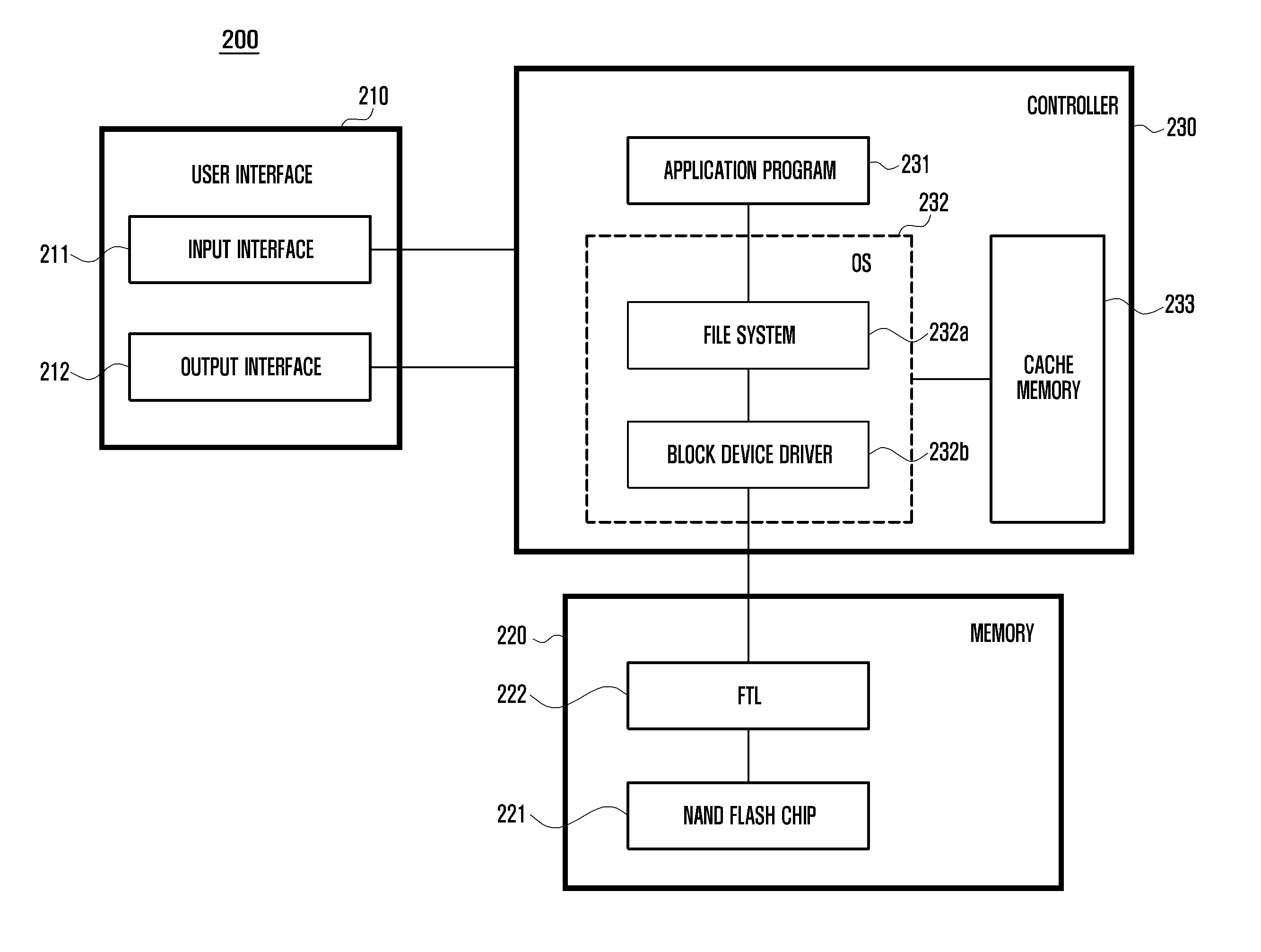

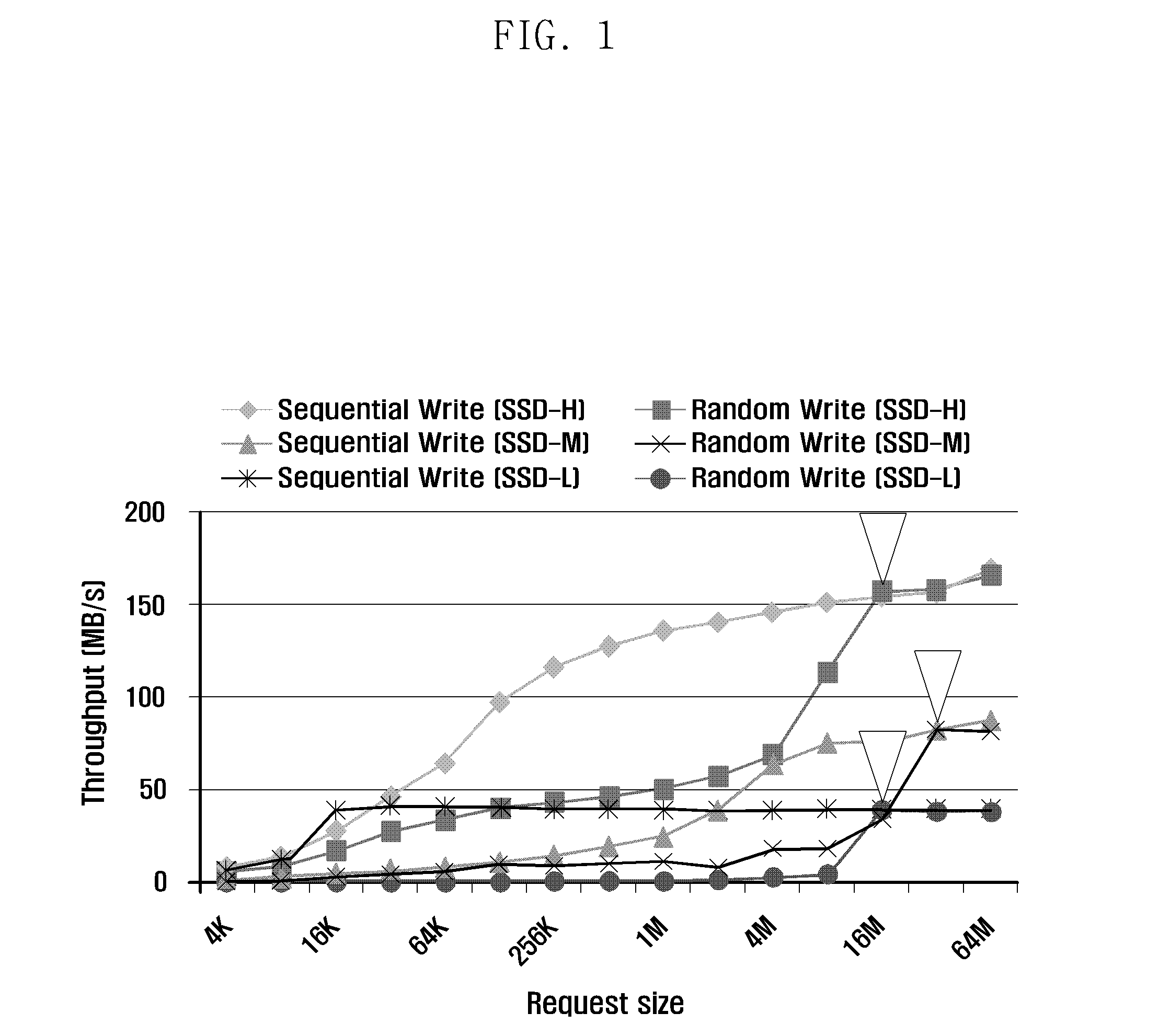

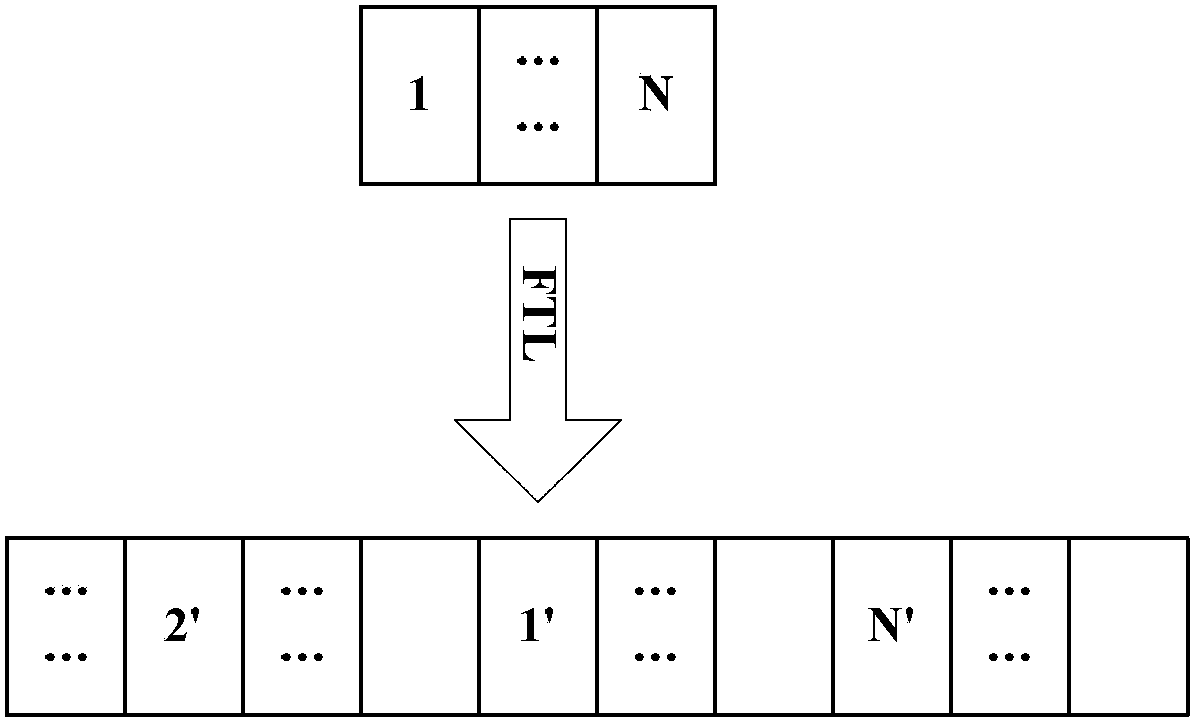

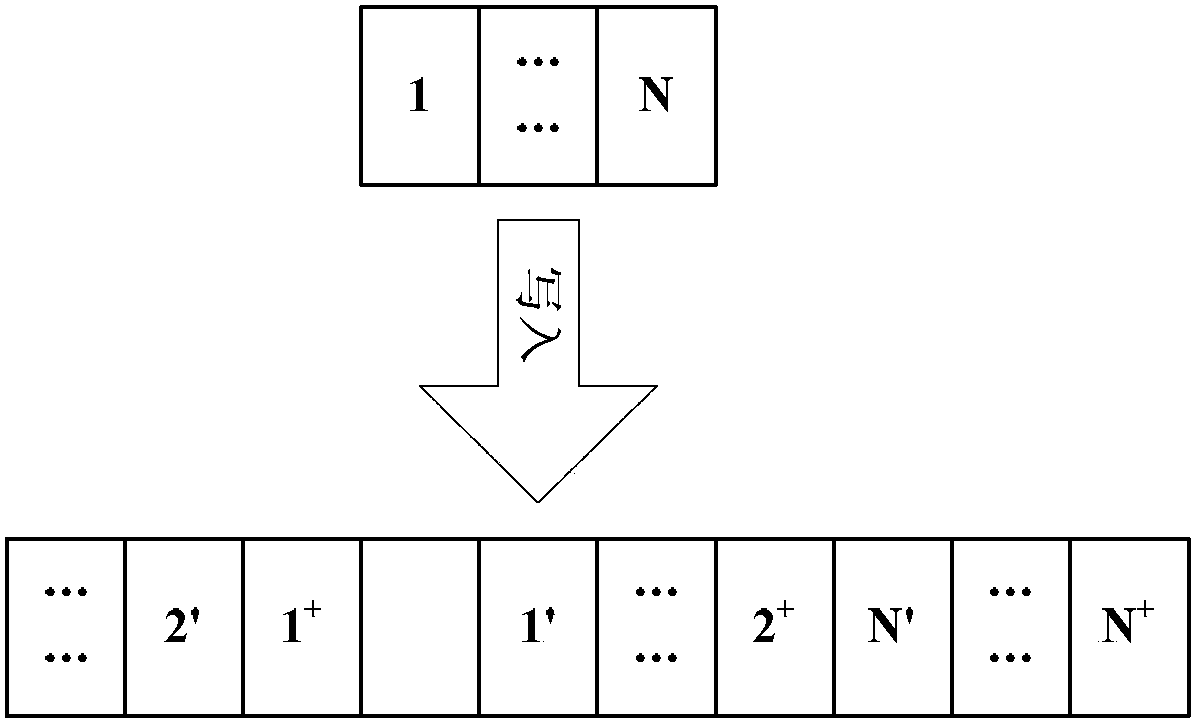

Method and apparatus for controlling writing data in storage unit based on NAND flash memory

InactiveUS20140013032A1Improve performanceInput/output to record carriersMemory adressing/allocation/relocationData controlDirty page

A method and apparatus for controlling writing of data in a storage unit based on a NAND flash memory are provided. The method includes determining reference values for classifying dirty pages to be written in the storage unit into a plurality of groups; calculating, with respect to each of the dirty pages, a hotness indicating a possibility of a change of data; classifying the dirty pages into the groups corresponding to reference values most similar to the calculated hotness; determining whether sizes of the groups are greater than a size of a segment, where the segment is a unit for performing a write request in the storage unit; and requesting a write operation for each segment with respect to groups having a size at least equal to the size of the segment to the storage unit.

Owner:SAMSUNG ELECTRONICS CO LTD +1

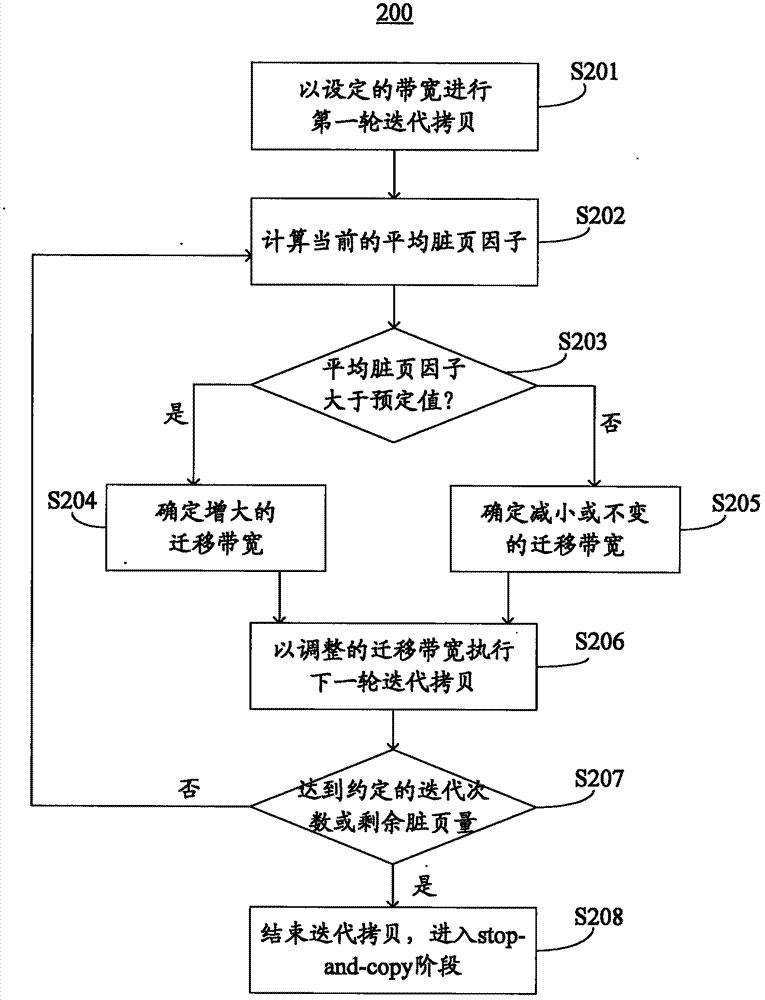

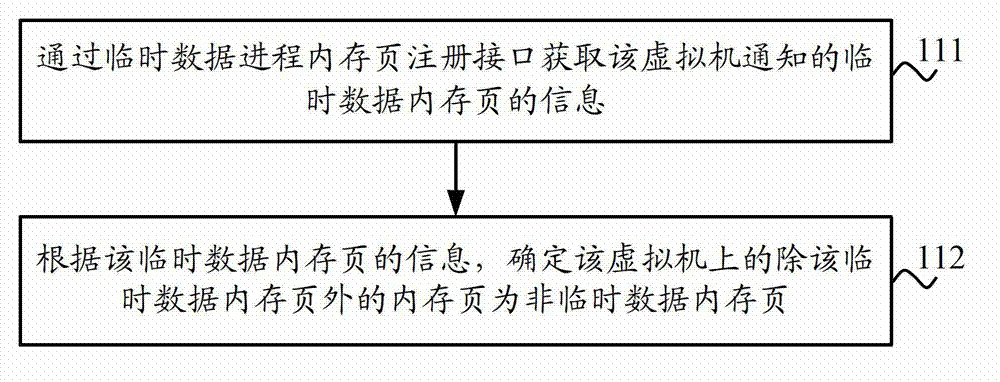

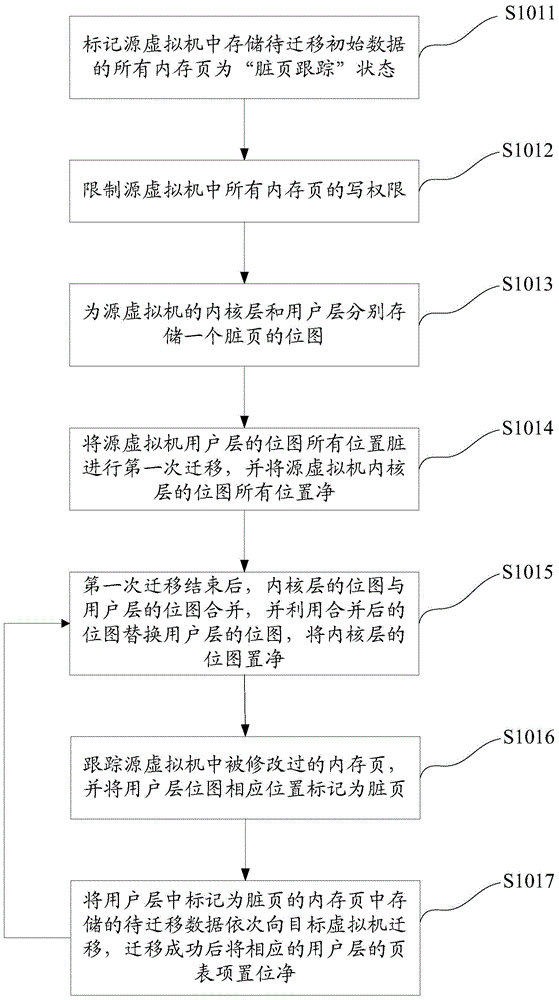

Memory pre-copying method in virtual machine migration, device executing memory pre-copying method and system

ActiveCN103365704AReduce downtimeSmall total migration timeMultiprogramming arrangementsSoftware simulation/interpretation/emulationDirty pageCopying

Disclosed are a memory pre-copying method in virtual machine migration, a virtual machine migration monitor executing the memory pre-copying method and a system comprising the virtual machine migration monitor. The memory pre-copying method includes: calculating a current average dirty page factor after every turn of iterative copying is finished, wherein the current average dirty page factor is an average value of dirty page factors of all turns of iterative copying already finished, and a dirty page factor of every turn of iterative copying is a ratio between a dirty page generating speed of the current turn and a migration bandwidth of the same turn; comparing the calculated average dirty page factor with a preset value; adaptively adjusting and the migration bandwidth for executing the next turn of iterative copying according to the comparison result.

Owner:CHINA MOBILE COMM GRP CO LTD

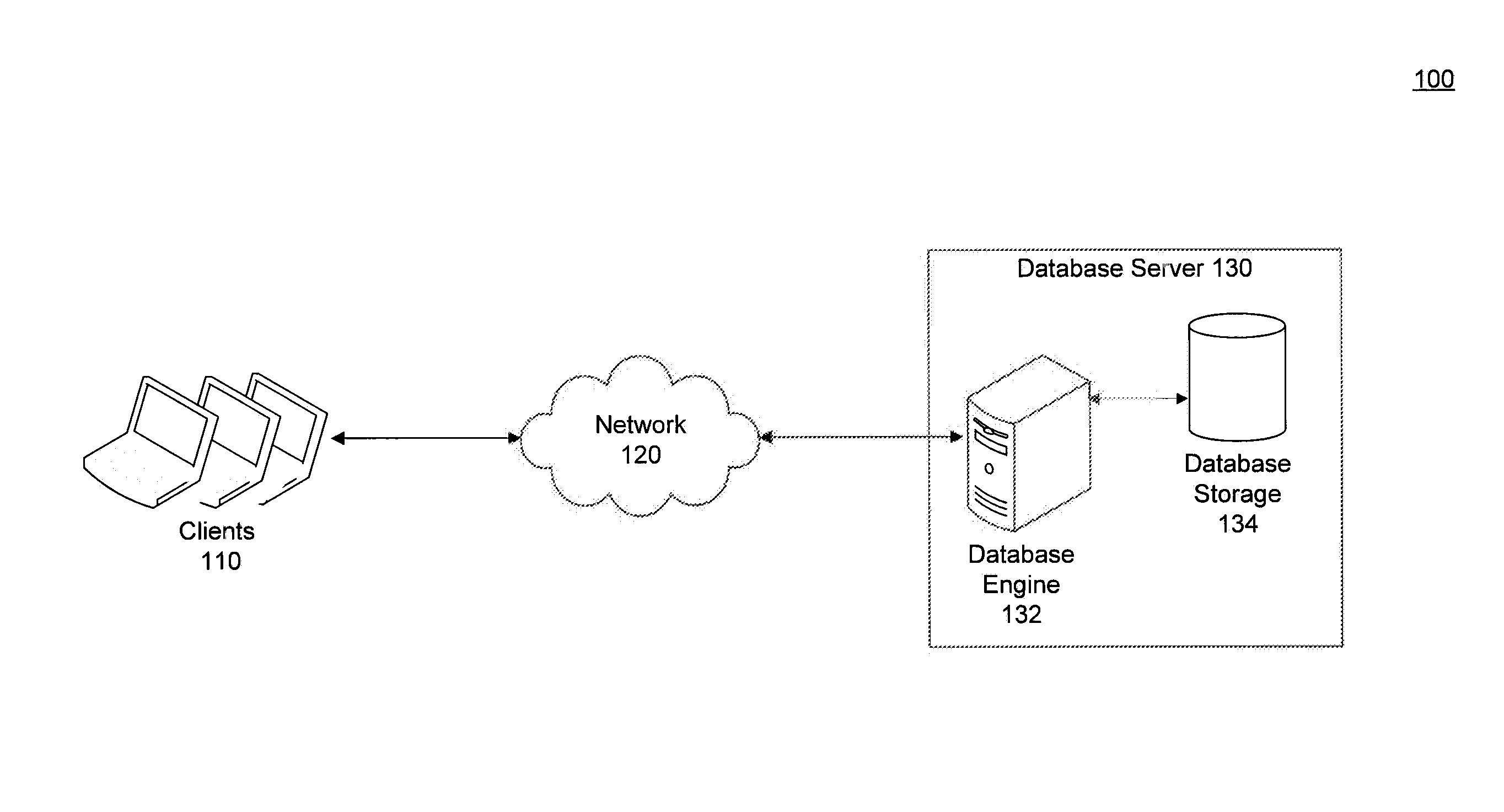

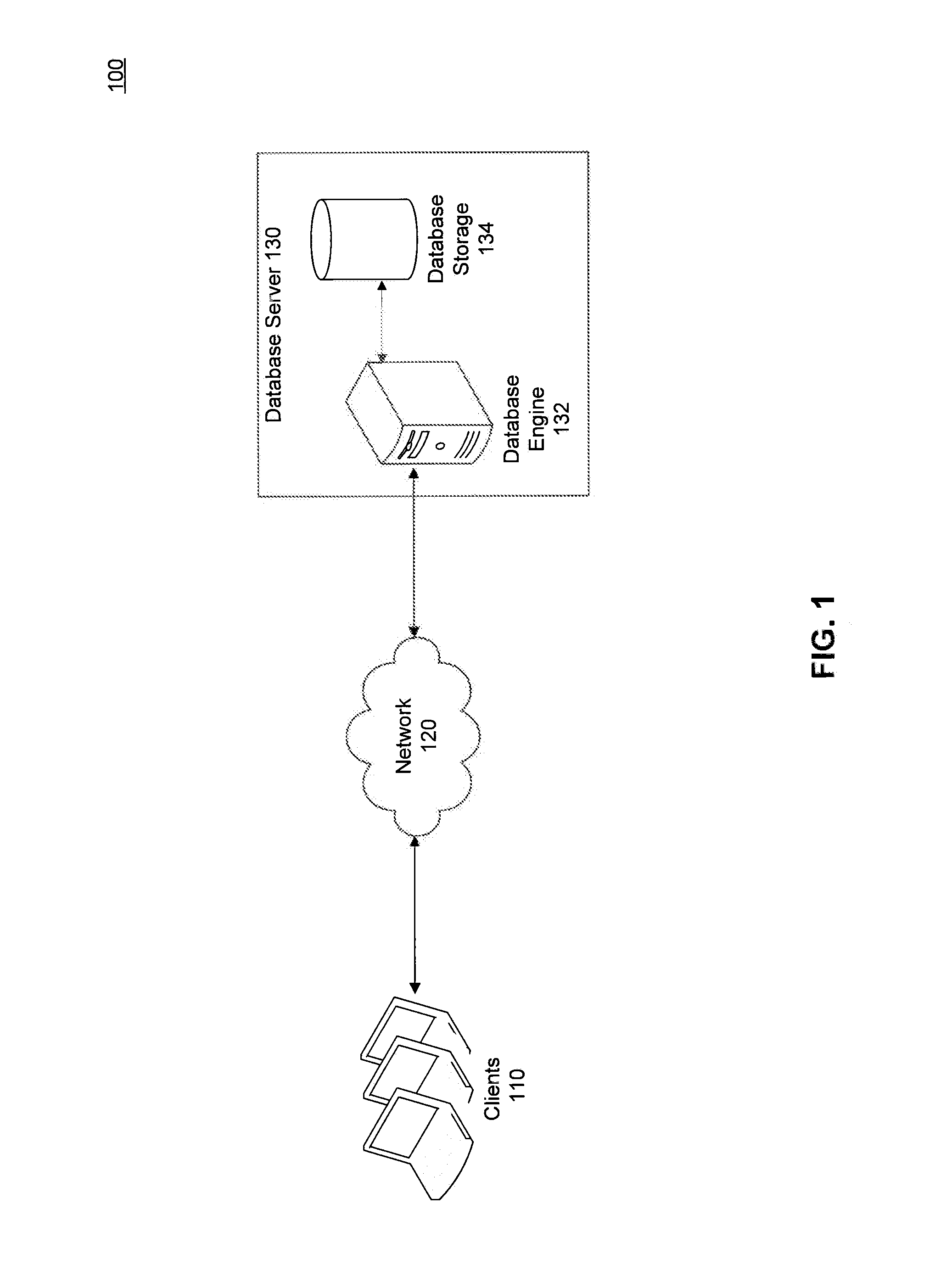

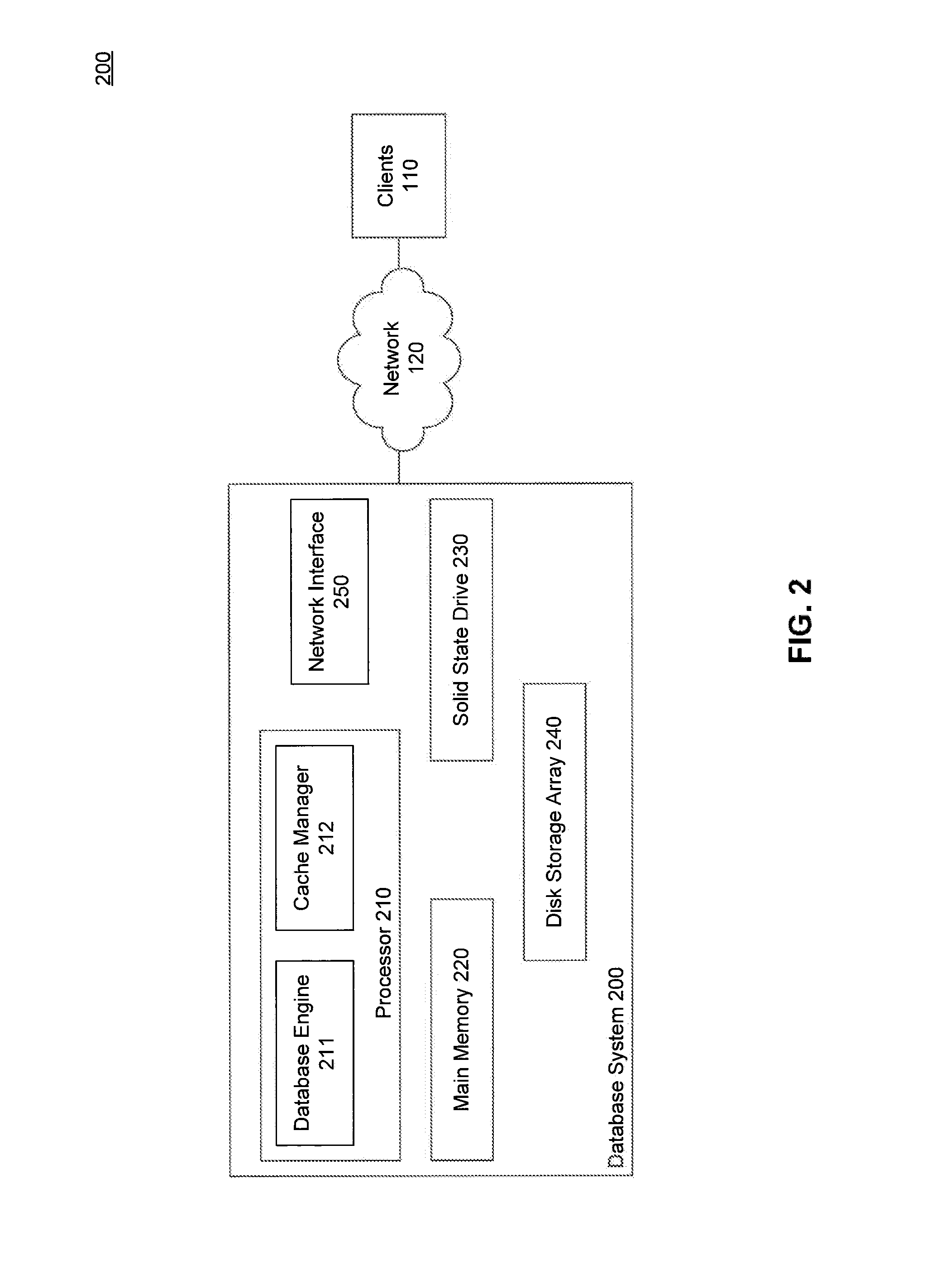

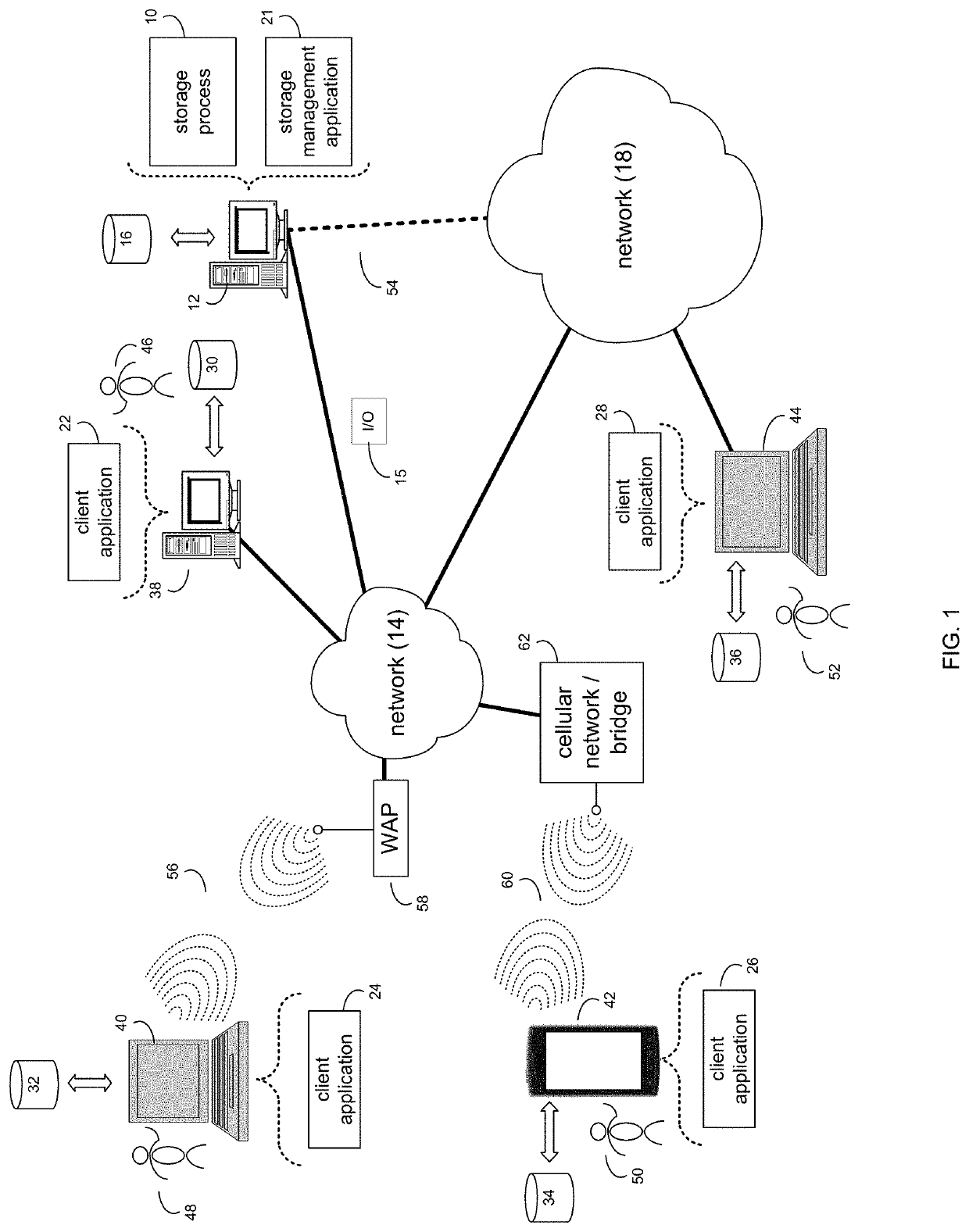

Solid State Drives as a Persistent Cache for Database Systems

ActiveUS20140019688A1Memory architecture accessing/allocationMemory adressing/allocation/relocationHard disc driveDirty page

Disclosed herein are systems, methods, and computer readable storage media for a database system using solid state drives as a second level cache. A database system includes random access memory configured to operate as a first level cache, solid state disk drives configured to operate as a persistent second level cache, and hard disk drives configured to operate as disk storage. The database system also includes a cache manager configured to receive a request for a data page and determine whether the data page is in cache or disk storage. If the data page is on disk, or in the second level cache, it is copied to the first level cache. If copying the data page results in an eviction, the evicted data page is copied to the second level cache. At checkpoint, dirty pages stored in the second level cache are flushed in place in the second level cache.

Owner:IANYWHERE SOLUTIONS

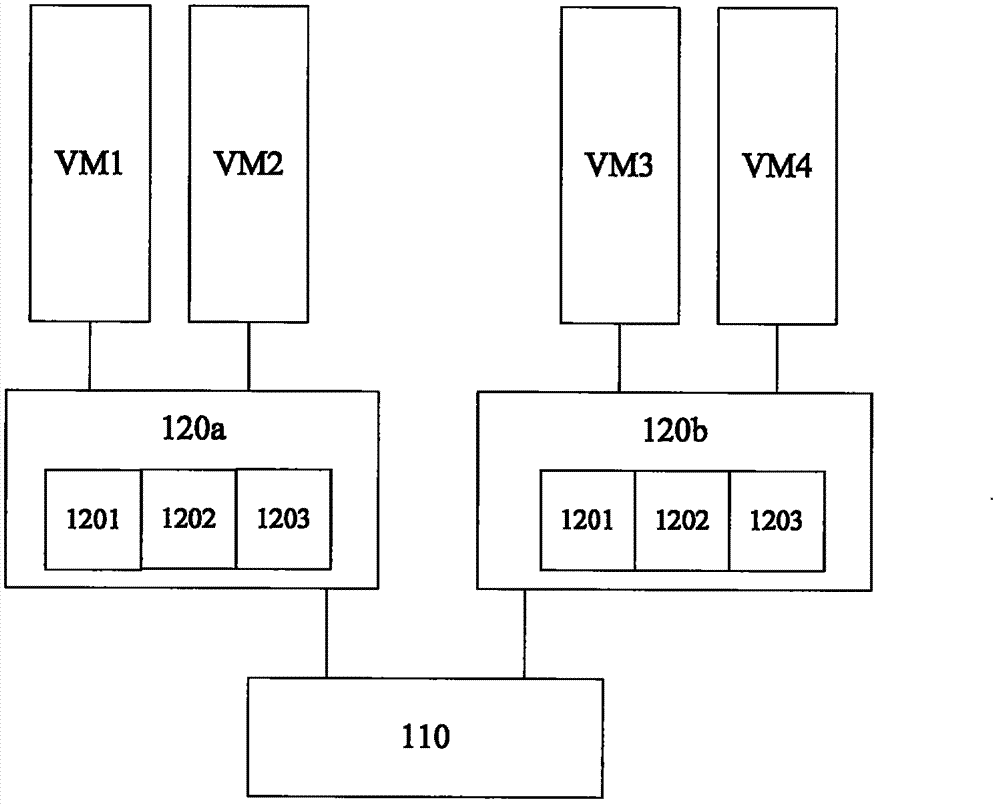

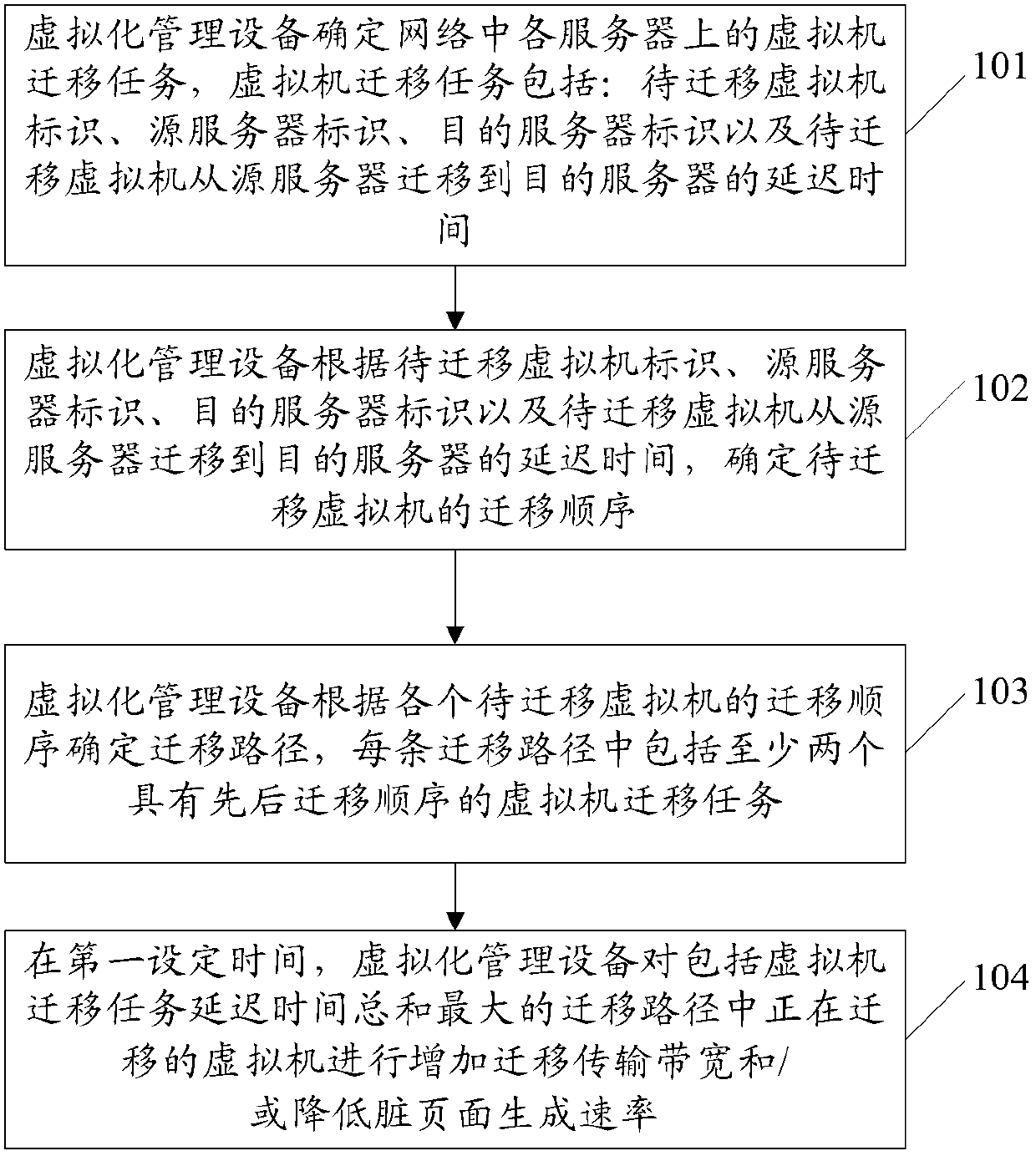

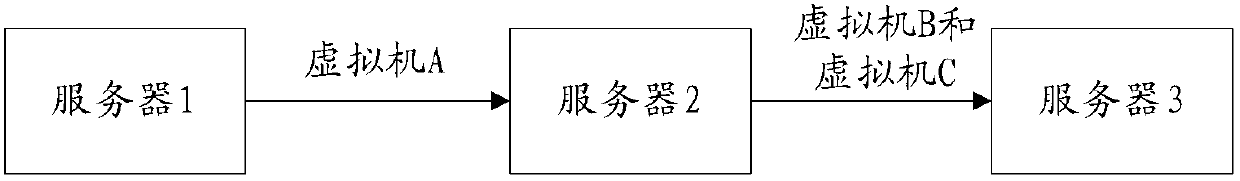

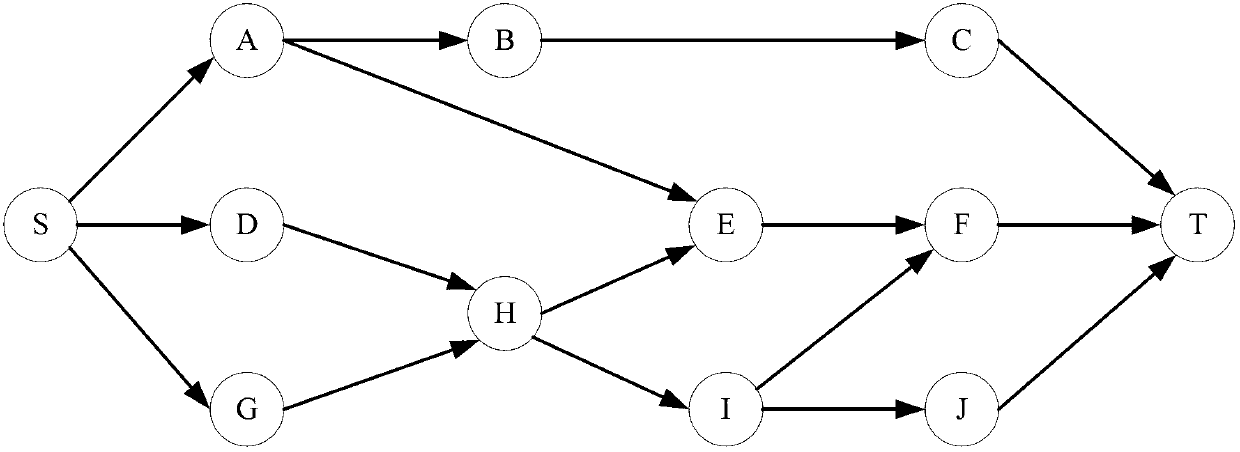

Virtual machine migration method and device

InactiveCN103218260AReduce latencyReduce dispatch timeProgram initiation/switchingSoftware simulation/interpretation/emulationDirty pageSpeed reduction

The invention provides a virtual machine migration method and a virtual machine migration device, wherein the method comprises the following steps that a virtual machine migration task on each server in a network is determined, the virtual machine migration task comprises a mark of a virtual machine to be migrated, a mark of a source server, a mark of a target server and the delay time for migrating the virtual machine to be migrated from the source server to the target server, the migration sequence of the virtual machine to be migrated is determined according to the mark of the virtual machine to be migrated, the mark of the source server, the mark of the target server and the delay time for migrating the virtual machine to be migrated from the source server to the target server, the migration path is determined according to the migration sequence of each virtual machine to be migrated, each migration path comprises at least two virtual machine migration tasks with the successive migration sequences, and at the first set time, the migration transmission bandwidth increase and / or dirty page generation speed reduction is carried out on the migrating virtual machines in the migration path with the maximum virtual machine migration task delay time sum.

Owner:CHINA UNITED NETWORK COMM GRP CO LTD

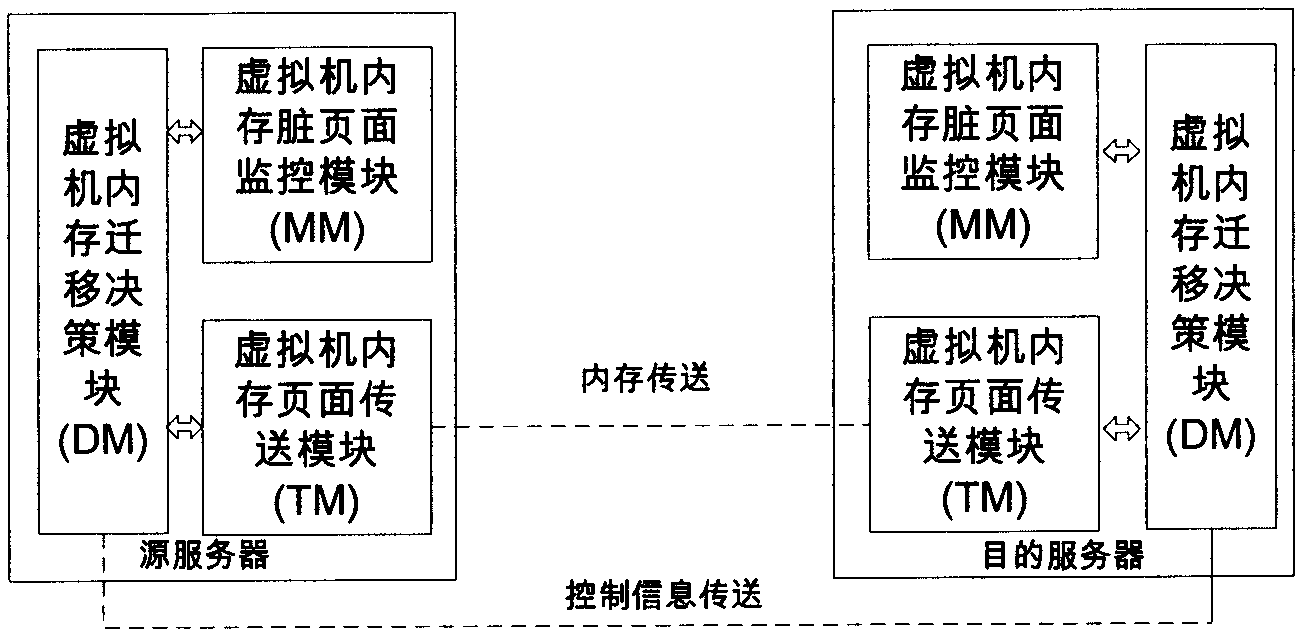

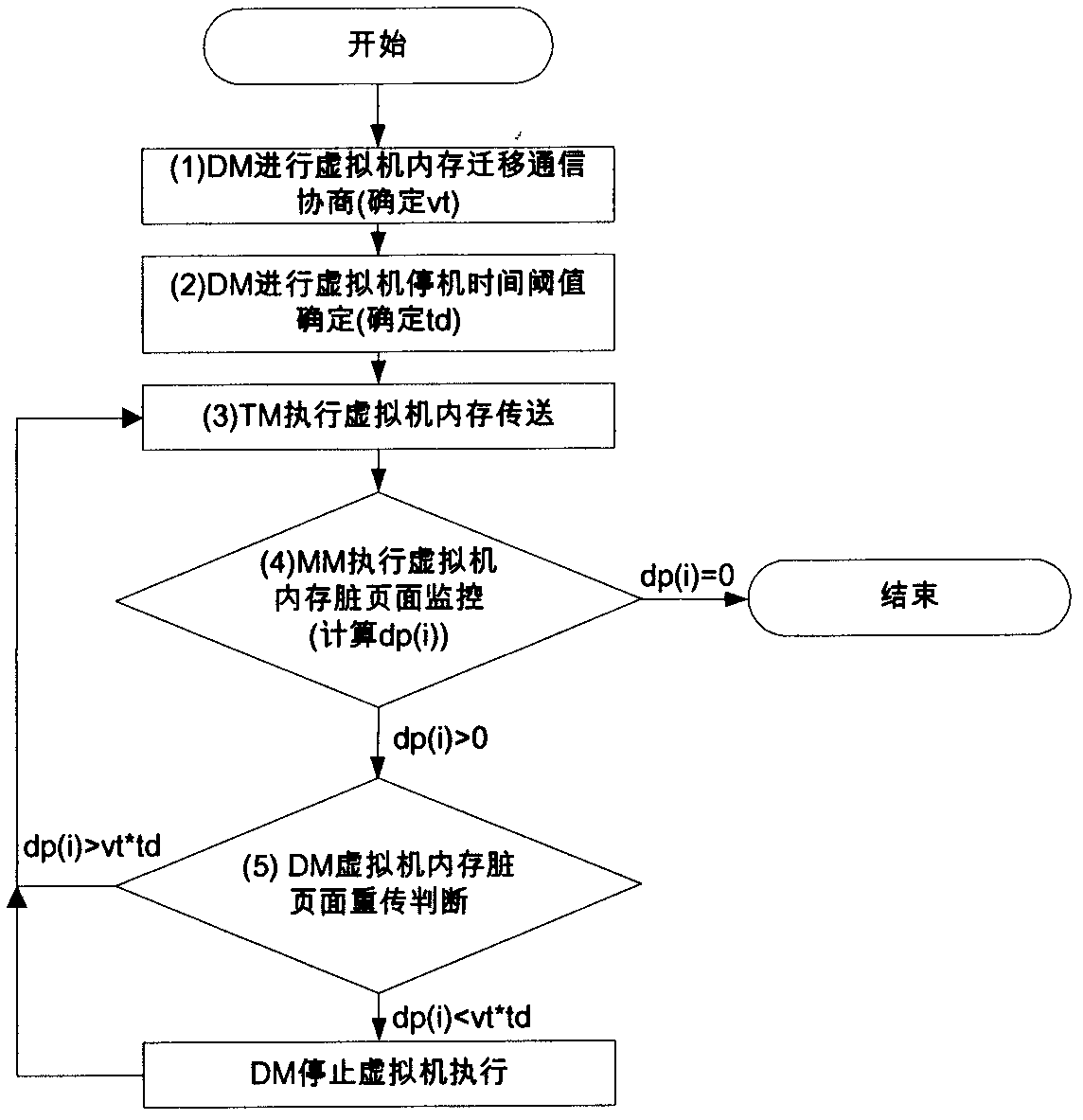

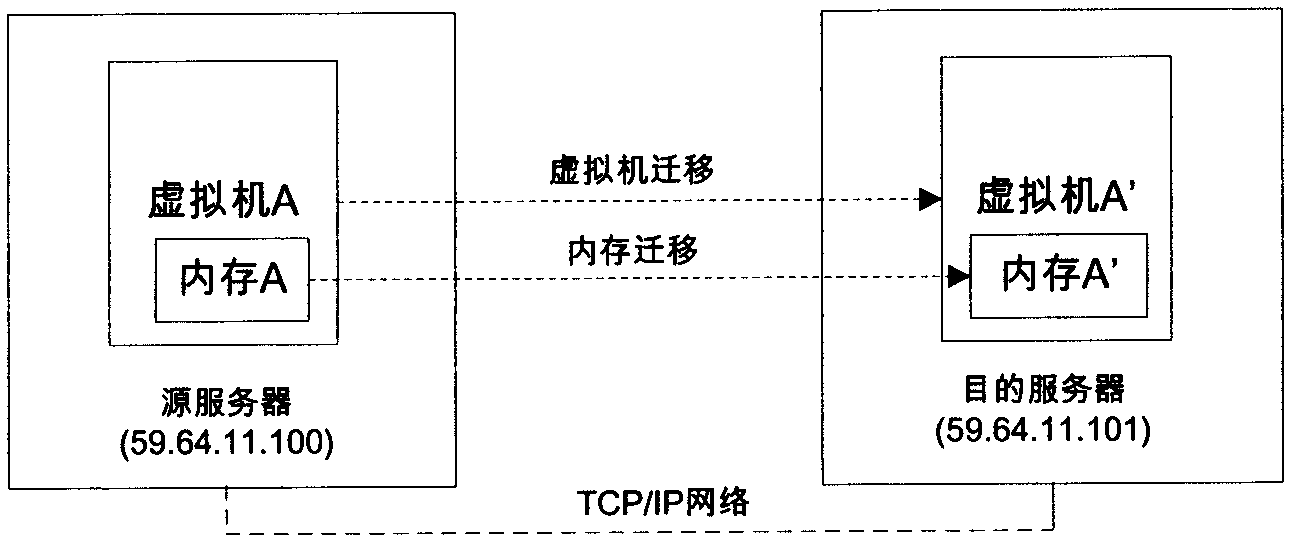

A virtual machine internal storage migration method based on down time threshold

InactiveCN102662723AMemory adressing/allocation/relocationSoftware simulation/interpretation/emulationDirty pageDecision-making

The present invention relates to a virtual machine internal storage migration method based on down time threshold, consisting of a virtual machine internal storage page transmit module, a virtual machine internal storage dirty page monitoring module, and a virtual machine internal storage migration decision-making module. The method realizes the migration of virtual machine internal storage from an original server to a targeted server through negotiation of page migration transmission speed, calculation of down time threshold and disposition of two core points, and may realize virtual machine on-line migration in a true sense in a retransmission process of a dirty page.

Owner:BEIJING UNIV OF POSTS & TELECOMM

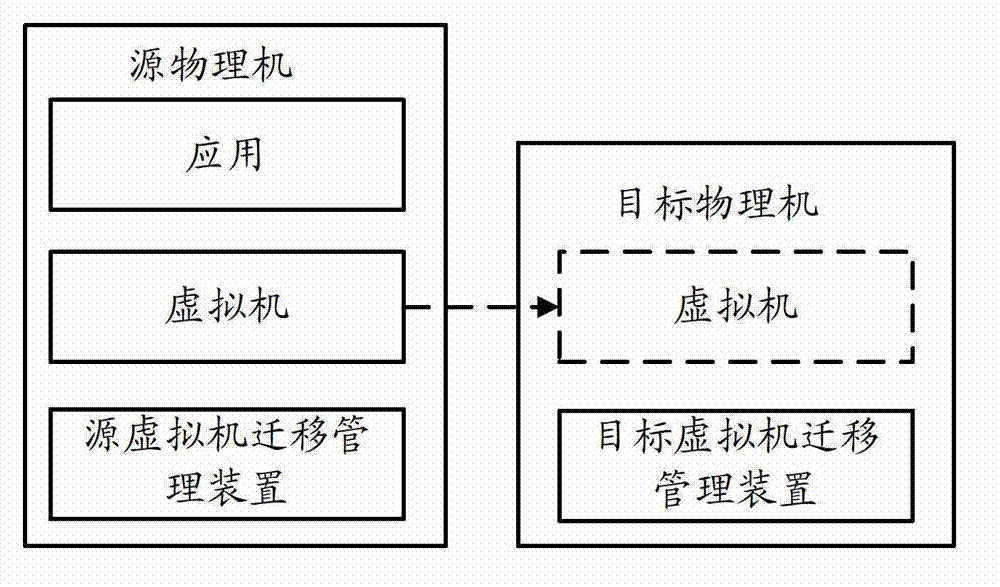

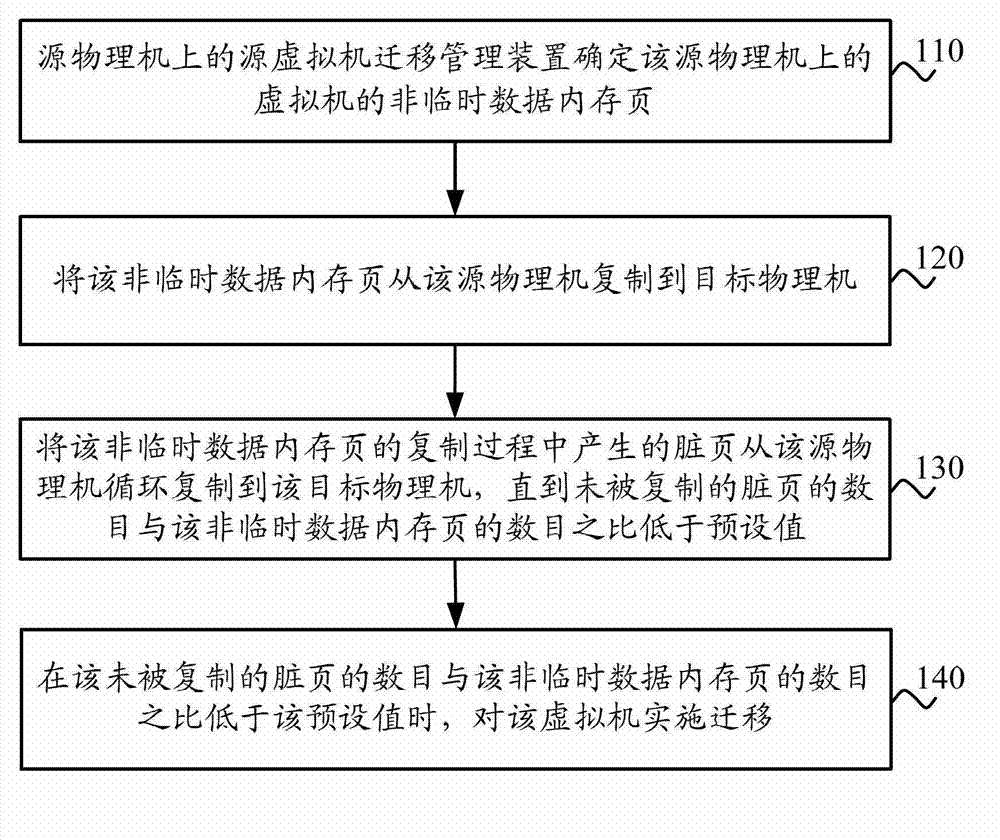

Method, device and system for achieving thermal migration of virtual machine

ActiveCN103049308AShort timeReduce wasteMemory adressing/allocation/relocationSoftware simulation/interpretation/emulationProcess systemsDirty page

The invention discloses a method, a device and a system for achieving thermal migration of a virtual machine. The method comprises that a source virtual machine management device on a source physical machine determines non-temporary data memory pages of the virtual machine on the source physical machine; the non-temporary data memory pages are copied to a target physical machine from the source physical machine; dirty pages produced in a non-temporary data memory page copying process are copied to the target physical machine from the source physical machine till the ratio of the number of the non-copied dirty pages to the number of the non-temporary data memory pages is lower than a preset value; and migration is performed on the virtual machine when the ratio of the number of the non-copied dirty pages to the number of the non-temporary data memory pages is lower than the preset value. According to the method, processes and memory pages in a multi-process system are classified, and temporary data memory pages are not copied in a dirty page circulatory copying process, so that waste of a system central processing unit (CPU) and the network bandwidth is reduced, and the user experience is improved.

Owner:四川华鲲振宇智能科技有限责任公司

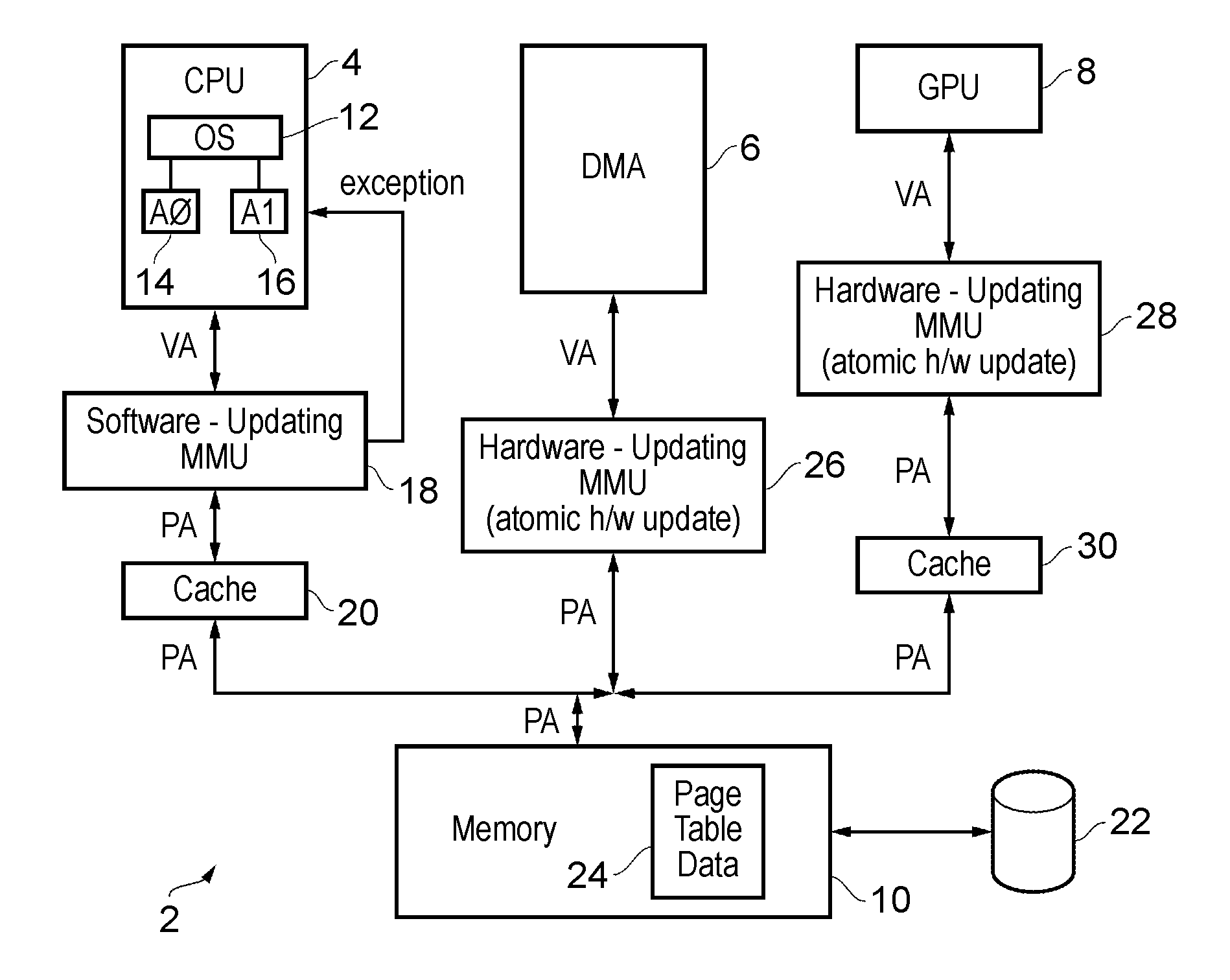

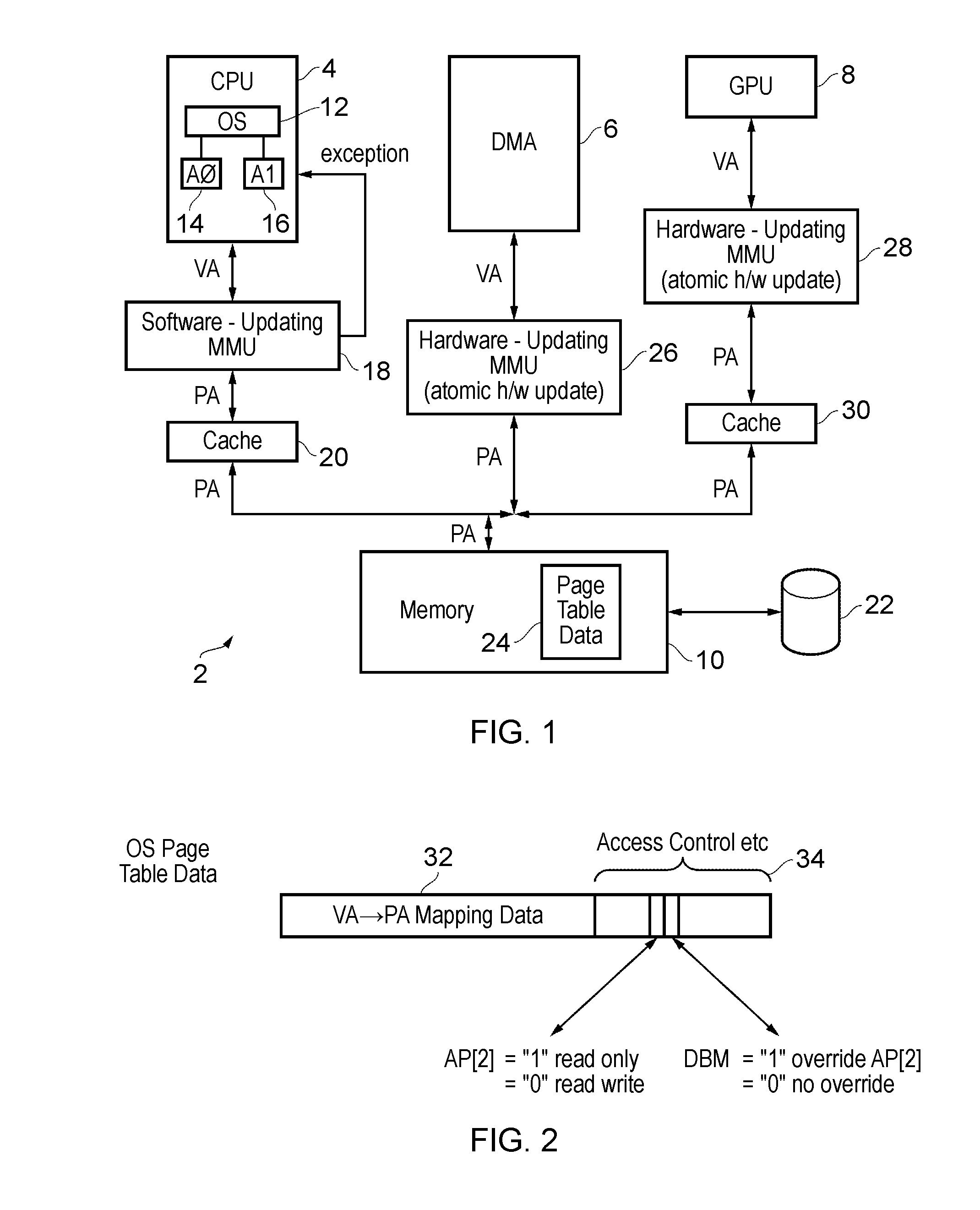

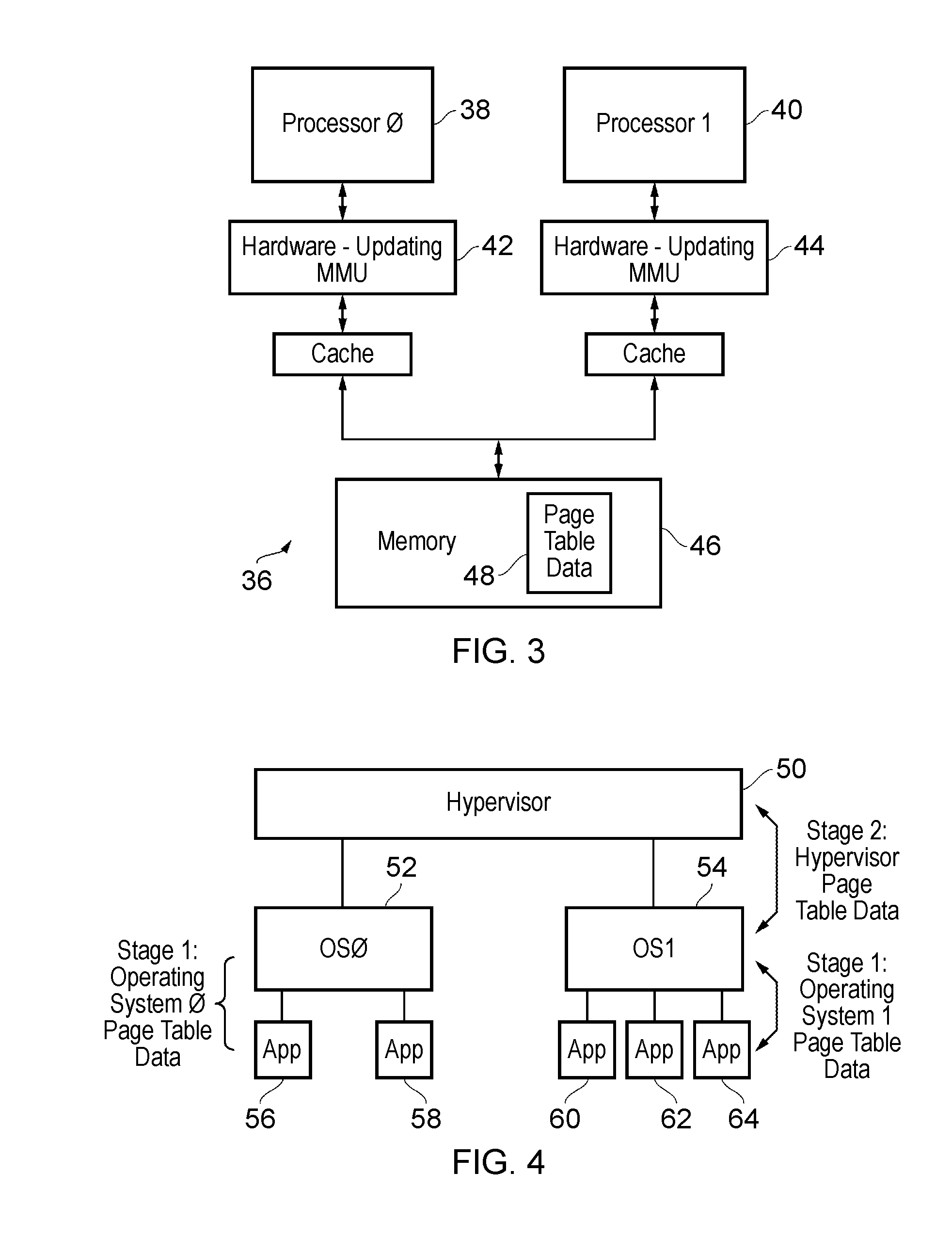

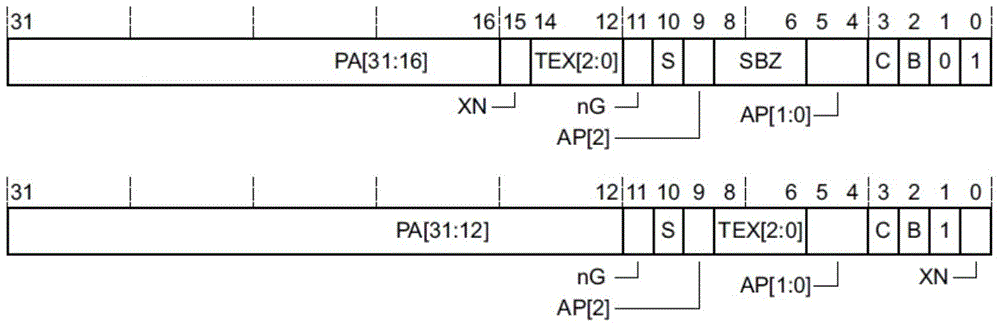

Page table management

ActiveUS20140337585A1Improve software compatibilityMemory architecture accessing/allocationUnauthorized memory use protectionDirty bitMemory address

Page table data for each page within a memory address space includes a write permission flag and a dirty-bit-modifier flag. The write permission flag is initialised to a value indicating that write access is not permitted. When a write access occurs, then the dirty-bit-modifier flag indicates whether or not the action of the write permission flag may be overridden. If the action of the write permission flag may be overridden, then the write access is permitted and the write permission flag is changed to indicate that write access is thereafter permitted. A page for which the write permission flag indicates that writes are permitted is a dirty page.

Owner:ARM LTD

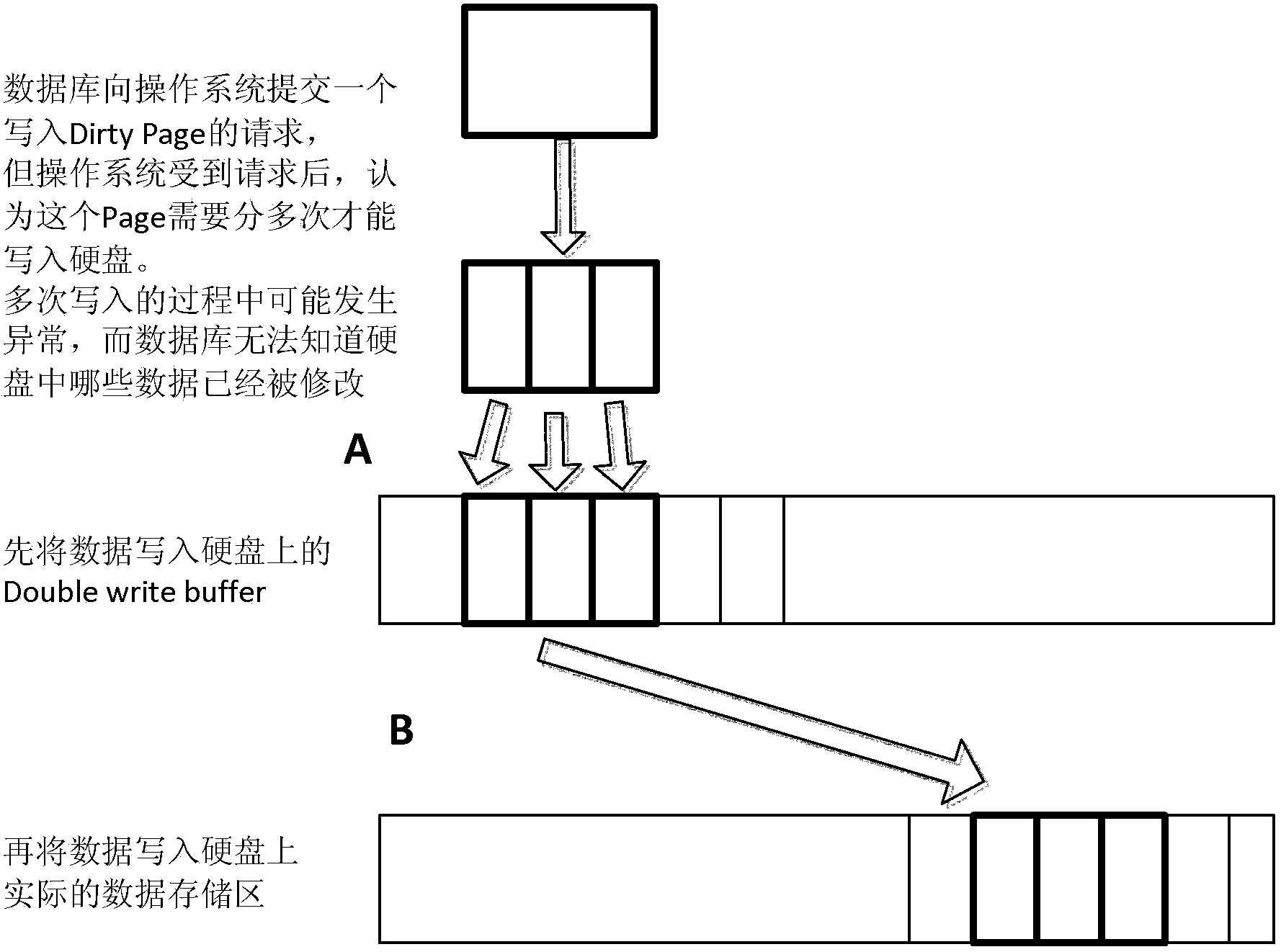

Method and system for writing database into SSD

ActiveCN103514095AImprove performanceReduce copy I/OMemory adressing/allocation/relocationSpecial data processing applicationsDirty pageDatabase

The invention discloses a method and system for writing a database into an SSD. The method includes the following steps that (1) if write-in of a dirty page can not be completed in an I / O, namely, write operations have no atomicity, the database notifies the SSD that the dirty page will be written in, the SSD allocates storage pages, simultaneously allocates tentative address mapping tables and records mapping relations; (2) when an exception occurs, FTL address mapping tables are updated according to the states of the tentative address mapping tables, and the write operations to the SSD are completed.

Owner:RAMAXEL TECH SHENZHEN

Method and apparatus for efficient memory replication for high availability (HA) protection of a virtual machine (VM)

ActiveUS8413145B2Prevents buffer overflowsAvoid bufferingMemory architecture accessing/allocationError detection/correctionDirty pageTransfer procedure

High availability (HA) protection is provided for an executing virtual machine. At a checkpoint in the HA process, the active server suspends the virtual machine; and the active server copies dirty memory pages to a buffer. During the suspension of the virtual machine on the active host server, dirty memory pages are copied to a ring buffer. A copy process copies the dirty pages to a first location in the buffer. At a predetermined benchmark or threshold, a transmission process can begin. The transmission process can read data out of the buffer at a second location to send to the standby host. Both the copy and transmission processes can operate substantially simultaneously on the ring buffer. As such, the ring buffer cannot overflow because the transmission process continues to empty the ring buffer as the copy process continues. This arrangement allows for smaller buffers and prevents buffer overflows.

Owner:AVAYA INC

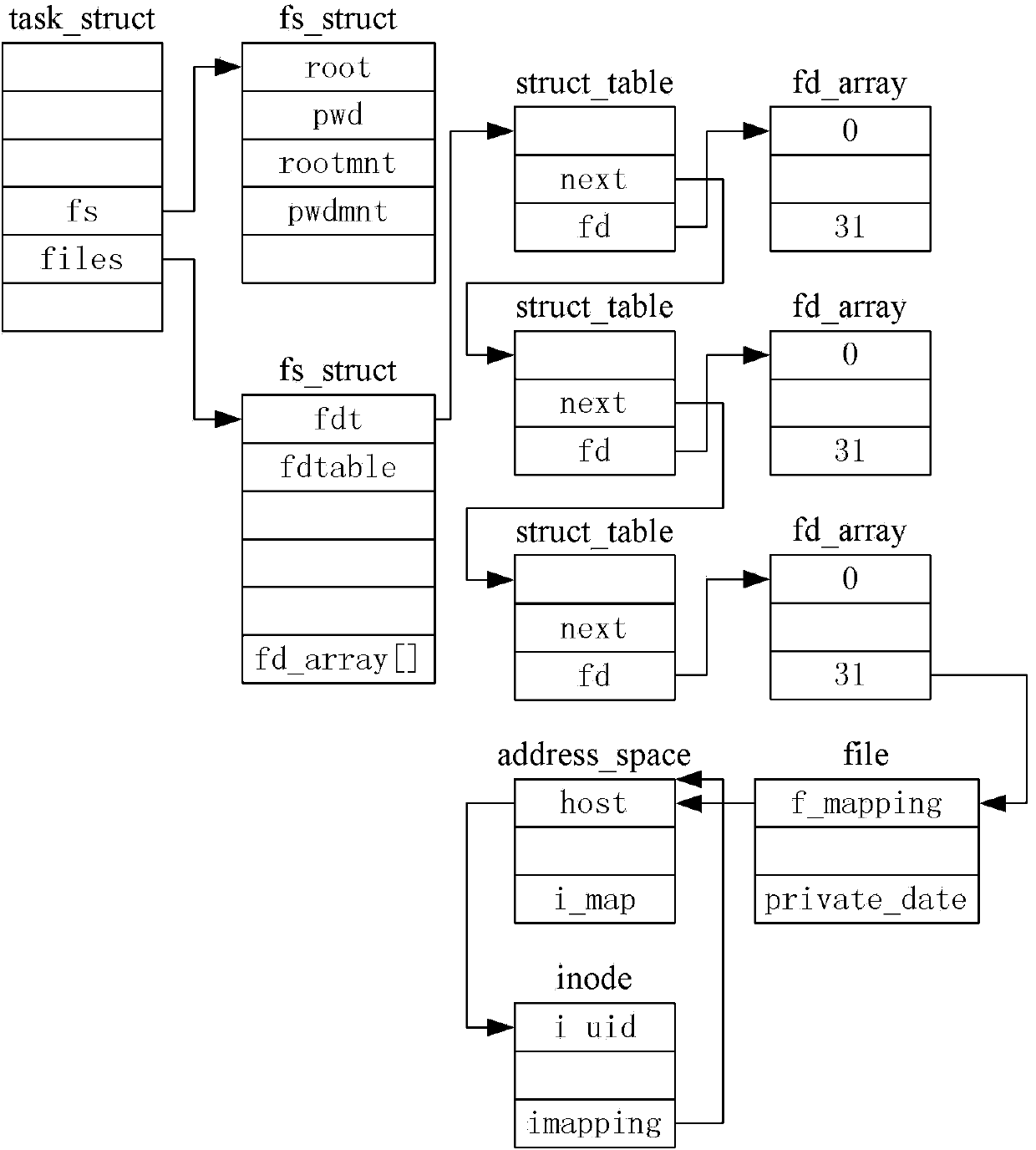

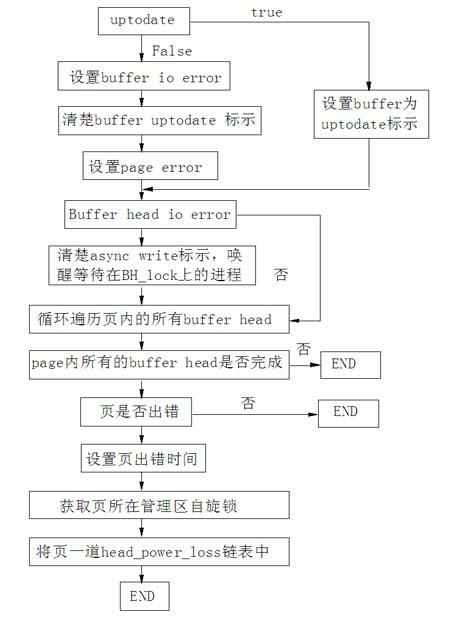

Method and device for eliminating sensitive data of Linux system memory

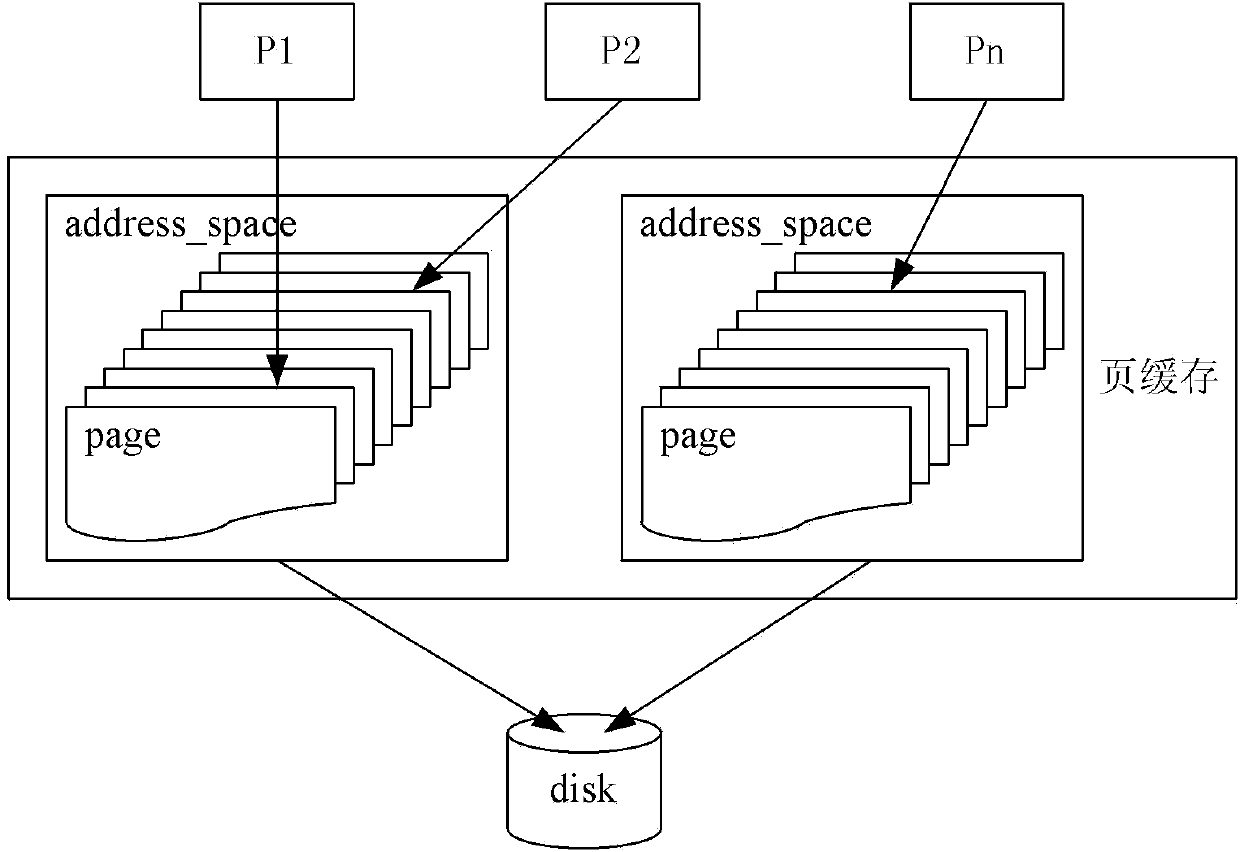

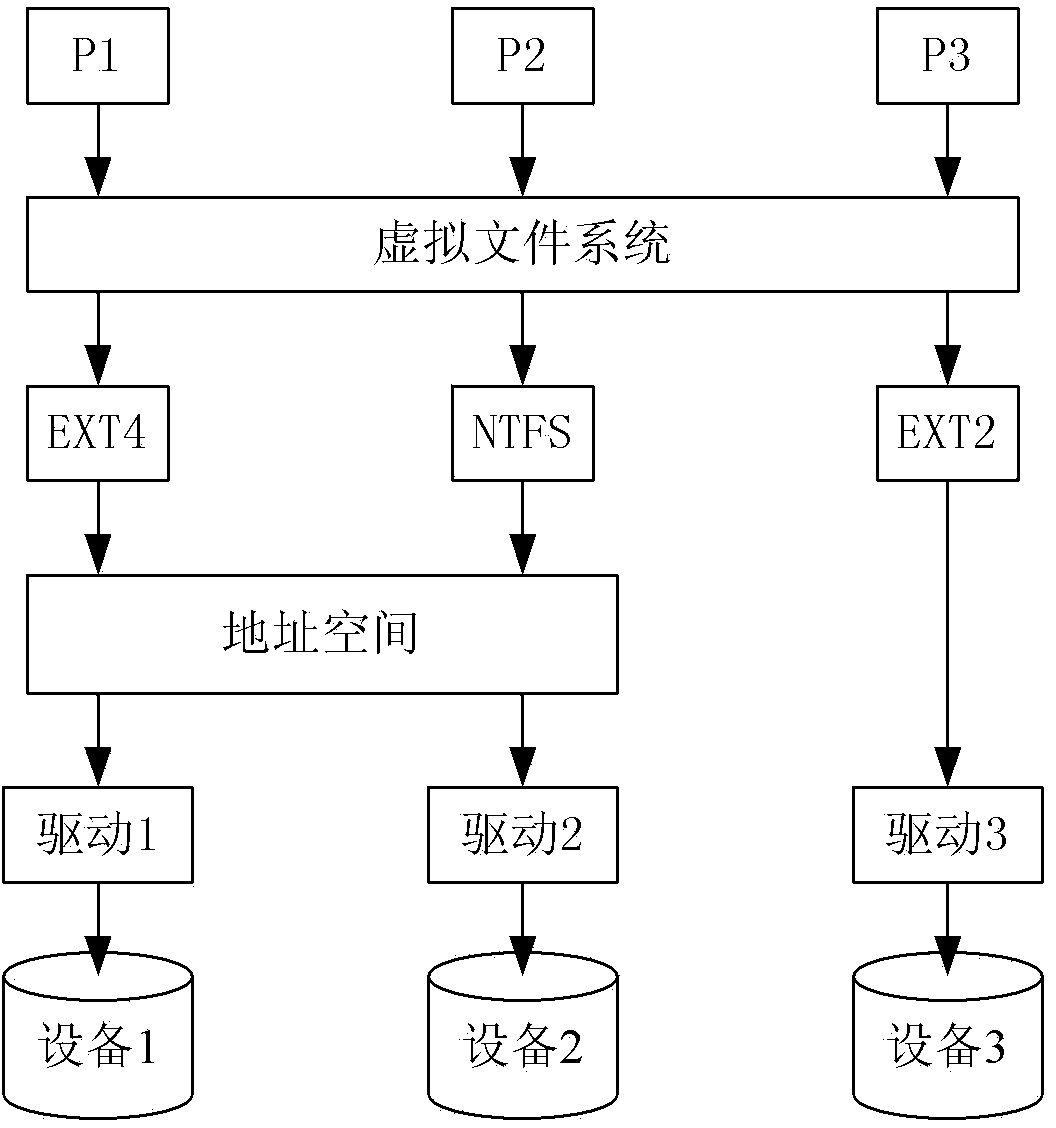

The invention provides a method and device for eliminating sensitive data of a Linux system memory. The method includes the steps that when a course calls a close system to call a closed file or exit_files are called for closing an unclosed file due to course exit, the unclosed file is located to corresponding struct address_space through a struct file, and if it is judged that a dirty page exists in an inode structure corresponding to the file to be closed, a vfs_fsync function is called so that the dirty page in the file can be written back to a disk; cardinal number trees in the located address space structure is traversed and all pages in the cardinal number trees are deleted and reset and then released to a free zone; a read-write chain table is established in each course, the start address and the data length in a device cache are recorded when data of a device file are read or written; when calling of a read or write system exits, the read-write chain tables are traversed, so that data in the address space from the address to the address plus the length of each node is reset.

Owner:INST OF INFORMATION ENG CHINESE ACAD OF SCI

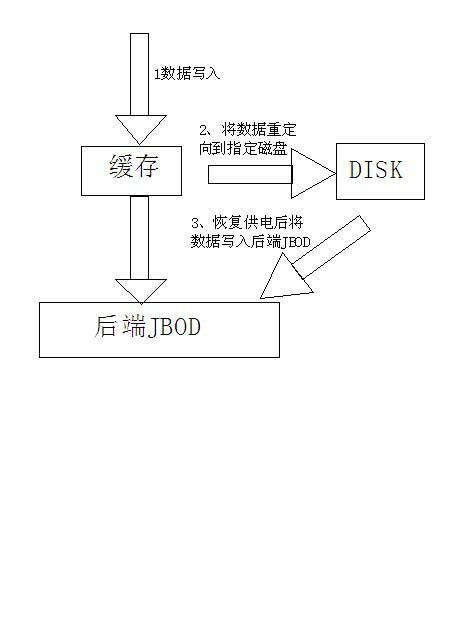

Method using software for power fail safeguard of caches in disk array

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

High-efficiency dirty page acquiring method

InactiveCN101706736AImprove performanceImprove the efficiency of executionMemory adressing/allocation/relocationSoftware simulation/interpretation/emulationVirtualizationDirty page

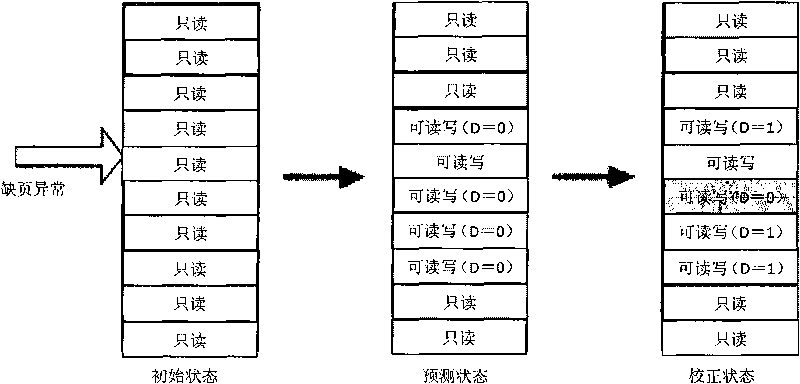

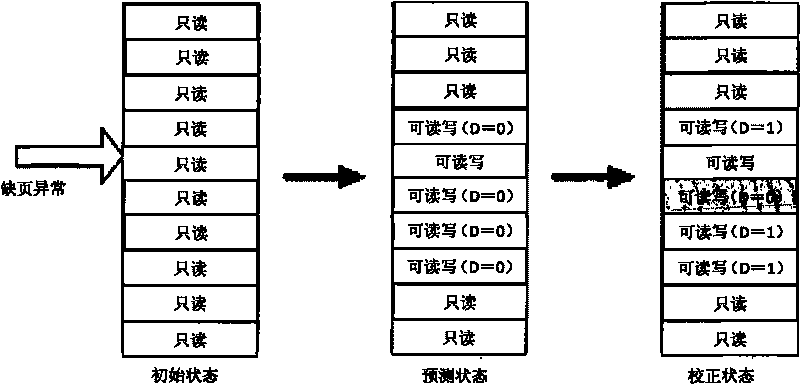

The invention discloses a high-efficiency dirty page acquiring method which belongs to the technical field of virtualization. The method comprises the following steps: 1) a virtual machine manager maintains an n-position sign for each first-level shadow page table; 2) before an execution cycle, the virtual machine manager sets all page table entries of the first-level shadow page table to be read only, and sets a corresponding sign to be 0; 3) in the execution cycle, the virtual machine manager records a corresponding page to be the dirty page according to captured page missing abnormal information; simultaneously sets the entry of the shadow page table to be writable, and sets the corresponding sign position to be 1; 4) when the execution cycle is ended, the virtual machine manager records all dirty pages of a main virtual machine; simultaneously transverses entry sections of the shadow page table with the sign position being 1, and sets all entries of the writable shadow page table to be read only; and 5) the virtual machine manager restores the execution of the main virtual machine, and steps 2) to 4) are repeated to start a new execution cycle. The method can greatly promote the performance of the main virtual machine.

Owner:PEKING UNIV

Solid-state disk page-level cache area management method

ActiveCN108762664AImprove read and write performanceImprove cache hit ratioInput/output to record carriersDirty pageSolid-state drive

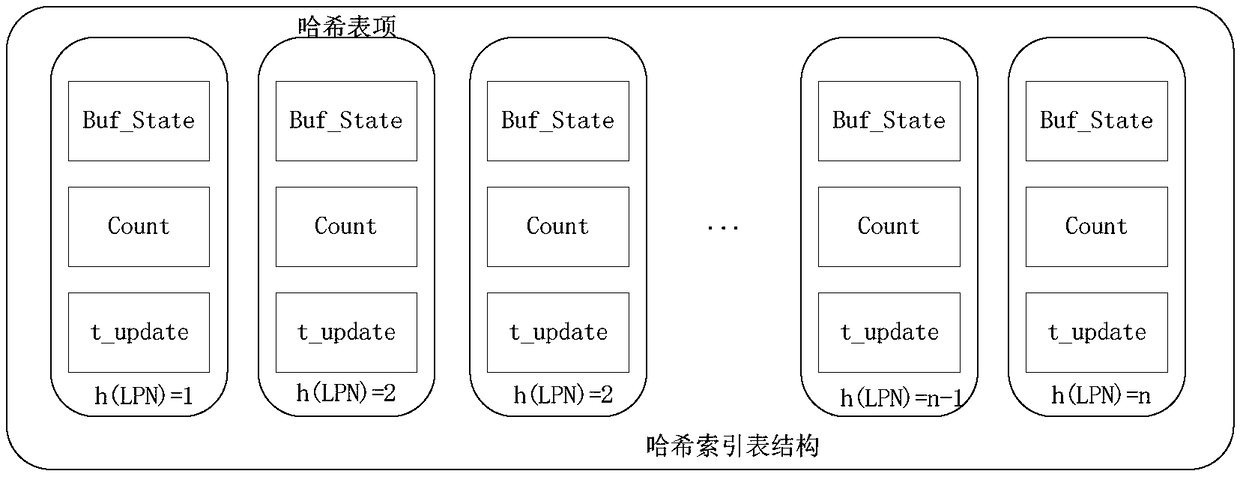

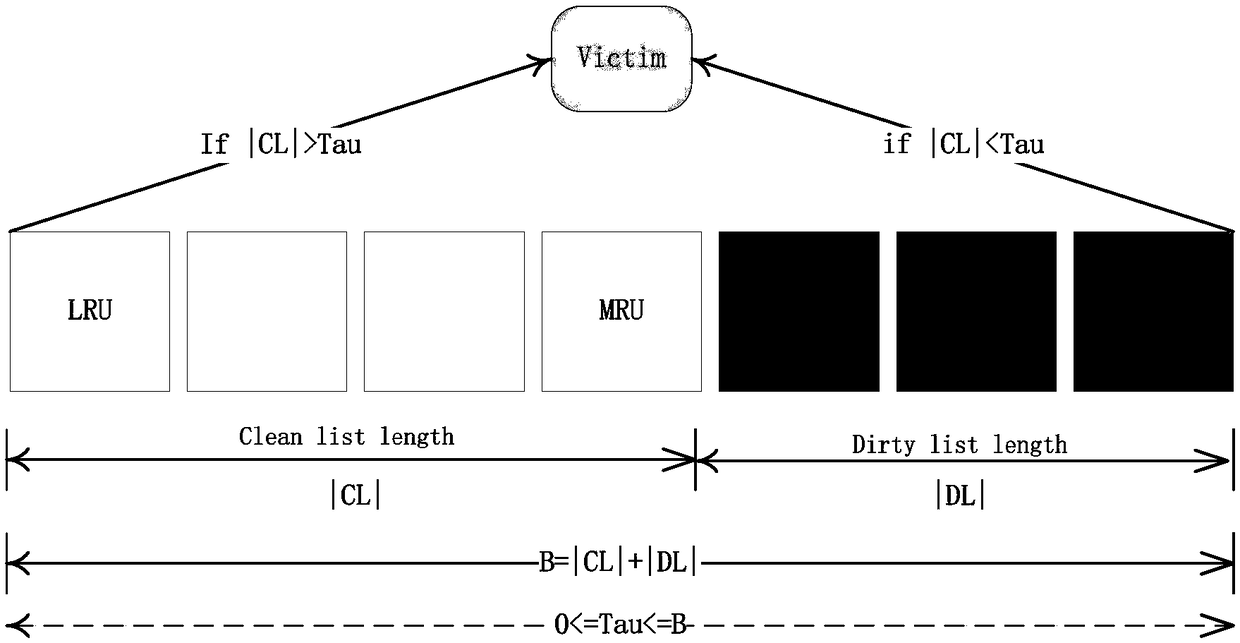

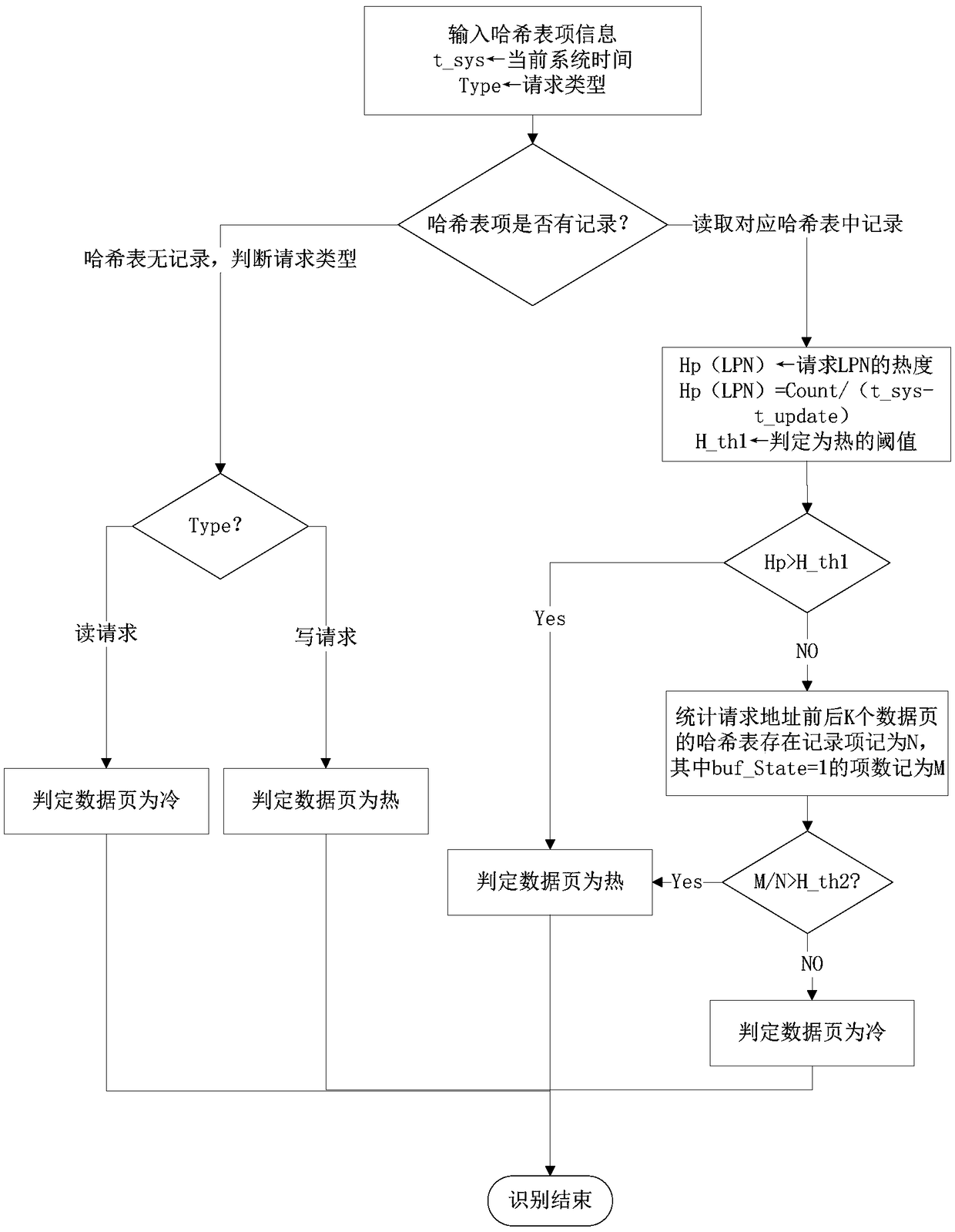

The invention provides a solid-state disk page-level cache area management method. The method comprises the following steps of: dividing a solid-state disk page-level cache area into three parts: a hash index table cache area, a dirty page cache area and a clean page cache area, wherein the hash index table cache area is used for recording historical features of access of different data pages, thedirty page cache area is used for caching hot dirty pages, and the clean page cache area is used for caching hot clean pages; carrying out hot data recognition on a request data page by utilizing historical access feature information of a corresponding request on a hash table by adoption of a hot data recognition mechanism, and loading the recognized hot data page into a buffer area by combiningspatial local features of an access request; and finally, dynamically selecting proper data pages from a clean page cache queue and a dirty page cache queue to carry out replacement by synthesizing current reading / writing request access features and practical bottom reading / writing cost by adoption of a self-adaptive replacement mechanism when a data page replacement operation can be carried out in the buffer area. The method has favorable practicability and market prospect.

Owner:HANGZHOU DIANZI UNIV

Method and device for data persistence processing and data base system

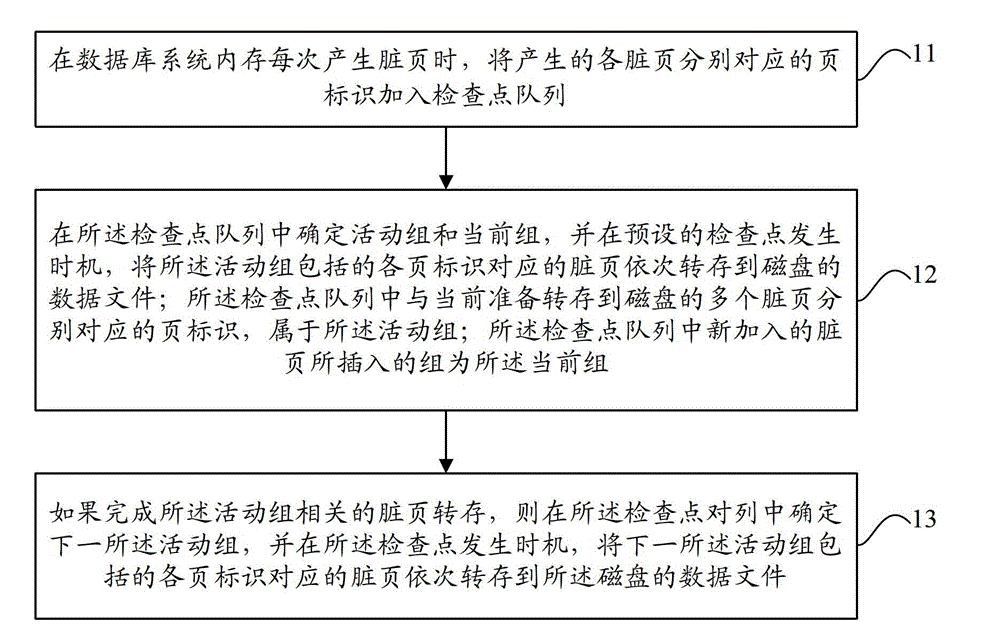

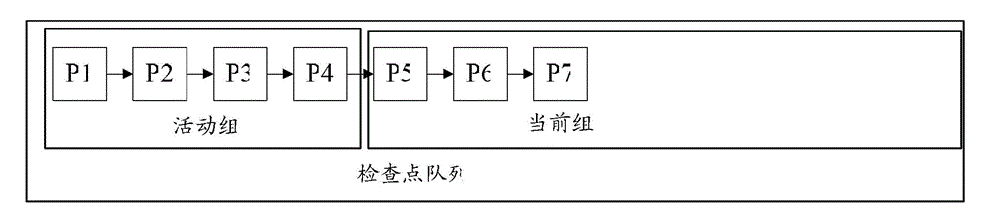

ActiveCN102750317AImprove transfer efficiencyError detection/correctionSpecial data processing applicationsData miningDirty page

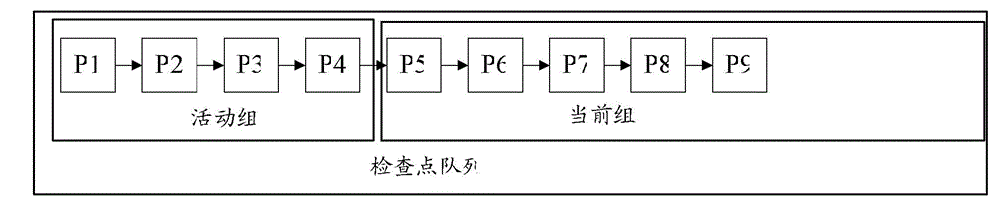

The invention discloses a method and a device for data persistence processing and a data base system. The method for data persistence processing includes: adding page identification respectively corresponding to generated dirty pages into a checking point queue every time an internal memory of the data base system generates dirty pages; determining an activity set and a current set in the checking point queue, and sequentially unloading the dirty pages corresponding to identification of each page and included by the activity set into a disc at a preset checking point occurring occasion; checking the page identification respectively corresponding to the plurality of dirty pages which are going to be uploaded into the disc in the point queue to form the activity set; enabling an inserted set added into the checking point queue to serve as the current set; and determining a next activity set in the checking point queue if unloading of relative dirty pages of the activity set is finished, and sequentially unloading the dirty pages corresponding to the identification of each page included by the next activity set into the disc. By means of the method and the device for data persistence processing and the data base system, the efficiency in dirty pages unloading is improved on the basis that dirty page unloading has small influence on normal business operation.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

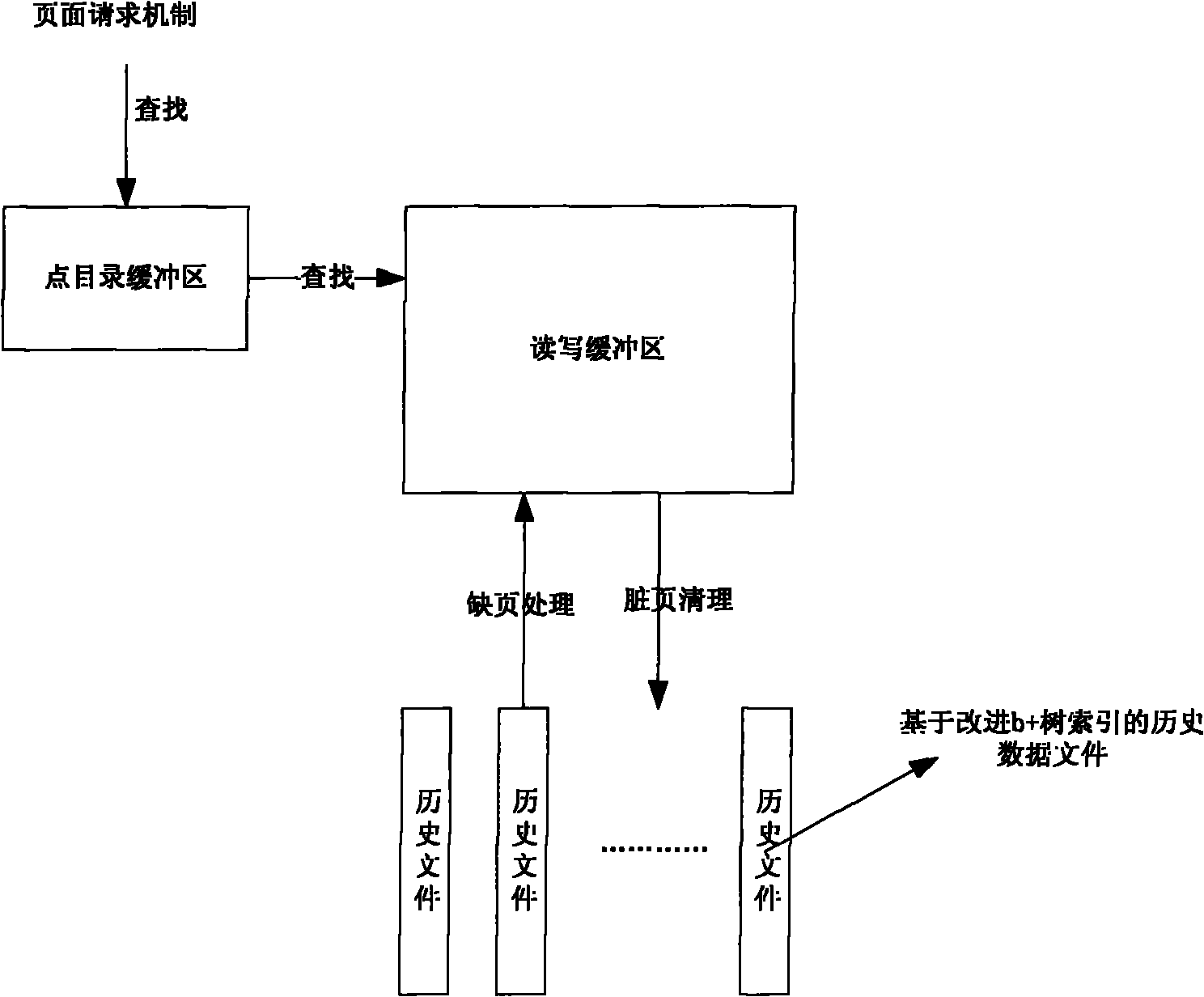

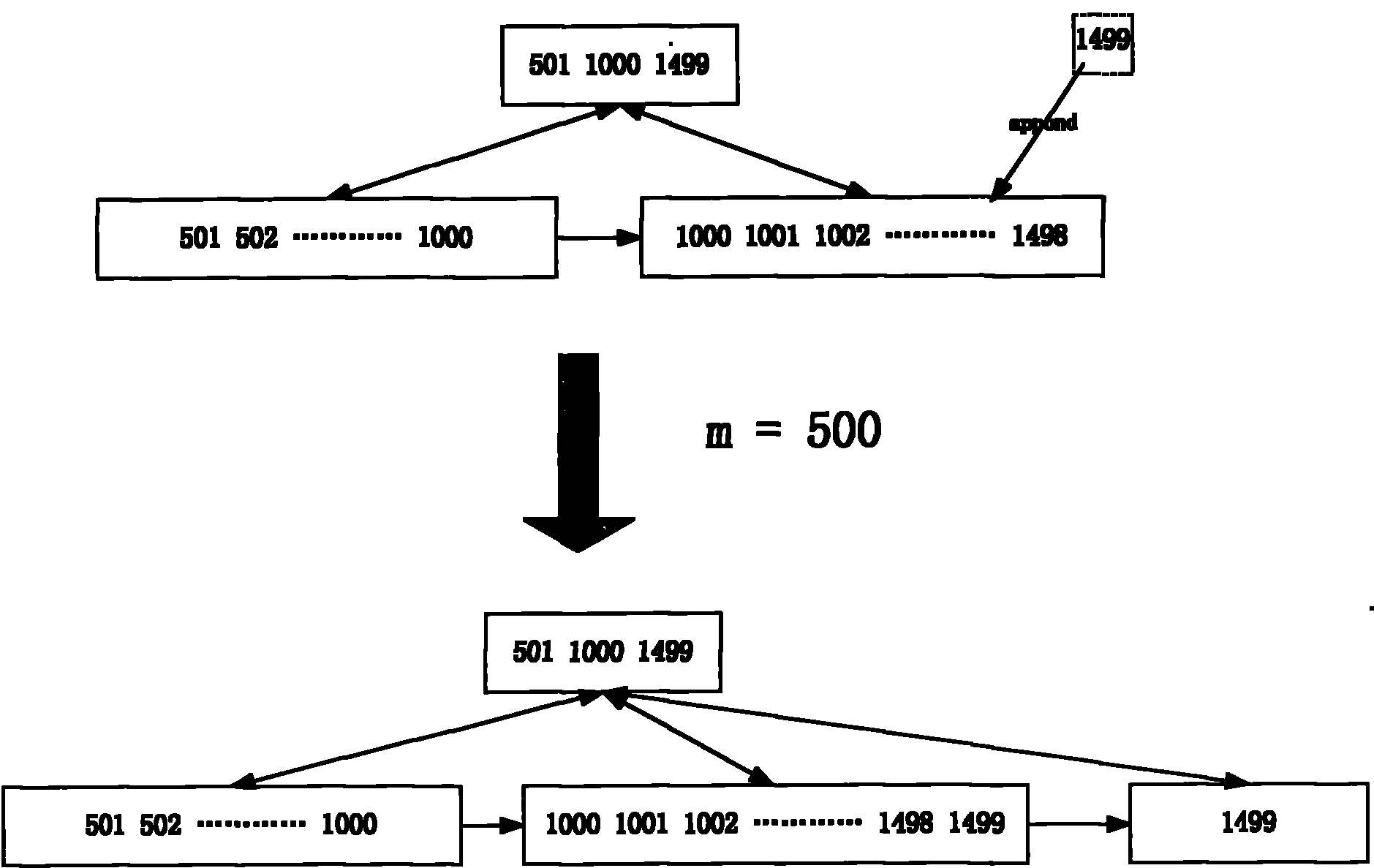

Real-time database history data organizational management method

InactiveCN102654863AImprove data insertionImproved Splitting MechanismSpecial data processing applicationsWrite bufferDirty page

The invention discloses a real-time database history data organizational management method. The method mainly comprises: real-time database history buffer area management and real-time database history file indexing modes. Through the history data organizational management method disclosed by the invention, a memory space with a designated size is allocated to the history data buffer area in advance, and the buffer area reading / writing management is performed in the memory space in a unified manner; the history data can be quickly written through history file management and the improved mode of indexing the history data by use of b+ tree in combination with proper buffer policy, page request mode and dirty page cleaning mode; and a history data index is established at relatively low cost at the time of writing so that the search for history data is very efficient. Meanwhile, by adopting a history data buffer area with a fixed size and a management mode of unifying reading / writing and buffering, the history data management model is greatly simplified, and the stability of the whole real-time database history data management module is improved.

Owner:华北计算机系统工程研究所

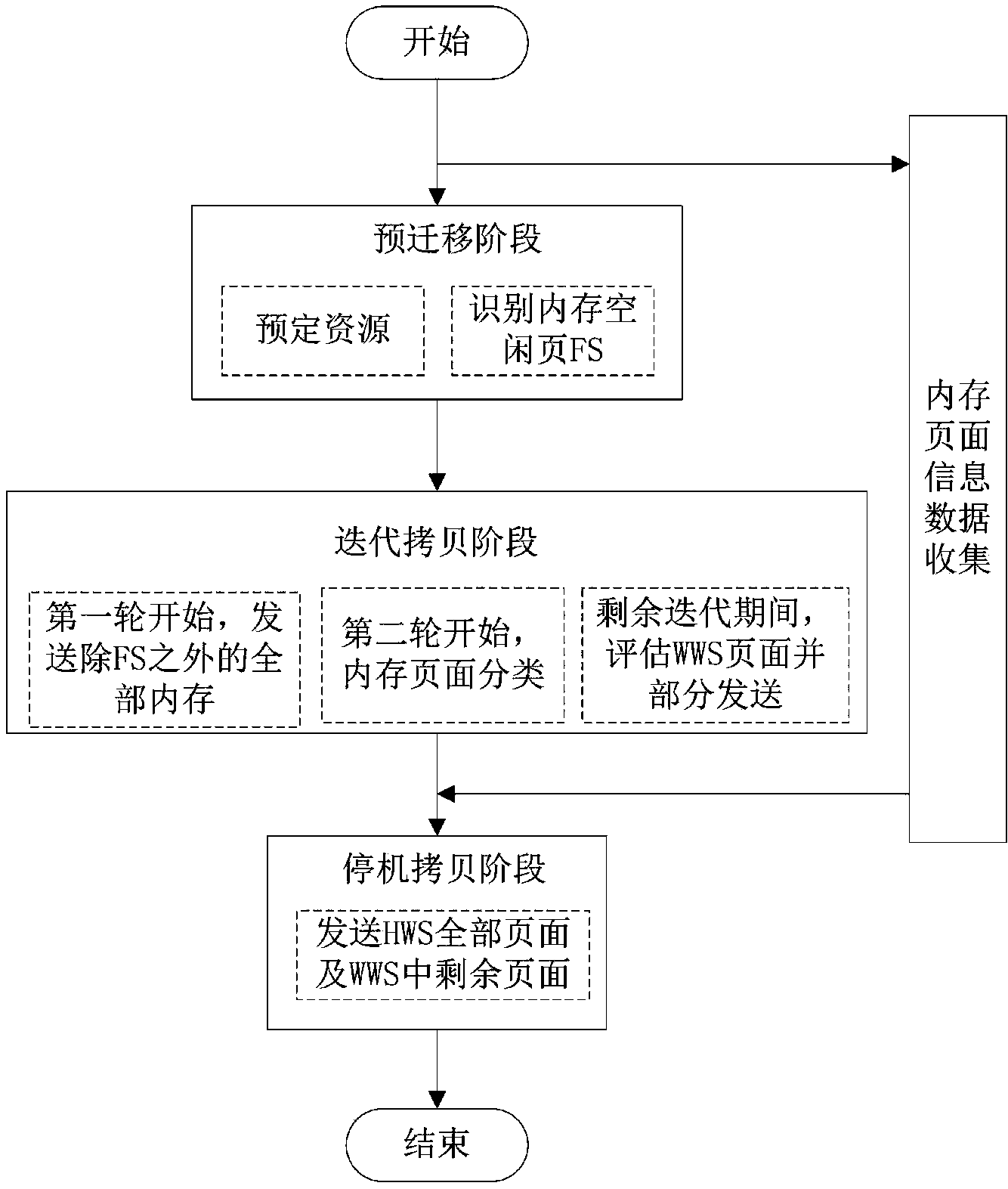

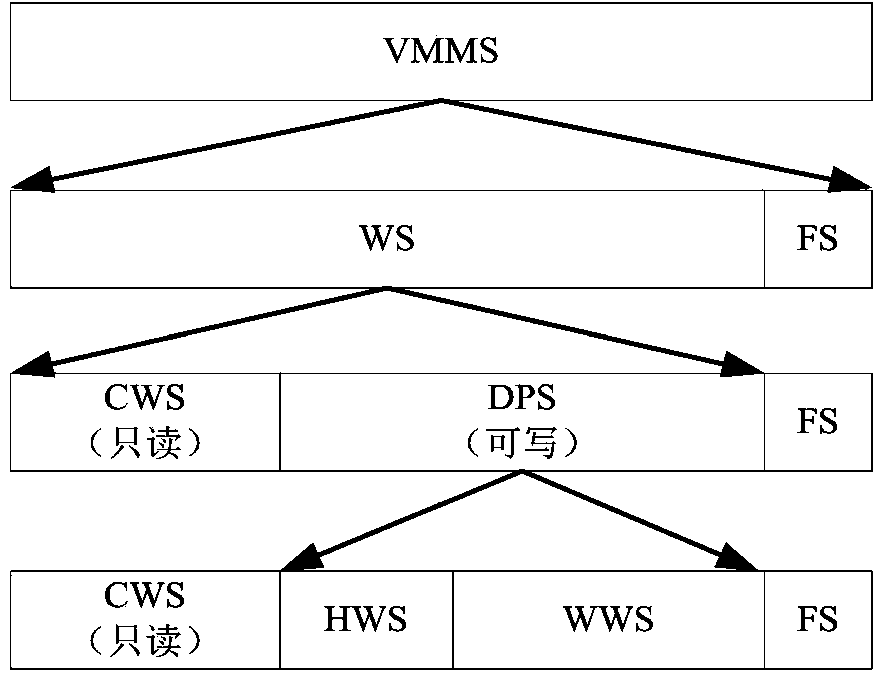

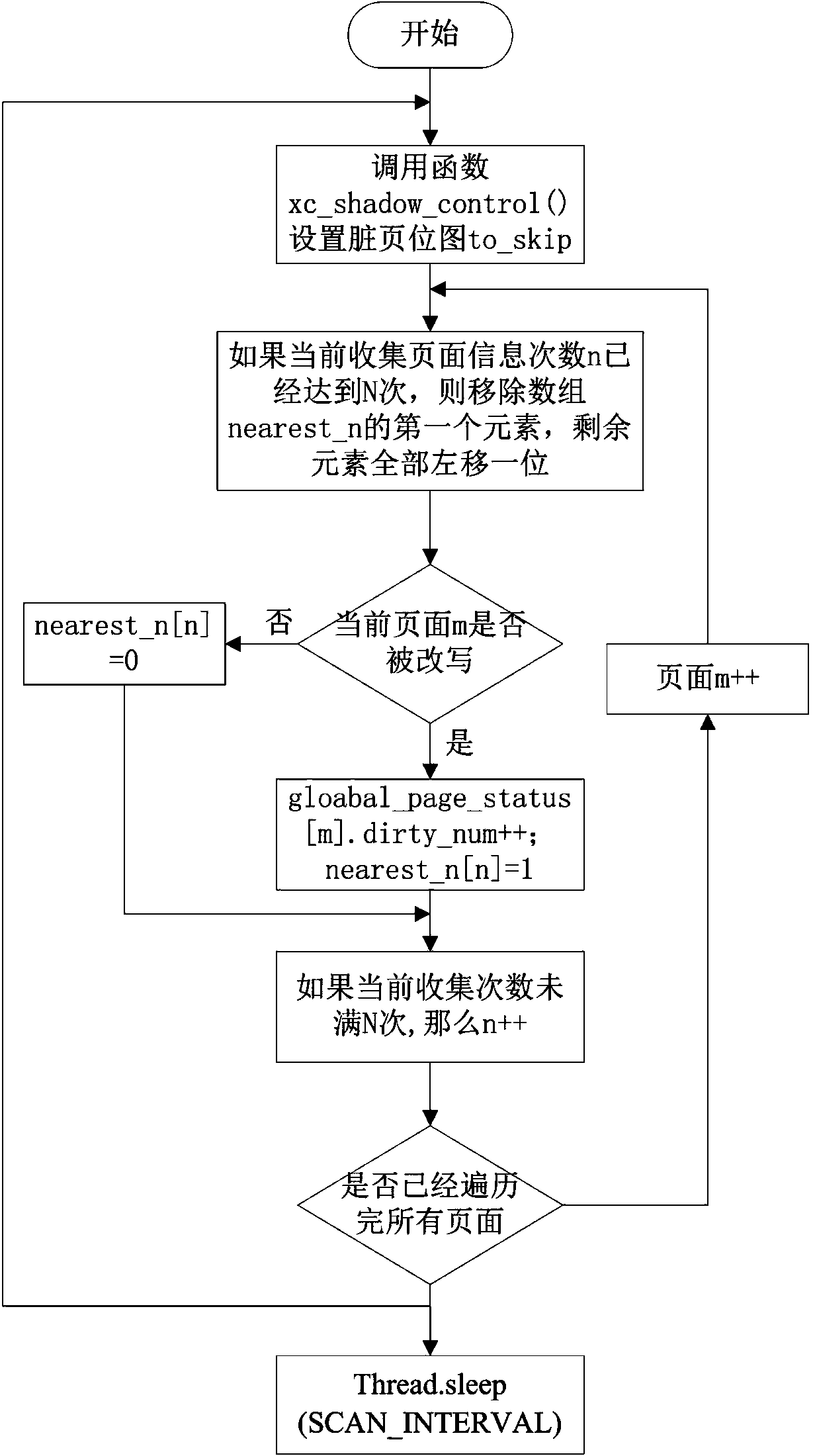

Memory state migration method applicable to dynamic migration of virtual machine

ActiveCN104268003AAvoid retransmissionResource allocationSoftware simulation/interpretation/emulationDirty pageCopying

The invention provides a memory state migration method applicable to dynamic migration of a virtual machine. The memory state migration method includes steps of regularly collecting memory historical data of memory dirty pages in a source host at a fixed time interval, recording rewriting times and observation values of the latest N times of each page; recognizing free memory pages of the source hose and transmitting migration requests to a selected target host; iteratively copying; stopping copying. The memory state migration method is high in copying efficiency, short in total migration time, short in halting time and small in migration data flows.

Owner:NANJING UNIV OF SCI & TECH

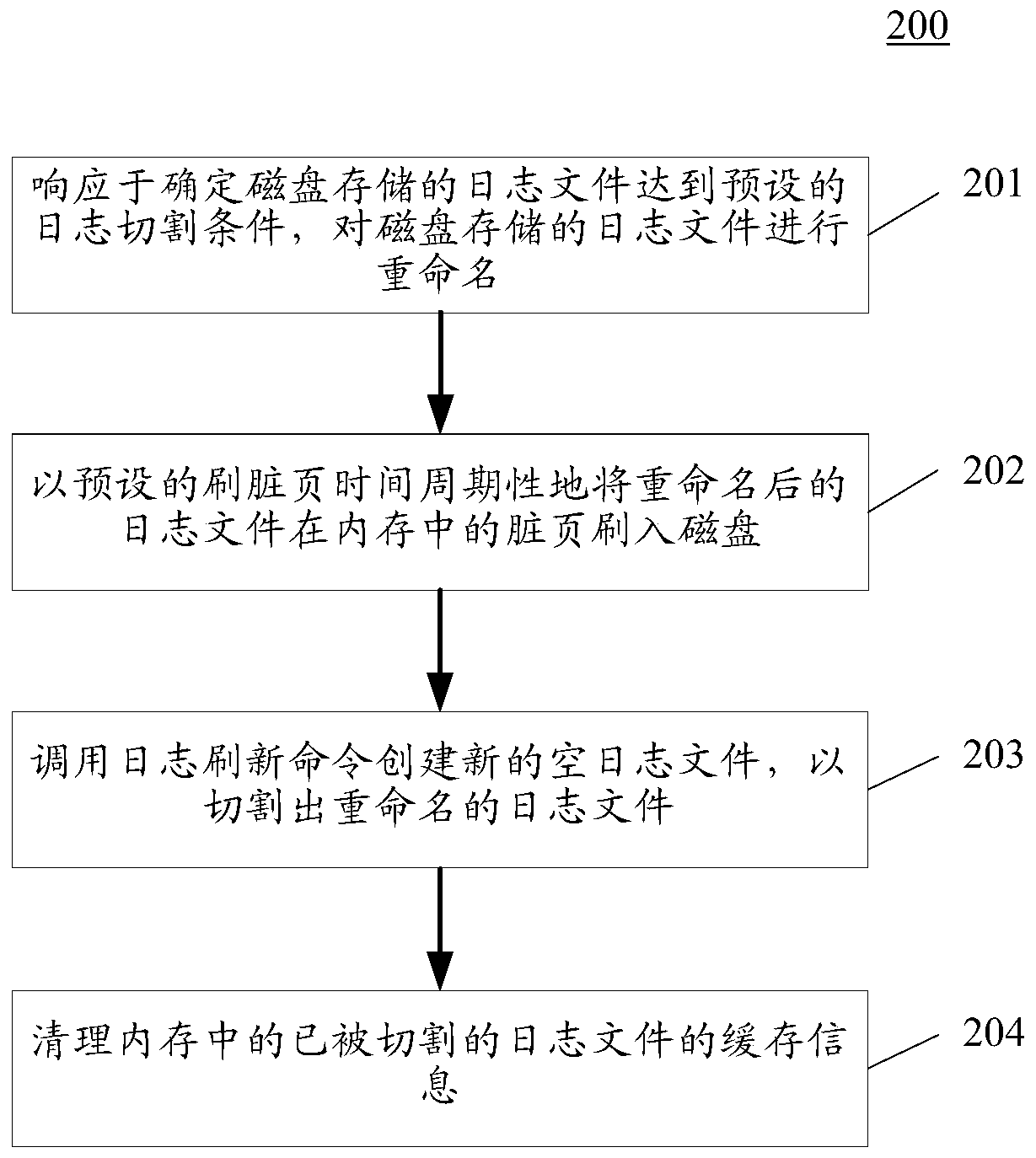

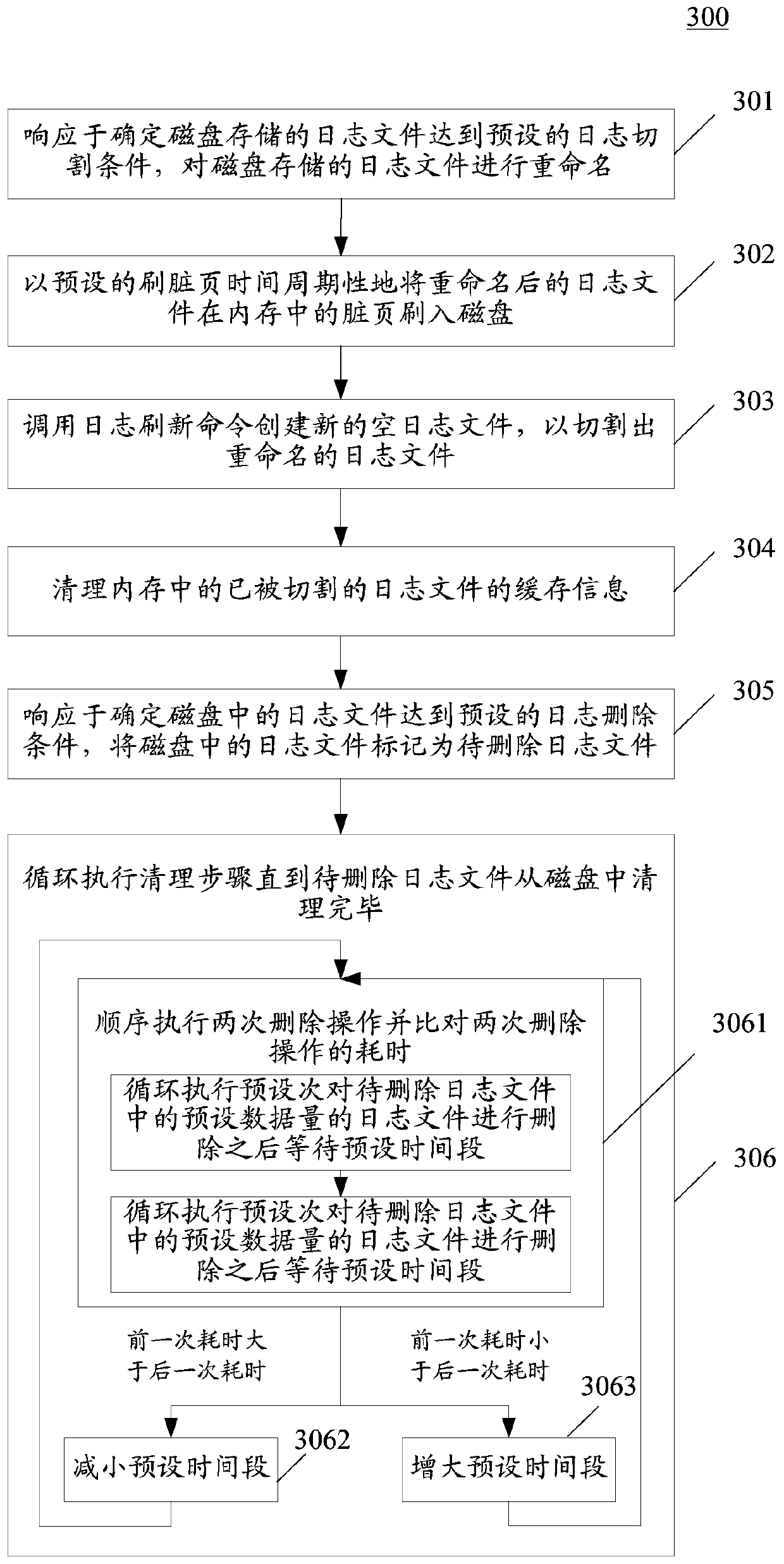

Log processing method and device for database

ActiveCN109960686AReduce blocking effectSave operating timeFile system functionsFile system typesDirty pageElectronic equipment

The embodiment of the invention discloses a log processing method and device for a database, electronic equipment and a computer readable medium. A specific embodiment of the method comprises the steps of renaming a log file stored in a disk in response to determining that the log file stored in the disk reaches a preset log cutting condition; periodically brushing dirty pages of the renamed log file in a memory into a disk at preset dirty page brushing time; calling the log refreshing command to create a new null log file so as to cut out a renamed log file; and clearing the cache informationof the cut log file in the memory. According to the embodiment, the blocking influence of the log lock on the database read-write request during log cutting can be reduced.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

Flush strategy for using DRAM as cache media system and method

ActiveUS10810123B1Memory architecture accessing/allocationMemory systemsDirty pageParallel computing

A method, computer program product, and computer system for receiving, by a computing device, an I / O request. The I / O request may be processed as a write miss I / O. One or more dirty pages associated with the write miss I / O may be placed into a tree according to a key. It may be determined whether one of a first event and a second event occurs. A data flush may be triggered for the tree when the first event occurs, and the data flush may be triggered for the data flush for the tree when the second event occurs.

Owner:EMC IP HLDG CO LLC

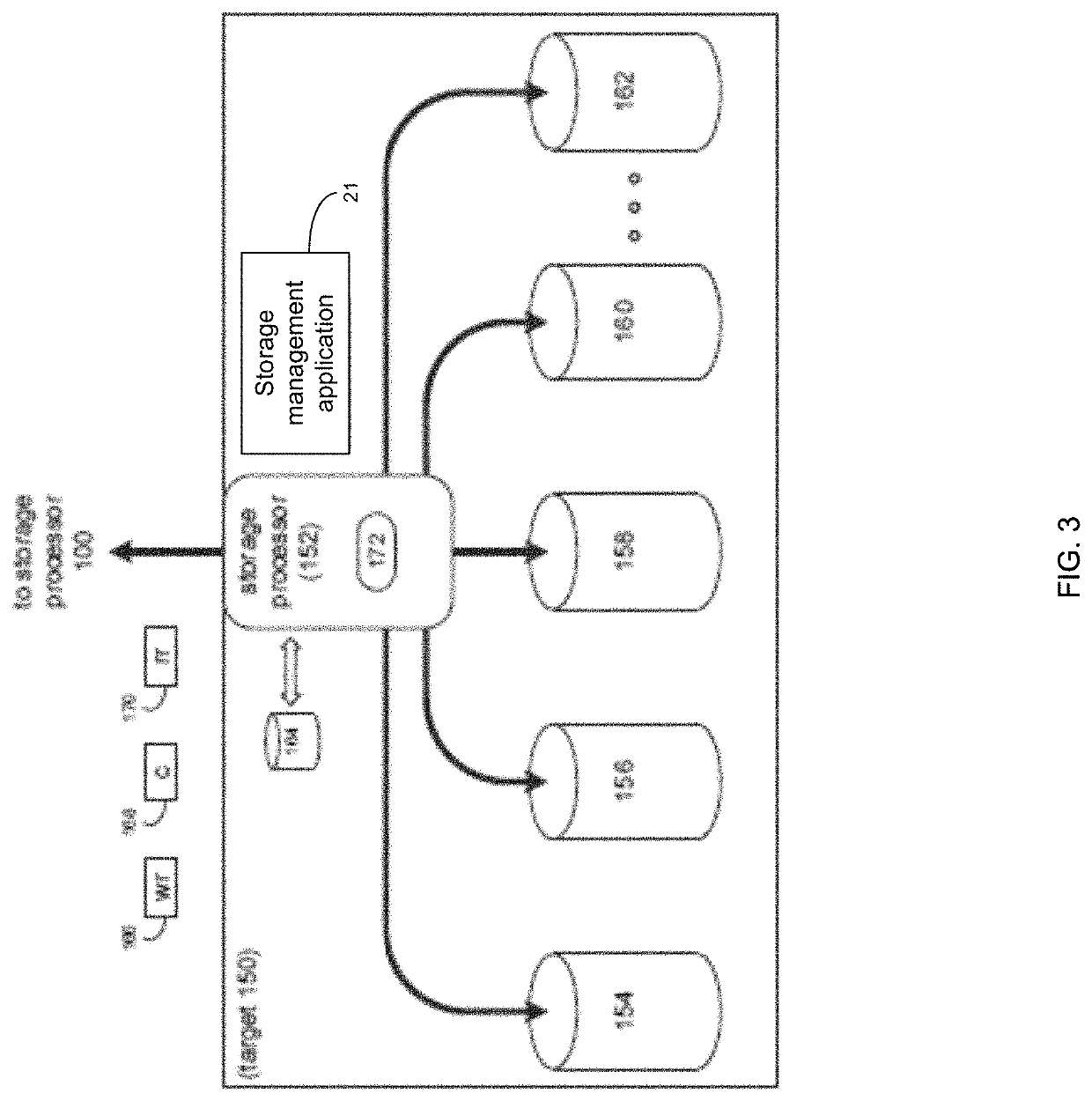

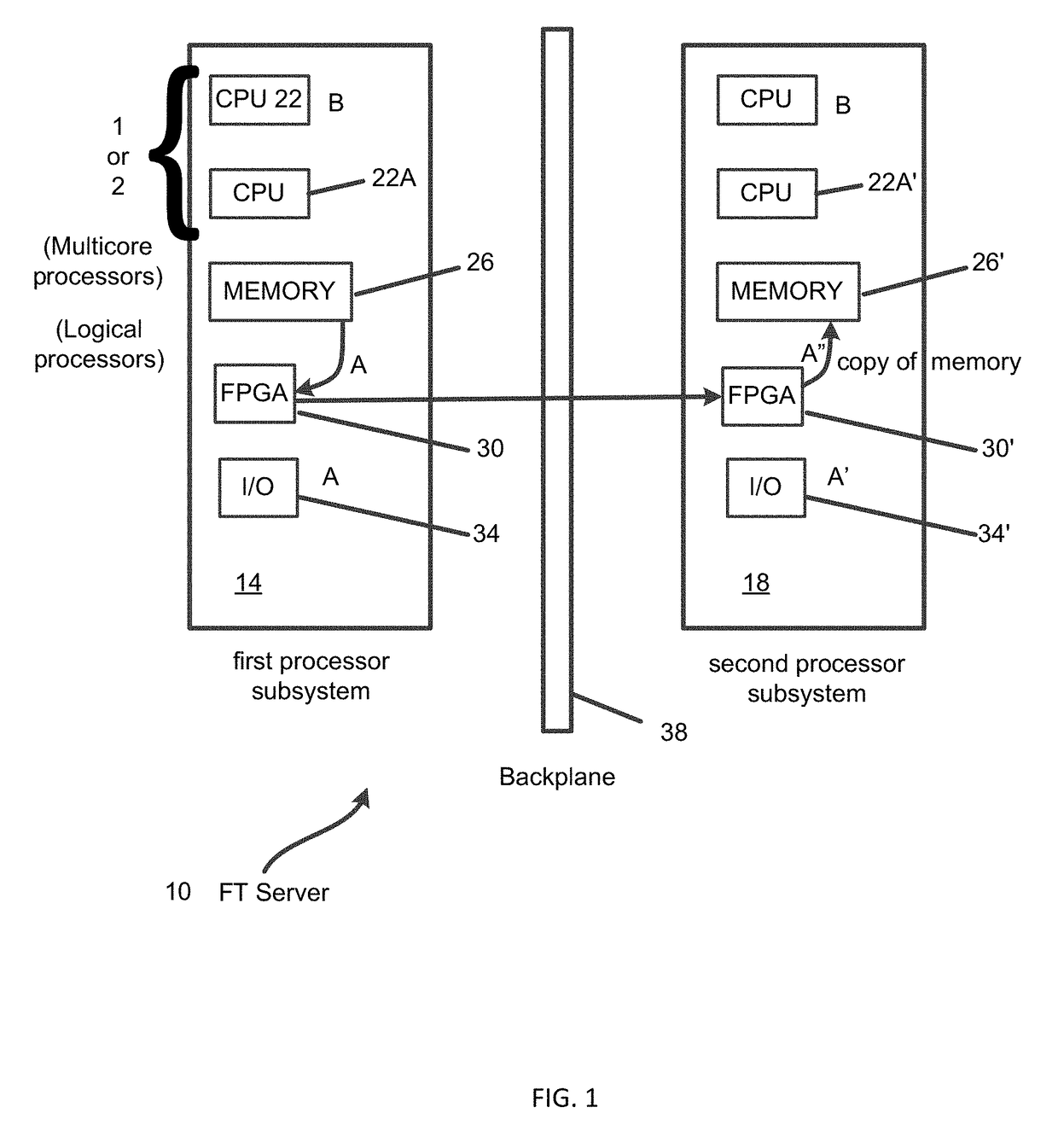

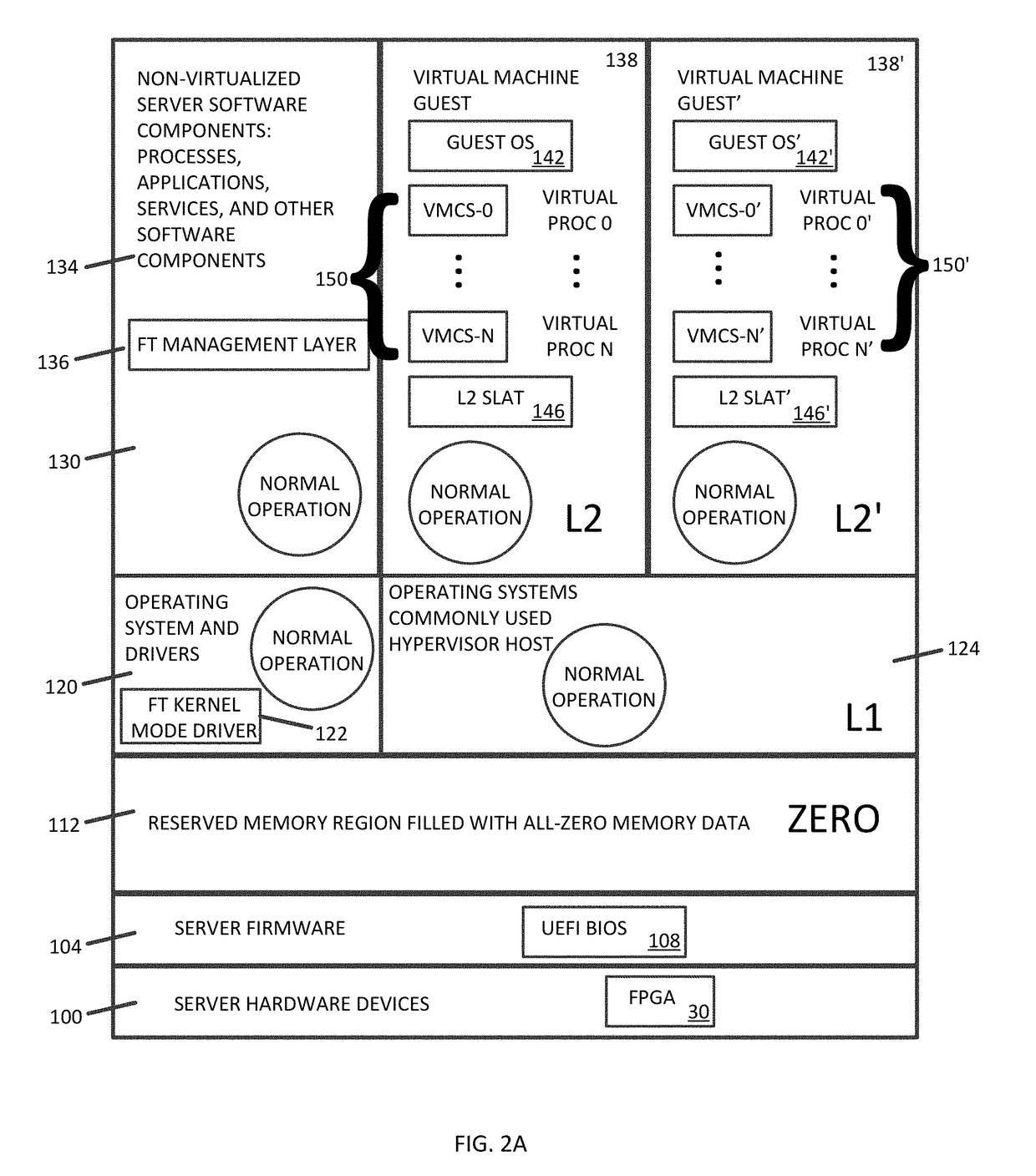

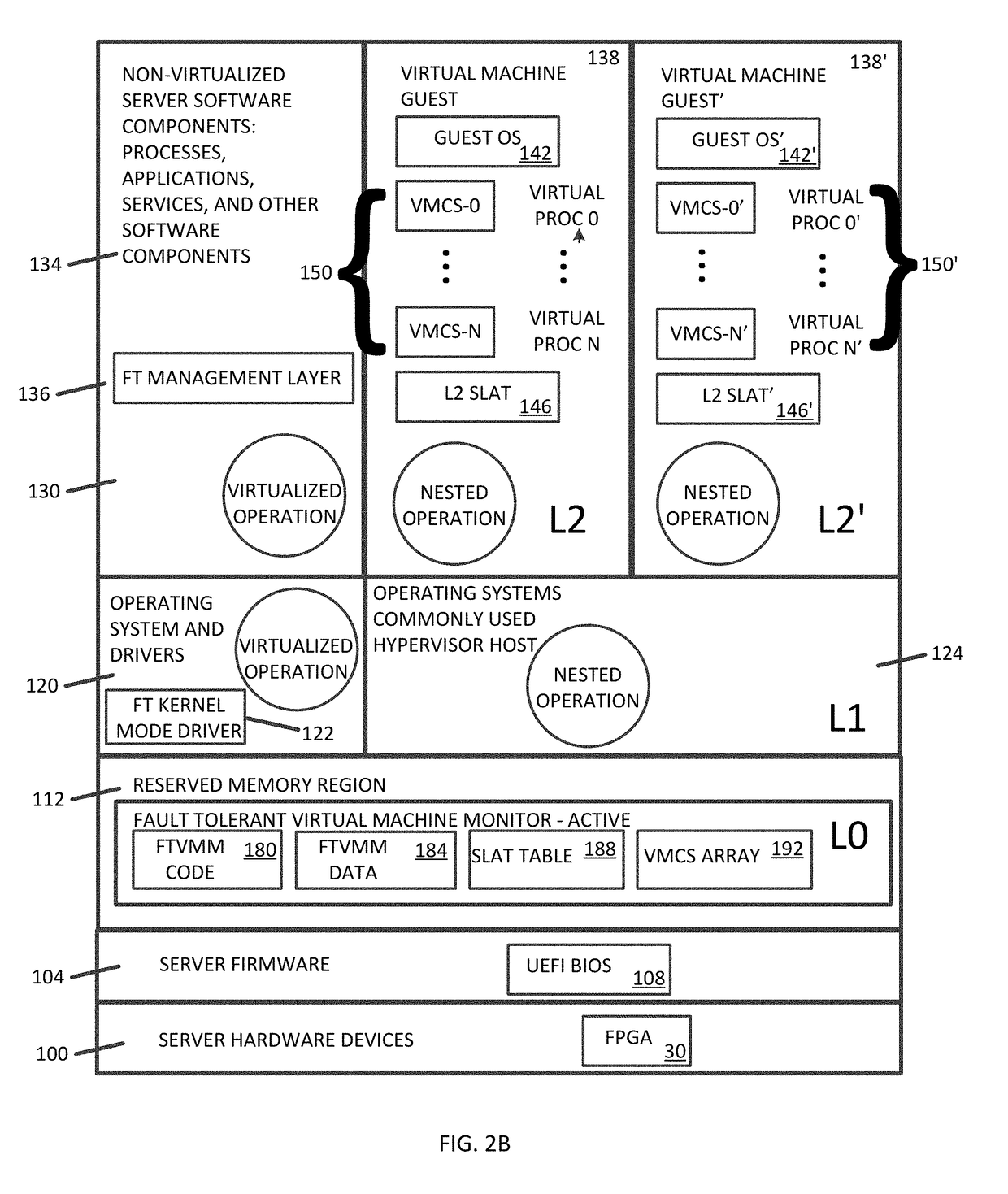

Method for Dirty-Page Tracking and Full Memory Mirroring Redundancy in a Fault-Tolerant Server

A method of transferring memory from an active to a standby memory in an FT Server system. The method includes the steps of: reserving a portion of memory using BIOS; loading and initializing an FT Kernel Mode Driver; loading and initializing an FT Virtual Machine Manager (FTVMM) including the Second Level Address Translation table SLAT into the reserved memory. In another embodiment, the method includes tracking memory accesses using the FTVMM's SLAT in Reserved Memory and tracking “L2” Guest memory accesses by tracking the current Guest's SLAT and intercepting the Hypervisor's writes to the SLAT. In yet another embodiment, the method includes entering Brownout by collecting the D-Bits; invalidating the processor's cached SLAT translation entries, and copying the dirtied pages from the active memory to memory in the second Subsystem. In one embodiment, the method includes entering Blackout and moving the final dirty pages from active to the mirror memory.

Owner:STRATUS TECH IRELAND LTD

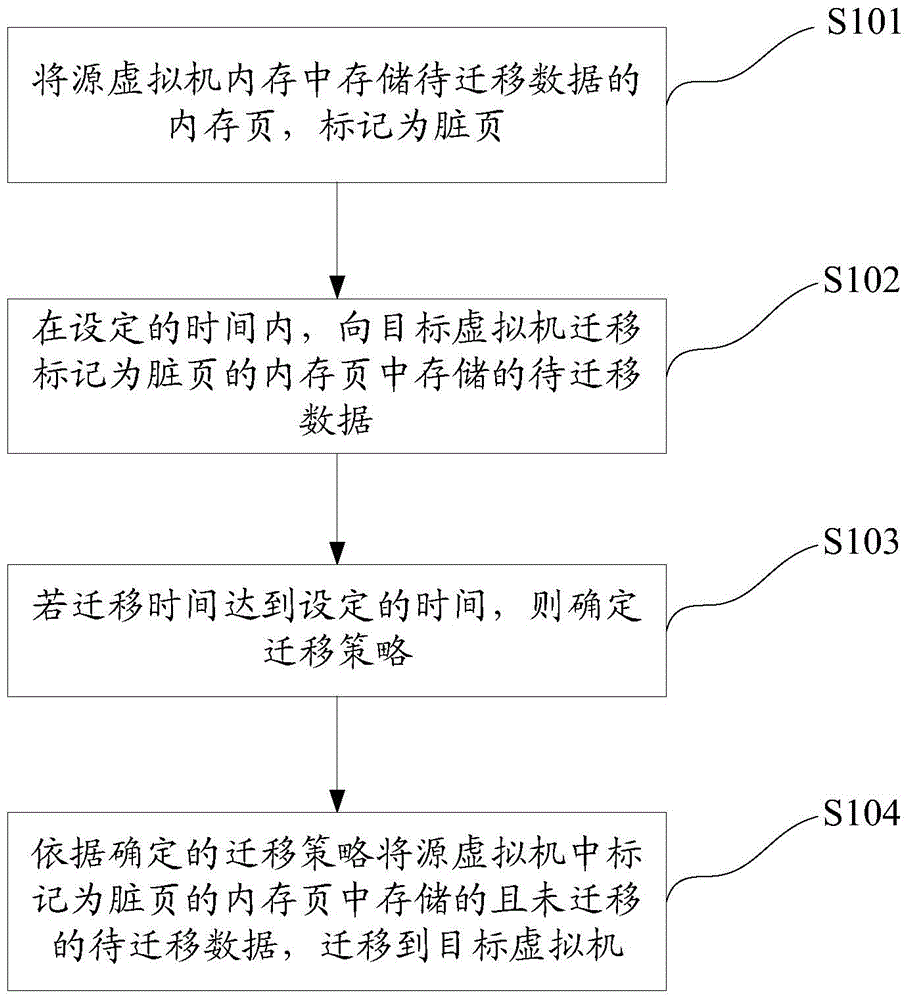

Virtual machine migration method and device

The invention discloses a virtual machine migration method and device; the method comprises the following steps: identifying memory pages with to be migrated data in a source virtual machine memory to be dirty pages; migrating the to be migrated data stored in the memory pages identified as dirty pages to a target virtual machine in a set time period; determining a migration strategy when the migration time reaches the set time period, and the migration strategy is used for migrating non-migrated to be migrated data stored in the memory pages identified as dirty pages in the source virtual machine; migrating the non-migrated to be migrated data stored in the memory pages identified as dirty pages from the source virtual machine to the target virtual machine according to the migration strategy. The virtual machine migration method can adapt to network environments, and is suitable for an ARM platform.

Owner:HUAWEI TECH CO LTD +1

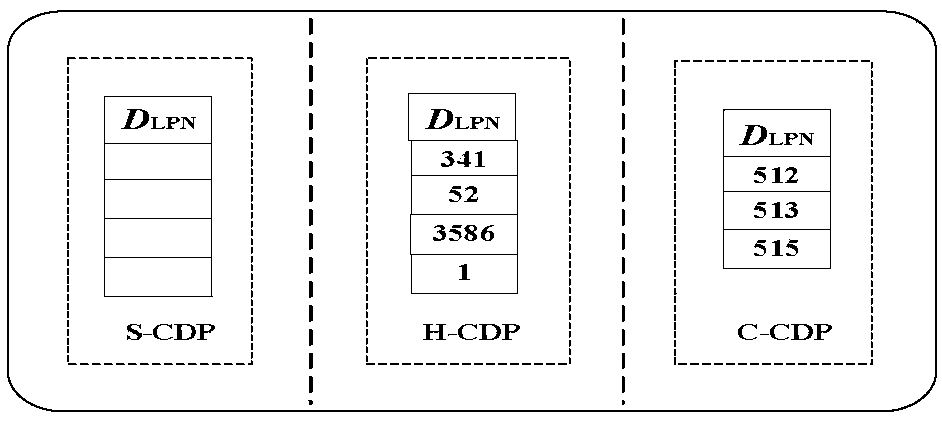

Page-level buffer improvement method based on classification strategy

InactiveCN107590084AImprove practicalityGood application prospectMemory systemsDirty pageComputer module

The invention discloses a page-level buffer improvement method based on a classification strategy. The method comprises a request type distinguishing module, a hot data page storage area module, a cold data page storage area module and a continuous data page storage area module. The method comprises the following steps: firstly, dividing a hot data page cache into the hot data page storage area module, the cold data page storage area module and the continuous data page storage area module, and separately using the modules to load data pages of a request with frequent accesses, the data pages of a request with lower access frequency and the data pages of the request with high spatial locality; secondly, arriving at the continuous data page storage area module by prefetching multiple continuous data pages; and finally, when the data page cache is full, preferably replacing least recently used (LRU) clean pages in the cold data page storage area module, and if the cold data page storage area module does not have dirty pages, replacing the dirty pages. Compared with a page-level LRU algorithm, by adopting the page-level buffer improvement method disclosed by the invention, the responseperformance to continuous loads can be improved, and the read and write overheads of flash memories can be effectively reduced.

Owner:ZHEJIANG WANLI UNIV

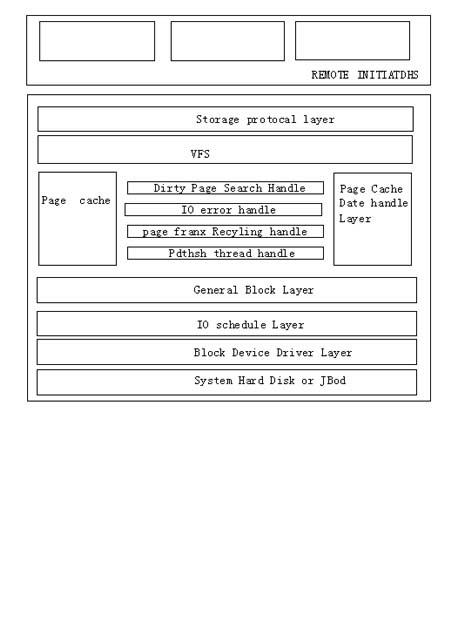

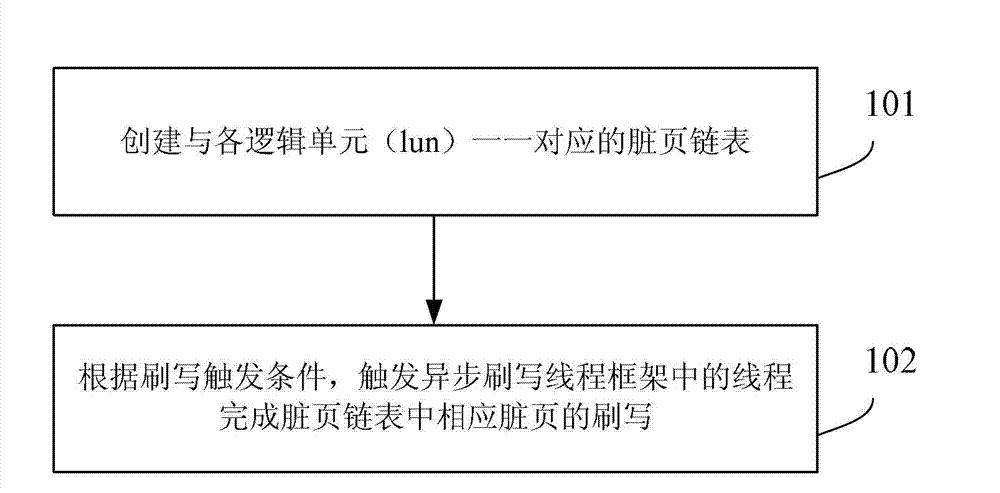

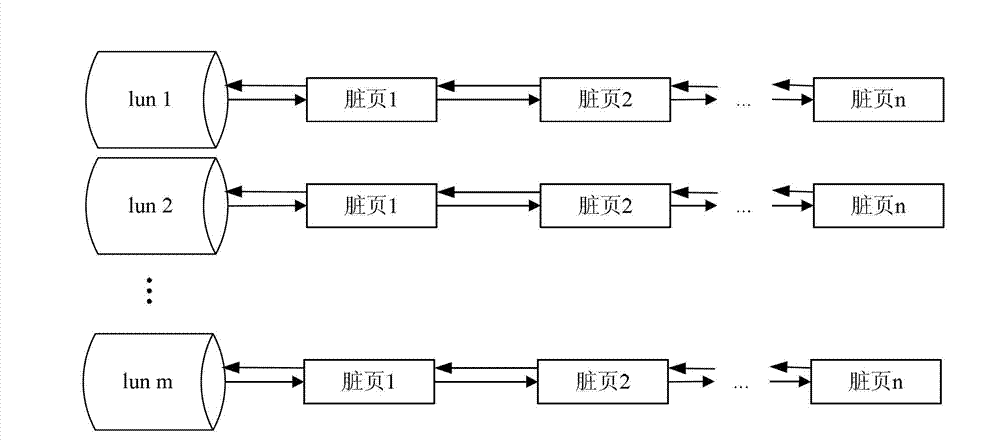

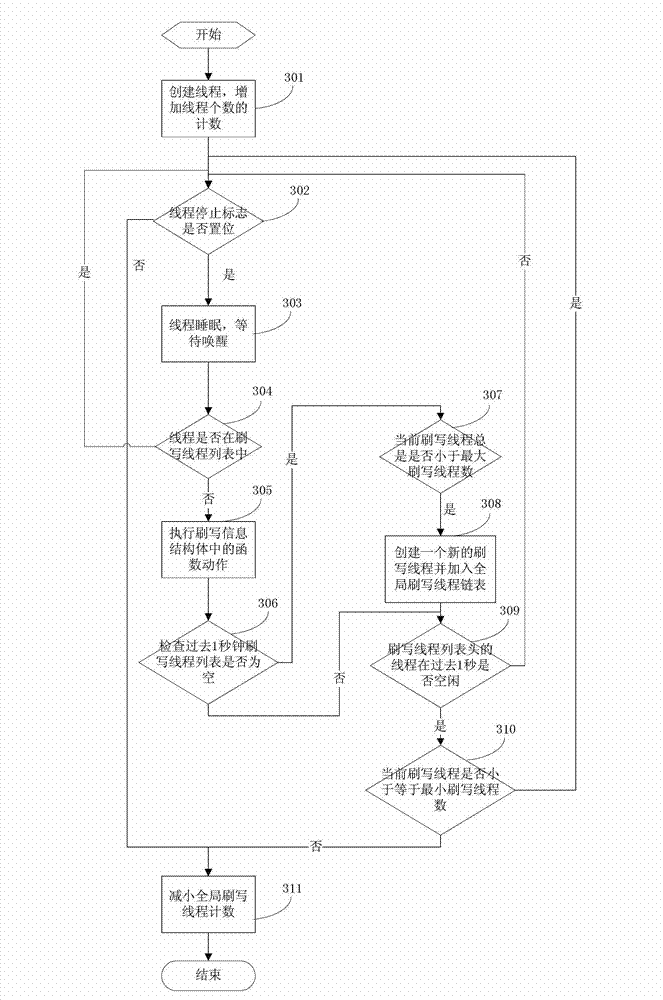

Method and device for flushing data

ActiveCN103049396AWrite quicklyIncrease usageInput/output to record carriersMemory adressing/allocation/relocationDirty pageCache page

The invention provides a method and a device for flushing data. The method comprises triggering threads in an asynchronous flash thread framework to complete flashing of corresponding dirty pages in a dirty page chain table, wherein the dirty pages are cache pages with data saved. According to the method and the device for flushing data, the data during cache can be written in discs rapidly, the cache usage rate is improved, and accordingly, the read-write efficiency is improved.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

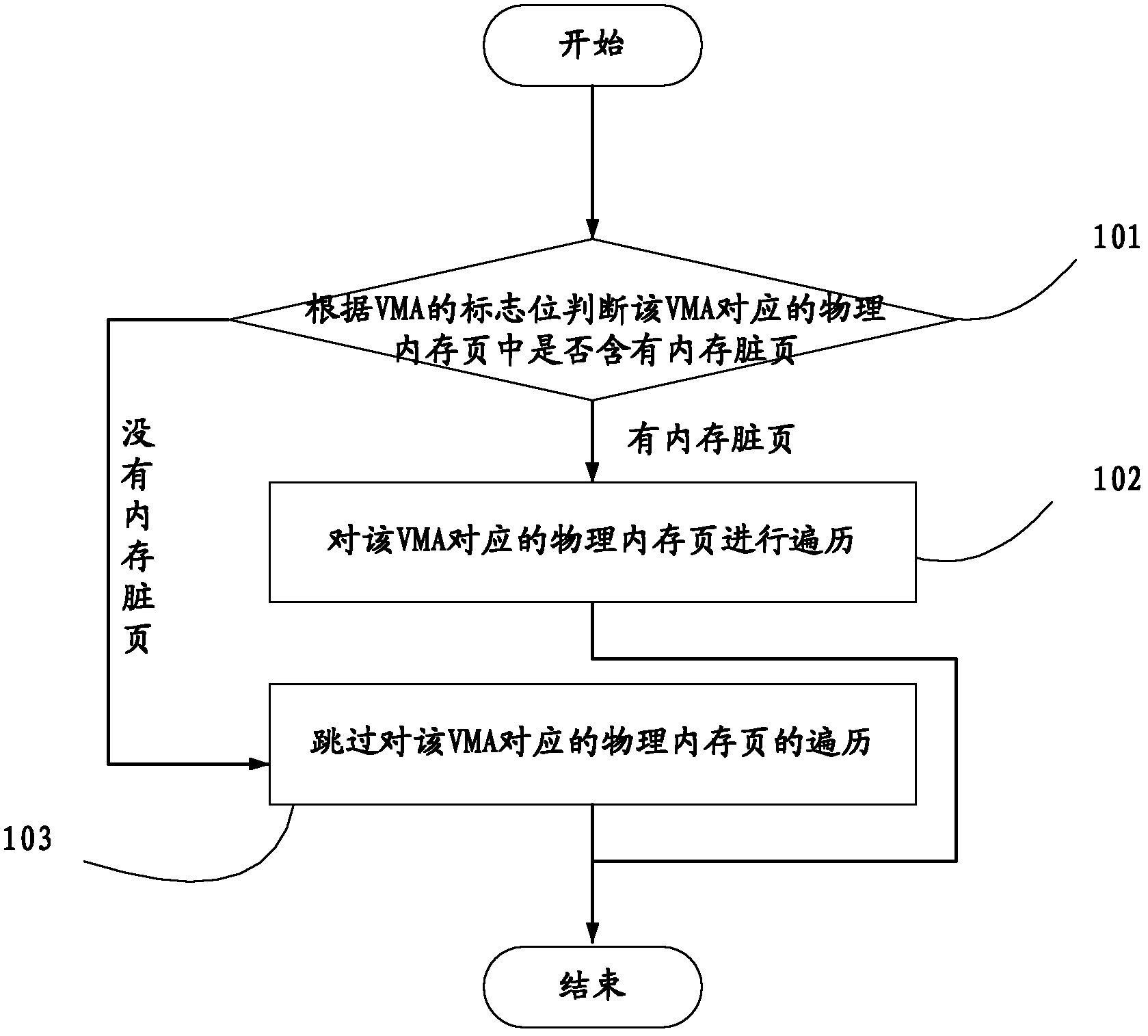

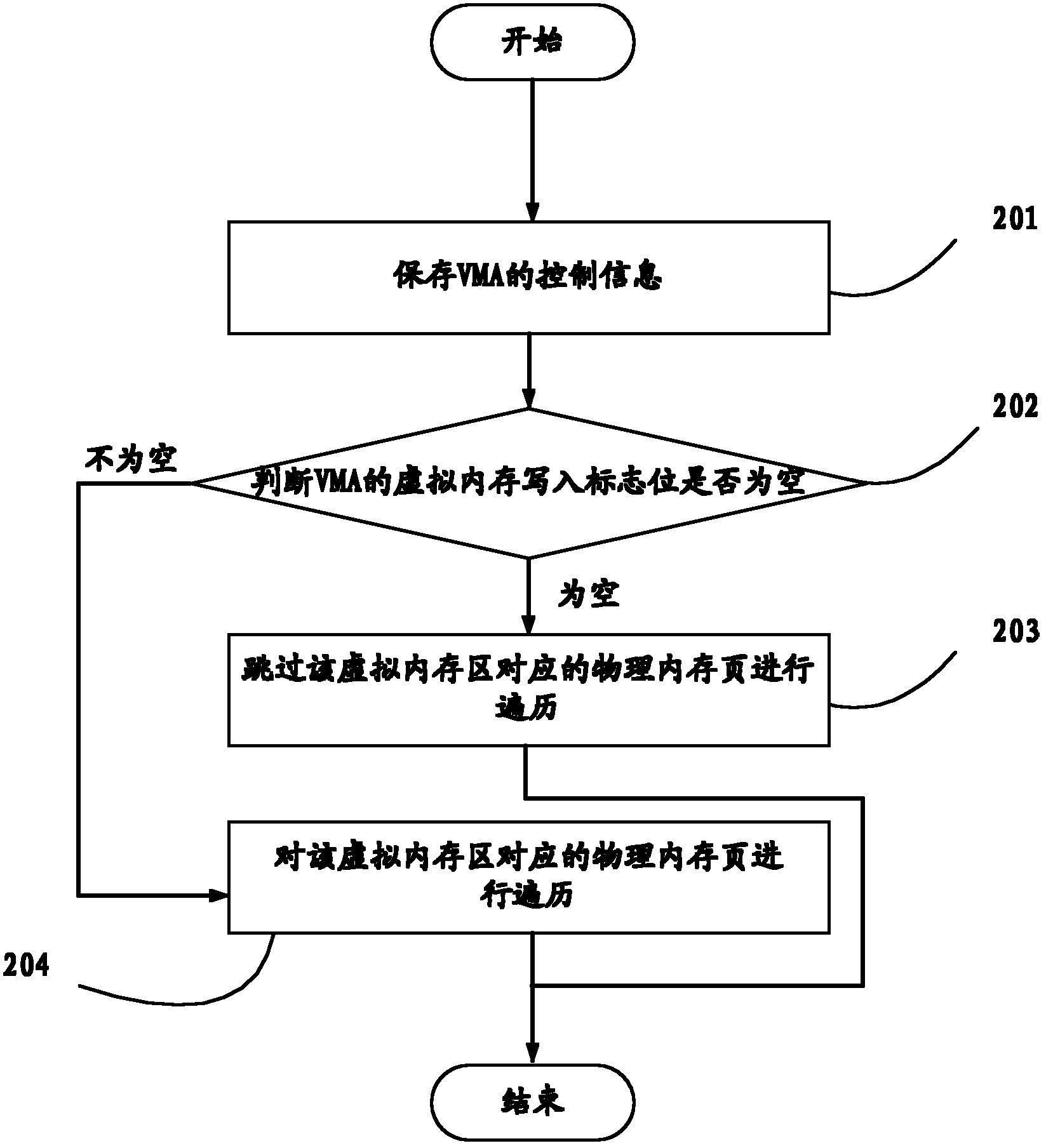

Data backup method, server and hot backup system

InactiveCN102662799AImprove processing efficiencyShorten the timeRedundant operation error correctionVirtual memoryDirty page

The invention discloses a data backup method, a server and a hot backup system, and relates to the field of computer. The data backup method, the server and the hot backup system are invented to shorten hot backup time and improve the efficiency of the hot backup system. The method includes: during backup of a virtual memory area of a process address space and after backup of control information of the virtual memory area, determining existence of memory dirty pages of corresponding physical memory pages in the virtual memory area according to a flag of the virtual memory area; when the corresponding physical memory pages in the virtual memory area include memory dirty pages, traversing the corresponding physical memory pages in the virtual memory area, namely locating the memory dirty pages of the physical memory pages and storing contents of the memory dirty pages; when the corresponding physical memory pages in the virtual memory area include no memory dirty pages, skipping the traversing for the corresponding physical memory pages in the virtual memory area. The data backup method, the server and the hot backup system are mainly applied to data information of application processes in a hot backup server.

Owner:HUAWEI TECH CO LTD

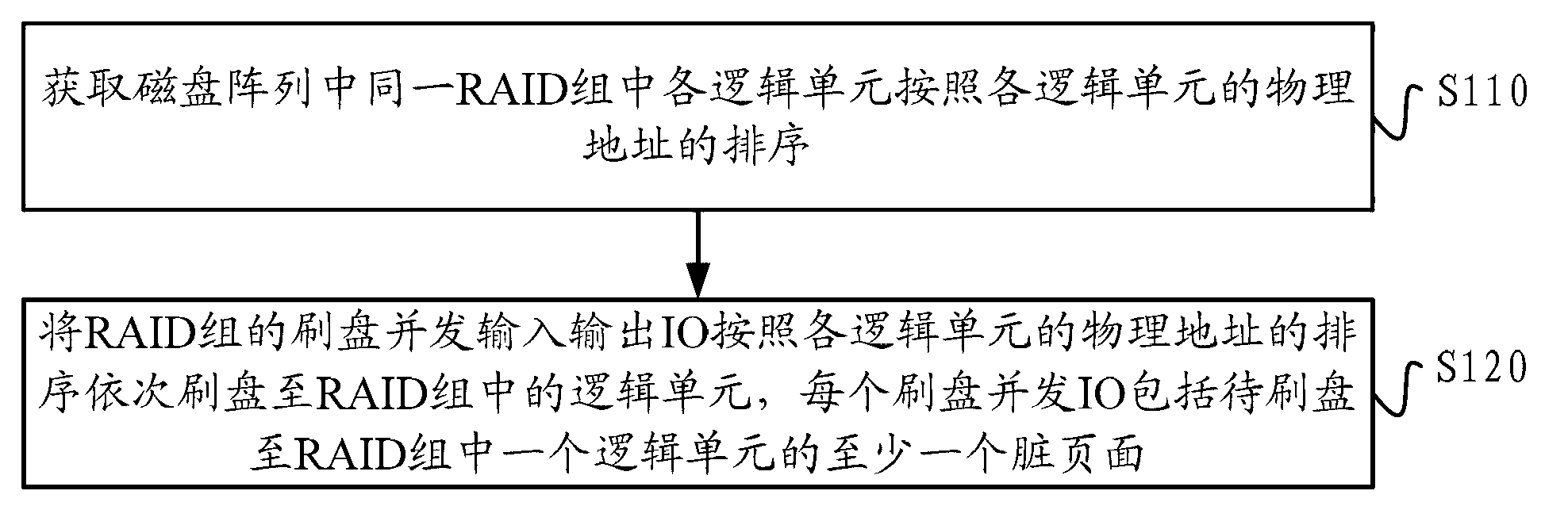

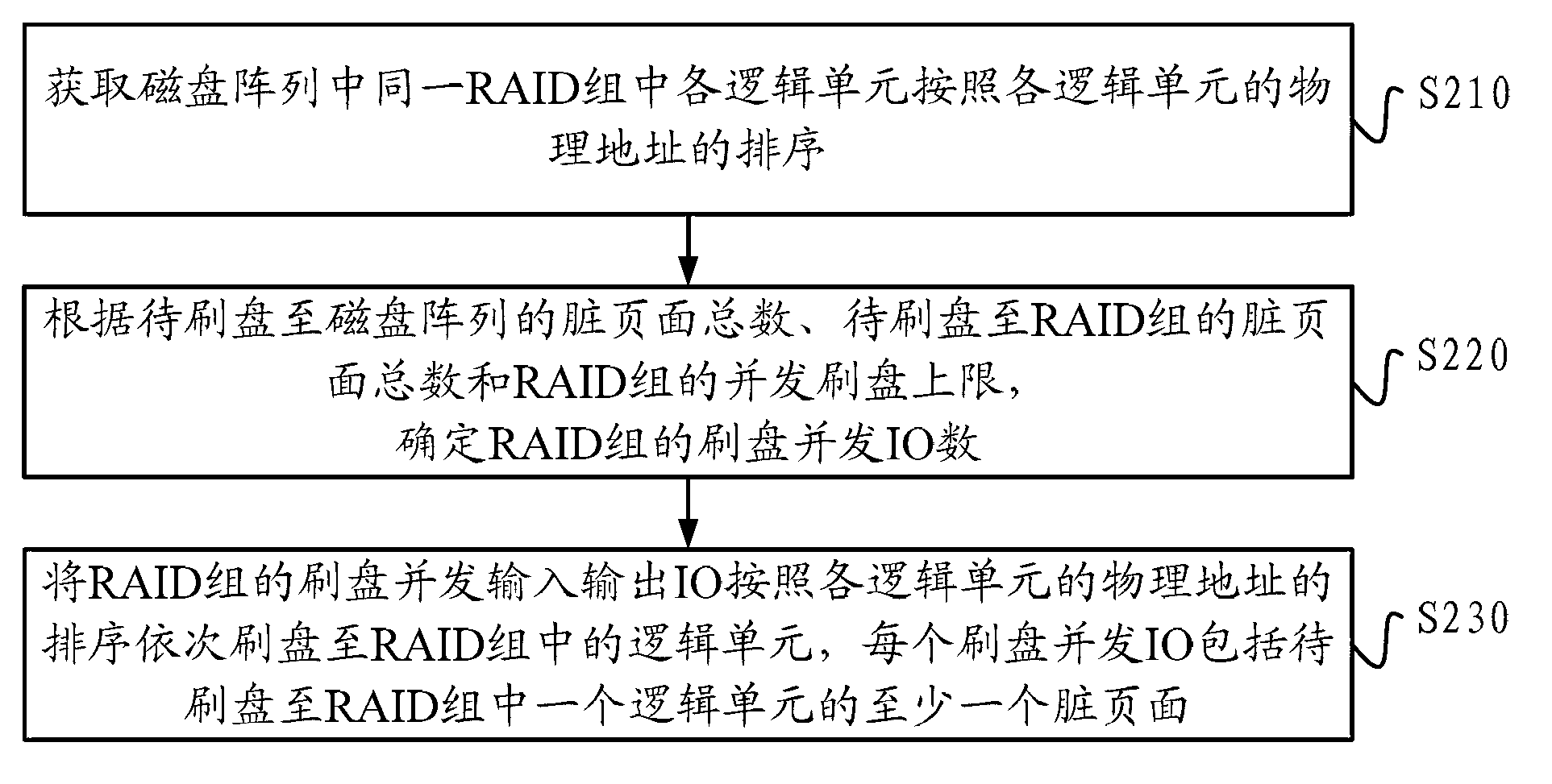

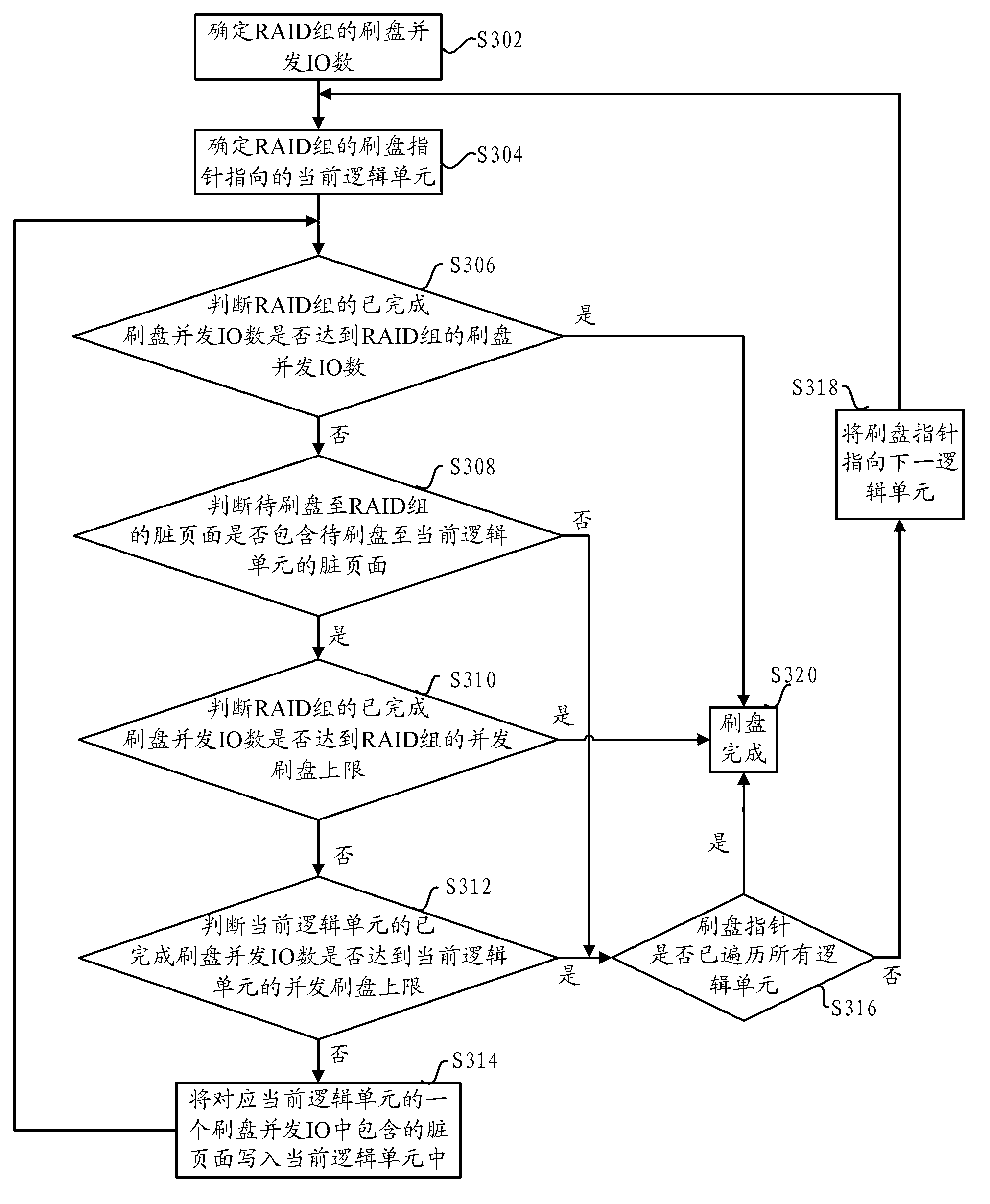

Disk writing method for disk arrays and disk writing device for disk arrays

ActiveCN103229136AImprove brushing efficiencyImprove throughputInput/output to record carriersMemory systemsRAIDDirty page

The embodiment of the invention provides a disk writing method for disk arrays and a disk writing device for the disk arrays. The method includes: acquiring a sequence, according to physical addresses of logic units, of the logic units in the same RAID (redundant array of independent disks) group of disk arrays; and writing the disk writing concurrence IOs (inputs / outputs) of the RAID groups to the logic units of the RAID groups according to the sequence of the physical addresses of the logic units, wherein each writing concurrence IO includes at least one dirty page of one logic unit to be written into the RAID group. The disk writing method for the disk arrays and the disk writing device for the disk arrays reduce the time which is consumed when a magnetic arm jitters back and forth in addressing through uniform scheduling of the logic units in a single RAID group and then writing the disks according to the sequence of the physical addresses and avoid the effects among different RAID groups through control of the RAID groups so that writing efficiency of the disk arrays is improved and throughput capacity of the disk arrays is increased.

Owner:XFUSION DIGITAL TECH CO LTD

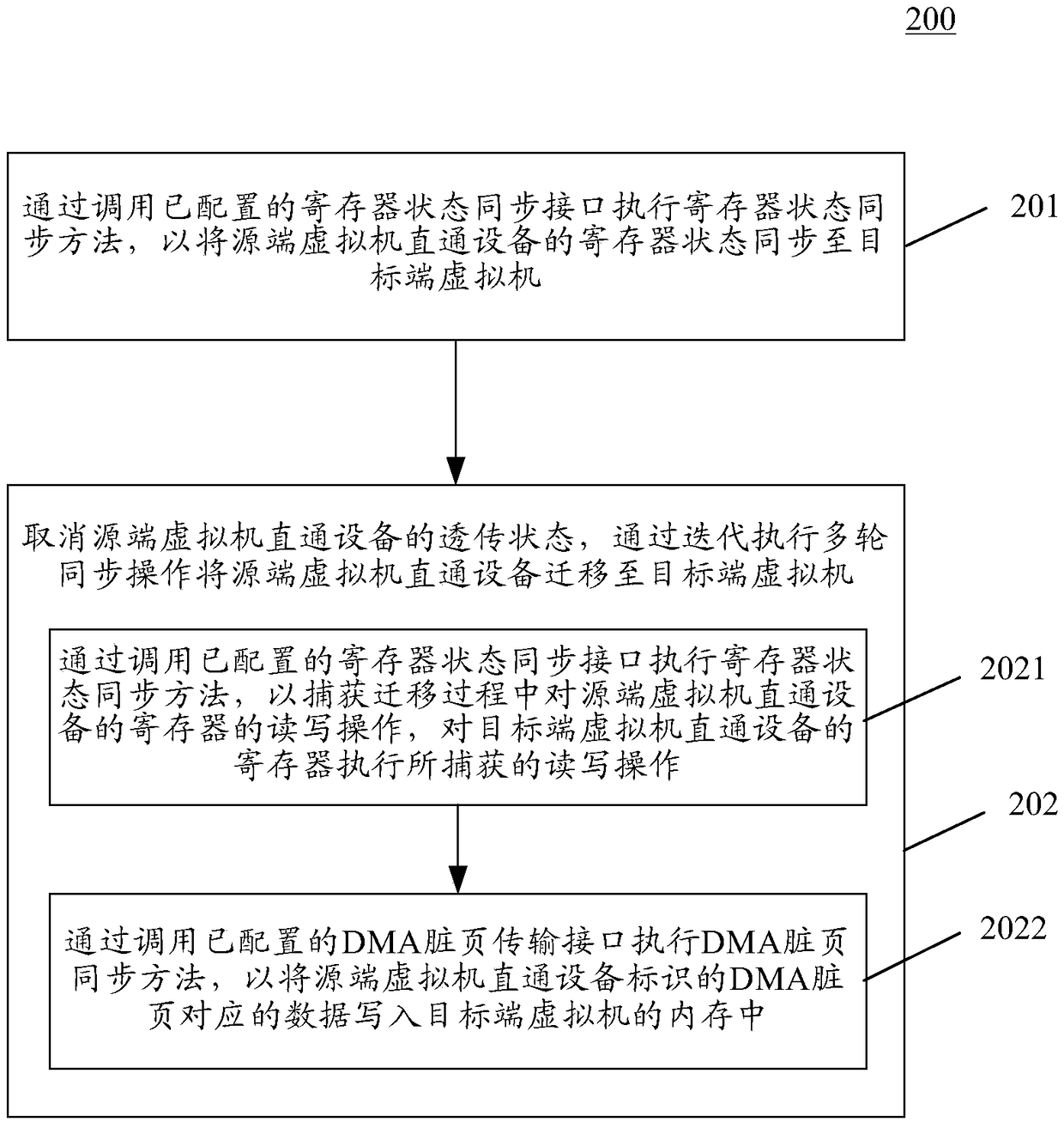

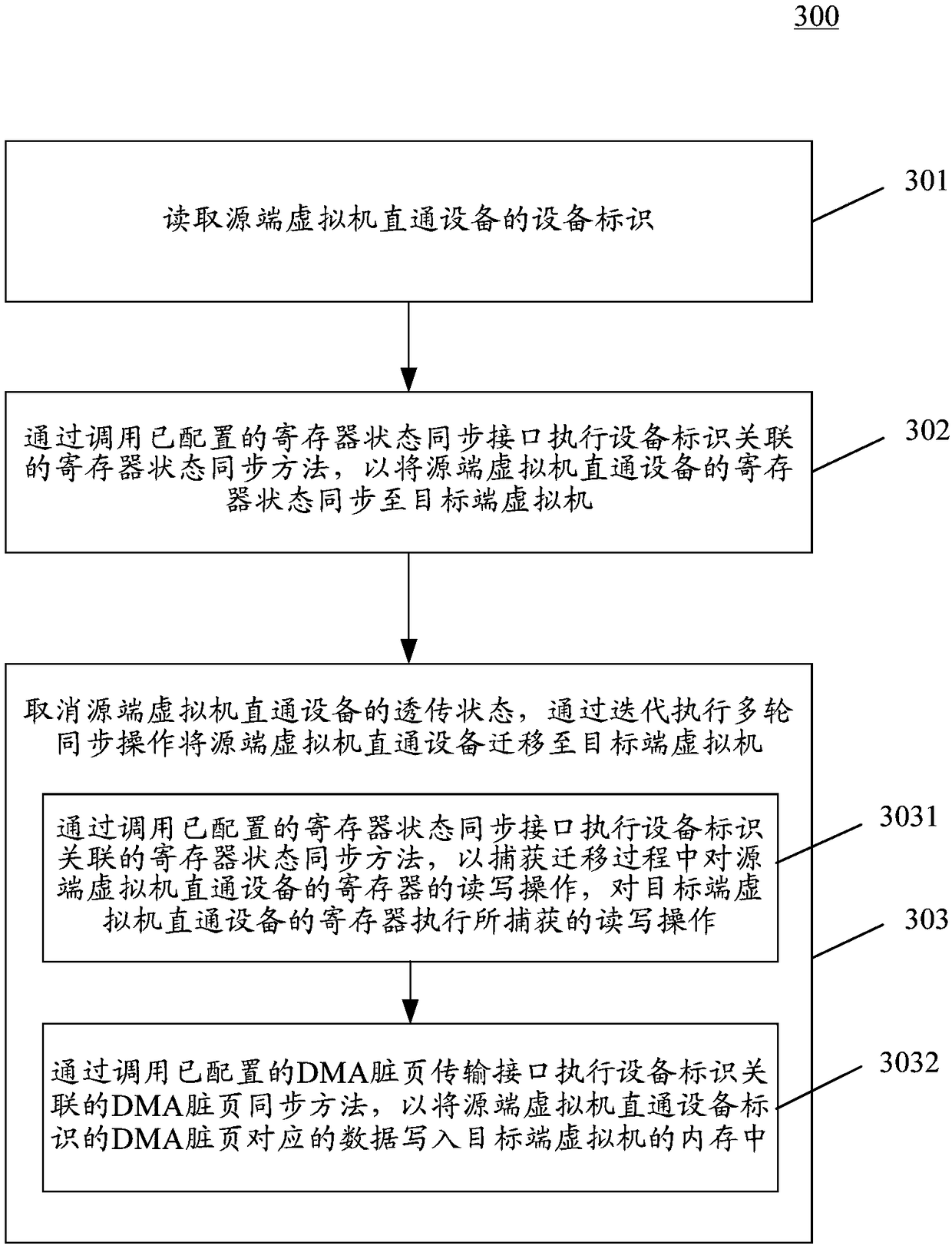

Thermal migration method and device for virtual machine direct connection equipment

ActiveCN108874506ARealization of hot migrationSoftware simulation/interpretation/emulationDirty pageProcessor register

The embodiment of the invention discloses a thermal migration method and device for virtual machine direct connection equipment. One specific embodiment of the method comprises the following steps: executing a register state synchronization method by calling a register state synchronization interface, and synchronizing a register state of source end virtual machine direct connection equipment to atarget end virtual machine; cancelling a transparent transfer state of the source end virtual machine direct connection equipment, and iteratively executing the following synchronization operations in multiple rounds and migrating the source end virtual machine direct connection equipment to the target end virtual machine: executing the register state synchronization method by calling the register state synchronization interface, capturing a read-write operation on a register of the source end virtual machine direct connection equipment in a migration process, and executing the captured read-write operation on a register of the target end virtual machine direct connection equipment; and executing a DMA dirty page synchronization method by calling a DMA dirty page transmission interface, and writing data corresponding to a DMA dirty page of the source end virtual machine direct connection equipment into a memory of the target end virtual machine. The embodiment of the invention can realize the thermal migration of direct connection equipment without changing a kernel of a virtual machine.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com