Patents

Literature

281 results about "Page cache" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, a page cache, sometimes also called disk cache, is a transparent cache for the pages originating from a secondary storage device such as a hard disk drive (HDD) or a solid-state drive (SSD). The operating system keeps a page cache in otherwise unused portions of the main memory (RAM), resulting in quicker access to the contents of cached pages and overall performance improvements. A page cache is implemented in kernels with the paging memory management, and is mostly transparent to applications.

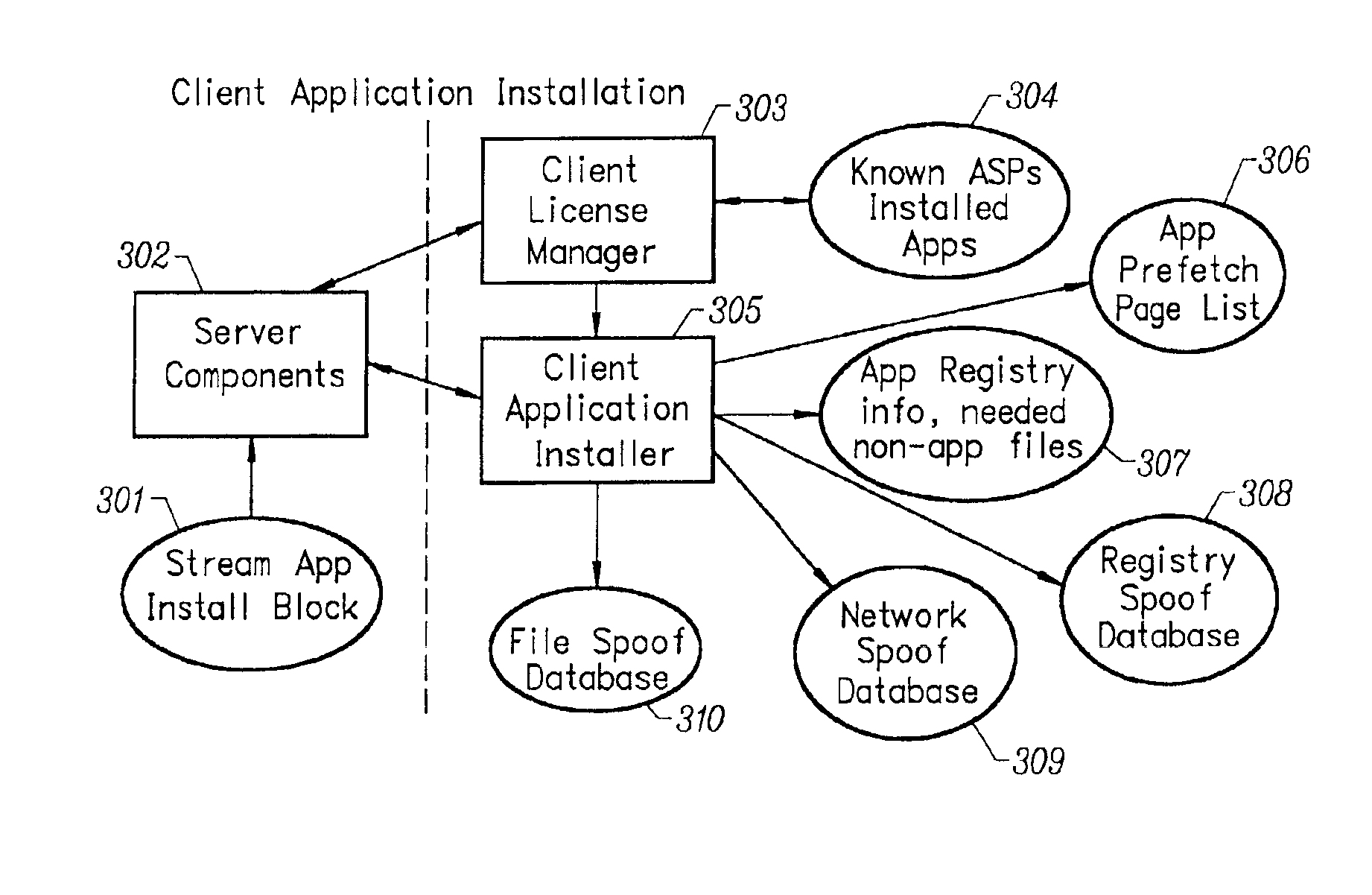

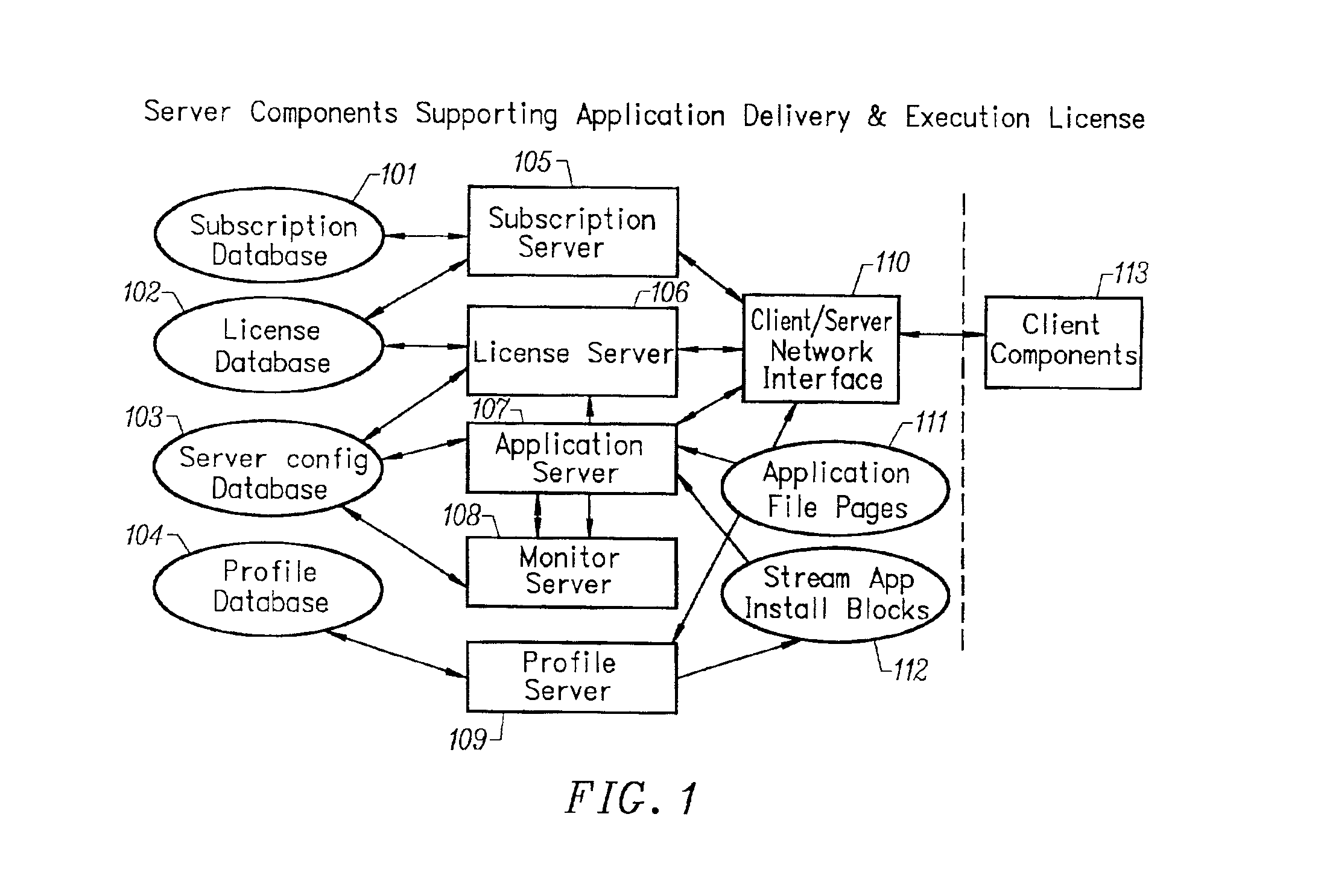

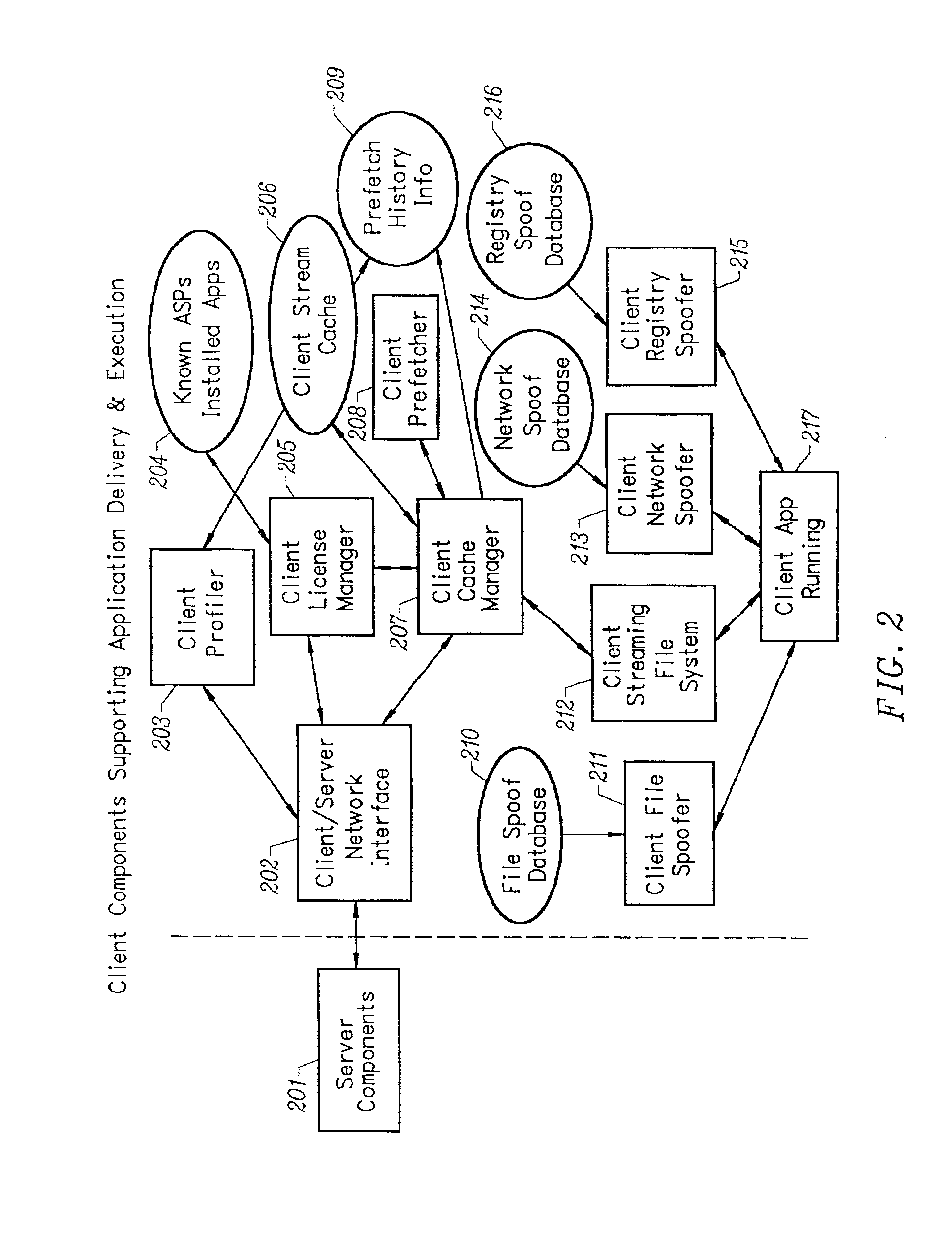

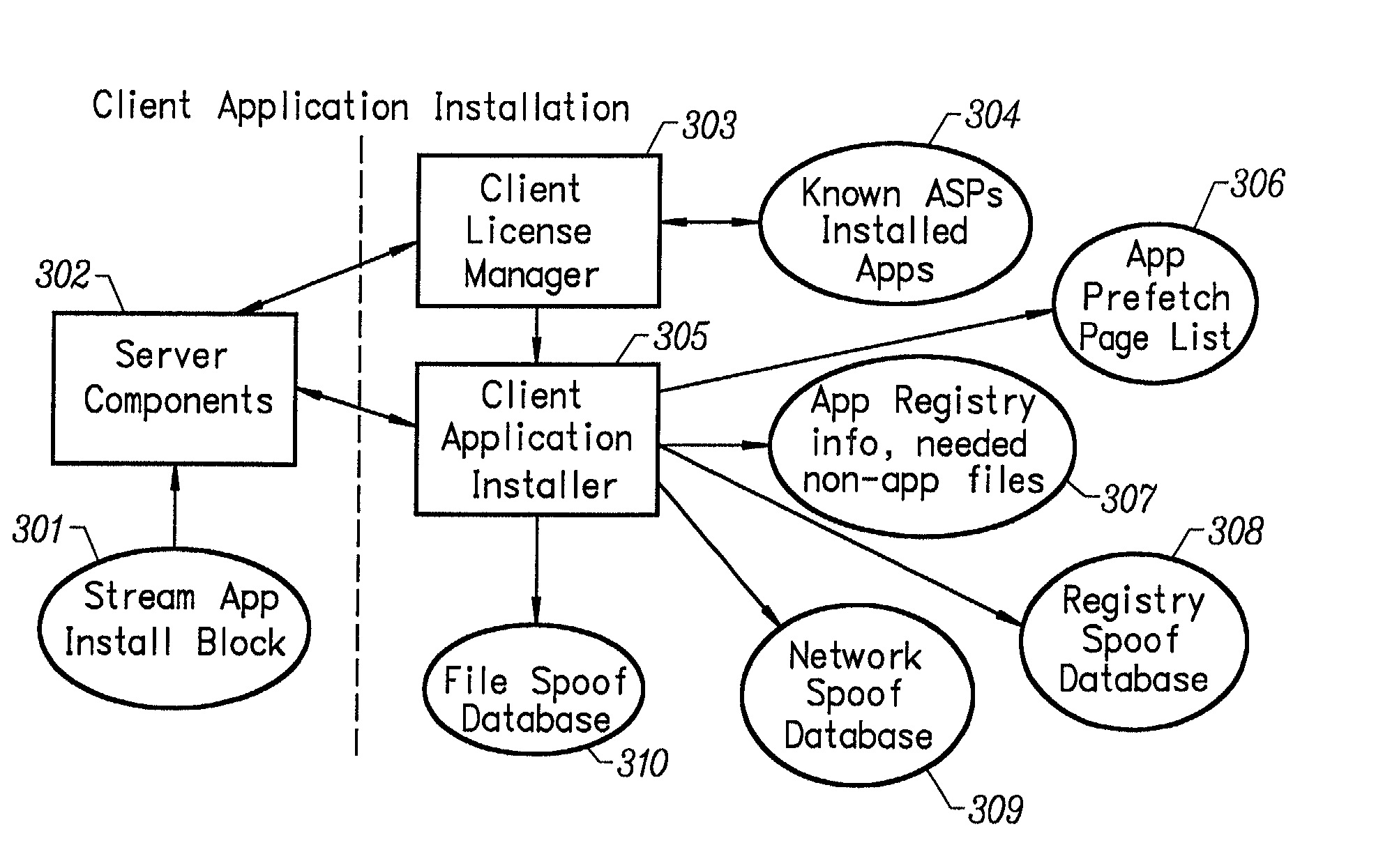

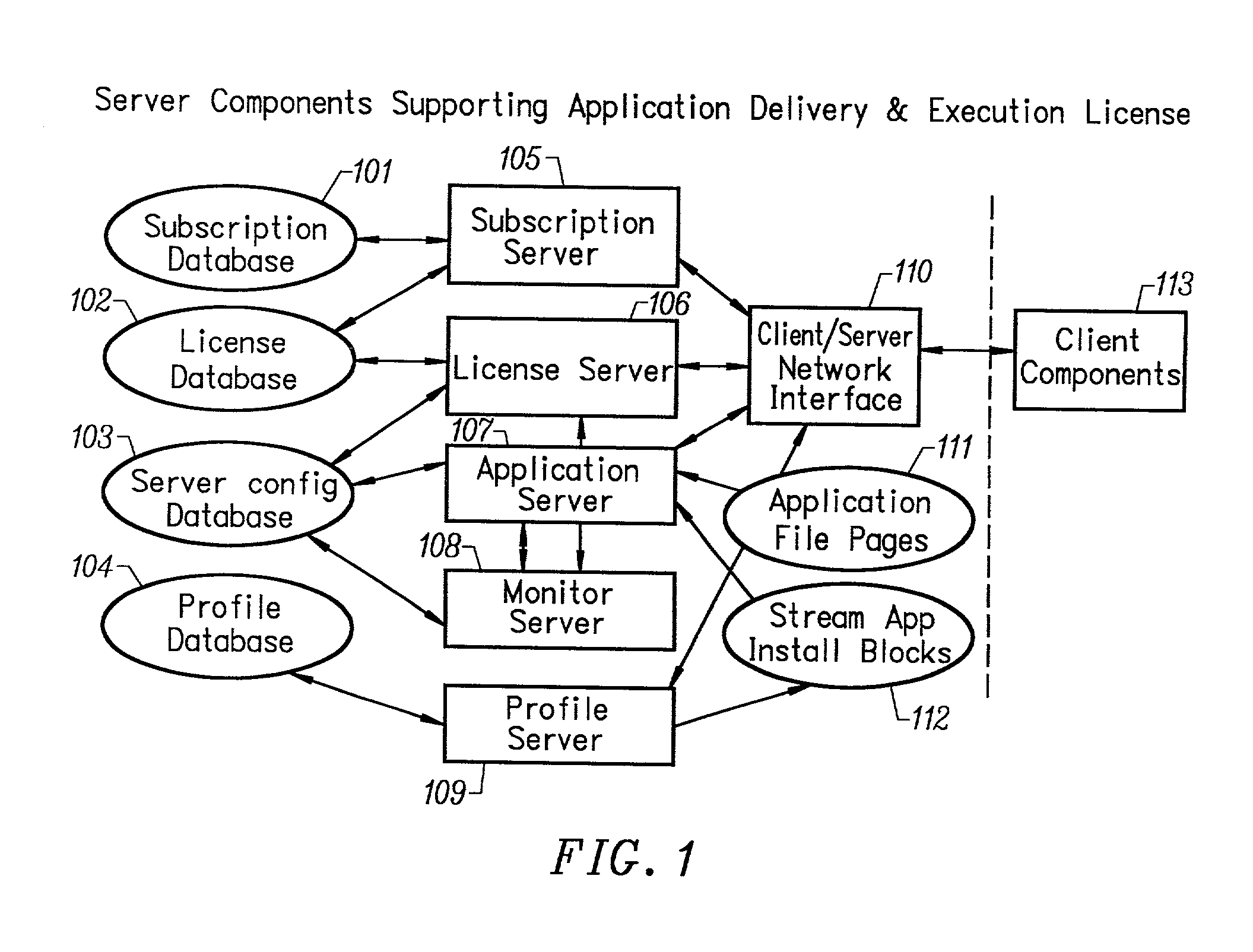

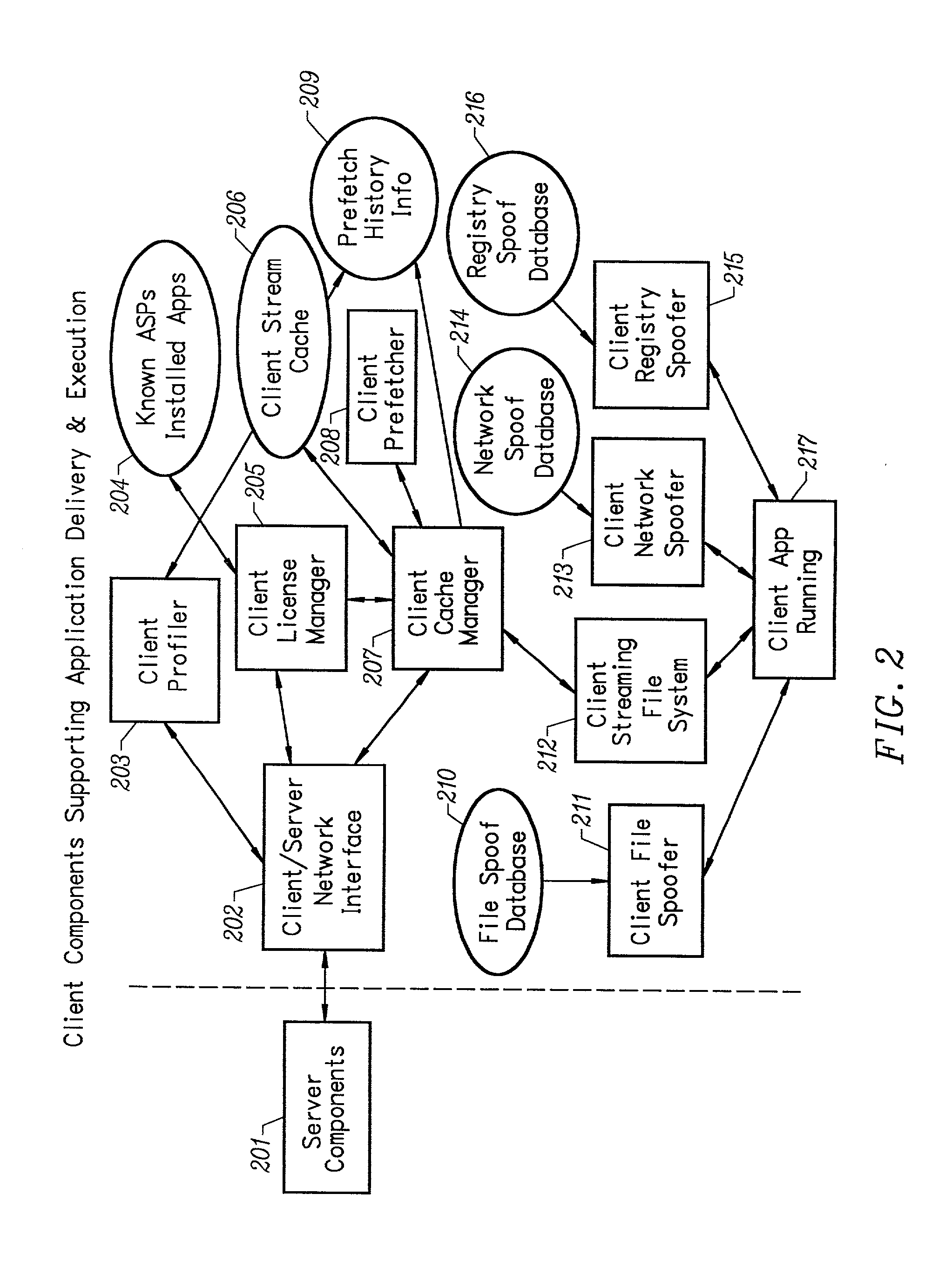

Client installation and execution system for streamed applications

InactiveUS6918113B2Multiple digital computer combinationsProgram loading/initiatingRegistry dataFile system

Owner:NUMECENT HLDG +1

Client installation and execution system for streamed applications

InactiveUS20020157089A1Multiple digital computer combinationsProgram loading/initiatingRegistry dataFile system

Owner:NUMECENT HLDG +1

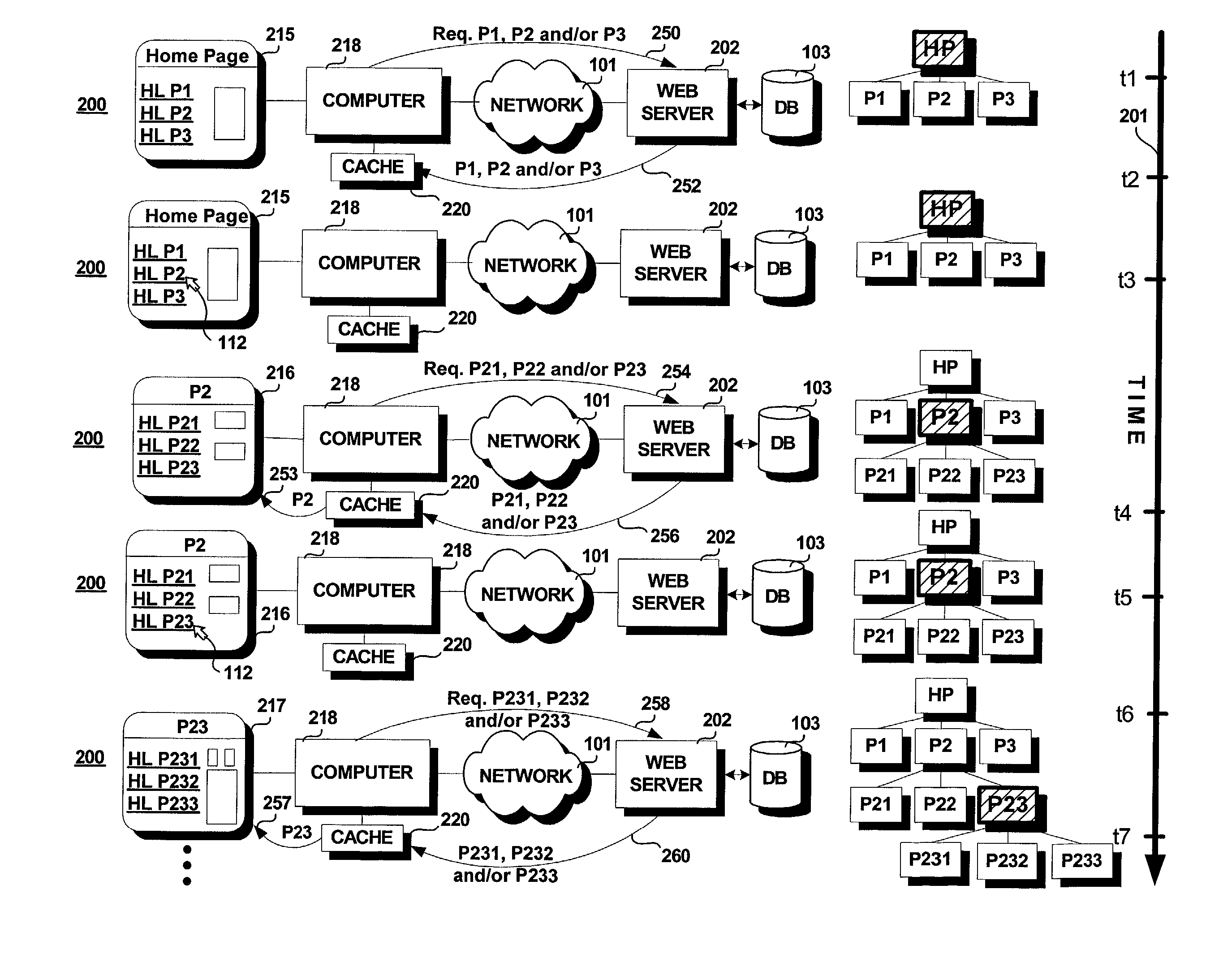

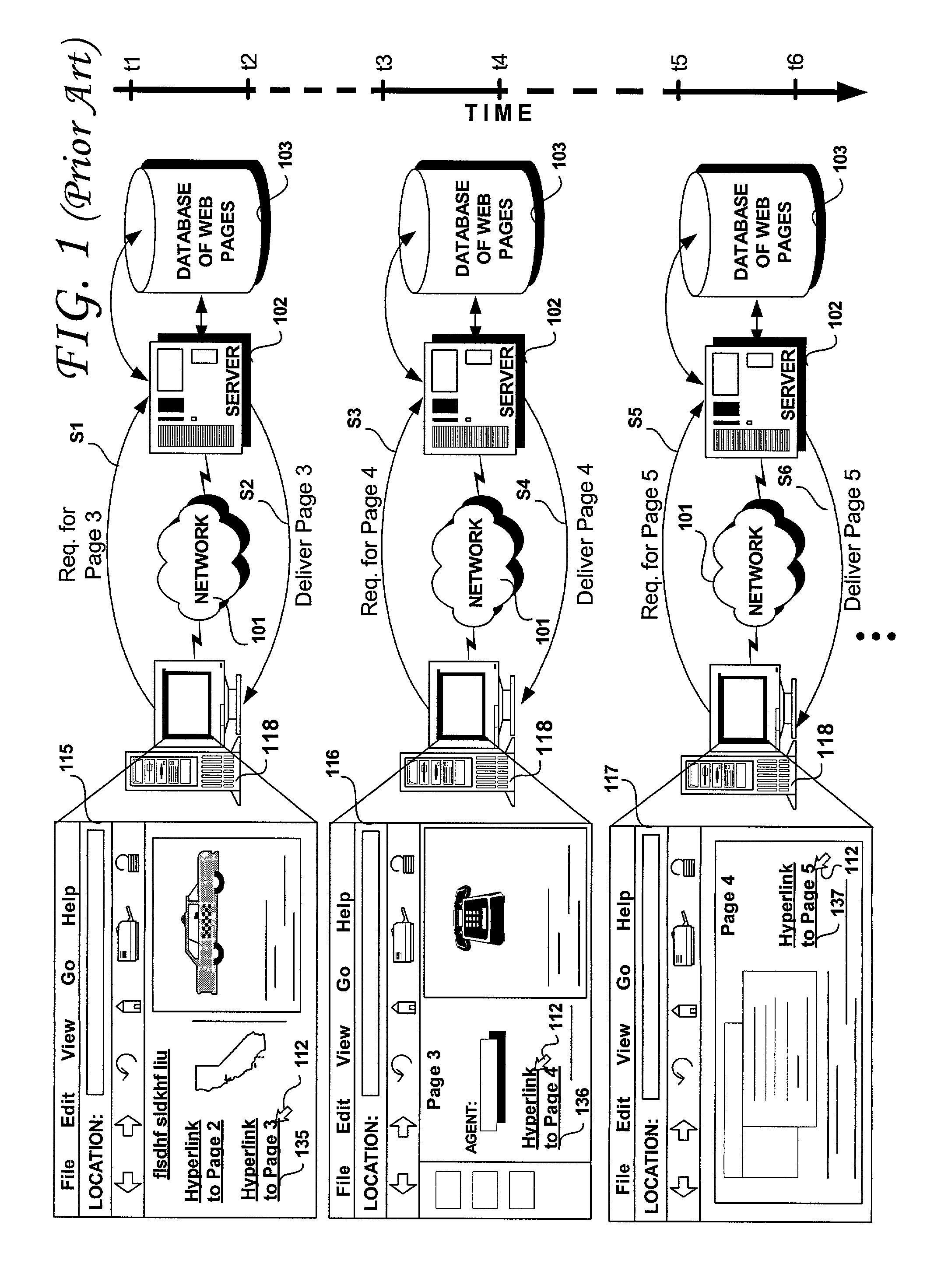

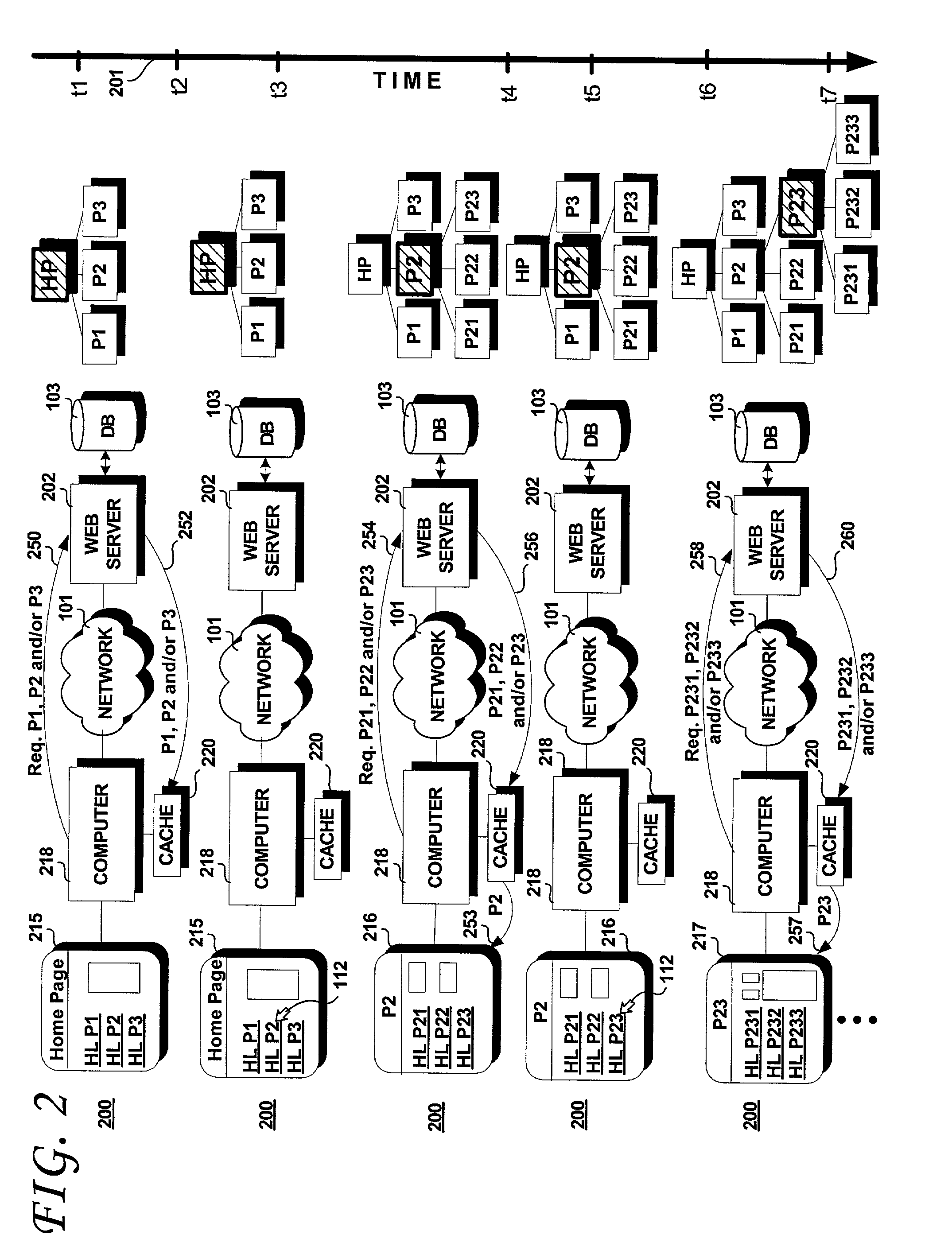

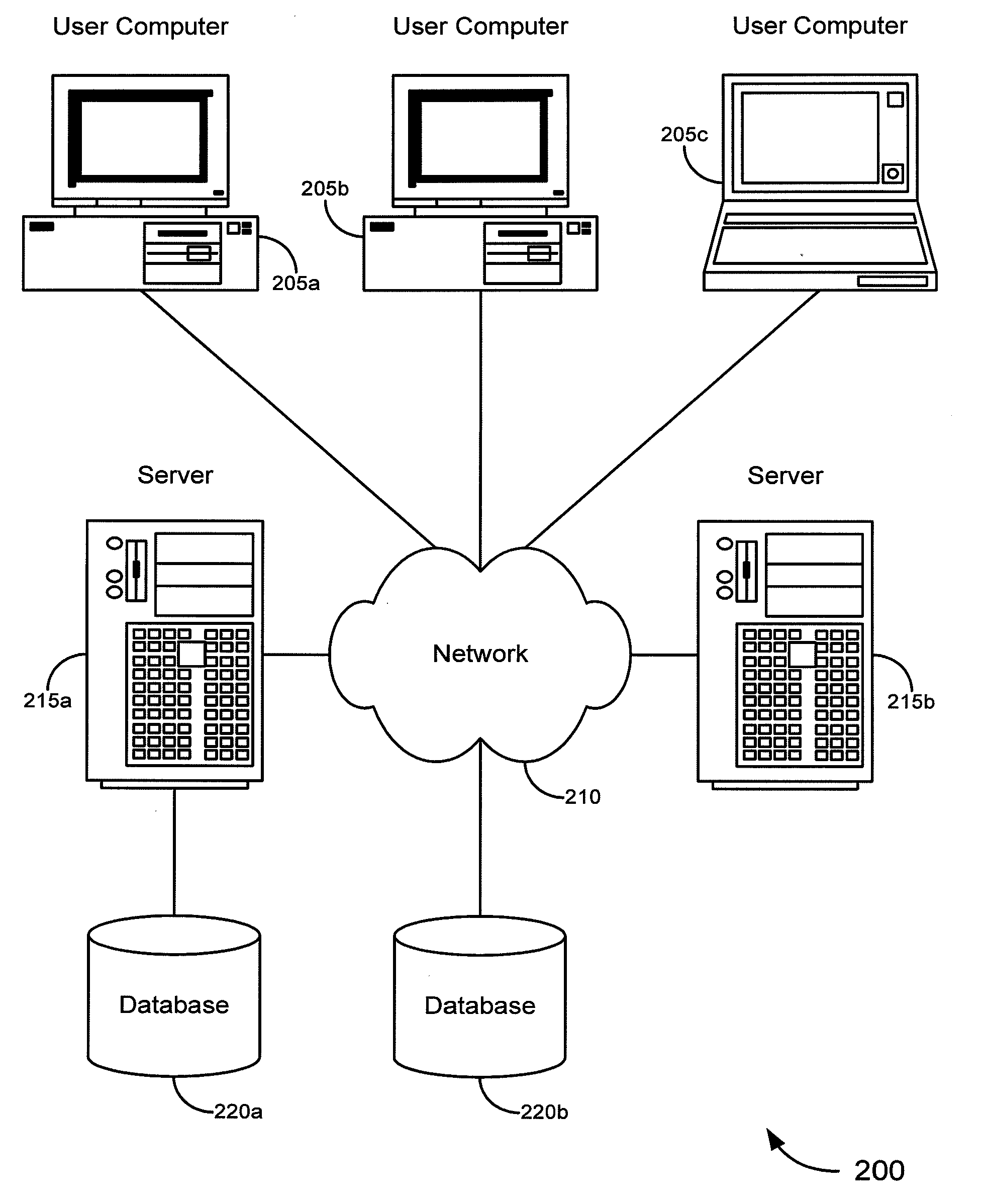

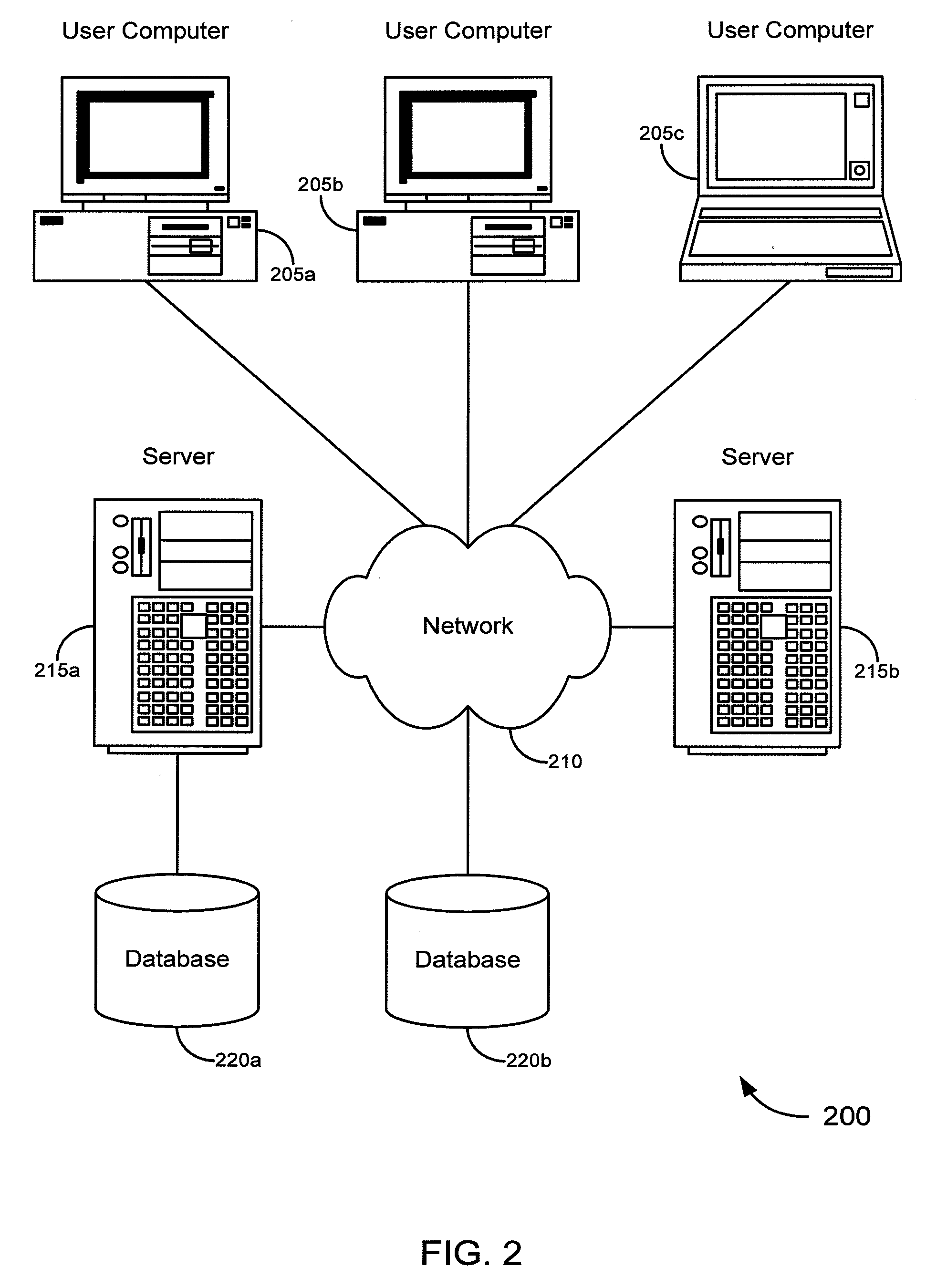

Methods and systems for preemptive and predictive page caching for improved site navigation

InactiveUS20030088580A1Digital data information retrievalMultiple digital computer combinationsWeb siteRemote computer

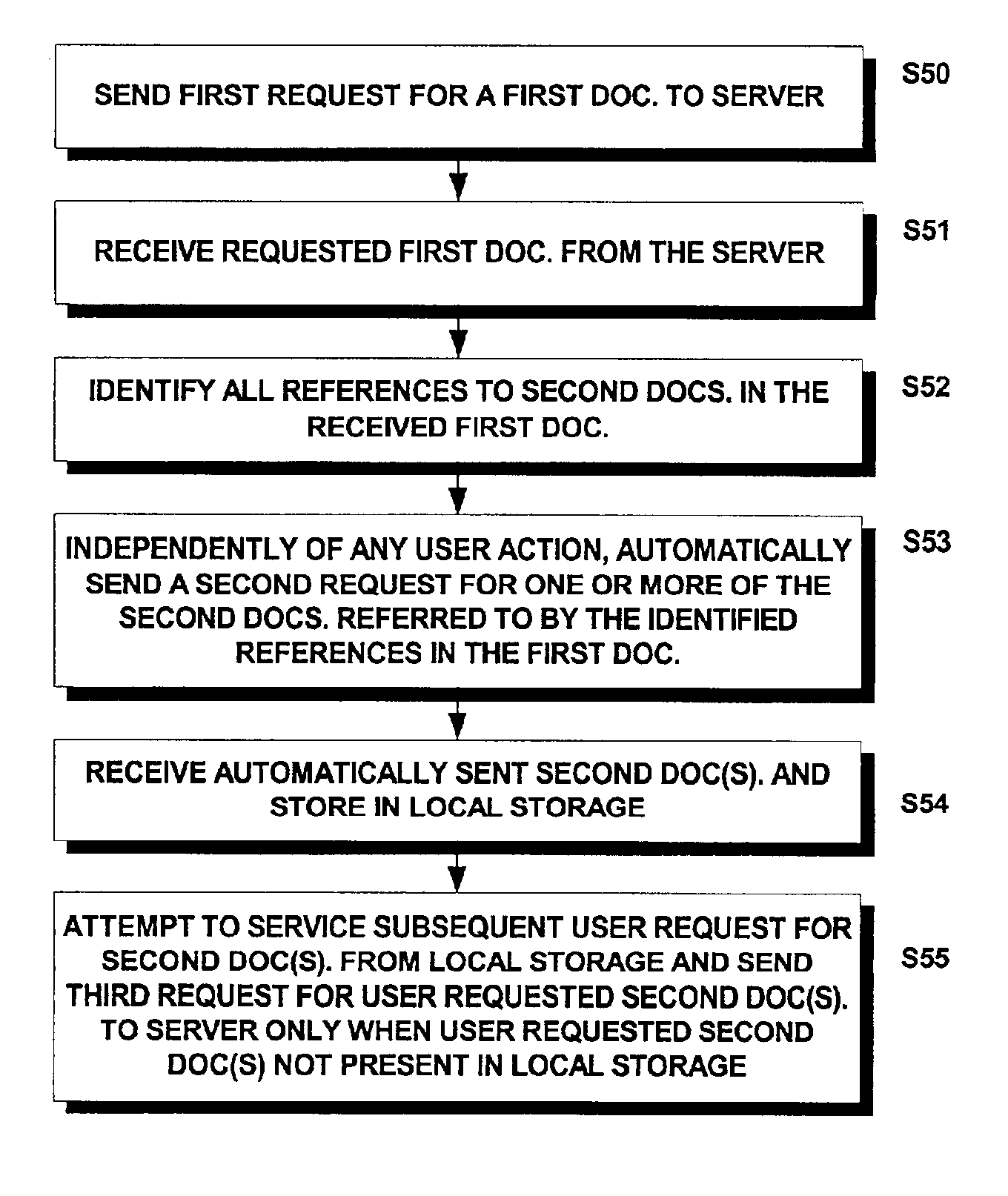

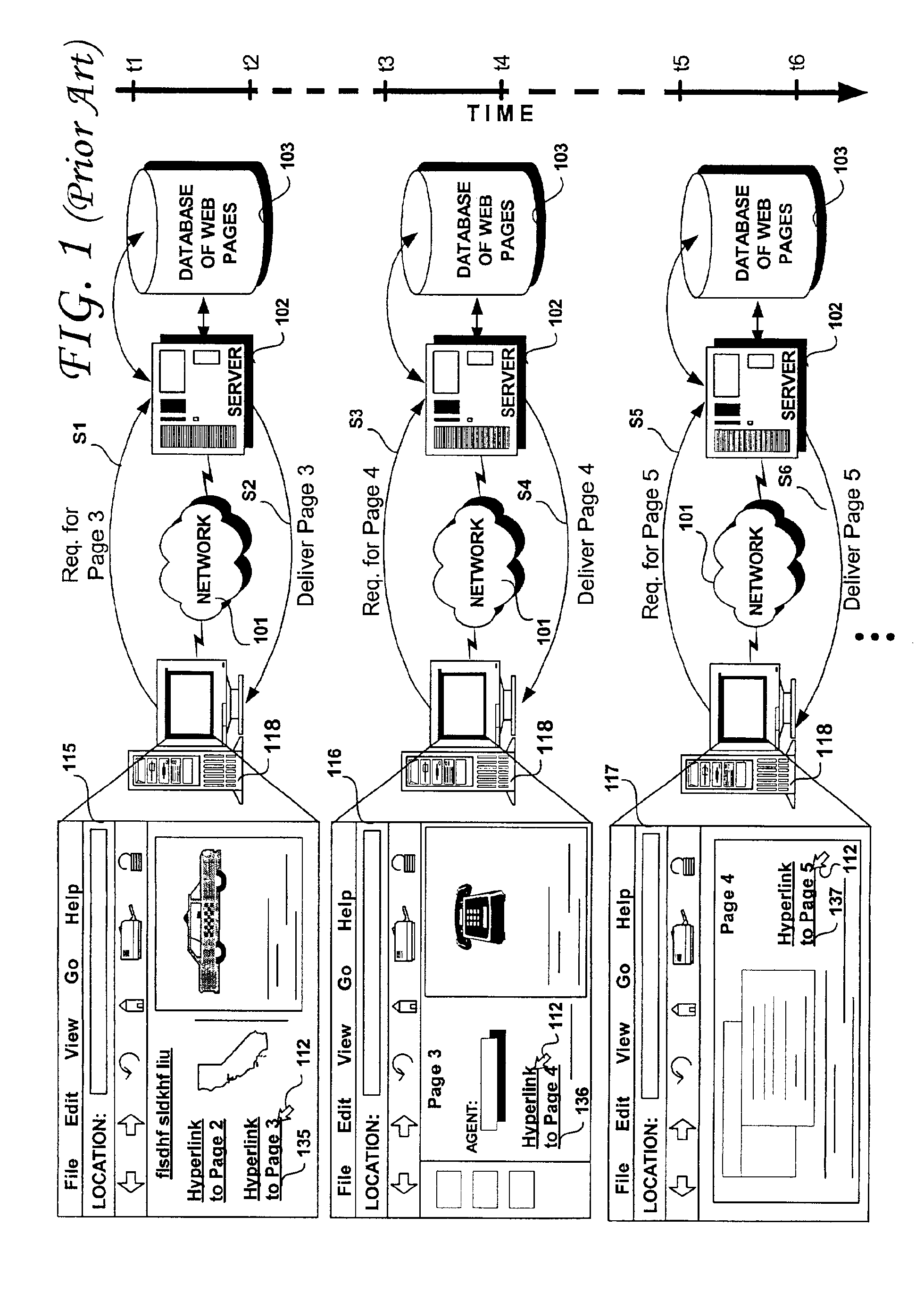

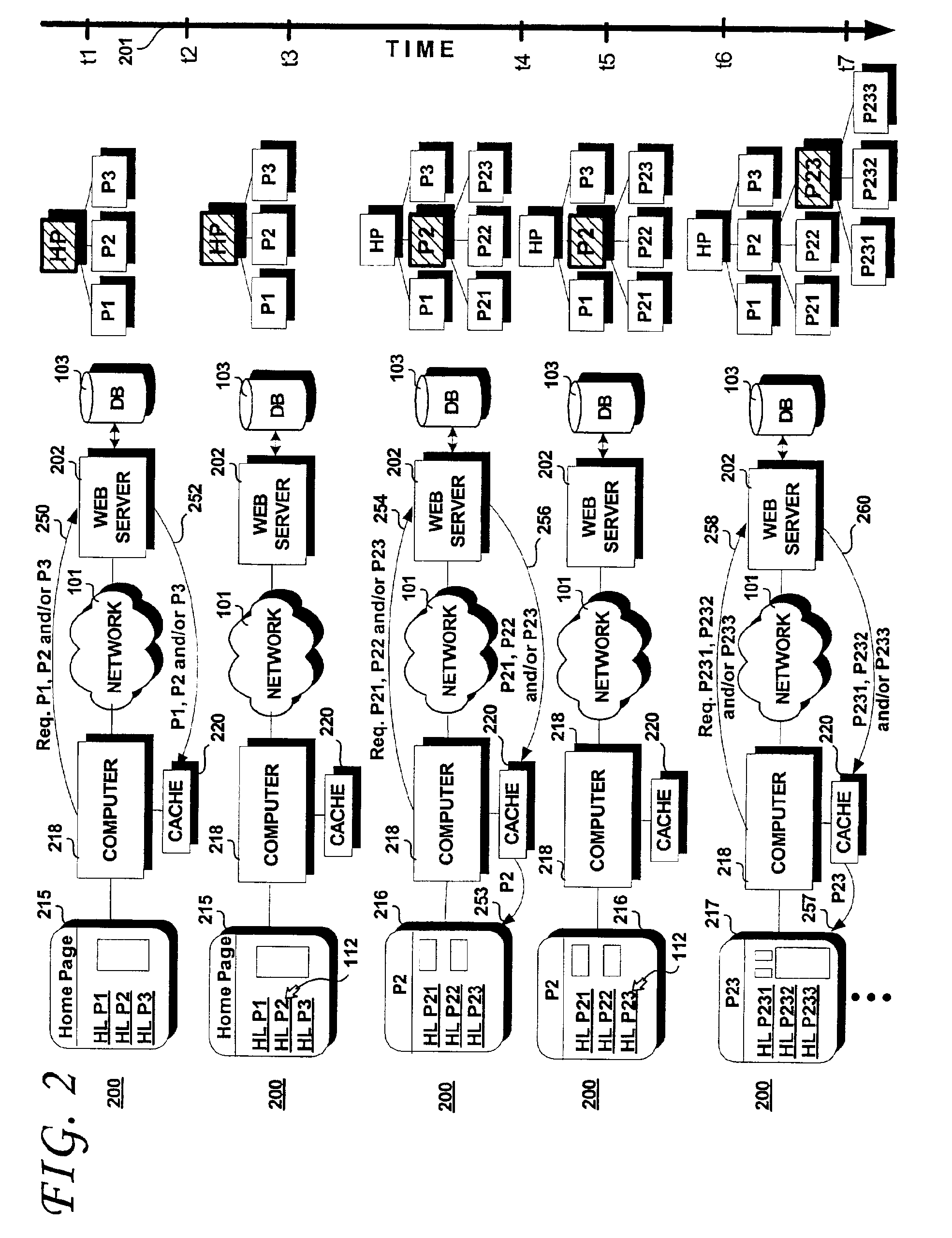

A method for a first computer to request documents from a second computer includes steps of sending a first request for a first document to the second computer responsive to a first user action, receiving the first document sent by the second computer responsive to the first request; identifying all references to second documents in the received first document; independently of any user action, automatically sending a second request for at least one of the second documents referred to by the identified references; receiving the second document(s) requested by the second request and storing the received second document(s) in a storage that is local to the first computer, and responsive to a user request for one or more of the second documents, attempting first to service the user request from the local storage and sending a third request to the second computer for second document(s) only when the second document(s) is not stored in the local storage. A method of servicing a request for access to a Web site by a remote computer may include a receiving step to receive the request for access to the Web site; a first sending step to send a first page of the accessed Web site to the remote computer responsive to the request, and independently of any subsequent request for a second page of the Web site originating from the remote computer, preemptively carrying out a second sending step to send the remote computer at least one selected second page based upon a prediction of a subsequent request by the remote computer and / or a history of second pages previously accessed by the remote computer.

Owner:ORACLE INT CORP

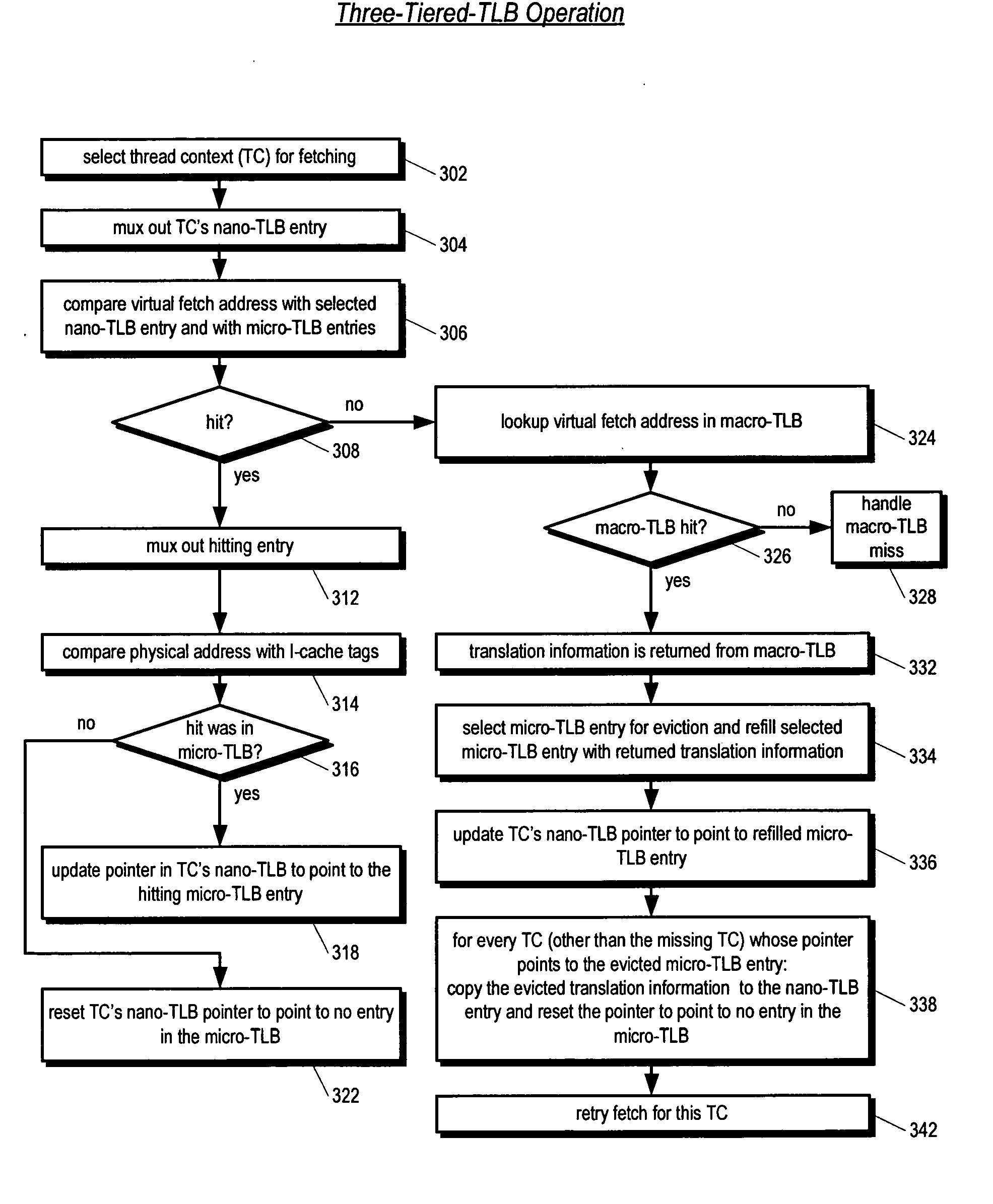

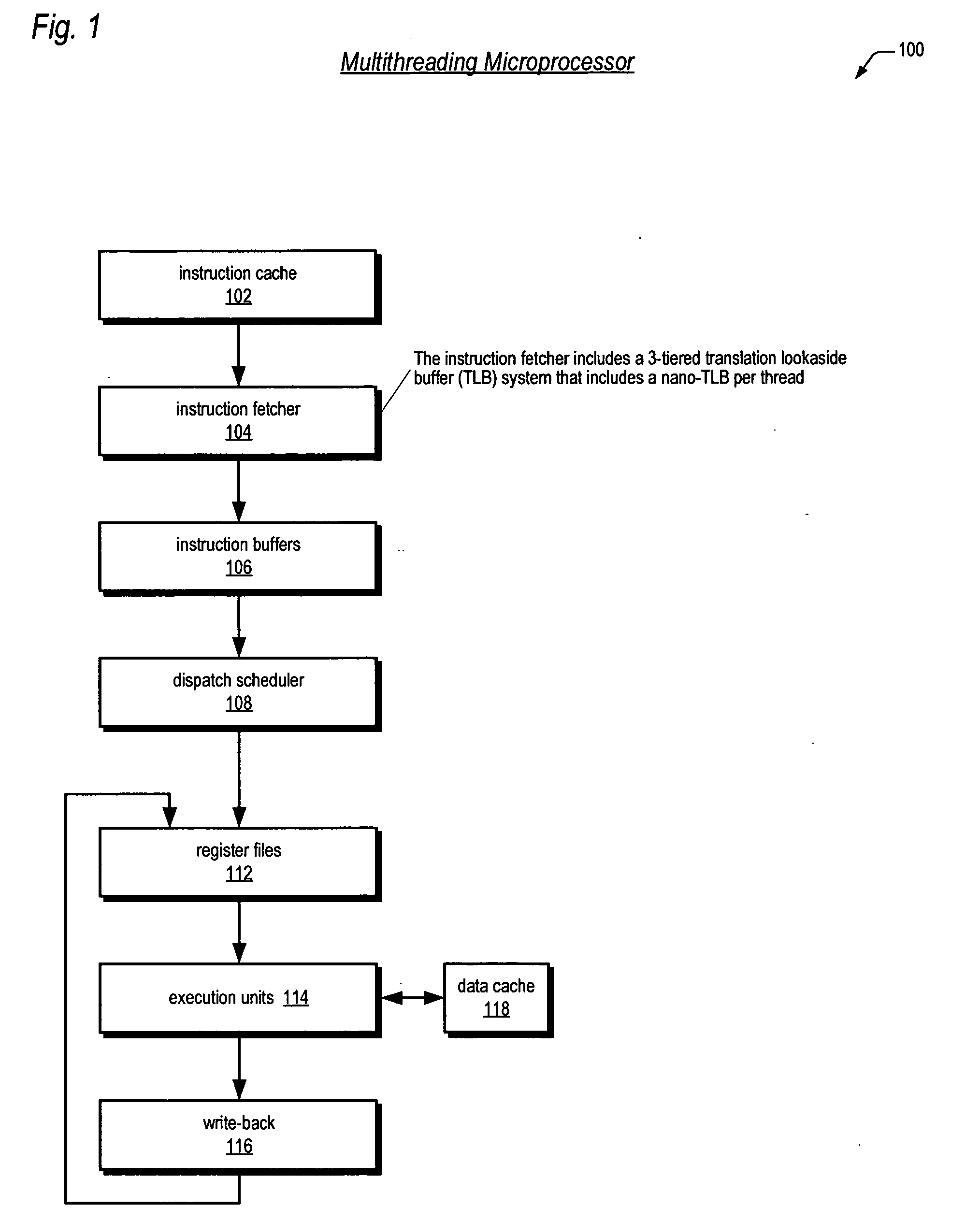

Three-tiered translation lookaside buffer hierarchy in a multithreading microprocessor

ActiveUS20060206686A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingTranslation lookaside buffer

A three-tiered TLB architecture in a multithreading processor that concurrently executes multiple instruction threads is provided. A macro-TLB caches address translation information for memory pages for all the threads. A micro-TLB caches the translation information for a subset of the memory pages cached in the macro-TLB. A respective nano-TLB for each of the threads caches translation information only for the respective thread. The nano-TLBs also include replacement information to indicate which entries in the nano-TLB / micro-TLB hold recently used translation information for the respective thread. Based on the replacement information, recently used information is copied to the nano-TLB if evicted from the micro-TLB.

Owner:MIPS TECH INC

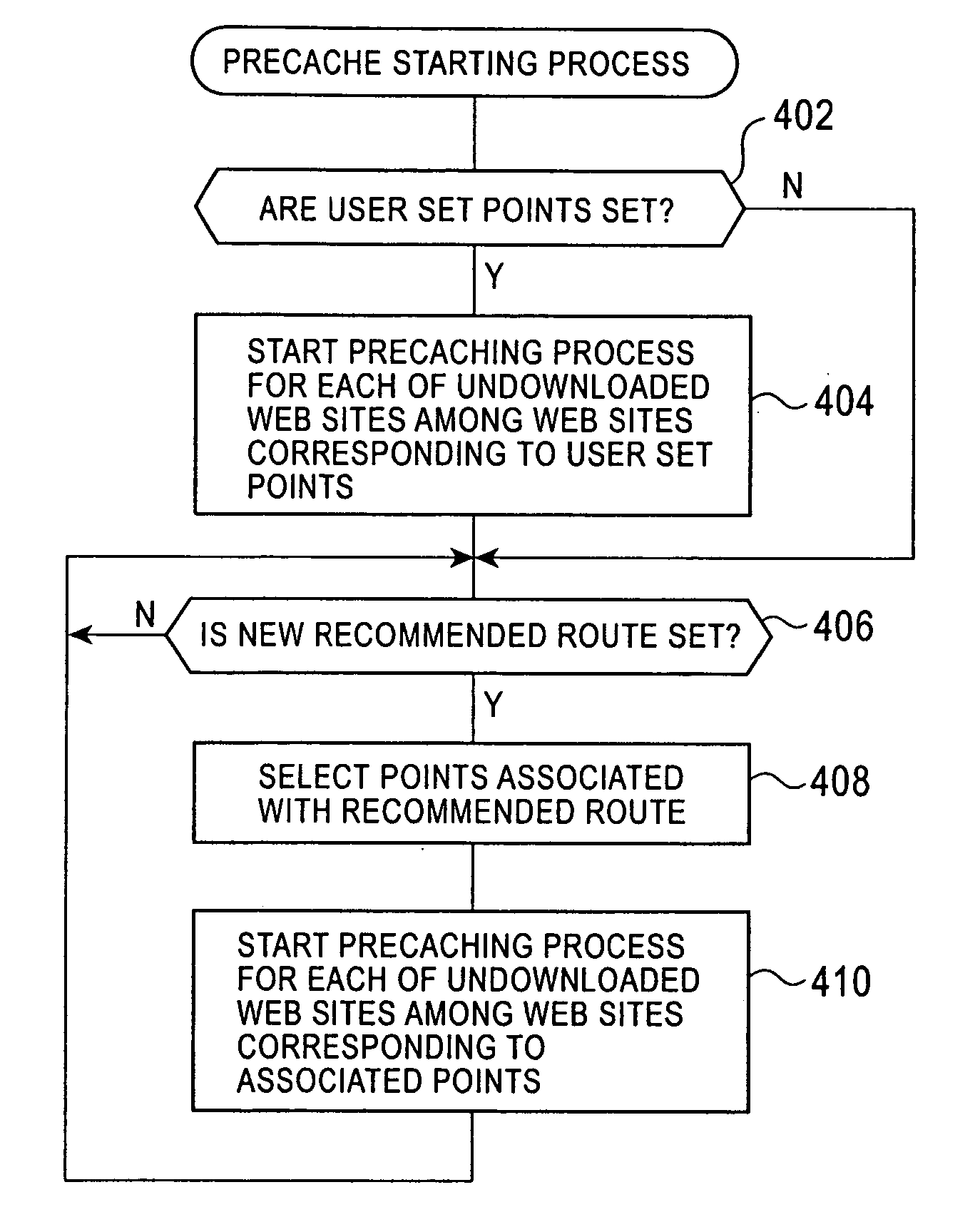

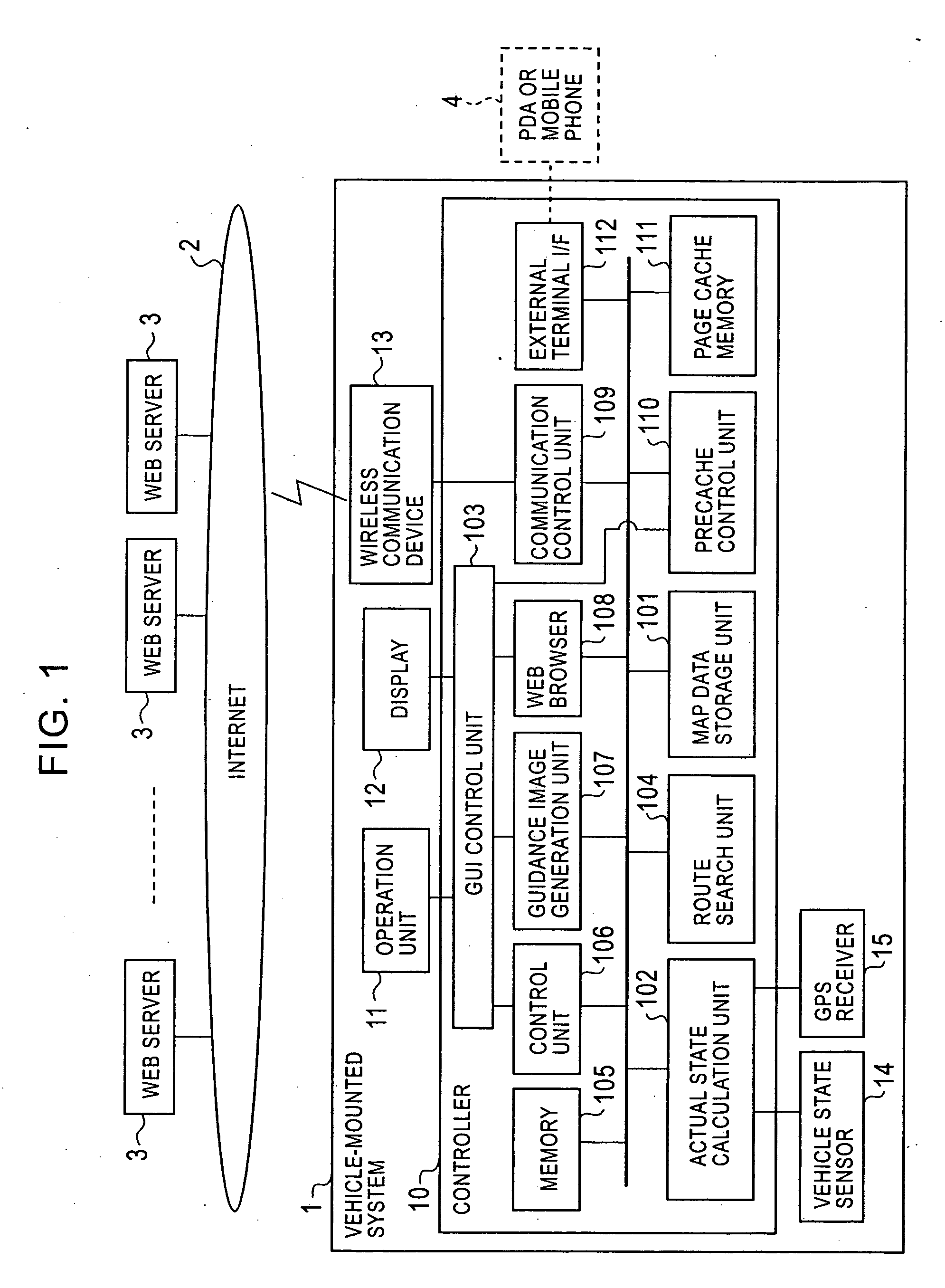

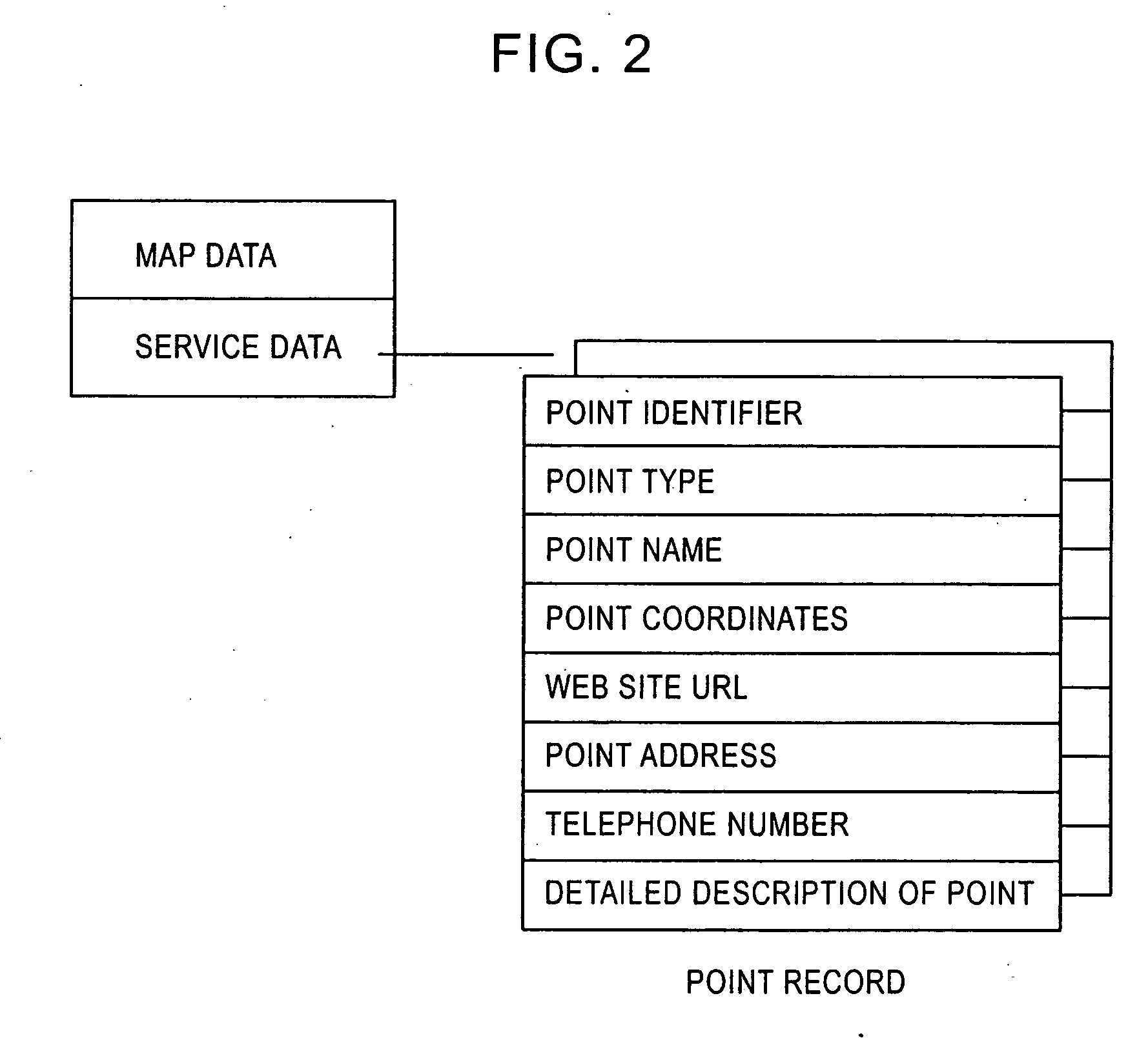

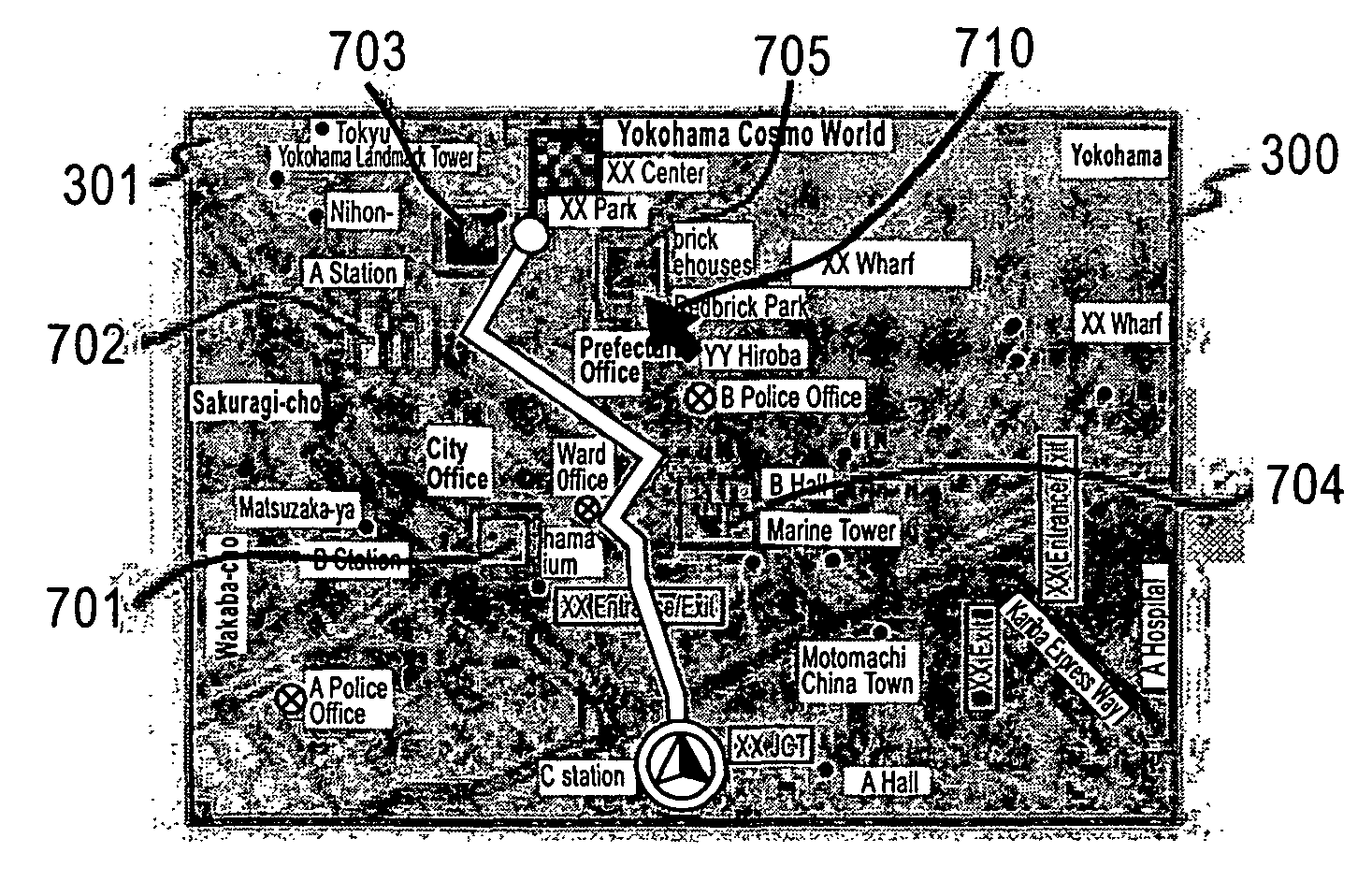

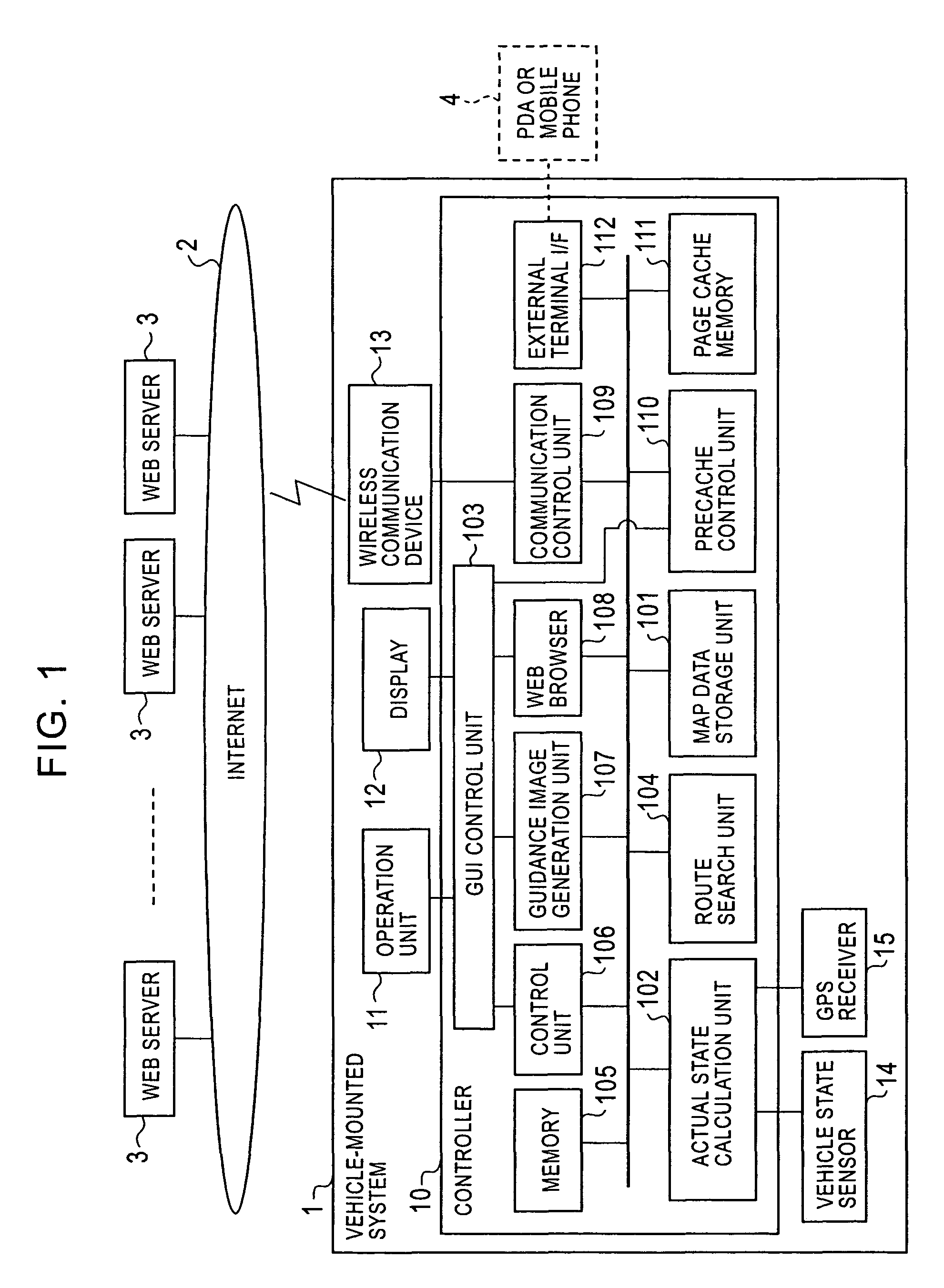

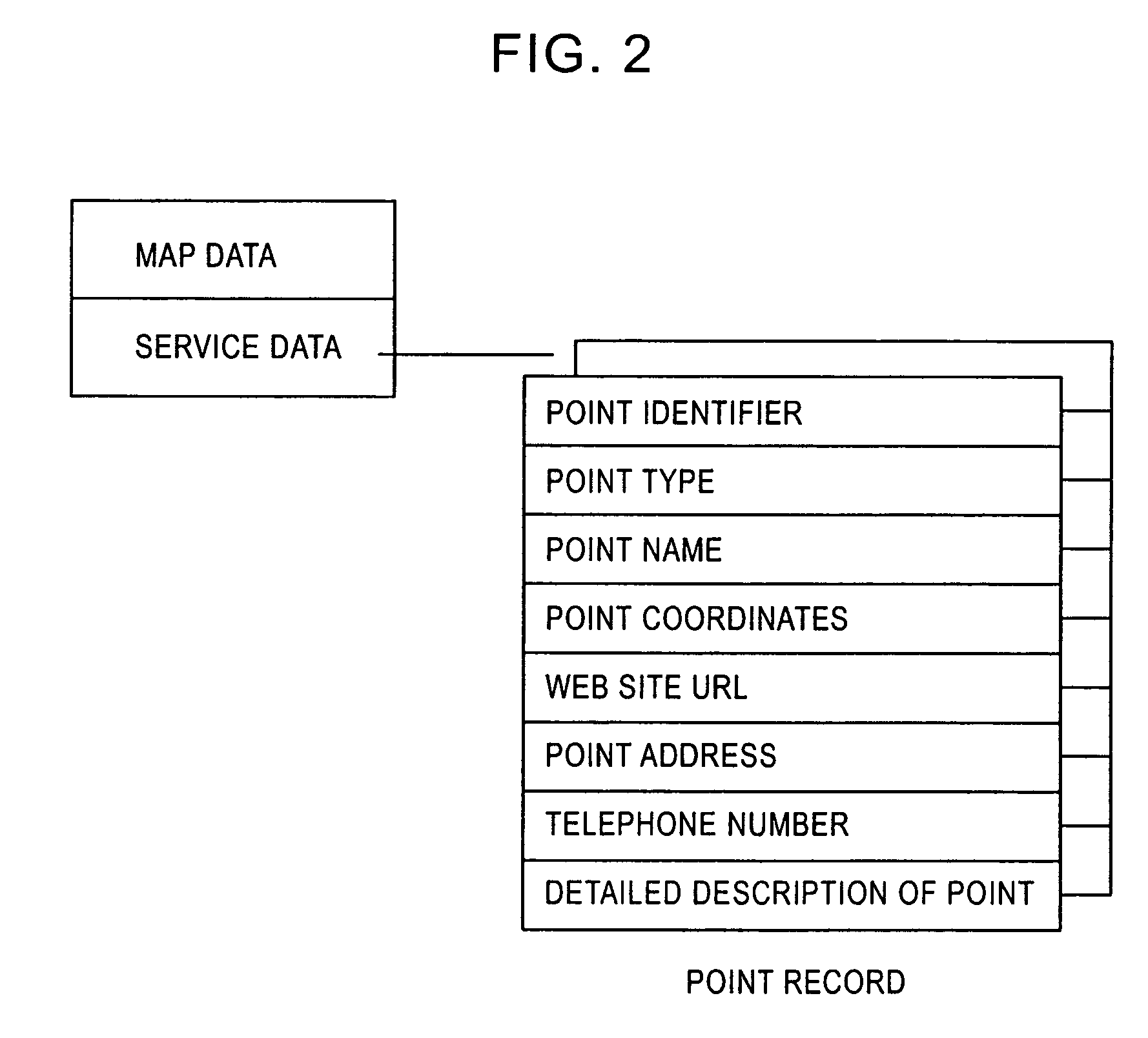

Vehicle-mounted apparatus

ActiveUS20060129636A1Easy selectionEasy to useInstruments for road network navigationDigital data information retrievalWeb siteWeb browser

The present invention provides a vehicle-mounted apparatus whereby a user can easily select and use a Web site in accordance with a point associated with information provided by the Web site and a description of the information. According to the present invention, a precache control unit automatically downloads at least one Web page of a Web site associated with each point matching a predetermined condition to a page cache memory and displays site icons at respective points corresponding to the downloaded Web sites on a map image. In addition, the precache control unit displays the predetermined type of extracted information by analyzing the description of the Web page of each Web site in an information window that pops up from the corresponding site icon. When the user selects one of the site icons, a Web browser displays the Web page of the Web site corresponding to the selected site icon.

Owner:ALPINE ELECTRONICS INC

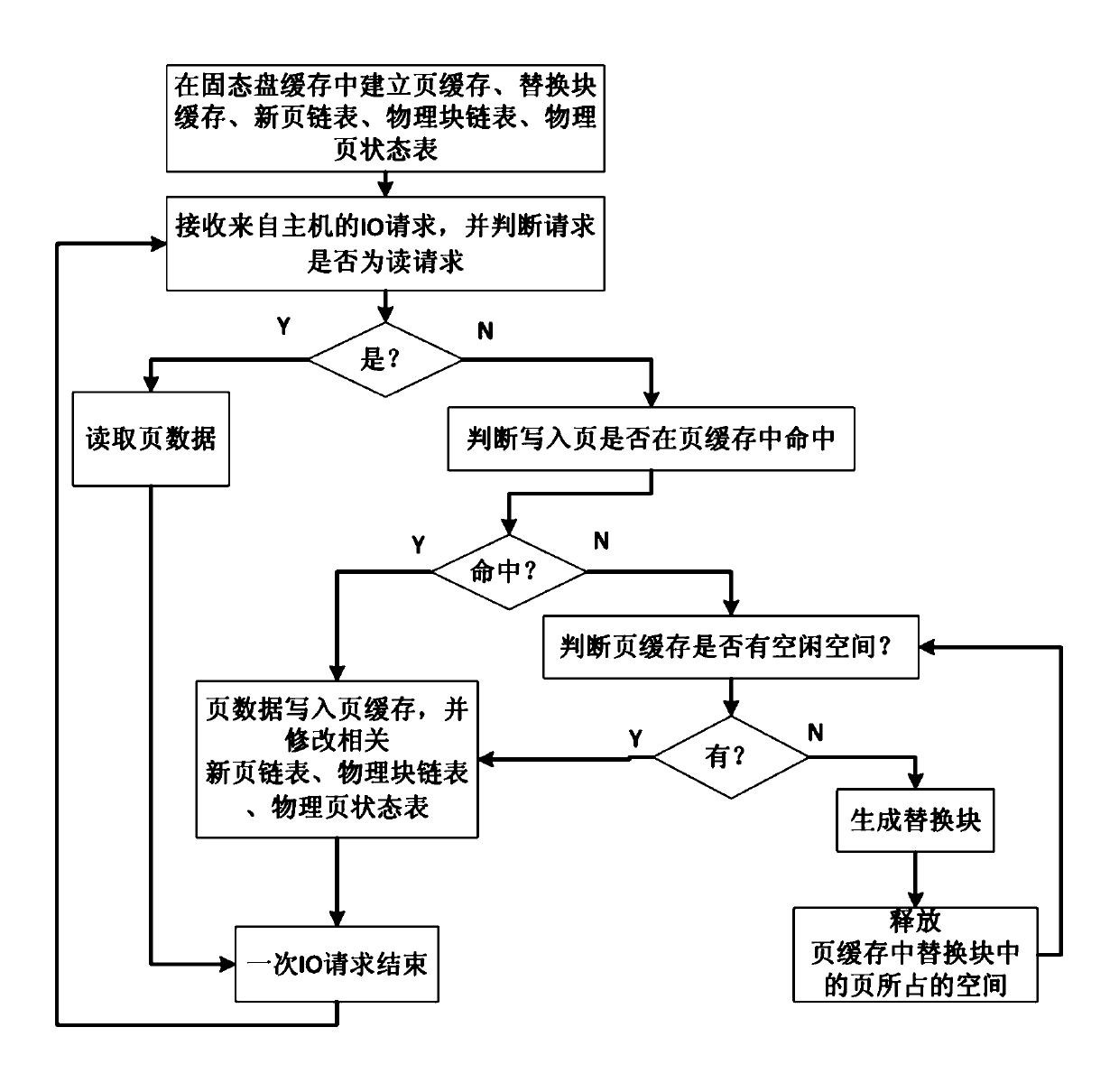

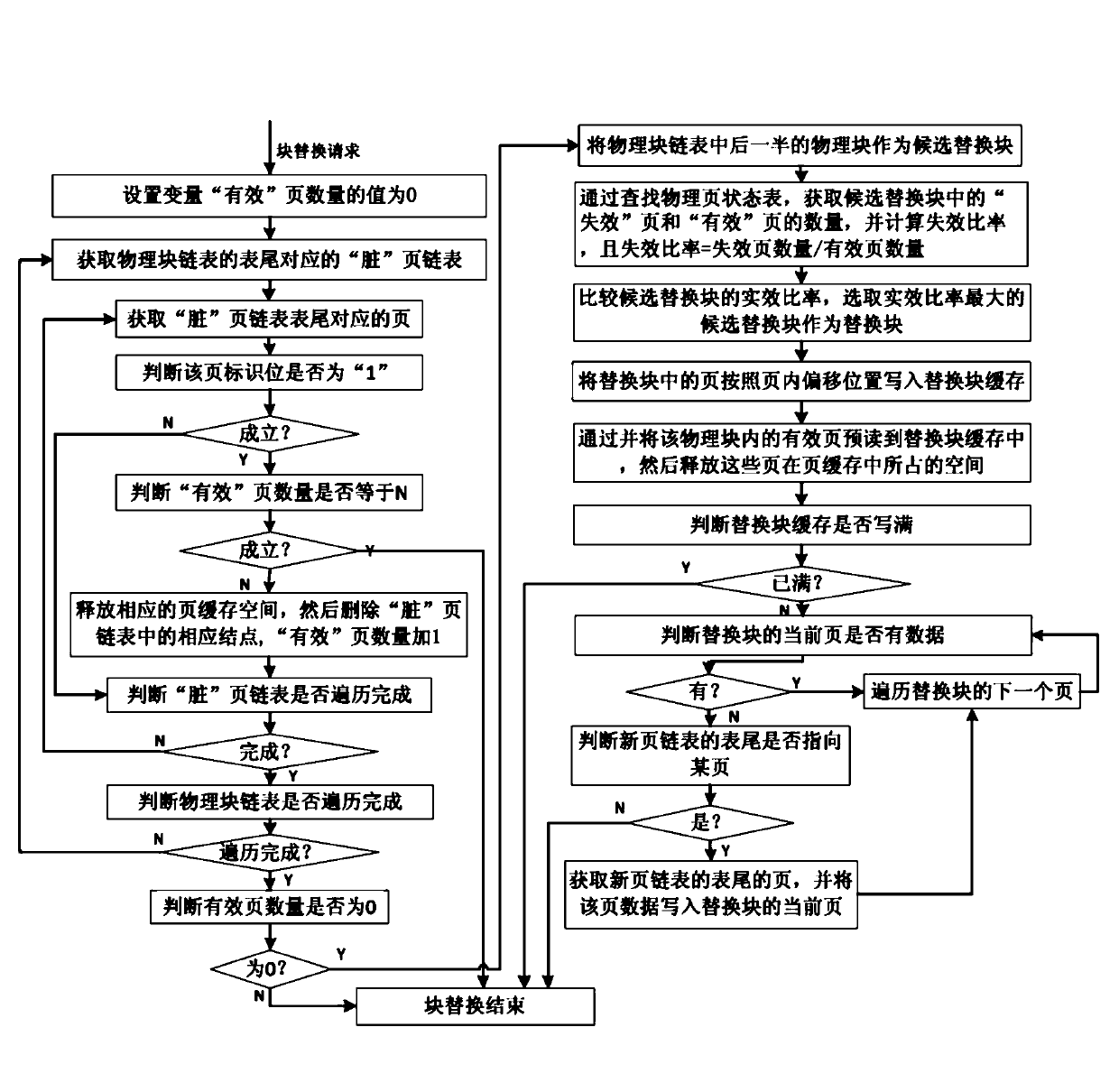

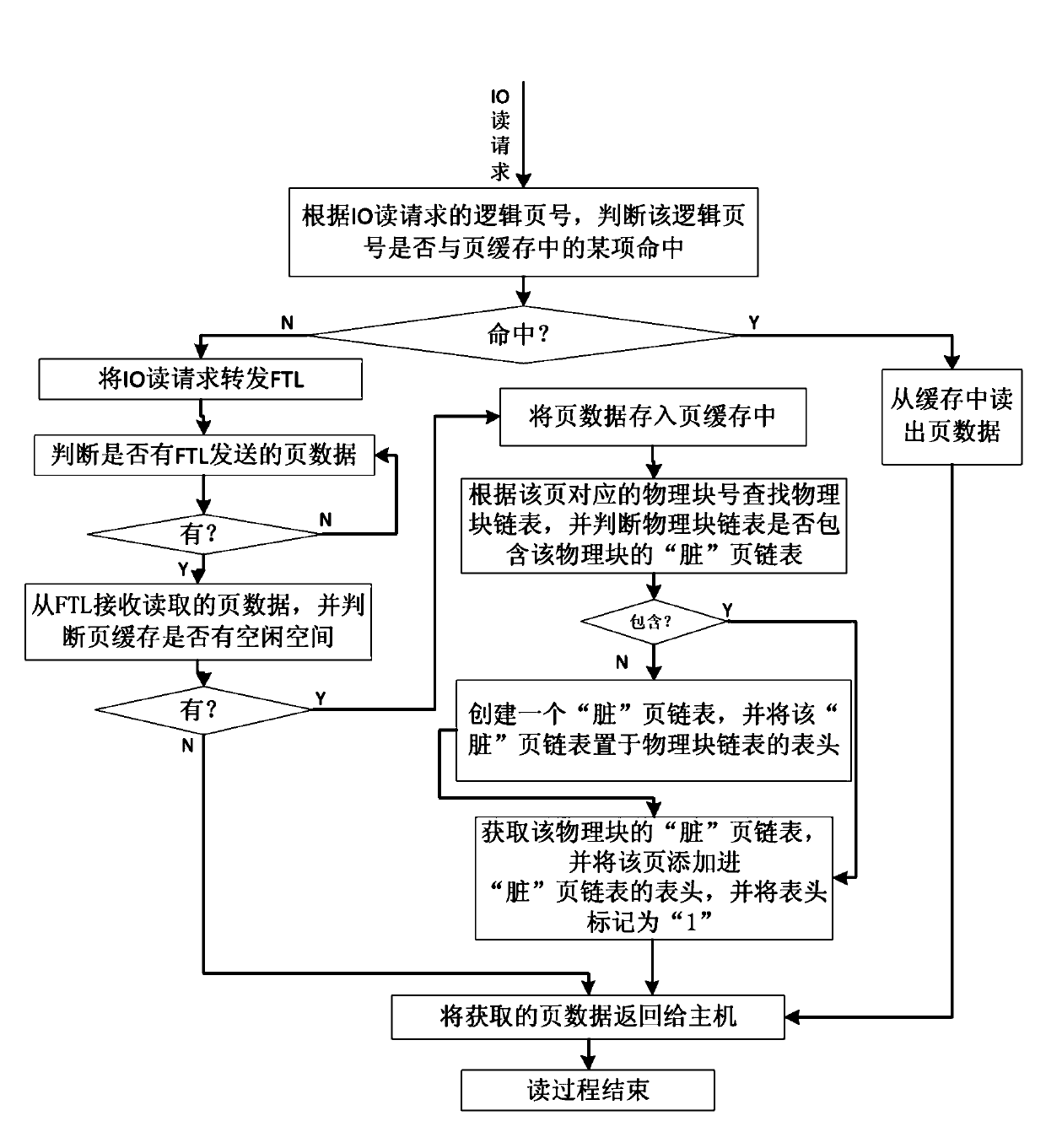

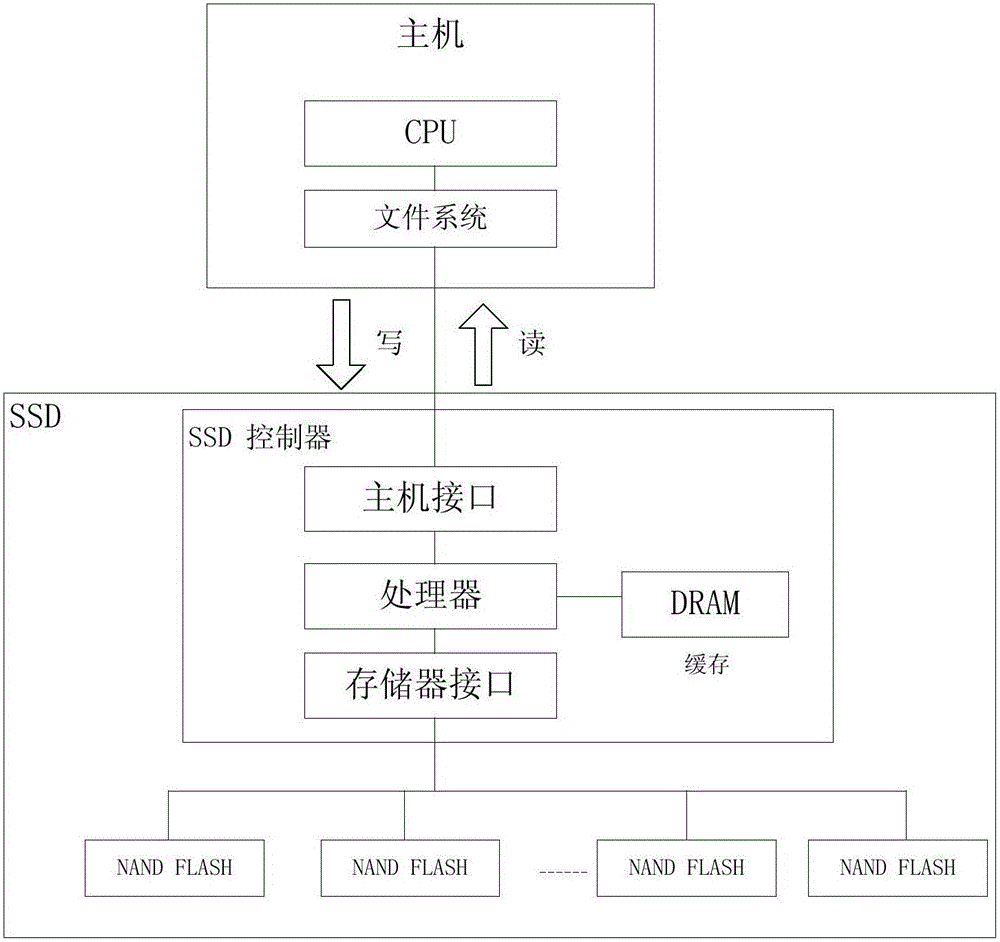

Cache management method for solid-state disc

ActiveCN103136121AImprove hit chanceImprove read and write speedMemory adressing/allocation/relocationDirty dataCache management

The invention discloses a cache management method for a solid-state disc. The method comprises the following implement steps that a page cache, a replace block module, a new page linked list, a physical block chain list and a physical page state list are established; an input and output (IO) request from a host is received and is executed through the page cache, when a writing request is executed, if the page cache is missed, and the page cache has no spare space, a block replace process of the solid-state disc is executed, namely an 'effective' page space in the page cache is preferential released; when the number of 'effective' pages in the page cache is zero, a candidate replace block with the largest failure ratio in a rear half physical block of the physical block chain list is selected to serve as a replace block, and the replace block cache is utilized to execute a replace writing process. The cache management method for the solid-state disc can effectively use a limited cache space and increase hit rate of the cache, enables a block written in a flash medium to comprise as many dirty data pages as possible and as few effective data pages as possible to reduce erasure operation and page copy operations and sequential rubbish recovery caused by the dirty data pages. The cache management method for the solid-state disc is easy to operate.

Owner:湖南长城银河科技有限公司

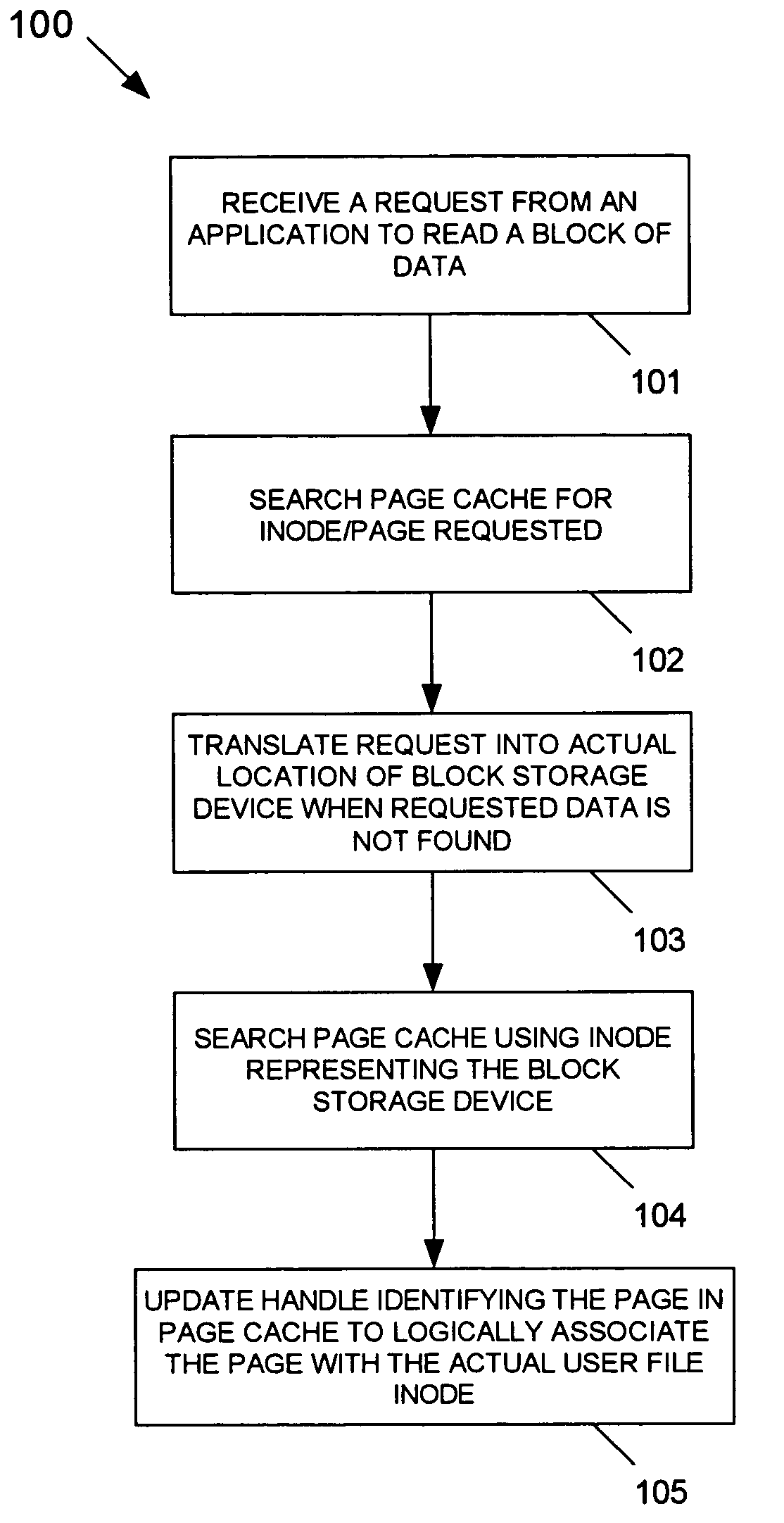

Multi-level page cache for enhanced file system performance via read ahead

InactiveUS7203815B2Improve efficiencyMemory architecture accessing/allocationMemory systemsInodeLeast recently frequently used

Owner:INT BUSINESS MASCH CORP

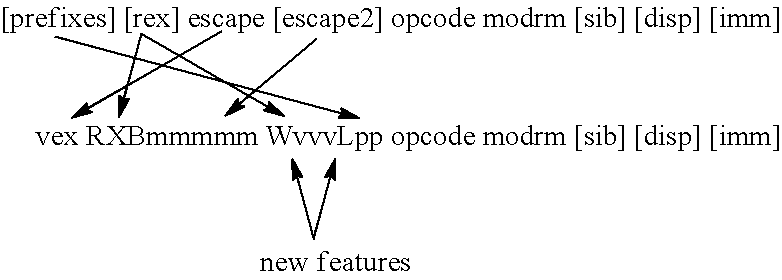

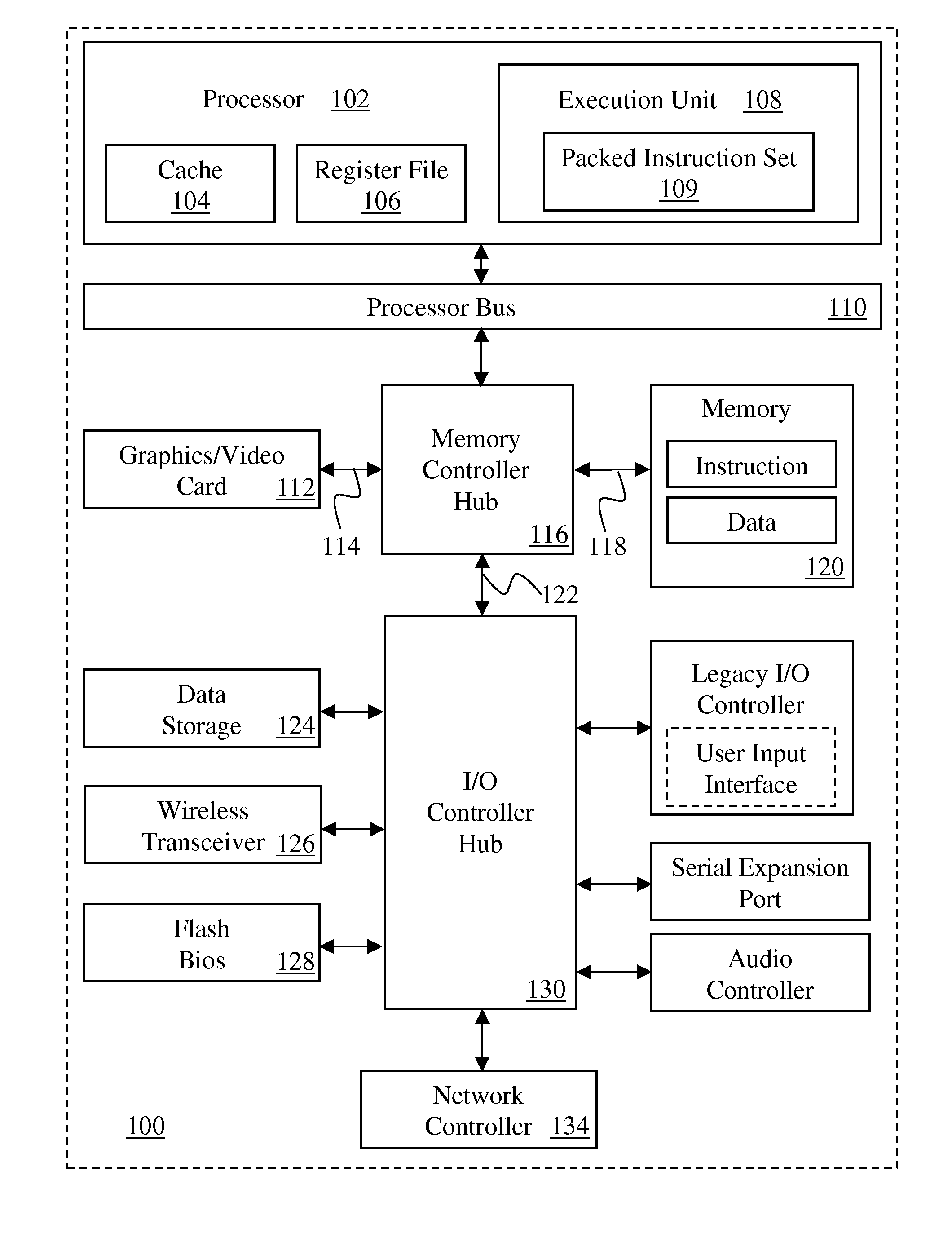

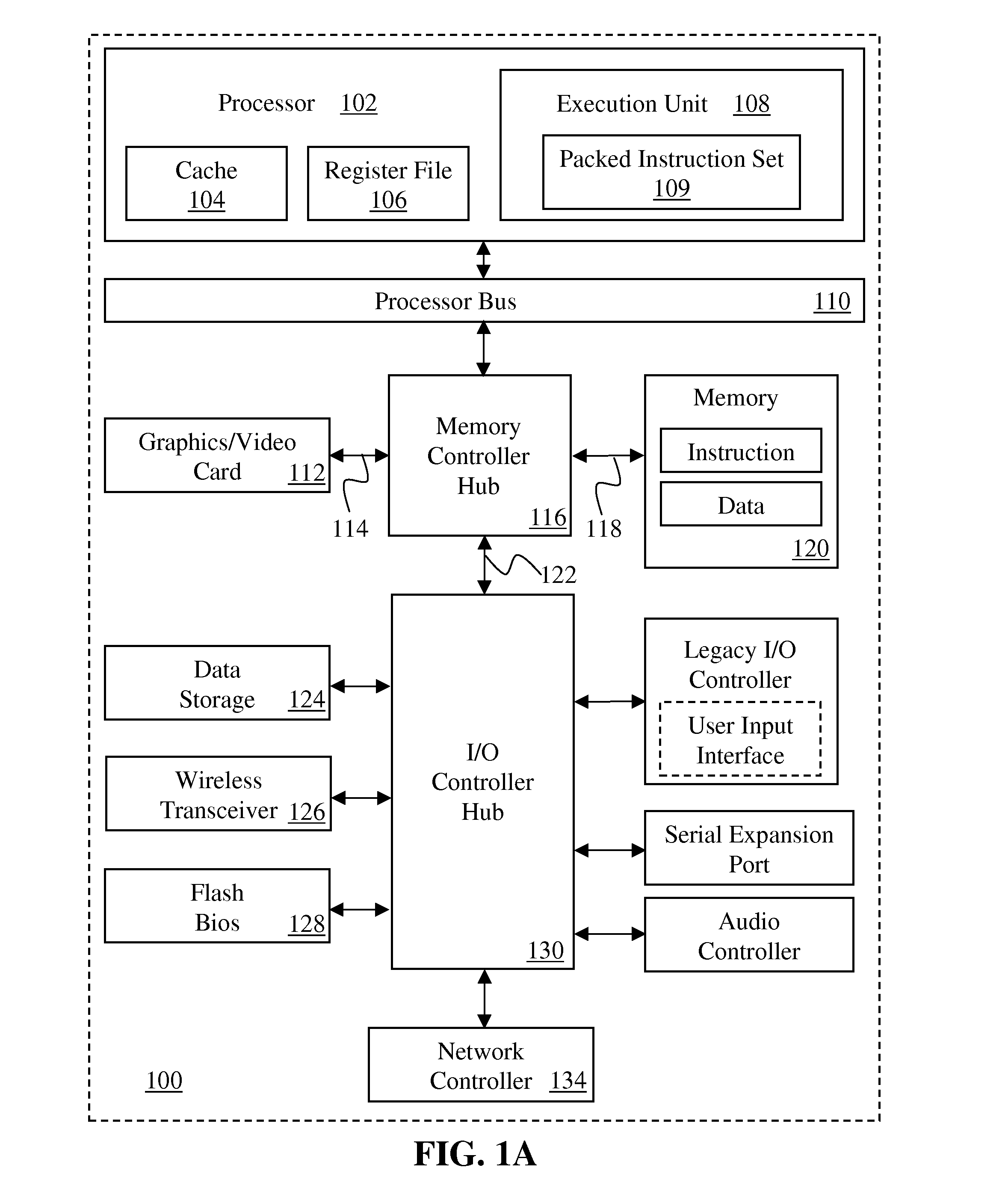

Instructions and logic to provide advanced paging capabilities for secure enclave page caches

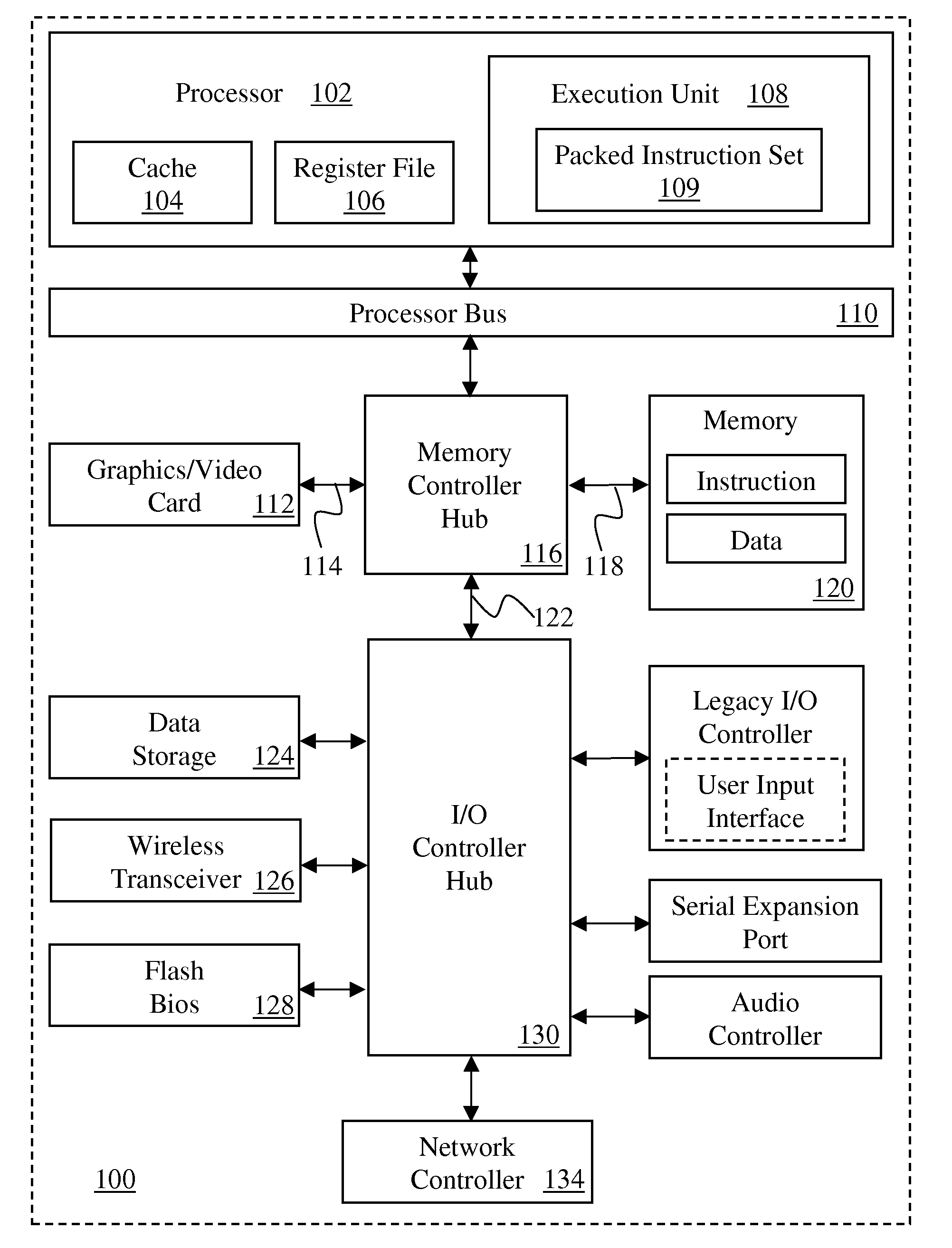

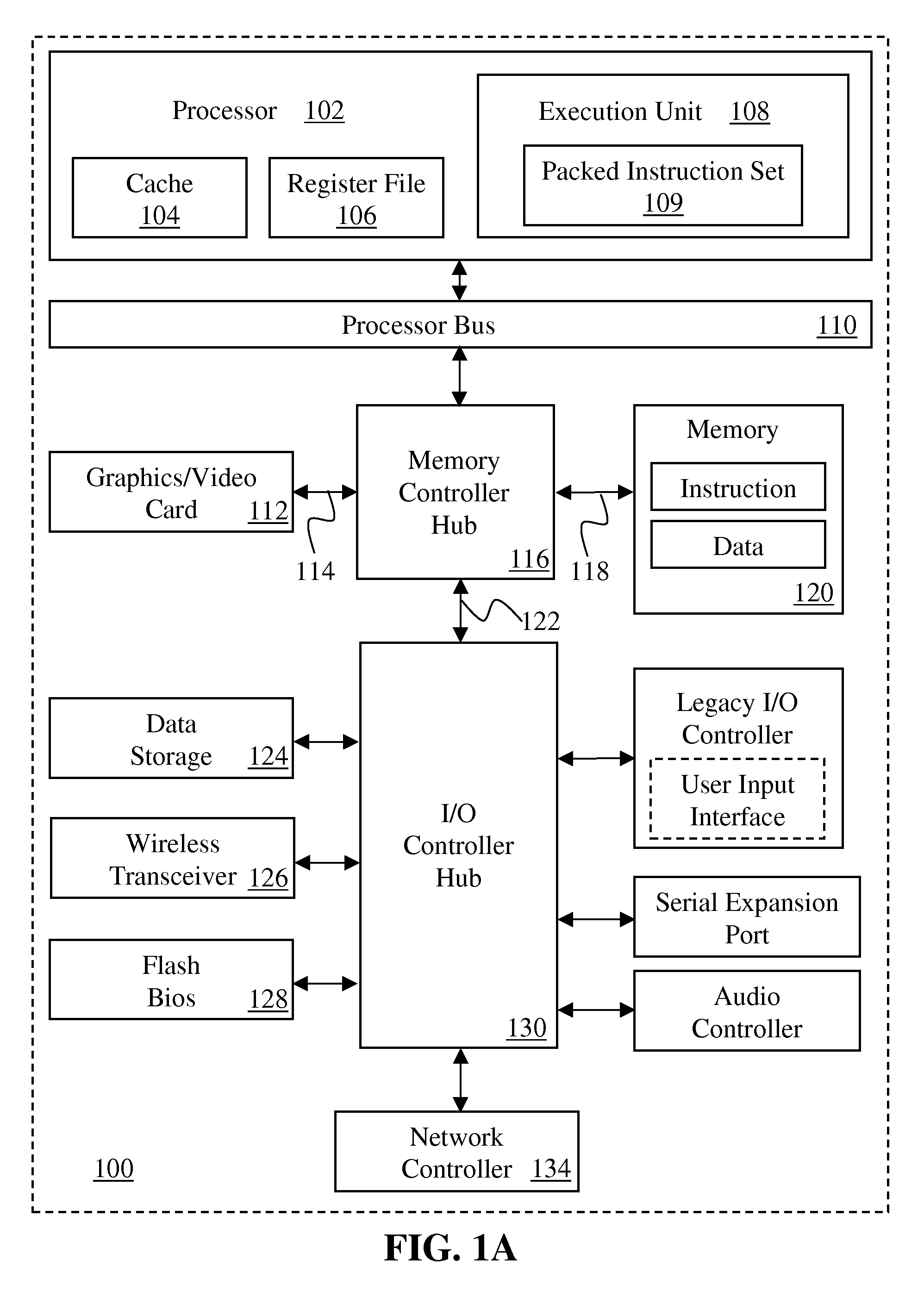

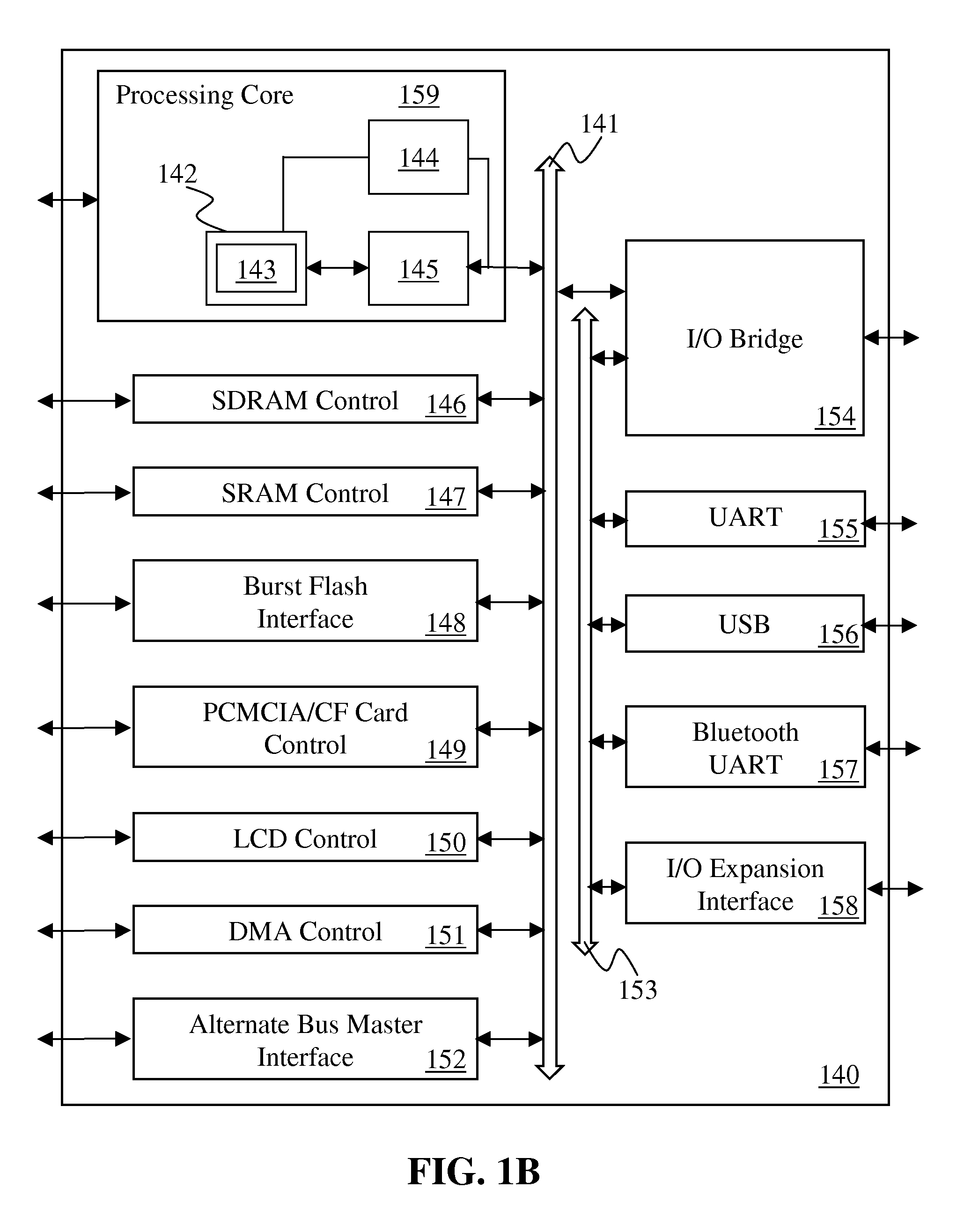

InactiveUS20140297962A1Memory architecture accessing/allocationMemory adressing/allocation/relocationHardware threadProcessing core

Instructions and logic provide advanced paging capabilities for secure enclave page caches. Embodiments include multiple hardware threads or processing cores, a cache to store secure data for a shared page address allocated to a secure enclave accessible by the hardware threads. A decode stage decodes a first instruction specifying said shared page address as an operand, and execution units mark an entry corresponding to an enclave page cache mapping for the shared page address to block creation of a new translation for either of said first or second hardware threads to access the shared page. A second instruction is decoded for execution, the second instruction specifying said secure enclave as an operand, and execution units record hardware threads currently accessing secure data in the enclave page cache corresponding to the secure enclave, and decrement the recorded number of hardware threads when any of the hardware threads exits the secure enclave.

Owner:INTEL CORP

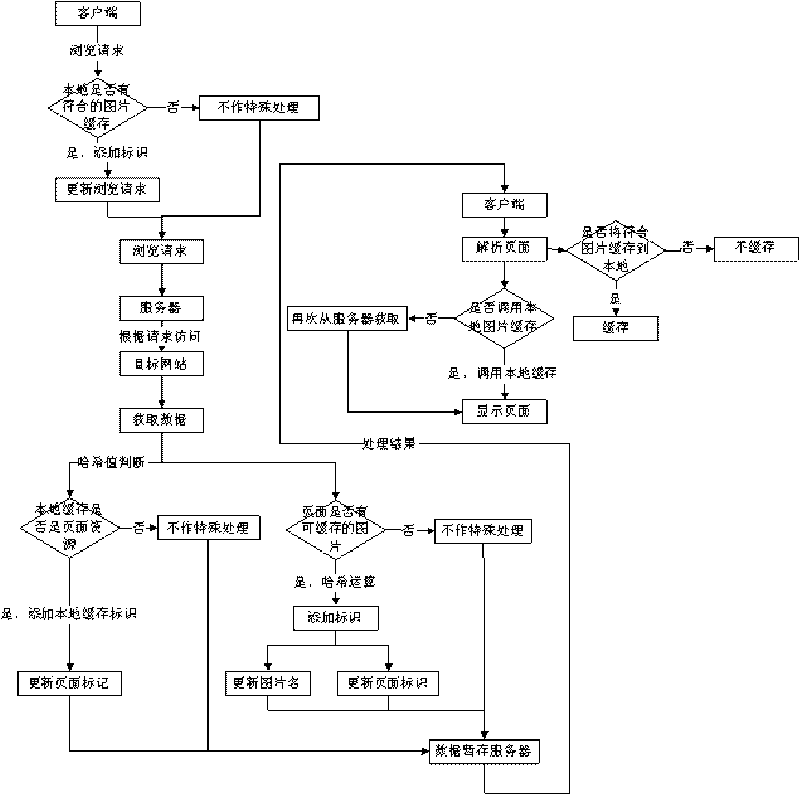

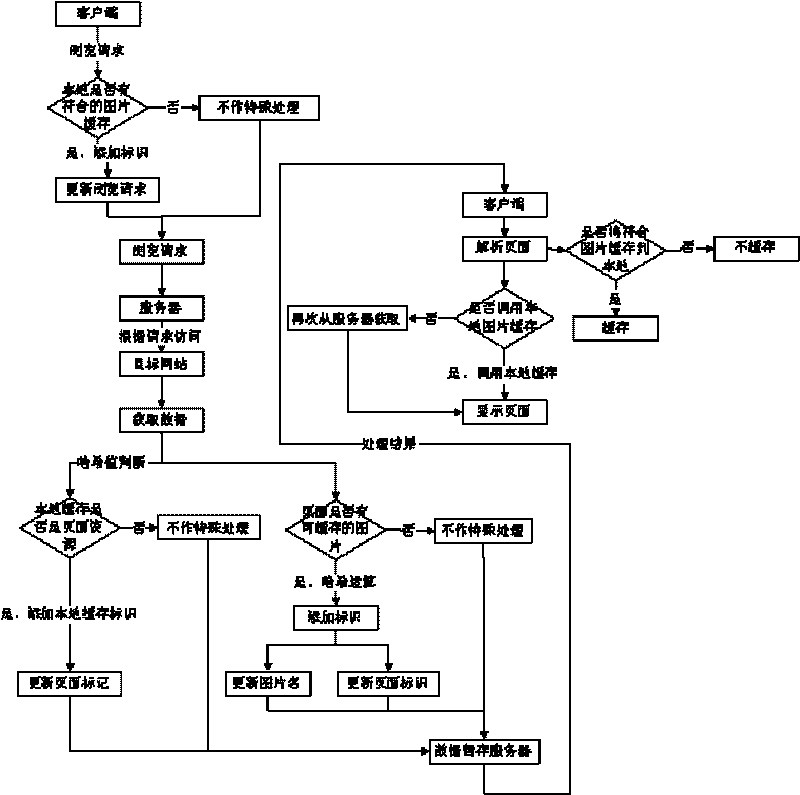

Page cache method for mobile communication equipment terminal

ActiveCN101741986AReduce the burden onHigh speedSubstation equipmentSpecial data processing applicationsTraffic capacityComputer terminal

The invention relates to the technical field of page browsing for a mobile communication equipment terminal, in particular to a page cache method for the mobile communication equipment terminal. The page cache method for the mobile communication equipment terminal comprises the following steps: (11) the mobile communication equipment terminal submits an access request to a transfer server; (12) the transfer server judges whether the requested data is cached according to the request content; (13) the transfer server acquires data from a target website, determines the corresponding cache label,and returns the cache label to the mobile communication equipment terminal; (14) the mobile communication equipment terminal checks whether the data is cached according to the cache label; and (15) the mobile communication equipment terminal requests the transfer server for data by the cache label, and the transfer server acquires the cache data and returns to the mobile communication equipment terminal. By adopting the method, when the mobile communication equipment terminal is transferring on browsing pages, the speed gets faster and less flow is consumed.

Owner:ALIBABA (CHINA) CO LTD

Instructions and logic to interrupt and resume paging in a secure enclave page cache

ActiveUS20150378941A1Memory architecture accessing/allocationMemory adressing/allocation/relocationData storeOperating system

Instructions and logic interrupt and resume paging in secure enclaves. Embodiments include instructions, specify page addresses allocated to a secure enclave, the instructions are decoded for execution by a processor. The processor includes an enclave page cache to store secure data in a first cache line and in a last cache line for a page corresponding to the page address. A page state is read from the first or last cache line for the page when an entry in an enclave page cache mapping for the page indicates only a partial page is stored in the enclave page cache. The entry for a partial page may be set, and a new page state may be recorded in the first cache line when writing-back, or in the last cache line when loading the page when the instruction's execution is being interrupted. Thus the writing-back, or loading can be resumed.

Owner:INTEL CORP

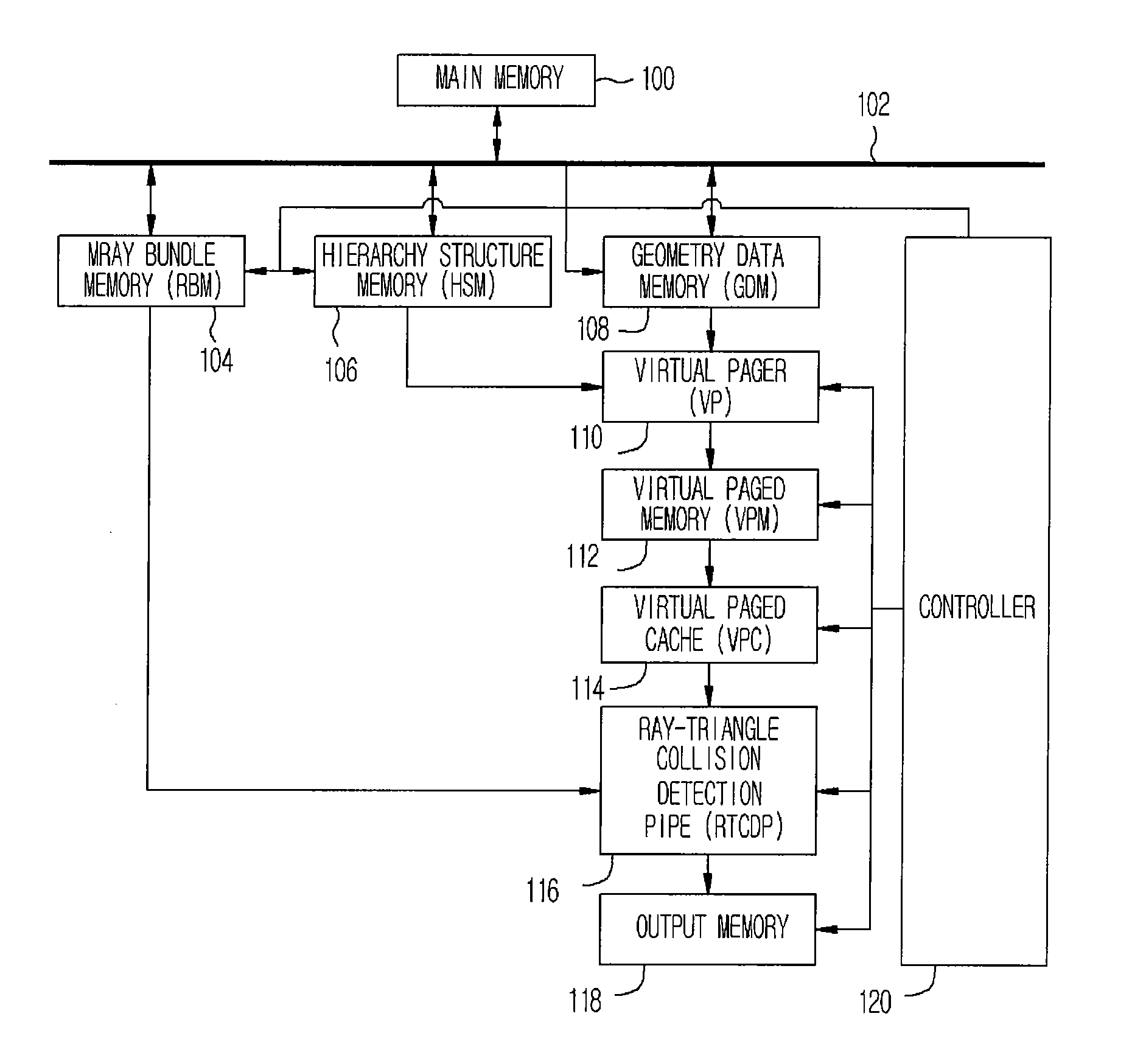

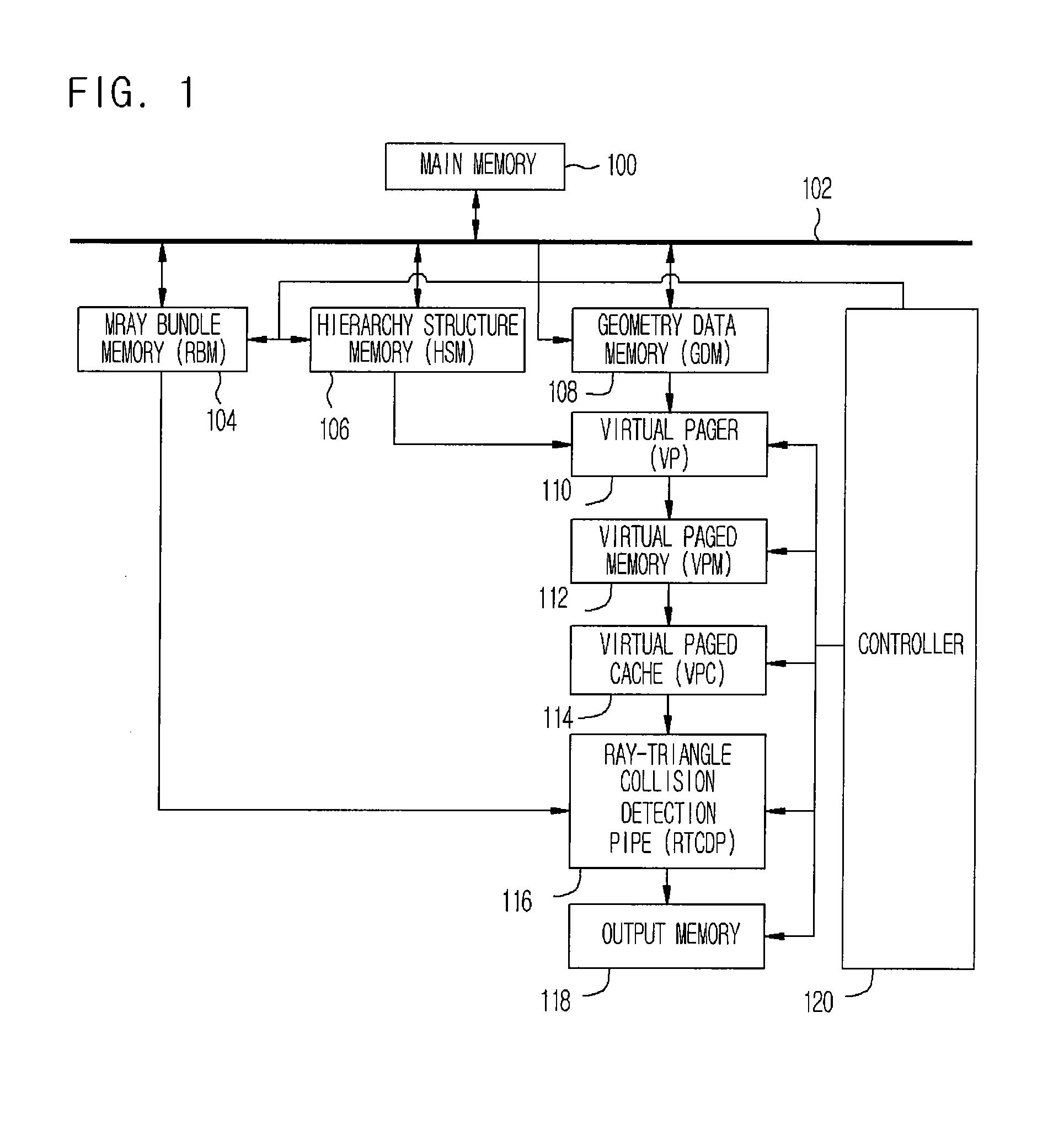

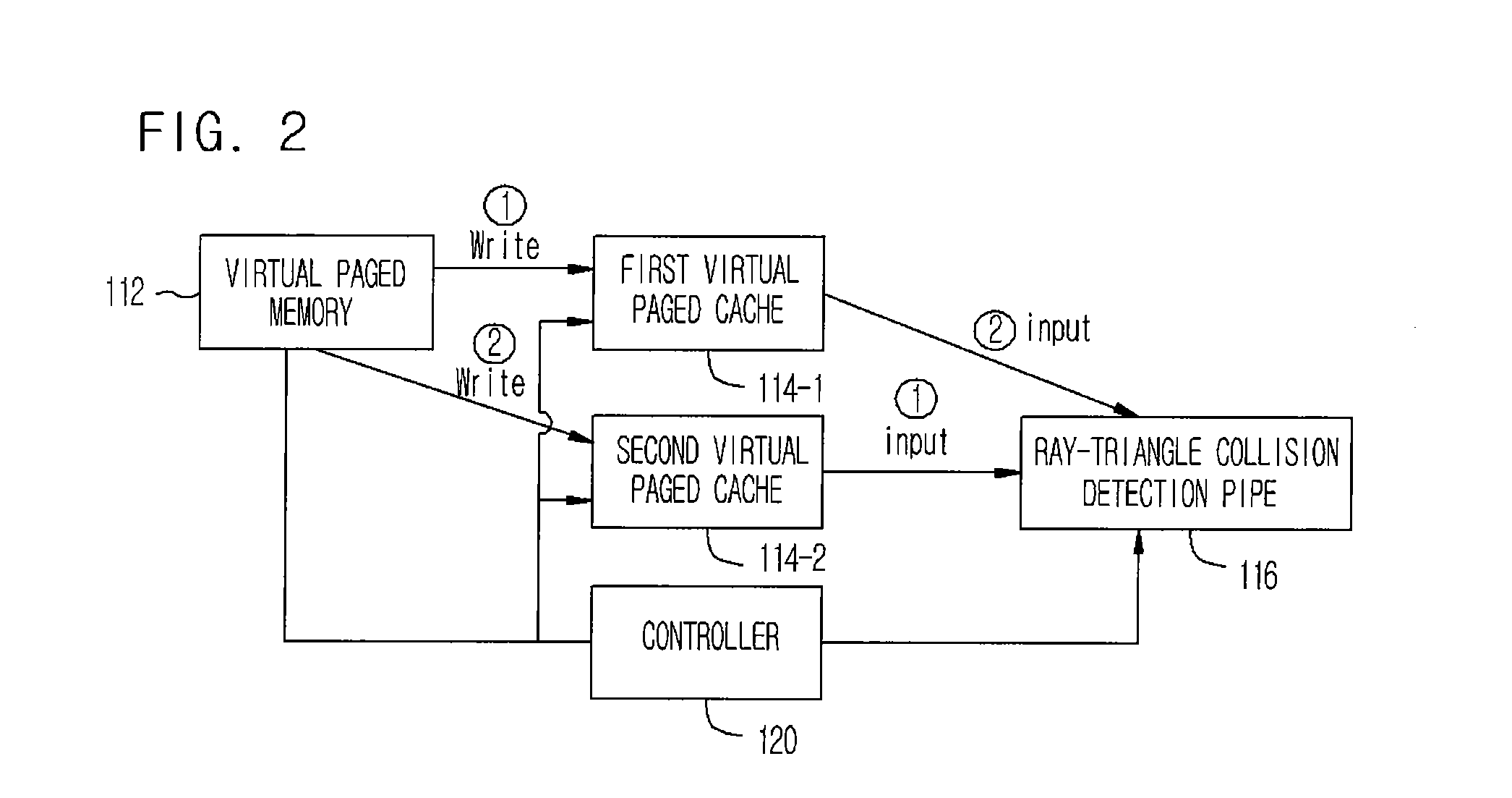

Apparatus and method of ray-triangle collision detection for ray-tracing

InactiveUS20080129734A1Data input efficientlyEfficient inputDrawing from basic elementsProcessor architectures/configurationPagerComputer graphics (images)

Provided are an apparatus and method for detecting ray-triangle collision for ray-tracing. The apparatus includes a ray bundle memory for storing ray bundle, a geometry data memory for storing geometry triangle data, a hierarchy structure memory for storing space subdivision and bounding volume hierarchy structure information, a virtual pager for receiving the geometry triangle data, the space subdivision and bounding volume hierarchy structure information, and the bounding hierarchical structure information by rearranging geometry triangle data by final end nodes, a virtual paged memory for receiving the rearranged data, forming page memories, and storing triangle data by pages, a virtual page cache for processing the page data in a pipe line manner, and previously storing a page memory for collision detection, a ray-triangle collision detection pipe for detecting a ray-triangle collision based on the page memory and the ray bundle, and an output memory for storing the ray-triangle collision detection result.

Owner:ELECTRONICS & TELECOMM RES INST

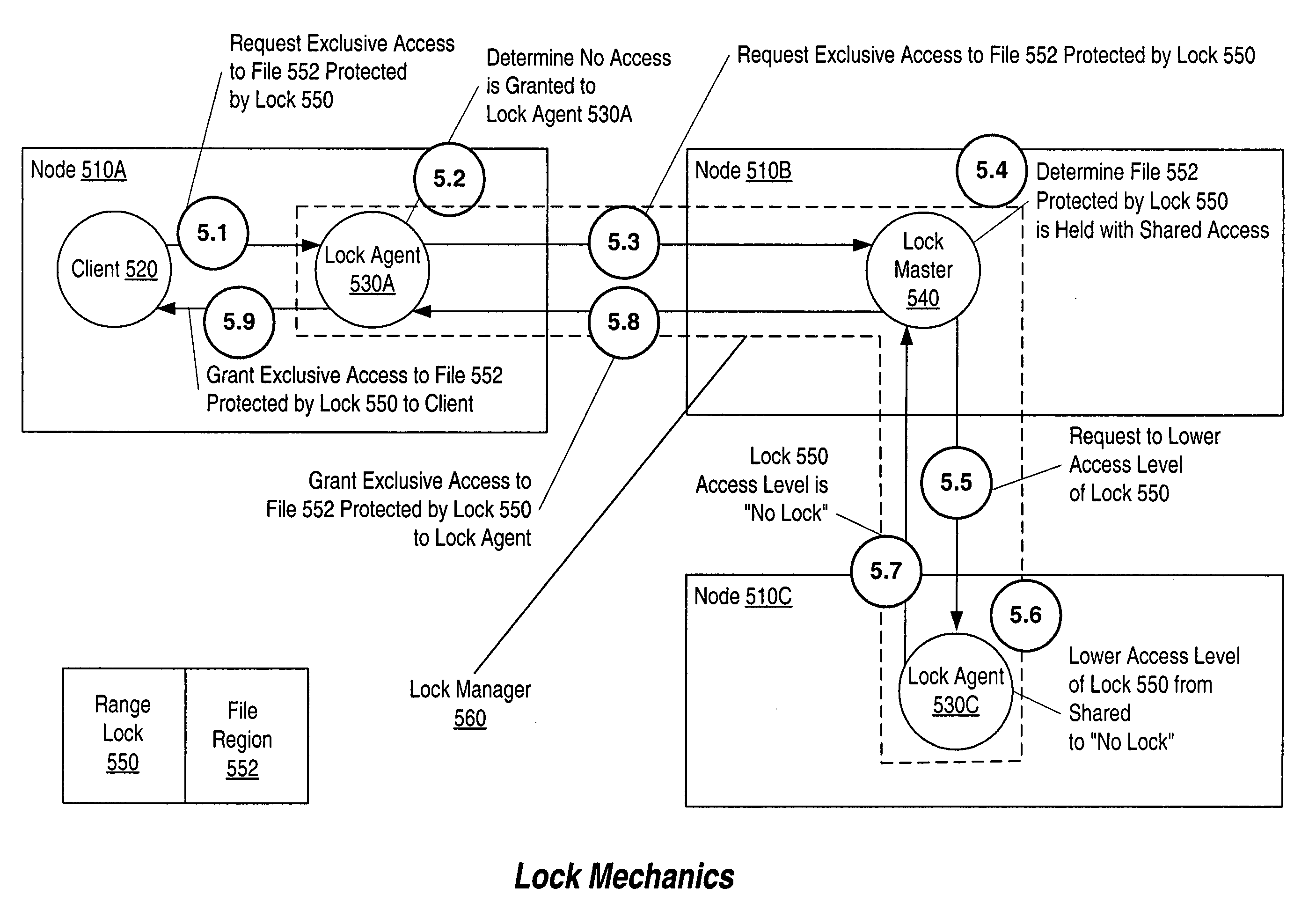

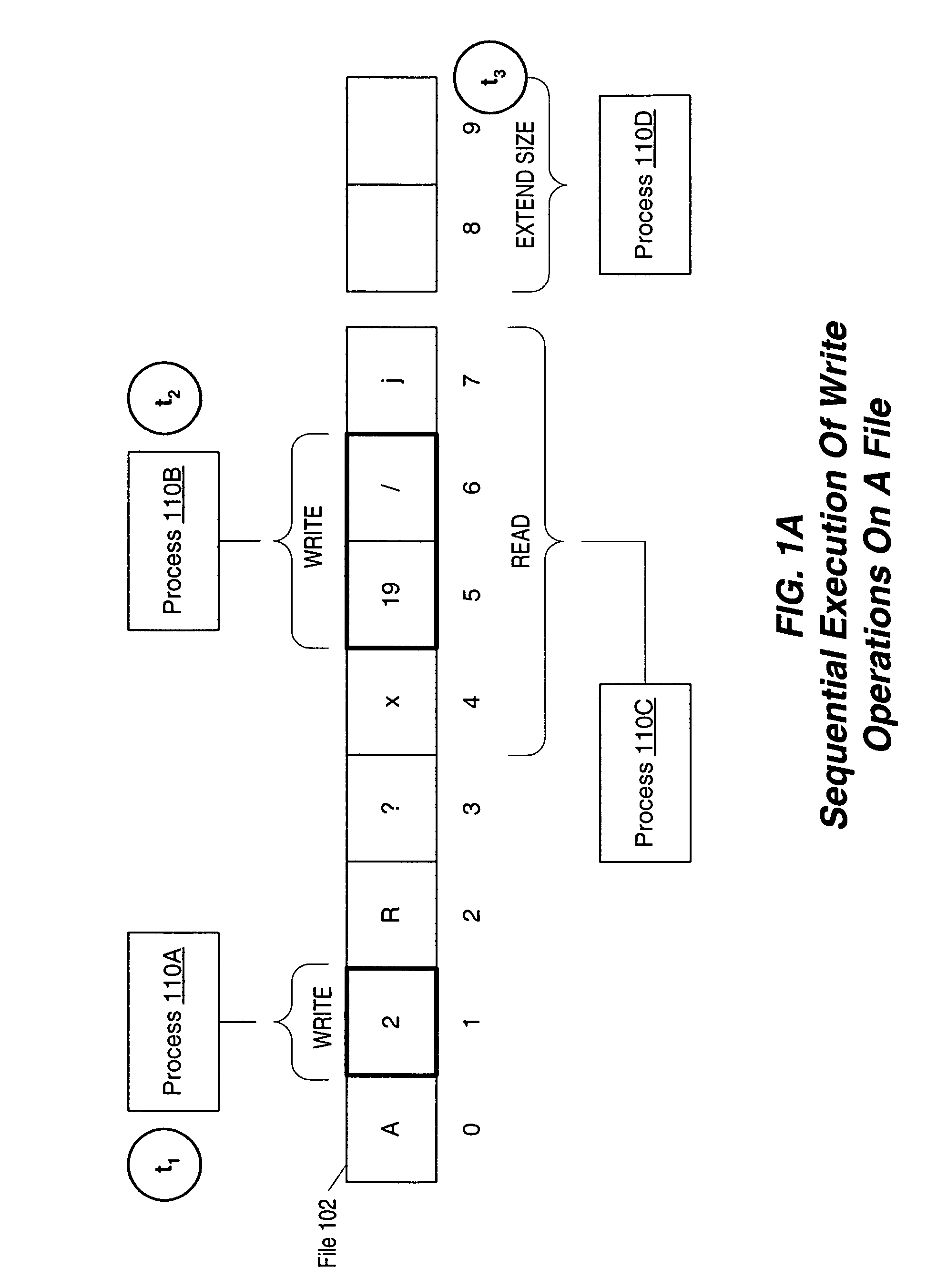

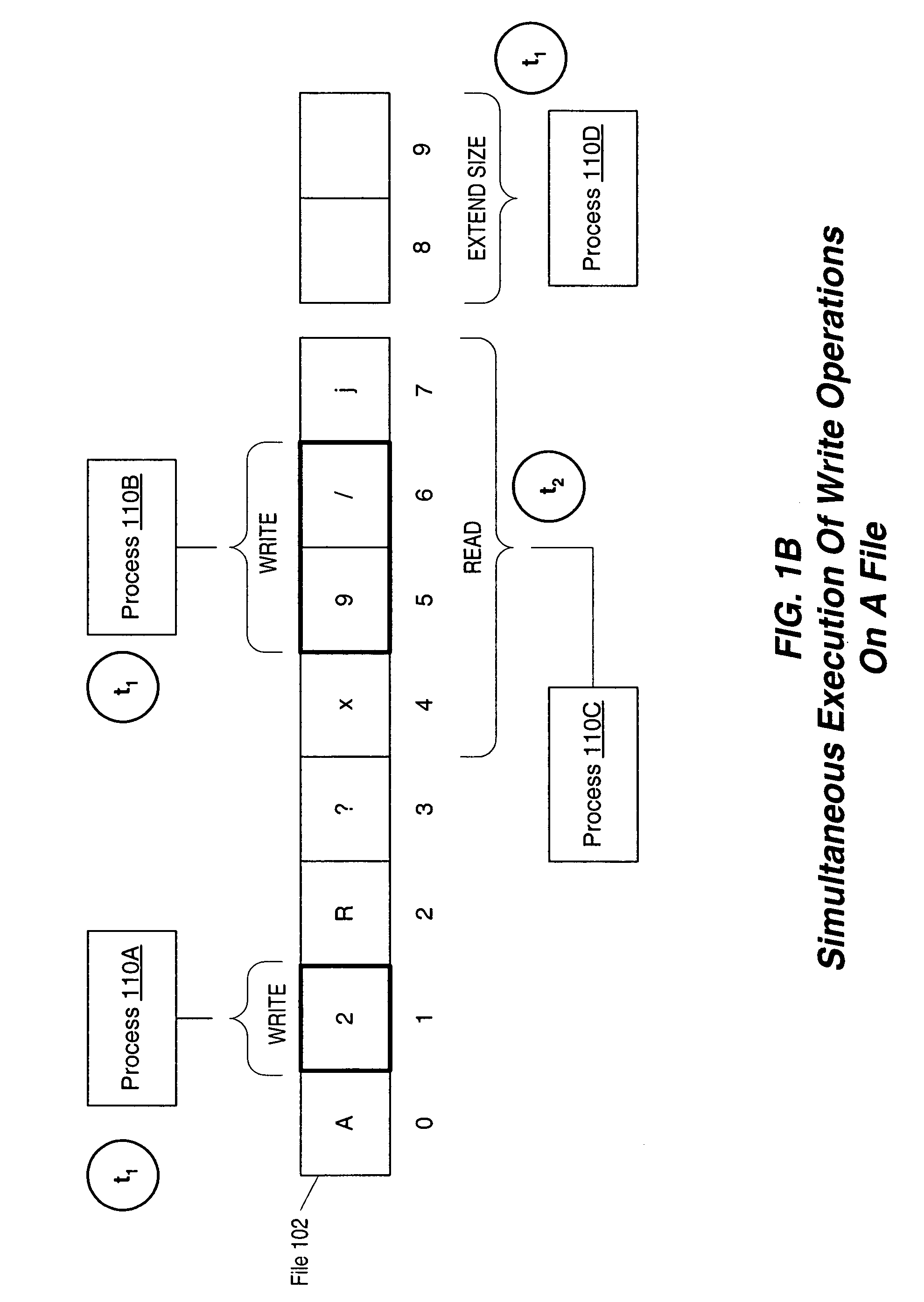

Page cache management for a shared file

ActiveUS7831642B1Efficient coordinationEffective flushingSpecial data processing applicationsMemory systemsComputerized systemOperating system

A method, system, computer system, and computer-readable medium to efficiently coordinate caching operations between nodes operating on the same file while allowing different regions of the file to be written concurrently. More than one program can concurrently read and write to the same file. Pages of data from the file are proactively and selectively cached and flushed on different nodes. In one embodiment, range locks are used to effectively flush and invalidate only those pages that are accessed on another node.

Owner:VERITAS TECH

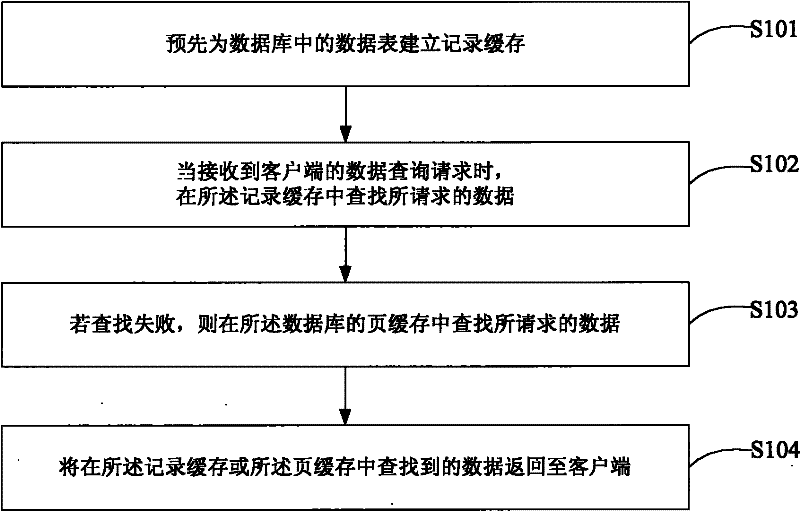

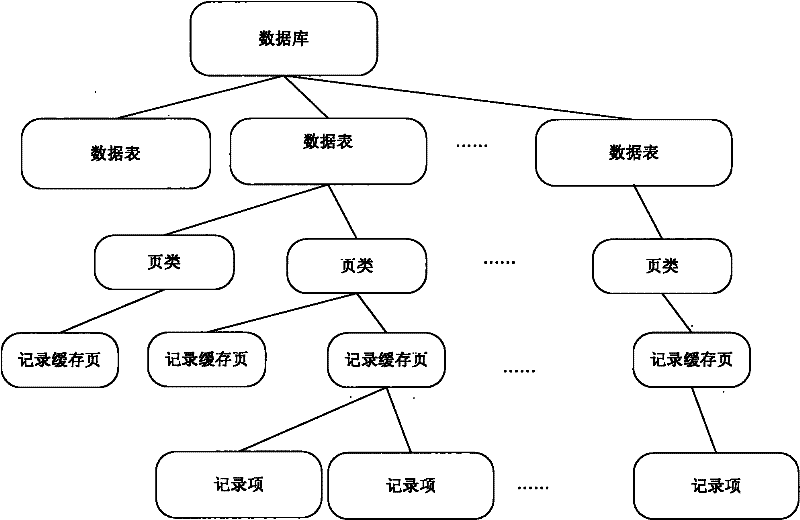

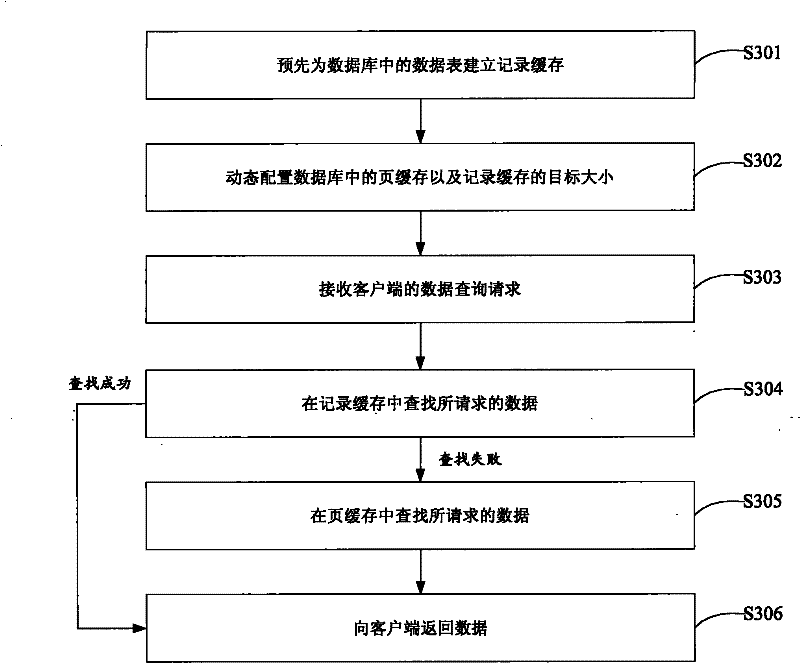

Database cache management method and database server

ActiveCN102331986AIncrease profitReduce update frequencySpecial data processing applicationsDatabase serverDatabase caching

The embodiment of the invention discloses a database cache management method and a database server. The method comprises the following steps of: pre-establishing a record cache for a data sheet in a database, and reading and writing data by the record cache by taking a data row as a unit; in the event of receiving a data inquiry request of a client, searching requested data in the record cache; if the searching process is failed, searching the requested data from a page cache of the database; and returning the data searched from the record cache or the page cache to the client. By utilizing the scheme, the two caches are included in the same database server, wherein the record cache is used for reading and writing data by taking the data row as the unit; the record cache can be only updated when only a small amount of hotspot data is changed, therefore, the cache utilization rate of the database server is increased; and the cache updating frequency is reduced.

Owner:TAOBAO CHINA SOFTWARE

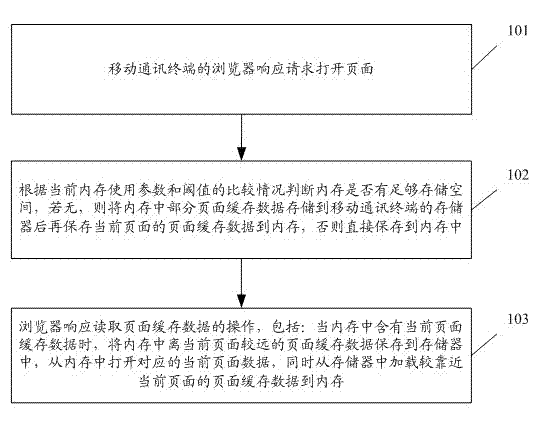

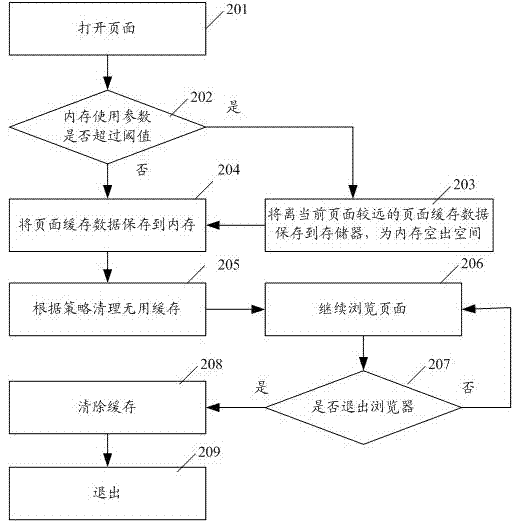

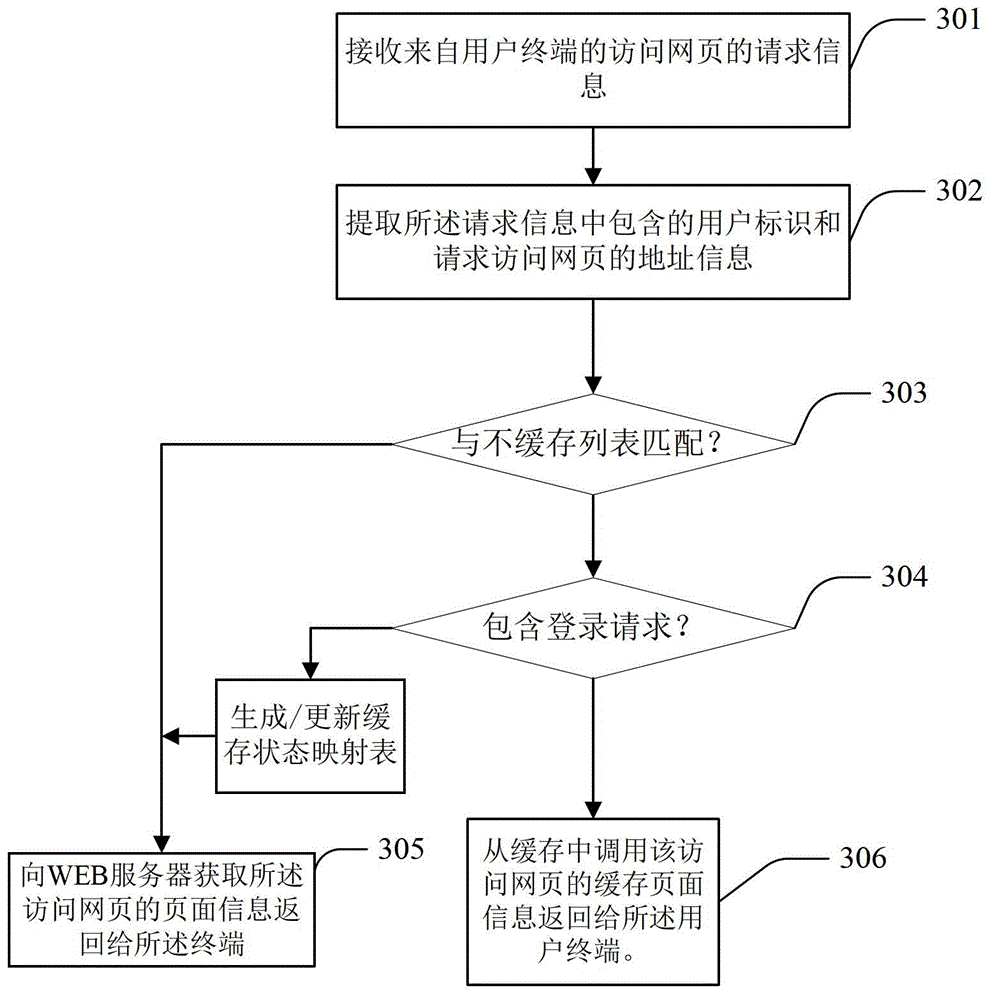

Webpage page caching management method and system

ActiveCN102368258AReduce occupancyReduce usageSpecial data processing applicationsComputer networkThe Internet

The invention discloses a webpage page caching management method and system. The method comprises the following steps that: a browser of a mobile communication terminal opens a page in response to a request, judges whether a memory has enough storage space according to the comparison result of a current memory use parameter and a threshold, if not, stores part of page caching data in the memory into a storage of the mobile communication terminal and then stores the page caching data of the current page into the memory, otherwise, directly stores the page caching data into the memory; the browser responds to the operation of reading the page caching data, wherein the process comprises the following steps of: when the memory has the current page caching data, storing the page caching data, located in the memory and further away from the current page, into the storage, opening corresponding current page data in the memory, and simultaneously, loading the page caching data, located in the storage and closer to the current page, into the memory. With the method and the system, page caching can be managed better while surfing the Internet via the mobile communication terminal, and users can get more convenient browse experience.

Owner:ALIBABA (CHINA) CO LTD

Methods and systems for preemptive and predictive page caching for improved site navigation

InactiveUS6871218B2Efficient deliveryEfficient use ofDigital data information retrievalMultiple digital computer combinationsWeb siteRemote computer

A method for a first computer to request documents from a second computer inacludes steps of sending a first request for a first document to the second computer responsive to a first user action, receiving the first document sent by the second computer responsive to the first request; identifying all references to second documents in the received first document; independently of any user action, automatically sending a second request for at least one of the second documents referred to by the identified references; receiving the second document(s) requested by the second request and storing the received second document(s) in a storage that is local to the first computer, and responsive to a user request for one or more of the second documents, attempting first to service the user request from the local storage and sending a third request to the second computer for second document(s) only when the second document(s) is not stored in the local storage. A method of servicing a request for access to a Web site by a remote computer may include a receiving step to receive the request for access to the Web site; a first sending step to send a first page of the accessed Web site to the remote computer responsive to the request, and independently of any subsequent request for a second page of the Web site originating from the remote computer, preemptively carrying out a second sending step to send the remote computer at least one selected second page based upon a prediction of a subsequent request by the remote computer and / or a history of second pages previously accessed by the remote computer.

Owner:ORACLE INT CORP

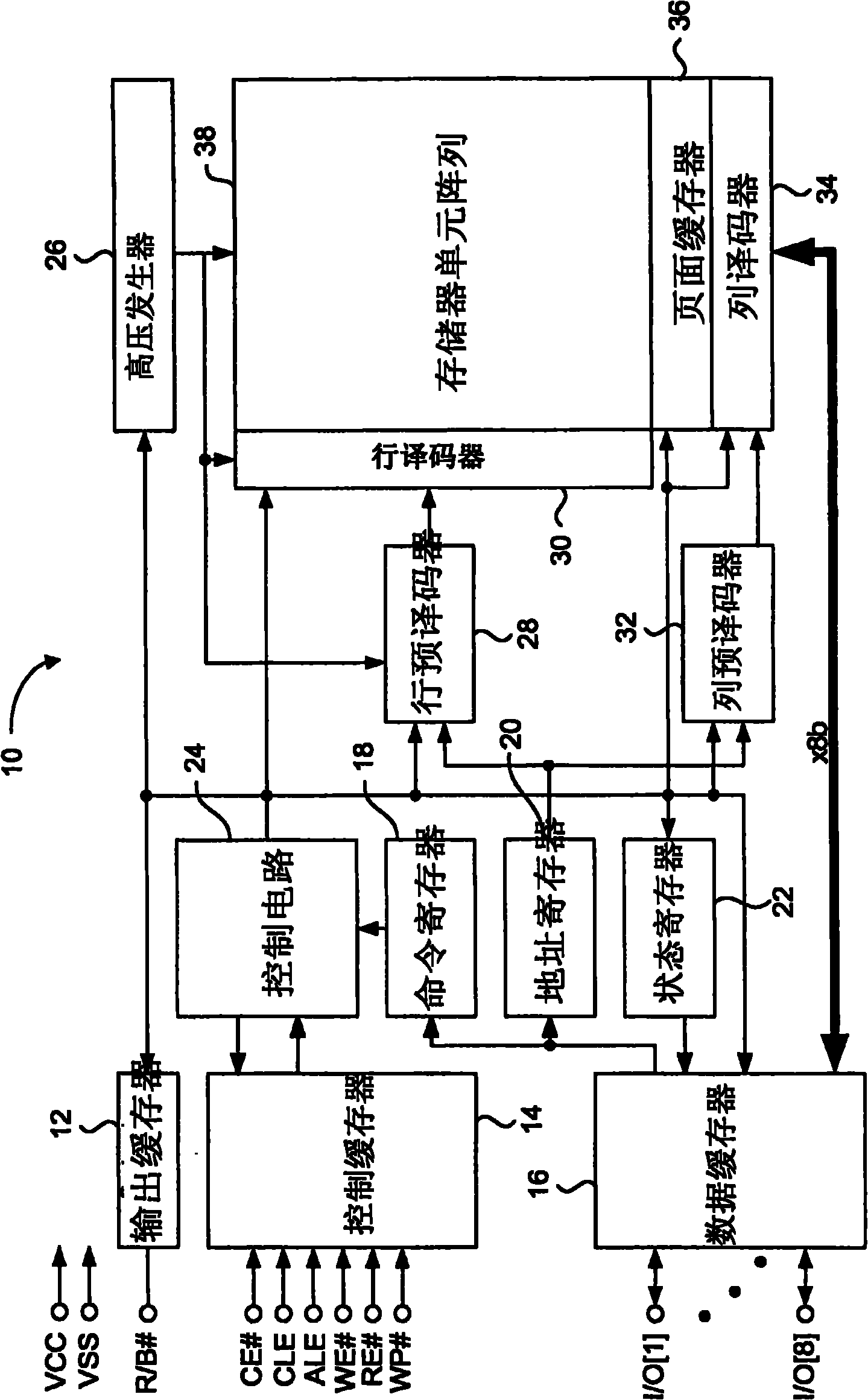

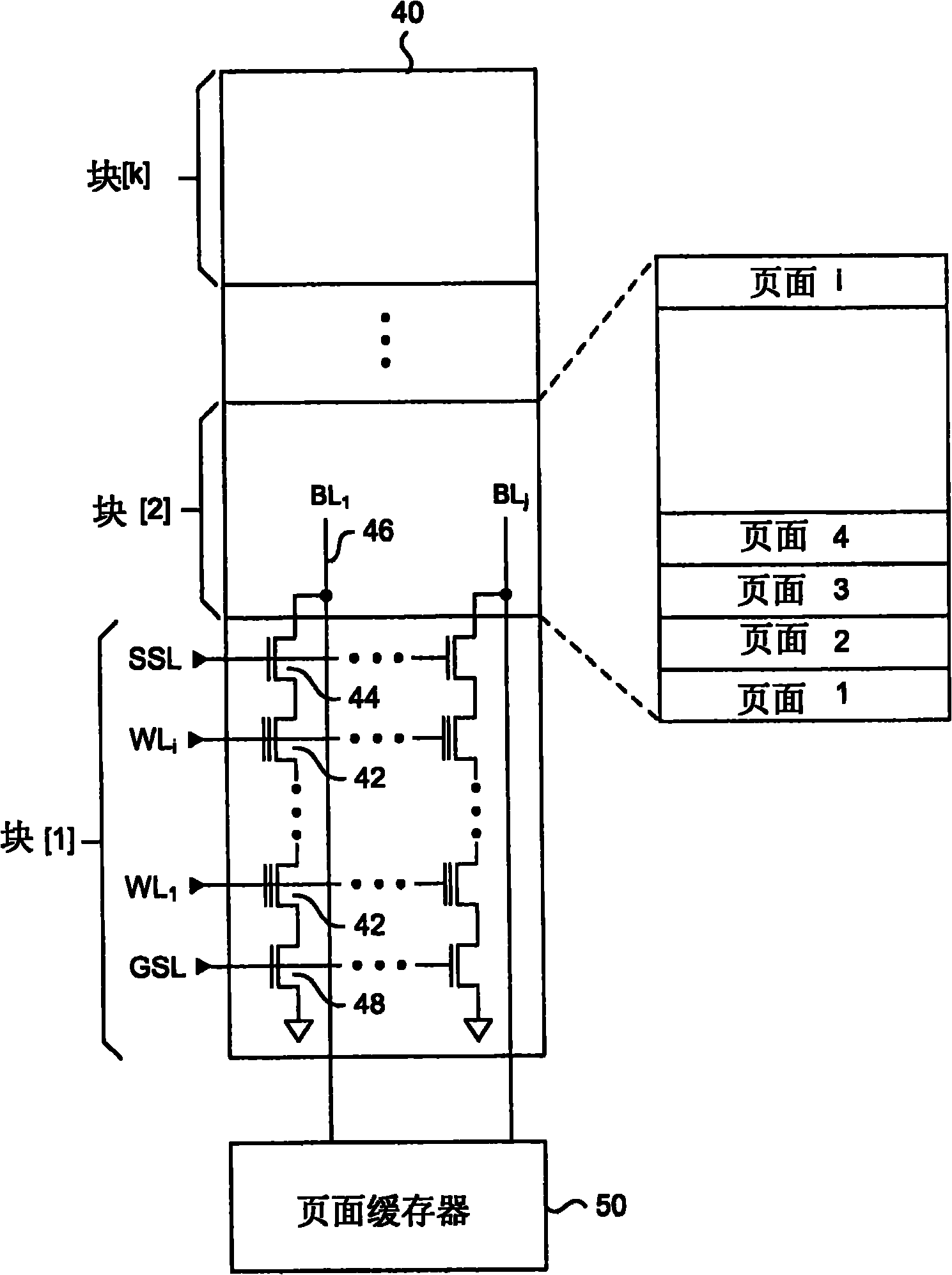

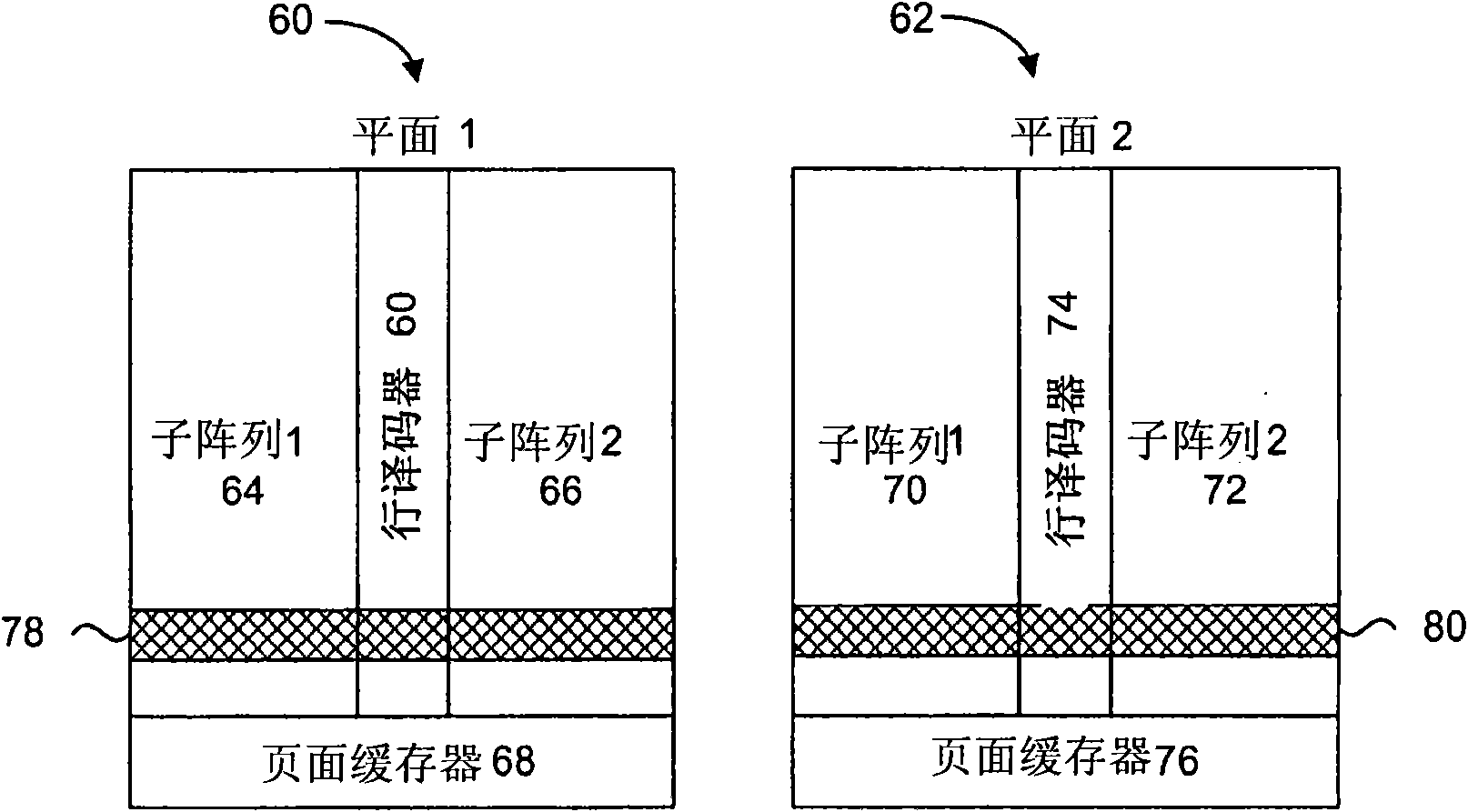

Non-volatile memory device having configurable page size

A flash memory device having at least one bank, where the each bank has an independently configurable page size. Each bank includes at least two memory planes having corresponding page buffers, where any number and combination of the memory planes are selectively accessed at the same time in response to configuration data and address data. The configuration data can be loaded into the memory device upon power up for a static page configuration of the bank, or the configuration data can be received with each command to allow for dynamic page configuration of the bank. By selectively adjusting a page size the memory bank, the block size is correspondingly adjusted.

Owner:CONVERSANT INTPROP MANAGEMENT INC

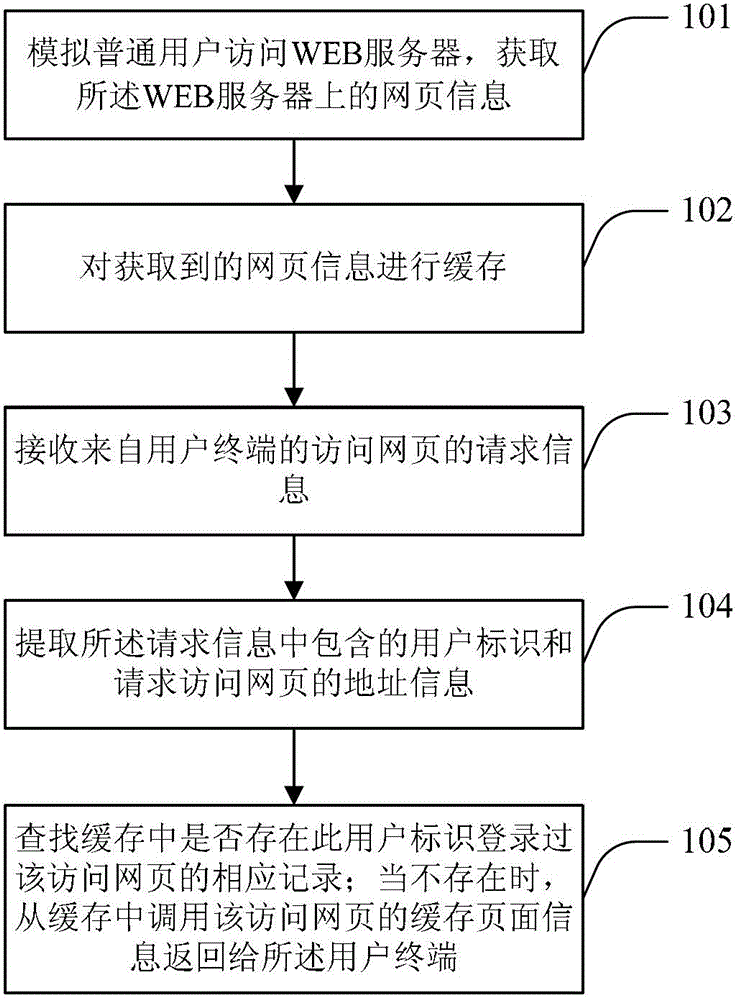

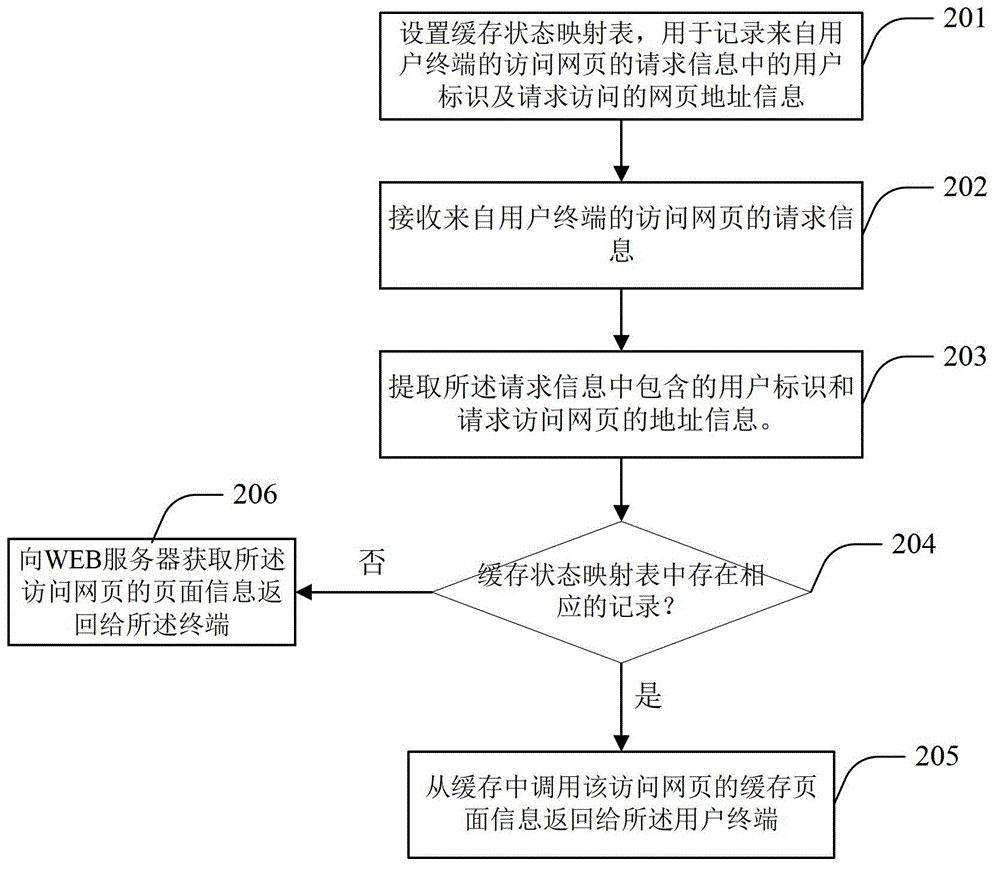

Network access method and server based on cache

ActiveCN102868719AImprove performanceImprove hit rateDigital data information retrievalTransmissionAccess methodWeb service

The invention provides a network access method and a server based on a cache. The method comprises the following steps of: simulating an ordinary user to access a WEB server to obtain information of a web page on the WEB server; caching the obtained information of the web page; receiving request information of accessing the web page from a user terminal; extracting a user identification contained in the requested information and address information of requesting to access the web page; searching whether a corresponding record indicating that the user identification logs on the accessed web page exists in the cache; and when the record does not exist, calling cache page information of the accessing page from the cache and returning to the user terminal. Since only when a network request never logs on the web page can the server send the network cache corresponding to the network request to the terminal, leakage of sensitive information of a user is prevented, a hit ratio of the page cache is increased, and property of an agent server responding to the request of network access is improved.

Owner:360 TECH GRP CO LTD

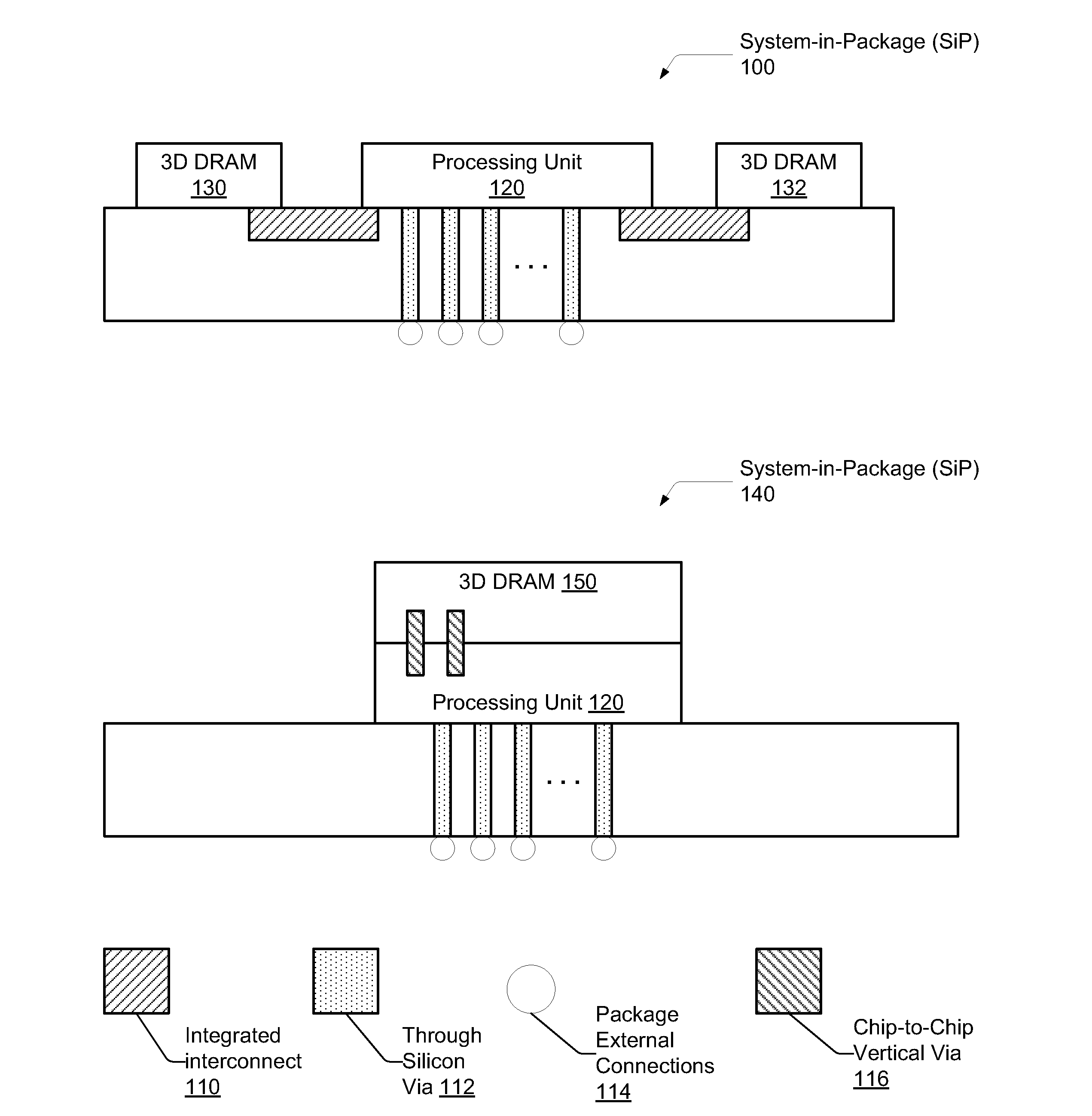

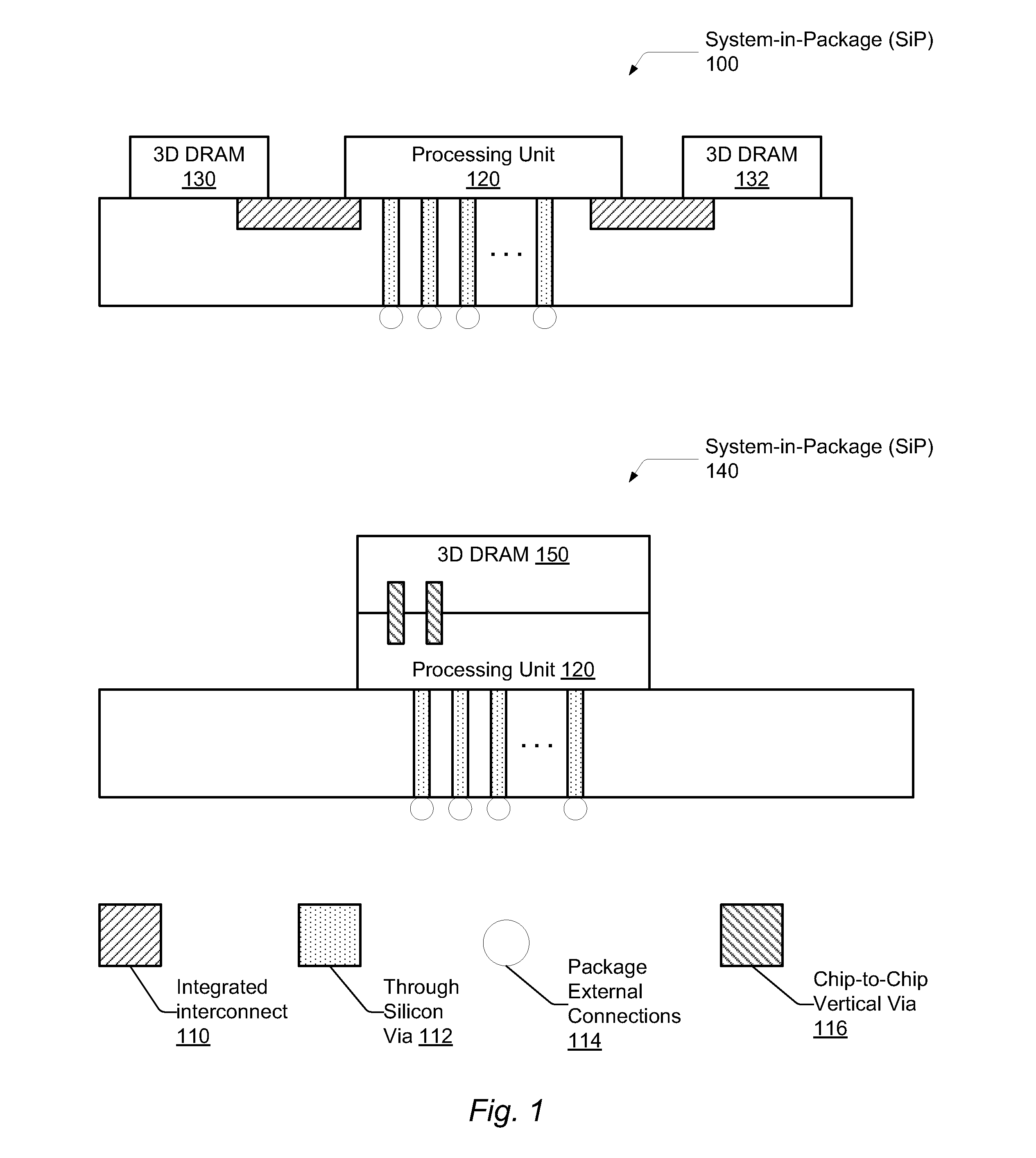

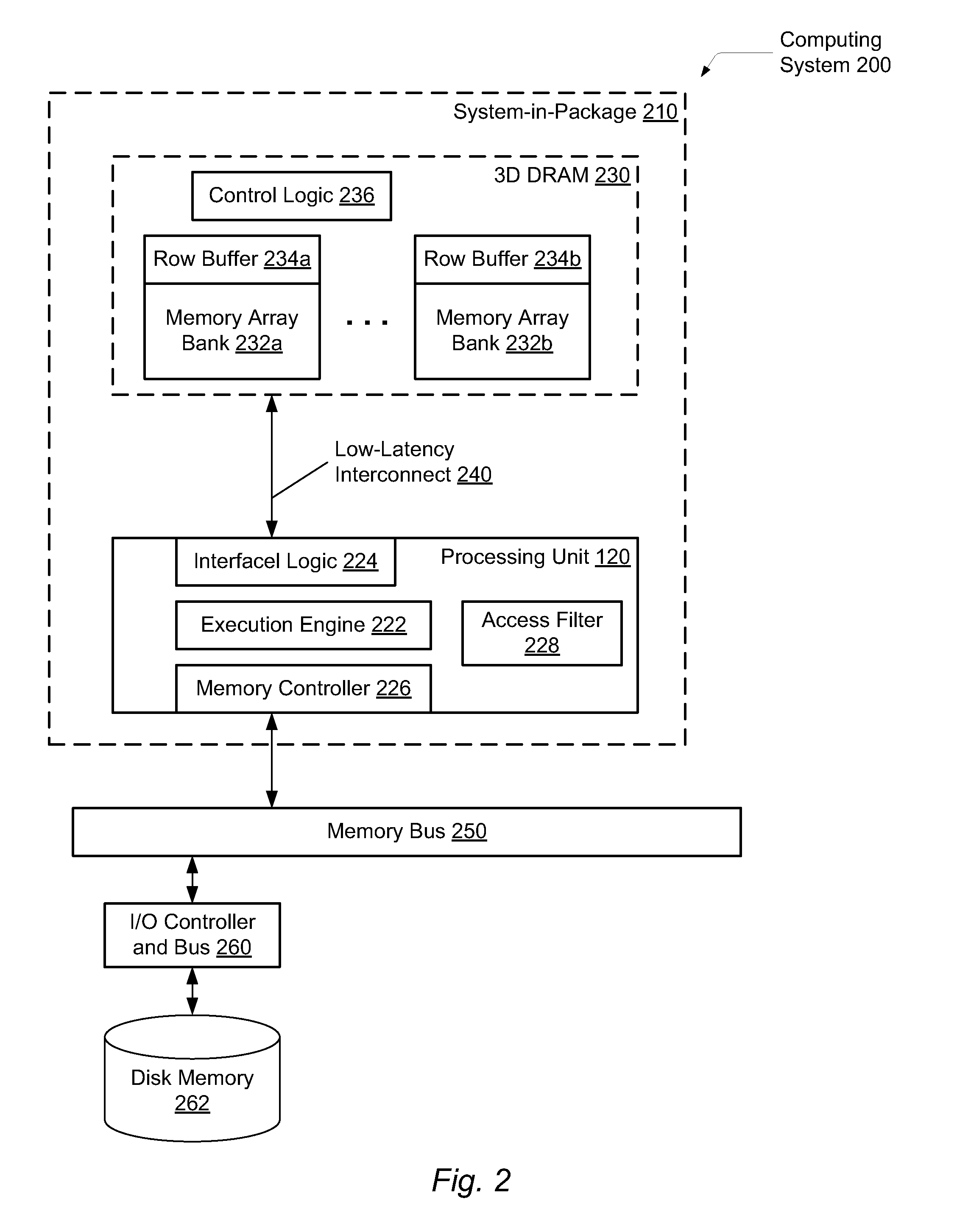

Hardware filter for tracking block presence in large caches

ActiveUS20130138894A1Efficiently determinedEnergy efficient ICTMemory adressing/allocation/relocationParallel computingComputing systems

A system and method for efficiently determining whether a requested memory location is in a large row-based memory of a computing system. A computing system includes a processing unit that generates memory requests on a first chip and a cache (LLC) on a second chip connected to the first chip. The processing unit includes an access filter that determines whether to access the cache. The cache is fabricated on top of the processing unit. The processing unit determines whether to access the access filter for a given memory request. The processing unit accesses the access filter to determine whether given data associated with a given memory request is stored within the cache. In response to determining the access filter indicates the given data is not stored within the cache, the processing unit generates a memory request to send to off-package memory.

Owner:ADVANCED MICRO DEVICES INC

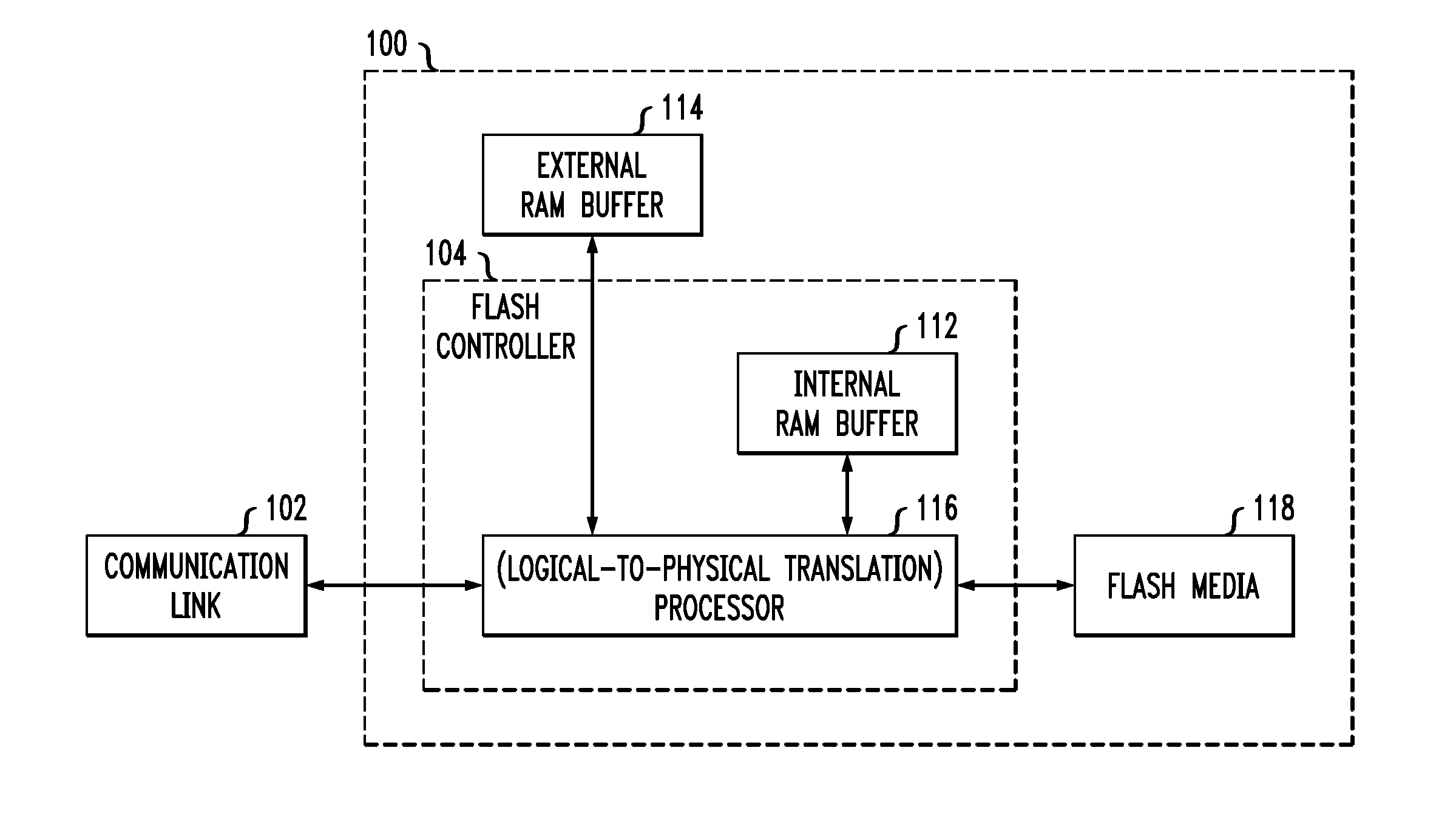

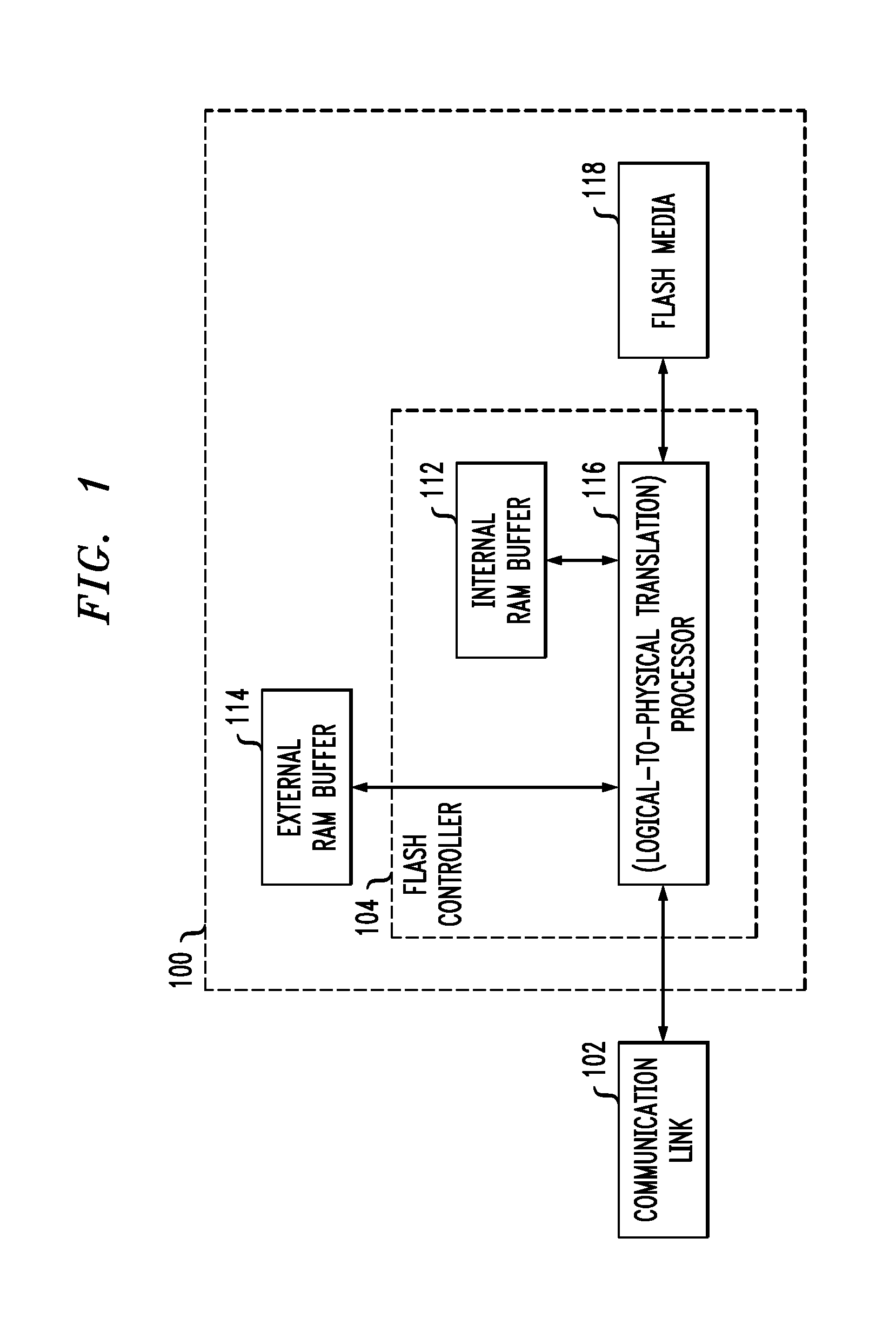

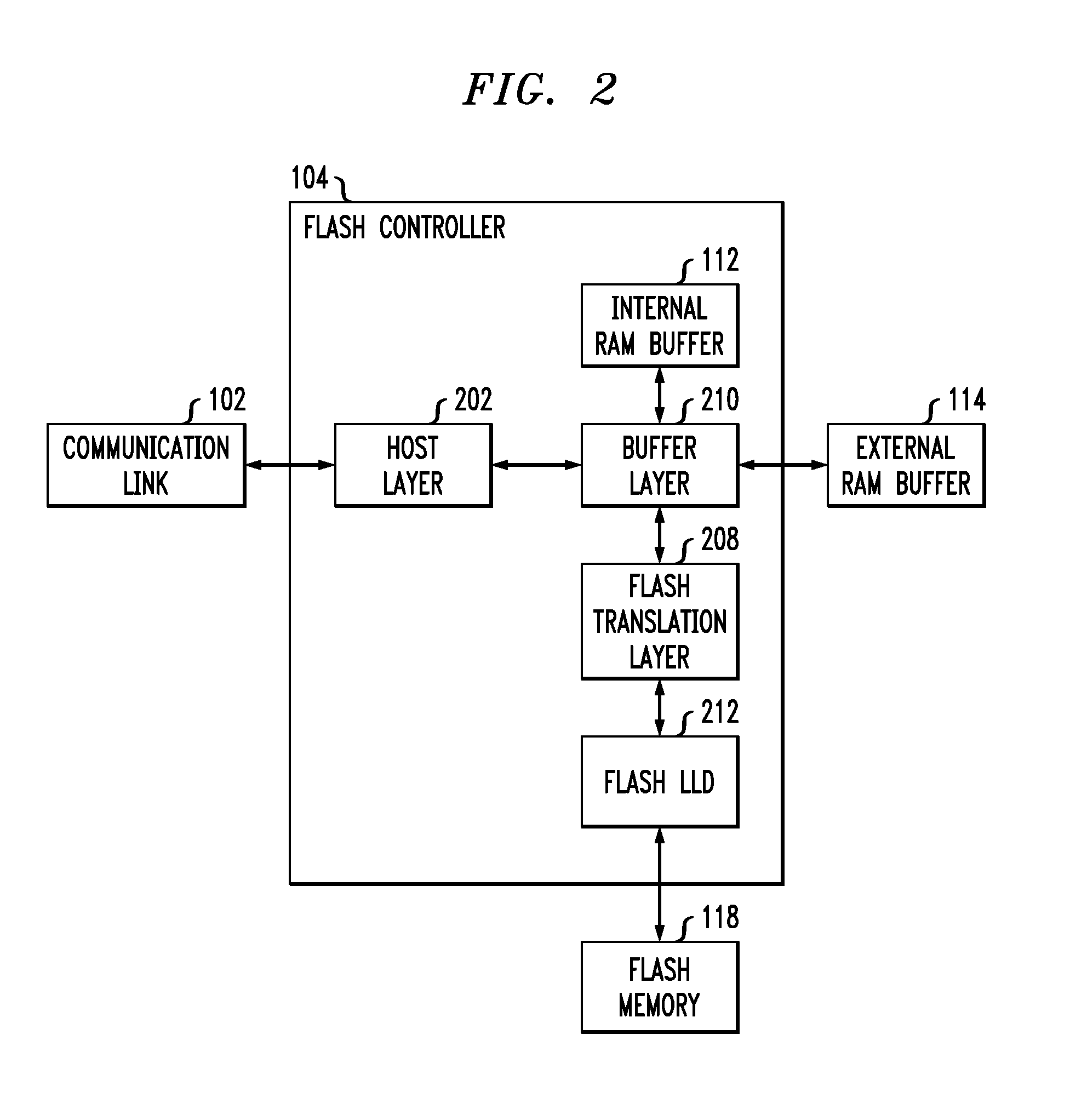

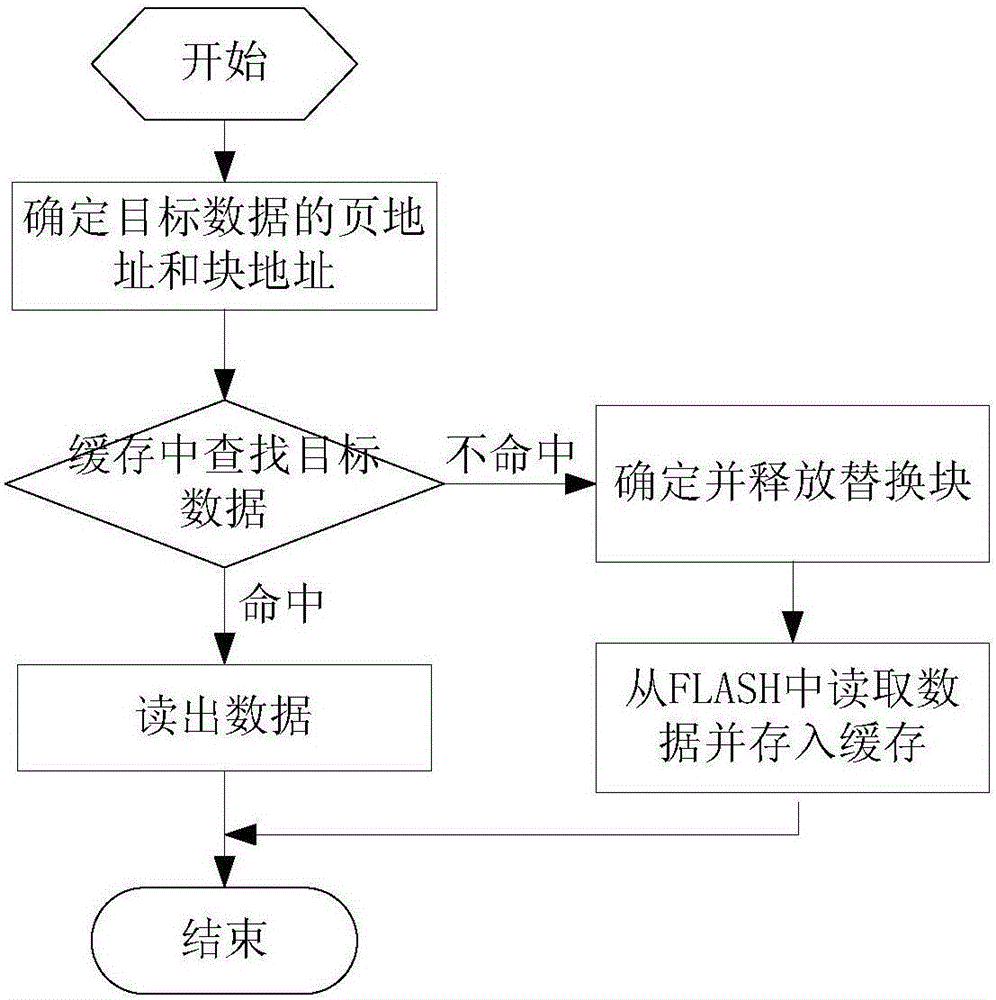

Accessing logical-to-physical address translation data for solid state disks

ActiveUS20110072198A1Minimize the numberMemory architecture accessing/allocationMemory adressing/allocation/relocationPhysical addressDatabase

Described embodiments provide a media controller for a storage device having sectors, the sectors organized into blocks and superblocks. The media controller stores, on the storage device, logical-to-physical address translation data in N summary pages, where N corresponds to the number of superblocks of the storage device. A buffer layer module of the media controller initializes a summary page cache in a buffer. The summary page cache has space for M summary page entries, where M is less than or equal to N. For operations that access a summary page, the media controller searches the summary page cache for the summary page. If the summary page is stored in the summary page cache, the buffer layer module retrieves the summary page from the summary page cache. Otherwise, the buffer layer module retrieves the summary page from the storage device and stores the retrieved summary page to the summary page cache.

Owner:AVAGO TECH INT SALES PTE LTD

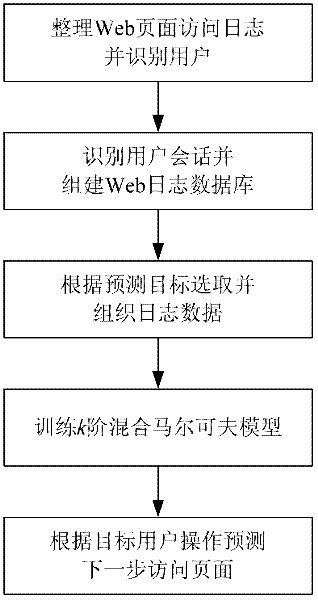

A Web Page Access Prediction Method Based on K-Order Mixed Markov Model

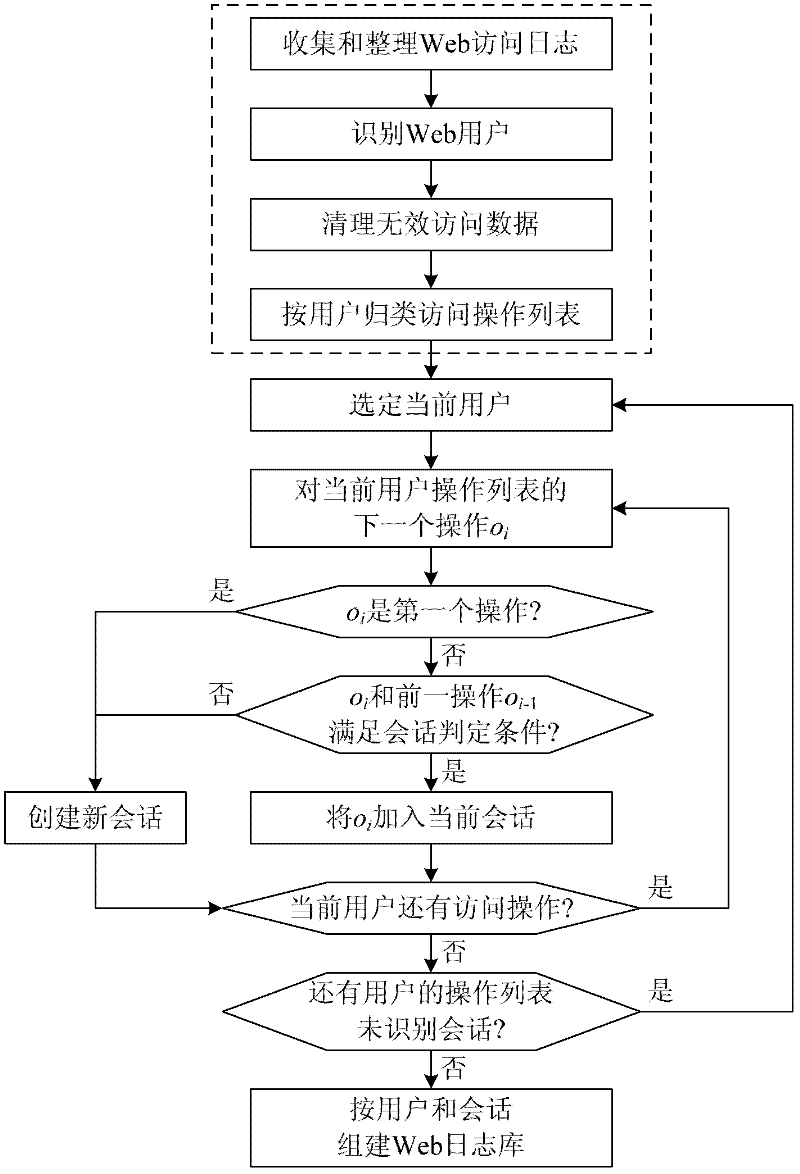

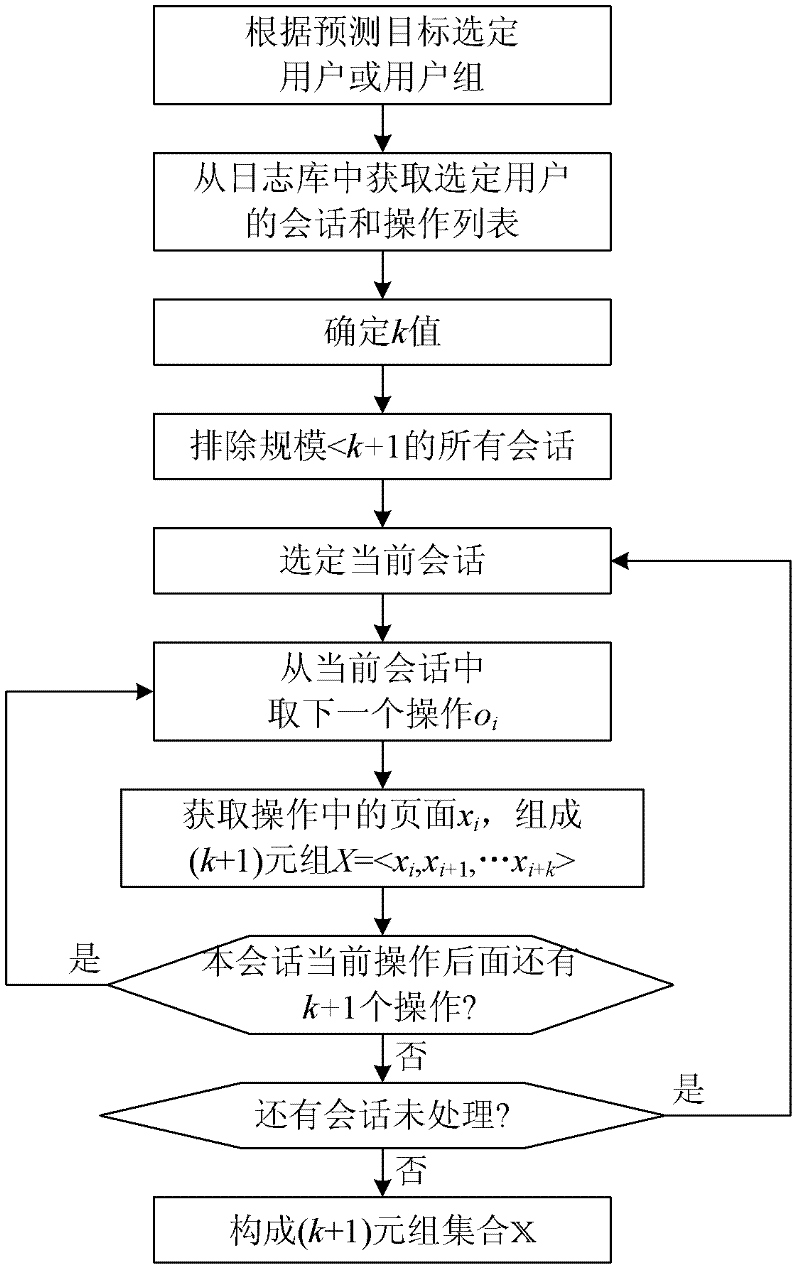

InactiveCN102262661ASpecial data processing applicationsExpectation–maximization algorithmPredictive methods

The invention discloses a Web page access forecasting method based on a k-order hybrid Markov model, and the method comprises the following steps of: firstly, gathering and handling Web server access log data, identifying a client and users and eliminating insignificant access data; then identifying user sessions to construct a Web log database; select log data from the database according to forecasting targets, and organizing (k+1) tuples by taking a session as a unit, wherein the (k+1) tuples are used for training the k-order hybrid Markov model; learning and calibrating a parameter set of the k-order hybrid Markov model by using an expectation-maximization algorithm; and identifying sessions according to page access operations of target users and applying the model for forecasting Web pages to be accessed by the users at the next step. The method provided by the invention can be used for recommending pages needed to be accessed by the users so as to reduce the delay of page access and optimize user experience; and in addition, from the point of a Web server, the organizational structure of the Web pages can be improved, the result sequence of a search engine is guided, and the page cache mechanism can be improved, thereby enhancing the quality of service.

Owner:NANJING UNIV

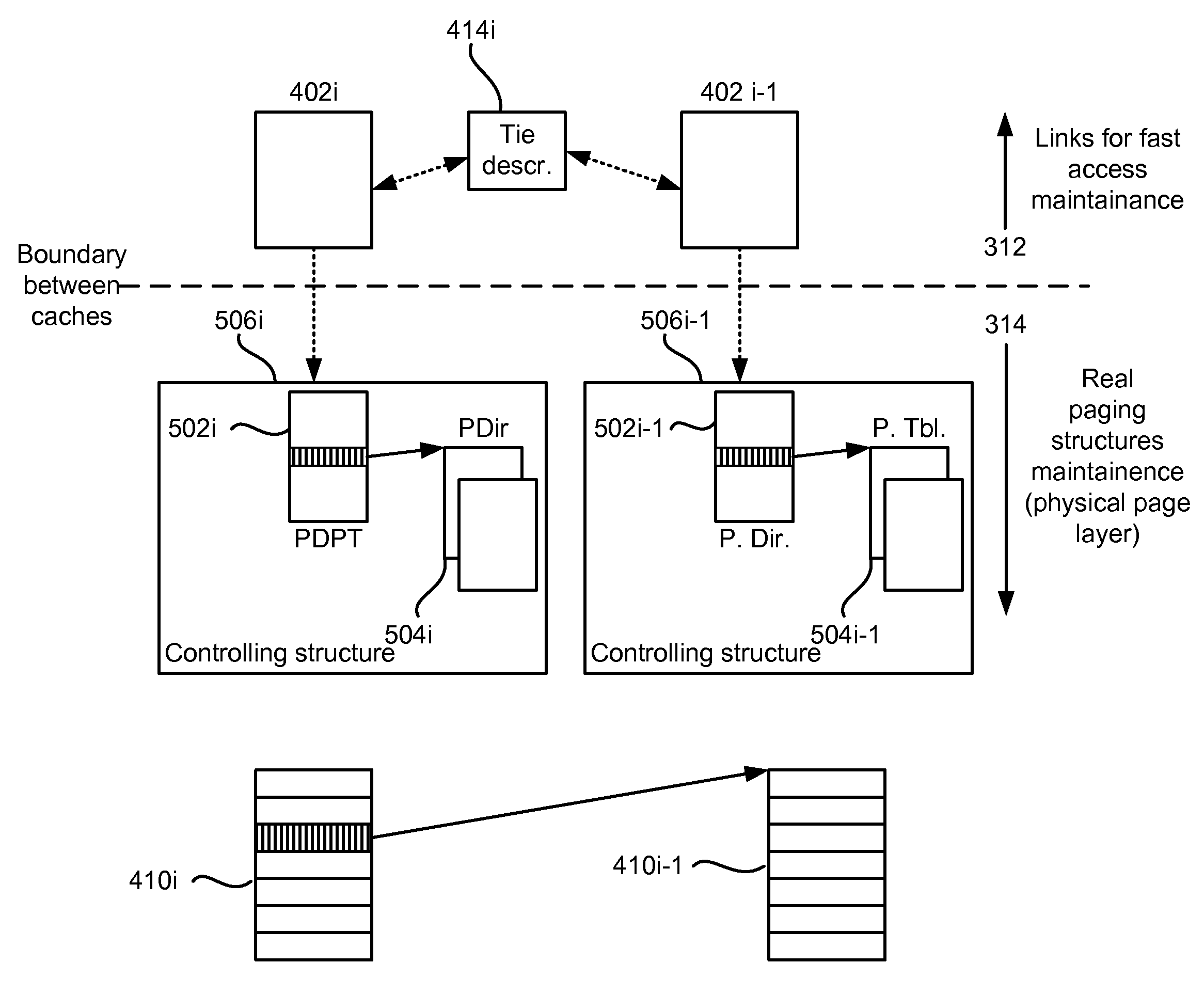

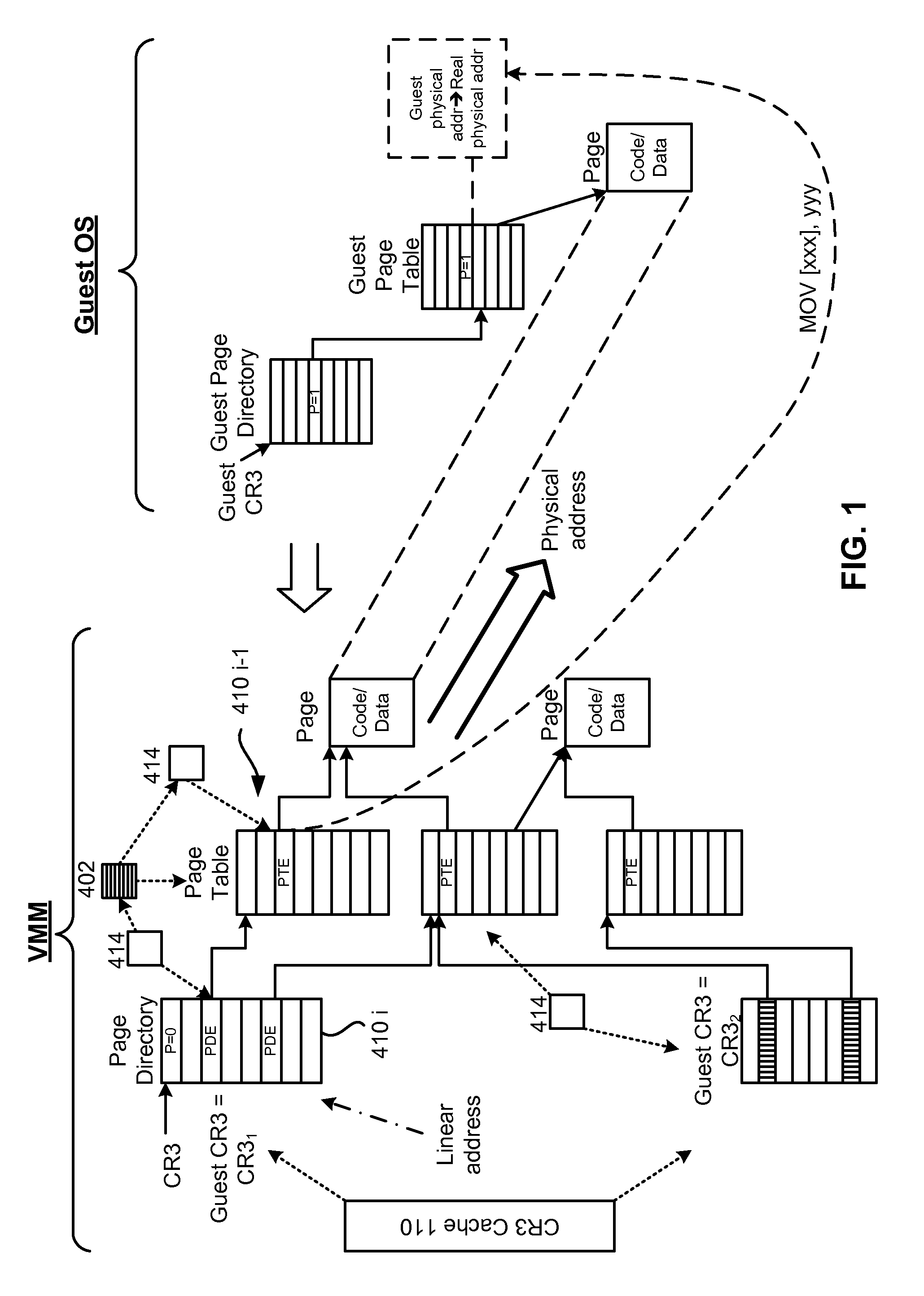

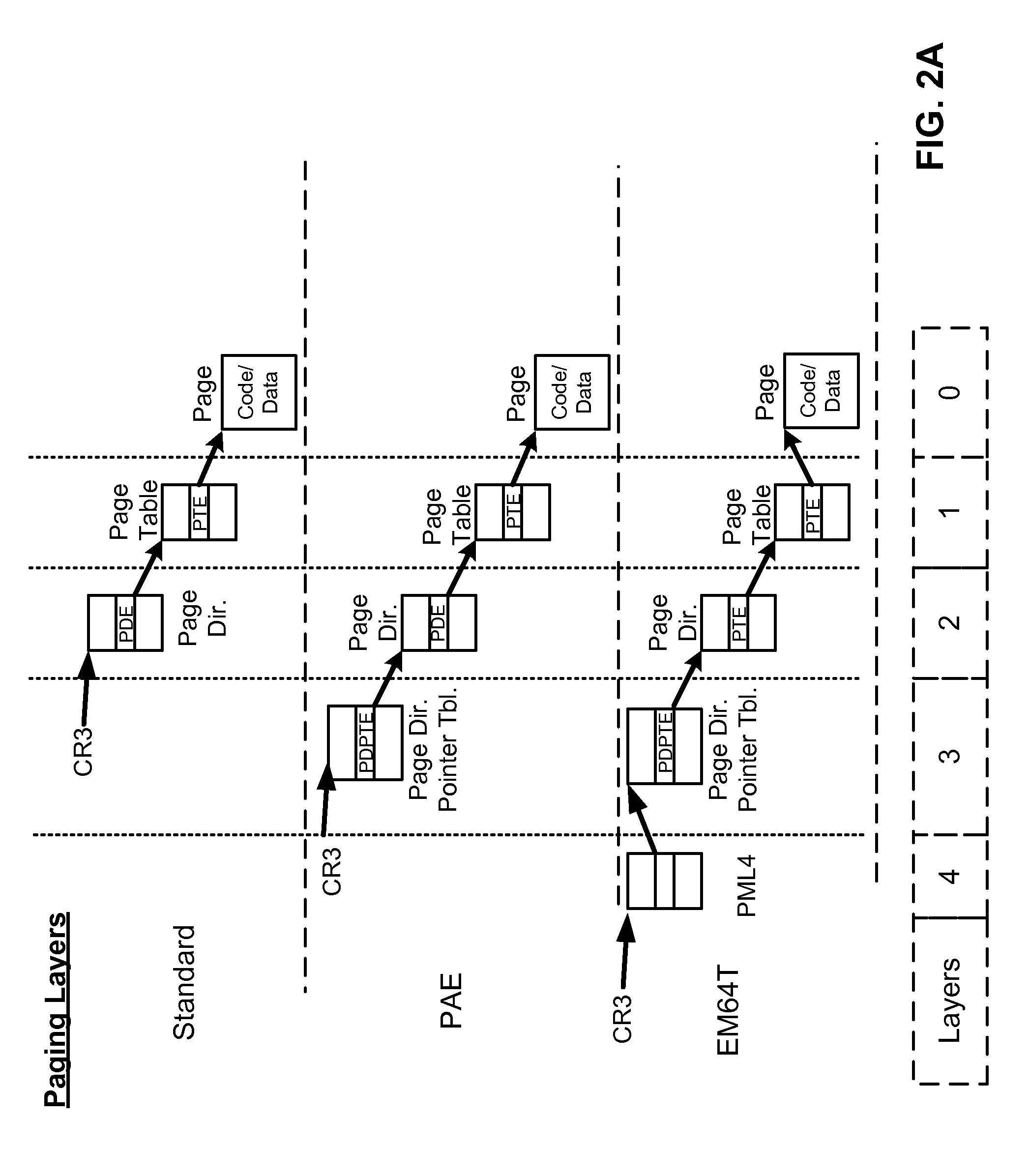

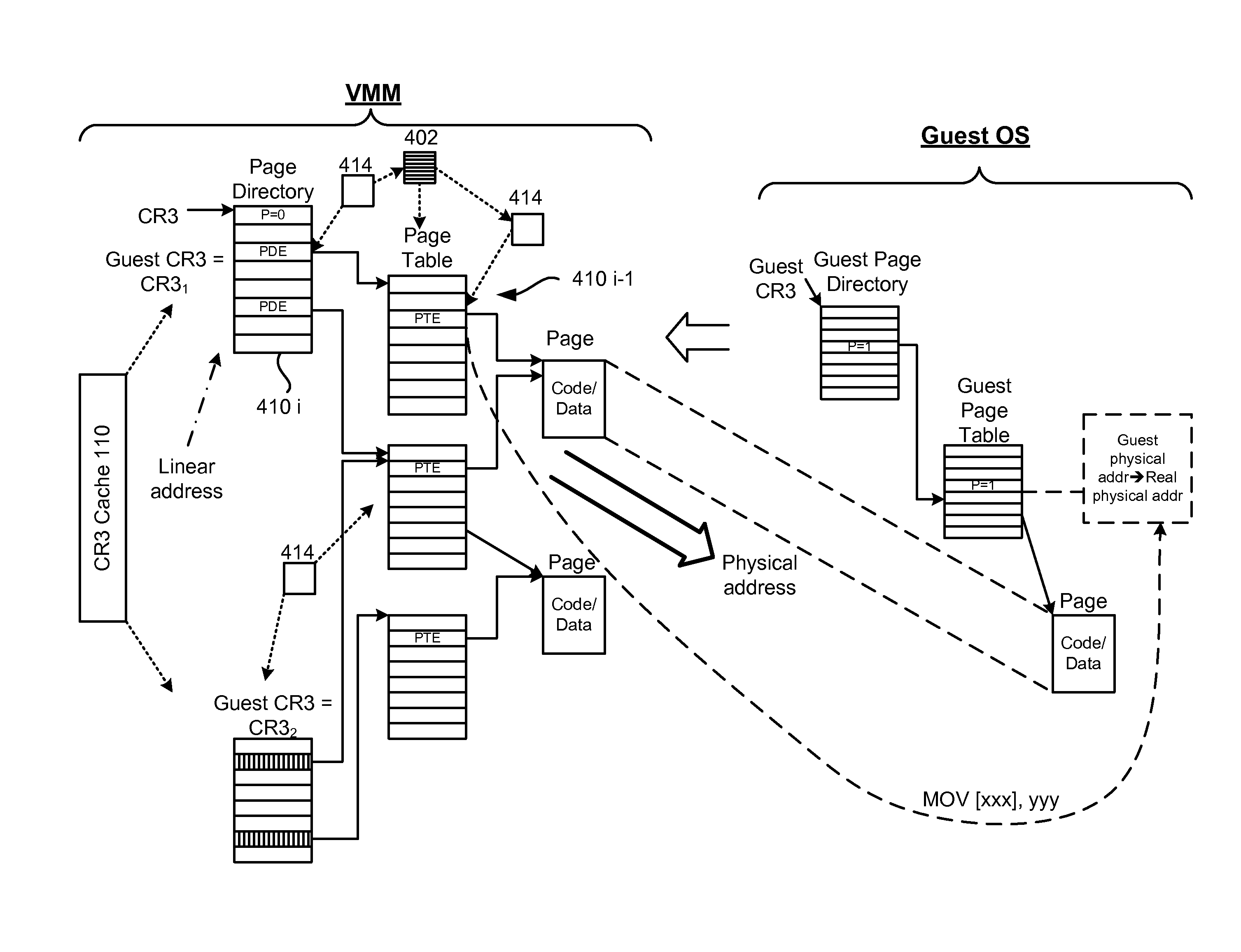

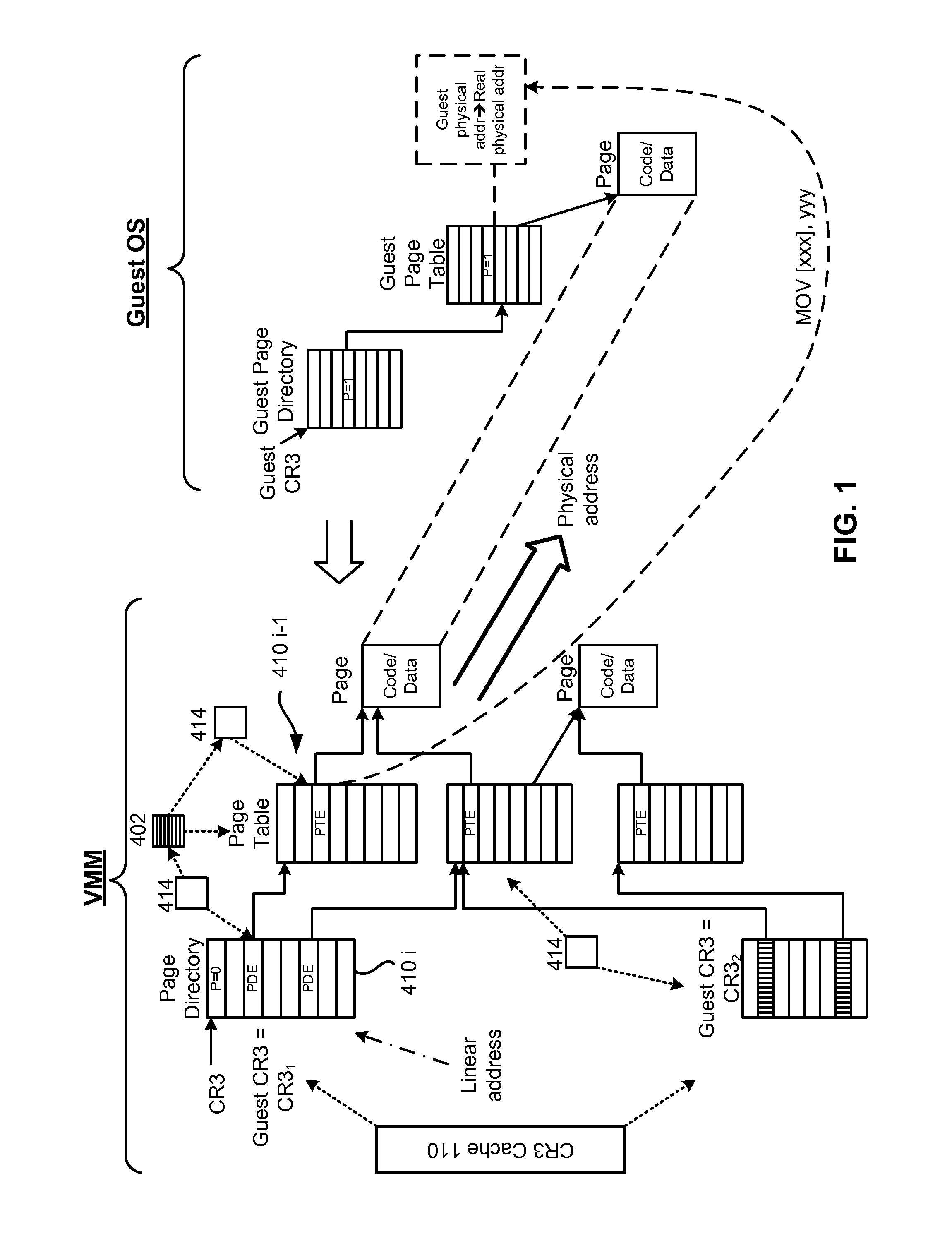

Paging cache optimization for virtual machine

ActiveUS7596677B1Memory adressing/allocation/relocationComputer security arrangementsVirtualizationCache optimization

A system, method and computer program product for virtualizing a processor include a virtualization system running on a computer system and controlling memory paging through hardware support for maintaining real paging structures. A Virtual Machine (VM) is running guest code and has at least one set of guest paging structures that correspond to guest physical pages in guest virtualized linear address space. At least some of the guest paging structures are mapped to the real paging structures. For each guest physical page that is mapped to the real paging structures, paging means for handling a connection structure between the guest physical page and a real physical address of the guest physical page. A cache of connection structures represents cached paths to the real paging structures. Each path is described by guest paging structure descriptors and by tie descriptors. Each path includes a plurality of nodes connected by the tie descriptors. Each guest paging structure descriptor is in a node of at least one path. Each guest paging structure either points to other guest paging structures or to guest physical pages. Each guest paging structure descriptor represents guest paging structure information for mapping guest physical pages to the real paging structures.

Owner:PARALLELS INT GMBH

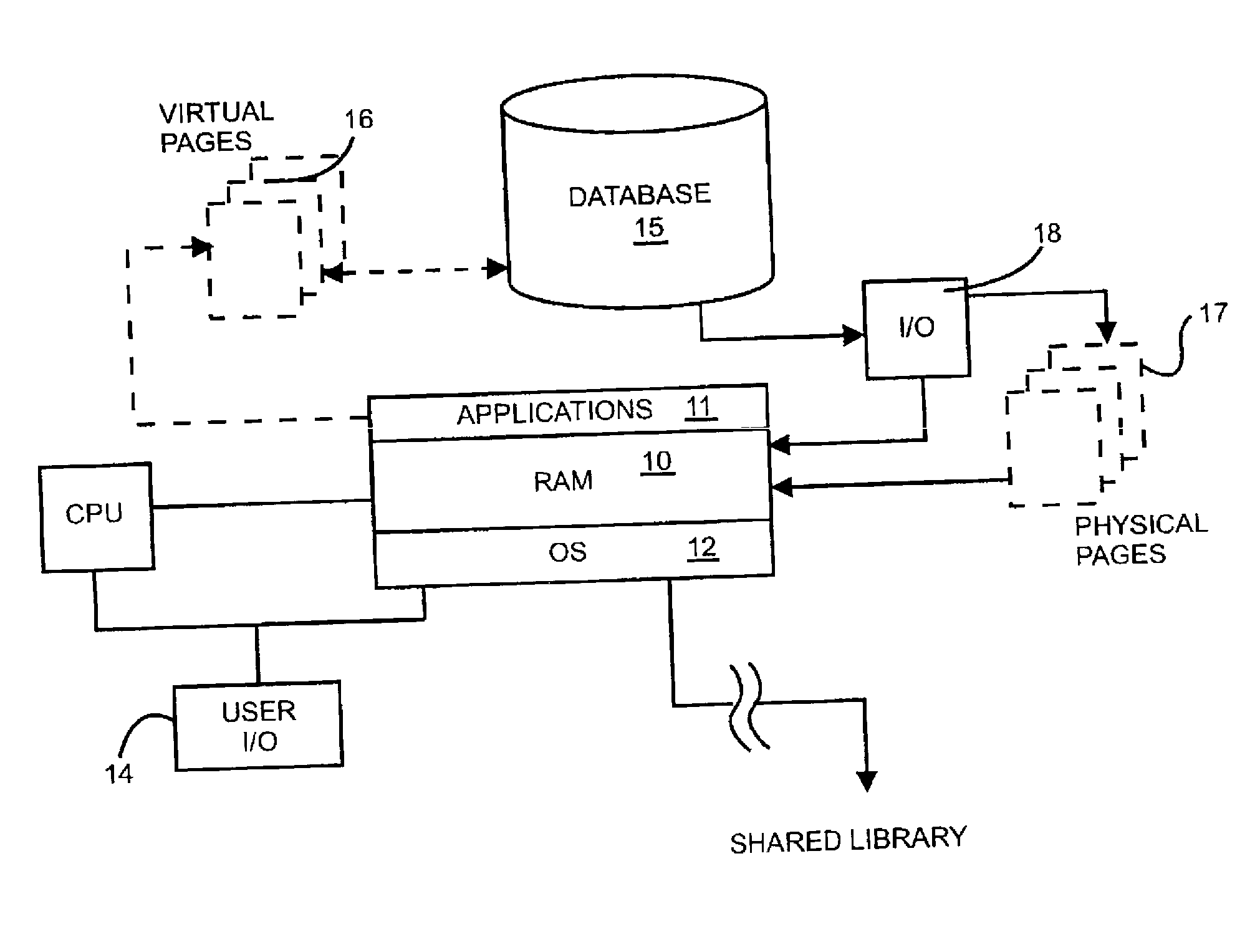

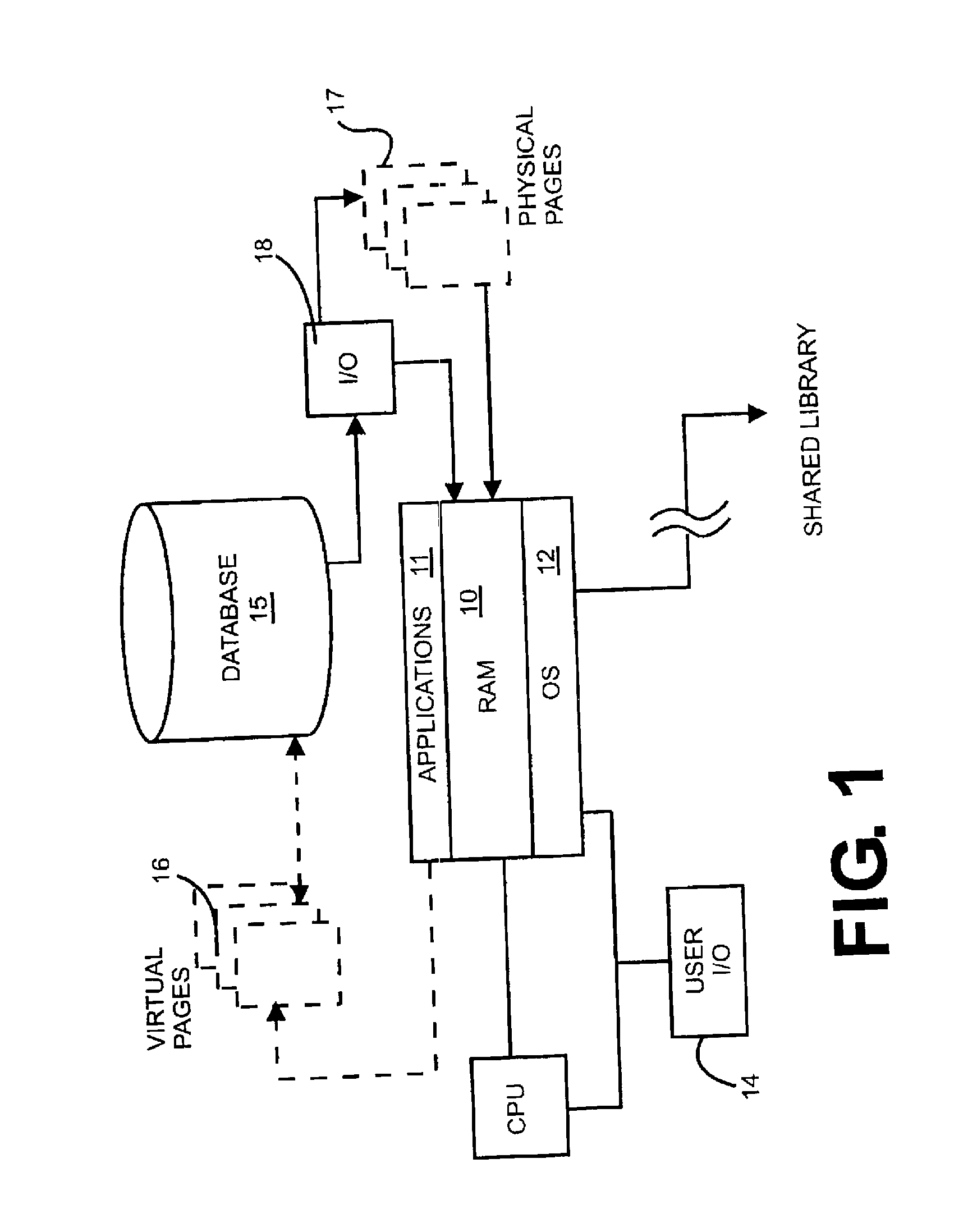

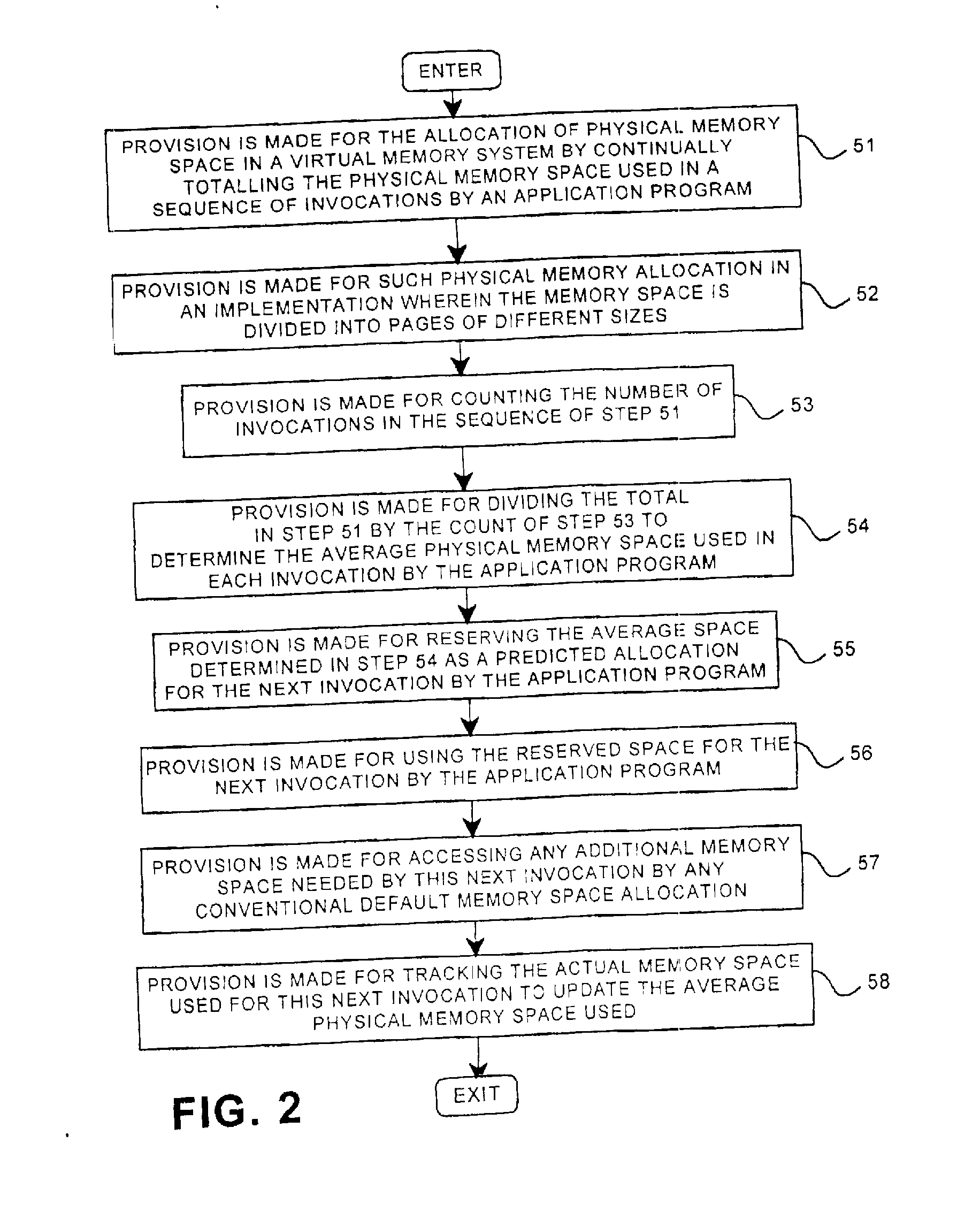

Predictive Page Allocation for Virtual Memory System

InactiveUS20110153978A1Configuration highMemory adressing/allocation/relocationMicro-instruction address formationVirtual memoryPhysical space

A virtual memory method for allocating physical memory space required by an application by tracking the page space used in each of a sequence of invocations by an application requesting memory space; keeping count of the number of said invocations; and determining the average page space used for each of said invocations from the count and previous average. Then, this average page space is recorded as a predicted allocation for the next invocation. This recorded average space is used for the next invocation. If there is any additional page space required by said next invocation, this additional page space may be accessed through any conventional default page space allocation.

Owner:IBM CORP

Vehicle-mounted apparatus

ActiveUS7873468B2Easy selectionEasy to useInstruments for road network navigationDigital data information retrievalWeb siteWeb browser

The present invention provides a vehicle-mounted apparatus whereby a user can easily select and use a Web site in accordance with a point associated with information provided by the Web site and a description of the information. According to the present invention, a precache control unit automatically downloads at least one Web page of a Web site associated with each point matching a predetermined condition to a page cache memory and displays site icons at respective points corresponding to the downloaded Web sites on a map image. In addition, the precache control unit displays the predetermined type of extracted information by analyzing the description of the Web page of each Web site in an information window that pops up from the corresponding site icon. When the user selects one of the site icons, a Web browser displays the Web page of the Web site corresponding to the selected site icon.

Owner:ALPINE ELECTRONICS INC

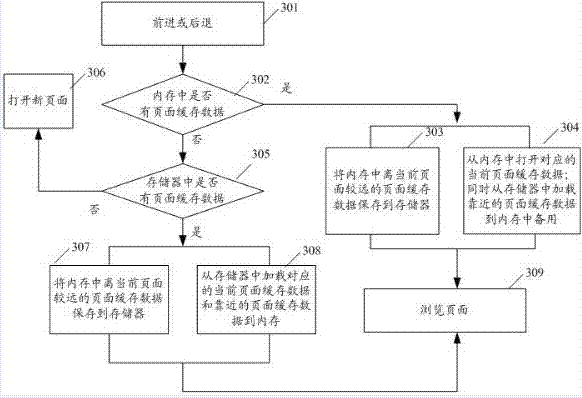

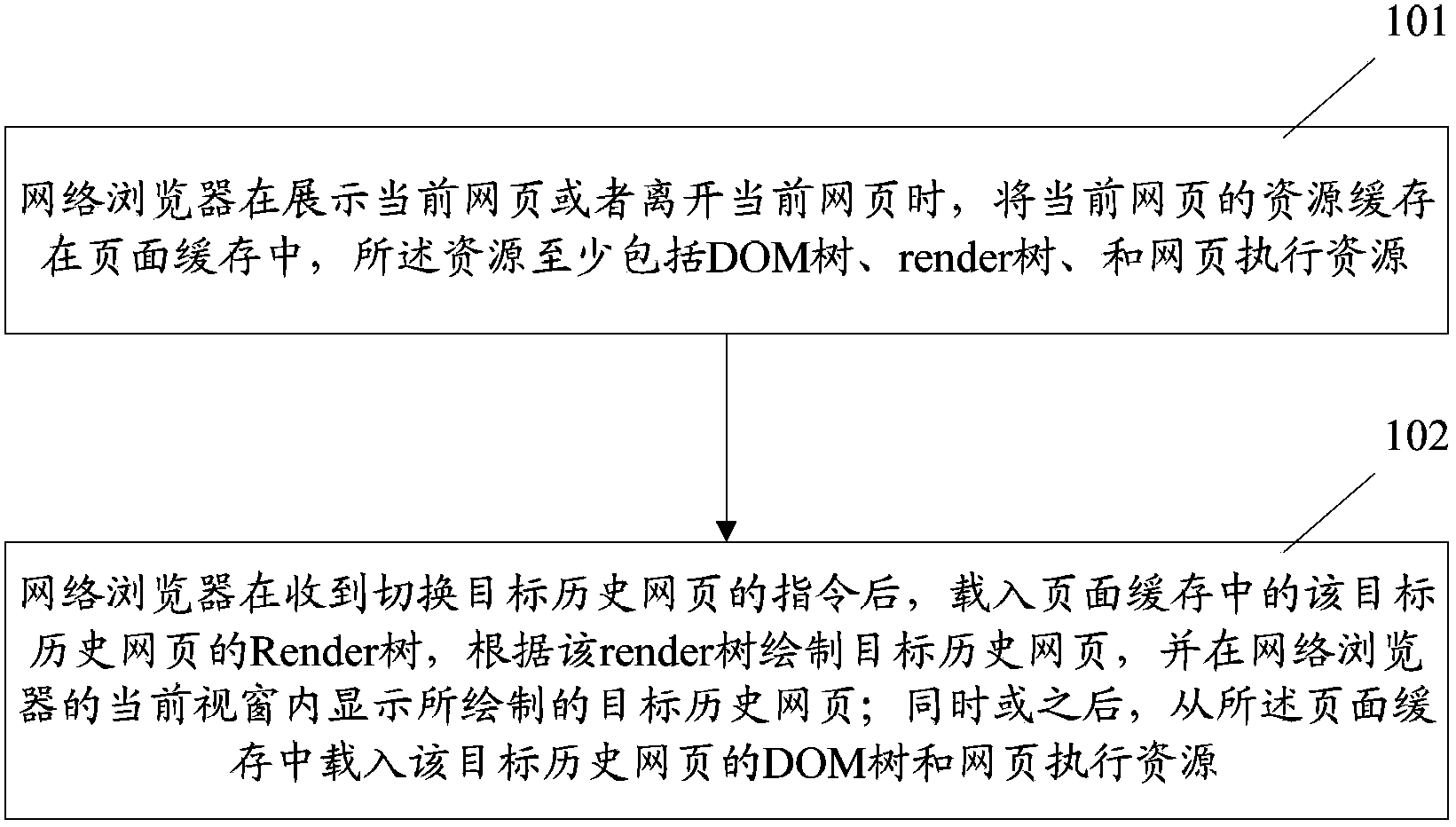

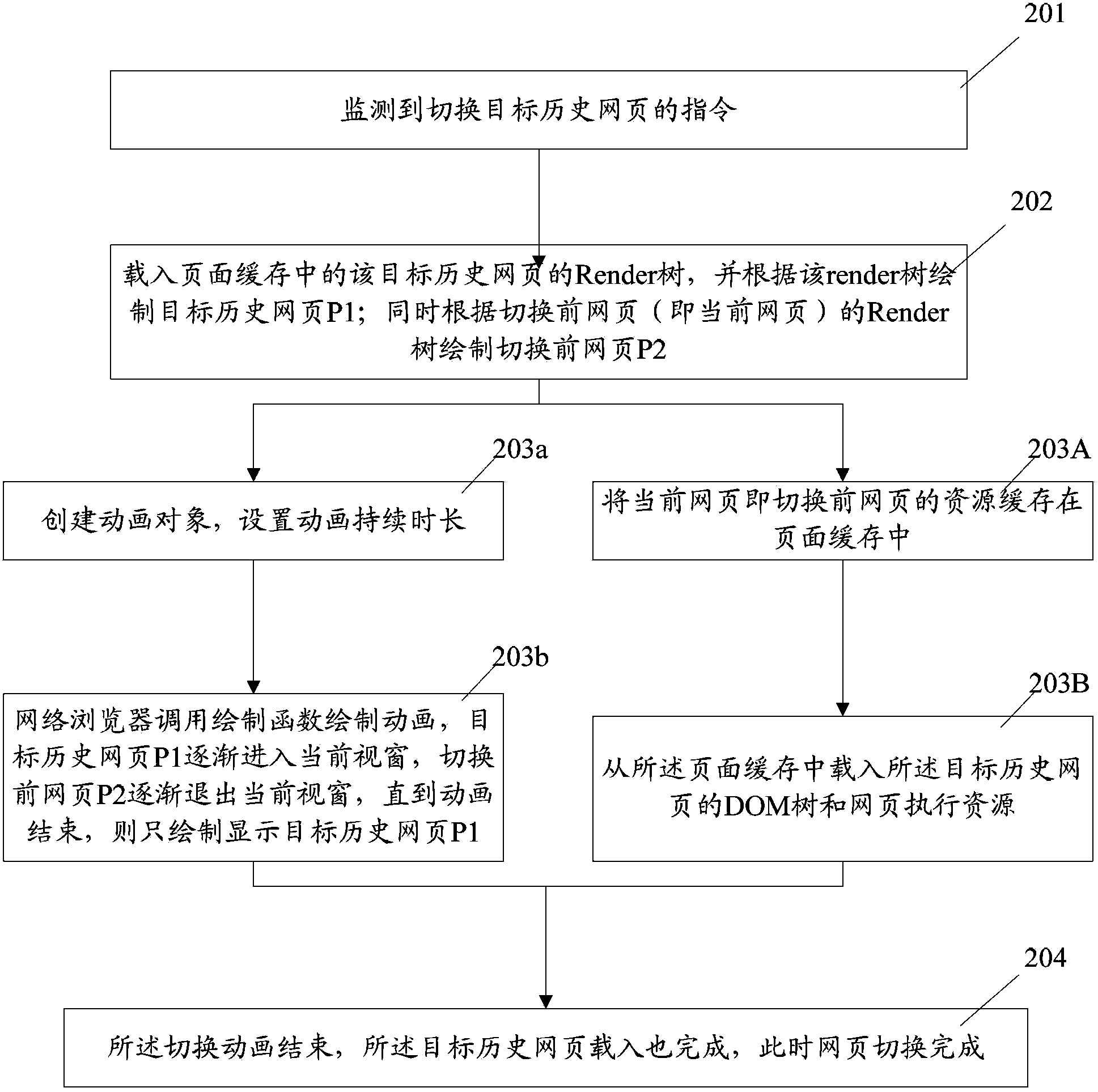

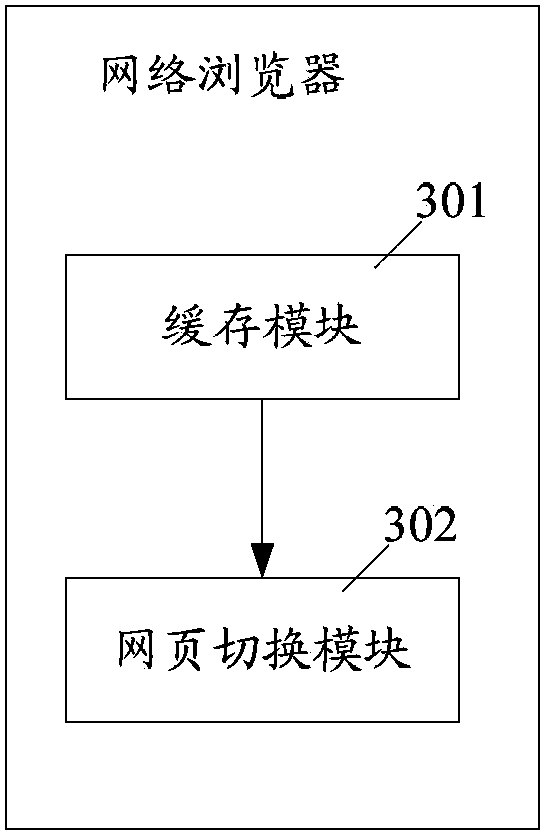

Method for web browser to switch over historical webpages and web browser

ActiveCN103514179AImproved cache managementSimple waySpecial data processing applicationsWeb browserWeb page

The invention discloses a method for a web browser to switch over historical webpages and the corresponding web browser. The web browser comprises a caching module and a webpage switchover module. The caching module is used for caching resources on a current webpage to a page cache when the web browser displays or exits the current webpage, and the cached resources at least comprise a DOM tree, a Render tree and webpage execution resources. The webpage switchover module is used for loading in the Render tree in a target historical webpage in the page cache, draws the target historical webpage according to the Render tree, and displays the drawn target historical webpage in a current window of the web browser after the web browser receives the instruction for switching over the target historical webpage. Meanwhile or then, the DOM tree and the webpage execution resources of the target historical webpage are loaded in from the page cache. By means of the method for the web browser to switch over historical webpages and the corresponding web browser, the switchover speed of the historical webpages can be improved for the web browser.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Optimization of paging cache protection in virtual environment

ActiveUS8438363B1Software simulation/interpretation/emulationMemory systemsVirtualizationParallel computing

A system, method and computer program product for virtualizing a processor include a virtualization system running on a computer system and controlling memory paging through hardware support for maintaining real paging structures. A Virtual Machine (VM) is running guest code and has at least one set of guest paging structures that correspond to guest physical pages in guest virtualized linear address space. At least some of the guest paging structures are mapped to the real paging structures. A cache of connection structures represents cached paths to the real paging structures. The mapped paging tables are protected using RW-bit. A paging cache is validated according to TLB resets. Non-active paging tree tables can be also protected at the time when they are activated. Tracking of access (A) bits and of dirty (D) bits is implemented along with synchronization of A and D bits in guest physical pages.

Owner:PARALLELS INT GMBH

Instructions and logic to suspend/resume migration of enclaves in a secure enclave page cache

ActiveUS20170185533A1Memory architecture accessing/allocationMultiple keys/algorithms usageExternal storageManagement process

Instructions and logic support suspending and resuming migration of enclaves in a secure enclave page cache (EPC). An EPC stores a secure domain control structure (SDCS) in storage accessible by an enclave for a management process, and by a domain of enclaves. A second processor checks if a corresponding version array (VA) page is bound to the SDCS, and if so: increments a version counter in the SDCS for the page, performs an authenticated encryption of the page from the EPC using the version counter in the SDCS, and writes the encrypted page to external memory. A second processor checks if a corresponding VA page is bound to a second SDCS of the second processor, and if so: performs an authenticated decryption of the page using a version counter in the second SDCS, and loads the decrypted page to the EPC in the second processor if authentication passes.

Owner:INTEL CORP

Methods and Systems for Implementing Transcendent Page Caching

This disclosure describes, generally, methods and systems for implementing transcendent page caching. The method includes establishing a plurality of virtual machines on a physical machine. Each of the plurality of virtual machines includes a private cache, and a portion of each of the private caches is used to create a shared cache maintained by a hypervisor. The method further includes delaying the removal of the at least one of stored memory pages, storing the at least one of stored memory pages in the shared cache, and requesting, by one of the plurality of virtual machines, the at least one of the stored memory pages from the shared cache. Further, the method includes determining that the at least one of the stored memory pages is stored in the shared cache, and transferring the at least one of the stored shared memory pages to the one of the plurality of virtual machines.

Owner:ORACLE INT CORP

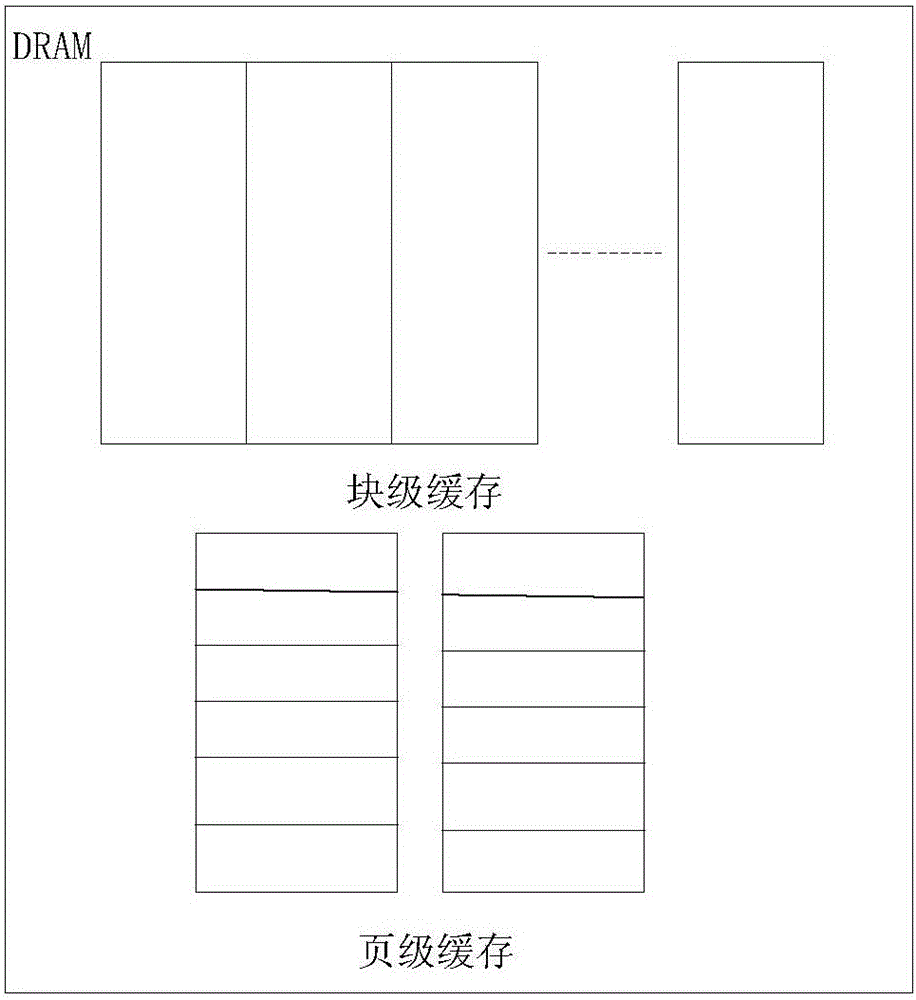

Data cache method used in NAND FLASH

ActiveCN105930282AImprove hit rateImprove space utilizationMemory systemsParallel computingBlock level

The invention provides a data cache method used in NAND FLASH. The method comprises following steps: first, a cache region cache is divided into a block-level cache and a page-level cache; then, when read-and write is performed on data, if current data exists in the block-level cache or the page-level cache, then data read-and write is directly completed; if current data does not exist in the block-level cache or the page-level cache, then data is read from the FLASH or free space in the data cache region is distributed to the current data and data is written in the FLASH; if there is no free space, a substitution block is determined through a substitution algorithm and data in the substitution block is written in the FLASH; finally, the substitution block is released, new data is written in, and then data cache is completed. By means of the combination of block cache and page cache, the cache hit ratio of random read-and-write access is increased; a efficient substitution algorithm is provided; the size of cache mapping table is reduced and space utilization rate of the cache region is increased; the method has good use value.

Owner:BEIJING MXTRONICS CORP +1

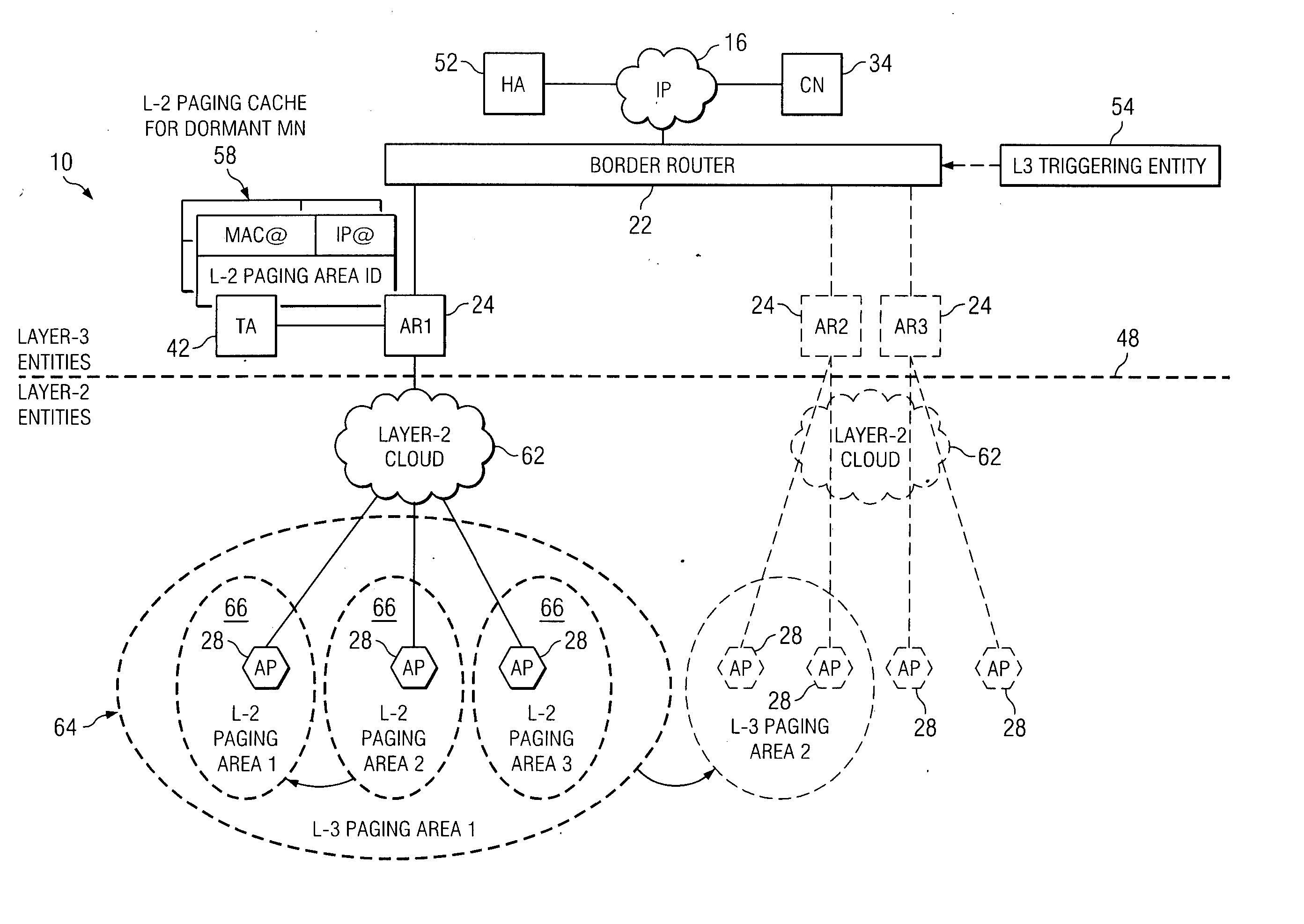

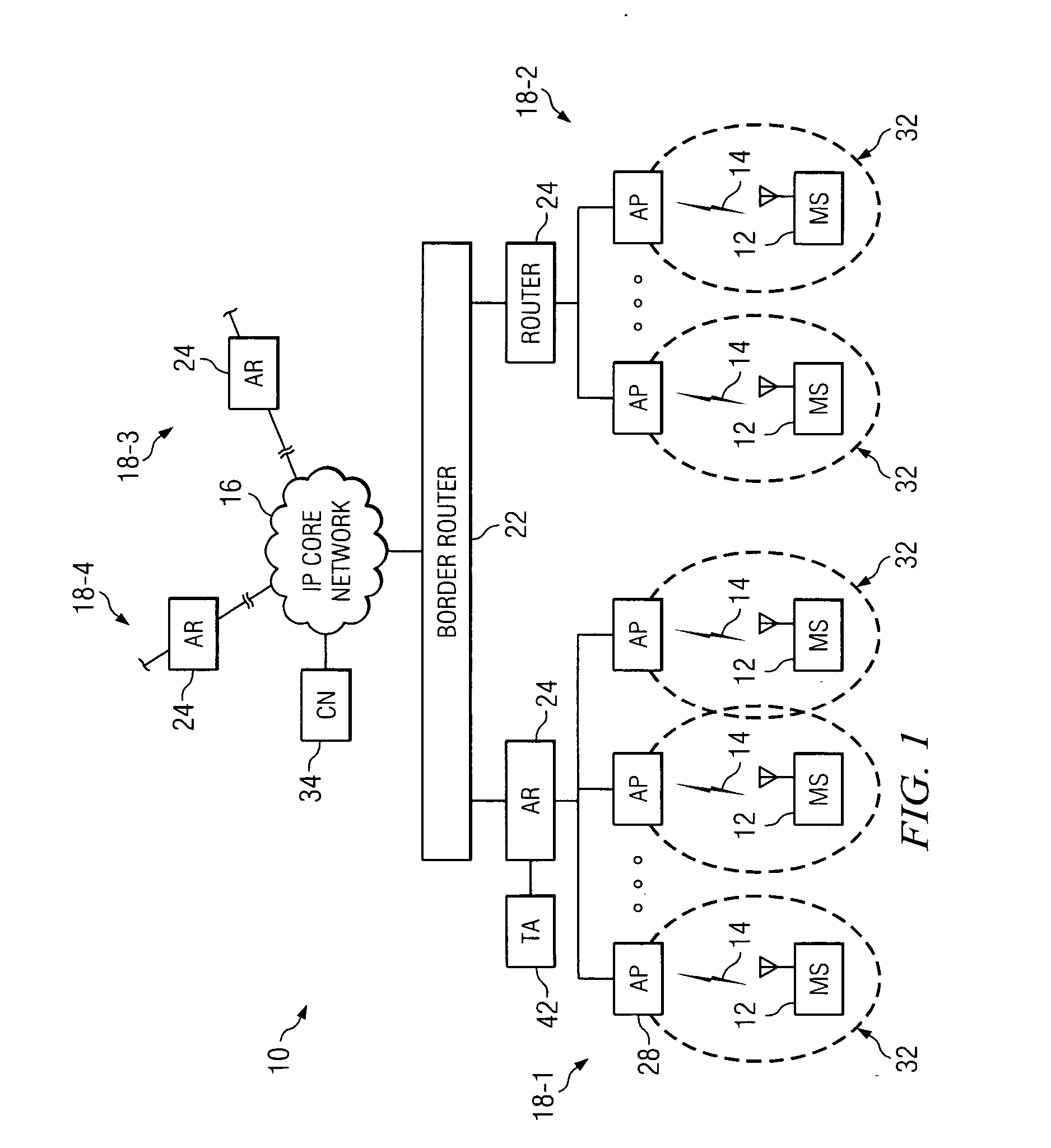

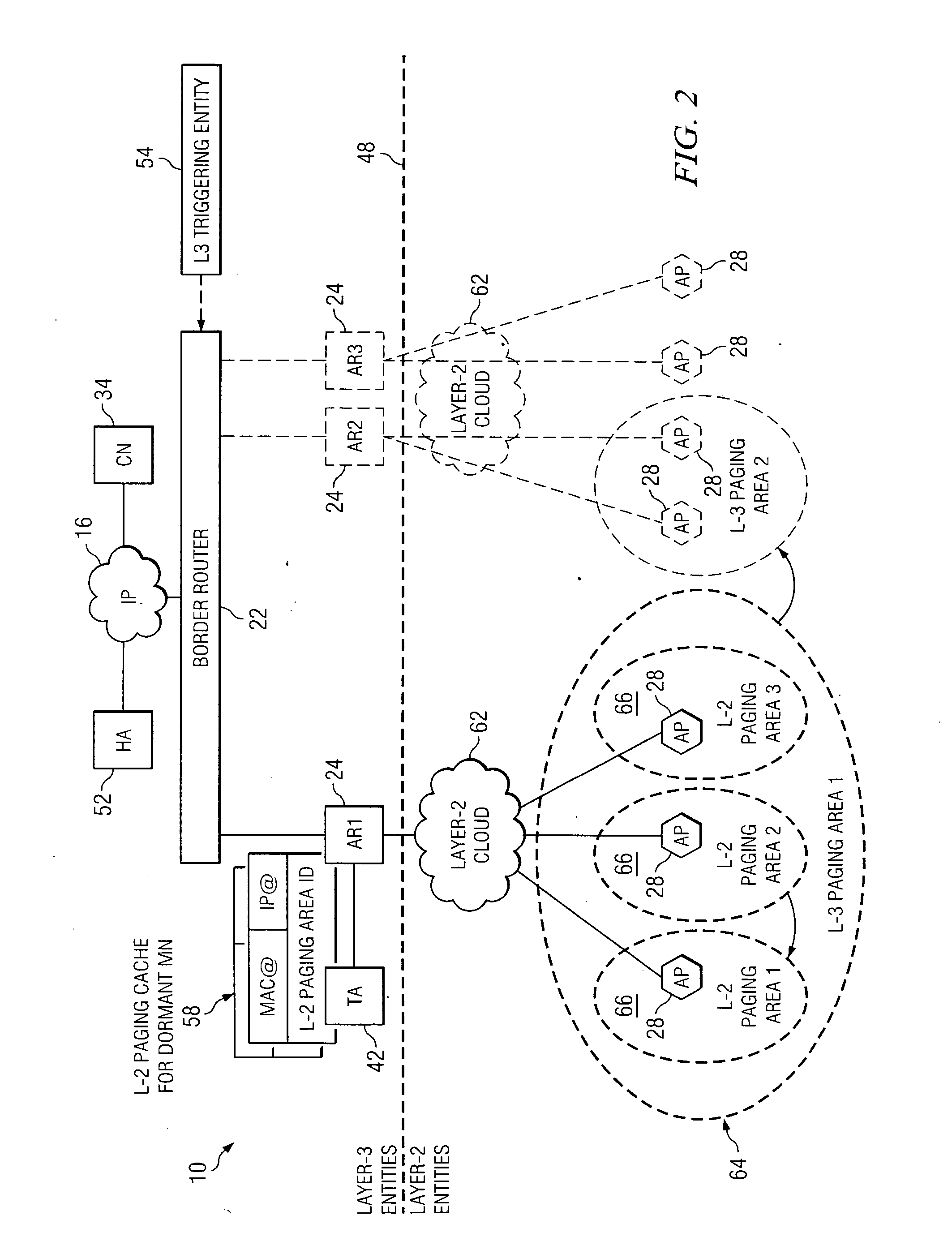

Apparatus, and an associated method, for performing link layer paging of a mobile station operable in a radio communication system

InactiveUS20050036510A1Lower latencyImprove mobile efficiencyTime-division multiplexData switching by path configurationCommunications systemMobile station

Apparatus, and an associated method, for paging a mobile station operable in a WLAN. Logical layer-2 paging is provided pursuant to a tracking agent protocol. A tracking agent is provided that tracks the location at a logical layer-2 logical level. A paging cache is maintained that identifies logical layer-2 paging areas in which the mobile station is most recently associated. When a communication session is initiated, a page is broadcast throughout the layer-2 logical layer paging area in which the mobile station is indicated to be positioned.

Owner:ALCATEL LUCENT SAS

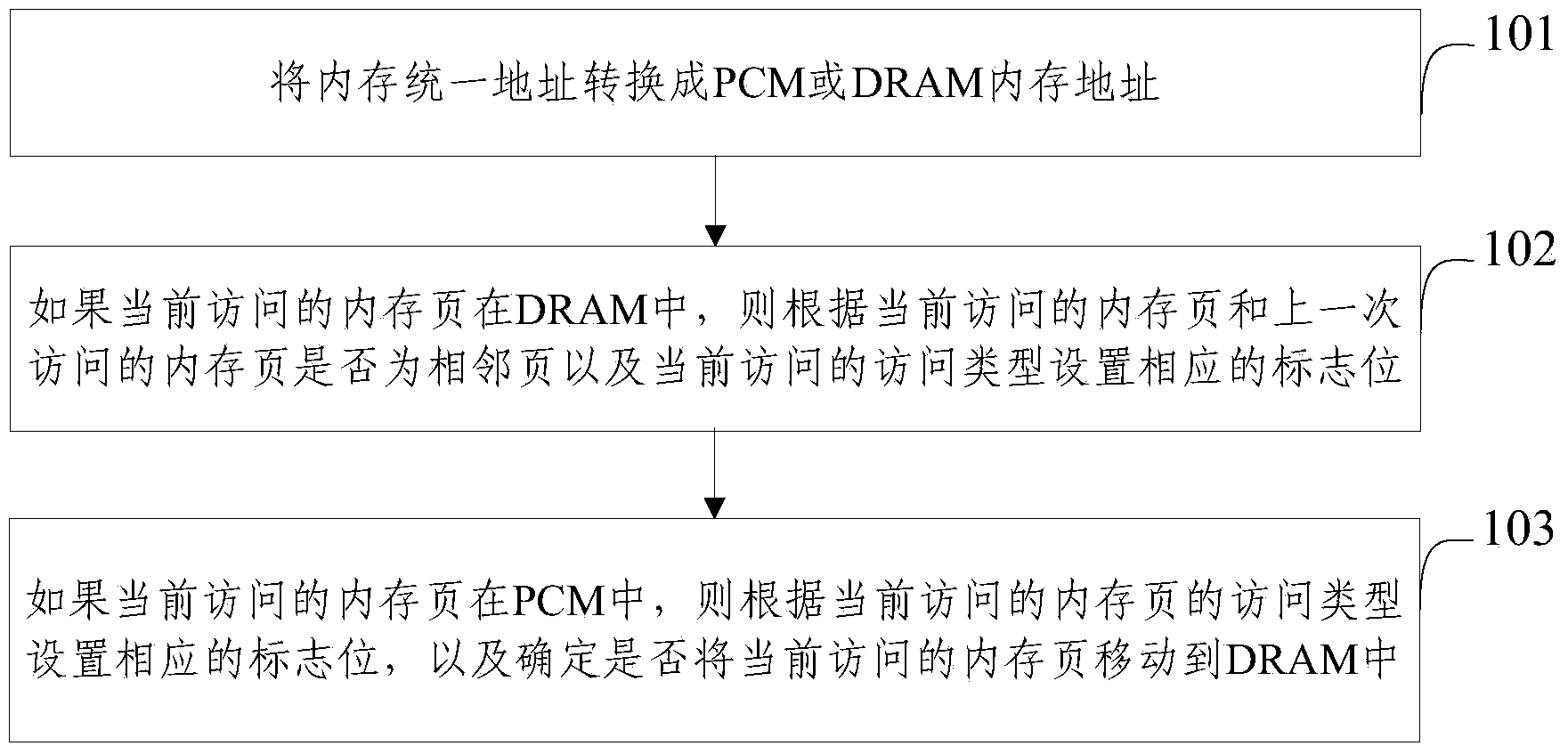

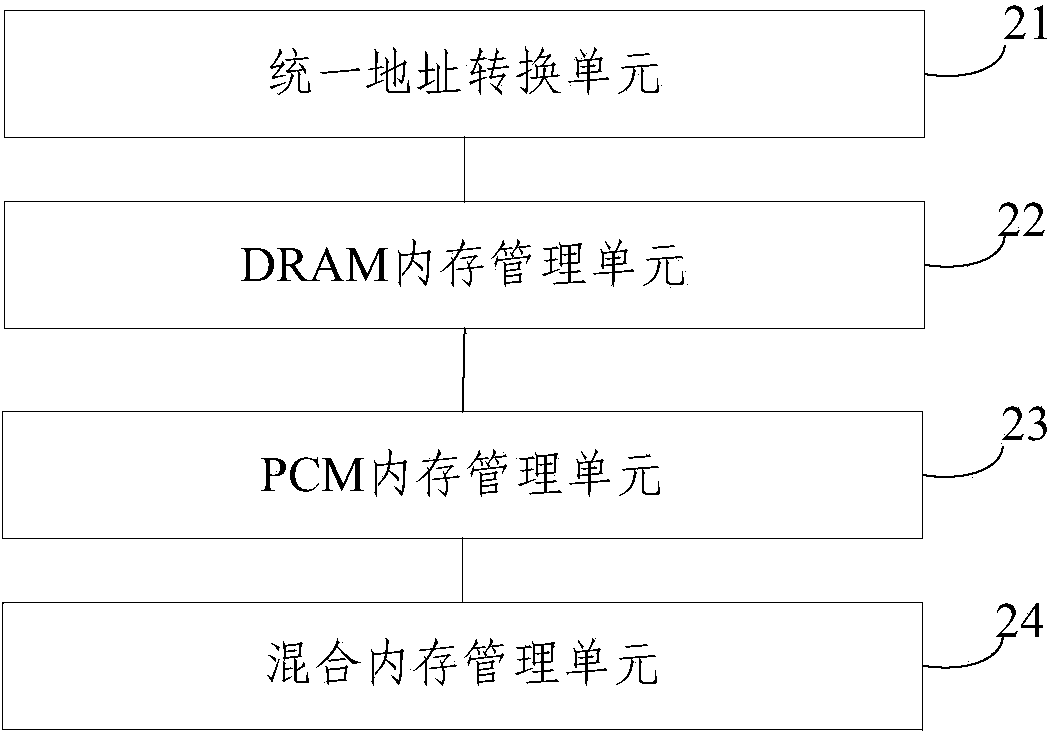

Hybrid memory paging method and device

InactiveCN104317739AReduce the number of writesOptimizing Wear ProgressMemory adressing/allocation/relocationMemory addressDram memory

The invention provides a hybrid memory paging method and device. The method includes the steps: 1, a unified memory address is converted into a PCD (page cache disable) or DRAM (dynamic random access memory) memory address; 2, if a currently accessed memory page is in the DRAM, a corresponding flag bit is set according to an access type currently accessed and the fact if the currently accessed memory page and a last visited page is an adjacent page; 3, if the currently accessed memory page is in the PCD, a corresponding flag bit is set according to an access type currently accessed and the fact if the currently accessed memory page and the last visited page is an adjacent page, and whether the currently accessed memory page needs to be moved to the DRAM is determined. With the hybrid memory paging method and device, memory pages needing frequent read operation is placed in the PCM as much as possible, and memory pages needing frequent write operation is placed in the DRAM as much as possible, and thus, writing operation of the PCM is reduced, and service life of the PCM is prolonged.

Owner:TSINGHUA UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com