Patents

Literature

431 results about "Hit ratio" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

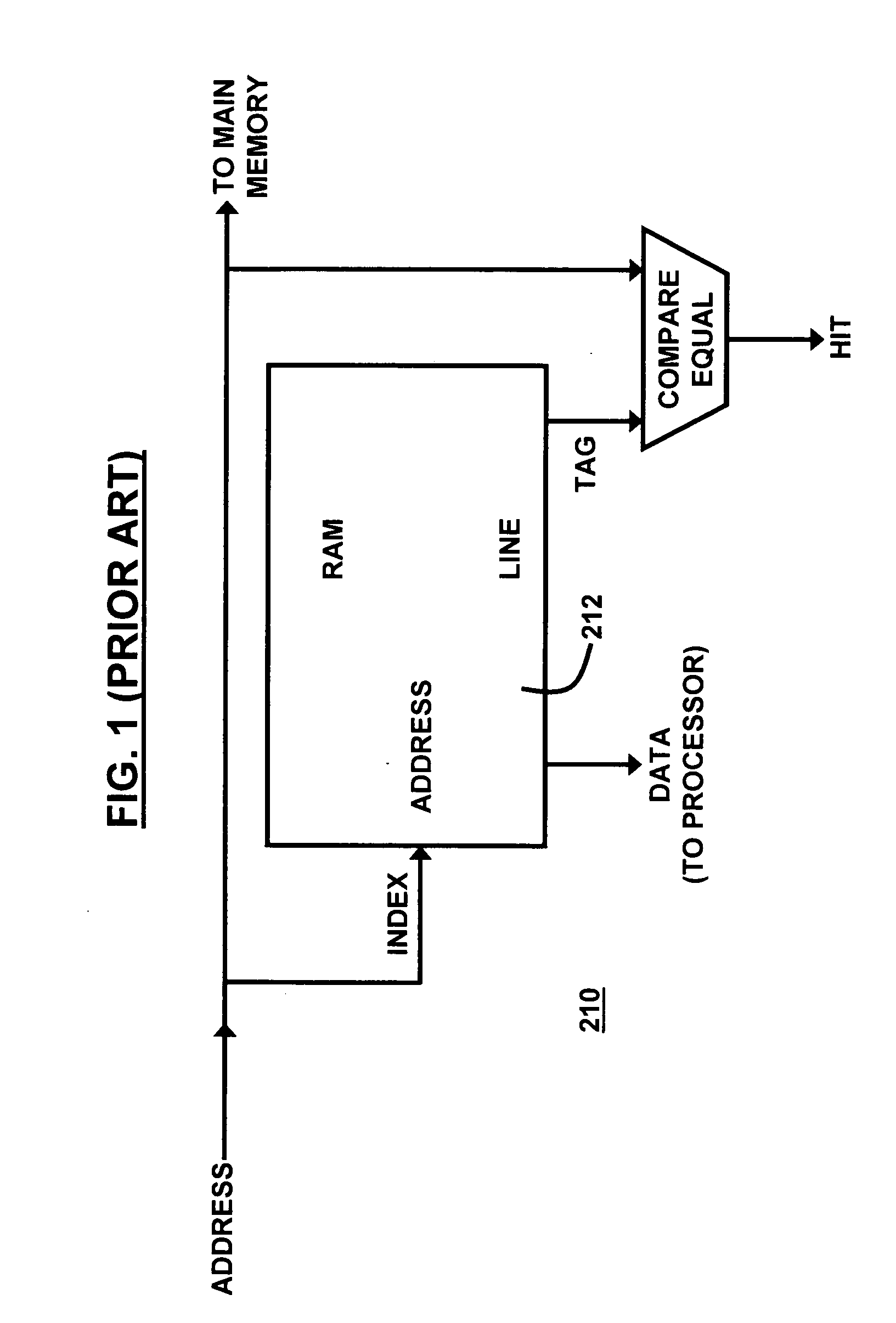

The hit ratio is the fraction of accesses which are a hit. The miss ratio is the fraction of accesses which are a miss. The (hit/miss) latency (AKA access time) is the time it takes to fetch the data in case of a hit/miss. If the access was a hit - this time is rather short because the data is already in the cache.

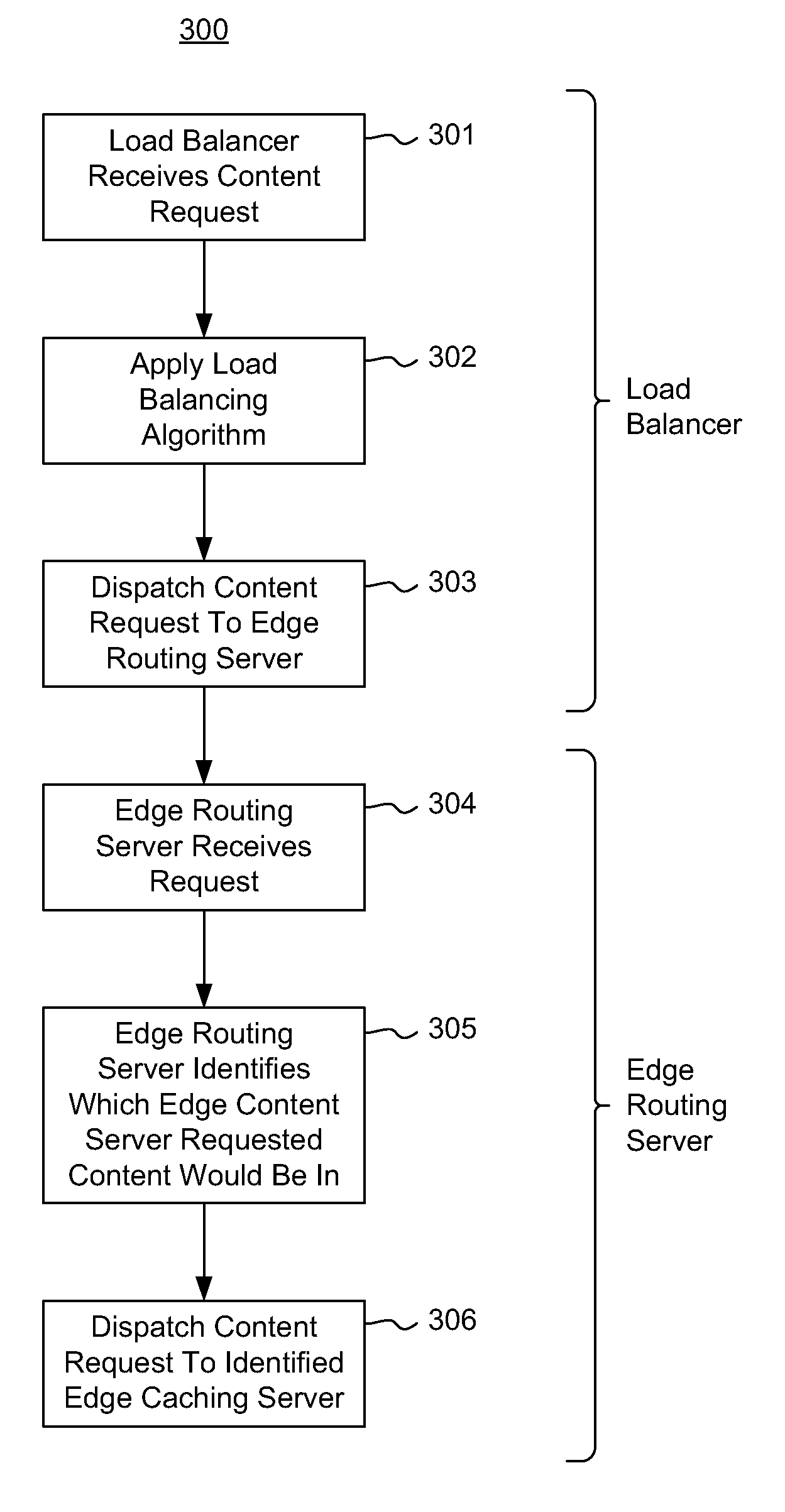

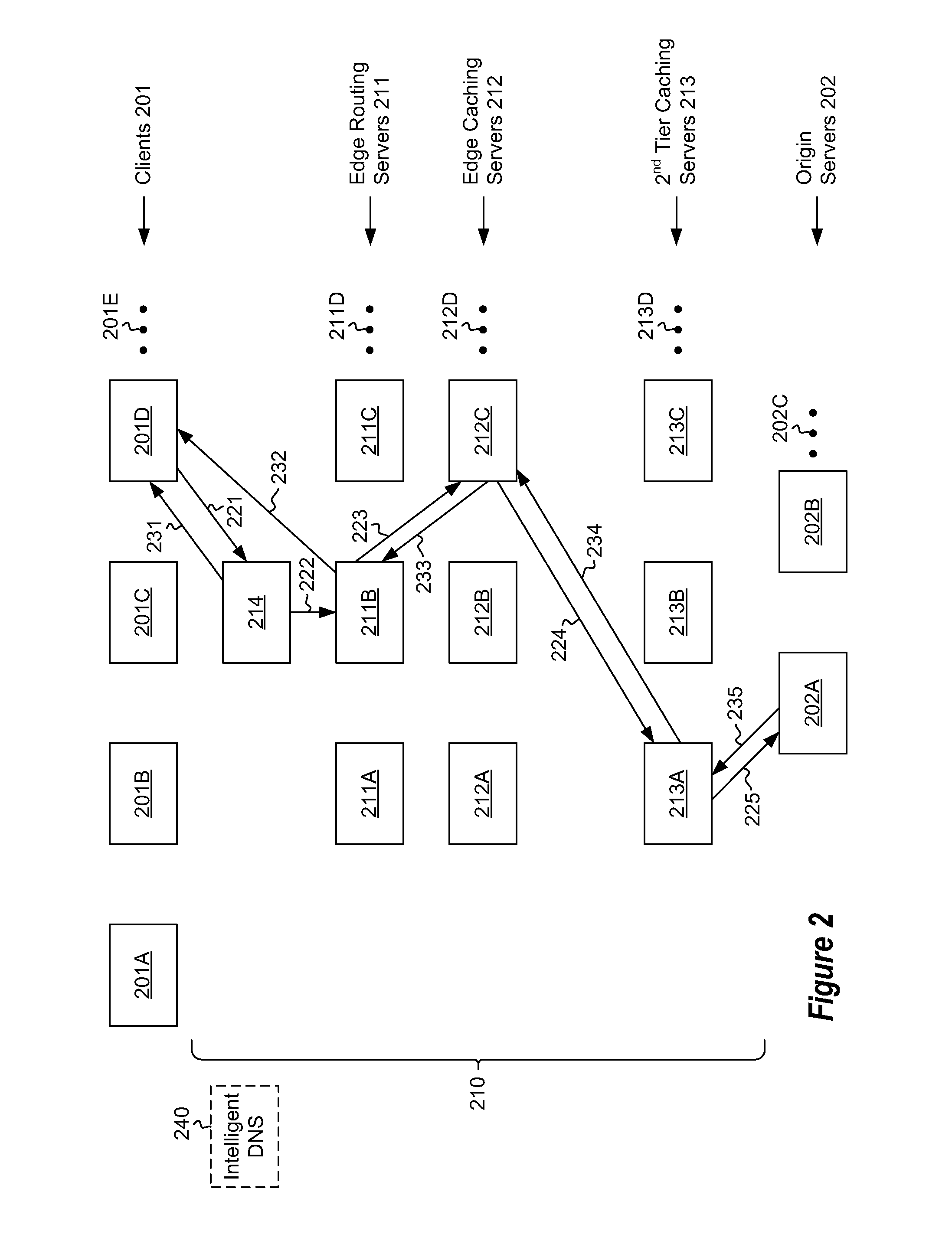

Proxy-based cache content distribution and affinity

ActiveUS8612550B2Maximize likelihoodMultiple digital computer combinationsTransmissionContent distributionMultiple edges

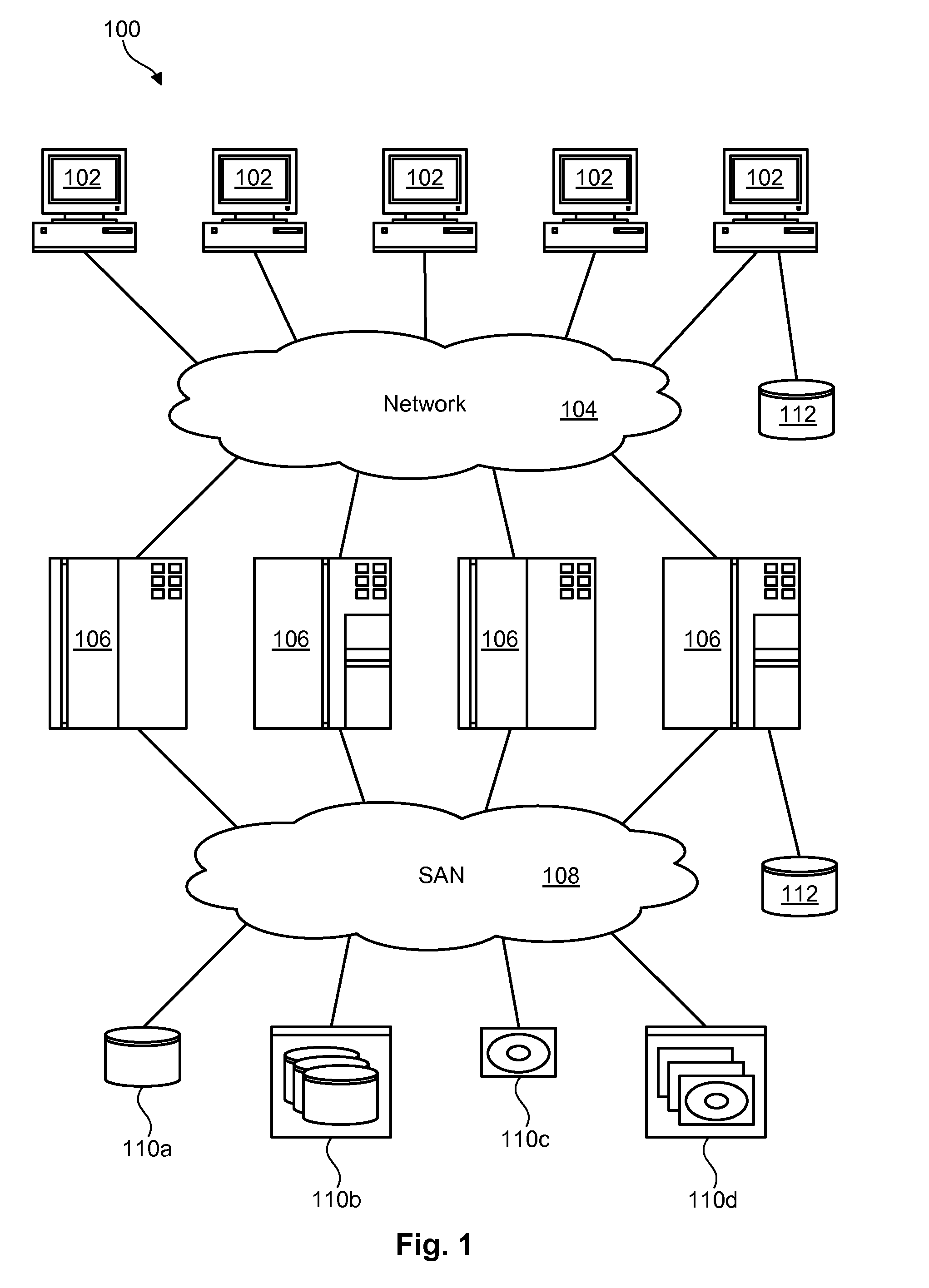

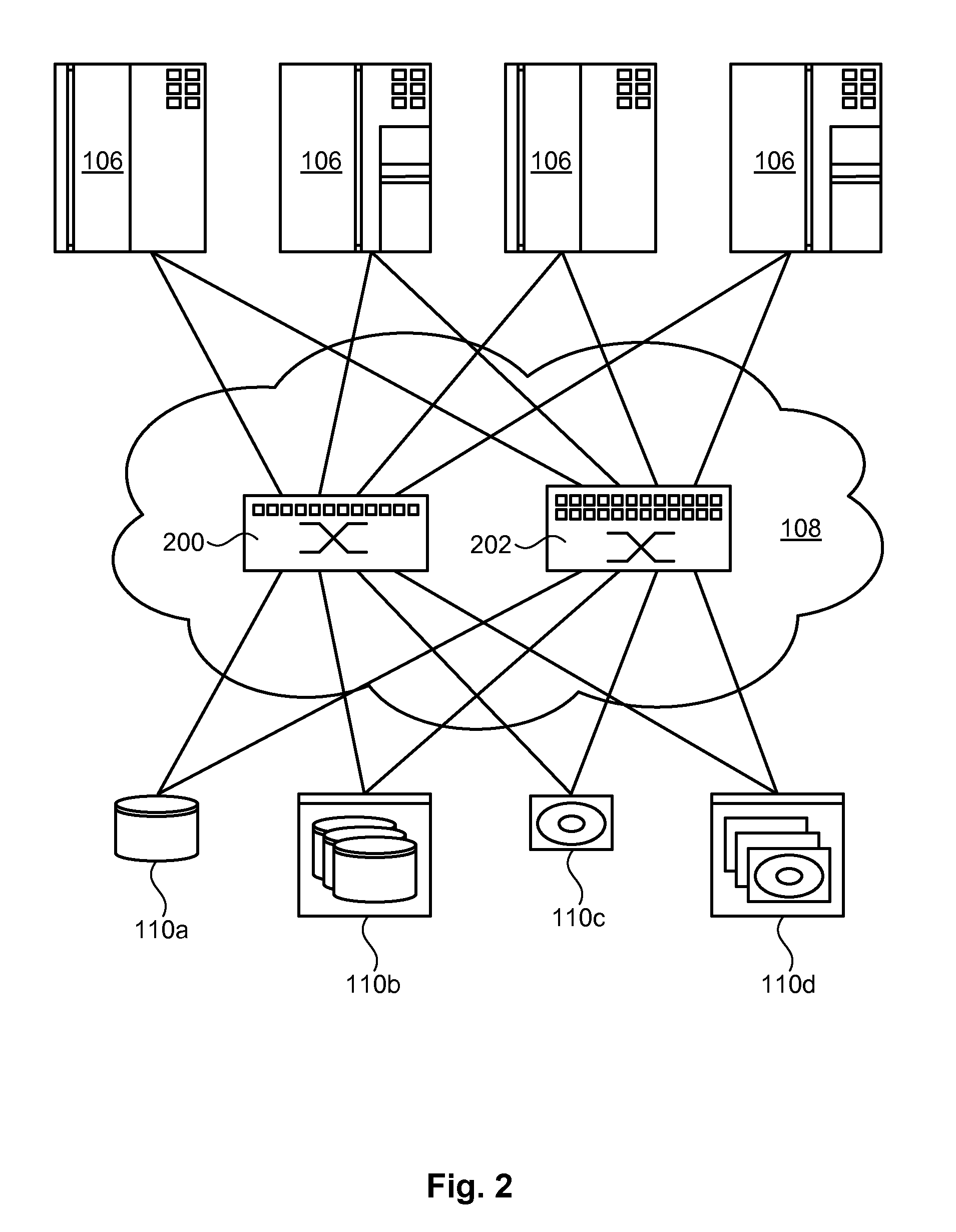

A distributed caching hierarchy that includes multiple edge routing servers, at least some of which receiving content requests from client computing systems via a load balancer. When receiving a content request, an edge routing server identifies which of the edge caching servers the requested content would be in if the requested content were to be cached within the edge caching servers, and distributes the content request to the identified edge caching server in a deterministic and predictable manner to increase the likelihood of increasing a cache-hit ratio.

Owner:MICROSOFT TECH LICENSING LLC

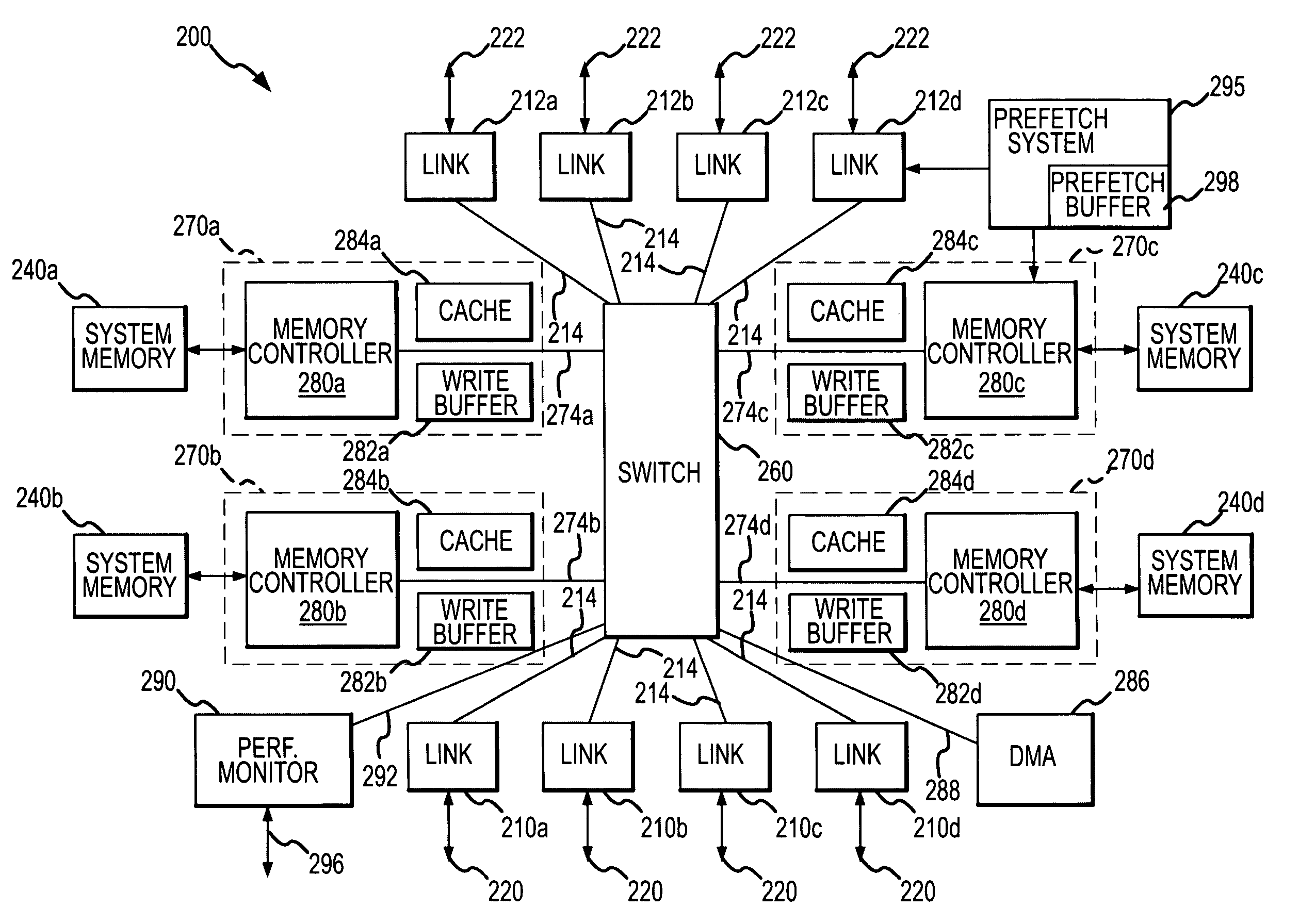

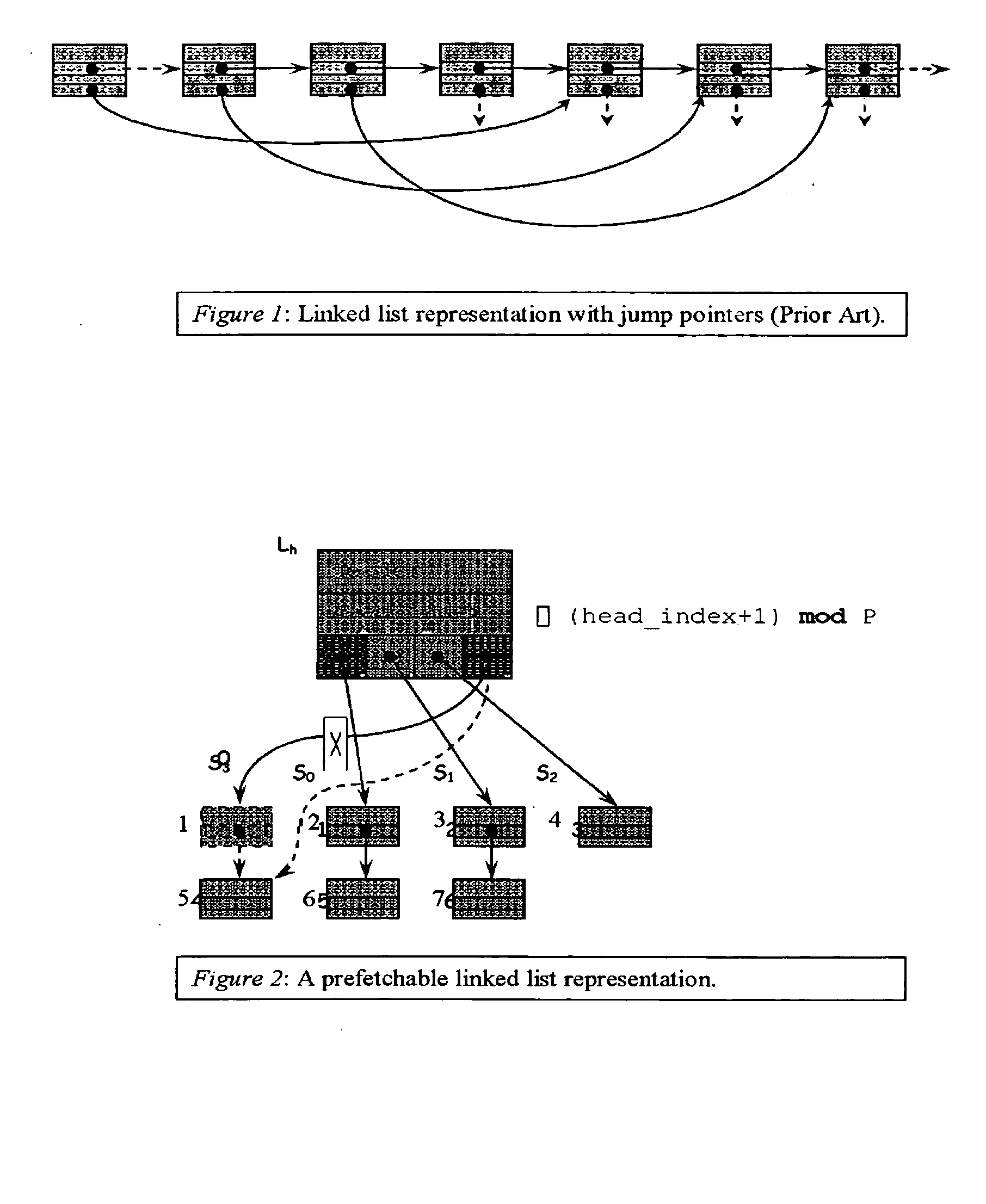

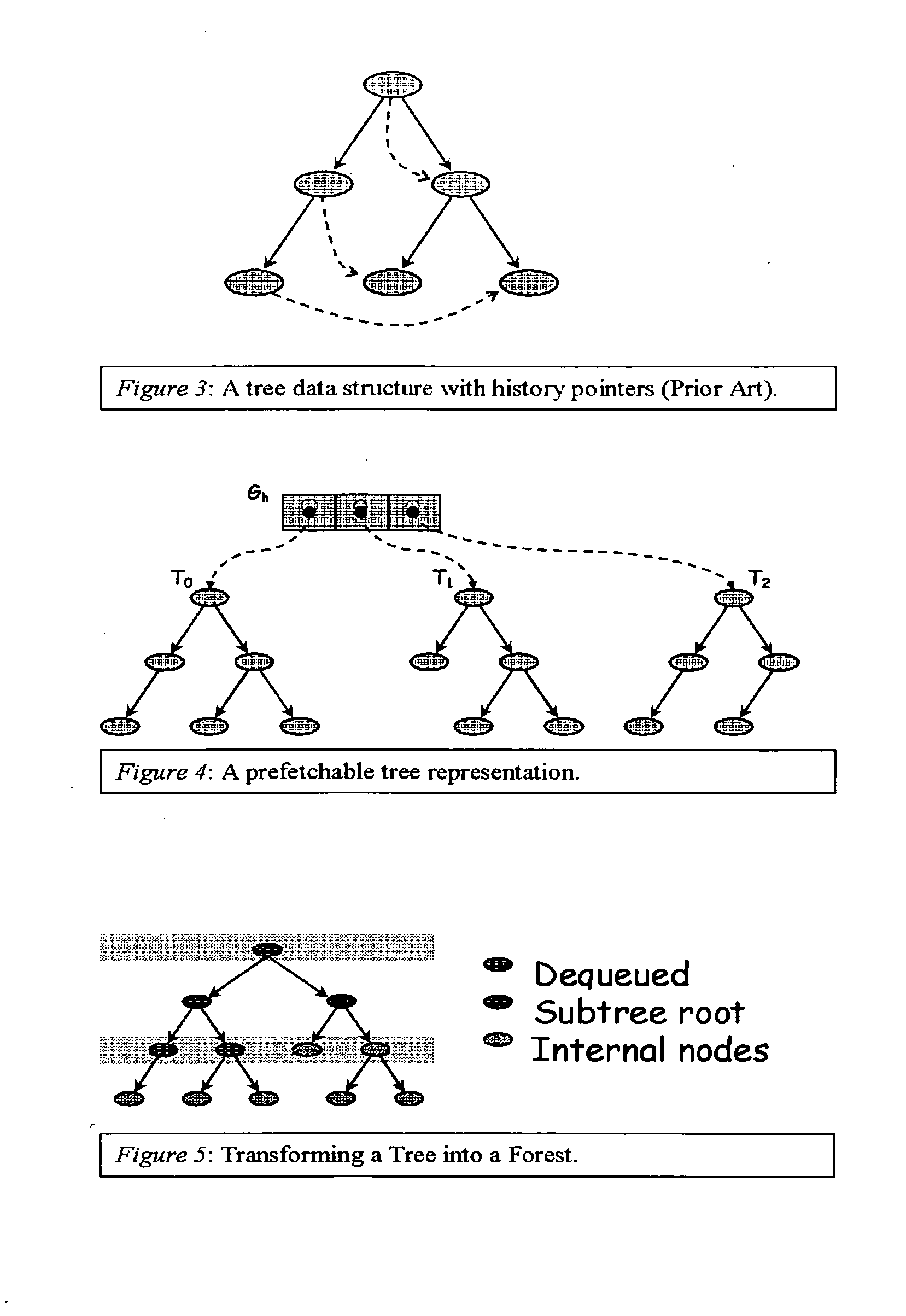

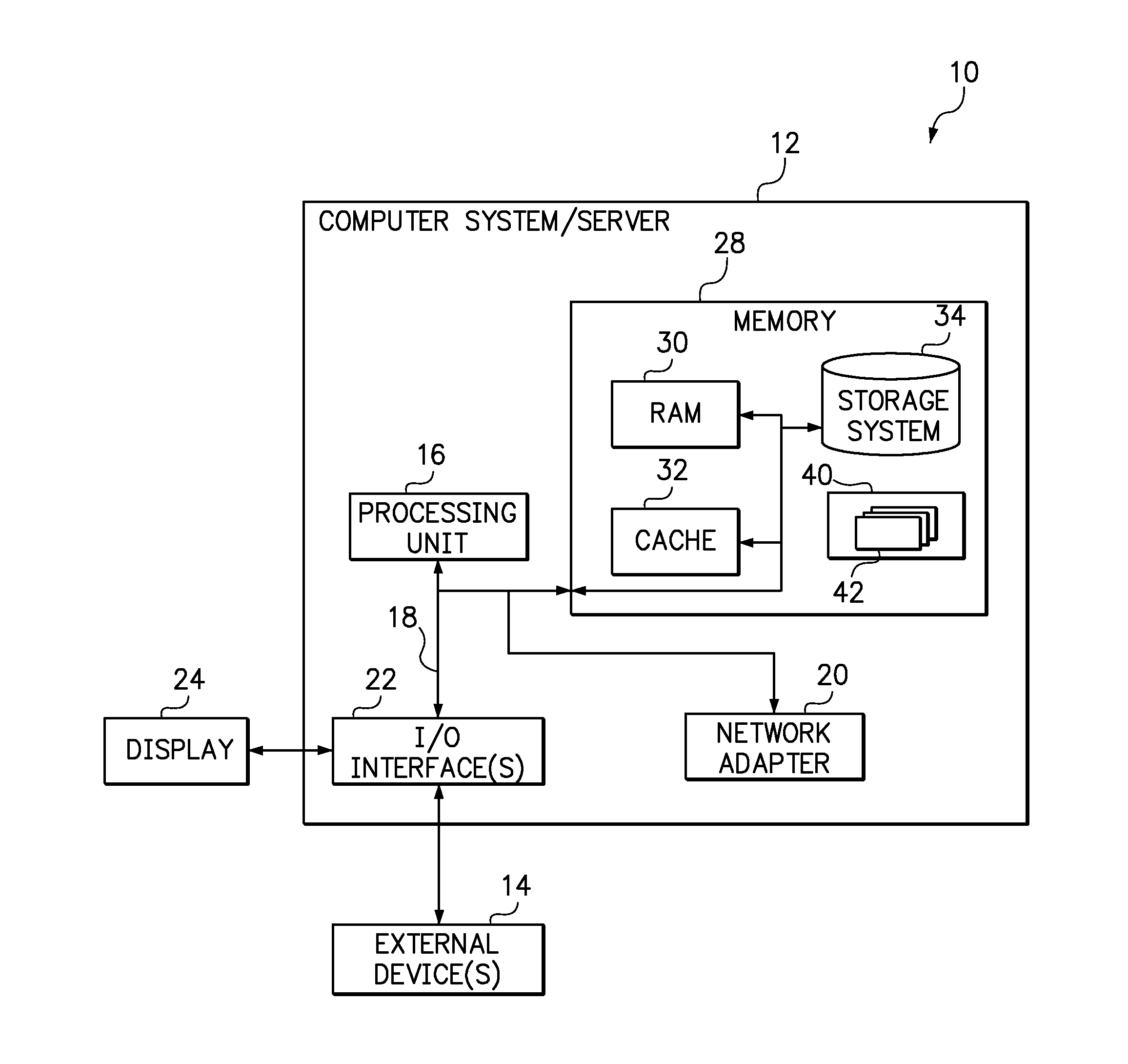

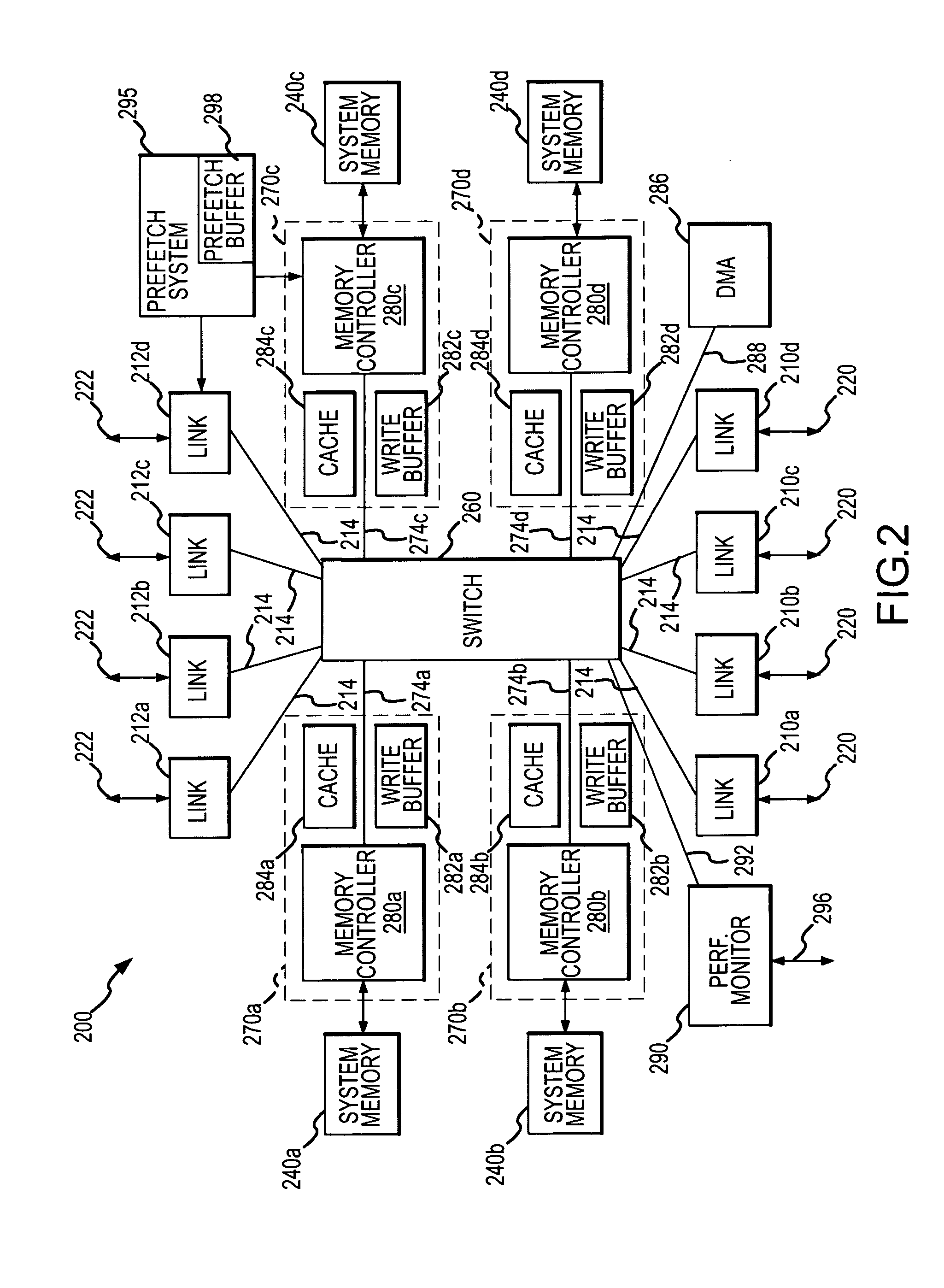

Method and apparatus for prefetching recursive data structures

InactiveUS6848029B2Improve cache hit ratioPotential throughput of the computer systemMemory architecture accessing/allocationMemory adressing/allocation/relocationApplication softwareCache hit rate

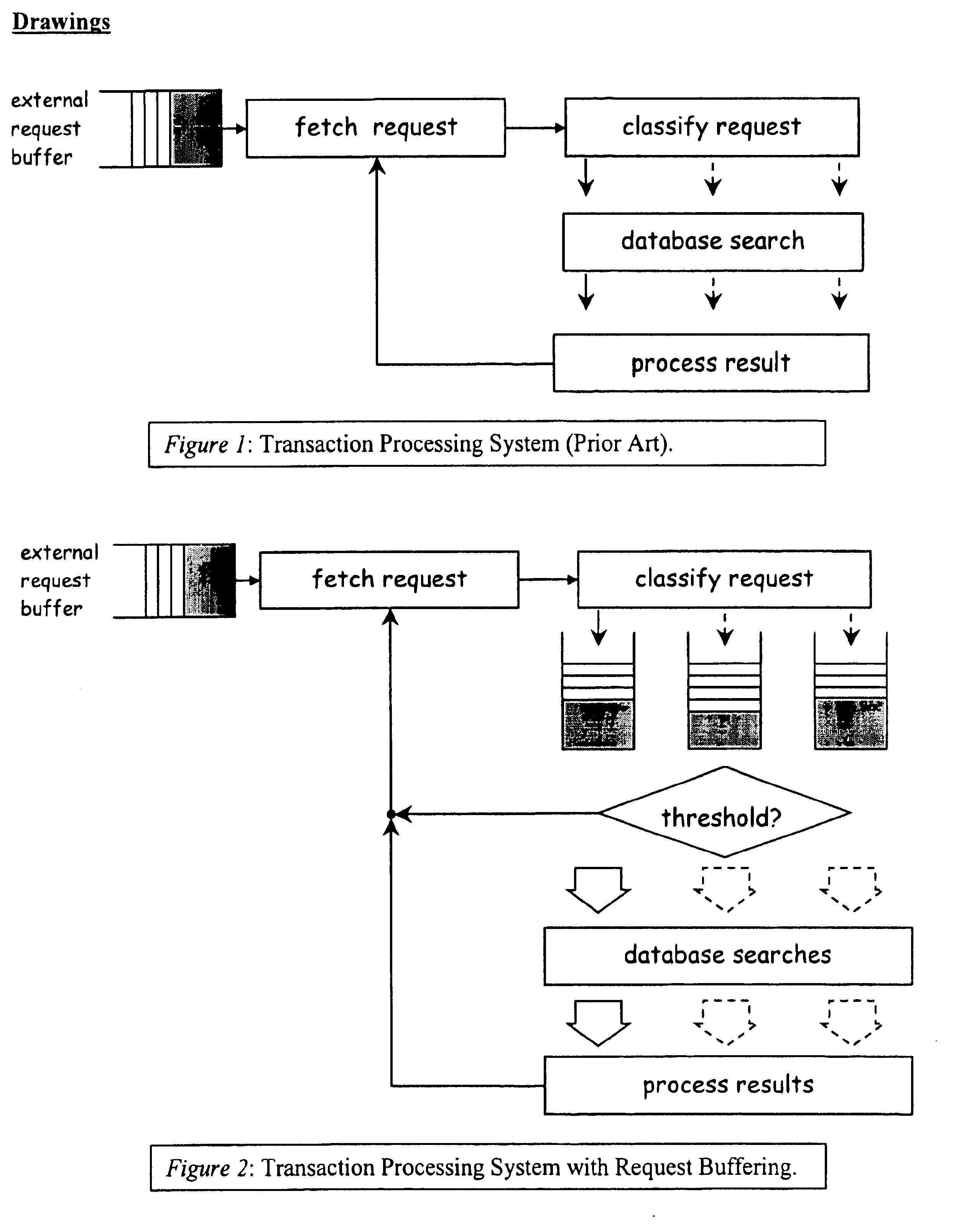

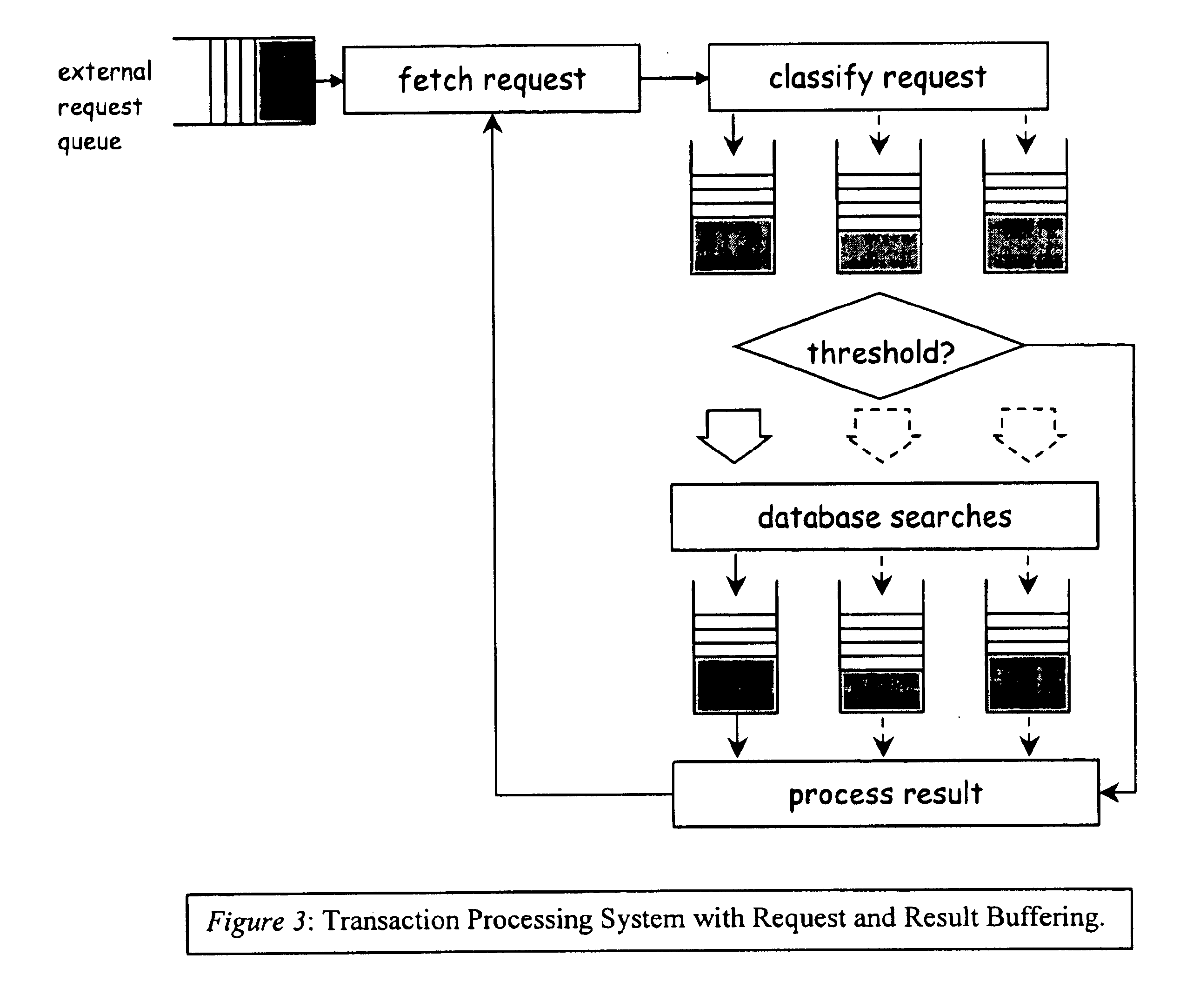

Computer systems are typically designed with multiple levels of memory hierarchy. Prefetching has been employed to overcome the latency of fetching data or instructions from or to memory. Prefetching works well for data structures with regular memory access patterns, but less so for data structures such as trees, hash tables, and other structures in which the datum that will be used is not known a priori. The present invention significantly increases the cache hit rates of many important data structure traversals, and thereby the potential throughput of the computer system and application in which it is employed. The invention is applicable to those data structure accesses in which the traversal path is dynamically determined. The invention does this by aggregating traversal requests and then pipelining the traversal of aggregated requests on the data structure. Once enough traversal requests have been accumulated so that most of the memory latency can be hidden by prefetching the accumulated requests, the data structure is traversed by performing software pipelining on some or all of the accumulated requests. As requests are completed and retired from the set of requests that are being traversed, additional accumulated requests are added to that set. This process is repeated until either an upper threshold of processed requests or a lower threshold of residual accumulated requests has been reached. At that point, the traversal results may be processed.

Owner:DIGITAL CACHE LLC +1

Cache device, cache data management method, and computer program

InactiveUS20050172080A1Easy to useFast dataMemory adressing/allocation/relocationData controlPending - status

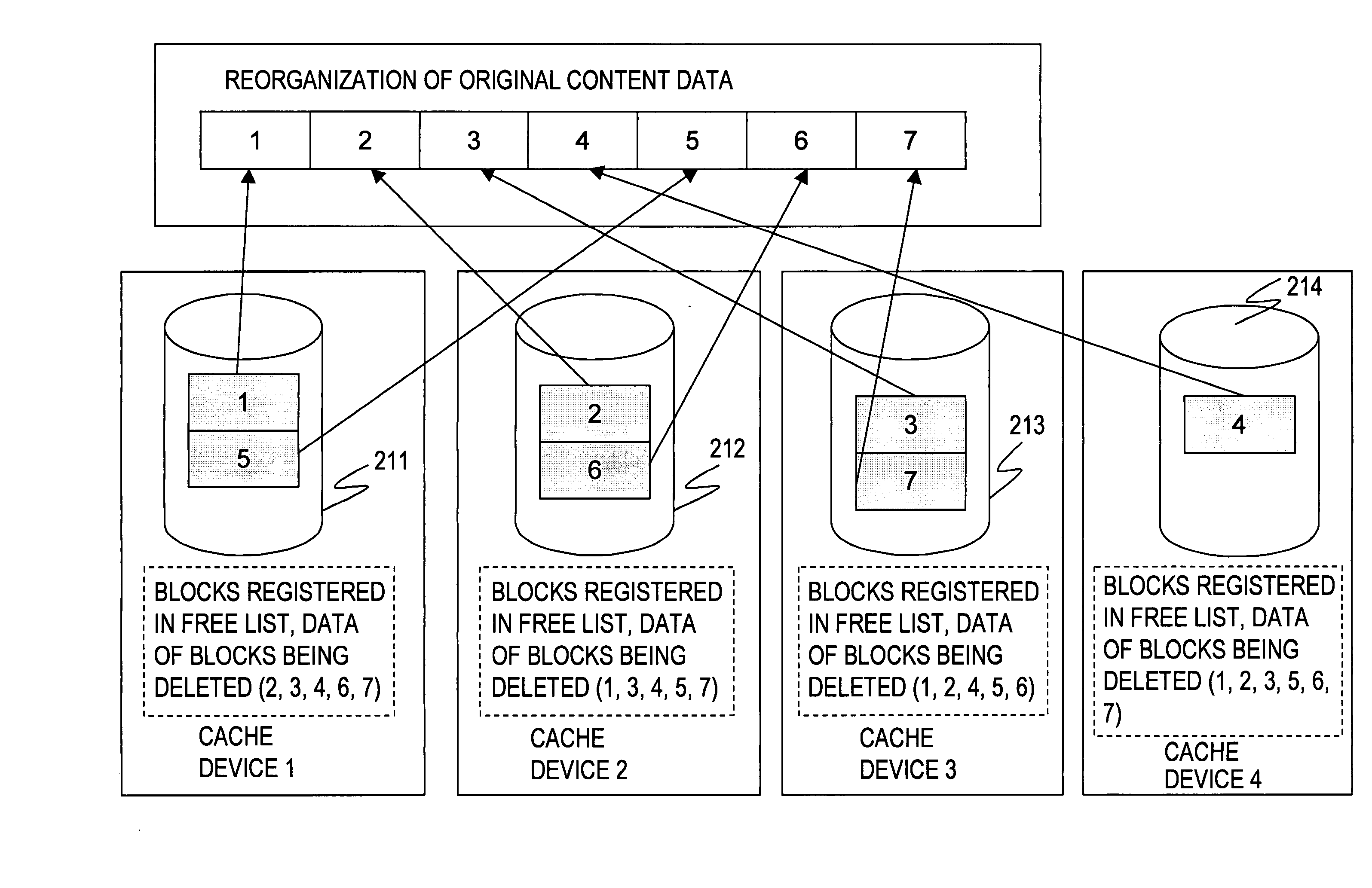

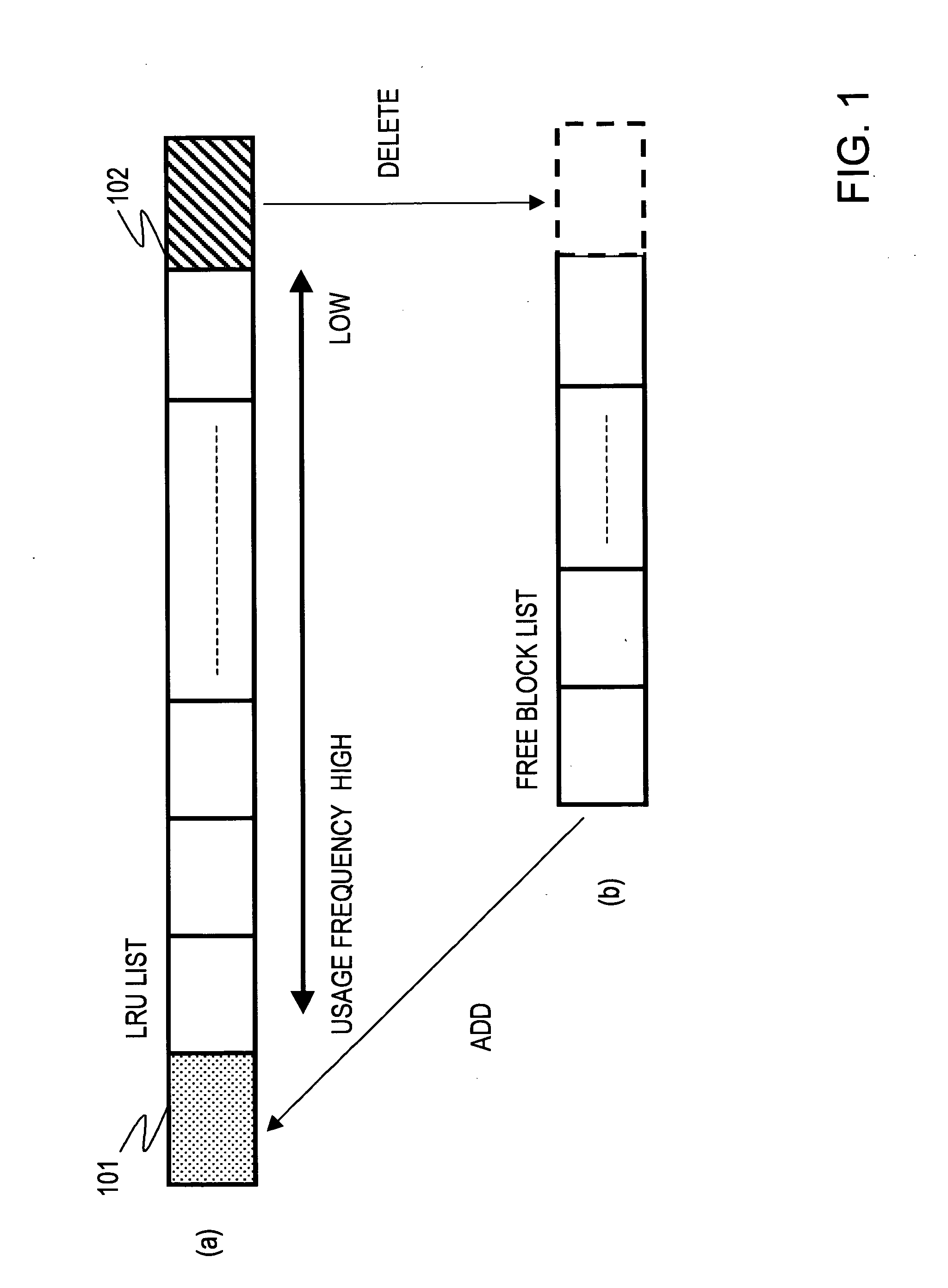

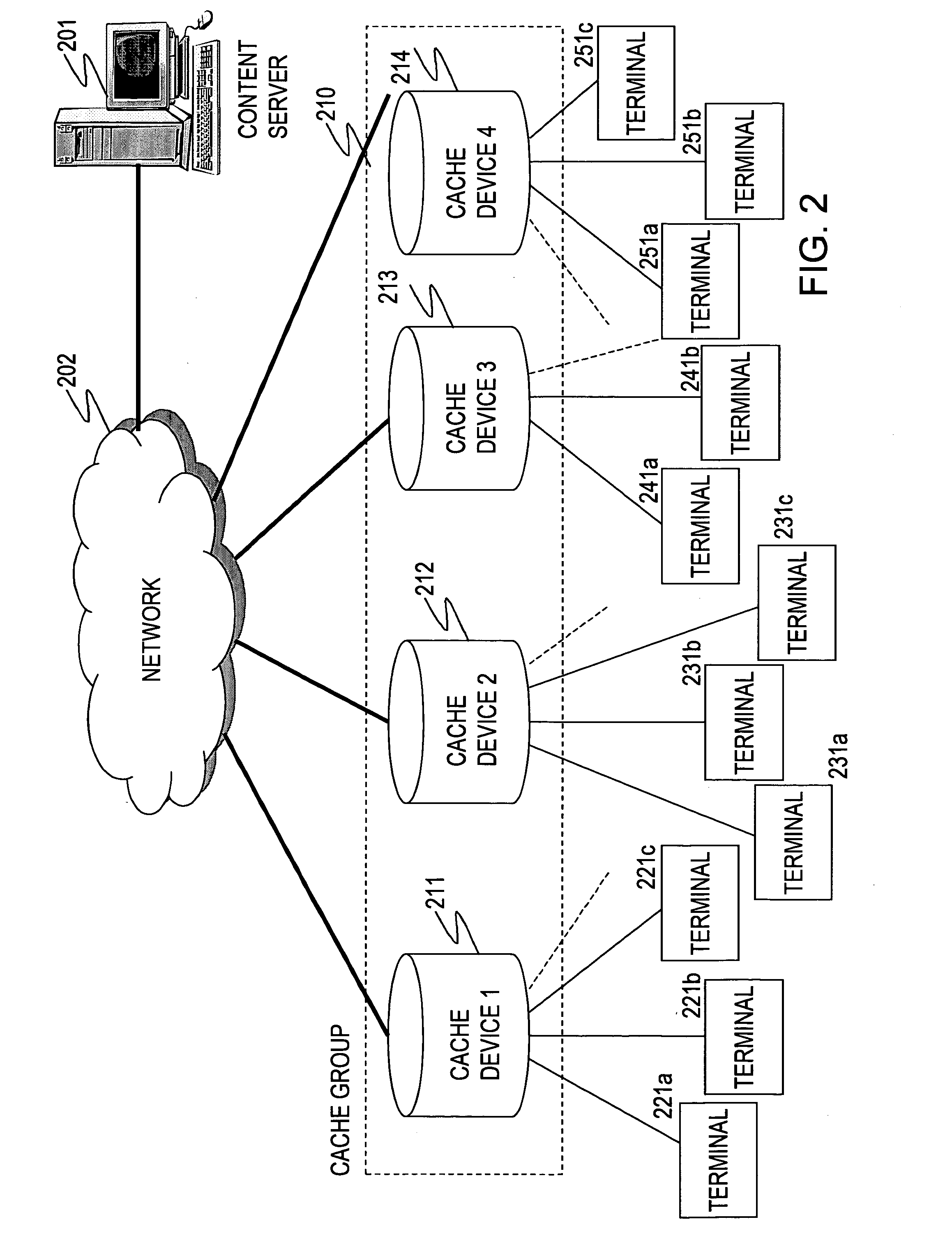

A cache device and a method for controlling cached data that enable efficient use of a storage area and improve the hit ratio are provided. When cache replacement is carried out in cache devices connected to each other through networks, data control is carried out so that data blocks set to a deletion pending status in each cache device, which includes lists regarding the data blocks set to a deletion pending status, in a cache group are different from those in other cache devices in the cache group. In this way, data control using deletion pending lists is carried out. According to the system of the present invention, a storage area can be used efficiently as compared with a case where each cache device independently controls cache replacement, and data blocks stored in a number of cache devices are collected to be sent to terminals in response to data acquisition requests from the terminals, thereby facilitating network traffic and improving the hit rate of the cache devices.

Owner:SONY CORP

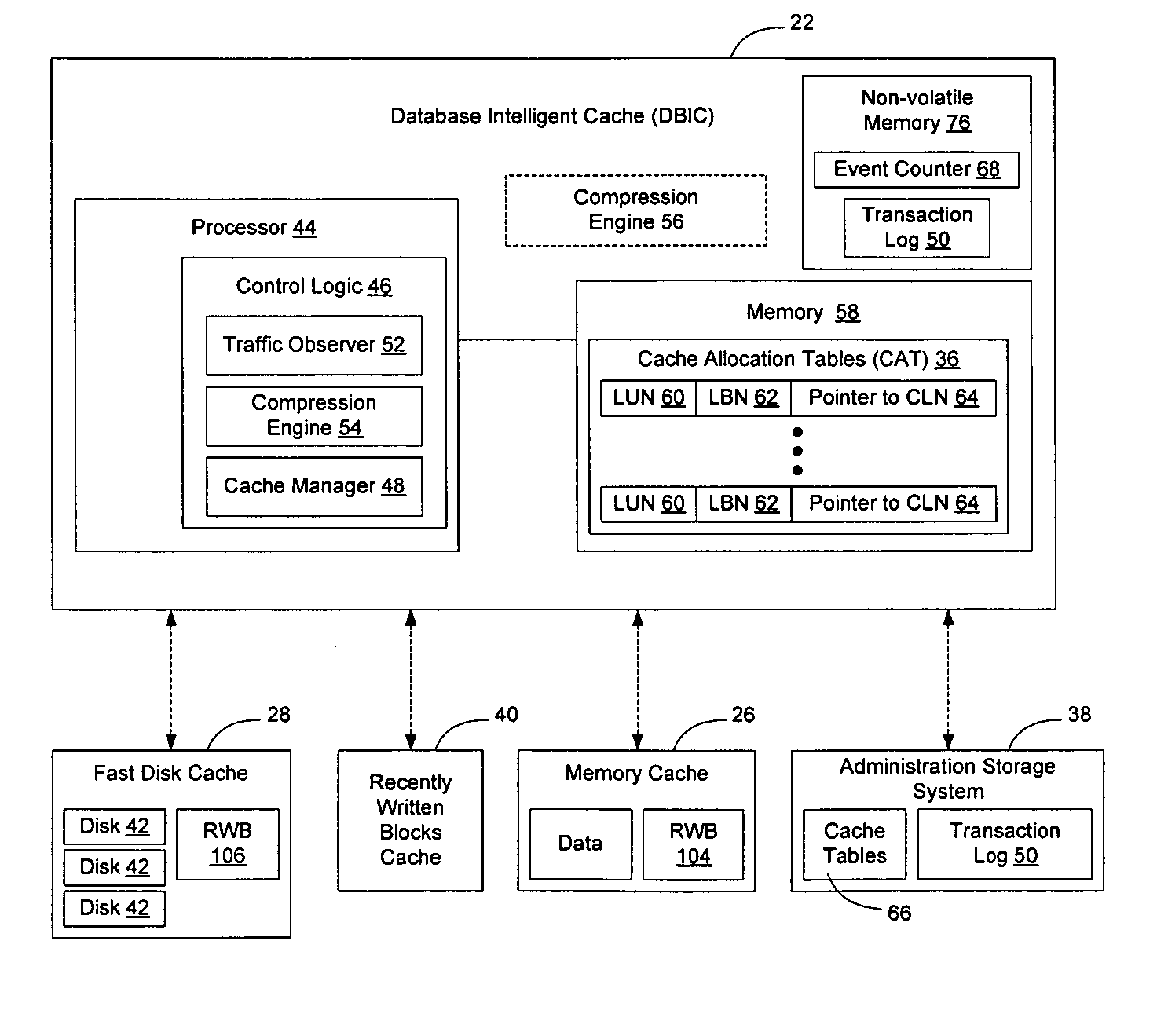

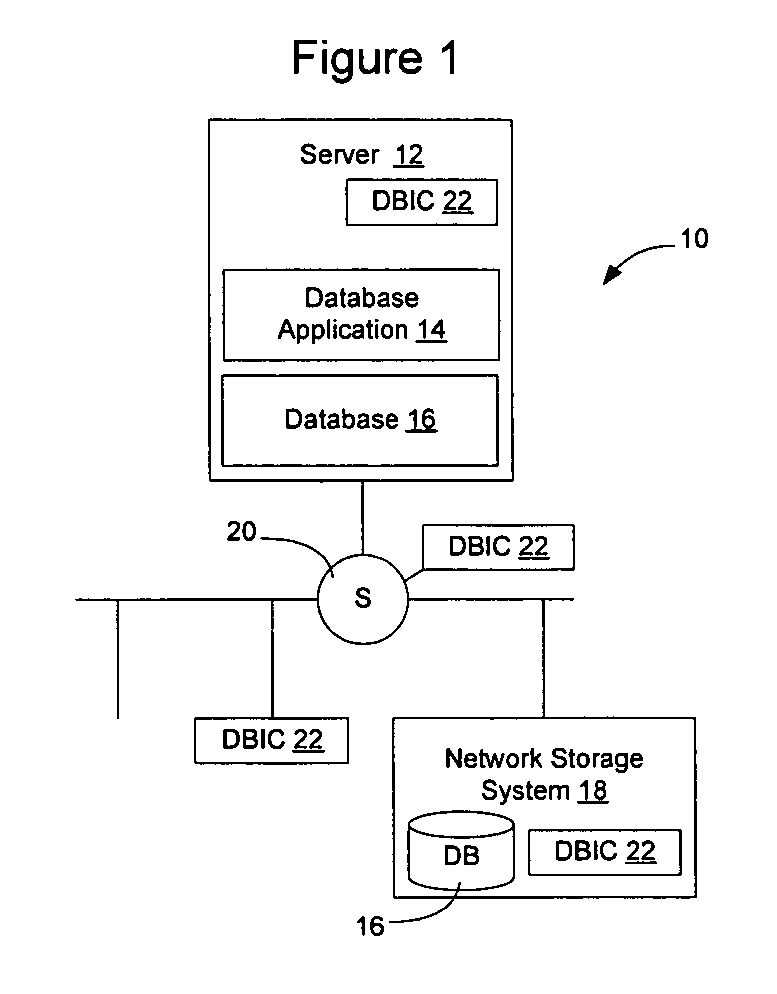

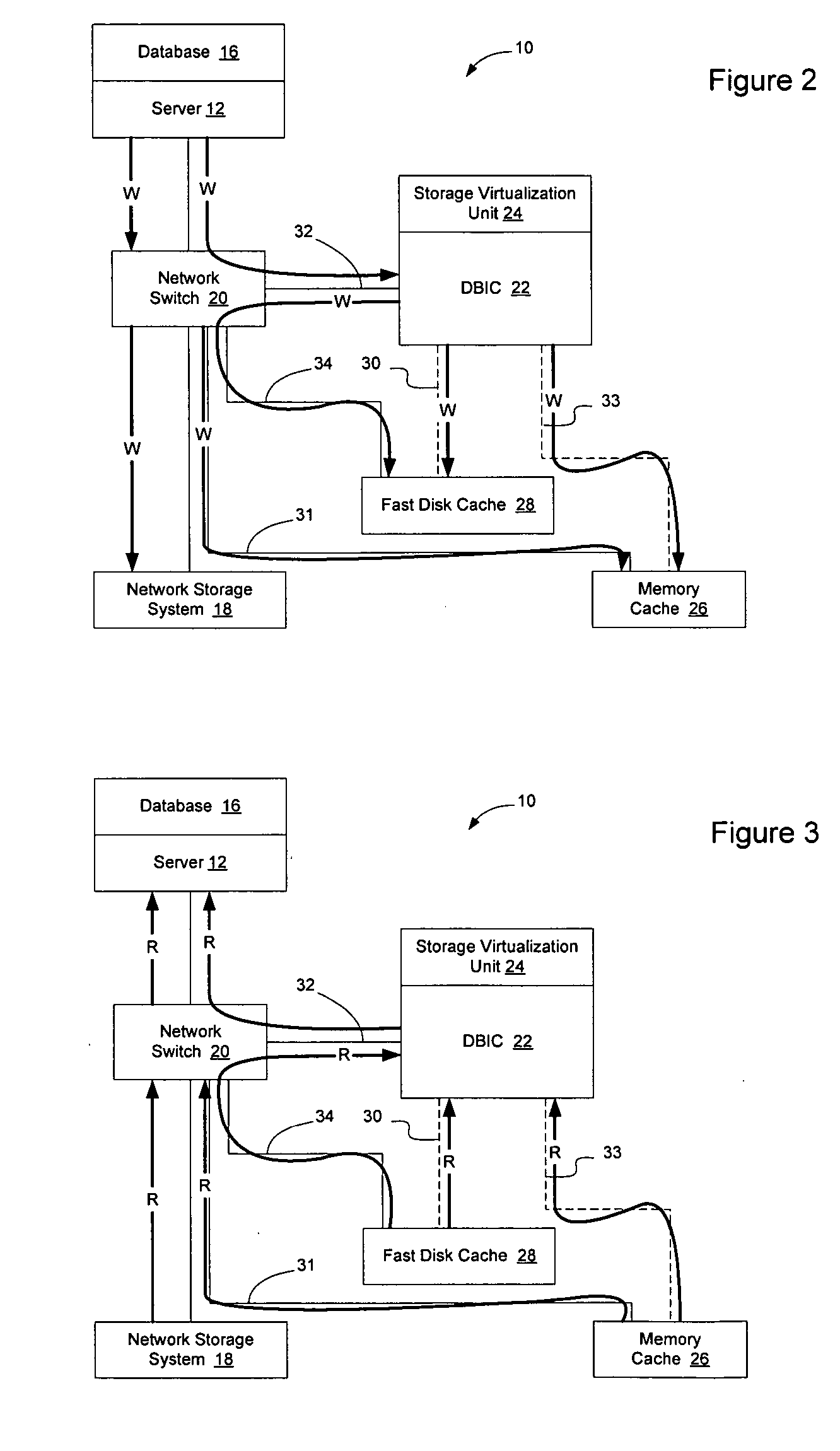

Method and apparatus for accelerating data access operations in a database system

InactiveUS20050198062A1Accelerating data access operationEfficiently streamedDigital data information retrievalSpecial data processing applicationsData operationsData access

Data access operations in a database system may be accelerated by allowing the memory cache to be supplemented with a disk cache. The disk cache can store data that isn't able to fit in the memory cache and, since it doesn't contain the primary copy of the data for the database, may organize the data in such a way that the data is able to be streamed from the disks in response to data read operations. The reduced number of read data operations allows the data to be read from the disk cache faster than it could be served from the primary storage facilities, which might not allow the data to be organized in the same manner. The cache hit ratio may be increased by compressing data prior to storing it in the cache. Additionally, where a particular portion of data stored on the disk cache is being used heavily, that portion may be pulled into memory cache to accelerate access to that portion of data.

Owner:SHAPIRO RICHARD B

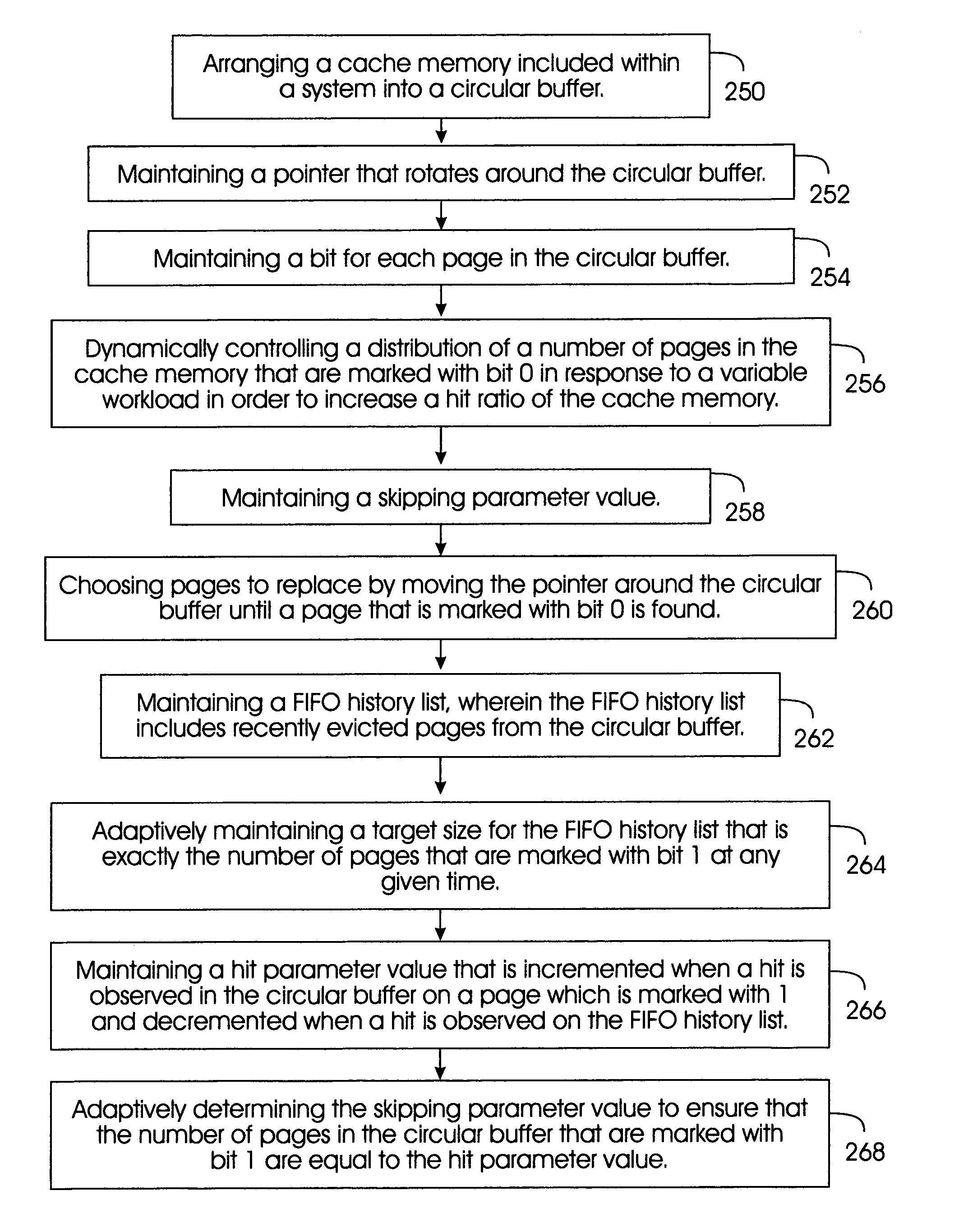

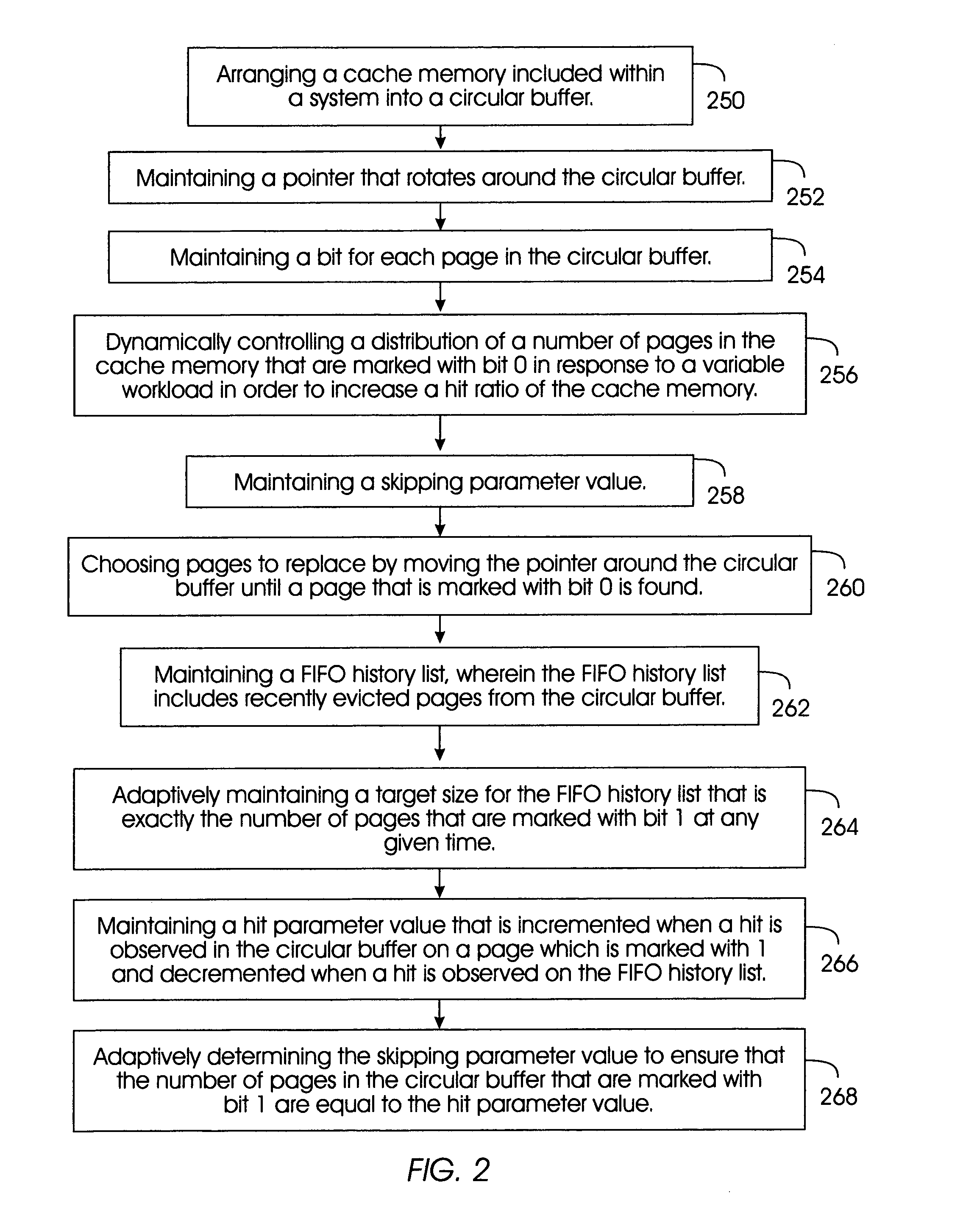

Method and system for a cache replacement technique with adaptive skipping

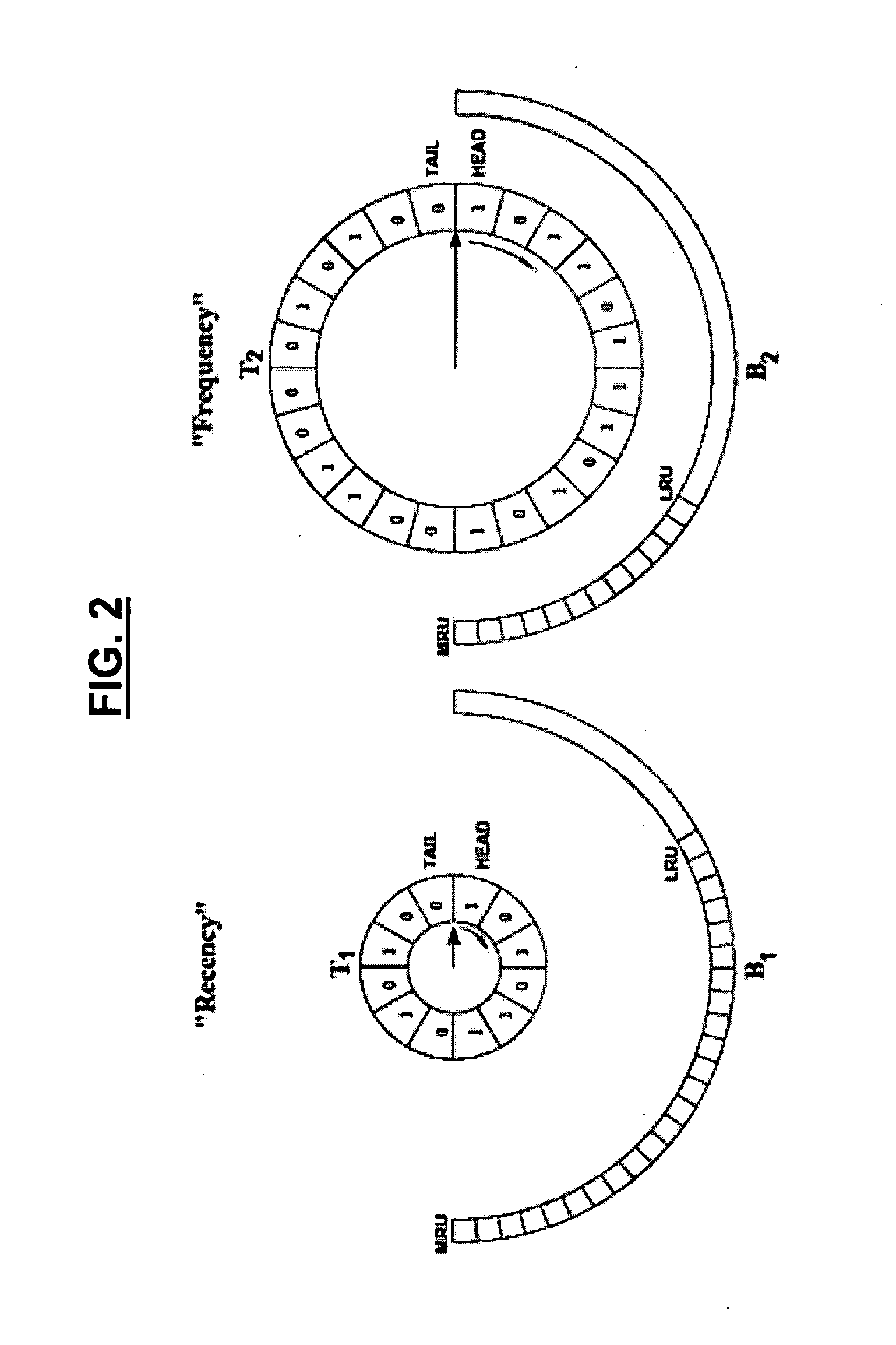

InactiveUS7096321B2Improve hit rateMemory architecture accessing/allocationMemory adressing/allocation/relocationCircular bufferWorkload

A method, system, and program storage medium for adaptively managing pages in a cache memory included within a system having a variable workload, comprising arranging a cache memory included within a system into a circular buffer; maintaining a pointer that rotates around the circular buffer; maintaining a bit for each page in the circular buffer, wherein a bit value 0 indicates that the page was not accessed by the system since a last time that the pointer traversed over the page, and a hit value 1 indicates that the page has been accessed since the last time the pointer traversed over the page; and dynamically controlling a distribution of a number of pages in the cache memory that are marked with bit 0 in response to a variable workload in order to increase a hit ratio of the cache memory.

Owner:INT BUSINESS MASCH CORP

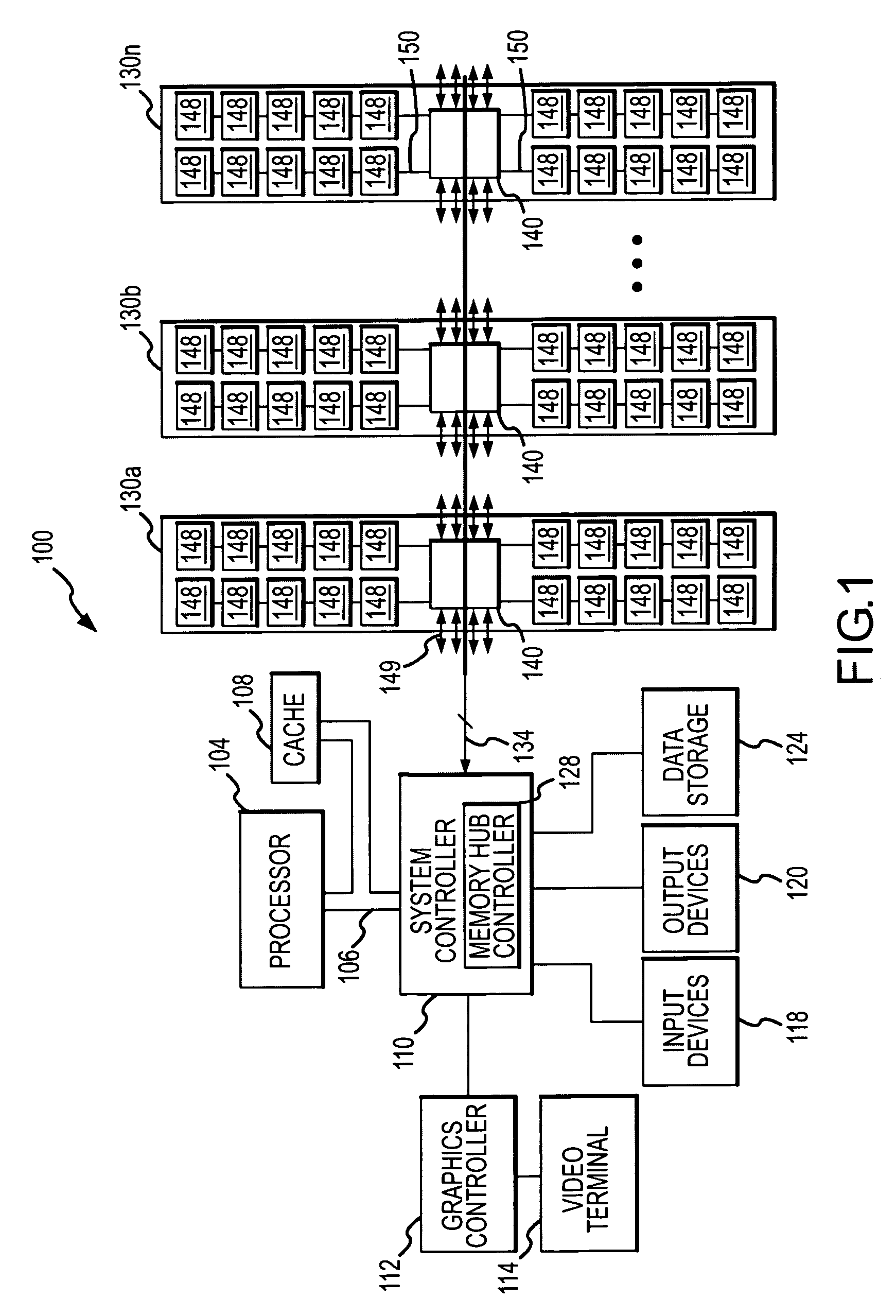

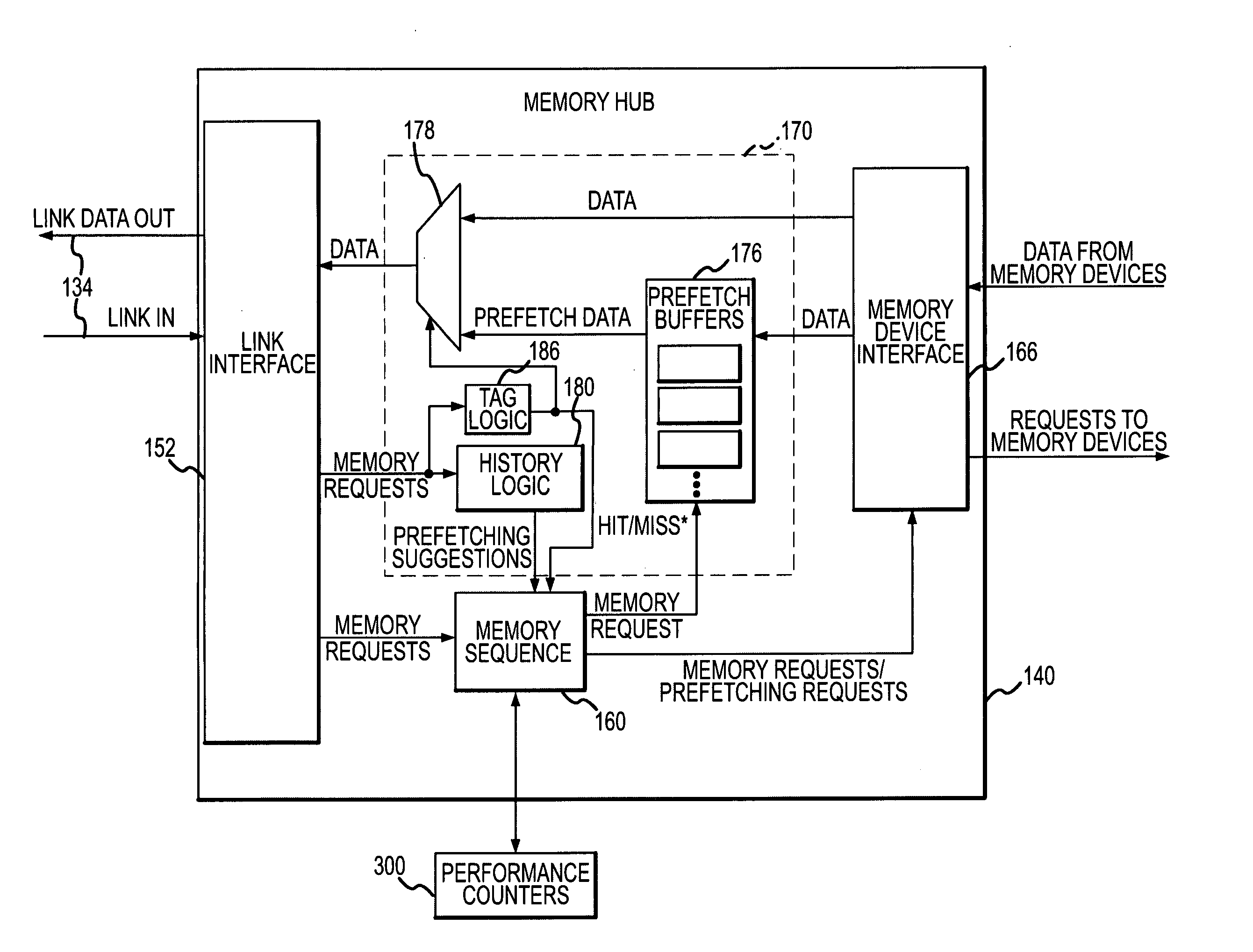

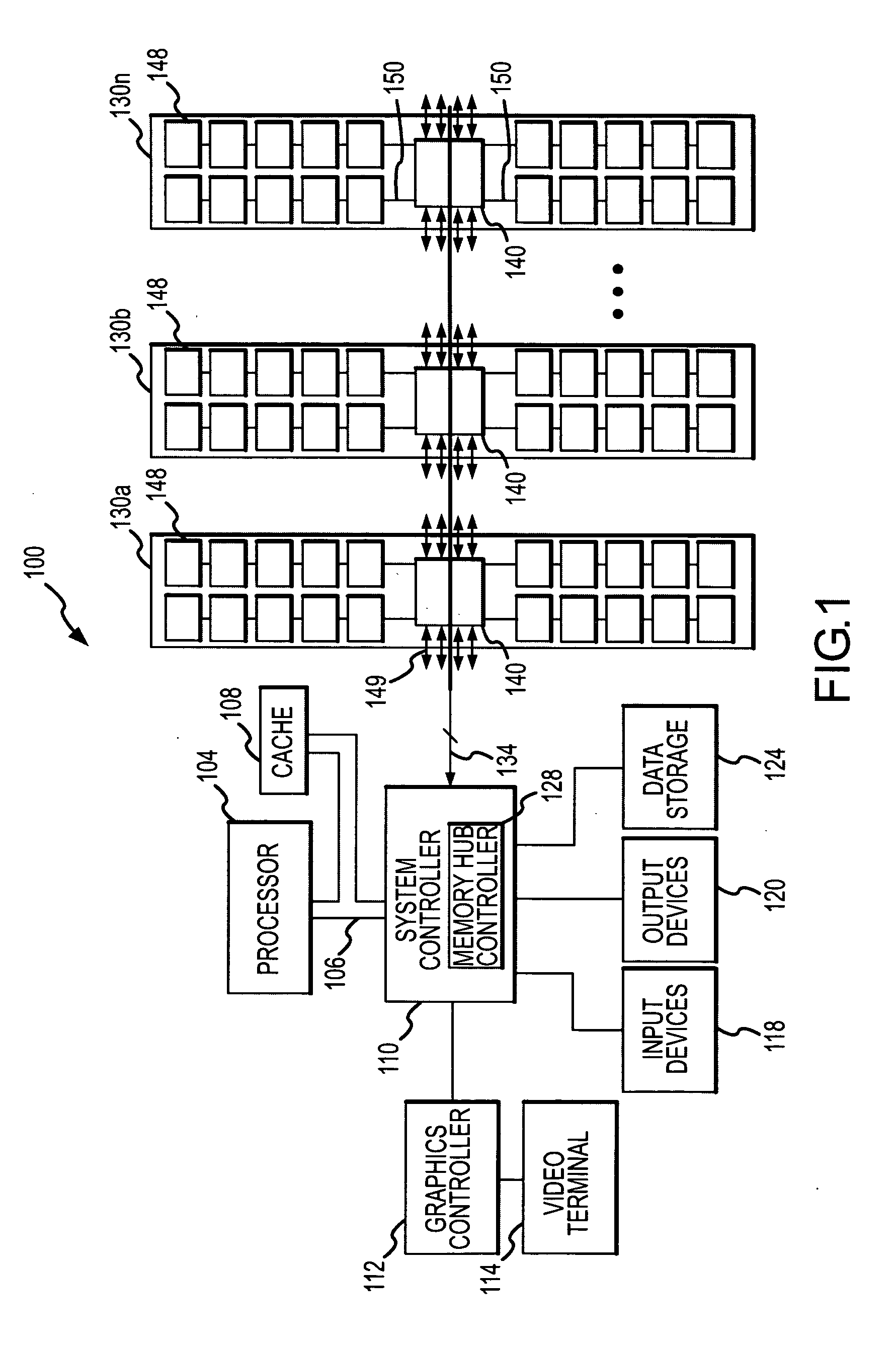

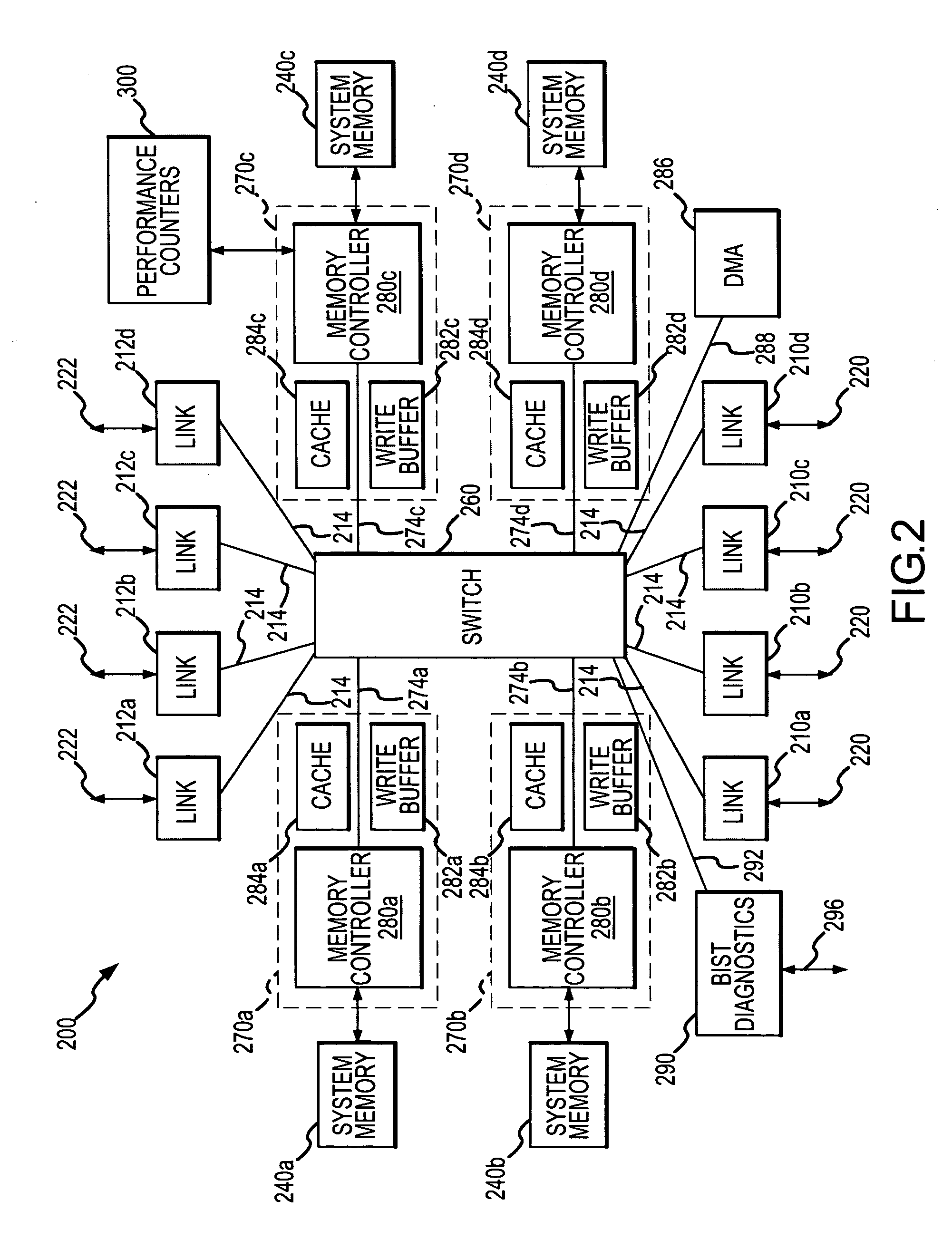

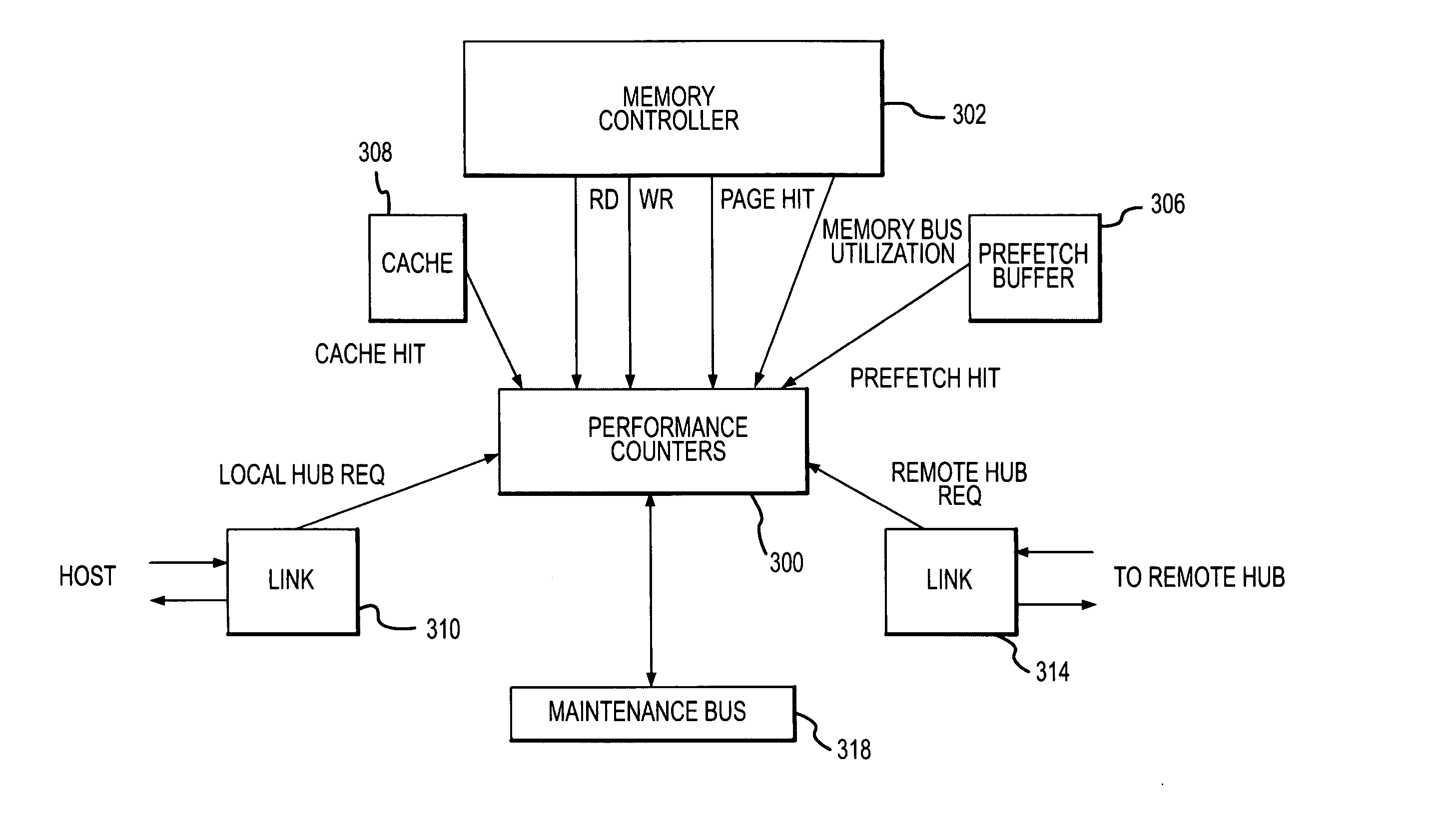

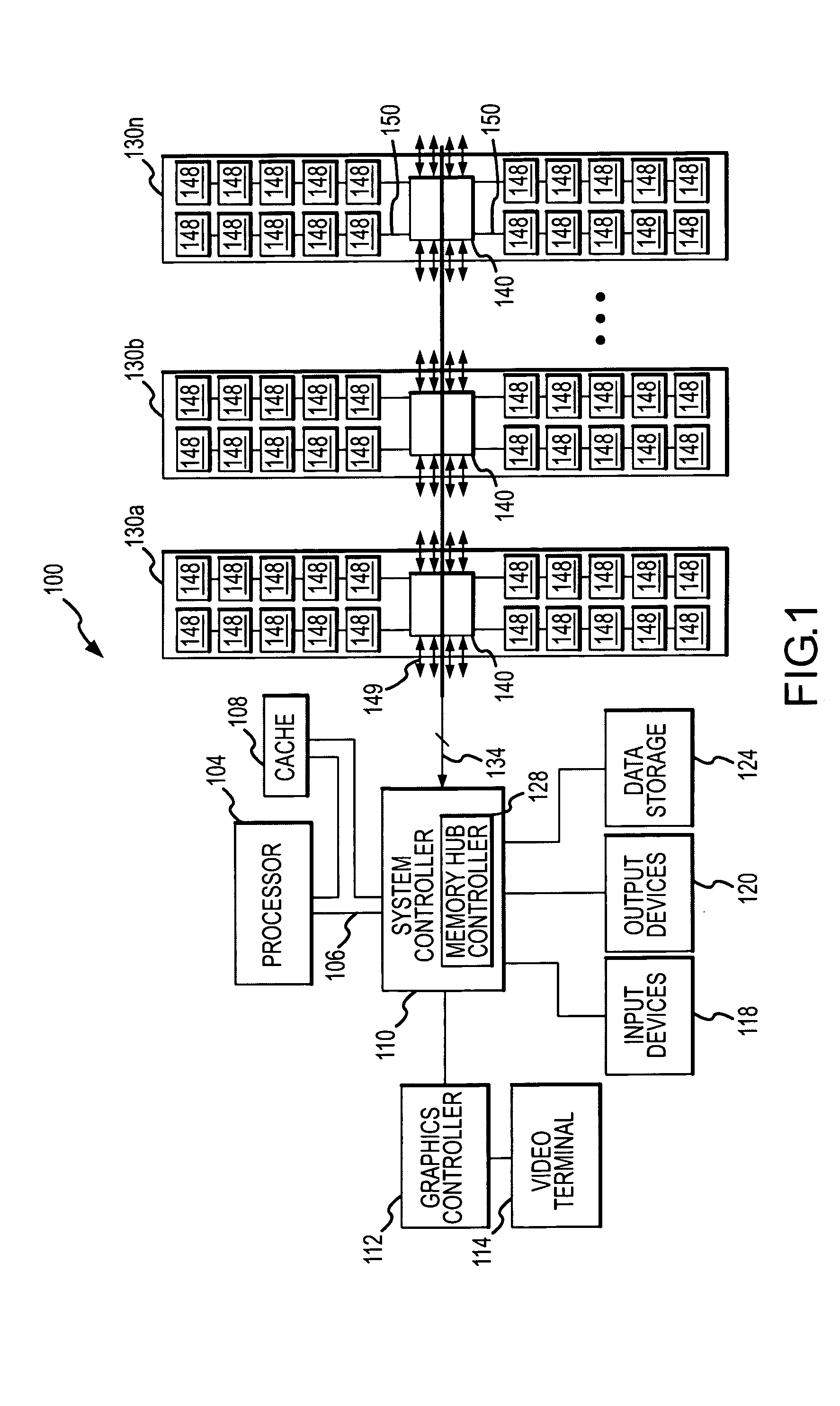

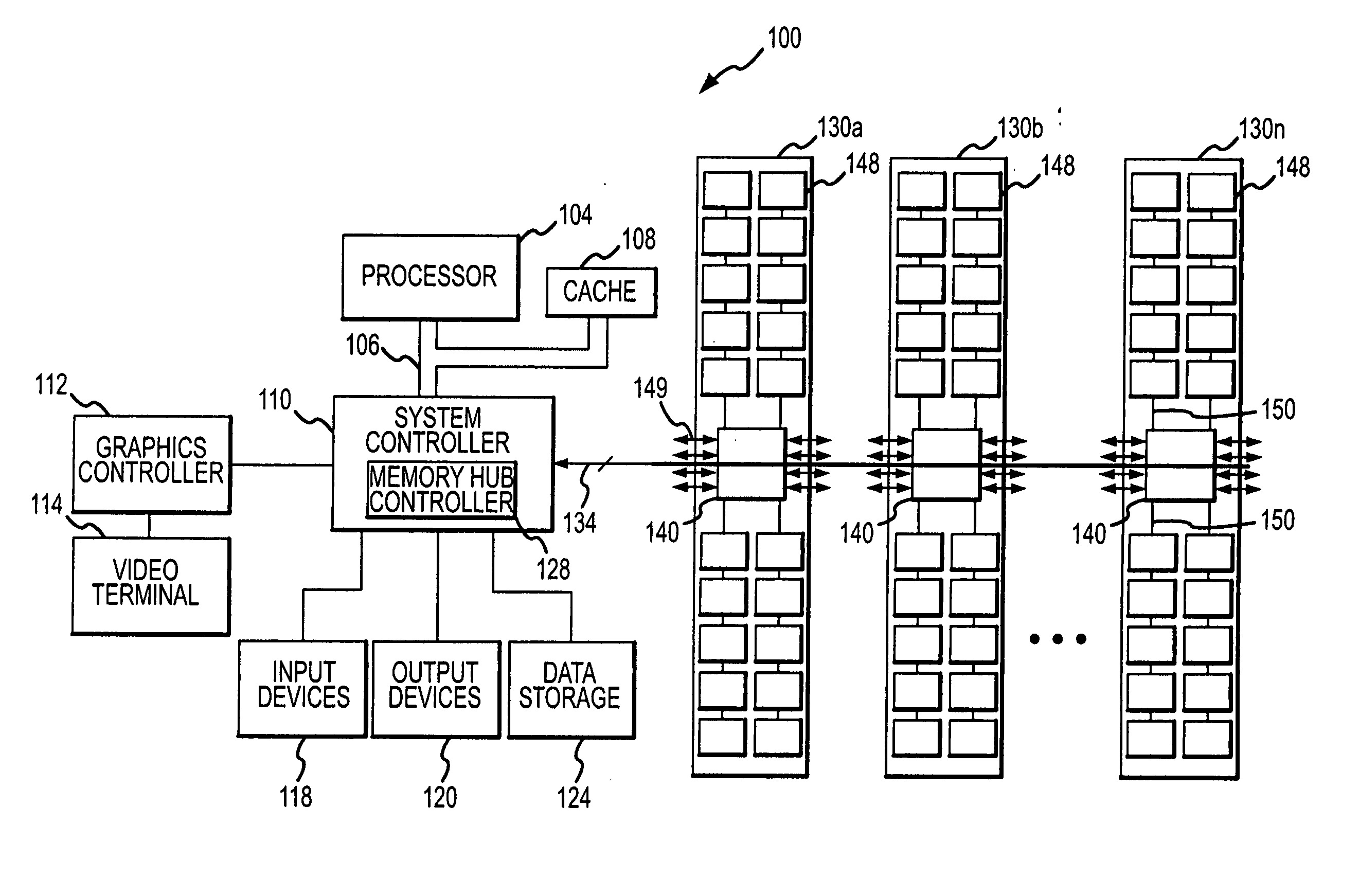

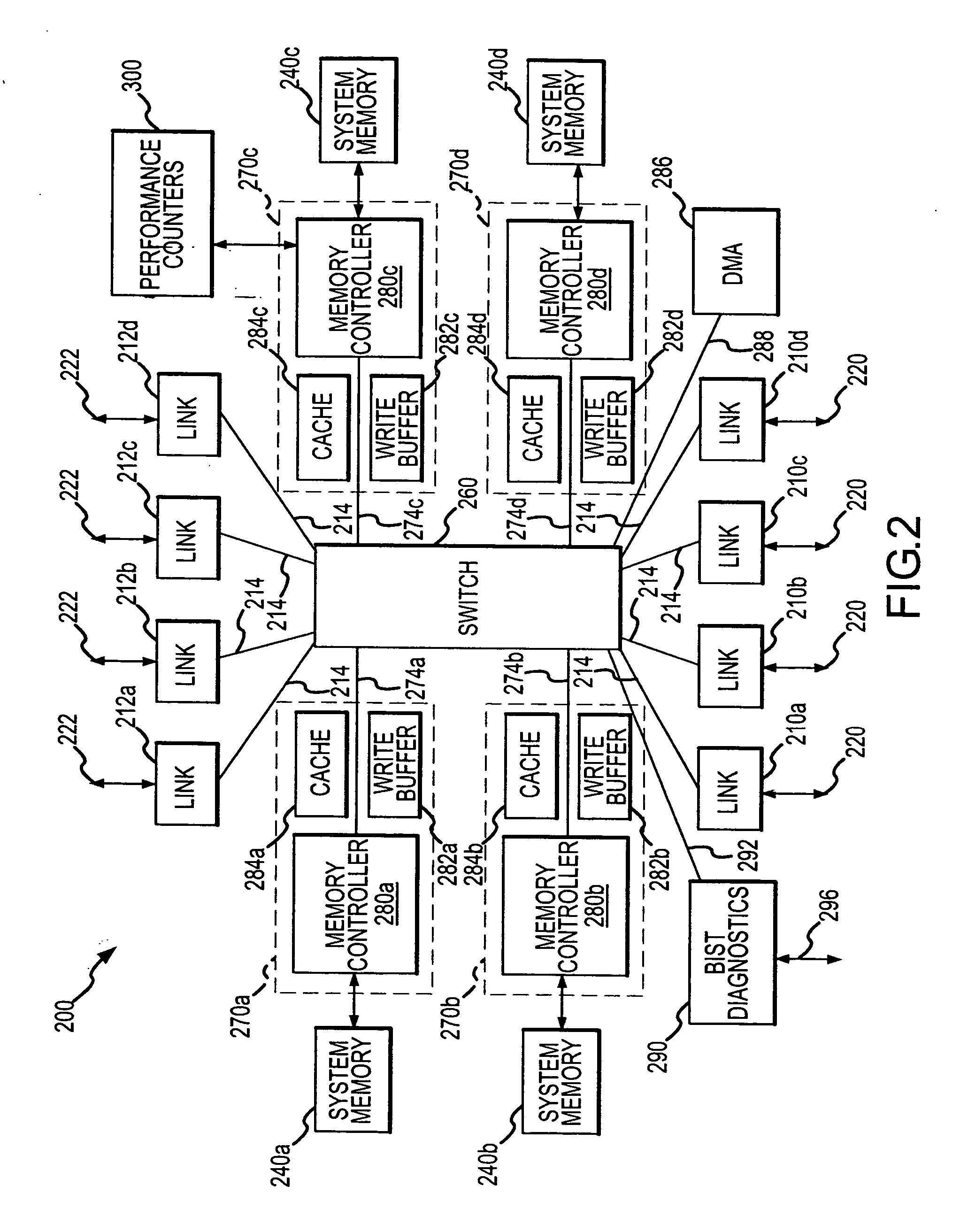

Memory hub and method for memory system performance monitoring

InactiveUS7216196B2Error detection/correctionMemory adressing/allocation/relocationMemory busCache hit rate

A memory module includes a memory hub coupled to several memory devices. The memory hub includes at least one performance counter that tracks one or more system metrics—for example, page hit rate, number or percentage of prefetch hits, cache hit rate or percentage, read rate, number of read requests, write rate, number of write requests, rate or percentage of memory bus utilization, local hub request rate or number, and / or remote hub request rate or number.

Owner:ROUND ROCK RES LLC

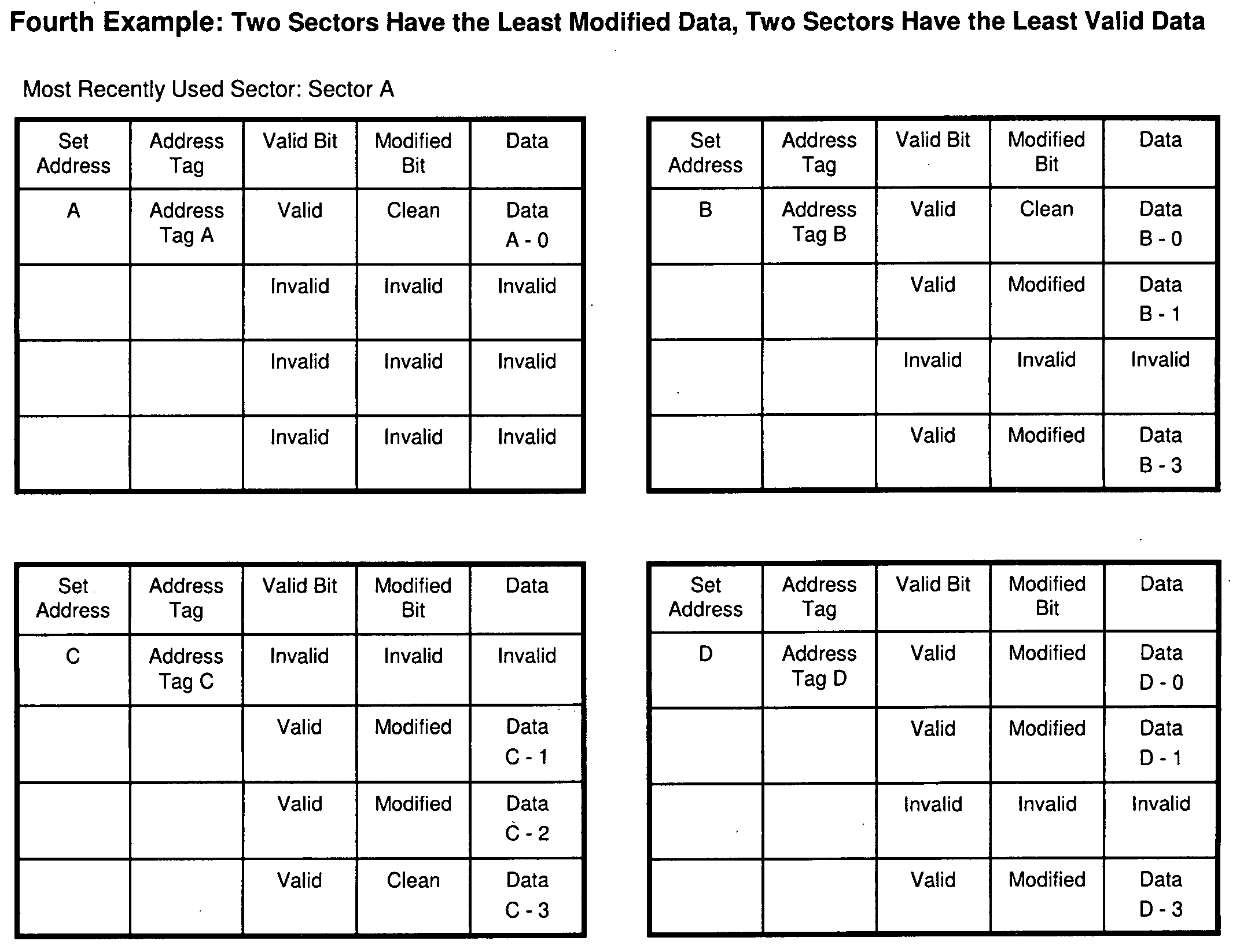

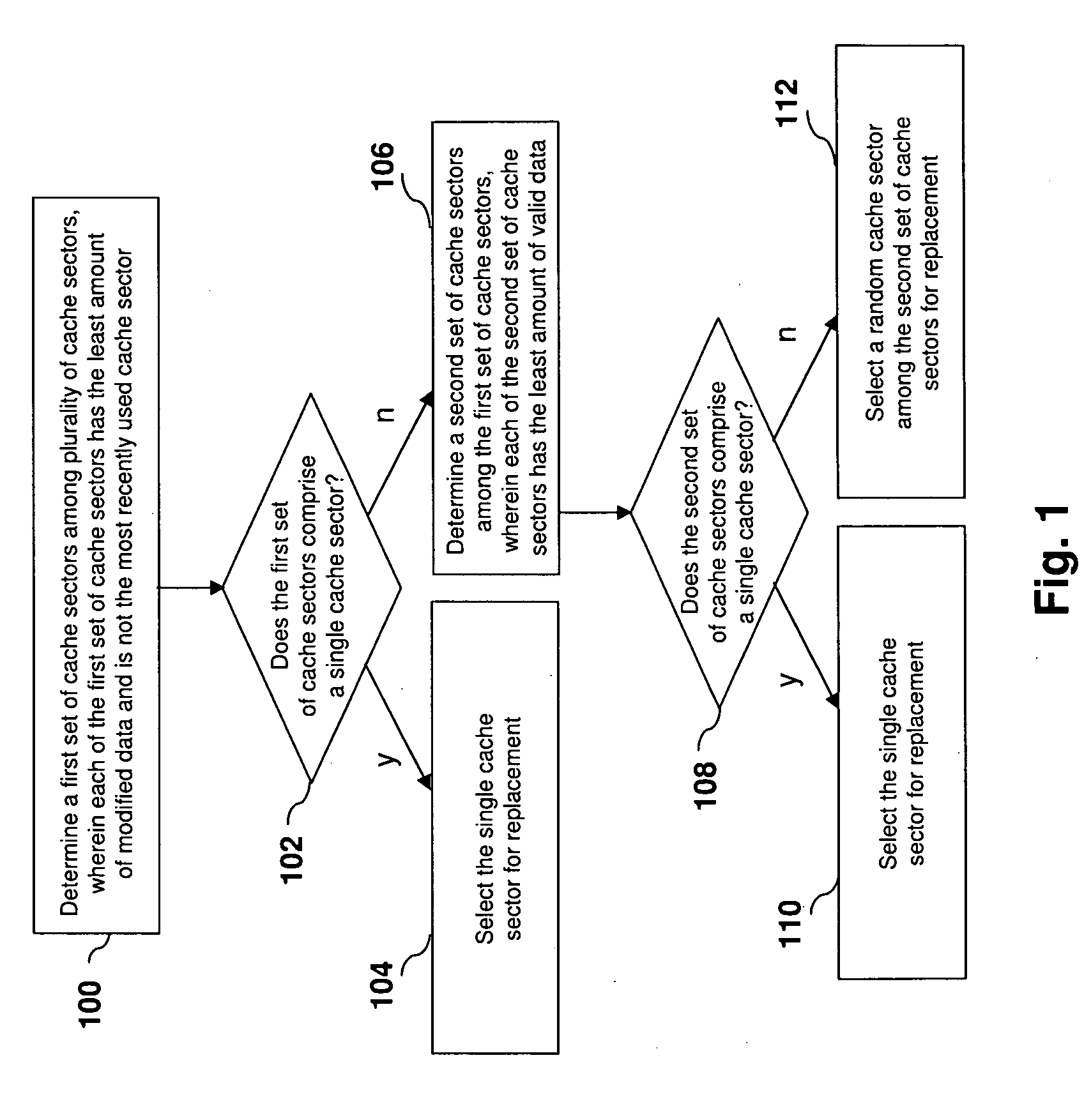

Sectored cache replacement algorithm for reducing memory writebacks

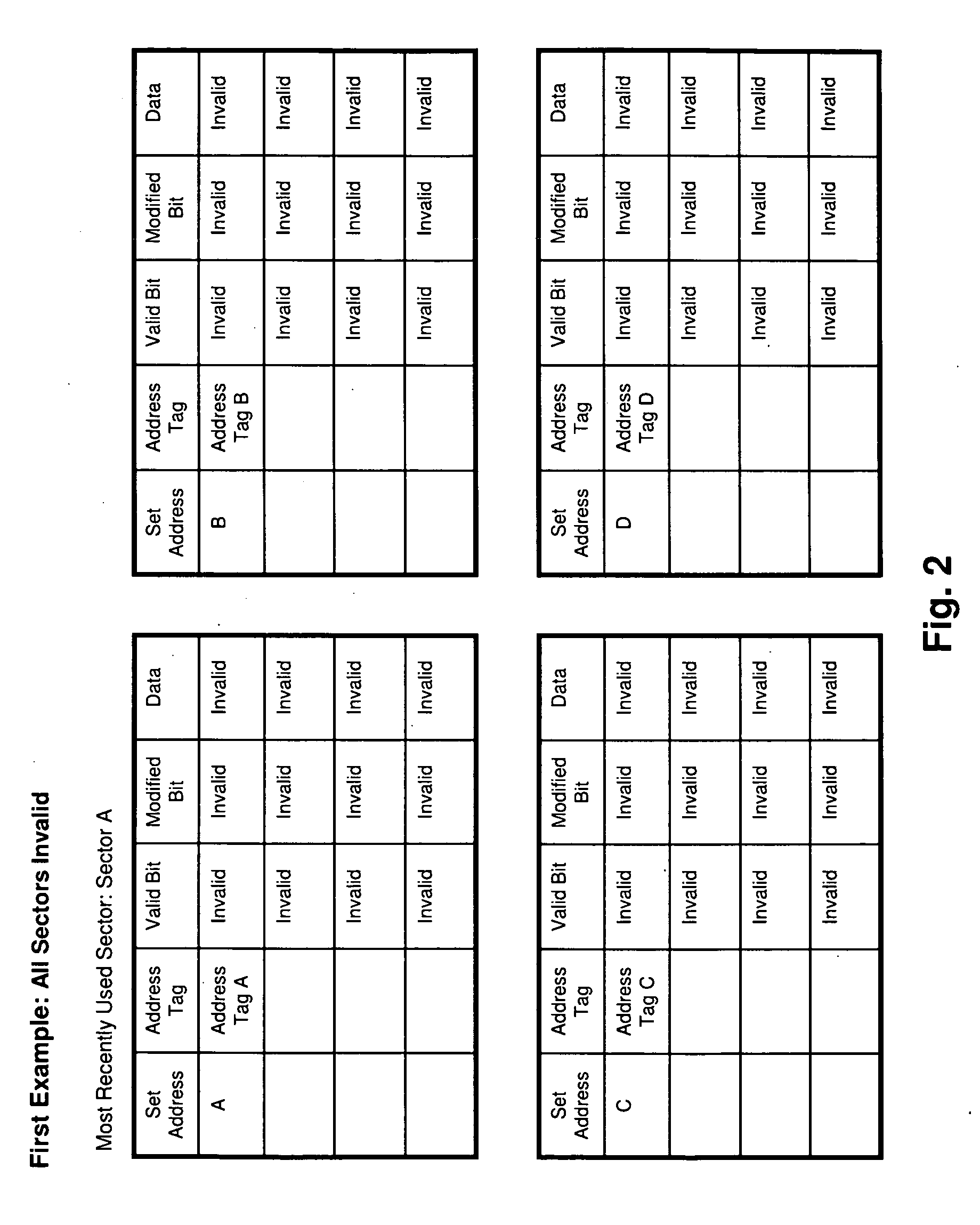

ActiveUS20100325365A1Increase ratingsReduce memory writebacksMemory adressing/allocation/relocationParallel computingComputerized system

An improved sectored cache replacement algorithm is implemented via a method and computer program product. The method and computer program product select a cache sector among a plurality of cache sectors for replacement in a computer system. The method may comprise selecting a cache sector to be replaced that is not the most recently used and that has the least amount of modified data. In the case in which there is a tie among cache sectors, the sector to be replaced may be the sector among such cache sectors with the least amount of valid data. In the case in which there is still a tie among cache sectors, the sector to be replaced may be randomly selected among such cache sectors. Unlike conventional sectored cache replacement algorithms, the improved algorithm implemented by the method and computer program product accounts for both hit rate and bus utilization.

Owner:LENOVO GLOBAL TECH INT LTD

Memory hub and method for memory sequencing

InactiveUS20050257005A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache hit rate

A memory module includes a memory hub coupled to several memory devices. The memory hub includes at least one performance counter that tracks one or more system metrics—for example, page hit rate, prefetch hits, and / or cache hit rate. The performance counter communicates with a memory sequencer that adjusts its operation based on the system metrics tracked by the performance counter.

Owner:ROUND ROCK RES LLC

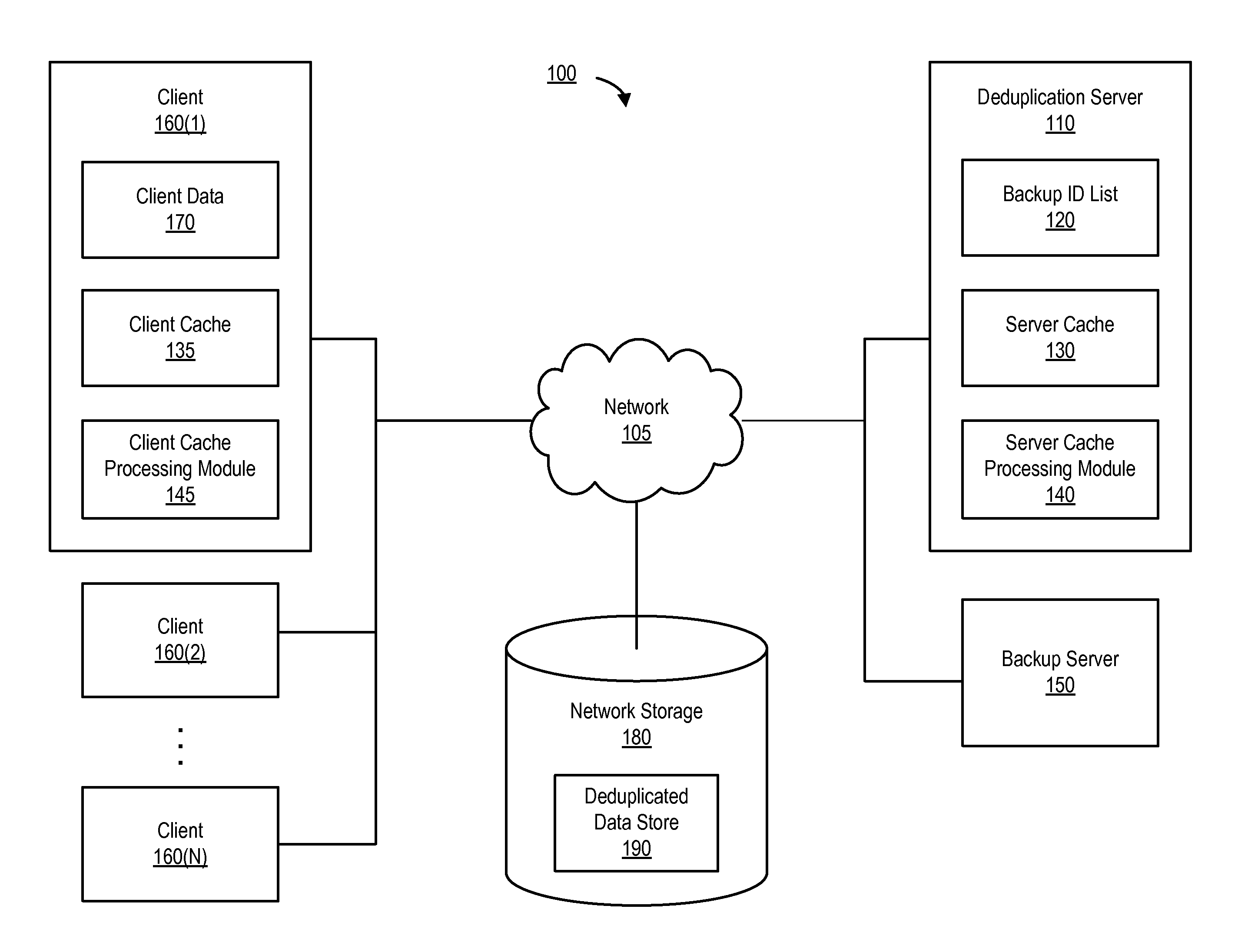

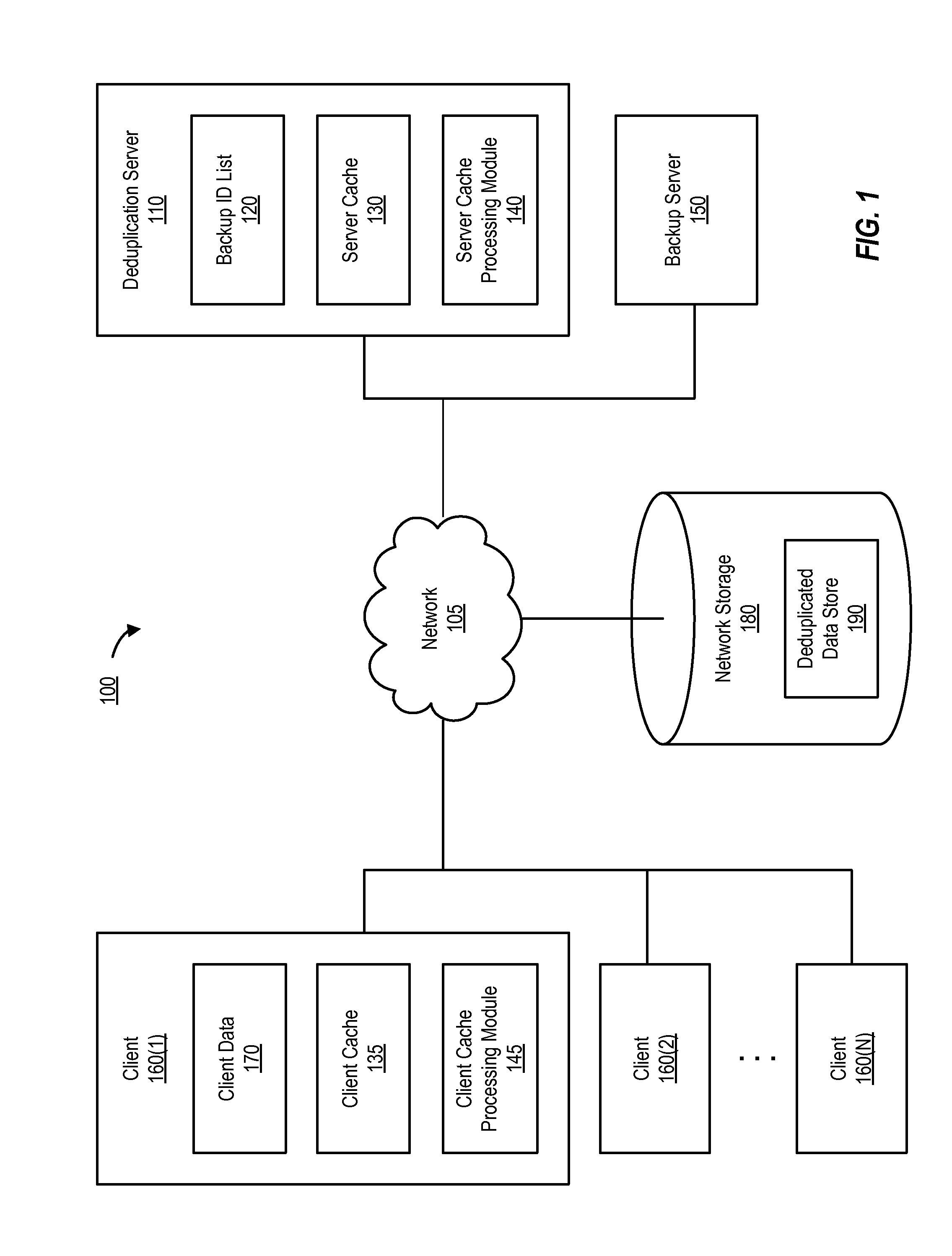

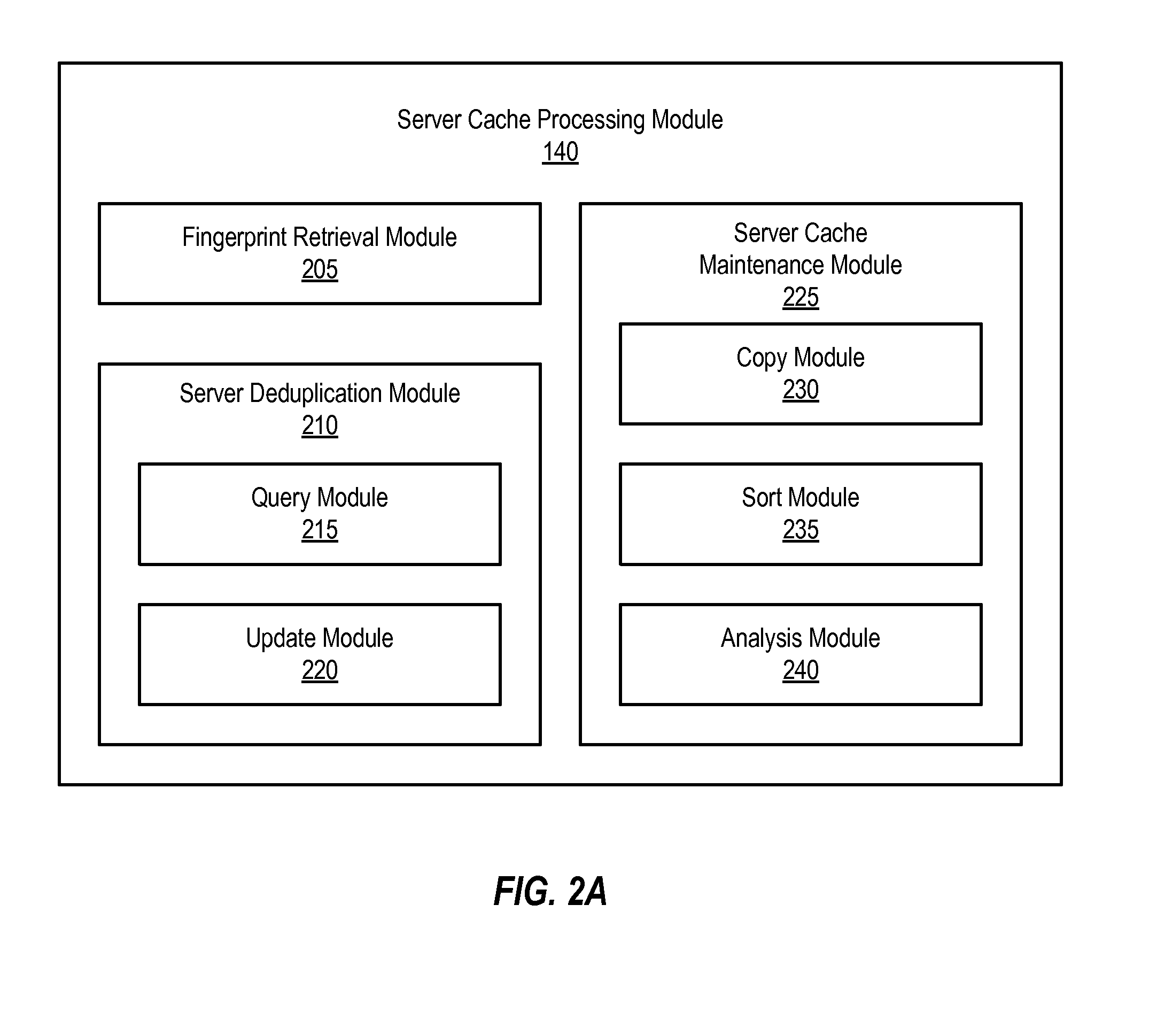

Locality Aware, Two-Level Fingerprint Caching

ActiveUS20140101113A1Error detection/correctionDigital data processing detailsClient-sideIncremental backup

The present disclosure provides for implementing a two-level fingerprint caching scheme for a client cache and a server cache. The client cache hit ratio can be improved by pre-populating the client cache with fingerprints that are relevant to the client. Relevant fingerprints include fingerprints used during a recent time period (e.g., fingerprints of segments that are included in the last full backup image and any following incremental backup images created for the client after the last full backup image), and thus are referred to as fingerprints with good temporal locality. Relevant fingerprints also include fingerprints associated with a storage container that has good spatial locality, and thus are referred to as fingerprints with good spatial locality. A pre-set threshold established for the client cache (e.g., threshold Tc) is used to determine whether a storage container (and thus fingerprints associated with the storage container) has good spatial locality.

Owner:VERITAS TECH

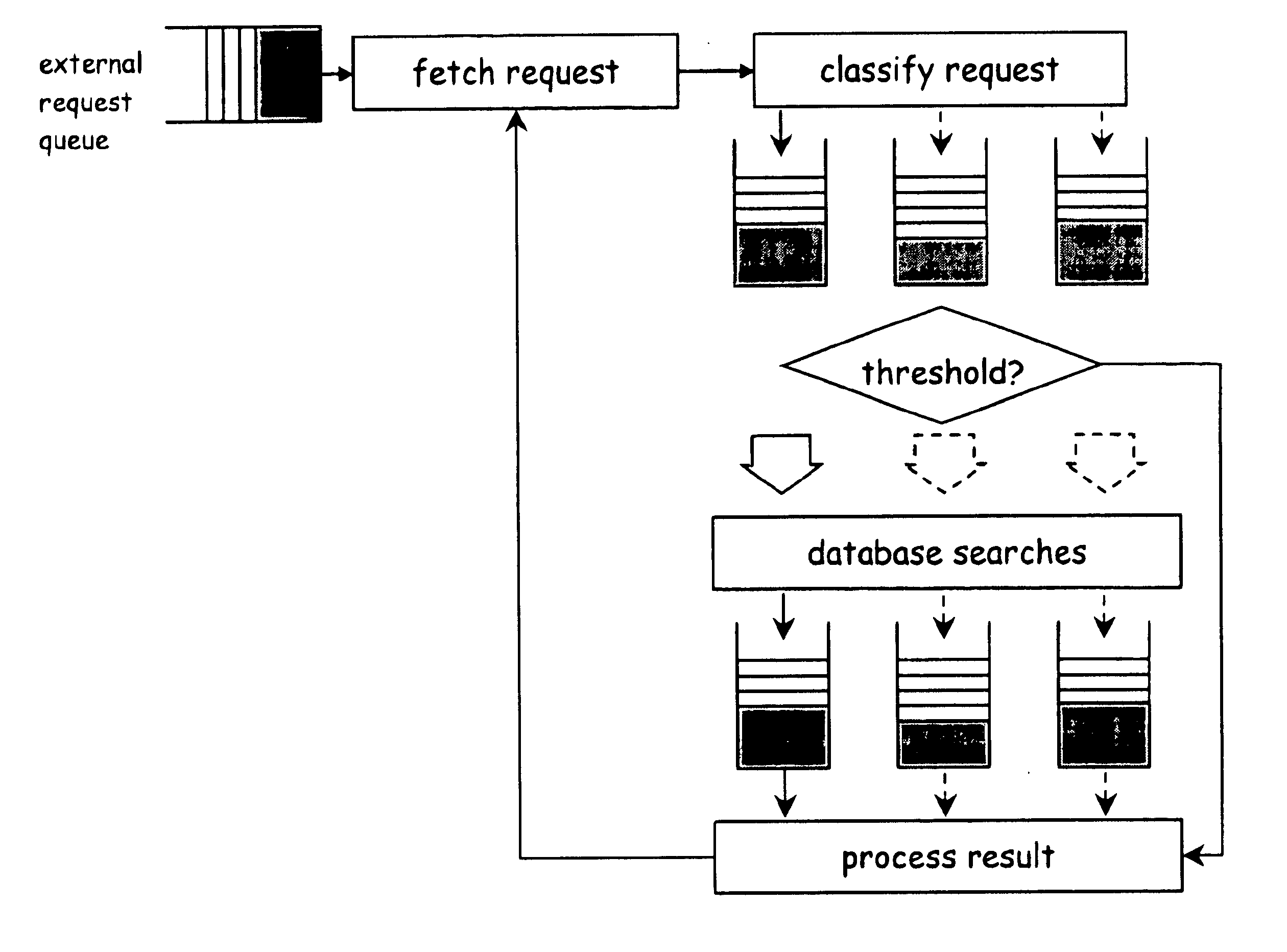

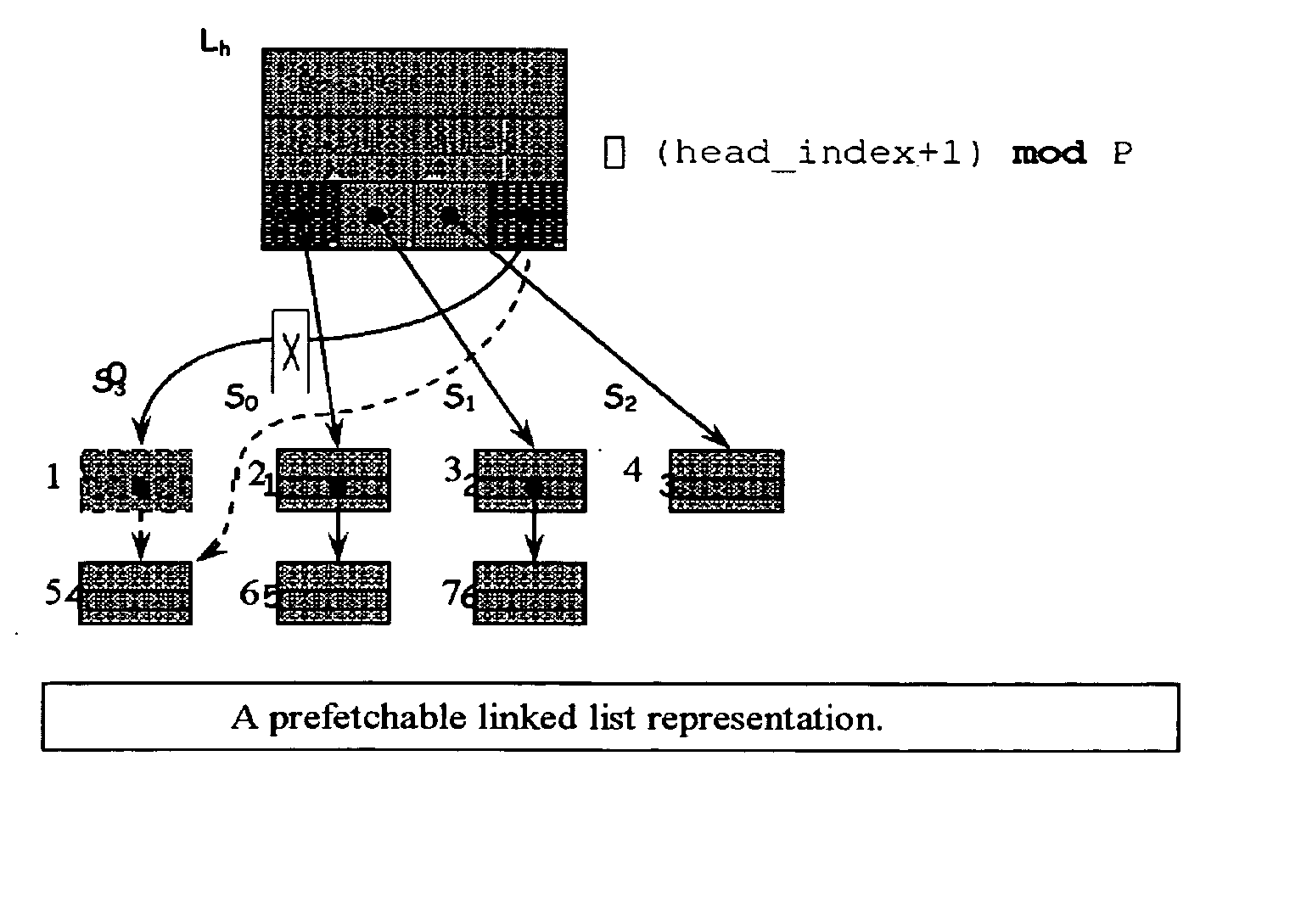

Method for prefetching recursive data structure traversals

InactiveUS20050102294A1Improve cache hit ratioPotential throughput of the computer systemDigital data information retrievalData processing applicationsOperational systemTerm memory

Computer systems are typically designed with multiple levels of memory hierarchy. Prefetching has been employed to overcome the latency of fetching data or instructions from or to memory. Prefetching works well for data structures with regular memory access patterns, but less so for data structures such as trees, hash tables, and other structures in which the datum that will be used is not known a priori. In modern transaction processing systems, database servers, operating systems, and other commercial and engineering applications, information is frequently organized in trees, graphs, and linked lists. Lack of spatial locality results in a high probability that a miss will be incurred at each cache in the memory hierarchy. Each cache miss causes the processor to stall while the referenced value is fetched from lower levels of the memory hierarchy. Because this is likely to be the case for a significant fraction of the nodes traversed in the data structure, processor utilization suffers. The inability to compute the address of the next address to be referenced makes prefetching difficult in such applications. The invention allows compilers and / or programmers to restructure data structures and traversals so that pointers are dereferenced in a pipelined manner, thereby making it possible to schedule prefetch operations in a consistent fashion. The present invention significantly increases the cache hit rates of many important data structure traversals, and thereby the potential throughput of the computer system and application in which it is employed. For data structure traversals in which the traversal path may be predetermined, a transformation is performed on the data structure that permits references to nodes that will be traversed in the future be computed sufficiently far in advance to prefetch the data into cache.

Owner:DIGITAL CACHE LLC

Dynamic migration of virtual machines based on workload cache demand profiling

ActiveUS20120226866A1Memory adressing/allocation/relocationComputer security arrangementsParallel computingRequirements analysis

A computer-implemented method comprises obtaining a cache hit ratio for each of a plurality of virtual machines, and identifying, from among the plurality of virtual machines, a first virtual machine having a cache hit ratio that is less than a threshold ratio. The identified first virtual machine is then migrated from the first physical server having a first cache size to a second physical server having a second cache size that is greater than the first cache size. Optionally, a virtual machine having a cache hit ratio that is less than a threshold ratio is identified on a class-specific basis, such as for L1 cache, L2 cache and L3 cache.

Owner:IBM CORP

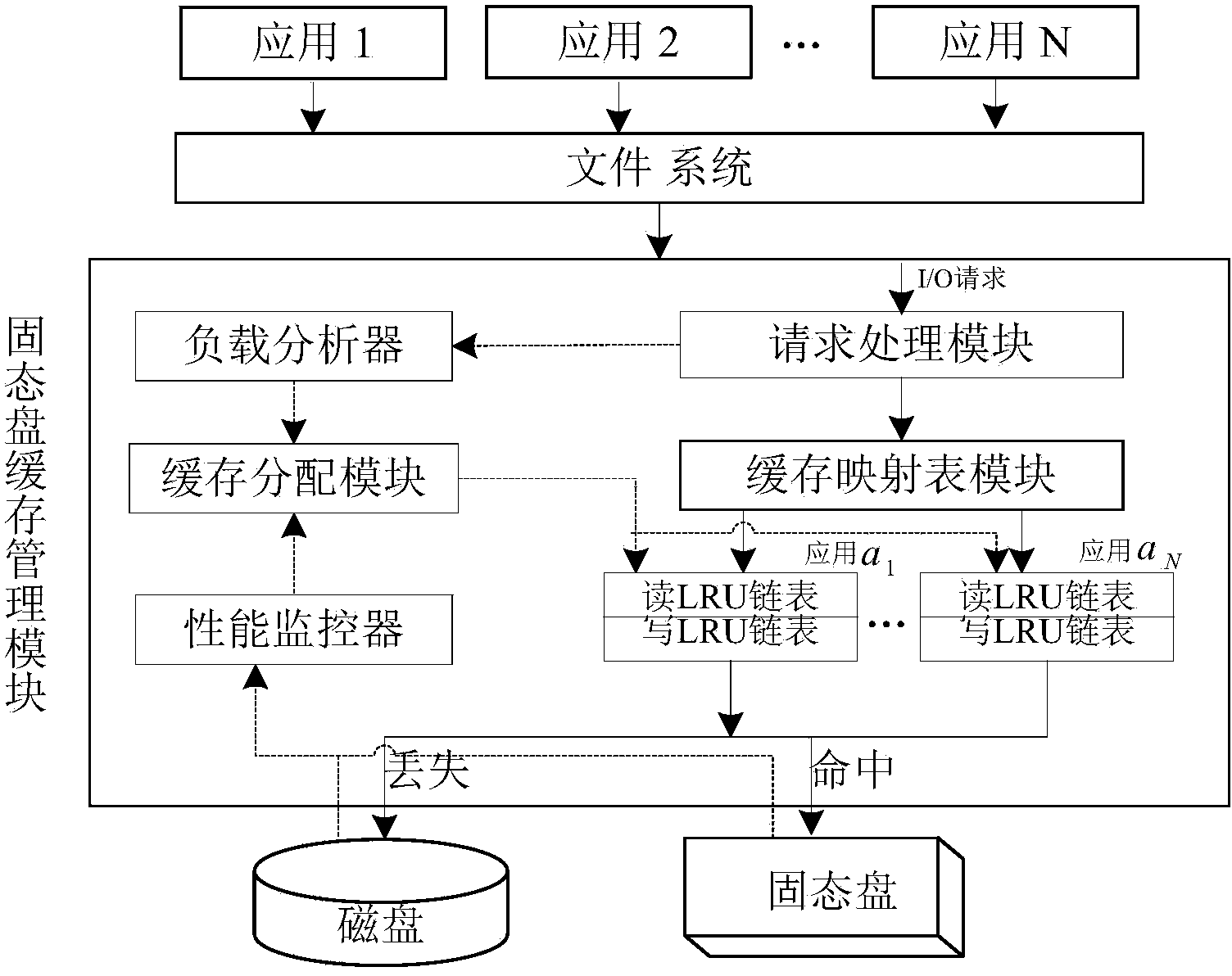

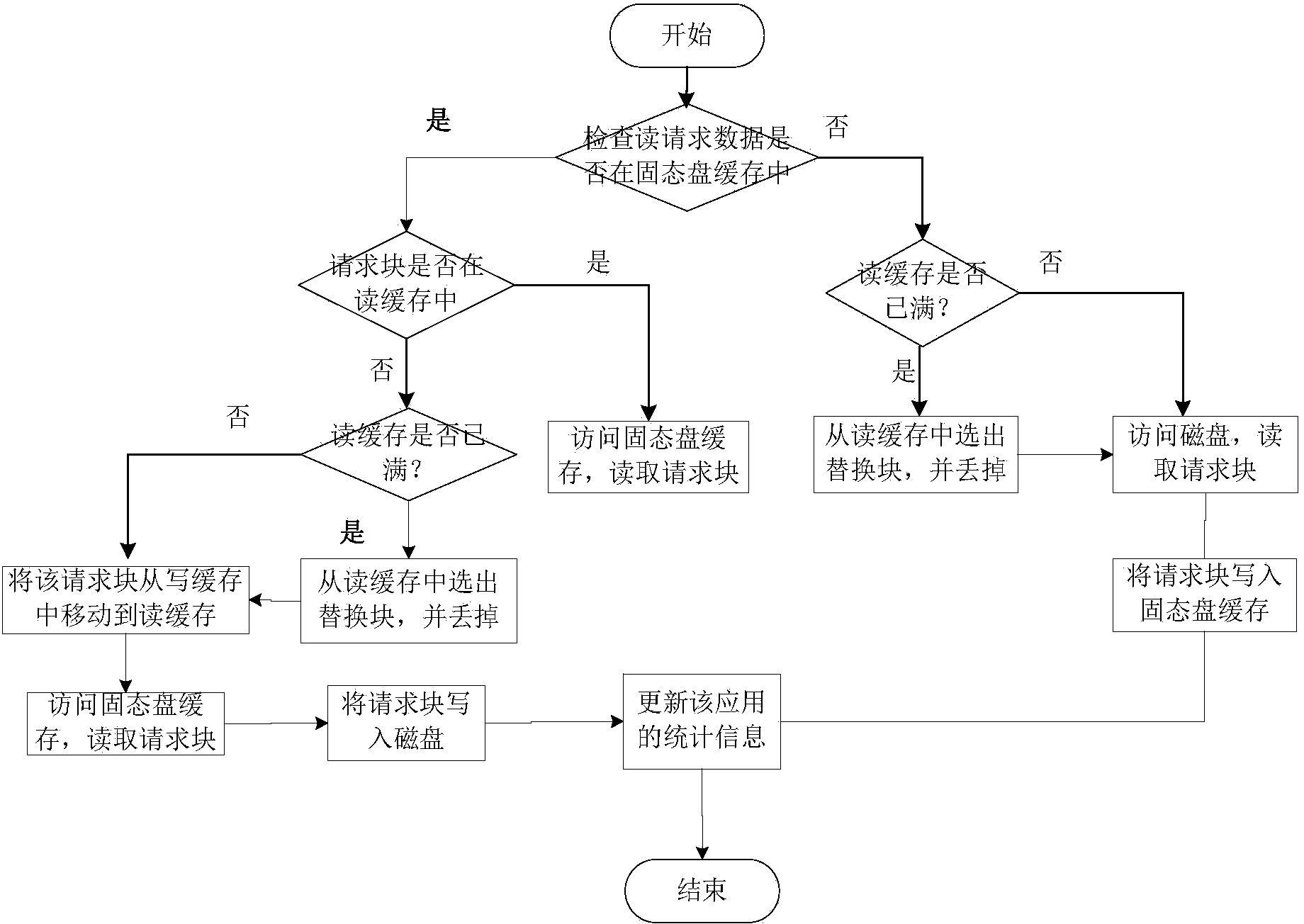

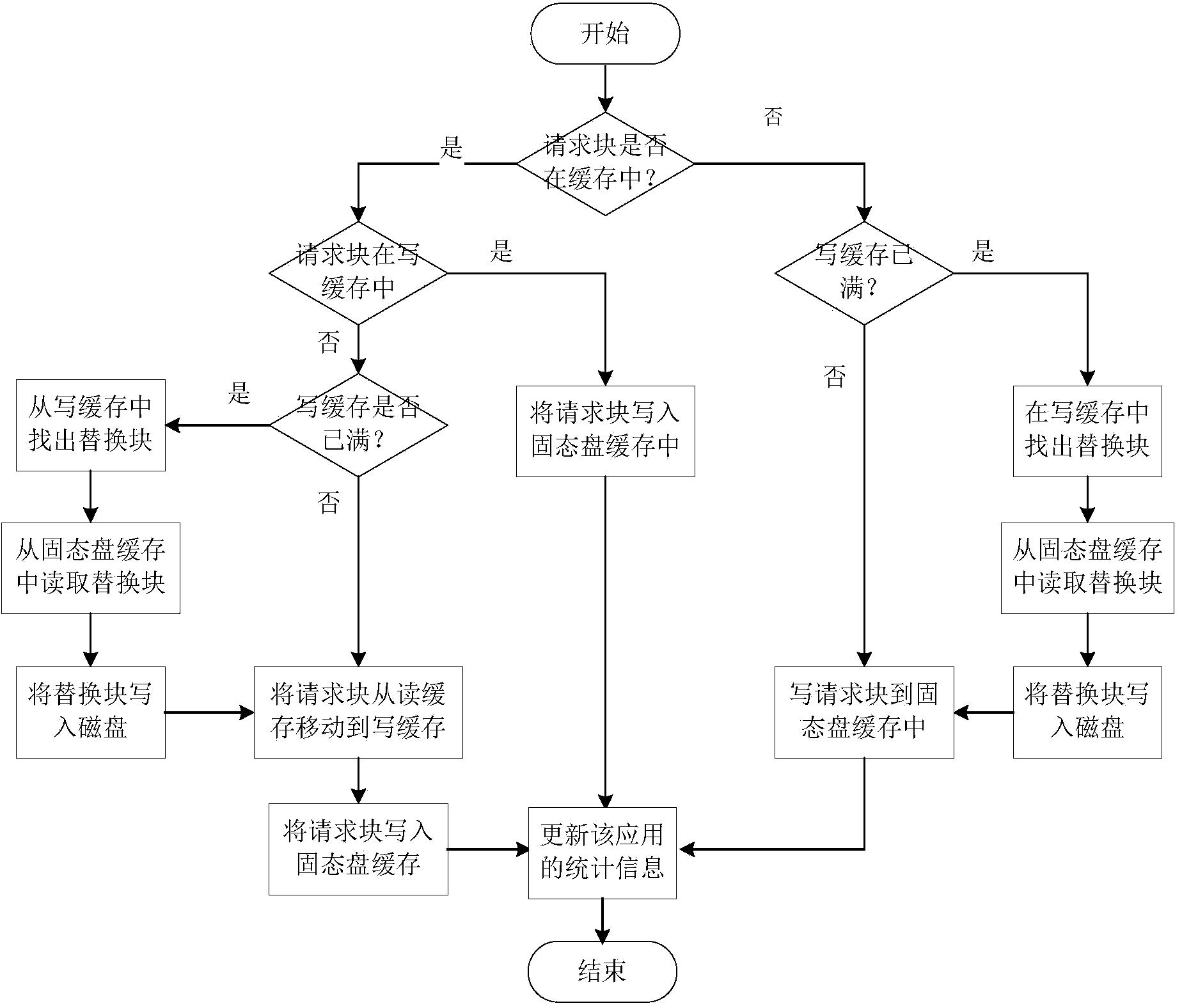

Mixed storage system and method for supporting solid-state disk cache dynamic distribution

ActiveCN103902474AEfficient use ofAvoid performance degradationMemory adressing/allocation/relocationDifferentiated servicesDirty data

The invention provides a mixed storage system and method for supporting solid-state disk cache dynamic distribution. The mixed storage method is characterized in that the mixed storage system is constructed through a solid-state disk and a magnetic disk, and the solid-state disk serves as a cache of the magnetic disk; the load characteristics of applications and the cache hit ratio of the solid-state disk are monitored in real time, performance models of the applications are built, and the cache space of the solid-state disk is dynamically distributed according to the performance requirements of the applications and changes of the load characteristics. According to the solid-state disk cache management method, the cache space of the solid-state disk can be reasonably distributed according to the performance requirements of the applications, and an application-level cache partition service is achieved; due to the fact that the cache space of the solid-state disk of the applications is further divided into a cache reading section and a cache writing section, dirty data blocks and the page copying and rubbish recycling cost caused by the dirty data blocks are reduced; meanwhile, the idle cache space of the solid-state disk is distributed to the applications according to the cache use efficiency of the applications, and therefore the cache hit ratio of the solid-state disk of the mixed storage system and the overall performance of the mixed storage system are improved.

Owner:HUAZHONG UNIV OF SCI & TECH

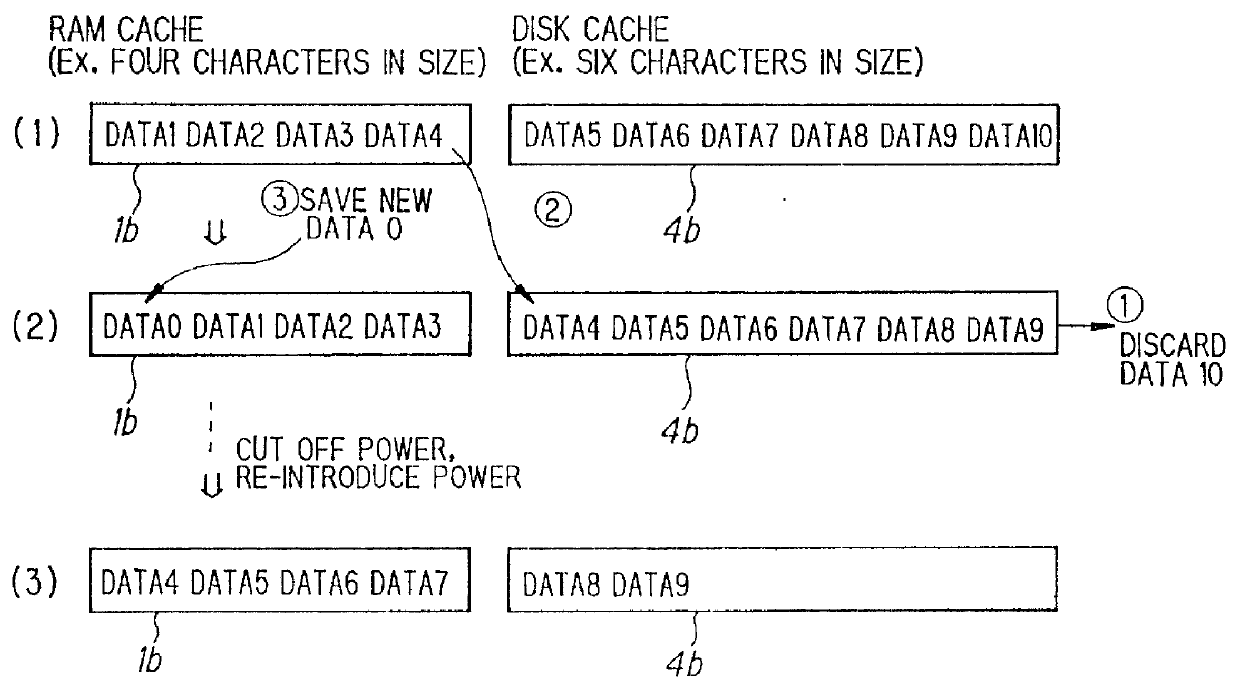

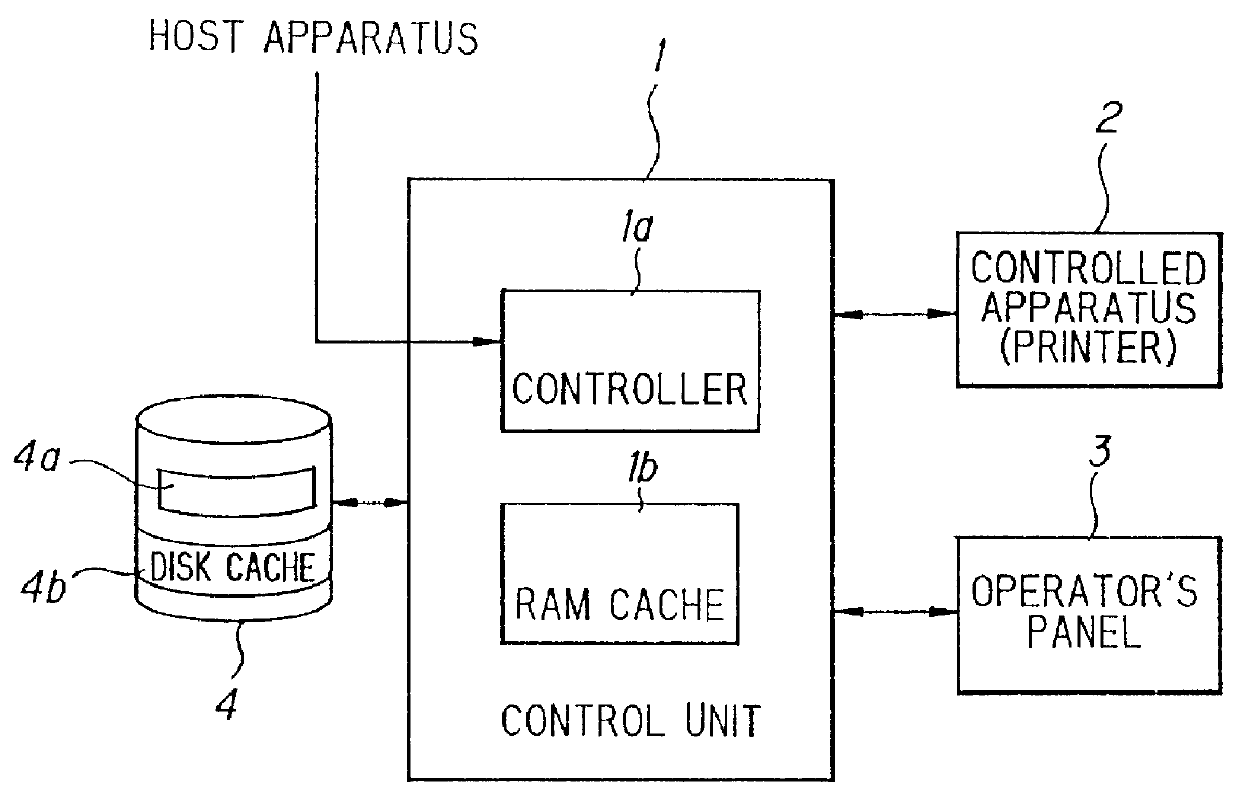

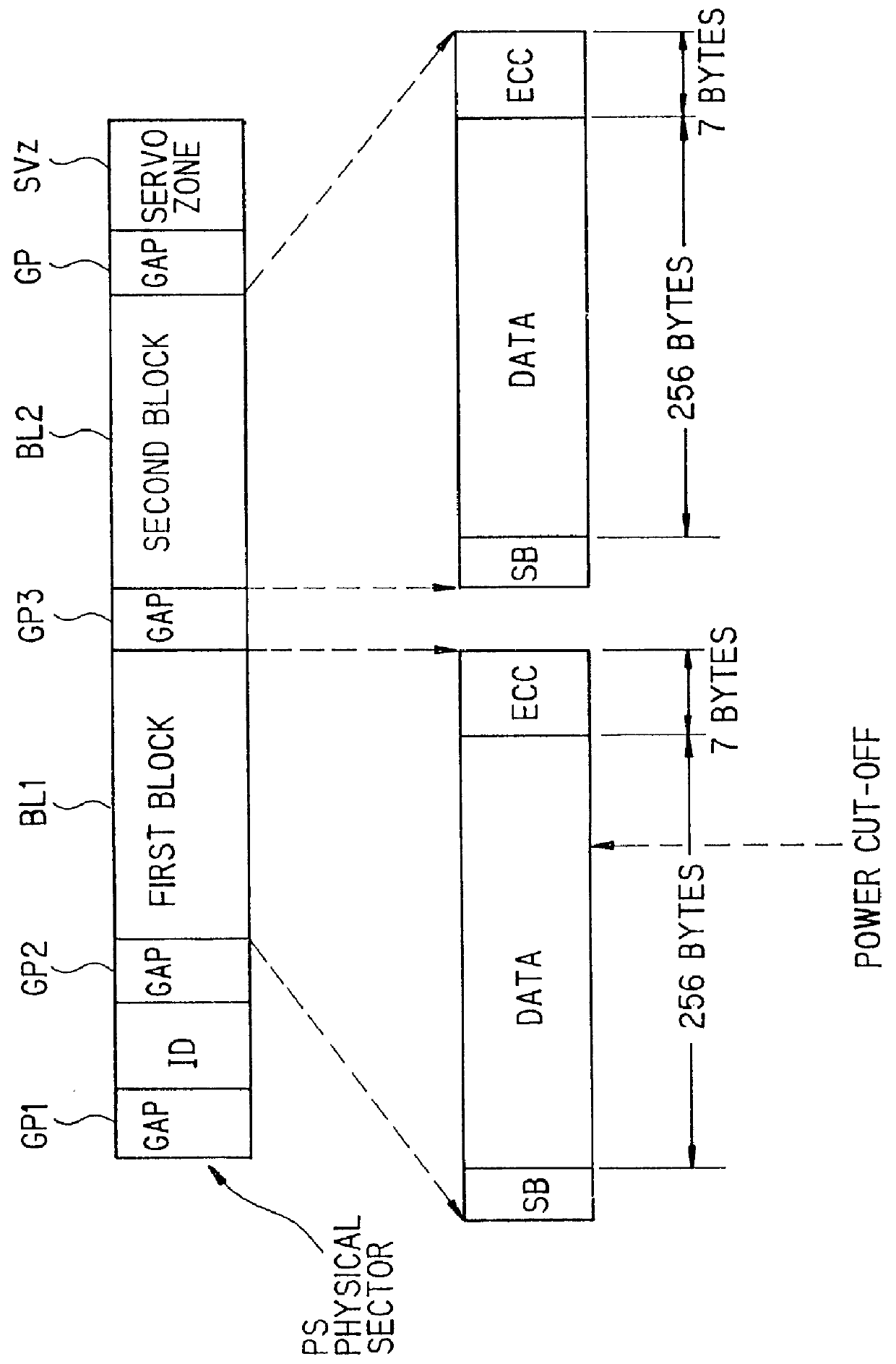

Method for saving generated character image in a cache system including a backup cache

InactiveUS6101576AWrite reliablyData processing applicationsMemory adressing/allocation/relocationHit ratioImaging data

When a control unit is in a non-operating (non-printing) state, data to be saved (character-image data) in a RAM cache memory is transferred to a disk cache memory at regular intervals in order of priority, thereby saving the data in the disk cache memory. If a power supply is cut off and then power is reintroduced, high-priority character-image data, which has been transferred from the RAM cache memory to the disk cache memory and saved in the disk cache memory when printing is not being carried out, is restored in the RAM cache memory. As a result, the high-priority data saved in the RAM cache memory when the power supply was cut off can be restored in the RAM cache memory, thereby raising the hit rate.

Owner:FUJIFILM BUSINESS INNOVATION CORP

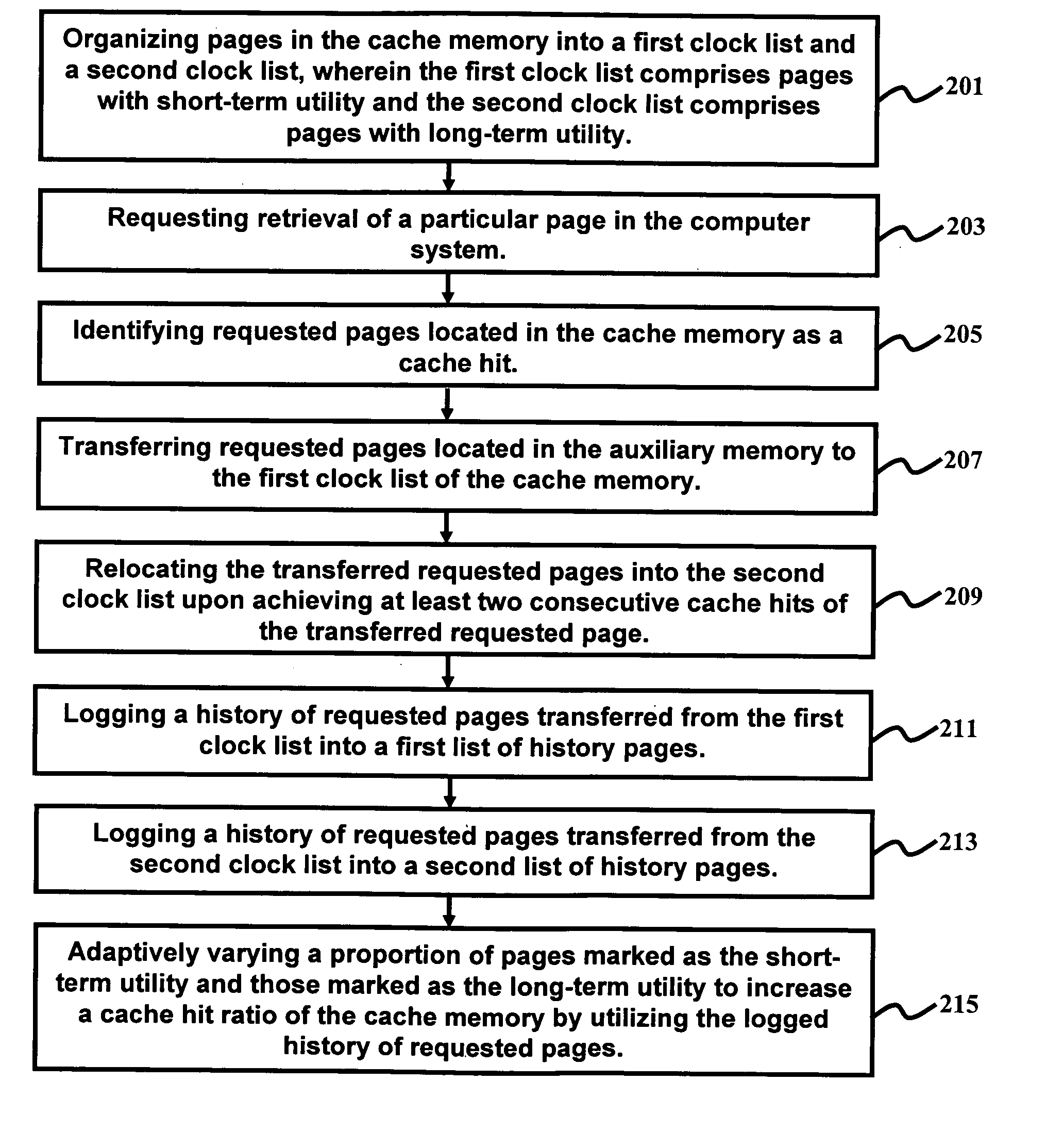

Method and system of clock with adaptive cache replacement and temporal filtering

InactiveUS20060069876A1Amenable to implementationImprove performanceMemory architecture accessing/allocationMemory systemsData retrievalParallel computing

A method and system of managing data retrieval in a computer comprising a cache memory and auxiliary memory comprises organizing pages in the cache memory into a first and second clock list, wherein the first clock list comprises pages with short-term utility and the second clock list comprises pages with long-term utility; requesting retrieval of a particular page in the computer; identifying requested pages located in the cache memory as a cache hit; transferring requested pages located in the auxiliary memory to the first clock list; relocating the transferred requested pages into the second clock list upon achieving at least two consecutive cache hits of the transferred requested page; logging a history of pages evicted from the cache memory; and adaptively varying a proportion of pages marked as short and long-term utility to increase a cache hit ratio of the cache memory by utilizing the logged history of evicted pages.

Owner:IBM CORP

Memory hub and method for memory system performance monitoring

InactiveUS20050144403A1Error detection/correctionMemory adressing/allocation/relocationParallel computingMemory bus

A memory module includes a memory hub coupled to several memory devices. The memory hub includes at least one performance counter that tracks one or more system metrics-for example, page hit rate, number or percentage of prefetch hits, cache hit rate or percentage, read rate, number of read requests, write rate, number of write requests, rate or percentage of memory bus utilization, local hub request rate or number, and / or remote hub request rate or number.

Owner:ROUND ROCK RES LLC

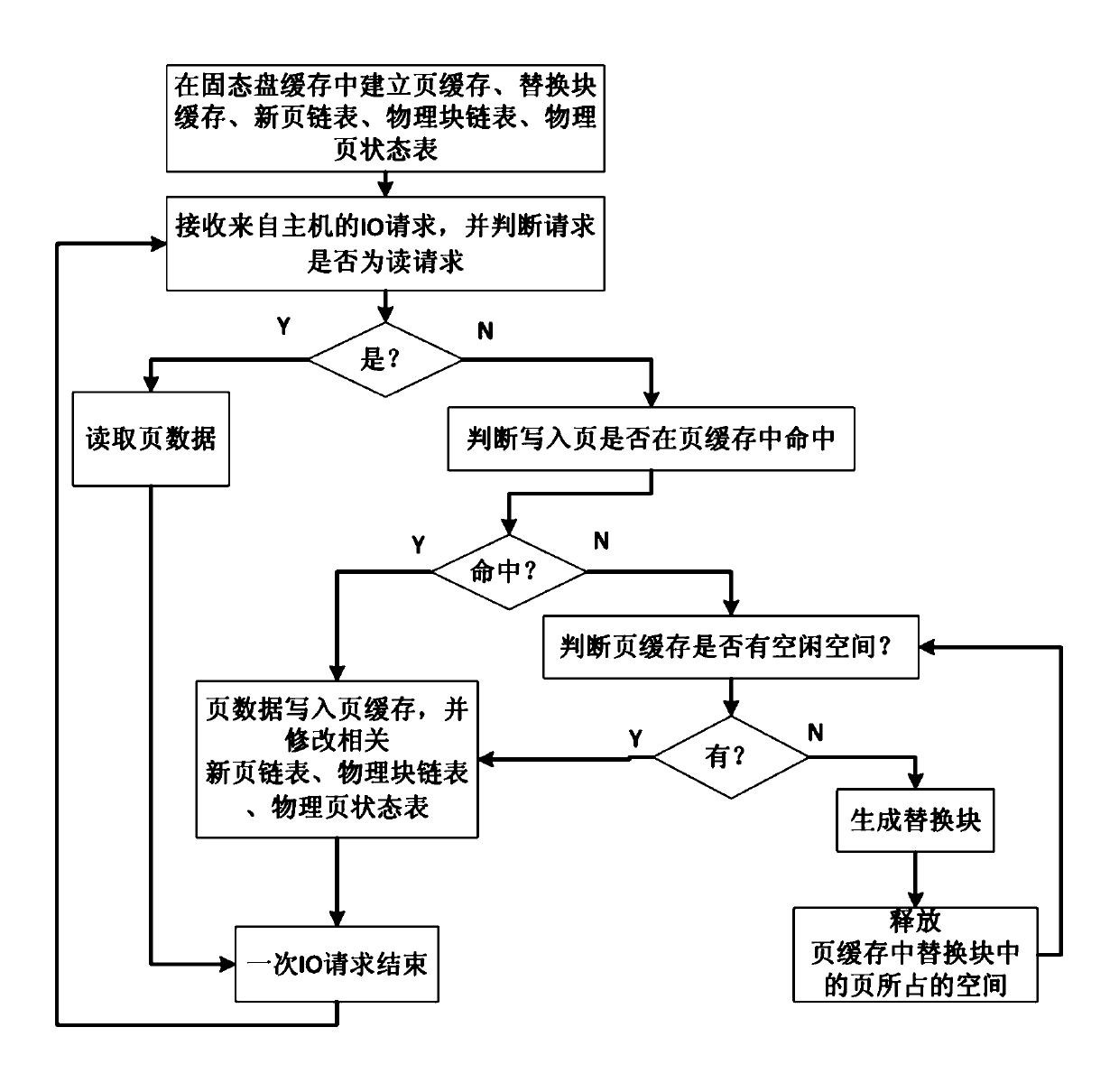

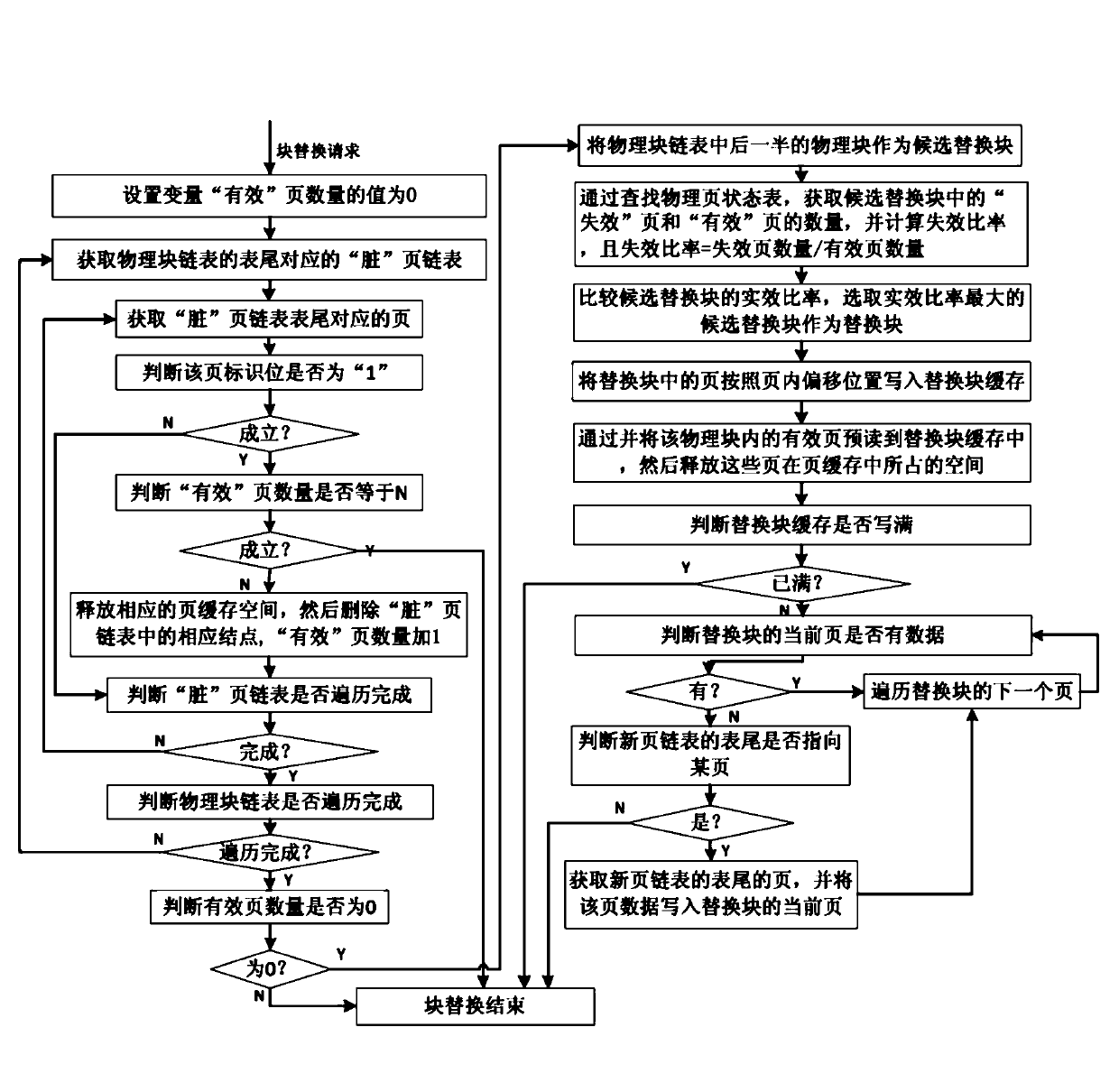

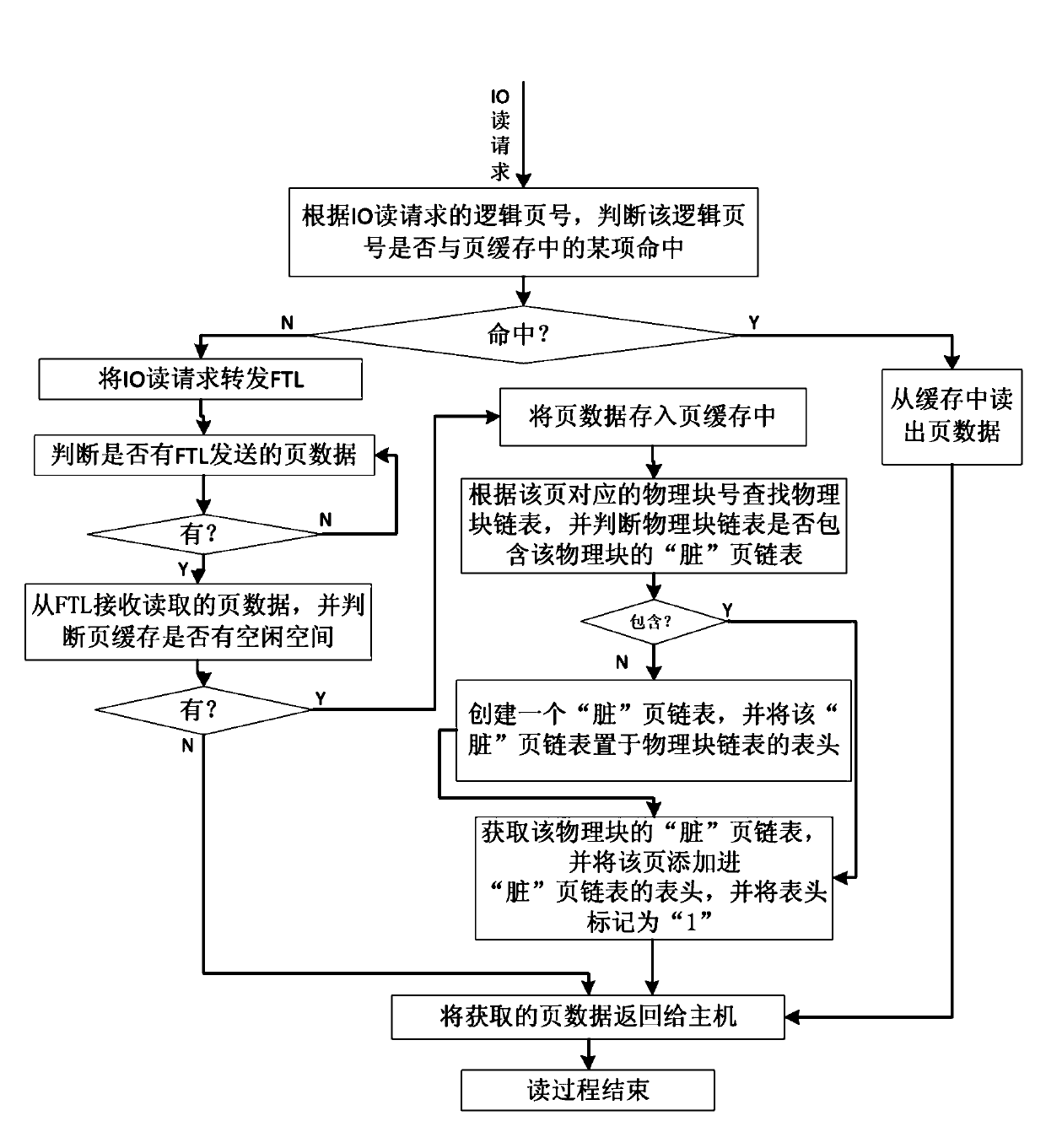

Cache management method for solid-state disc

ActiveCN103136121AImprove hit chanceImprove read and write speedMemory adressing/allocation/relocationDirty dataCache management

The invention discloses a cache management method for a solid-state disc. The method comprises the following implement steps that a page cache, a replace block module, a new page linked list, a physical block chain list and a physical page state list are established; an input and output (IO) request from a host is received and is executed through the page cache, when a writing request is executed, if the page cache is missed, and the page cache has no spare space, a block replace process of the solid-state disc is executed, namely an 'effective' page space in the page cache is preferential released; when the number of 'effective' pages in the page cache is zero, a candidate replace block with the largest failure ratio in a rear half physical block of the physical block chain list is selected to serve as a replace block, and the replace block cache is utilized to execute a replace writing process. The cache management method for the solid-state disc can effectively use a limited cache space and increase hit rate of the cache, enables a block written in a flash medium to comprise as many dirty data pages as possible and as few effective data pages as possible to reduce erasure operation and page copy operations and sequential rubbish recovery caused by the dirty data pages. The cache management method for the solid-state disc is easy to operate.

Owner:湖南长城银河科技有限公司

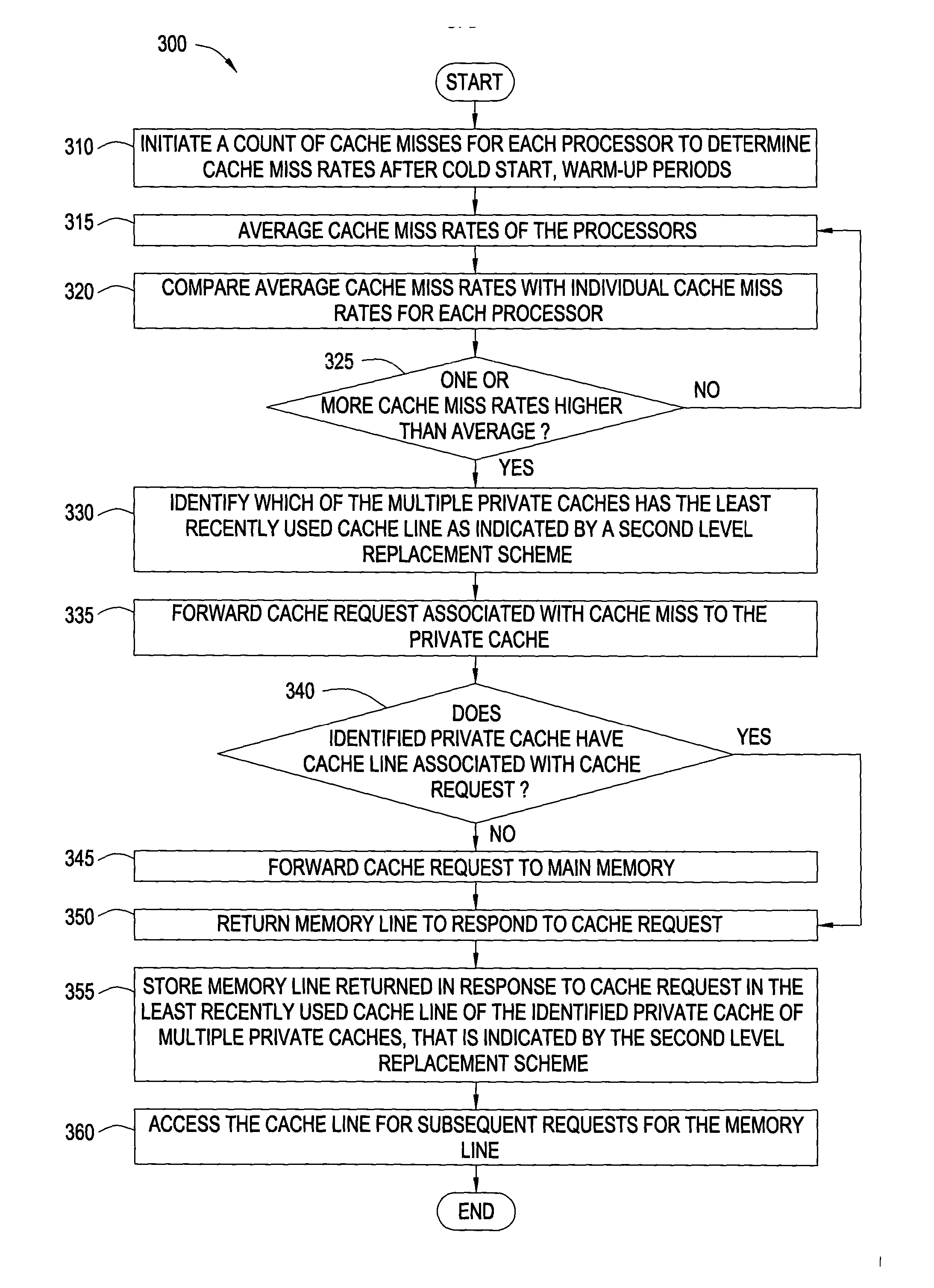

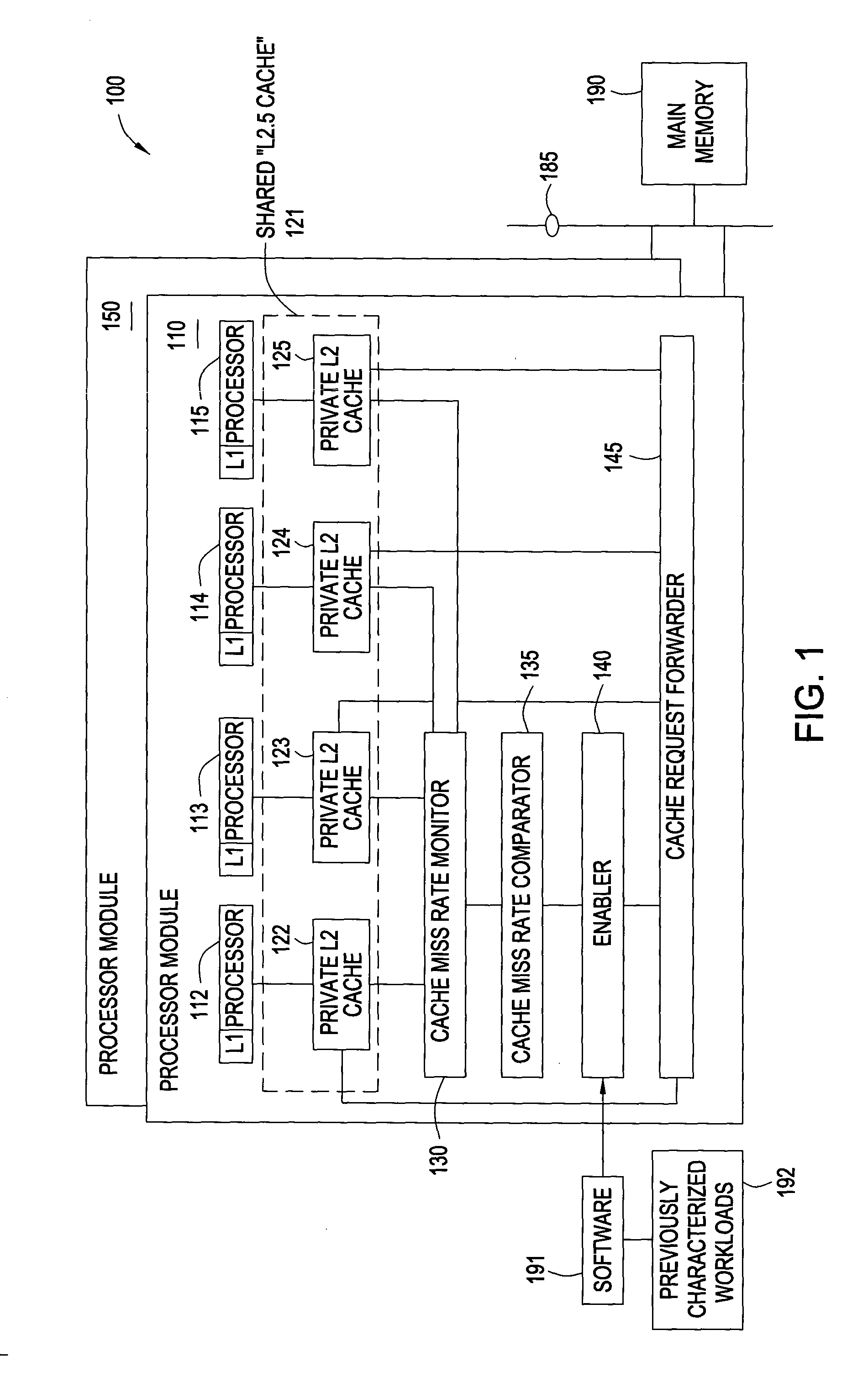

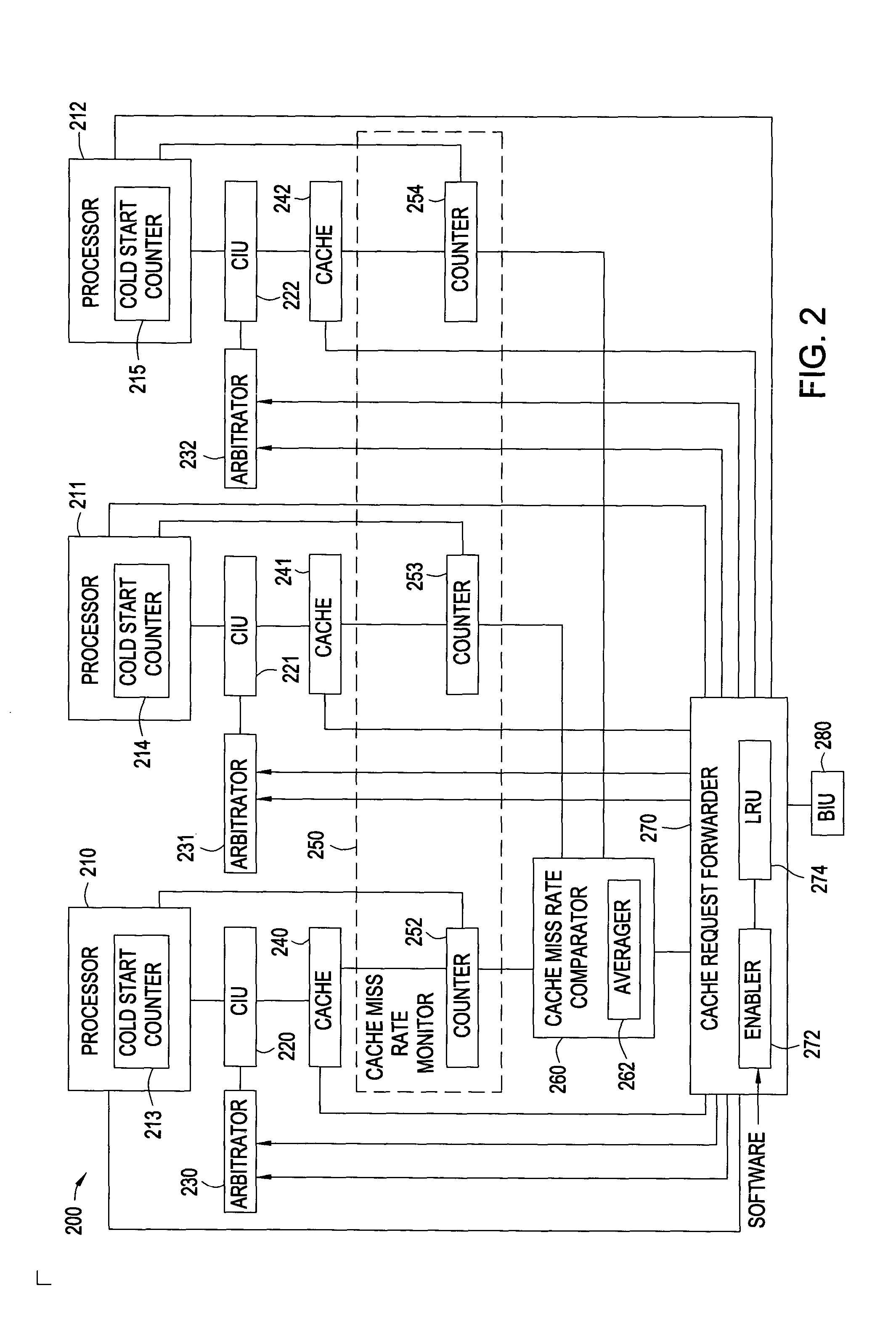

Reduction of cache miss rates using shared private caches

InactiveUS20050071564A1Reduce cache miss rateReduce rateMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingWorkload

Methods and systems for reducing cache miss rates for cache are disclosed. Embodiments may include a computer system with one or more processors and each processor may couple with a private cache. Embodiments selectively enable and implement a cache re-allocation scheme for cache lines of the private caches based upon a workload or an expected workload for the processors. In particular, a cache miss rate monitor may count the number of cache misses for each processor. A cache miss rate comparator compares the cache miss rates to determine whether one or more of the processors have significantly higher cache miss rates than the average cache miss rates within a processor module or overall. If one or more processors have significantly higher cache miss rates, cache requests from those processors are forwarded to private caches that have lower cache miss rates and have the least recently used cache lines.

Owner:IBM CORP

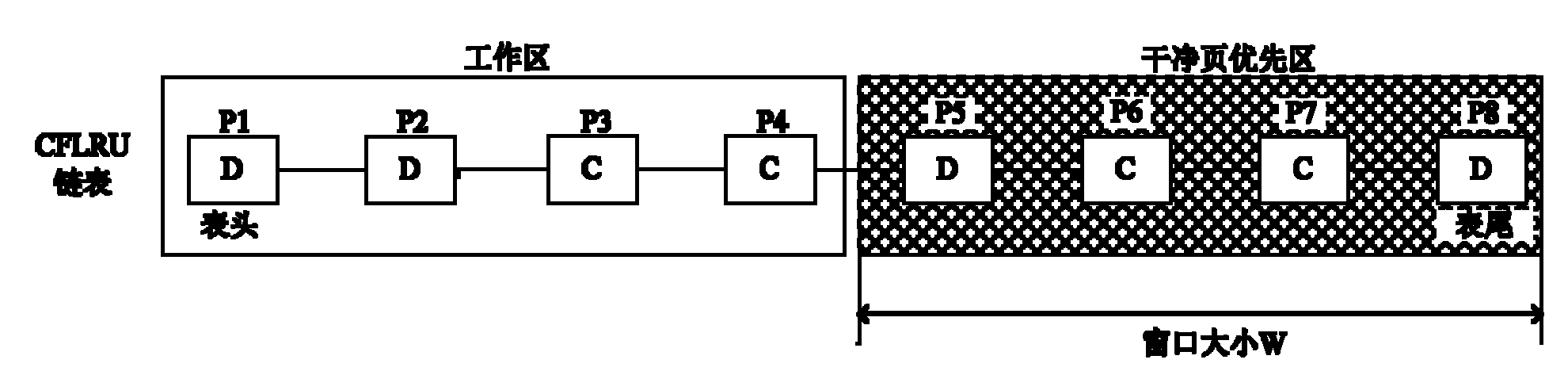

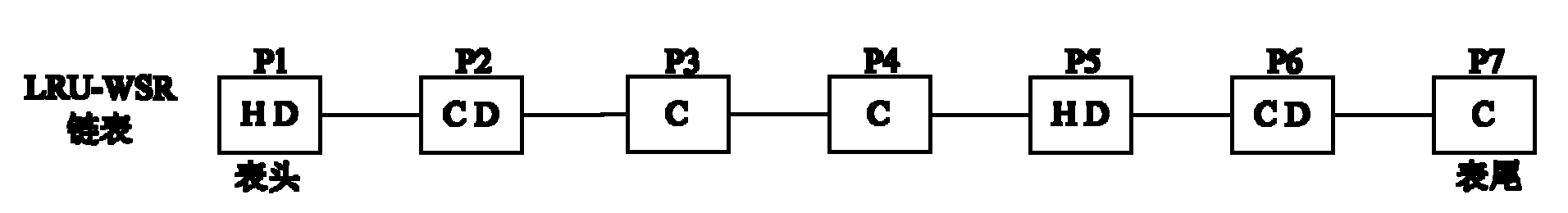

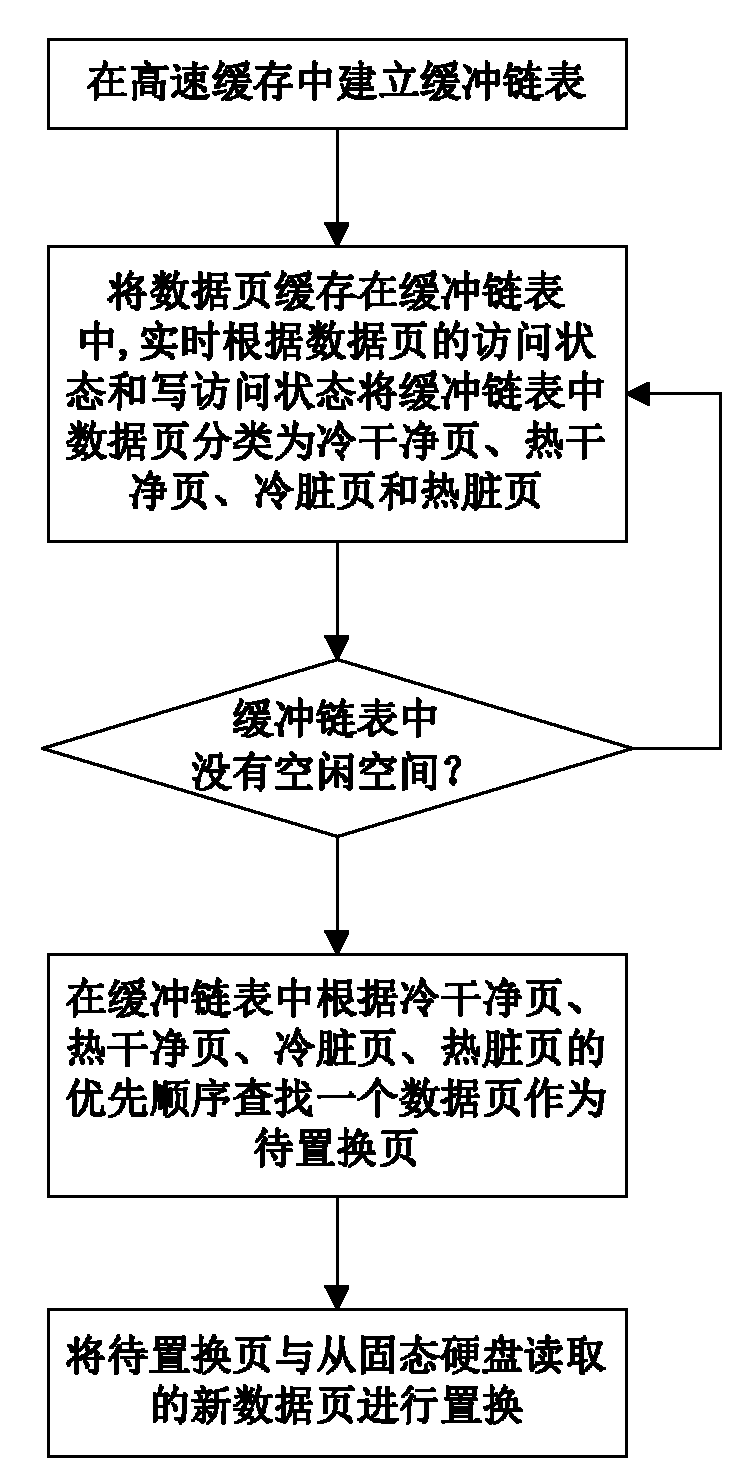

Data page caching method for file system of solid-state hard disc

ActiveCN102156753AReduce overheadImprove hit rateSpecial data processing applicationsDirty pageExternal storage

The invention discloses a data page caching method for a file system of a solid-state hard disc, which comprises the following implementation steps of: (1) establishing a buffer link list used for caching data pages in a high-speed cache; (2) caching the data pages read in the solid-state hard disc in the buffer link list for access, classifying the data pages in the buffer link list into cold clean pages, hot clean pages, cold dirty pages and hot dirty pages in real time according to the access states and write access states of the data pages; (3) firstly searching a data page as a page to be replaced in the buffer link list according to the priority of the cold clean pages, the hot clean pages, the cold dirty pages and the hot dirty pages, and replacing the page to be replaced with a new data page read from the solid-state hard disc when a free space does not exist in the buffer link list. In the invention, the characteristics of the solid-state hard disc can be sufficiently utilized, the performance bottlenecks of the external storage can be effectively relieved, and the storage processing performance of the system can be improved; moreover, the data page caching method has the advantages of good I / O (Input / Output) performance, low replacement cost for cached pages, low expense and high hit rate.

Owner:NAT UNIV OF DEFENSE TECH

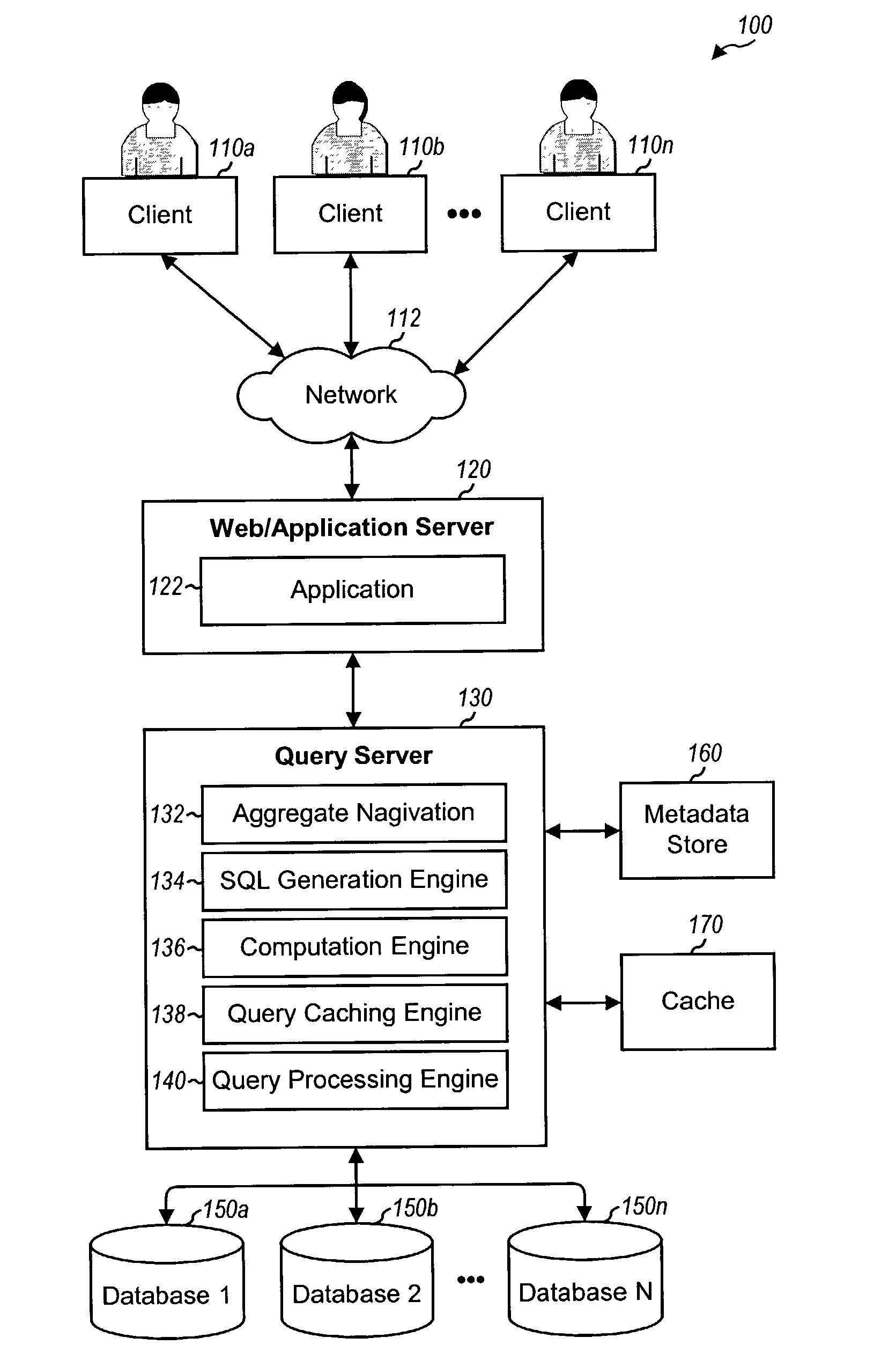

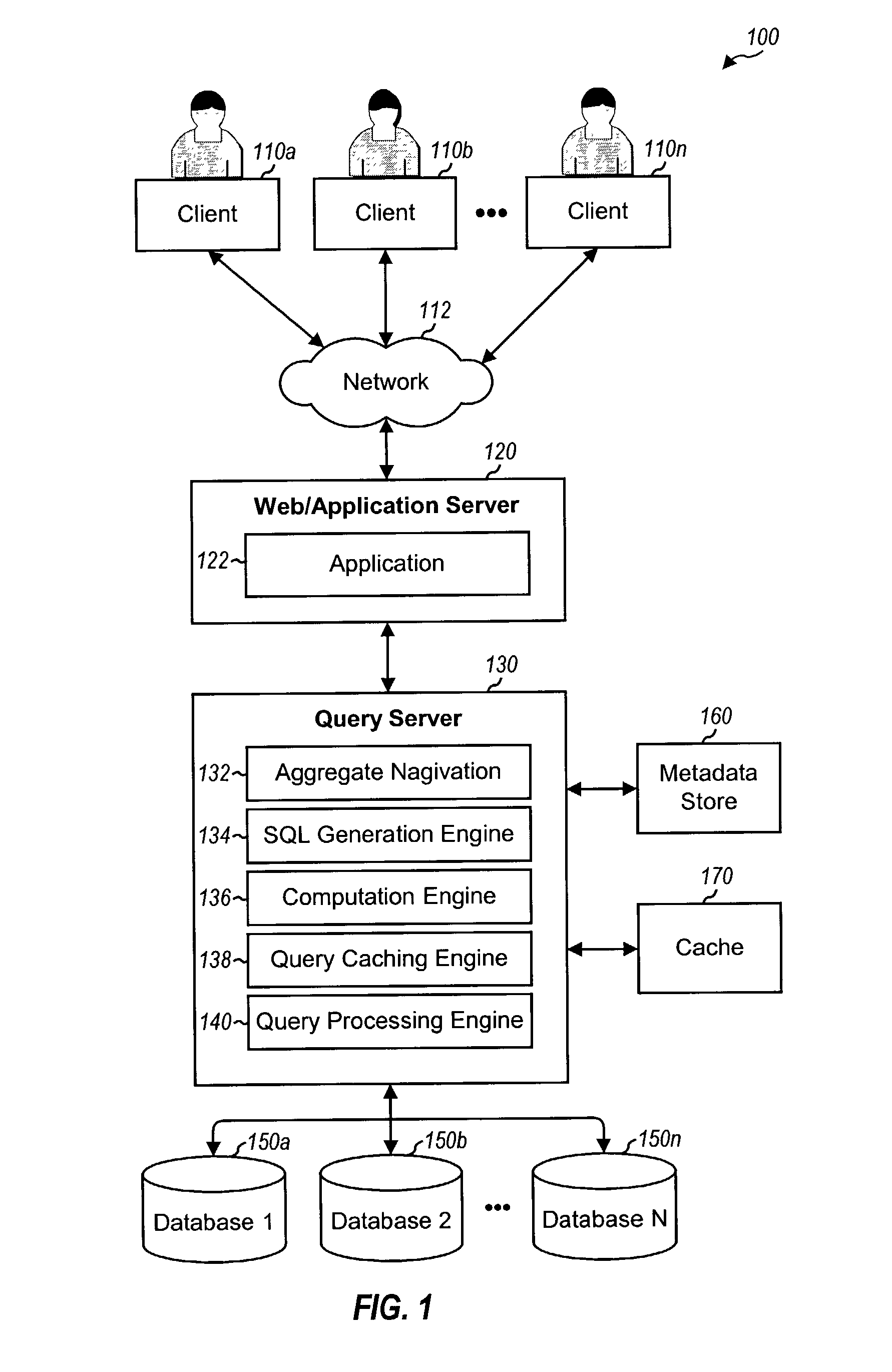

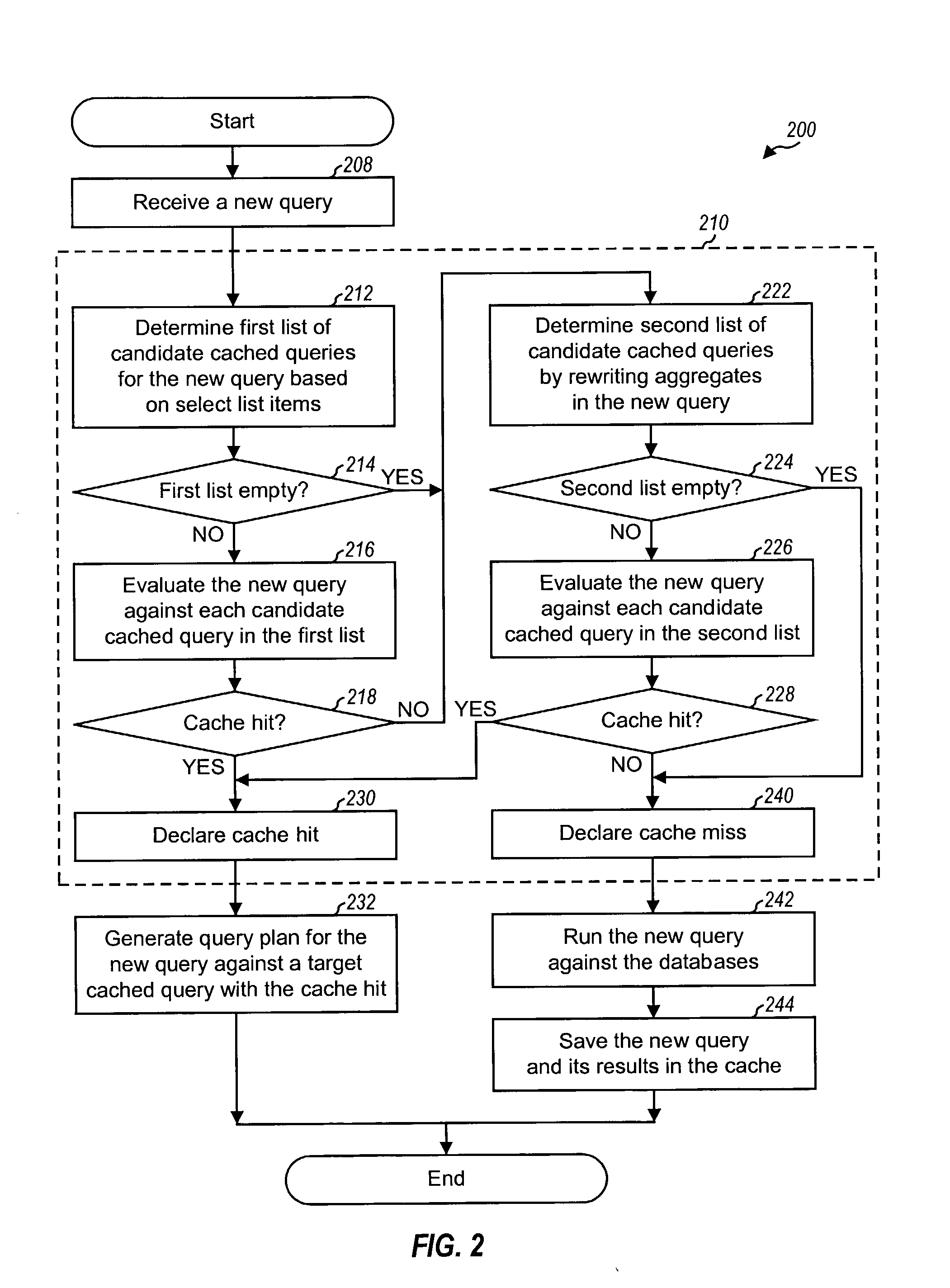

Detecting and processing cache hits for queries with aggregates

ActiveUS20070208690A1EfficientlyImprove query caching performanceData processing applicationsDigital data information retrievalExact matchQuery plan

Techniques to improve query caching performance by efficiently selecting queries stored in a cache for evaluation and increasing the cache hit rate by allowing for inexact matches. A list of candidate queries stored in the cache that potentially could be used to answer a new query is first determined. This list may include all cached queries, cached queries containing exact matches for select list items, or cached queries containing exact and / or inexact matches. Each of at least one candidate query is then evaluated to determine whether or not there is a cache hit, which indicates that the candidate query could be used to answer the new query. The evaluation is performed using a set of rules that allows for inexact matches of aggregates, if any, in the new query. A query plan is generated for the new query based on a specific candidate query with a cache hit.

Owner:ORACLE INT CORP

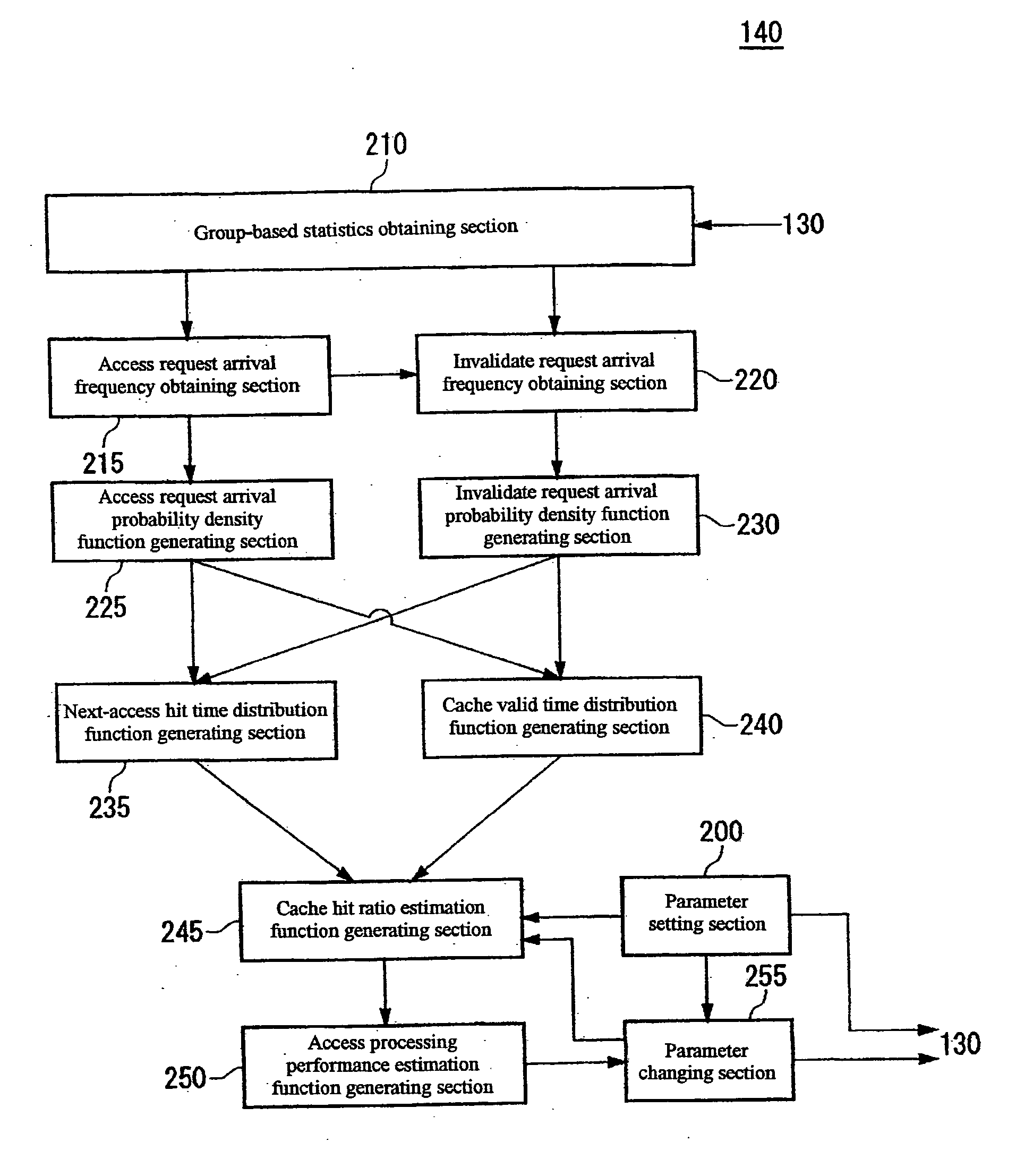

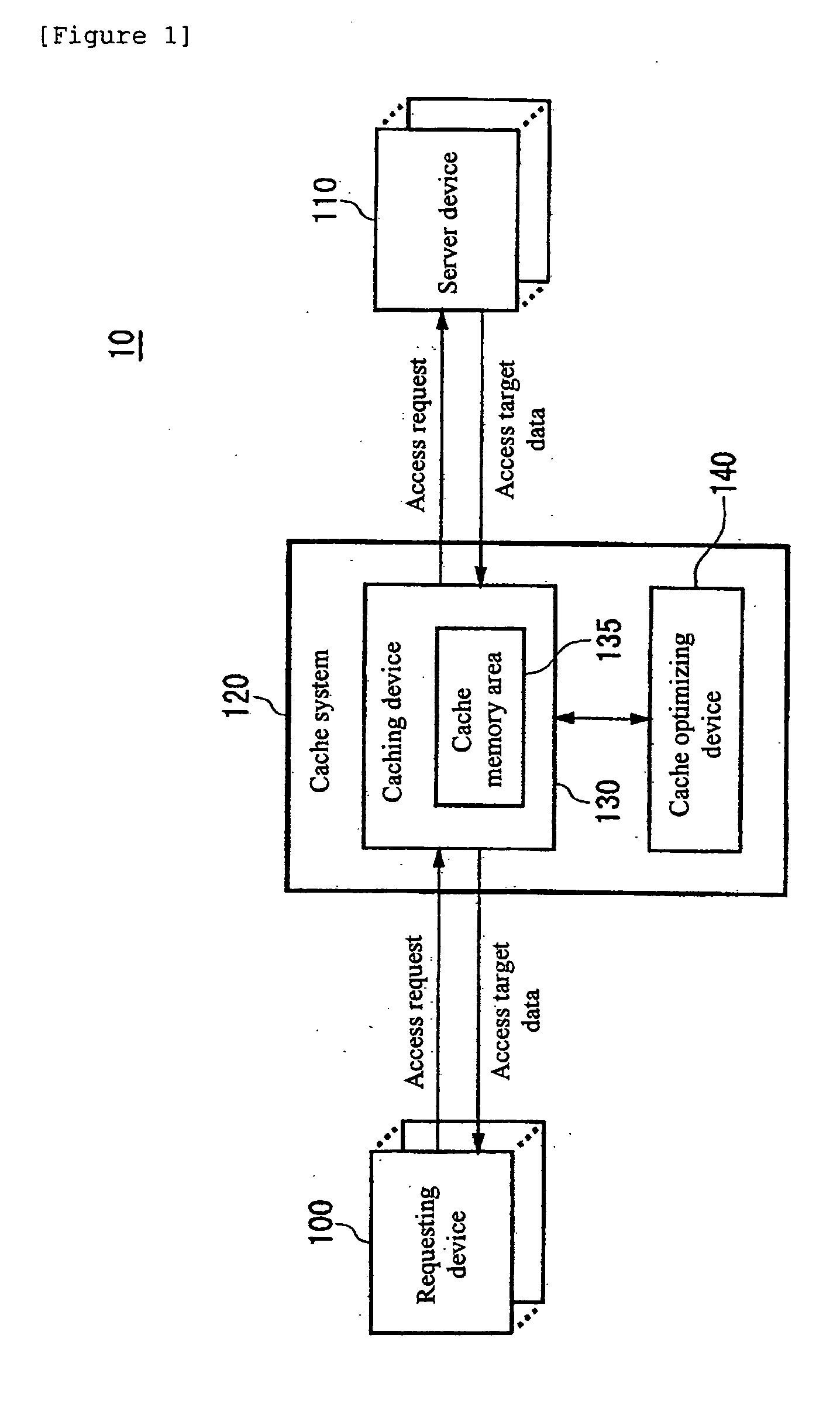

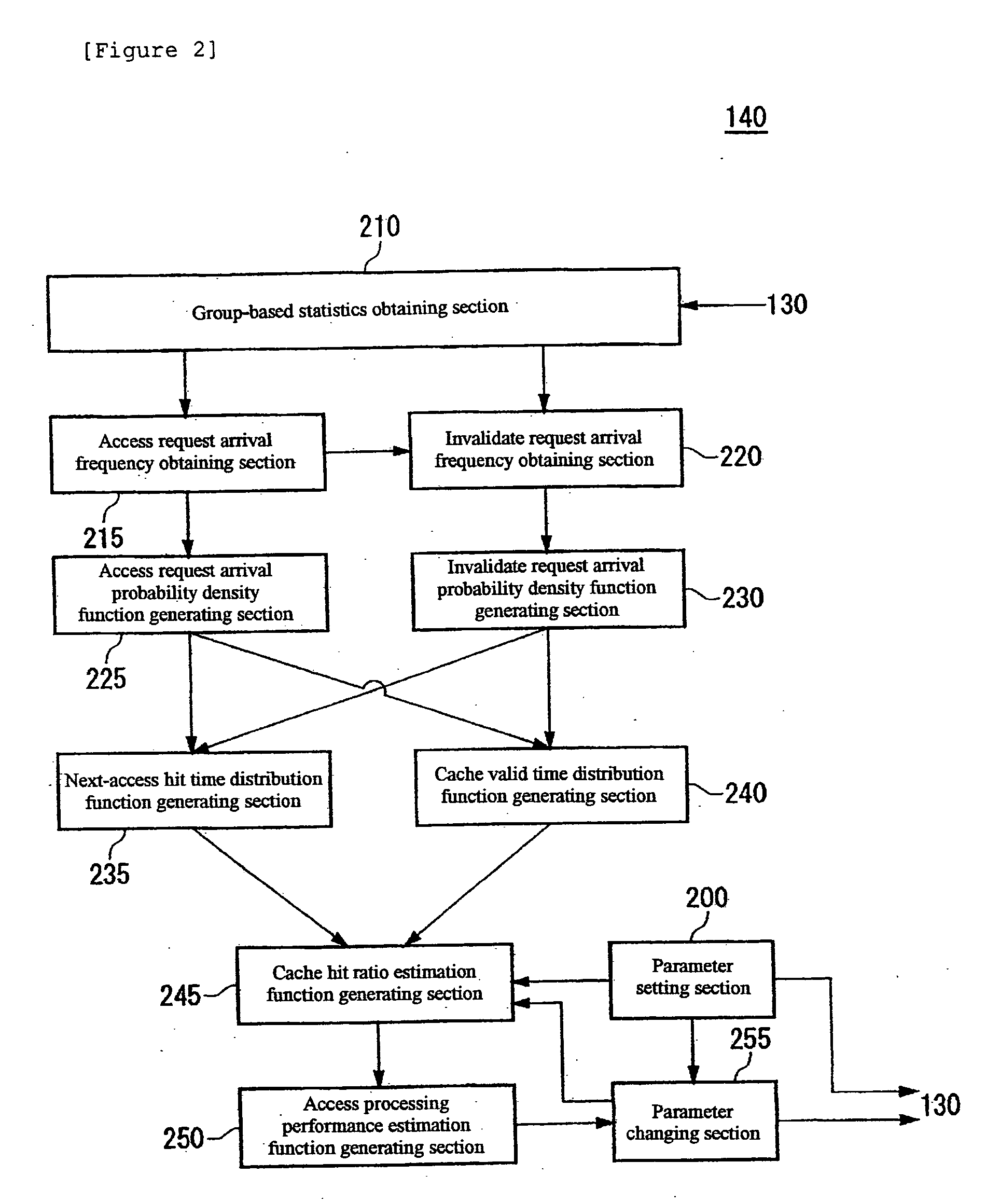

Cache hit ratio estimating apparatus, cache hit ratio estimating method, program, and recording medium

InactiveUS20050268037A1Improve accuracyEasy to processInput/output to record carriersError detection/correctionCache accessNormal density

Determining a cache hit ratio of a caching device analytically and precisely. There is provided a cache hit ratio estimating apparatus for estimating the cache hit ratio of a caching device, caching access target data accessed by a requesting device, including: an access request arrival frequency obtaining section for obtaining an average arrival frequency measured for access requests for each of the access target data; an access request arrival probability density function generating section for generating an access request arrival probability density function which is a probability density function of arrival time intervals of access requests for each of the access target data on the basis of the average arrival frequency of access requests for the access target data; and a cache hit ratio estimation function generating section for generating an estimation function for the cache hit ratio for each of the access target data on the basis of the access request arrival probability density function for the plurality of the access target data.

Owner:GOOGLE LLC

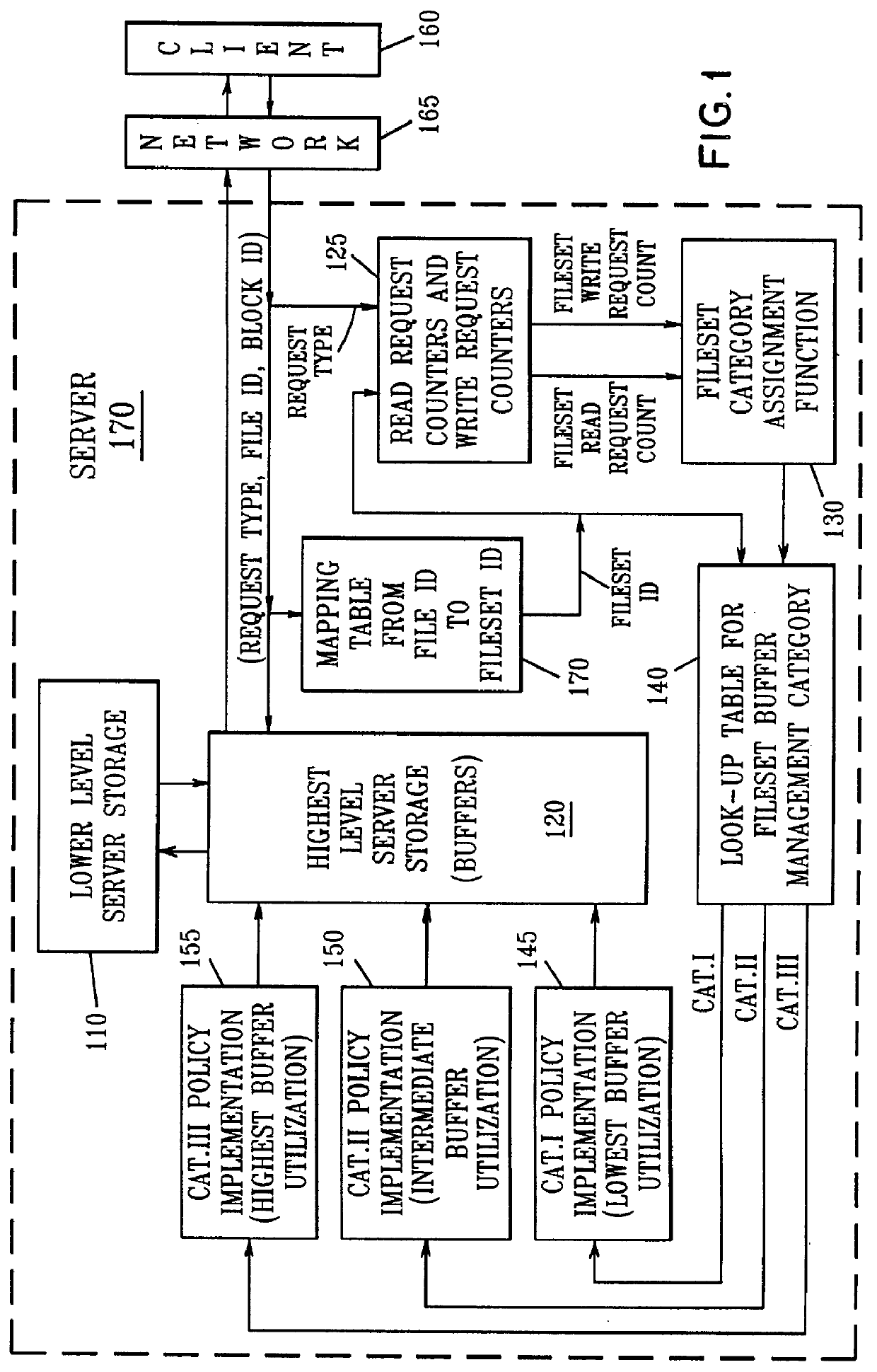

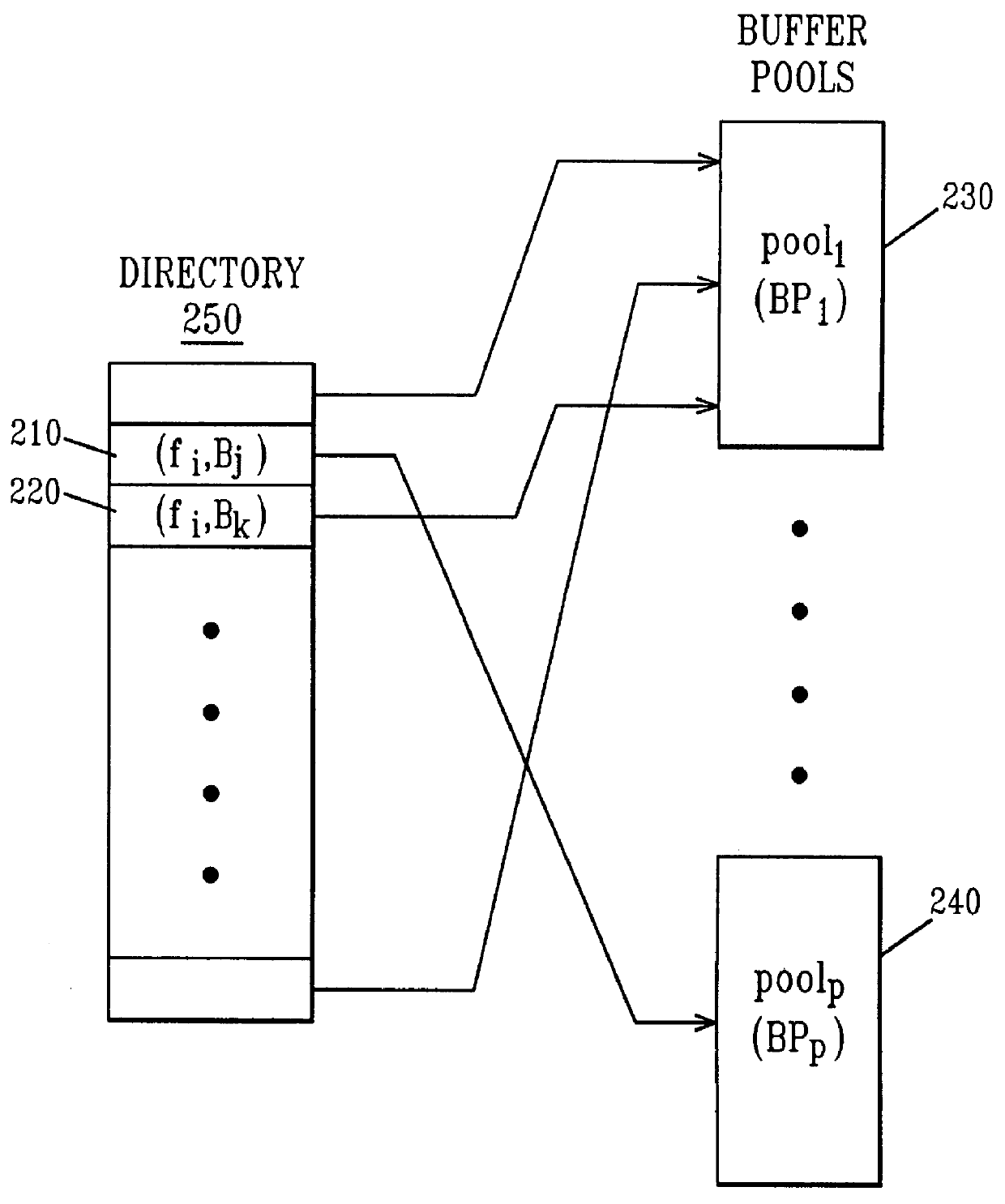

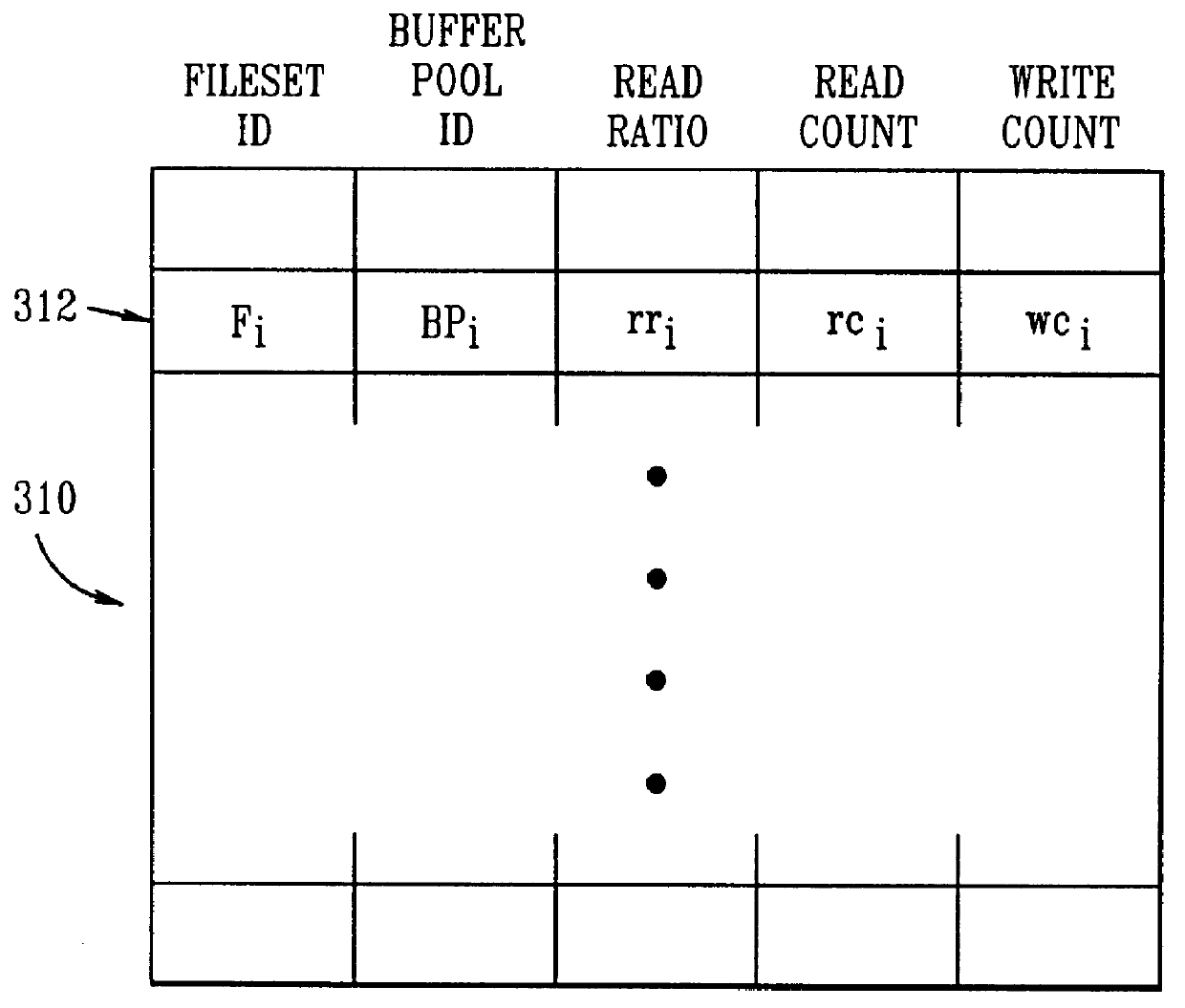

Fileserver buffer manager based on file access operation statistics

InactiveUS6088767AHigh hit ratioPartly effectiveData processing applicationsMemory adressing/allocation/relocationFile serverHit ratio

Fileserver buffers are managed so as to improve the hit ratio for read accesses to the fileserver by clients by grouping related files into filesets, collecting fileserver access operation (i.e., read and write) statistics for each of the filesets, classifying the filesets into a plurality of fileset categories having similar collected access operation statistics and then implementing different fileserver buffer management policies for the blocks (or pages) from each of the different fileset categories. The buffer management policy applied to each of these categories is designed to create a generally higher preference for retaining blocks (or pages) of files in the fileserver buffers having a generally higher read to write ratio.

Owner:IBM CORP

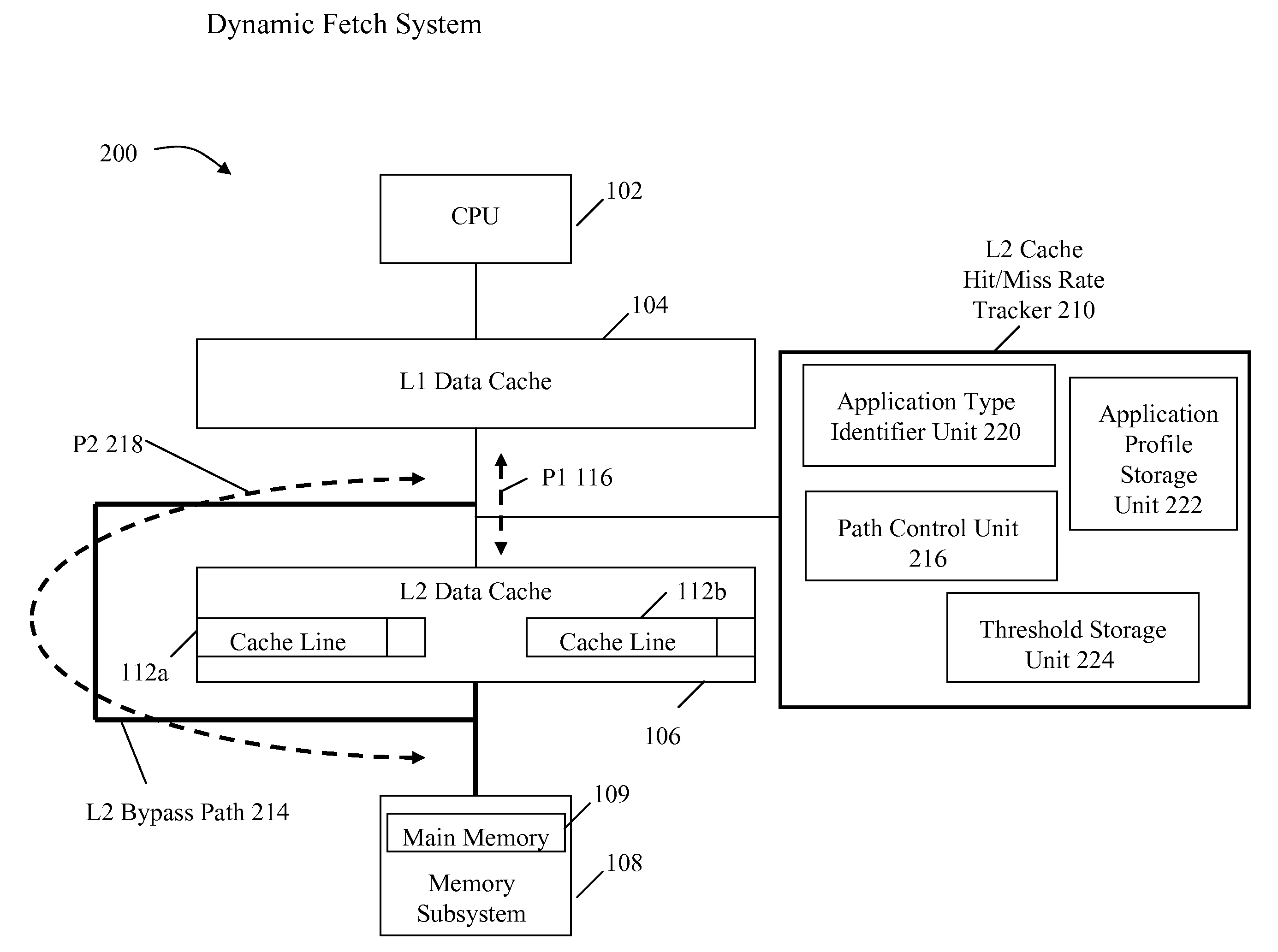

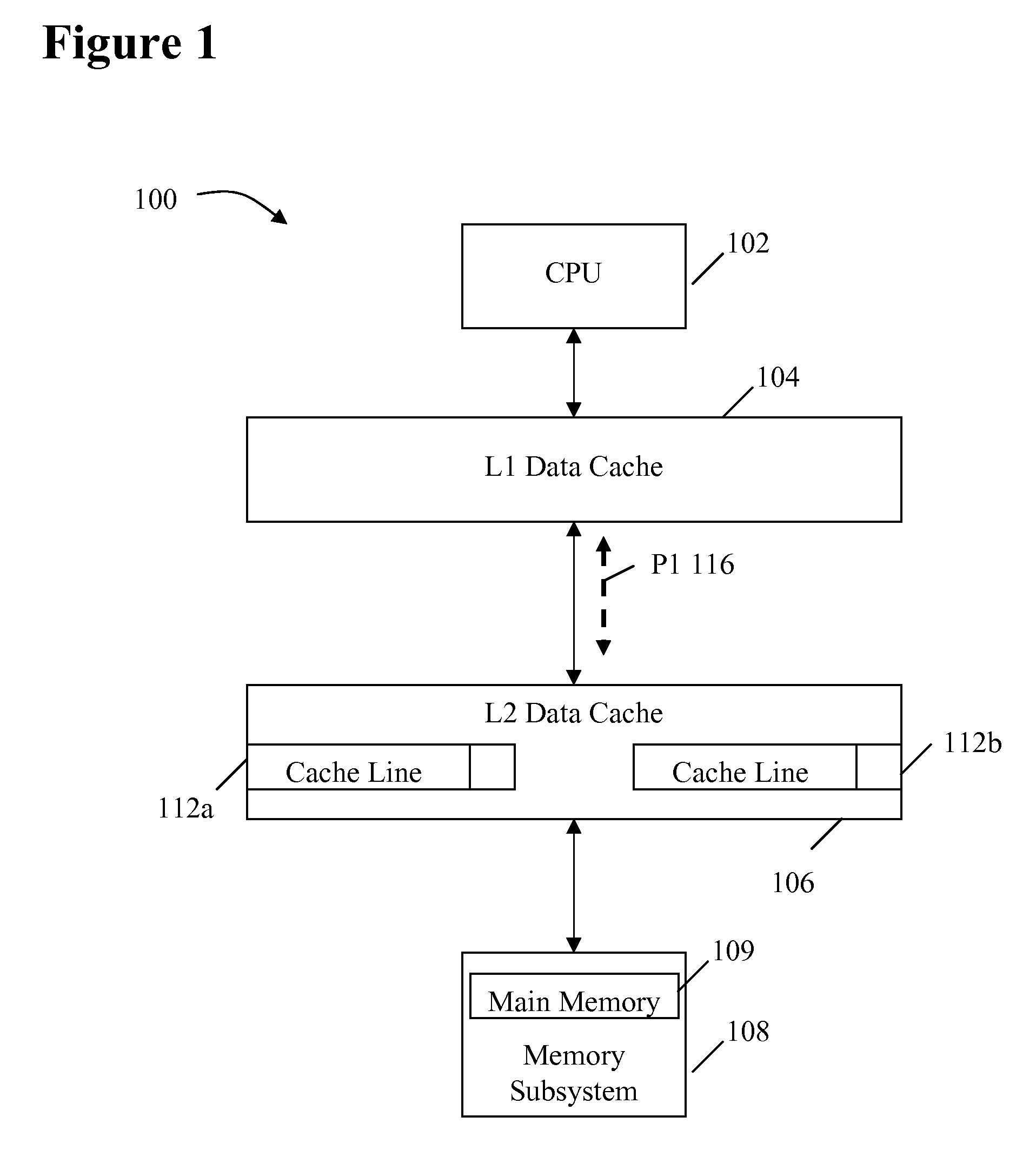

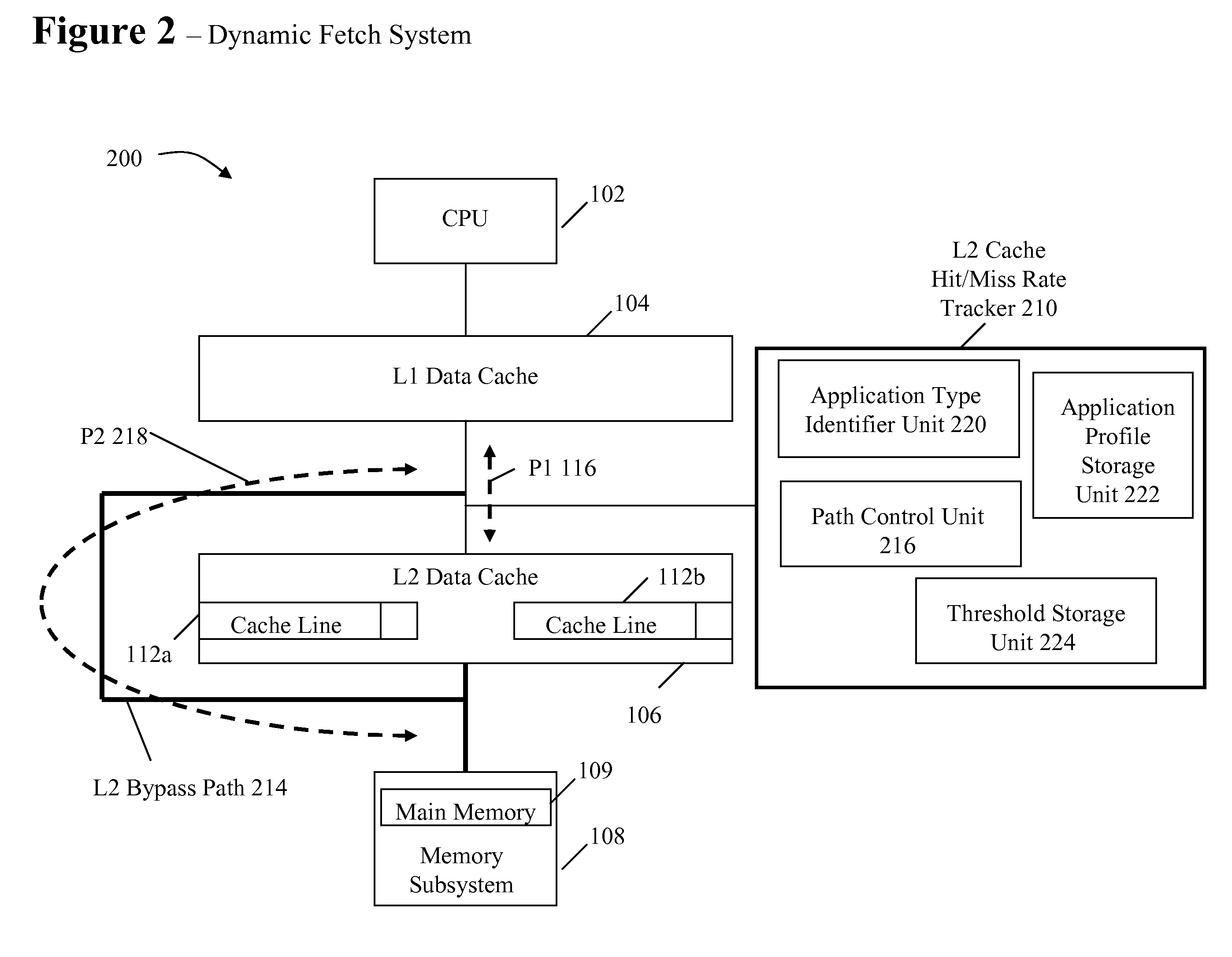

System and method for dynamically selecting the fetch path of data for improving processor performance

InactiveUS20090037664A1Improve system performanceImprove latencyMemory adressing/allocation/relocationData accessParallel computing

A system and method for dynamically selecting the data fetch path for improving the performance of the system improves data access latency by dynamically adjusting data fetch paths based on application data fetch characteristics. The application data fetch characteristics are determined through the use of a hit / miss tracker. It reduces data access latency for applications that have a low data reuse rate (streaming audio, video, multimedia, games, etc.) which will improve overall application performance. It is dynamic in a sense that at any point in time when the cache hit rate becomes reasonable (defined parameter), the normal cache lookup operations will resume. The system utilizes a hit / miss tracker which tracks the hits / misses against a cache and, if the miss rate surpasses a prespecified rate or matches an application profile, the hit / miss tracker causes the cache to be bypassed and the data is pulled from main memory or another cache thereby improving overall application performance.

Owner:IBM CORP

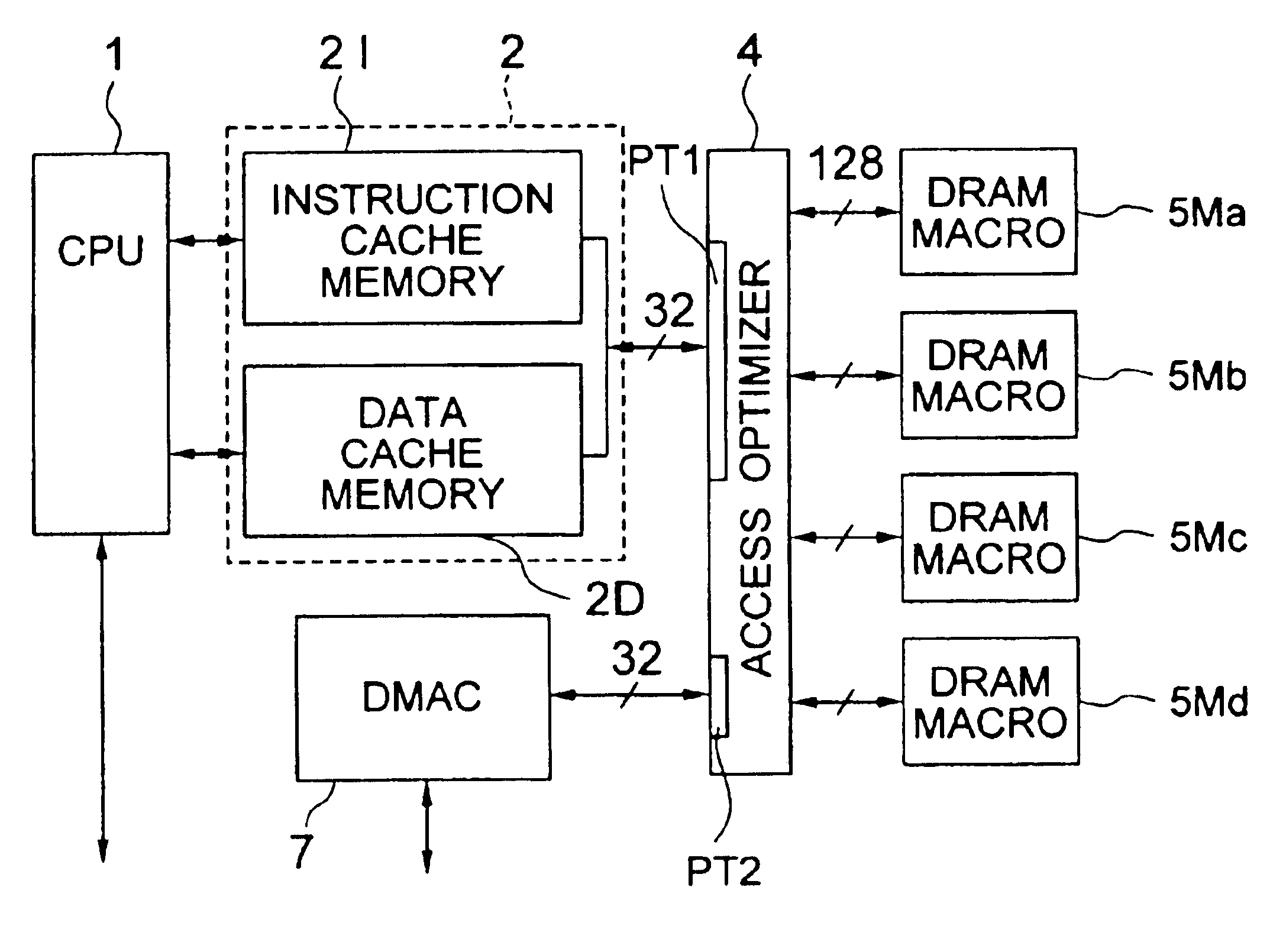

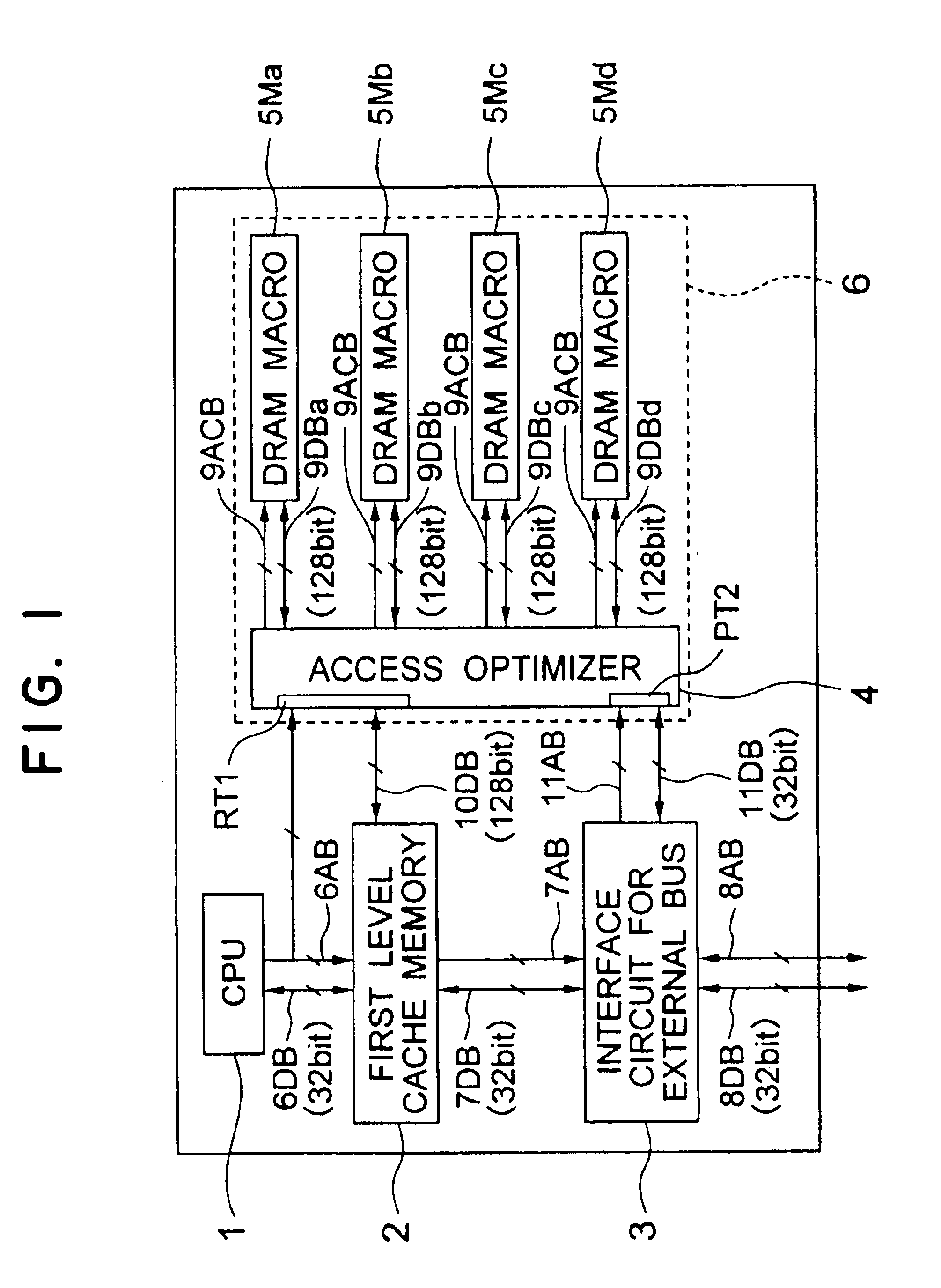

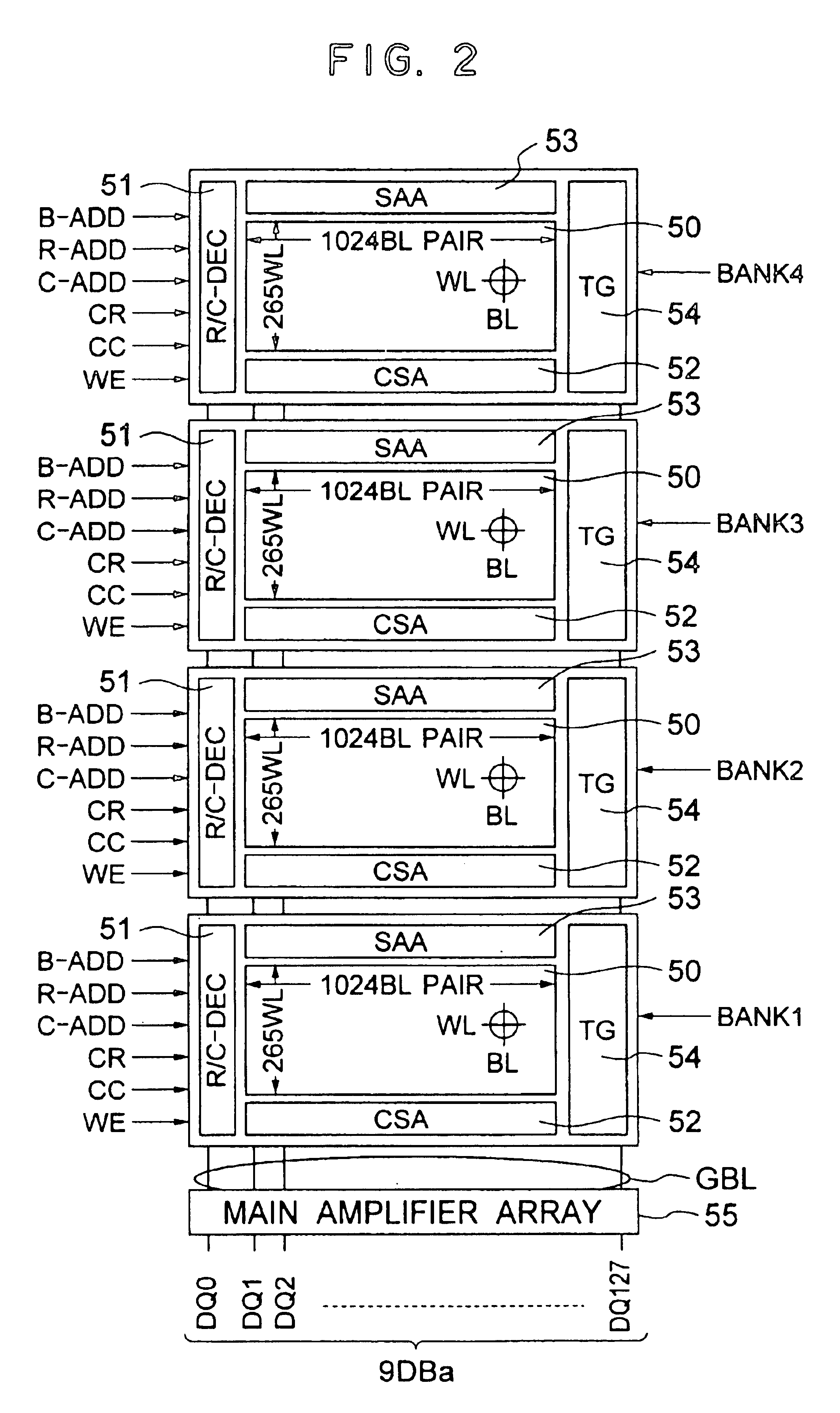

Semiconductor integrated circuit and data processing system

InactiveUS6847578B2Increase speedHigh data efficiencyMemory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemAudio power amplifier

To enhance the speed of first access (read access different in word line from the previous access) to a multi-bank memory, multi-bank memory macro structures are used. Data are held in a sense amplifier for every memory bank. When access is hit to the held data, data latched by the sense amplifier are output to thereby enhance the speed of first access to the memory macro structures. Namely, each memory bank is made to function as a sense amplifier cache. To enhance the hit ratio of such a sense amplifier cache more greatly, an access controller self-prefetches the next address (an address to which a predetermined offset has been added) after access to a memory macro structure so that data in the self-prefetched address are preread by a sense amplifier in another memory bank.

Owner:RENESAS ELECTRONICS CORP

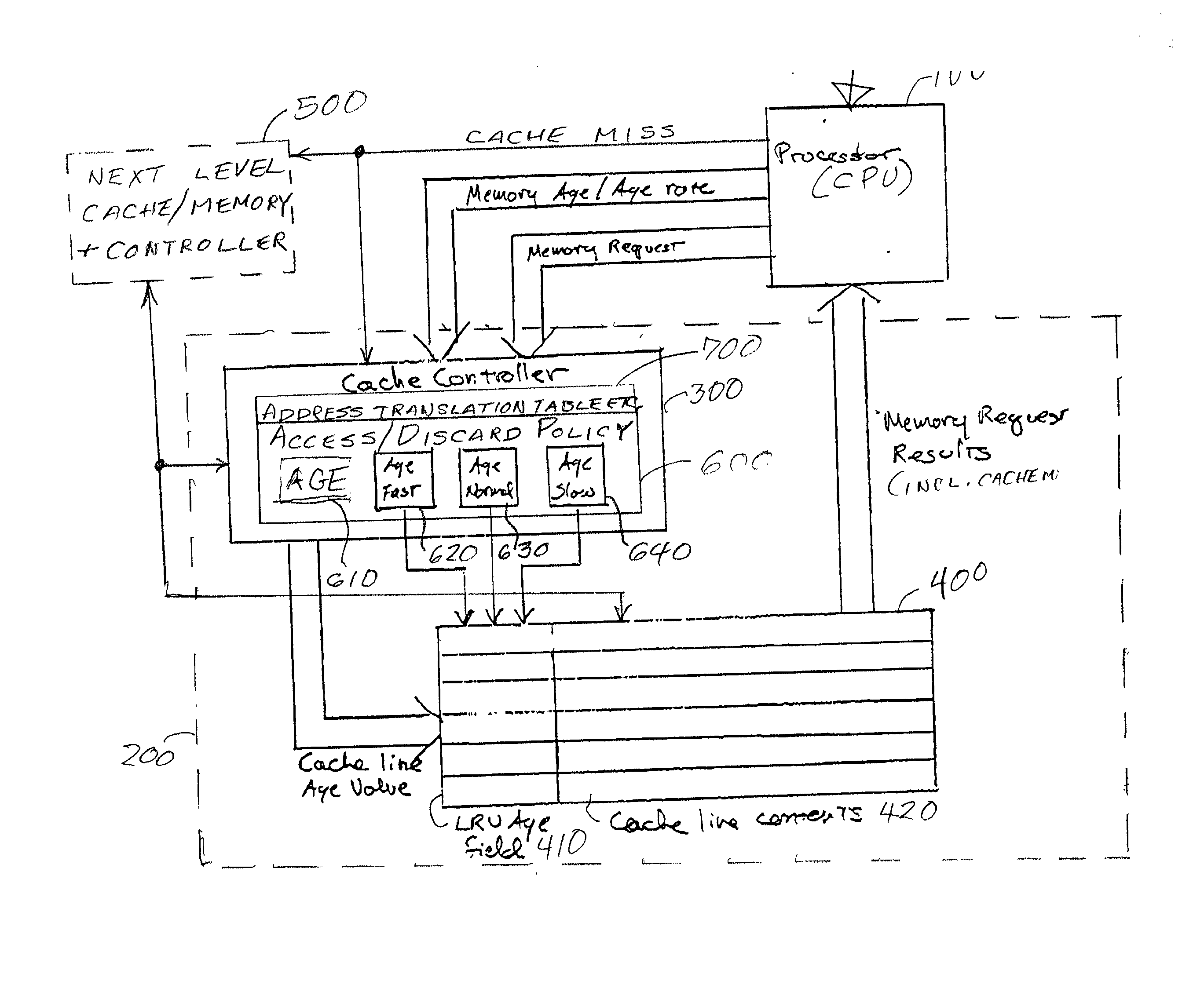

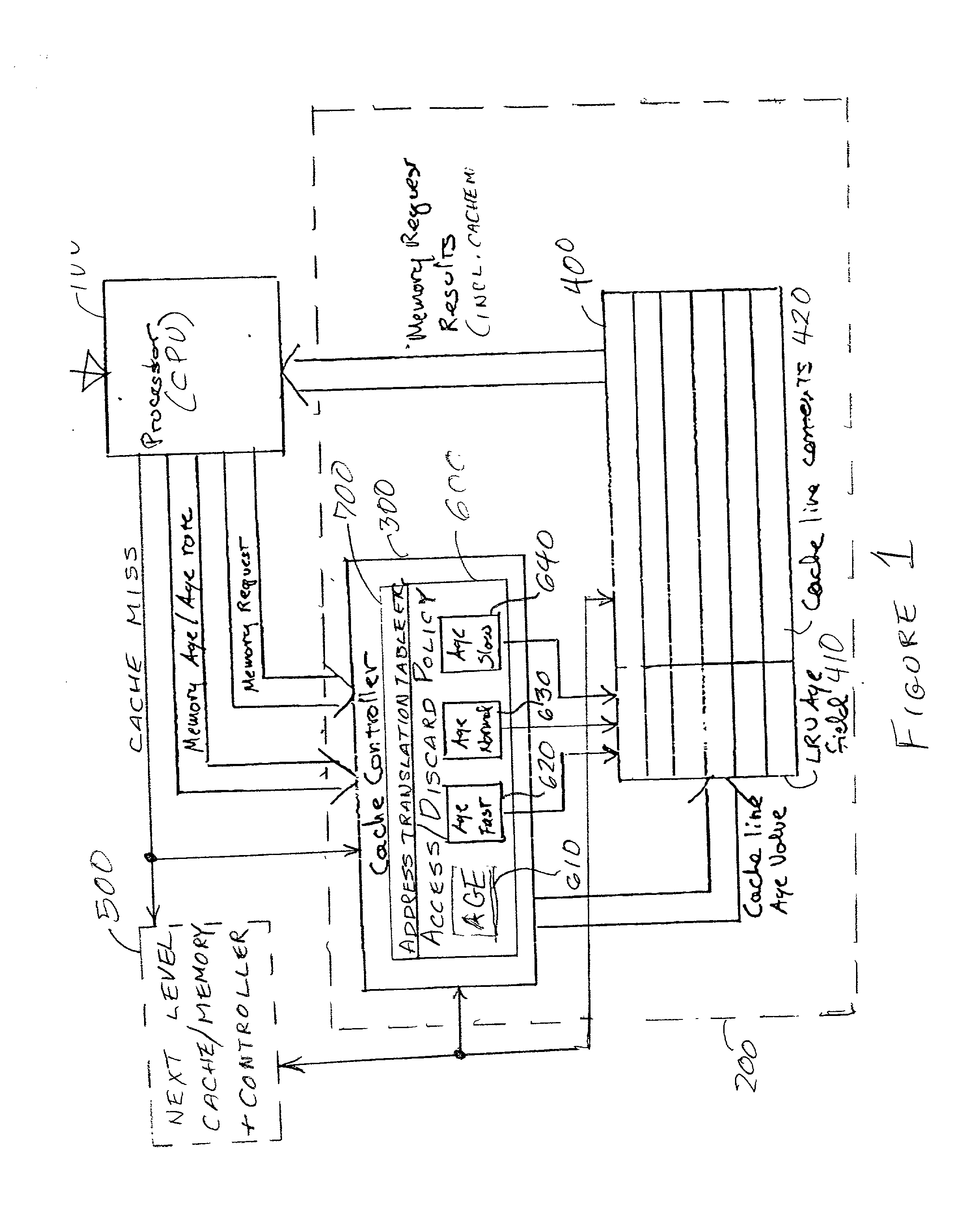

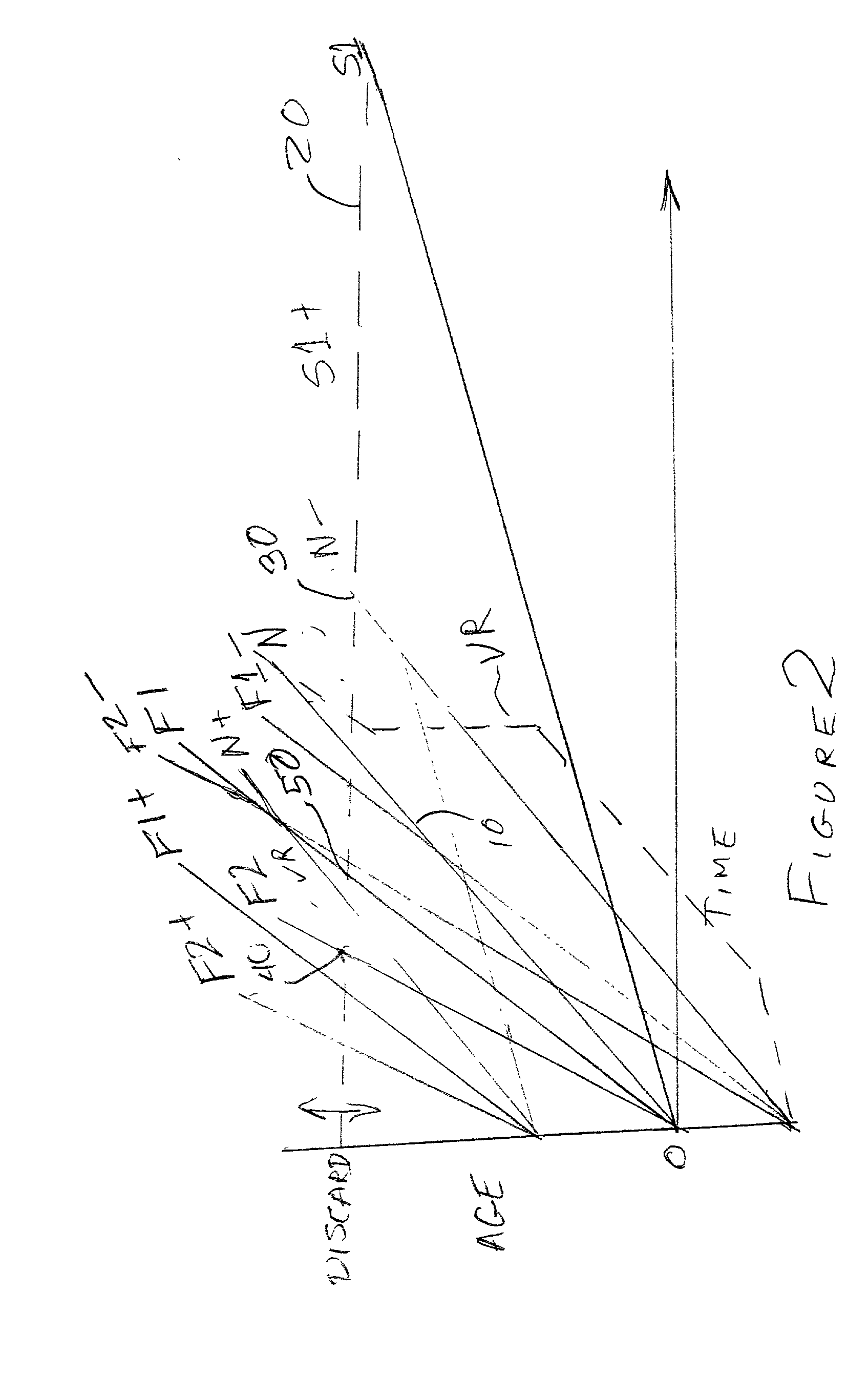

Directed least recently used cache replacement method

InactiveUS20020152361A1Performance maximizationImprove cache hit ratioEnergy efficient ICTMemory adressing/allocation/relocationParallel computingLeast recently frequently used

Fine grained control of cache maintenance resulting in improved cache hit rate and processor performance by storing age values and aging rates for respective code lines stored in the cache to direct performance of a least recently used (LRU) strategy for casting out lines of code from the cache which become less likely, over time, of being needed by a processor, thus supporting improved performance of a processor accessing the cache. The invention is implemented by the provision for entry of an arbitrary age value when a corresponding code line is initially stored in or accessed from the cache and control of the frequency or rate at which the age of each code is incremented in response to a limited set of command instructions which may be placed in a program manually or automatically using an optimizing compiler.

Owner:IBM CORP

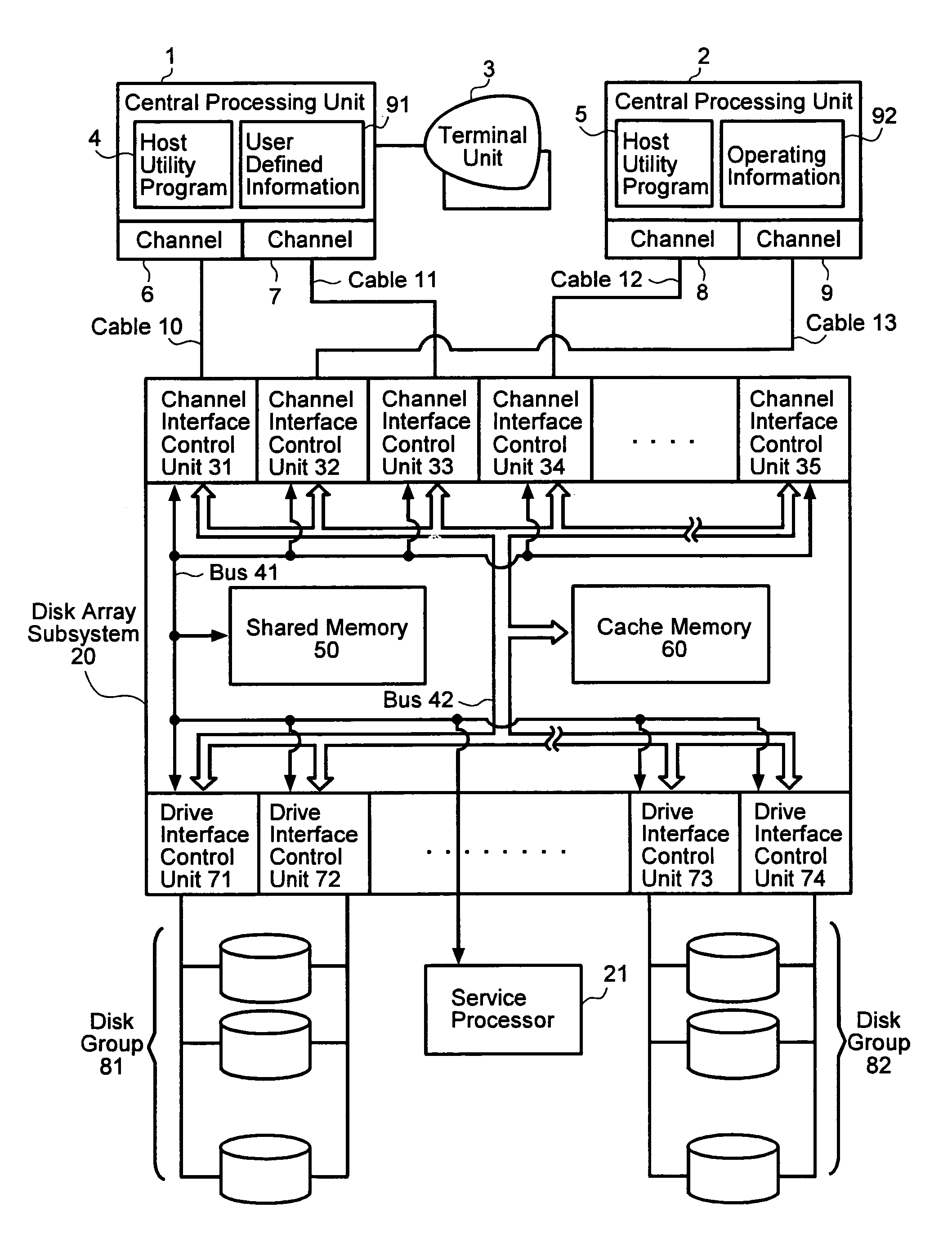

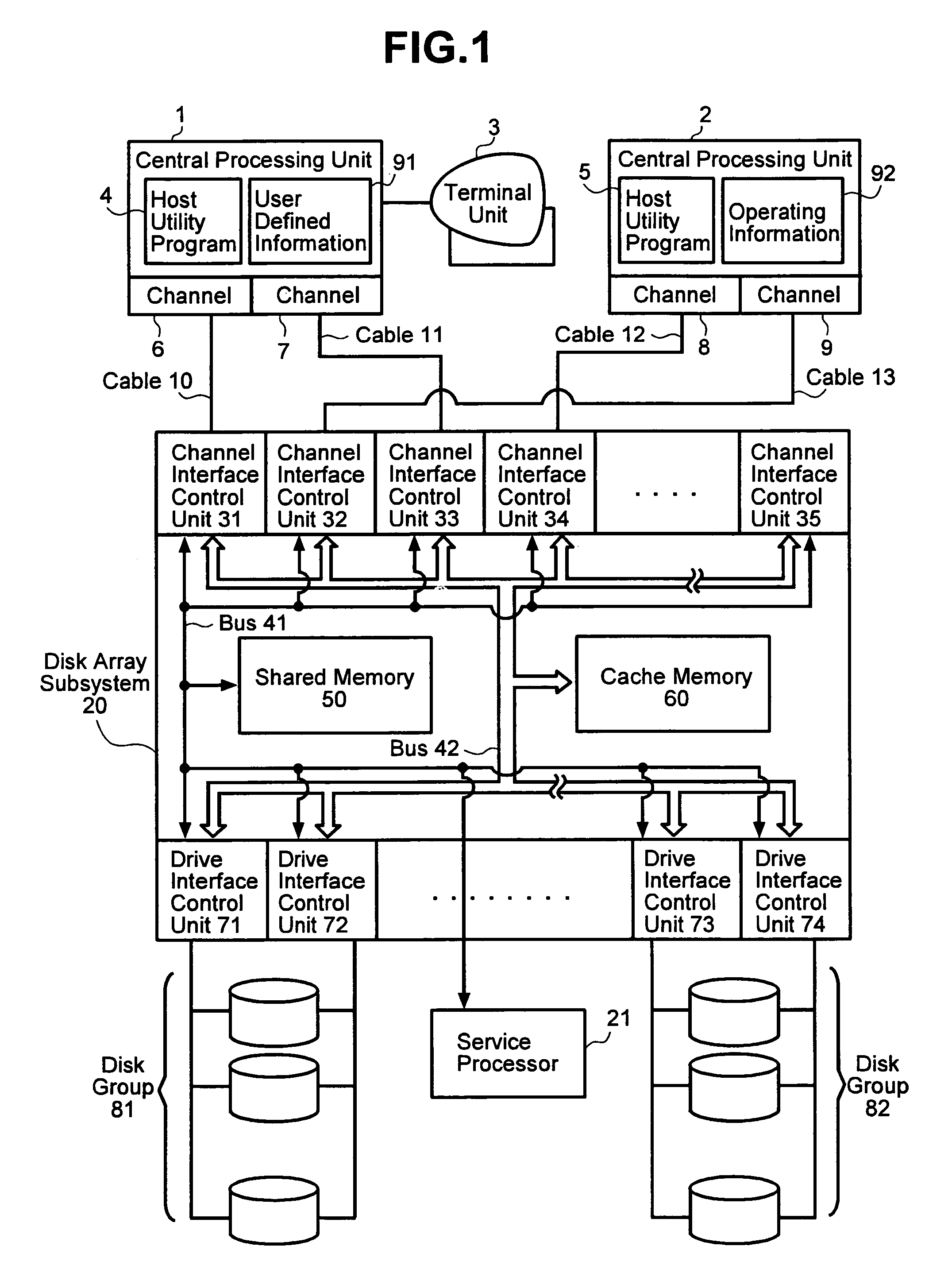

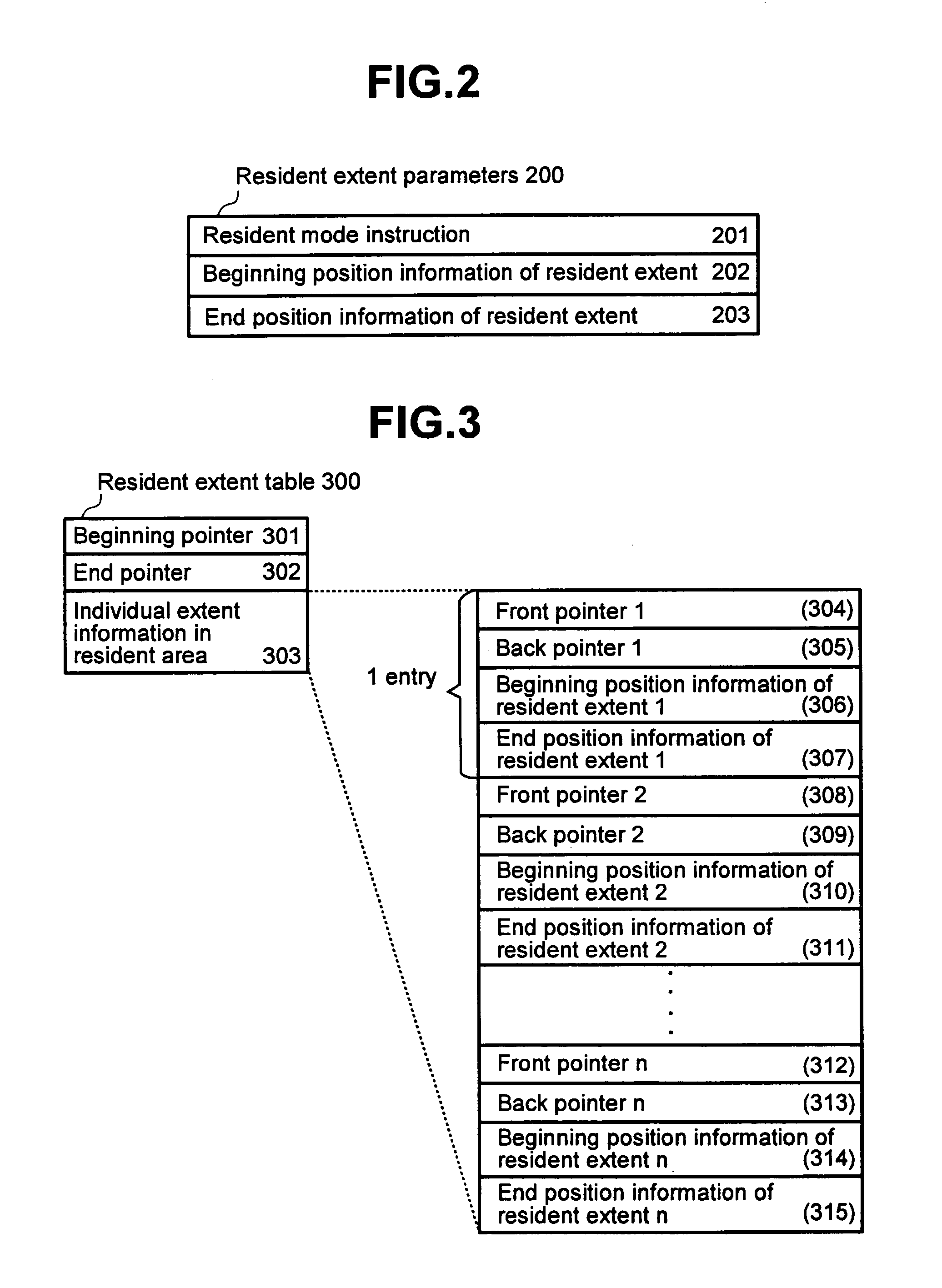

Software prefetch system and method for concurrently overriding data prefetched into multiple levels of cache

InactiveUS6959359B1Improve information processing abilityImprove performanceMemory adressing/allocation/relocationDigital computer detailsParallel computingHit ratio

A cache memory control technology by which set residing data / reset residing data in a cache memory can be executed by individual users. In this system, by execution of set residing data and reset residing data commands, user data spread on plural tracks in the cache memory, using an utility program improves the hit rate of the cache memory.

Owner:HITACHI LTD +1

Differential caching mechanism based on media I/O speed

ActiveUS20100318744A1Memory architecture accessing/allocationMemory adressing/allocation/relocationHit ratioComputer program

A method for allocating space in a cache based on media I / O speed is disclosed herein. In certain embodiments, such a method may include storing, in a read cache, cache entries associated with faster-responding storage devices and cache entries associated with slower-responding storage devices. The method may further include implementing an eviction policy in the read cache. This eviction policy may include demoting, from the read cache, the cache entries of faster-responding storage devices faster than the cache entries of slower-responding storage devices, all other variables being equal. In certain embodiments, the eviction policy may further include demoting, from the read cache, cache entries having a lower read-hit ratio faster than cache entries having a higher read-hit ratio, all other variables being equal. A corresponding computer program product and apparatus are also disclosed and claimed herein.

Owner:IBM CORP

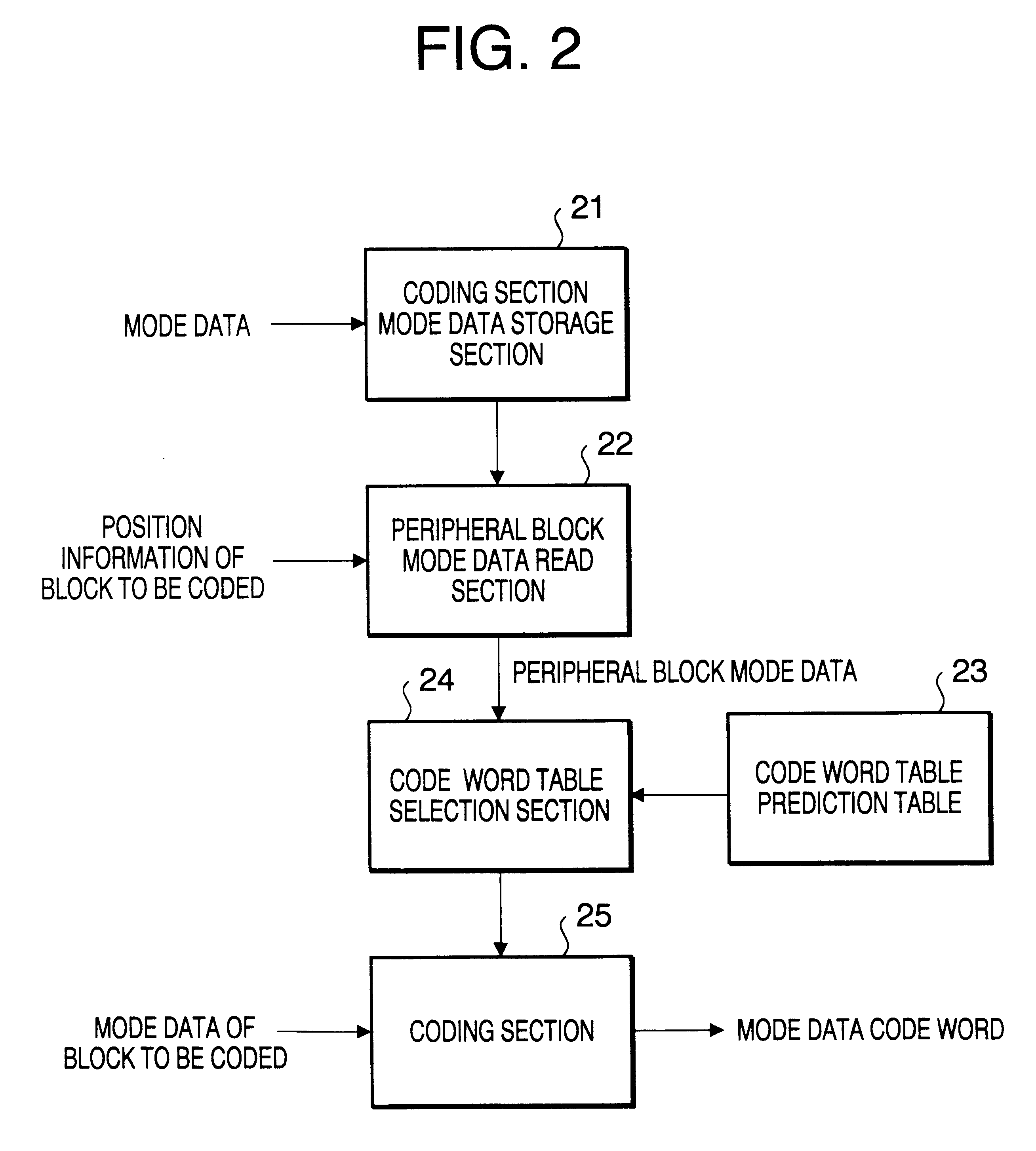

Image encoding apparatus and an image decoding apparatus

InactiveUS6345121B1Avoid efficiencyIncrease the number ofPulse modulation television signal transmissionDigital computer detailsComputer hardwareHit ratio

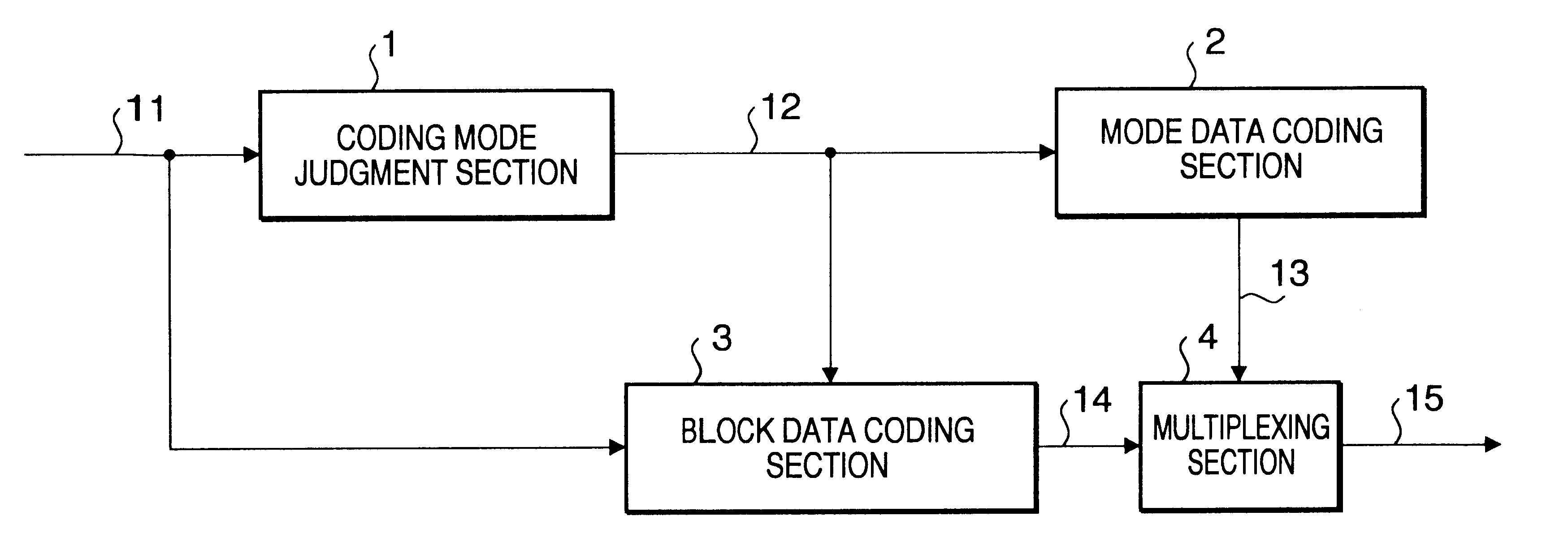

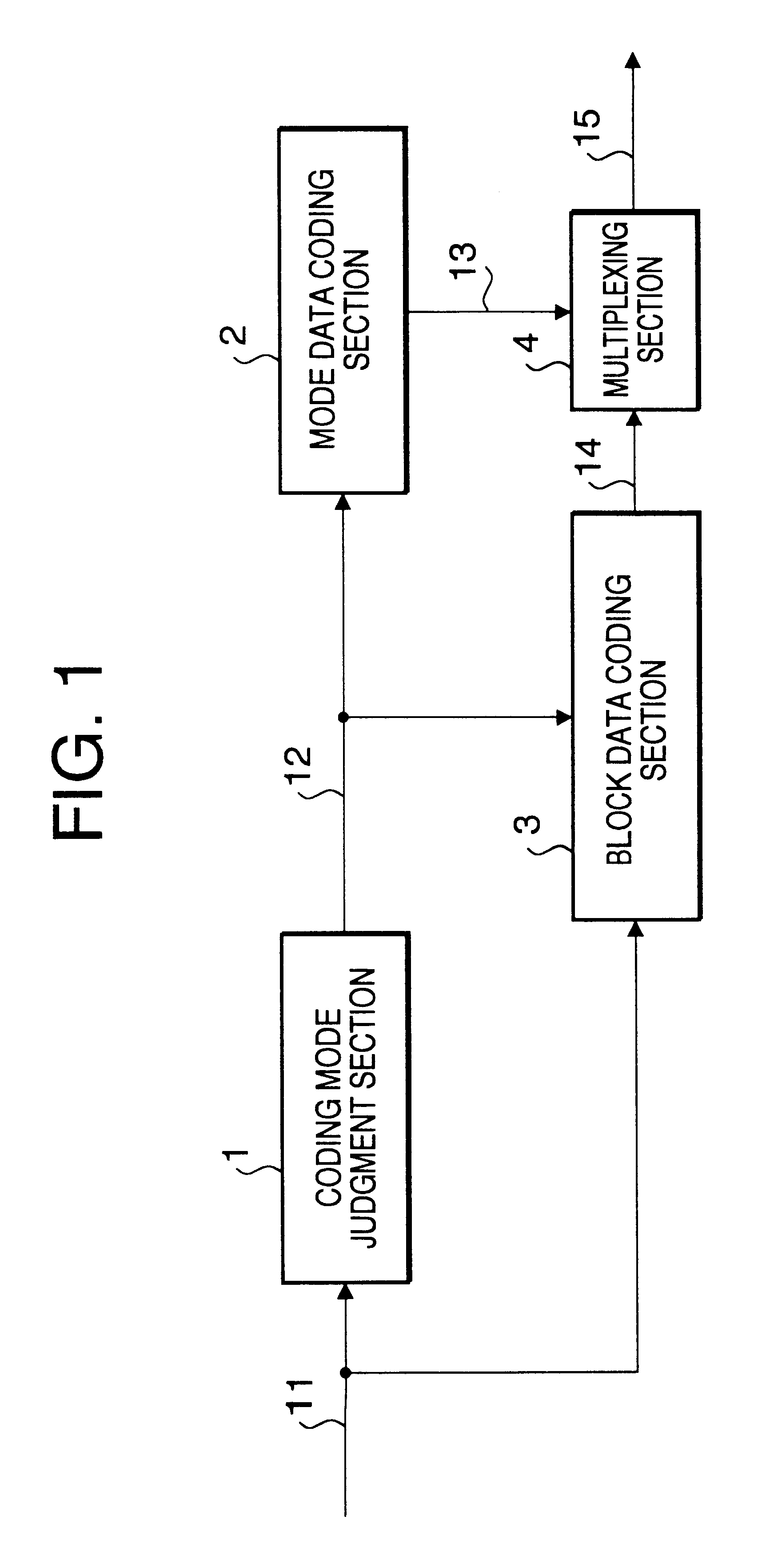

The mode data of a block to be coded is predicted from the mode data of peripheral blocks already coded, and coded using a code word table switched according to a hitting ratio of prediction. In the code word table, the code word length is set shorter for coding modes with high hitting ratio.

Owner:GK BRIDGE 1

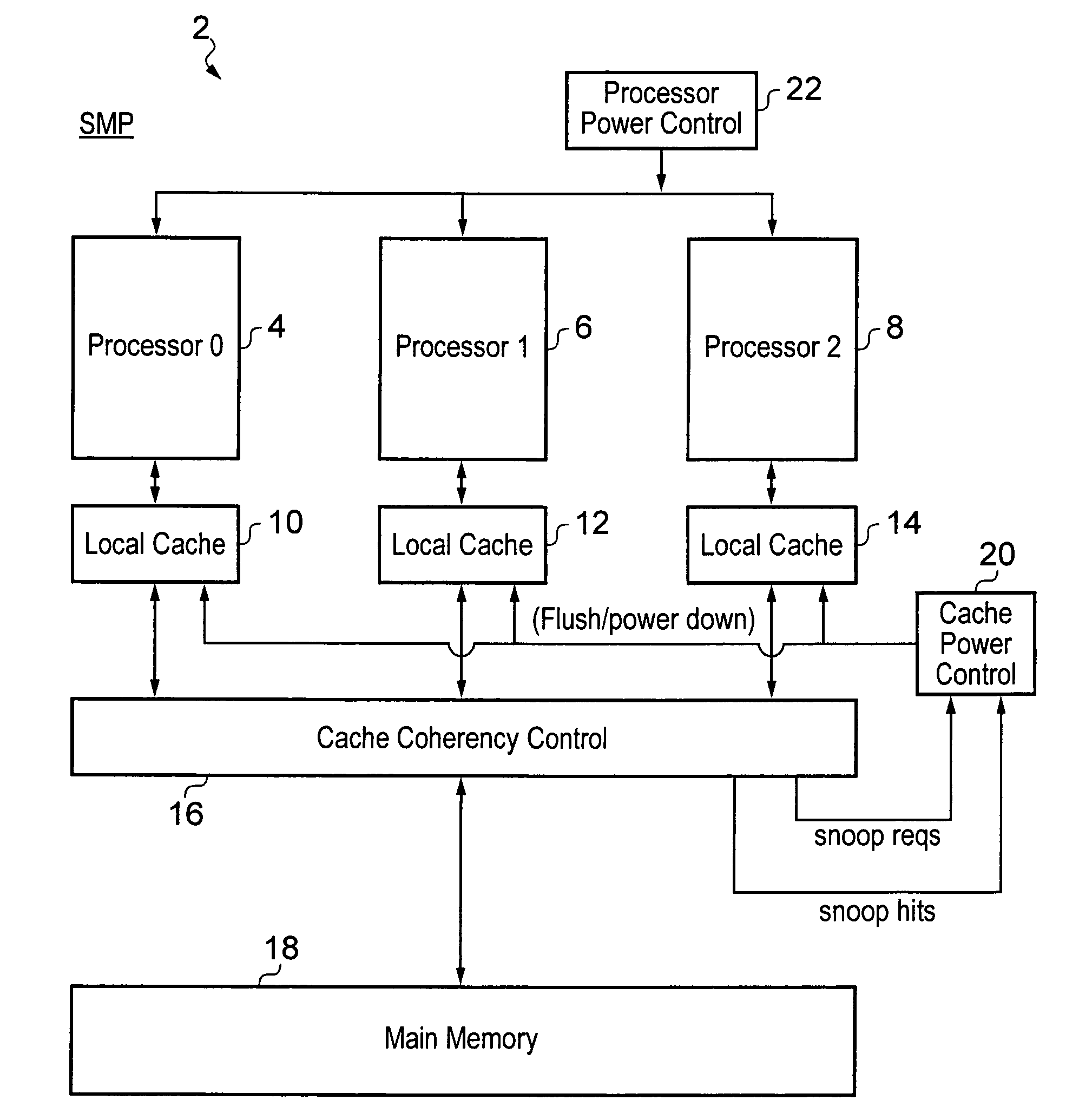

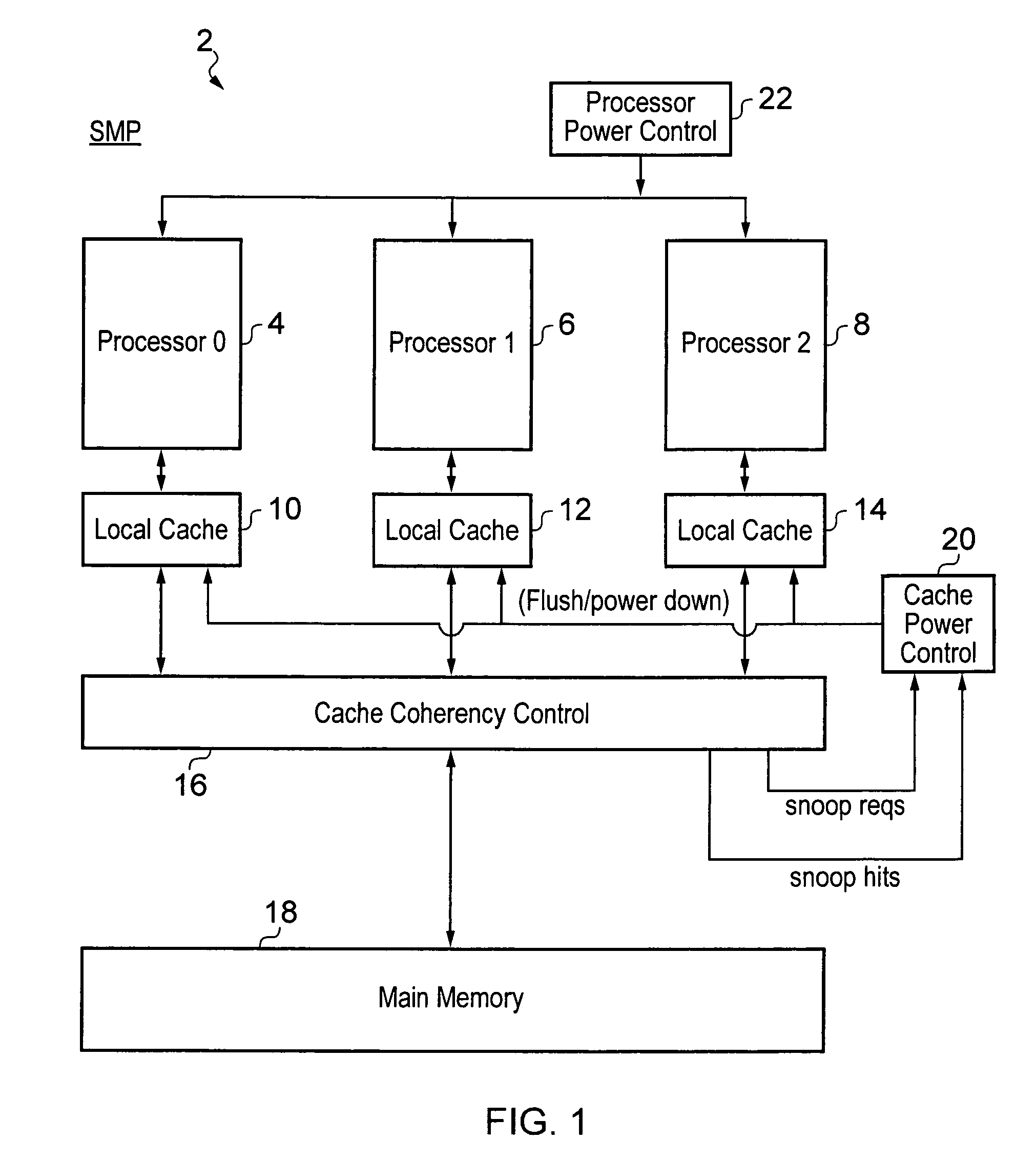

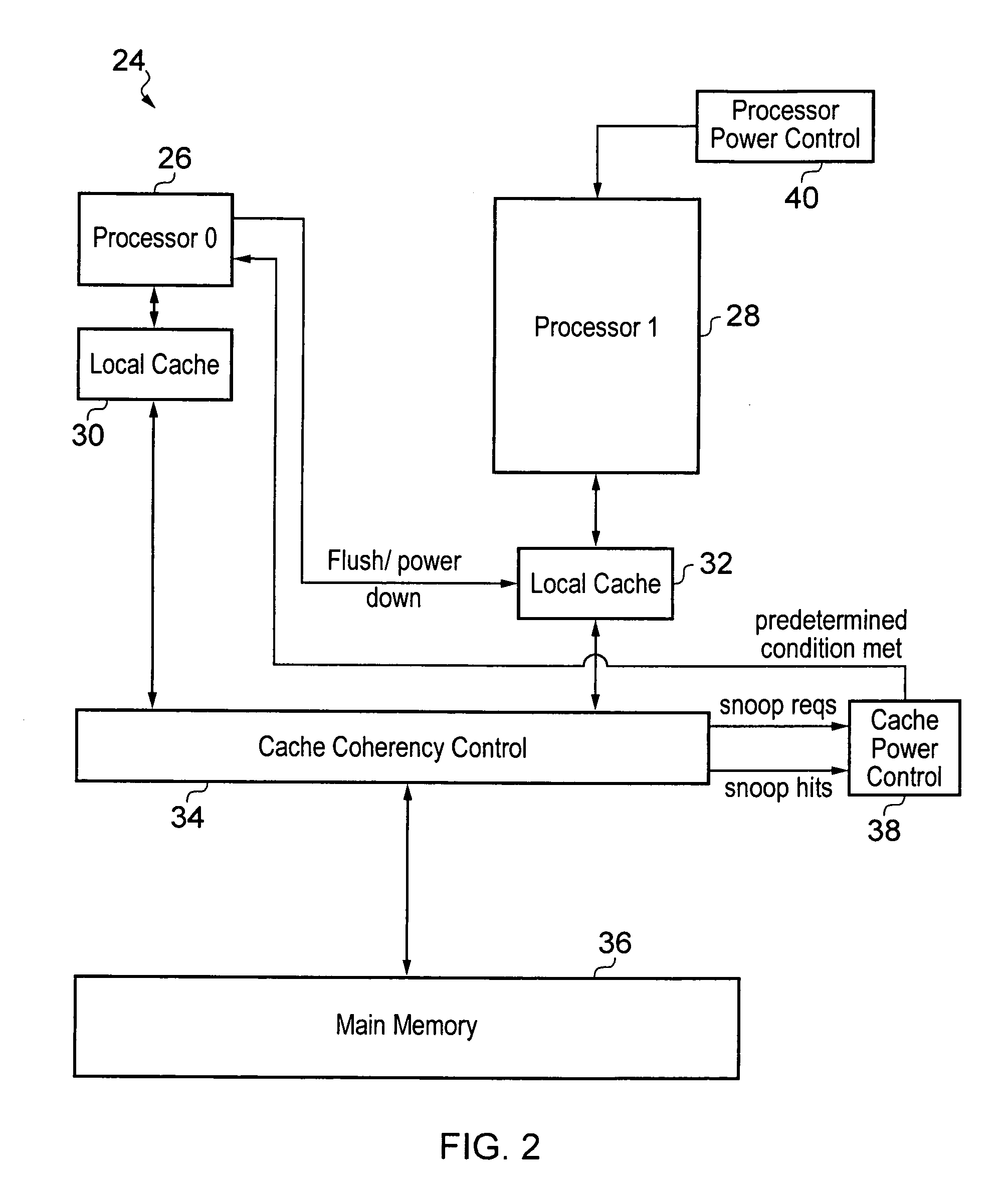

Local cache power control within a multiprocessor system

ActiveUS20100185821A1Great degreeMemory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemMulti processor

A data processing system including a plurality of processors 4, 6, 8 each having a local cache memory 10, 12, 14 is provided. A cache coherency controller 16 serves to maintain cache coherency between the local cache memories 10, 12, 14. When one of the processors 4, 6, 8 is placed into a low power state its associated local cache memory 10, 12, 14 is maintained in a state in which the data it is holding is accessible to the cache coherency controller 16 until a predetermined condition has been met whereupon the local cache memory 10, 12, 14 concerned is placed into a low power state. The predetermined condition can take a variety of different forms such as the rate of snoop hits falling below a threshold value, the ratio of snooping hits to snoop requests falling below a threshold value, a predetermined number of clock cycles passing since the associated processor for that local cache memory was powered down as well as other possibilities.

Owner:ARM LTD

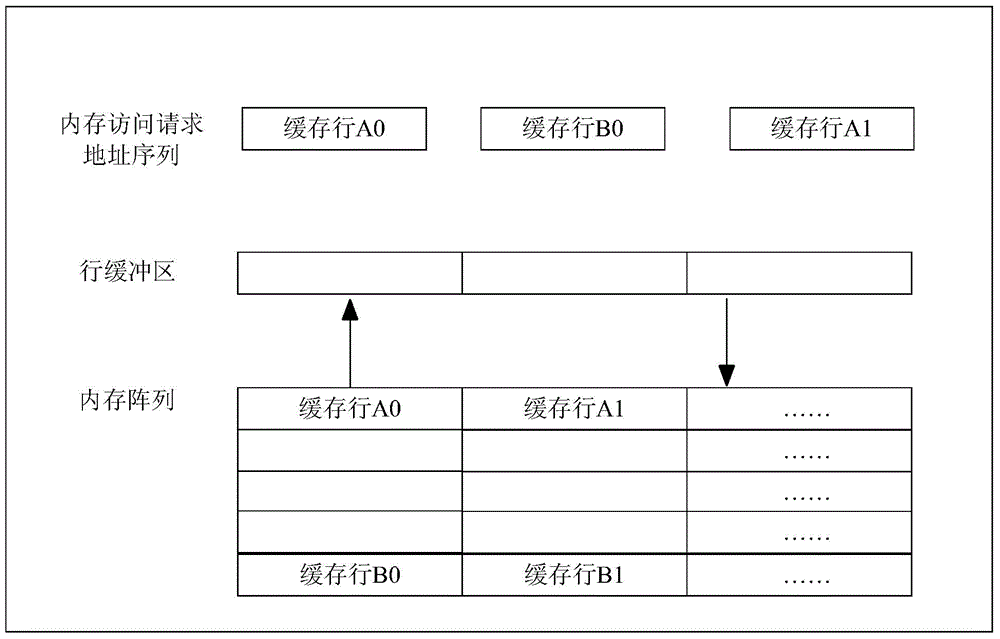

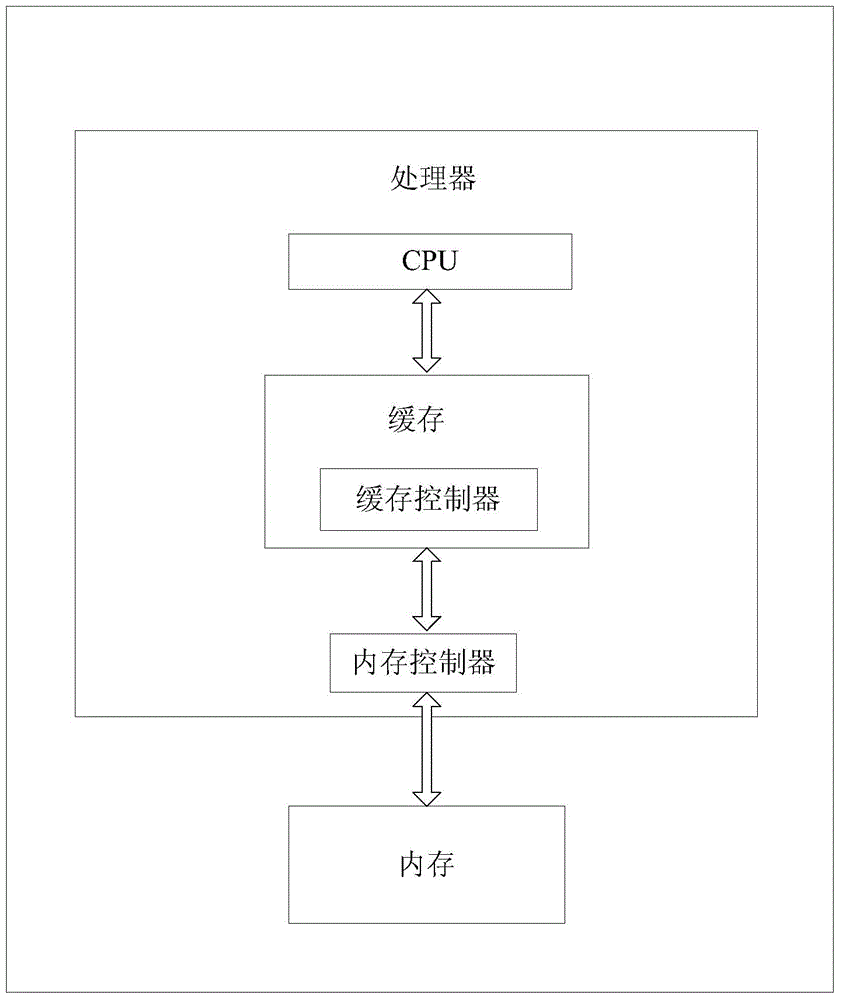

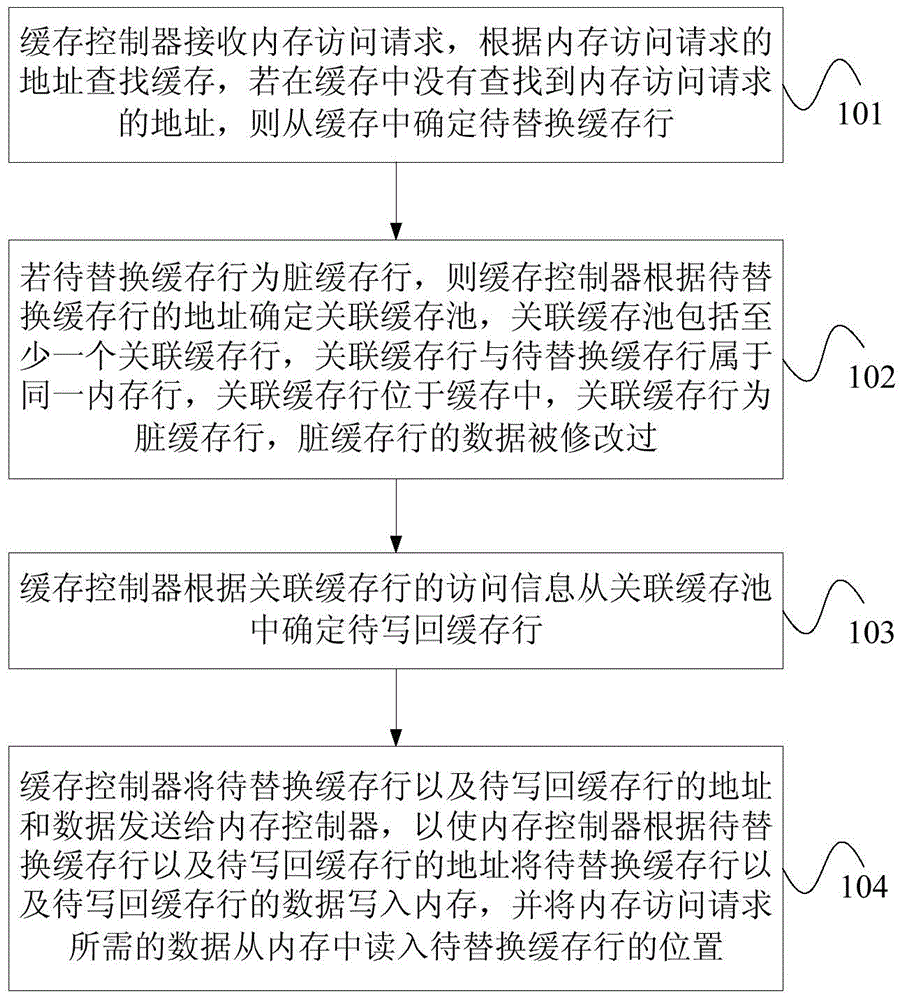

Cache replacing method, cache controller and processor

ActiveCN105095116AImprove access performanceReduce the number of writesMemory adressing/allocation/relocationParallel computingHit ratio

The embodiment of the invention provides a cache replacing method, a cache controller and a processor. The method comprises the following steps that: the cache controller determines an associated cache pool of a cache line to be replaced, wherein each associated cache row in the associated cache pool and the cache row to be replaced belong to the same memory row; a cache row to be written back is further determined from the associated cache pool according to the access information of the associated cache row; and data in the cache row to be replaced and the cache row to be written back are simultaneously written into a memory. The cache row to be replaced and the cache row to be written back belong to the same memory row, so that the hit rate of the cache region can be improved; and the memory access performance is improved. The cache controller further determines the cache row to be written back from the associated cache pool according to the access information of the associated cache row, and only the cache row to be written back in the associated cache pool is written into the memory, so that the number of the memory writing times can be reduced; and the service life of the memory is prolonged.

Owner:HUAWEI TECH CO LTD +1

Memory hub and method for memory sequencing

InactiveUS20070033353A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache hit rate

A memory module includes a memory hub coupled to several memory devices. The memory hub includes at least one performance counter that tracks one or more system metrics—for example, page hit rate, prefetch hits, and / or cache hit rate. The performance counter communicates with a memory sequencer that adjusts its operation based on the system metrics tracked by the performance counter.

Owner:ROUND ROCK RES LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com