Patents

Literature

171 results about "Data reuse" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

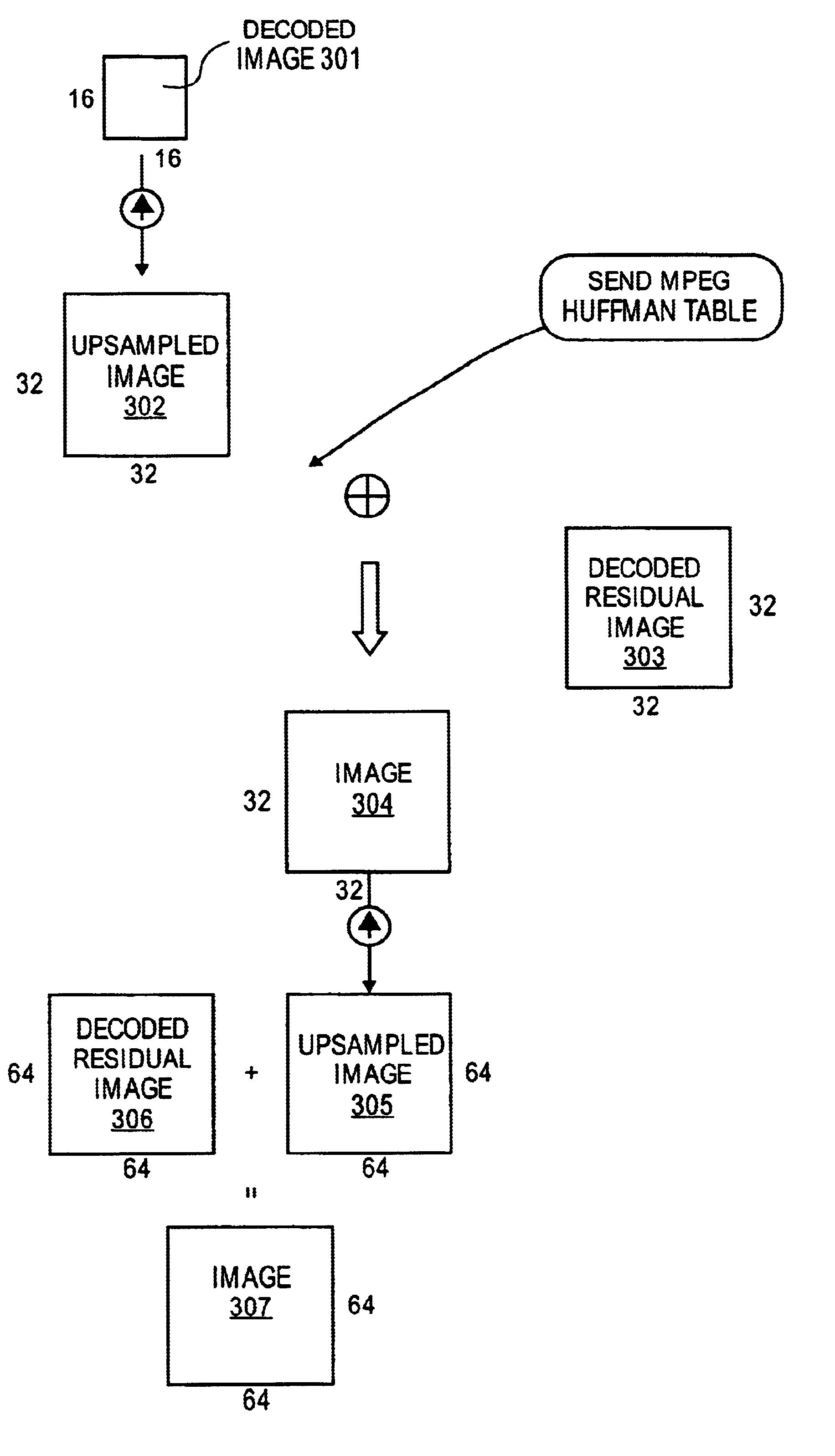

System and method for tiled multiresolution encoding/decoding and communication with lossless selective regions of interest via data reuse

A method and apparatus for processing an image is described. In one embodiment, the method comprises: receiving a portion of an encoded version of a downsized image and one or more encoded residual images, decoding the portion of the encoded version of the downsized image to create a decoded portion of the downsized image, decoding a portion of at least one or more encoded residual images, enlarging the decoded portion of the portion of the downsized image, and combining the enlarged and decoded portion of the downsized image with a first decoded residual image to create a new image.

Owner:QUALCOMM INC

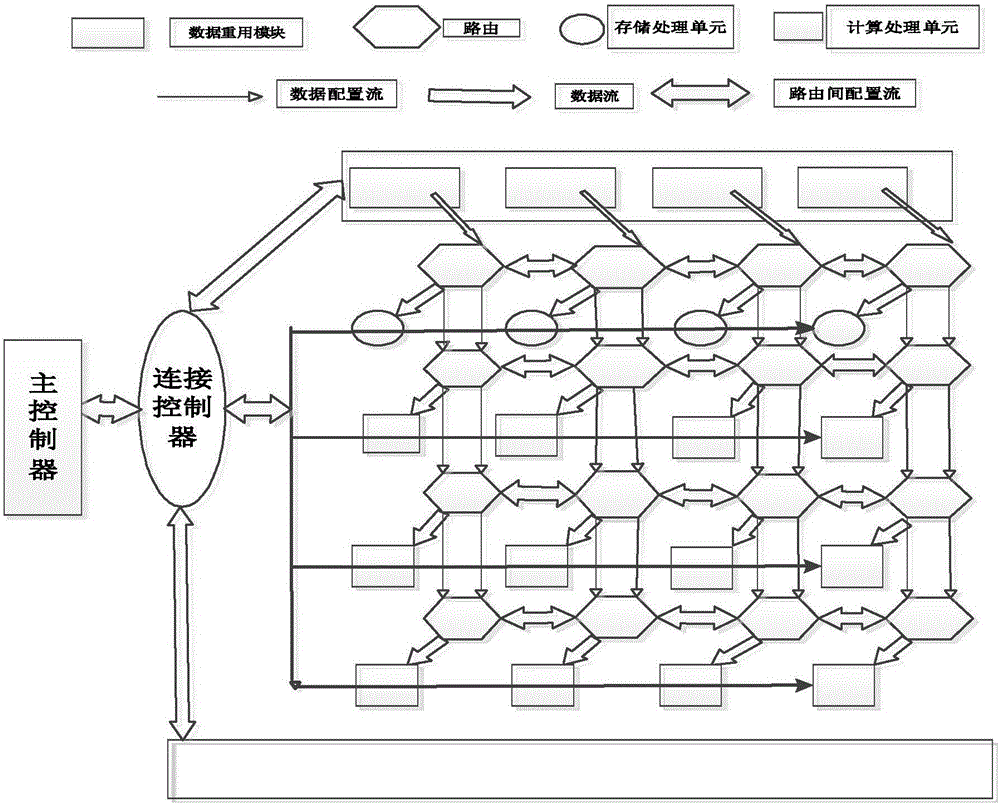

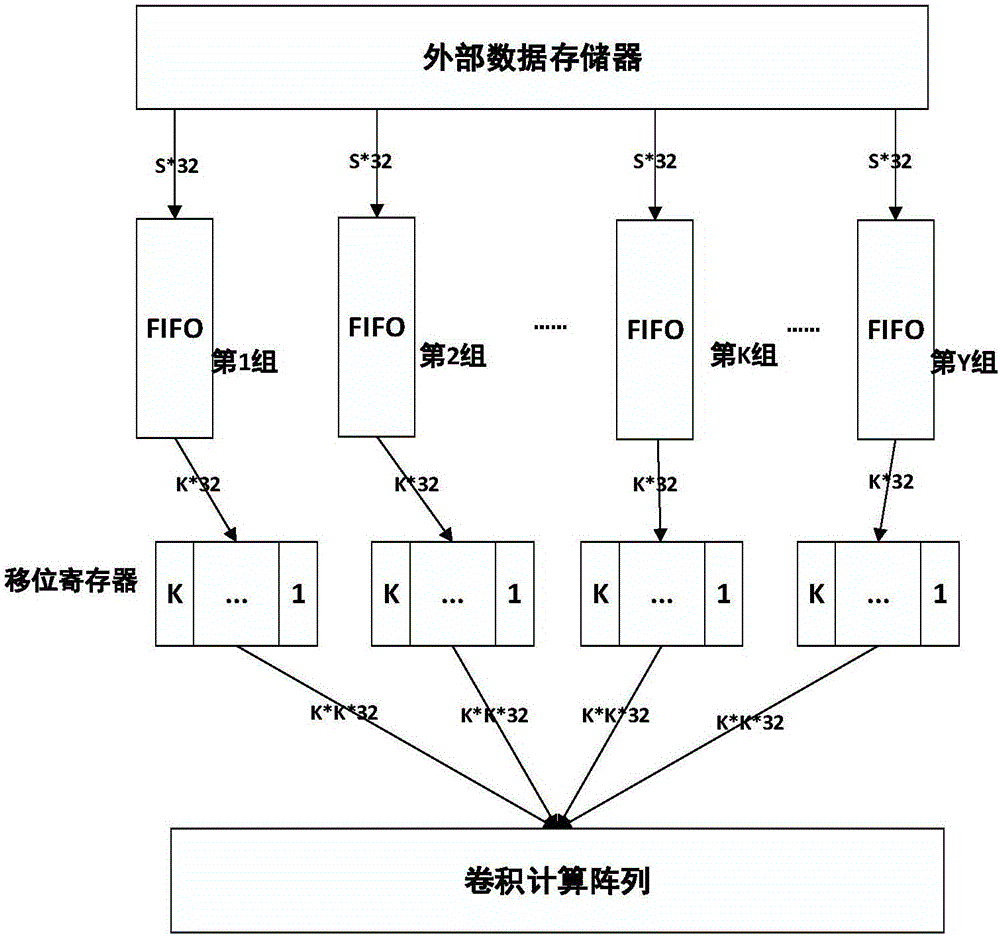

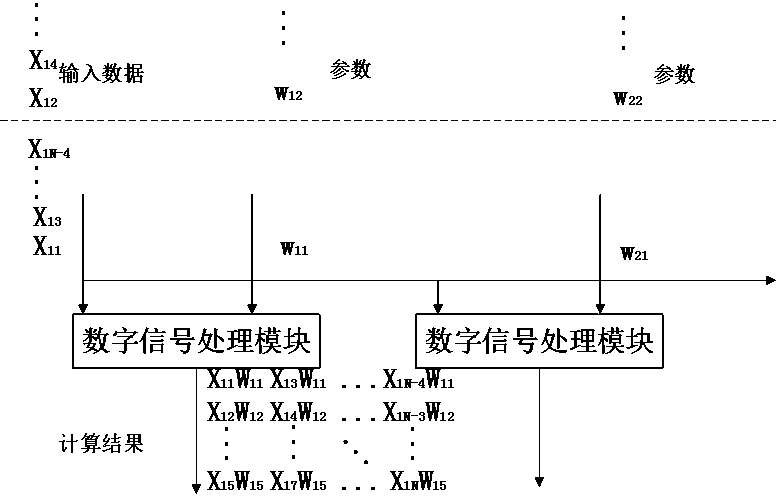

System for circular convolution calculation data reuse of convolutional neural network

InactiveCN106250103ARegister arrangementsConcurrent instruction executionProcessor registerComputation process

The invention discloses a coarse-grained reconfigurable system-oriented convolution neural network loop convolution calculation data reuse system, including a main controller and a connection control module, an input data reuse module, a convolution loop operation processing array, and a data transmission Pathways in four parts. During the convolution cycle operation, the essence is to multiply multiple two-dimensional input data matrices with multiple two-dimensional weight matrices. Generally, these matrices are large in size, and the multiplication takes up most of the time of the entire convolution calculation. The present invention utilizes a coarse-grained reconfigurable array system to complete the convolution calculation process. After receiving the convolution operation request instruction, the register rotation method is used to fully explore the reusability of the input data in the convolution cycle calculation process, which improves the data utilization rate. It also reduces the bandwidth access pressure, and the designed array unit is configurable, and can complete convolution operations with different cyclic convolution scales and step sizes.

Owner:SOUTHEAST UNIV

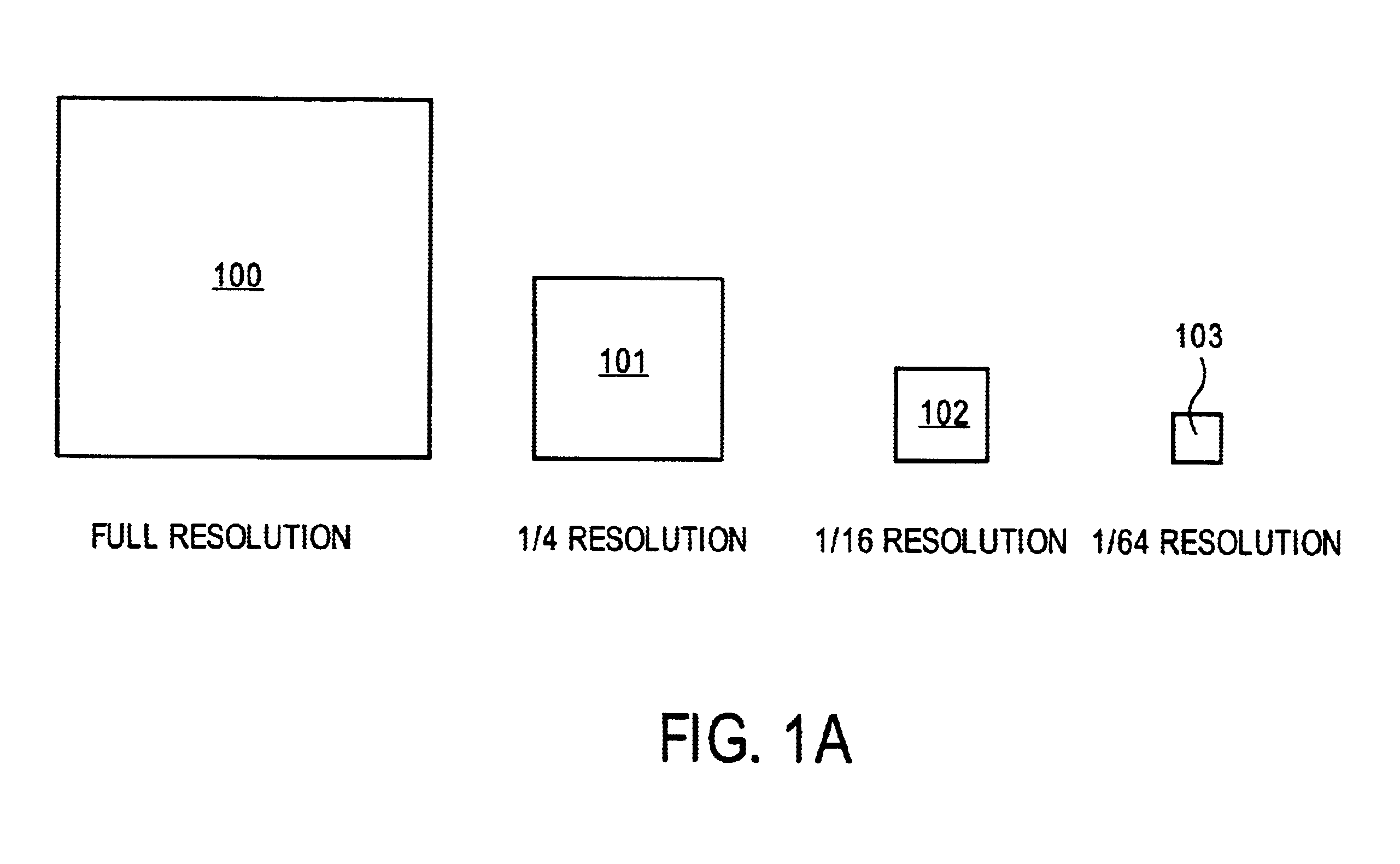

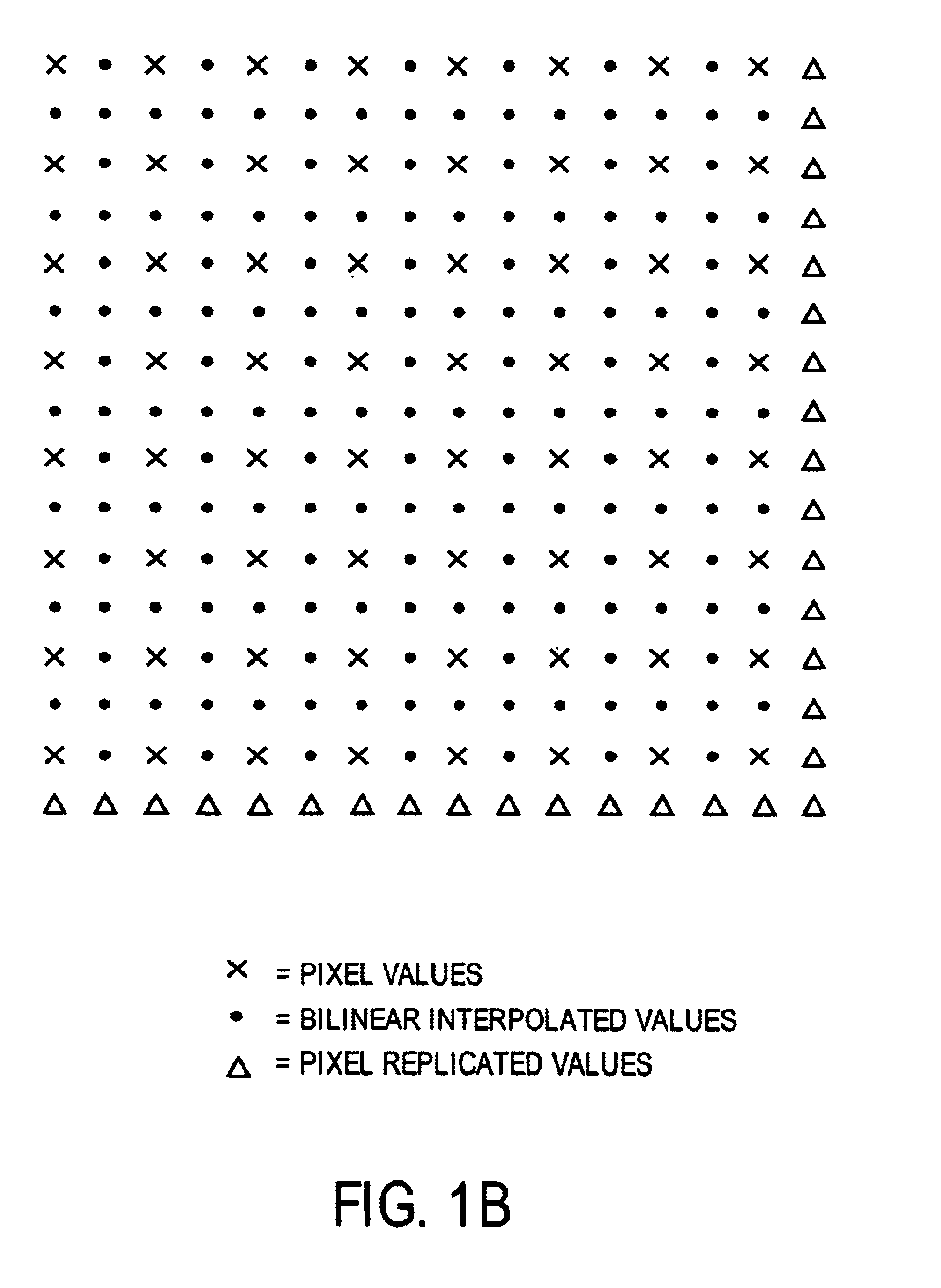

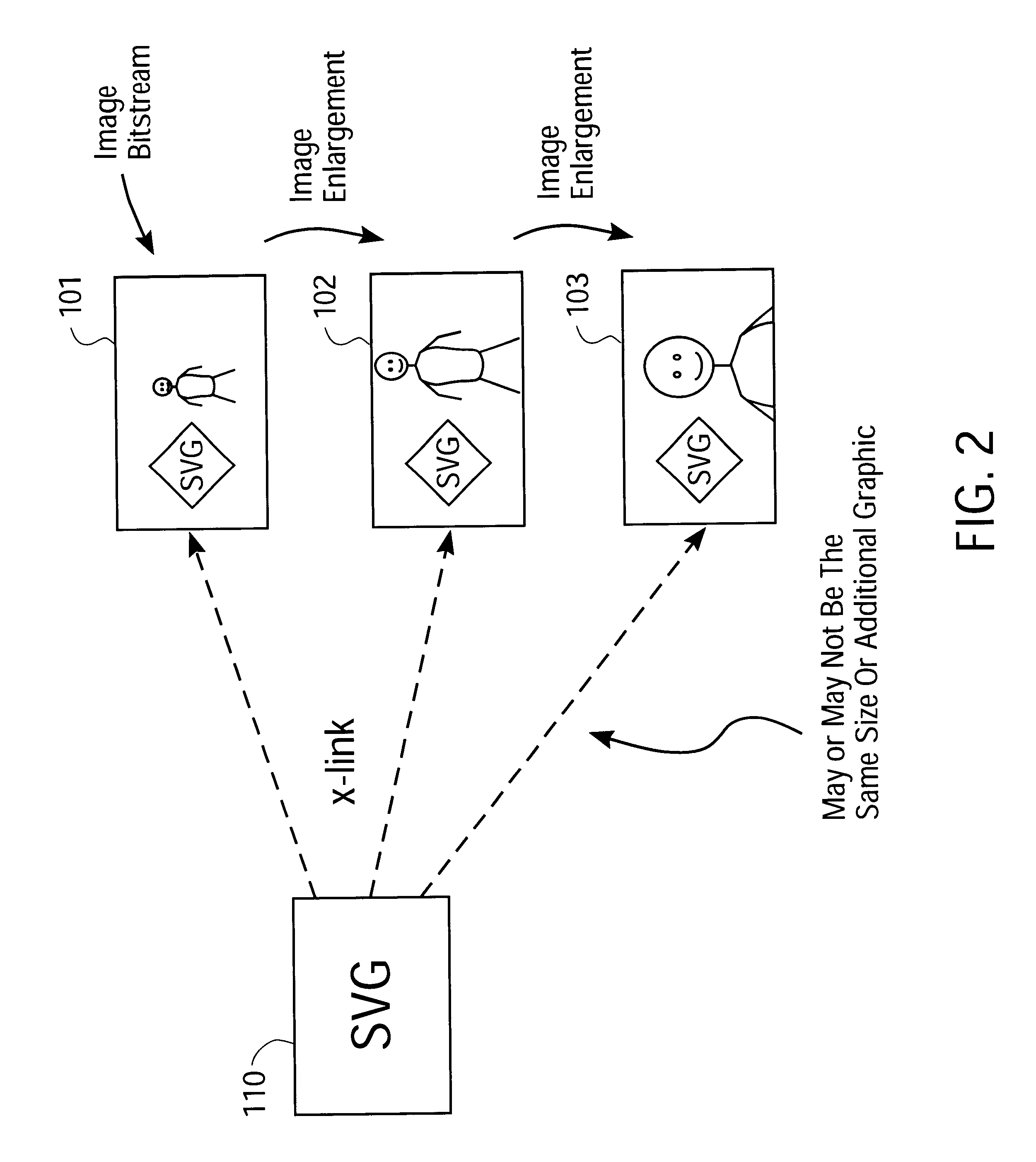

Scalable graphics image drawings on multiresolution image with/without image data re-usage

InactiveUS6873343B2Quality improvementGeometric image transformationCode conversionGraphicsImage resolution

A method and apparatus for creating a background or foreground image at different resolutions with a scalable graphic thereon is described. In one embodiment, the method comprises selecting a version of an image for display with a scalable graphic. The version of the image is at one of a plurality of resolutions. The method also includes generating the version of the image from a first image bitstream from which versions of the image at two or more of the plurality of resolutions could be generated. One of the versions is generated using a first portion of the first image bitstream and a second of the versions is generated using the first portion of the first image bitstream and a second portion of the first image bitstream.

Owner:QUALCOMM INC

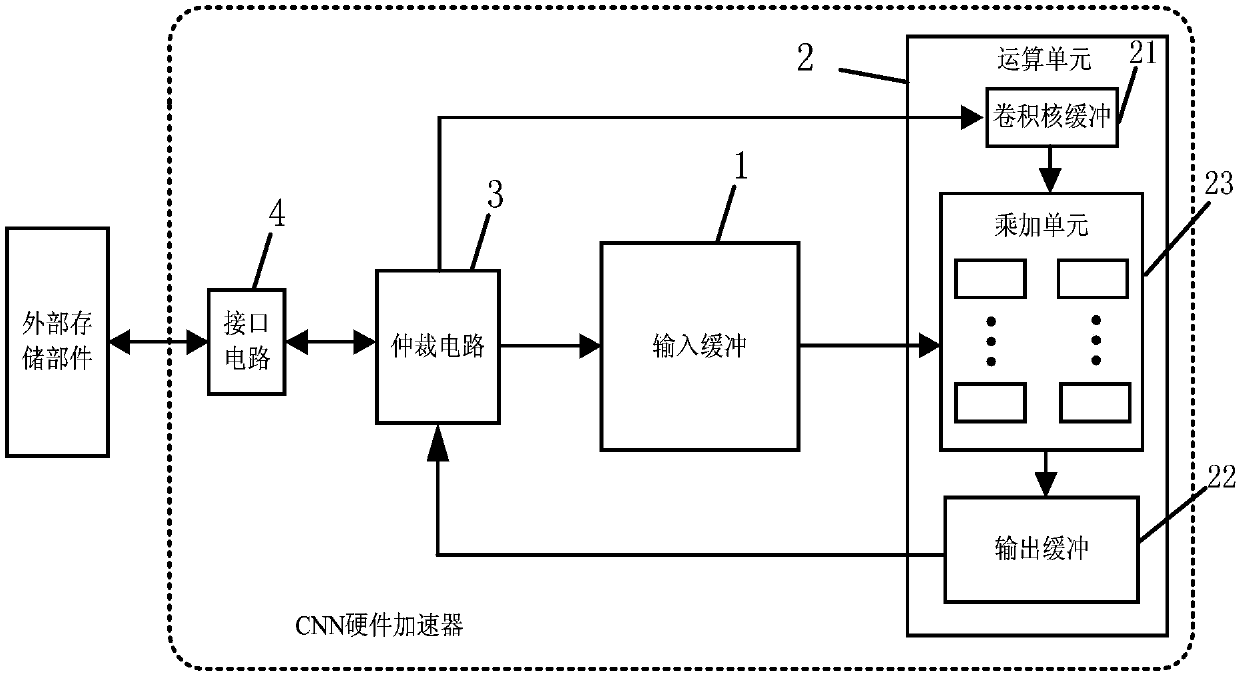

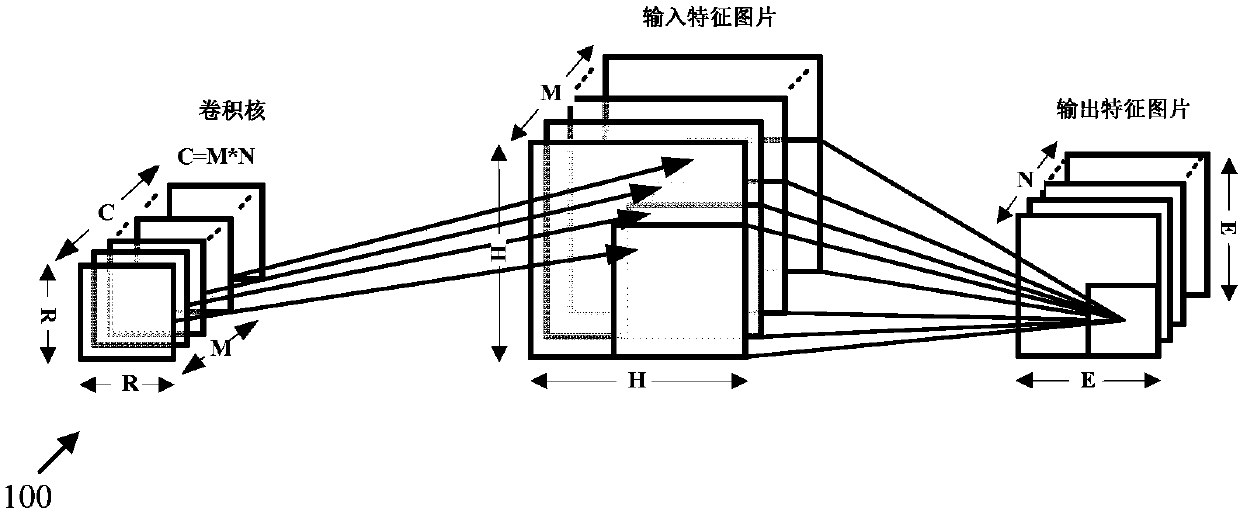

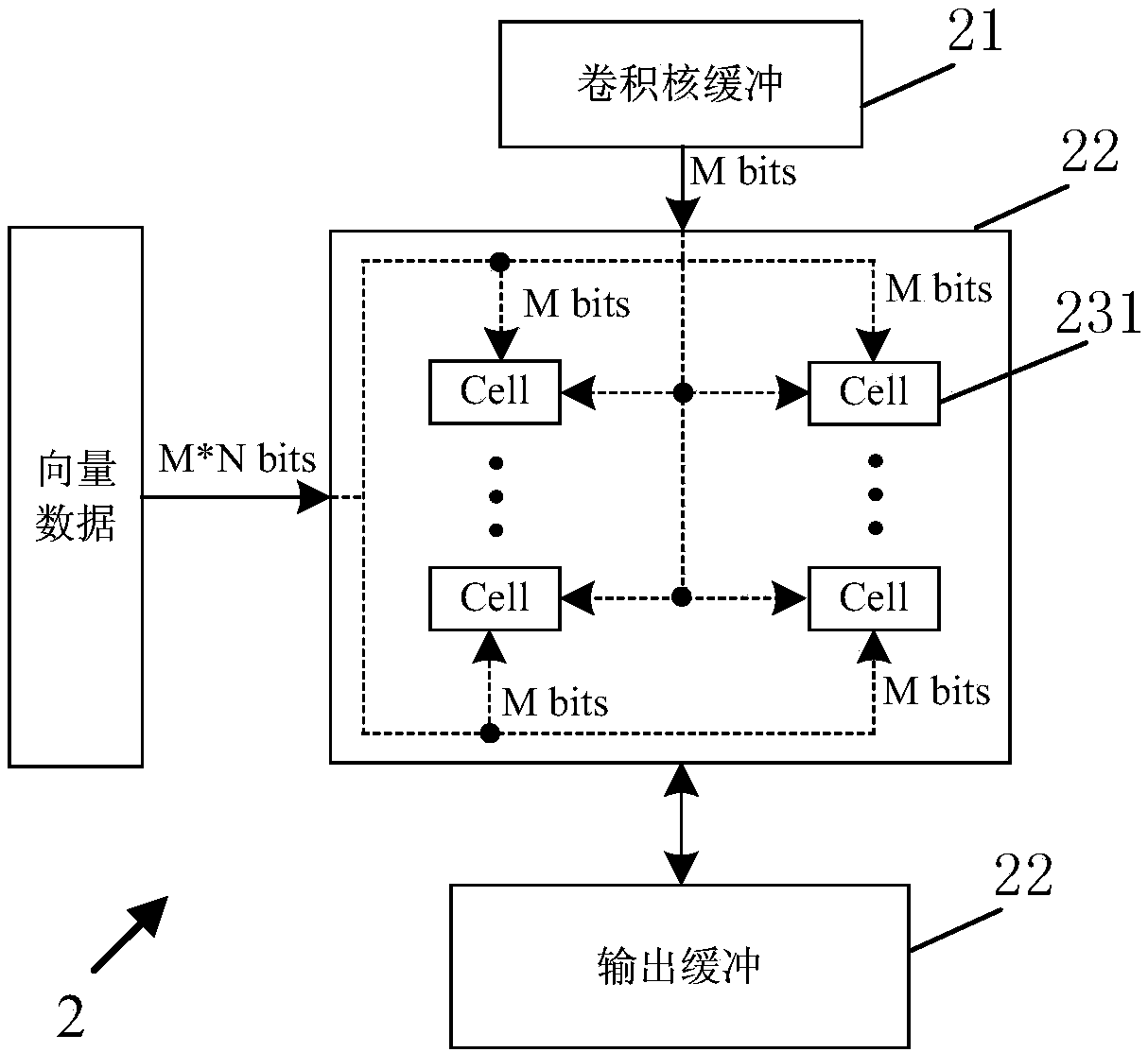

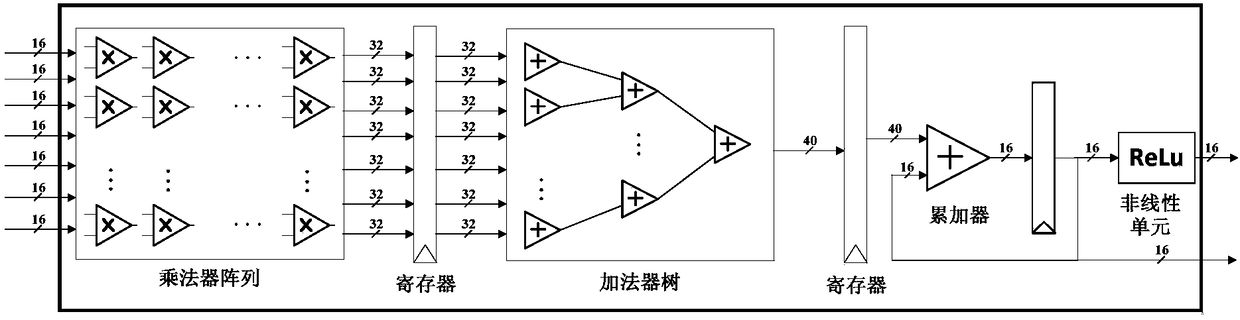

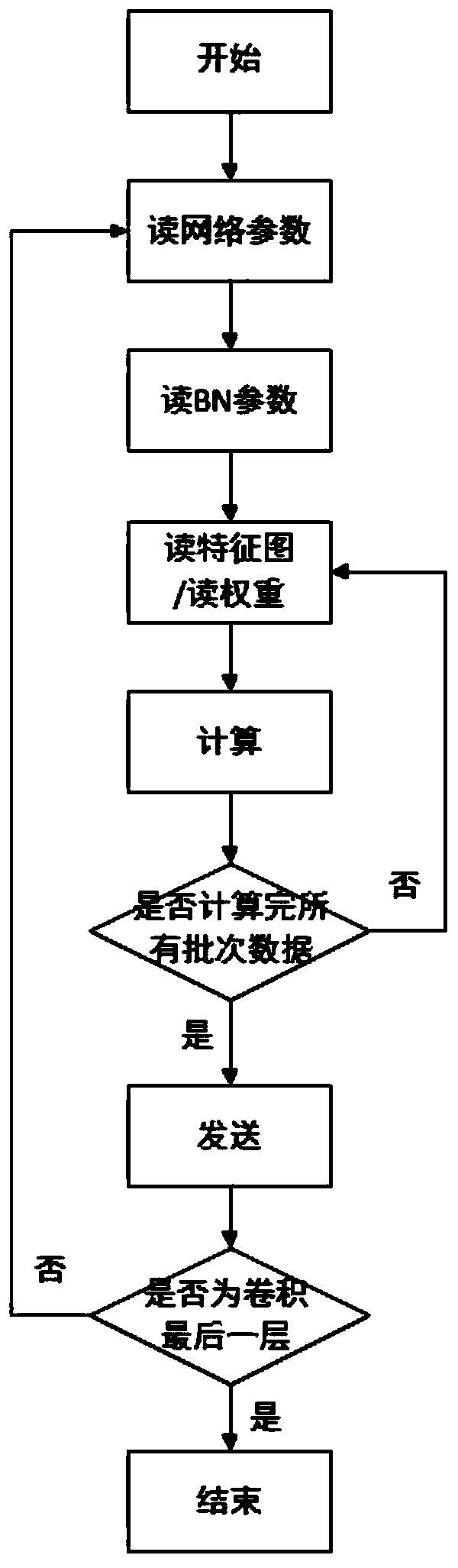

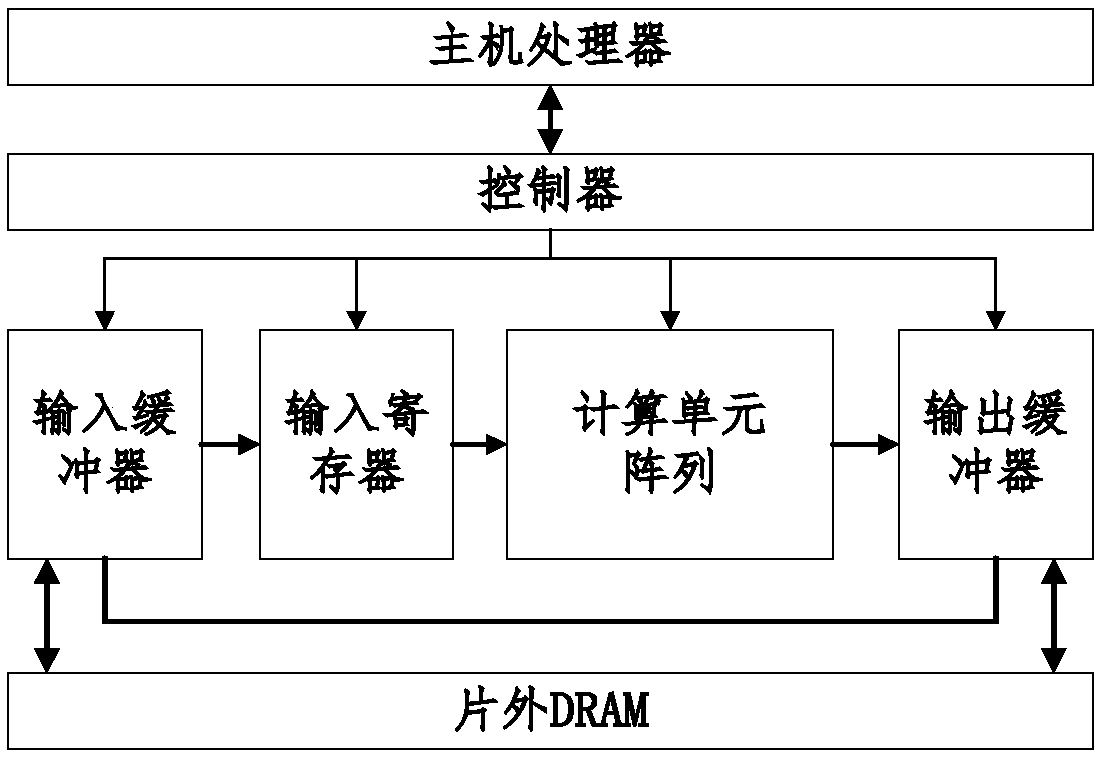

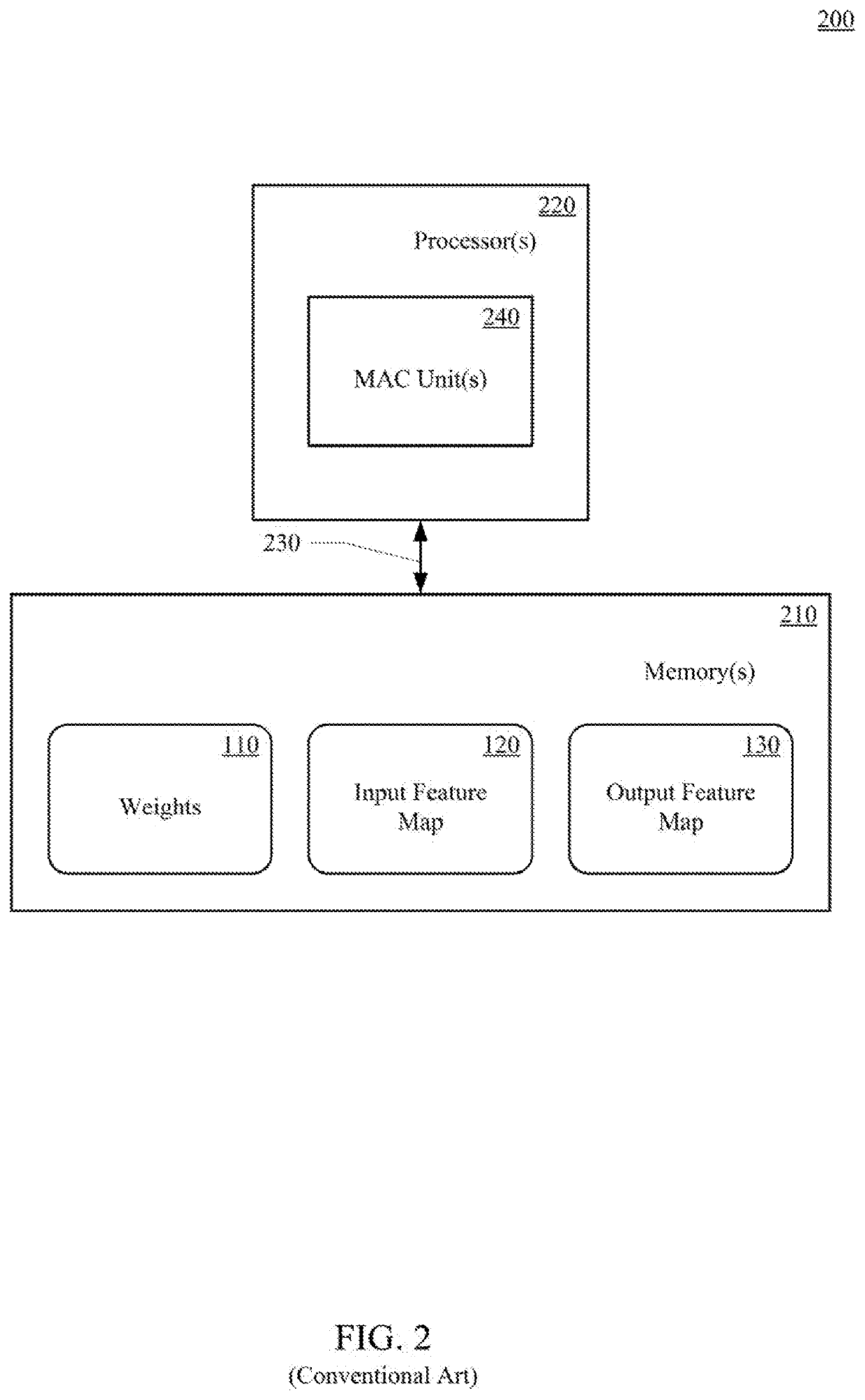

Convolution neural network (CNN) hardware accelerator and acceleration method

ActiveCN107657581AImprove computing efficiencyImprove reuseProcessor architectures/configurationPhysical realisationExtensibilityExternal storage

The invention discloses a convolution neural network (CNN) hardware accelerator and an acceleration method. The accelerator comprises an input buffer and a plurality of operation units , and is characterized in that the input buffer is used for caching input feature picture data, the plurality of operation units respectively share the input feature picture data to perform a CNN convolution operation, each operation unit comprises a convolution kernel buffer, an output buffer and a multiplier-adder unit formed by a plurality of MAC components, the convolution kernel buffer receives convolutionkernel data returned from an external storage component, the convolution kernel data is provided for each MAC component of the multiplier-adder unit, each MAC component receives the input feature picture data and the convolution kernel data to perform a multiply accumulation operation, and an intermediate result of the operation is written into the output buffer. The acceleration method is a method applying the accelerator. The CNN hardware accelerator and the acceleration method can improve the CNN hardware acceleration performance, and have the advantages of high data reuse rate and efficiency, small amount of data migration, good expansibility, small bandwidth required by the system, small hardware overhead and the like.

Owner:NAT UNIV OF DEFENSE TECH

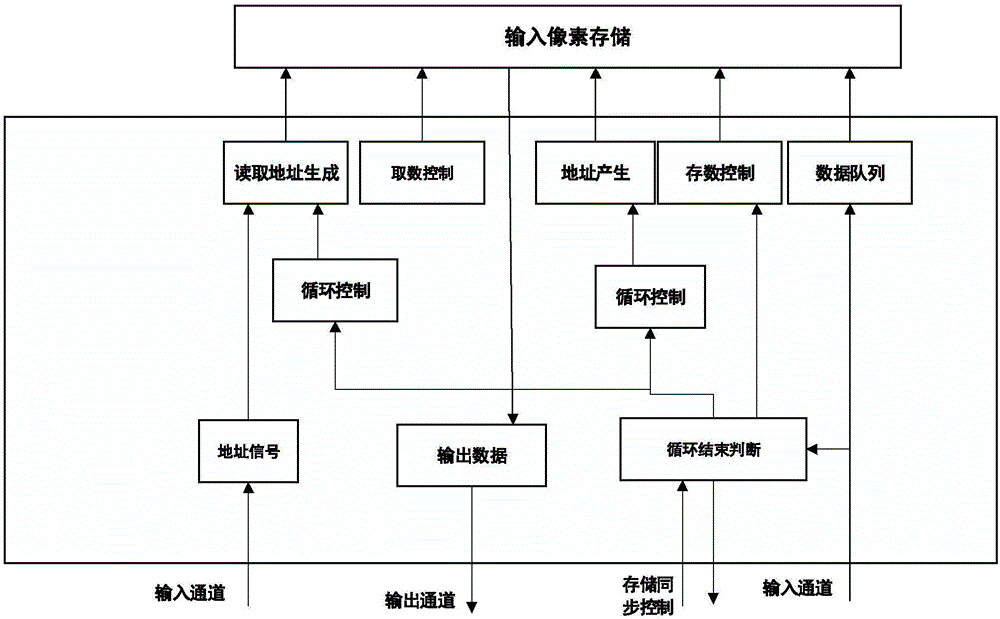

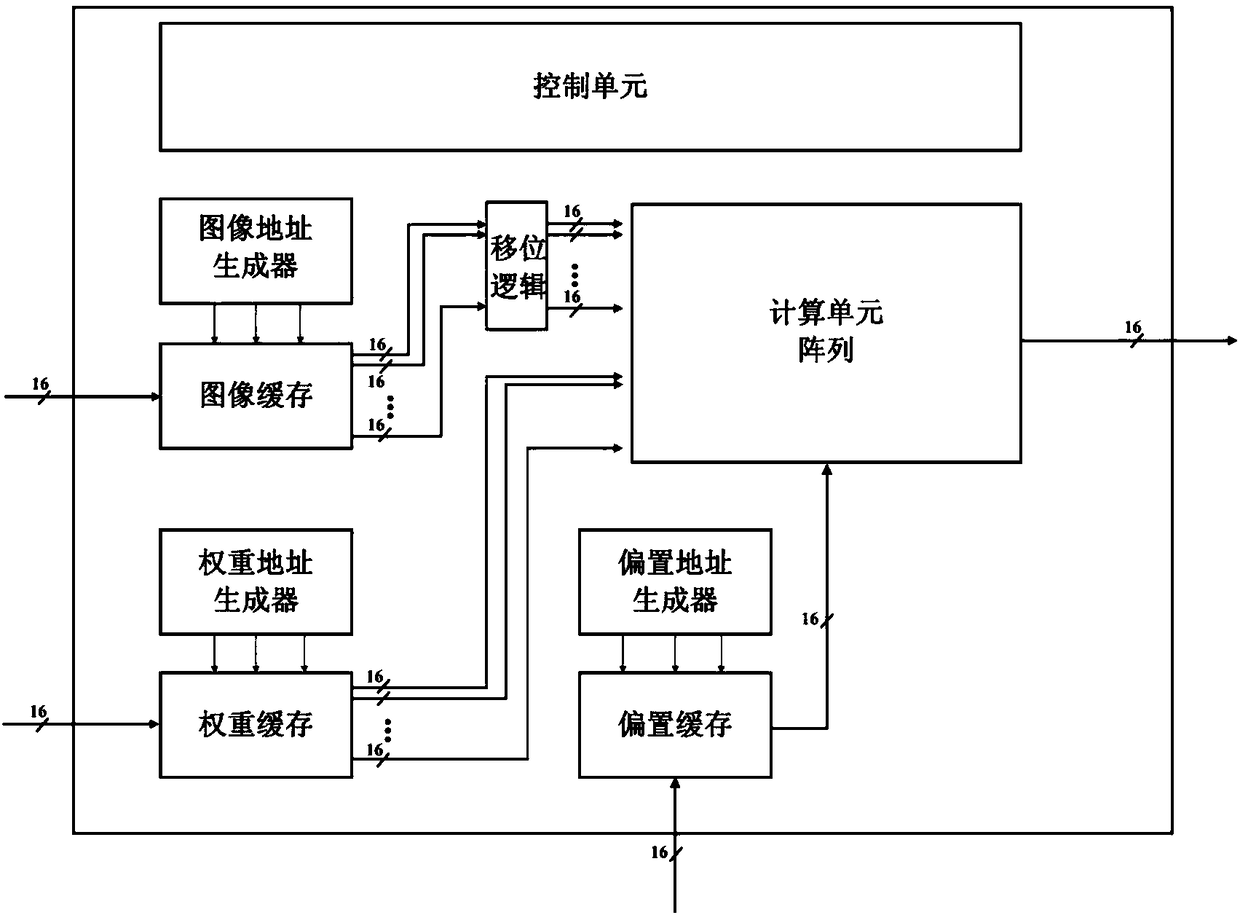

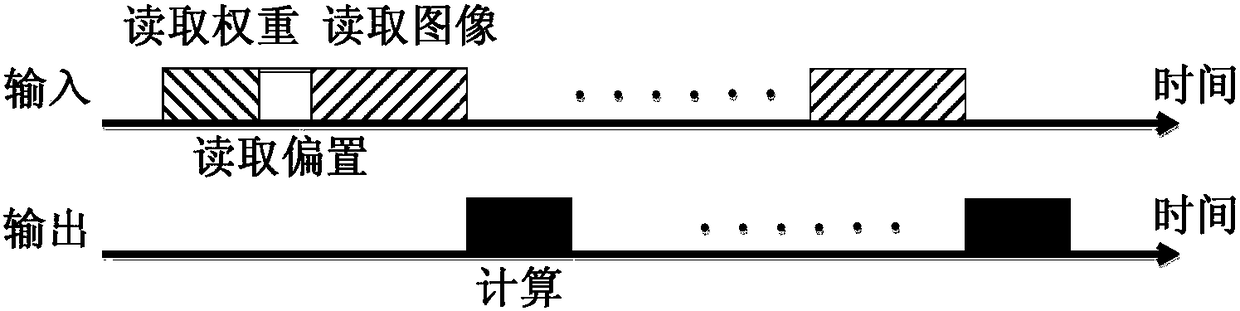

SOC-based data reuse convolutional neural network accelerator

ActiveCN108171317AImprove computing efficiencyReduce latencyImage memory managementNeural architecturesAddress generation unitRead through

The invention provides an SOC-based data reuse convolutional neural network accelerator. Input data of image input, weight parameters, offset parameters and the like of a convolutional neural networkis grouped; a large amount of the input data is classified into reusable block data; and reusable data blocks are read through a control state machine. The convolutional neural network has large parameter quantity and requires strong calculation capability, so that the convolutional neural network accelerator needs to provide very large data bandwidth and calculation capability. A large load is subjected to reusable segmentation, and data reuse is realized through a control unit and an address generation unit, so that the calculation delay and the required bandwidth of the convolutional neuralnetwork are shortened and reduced, and the calculation efficiency is improved.

Owner:BEIJING MXTRONICS CORP +1

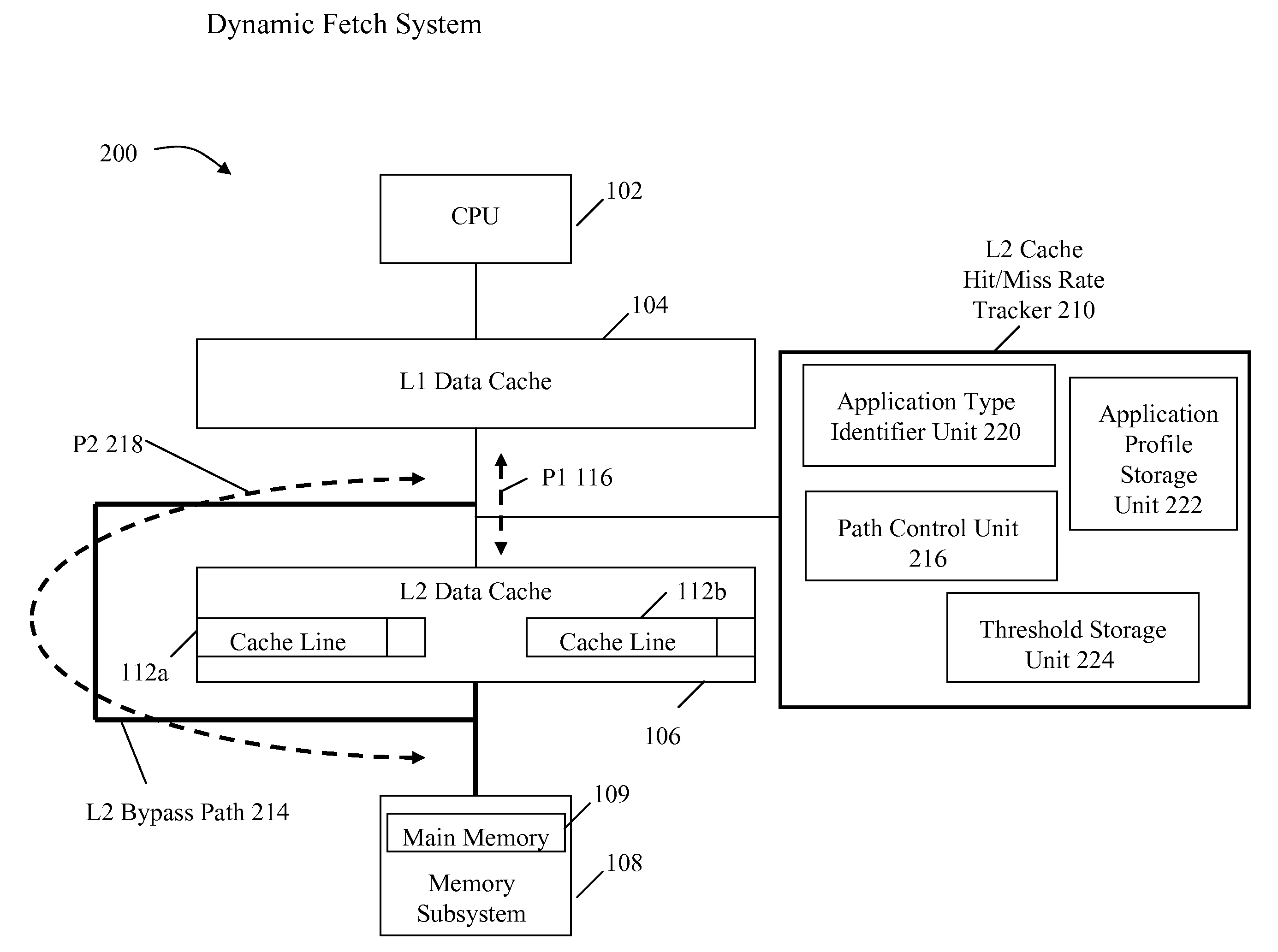

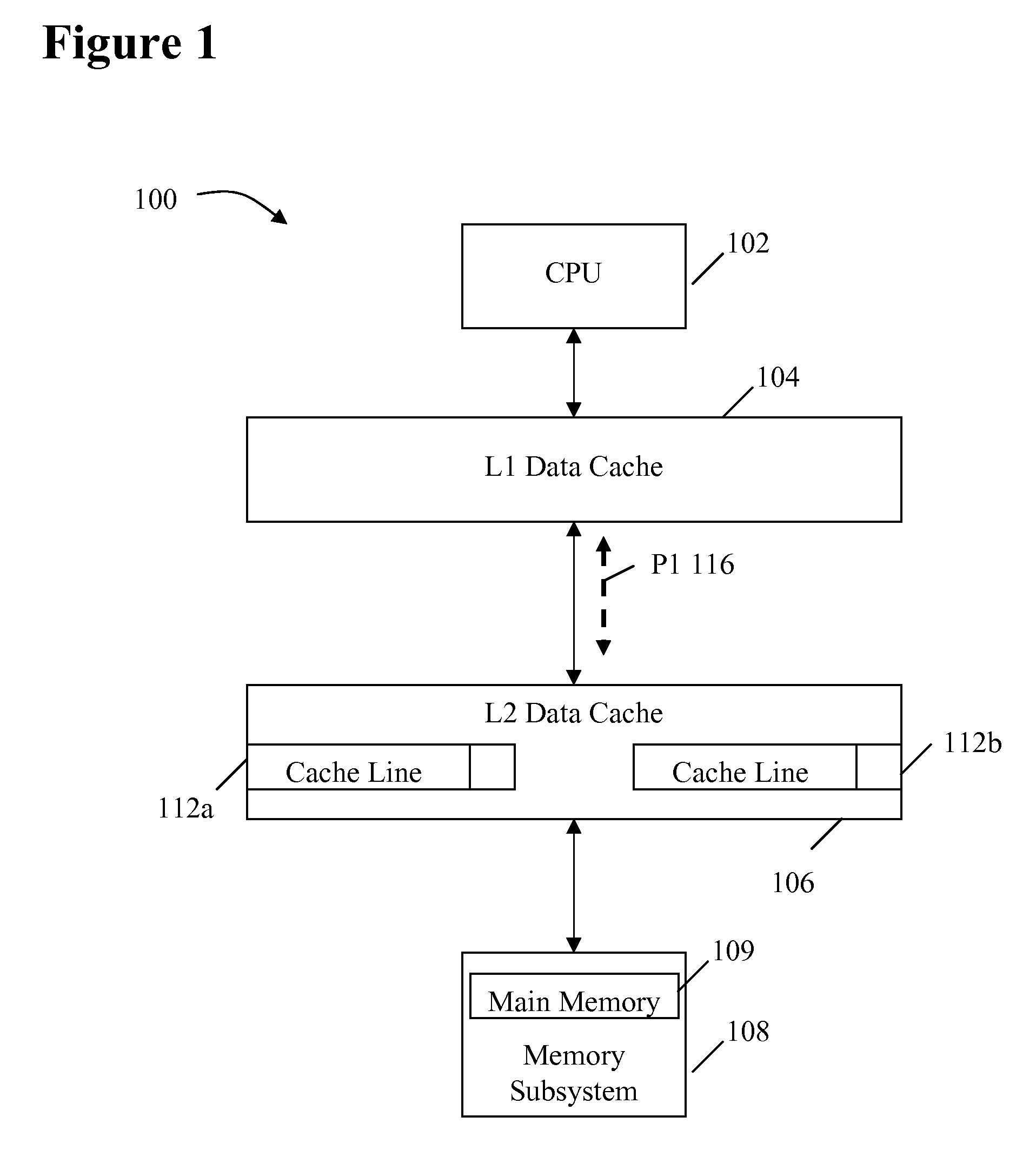

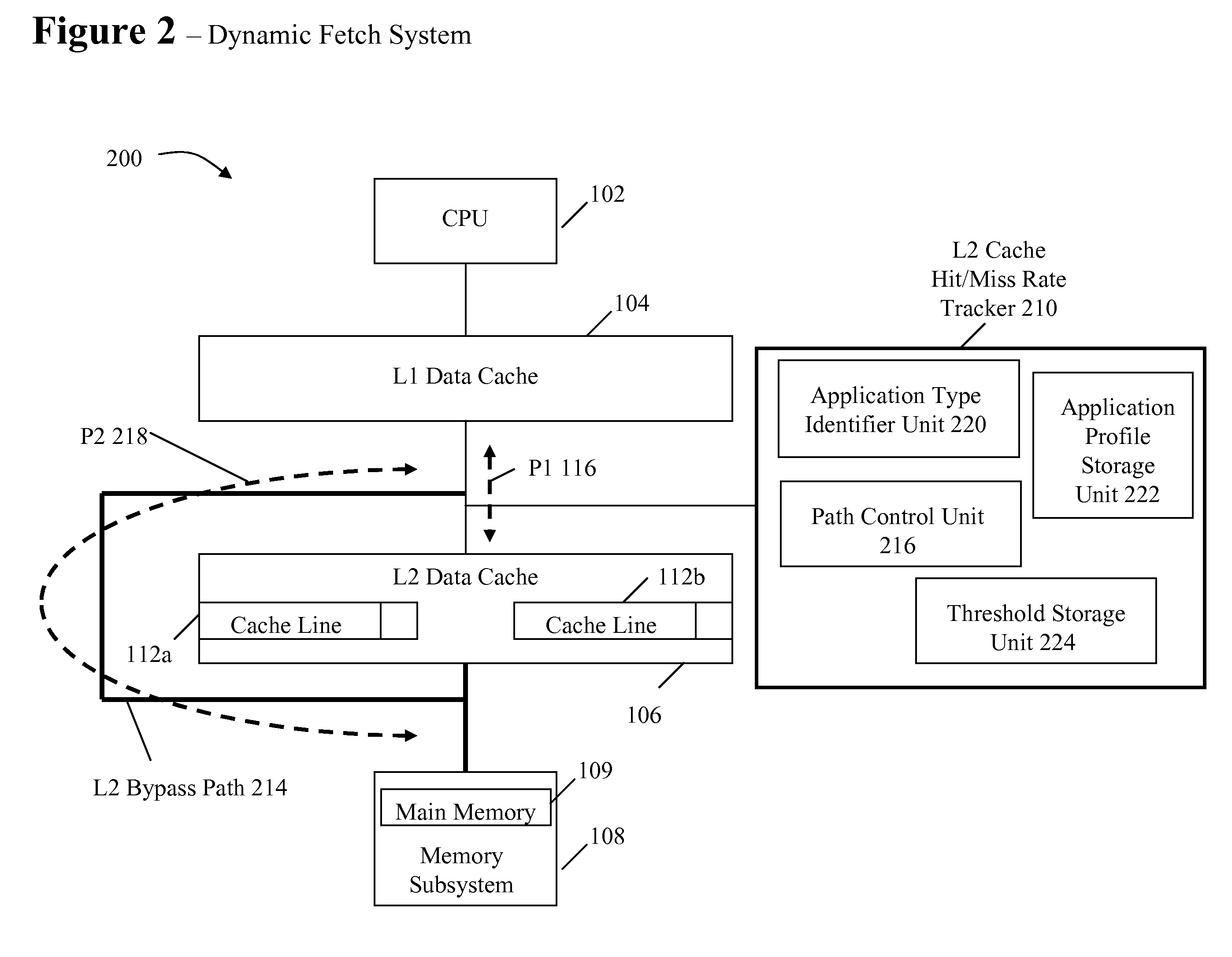

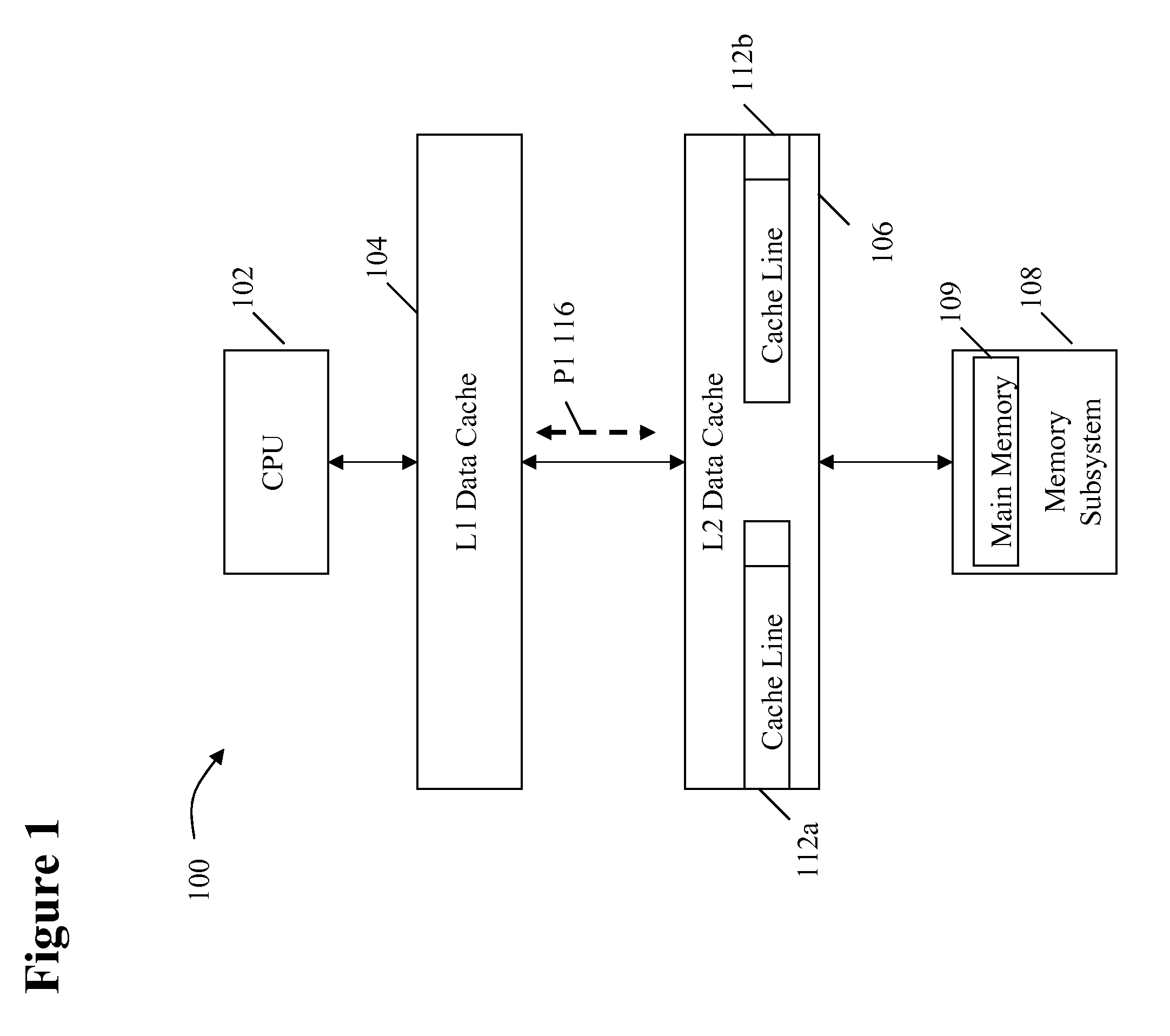

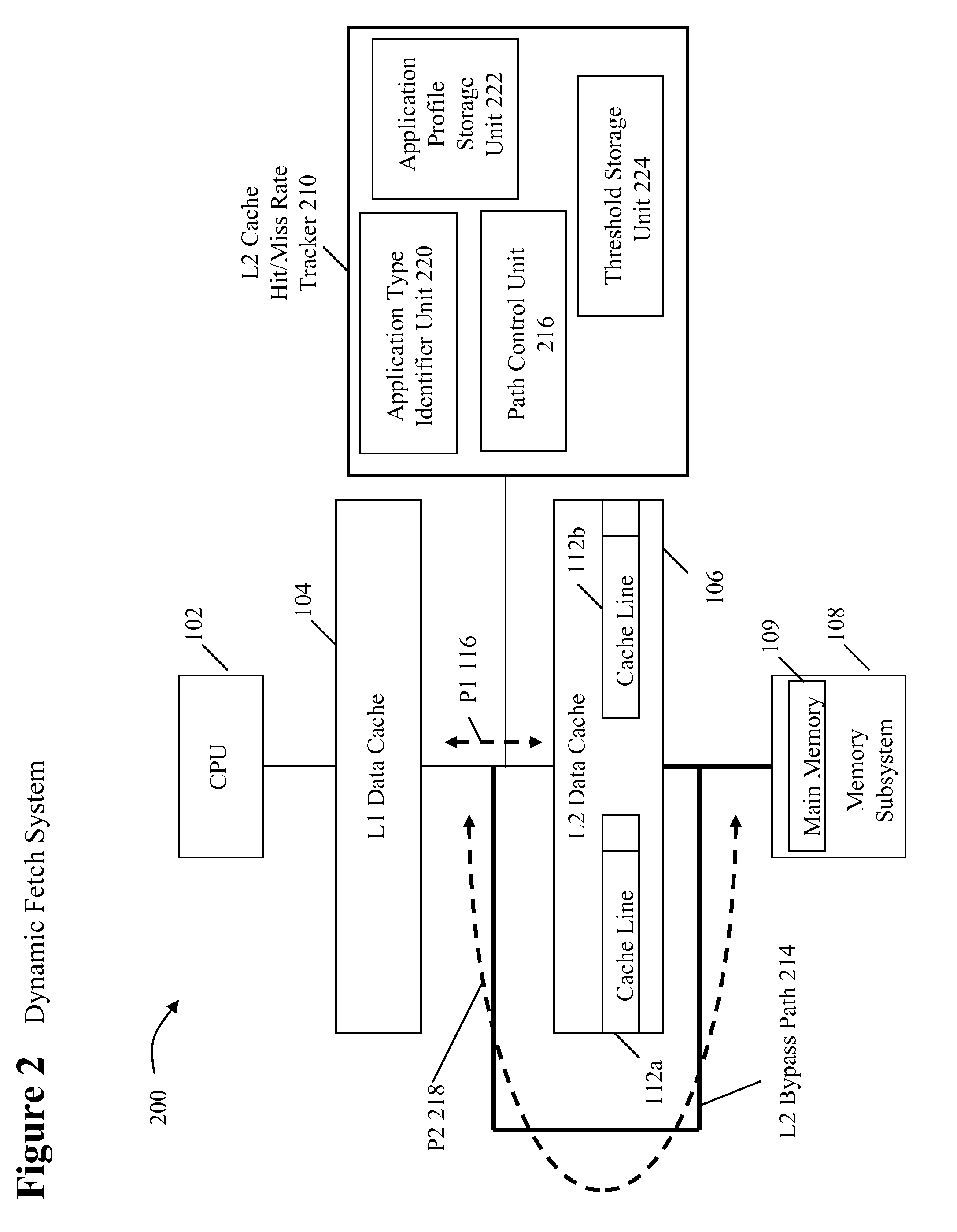

System and method for dynamically selecting the fetch path of data for improving processor performance

InactiveUS20090037664A1Improve system performanceImprove latencyMemory adressing/allocation/relocationData accessParallel computing

A system and method for dynamically selecting the data fetch path for improving the performance of the system improves data access latency by dynamically adjusting data fetch paths based on application data fetch characteristics. The application data fetch characteristics are determined through the use of a hit / miss tracker. It reduces data access latency for applications that have a low data reuse rate (streaming audio, video, multimedia, games, etc.) which will improve overall application performance. It is dynamic in a sense that at any point in time when the cache hit rate becomes reasonable (defined parameter), the normal cache lookup operations will resume. The system utilizes a hit / miss tracker which tracks the hits / misses against a cache and, if the miss rate surpasses a prespecified rate or matches an application profile, the hit / miss tracker causes the cache to be bypassed and the data is pulled from main memory or another cache thereby improving overall application performance.

Owner:IBM CORP

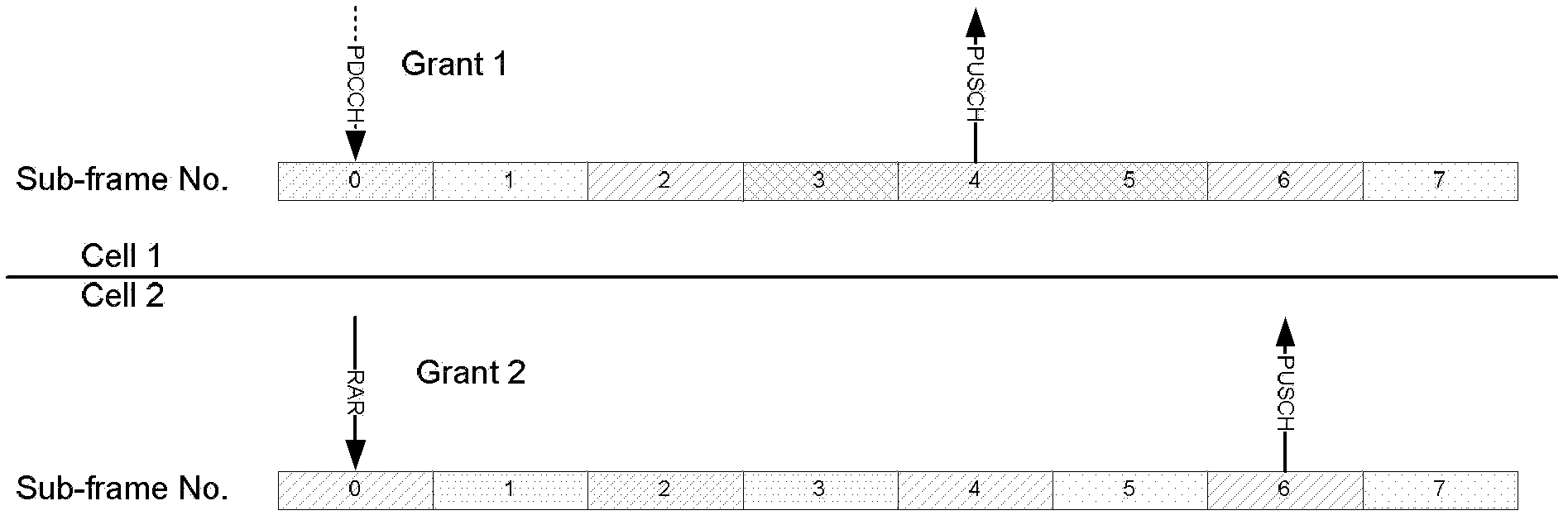

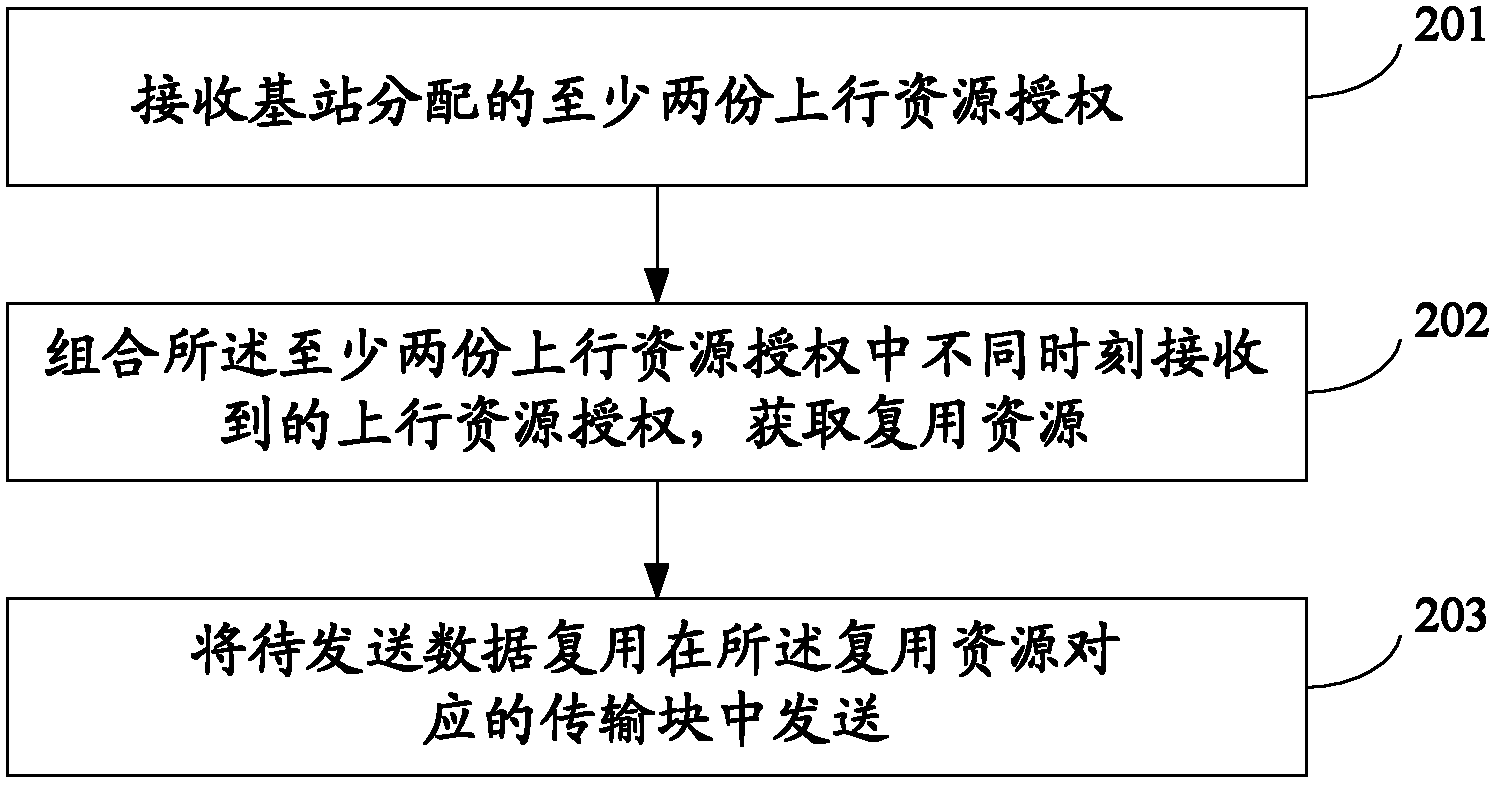

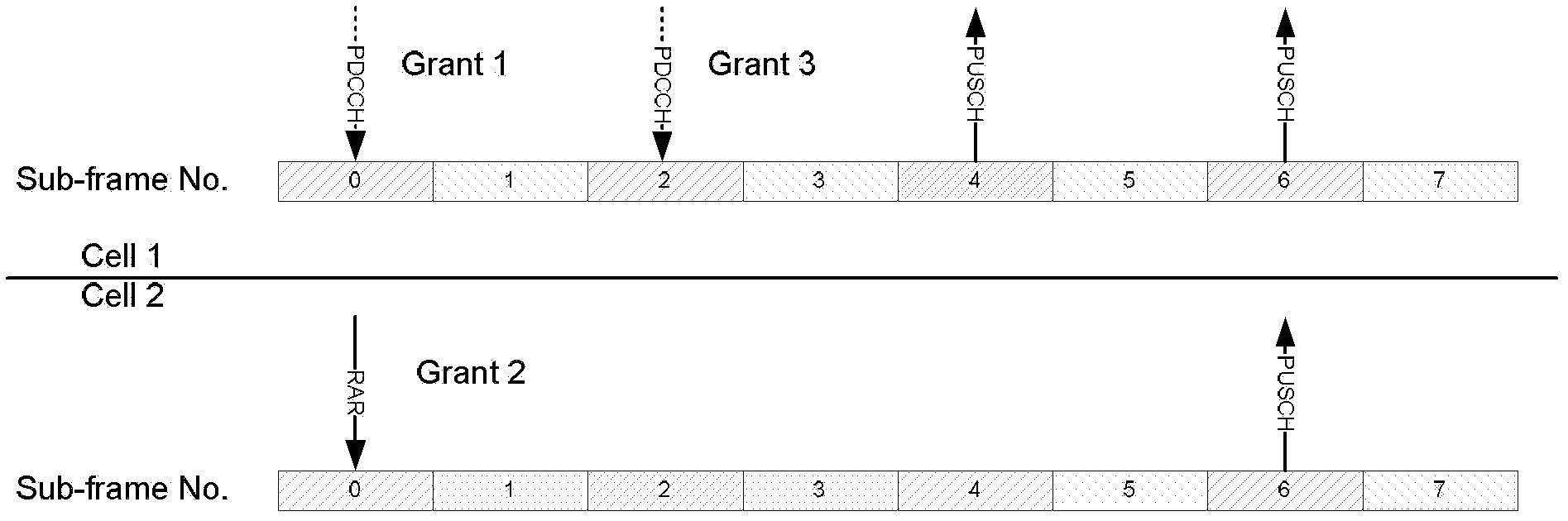

Data transmission method and user device

The invention discloses a data transmission method and a user device, and belongs to the mobile communication field. The method comprises receiving at least two parts of uplink resource authorization allocated by a base station; combining uplink resource authorization received at different moments in the at least two parts of uplink resource authorization to obtain reuse resources; and reusing data to be transmitted in transmission blocks corresponding to the reuse resources. The user device comprises a receiving module, a processing module and a transmission module. An embodiment of the data transmission method and the user device groups the uplink resource authorization according to transmission moments of the transmission blocks corresponding to the uplink resource authorization to determine the reuse resources, transmits the transmission blocks corresponding to the uplink resource authorization after data reuse is grouped to enable user experience (UE) to determine delay situations of the uplink resources according to transmission moments, and adjusts reuse sequences according to probable delay to avoid delay increases during high-priority data transmission.

Owner:HUAWEI TECH CO LTD

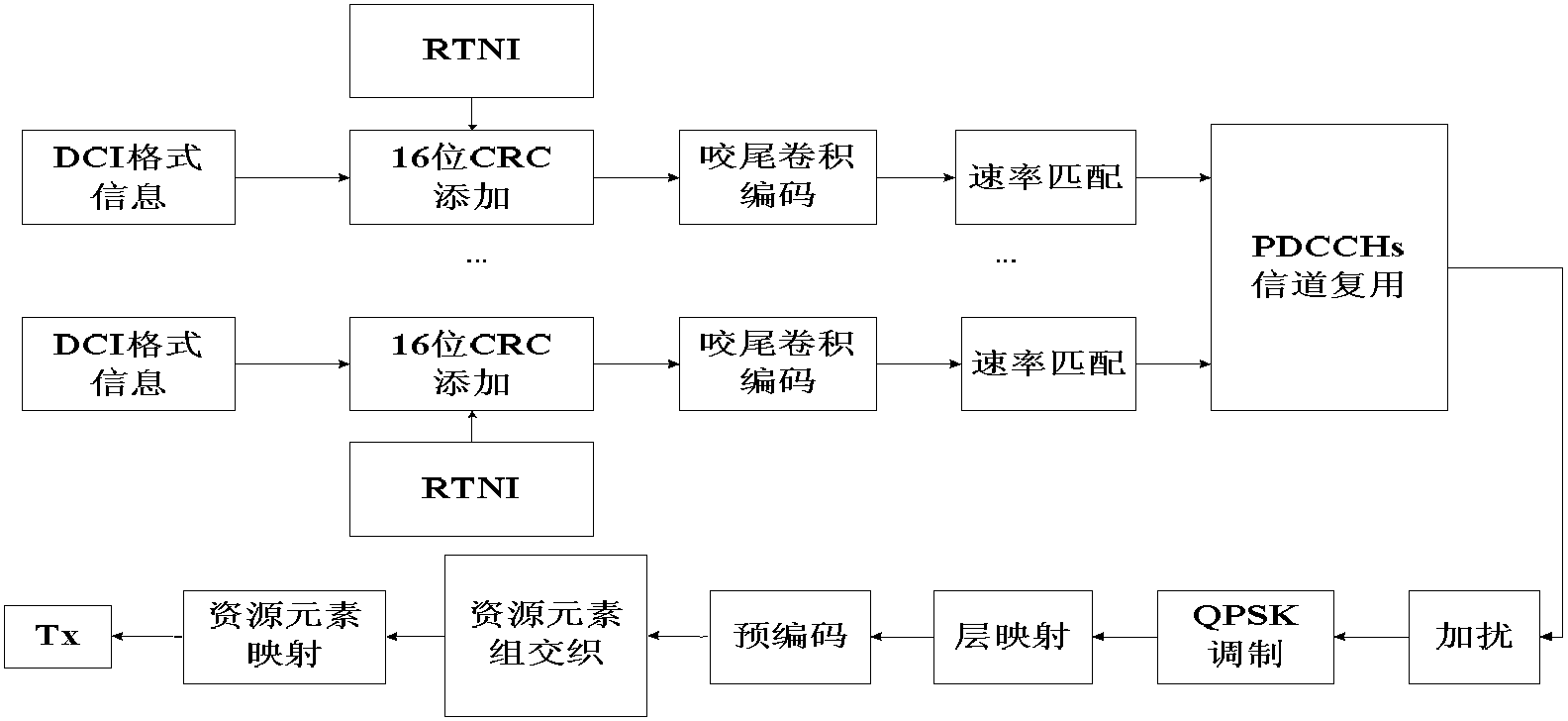

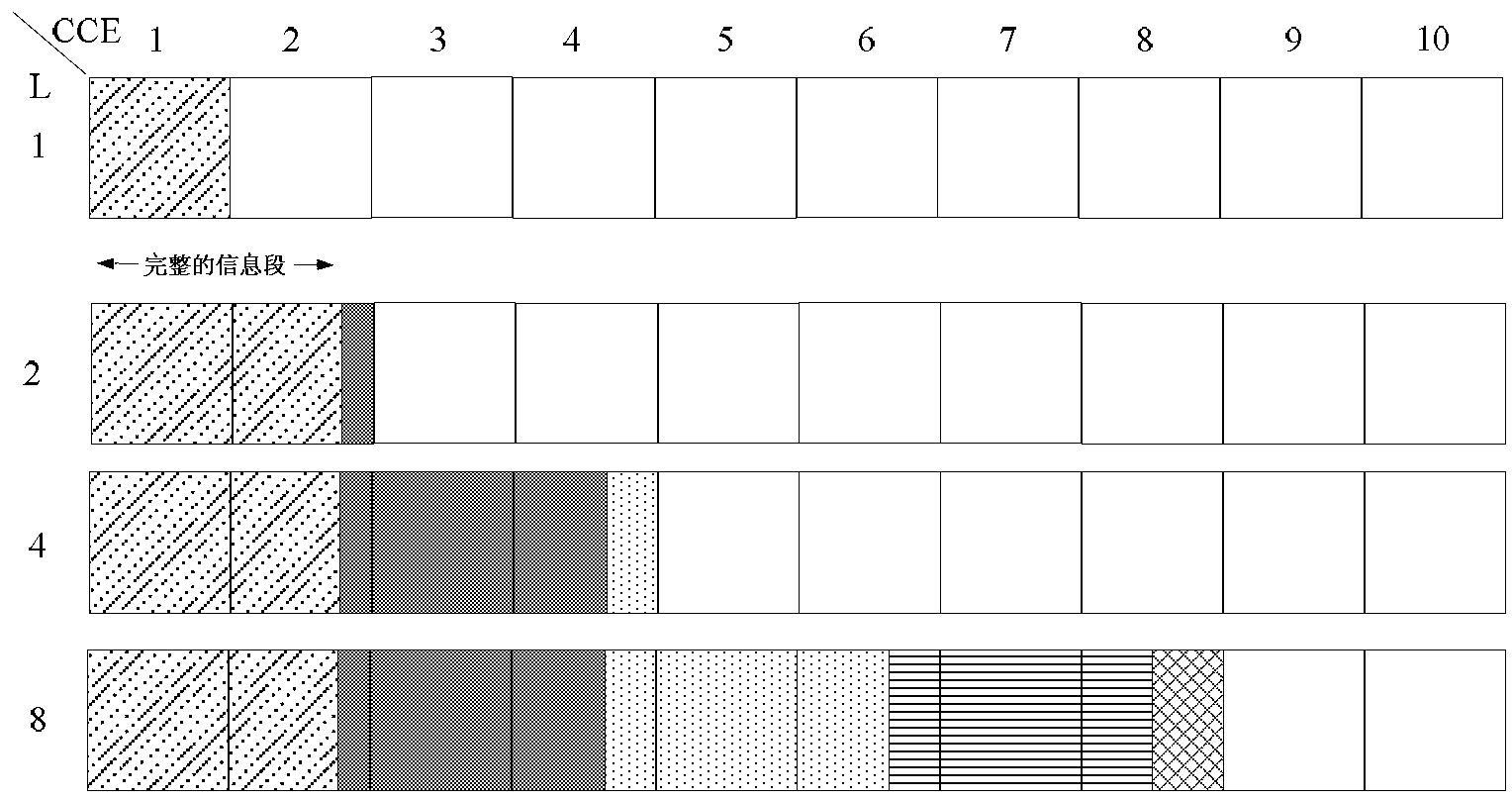

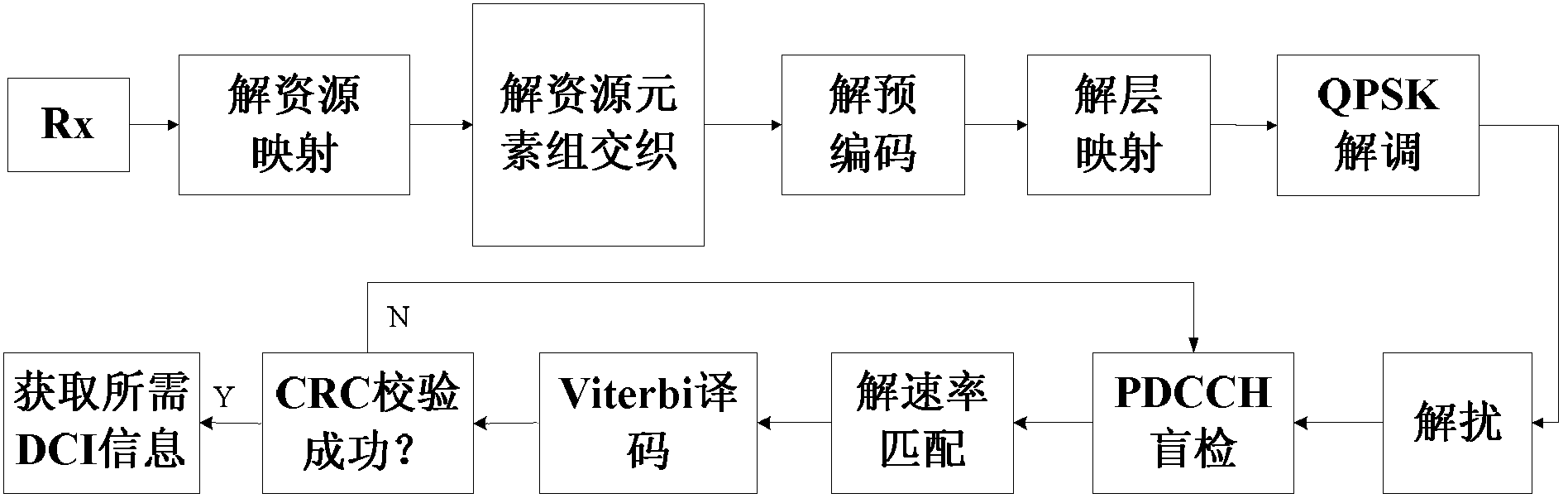

Blind test efficiency improvement method for TD-LTE (Time Division Long Term Evolution) system

ActiveCN103297195AReduce the number of blind inspectionsReduce the maximum number of blind detectionsError preventionTransmission path multiple useTest efficiencyTime-Division Long-Term Evolution

The invention discloses a blind test efficiency improvement method for a TD-LTE (Time Division Long Term Evolution) system. Data repeat is utilized when the speed rates are matched at a sending terminal, sliding self-correlation is utilized at a receiving terminal, starting positions of data reuse are estimated, candidate sets are arranged according to the possibility, and blind tests are sequentially performed, so that the accuracy of the blind tests is improved; two large polymerization degree blind test sets are combined, so that the maximum blind test numbers are reduced; and judgment can be performed on a correlation value of a public search space first and then calculation can be performed when two search spaces simultaneously exist, so that the speed rates of the blind tests are improved. The blind test efficiency improvement method for the TD-LTE system can effectively improve the blind test efficiency and enables the blind tests to be rapid.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

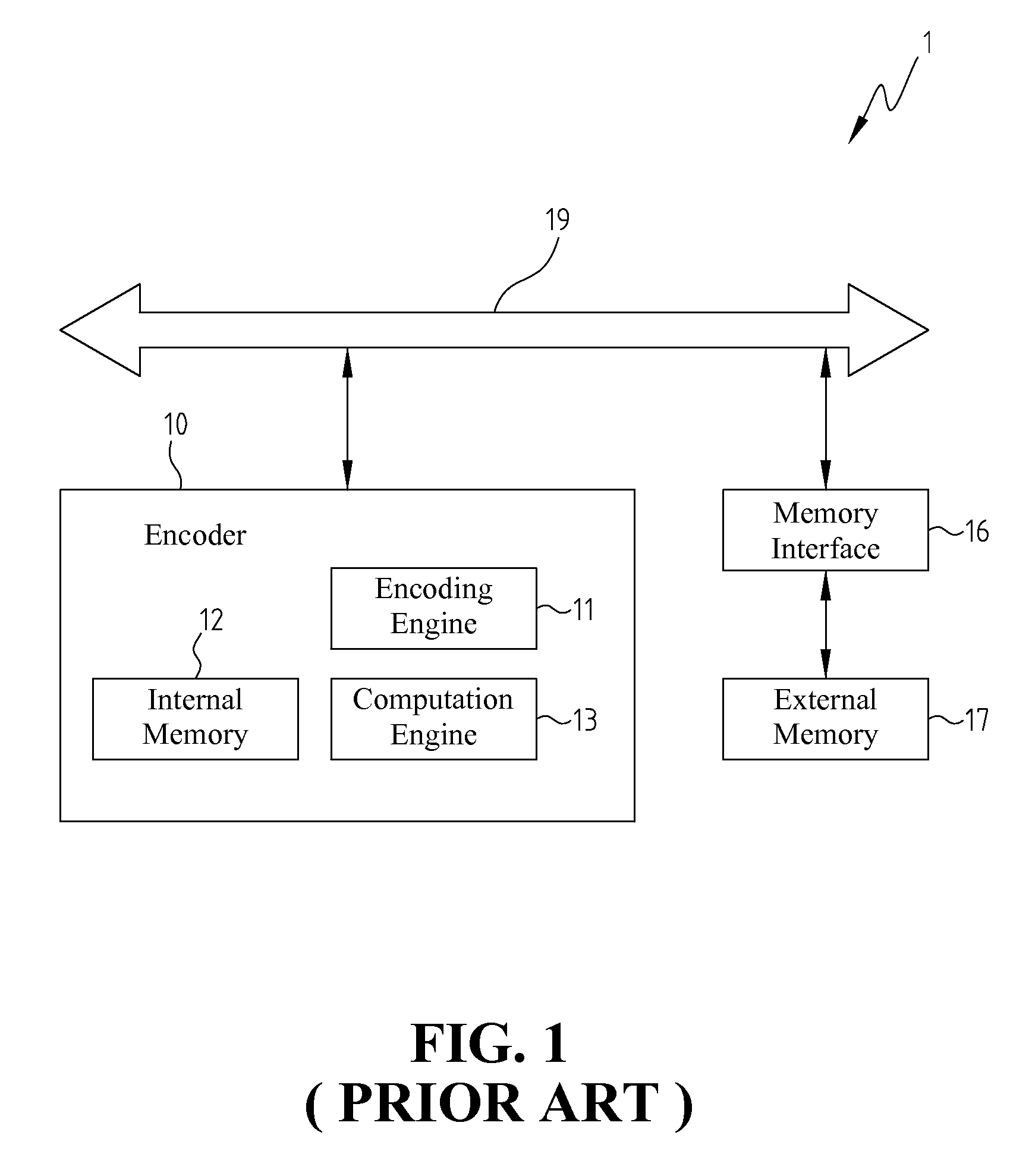

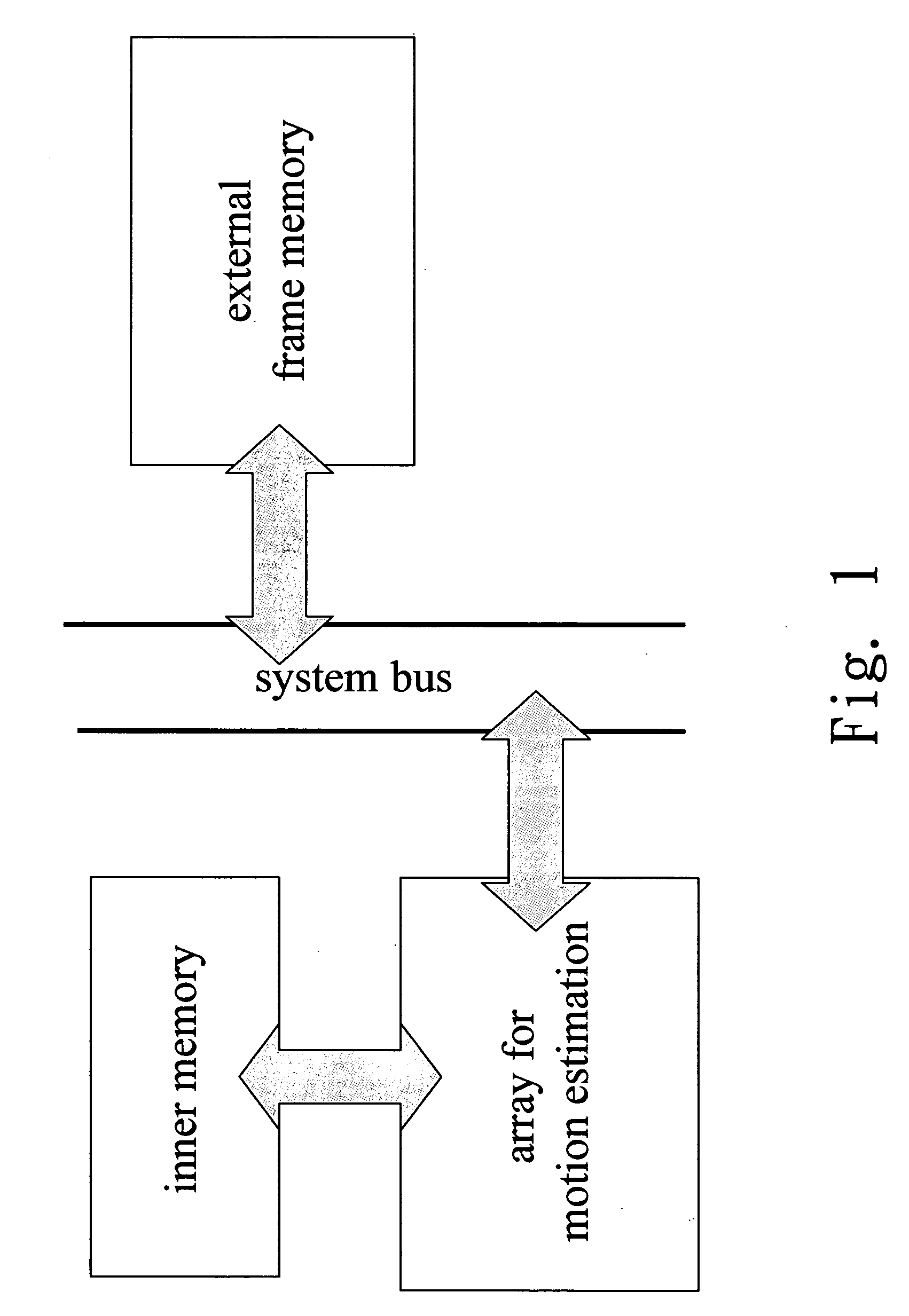

High-Performance Block-Matching VLSI Architecture With Low Memory Bandwidth For Power-Efficient Multimedia Devices

InactiveUS20100091862A1Reduce memory bandwidthReduce frequencyColor television with pulse code modulationColor television with bandwidth reductionComputer architecturePower efficient

A high-performance block-matching VLSI architecture with low memory bandwidth for power-efficient multimedia devices is disclosed. The architecture uses several current blocks with the same spatial address in different current frames to search the best matched blocks in the search window of the reference frame based on the best matching algorithm (BMA) to implement the process of motion estimation in video coding. The scheme of the architecture using several current blocks for one search window greatly increases data reuse, accelerates the process of motion estimation, and reduces the data bandwidth and the power consumption.

Owner:NAT TAIWAN UNIV

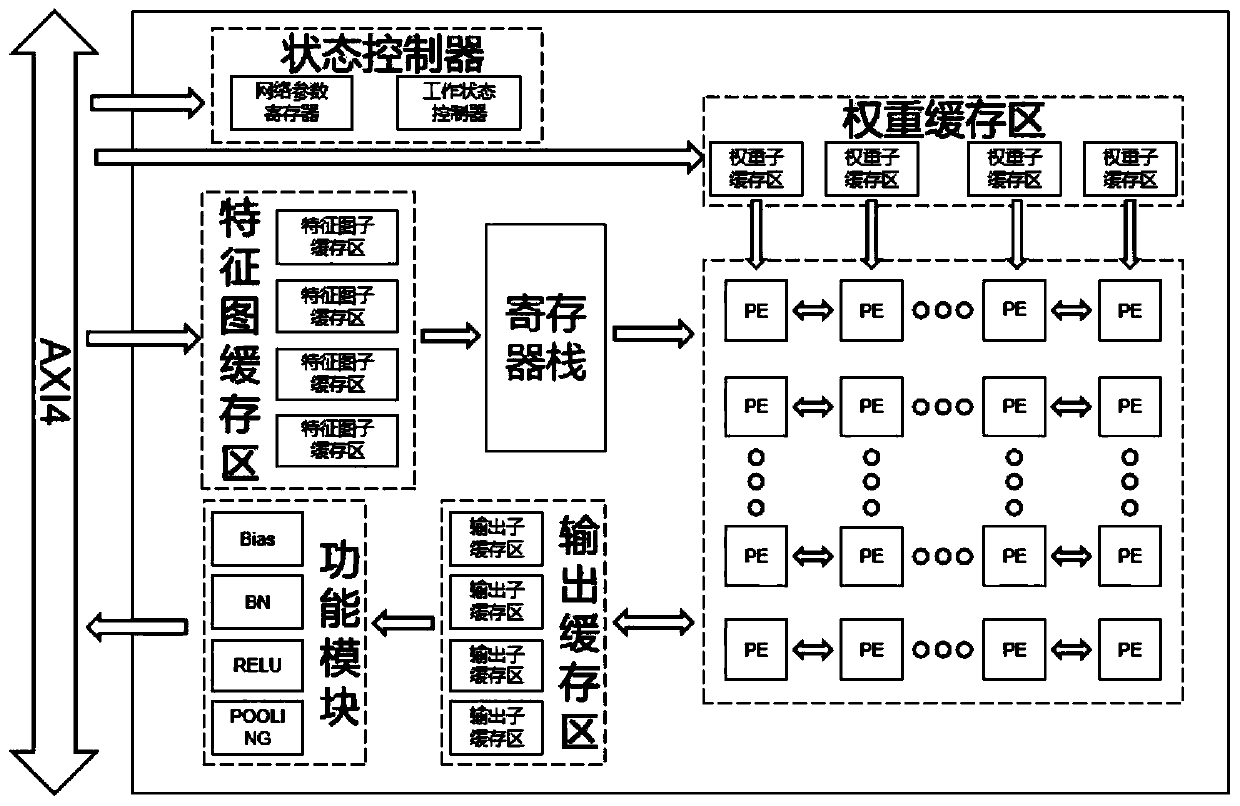

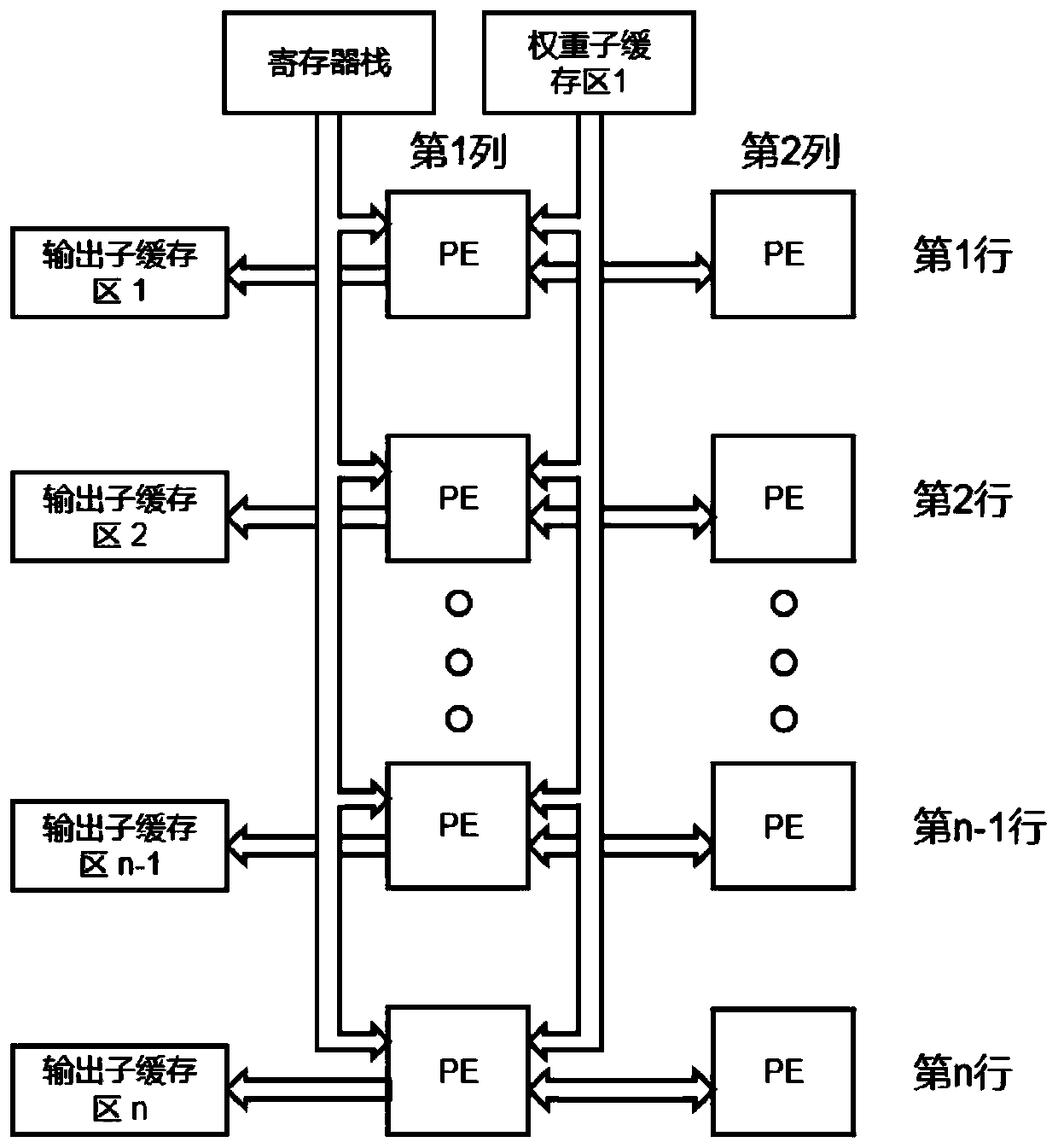

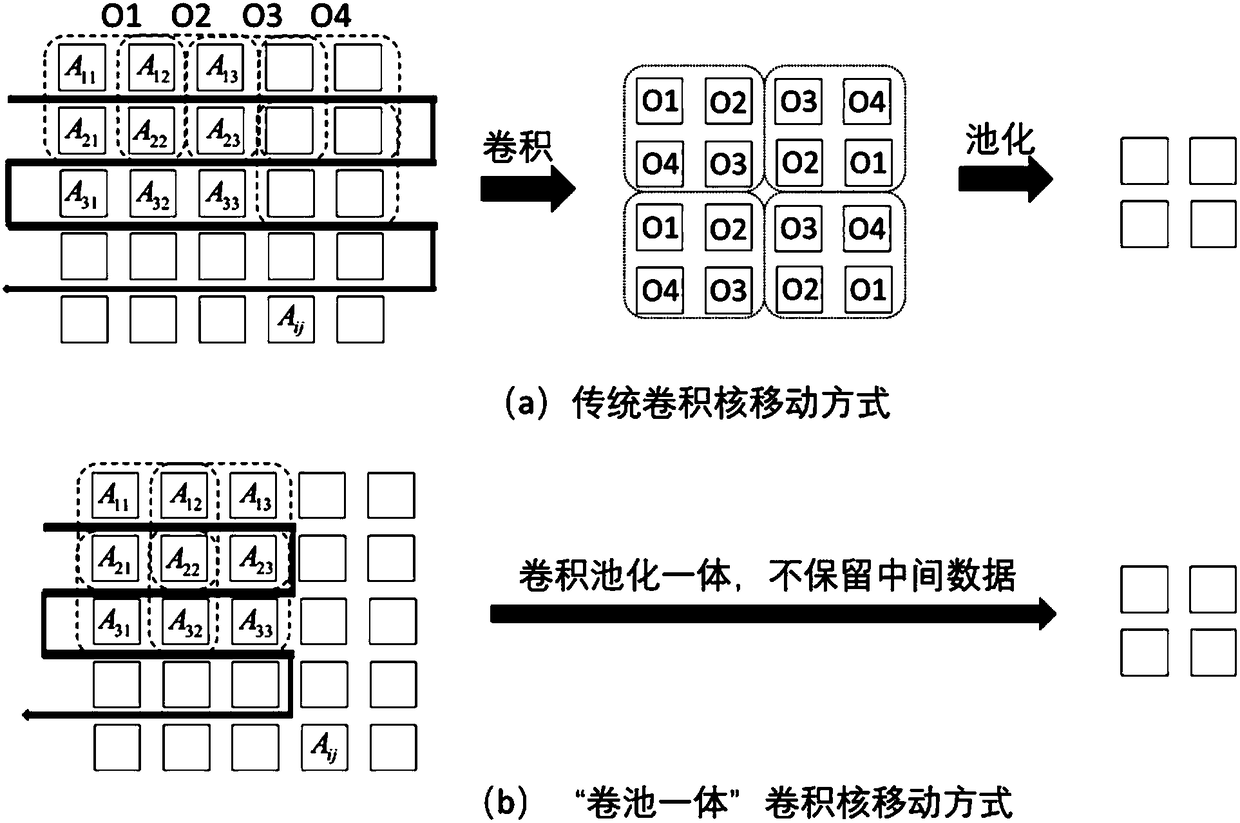

Configurable universal convolutional neural network accelerator

ActiveCN110390384AMeet changing application requirementsThe acceleration effect is obviousImage memory managementProcessor architectures/configurationControl signalProcessor register

The invention discloses a configurable universal convolutional neural network accelerator, and belongs to the technical field of calculation, calculation and counting. The accelerator comprises a PE array, a state controller, a function module, a weight cache region, a feature map cache region, an output cache region and a register stack; the state controller comprises a network parameter registerand a working state controller. An excellent acceleration effect can be achieved for networks of different scales by configuring a network parameter register, and a working state controller controlsswitching of the working state of the accelerator and sends a control signal to other modules. The weight cache region, the feature map cache region and the output cache region are each composed of aplurality of data sub-cache regions and used for storing weight data, feature map data and calculation results. According to the method, proper data reuse modes, array sizes and the number of sub-cache regions can be configured according to different network characteristics, and the method is good in universality, low in power consumption and high in throughput.

Owner:SOUTHEAST UNIV

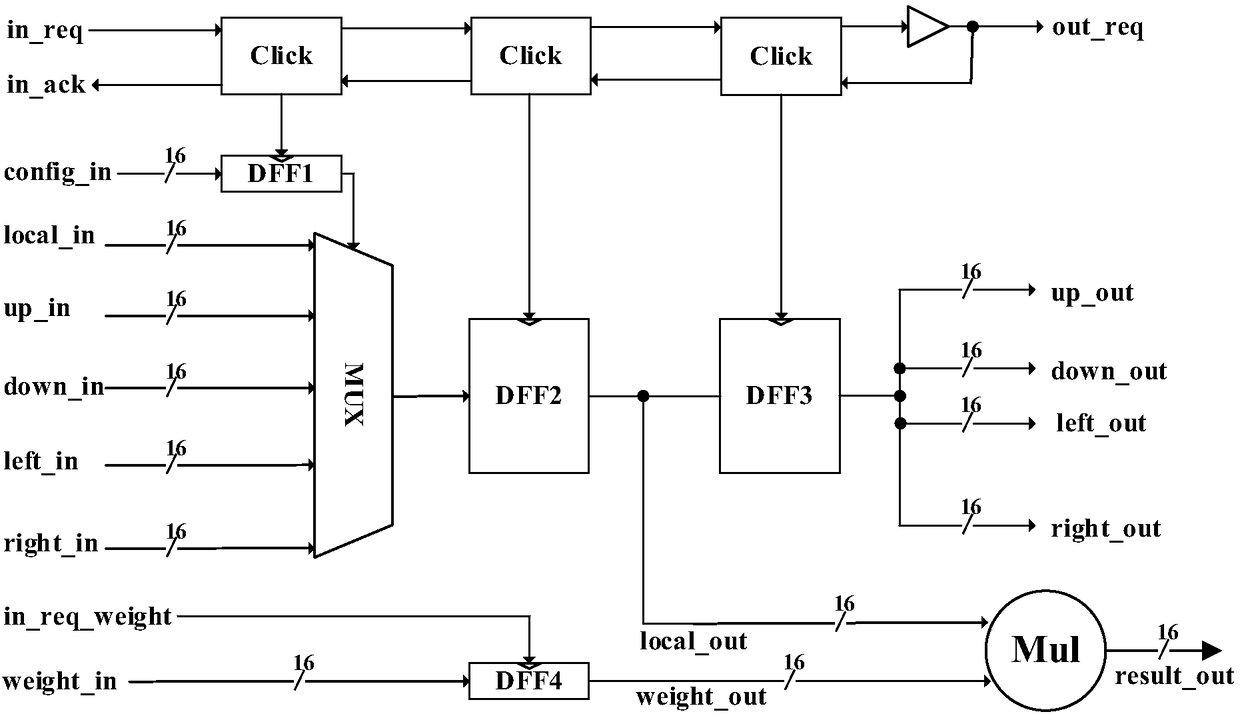

Reconfigurable convolutional neural network acceleration circuit based on asynchronous logic

InactiveCN108537331ARealize communicationImprove parallelismPhysical realisationAsynchronous circuitParallel computing

The present invention provides a reconfigurable convolutional neural network acceleration circuit based on asynchronous logic. The circuit comprises three portions consisting of basic processing elements (PE), a processing array formed by the PEs and a configurable pooling unit. The circuit employs the basic configuration of a reconfigurable circuit to perform reconfiguration of the pressing arrayfor different convolutional neural network models; the circuit is integrally based on the asynchronous logic to employ a lock clock generated by Click units in an asynchronous circuit to replace a global clock in a synchronous circuit and employ an asynchronous pipeline architecture formed by cascading the Click units; and finally, the circuit employs the asynchronous communicating Mesh network to achieve data reuse to reduce power dissipation through reduction of the number of times of accessing the memory. The circuit provided by the invention is flexible and high in degree of parallelism and data reuse rate, and has a power consumption advantage compared to an acceleration circuit implemented through synchronous logic so as to greatly improve the processing speed of the convolutional neural network in the low power consumption.

Owner:TSINGHUA UNIV

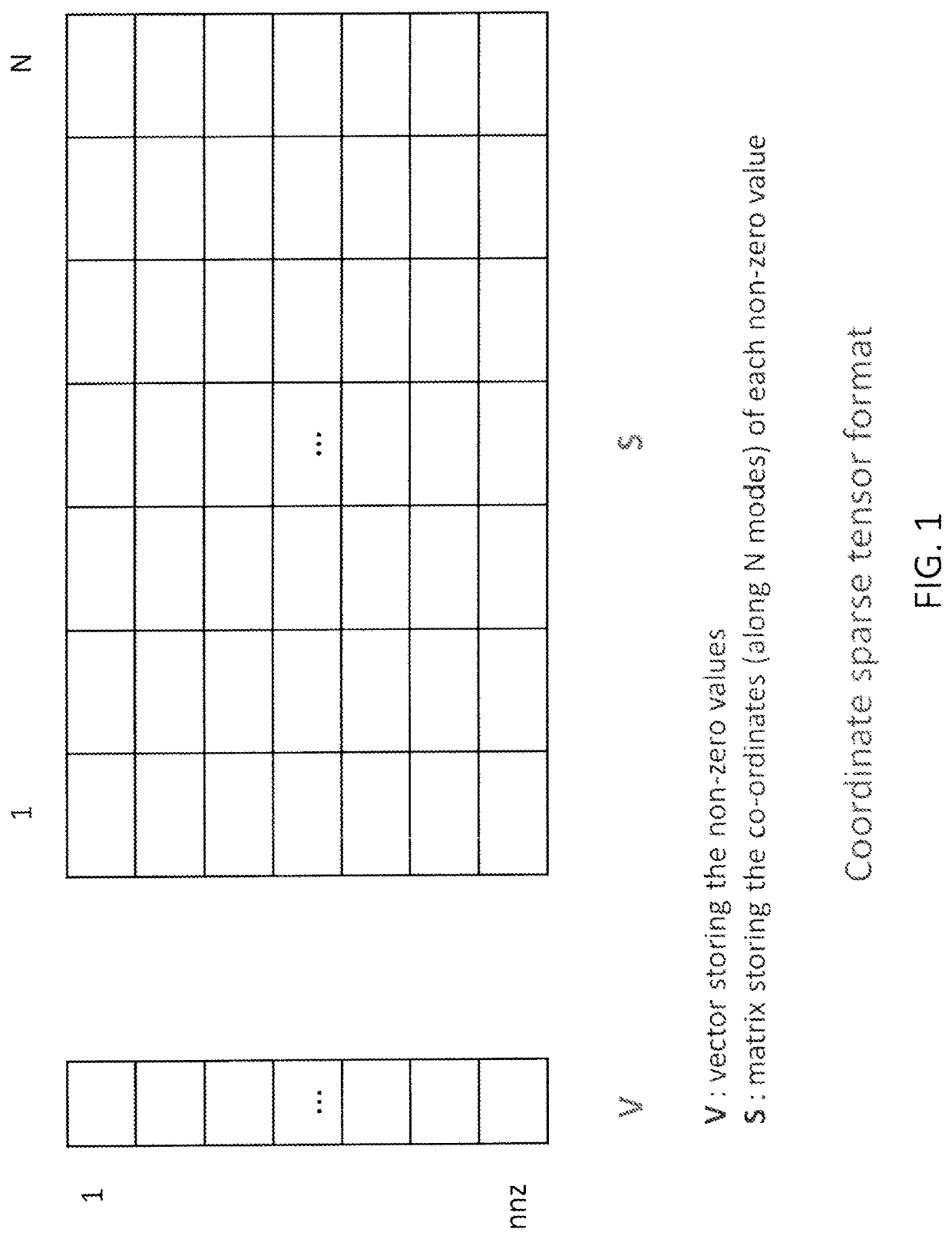

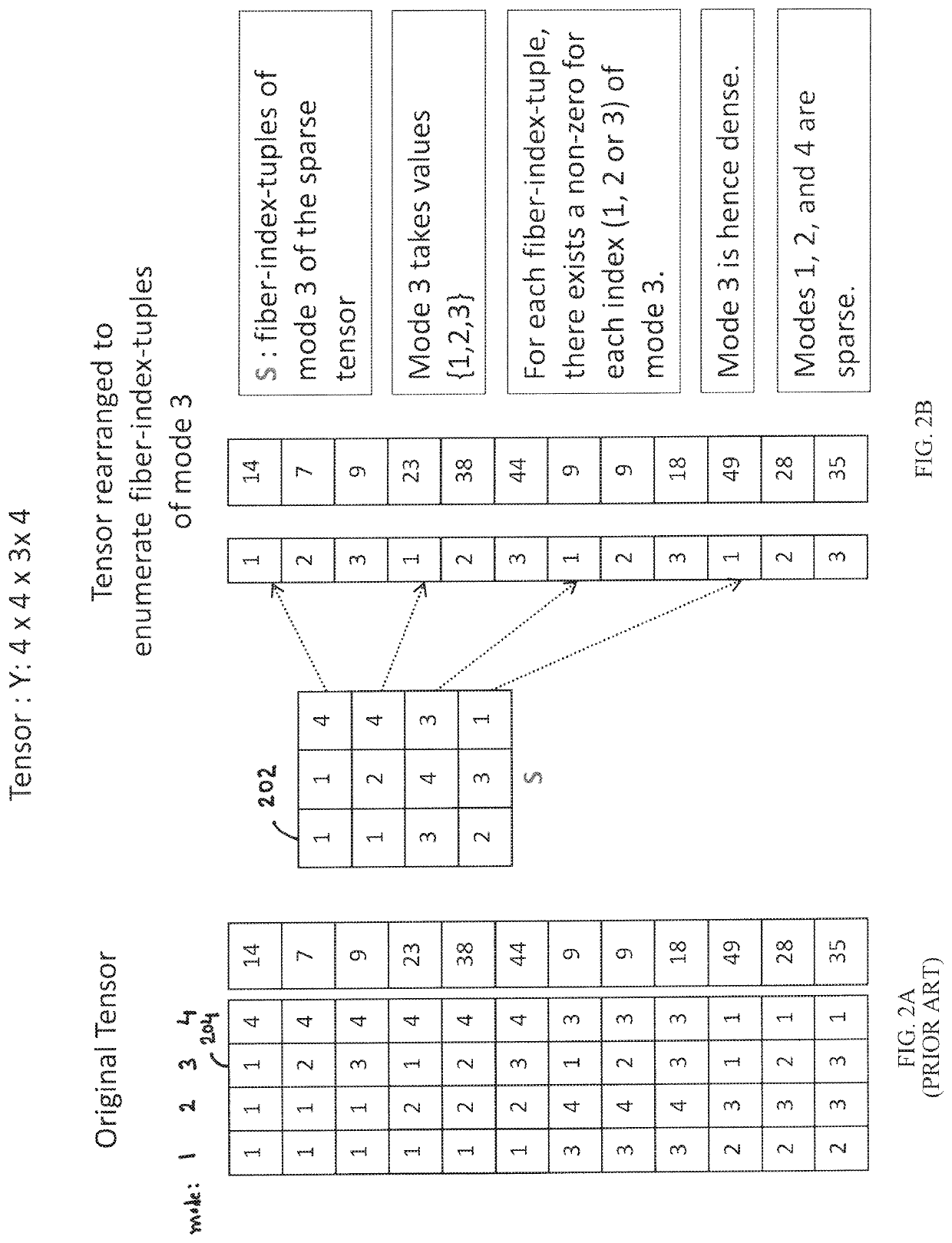

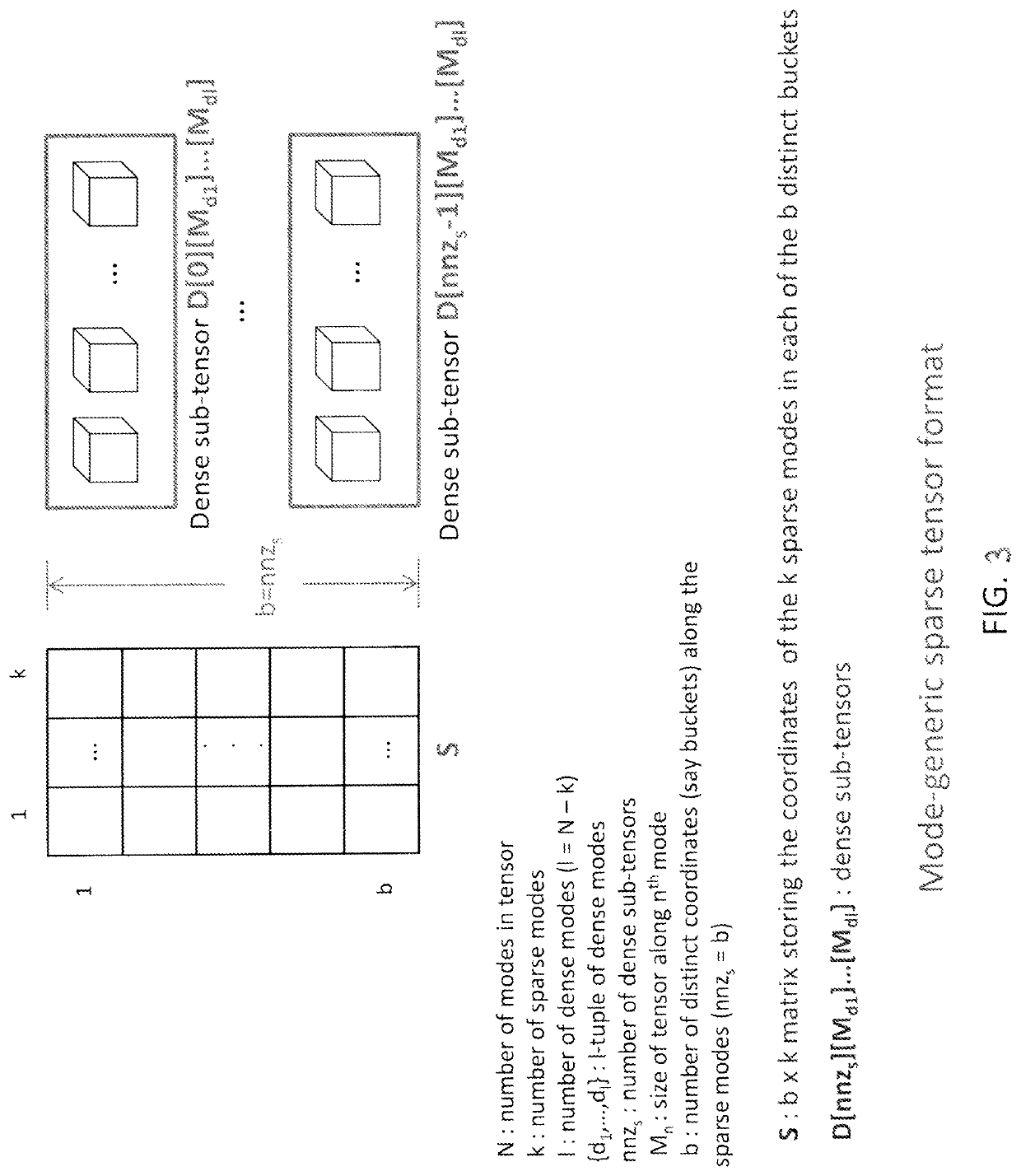

Efficient and scalable computations with sparse tensors

ActiveUS10936569B1Improve data localityMemory storage benefitsComplex mathematical operationsDatabase indexingLocality of referenceAlgorithm

In a system for storing in memory a tensor that includes at least three modes, elements of the tensor are stored in a mode-based order for improving locality of references when the elements are accessed during an operation on the tensor. To facilitate efficient data reuse in a tensor transform that includes several iterations, on a tensor that includes at least three modes, a system performs a first iteration that includes a first operation on the tensor to obtain a first intermediate result, and the first intermediate result includes a first intermediate-tensor. The first intermediate result is stored in memory, and a second iteration is performed in which a second operation on the first intermediate result accessed from the memory is performed, so as to avoid a third operation, that would be required if the first intermediate result were not accessed from the memory.

Owner:QUALCOMM INC

Circuit structure for accelerating convolutional layer and fully connected layer of neural network

ActiveCN108416434AReduce memory access performance lossIncrease usagePhysical realisationParallel computingComputer module

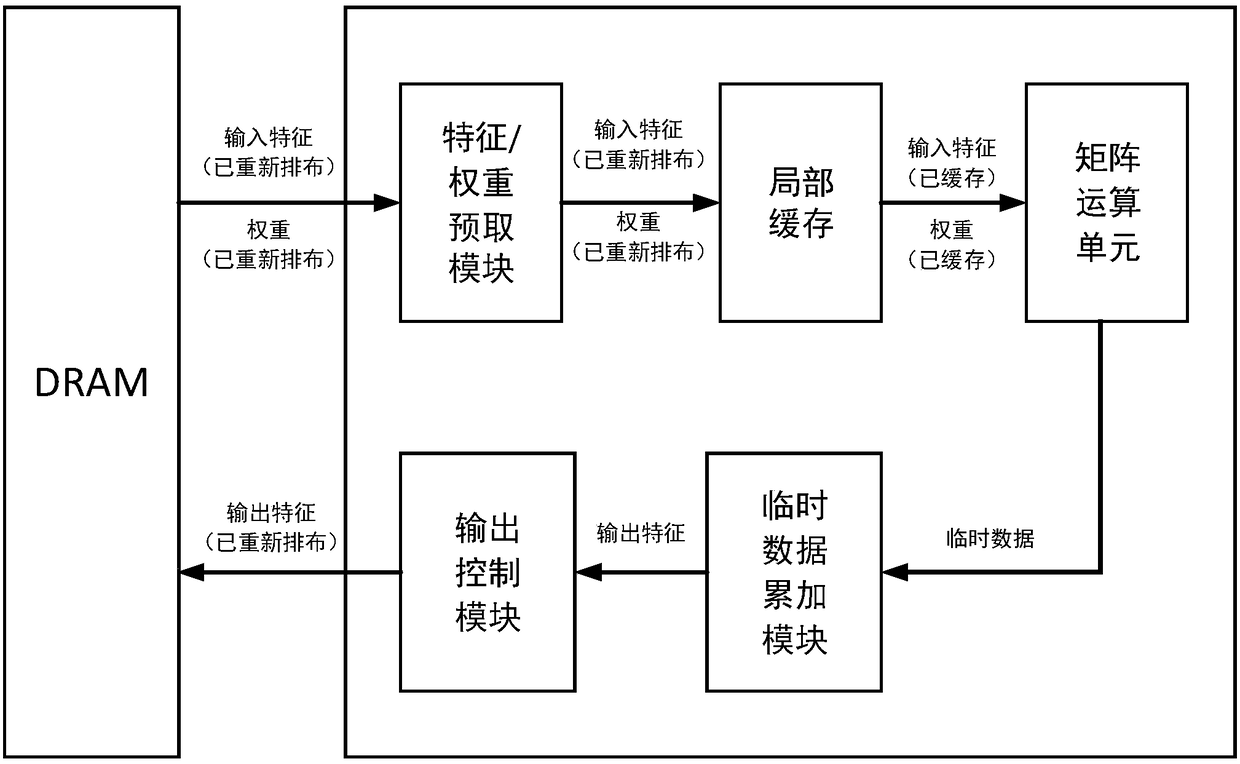

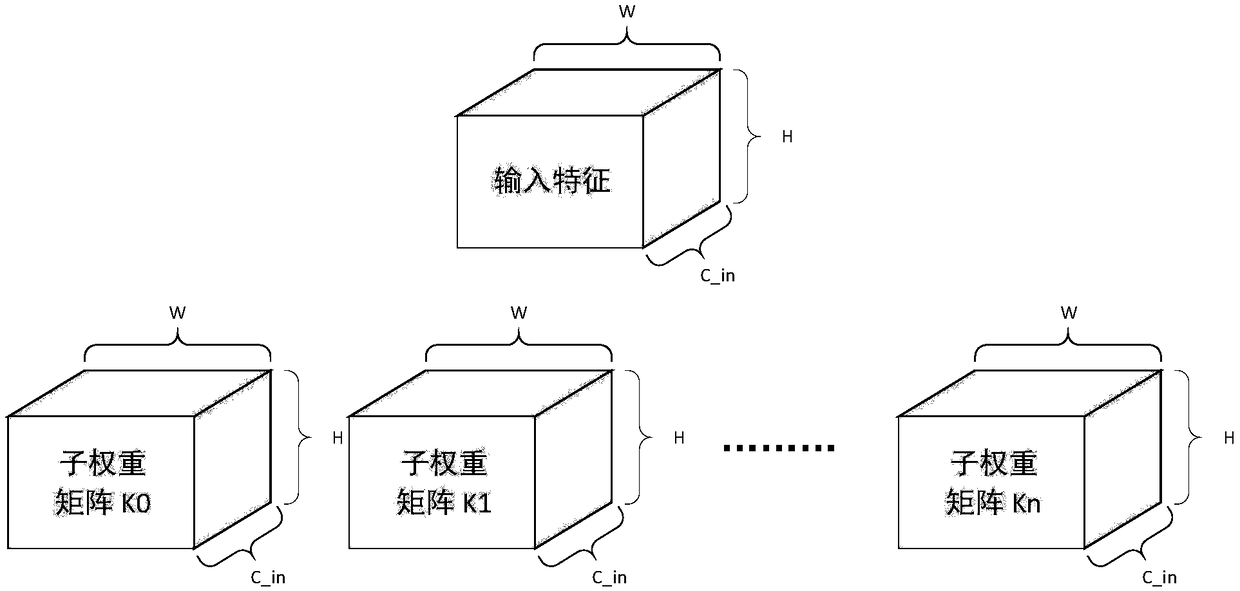

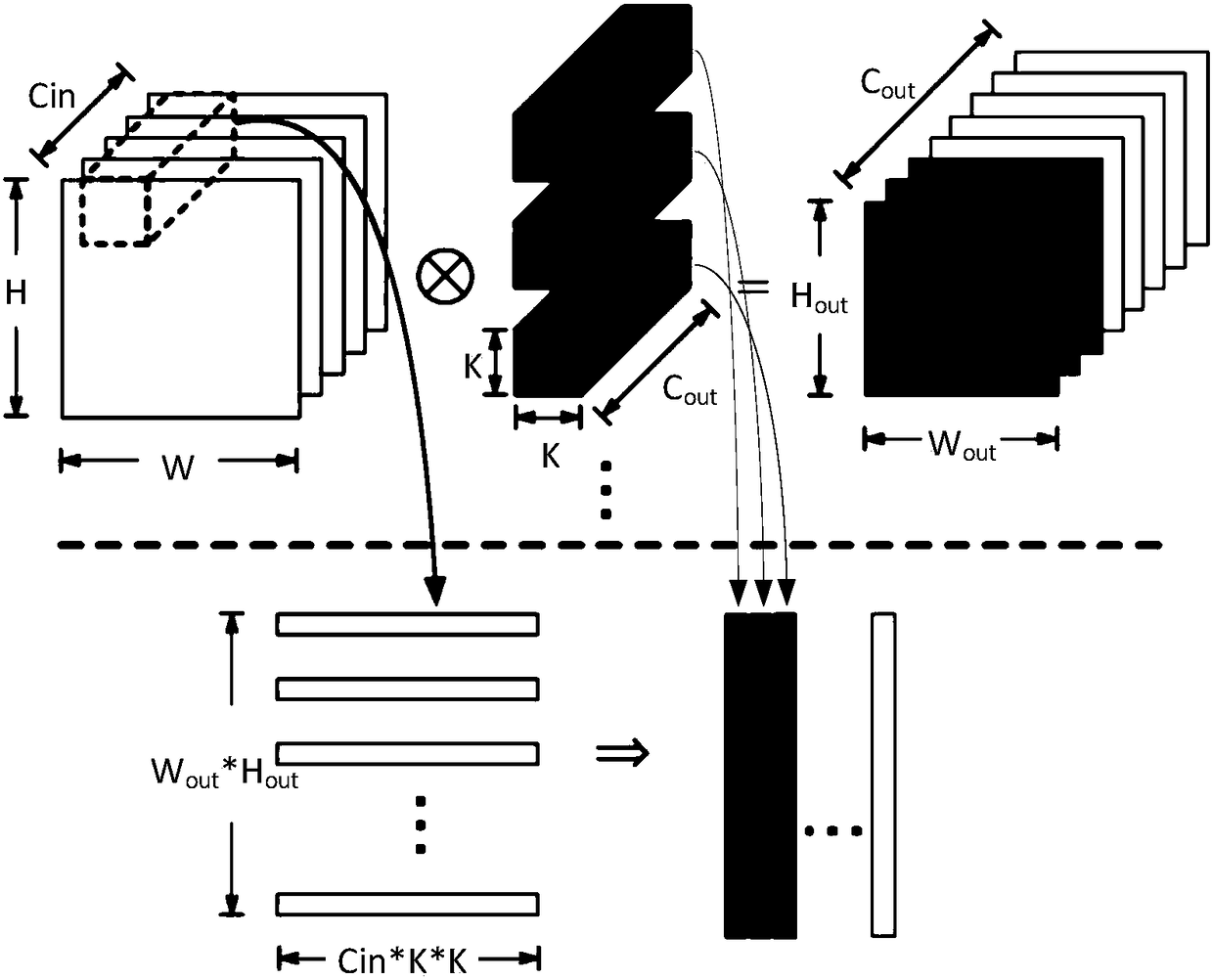

The invention belongs to the technical field of integrated circuit designs and particular relates to a circuit structure capable of simultaneously accelerating a convolutional layer and a fully connected layer. The circuit structure comprises five parts: a characteristic / weight pre-fetching module for data reading, a local cache for improving a data reuse rate, a matrix operation unit for realizing matrix multiplication, a temporary data accumulation module for accumulating temporary output results and an output control module in responsible for data rewriting. According to the circuit, a special mapping method is adopted, and operations of the convolutional layer and the fully connected layer are mapped to the matrix operation unit with a fixed size. In the circuit, the memory layout modes of characteristics and weights are adjusted so as to greatly improve the access efficiency of the circuit. Meanwhile, the assembly line mechanism is adopted for scheduling of a circuit module so that all hardware units in each clock period are in a working state, the utilization rate of the hardware units is improved and the working efficiency of the circuit is improved.

Owner:FUDAN UNIV

System and method for dynamically selecting the fetch path of data for improving processor performance

InactiveUS7865669B2Improve system performanceImprove latencyMemory adressing/allocation/relocationSensuData access

A system and method for dynamically selecting the data fetch path for improving the performance of the system improves data access latency by dynamically adjusting data fetch paths based on application data fetch characteristics. The application data fetch characteristics are determined through the use of a hit / miss tracker. It reduces data access latency for applications that have a low data reuse rate (streaming audio, video, multimedia, games, etc.) which will improve overall application performance. It is dynamic in a sense that at any point in time when the cache hit rate becomes reasonable (defined parameter), the normal cache lookup operations will resume. The system utilizes a hit / miss tracker which tracks the hits / misses against a cache and, if the miss rate surpasses a prespecified rate or matches an application profile, the hit / miss tracker causes the cache to be bypassed and the data is pulled from main memory or another cache thereby improving overall application performance.

Owner:INT BUSINESS MASCH CORP

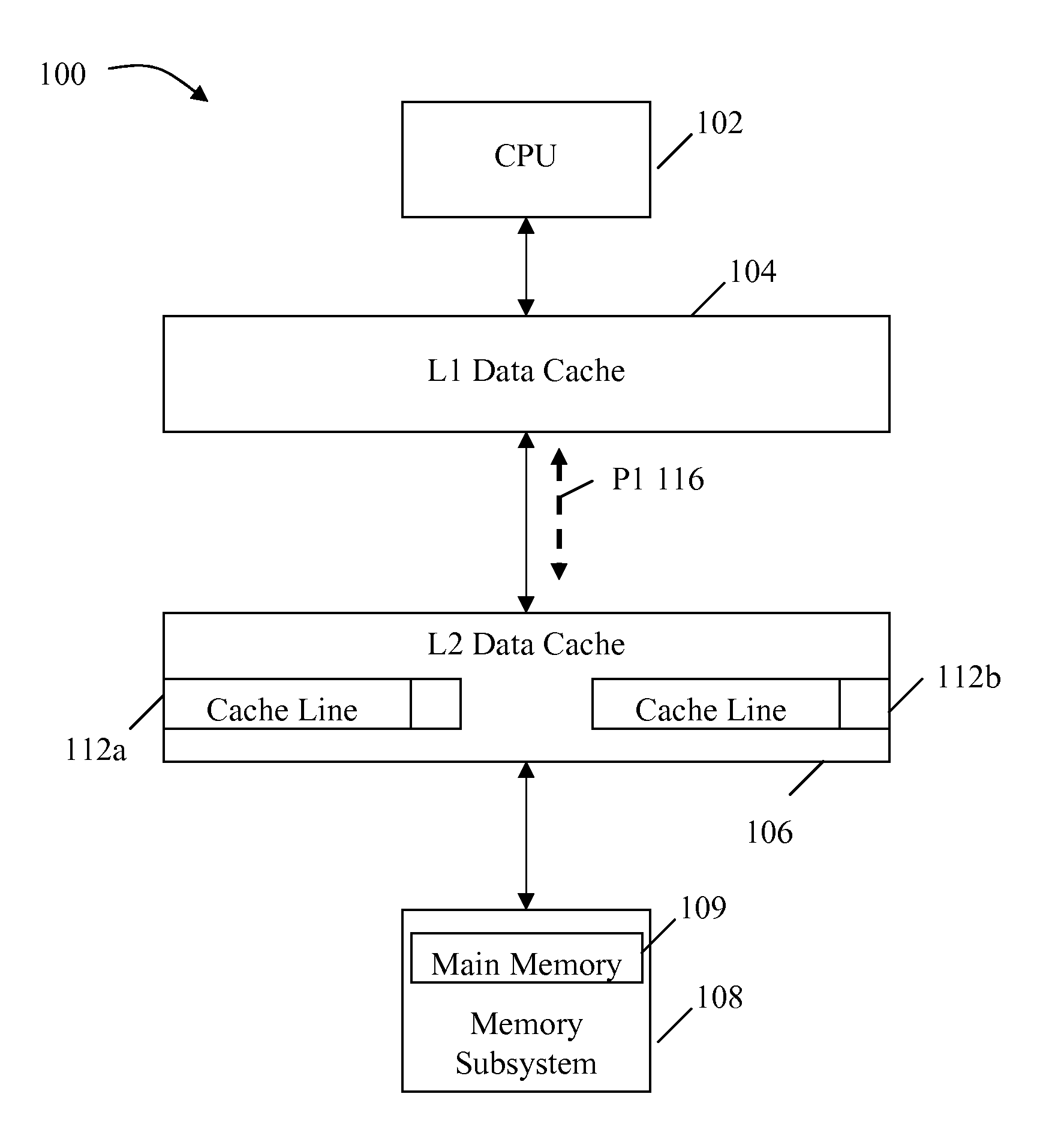

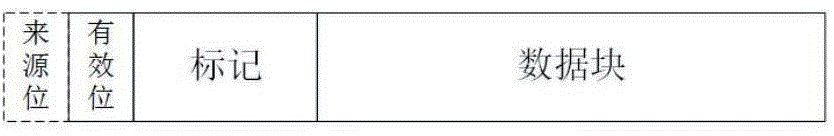

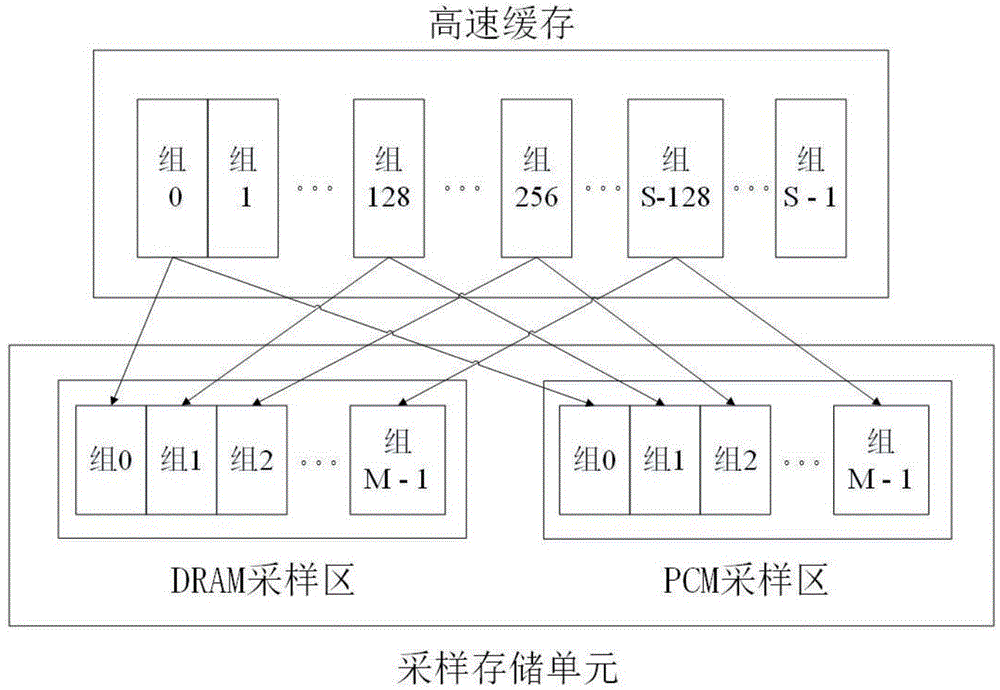

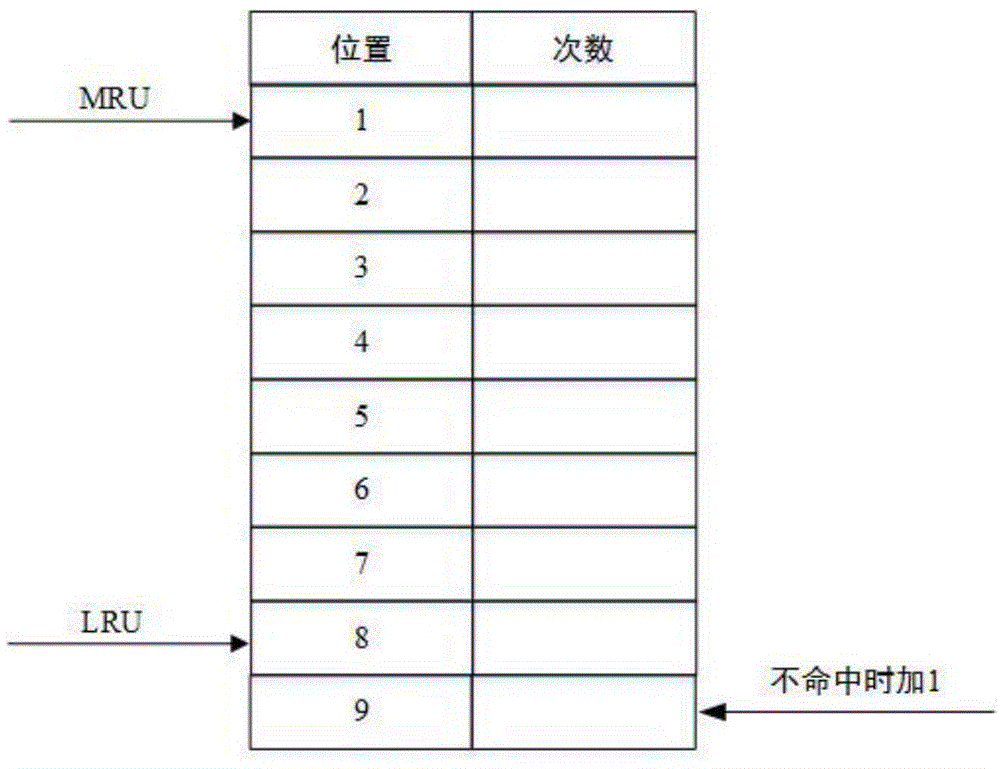

Cache replacement method under heterogeneous memory environment

ActiveCN104834608AComprehensive consideration of memory access characteristicsIncrease space sizeMemory adressing/allocation/relocationHardware structurePhase-change memory

The invention discloses a cache replacement method under heterogeneous memory environment. The method is characterized by comprising the steps: adding one source flag bit in a cache line hardware structure for flagging whether cache line data is derived from a DRAM (Dynamic Random Access Memory) or a PCM (Phase Change Memory); adding a new sample storage unit in a CPU (Central Processing Unit) for recording program cache access behaviors and data reusing range information; the method also comprises three sub methods including a sampling method, an equivalent position calculation method and a replacement method, wherein the sampling sub method is used for performing sampling statistics on the cache access behaviors; the equivalent position calculation sub method is used for calculating equivalent positions, and the replacement sub method is used for determining a cache line needing to be replaced. According to the cache replacement method, for the cache access characteristic of a program under the heterogeneous memory environment, a traditional cache replacement policy is optimized, the high time delay cost that the PCM needs to be accessed due to cache missing can be reduced by implementing the cache replacement method, and thus the cache access performance of a whole system is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

Method, system, and apparatus for data reuse

ActiveUS8290958B2Convenient dictationPrecious timeData processing applicationsDigital data processing detailsDocument preparationDocumentation

Owner:NUANCE COMM INC

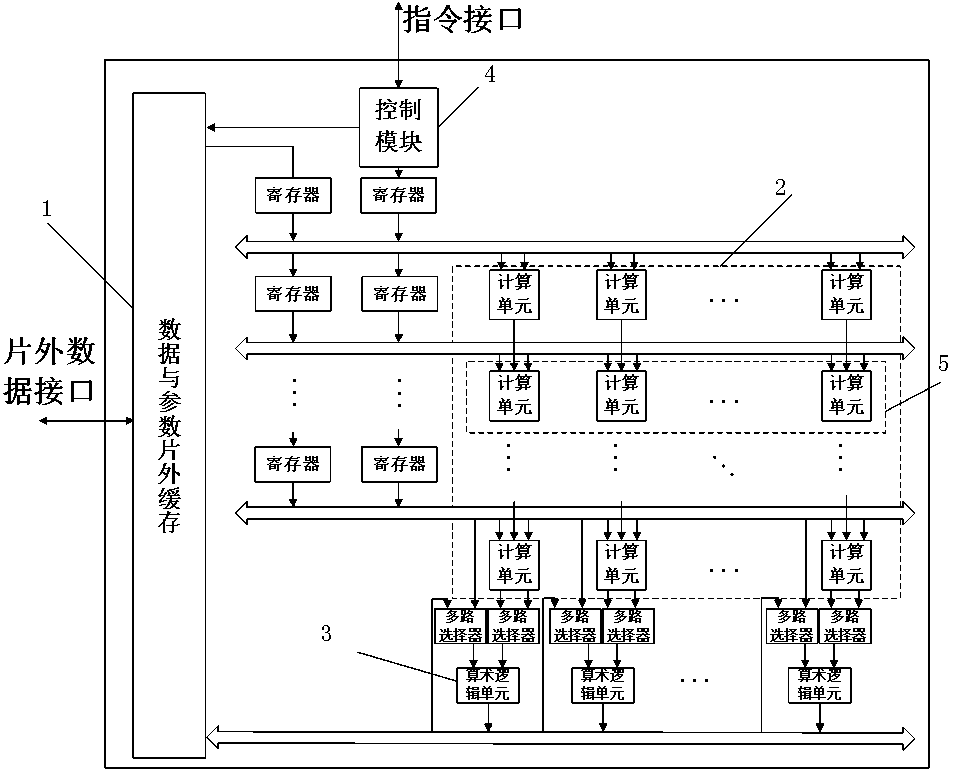

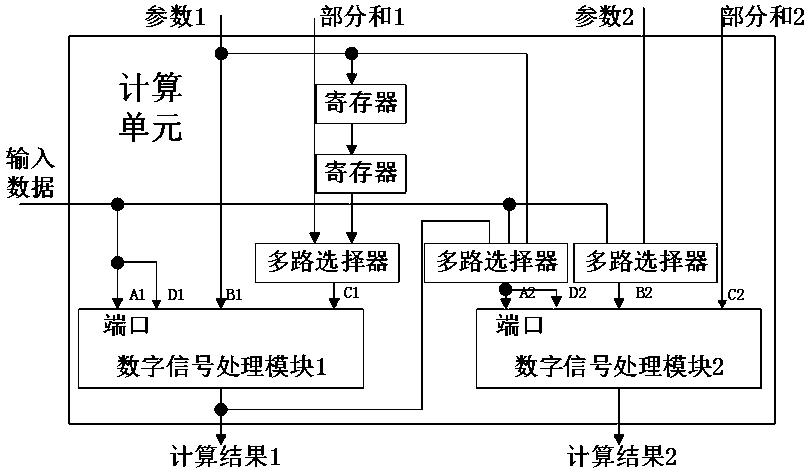

Hardware interconnection architecture of reconfigurable convolutional neural network

The invention belongs to the technical field of hardware design of image processing algorithms, and specifically discloses hardware interconnection architecture of a reconfigurable convolutional neural network. The interconnection architecture comprises a data and parameter off-chip caching module, a basic calculation unit array module and an arithmetic logic unit calculation module, wherein the data and parameter off-chip caching module is used for caching pixel data in input to-be-processed pictures and parameters input during convolutional neural network calculation; the basic calculation unit array module is used for realizing core calculation of the convolutional neural network; and the arithmetic logic unit calculation module is used for processing calculation results of the basic calculation unit array module and accumulating a down-sampling layers, activation functions and partial sums. The basic calculation unit array module is interconnected according to a two-dimensional array manner; in a row direction, input data is shared and parallel calculation is realized by using different pieces of parameter data; and in a column direction, a calculation result is transferred rowby row to serve as input of the next row to participate in the operation. The hardware interconnection architecture is capable of reducing the bandwidth demand while enhancing the data reusing ability through structure interconnection.

Owner:FUDAN UNIV

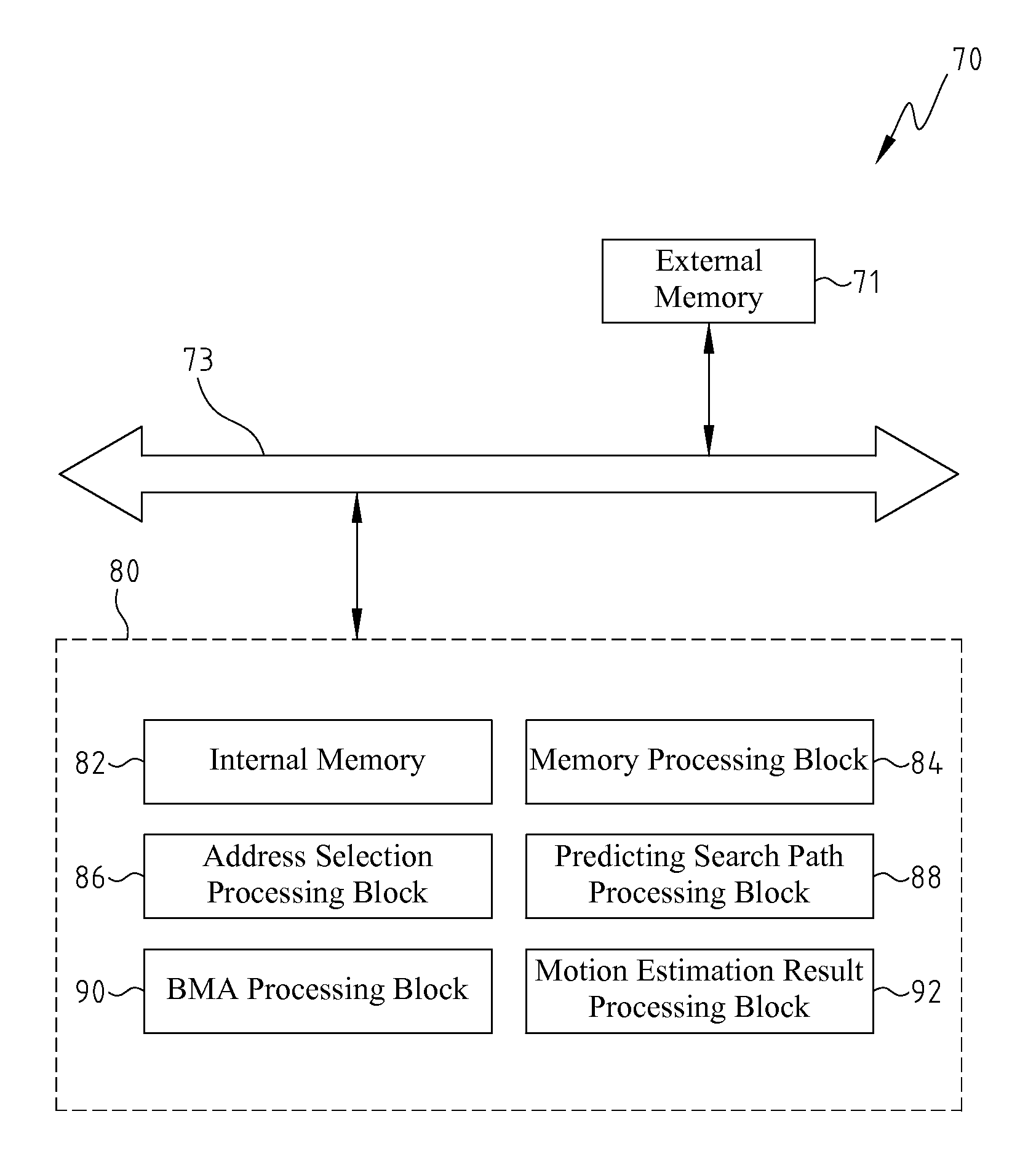

Data reuse method for blocking matching motion estimation

ActiveUS20070053439A1Saving more bandwidthSmall sizeColor television with pulse code modulationColor television with bandwidth reductionInternal memoryExternal storage

A data reuse method with level C+ for block matching motion estimation is disclosed. Compared to conventional Level C scheme, this invention can save large external memory bandwidth of motion estimation. The main idea is to reuse the overlapped searching region in the horizontal direction and partially reuse the overlapped searching region in the vertical direction. Several vertical successive current macroblocks are stitched, and the searching region of these current macroblocks is loaded, simultaneously. With the small overhead of internal memory, the reduction of external memory bandwidth is large. By case studies of H.264 / AVC, the level C+ scheme can provide a good trade-off between the conventional Level C and D scheme.

Owner:NAT TAIWAN UNIV

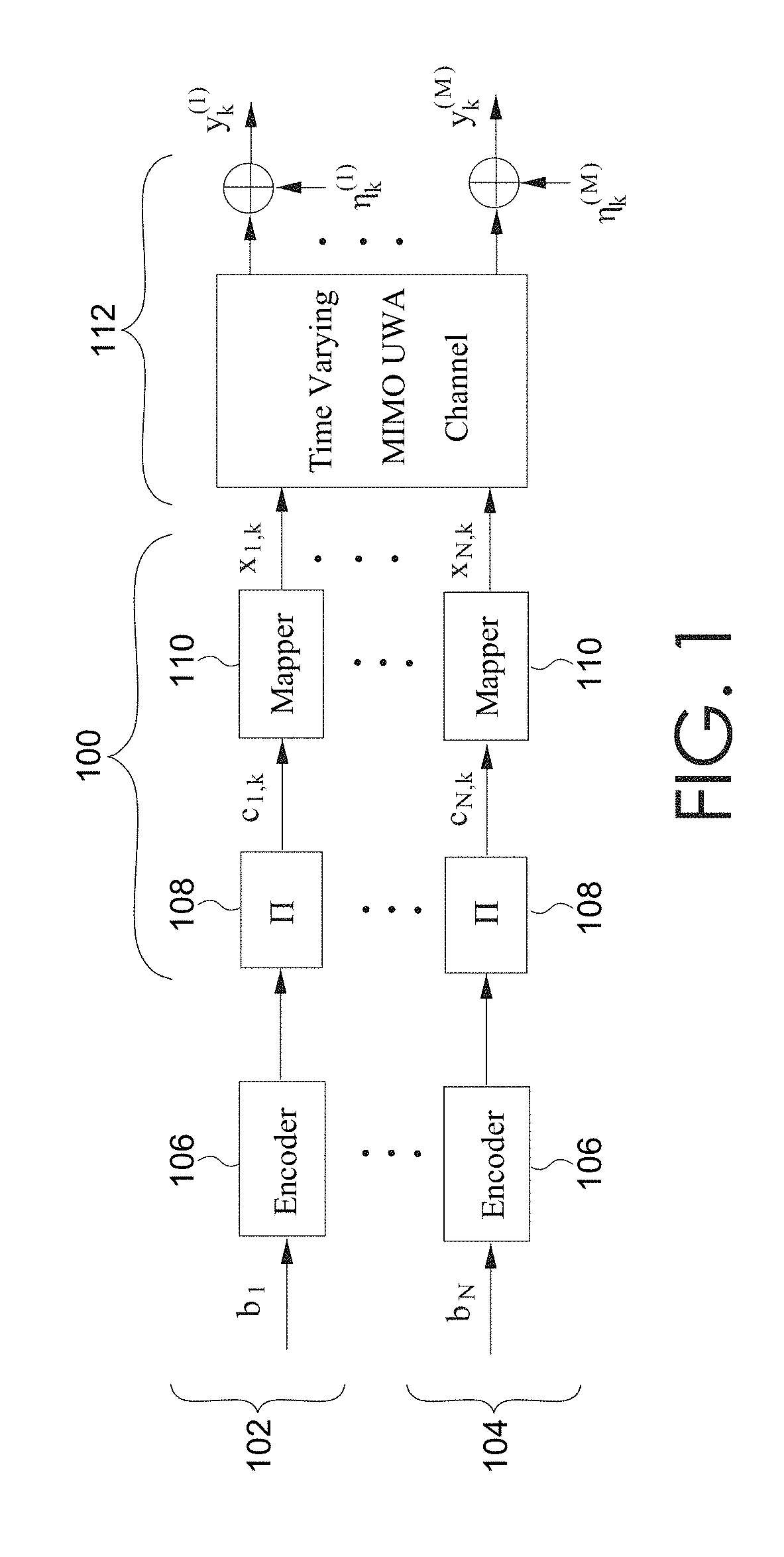

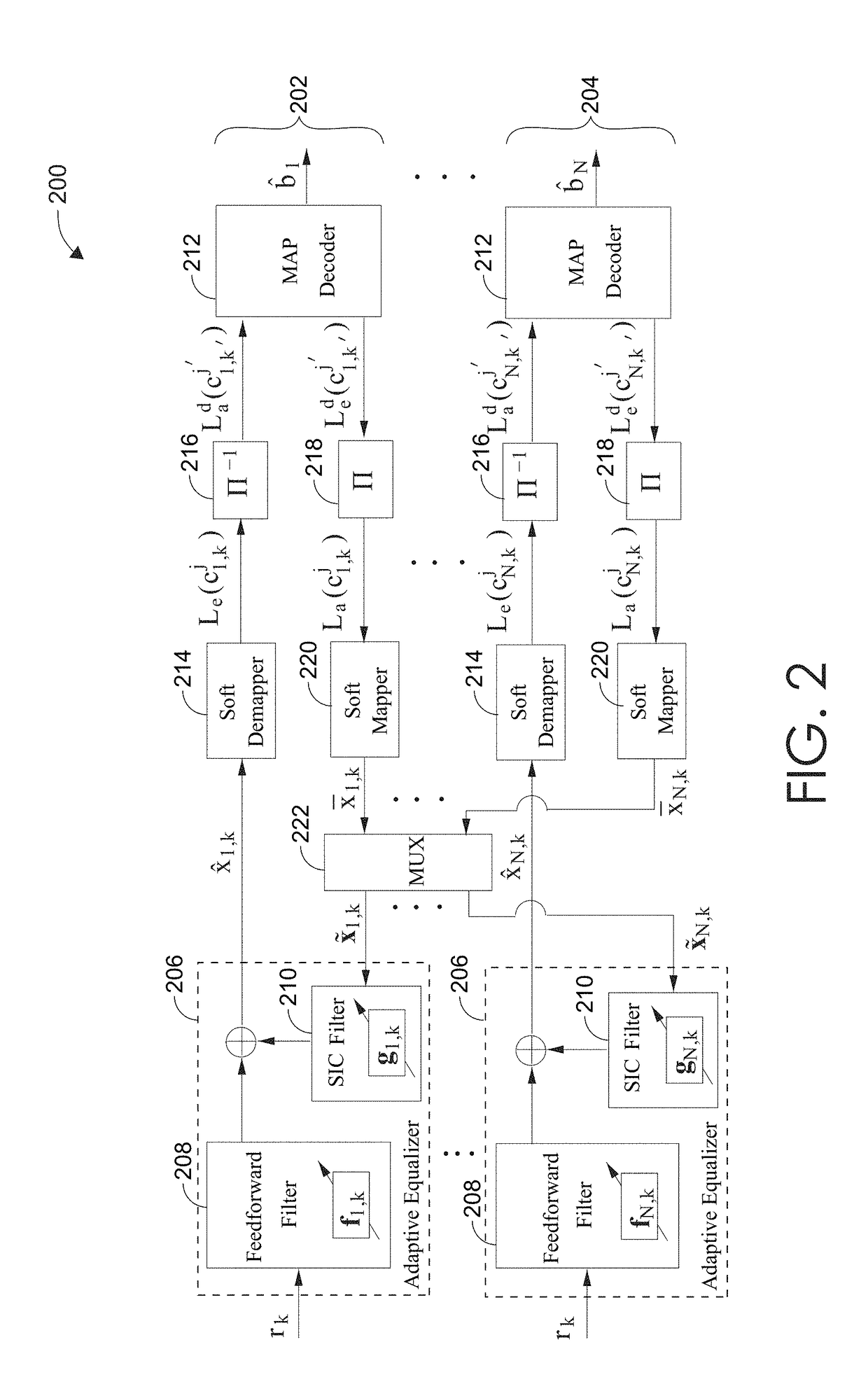

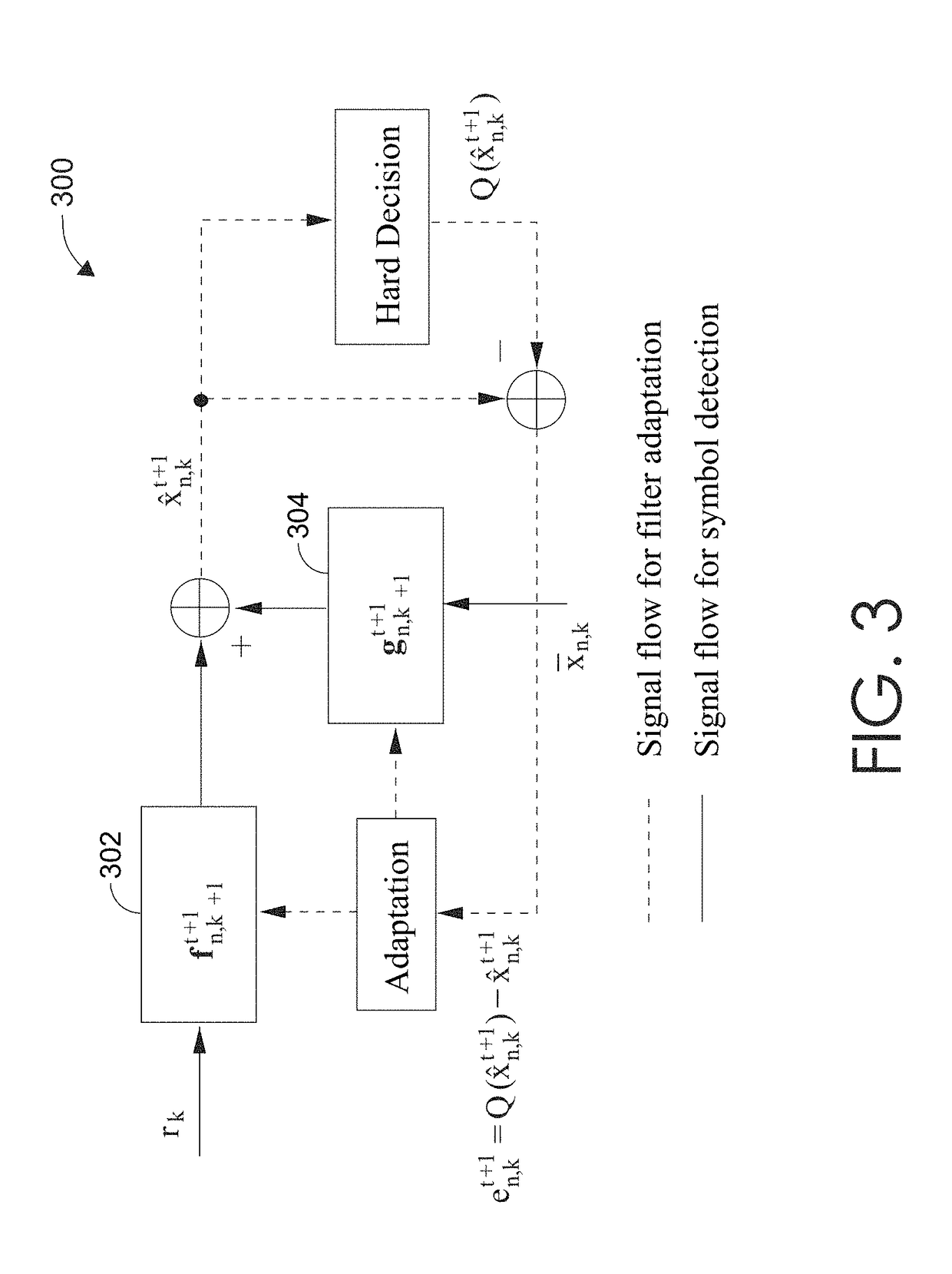

Turbo receivers for multiple-input multiple-output underwater acoustic communications

ActiveUS20190068294A1Easy to detectImprove fidelityMultiple modulation transmitter/receiver arrangementsSonic/ultrasonic/infrasonic transmissionMultiple inputFeedforward filter

Systems and methods for underwater communication using a MIMO acoustic channel. An acoustic receiver may receive a signal comprising information encoded in at least one transmitted symbol. Using a two-layer iterative process, the at least one transmitted symbol is estimated. The first layer of the two-layer process uses iterative exchanges of soft-decisions between an adaptive turbo equalizer and a MAP decoder. The second layer of the two-layer process uses a data-reuse procedure that adapts an equalizer vector of both a feedforward filter and a serial interference cancellation filter of the adaptive turbo equalizer using a posteriori soft decisions of the at least one transmitted symbol. After a plurality of iterations, a hard decision of the bits encoded on the at least one transmitted symbol is output from the MAP decoder.

Owner:UNIVERSITY OF MISSOURI

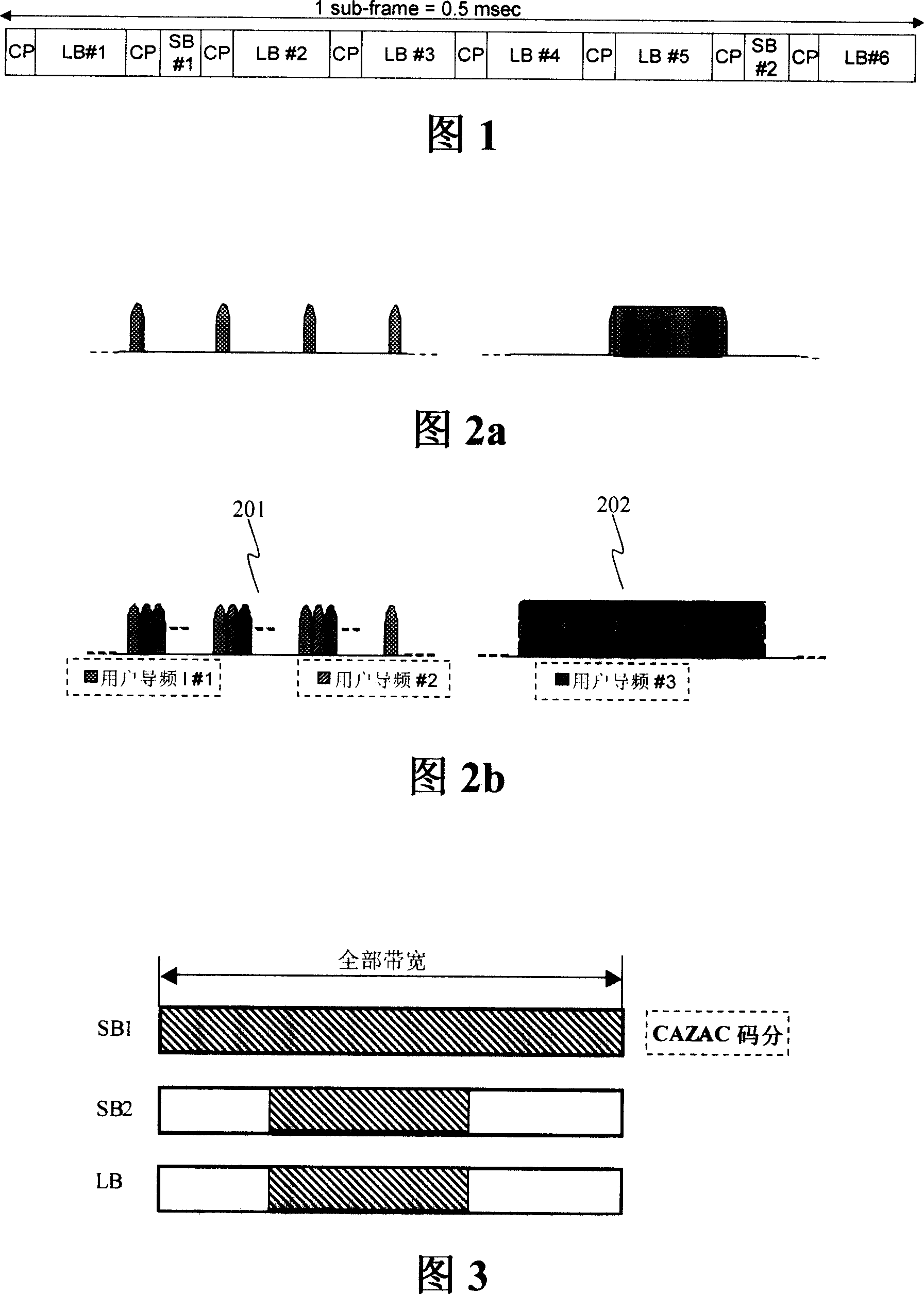

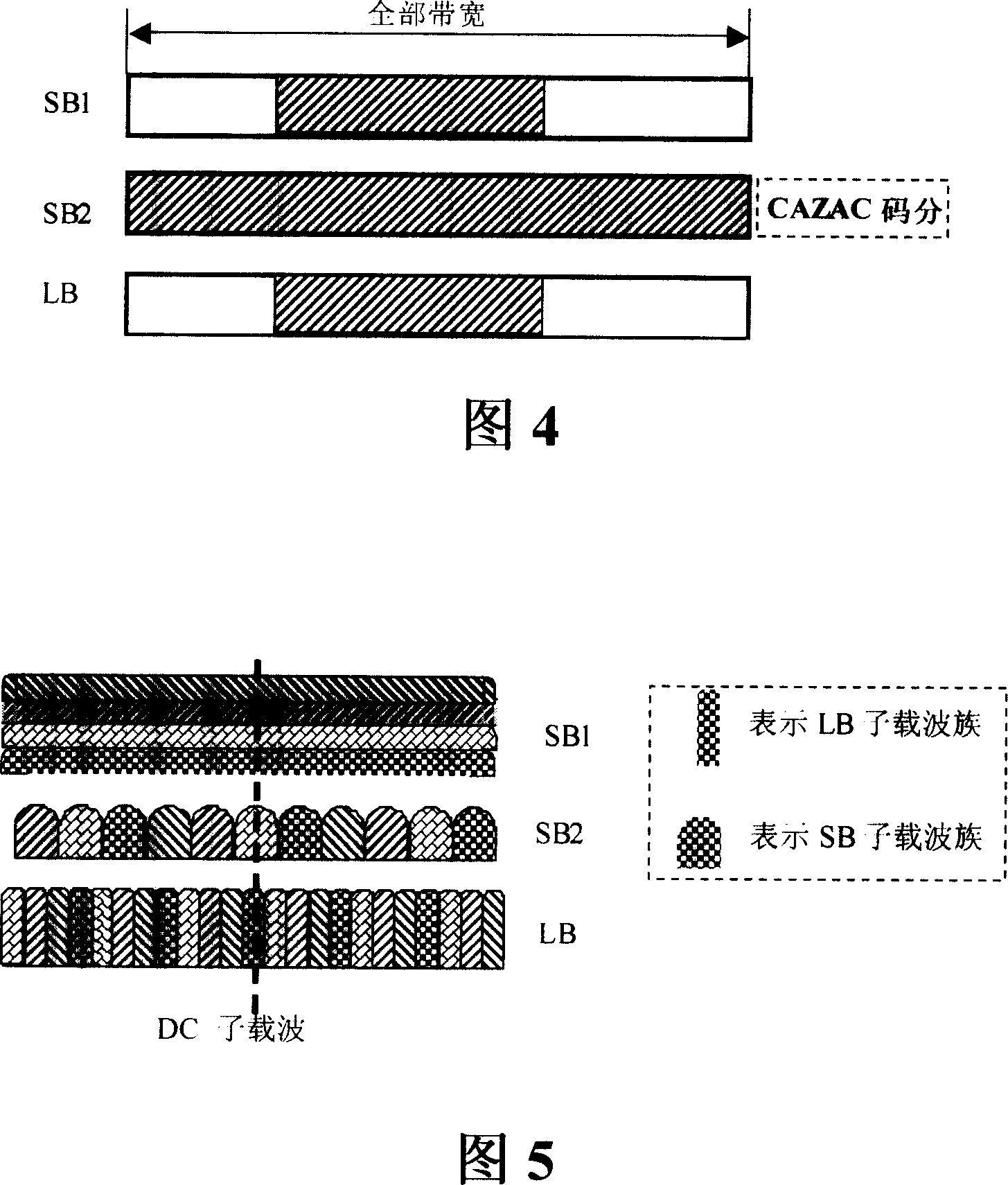

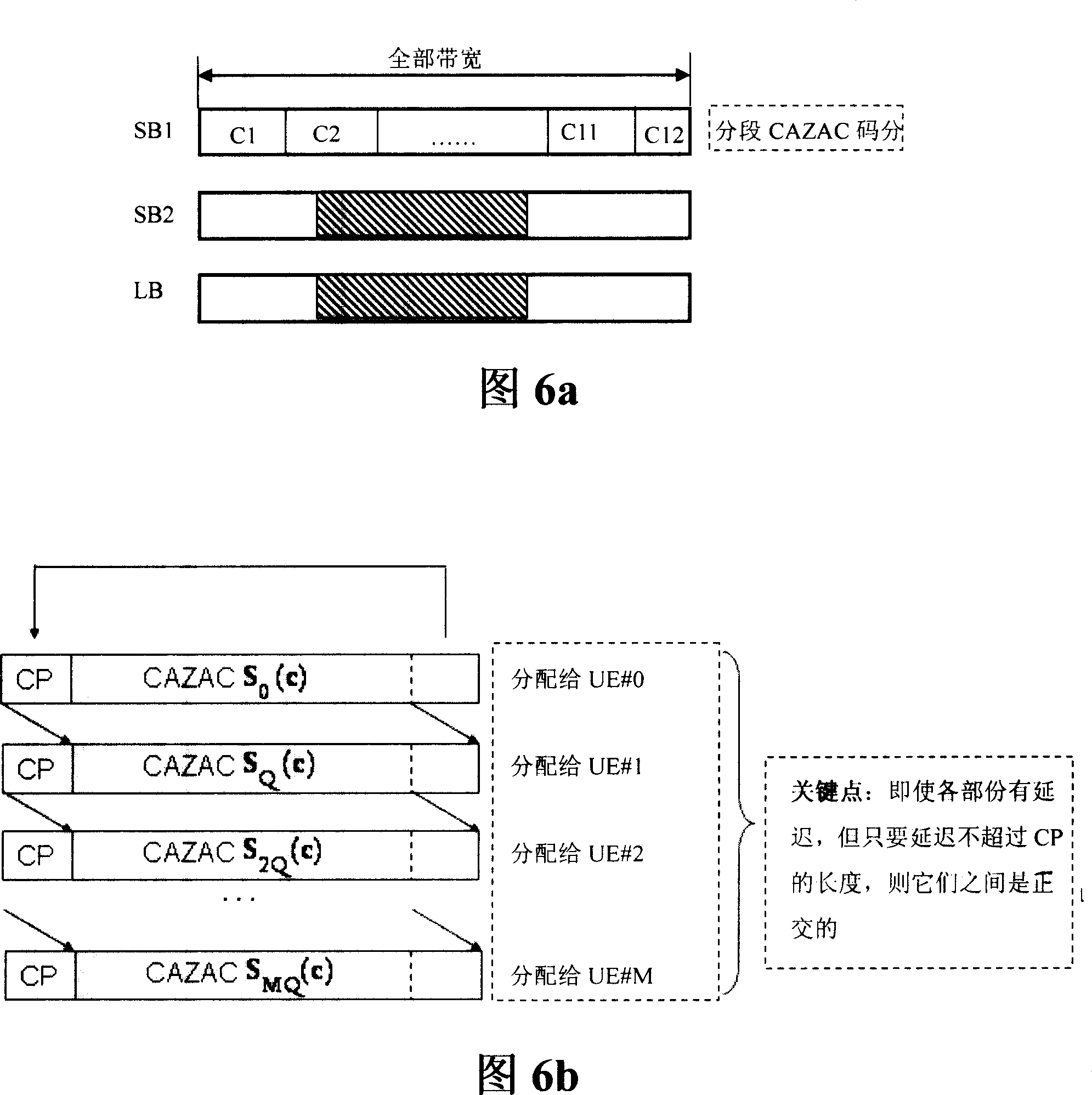

An implementation method for uplink pilot frequency insertion and data reuse

InactiveCN101009527AIncrease data transfer rateFlexible schedulingFrequency-division multiplexMultiplexingTime domain

The invention discloses a realization method of ascending pilot inserting and data multiplexing, it is fit for ascending pilot construction of single carrier frequency division multiplex, a sub frame of said pilot construction includes the first ascending pilot and the second ascending pilot, the method sets data in integral mode, the first ascending pilot is set in the time domain code-division or frequency domain code-division mode, the second ascending pilot is set in frequency-division mode; or the data is set in integral mode, the first ascending pilot is set in the frequency-division mode, the second ascending pilot is set in time domain code-division or frequency domain code-division mode; or the data is set in sub carrier distributed mode, the first ascending pilot is set in time domain code-division or frequency domain code-division mode, the second ascending pilot is set in the frequency-division mode; or the data is set in sub carrier distributed mode, the first ascending pilot is set in frequency-division mode, the second ascending pilot is set in the time domain code-division or frequency domain code-division mode. The invention adopts proper pilot inserting and data multiplexing technique to make system realize channel compensation and flexible dispatching.

Owner:ZTE CORP

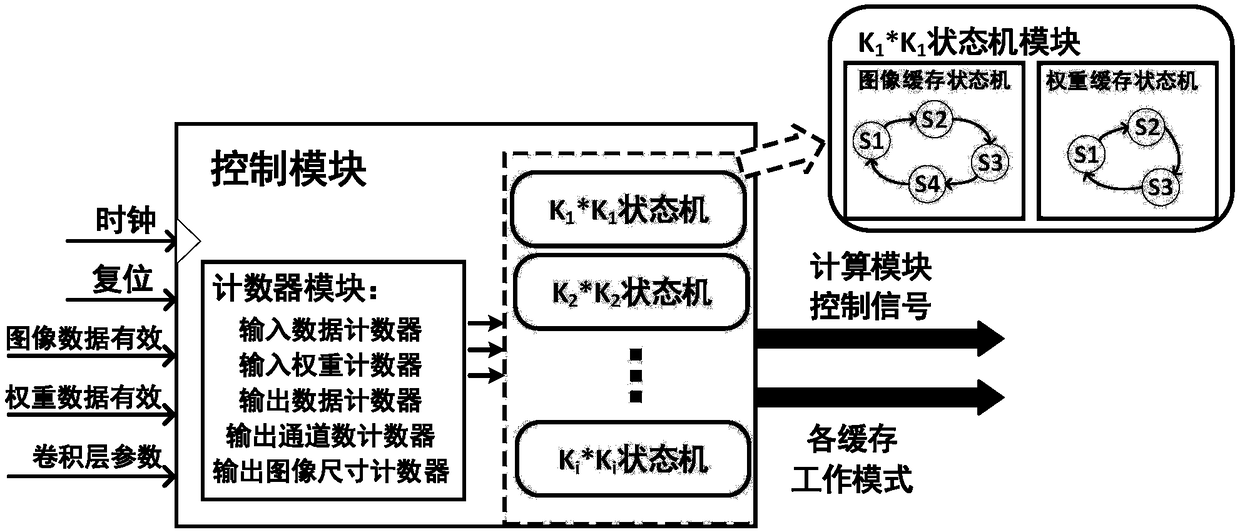

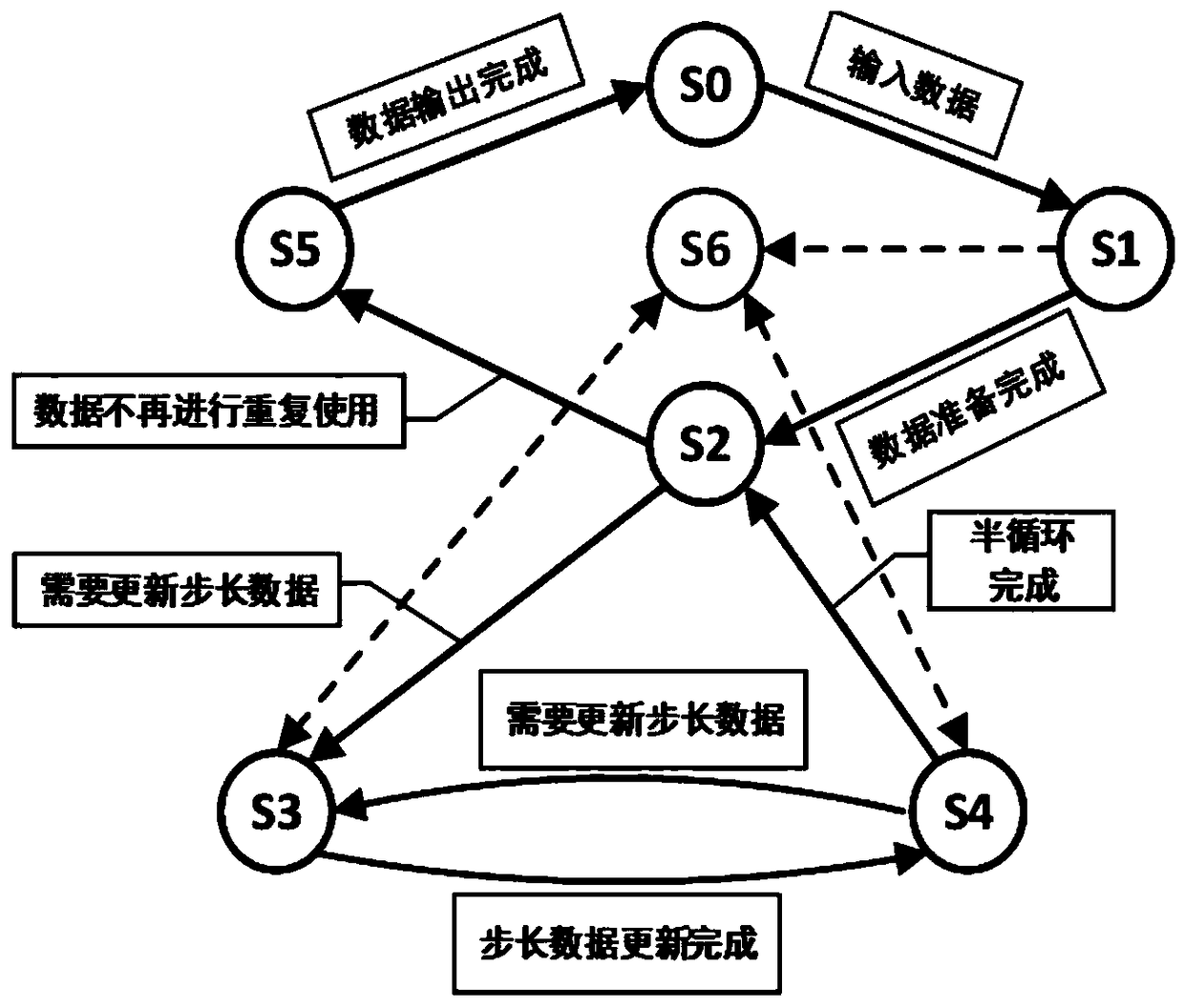

Flexibly configurable neural network computing unit, computing array and construction method thereof

ActiveCN109409512AGuaranteed computing powerIncrease flexibilityNeural architecturesPhysical realisationComputer moduleParallel computing

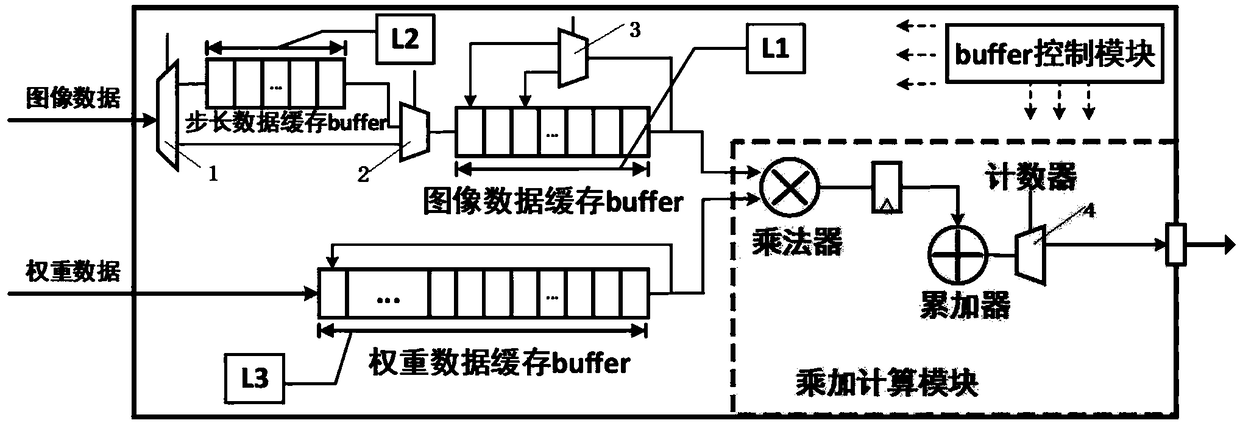

The invention discloses a flexibly configurable neural network computing unit, a computing array and a construction method thereof. The neural network computing unit comprises a configurable storage module, a configurable control module and a time-division multiplexing multiplication and addition computing module. The configurable storage module comprises a feature map data buffer, a step-size data buffer and a weight data buffer. The configurable control module comprises a counter module and a state machine module; the multiplication and addition calculation module comprises a multiplier andan accumulator. The invention can support any type of convolution calculation and parallel calculation of multi-size convolution kernel, fully exploits the flexibility and data reusability of the convolution neural network calculation unit, greatly reduces the system power consumption caused by data moving, and improves the calculation efficiency of the system.

Owner:XI AN JIAOTONG UNIV

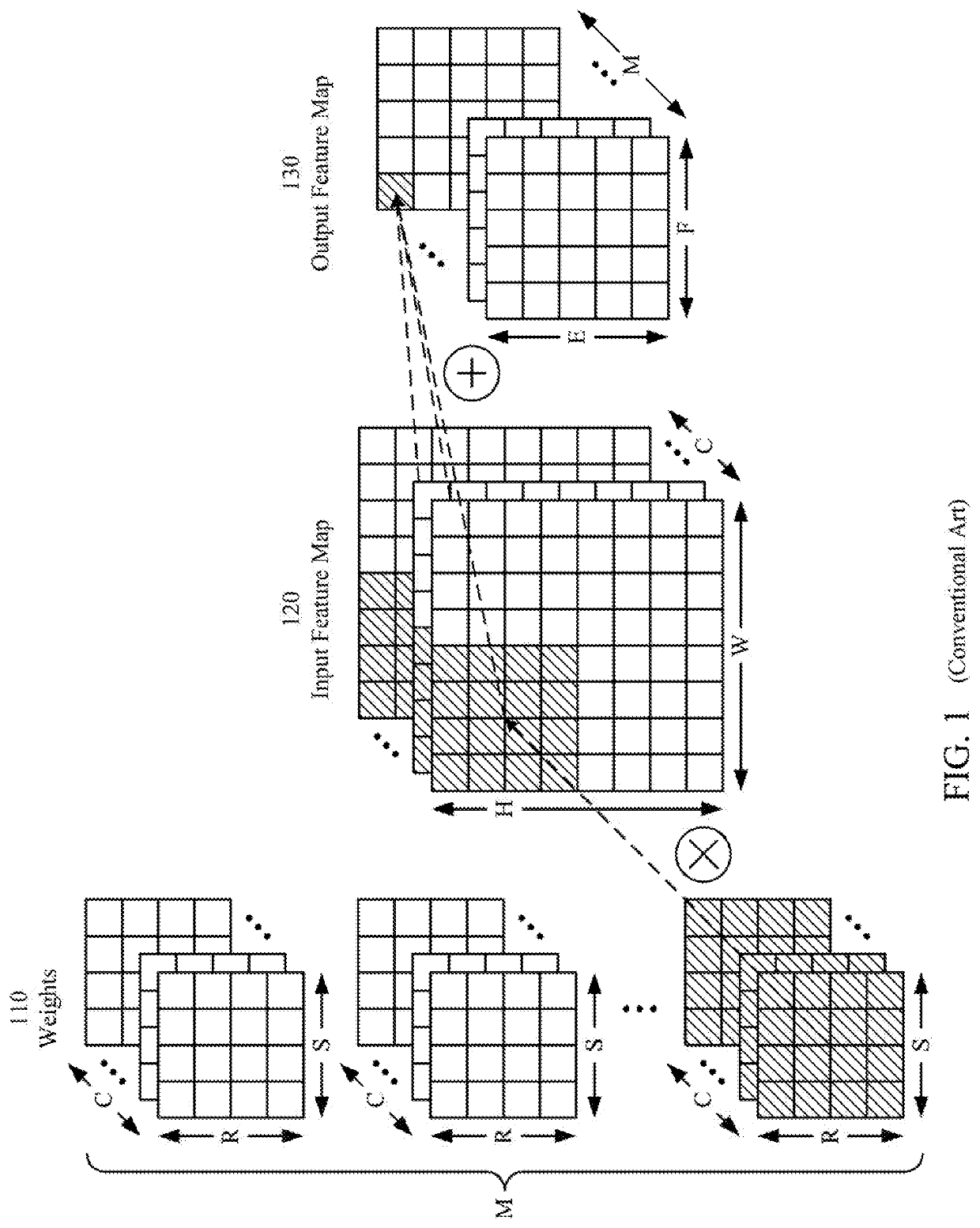

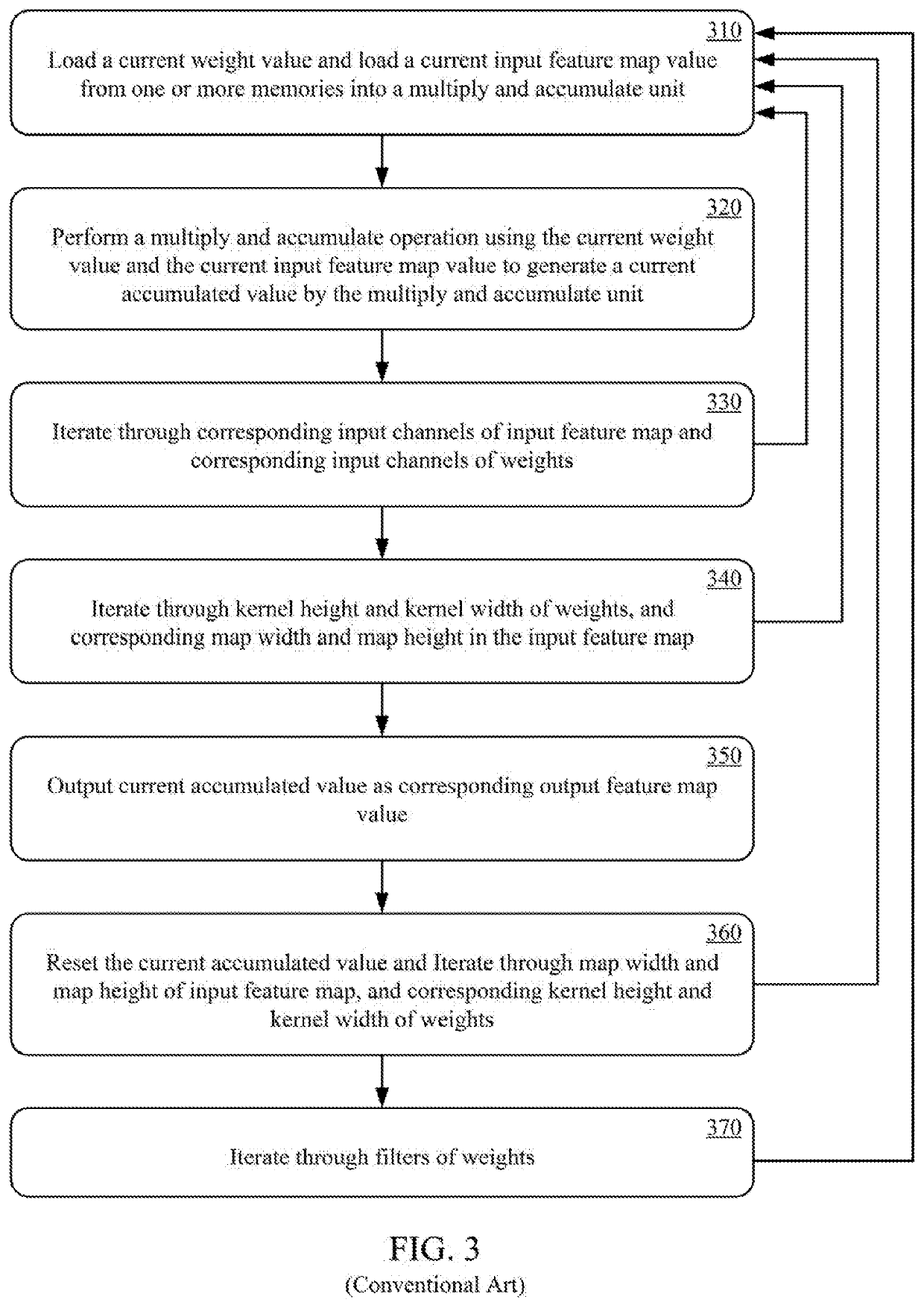

Matrix Data Reuse Techniques in Processing Systems

PendingUS20210011732A1Reduce duplicate memory accessReduce bottlenecksMemory architecture accessing/allocationDigital data processing detailsComputer architectureParallel computing

Techniques for computing matrix convolutions in a plurality of multiply and accumulate units including data reuse of adjacent values. The data reuse can include reading a current value of the first matrix in from memory for concurrent use by the plurality of multiply and accumulate units. The data reuse can also include reading a current value of the second matrix in from memory to a serial shift buffer coupled to the plurality of multiply and accumulate units. The data reuse can also include reading a current value of the second matrix in from memory for concurrent use by the plurality of multiply and accumulate units.

Owner:MEMRYX INCORPORATED

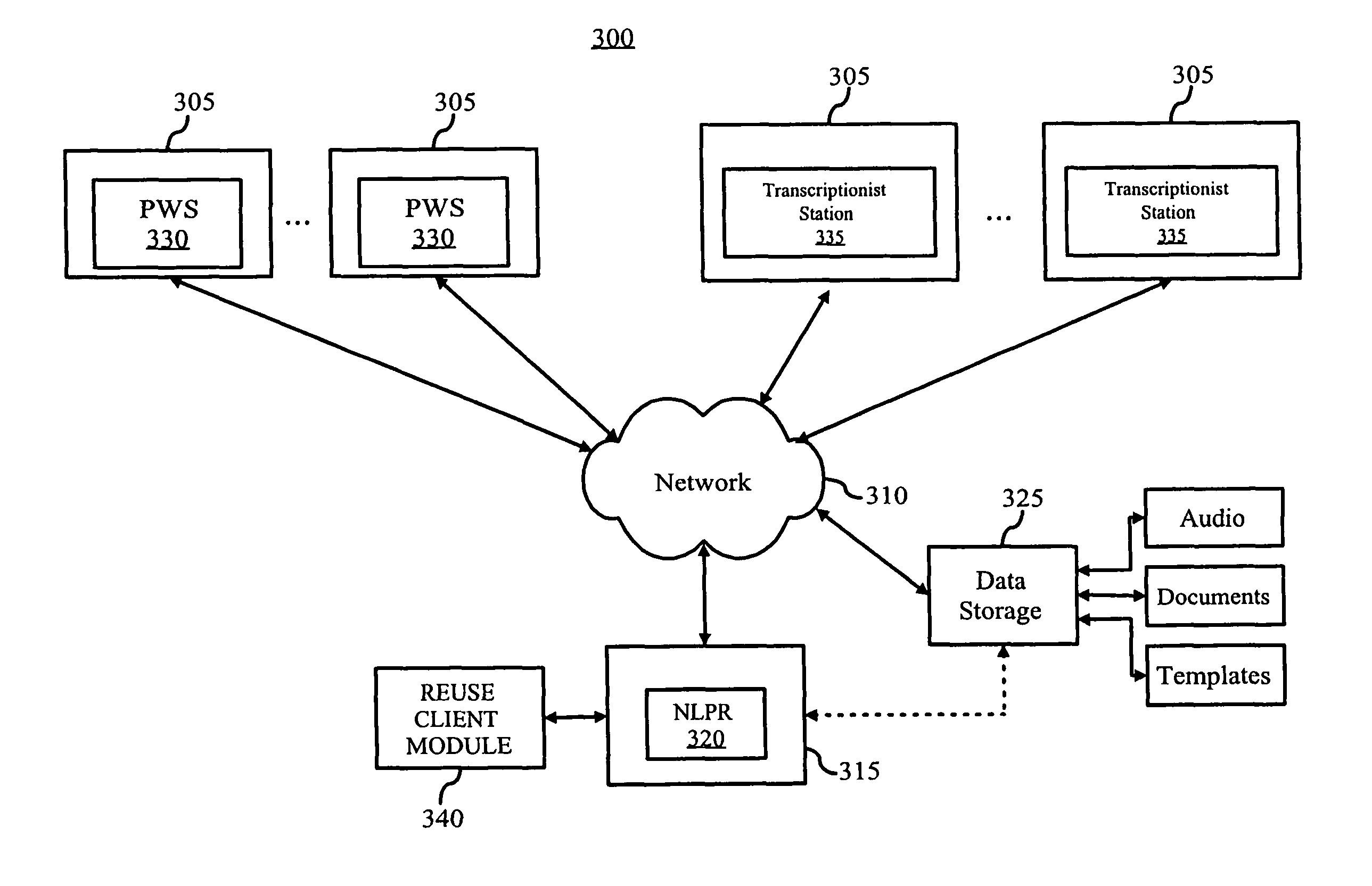

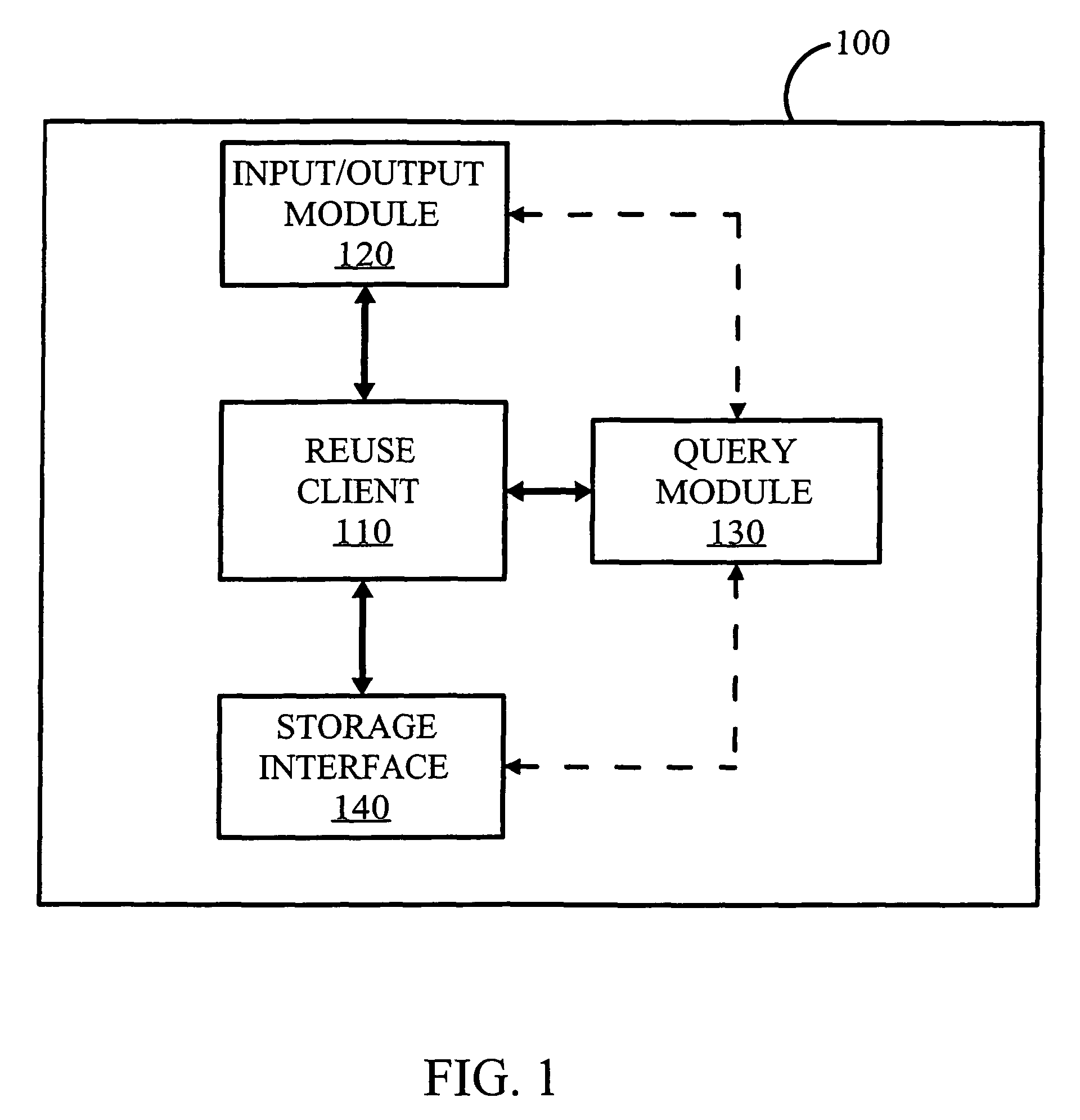

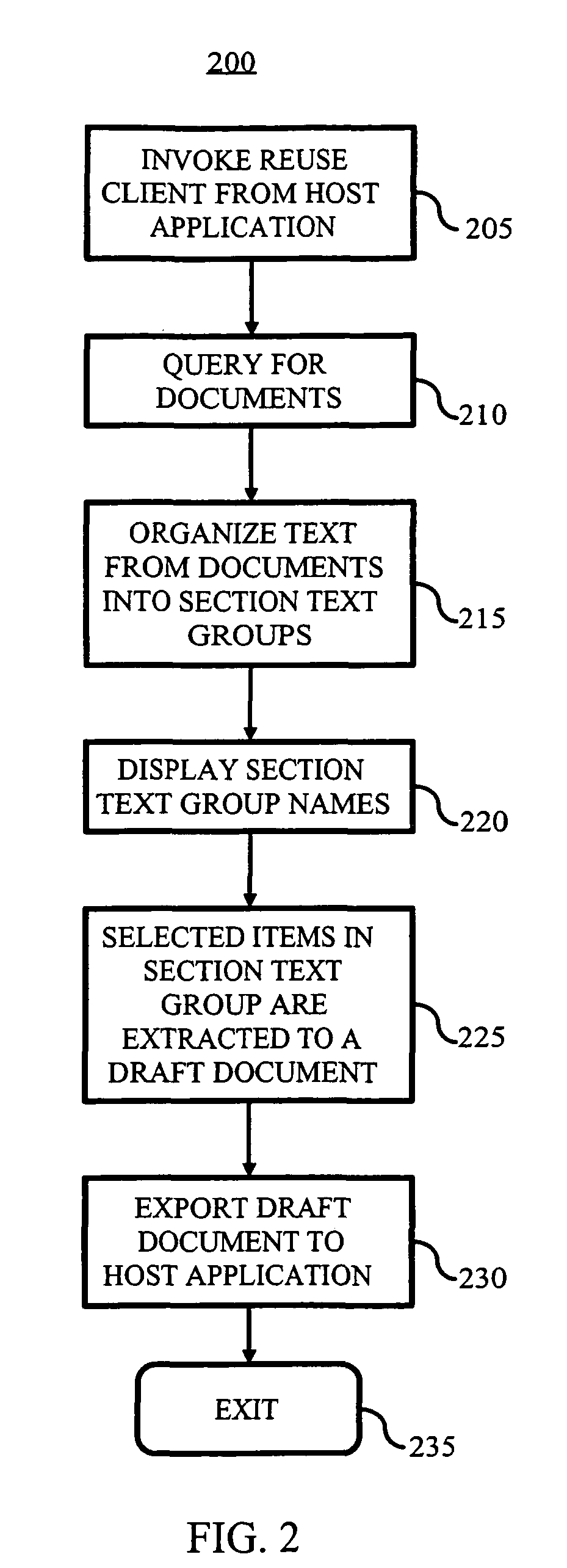

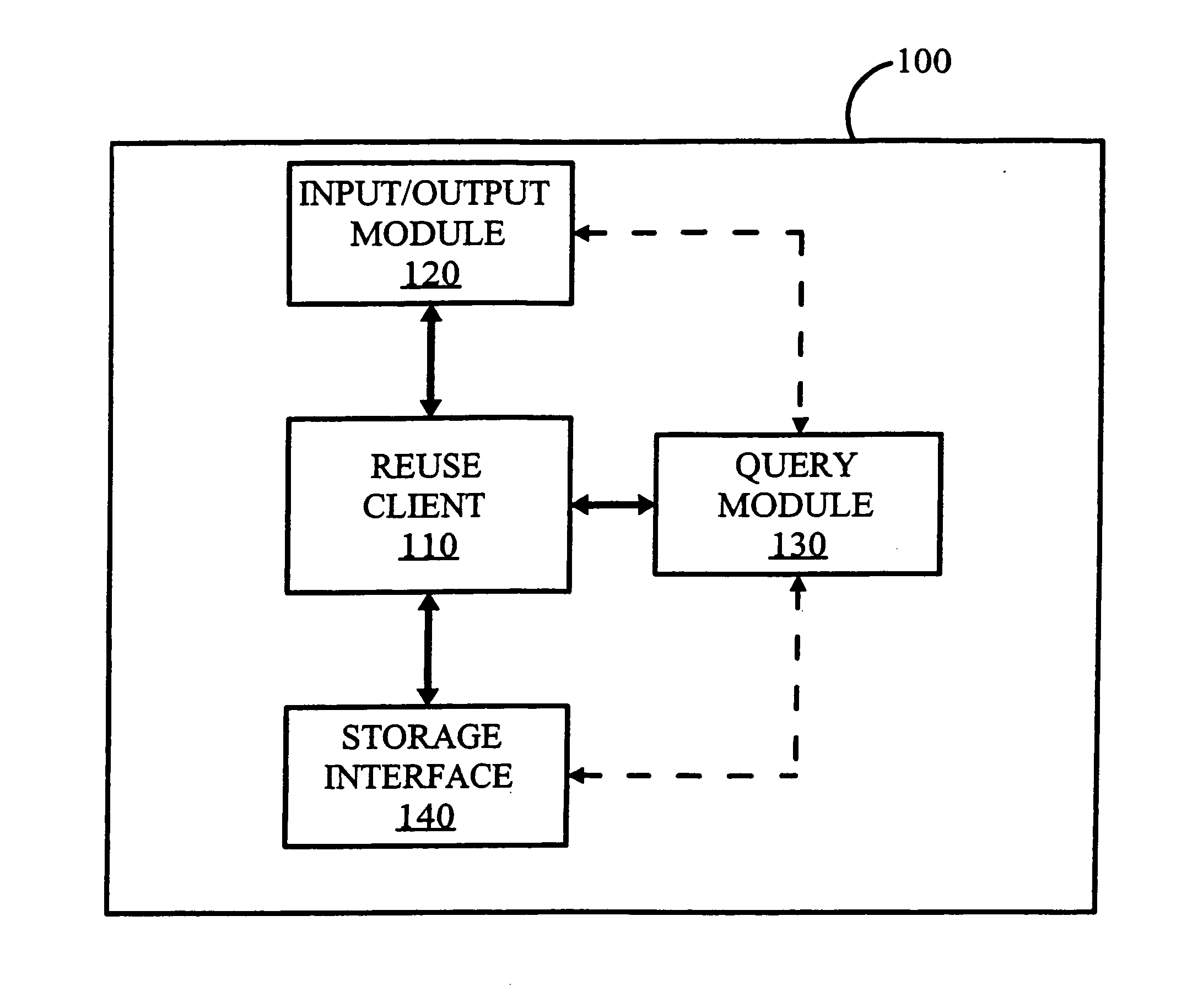

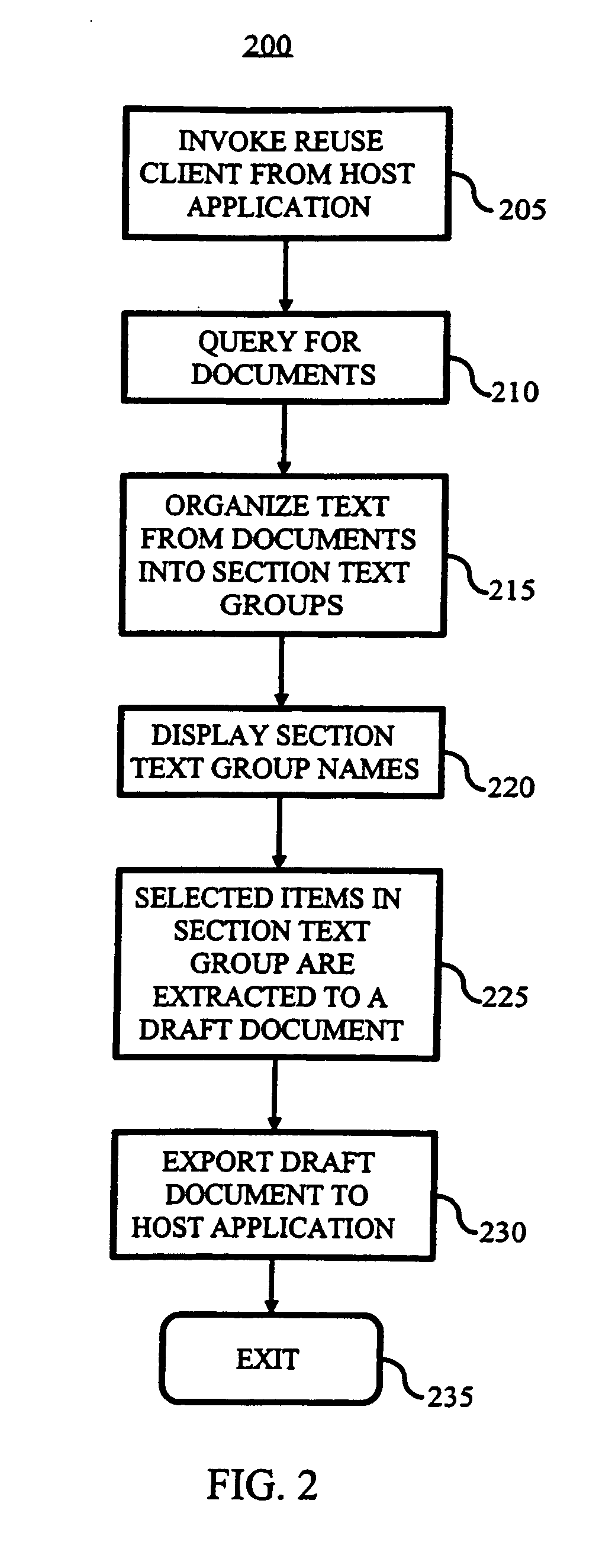

Method, system and apparatus for data reuse

ActiveUS20070038611A1Convenient dictationPrecious timeData processing applicationsText processingPaper documentDocument preparation

A system and method may be disclosed for facilitating the creation or modification of a document by providing a mechanism for locating relevant data from external sources and organizing and incorporating some or all of said data into the document. In the method for reusing data, there may be a set of documents that may be queried, where each document may be divided into a plurality of sections. A plurality of section text groups may be formed based on the set of documents, where each section text group may be associated with a respective section from the plurality of sections and each section group includes a plurality of items. Each item may be associated with a respective section from each document of the set of documents. A selected item within a selected section text group may be focused. The selected item may be extracted to a current document. The current document may be exported to a host application.

Owner:NUANCE COMM INC

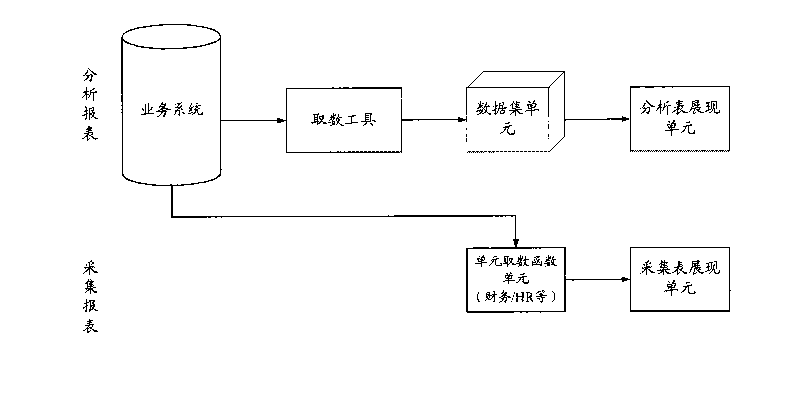

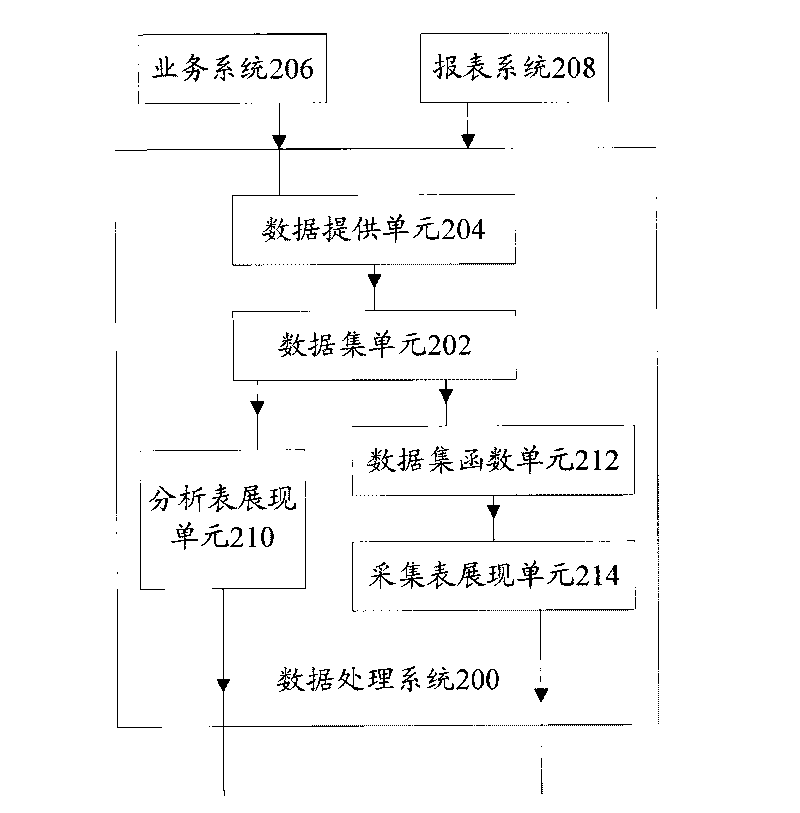

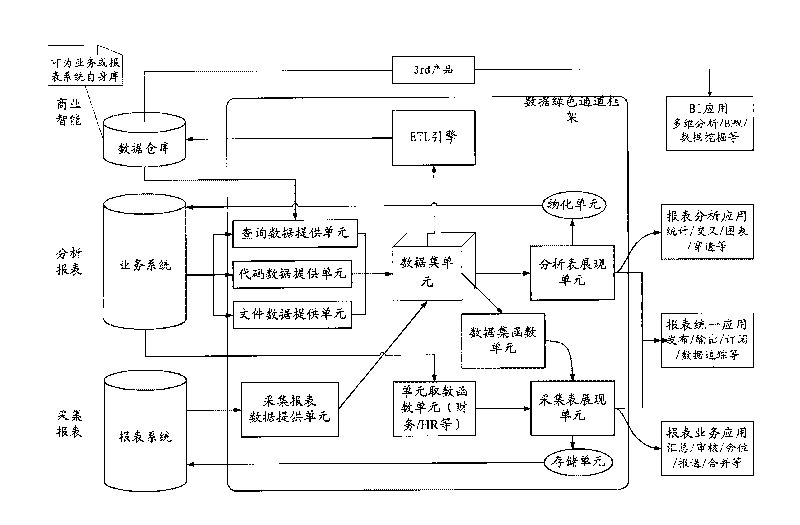

Data processing system

InactiveCN101739454ASolve problems in the application fieldSpecial data processing applicationsData processing systemData set

The invention provides a data processing system used for realizing the data reuse between an analysis report and an acquisition report. The data processing system comprises a dataset unit used for storing data from a data providing unit, the data providing unit connected to a service system which corresponds to the analysis report and a report system which corresponds to the acquisition report and used for acquiring data of the service system and the report system and transmitting the acquired data to the dataset unit, an analysis sheet display unit connected to the dataset unit and used for displaying service data from the dataset unit, a dataset function unit connected to the dataset unit and used for acquiring data from the dataset unit according to the dataset function unit, and an acquisition sheet display unit connected to the dataset function unit and used for displaying data from the dataset function unit. Therefore, the data processing system realizes the data reuse between the analysis report and the acquisition report, and generates significant business management values.

Owner:YONYOU NETWORK TECH CO LTD

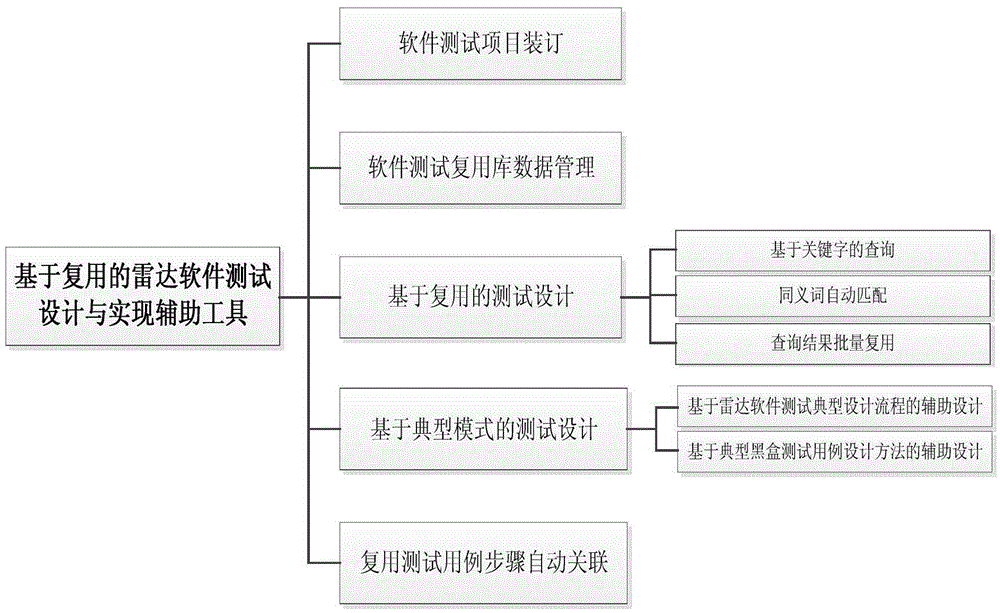

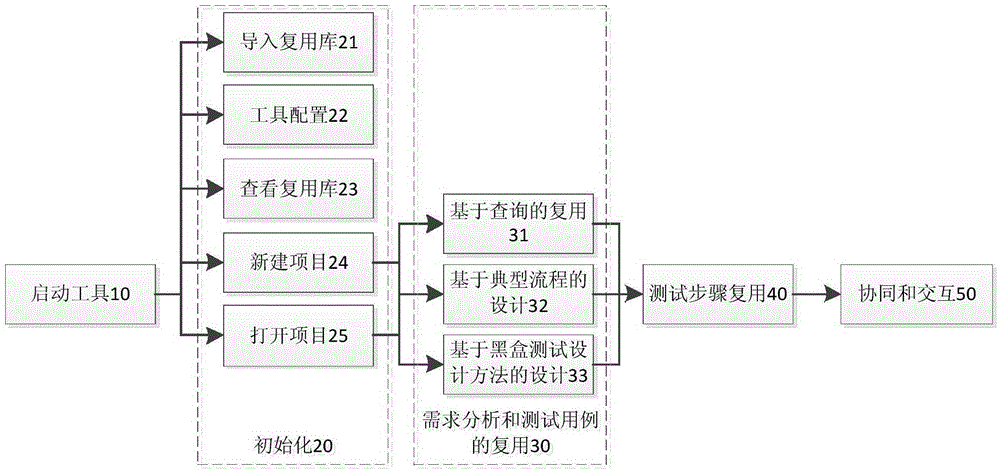

Reuse based design and implementation method for radar software testing

The present invention relates to a reuse based design and implementation method for radar software testing. The method comprises: performing software test project binding; performing data management of a software test reuse library; supporting searching and copying of imported test data in a test typical data reuse library and pasting and (batch) modification in a current project, and supporting addition, deletion, searching and modification on test data in the current project; supporting batch generation of test cases of a typical test type by means of a test design template, and supporting batch generation of test cases by means of a typical black box test case design method; supporting designating a person in charge of node data in a current project, supporting exporting and distribution of the current test project by means of an Excel, and supporting importing and combining of test design Excels prepared by different users; and automatically associating steps of a reused test case . The method of the present invention realizes rapid, batch and patterned reuse and generation of test cases.

Owner:CHINA ELECTRONICS TECH GRP CORP NO 14 RES INST

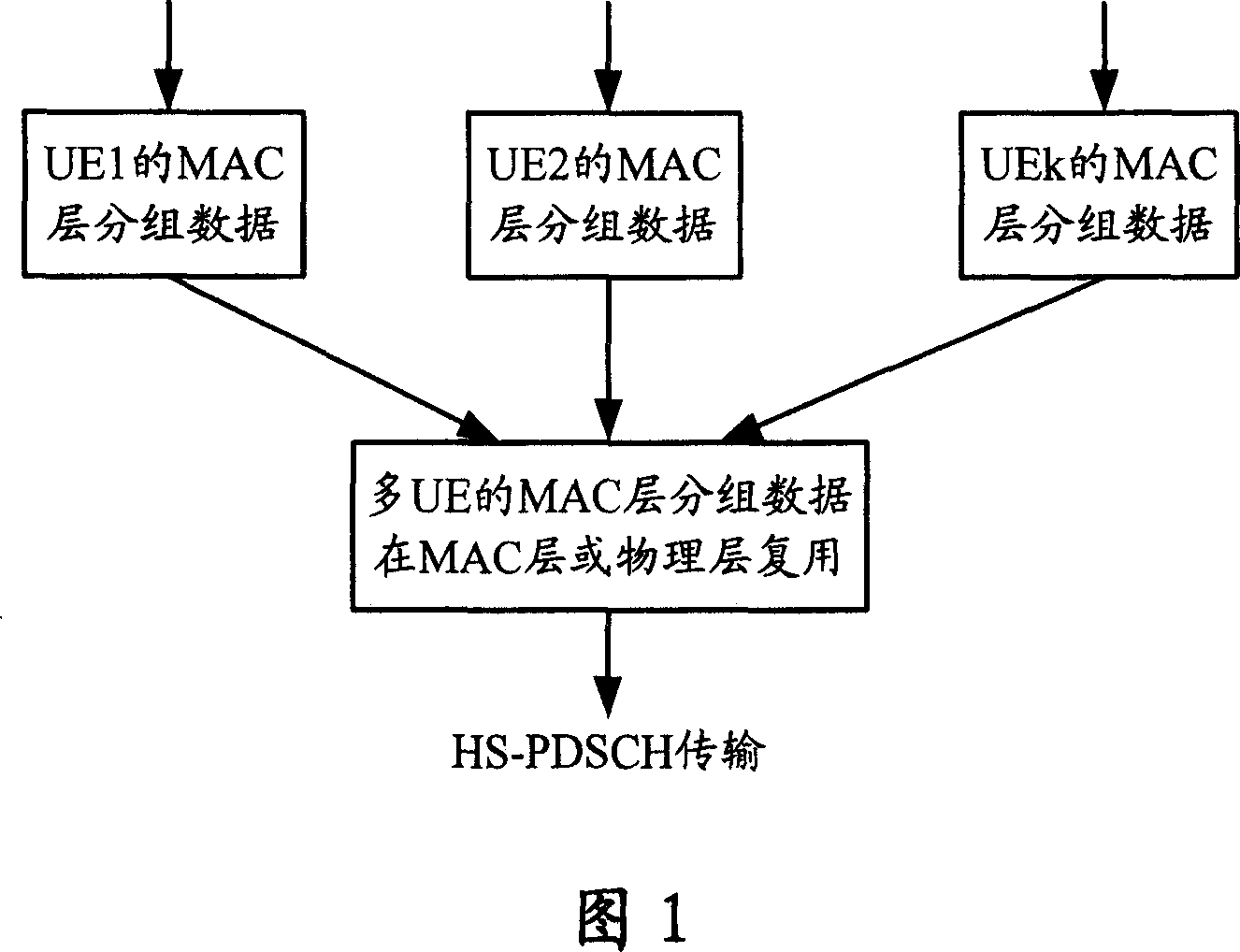

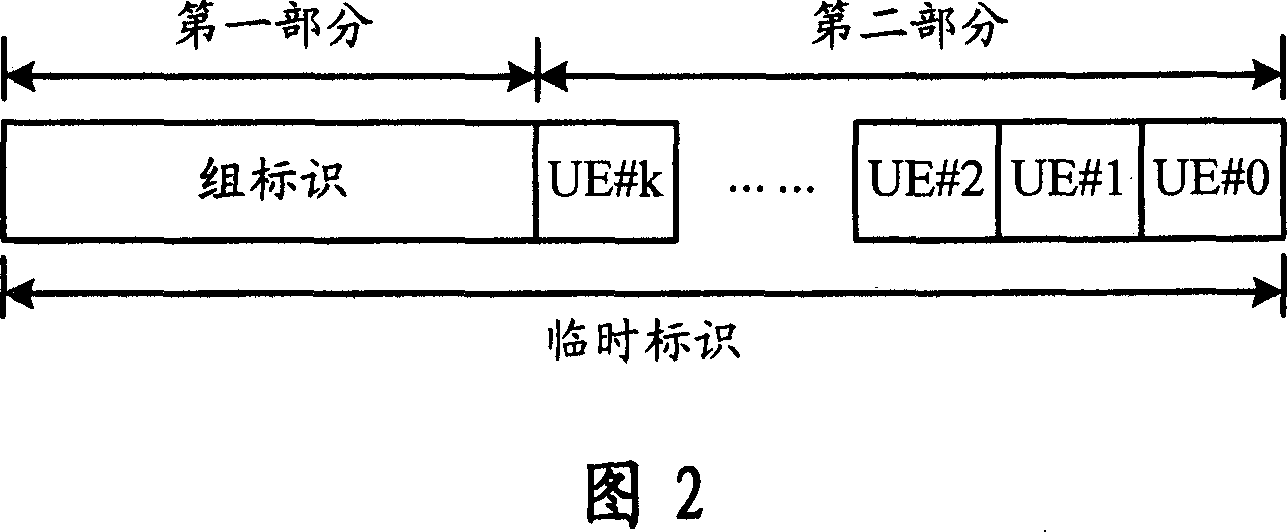

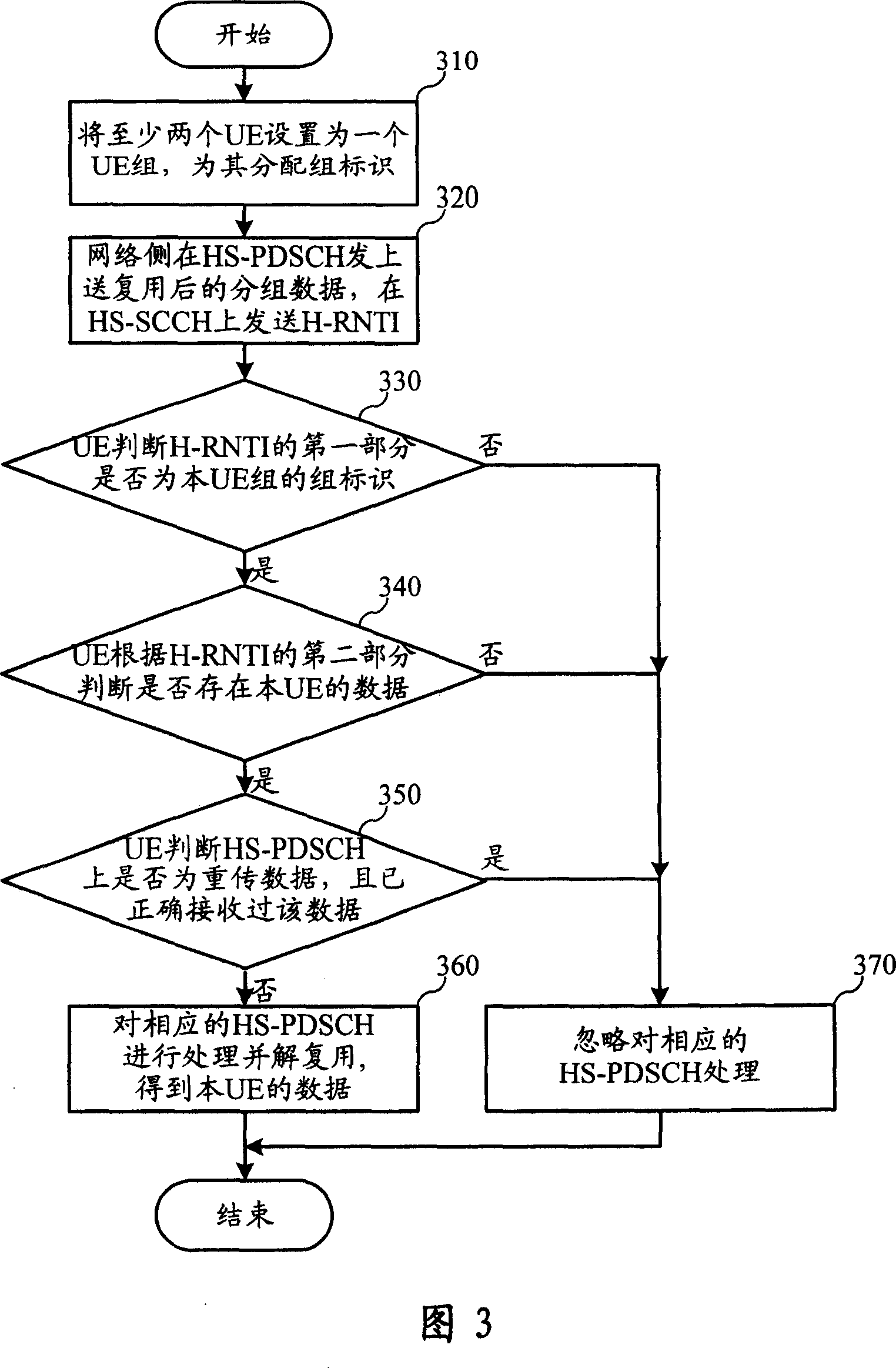

Data multiplexing method of multi-user device for the mobile communication network and its system

ActiveCN101034935AReduce the burden onReduce occupancyCode division multiplexBroadcast service distributionMultiplexingData transmission

This invention relates to mobile communications technology, discloses communications network equipment in multi-user data multiplexing transmission methods and systems, a number of UE makes data reuse in the HS-PDSCH in a TTI, can reduce the processing burden and UE on the Node B Retransmission demand. This invention, the UE organization, in to group data issued after multiplexing, divided temporarily logo, into two parts, the first part of the group that UE logo, and the second part of each group said UE whether there is a data transmission; the group UE analysis the received H-RNTI only when there are data of their own transmissed they could receiver the suit able data from the shared access when there are such data the group UE with go farther to check whether the data is the retransmits one, it will receive it when it is ,if not, it won't receive it.

Owner:HUAWEI TECH CO LTD

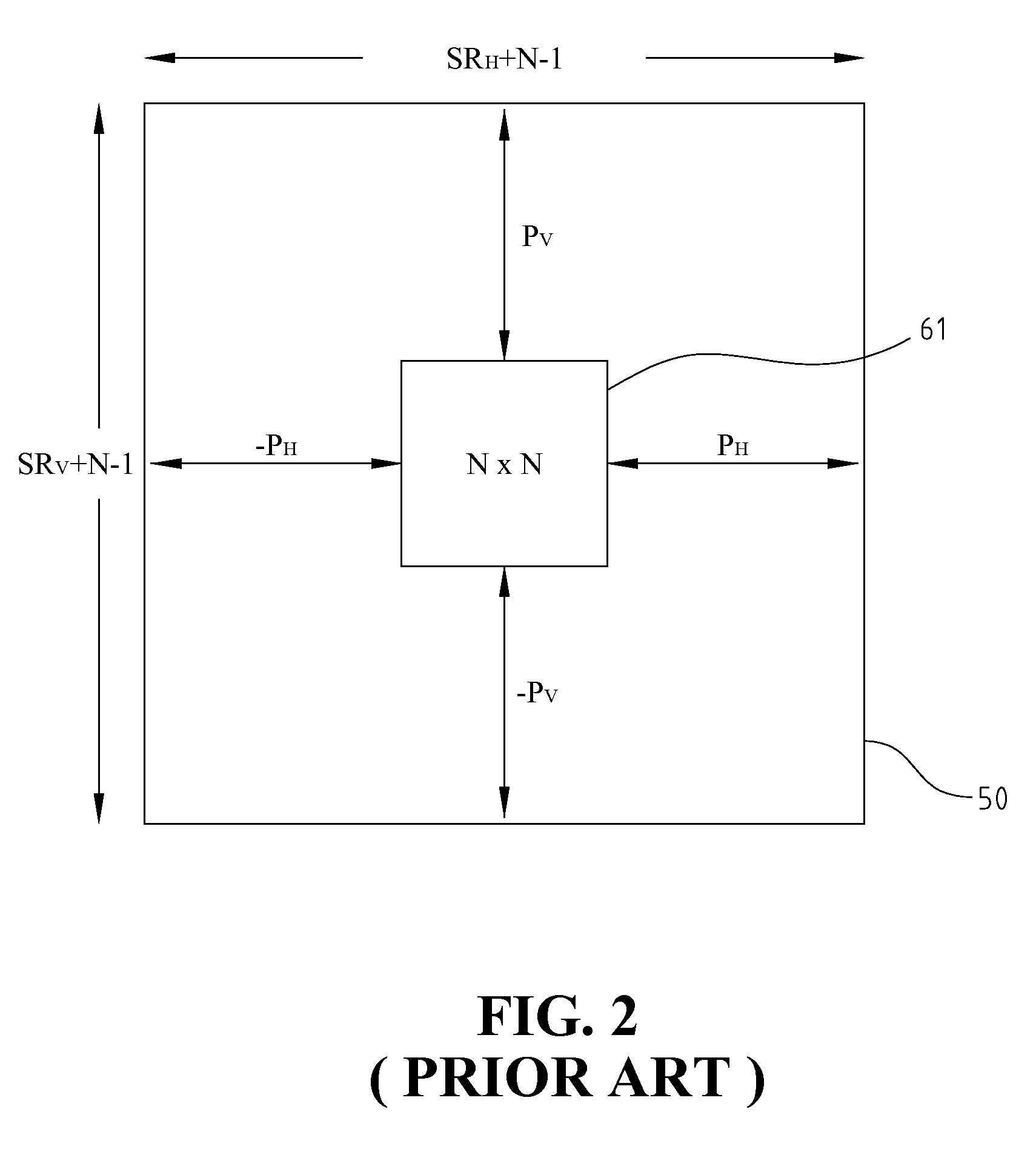

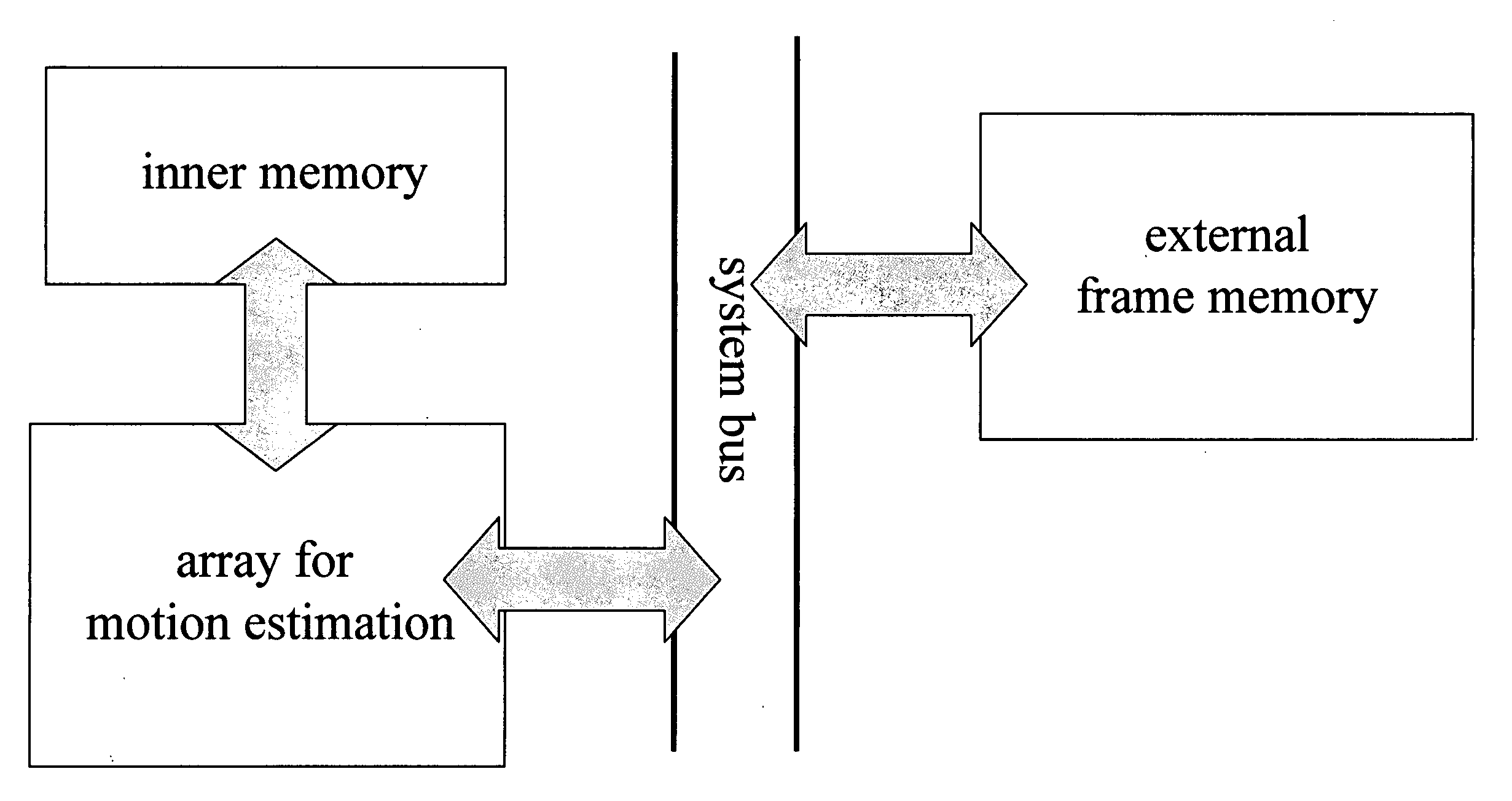

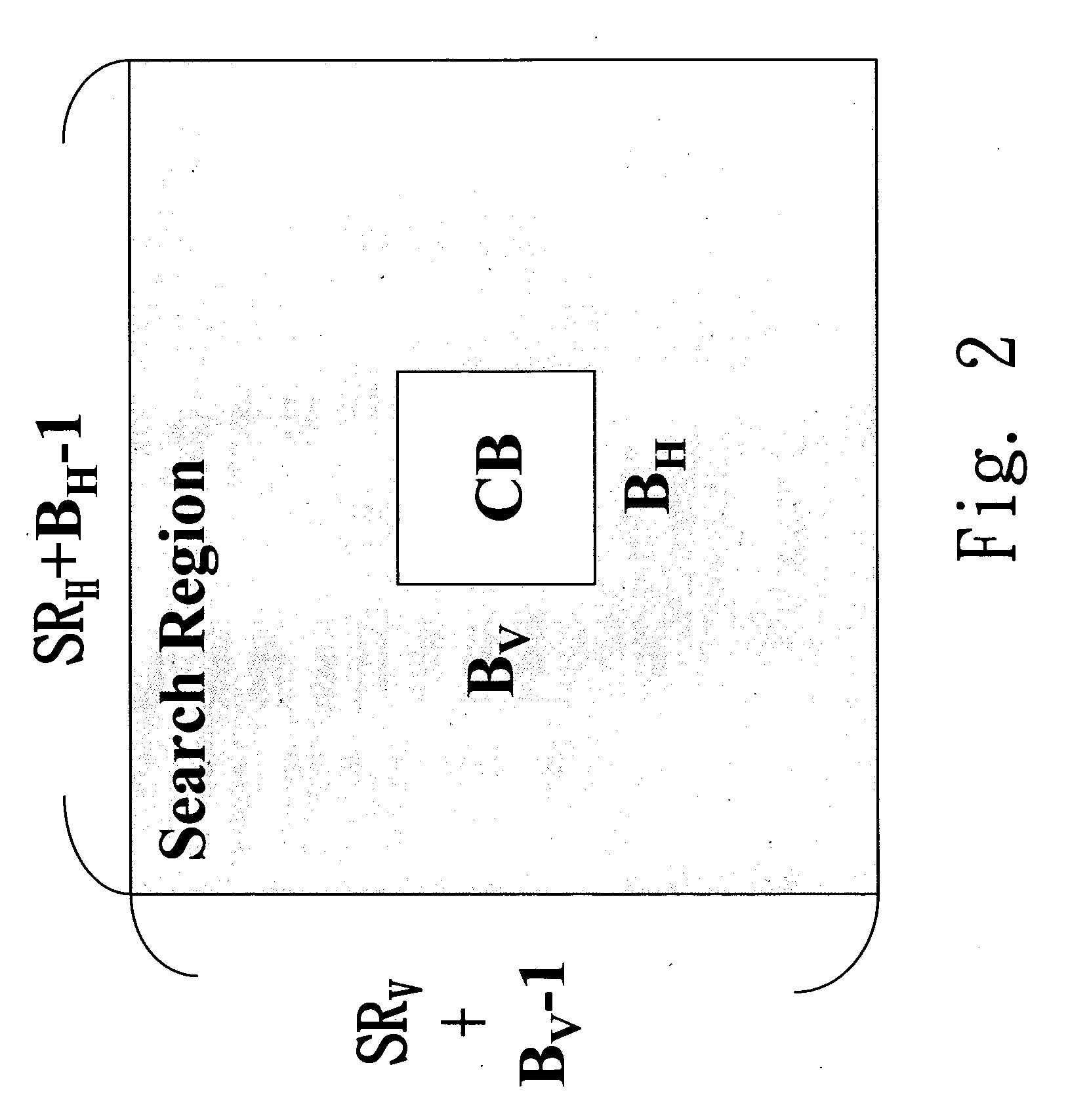

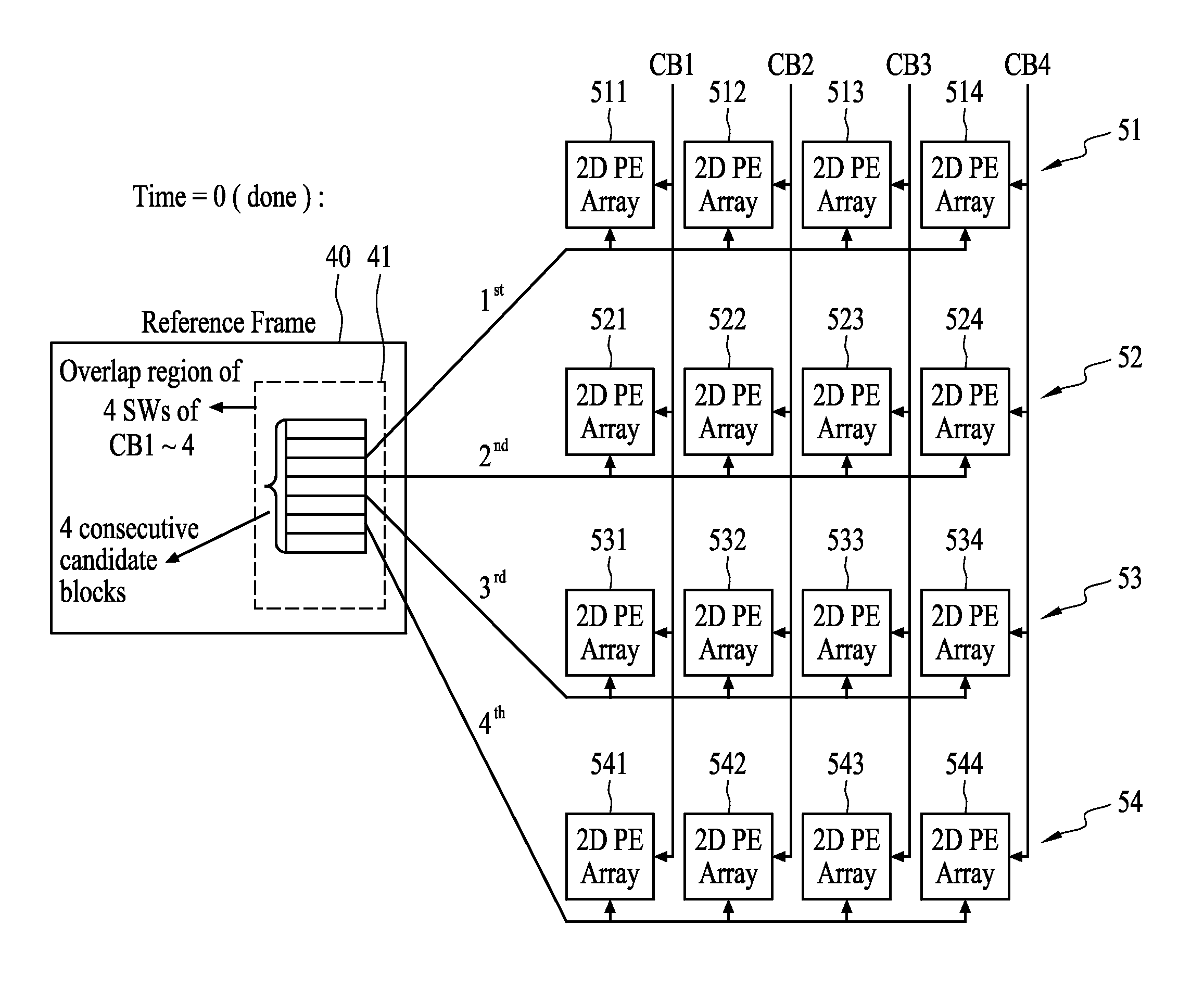

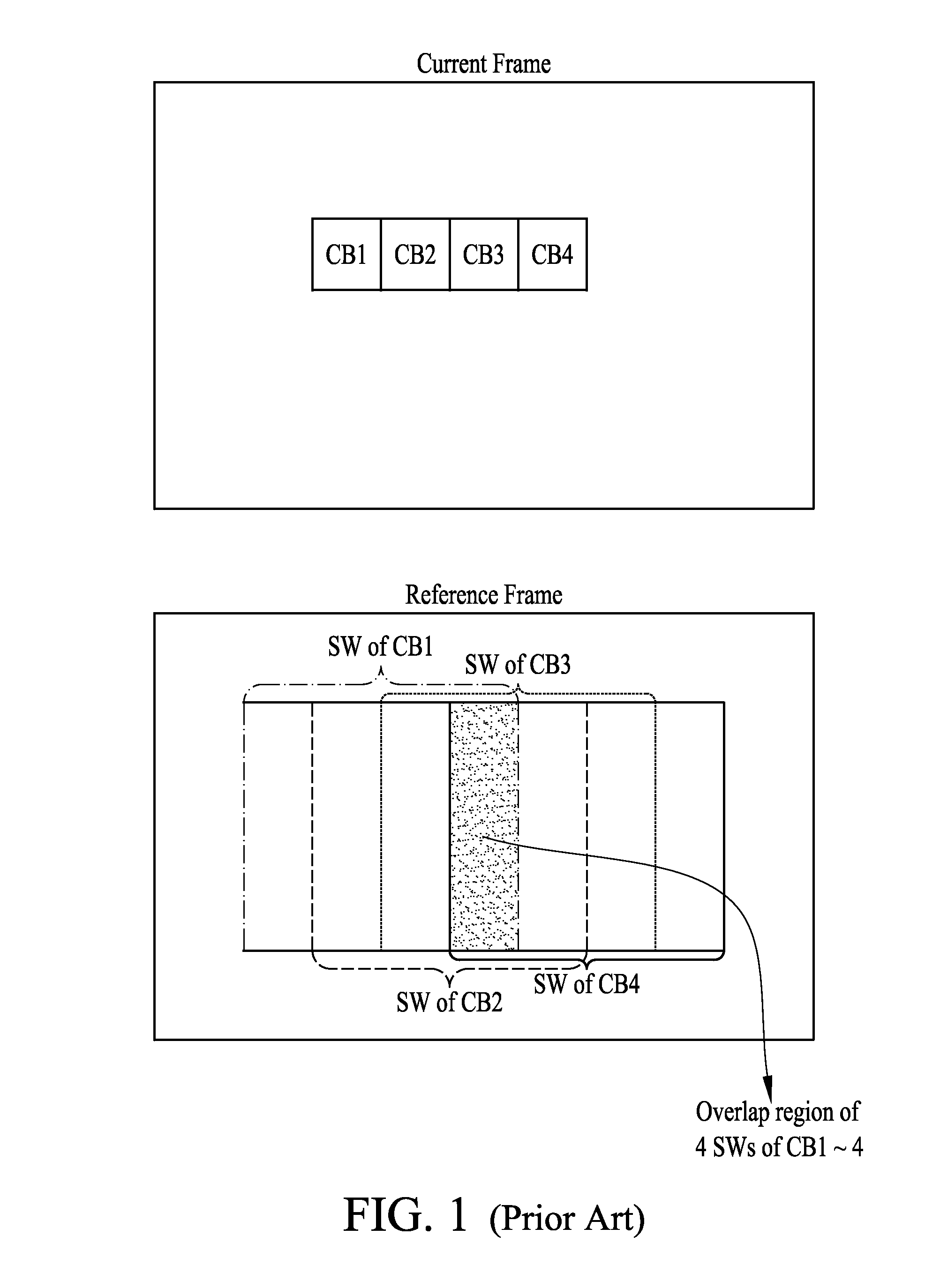

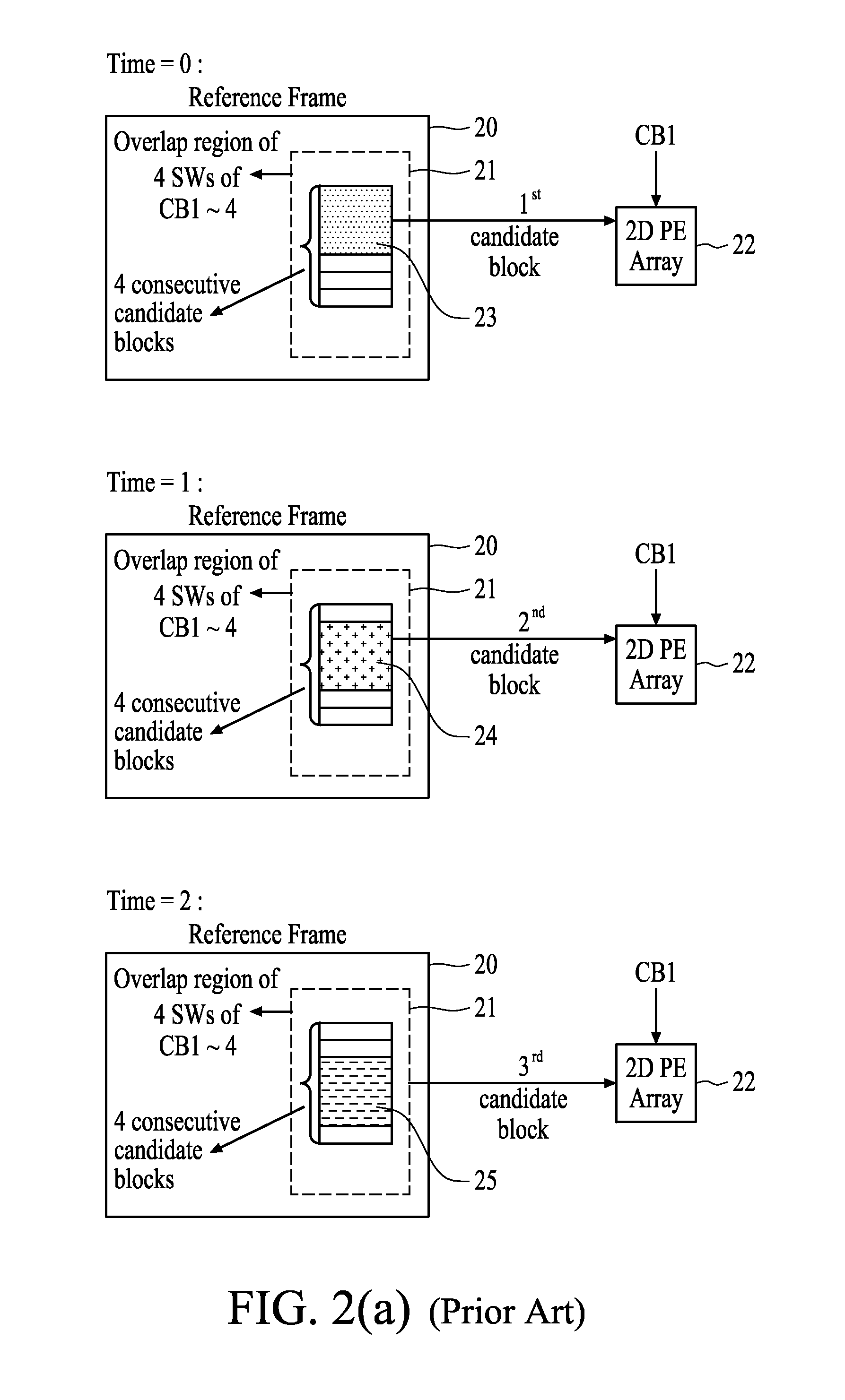

Method of Data Reuse for Motion Estimation

InactiveUS20080225948A1Reduce memory bandwidthMemory access time savingColor television with pulse code modulationColor television with bandwidth reductionComputational scienceComputer graphics (images)

A so-called inter-macroblock parallelism is proposed for motion estimation. First, pixel data of one of the consecutive candidate blocks in an overlapped region of search windows of current blocks in a reference frame including reference blocks corresponding to the current blocks are read and transferred to a plurality of processing element (PE) arrays in parallel. The plurality of PE arrays are used to determine the match situation of the current blocks and the reference blocks. Then, the above process is repeated for the rest of the candidate blocks in sequence. For example, if there are four current blocks CB1-CB4 and four consecutive candidate blocks, at the beginning the data of the first candidate block are read and transferred to four PE arrays in parallel, and so to the second, third and fourth candidate blocks in sequence, and the four PE arrays calculate SADs for CB1 to CB4, respectively.

Owner:NATIONAL TSING HUA UNIVERSITY

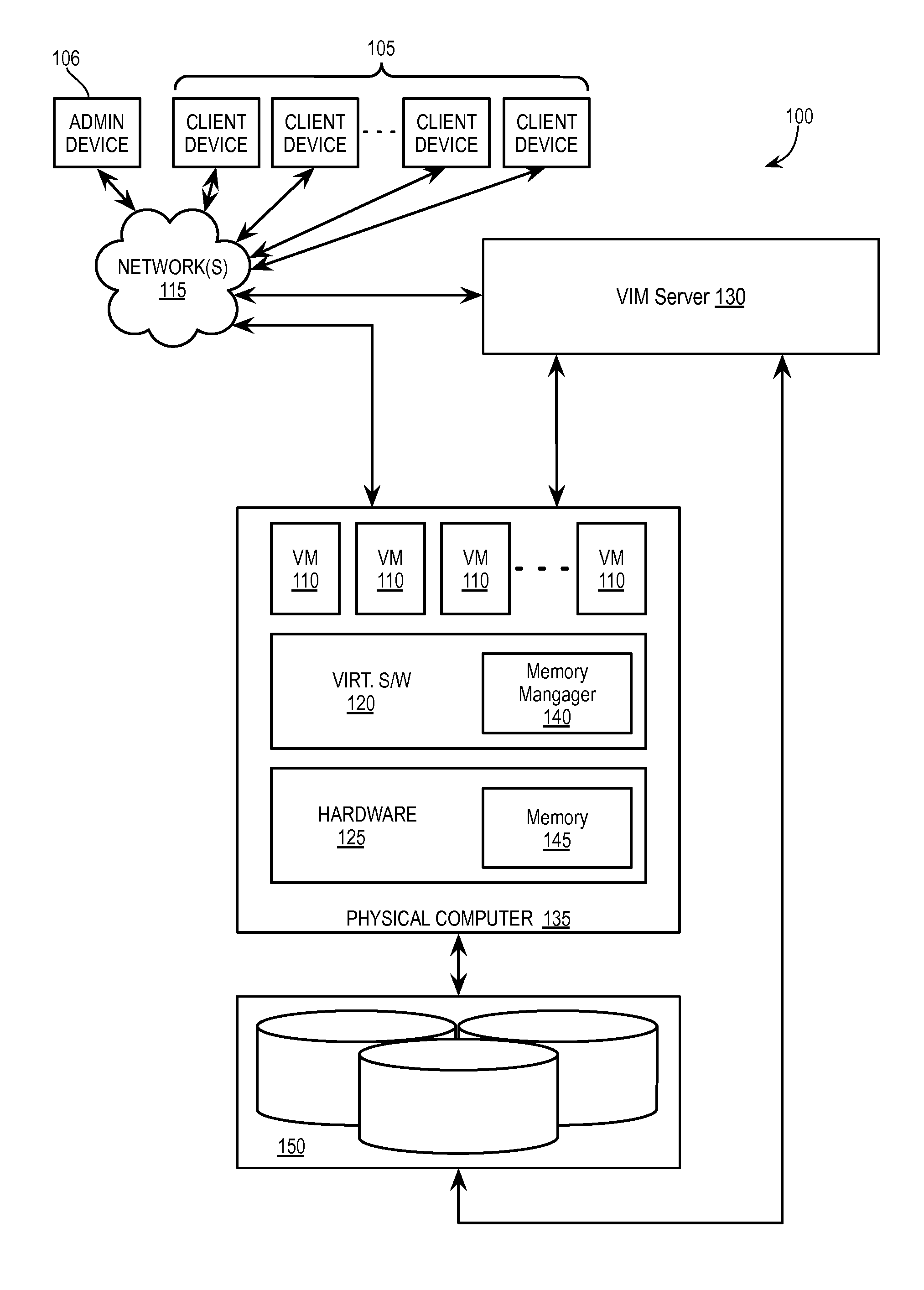

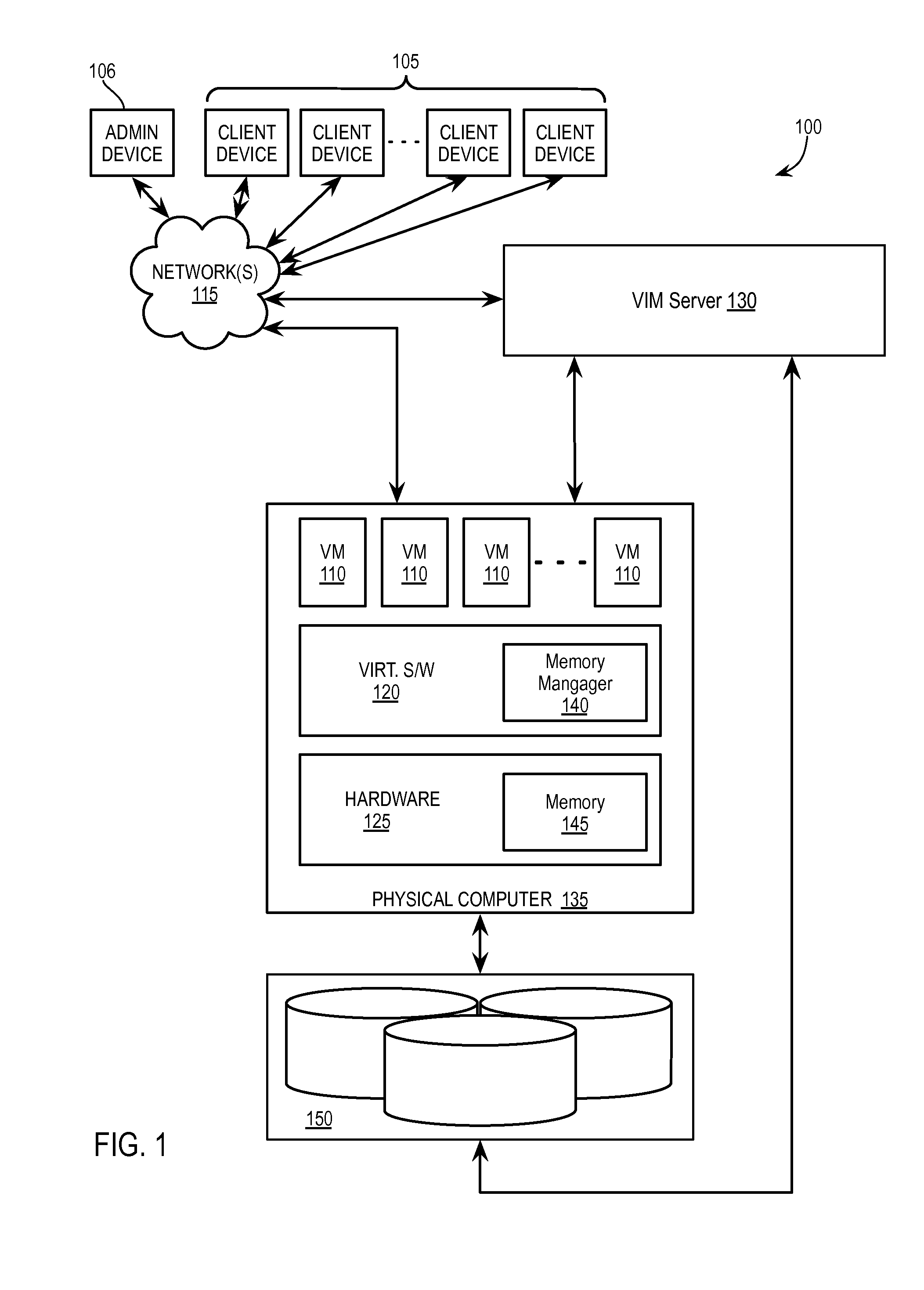

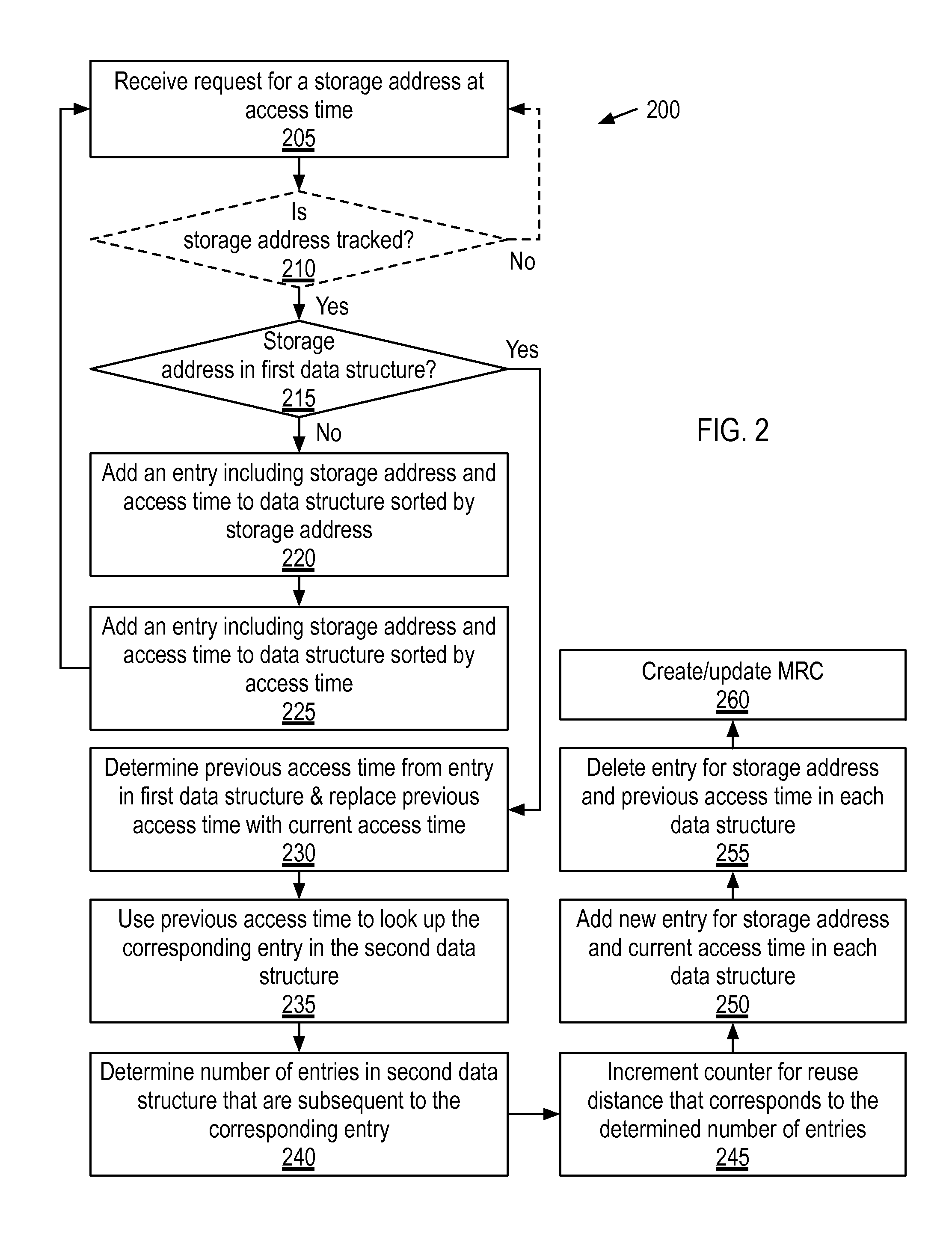

Data reuse tracking and memory allocation management

ActiveUS20150363236A1Memory architecture accessing/allocationResource allocationAccess timeData structure

Exemplary methods, apparatuses, and systems receive a first request for a storage address at a first access time. Entries are added to first and second data structures. Each entry includes the storage address and the first access time. The first data structure is sorted in an order of storage addresses. The second data structure is sorted in an order of access times. A second request for the storage address is received at a second access time. The first access time is determined by looking up the entry in first data structure using the storage address received in the second request. The entry in the second data structure is looked up using the determined first access time. A number of entries in second data structure that were subsequent to the second entry is determined. A hit count for a reuse distance corresponding to the determined number of entries is incremented.

Owner:VMWARE INC

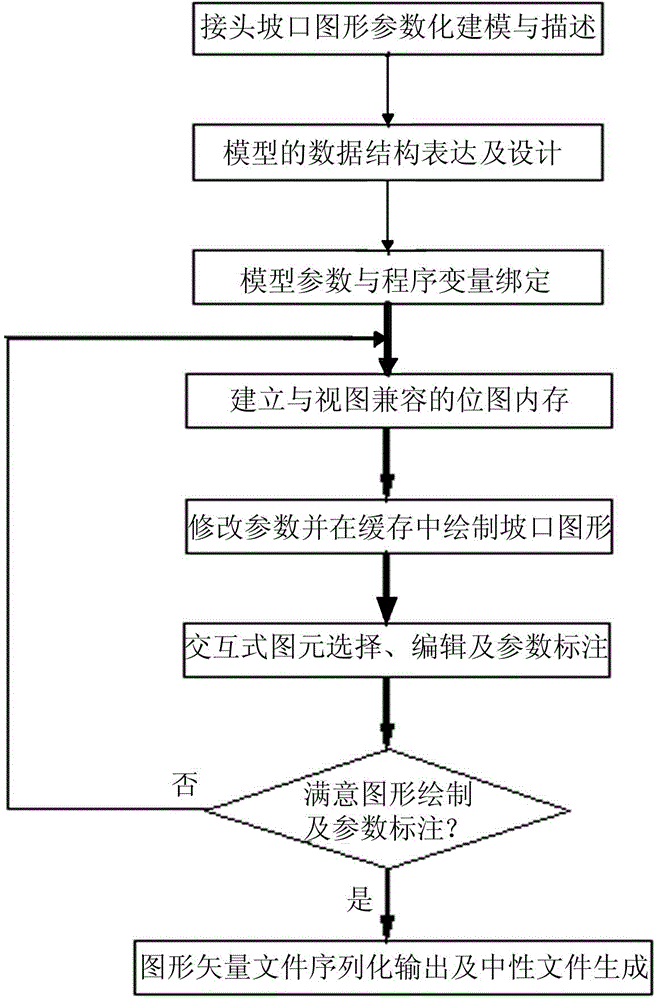

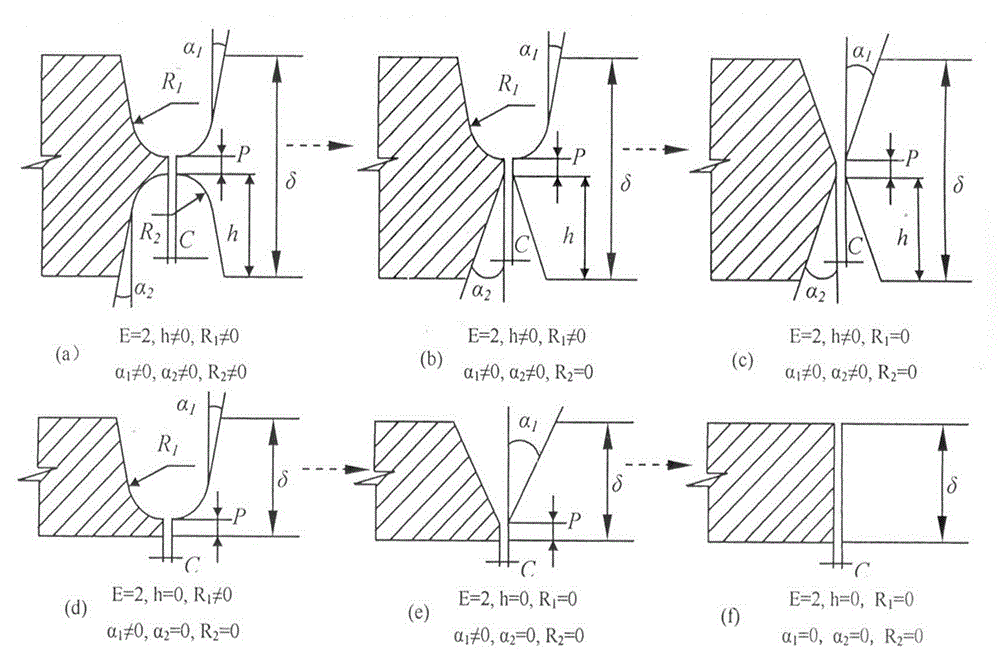

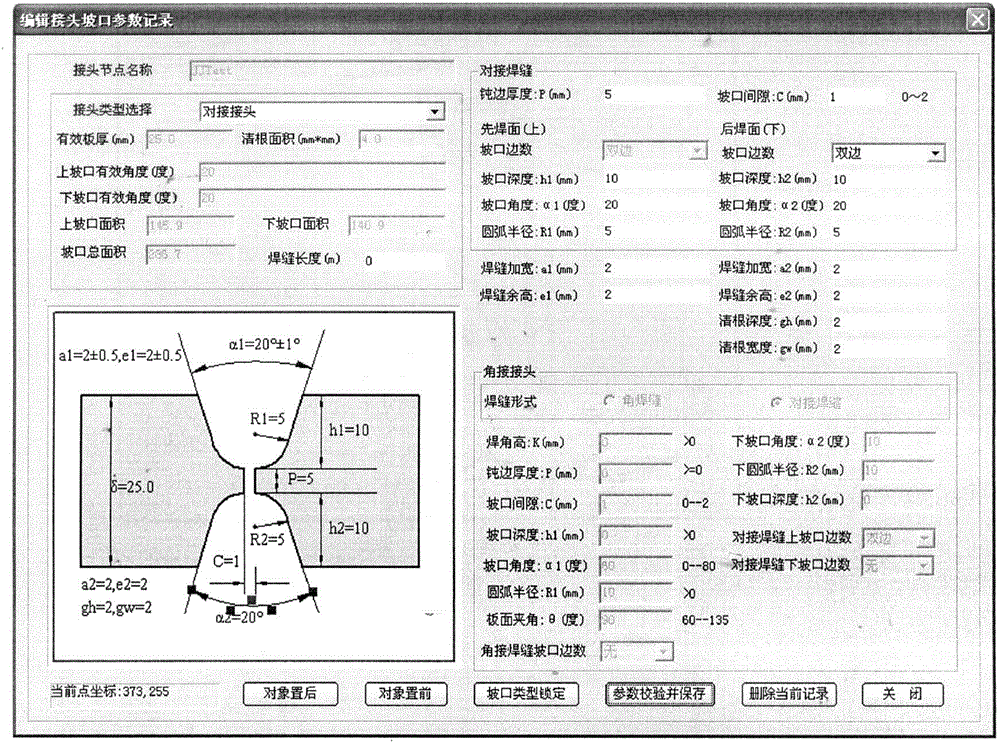

Interactive welding joint groove graph drawing and parameter tagging method

InactiveCN104462682AAvoid distortionFix description problemSpecial data processing applicationsInteractive graphicsFile size

The invention discloses an interactive welding joint groove graph drawing and parameter tagging method. The method comprises the following steps of (1) conducting parametric modeling on a groove graph; (2) establishing an interactive graphic element construction and parameter tagging method; (3) after the groove graph is drawn, storing joint groove parameter information and the groove graph in a database table field through database metadata, and storing the groove graph into a database in a neutral meta file vector format WMF. On the one hand, the size of a graph file is reduced, meanwhile, the vector graph file is loaded into an application program, and parameter tagging and graph property modification can be carried out on the vector graph file to achieve graph data reuse; on the other hand, the neutral groove graph file can be used as an embedded graph object to be output to a process report file, sawteeth or graphic element interruption and other distortion phenomena will not be generated during stepless zooming in a display area, and the graph file has the advantages in the aspects of storage and display compared with a bitmap file.

Owner:泰州学院

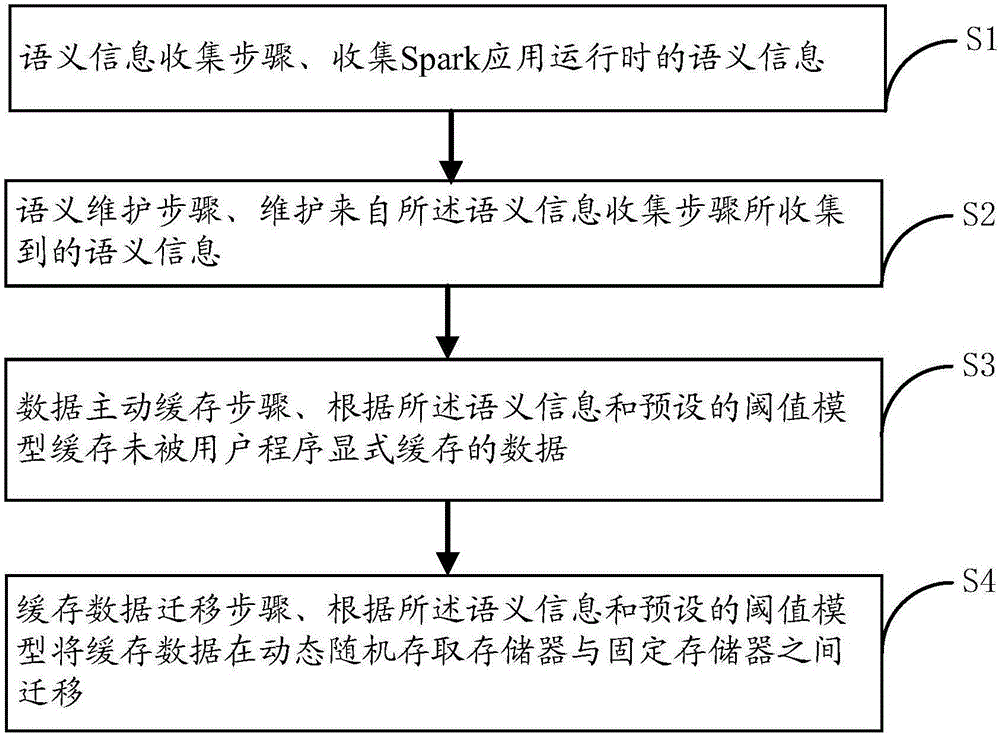

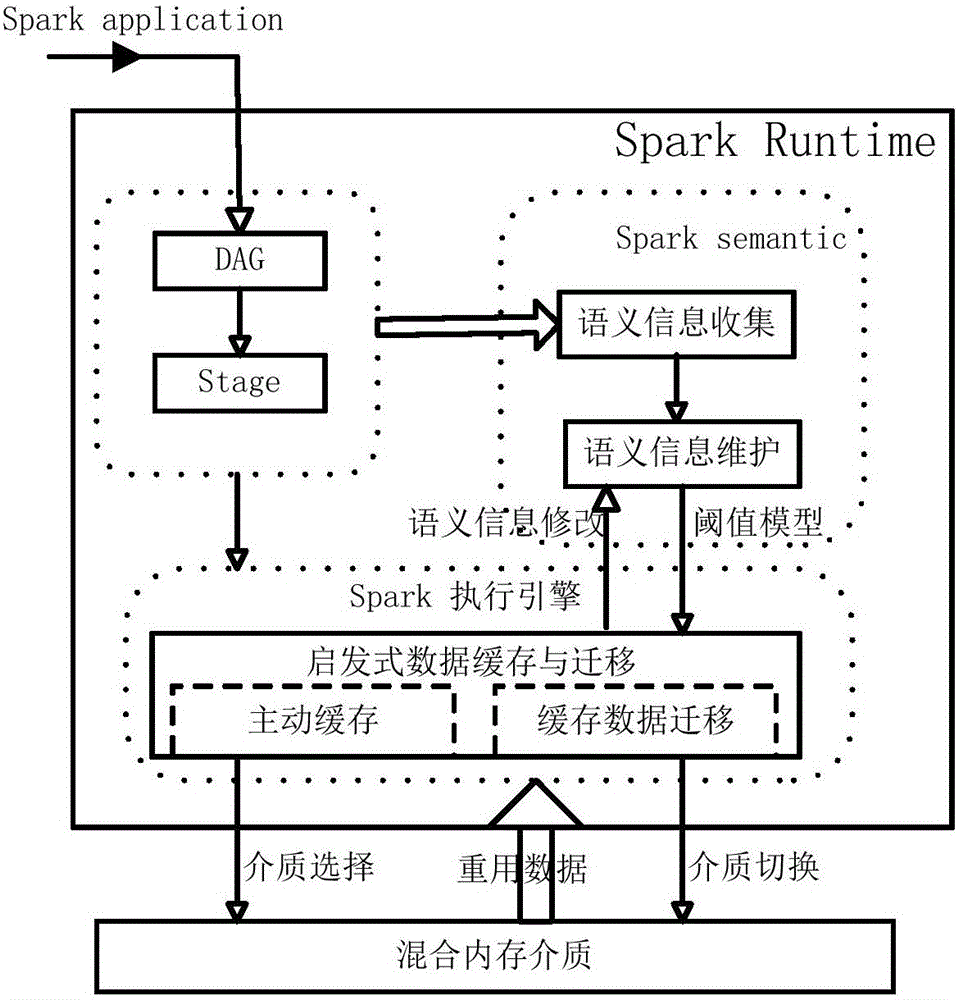

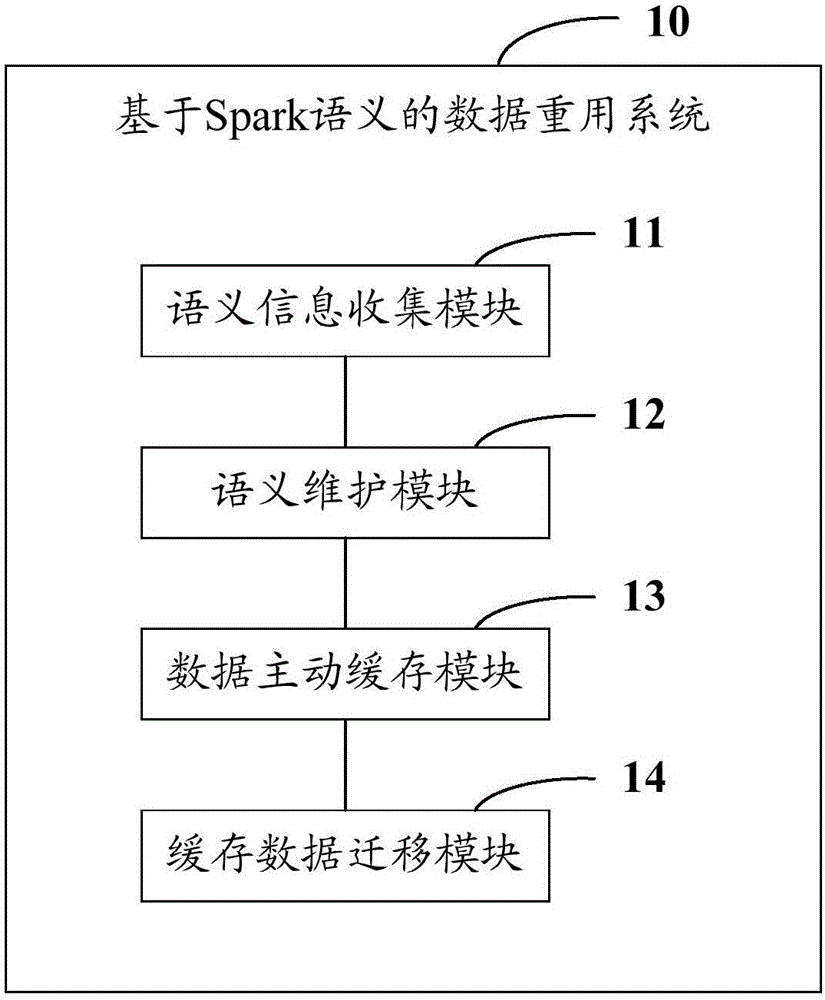

Spark semantics based data reuse method and system thereof

ActiveCN106484368ALarge capacityReduce calculationInput/output to record carriersRegister arrangementsStatic random-access memoryRandom access memory

The present invention provides a Spark semantics based data reuse method. The method comprises a semantic information collection step of collecting semantic information during the operation of a Spark application; a semantic maintenance step of maintaining the semantic information collected from the semantic information collection step; a data active caching step of caching data that is not explicitly cached by a user program according to the semantic information and a preset threshold model; and cached data migrating step of carrying out migration on the cached data between a dynamic random access memory and a fixed memory according to the semantic information and the preset threshold model. The present invention further provides a Spark semantics based data reuse system. The technical scheme provided by the present invention can reduce the repetitive data calculation, improve the calculation efficiency, and effectively avoid the dependence on the experience of developers.

Owner:POWERLEADER TELECOM TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com