Patents

Literature

180results about How to "Reduce bottlenecks" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Systems and methods for the autonomous production of videos from multi-sensored data

ActiveUS20120057852A1Quantity minimizationSelection rate can be highTelevision system detailsElectronic editing digitised analogue information signalsPersonalizationEngineering

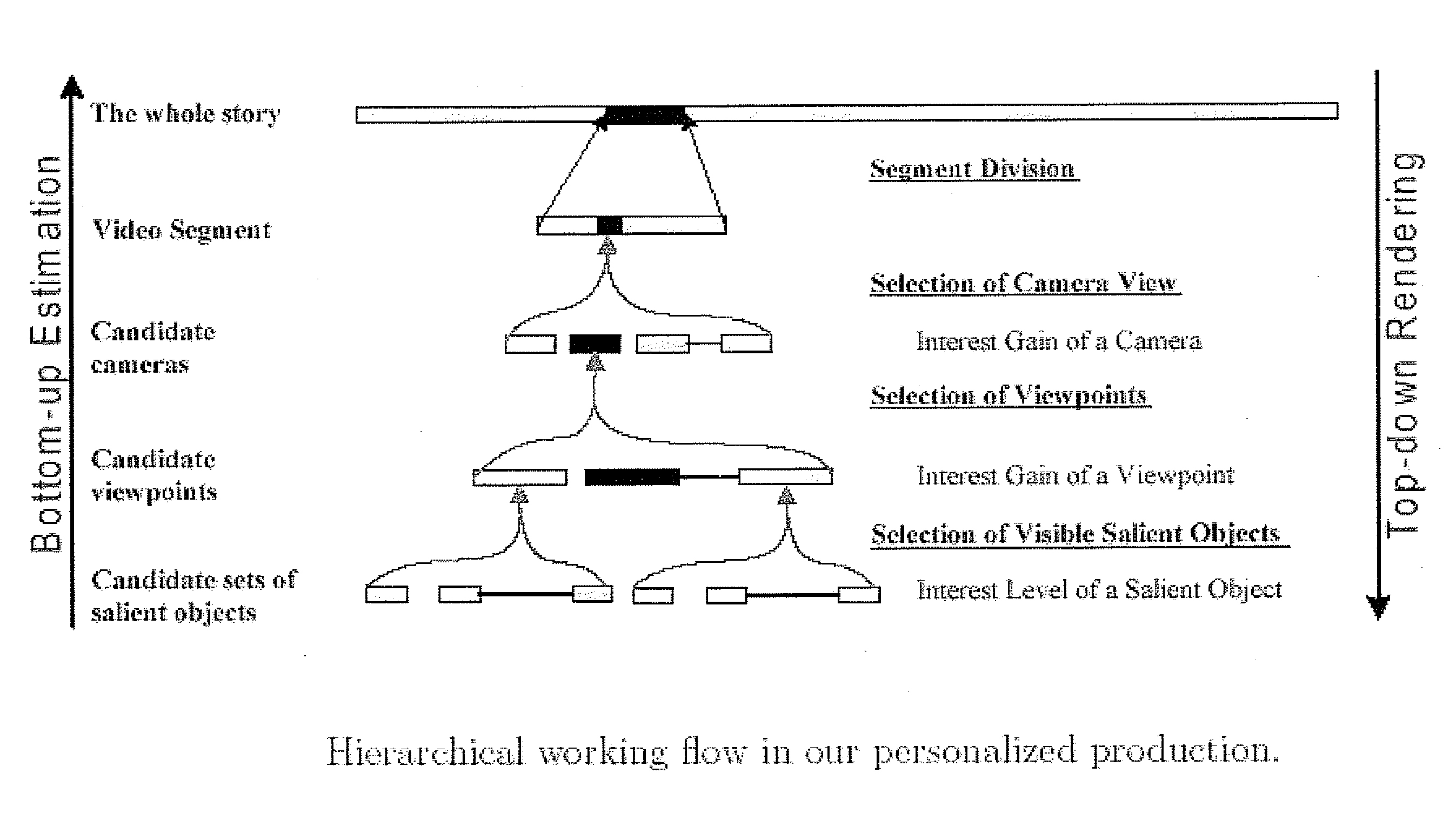

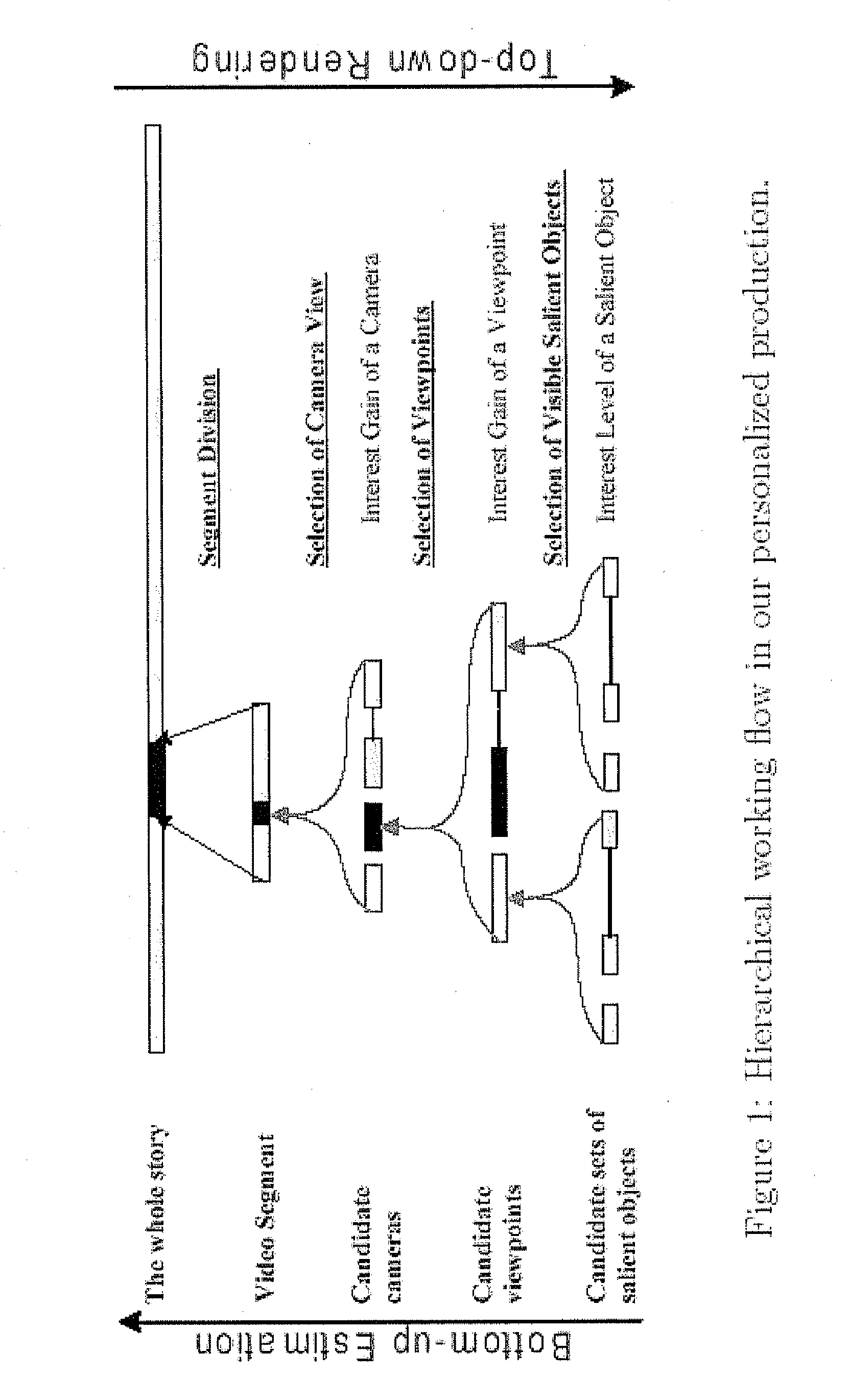

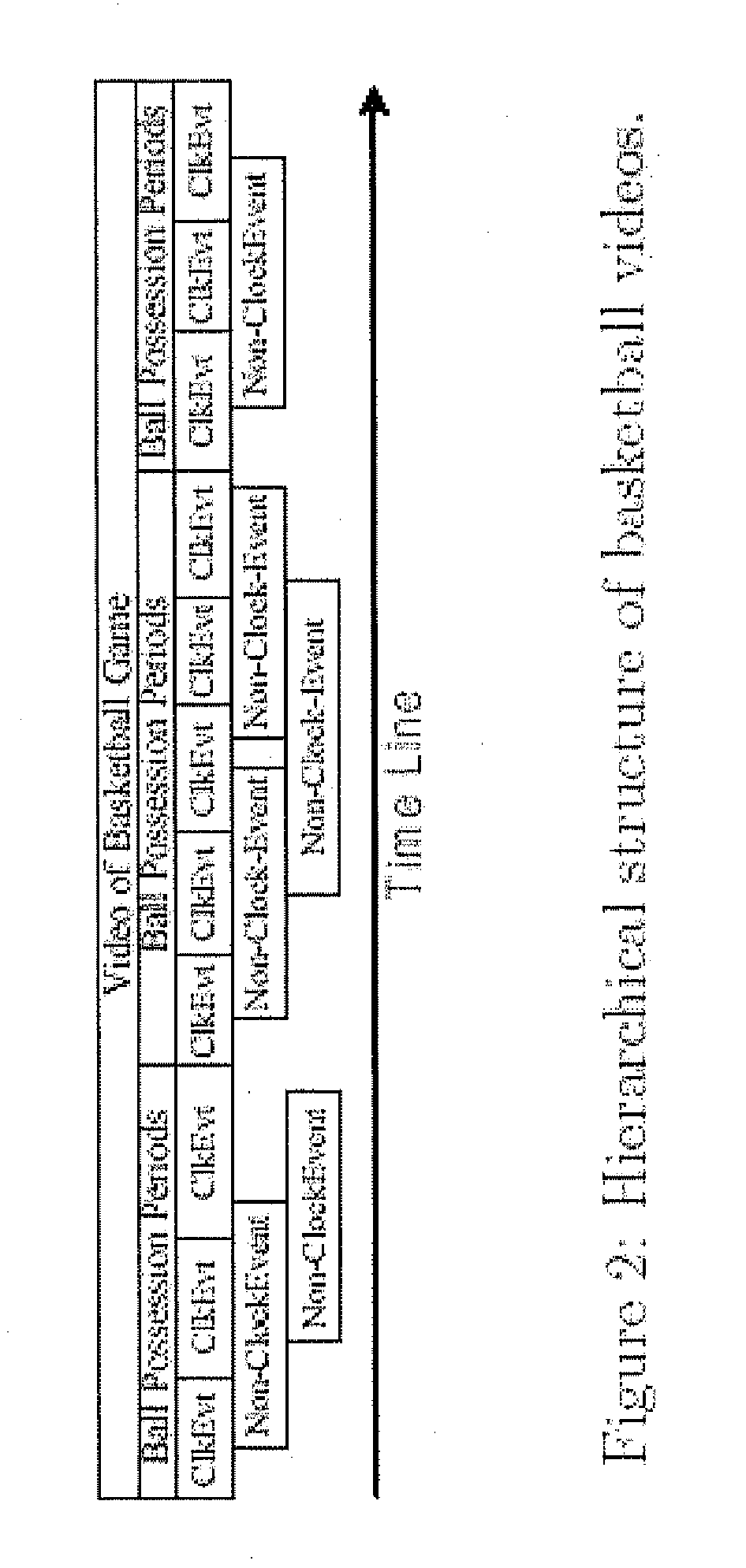

An autonomous computer based method and system is described for personalized production of videos such as team sport videos such as basketball videos from multi-sensored data under limited display resolution. Embodiments of the present invention relate to the selection of a view to display from among the multiple video streams captured by the camera network. Technical solutions are provided to provide perceptual comfort as well as an efficient integration of contextual information, which is implemented, for example, by smoothing generated viewpoint / camera sequences to alleviate flickering visual artefacts and discontinuous story-telling artefacts. A design and implementation of the viewpoint selection process is disclosed that has been verified by experiments, which shows that the method and system of the present invention efficiently distribute the processing load across cameras, and effectively selects viewpoints that cover the team action at hand while avoiding major perceptual artefacts.

Owner:KEEMOTION

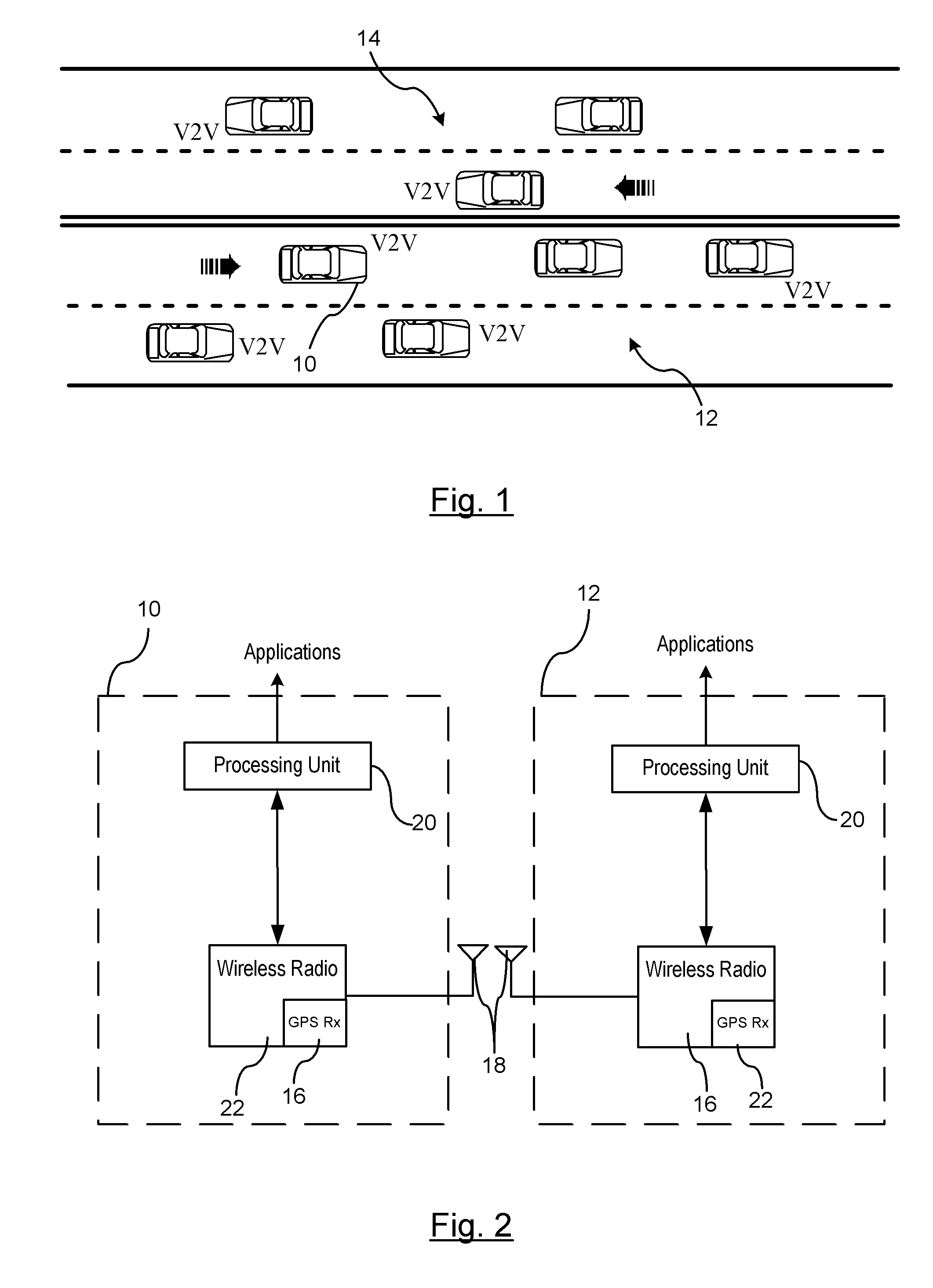

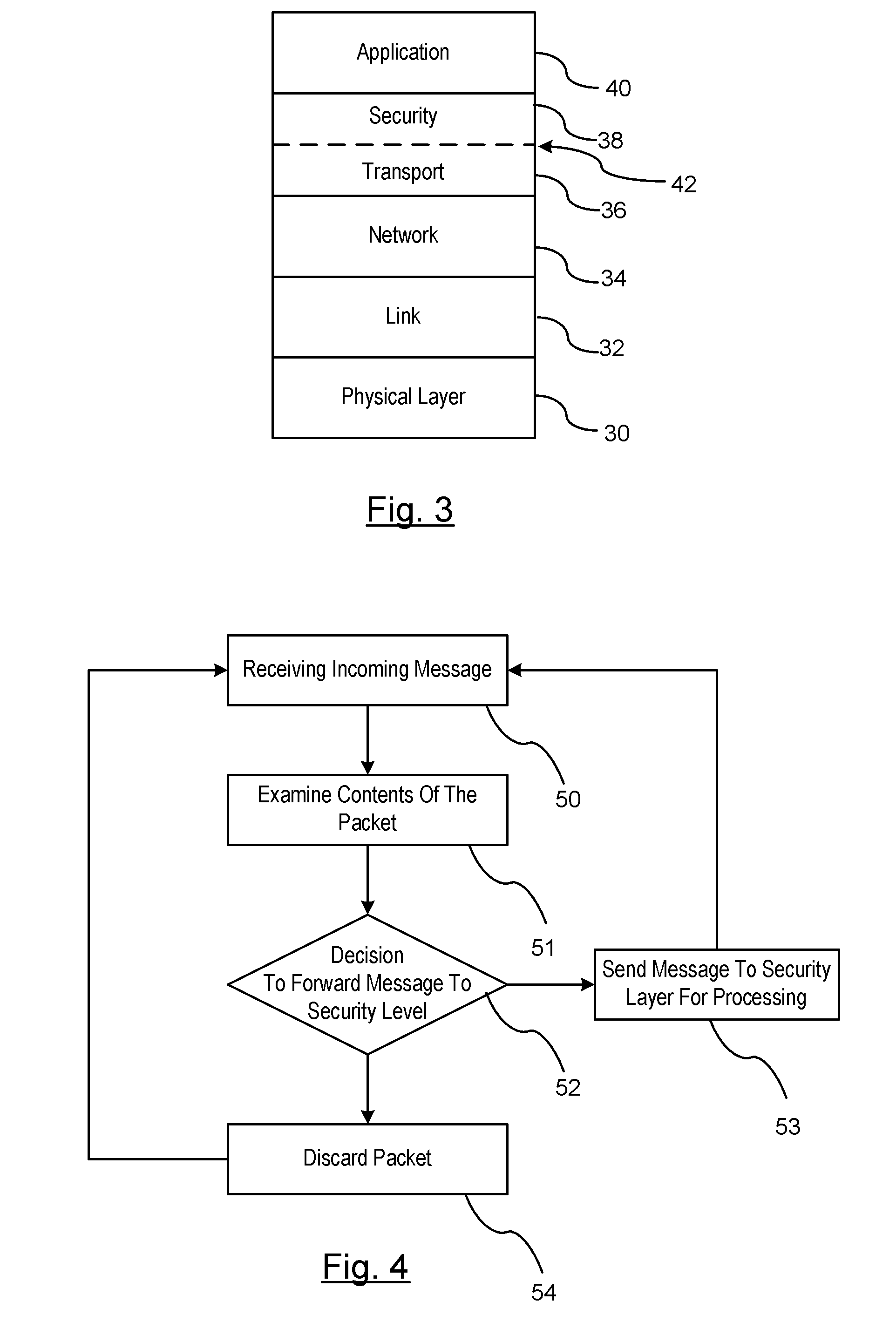

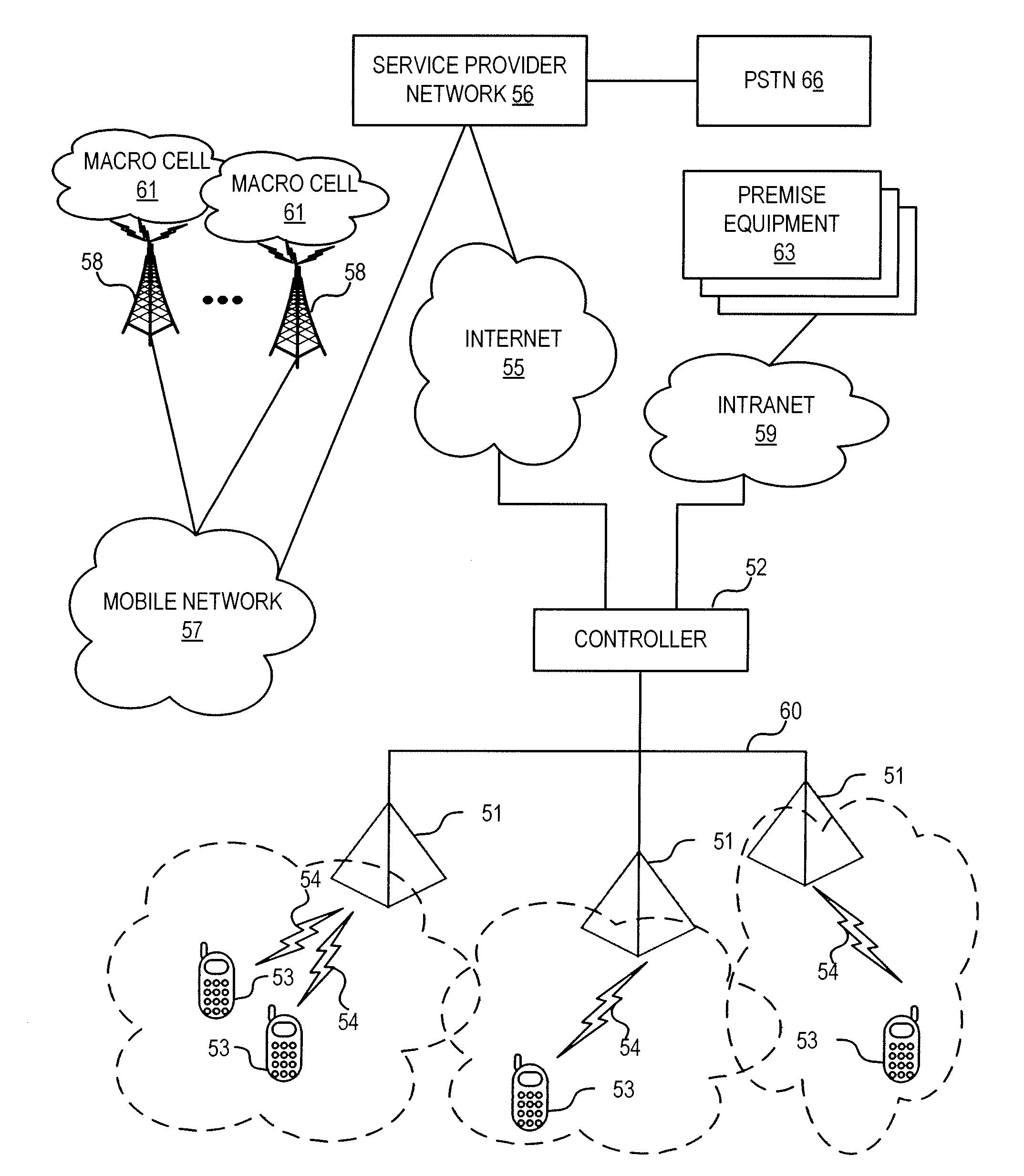

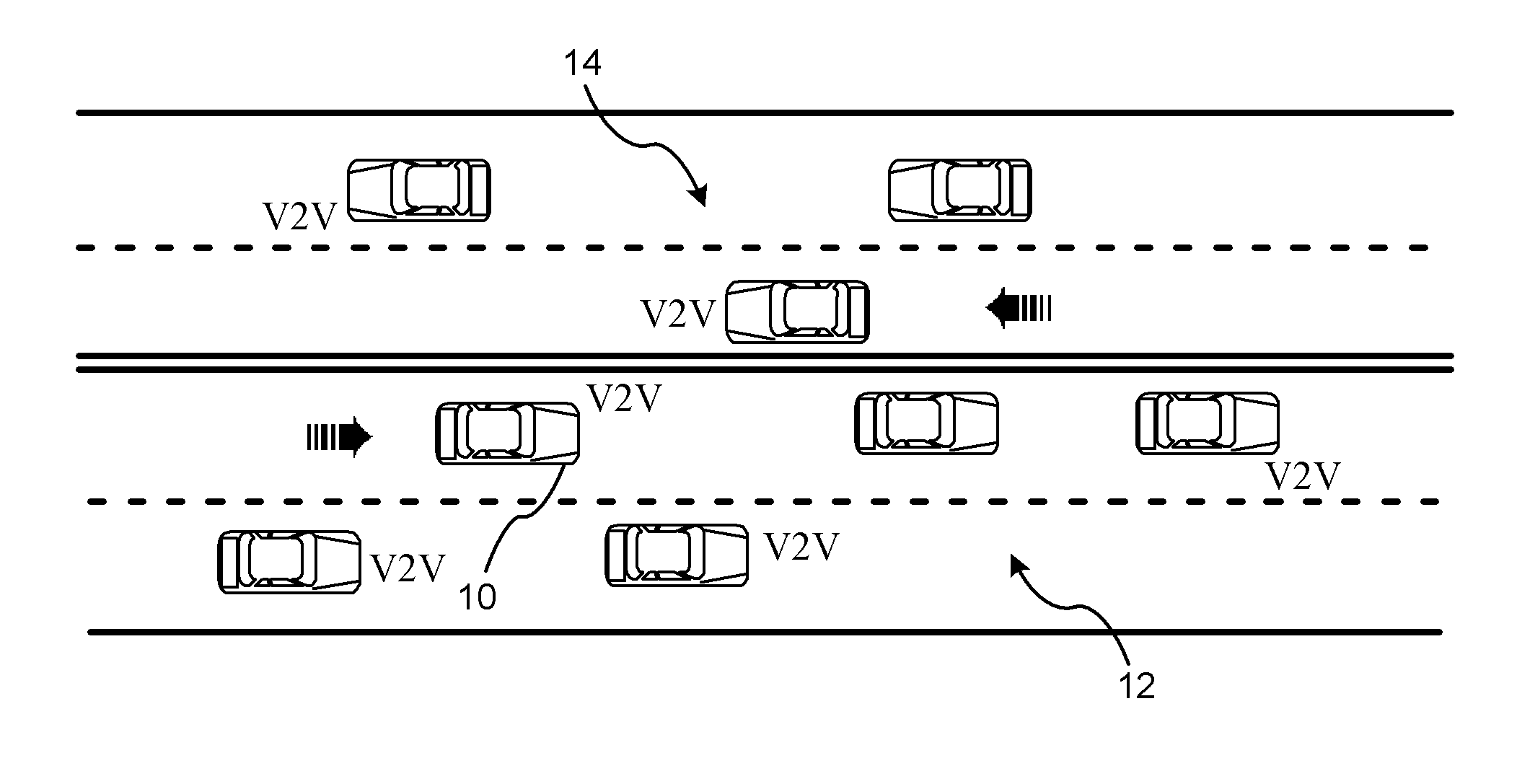

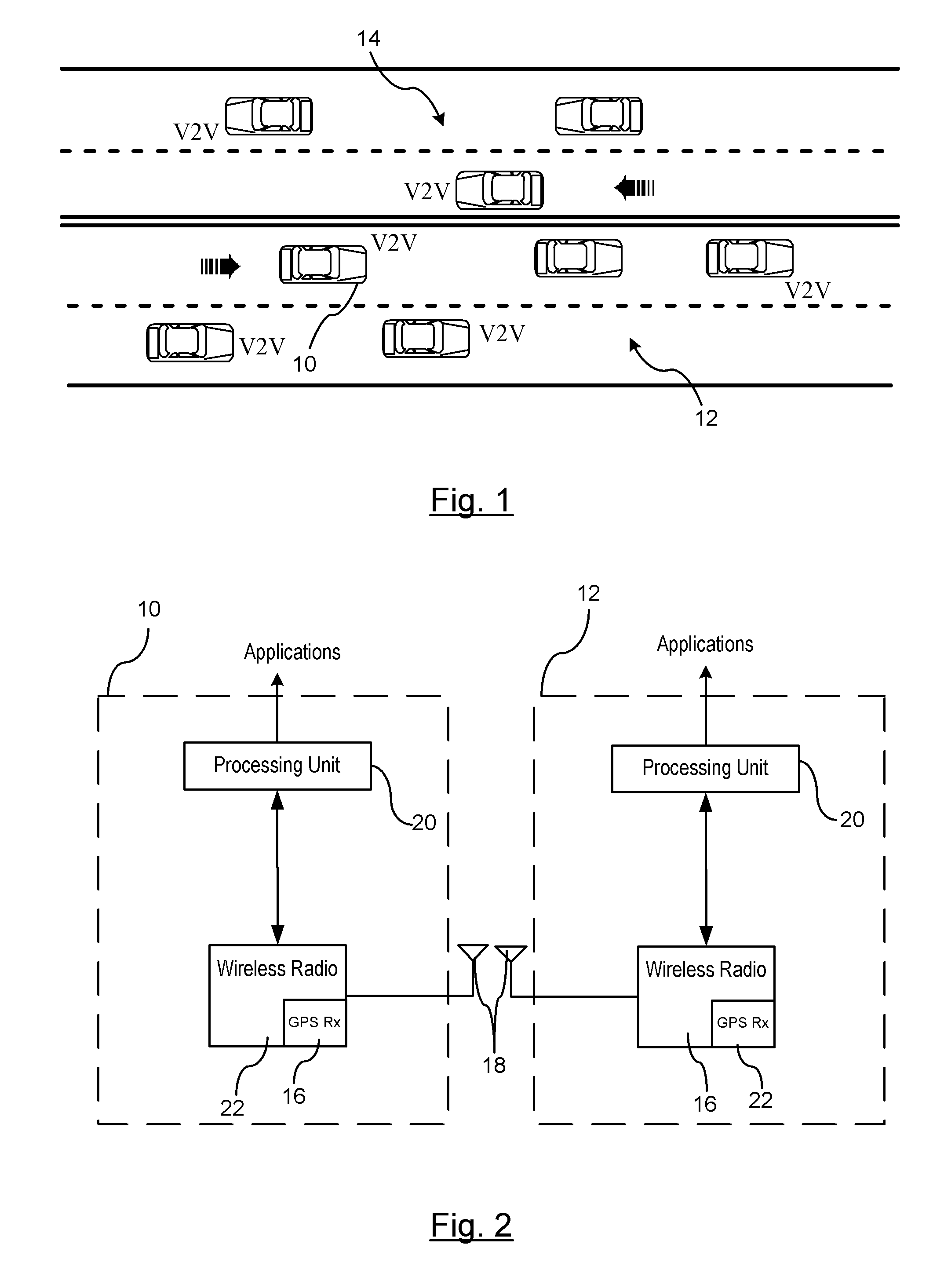

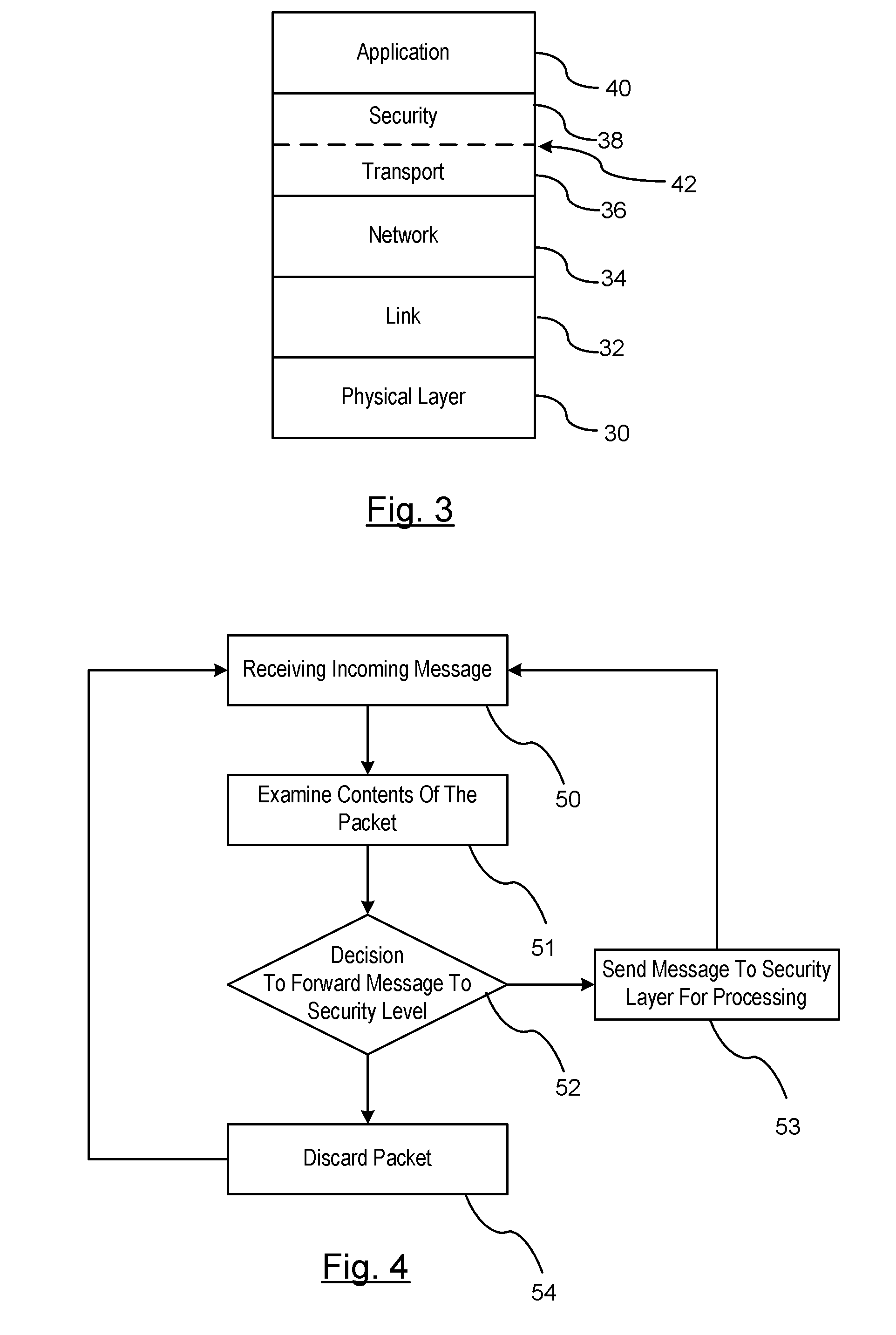

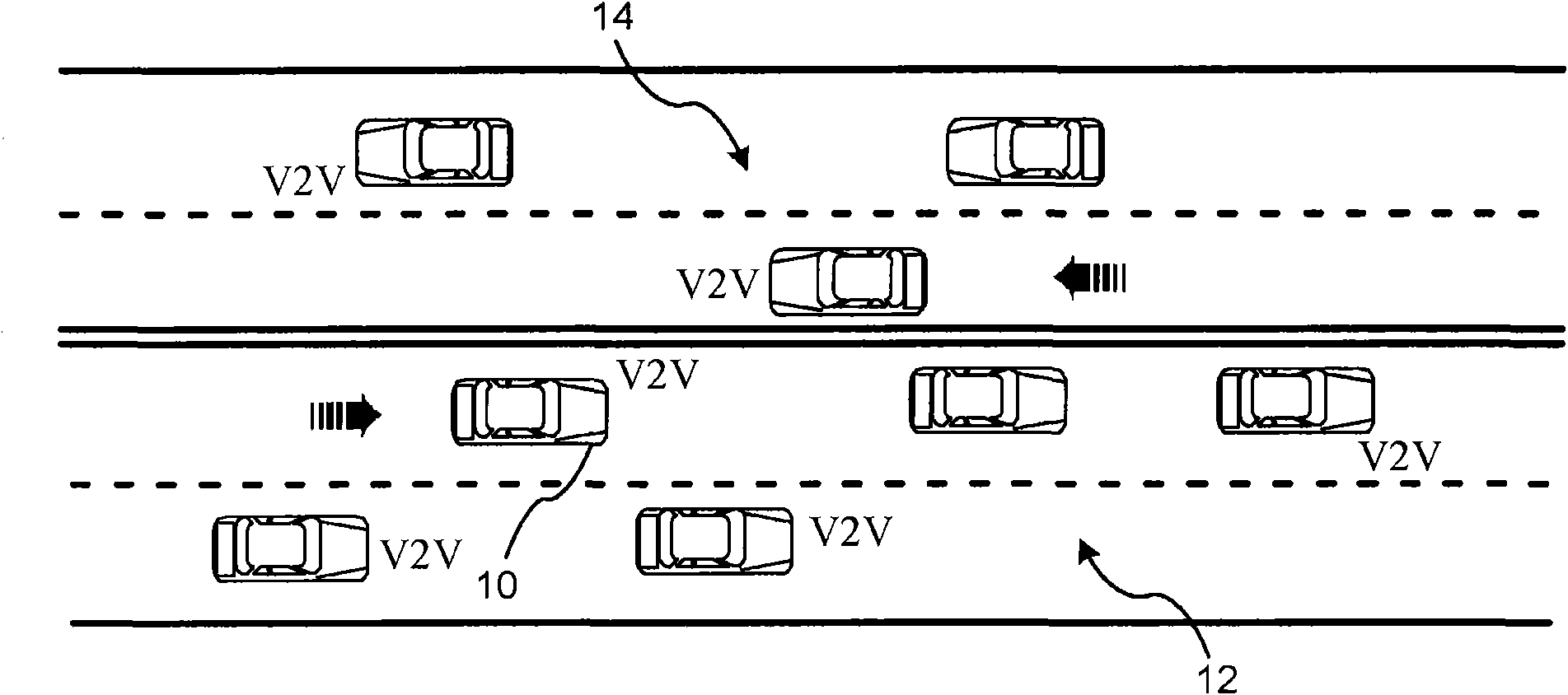

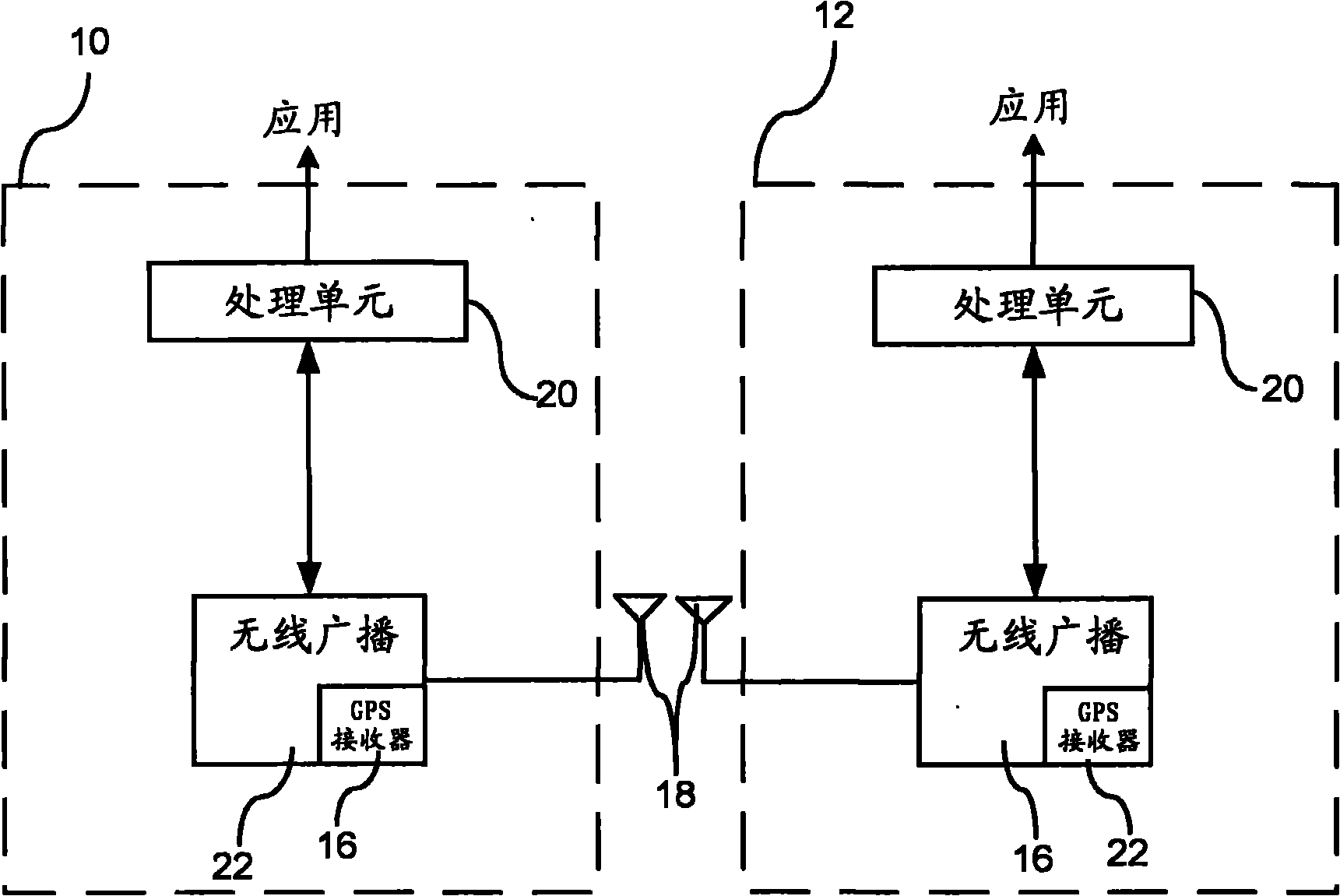

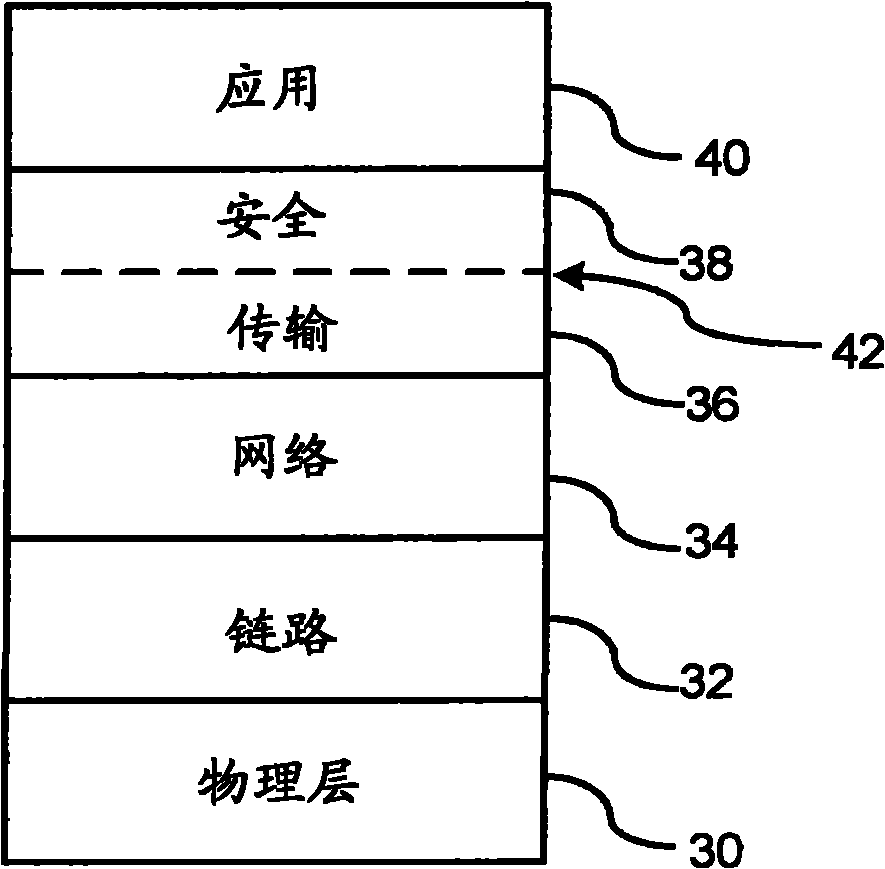

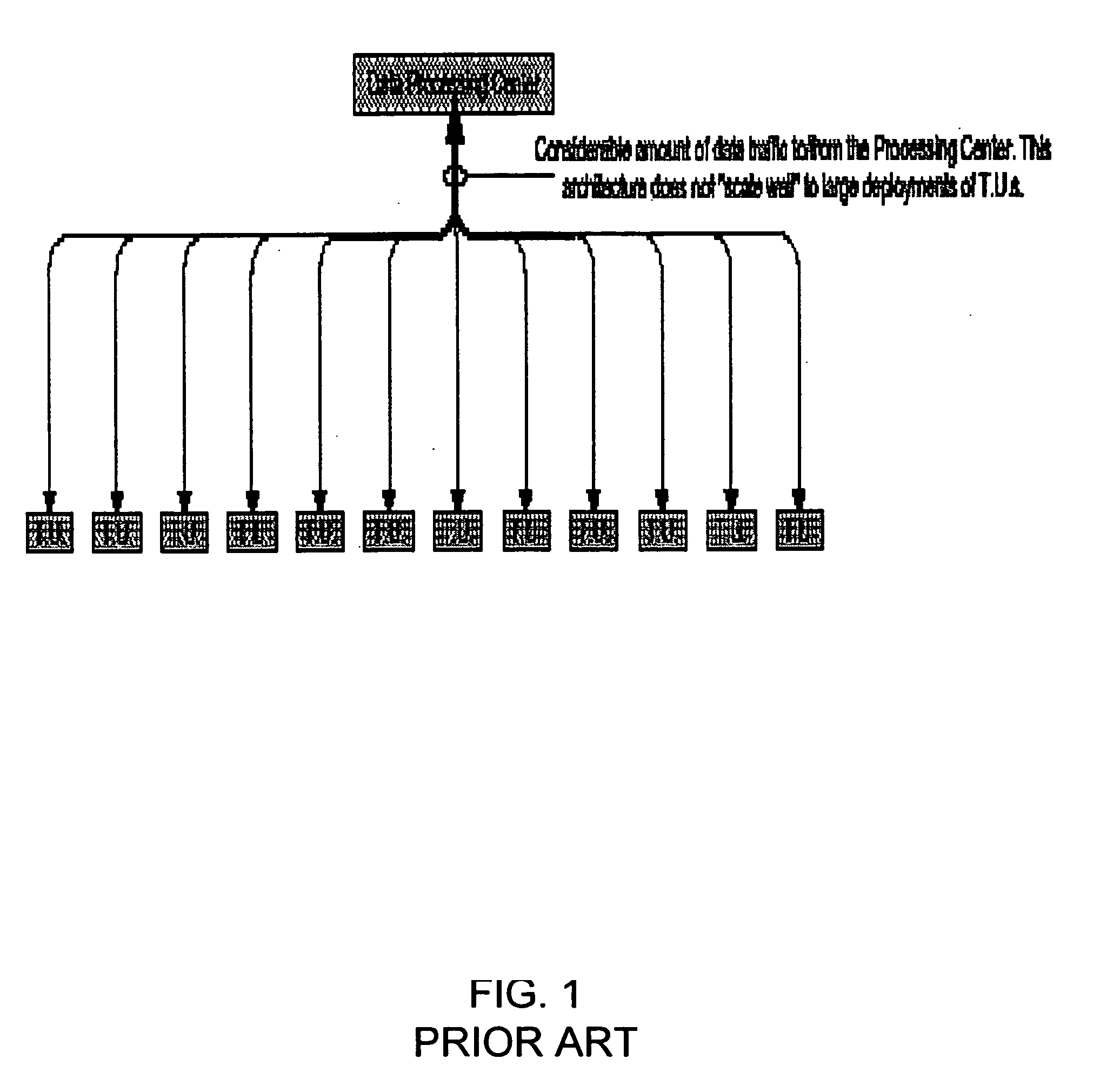

Reducing the Computational Load on Processors by Selectively Discarding Data in Vehicular Networks

ActiveUS20110080302A1Efficient processingReduce computing loadService provisioningParticular environment based servicesCommunications systemProtocol stack

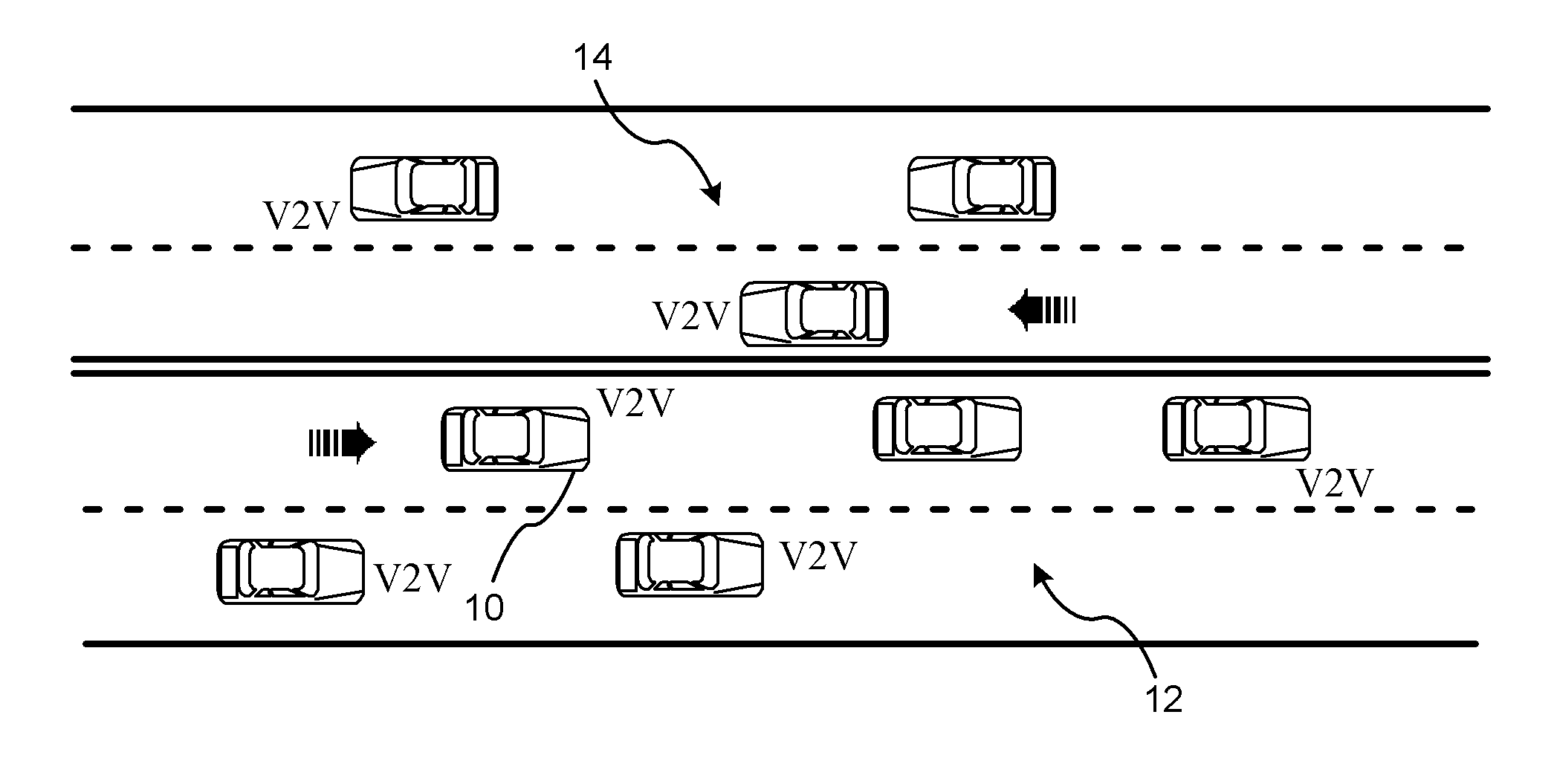

A method is provided for efficiently processing messages staged for authentication in a security layer of a protocol stack in a wireless vehicle-to-vehicle communication system. The vehicle-to-vehicle communication system includes a host vehicle receiver for receiving messages transmitted by one or more remote vehicles. The host receiver is configured to authenticate received messages in a security layer of a protocol stack. A wireless message broadcast by a remote vehicle is received. The wireless message contains characteristic data of the remote vehicle. The characteristic data is analyzed for determining whether the wireless message is in compliance with a predetermined parameter of the host vehicle. The wireless message is discarded prior to a transfer of the wireless message to the security layer in response to a determination that the wireless message is not in compliance with the predetermined parameter of the host vehicle. Otherwise, the wireless message is transferred to the security layer.

Owner:GM GLOBAL TECH OPERATIONS LLC

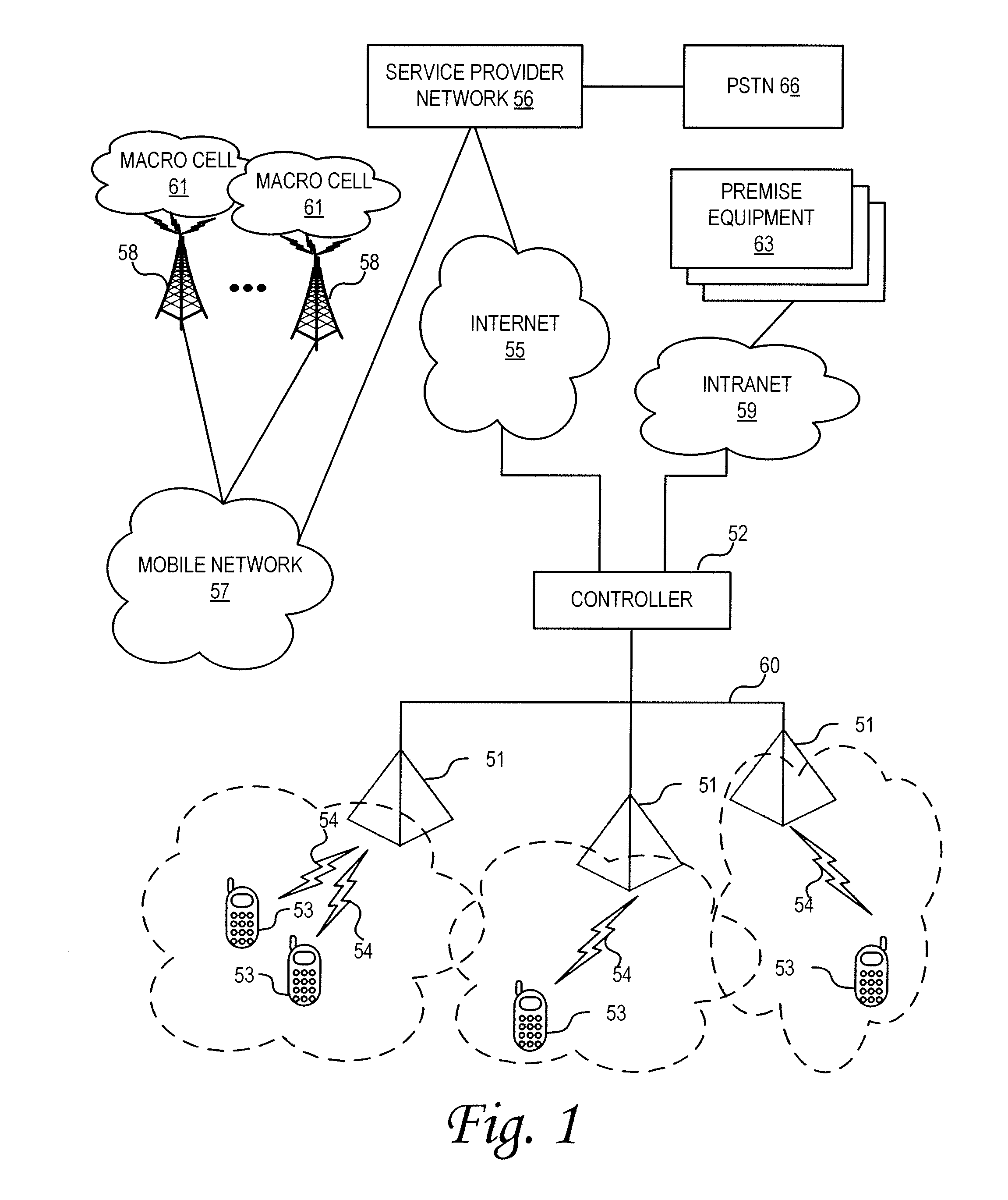

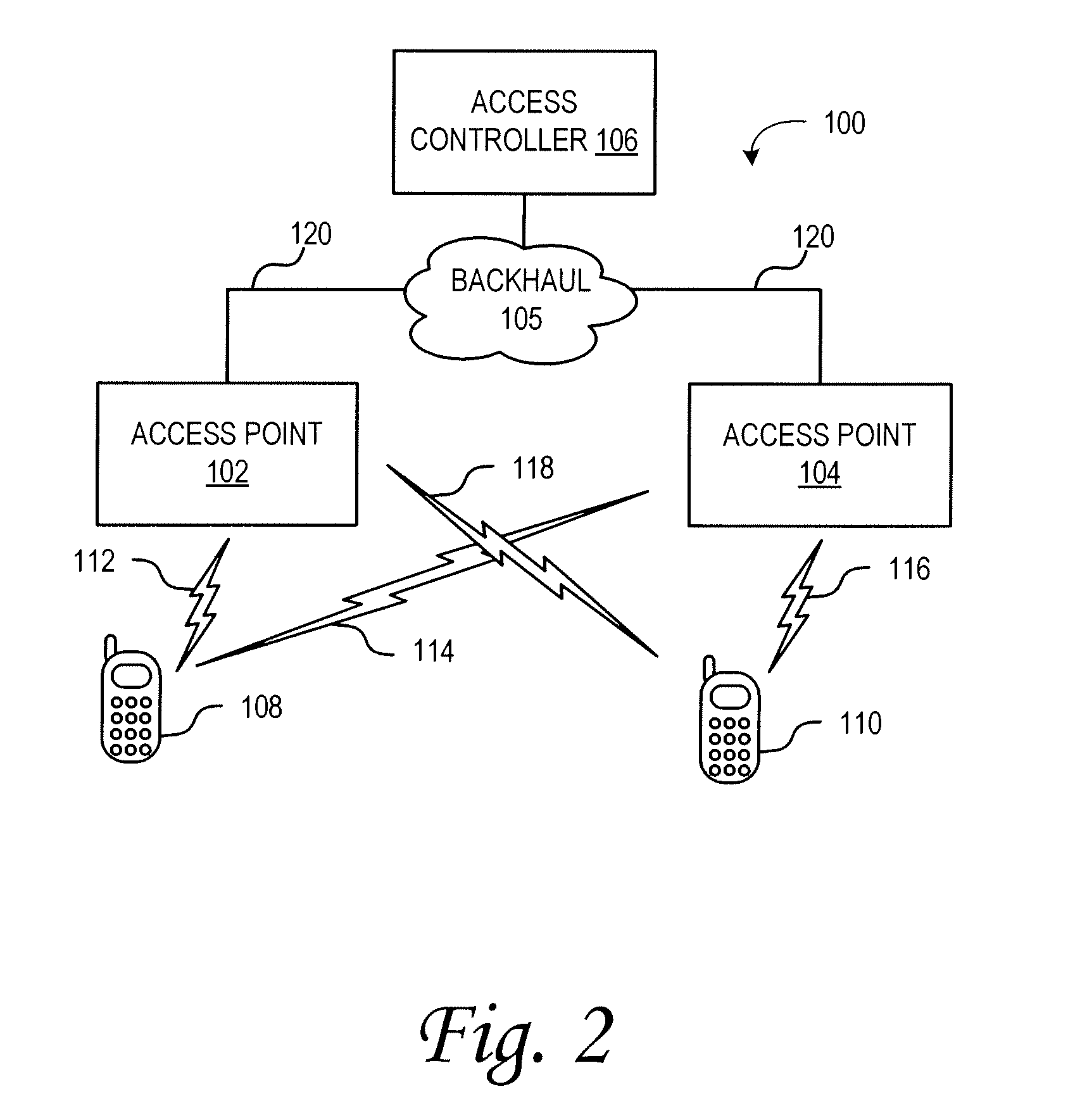

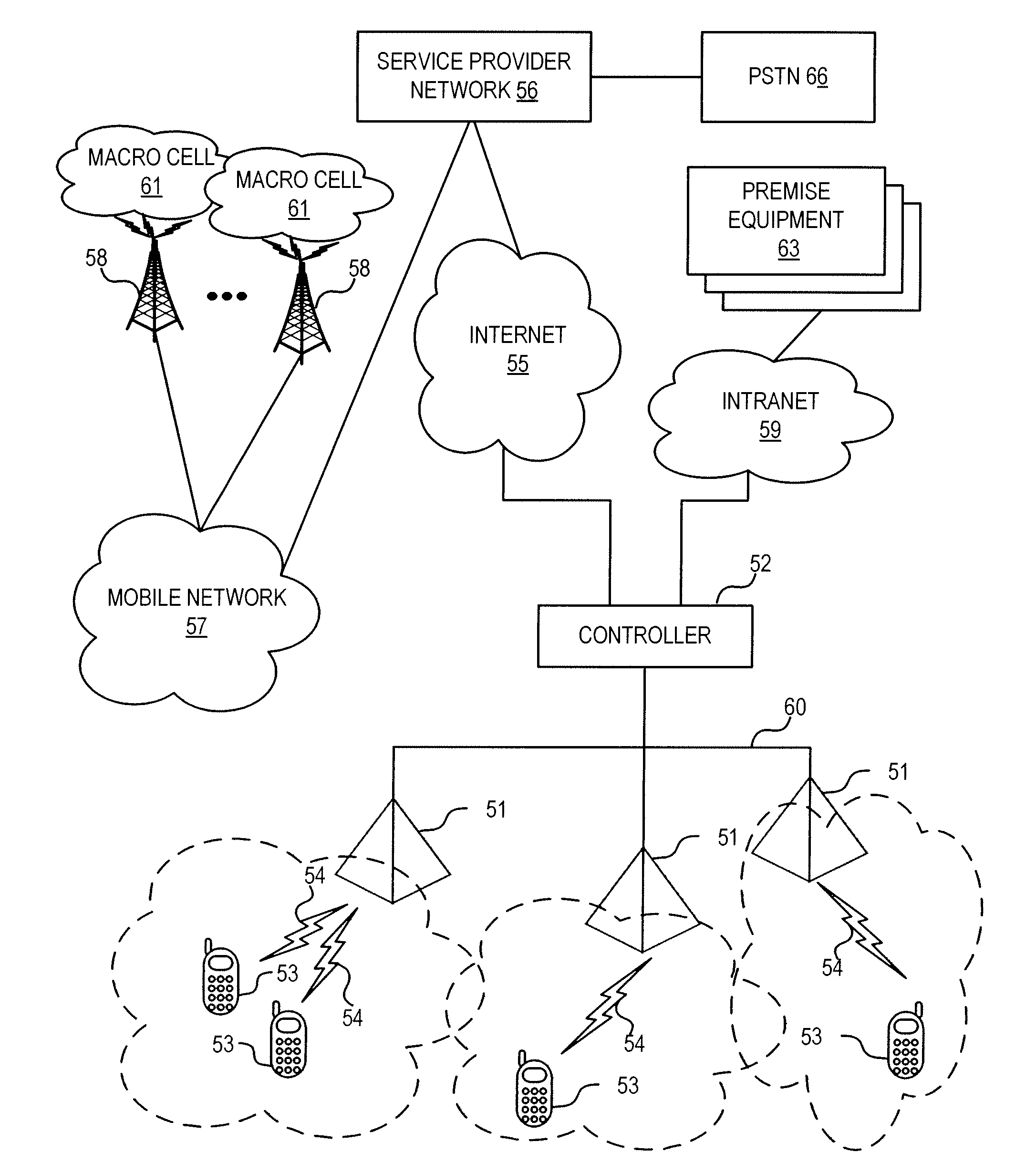

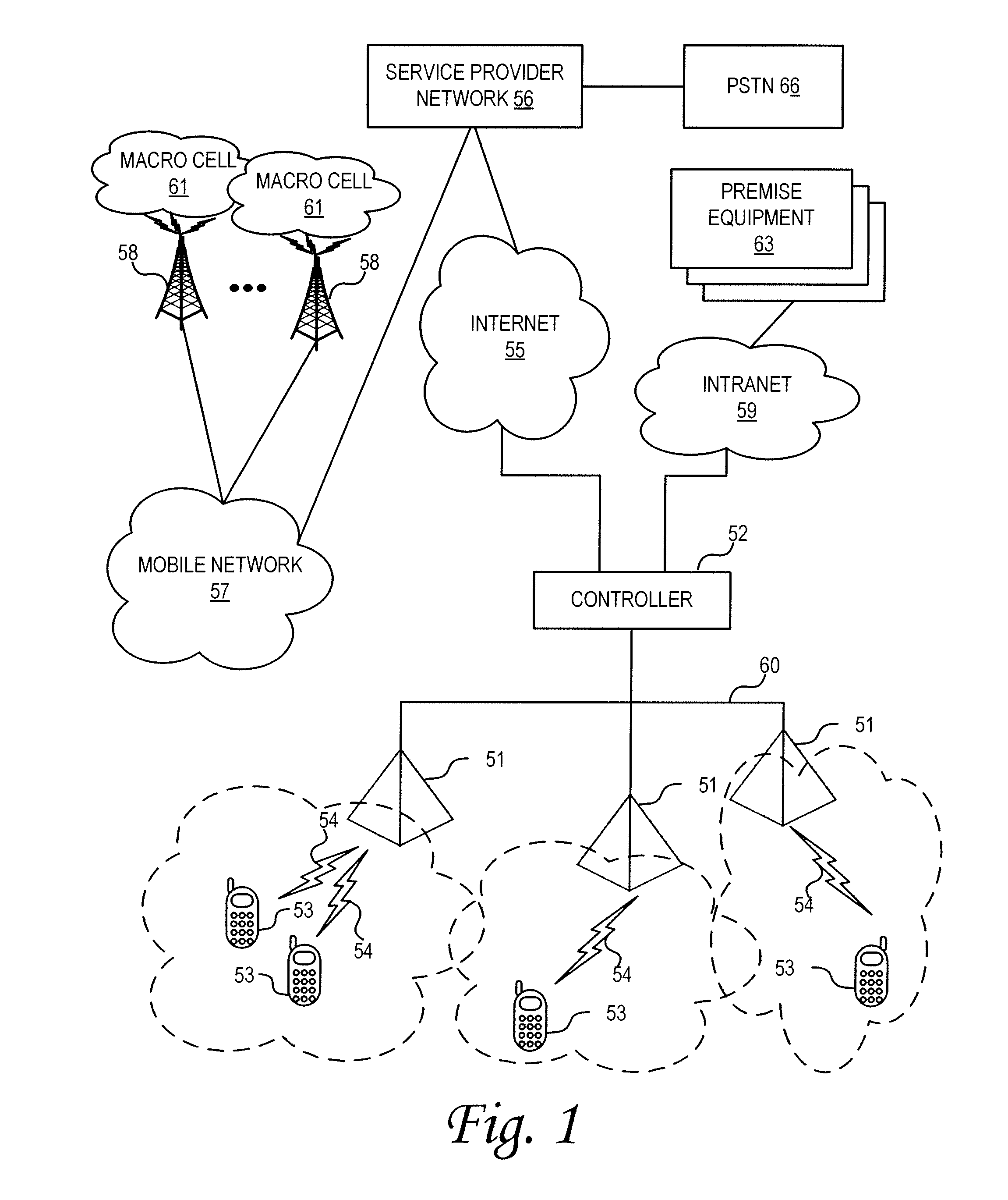

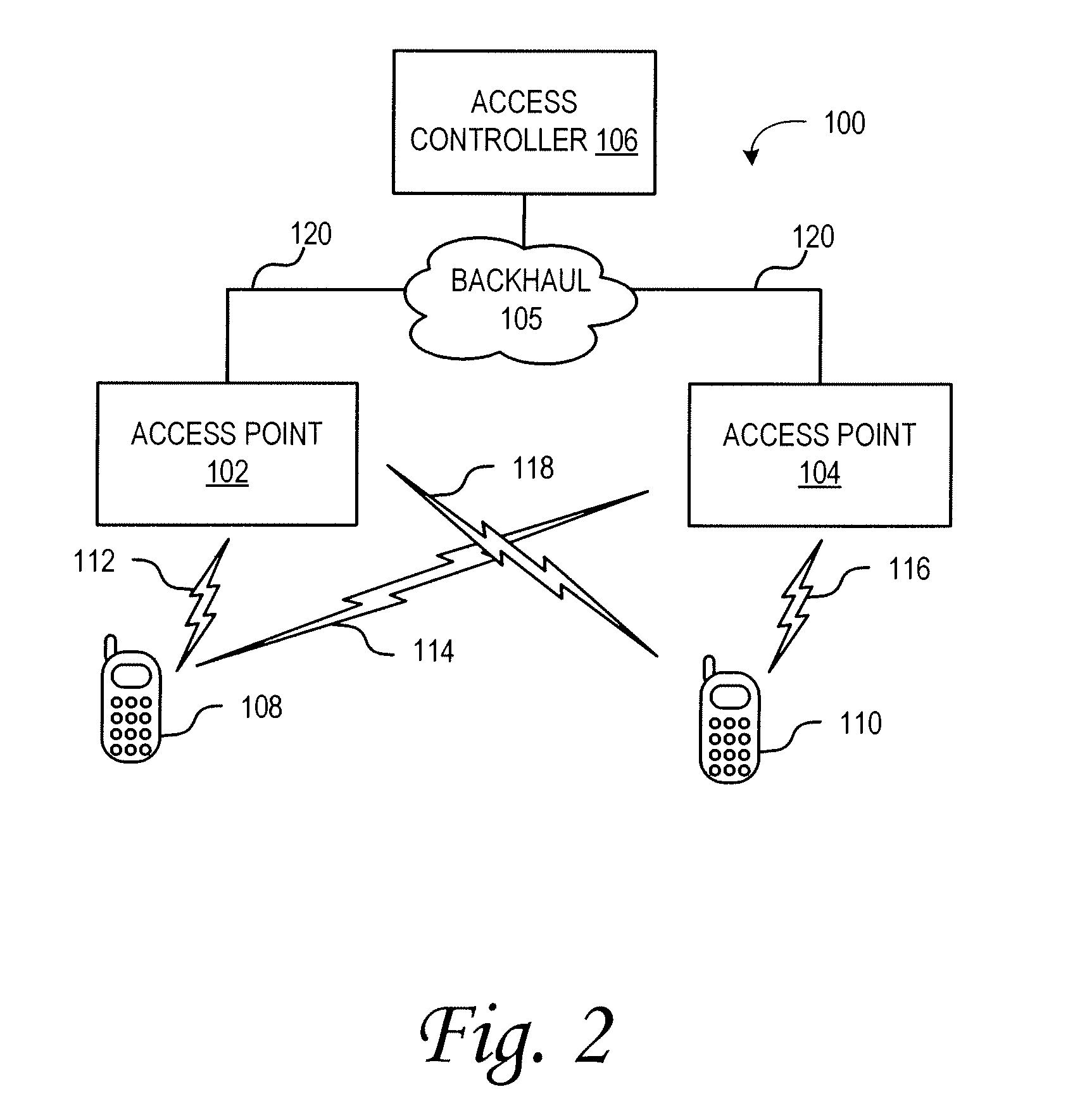

Dynamic topological adaptation

ActiveUS20100085884A1Reduce bottlenecksImprove balancePower managementError preventionUser equipmentTraffic load

Apparatus and methods for reconfiguration of a communication environment based on loading requirements. Network operations are monitored and analyzed to determine loading balance across the network or a portion thereof. Where warranted, the network is reconfigured to balance the load across multiple network entities. For example, in a cellular-type of network, traffic loads and throughput requirements are analyzed for the access points and their user equipment. Where loading imbalances occur, the cell coverage areas of one or more access points can be reconfigured to alleviate bottlenecks or improve balancing.

Owner:CORNING OPTICAL COMM LLC

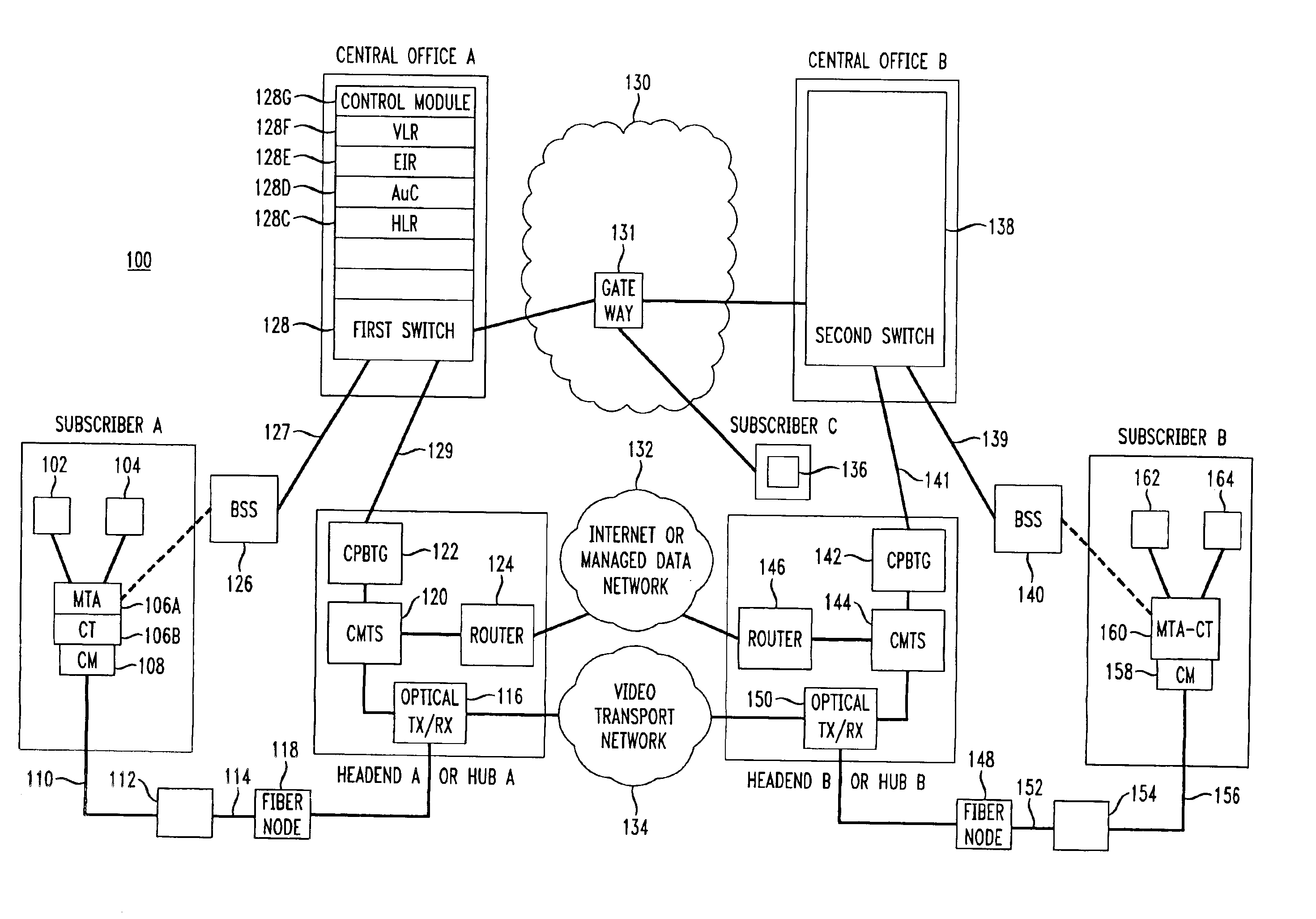

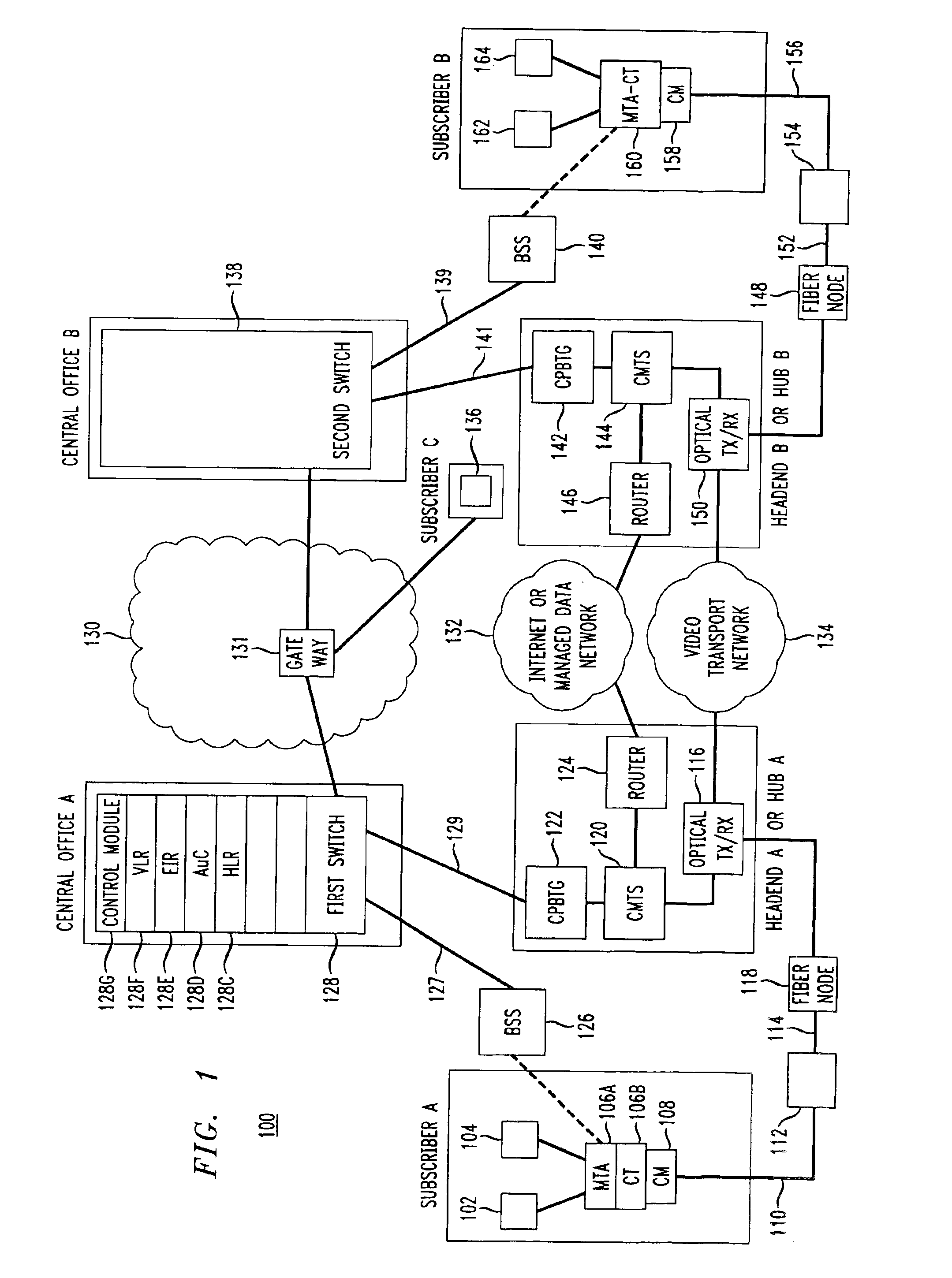

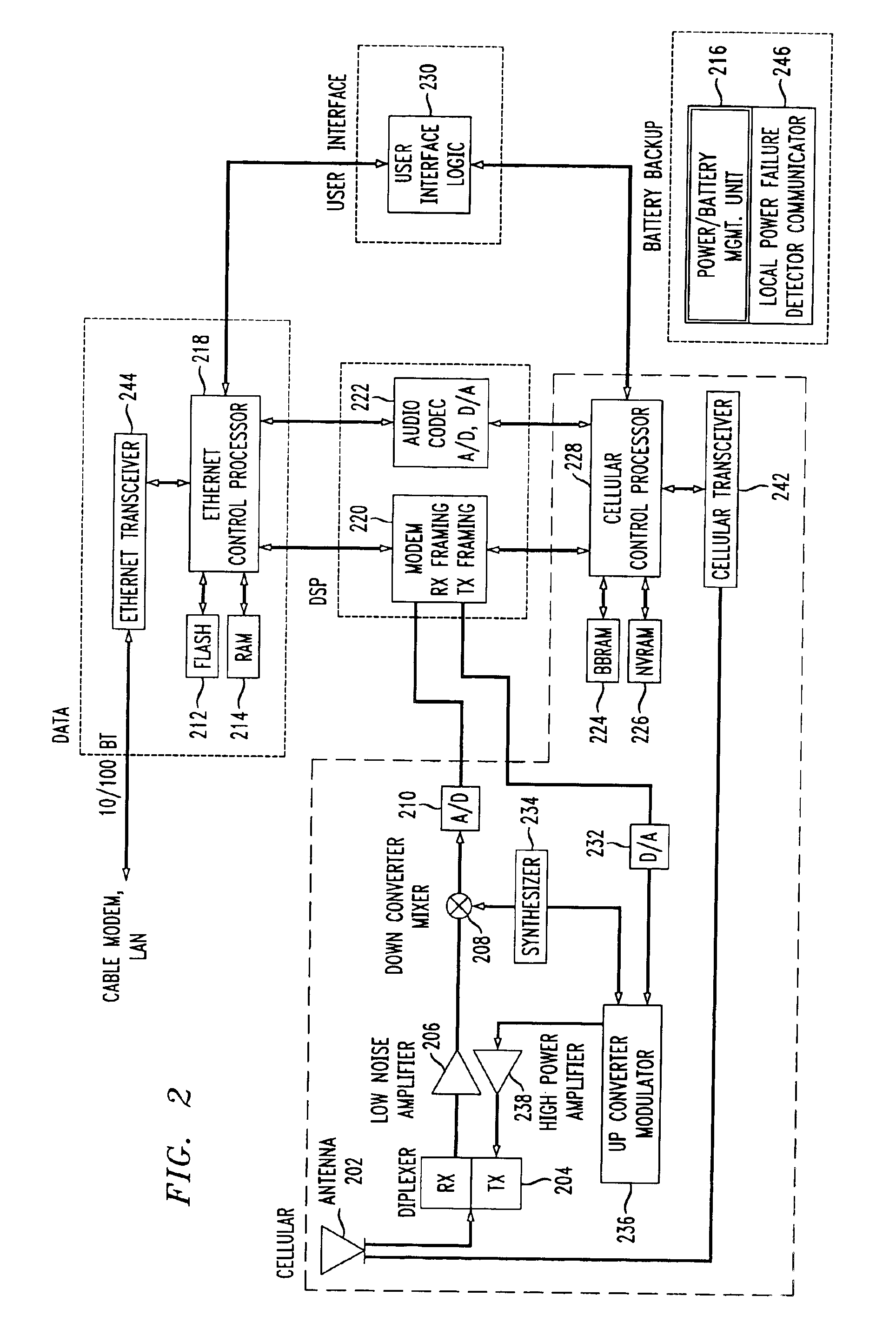

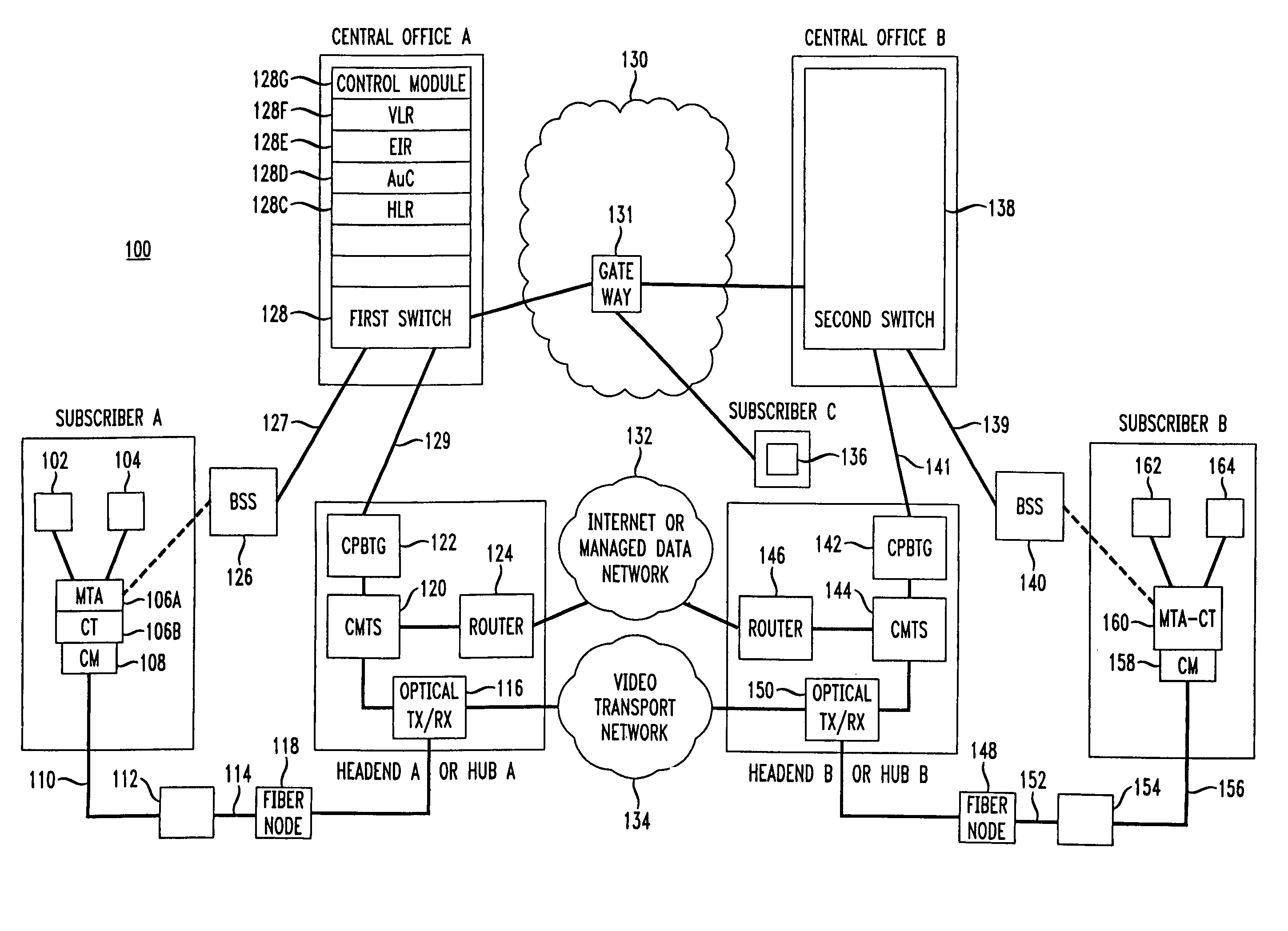

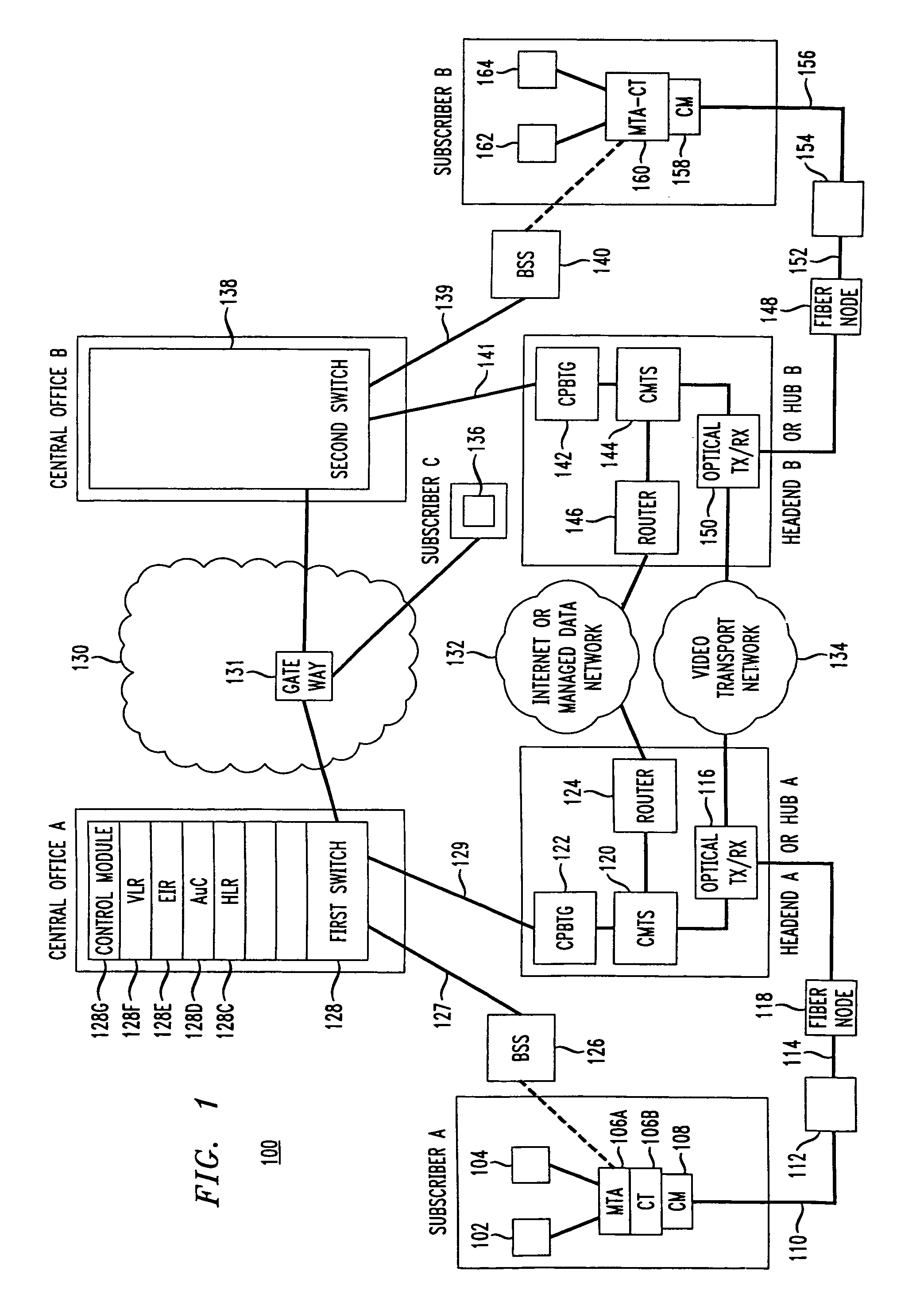

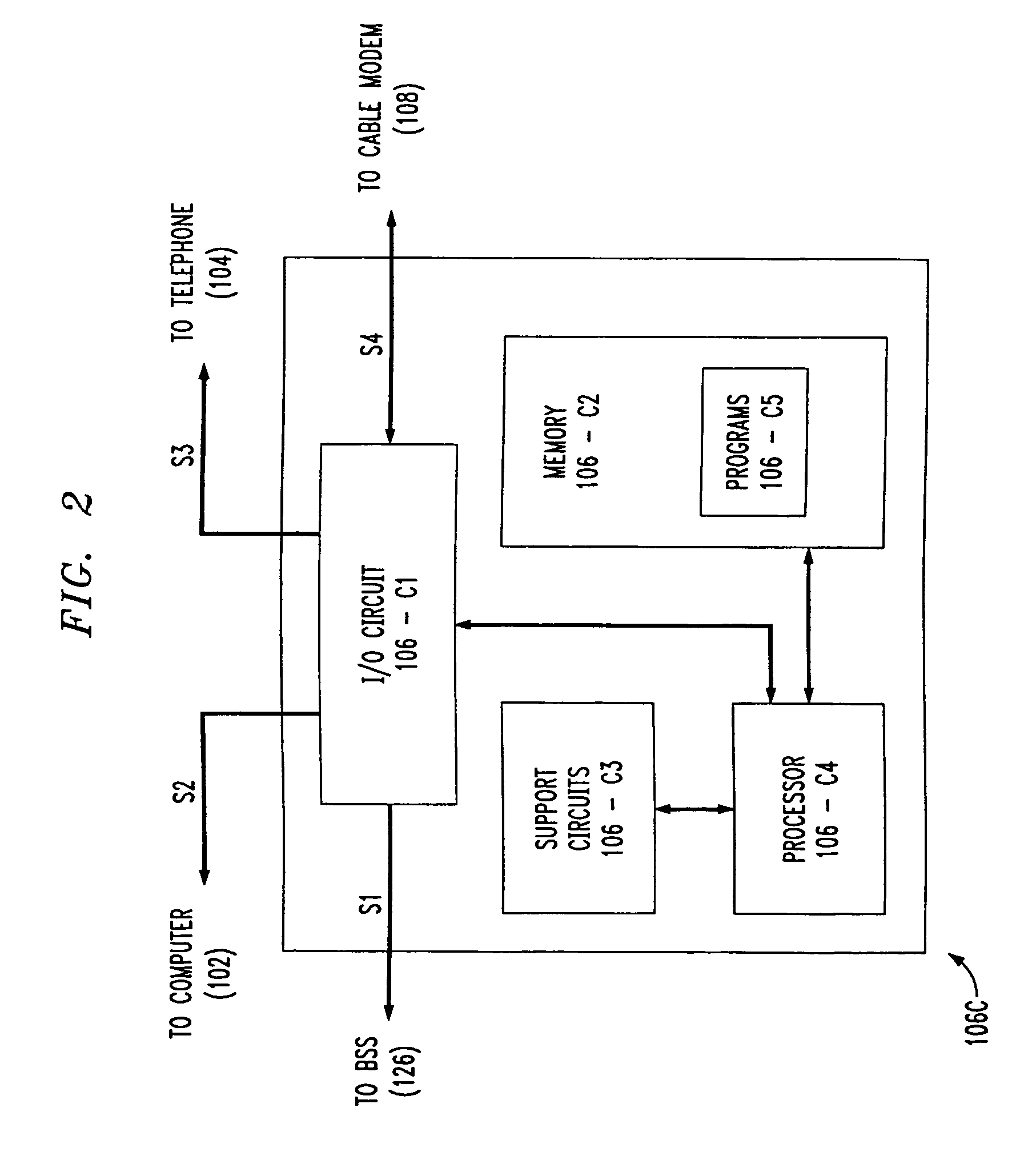

Media terminal adapter-cellular transceiver (MTA-CT)

InactiveUS6879582B1Efficient communicationReduce bottlenecksTime-division multiplexWireless commuication servicesTransceiverVoice traffic

An apparatus for providing bifurcated voice and signaling traffic over a cable telephony architecture by segregating signaling traffic and voice traffic and transmitting the respective traffic over two different mediums to a switch to establish a phone call.

Owner:LUCENT TECH INC

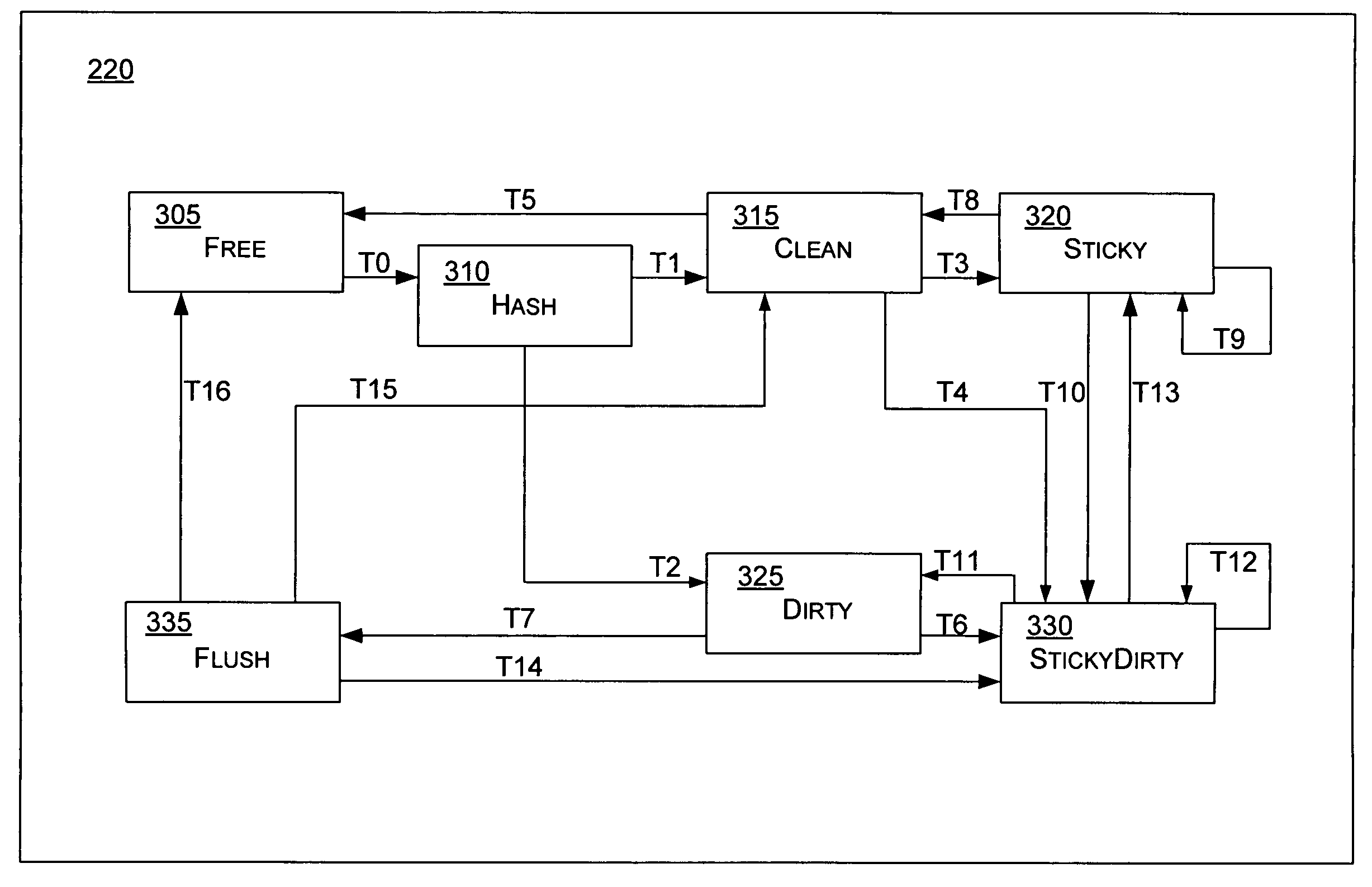

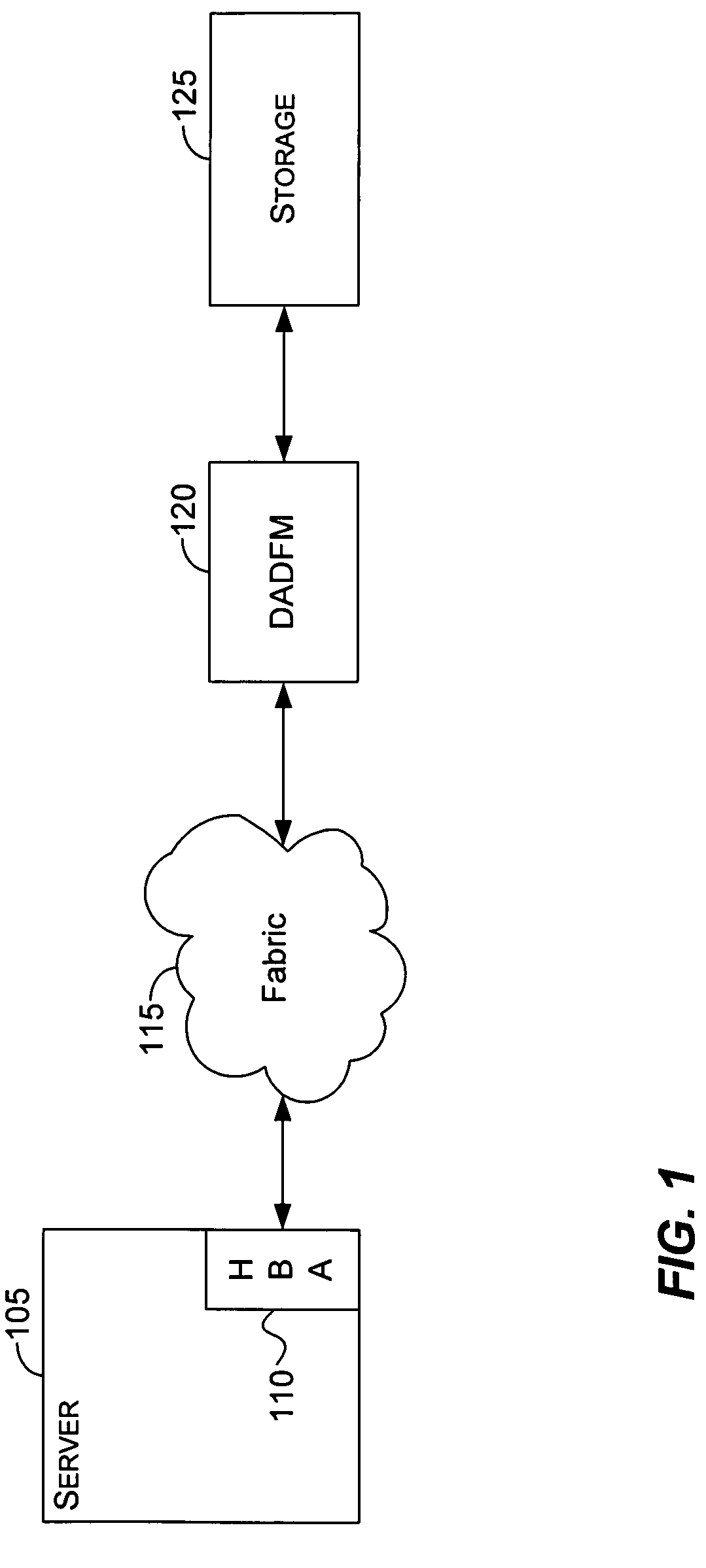

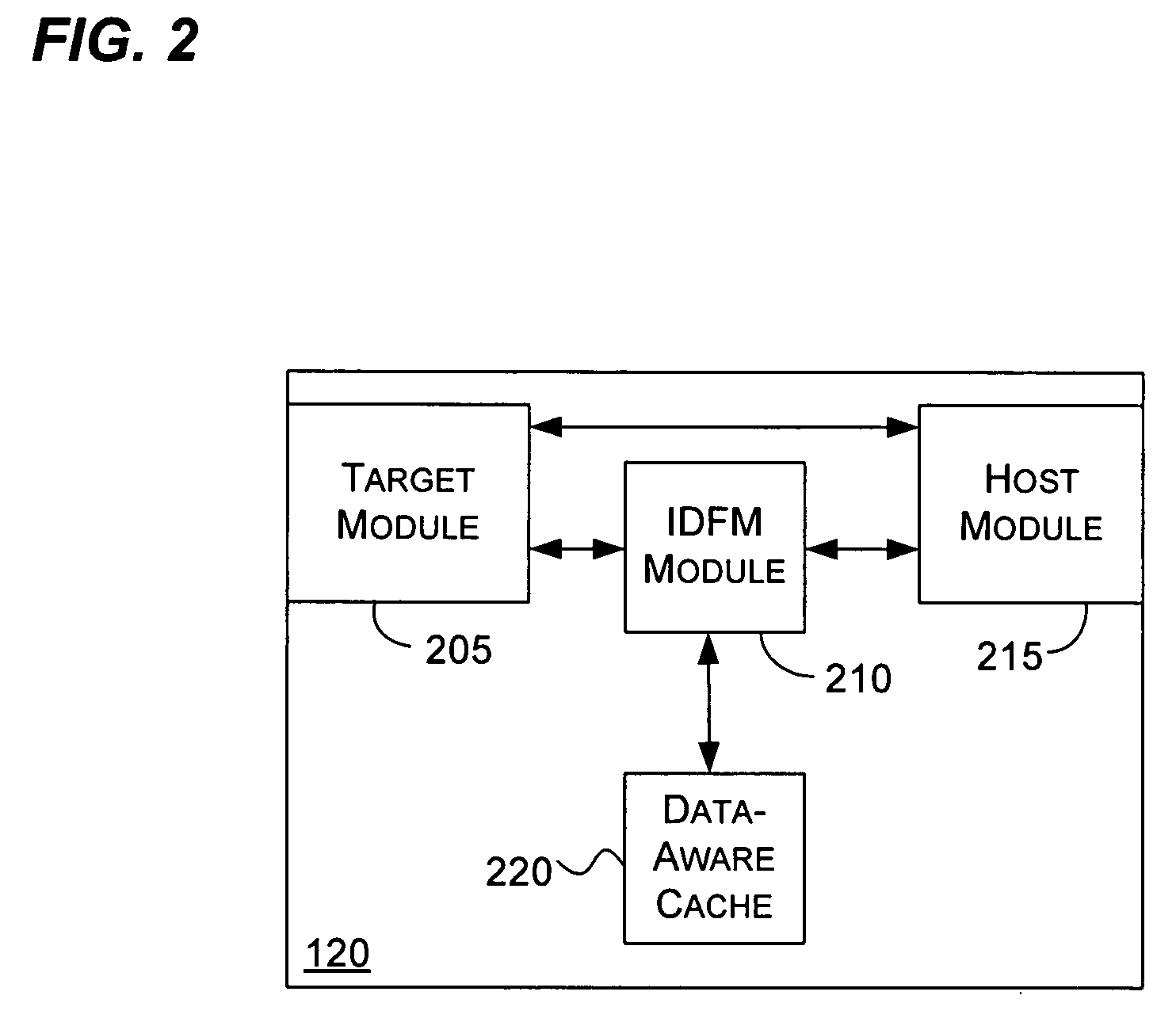

Data-aware cache state machine

InactiveUS20050172082A1Reduce bottlenecksImprove effectivenessMemory architecture accessing/allocationMemory adressing/allocation/relocationData transformationData stream

A method and system directed to improve effectiveness and efficiency of cache and data management by differentiating data based on certain attributes associated with the data and reducing the bottleneck to storage. The data-aware cache differentiates and manages data using a state machine having certain states. The data-aware cache may use data pattern and traffic statistics to retain frequently used data in cache longer by transitioning it into Sticky or StickyDirty states. The data-aware cache may also use content or application related attributes to differentiate and retain certain data in cache longer. Further, the data-aware cache may provide cache status and statistics information to a data-aware data flow manager, thus assisting data-aware data flow manager to determine which data to cache and which data to pipe directly through, or to switch cache policies dynamically, thus avoiding some of the overhead associated with caches. The data-aware cache may also place clean and dirty data in separate states, enabling more efficient cache mirroring and flush, thus improve system reliability and performance.

Owner:SANDISK TECH LLC

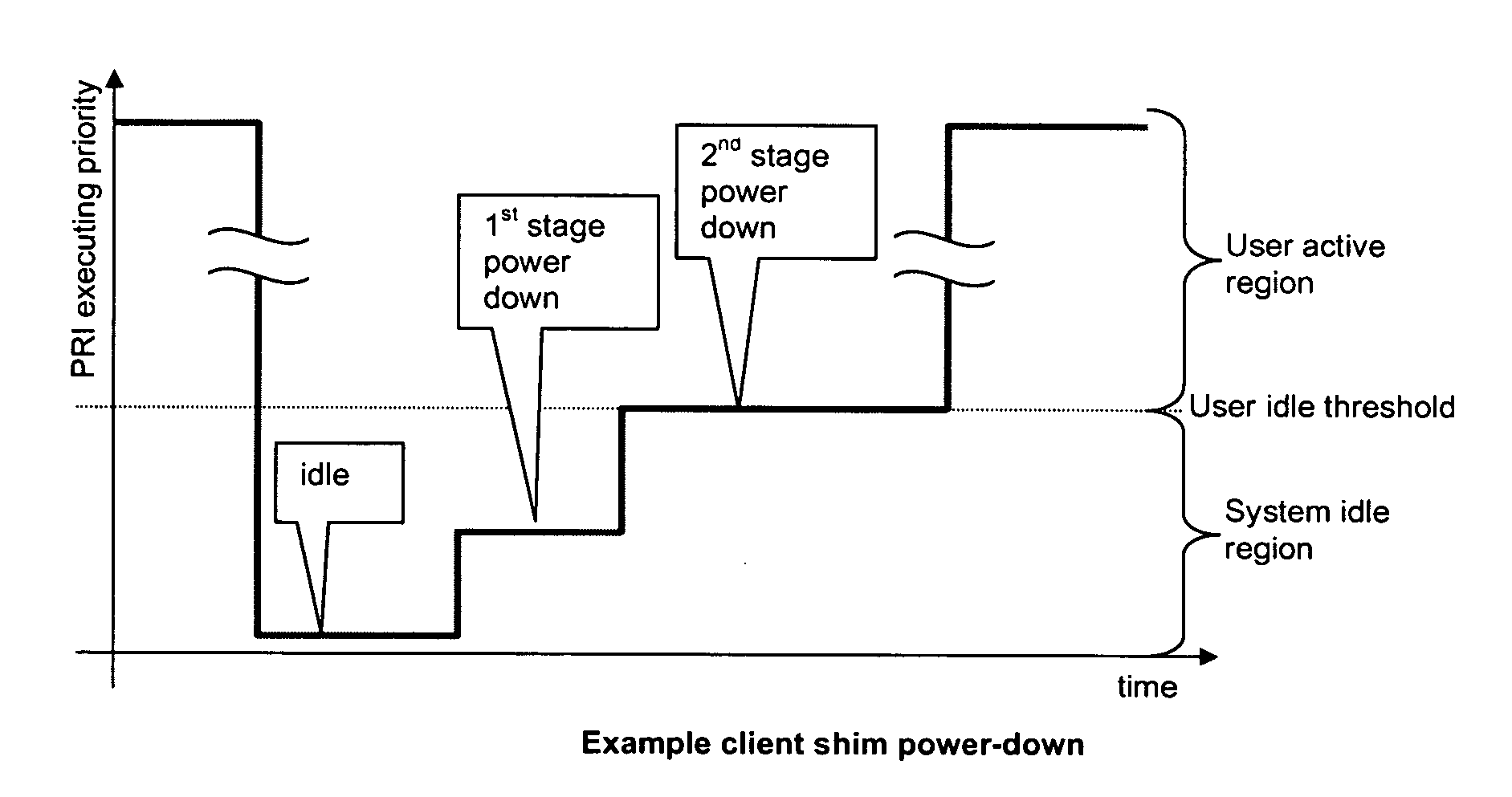

Managing power consumption in a multicore processor

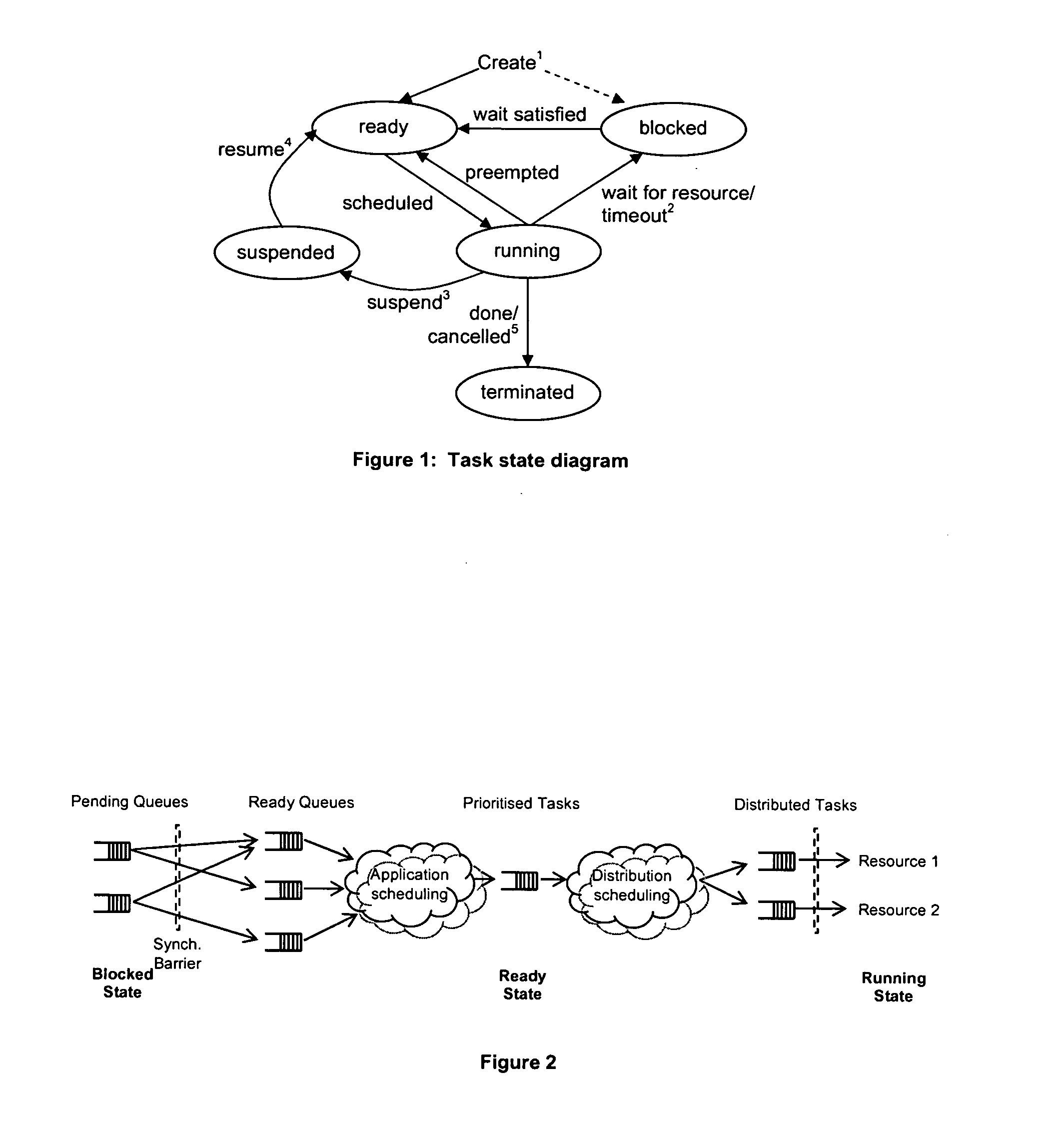

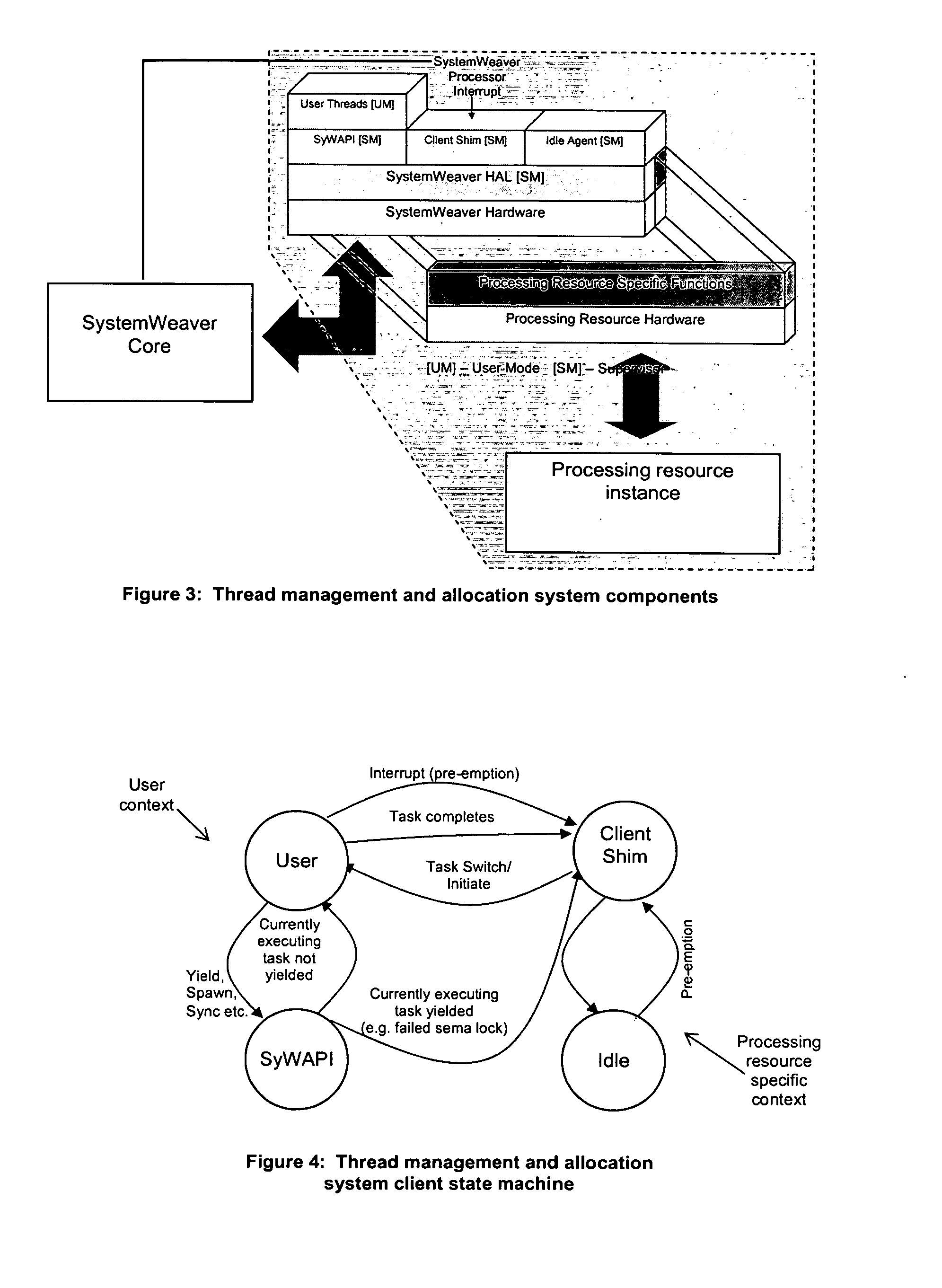

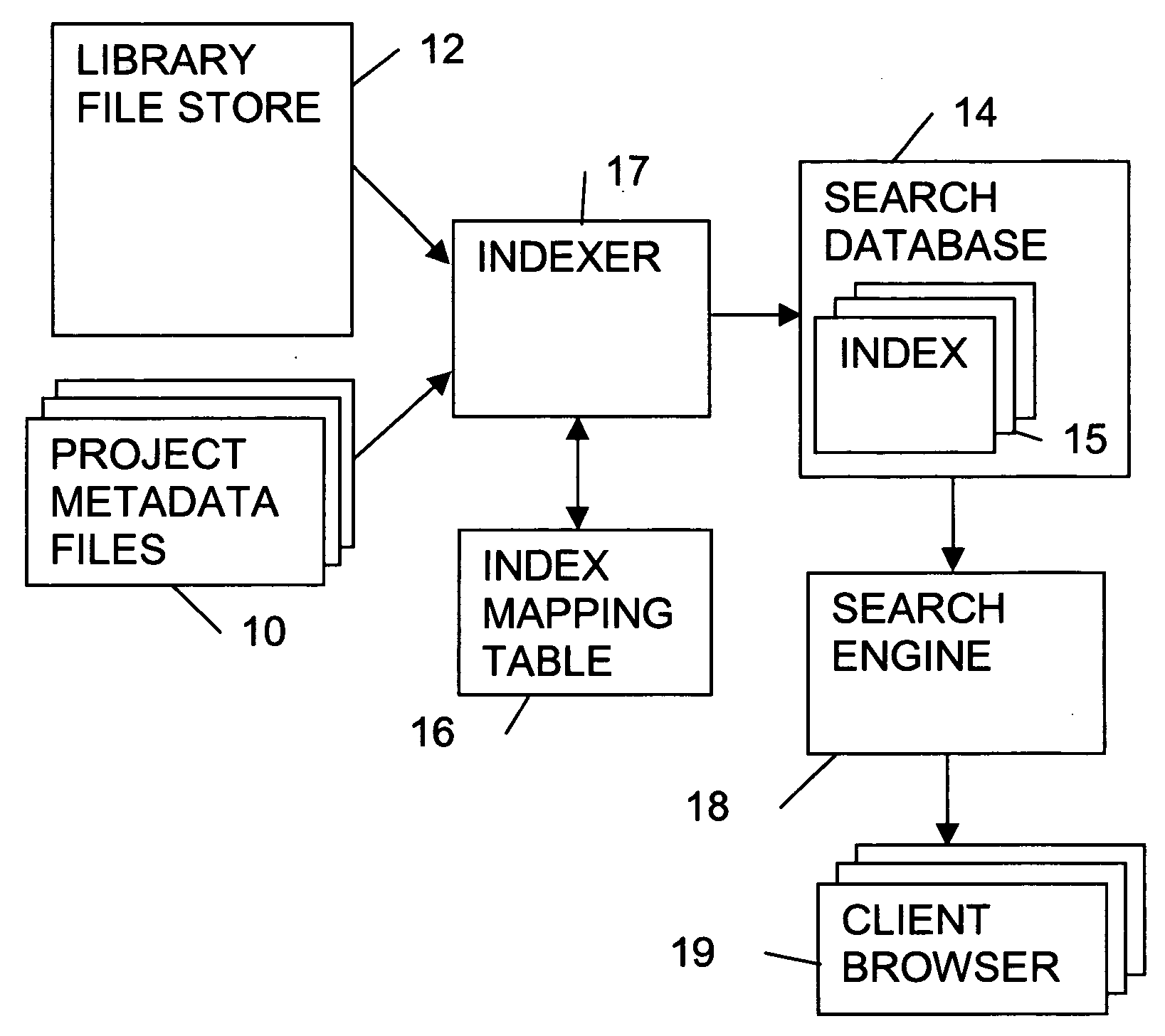

ActiveUS20070220294A1Improve application performanceIncrease speedEnergy efficient ICTVolume/mass flow measurementProcessor elementMulti-core processor

A method and computer-usable medium including instructions for performing a method of managing power consumption in a multicore processor comprising a plurality of processor elements with at least one power saving mode. The method includes listing, using at least one distribution queue, a portion of the executable transactions in order of eligibility for execution. A plurality of executable transaction schedulers are provided. The executable transaction schedulers are linked together to provide a multilevel scheduler. The most eligible executable transaction is output from the multilevel scheduler to the at least one distribution queue. One or more of the plurality of processor elements are placed into a first power saving mode when a number of executable transactions allocated to the plurality of processor elements is such that only a portion of available processor elements are used to execute executable transactions.

Owner:FUJITSU SEMICON LTD +1

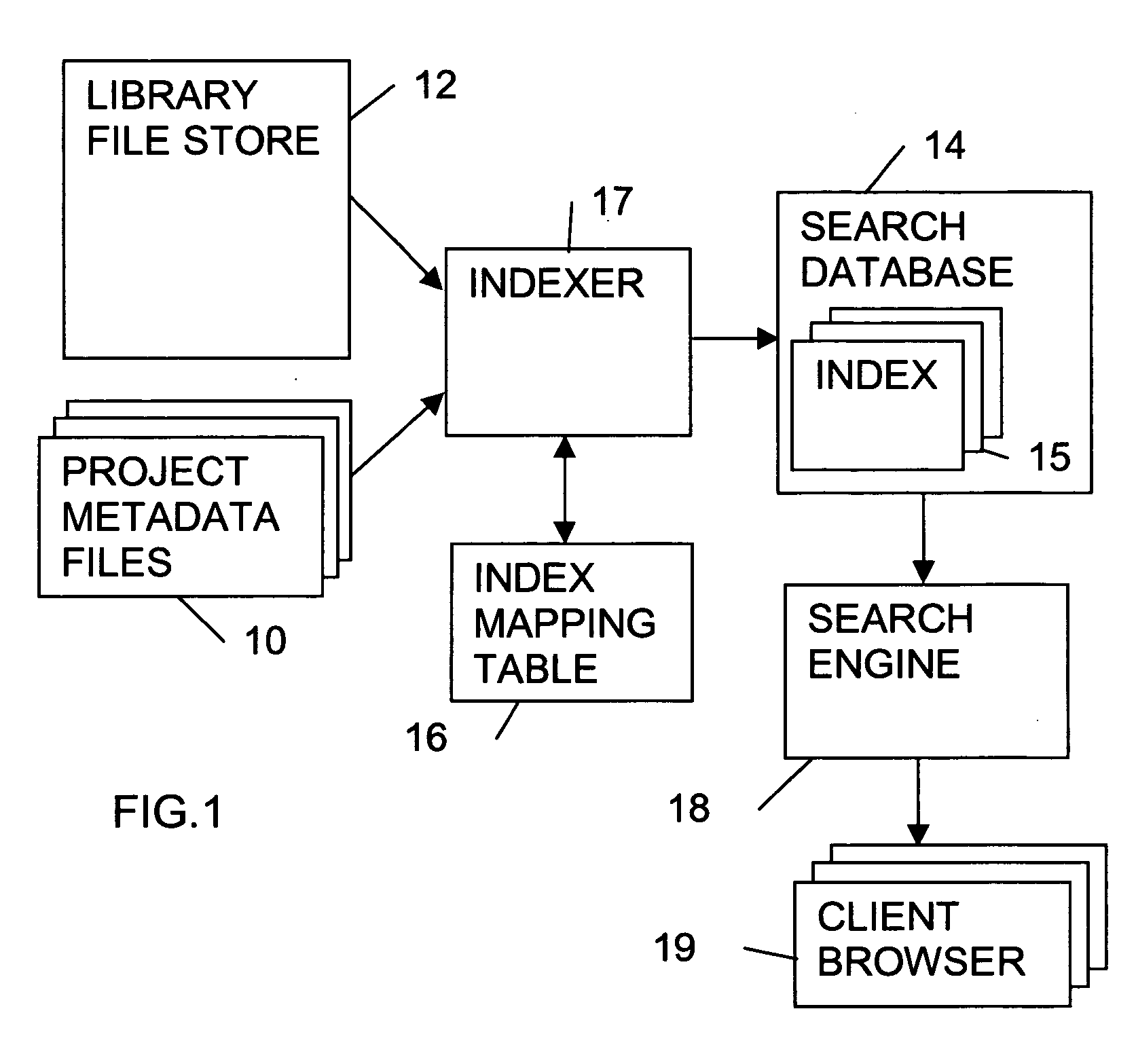

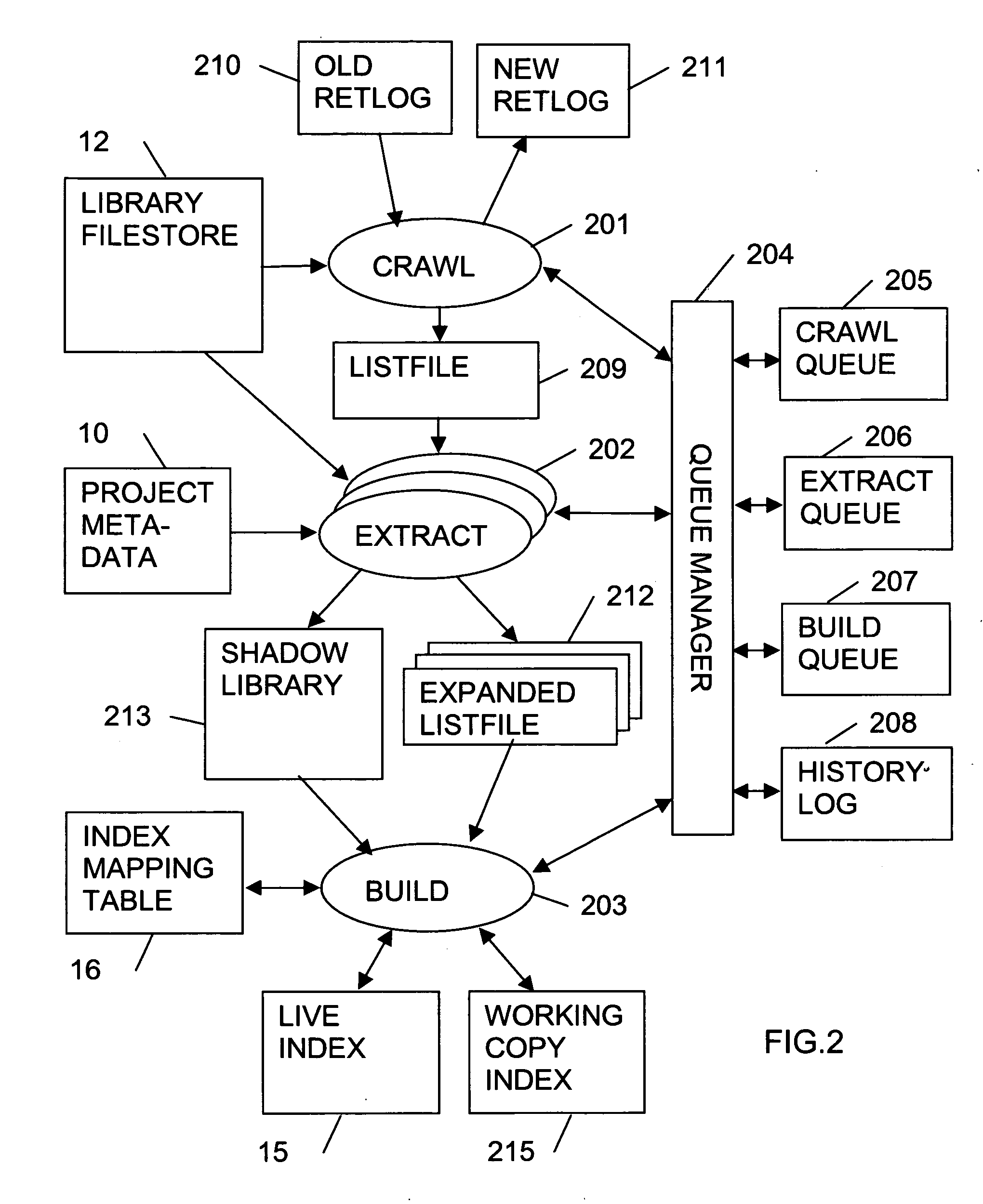

Indexing system for a computer file store

InactiveUS20060041606A1Alleviate bottleneckReduce bottlenecksText database indexingSpecial data processing applicationsPaper documentSearch engine indexing

A computerized document retrieval system has a file store holding a collection of documents, and indexer for constructing and updating at least one index from the contents of the documents, and a search engine for searching the index to retrieve documents from the file store. The indexer comprises three asynchronously executable processes: (a) a crawl process, which scans the file store to find documents requiring to be indexed, (b) an extract process, which accesses the documents requiring to be indexed and extracts indexing data from them, and (c) a build process, which uses the indexing data to construct or update the index.

Owner:FUJITSU SERVICES

Reducing the computational load on processors by selectively discarding data in vehicular networks

ActiveUS8314718B2Reduce computing loadReduce bottlenecksService provisioningParticular environment based servicesCommunications systemComputer science

Owner:GM GLOBAL TECH OPERATIONS LLC

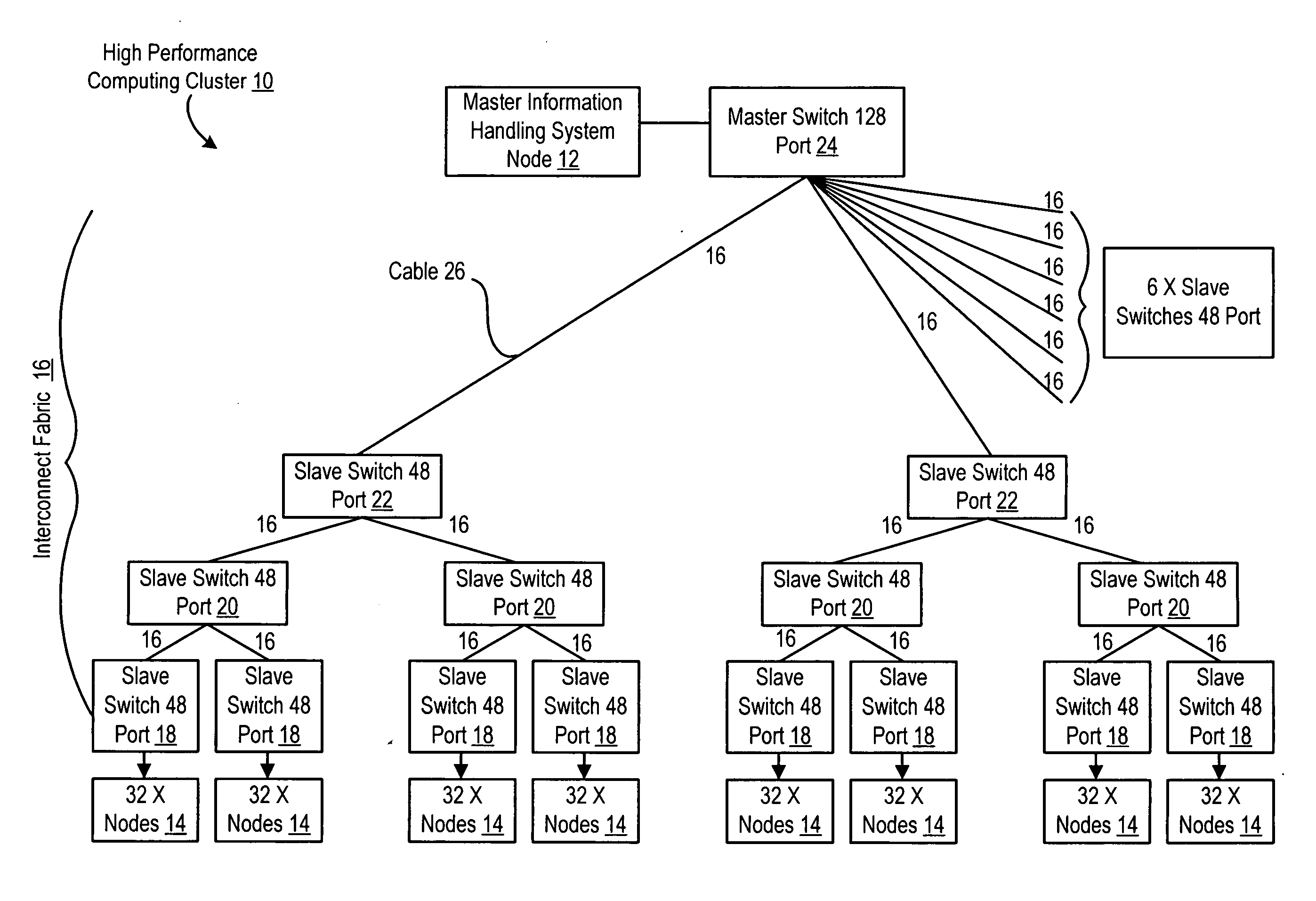

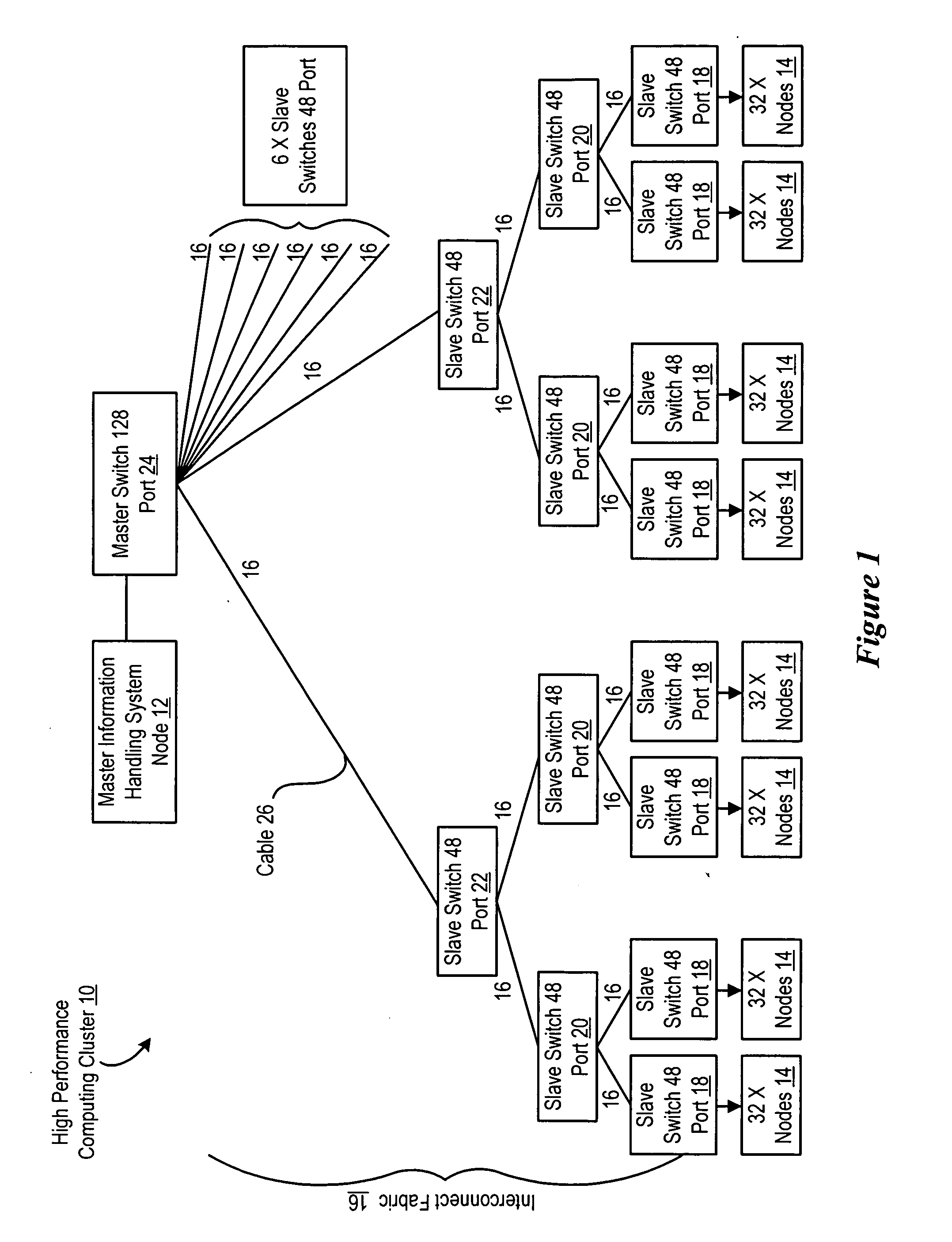

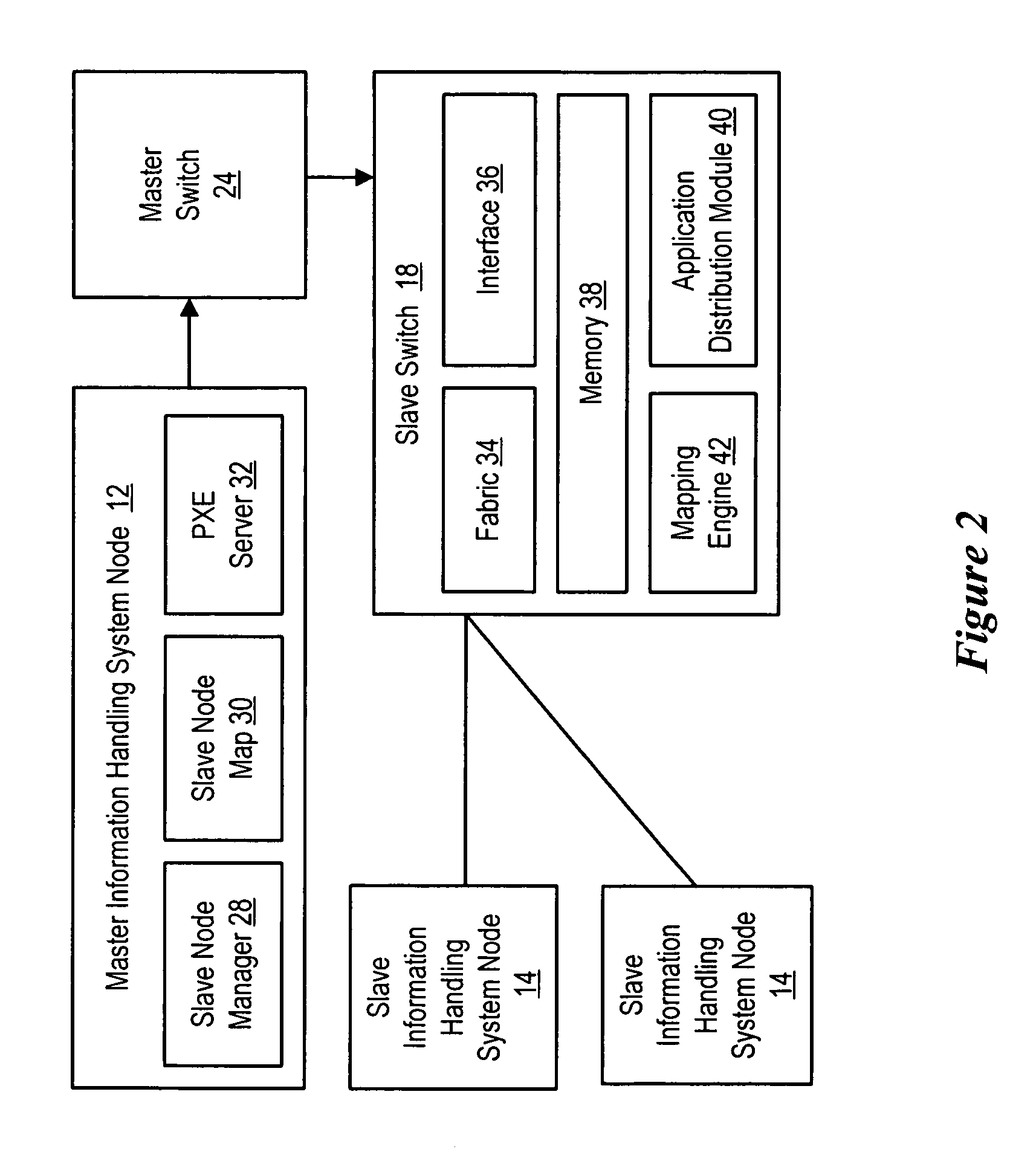

System and method for intelligent information handling system cluster switches

InactiveUS20070253437A1Network degradationReduce disadvantagesData switching by path configurationMultiple digital computer combinationsOperational systemComputer network

Information is more efficiently distributed between master and slave information handling systems interfaced through a blocking network of switches by storing the information on switches within the blocking network and distributing the information from the switches. As an example, an application distribution module located on a leaf switch distributes an application, such as an operating system, to connected slave nodes so that the slave nodes do not have to retrieve the operating system from the master node through the blocking network. For instance, a PXE boot request from a slave node to the master node is intercepted at the leaf switch to allow the slave node to boot from an image of the operating system stored in local memory of the leaf switch.

Owner:DELL PROD LP

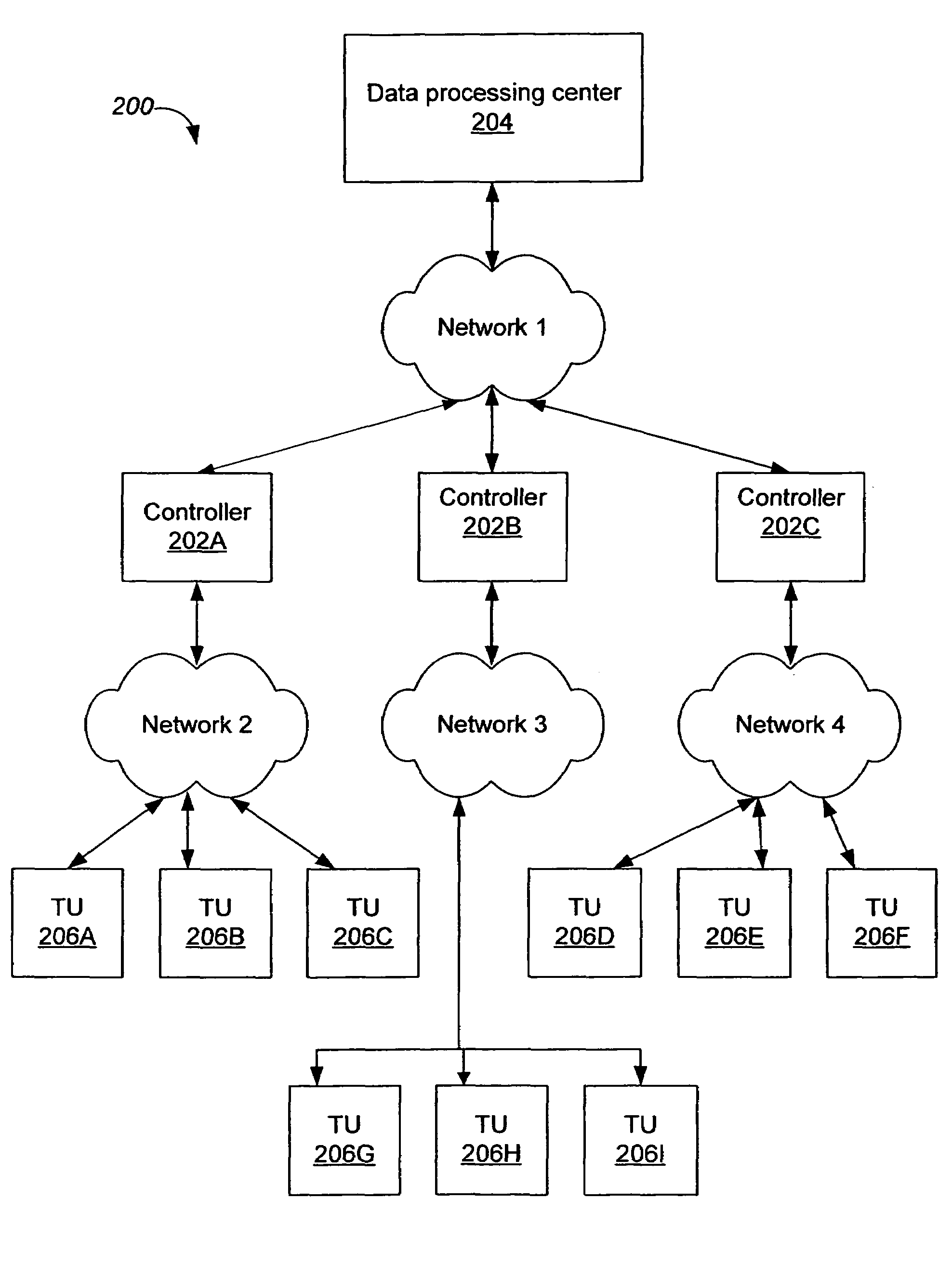

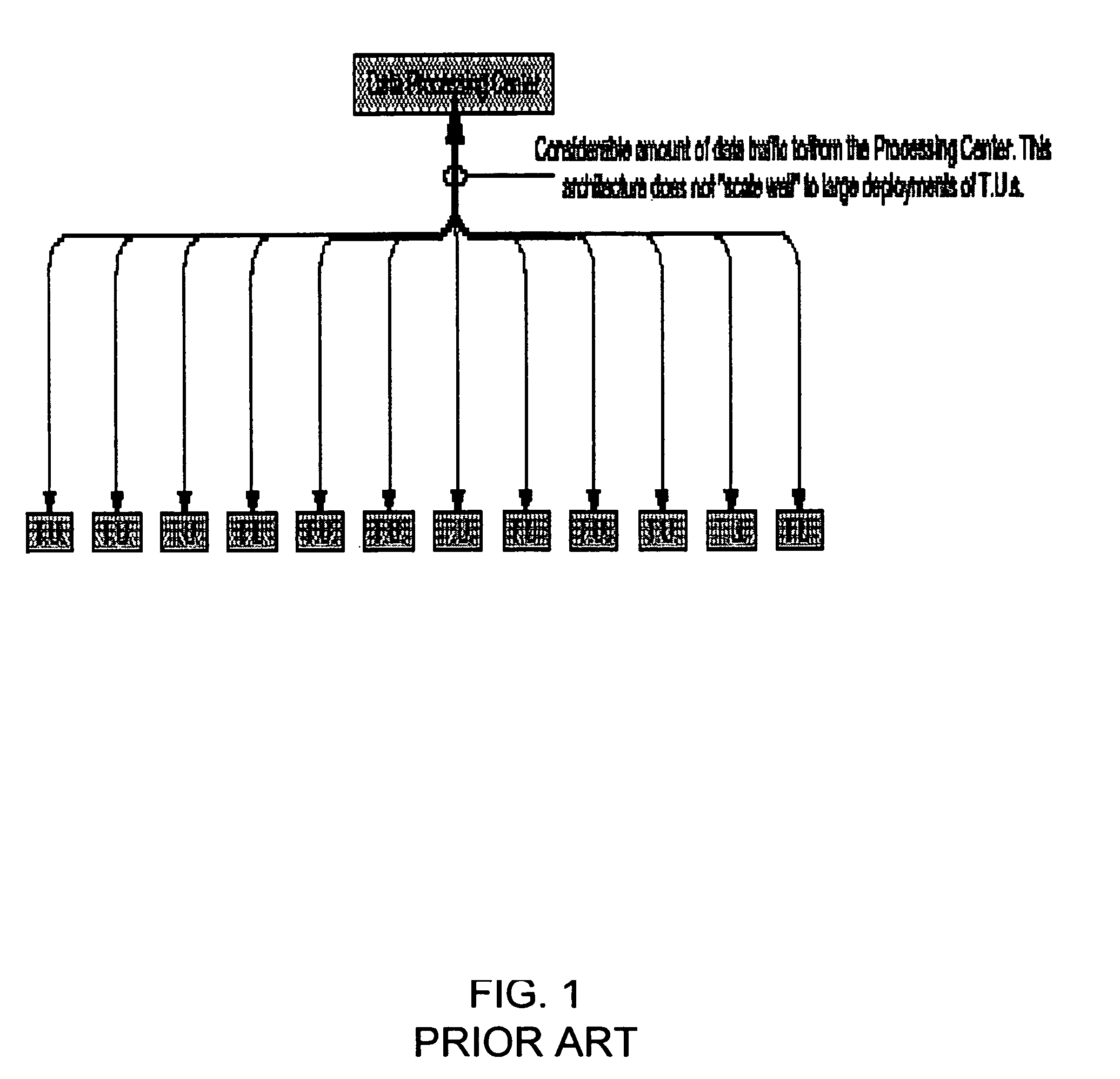

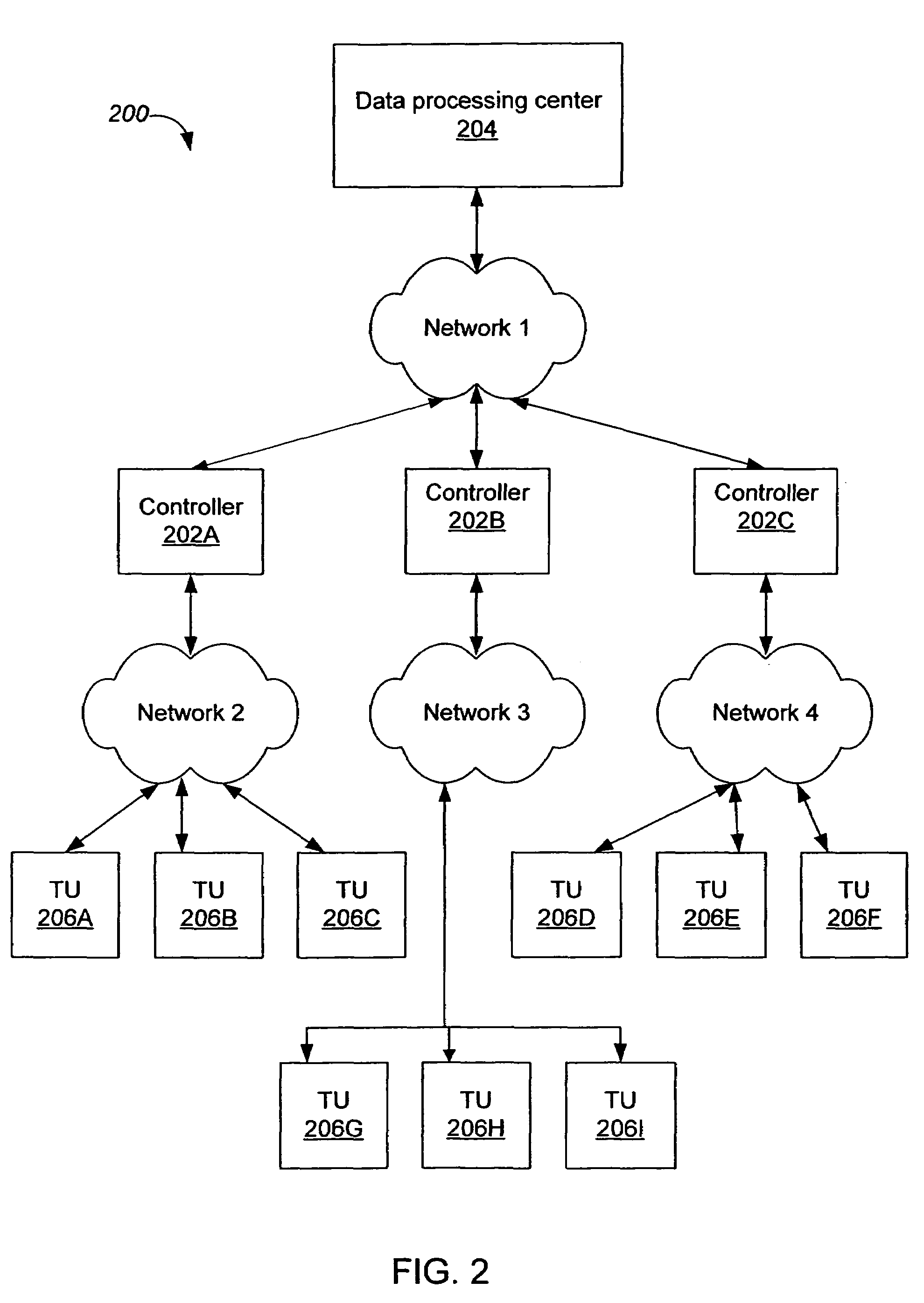

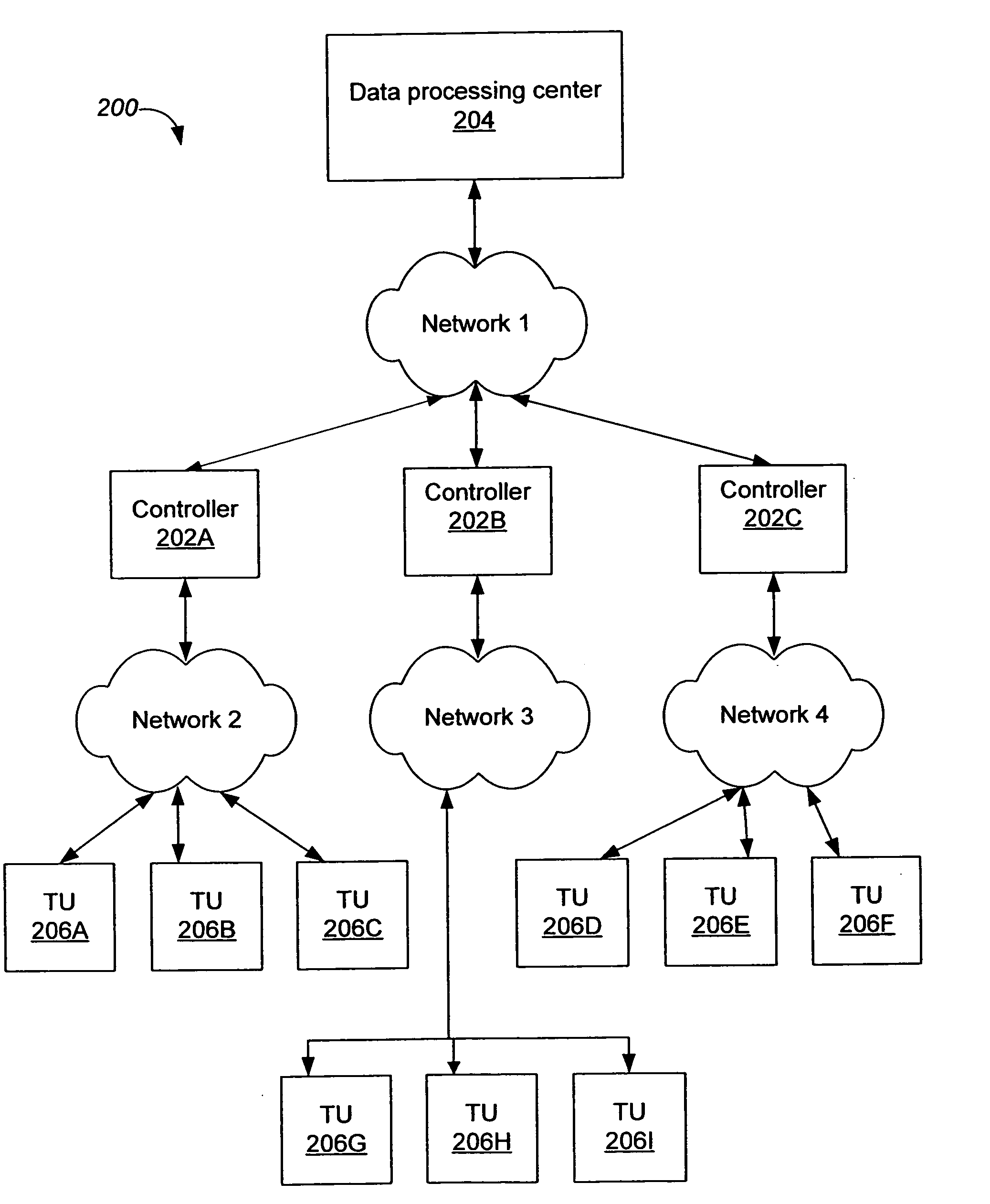

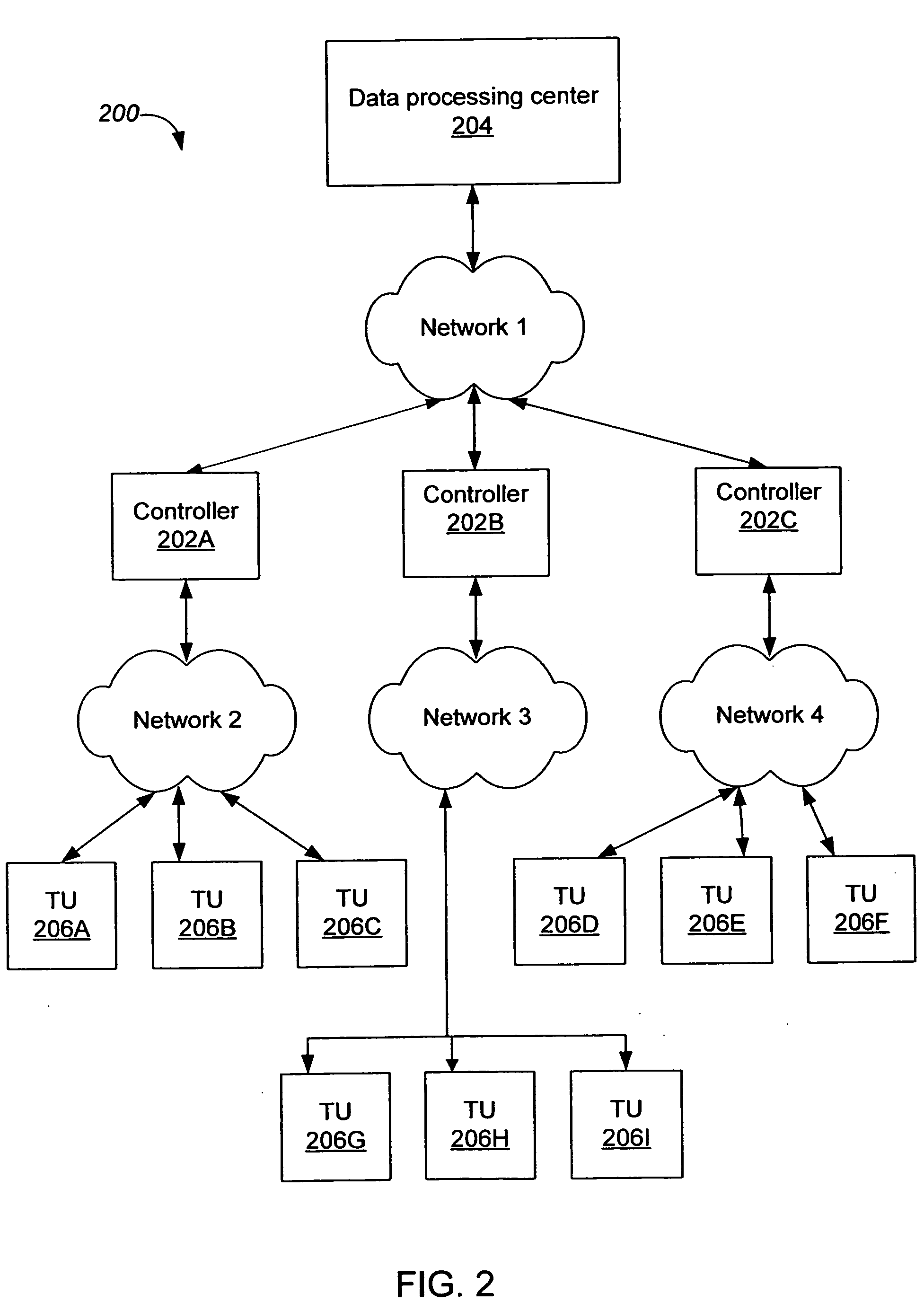

Intelligent two-way telemetry

InactiveUS7230544B2Reduce data congestionSimple and efficient and economical approachElectric signal transmission systemsLevel controlEngineeringTelemetry Equipment

Methods and apparatus, including computer program products, implementing and using techniques for intelligent two-way telemetry. A telemetry system in accordance with the invention includes one or more telemetry units that can receive and send data. The system further includes one or more controllers that includes intelligence for processing data from the one or more telemetry units and for autonomously communicating with the one or more telemetry units. The controllers are separately located from a data processing center of the telemetry system such that the controllers alleviate data congestion going to and coming from the data processing center.

Owner:LANDISGYR INNOVATIONS INC

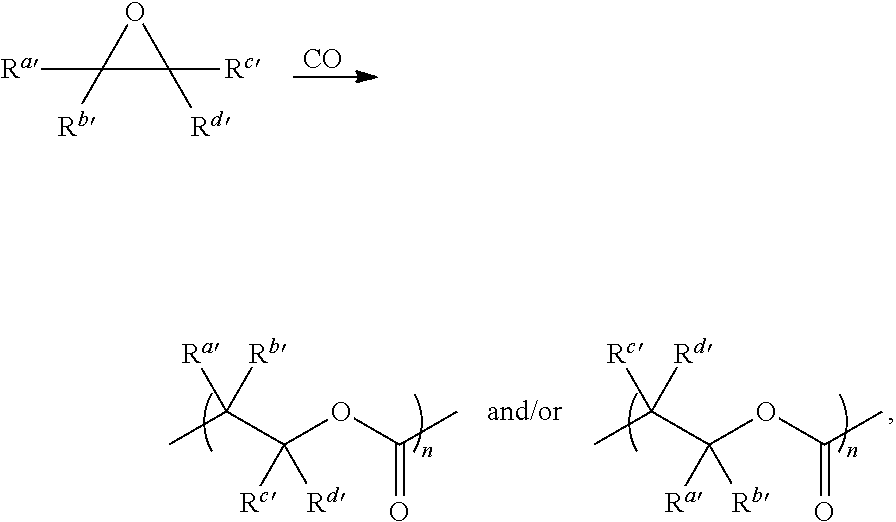

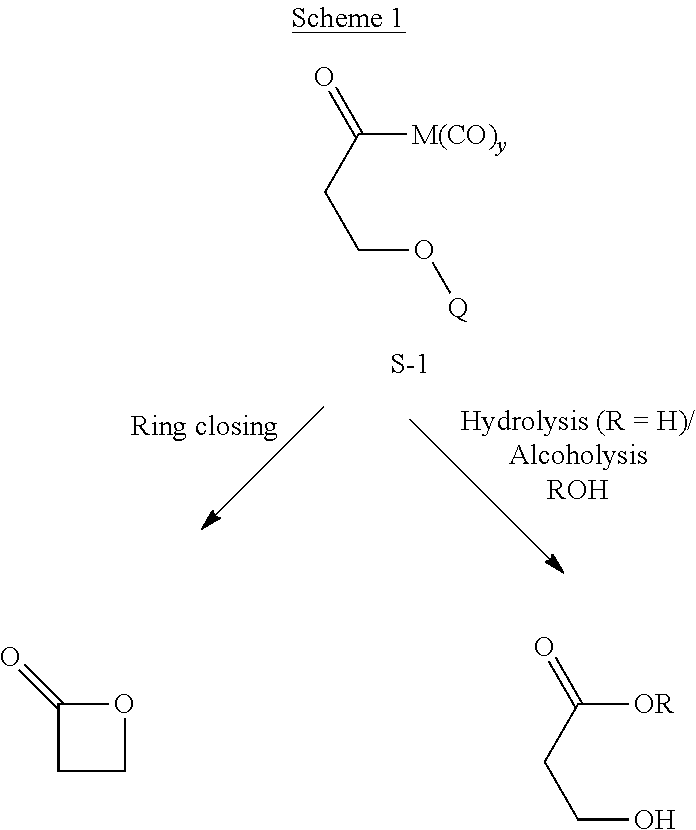

Catalysts and methods for polyester production

InactiveUS20150368394A1High yieldIncrease ratingsPhysical/chemical process catalystsOther chemical processesPolyesterMetal carbonyl

Disclosed are polymerization systems and methods for the formation of polyesters from epoxides and carbon monoxide. The inventive polymerization systems feature the combination of metal carbonyl compounds and polymerization initiators and are characterized in that the molar ratios of metal carbonyl compound, polymerization initiators and provided epoxides are present in certain ratios.

Owner:NOVOMER INC

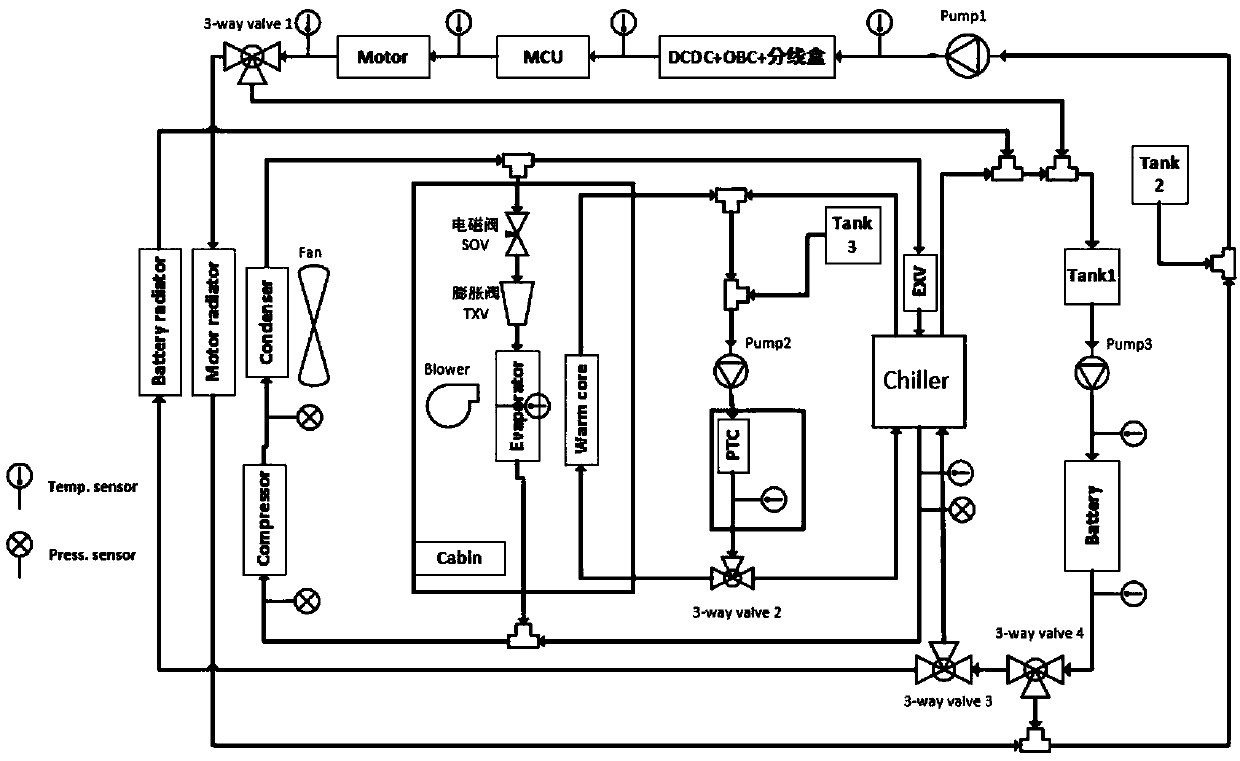

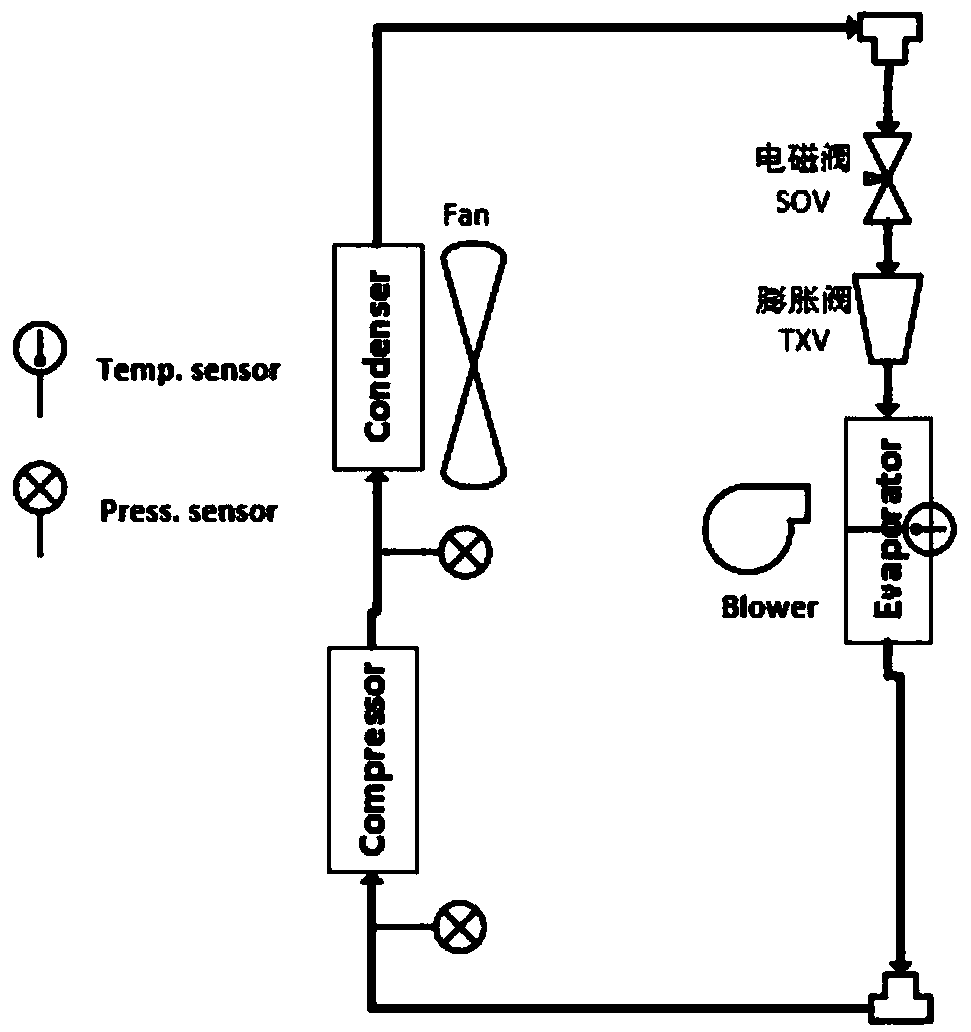

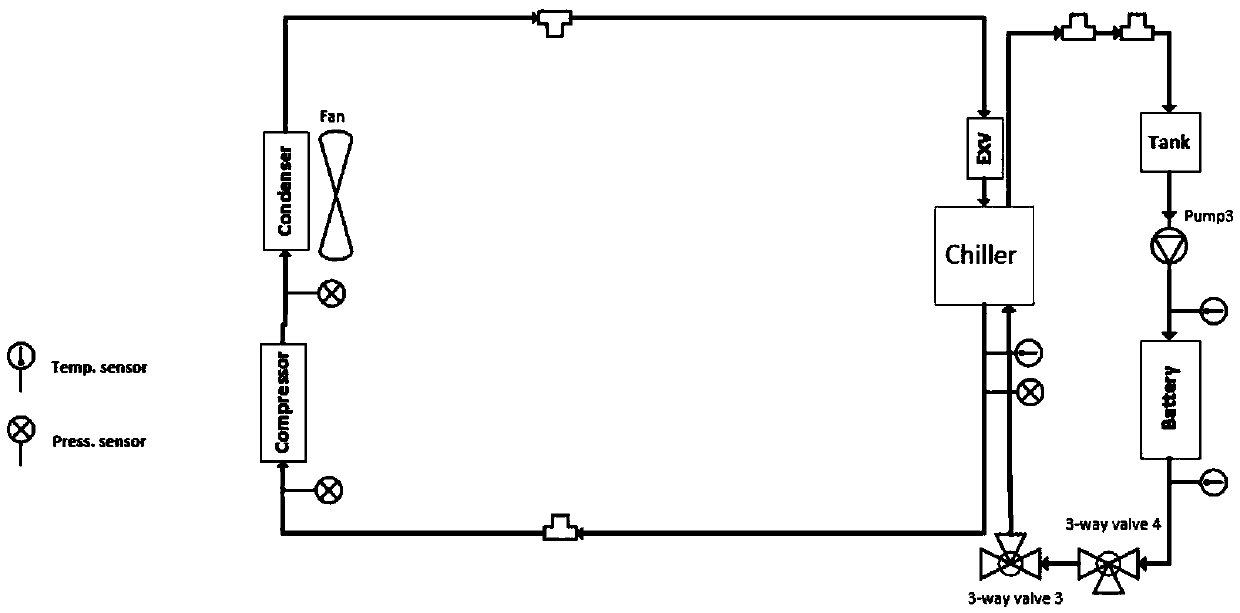

Low-power-consumption heat management system of electric car

InactiveCN109532563AReduce heating effectReduce cooling effectAir-treating devicesSecondary cellsElectrical batterySolenoid valve

The invention relates to an electric car heat management system, in particular to a low-power-consumption heat management system of an electric car. The low-power-consumption heat management system comprises an electric compressor, a condenser, a refrigerant solenoid valve, a thermostatic expansion valve, an HVAC assembly, an electronic expansion valve, a cooler, a first expansion kettle, a thirdelectronic water pump, a fourth three-way valve and a third three-way valve. After the components are combined, a passenger compartment refrigerating cycle loop, a battery forced cooling cycle loop, abattery low-temperature heat radiation cycle loop, a passenger compartment heating cycle loop, a battery forced heating cycle loop, a battery waste heat utilization cycle loop, a battery temperatureequalization cycle loop and a motor cooling cycle loop are formed. According to the low-power-consumption heat management system, the situation that a PTC and the electric compressor are utilized forheating and cooling a battery can be effectively reduced, and therefore the whole car power consumption is reduced, and the driving mileage is increased.

Owner:JIANGSU MINAN AUTOMOTIVE CO LTD

Reducing the computational load on processors by selectively discarding data in vehicular networks

ActiveCN102035874AReduce in quantitySmall amount of calculationService provisioningParticular environment based servicesCommunications systemProtocol stack

A method is provided for efficiently processing messages staged for authentication in a security layer of a protocol stack in a wireless vehicle-to-vehicle communication system. The vehicle-to-vehicle communication system includes a host vehicle receiver for receiving messages transmitted by one or more remote vehicles. The host receiver is configured to authenticate received messages in a security layer of a protocol stack. A wireless message broadcast by a remote vehicle is received. The wireless message contains characteristic data of the remote vehicle. The characteristic data is analyzed for determining whether the wireless message is in compliance with a predetermined parameter of the host vehicle. The wireless message is discarded prior to a transfer of the wireless message to the security layer in response to a determination that the wireless message is not in compliance with the predetermined parameter of the host vehicle. Otherwise, the wireless message is transferred to the security layer.

Owner:GM GLOBAL TECH OPERATIONS LLC

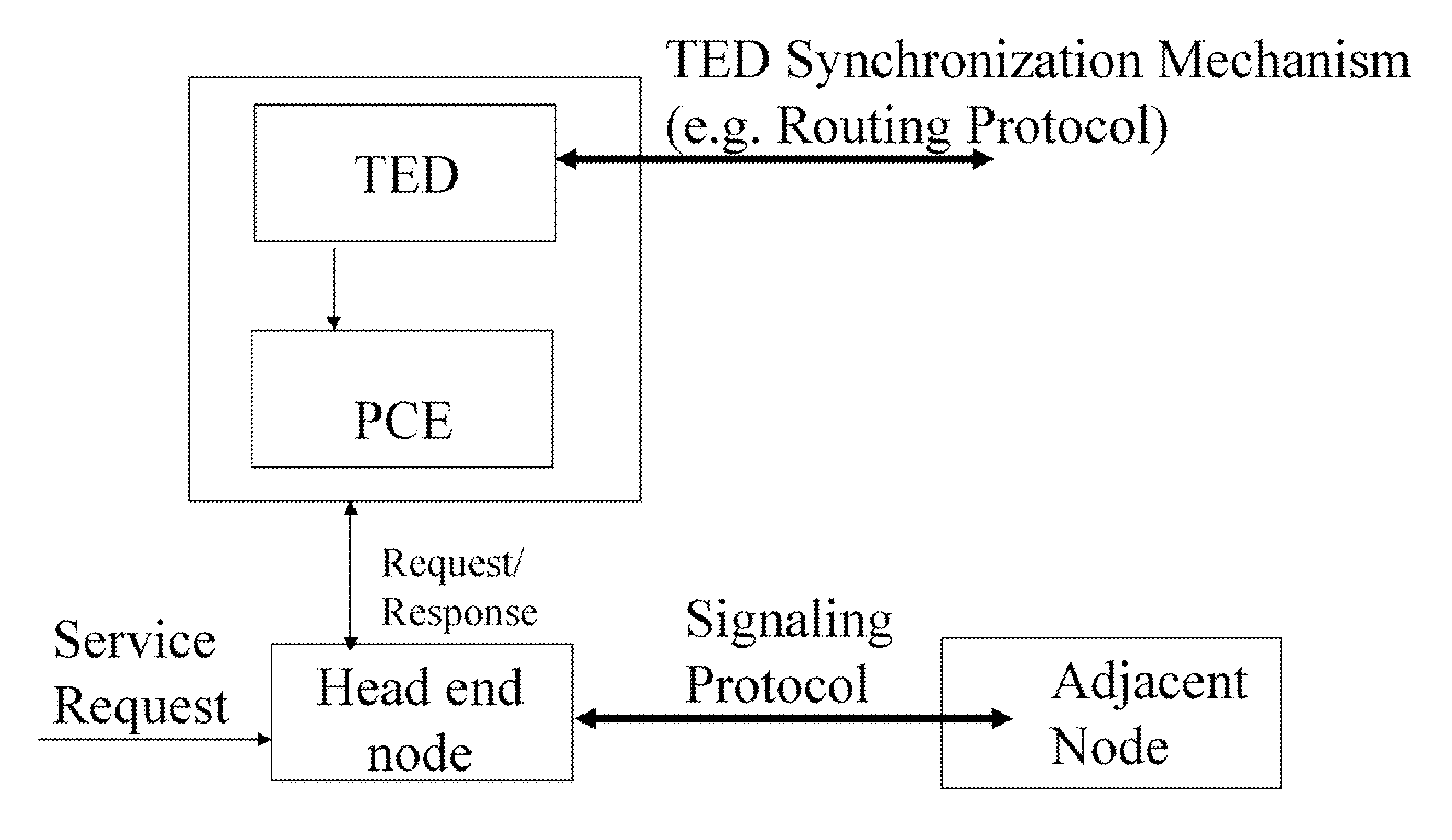

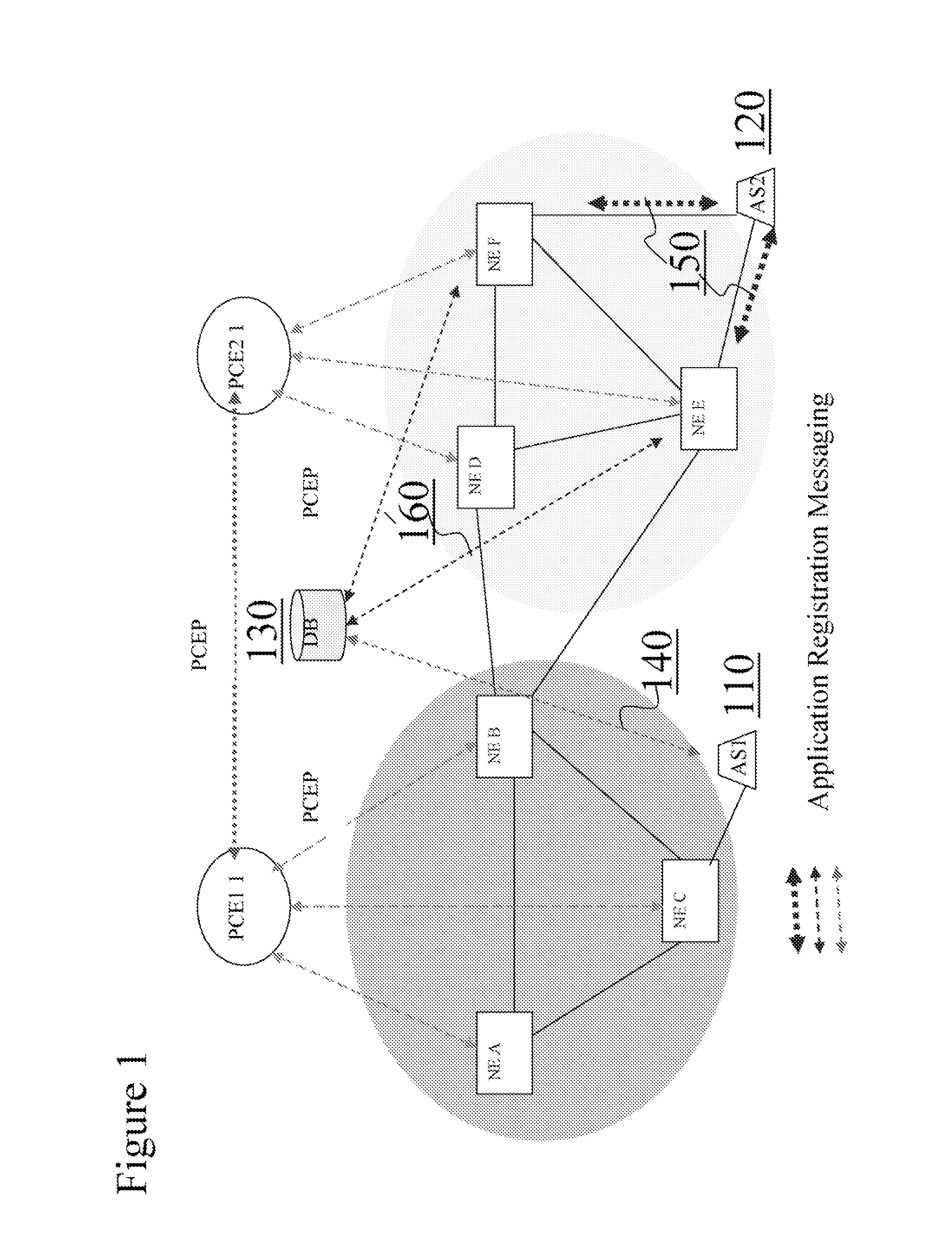

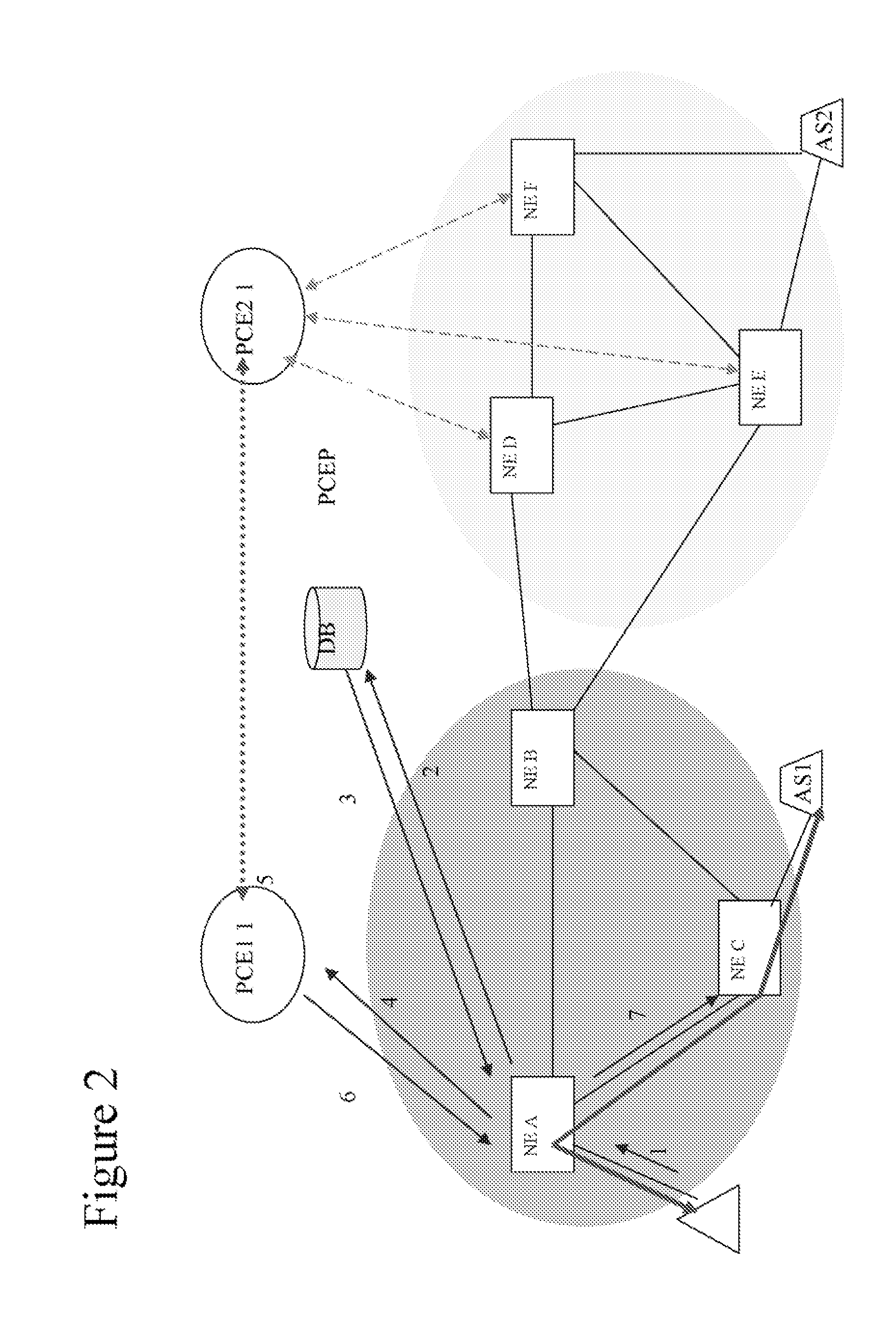

Optimal Path Selection for Accessing Networked Applications

ActiveUS20080267088A1Easy to routeQuality improvementData switching by path configurationMultiple digital computer combinationsAccess methodApplication server

An efficient and user-friendly application service access method utilizing network dynamic conditions and application traffic requirements. This method allows applications to register their servers' location information and traffic requirements with the network and provides an optimal connection to application service without specifying an application server location for an end user and based on network conditions and application requirements.

Owner:FUTUREWEI TECH INC

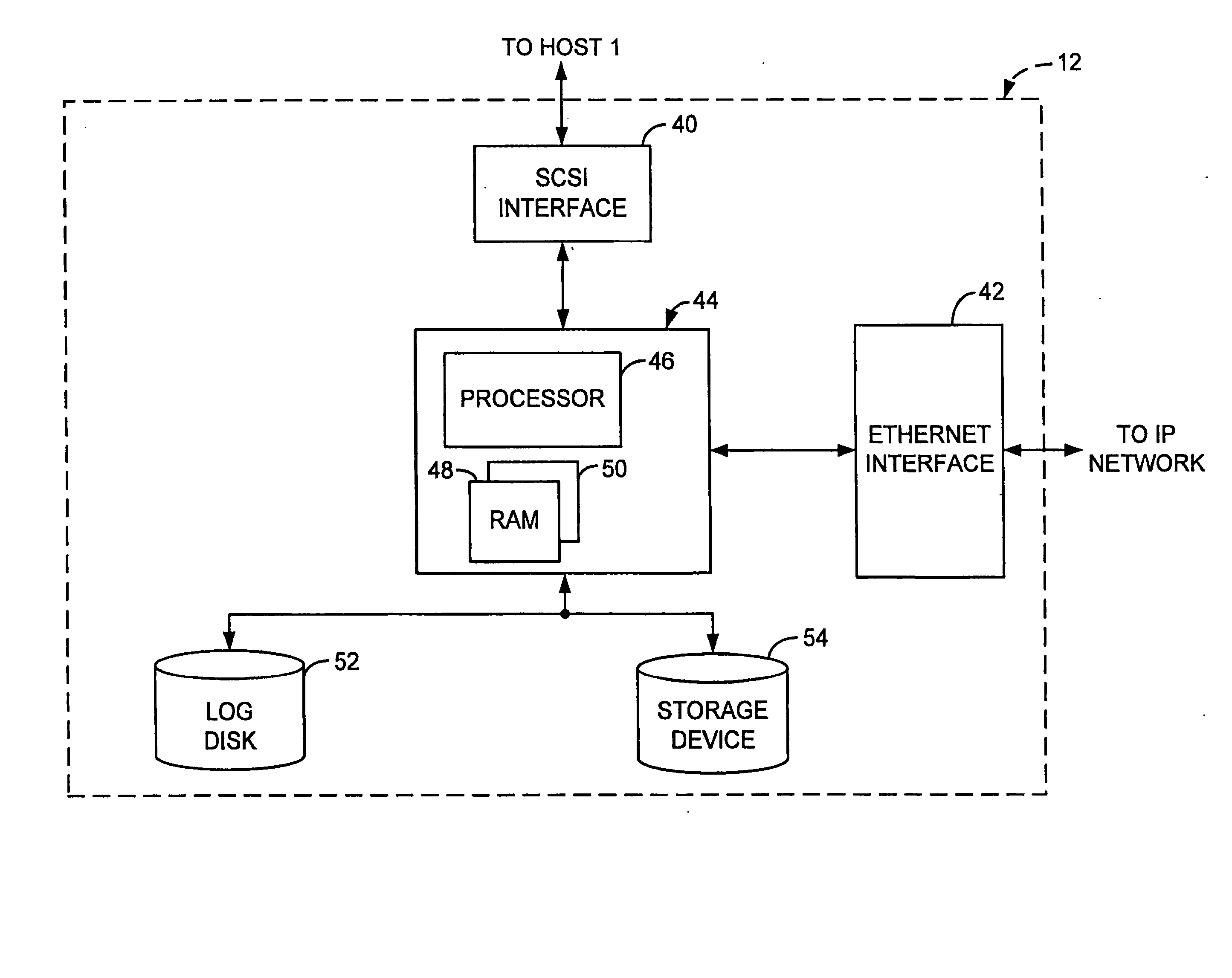

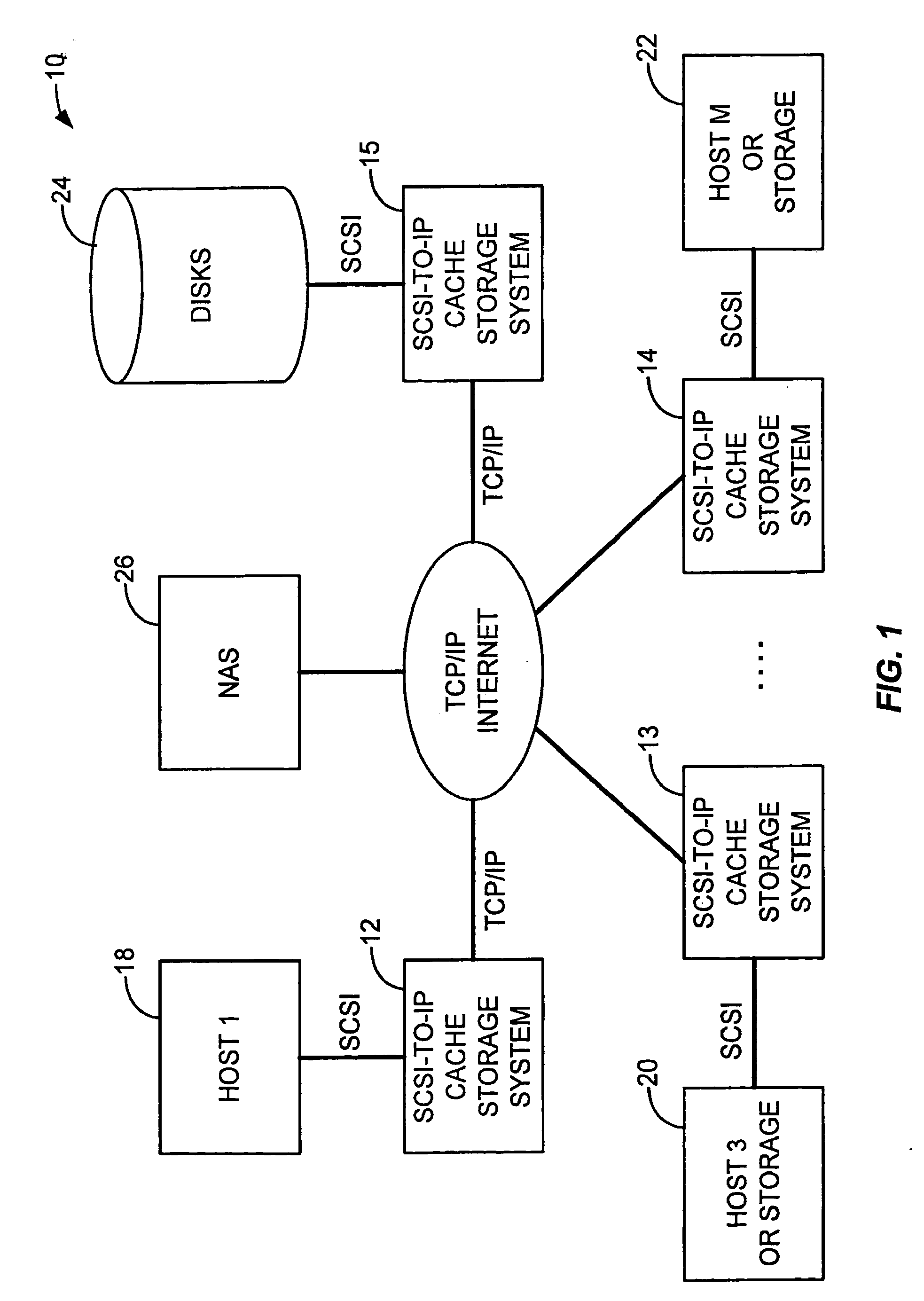

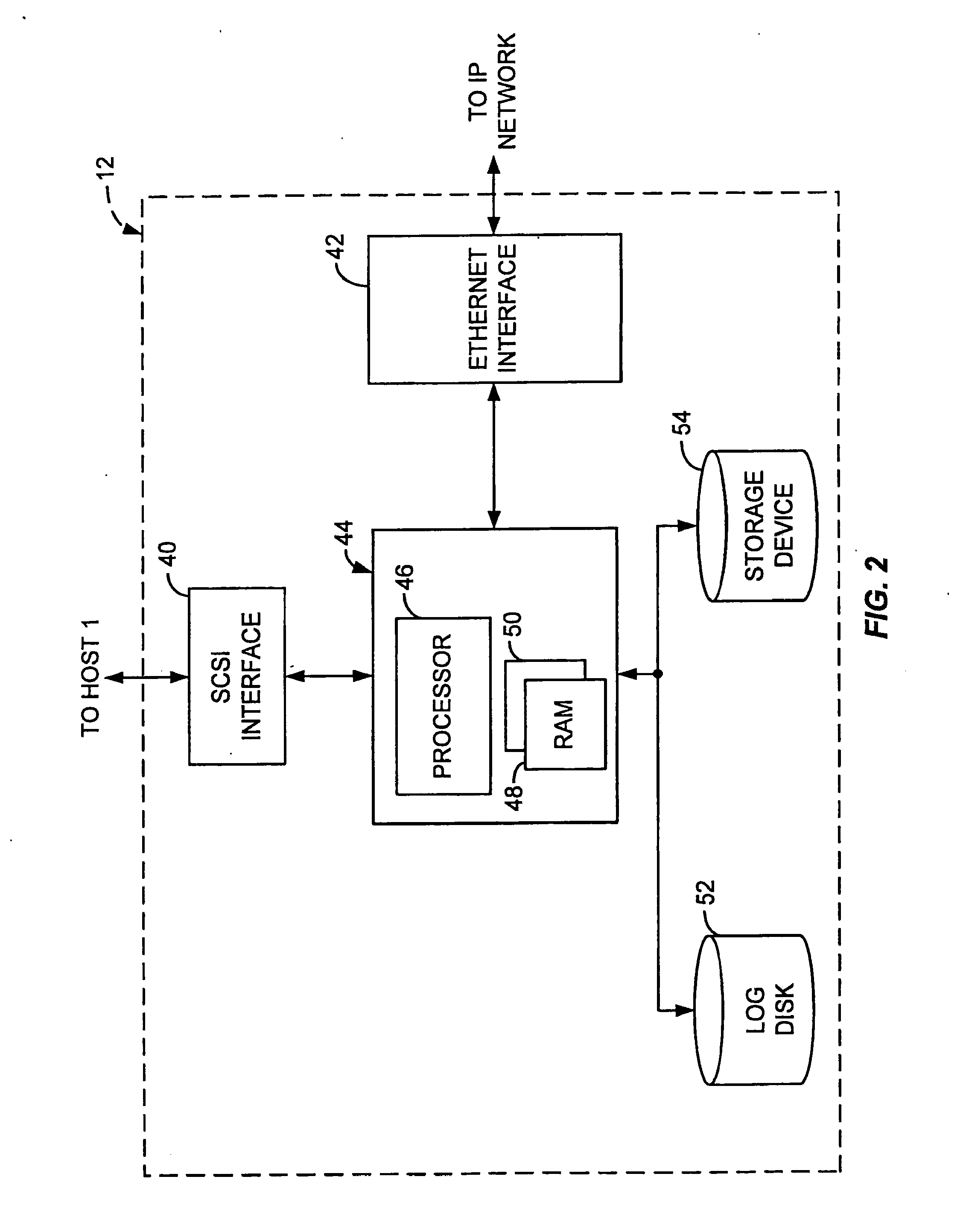

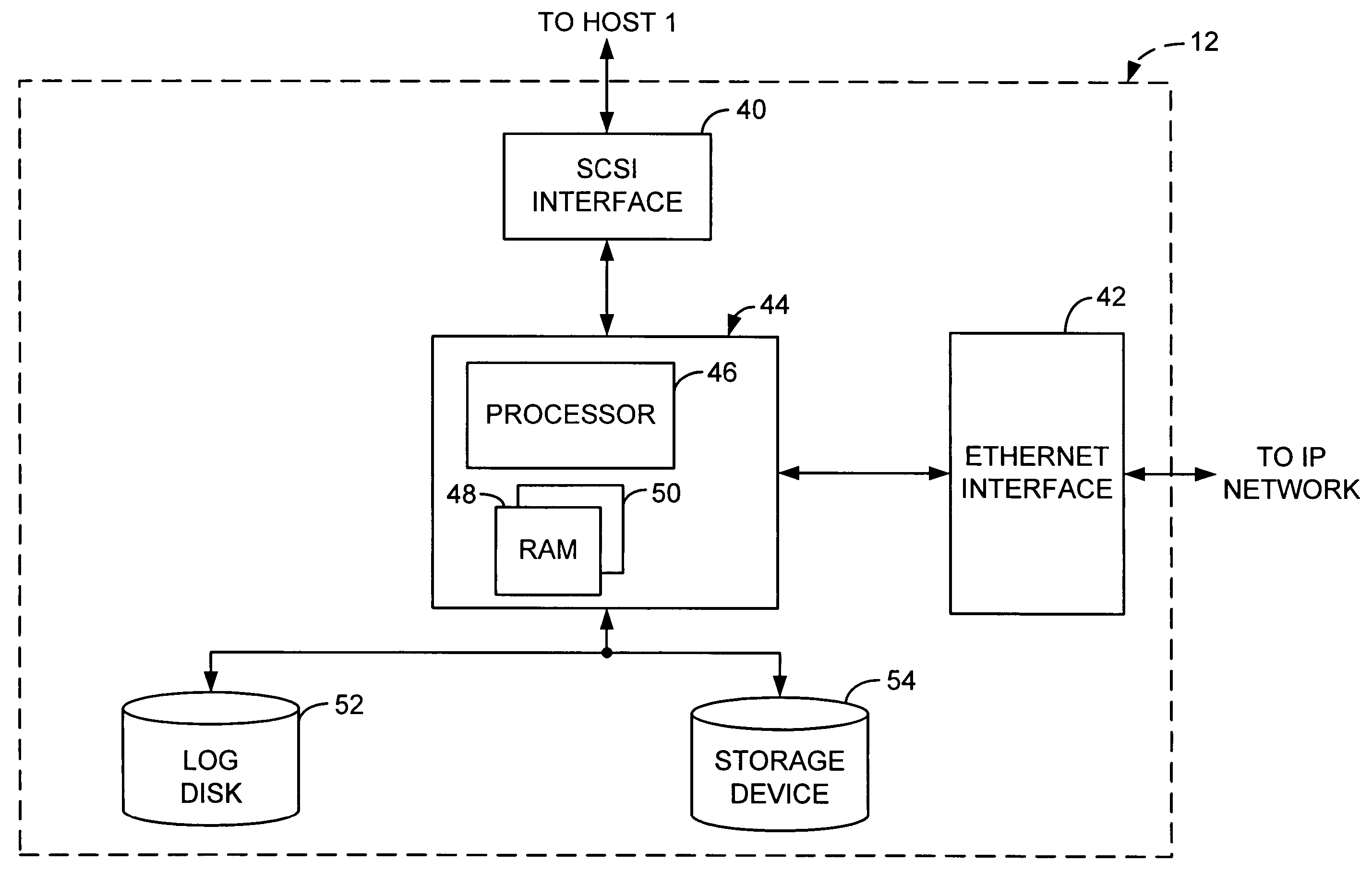

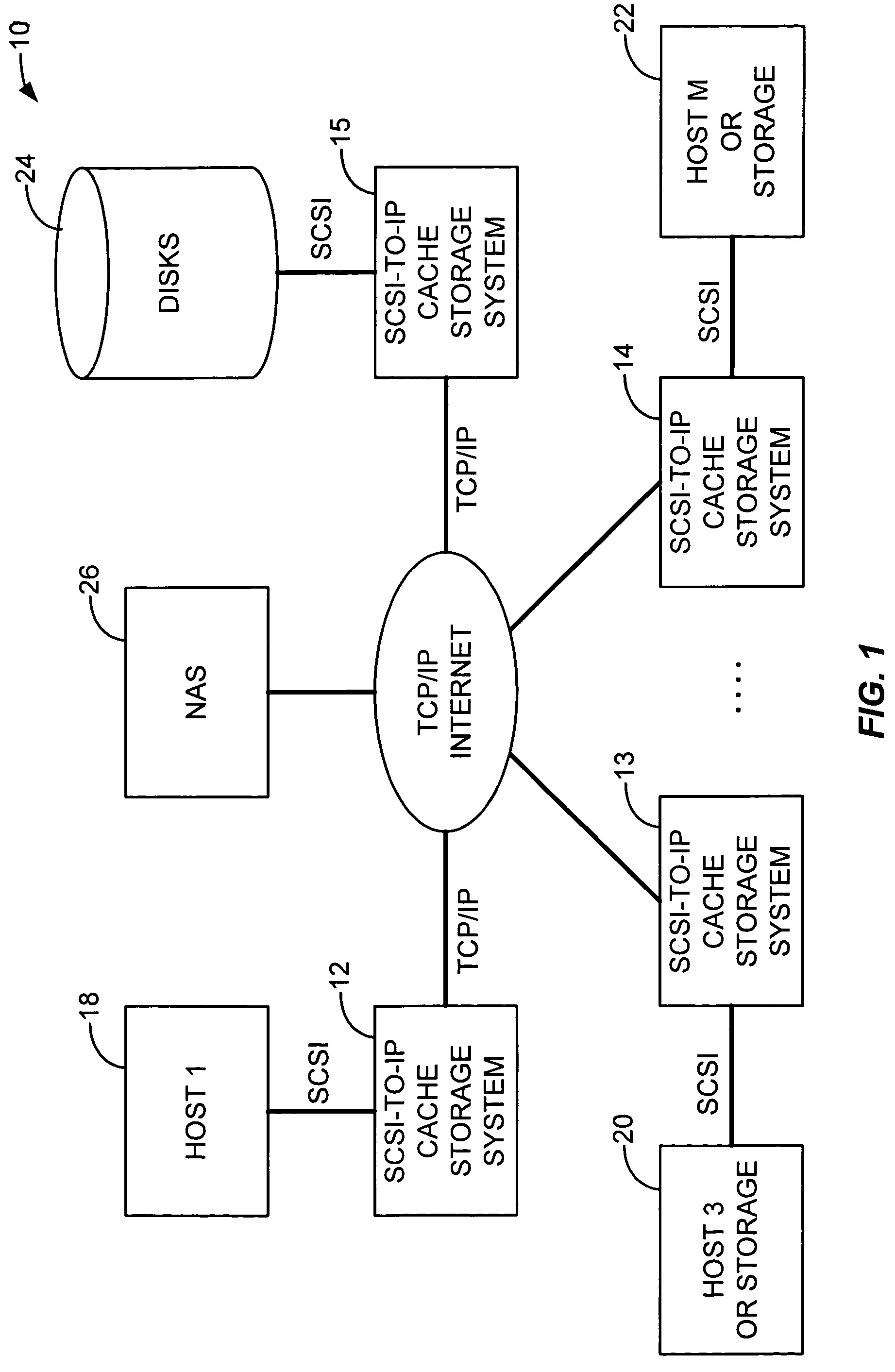

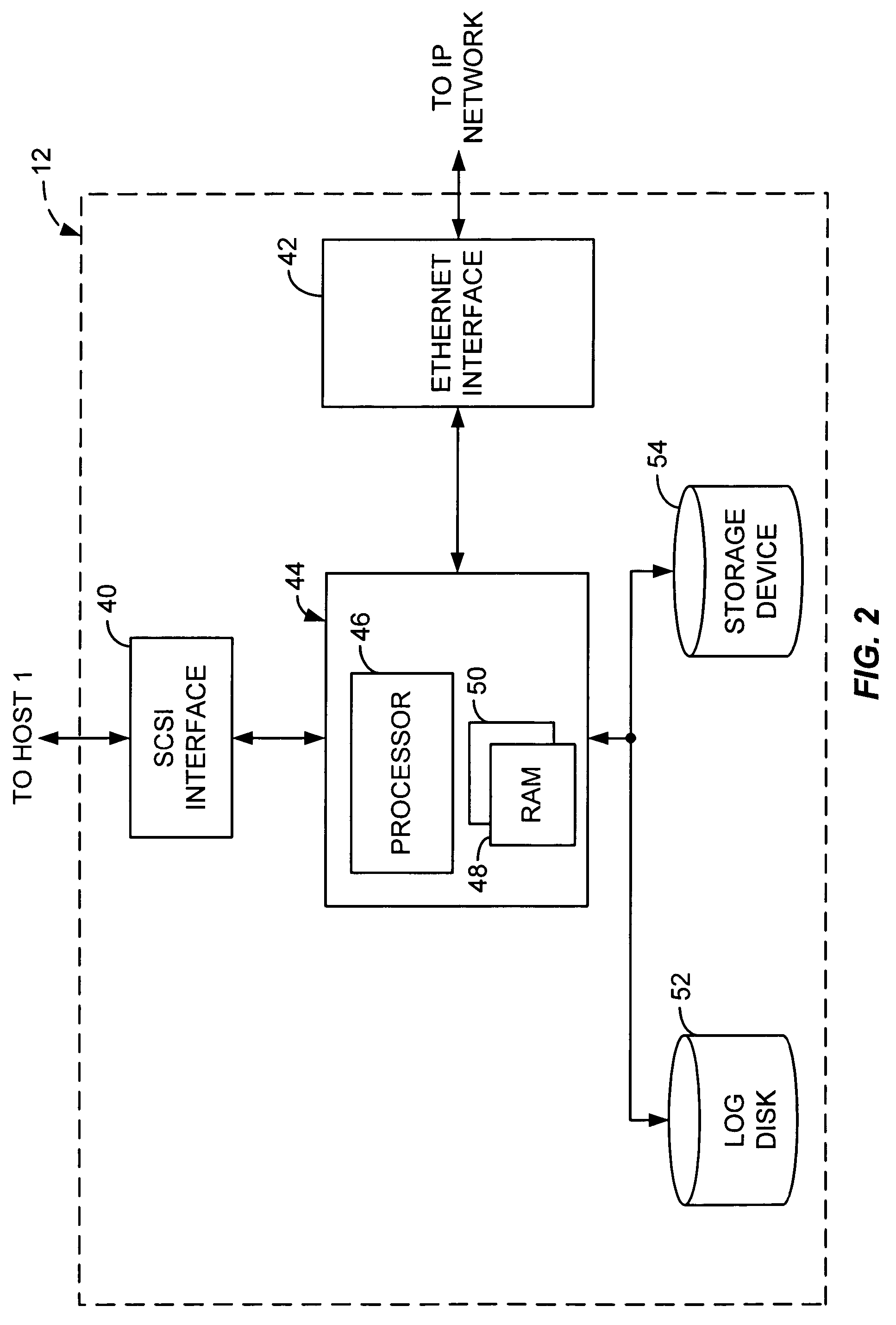

SCSI-to-IP Cache Storage Device and Method

InactiveUS20080010411A1Reduction of unnecessary trafficReduce bottlenecksMultiple digital computer combinationsMemory systemsSCSIThe Internet

A SCSI-to-IP cache storage system interconnects a host computing device or a storage unit to a switched packet network. The cache storage system includes a SCSI interface (40) that facilitates system communications with a host computing device or the storage unit, and a Ethernet interface (42) that allows the system to receive data from and send data to the Internet. The cache storage system further comprises a processing unit (44) that includes a processor (46), a memory (48) and a log disk (52) configured as a sequential access device. The log disk (52) caches data along with the memory (48) resident in the processing unit (44), wherein the log disk (52) and the memory (48) are configured as a two-level hierarchical cache.

Owner:BOARD OF GOVERNORS FOR HIGHER EDUCATION STATE OF RHODE ISLAND & PROVIDENCE PLANTATIONS

Method and apparatus for providing bifurcated transport of signaling and informational voice traffic

InactiveUS7203185B1Reduce bottlenecksEfficient communicationInterconnection arrangementsError preventionVoice trafficCable telephony

A method and apparatus for providing bifurcated voice and signaling traffic over a cable telephony architecture by segregating signaling traffic and voice traffic and transmitting the respective traffic over two different mediums to a controller to establish a phone call.

Owner:LUCENT TECH INC

Dynamic topological adaptation

ActiveUS8169933B2Reduce bottlenecksImprove balancePower managementError preventionUser equipmentTraffic load

Apparatus and methods for reconfiguration of a communication environment based on loading requirements. Network operations are monitored and analyzed to determine loading balance across the network or a portion thereof. Where warranted, the network is reconfigured to balance the load across multiple network entities. For example, in a cellular-type of network, traffic loads and throughput requirements are analyzed for the access points and their user equipment. Where loading imbalances occur, the cell coverage areas of one or more access points can be reconfigured to alleviate bottlenecks or improve balancing.

Owner:CORNING OPTICAL COMM LLC

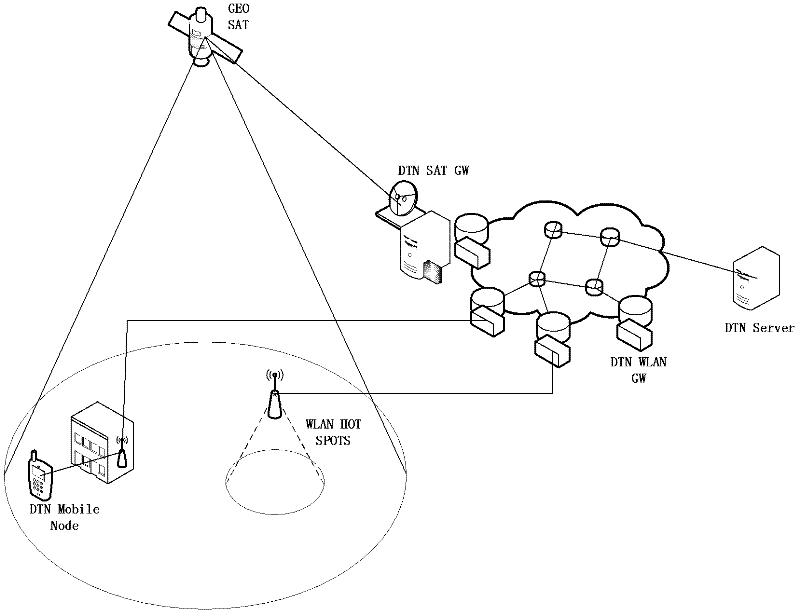

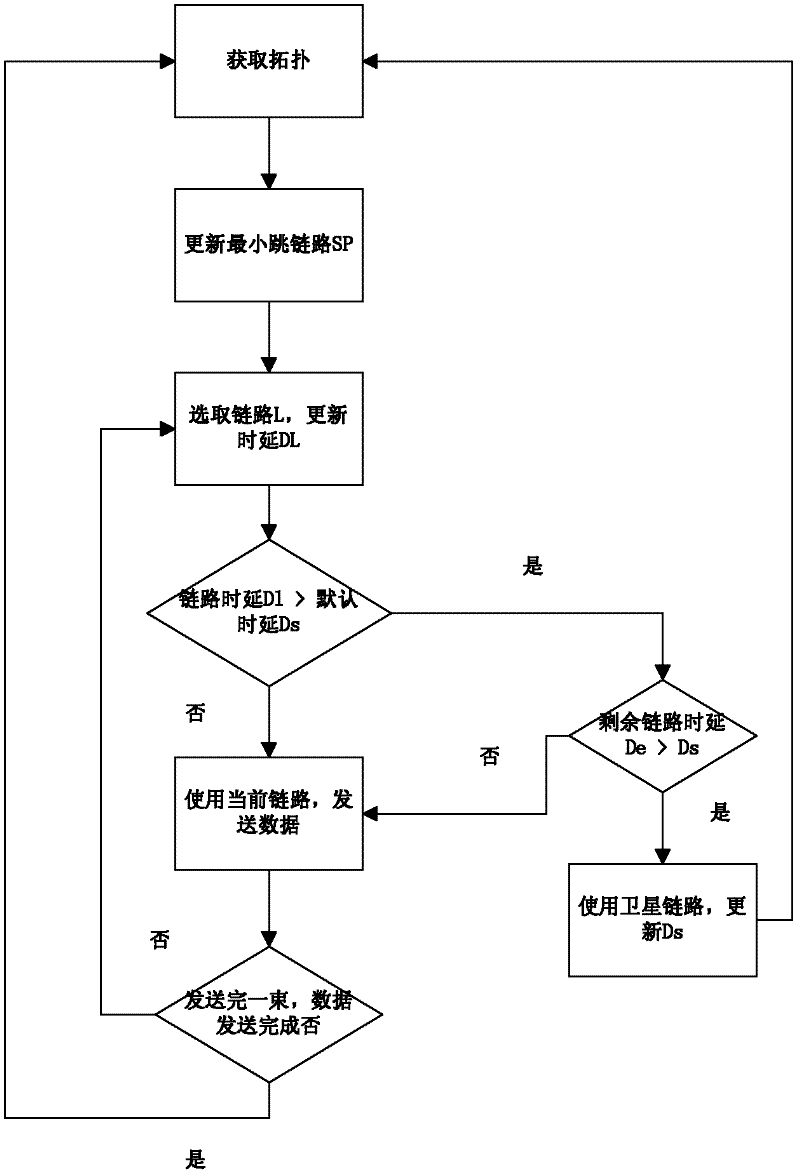

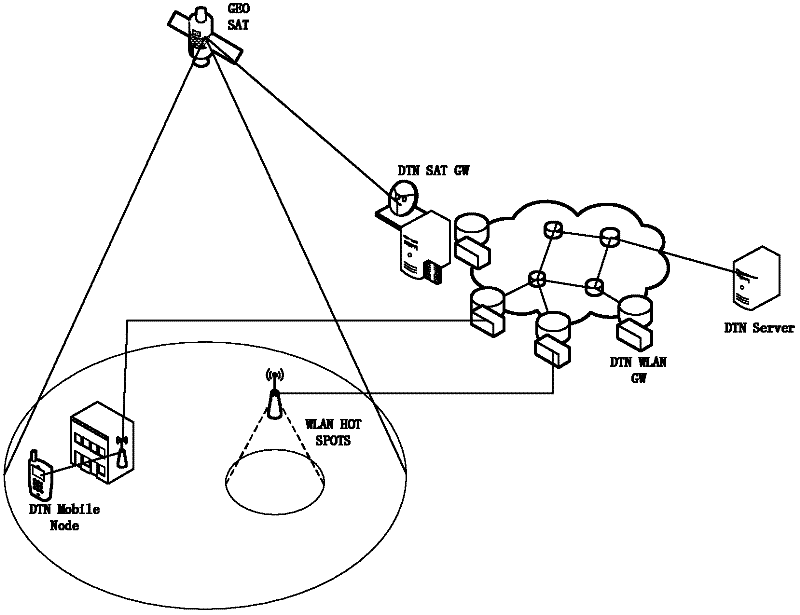

Multilayer effective routing method applied to delay tolerant network (DTN)

InactiveCN102571571AEfficient use ofImprove throughputData switching networksArea networkBundle protocol

The invention discloses a multi-layer effective routing method applied to a delay tolerant network (DTN). At present, Internet based on a TCP (Transmission Control Protocol) / IP (Internet Protocol) protocol is applied widely. However, the TCP / IP cannot be well applied to networks such as high-altitude area networks, satellite networks, and the like. The (DTN) is a type of delay / interrupt tolerant network, which has the characteristics of long delay, no end-to-end chain or frequent interrupt of the chain at a certain moment, low capability of node storing / calculating capacity, and the like. A DTN framework and a bundle protocol are introduced briefly, and the throughput rate of the DTN is increased and the channel general utilization ratio is lowered by improving a routing algorithm used by the DTN.

Owner:NANJING UNIV OF POSTS & TELECOMM

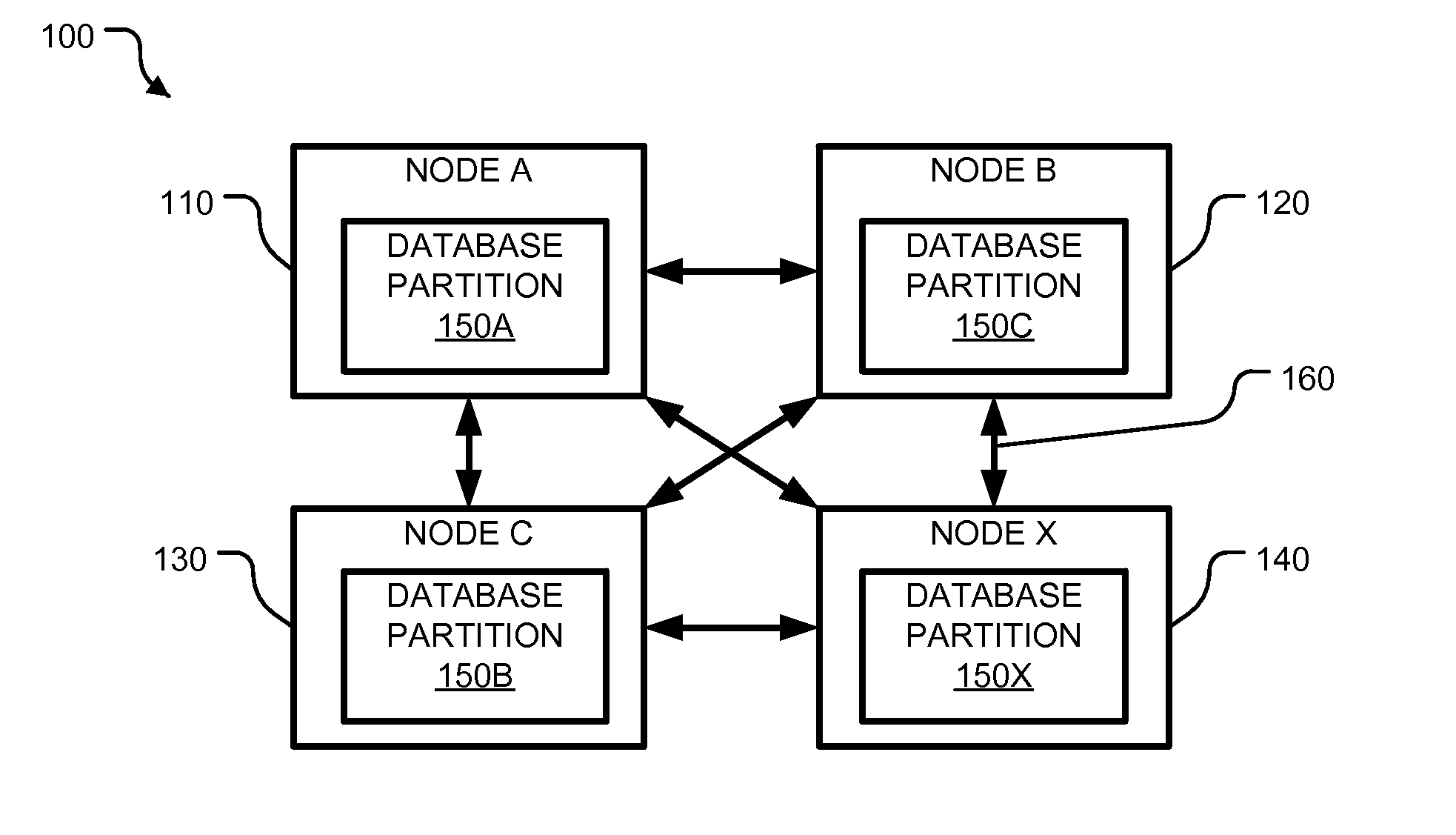

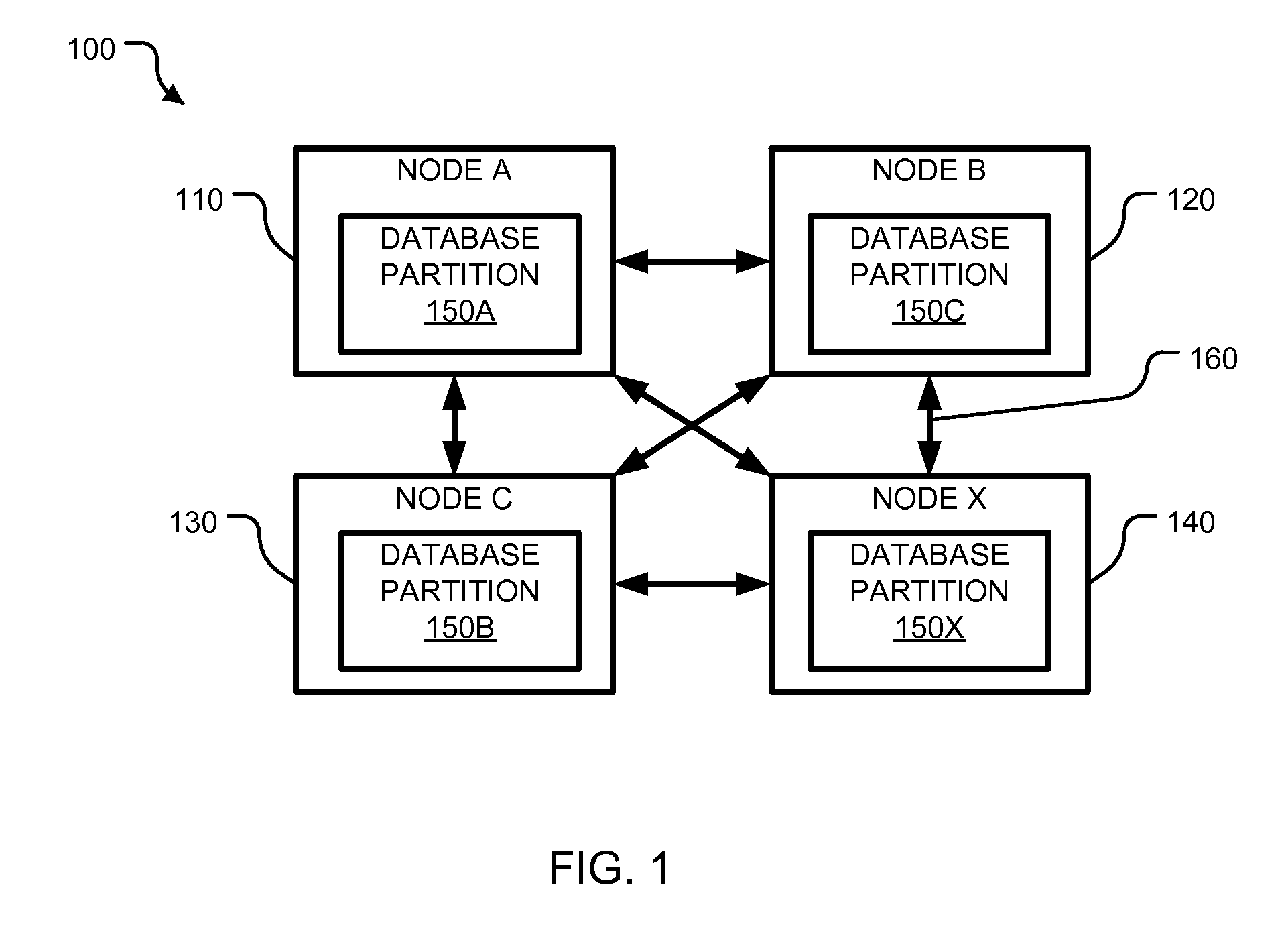

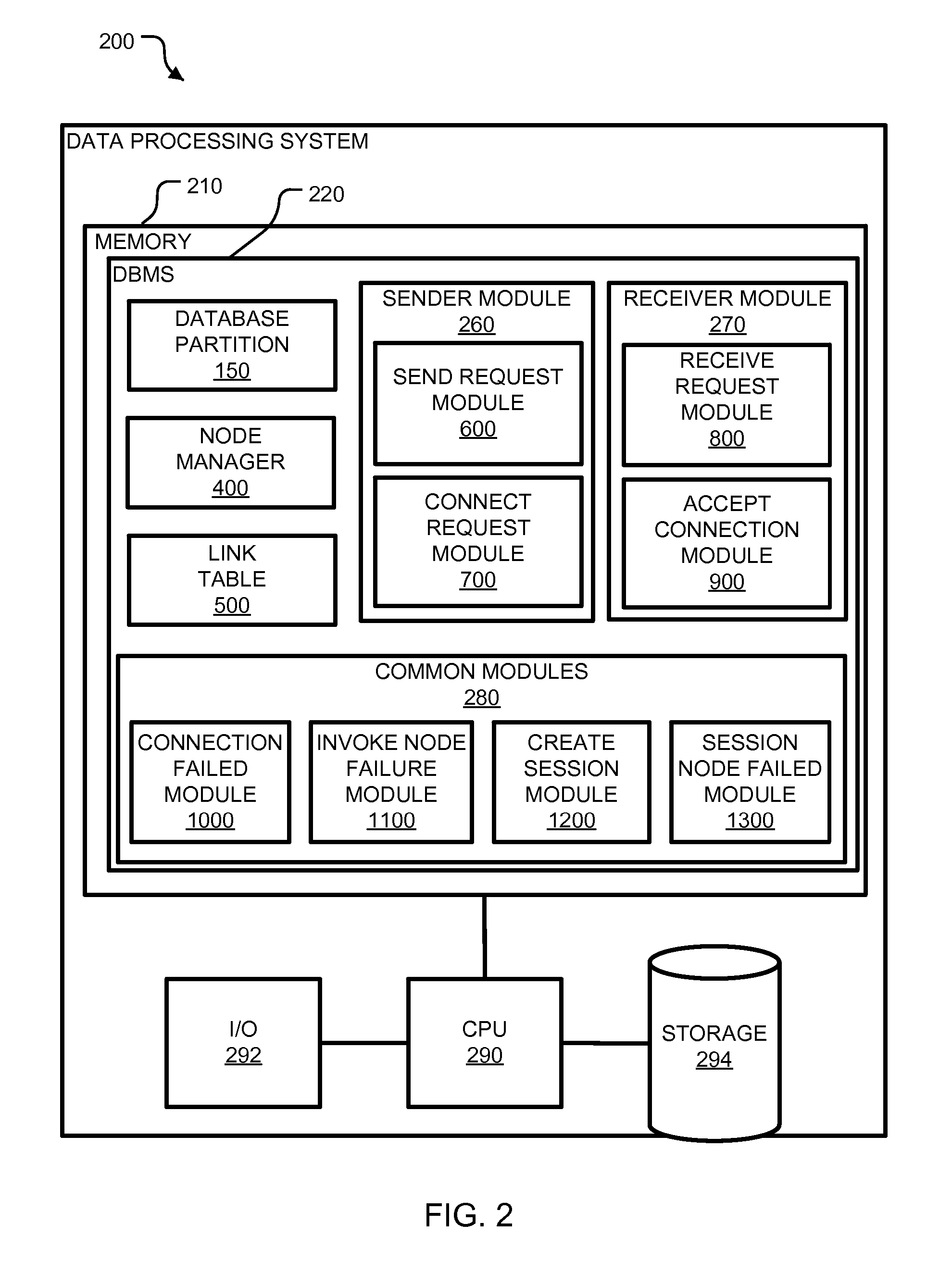

Asynchronous interconnect protocol for a clustered dbms

InactiveUS20070260714A1Good scalabilityImprove good performanceDigital data information retrievalMultiple digital computer combinationsProtocol for Carrying Authentication for Network AccessTimestamp

A method, system and computer program product for an asynchronous interconnection between nodes of a clustered database management system (DBMS). Node timestamps are provided when each of the nodes in the cluster are started. Two or more communication conduits are established between the nodes. Each communication conduit between a local node and a remote node has an associated session identifier. The session identifiers and the timestamp from the remote node are associated to each communication conduit and the associated local node in the cluster. A timestamp is received from the remote node at the local node when establishing communication to determine if the remote node corresponds to the remote node incarnation identified by the timestamp and if DBMS communication between nodes can be initiated.

Owner:IBM CORP

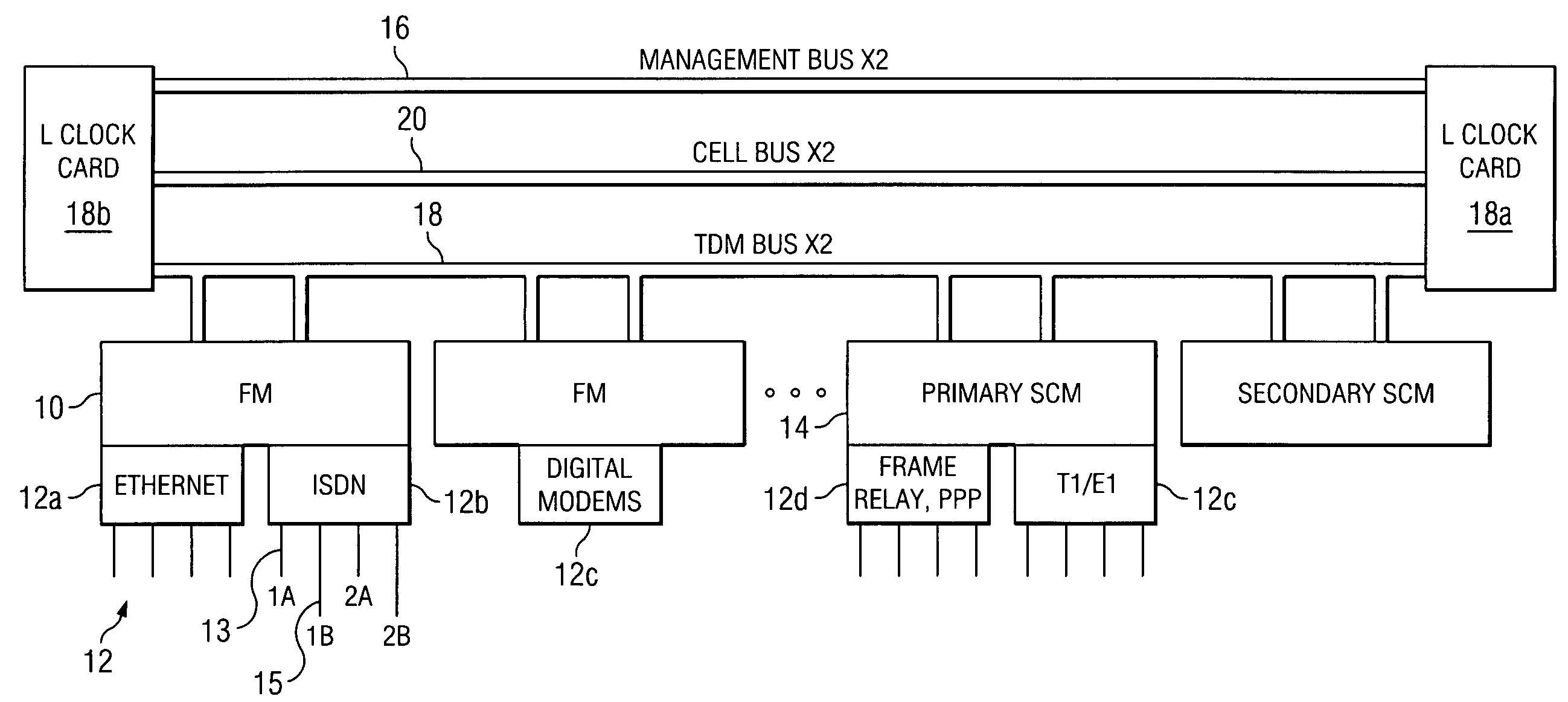

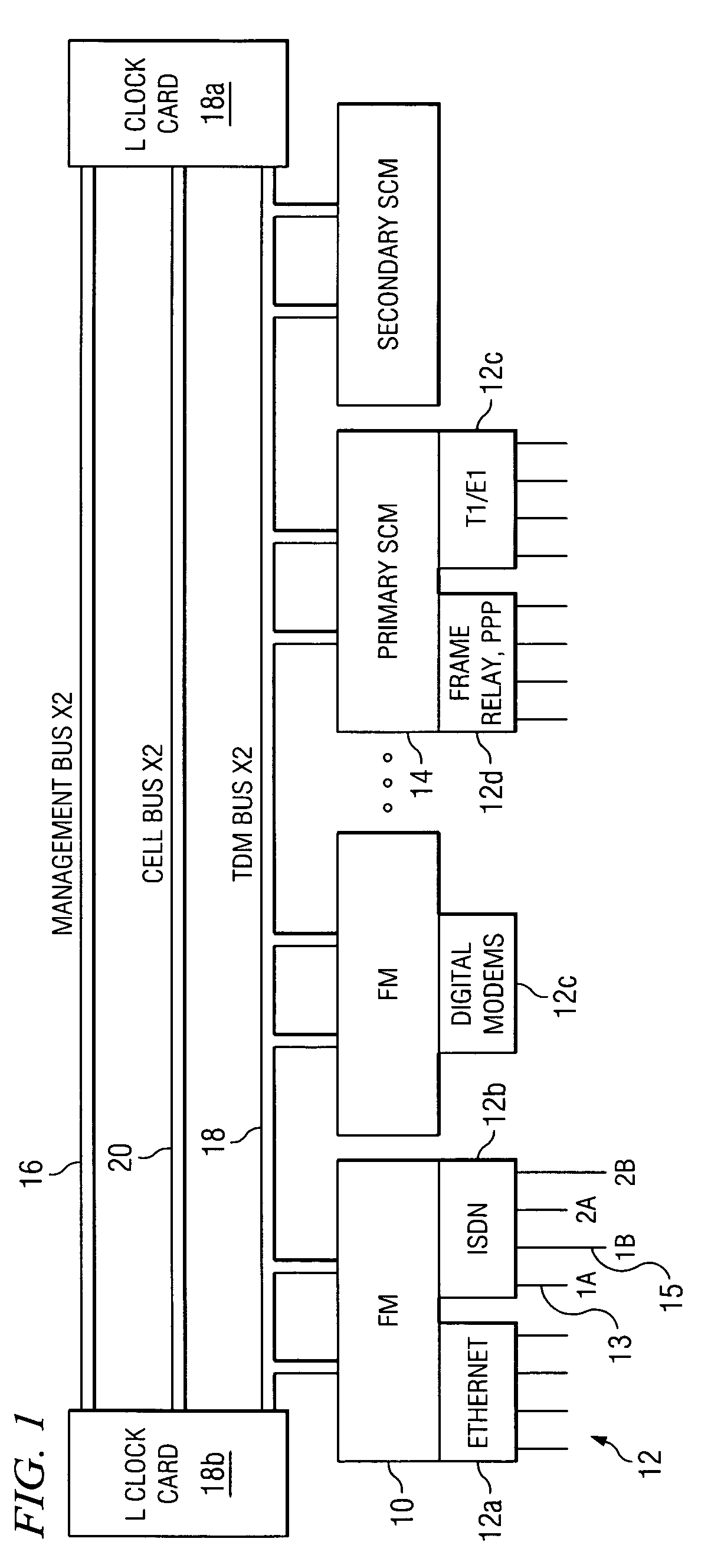

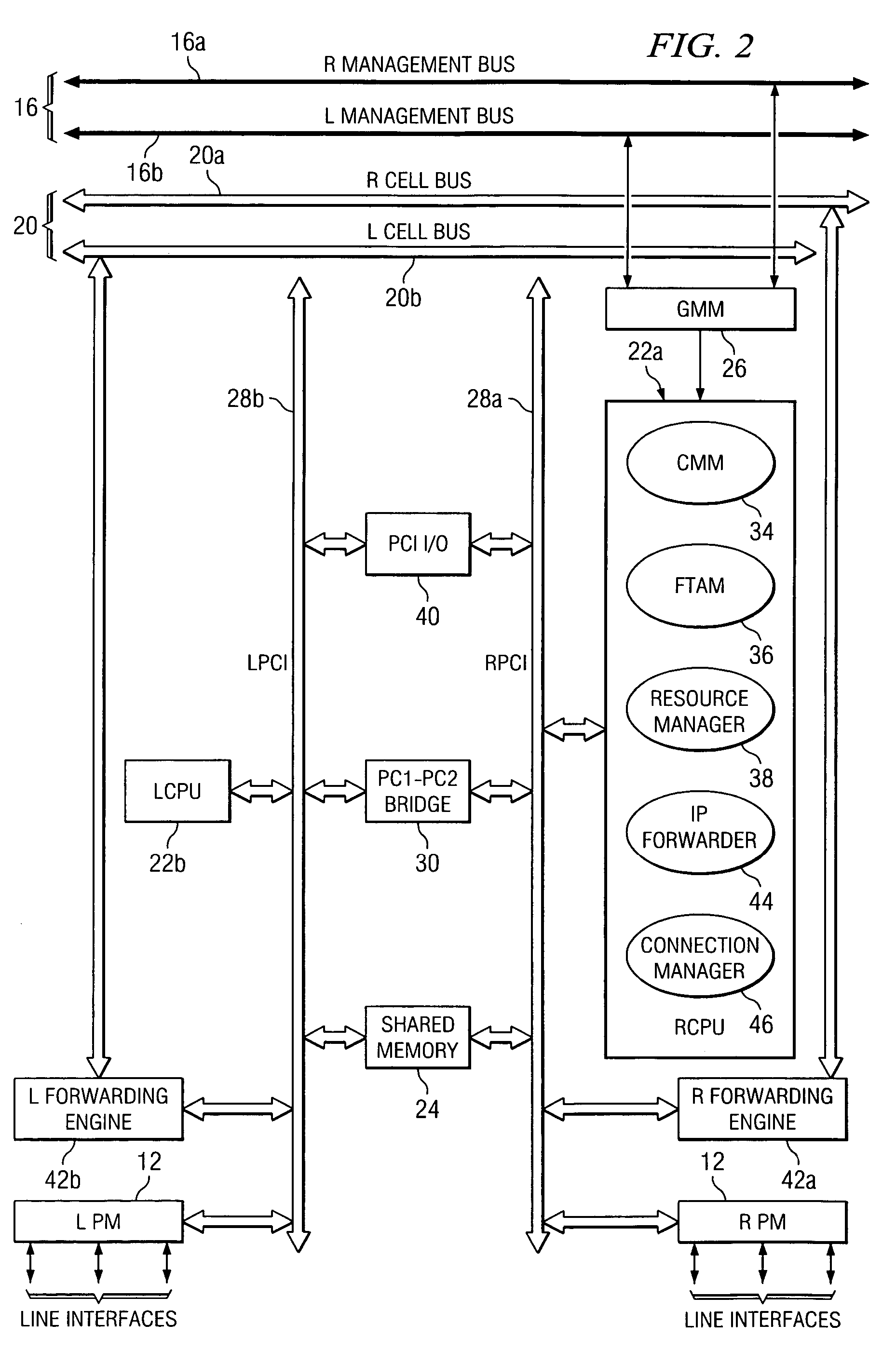

Multi-service network switch with independent protocol stack architecture

InactiveUS7327757B2Reduce bottlenecksImprove throughputMultiplex system selection arrangementsTime-division multiplexDomain nameRouting table

A multi-service network switch capable of providing multiple network services from a single platform. The switch incorporates a distributed packet forwarding architecture where each of the various cards is capable of making independent forwarding decisions. The switch further allows for dynamic resource management for dynamically assigning modem and ISDN resources to an incoming call. The switch may also include fault management features to guard against single points of failure within the switch. The switch further allows the partitioning of the switch into multiple virtual routers where each virtual router has its own wet of resources and a routing table. Each virtual router is further partitioned into virtual private networks for further controlling access to the network. The switch's supports policy based routing where specific routing paths are selected based a domain name, a telephone number, and the like. The switch also provides tiered access of the Internet by defining quality of access levels to each incoming connection request. The switch may further support an IP routing protocol and architecture in which the layer two protocols are independent of the physical interface they run on. Furthermore, the switch includes a generic forwarding interface software for hiding the details of transmitting and receiving packets over different interface types.

Owner:ALCATEL LUCENT SAS

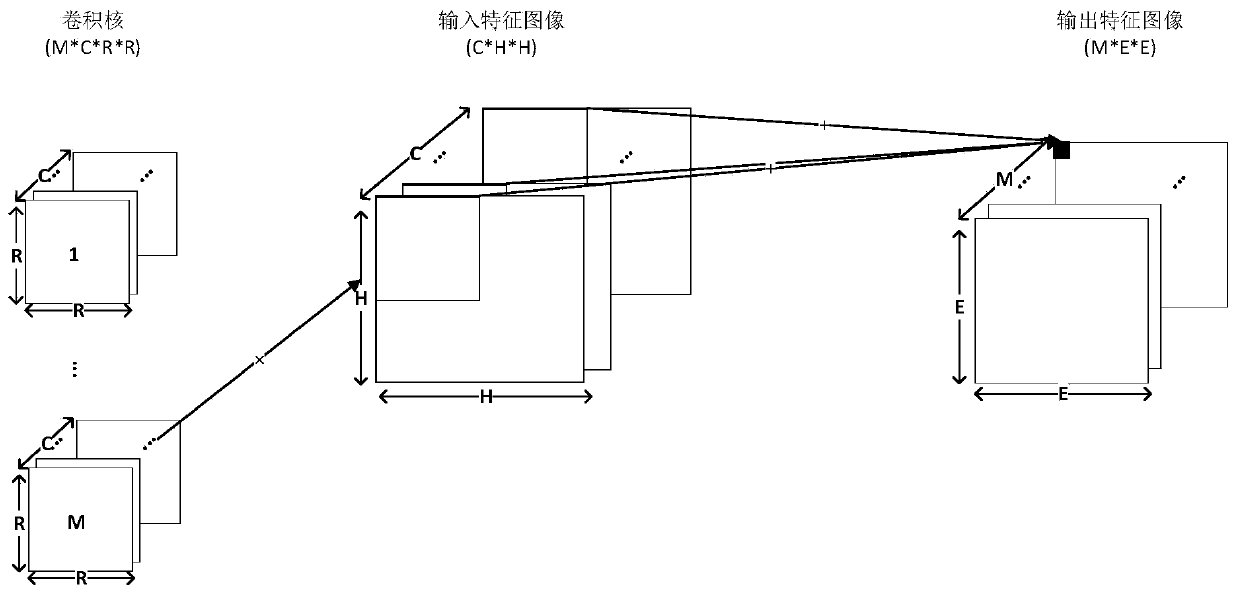

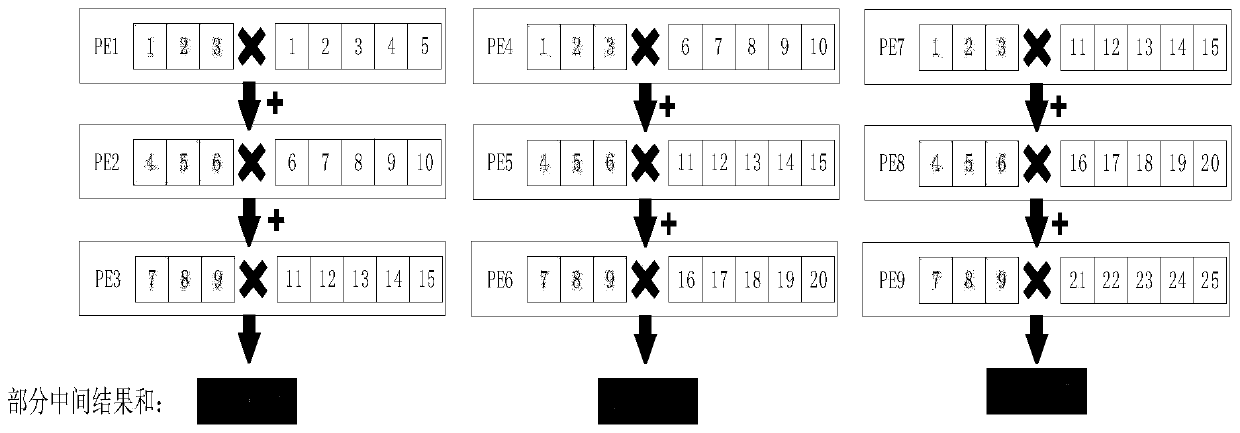

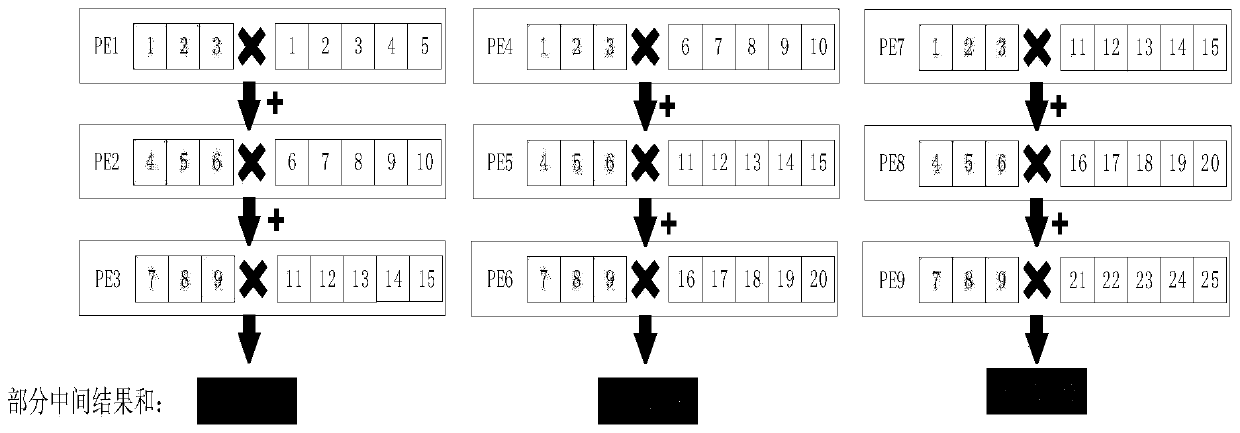

Convolutional neural network acceleration engine, convolutional neural network acceleration system and method

ActiveCN111178519AReduce data accessImprove performanceNeural architecturesPhysical realisationAlgorithmTerm memory

The invention discloses a convolutional neural network acceleration engine, a convolutional neural network acceleration system and a convolutional neural network acceleration method, and belongs to the field of heterogeneous computing acceleration, wherein the physical PE matrix comprises a plurality of physical PE units, and the physical PE units are used for executing row convolution operation and related partial sum accumulation operation; the XY interconnection bus is used for transmitting the input feature image data, the output feature image data and the convolution kernel parameters from the global cache to the physical PE matrix, or transmitting an operation result generated by the physical PE matrix to the global cache; the adjacent interconnection bus is used for transmitting anintermediate result between the same column of physical PE units; the system comprises a 3D-Memory, and a convolutional neural network acceleration engine is integrated in a memory controller of eachVault unit and used for completing a subset of a convolutional neural network calculation task; the method is optimized layer by layer on the basis of the system. According to the invention, the performance and energy consumption of the convolutional neural network can be improved.

Owner:HUAZHONG UNIV OF SCI & TECH

Intelligent two-way telemetry

InactiveUS20060111796A1Reduces bottleneckHigh ratioElectric signal transmission systemsLevel controlTelemetry EquipmentEngineering

Methods and apparatus, including computer program products, implementing and using techniques for intelligent two-way telemetry. A telemetry system in accordance with the invention includes one or more telemetry units that can receive and send data The system further includes one or more controllers that includes intelligence for processing data from the one or more telemetry units and for autonomously communicating with the one or more telemetry units. The controllers are separately located from a data processing center of the telemetry system such that the controllers alleviate data congestion going to and coming from the data processing center.

Owner:LANDISGYR INNOVATIONS INC

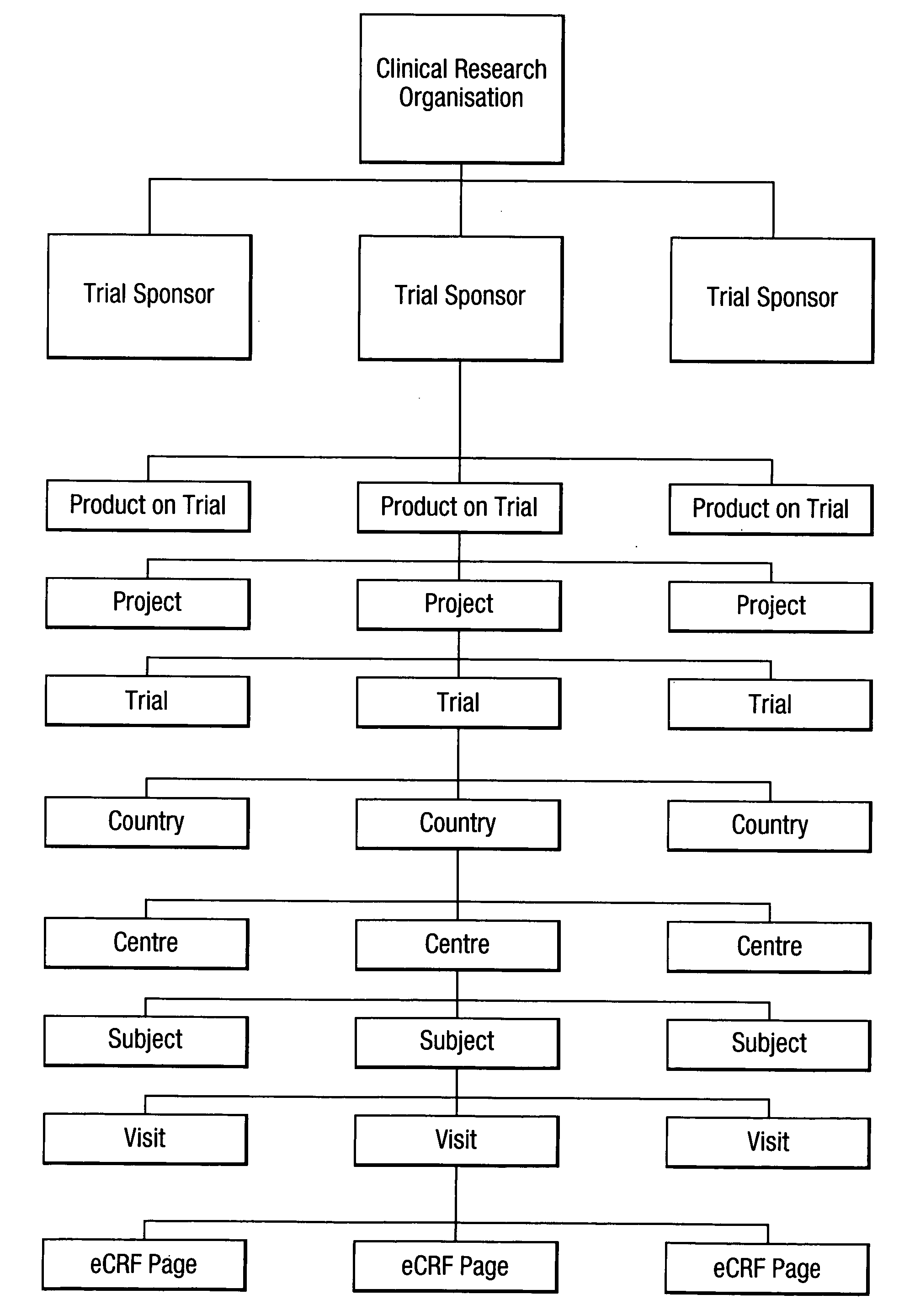

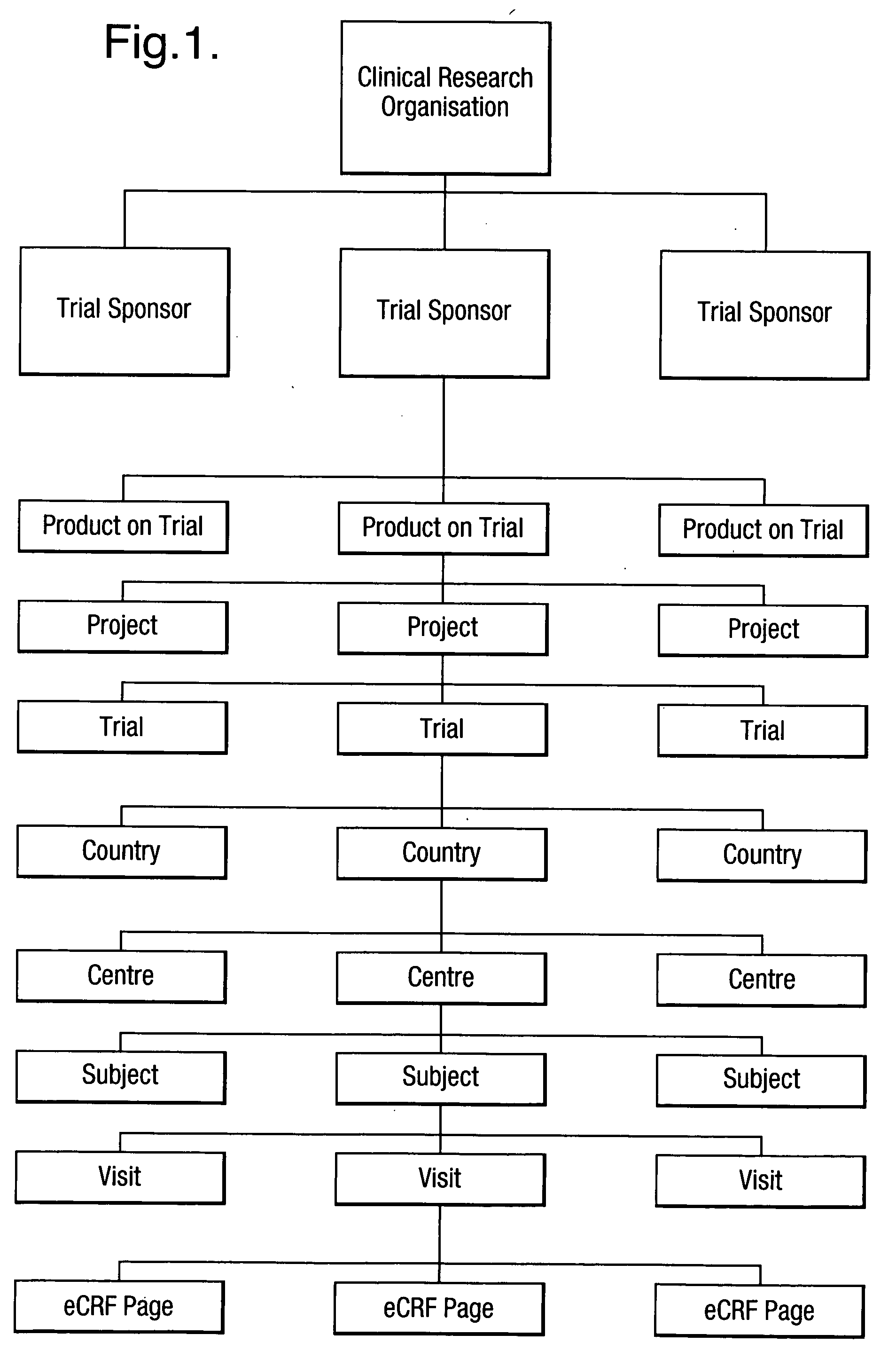

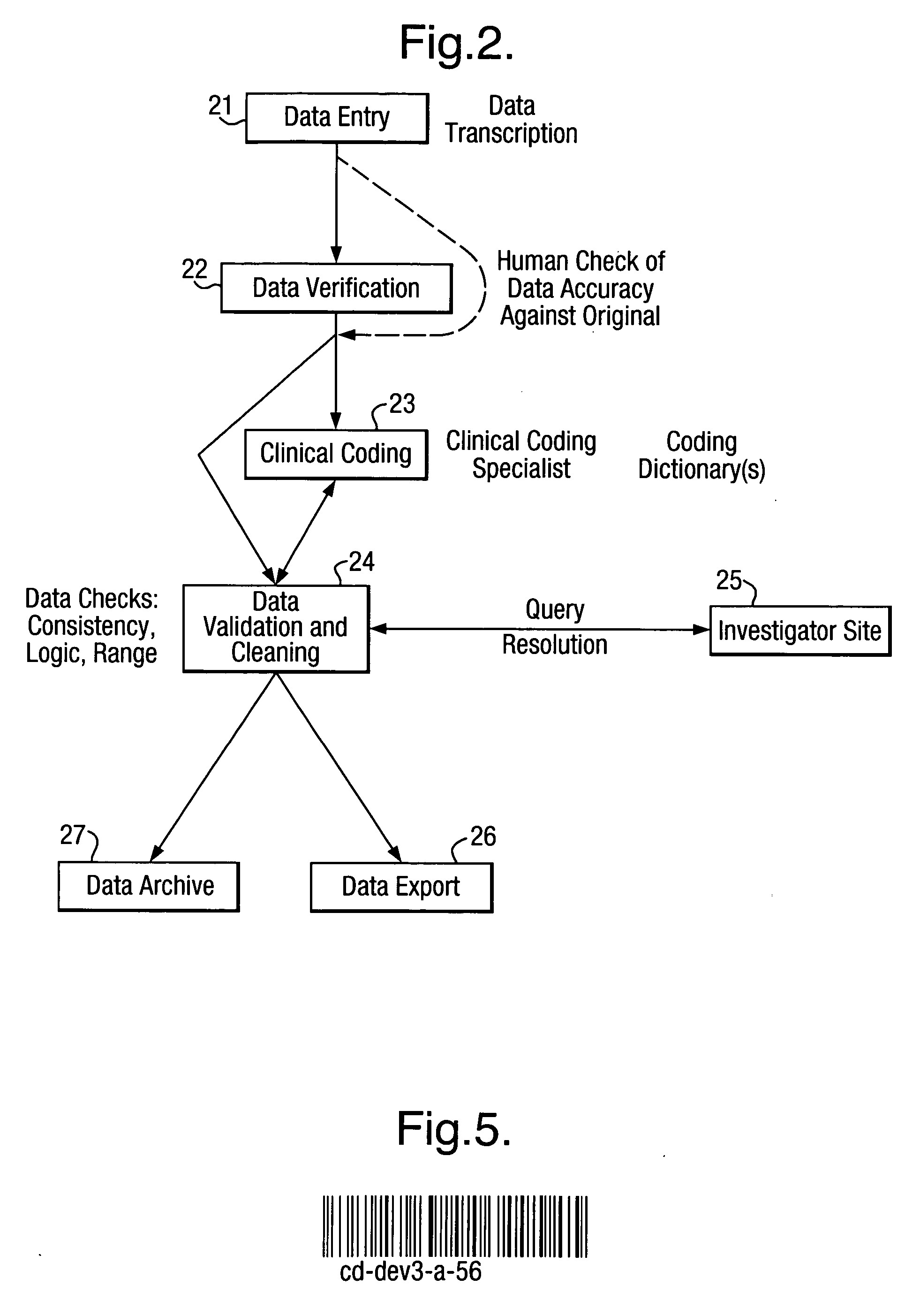

Database system

InactiveUS20040177090A1Easily exposedReduce distanceObject oriented databasesSpecial data processing applicationsData storingData store

A database system which uses a hierarchical structure of data nodes. The nodes are of two types, tag nodes forming the hierarchical structure, each tag node having one or more data storing audit nodes as children. Data entered into the database is stored in the audit nodes and changes to the stored data are made by the addition of new versions of the audit nodes such that the audit nodes form an audit trail for the database. Current data is viewed by supplying via the tag node the most recently added child, and older versions of the database are similarly supplied via the tag nodes transparently presenting older versions of the time-stamped audit nodes. The tag nodes may store data which has been automatically deduced from the data in the audit nodes. Both data and the data structure may be selectively replicated throughout the database using an efficient algorithm.

Owner:CMED GROUP

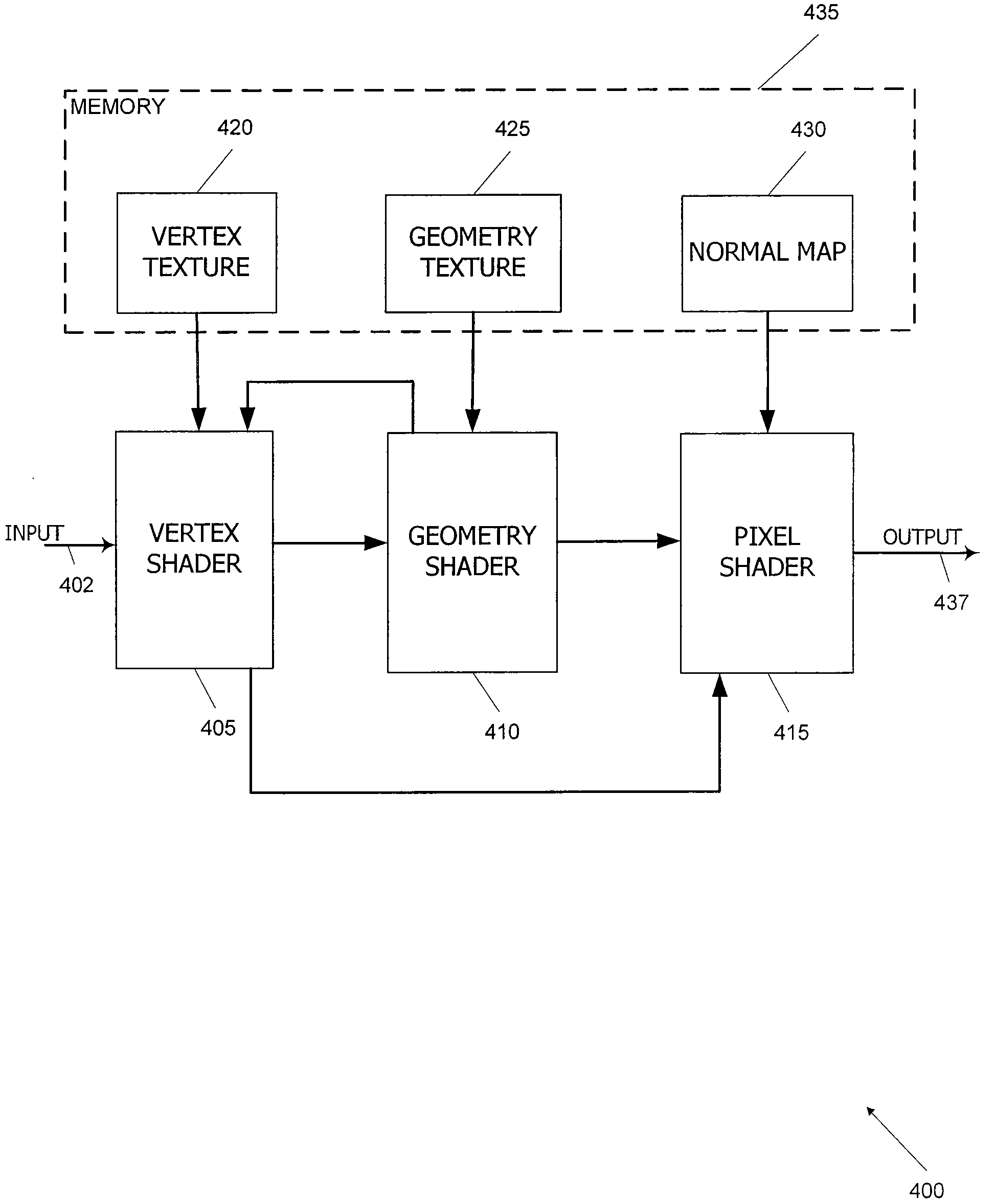

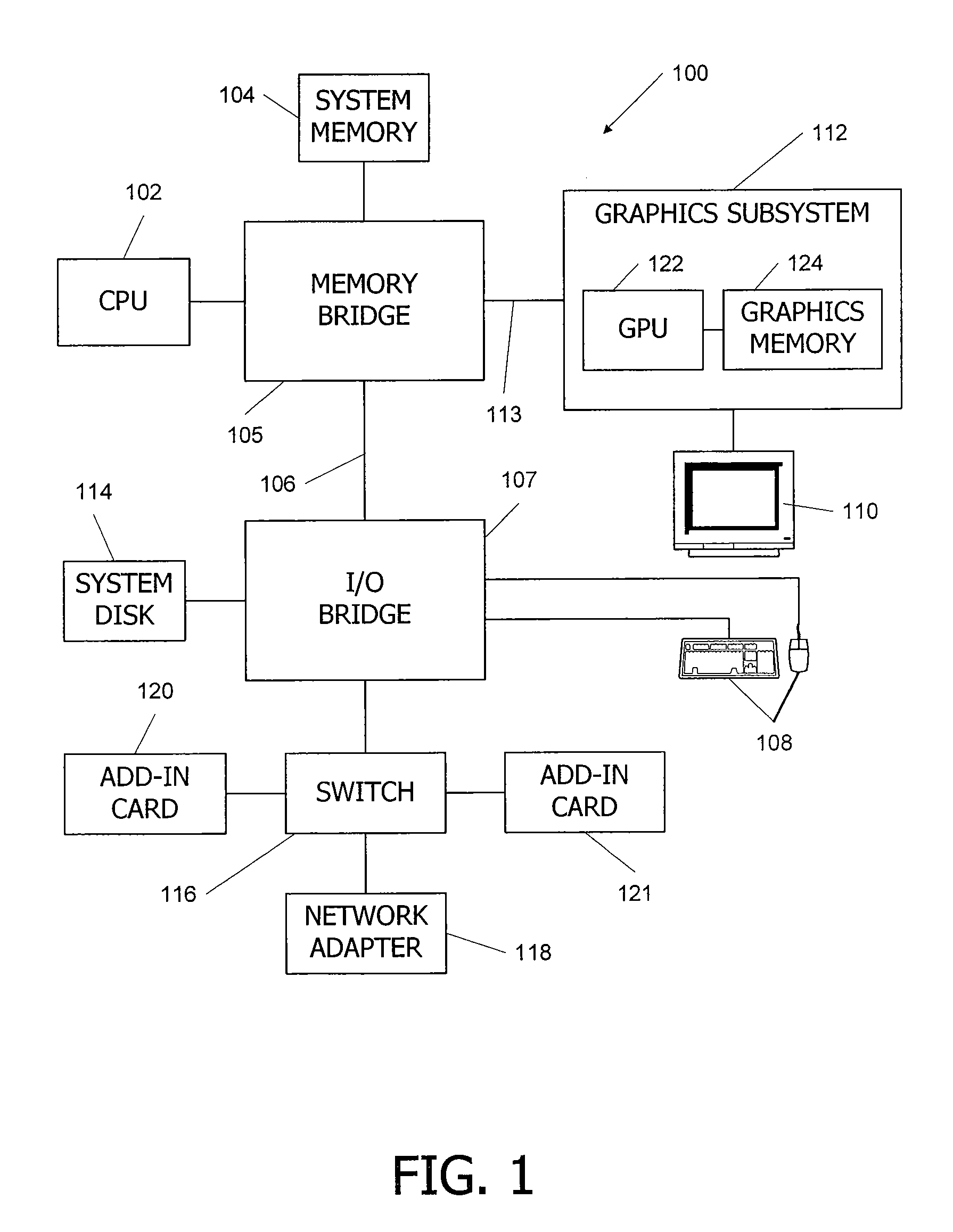

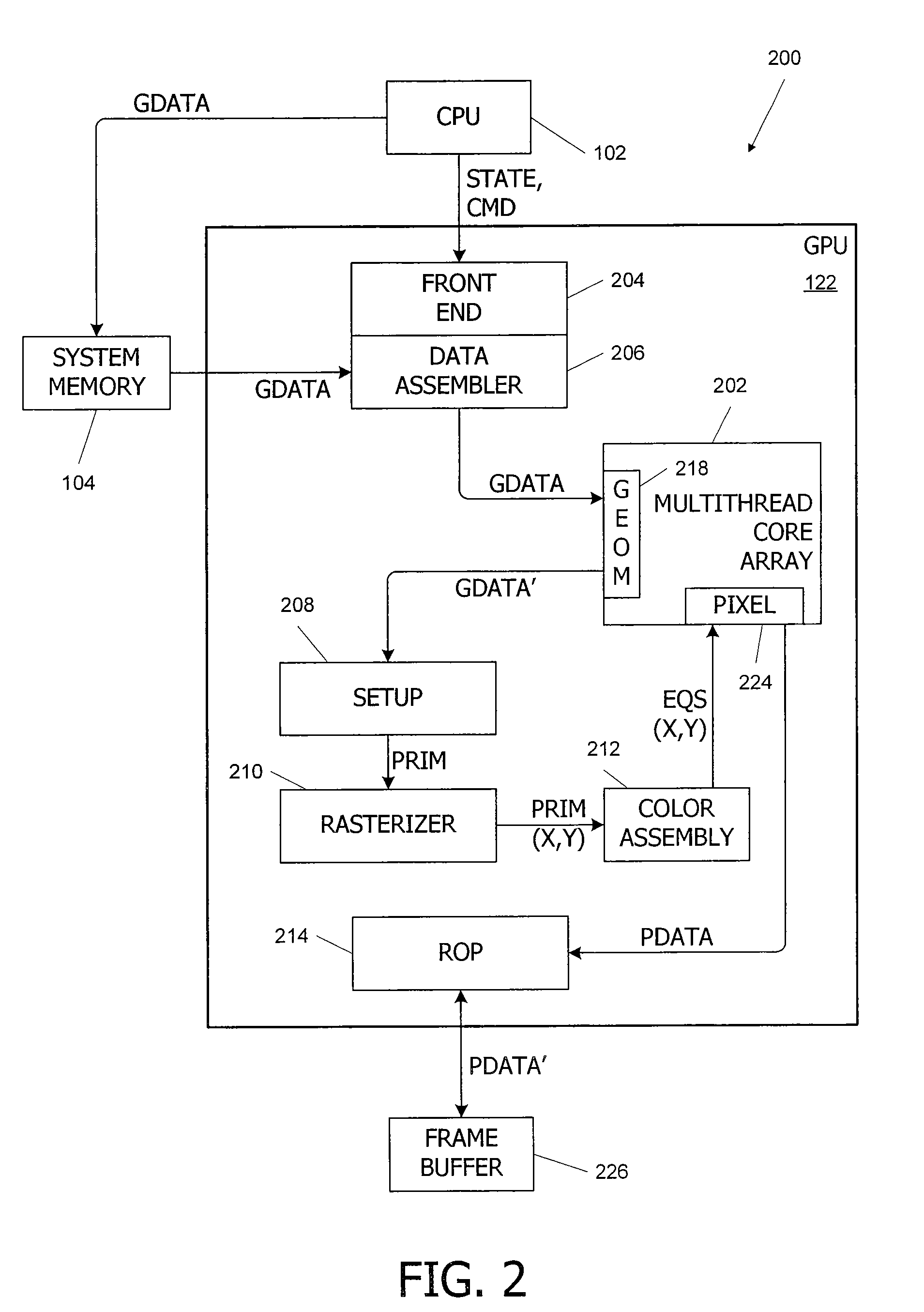

Decompression of vertex data using a geometry shader

InactiveUS20080266287A1Fewer deliveriesImprove throughput3D-image rendering3D modellingComputational scienceGeometry shader

A geometry shader of a graphics processor decompresses a set of vertex data representing a simplified model to create a more detailed representation. The geometry shader receives vertex data including a number of vertices representative of a simplified model. The geometry shader decompresses the vertex data by computing additional vertices to create the more detailed representation. In some embodiments, the geometry shader also receives rules data including information on how the vertex data is to be decompressed.

Owner:NVIDIA CORP

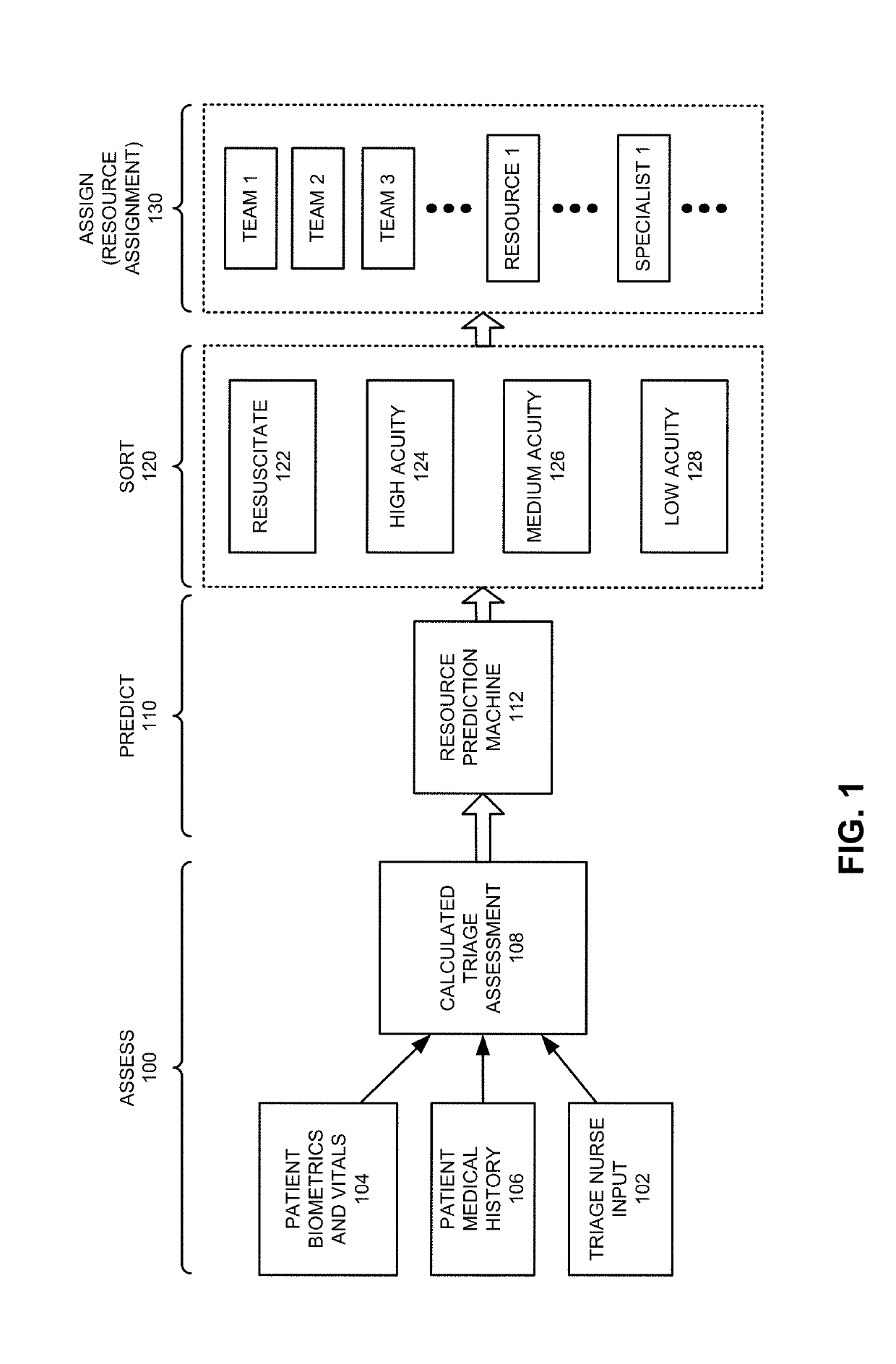

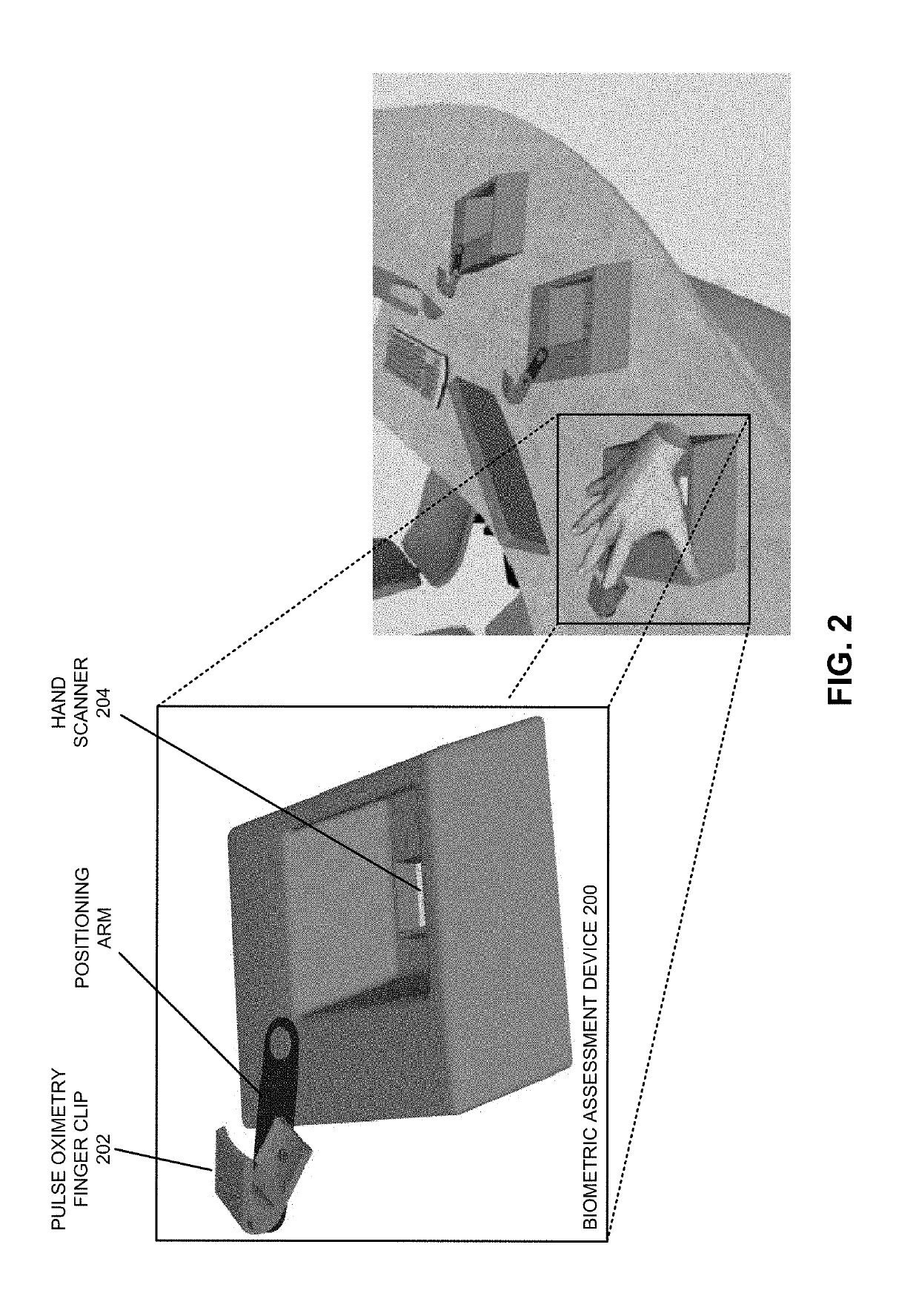

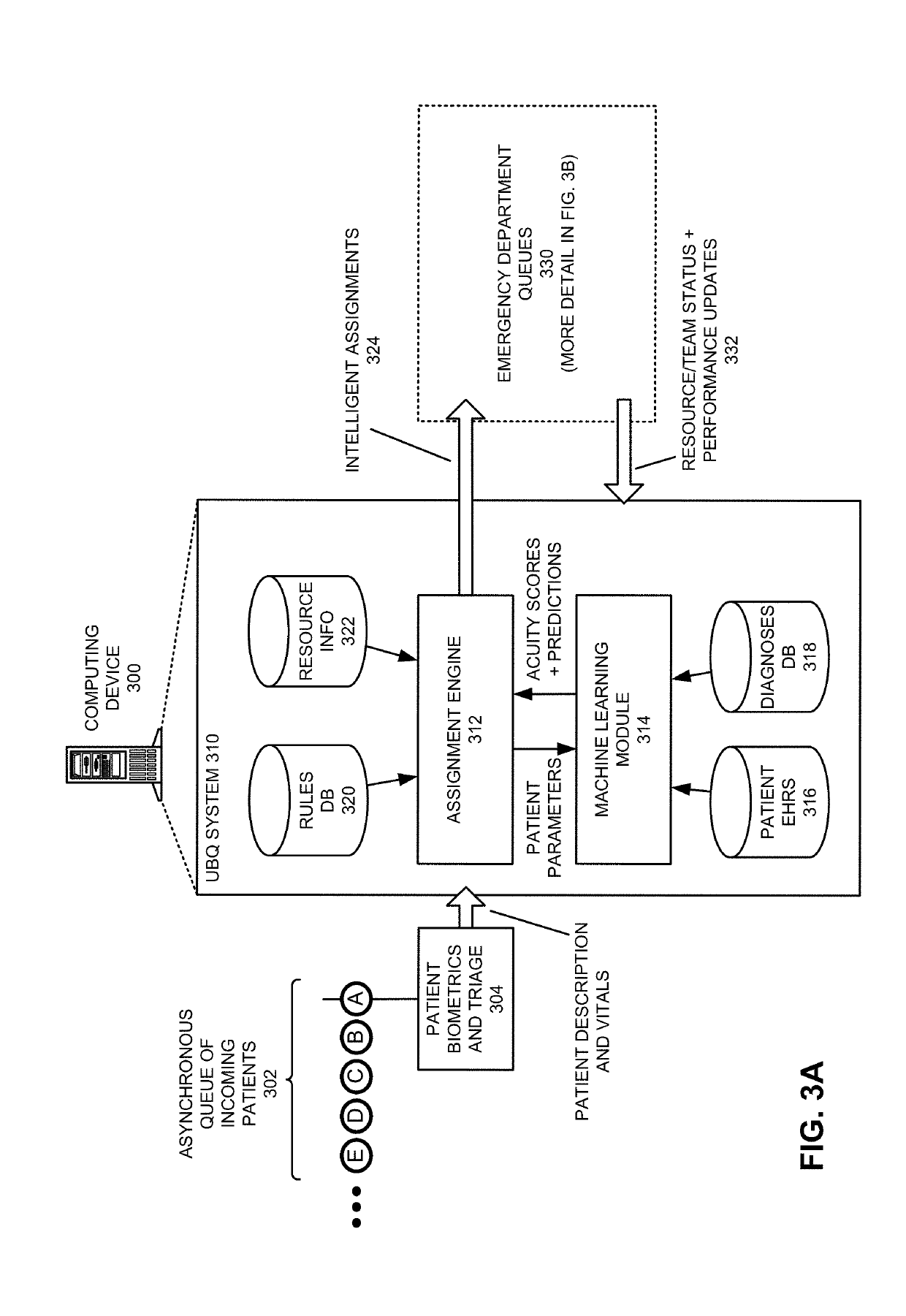

Optimizing emergency department resource management

InactiveUS20190180868A1Good care and efficiencyReduce bottlenecksMedical data miningHealth-index calculationSystems analysisResource management

The disclosed embodiments disclose techniques for optimizing emergency department resource management. During operation, a system receives a set of parameters that is associated with a patient entering an emergency department. The system analyzes the set of parameters in a machine learning module to determine (1) a calculated acuity score that indicates an estimated severity of illness for the patient and (2) a set of workload predictions that predict a set of resources that will be needed to treat the patient in the emergency department. The system then uses the acuity score and the workload predictions to assign a set of predicted tasks that are associated with treating the patient into the work queues of the emergency department.

Owner:UBQ INC

SCSI-to-IP cache storage device and method

ActiveUS7275134B2Reduction of unnecessary trafficLimited bandwidthMultiple digital computer combinationsData switching networksSequential accessStorage cell

A SCSI-to-IP cache storage system interconnects a host computing device or a storage unit to a switched packet network. The cache storage system includes a SCSI interface (40) that facilitates system communications with a host computing device or the storage unit, and an Ethernet interface (42) that allows the system to receive data from and send data to the Internet. The cache storage system further comprises a processing unit (44) that includes a processor (46), a memory (48) and a log disk (52) configured as a sequential access device. The log disk (52) caches data along with the memory (48) resident in the processing unit (44), wherein the log disk (52) and the memory (48) are configured as a two-level hierarchical cache.

Owner:BOARD OF GOVERNORS FOR HIGHER EDUCATION STATE OF RHODE ISLAND & PROVIDENCE PLANTATIONS

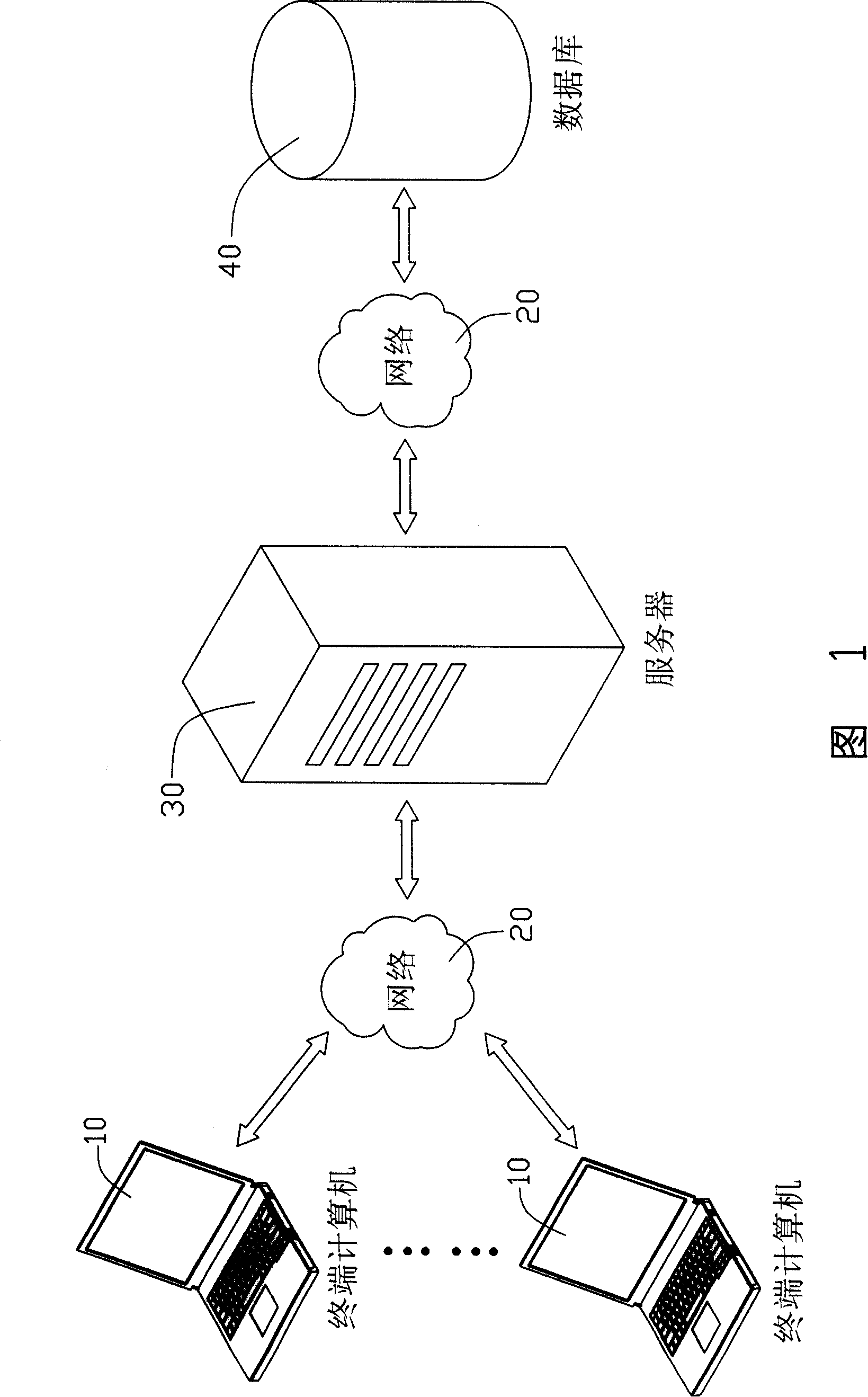

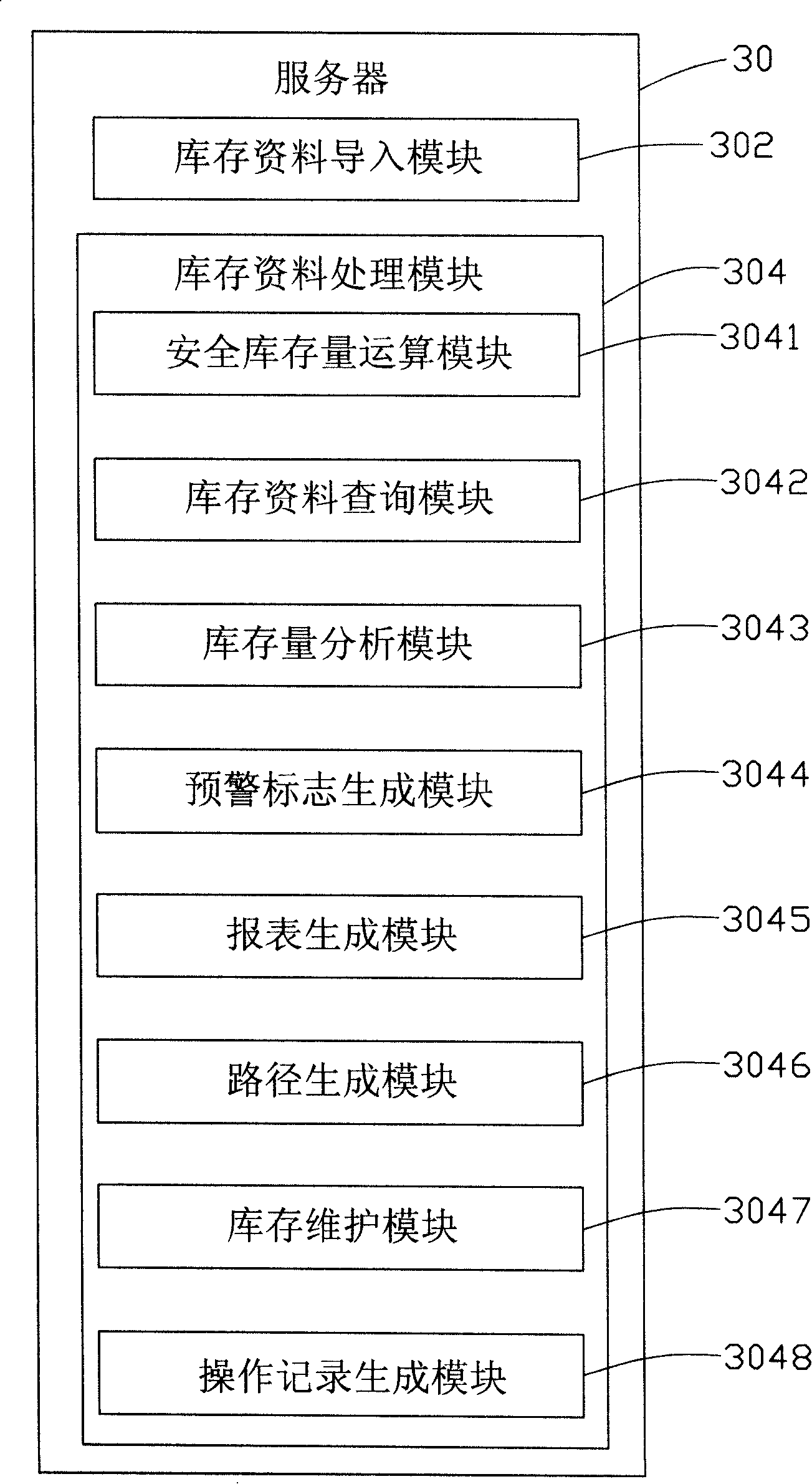

Inventory management system

InactiveCN101206729AReduce bottlenecksAvoid deficienciesSpecial data processing applicationsInformation processingComputer science

The invention provides an inventory management system which comprises at least one terminal computer, one database and one server; the terminal computer is used to have management operation on inventory information; the database is used to store the inventory information; the server is connected with the terminal computer and the database respectively through network. The server comprises an inventory information import module and an inventory information processing module; the inventory information import module is used to import all the inventory information which is to be treated with processing to the database; through adopting the method of mathematical statistics on the utilization rate of raw material, the inventory information processing module determines the standard deviation of raw material in a certain time, combines the safety factor of raw material and the average delivery time of suppliers to forecast safety stock in a certain future time, inquires the current inventory, judges whether the current inventory relative to the safety inventory is excessive or inadequate, produces an inventory state table with a warning mark and deliveries the state table to a relative external system and the suppliers.

Owner:SHENZHEN FUTAIHONG PRECISION IND CO LTD

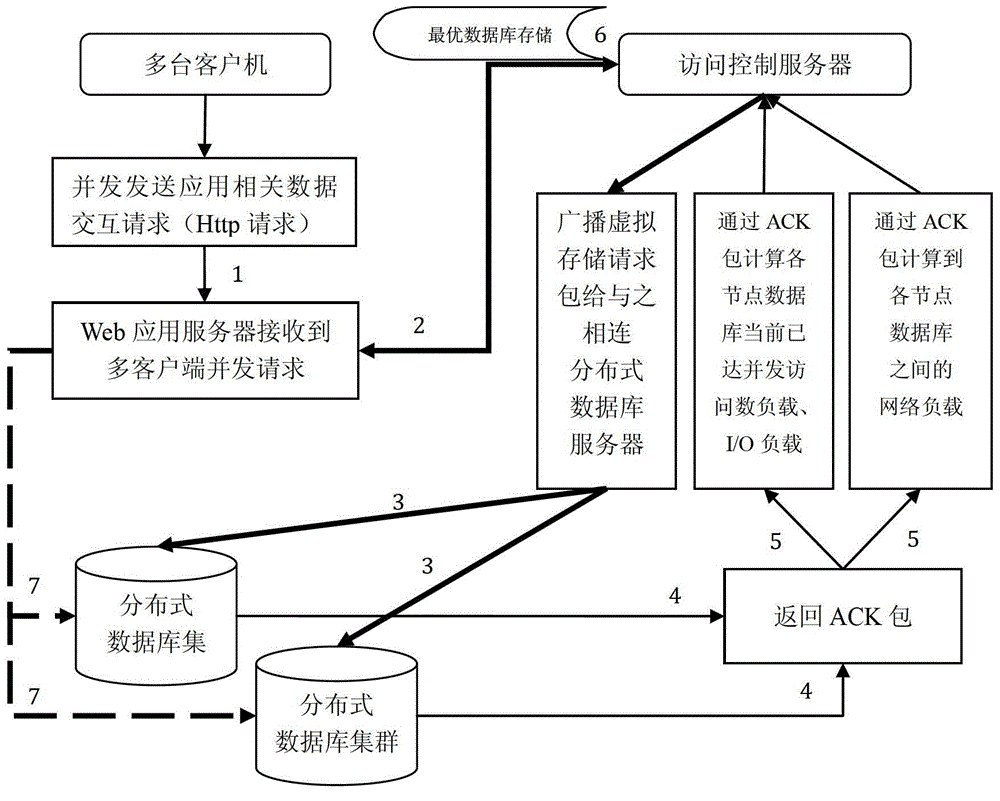

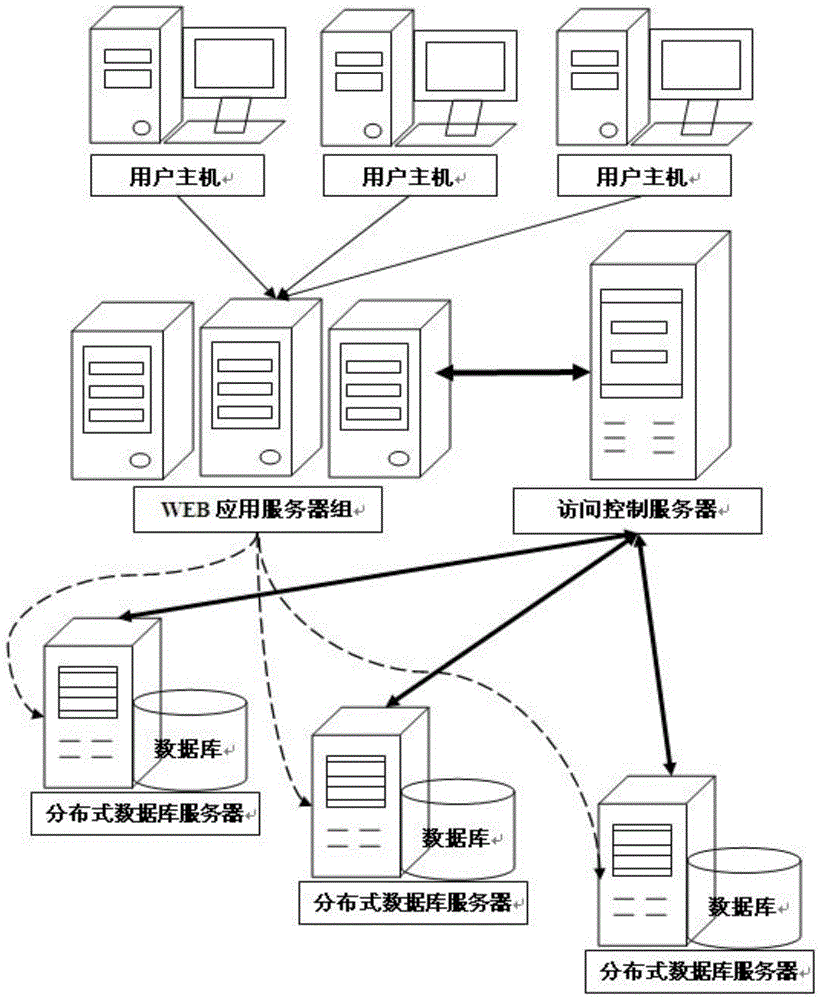

Distributed database concurrence storage virtual request mechanism

ActiveCN103338252AImprove QosImprove throughputTransmissionSpecial data processing applicationsTraffic capacityData stream

A system of a distributed database concurrence storage virtual request mechanism comprises a client for sending storage requests, a web application server, an access control server and a distributed database cluster. Based on the system, a distributed database storage management mechanism scheme is put forward, the mechanism particularly considers the flow request required by the number of concurrence users to reasonably store the user data flow in a distributed manner, that is, under the framework of the cloud database, the user data flow is distributed to the most reasonable database cluster for storage according to the current network load and the current database concurrence connected load. The mechanism realizes management of the distributed database cluster by utilizing the access control server, and an ACK (acknowledgement character) information packet fed back by the database cluster calculates the optimized storage strategy and completes final data storage through a corresponding flexible algorithm.

Owner:NANJING UNIV OF POSTS & TELECOMM

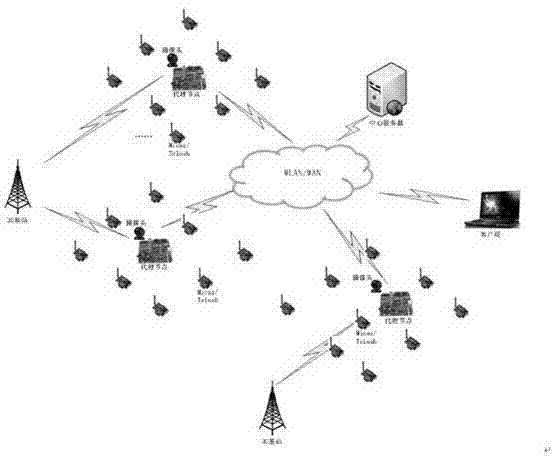

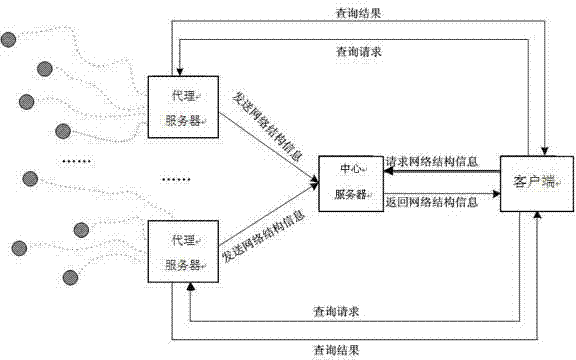

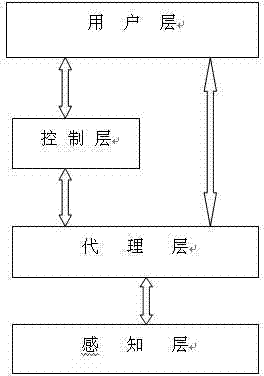

Data management system for distributed heterogeneous sensing network and data management method for data management system

InactiveCN102932846ARich application requirementsReduce bottlenecksNetwork traffic/resource managementNetwork topologiesSensor nodeData management

The invention discloses a data management system for a distributed heterogeneous sensing network and a data management method for the data management system. The heterogeneous sensor network is subjected to data management by adopting a multi-layer architecture system, so that the whole sensing network is convenient and easy to arrange and adjust. Furthermore, the system performs distributed storage on data acquired by wireless sensor nodes, so that the bottleneck problem of concentrated data processing is solved, and the data processing efficiency is greatly improved. A large quantity of data acquired by data sensing nodes of a camera are quickly transmitted by a wireless local area network, so that an application requirement of a client on an image video can be met. The system supplies a high-efficiency data inquiry function and a real-time topological display function to a client; a requirement of the client for complicatedly processing the data acquired by the sensor nodes can be met; and a running state of the whole sensor network can be timely reflected to the client.

Owner:NANJING UNIV

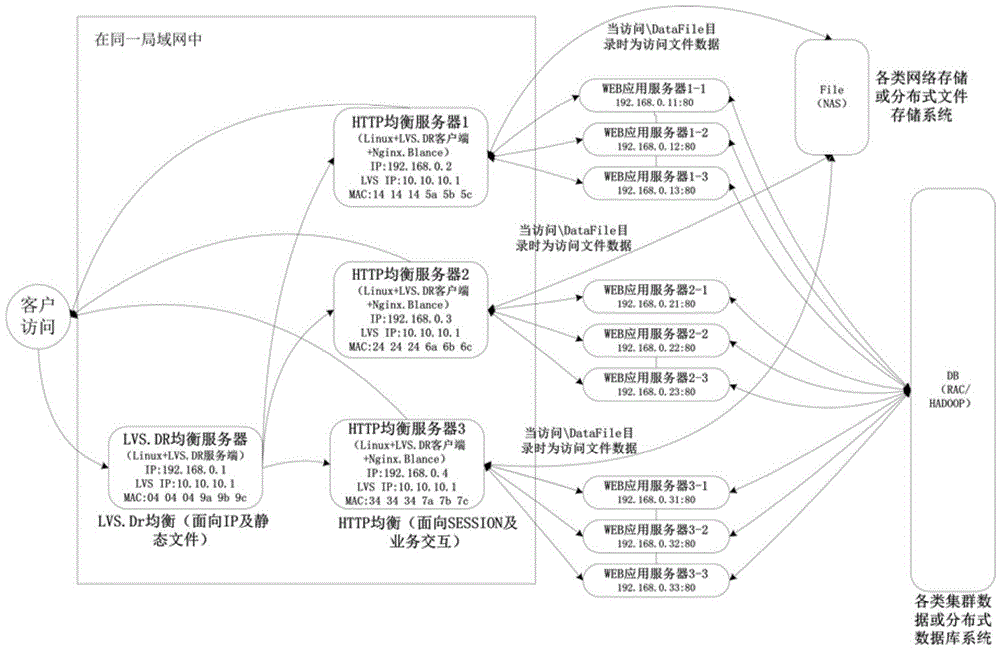

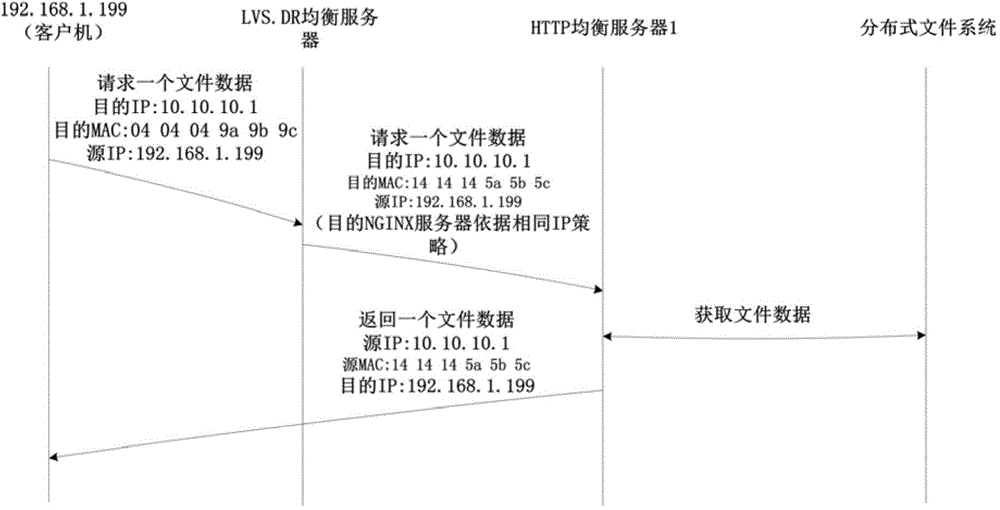

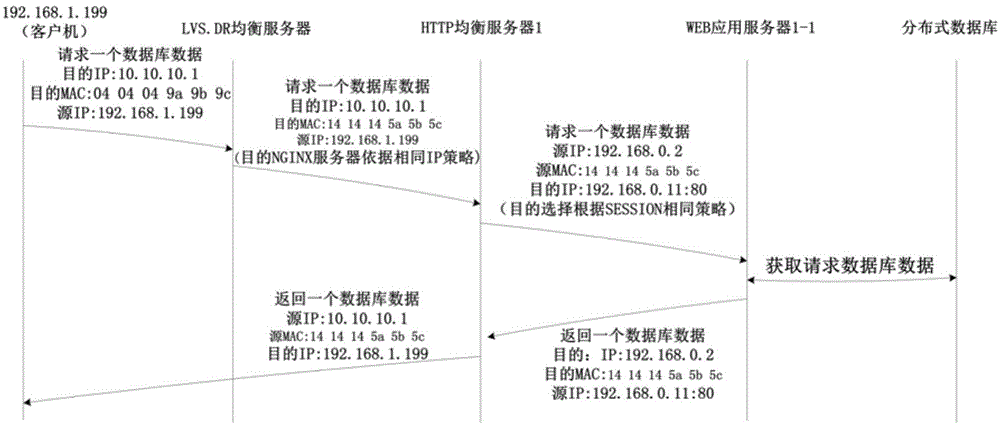

Combined equalizing method based on large-scale website

The invention discloses a combined equalizing method based on a large-scale website. The combined equalizing method comprises the following steps: classifying information of the comprehensive large-scale website into data files and database data; equalizing the data files through LVS.DR (Linux Virtual Server Direct Routing); performing IP binding on an IPVS (IP Virtual Server) module to achieve an IP-level equilibrium strategy; meanwhile locally accessing files in an independent folder through an NGINX, so as to return the data files immediately, directly return the data to a client, and solve the network bottleneck problem of an equalizing device; performing reverse proxy on the database data by NGINX and equalizing the database data to a corresponding application server, and providing data support through a distributed database by the application server. The session value is not required to be shared by shared memory, and the automatic sticky connection to the corresponding application server is only required according to the session, so as to wholly reduce the possible bottleneck caused by session sharing.

Owner:JIANGSU AISINO TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com