Patents

Literature

38results about How to "Reduce data access" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

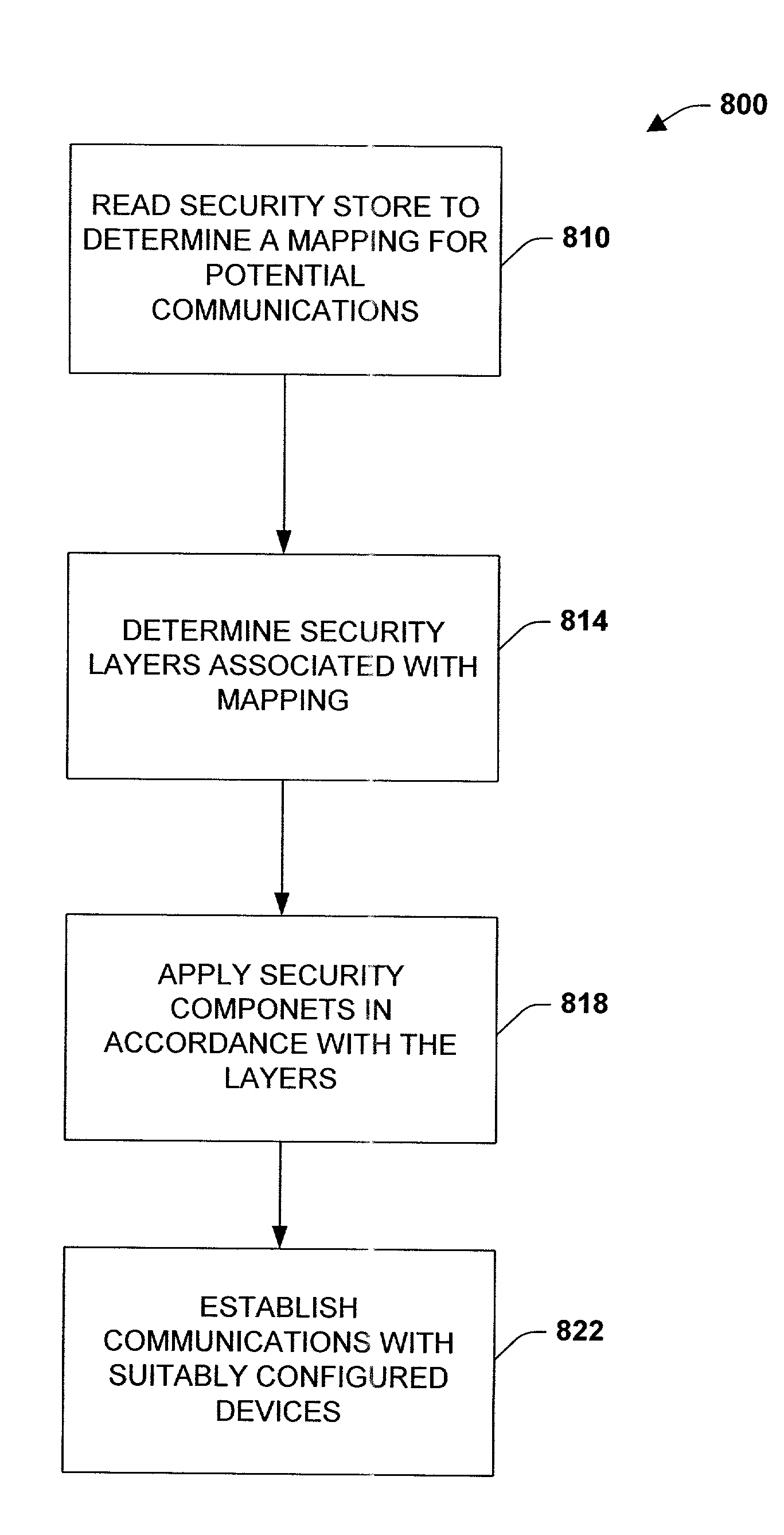

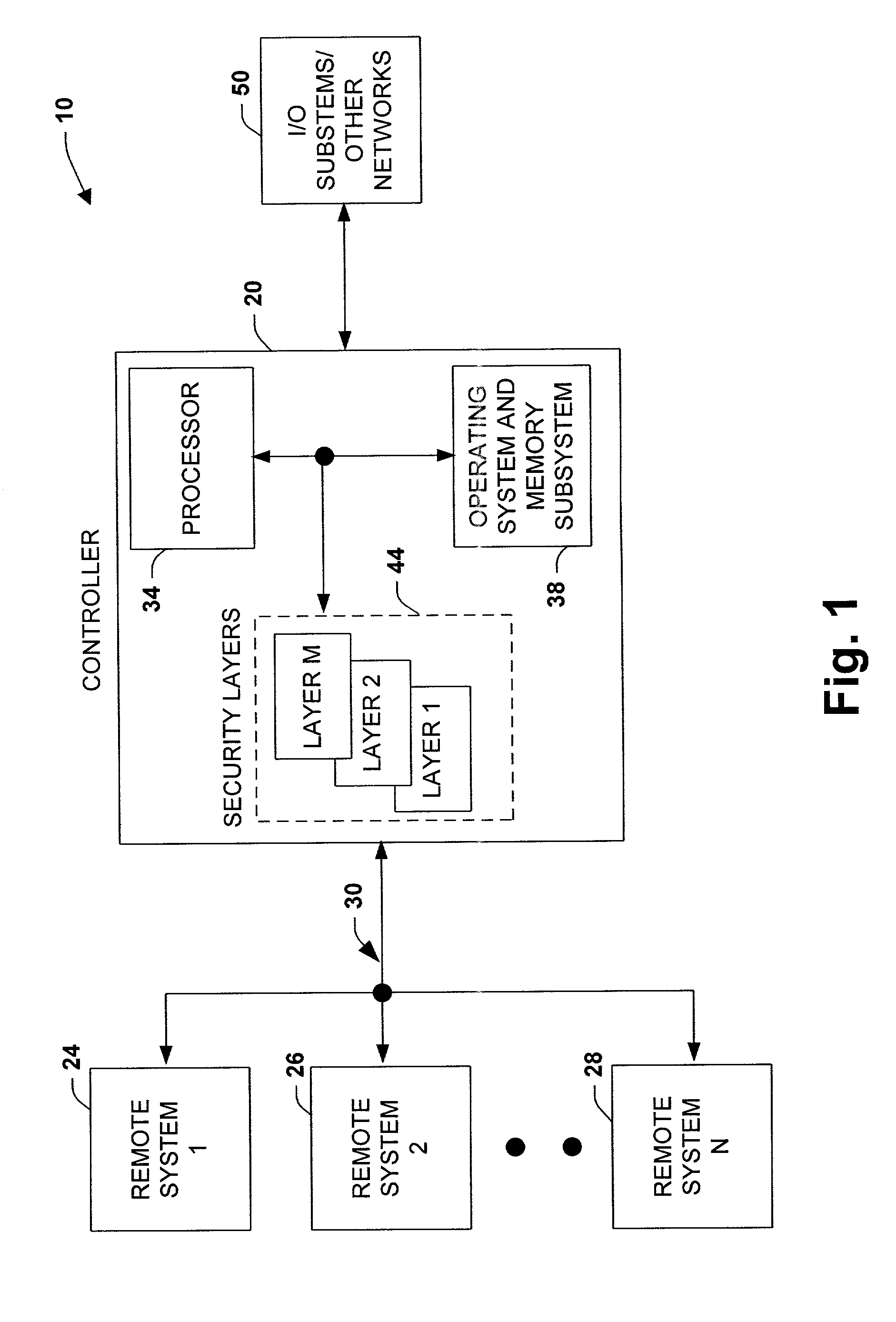

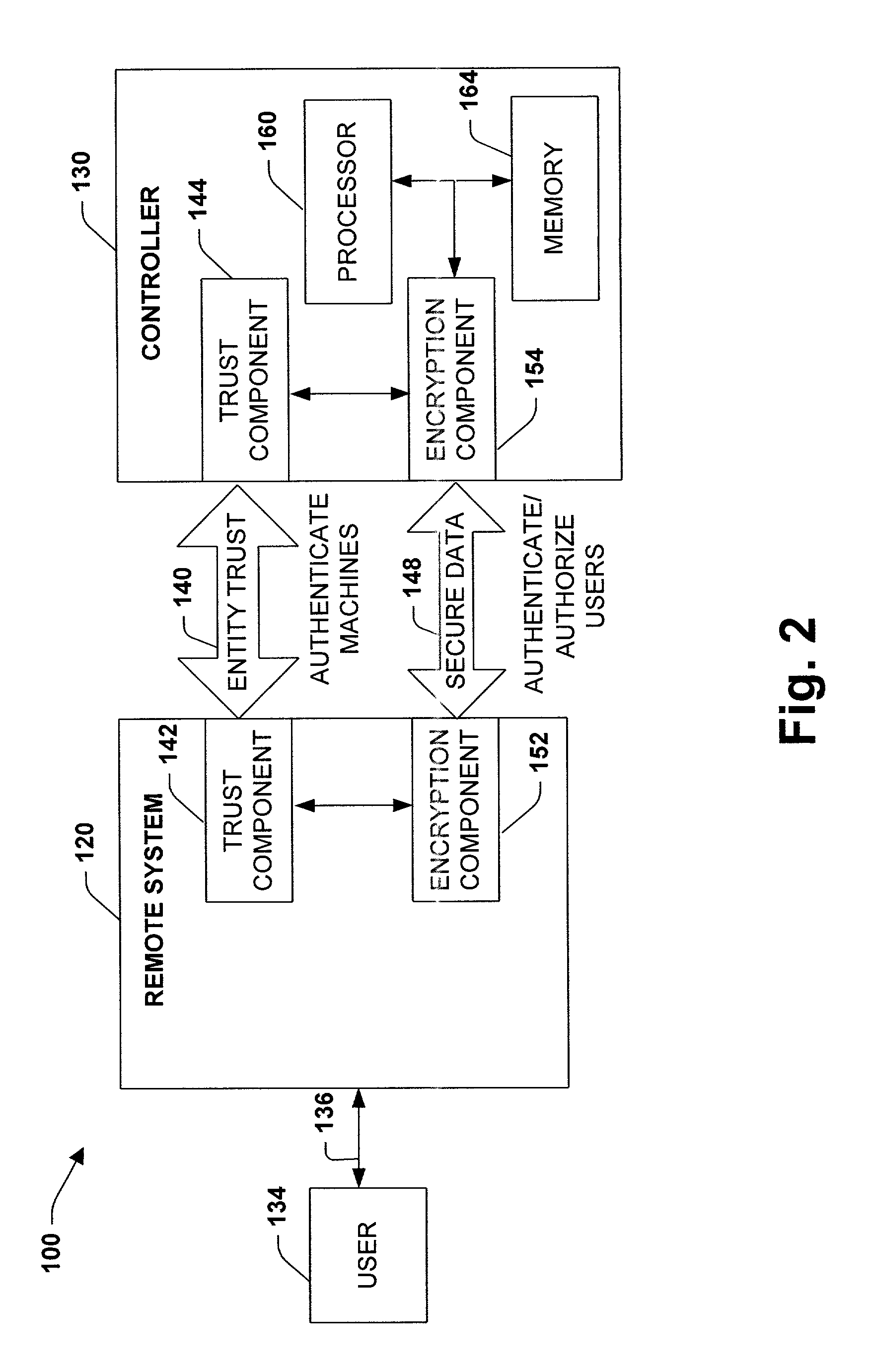

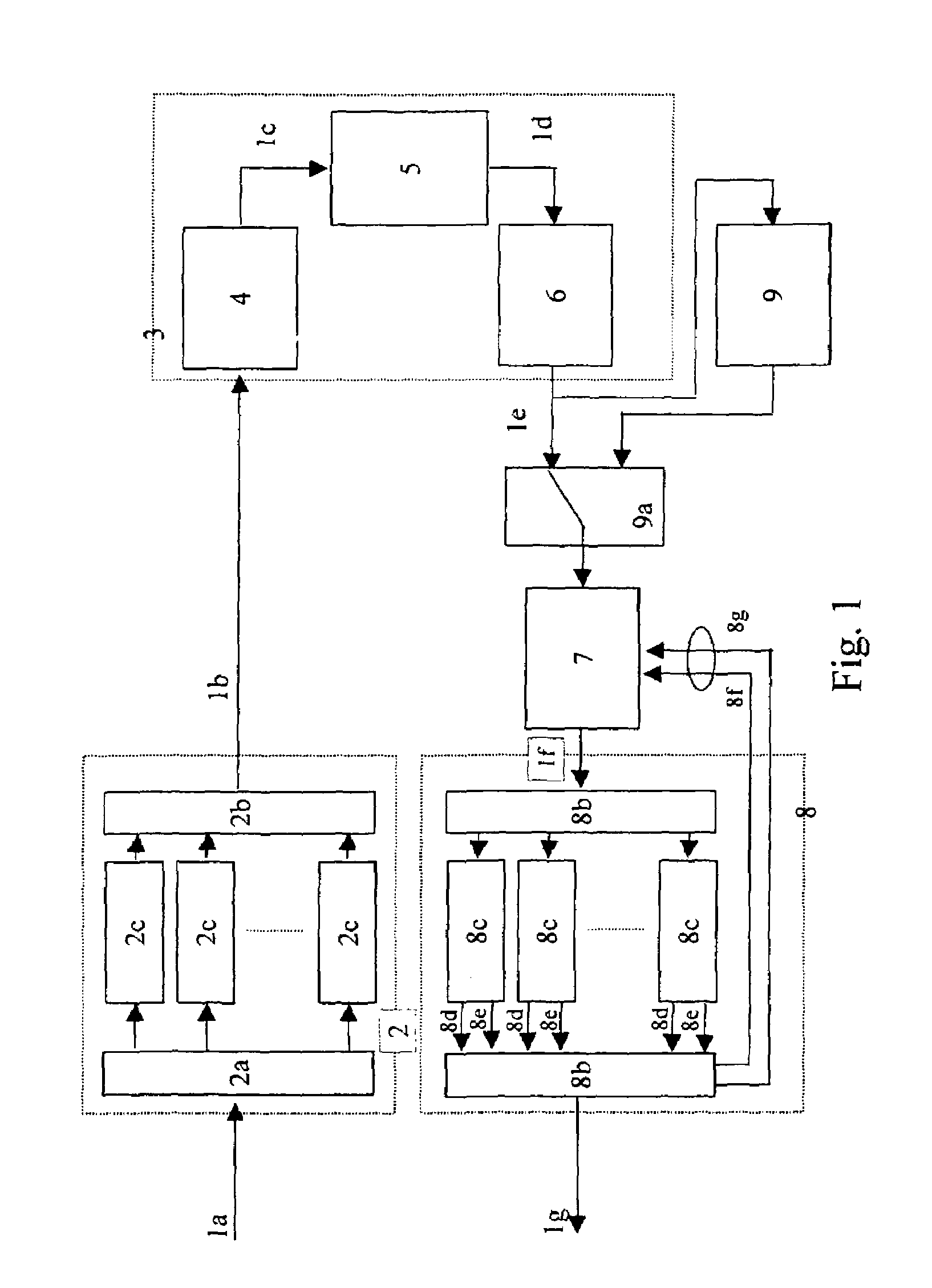

System and methodology providing multi-tier-security for network data exchange with industrial control components

ActiveUS7536548B1Well formedMitigates unauthorized data accessDigital data processing detailsUser identity/authority verificationOperational systemData access

The present invention relates to a system and methodology facilitating network security and data access in an industrial control environment. An industrial control system is provided that includes an industrial controller to communicate with a network. At least one security layer can be configured in the industrial controller, wherein the security layer can be associated with one or more security components to control and / or restrict data access to the controller. An operating system manages the security layer in accordance with a processor to limit or mitigate communications from the network based upon the configured security layer or layers.

Owner:ROCKWELL AUTOMATION TECH

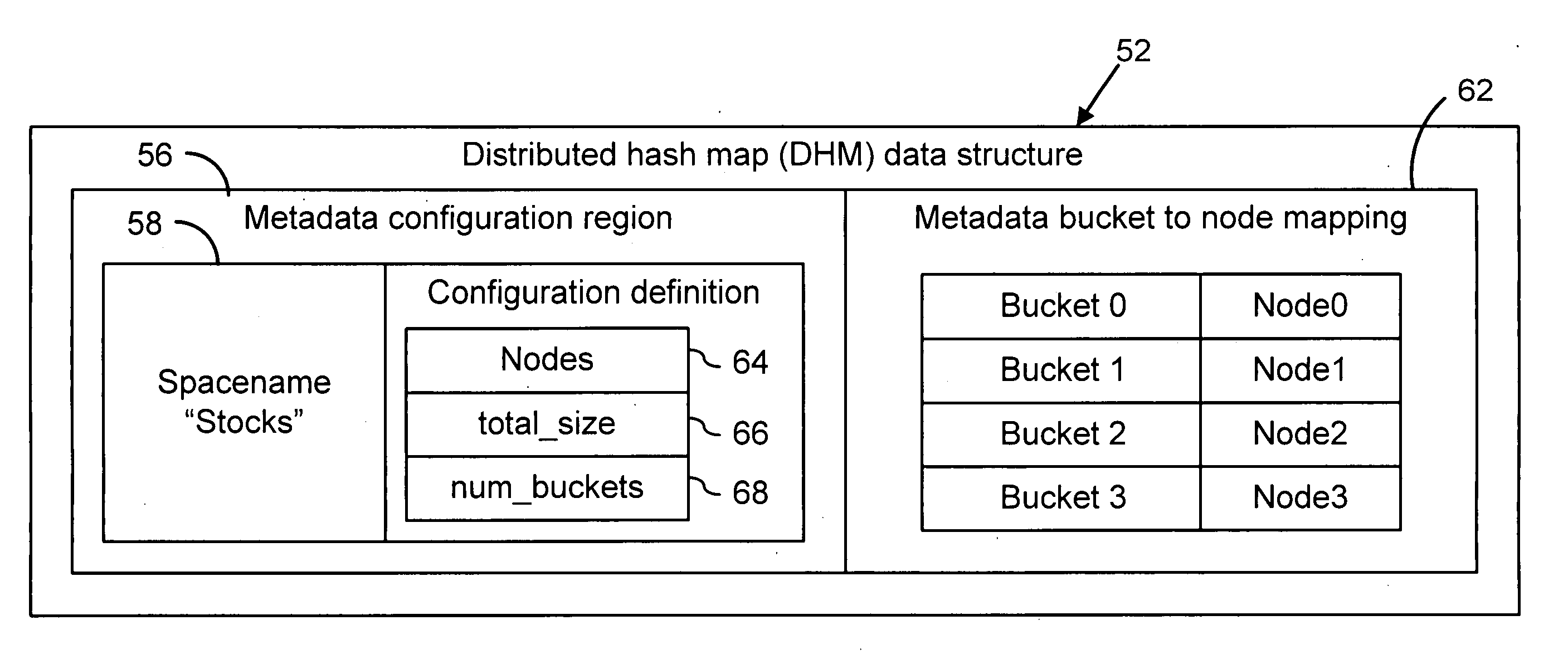

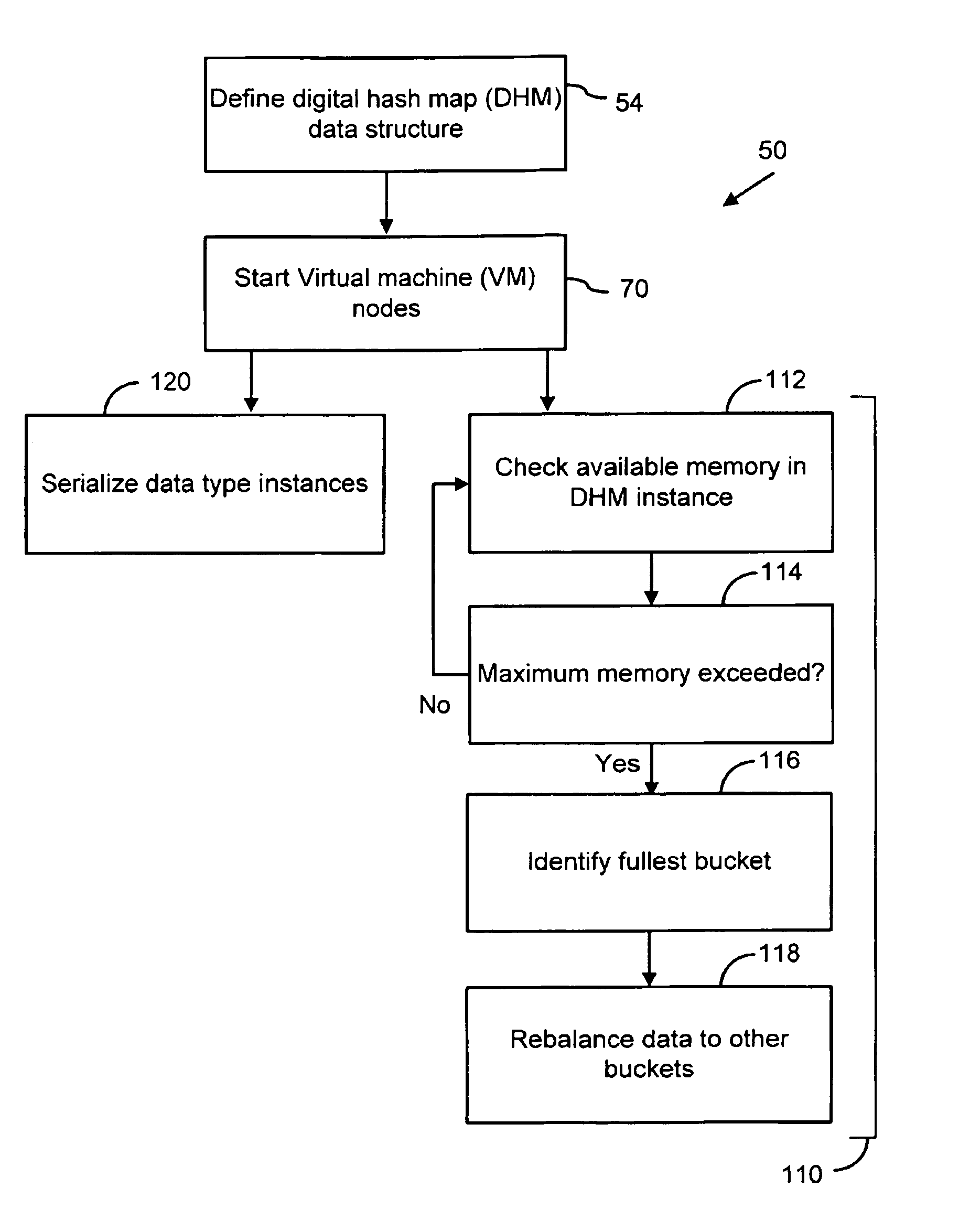

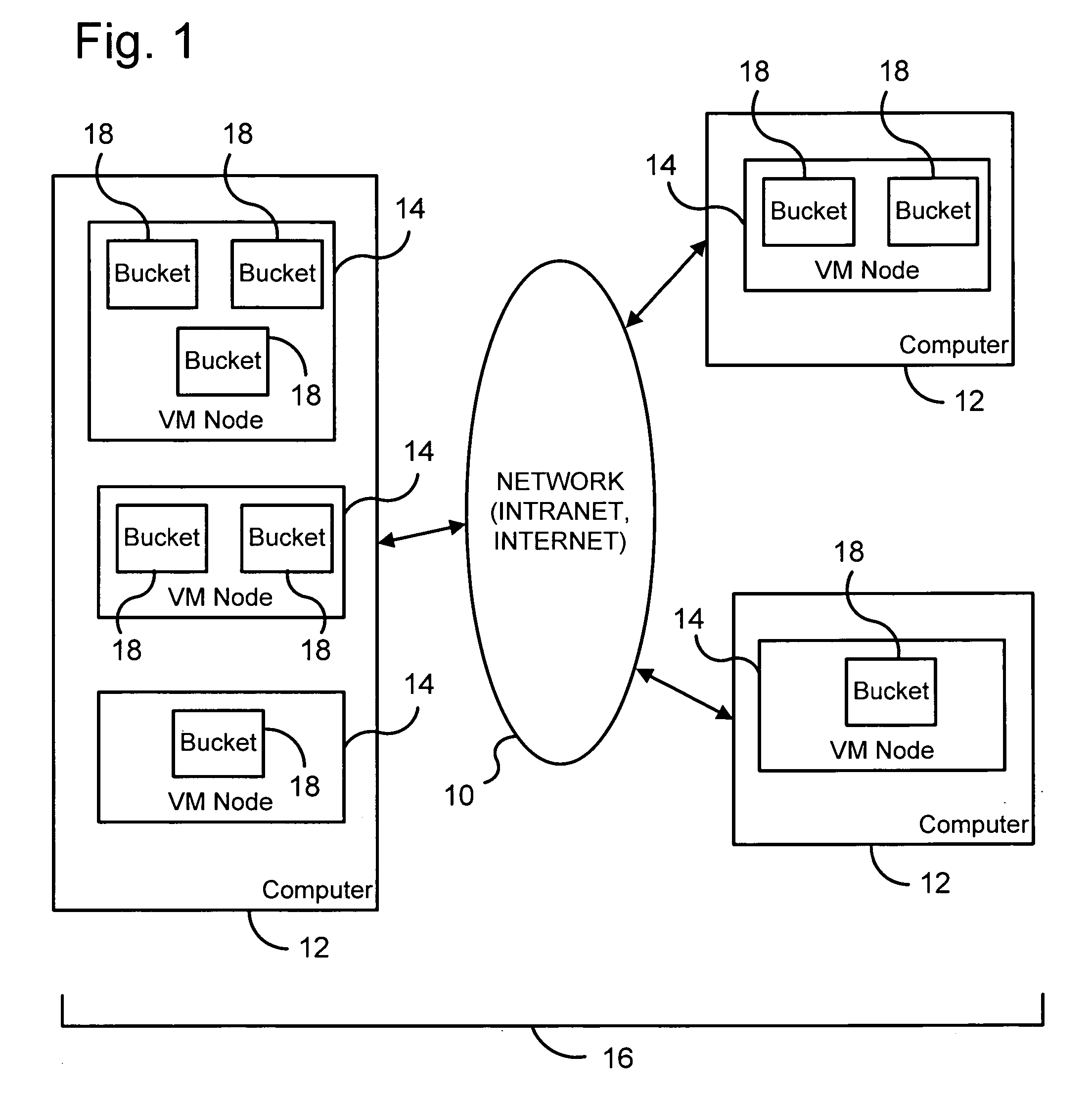

Distributed data management system

ActiveUS20060277180A1Improve scalabilityReduce performanceDigital data information retrievalDigital data processing detailsExtensibilityData management

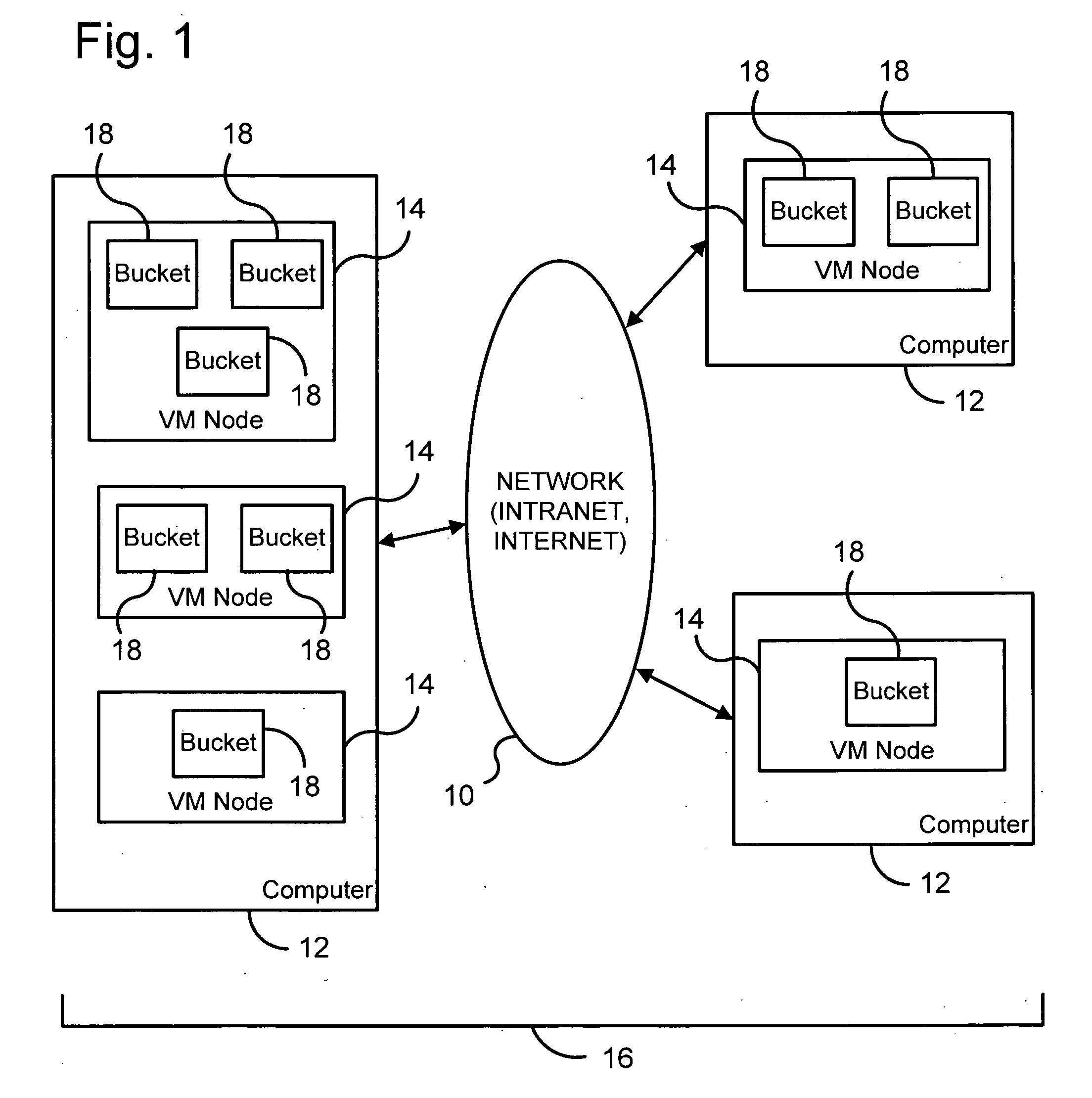

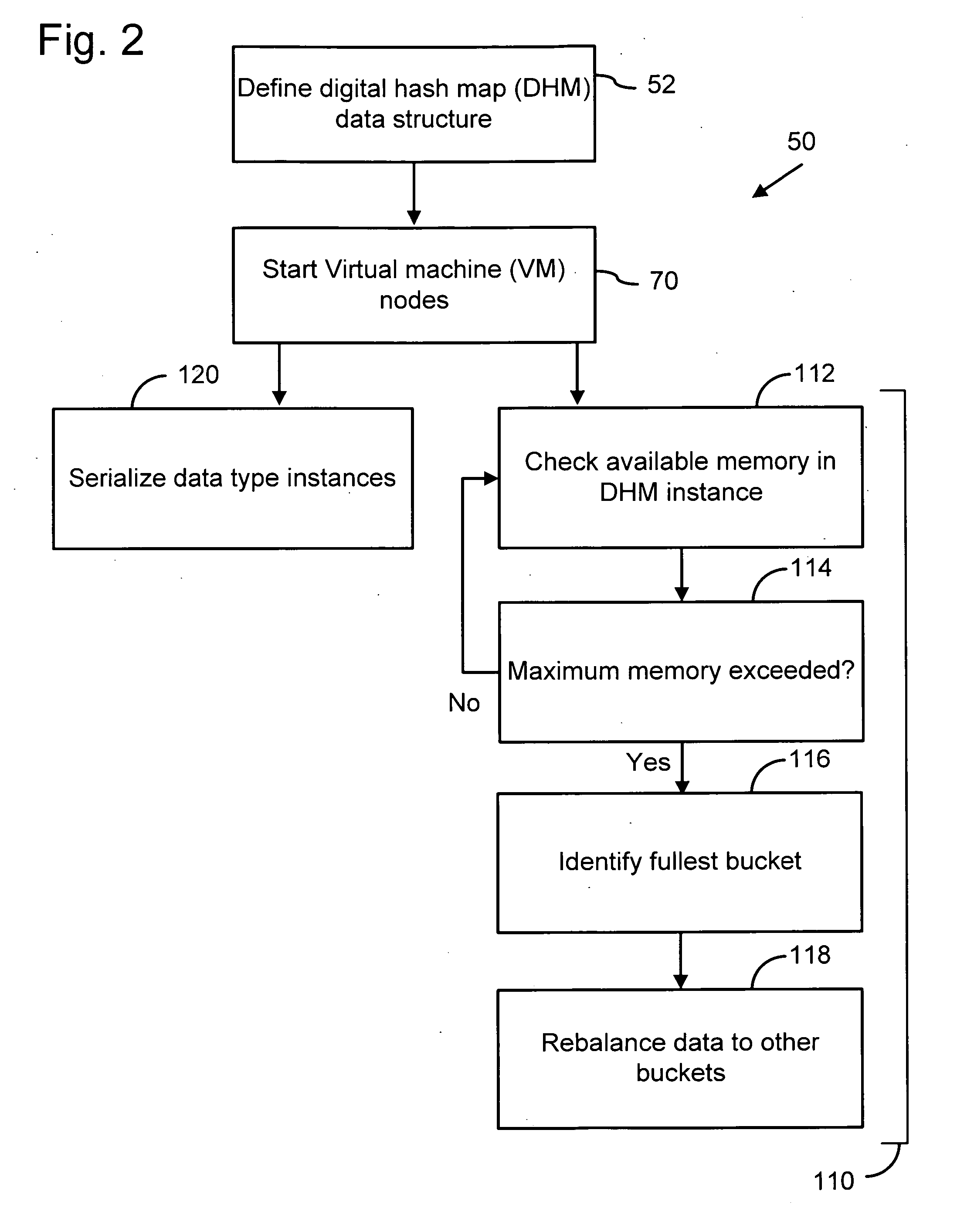

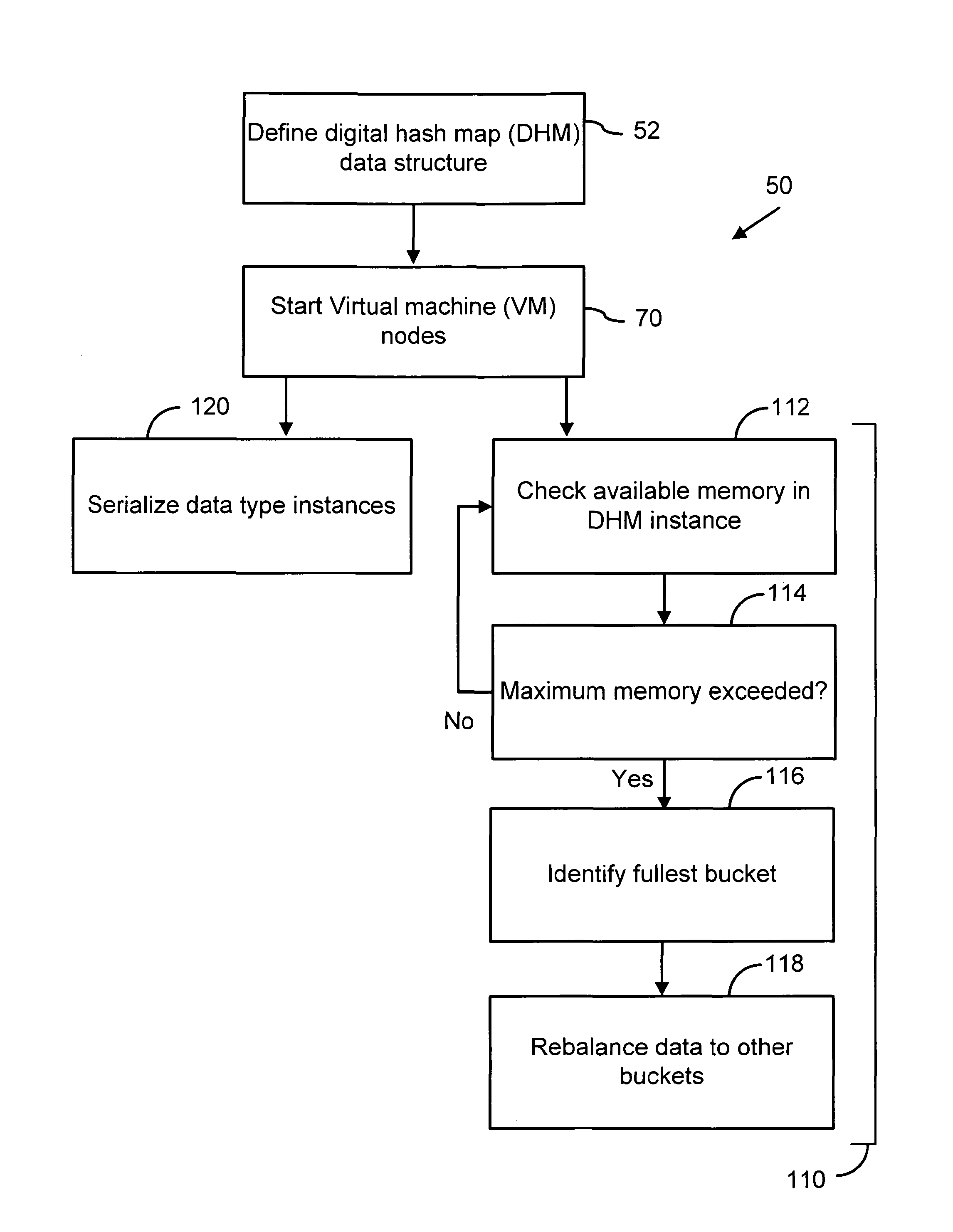

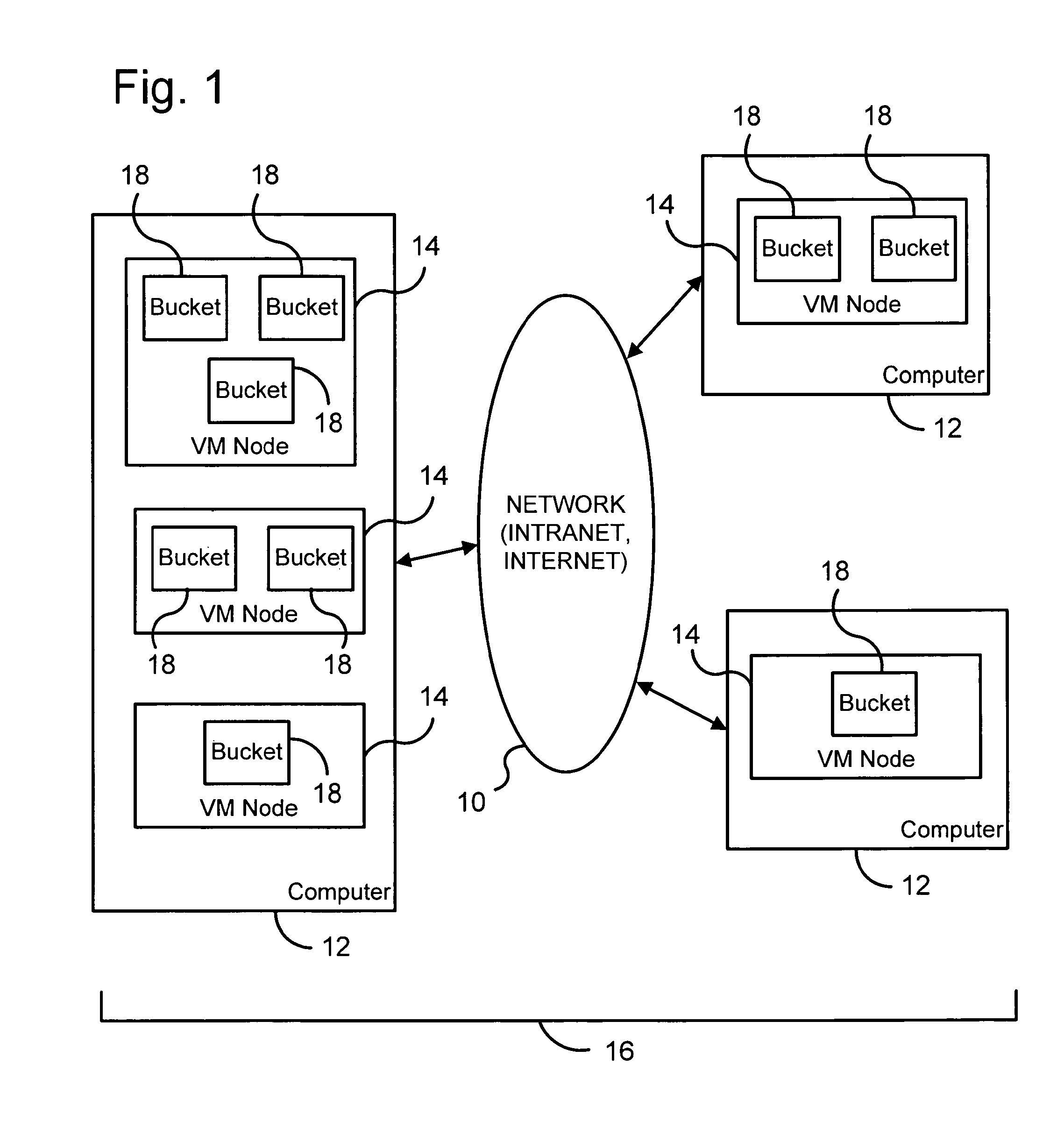

A distributed data management system has multiple virtual machine nodes operating on multiple computers that are in communication with each other over a computer network. Each virtual machine node includes at least one data store or “bucket” for receiving data. A digital hash map data structure is stored in a computer readable medium of at least one of the multiple computers to configure the multiple virtual machine nodes and buckets to provide concurrent, non-blocking access to data in the buckets, the digital hash map data structure including a mapping between the virtual machine nodes and the buckets. The distributed data management system employing dynamic scalability in which one or more buckets from a virtual machine node reaching a memory capacity threshold are transferred to another virtual machine node that is below its memory capacity threshold.

Owner:GOPIVOTAL

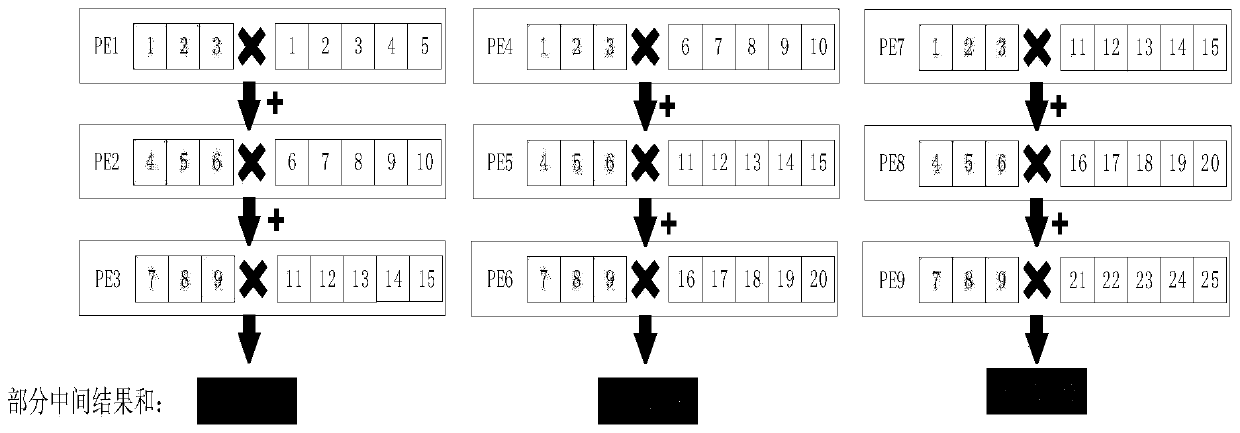

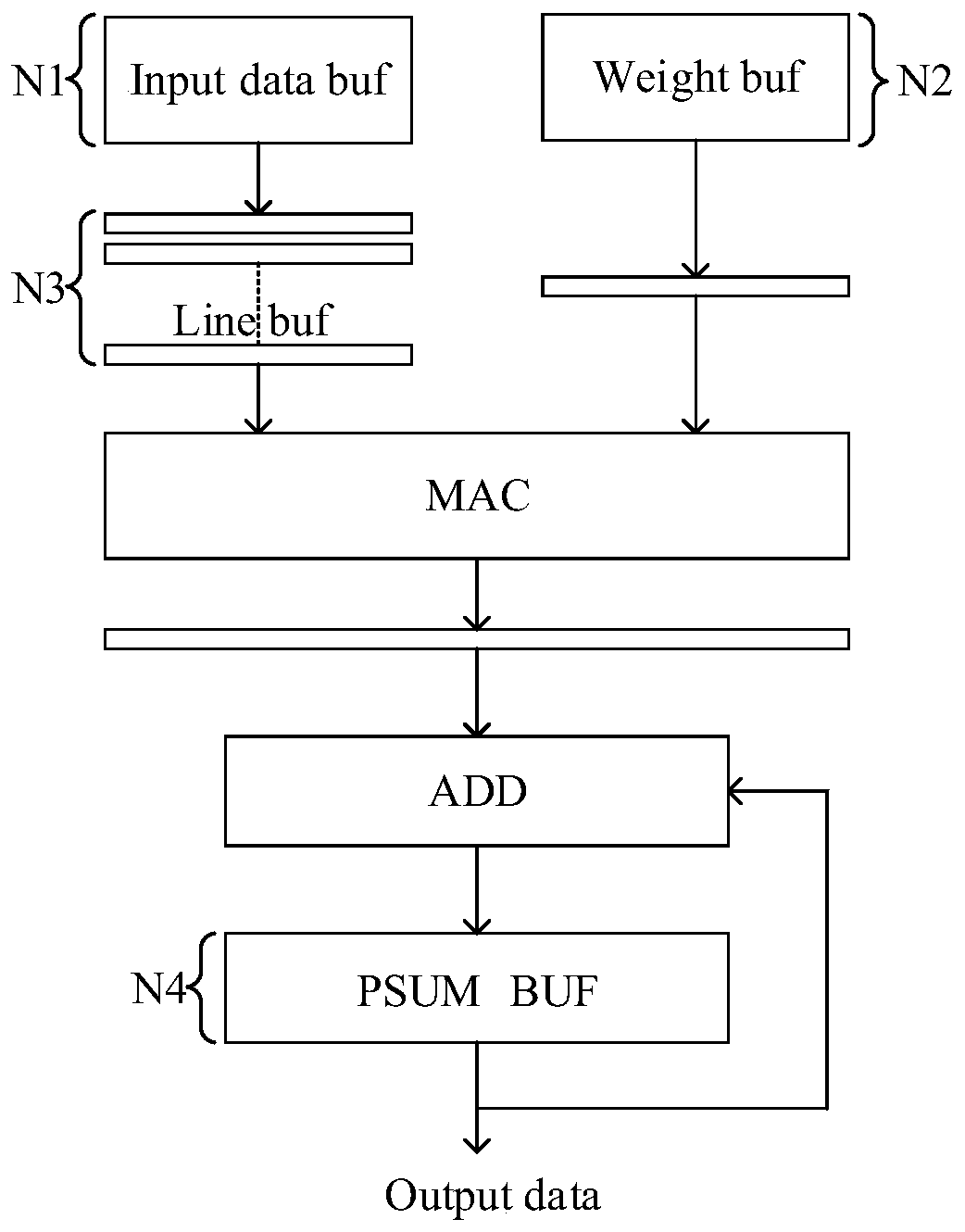

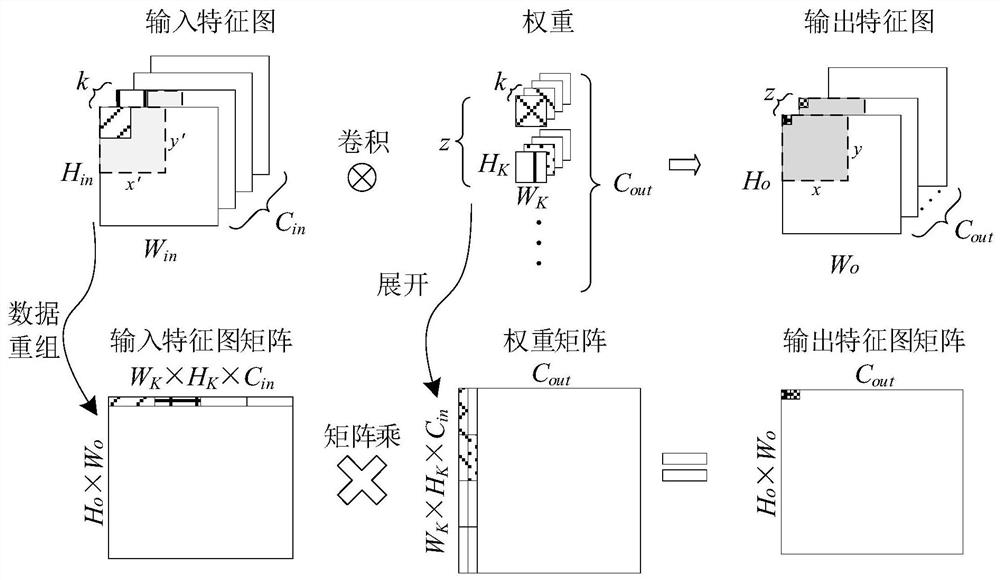

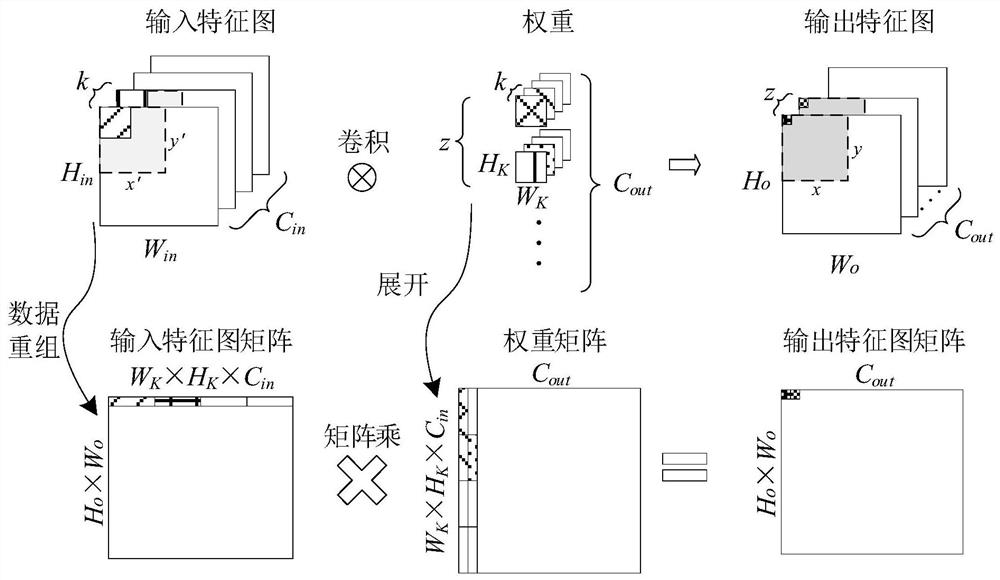

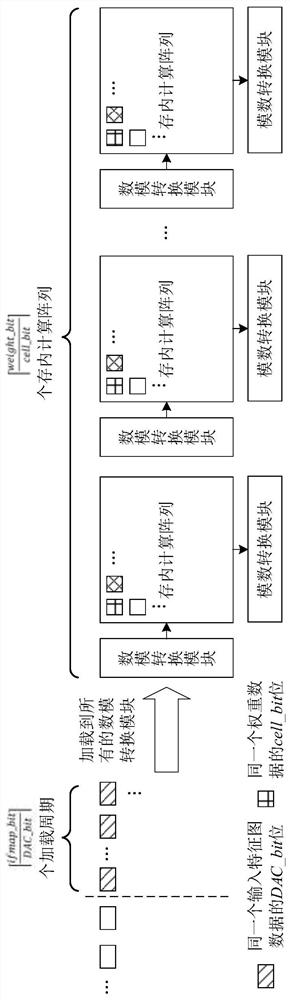

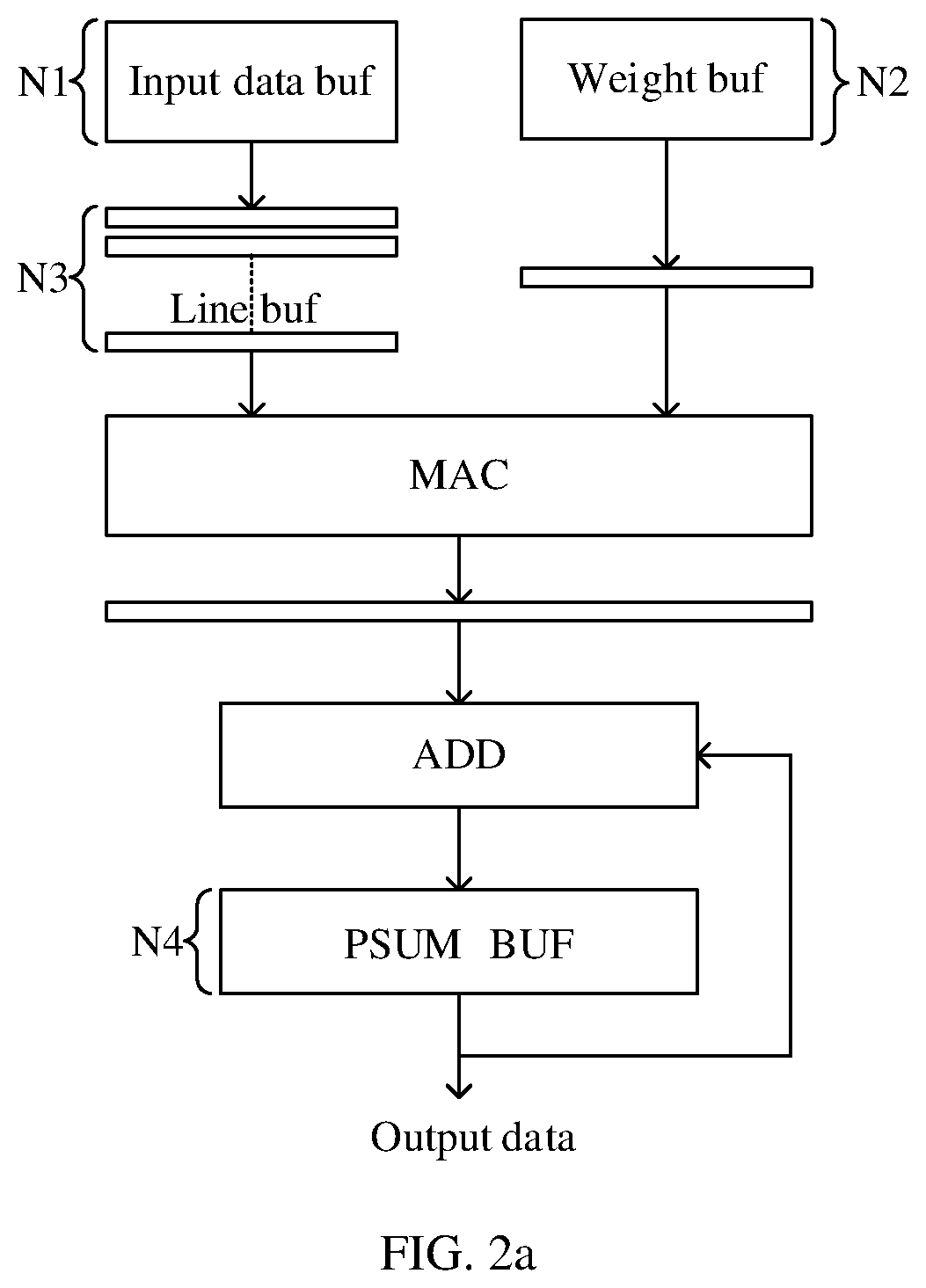

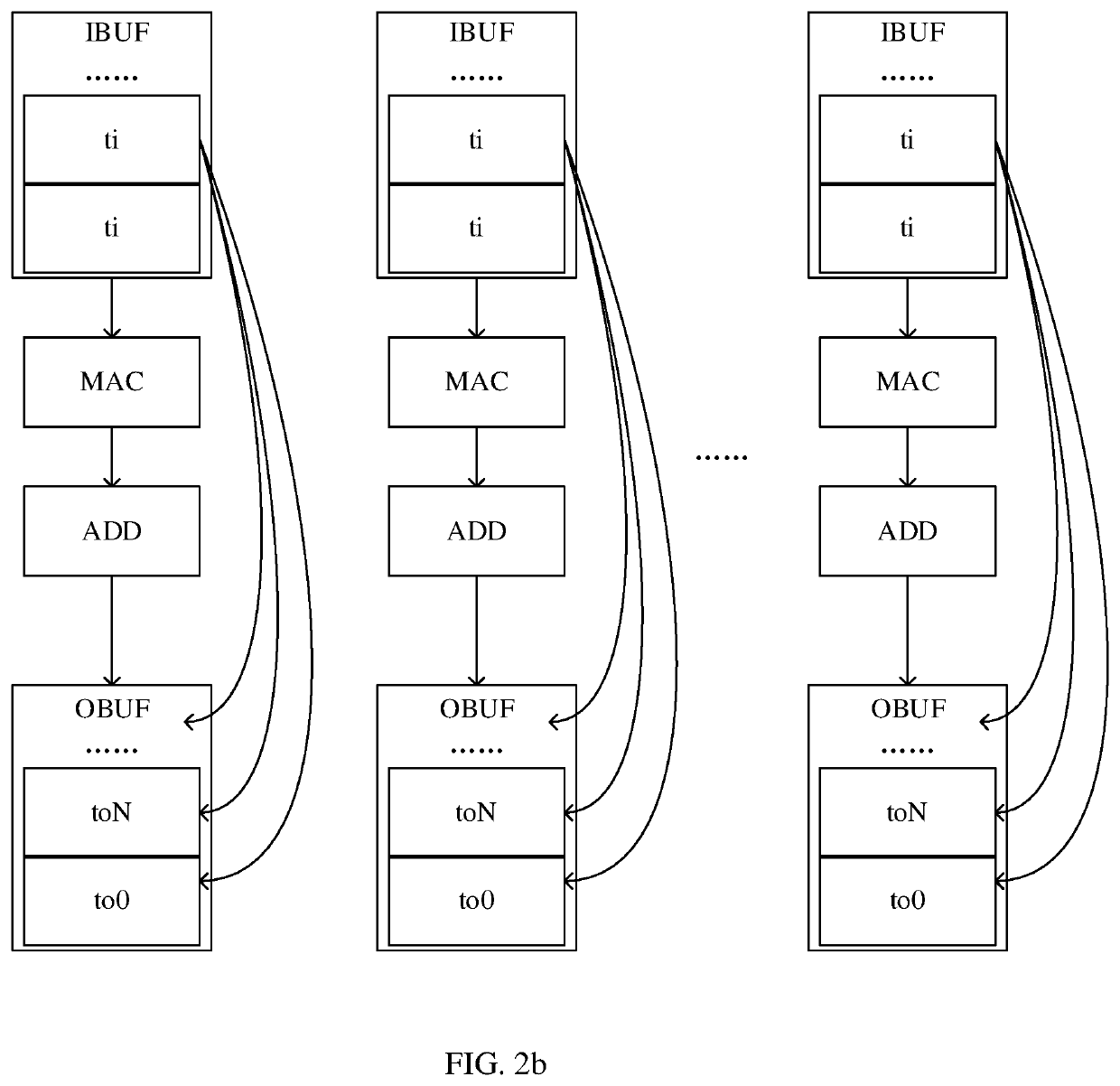

Convolutional neural network acceleration engine, convolutional neural network acceleration system and method

ActiveCN111178519AReduce data accessImprove performanceNeural architecturesPhysical realisationAlgorithmTerm memory

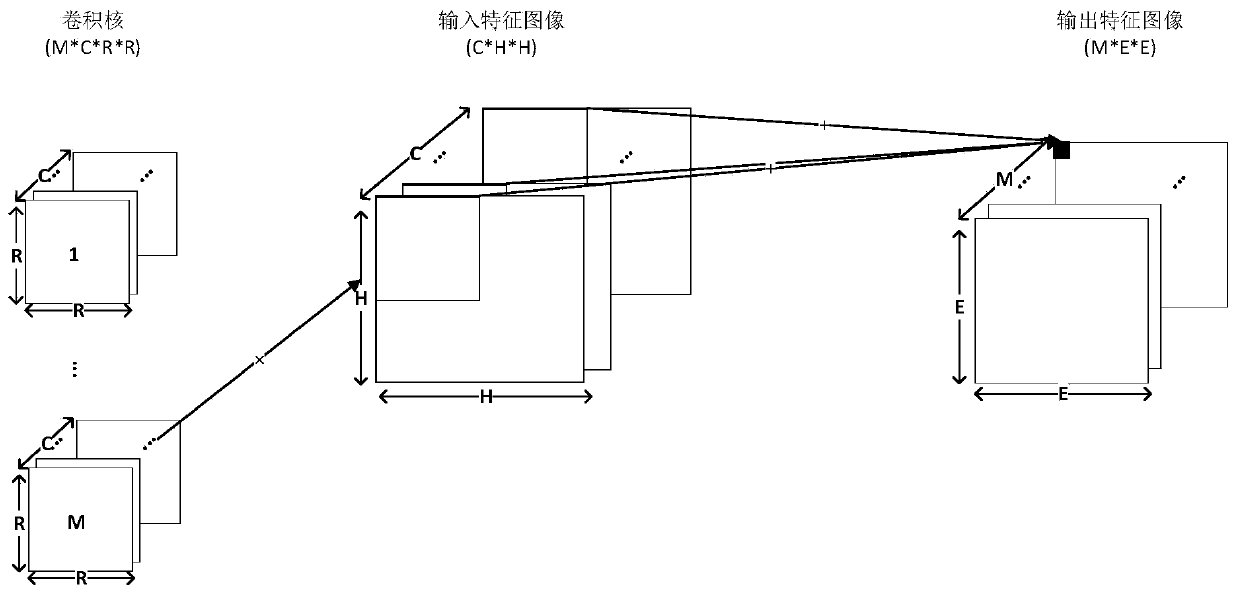

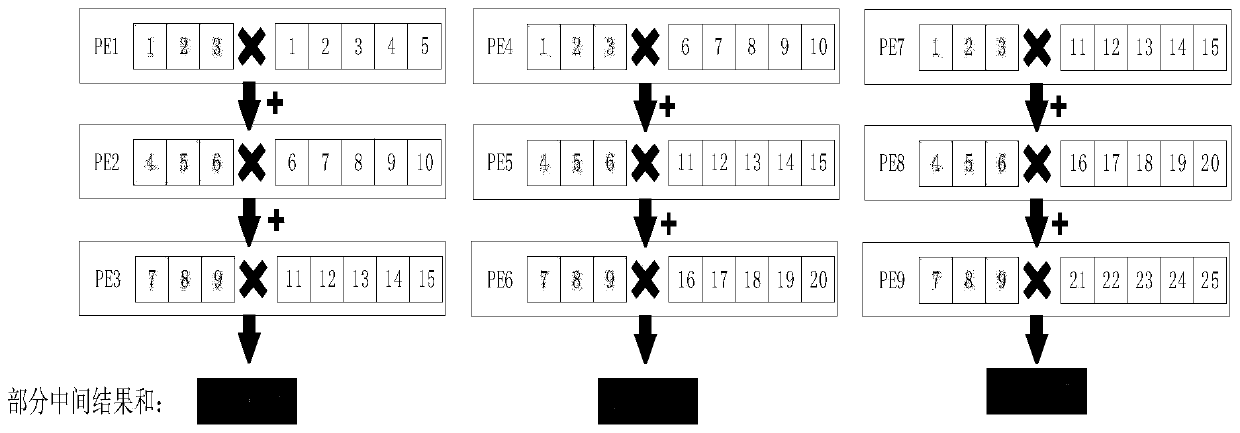

The invention discloses a convolutional neural network acceleration engine, a convolutional neural network acceleration system and a convolutional neural network acceleration method, and belongs to the field of heterogeneous computing acceleration, wherein the physical PE matrix comprises a plurality of physical PE units, and the physical PE units are used for executing row convolution operation and related partial sum accumulation operation; the XY interconnection bus is used for transmitting the input feature image data, the output feature image data and the convolution kernel parameters from the global cache to the physical PE matrix, or transmitting an operation result generated by the physical PE matrix to the global cache; the adjacent interconnection bus is used for transmitting anintermediate result between the same column of physical PE units; the system comprises a 3D-Memory, and a convolutional neural network acceleration engine is integrated in a memory controller of eachVault unit and used for completing a subset of a convolutional neural network calculation task; the method is optimized layer by layer on the basis of the system. According to the invention, the performance and energy consumption of the convolutional neural network can be improved.

Owner:HUAZHONG UNIV OF SCI & TECH

Distributed data management system

ActiveUS7941401B2Reduce data accessControl performanceDigital data information retrievalDigital data processing detailsData managementData store

A distributed data management system has multiple virtual machine nodes operating on multiple computers that are in communication with each other over a computer network. Each virtual machine node includes at least one data store or “bucket” for receiving data. A digital hash map data structure is stored in a computer readable medium of at least one of the multiple computers to configure the multiple virtual machine nodes and buckets to provide concurrent, non-blocking access to data in the buckets, the digital hash map data structure including a mapping between the virtual machine nodes and the buckets. The distributed data management system employing dynamic scalability in which one or more buckets from a virtual machine node reaching a memory capacity threshold are transferred to another virtual machine node that is below its memory capacity threshold.

Owner:GOPIVOTAL

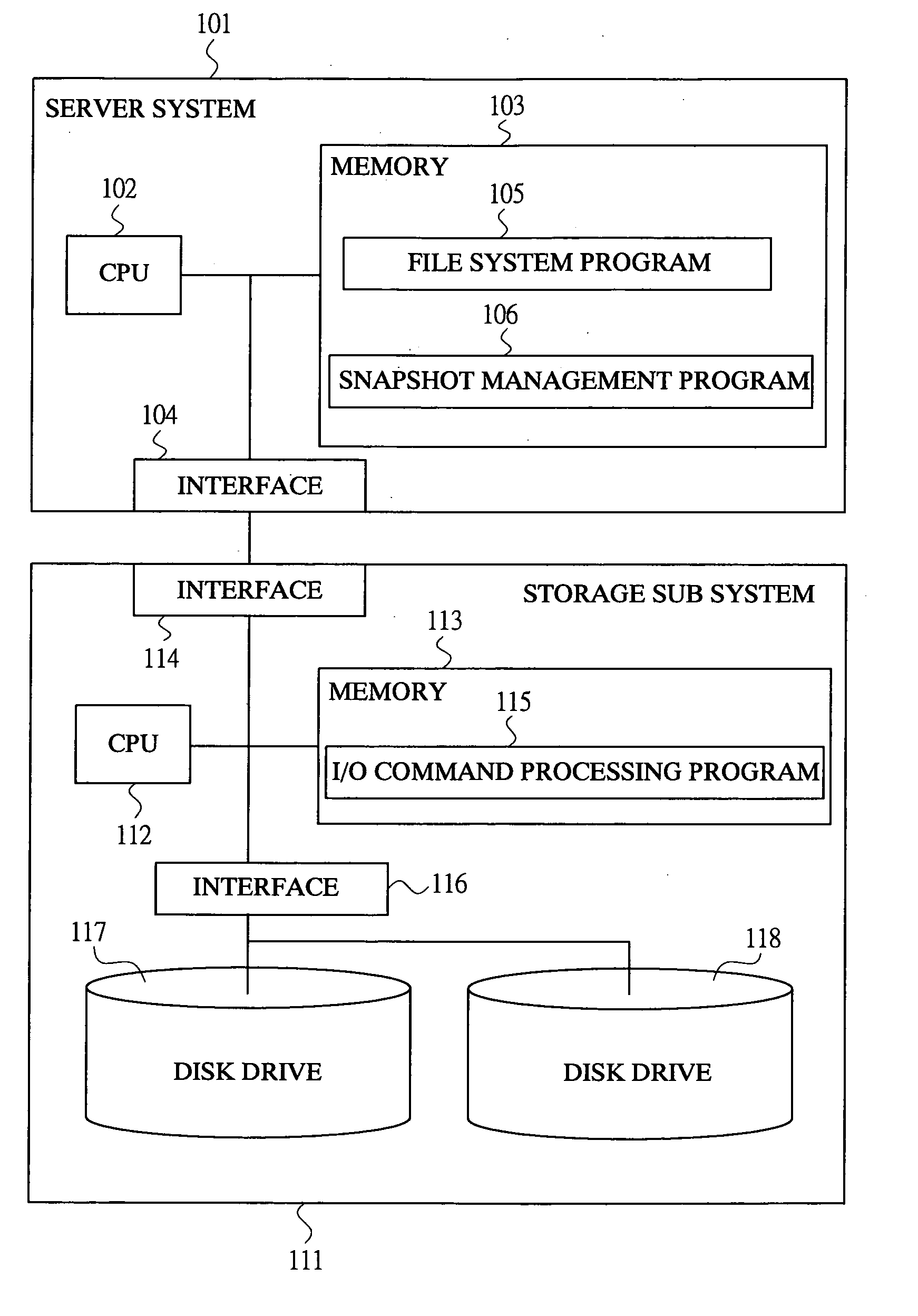

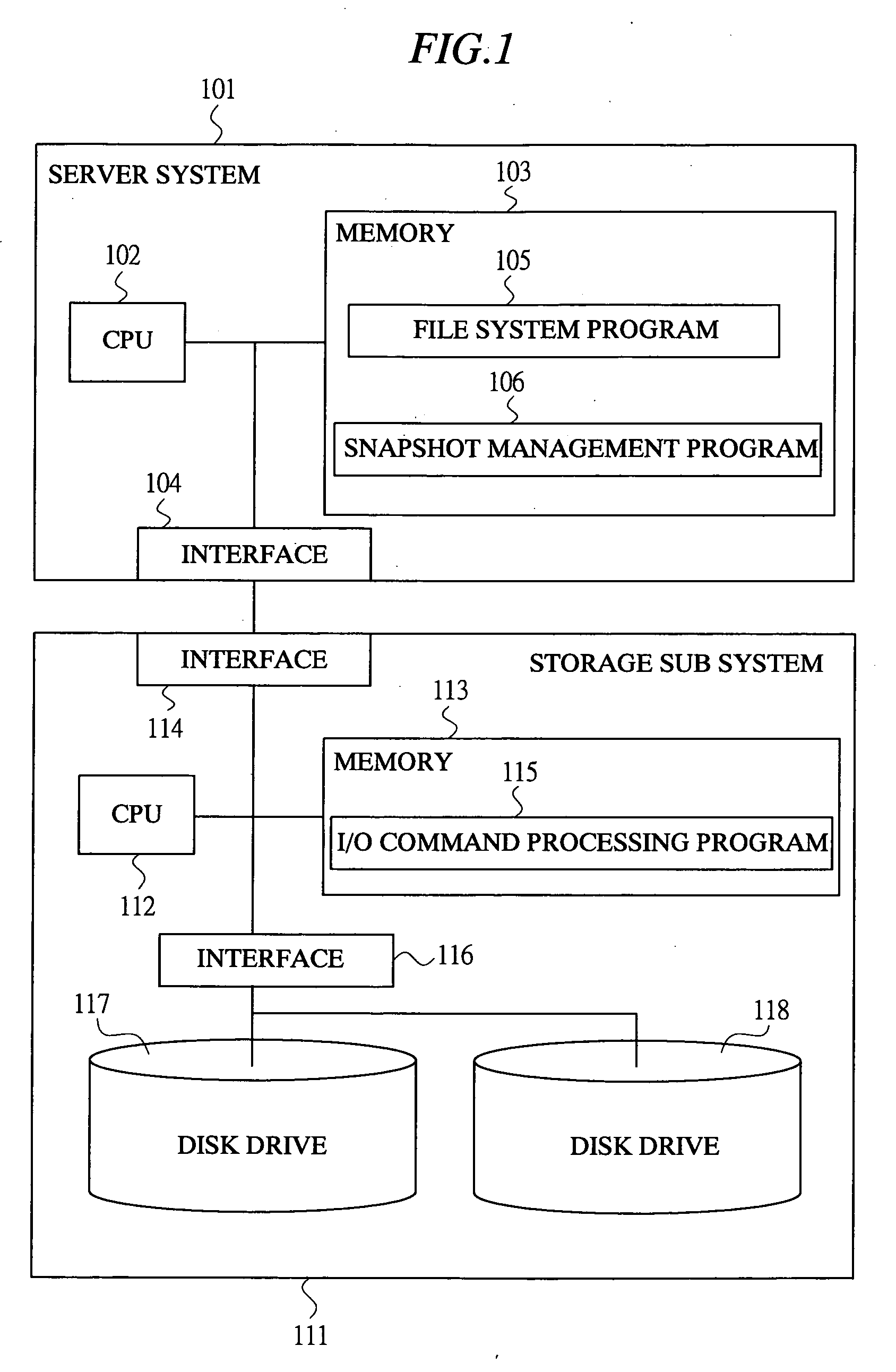

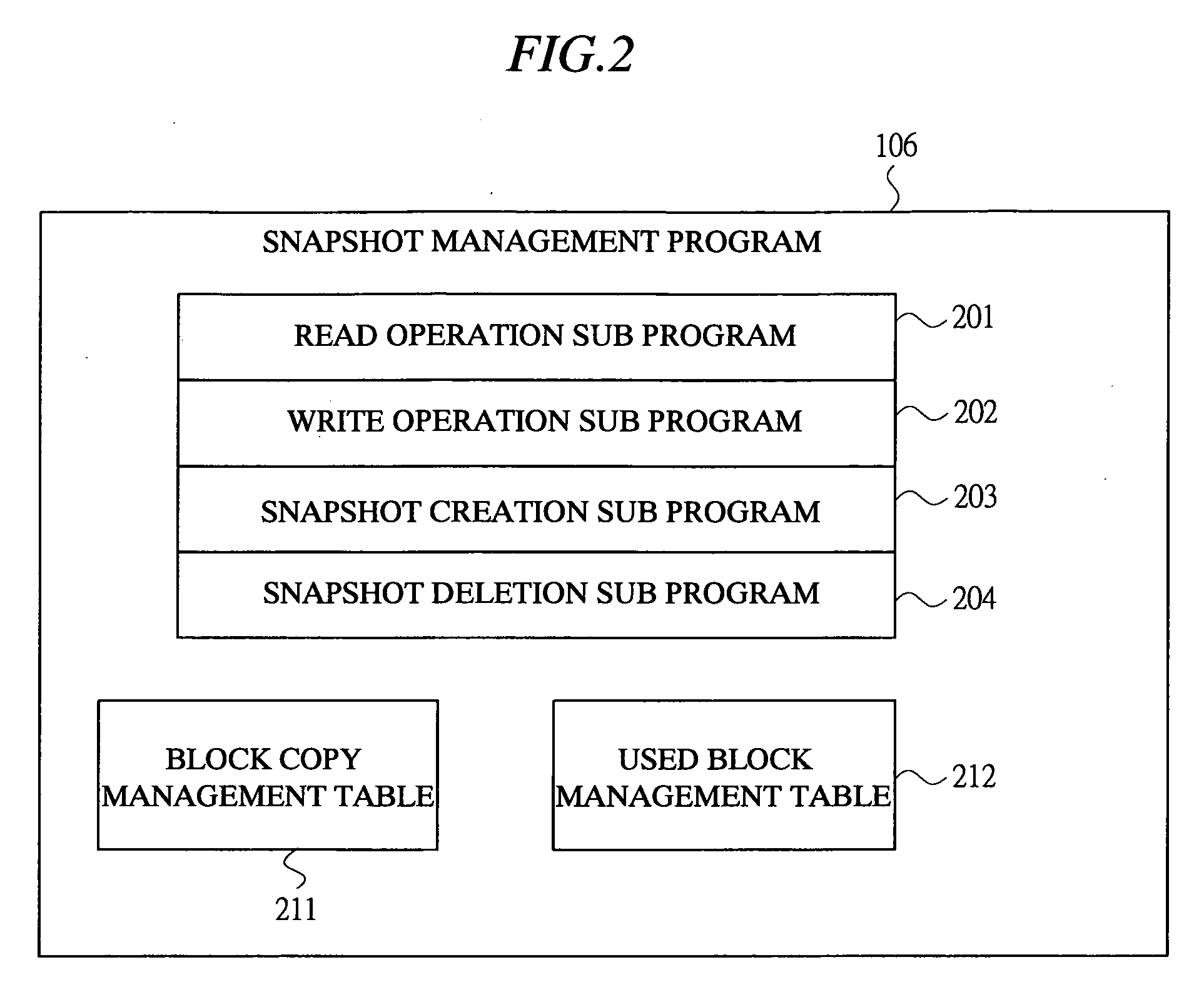

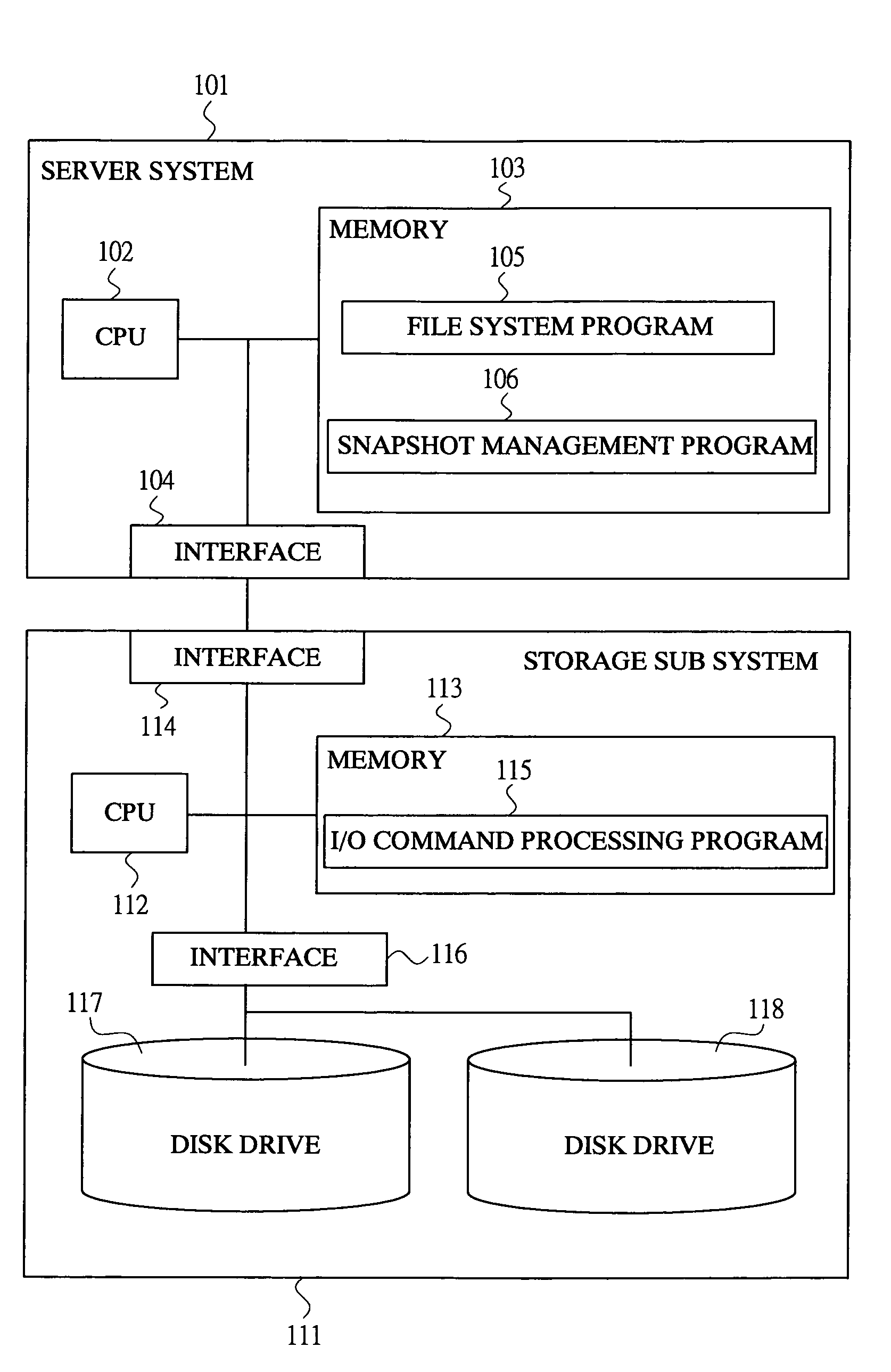

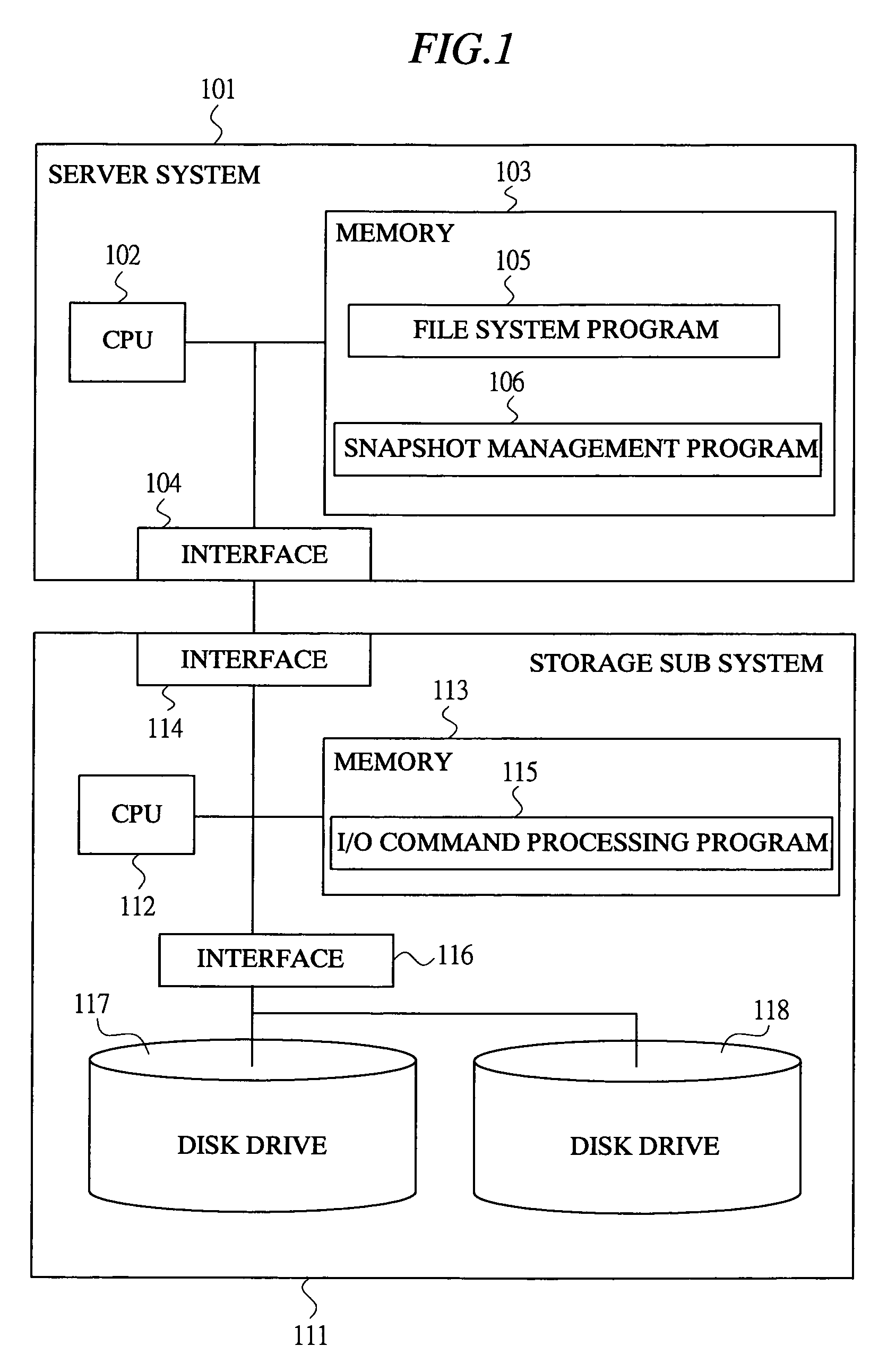

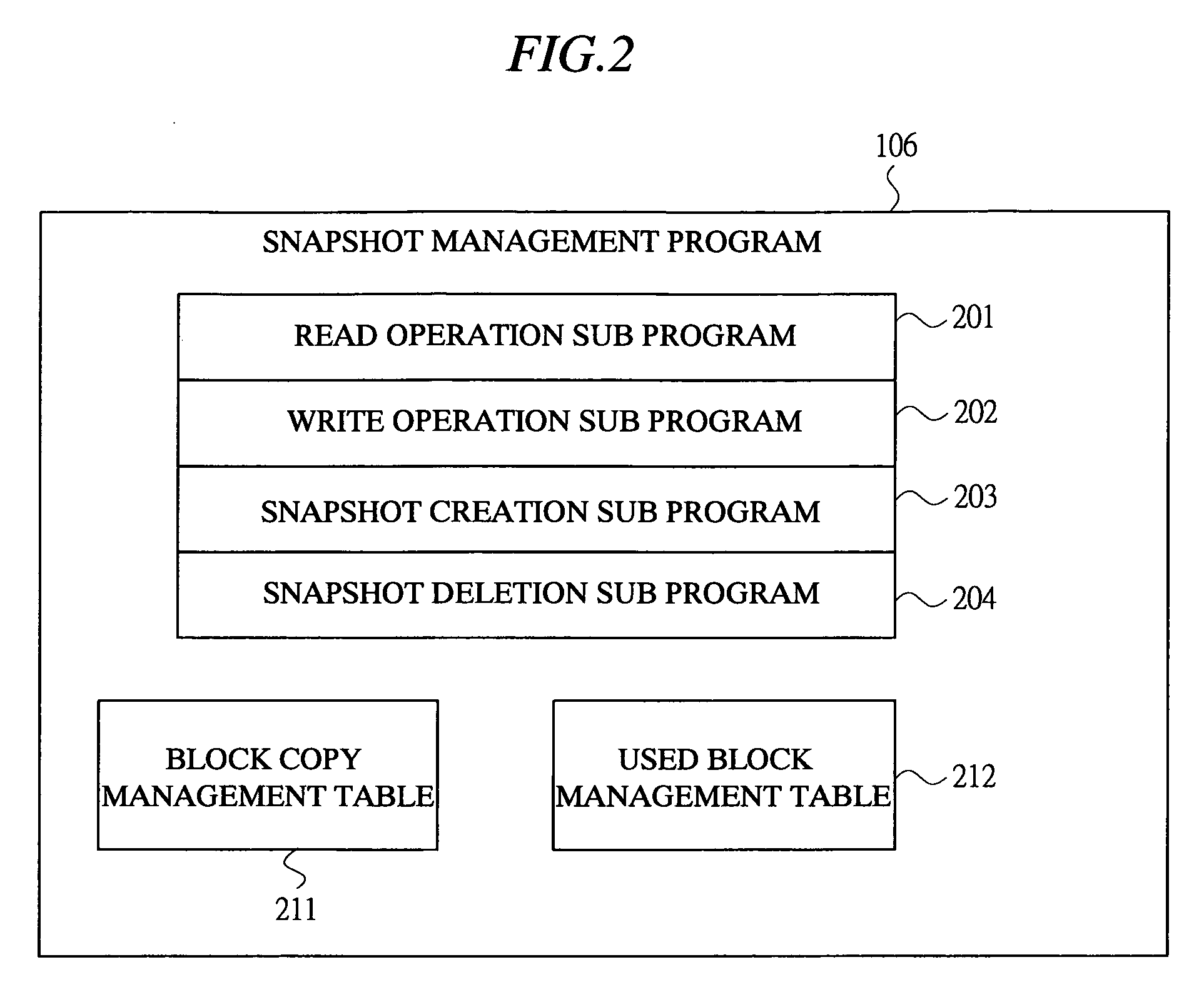

Method for keeping snapshot image in a storage system

ActiveUS20060085663A1Reduce data transferReduce unnecessary consumptionError detection/correctionMemory systemsData transmissionOperating system

A technique for realizing a snapshot function is provided, which can reduce data transfer between a server system and a storage subsystem which is necessary in data copy in storages and reduce the degradation of data access performance of the storage in operation. In a storage system, a command processed by a CPU of a storage subsystem includes a COPY and WRITE command for performing a data copy process and a data storage process in accordance with a predetermined sequence, and a server system issues the command to the storage subsystem. After receiving the command, the storage subsystem executes a data copy process from a first disk drive to a second disk drive, and subsequently executes a data storage process to the first disk drive, thereby keeping a snapshot of the data stored in the first disk drive.

Owner:GOOGLE LLC

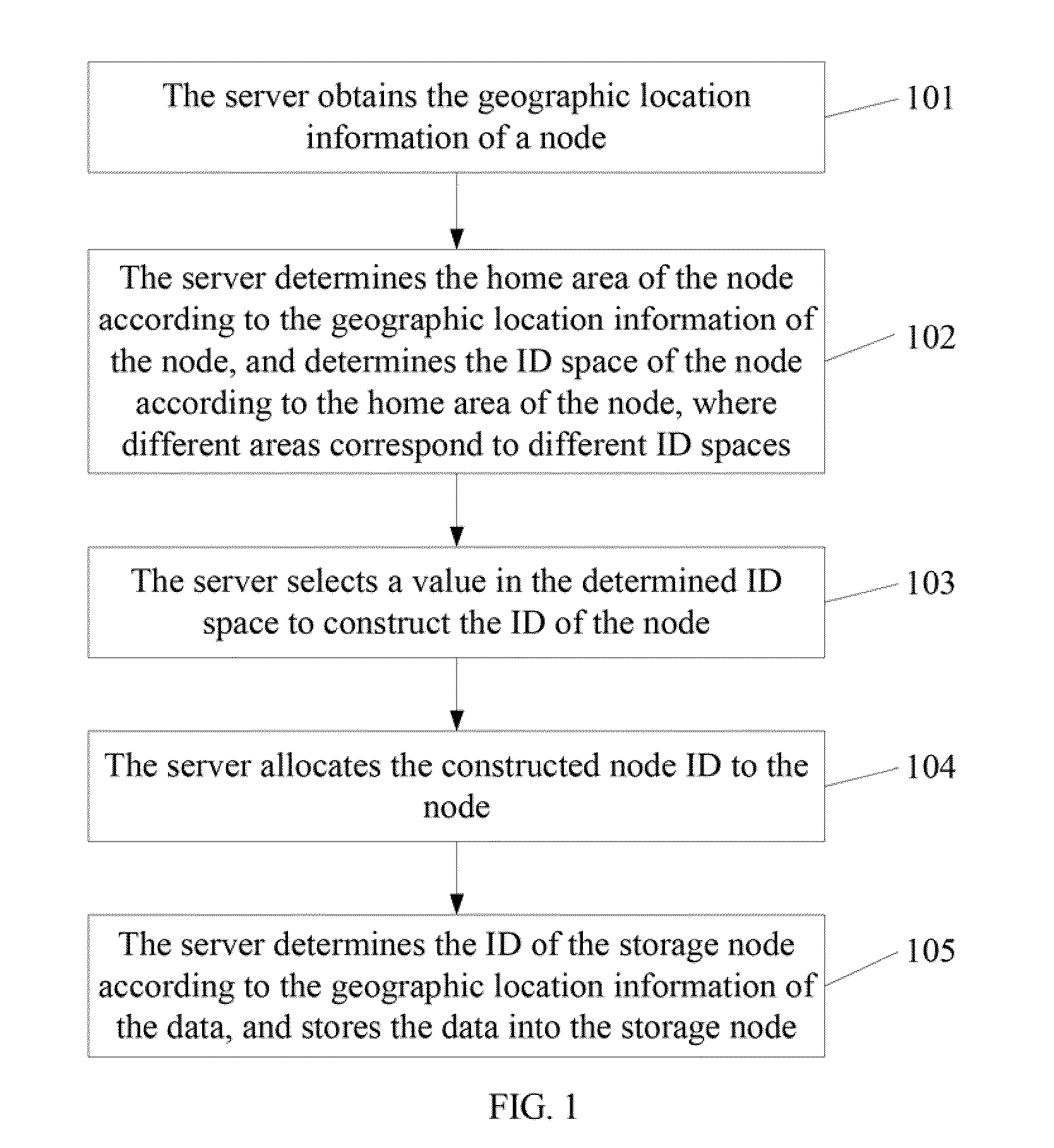

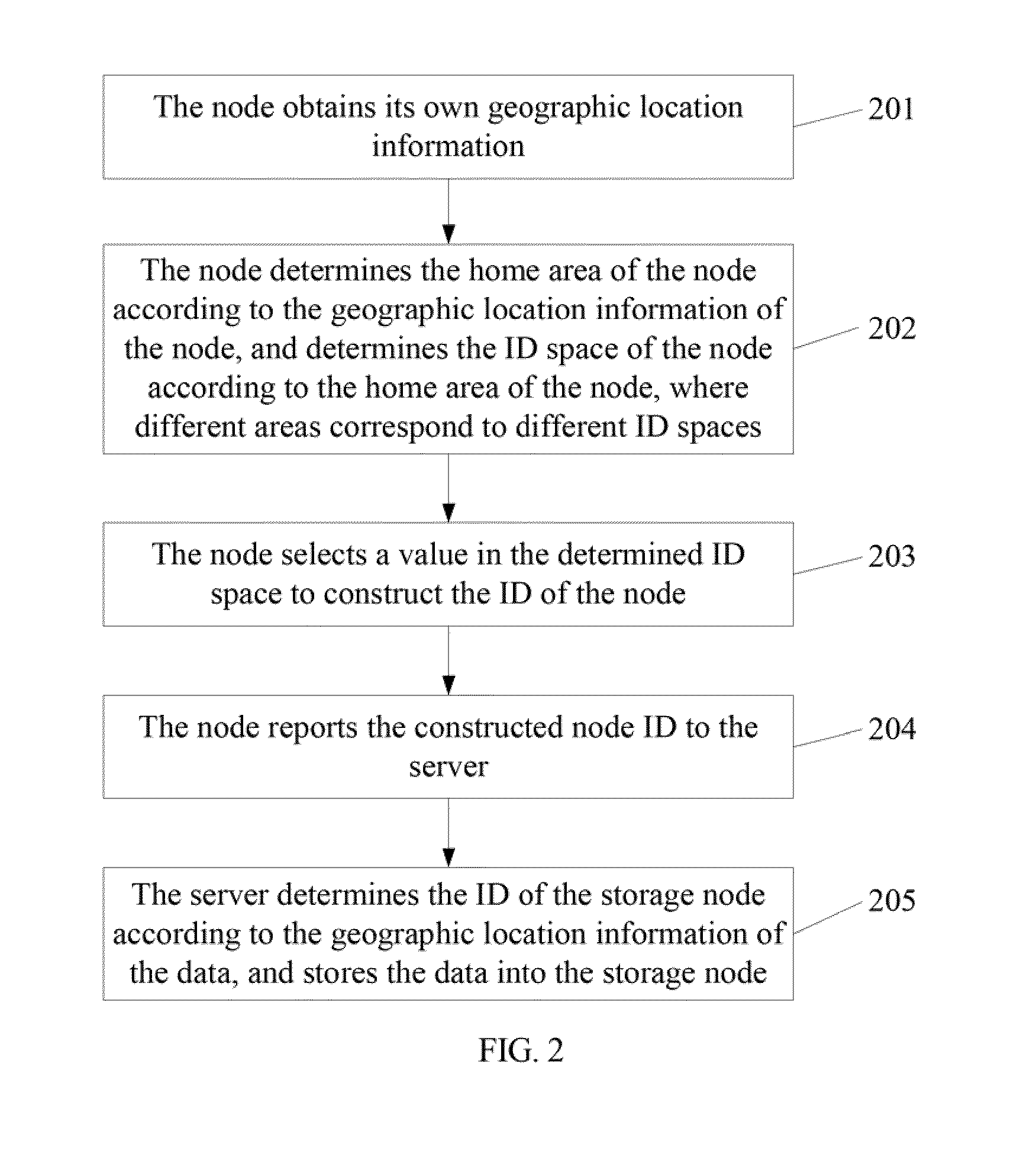

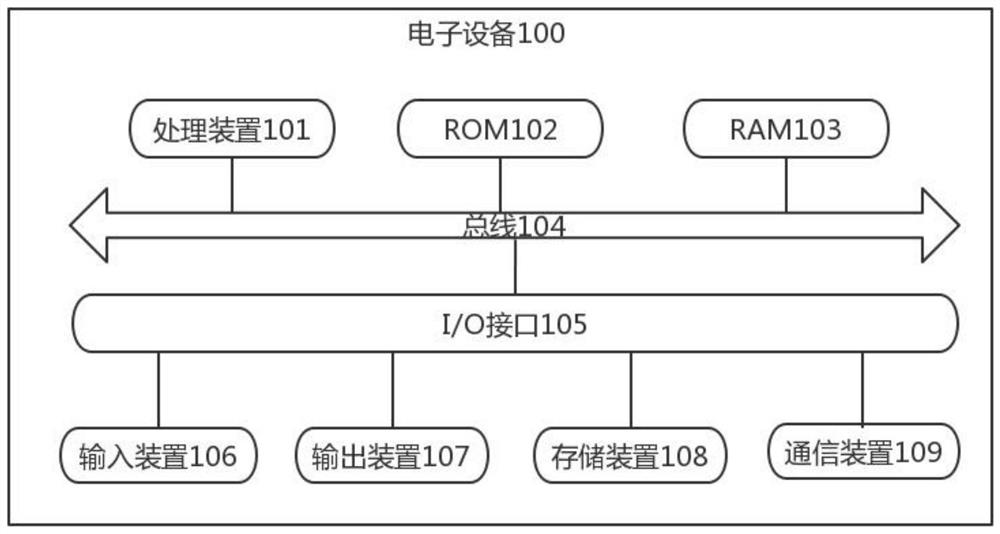

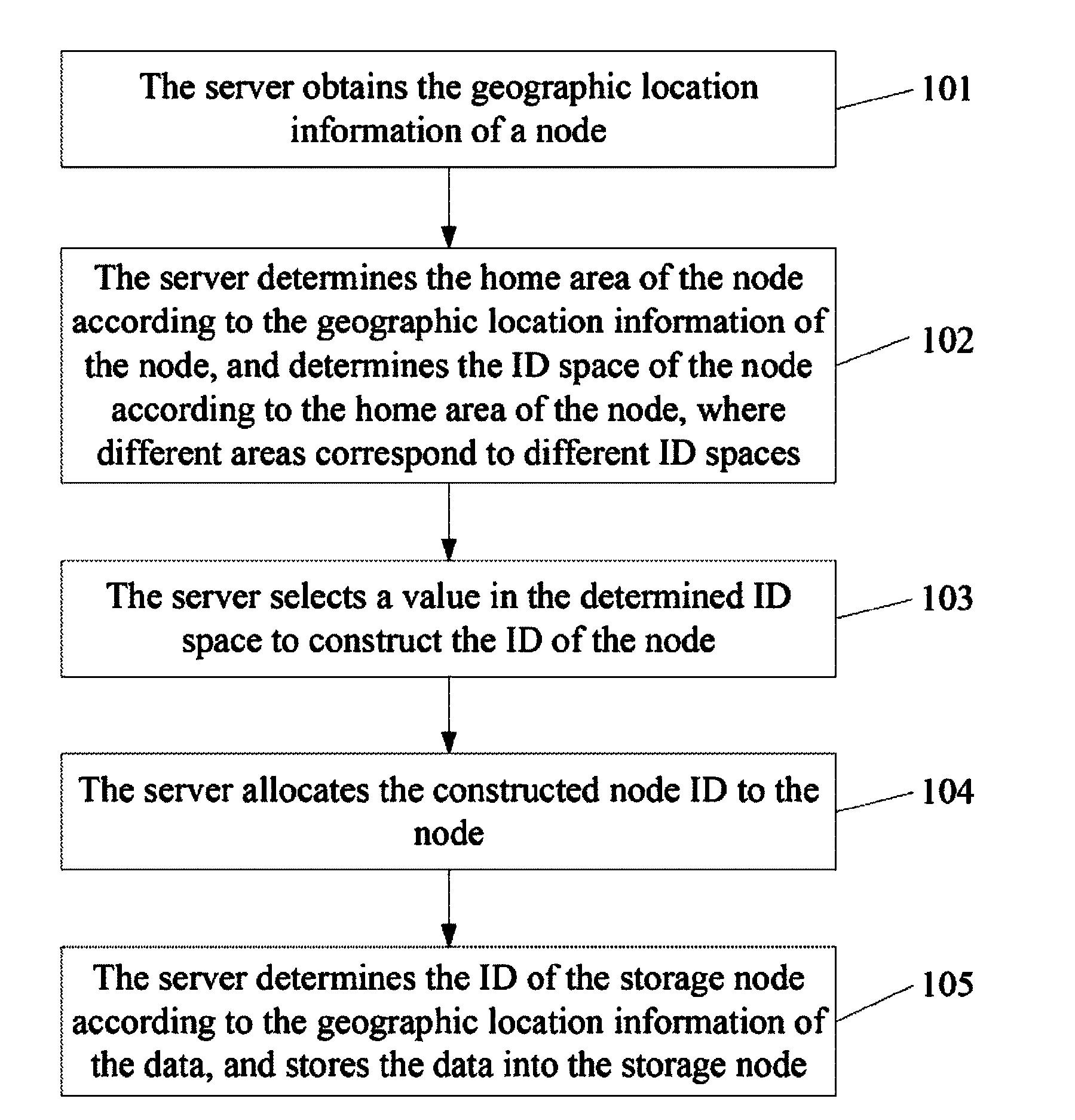

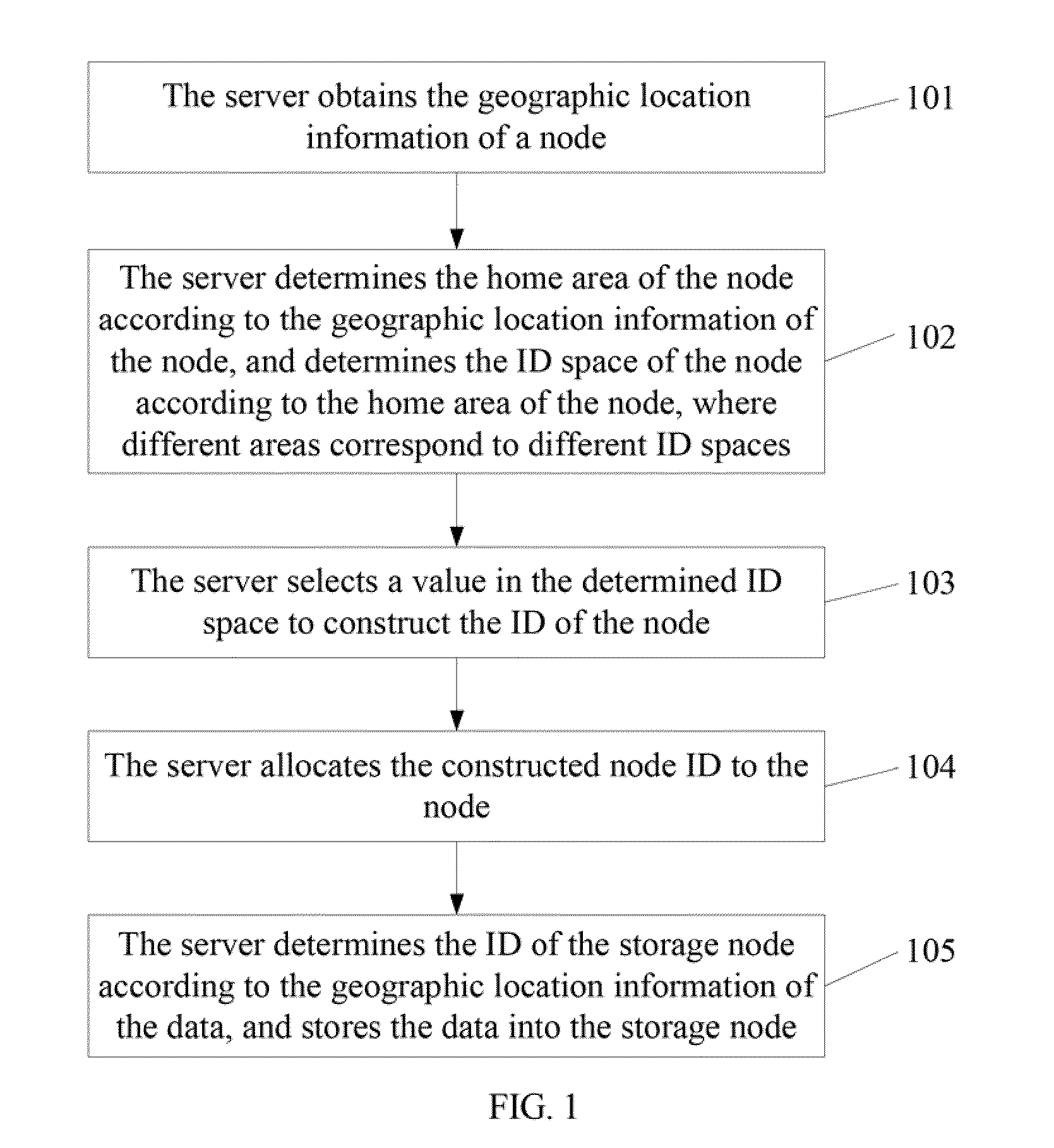

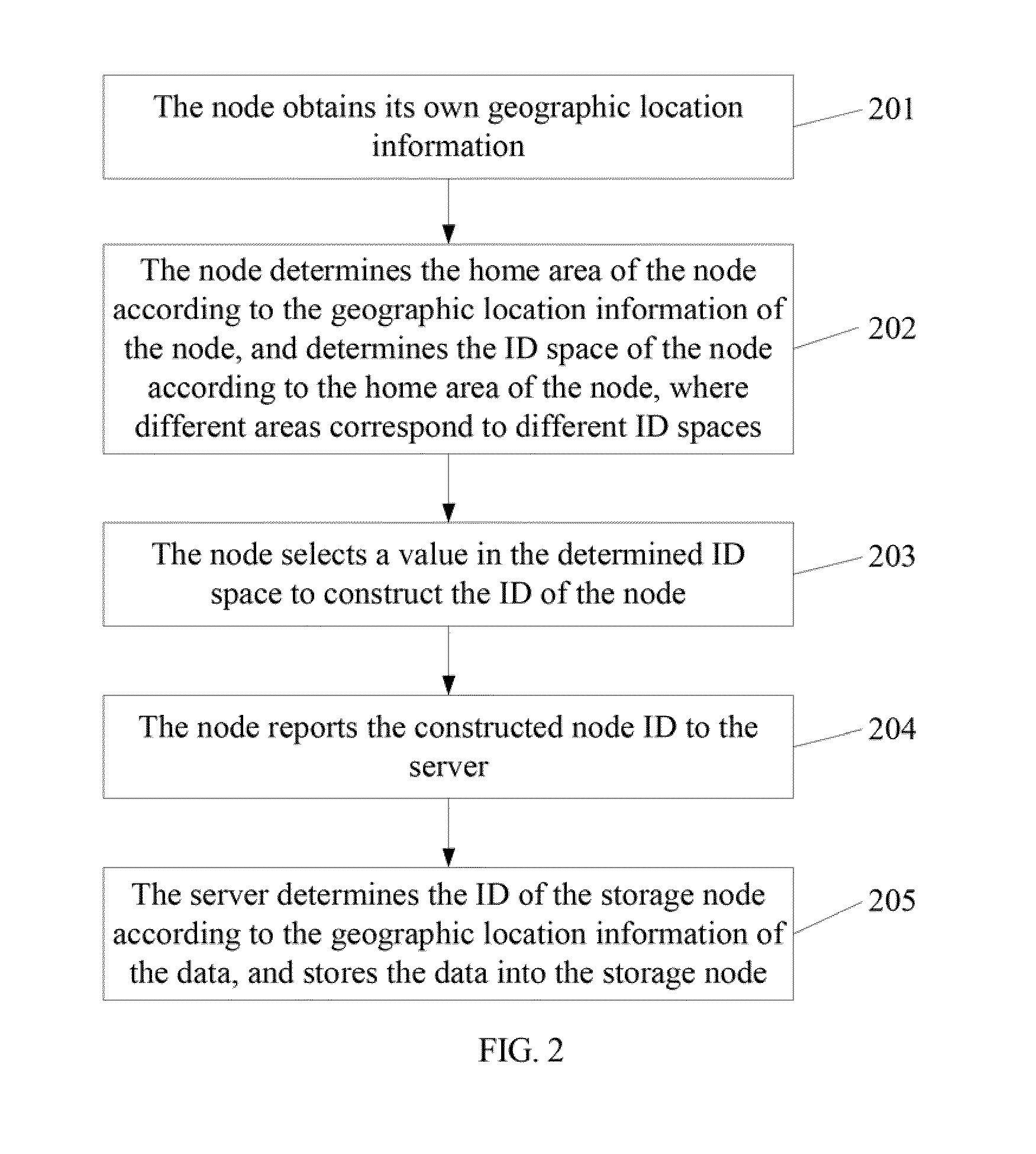

Distributed network construction and storage method, apparatus and system

ActiveUS20100169280A1Reduce network loadReduce instabilityDigital data processing detailsMultiple digital computer combinationsTraffic capacityData access

The disclosure relates to distributed network communications, and in particular, to a distributed network construction method and apparatus, a distributed data storage method and apparatus, and a distributed network system. When a node joins a distributed network, the ID of the node is determined according to the geographic location information about the node. Therefore, all the nodes in the same area belong to the same ID range, and the node IDs are allocated according to the area. Because the node IDs are determined according to the area, the local data may be stored in the node in the area according to the geographic information, inter-area data access is reduced. Therefore, the method, the apparatus, and the system provided herein reduce the data load on the backbone network, balance the data traffic and the bandwidth overhead of the entire network, and reduce the network instability.

Owner:HUAWEI TECH CO LTD

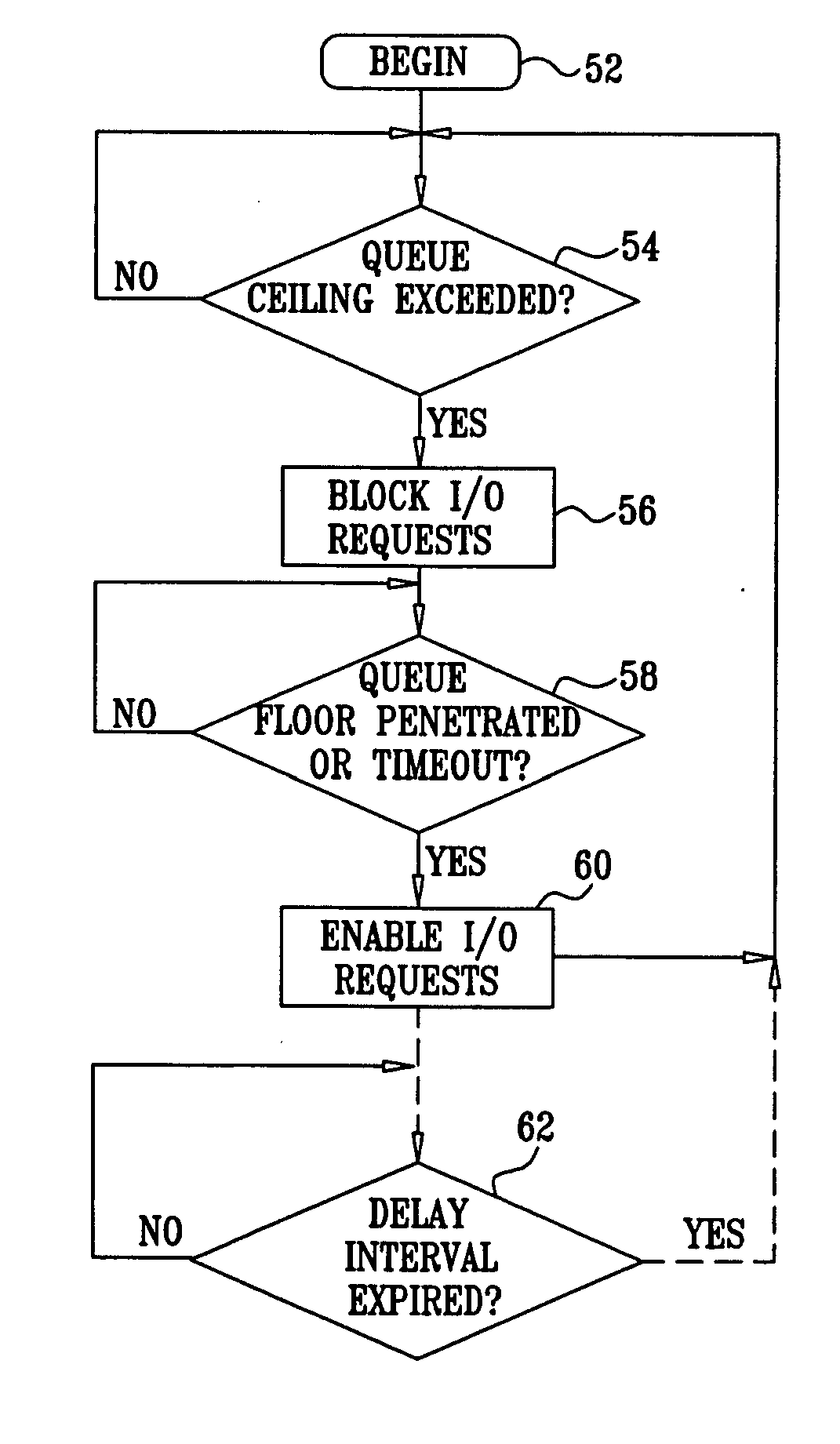

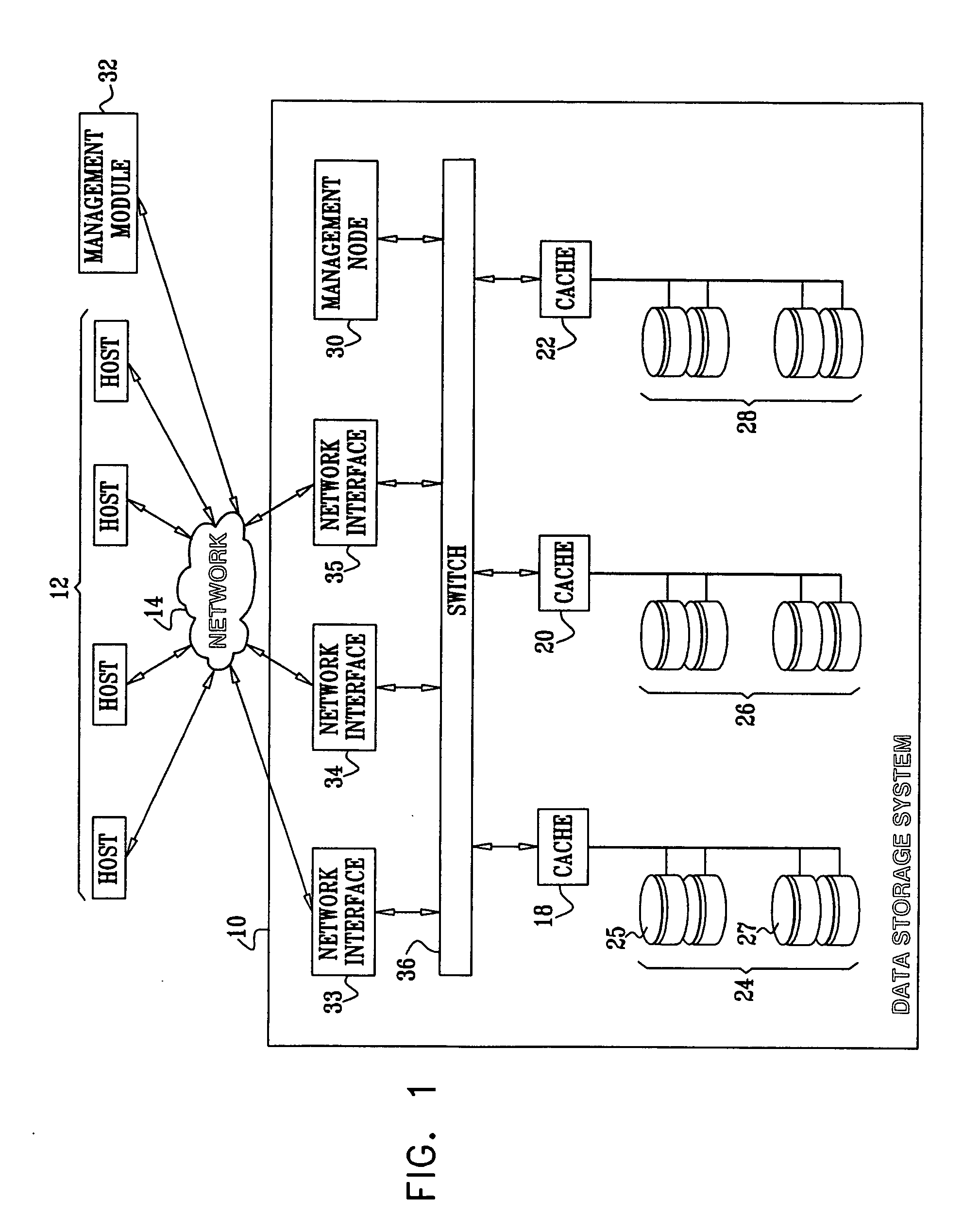

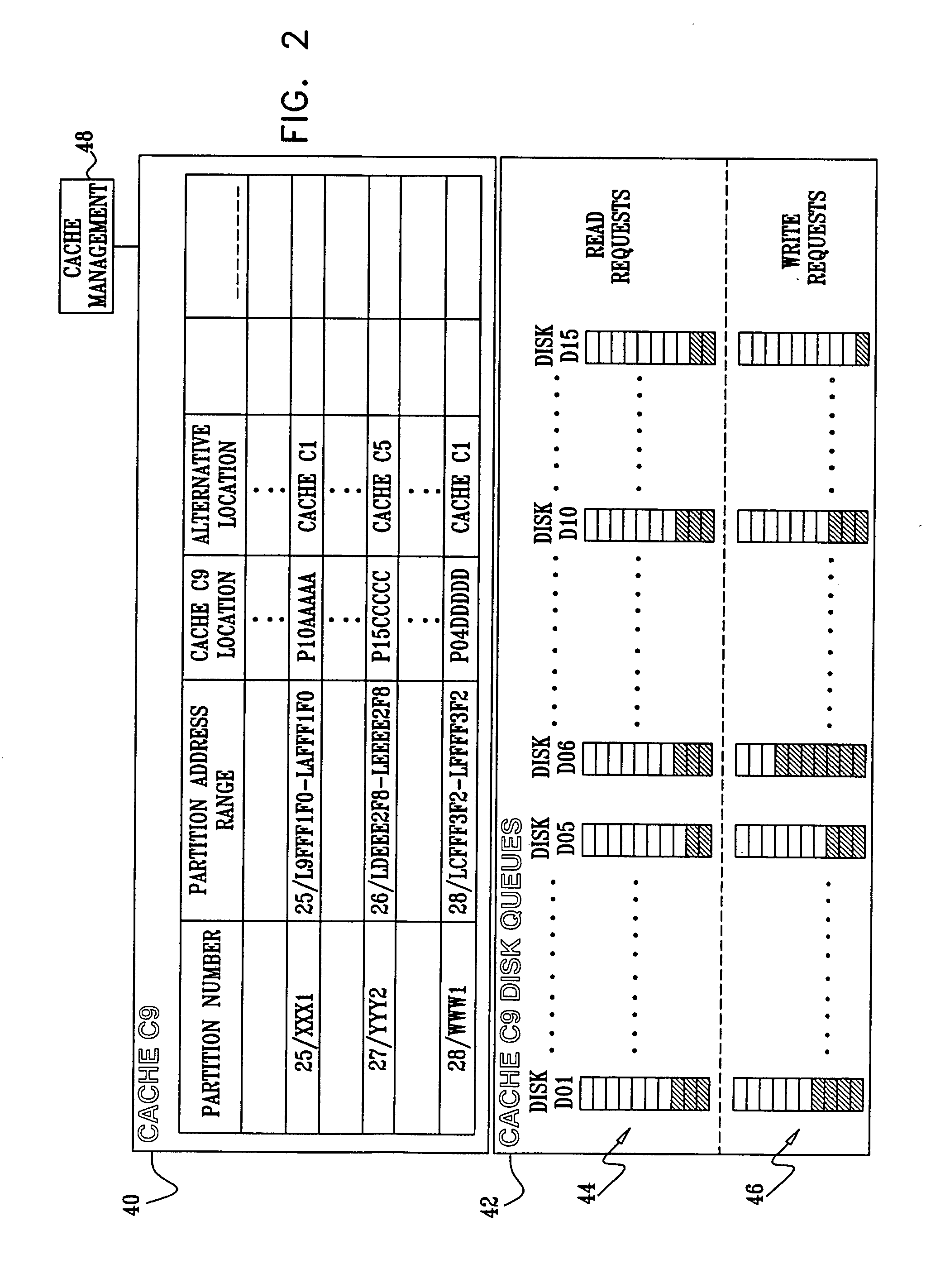

Restricting access to improve data availability

InactiveUS20070124555A1Reduce data access latencyReduce data accessMemory architecture accessing/allocationUnauthorized memory use protectionRate limitingLimited access

I / O requests from hosts in a data storage system are blocked or rate-restricted upon detection of an unbalanced or overload condition in order to prevent timeouts by host computers, and achieve an aggregate reduction of data access latency. The blockages are generally of short duration, and are transparent to hosts, so that host timeouts are unlikely to occur. During the transitory suspensions of new I / O requests, server queues shorten, after which I / O requests are again enabled.

Owner:IBM CORP

Method for keeping snapshot image in a storage system

ActiveUS7398420B2Reduce data transferReduce data accessMemory loss protectionError detection/correctionData accessData transmission

A technique for realizing a snapshot function is provided, which can reduce data transfer between a server system and a storage subsystem which is necessary during data copy operations between storage devices and reduce the degradation of data access performance of the storage device in operation. In a storage system, a command processed by a CPU of a storage subsystem includes a COPY and WRITE command for performing a data copy process and a data storage process in accordance with a predetermined sequence, and a server system issues the command to the storage subsystem. After receiving the command, the storage subsystem executes a data copy process from a first disk drive to a second disk drive, and subsequently executes a data storage process to the first disk drive, thereby keeping a snapshot of the data stored in the first disk drive.

Owner:GOOGLE LLC

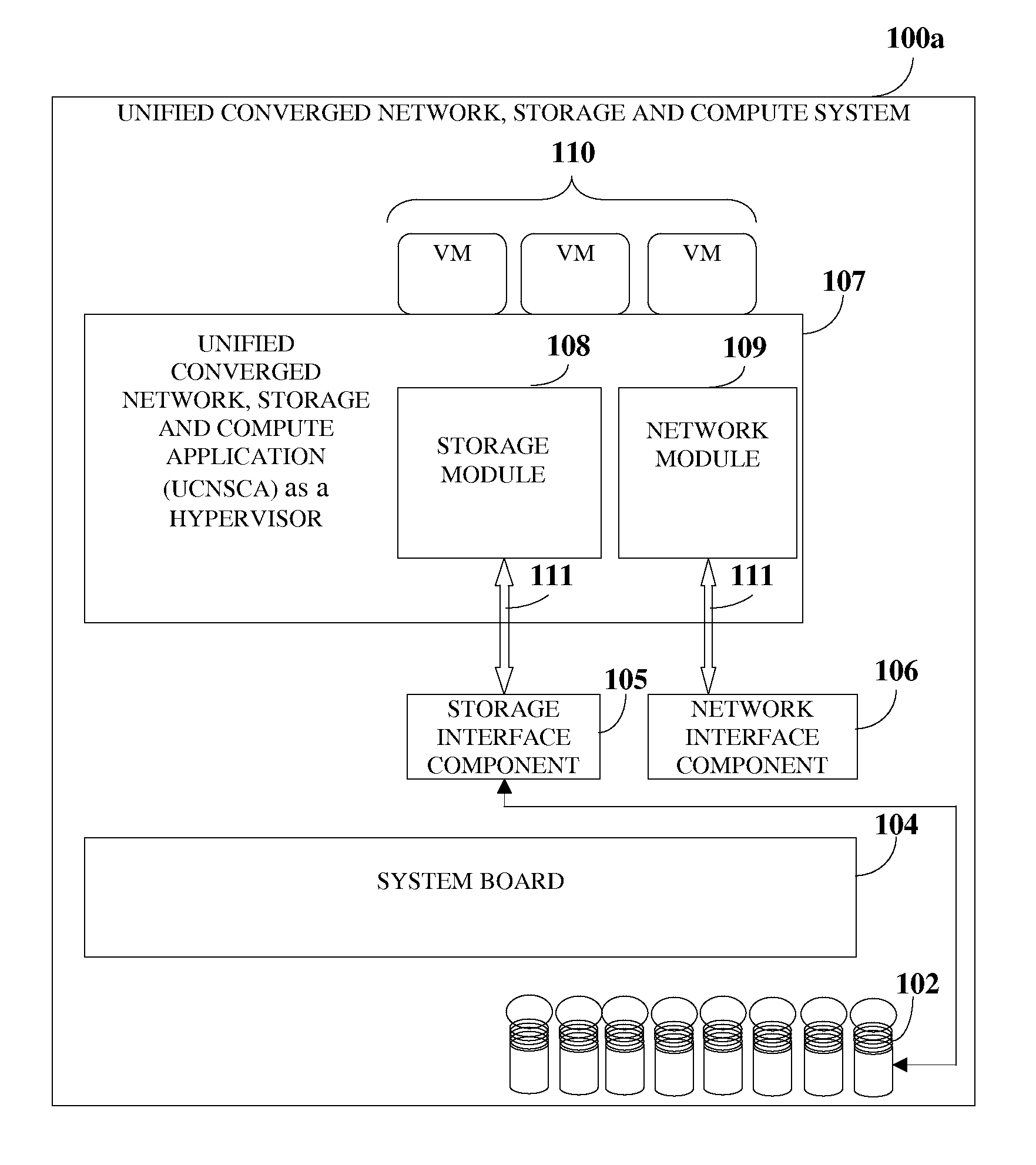

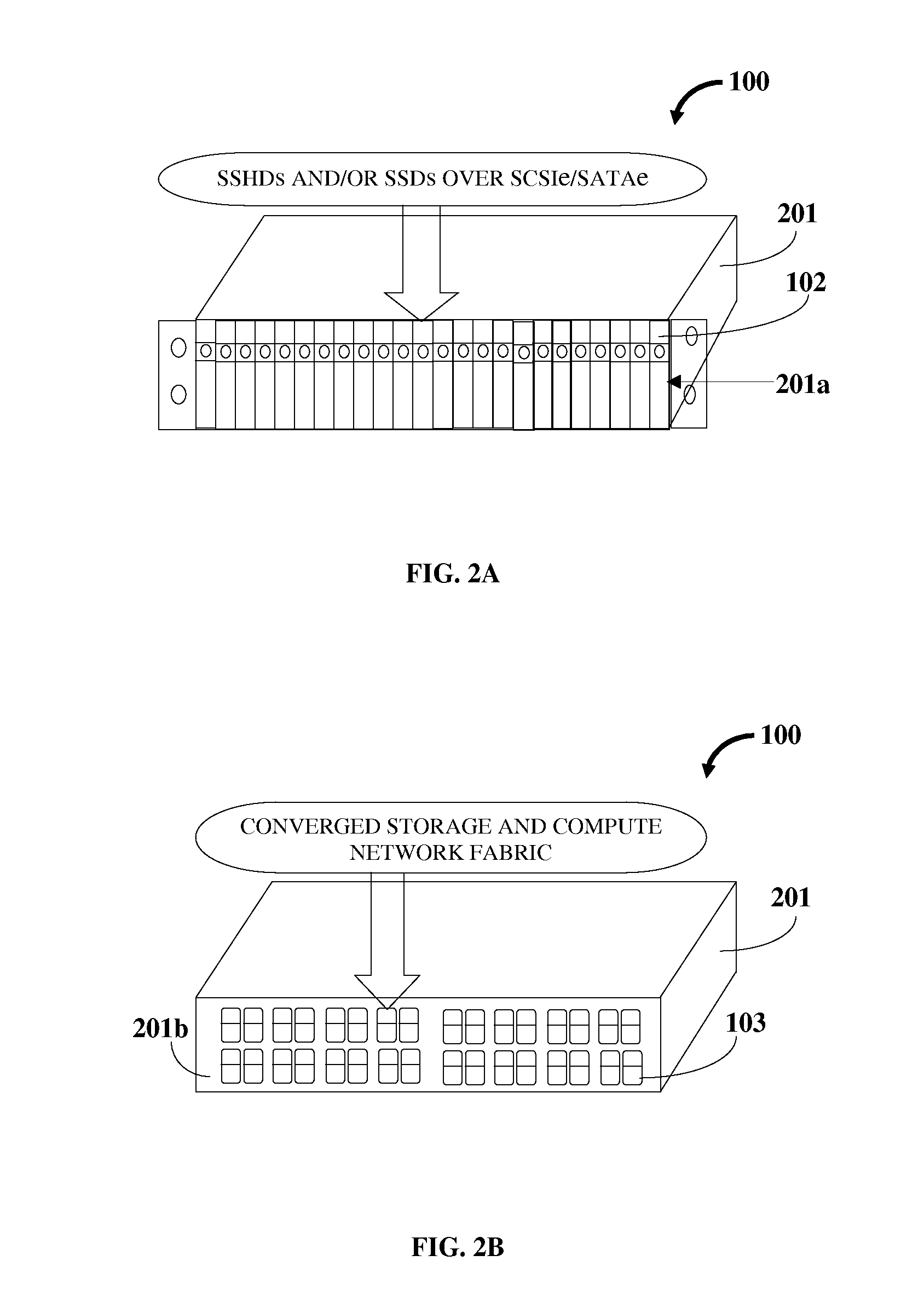

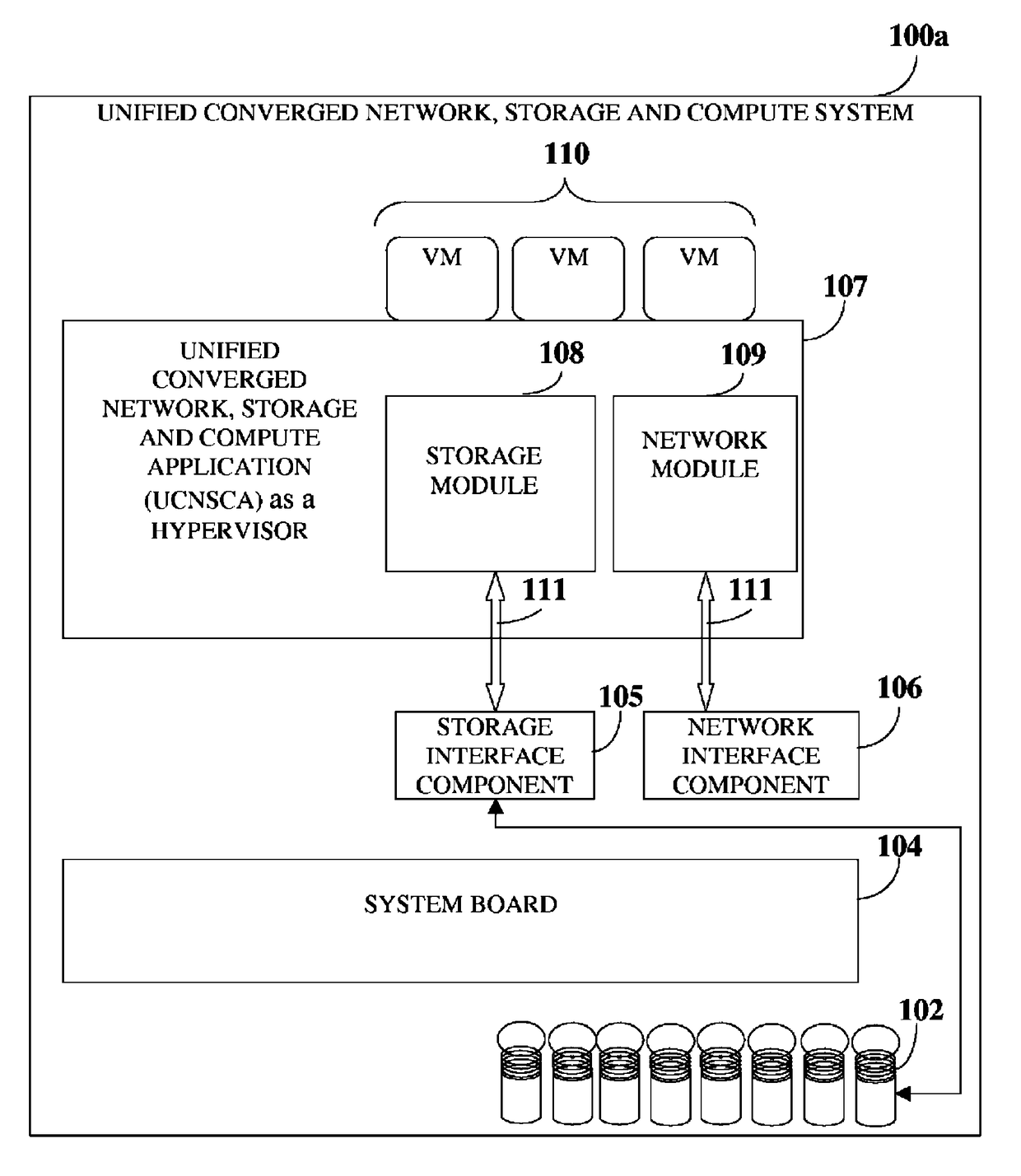

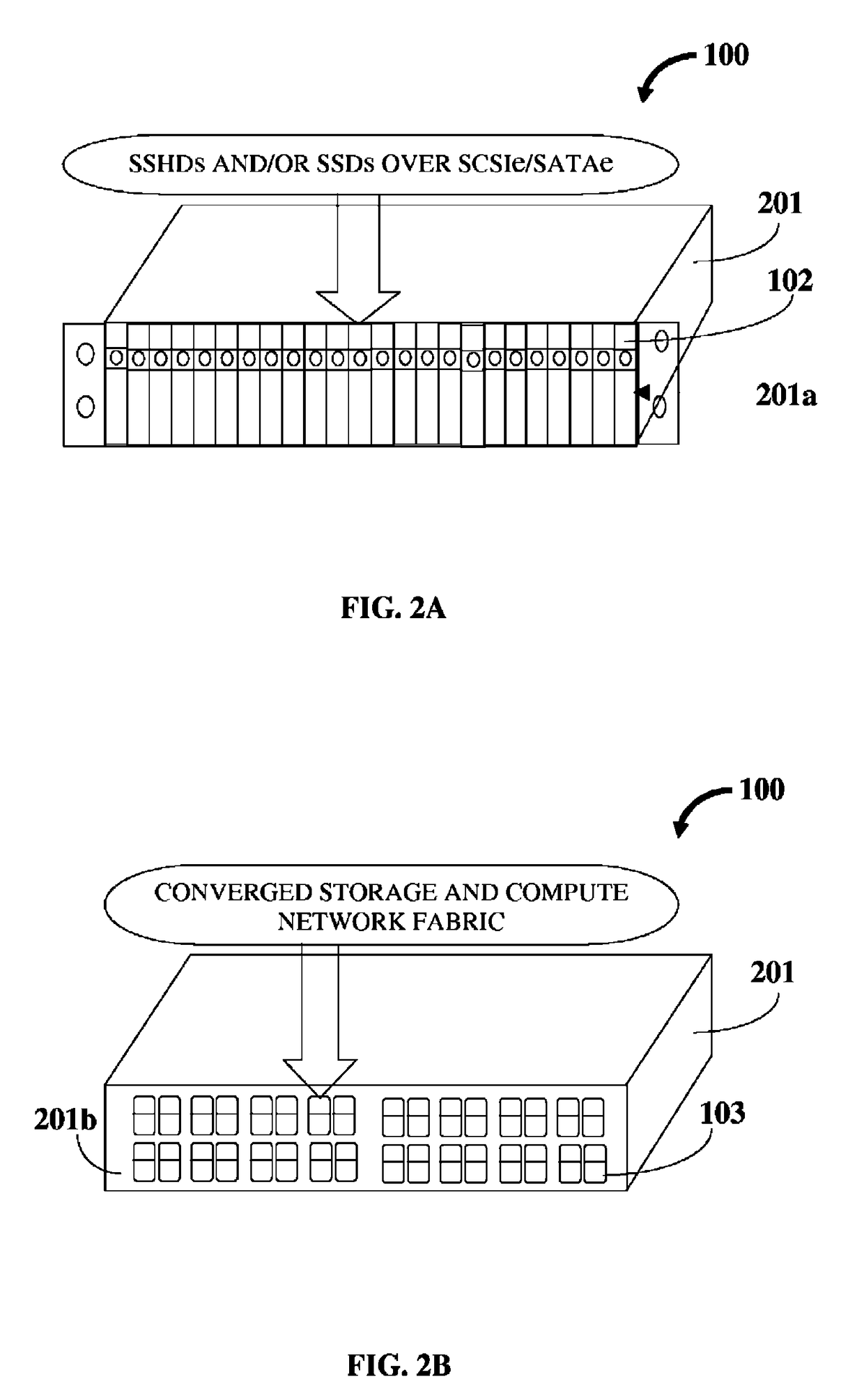

Unified Converged Network, Storage And Compute System

InactiveUS20160026592A1Function increaseHinder software implementationEnergy efficient computingSoftware simulation/interpretation/emulationComputer moduleNetwork switch

A unified converged network, storage and compute system (UCNSCS) converges functionalities of a network switch, a network router, a storage array, and a server in a single platform. The UCNSCS includes a system board, interface components free of a system on chip (SoC) such as a storage interface component and a network interface component operably connected to the system board, and a unified converged network, storage and compute application (UCNSCA). The storage interface component connects storage devices to the system board. The network interface component forms a network of UCNSCSs or connects to a network. The UCNSCA functions as a hypervisor that hosts virtual machines or as a virtual machine on a hypervisor and incorporates a storage module and a network module therewithin for controlling and managing operations of the UCNSCS and expanding functionality of the UCNSCS to operate as a converged network switch, network router, and storage array.

Owner:CIMWARE PVT LTD

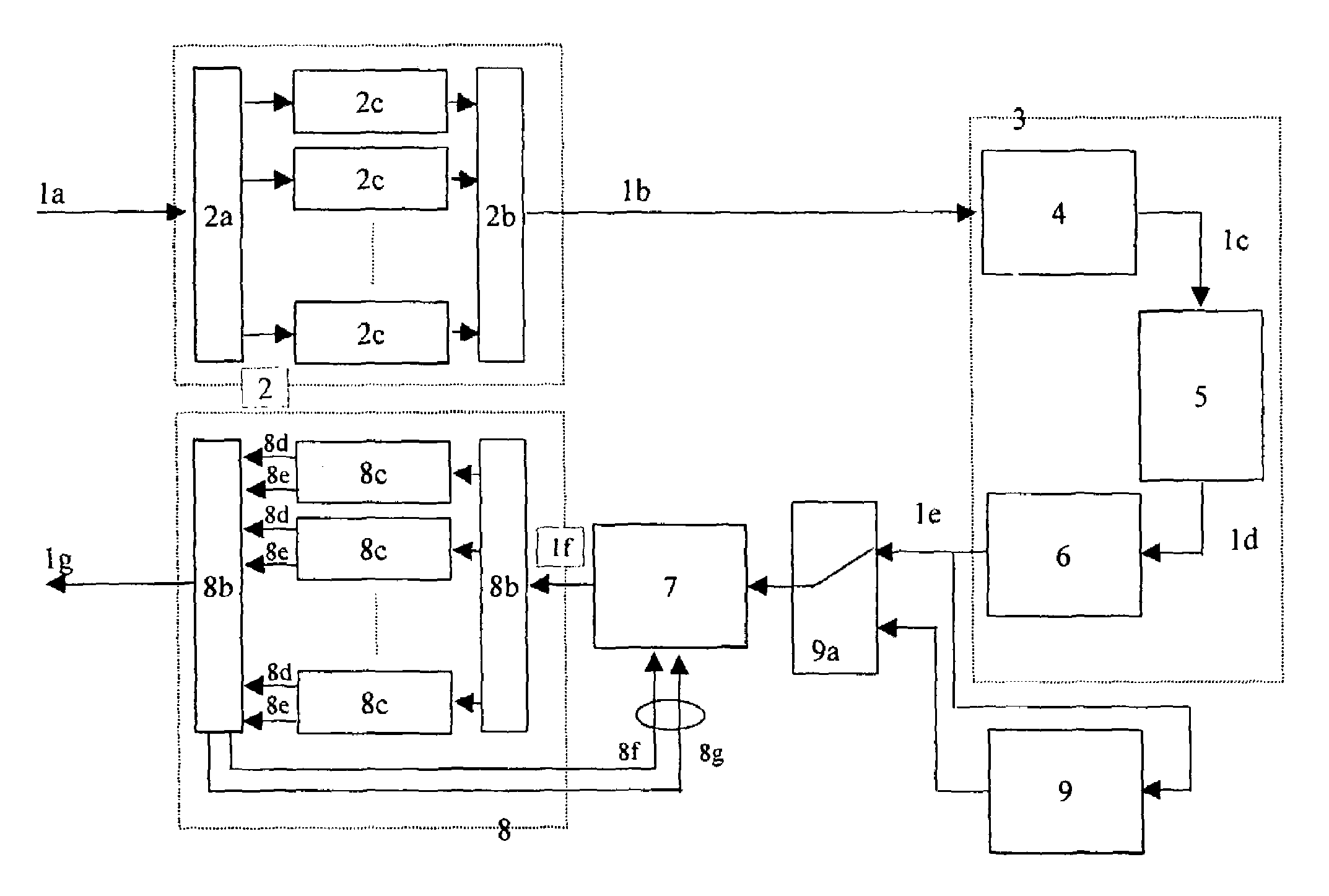

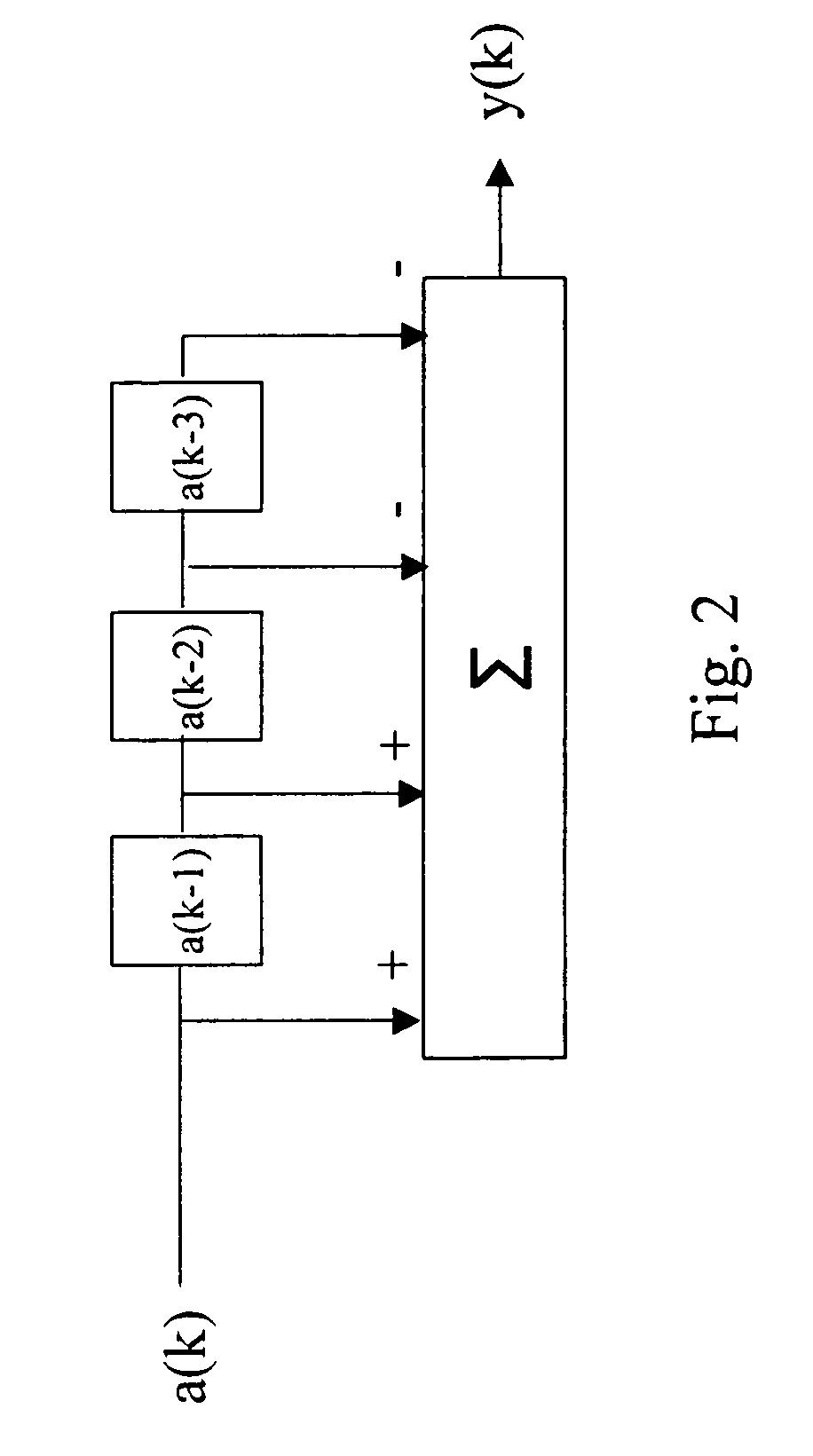

Data recording/readback method and data recording/readback device for the same

InactiveUS7076721B2Accurately decodeHigh recording densityModification of read/write signalsOther decoding techniquesTheoretical computer scienceData recording

The present invention discloses an information processing method including the following steps:(1) a first step receiving an encoded information data series as input;(2) a second step selecting a candidate decoded data code series from a first candidate decoded data code series group, decoding the encoded information data series, and generating a first decoded data code series;(3) a third step detecting a position and contents of erroneous decoded data codes in the first decoded data code series that cannot exist in the information data code;(4) a fourth step correcting the erroneous decoded data code and generating a corrected data code;(5) a fifth step selecting a single decoded data code series out of a second candidate decoded data code series group, decoding the encoded information data code series again, and generating a second decoded data code series;(6) The second candidate decode data code series group includes candidate decoded data code series from the first candidate decoded data code series group that fulfills at least one of the following conditions:1. A candidate decoded data code series that does not contain erroneous decoded data codes that were detected at the third step and that could not be corrected at the fourth step.2. A candidate data code series that contains: data codes that were determined at the third step to not contain erroneous decoded data codes; and corrected data codes corrected at the fourth step.

Owner:HITACHI GLOBAL STORAGE TECH JAPAN LTD +1

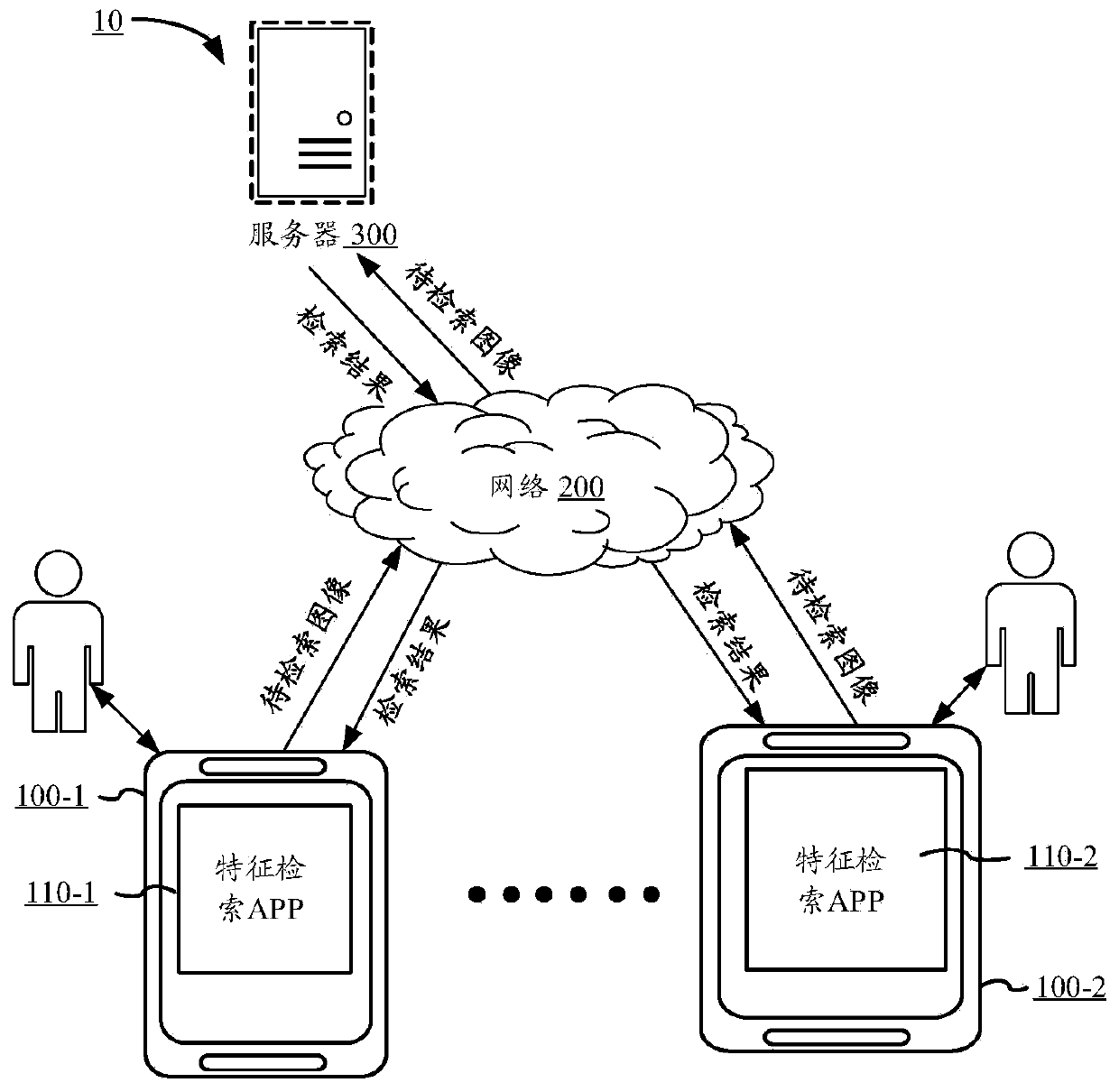

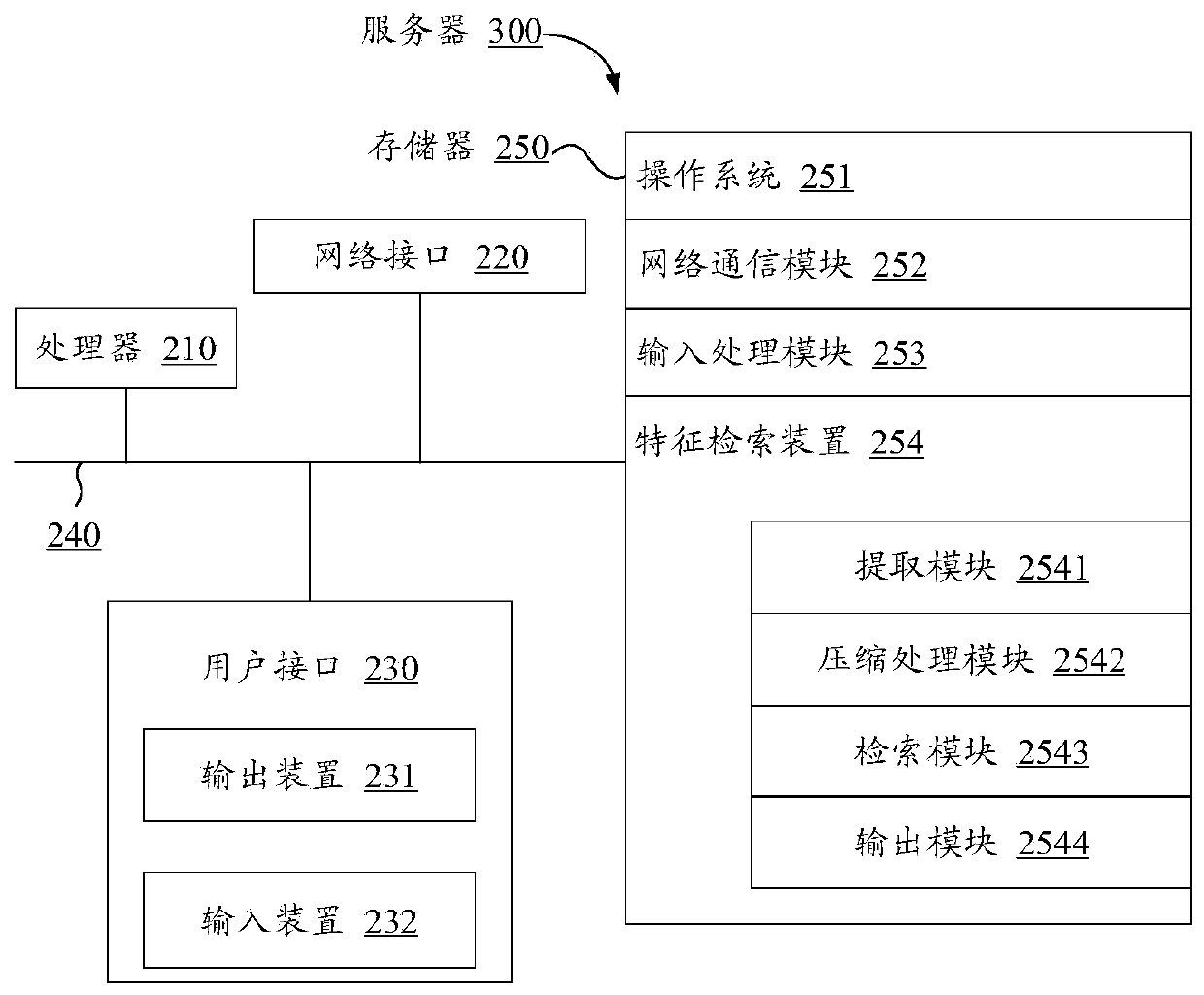

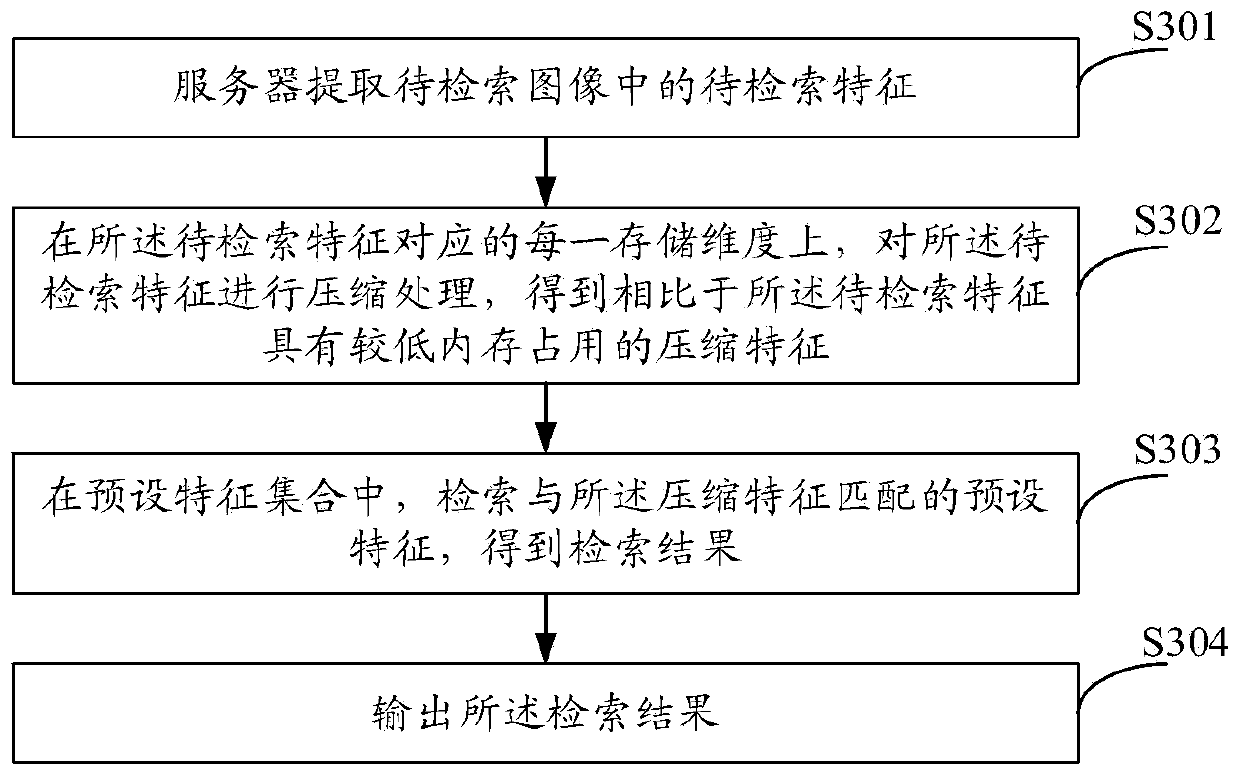

Feature retrieval method and device and storage medium

PendingCN110647649AReduce memory usageReduce data accessDigital data information retrievalSpecial data processing applicationsComputer visionFeature set

The embodiment of the invention provides a feature retrieval method and device and a storage medium, and the method comprises the steps: extracting a to-be-retrieved feature in a to-be-retrieved image; on each storage dimension corresponding to the to-be-retrieved feature, performing compression processing on the to-be-retrieved feature to obtain a compression feature with lower memory occupationcompared with the to-be-retrieved feature; retrieving preset features matched with the compression features in a preset feature set to obtain a retrieval result; outputting the retrieval result. The space consumption of feature storage can be reduced, the feature retrieval time delay is reduced, and the feature retrieval throughput is improved.

Owner:TENCENT CLOUD COMPUTING BEIJING CO LTD

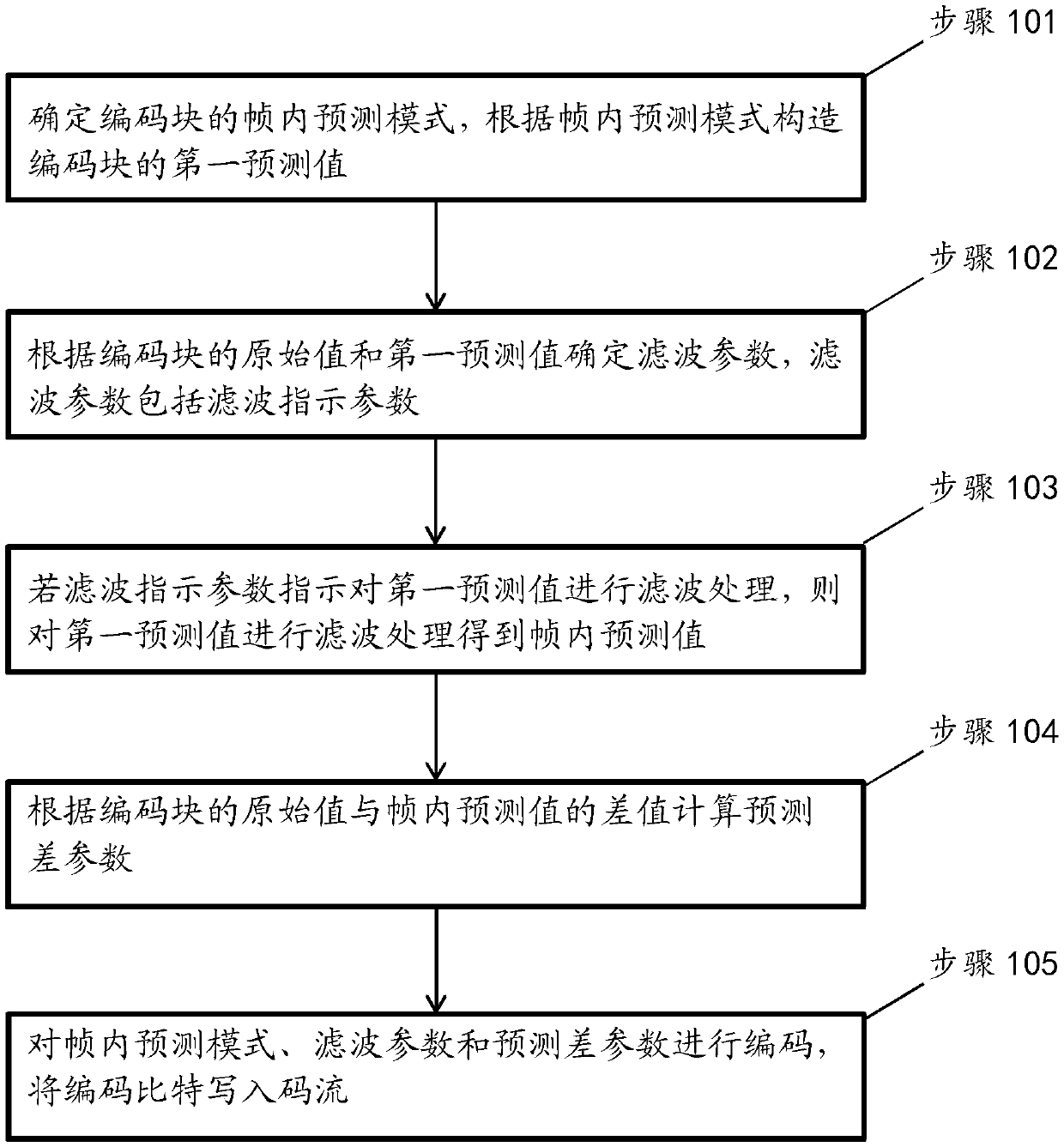

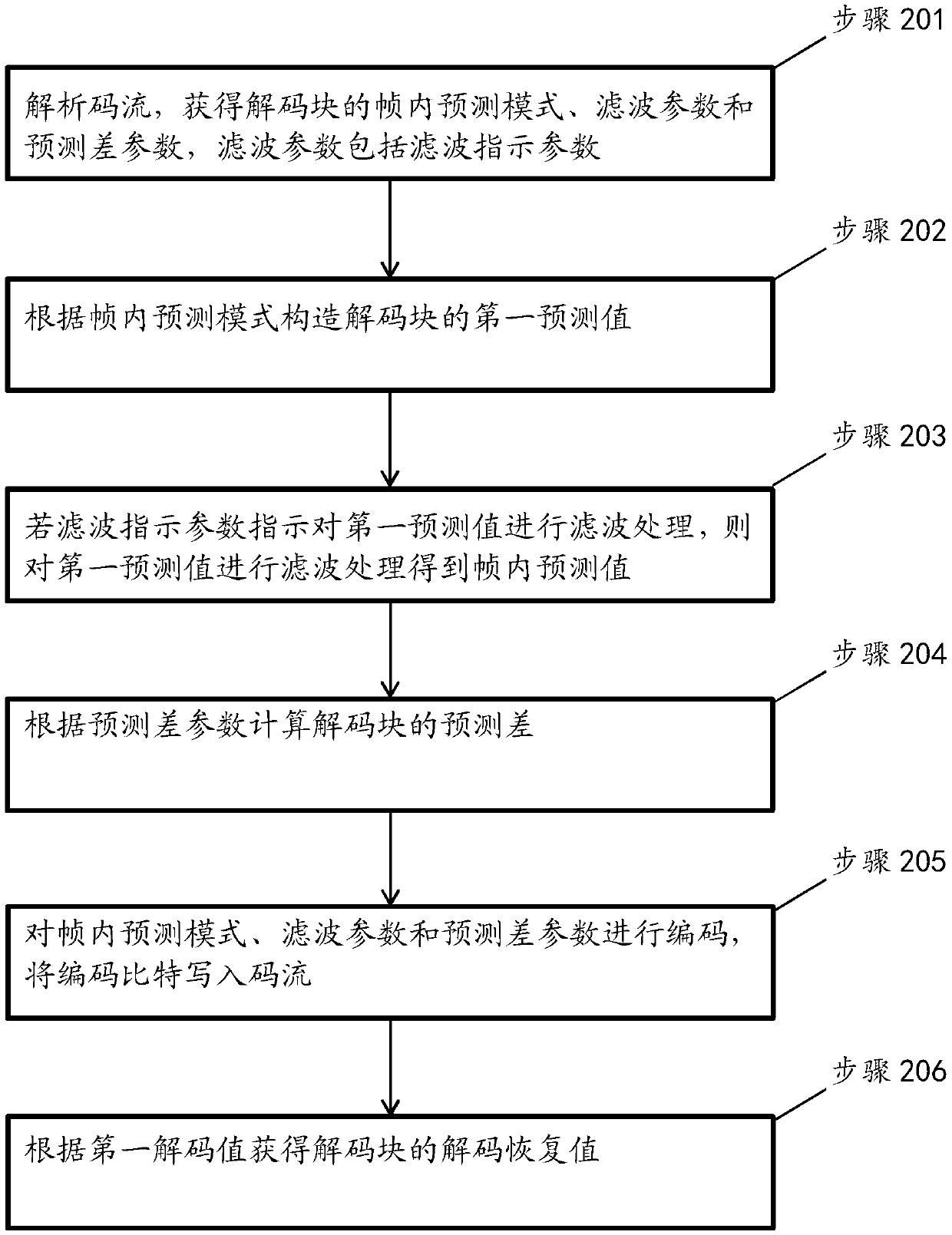

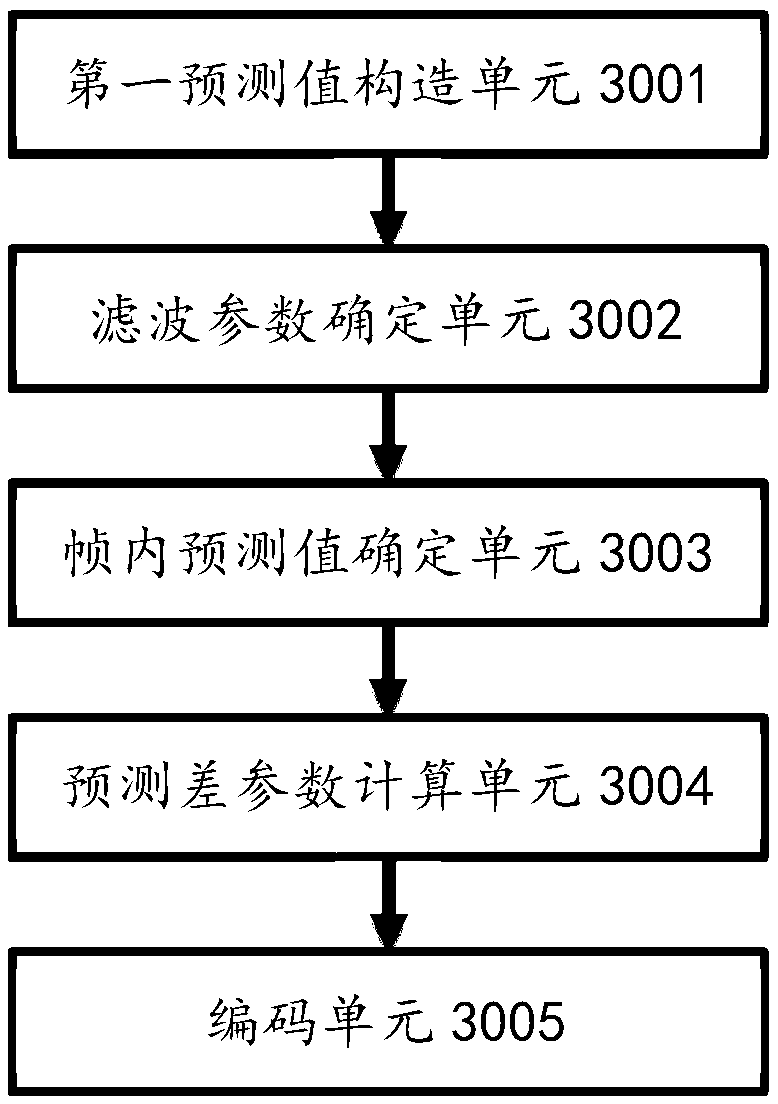

Image encoding method, image decoding method, encoder, decoder and storage medium

PendingCN110650349AReduce in quantityReduce overheadDigital video signal modificationCoding blockEncoder decoder

Provided in an embodiment of the present invention is an image encoding method, comprising: determining an intra prediction mode of an encoded block, and constructing a first prediction value of the encoded block according to the intra prediction mode; determining a filtering parameter according to the original value of the coding block and the first prediction value, wherein the filtering parameter comprises a filtering indication parameter; if the filtering indication parameter indicates to perform filtering processing on the first prediction value, performing filtering processing on the first prediction value to obtain an intra-frame prediction value; calculating a prediction difference parameter according to the difference between the original value of the coding block and the intra-frame prediction value; and encoding the intra-frame prediction mode, the filtering parameter and the prediction difference parameter, and writing encoding bits into a code stream. The invention furtherprovides an image decoding method, an encoder, a decoder and a computer storage medium.

Owner:ZTE CORP

Distributed data management system

ActiveUS8504521B2Reduce data accessControl performanceDigital data information retrievalDigital data processing detailsExtensibilityData management

A distributed data management system has multiple virtual machine nodes operating on multiple computers that are in communication with each other over a computer network. Each virtual machine node includes at least one data store or “bucket” for receiving data. A digital hash map data structure is stored in a computer readable medium of at least one of the multiple computers to configure the multiple virtual machine nodes and buckets to provide concurrent, non-blocking access to data in the buckets, the digital hash map data structure including a mapping between the virtual machine nodes and the buckets. The distributed data management system employing dynamic scalability in which one or more buckets from a virtual machine node reaching a memory capacity threshold are transferred to another virtual machine node that is below its memory capacity threshold.

Owner:GOPIVOTAL

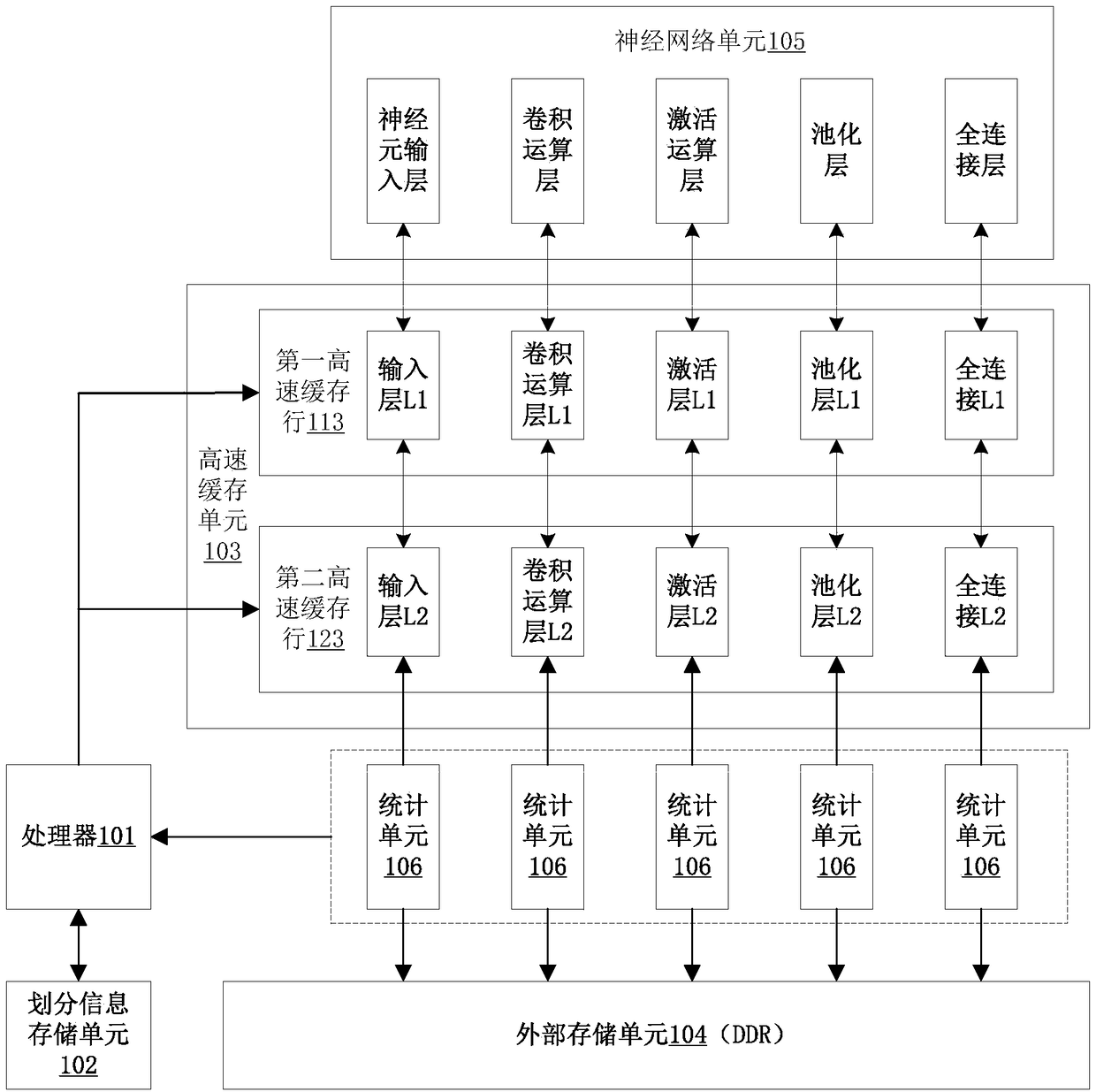

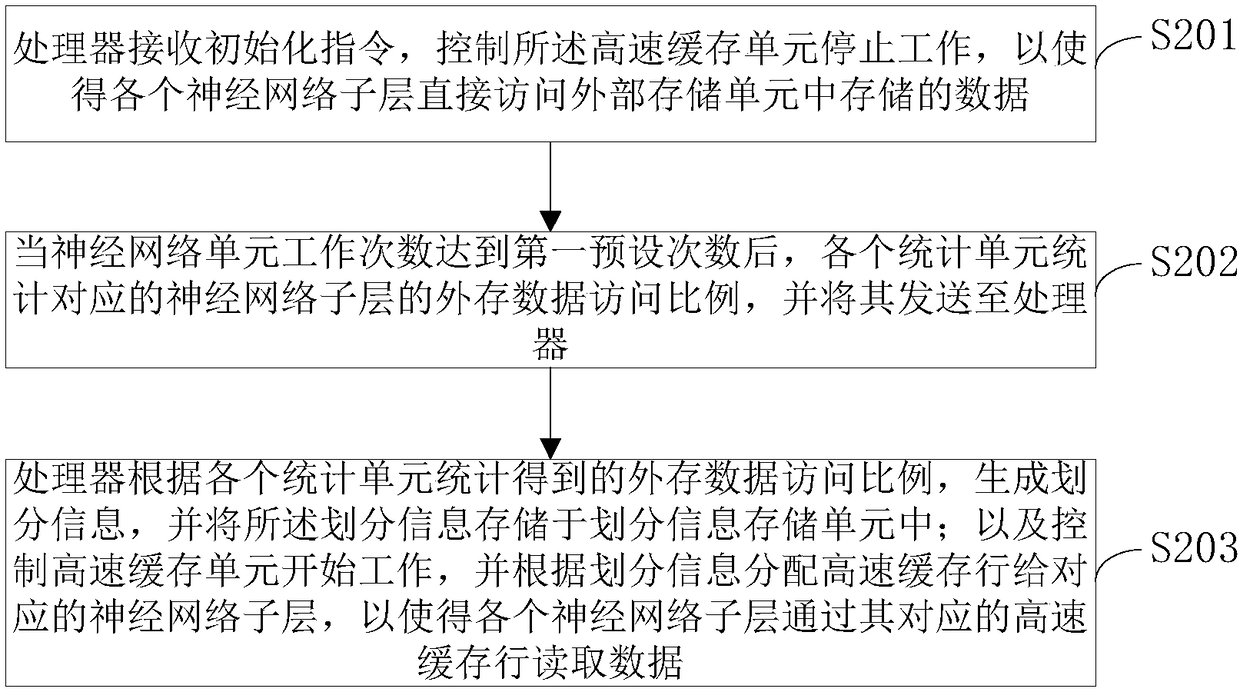

Deep learning chip based dynamic cache allocation method and device

ActiveCN108520296AReduce data accessImprove computing efficiencyNeural architecturesPhysical realisationExternal storageNerve network

The invention provides a deep learning chip based dynamic cache allocation method and device. With a cache unit, the device enables a large amount of data access of the neural network to be completedinside the chip, which reduces the data access of the neural network to the external storage, reduces the bandwidth requirement for external storage, and finally achieves the purpose of reducing the bandwidth. At the same time, the allocation of the cache unit ratio is determined according to the external data throughput of each neural network sub-layer, so that the limited cache space is more rationally allocated, and the computational efficiency of the neural network is effectively improved.

Owner:FUZHOU ROCKCHIP SEMICON

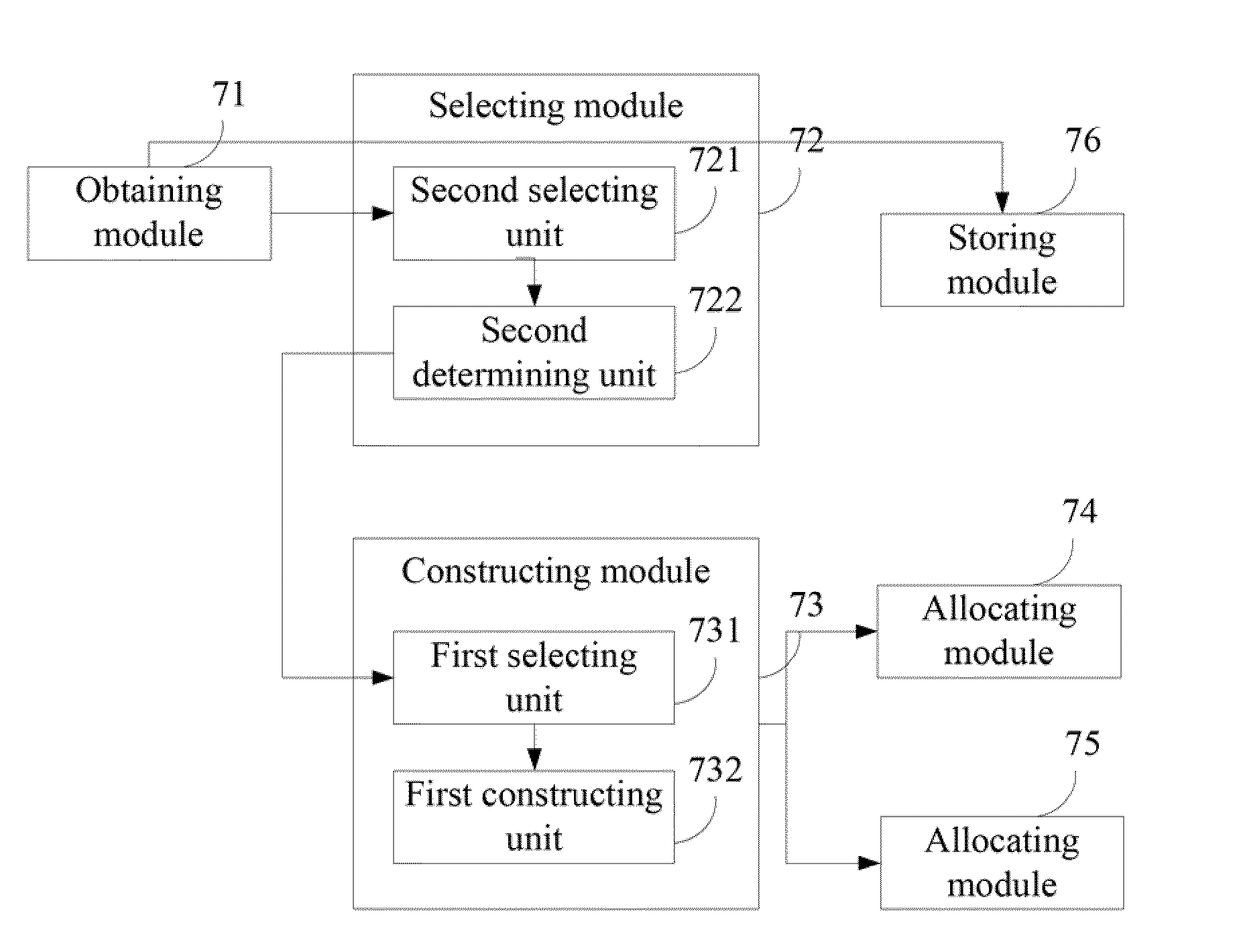

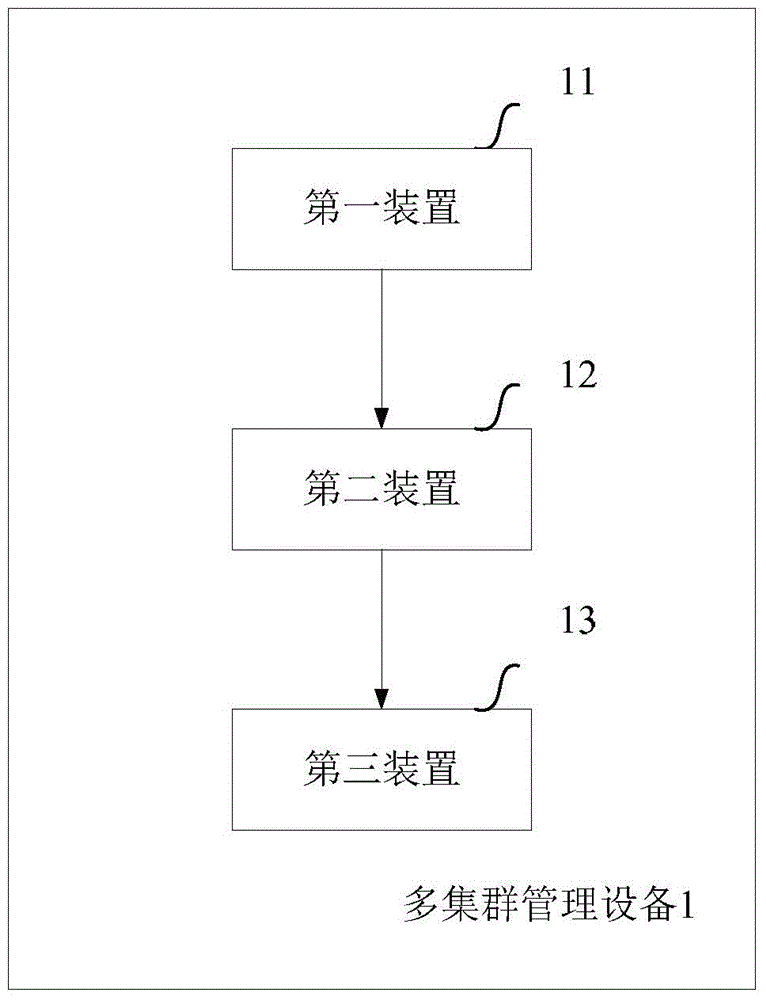

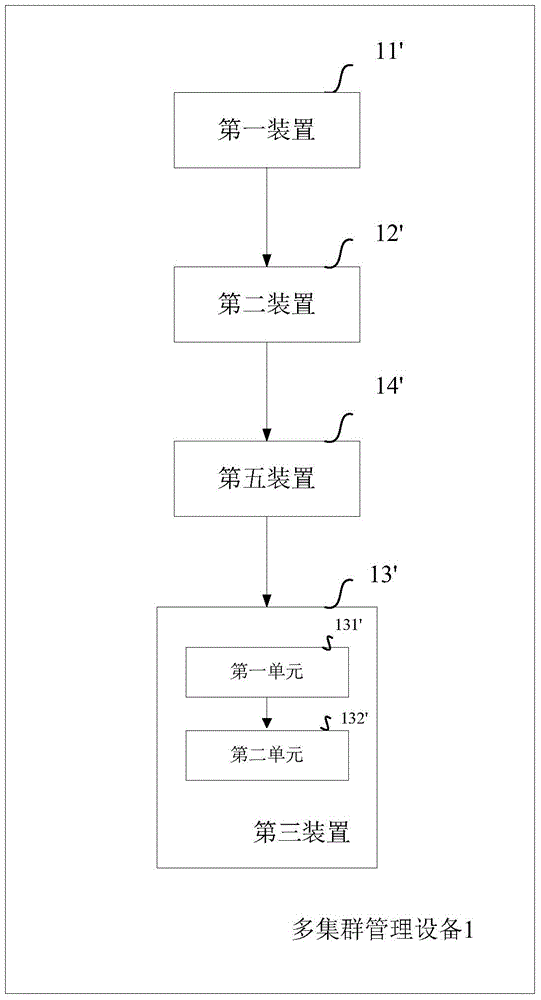

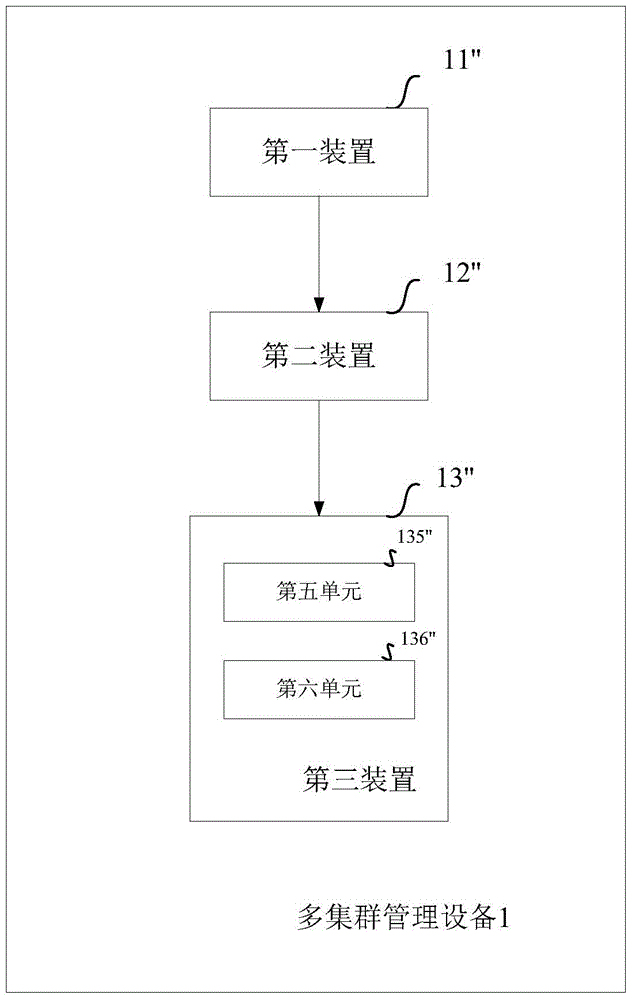

Multi-cluster management method and device

ActiveCN106161525ARealize rationalityImplement configurationData switching networksInstrumentsMulti clusterData access

The object of the invention is to provide a multi-cluster management method and device. The multi-cluster management method comprises the following steps: acquiring historical operation data of a plurality of clusters; determining future demand information of the plurality of clusters based on the historical operation data; and determining cluster configuration information of the plurality of clusters based on the future demand information. Compared with the prior art, in the multi-cluster management method provided by the invention, the acquired historical operation data of the plurality of clusters is processed and analyzed to acquire the future demand information of the plurality of clusters, and the cluster configuration information of the plurality of clusters is determined based on the future demand information. Based on the cluster configuration information, the multi-cluster management method provided by the invention can be used for realizing reasonable distribution and configuration of resources of the plurality of clusters in a cross-regional multi-cluster and large-scale data processing environment to achieve the balance optimization of global resources, and can also be used for efficiently realizing cross-cluster data access maximally under the allowance of resource conditions between the clusters.

Owner:ALIBABA GRP HLDG LTD

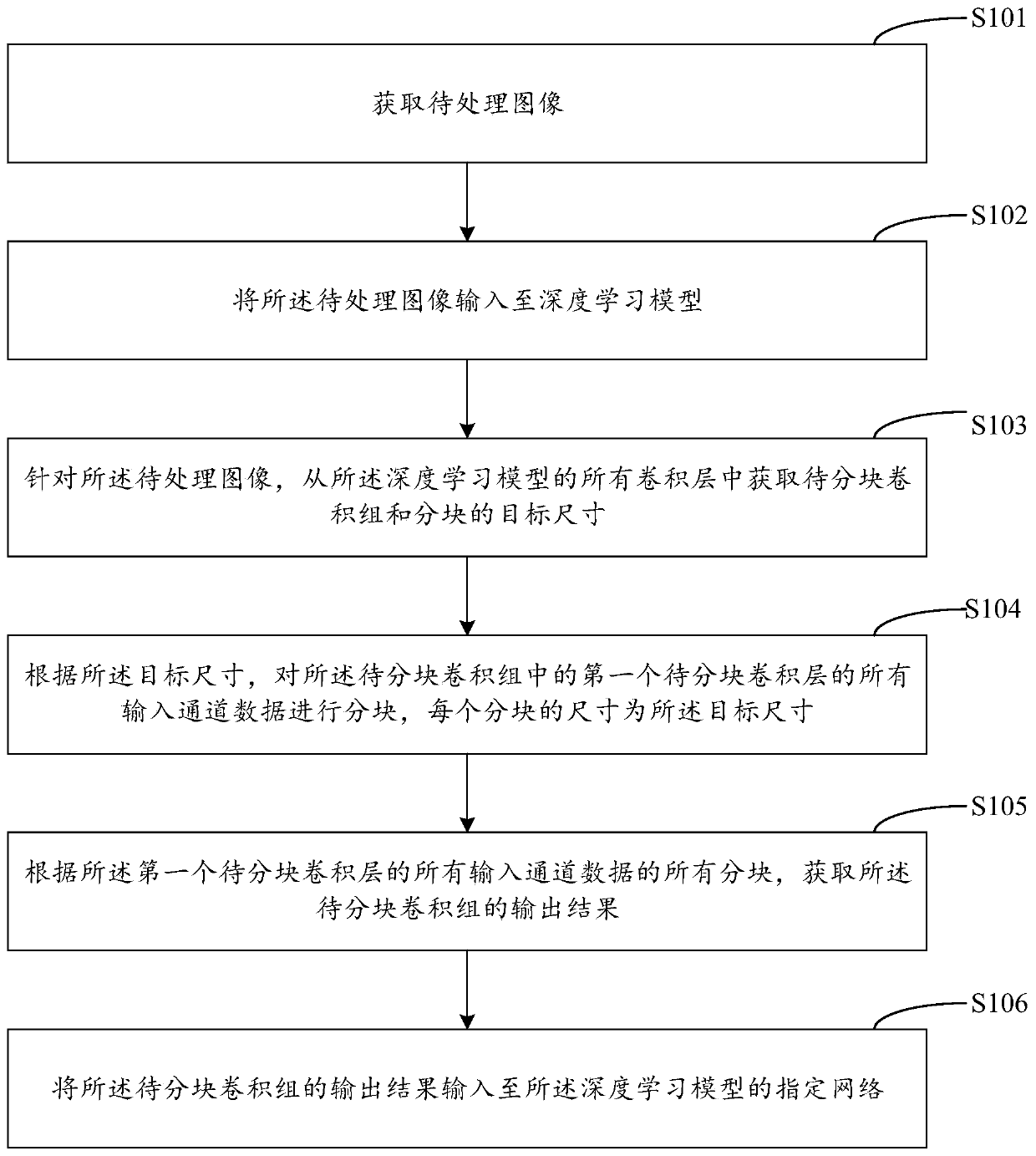

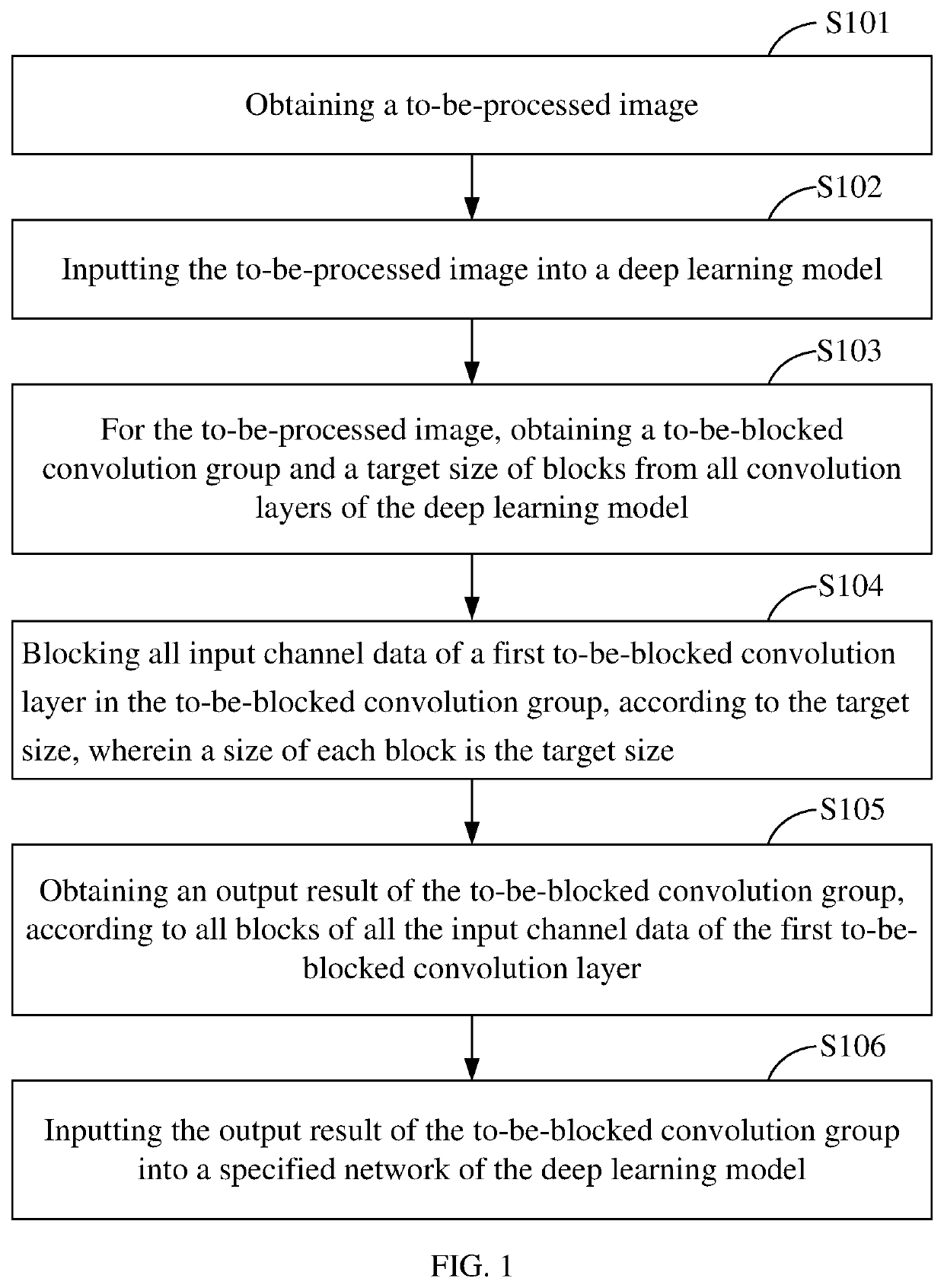

Convolution calculation method, convolution calculation device and terminal equipment

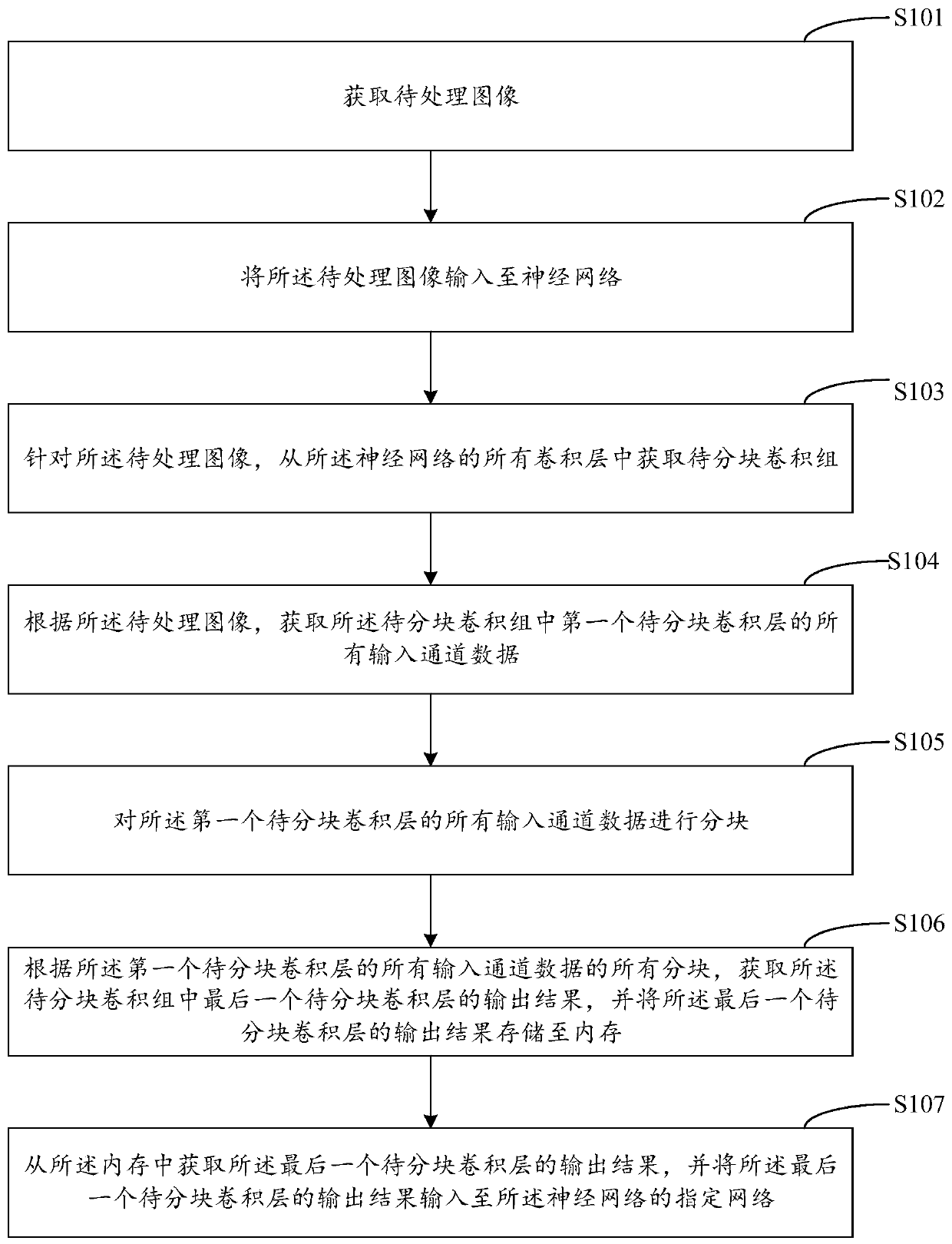

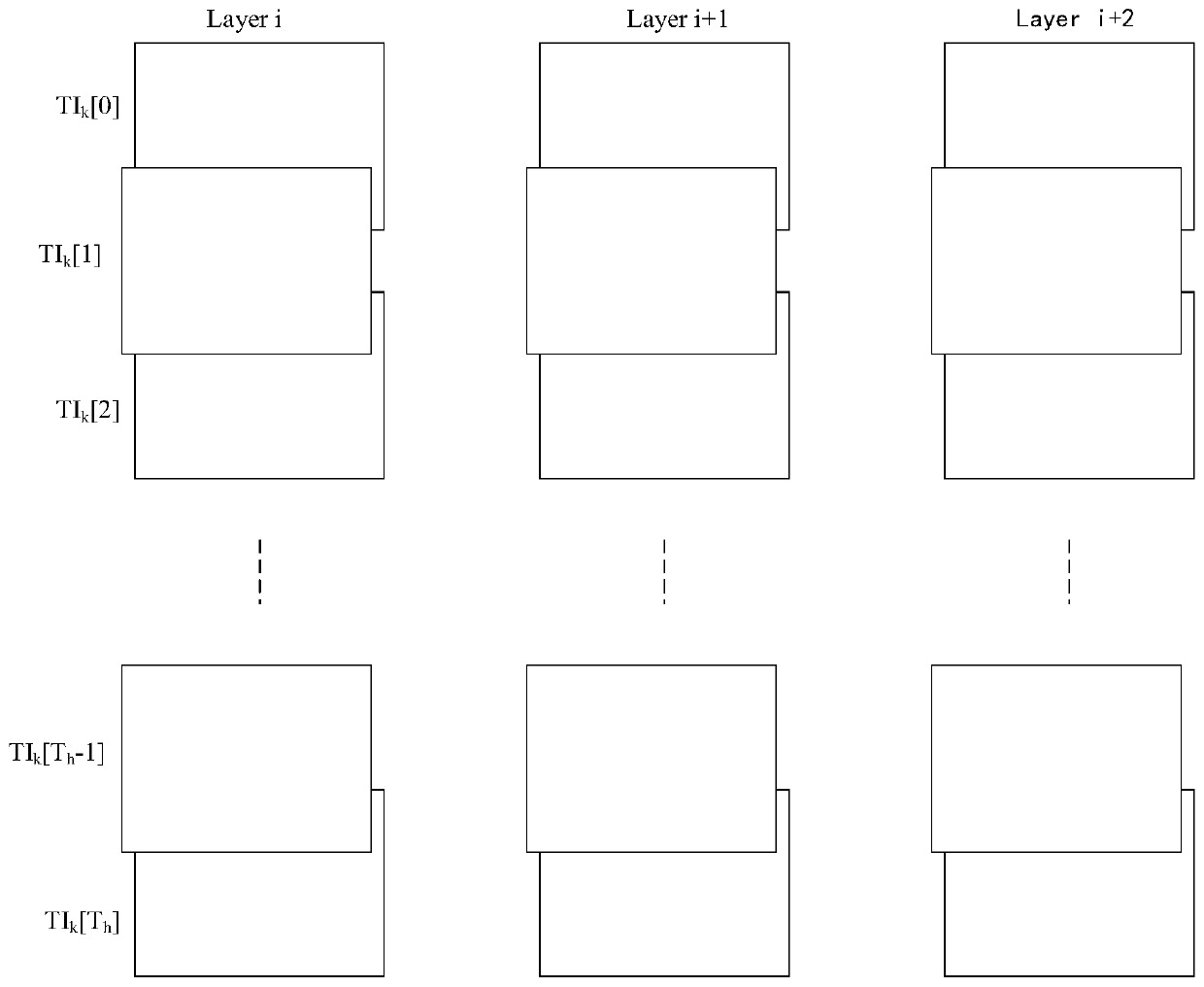

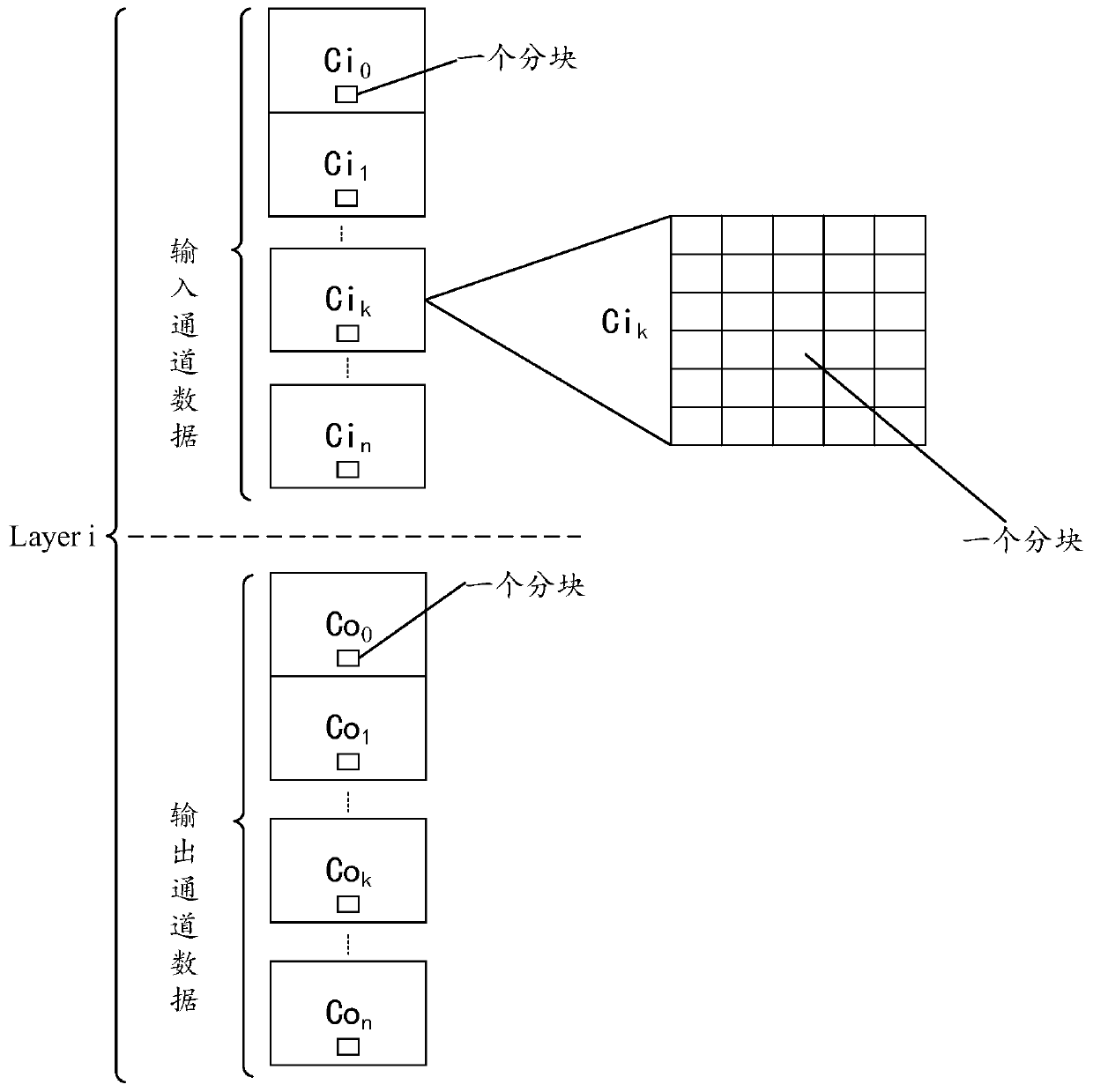

ActiveCN111210004AImprove processing efficiencyReduce data accessCharacter and pattern recognitionNeural architecturesChannel dataAlgorithm

The invention is suitable for the technical field of deep learning, and provides a convolution calculation method, a convolution calculation device, terminal equipment and a computer readable storagemedium, and the method comprises the steps: inputting a to-be-processed image into a deep learning model, and obtaining a to-be-blocked convolution group and a blocked target size from all convolutionlayers of the deep learning model; according to the target size, blocking all input channel data of a first to-be-blocked convolution layer in the to-be-blocked convolution group, and the size of each block being the target size; according to all blocks of all input channel data of the first to-be-blocked convolution layer, obtaining an output result of the to-be-blocked convolution group; and inputting an output result of the convolution group to be partitioned into a specified network of the deep learning model. According to the method, the bandwidth consumption can be adjusted by adjustingthe partitioning size of the convolution layer to be partitioned, and frequent updating and upgrading of the deep learning model are self-adapted.

Owner:SHENZHEN INTELLIFUSION TECHNOLOGIES CO LTD

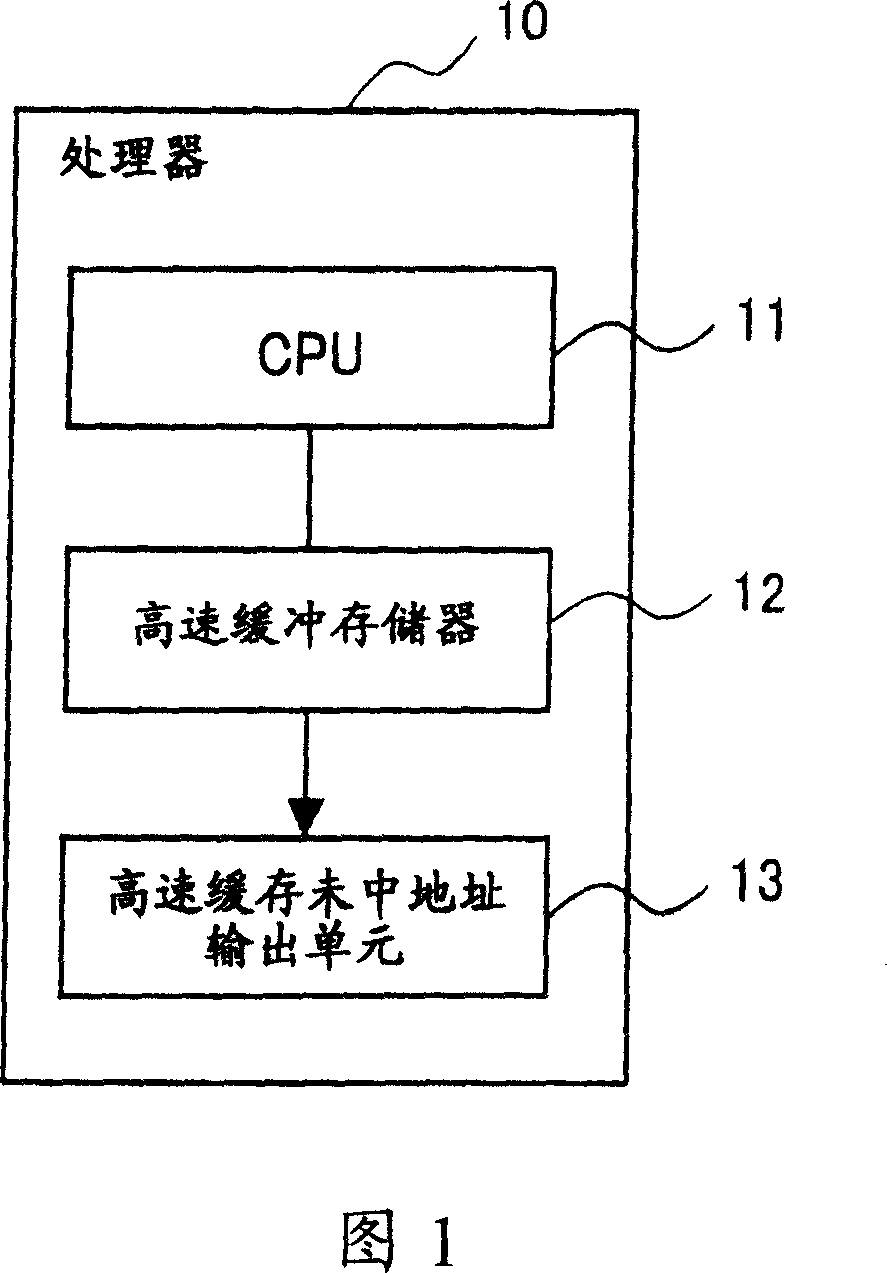

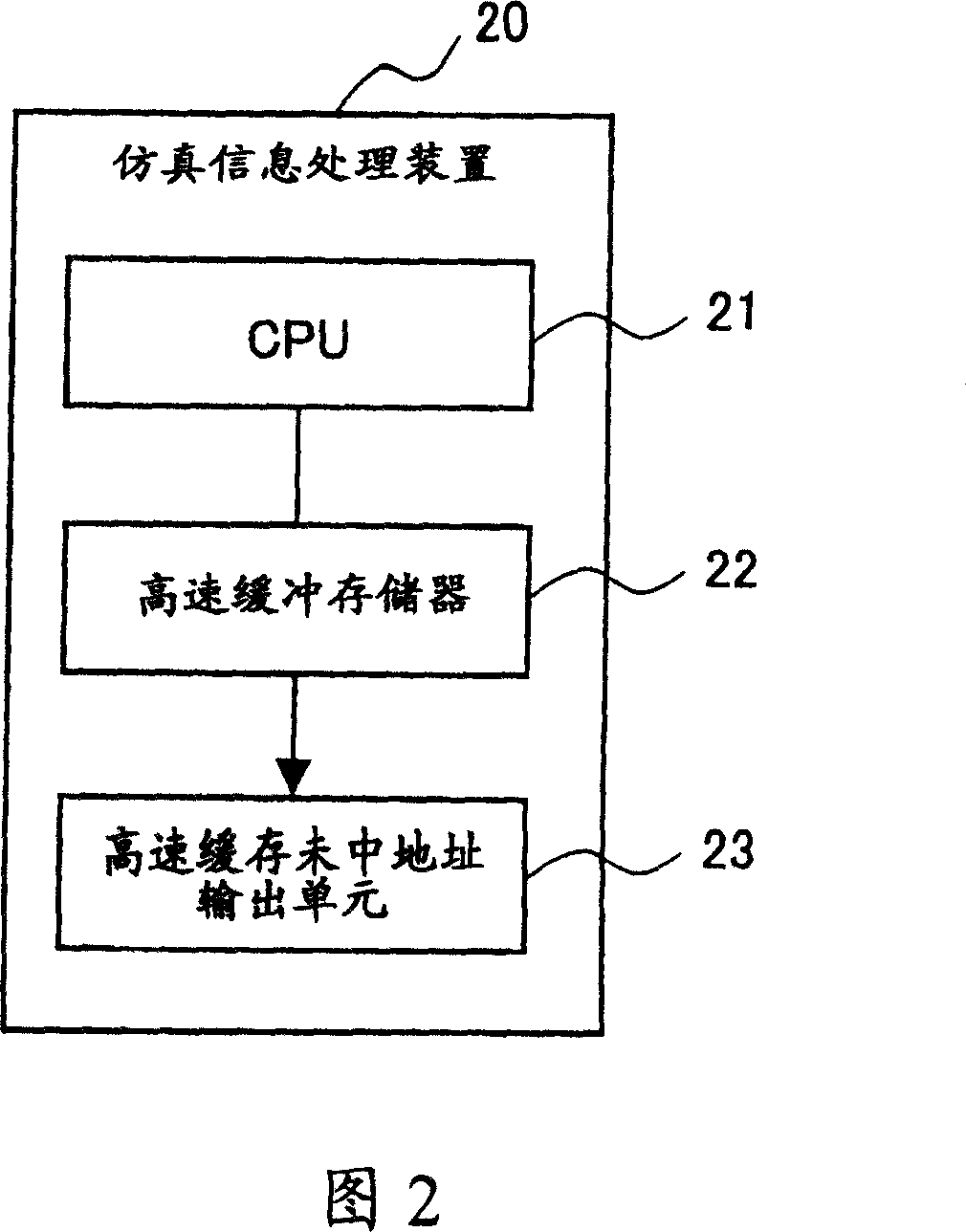

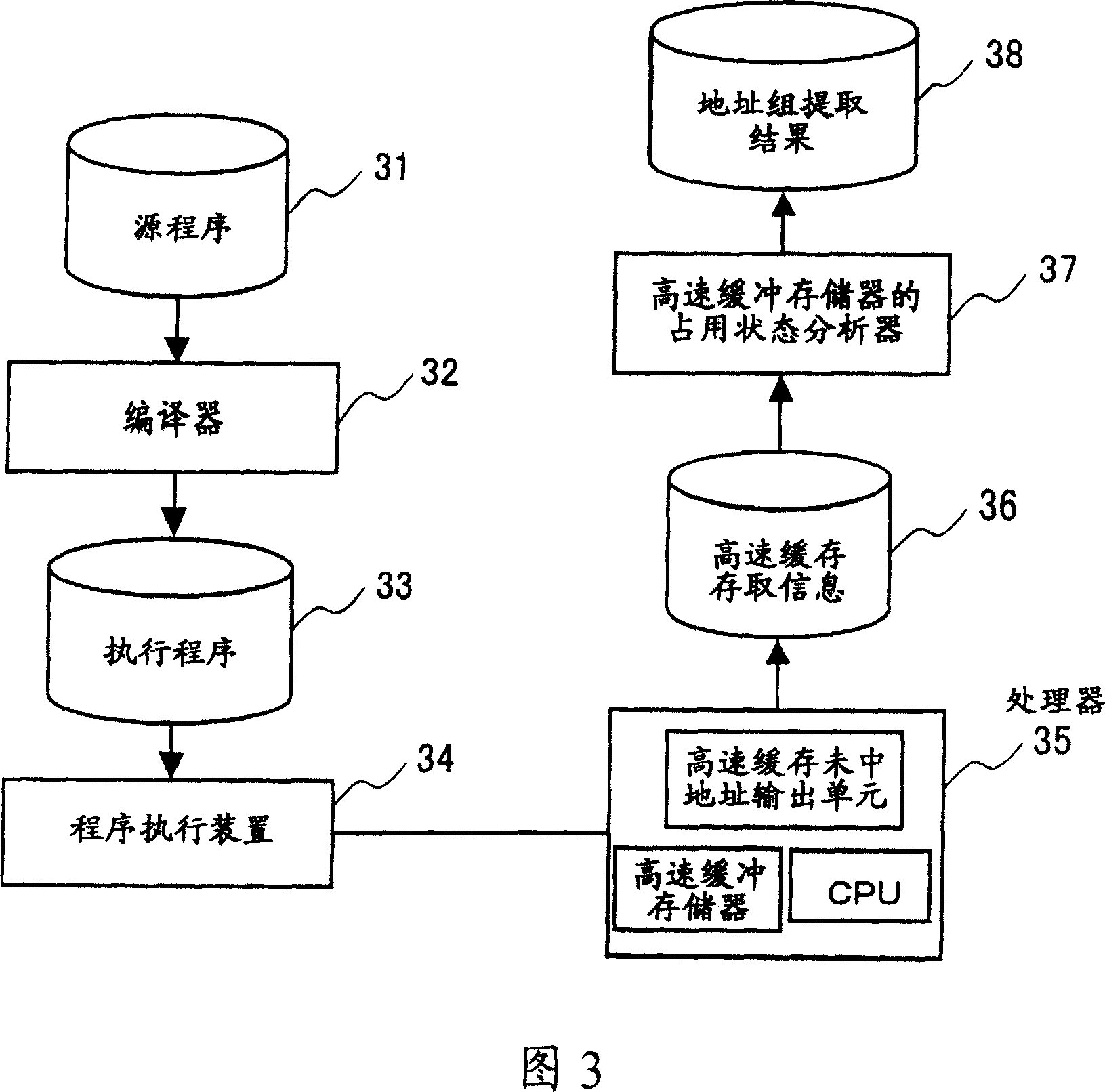

Cache memory analyzing method

ActiveCN1928841AReduce data accessEasy to handleMemory adressing/allocation/relocationMemory addressParallel computing

What is done is to read from the cache the information containing the memory address that caused the cache miss. The number of cache misses occurring at each cache miss occurrence address included in the information is totaled. The cache miss generation addresses where the number of generated cache misses are totaled are segmented for each set. Further, a group of addresses whose numbers of generated cache misses are identical or close is extracted from a plurality of cache miss generated addresses classified as addresses in the same set.

Owner:SOCIONEXT INC

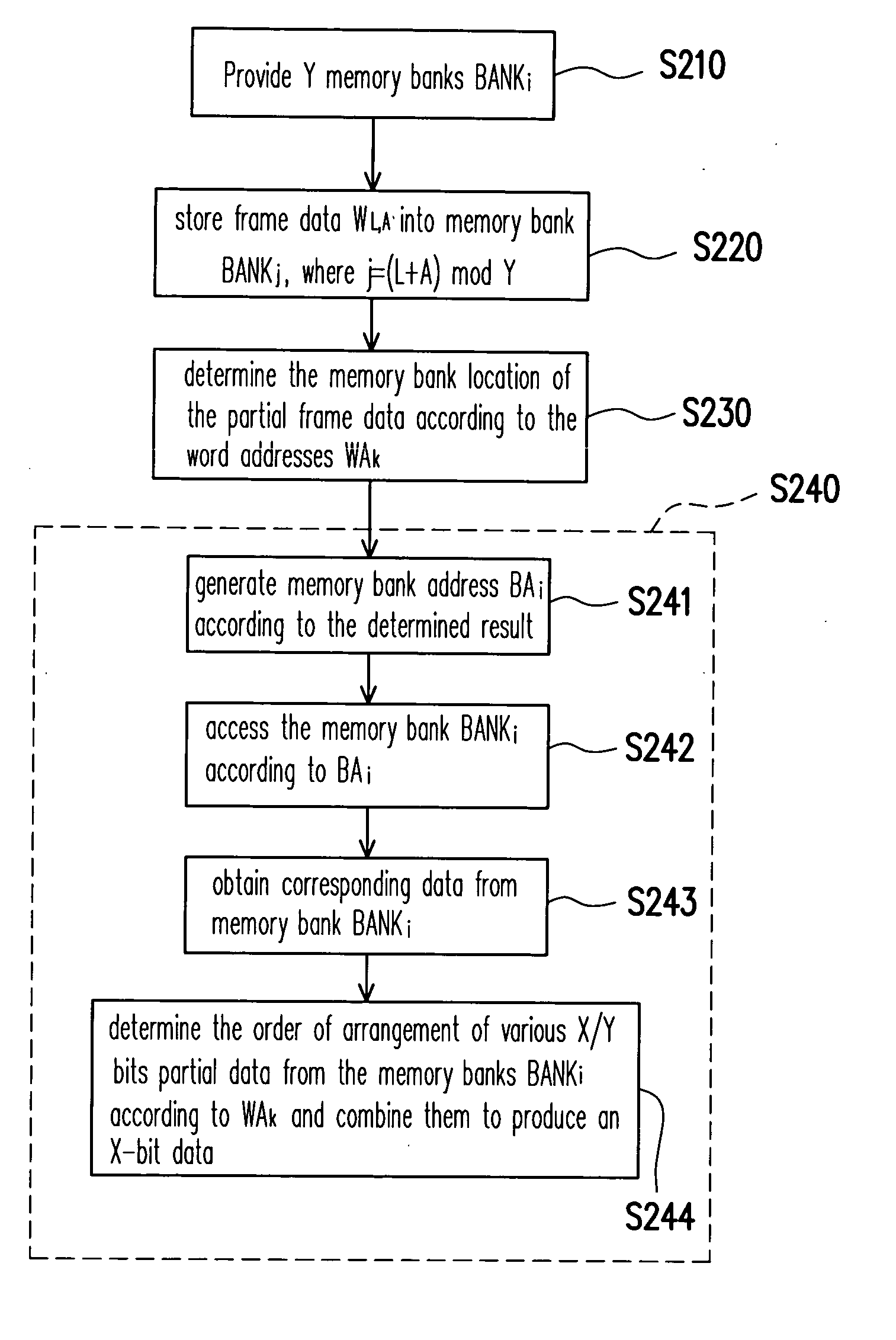

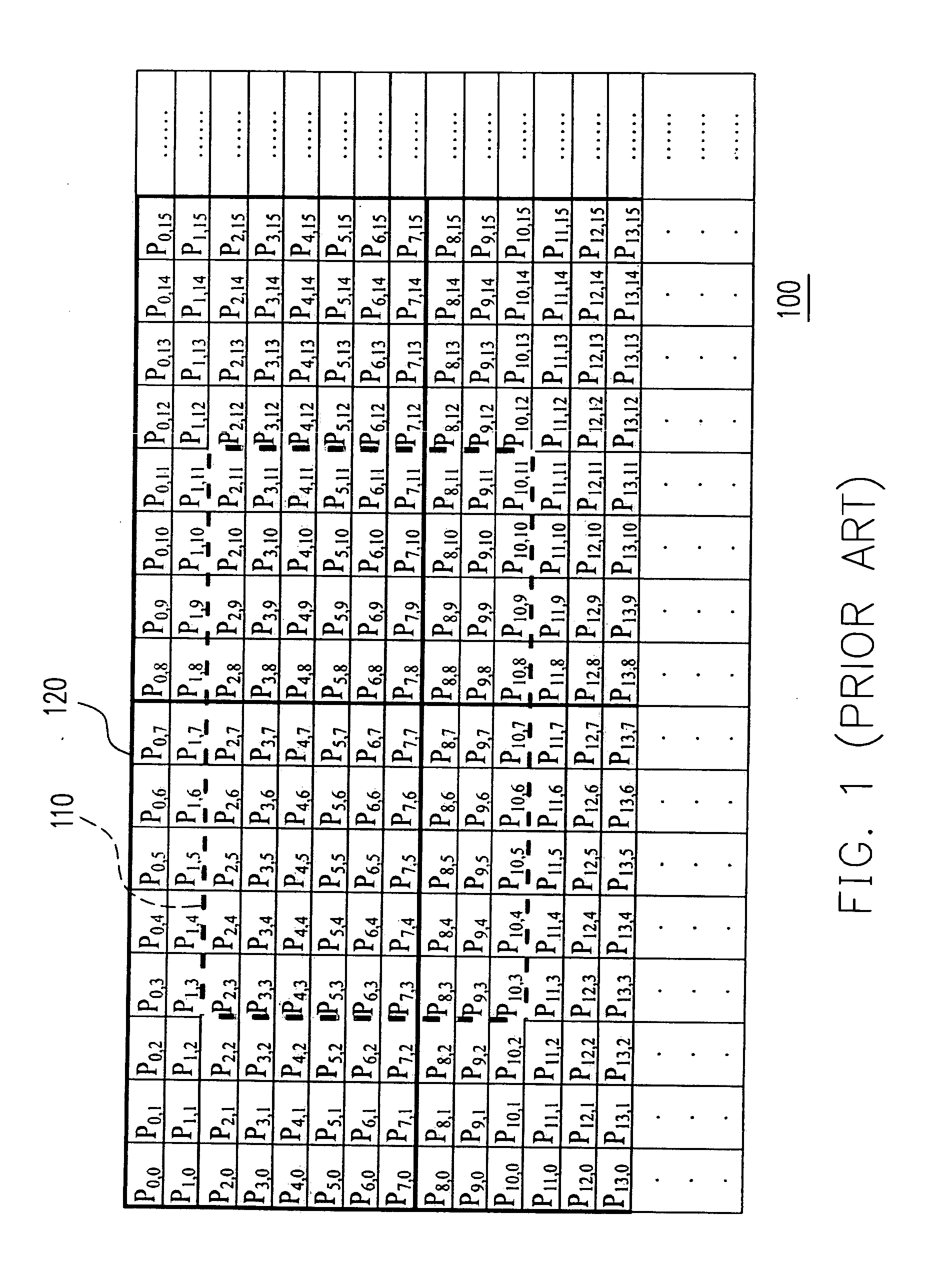

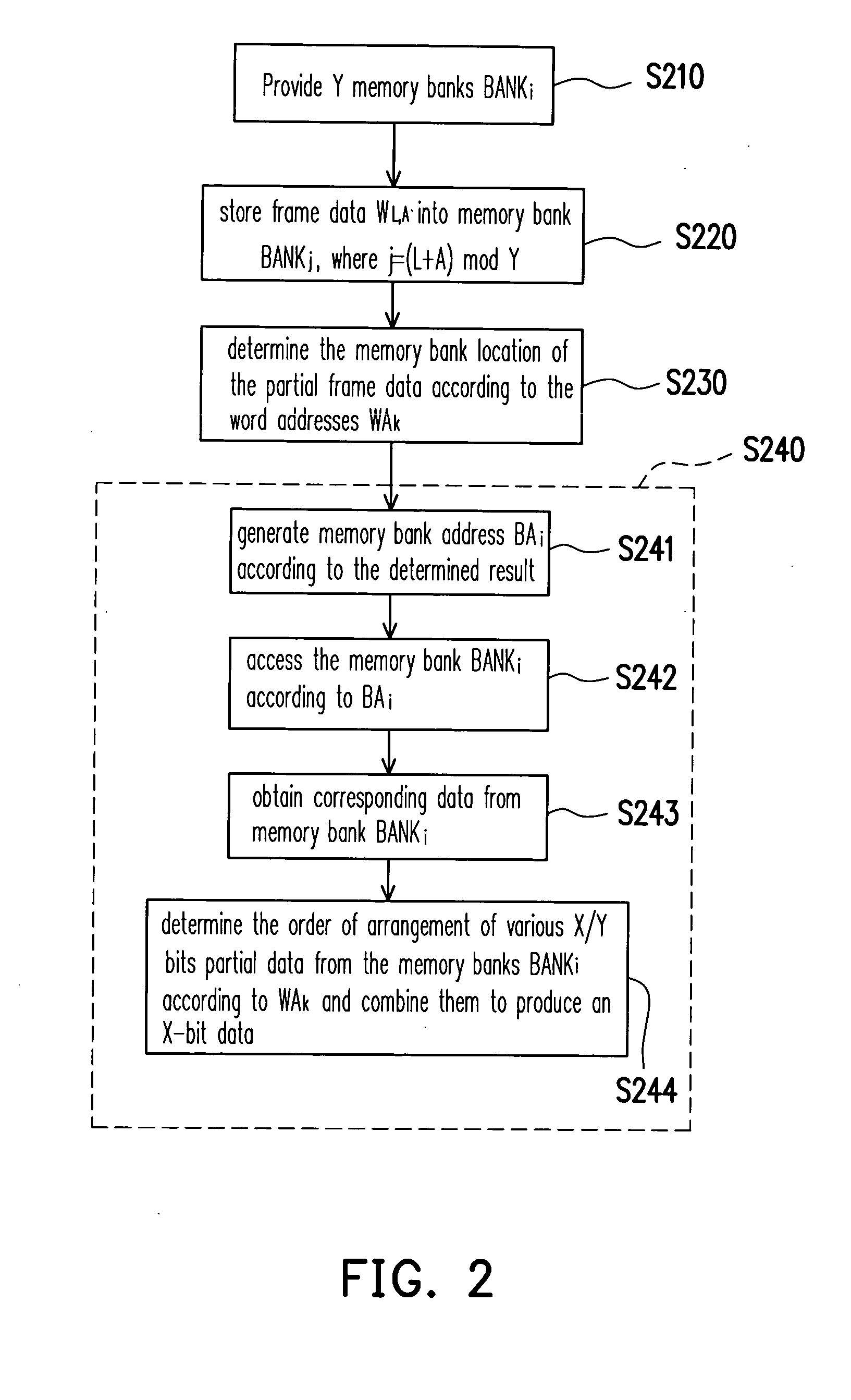

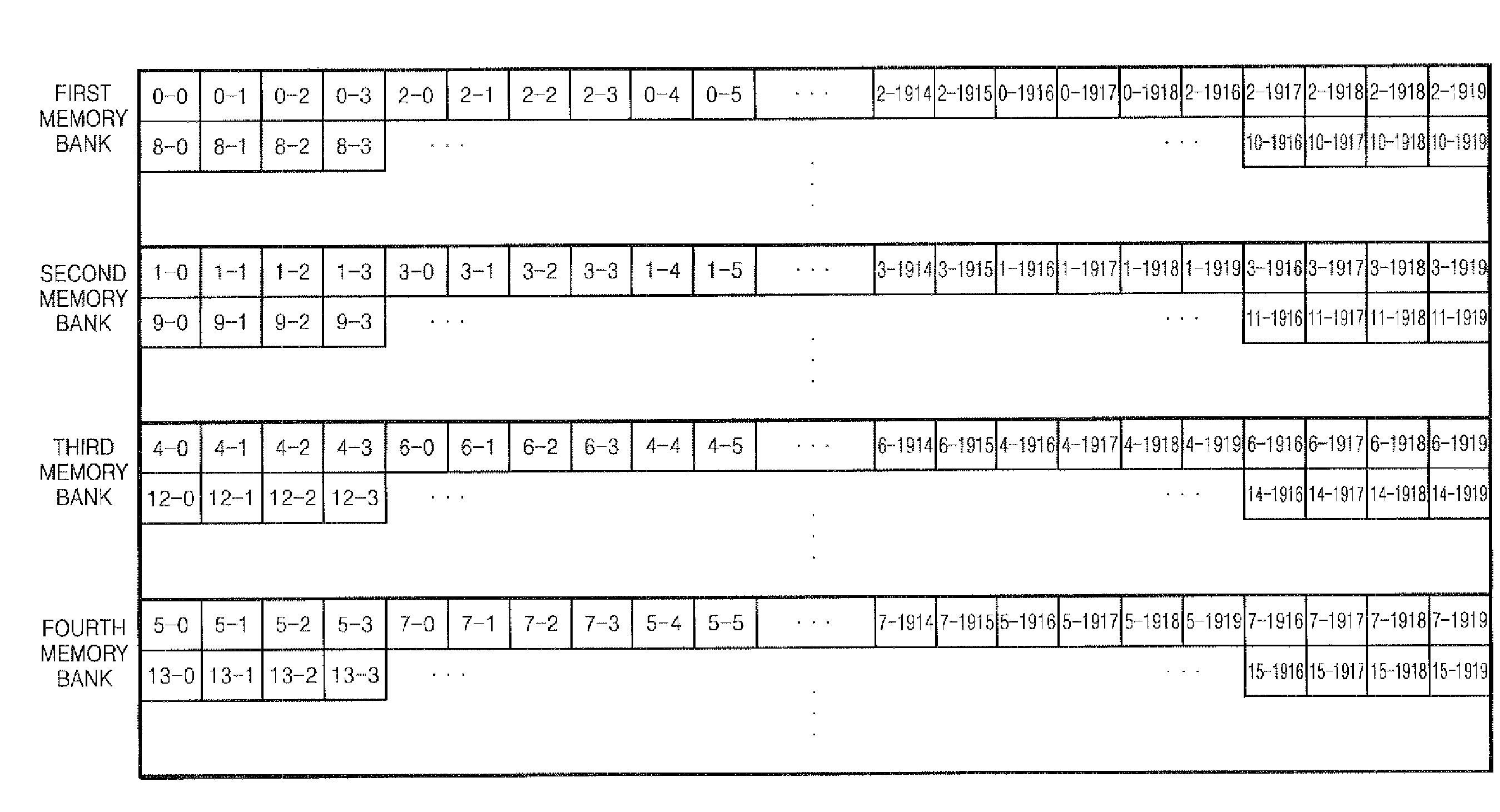

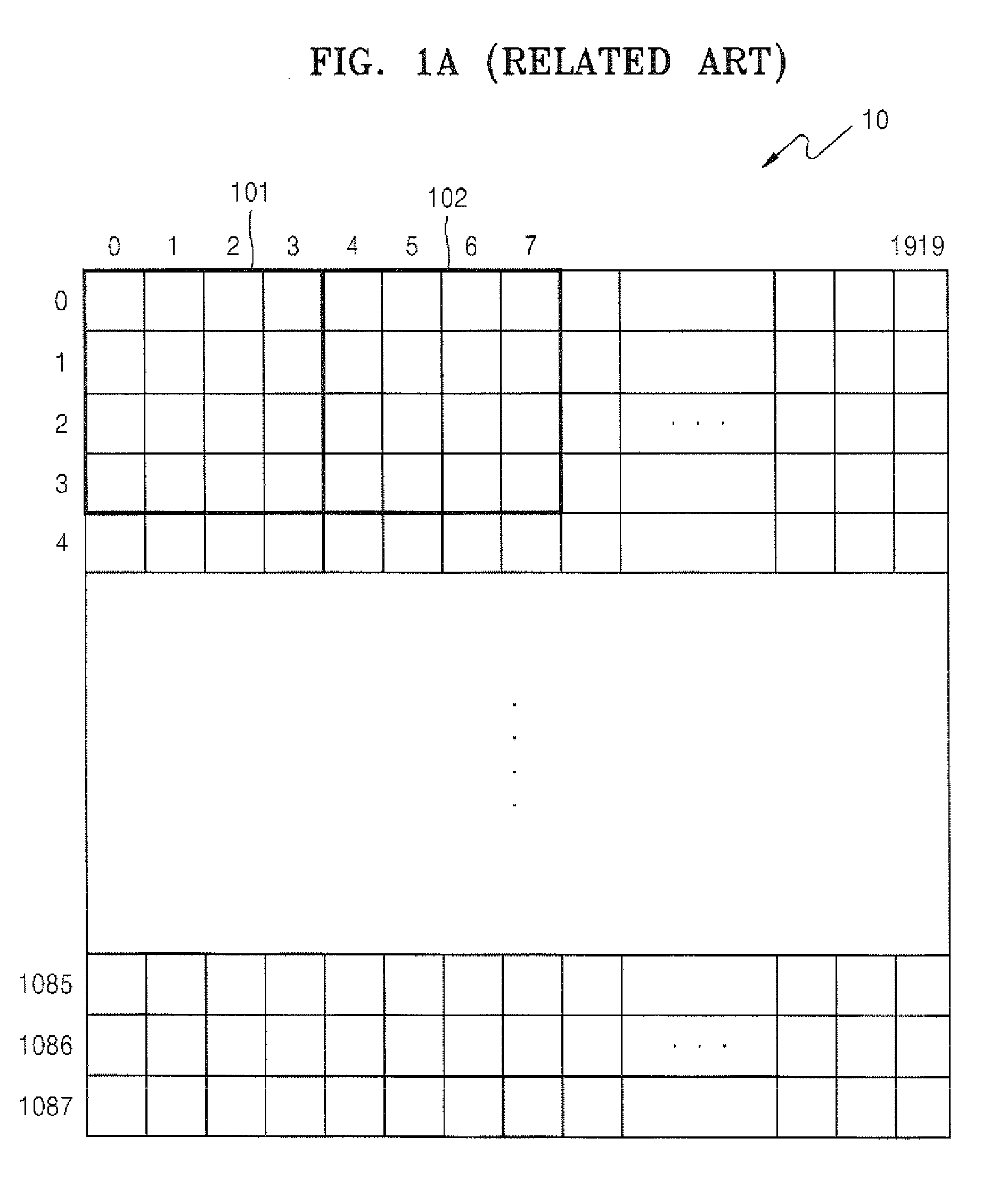

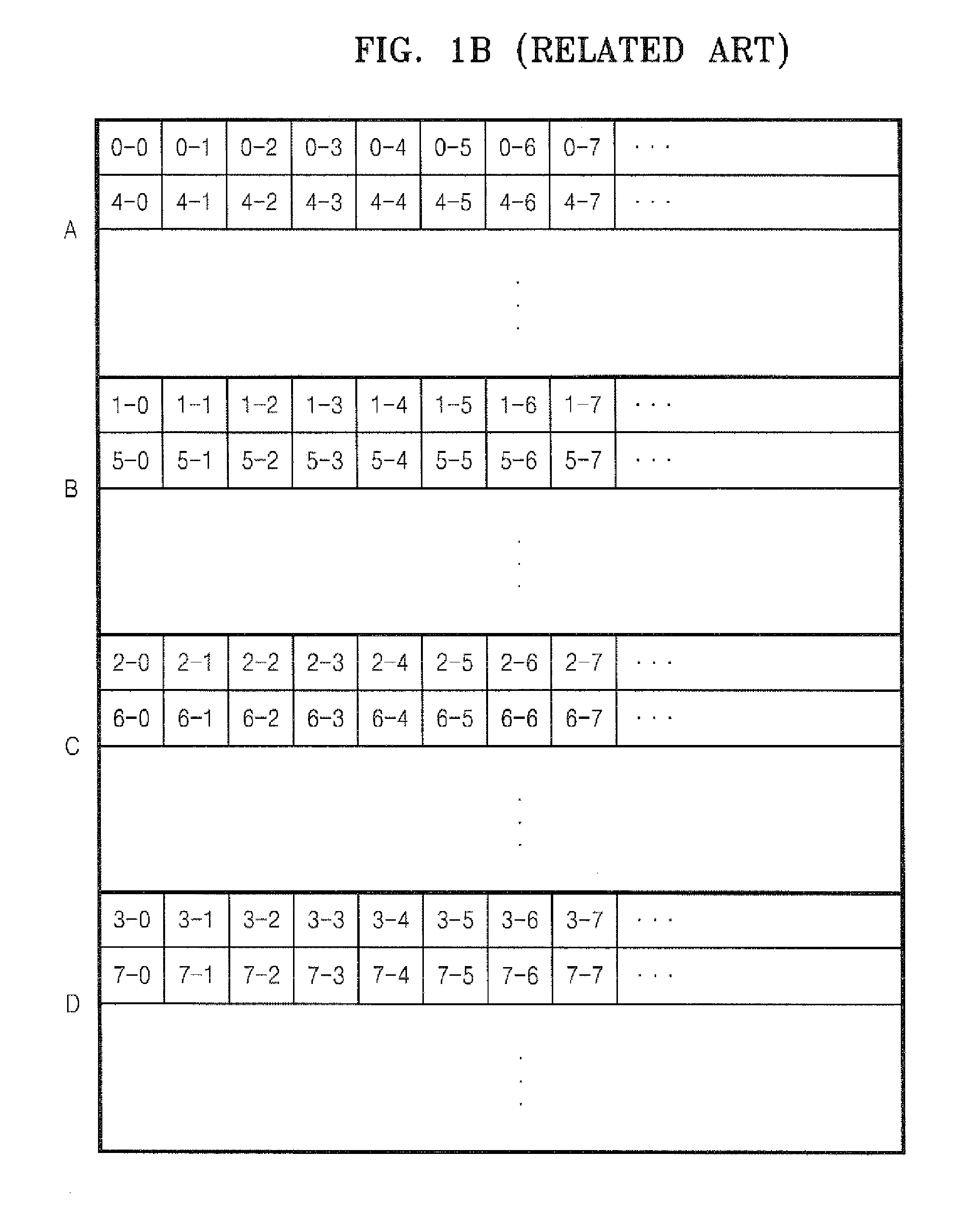

Method of accessing frame data and data accessing device thereof

InactiveUS20060007235A1Save bandwidthImprove system performanceMemory architecture accessing/allocationEnergy efficient ICTComputer hardwareMemory bank

A method for accessing frame data and data accessing device thereof are provided to access X-bit frame data. The method comprises providing Y memory banks BANKi (1<Y≦X), where BANKi represents the ith memory bank (0≦i<Y); arranging a partial frame data WL,A (X / Y bits) to be held in BANKj, where WL,A represents a Lth line Ath frame data word and j=(L+A) mod Y; receiving and according to Y word addresses WAk to determine the memory banks where WL,A is located, where addresses WAk represent the addresses of the kth partial frame data ((0≦k<Y); and obtaining the partial frame data (X / Y bits) from each BANKi according to the determined results and combining them to form the frame data (X bits).

Owner:FARADAY TECH CORP

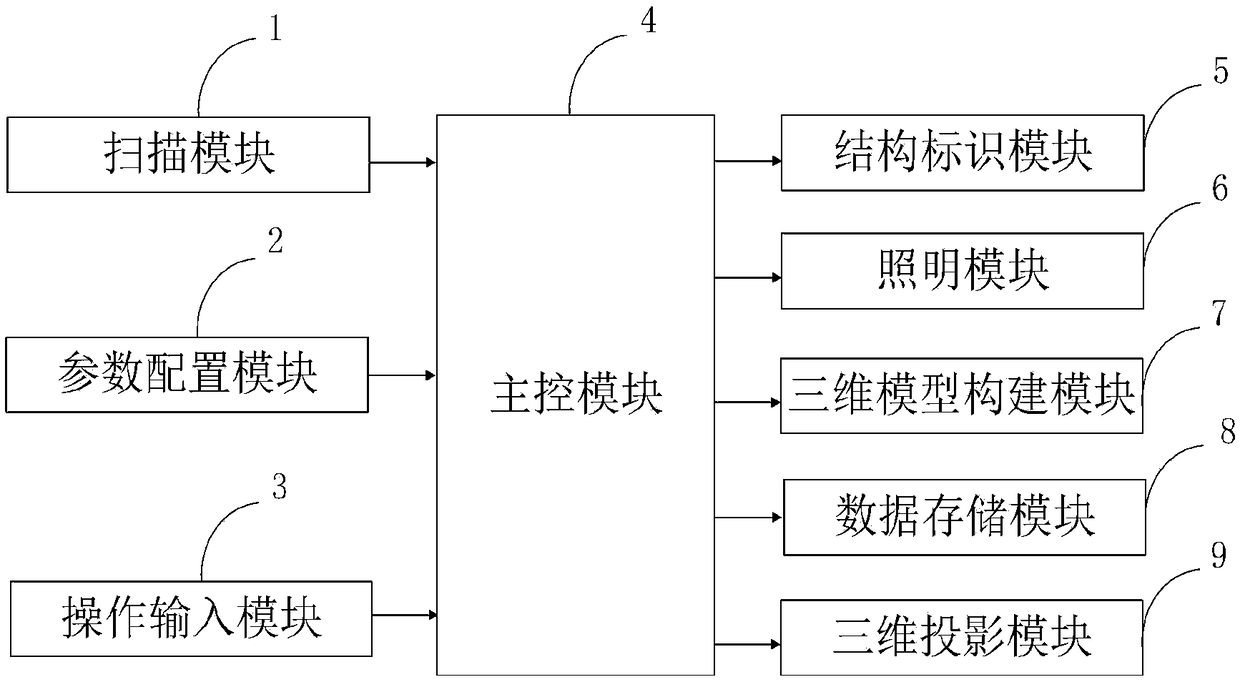

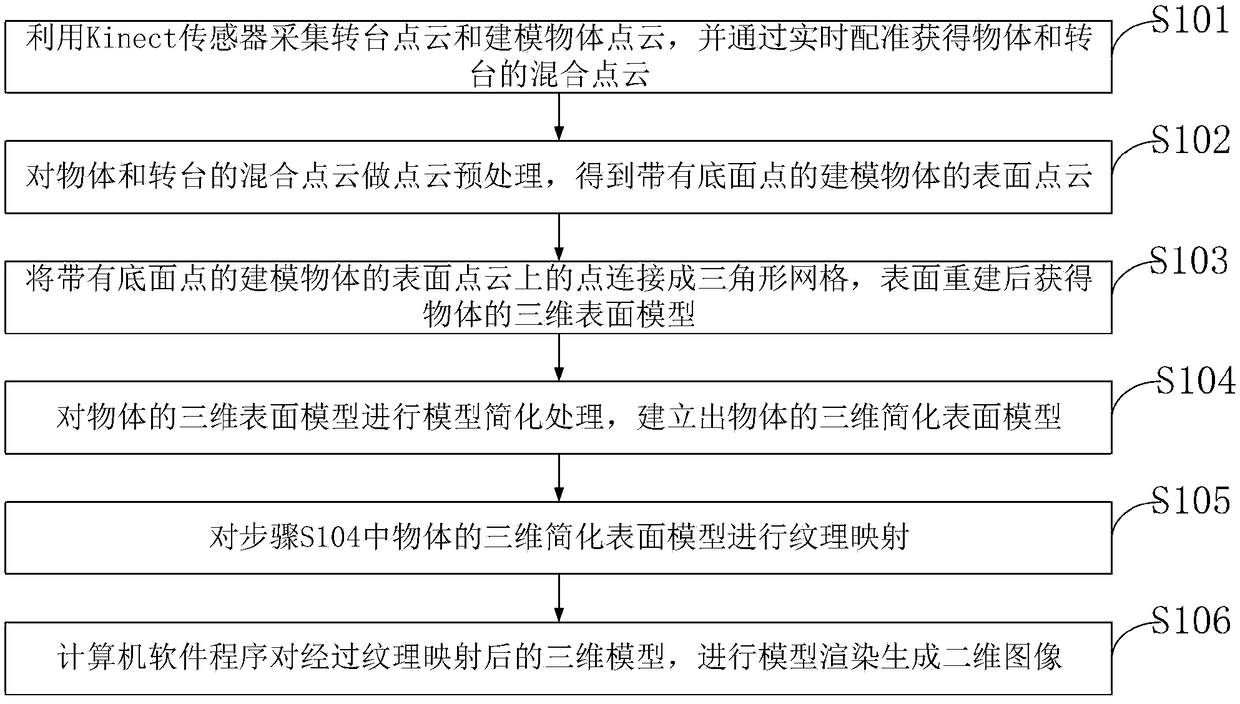

A mechanical manufacturing display auxiliary system

The invention belongs to the technical field of mechanical manufacturing, and discloses a mechanical manufacturing display auxiliary system. The mechanical manufacturing display auxiliary system comprises a scanning module, a parameter configuration module, an operation input module, a main control module, a structure identification module, a lighting module, a three-dimensional model constructionmodule, a data storage module and a three-dimensional projection module. The system of the invention realizes the real-time automatic point cloud registration by using a function interface provided by Microsoft KinectFusion through a three-dimensional model construction module, greatly shortens modeling time, and finally the precision of the obtained model can meet general requirements. At the same time, the process of constructing three-dimensional projection image by three-dimensional projection module and the process of outputting three-dimensional projection image can be carried out simultaneously and in real time, and the redundant data access is reduced by line buffer segmentation, so that the three-dimensional projection efficiency is improved, the mechanical manufacturing productsare displayed more intuitively and stereoscopically, and the display effect is good.

Owner:HUNAN MECHANICAL & ELECTRICAL POLYTECHNIC

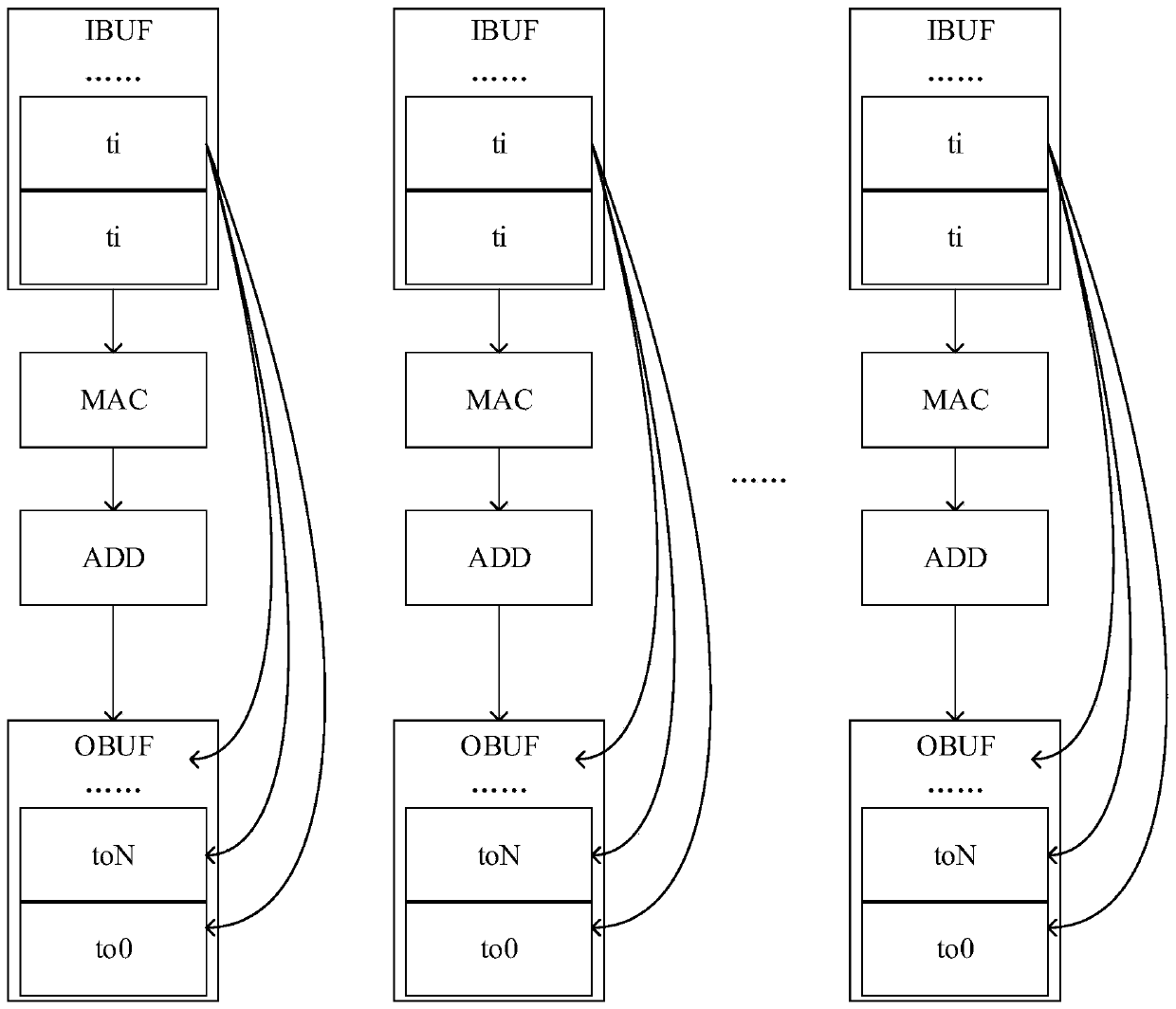

Convolution implementation method and convolution implementation device of neural network, and terminal equipment

ActiveCN111178513AImprove processing efficiencyReduce data accessNeural architecturesChannel dataAlgorithm

The invention is applicable to the technical field of neural networks, and provides a convolutional implementation method and a convolution implementation device of a neural network, terminal equipment and a computer readable storage medium. The method comprises the steps of inputting a to-be-processed image into the neural network; obtaining convolution groups to be partitioned from all convolution layers of the neural network; obtaining all input channel data of a first to-be-blocked convolution layer in the to-be-blocked convolution group according to the to-be-processed image; partitioningall input channel data of the first convolution layer to be partitioned; according to all blocks of all input channel data of the first to-be-blocked convolution layer, obtaining an output result ofthe last to-be-blocked convolution layer in the to-be-blocked convolution group, and storing the output result of the last to-be-blocked convolution layer into a memory; and inputting the output result of the last to-be-blocked convolutional layer into a specified network of the neural network. According to the method and the device, data access to the memory in the convolution calculation processcan be reduced, so that the convolution calculation efficiency is improved.

Owner:SHENZHEN INTELLIFUSION TECHNOLOGIES CO LTD

Method of and apparatus for saving video data

InactiveUS20080062188A1Saving dataReduce data accessTelevision system detailsMemory adressing/allocation/relocationMemory bankByte

A method of and apparatus are provided for saving video data. The method for saving video data in a memory includes mapping the memory such that data of a plurality of consecutive lines in a video frame is recorded one after another in a single memory bank at intervals of a specified number of bytes and recording data of the lines in the memory according to the mapping

Owner:SAMSUNG ELECTRONICS CO LTD

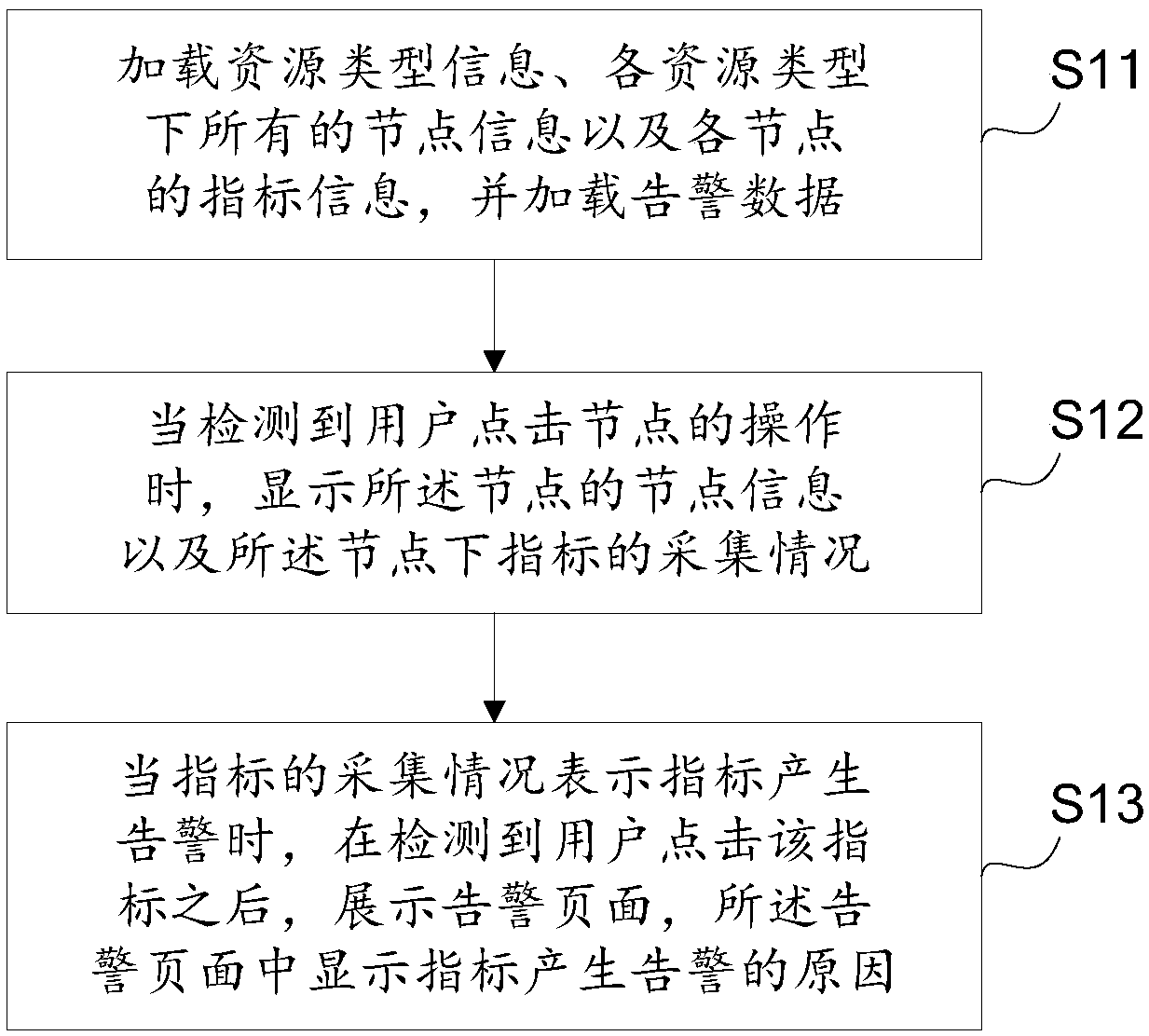

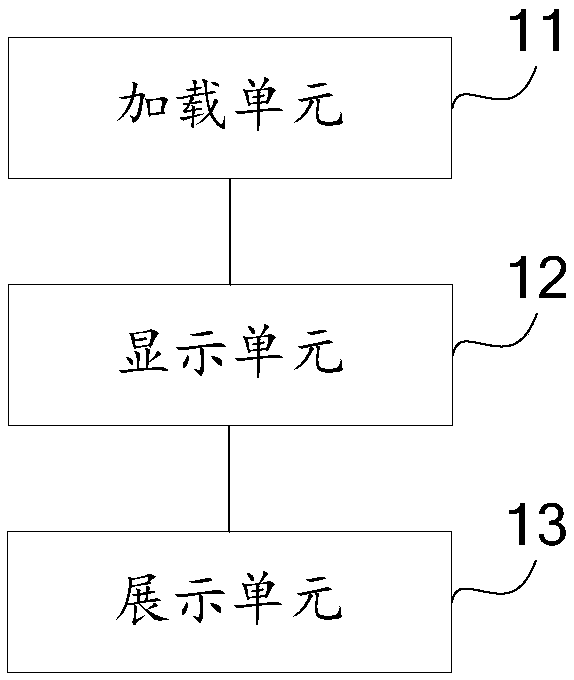

Realization method of cluster index alarm and cluster index alarm system

InactiveCN109062772AReduce data accessReduce query timeHardware monitoringMultiple digital computer combinationsReal-time computingData access

The invention provides a method for realizing cluster index alarm and a cluster index alarm system. The method comprises the following steps: loading resource type information, all node information under each resource type and index information of each node, and loading alarm data; when the operation of clicking a node by a user is detected, the node information of the node and the collection situation of indicators under the node are displayed; When the collection condition of the indicator indicates that the indicator generates an alarm, an alarm page is displayed after the user clicks on the indicator, and the alarm page displays the reason why the indicator generates the alarm. The invention can reduce the amount of data access, shorten the inquiry time and facilitate the operation ofthe user.

Owner:DAWNING INFORMATION IND BEIJING

Unified converged network, storage and compute system

InactiveUS9892079B2Function increaseHinder software implementationSoftware simulation/interpretation/emulationSingle machine energy consumption reductionNetwork switchComputing systems

A unified converged network, storage and compute system (UCNSCS) converges functionalities of a network switch, a network router, a storage array, and a server in a single platform. The UCNSCS includes a system board, interface components free of a system on chip (SoC) such as a storage interface component and a network interface component operably connected to the system board, and a unified converged network, storage and compute application (UCNSCA). The storage interface component connects storage devices to the system board. The network interface component forms a network of UCNSCSs or connects to a network. The UCNSCA functions as a hypervisor that hosts virtual machines or as a virtual machine on a hypervisor and incorporates a storage module and a network module therewithin for controlling and managing operations of the UCNSCS and expanding functionality of the UCNSCS to operate as a converged network switch, network router, and storage array.

Owner:CIMWARE PVT LTD

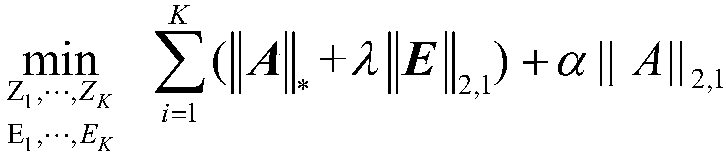

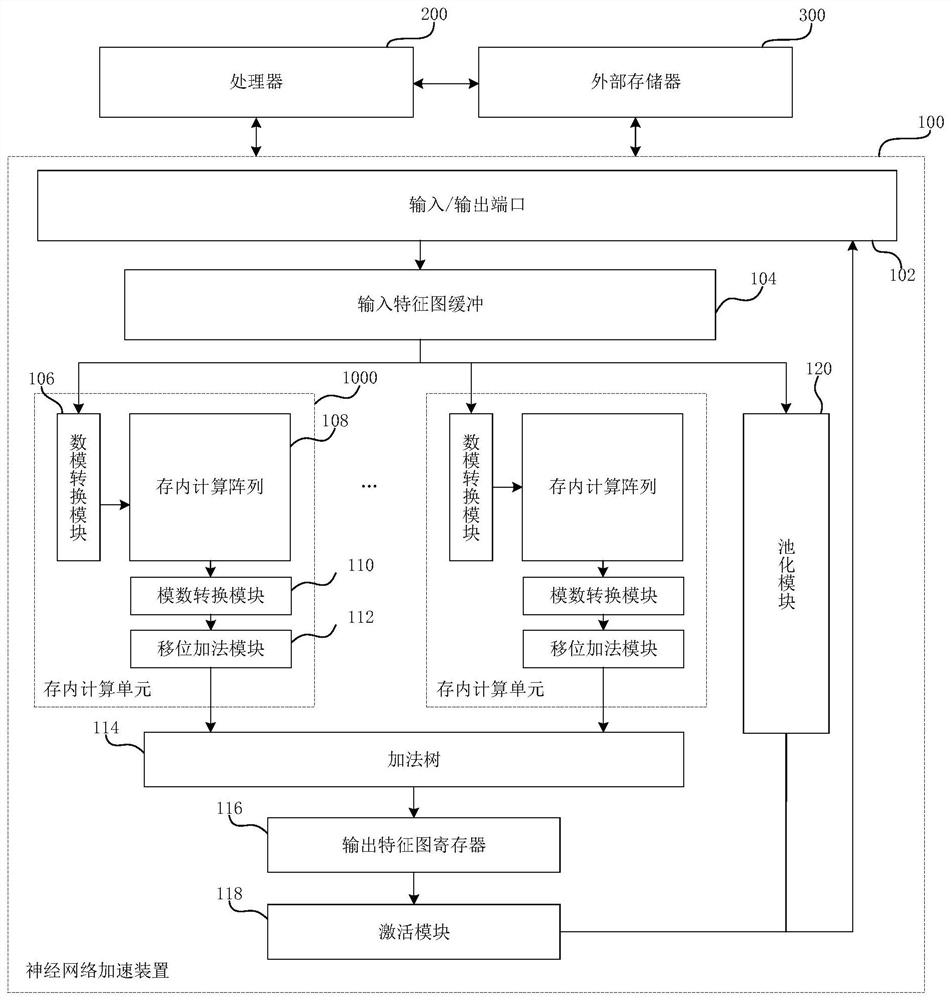

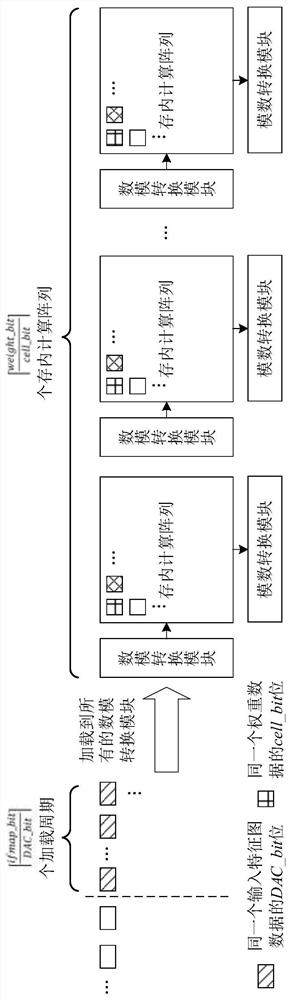

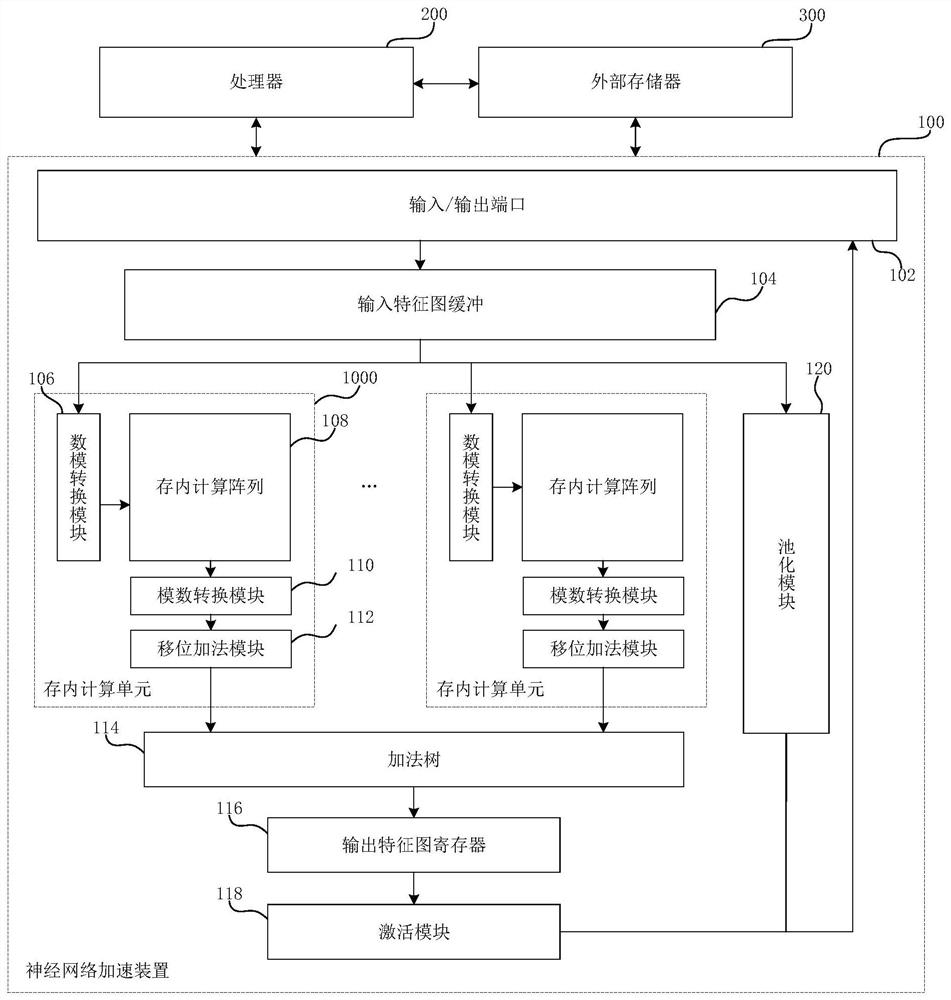

Neural network in-memory computing device based on communication lower bound and acceleration method

ActiveCN113052299AReduce data accessNeural architecturesPhysical realisationComputer hardwareMultiplexing

The invention relates to the field of neural network algorithms and computer hardware design, in particular to a neural network in-memory computing device based on a communication lower bound and an acceleration method. The invention discloses a neural network in-memory computing device based on a communication lower bound, which comprises a processor, an external memory and a neural network acceleration device. The invention further discloses an acceleration method using the neural network in-memory computing device based on the communication lower bound. According to the method, off-chip-on-chip communication lower bound analysis is taken as a theoretical support, output feature map multiplexing, convolution window multiplexing, balance weight multiplexing and input feature map multiplexing are utilized, and a neural network acceleration device under an in-memory computing architecture and a corresponding data flow scheme are provided, so that off-chip-on-chip data access amount is reduced.

Owner:ZHEJIANG UNIV

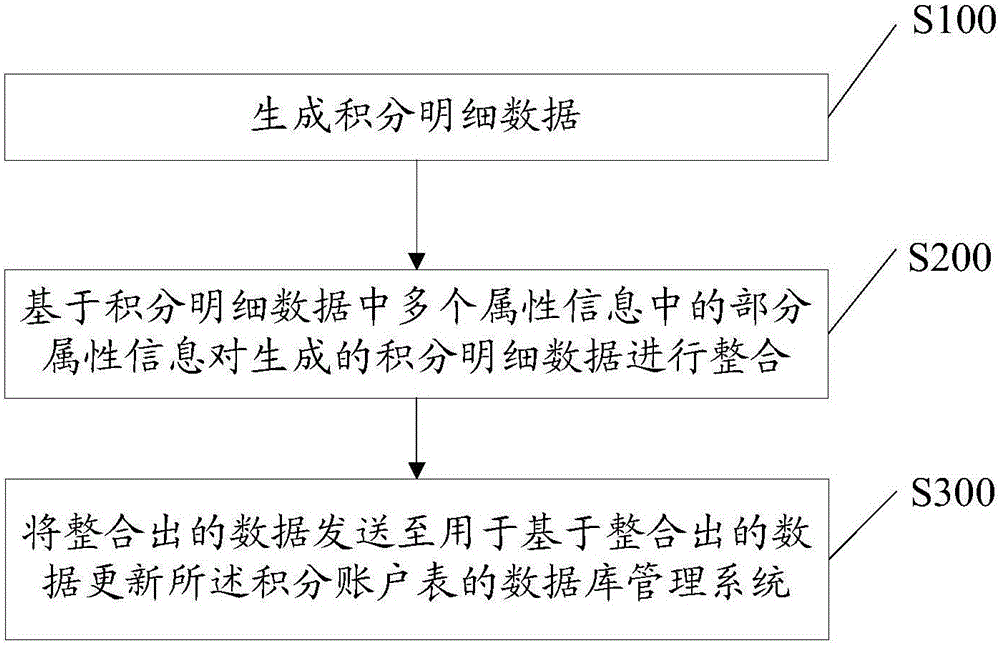

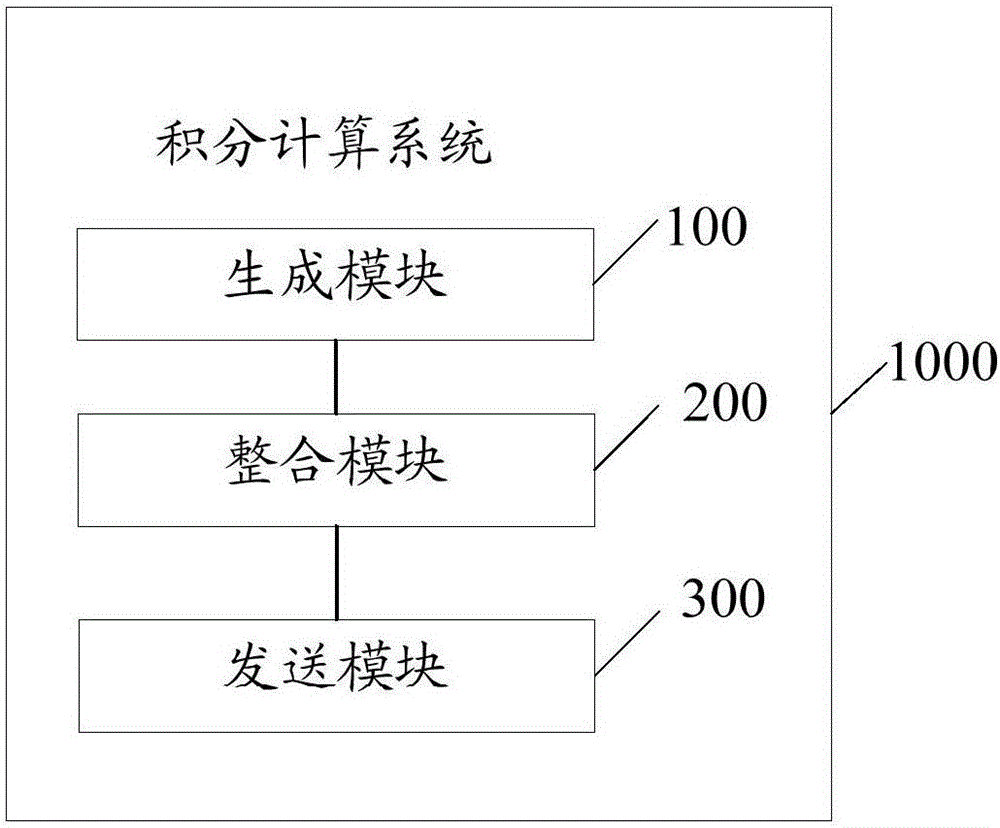

Data table update method for bonus point account adjustment and bonus point calculating system

InactiveCN106056410ATimely updateGuaranteed accuracyMarketingSpecial data processing applicationsData accessData mining

The invention provides a data table update method for bonus point account adjustment and a bonus point calculating system. The method comprises the following steps: generating bonus point detail data, wherein the bonus point detail data comprise the bonus point value and a plurality of attribute information corresponding to the bonus point value; carrying out integration on the generated bonus point detail data based on a part of attribute information in the plurality of attribute information, wherein the part of attribute information comprises attribute information corresponding to a main key of a bonus point account table to be updated; and sending the integrated data to a database management system for updating the bonus point account table based on the integrated data. According to the method and system, through integration of the bonus point detail data, data page view of the database management system during bonus point account table updating is reduced, response speed of the database management system is improved, the database management system is allowed to update the bonus point account table in time, and accuracy and reliability of the data in the bonus point account table are ensured.

Owner:CHINA CONSTRUCTION BANK

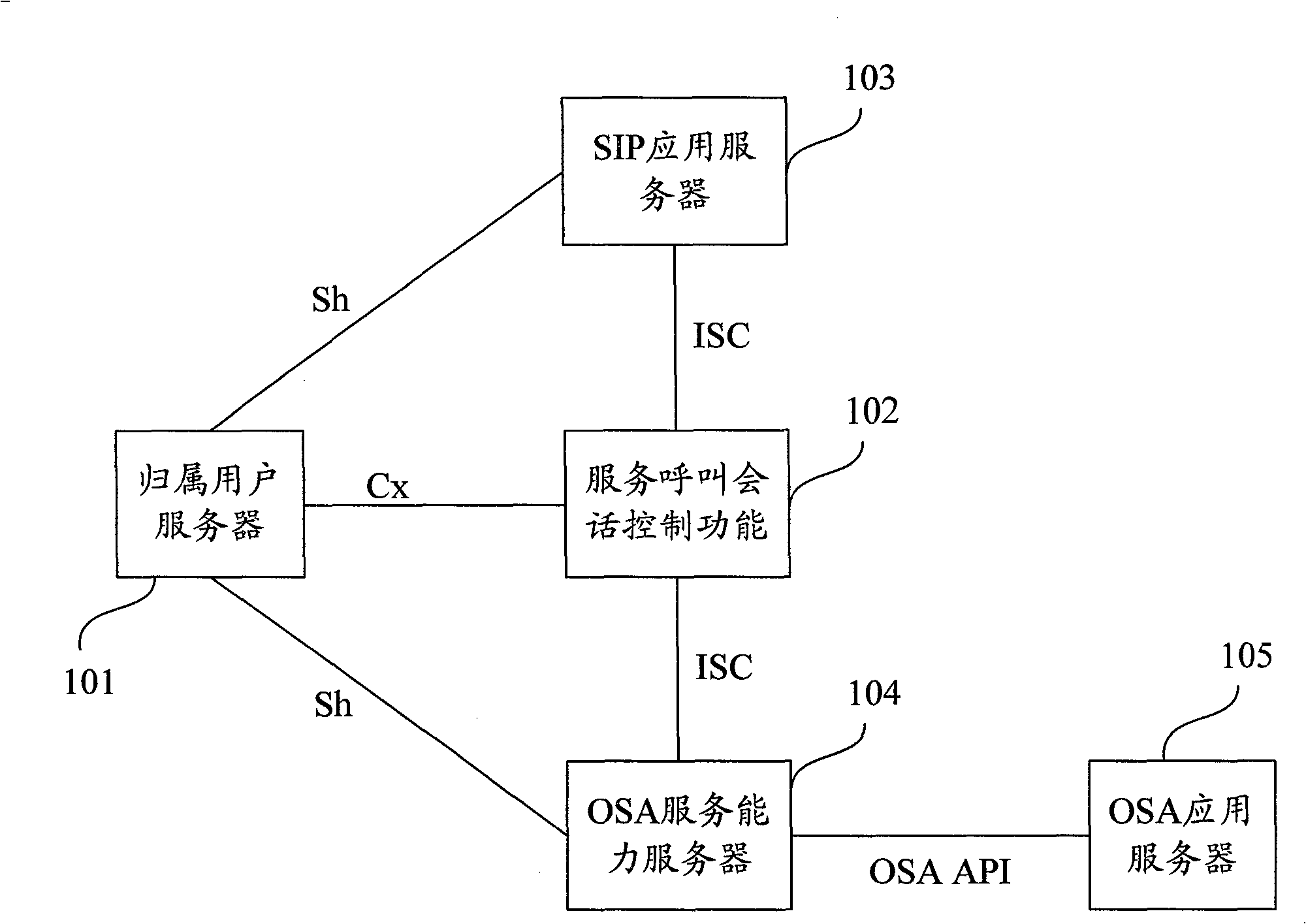

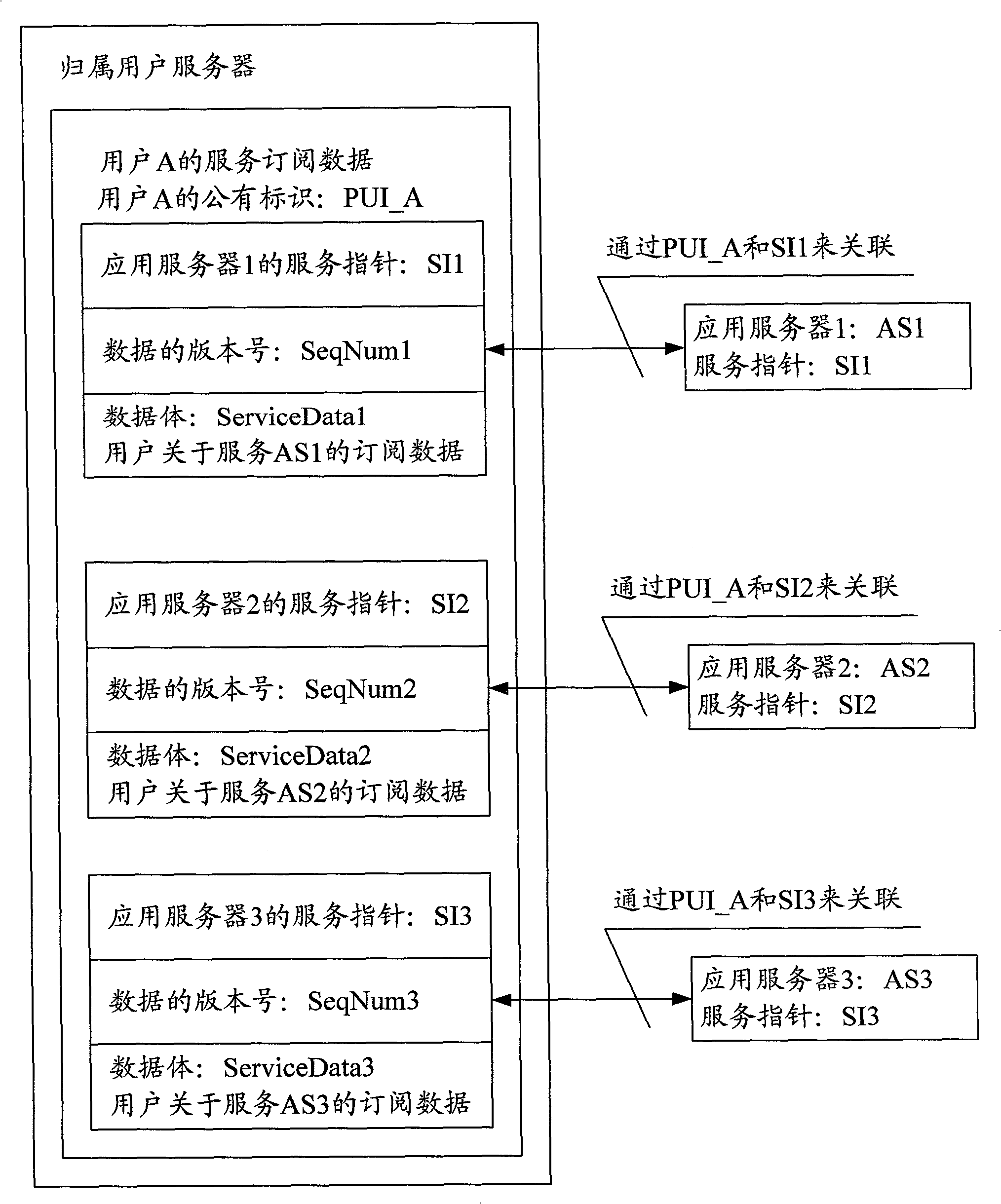

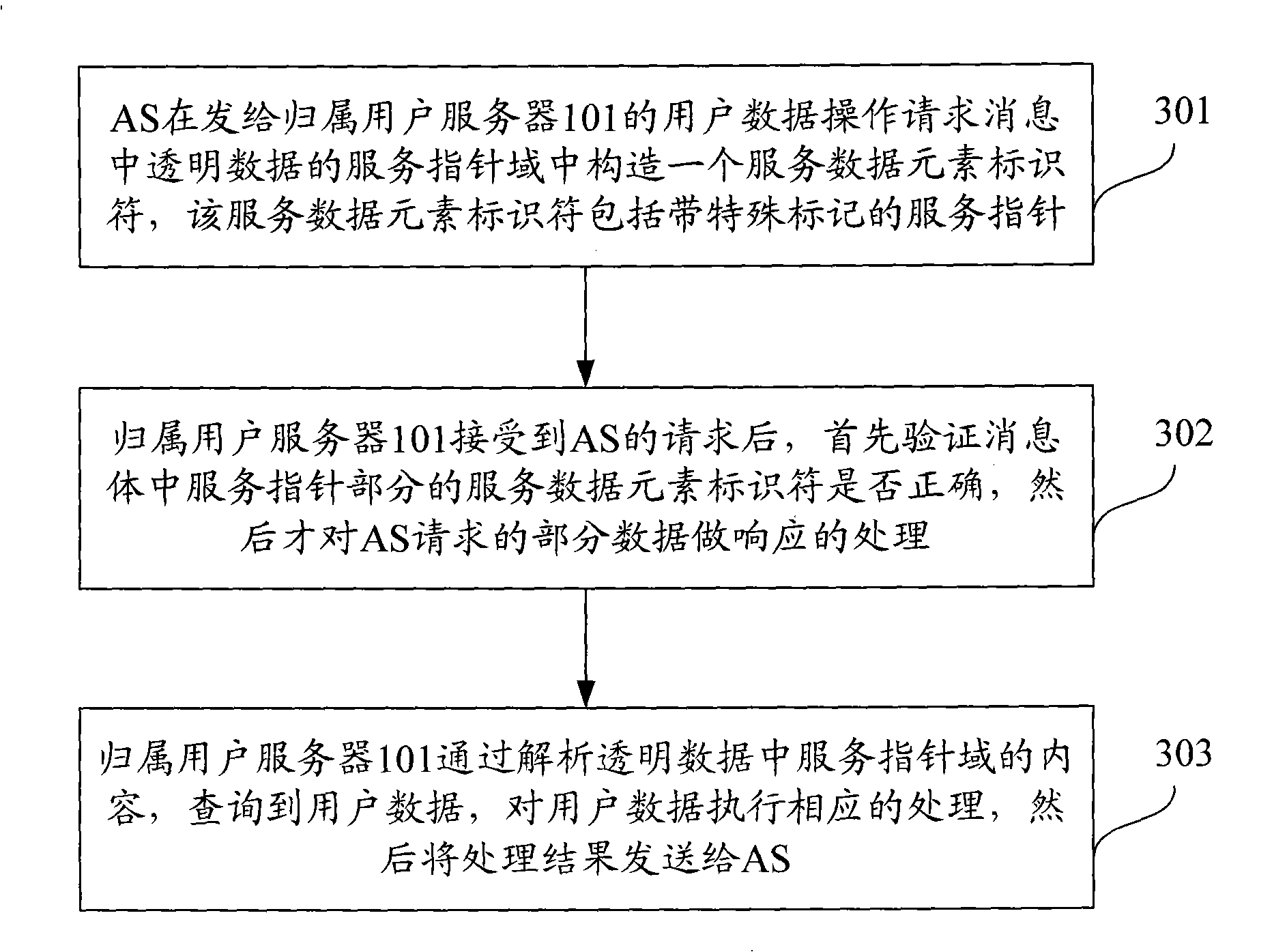

Method for transmitting Sh interface transparent data

InactiveCN101330456BOvercoming the defects of block transferOvercoming transmission deficienciesData switching networksNetwork data managementTelecommunicationsApplication server

The invention discloses a method for transmitting Sh interface transparent data, which is used for transmitting subscriber data between a home subscriber server (HSS) and an application server (AS), so as to improve the transmission efficiency of the subscriber data. The method comprises the following steps: the AS sends a subscriber data operation request to the HSS, and indicates that the subscriber data is transmitted by using data segmentation transmission characteristic in a service indication region of the transparent data mentioned in the request using a service indication with a special mark; and the HSS searches the subscriber data according to the service indication with the special mark, and conducts corresponding operations on the subscriber data according to the subscriber data operation request. The method overcomes the shortcoming with the transmission of entire Sh interface subscriber data blocks in the prior art.

Owner:ZTE CORP

Neural network in-memory computing device and acceleration method based on communication lower bound

The invention relates to the fields of neural network algorithms and computer hardware design, and specifically proposes a neural network memory computing device and acceleration method based on a communication lower bound. The invention discloses a neural network in-memory computing device based on a communication lower bound, which includes a processor, an external memory and a neural network acceleration device. The invention also simultaneously discloses an acceleration method performed by using the neural network in-memory computing device based on the communication lower bound. The present invention takes off-chip-on-chip communication lower bound analysis as theoretical support, uses output feature map multiplexing and convolution window multiplexing, balances weight reuse and input feature map multiplexing, and proposes a neural network acceleration device under an in-memory computing architecture and Corresponding data flow scheme, thereby reducing off-chip-on-chip data access.

Owner:ZHEJIANG UNIV

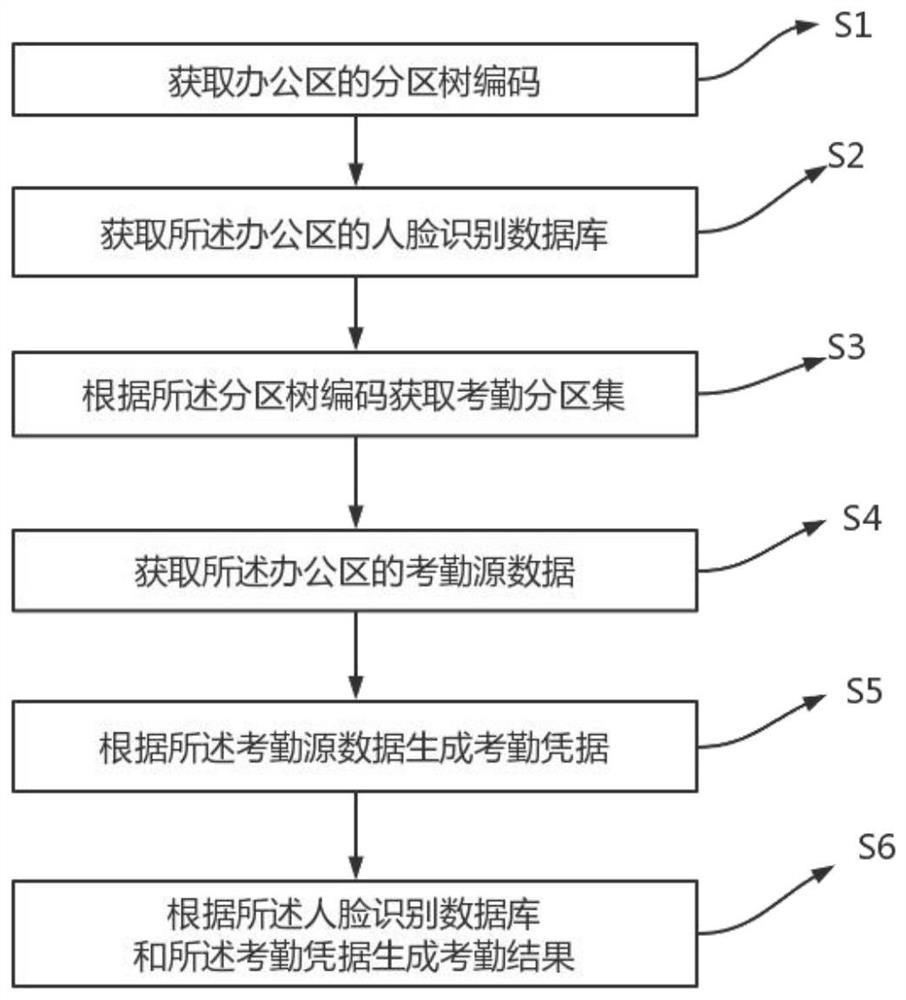

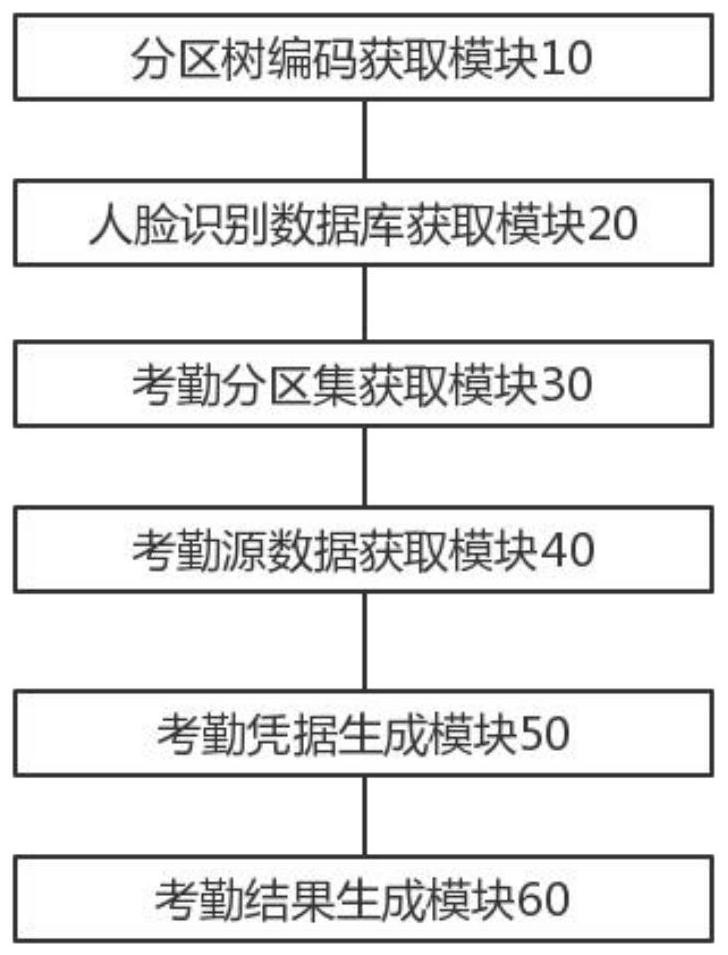

Face recognition non-inductive attendance checking method and device and storage medium

PendingCN114358710AExtension of timeReduce data accessStill image data indexingOffice automationTree codeData mining

The invention discloses a face recognition non-inductive attendance checking method and device and a storage medium. The method comprises the following steps: acquiring a partition tree code of an office area; acquiring a face recognition database of the office area; acquiring an attendance partition set according to the partition tree code; acquiring attendance source data of the office area; generating attendance credentials according to the attendance source data; and generating an attendance result according to the face recognition database and the attendance credential. According to the face recognition non-inductive attendance checking method and device and the storage medium provided by the invention, an attendance checking gate is not arranged, so that the time for attendance checking personnel to enter an office site can be greatly shortened, and time is saved; attendance personnel do not need to queue up to grab human faces, so that labor is saved; attendance equipment and attendance sites are saved, and money is saved; the data access to the attendance system is reduced and safe; and whether the attendance personnel are on duty can be directly and accurately verified.

Owner:EZHOU INST OF IND TECH HUAZHONG UNIV OF SCI & TECH +1

Distributed network construction and storage method, apparatus and system

ActiveUS8645433B2Reduce network loadReduce instabilityDigital data processing detailsDigital computer detailsInstabilityData access

The disclosure relates to distributed network communications, and in particular, to a distributed network construction method and apparatus, a distributed data storage method and apparatus, and a distributed network system. When a node joins a distributed network, the ID of the node is determined according to the geographic location information about the node. Therefore, all the nodes in the same area belong to the same ID range, and the node IDs are allocated according to the area. Because the node IDs are determined according to the area, the local data may be stored in the node in the area according to the geographic information, inter-area data access is reduced. Therefore, the method, the apparatus, and the system provided herein reduce the data load on the backbone network, balance the data traffic and the bandwidth overhead of the entire network, and reduce the network instability.

Owner:HUAWEI TECH CO LTD

Convolution calculation method, convolution calculation apparatus, and terminal device

ActiveUS20220351490A1Reduce bandwidth consumptionReduce data accessCharacter and pattern recognitionEnergy efficient computingChannel dataAlgorithm

The present application provides a convolution calculation method, a convolution calculation apparatus, a terminal device, and a computer readable storage medium. The method includes: inputting an image to be processed into a deep learning model, and obtaining a to-be-blocked convolution group and a target size of a block from all convolution layers of the deep learning model; blocking all input channel data of a first to-be-blocked convolution layer in said convolution group according to the target size, a size of each block being the target size; obtaining an output result of said convolution group according to all blocks of all input channel data of said first convolution layer; inputting the output result of said convolution group to a specified network of the deep learning model. Sizes of blocks of the to-be-blocked convolution layer and bandwidth consumption can be adjusted to adapt to frequently updating and upgrading the deep learning model.

Owner:SHENZHEN INTELLIFUSION TECHNOLOGIES CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com