Neural network in-memory computing device and acceleration method based on communication lower bound

A neural network and computing device technology, applied in biological neural network models, neural architecture, energy-saving computing, etc., can solve problems such as inability to guarantee the optimality of data flow schemes, lack of theoretical analysis support, etc., and achieve the effect of reducing the amount of data access

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

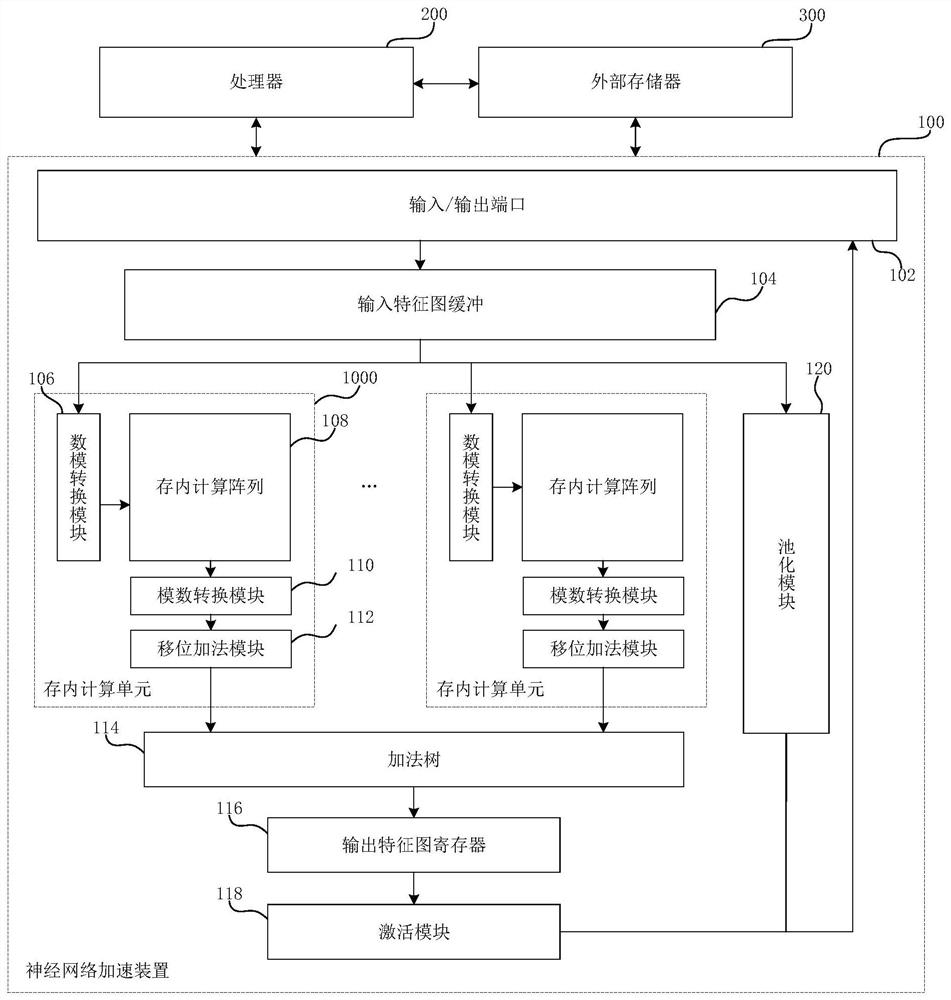

[0045] Embodiment 1. Neural network in-memory computing device based on communication lower bound, such as Figure 1-5 As shown, it includes a neural network acceleration device 100, a processor 200 and an external memory 300. The neural network acceleration device 100 is signal-connected to the processor 200 and the external memory 300 respectively; the processor 200 is used to control the flow of the neural network acceleration device 100 and Calculation of some special layers (such as Softmax layer, etc.) is performed; the external memory 300 stores the weight data, input feature map data and output feature map data of each layer required in the neural network calculation process.

[0046] The processor 200 and the external memory 300 are signal-connected to each other, and the processor 200 and the external memory 300 belong to the prior art, so they will not be described in detail.

[0047] The neural network acceleration apparatus 100 includes an input / output port 102, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com