Patents

Literature

444results about How to "Reduce query time" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

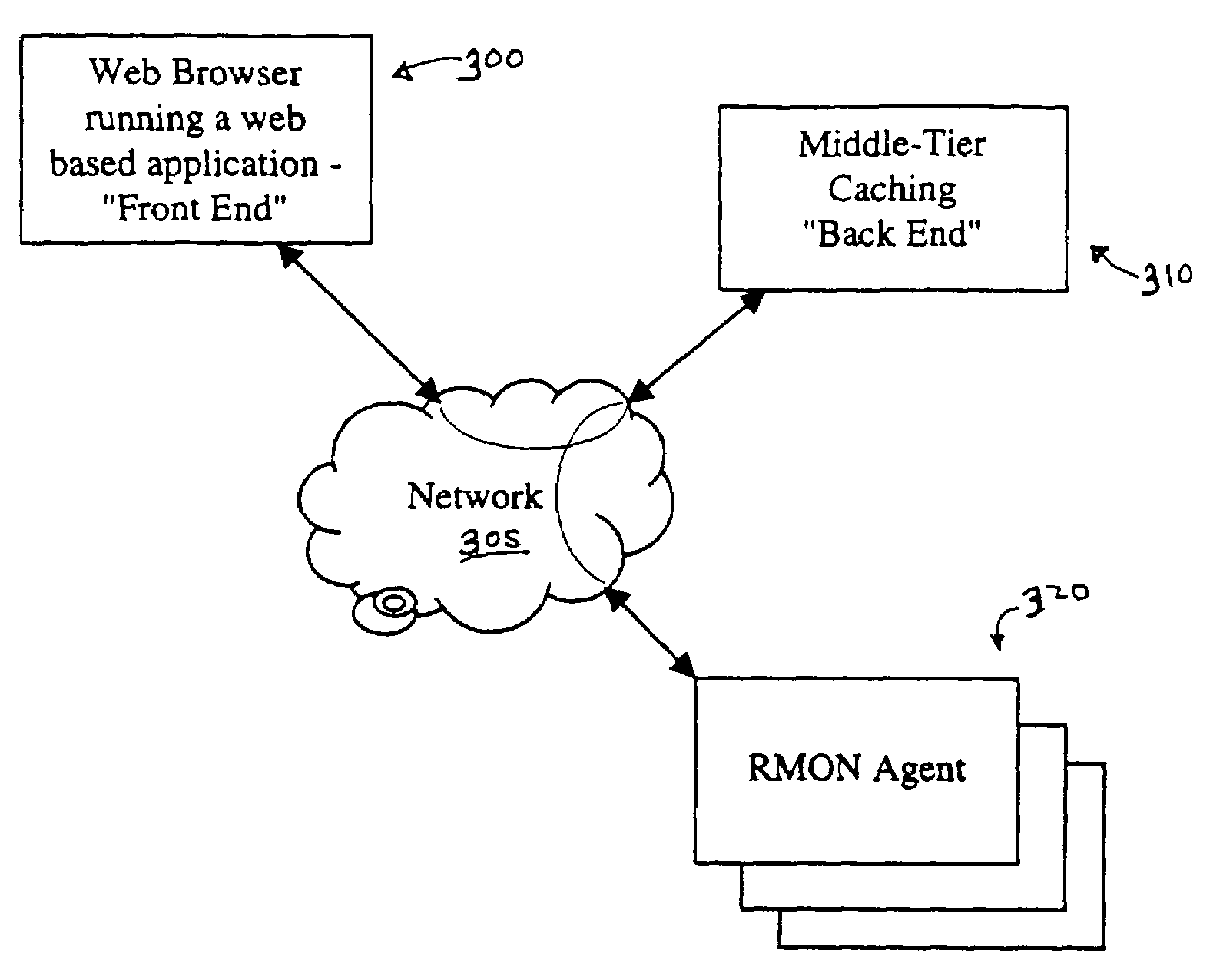

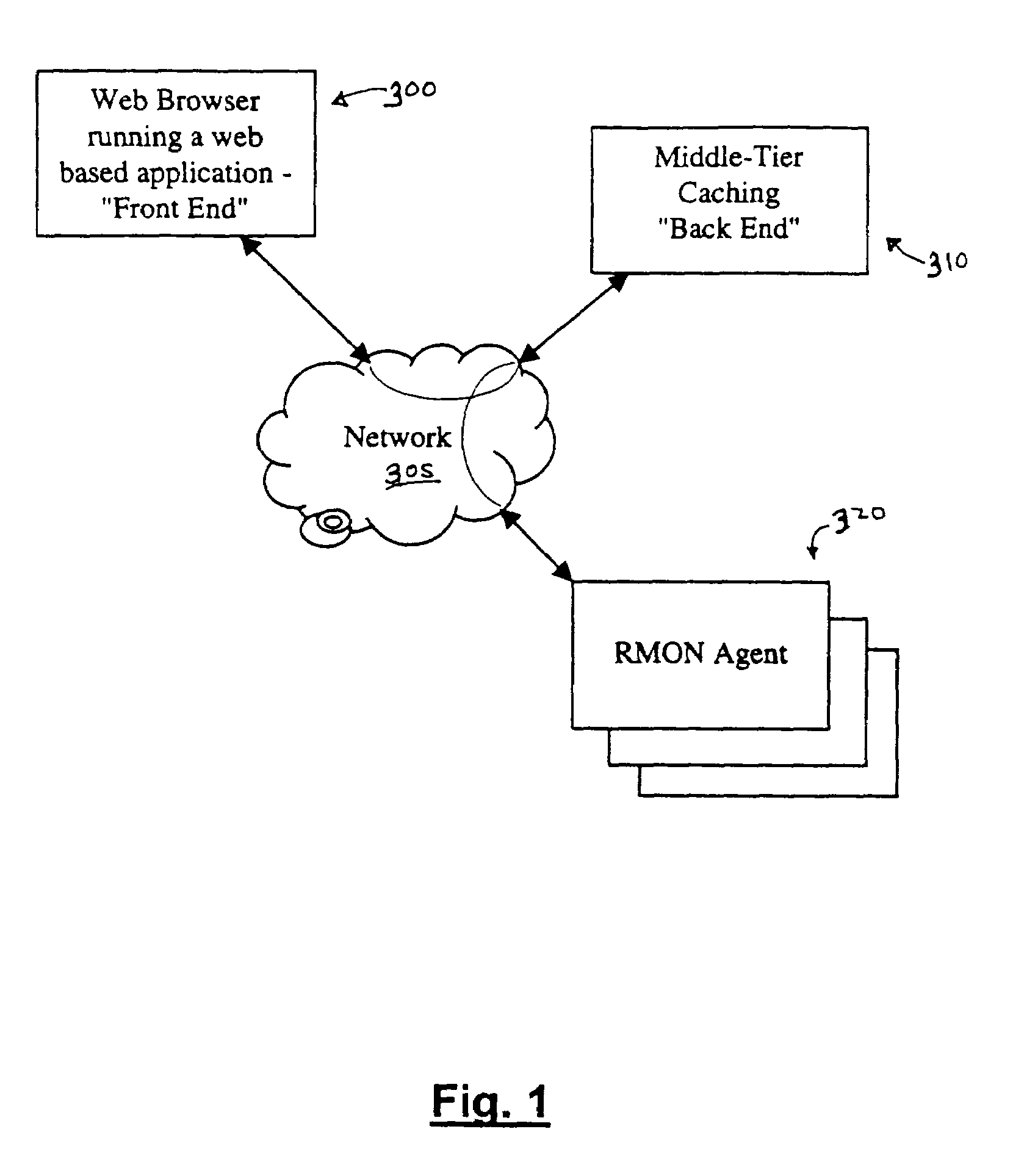

Method and architecture for a high performance cache for distributed, web-based management solutions

InactiveUS6954786B1Improve performanceReduce query timeMultiple digital computer combinationsData switching networksInformation repositoryManagement information base

An architecture for a management information system includes a backend that services information requests from a front end. The backend retrieves data from management information base(s) (MIBs) and prepares a response to the information requests. The backend maintains a cache of data recently retrieved from the MIBs and uses the cache to retrieve data, instead of making additional requests to the MIBs. The responses may be prepared from cache data only, MIB data only, or a combination of both. The back end is preferably maintained on a same segment of a network as the MIBs. Multiple MIBs may be queried from the back end, and the backend may coordinate with back ends on other segments of the network.

Owner:VALTRUS INNOVATIONS LTD

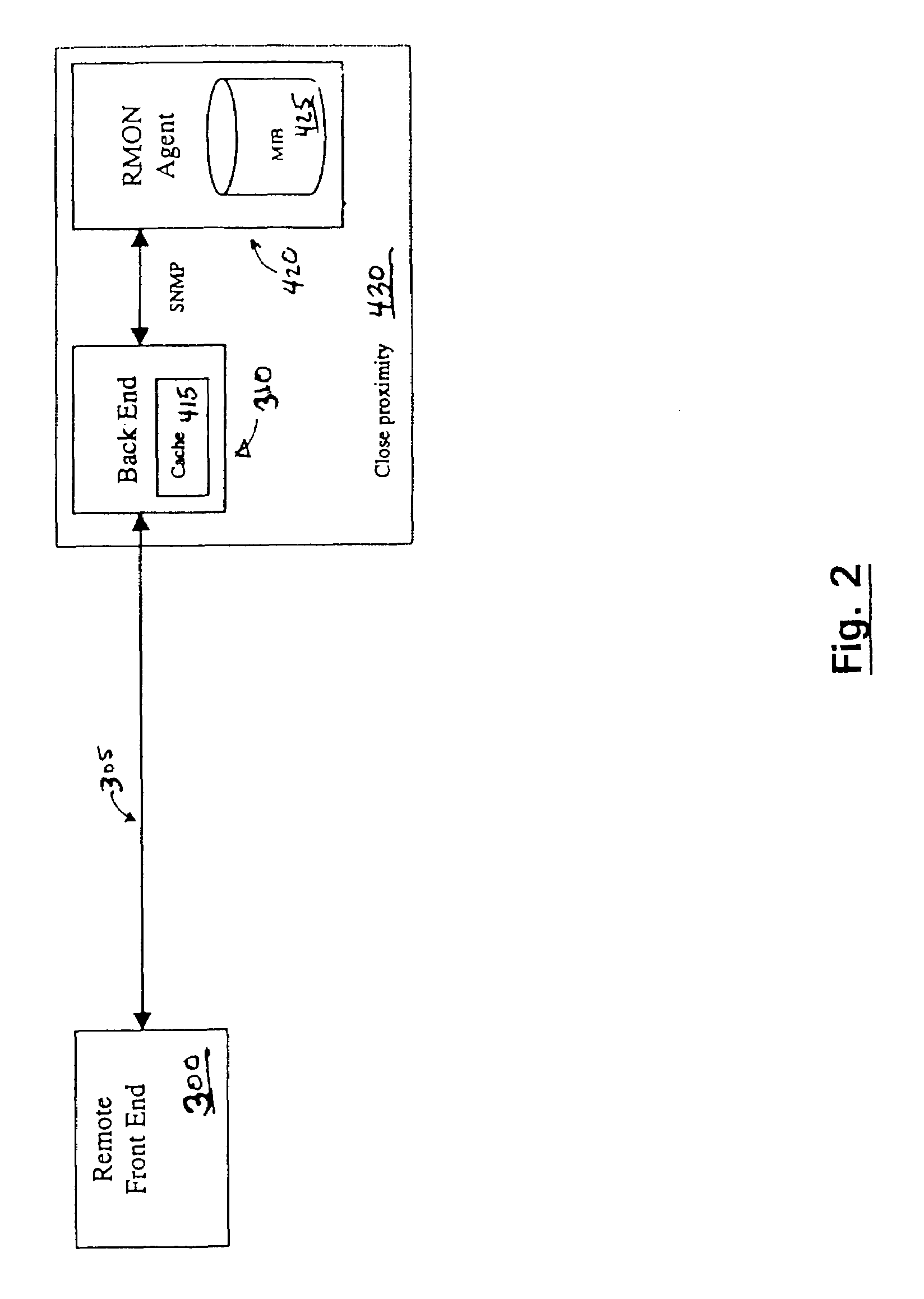

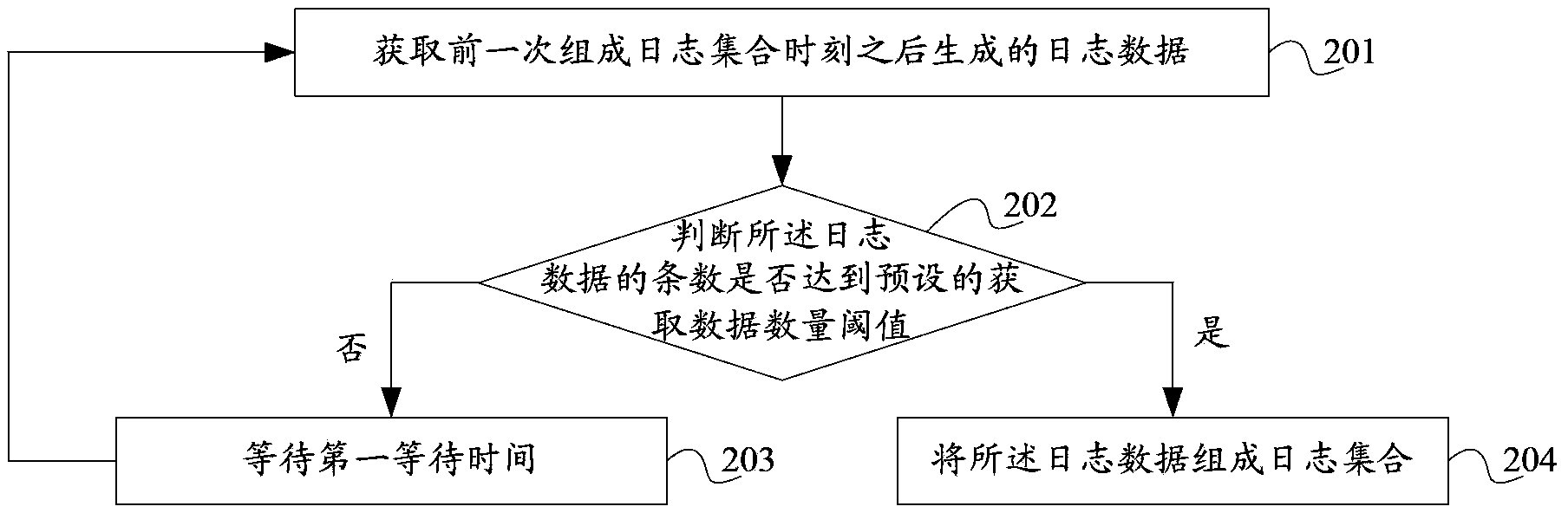

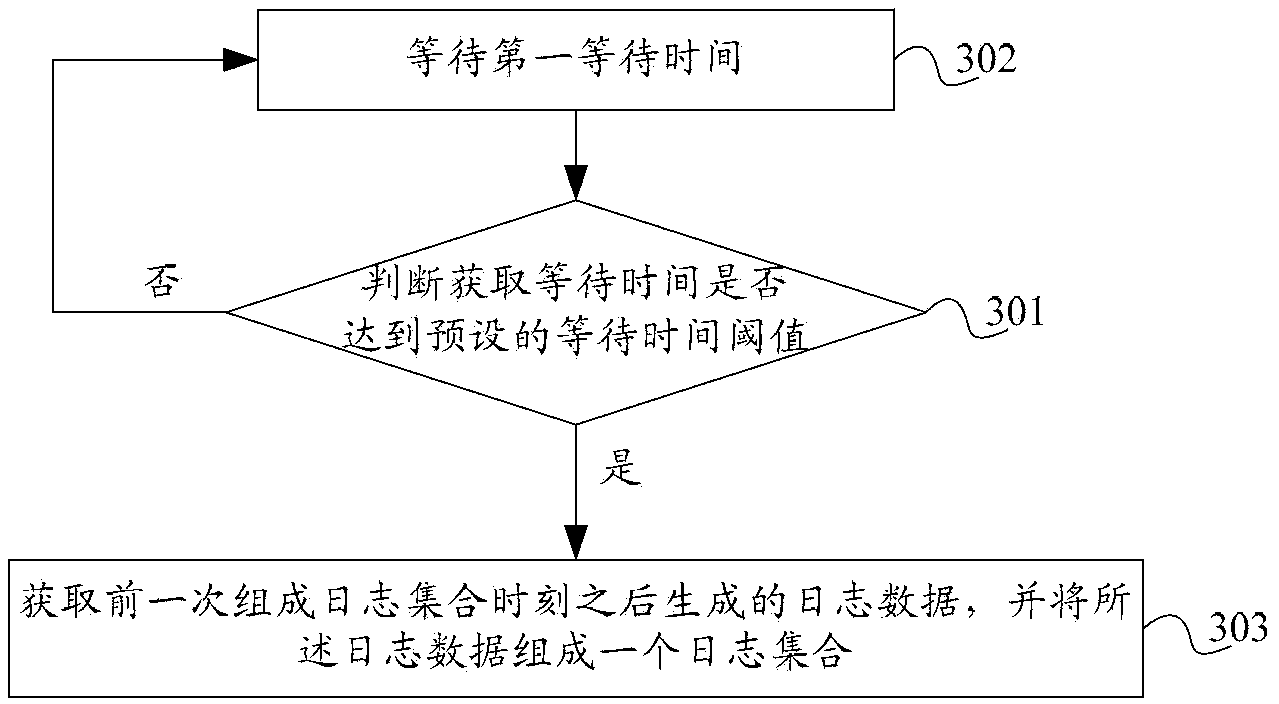

Method, log server and system for recording log data

The invention discloses a method, log server and system for recording log data. The method comprises that log data obtained according to the log obtaining condition forms a log set; a data storage server for storing the log set is determined; the log set is stored in the data storage server, and a data sheet of the log set is generated in the data storage server; and after all log data of the log set is introduced into the data sheet, an index is created for the data sheet of the log set. According to the method, log server and system, the storage speed of log data can be improved, a network platform can timely record a lot of log data that is generated in a short time, data loss is prevented, and delayed query time can be shortened.

Owner:ALIBABA GRP HLDG LTD

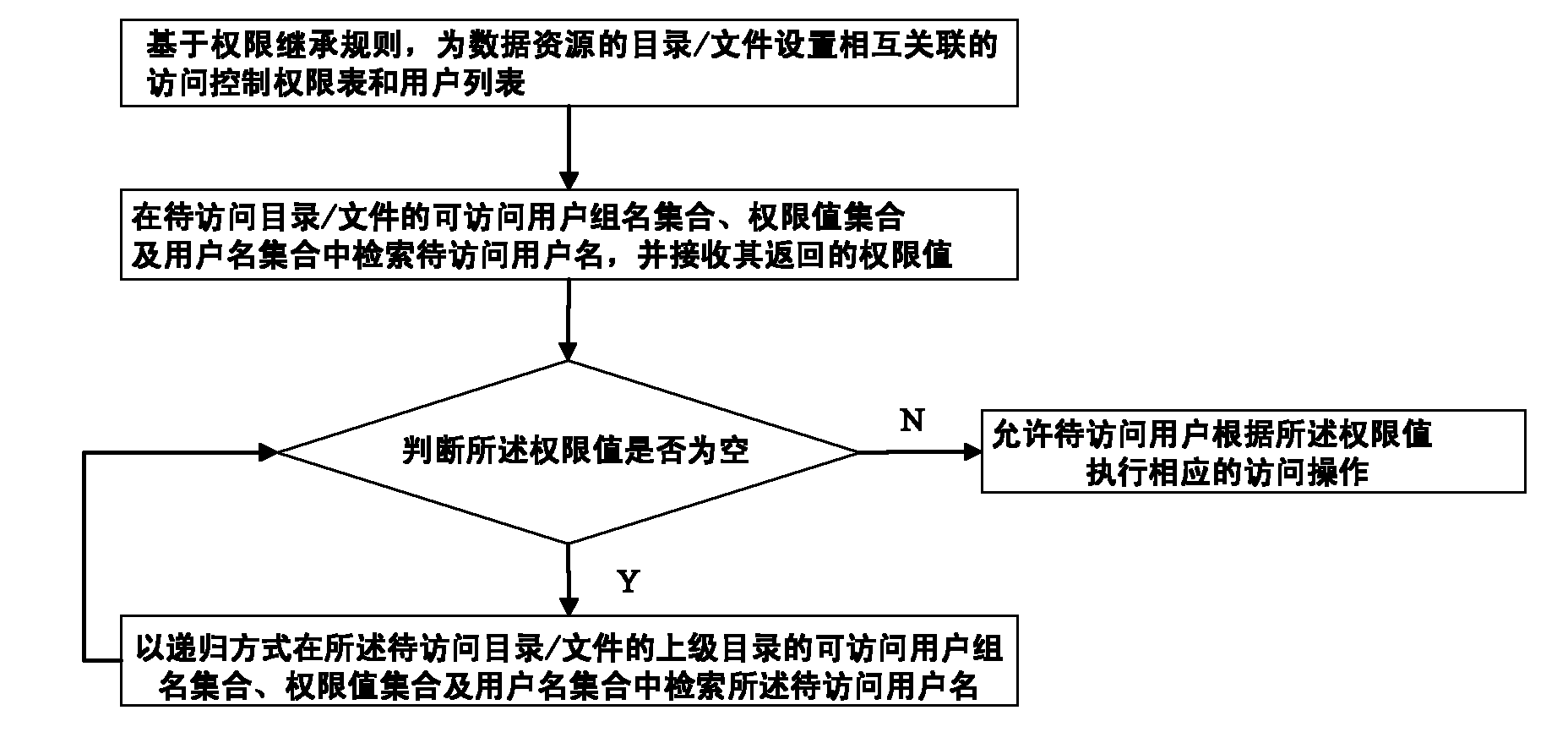

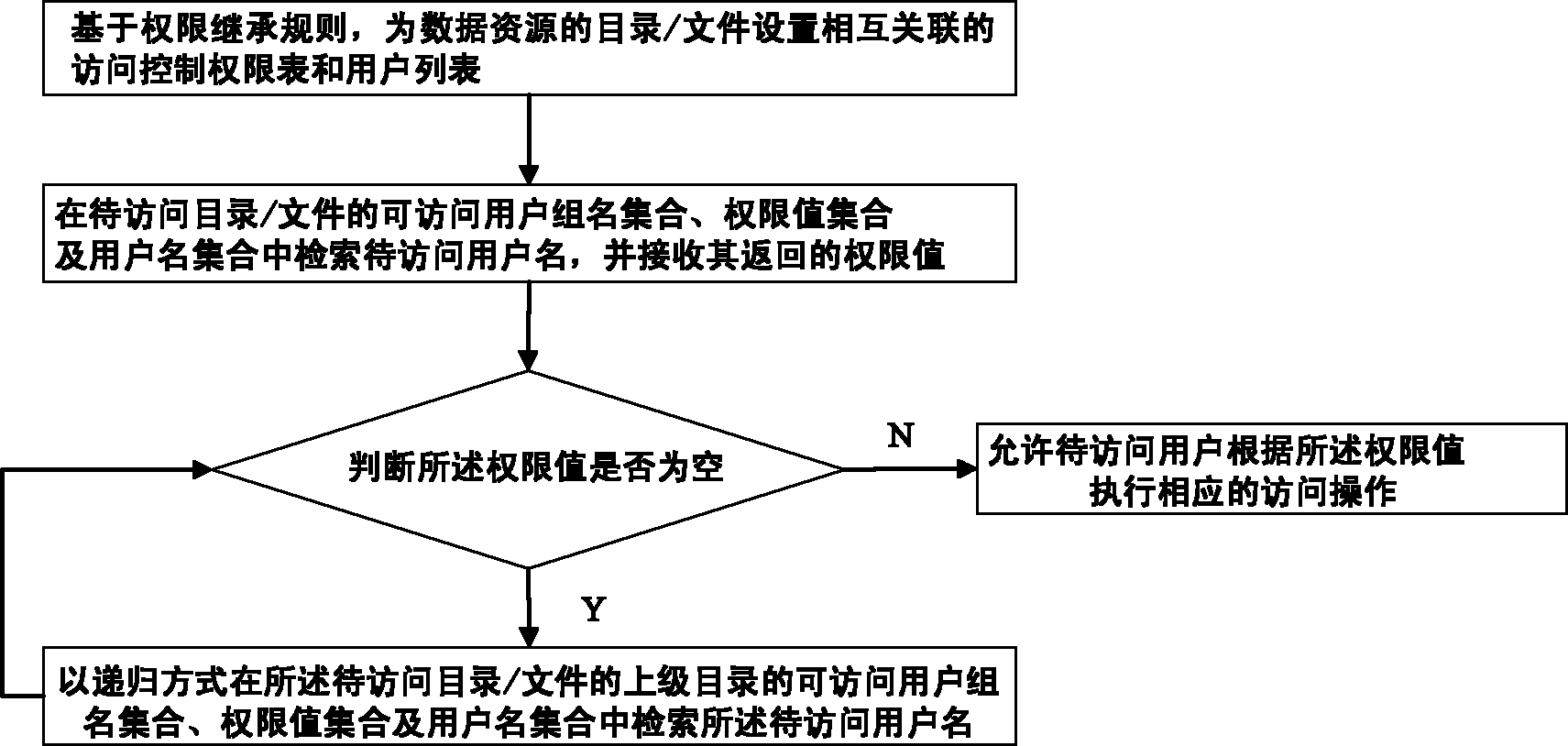

Data resource authority management method based on access control list

The invention provides a data resource authority management method based on an access control list, which comprises the following steps: S1: setting an access control authority list and a related user list for a directory / file of data resources on the basis of authority inheritance rules; S2: retrieving a name of a user who is going to access from an accessible user group name set, an authority value set and a user name set of the directory / file to be accessed, and receiving the returned authority value; S3: judging whether the authority value is null, and if not, executing S4; and S4: permitting the user who is going to access to execute the corresponding access operation according to the authority value, and if the authority value is null, retrieving the name of the user who is going to access in a recursive mode, and returning to the step S3. The method can enhance the access authority inquiry efficiency of large-volume data resources, and implement simple and independent data resource authority management and control.

Owner:TSINGHUA UNIV

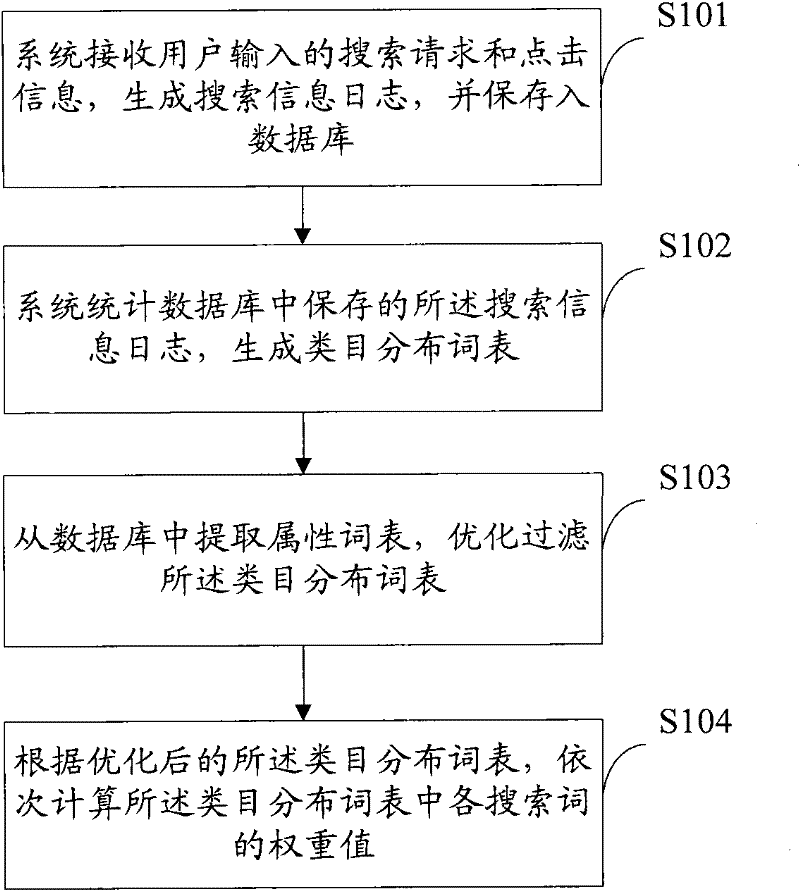

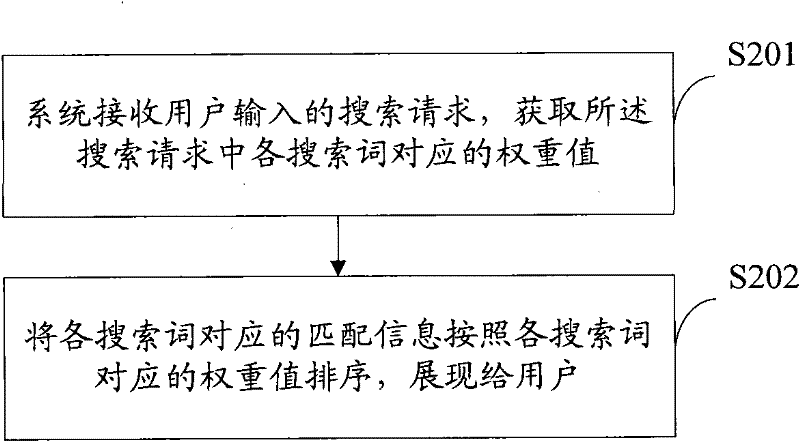

Method and device for determining search word weight value, method and device for generating search results

InactiveCN102289436AImprove versatilityLess importantDigital data information retrievalSpecial data processing applicationsSearch wordsStatistical database

Owner:ALIBABA GRP HLDG LTD

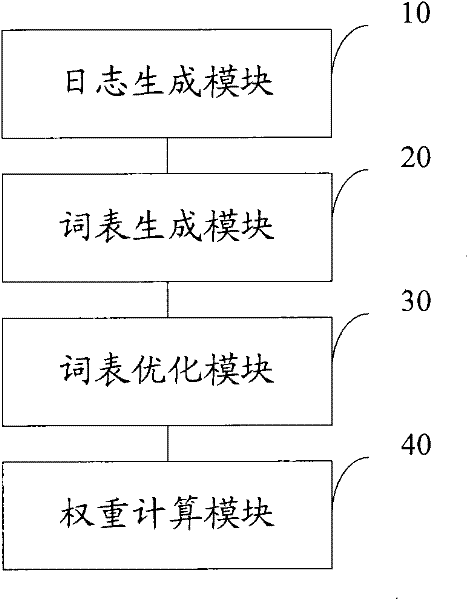

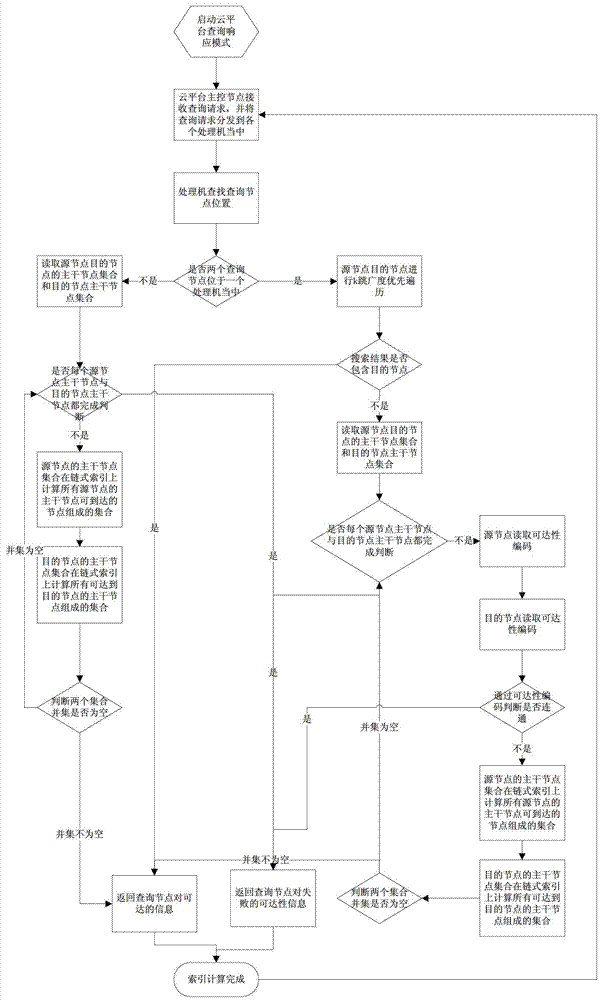

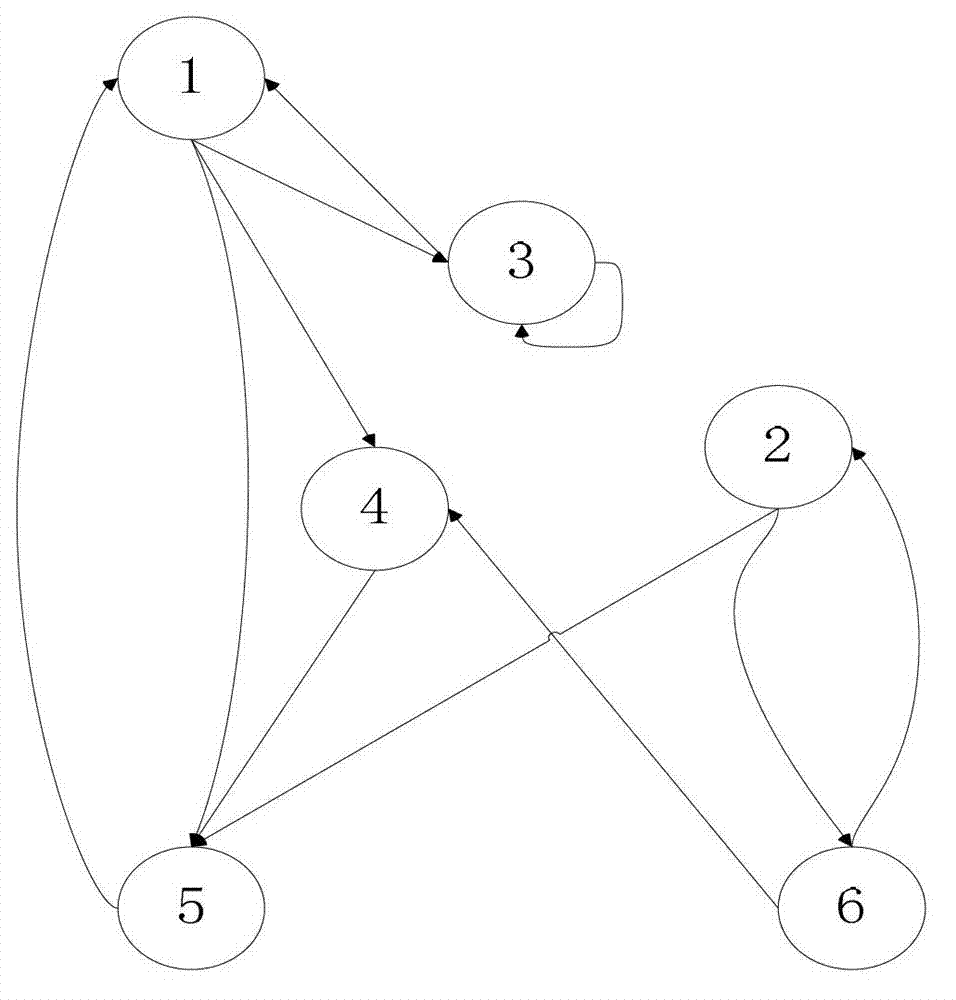

Generation and search method for reachability chain list of directed graph in parallel environment

ActiveCN103399902AReduce sizeReduce computing loadSpecial data processing applicationsData compressionSkip list

The invention belongs to the field of data processing for large graphs and relates to a generation and search method for reachability chain list of a directed graph in the parallel environment. The method includes distributing the directed graph to every processor which stores nodes in the graph and sub-nodes corresponding to the nodes; compressing graph data split to the processors; calculating a backbone node reachability code of a backbone graph; building a chain index; building a skip list on the chain index; allowing data communication among the processors; allowing each processor to send skip list information to other processors; allowing each processor to upgrade own skip list information; and building a reachability index of a total graph. Through use of graph reachability compression technology in the parallel environment, the size of graph data is greatly reduced, system computing load is reduced, and a system can process the graph data on a larger scale. The method has the advantages that the speed of reading data from a disk is higher, search speed is indirectly increased, accuracy of search results is guaranteed, and network communication cost and search time are reduced greatly for a parallel computing system during searching.

Owner:NORTHEASTERN UNIV

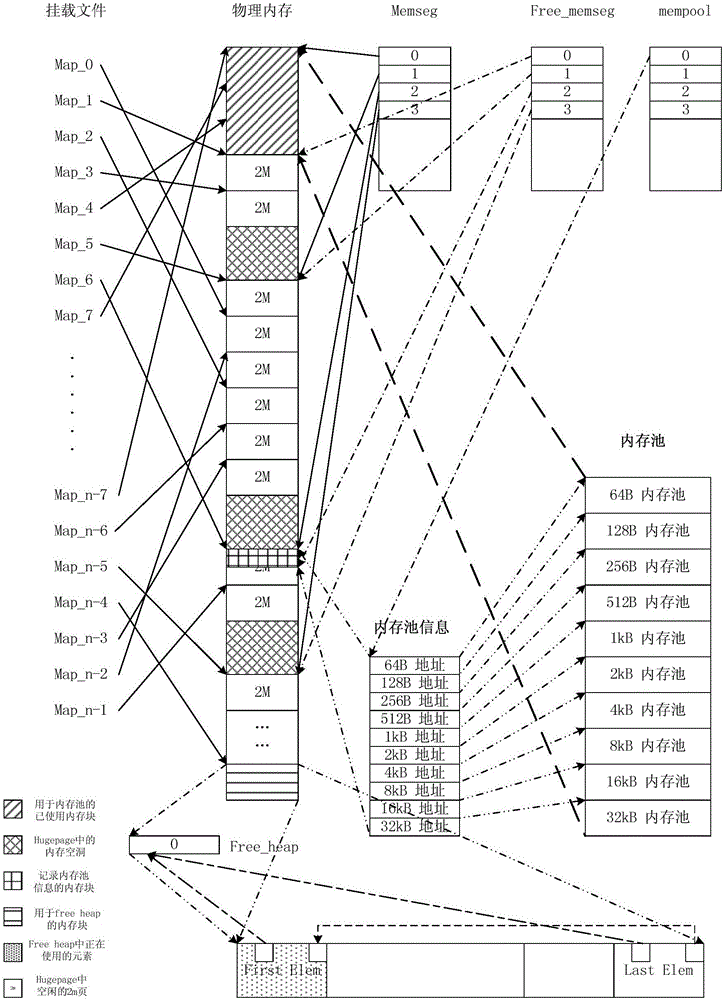

Memory management method used in Linux system

ActiveCN105893269AImprove efficiencyImprove access speedMemory architecture accessing/allocationMemory adressing/allocation/relocationStatic memory allocationGNU/Linux

The invention provides a memory management method used in a Linux system. A large page memory is used in a Linux environment, and the memory configuration working process, the memory application process and memory release process are executed based on the large page memory; the memory configuration working process comprises the steps of calculating the relation between virtual addresses and physical addresses to determine the numa node a mapped hugepage belongs to and performing sorting according to the physical addresses; the memory application process comprises the steps of memory pool configuration application and common memory application. The memory management method keeps the high efficiency advantage of static memory allocation and adopts the 2MB hugepage to replace a 4KB page, the page query time is saved, and the TLB miss probability is reduced. In addition, a hugepage memory is not exchanged to a disk, it is ensured that the memory is always used by an applied application program, priority is given to use a local memory during memory application, and the memory access speed is improved.

Owner:WUHAN HONGXIN TECH SERVICE CO LTD

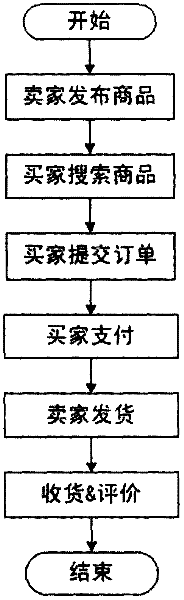

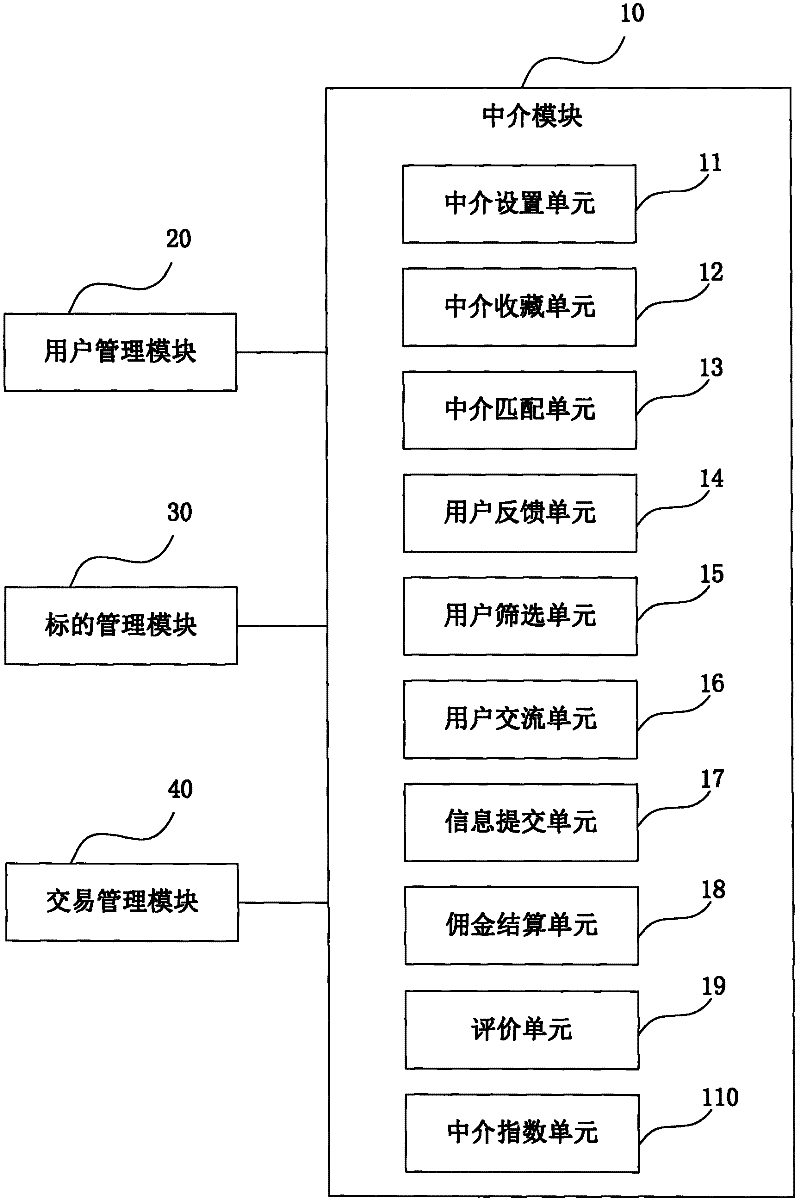

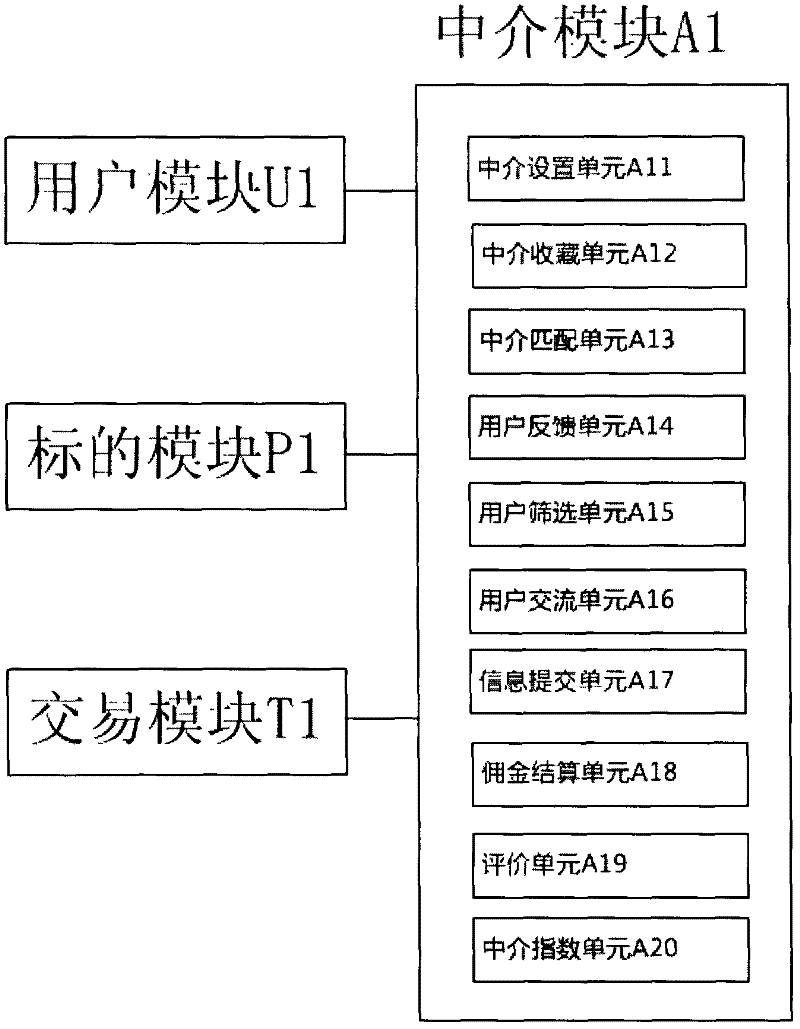

System and method for matchmaking and transaction of electronic commerce

InactiveCN102609870AReduce query timeLow costBuying/selling/leasing transactionsDeep knowledgeTransaction management

The invention discloses a system and method for matchmaking and transaction of electronic commerce. The system comprises a user management module, a target management module, a transaction management module and an intermediary module, wherein an intermediary user provides commodities, services or user recommendation service for a buyer or a seller through the intermediary module; the commodities and services needing to be purchased or user parameter information provided by the buyer and the commodities, services or the user parameter information provided by the seller are received and stored into a database by the intermediary module; the commodities, services or user parameter information needed by the buyer and the commodities, services or user parameter information needed by the seller are matched, and the matching coincidence rate is calculated; and a group of commodities, services or user data or multiple groups of commodities, services or user data are selected for the two parties of the buyer and the seller to select according to the matching coincidence rate. According to the system and the method, the intermediary user who has deep knowledge of certain category products can effectively help the two trading parties reduce information query time and cost; and the success rate of on-line transaction is greatly improved due to the appearance of the intermediary user.

Owner:陈晓亮

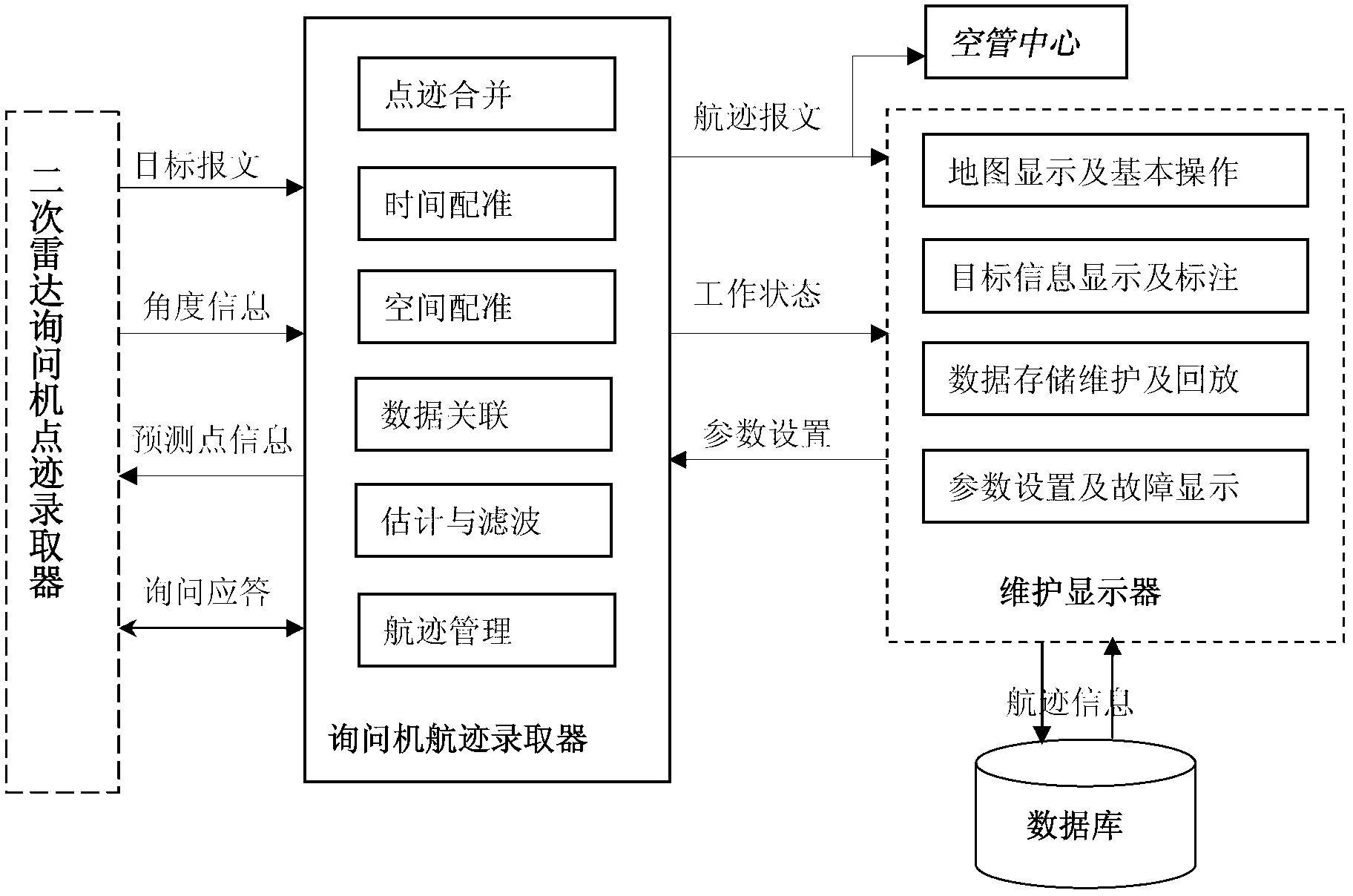

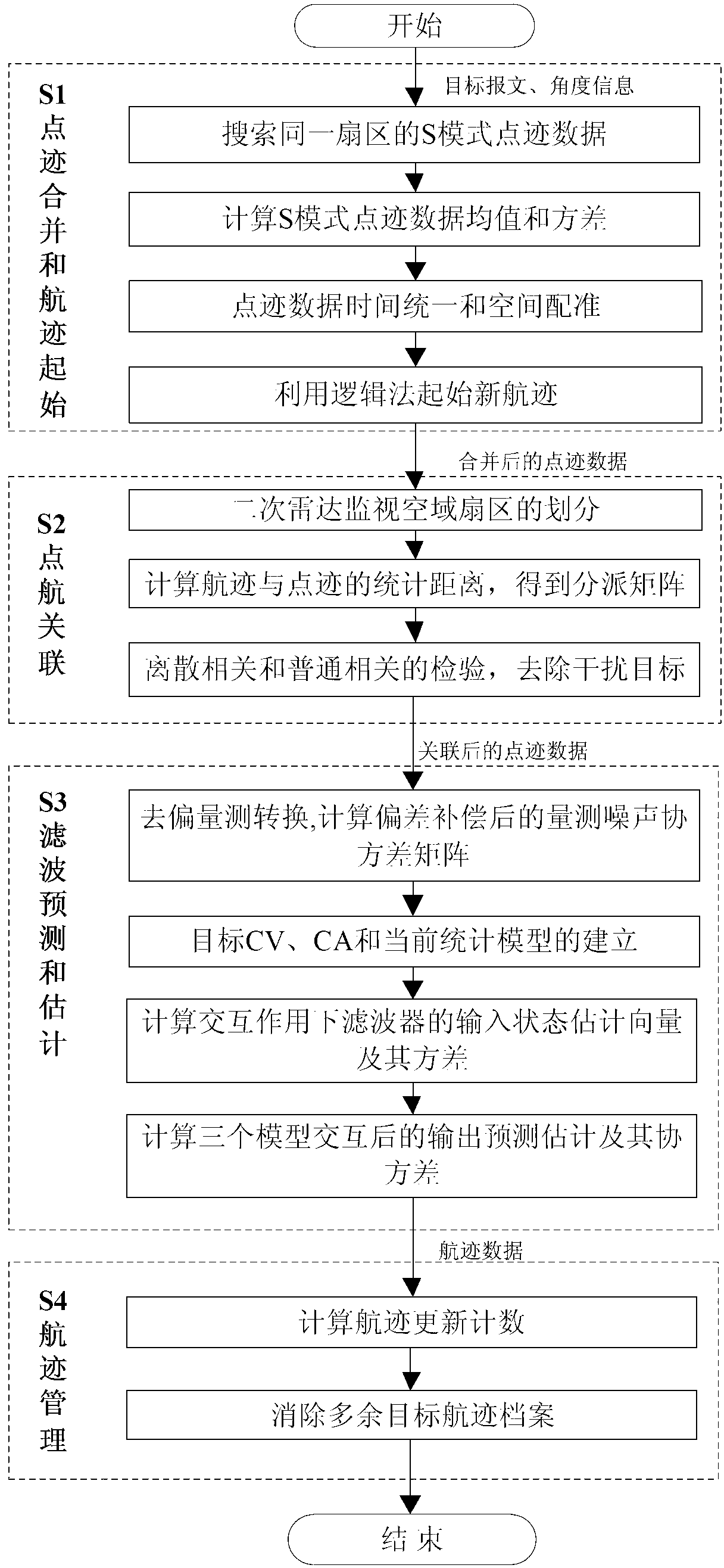

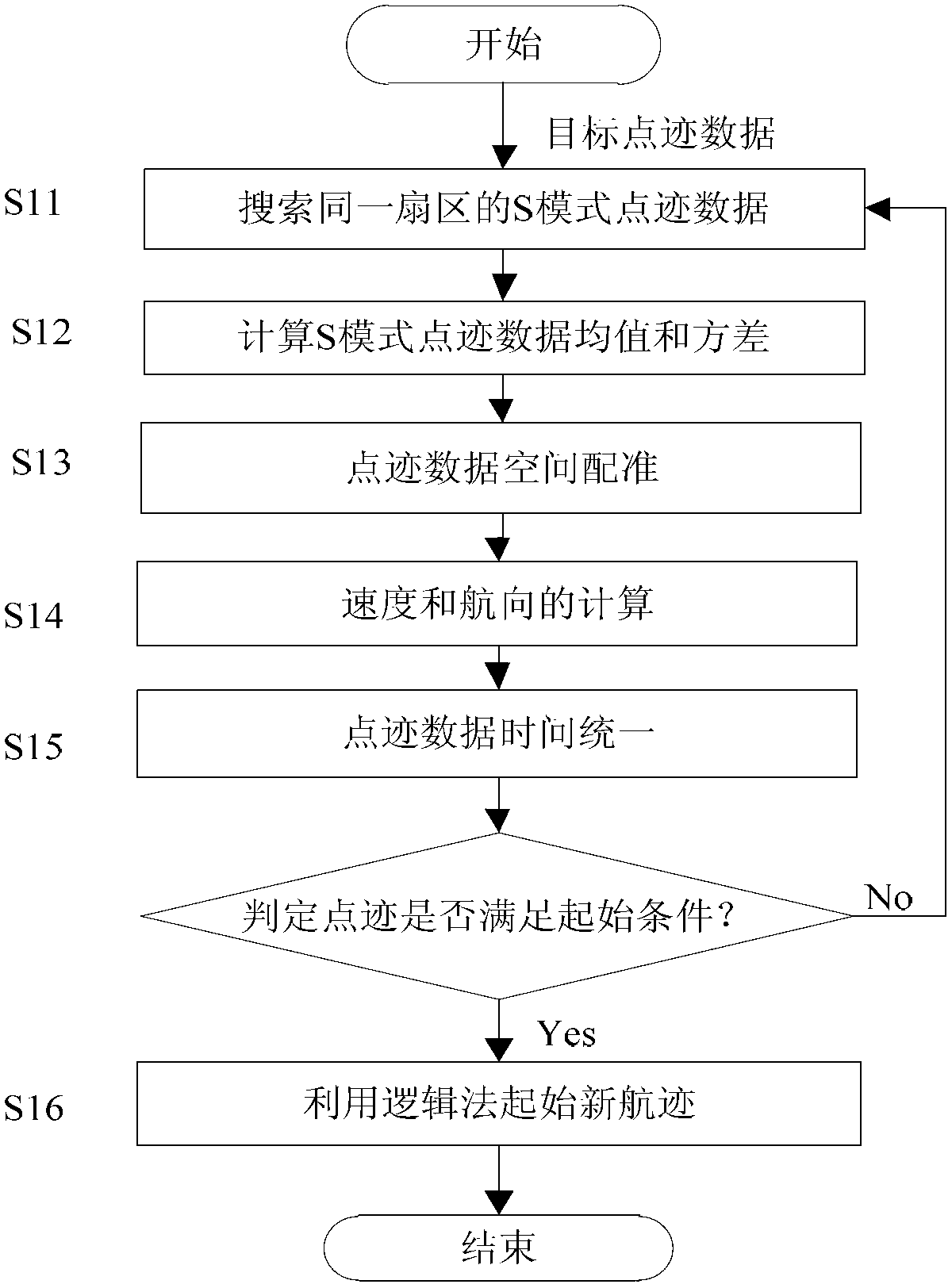

Secondary surveillance radar track extraction method for multimode polling and S-mold roll-calling interrogation

InactiveCN103076605ASolve technical problems that are difficult to removeImprove accuracyRadio wave reradiation/reflectionSecondary surveillance radarTime space

The invention provides a secondary surveillance radar track extraction method for multimode polling and S-mold roll-calling interrogation. By using the method, false targets under an interference environment can be effectively removed and the accuracy and the real-time performance of the S-mode query are improved. The method is realized through the technical scheme that plot combination is conducted to S-mode plot data which is searched from the same sector of a secondary surveillance radar interrogator, the mean value and the variance of the plot data are calculated, and a real track is initiated after time-space registration; the combined plot data is divided according to the sector characteristic of radar and an associated sector window is created to enter a plot and track processing flow; firstly de-biased measurement conversion is conducted to the associated plot data, a CV (Constant Velocity) model, a CA (Constant Acceleration) model and a current statistical model are combined into a target multi-model, and the interactive input state estimation vector and the variance of each filter under an interactive effect are calculated; interference targets are removed by adopting interference target removing strategies; and unnecessary target files are removed through track management, tracking is ended and a correct target track extraction result of the secondary surveillance radar is given.

Owner:10TH RES INST OF CETC

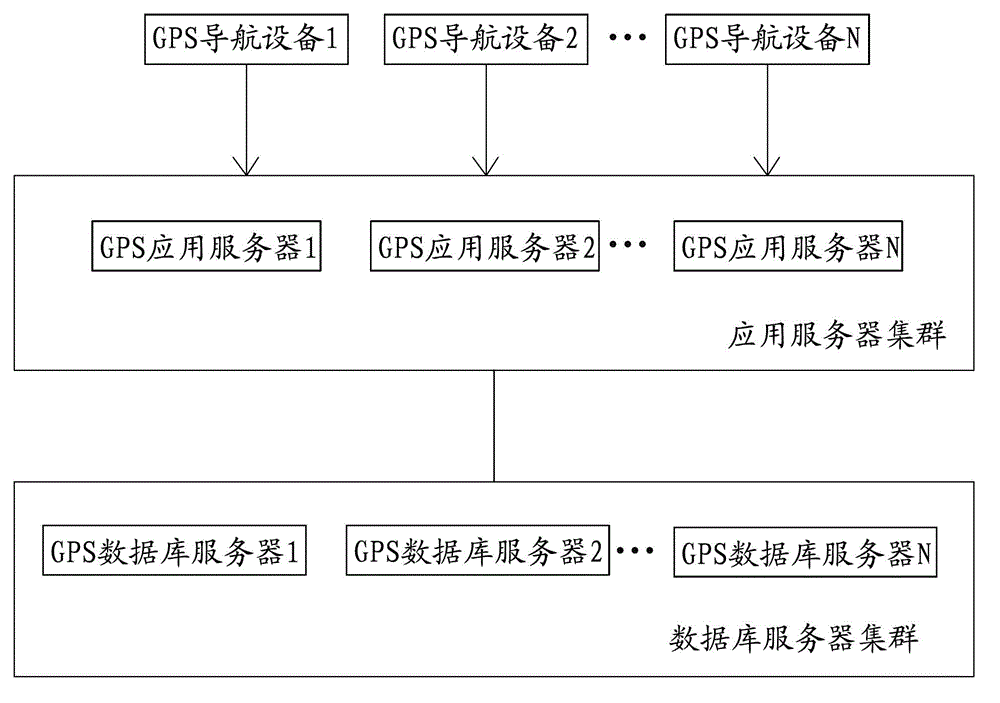

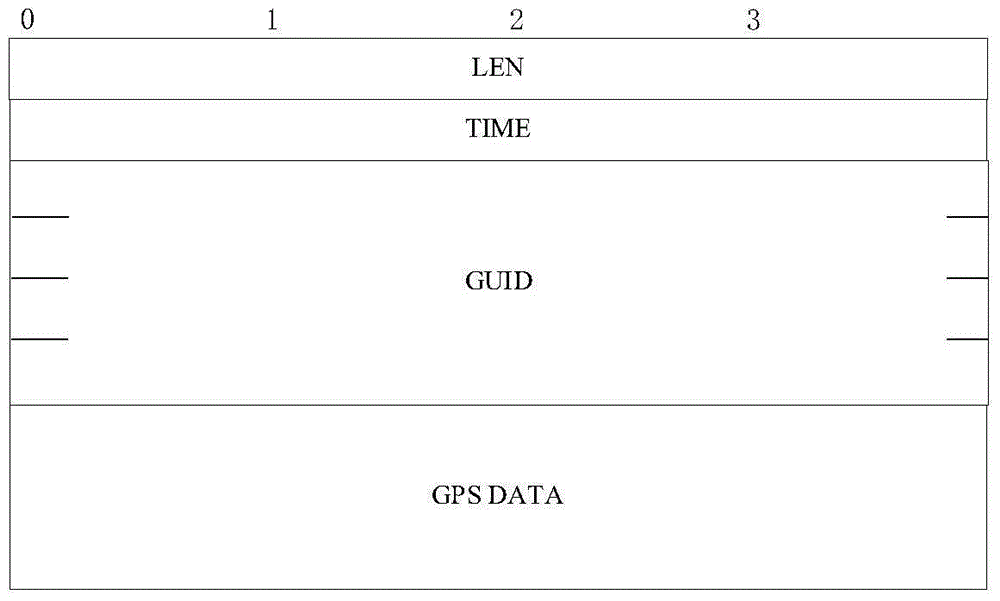

Global position system (GPS) mass data processing method

InactiveCN103064890AReduce storage timeReduce query timeSpecial data processing applicationsApplication serverDatabase server

The invention relates to the field of mass data processing, in particular to a global position system (GPS) mass data processing method. The method includes that (1) a plurality of GPS database servers are arranged to form a distributed database server cluster, and a plurality of GPS application servers are arranged to form a distributed application server cluster; (2) region division is performed according to GPS data, and divided GPS data are distributed to different GPS application servers; (3) the different GPS application servers receive the GPS data, classify the GPS data and send the classified GPS data to the different GPS database servers for storage; and (4) when the GPS data are required to be inquired, the GPS application servers receive a query request of a user, the query request comprises a position terminal identification and positioning time, and the GPS application servers firstly find out the GPS database servers which store the GPS data according to the position terminal identification and then find out a record by combining with a classification table of the GPS data.

Owner:QUANZHOU HAOJIE INFORMATION TECH DEV

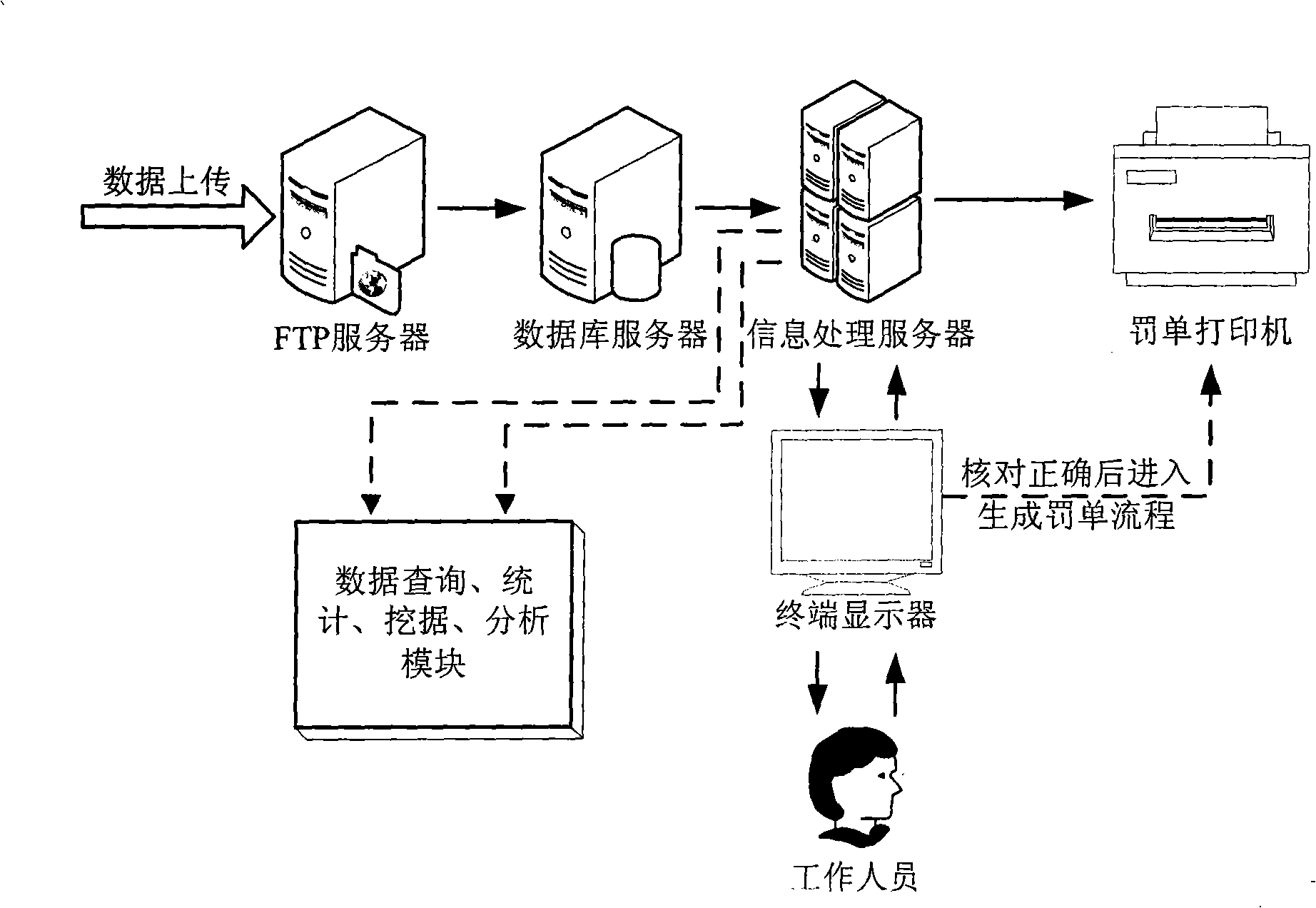

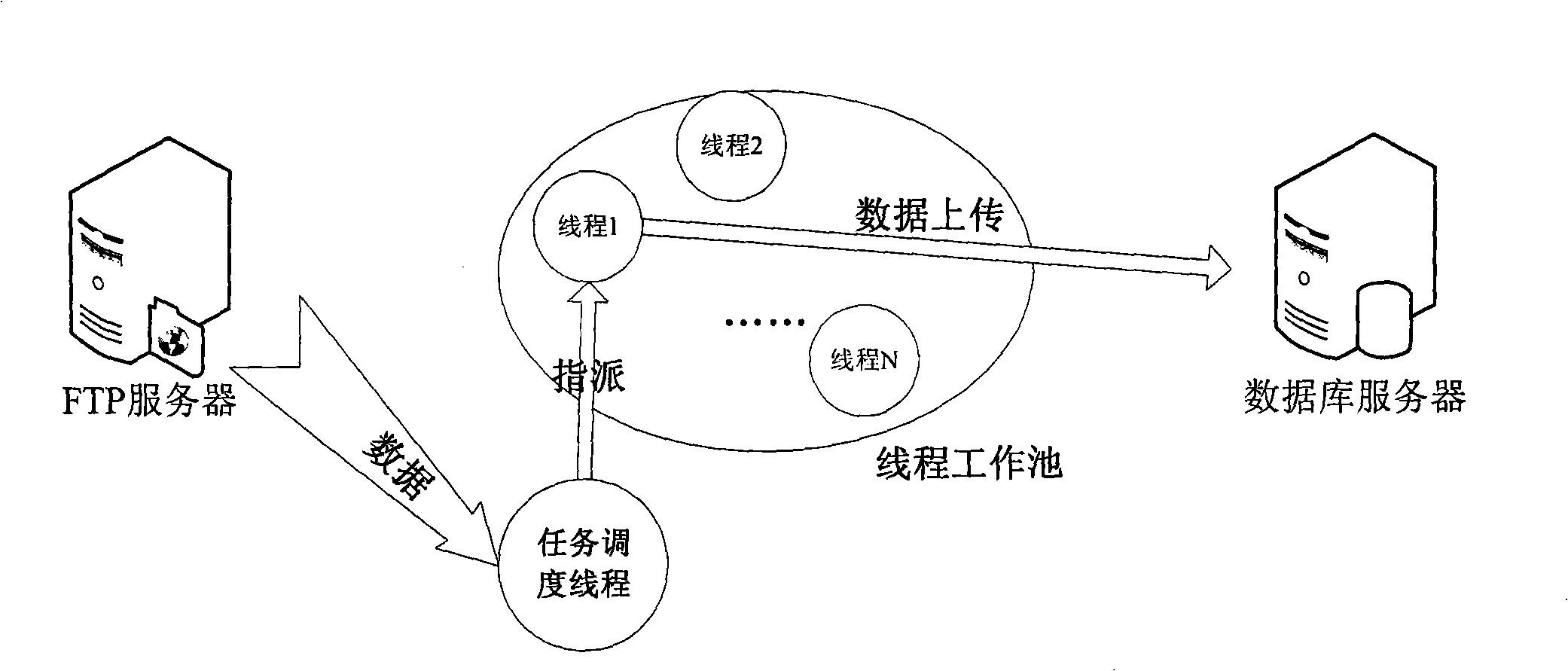

Electronic police background intelligent management and automatic implementation system

InactiveCN101261722AHigh speedImprove experienceData processing applicationsMultiprogramming arrangementsData fileUnexpected events

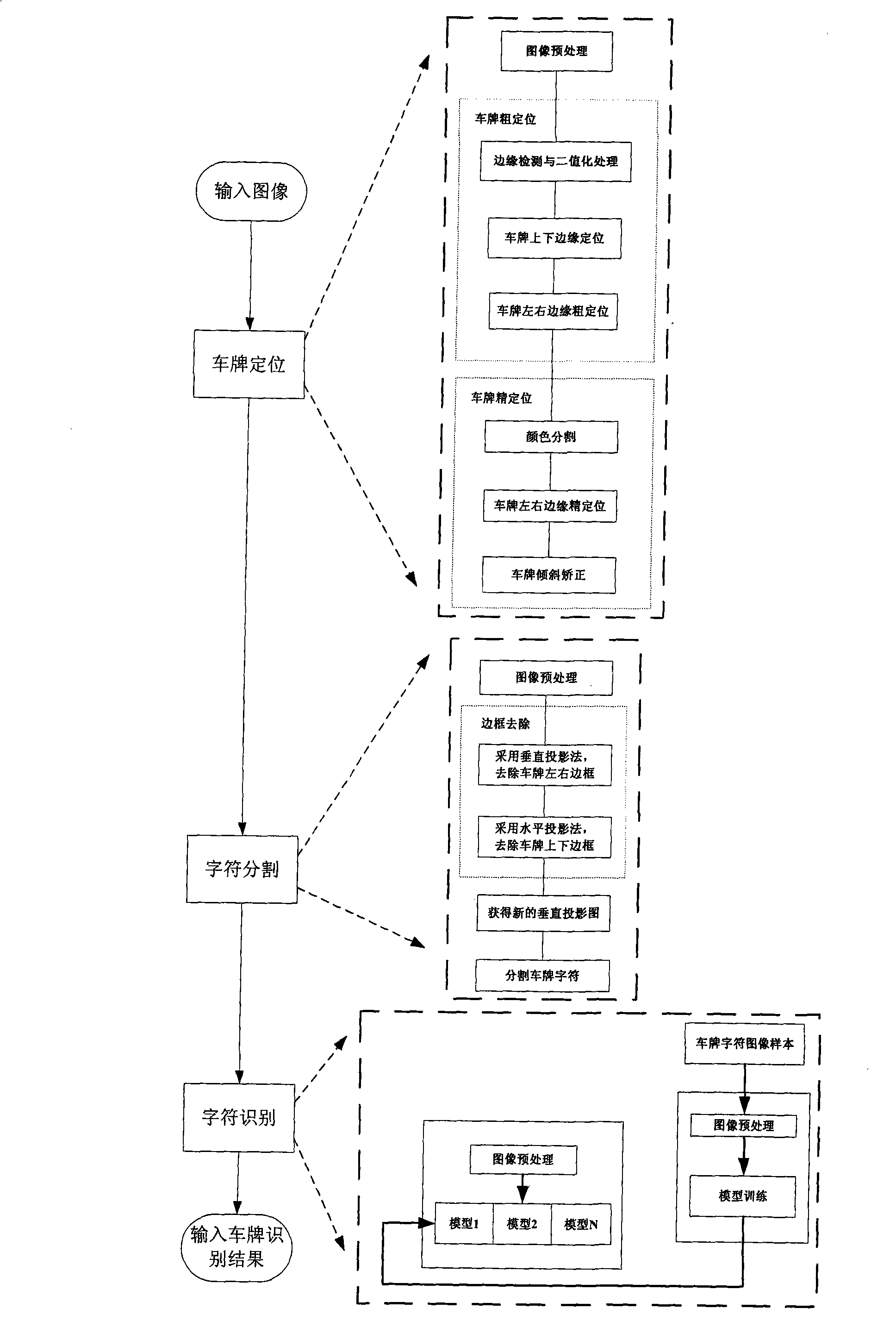

An electronic policeman background intelligent management and automatic implementing system includes: a data processing working flow module and a data discovering module which are used for transmitting a data document from a data acquisition front end device to the background system which carries out automatic license plate identification, inquires about vehicle information and generates a traffic ticket; the data discovering module is used for inquiring, clearing up and statistics on the data, gives alarms to the vehicle owners breaking the law for a plurality of times and reasonably arranges the constabulary duties. The electronic policeman background intelligent management and automatic implementing system of the invention realizes automatization and intellectualization as well as builds a real time, accurate and effective transportation integrated management system which affects in a large scale in full aspects by carrying out coordination processing on the invention by dint of various advanced devices and technologies; thereby ensuring the traffic safety, reducing the working intensity of the policemen, releasing a large number of policemen used for processing emergencies and ensuring the safeties of human lives and properties, bringing benefits to traffic, society, economy, population, environment and technology and making contributions for building a harmonious society.

Owner:BEIHANG UNIV

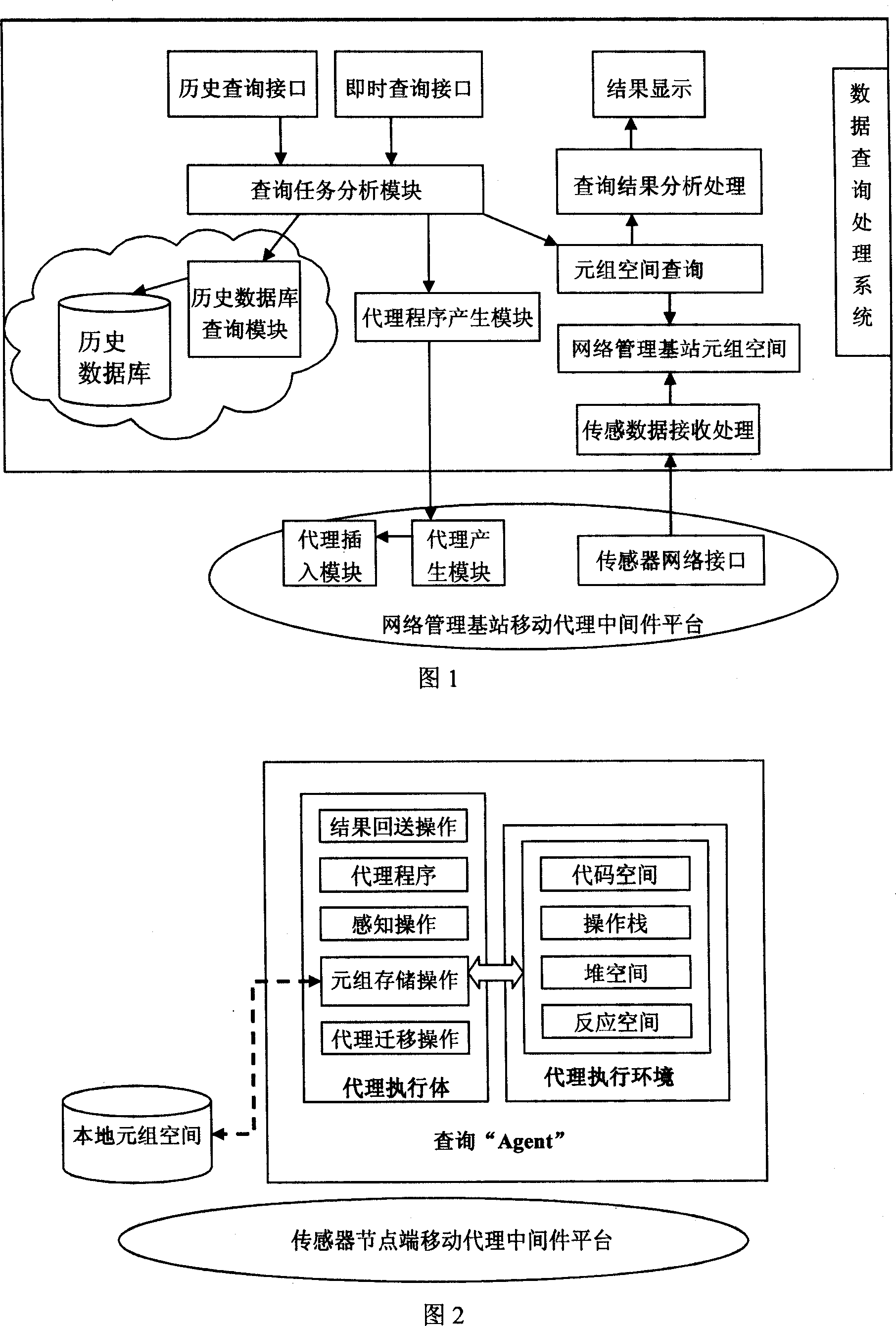

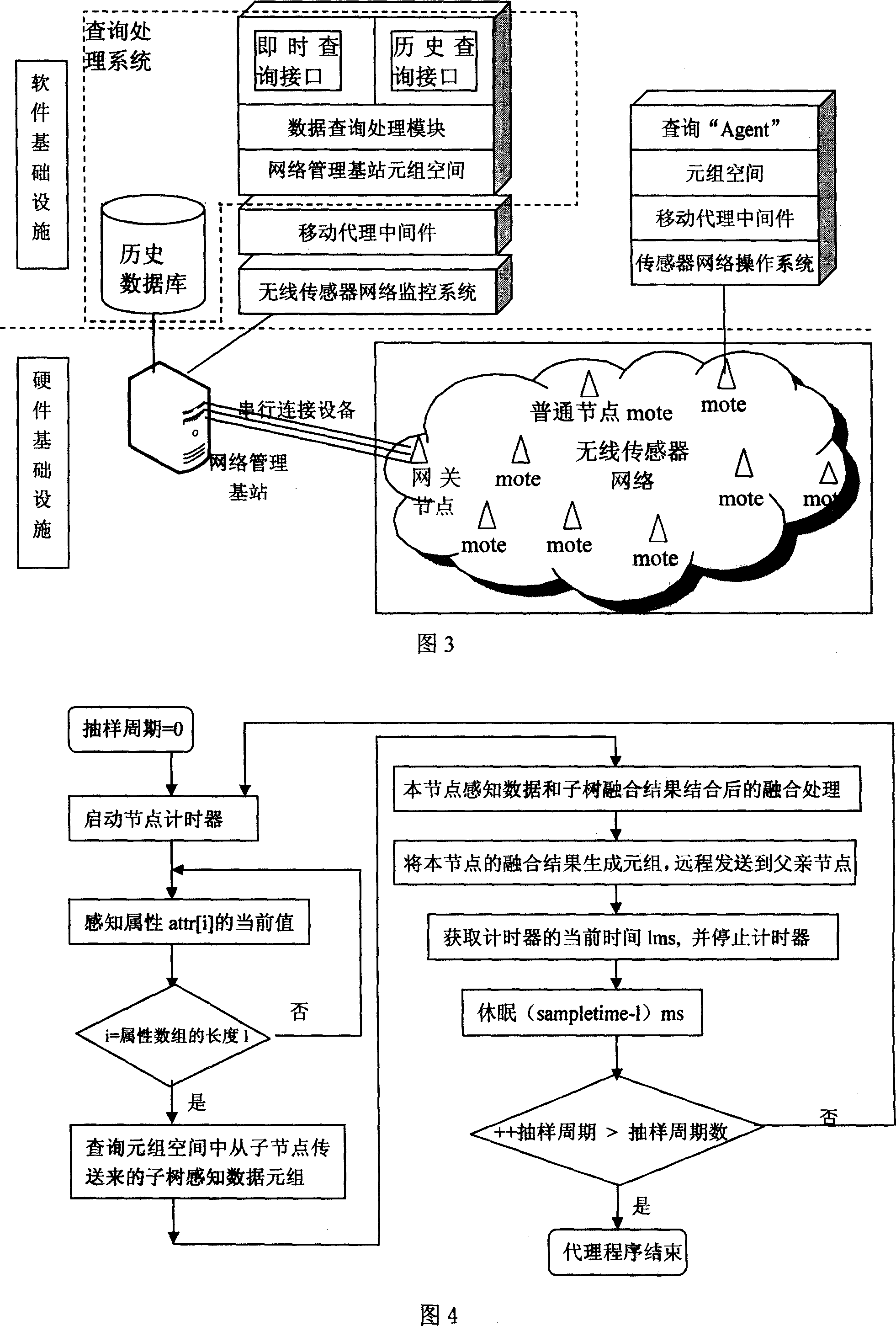

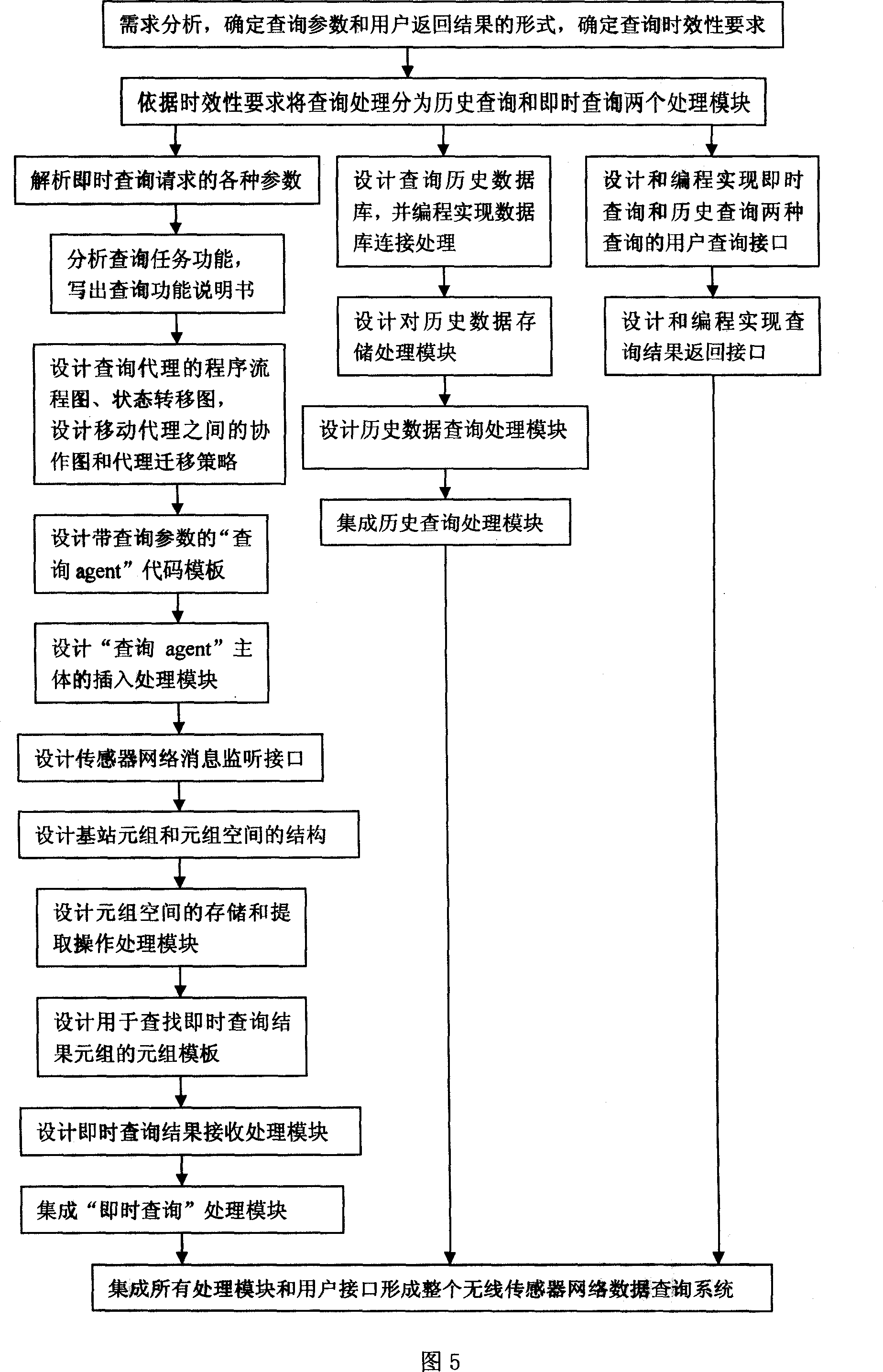

Method for realizing data inquiring system of sensor network based on middleware of mobile agent

InactiveCN101013986AImprove development efficiencyImprove deployment efficiencyData switching by path configurationSpecial data processing applicationsProcess systemsHandling system

The invention relates to one sensor network data inquire process method based on mobile agent middle part, which comprises the following steps: regarding whole wireless sensor as one database; realizing on mobile agent middle part; executing user inquire task by mobile agent onto designed point to start operations till fulfilling inquire task to end its life circle or according to its task need onto other point; inquiring process system design to realize network base station to change inquire agent design.

Owner:NANJING UNIV OF POSTS & TELECOMM

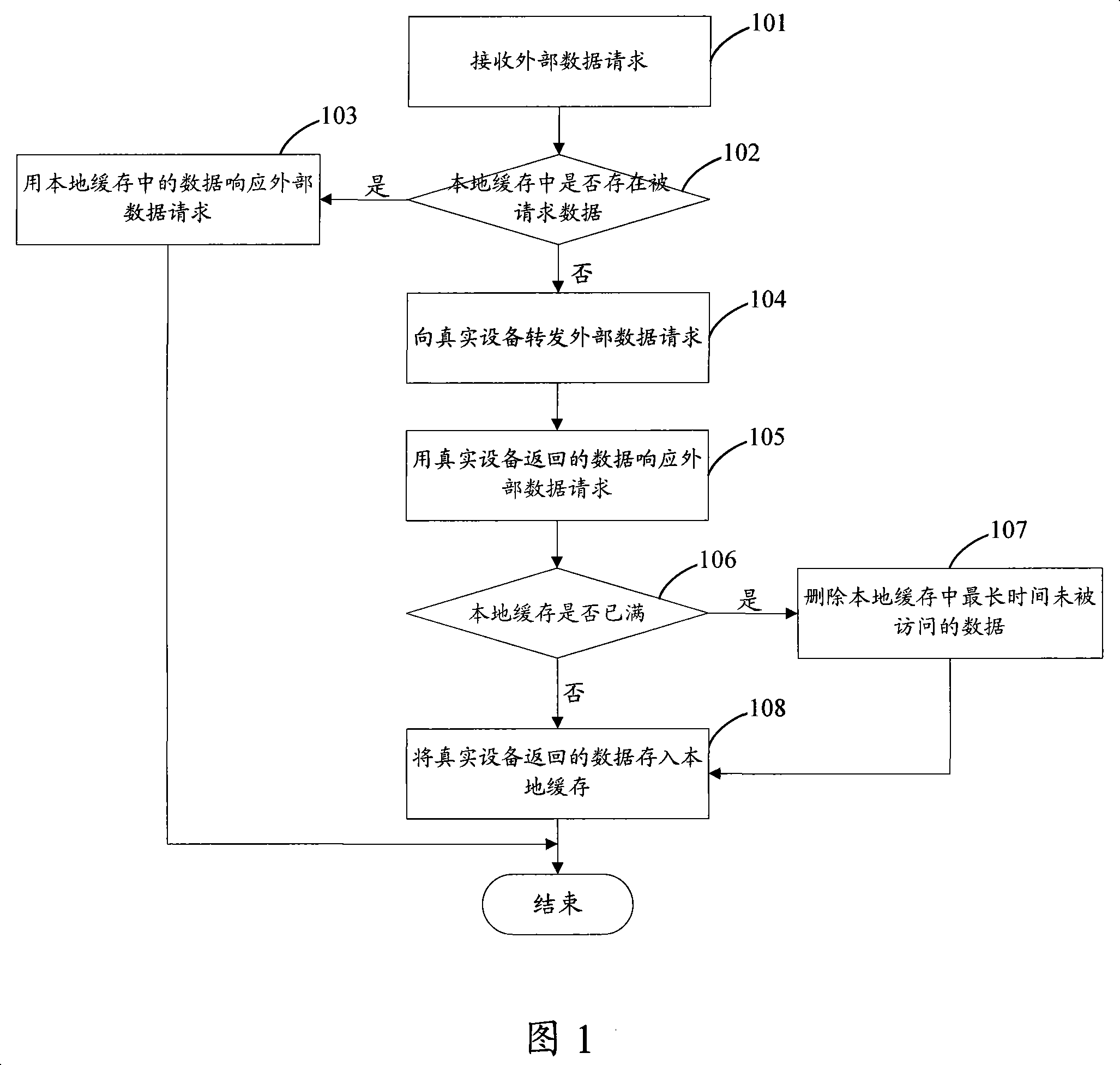

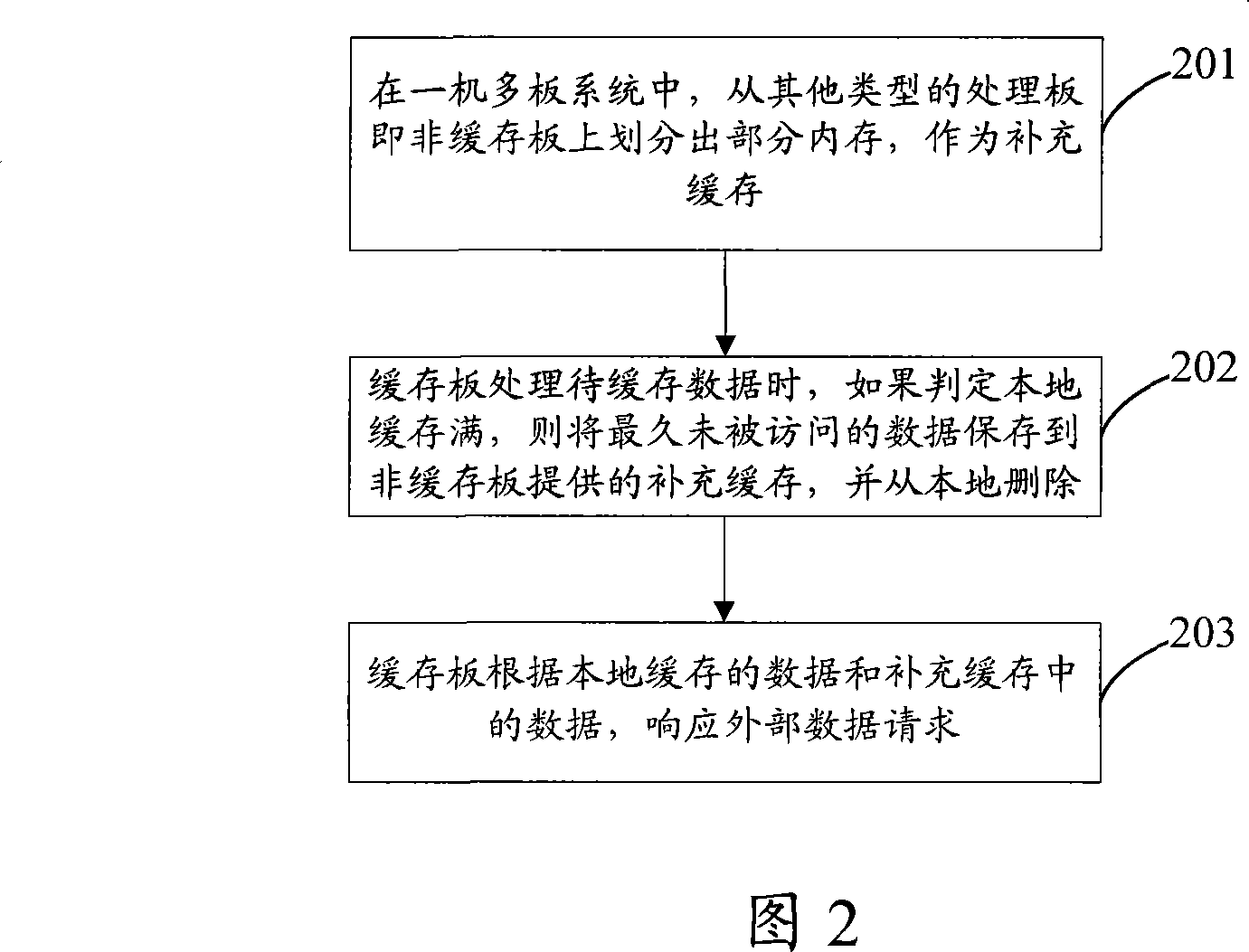

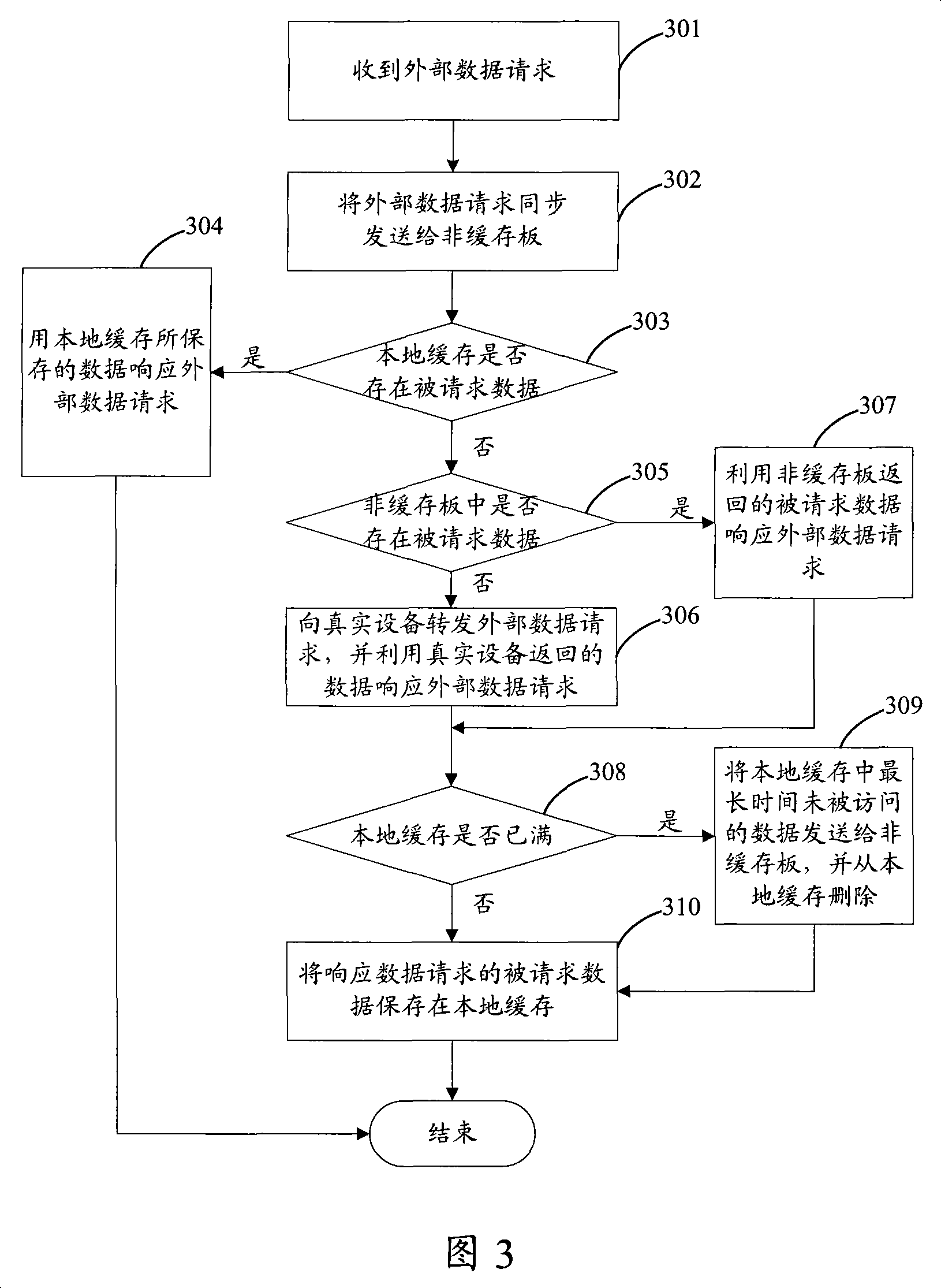

Distributed caching method and system, caching equipment and non-caching equipment

InactiveCN101196852AFast memory accessImprove access response speedMemory adressing/allocation/relocationExternal dataSingle plate

The invention discloses a distributed cache method, which is applied in a system with one machine frame and a plurality of single boards, the method comprises: a cache board in the system records a non-cache board which provides the memory of self part as the supplementary cache; the cache stores the data to be deleted due to full local caching in the supplementary cache provided by the non-cache board and deletes the data from the cache board; the cache board responds to the external data request according to the data in the local caching and supplementary cache. The invention also provides another distributed cache method, a distributed caching system and buffers and non buffers in the system. By adopting the invention, the expansion of capacity of caching under the condition of not adding extra storage media can be realized.

Owner:NEW H3C TECH CO LTD

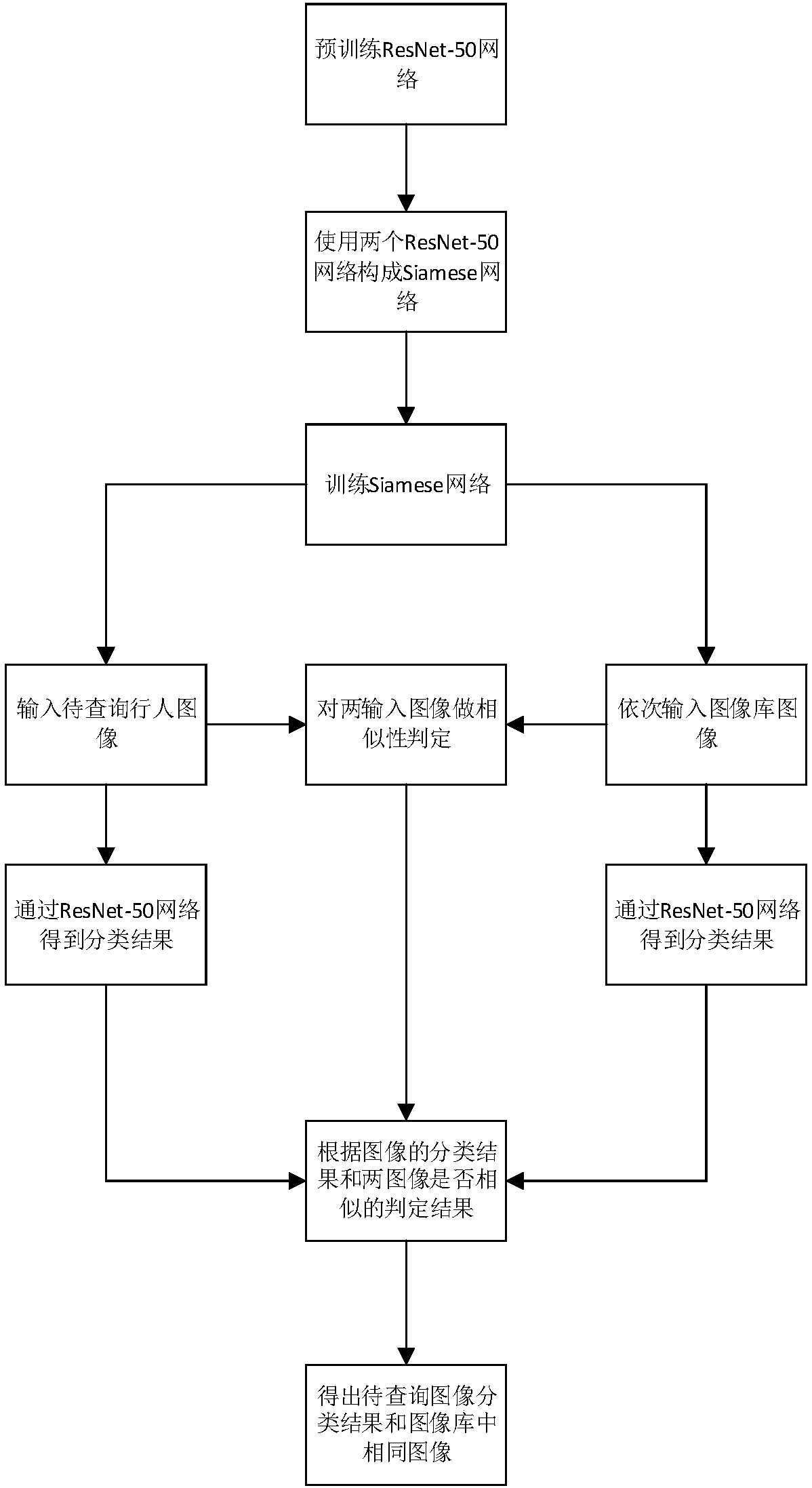

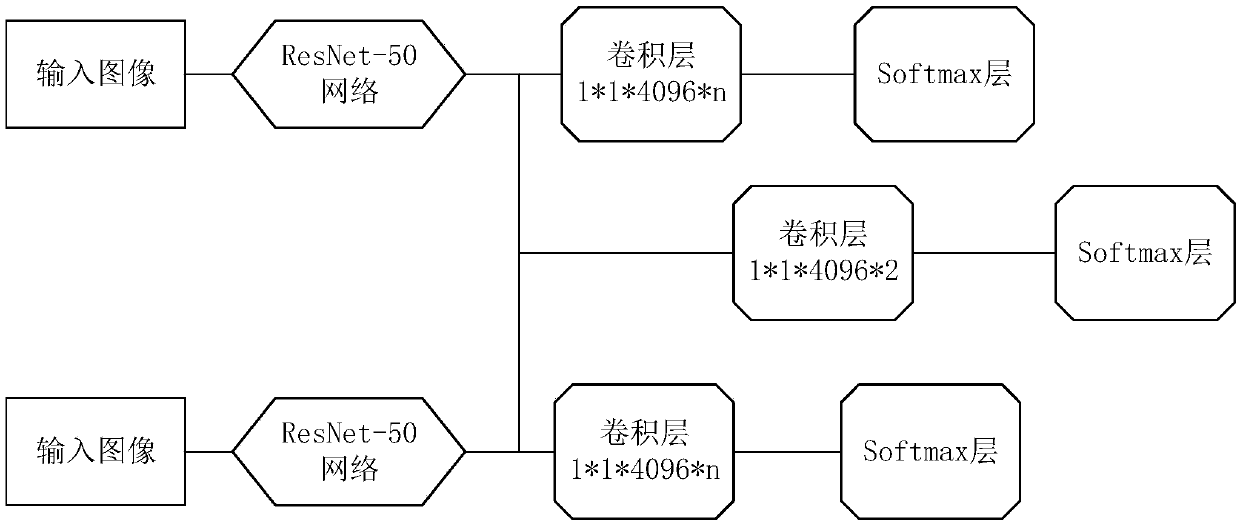

Siamese network-based method for pedestrian re-identification

ActiveCN108171184AIncrease the degree of differentiationReduce query timeBiometric pattern recognitionTraining data setsRe identification

The invention discloses a Siamese network-based method for pedestrian re-identification. According to the method, two identical ResNet-50 convolutional neural networks are adopted to form a Siamese network, specifically, the ResNet-50 networks are pre-trained, the ResNet-50 networks are adjusted, the ResNet-50 networks are adopted to build the Siamese network, and the Siamese network is improved;a training dataset is pre-processed; the training dataset is adopted to train the Siamese network; an image to be checked is matched with images in the training dataset, so that an image pair can be obtained; pedestrian re-identification is carried out; and with the classification results of the two images and the consistency of the two images adopted as judgment conditions, images in the trainingdata set which belong to the same person as the image to be checked are determined. With the method of the invention adopted, the distinguishing degree of image depth features and the accuracy of pedestrian re-identification are improved, and time for querying images in a large-scale image set can be decreased.

Owner:NANJING UNIV OF SCI & TECH

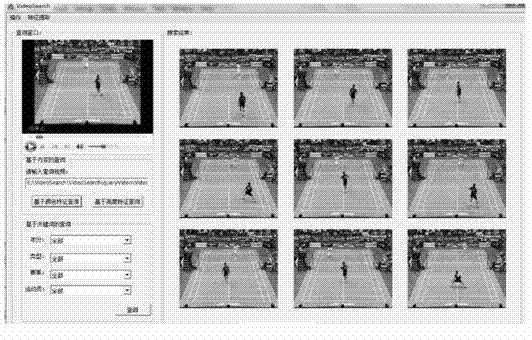

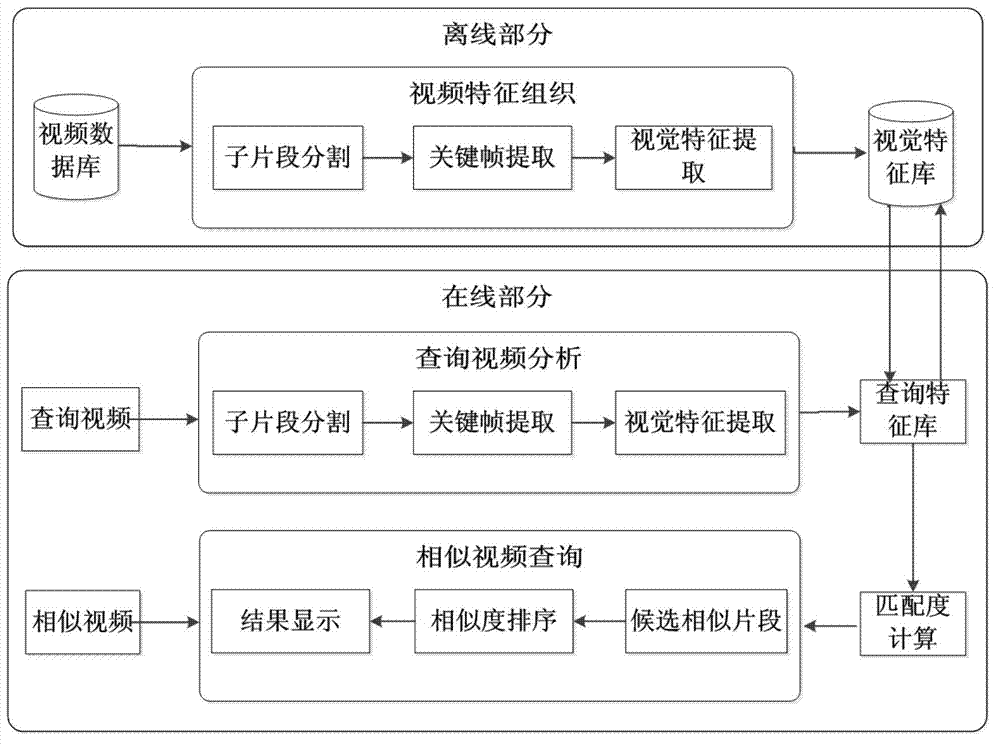

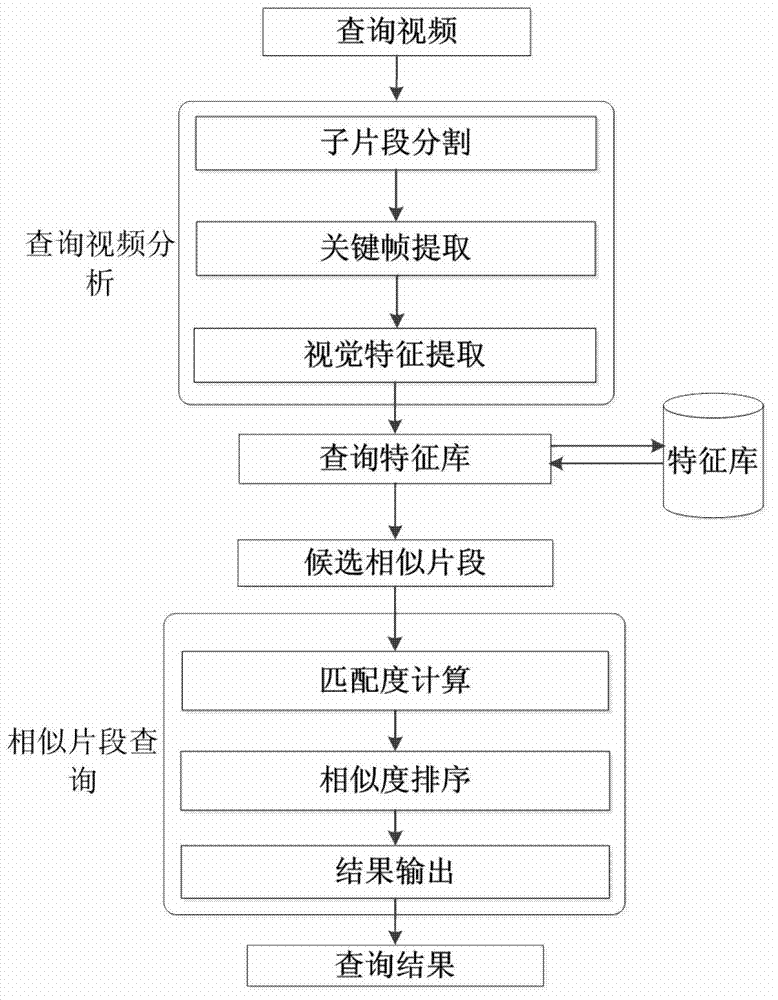

Method for retrieving similar video clips based on sports competition videos

ActiveCN102890700AGuaranteed retrieval accuracySmall amount of calculationSpecial data processing applicationsFeature extractionInterference factor

The invention relates to a method for retrieving similar video clips based on sports competition videos. The method comprises the following steps of: preprocessing all videos in a video library by using an off-line part to form a video feature library; performing video structured analysis and feature extraction on the queried clips by using an on-line part; and performing two-round vari-size grained retrieval in the video feature library, wherein a candidate video set is determined in the first-round coarse-grained retrieval process, and the accurate positions of the similar clips in a video object are determined in the second-round fine-grained retrieval process. The query process is accelerated by organizing all video features through a K-dimension (K-D) tree; and the influence of vision, a time sequence and an interference factor on similarities is comprehensively considered, and the similarities of the similar chips are calculated and sequenced.

Owner:CHANGCHUN YIHANG INTELLIGENT TECH CO LTD

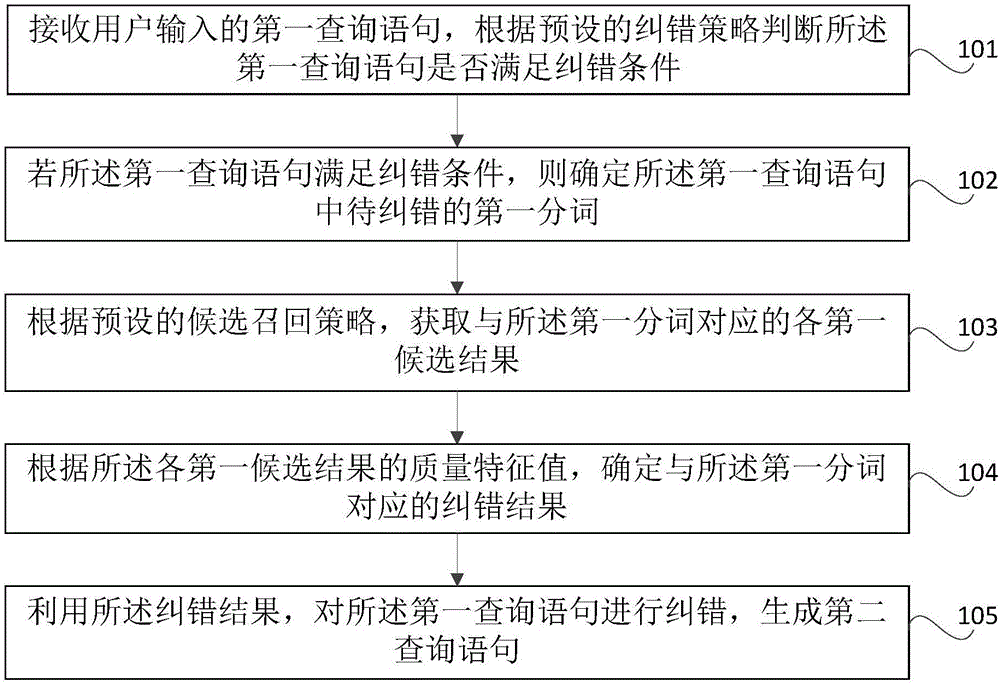

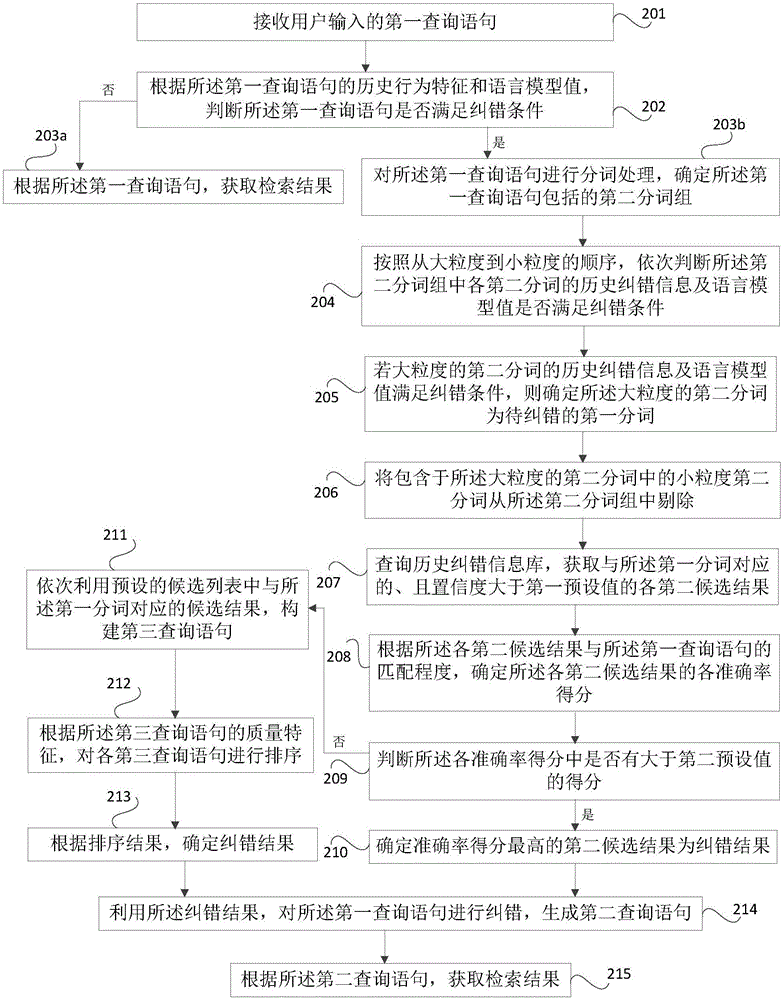

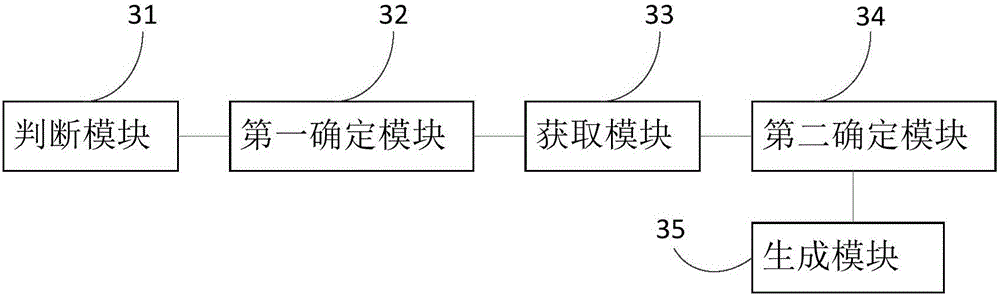

Artificial intelligence-based searching error correction method and apparatus

ActiveCN106528845AReduce query timeImprove experienceWeb data indexingNatural language data processingUser inputAlgorithm

The invention provides an artificial intelligence-based searching error correction method and apparatus. The method comprises the steps of receiving a first query statement input by a user, and determining whether the first query statement satisfies an error correction condition or not according to a preset error correction strategy; if the first query statement satisfies the error correction condition, determining to-be-error-corrected first word segmentations from the first query statement; obtaining respective first candidate results corresponding to the first word segmentations according to a preset candidate recall strategy; determining an error correction result corresponding to the first word segmentations according to quality characteristic values of the respective first candidate results; and performing error correction on the first query statement based on the error correction result to generate a second query statement. By adoption of the artificial intelligence-based searching error correction method, whether the query needs to be subjected to error correction or not is accurately determined based on historical data, and the error correction candidate results are accurately screened to determine the error correction result, so that the error correction efficiency and accuracy of a search engine are improved, inquiring time of the user is reduced, and user experience is improved.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

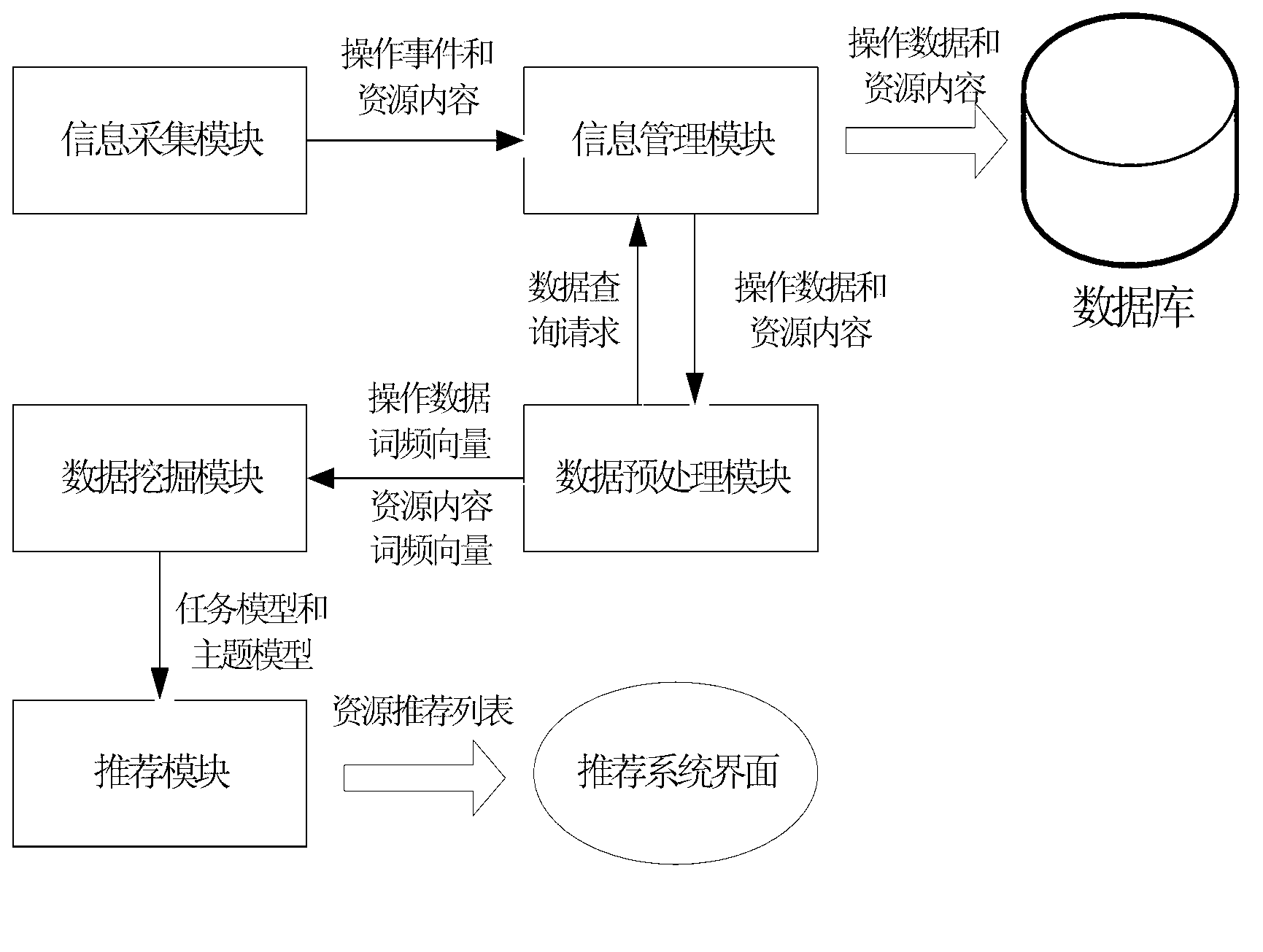

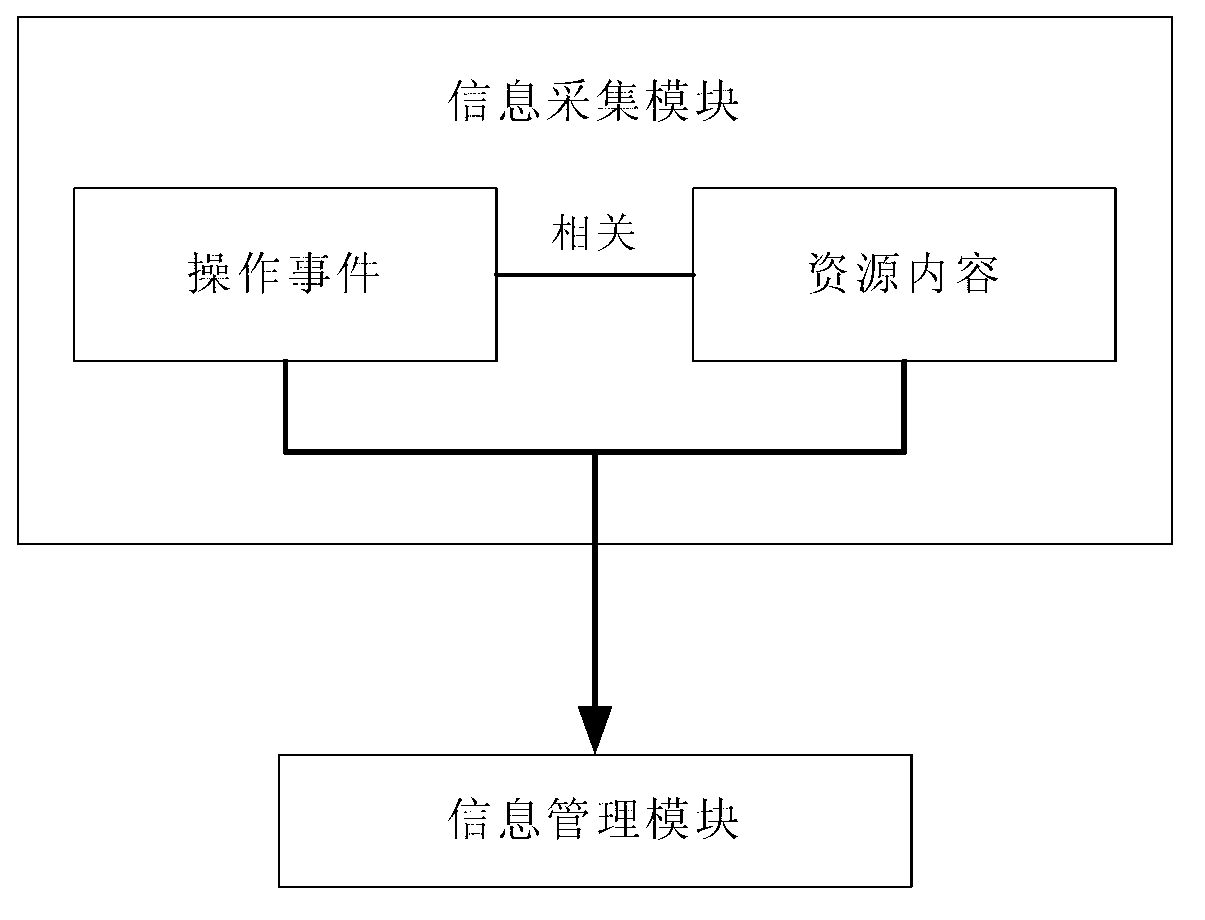

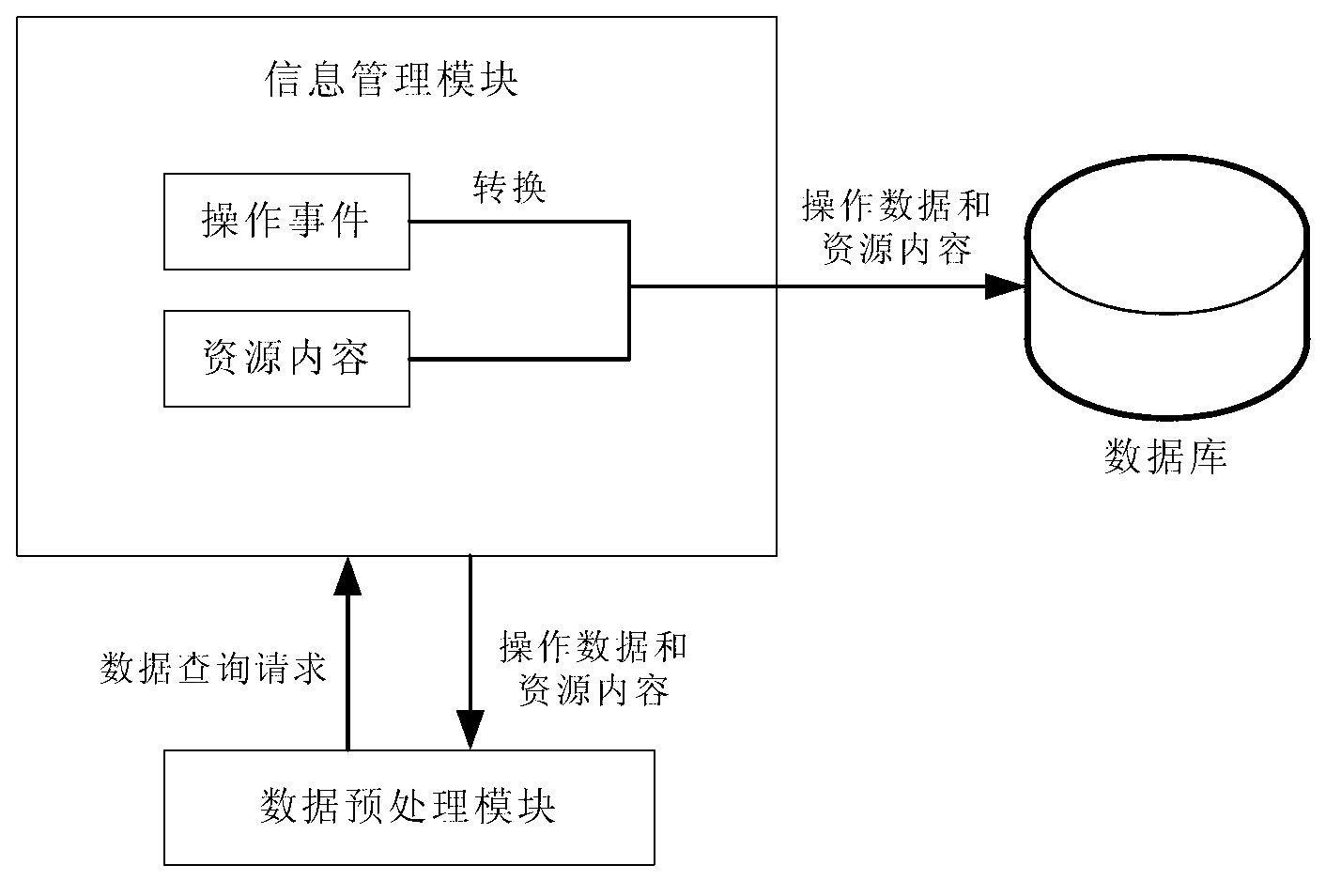

Information associating method based on user operation record and resource content

ActiveCN102915335AReduce the burden onReduce query timeSpecific program execution arrangementsSpecial data processing applicationsResource basedPersonal computer

The invention relates to an information associating method based on a user operation record and resource content. The method comprises the following steps: firstly, automatically excavating a task model (of a user) based on the operation record and a subject model based on the resource content according to the operation history record and the relevant resource content (of the user) in the operation in a personal computer; subsequently, combining the association relationship between the measuring information of the task model and the subject model, and finally finding out other resources which are most relevant to the current resource for the user when the user uses the resource, and recommending the other resources to the user, wherein the user does not need any extra operation in the whole operation process. The task model based on the operation history record and the subject model based on the resource content are automatically excavated, other resources relevant to the resource are automatically recommended as much as possible without any extra operation when the user uses the resources; and the invention aims at saving the time that the user spends in checking the file, so as to guarantee the consistency of user tasks as much as possible, and effectively alleviate the burden of the user to switch over the tasks.

Owner:PEKING UNIV

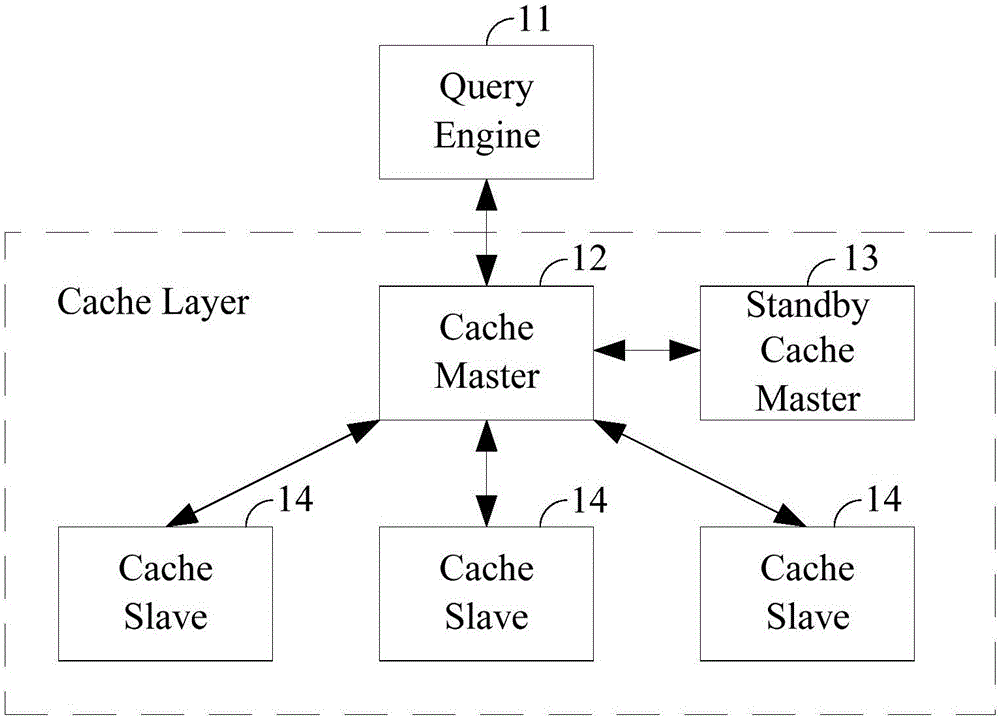

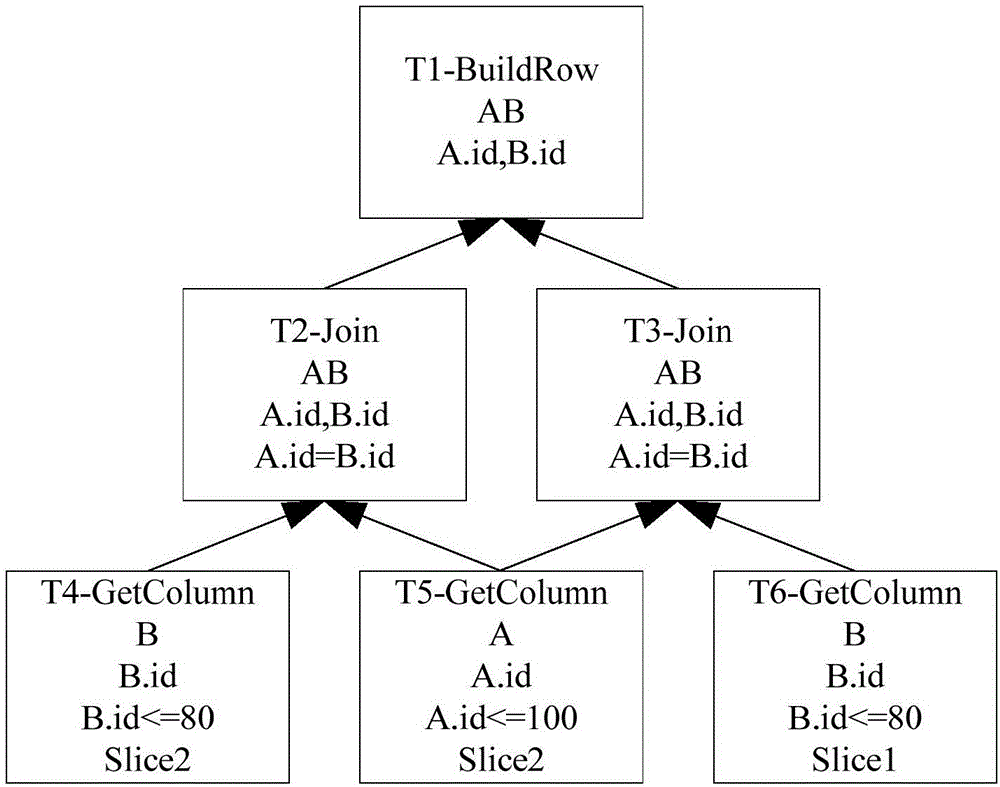

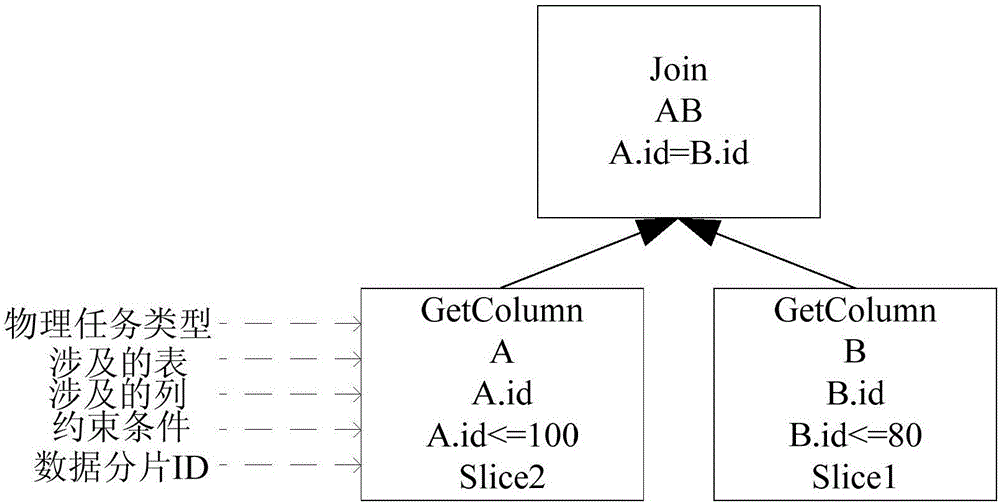

Cache management method of distributed internal memory column database

ActiveCN106294772AReduce calculations for repetitive tasksReduce query timeSpecial data processing applicationsExecution planDistributed memory

The invention discloses a cache management method of a distributed internal memory column database. The cache management method comprises the steps that cache queues are established on cache master control nodes; each physical task is used as a root node to cut the physical execution plan the node is located so as to obtain the cache calculation track corresponding to each physical task; cache feature trees are established on the cache master control nodes according to the cache calculation track corresponding to each physical task; when query requests arrive, an execution engine is queries to parse SOL statements into the physical execution plans; layer-level transversal is conducted on each node in the physical execution plans starting from the root nodes of the physical execution plan to execute, and whether the cache calculation track corresponding to each physical task is matched with the corresponding cache feature tree or not is judged; if yes, actual cache data of the physical tasks is directly read from the cache nodes, if not, the physical tasks are calculated. According to the cache management method of a distributed internal memory column database, weather a cache hits the target or not is rapidly detected through an efficient cache matching algorithm, and the query efficiency is improved.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

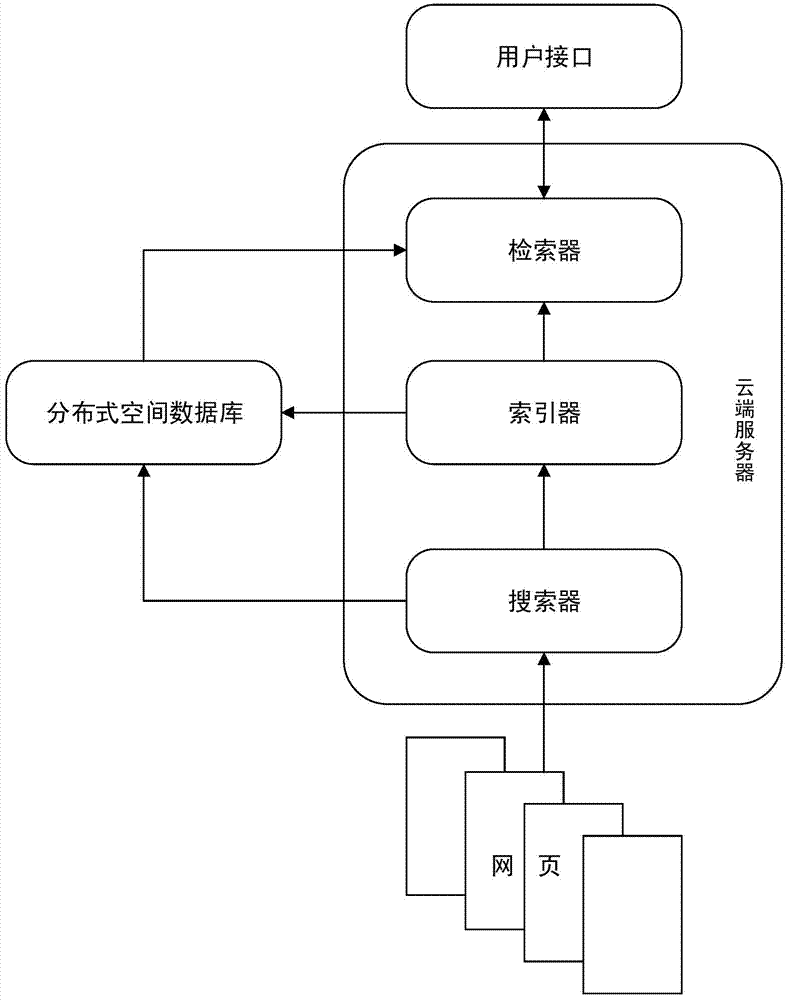

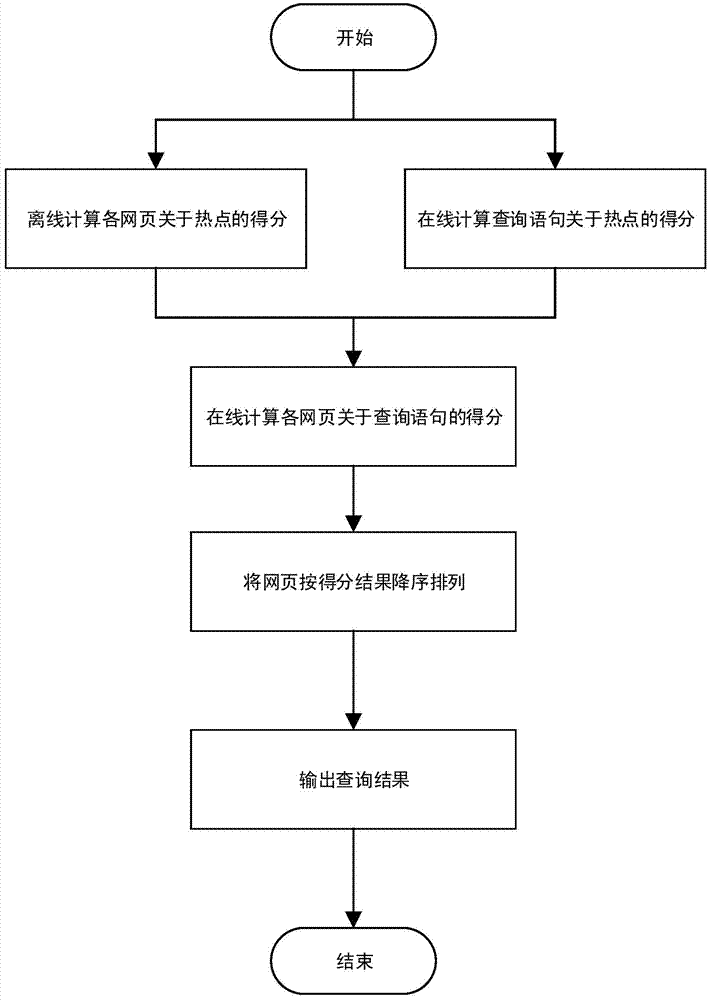

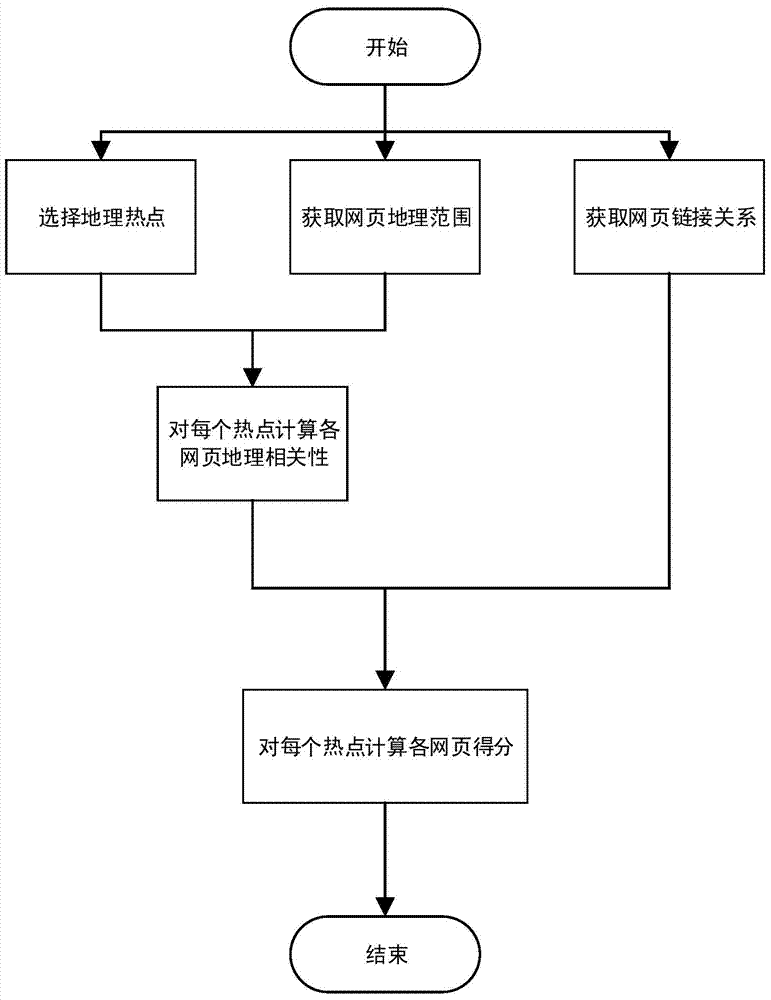

Search engine method and system sensitive to geographical position

InactiveCN103678629AImprove query accuracyReduce workloadGeographical information databasesSpecial data processing applicationsGeographic siteNetwork link

The invention provides a webpage retrieval method sensitive to geographical positions, a search engine method and a search engine system. Firstly, a cloud server calculates geographical relevance of selected geographical hot spots of web pages in an off-line state and calculates scores of importance of each geographical hot spot of each web page by being combined with a network link structure obtained by a grid crawling unit, the scores are recorded in meta data of each corresponding web page as fields, and the meta data of each web page are stored in a space database of the server; when a user inquires on line, the server analyzes a geographical range of an inquire statement through natural language processing, calculates the geographical relevance of the inquire statement relative to the geographical hot spots according to the distance between the inquire statement and the geographical hot spots, calls the scores of corresponding geographical hot spots of the web pages in the space database, calculates scores of the web pages in a specific inquiry on line, sequences the results in a descending order, and outputs a retrieval result at the user side.

Owner:PEKING UNIV

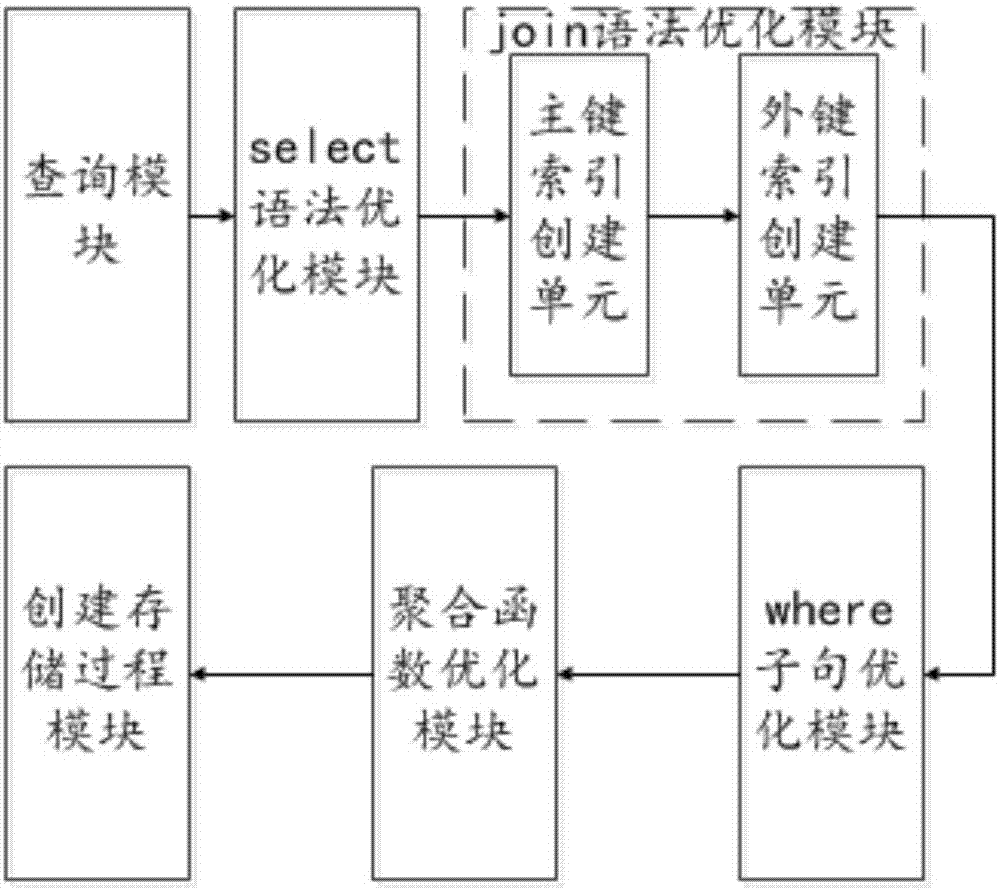

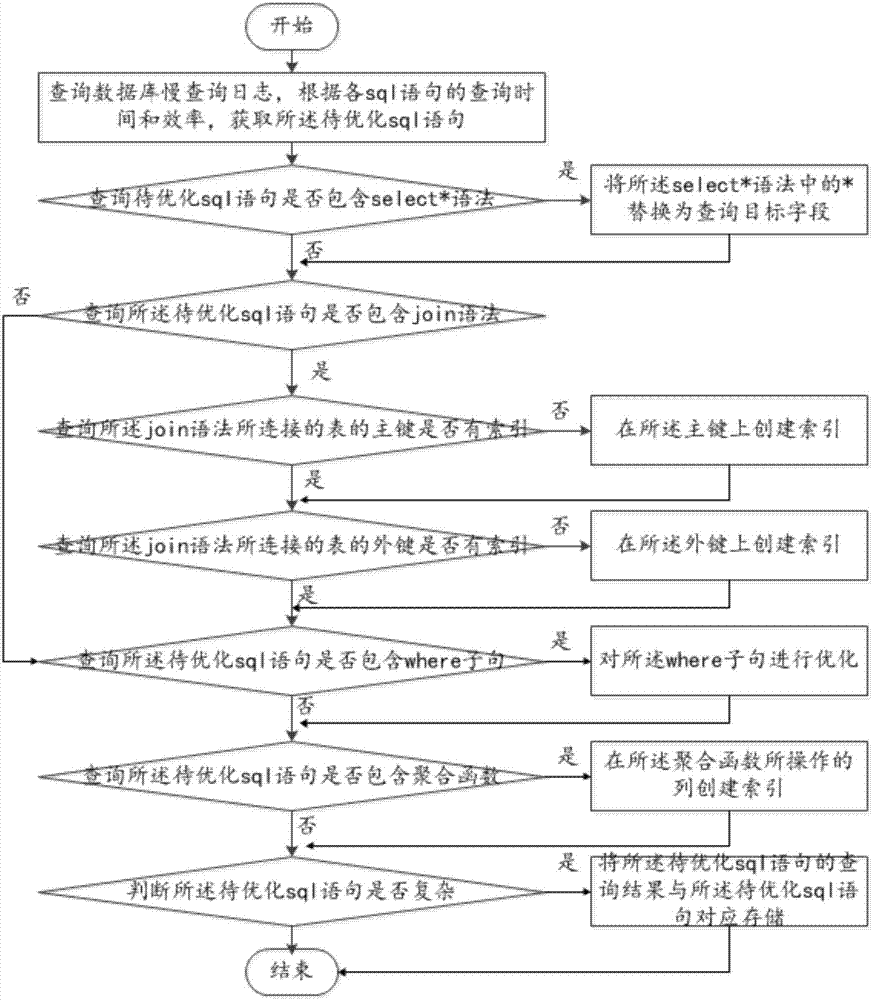

System and method for database query optimization

InactiveCN106919678ARealize dynamic self-tuningGood effectSpecial data processing applicationsDatabase queryQuery optimization

The invention relates to a system and method for database query optimization. The system comprises a select grammar optimization module, a join grammar optimization module, a where clause optimization module and an aggregate function optimization module. The invention has the beneficial effects that the database query optimization system is provided; a sql statement with long query time and low query efficiency is re-written; an index is established for columns which need to be screened or sequenced and are related to the sql statement; dynamic self-adjustment and self-optimization of the sql statement can be achieved; optimization effects can be ensured; query results are not influenced after optimization; query time is effectively reduced; and query efficiency is increased.

Owner:WUHAN LUOJIA WEIYE TECH CO LTD

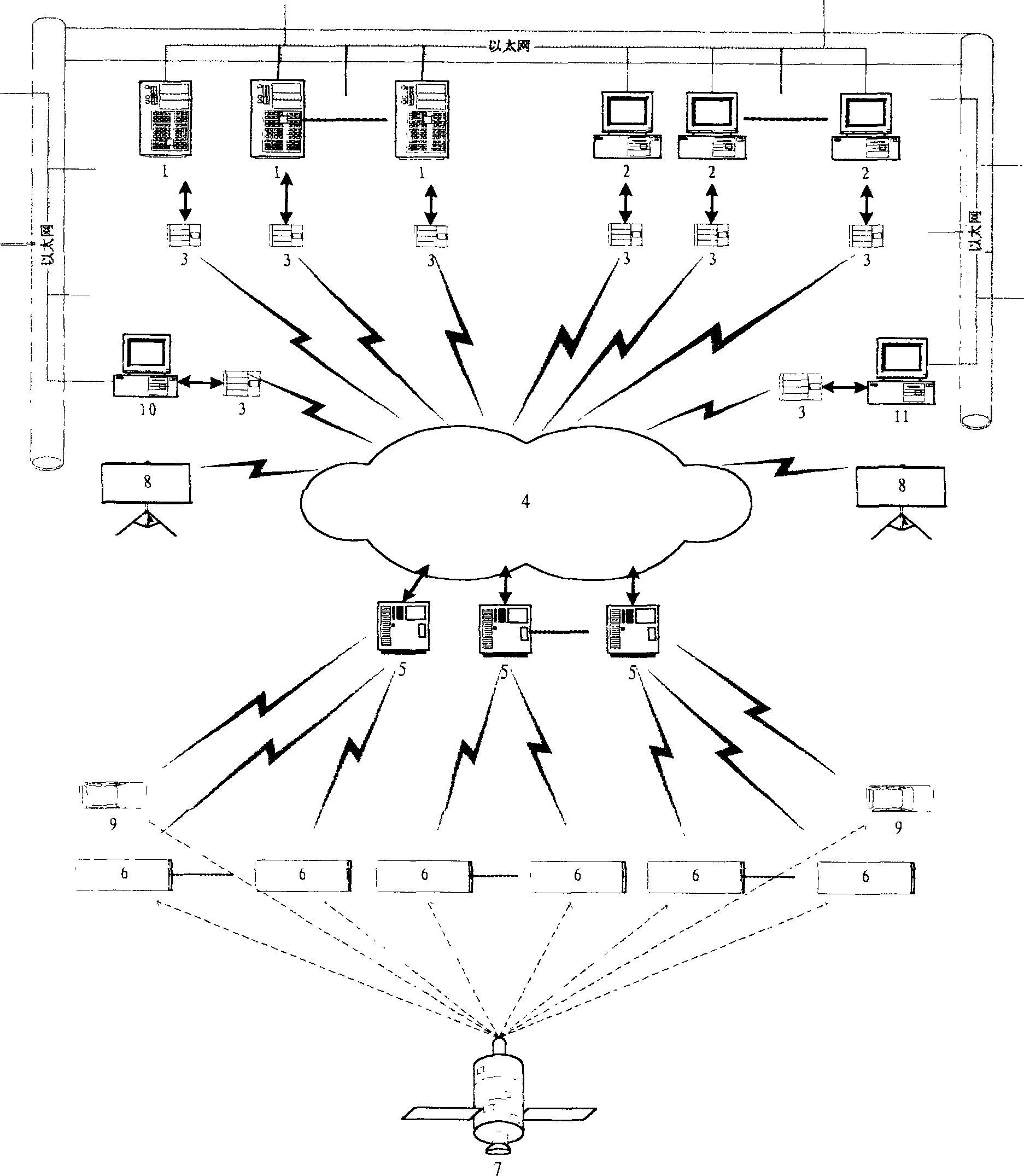

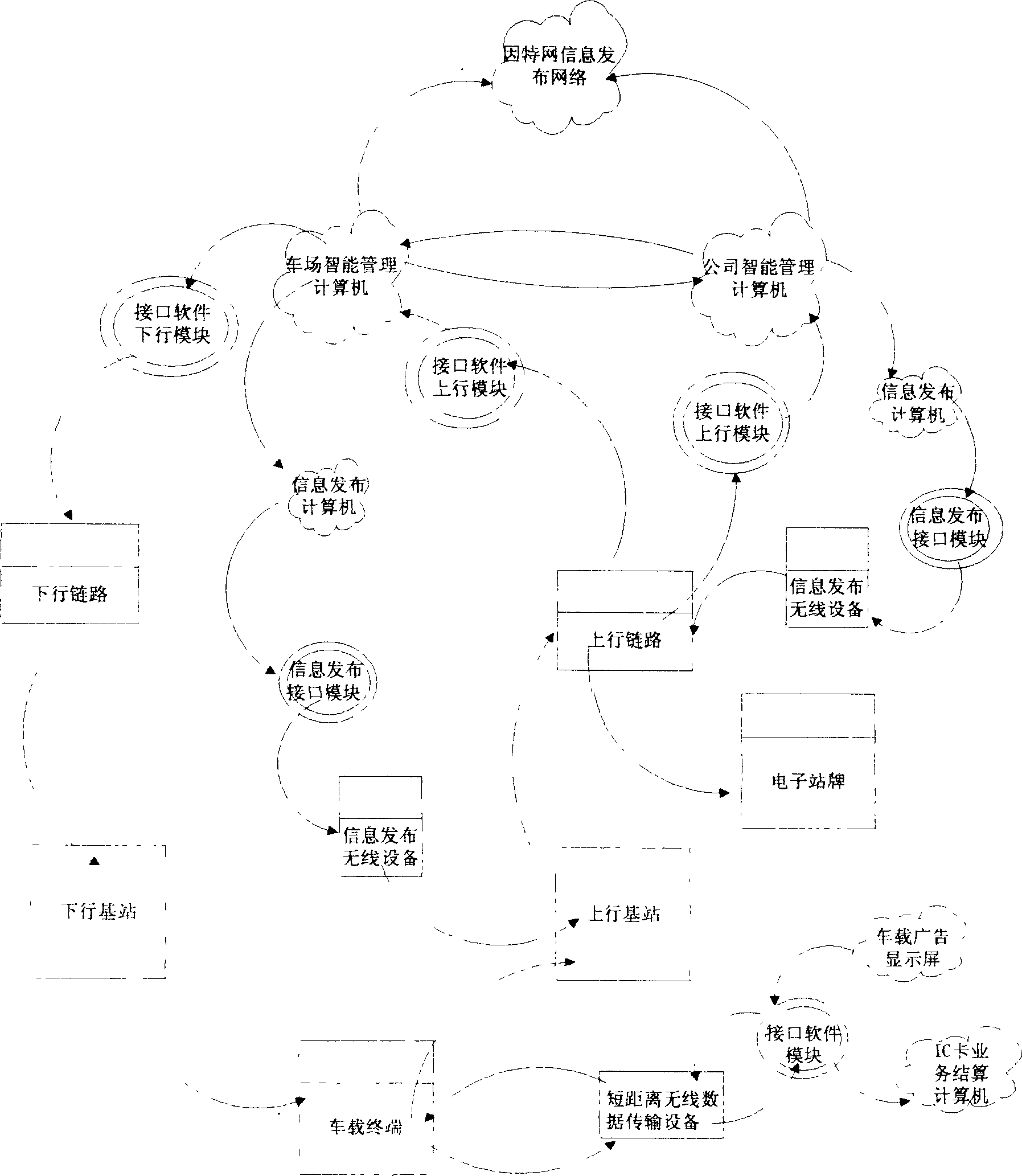

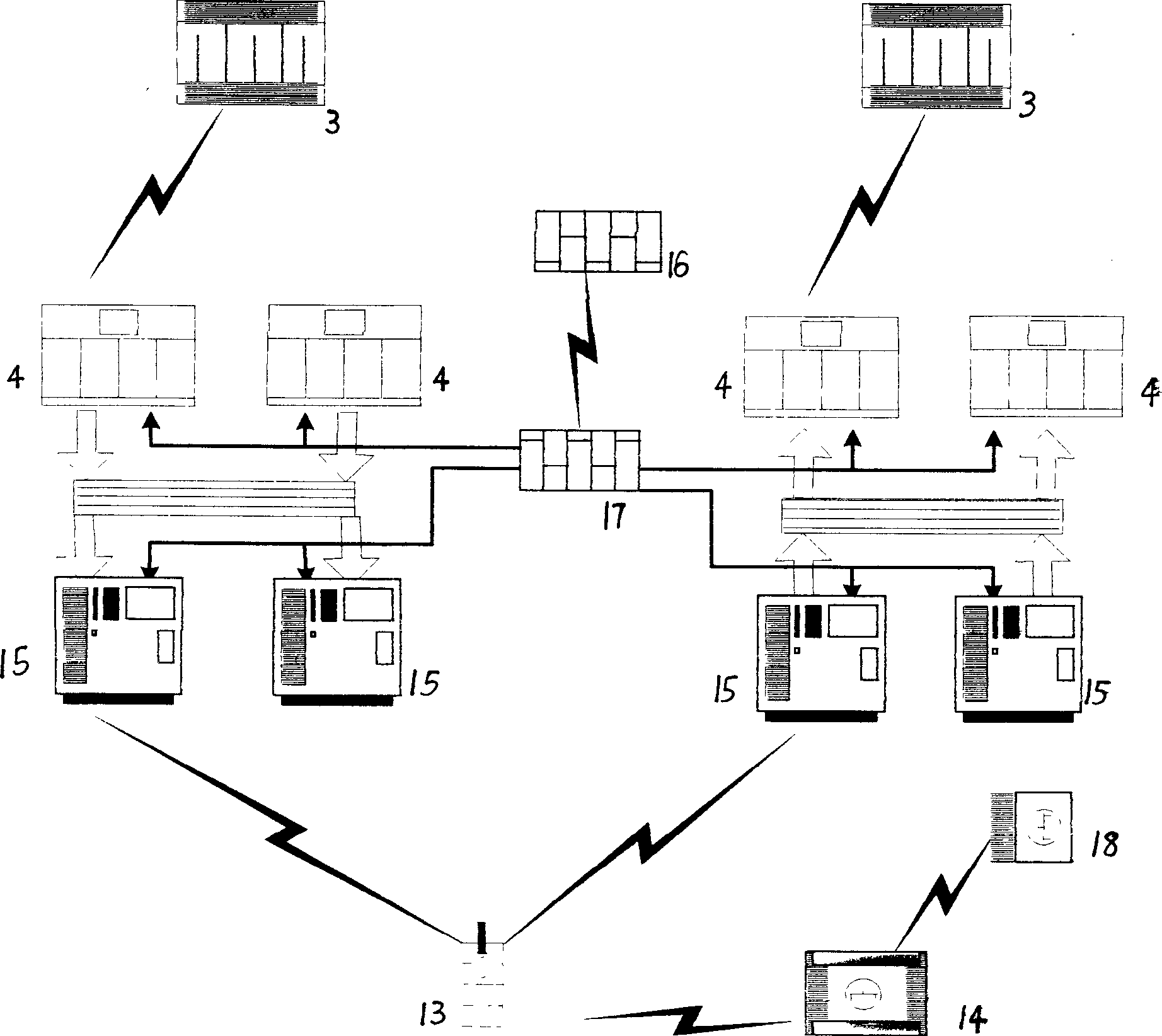

Intelligent public traffic system

ActiveCN1529527ARealize automatic statisticsAchieve over-the-air updatesRoad vehicles traffic controlNetwork topologiesDynamic monitoringTransit bus

The system comprises wireless communication dedicated network and computer software management system. The dedicated network is composed of dedicated frequency channel, base station equipment, link transmission equipment and bus carried terminals. Computer software management system consists of subsystem of electric arranging buses and subsystem of dynamic monitoring. The system provides advantages of very low running expenses, large user capacity at single frequency point, fast information response speed, powerful linking ability of base station including individual call, group call and cluster call, automatic statistics for passenger flow of getting on and getting off as well as updating and down loading bus carried data wirelessly. Running condition of wireless network and fault analysis are monitored remotely. Computer software management system includes multifunctional subsystems assemble by user. The invention is suitable to areas of materials circulation, special vehicle etc.

Owner:HUALU ZHIDA TECH

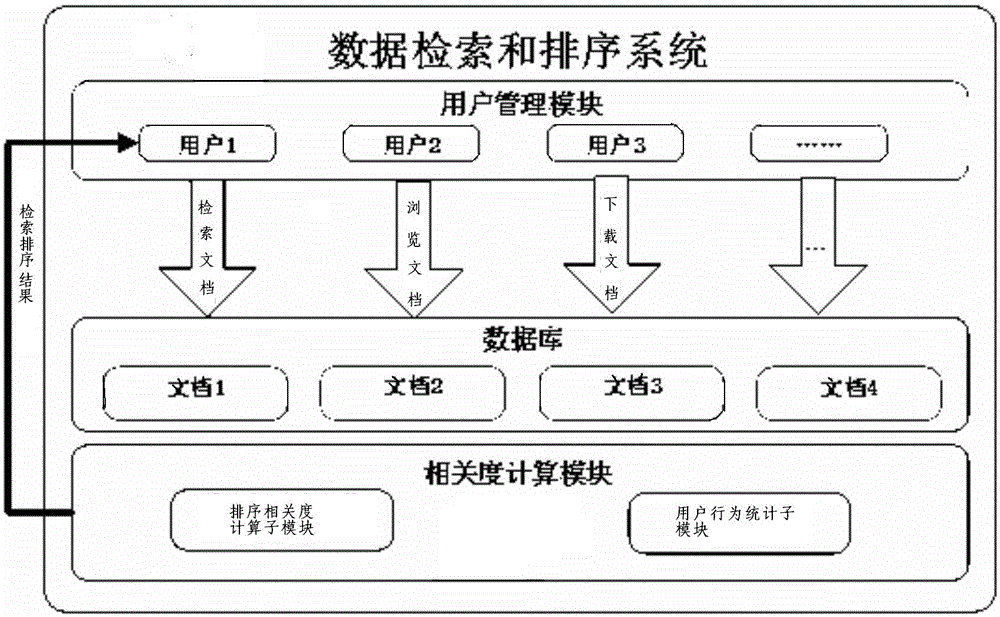

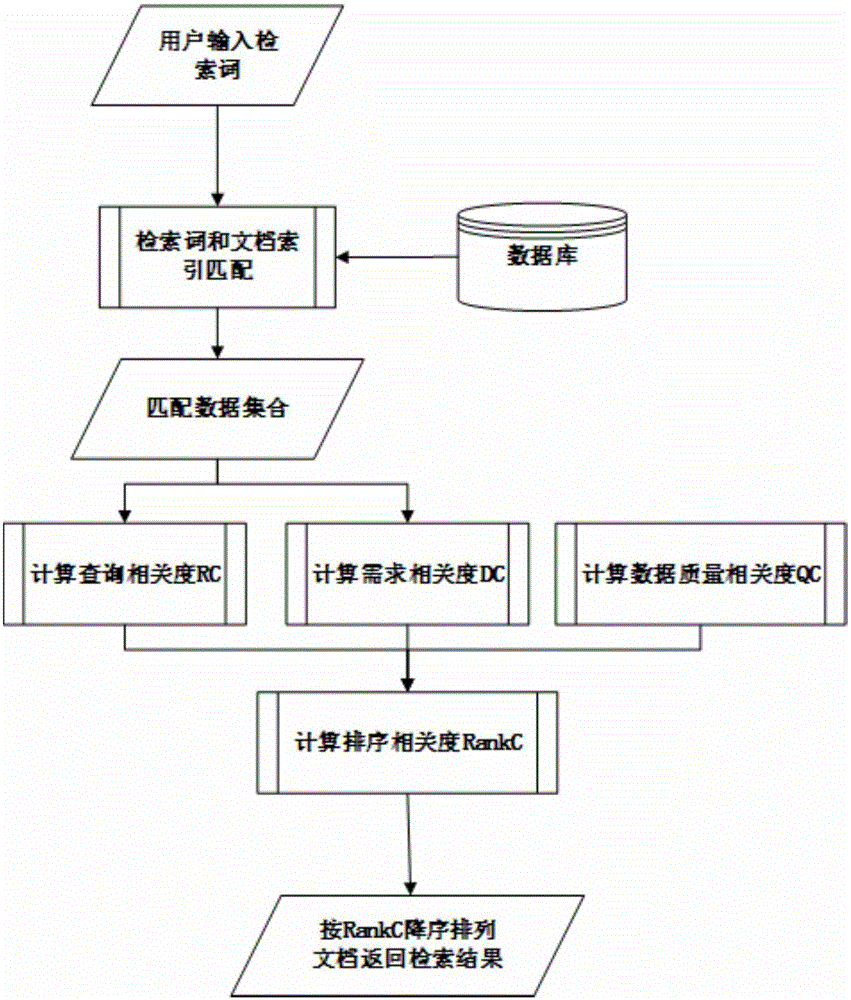

Data retrieving and sorting system and method

ActiveCN105159932AReduce query timeImprove relevanceWeb data indexingSpecial data processing applicationsUser needsData set

The invention relates to a data retrieving and sorting system and method. The system comprises: a user management module, used for managing user information; a database, used for storing documents by classification and making a response to a user request; and a correlation calculation module, used for calculating and sorting retrieval results, wherein the correlation calculation module comprises a user behavior statistics sub-module used for making statistics on keywords of user preferences, comments of a user on the documents and application behaviors, and a sorting correlation calculation sub-module used for calculating a sorting correlation and sorting the retrieval results according to the sorting correlation. According to the data retrieving and sorting system and method, a data set matched with retrieval words is further subjected to analysis and calculation of query correlation (RC), demand correlation (DC) and data quality correlation (QC), so that the retrieved data set conforms to the user preferences, the comments of the user on the documents and the application behaviors, the correlations between the retrieval results and user demands are improved, and the query time of the user is shortened.

Owner:CRRC QINGDAO SIFANG CO LTD

Geographical-tag-oriented hot spot area event detection system applied to LBSN

ActiveCN103995859ASolve the defect of low precisionReduce data volumeLocation information based serviceSpecial data processing applicationsCalculated dataGeographic area

Owner:北京中实信息技术有限公司

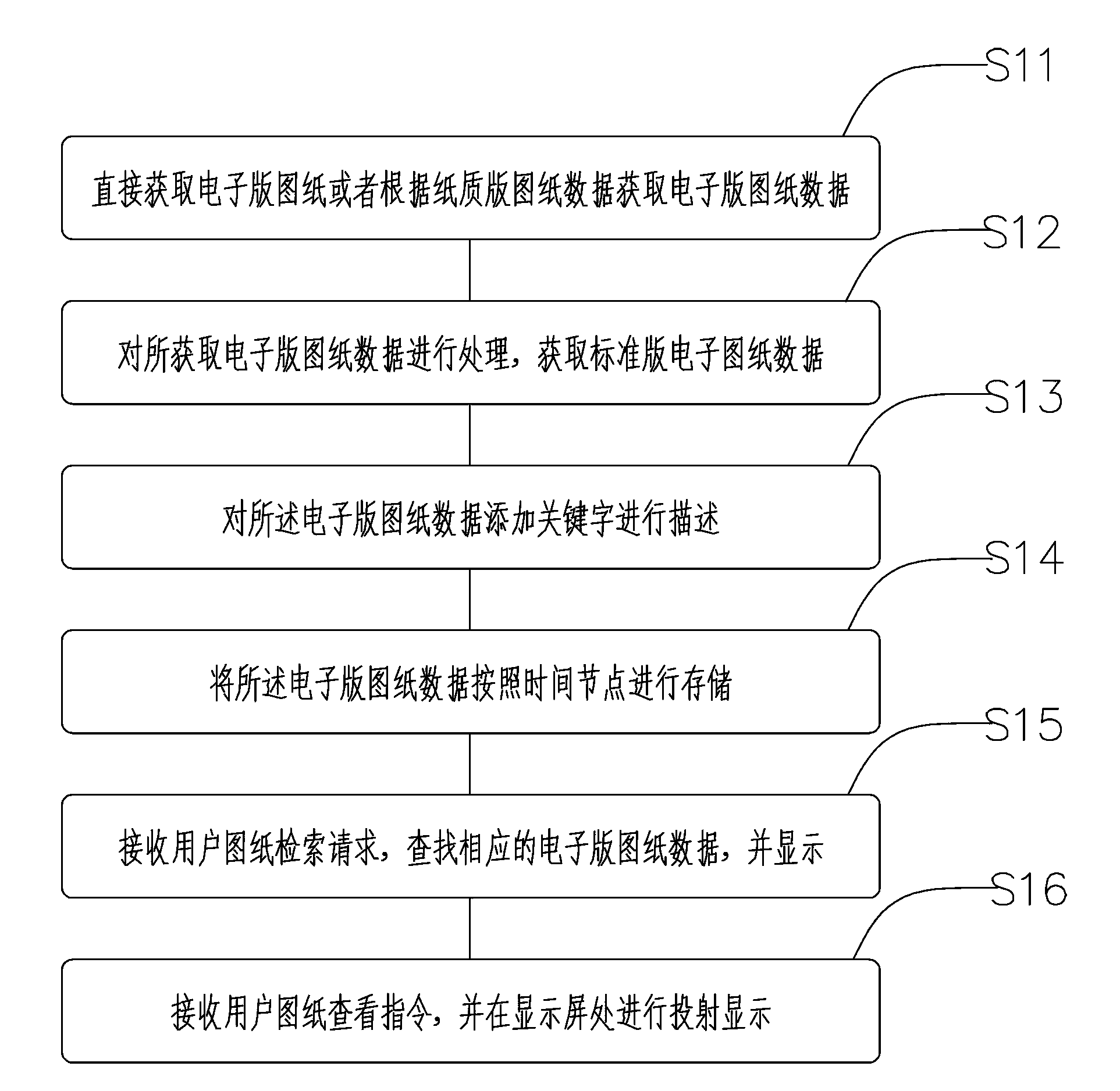

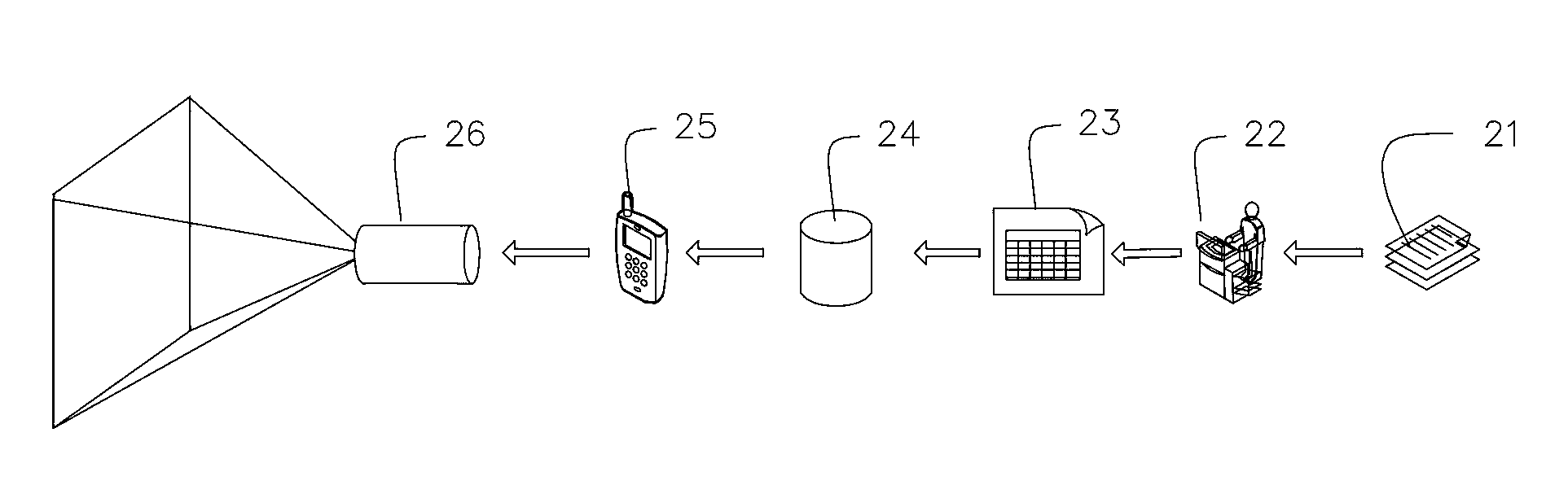

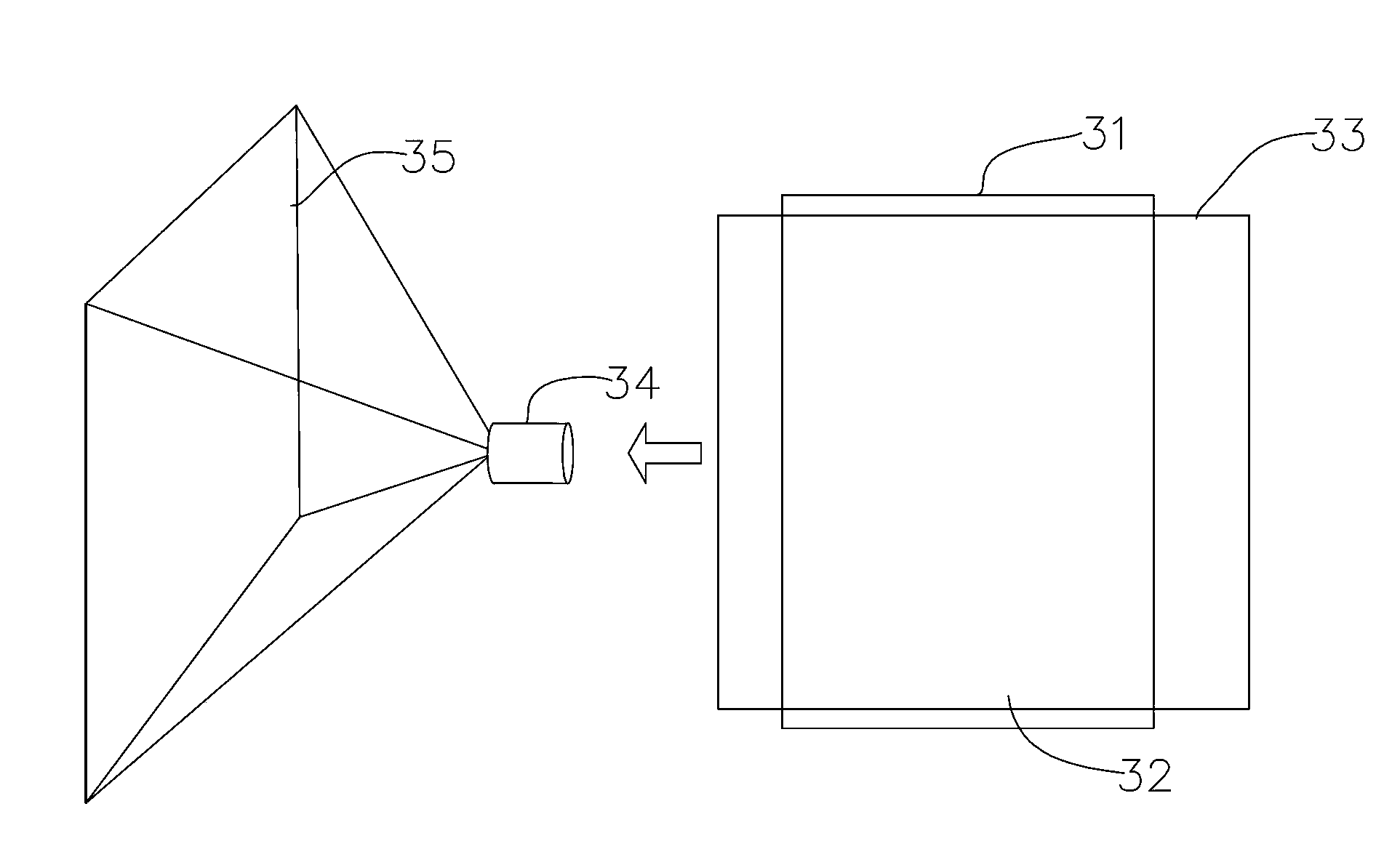

Method and system for viewing drawings of substation

InactiveCN103984774AQuick searchReduce query timeSpecial data processing applicationsInput/output processes for data processingComputer scienceElectric power

The invention relates to a method and a system for viewing drawings of a substation and is applied to the field of electric power construction. By means of the method and the system for viewing the drawings of the substation, users can view the drawings in different formats on a work site of the substation conveniently, and convenience is brought to workers in the site of the substation to carry out the work. The technical scheme includes that the method comprises the following steps of acquiring electronic drawings directly or acquiring the electronic drawings according to data of paper drawings; processing data of the acquired electronic drawings to acquire data of standard electronic drawings, and supporting edition and annotation functions; describing the key words added to the data of the electronic drawings, wherein the mode of adding the key words is to establish two data tables in standard formats, and fields are added and edited to the data tables. By means of the method and the system, contents of the required drawings can be inquired quickly, information of the required drawings can be found out, query time is saved, and work efficiency is increased.

Owner:STATE GRID CORP OF CHINA +1

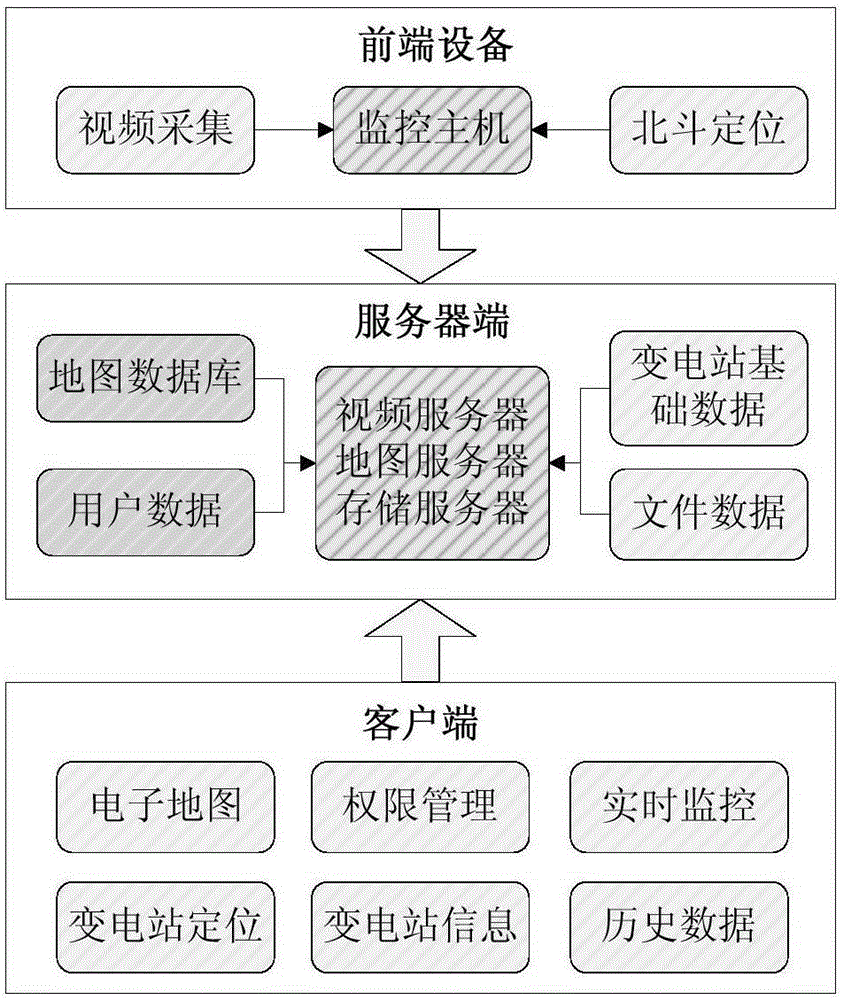

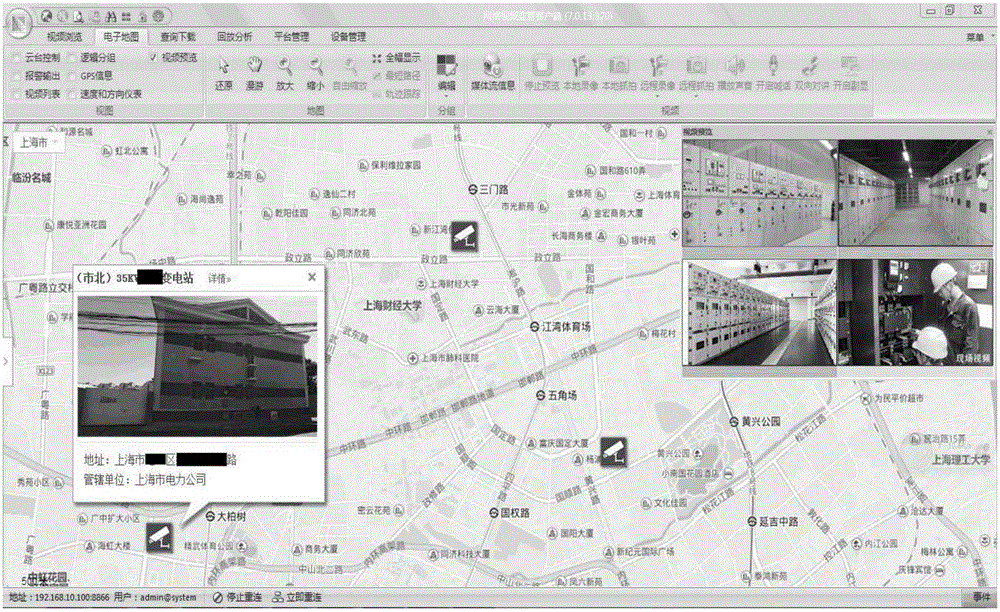

Video monitoring method based on map positioning of transformer station

InactiveCN105245825AUnderstand locationImprove work efficiencyClosed circuit television systemsVideo monitoringTransformer

The invention relates to a video monitoring method based on map positioning of a transformer station, and belongs to the monitoring field. A video collection unit of a front-end device or a monitoring host is provided with a GPS positioning module; the monitoring host transmits video signals and GPS positioning signals to a remote server end via the 4G network; the remote server end comprises a video server, a map server and a storage server at least, and forms a server platform; and a client device composed of a computer or a mobile communication terminal is arranged, when each front-end device on an electronic map of client software is clicked, an image of the position where the front-end device is placed is popped up, and thus, a user can know positions, devices and operation states of different stations the more visually. The monitoring method can be widely applied to video monitoring of unmanned transformer stations.

Owner:SHANGHAI MUNICIPAL ELECTRIC POWER CO +1

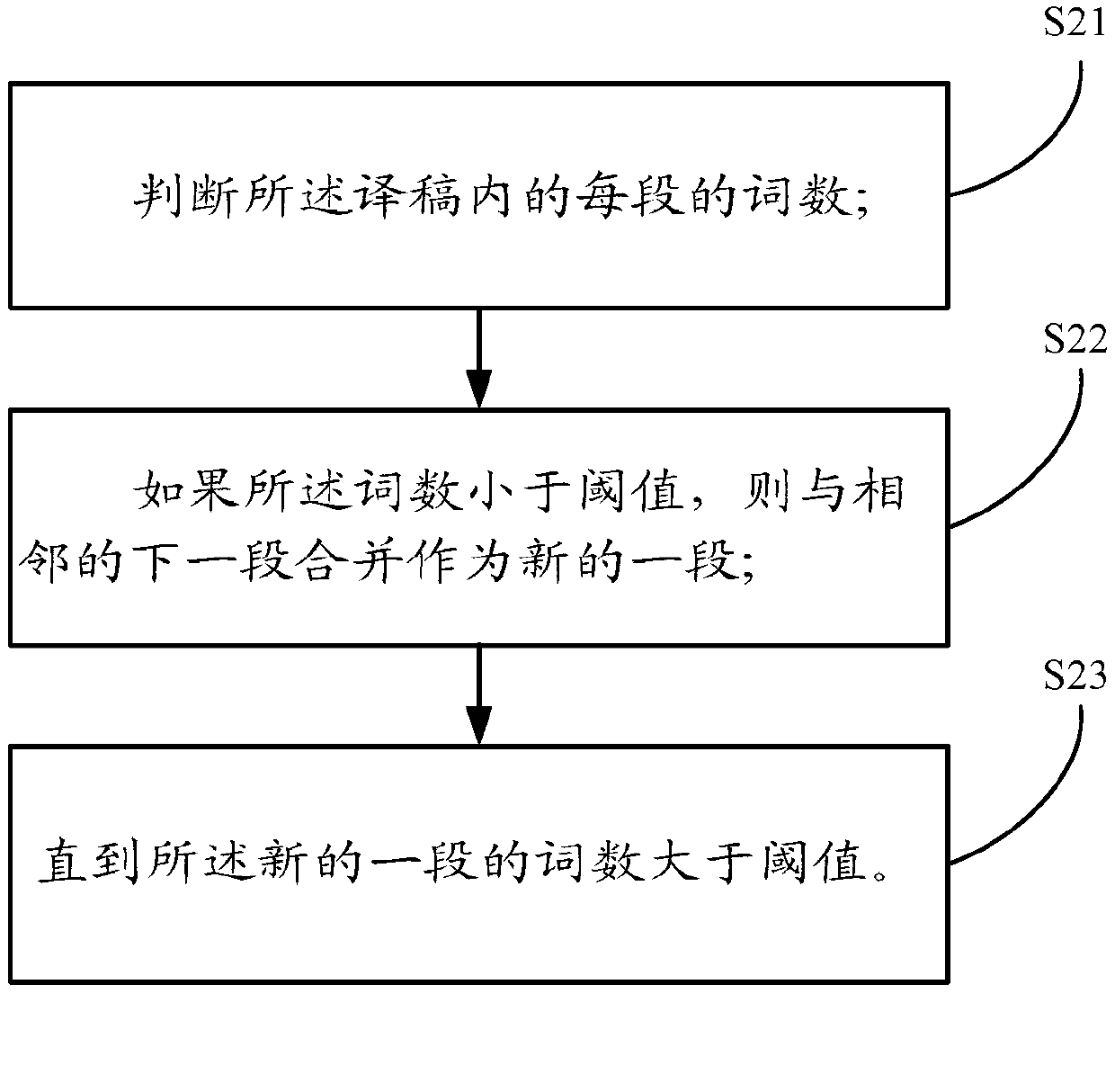

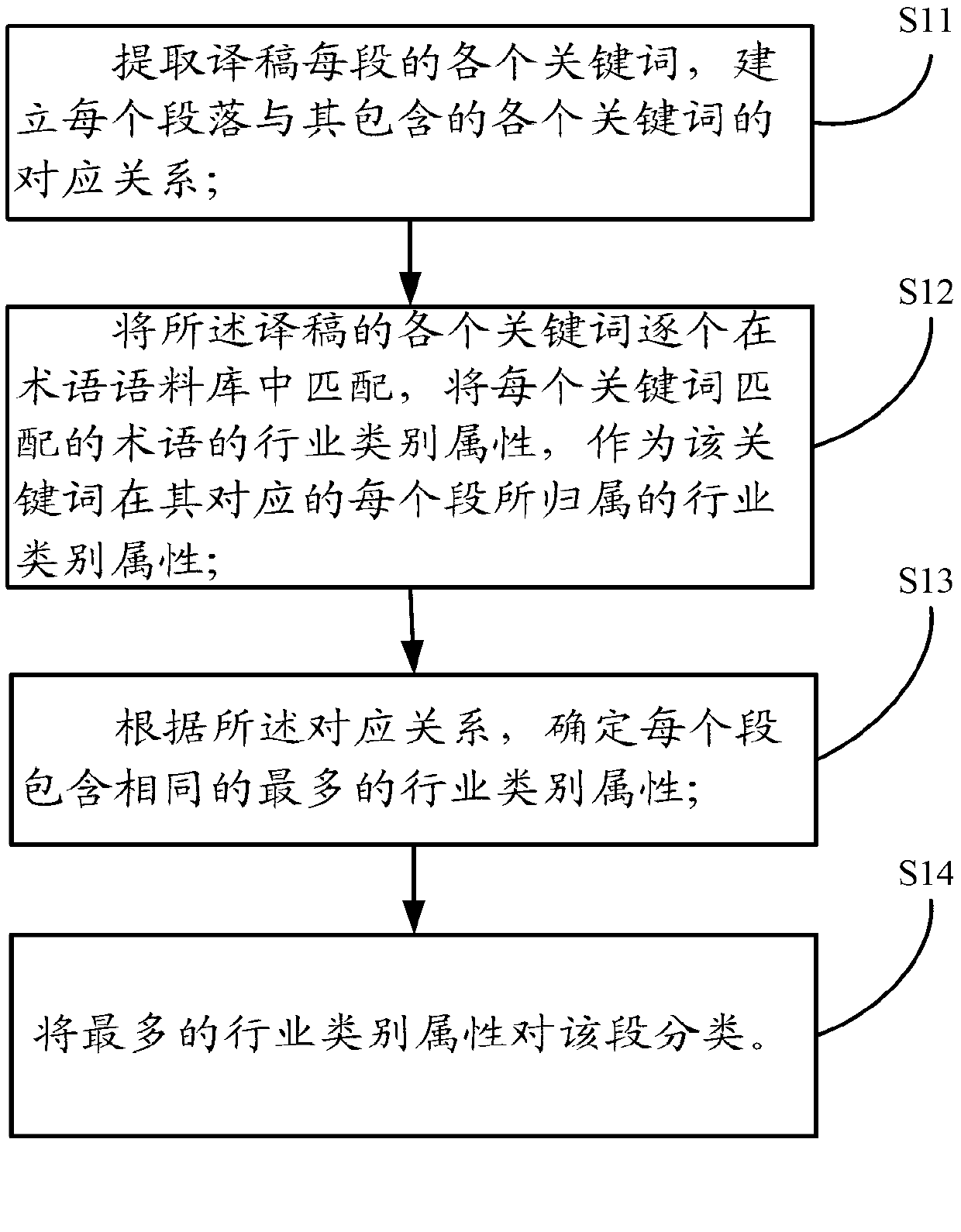

Method which is used for classifying translation manuscript in automatic fragmentation mode and based on large-scale term corpus

InactiveCN103106245AShorten the timeReduce query timeSpecial data processing applicationsPattern matchingParagraph

The invention provides a method which is used for classifying a translation manuscript in an automatic fragmentation mode and based on a large-scale term corpus. The method which is used for classifying the translation manuscript in an automatic fragmentation mode and based on the large-scale term corpus comprises that the translation manuscript is processed in a word classification mode, stop words are eliminated, a key word set is acquired, each key word of each paragraph of the translation manuscript is picked up, and corresponding relations of each paragraph and each key word included by the each paragraph are built; key words of the translation manuscript are one by one matched in the term corpus, and industry categorical attributes of terms matched by the key word are used as attributive industry categorical attributes of each paragraph corresponding to the key word; according to the corresponding relations, identical and maximum categorical attributes included by each paragraph are confirmed; and the paragraph is classified by the maximum categorical attributes. Because the number of words of the translation manuscript is far less than the number of words of the term corpus, the term corpus has the function of being looked up according to alphabet sequences and a pattern matching algorithm needs not adopting when key word matching is conducted in the term corpus, and therefore lookup time is greatly reduced, fragmentation time of the translation manuscript is shortened and fragmentation efficiency is improved.

Owner:IOL WUHAN INFORMATION TECH CO LTD

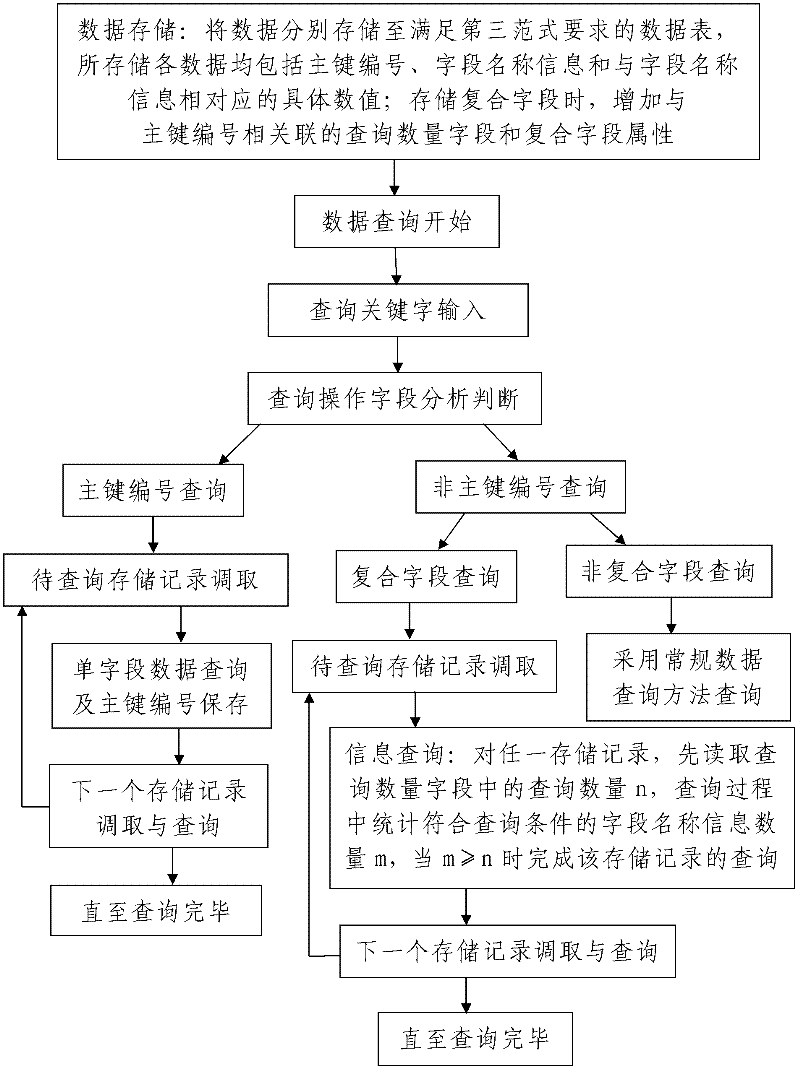

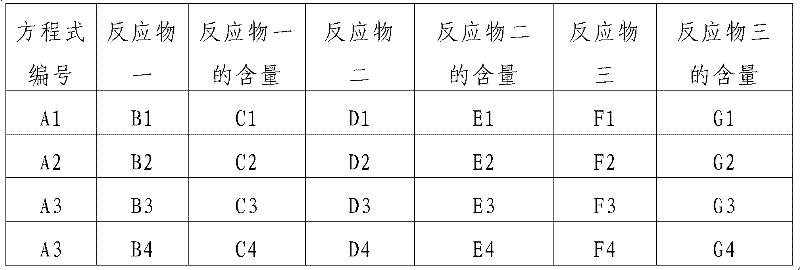

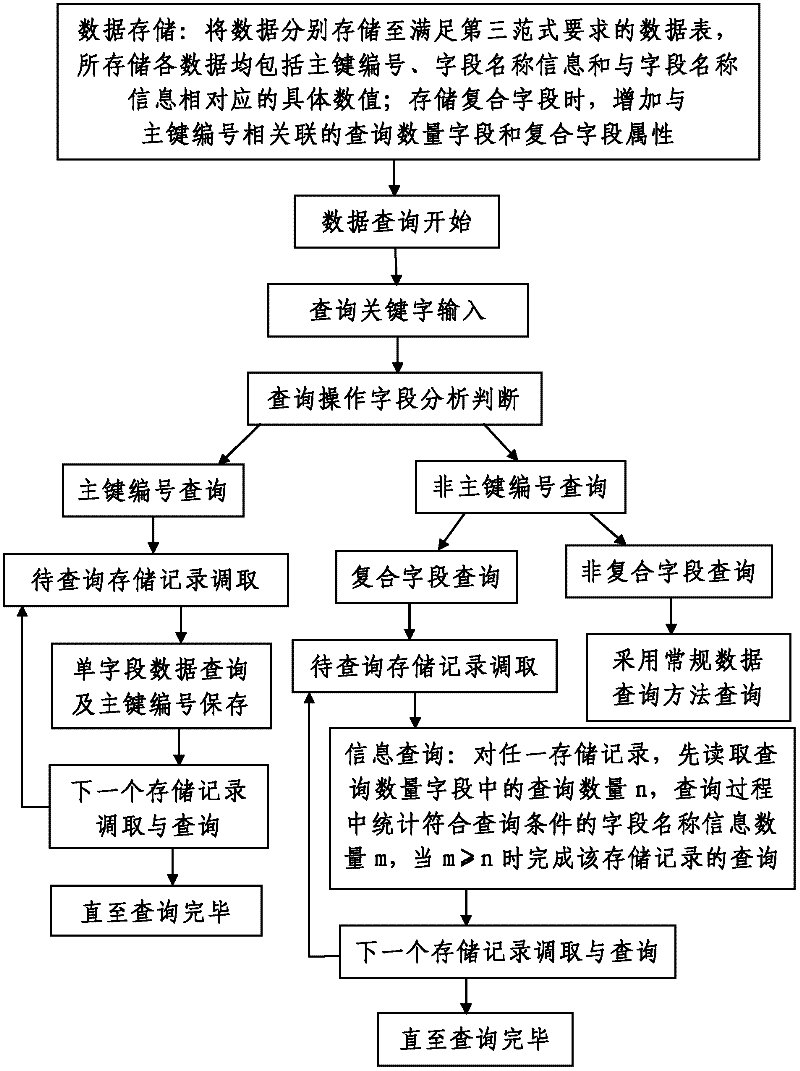

Data storage and query method for compound fields

InactiveCN102243664AReasonable designEasy to operateSpecial data processing applicationsInformation quantityData query

The invention discloses a data storage and query method for compound fields. The method comprises the following steps of: 1, data storage: storing multiple data in a data table meeting requirements of the third normal form, wherein all storage recordings comprise field names, specific values and serial numbers of major keys; when the compound fields are stored, adding attributes of query quantityfields and the compound fields, related to the serial numbers of the major keys; 2, data query: when the compound fields are queried, for every storage recording, firstly reading query quantity n in the query quantity fields; in the query process, counting the name information quantity m of fields meeting query requirements, and completing the query process of one storage recording when m is not less than n; and then, continuing to perform query till all data tables are queried. The storage and query method has the advantages of reasonable design, convenience for storage and query, small dataprocessing quantity and high query efficiency; and by the adoption of the storage and query method, the problems of low query efficiency, long query time, low search speed, low search efficiency and the like, existing in compound field query, can be solved.

Owner:NORTHWEST UNIV(CN) +1

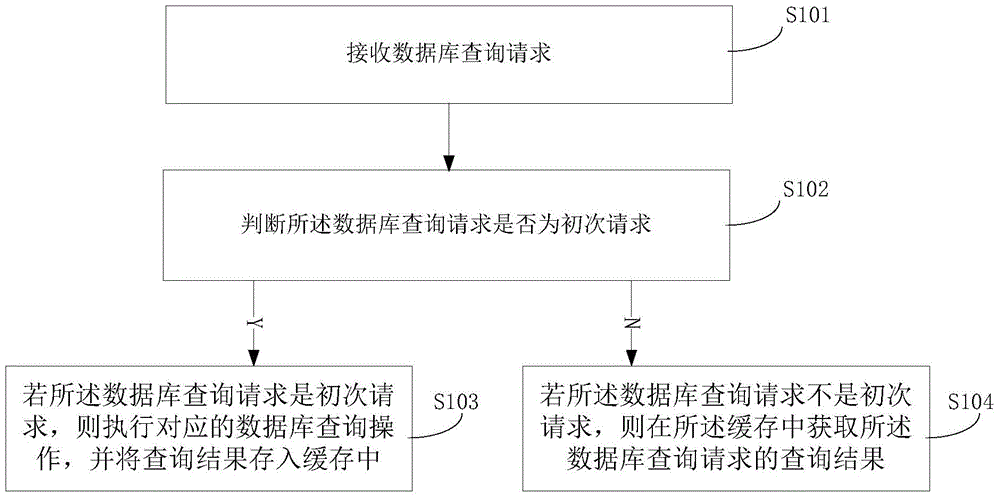

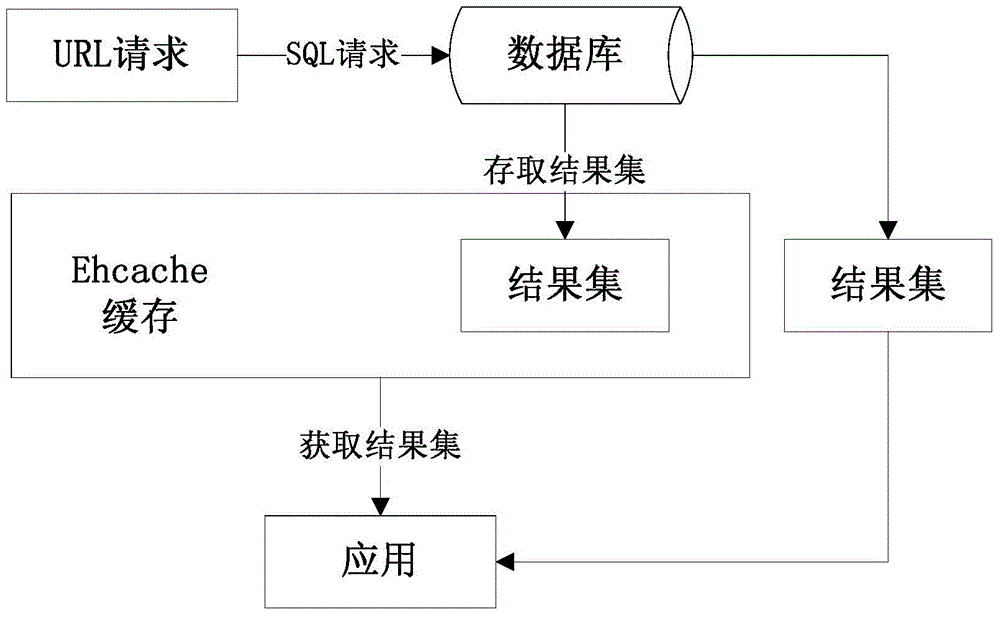

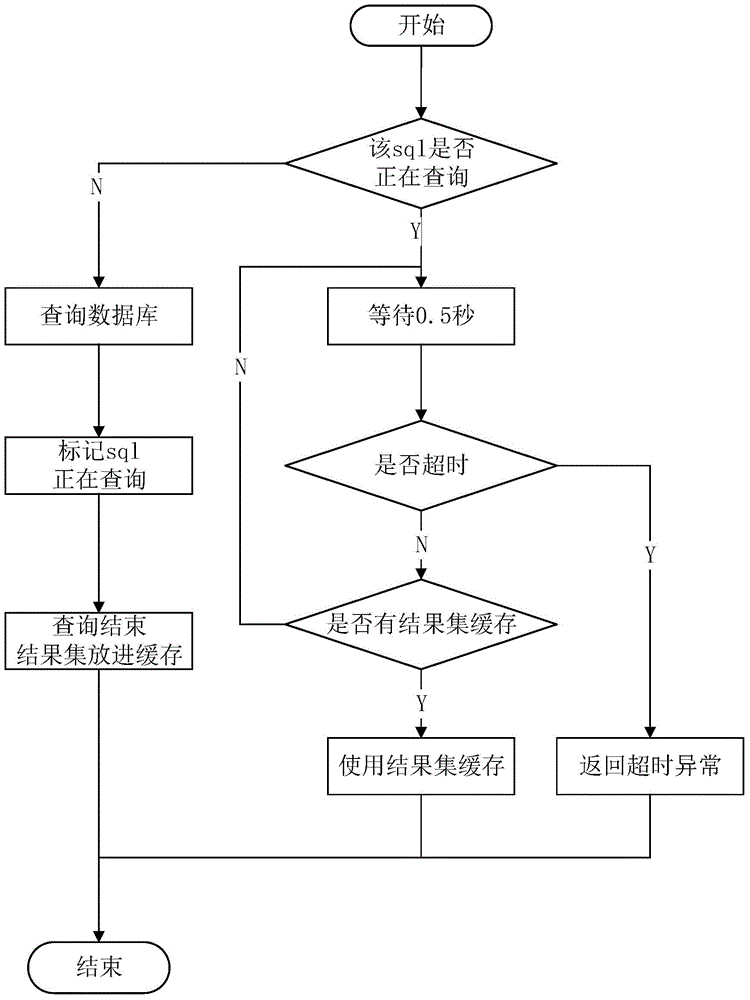

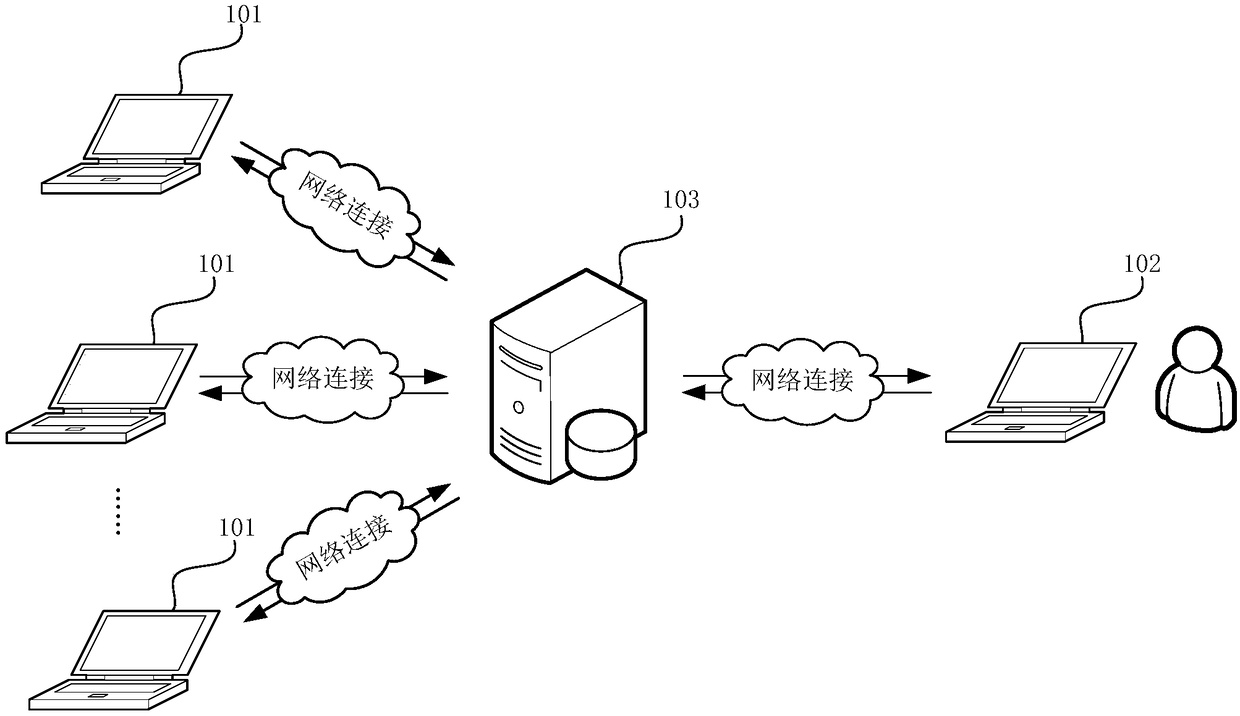

Database query method and system

InactiveCN105243072AReduce the burden onSolve technical problems of congestionSpecial data processing applicationsResult setComputer science

The invention discloses a database query method and system, and relates to the technical field of databases. The method of the embodiment of the invention comprises the following steps: receiving a database query request; judging whether the request is a primary request or not; if the request is the primary request, executing a corresponding database query operation, and storing a query result into a cache; and if the request is not the primary request, obtaining the query result of the database query request in the cache. When the same SQLs simultaneously query the database, the SQLs only query one time and return a result, other requests obtain the result of the first request, i.e., under a certain condition, only first-time SQL language query takes effect within a period of time, and the rest waits for the query result. Multiple-time SQL requests are compressed into one-time SQL request, the burden of the database can be lightened so as to solve the technical problem of database congestion caused by the multiple-time database requests, the result set returning speed of SQL query can be improved, the result set which is subsequently requested to use is the result set which is requested the first time, and query time is greatly shortened.

Owner:ULTRAPOWER SOFTWARE

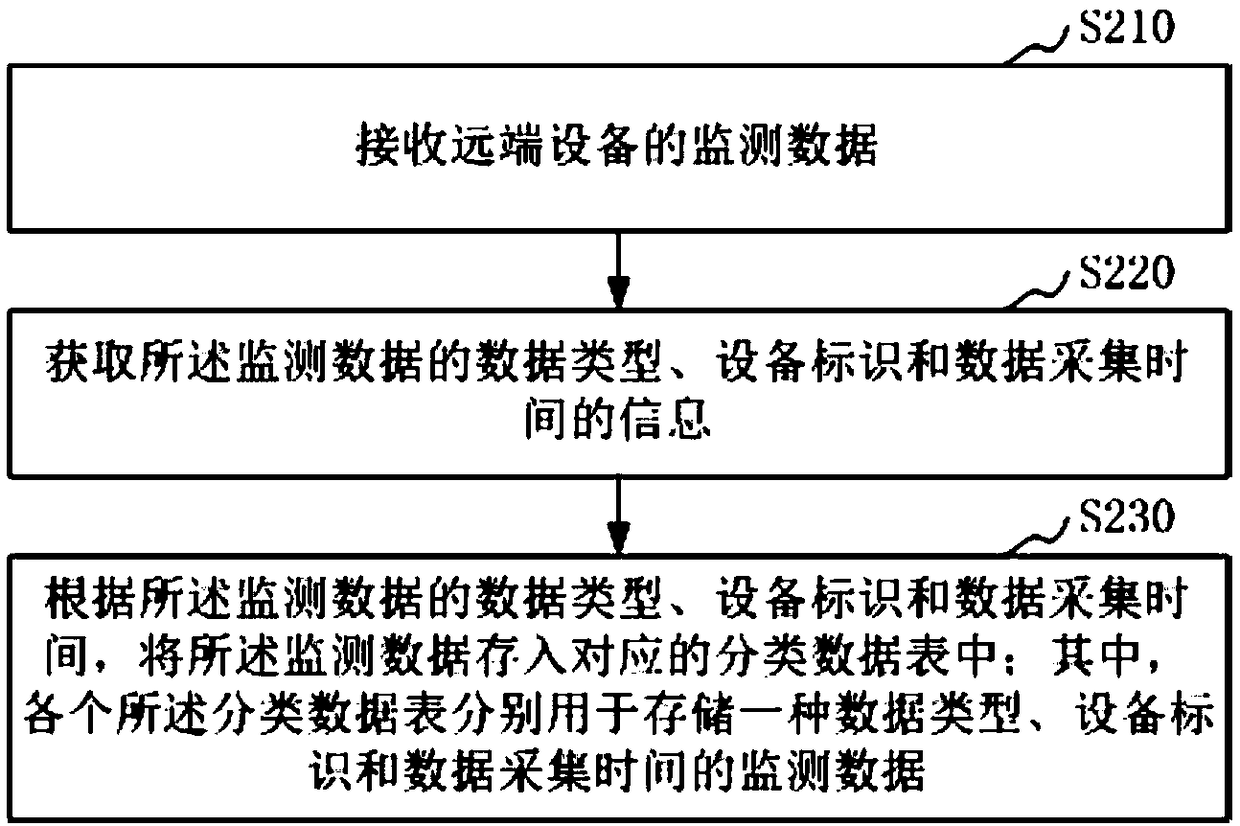

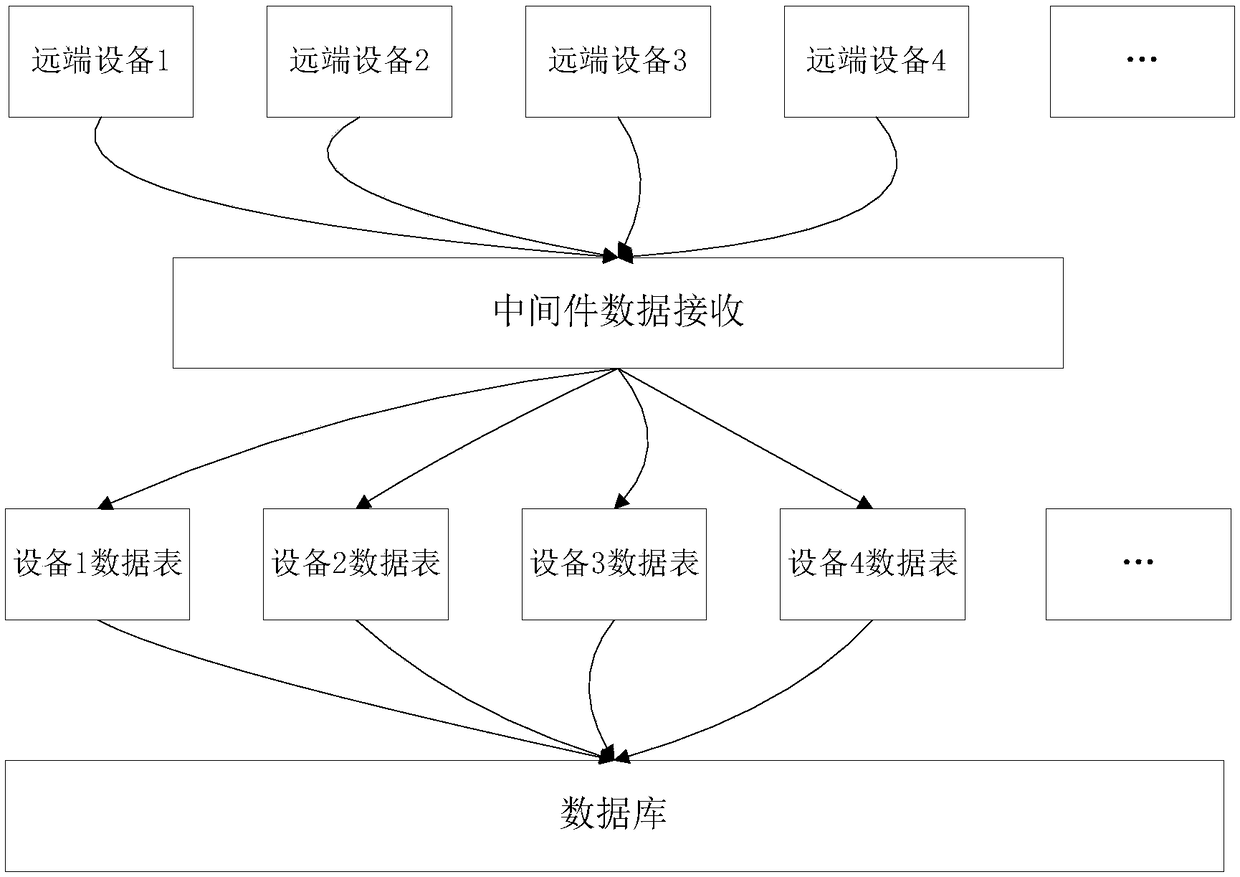

Monitoring data storing method and device, monitoring data querying method and device, computer device

InactiveCN109408508AReduce query timeImprove storage efficiencySpecial data processing applicationsDatabase indexingData acquisitionData needs

The invention relates to a monitoring data storing method and device, monitoring data querying method and device, computer device and computer readable storage medium, wherein the method comprises thefollowing steps: receiving monitoring data of a remote device; obtaining information of data type of the monitoring data, equipment identification and data acquisition time; storing the monitoring data into a corresponding classification data table according to the data type of the monitoring data, the equipment identification and the data acquisition time, wherein each of the classification datatables is respectively used for storing monitoring data of a data type, a device identification and a data acquisition time. According to the invention, the monitoring data of different data types, equipment identification and data collection time are separately stored in different classification data tables. When querying the corresponding monitoring data, only the monitoring data need to be found in the classification data table corresponding to the monitoring data, which reduces the data query time and improves the query efficiency of the monitoring data.

Owner:广州恩业电子科技有限公司

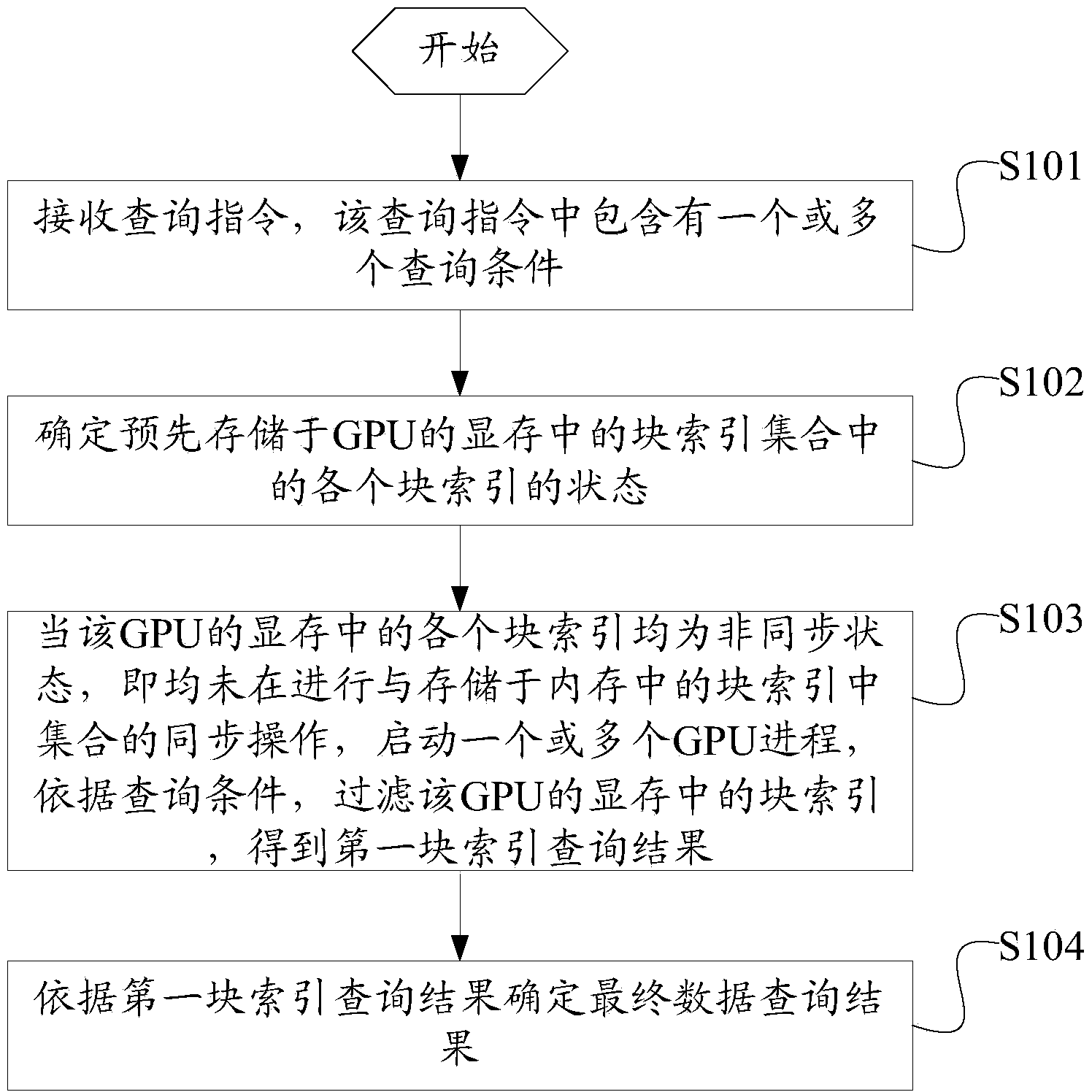

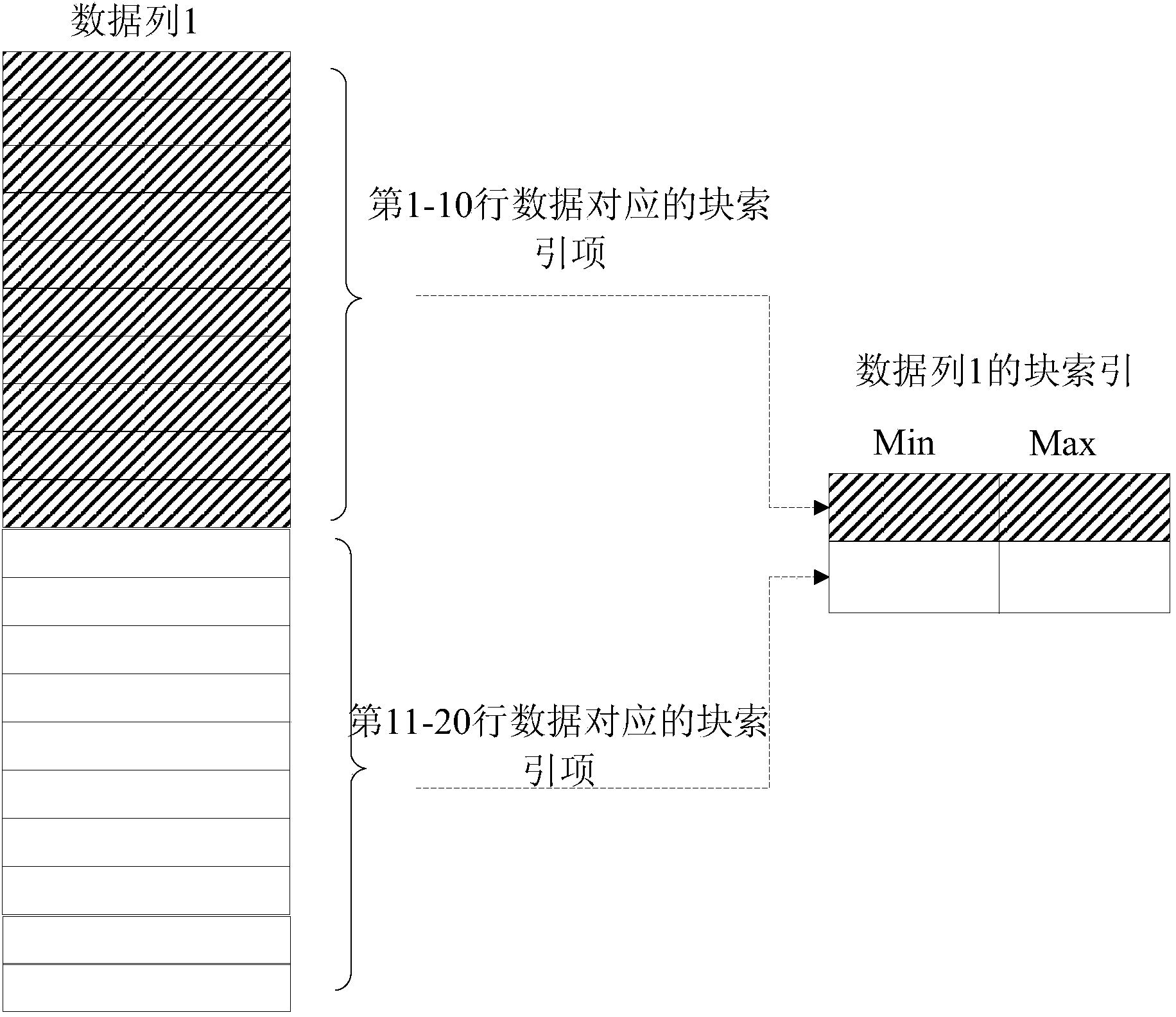

Method and device for inquiring data in database

ActiveCN103984695ASimplify the query processReduce query timeSpecial data processing applicationsVideo memoryOriginal data

The application discloses a method and a device for inquiring data in a database. The method comprises the following steps of receiving an inquiry instruction, determining the state of each block index in a block index collection which is preliminarily stored in a video memory of a GPU (Graphics Processing Unit), starting one or more GPU processes in the case that each block index in the video memory of the GPU is in an asynchronous state, and according to inquiry conditions, filtering the block indexes in the video memory of the GPU to obtain a first block index inquiry result; determining a final data inquiry result according to the first block index inquiry result. According to the method, a CPU (Central Processing Unit) preliminarily generates the block index collection corresponding to the database; as the volume of data of the block index collection is smaller than the volume of original data or partitioned data, all data of the block index collection can be copied and stored in a global memory of the GPU; when the block indexes in the global memory of the GPU are all in the asynchronous state, the inquiry is implemented by directly using the GPU processes; therefore the course of copying the partitioned data from the memory multiple times in the prior art is avoided; the inquiry time is shortened; the inquiry efficiency is improved.

Owner:HUAWEI TECH CO LTD

High-speed charging method and system based on block chain

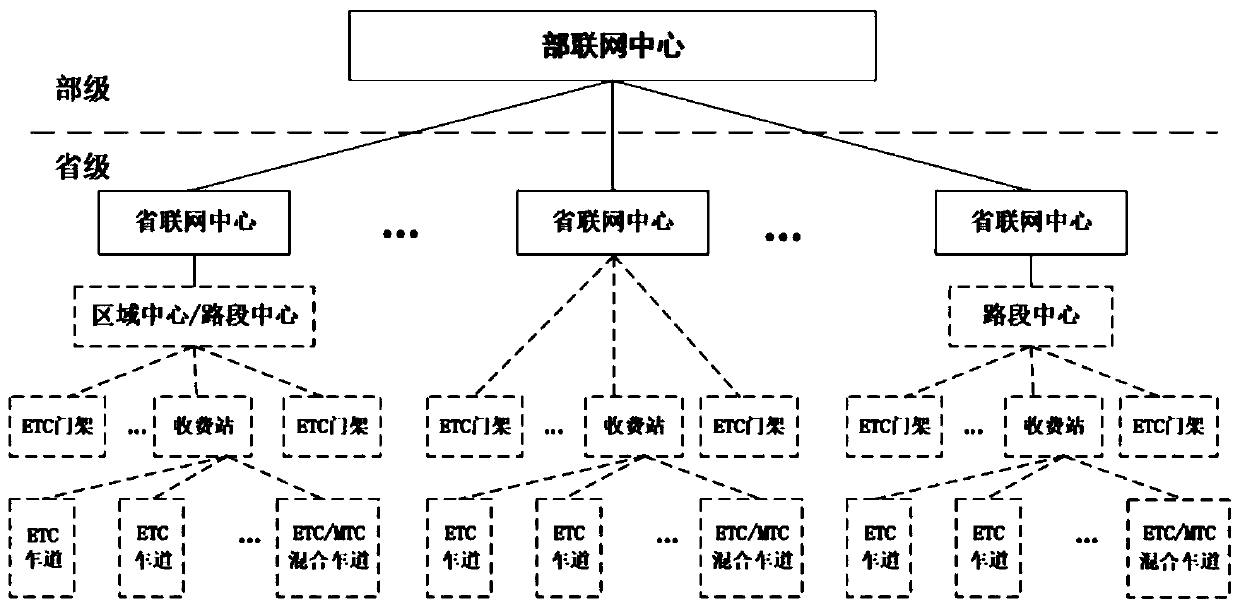

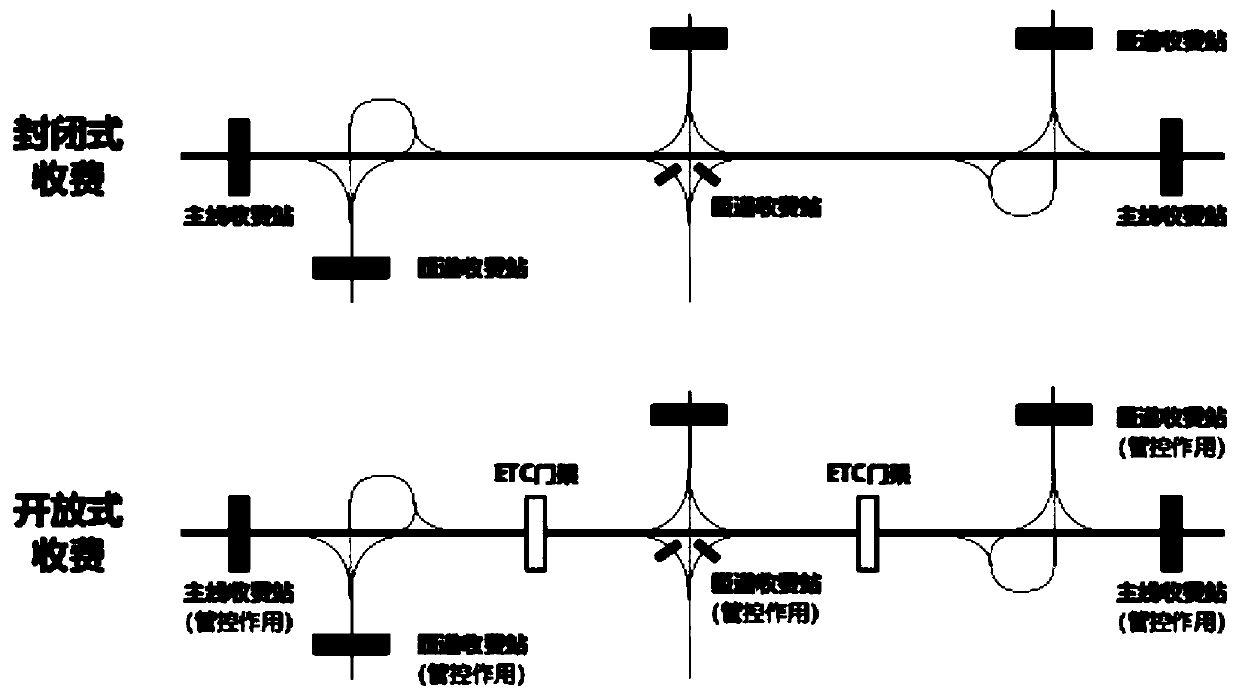

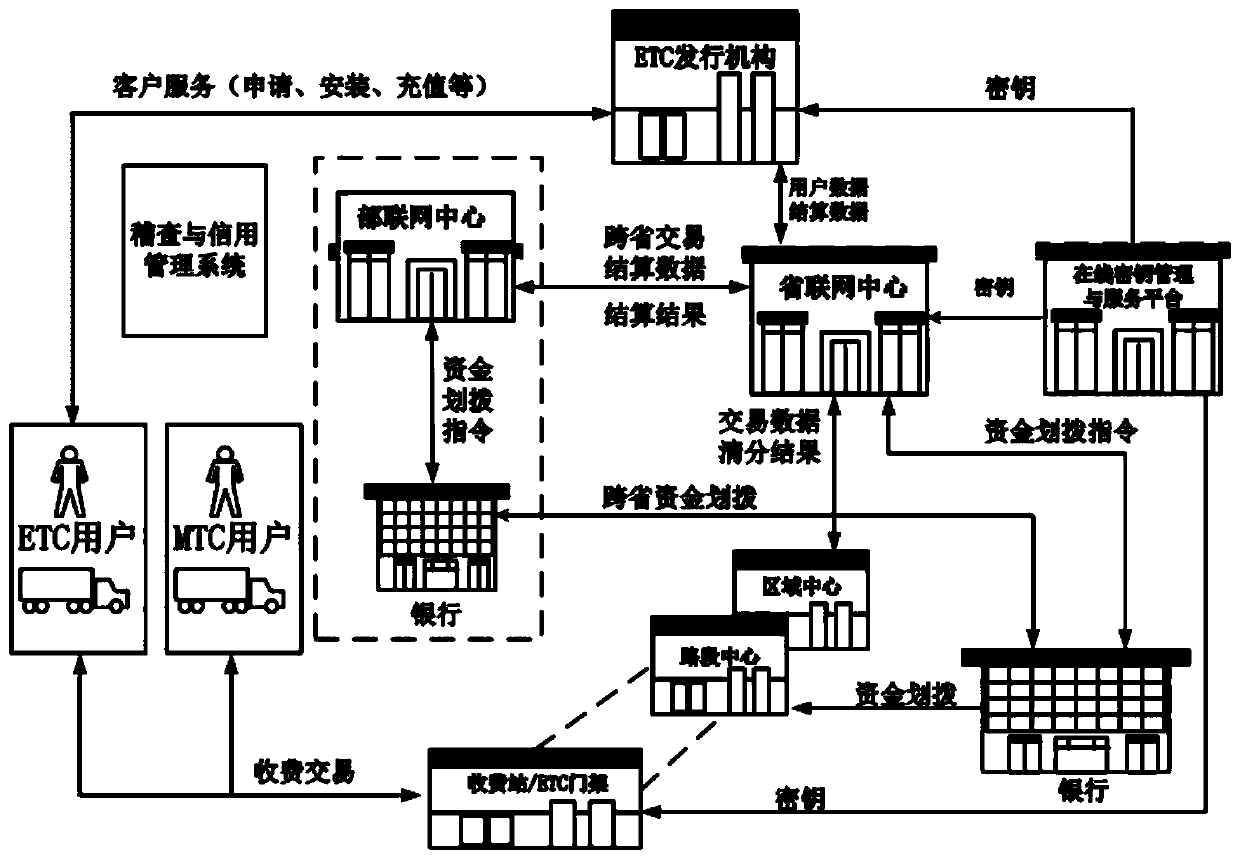

PendingCN110992499ARealize distributed storageAvoid lossTicket-issuing apparatusIndividual entry/exit registersSimulationFinancial transaction

The invention discloses a high-speed charging method and system based on a block chain, and the method comprises the steps: S100, obtaining the vehicle information of a vehicle when the vehicle passesthrough an entrance of a toll station, generating first transaction flow data, and uploading the first transaction flow data to a road section center to which the toll station belongs; s200, when thevehicle passes through the ETC portal frame, acquiring and uploading path information and cost information of the vehicle to a road section center to which the ETC portal frame belongs; and S300, when the vehicle passes through an exit of the toll station, acquiring path information and cost information of the vehicle and uploading the information to a road section center to which the toll station belongs. The block chain technology and the high-speed CPC charging technology are combined, distributed storage of data is achieved, the data security level is improved, data loss and illegal tampering are prevented, point-to-point data transmission of the center of a road section is achieved, the path backtracking speed is increased, and the path query network pressure is reduced.

Owner:WATCHDATA SYST

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com