Deep learning chip based dynamic cache allocation method and device

A deep learning and chip technology, applied in the computer field, can solve the problems that the chip storage structure is overwhelmed and unable to meet the needs of large-scale computing, and achieve the effect of reducing data access, reducing bandwidth, and rational allocation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] In order to explain in detail the technical content, structural features, achieved goals and effects of the technical solution, the following will be described in detail in conjunction with specific embodiments and accompanying drawings.

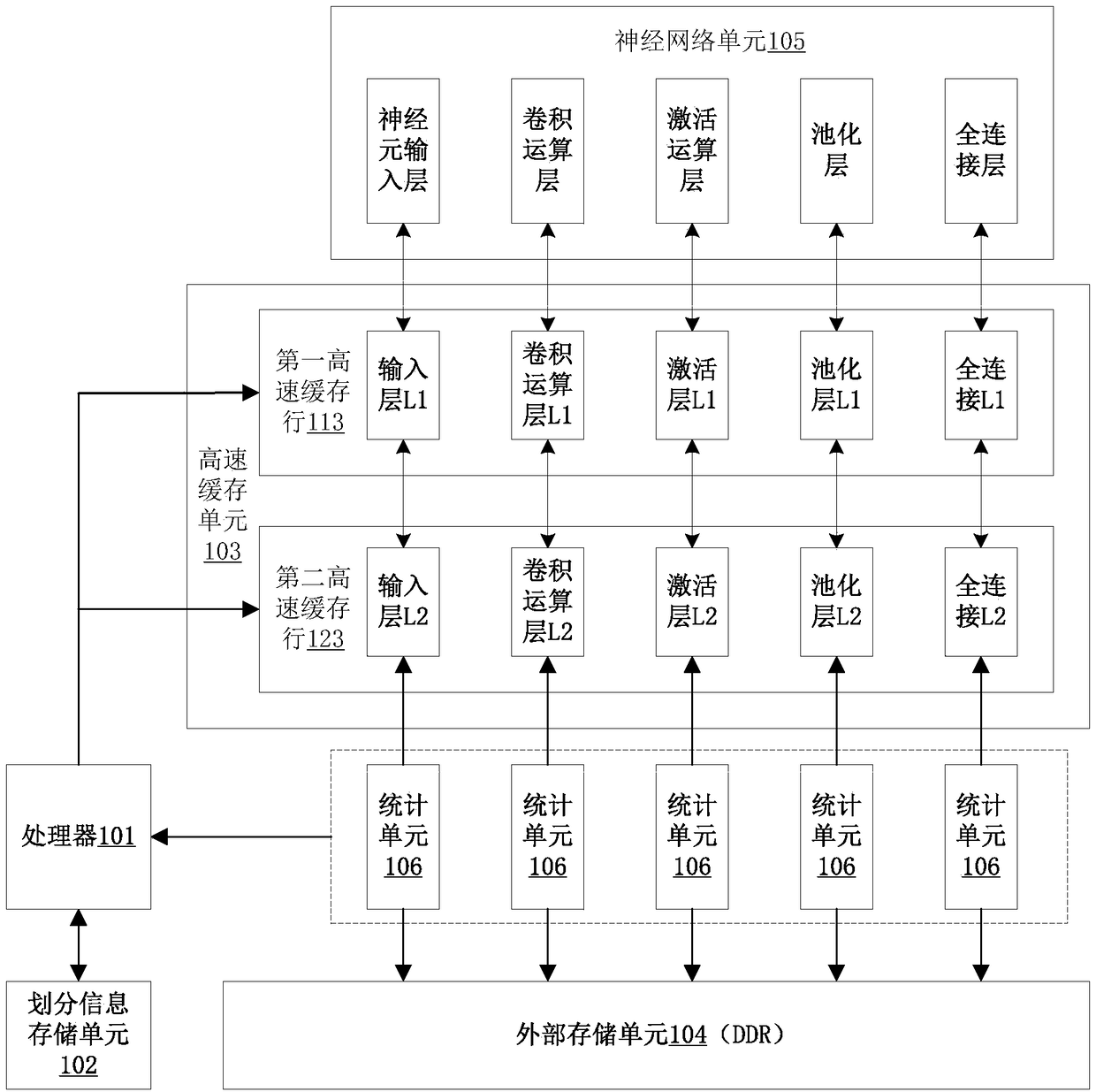

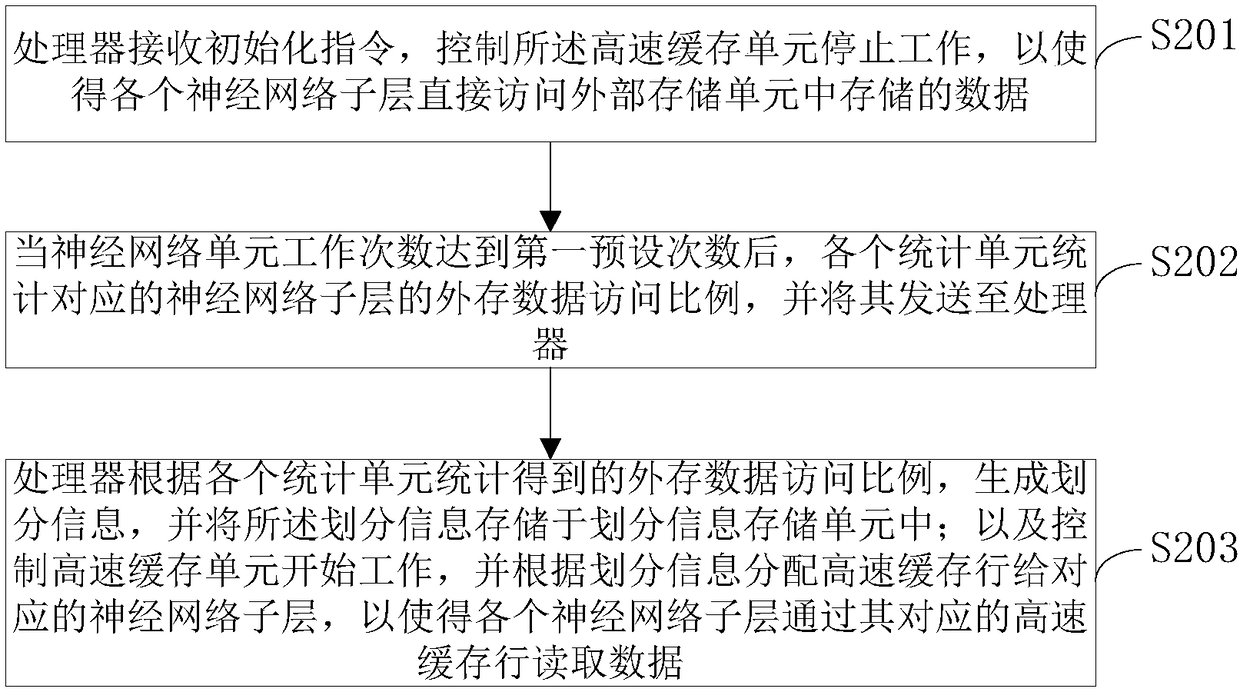

[0041] see figure 1 , a schematic structural diagram of a device for dynamic cache allocation based on a deep learning chip according to an embodiment of the present invention. The device includes a processor 101, a division information storage unit 102, a cache unit 103, an external storage unit 104, a neural network unit 105, and a statistical unit 106; the cache unit 103 includes a plurality of cache lines; the neural network The unit 105 includes a plurality of neural network sublayers, each neural network sublayer corresponds to a statistical unit 106; the neural network unit 105 is connected to the cache unit 103, and the cache unit 103 is connected to the processor 101 and the statistical unit 106 respectively. Connection; the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com