Patents

Literature

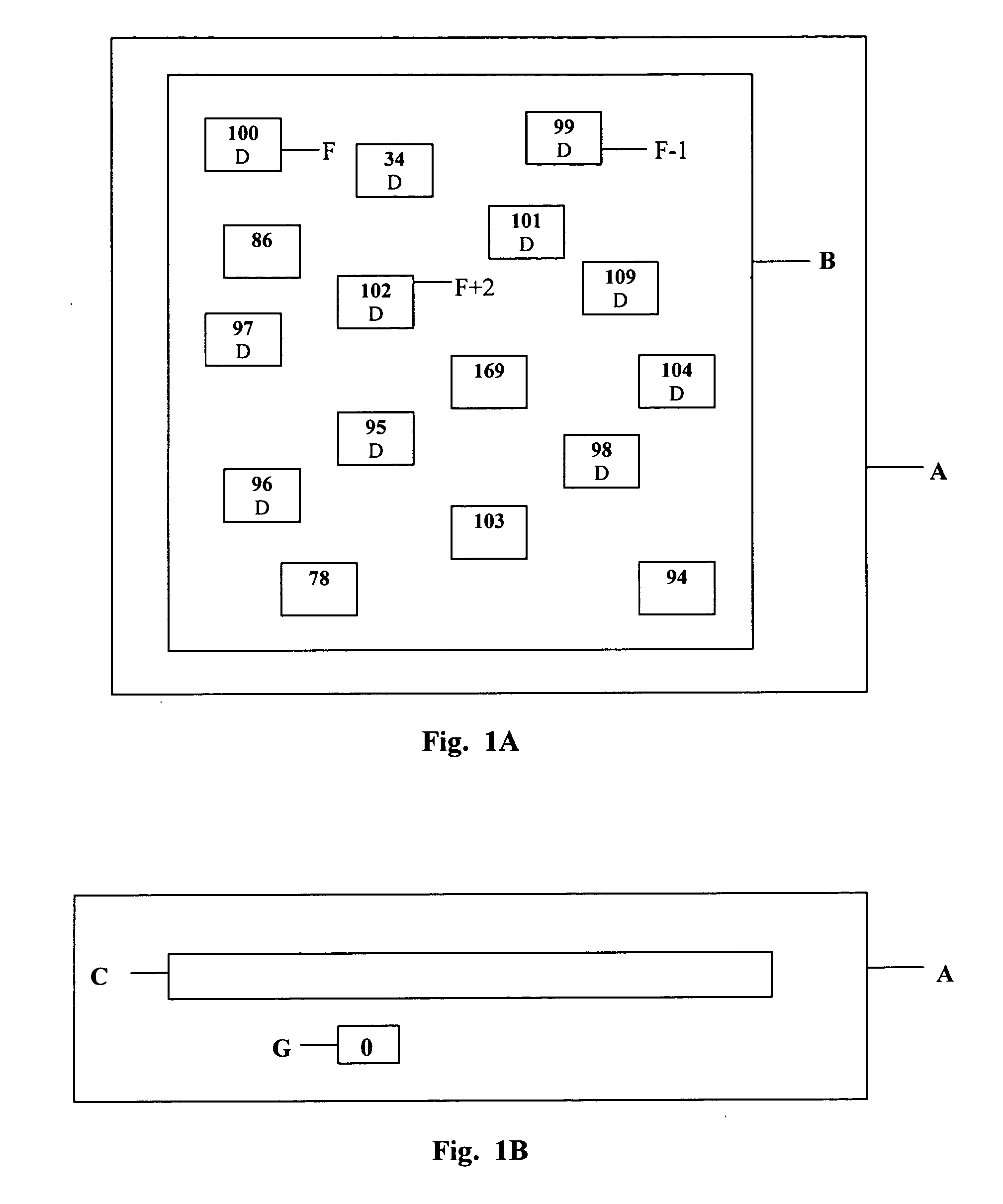

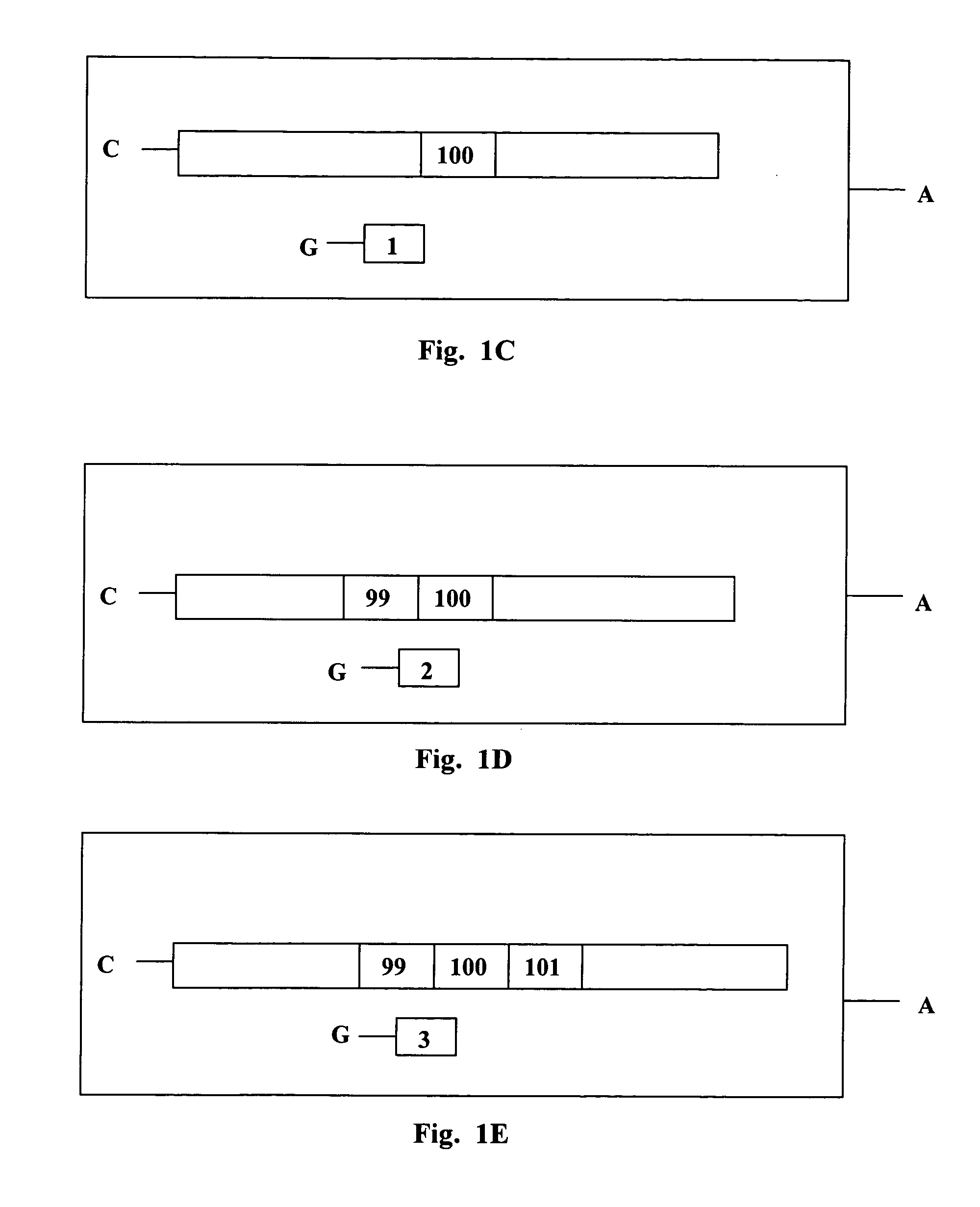

538 results about "Dirty data" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Dirty data, also known as rogue data, are inaccurate, incomplete or inconsistent data, especially in a computer system or database. Dirty data can contain such mistakes as spelling or punctuation errors, incorrect data associated with a field, incomplete or outdated data, or even data that has been duplicated in the database. They can be cleaned through a process known as data cleansing.

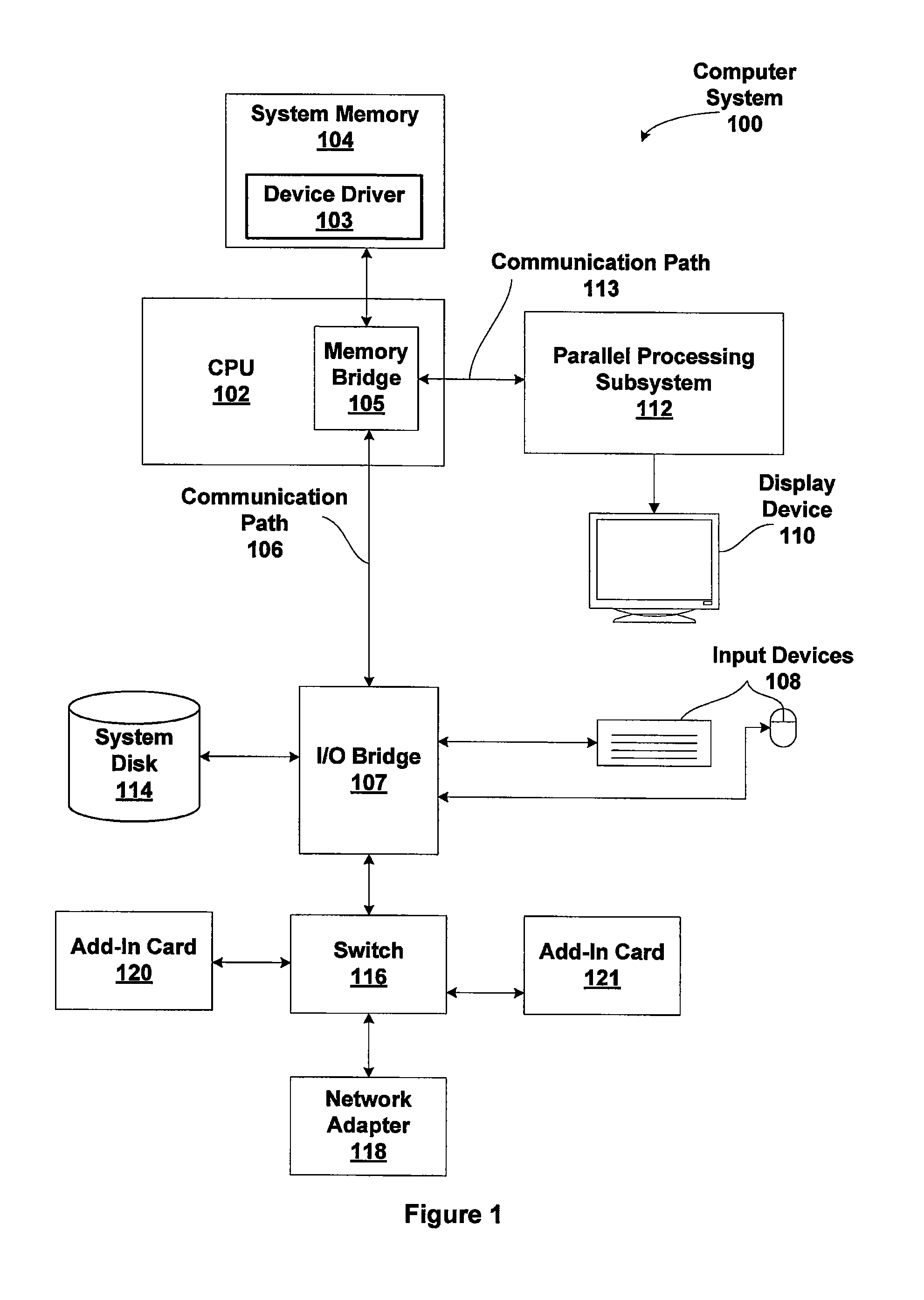

Lease based safety protocol for distributed system with multiple networks

InactiveUS6775703B1Memory adressing/allocation/relocationMultiple digital computer combinationsDirty dataClient-side

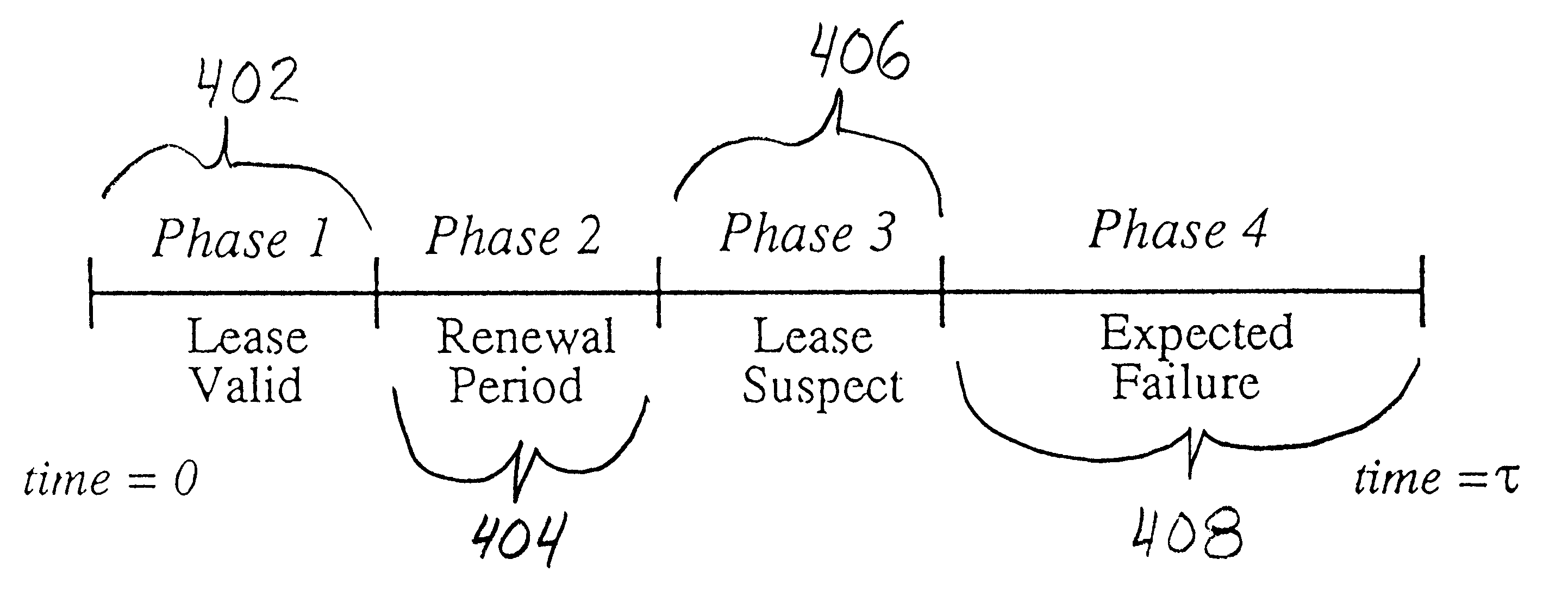

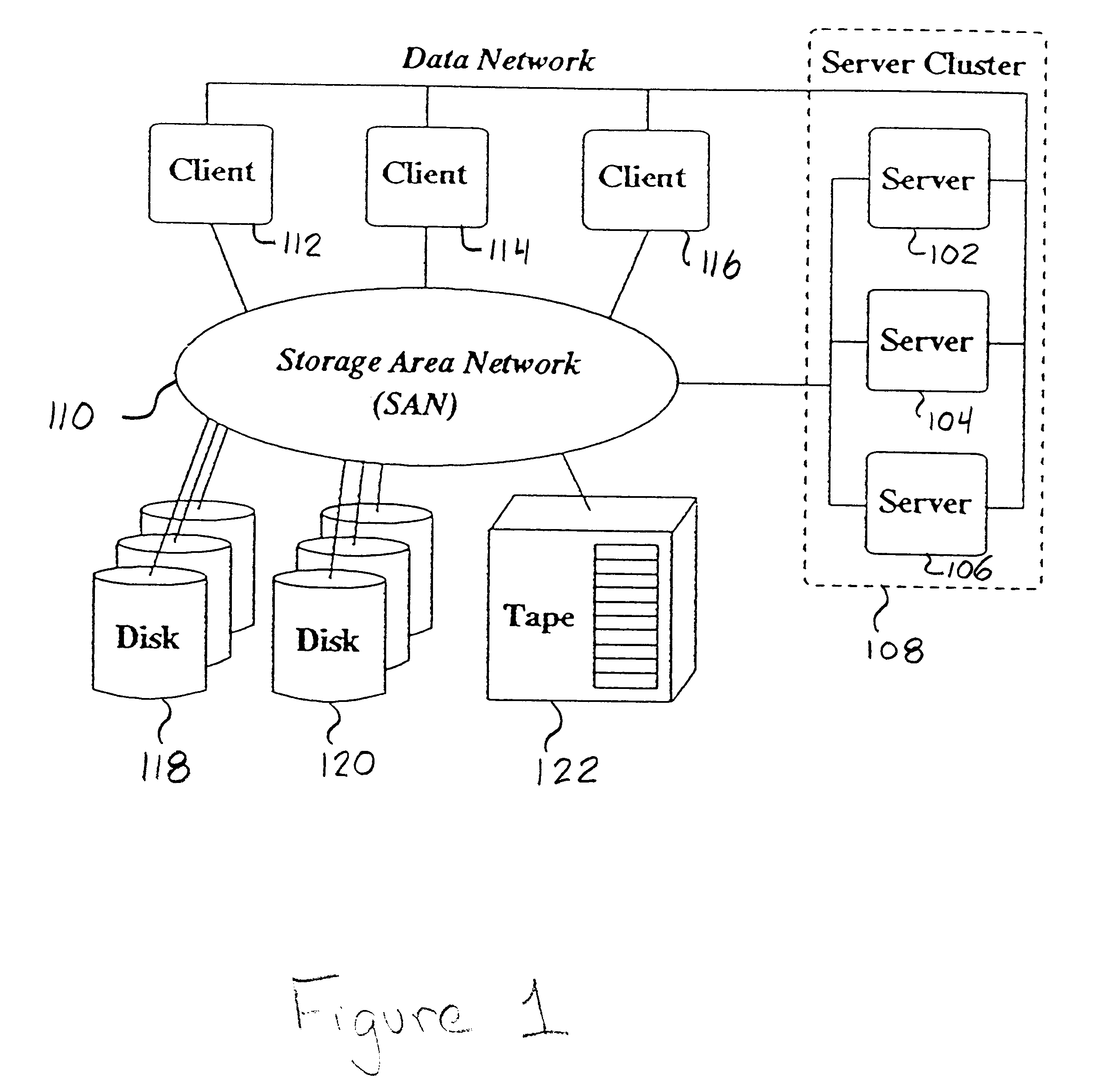

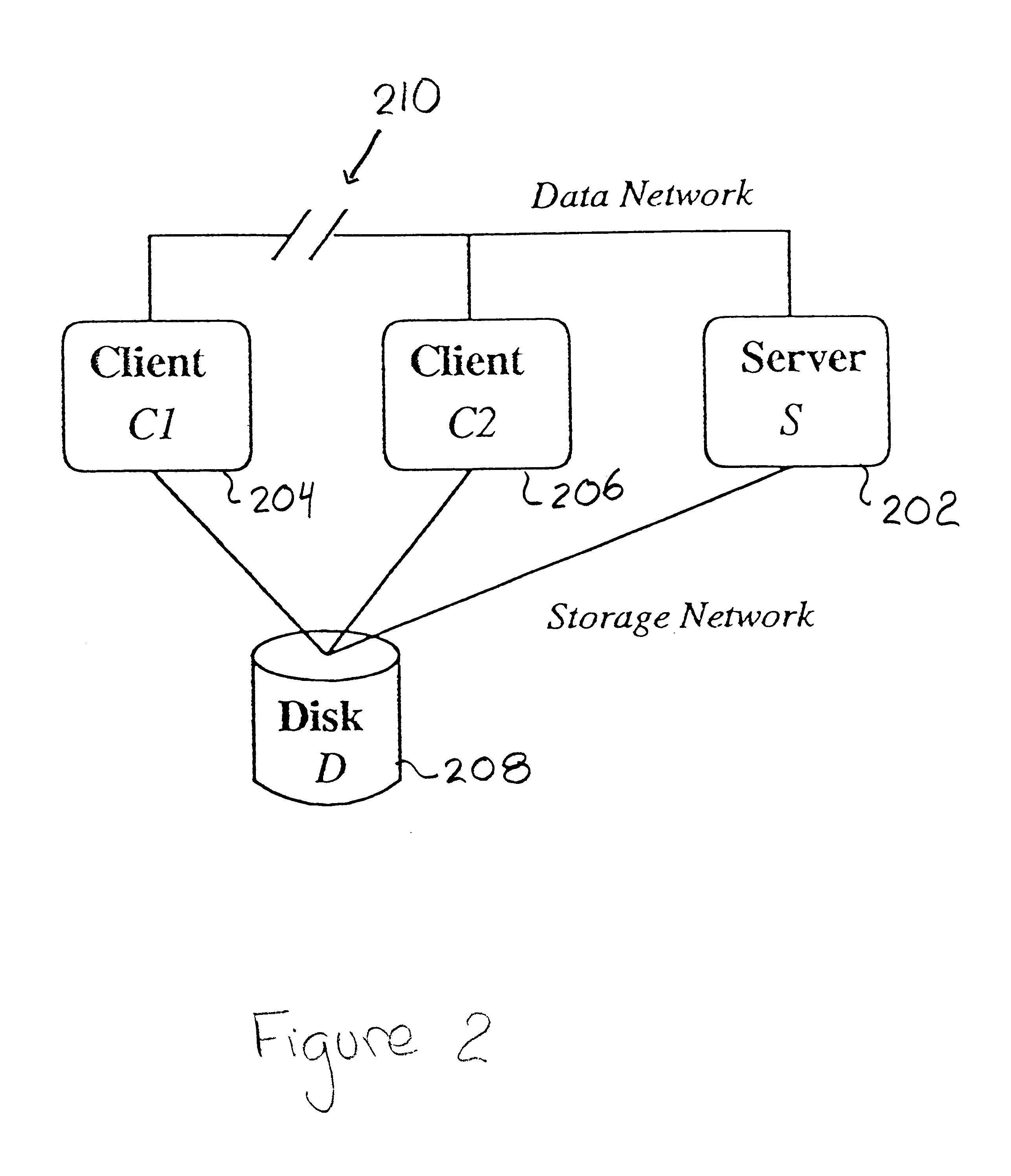

A system, method, and computer program product for a lease-based timeout scheme that addresses fencing's shortcomings. Unlike fencing, this scheme (or protocol) enables an isolated computer to realize it is disconnected from the distributed system and write its dirty data out to storage before its locks are stolen. In accordance with the invention, data consistency during a partition in a distributed system is ensured by establishing a lease based protocol between in the distributed system wherein a client can hold a lease with a server. The lease represents a contract between a client and a server in which the server promises to respect the client for a period of time. The server respects the contract even when it detects a partition between the client and itself.

Owner:IBM CORP

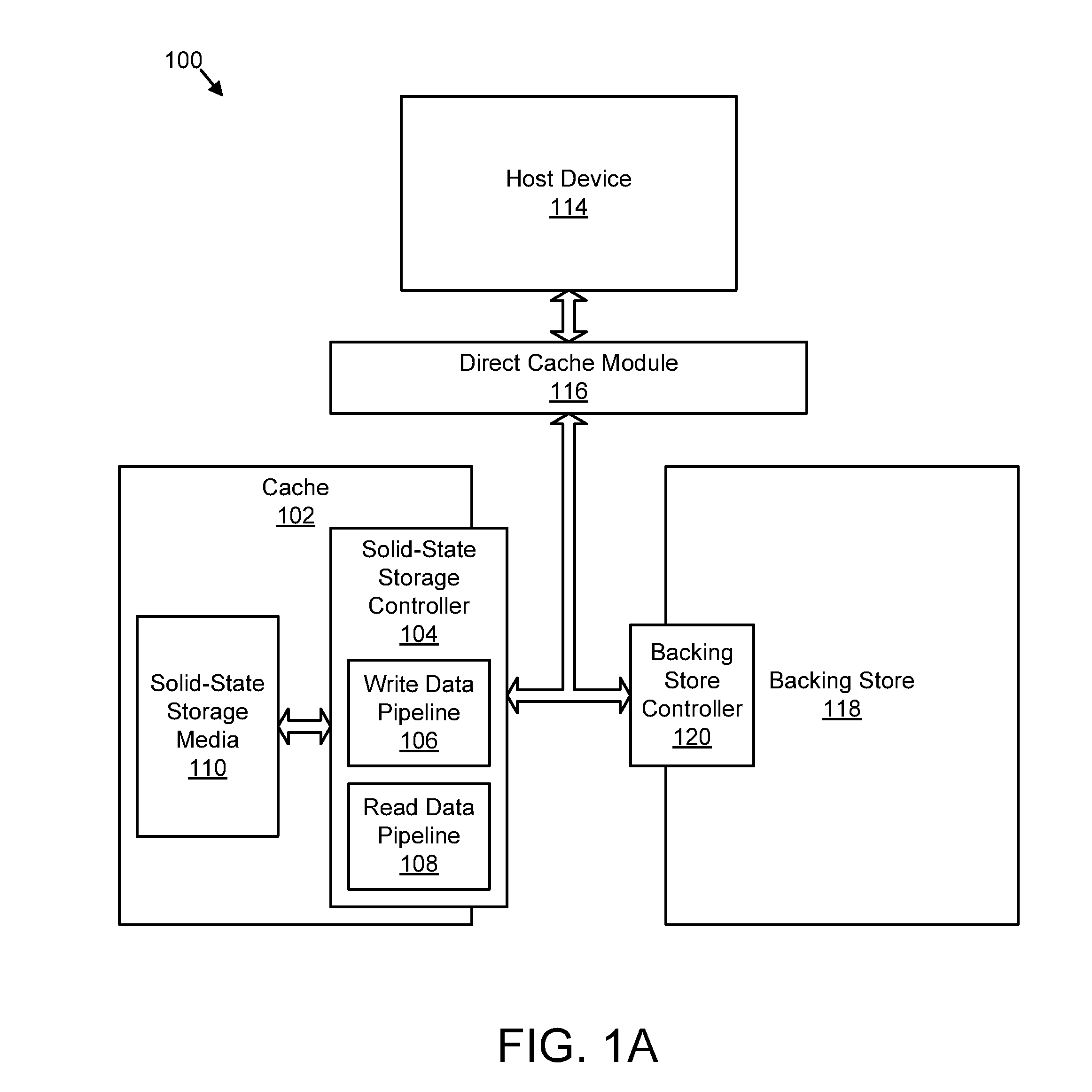

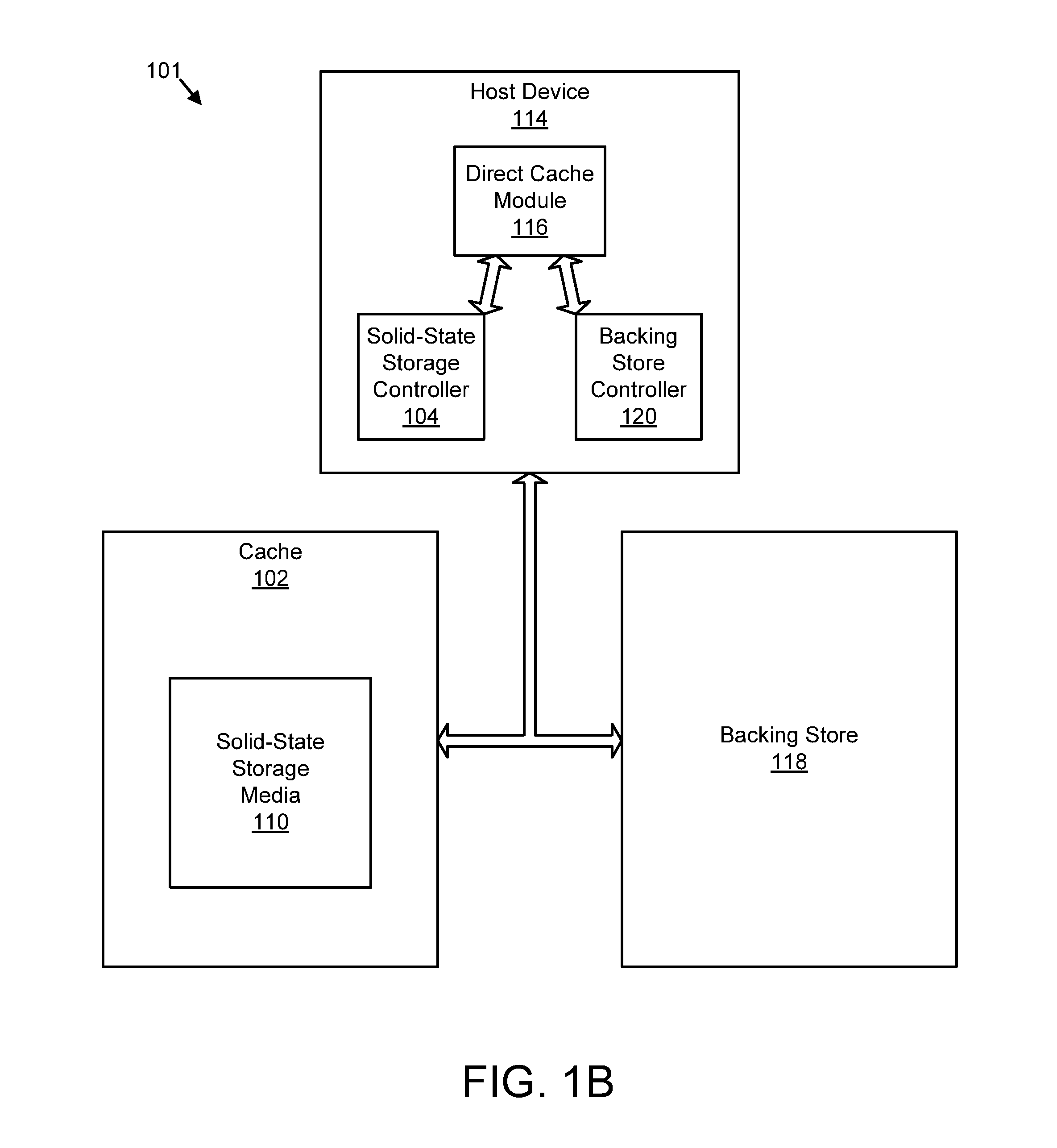

Apparatus, system, and method for destaging cached data

ActiveUS20120124294A1Memory architecture accessing/allocationError detection/correctionDirty dataData rate

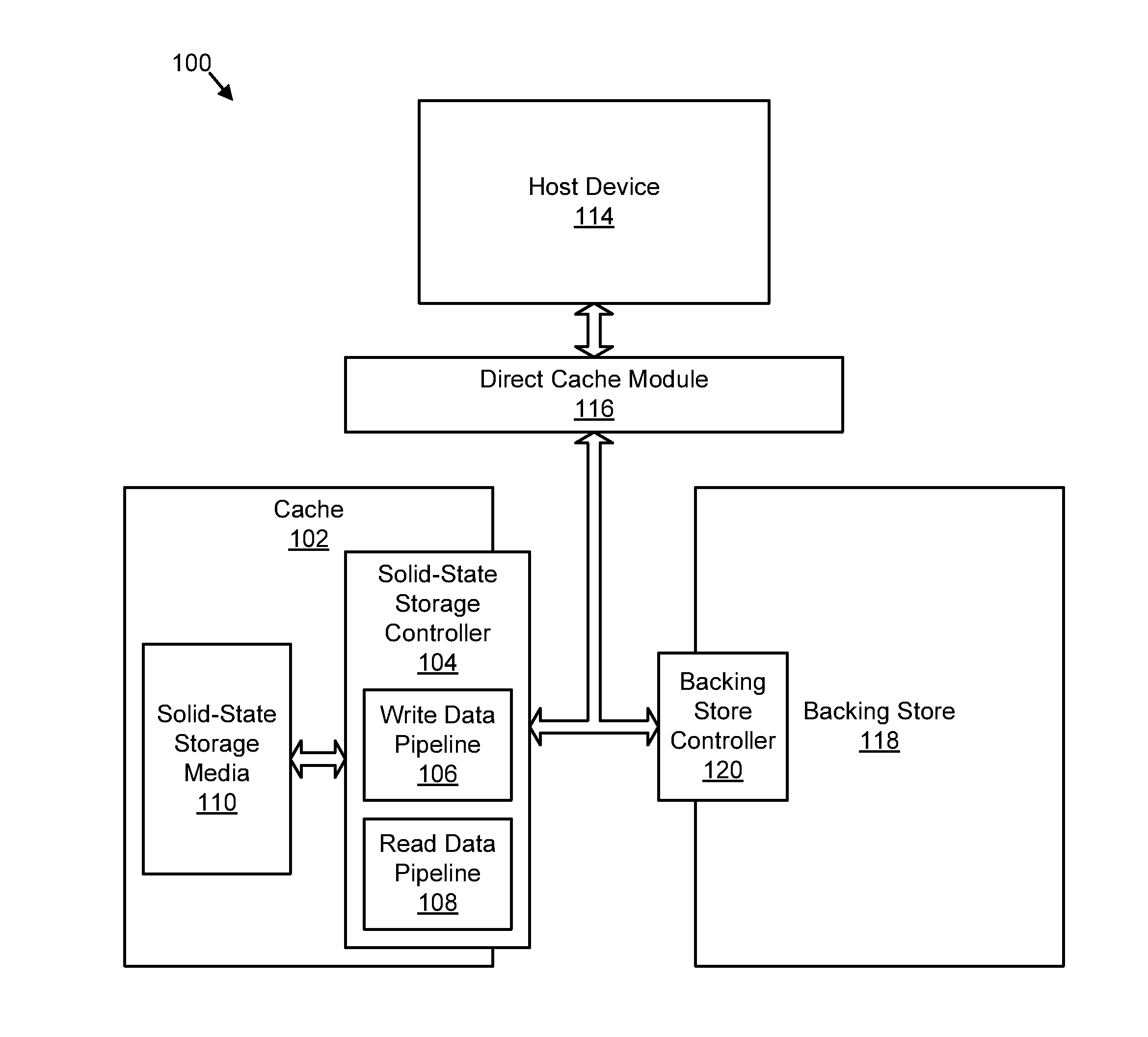

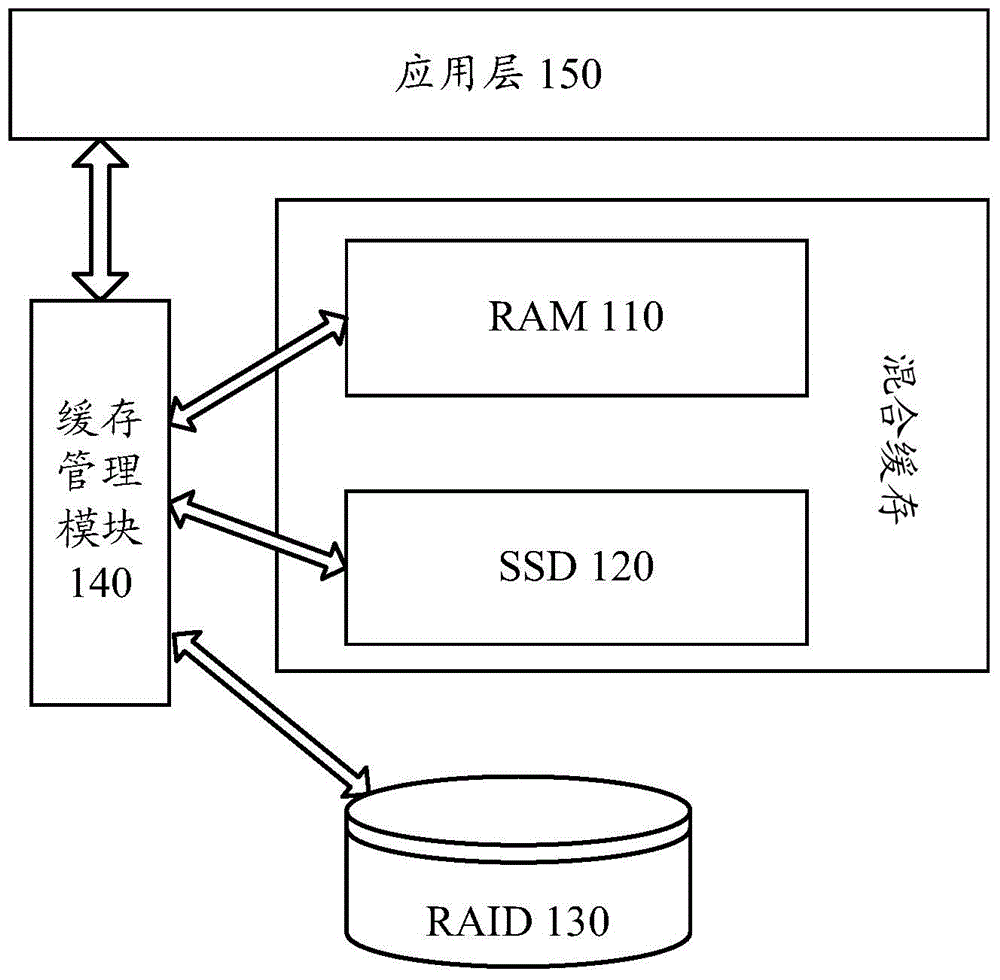

An apparatus, system, and method are disclosed for satisfying storage requests while destaging cached data. A monitor module samples a destage rate for a nonvolatile solid-state cache, a total cache write rate for the cache, and a dirtied data rate. The dirtied data rate comprises a rate at which write operations increase an amount of dirty data in the cache. A target module determines a target cache write rate for the cache based on the destage rate, the total cache write rate, and the dirtied data rate to target a destage write ratio. The destage write ratio comprises a predetermined ratio between the dirtied data rate and the destage rate. A rate enforcement module enforces the target cache write rate such that the total cache write rate satisfies the target cache write rate.

Owner:SANDISK TECH LLC

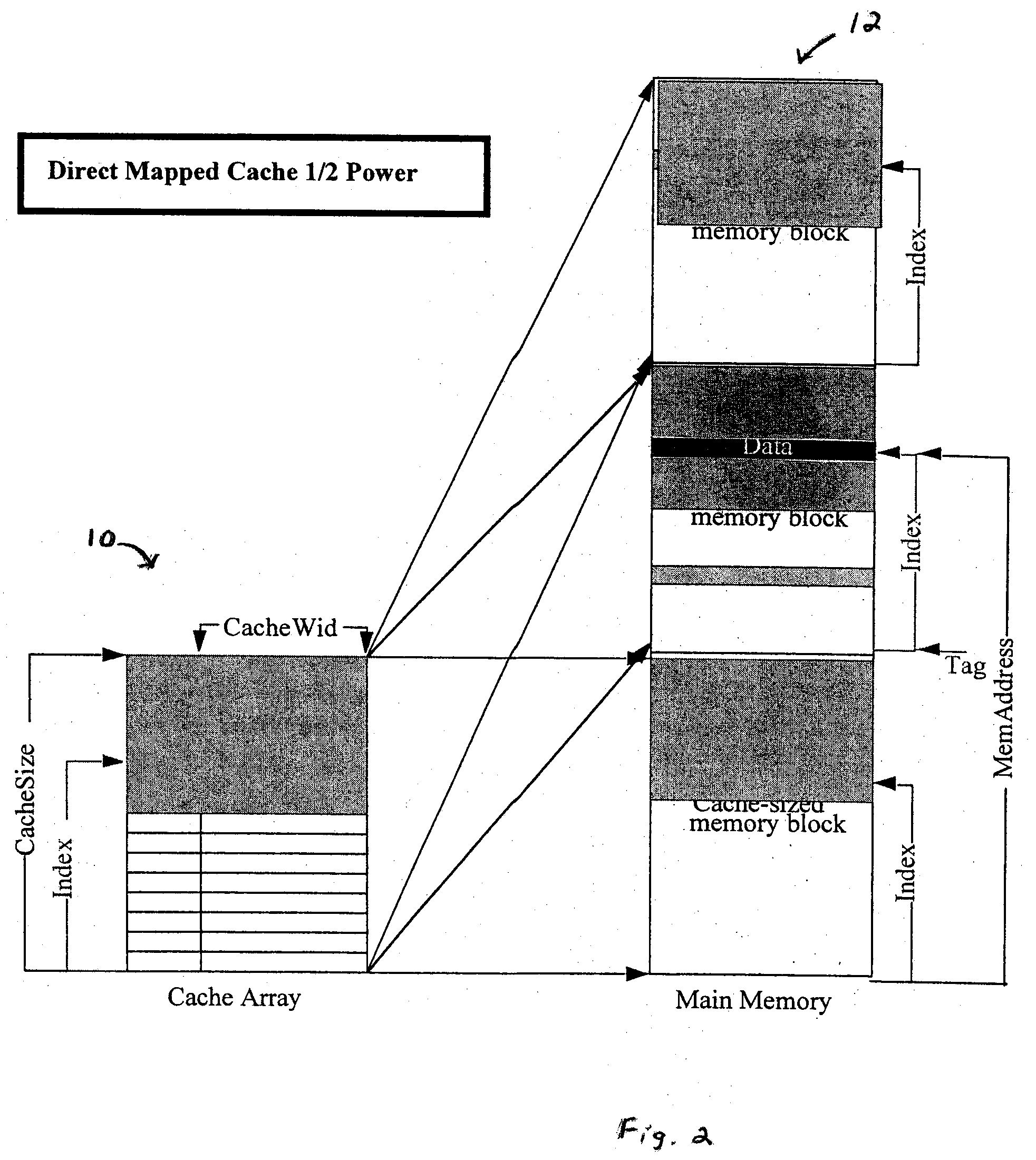

Method of dynamically controlling cache size

ActiveUS20050080994A1Minimize power consumptionNot impact performanceMemory architecture accessing/allocationEnergy efficient ICTPower savingSoftware

A power saving cache and a method of operating a power saving cache. The power saving cache includes circuitry to dynamically reduce the logical size of the cache in order to save power. Preferably, a method is used to determine optimal cache size for balancing power and performance, using a variety of combinable hardware and software techniques. Also, in a preferred embodiment, steps are used for maintaining coherency during cache resizing, including the handling of modified (“dirty”) data in the cache, and steps are provided for partitioning a cache in one of several way to provide an appropriate configuration and granularity when resizing.

Owner:GLOBALFOUNDRIES US INC

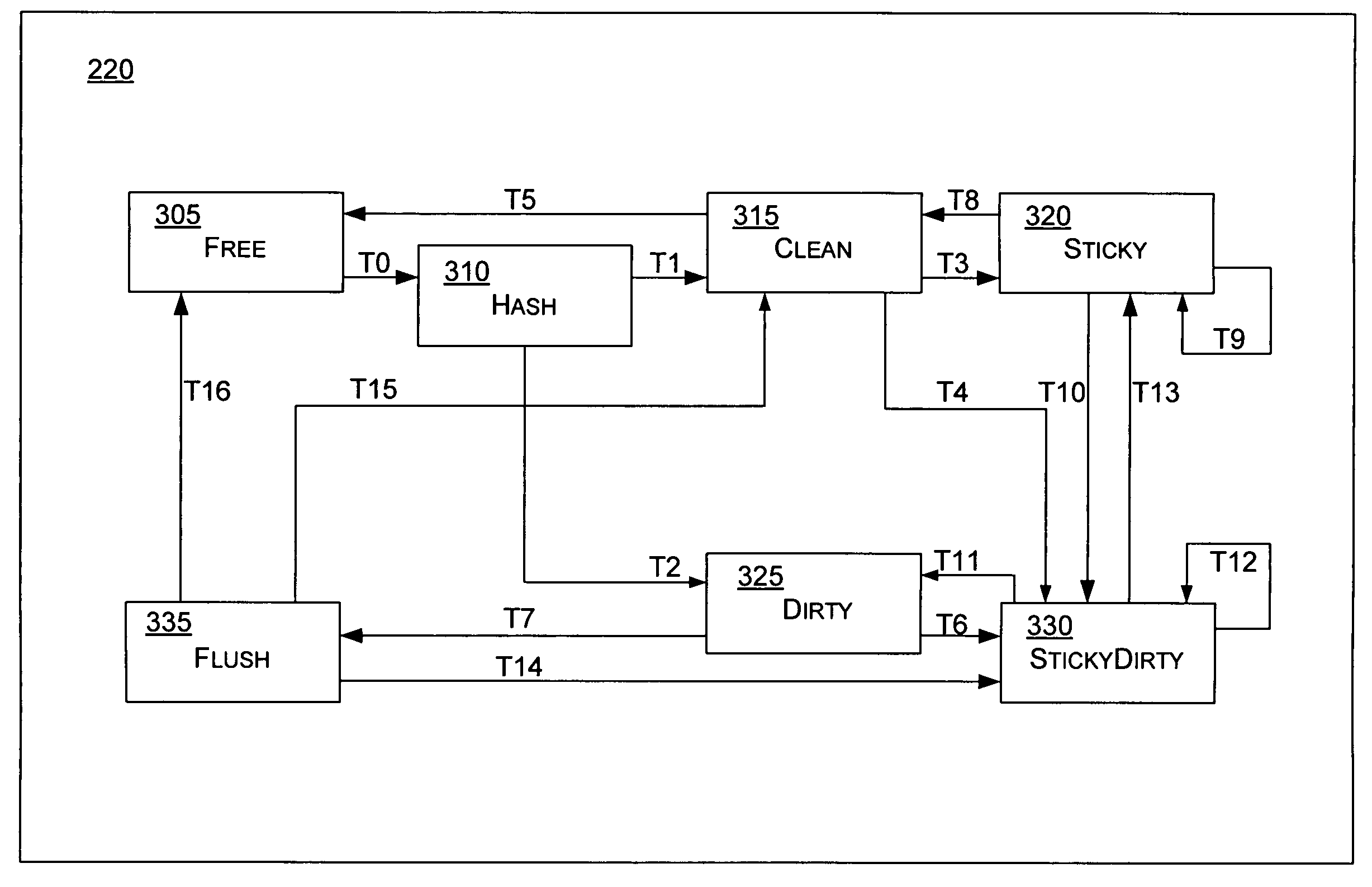

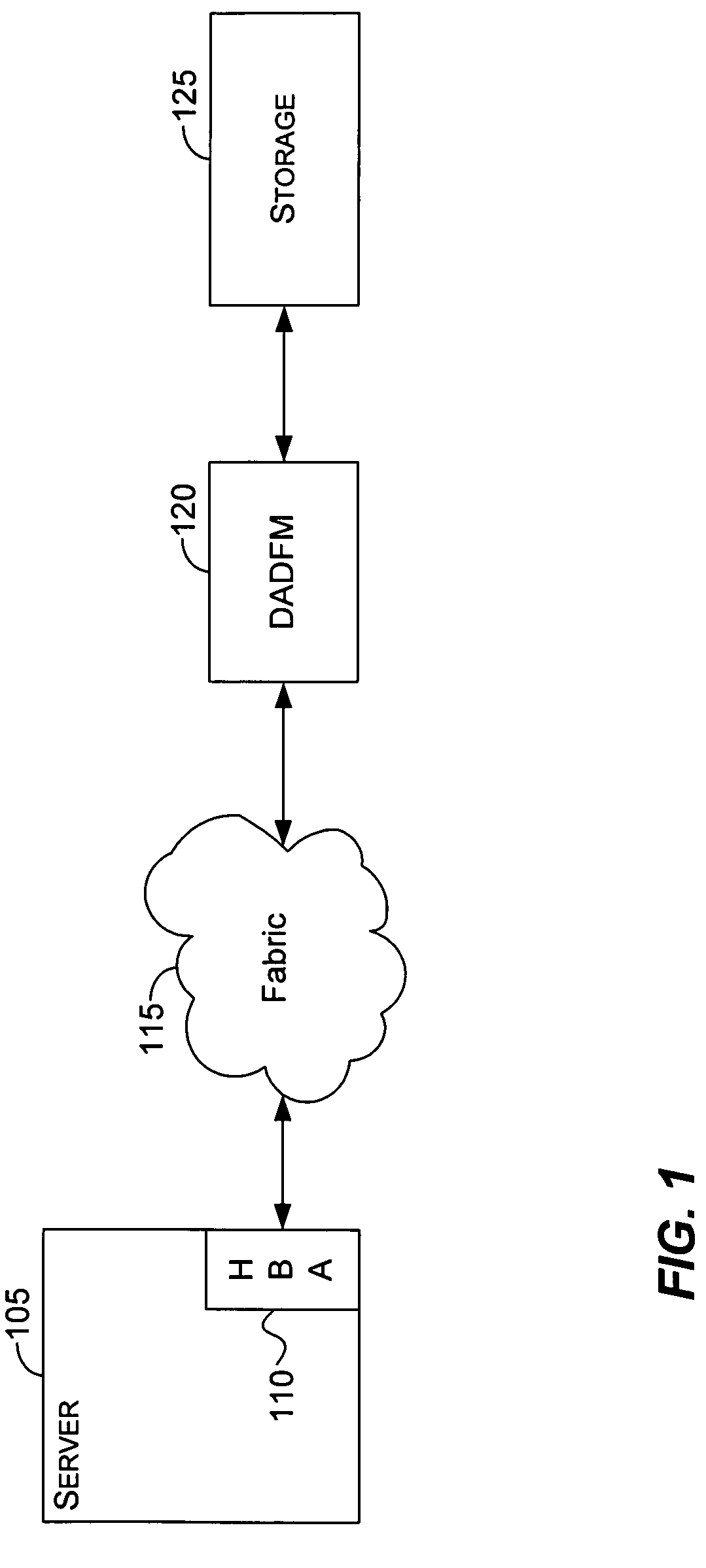

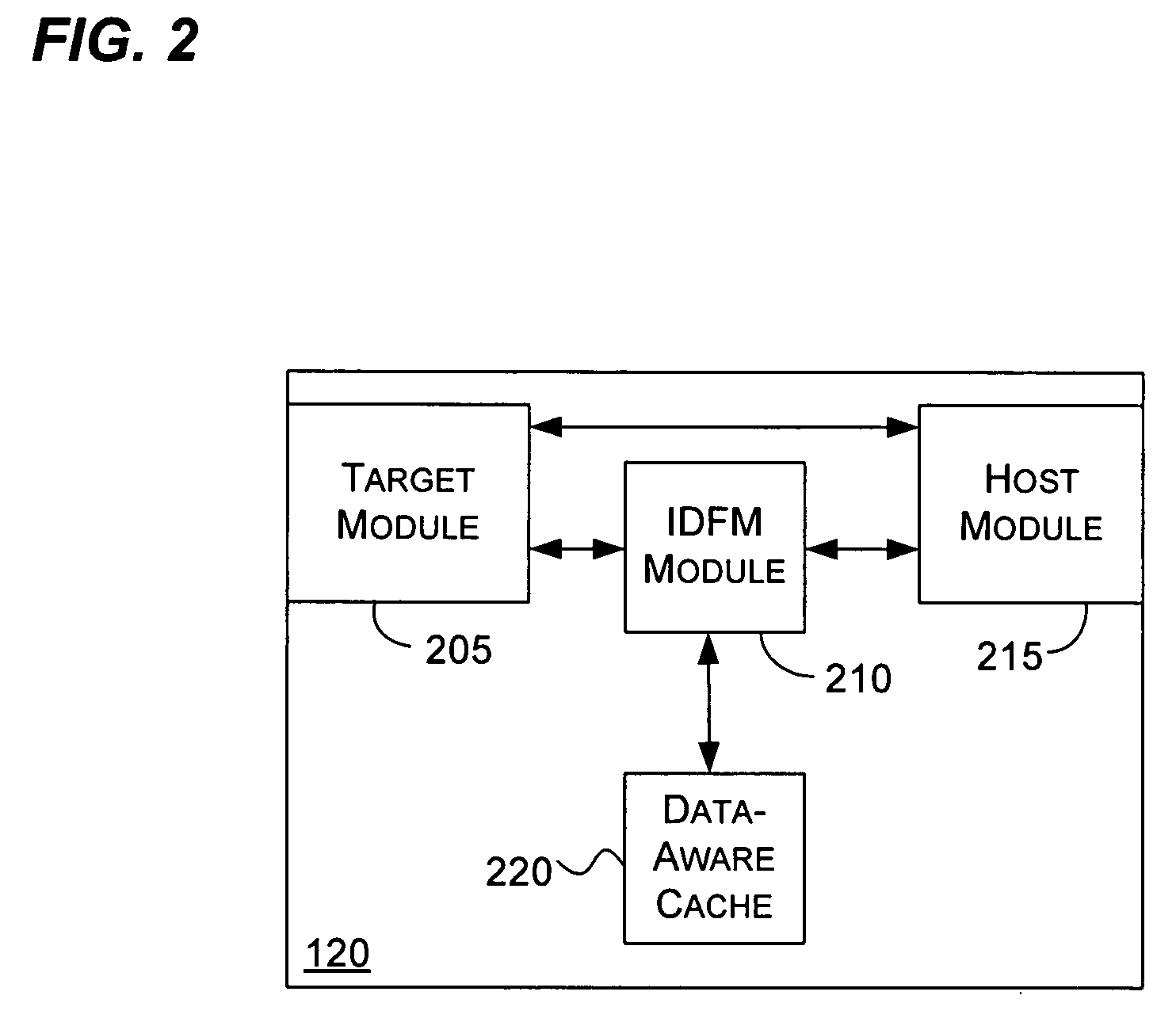

Data-aware cache state machine

InactiveUS20050172082A1Reduce bottlenecksImprove effectivenessMemory architecture accessing/allocationMemory adressing/allocation/relocationData transformationData stream

A method and system directed to improve effectiveness and efficiency of cache and data management by differentiating data based on certain attributes associated with the data and reducing the bottleneck to storage. The data-aware cache differentiates and manages data using a state machine having certain states. The data-aware cache may use data pattern and traffic statistics to retain frequently used data in cache longer by transitioning it into Sticky or StickyDirty states. The data-aware cache may also use content or application related attributes to differentiate and retain certain data in cache longer. Further, the data-aware cache may provide cache status and statistics information to a data-aware data flow manager, thus assisting data-aware data flow manager to determine which data to cache and which data to pipe directly through, or to switch cache policies dynamically, thus avoiding some of the overhead associated with caches. The data-aware cache may also place clean and dirty data in separate states, enabling more efficient cache mirroring and flush, thus improve system reliability and performance.

Owner:SANDISK TECH LLC

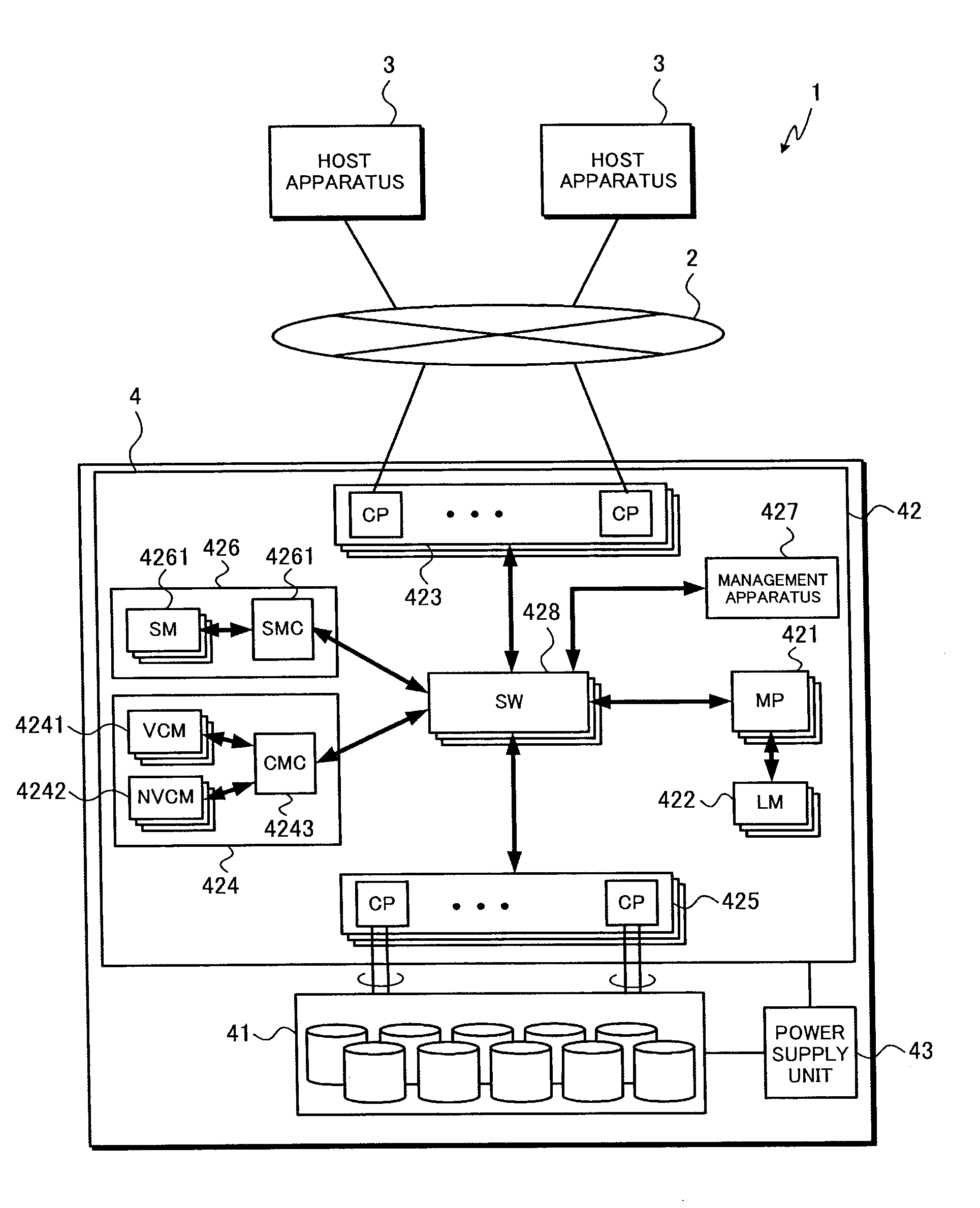

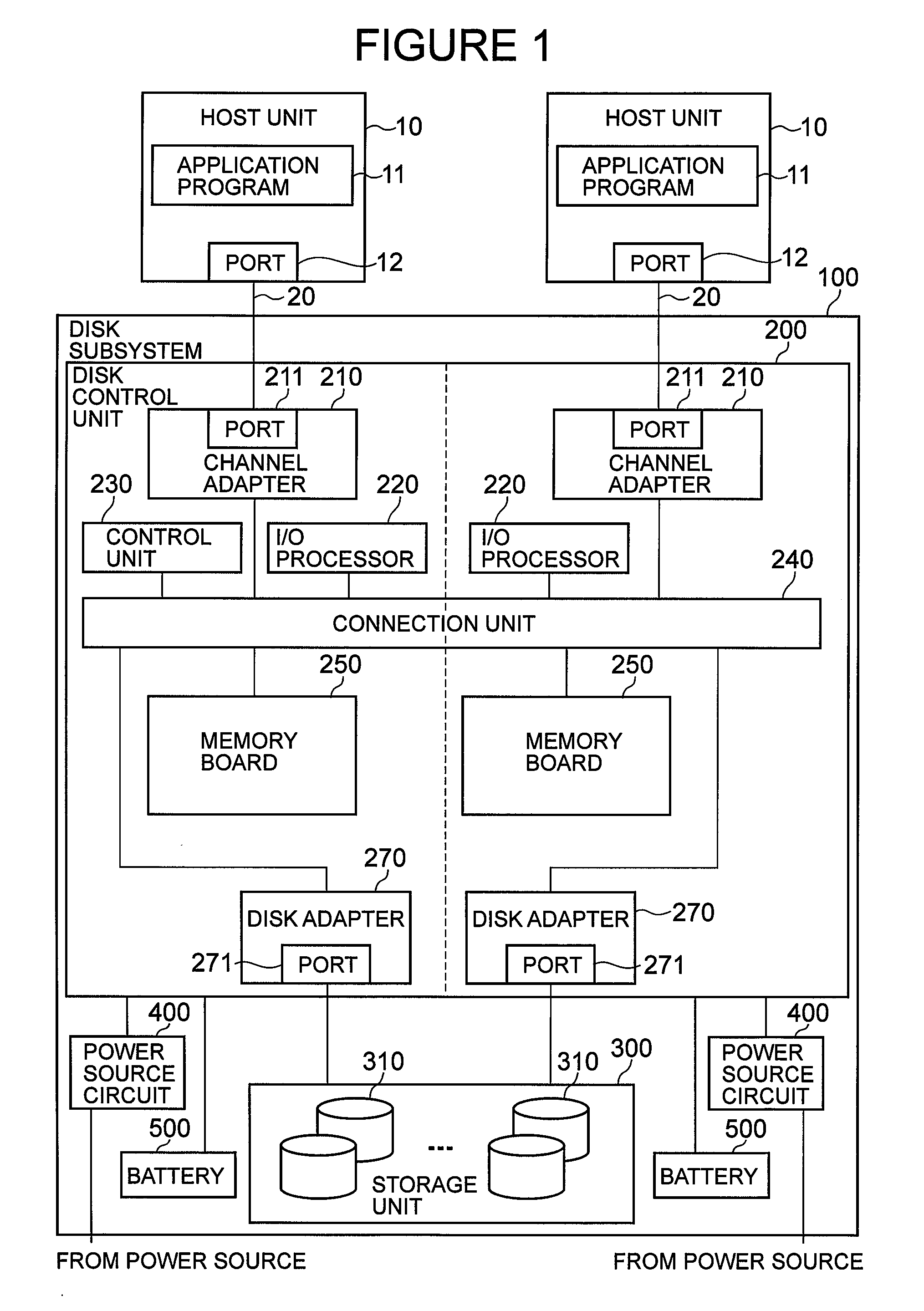

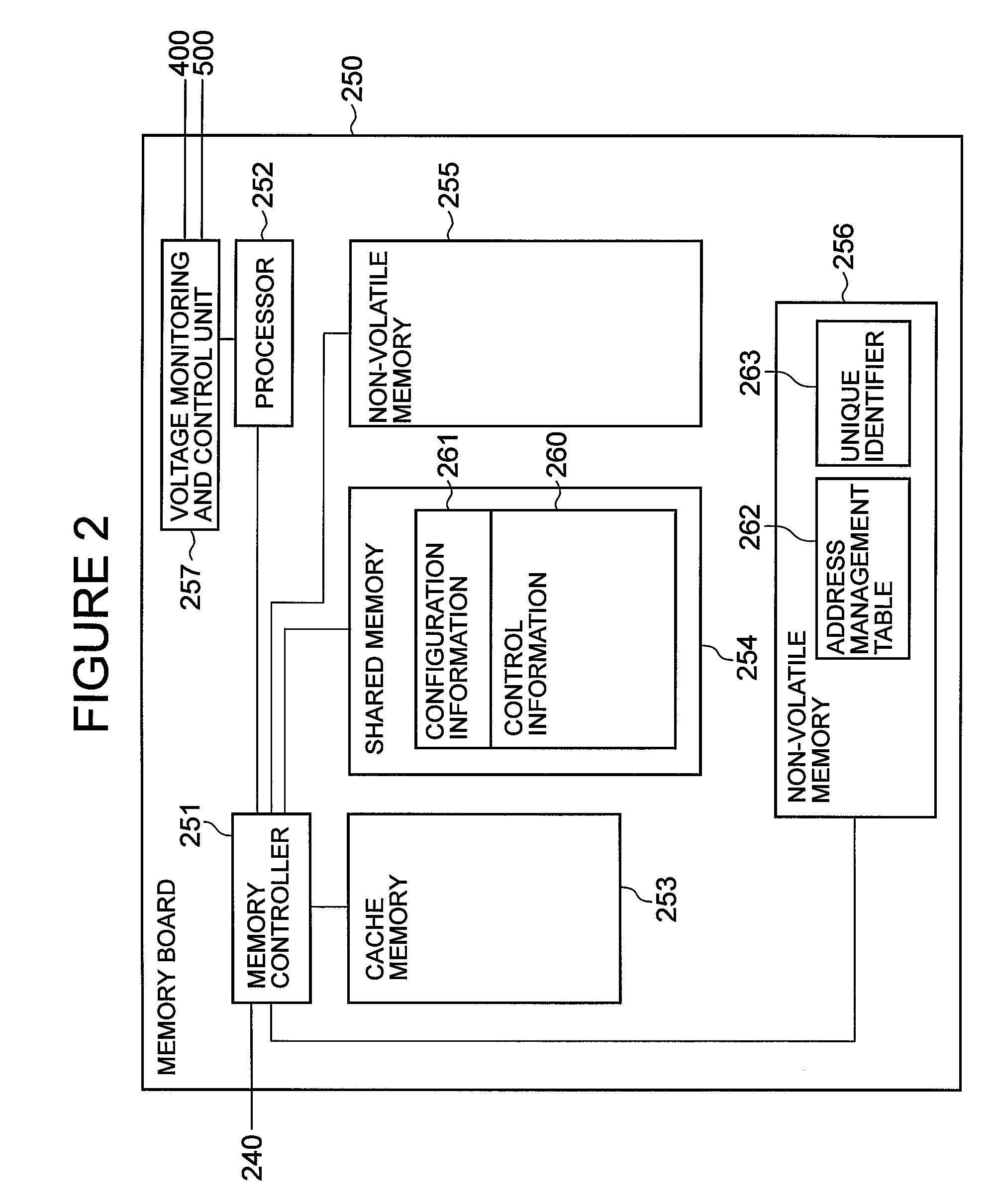

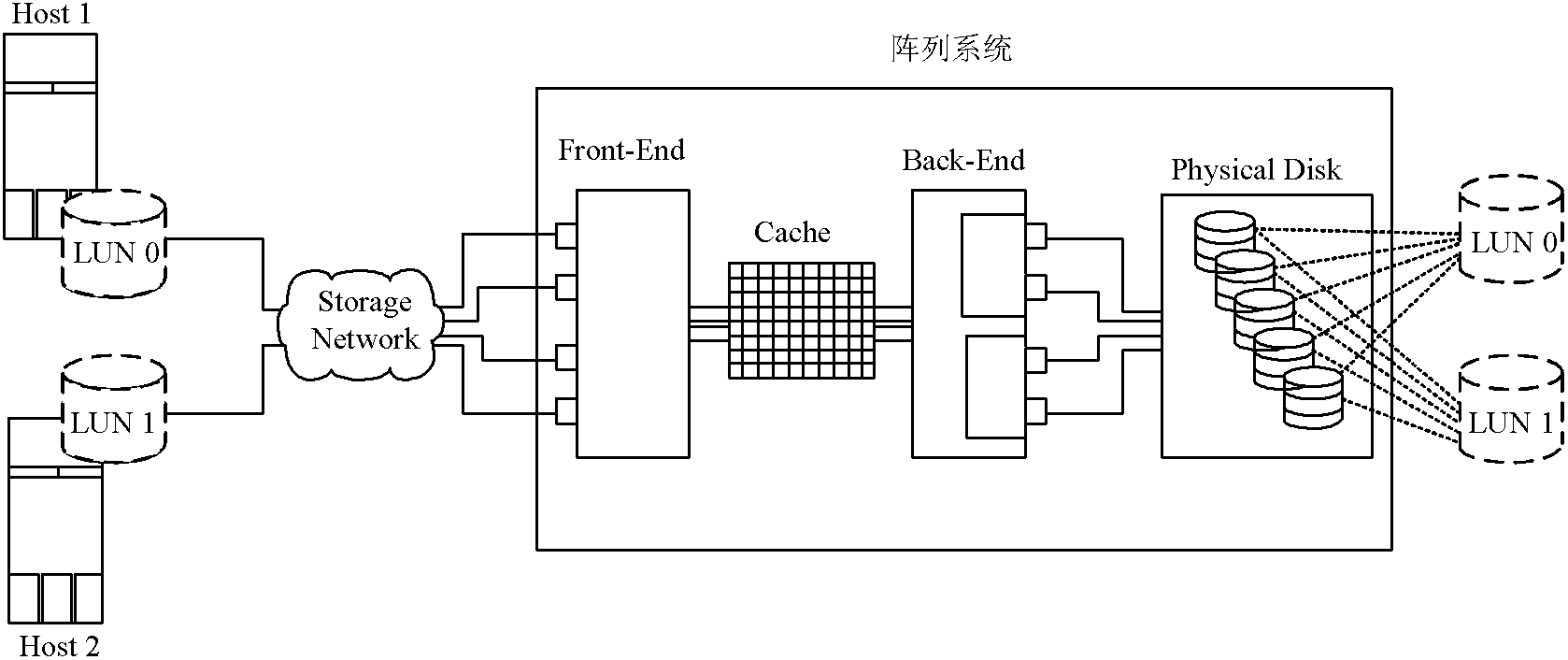

Storage apparatus and data management method in the storage apparatus

ActiveUS20090077312A1Effective supportEfficiently and reliably storedMemory architecture accessing/allocationEnergy efficient ICTDirty dataData management

A storage apparatus sets up part of non-volatile cache memory as a cache-resident area, and in an emergency such as an unexpected power shutdown, backs up dirty data of data cached in volatile memory to an area other than the cache-resident area in the non-volatile cache memory, together with the relevant cache management information. Further, the storage apparatus monitors the amount of the dirty data in the volatile cache memory so that the dirty data cached in the volatile cache memory is reliably contained in a backup area in the non-volatile memory, and when the dirty data amount exceeds a predetermined threshold value, the storage apparatus releases the cache-resident area to serve as the backup area.

Owner:HITACHI LTD

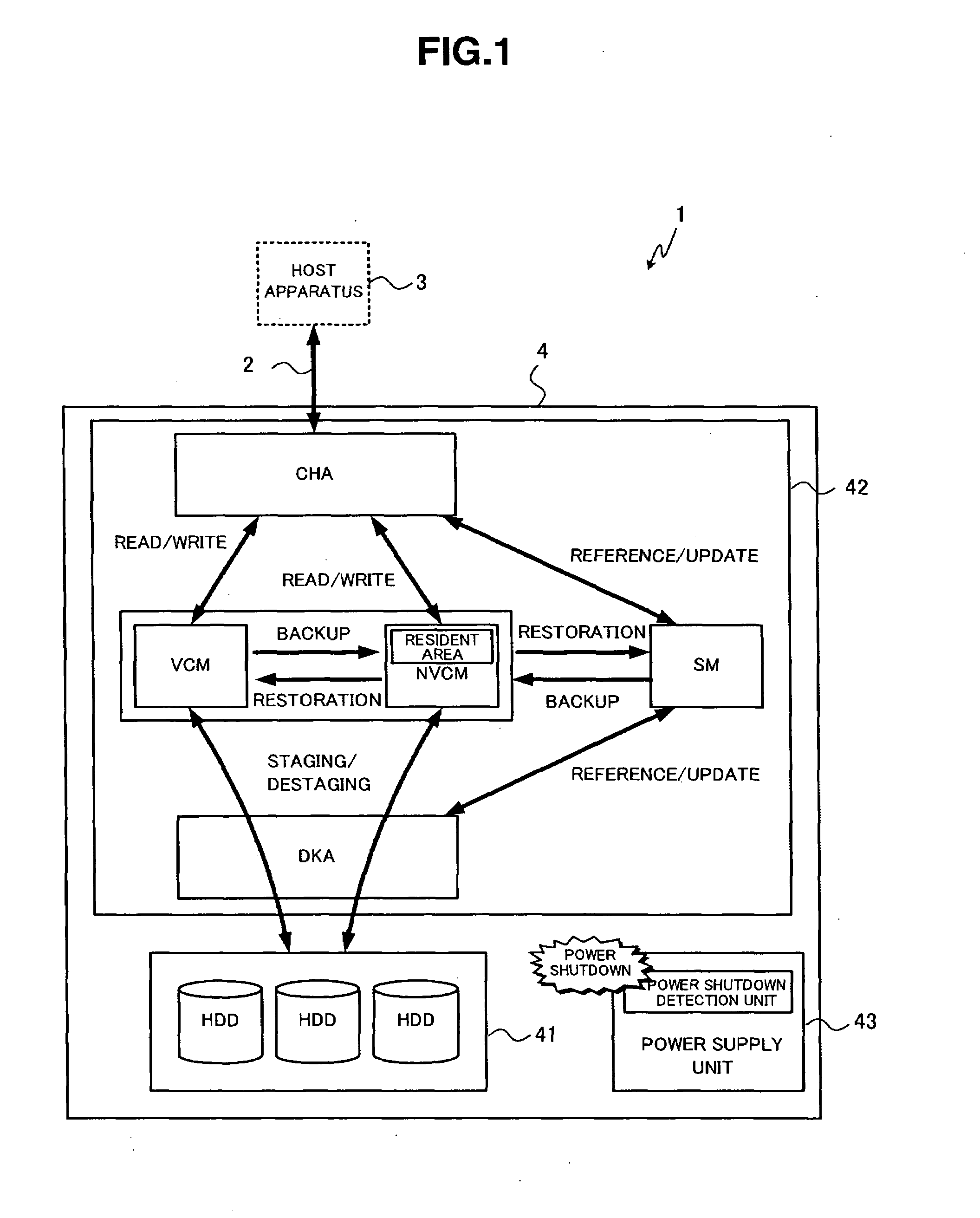

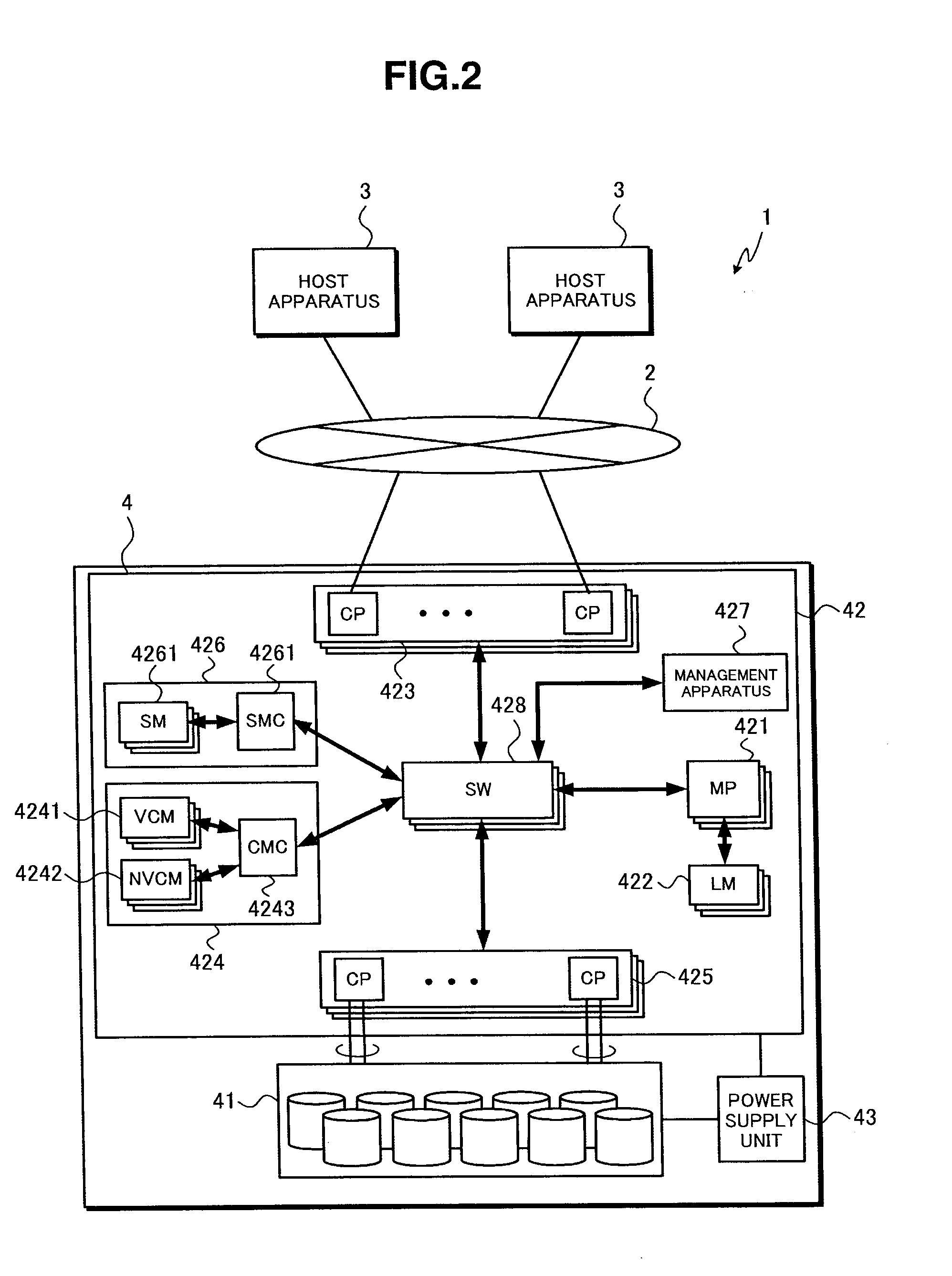

Storage control unit and data management method

InactiveUS20080189484A1Fully preservedReduce capacityEnergy efficient ICTVolume/mass flow measurementDirty dataData management

An I / O processor determines whether or not the amount of dirty data on a cache memory exceeds a threshold value and, if the determination is that this threshold value has been exceeded, writes a portion of the dirty data of the cache memory to a storage device. If a power source monitoring and control unit detects a voltage abnormality of the supplied power, the power monitoring and control unit maintains supply of power using power from a battery, so that a processor receives supply of power from the battery and saves the dirty data stored on the cache memory to a non-volatile memory.

Owner:HITACHI LTD

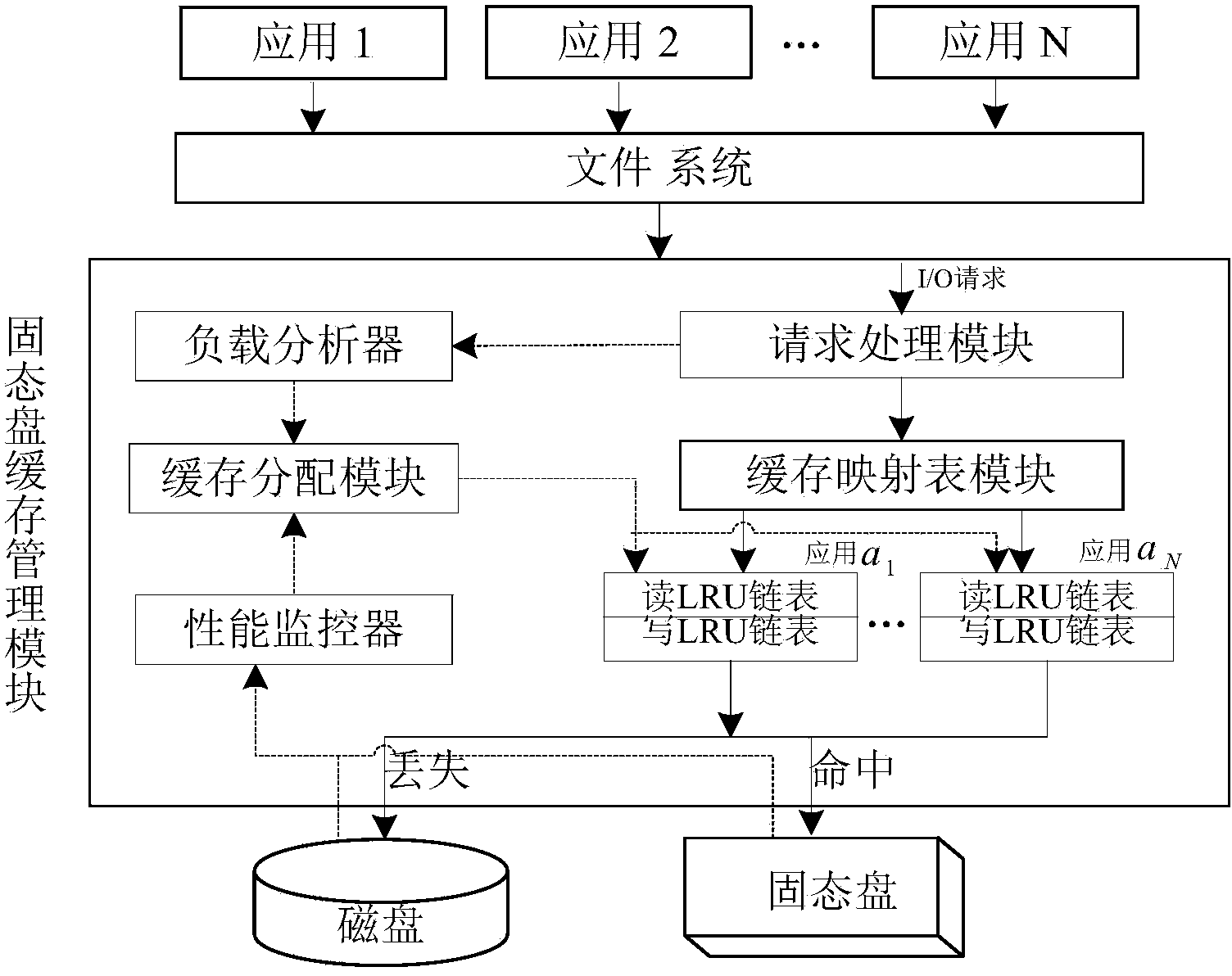

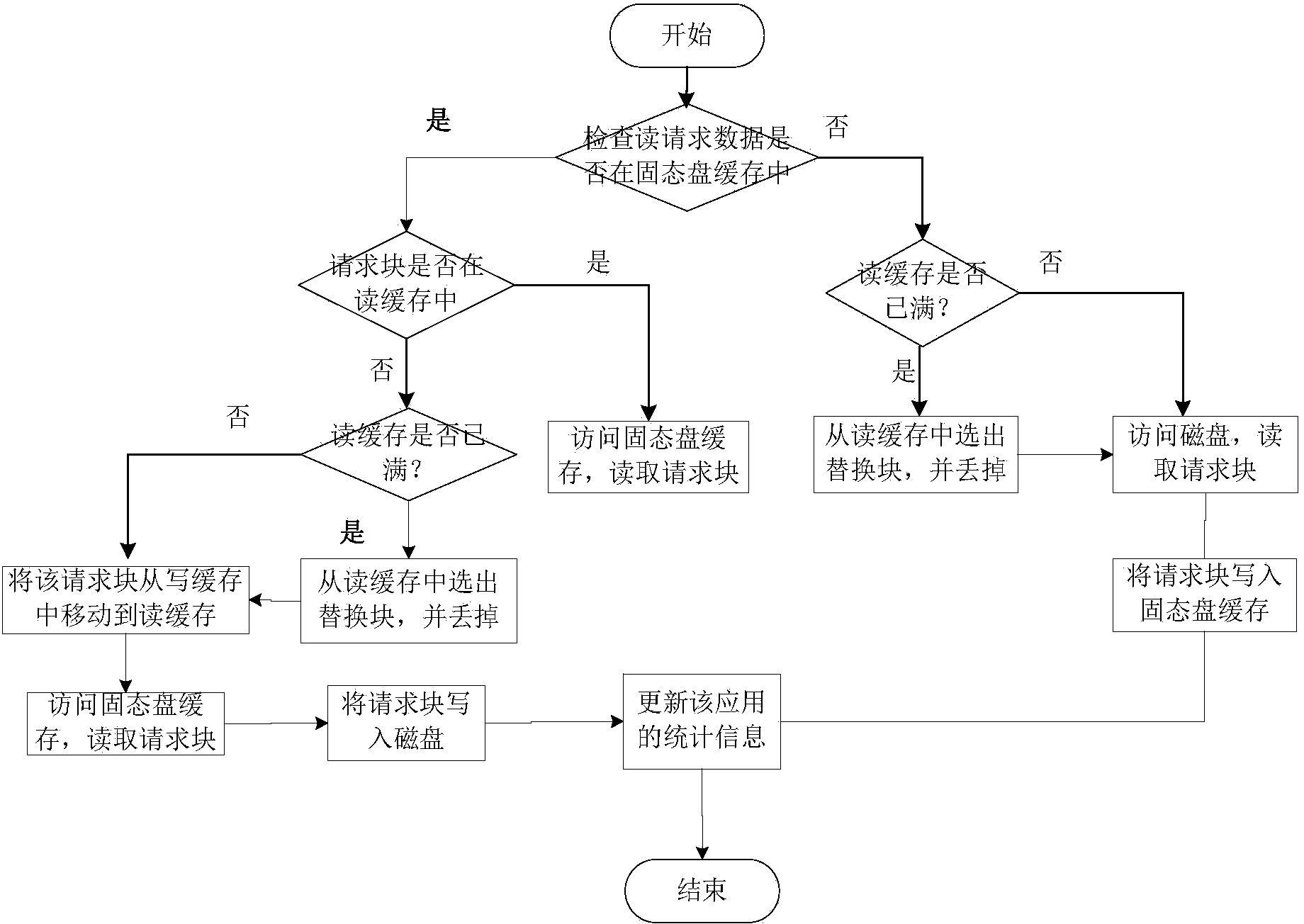

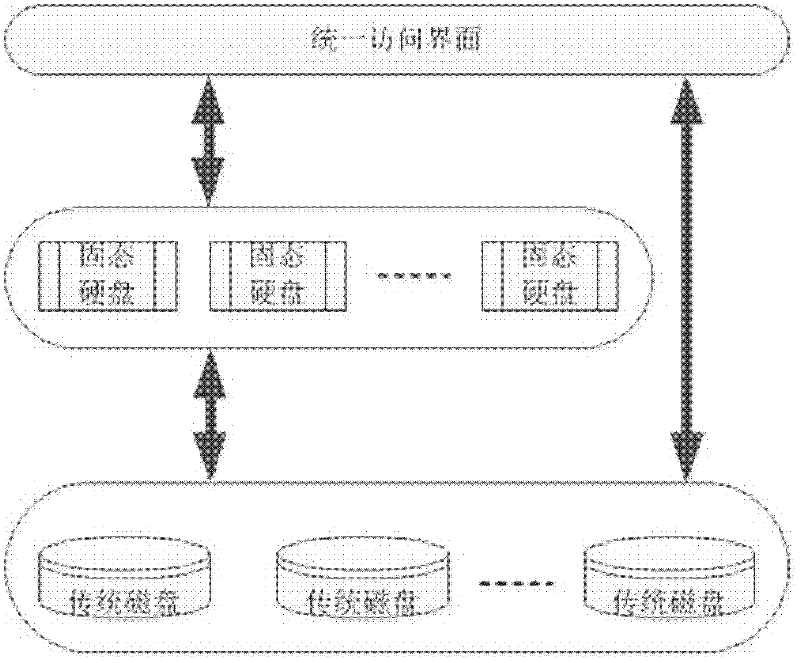

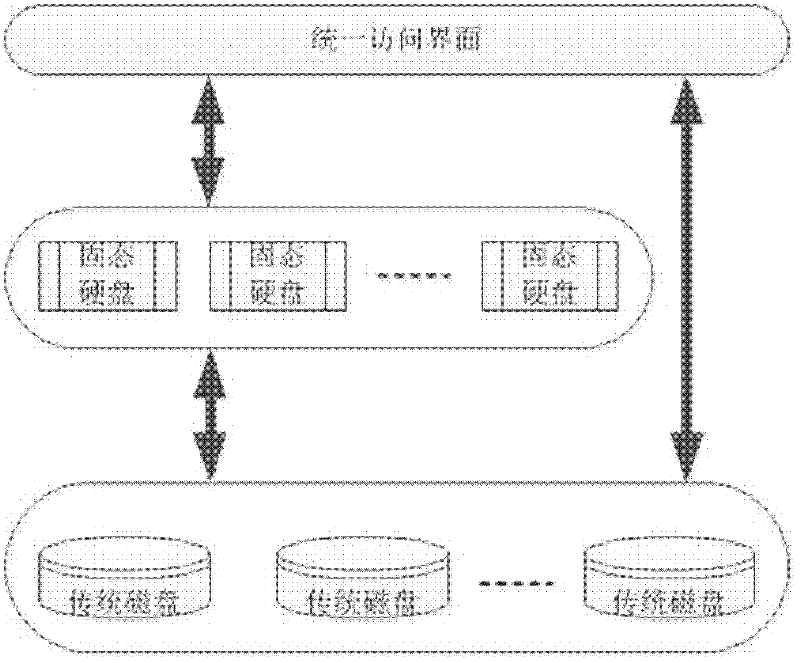

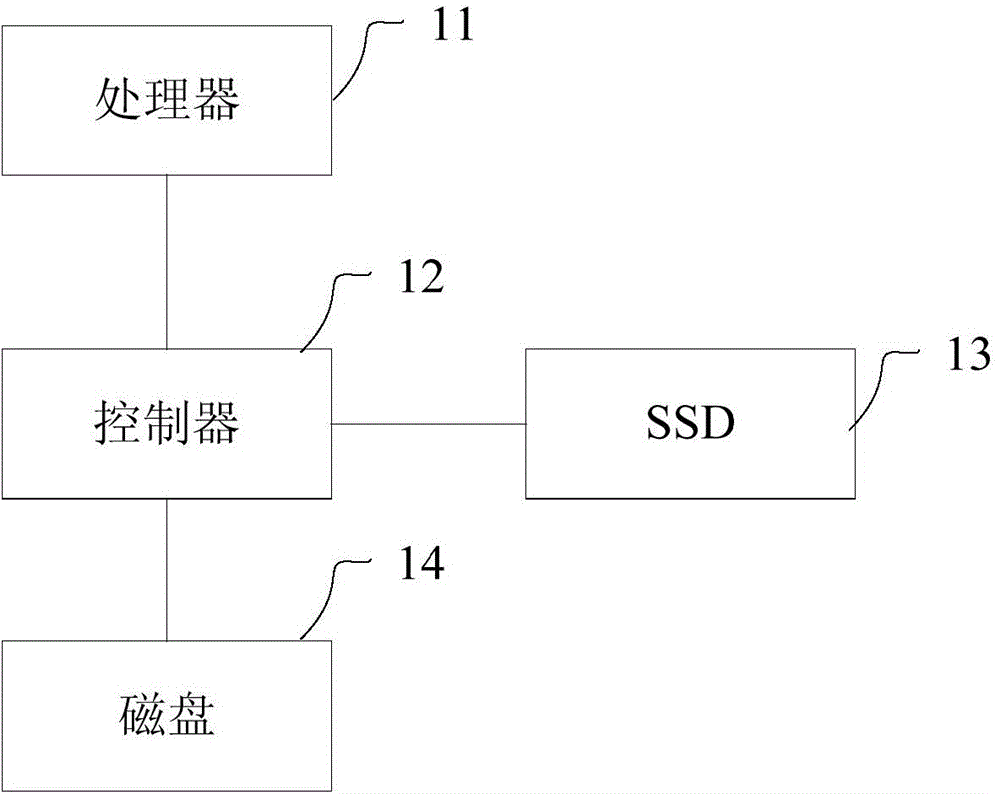

Mixed storage system and method for supporting solid-state disk cache dynamic distribution

ActiveCN103902474AEfficient use ofAvoid performance degradationMemory adressing/allocation/relocationDifferentiated servicesDirty data

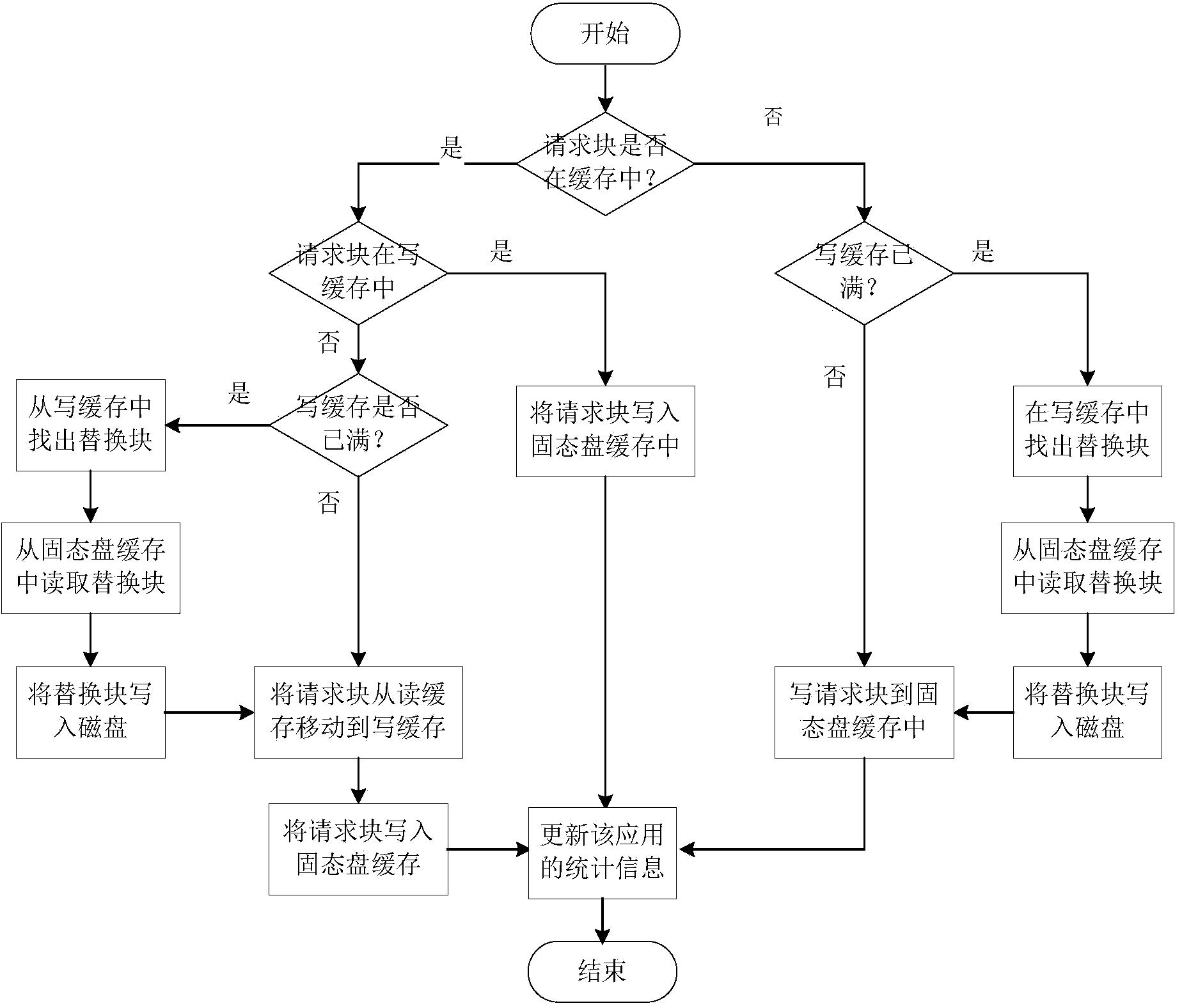

The invention provides a mixed storage system and method for supporting solid-state disk cache dynamic distribution. The mixed storage method is characterized in that the mixed storage system is constructed through a solid-state disk and a magnetic disk, and the solid-state disk serves as a cache of the magnetic disk; the load characteristics of applications and the cache hit ratio of the solid-state disk are monitored in real time, performance models of the applications are built, and the cache space of the solid-state disk is dynamically distributed according to the performance requirements of the applications and changes of the load characteristics. According to the solid-state disk cache management method, the cache space of the solid-state disk can be reasonably distributed according to the performance requirements of the applications, and an application-level cache partition service is achieved; due to the fact that the cache space of the solid-state disk of the applications is further divided into a cache reading section and a cache writing section, dirty data blocks and the page copying and rubbish recycling cost caused by the dirty data blocks are reduced; meanwhile, the idle cache space of the solid-state disk is distributed to the applications according to the cache use efficiency of the applications, and therefore the cache hit ratio of the solid-state disk of the mixed storage system and the overall performance of the mixed storage system are improved.

Owner:HUAZHONG UNIV OF SCI & TECH

Cache management method for solid-state disc

ActiveCN103136121AImprove hit chanceImprove read and write speedMemory adressing/allocation/relocationDirty dataCache management

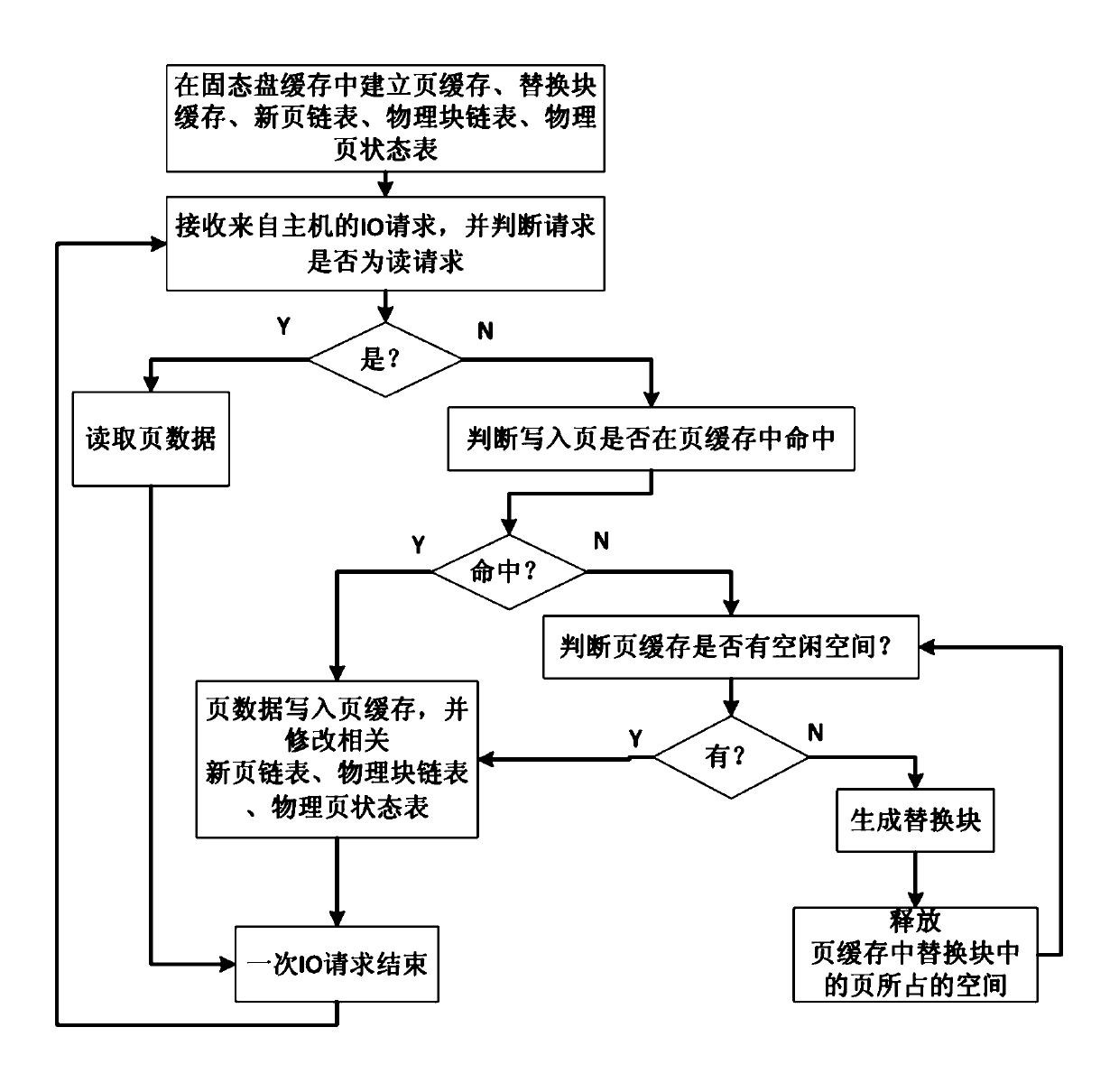

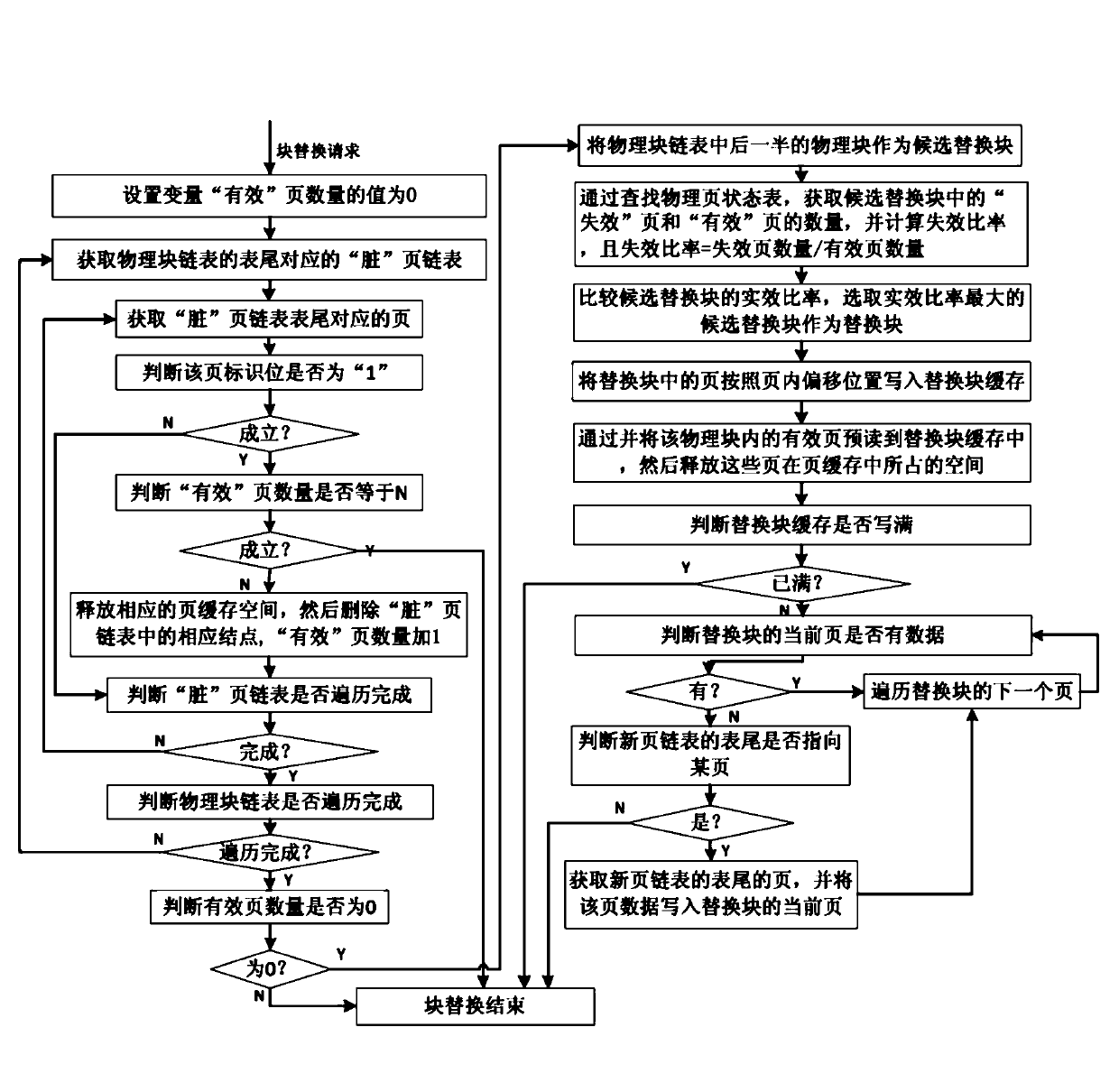

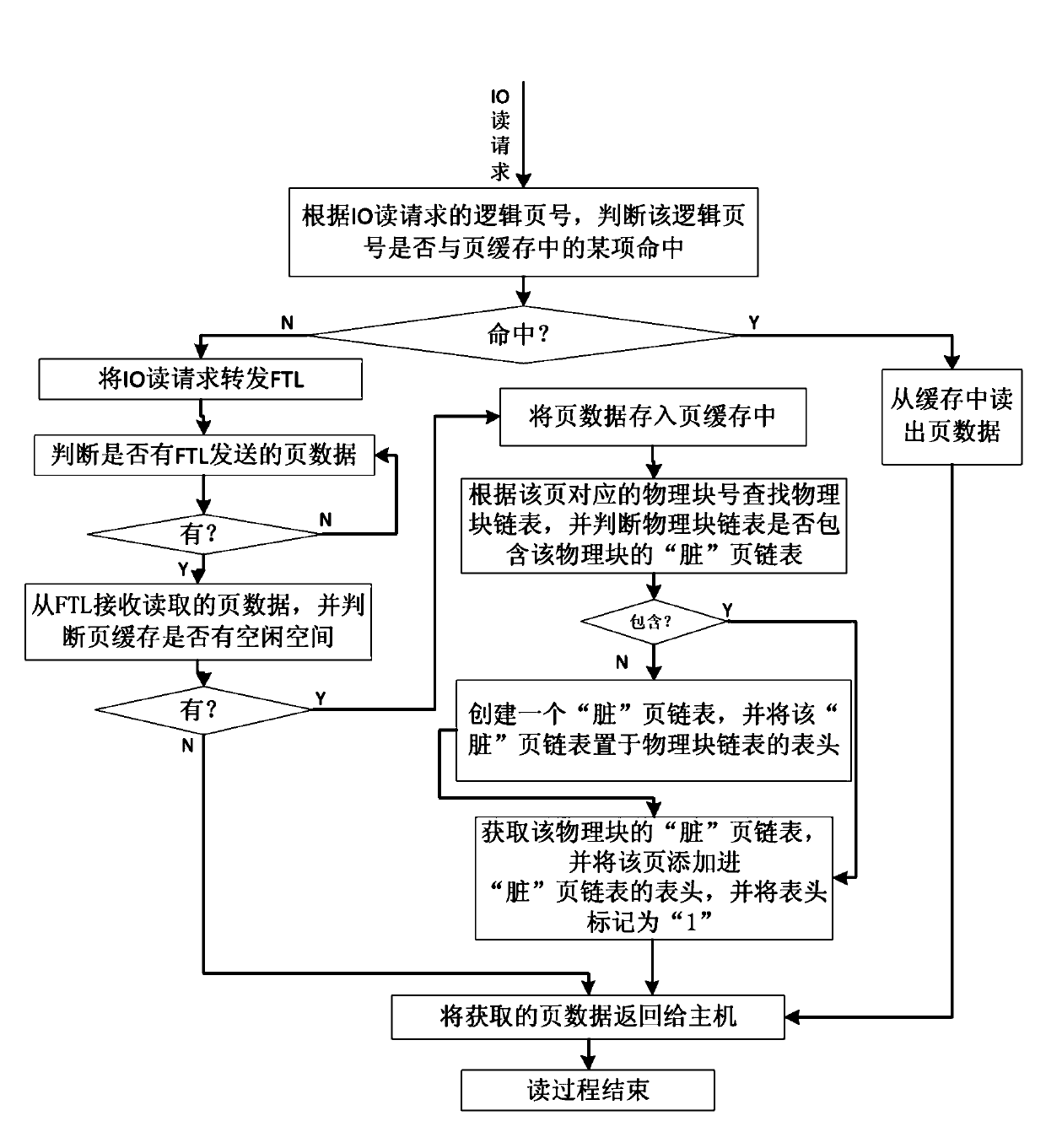

The invention discloses a cache management method for a solid-state disc. The method comprises the following implement steps that a page cache, a replace block module, a new page linked list, a physical block chain list and a physical page state list are established; an input and output (IO) request from a host is received and is executed through the page cache, when a writing request is executed, if the page cache is missed, and the page cache has no spare space, a block replace process of the solid-state disc is executed, namely an 'effective' page space in the page cache is preferential released; when the number of 'effective' pages in the page cache is zero, a candidate replace block with the largest failure ratio in a rear half physical block of the physical block chain list is selected to serve as a replace block, and the replace block cache is utilized to execute a replace writing process. The cache management method for the solid-state disc can effectively use a limited cache space and increase hit rate of the cache, enables a block written in a flash medium to comprise as many dirty data pages as possible and as few effective data pages as possible to reduce erasure operation and page copy operations and sequential rubbish recovery caused by the dirty data pages. The cache management method for the solid-state disc is easy to operate.

Owner:湖南长城银河科技有限公司

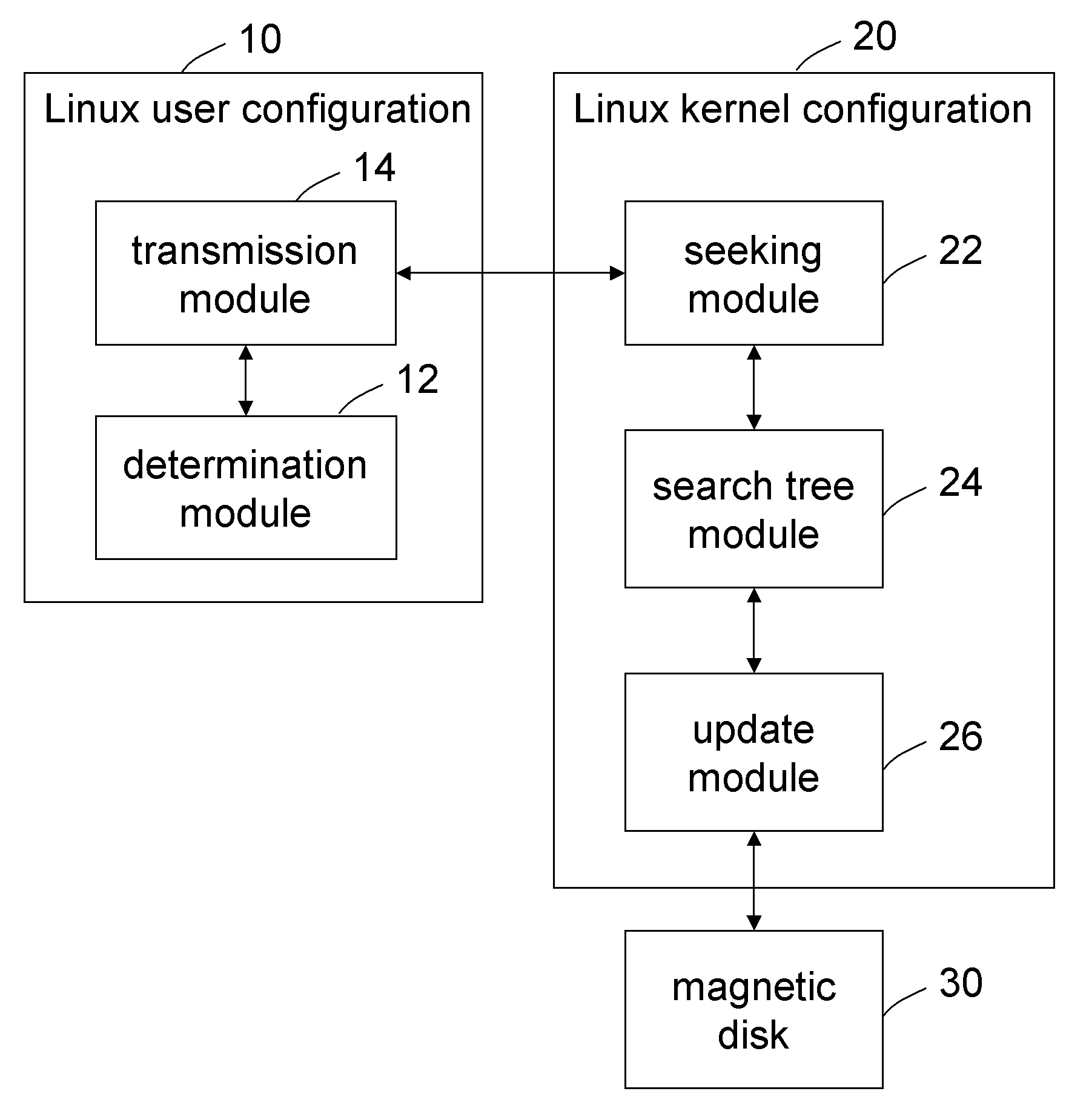

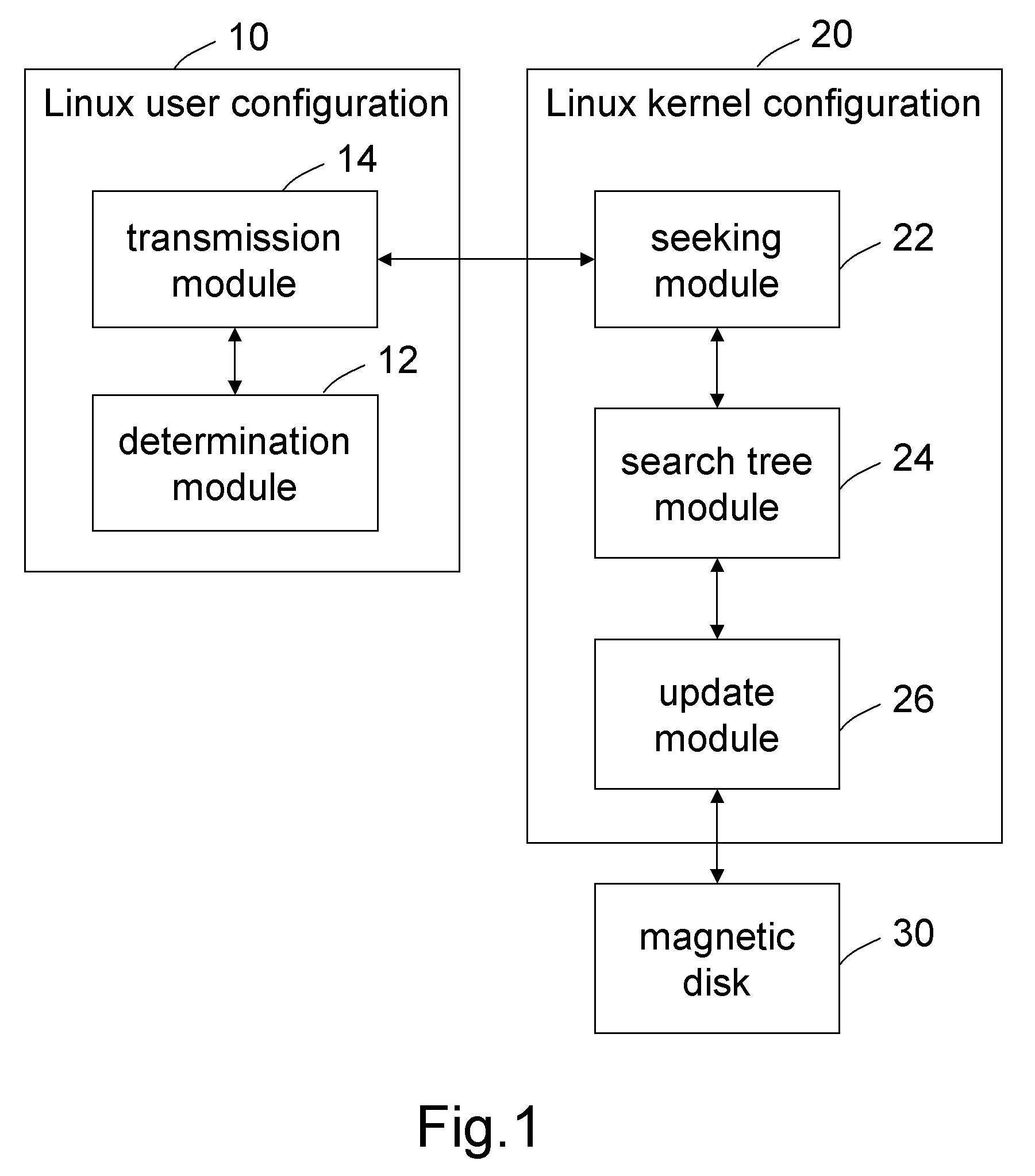

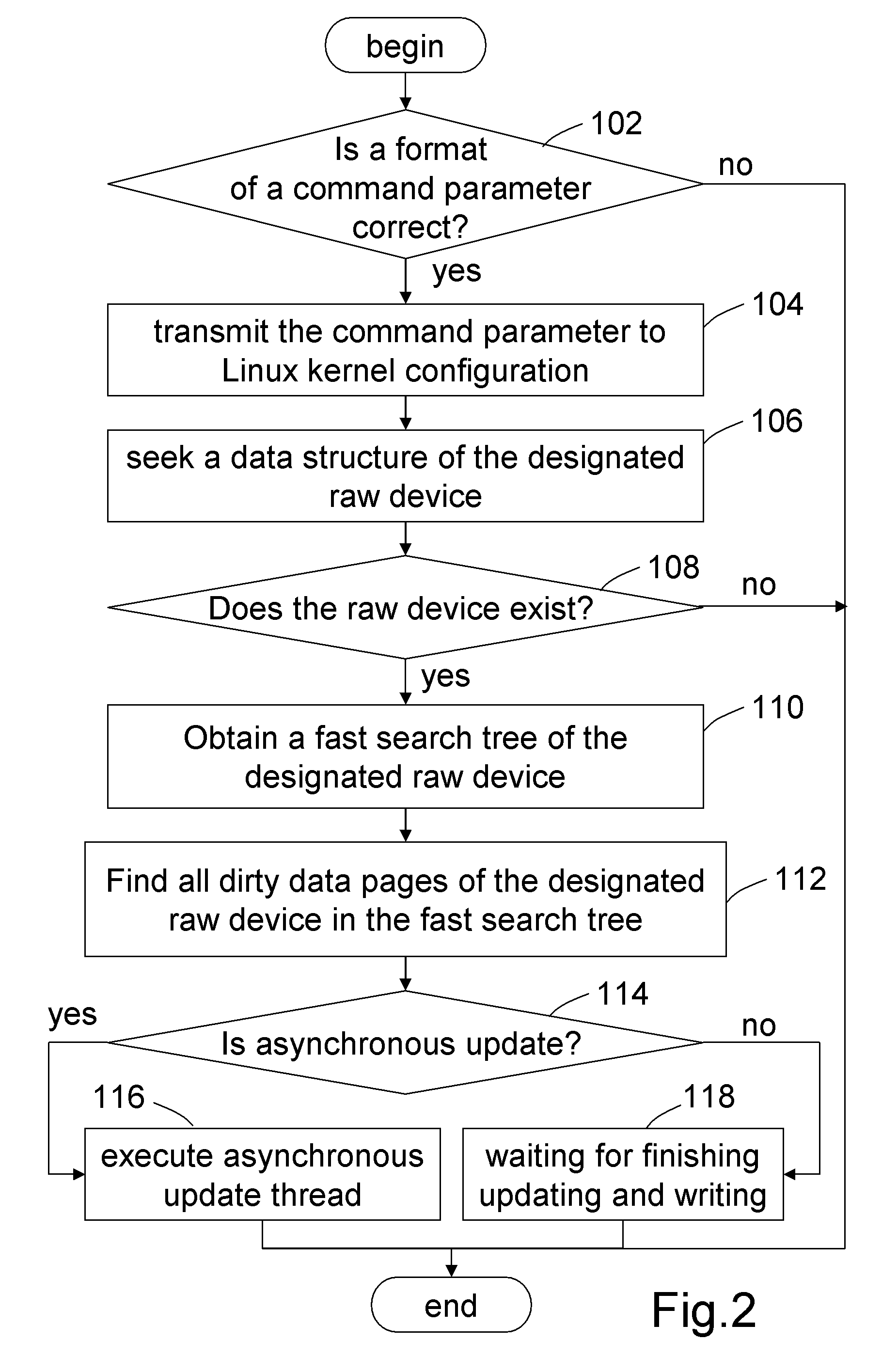

System and method for updating dirty data of designated raw device

ActiveUS20090113130A1Shorten the timeMemory adressing/allocation/relocationMicro-instruction address formationGNU/LinuxDirty data

A system and method for updating dirty data of designated raw device is applied in Linux system. A format of a command parameter for updating the dirty data of the designated raw device is determined, to obtain the command parameter with the correct format and transmit it into the Kernel of the Linux system. Then, a data structure of the designated raw device is sought based on the command parameter, to obtain a fast search tree of the designated raw device. Finally, all dirty data pages of the designated raw device are found by the fast search tree, and then are updated into a magnetic disk in a synchronous or asynchronous manner. Therefore, the dirty data of an individual raw device can be updated and written into the magnetic disk without interrupting the normal operation of the system, hereby ensuring secure, convenient, and highly efficient update of the dirty data.

Owner:INVENTEC CORP

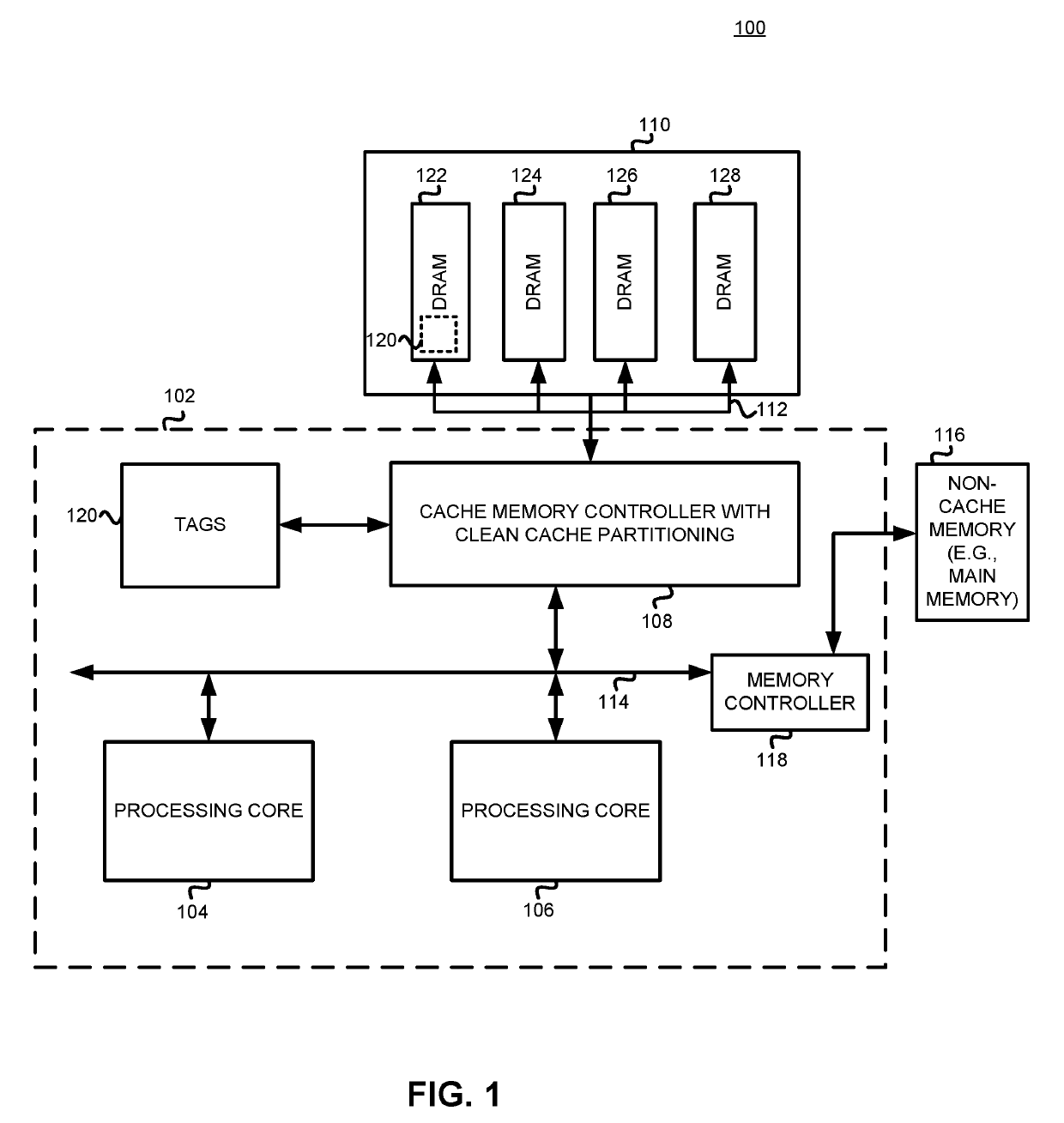

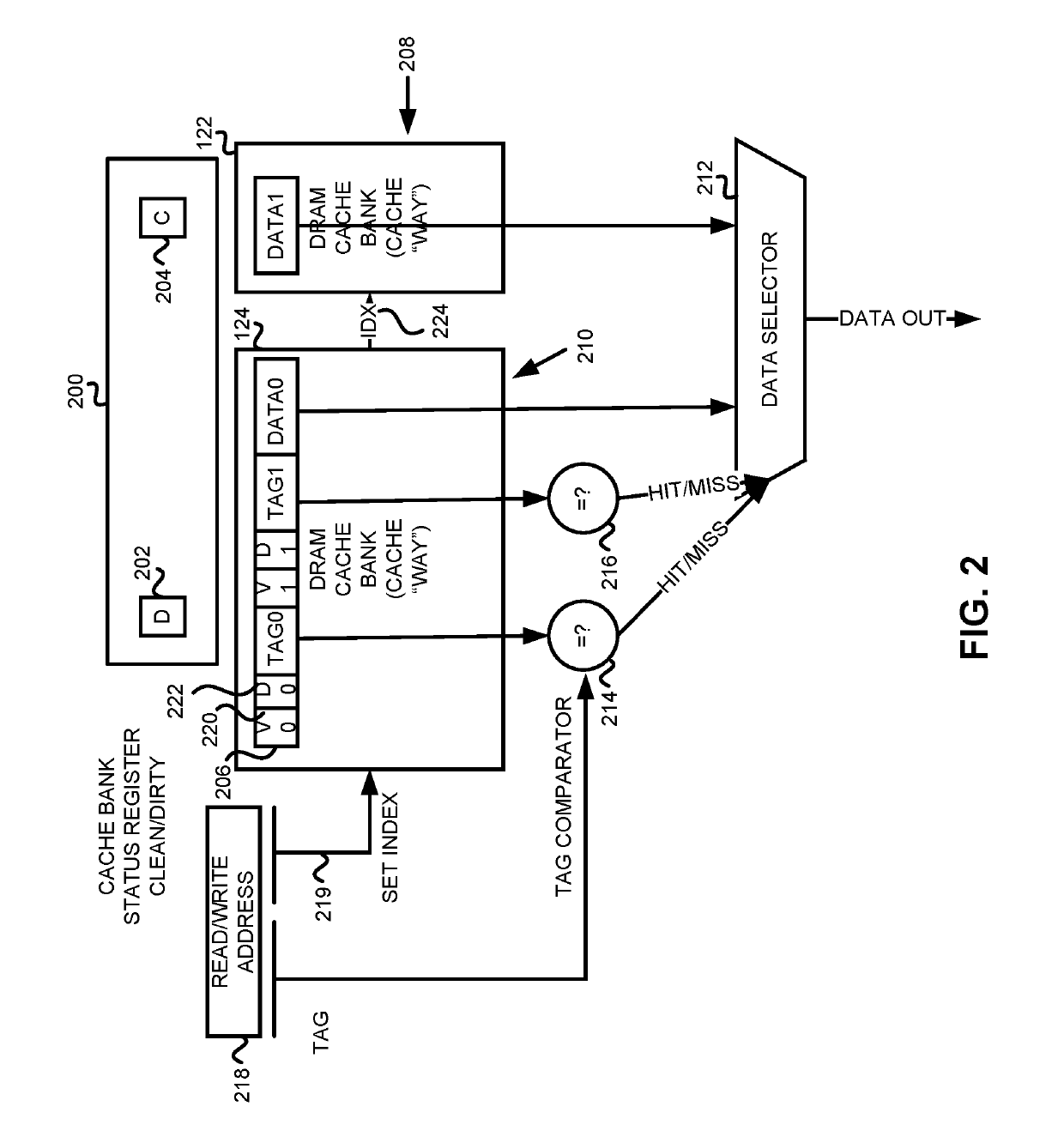

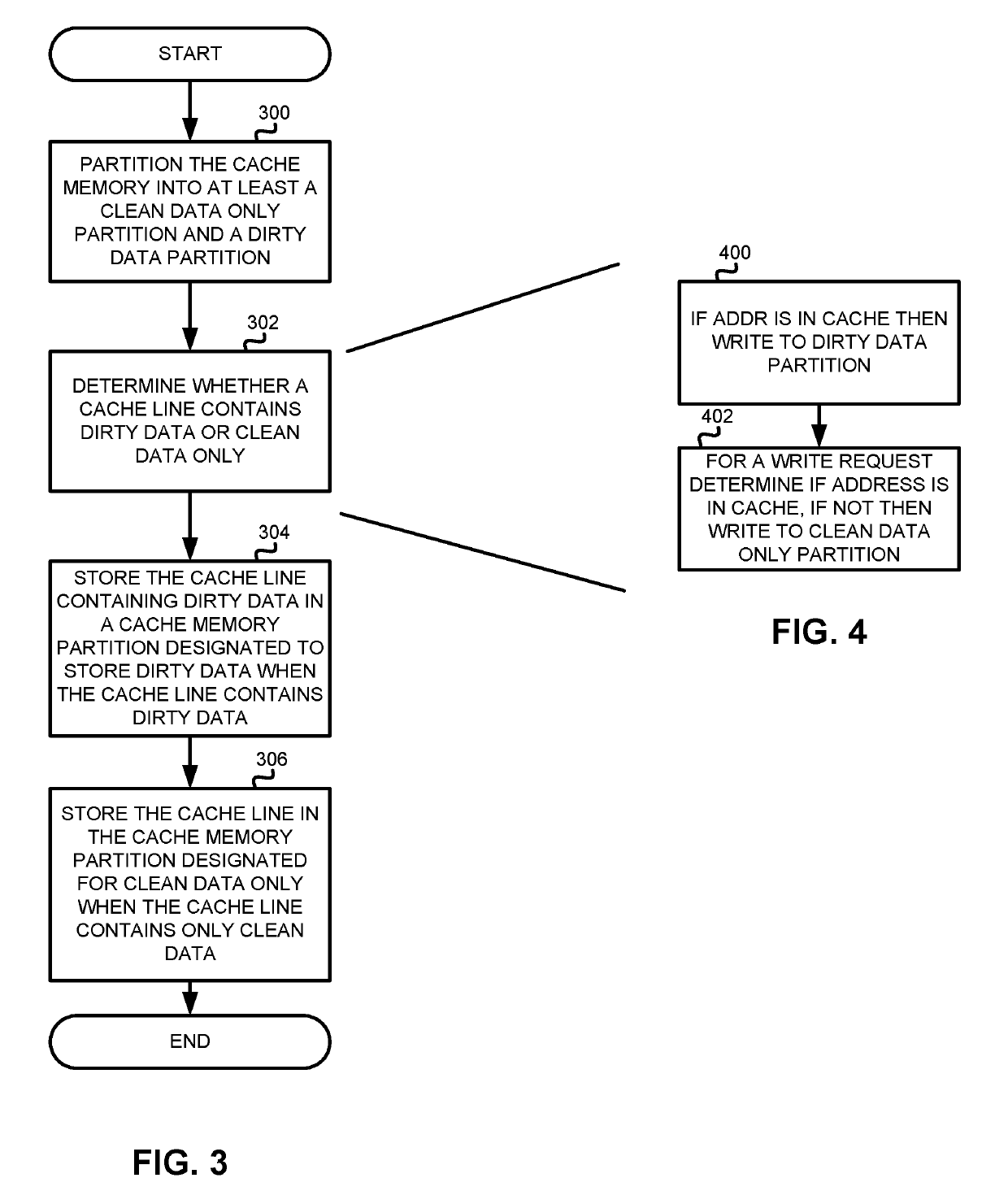

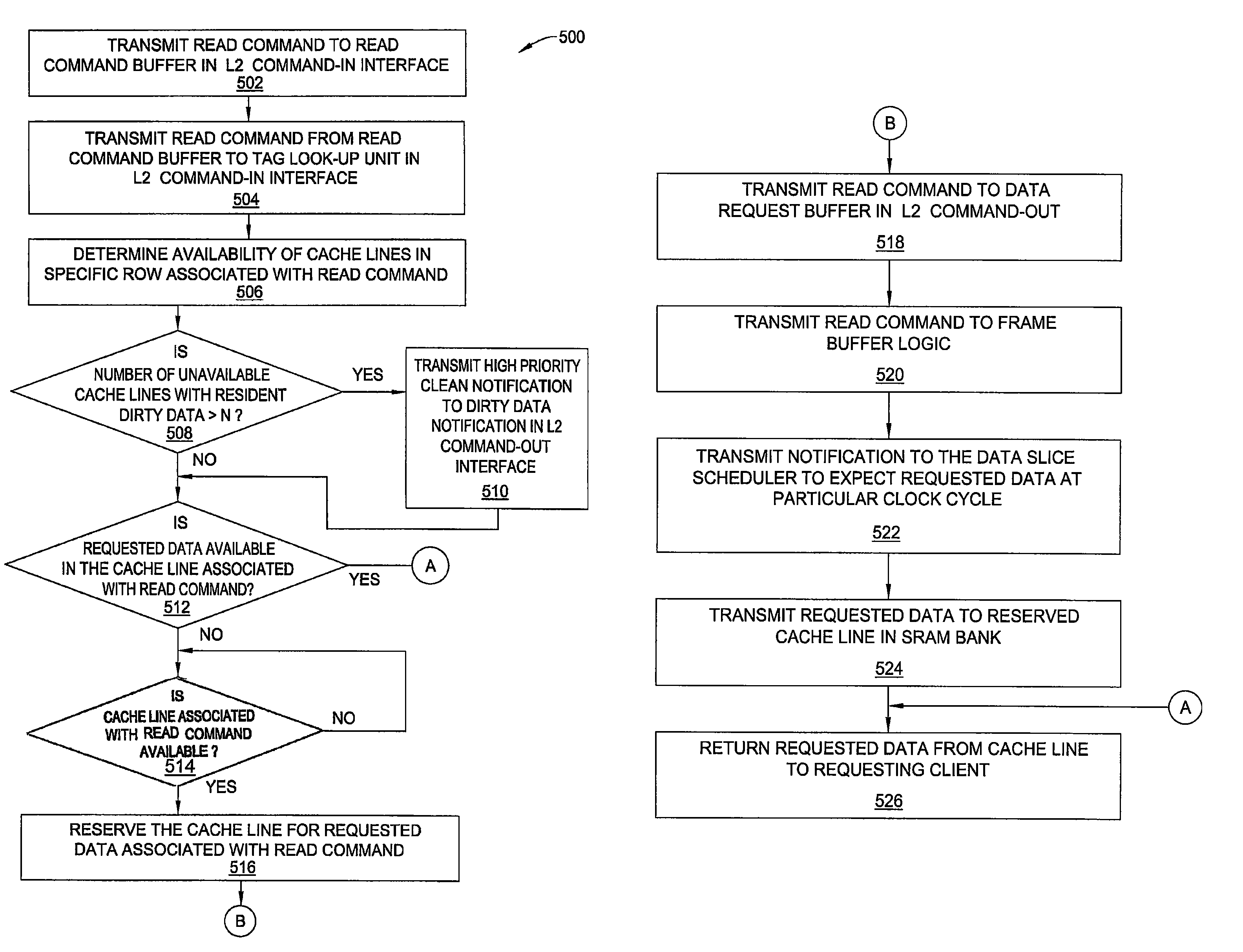

Method and apparatus for controlling cache line storage in cache memory

ActiveUS20190205253A1Memory architecture accessing/allocationEnergy efficient computingDirty dataMemory controller

A method and apparatus physically partitions clean and dirty cache lines into separate memory partitions, such as one or more banks, so that during low power operation, a cache memory controller reduces power consumption of the cache memory containing the clean only data. The cache memory controller controls a refresh operation so that a data refresh does not occur for the clean data only banks or the refresh rate is reduced for the clean data only banks. Partitions that store dirty data can also store clean data; however, other partitions are designated for storing only clean data so that the partitions can have their refresh rate reduced or refresh stopped for periods of time. When multiple DRAM dies or packages are employed, the partition can occur on a die or package level as opposed to a bank level within a die.

Owner:ADVANCED MICRO DEVICES INC

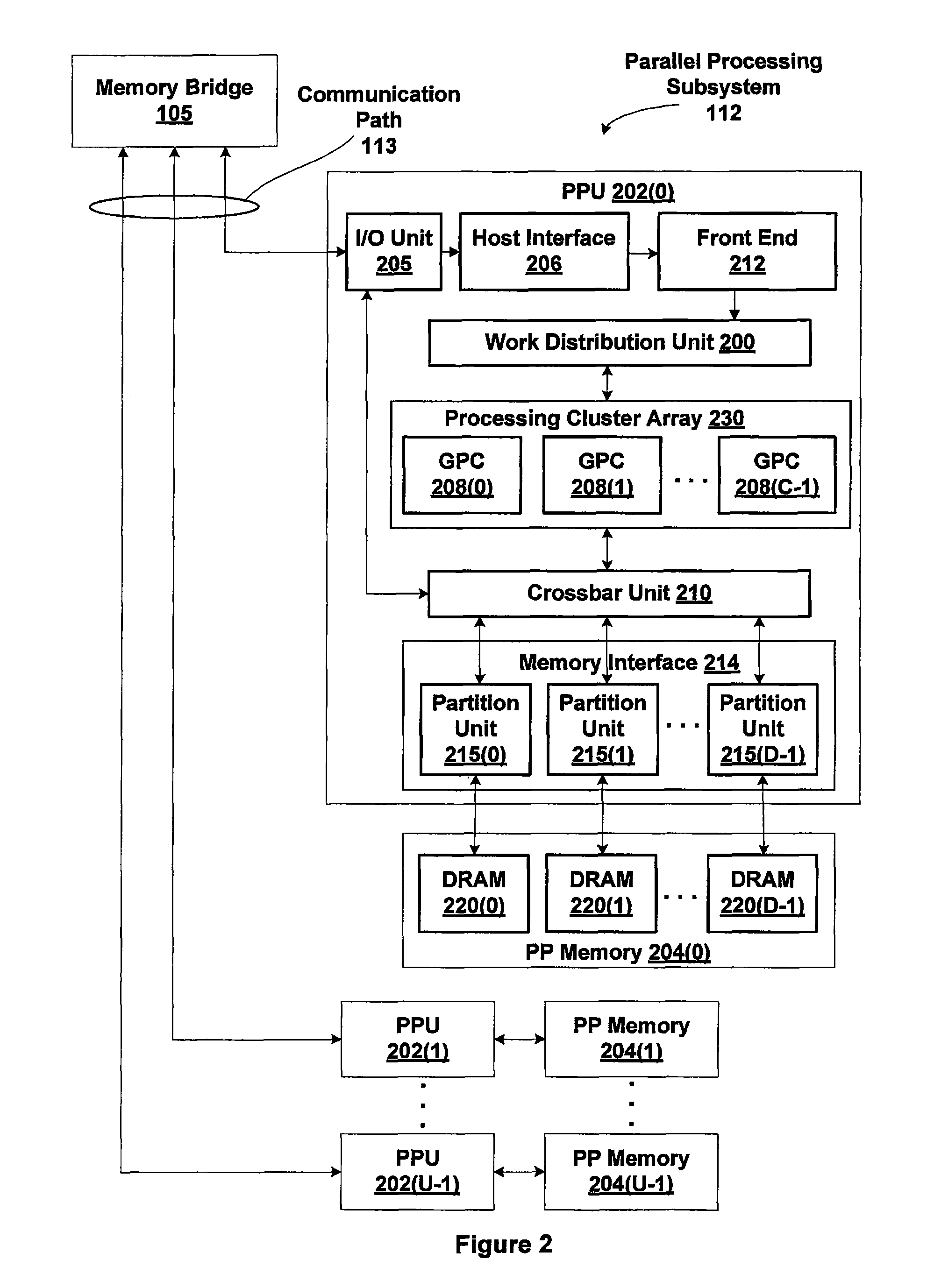

Control mechanism for fine-tuned cache to backing-store synchronization

ActiveUS20140122809A1Decreased write bandwidthAttenuation bandwidthMemory adressing/allocation/relocationMemory addressDirty data

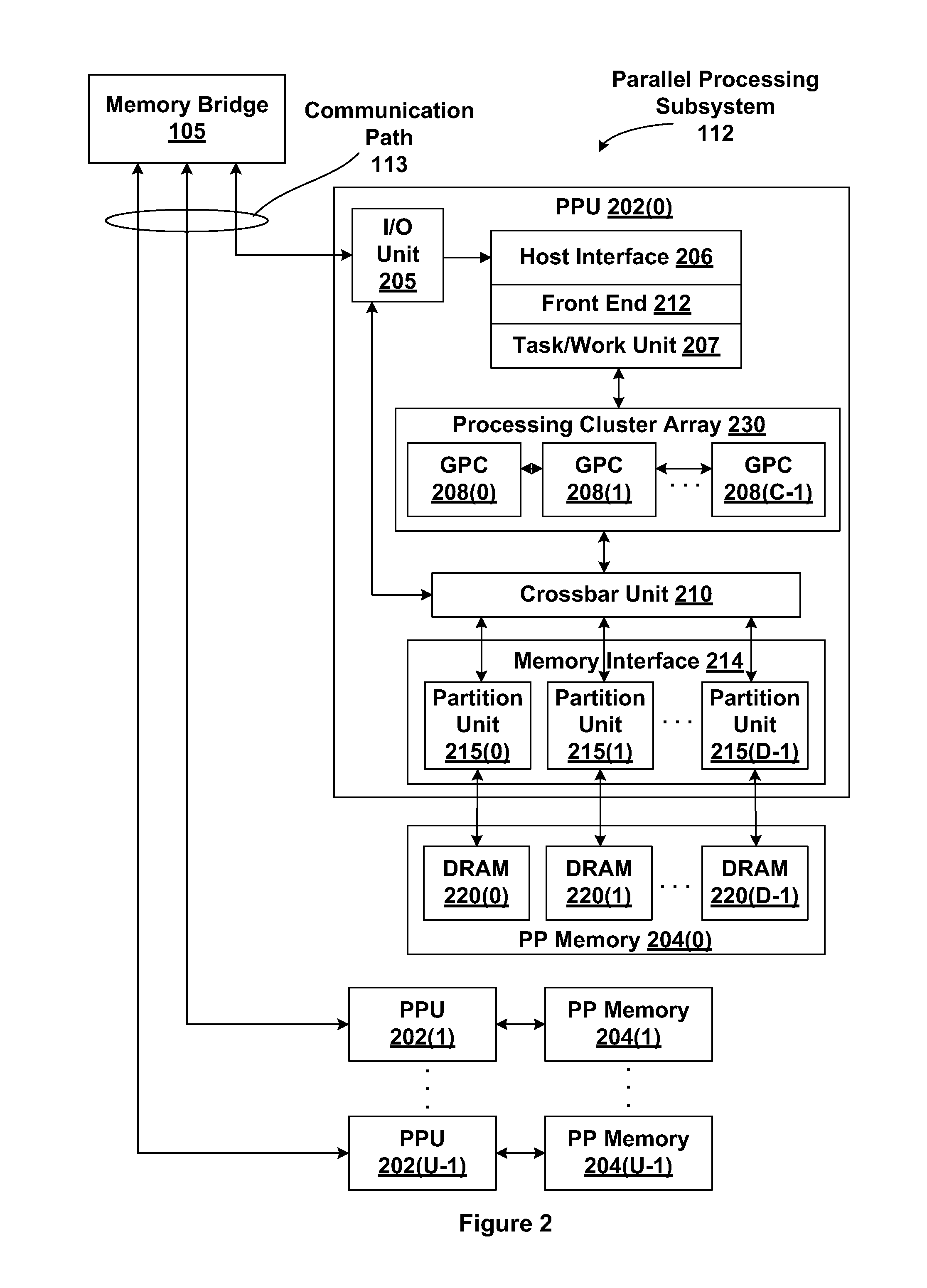

One embodiment of the present invention sets forth a technique for processing commands received by an intermediary cache from one or more clients. The technique involves receiving a first write command from an arbiter unit, where the first write command specifies a first memory address, determining that a first cache line related to a set of cache lines included in the intermediary cache is associated with the first memory address, causing data associated with the first write command to be written into the first cache line, and marking the first cache line as dirty. The technique further involves determining whether a total number of cache lines marked as dirty in the set of cache lines is less than, equal to, or greater than a first threshold value, and: not transmitting a dirty data notification to the frame buffer logic when the total number is less than the threshold value, or transmitting a dirty data notification to the frame buffer logic when the total number is equal to or greater than the first threshold value.

Owner:NVIDIA CORP

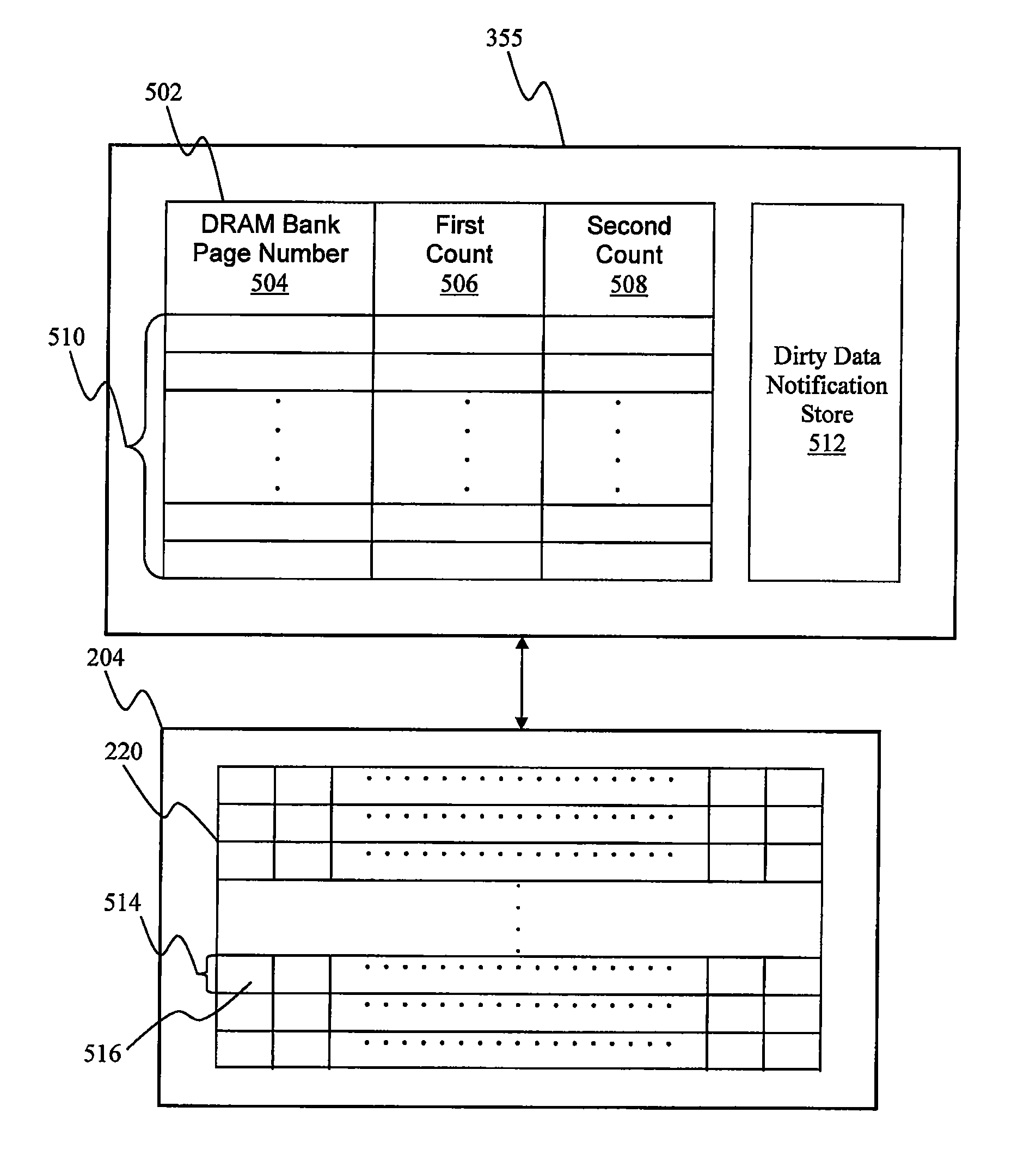

System and method for cleaning dirty data in an intermediate cache using a data class dependent eviction policy

ActiveUS8244984B1Raise priorityMemory architecture accessing/allocationMemory adressing/allocation/relocationData classMemory address

In one embodiment, a method for managing information related to dirty data stored in an intermediate cache coupled to one or more clients and to an external memory includes receiving a dirty data notification related to dirty data residing in the intermediate cache, the dirty data notification including a memory address indicating a location in the external memory where the dirty data should be stored and a data type associated with the dirty data, and extracting a bank page number from the memory address that identifies a bank page within the external memory where the dirty data should be stored. The embodiment also includes incrementing a first count associated with a first entry in a notification sorter that is affirmatively associated with the bank page, determining that the dirty data has a first data type, and incrementing a second count associated with the first entry.

Owner:NVIDIA CORP

Keypress behavior pattern construction and analysis system of touch screen user and identity recognition method thereof

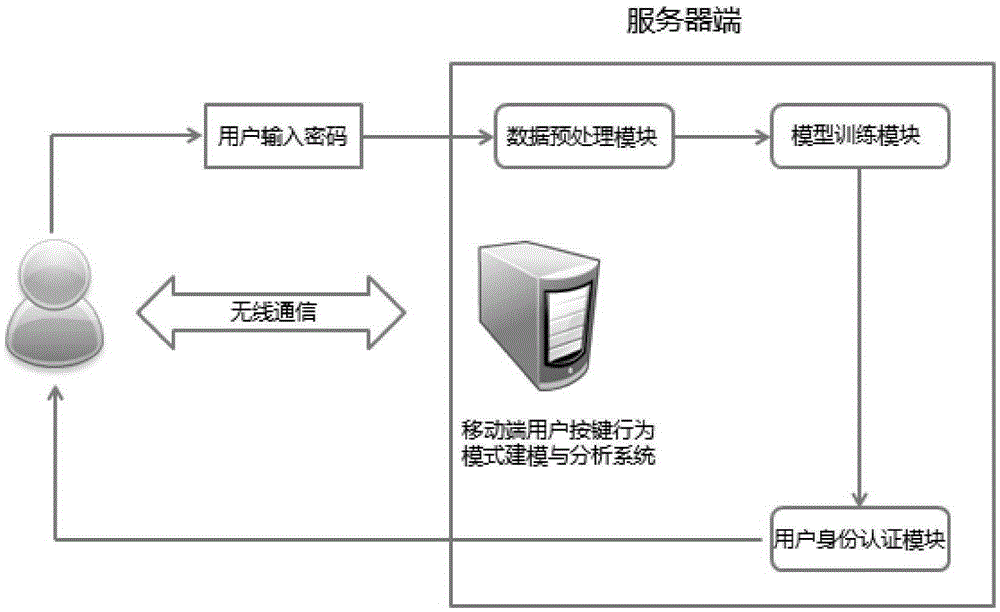

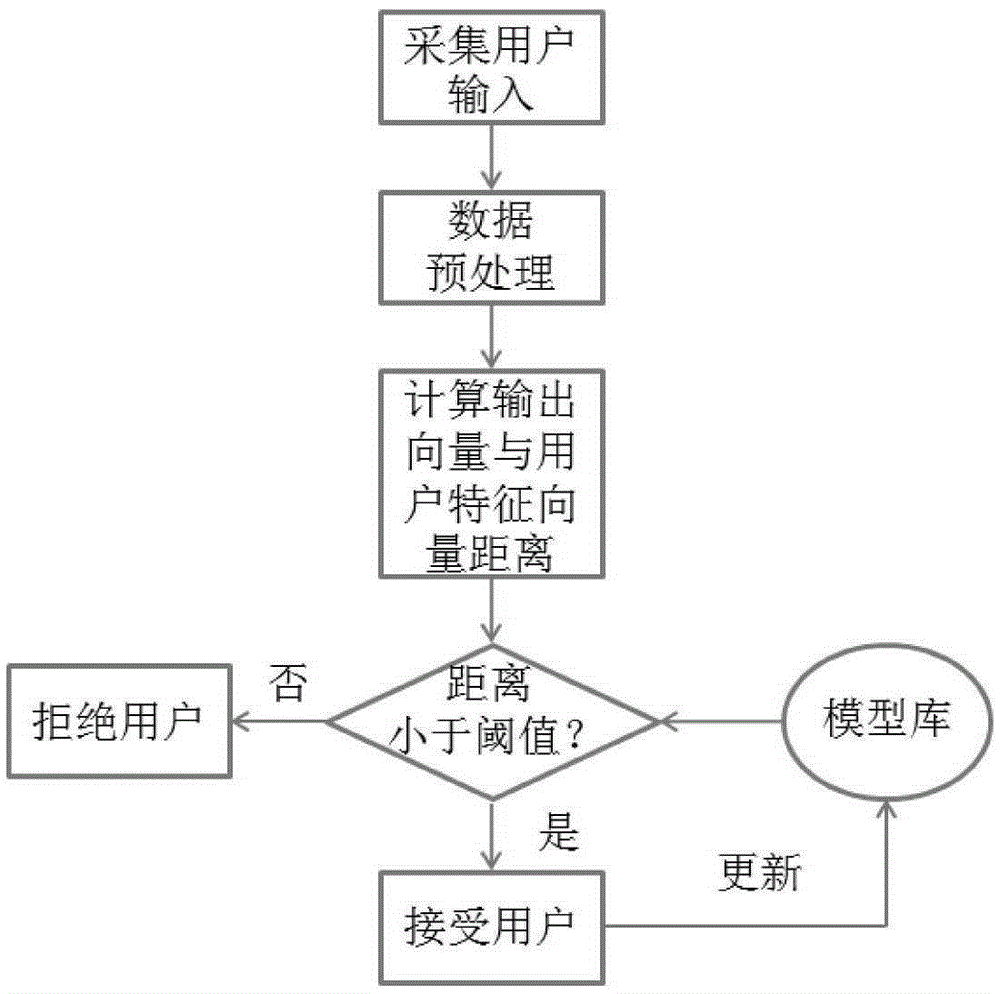

ActiveCN105279405AFix security issuesThe realization process is convenientDigital data authenticationTransmissionIdentity recognitionData acquisition

The invention provides a keypress behavior pattern construction and analysis system of a touch screen user and an identity recognition method thereof. According to the invention, data analysis is performed by using history keypress information of inputting a password by a soft keyboard on a mobile phone touch screen; a corresponding neural network model is established; and model calculation is performed on new to-be-tested data, to recognize a user identity. The system comprises a user data acquisition module, a data pre-processing module, a model training module, and a user identity identification module. The user data acquisition module is responsible for acquiring time series information of a user clicking the soft keyboard and inputting the password, and pressure and contact area information. The data pre-processing module is responsible for pre-processing the acquired data, removing dirty data, and performing normalization on the data. The model training module is responsible for performing analysis and modeling on a key-in mode of all users. The user identity identification module is responsible for performing model calculation on the new to-be-tested data, to recognize the user identity and improve security of a user account password.

Owner:TONGJI UNIV

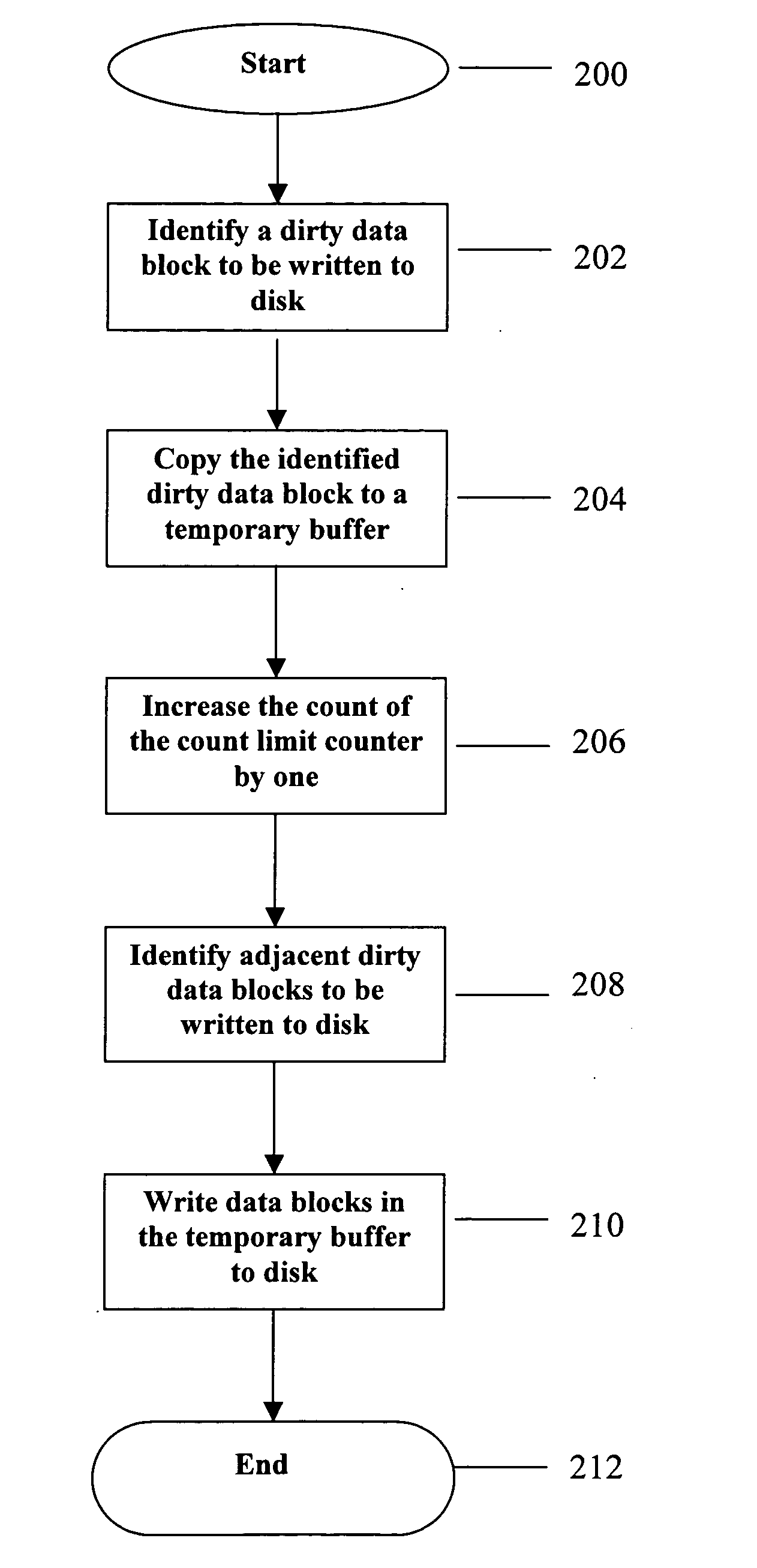

Reducing disk IO by full-cache write-merging

ActiveUS20050044311A1Reduced head movementImprove performanceMemory adressing/allocation/relocationHead movementsDirty data

A method and system for implementing coalescing write IOs for an electronic and computerized system is disclosed. The electronic and computerized system has a buffer cache storing data blocks waiting for being written into a disk of the electronic and computerized system. Dirty data blocks with consecutive data block addresses in the buffer cache are coalesced and written to the disk together. Thus, the disk head movements for performing the write IOs are significantly reduced, thereby allowing the electronic and computerized system to maintain a high IO throughput and high peak performance with fewer disks.

Owner:ORACLE INT CORP

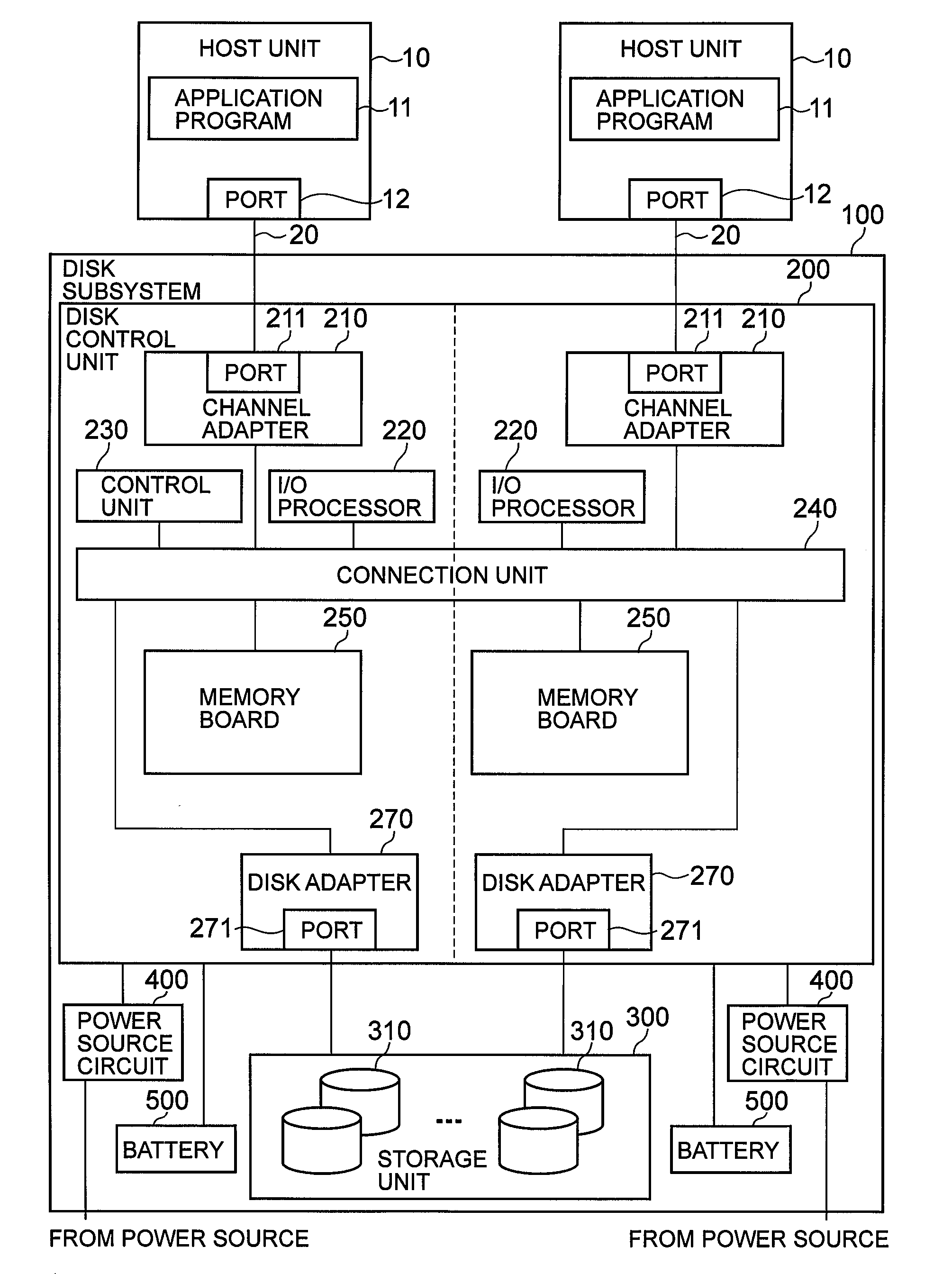

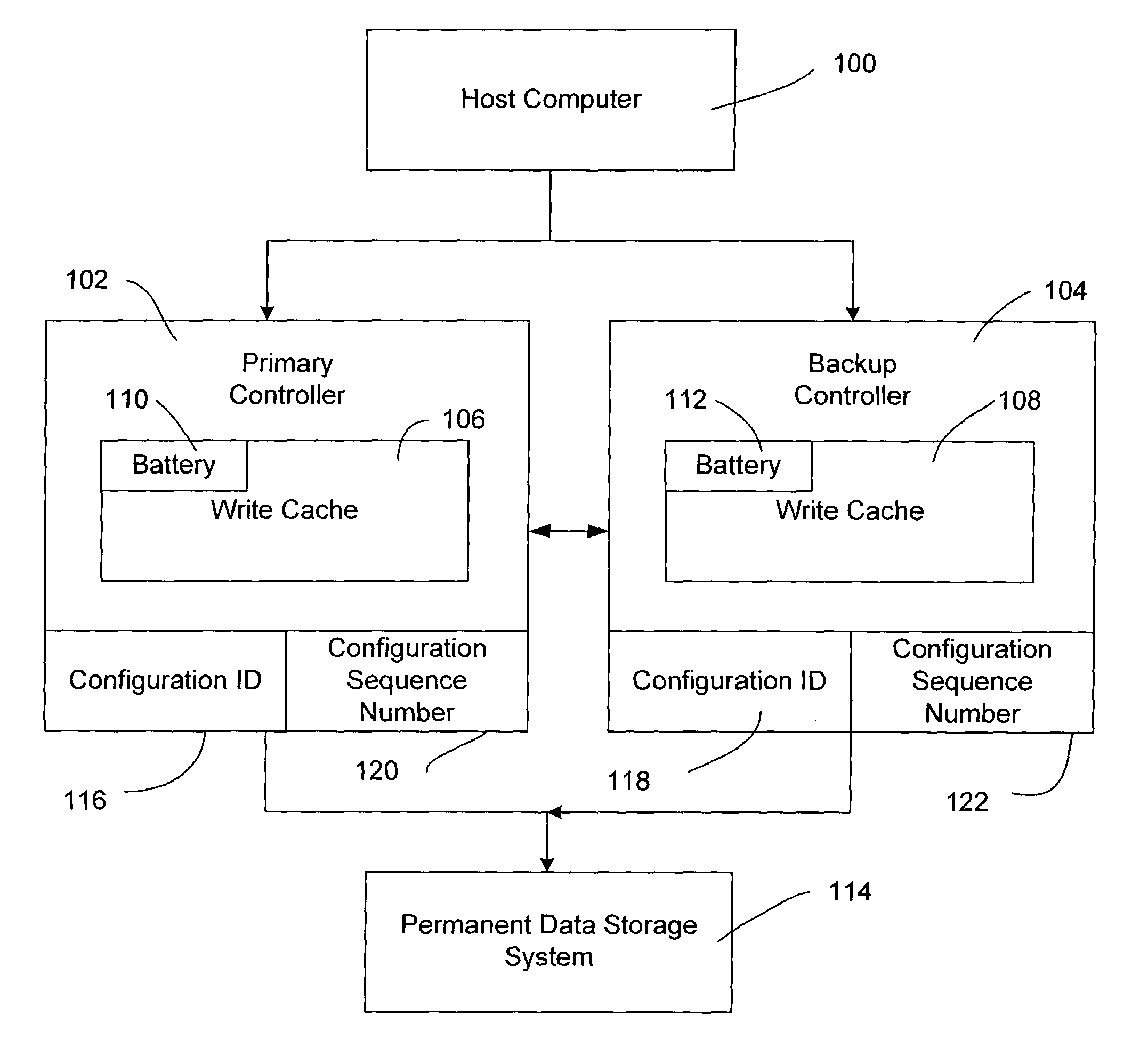

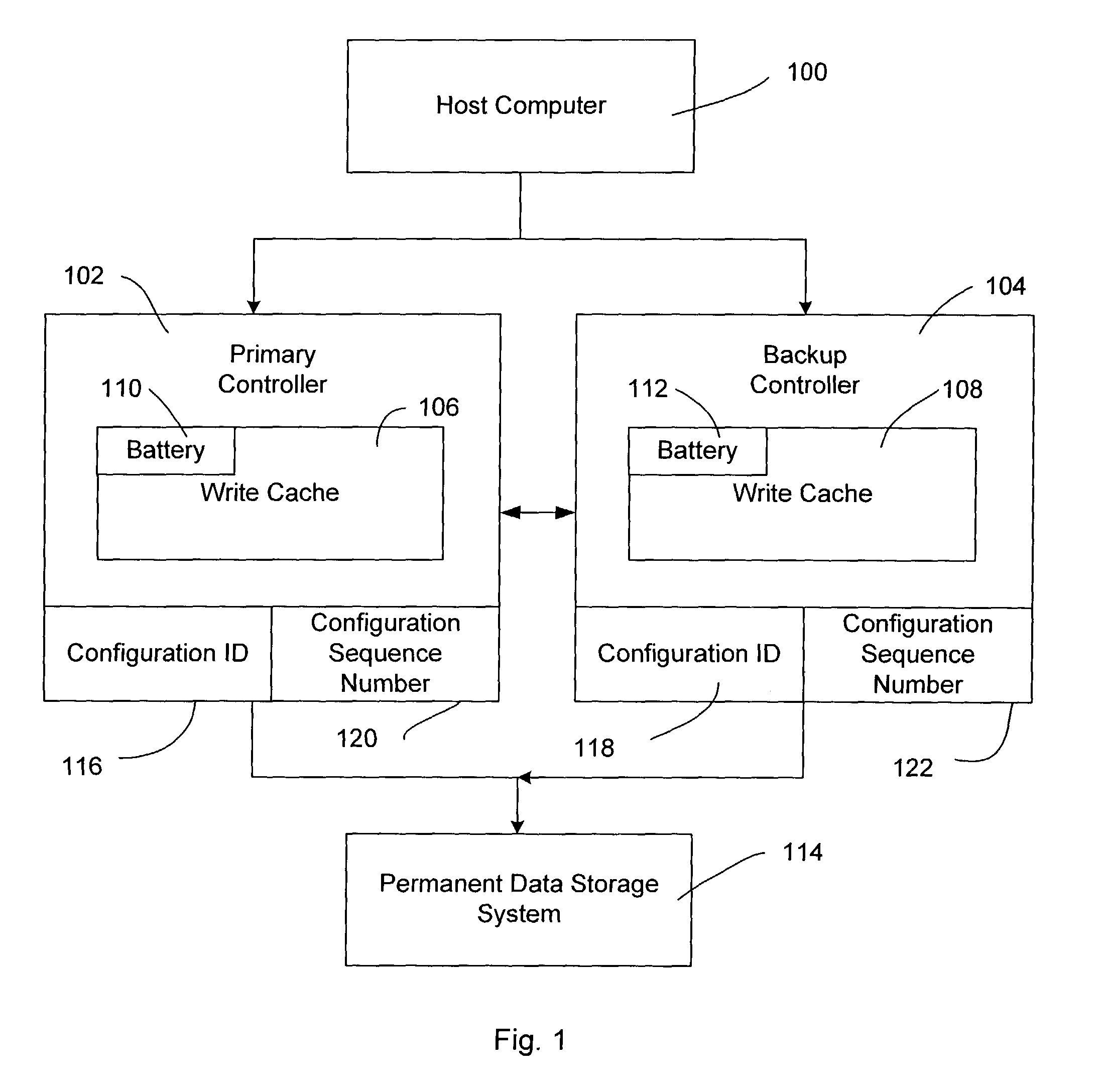

Write cache recovery after loss of power

A method for recovering dirty write cache data after controller power loss or failure from one of two independently battery backed up and mirrored write caches. Two independent controllers jointly operate with a permanent data storage system. Each controller has a write cache that is a mirror of the write cache in the other controller. The primary controller resets a power down flag stored each write cache upon proper shutdown. The primary controller further increments and stores a configuration sequence number into each write cache upon proper shutdown. If a primary controller powers up and identifies that the write cache was not properly shutdown due to the state of the power down flag, it flushes the dirty data in the write cache only if the configuration sequence number contained in the write cache is the same as the configuration sequence number contained in the primary controller. If the configuration sequence number in the primary controller is higher than the configuration sequence number in the write cache, the dirty data was previously flushed to permanent data storage with the other write cache.

Owner:XIOTECH CORP

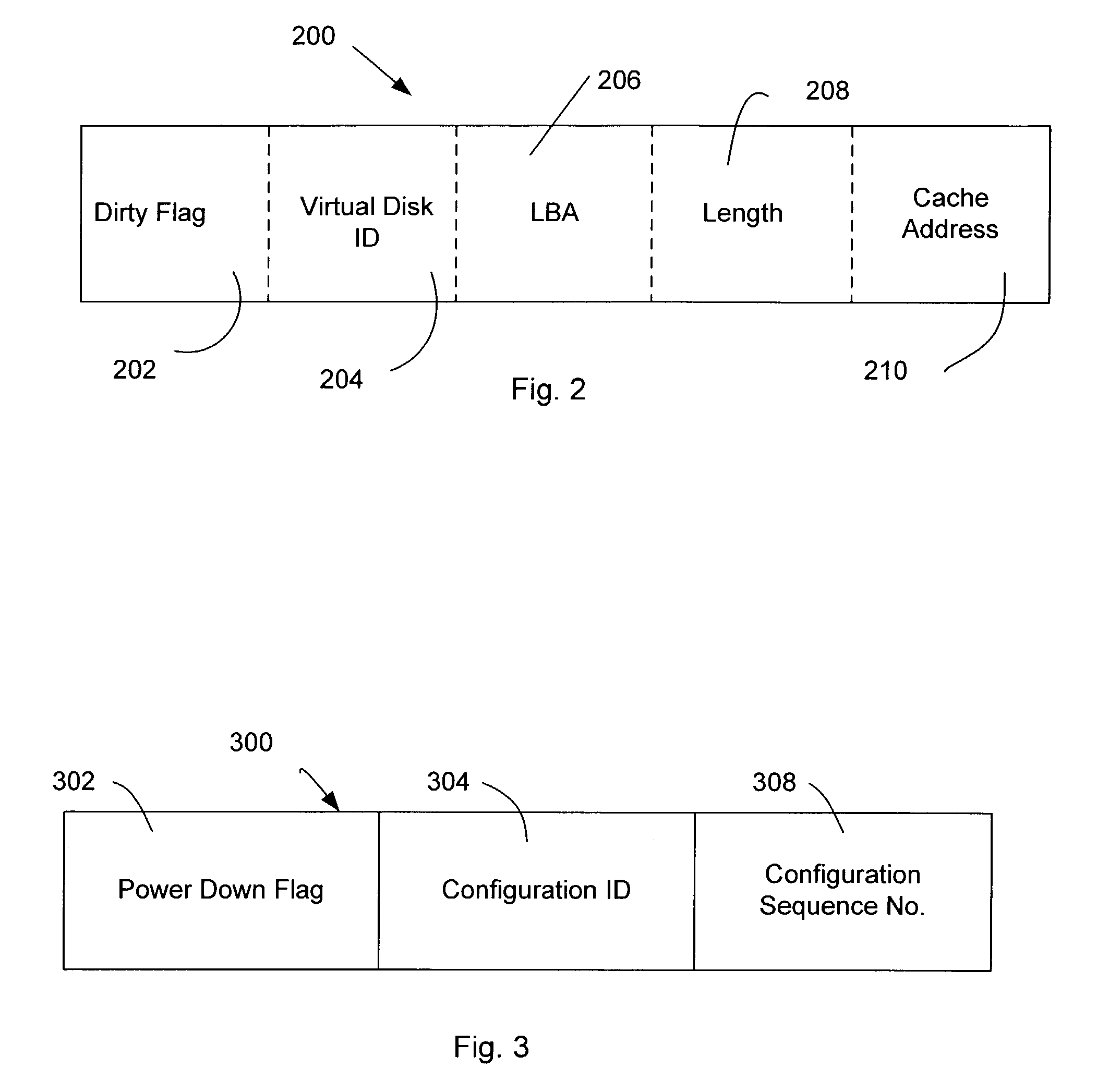

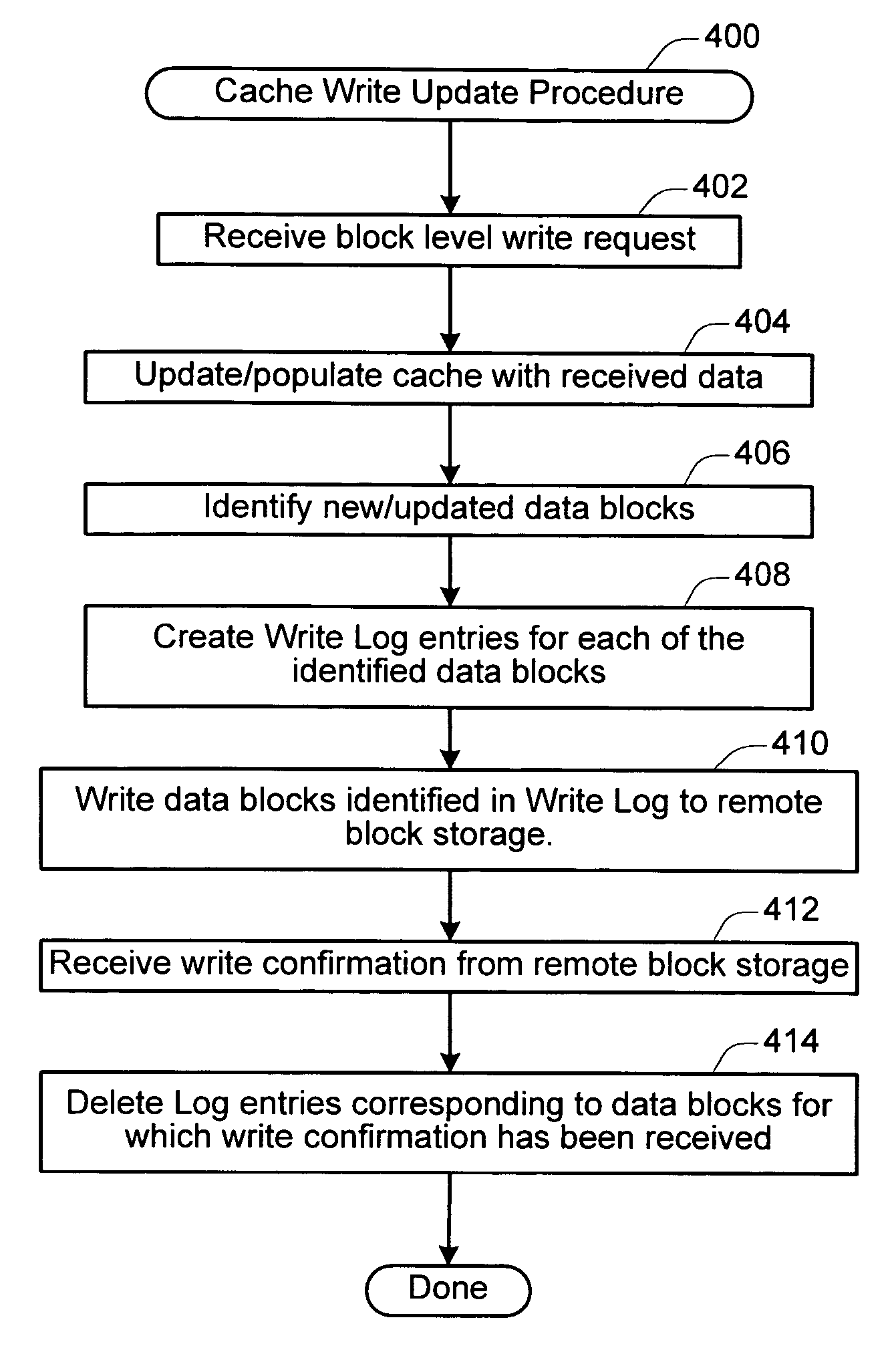

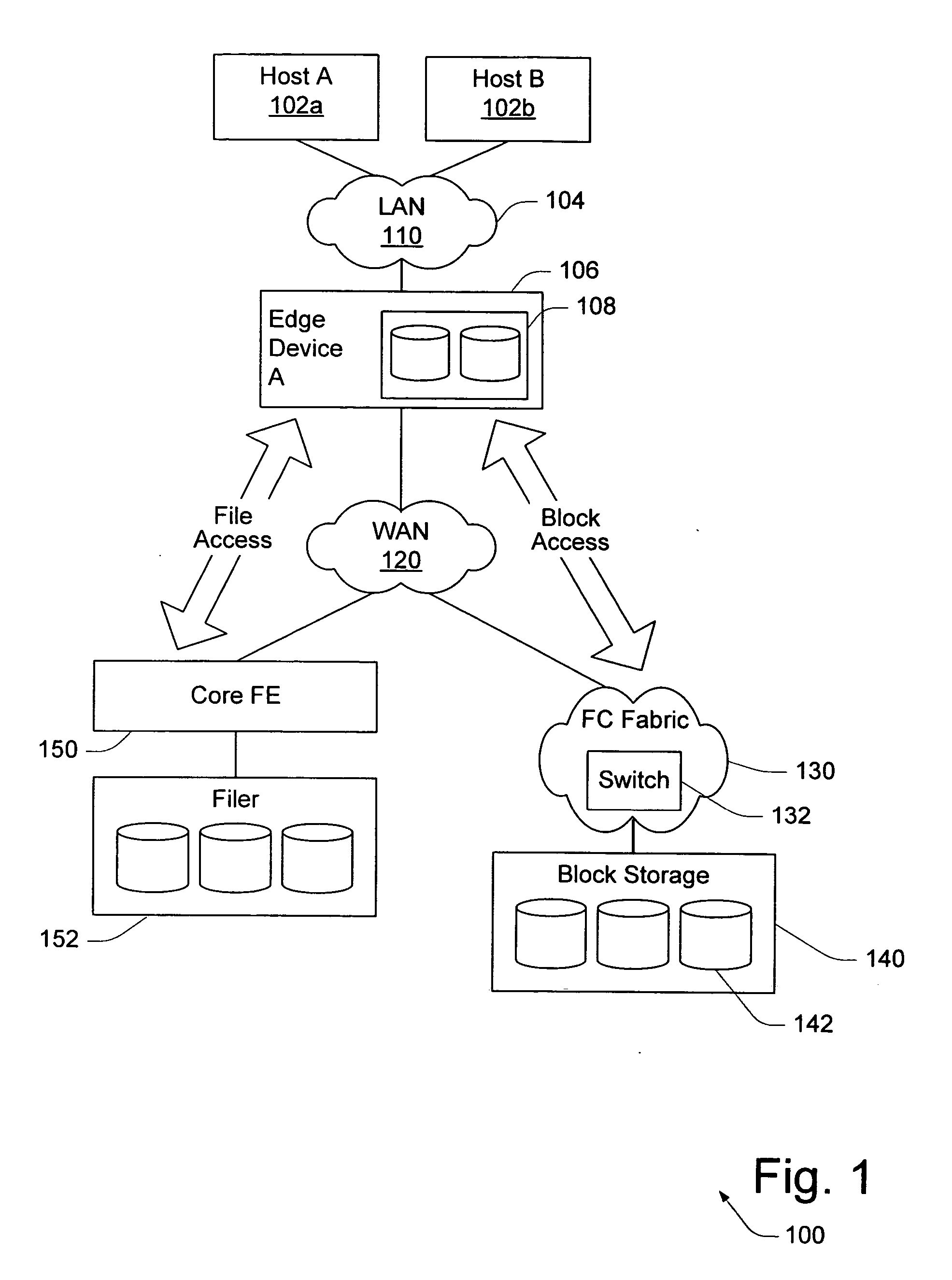

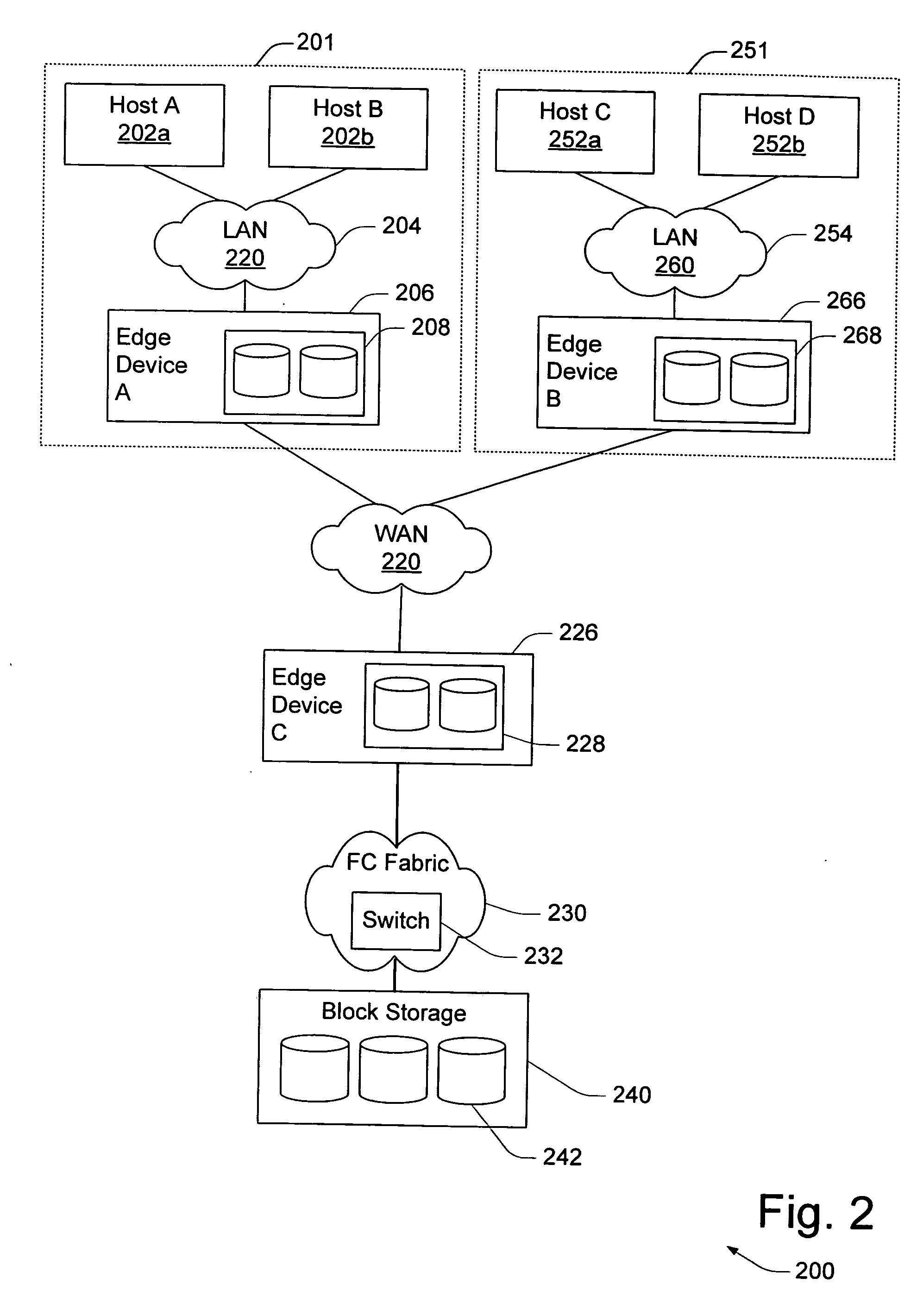

ISCSI block cache and synchronization technique for WAN edge device

ActiveUS20060282618A1Memory architecture accessing/allocationMemory systemsDirty dataFile area network

A technique is described for facilitating block level access operations to be performed at a remote volume via a wide area network (WAN). The block level access operations may be initiated by at least one host which is a member of a local area network (LAN). The LAN includes a block cache mechanism configured or designed to cache block data in accordance with a block level protocol. A block level access request is received from a host on the LAN. In response to the block level access request, a portion of block data may be cached in the block cache mechanism using a block level protocol. In at least one implementation, portions of block data in the block cache mechanism may be identified as “dirty” data which has not yet been stored in the remote volume. Block level write operations may be performed over the WAN to cause the identified dirty data in the block cache mechanism to be stored at the remote volume.

Owner:CISCO TECH INC

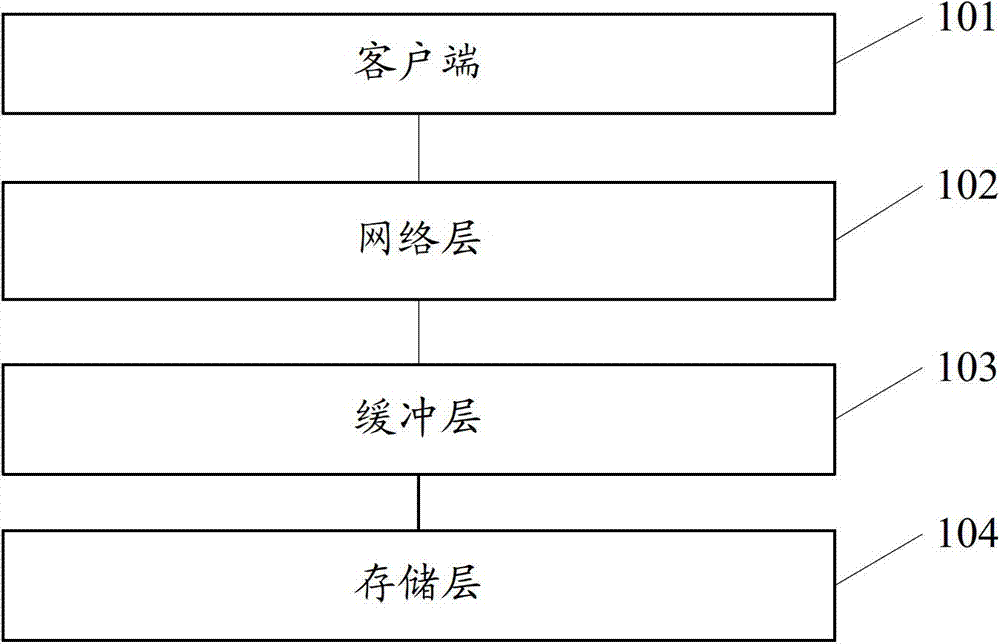

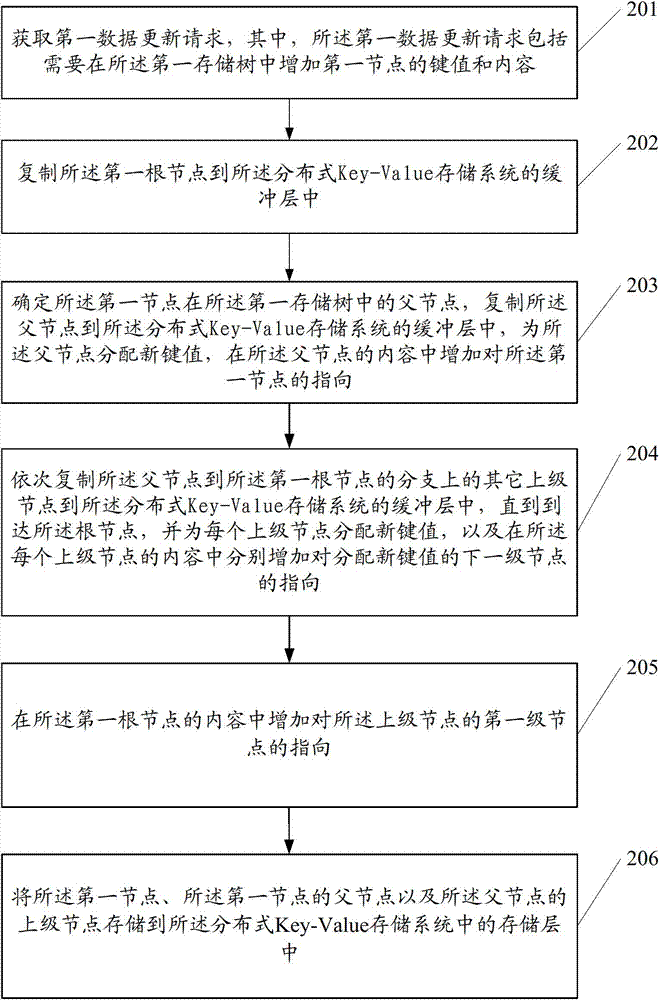

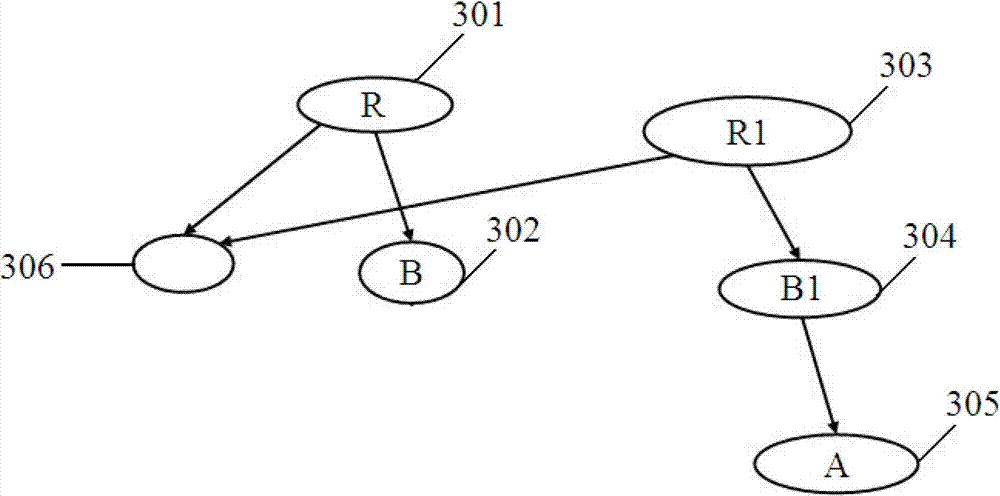

Data update method for distributed storage system and server

ActiveCN103518364AAvoid readingAvoid searchingTransmissionSpecial data processing applicationsRelationship - FatherDirty data

An embodiment of the invention provides a data update method for a Key-Value storage system via distributed key values. Through replication of nodes requiring update, father nodes, ancestor nodes, and root nodes to a buffer layer of the Key-Value storage system, modification of key values and contents of the nodes requiring update, the father nodes, and the ancestor nodes, and modification of the contents of the root nodes, the nodes are made different from original nodes in the storage layer. The method is characterized in that, the nodes requiring update, the father nodes, and the ancestor nodes are first stored in the storage layer, and then the root nodes are stored in a key value pair system. Since the nodes requiring update in the buffer layer are stored in the storage layer prior to storage of the root nodes in the buffer layer in the storage layer, reading operation in the storage process of the nodes requiring update does not enable reading of the nodes requiring update, thereby preventing reading of dirty data. The invention also provides a corresponding method for reading index nodes and a server.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

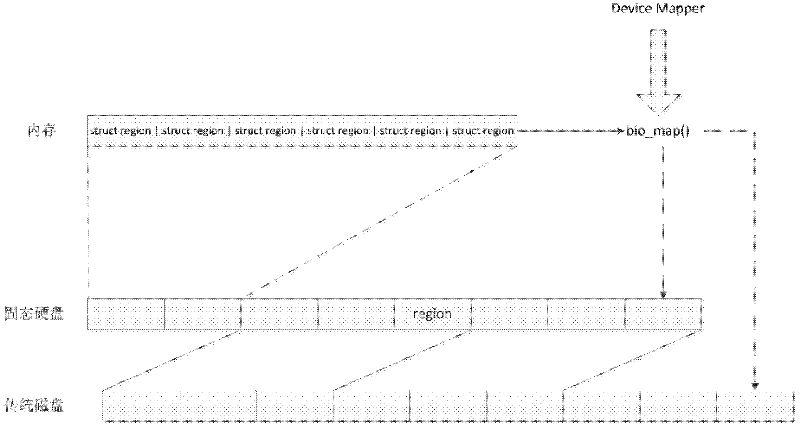

Management method by using rapid non-volatile medium as cache

The invention provides a management method by using a rapid non-volatile medium as a cache. According to the method, a solid-state hard disk is used as a caching device, each caching device is shared by multiple disks, and each disk can only use one caching device; the caching device is divided into a region structure, and mapping connection is adopted between the caching devices and the disks; the caching device is divided into two parts, the front part is used for storing the region data structure in a memory, and the back part is a cache. The method provided by the invention adopts a policy that metadata can be written in the solid-state hard disk in real time to solve the problem, and synchronizes the metadata for managing the dirty data in an assigned position of the solid-state hard disk while the dirty data are written in the solid-state hard disk, so that all the data cached in the solid-sate hard disk without being written in the conventional disk can be read only by reading the metadata when the system is restarted, and further all the data can be prevented from being lost when the system breaks down and is in power failure.

Owner:DAWNING INFORMATION IND BEIJING +1

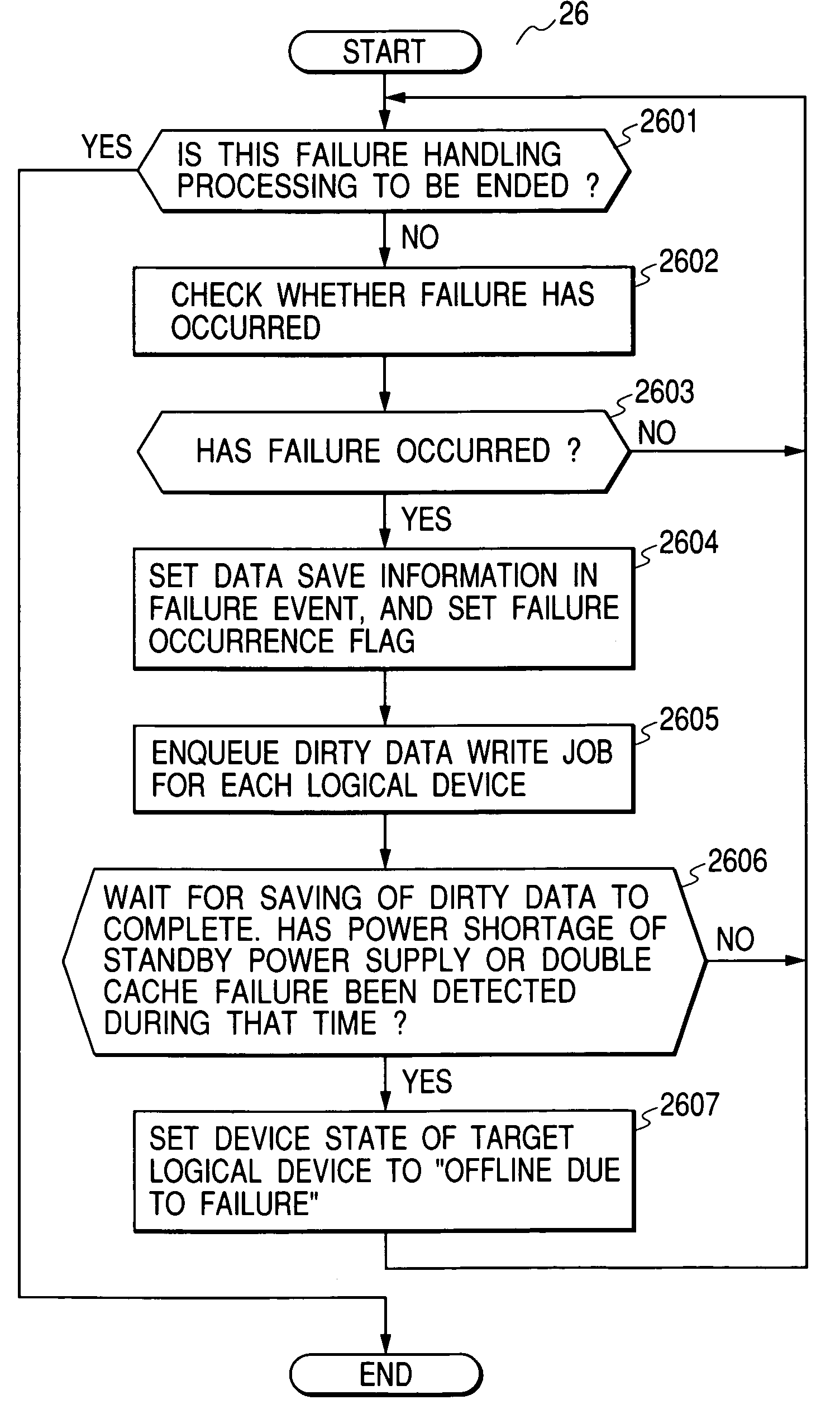

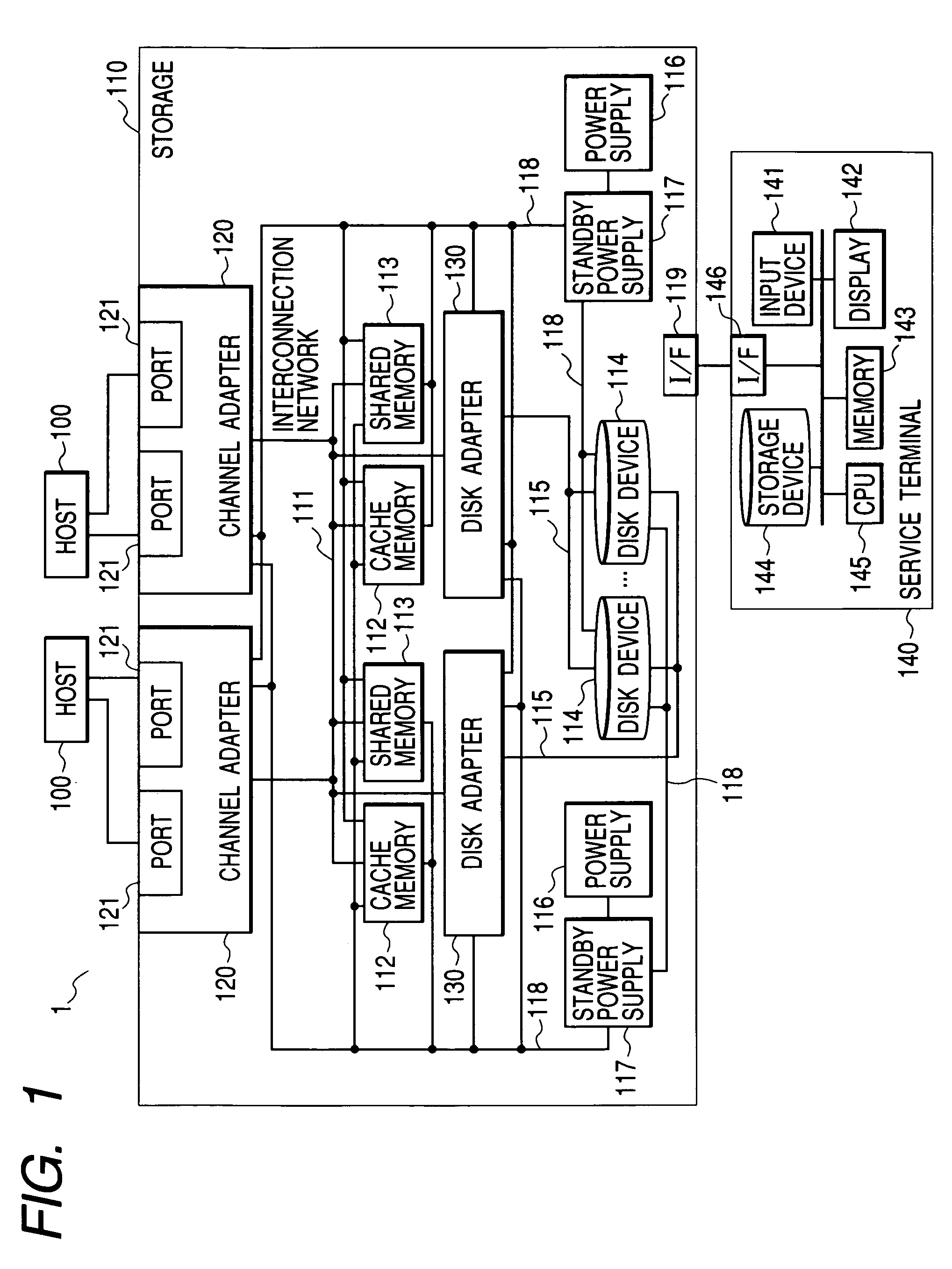

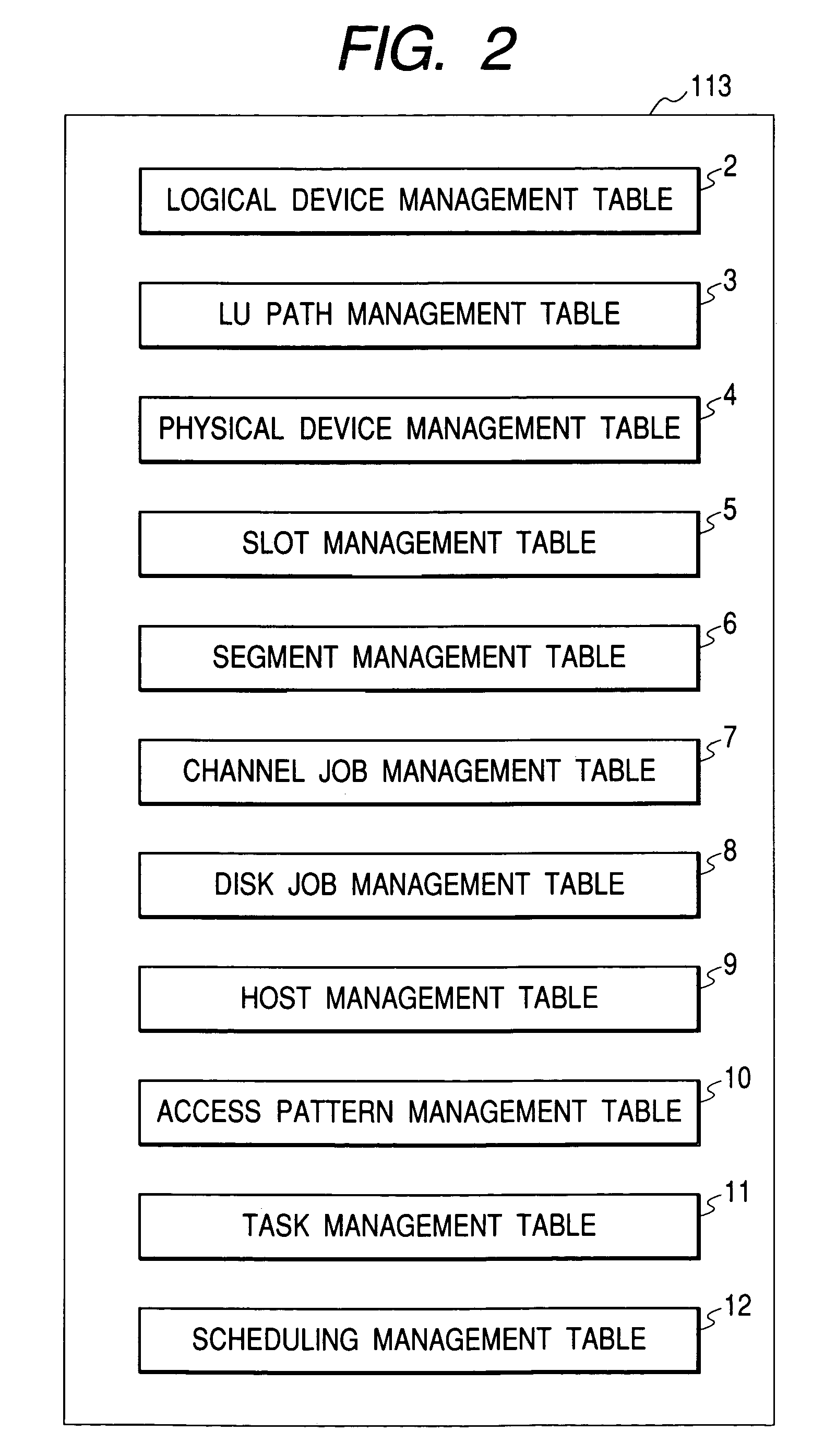

Storage system, and control method, job scheduling processing method, and failure handling method therefor, and program for each method

InactiveUS7100074B2Reduces total input/output processing performance of storageQuick saveMemory architecture accessing/allocationInput/output to record carriersComputer hardwareDirty data

An arbitrary number of a plurality of physical devices are mapped to each logical device provided for a host as a working unit, while considering a priority given to each logical device, and with each physical device being made up of a plurality of disk devices. This arrangement allows dirty data to be quickly saved to the disk devices in the order of logical device priority in the event of a failure. Furthermore, jobs are preferentially processed for important tasks in the event of a failure to reduce deterioration of the host processing performance.

Owner:HITACHI LTD

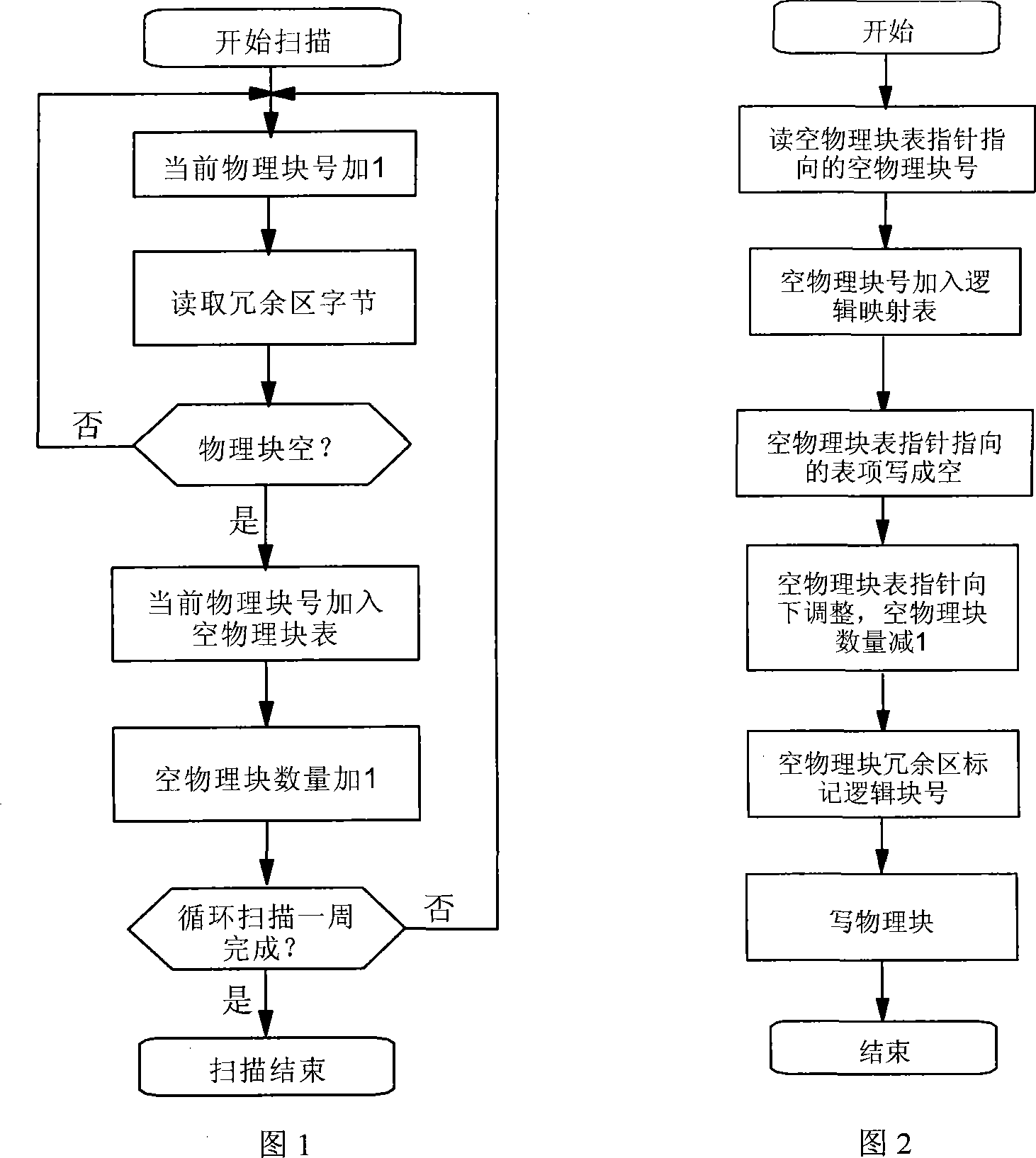

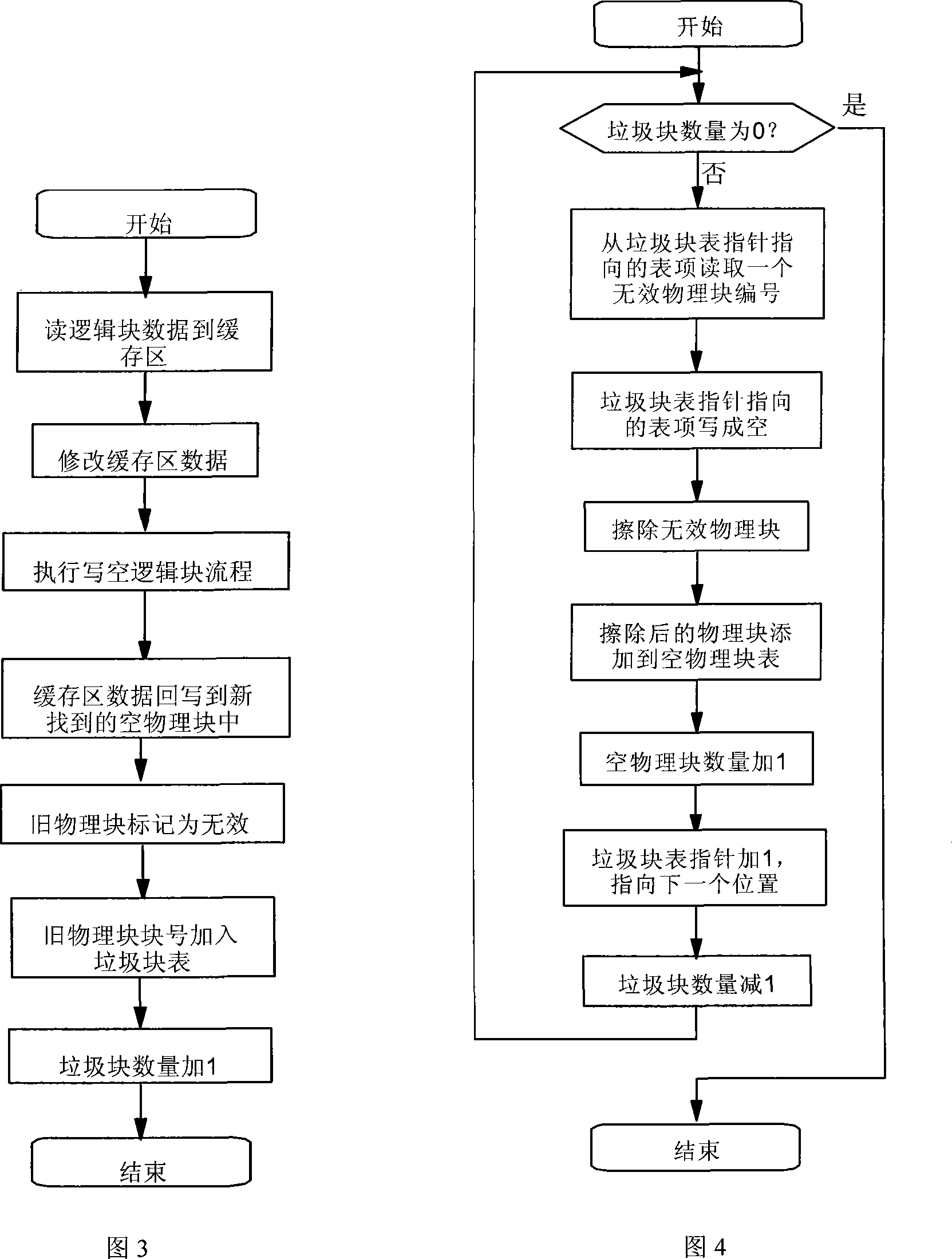

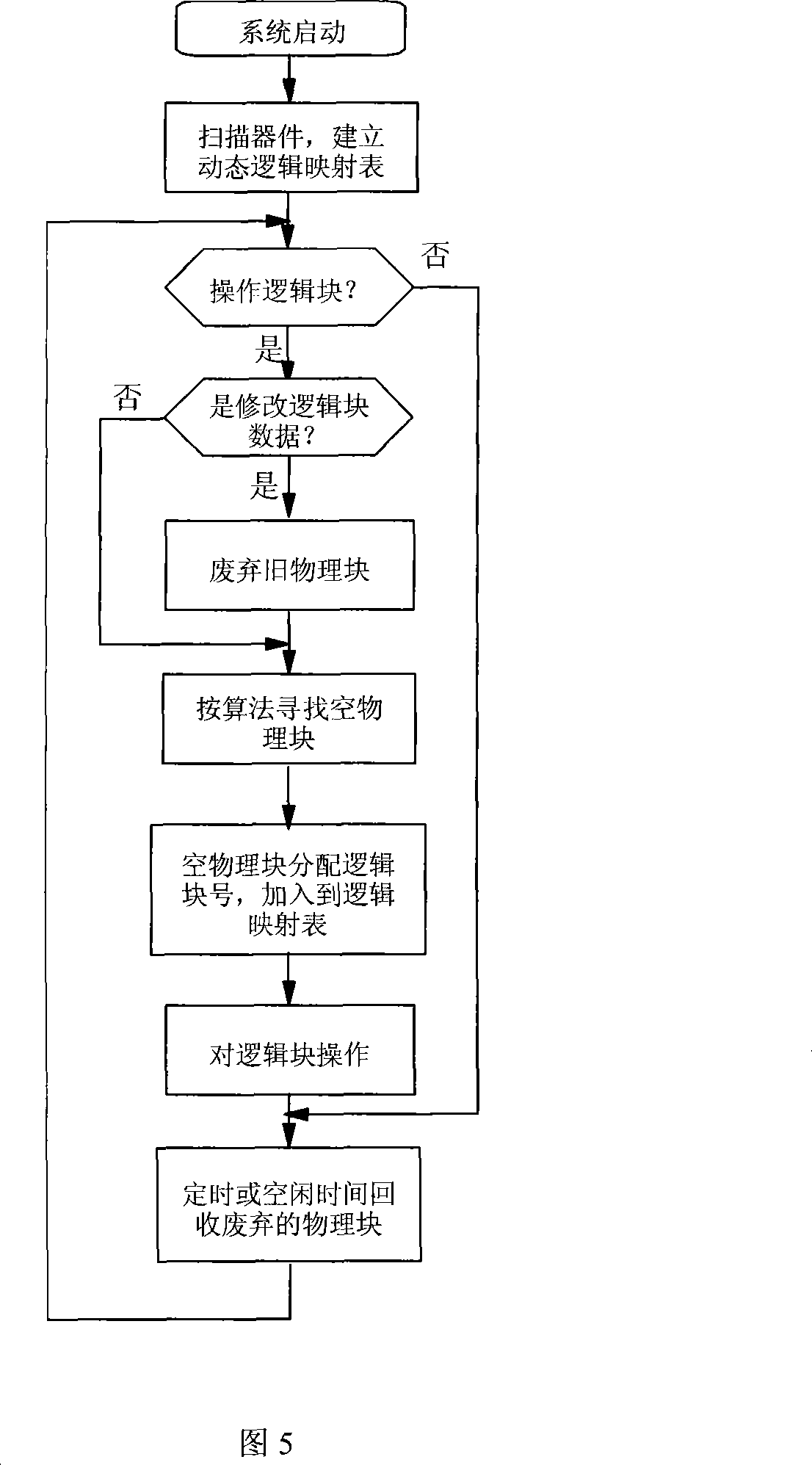

Dynamic state management techniques of NAND flash memory

InactiveCN101178689AImprove efficiencyEasy to useMemory adressing/allocation/relocationDirty dataState management

The invention relates to a dynamic managing method of an NAND Flash memorizer. The invention sets dynamic logic mapped table, in which when no used logic block is available, the physical block is not distributed temporarily; the dynamic logic mapped table searches the empty physical block in a rolling way according to the choice equal principle through arithmetic when in use, balances the using frequency of the physical block in the NAND Flash through the dynamic rolling of the table, takes one empty physical bock to distribute logic number as operating one logic block and adds to the logic mapped table to re-operate. The logic mapped table is gradually filled flowing the running of the system, abandons and reclaims the old physical blocks during amending the logic block data, and finds a new physical block to re-write back; the logic mapped table proceeds particular definition to the user byte in the redundant district of each page, distinguishes the dirty data only by compare the byte value. The software of the invention has high efficiency, realizing the balance use of the physical blocks and prolonging the service life of the NAND Flash memorizer.

Owner:HANGZHOU NENGLIAN SCI & TECH

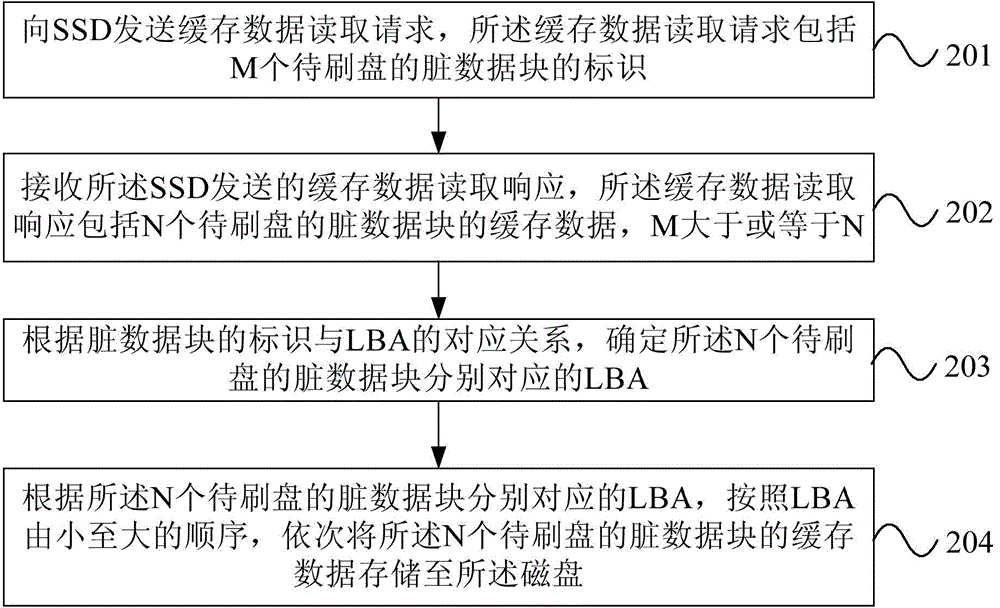

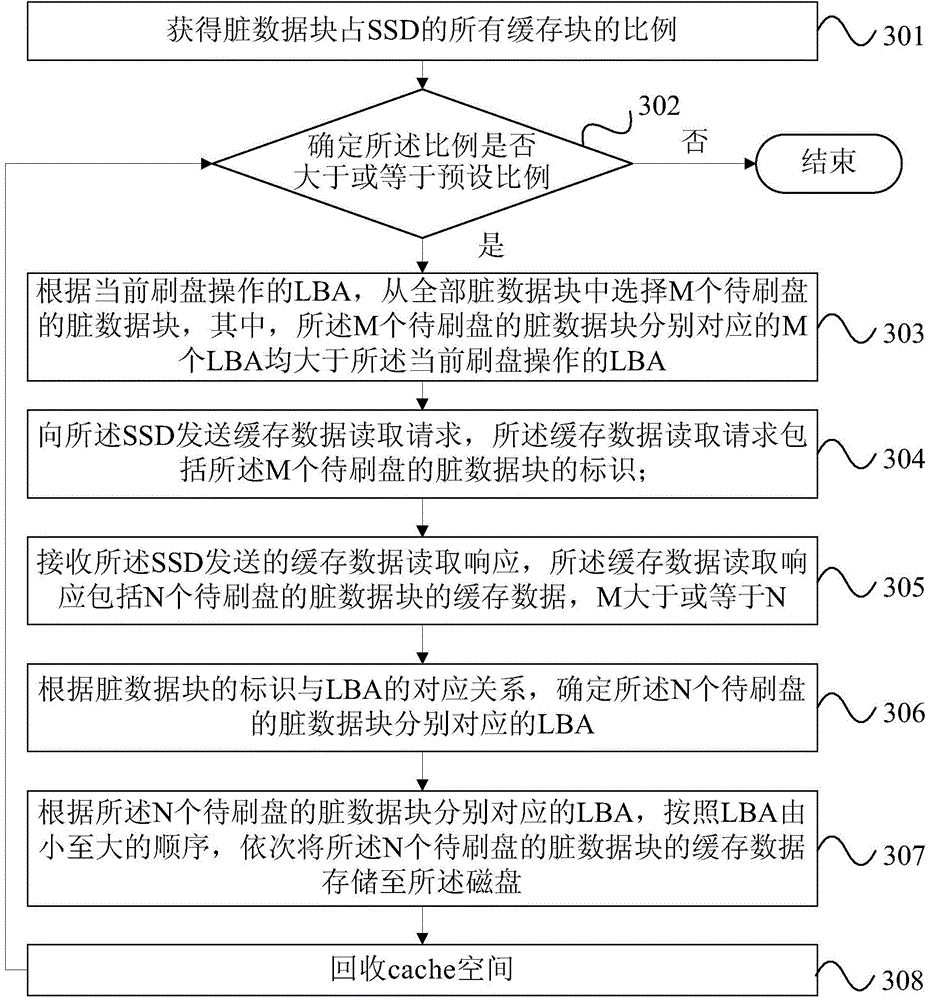

Cached data disk brushing method and device

ActiveCN104461936AImprove brushing efficiencyAvoid brushing timeInput/output to record carriersMemory adressing/allocation/relocationDirty dataRequest–response

The invention provides a cached data disk brushing method and device. The cached data disk brushing method is applied to a storage system. The storage system comprises a controller, a disk and an SSD, wherein the SSD serves as a cache of the disk. The cached data disk brushing method is executed by the controller and comprises the steps of sending a cached data reading request to the SSD, wherein the cached data reading request includes identifications of dirty data blocks of M disks to be brushed; receiving cached data reading request response sent by the SSD, wherein the cached data reading request response includes cached data of dirty data blocks of N disks to be brushed, and the M is greater than or equal to the N; determining LBA respectively corresponding to the dirty data blocks of the N disks according to the corresponding relation of the identifications of the dirty data blocks and the LBA; sequentially storing the cached data of the dirty data blocks of the N disks in the disk according to the LBA respectively corresponding to the dirty data blocks of the N disks and in an LBA sequence from small to large.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

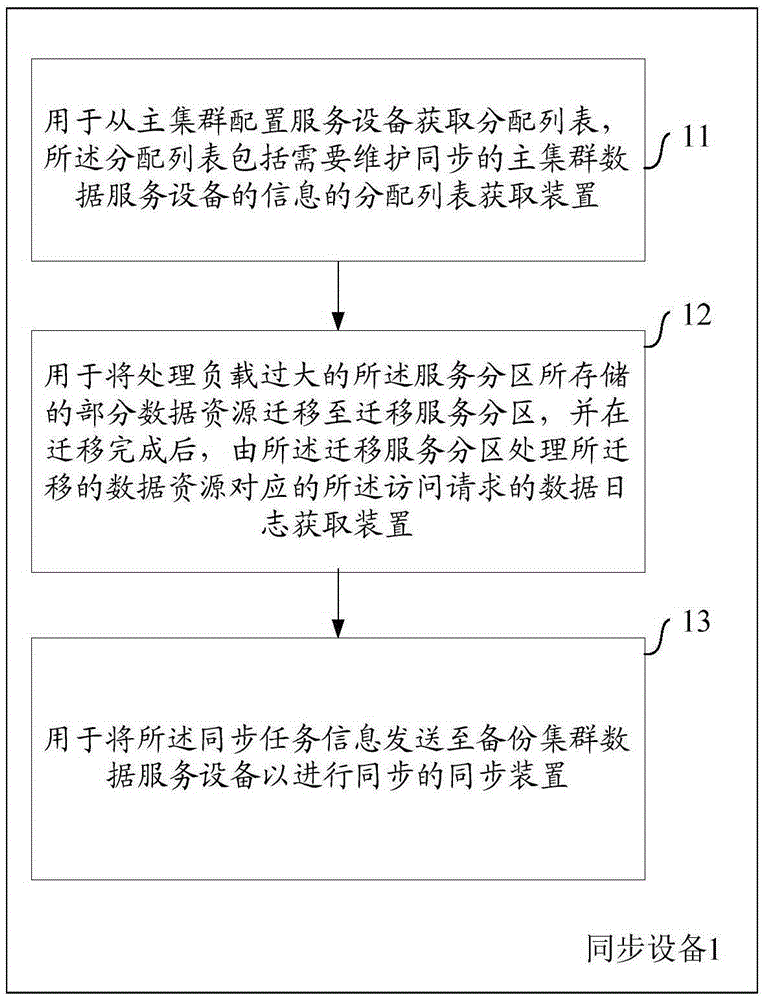

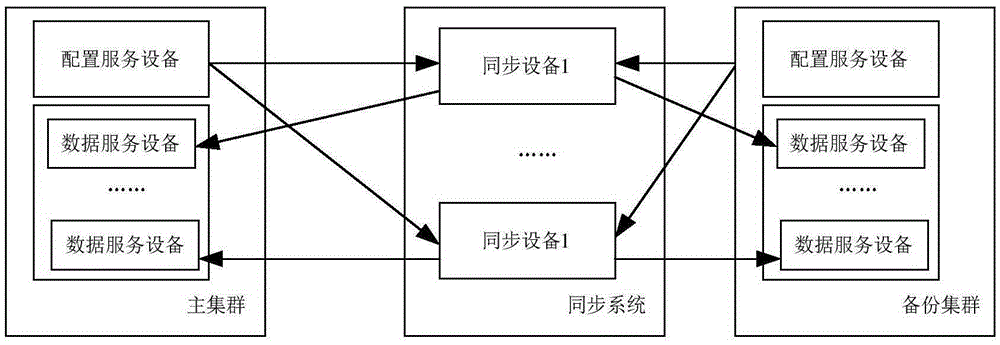

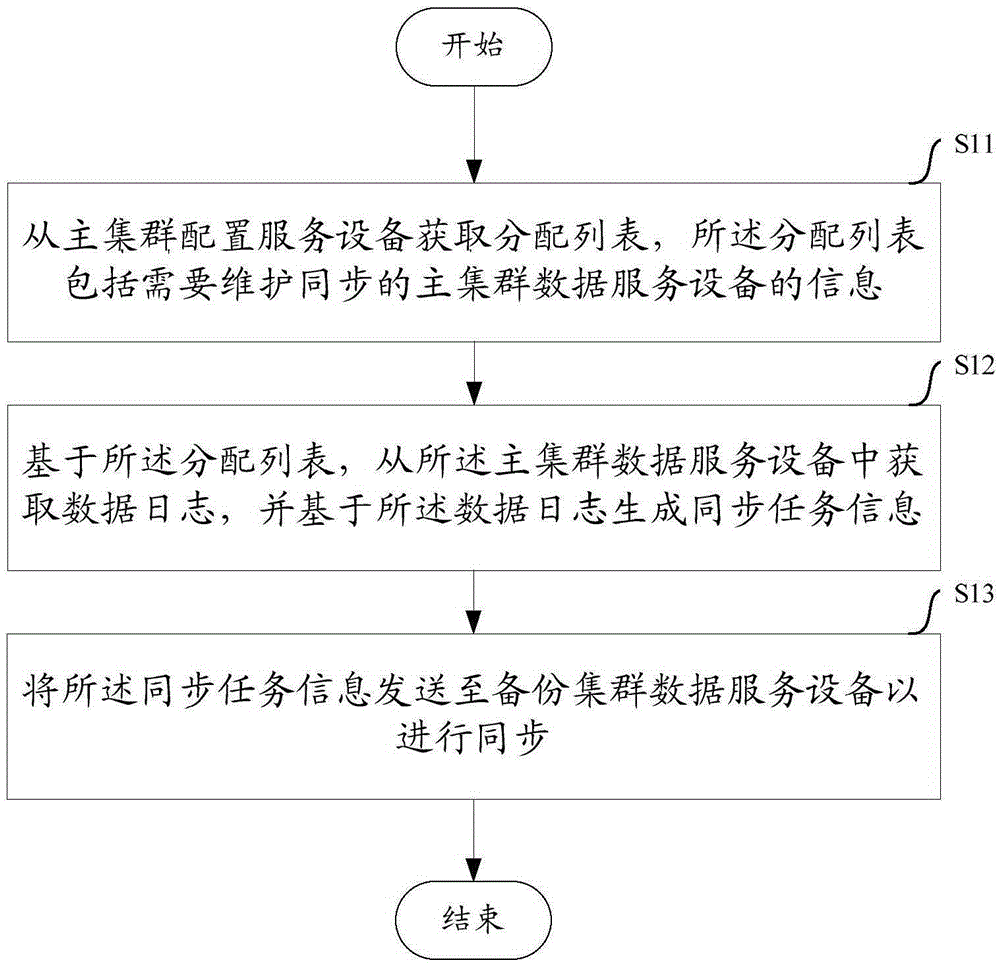

Method and equipment for data synchronization of distributed caching system

InactiveCN106570007AAvoid lossDatabase distribution/replicationSpecial data processing applicationsData synchronizationClustered data

The invention aims to provide a method and equipment for synchronization of a distributed caching system. The method comprises the steps of acquiring a distribution list from main cluster configuration service equipment, wherein the distribution list comprises information of the main cluster data service equipment which requires maintenance synchronization; based on the distribution list, acquiring a data log from the main cluster data service equipment, and generating synchronization task information based on the data log; and transmitting the synchronization task information to backup cluster data service equipment for synchronization, thereby continuously synchronizing the particular state of the data service equipment before shutdown to taking-over data service equipment when shutdown of the main cluster data service equipment or shutdown of the integral main cluster occurs, and preventing dirty data or data loss accordingly.

Owner:ALIBABA GRP HLDG LTD

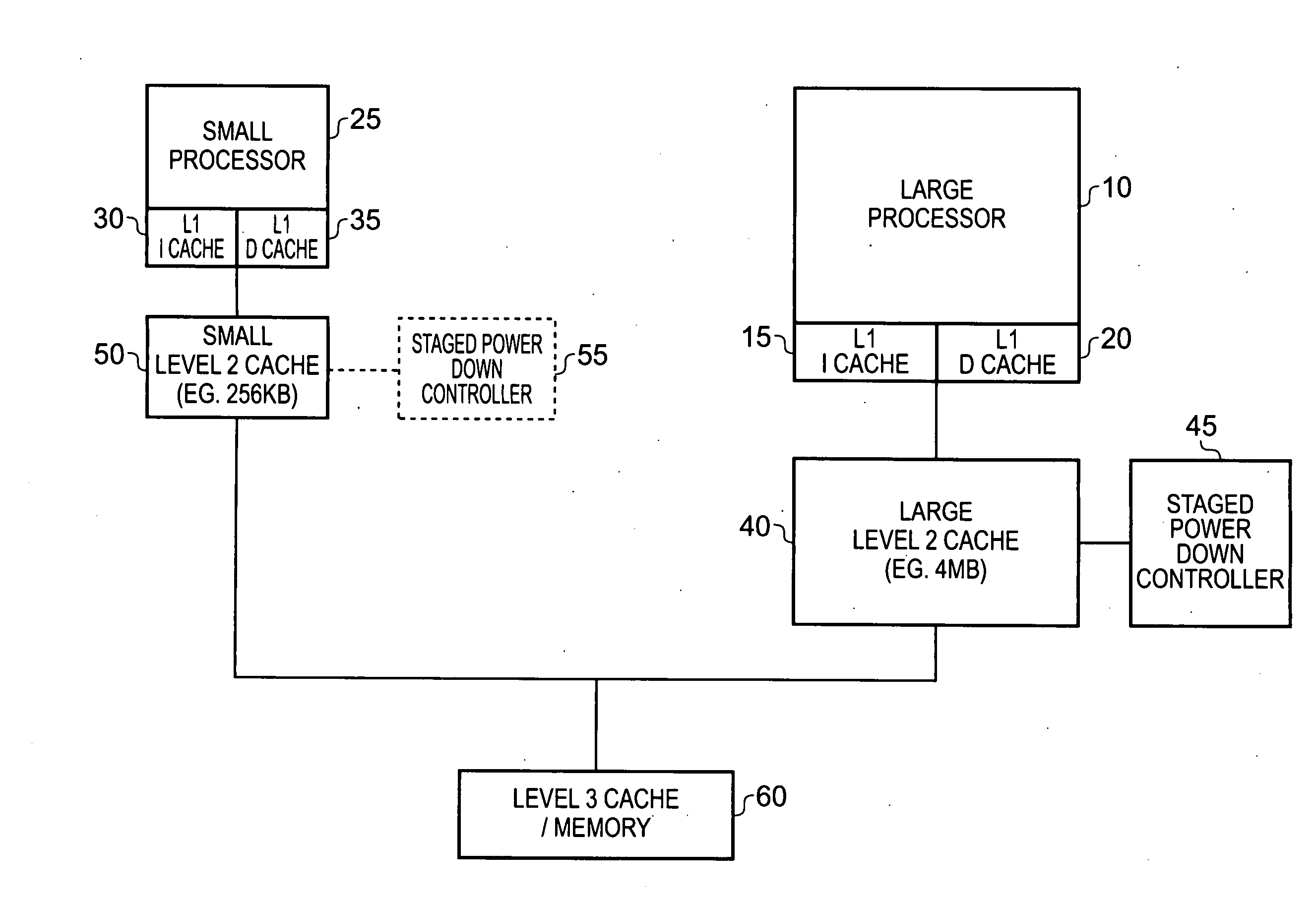

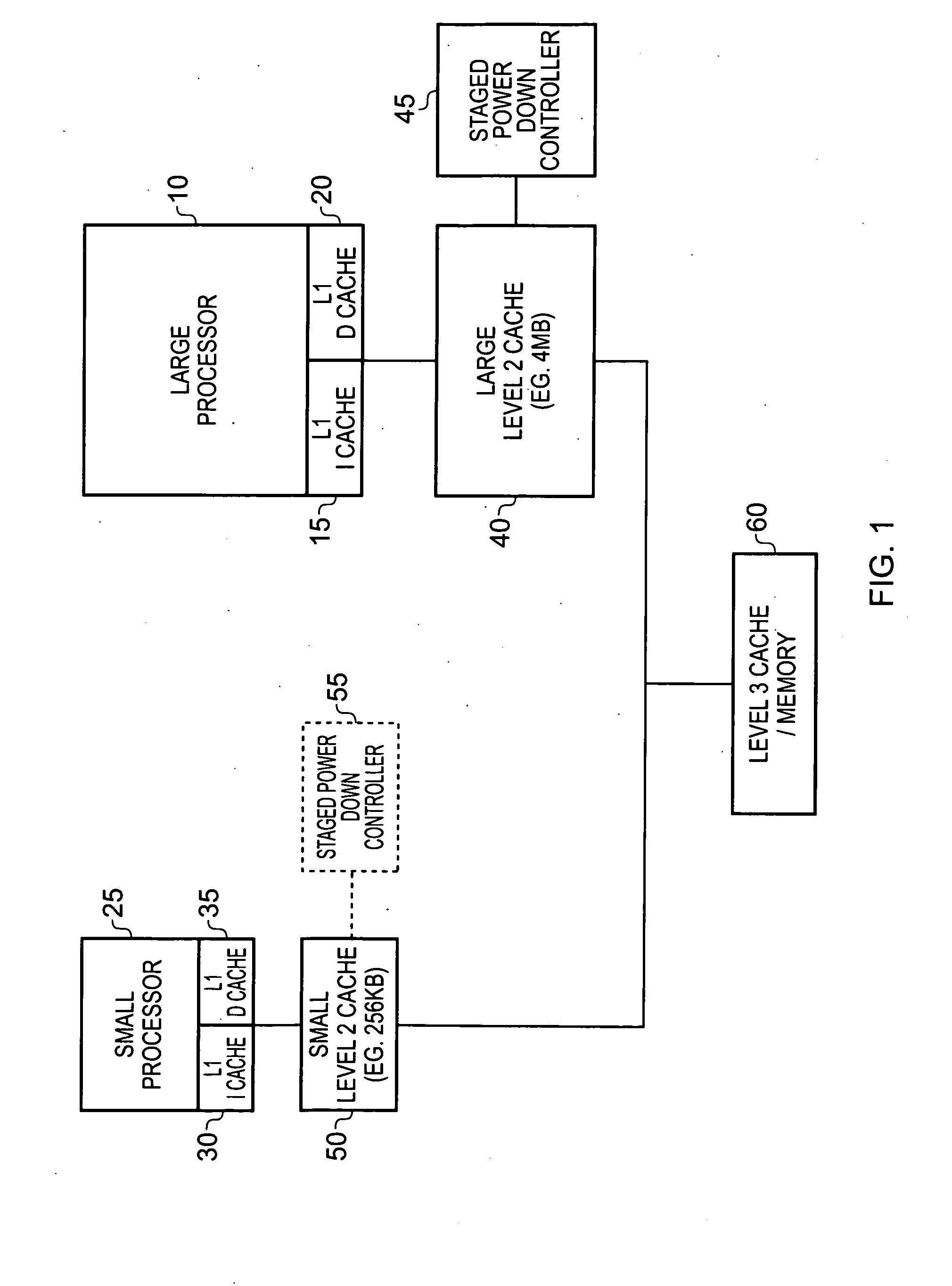

Data processing apparatus and method for powering down a cache

InactiveUS20130036270A1Technique is effectiveFast outputMemory architecture accessing/allocationEnergy efficient ICTMemory addressElectricity

A data processing apparatus is provided comprising a processing device, and an N-way set associative cache for access by the processing device, each way comprising a plurality of cache lines for temporarily storing data for a subset of memory addresses of a memory device, and a plurality of dirty fields, each dirty field being associated with a way portion and being set when the data stored in that way portion is dirty data. Dirty way indication circuitry is configured to generate an indication of the degree of dirty data stored in each way. Further, staged way power down circuitry is responsive to at least one predetermined condition, to power down at least a subset of the ways of the N-way set associative cache in a plurality of stages, the staged way power down circuitry being configured to reference the dirty way indication circuitry in order to seek to power down ways with less dirty data before ways with more dirty data. This approach provides a particularly quick and power efficient technique for powering down the cache in a plurality of stages.

Owner:RGT UNIV OF MICHIGAN +1

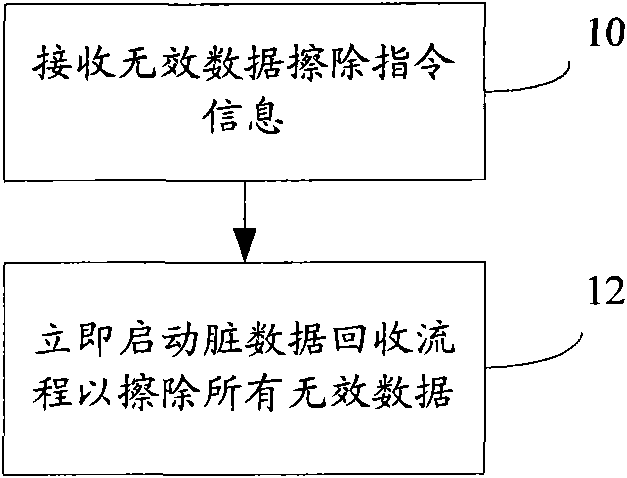

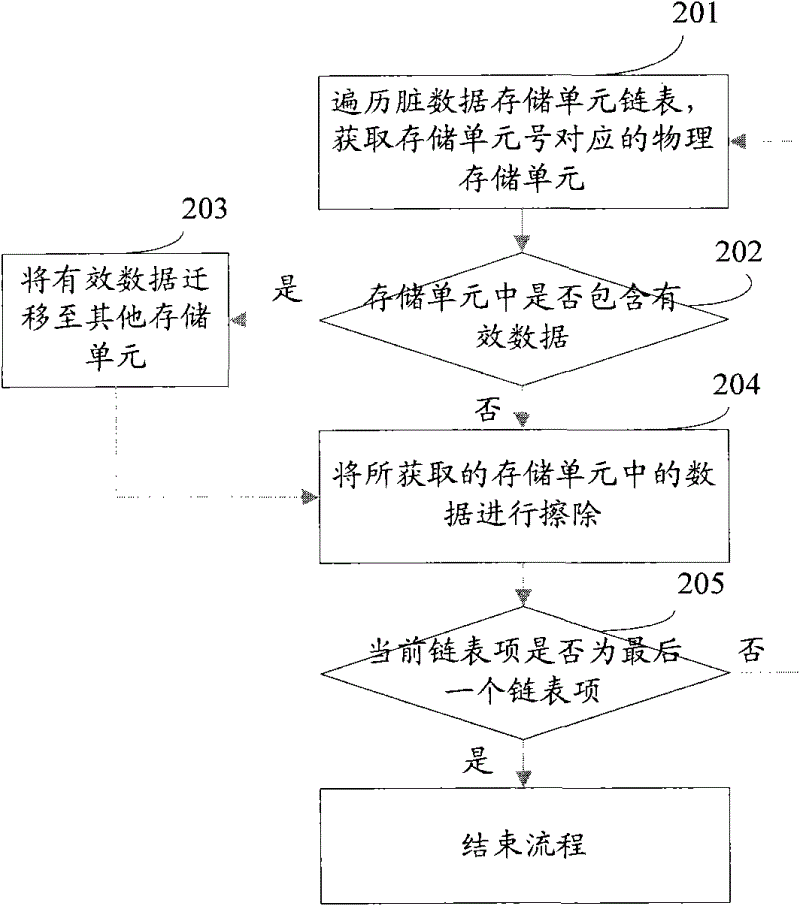

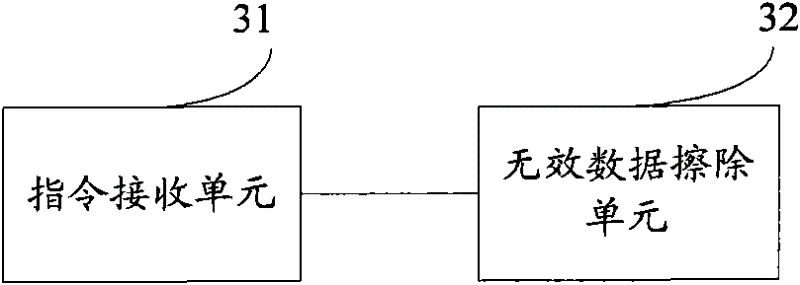

Invalid data erasing method, device and system

InactiveCN102622310AProtecting Security InformationMemory architecture accessing/allocationUnauthorized memory use protectionDirty dataInvalid Data

The embodiment of the invention provides an invalid data erasing method, device and system. Through the embodiment of the invention, after invalid data erasing instruction information is received, a dirty data recovery procedure is started immediately so as to erase all invalid data, so that all the invalid data can be erased from a memory medium, thereby effectively protecting the safety information of users.

Owner:HUAWEI DIGITAL TECH (CHENGDU) CO LTD

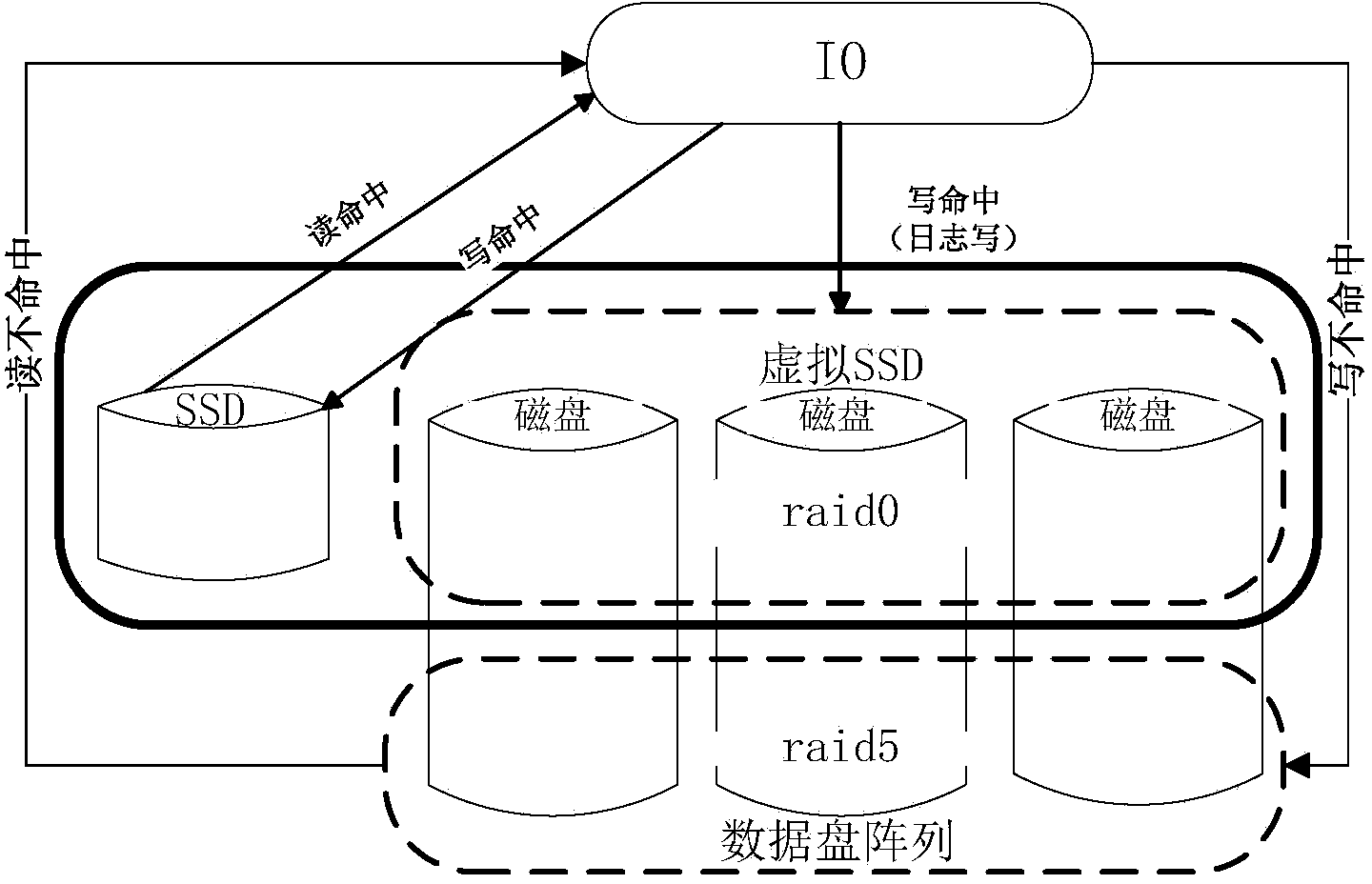

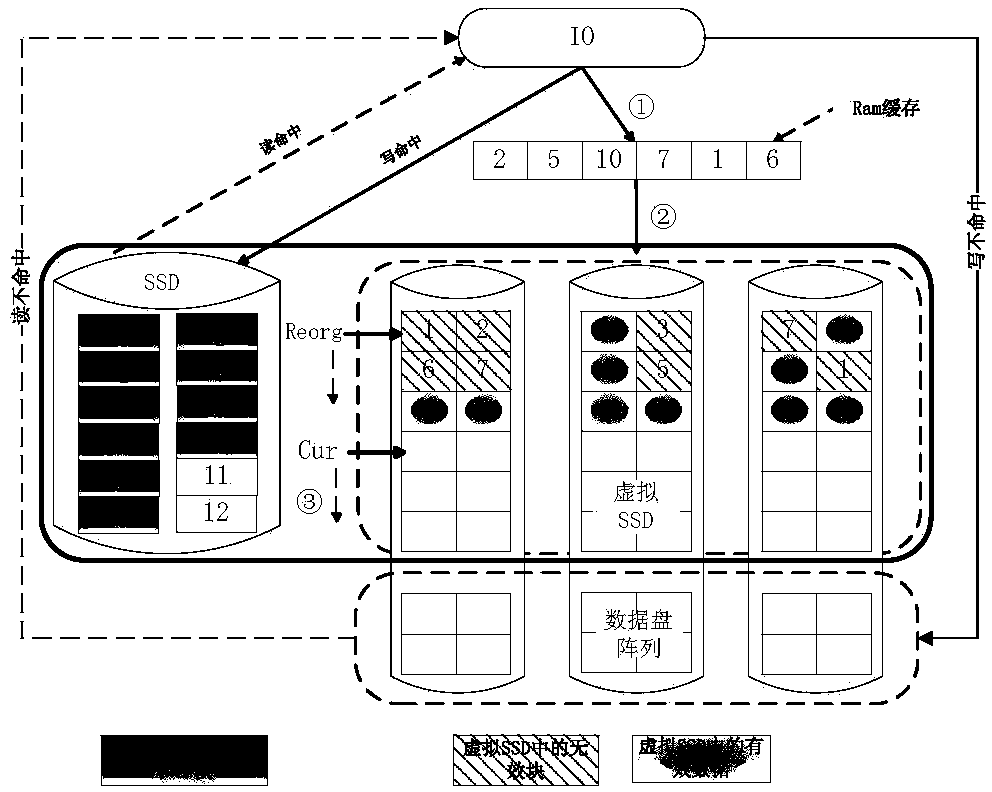

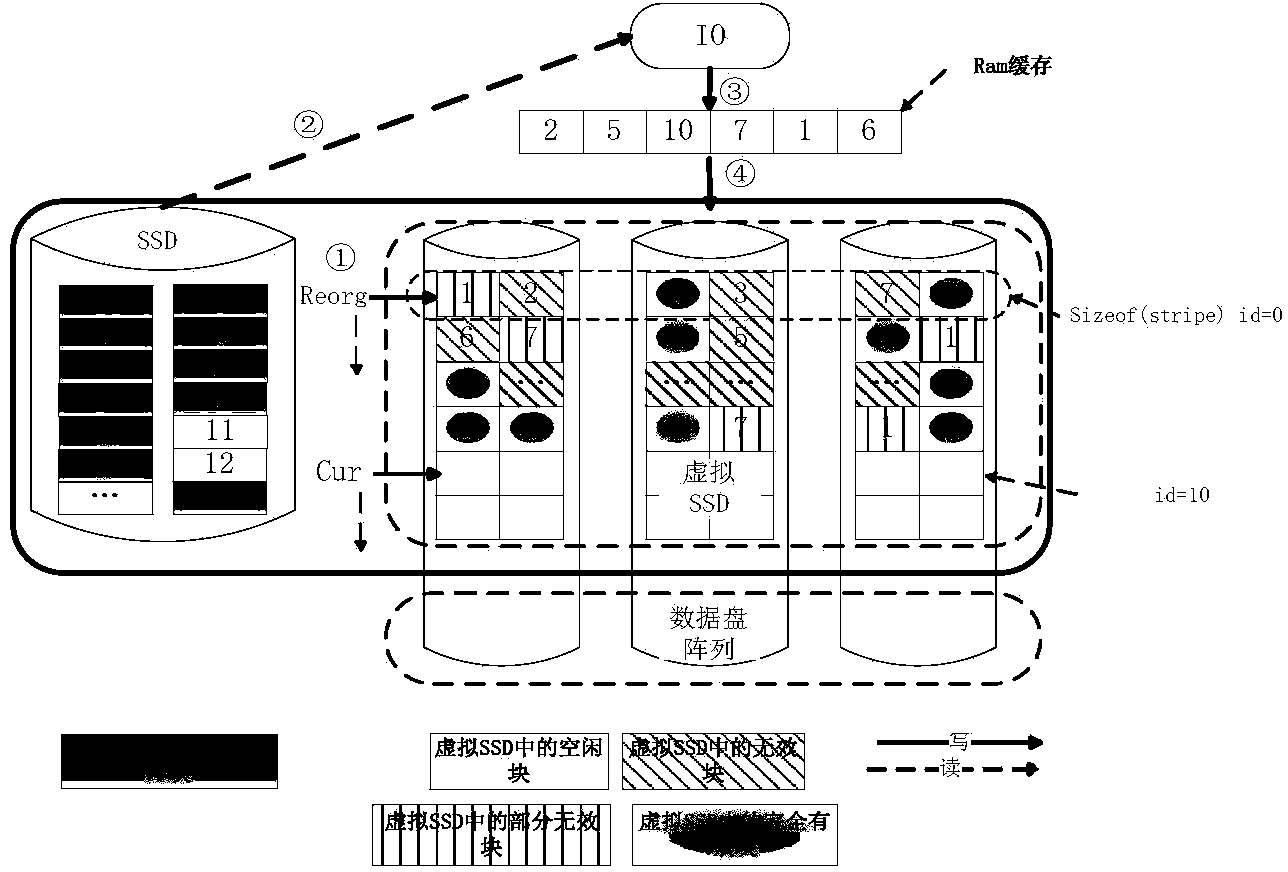

Disk array caching method for virtual SSD and SSD isomerous mirror image

ActiveCN103645859AReduce the number of writesReduce overheadInput/output to record carriersRAIDDirty data

The invention discloses a disk array caching method for a virtual SSD and an SSD isomerous mirror image. The disk array caching method comprises the steps that a disk is logically divided into two parts, wherein the upper half part forms an RAID0 or RAID5 structure to serve as the virtual SSD so as to back up dirty data in a real SSD, the virtual SSD is in a log writing mode, a ram writing cache is set, and the lower half portion is assembled to be of different RAID structures according to needs of users, and is used as a data array so as to store cold data; an address mapping Hash locating table between a data disk and the cache of the real SSD is built; a timer is set so as to regularly write data cached in the ram writing cache of the virtual SSD into the virtual SSD; a request from an upper layer file system is received; for a reading request, when the reading request is not hit in the real SSD, data are read from the data array. The disk array caching method gives consideration to performance, reliability and cost.

Owner:HUAZHONG UNIV OF SCI & TECH

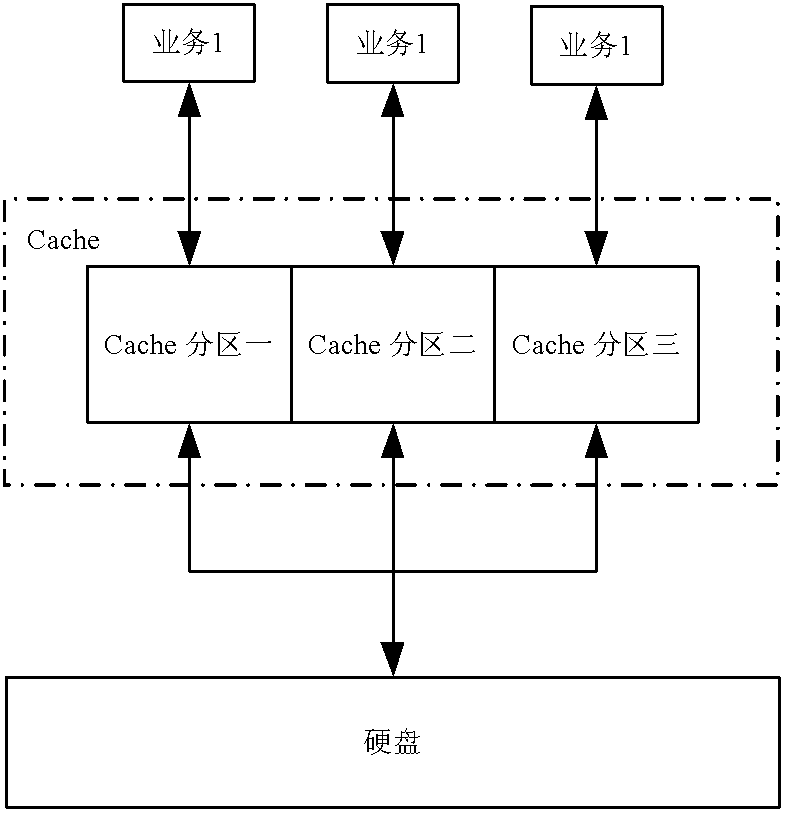

Memory system, and method and system for controlling service quality of memory system

ActiveCN102508619AOvercoming preemptionAvoid performance impactMemory architecture accessing/allocationInput/output to record carriersDirty dataControl system

The invention provides a memory system, and a method and a system for controlling service quality of the memory system. The method for controlling the service quality of the memory system comprises the following steps: collecting processing capability information of hard discs in the memory system, and obtaining processing capabilities of the hard discs through the processing capability information; dividing a cache into a plurality of levels according to the processing capabilities of the hard discs, wherein the cache in each level corresponds to one or more hard discs in the memory system, and the page quota of the cache in each level is pre-allocated according to the processing capability information of one or more hard discs corresponding to the cache in each level; and for the cache of a level in which dirty data reach a preset proportion, writing data in the cache of the level into the hard discs corresponding to the cache of the level. The system for controlling the service quality of the memory system comprises a collecting module, a first dividing module and a disc-writing module; and the memory system comprises a host, a cache and the system for controlling the service quality of the memory system. The preemption of page resources in the cache is avoided.

Owner:CHENGDU HUAWEI TECH

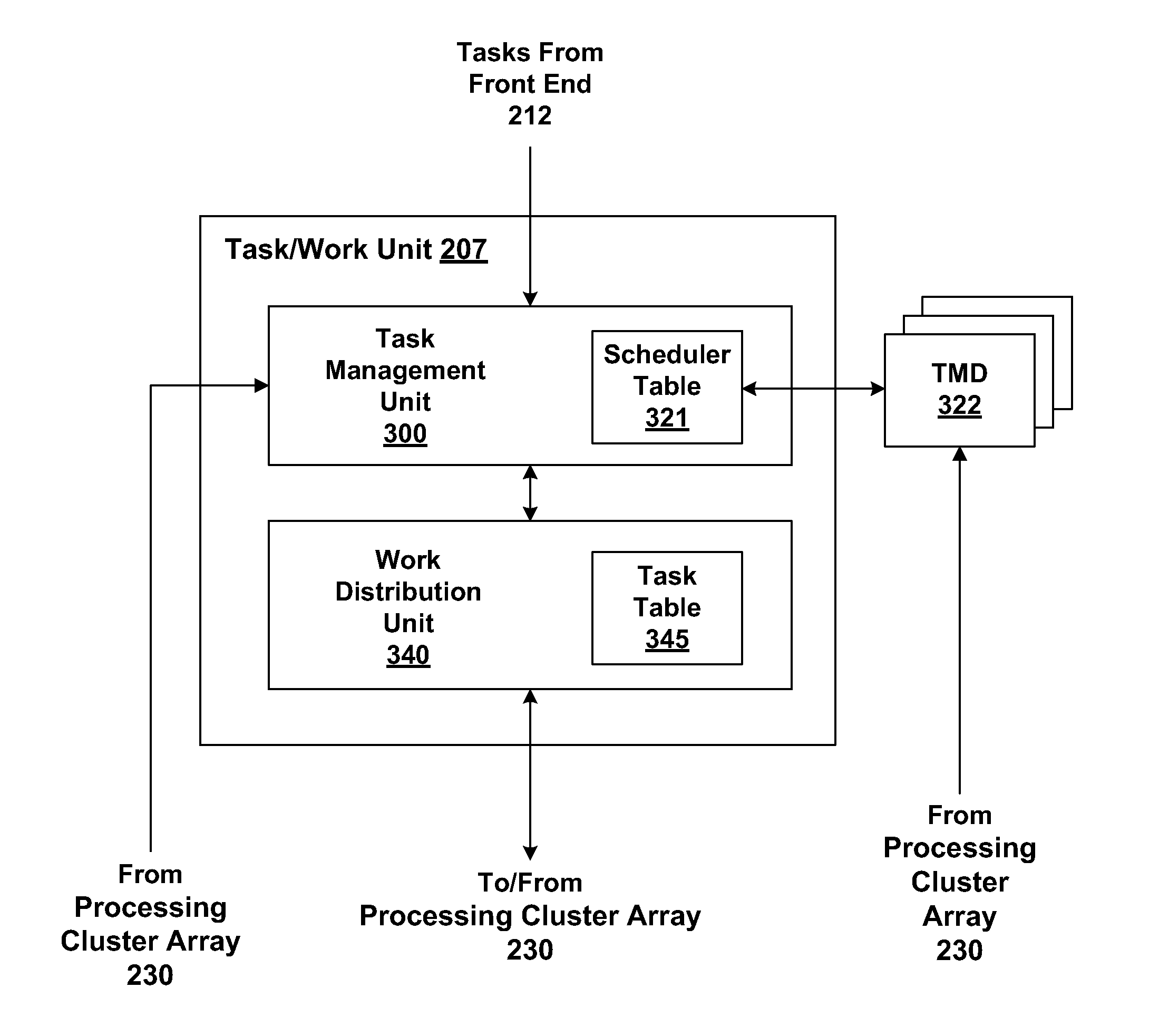

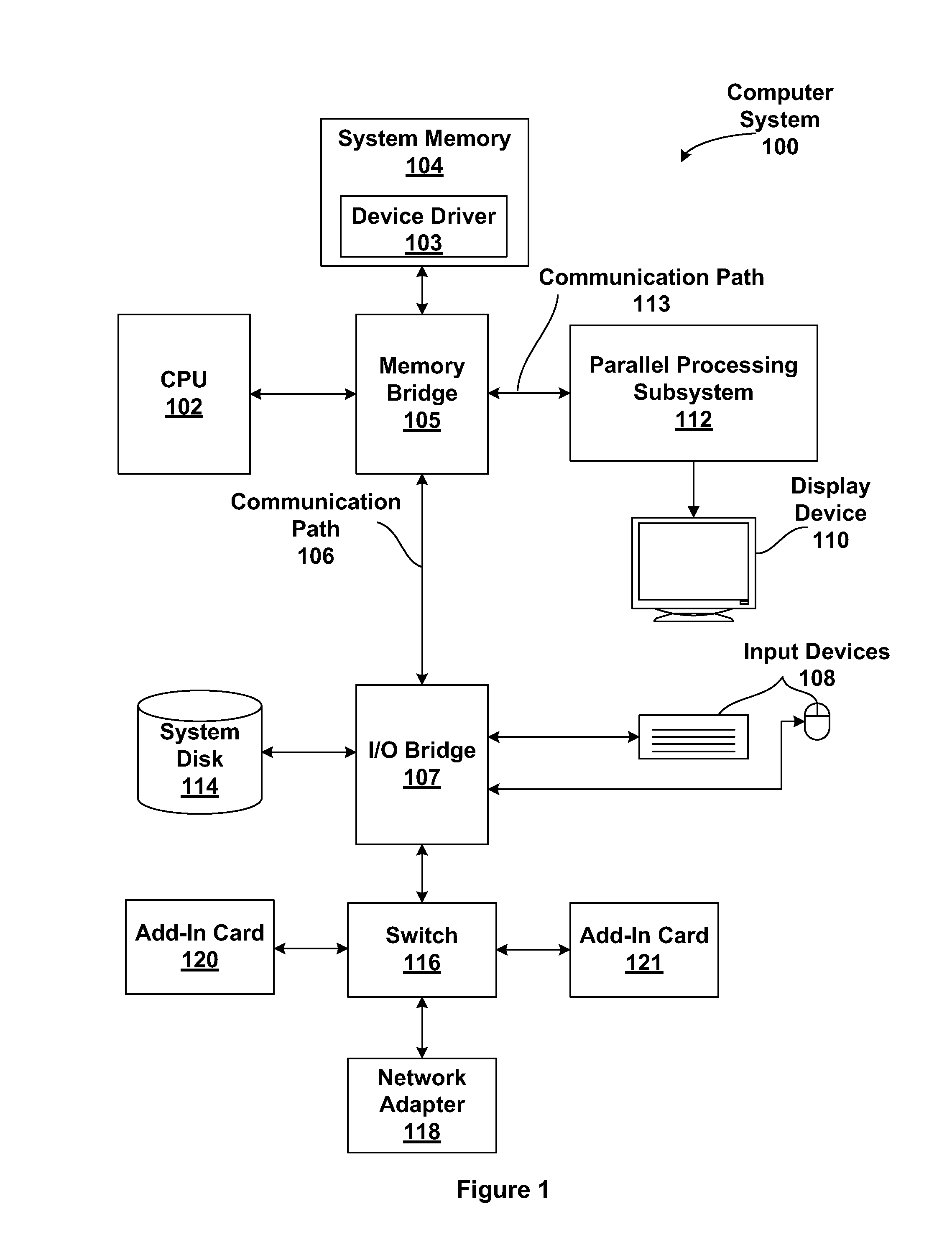

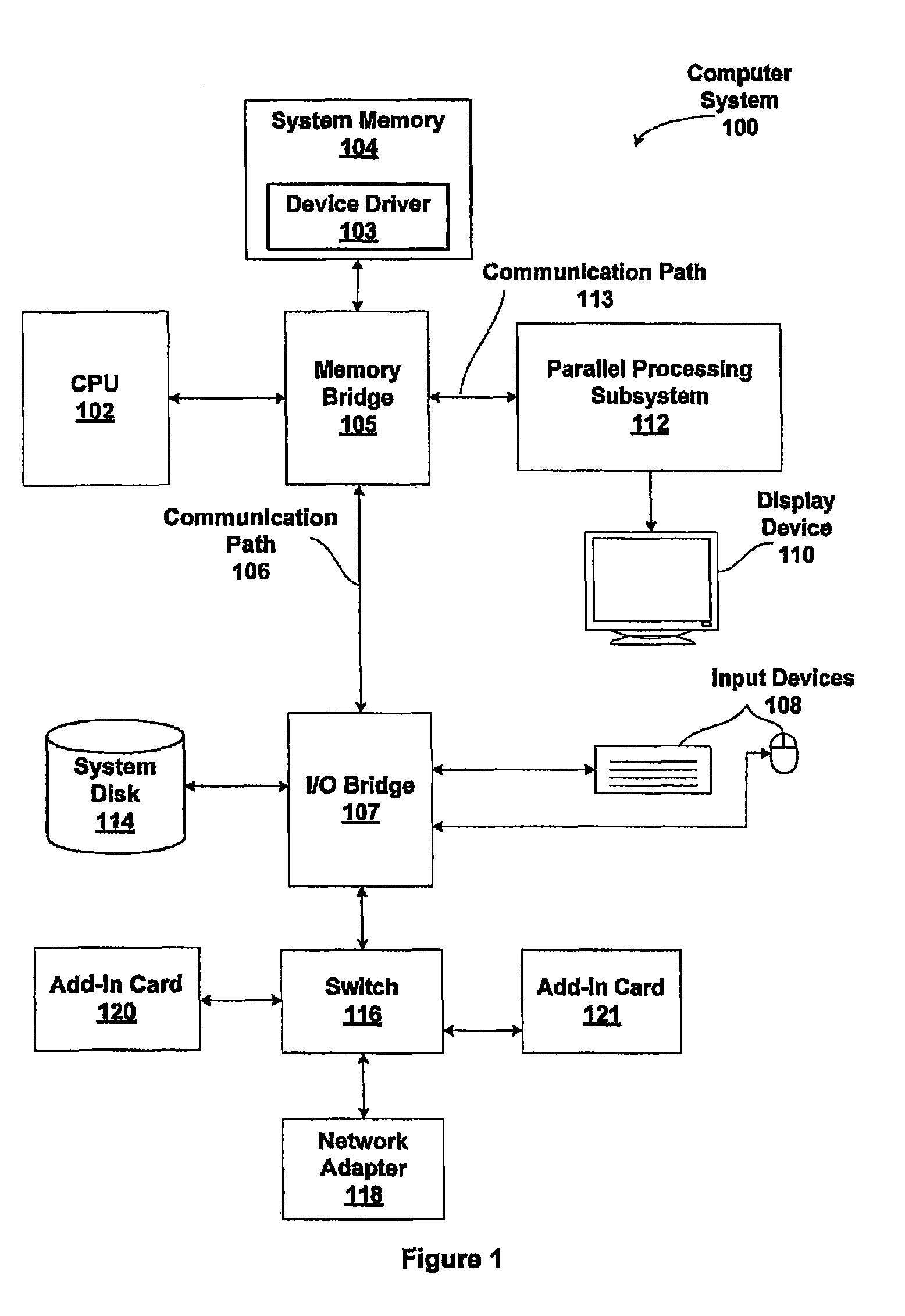

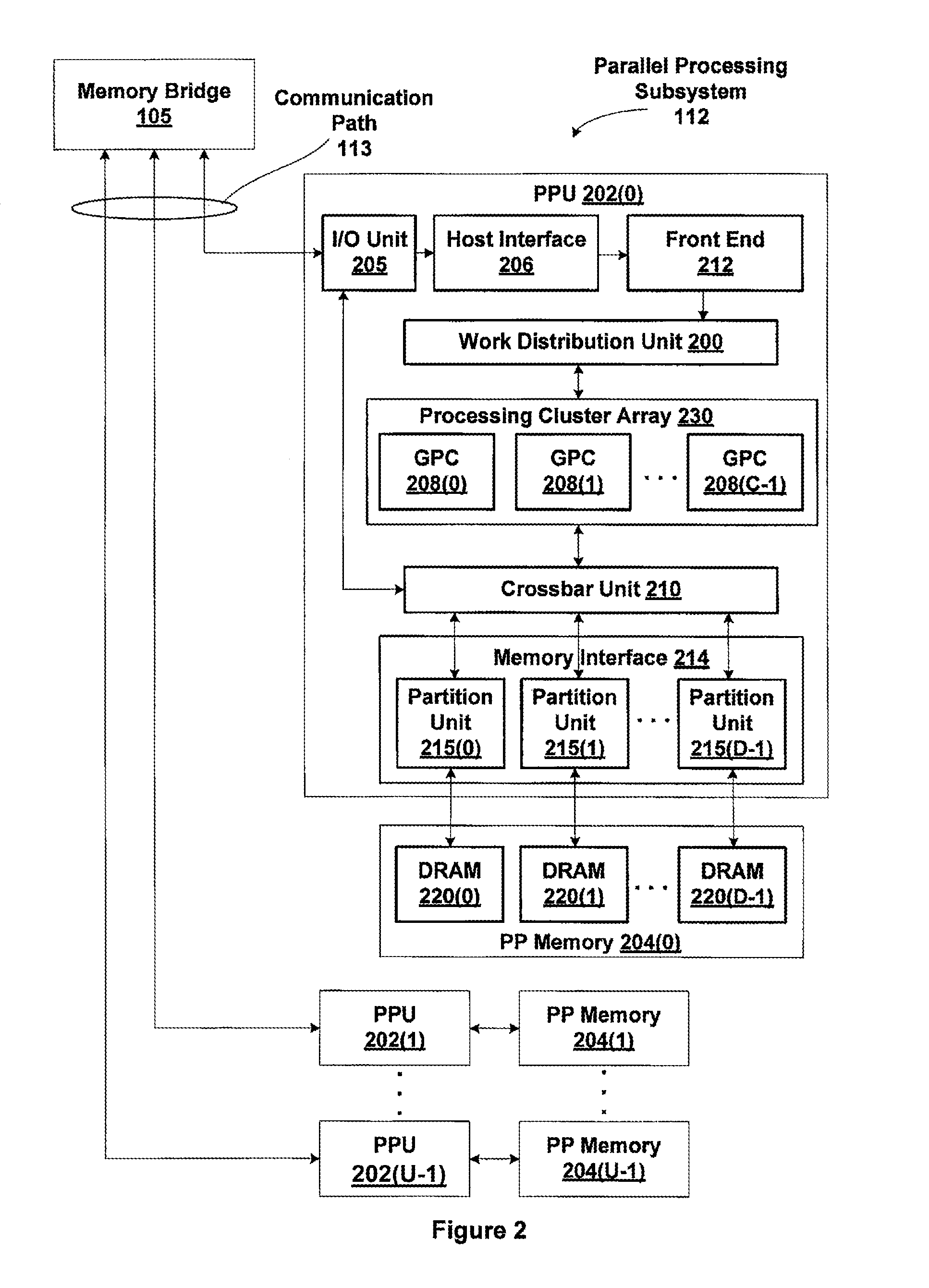

Using a data cache array as a DRAM load/store buffer

One embodiment of the invention sets forth a mechanism for using the L2 cache as a buffer for data associated with read / write commands that are processed by the frame buffer logic. A tag look-up unit tracks the availability of each cache line in the L2 cache, reserves necessary cache lines for the read / write operations and transmits read commands to the frame buffer logic for processing. A data slice scheduler transmits a dirty data notification to the frame buffer logic when data associated with a write command is stored in an SRAM bank. The data slice scheduler schedules accesses to the SRAM banks and gives priority to accesses requested by the frame buffer logic to store or retrieve data associated with read / write commands. This feature allows cache lines reserved for read / write commands that are processed by the frame buffer logic to be made available at the earliest clock cycle.

Owner:NVIDIA CORP

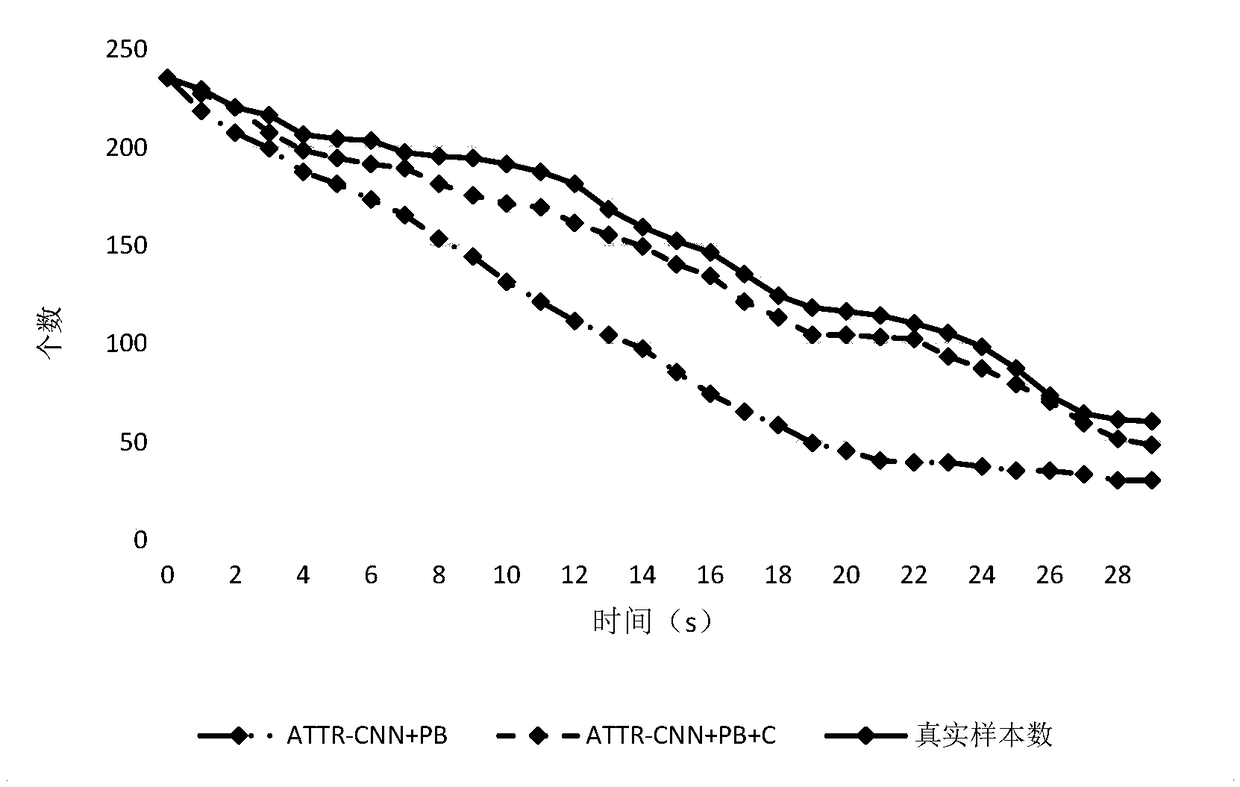

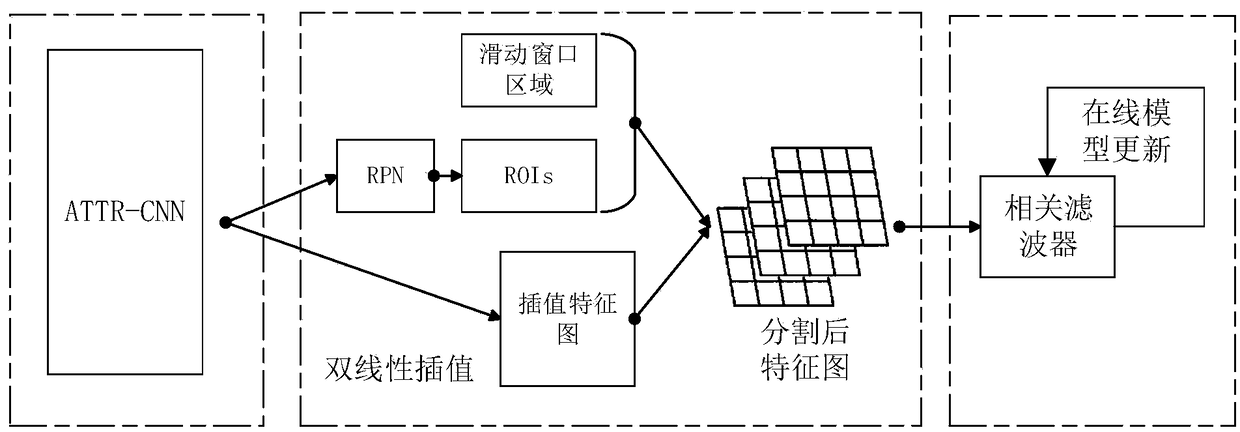

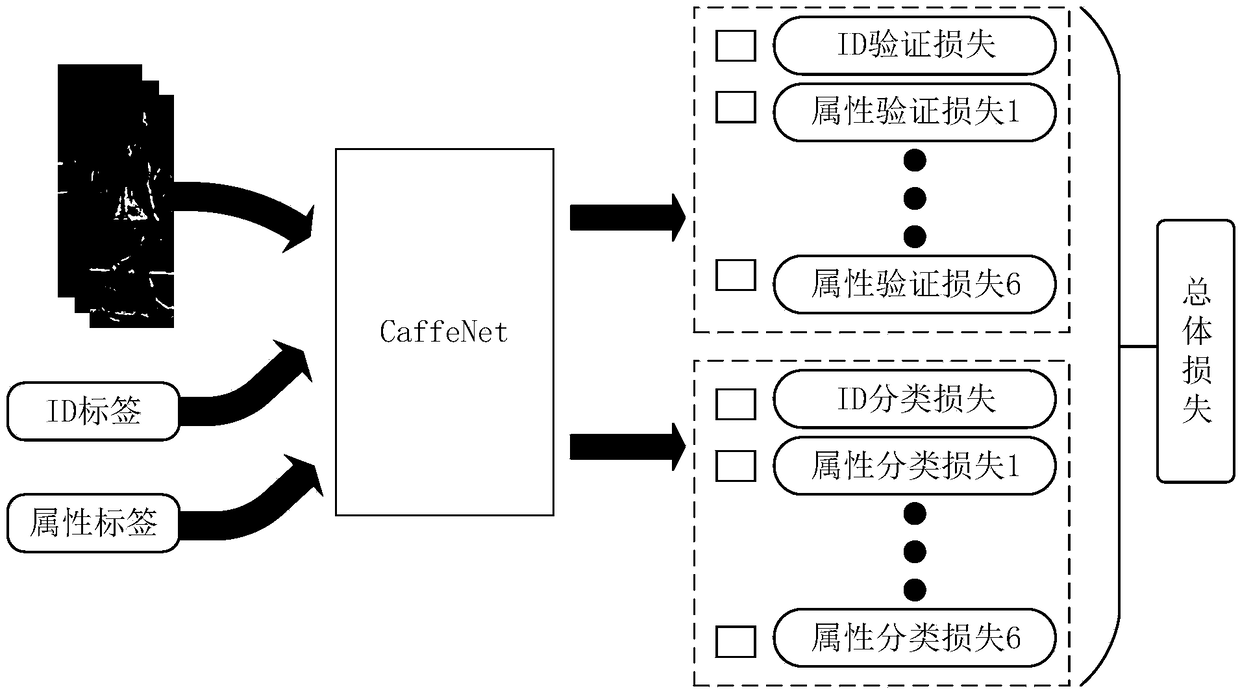

Pedestrian target tracking method based on depth learning

ActiveCN109146921AImprove accuracyAvoid introducingImage enhancementImage analysisCosine similarityFeature extraction

The invention discloses a pedestrian target tracking method based on depth learning, which combines depth learning and correlation filtering to track the target, and effectively improves the accuracyof tracking on the premise of ensuring real-time tracking. Aiming at the problem of large change of target posture in tracking process, the deep convolution feature based on pedestrian attribute is applied to tracking. Aiming at occlusion problem, cosine similarity method is used to judge occlusion in order to avoid the introduction of dirty data caused by occlusion. In order to improve the efficiency and solve the problem of using deep convolution features in correlation filters, a bilinear interpolation method is proposed to eliminate quantization errors and avoid repeated feature extraction, which greatly improves the efficiency. Aiming at the problem of high-speed motion of target, a preselection algorithm is proposed, which can not only search the global image, but also can be used asa strong negative sample to join the training, so as to improve the distinguishing ability of the correlation filter.

Owner:HUAZHONG UNIV OF SCI & TECH

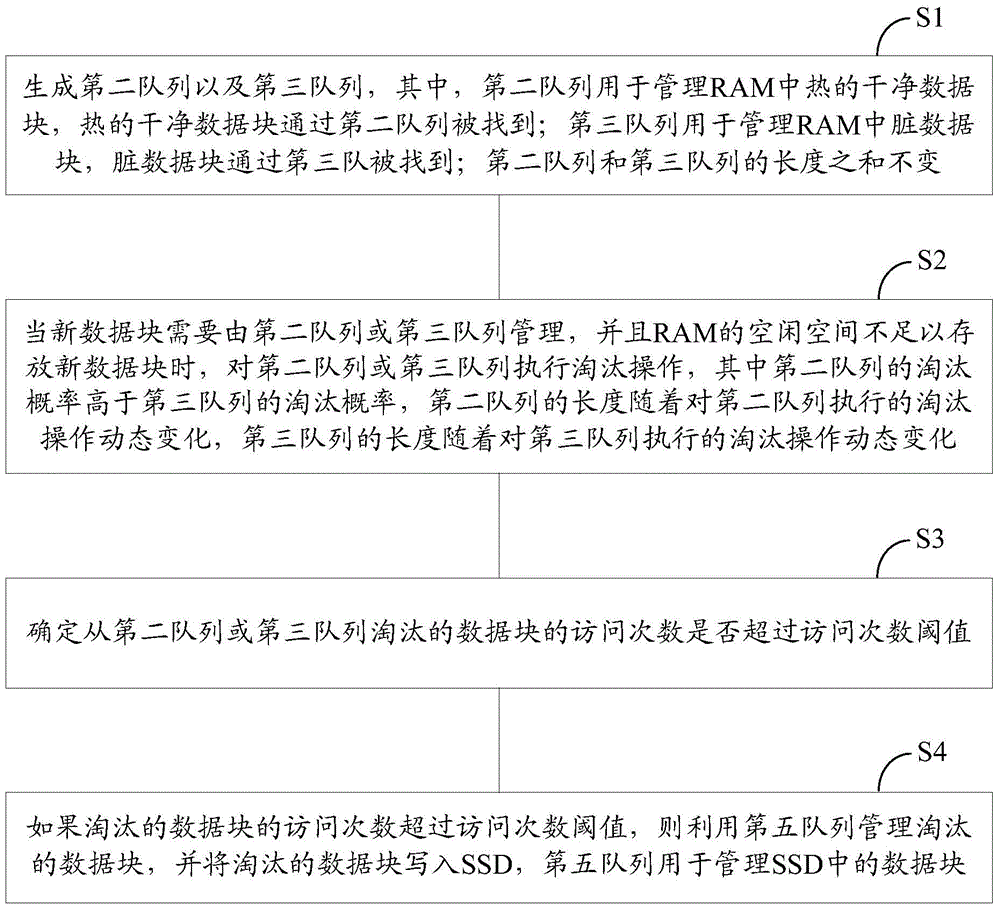

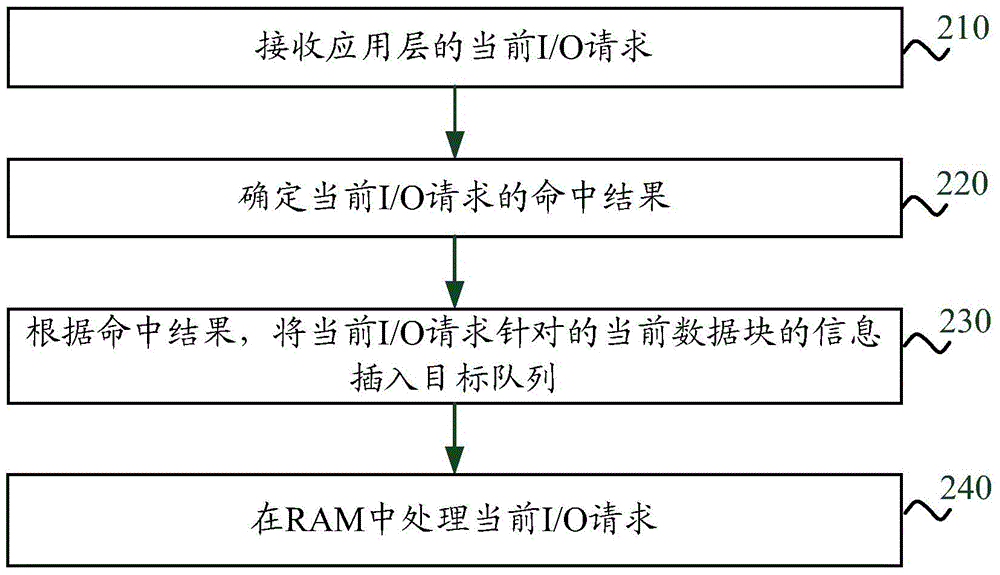

Method and equipment for managing hybrid cache

ActiveCN104090852AReduce frequent write operationsReasonable distributionInput/output to record carriersMemory adressing/allocation/relocationSolid-state storageDirty data

The embodiment of the invention provides a method for managing a hybrid cache. The hybrid cache comprises an RAM and a solid state memory SSD. The method comprises the steps that a second queue and a third queue are generated, wherein the second queue is used for managing hot clean data blocks in the RAM; the third queue is used for managing dirty data blocks in the RAM; the sum of the length of the second queue and the length of the third queue is unchanged; the elimination probability of the second queue is higher than that of the third queue, the length of the second queue dynamically changes along with the elimination operation executed on the second queue, and the length of the third queue dynamically changes along with the elimination operation executed on the third queue; whether the access frequency of data blocks eliminated from the second queue or the third queue exceeds an access frequency threshold value or not is determined; if yes, the data blocks are judged as long-term hot data blocks, the eliminated data blocks are managed by using a fifth queue and are written in the SSD, and the fifth queue is used for managing the data blocks in the SSD.

Owner:XFUSION DIGITAL TECH CO LTD

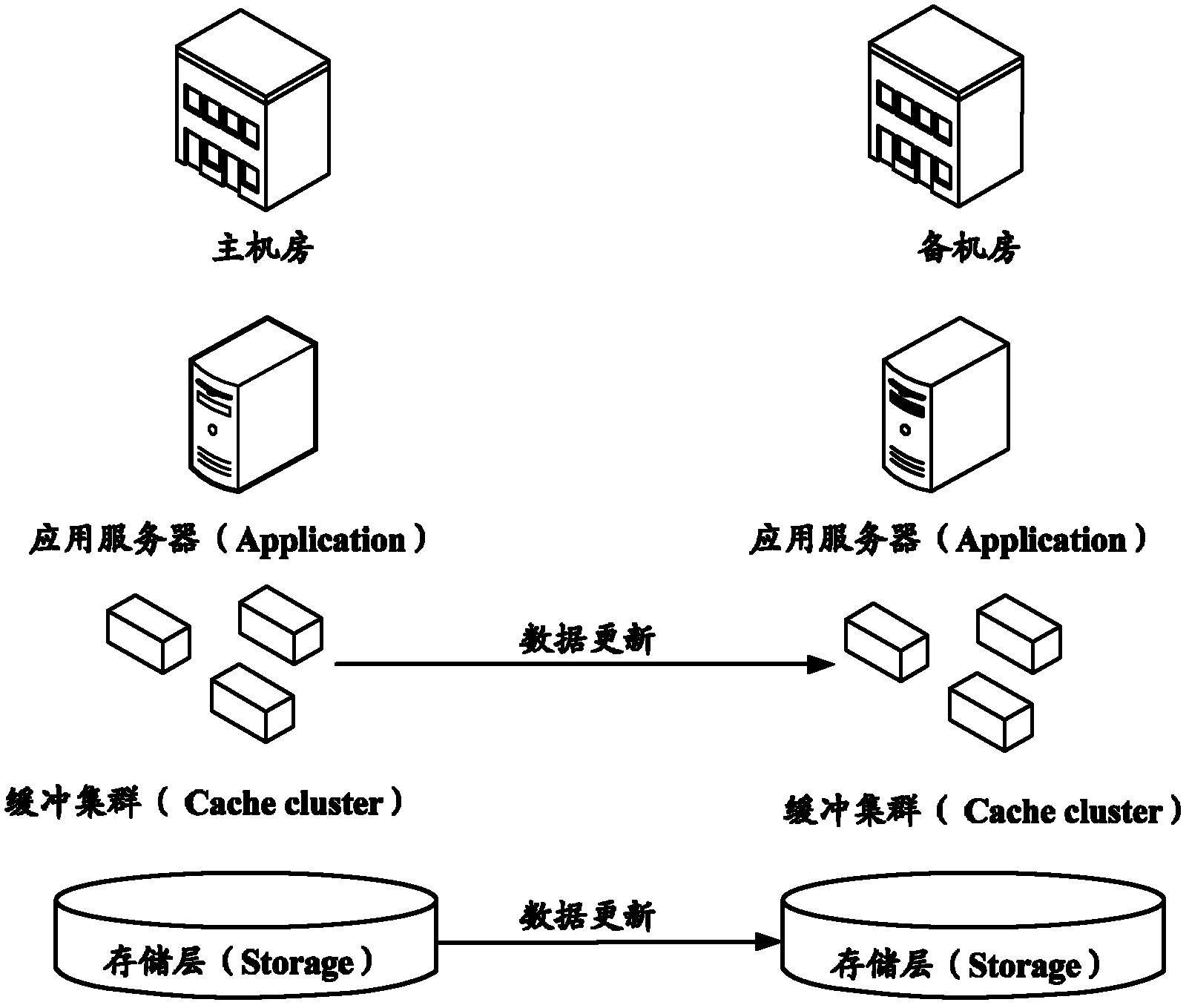

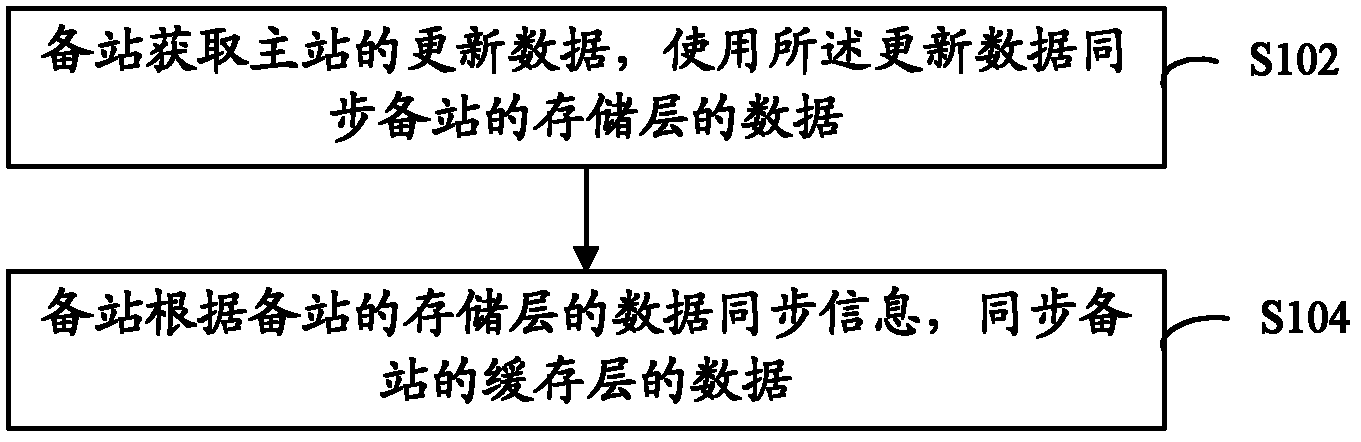

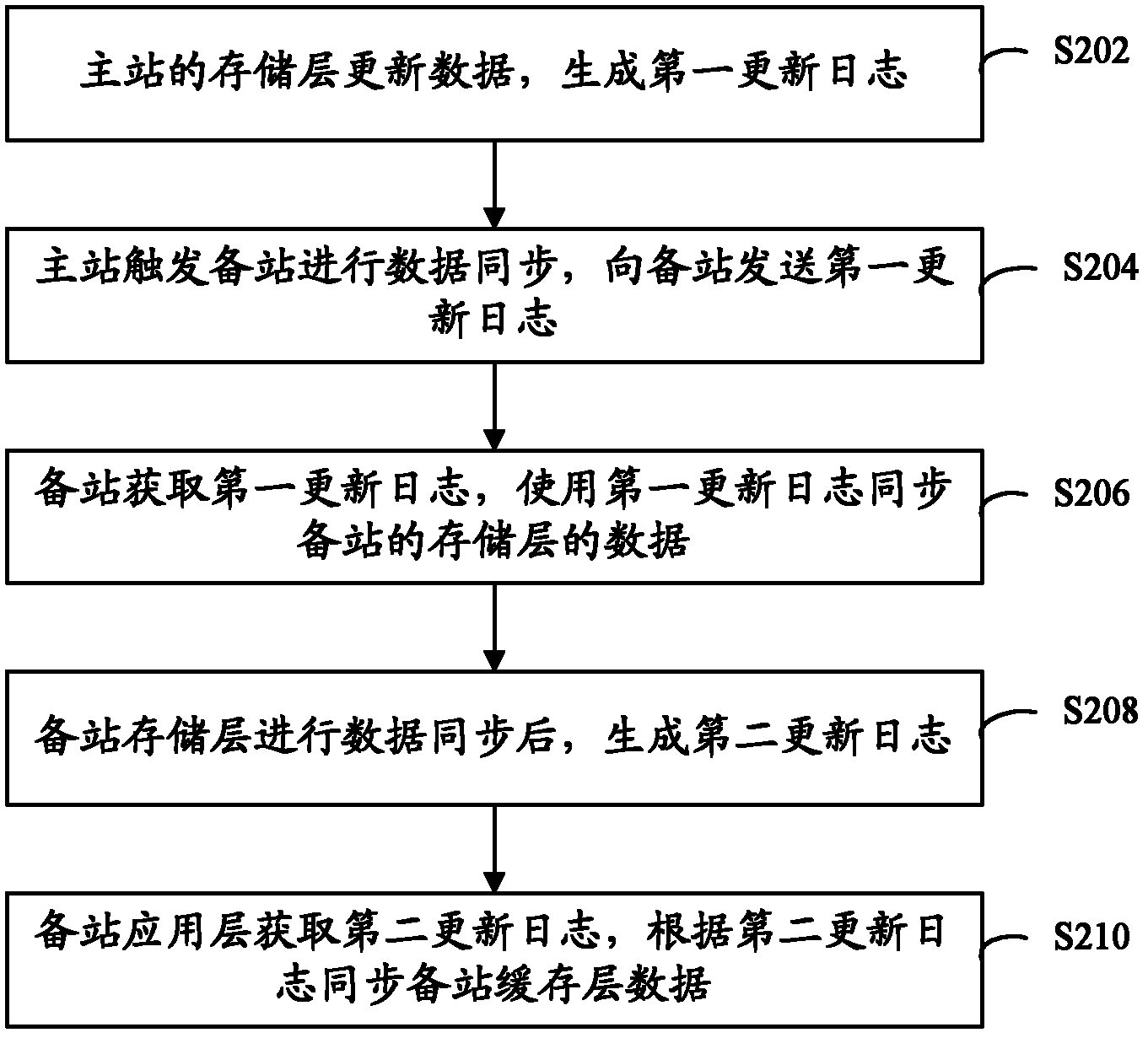

Data synchronizing method and data synchronizing system

ActiveCN103138912APrevent the occurrenceConsistent dataError preventionData switching networksData synchronizationDirty data

The invention provides a data synchronizing method and a data synchronizing system. The data synchronizing method comprises the steps that an auxiliary station acquires updated data information of a master station and utilizes the updated data information to synchronize data of a storage layer of the auxiliary station; and then data in a buffer memory layer of the auxiliary station is synchronized according to data synchronizing information of the storage layer of the auxiliary station. According to the data synchronizing method and the data synchronizing system, reliability of data in an auxiliary computer room is guaranteed, and generation of dirty data is avoided.

Owner:ALIBABA GRP HLDG LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com