Method and equipment for managing hybrid cache

A cache and device technology, applied in the information field, can solve the problem of low cache hit rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

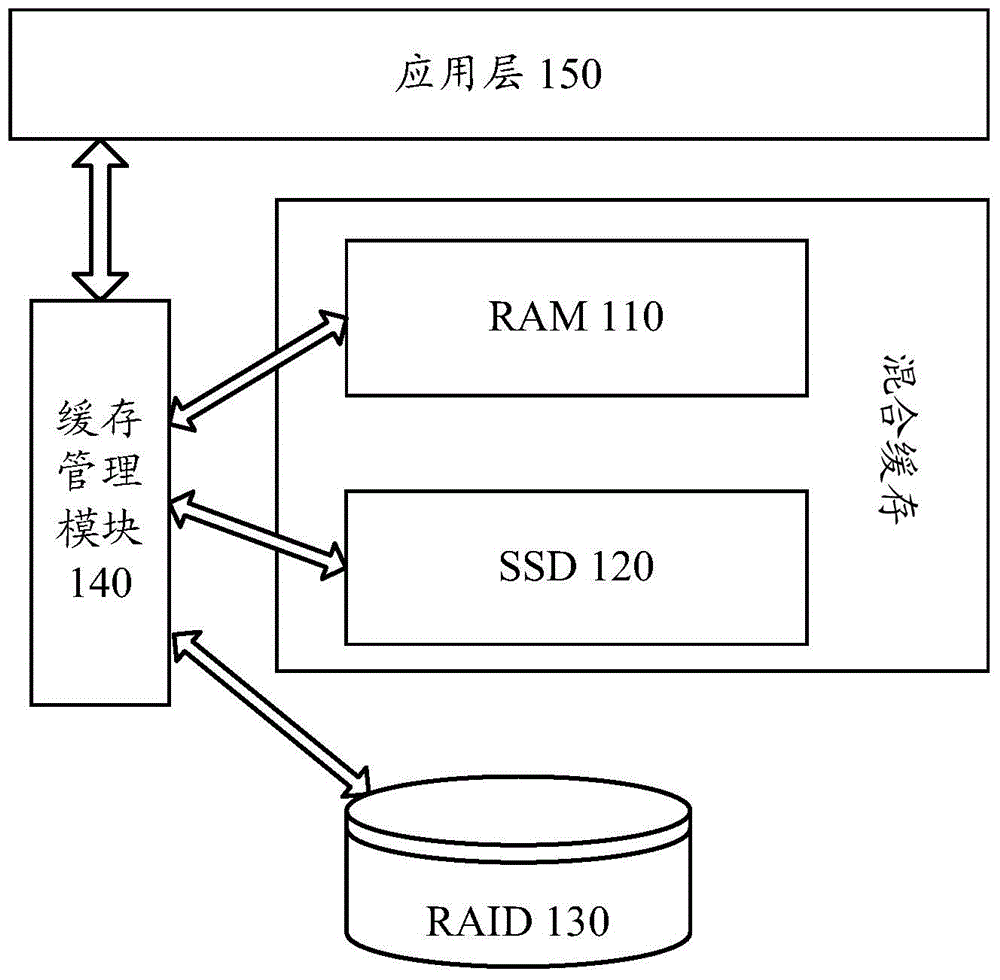

[0089] figure 1 It is a schematic diagram of the framework applicable to Embodiment 1 of the present invention.

[0090] like figure 1 As shown, in this architecture, the storage system includes RAM110, SSD120, and disk system, wherein, in order to ensure performance and reliability, the disk system generally forms RAID (of course, if the above advantages are not considered, RAID may not be constructed). An example is represented by RAID130 in the figure. RAM 110 and SSD 120 together form a mixed cache of RAID 130 .

[0091] figure 1 Among them, the cache management module 140 can manage the hybrid cache and RAID130. The cache management module is a logically divided module, and there are various forms of its realization. For example, the cache management module may be a software module running on the host, and is used to manage storage devices directly connected to the host (Direct Attached) or through a network (such as Storage Attached Network), including RAM and SSD s...

Embodiment 2

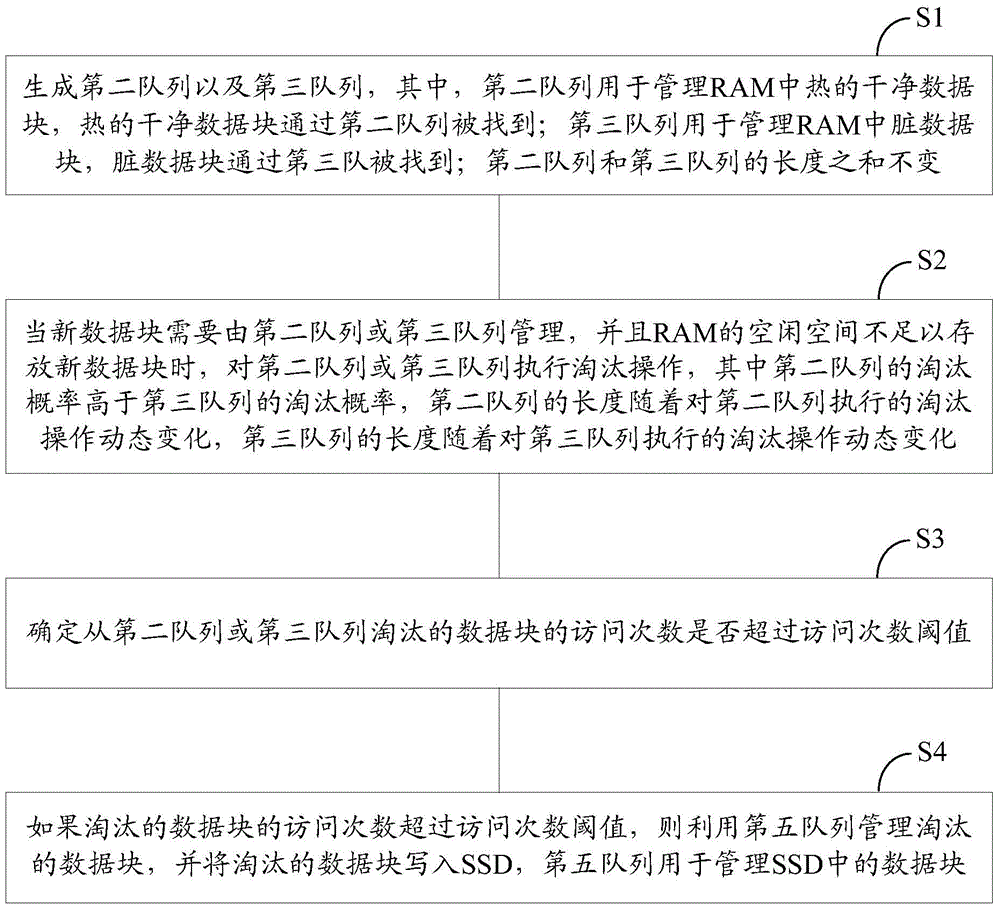

[0133] Based on Embodiment 1, the embodiment of the present invention specifically describes the above solution through a specific execution process, which specifically includes the following steps:

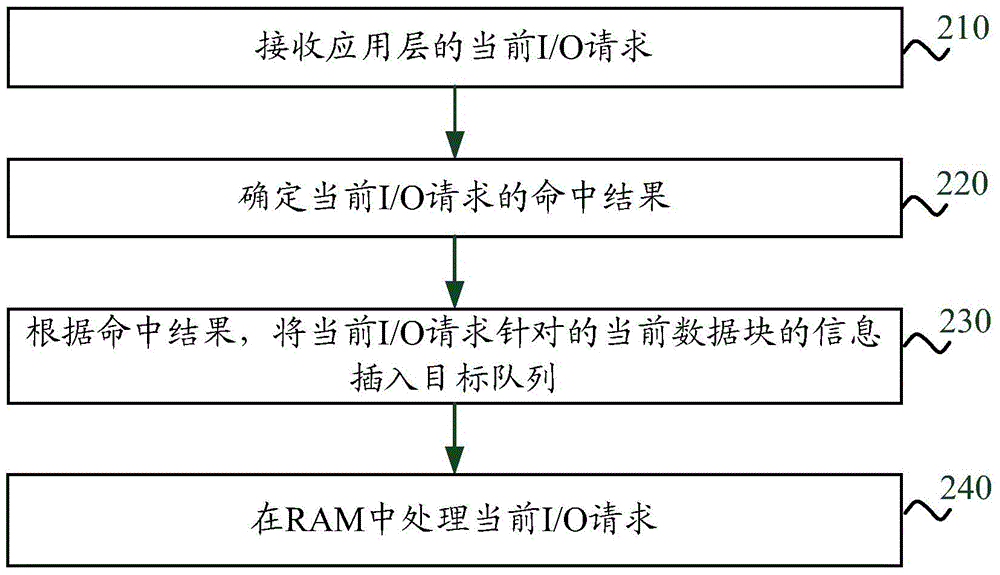

[0134] image 3 is a schematic flowchart of a method for managing a hybrid cache according to an embodiment of the present invention. image 3 The method is executed by the device that manages the mixed cache, for example it can be figure 1 The cache management module 140 shown in .

[0135] Hybrid cache includes RAM and SSD. RAM and SSD work together as the RAID cache.

[0136] 210. Receive the current I / O request of the application layer.

[0137] 220. Determine the hit result of the current I / O request, where the hit result is used to indicate whether the I / O request hits one of the first queue, the second queue, the third queue, the fourth queue, and the fifth queue, where the first The queue is used to record the information of the first part of data blocks in RAM, the ...

Embodiment 3

[0347] see Figure 16 , based on the above-mentioned embodiments, an embodiment of the present invention provides a device 300 for managing a hybrid cache, wherein the hybrid cache includes a random access memory (RAM) and a solid-state memory (SSD), and the RAM and SSD together serve as a disk system composed of one or more disks the cache;

[0348] The equipment includes:

[0349] The generating unit 301 is configured to generate a second queue, a third queue, and a fifth queue, wherein the second queue is used to manage hot clean data blocks in the RAM, and the hot clean data blocks are found through the second queue; the third queue Used to manage dirty data blocks in RAM, dirty data blocks are found through the third queue; the sum of the lengths of the second queue and the third queue remains unchanged; the fifth queue is used to manage data blocks in SSD;

[0350] Elimination unit 302, when there is a new data block that needs to be managed by the second queue or the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com