Patents

Literature

678 results about "Cache hit rate" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The hit rate is the number of cache hits divided by the total number of memory requests over a given time interval. The value is expressed as a percentage: The miss rate is similar in form: the total cache misses divided by the total number of memory requests expressed as a percentage over a time interval.

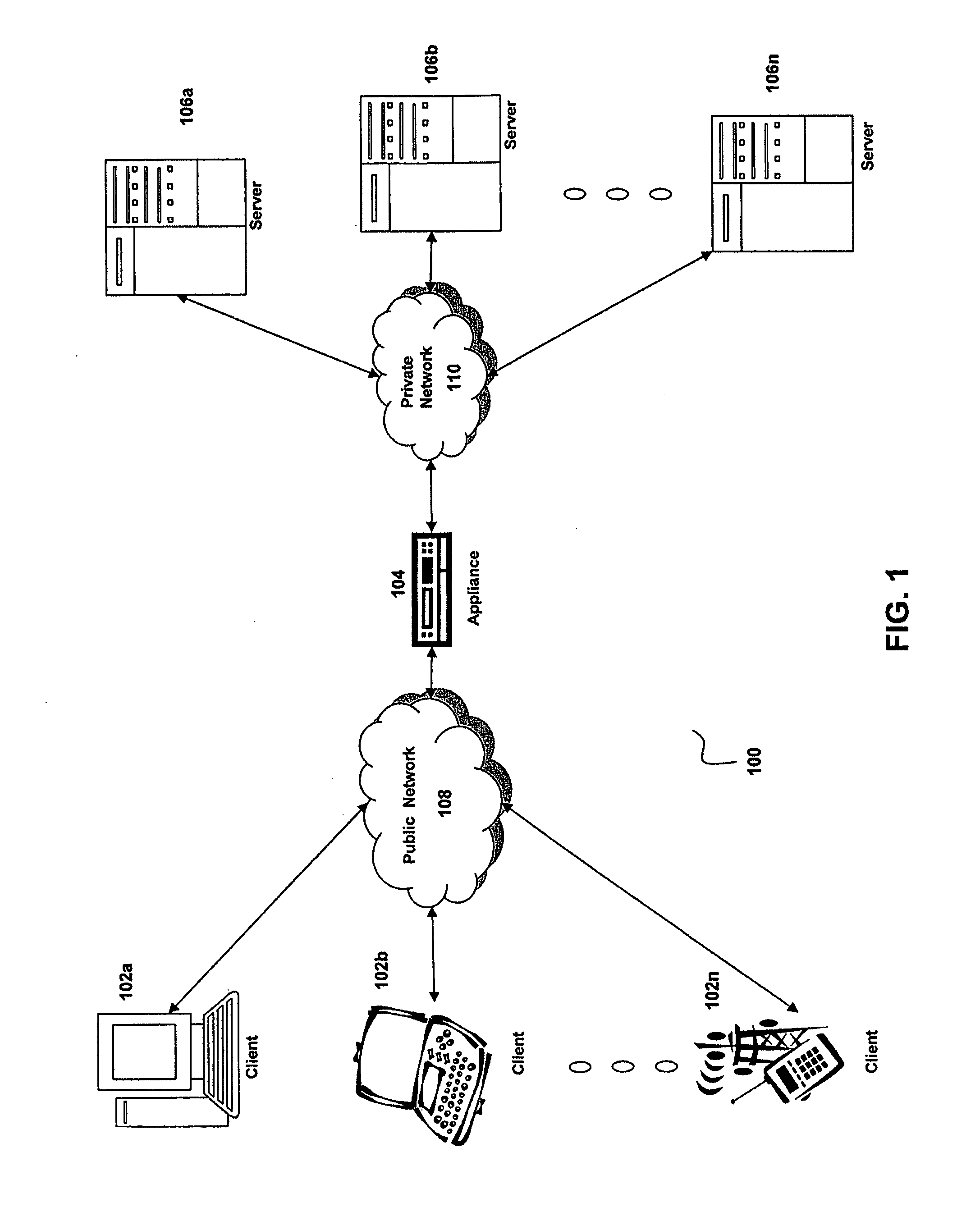

Proxy-based cache content distribution and affinity

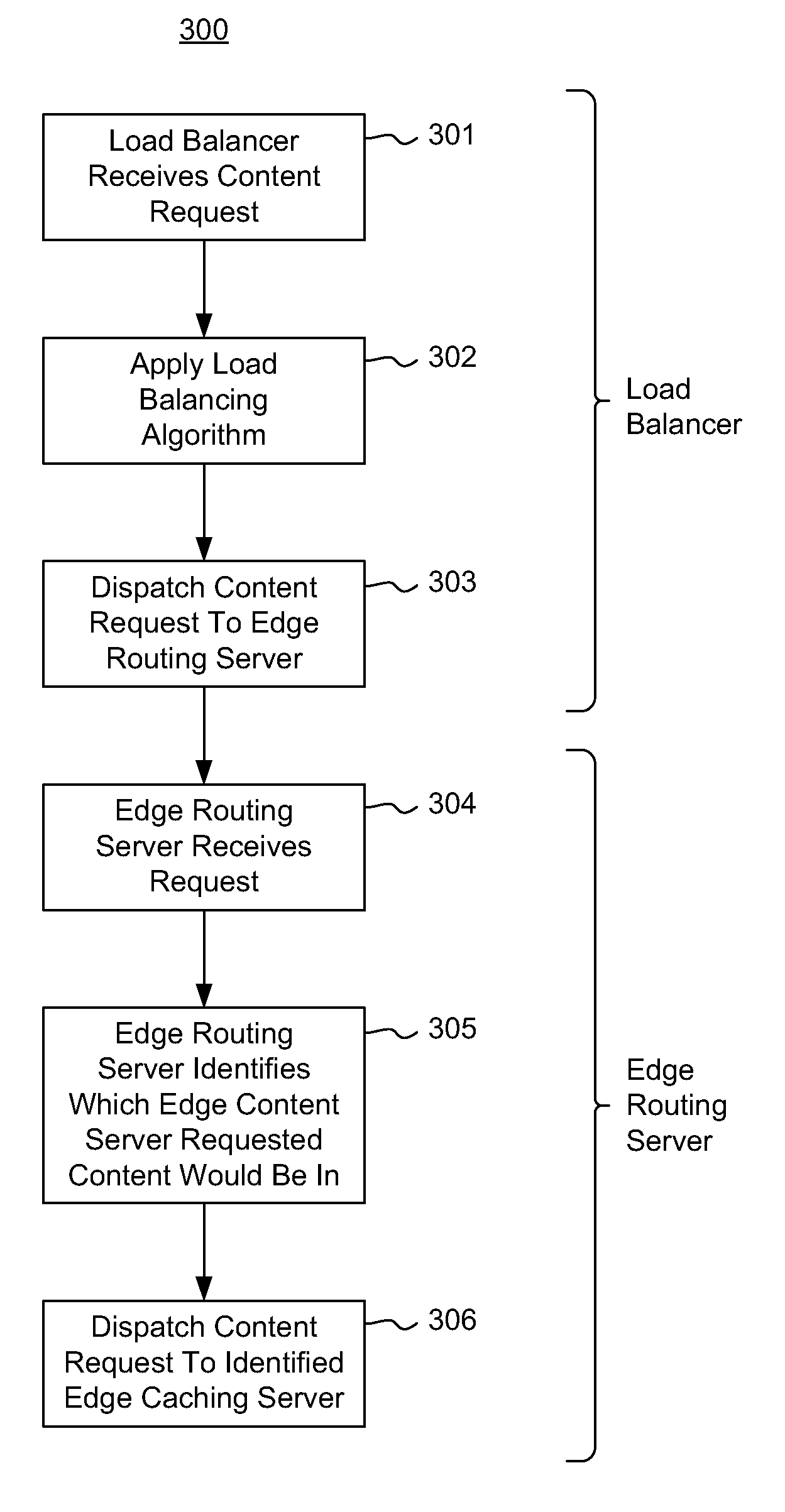

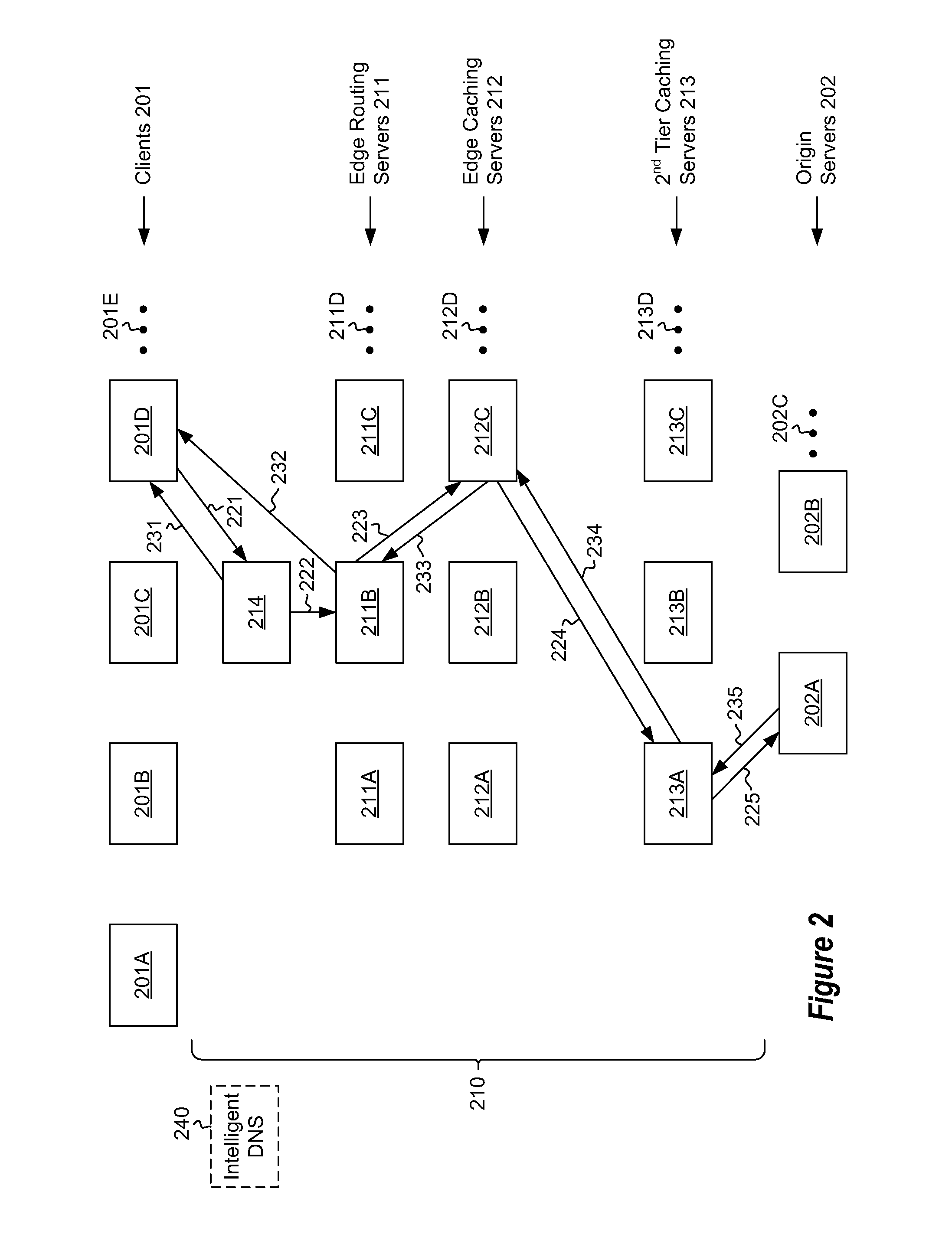

ActiveUS8612550B2Maximize likelihoodMultiple digital computer combinationsTransmissionContent distributionMultiple edges

A distributed caching hierarchy that includes multiple edge routing servers, at least some of which receiving content requests from client computing systems via a load balancer. When receiving a content request, an edge routing server identifies which of the edge caching servers the requested content would be in if the requested content were to be cached within the edge caching servers, and distributes the content request to the identified edge caching server in a deterministic and predictable manner to increase the likelihood of increasing a cache-hit ratio.

Owner:MICROSOFT TECH LICENSING LLC

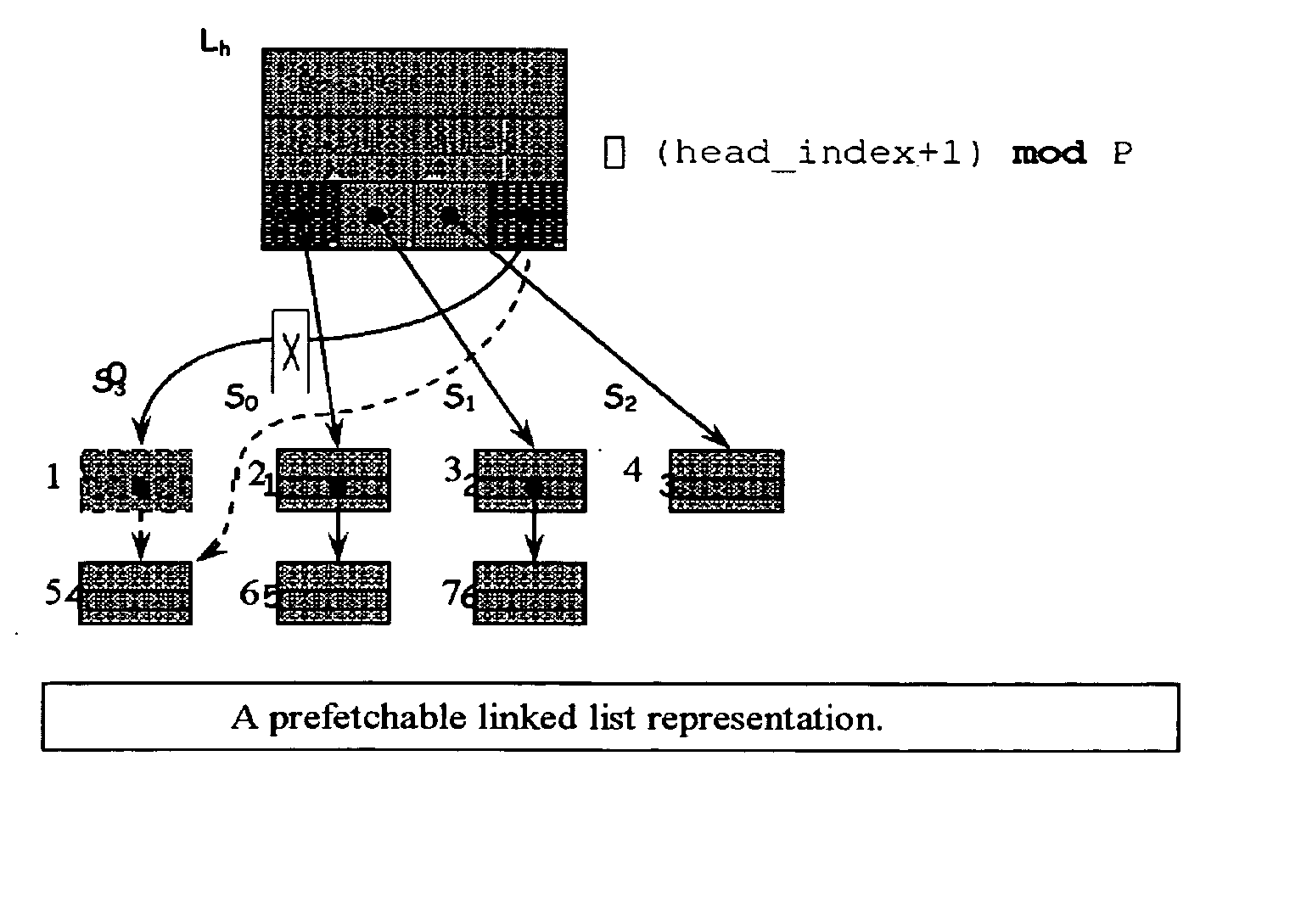

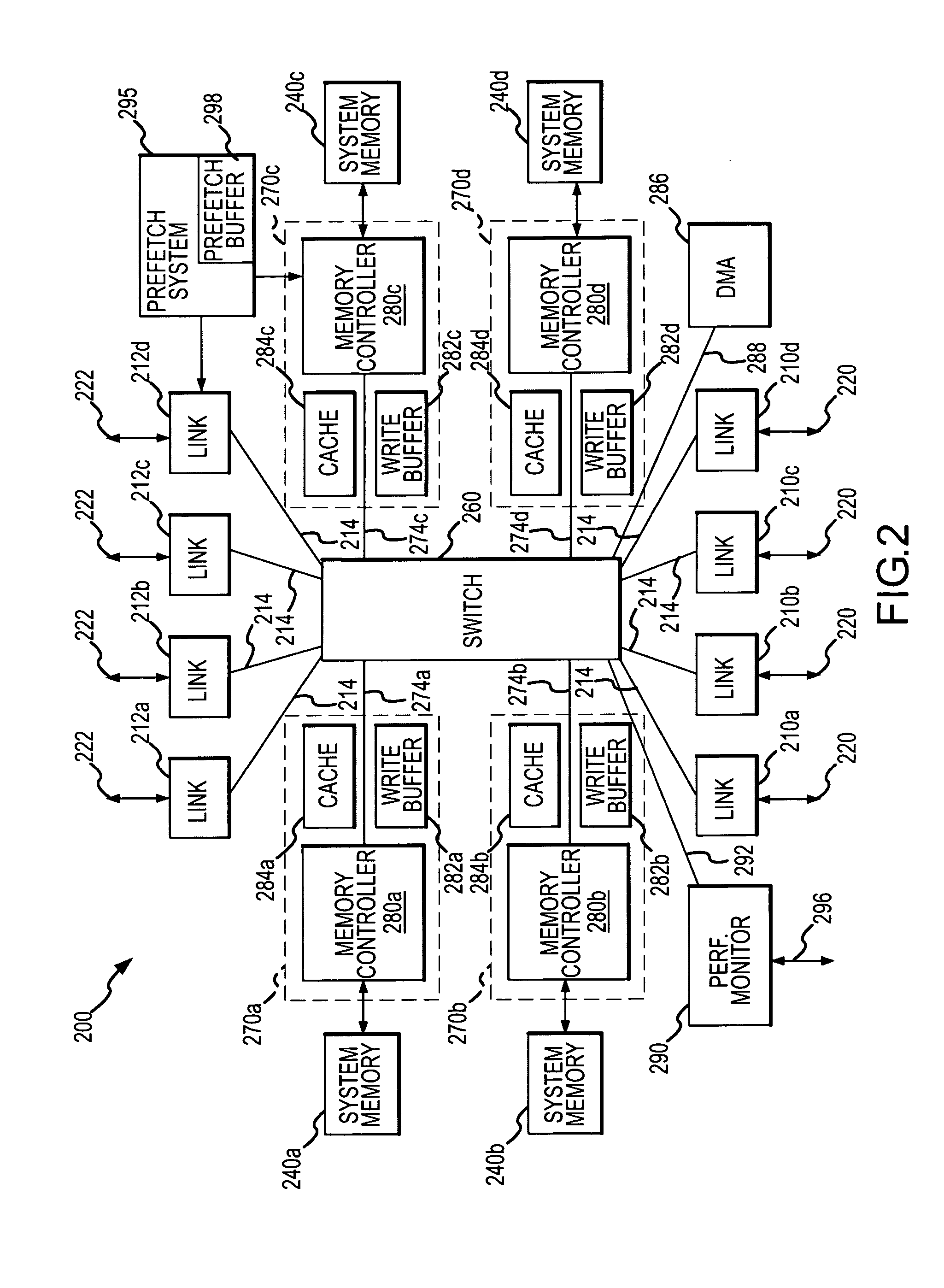

Method and apparatus for prefetching recursive data structures

InactiveUS6848029B2Improve cache hit ratioPotential throughput of the computer systemMemory architecture accessing/allocationMemory adressing/allocation/relocationApplication softwareCache hit rate

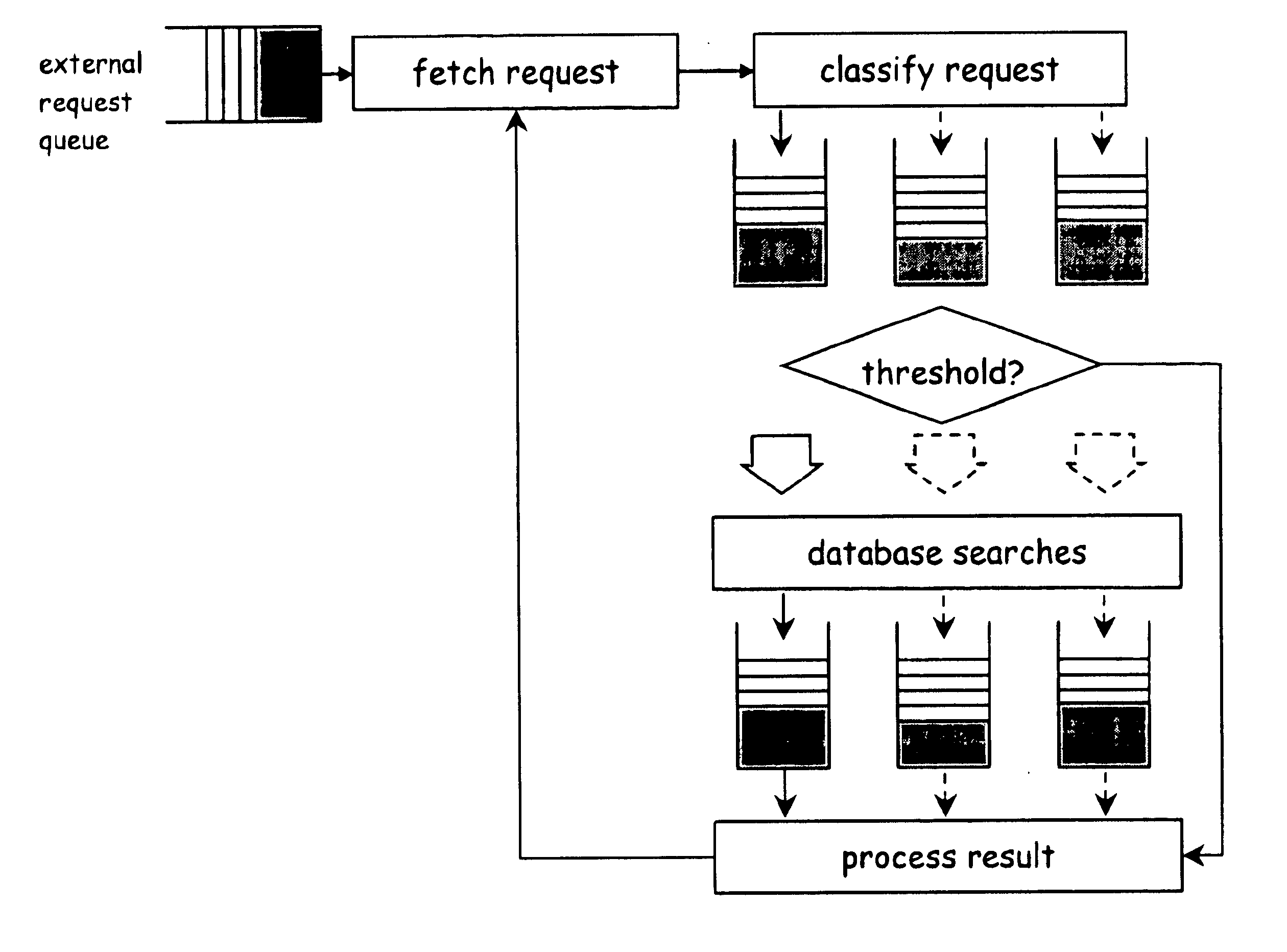

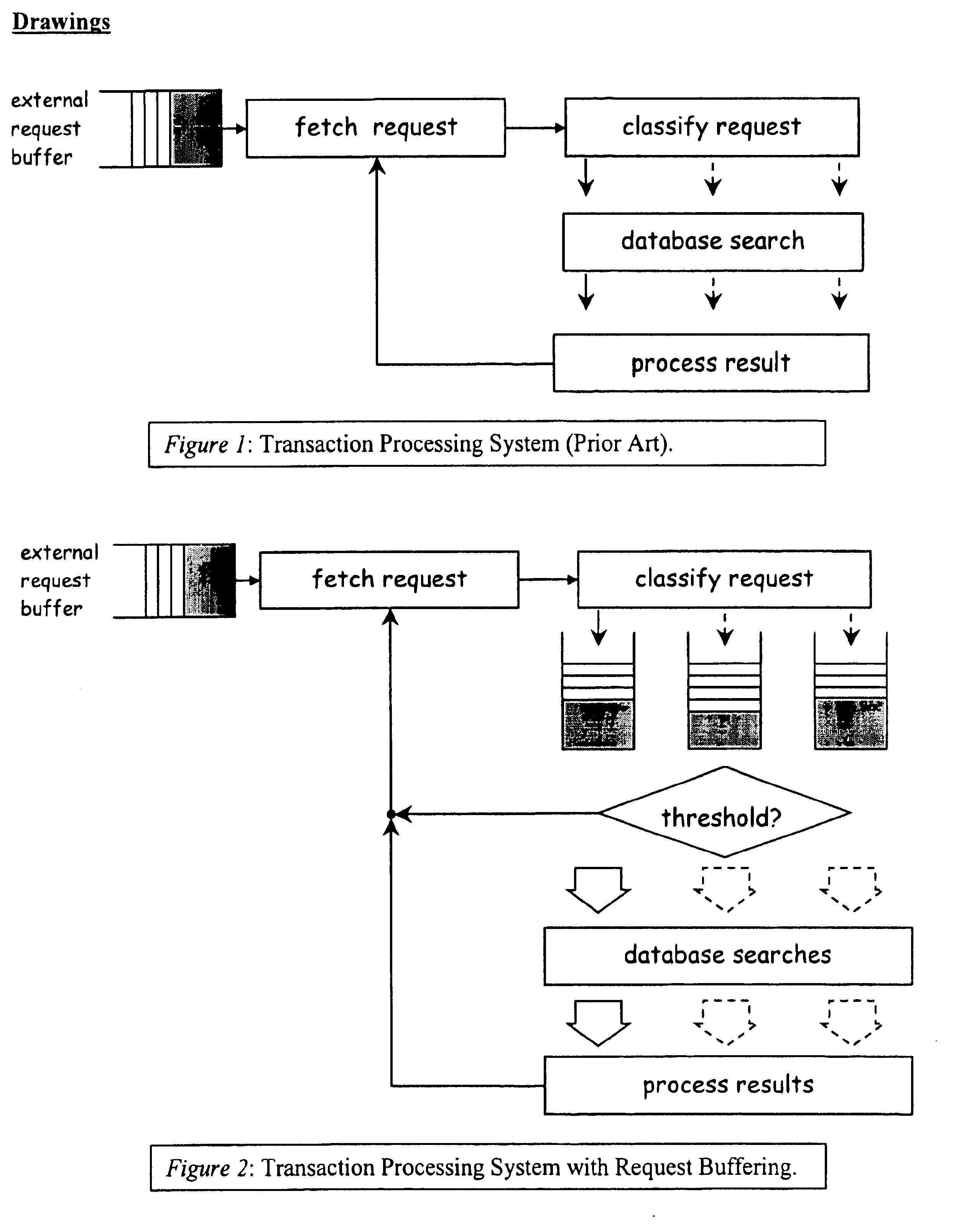

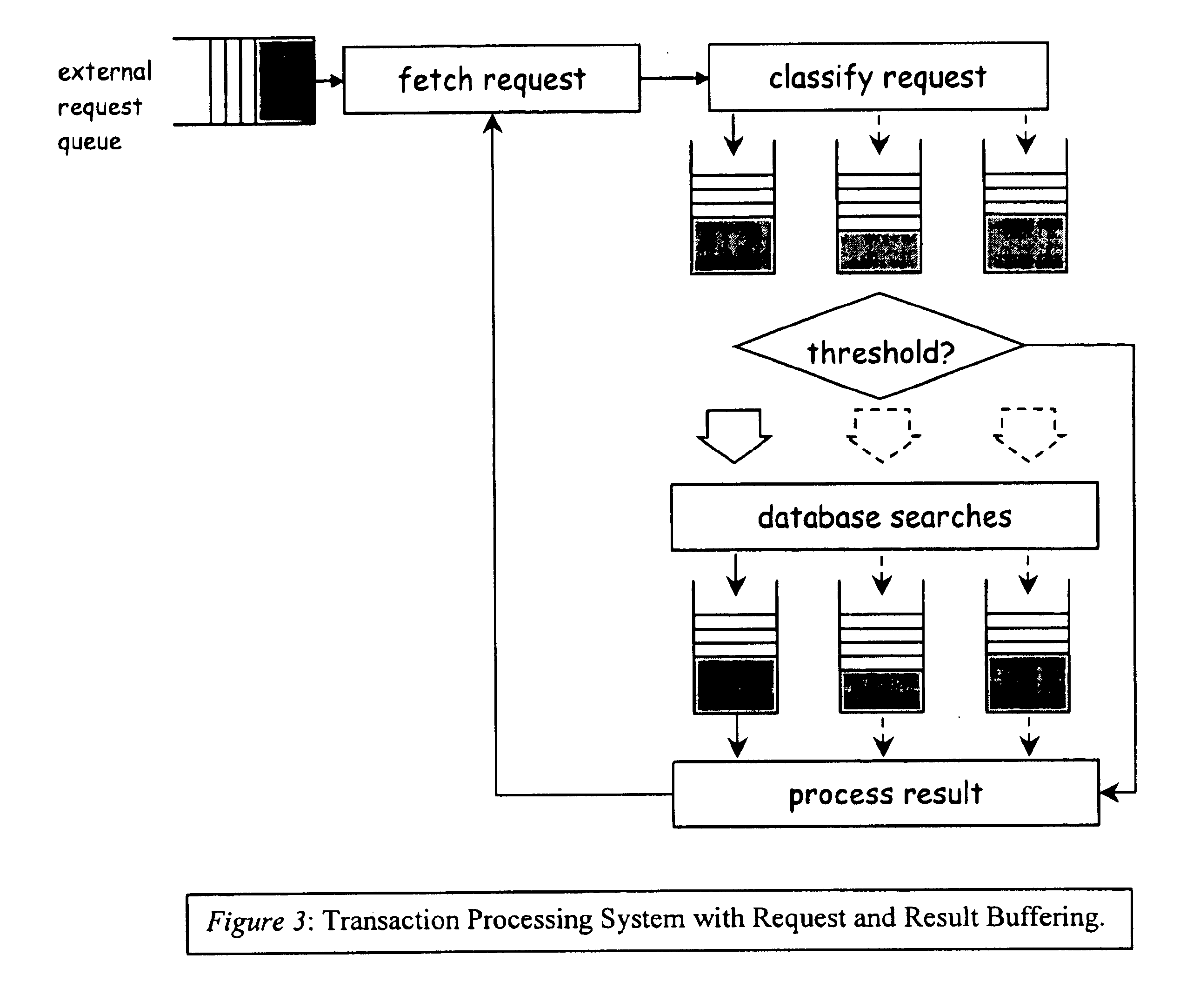

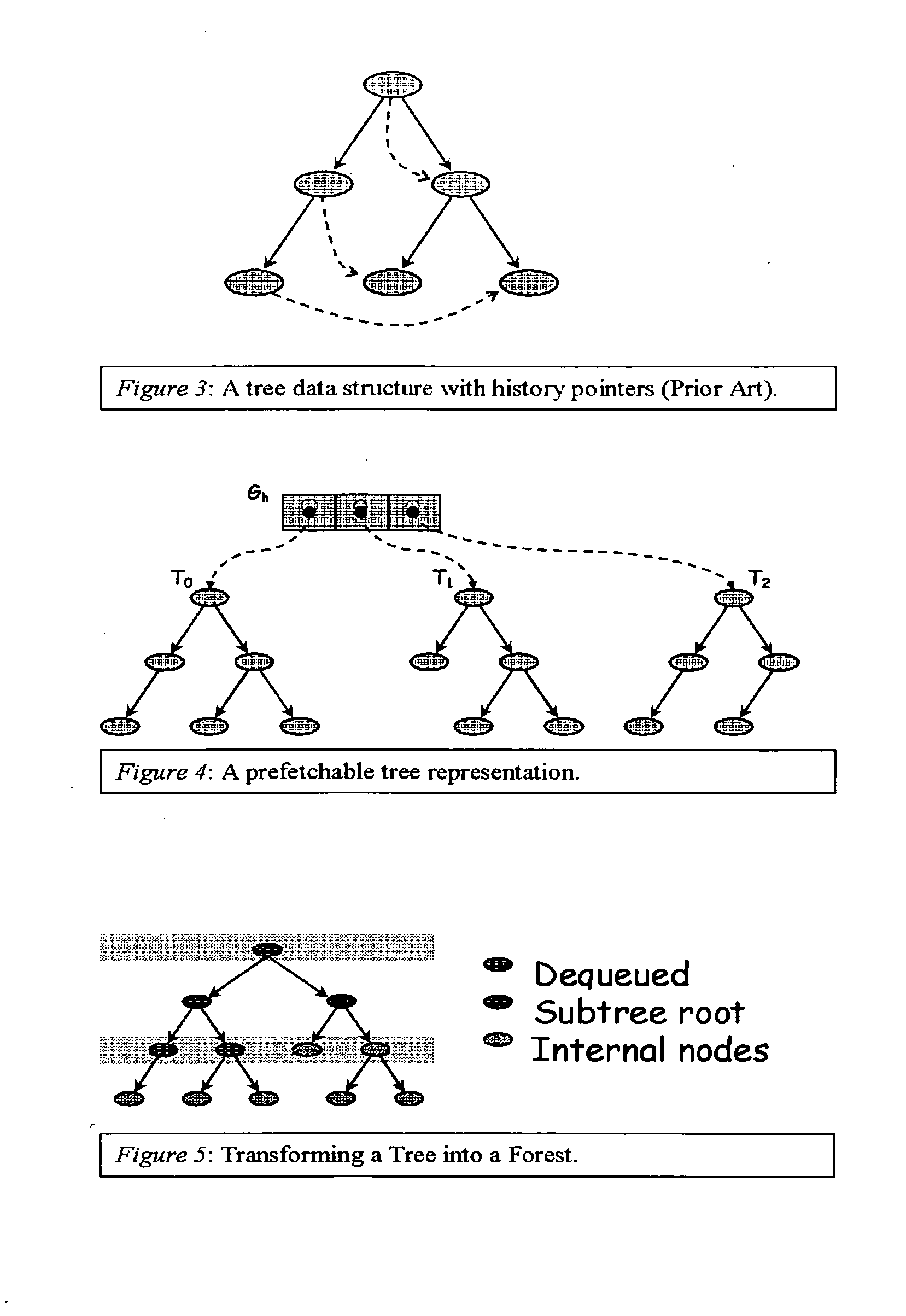

Computer systems are typically designed with multiple levels of memory hierarchy. Prefetching has been employed to overcome the latency of fetching data or instructions from or to memory. Prefetching works well for data structures with regular memory access patterns, but less so for data structures such as trees, hash tables, and other structures in which the datum that will be used is not known a priori. The present invention significantly increases the cache hit rates of many important data structure traversals, and thereby the potential throughput of the computer system and application in which it is employed. The invention is applicable to those data structure accesses in which the traversal path is dynamically determined. The invention does this by aggregating traversal requests and then pipelining the traversal of aggregated requests on the data structure. Once enough traversal requests have been accumulated so that most of the memory latency can be hidden by prefetching the accumulated requests, the data structure is traversed by performing software pipelining on some or all of the accumulated requests. As requests are completed and retired from the set of requests that are being traversed, additional accumulated requests are added to that set. This process is repeated until either an upper threshold of processed requests or a lower threshold of residual accumulated requests has been reached. At that point, the traversal results may be processed.

Owner:DIGITAL CACHE LLC +1

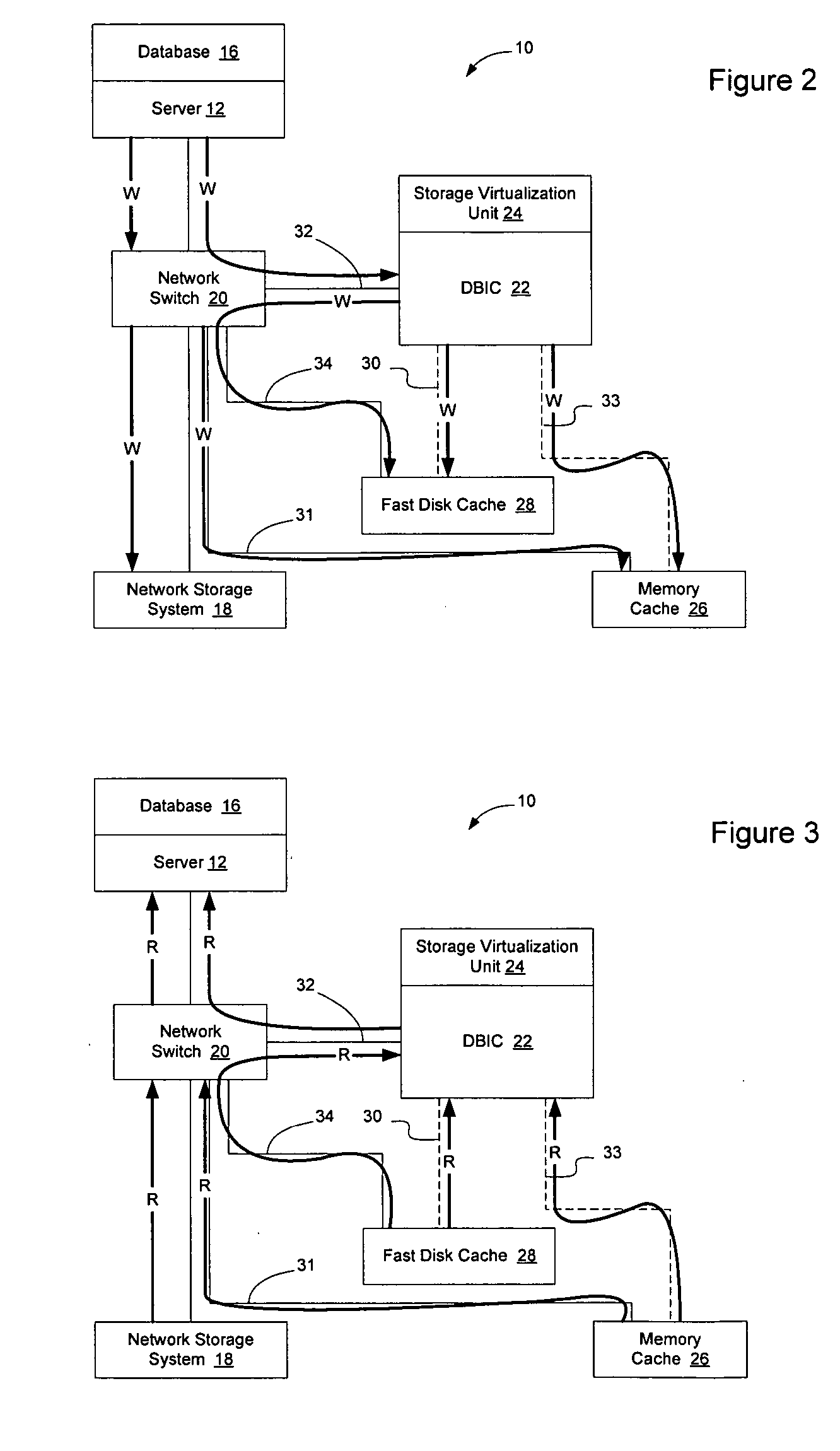

Method and apparatus for accelerating data access operations in a database system

InactiveUS20050198062A1Accelerating data access operationEfficiently streamedDigital data information retrievalSpecial data processing applicationsData operationsData access

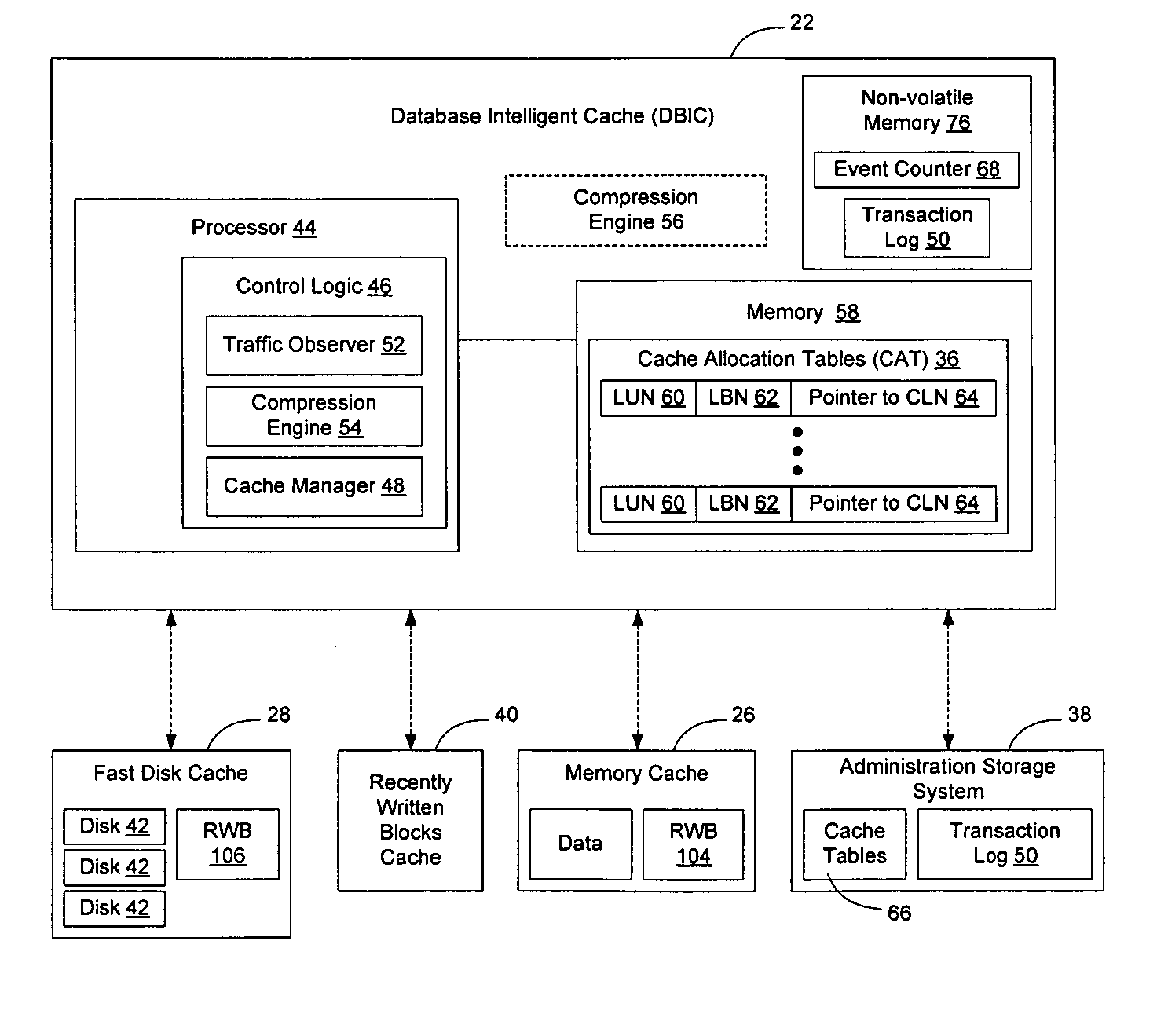

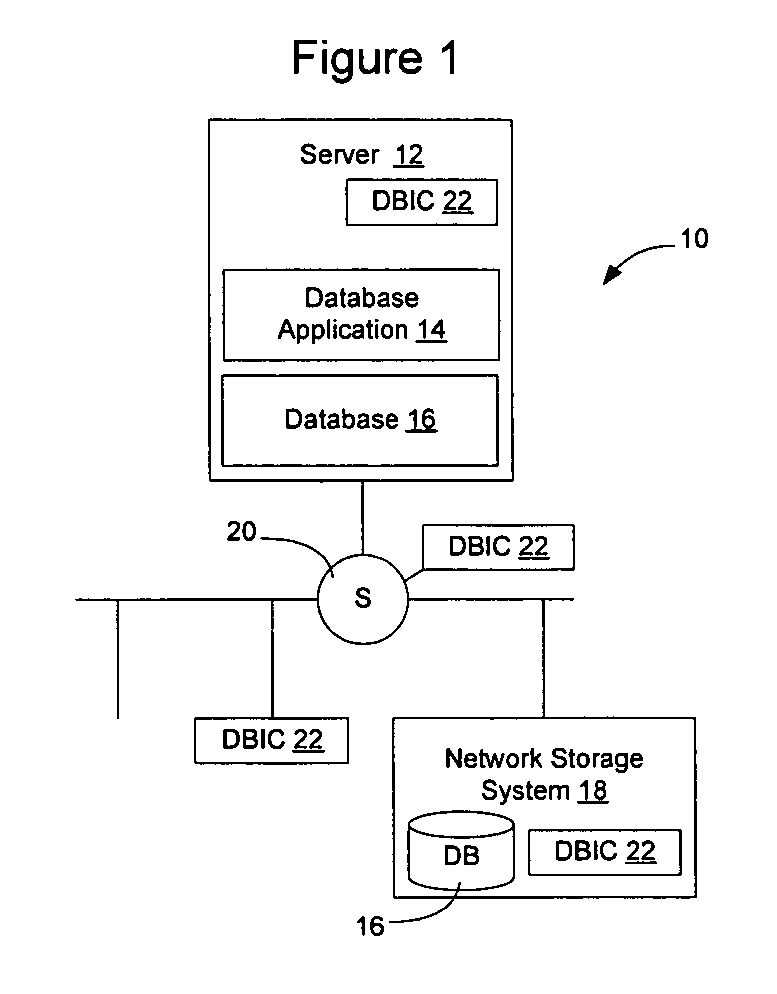

Data access operations in a database system may be accelerated by allowing the memory cache to be supplemented with a disk cache. The disk cache can store data that isn't able to fit in the memory cache and, since it doesn't contain the primary copy of the data for the database, may organize the data in such a way that the data is able to be streamed from the disks in response to data read operations. The reduced number of read data operations allows the data to be read from the disk cache faster than it could be served from the primary storage facilities, which might not allow the data to be organized in the same manner. The cache hit ratio may be increased by compressing data prior to storing it in the cache. Additionally, where a particular portion of data stored on the disk cache is being used heavily, that portion may be pulled into memory cache to accelerate access to that portion of data.

Owner:SHAPIRO RICHARD B

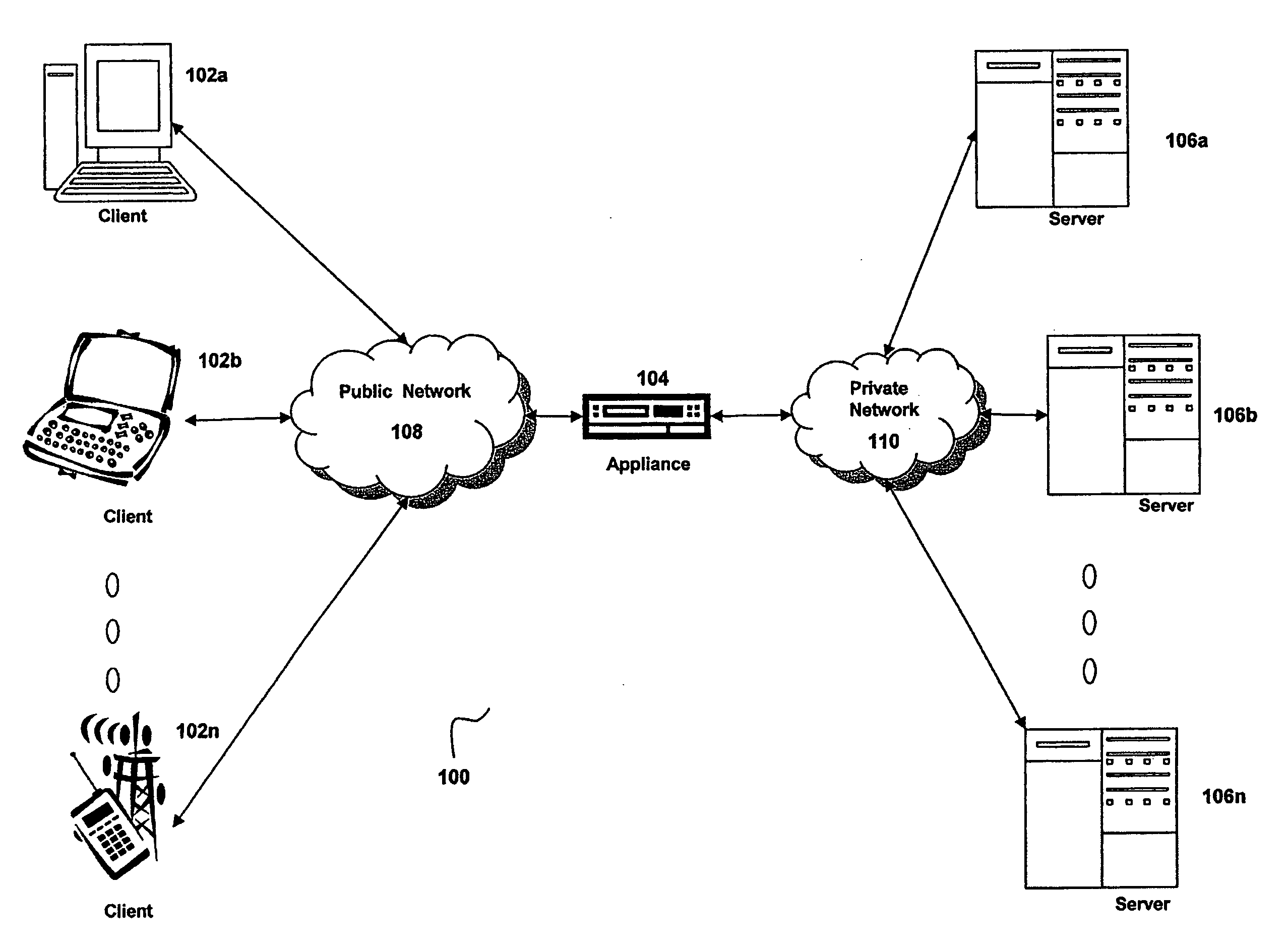

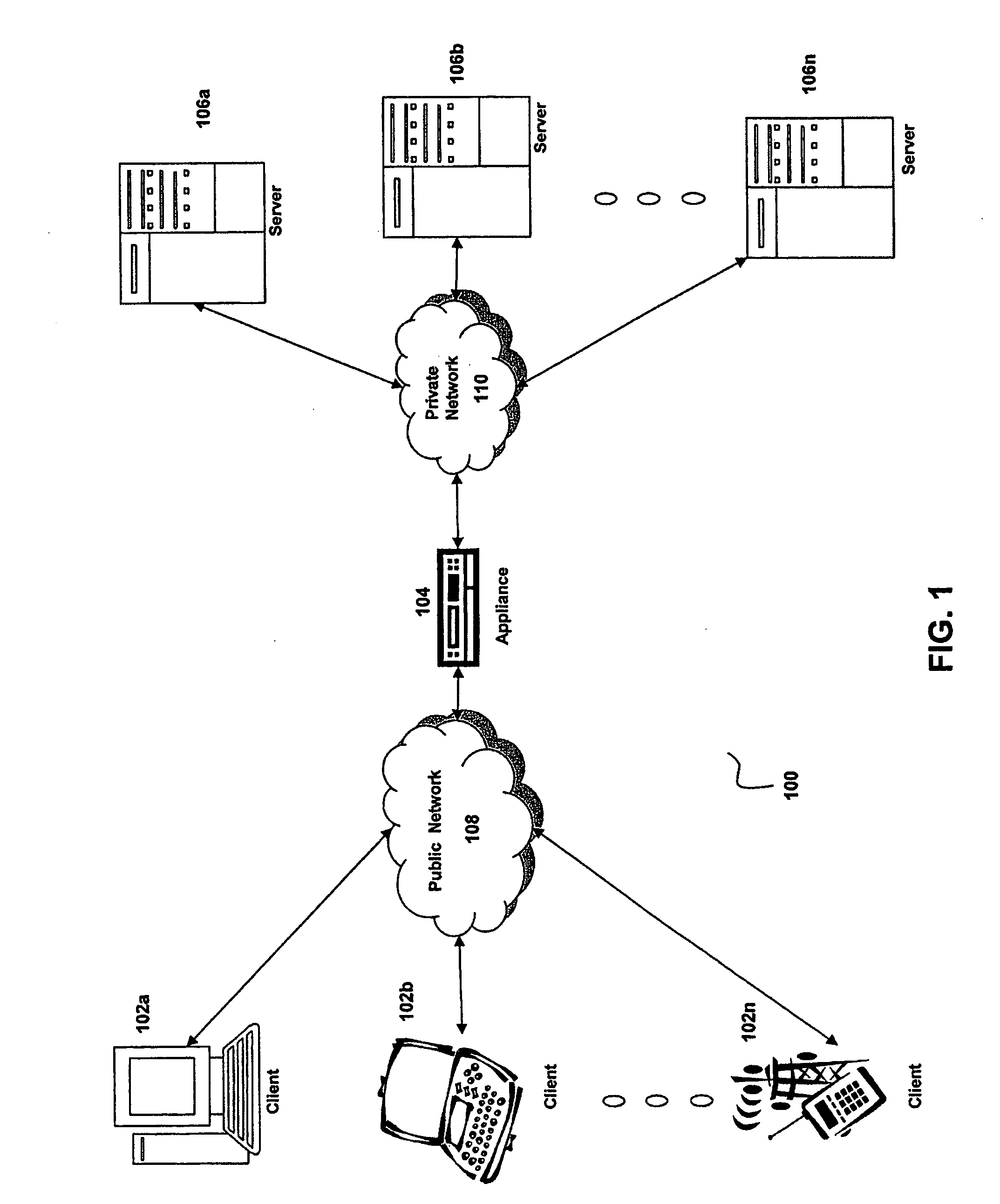

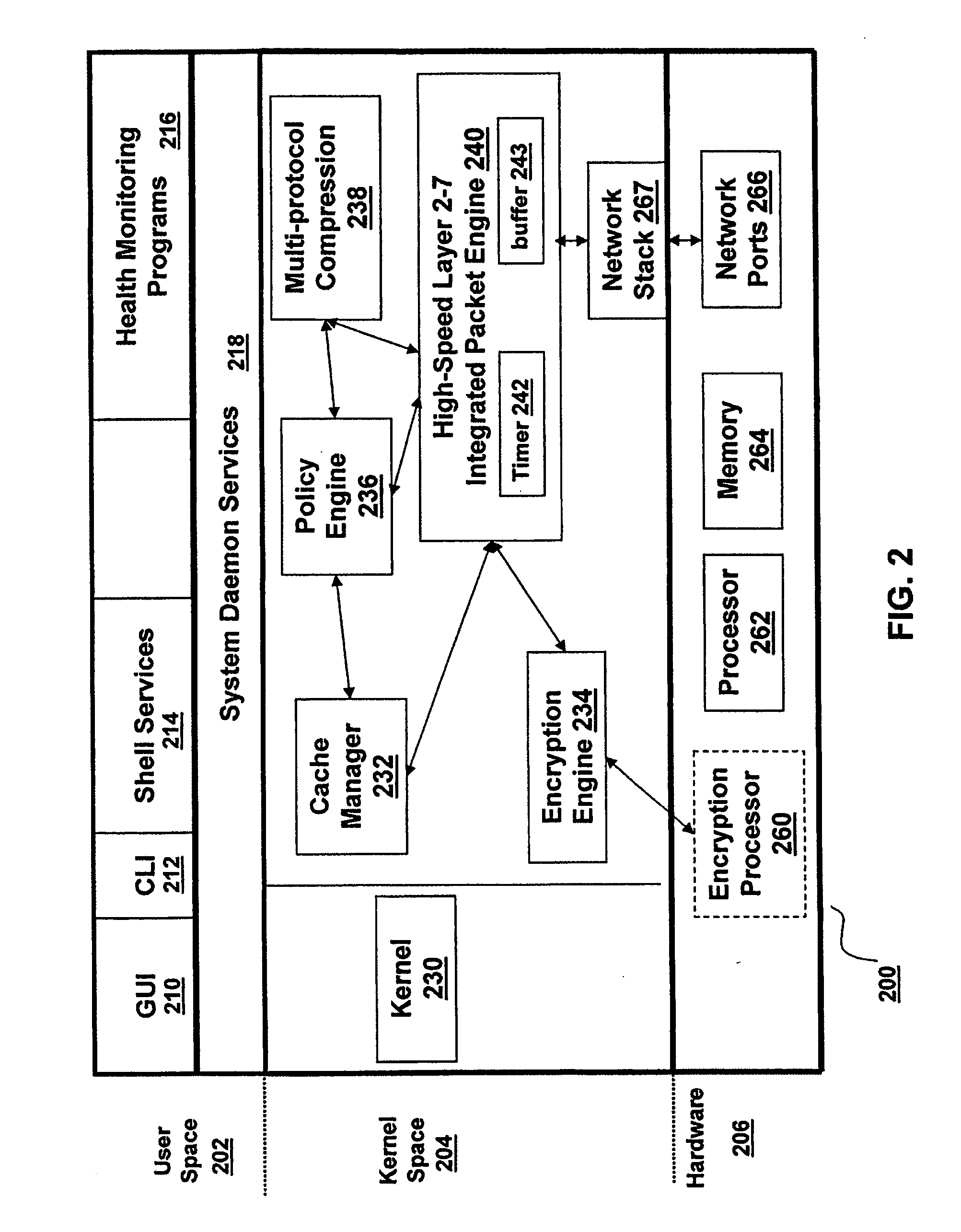

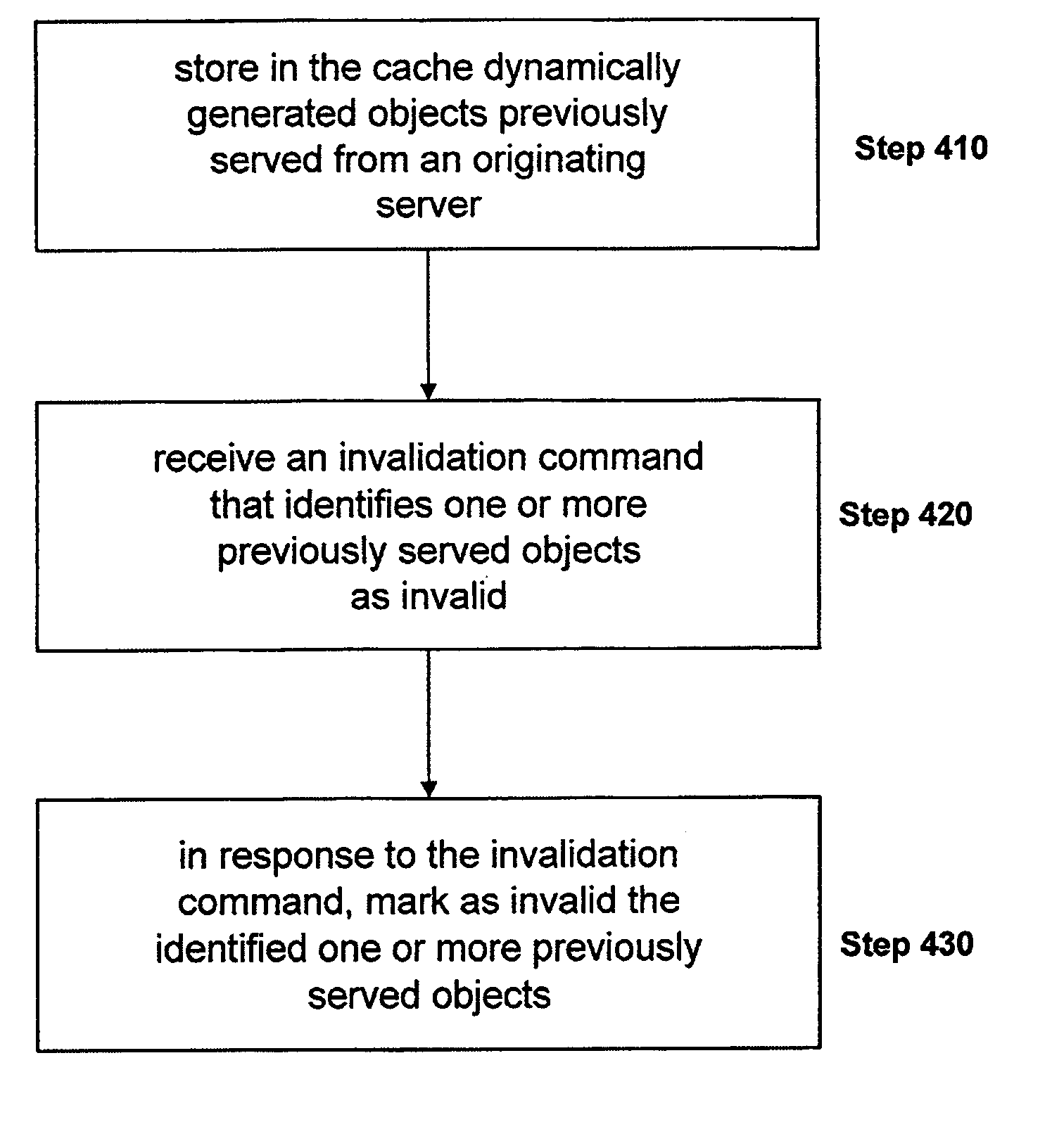

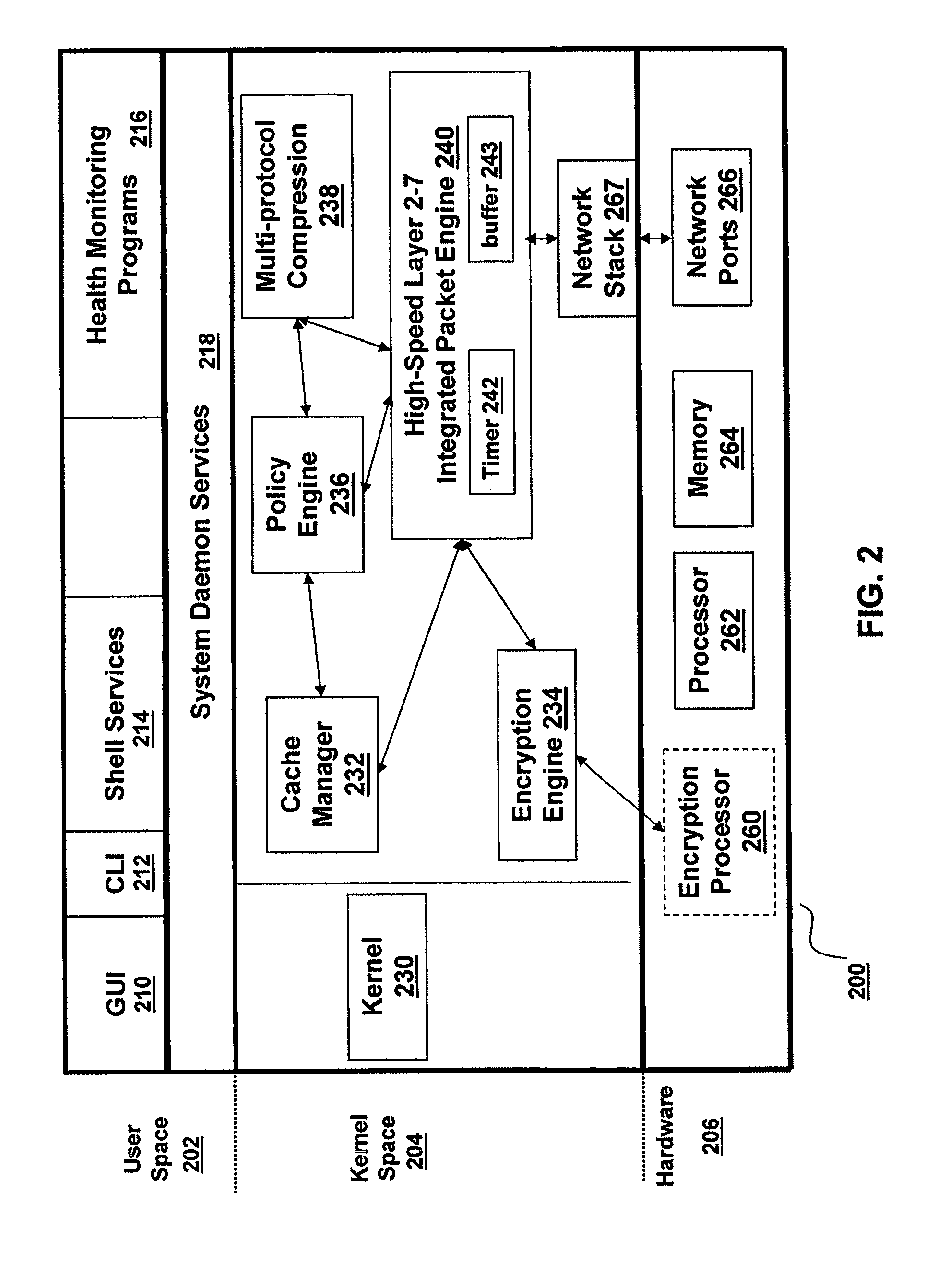

System and method for performing flash caching of dynamically generated objects in a data communication network

ActiveUS20070156876A1Improve cache hit ratioQuick changeMultiprogramming arrangementsMultiple digital computer combinationsObject storeClient-side

The present invention is directed towards a method and system for providing a technique referred to as flash caching to respond to requests for an object, such as a dynamically generated object, from multiple clients. This technique of the present invention uses a dynamically generated object stored in a buffer for transmission to a client, for example in response to a request from the client, to also respond to additional requests for the dynamically generated object from other clients while the object is stored in the buffer. Using this technique, the present invention is able to increase cache hit rates for extremely fast changing dynamically generated objects that may not otherwise be cacheable.

Owner:CITRIX SYST INC

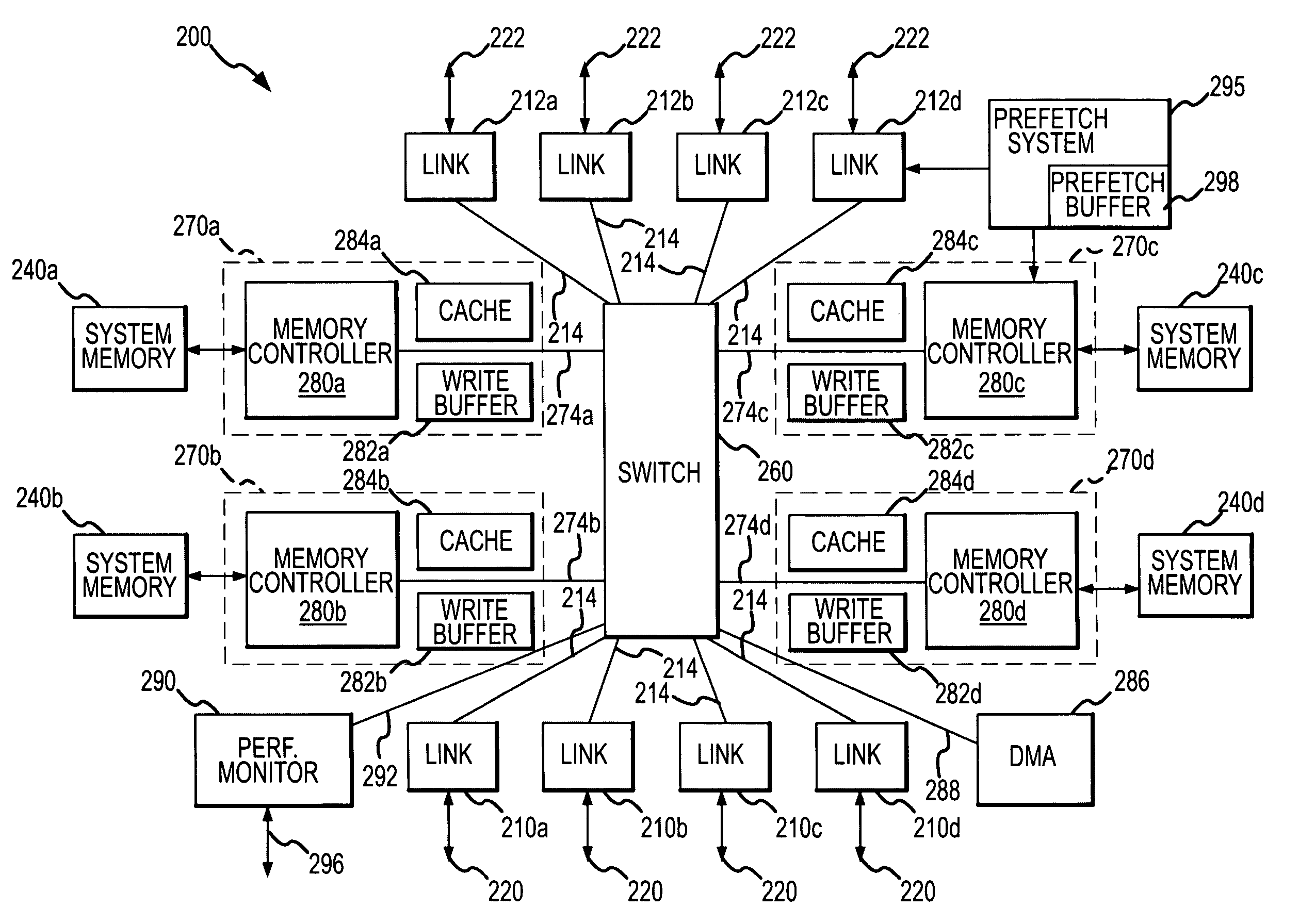

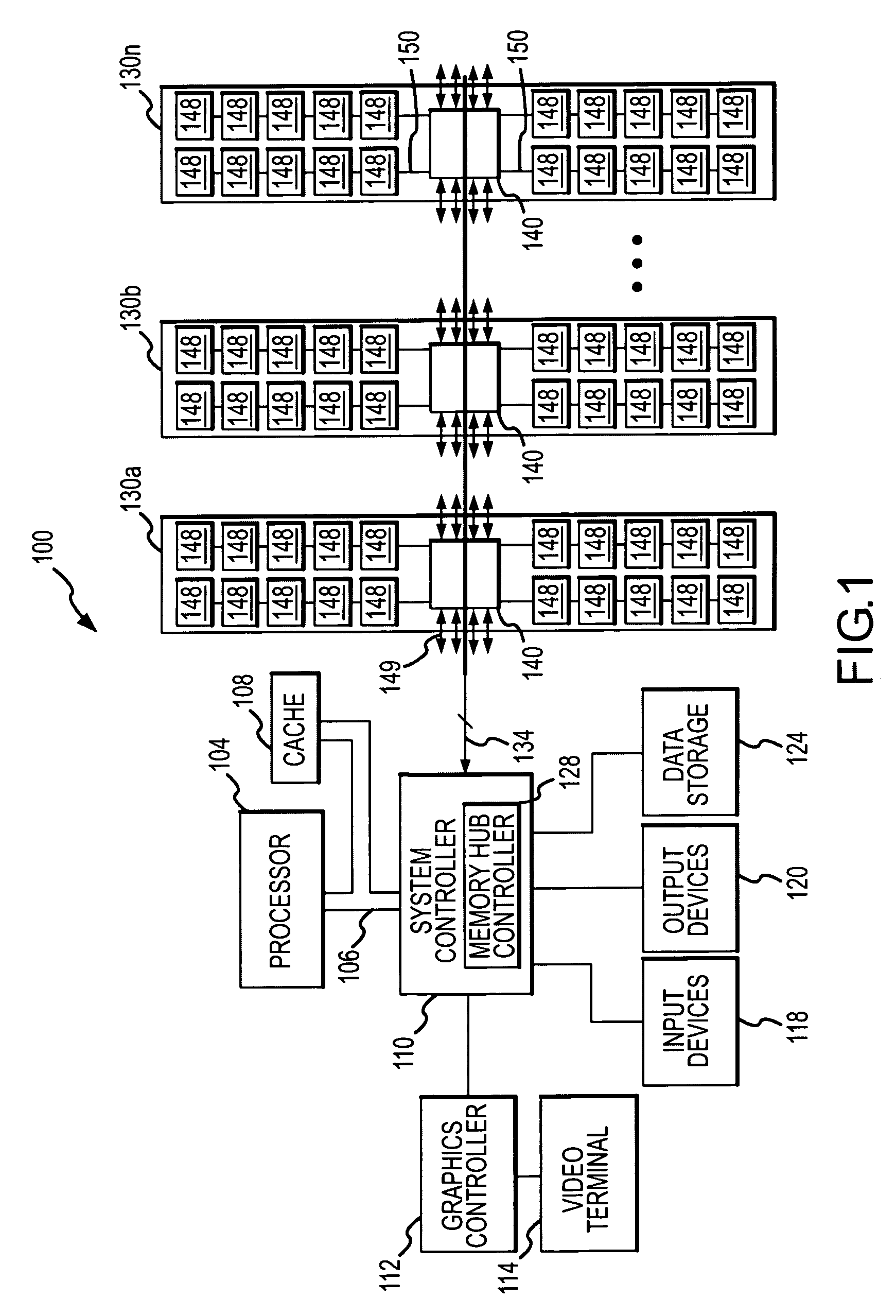

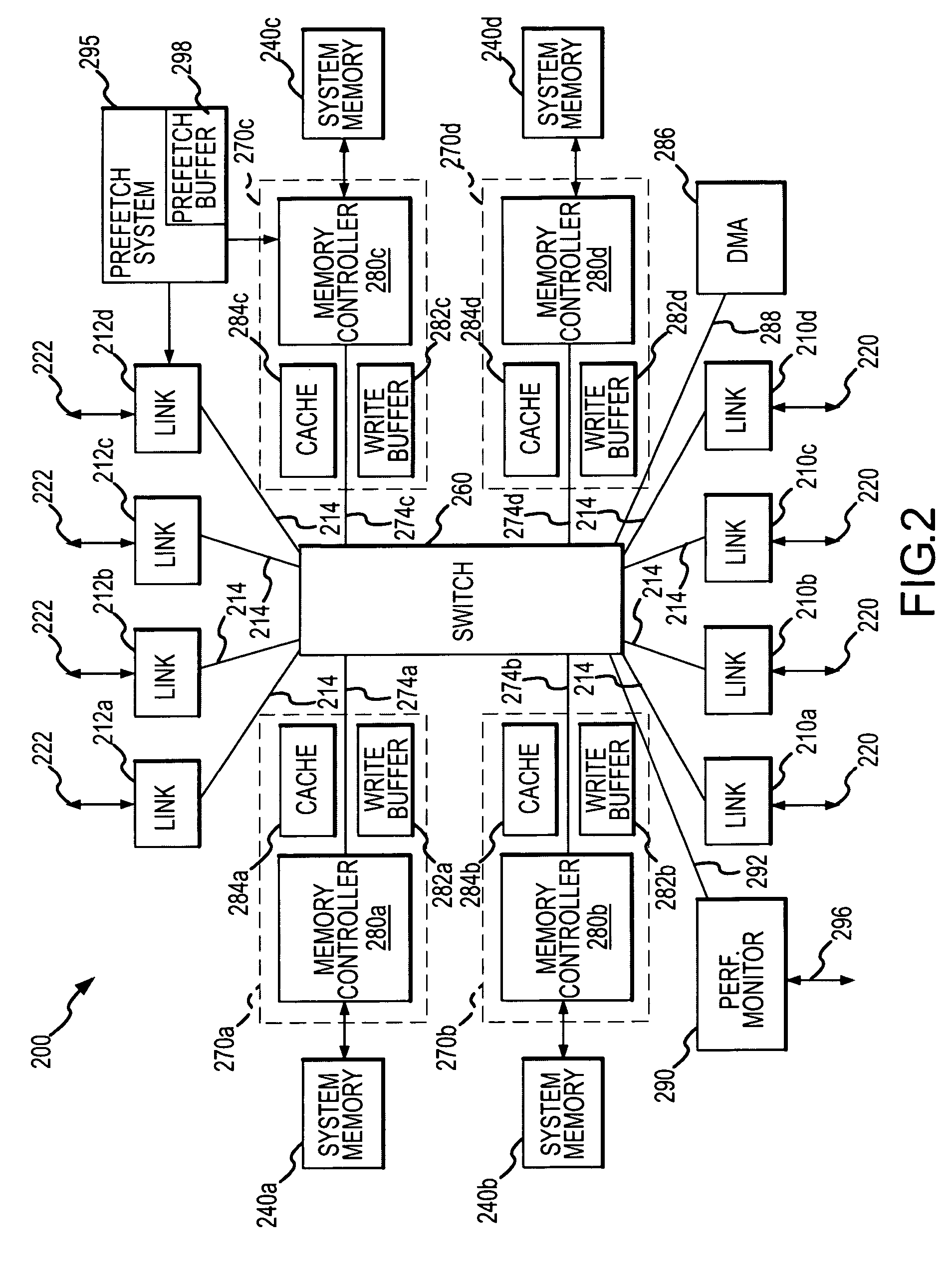

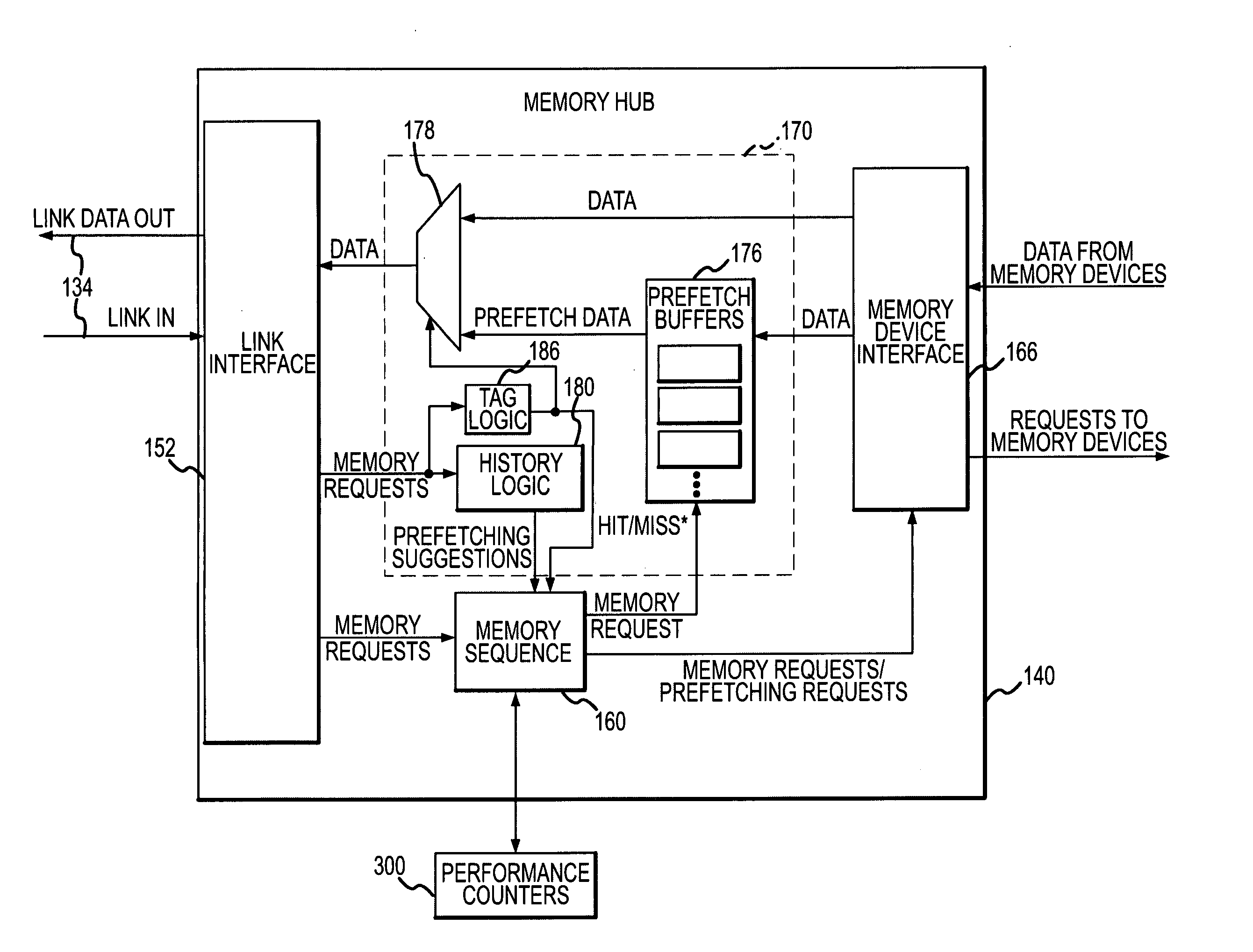

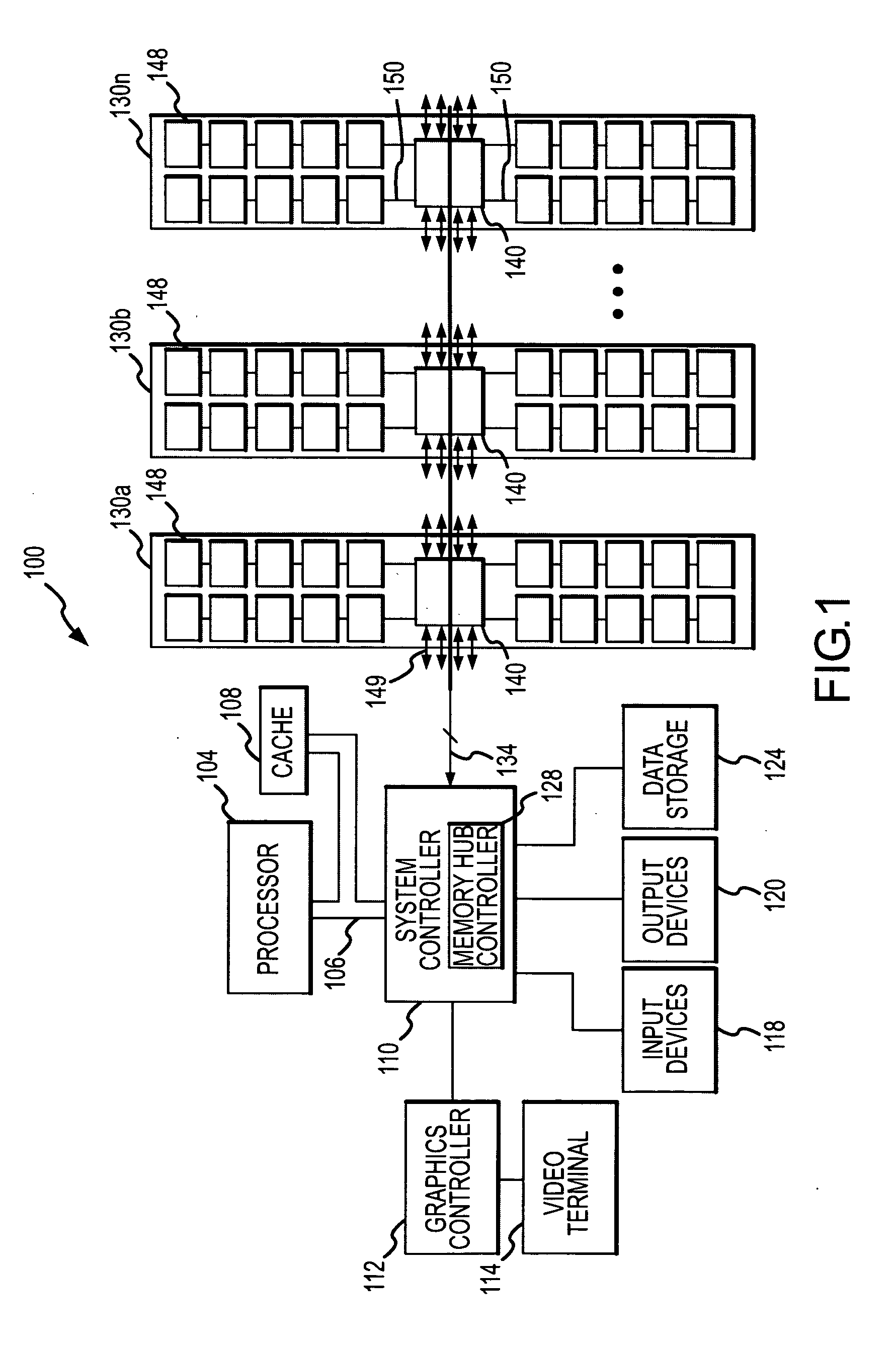

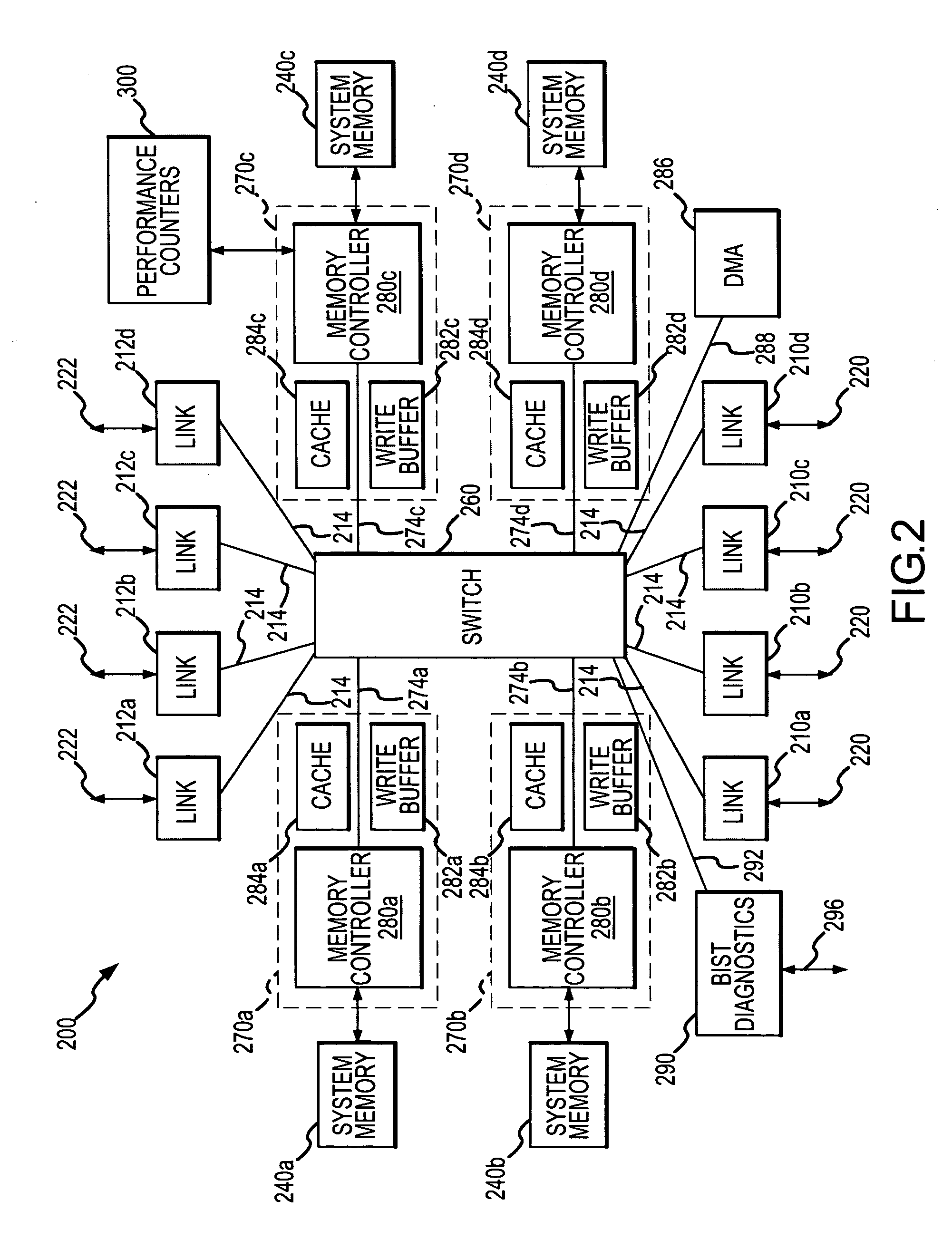

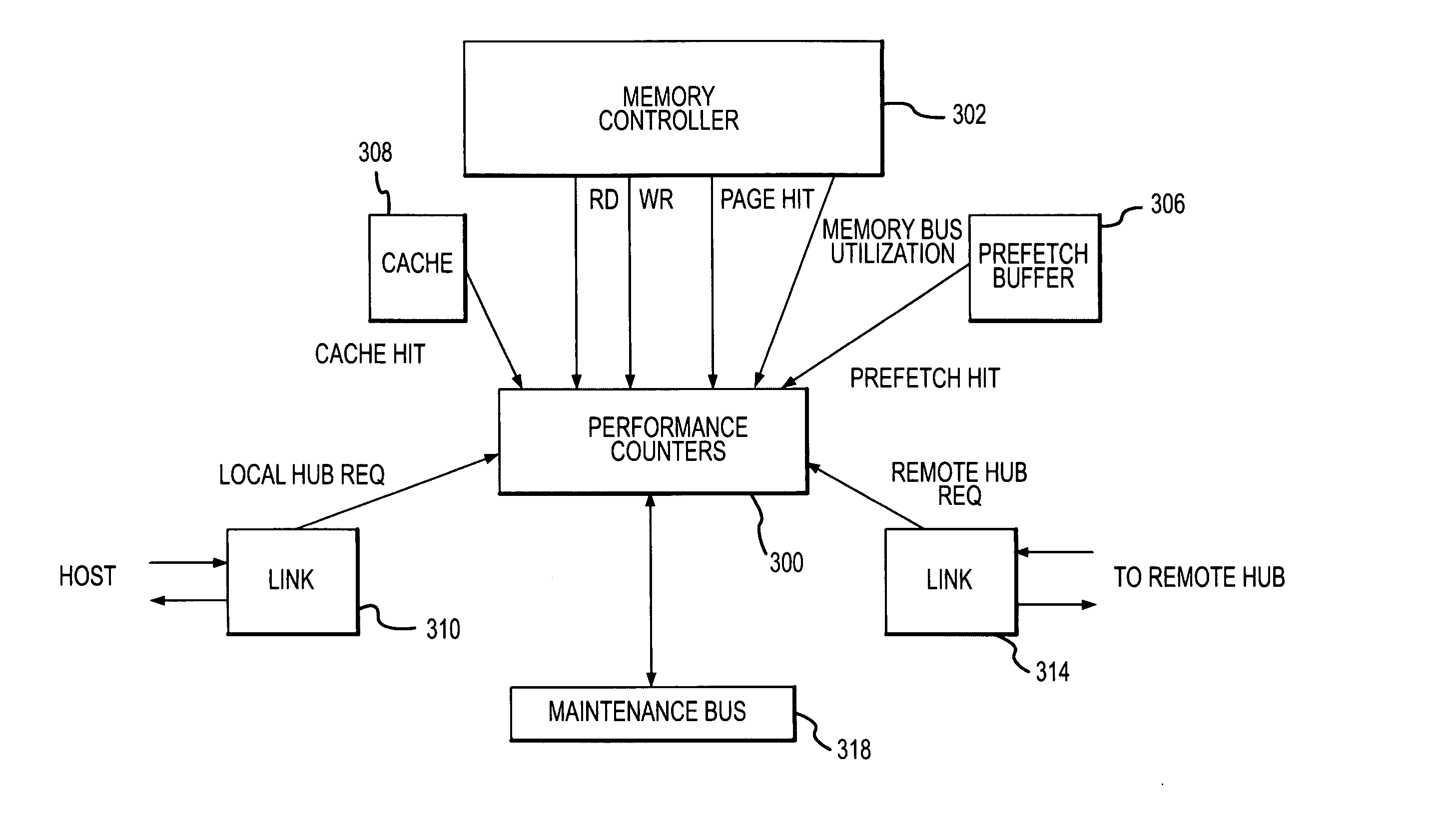

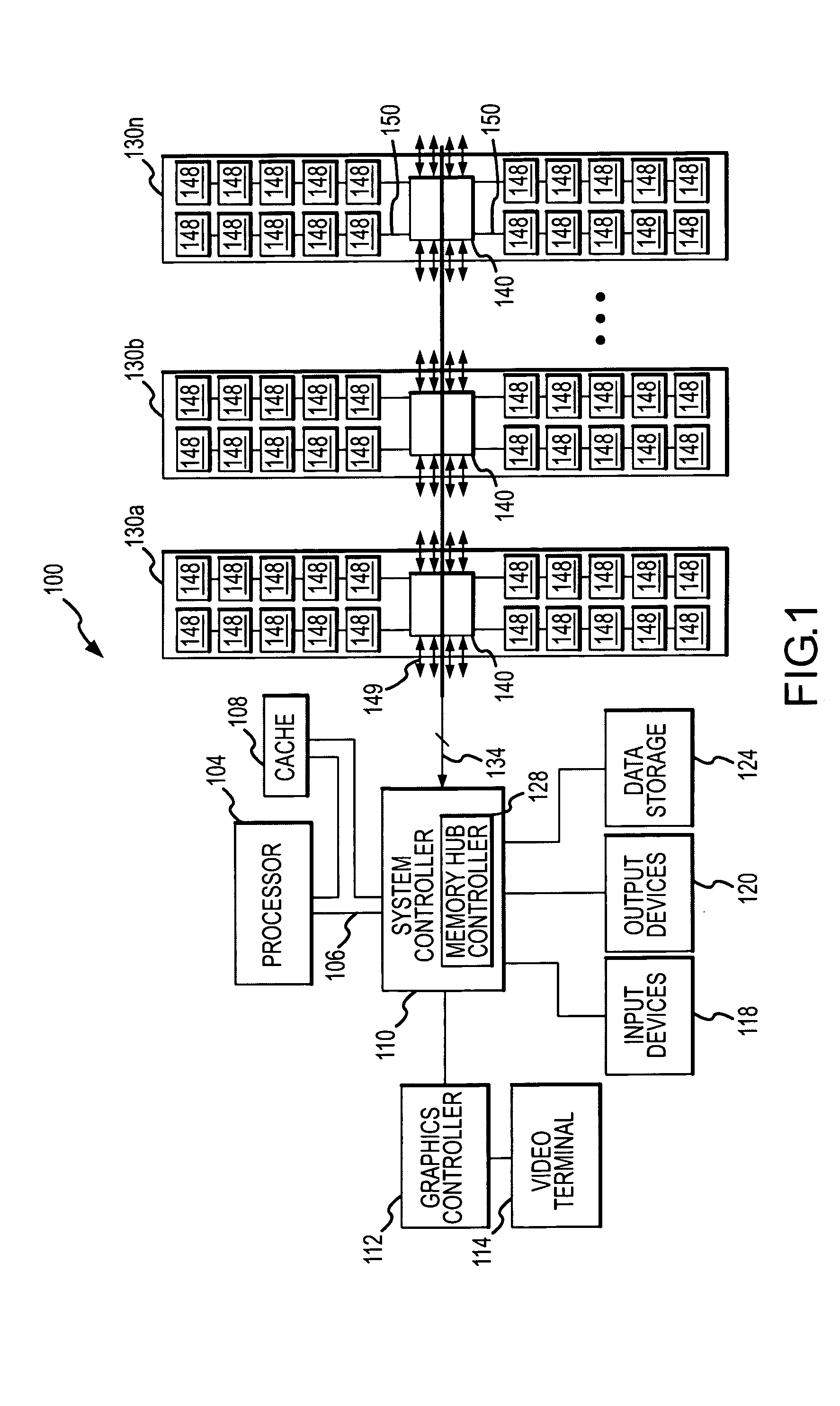

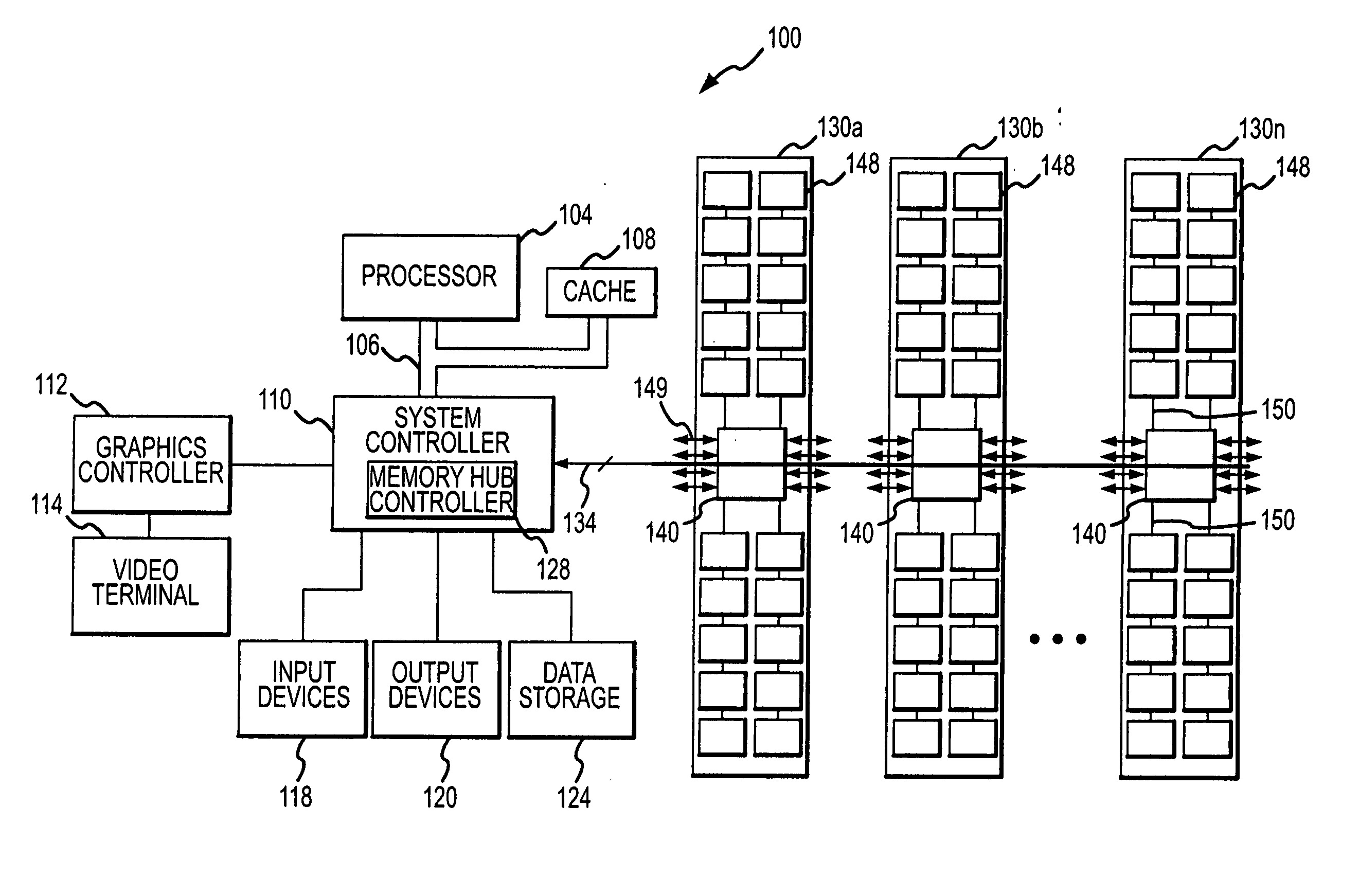

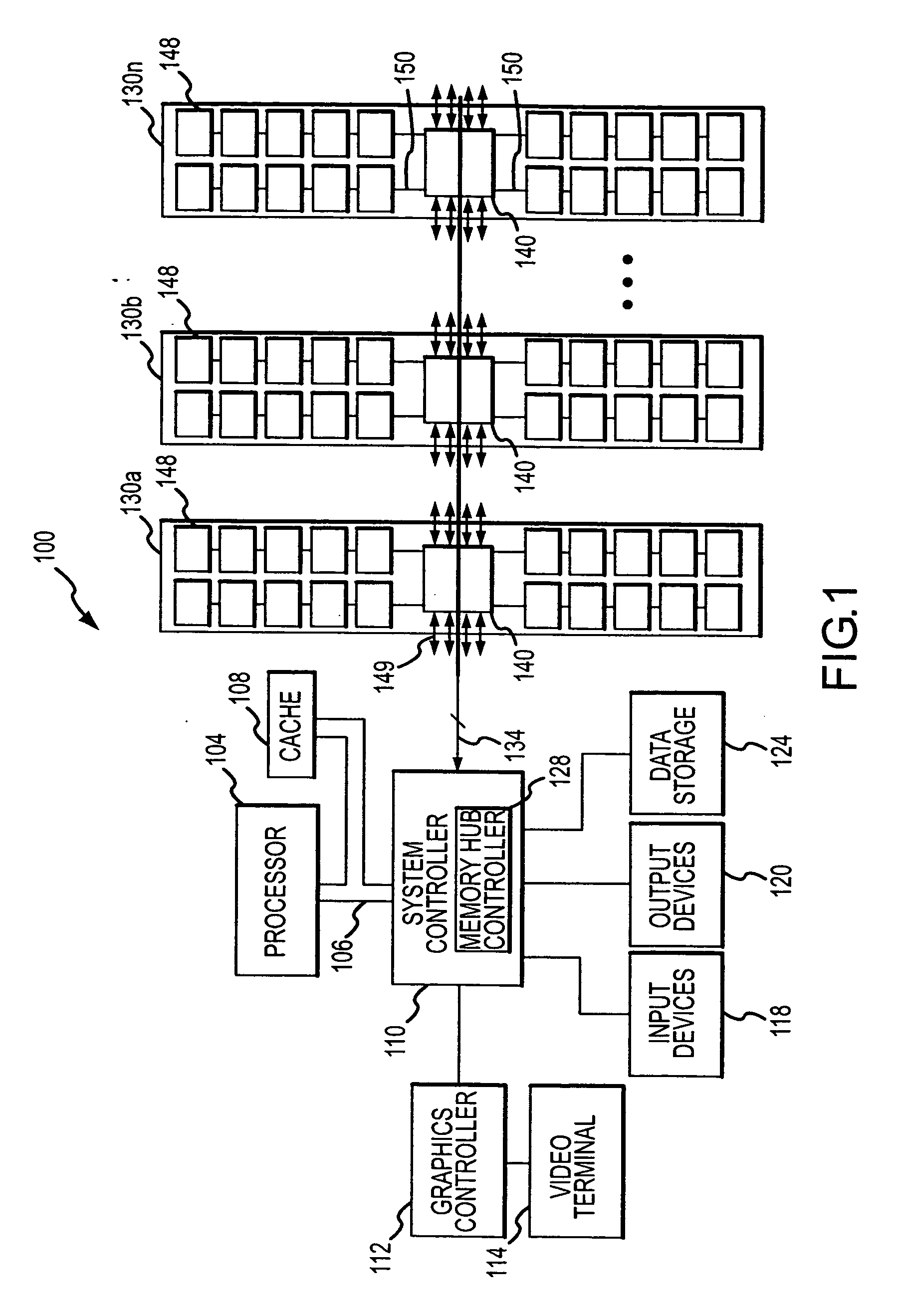

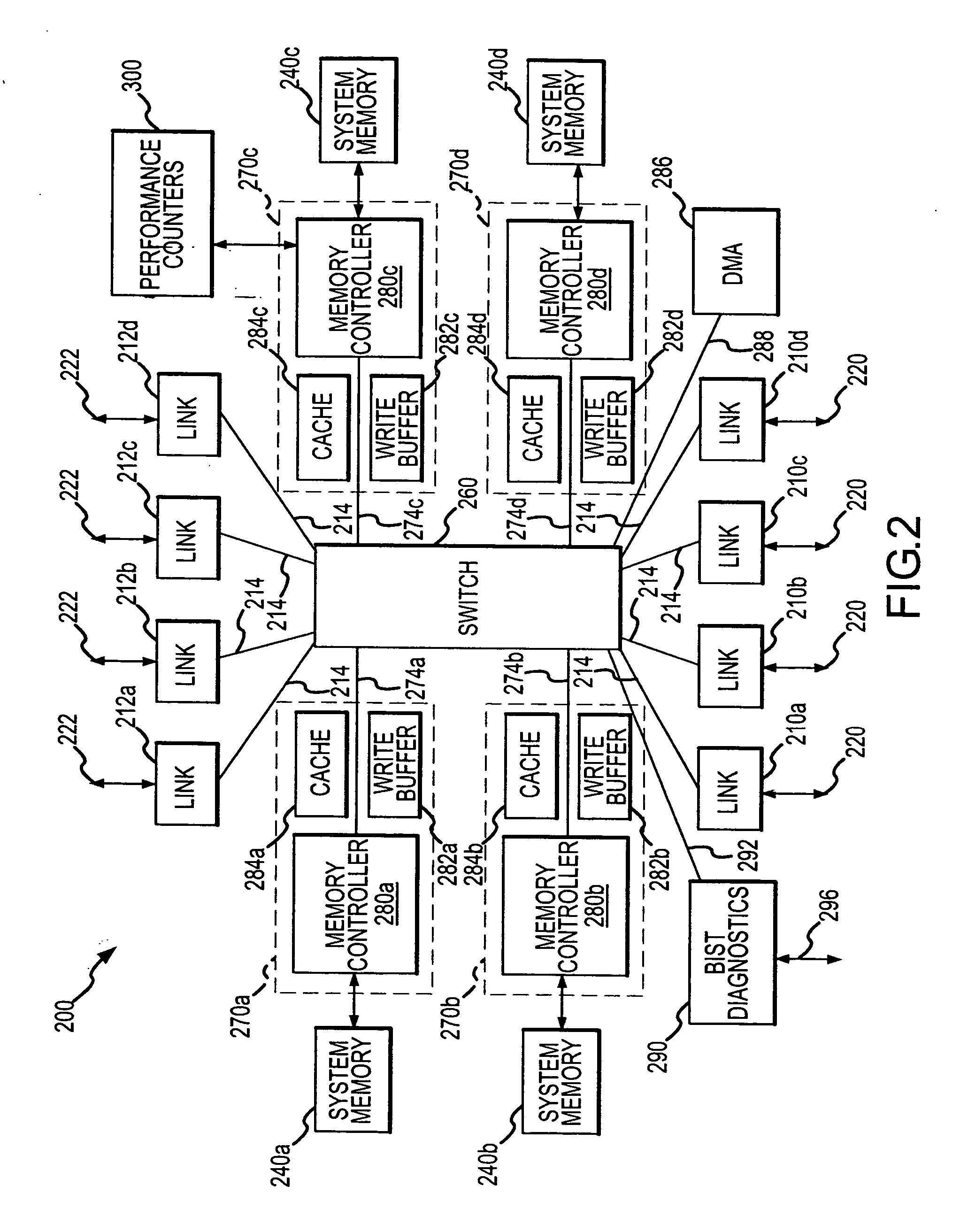

Memory hub and method for memory system performance monitoring

InactiveUS7216196B2Error detection/correctionMemory adressing/allocation/relocationMemory busCache hit rate

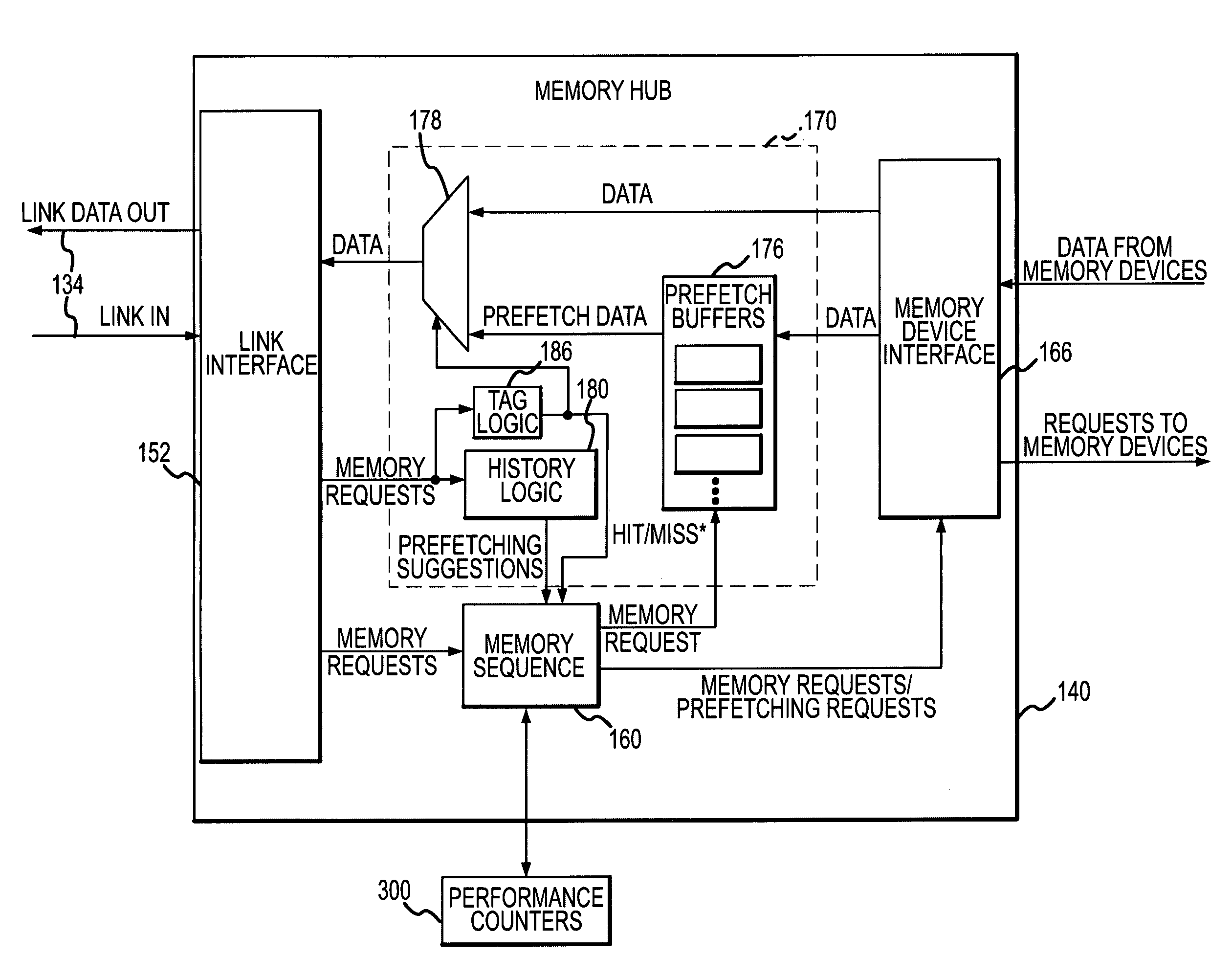

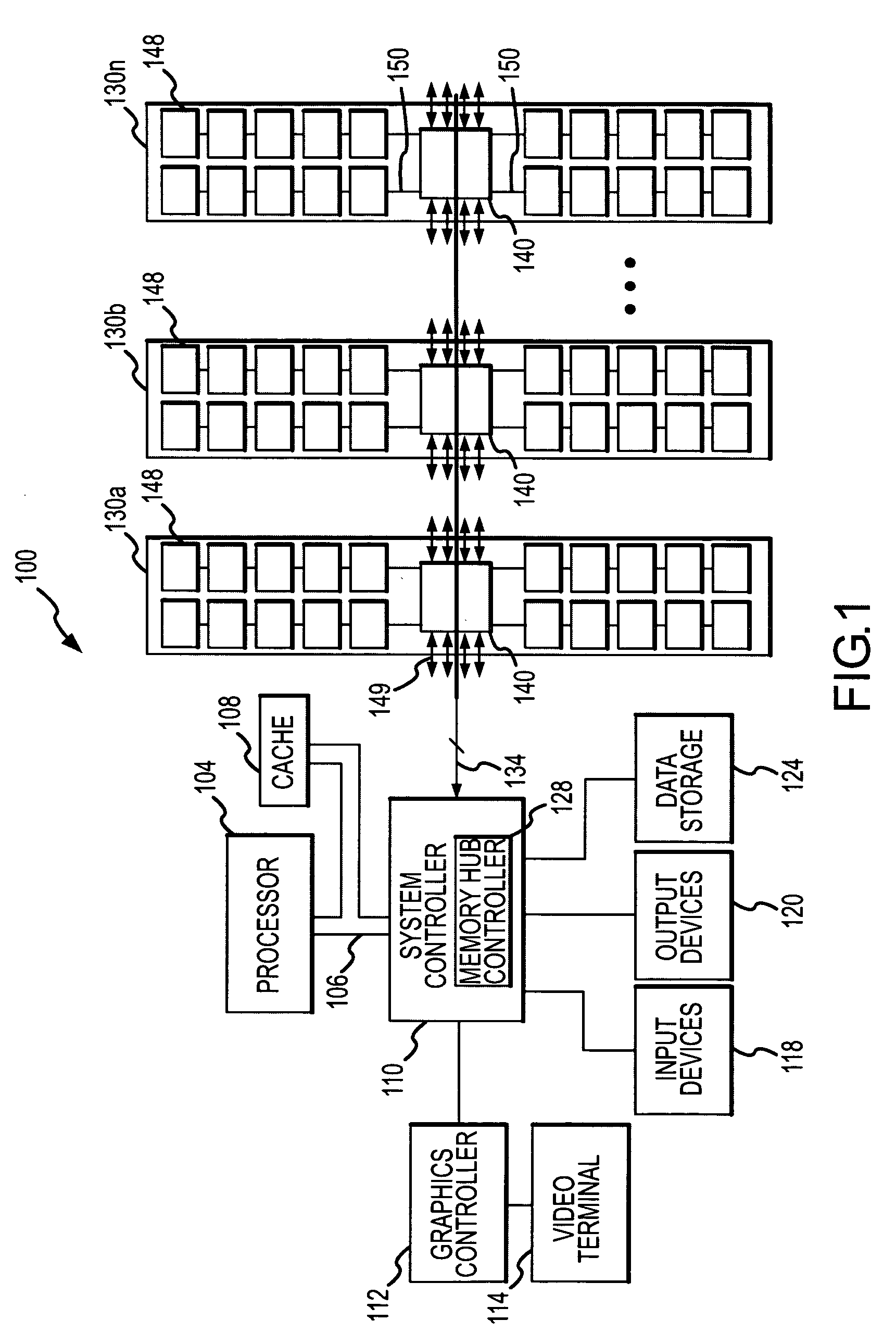

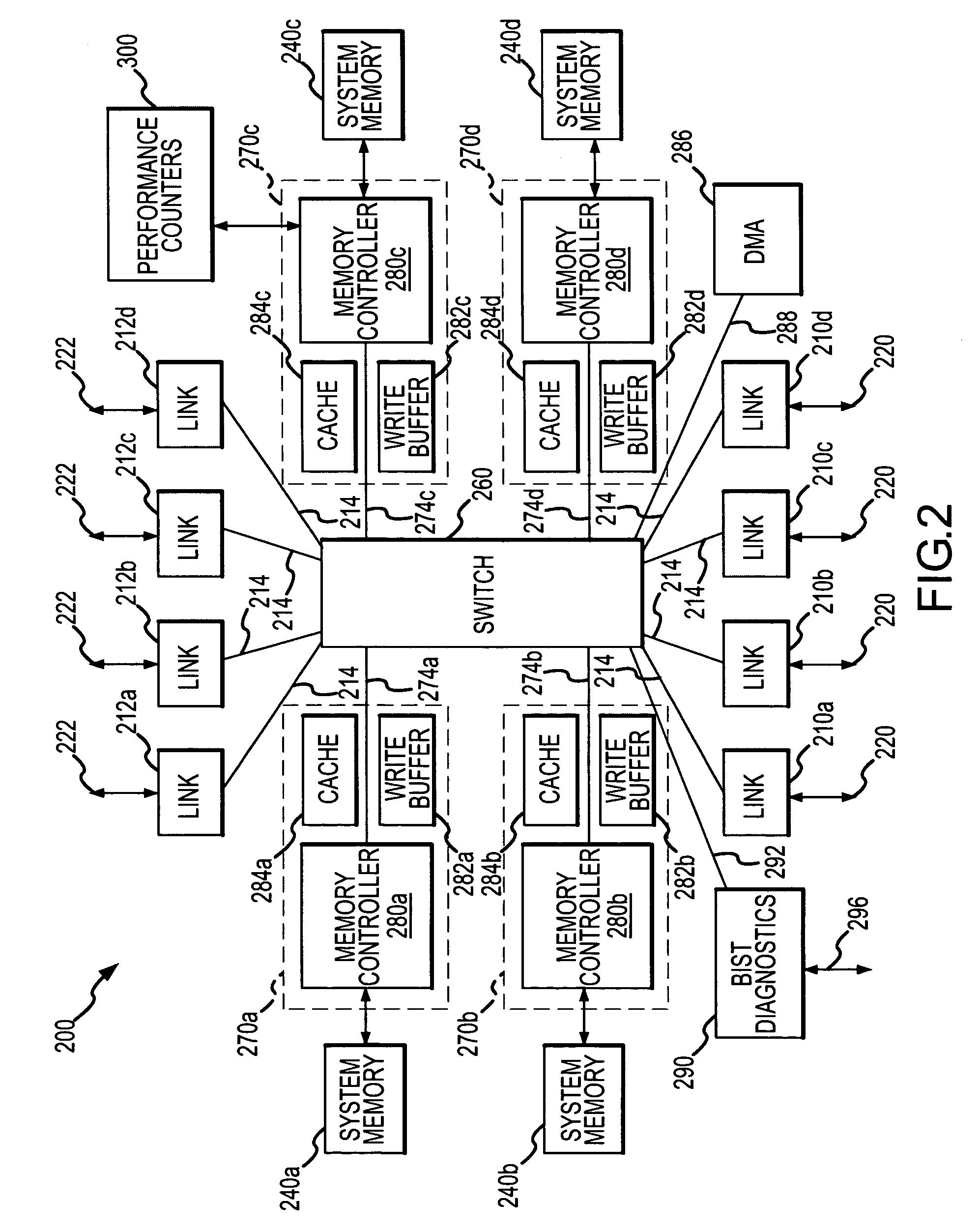

A memory module includes a memory hub coupled to several memory devices. The memory hub includes at least one performance counter that tracks one or more system metrics—for example, page hit rate, number or percentage of prefetch hits, cache hit rate or percentage, read rate, number of read requests, write rate, number of write requests, rate or percentage of memory bus utilization, local hub request rate or number, and / or remote hub request rate or number.

Owner:ROUND ROCK RES LLC

Memory hub and method for memory sequencing

InactiveUS20050257005A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache hit rate

A memory module includes a memory hub coupled to several memory devices. The memory hub includes at least one performance counter that tracks one or more system metrics—for example, page hit rate, prefetch hits, and / or cache hit rate. The performance counter communicates with a memory sequencer that adjusts its operation based on the system metrics tracked by the performance counter.

Owner:ROUND ROCK RES LLC

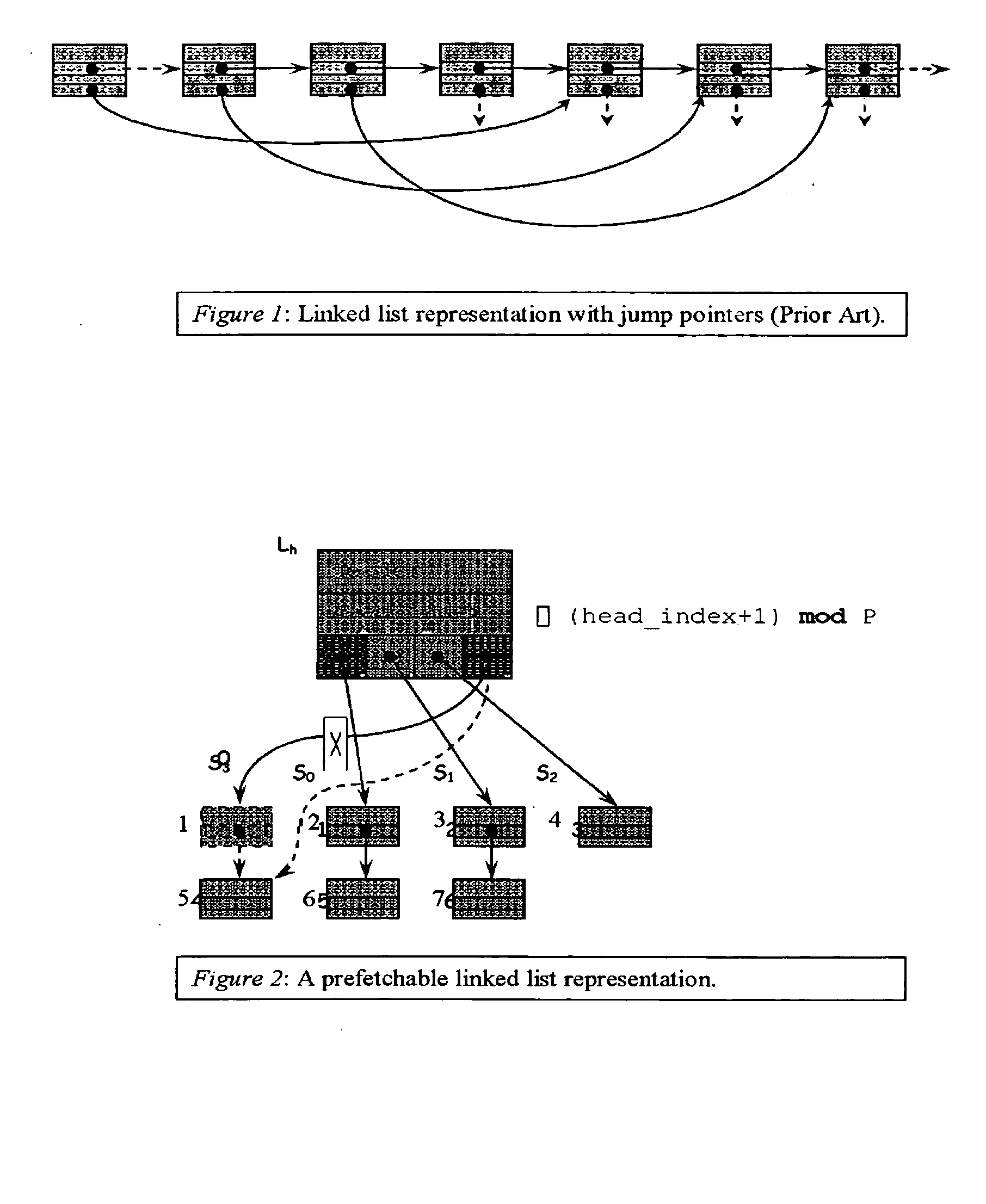

Method for prefetching recursive data structure traversals

InactiveUS20050102294A1Improve cache hit ratioPotential throughput of the computer systemDigital data information retrievalData processing applicationsOperational systemTerm memory

Computer systems are typically designed with multiple levels of memory hierarchy. Prefetching has been employed to overcome the latency of fetching data or instructions from or to memory. Prefetching works well for data structures with regular memory access patterns, but less so for data structures such as trees, hash tables, and other structures in which the datum that will be used is not known a priori. In modern transaction processing systems, database servers, operating systems, and other commercial and engineering applications, information is frequently organized in trees, graphs, and linked lists. Lack of spatial locality results in a high probability that a miss will be incurred at each cache in the memory hierarchy. Each cache miss causes the processor to stall while the referenced value is fetched from lower levels of the memory hierarchy. Because this is likely to be the case for a significant fraction of the nodes traversed in the data structure, processor utilization suffers. The inability to compute the address of the next address to be referenced makes prefetching difficult in such applications. The invention allows compilers and / or programmers to restructure data structures and traversals so that pointers are dereferenced in a pipelined manner, thereby making it possible to schedule prefetch operations in a consistent fashion. The present invention significantly increases the cache hit rates of many important data structure traversals, and thereby the potential throughput of the computer system and application in which it is employed. For data structure traversals in which the traversal path may be predetermined, a transformation is performed on the data structure that permits references to nodes that will be traversed in the future be computed sufficiently far in advance to prefetch the data into cache.

Owner:DIGITAL CACHE LLC

Memory hub and method for memory sequencing

InactiveUS7162567B2Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache hit rate

A memory module includes a memory hub coupled to several memory devices. The memory hub includes at least one performance counter that tracks one or more system metrics—for example, page hit rate, prefetch hits, and / or cache hit rate. The performance counter communicates with a memory sequencer that adjusts its operation based on the system metrics tracked by the performance counter.

Owner:ROUND ROCK RES LLC

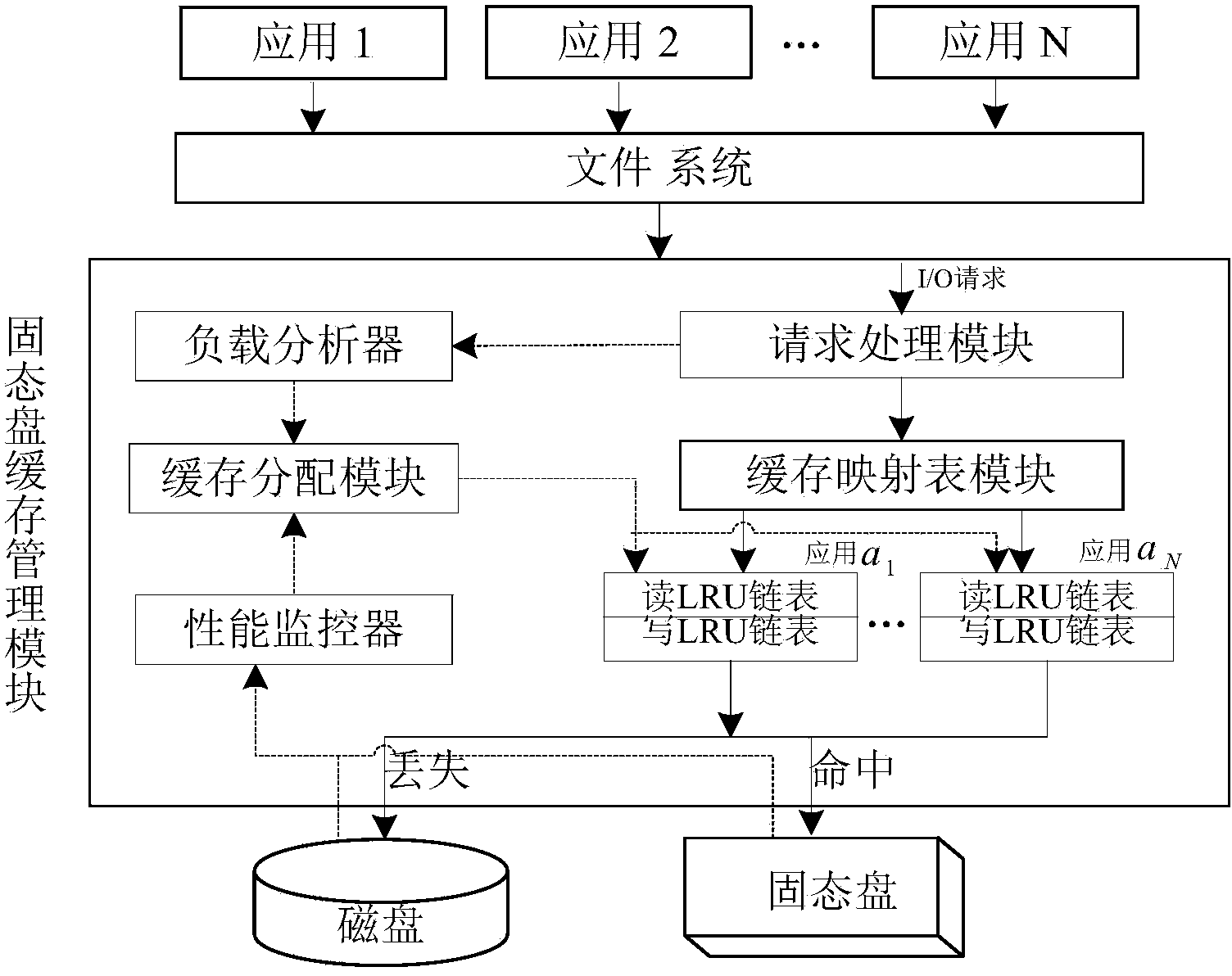

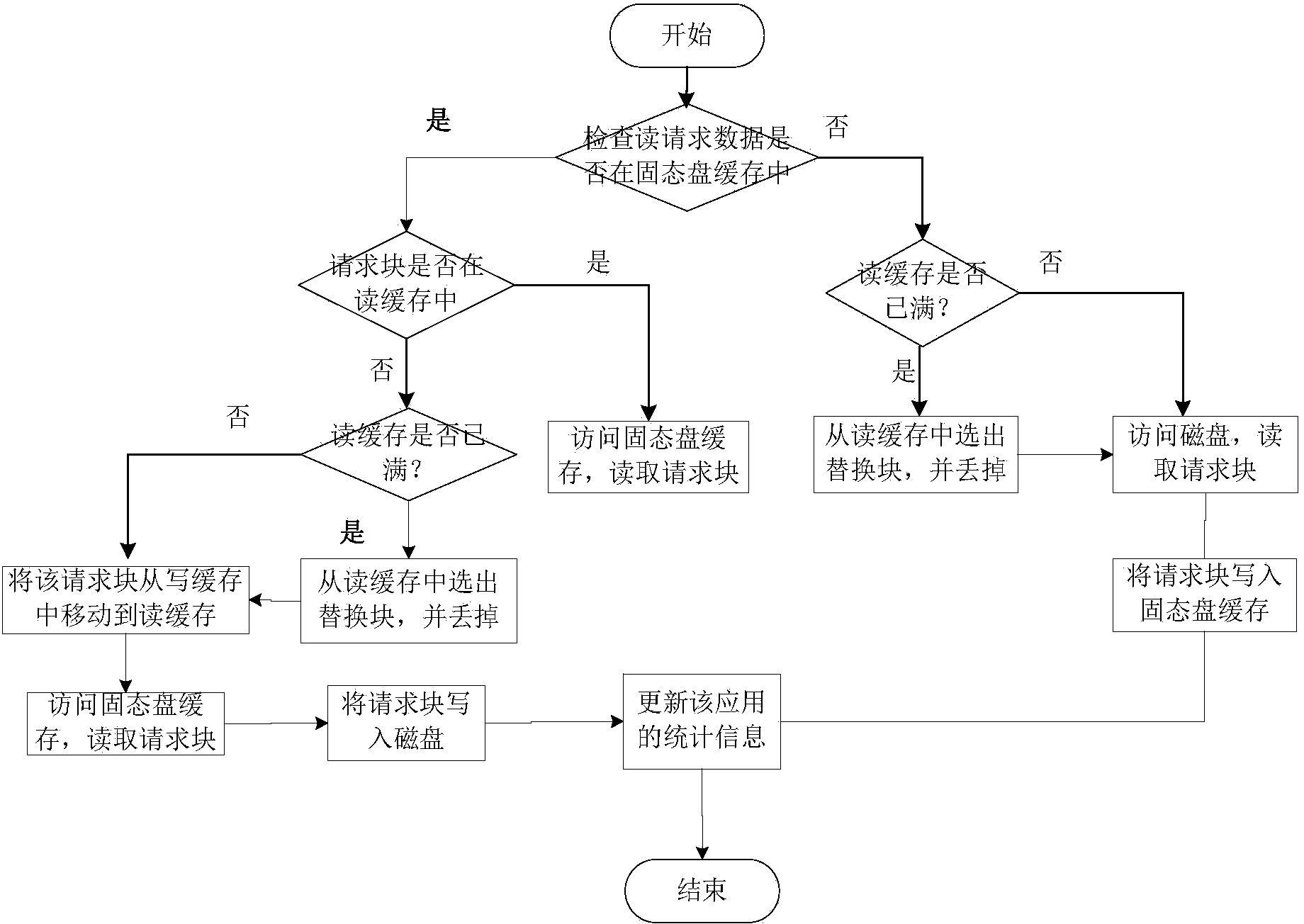

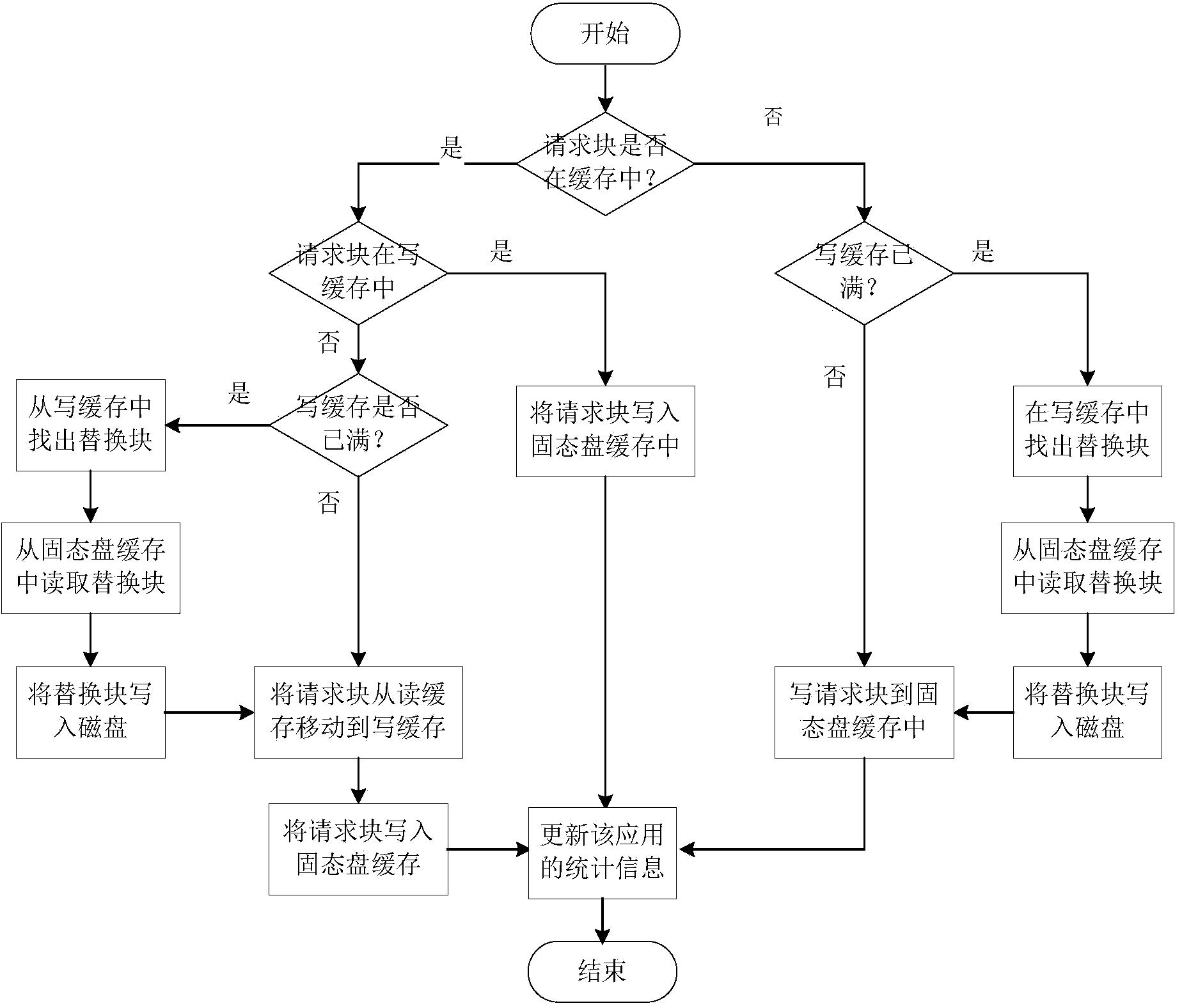

Mixed storage system and method for supporting solid-state disk cache dynamic distribution

ActiveCN103902474AEfficient use ofAvoid performance degradationMemory adressing/allocation/relocationDifferentiated servicesDirty data

The invention provides a mixed storage system and method for supporting solid-state disk cache dynamic distribution. The mixed storage method is characterized in that the mixed storage system is constructed through a solid-state disk and a magnetic disk, and the solid-state disk serves as a cache of the magnetic disk; the load characteristics of applications and the cache hit ratio of the solid-state disk are monitored in real time, performance models of the applications are built, and the cache space of the solid-state disk is dynamically distributed according to the performance requirements of the applications and changes of the load characteristics. According to the solid-state disk cache management method, the cache space of the solid-state disk can be reasonably distributed according to the performance requirements of the applications, and an application-level cache partition service is achieved; due to the fact that the cache space of the solid-state disk of the applications is further divided into a cache reading section and a cache writing section, dirty data blocks and the page copying and rubbish recycling cost caused by the dirty data blocks are reduced; meanwhile, the idle cache space of the solid-state disk is distributed to the applications according to the cache use efficiency of the applications, and therefore the cache hit ratio of the solid-state disk of the mixed storage system and the overall performance of the mixed storage system are improved.

Owner:HUAZHONG UNIV OF SCI & TECH

Memory hub and method for memory system performance monitoring

InactiveUS20050144403A1Error detection/correctionMemory adressing/allocation/relocationParallel computingMemory bus

A memory module includes a memory hub coupled to several memory devices. The memory hub includes at least one performance counter that tracks one or more system metrics-for example, page hit rate, number or percentage of prefetch hits, cache hit rate or percentage, read rate, number of read requests, write rate, number of write requests, rate or percentage of memory bus utilization, local hub request rate or number, and / or remote hub request rate or number.

Owner:ROUND ROCK RES LLC

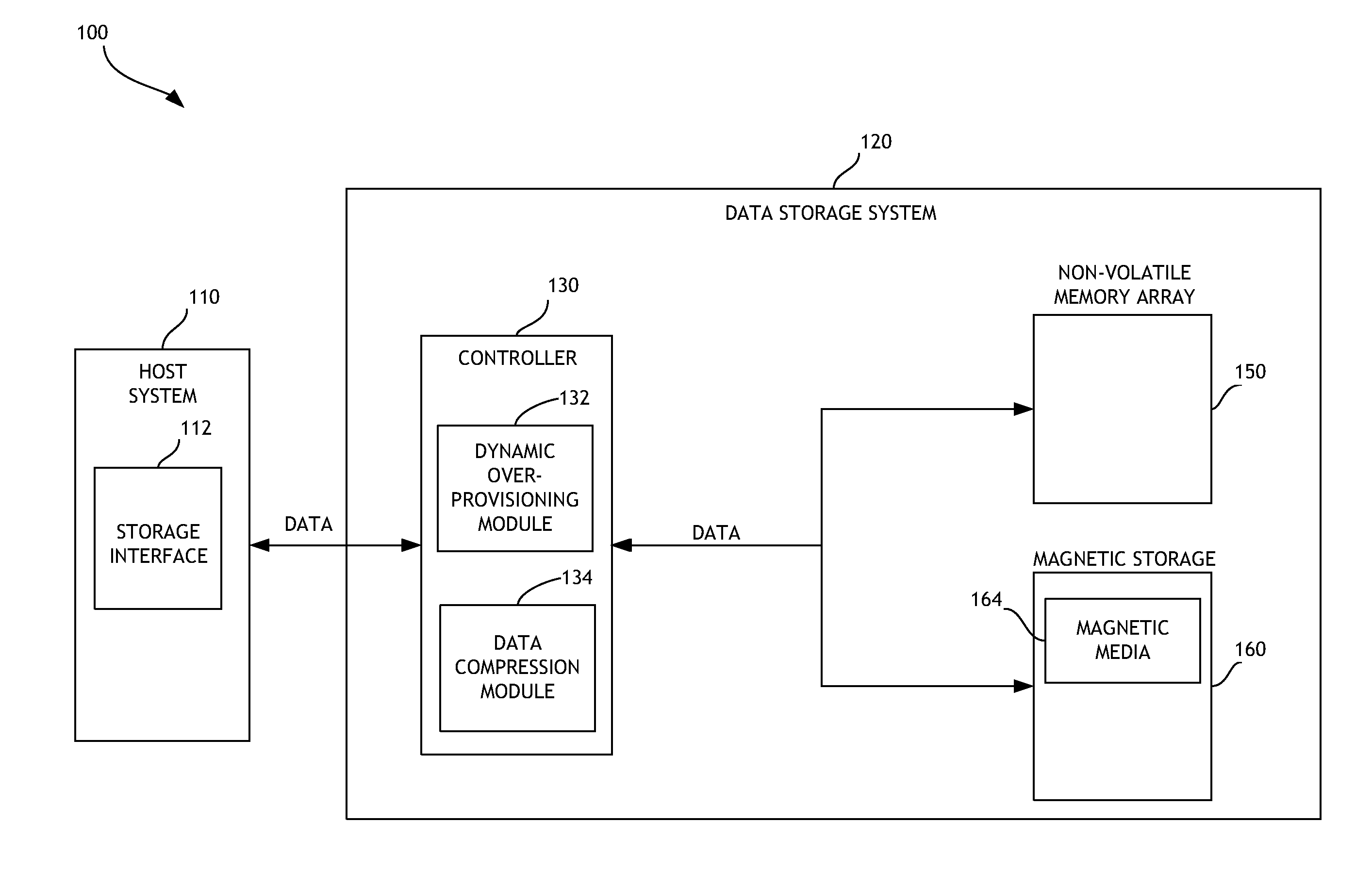

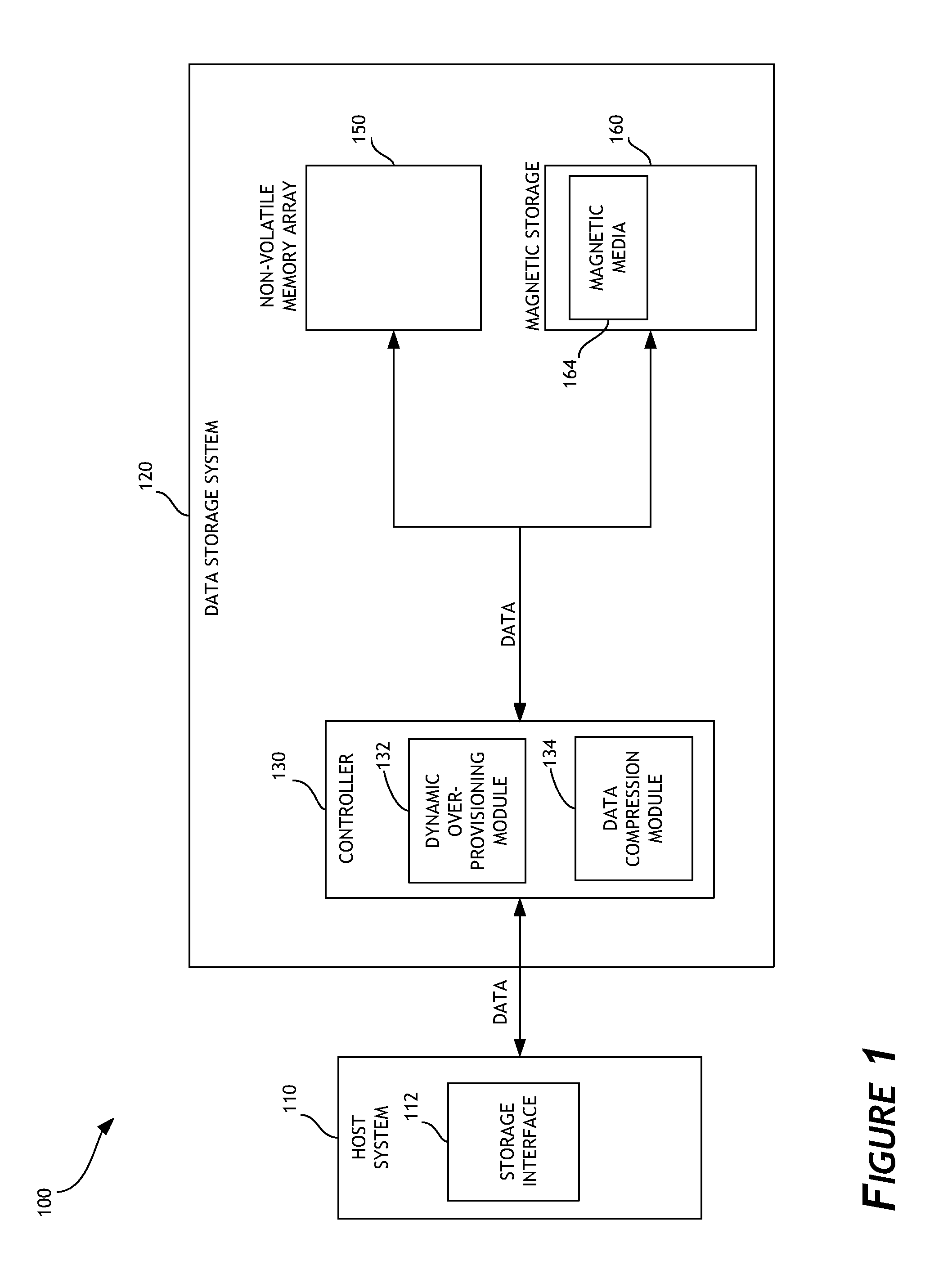

Dynamic overprovisioning for data storage systems

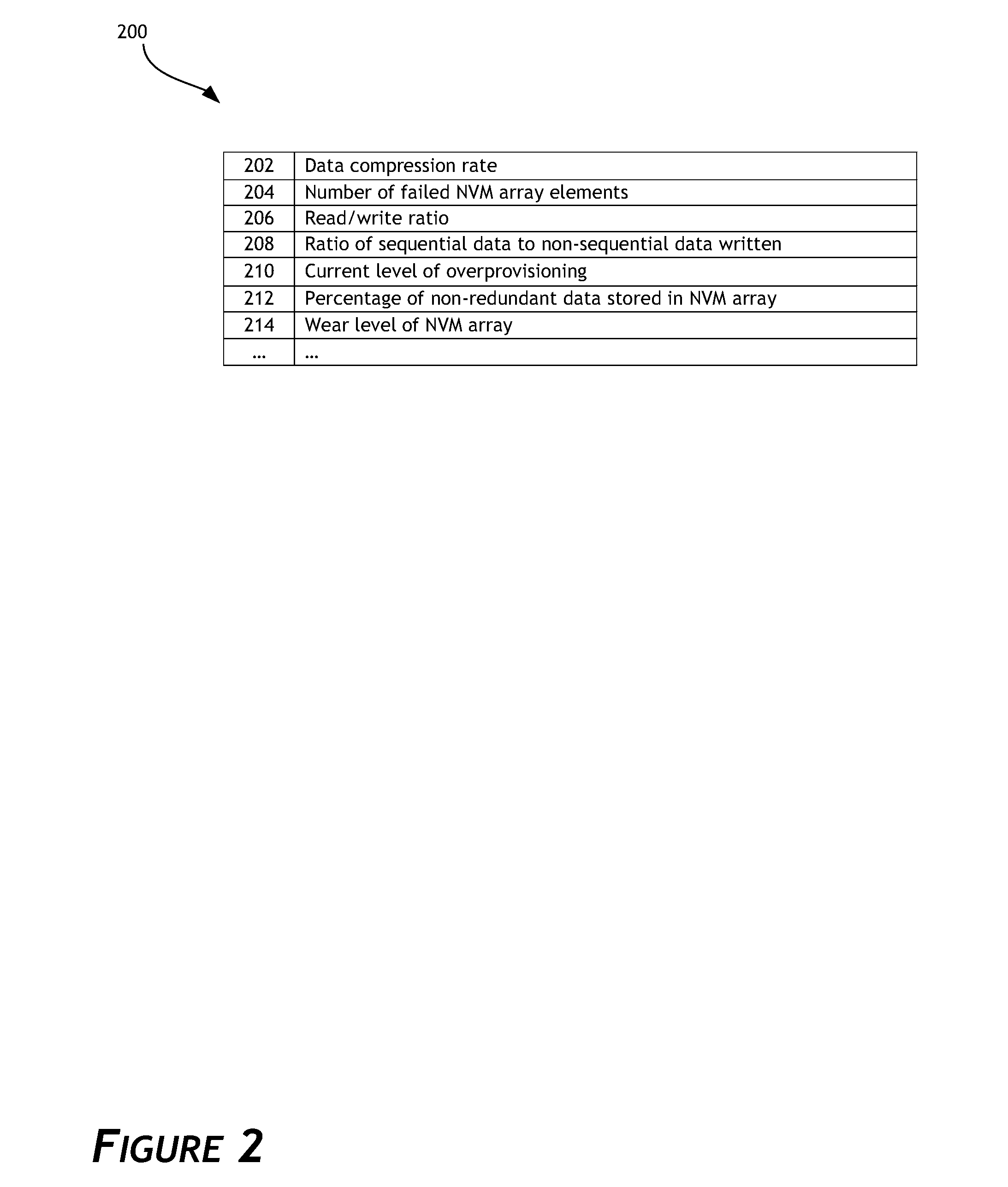

ActiveUS20140181369A1Improve efficiencyIncreased longevityMemory architecture accessing/allocationMemory adressing/allocation/relocationWrite amplificationCache hit rate

Disclosed embodiments are directed to systems and methods for dynamic overprovisioning for data storage systems. In one embodiment, a data storage system can reserve a portion of memory, such as non-volatile solid-state memory, for overprovisioning. Depending on various overprovisioning factors, recovered storage space due to compressing user data can be allocated for storing user data and / or overprovisioning. Utilizing the disclosed dynamic overprovisioning systems and methods can result is more efficient utilization of cache memory, reduction of write amplification, increase in a cache hit rate, and the like. Improved data storage system performance and increased endurance and longevity can thereby be attained.

Owner:WESTERN DIGITAL TECH INC

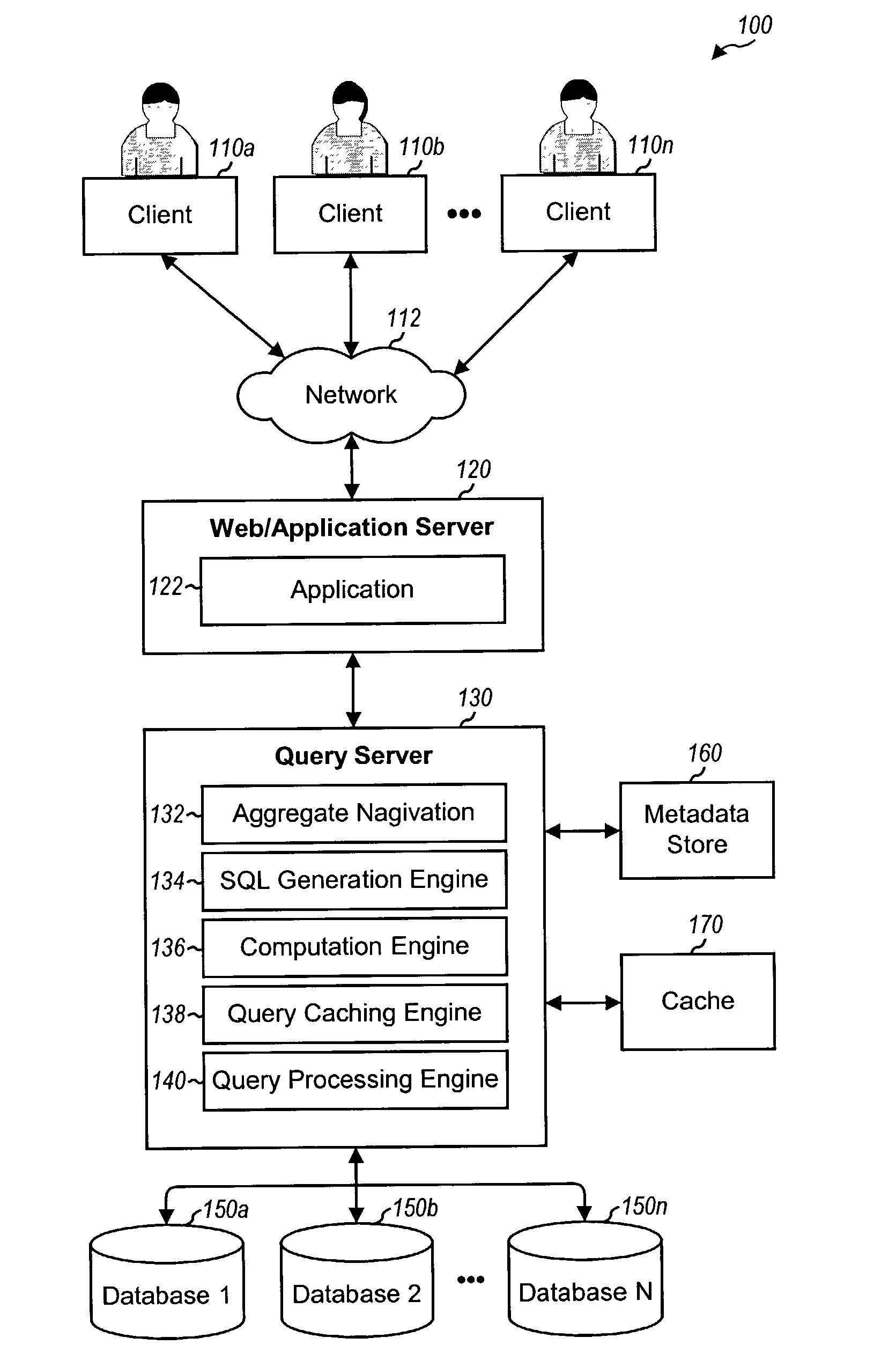

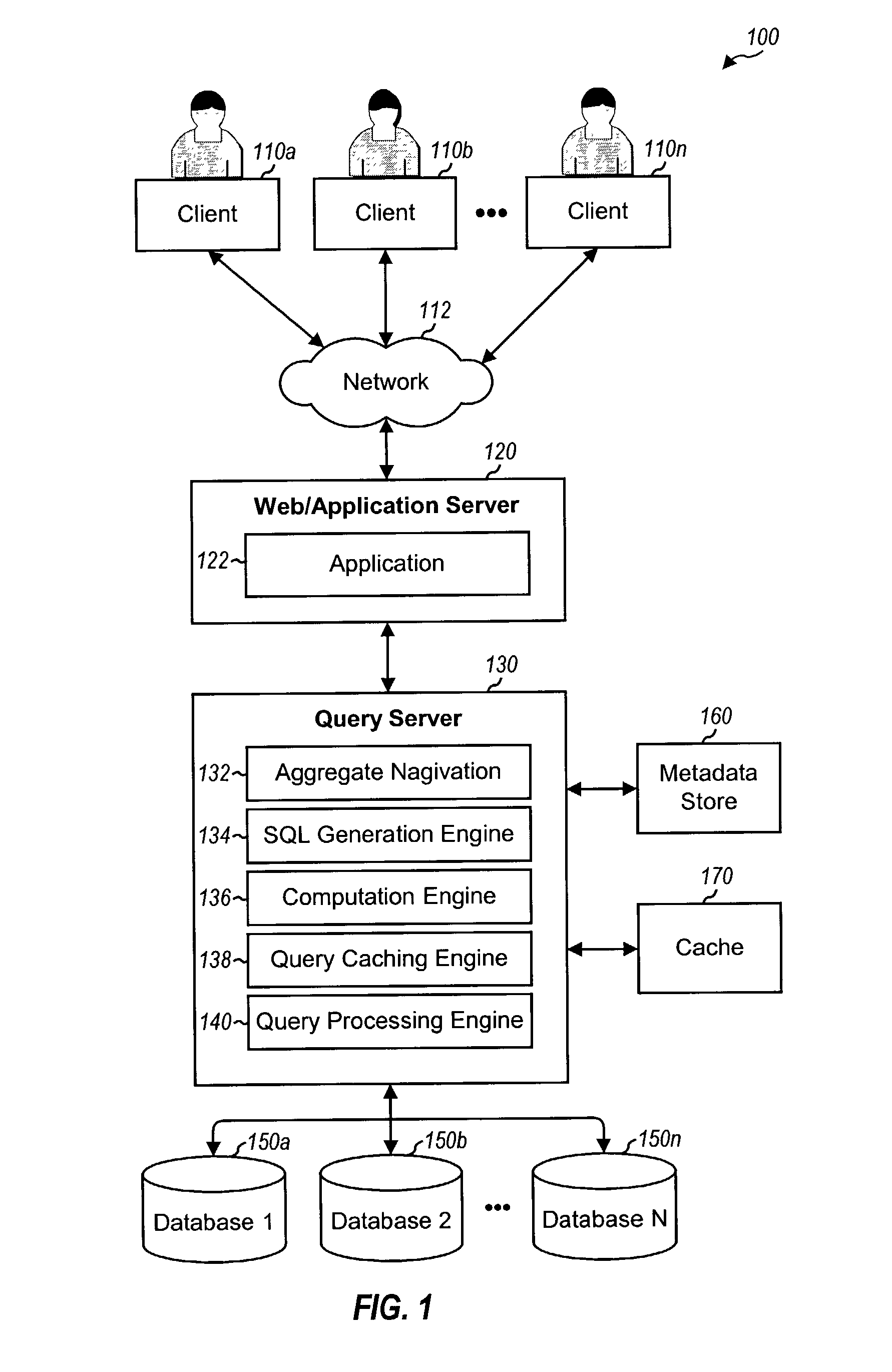

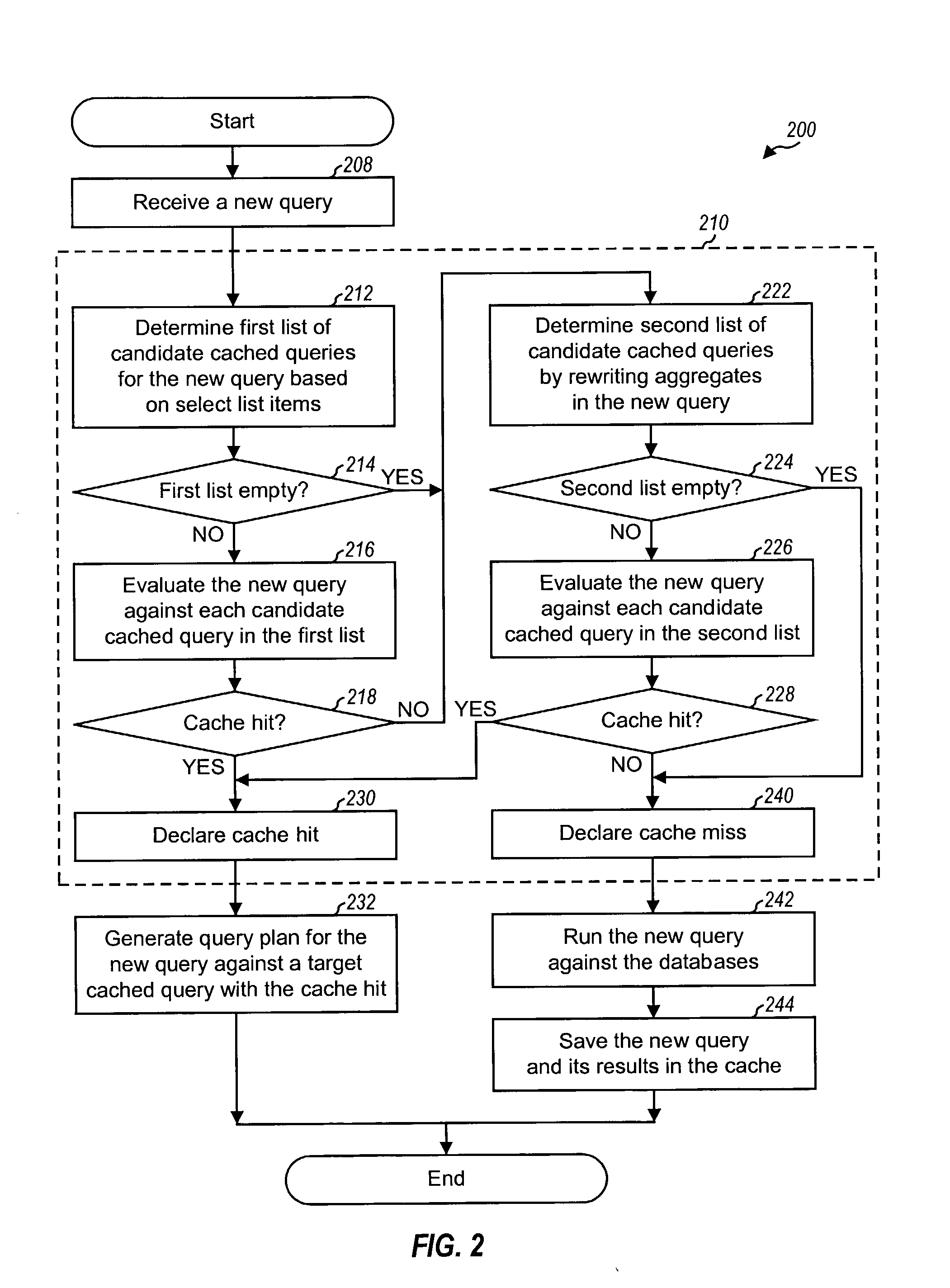

Detecting and processing cache hits for queries with aggregates

ActiveUS20070208690A1EfficientlyImprove query caching performanceData processing applicationsDigital data information retrievalExact matchQuery plan

Techniques to improve query caching performance by efficiently selecting queries stored in a cache for evaluation and increasing the cache hit rate by allowing for inexact matches. A list of candidate queries stored in the cache that potentially could be used to answer a new query is first determined. This list may include all cached queries, cached queries containing exact matches for select list items, or cached queries containing exact and / or inexact matches. Each of at least one candidate query is then evaluated to determine whether or not there is a cache hit, which indicates that the candidate query could be used to answer the new query. The evaluation is performed using a set of rules that allows for inexact matches of aggregates, if any, in the new query. A query plan is generated for the new query based on a specific candidate query with a cache hit.

Owner:ORACLE INT CORP

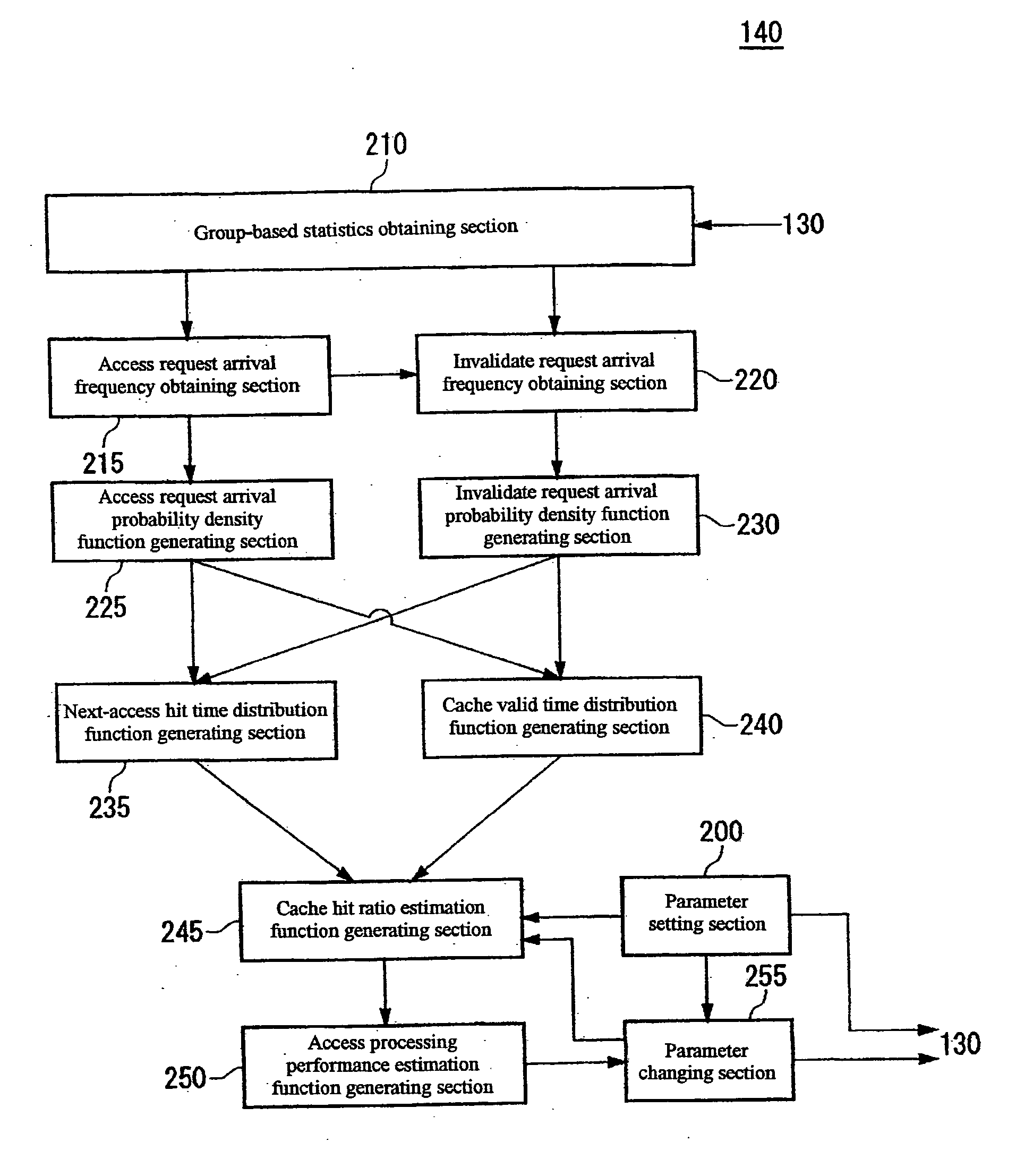

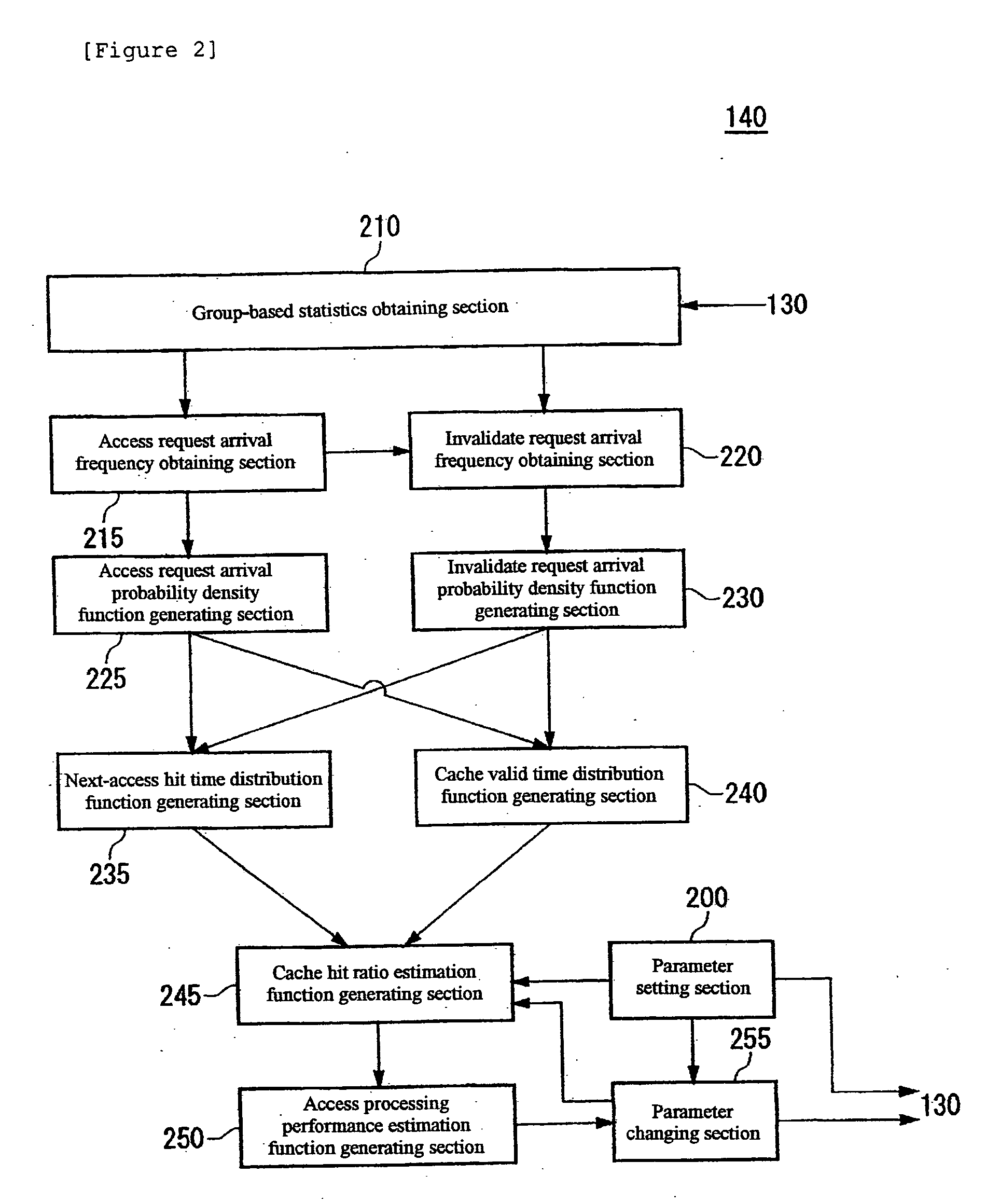

Cache hit ratio estimating apparatus, cache hit ratio estimating method, program, and recording medium

InactiveUS20050268037A1Improve accuracyEasy to processInput/output to record carriersError detection/correctionCache accessNormal density

Determining a cache hit ratio of a caching device analytically and precisely. There is provided a cache hit ratio estimating apparatus for estimating the cache hit ratio of a caching device, caching access target data accessed by a requesting device, including: an access request arrival frequency obtaining section for obtaining an average arrival frequency measured for access requests for each of the access target data; an access request arrival probability density function generating section for generating an access request arrival probability density function which is a probability density function of arrival time intervals of access requests for each of the access target data on the basis of the average arrival frequency of access requests for the access target data; and a cache hit ratio estimation function generating section for generating an estimation function for the cache hit ratio for each of the access target data on the basis of the access request arrival probability density function for the plurality of the access target data.

Owner:GOOGLE LLC

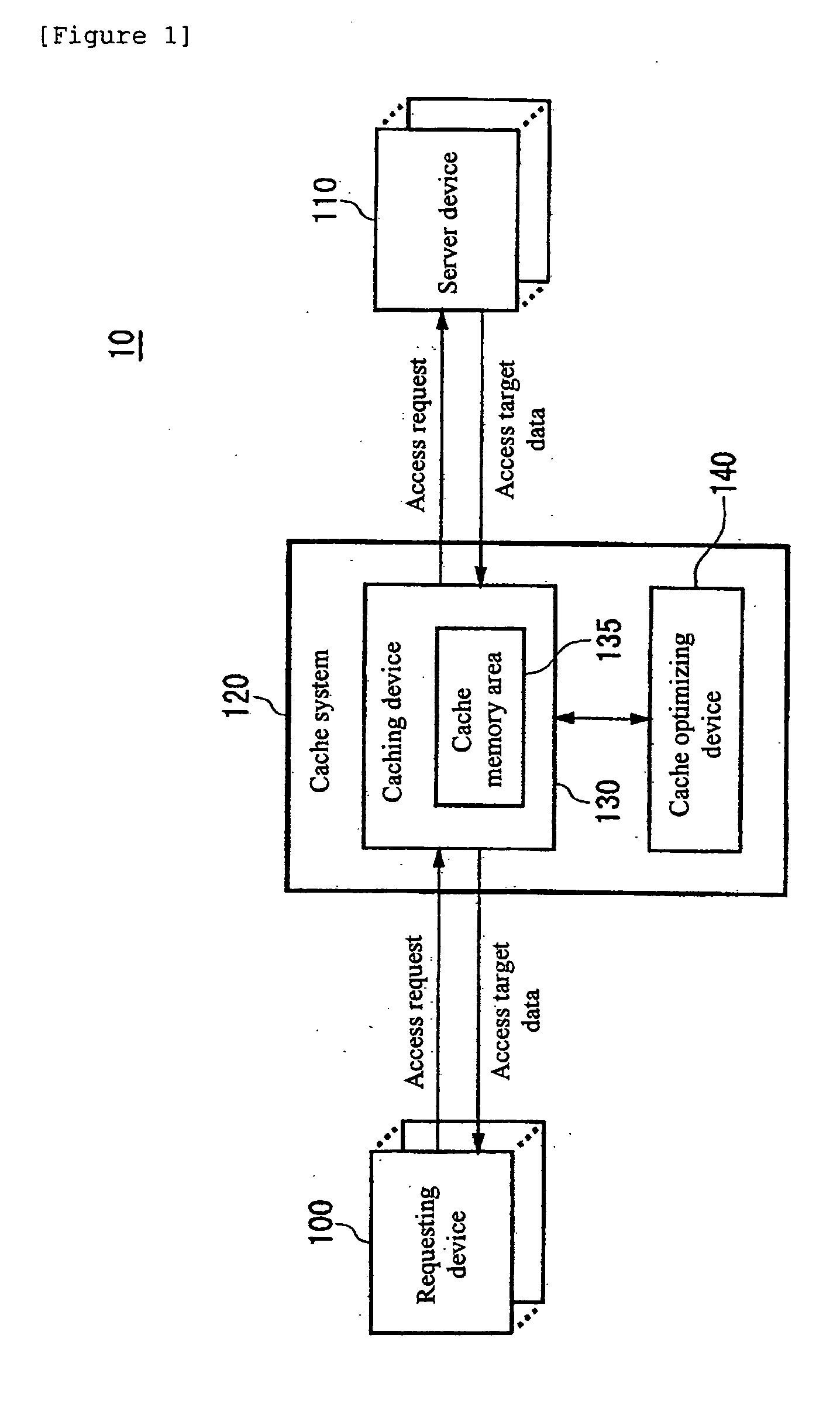

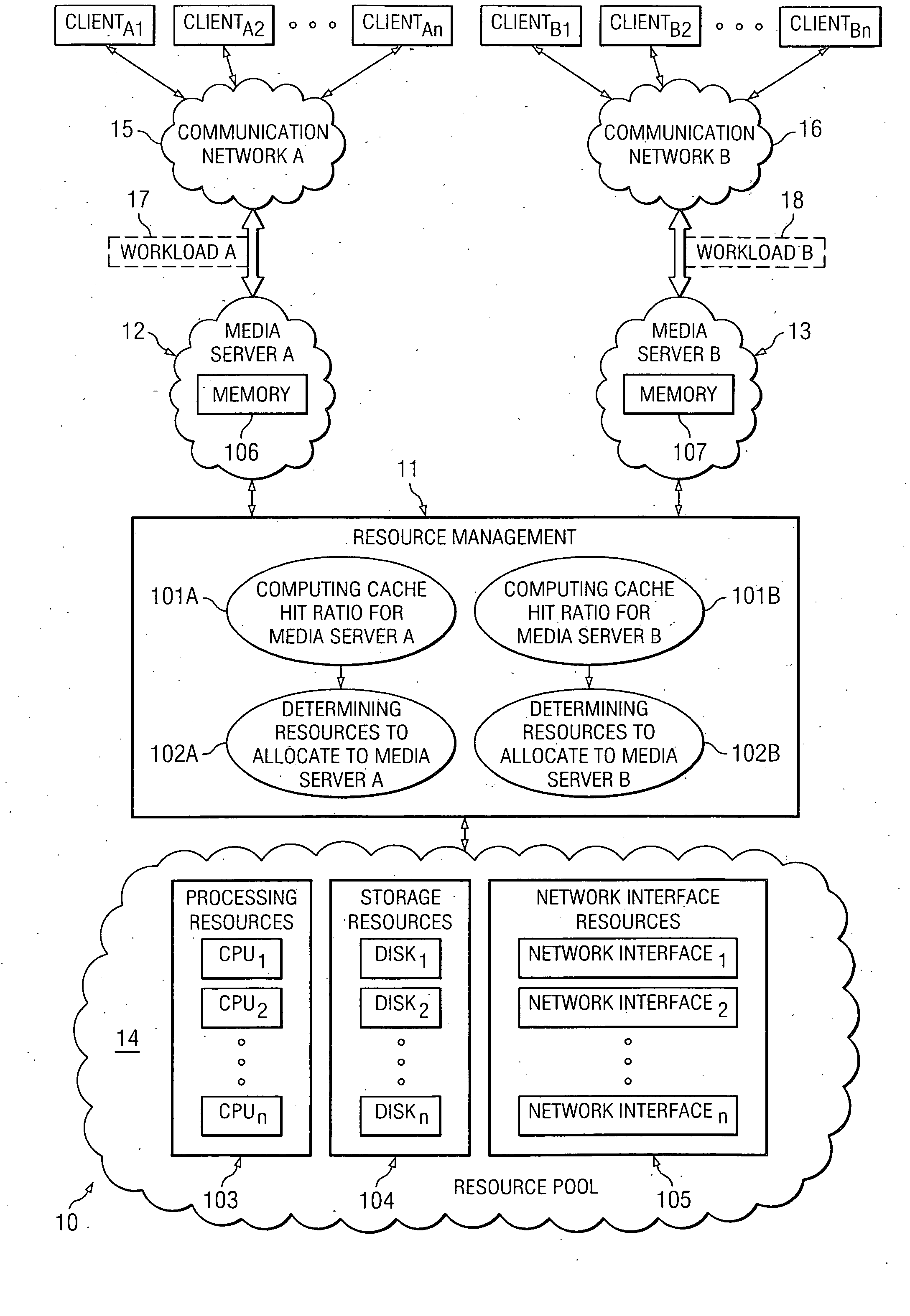

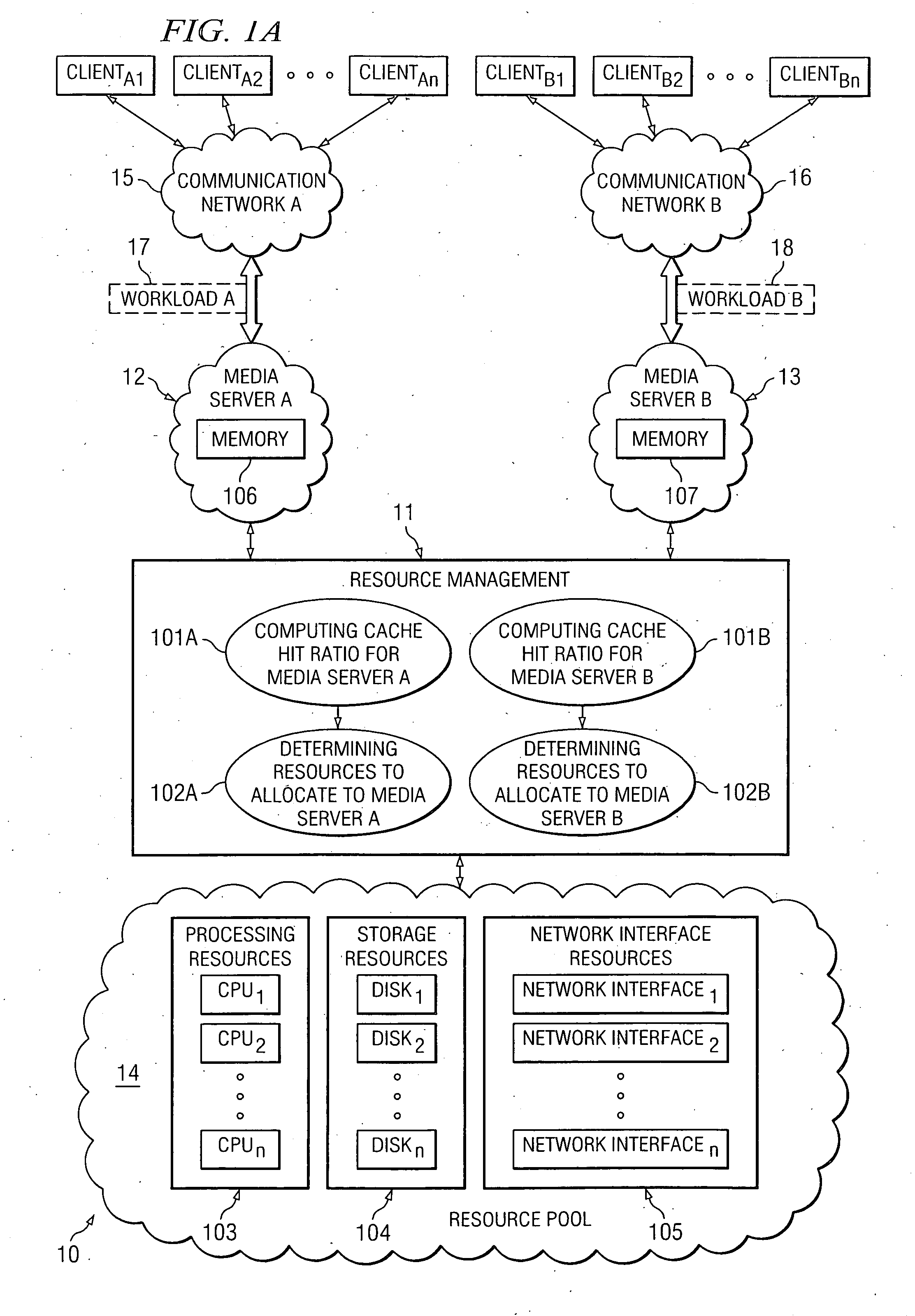

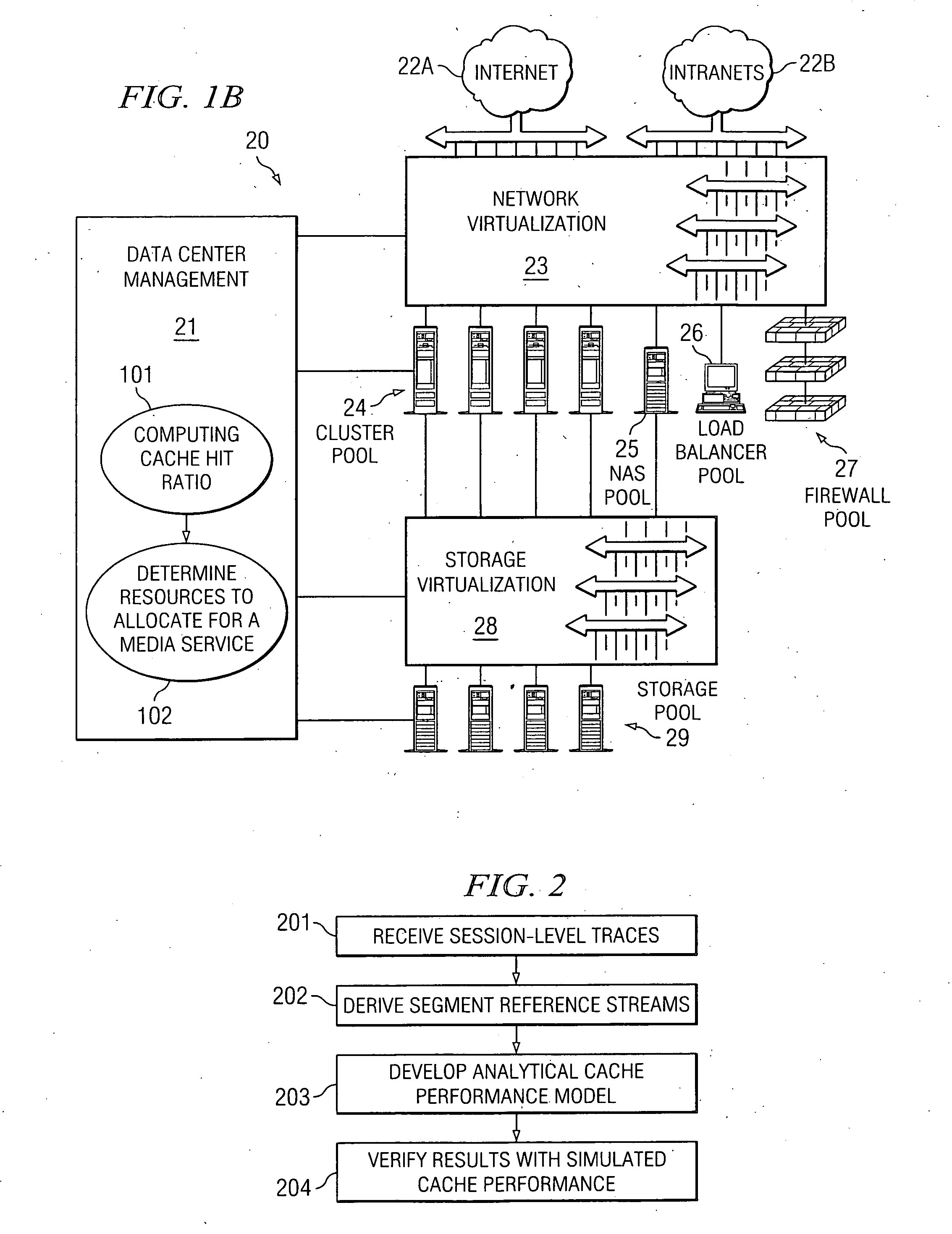

Analytical cache performance model for a media server

ActiveUS20050138165A1Digital computer detailsSelective content distributionClient-sideCache hit rate

According to at least one embodiment, a method comprises receiving a session trace log identifying a plurality of sessions accessing streaming media files from a media server. The method further comprises deriving from the session trace log a segment trace log that identifies for each of a plurality of time intervals the segments of the streaming media files accessed, and using the segment trace log to develop an analytical cache performance model. According to at least one embodiment, a method comprises receiving workload information representing client accesses of streaming media files from a media server, and using an analytical cache performance model to compute a cache hit ratio for the media server under the received workload.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

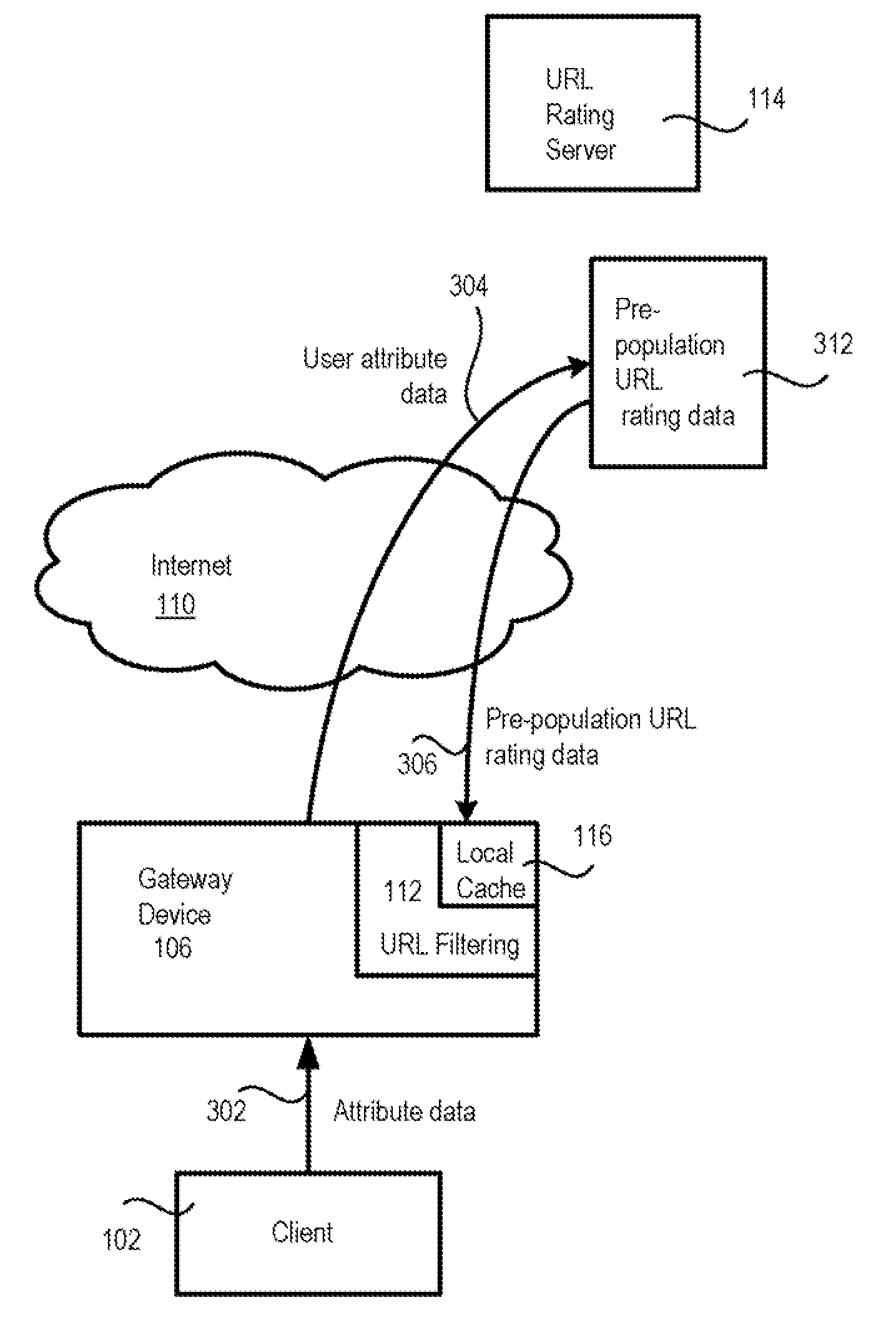

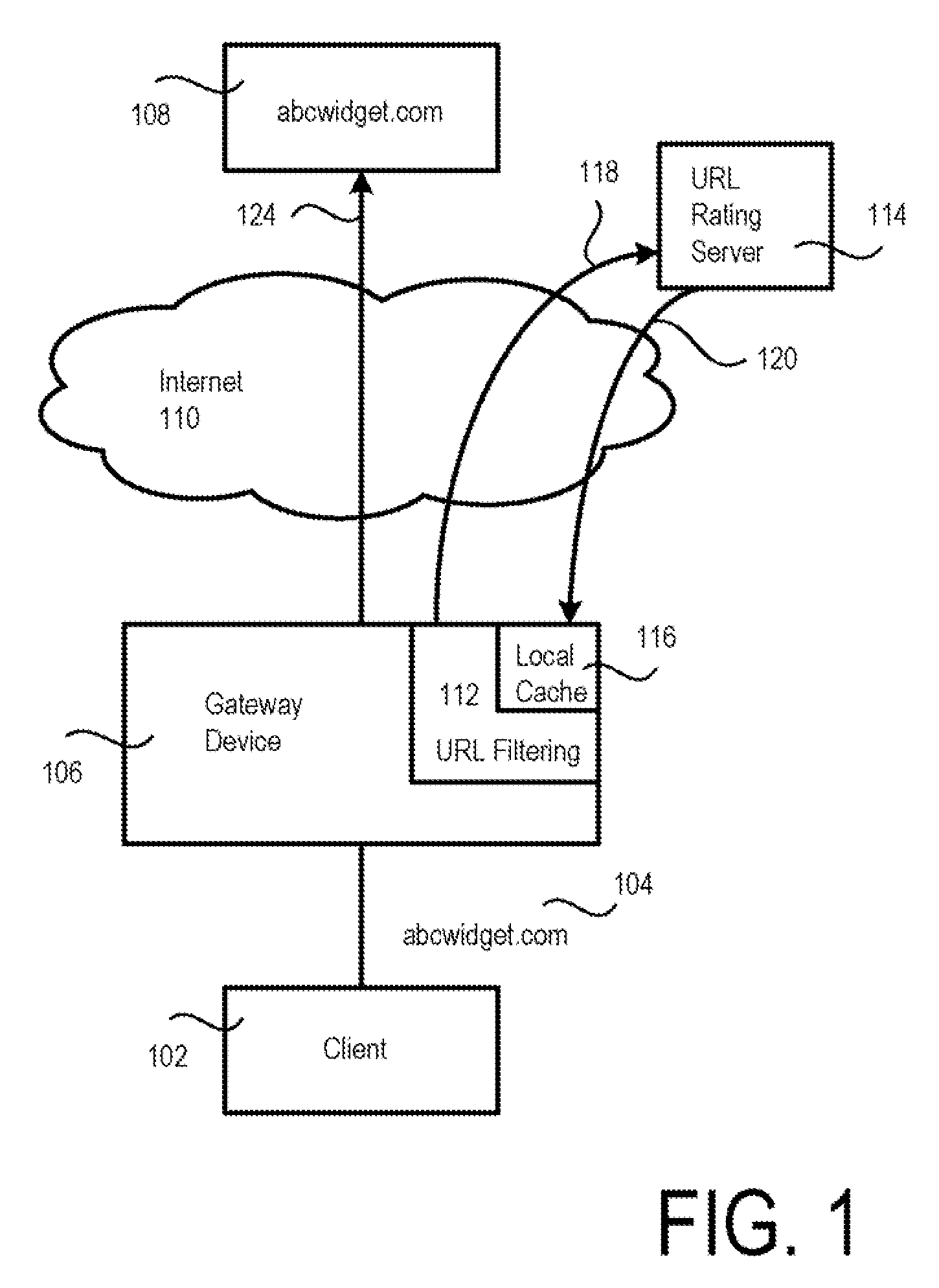

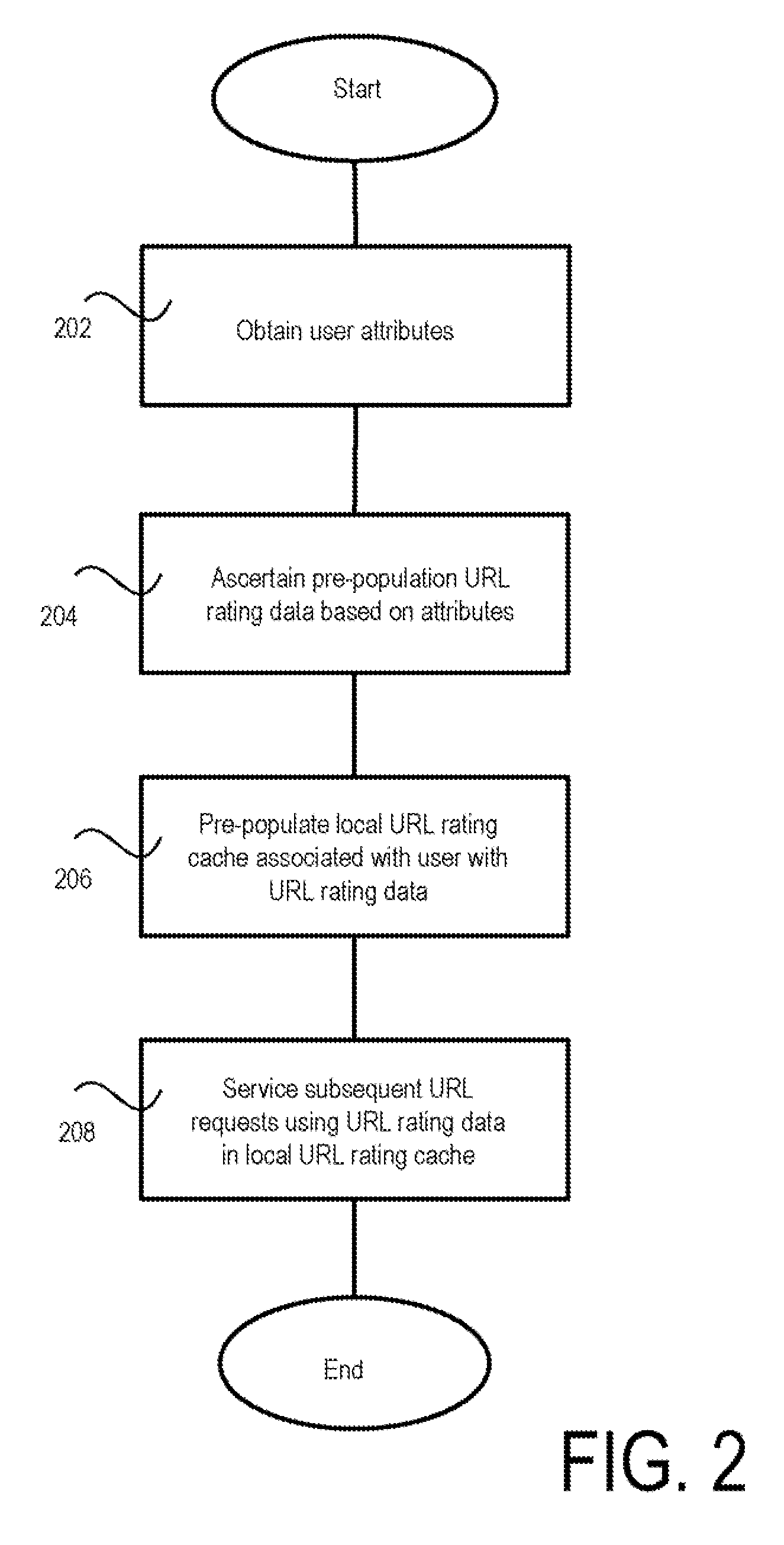

Pre-populating local URL rating cache

ActiveUS20080163380A1Digital data information retrievalDigital data processing detailsUniform resource locatorCache hit rate

A method and apparatus for improving the system response time when URL filtering is employed to provide security for web access. The method involves gathering the attributes of the user, and pre-populating a local URL-rating cache with URLs and corresponding ratings associated with analogous attributes from a URL cache database. Thus, the cache hit rate is higher with a pre-populated local URL rating cache, and the system response time is also improved.

Owner:TREND MICRO INC

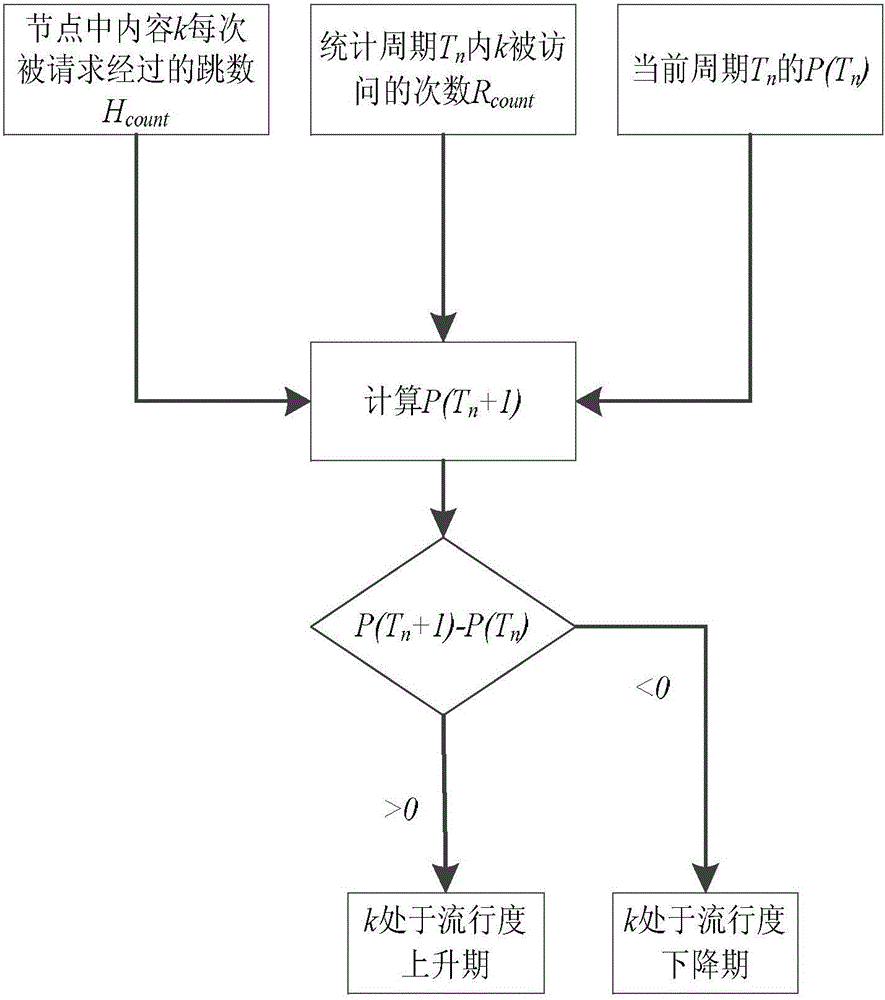

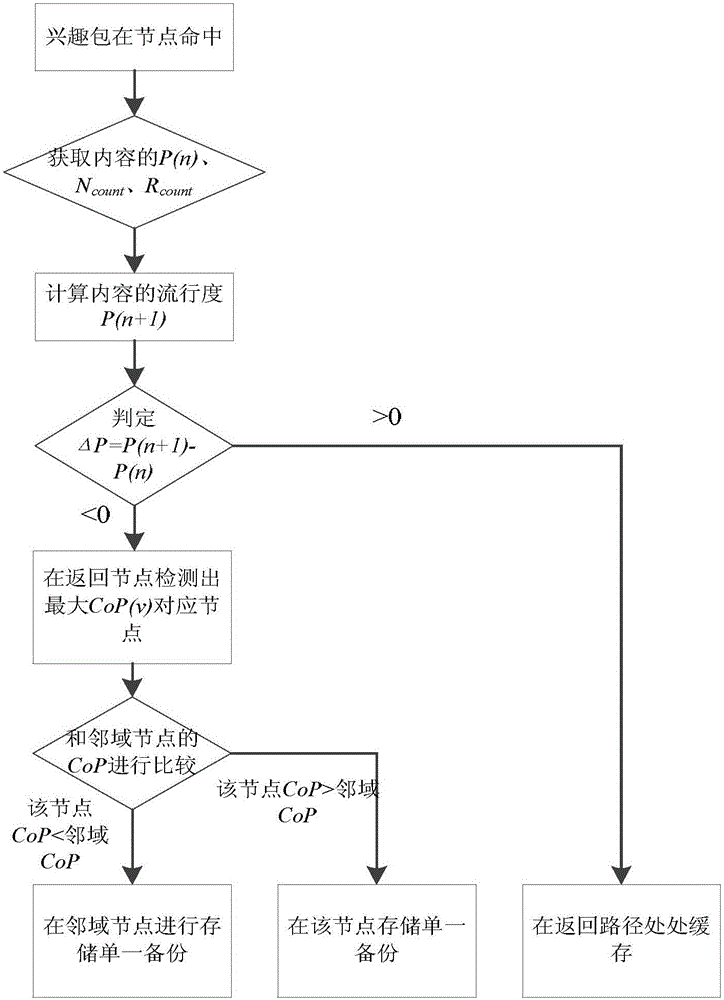

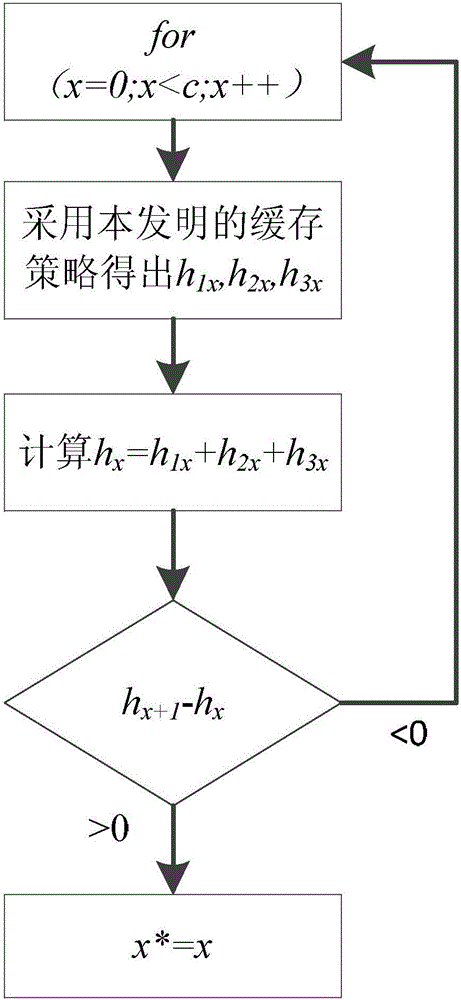

Cooperative caching method based on popularity prediction in named data networking

ActiveCN106131182AFast pushSatisfy low frequency requestsTransmissionCache hit rateDistributed computing

The invention discloses a cooperative caching method based on popularity prediction in a named data networking. When storing a content in the named data networking (NDN), the application of a strategy of caching at multiple parts along a path or a strategy of caching at important nodes causes high redundancy of node data and low utilization rate of a caching space in the networking. The method provided by the invention uses a 'partial cooperative caching mode', firstly, the content is subjected to future popularity prediction, then, the caching space of an optimal proportion is segmented from each node to be used as a local caching space for storing the content of high popularity. The rest part of each node stores the content of relatively low popularity through a neighborhood cooperation mode. An optimal space division proportion is calculated through considering hop count of an interest packet in node kit and server side request hit in the networking. Compared with the conventional caching strategy, the method provided by the invention increases the utilization rate of the caching space in the networking, reduces caching redundancy in the networking, improves a caching hit rate of the nodes in the networking, and promotes performance of the whole networking.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

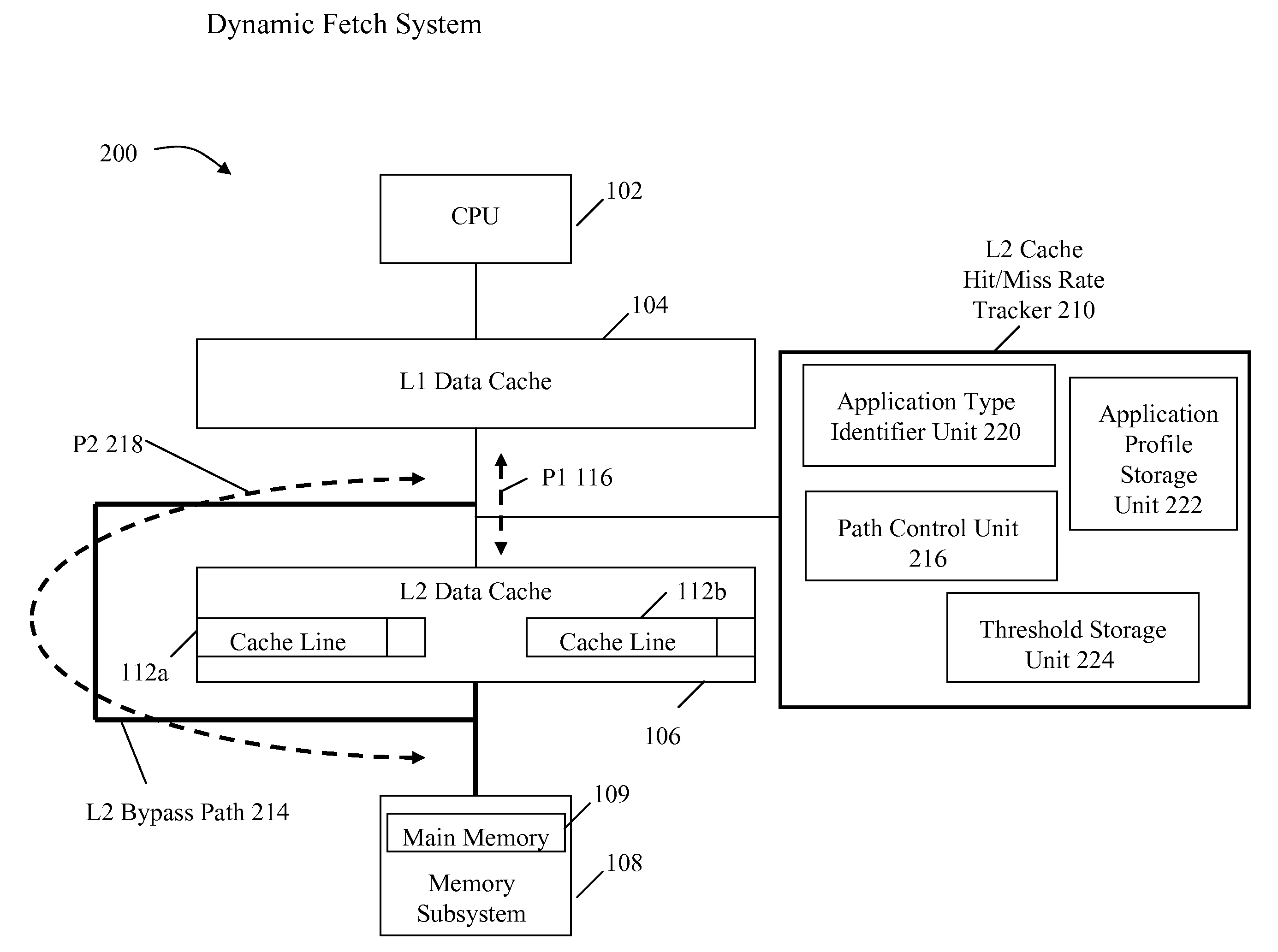

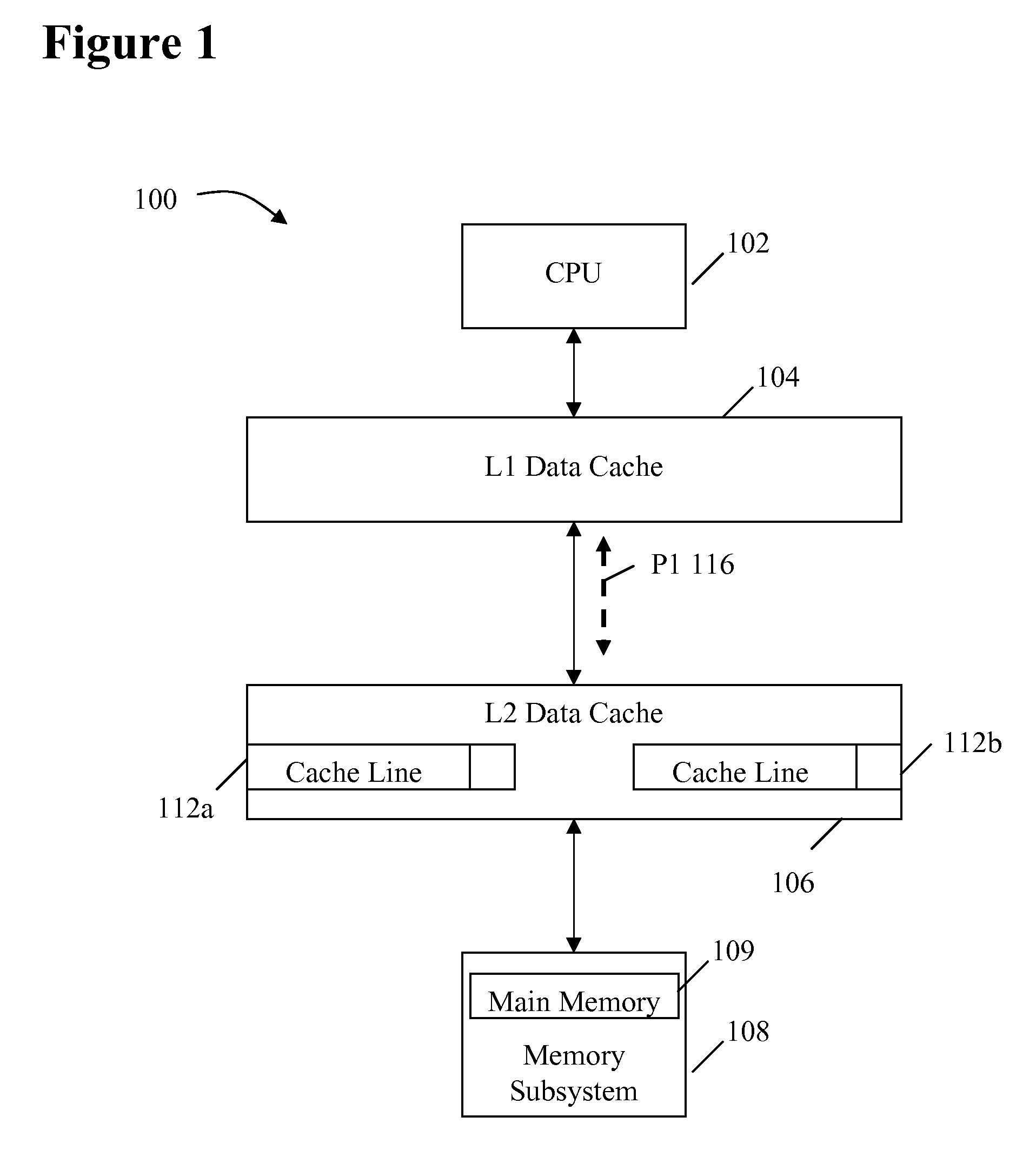

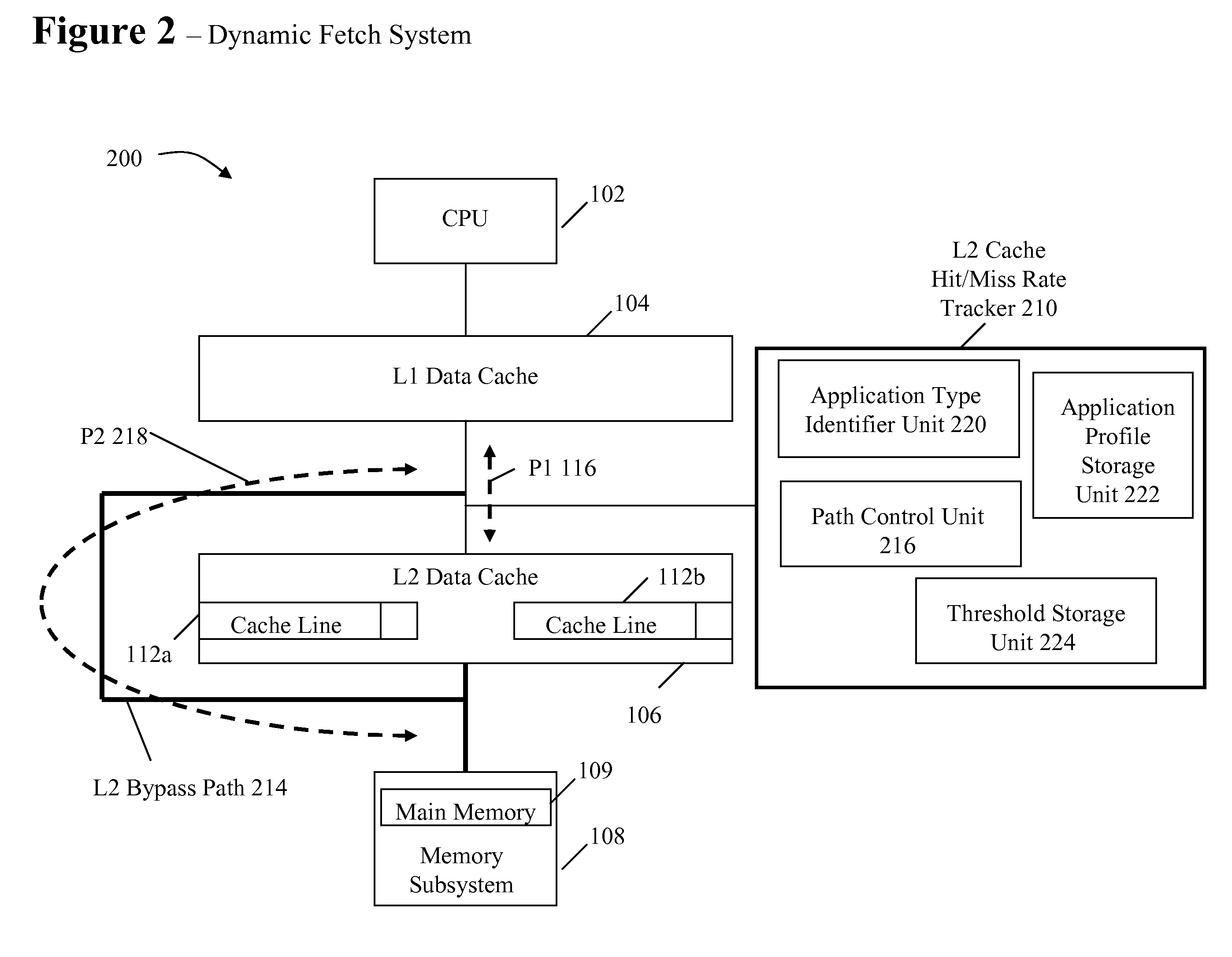

System and method for dynamically selecting the fetch path of data for improving processor performance

InactiveUS20090037664A1Improve system performanceImprove latencyMemory adressing/allocation/relocationData accessParallel computing

A system and method for dynamically selecting the data fetch path for improving the performance of the system improves data access latency by dynamically adjusting data fetch paths based on application data fetch characteristics. The application data fetch characteristics are determined through the use of a hit / miss tracker. It reduces data access latency for applications that have a low data reuse rate (streaming audio, video, multimedia, games, etc.) which will improve overall application performance. It is dynamic in a sense that at any point in time when the cache hit rate becomes reasonable (defined parameter), the normal cache lookup operations will resume. The system utilizes a hit / miss tracker which tracks the hits / misses against a cache and, if the miss rate surpasses a prespecified rate or matches an application profile, the hit / miss tracker causes the cache to be bypassed and the data is pulled from main memory or another cache thereby improving overall application performance.

Owner:IBM CORP

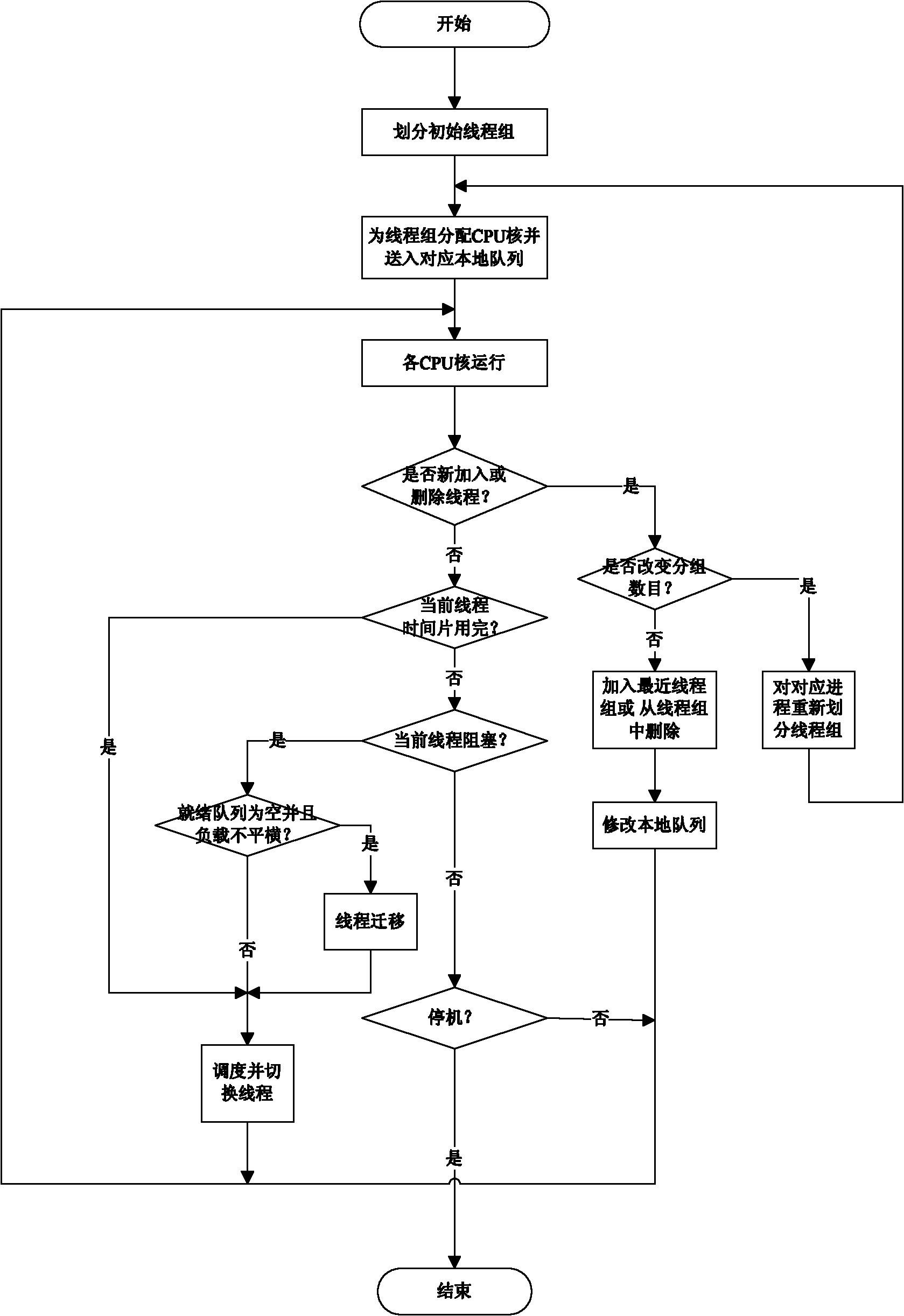

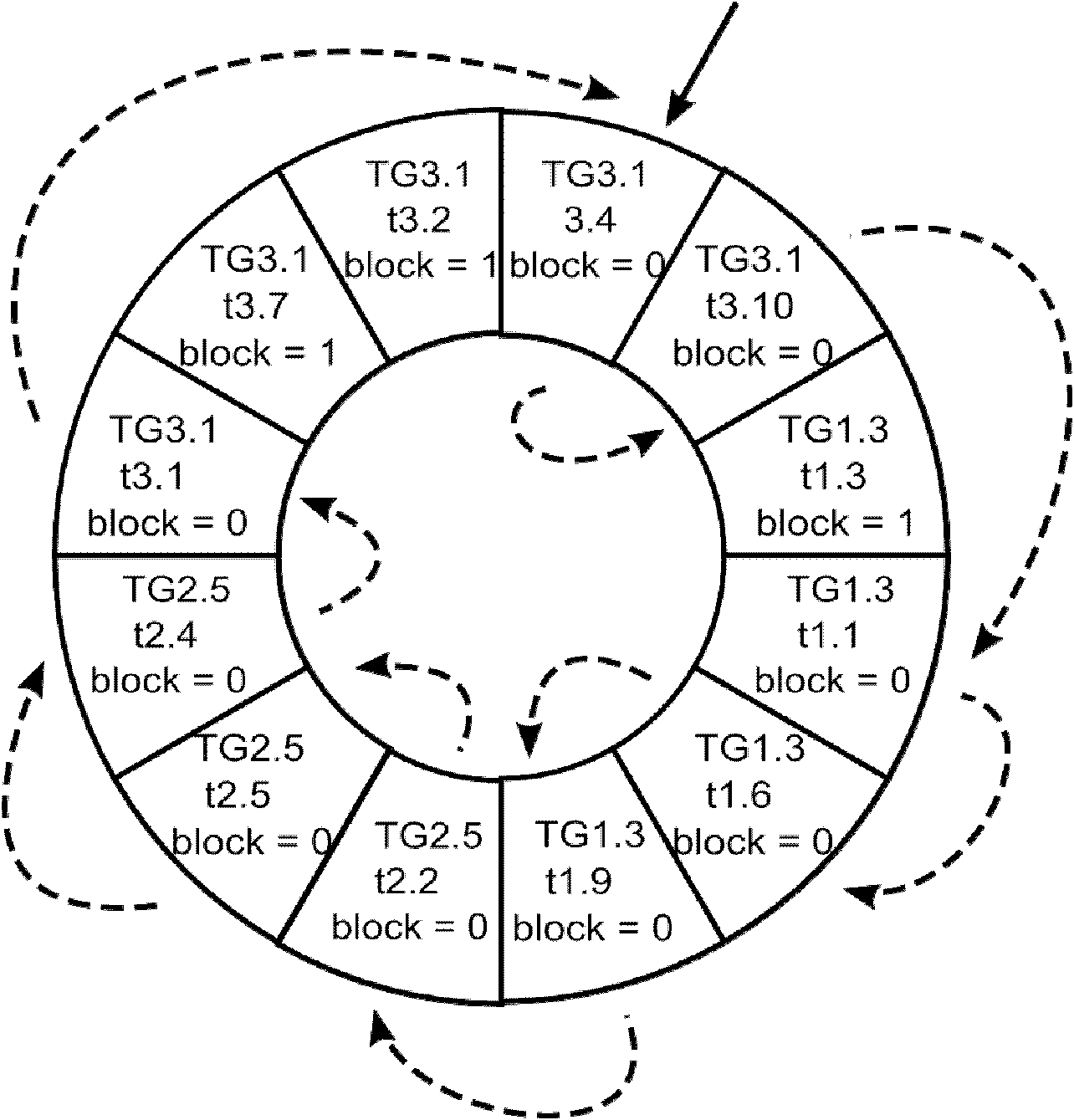

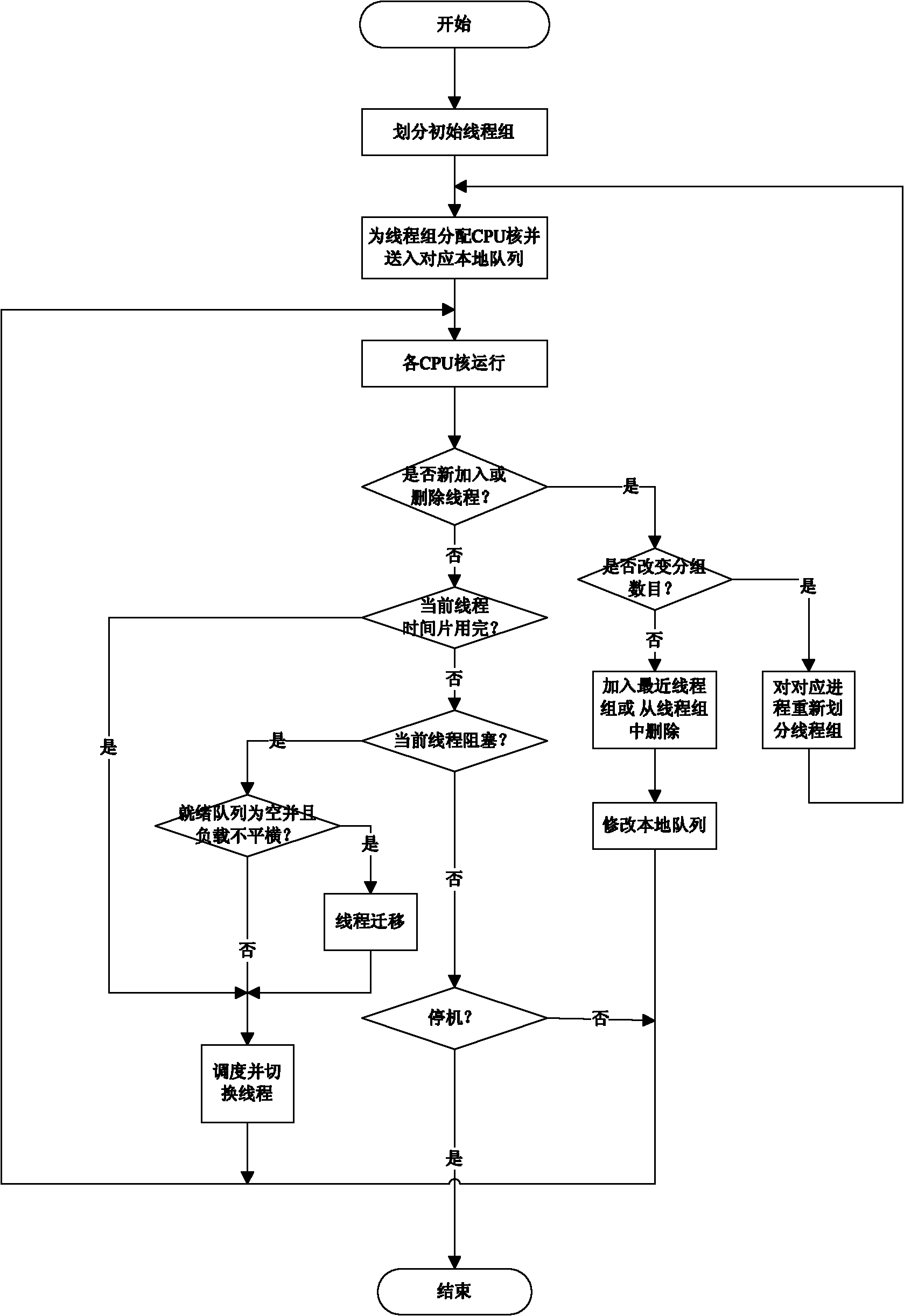

Thread group address space scheduling and thread switching method under multi-core environment

InactiveCN101923491AImprove hit rateReduce switching timesProgram initiation/switchingResource allocationParallel computingCombined use

The invention relates to a thread group address space scheduling and thread switching method under a multi-core environment in the technical field of computers, wherein a thread grouping strategy is introduced to aggregate potential threads which can be benefited through CPU core distribution and scheduling order arrangement, the address space switching frequency in the scheduling process are reduced, and the Cache hit rate is improved, thereby improving the throughput rate of a system and enhancing the overall performance of the system; adjustment can be flexibly carried out by adopting a thread grouping method according to the characteristics and the application characteristics of a hardware platform, so that thread group division adapted to a specific situation can be created; and the method also can be combined with other scheduling methods for use. In the invention, a task queue is equipped for each core of a processor by grouping the threads, the threads with scheduling benefits are sequentially scheduled, and the invention has the advantages of less scheduling spending, large task throughput and high scheduling flexibility.

Owner:SHANGHAI JIAO TONG UNIV

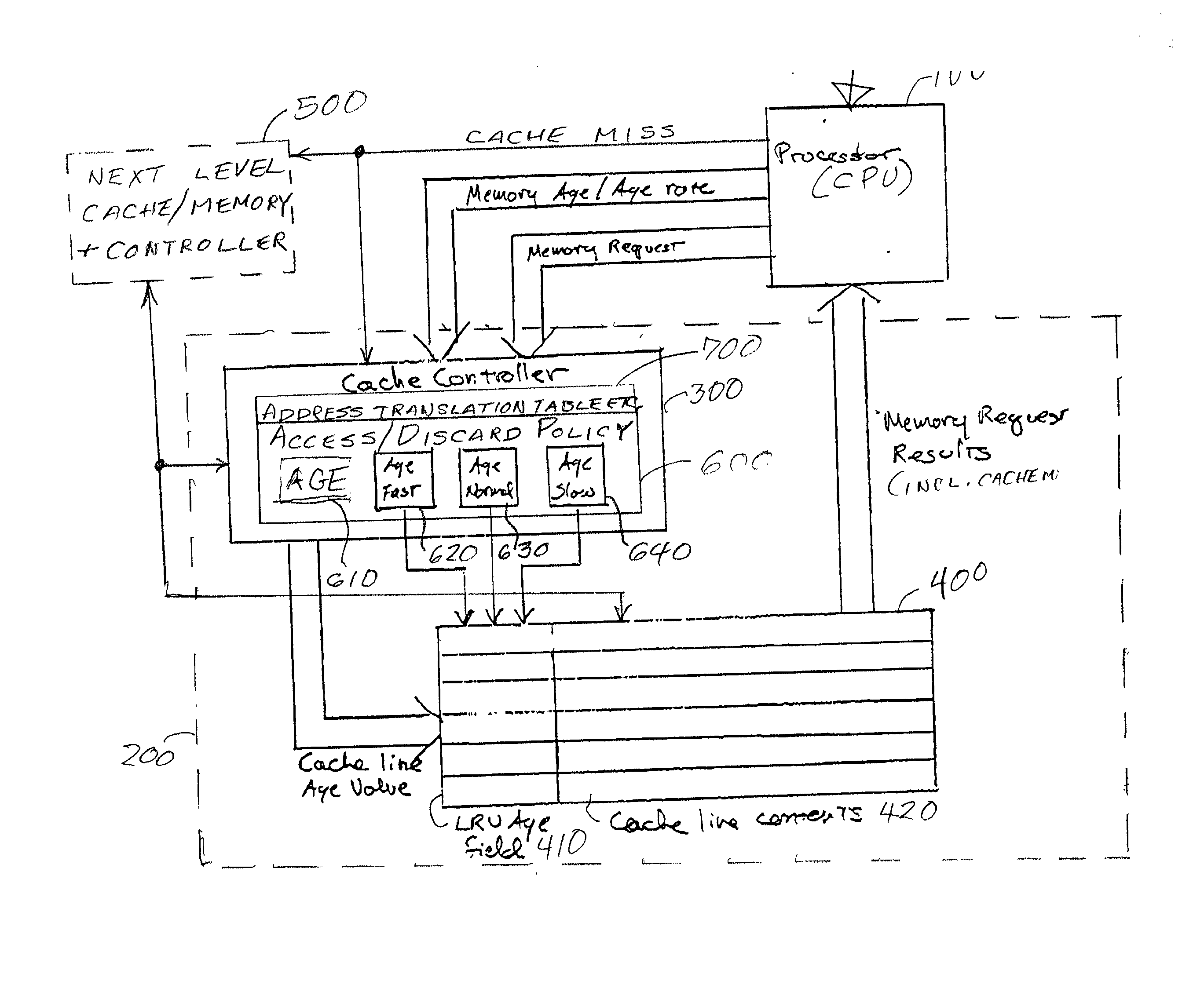

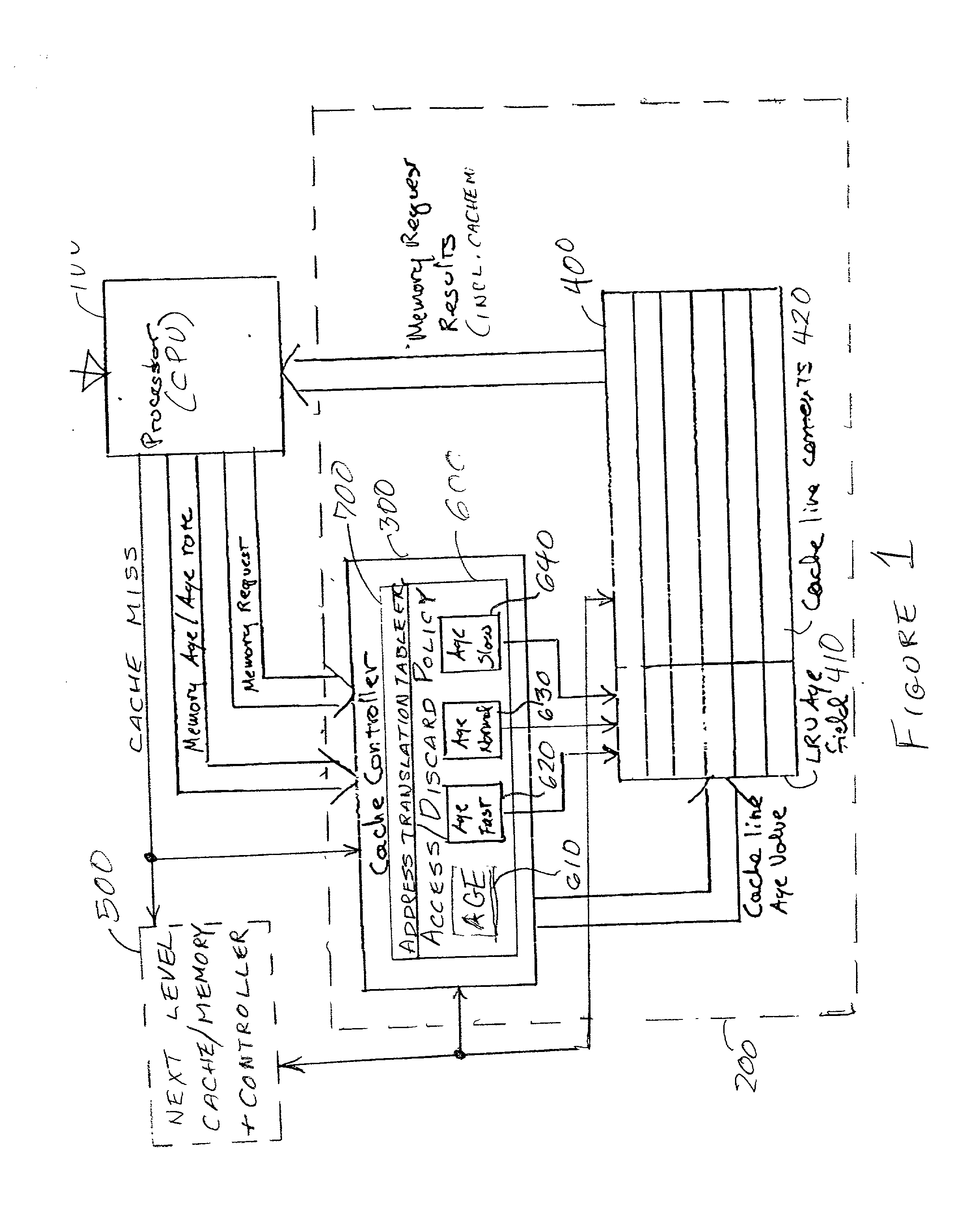

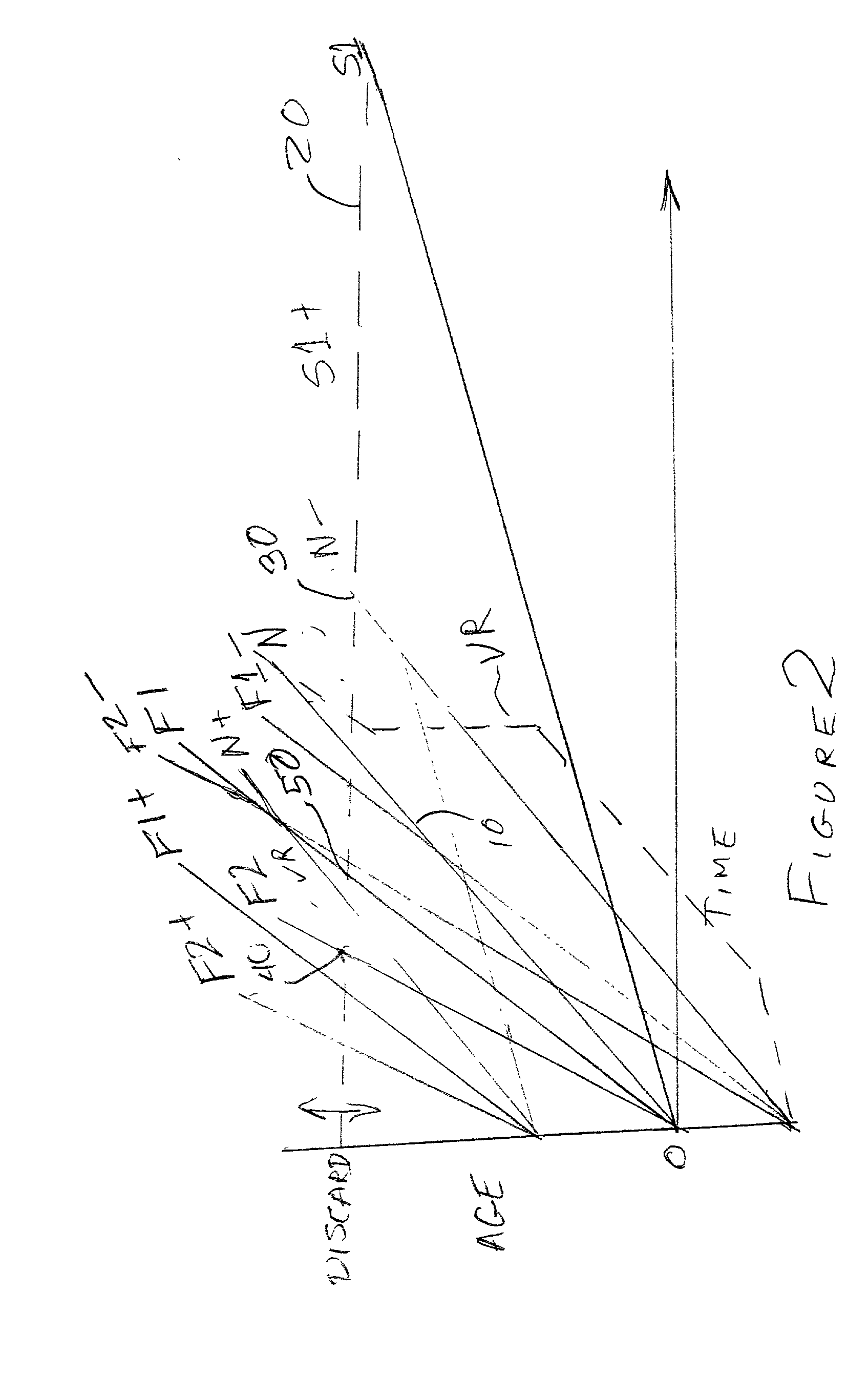

Directed least recently used cache replacement method

InactiveUS20020152361A1Performance maximizationImprove cache hit ratioEnergy efficient ICTMemory adressing/allocation/relocationParallel computingLeast recently frequently used

Fine grained control of cache maintenance resulting in improved cache hit rate and processor performance by storing age values and aging rates for respective code lines stored in the cache to direct performance of a least recently used (LRU) strategy for casting out lines of code from the cache which become less likely, over time, of being needed by a processor, thus supporting improved performance of a processor accessing the cache. The invention is implemented by the provision for entry of an arbitrary age value when a corresponding code line is initially stored in or accessed from the cache and control of the frequency or rate at which the age of each code is incremented in response to a limited set of command instructions which may be placed in a program manually or automatically using an optimizing compiler.

Owner:IBM CORP

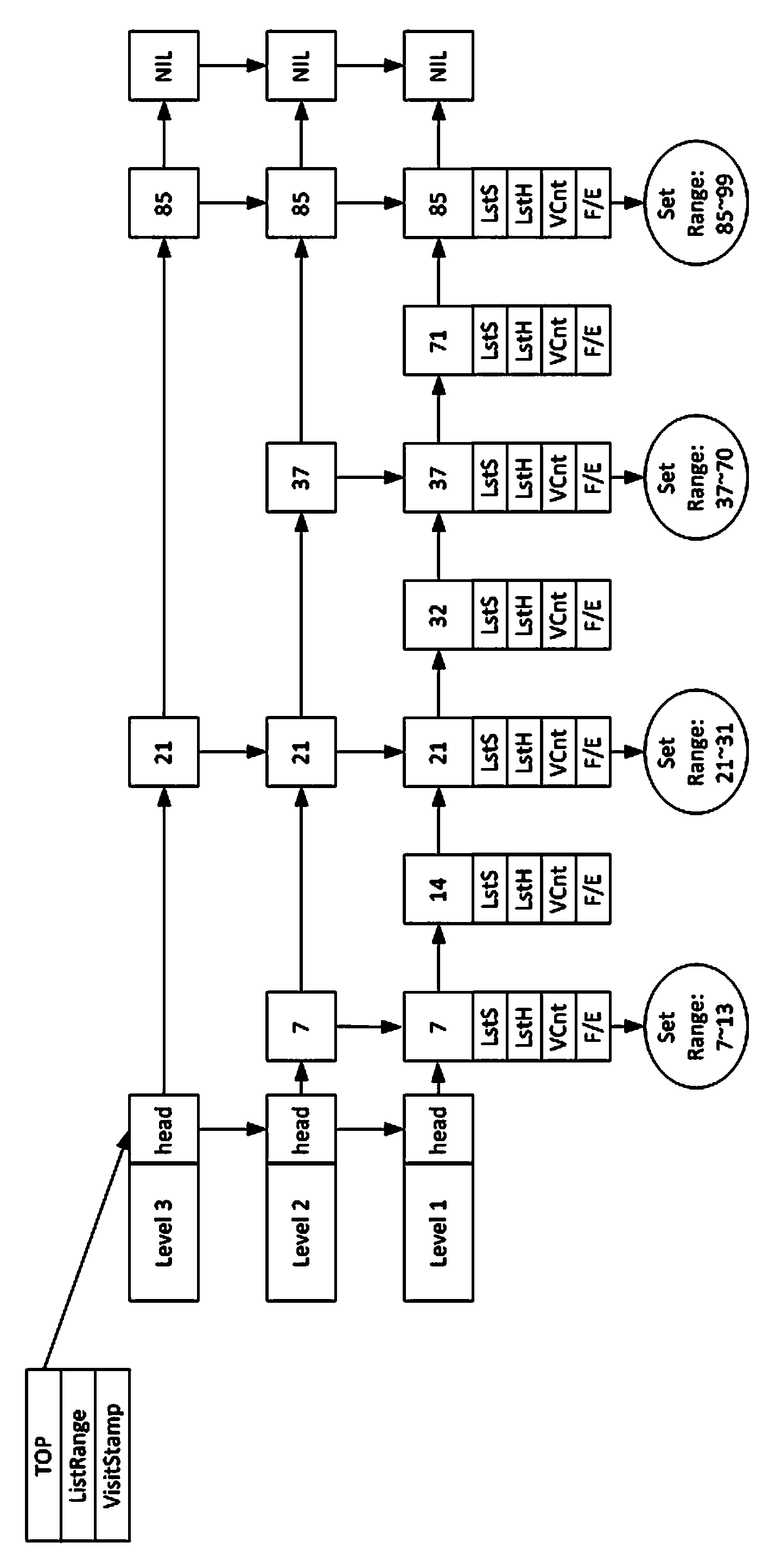

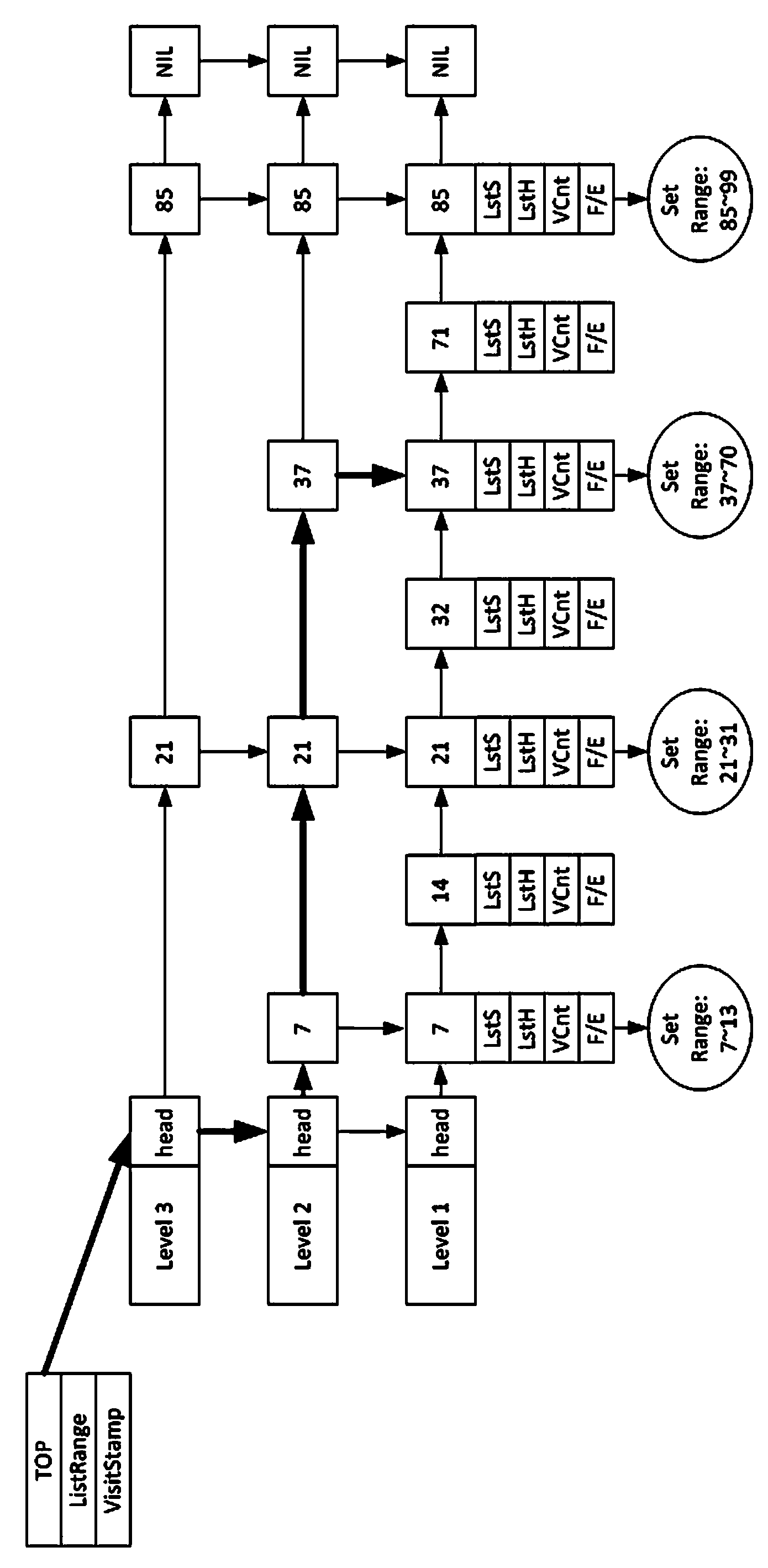

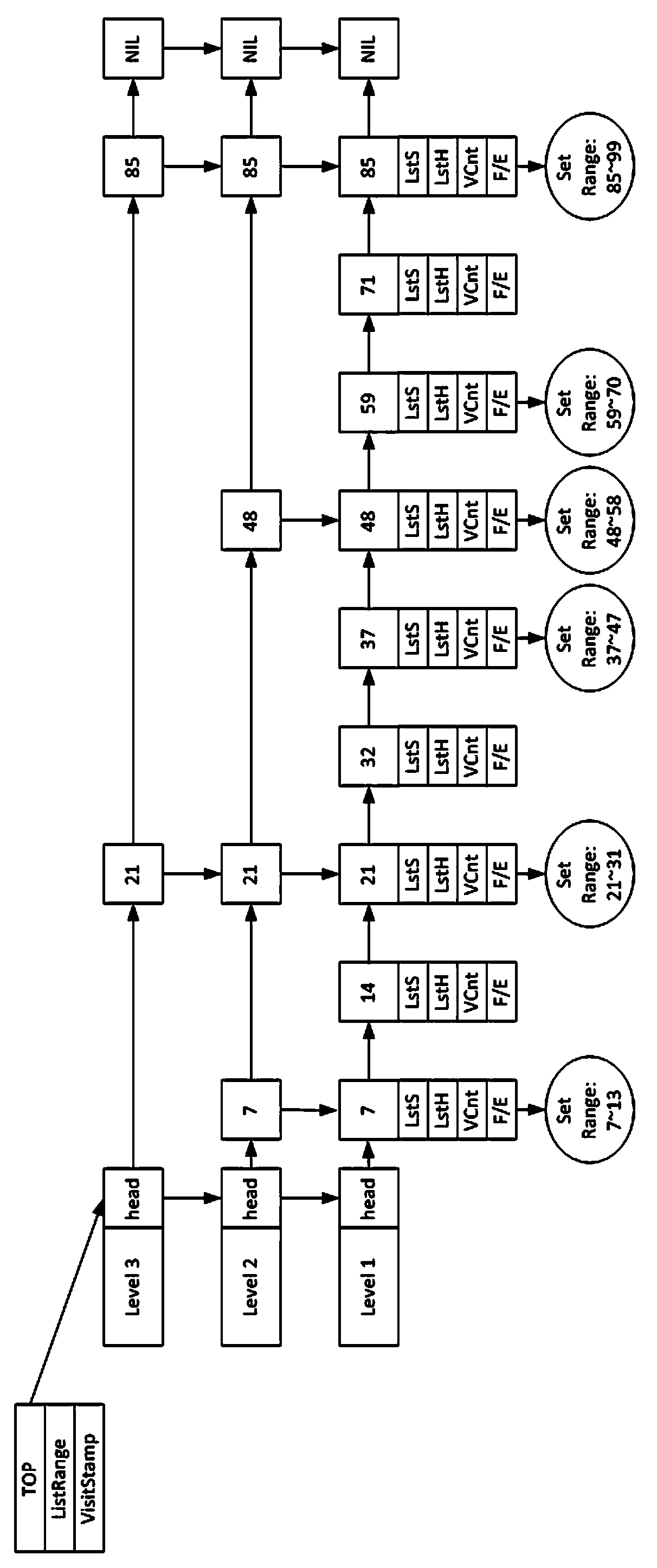

Memory caching method oriented to range querying on Hadoop

InactiveCN103942289AAdaptive Query RequirementsImprove query cache hit ratioSpecial data processing applicationsCache hit rateSelf adaptive

The invention discloses a memory caching method oriented to range querying on Hadoop. The memory caching method oriented to the range querying on the Hadoop comprises the following steps that (1) an index is established on querying attributes of Hadoop mass data and is stored on an Hbase; (2) a memory is established on index data of the Hbase to conduct fragment caching, the frequently-accessed index data are selected and stored in the memory, data fragments are fragmented in an initial stage by adopting a fixed length equal dividing method, and the mass data fragments are organized by adopting a skiplist; (3) hit data are queried and recorded according to the data, and the heat of the data fragments is measured by adopting an exponential smoothing method; (4) a memory cache is updated. The memory caching method oriented to the range querying on the Hadoop has the advantages that the structure of combining the skiplist and a collection is adopted, the dynamic adjustment of the fragment boundary of the collection is supported on the structure, the data fragments are made to be adaptive to querying demands, the querying cache hit rate of hot data fragments is improved, the overhead of a querying accessed disk is lowered, and thus the performance of the range querying is improved greatly.

Owner:GUANGXI NORMAL UNIV

Memory hub and method for memory sequencing

InactiveUS20070033353A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingCache hit rate

A memory module includes a memory hub coupled to several memory devices. The memory hub includes at least one performance counter that tracks one or more system metrics—for example, page hit rate, prefetch hits, and / or cache hit rate. The performance counter communicates with a memory sequencer that adjusts its operation based on the system metrics tracked by the performance counter.

Owner:ROUND ROCK RES LLC

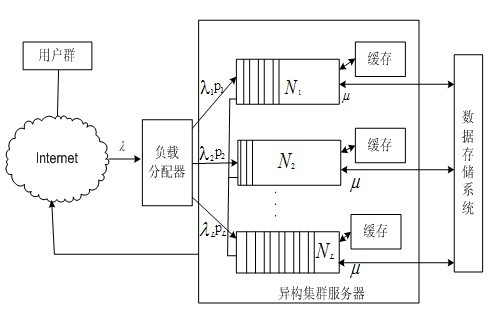

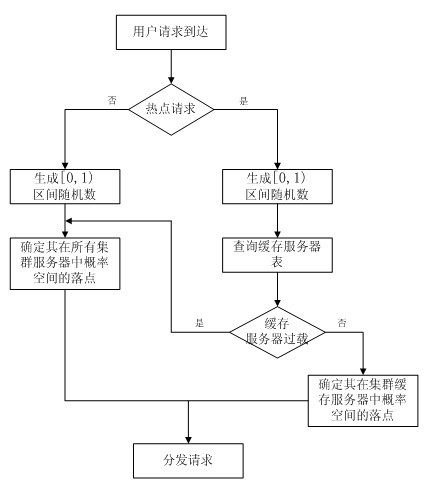

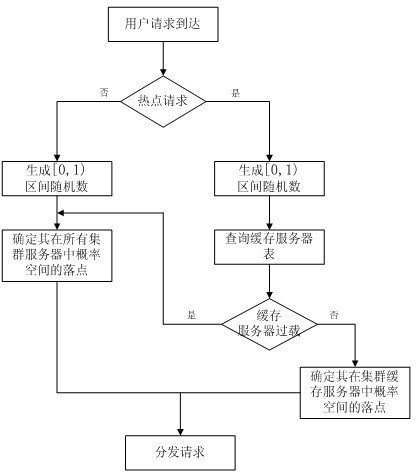

Method for balancing load of network GIS heterogeneous cluster server

The invention discloses a method for balancing a load of a network GIS (Geographic Information System) heterogeneous cluster server. In the method, built-in attributes which accord with the Zipf distribution rule and a server heterogeneous processing capacity are accessed on the basis of GIS data; the method adapts to dense access of a user in the aspect of the cluster caching distribution; when the cache hit rate is improved, the access load of hot spot data is balanced; the minimum processing cost of a cluster system, which is required by a data request service, is solved from the integral performance of a heterogeneous cluster service system, and user access response time is optimized when the load of the heterogeneous cluster server is balanced; and the distribution processing is carried out on the basis of data request contents and the access load of the hot spot data is prevented from being excessively centralized. The method disclosed by the invention highly accords with the large-scale user highly-clustered access characteristic in a network GIS, well coordinates and balances the relation between the load distribution and the access local control, ensures the service efficiency and the optimization of the load, effectively promotes the service performance of the actual network GIS and the utilization efficiency of the heterogeneous cluster service system.

Owner:WUHAN UNIV

Physical Rendering With Textured Bounding Volume Primitive Mapping

InactiveUS20100238169A1Reduce overheadReduce memory usageImage data processing detailsSpecial data processing applicationsComputer graphics (images)Memory footprint

A circuit arrangement, program product and circuit arrangement utilize a textured bounding volume to reduce the overhead associated with generating and using an Accelerated Data Structure (ADS) in connection with physical rendering. In particular, a subset of the primitives in a scene may be mapped to surfaces of a bounding volume to generate textures on such surfaces that can be used during physical rendering. By doing so, the primitives that are mapped to the bounding volume surfaces may be omitted from the ADS to reduce the processing overhead associated with both generating the ADS and using the ADS during physical rendering, and furthermore, in many instances the size of the ADS may be reduced, thus reducing the memory footprint of the ADS, and often improving cache hit rates and reducing memory bandwidth.

Owner:IBM CORP

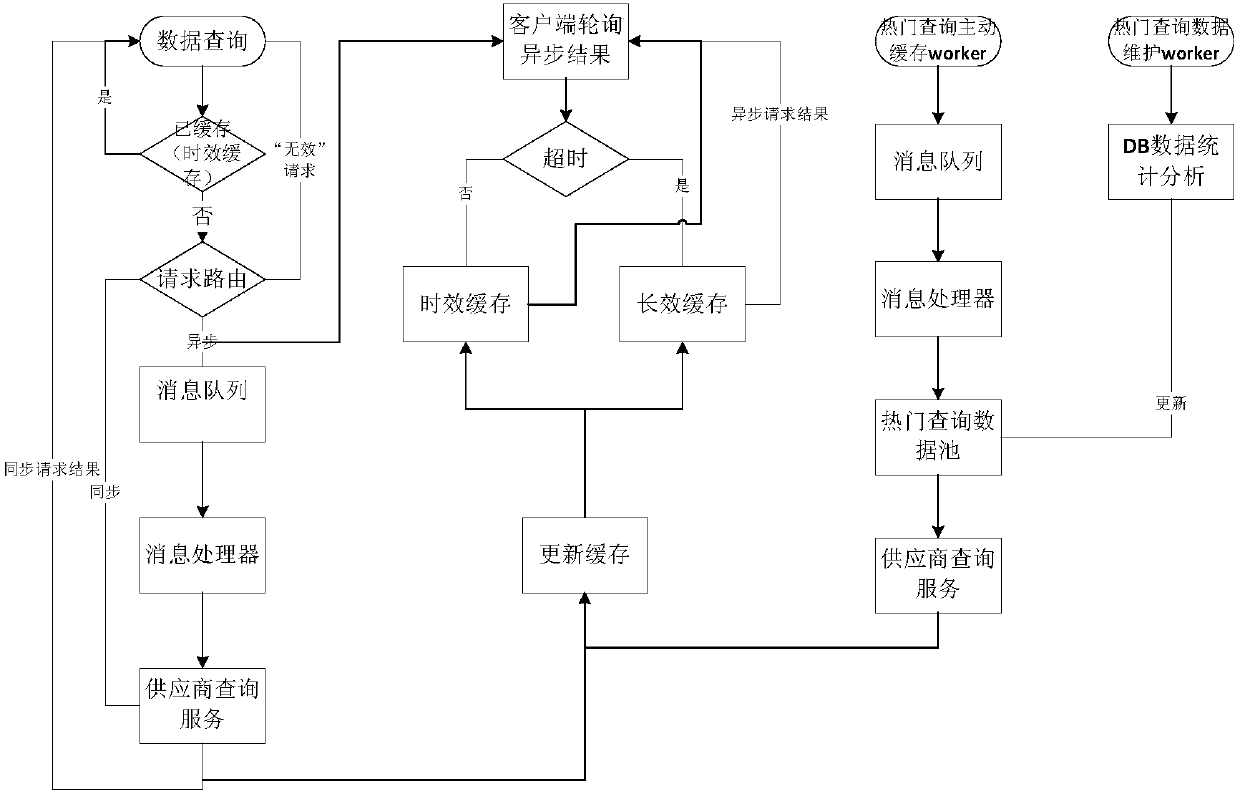

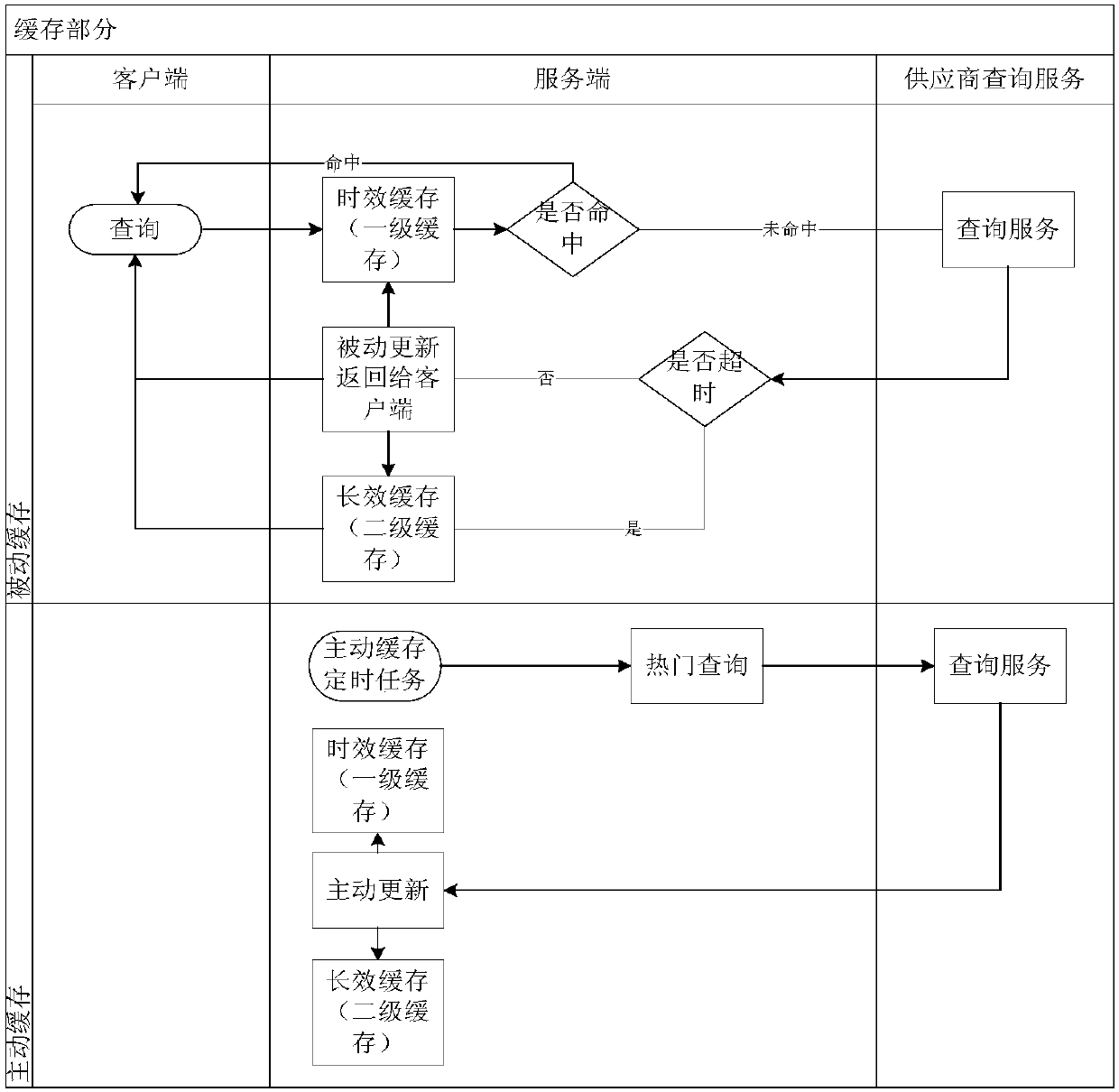

Data query method and device

ActiveCN109684358AImprove usabilityIncrease risk resistanceDigital data information retrievalSpecial data processing applicationsCache hit rateData query

The invention provides a data query method and a data query device, which can dynamically analyze hot queries, improve the cache hit rate and reduce the delay of query response by adopting a mode of coexistence of a first-level cache and a second-level cache, and can improve the accuracy of cached data by utilizing a data updating form of combination of an active cache and a passive cache. The method comprises the following steps: querying data from a first-level cache according to a received query request, and returning the data if the data is queried; Otherwise, continuing to call the external query service interface to perform data query; if the query is not overtime, returning the queried data; updating the inquired data into a first-level cache and a second-level cache; and if the query is overtime, querying data from a second-level cache and returning the data, the first-level cache having a first preset data expiration deletion time, the second-level cache having a second presetdata expiration deletion time, and the first preset data expiration deletion time being less than the second preset data expiration deletion time.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

Tunable processor performance benchmarking

InactiveUS20070136726A1Error detection/correctionMultiprogramming arrangementsResource consumptionParallel computing

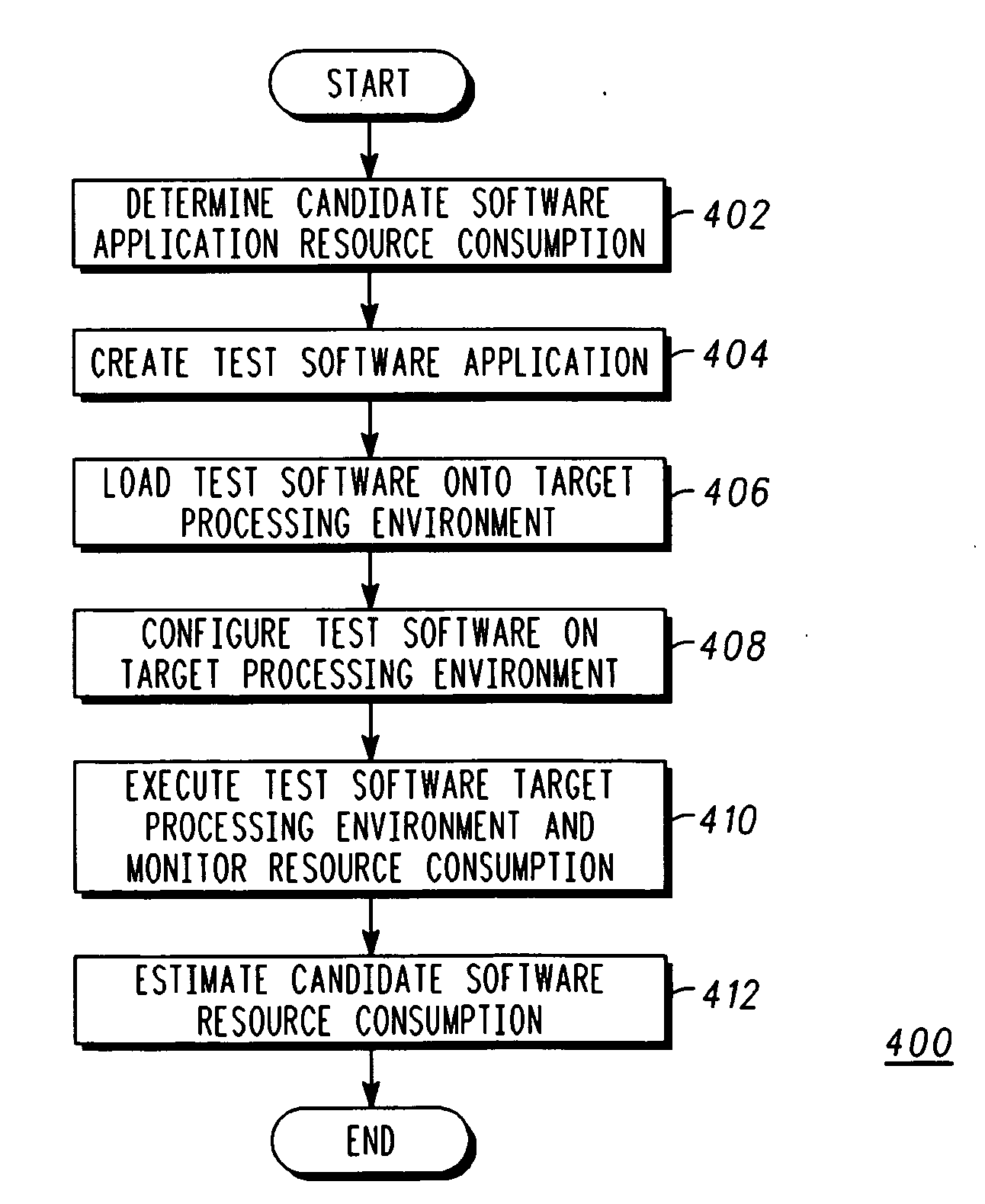

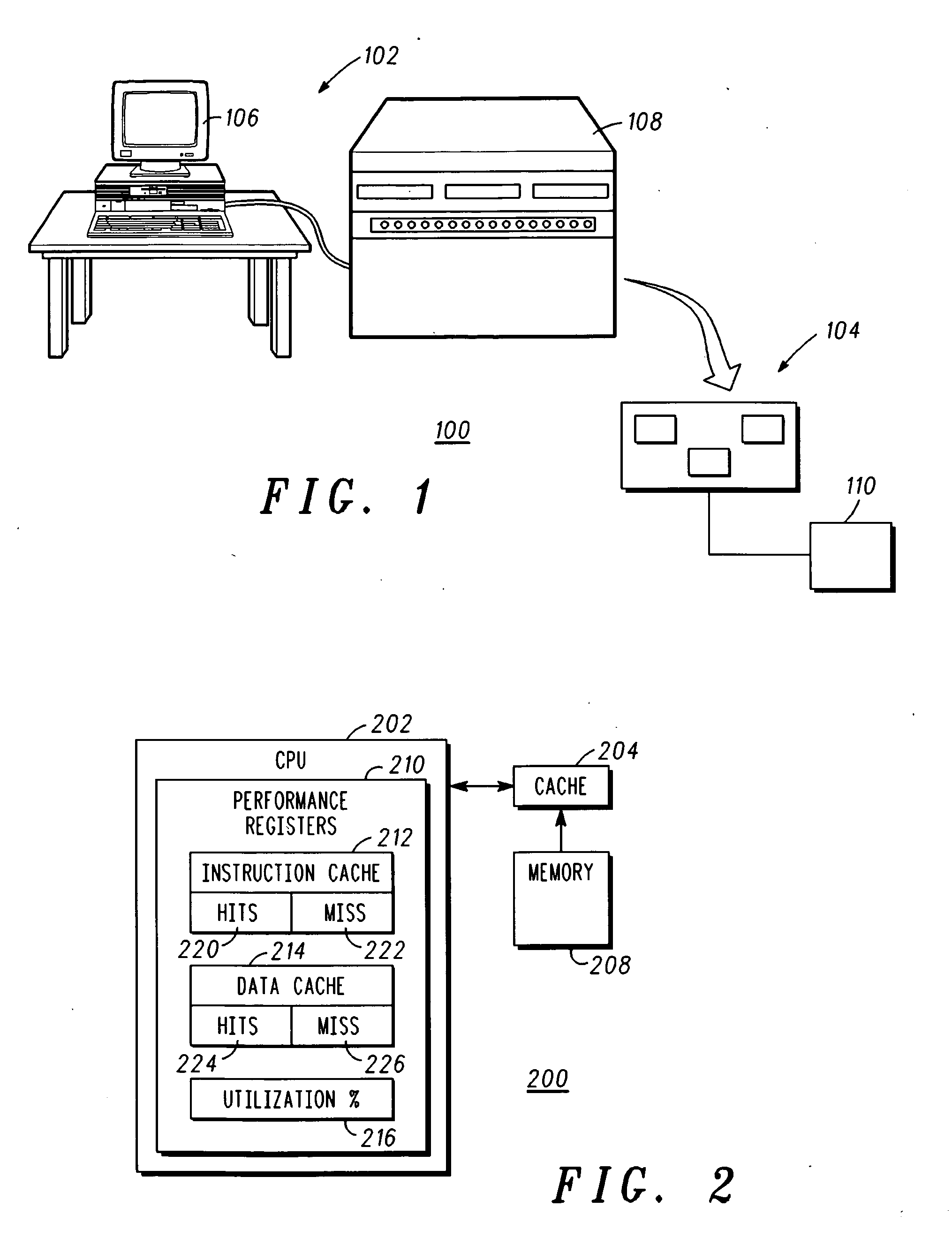

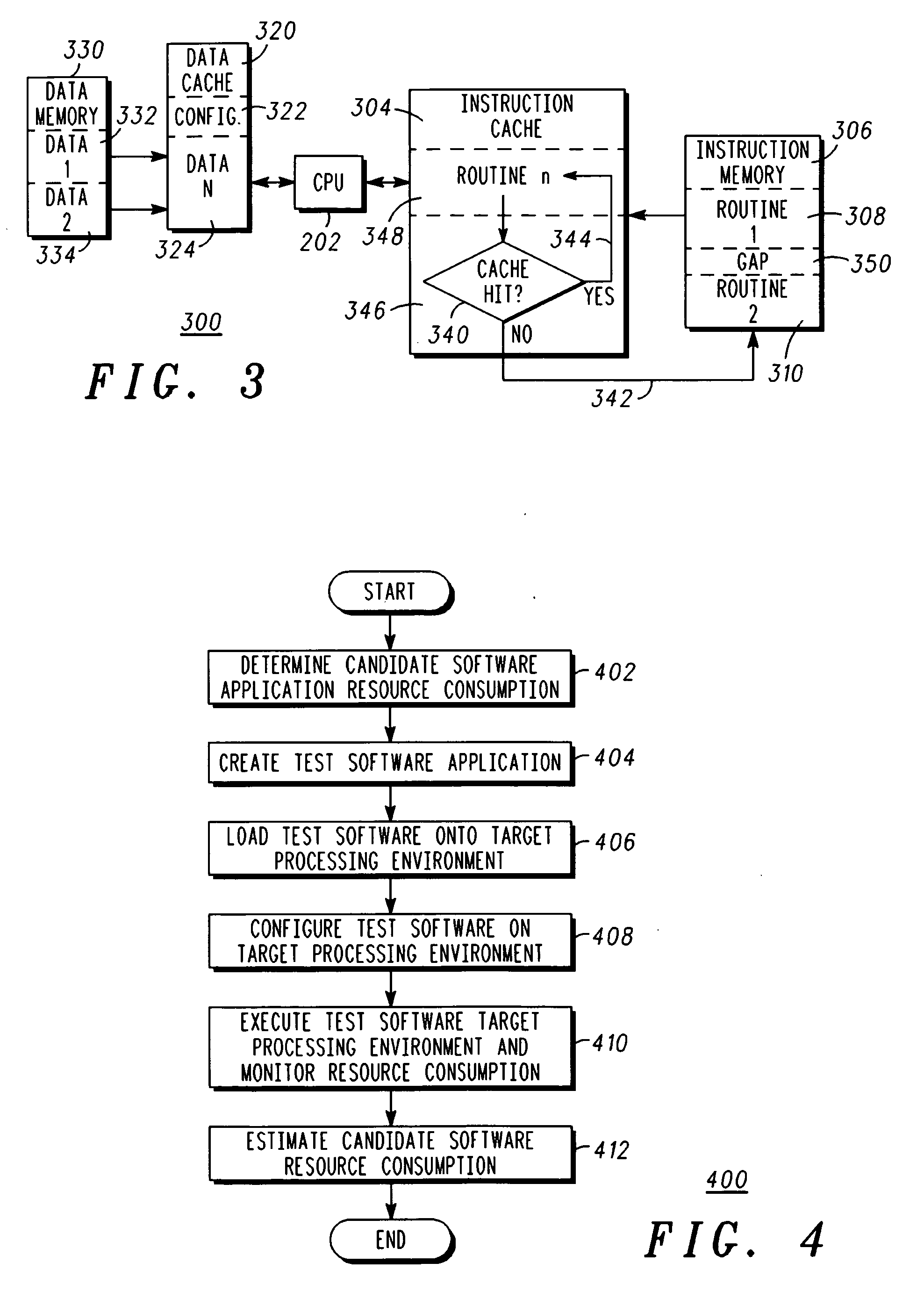

A tunable processor performance benchmarking method and system (100) estimates candidate software performance on a target processing environment (104) without porting the application. The candidate software's resource consumption is characterized to determine cache hit or miss rates. A test software generator (102) generates test software that is configured to have substantially the same cache miss rates and processor utilization, and its performance is measured when executing on the target processing environment (104). Instruction cache hit rates are maintained for the test software by selectively branching either within a routine (308, 310) that is resident in the instruction cache or to a routine (308, 310) that is not within the instruction cache. Data blocks (332, 334) are also selectively accessed in order to maintain a desired data cache miss rate.

Owner:MOTOROLA INC

System and method for performing entity tag and cache control of a dynamically generated object not identified as cacheable in a network

ActiveUS20060195660A1Improve cache hit ratioMemory loss protectionAssess restrictionParallel computingClient-side

The present invention is directed towards a method and system for modifying by a cache responses from a server that do not identify a dynamically generated object as cacheable to identify the dynamically generated object to a client as cacheable in the response. In some embodiments, such as an embodiment handling HTTP requests and responses for objects, the techniques of the present invention insert an entity tag, or “etag” into the response to provide cache control for objects provided without entity tags and / or cache control information from an originating server. This technique of the present invention provides an increase in cache hit rates by inserting information, such as entity tag and cache control information for an object, in a response to a client to enable the cache to check for a hit in a subsequent request.

Owner:CITRIX SYST INC

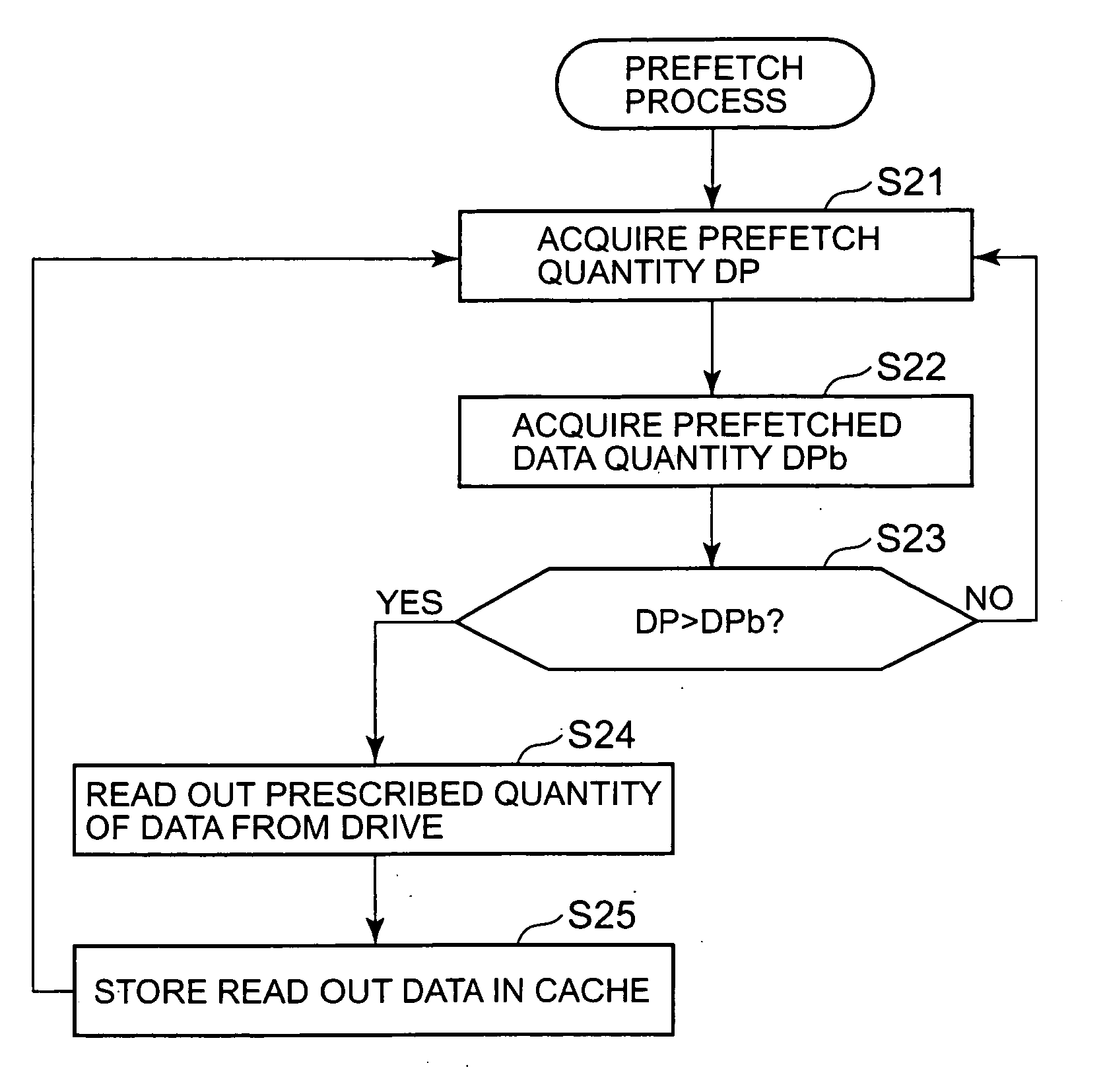

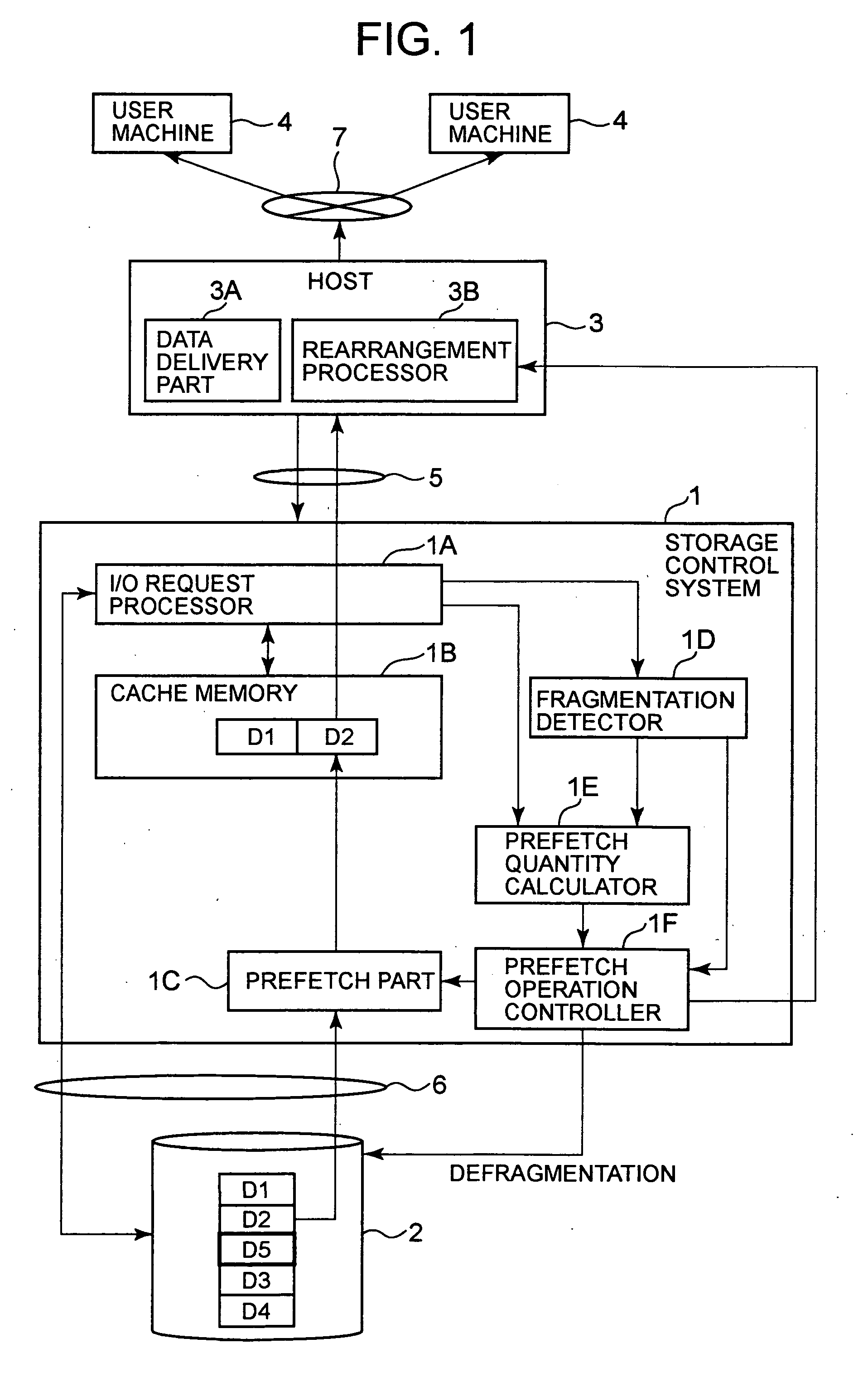

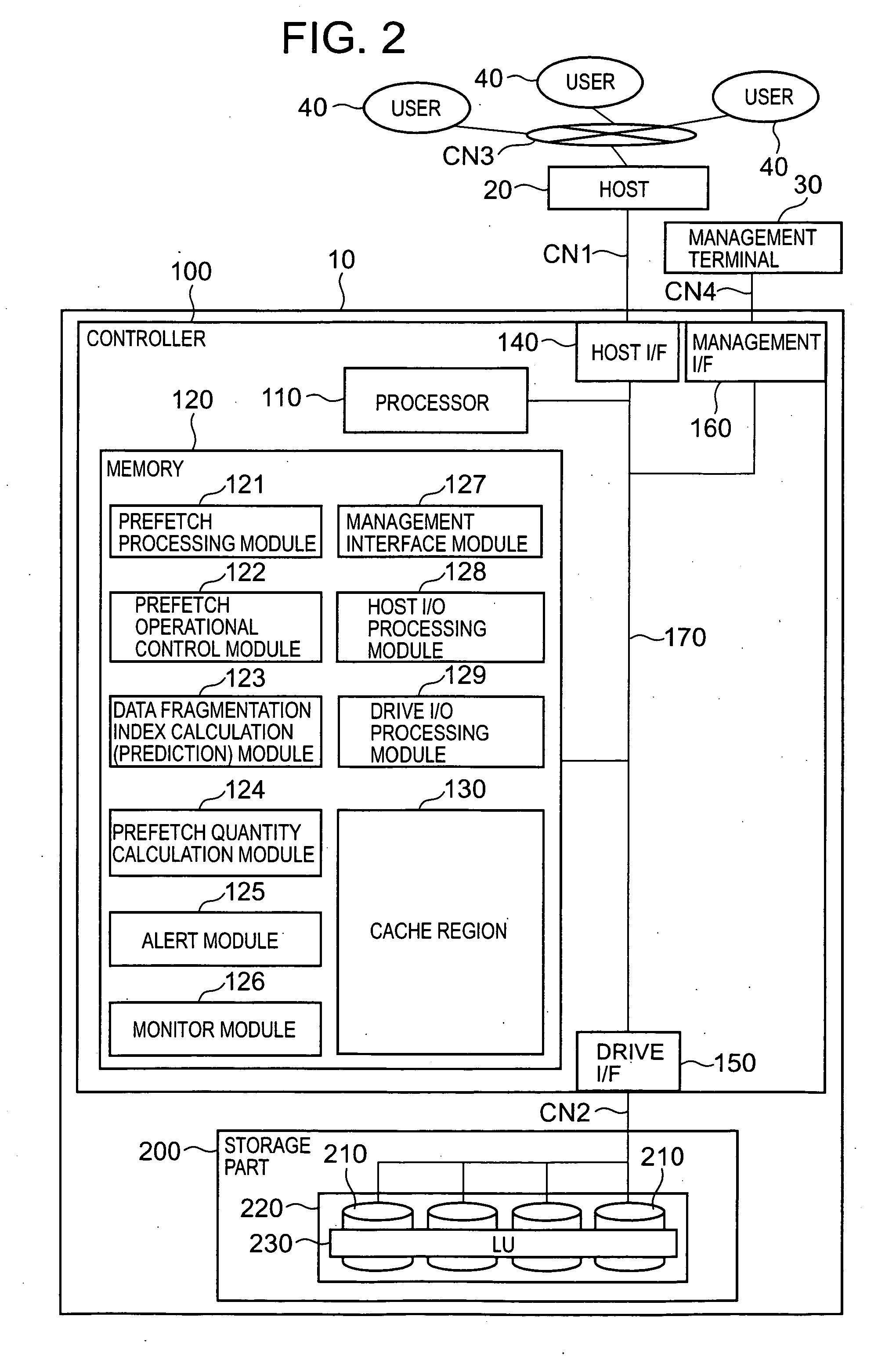

Storage system and storage system control method

InactiveUS20070220208A1Improve responsivenessAvoid performanceMemory architecture accessing/allocationMemory systemsCache hit rateHit ratio

A storage system of the present invention improves the response performance of sequential access to data, the data arrangement of which is expected to be sequential. Data to be transmitted via streaming delivery is stored in a storage section. A host sends data read out from the storage section to respective user machines. A prefetch section reads out from the storage section ahead of time the data to be read out by the host, and stores it in a cache memory. A fragmentation detector detects the extent of fragmentation of the data arrangement in accordance with the cache hit rate. The greater the extent of the fragmentation, the smaller the prefetch quantity calculated by a prefetch quantity calculator. A prefetch operation controller halts a prefetch operation when the extent of data arrangement fragmentation is great, and restarts a prefetch operation when the extent of fragmentation decreases.

Owner:HITACHI LTD

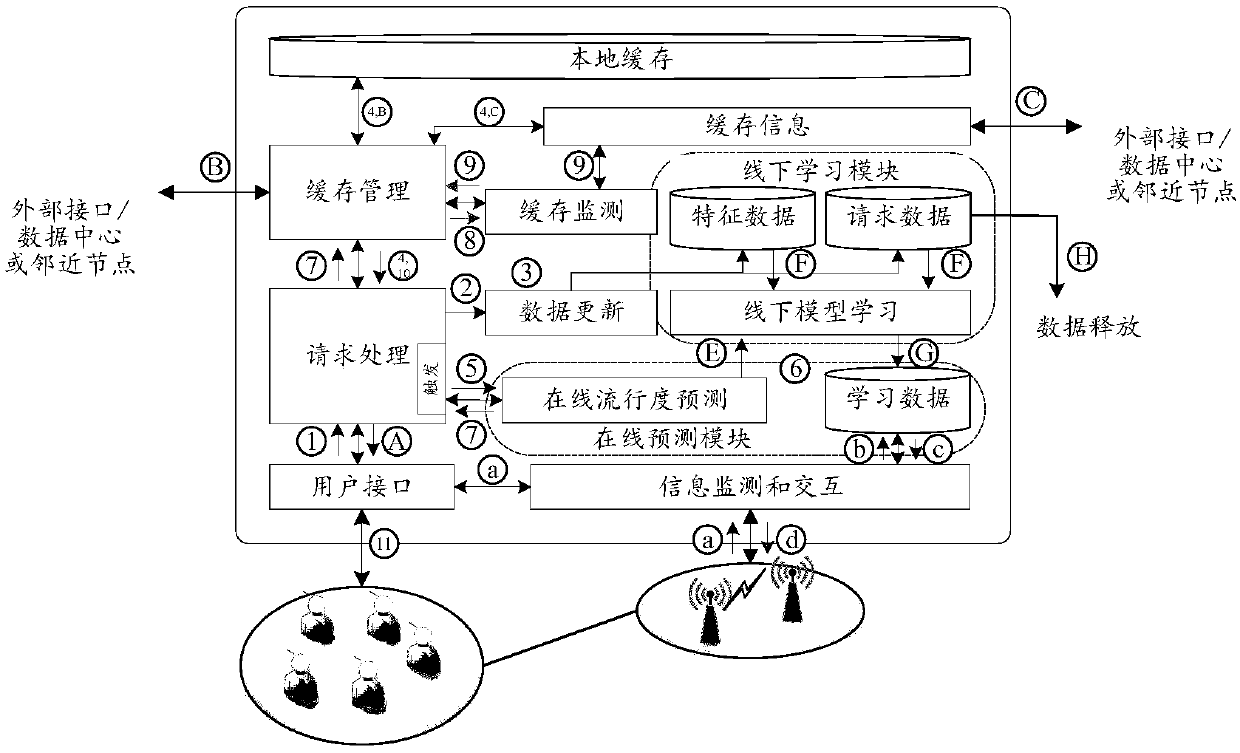

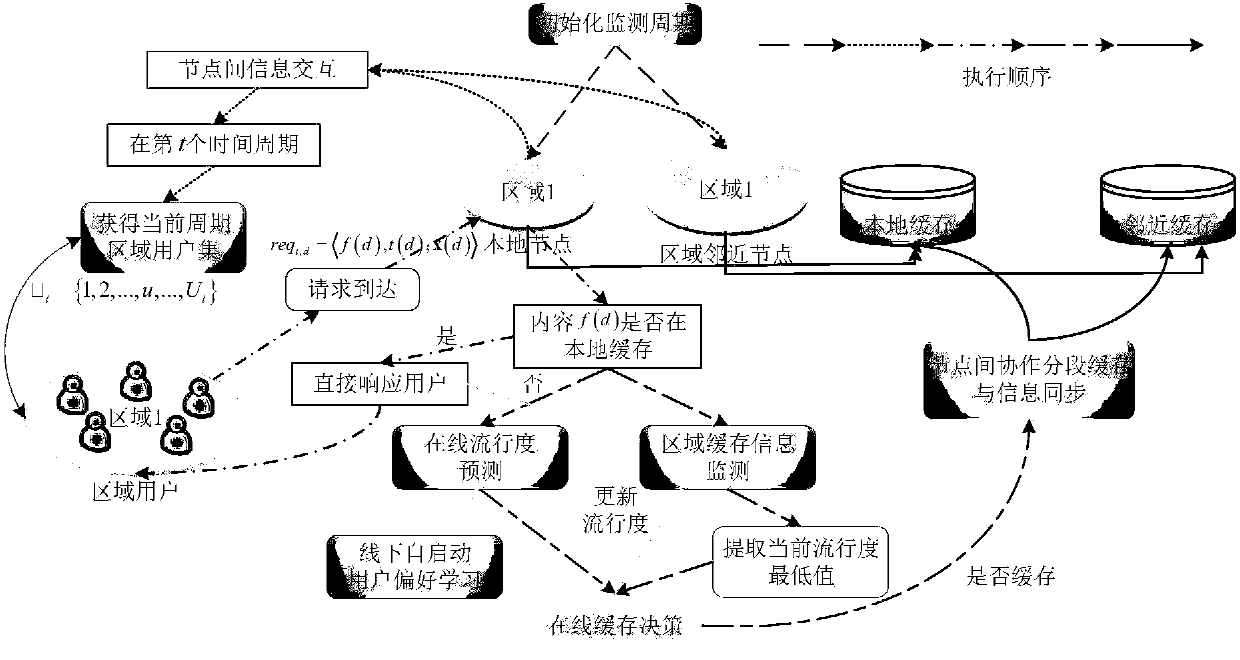

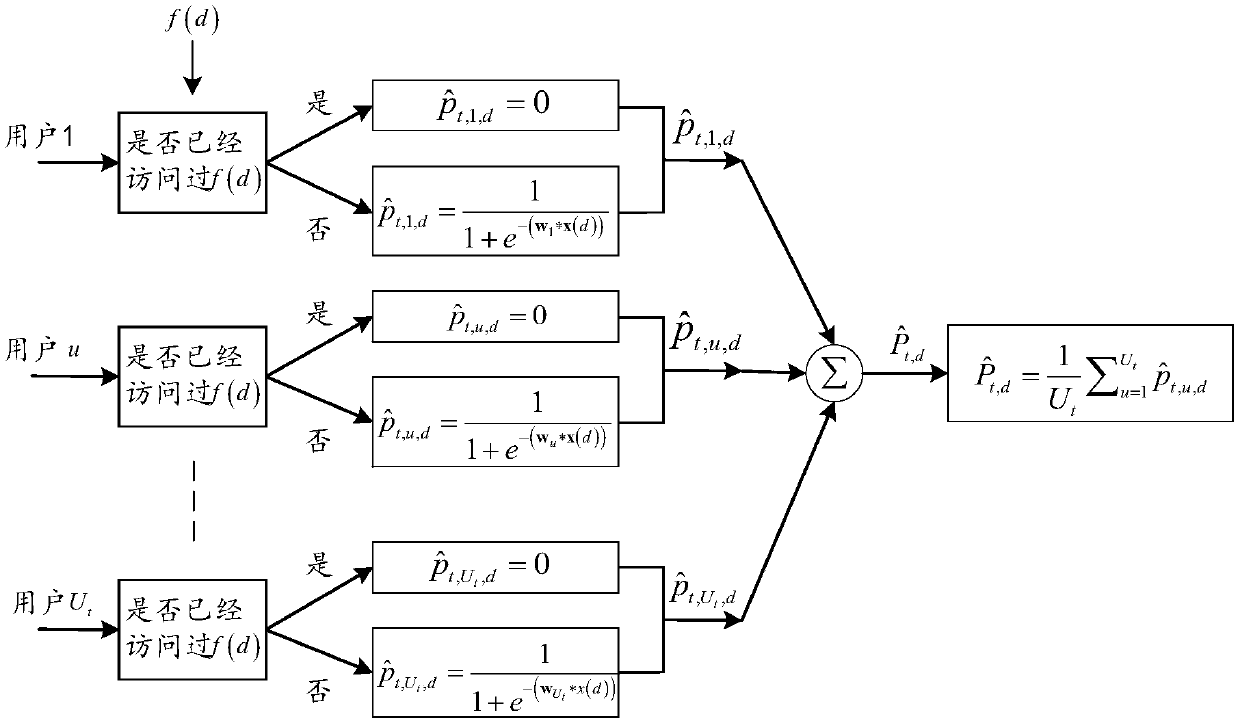

Edge caching system and method based on content popularity prediction

ActiveCN107909108AImprove accuracyImprove real-time performanceDatabase updatingCharacter and pattern recognitionEdge nodeCache hit rate

The invention discloses an edge caching system and method based on content popularity prediction. The method comprises the following steps that: (1) according to the historical request information ofa user, training the preference model of each user in a node coverage region offline; (2) when a request arrives, if requested contents are not in the presence in a cache region, on the basis of the preference model of the user, carrying out the on-line prediction of content popularity; (3) comparing a content popularity prediction value with the minimum value of the content popularity of the cache region, and making a corresponding caching decision; and (4) updating a content popularity value at a current moment, evaluating the preference model of the user, and determining whether the offlinelearning of the preference model of the user is started or not. By use of the method, an edge node can predict the content popularity online and track the change of the content popularity in real time, the corresponding caching decision is made on the basis of the predicted content popularity, so that the edge node is guaranteed to continuously cache hot contents, and a caching hit rate which approaches to an ideal caching method is obtained.

Owner:SOUTHEAST UNIV

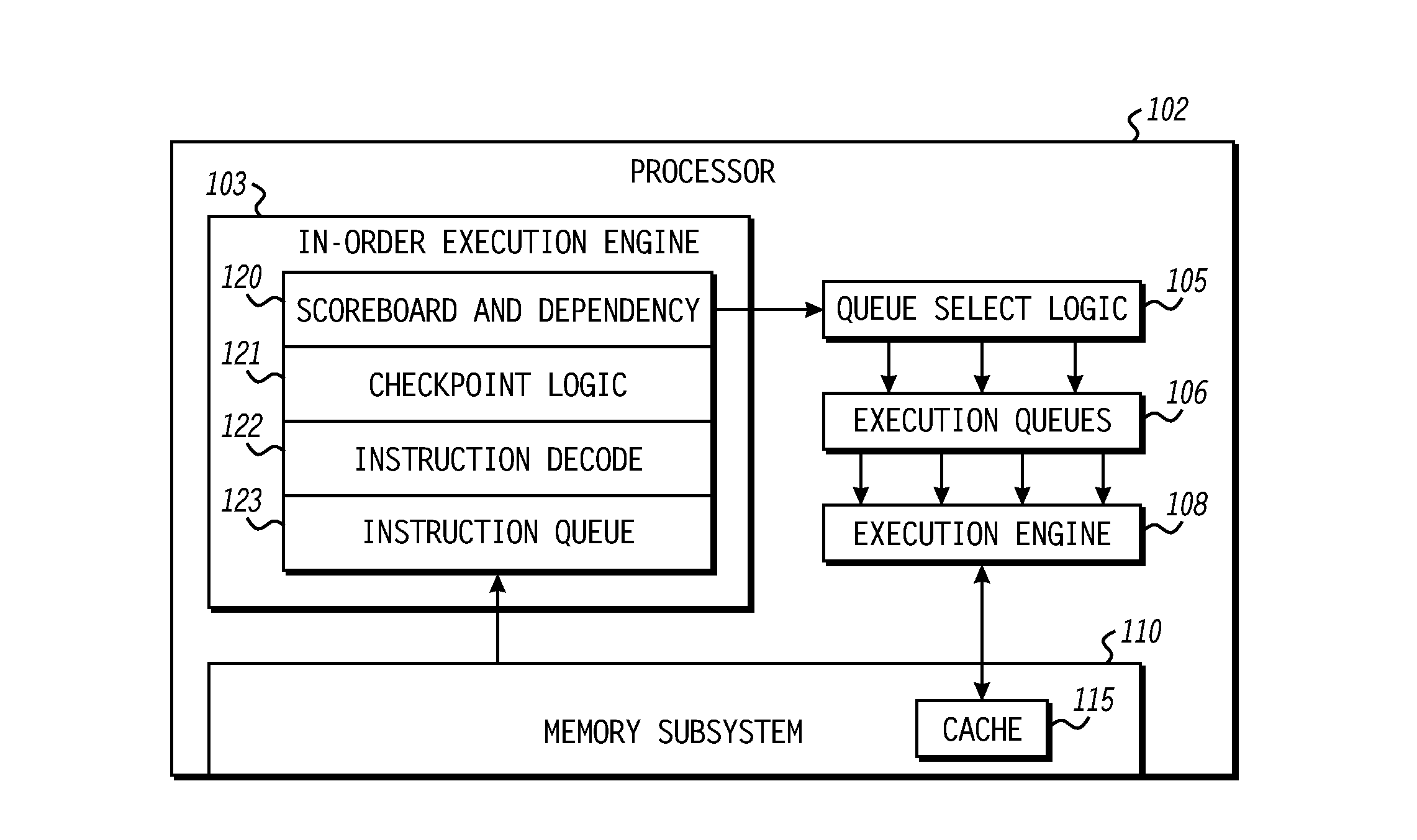

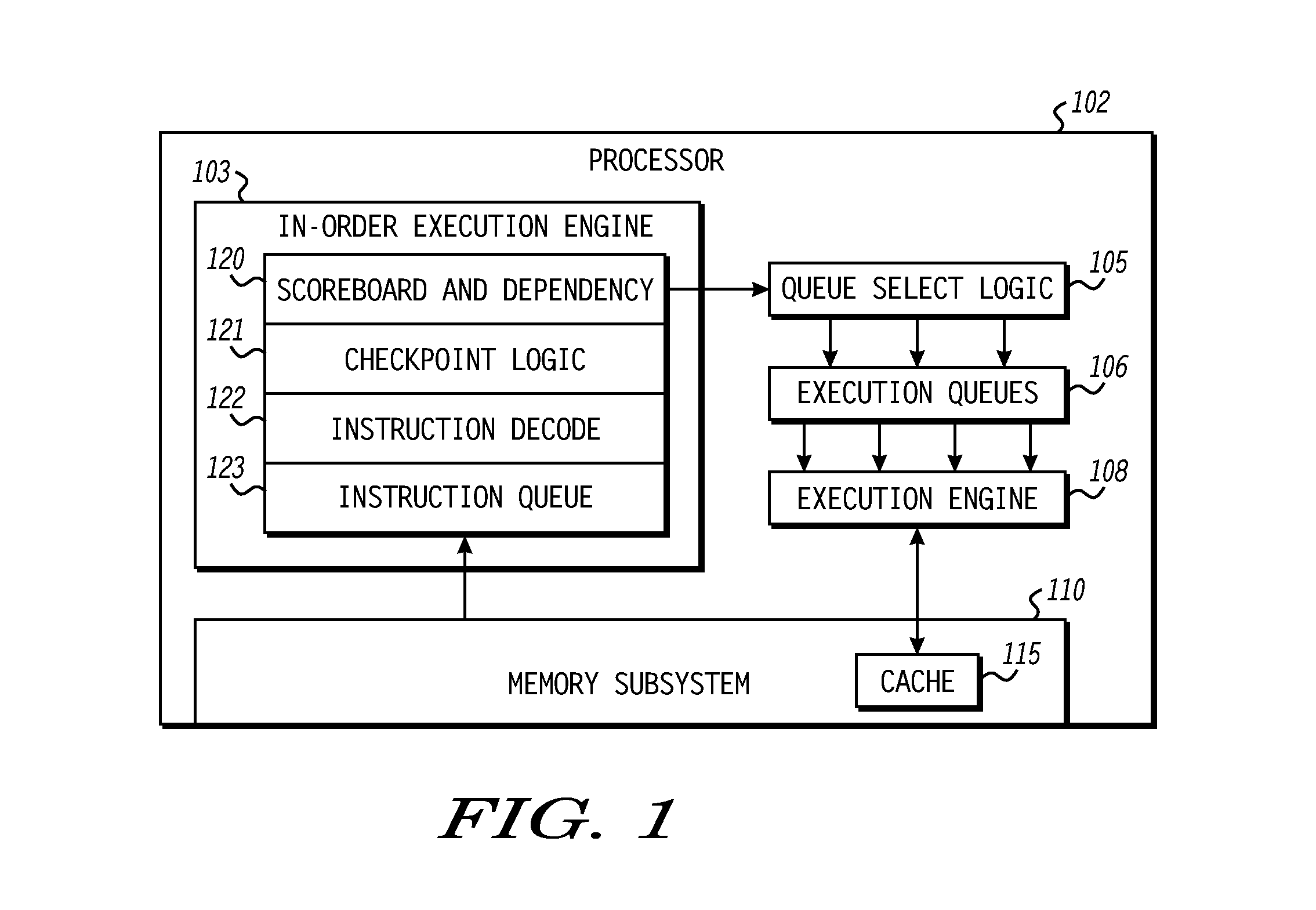

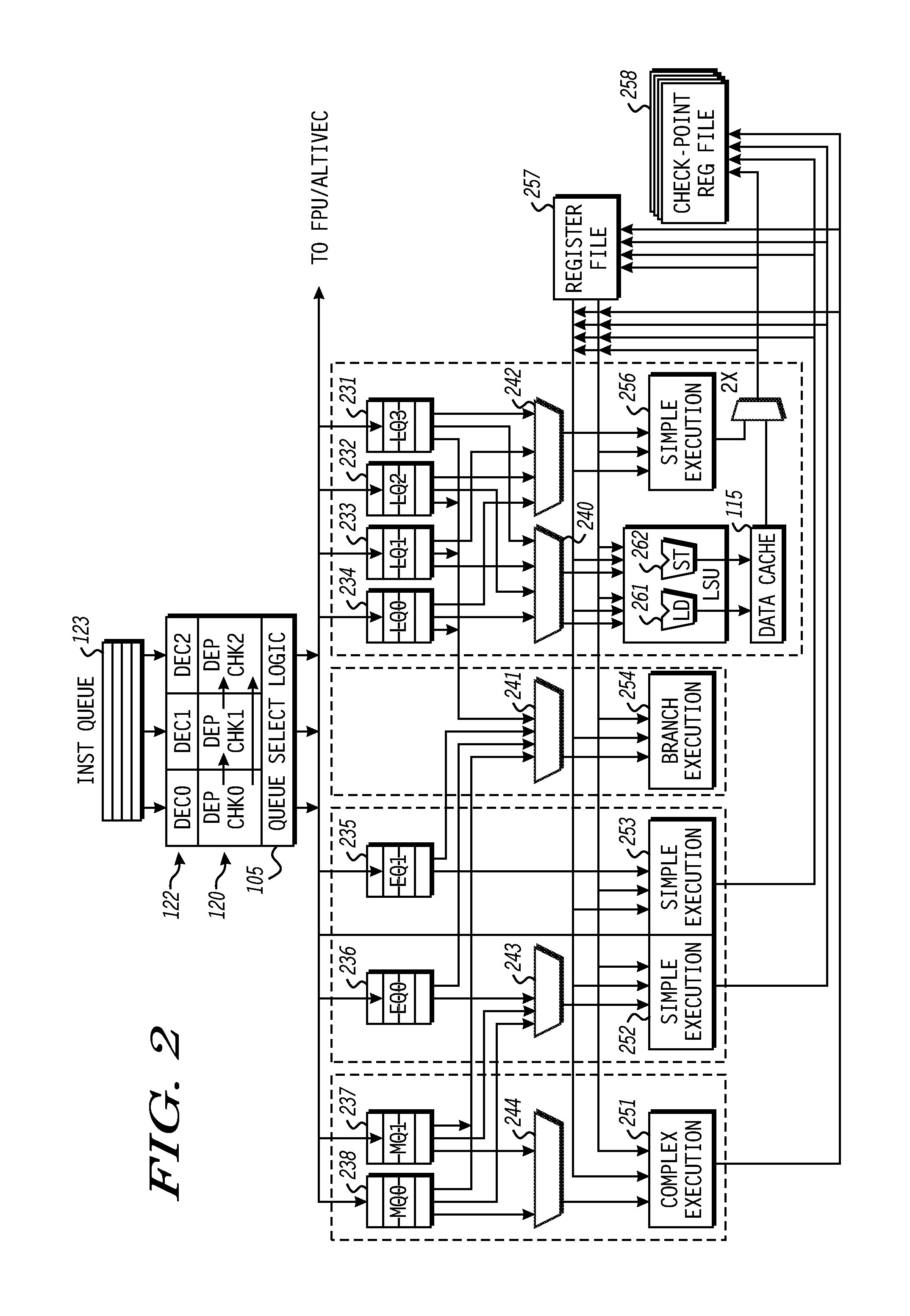

Apparatus and method for dynamic allocation of execution queues

A processor reduces the likelihood of stalls at an instruction pipeline by dynamically extending the size of a full execution queue. To extend the full execution queue, the processor temporarily repurposes another execution queue to store instructions on behalf of the full execution queue. The execution queue to be repurposed can be selected based on a number of factors, including the type of instructions it is generally designated to store, whether it is empty of other instruction types, and the rate of cache hits at the processor. By selecting the repurposed queue based on dynamic factors such as the cache hit rate, the likelihood of stalls at the dispatch stage is reduced for different types of program flows, improving overall efficiency of the processor.

Owner:FREESCALE SEMICON INC

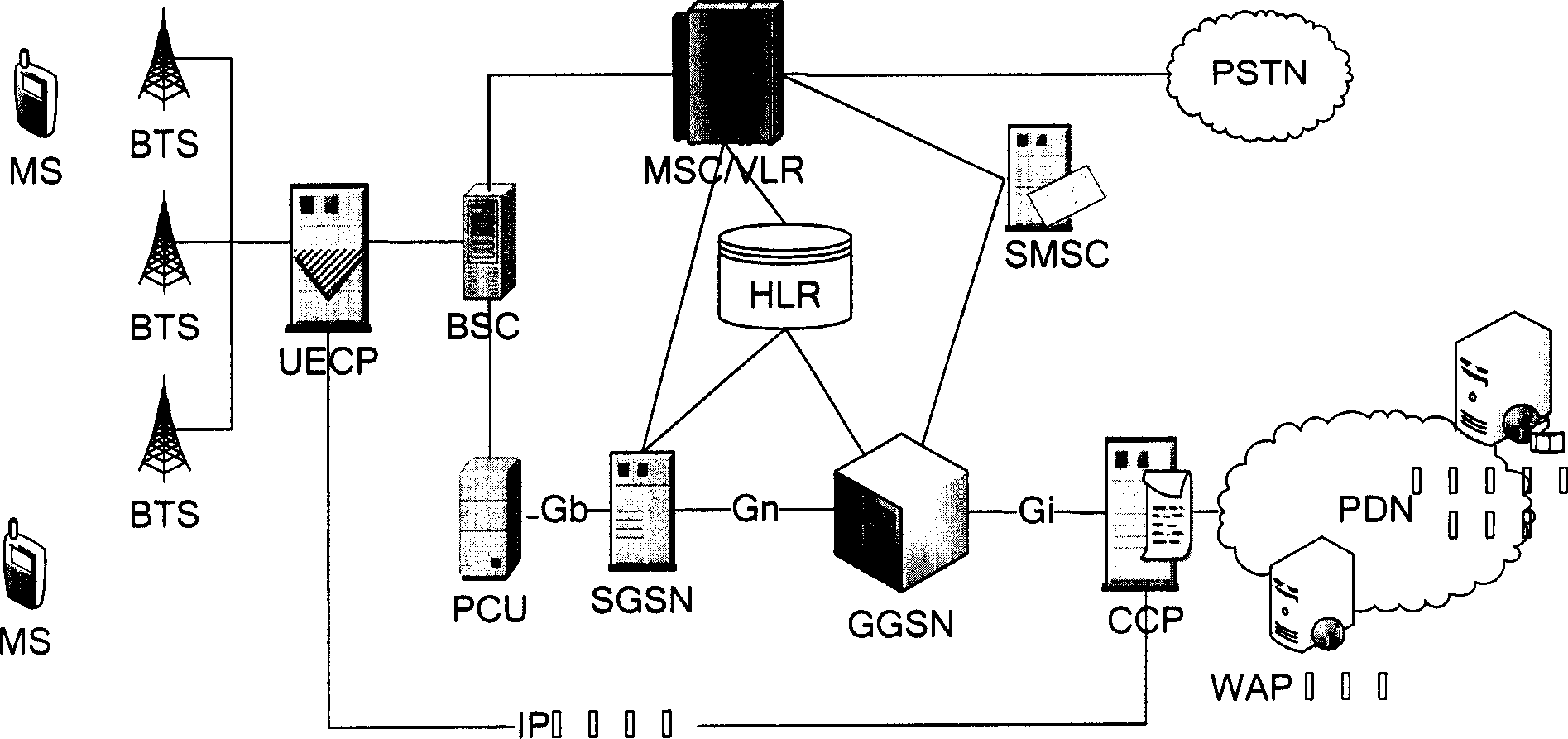

Mobile video order service system with optimized performance and realizing method

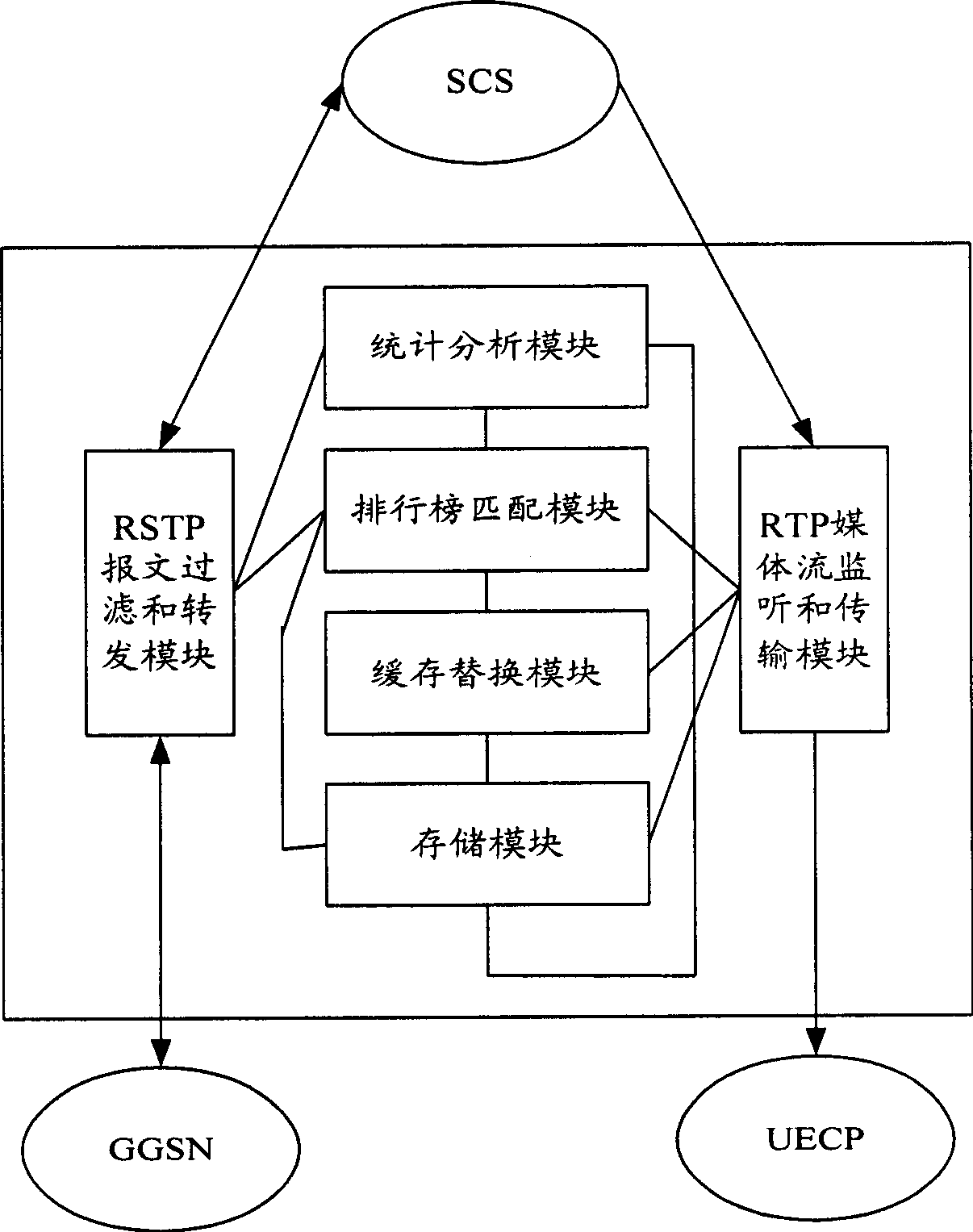

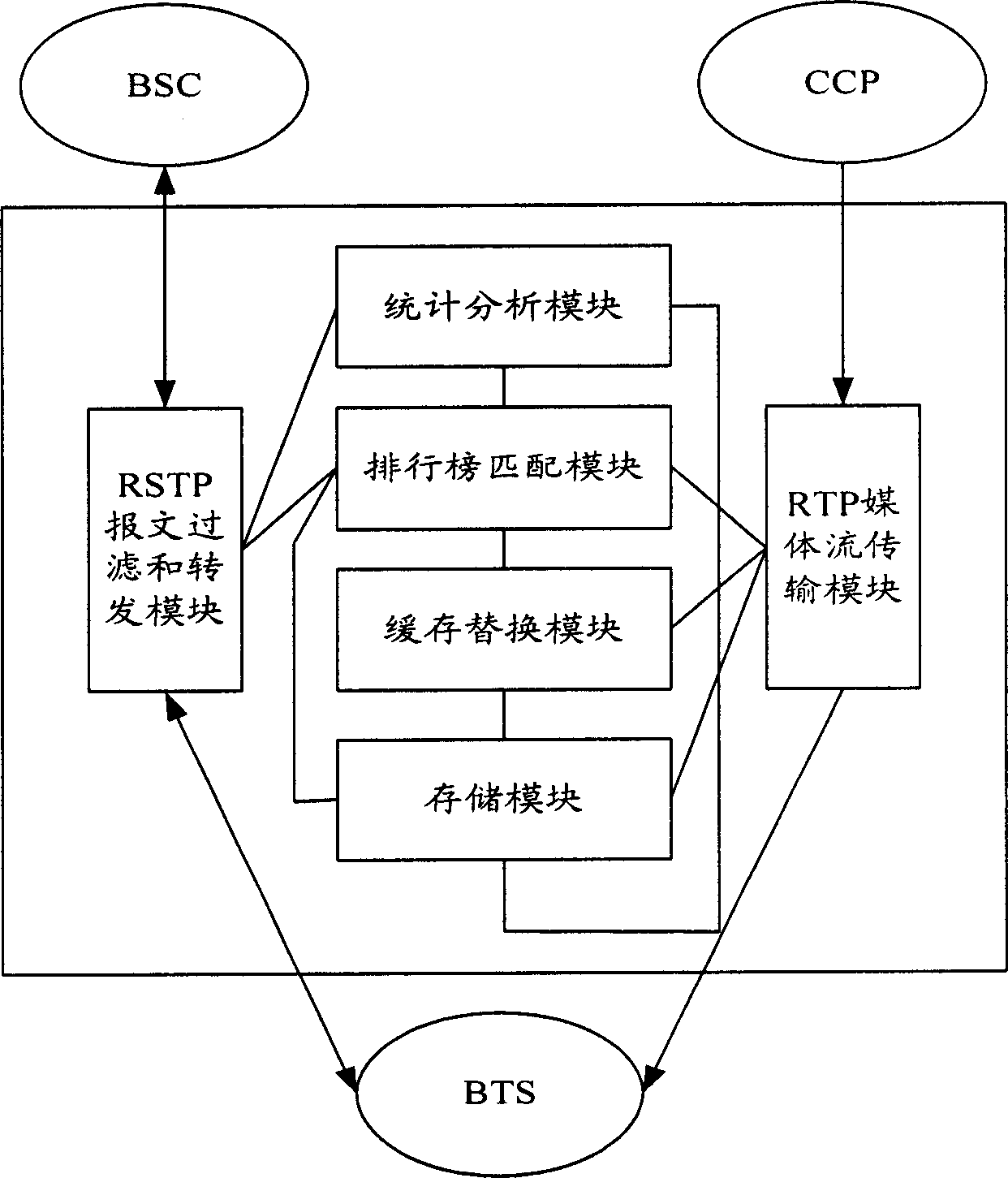

InactiveCN1791213AReduce data trafficReduce processing loadTransmissionSelective content distributionTraffic capacityAlternative strategy

The mobile VOD business system with super performance based on GPRS mobile network comprises: besides traditional net element, a CCCP server on boundary of GGSN and PDN to release load for SCS and reduce bandwidth consumption between GGSN and SGS, a UECP server arranged in BSS that is between BSC and BTS to self-adaptive improve buffer hit rate based on buffer alternative strategy and ensure fast response to most user request, and a high-speed IP DL between CCP and UECP to transmit flow media content and construct two-stage buffer device with two proxy servers to bypass great media and reduce data flux and load for other link and eliminate the effect of VOD to network performance.

Owner:BEIJING UNIV OF POSTS & TELECOMM

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com