Patents

Literature

184 results about "Least recently frequently used" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

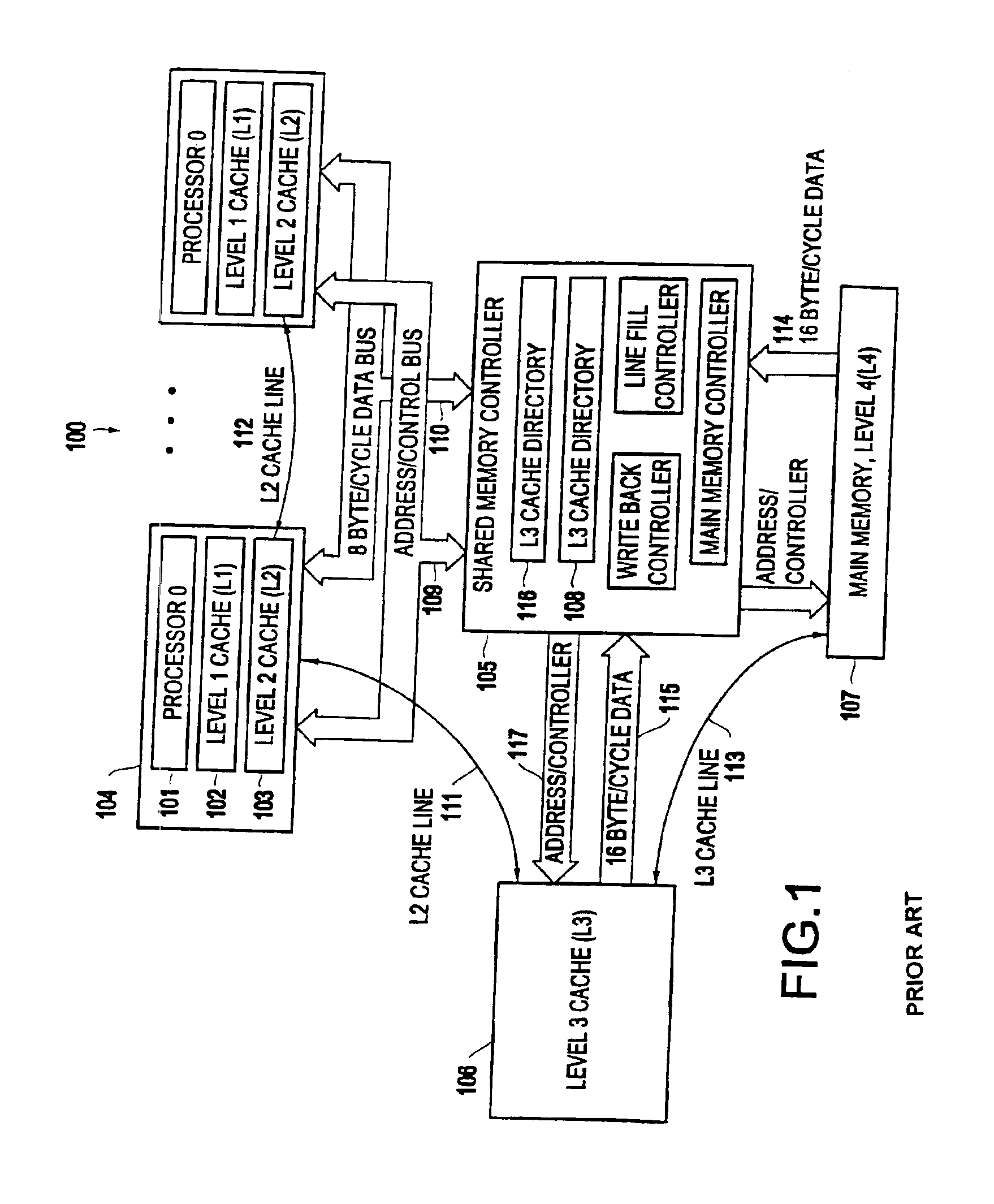

Digital signal processor containing scalar processor and a plurality of vector processors operating from a single instruction

InactiveUS6317819B1Register arrangementsMemory adressing/allocation/relocationCrossbar switchDigital data

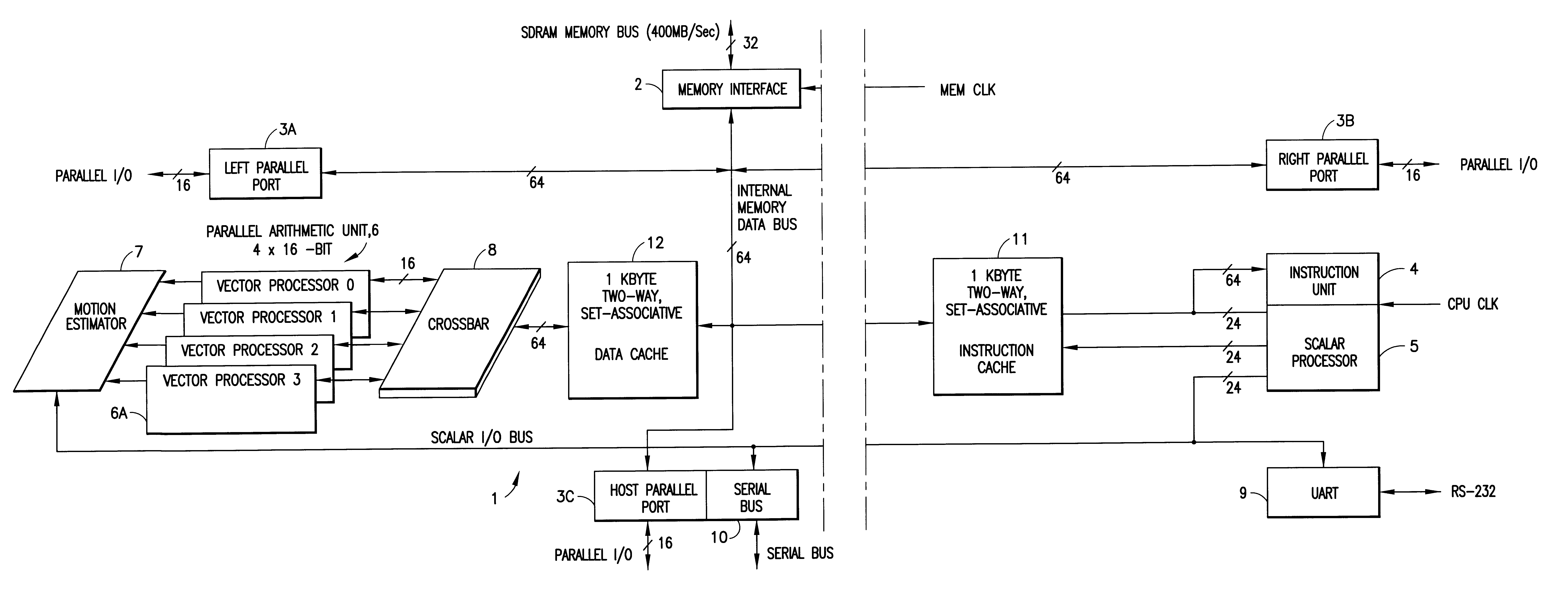

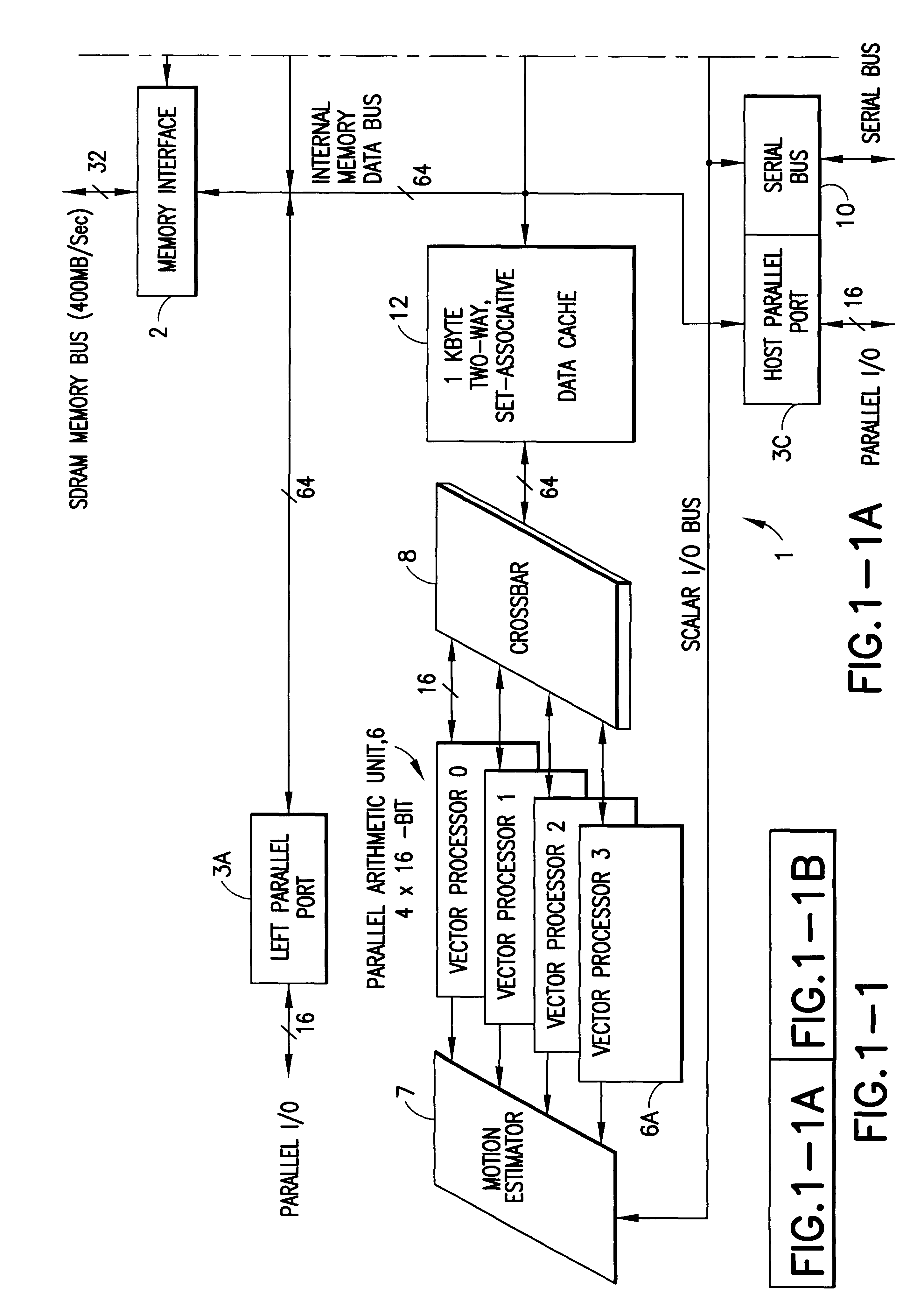

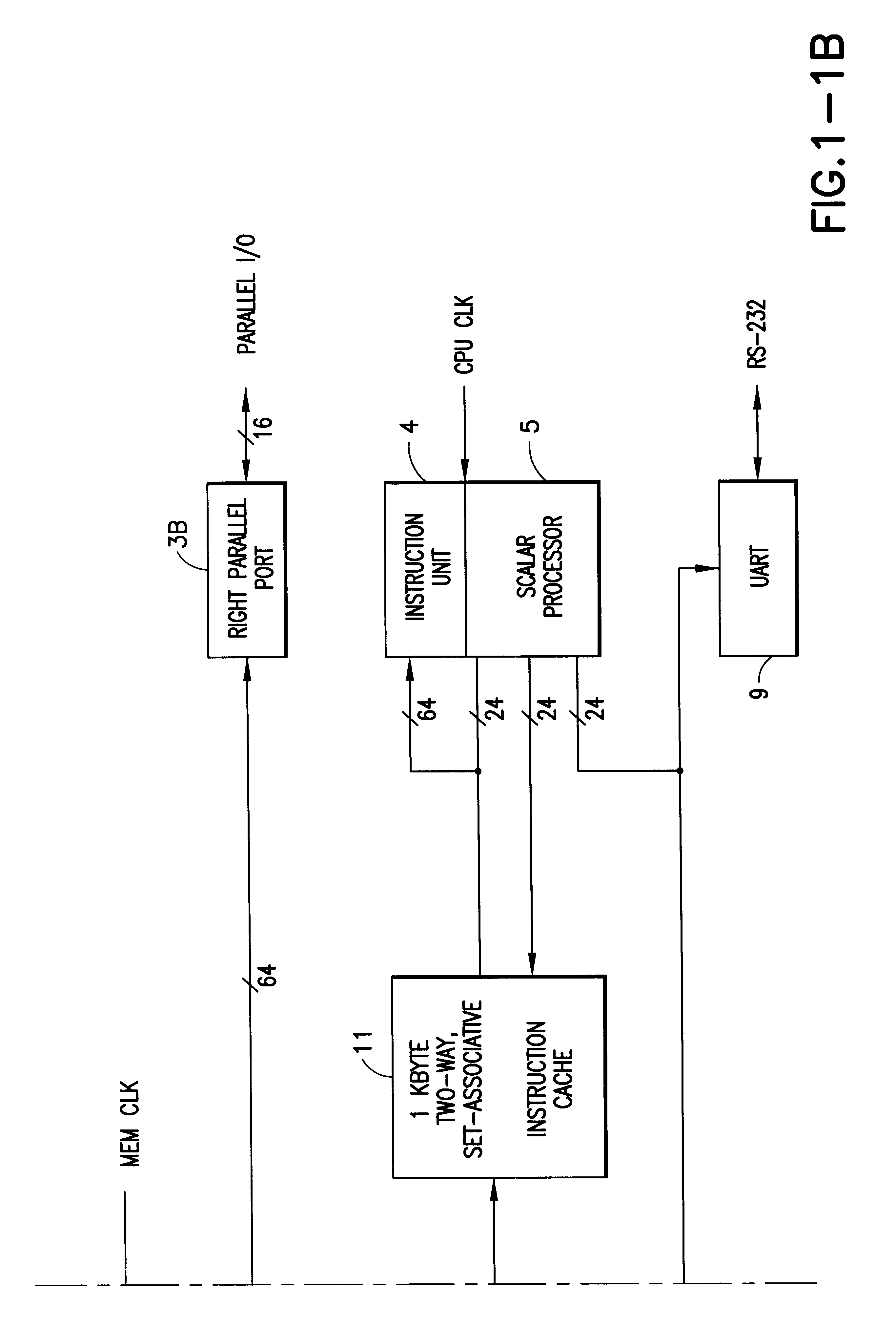

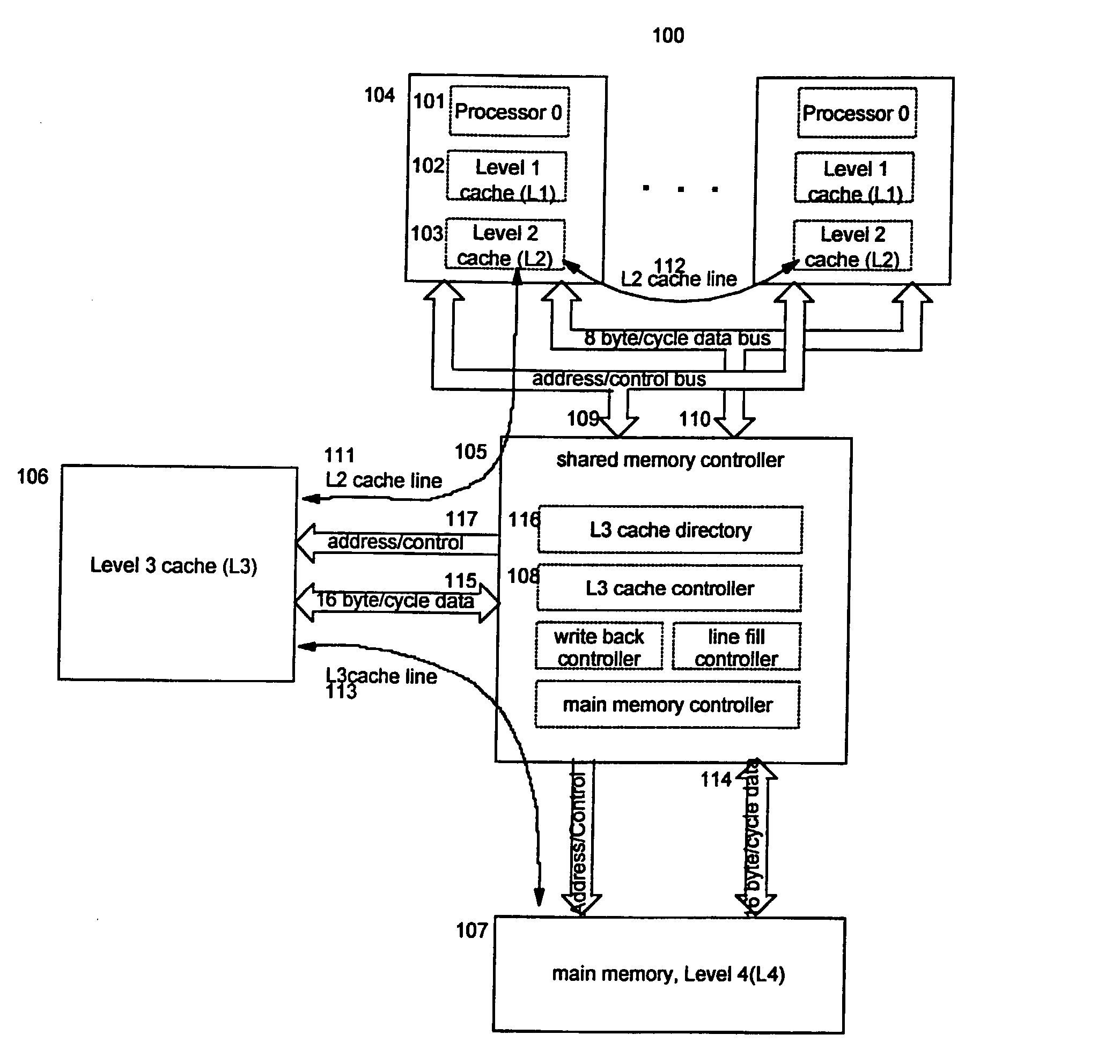

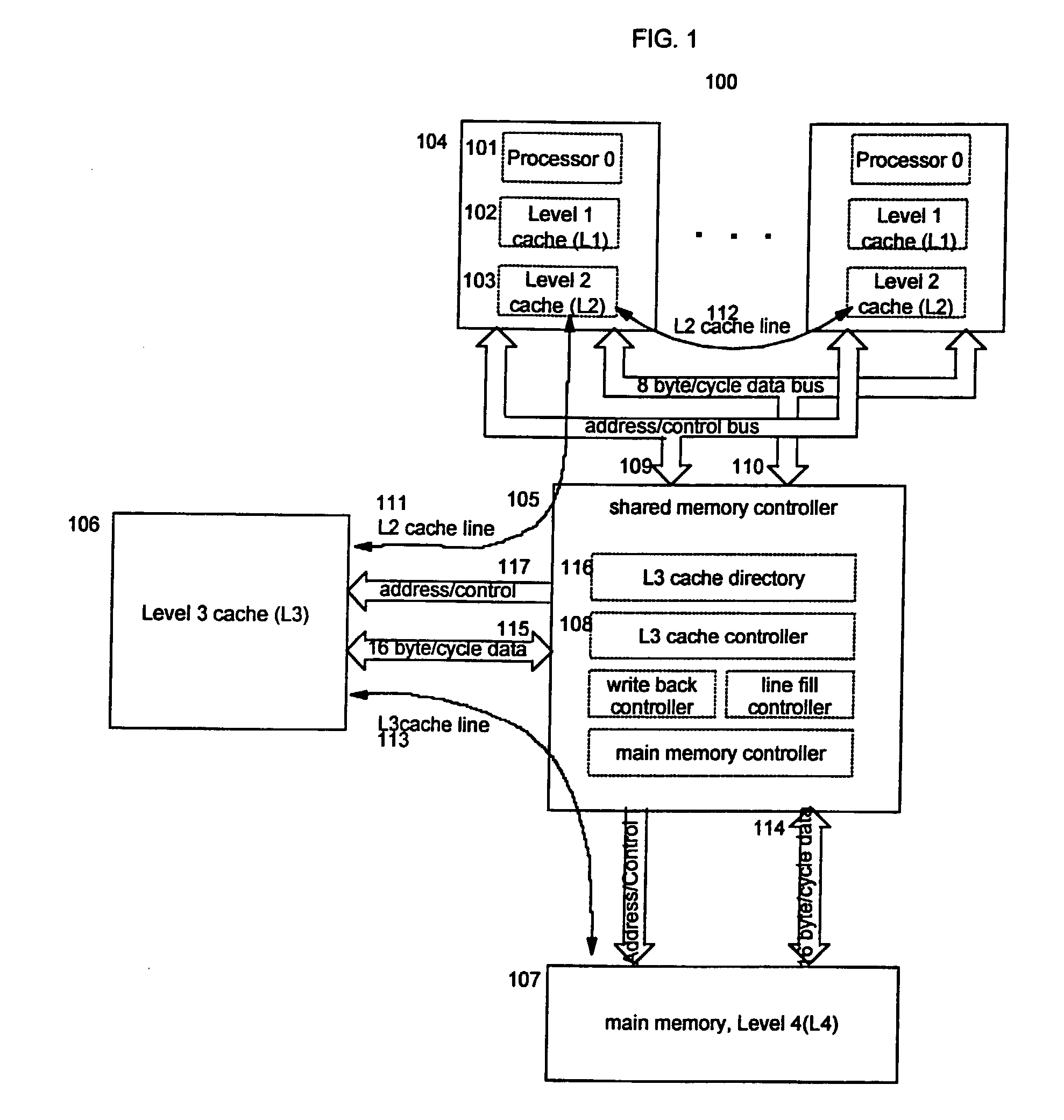

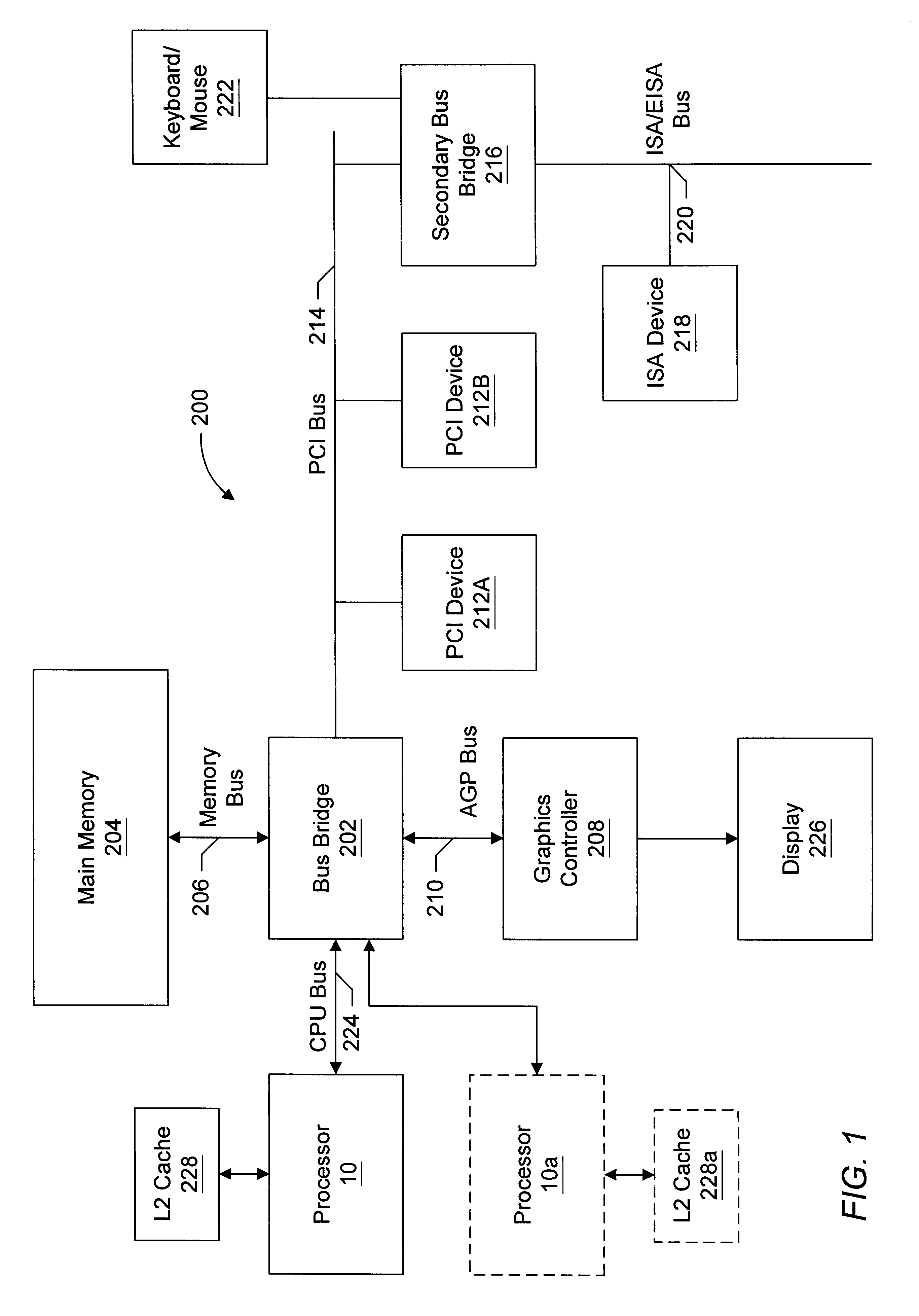

A digital data processor integrated circuit (1) includes a plurality of functionally identical first processor elements (6A) and a second processor element (5). The first processor elements are bidirectionally coupled to a first cache (12) via a crossbar switch matrix (8). The second processor element is coupled to a second cache (11). Each of the first cache and the second cache contain a two-way, set-associative cache memory that uses a least-recently-used (LRU) replacement algorithm and that operates with a use-as-fill mode to minimize a number of wait states said processor elements need experience before continuing execution after a cache-miss. An operation of each of the first processor elements and an operation of the second processor element are locked together during an execution of a single instruction read from the second cache. The instruction specifies, in a first portion that is coupled in common to each of the plurality of first processor elements, the operation of each of the plurality of first processor elements in parallel. A second portion of the instruction specifies the operation of the second processor element. Also included is a motion estimator (7) and an internal data bus coupling together a first parallel port (3A), a second parallel port (3B), a third parallel port (3C), an external memory interface (2), and a data input / output of the first cache and the second cache.

Owner:CUFER ASSET LTD LLC

Method and system for managing data in cache using multiple data structures

InactiveUS6141731AEfficient managementChoose accuratelyMemory adressing/allocation/relocationLeast recently frequently usedCache management

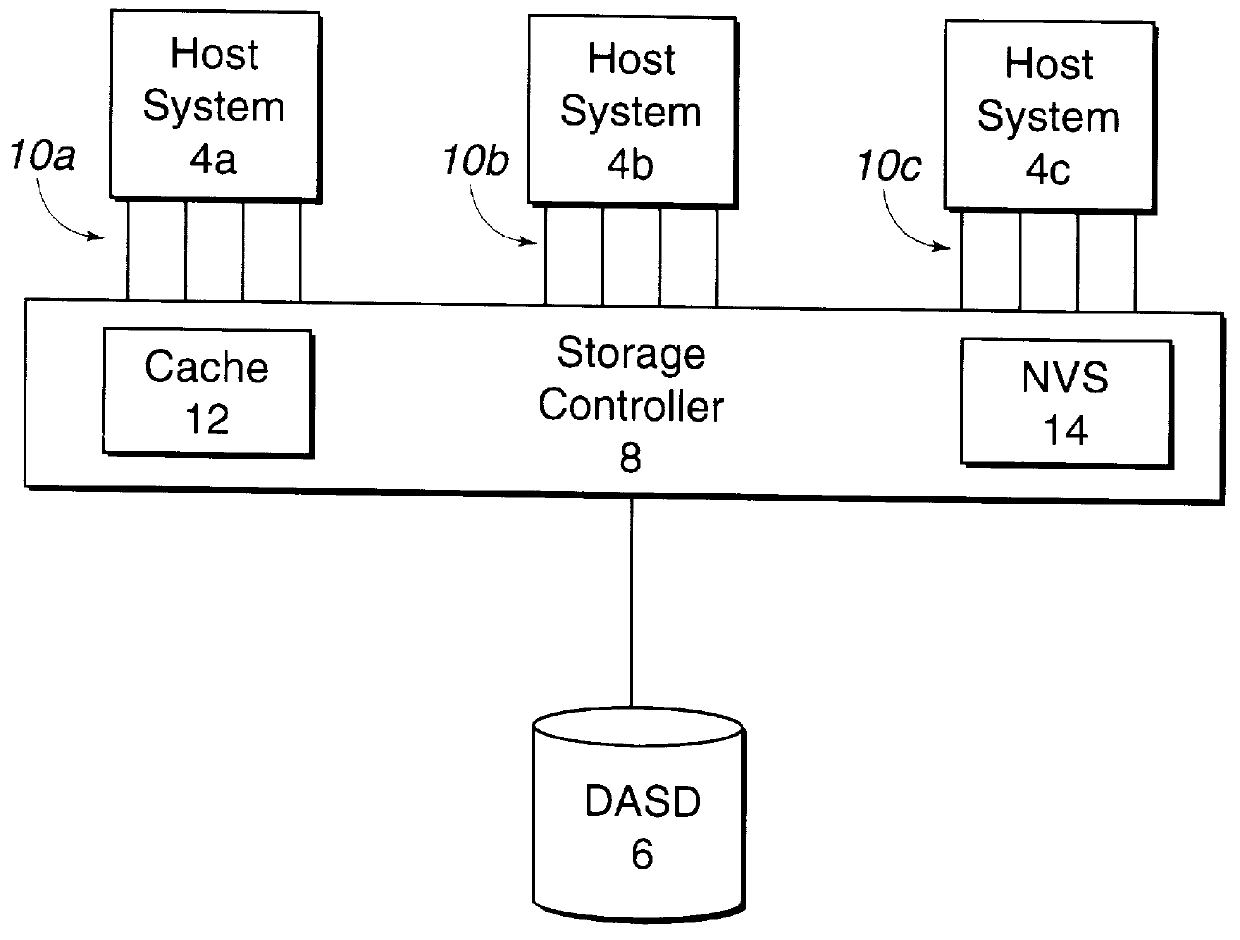

Disclosed is a cache management scheme using multiple data structure. A first and second data structures, such as linked lists, indicate data entries in a cache. Each data structure has a most recently used (MRU) entry, a least recently used (LRU) entry, and a time value associated with each data entry indicating a time the data entry was indicated as added to the MRU entry of the data structure. A processing unit receives a new data entry. In response, the processing unit processes the first and second data structures to determine a LRU data entry in each data structure and selects from the determined LRU data entries the LRU data entry that is the least recently used. The processing unit then demotes the selected LRU data entry from the cache and data structure including the selected data entry. The processing unit adds the new data entry to the cache and indicates the new data entry as located at the MRU entry of one of the first and second data structures.

Owner:IBM CORP

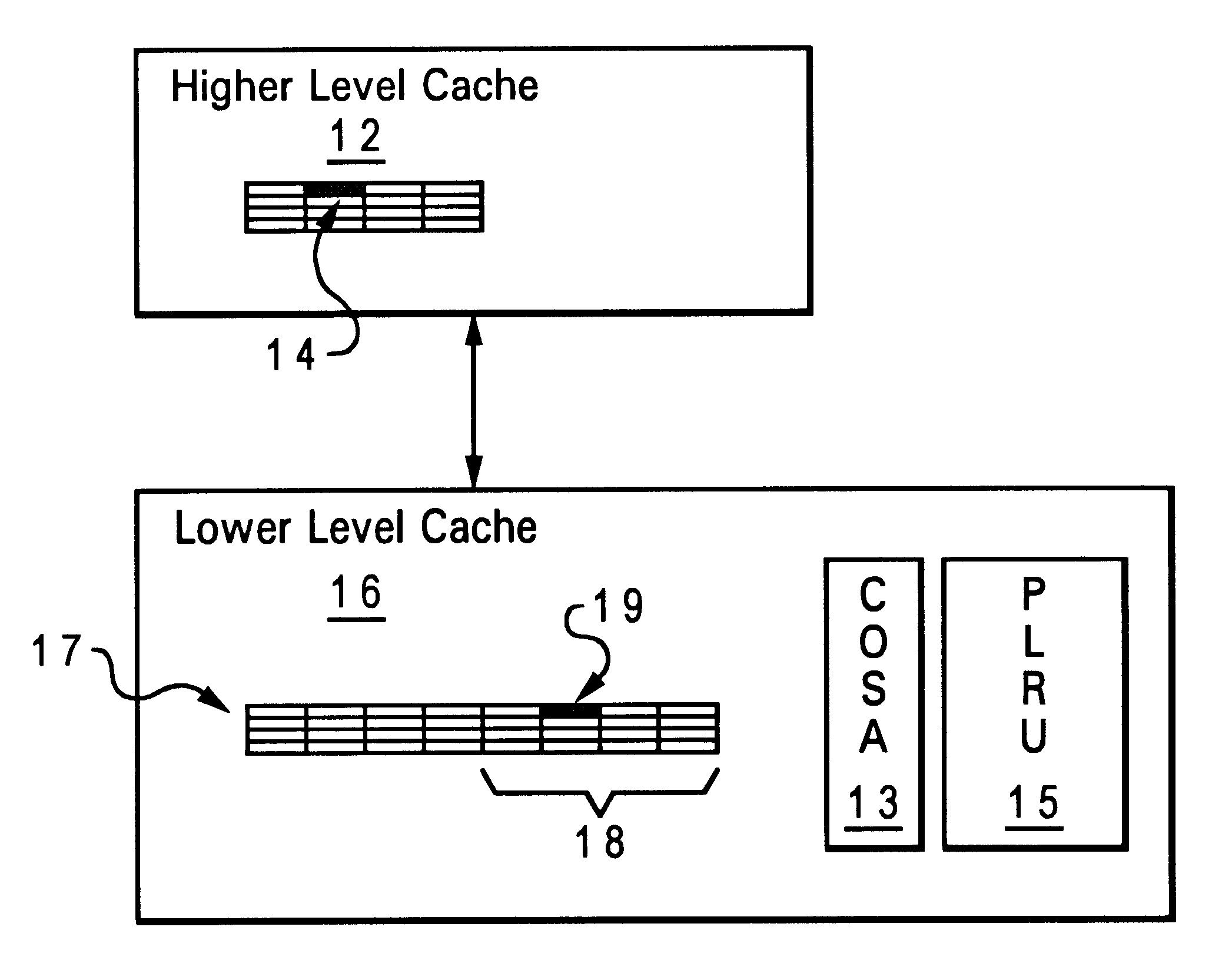

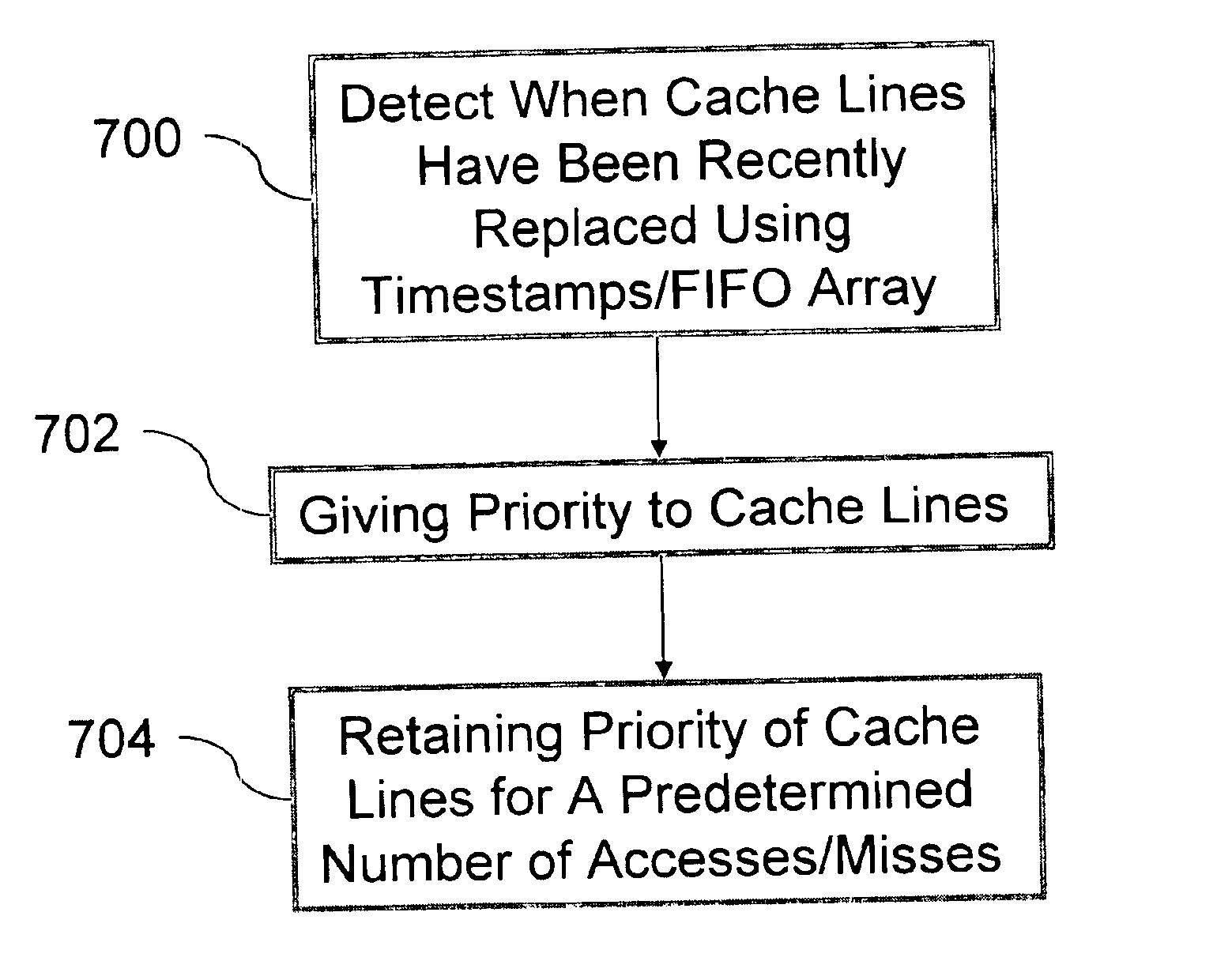

Weighted cache line replacement

InactiveUS20040083341A1Avoid replacementMemory adressing/allocation/relocationCache hierarchyParallel computing

A method for selecting a line to replace in an inclusive set-associative cache memory system which is based on a least recently used replacement policy but is enhanced to detect and give special treatment to the reloading of a line that has been recently cast out. A line which has been reloaded after having been recently cast out is assigned a special encoding which temporarily gives priority to the line in the cache so that it will not be selected for replacement in the usual least recently used replacement process. This method of line selection for replacement improves system performance by providing better hit rates in the cache hierarchy levels above, by ensuring that heavily used lines in the levels above are not aged out of the levels below due to lack of use.

Owner:IBM CORP

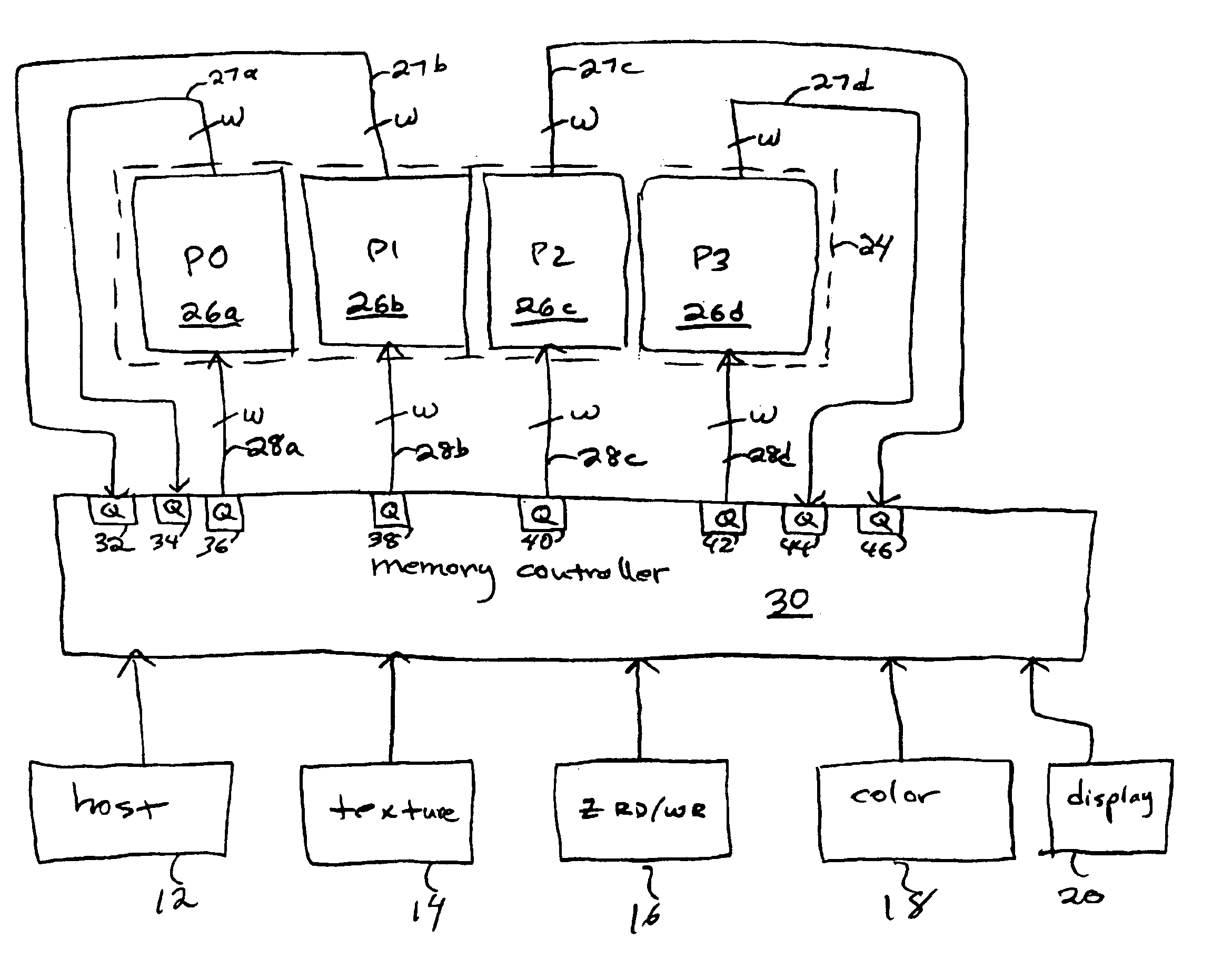

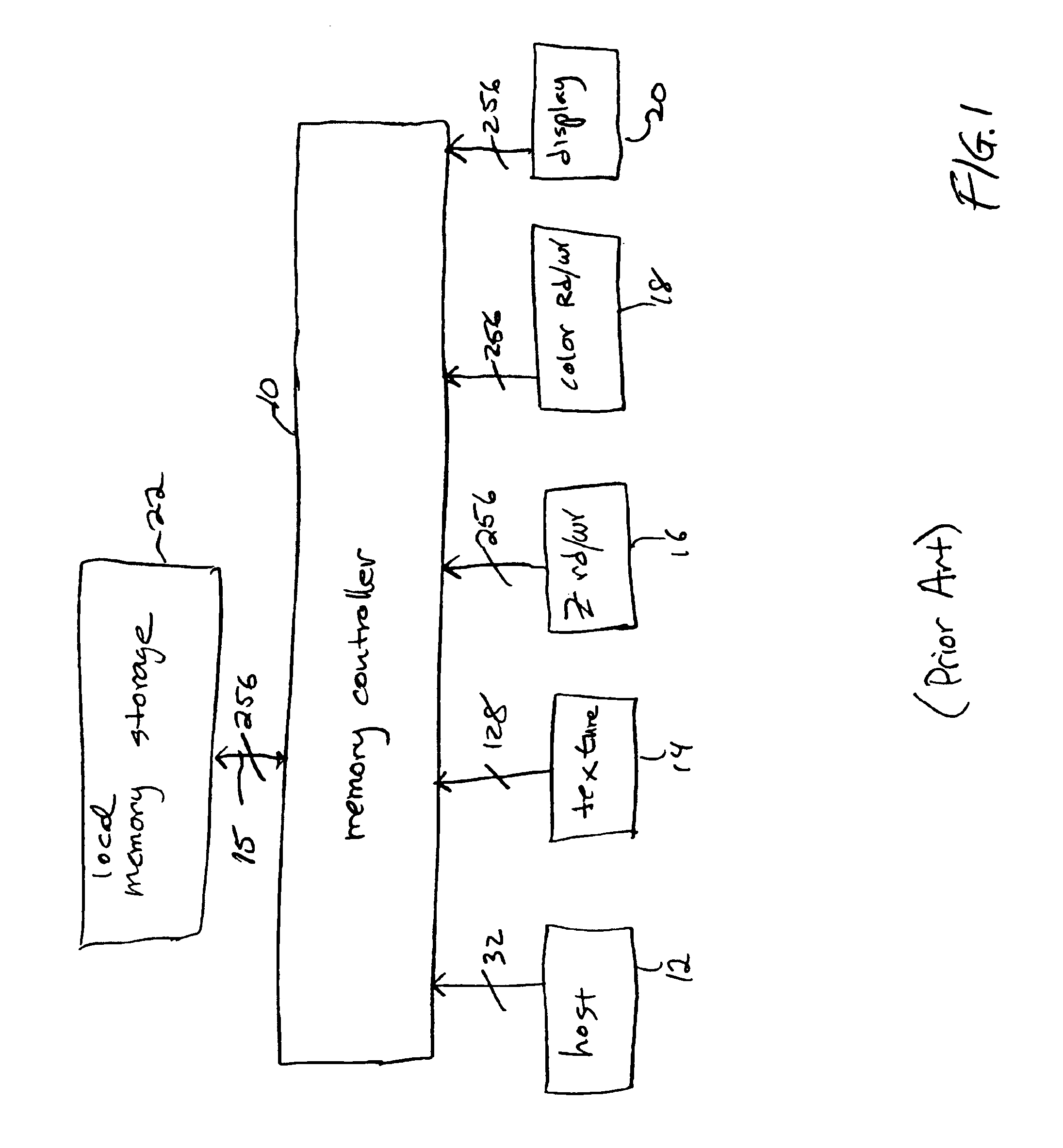

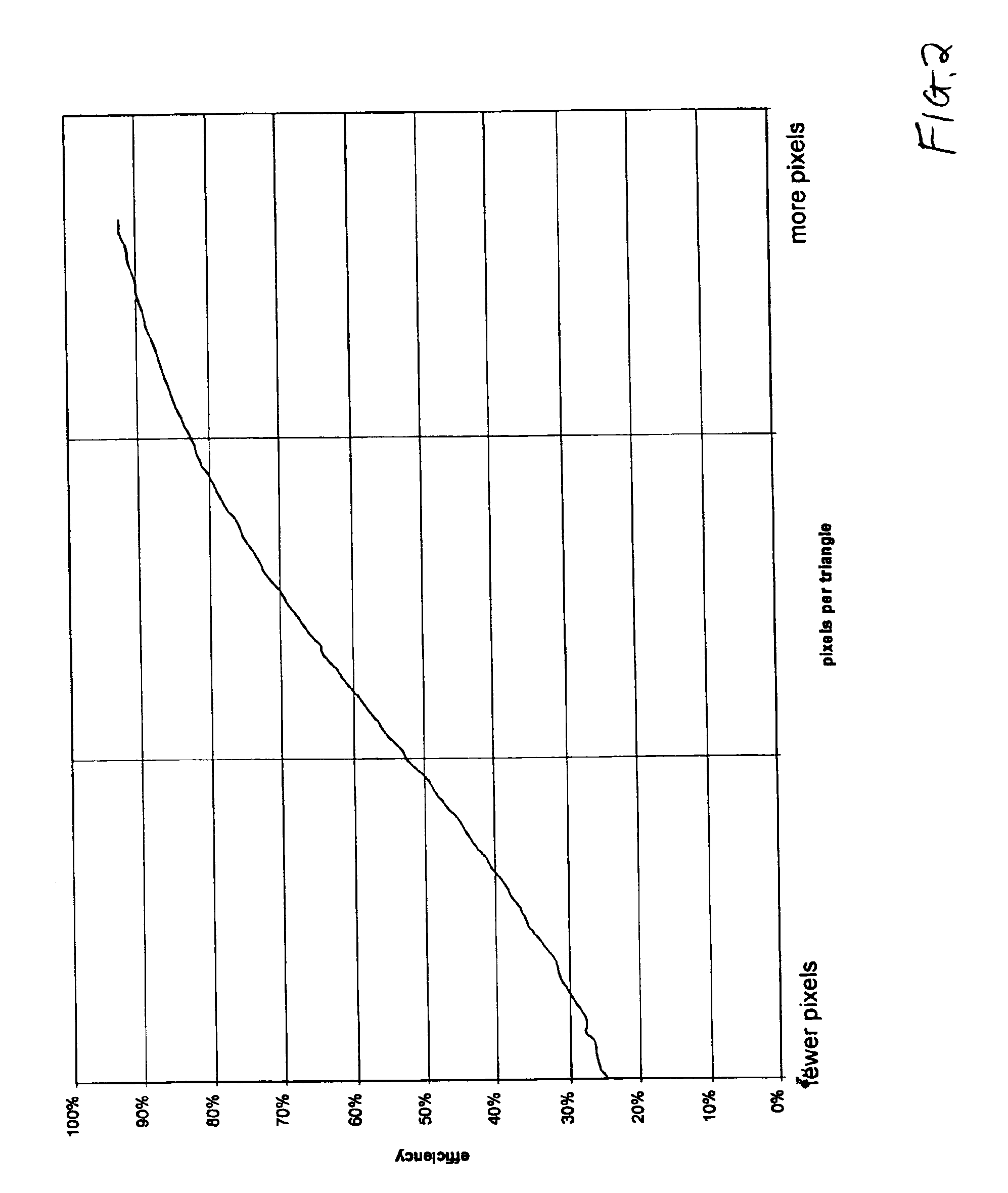

Controller for a memory system having multiple partitions

InactiveUS6853382B1Improving topologyExtend cycle timeMemory adressing/allocation/relocationImage memory managementLeast recently frequently usedClient-side

A memory system having a number of partitions each operative to independently service memory requests from a plurality of memory clients while maintaining the appearance to the memory client of a single partition memory subsystem. The memory request specifies a location in the memory system and a transfer size. A partition receives input from an arbiter circuit which, in turn, receives input from a number of client queues for the partition. The arbiter circuit selects a client queue based on a priority policy such as round robin or least recently used or a static or dynamic policy. A router receives a memory request, determines the one or more partitions needed to service the request and stores the request in the client queues for the servicing partitions. In one embodiment, an additional arbiter circuit selects memory requests from one of a subset of the memory clients and forwards the requests to a routing circuit, thereby providing a way for the subset of memory clients to share the client queues and routing circuit. Alternatively, a memory client can make requests directed to a particular partition in which case no routing circuit is required. For a read request that requires more than one partition to service, the memory system must collect the read data from read queues for the various partitions and deliver the collected data back to the proper client. Read queues can provide data in non-fifo order to satisfy an memory client that can receive data out-of-order.

Owner:NVIDIA CORP

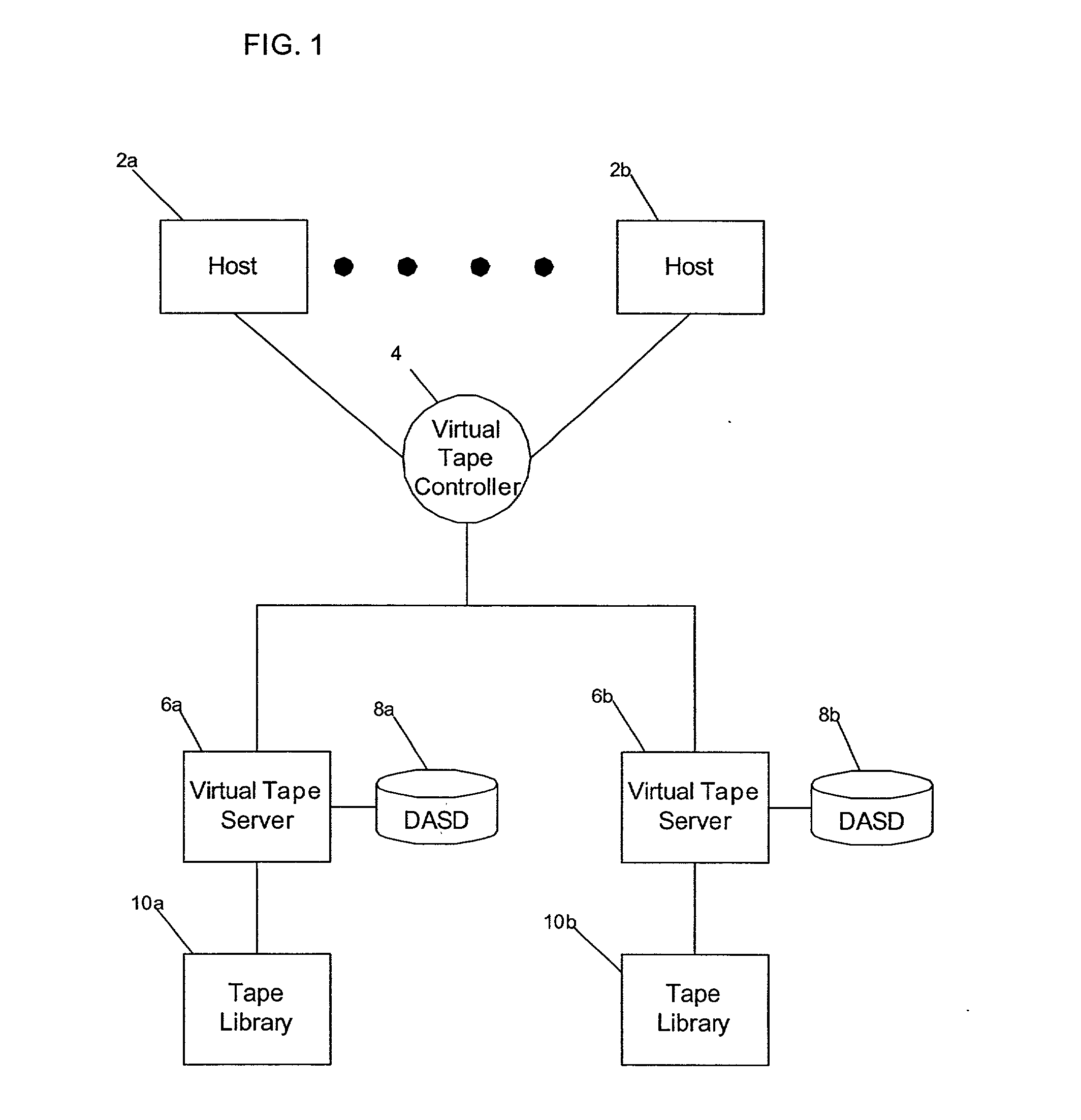

Preferential caching of uncopied logical volumes in a peer-to-peer virtual tape server

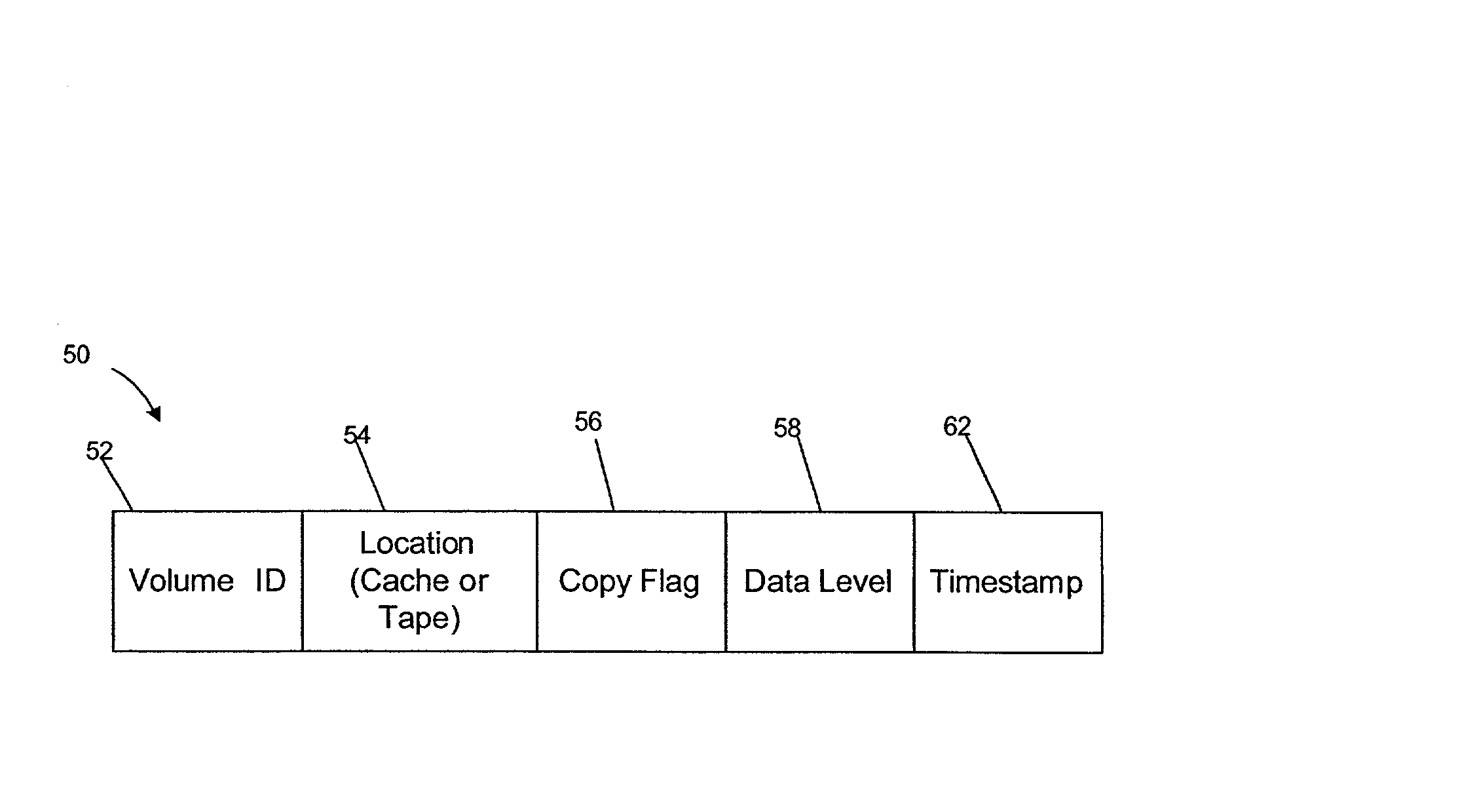

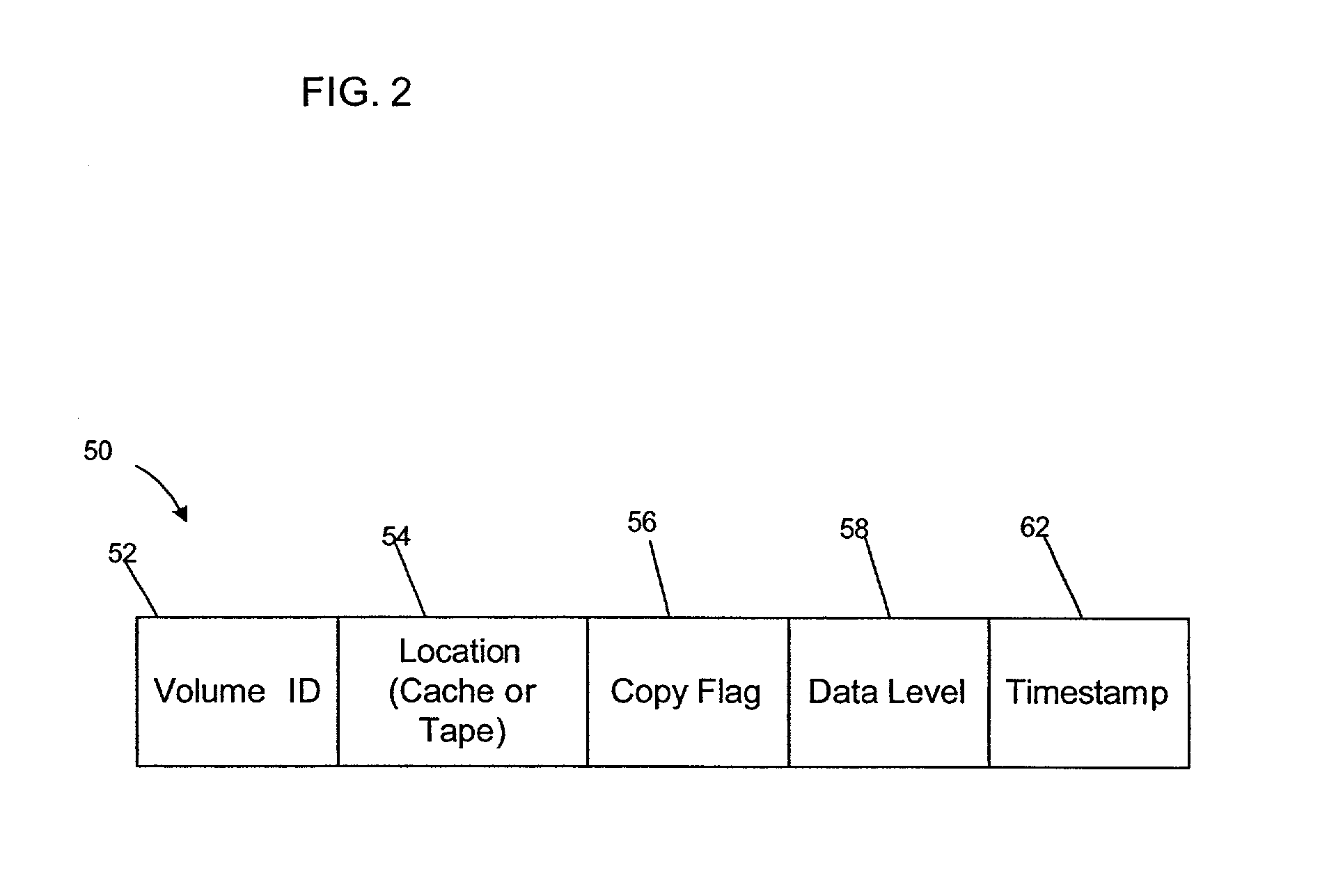

InactiveUS20030004980A1Delay the time of being blockedImproved performance benefitInput/output to record carriersData processing applicationsData setTimestamp

Disclosed is a system, method, and an article of manufacture for preferentially keeping an uncopied data set in one of two storage devices in a peer-to-peer environment when data needs to be removed from the storage devices. Each time a data set is modified or newly created, flags are used to denote whether the data set needs to be copied from one storage device to the other. The preferred embodiments modify the timestamp for each uncopied data set by adding a period of time, and thus give preference to the uncopied data set when the data from the storage device is removed based on the least recently used as denoted by timestamp of each data set. Once the data set is copied, the timestamp is set back to normal by subtracting the same period of time added on when the data set was flagged as needing to be copied.

Owner:IBM CORP

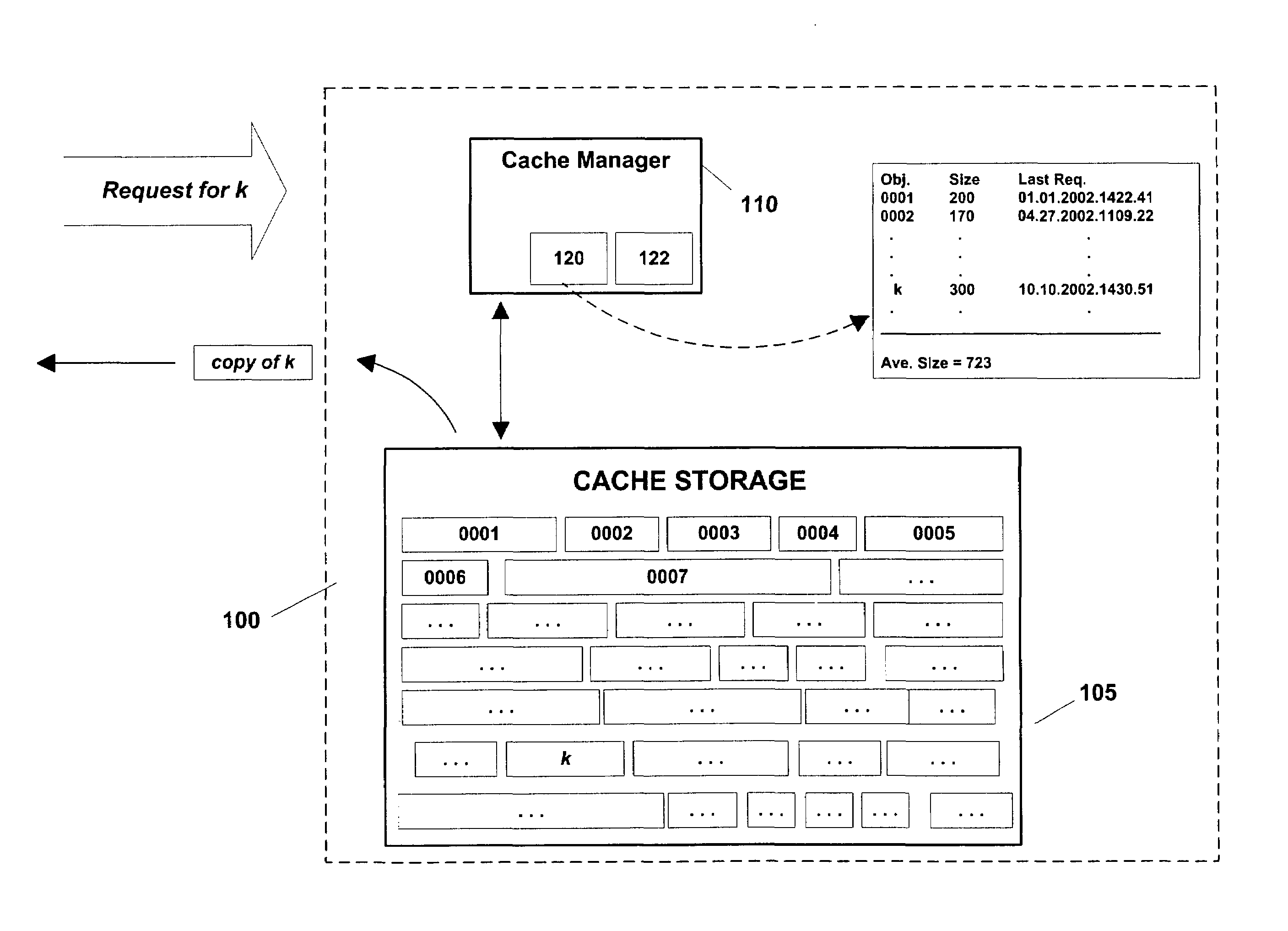

Memory admission control based on object size or request frequency

InactiveUS7051161B2Improved memory operationImprove operationDigital data information retrievalSpecial data processing applicationsObject basedParallel computing

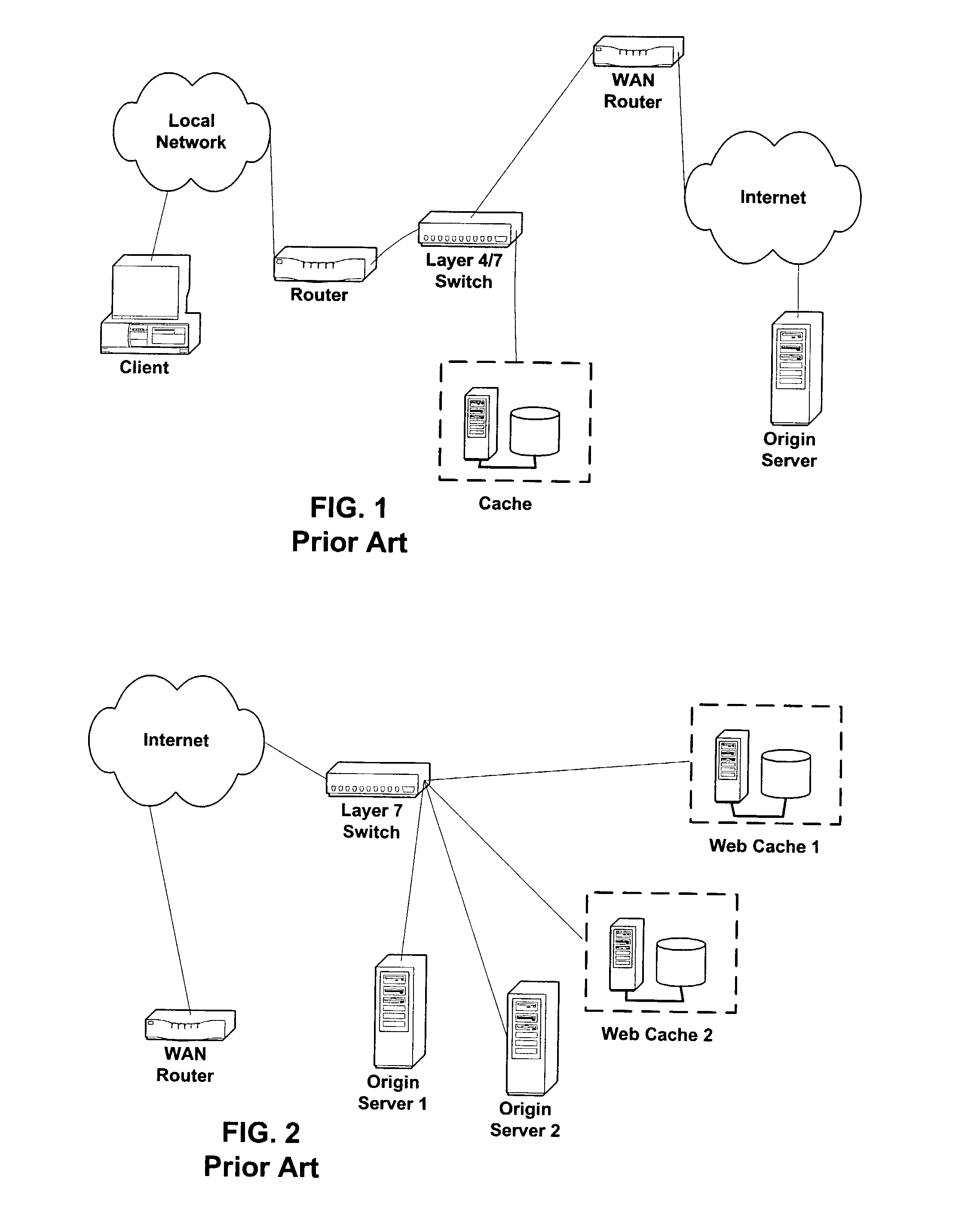

Admission of new objects into a memory such as a web cache is selectively controlled. If an object is not in the cache, but has been requested a specified number of prior occasions (e.g., if the object has been requested at least once before), it is admitted into the cache regardless of size. If the object has not previously been requested the specified number of times, the object is admitted into the cache if the object satisfies a specified size criterion (e.g., if it is smaller than the average size of objects currently stored in the cache). To make room for new objects, other objects are evicted from the cache on, e.g., a Least Recently Used (LRU) basis. The invention could be implemented on existing web caches, on distributed web caches, in client-side web caching, and in contexts unrelated to web object caching.

Owner:III HLDG 3

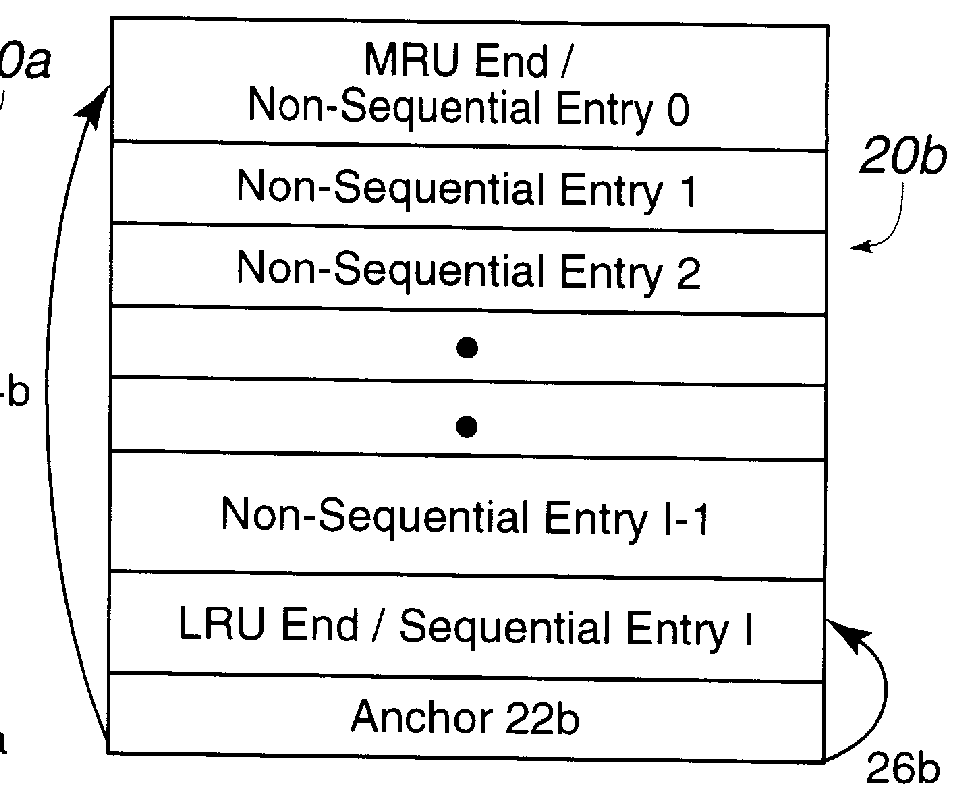

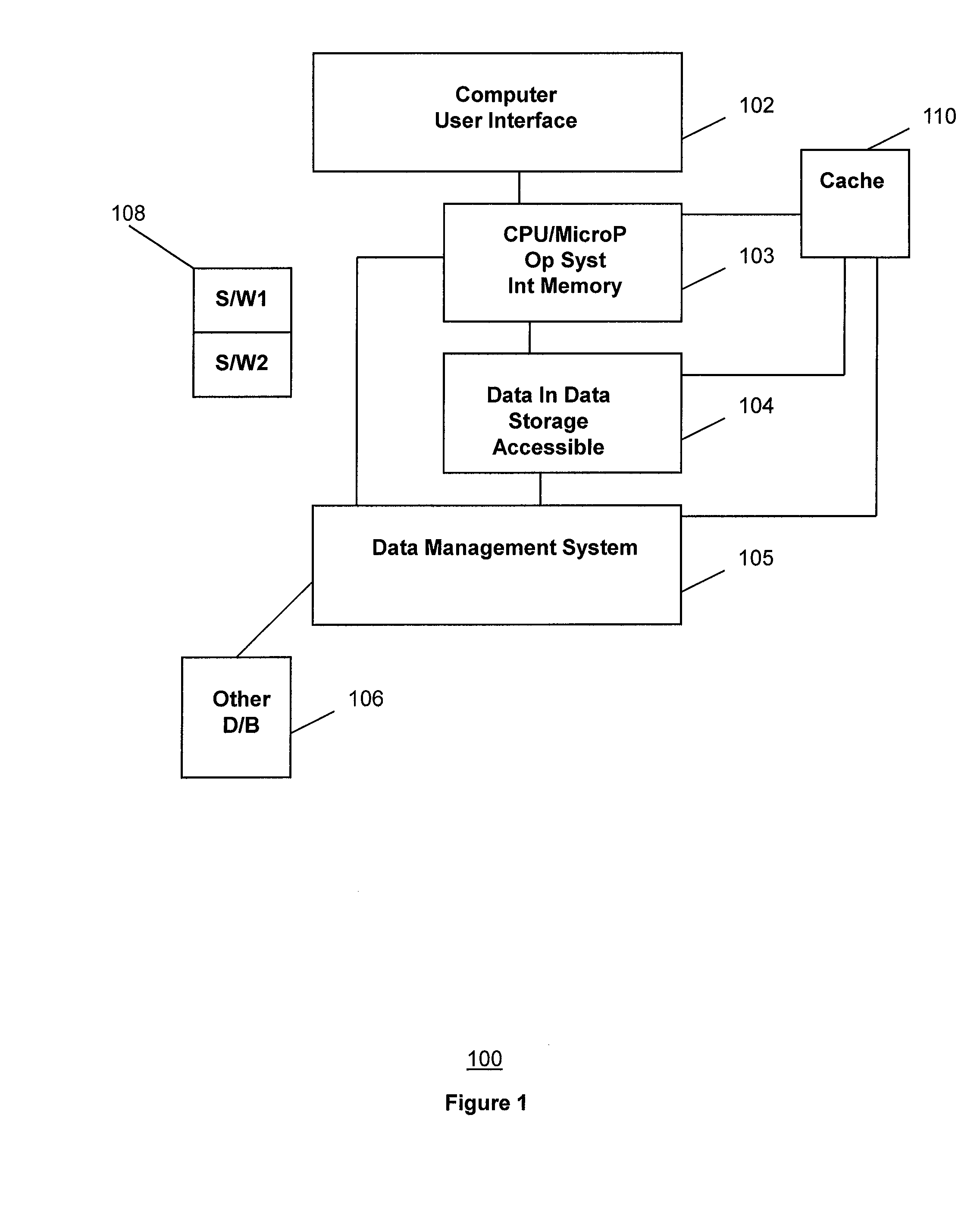

Method and system for managing data in cache

InactiveUS6327644B1Limited durationMaximize availabilityMemory adressing/allocation/relocationLeast recently frequently usedComputer science

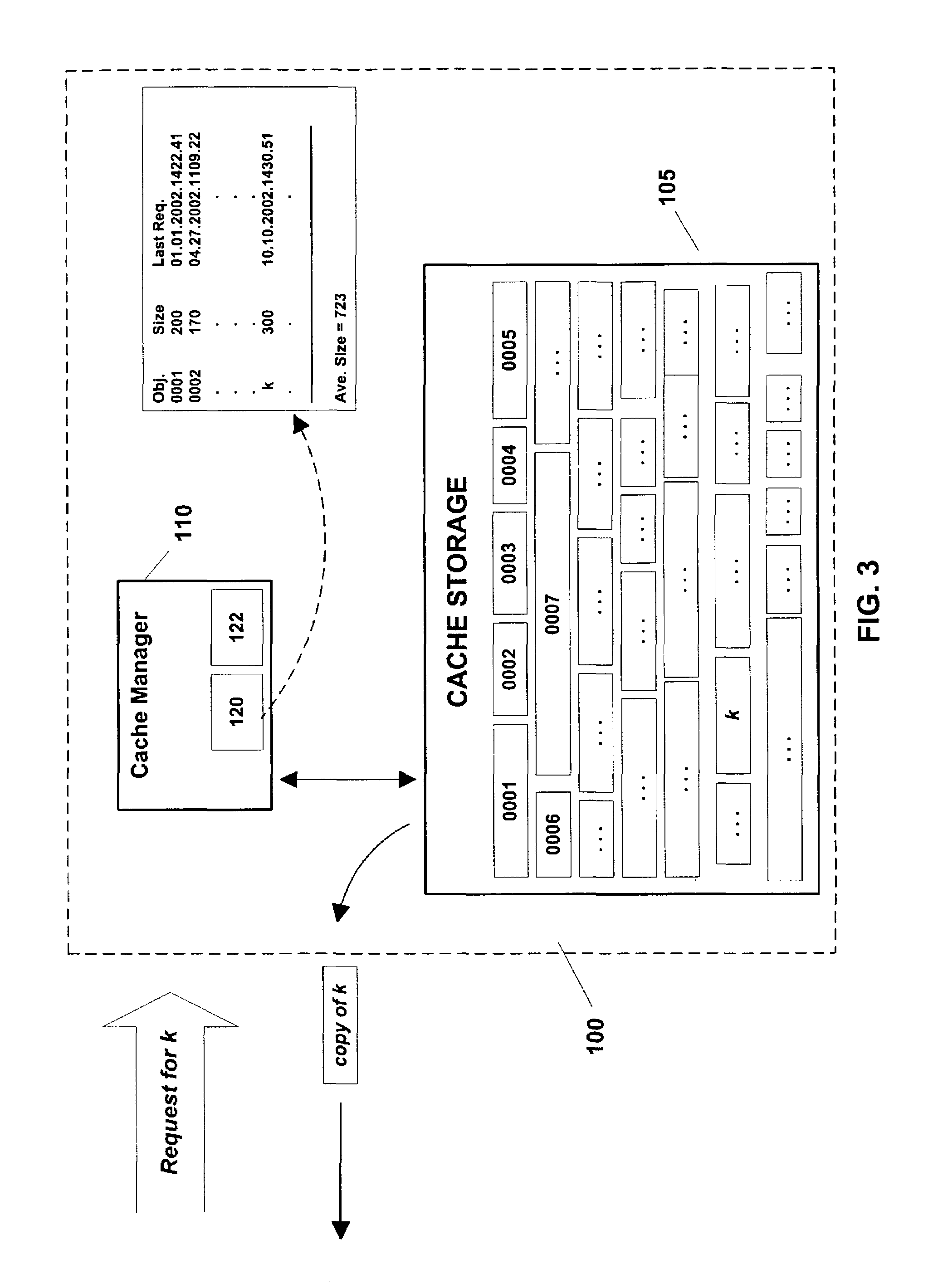

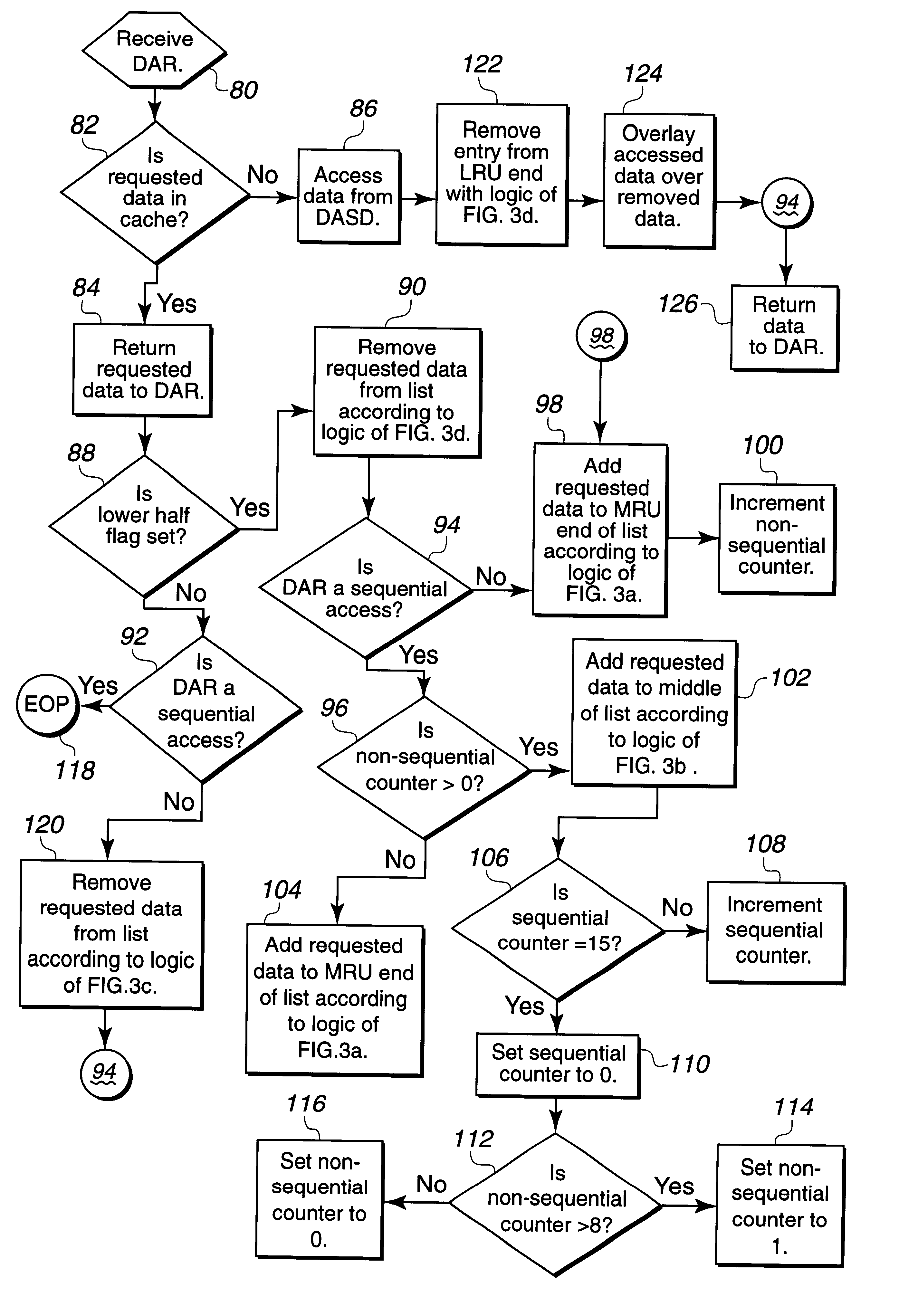

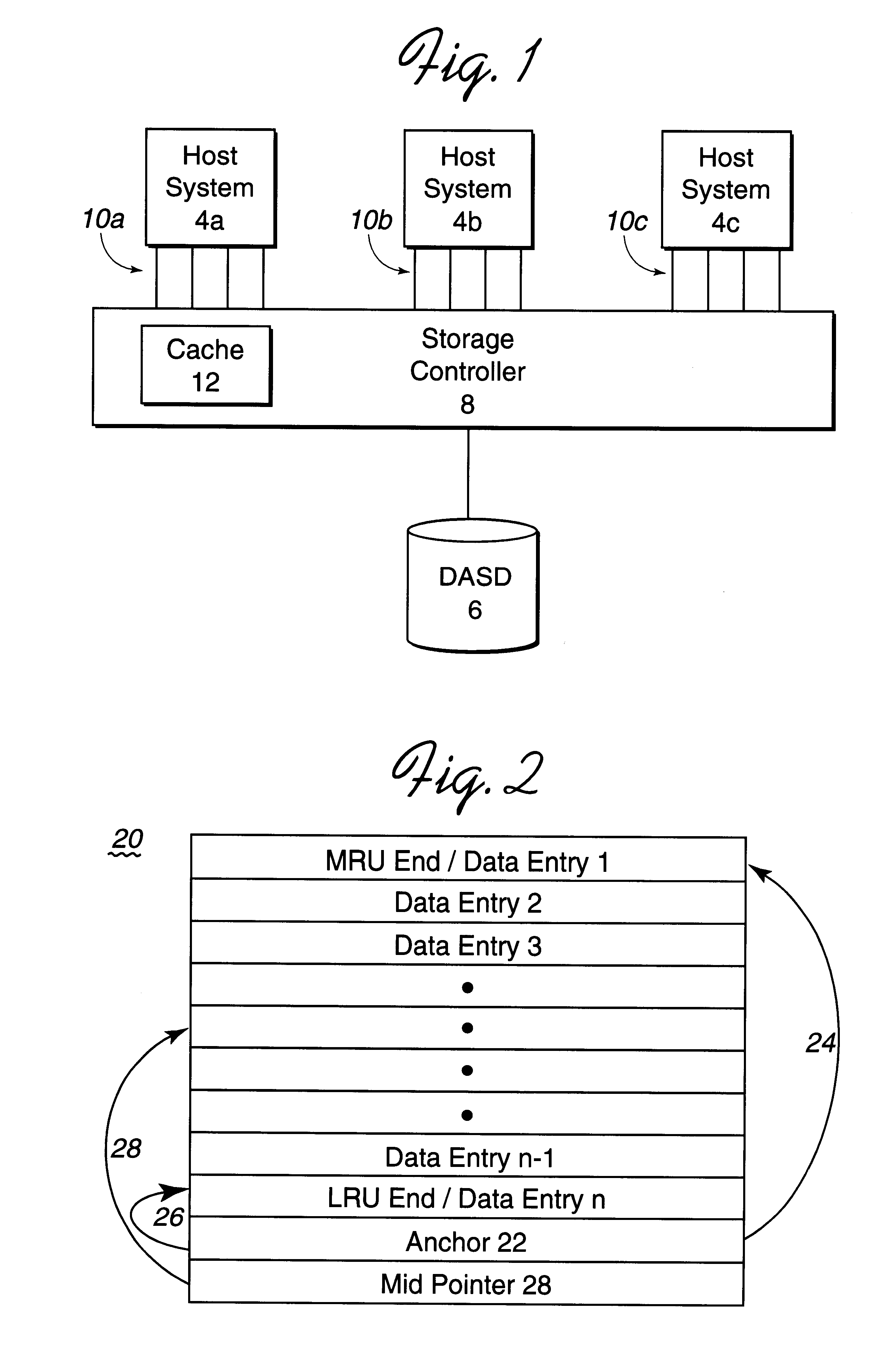

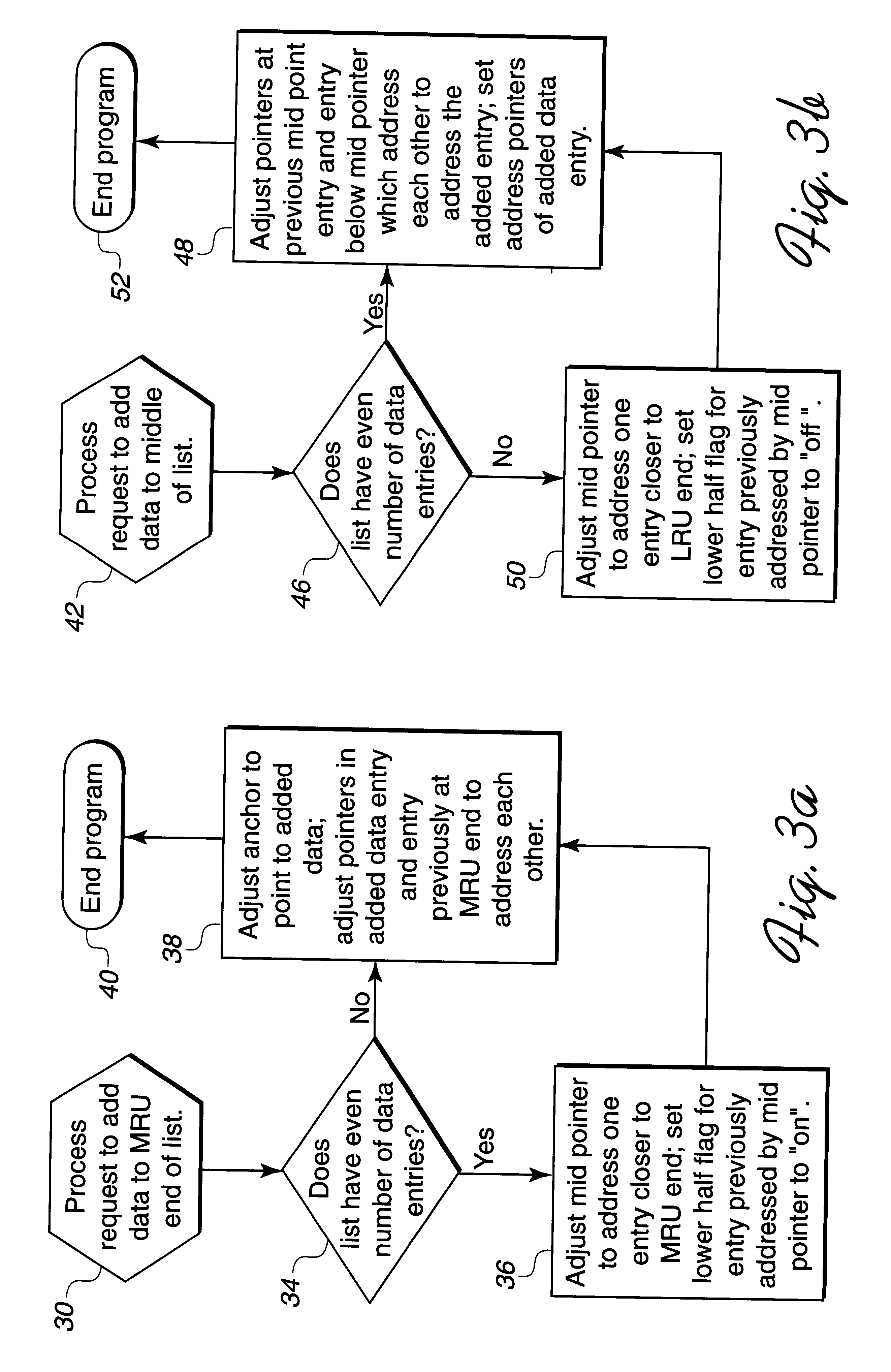

Disclosed is a system for managing data in cache. A list of data entries in a first memory area has a first end and a second end, such as a most recently used (MRU) end and least recently used (LRU) end. A first pointer addresses a data entry in the list and a second pointer addresses another data entry in the list that is not at the first and second ends. Data from a second memory area is provided to add to the list. A determination is made as to whether the provided data to add to the list is one of a first type and second type of data, such as sequentially accessed data or non-sequentially accessed data. The provided data is stored in the first memory area as a new data entry in the list. The first pointer is modified to address the new data entry after determining that the provided data is of the first type. After determining that the provided data is of the second type, the second pointer is processed to determine where to add the new data entry to the list between the first and second ends.

Owner:IBM CORP

Data block frequency map dependent caching

InactiveUS20090204765A1Improve caching capacityImprove system performanceMemory systemsParallel computingCache algorithms

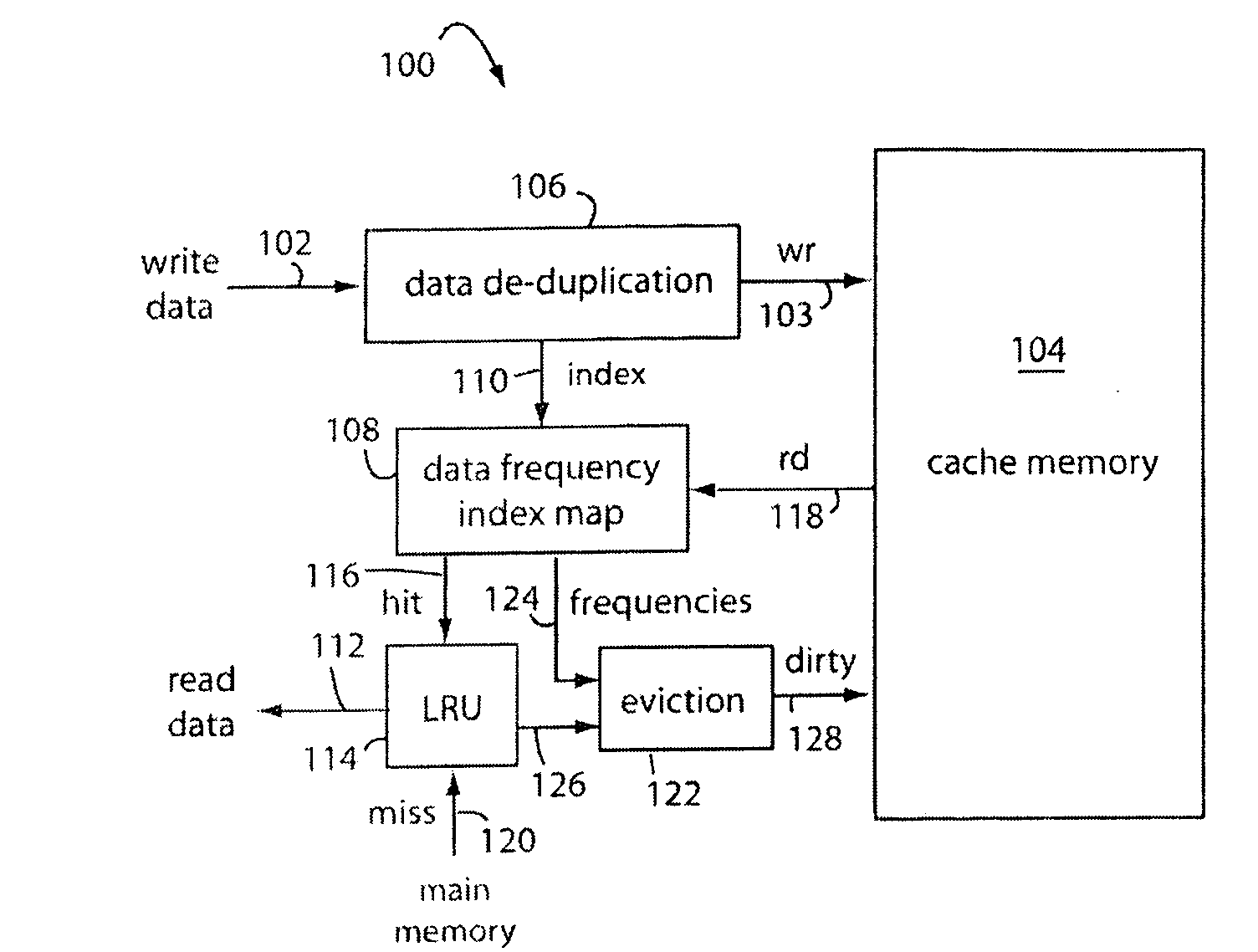

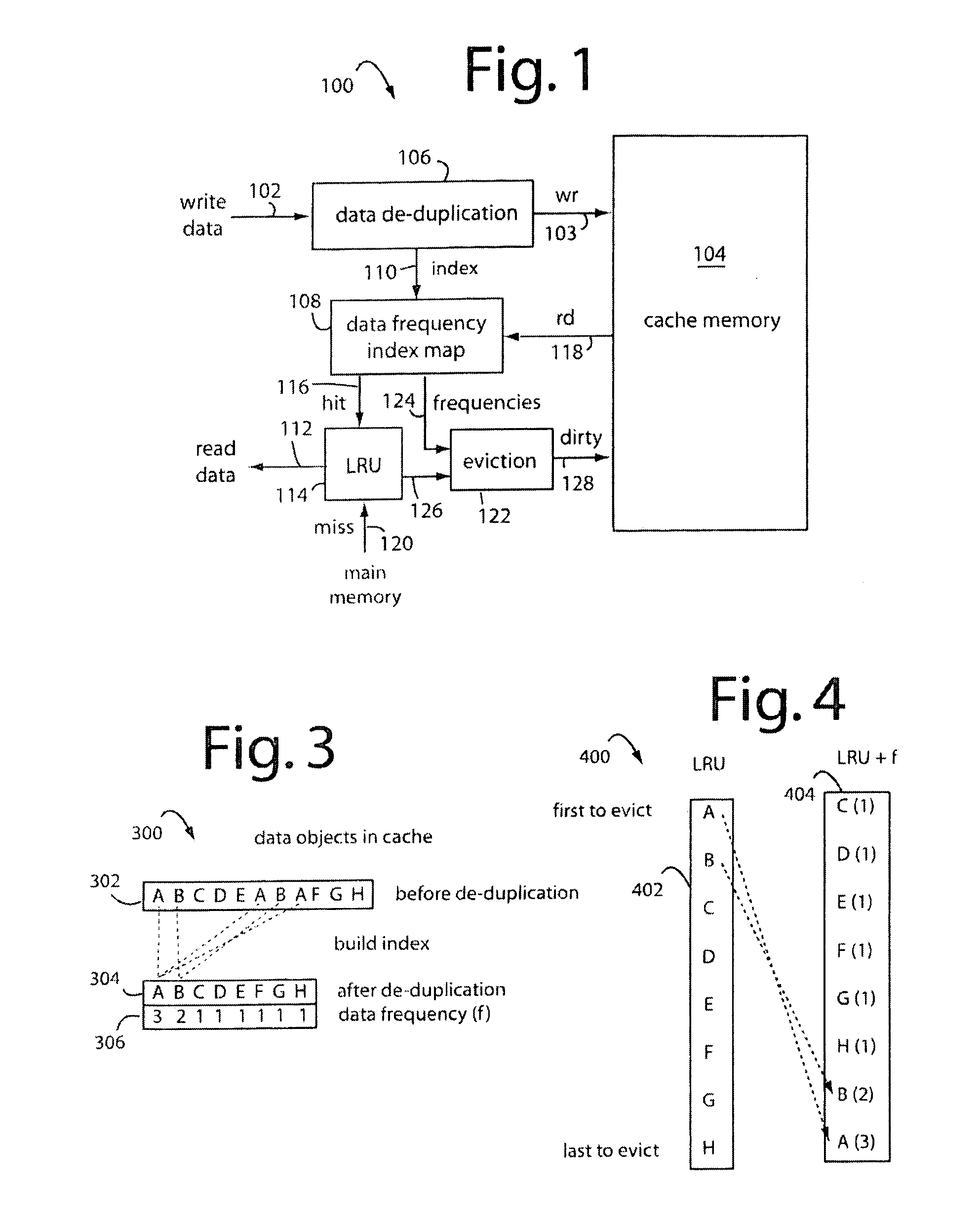

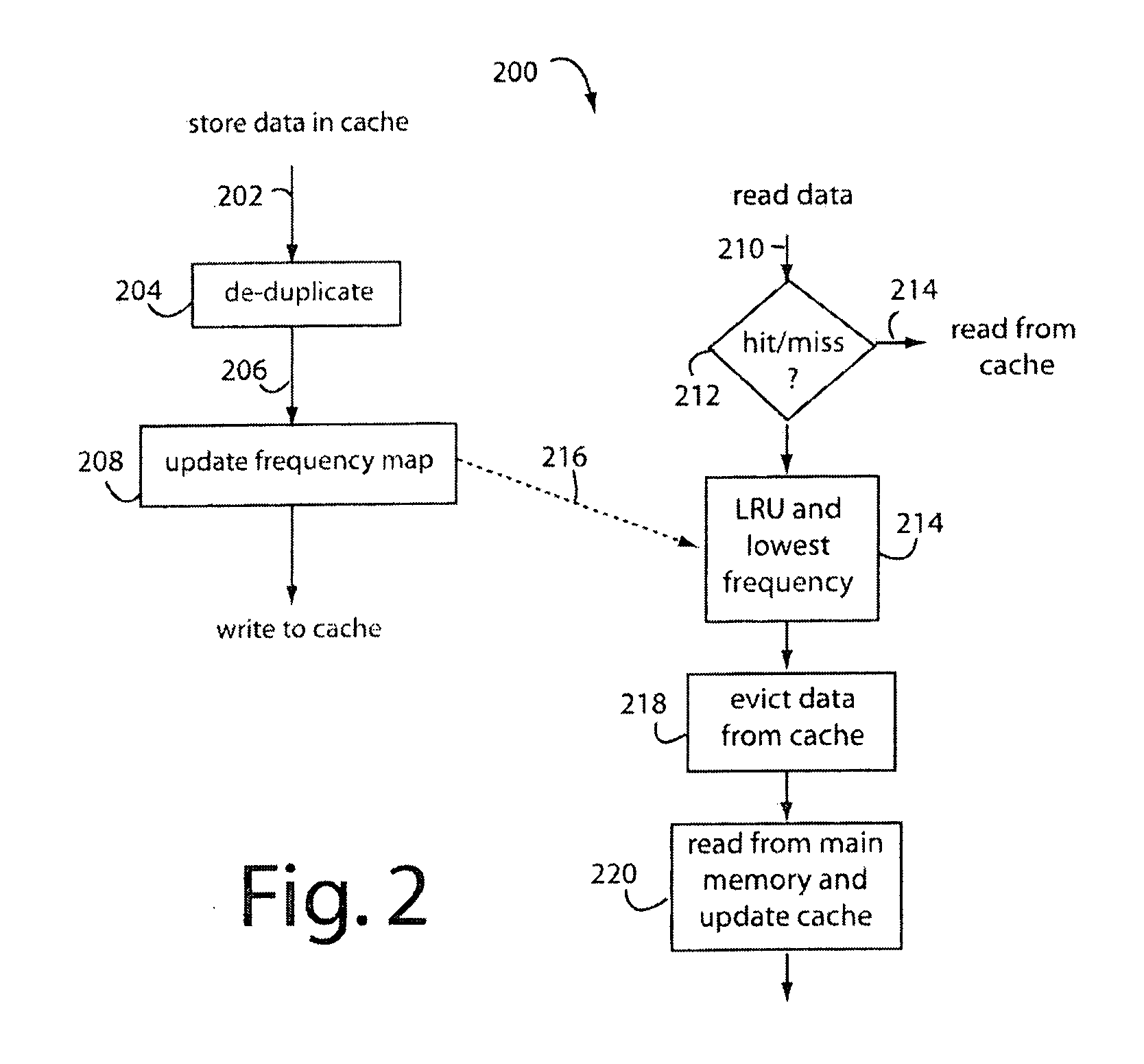

A method for increasing the performance and utilization of cache memory by combining the data block frequency map generated by data de-duplication mechanism and page prefetching and eviction algorithms like Least Recently Used (LRU) policy. The data block frequency map provides weight directly proportional to the frequency count of the block in the dataset. This weight is used to influence the caching algorithms like LRU. Data blocks that have lesser frequency count in the dataset are evicted before those with higher frequencies, even though they may not have been the topmost blocks for page eviction by caching algorithms. The method effectively combines the weight of the block in the frequency map and its eviction status by caching algorithms like LRU to get an improved performance and utilization of the cache memory.

Owner:IBM CORP

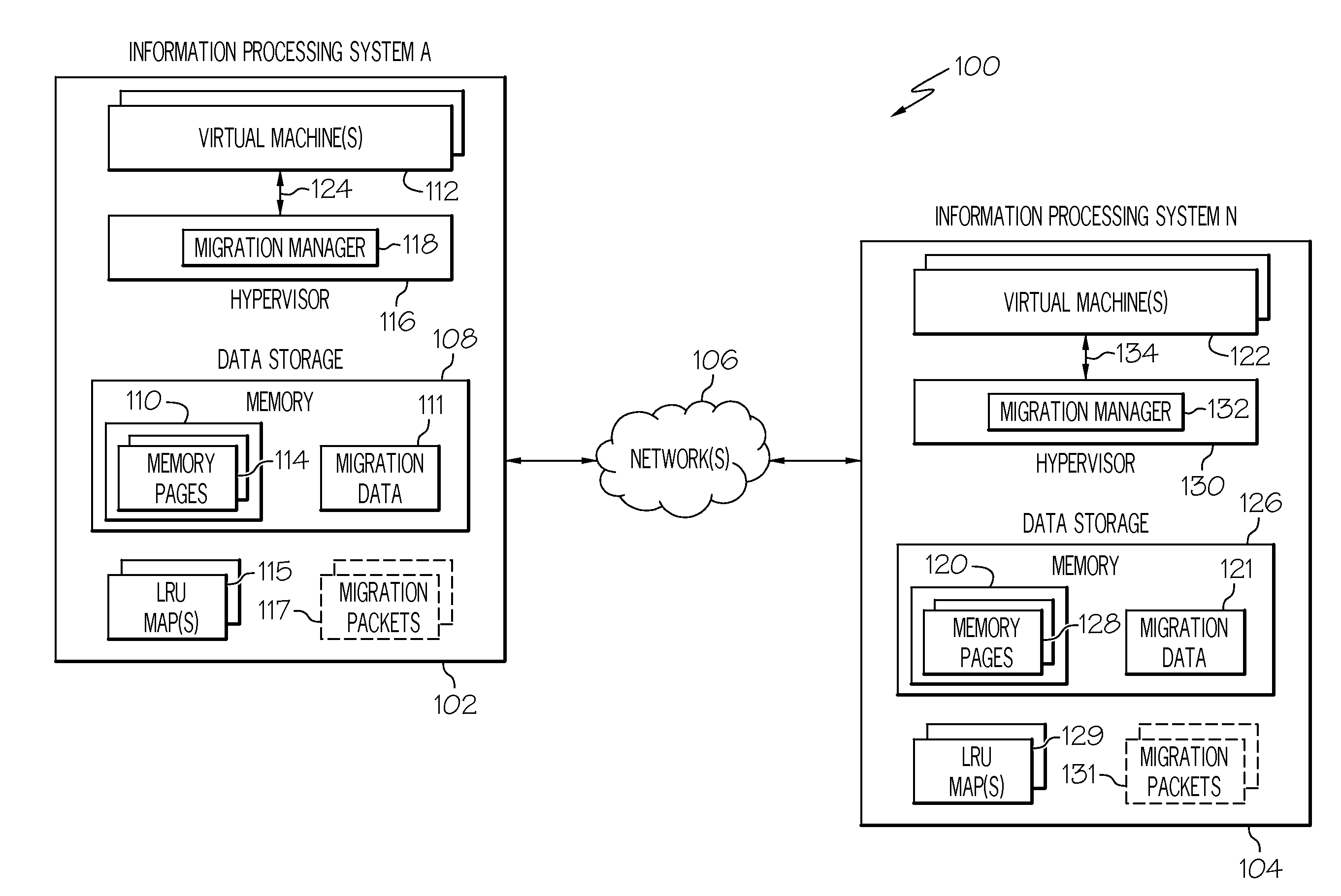

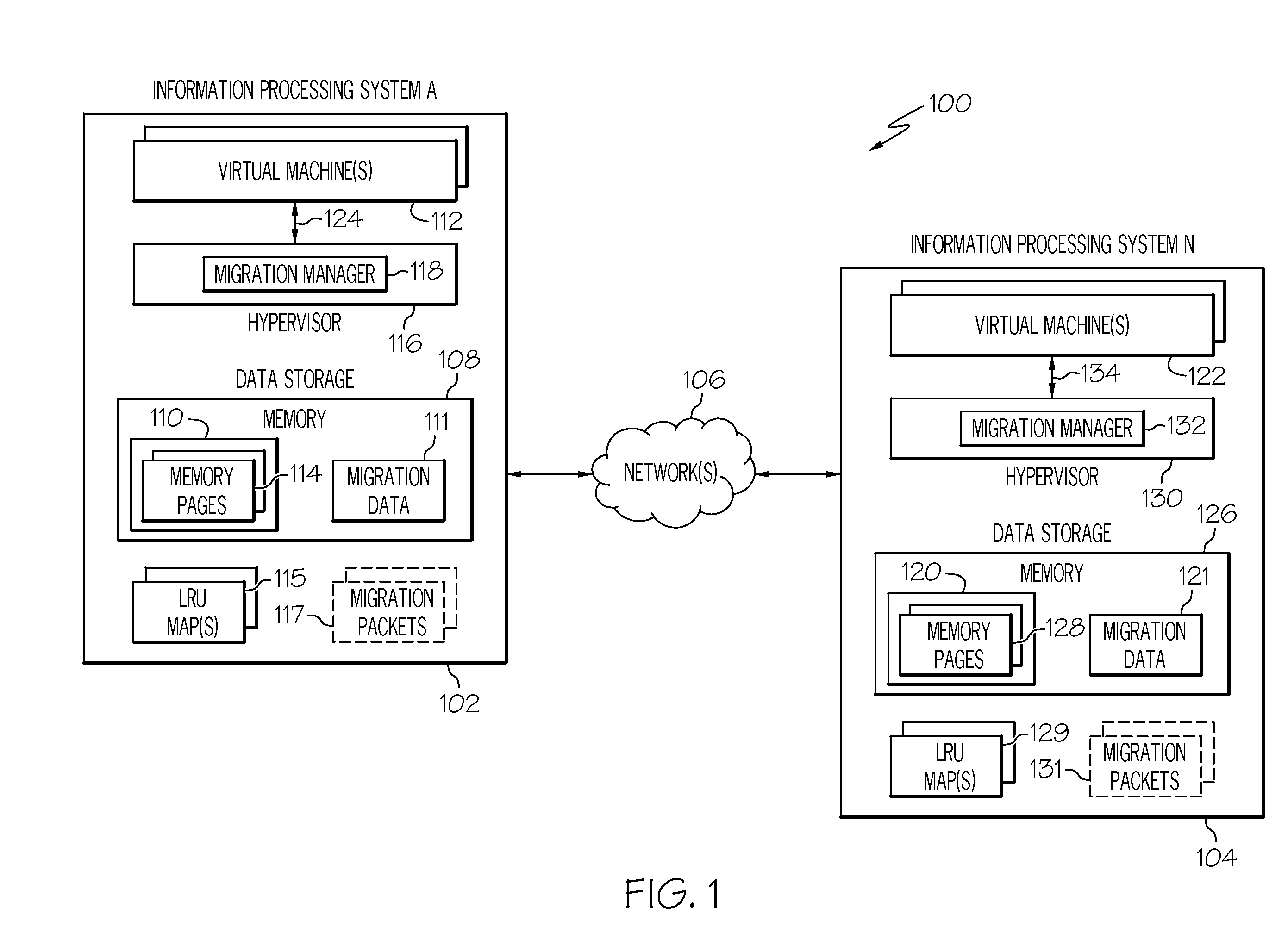

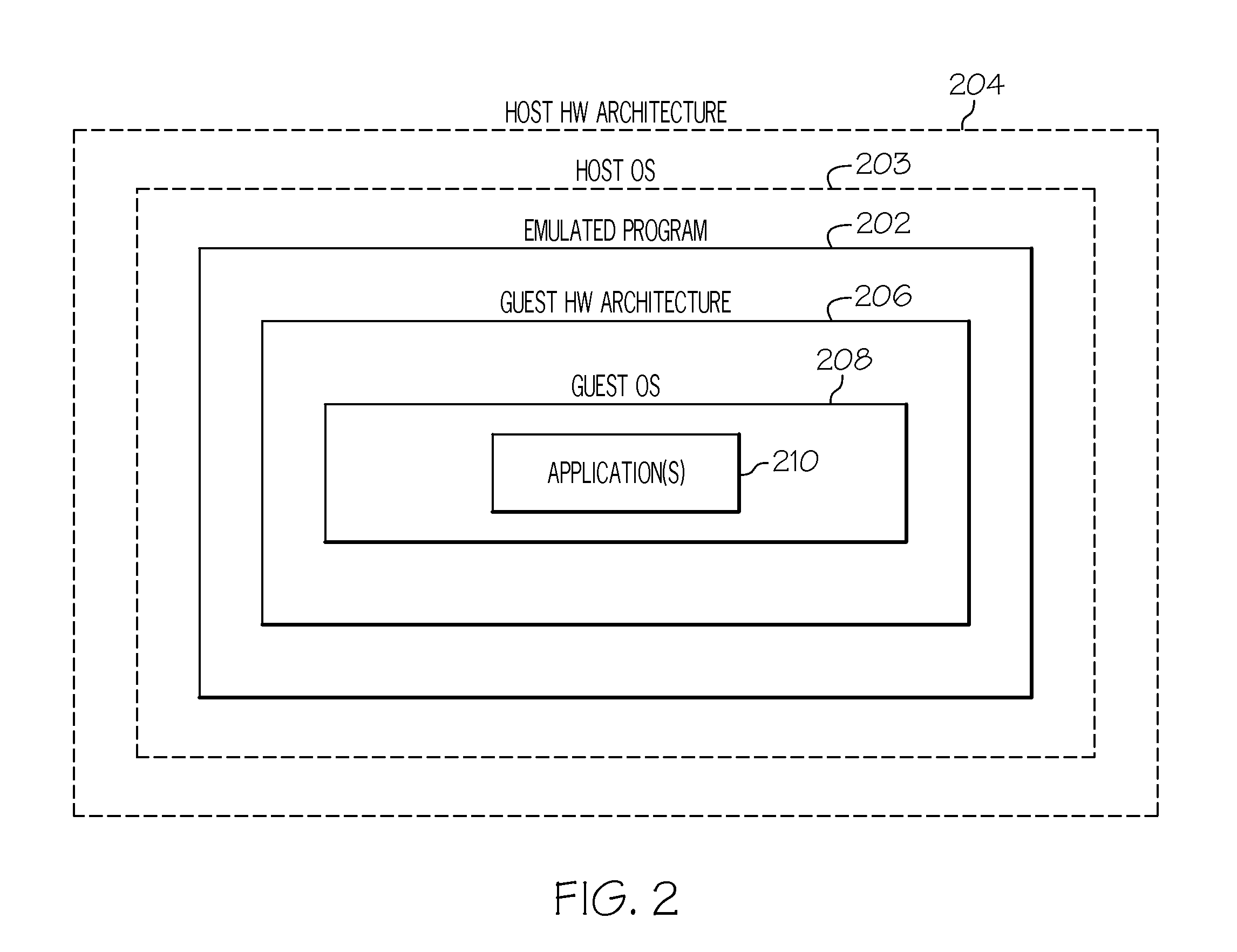

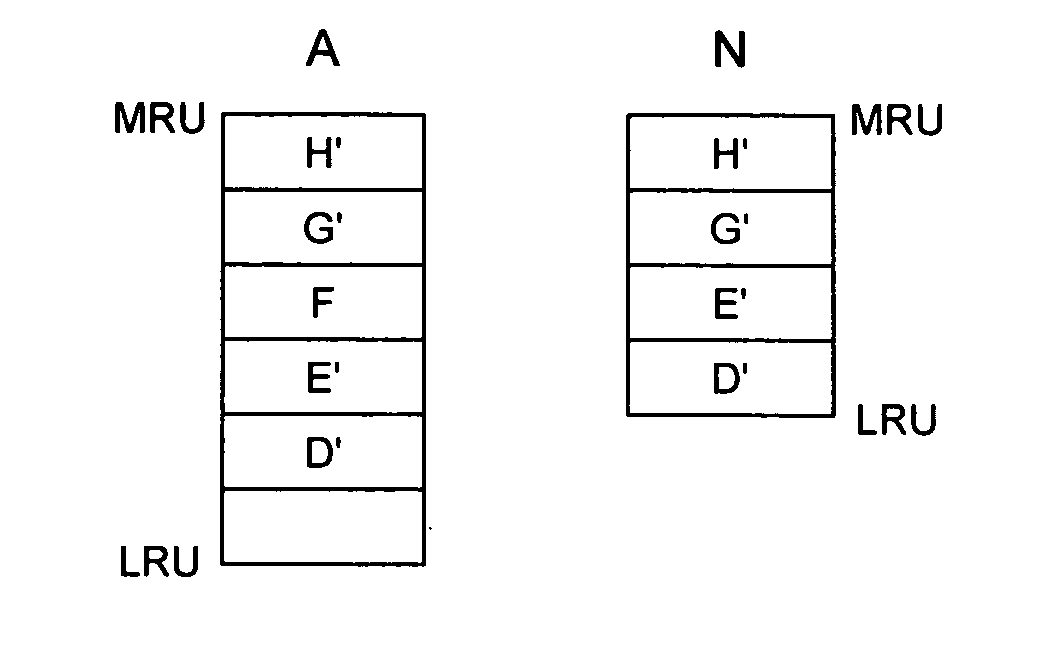

Symmetric live migration of virtual machines

InactiveUS20110119427A1Memory adressing/allocation/relocationMultiprogramming arrangementsPhysical addressLeast recently frequently used

A first least recently used map is generated for a set of memory pages of a first virtual machine. The first least recently used map includes metadata including memory page physical address location information. A first of the memory pages of the first virtual machine and the metadata for the first memory page is sent from the first virtual machine to a second virtual machine while the first virtual machine is executing. A first memory page and meta data associated therewith of the second virtual machine is received from the second virtual machine at the first virtual machine. The memory pages of the first virtual machine are ordered from a first location of the first least recently used map to a last location of the first least recently used map based on how recently each of the memory pages of the first virtual machine has been used.

Owner:IBM CORP

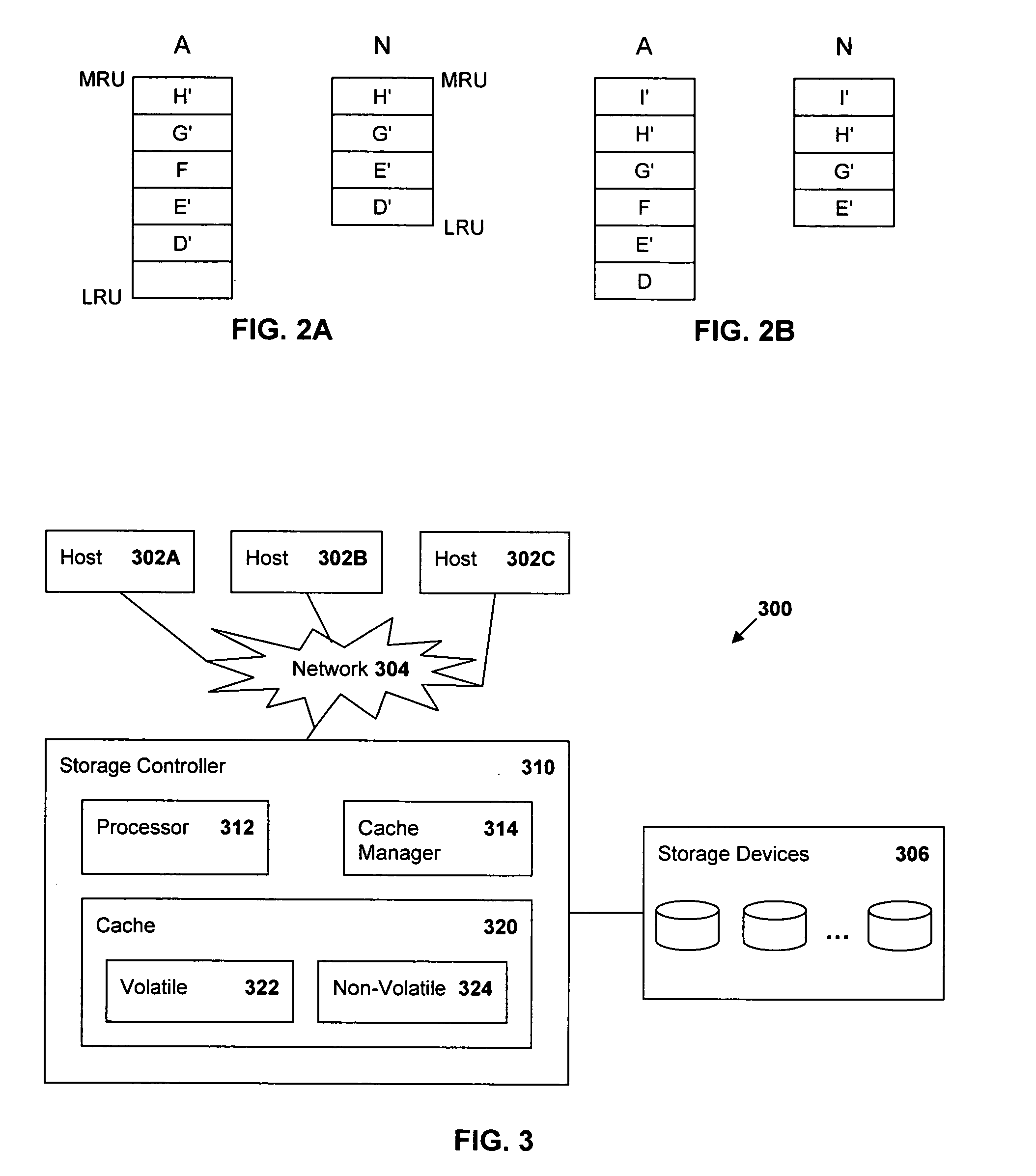

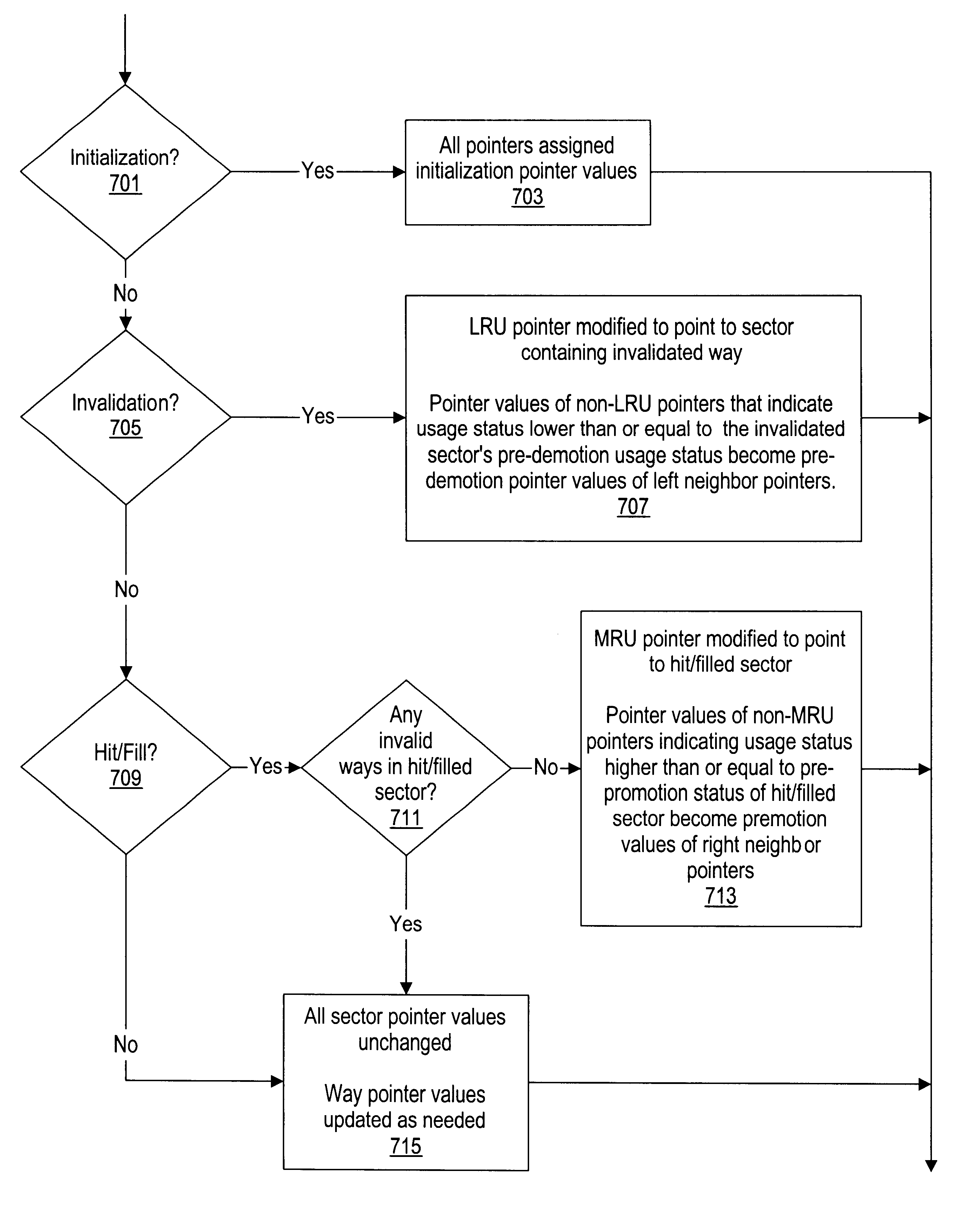

Decoupling storage controller cache read replacement from write retirement

In a data storage controller, accessed tracks are temporarily stored in a cache, with write data being stored in a first cache (such as a volatile cache) and a second cache and read data being stored in a second cache (such as a non-volatile cache). Corresponding least recently used (LRU) lists are maintained to hold entries identifying the tracks stored in the caches. When the list holding entries for the first cache (the A list) is full, the list is scanned to identify unmodified (read) data which can be discarded from the cache to make room for new data. Prior to or during the scan, modified (write) data entries are moved to the most recently used (MRU) end of the list, allowing the scans to proceed in an efficient manner and reducing the number of times the scan has to skip over modified entries Optionally, a status bit may be associated with each modified data entry. When the modified entry is moved to the MRU end of the A list without being requested to be read, its status bit is changed from an initial state (such as 0) to a second state (such as 1), indicating that it is a candidate to be discarded. If the status bit is already set to the second state (such as 1), then it is left unchanged. If a modified track is moved to the MRU end of the A list as a result of being requested to be read, the status bit of the corresponding A list entry is changed back to the first state, preventing the track from being discarded. Thus, write tracks are allowed to remain in the first cache only as long as necessary.

Owner:IBM CORP

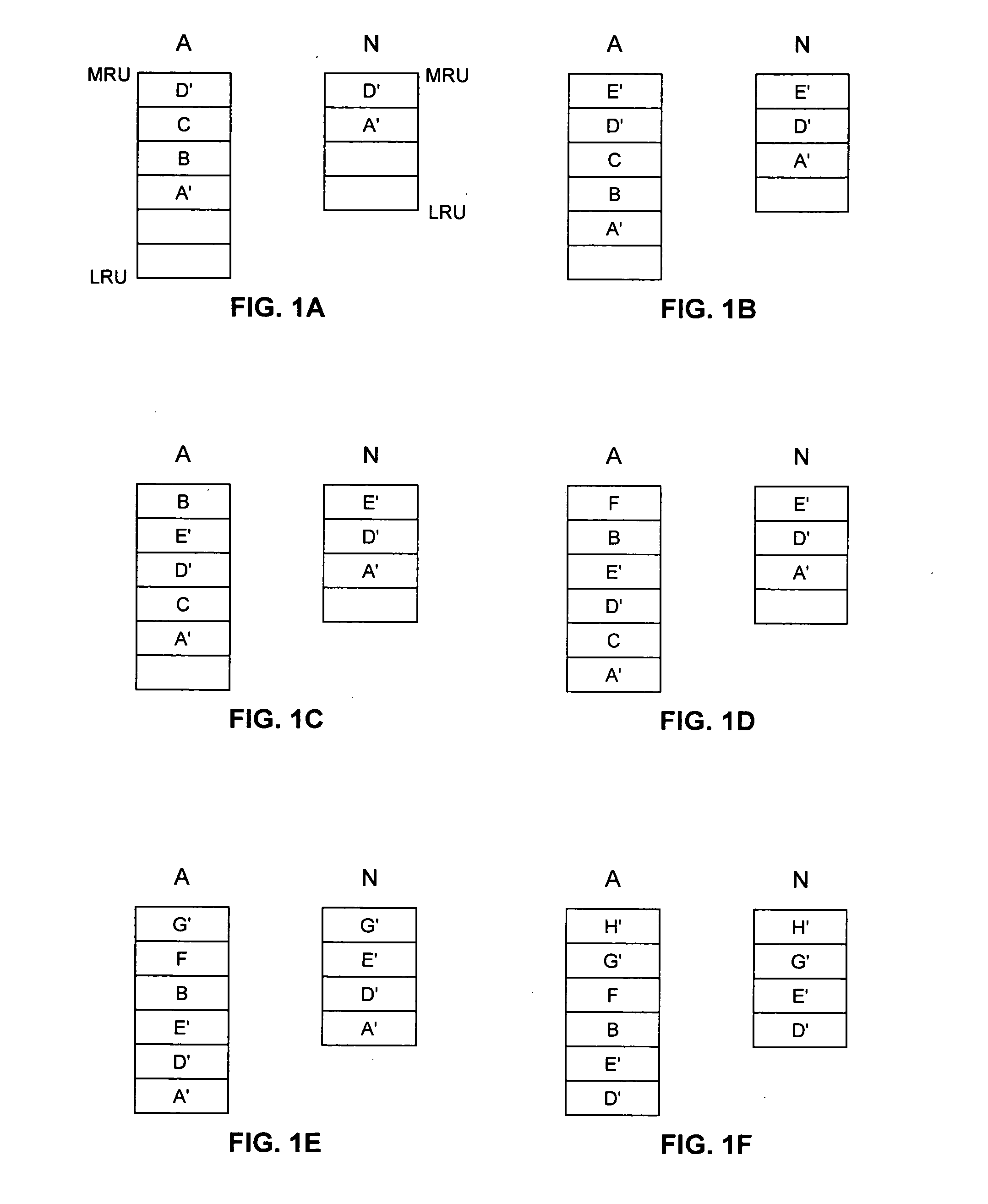

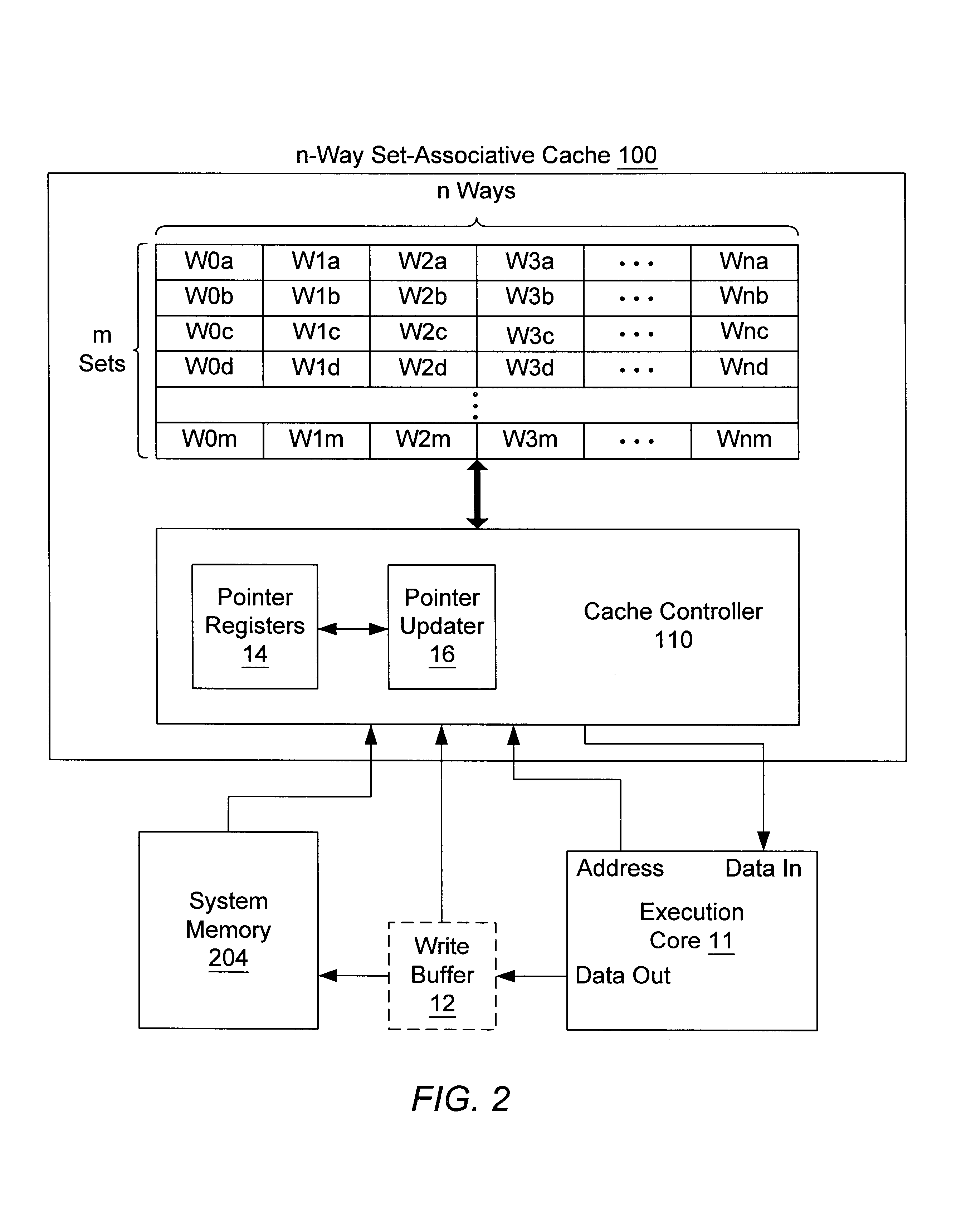

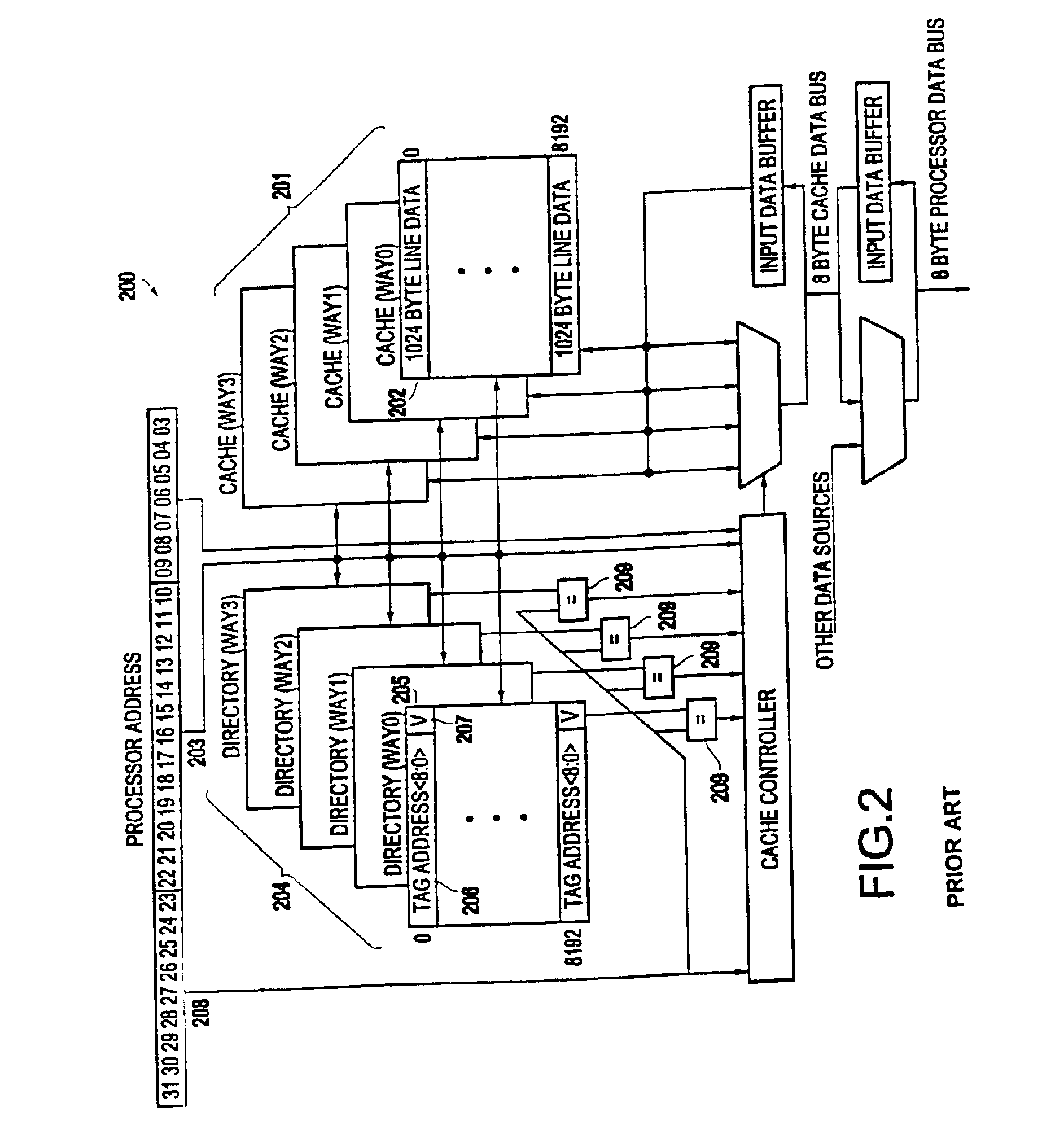

Sectored least-recently-used cache replacement

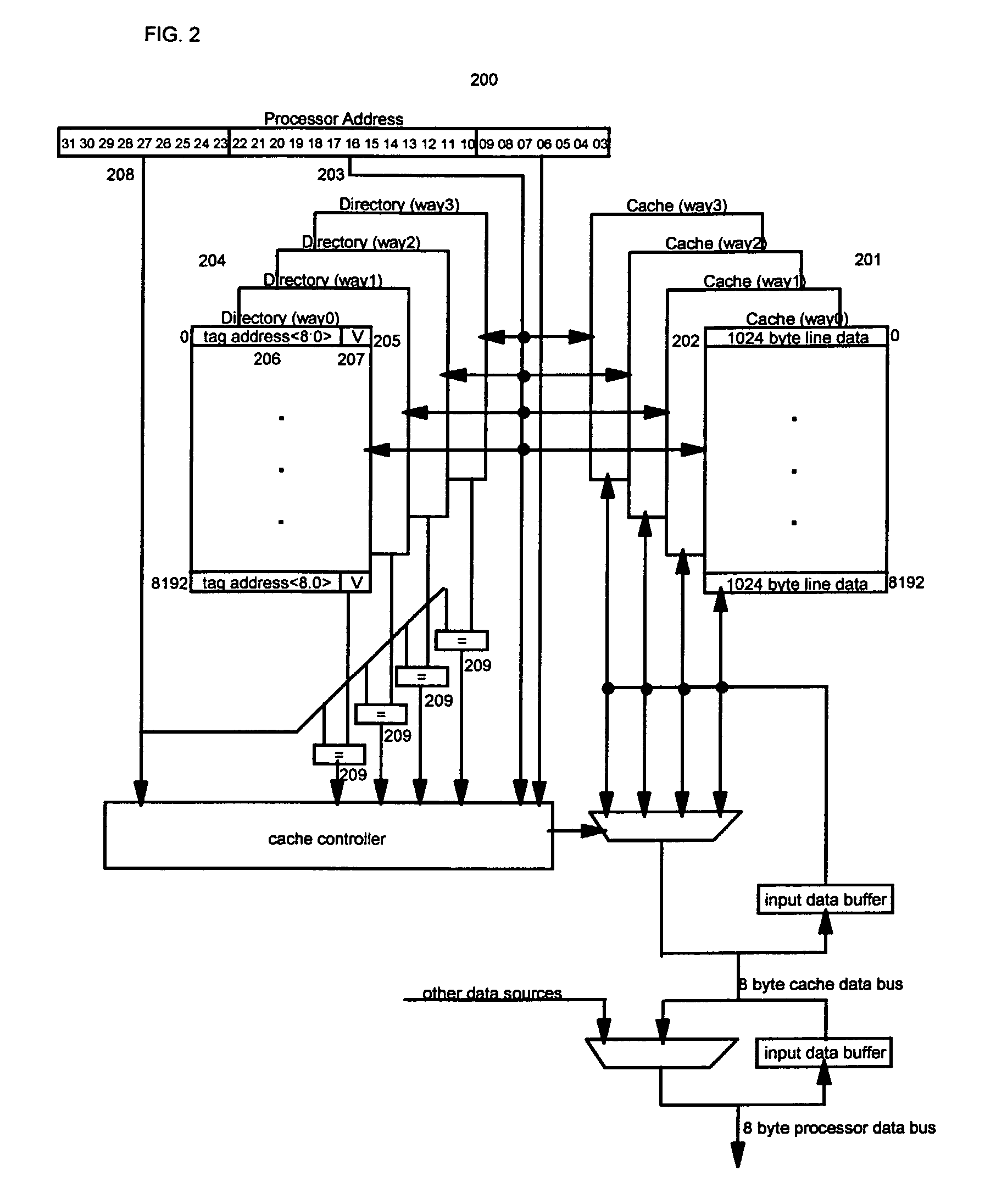

InactiveUS6823427B1Memory adressing/allocation/relocationLeast recently frequently usedOperating system

Various methods and systems for implementing a sectored least recently used (LRU) cache replacement algorithm are disclosed. Each set in an N-way set-associative cache is partitioned into several sectors that each include two or more of the N ways. Usage status indicators such as pointers show the relative usage status of the sectors in an associated set. For example, an LRU pointer may point to the LRU sector, an MRU pointer may point to the MRU sector, and so on. When a replacement is performed, a way within the LRU sector identified by the LRU pointer is filled.

Owner:ADVANCED MICRO DEVICES INC

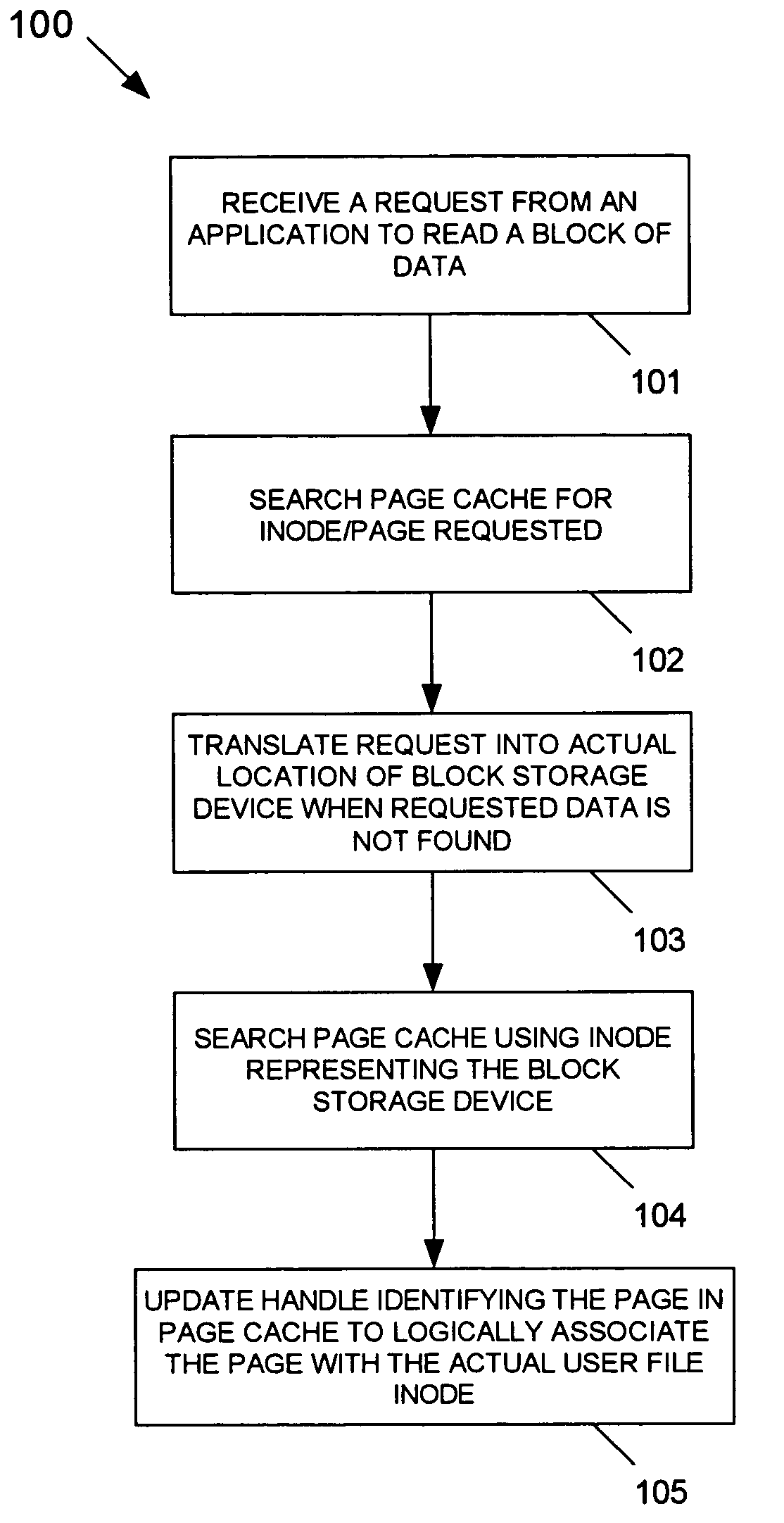

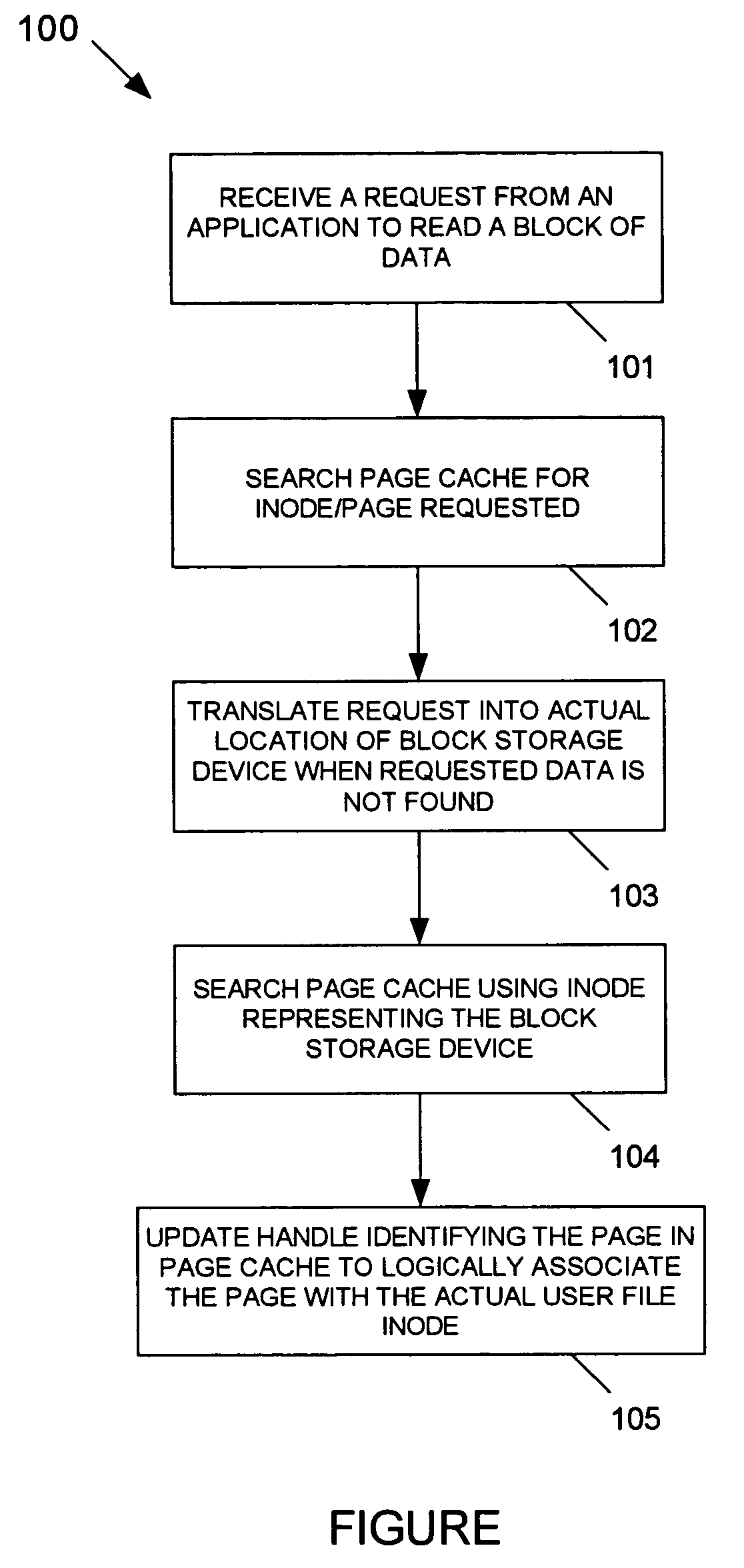

Multi-level page cache for enhanced file system performance via read ahead

InactiveUS7203815B2Improve efficiencyMemory architecture accessing/allocationMemory systemsInodeLeast recently frequently used

Owner:INT BUSINESS MASCH CORP

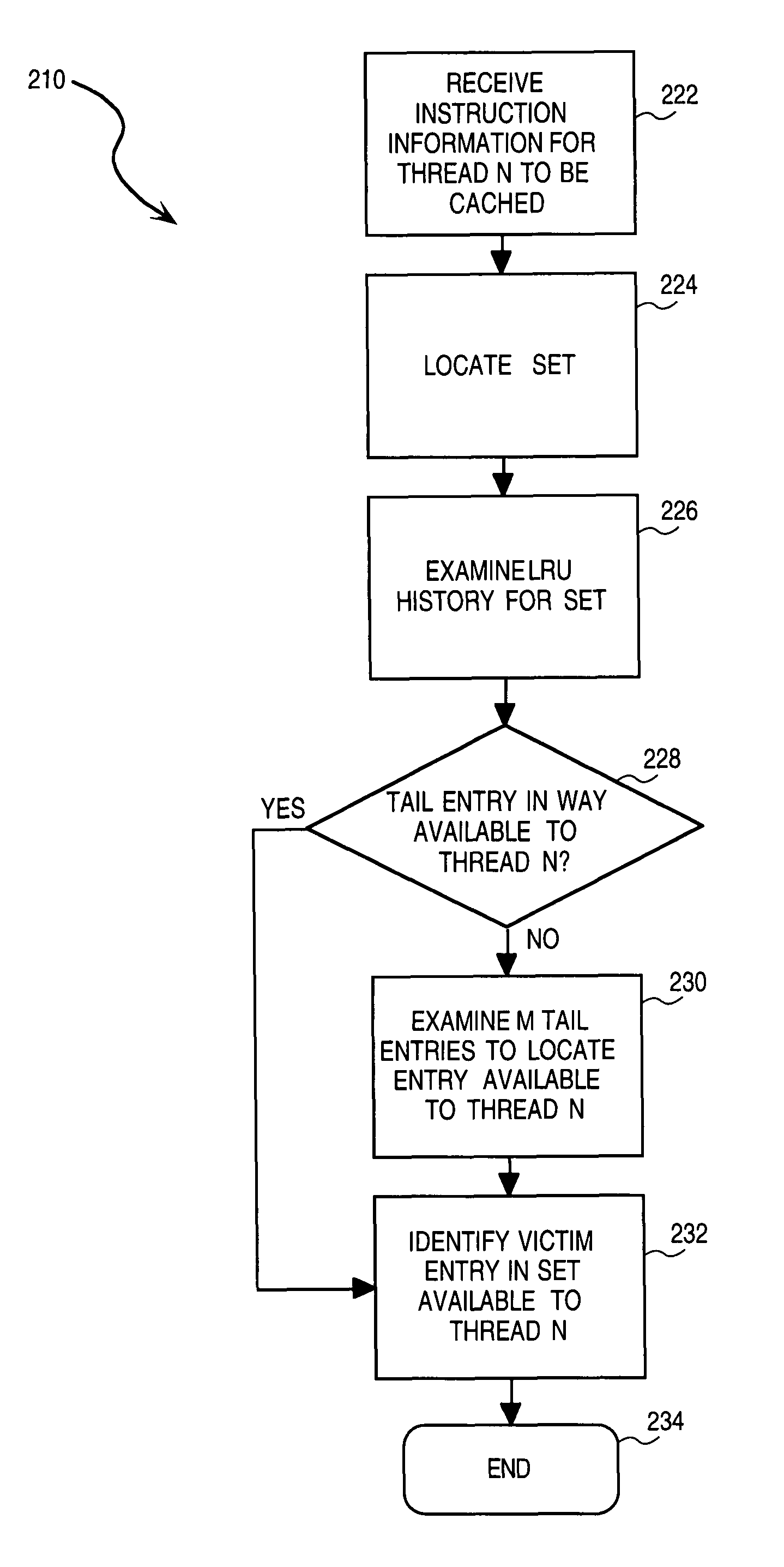

LRU cache replacement for a partitioned set associative cache

InactiveUS7856633B1Efficiently share resourceResource allocationRuntime instruction translationLeast recently frequently usedOperating system

A method of partitioning a memory resource, associated with a multi-threaded processor, includes defining the memory resource to include first and second portions that are dedicated to the first and second threads respectively. A third portion of the memory resource is then designated as being shared between the first and second threads. Upon receipt of an information item, (e.g., a microinstruction associated with the first thread and to be stored in the memory resource), a history of Least Recently Used (LRU) portions is examined to identify a location in either the first or the third portion, but not the second portion, as being a least recently used portion. The second portion is excluded from this examination on account of being dedicated to the second thread. The information item is then stored within a location, within either the first or the third portion, identified as having been least recently used.

Owner:INTEL CORP

Method of efficiently choosing a cache entry for castout

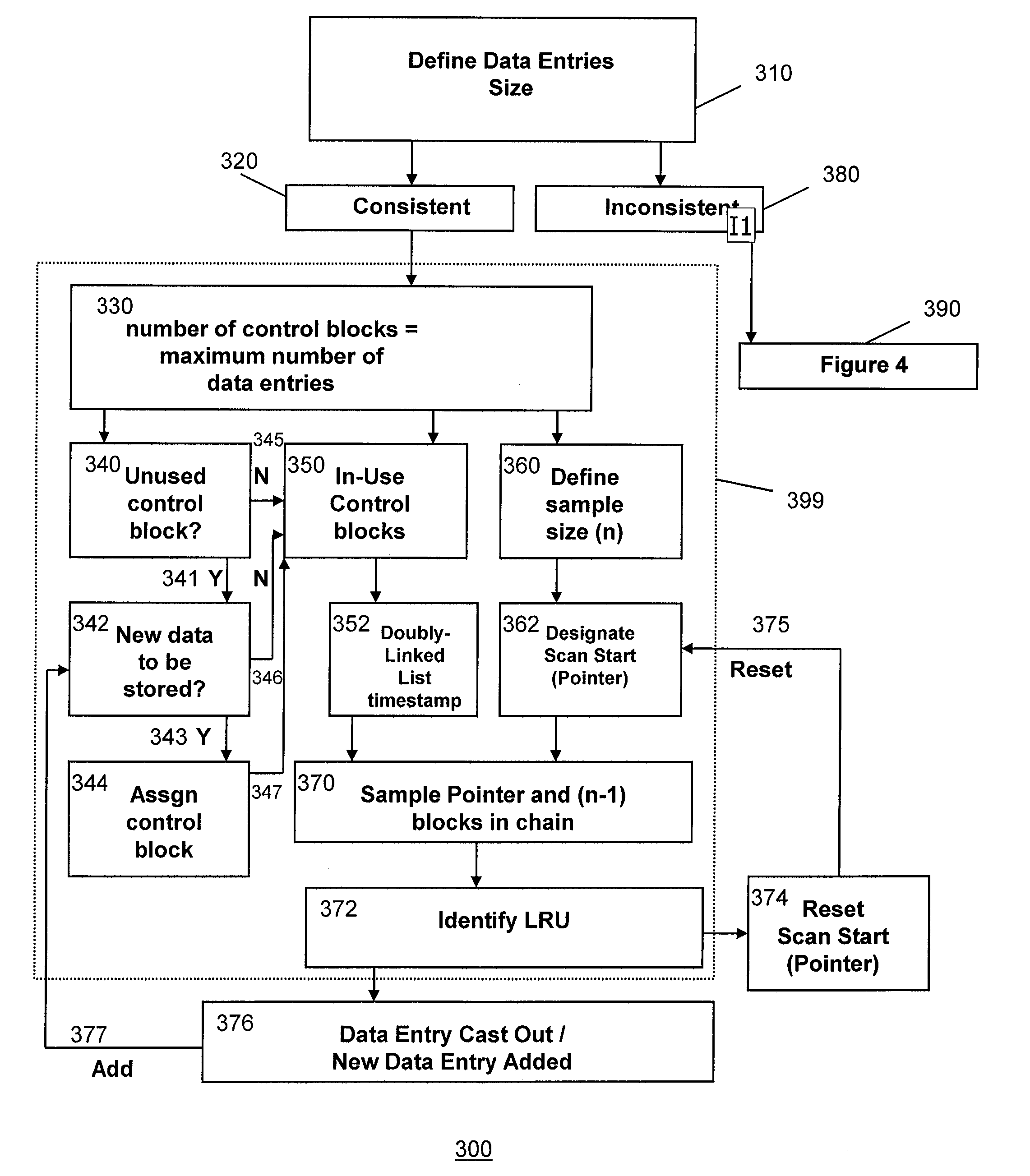

InactiveUS20090177844A1Efficient identificationMemory adressing/allocation/relocationTimestampParallel computing

The present invention relates generally to a method and system for efficiently identifying a cache entry for cast out in relation to scanning a predetermined sampling subset of pseudo-randomly sampled cached entries and determining a least recently used (LRU) entry from the scanned cached entries subset, thereby avoiding a comprehensive review of all of or groups of the cached entries in the cache at any instant. In one or more implementations, a subset of the data entries in a cache are randomly sampled, assessed by timestamp in a doubly-linked listing and a least recently used data entry to cast out is identified.

Owner:IBM CORP

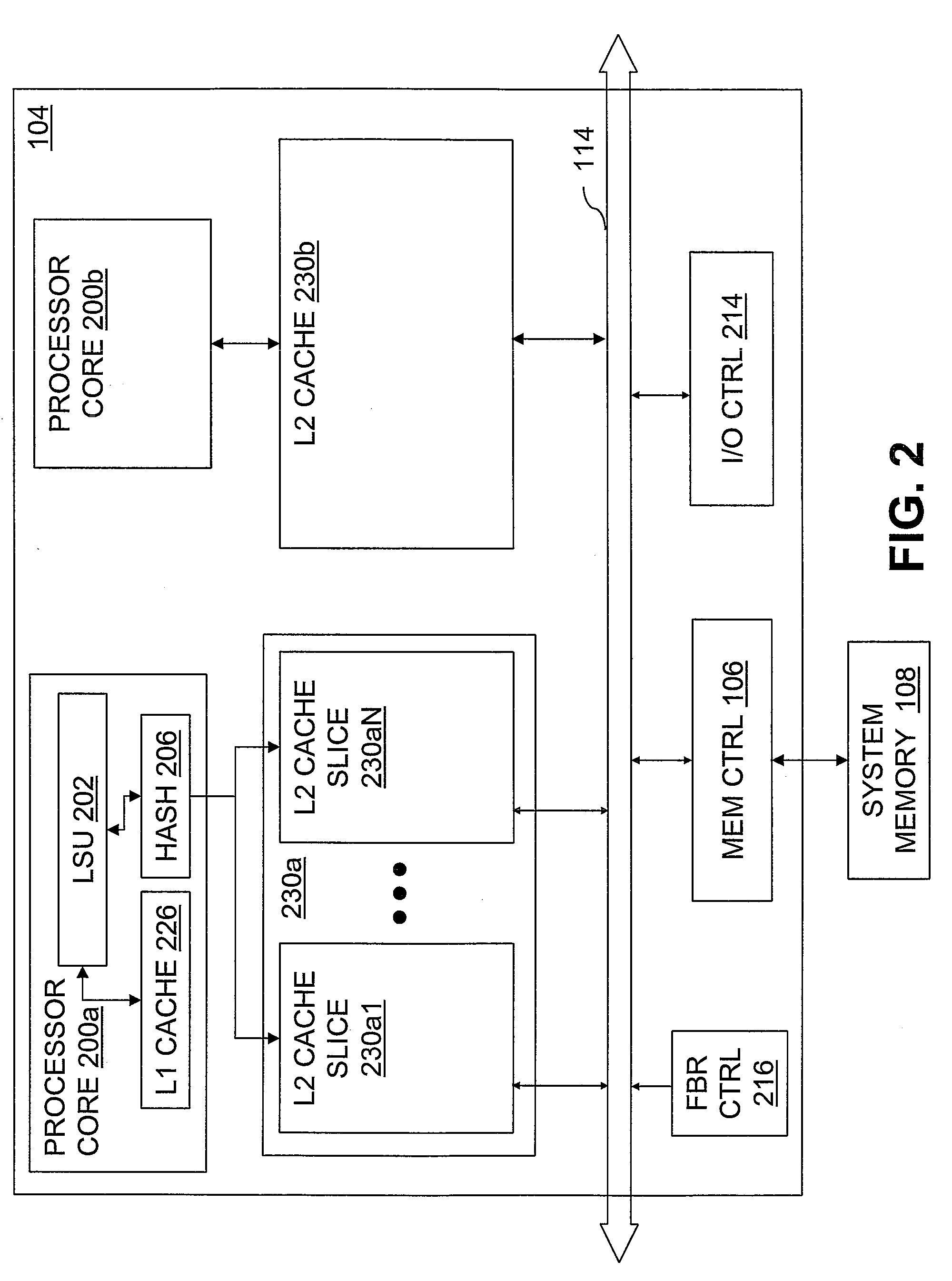

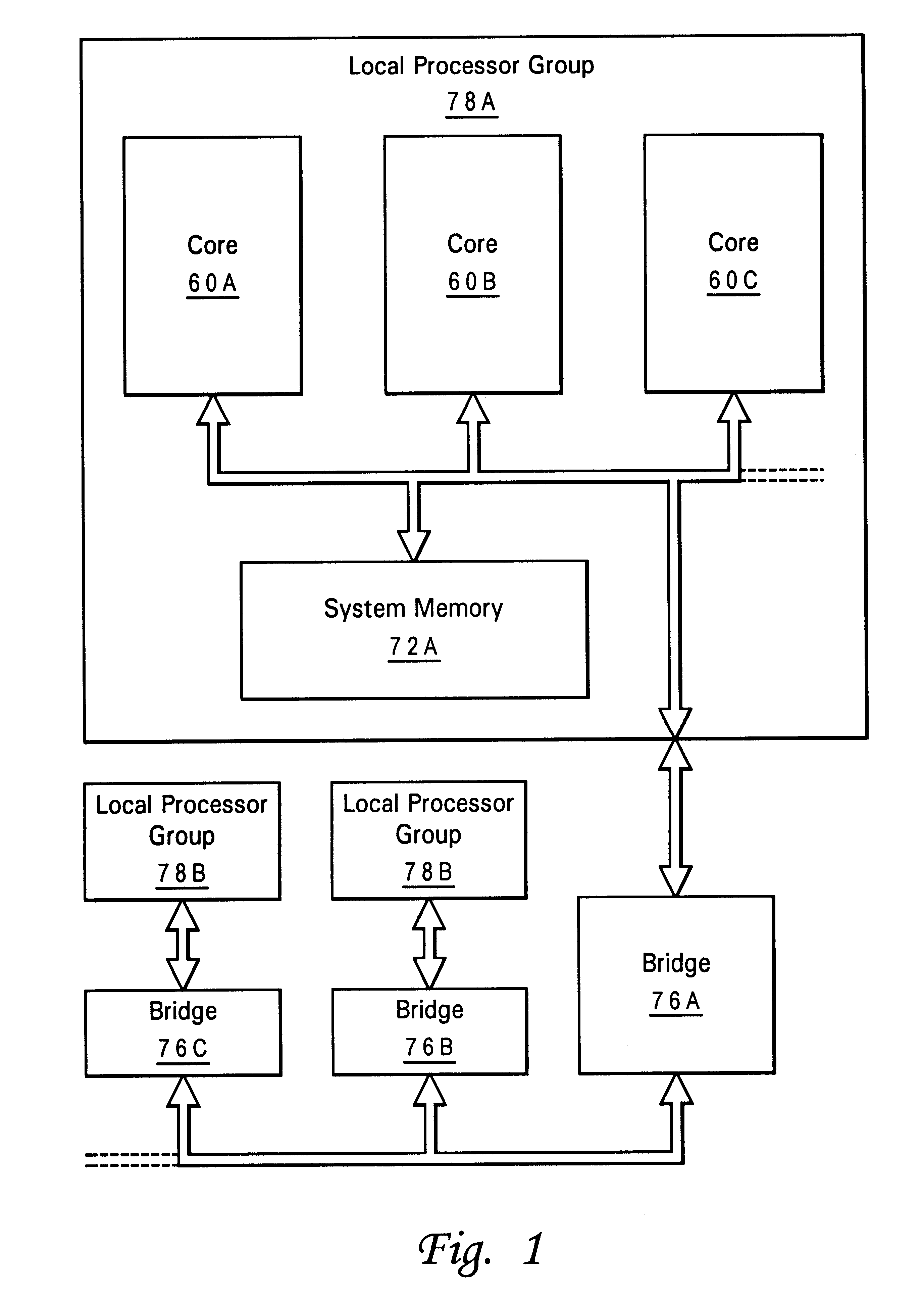

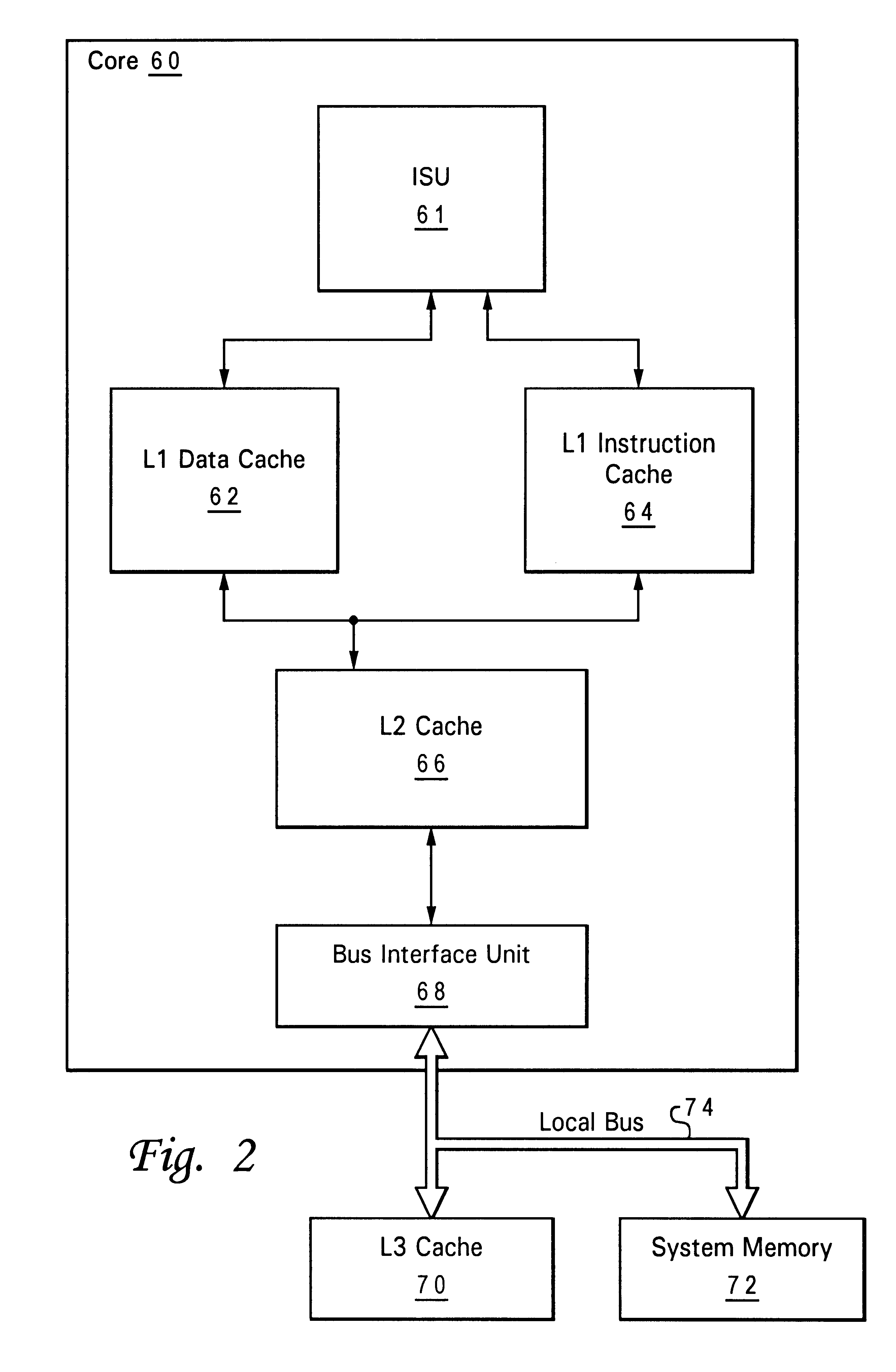

Load request scheduling in a cache hierarchy

InactiveUS20100268882A1Memory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyLeast recently frequently used

A system and method for tracking core load requests and providing arbitration and ordering of requests. When a core interface unit (CIU) receives a load operation from the processor core, a new entry in allocated in a queue of the CIU. In response to allocating the new entry in the queue, the CIU detects contention between the load request and another memory access request. In response to detecting contention, the load request may be suspended until the contention is resolved. Received load requests may be stored in the queue and tracked using a least recently used (LRU) mechanism. The load request may then be processed when the load request resides in a least recently used entry in the load request queue. CIU may also suspend issuing an instruction unless a read claim (RC) machine is available. In another embodiment, CIU may issue stored load requests in a specific priority order.

Owner:IBM CORP

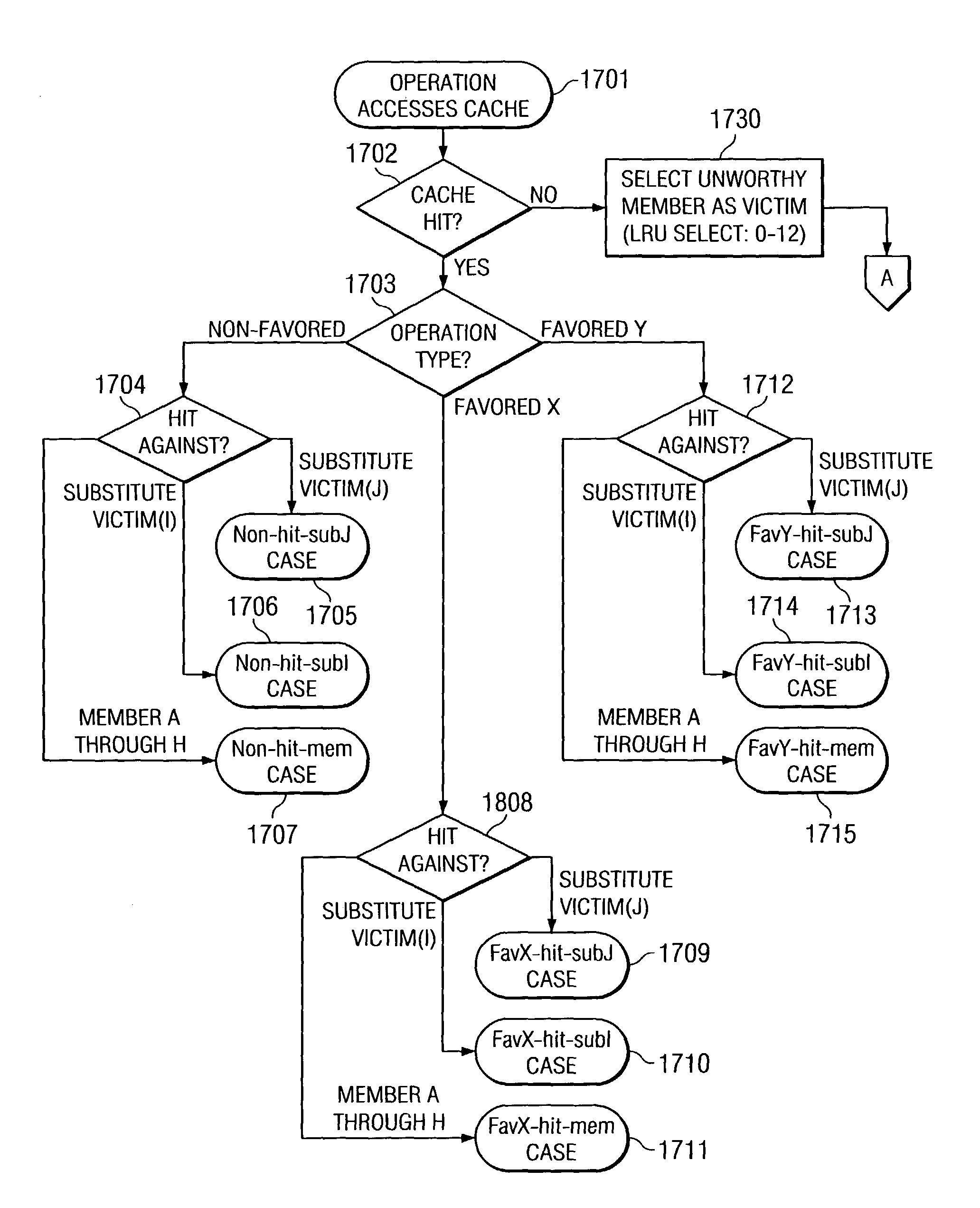

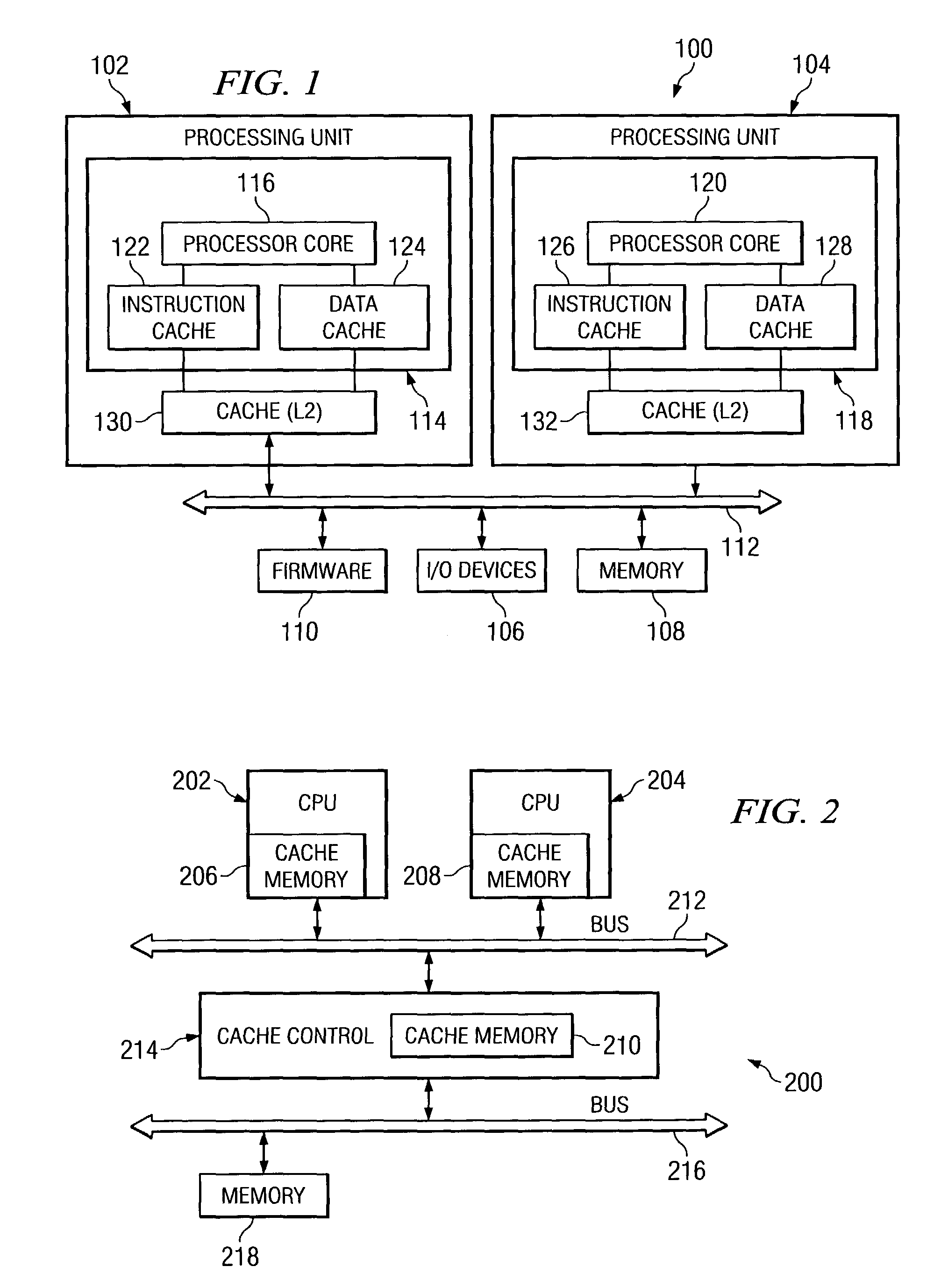

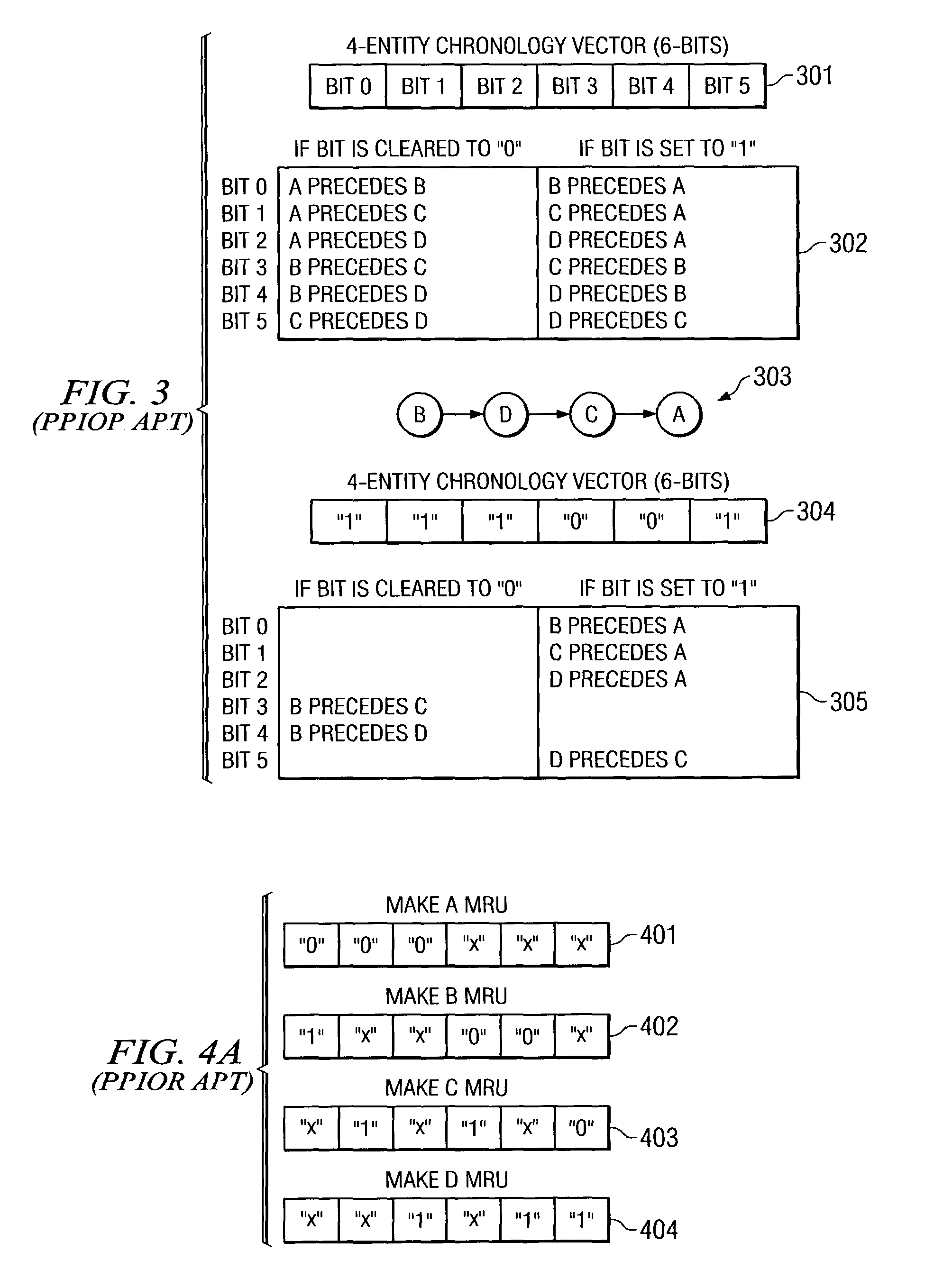

Cache allocation mechanism for saving multiple elected unworthy members via substitute victimization and imputed worthiness of multiple substitute victim members

A method and apparatus in a data processing system for protecting against displacement of two types of cache lines using a least recently used cache management process. A first member in a class of cache lines is selected as a first substitute victim. The first substitute victim is unselectable by the least recently used cache management process, and the second substitute victim is associated with a selected member in the class of cache lines. A second member in the class of cache lines is selected as a second substitute victim. The second victim is unselectable by the least recently used cache management process, and the second substitute victim is associated with the selected member in the class of cache lines. One of the first or second substitute victims are replaced in response to a selection of the selected member as a victim when a cache miss occurs, wherein the selected member remains in the class of cache lines.

Owner:TWITTER INC

Directed least recently used cache replacement method

InactiveUS20020152361A1Performance maximizationImprove cache hit ratioEnergy efficient ICTMemory adressing/allocation/relocationParallel computingLeast recently frequently used

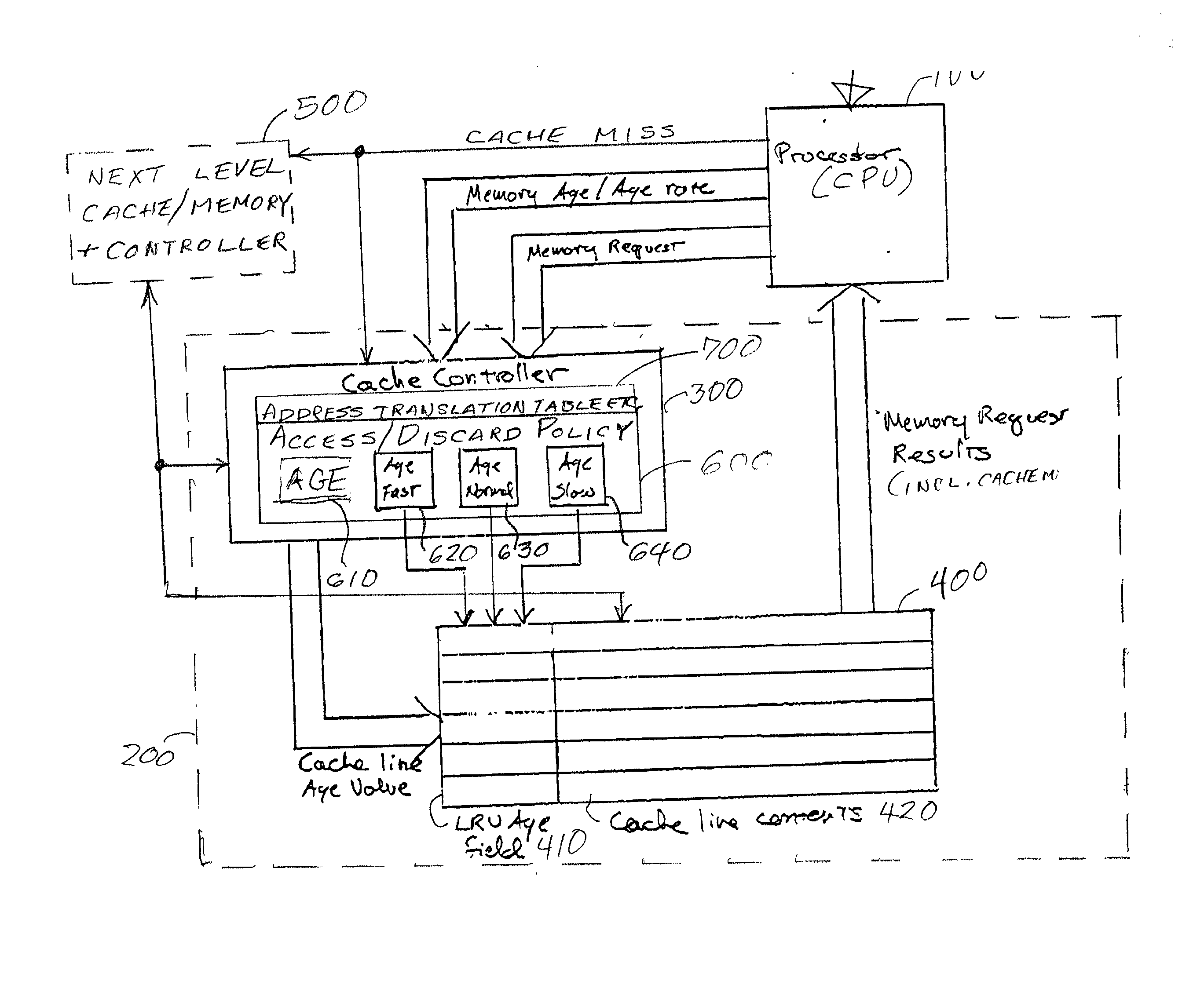

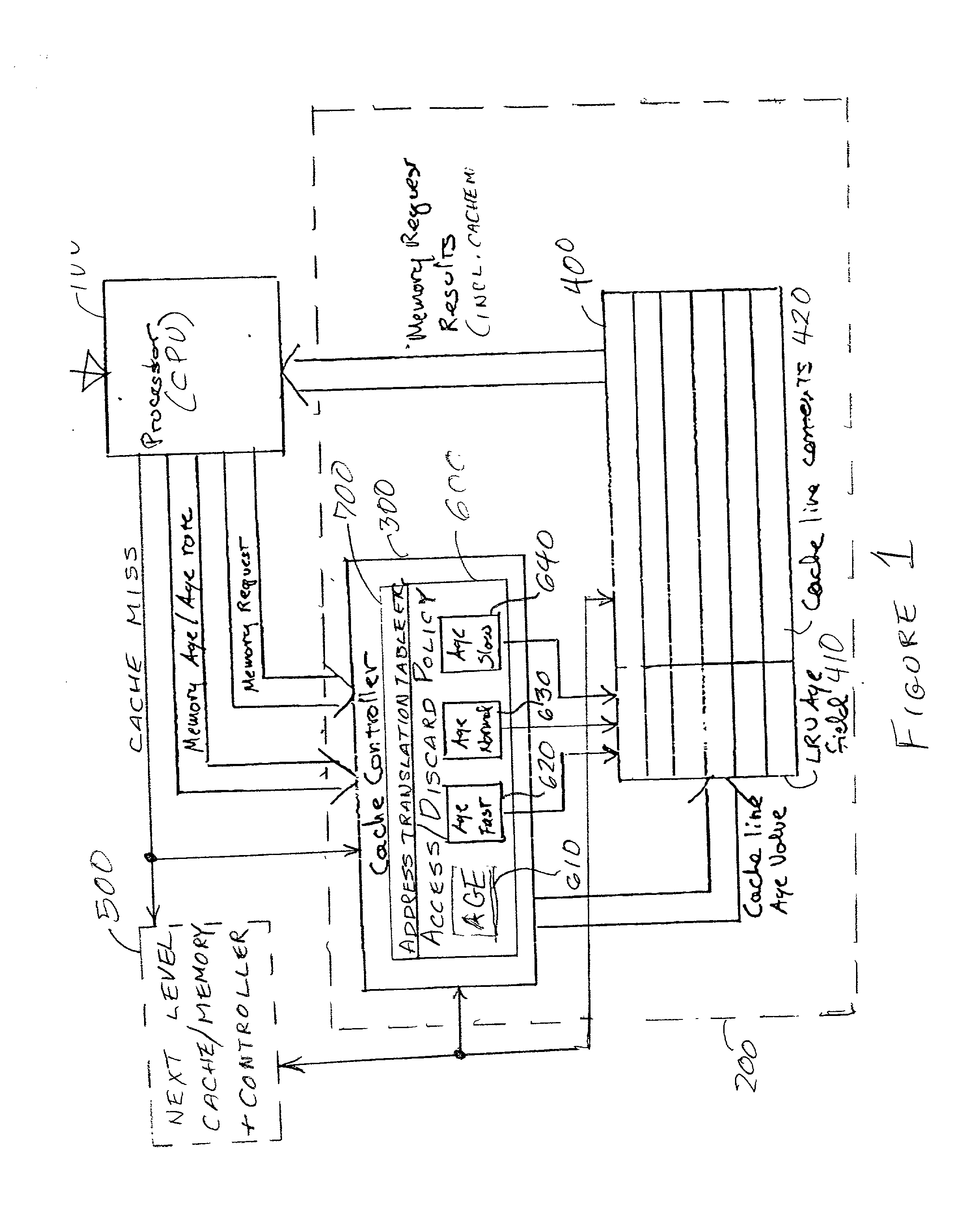

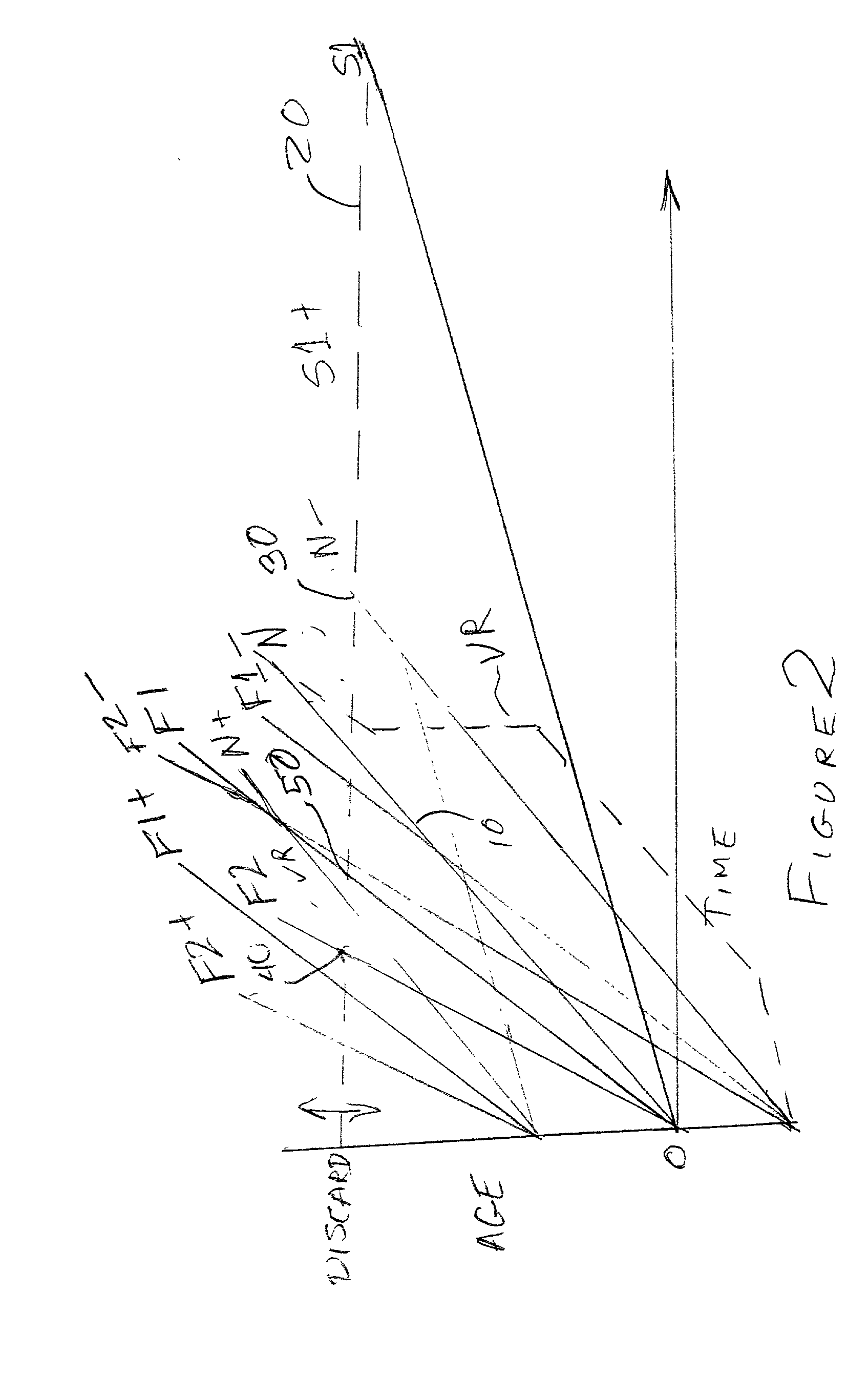

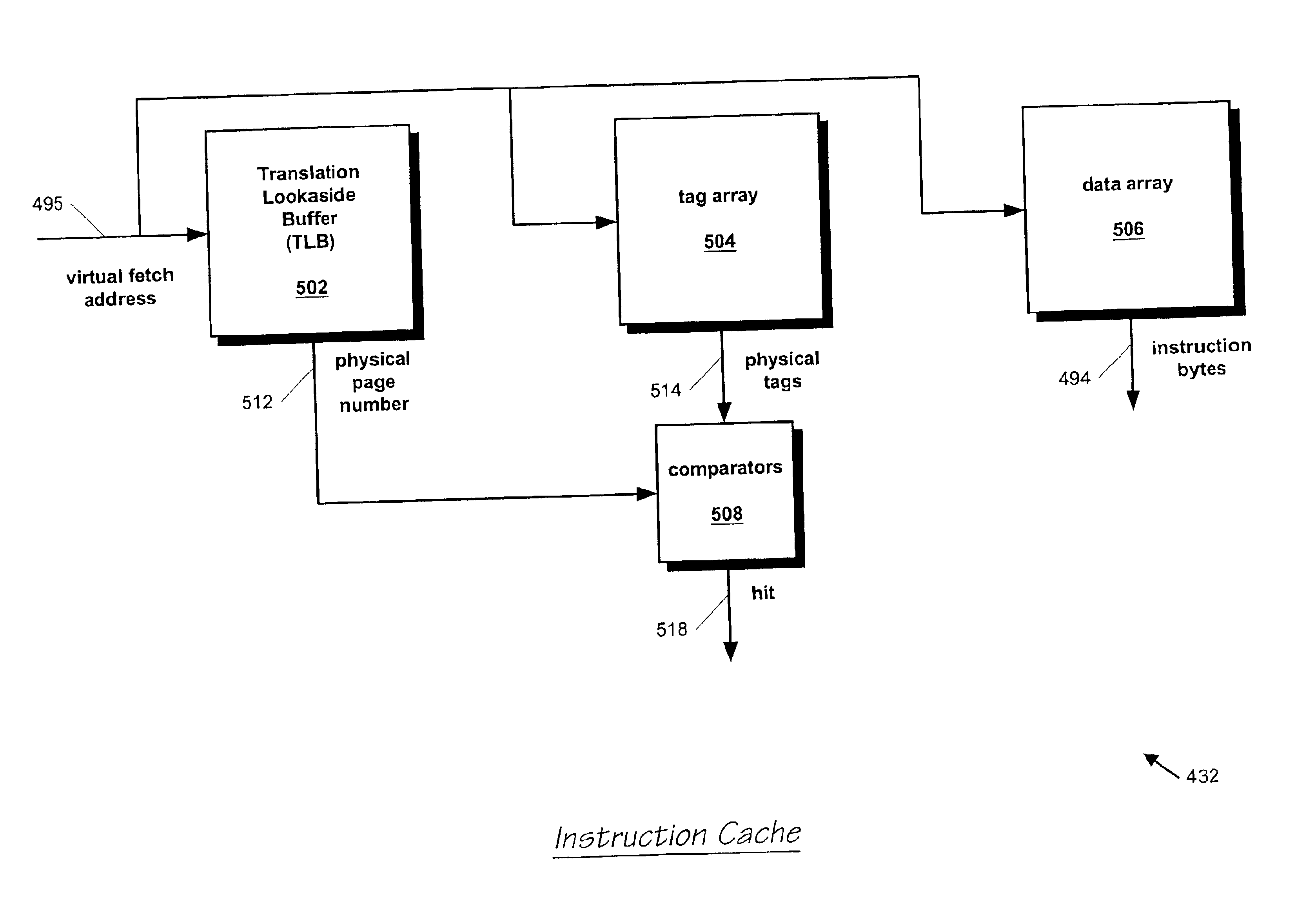

Fine grained control of cache maintenance resulting in improved cache hit rate and processor performance by storing age values and aging rates for respective code lines stored in the cache to direct performance of a least recently used (LRU) strategy for casting out lines of code from the cache which become less likely, over time, of being needed by a processor, thus supporting improved performance of a processor accessing the cache. The invention is implemented by the provision for entry of an arbitrary age value when a corresponding code line is initially stored in or accessed from the cache and control of the frequency or rate at which the age of each code is incremented in response to a limited set of command instructions which may be placed in a program manually or automatically using an optimizing compiler.

Owner:IBM CORP

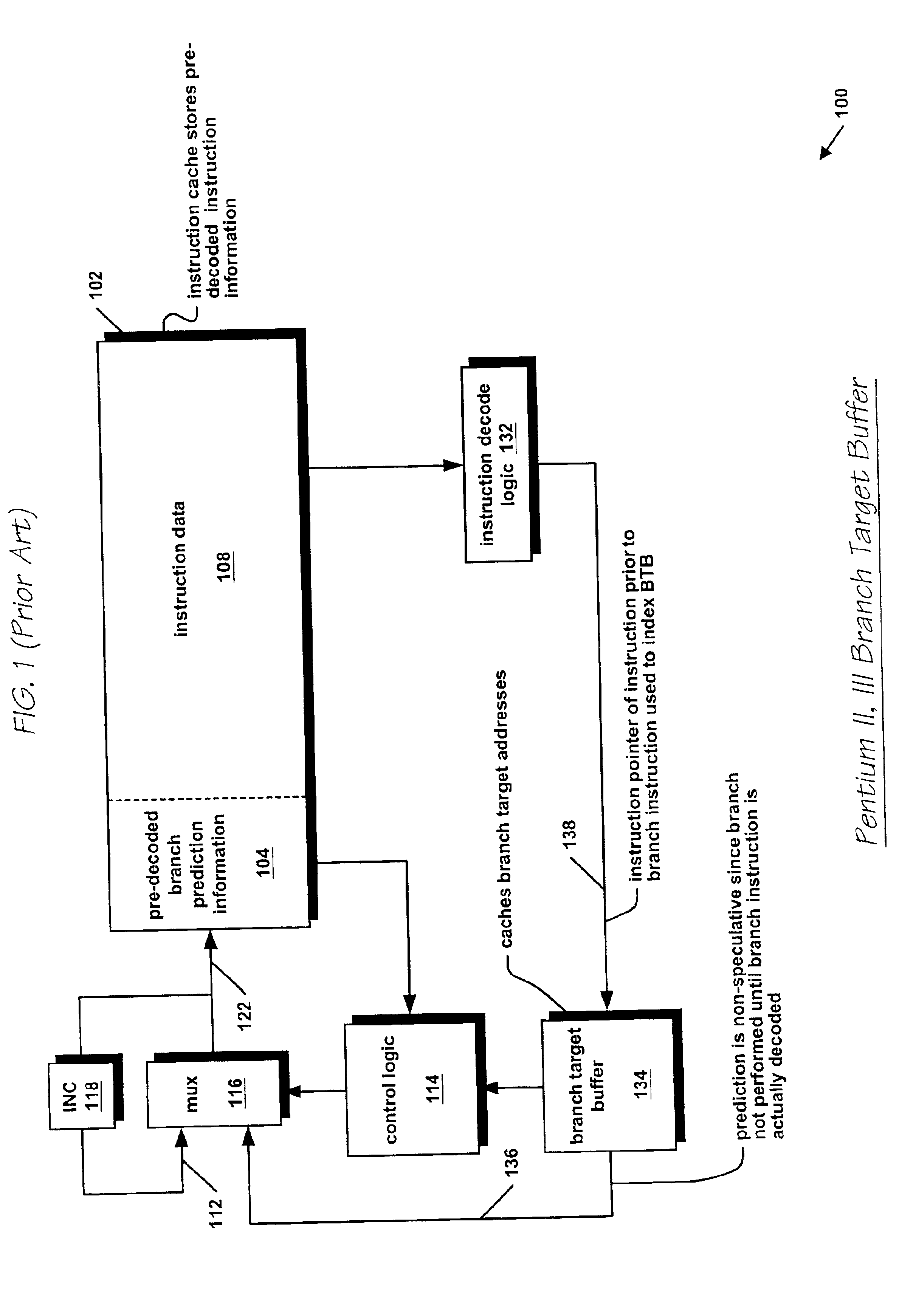

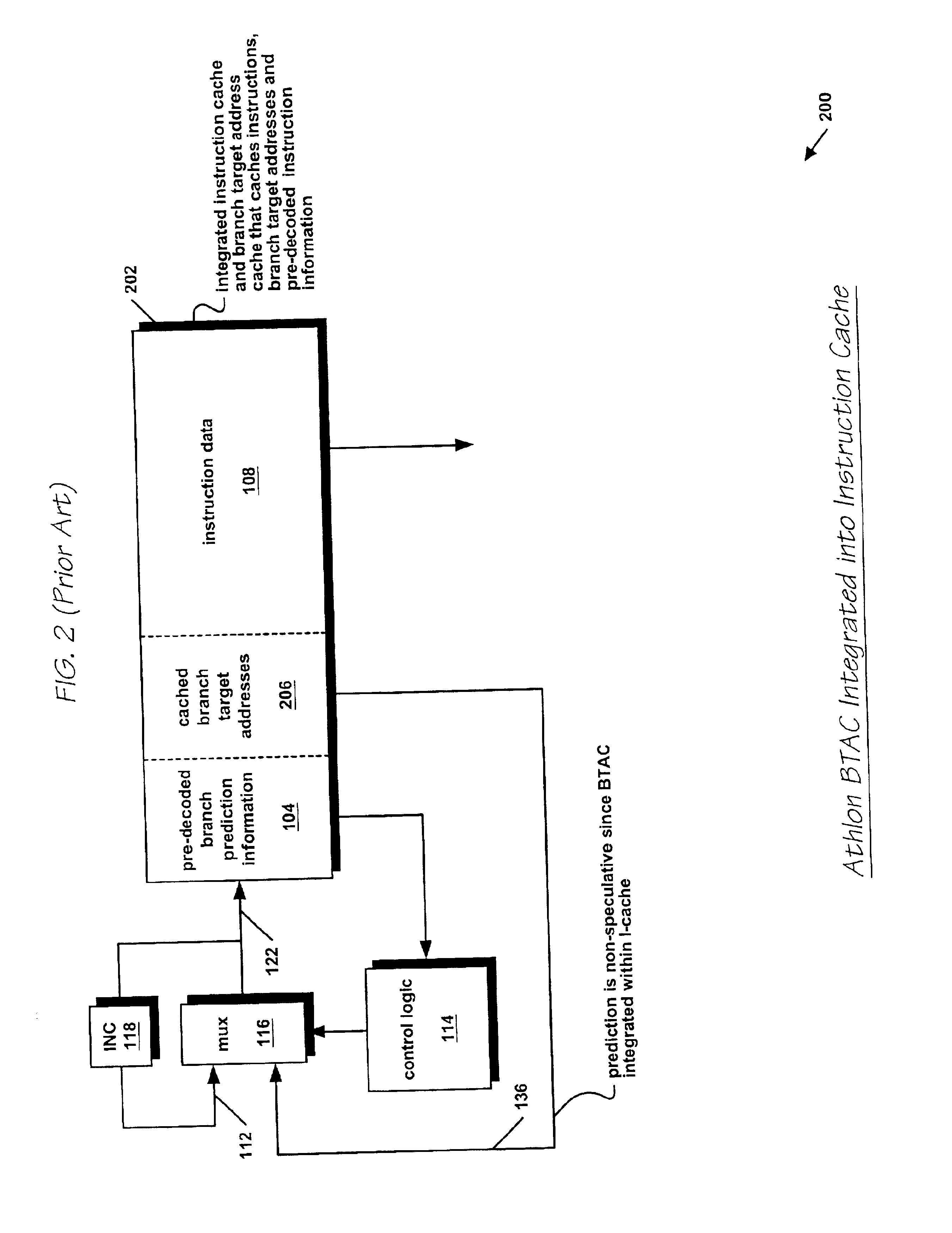

Apparatus and method for target address replacement in speculative branch target address cache

InactiveUS6895498B2Easy to useMemory adressing/allocation/relocationDigital computer detailsProcessor registerBranch target address cache

An apparatus and method in a pipelined microprocessor for replacing one of two target addresses in a branch target address cache (BTAC) line. If only one of the two entries is invalid, the invalid entry is replaced. If both entries are valid, the least recently used entry is replaced. If both entries are invalid, the entry is replaced corresponding to the side of the BTAC, indicated by a global status register, not last written to with an invalid entry. In one embodiment, the global status is updated only if a side is written when both entries are invalid. In another embodiment, the BTAC stores N entries per line, where N is greater than 1. The status register maintains information for determining which of the N sides is least recently written. The least recently written side is chosen for replacement.

Owner:IP FIRST

Method and system for maintaining allocation information on data castout from an upper level cache

InactiveUS6385695B1Easy to useMemory adressing/allocation/relocationMemory hierarchyParallel computing

A method and system for maintaining allocation information on data castout from an upper level cache provides a cache control with the ability to select victims based on whether a cache entry is present due to a read request from a higher level in the memory hierarchy or is present due to being modified in the higher level and then castout to the lower level. The information maintained may be a single bit indicating this status, may be a separate least-recently-used (LRU) array value indicating the order of allocation in the lower level for storage of cache entries castout from the higher level.

Owner:IBM CORP

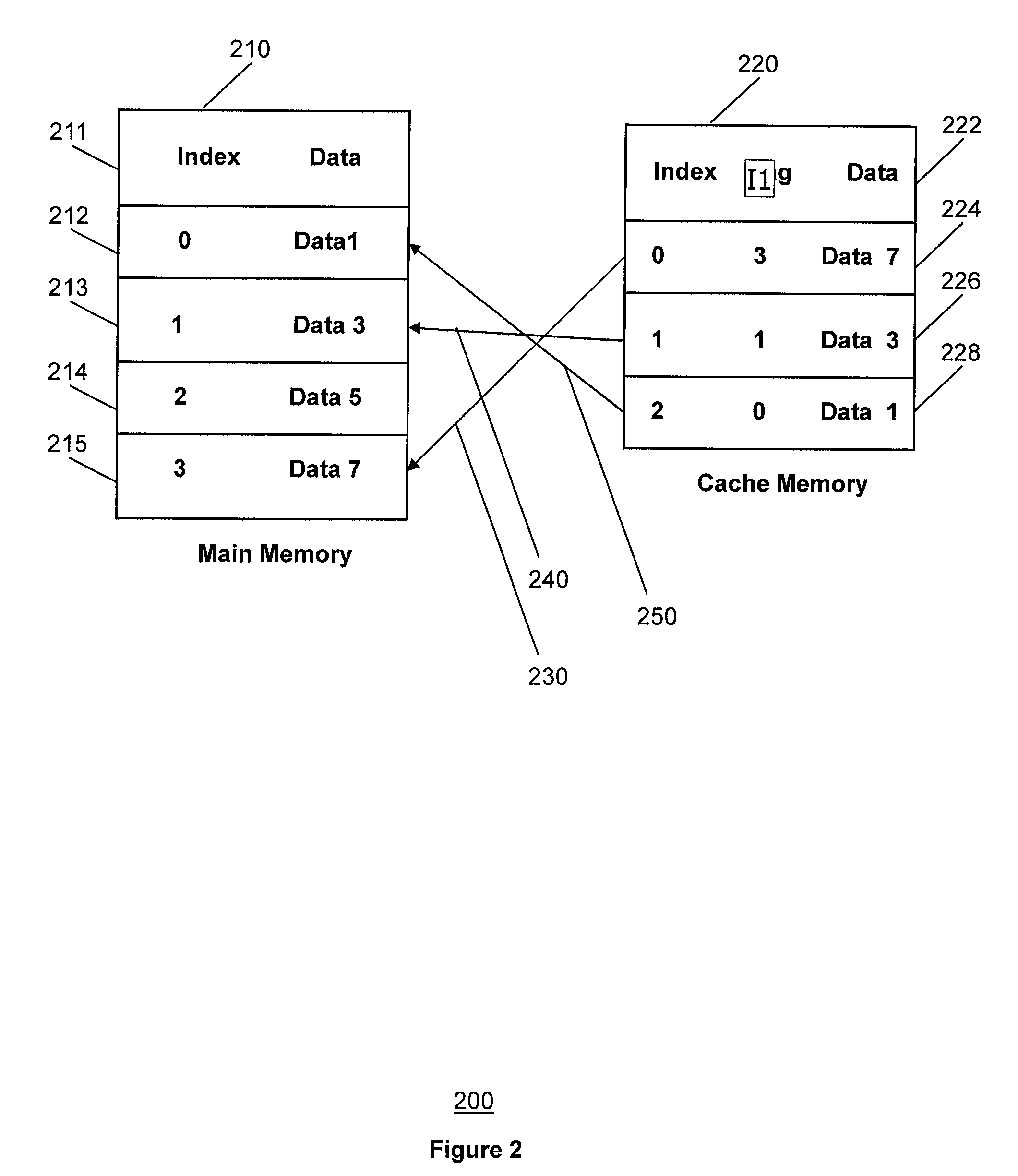

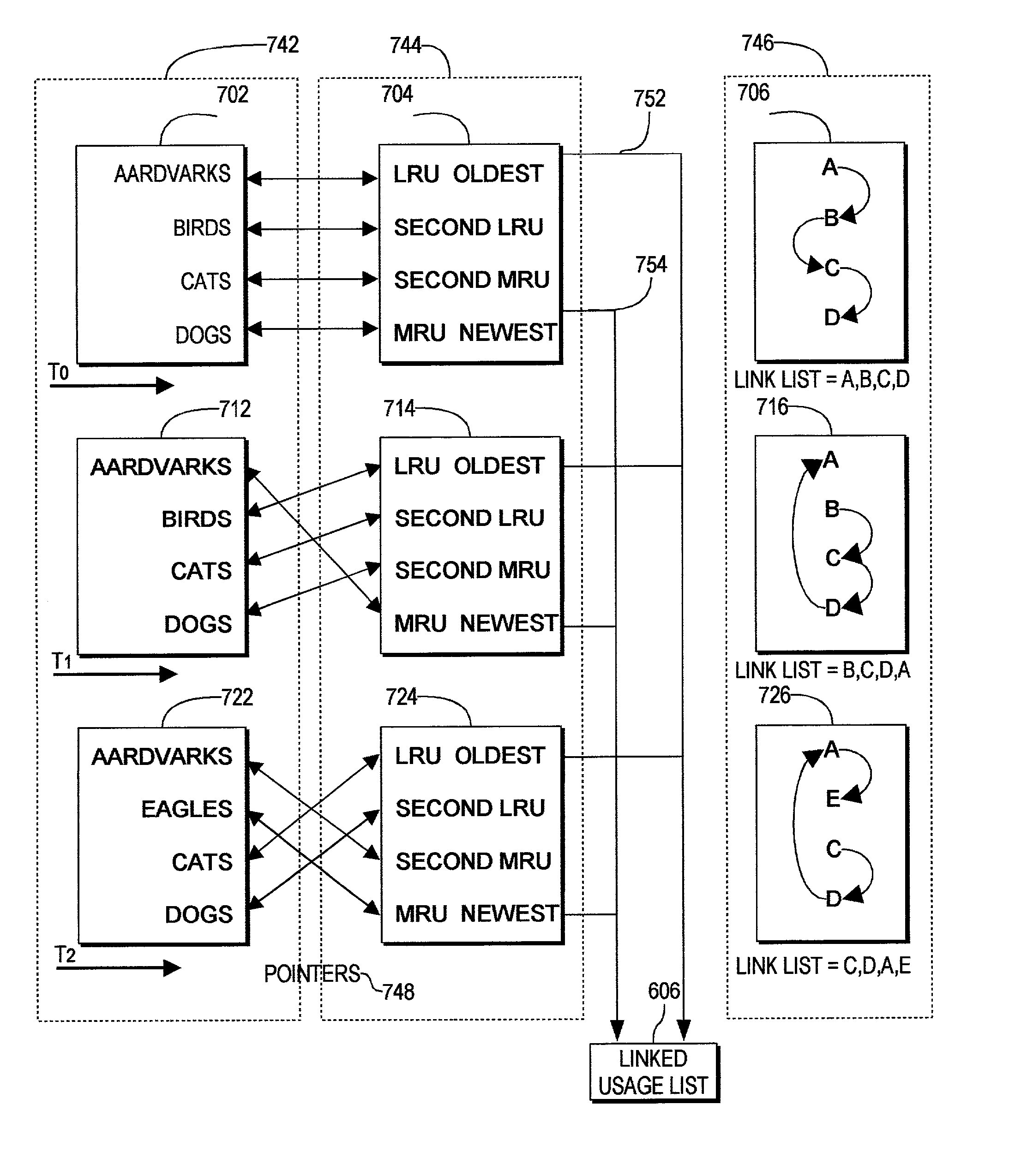

Dual organization of cache contents

A method, and computer readable medium for control of data in a caching application. An indexed list of a type is used to hold cache elements for ease of lookup while a linked usage list is maintained for the Most Recently Used / Least Recently Used elements. Pointers between the lists are also maintained. This allows the cache to find both a specific entry if it exists and if it does not, and in the latter case the LRU element can be located without the need for a sequential search. Each element in the linked list holds a pointer to a cache element in the, and each cache element record in the indexed list also holds a pointer to its corresponding record in the linked list, in addition to the actual cached data.

Owner:DALEEN TECH

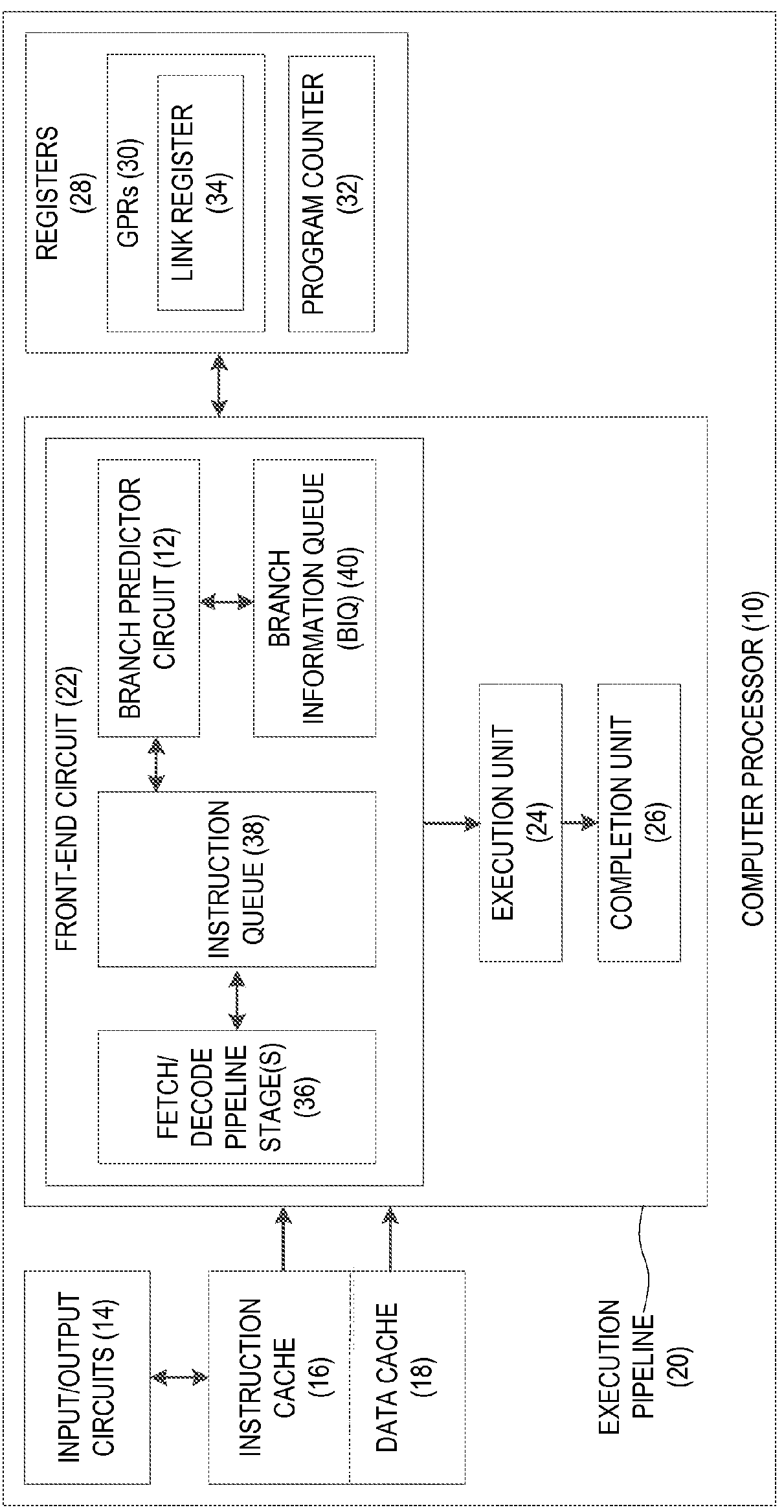

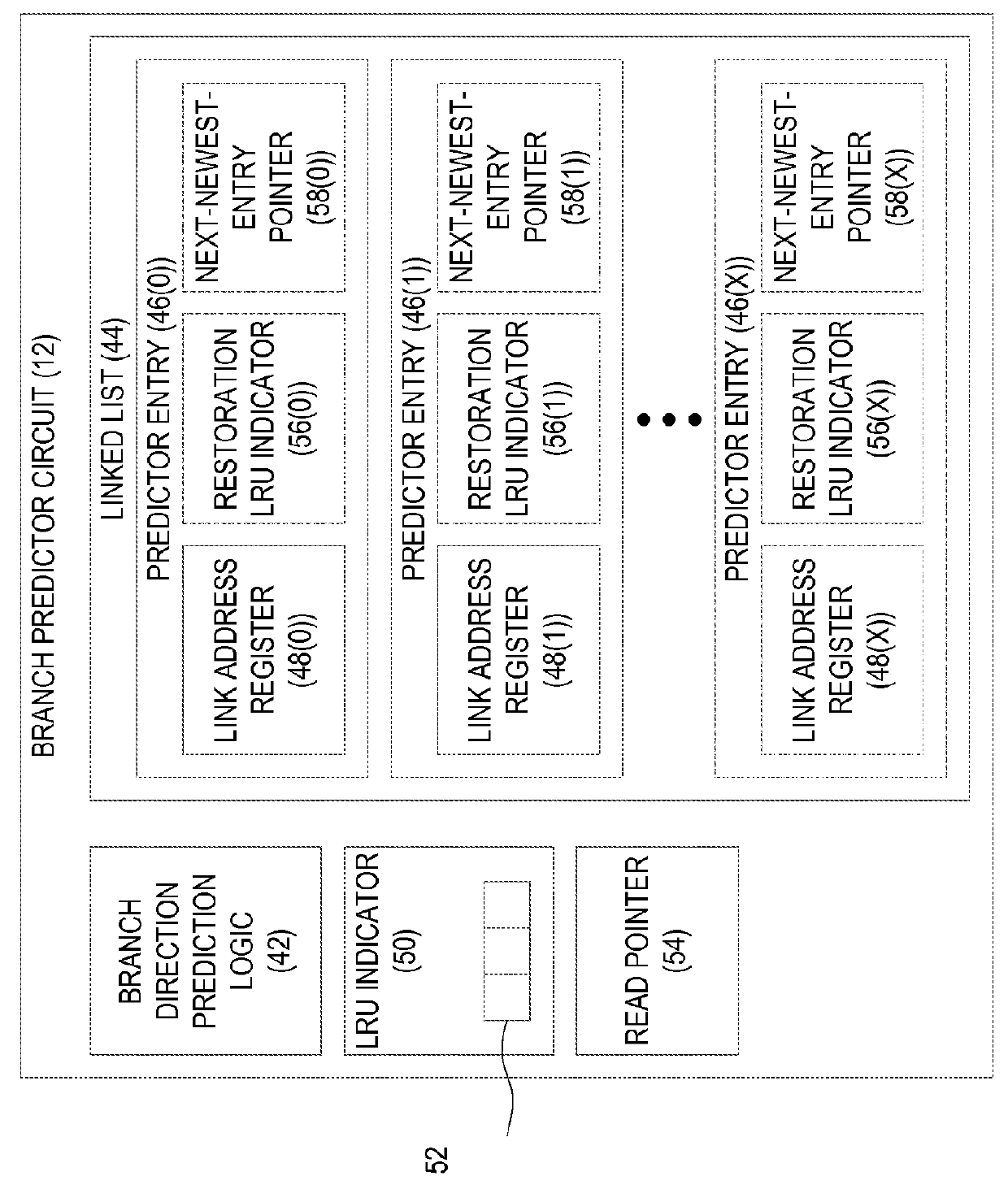

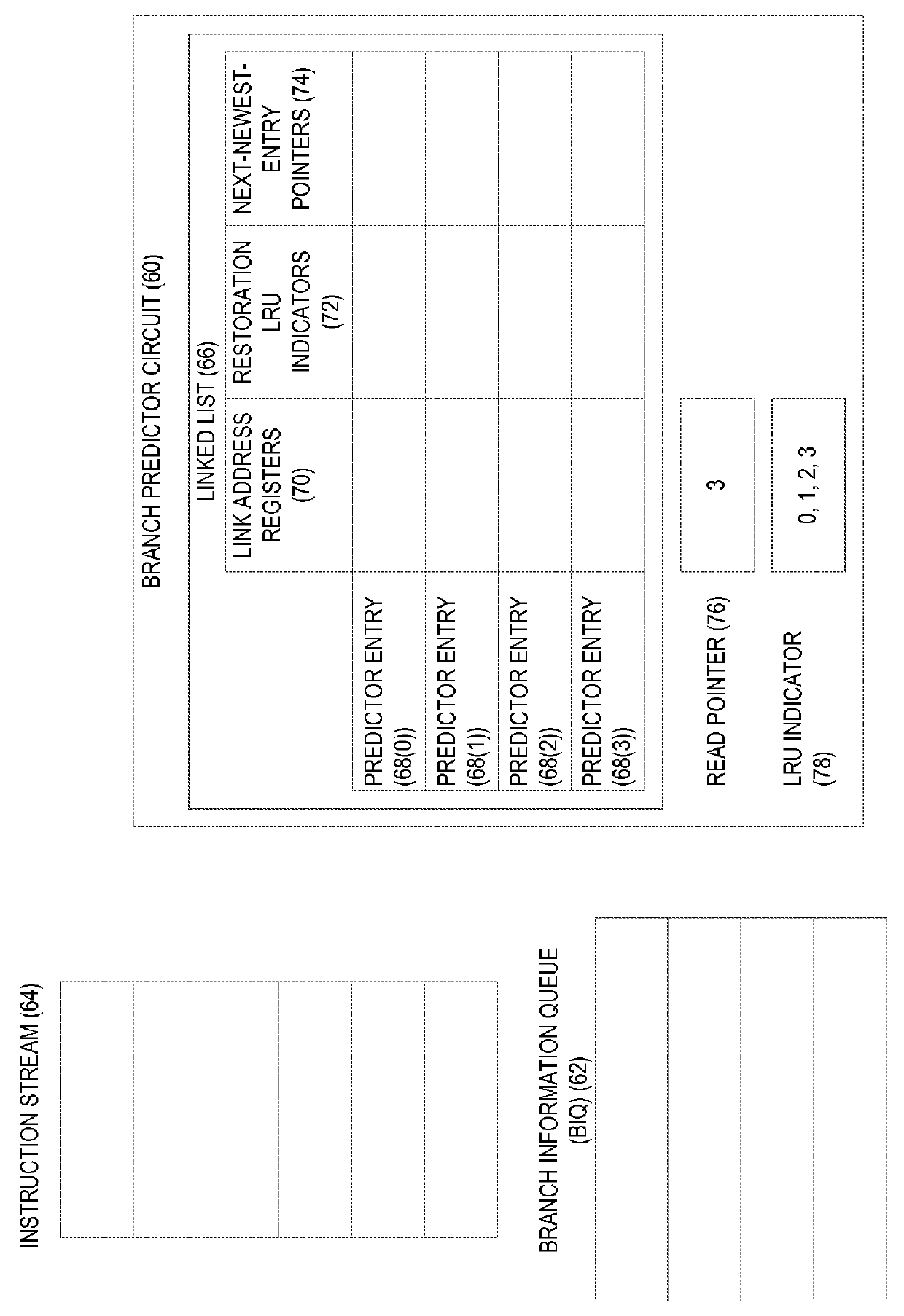

Branch prediction using least-recently-used (LRU)-class linked list branch predictors, and related circuits, methods, and computer-readable media

InactiveUS20160055003A1Decrease sensitivity to speculative corruptionMemory architecture accessing/allocationMemory adressing/allocation/relocationProcessor registerLeast recently frequently used

Branch prediction using Least-Recently-Used (LRU)-class linked list branch predictors, and related circuits, methods, and computer-readable media are disclosed. In one aspect, a branch predictor circuit comprises branch direction prediction logic and a linked list comprising a plurality of predictor entries, each comprising a link address register. The branch predictor circuit also comprises a LRU indicator indicative of a relative age of each of the predictor entries. The branch predictor circuit is configured to detect a first branch instruction in an instruction stream, and determine whether the first branch instruction is predicted to be taken. Responsive to determining that the first branch instruction is predicted to be taken, the branch predictor circuit allocates a least-recently-used entry of the plurality of predictor entries of the linked list based on the LRU indicator, and stores a sequential address for the first branch instruction in the link address register of the least-recently-used predictor entry.

Owner:QUALCOMM INC

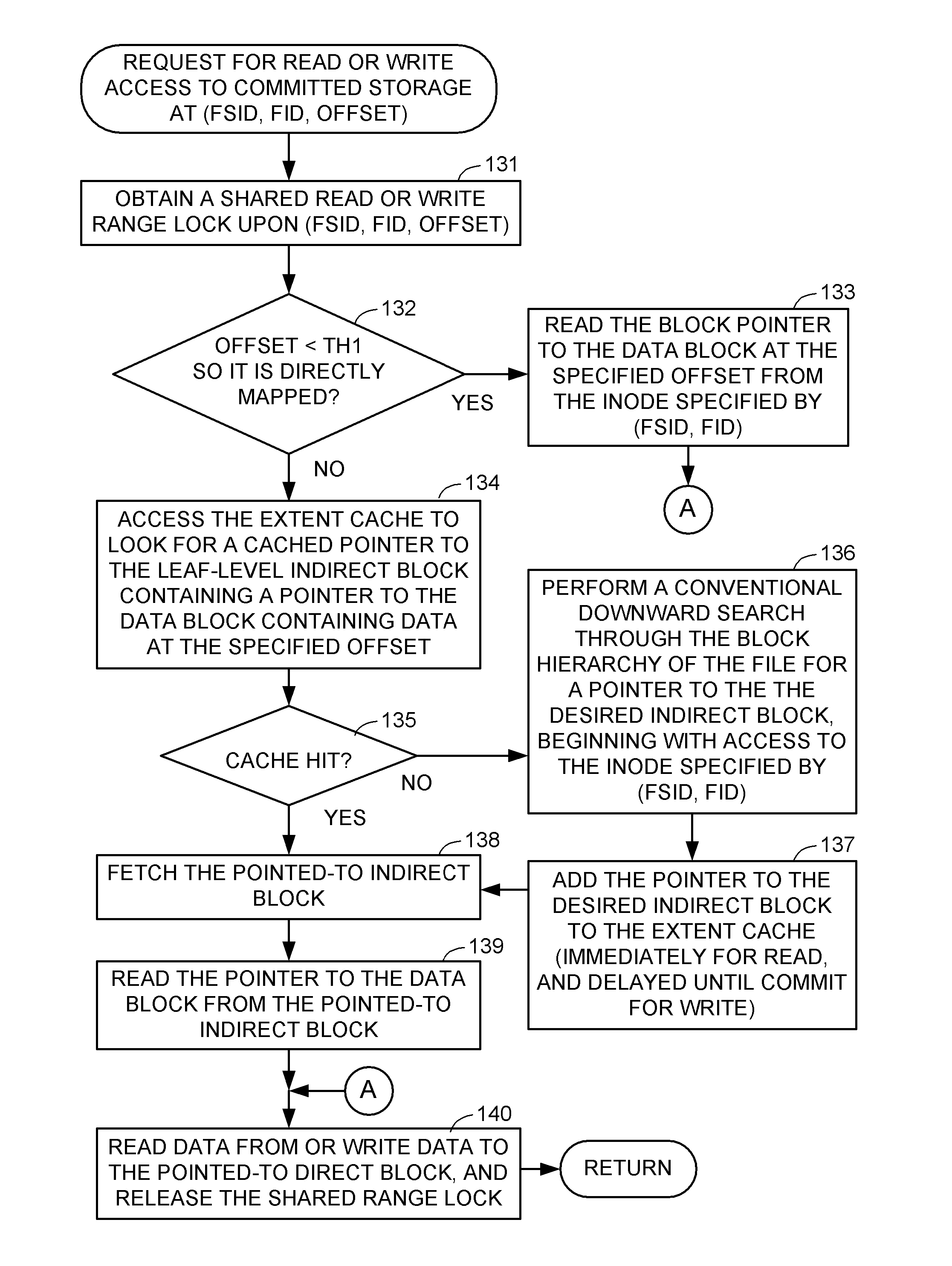

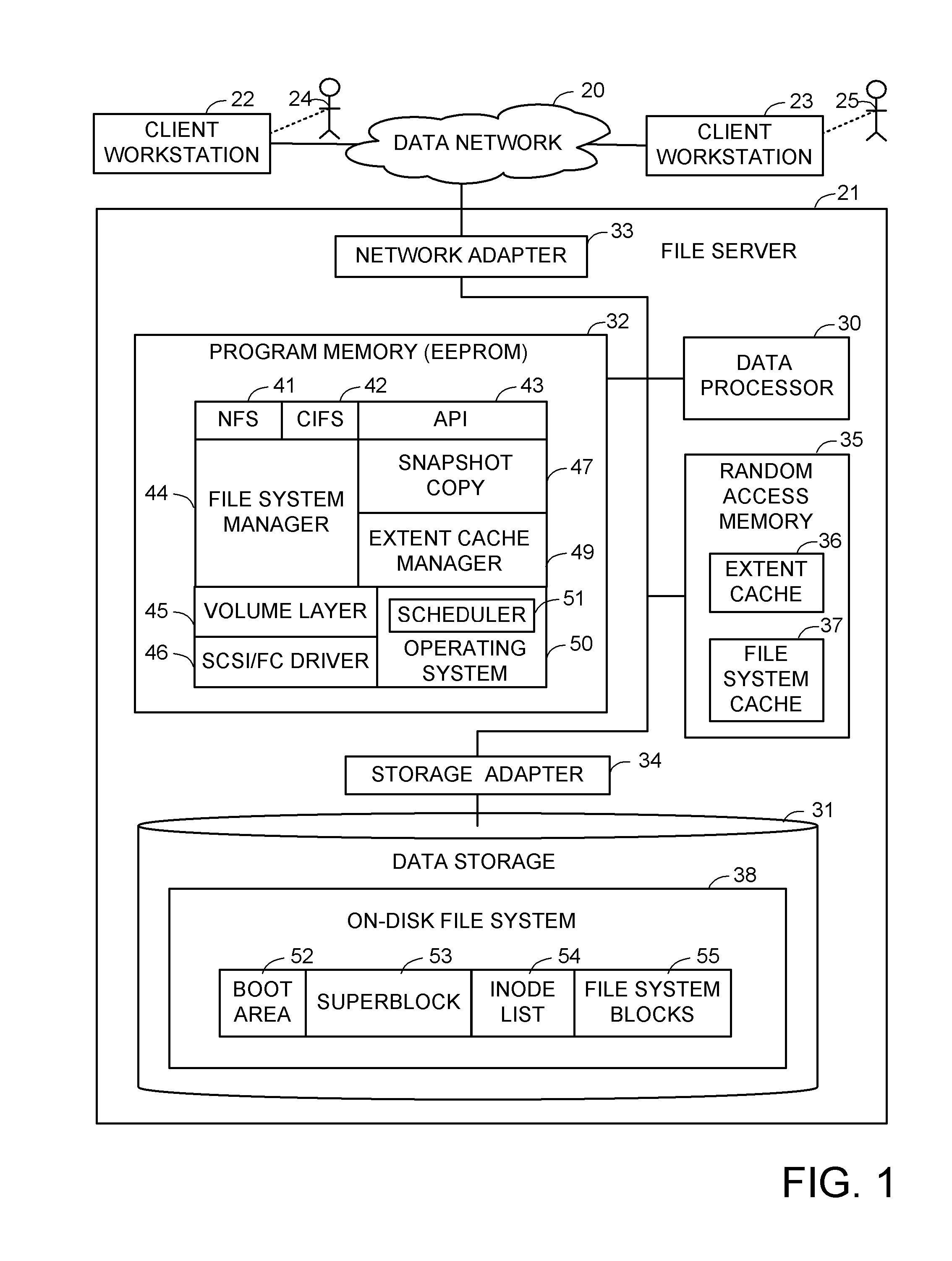

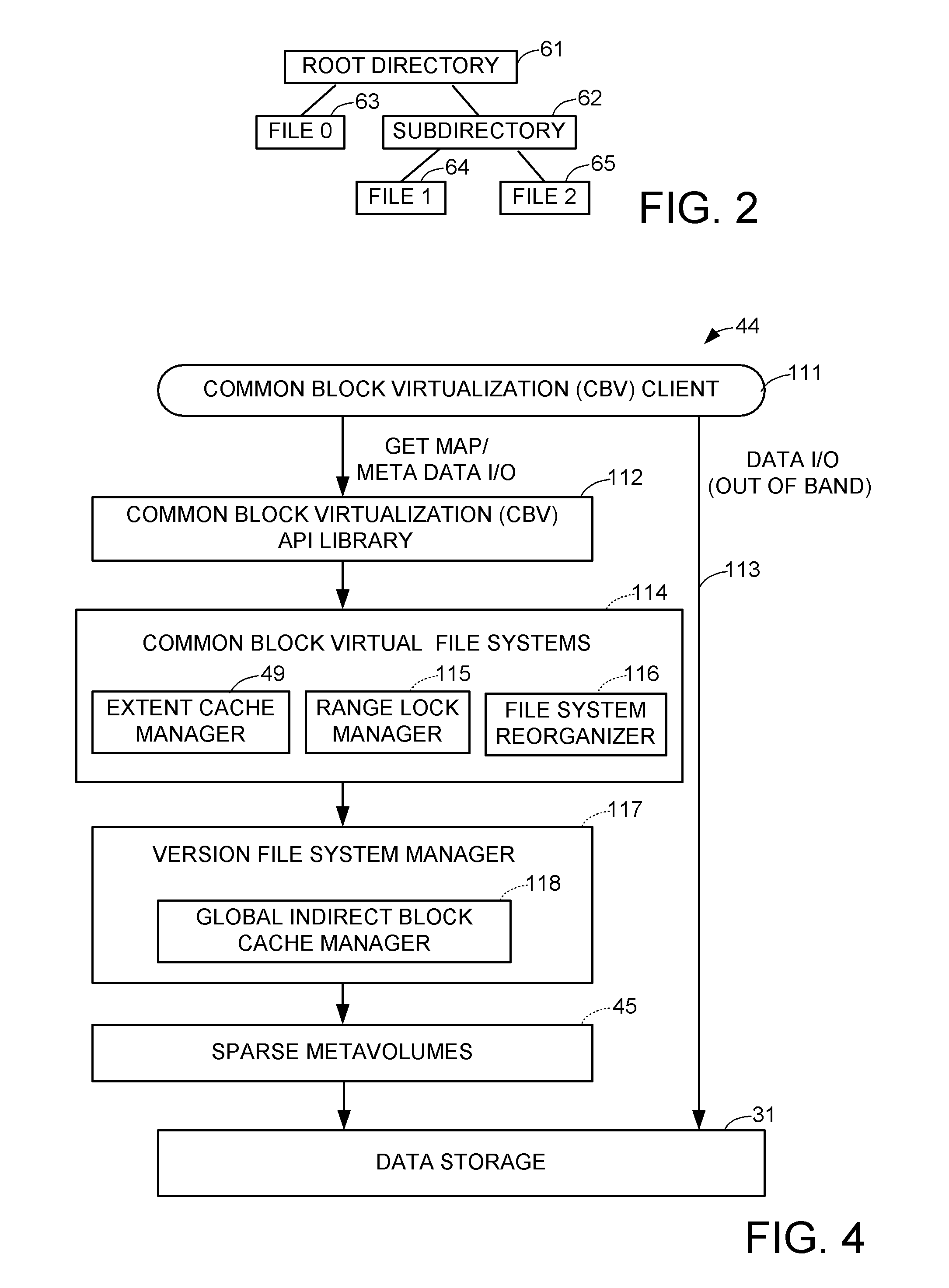

Extended file mapping cache for fast input-output

ActiveUS8204871B1Easy to findTiming offsetDigital data information retrievalDigital data processing detailsFile systemMostly True

A file server has an extent cache of pointers to leaf-level indirect blocks containing file mapping metadata. The extent cache improves file access read and write performance by returning a mapping for the data blocks to be read or written without having to iterate through intermediate level indirect blocks of the file. In addition, the extent cache contains pointers to the leaf-level indirect blocks in the file system cache. Therefore, in most cases, the time spent looking up pointers in the extent cache is offset by a reduction in the time that would otherwise be spent in locating the leaf-level indirect blocks in the file system cache. In a preferred implementation, the extent cache has a first least recently used (LRU) list and cache entry allocation for production files, and a second LRU list and cache entry allocation for snapshot copies of the production files.

Owner:EMC IP HLDG CO LLC

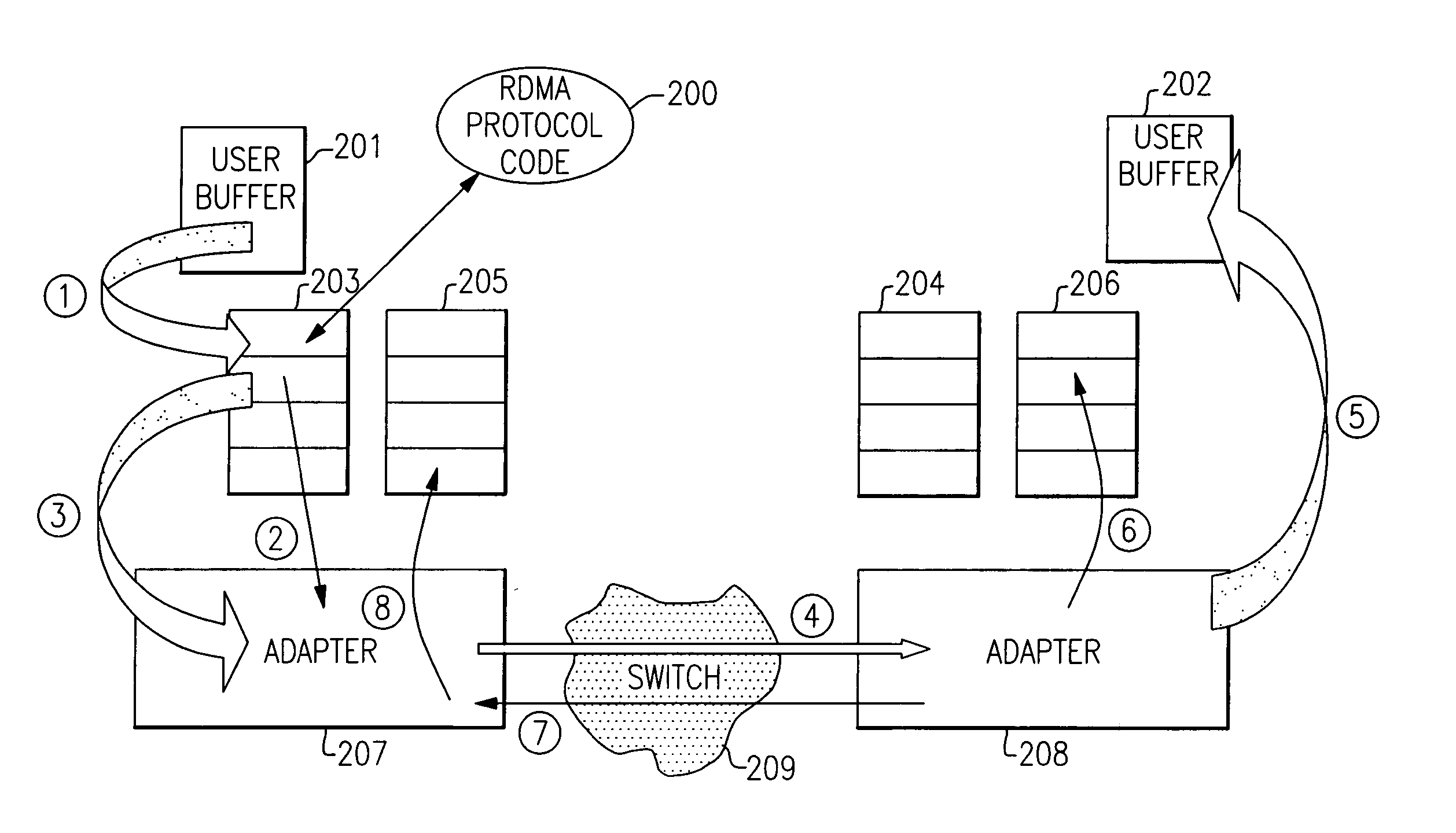

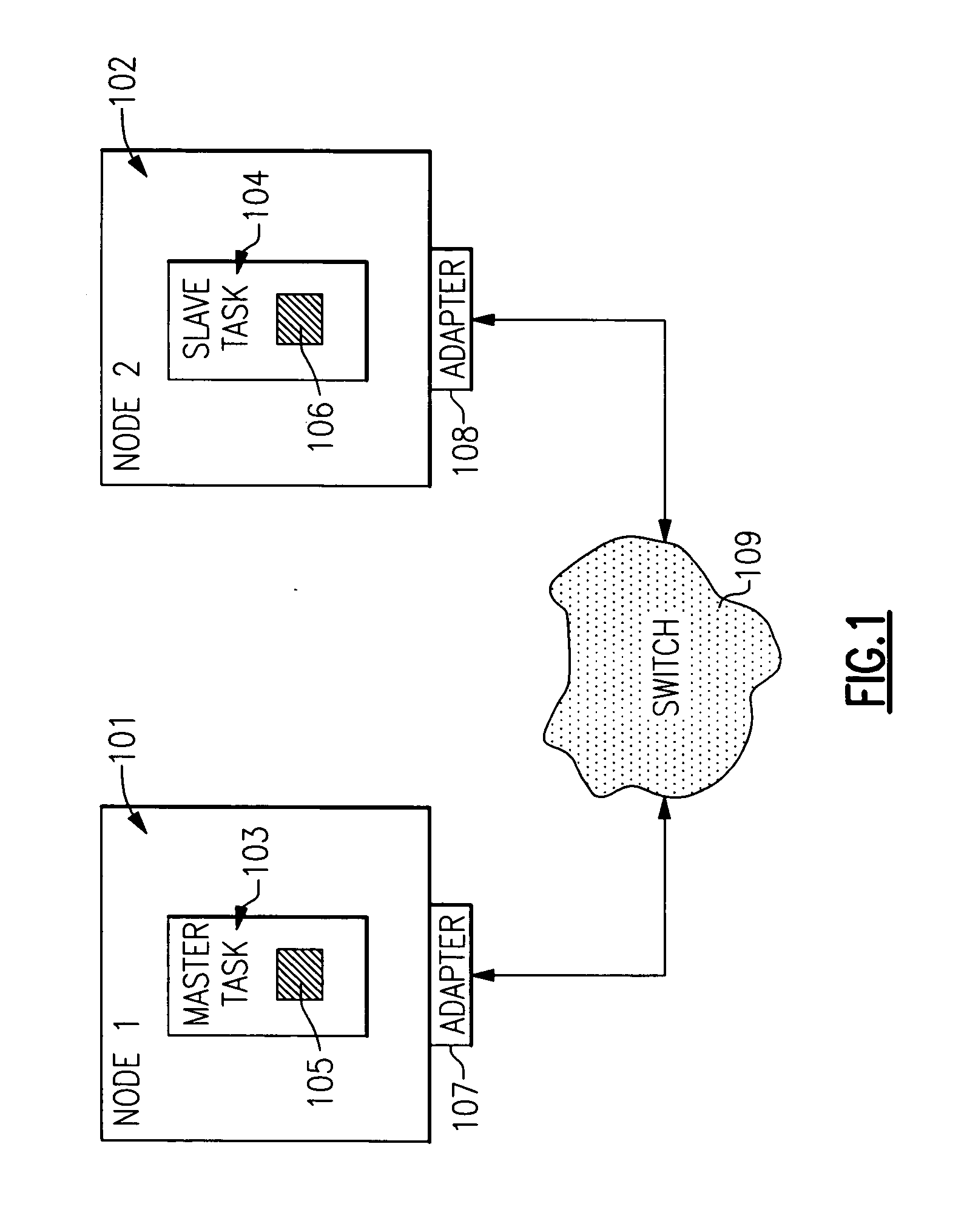

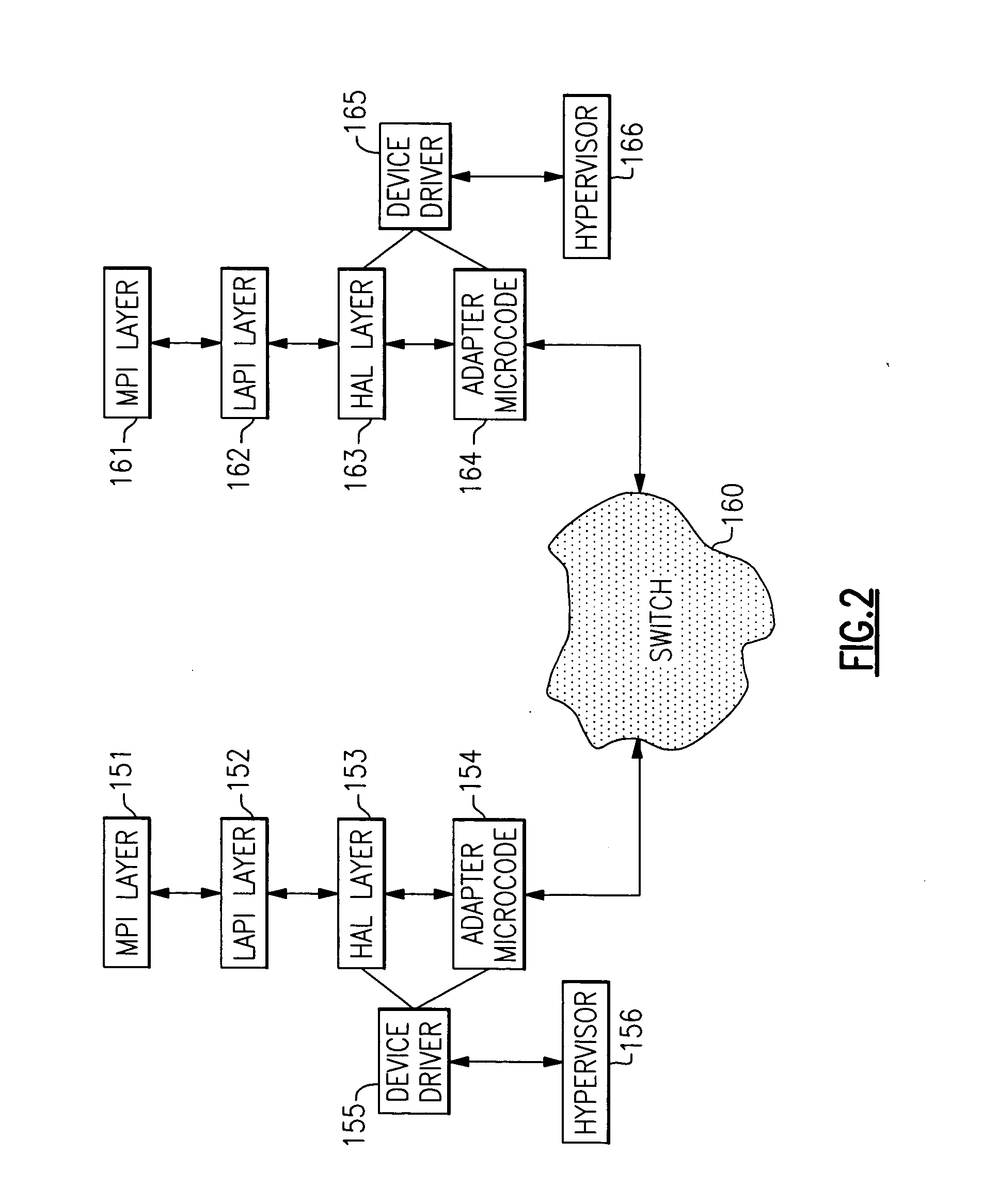

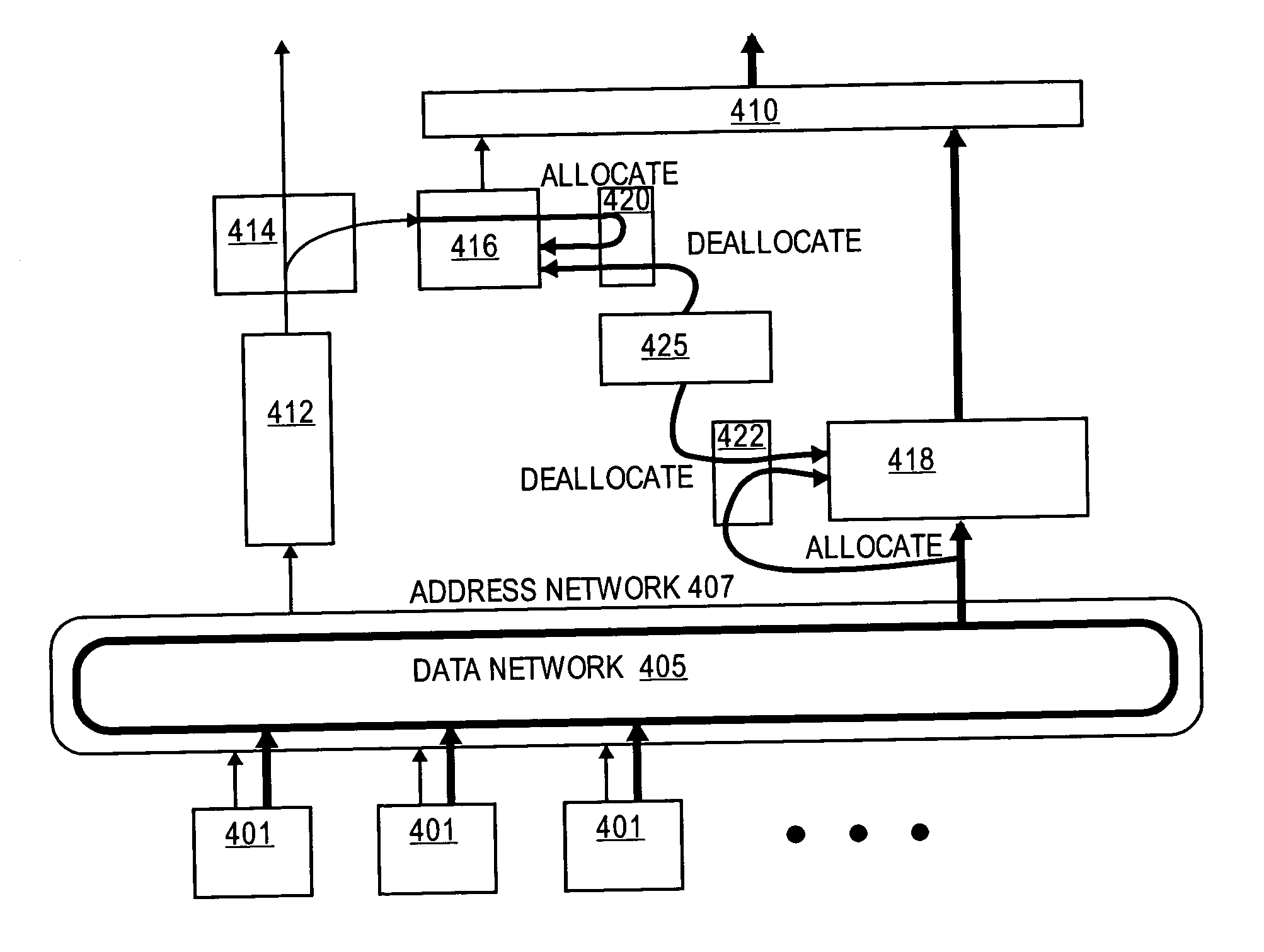

Lazy deregistration of user virtual machine to adapter protocol virtual offsets

InactiveUS20060059242A1Data switching by path configurationMultiple digital computer combinationsData processing systemRemote direct memory access

A method is provided for operating a communications adapter employed in a multinode data processing system in a fashion which enhances the performance of remote direct memory access data transfers. The system is provided with pointers and a table which are employed to determine whether or not an address which has been supplied for the transfer has already been mapped to a real address at the source or destination node. The table is also preferably provided with counters which can be incremented or decremented to enable the use of least recently used mechanisms at the upper level protocol layers to more efficiently control the setting and resetting of table entries.

Owner:IBM CORP

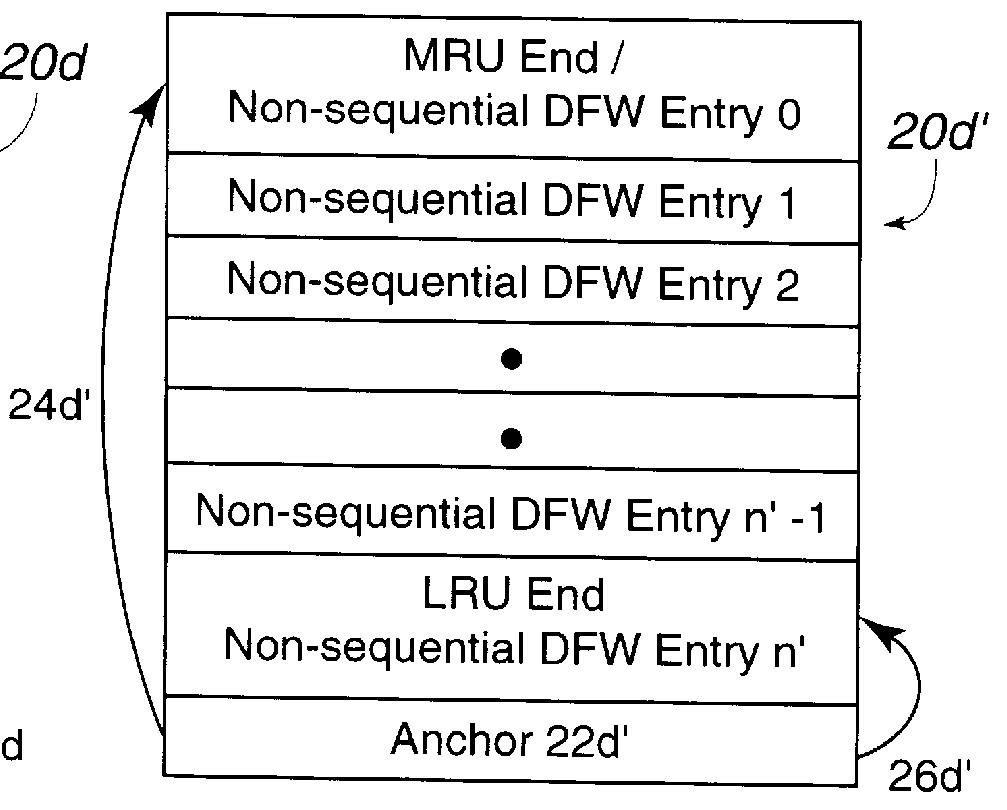

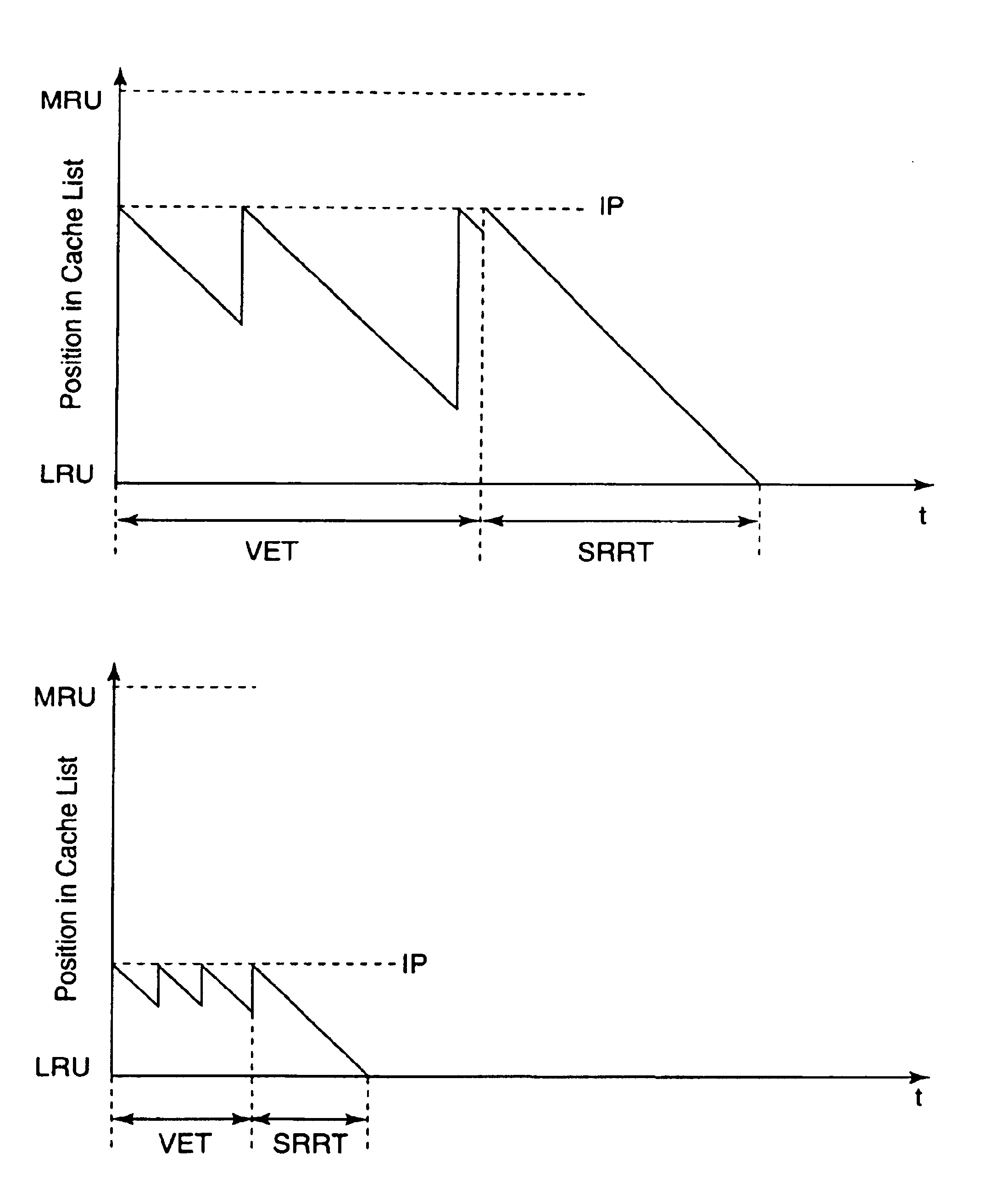

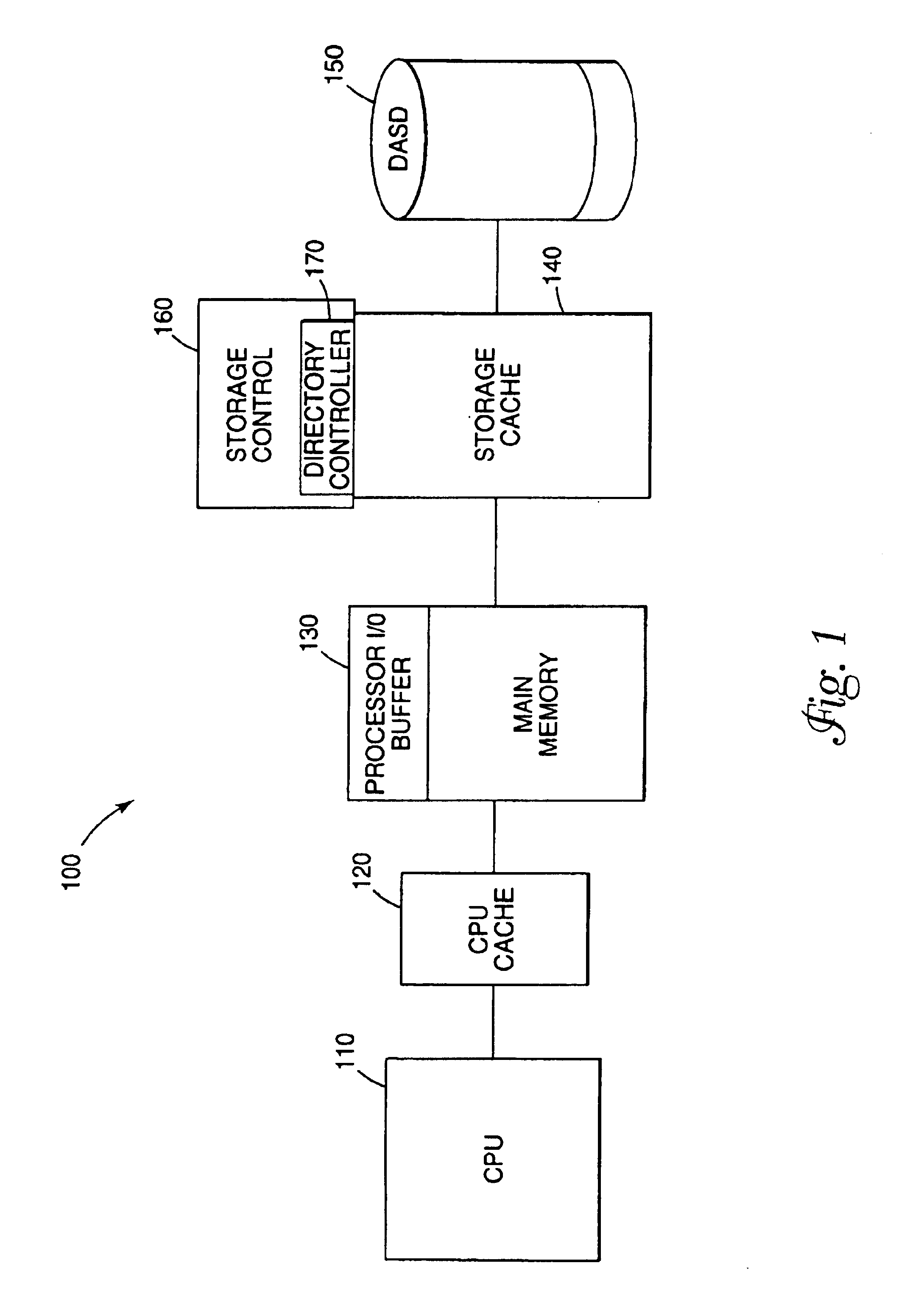

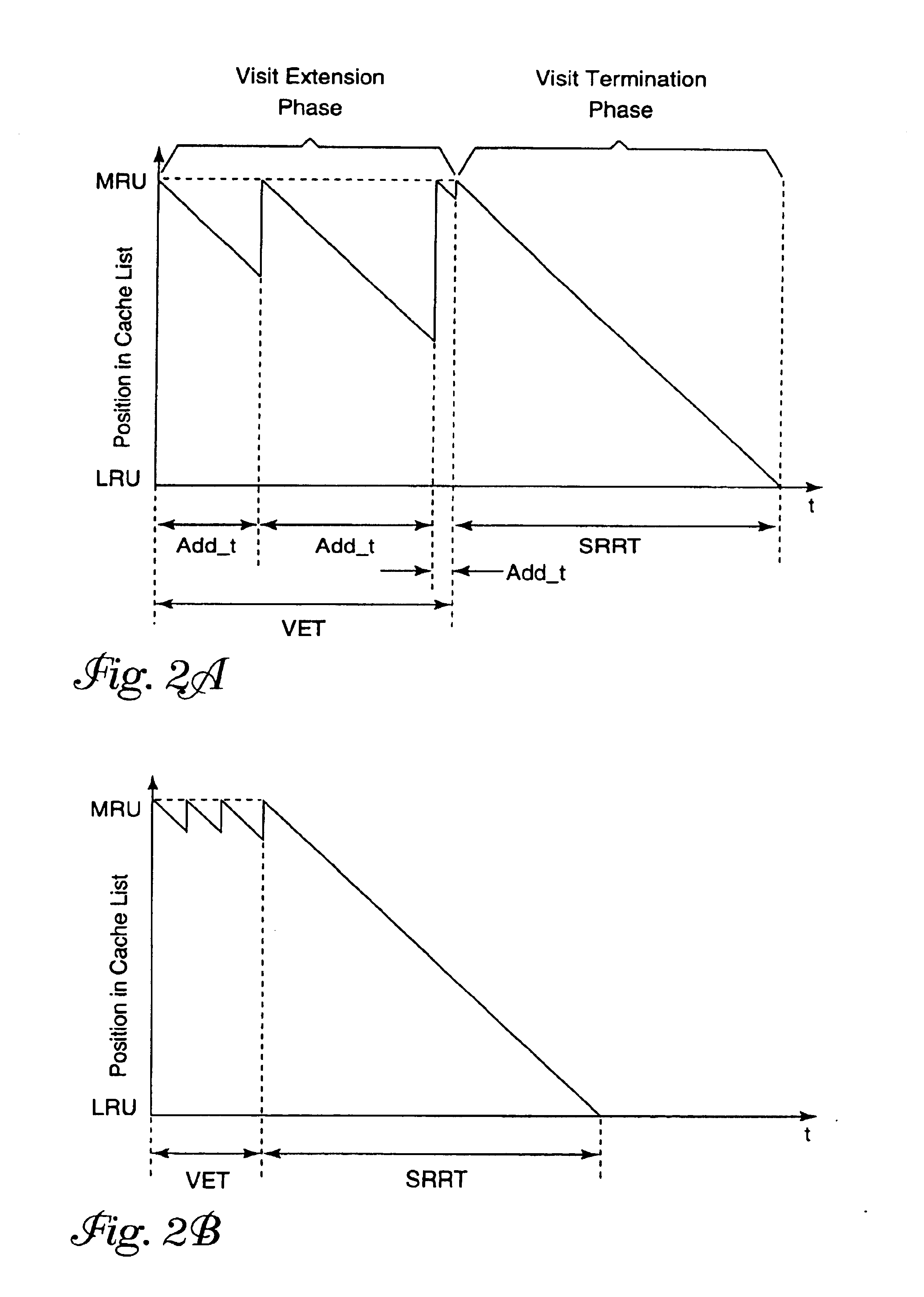

Method and apparatus for providing efficient management of least recently used (LRU) algorithm insertion points corresponding to defined times-in-cache

InactiveUS6842826B1Memory adressing/allocation/relocationComputer graphics (images)Least recently frequently used

A method and apparatus for providing efficient management of LRU insertion points corresponding to defined times-in-cache is disclosed. Insertion points are implemented as “dummy entries” in the LRU list. As such, they undergo the standard process for aging out of cache, along with all other entries. A circular queue of insertion points is maintained. At regular intervals, a new insertion point is placed at the top of the LRU list, and at the tail of the queue. When an insertion point reaches the bottom of the LRU list (“ages out”), it is removed form the head of the queue. Since insertion points are added to the list at regular intervals, the remaining time for data at the corresponding LRU list positions to age out must increase in the same, regular steps, as we consider insertion points from the bottom to the top of the LRU list. Therefore, we can find an insertion point which exhibits any desired age-out time, by indexing into the circular queue.

Owner:IBM CORP

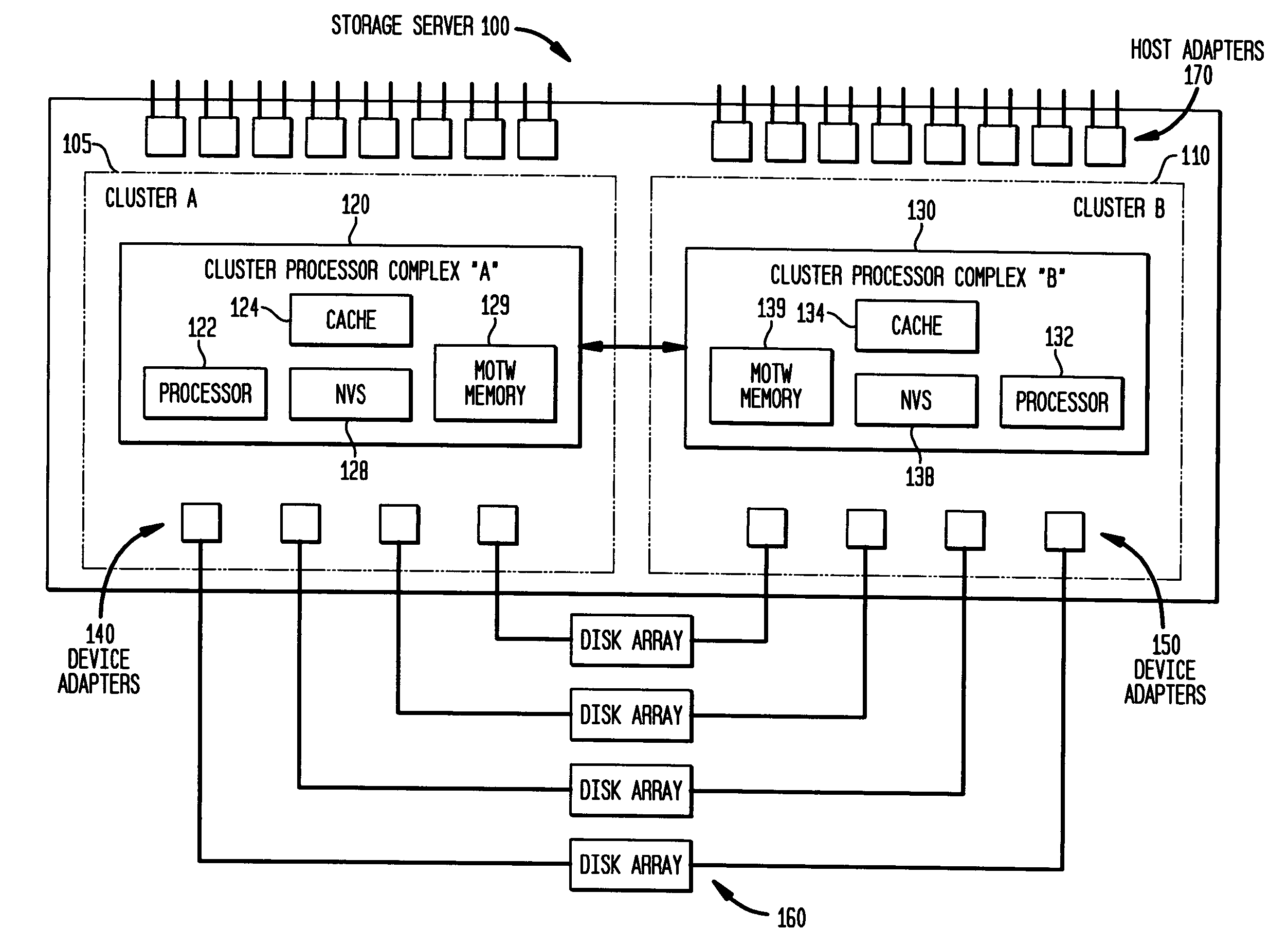

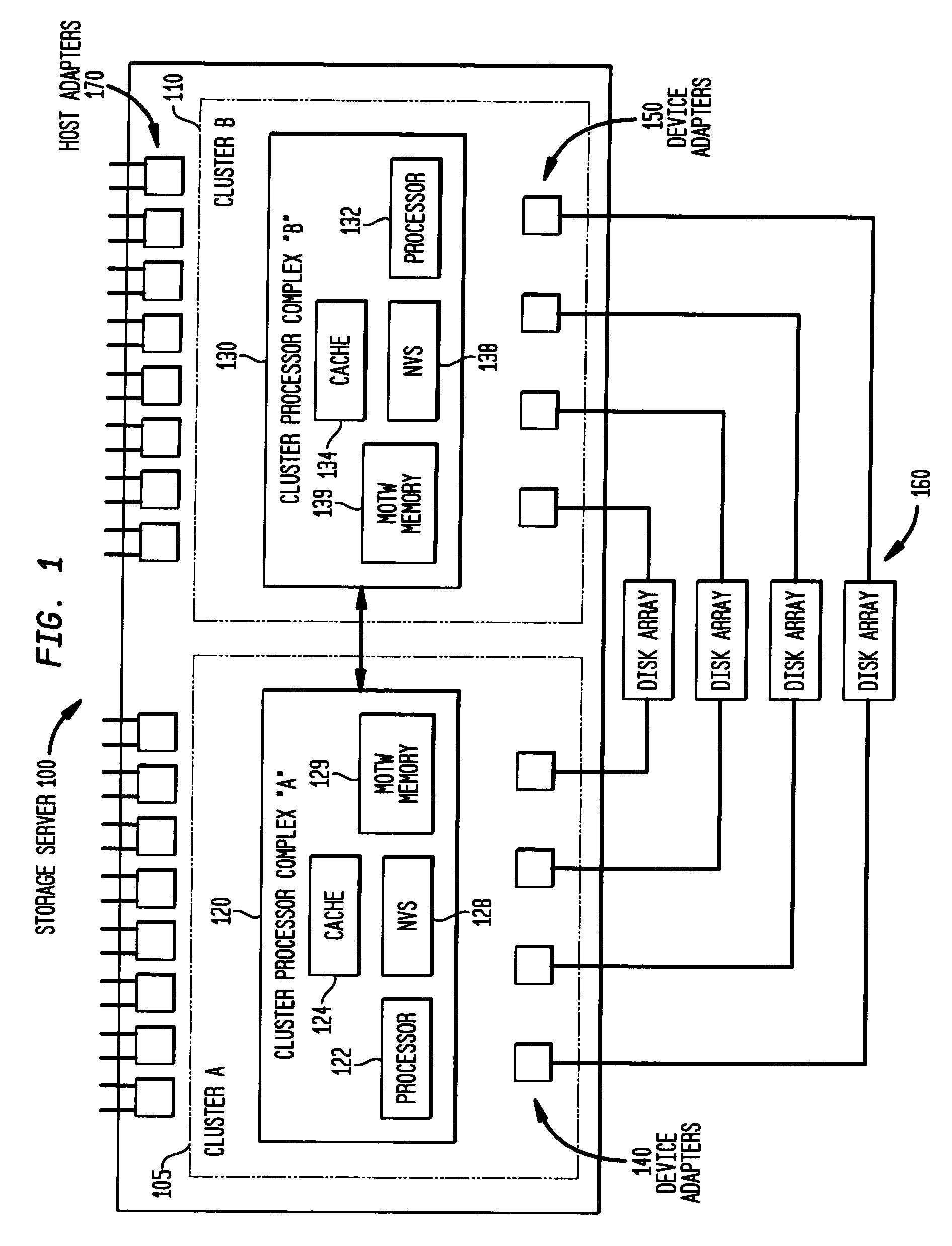

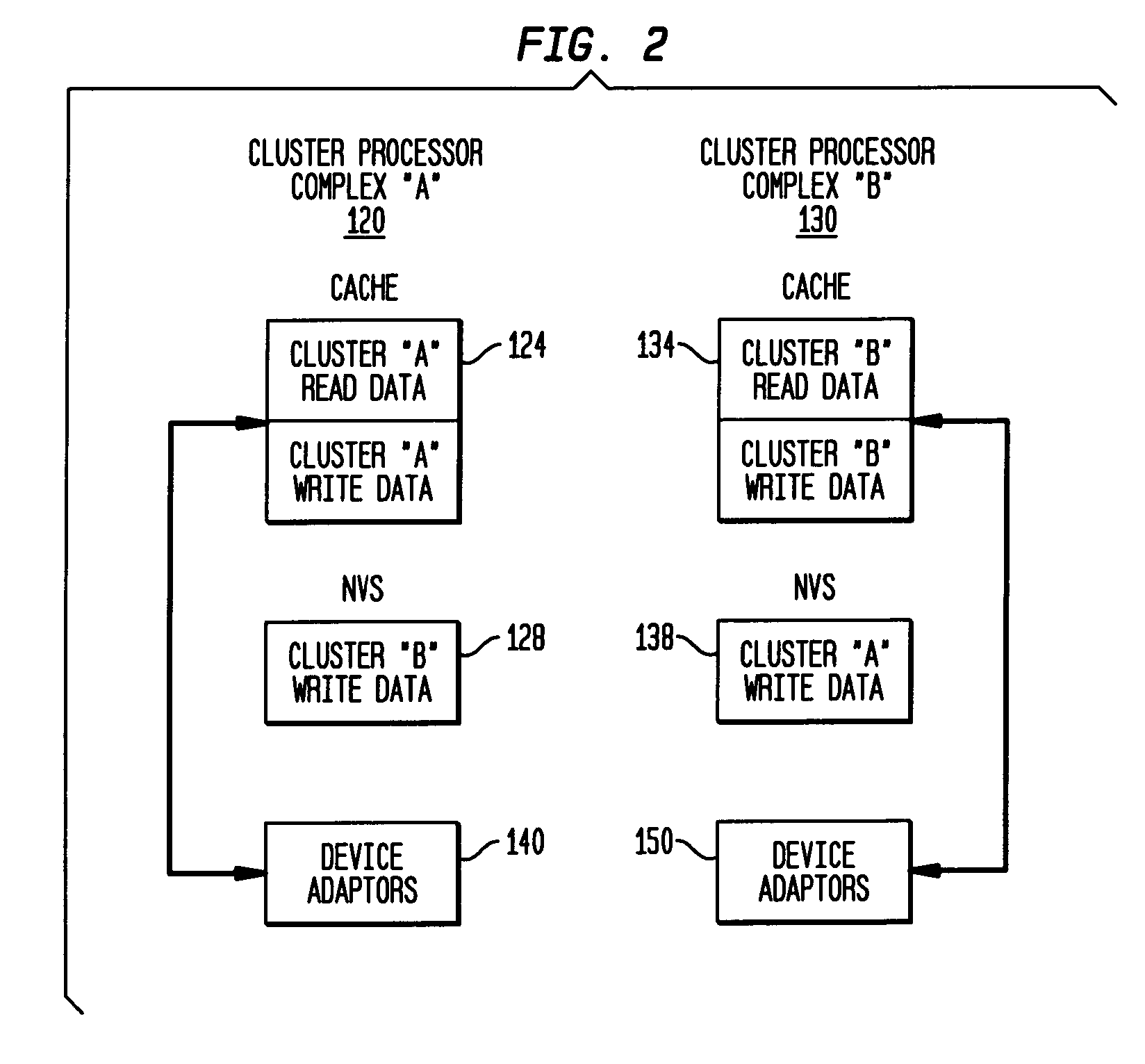

Preserving cache data against cluster reboot

InactiveUS20050005188A1Error detection/correctionEmergency protective arrangements for automatic disconnectionData descriptionLeast recently frequently used

A dual cluster storage server maintains track control blocks (TCBs) in a data structure to describe the data stored in cache in corresponding track images or segments. Following a cluster failure and reboot, the surviving cluster uses the TCBs to rebuild data structures such as a scatter table, which is a hash table that identifies a location of a track image, and a least recently used (LRU) / most recently used (MRU) list for the track images. This allows the cache data to be recovered. The TCBs describe whether the data in the track images is modified and valid, and describe forward and backward pointers for the data in the LRU / MRU lists. A separate non-volatile memory that is updated as the track images are updated is used to verify the integrity of the TCBs.

Owner:LINKEDIN

Prioritizing and locking removed and subsequently reloaded cache lines

InactiveUS6901483B2Low cache hit rateInefficiency described above will be avoidedMemory adressing/allocation/relocationCache hierarchyParallel computing

Owner:INT BUSINESS MASCH CORP

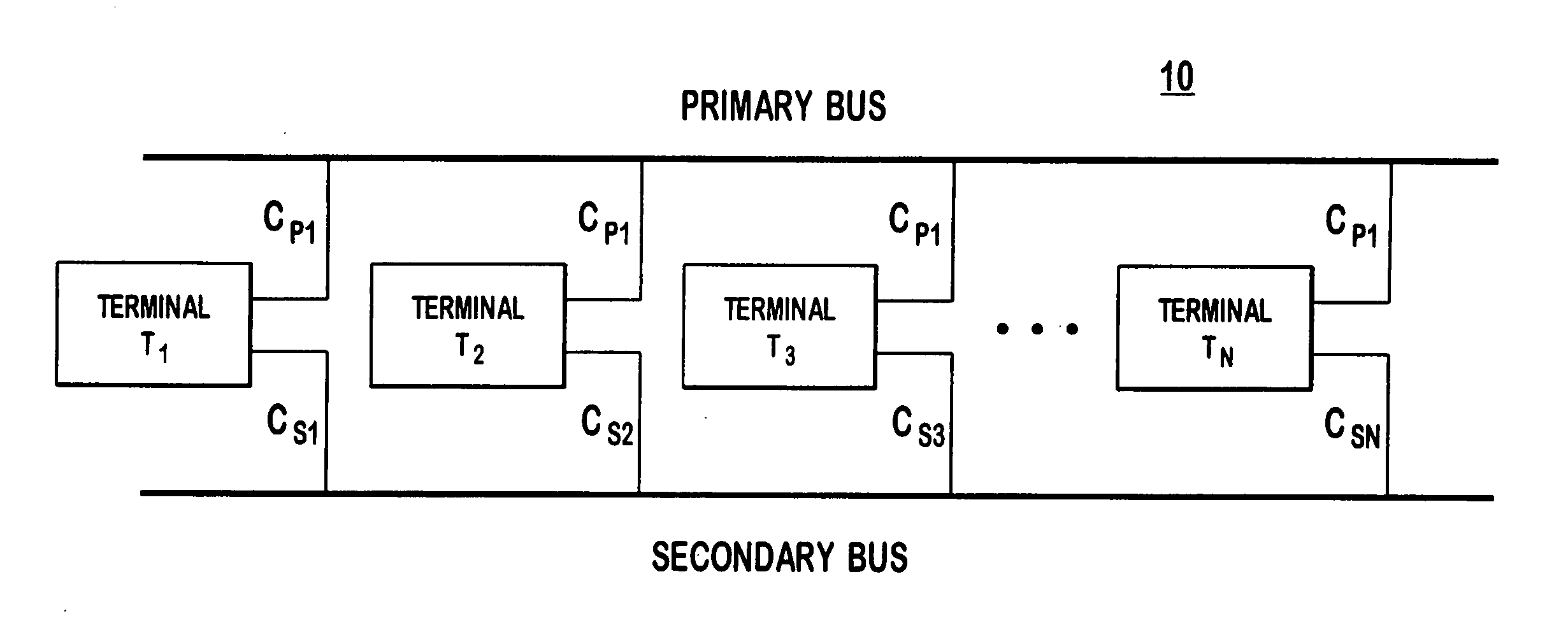

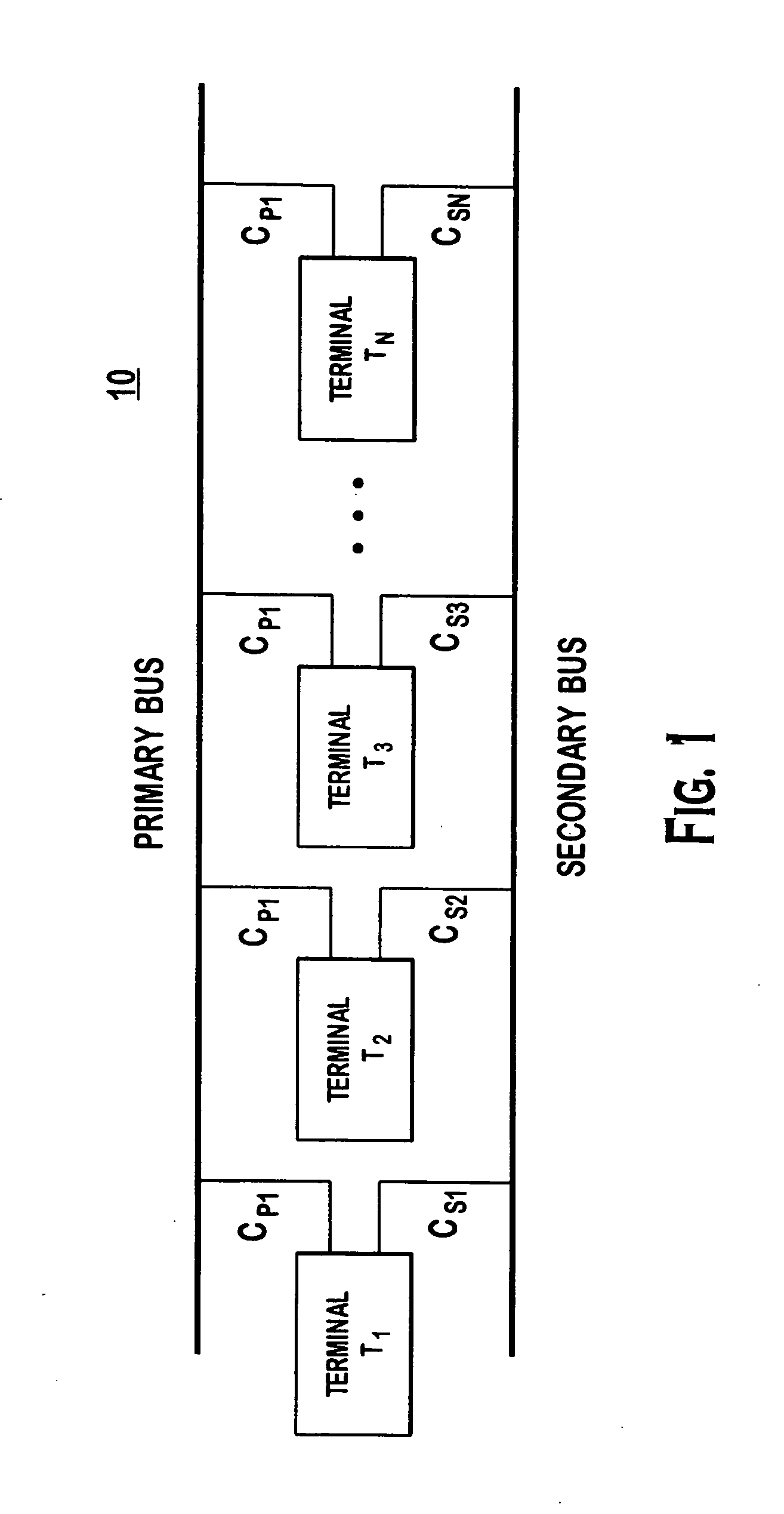

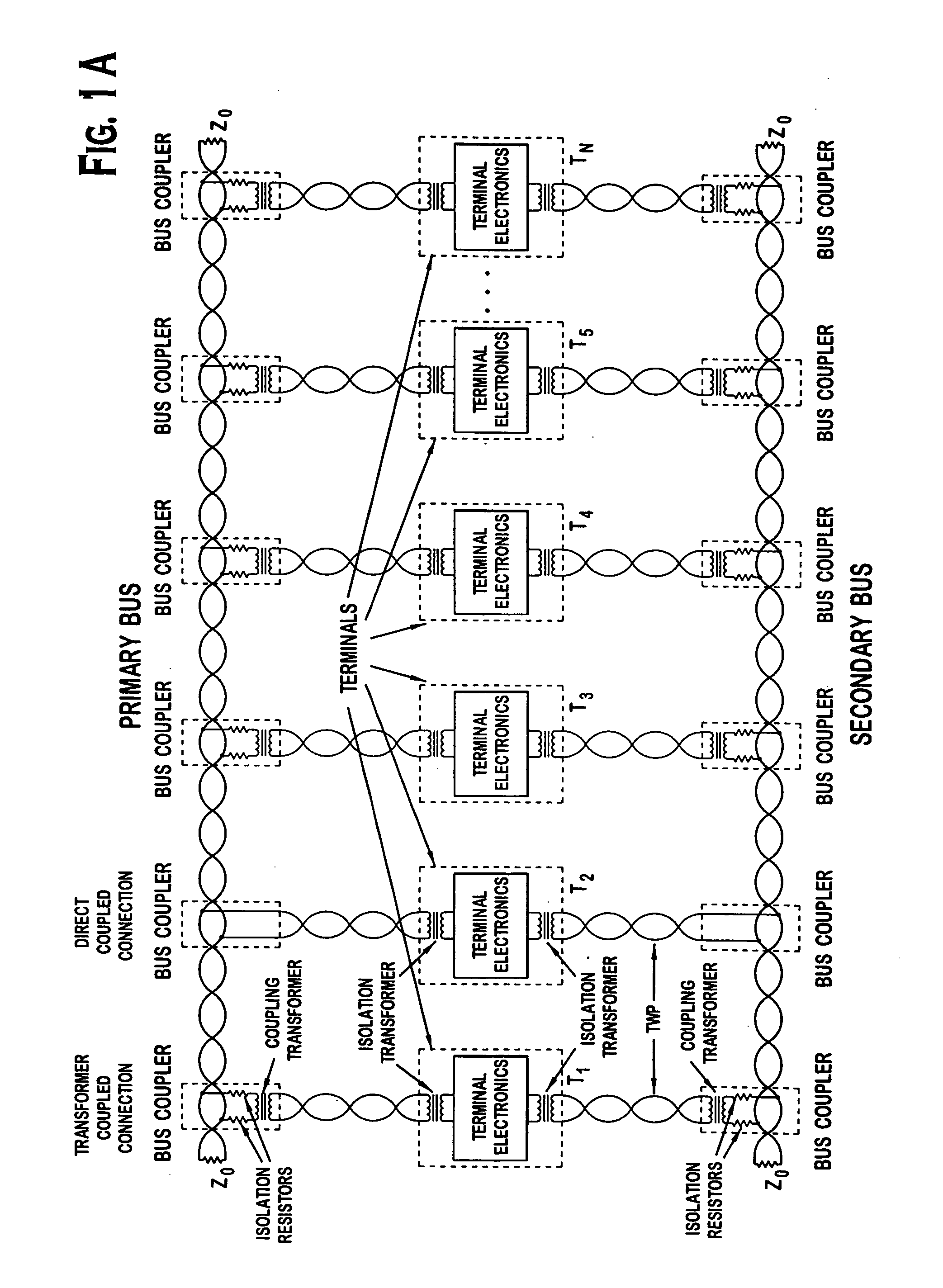

Dual speed/dual redundant bus system

InactiveUS20060101184A1Increase data rateDecreased SNRError detection/correctionData switching networksMultiplexingFault tolerance

Method and apparatus for use of the dual redundant bus network utilizing time and frequency multiplexing techniques to provide a high bit rate system that maintains a lower bit error rate with good fault tolerance by sending a low speed message on one bus and a high speed message on the remaining bus then operating at a dual bus mode and for reducing the speed of the high speed to aerate between the high speed and low speed message rates and multiplexing the low speed and reduced high speed messages when a fault condition is detected on one of the buses. Techniques are provided for continuously monitoring the buses to identify the least recently used (LRU) to facilitate bus selection and selection of one of the dual bus and concurrent modes for operation.

Owner:DATA DEVICE CORP

Buffer allocation for split data messages

A technique to store a plurality of addresses and data to address and data buffers, respectively, in an ordered manner. More particularly, one embodiment of the invention stores a plurality of addresses to a plurality of address buffer entries and a plurality of data to a plurality of data buffer entries according to a true least-recently-used (LRU) allocation algorithm.

Owner:BEIJING XIAOMI MOBILE SOFTWARE CO LTD

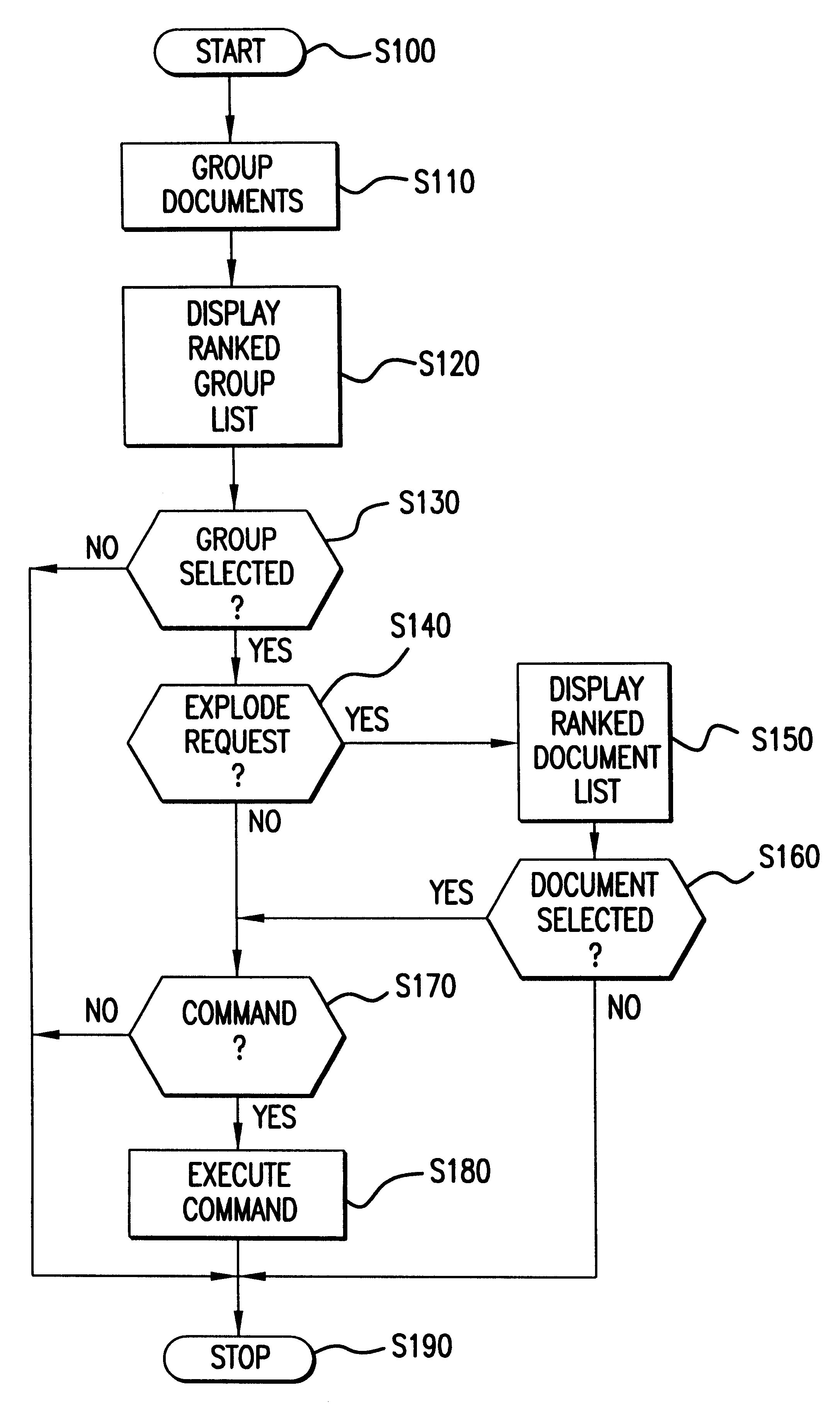

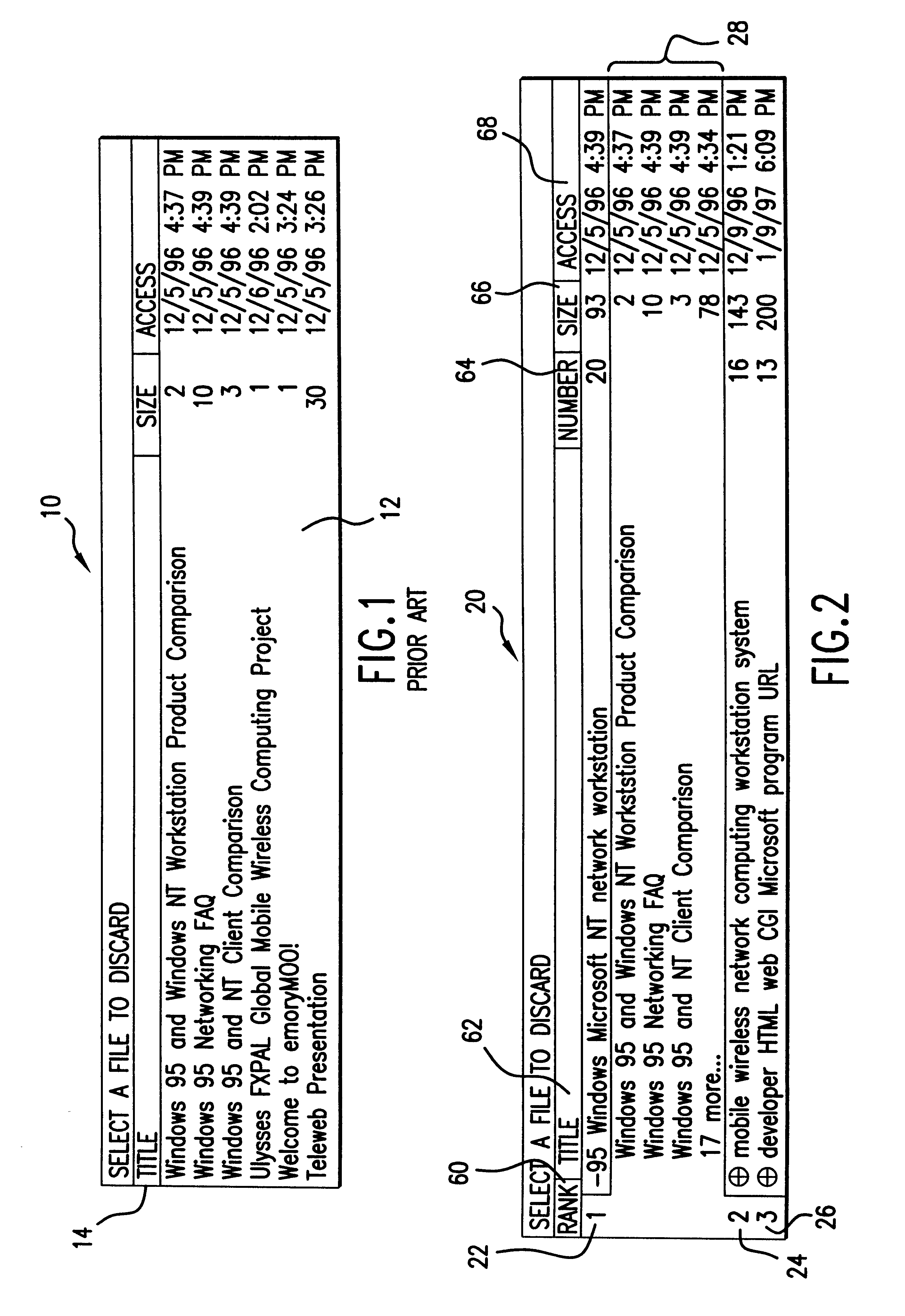

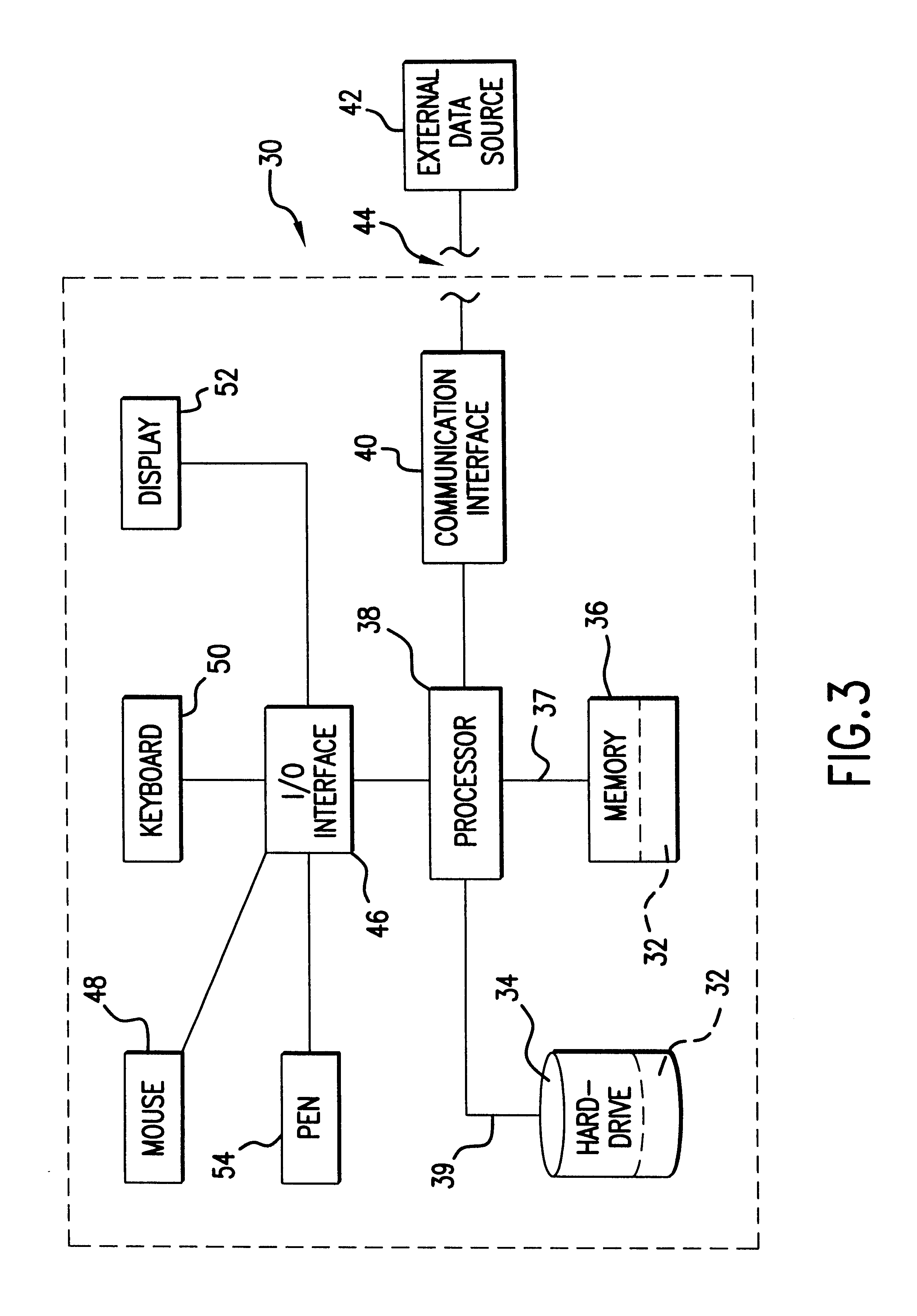

Document cache replacement policy for automatically generating groups of documents based on similarity of content

InactiveUS6842876B2Avoiding expensive file-by-file decisionFree up spaceDigital data information retrievalSpecial data processing applicationsDocumentation procedureDocument preparation

A document storage management system and method that manages the storage of documents based upon the similarity of the content of the documents. Groups of documents are created based upon the similarity of the contents of the documents. Those groups are displayed to the user in a ranked list of selectable groups to permit selection of a group or document. The storage of the selected group or document is then managed by, for example, deleting, compressing, or copying. The displayed list may be ranked based upon a least recently used policy, the relevance to a predetermined topic, the size of the group, the radius of the group based upon the maximum distance of any document from the group centroid, the number of documents in the group and any other combination of parameters.

Owner:MAJANDRO LLC

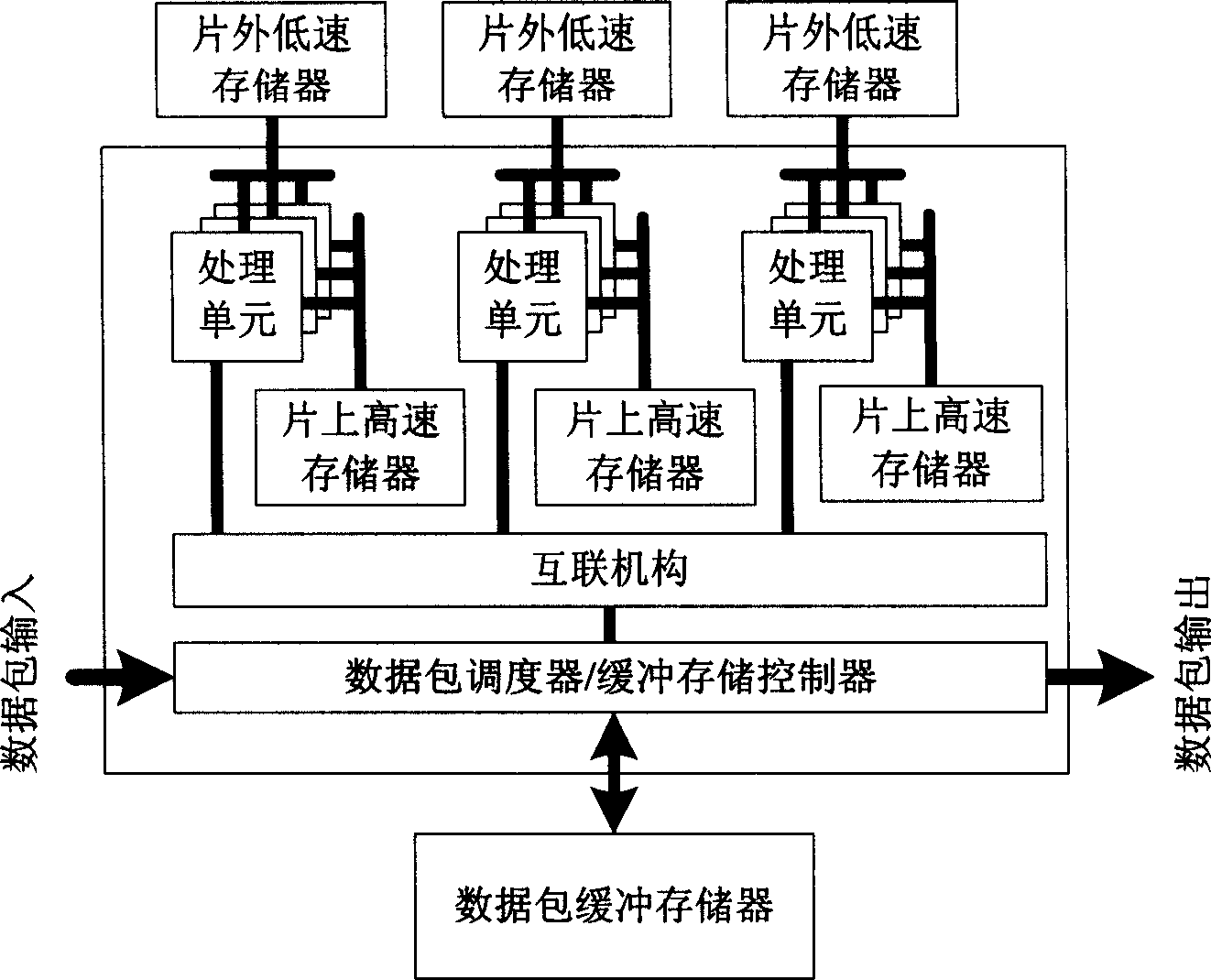

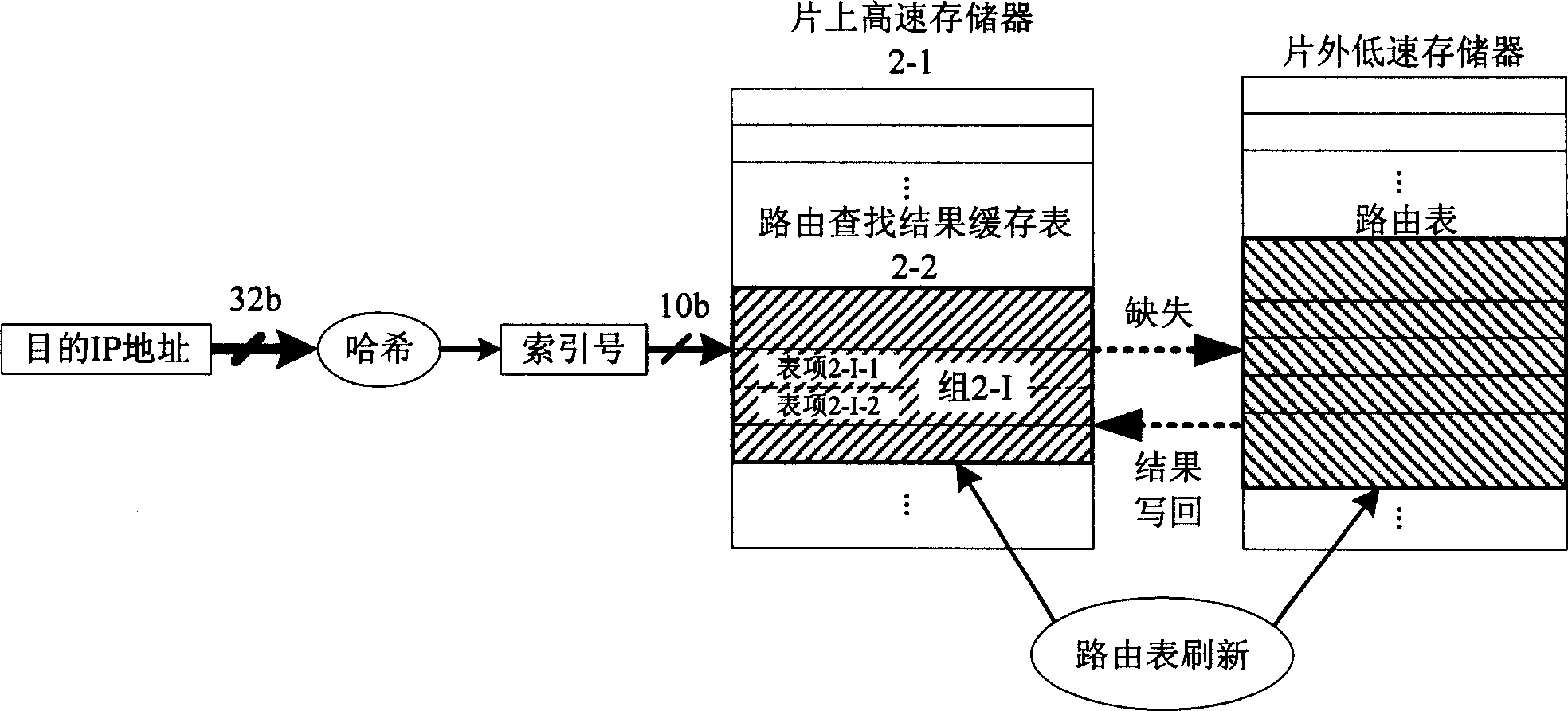

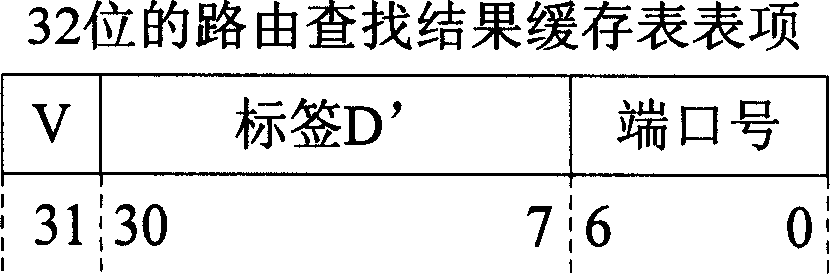

Route searching result cache method based on network processor

InactiveCN1863169AImprove search efficiencyEvenly distributedData switching networksLow speedRouting table

The invention a network processor-based route searching result caching method, belonging to computer field, characterized in: building and maintaining a route searching result caching table in on-chip high speed memory of the network processor; after each to-be-searched destination IP address is received by the network processor, firstly making fast searching in the caching list by Hash function; if its result has existed in the caching list, directly returning the route searching result and regulating the sequence of items in the caching list by a rule that the closer an item is, the least it is used, to find the result as soon as possible in the follow-up searching; otherwise searching the route list stored in an outside-chip low speed memory, returning the searching result to application program and writing the searching result back into the caching list. And the invention reduces number of accessing times of outside-chip low speed memory for route searching and reducing occupation of memory bandwidth.

Owner:TSINGHUA UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com