Patents

Literature

497 results about "Remote direct memory access" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, remote direct memory access (RDMA) is a direct memory access from the memory of one computer into that of another without involving either one's operating system. This permits high-throughput, low-latency networking, which is especially useful in massively parallel computer clusters.

Remote direct memory access system and method

InactiveUS20060075057A1Digital computer detailsTransmissionMulti processorRemote direct memory access

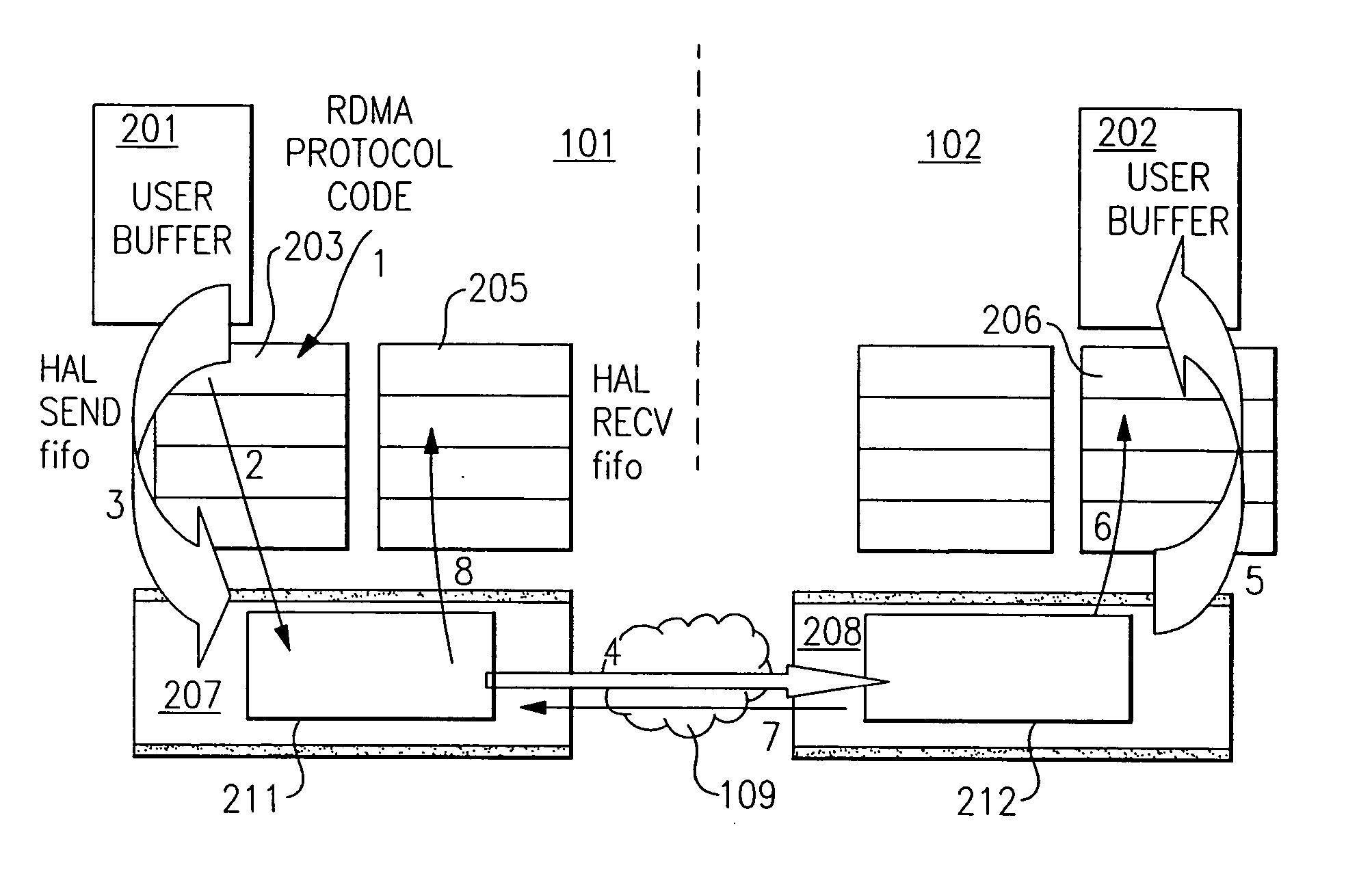

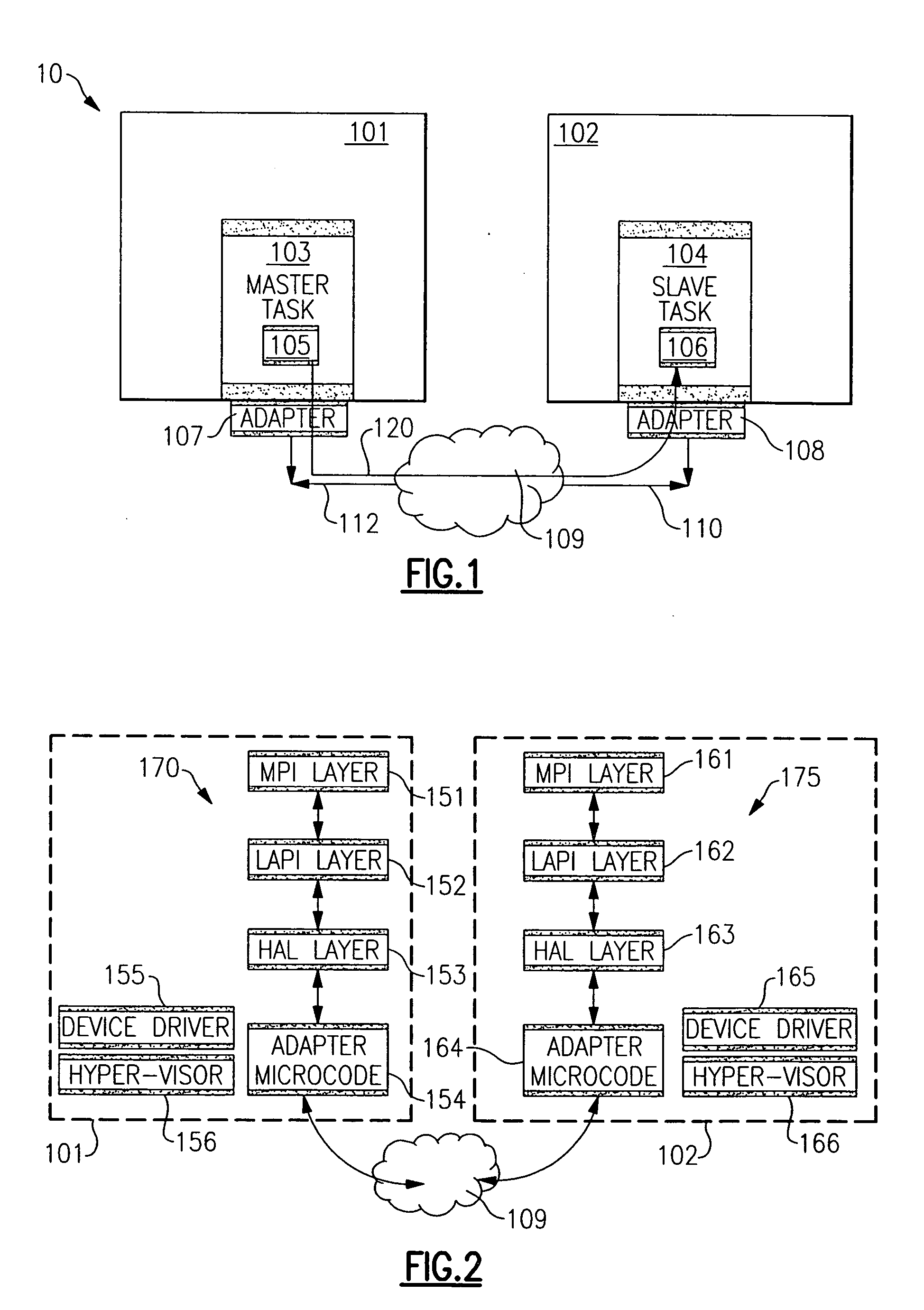

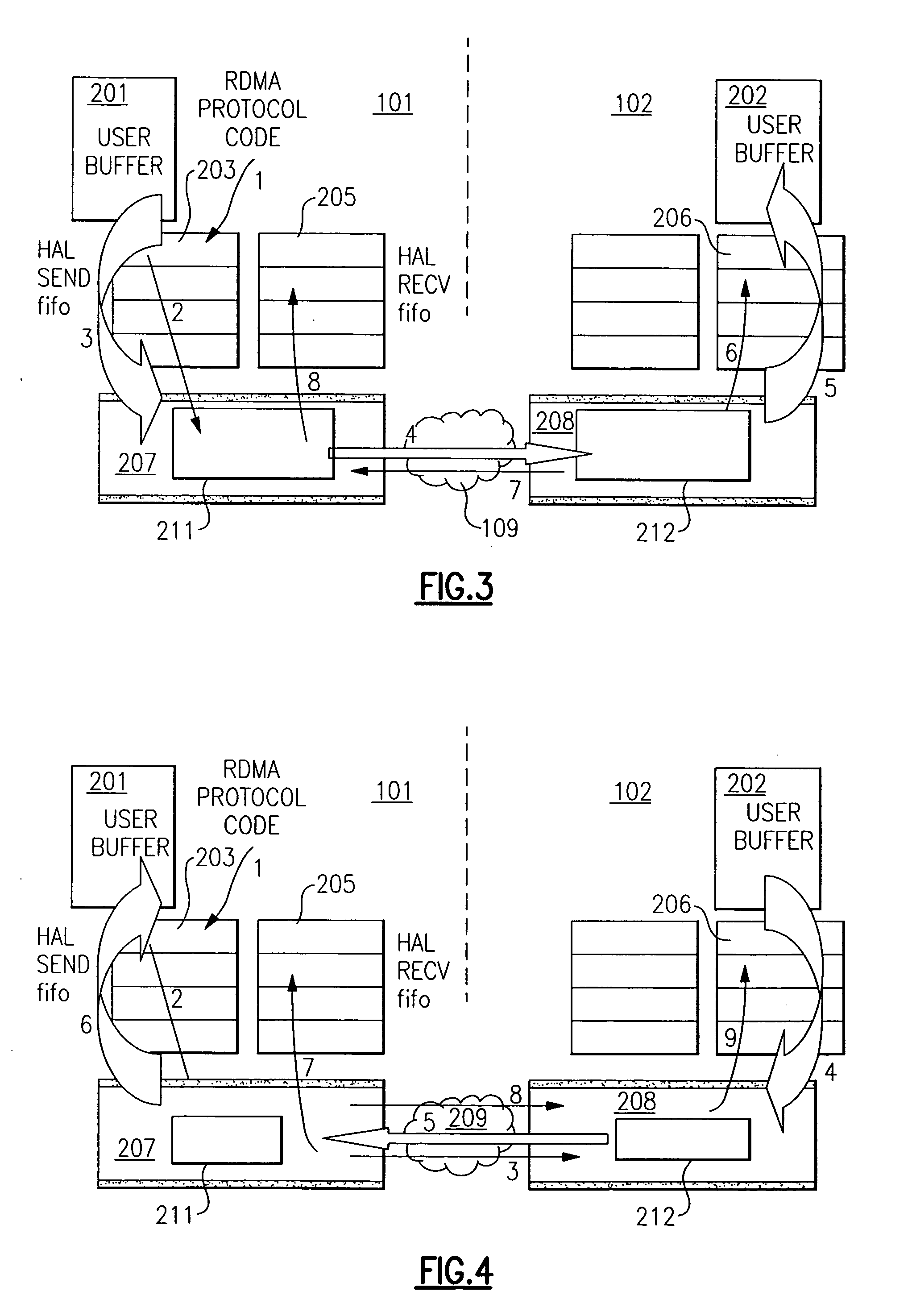

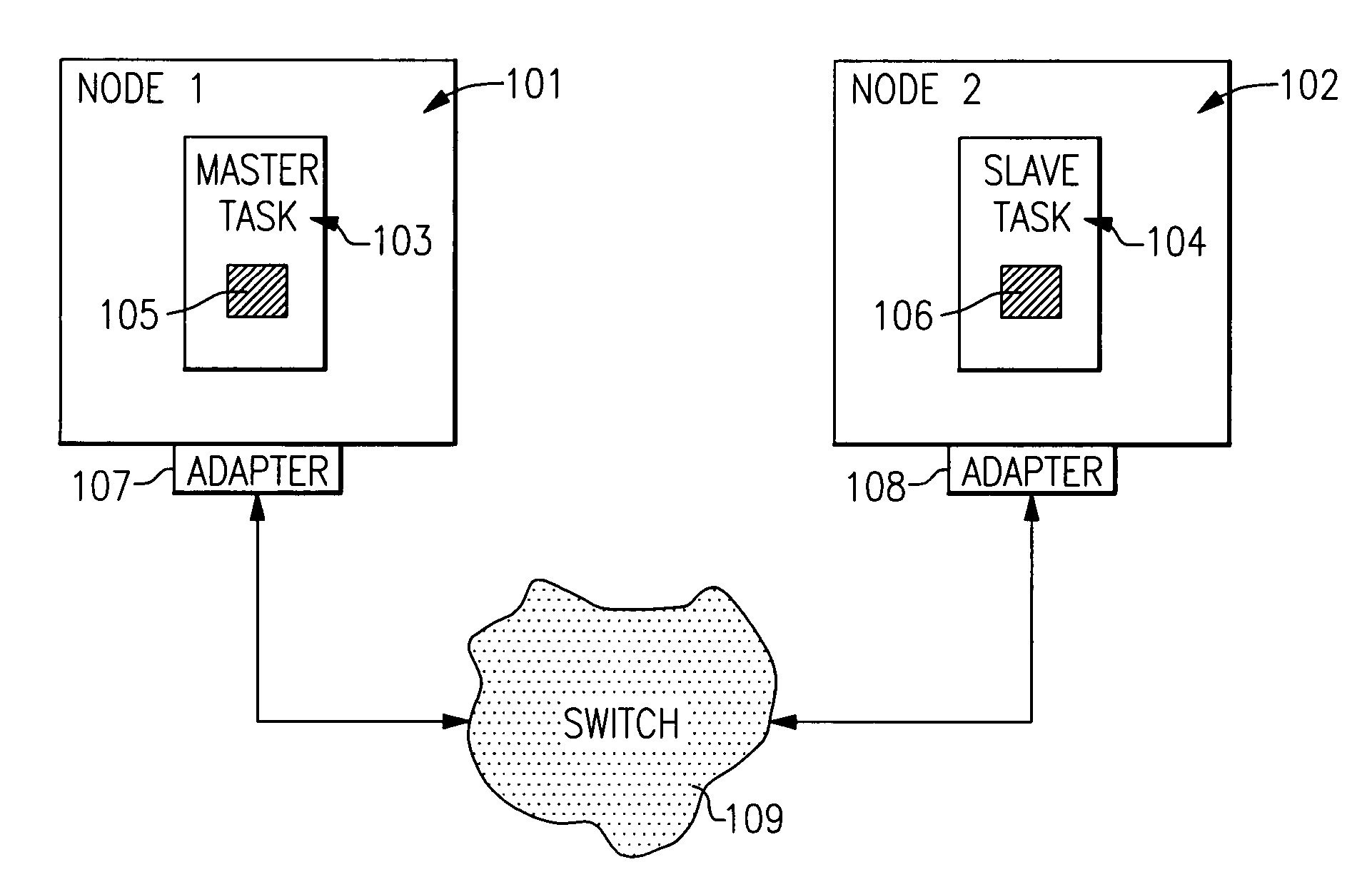

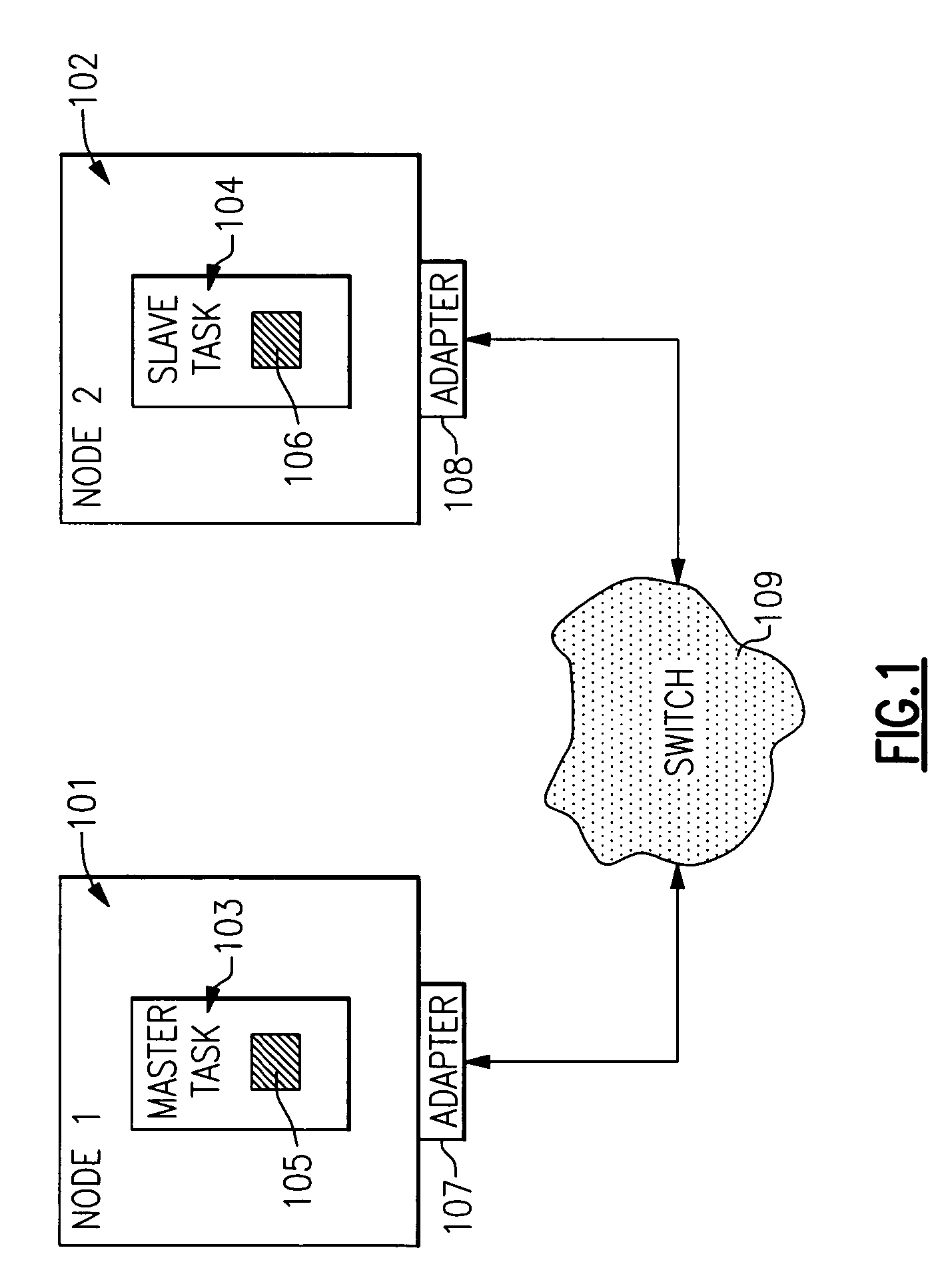

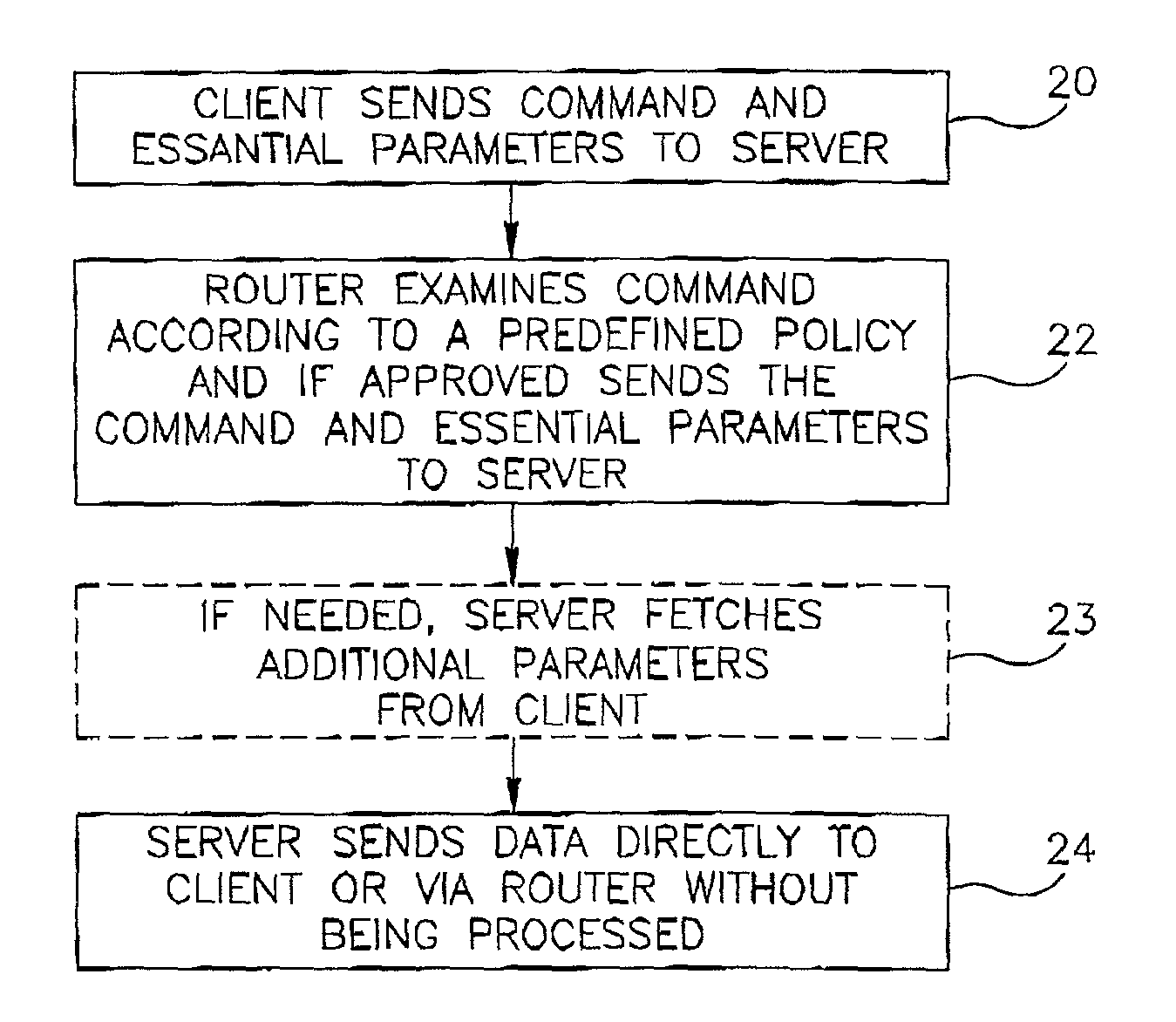

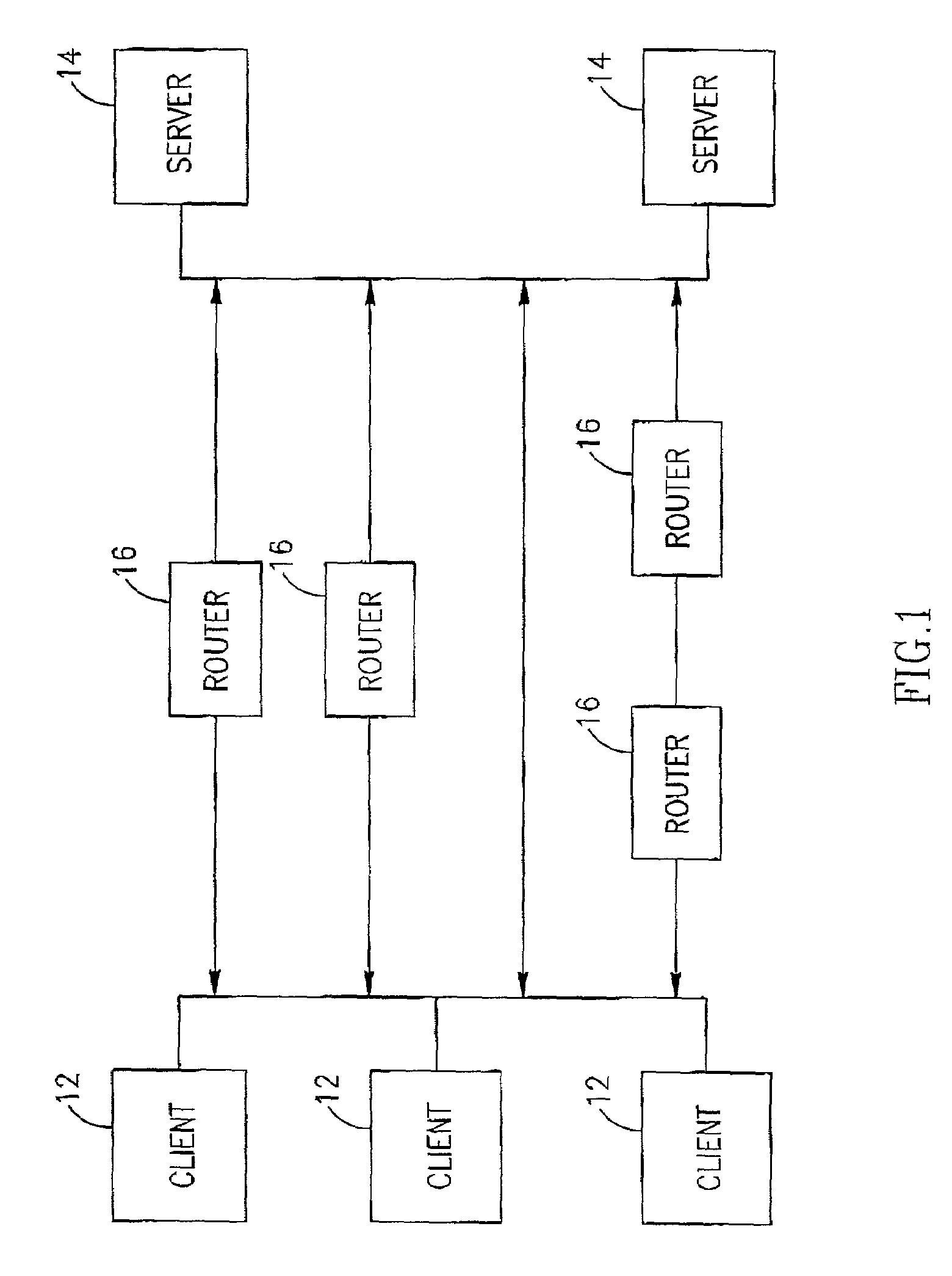

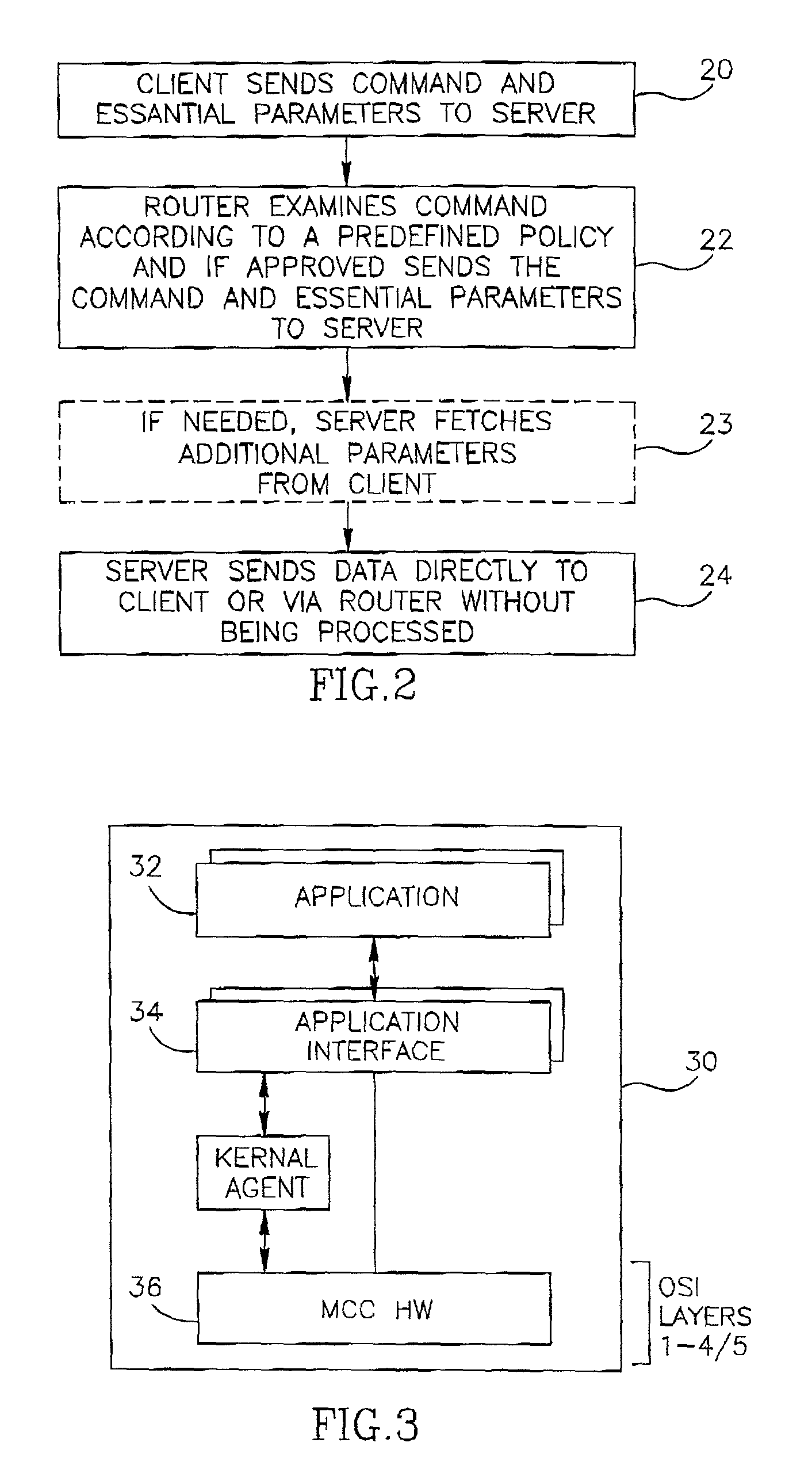

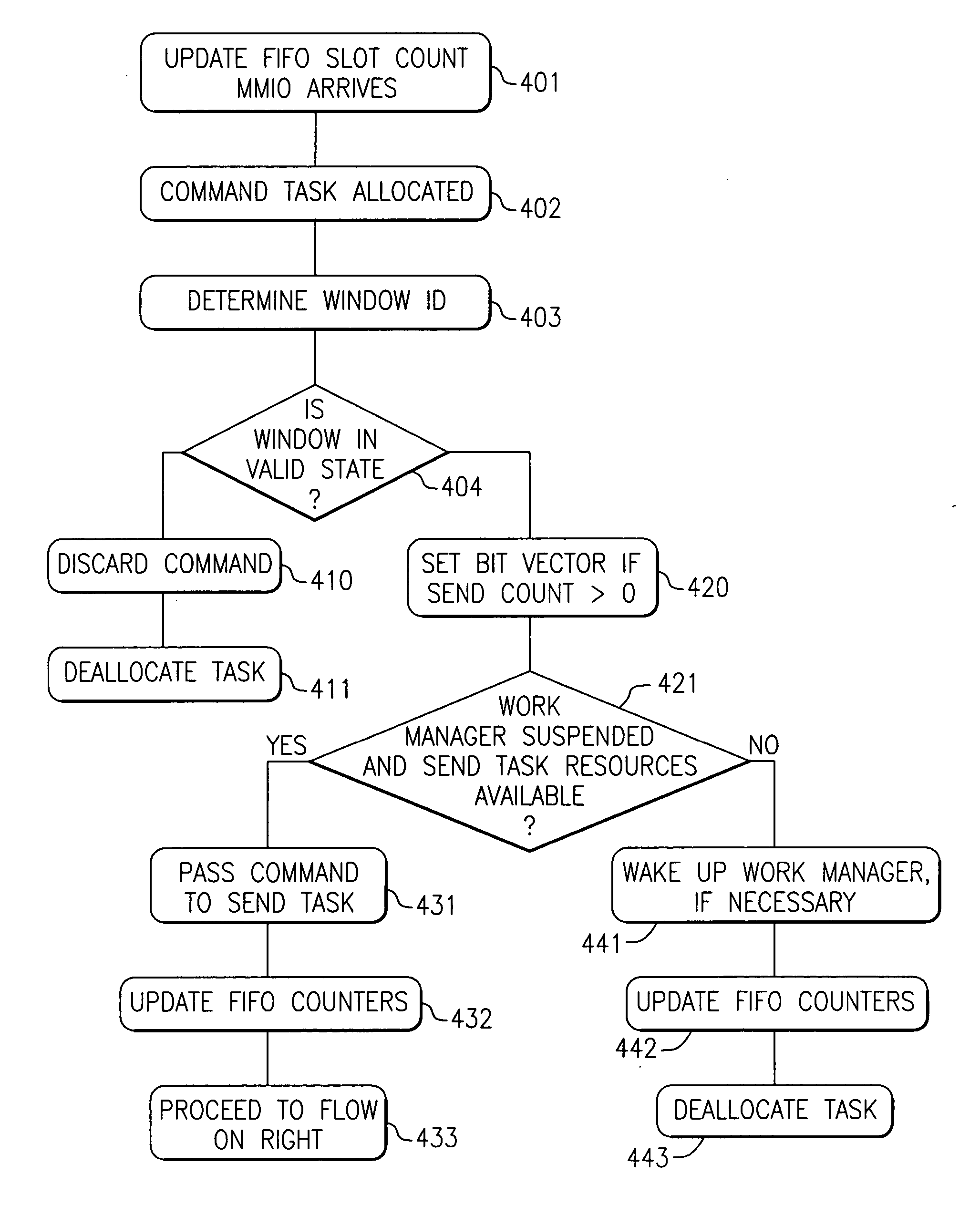

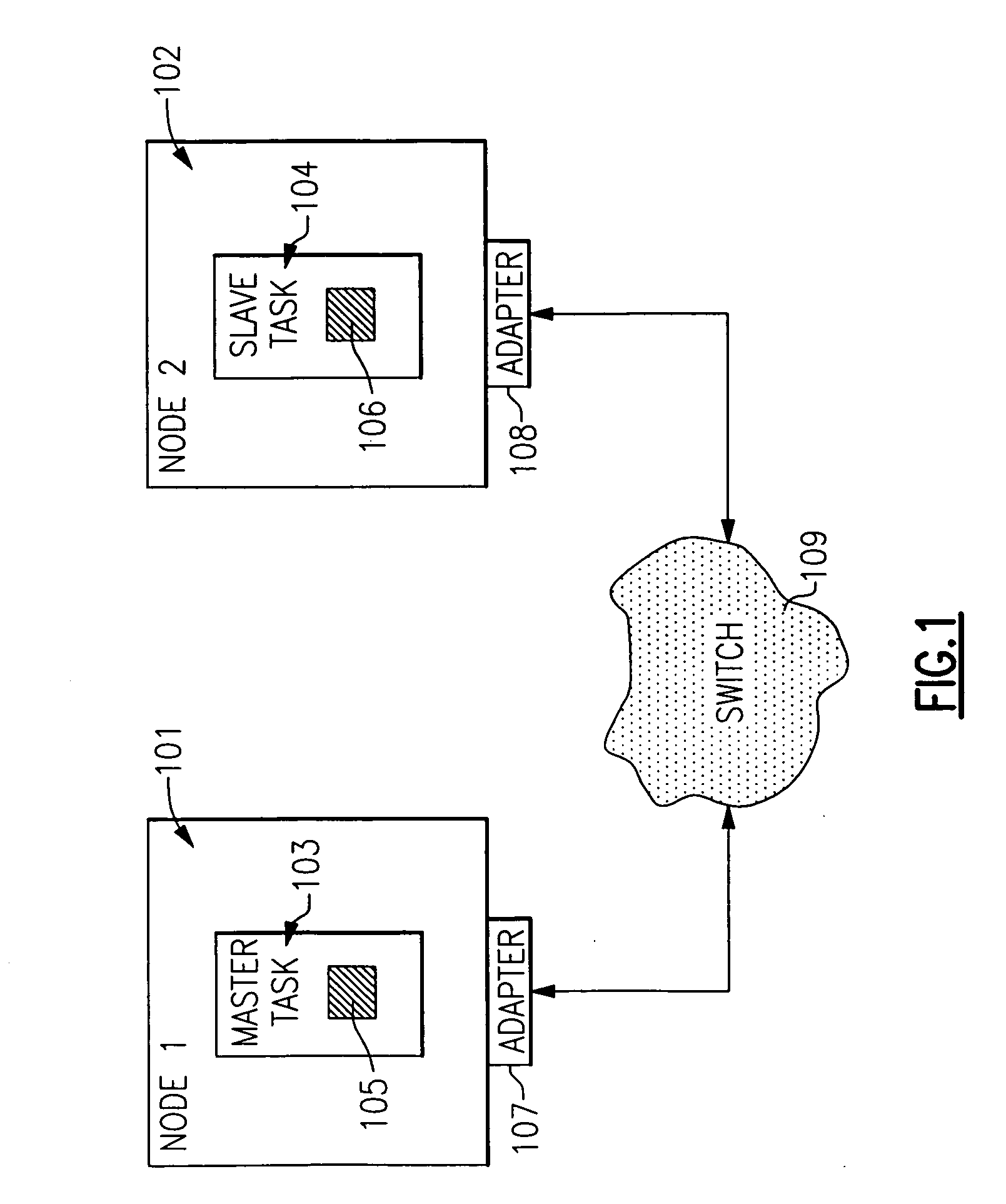

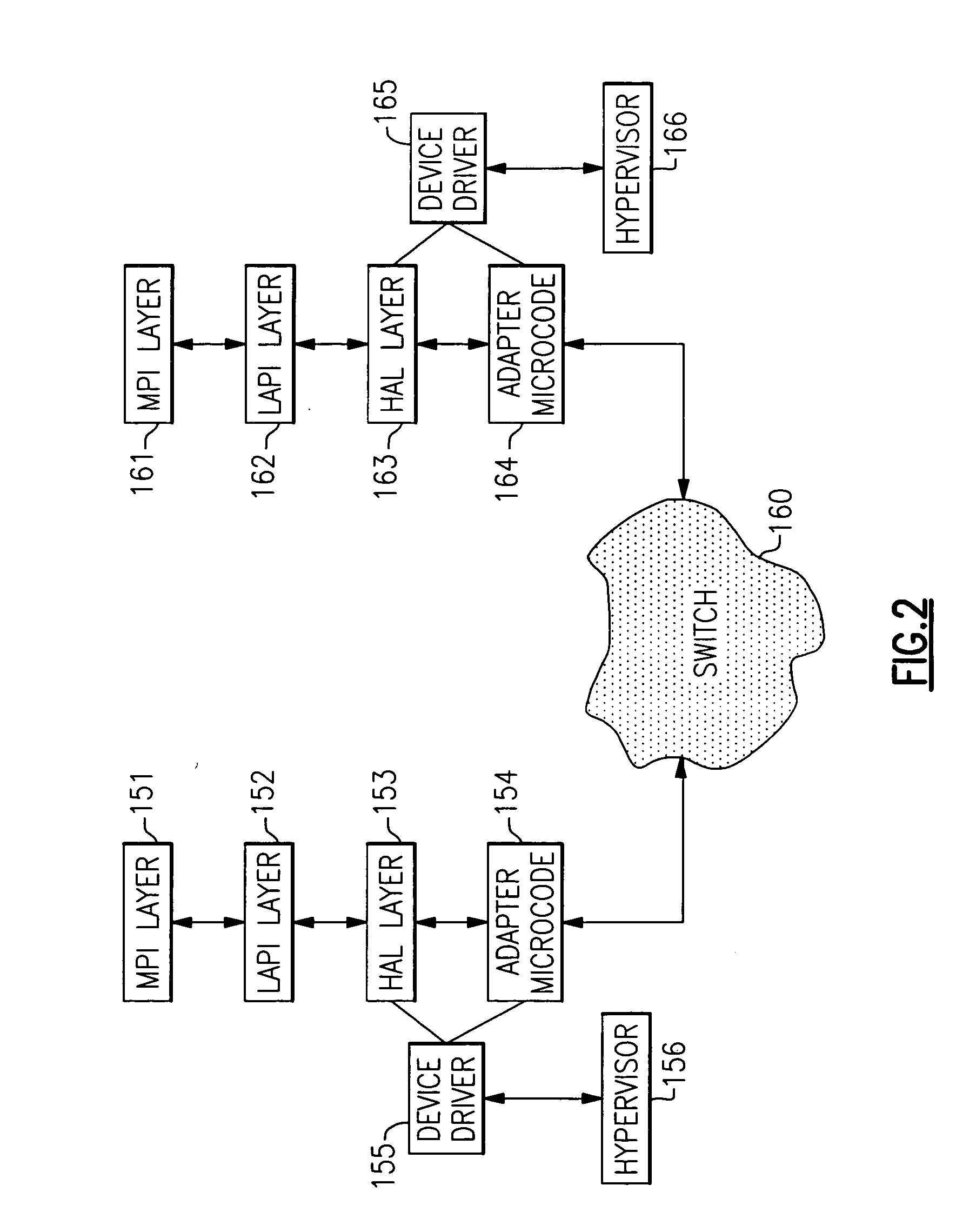

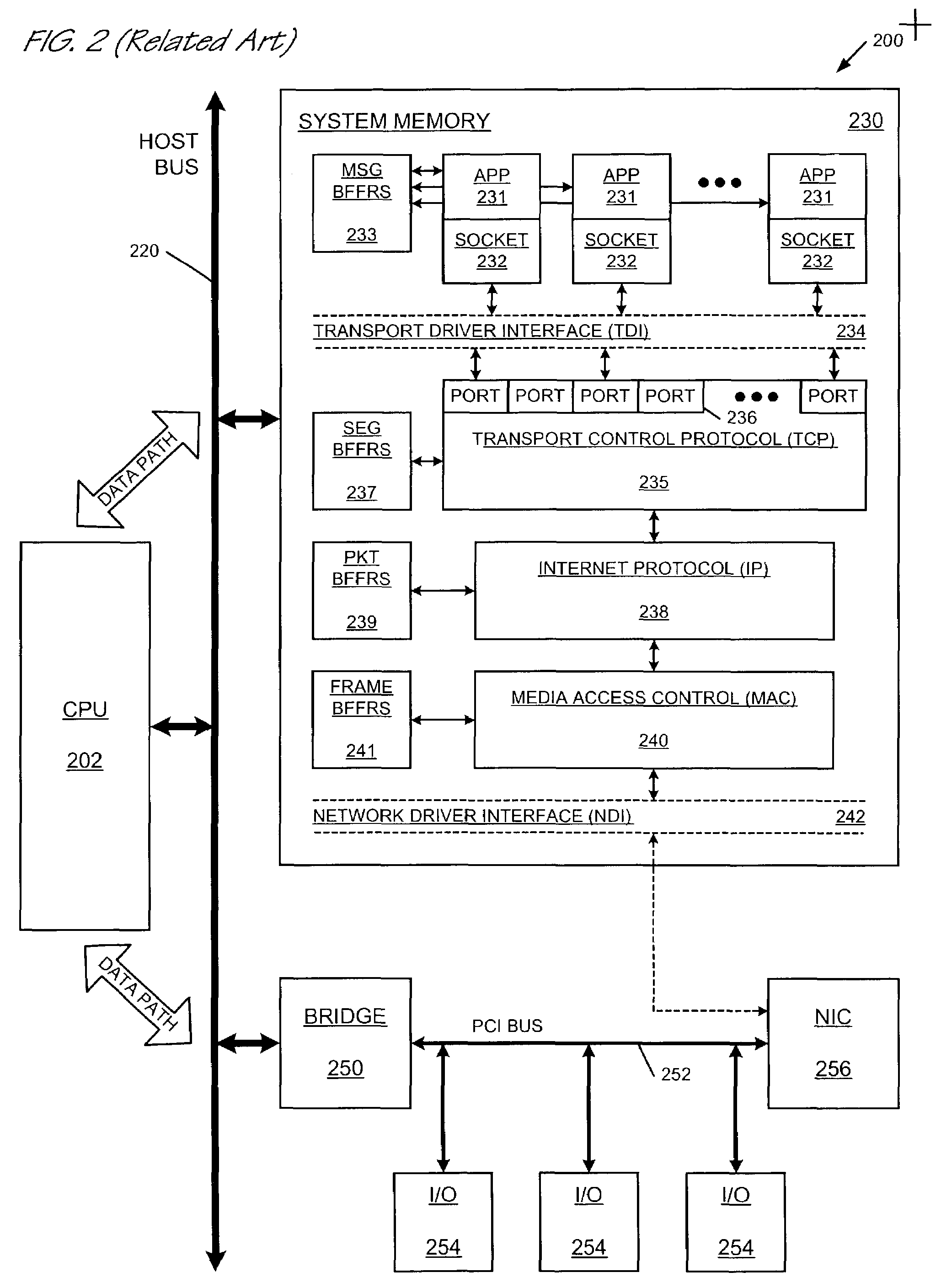

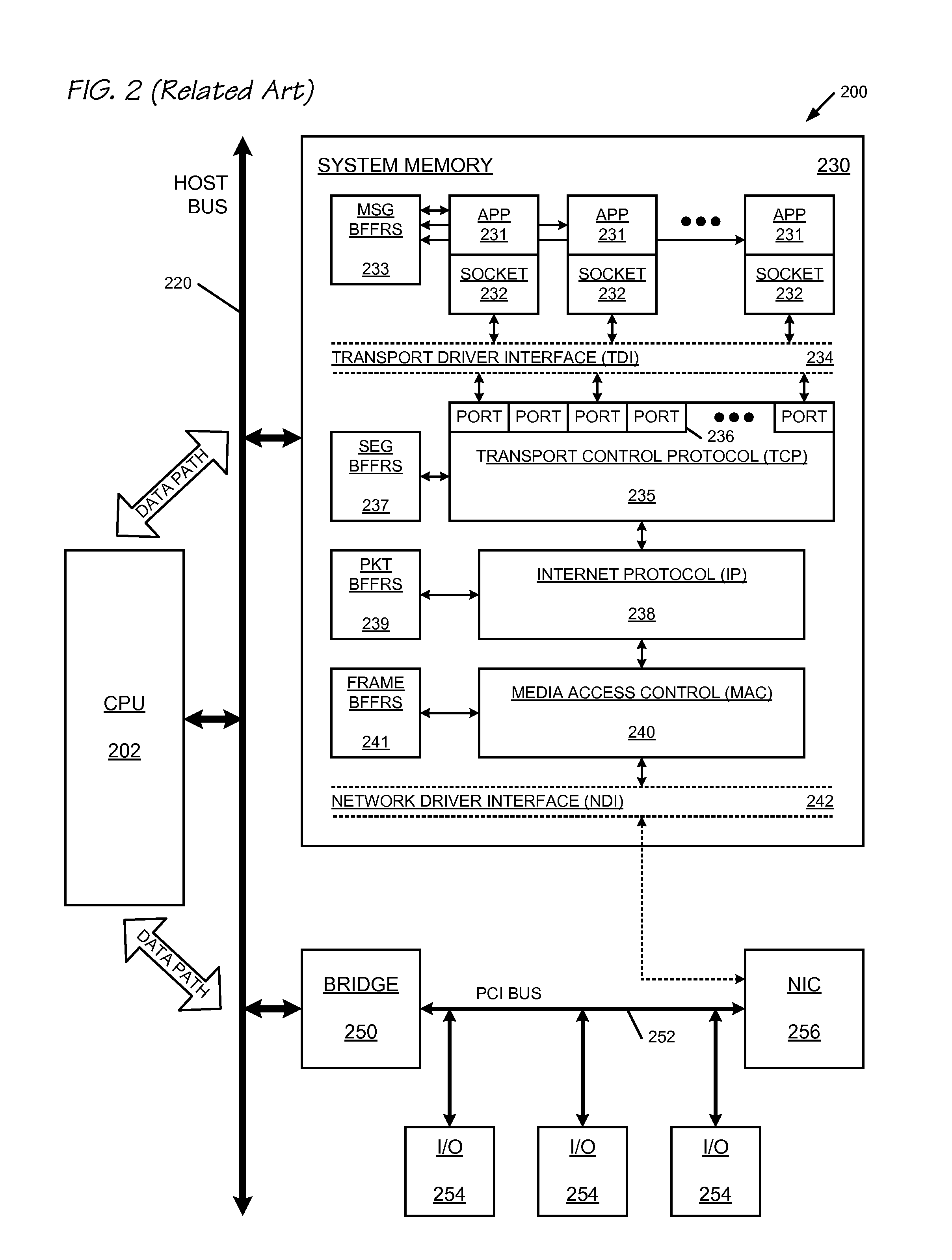

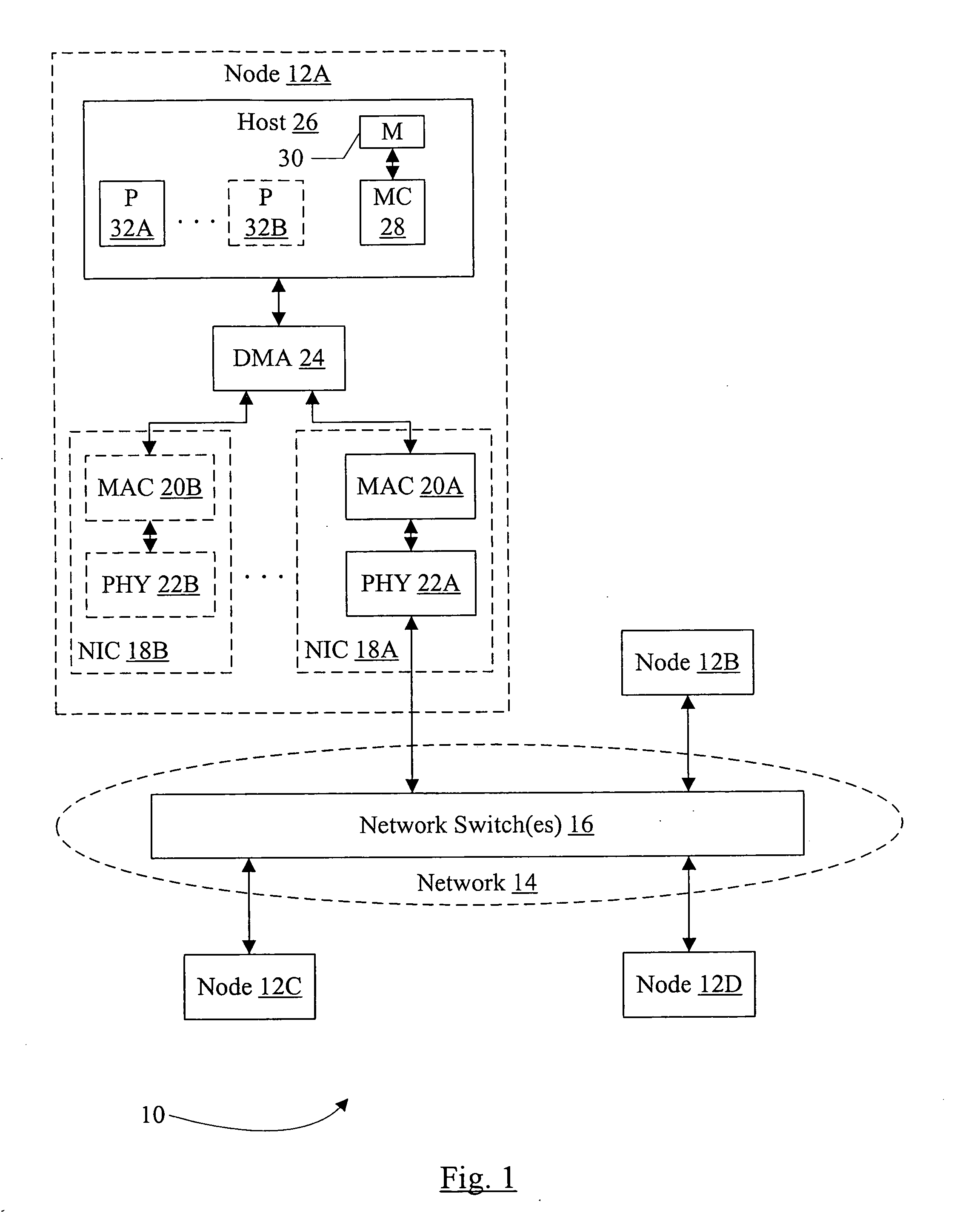

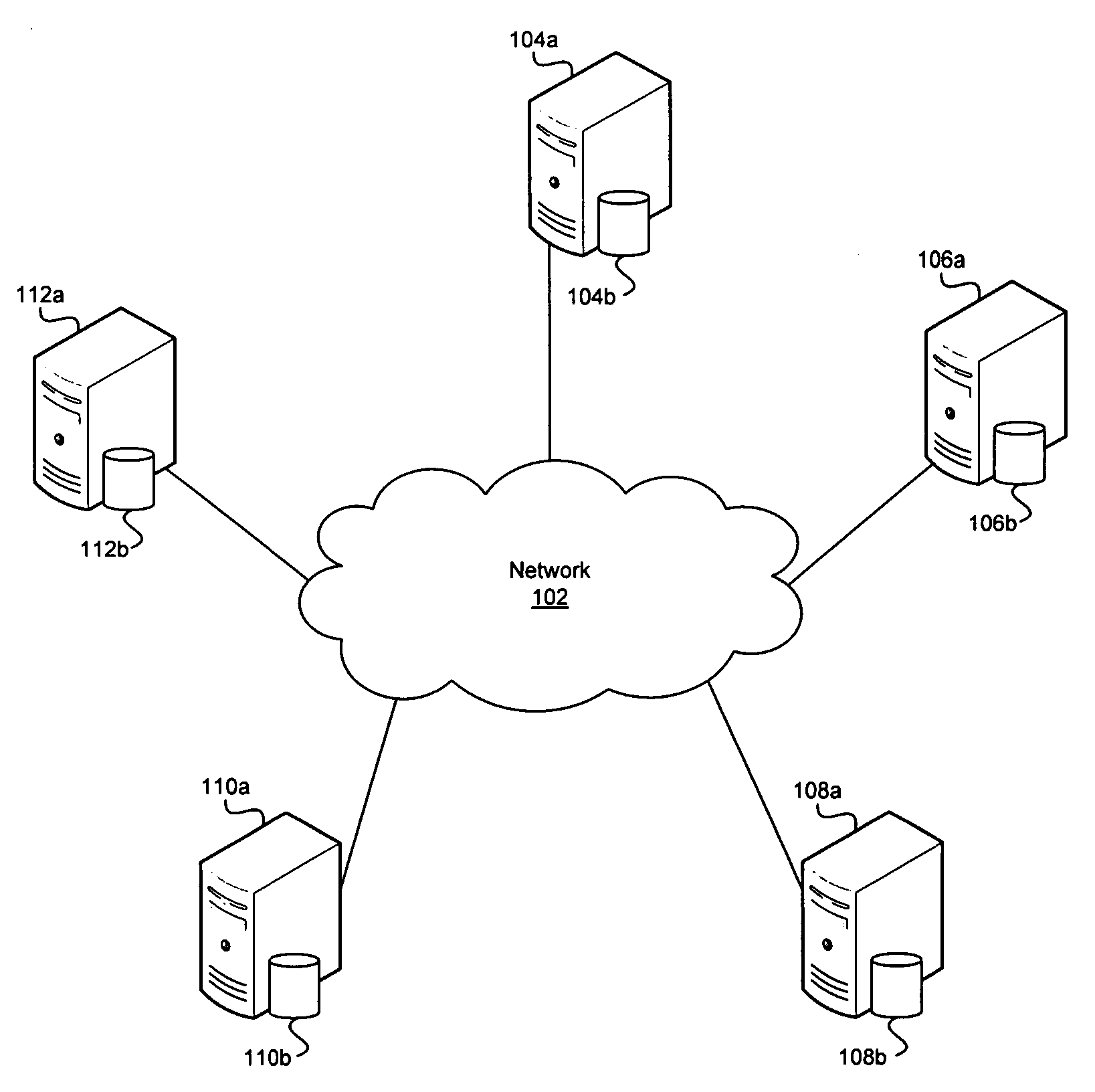

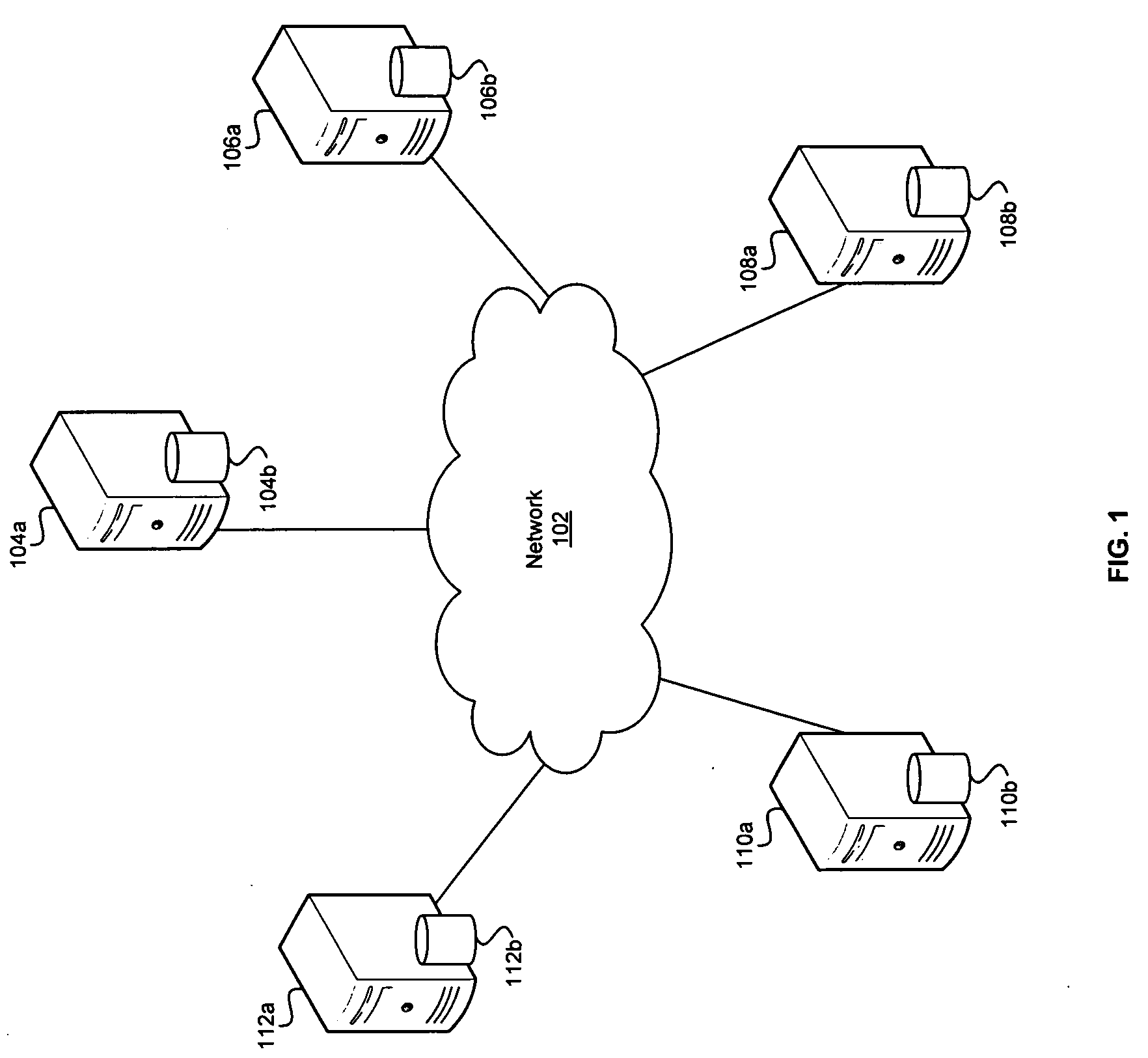

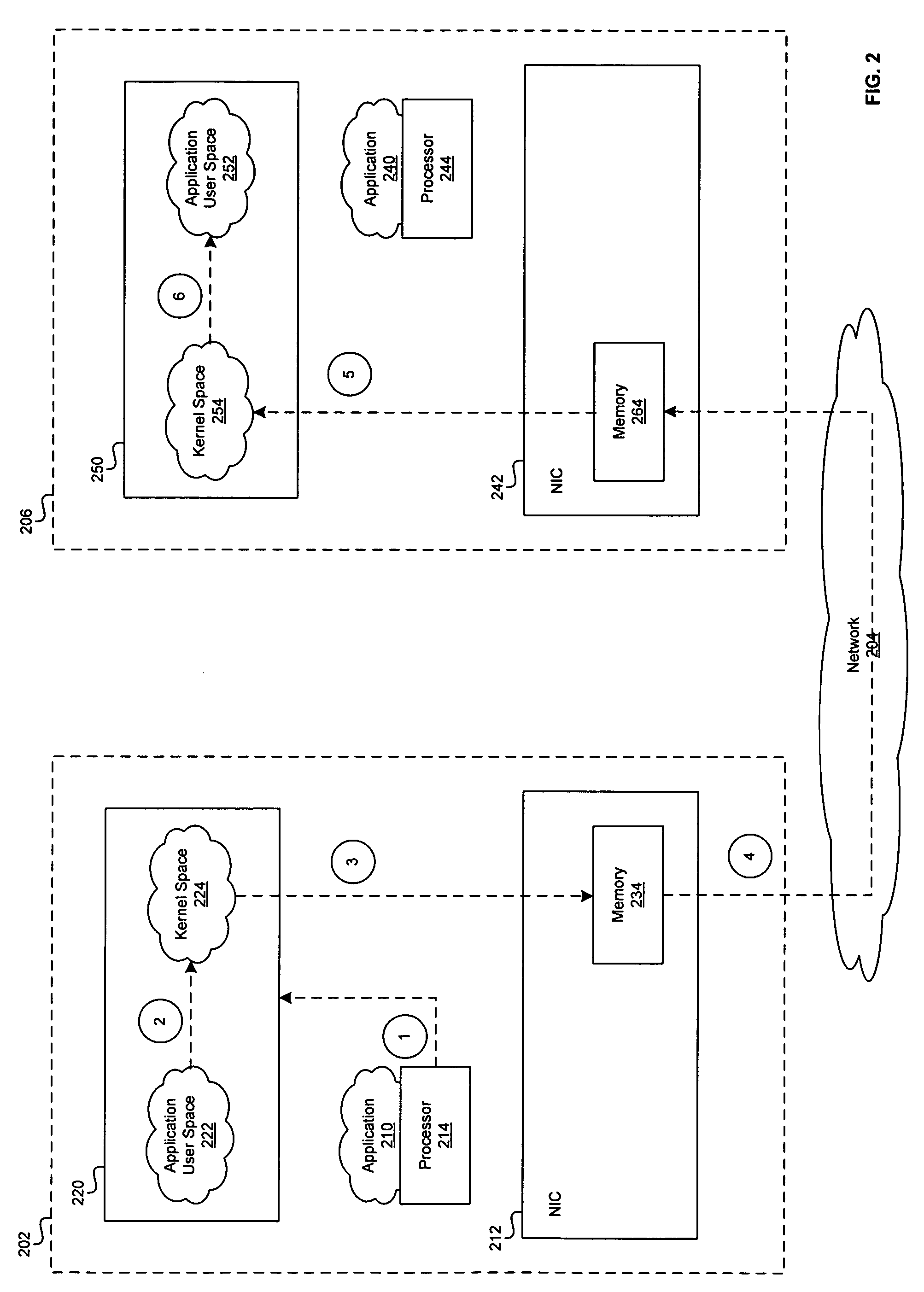

A remote direct memory access (RDMA) system is provided in which data is transferred over a network by DMA between from a memory of a first node of a multi-processor system having a plurality of nodes connected by a network and a memory of a second node of the multi-processor system. The system includes a first network adapter at the first node, operable to transmit data stored in the memory of the first node to a second node in a plurality of portions in fulfillment of a DMA request. The first network adapter is operable to transmit each portion together with identifying information and information identifying a location for storing the transmitted portion in the memory of the second node, such that each portion is capable of being received independently by the second node according to the identifying information. Each portion is further capable of being stored in the memory of the second node at the location identified by the location identifying information.

Owner:IBM CORP

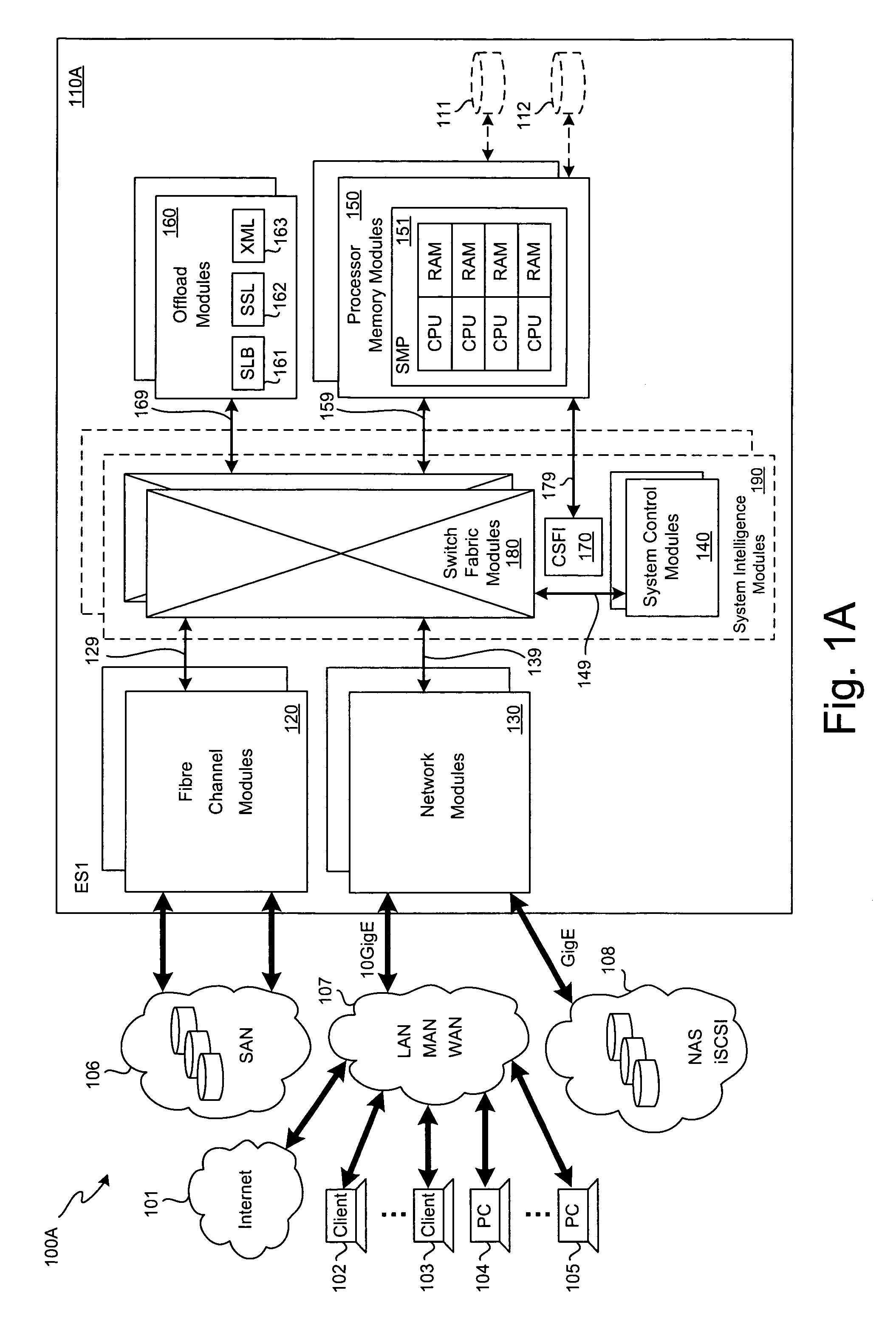

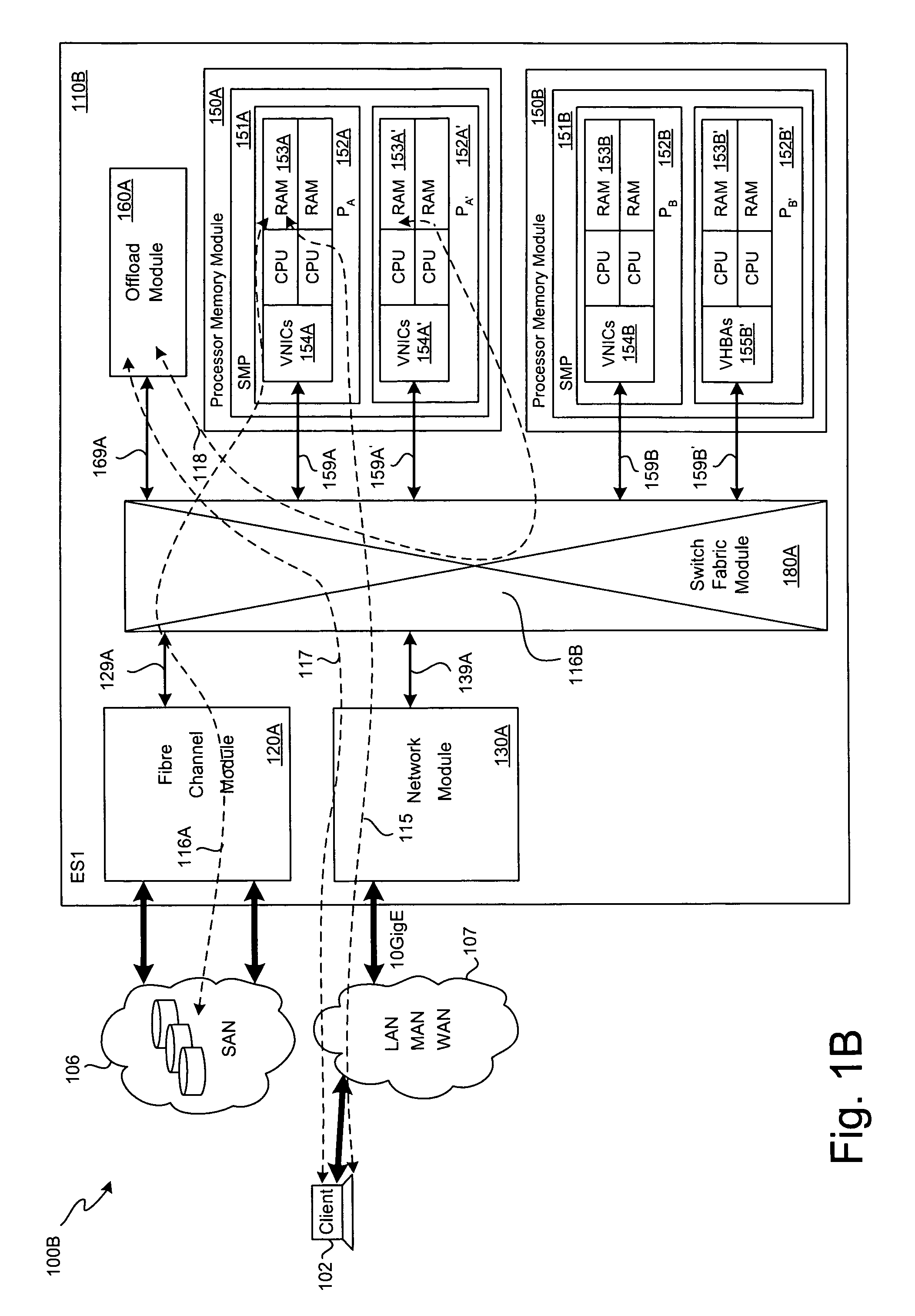

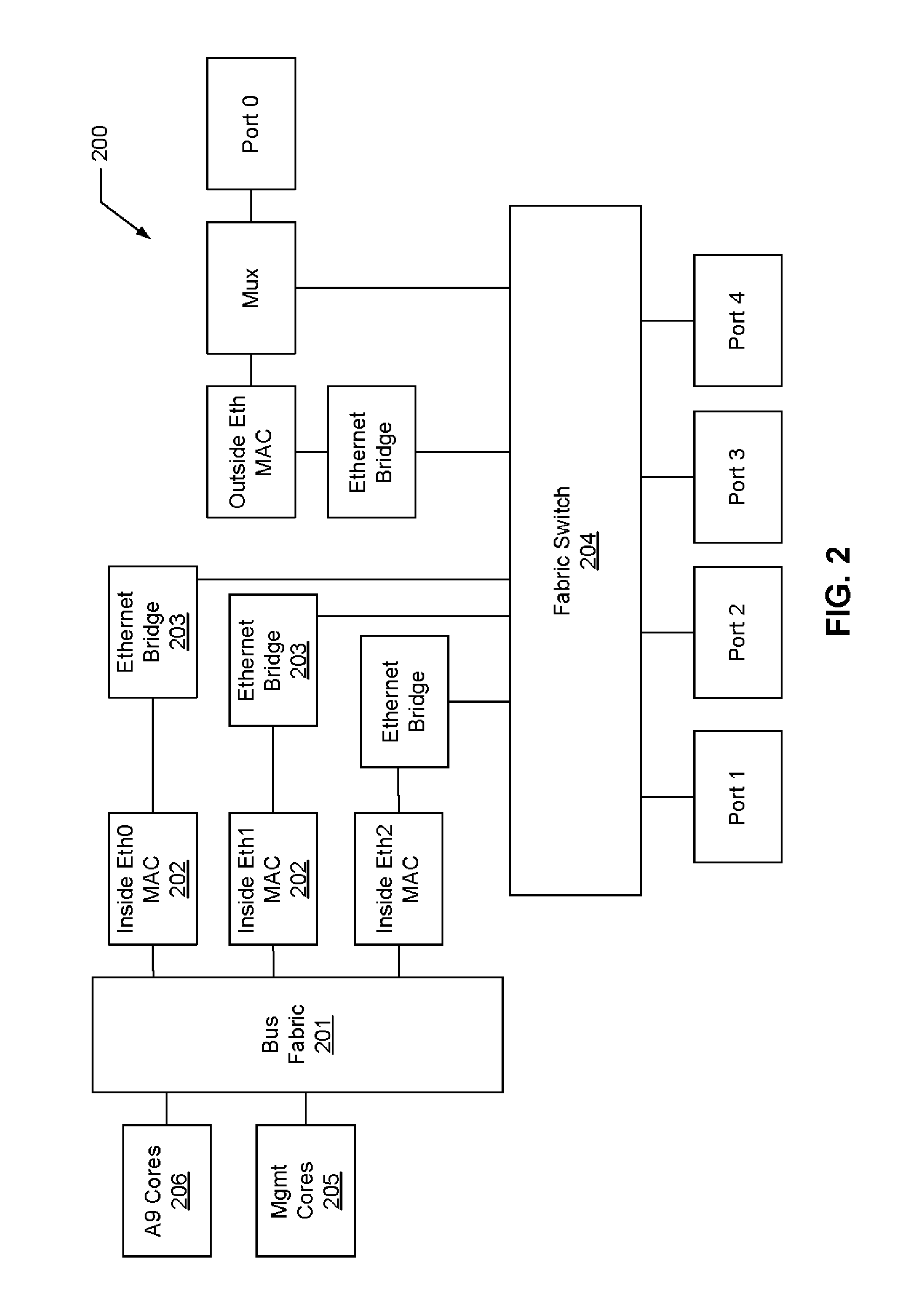

SCSI transport for fabric-backplane enterprise servers

ActiveUS7633955B1Improve performanceImprove efficiencyDigital computer detailsData switching by path configurationSCSI initiator and targetFiber

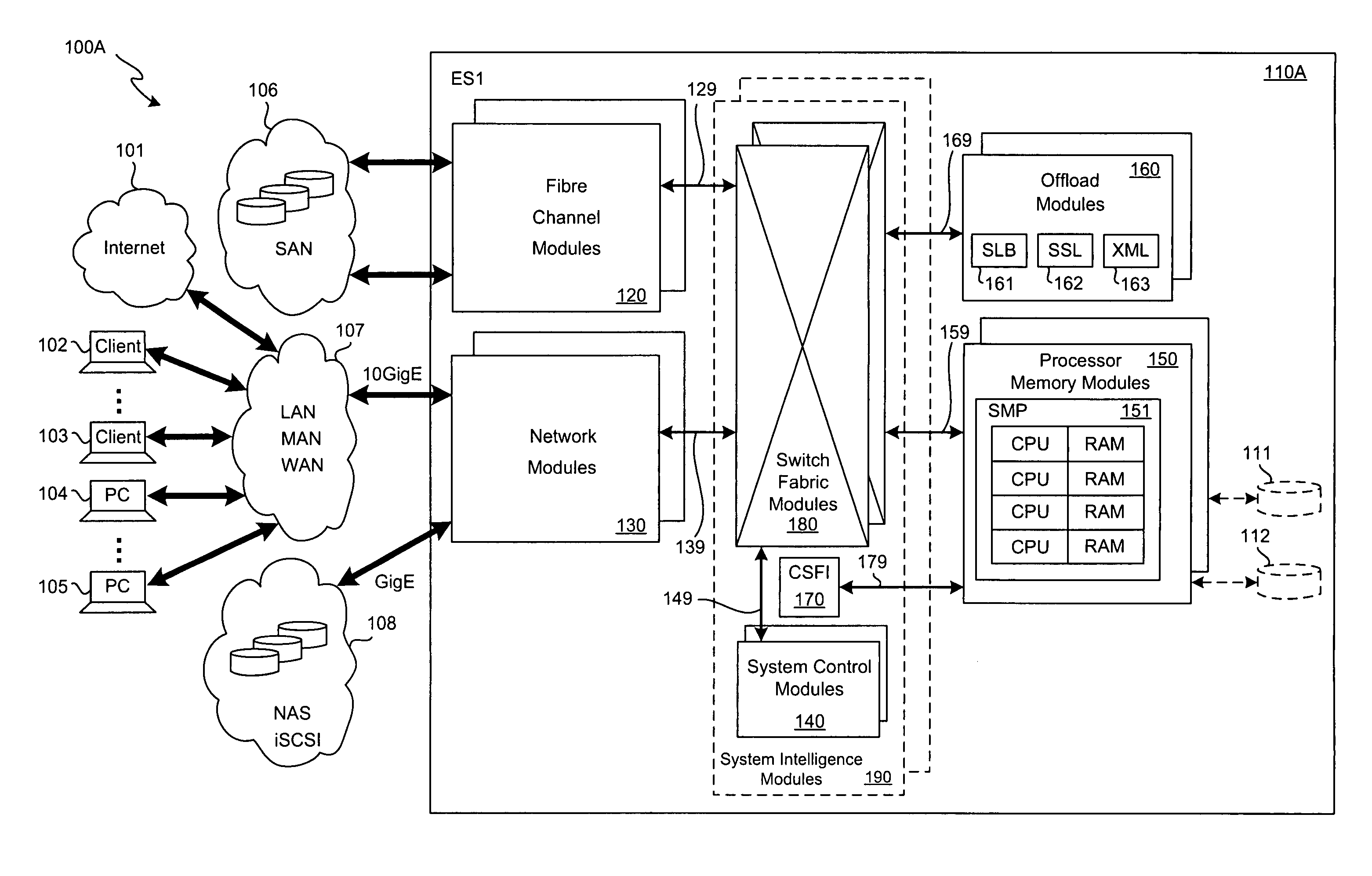

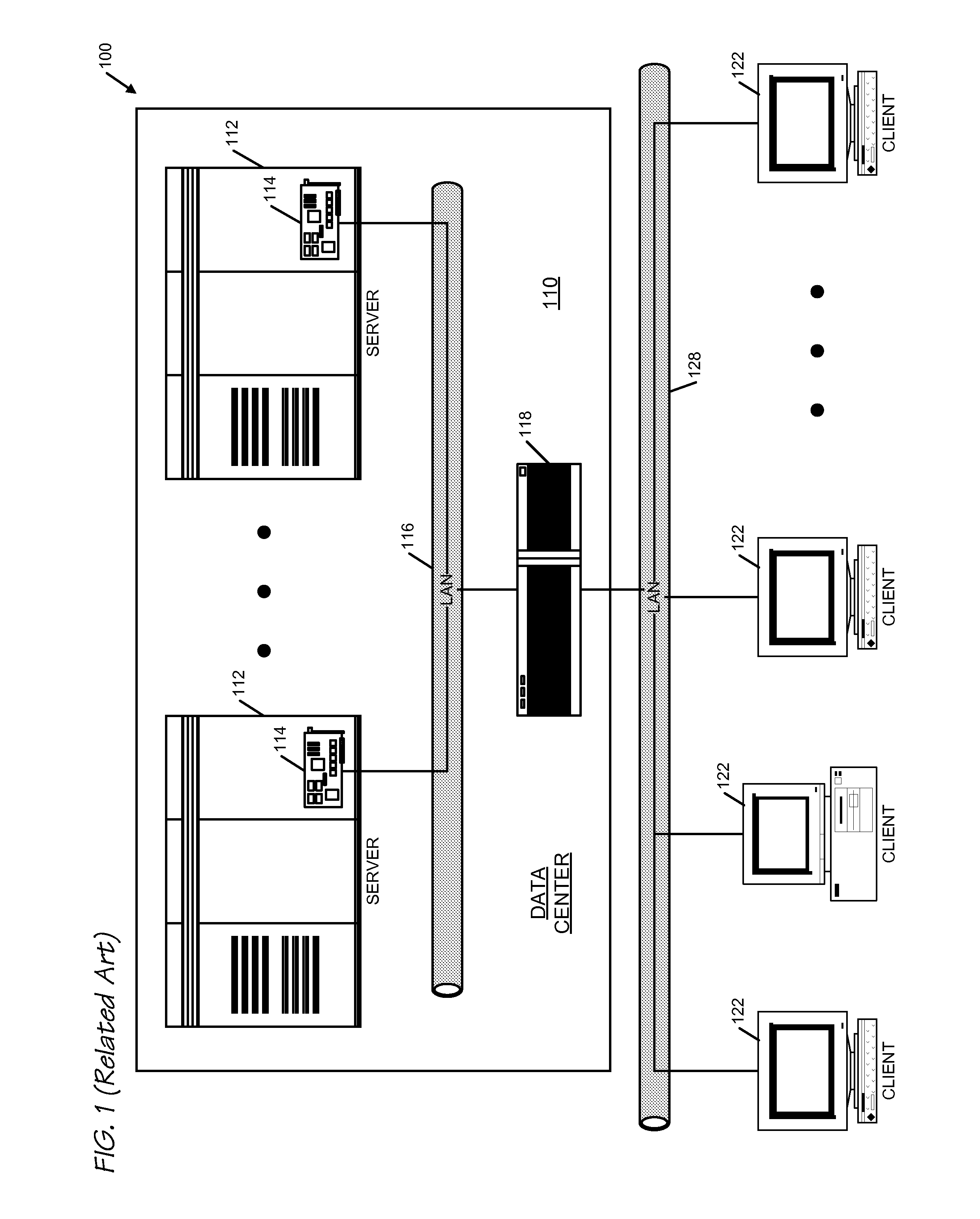

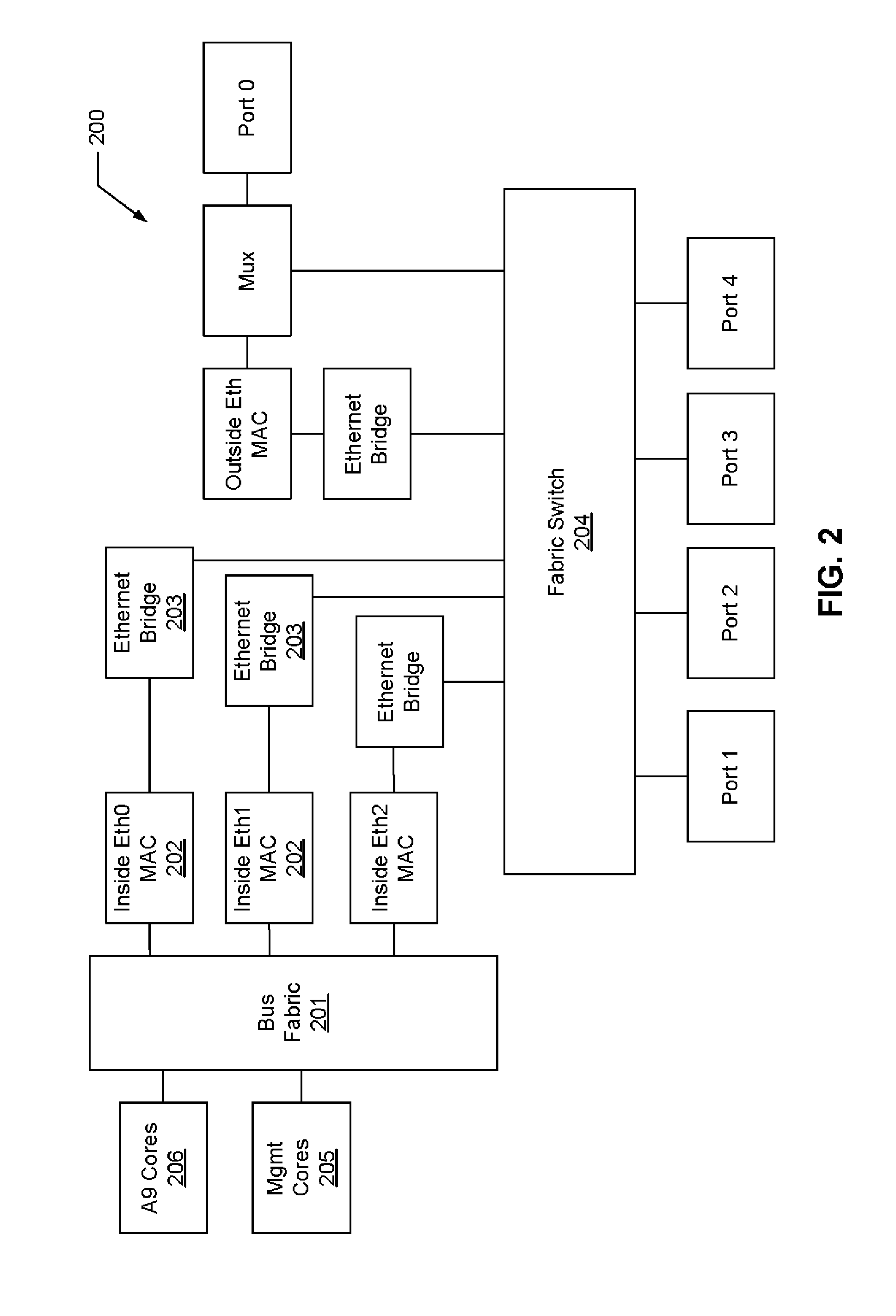

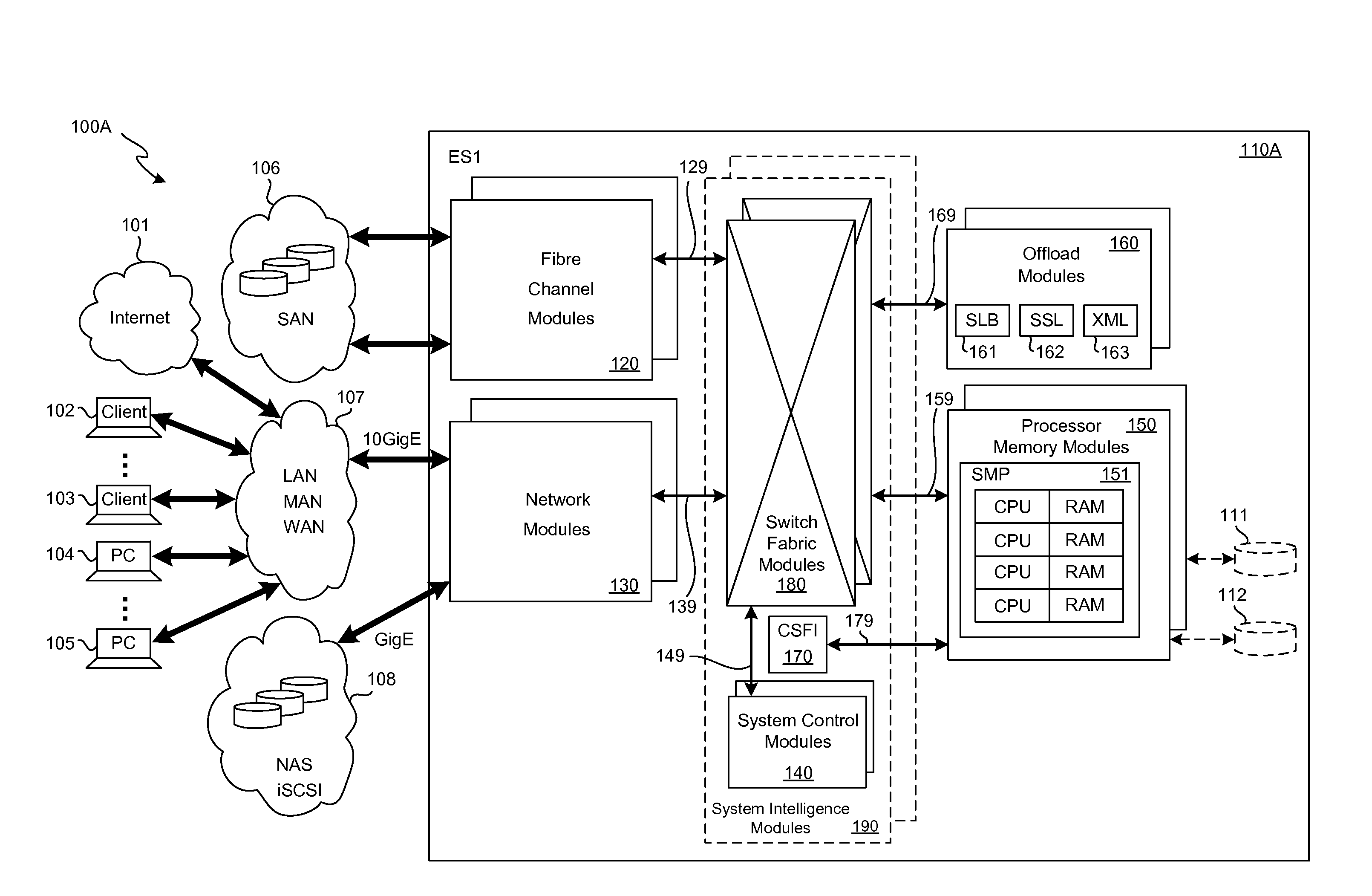

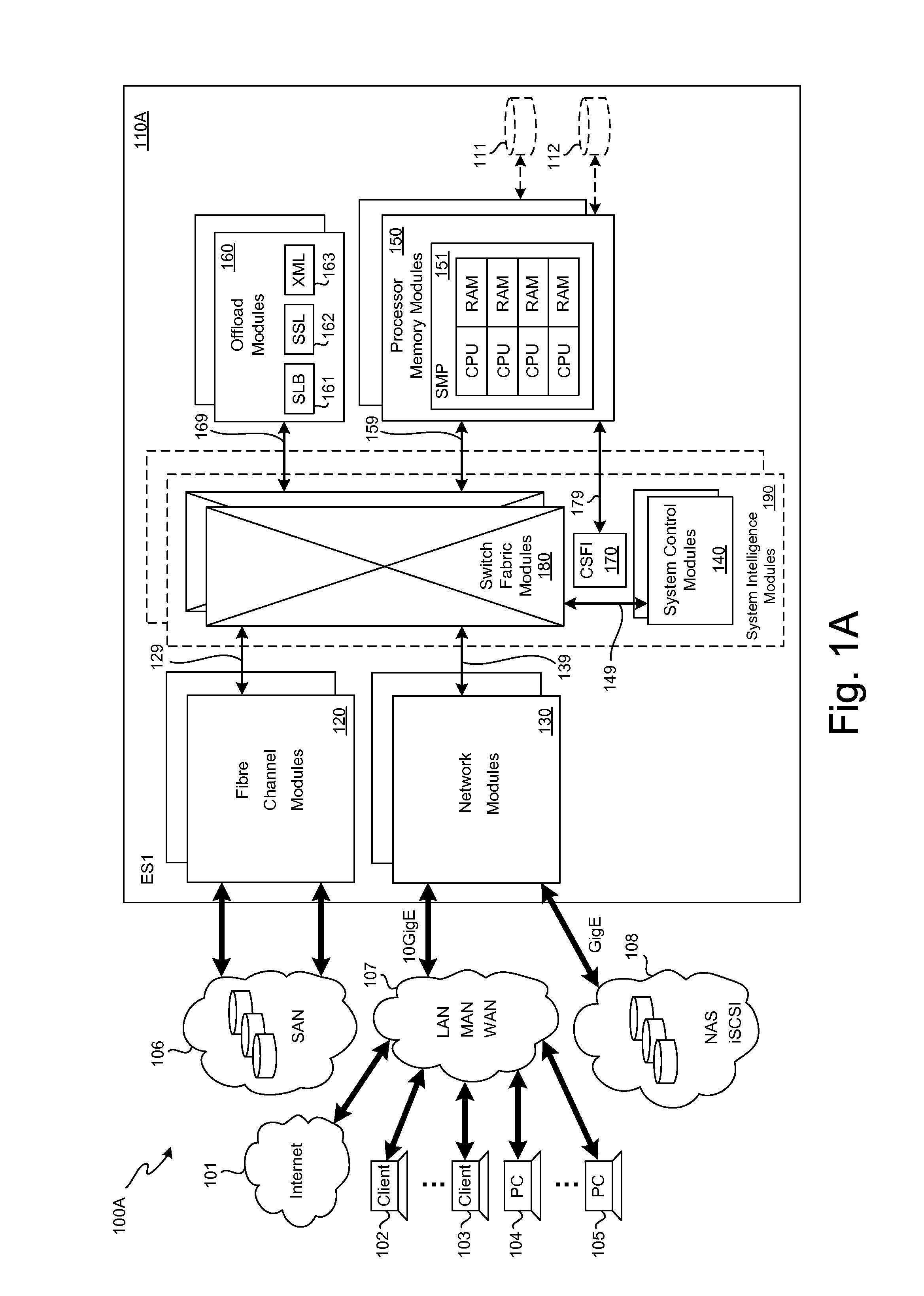

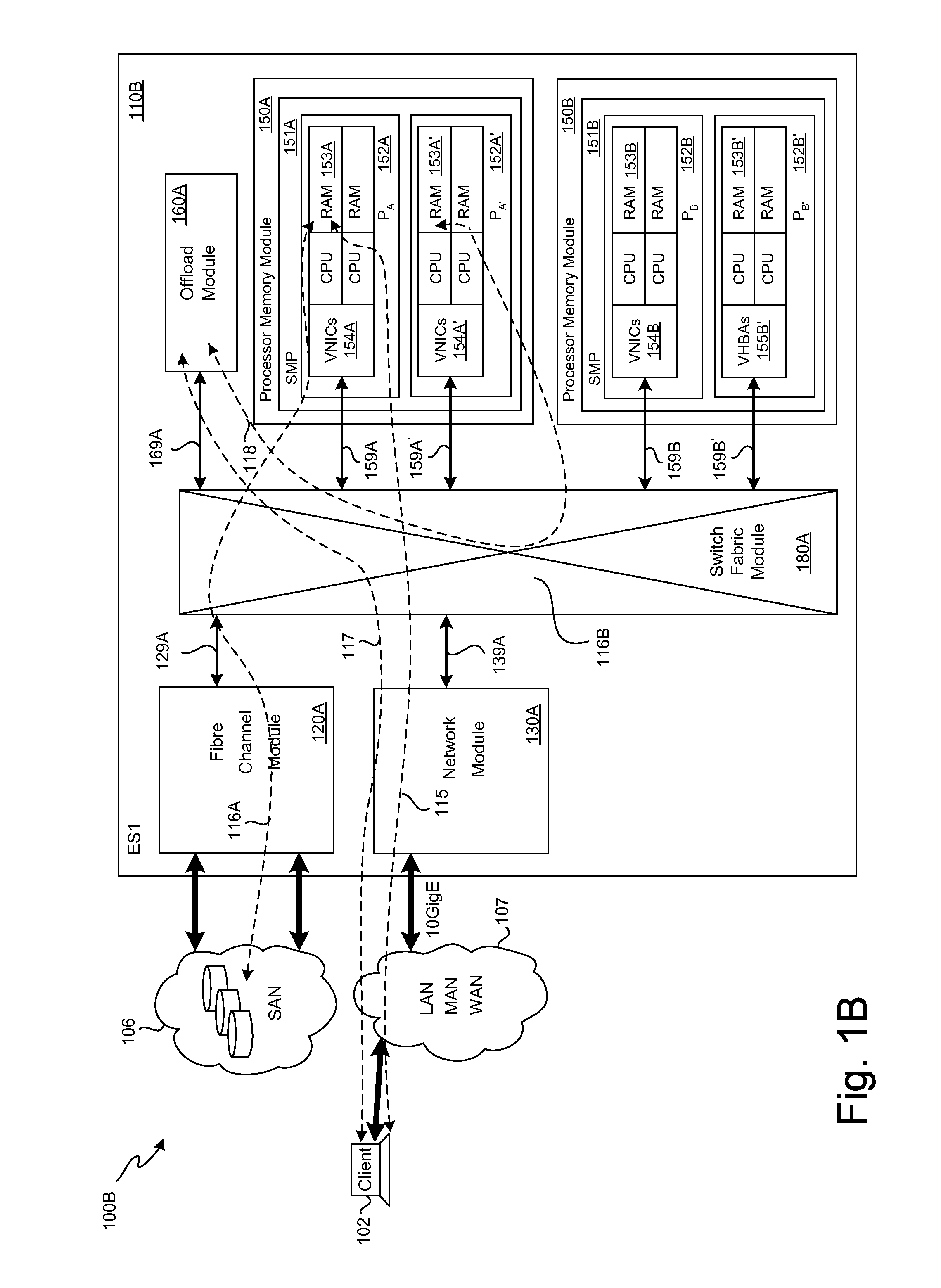

A Small Computer System Interface (SCSI) transport for fabric backplane enterprise servers provides for local and remote communication of storage system information between storage sub-system elements of an ES system and other elements of an ES system via a storage interface. The transport includes encapsulation of information for communication via a reliable transport implemented in part across a cellifying switch fabric. The transport may optionally include communication via Ethernet frames over any of a local network or the Internet. Remote Direct Memory Access (RDMA) and Direct Data Placement (DDP) protocols are used to communicate the information (commands, responses, and data) between SCSI initiator and target end-points. A Fibre Channel Module (FCM) may be operated as a SCSI target providing a storage interface to any of a Processor Memory Module (PMM), a System Control Module (SCM), and an OffLoad Module (OLM) operated as a SCSI initiator.

Owner:ORACLE INT CORP

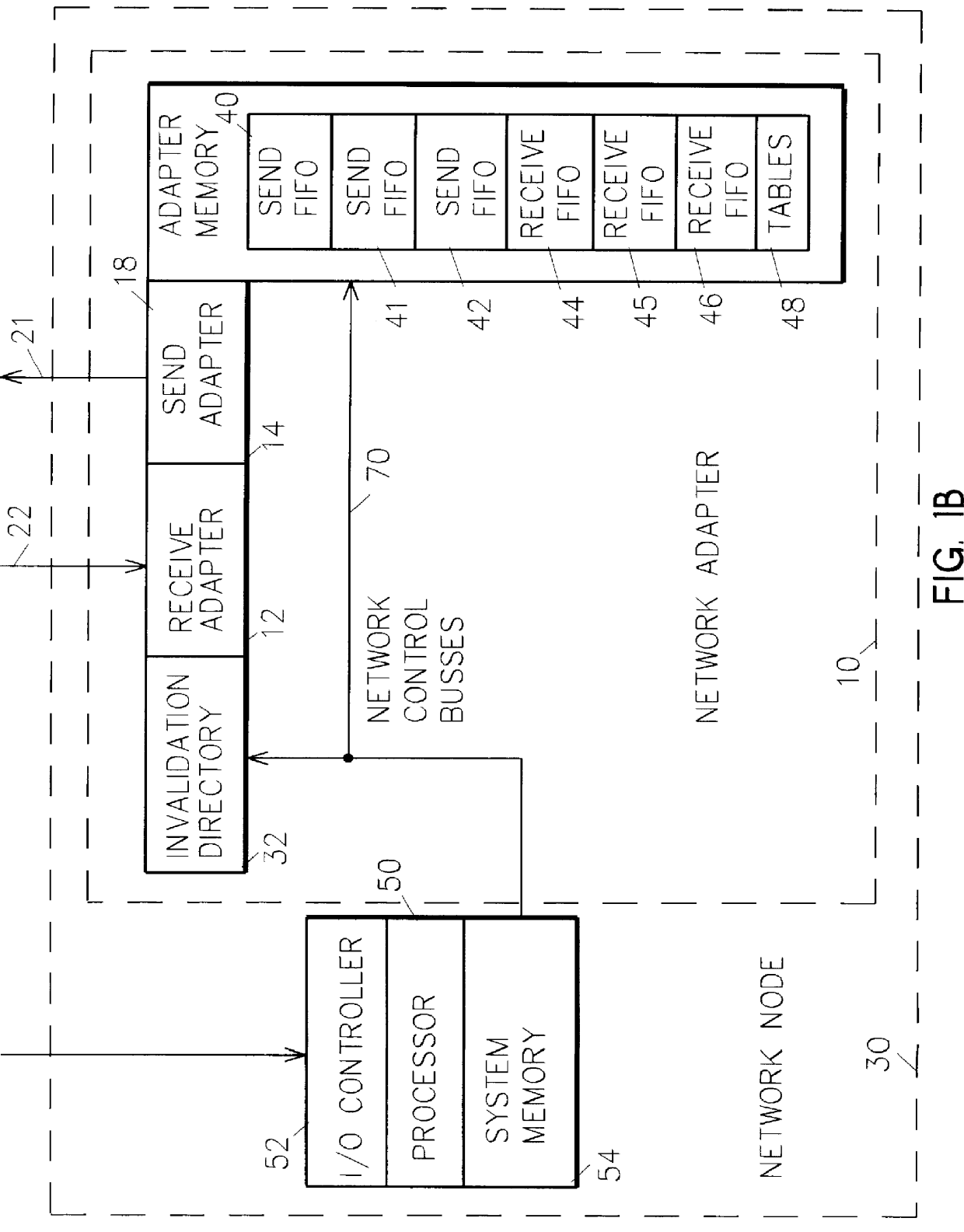

Memory controller for controlling memory accesses across networks in distributed shared memory processing systems

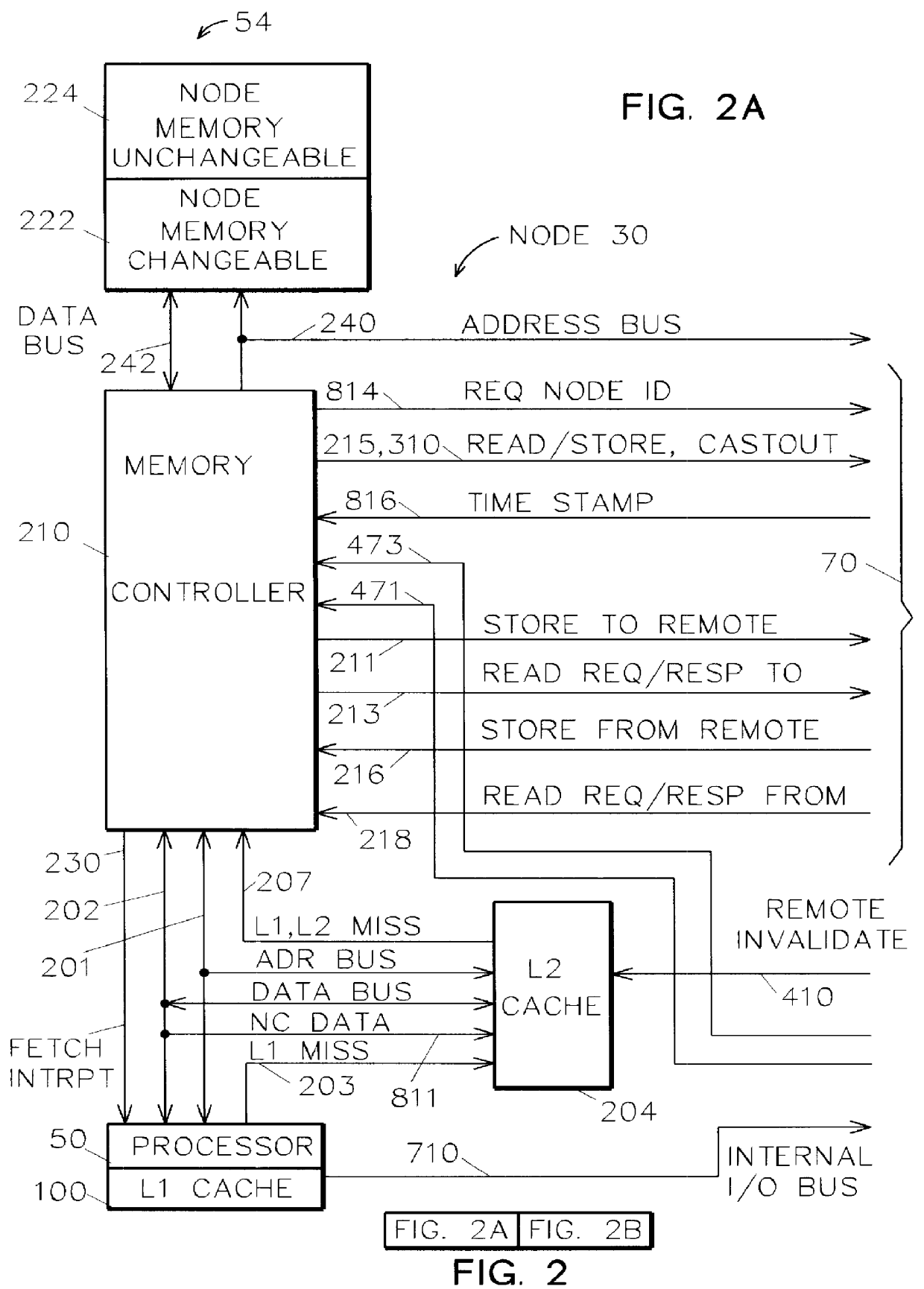

InactiveUS6044438AMore efficient cache coherent systemData processing applicationsMemory adressing/allocation/relocationRemote memory accessRemote direct memory access

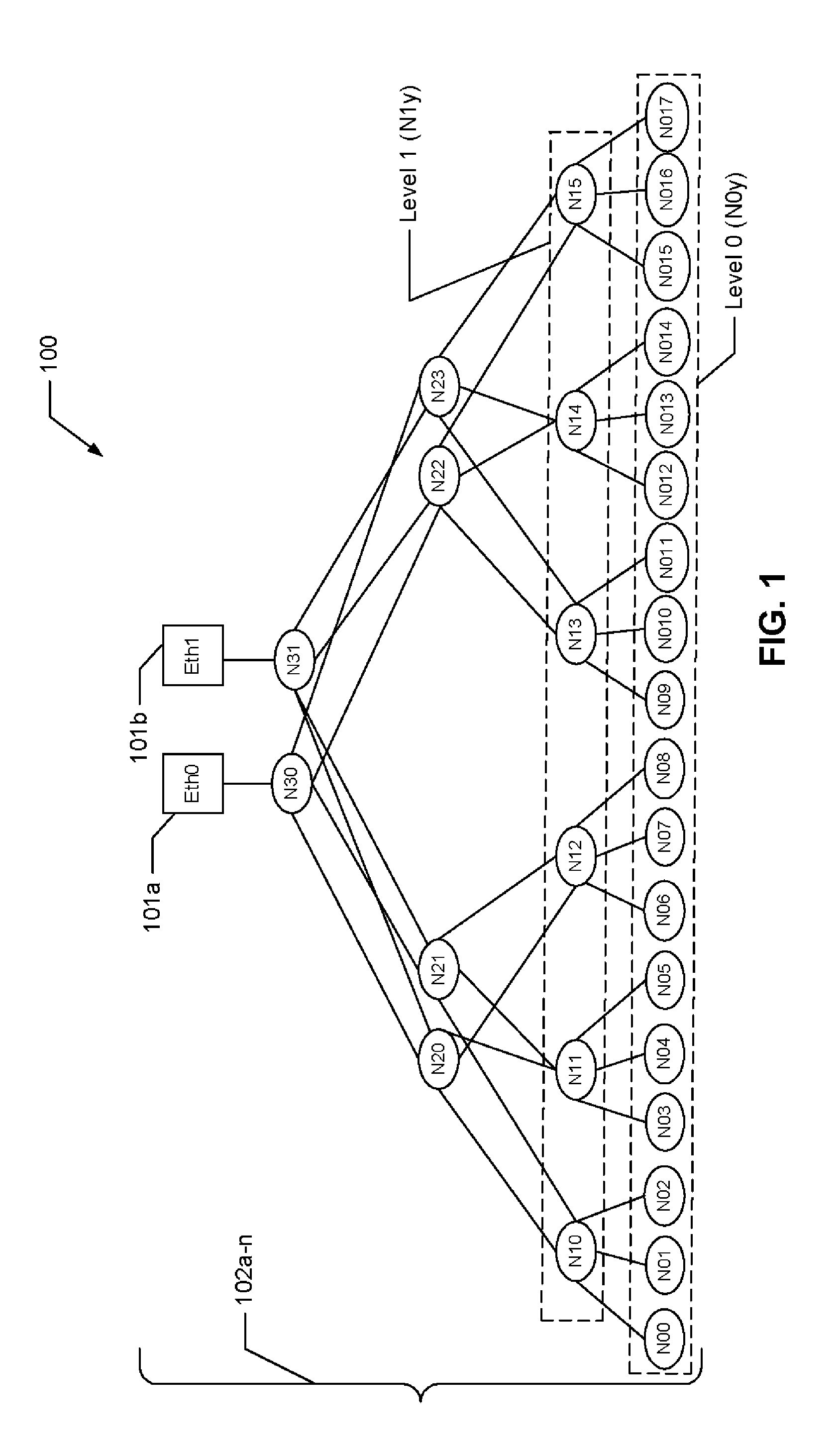

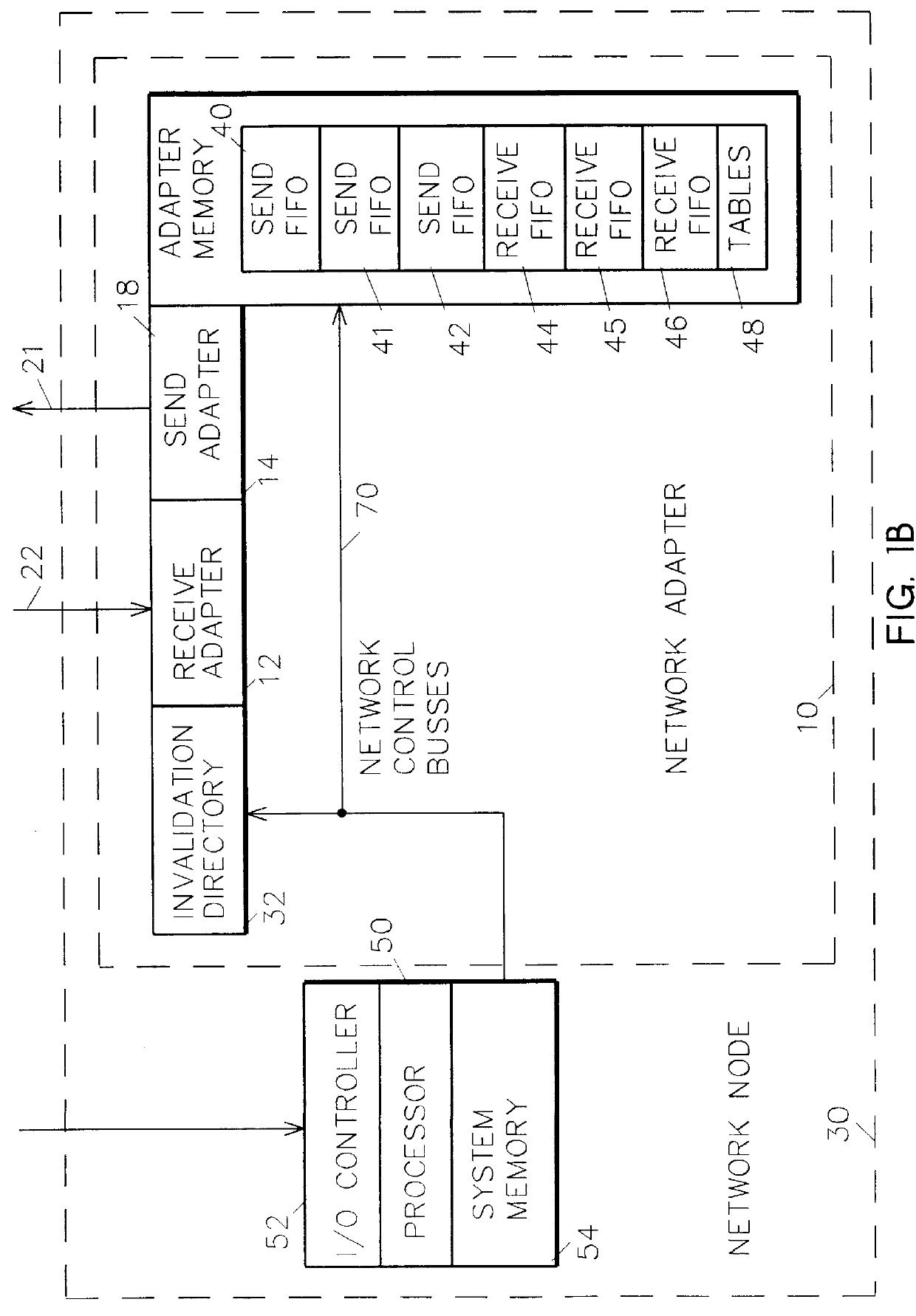

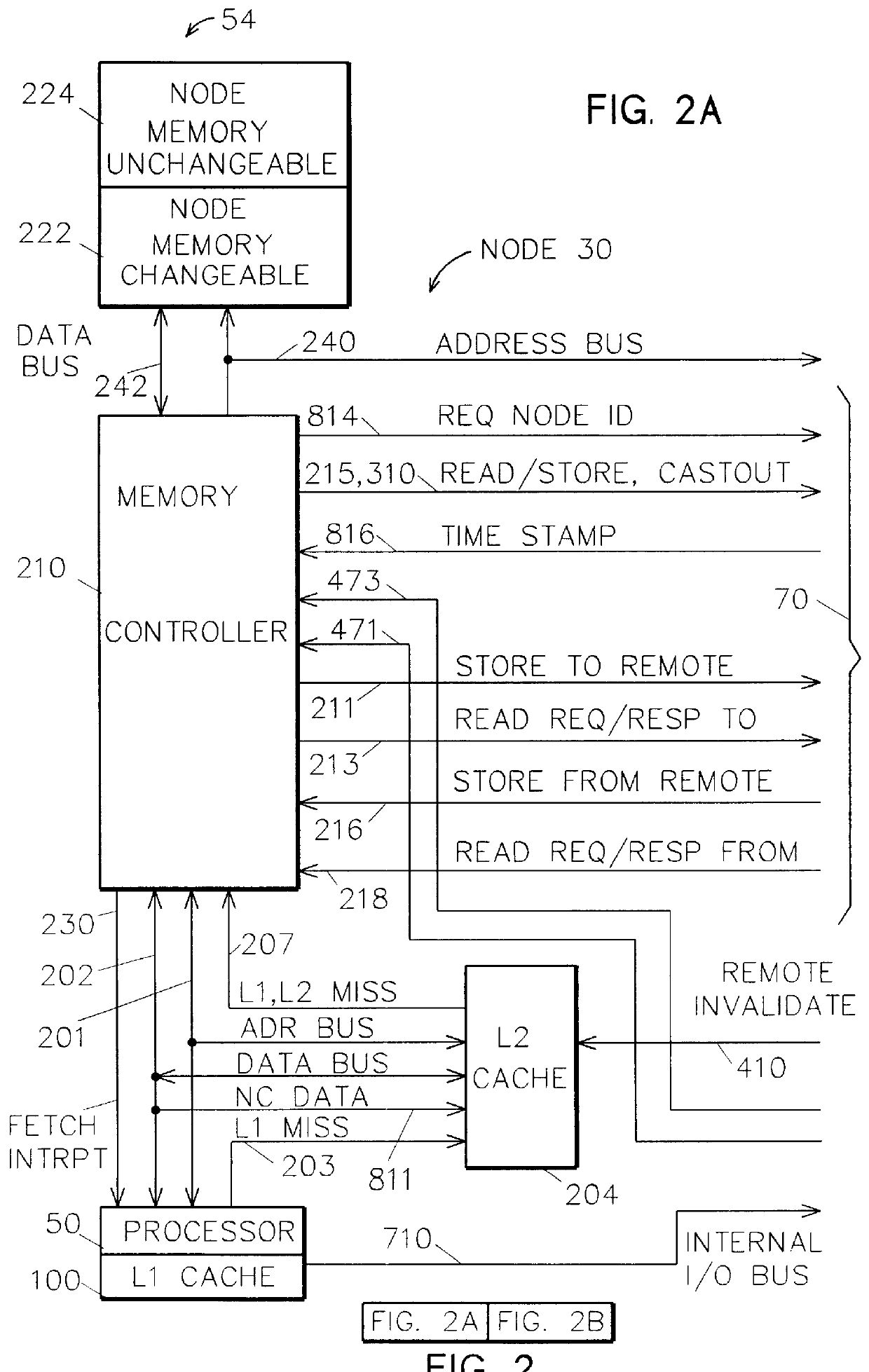

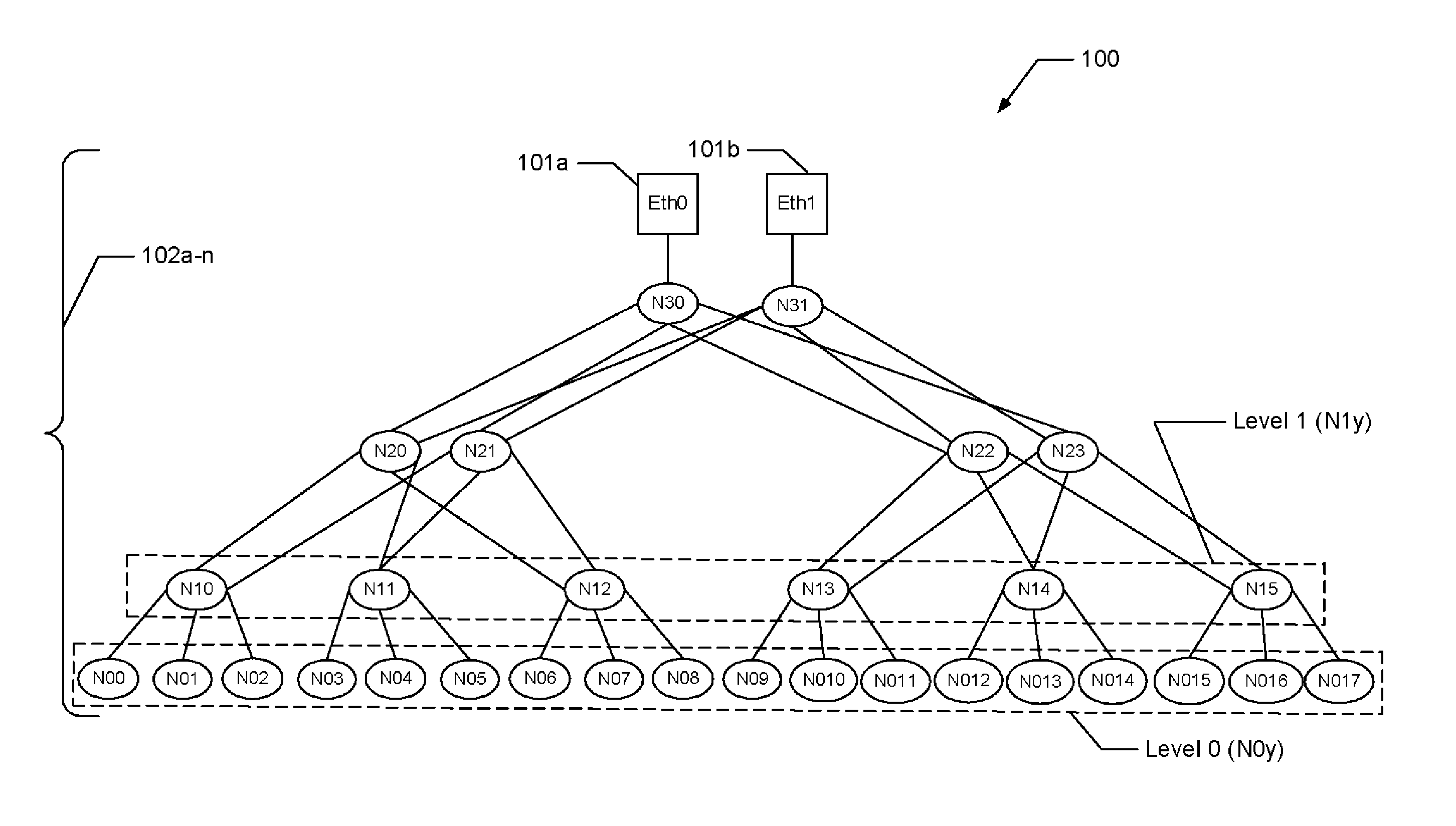

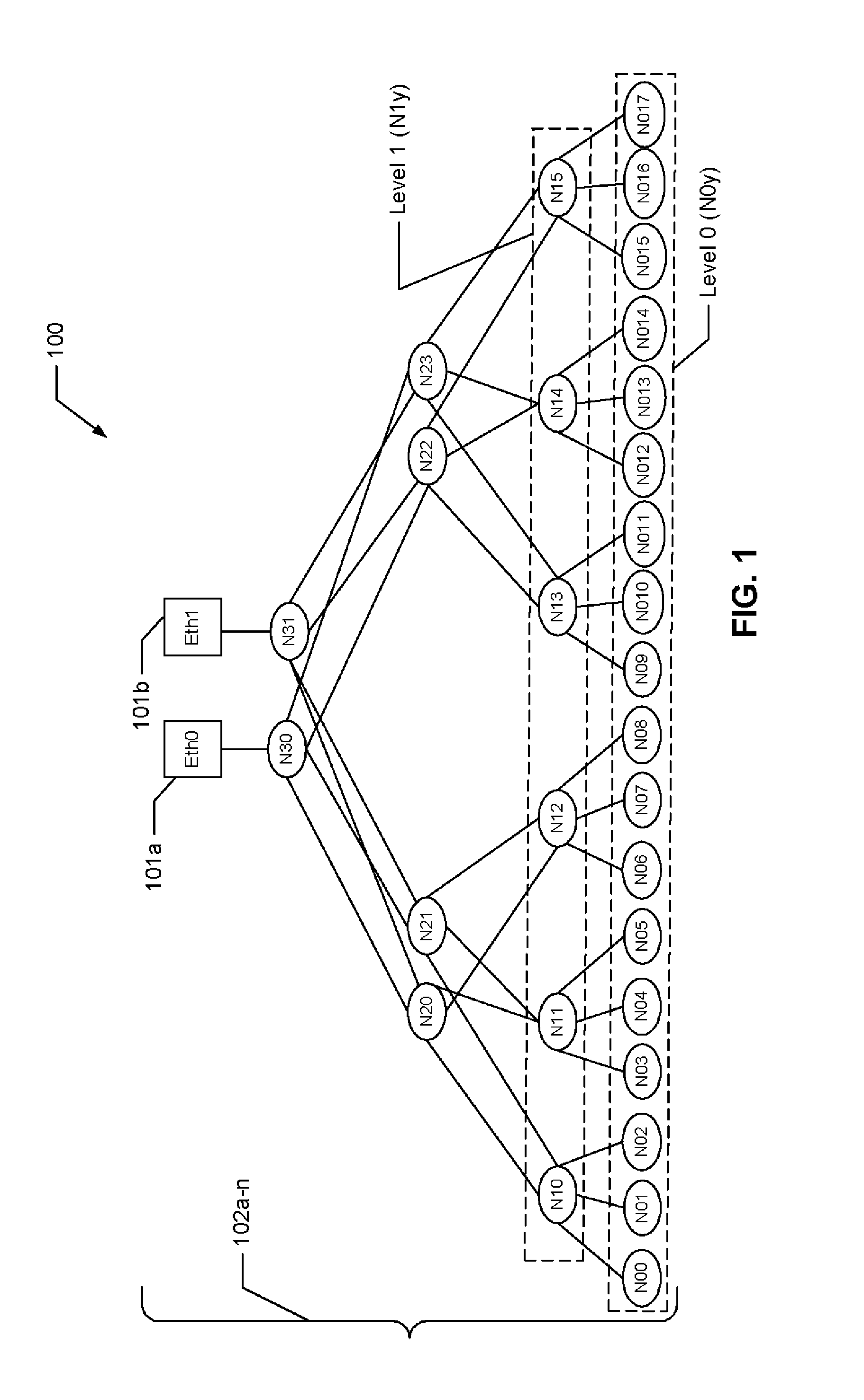

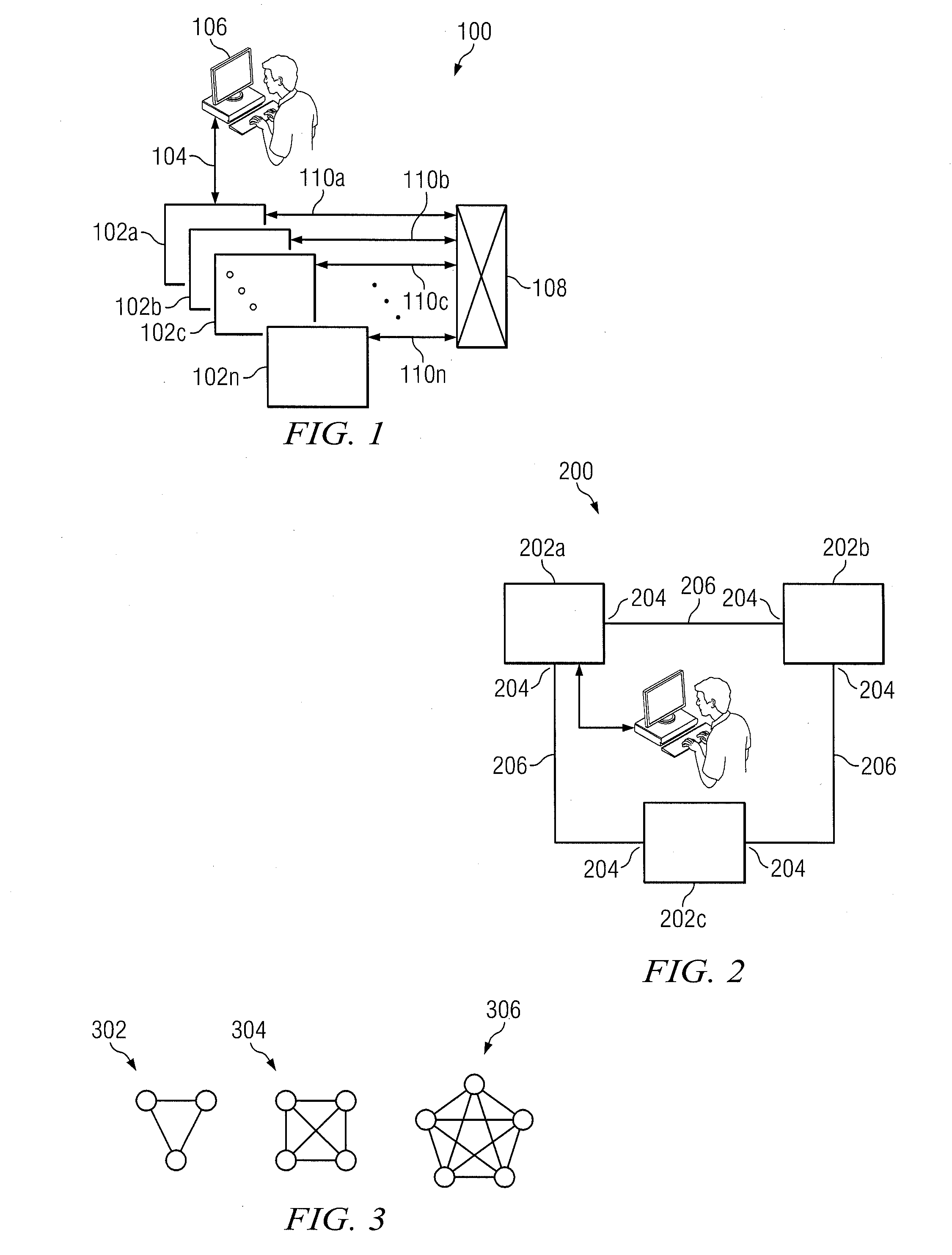

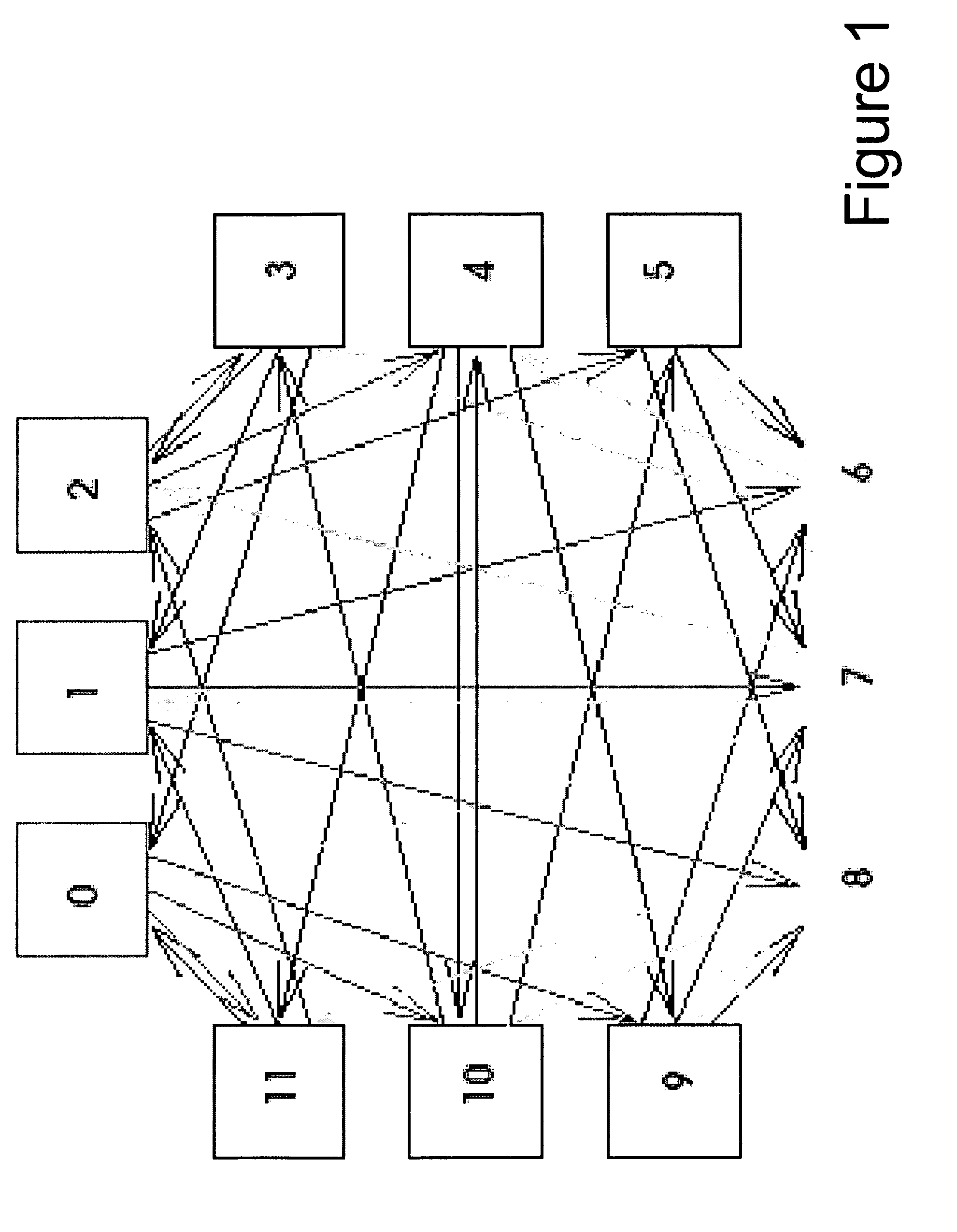

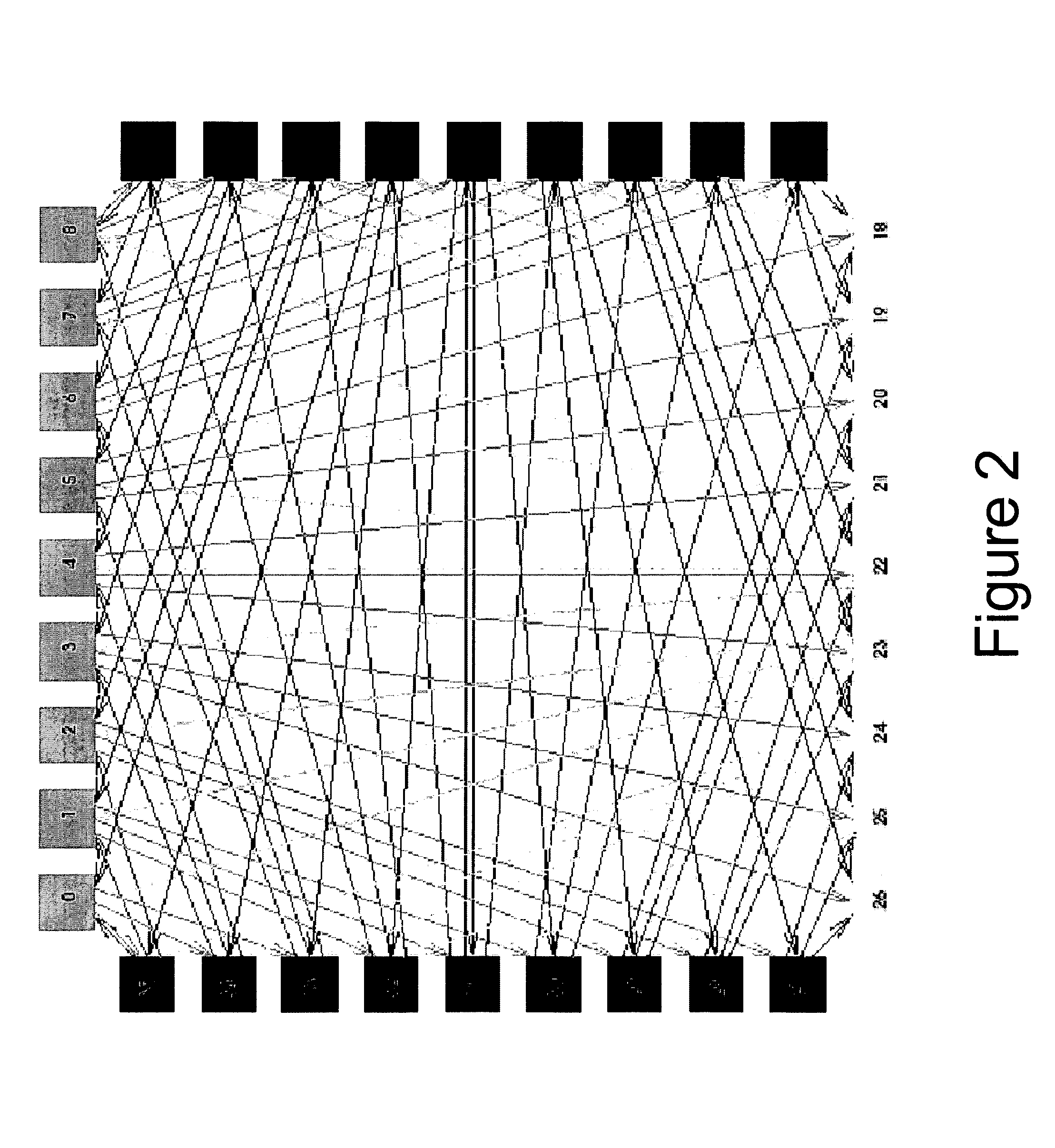

A shared memory parallel processing system interconnected by a multi-stage network combines new system configuration techniques with special-purpose hardware to provide remote memory accesses across the network, while controlling cache coherency efficiently across the network. The system configuration techniques include a systematic method for partitioning and controlling the memory in relation to local verses remote accesses and changeable verses unchangeable data. Most of the special-purpose hardware is implemented in the memory controller and network adapter, which implements three send FIFOs and three receive FIFOs at each node to segregate and handle efficiently invalidate functions, remote stores, and remote accesses requiring cache coherency. The segregation of these three functions into different send and receive FIFOs greatly facilitates the cache coherency function over the network. In addition, the network itself is tailored to provide the best efficiency for remote accesses.

Owner:IBM CORP

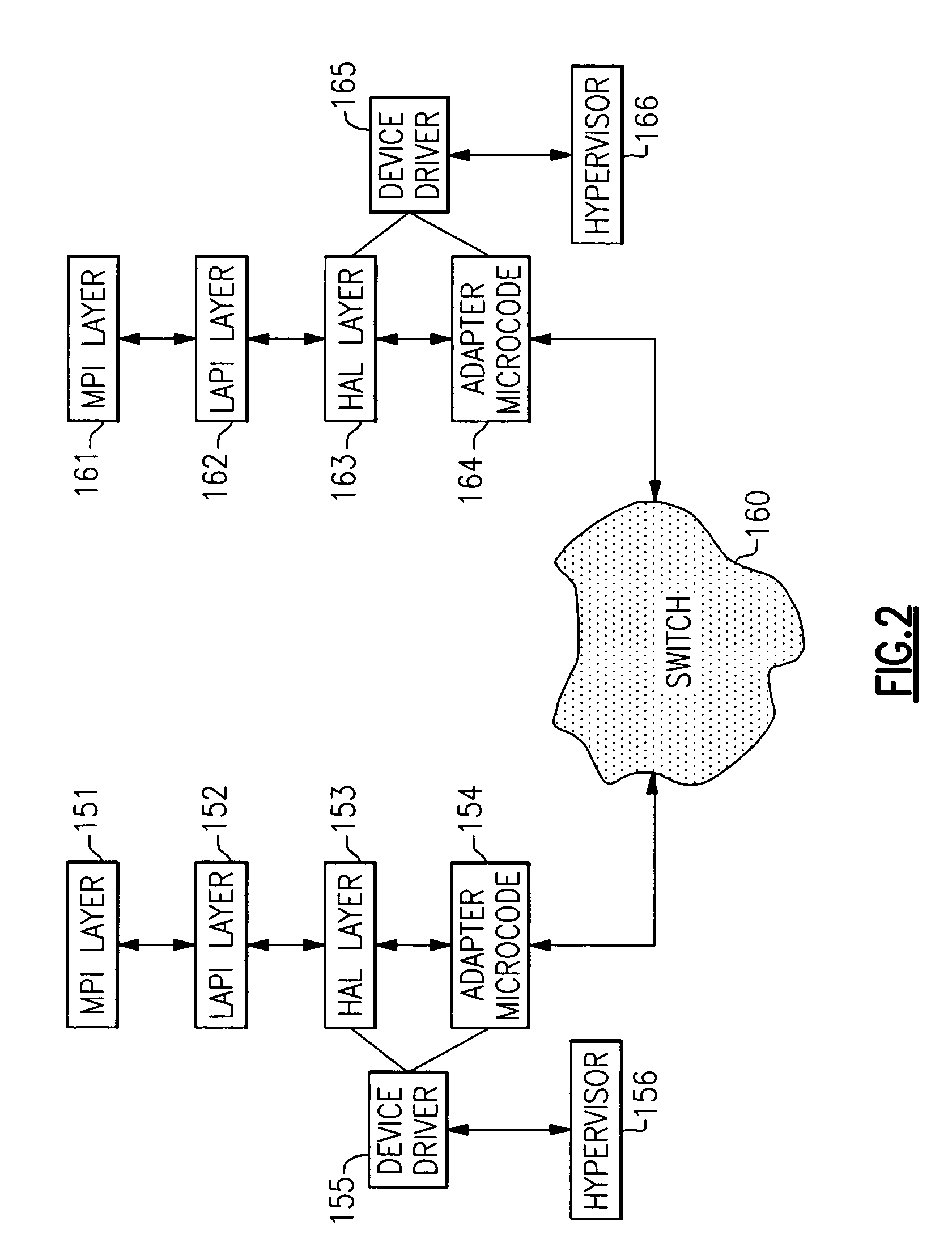

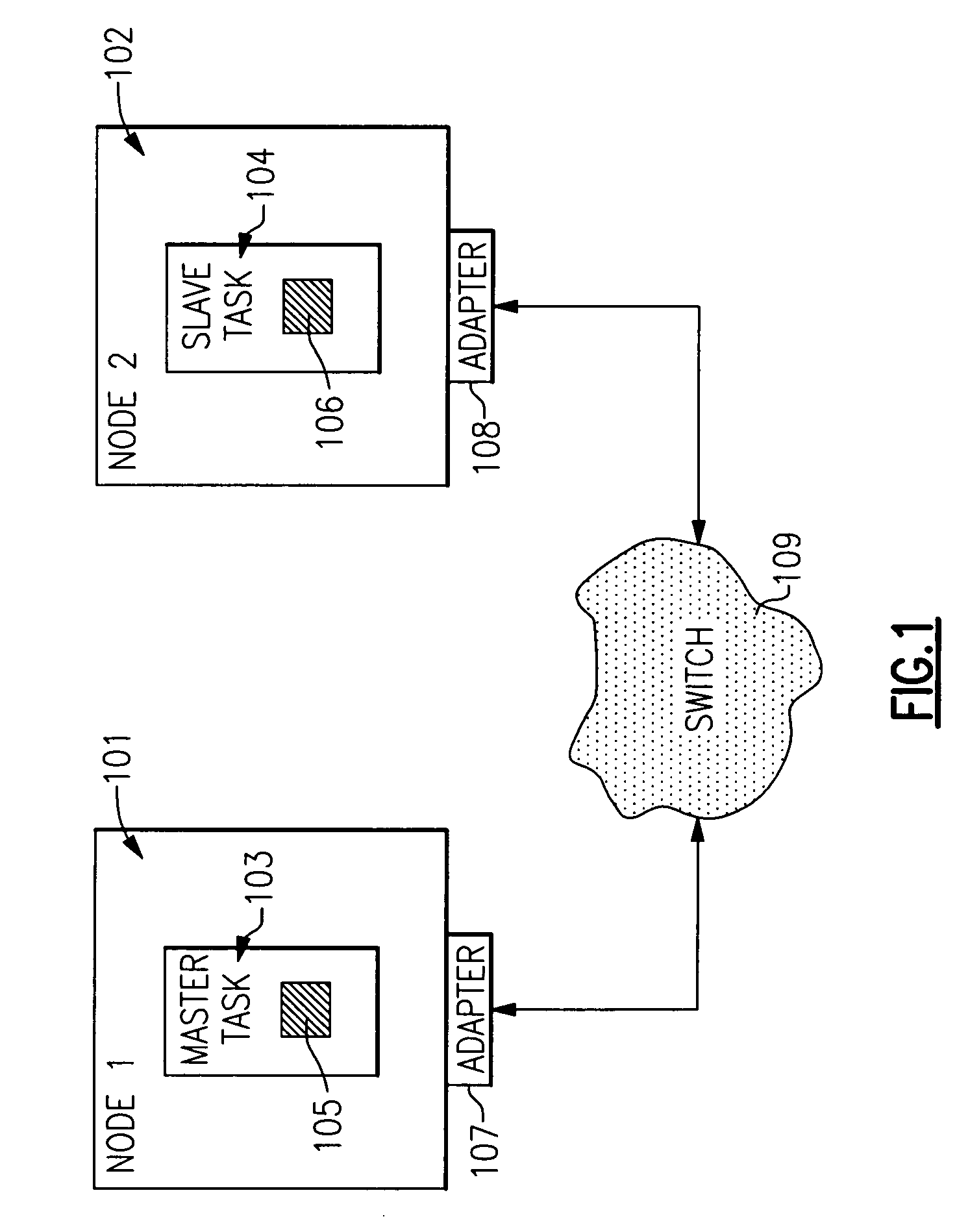

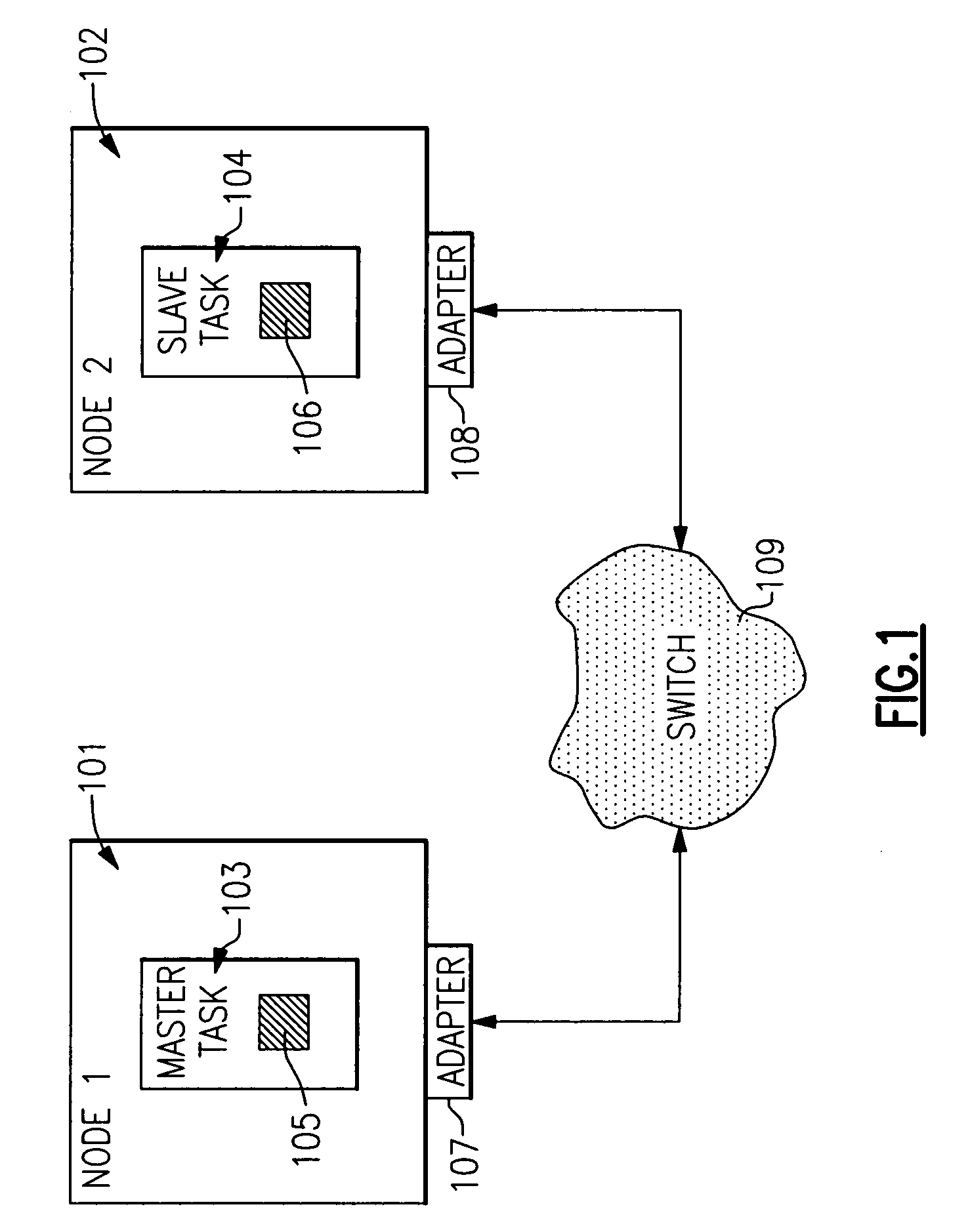

Failover mechanisms in RDMA operations

InactiveUS20060045005A1Frequency-division multiplex detailsTransmission systemsFailoverData processing system

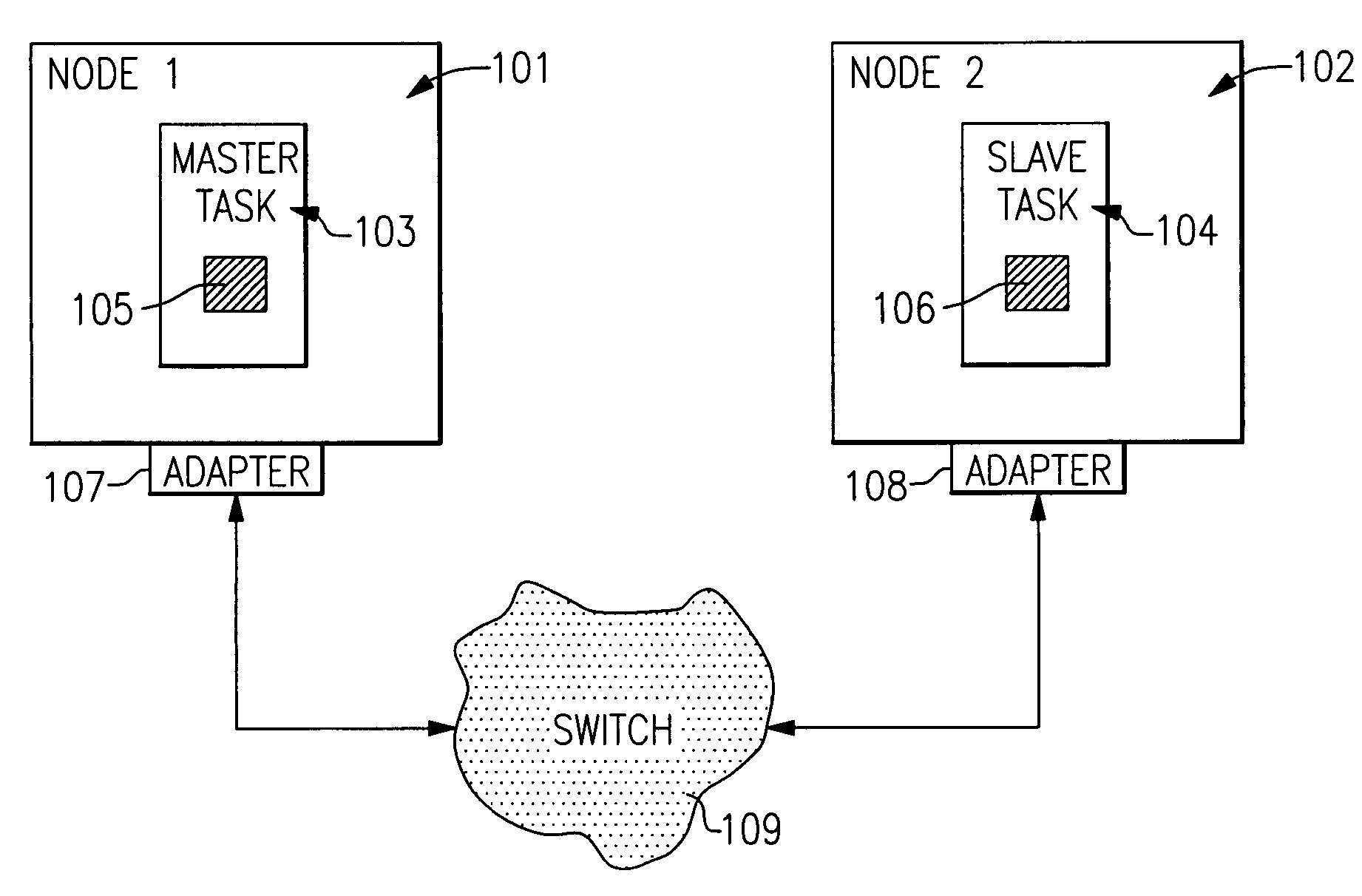

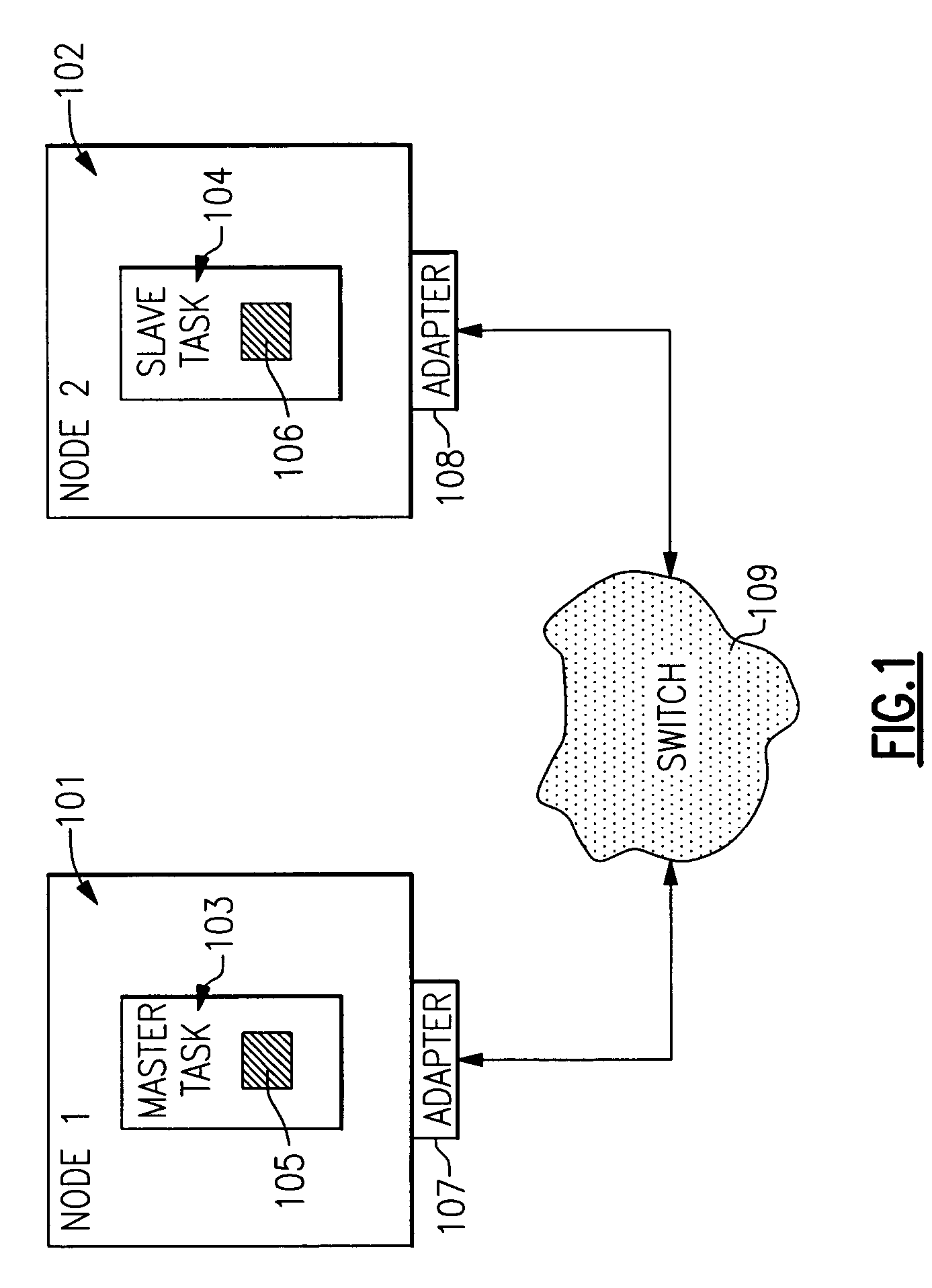

In remote direct memory access transfers in a multinode data processing system in which the nodes communicate with one another through communication adapters coupled to a switch or network, failures in the nodes or in the communication adapters can produce the phenomenon known as trickle traffic, which is data that has been received from the switch or from the network that is stale but which may have all the signatures of a valid packet data. The present invention addresses the trickle traffic problem in two situations: node failure and adapter failure. In the node failure situation randomly generated keys are used to reestablish connections to the adapter while providing a mechanism for the recognition of stale packets. In the adapter failure situation, a round robin context allocation approach is used with adapter state contexts being provided with state information which helps to identify stale packets. In another approach to handling the adapter failure situation counts are assigned which provide an adapter failure number to the node which will not match a corresponding number in a context field in the adapter, thus enabling the identification of stale packets.

Owner:IBM CORP

System and method for highly scalable high-speed content-based filtering and load balancing in interconnected fabrics

InactiveUS7346702B2Digital computer detailsMultiprogramming arrangementsTraffic capacityNetworking hardware

In some embodiments of the present invention, a system includes one or more server computers having multi-channel reliable network hardware and a proxy. The proxy is able to receive packet-oriented traffic from a client computer, to convert a session of the packet-oriented traffic into transactions, and to send the transactions to one of the server computers. The transactions include remote direct memory access messages.

Owner:MELLANOX TECH TLV

Apparatus and method for stateless CRC calculation

ActiveUS20070165672A1Computationally efficientCode conversionTime-division multiplexRemote direct memory accessComputer science

A mechanism for performing remote direct memory access (RDMA) operations between a first server and a second server. The apparatus includes a packet parser and a protocol engine. The packet parser processes a TCP segment within an arriving network frame, where the packet parser performs one or more speculative CRC checks according to an upper layer protocol (ULP), and where the one or more speculative CRC checks are performed concurrent with arrival of the network frame. The protocol engine is coupled to the packet parser. The protocol engine receives results of the one or more speculative CRC checks, and selectively employs the results for validation of a framed protocol data unit (FPDU) according to the ULP.

Owner:INTEL CORP

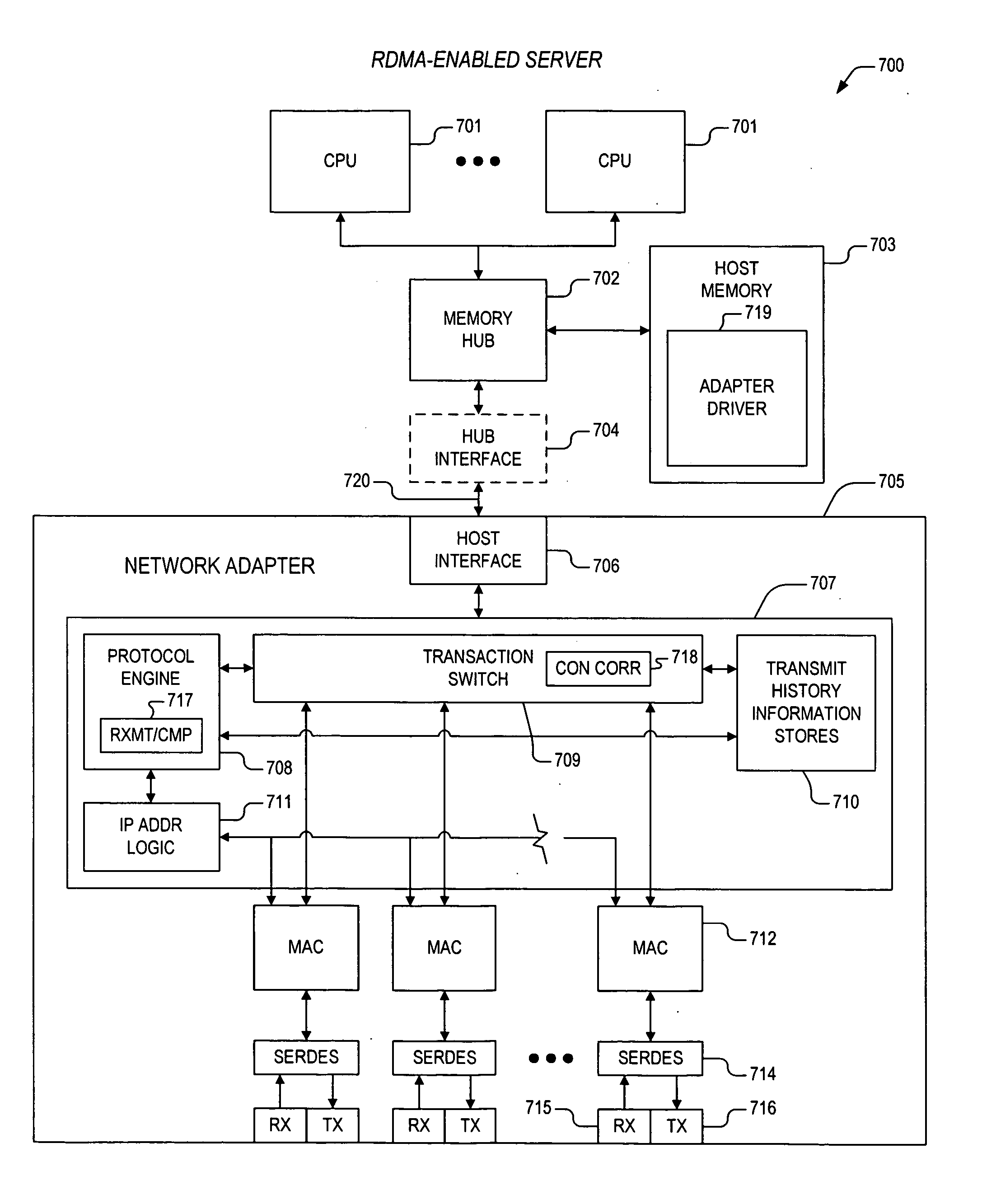

Apparatus and method for out-of-order placement and in-order completion reporting of remote direct memory access operations

InactiveUS20070208820A1Multiple digital computer combinationsTransmissionRemote direct memory accessNetwork structure

A mechanism for performing RDMA operations over a network fabric. Apparatus includes transaction logic to process work queue elements, and to accomplish the RDMA operations over a TCP / IP interface between first and second servers. The transaction logic has out-of-order segment range record stores and a protocol engine. The out-of-order segment range record stores maintains parameters associated with one or more out-of-order segments, the one or more out-of-order segments having been received and corresponding to one or more RDMA messages that are associated with the work queue elements. The protocol engine is coupled to the out-of-order segment range record stores and is configured to access the parameters to enable in-order completion tracking and reporting of the one or more RDMA messages.

Owner:INTEL CORP

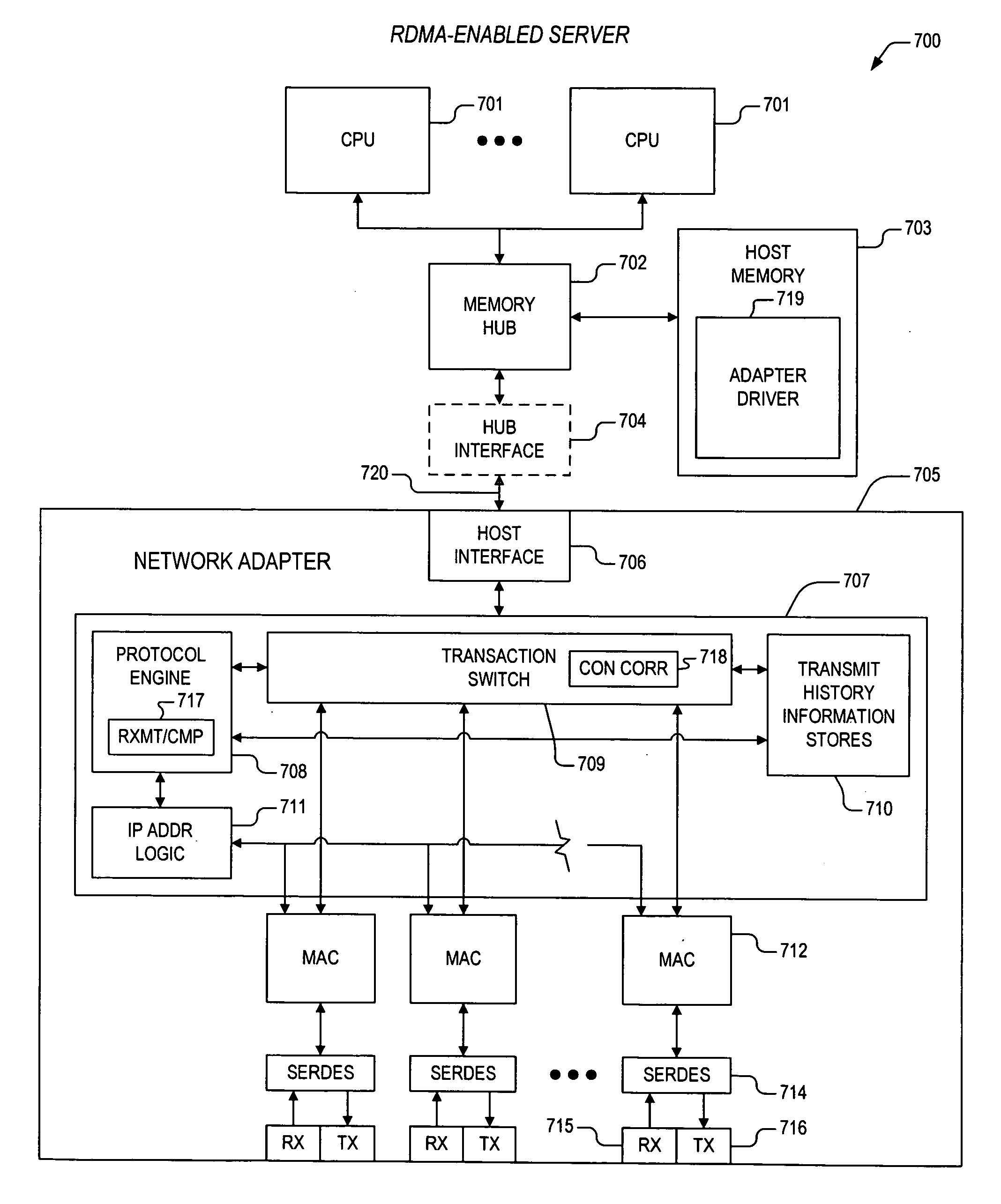

Apparatus and method for packet transmission over a high speed network supporting remote direct memory access operations

ActiveUS20060230119A1Efficient and effective rebuildDigital computer detailsTransmissionRemote direct memory accessTerm memory

A mechanism for performing remote direct memory access (RDMA) operations between a first server and a second server over an Ethernet fabric. The RDMA operations are initiated by execution of a verb according to a remote direct memory access protocol. The verb is executed by a CPU on the first server. The apparatus includes transaction logic that is configured to process a work queue element corresponding to the verb, and that is configured to accomplish the RDMA operations over a TCP / IP interface between the first and second servers, where the work queue element resides within first host memory corresponding to the first server. The transaction logic includes transmit history information stores and a protocol engine. The transmit history information stores maintains parameters associated with said work queue element. The protocol engine is coupled to the transmit history information stores and is configured to access the parameters to enable retransmission of one or more TCP segments corresponding to the RDMA operations.

Owner:INTEL CORP

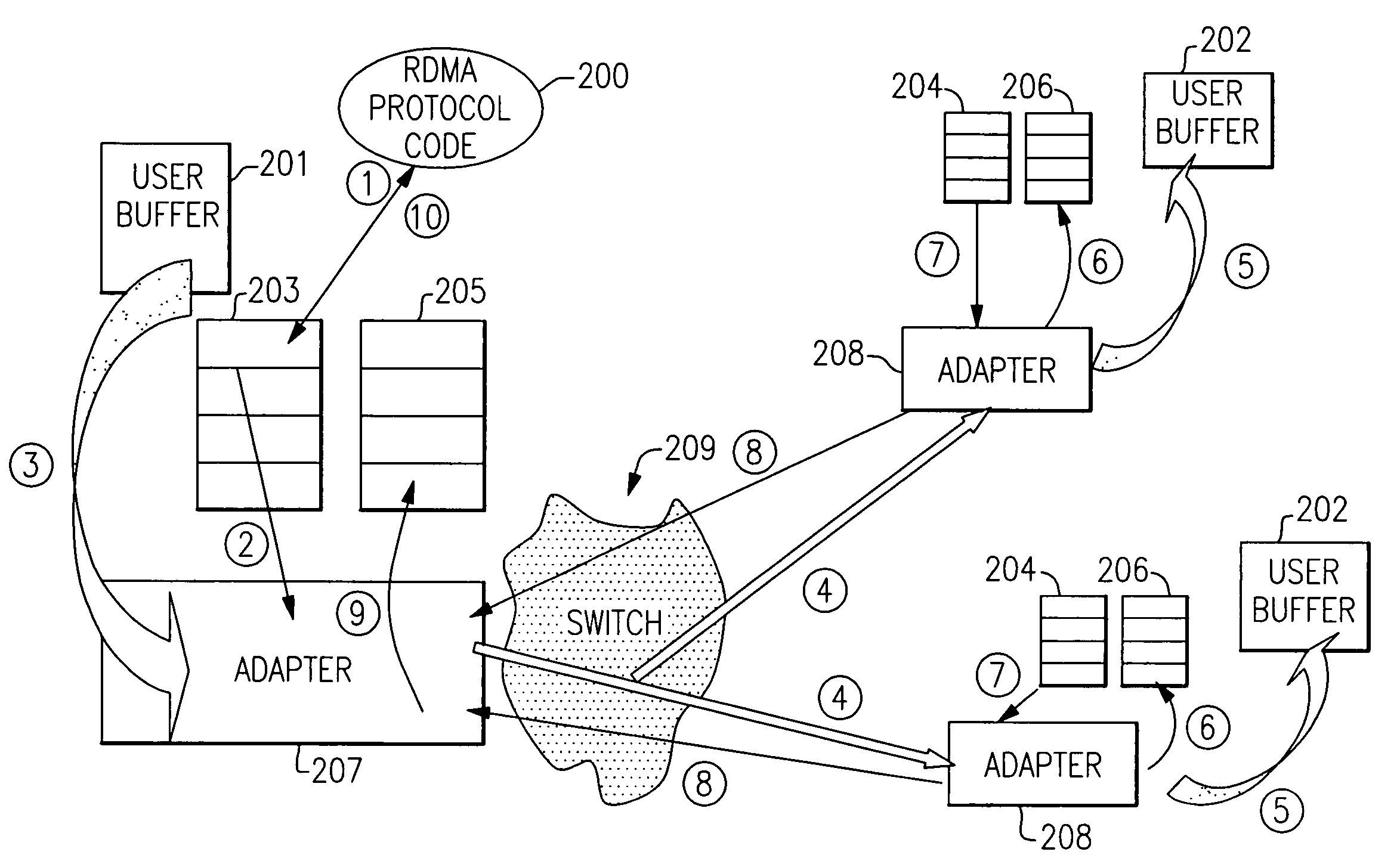

Remote direct memory access with striping over an unreliable datagram transport

In a multinode data processing system in which nodes exchange information over a network or through a switch, a structure and mechanism are provided which enables data packets to be sent and received in any order. Normally, if in-order transmission and receipt are required, then transmission over a single path is essential to insure proper reassembly. However, the present mechanism avoids this necessity and permits Remote Direct Memory Access (RDMA) operations to be carried out simultaneously over multiple paths. This provides a data striping mode of operation in which data transfers can be carried out much faster since packets of single or multiple RDMA messages can be portioned and transferred over several paths simultaneously, thus providing the ability to utilize the full system bandwidth that is available.

Owner:IBM CORP

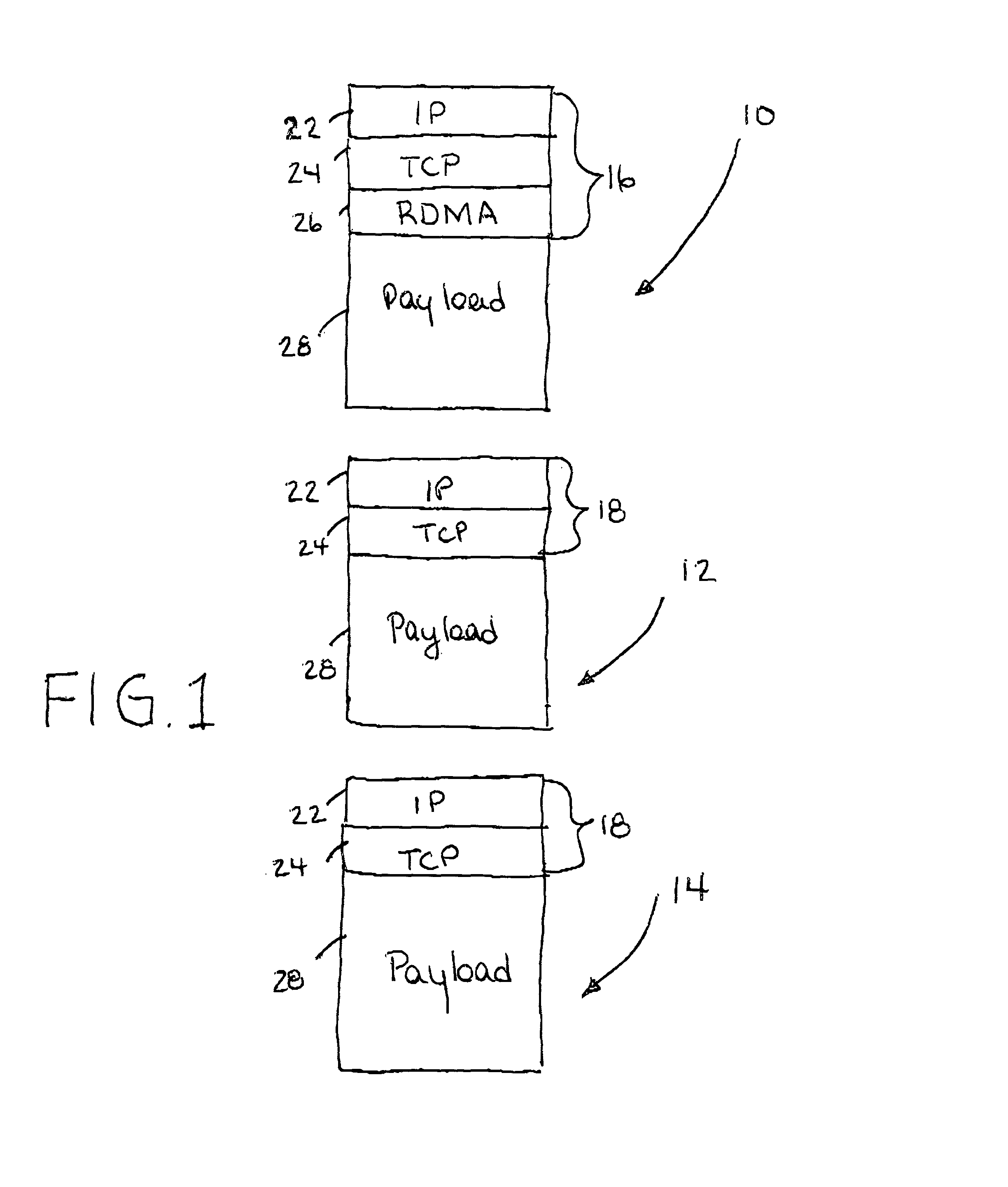

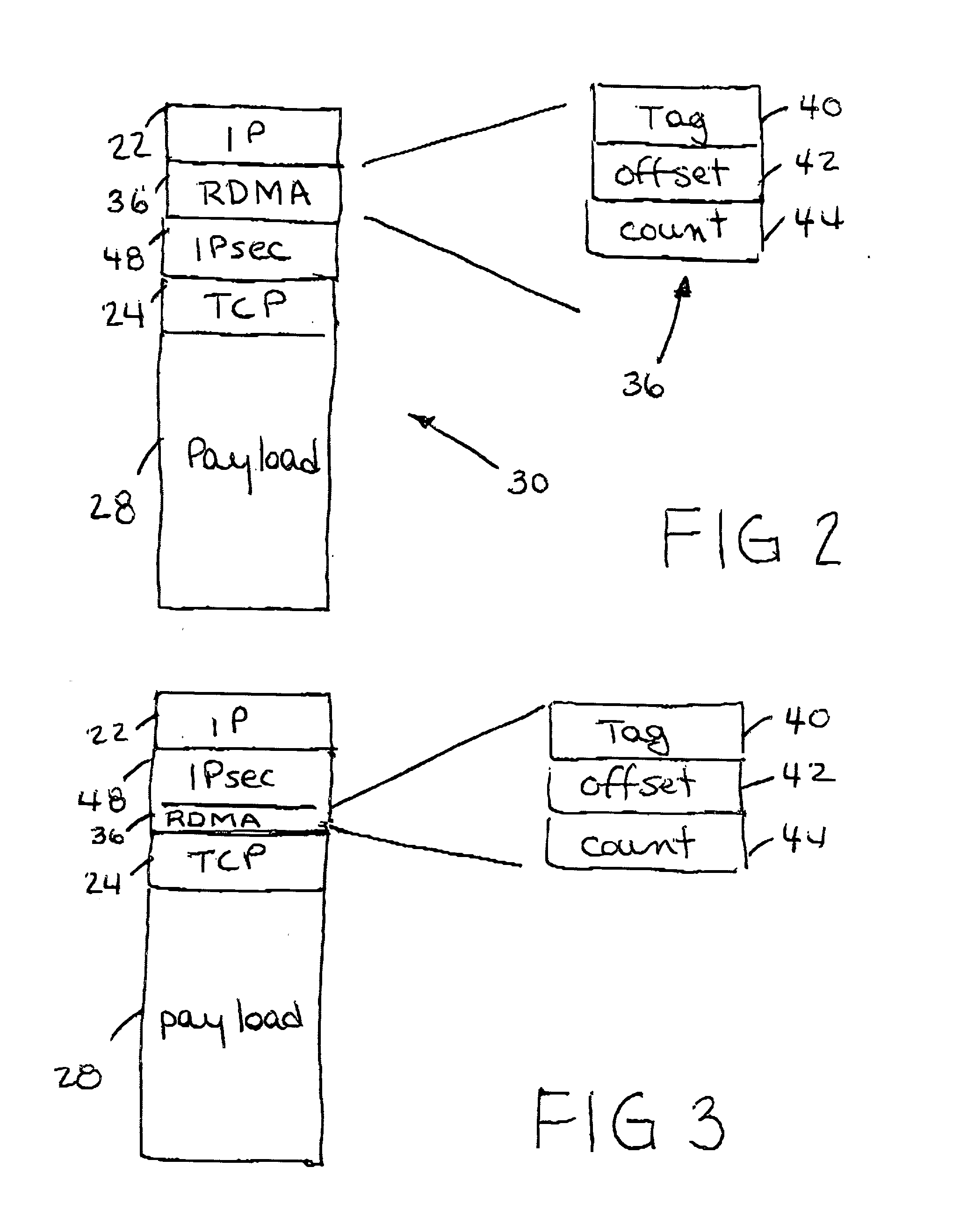

IP headers for remote direct memory access and upper level protocol framing

InactiveUS20020085562A1Time-division multiplexData switching by path configurationDirect memory accessRemote direct memory access

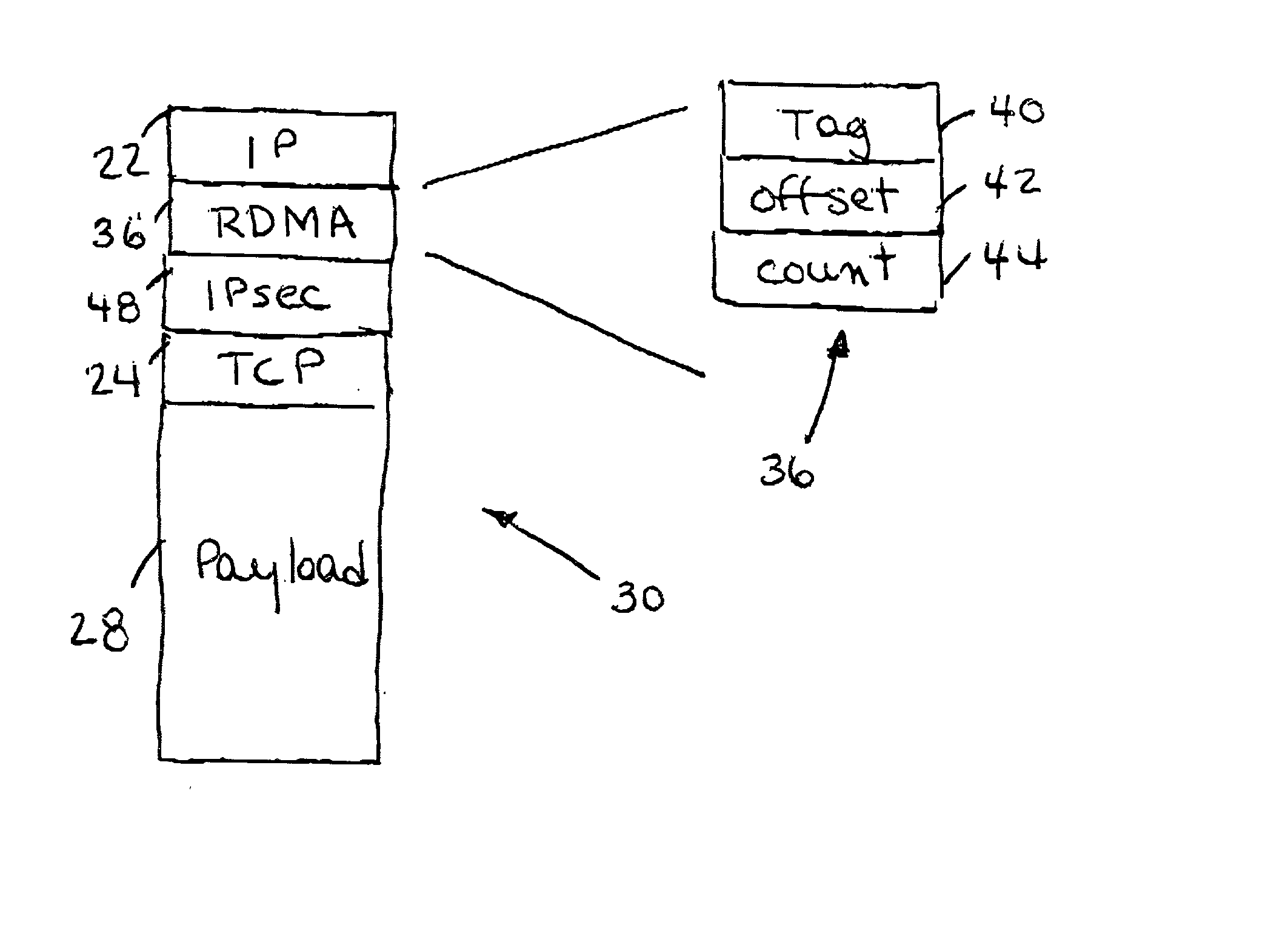

A data packet header including an internet protocol (IP) header, a remote direct memory access (RDMA) header, and a transmission control protocol (TCP) header, wherein the RDMA header is between the IP header and the TCP header.

Owner:IBM CORP

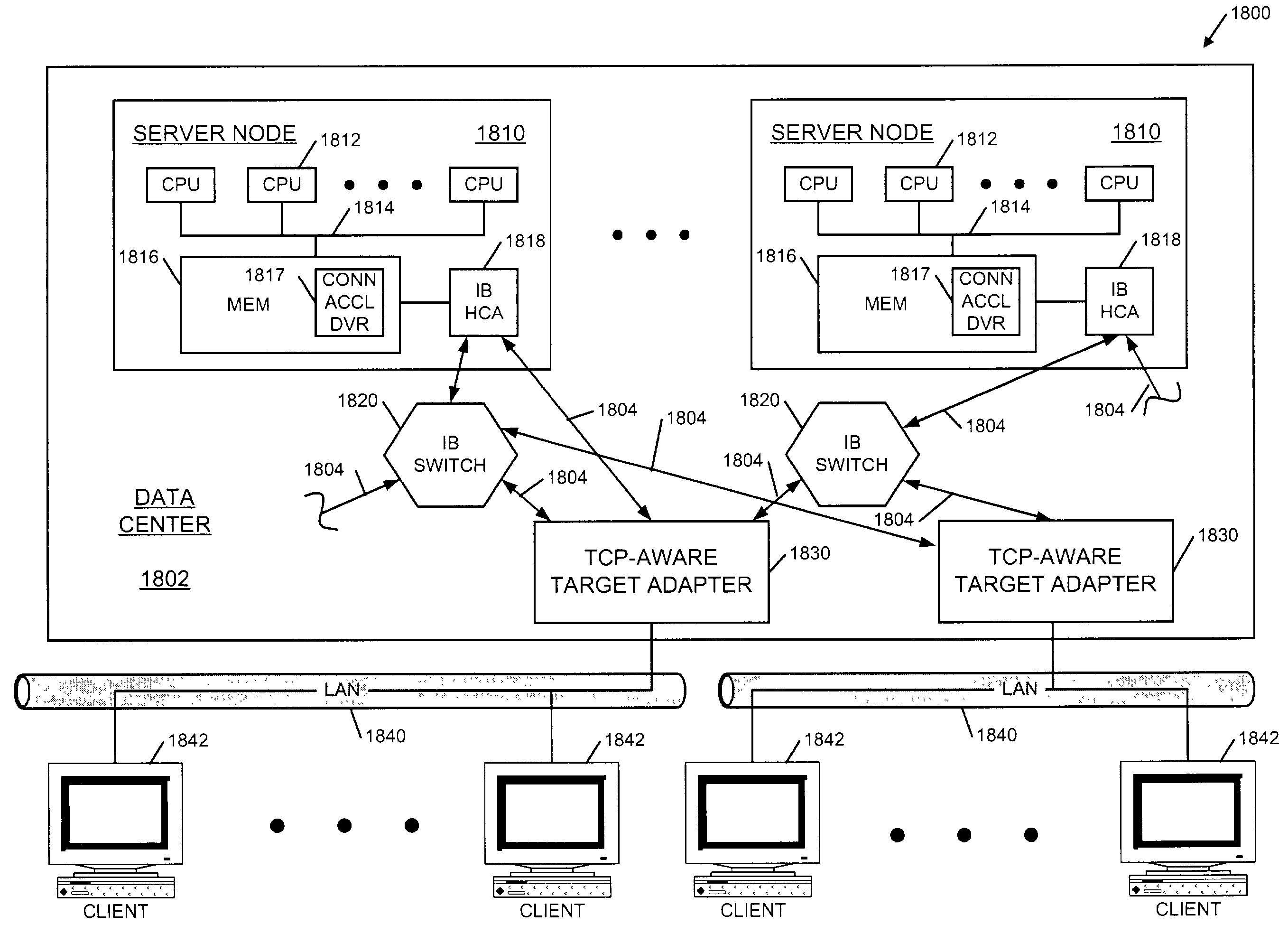

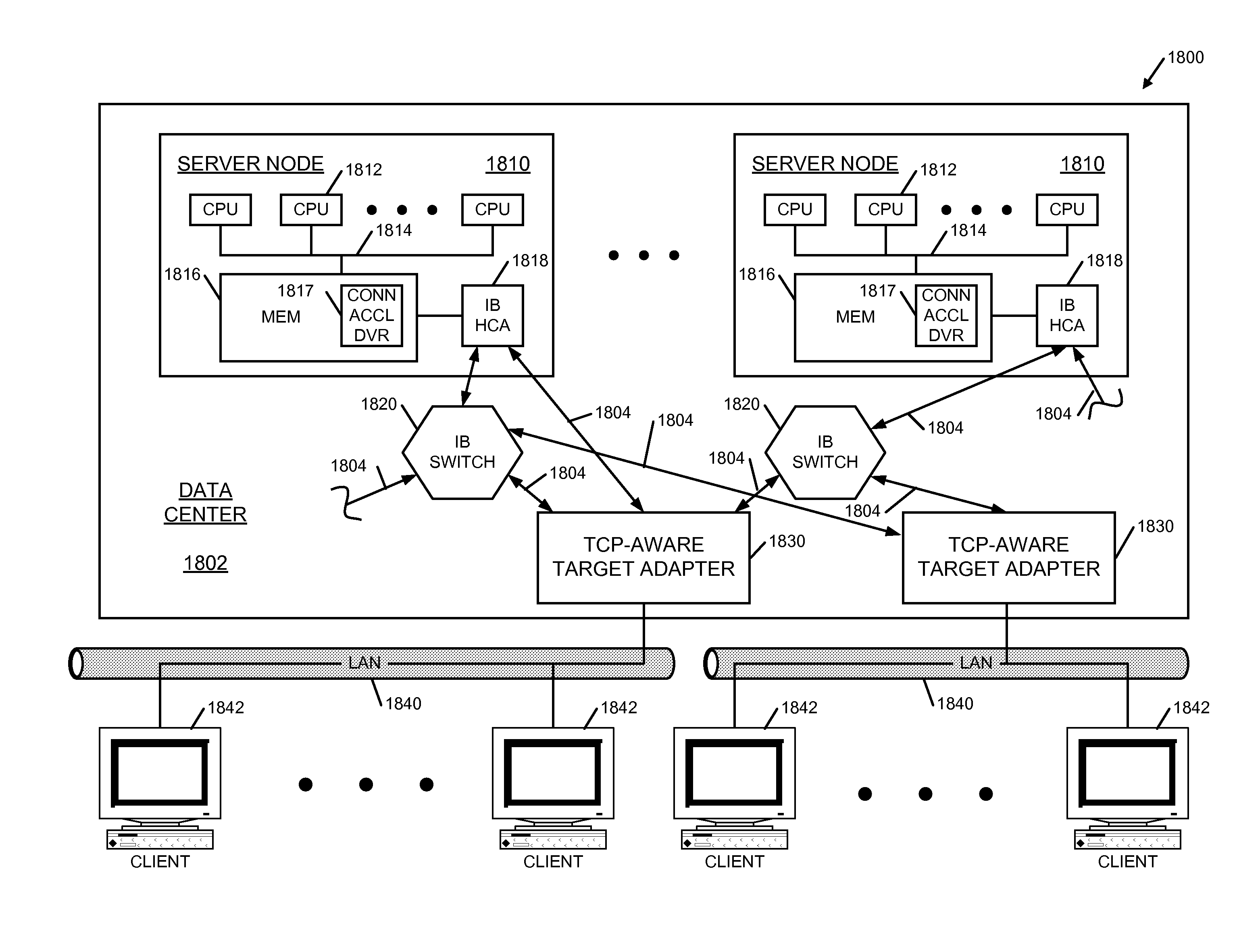

Infiniband TM work queue to TCP/IP translation

InactiveUS7149817B2Increase capacityFast data transferMultiple digital computer combinationsMemory systemsDirect memory accessIp processing

A TCP-aware target adapter for accelerating TCP / IP connections between clients and servers, where the servers are interconnected over an Infiniband™ fabric and the clients are interconnected over a TCP / IP-based network. The TCP-aware target adapter includes an accelerated connection processor and a target channel adapter. The accelerated connection processor bridges TCP / IP transactions between the clients and the servers. The accelerated connection processor accelerates the TCP / IP connections prescribing Infiniband remote direct memory access operations to retrieve / provide transaction data from / to the servers. The target channel adapter is coupled to the accelerated connection processor. The target channel adapter supports Infiniband operations with the servers, including execution of the remote direct memory access operations to retrieve / provide the transaction data. The TCP / IP connections are accelerated by offloading TCP / IP processing otherwise performed by the servers to retrieve / provide said transaction data.

Owner:INTEL CORP

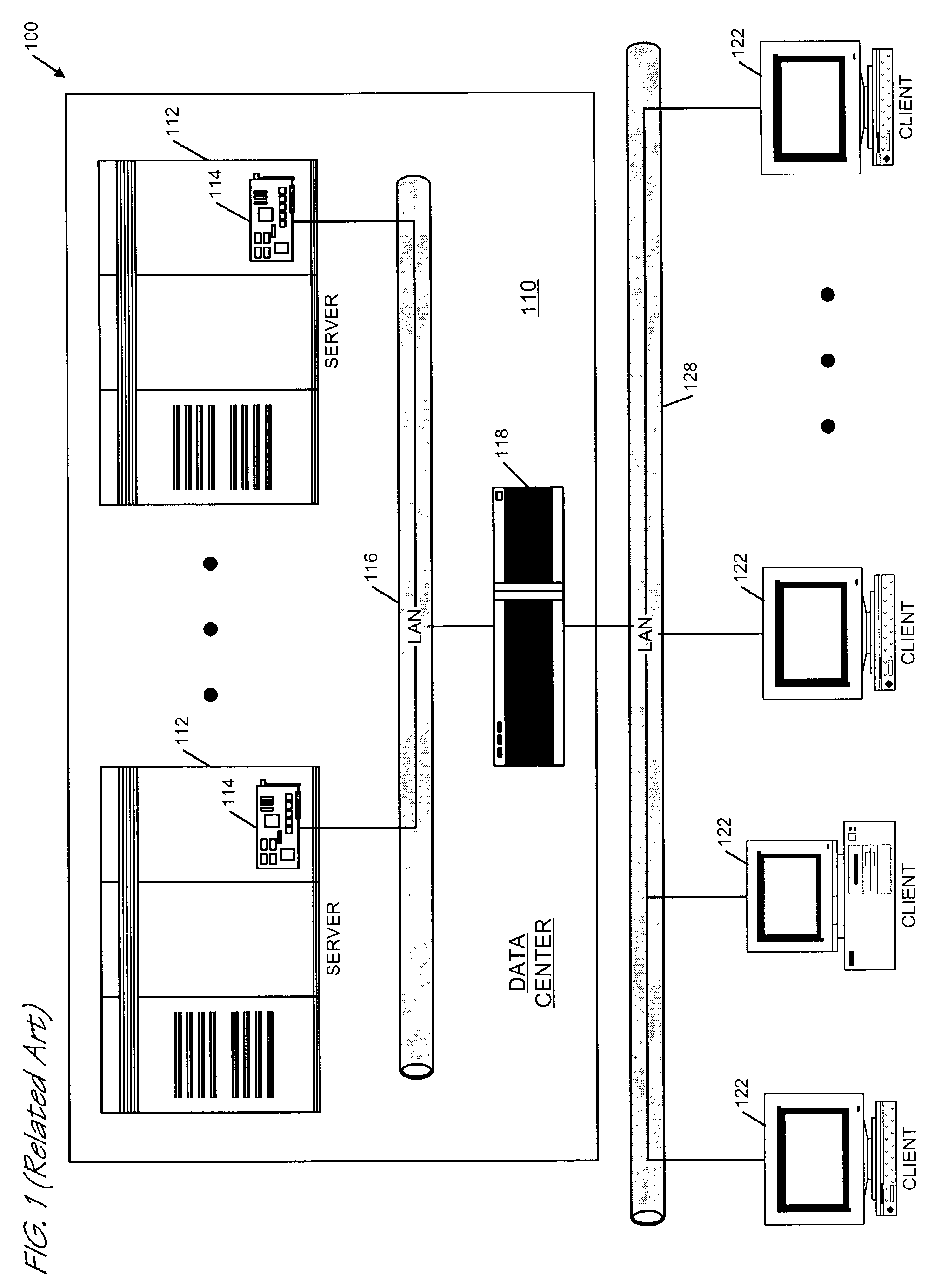

Work queue to TCP/IP translation

InactiveUS7149819B2Increase capacityFast data transferMultiple digital computer combinationsSecuring communicationIp processingTransaction data

An apparatus and method are provided to offload TCP / IP-related processing, where a server is connected to a plurality of clients, and the plurality of clients is accessed via a TCP / IP network. TCP / IP connections between the plurality of clients and the server are accelerated. The apparatus includes an accelerated connection processor and a target channel adapter. The accelerated connection processor bridges TCP / IP transactions between the plurality of clients and the server, where the accelerated connection processor accelerates the TCP / IP connections by prescribing remote direct memory access operations to retrieve / provide transaction data from / to the server. The target channel adapter is coupled to the accelerated connection processor. The target channel adapter executes the remote direct memory access operations to retrieve / provide the transaction data. The TCP / IP transactions are accelerated by offloading TCP / IP processing otherwise performed by the server to retrieve / provide transaction data.

Owner:INTEL CORP

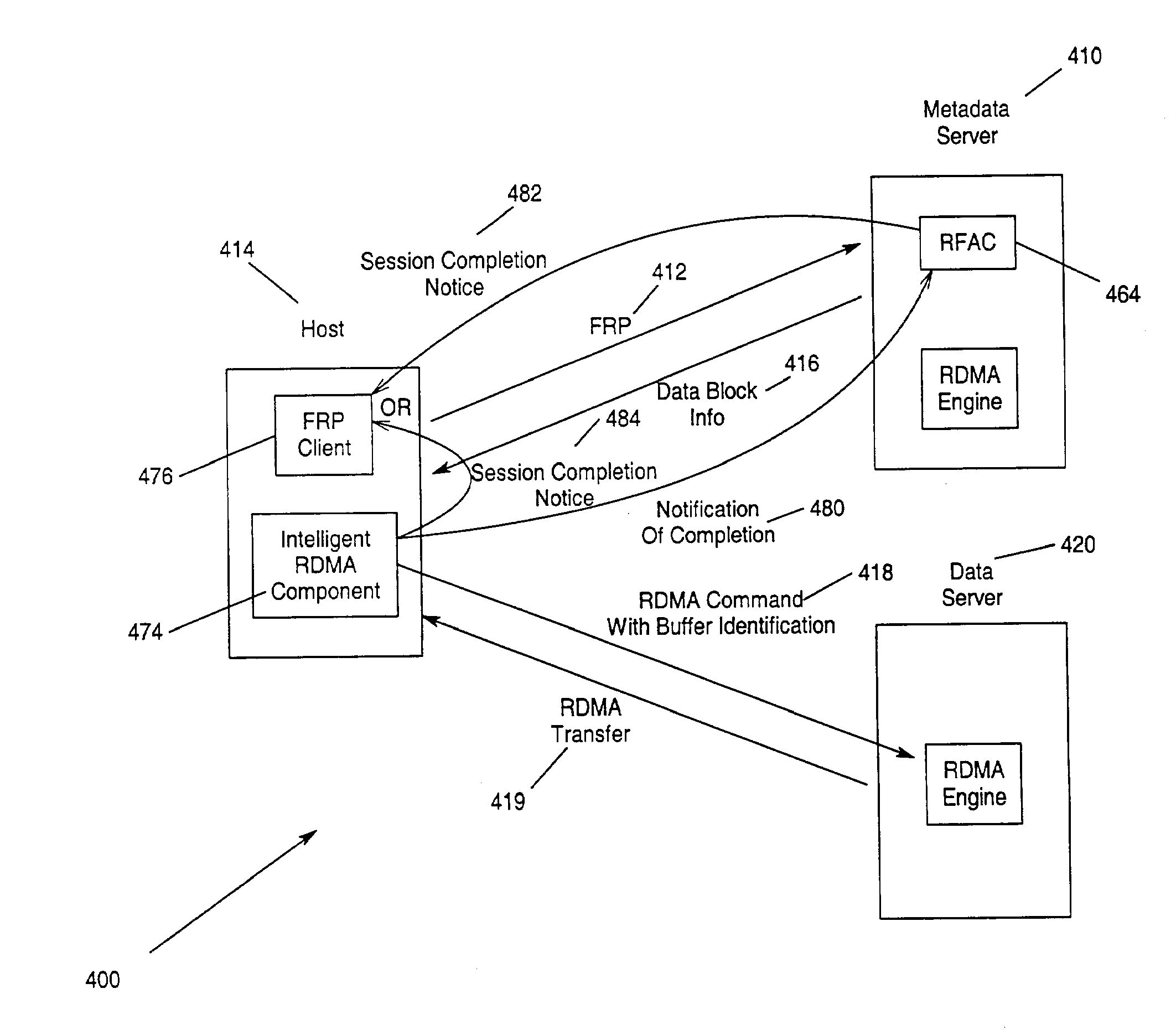

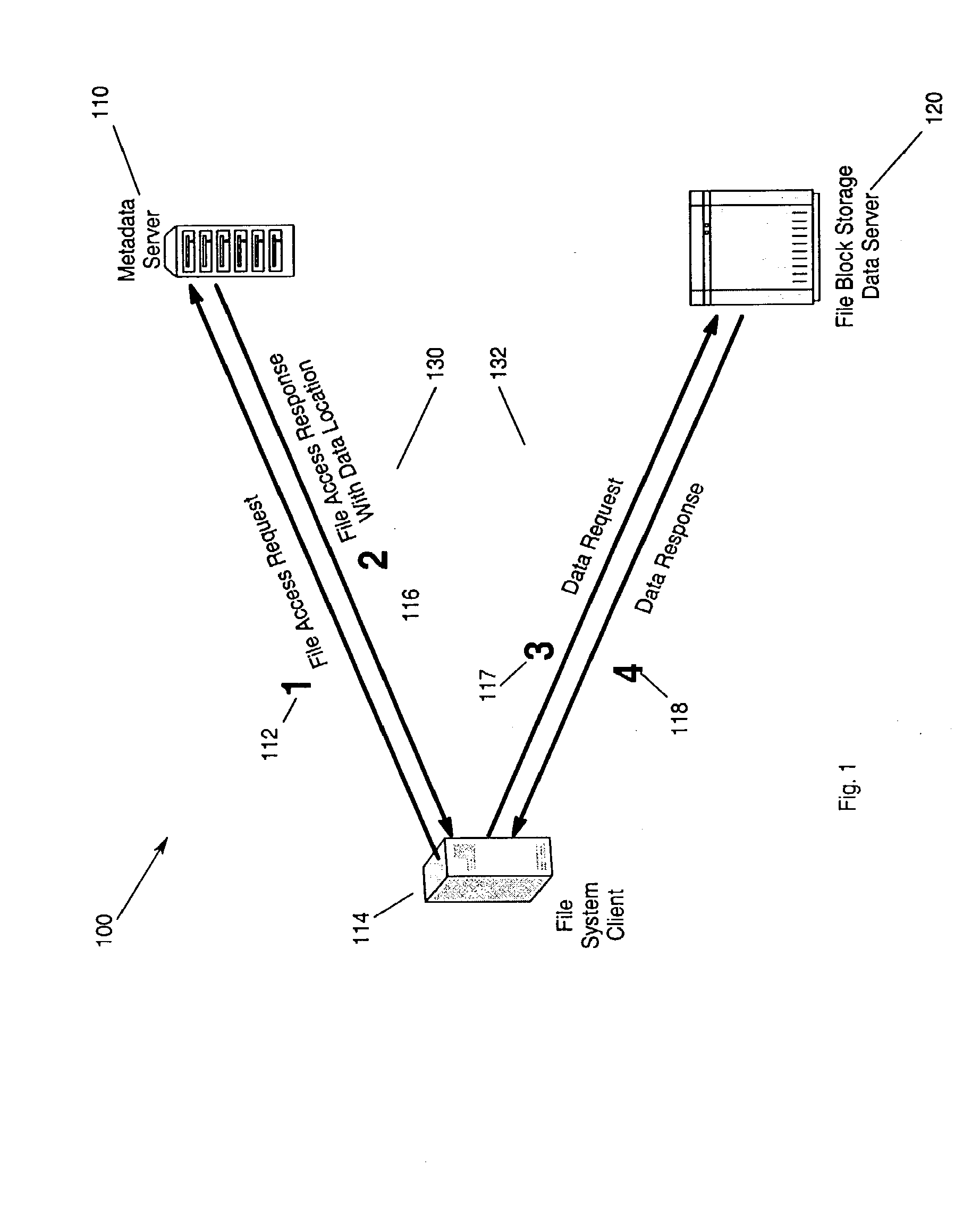

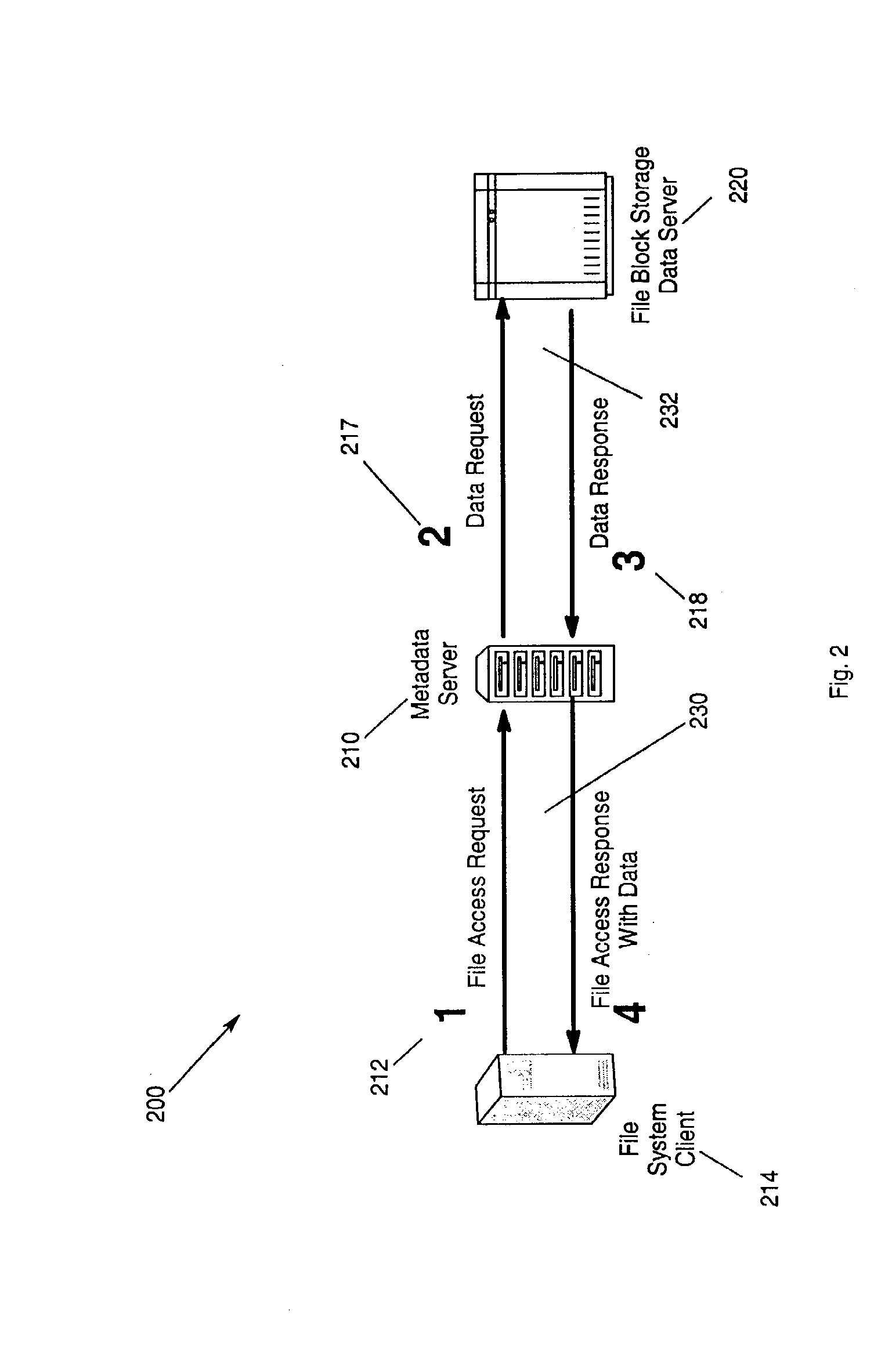

Distributed file serving architecture system with metadata storage virtualization and data access at the data server connection speed

InactiveUS20100095059A1Efficient accessSufficient operationMemory loss protectionMultiple digital computer combinationsData accessRemote direct memory access

Method, apparatus and program storage device that provides a distributed file serving architecture with metadata storage virtualization and data access at the data server connection speed is provided. A host issues a file access request including data target locations. The file access request including data target locations is processed. Remote direct memory access (RDMA) channel endpoint connection are issued in response to the processing of the file access request. An RDMA transfer of the file-block data associated with the file access request is made directly between a memory at the host and a data server.

Owner:IBM CORP

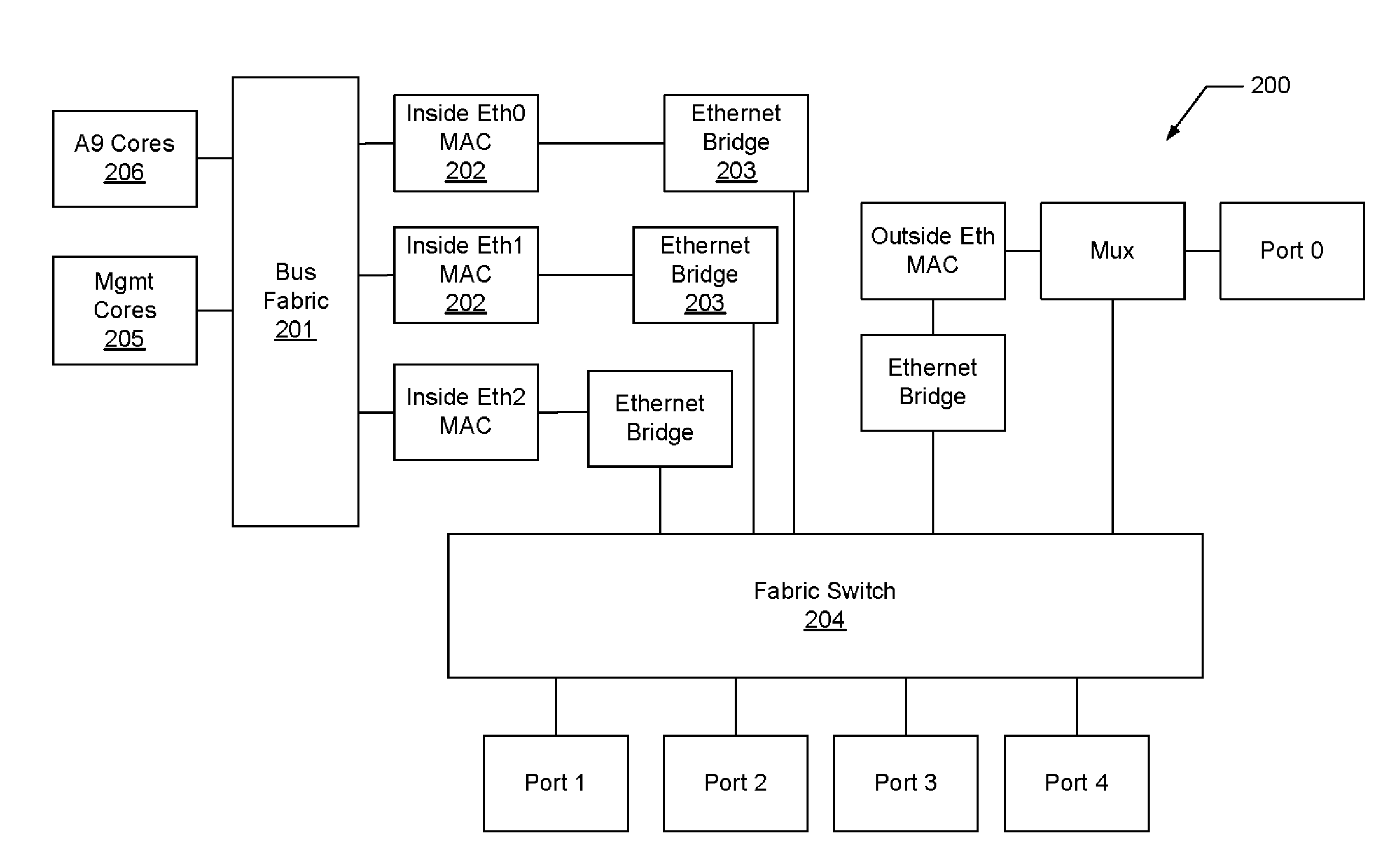

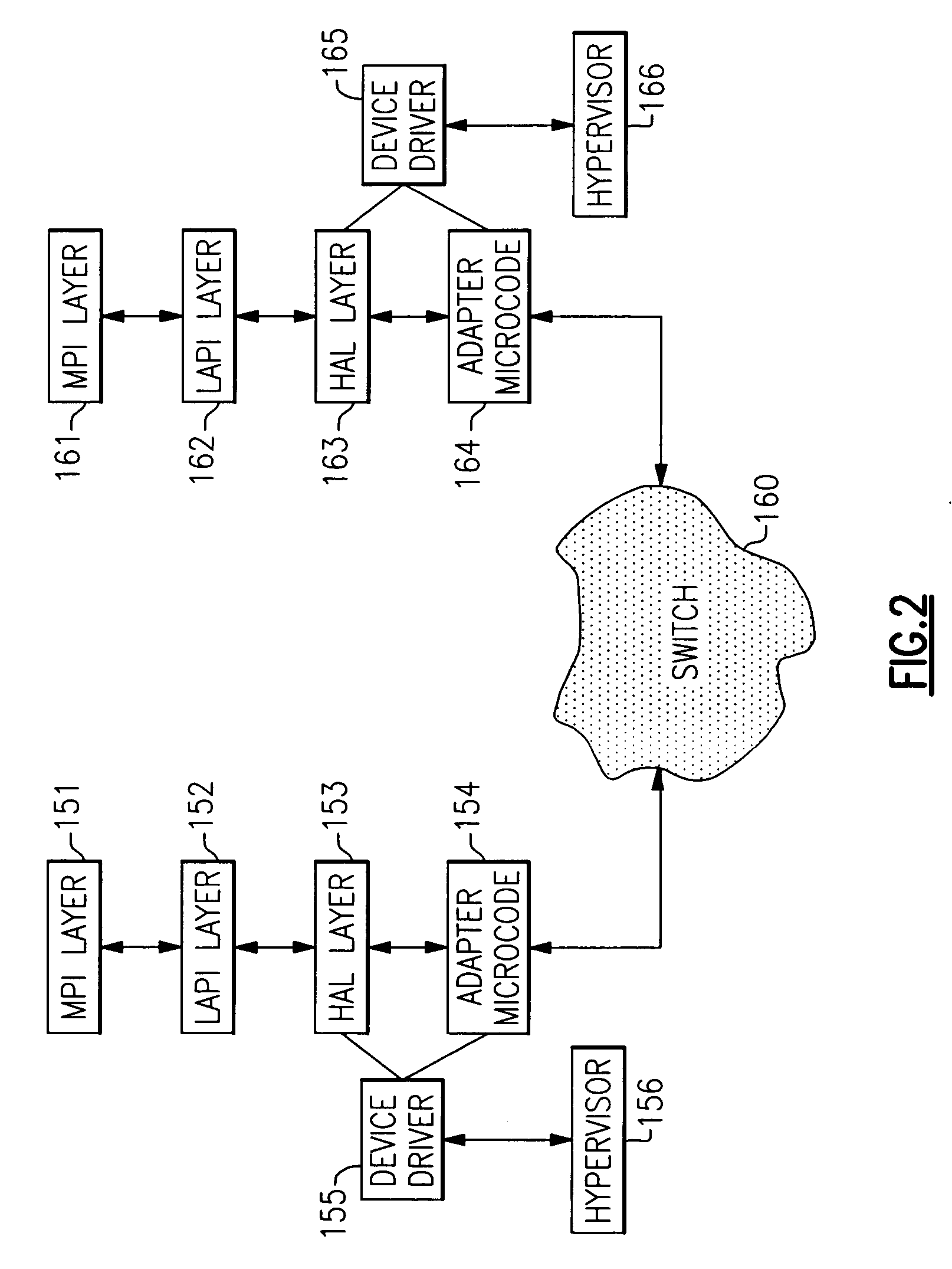

System and method for using a multi-protocol fabric module across a distributed server interconnect fabric

ActiveUS8599863B2Extend performance and power optimization and functionalityDigital computer detailsData switching by path configurationPersonalizationStructure of Management Information

A multi-protocol personality module enabling load / store from remote memory, remote Direct memory Access (DMA) transactions, and remote interrupts, which permits enhanced performance, power utilization and functionality. In one form, the module is used as a node in a network fabric and adds a routing header to packets entering the fabric, maintains the routing header for efficient node-to-node transport, and strips the header when the packet leaves the fabric. In particular, a remote bus personality component is described. Several use cases of the Remote Bus Fabric Personality Module are disclosed: 1) memory sharing across a fabric connected set of servers; 2) the ability to access physically remote Input Output (I / O) devices across this fabric of connected servers; and 3) the sharing of such physically remote I / O devices, such as storage peripherals, by multiple fabric connected servers.

Owner:III HLDG 2

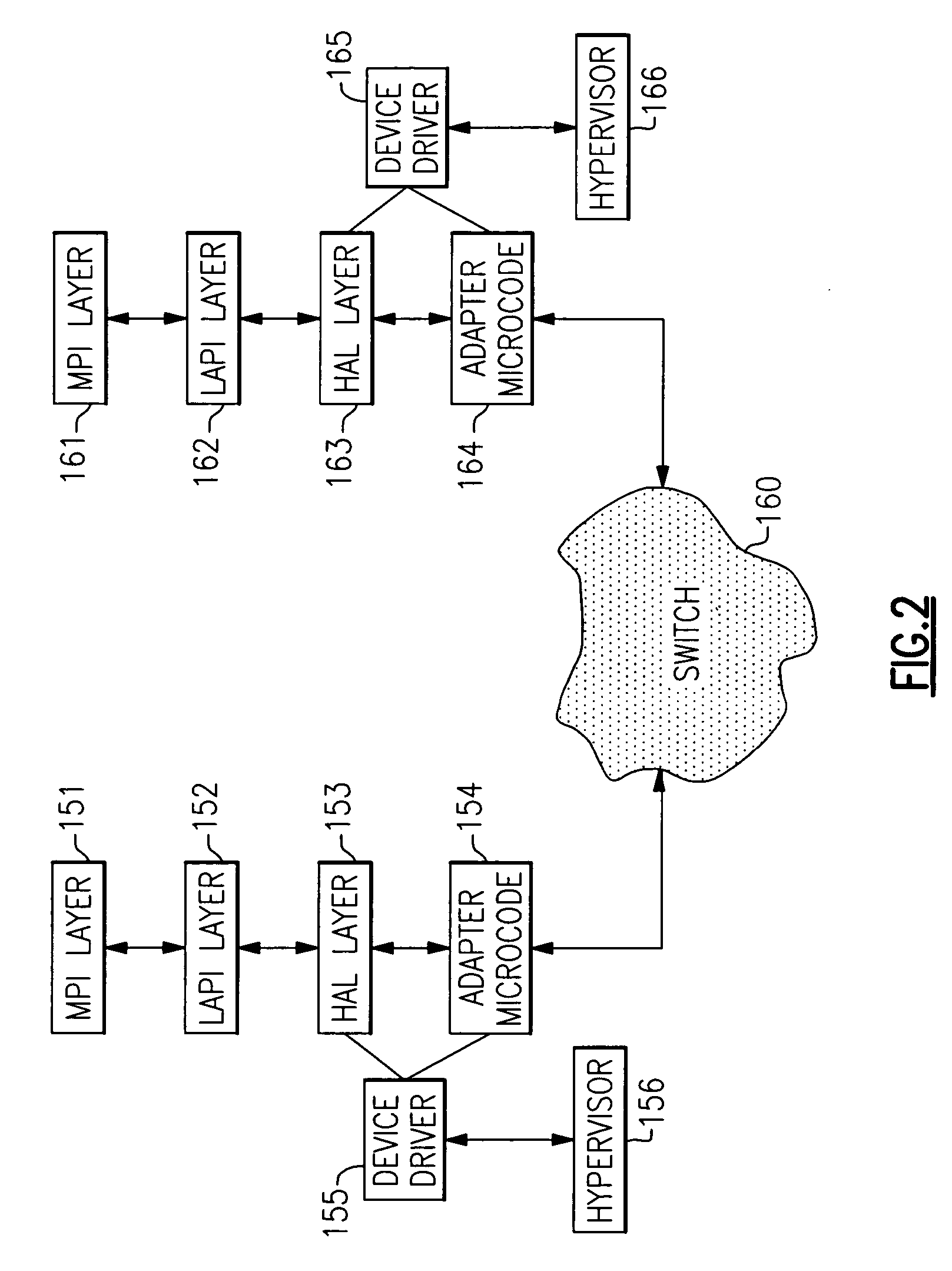

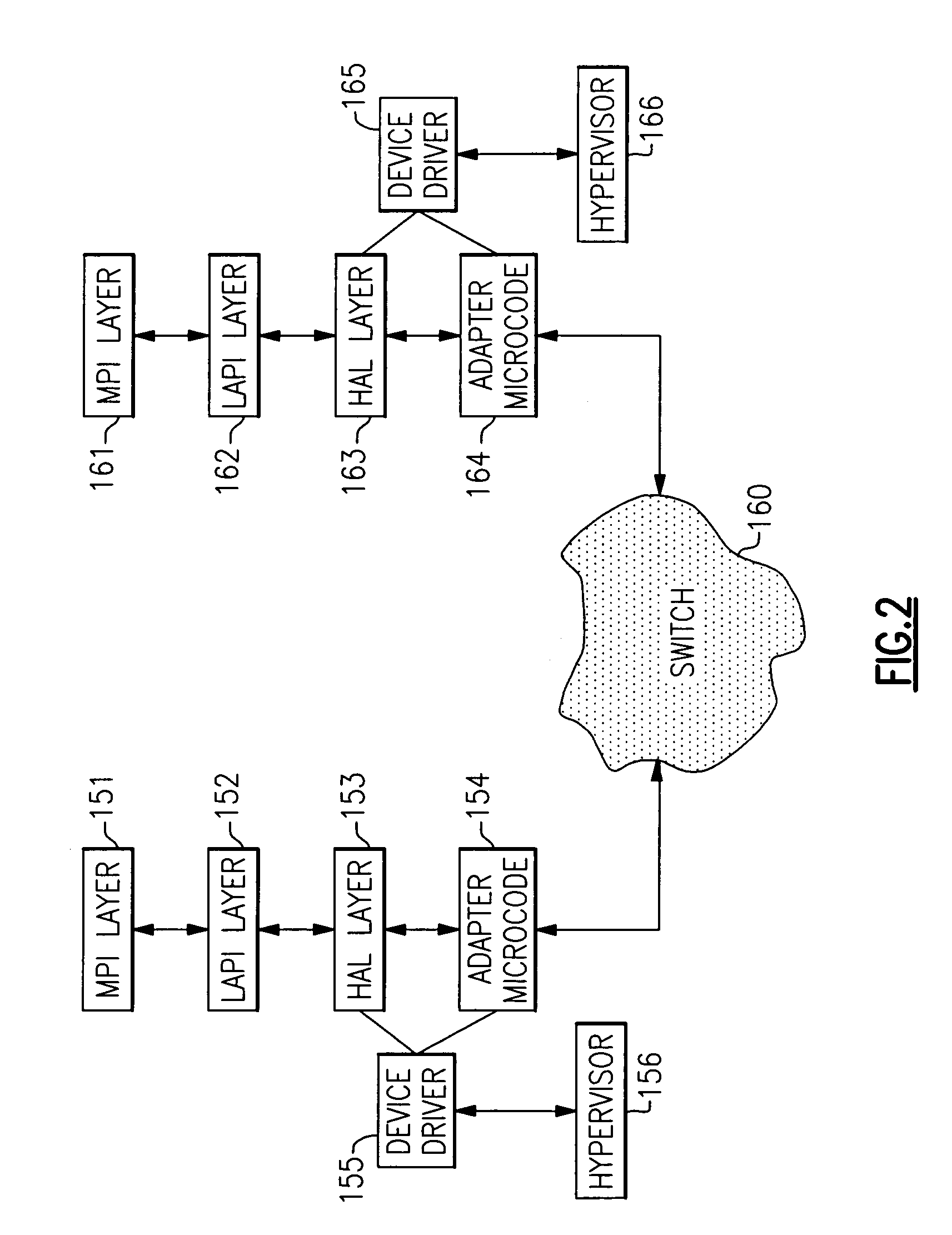

RDMA server (OSI) global TCE tables

ActiveUS20060047771A1Multiple digital computer combinationsTransmissionVirtual memoryData processing system

In remote direct memory access (RDMA) transfers in a multinode data processing system in which the nodes communicate with one another through communication adapters coupled to a switch or network, there is a need for the system to ensure efficient memory protection mechanisms across jobs. A method is thus desired for addressing virtual memory on local and remote servers that is independent of the process ID on the local and / or remote node. The use of global Translation Control Entry (TCE) tables that are accessed / owned by RDMA jobs and are managed by a device driver in conjunction with a Protocol Virtual Offset (PVO) address format solves this problem.

Owner:IBM CORP

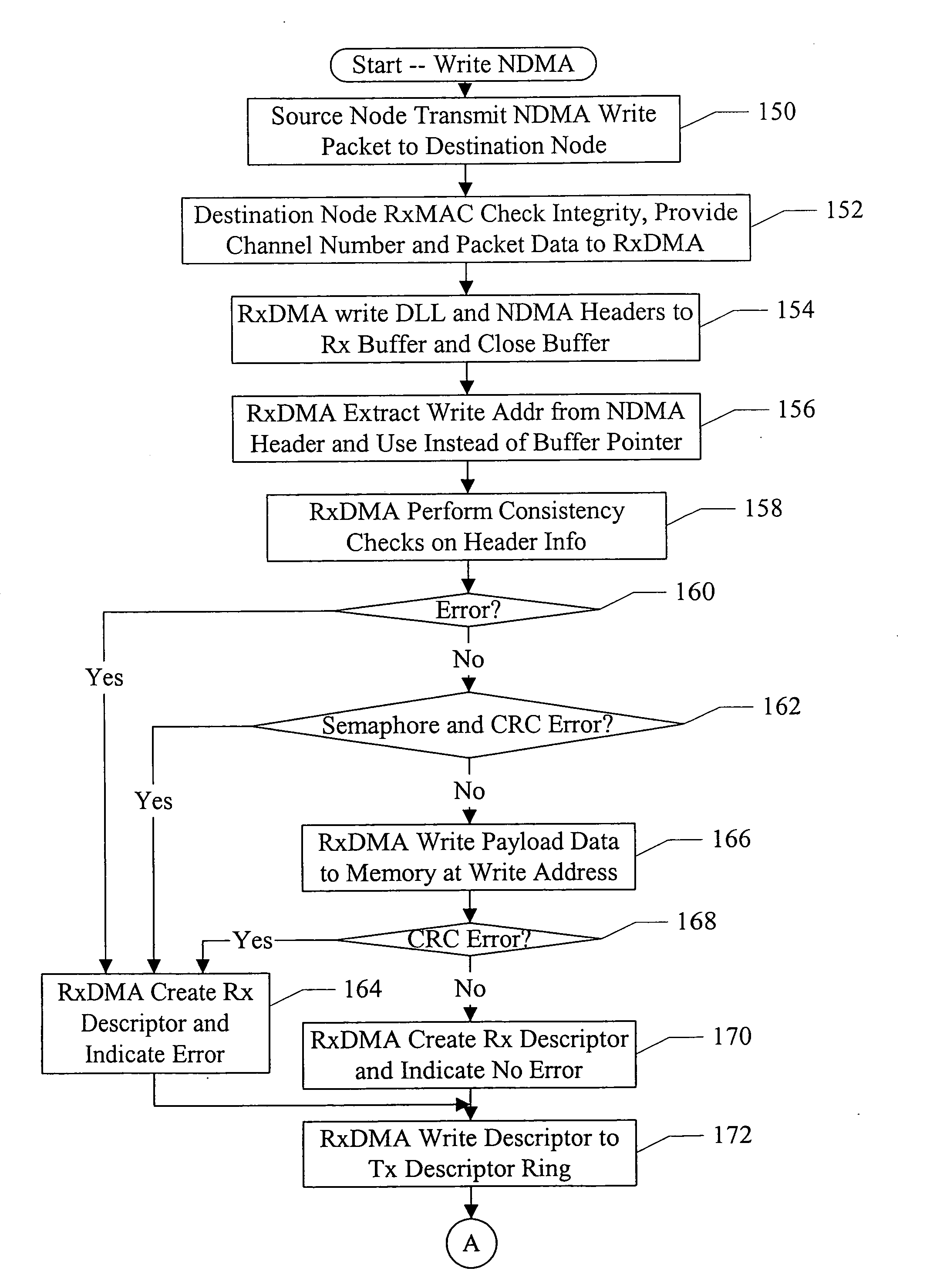

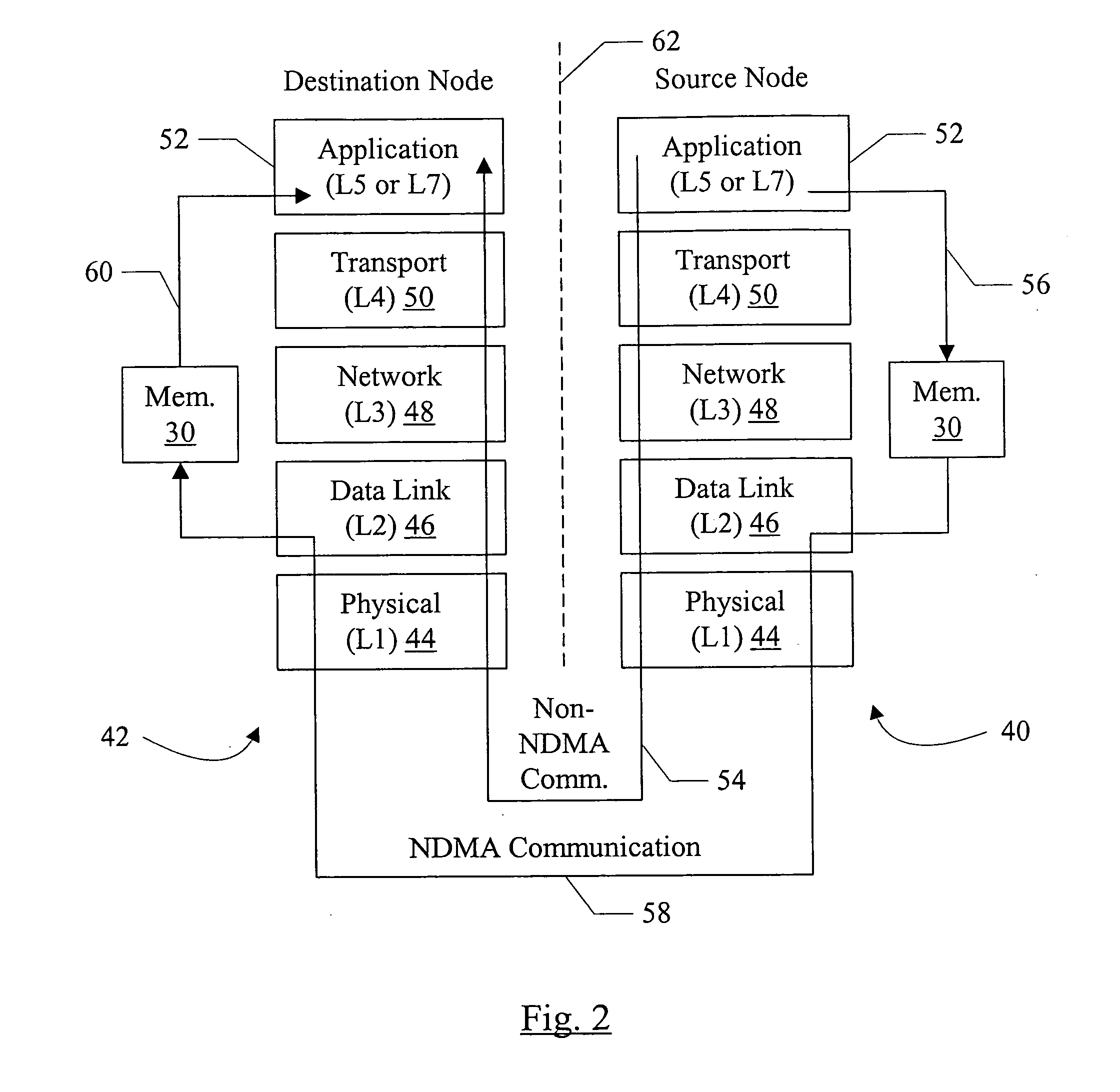

Network direct memory access

InactiveUS20080043732A1Data switching by path configurationMultiple digital computer combinationsDirect memory accessRemote direct memory access

In one embodiment, a system comprises at least a first node and a second node coupled to a network. The second node comprises a local memory and a direct memory access (DMA) controller coupled to the local memory. The first node is configured to transmit at least a first packet to the second node to access data in the local memory and at least one other packet that is not coded to access the local memory. The second node is configured to capture the packet from a data link layer of a protocol stack, and wherein the DMA controller is configured to perform one more transfers with the local memory to access the data specified by the first packet responsive to the first packet received from the data link layer. The second node is configured to process the other packet to a top of the protocol stack.

Owner:APPLE INC

SCSI transport for servers

ActiveUS20130117621A1Error prevention/detection by using return channelTransmission systemsFiberSCSI initiator and target

A Small Computer System Interface (SCSI) transport for fabric backplane enterprise servers provides for local and remote communication of storage system information between storage sub-system elements of an ES system and other elements of an ES system via a storage interface. The transport includes encapsulation of information for communication via a reliable transport implemented in part across a cellifying switch fabric. The transport may optionally include communication via Ethernet frames over any of a local network or the Internet. Remote Direct Memory Access (RDMA) and Direct Data Placement (DDP) protocols are used to communicate the information (commands, responses, and data) between SCSI initiator and target end-points. A Fibre Channel Module (FCM) may be operated as a SCSI target providing a storage interface to any of a Processor Memory Module (PMM), a System Control Module (SCM), and an OffLoad Module (OLM) operated as a SCSI initiator.

Owner:ORACLE INT CORP

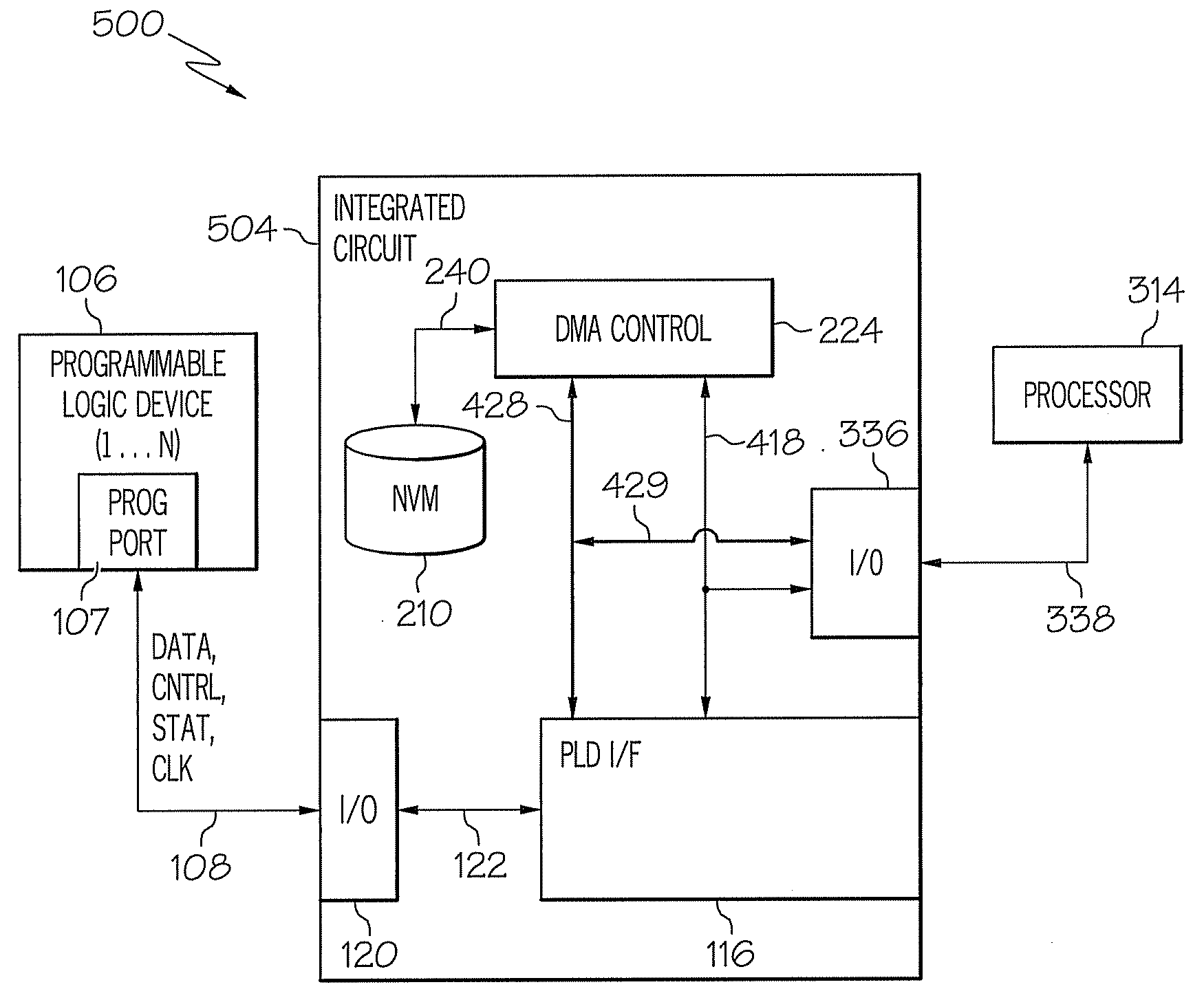

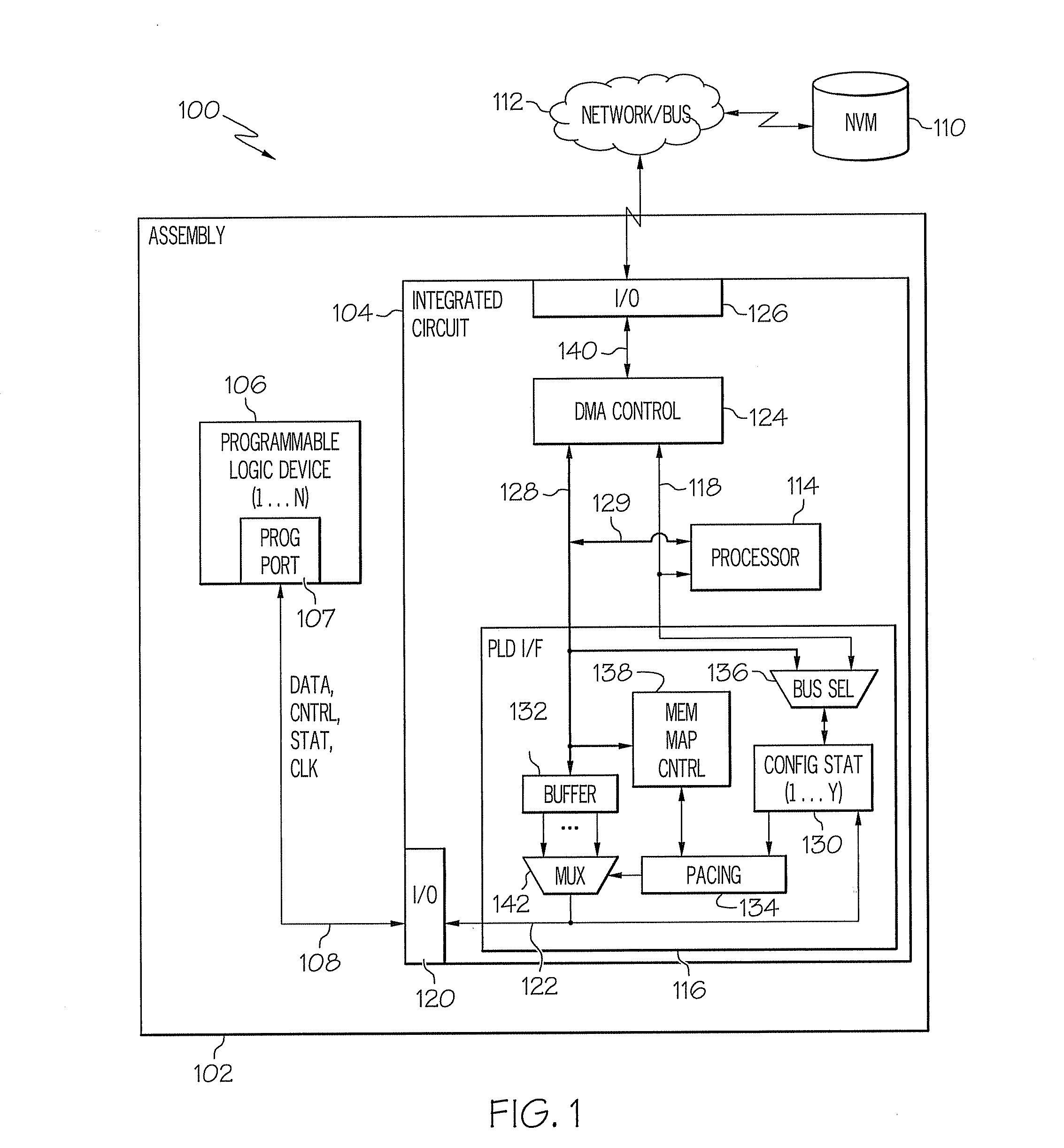

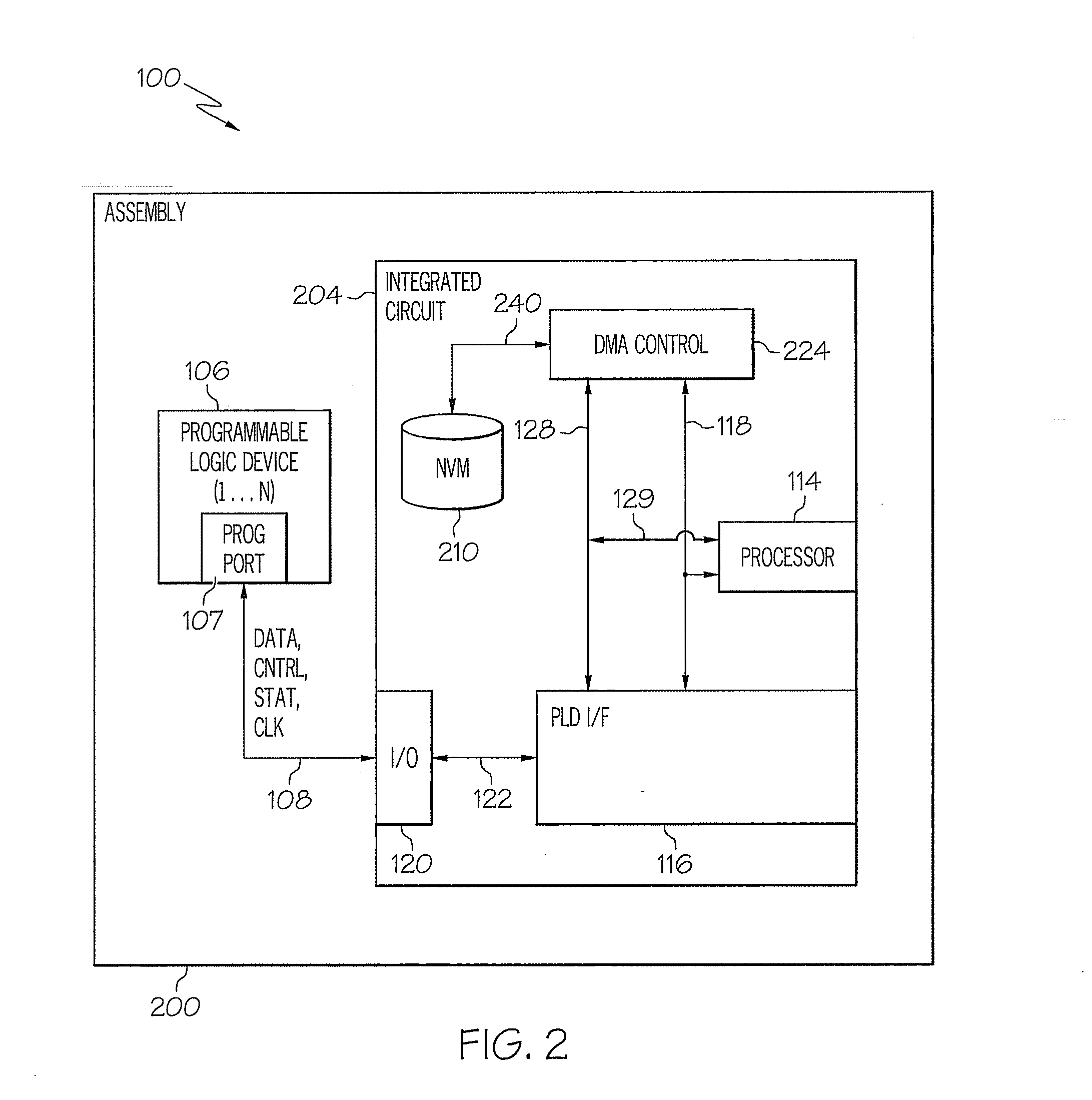

Methods, systems, and computer program products for using direct memory access to initialize a programmable logic device

ActiveUS20080186052A1Special data processing applicationsLogic circuits using elementary logic circuit componentsDirect memory accessProgrammable logic device

Methods, systems, and computer program products for using direct memory access (DMA) to initialize a programmable logic device (PLD) are provided. A method includes manipulating a control line of the PLD to configure the PLD in a programming mode, receiving PLD programming data from a DMA control at a DMA speed, and writing the PLD programming data to a data buffer. The method also includes reading the PLD programming data from the data buffer, and transmitting the PLD programming data to a programming port on the PLD at a PLD programming speed.

Owner:GLOBALFOUNDRIES US INC

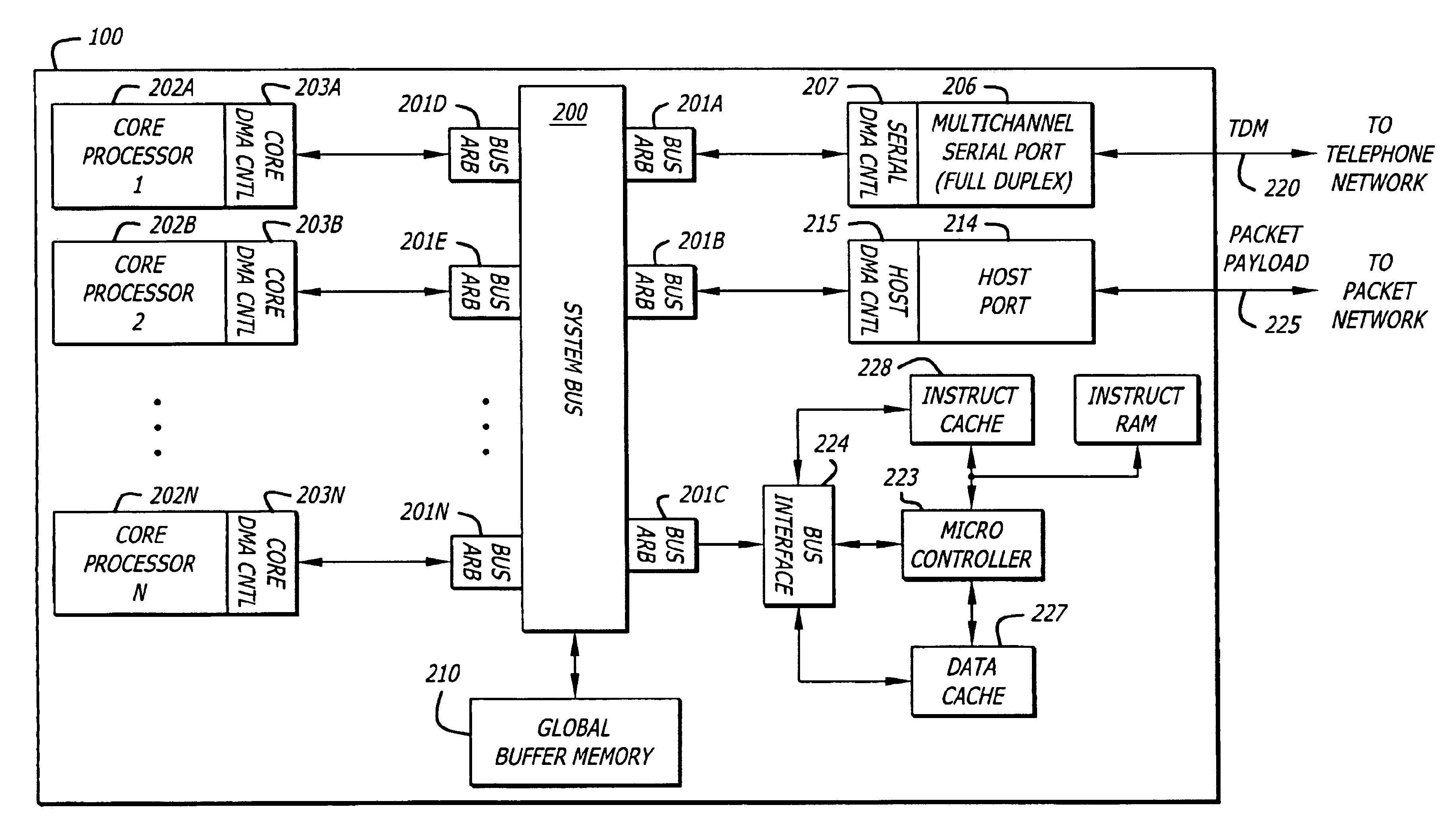

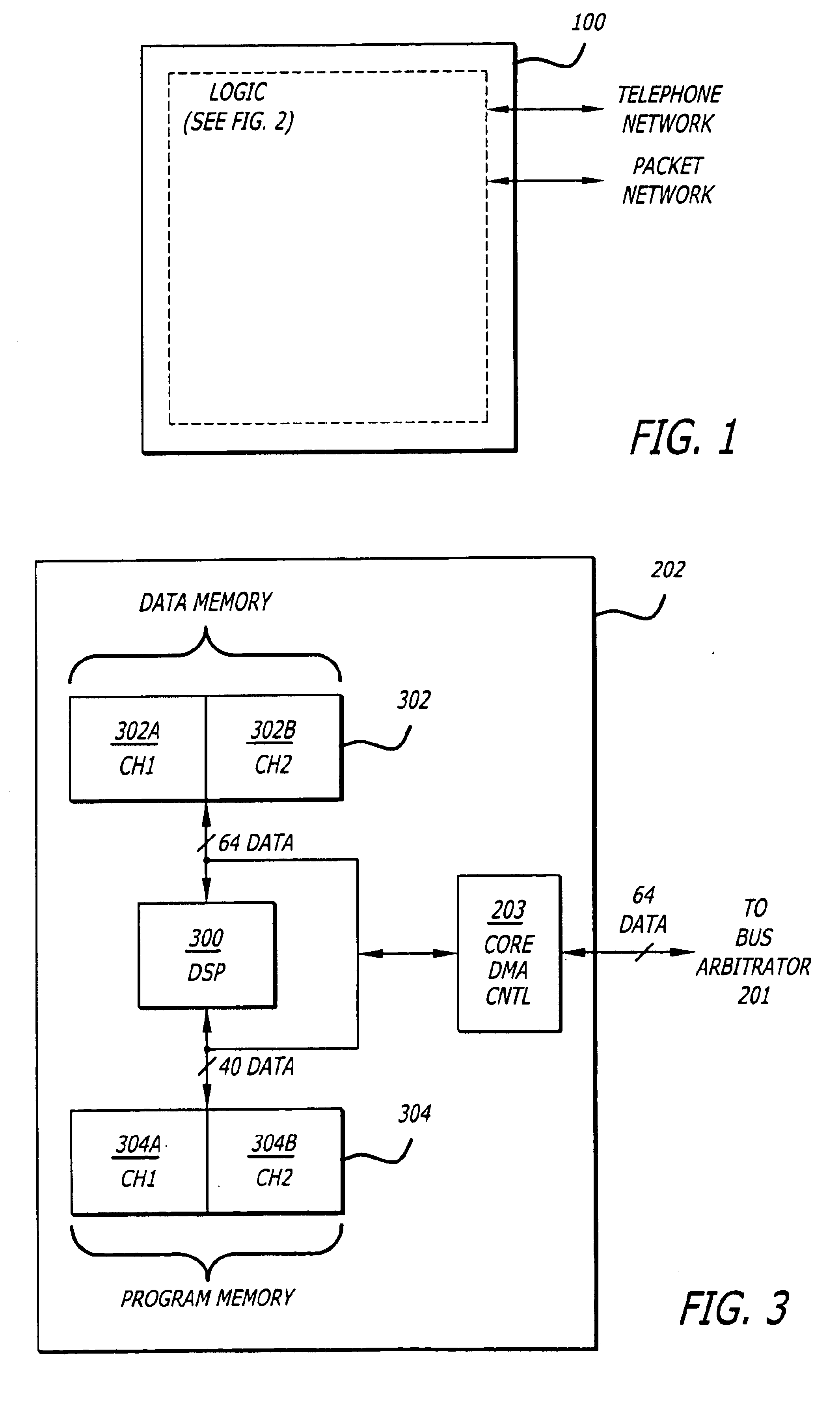

Method and apparatus for distributed direct memory access for systems on chip

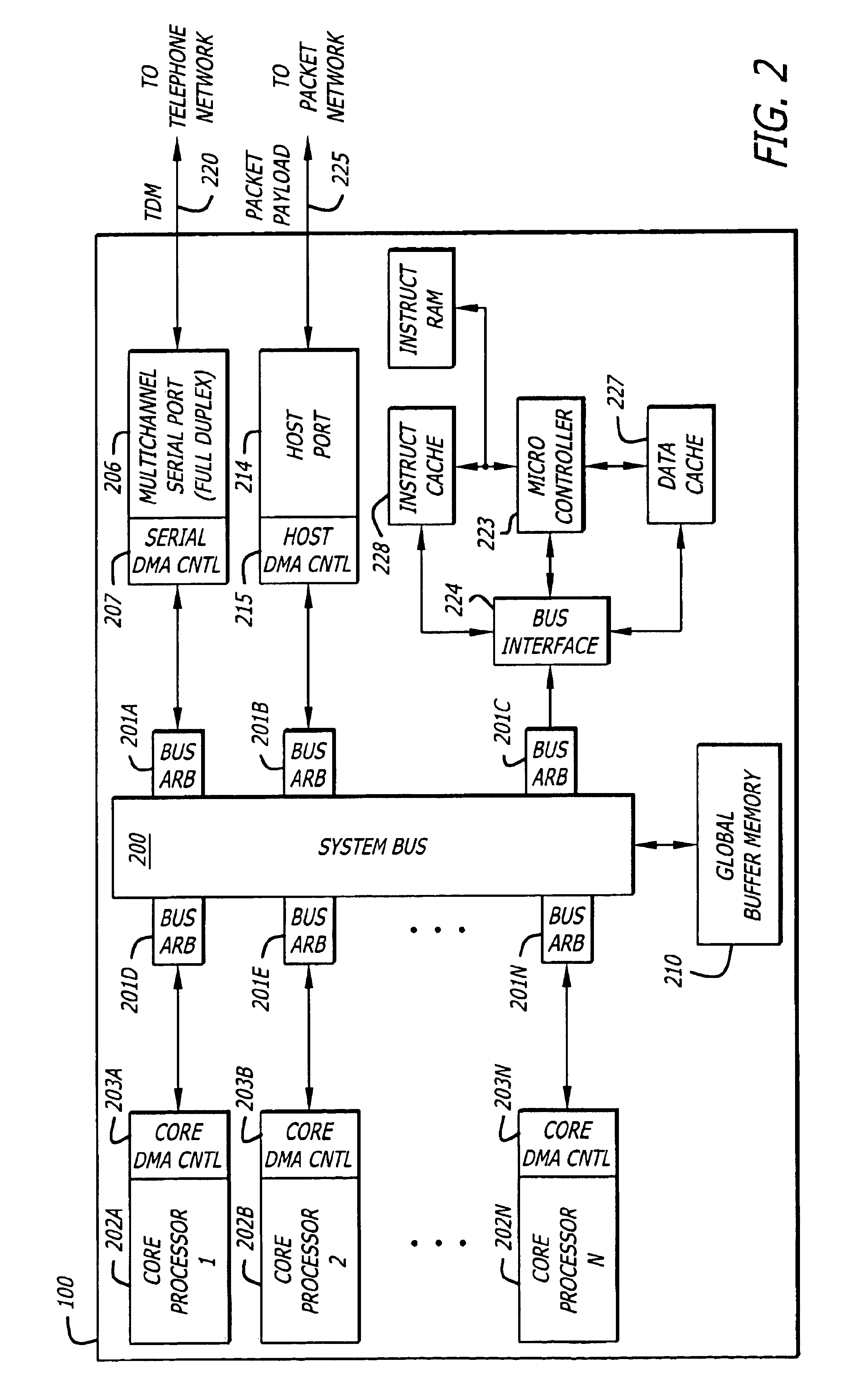

InactiveUS6874039B2High bandwidthArbitration is simplifiedMemory adressing/allocation/relocationDigital storageDirect memory accessRemote direct memory access

A distributed direct memory access (DMA) method, apparatus, and system is provided within a system on chip (SOC). DMA controller units are distributed to various functional modules desiring direct memory access. The functional modules interface to a systems bus over which the direct memory access occurs. A global buffer memory, to which the direct memory access is desired, is coupled to the system bus. Bus arbitrators are utilized to arbitrate which functional modules have access to the system bus to perform the direct memory access. Once a functional module is selected by the bus arbitrator to have access to the system bus, it can establish a DMA routine with the global buffer memory.

Owner:INTEL CORP

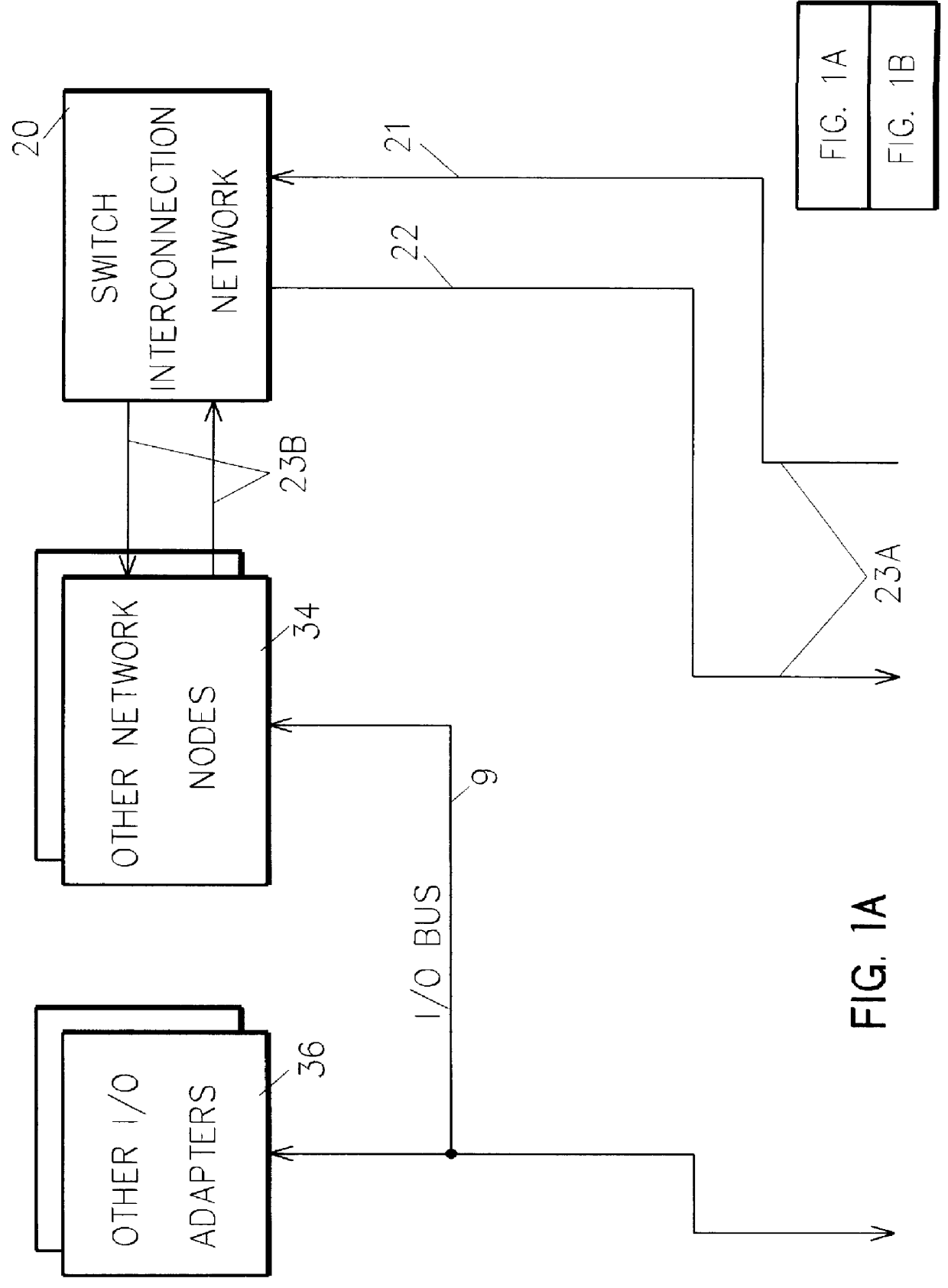

Bi-directional network adapter for interfacing local node of shared memory parallel processing system to multi-stage switching network for communications with remote node

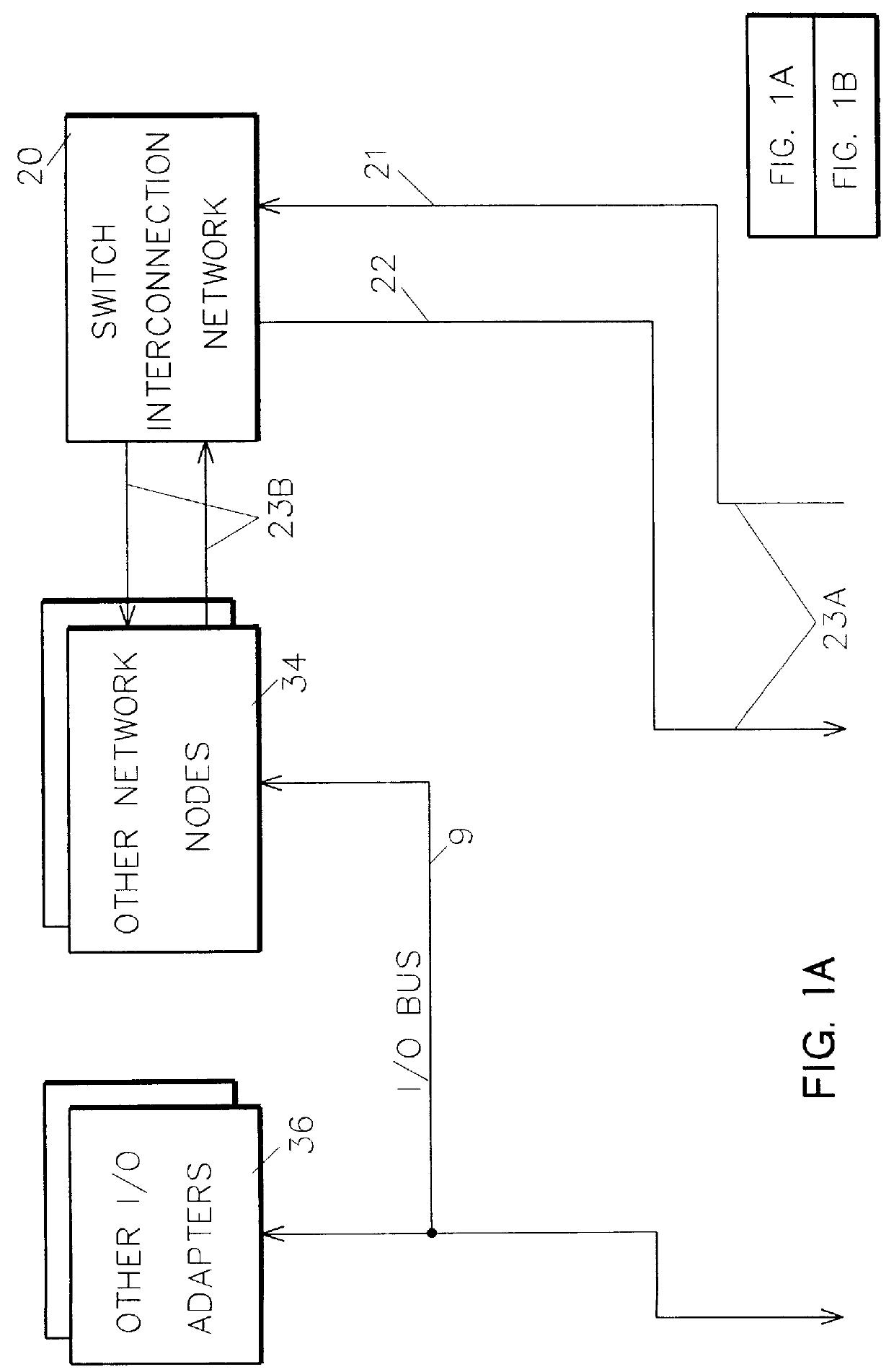

InactiveUS6122674AOptimize networkData processing applicationsMemory adressing/allocation/relocationRemote memory accessExchange network

A shared memory parallel processing system interconnected by a multi-stage network combines new system configuration techniques with special-purpose hardware to provide remote memory accesses across the network, while controlling cache coherency efficiently across the network. The system configuration techniques include a systematic method for partitioning and controlling the memory in relation to local verses remote accesses and changeable verses unchangeable data. Most of the special-purpose hardware is implemented in the memory controller and network adapter, which implements three send FIFOs and three receive FIFOs at each node to segregate and handle efficiently invalidate functions, remote stores, and remote accesses requiring cache coherency. The segregation of these three functions into different send and receive FIFOs greatly facilitates the cache coherency function over the network. In addition, the network itself is tailored to provide the best efficiency for remote accesses.

Owner:IBM CORP

System and method for using a multi-protocol fabric module across a distributed server interconnect fabric

ActiveUS20150103826A1Extend performance and power optimization and functionalityData switching by path configurationPersonalizationStructure of Management Information

A multi-protocol personality module enabling load / store from remote memory, remote Direct Memory Access (DMA) transactions, and remote interrupts, which permits enhanced performance, power utilization and functionality. In one form, the module is used as a node in a network fabric and adds a routing header to packets entering the fabric, maintains the routing header for efficient node-to-node transport, and strips the header when the packet leaves the fabric. In particular, a remote bus personality component is described. Several use cases of the Remote Bus Fabric Personality Module are disclosed: 1) memory sharing across a fabric connected set of servers; 2) the ability to access physically remote Input Output (I / O) devices across this fabric of connected servers; and 3) the sharing of such physically remote I / O devices, such as storage peripherals, by multiple fabric connected servers.

Owner:III HLDG 2

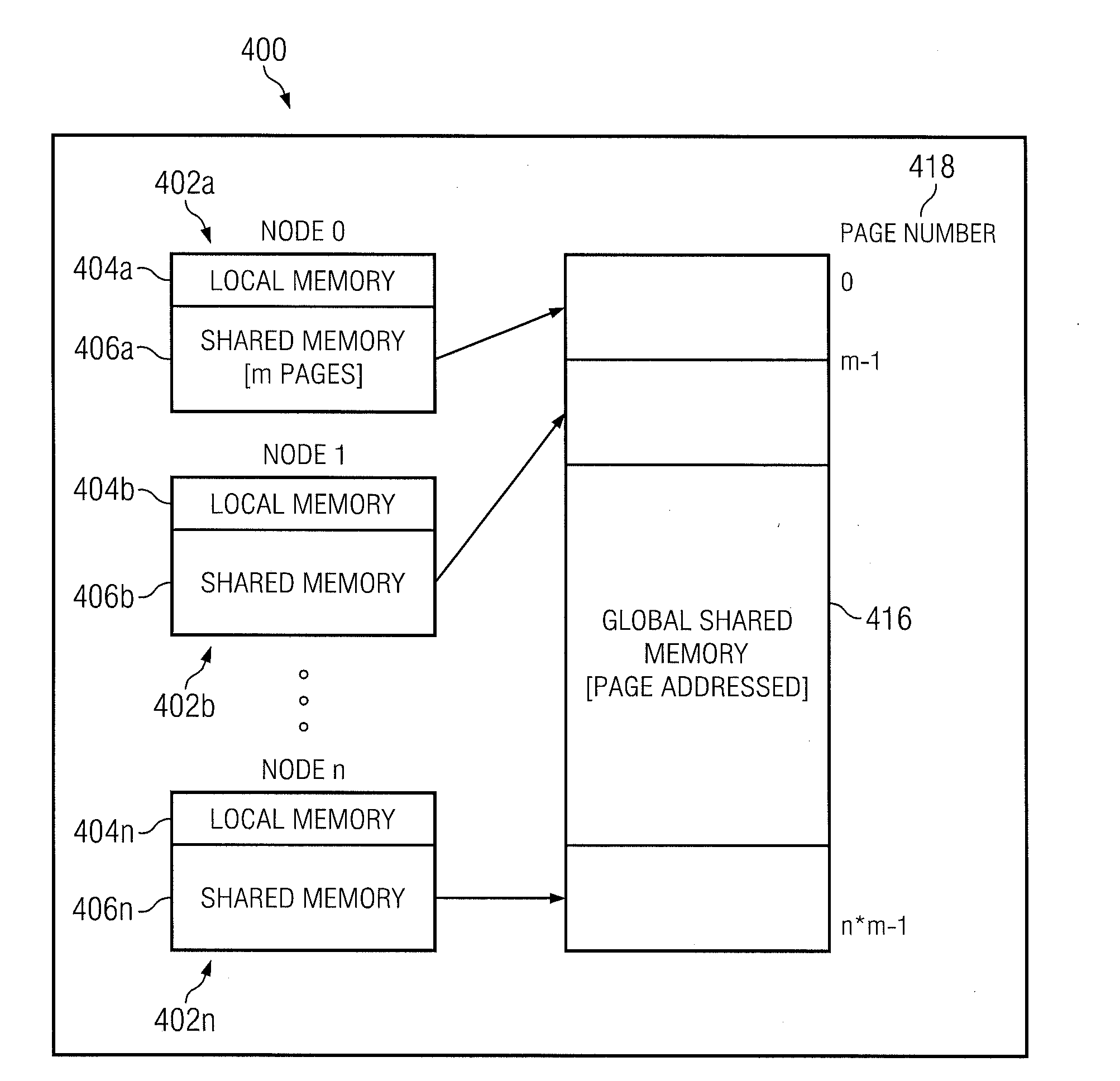

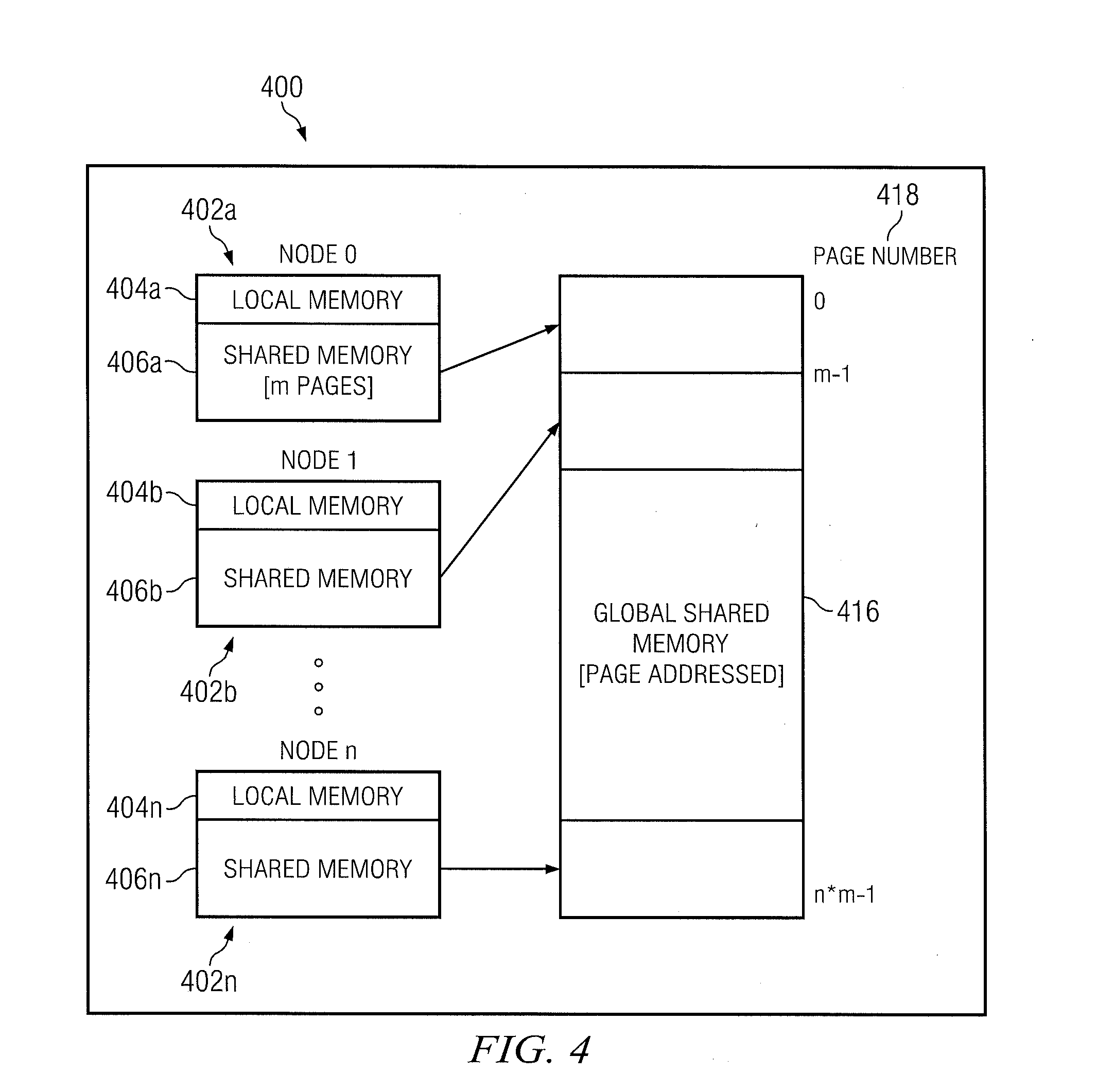

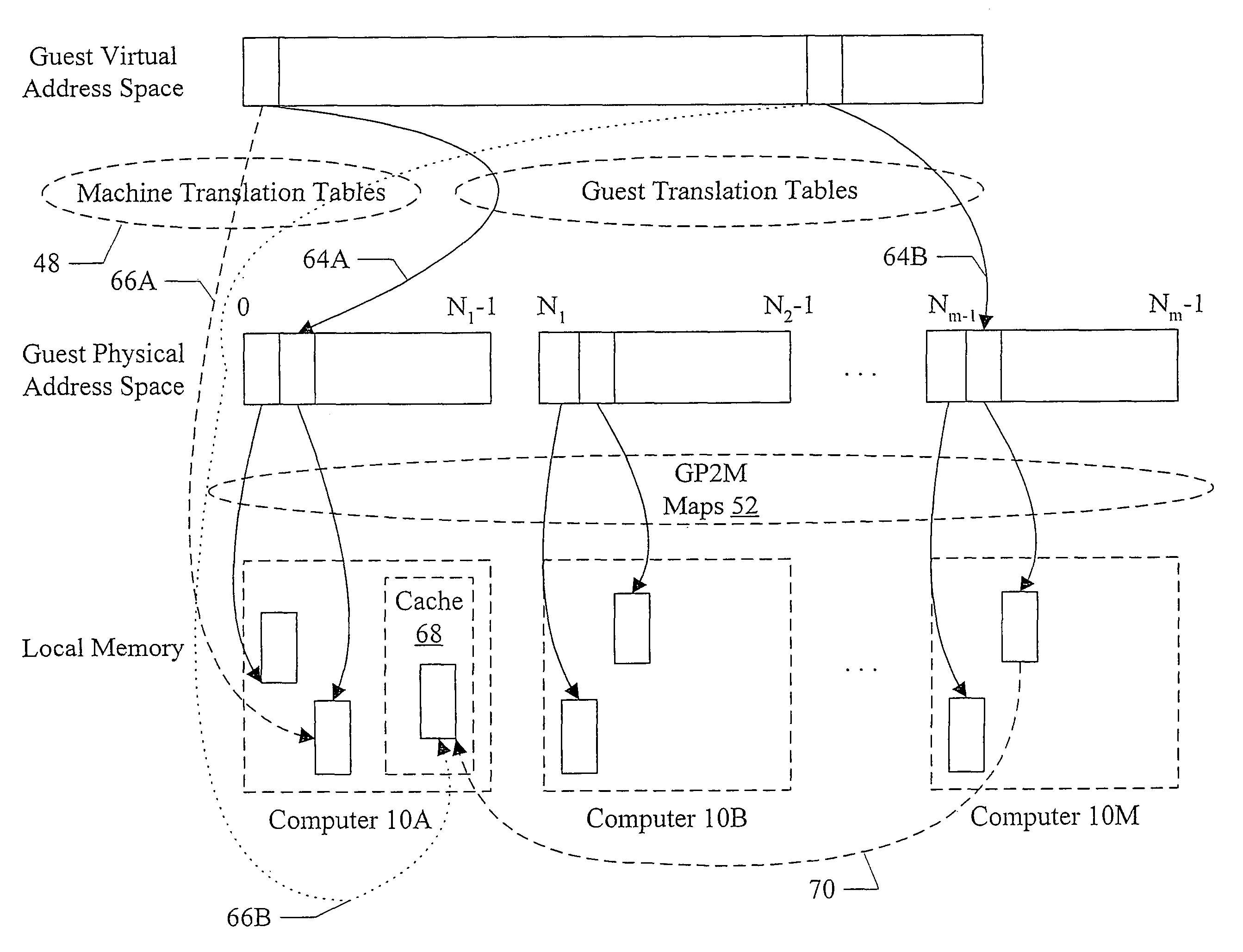

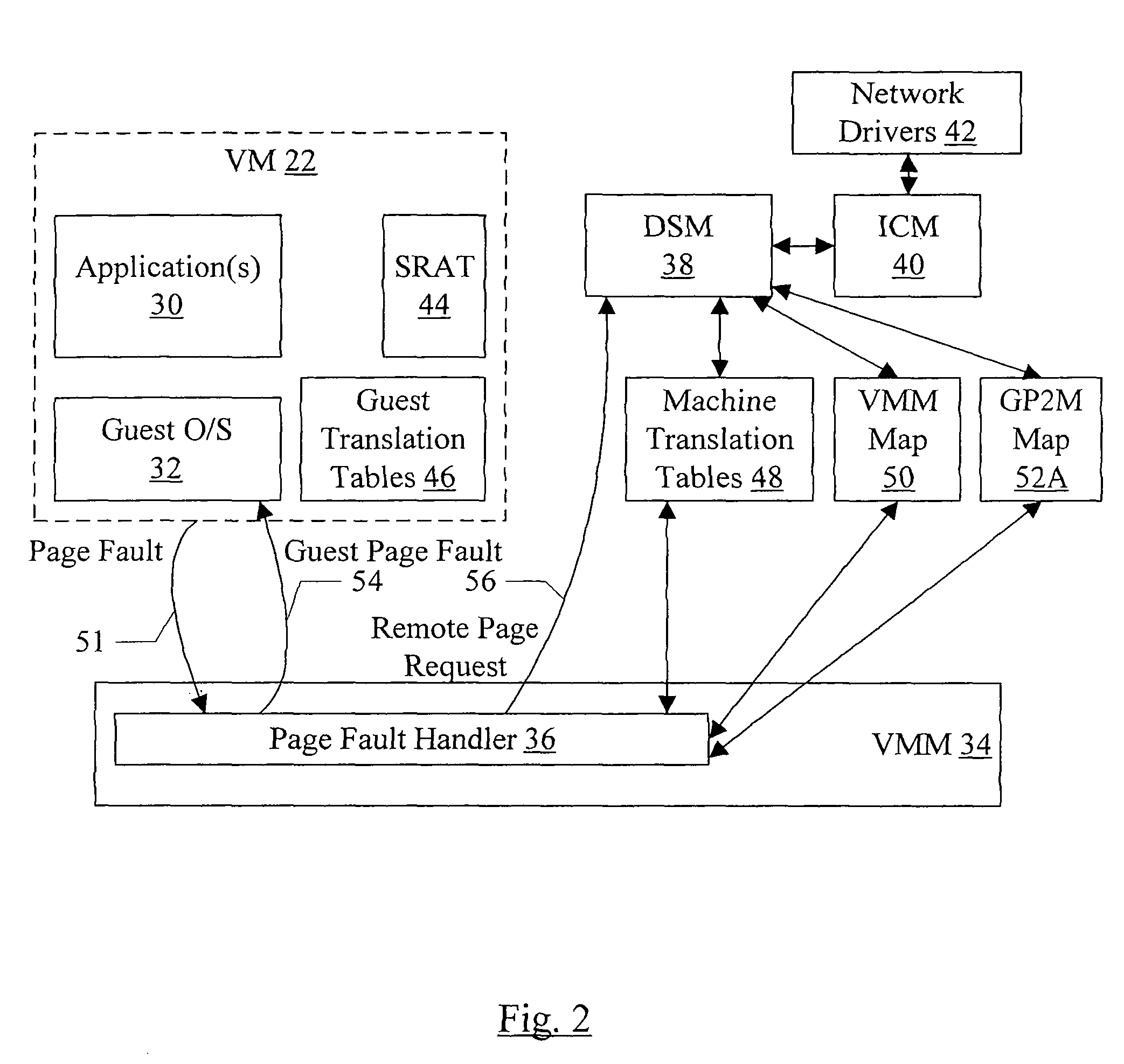

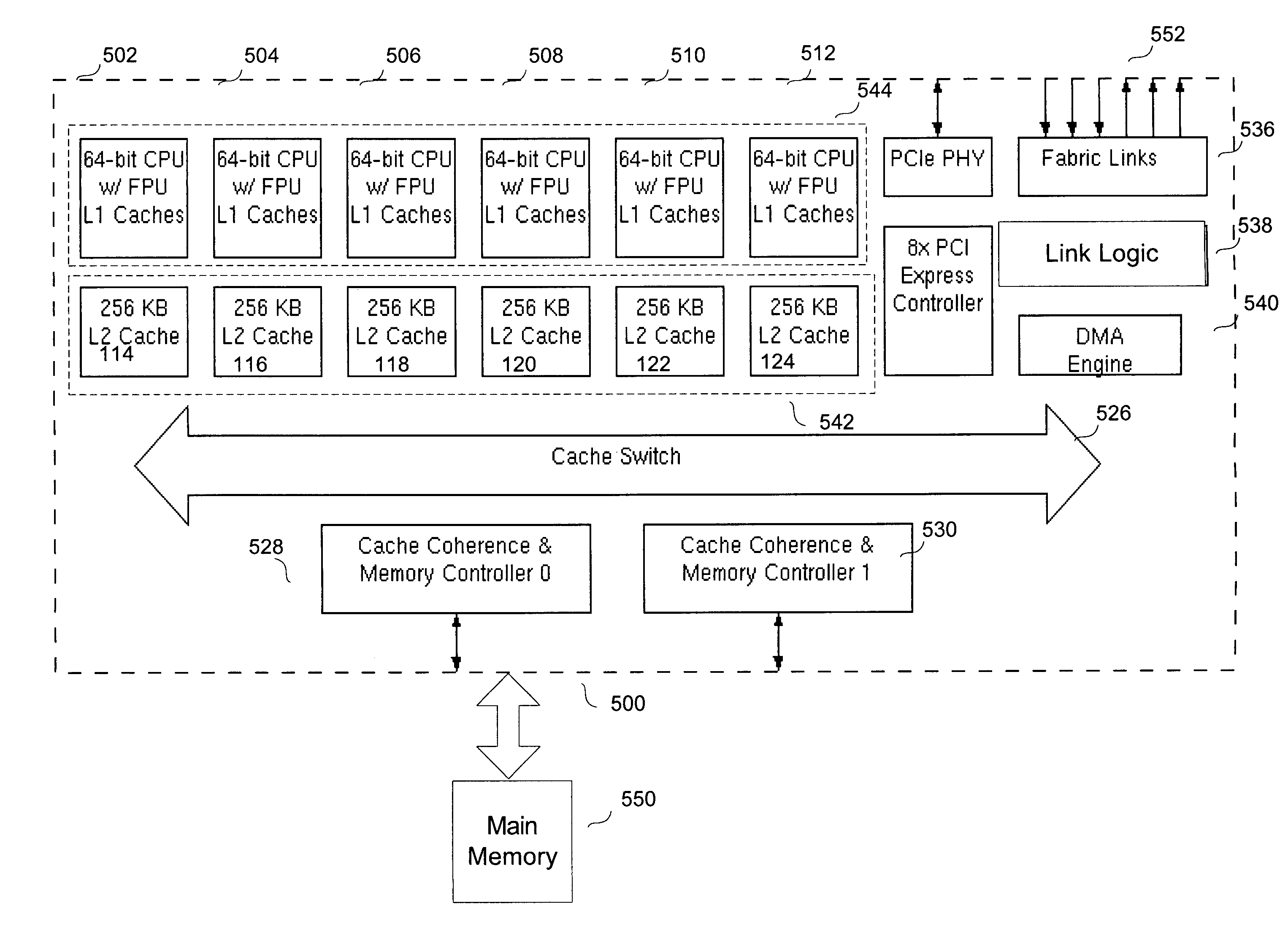

Distributed symmetric multiprocessing computing architecture

ActiveUS20110125974A1Shorten the progressMemory adressing/allocation/relocationDigital computer detailsSupercomputerGlobal address space

Example embodiments of the present invention includes systems and methods for implementing a scalable symmetric multiprocessing (shared memory) computer architecture using a network of homogeneous multi-core servers. The level of processor and memory performance achieved is suitable for running applications that currently require cache coherent shared memory mainframes and supercomputers. The architecture combines new operating system extensions with a high-speed network that supports remote direct memory access to achieve an effective global distributed shared memory. A distributed thread model allows a process running in a head node to fork threads in other (worker) nodes that run in the same global address space. Thread synchronization is supported by a distributed mutex implementation. A transactional memory model allows a multi-threaded program to maintain global memory page consistency across the distributed architecture. A distributed file access implementation supports non-contentious file I / O for threads. These and other functions provide a symmetric multiprocessing programming model consistent with standards such as Portable Operating System Interface for Unix (POSIX).

Owner:ANDERSON RICHARD S

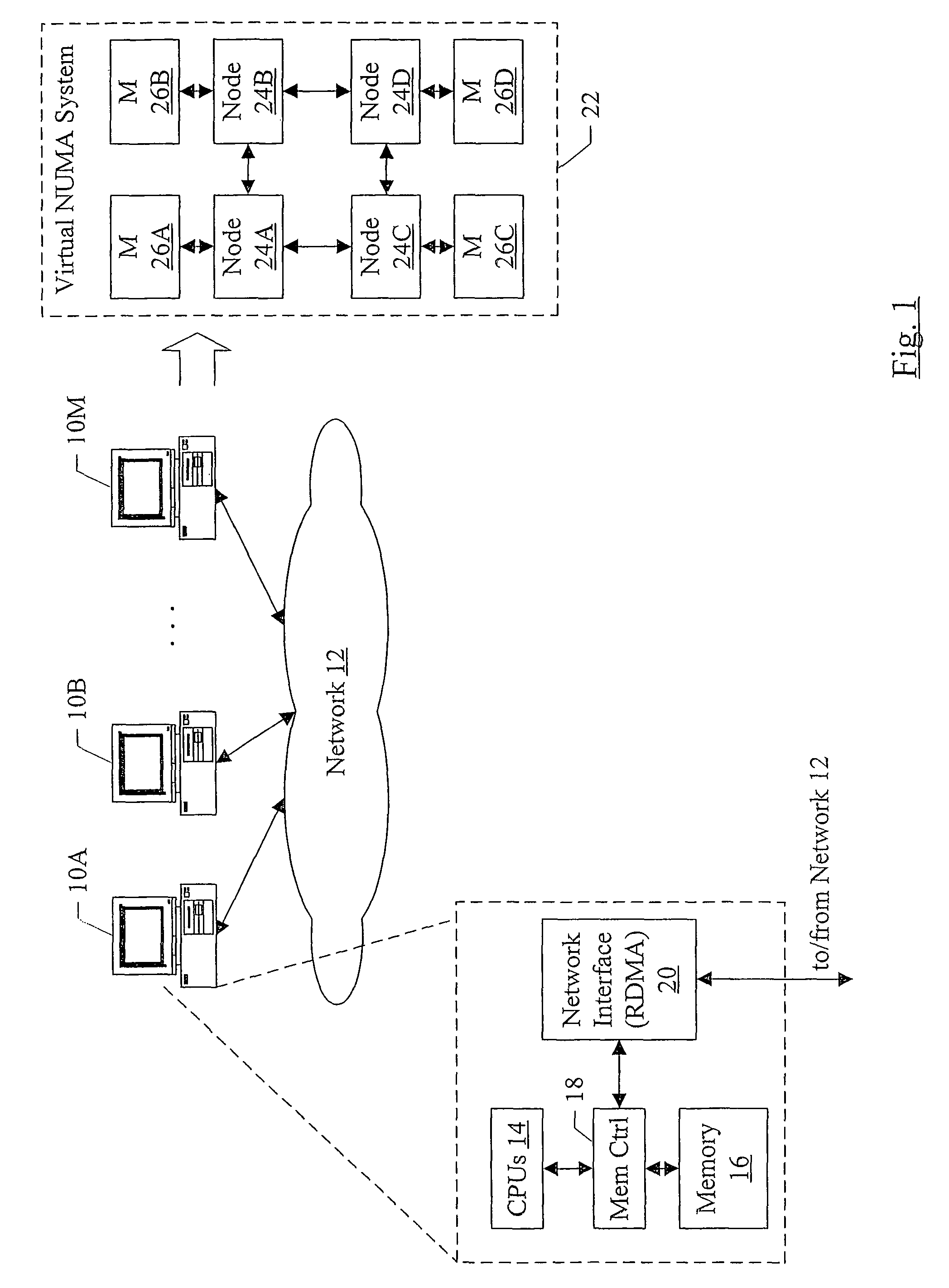

Efficient data transfer between computers in a virtual NUMA system using RDMA

InactiveUS7756943B1Remote access be reducedImprove performanceDigital computer detailsProgram controlPhysical addressRemote direct memory access

In one embodiment, a virtual NUMA machine is formed from multiple computers coupled to a network. Each computer includes a memory and a hardware device coupled to the memory. The hardware device is configured to communicate on the network, and more particularly is configured to perform remote direct memory access (RDMA) communications on the network to access the memory in other computers. A guest physical address space of the virtual NUMA machine spans a portion of the memories in each of the computers, and each computer serves as a home node for at least one respective portion of the guest physical address space. A software module on a first computer uses RDMA to coherently access data on a home node of the data without interrupting execution in the home node.

Owner:SYMANTEC OPERATING CORP

Early interrupt notification in RDMA and in DMA operations

InactiveUS20060045109A1Data switching by path configurationData processing systemDirect memory access

In a multinode data processing system in which data is transferred, via direct memory access (DMA) or in remote direct memory access (RDMA), from a source node to at least one destination node through communication adapters coupling each node to a network or switch, a method is provided in which interrupt handling is overlapped with data transfer so as to allow interrupt processing overhead to run in parallel at the destination node with the movement of data to provide performance benefits. The method is also applicable to situations involving multiple interrupt levels corresponding to multithreaded handling capabilities.

Owner:IBM CORP

System and method for remote direct memory access without page locking by the operating system

InactiveUS7533197B2Digital computer detailsElectric digital data processingDirect memory accessOperational system

A multi-node computer system with a plurality of interconnected processing nodes, including a method of using DMA engines without page locking by the operating system. The method includes a sending node with a first virtual address space and a receiving node with a second virtual address space. Performing a DMA data transfer operation between the first virtual address space on the sending node and the second virtual address space on the receiving node via a DMA engine, and if the DMA operation refers to a virtual address within the second virtual address space that is not in physical memory, causing the DMA operation to fail. The method includes causing the receiving node to map the referenced virtual address within the second virtual address space to a physical address, and causing the sending node to retry the DMA operation, wherein the retried DMA operation is performed without page locking.

Owner:SICORTEX

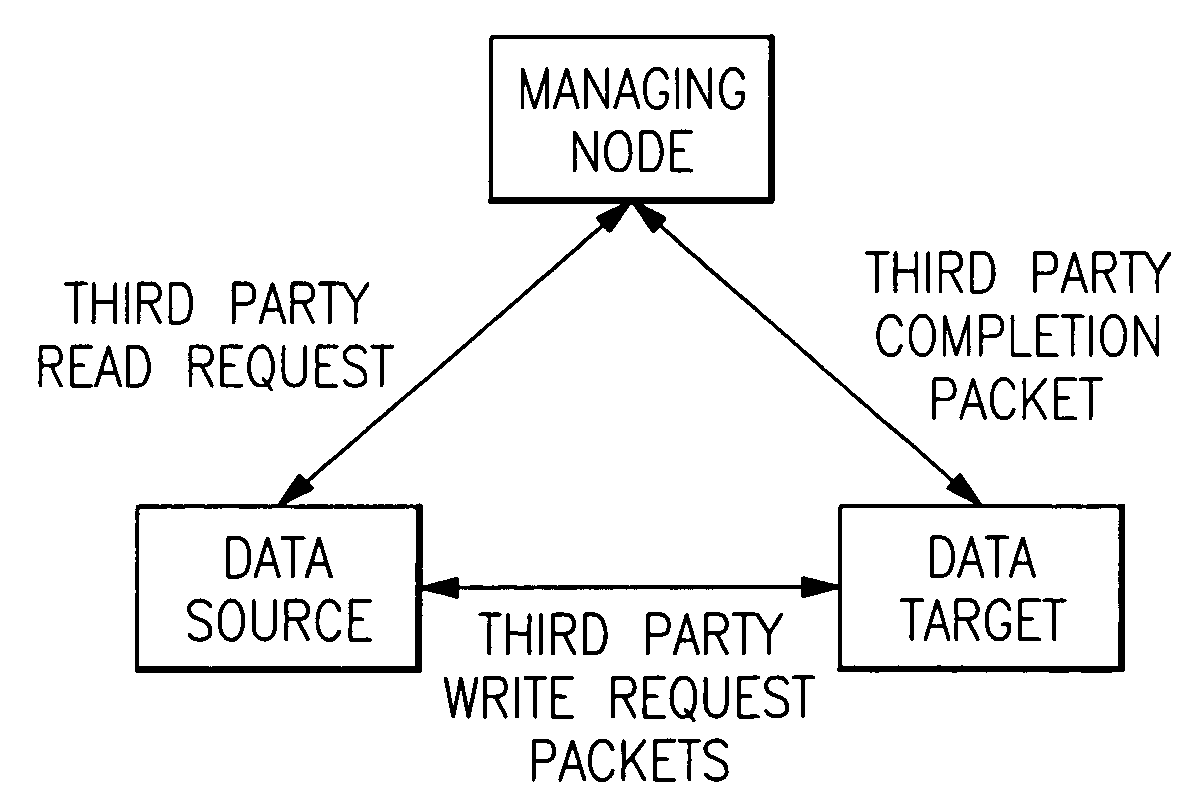

Method for third party, broadcast, multicast and conditional RDMA operations

ActiveUS7478138B2Data switching by path configurationMultiple digital computer combinationsData processing systemThird party

In a multinode data processing system in which nodes exchange information over a network or through a switch, the mechanism which enables out-of-order data transfer via Remote Direct Memory Access (RDMA) also provides a corresponding ability to carry out broadcast operations, multicast operations, third party operations and conditional RDMA operations. In a broadcast operation a source node transfers data packets in RDMA fashion to a plurality of destination nodes. Multicast operation works similarly except that distribution is selective. In third party operations a single central node in a cluster or network manages the transfer of data in RDMA fashion between other nodes or creates a mechanism for allowing a directed distribution of data between nodes. In conditional operation mode the transfer of data is conditioned upon one or more events occurring in either the source node or in the destination node.

Owner:IBM CORP

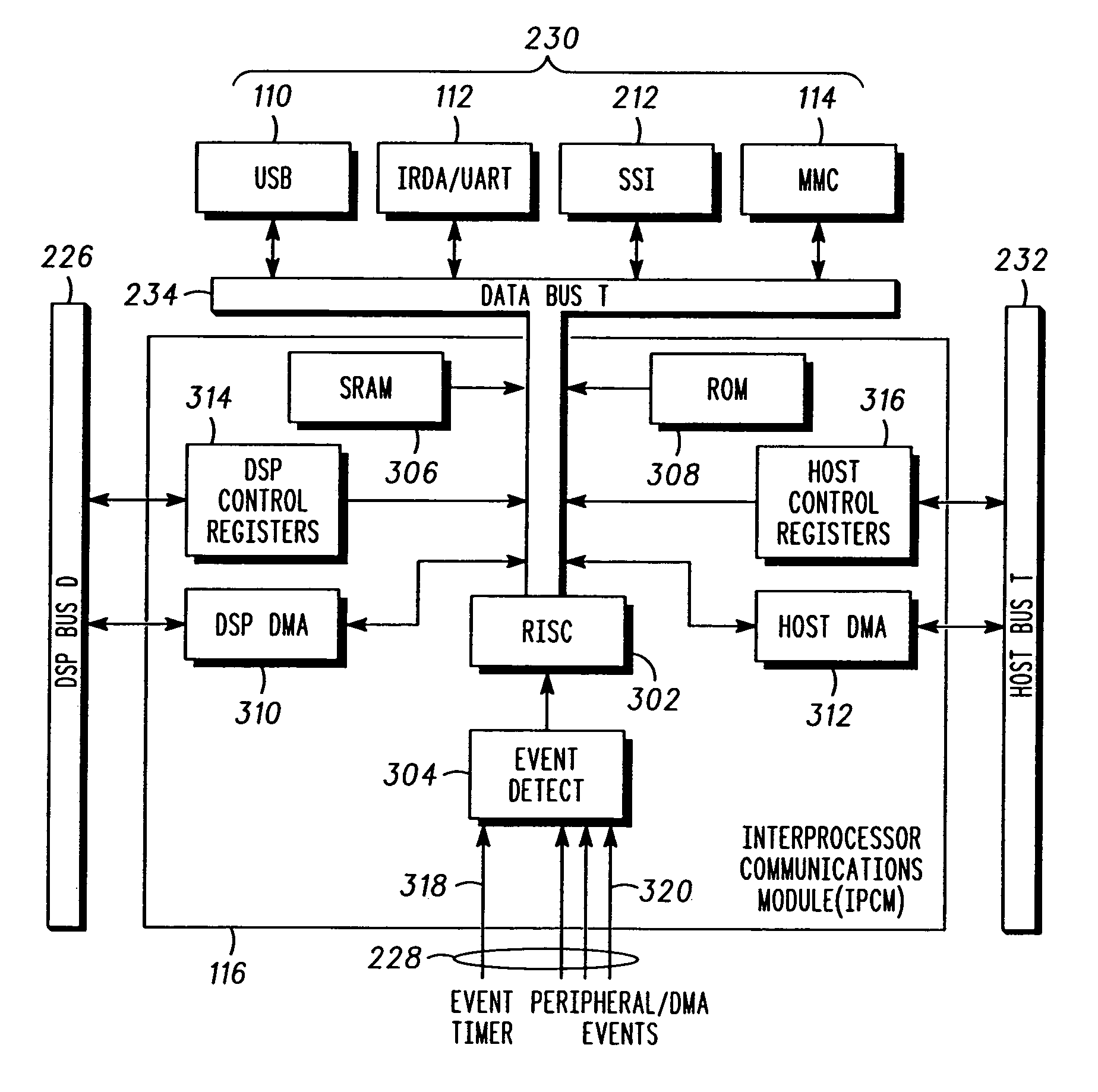

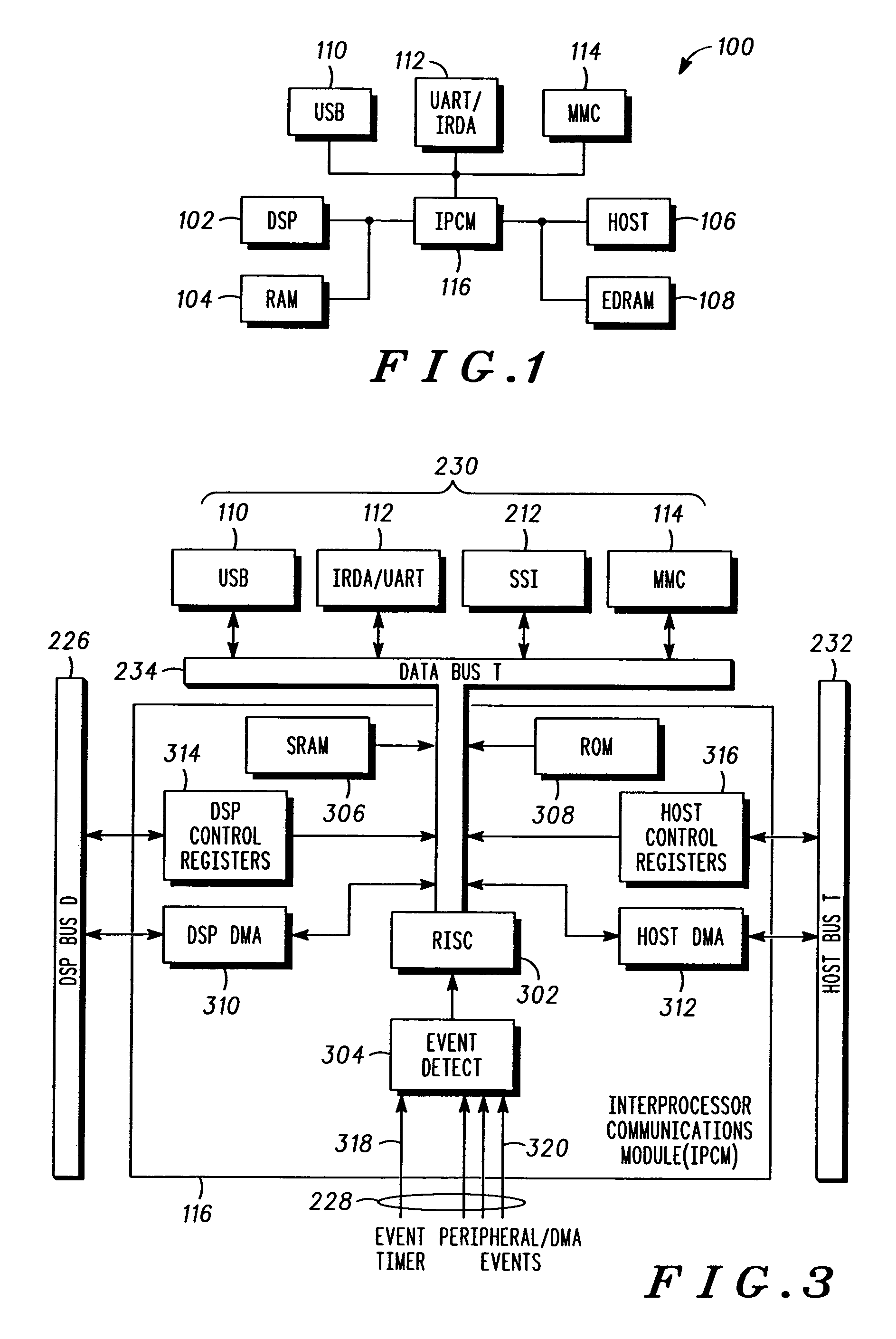

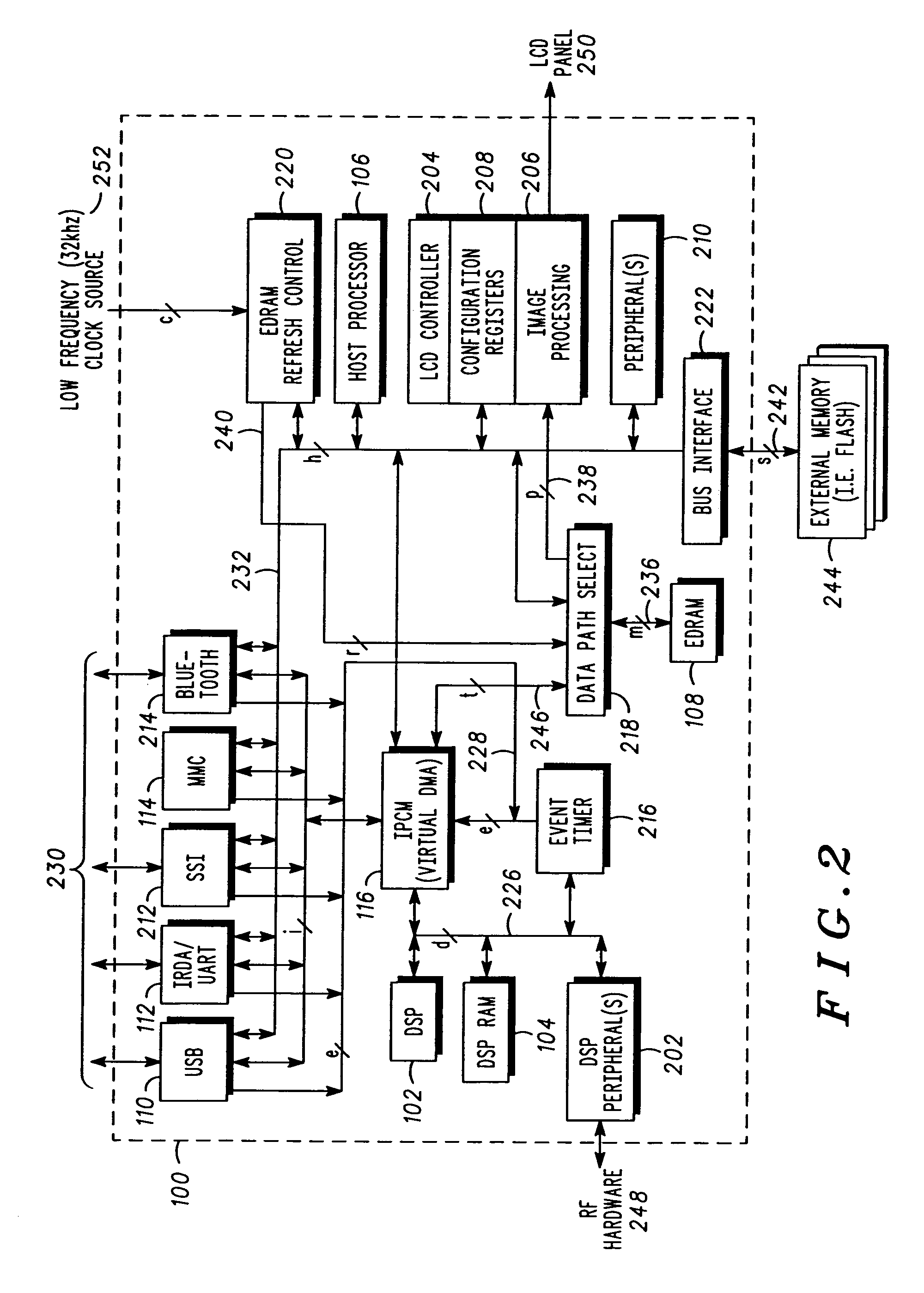

Integrated processor platform supporting wireless handheld multi-media devices

InactiveUS7089344B1Energy efficient ICTEnergy efficient computingDirect memory accessRemote direct memory access

A direct memory access system consists of a direct memory access controller establishing a direct memory access data channel and including a first interface for coupling to a memory. A second interface is for coupling to a plurality of nodes. And a processor is coupled to the direct memory access controller and coupled to the second interface, wherein the processor configures the direct memory access data channel to transfer data between a programmably selectable respective one or more of the plurality of nodes and the memory. In some embodiments, the plurality of nodes are a digital signal processor memory and a host processor memory of a multi-media processor platform to be implemented in a wireless multi-media handheld telephone.

Owner:GOOGLE TECH HLDG LLC

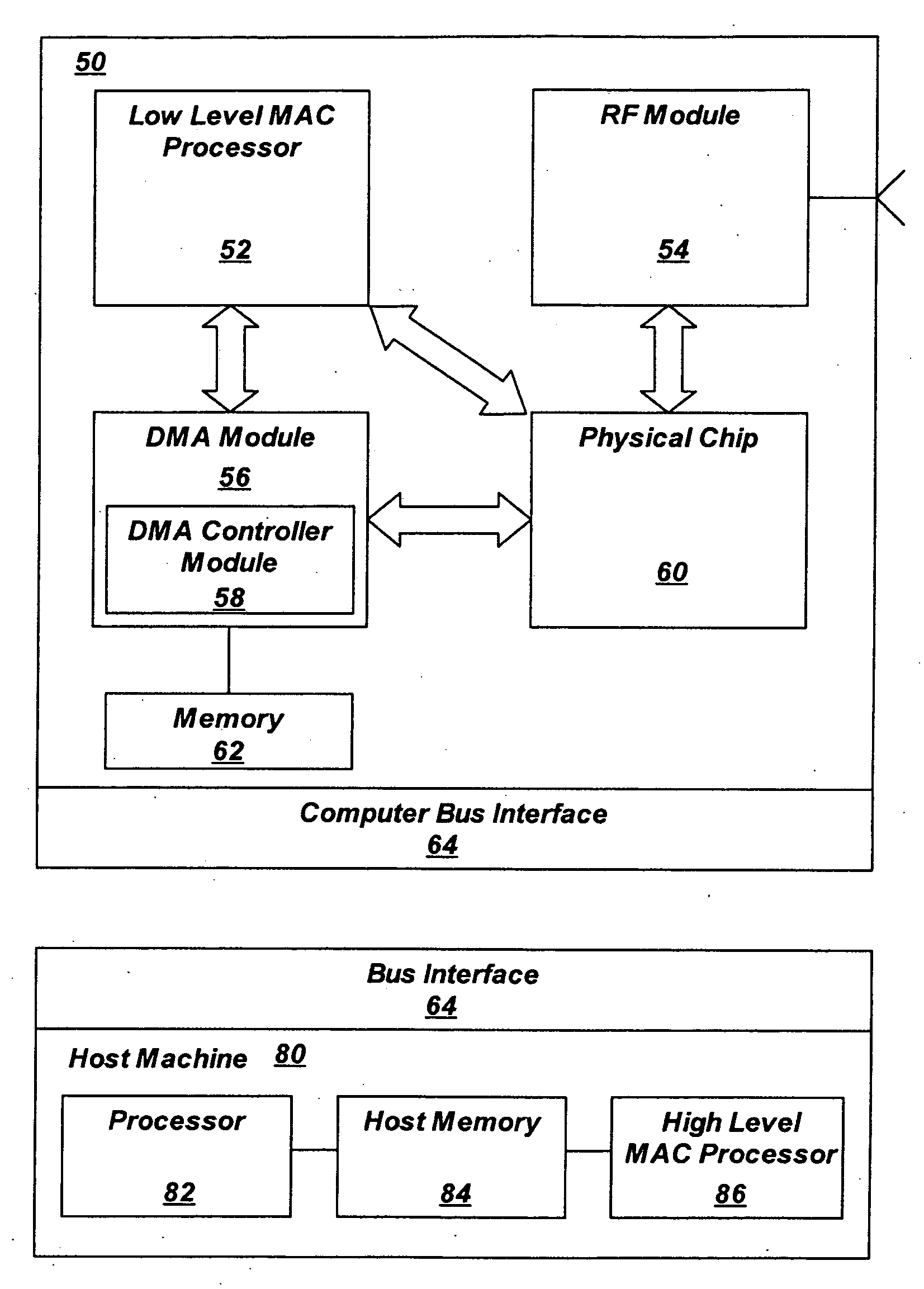

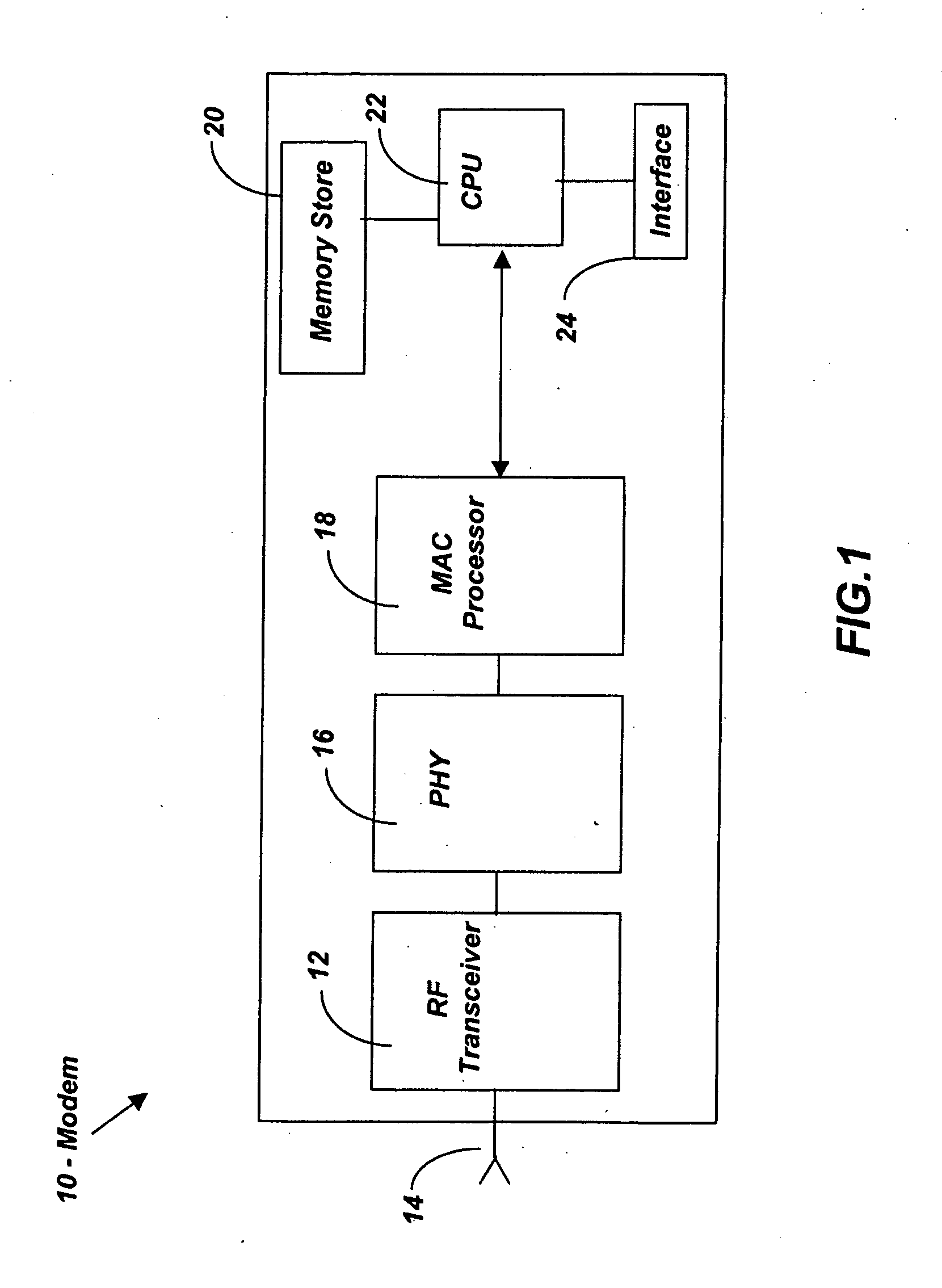

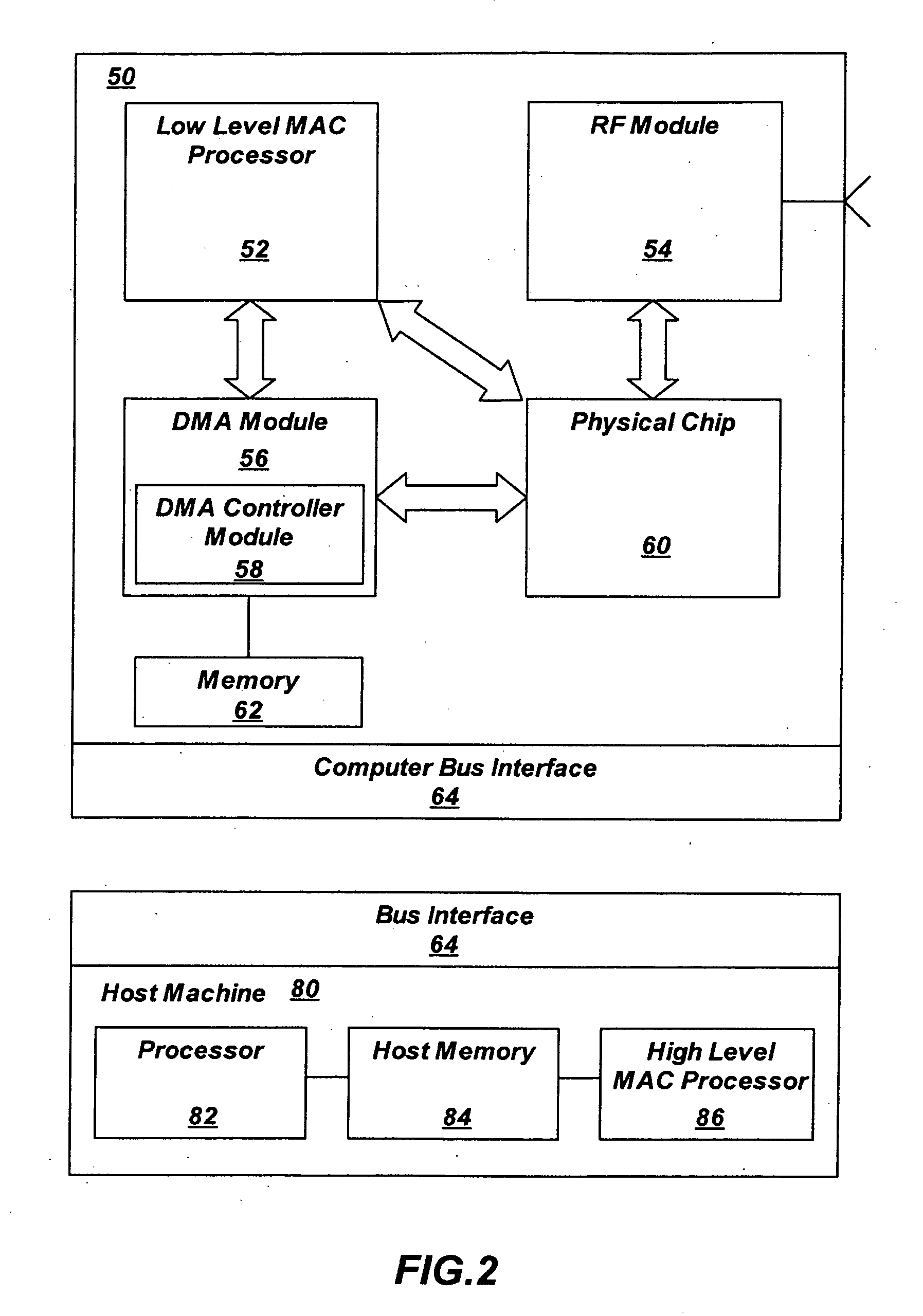

Wireless modem

InactiveUS20070147425A1Time-division multiplexWireless network protocolsModem deviceDirect memory access

A wireless modem includes an RF module adapted to be connected to an antenna, comprising an intermediate frequency input and an intermediate frequency output, a PHY module receiving the intermediate frequency output and generating the intermediate frequency input, and a low level media access control (LL-MAC) module connected to the PHY and using a direct memory access (DMA) connection for data exchange with a high level media access control module (HL-MAC). A direct memory access (DMA) to computer bus interface module is adapted to be connected to the DMA connection of the LL-MAC and to a computer bus. The DMA interface module responds to the LL-MAC as memory and is adapted to cooperate with the HL-MAC connected to the computer bus. The architecture allows for a modem to be placed on a mini-PCI module with the HL-MAC residing in software on a host computer.

Owner:WAVESAT

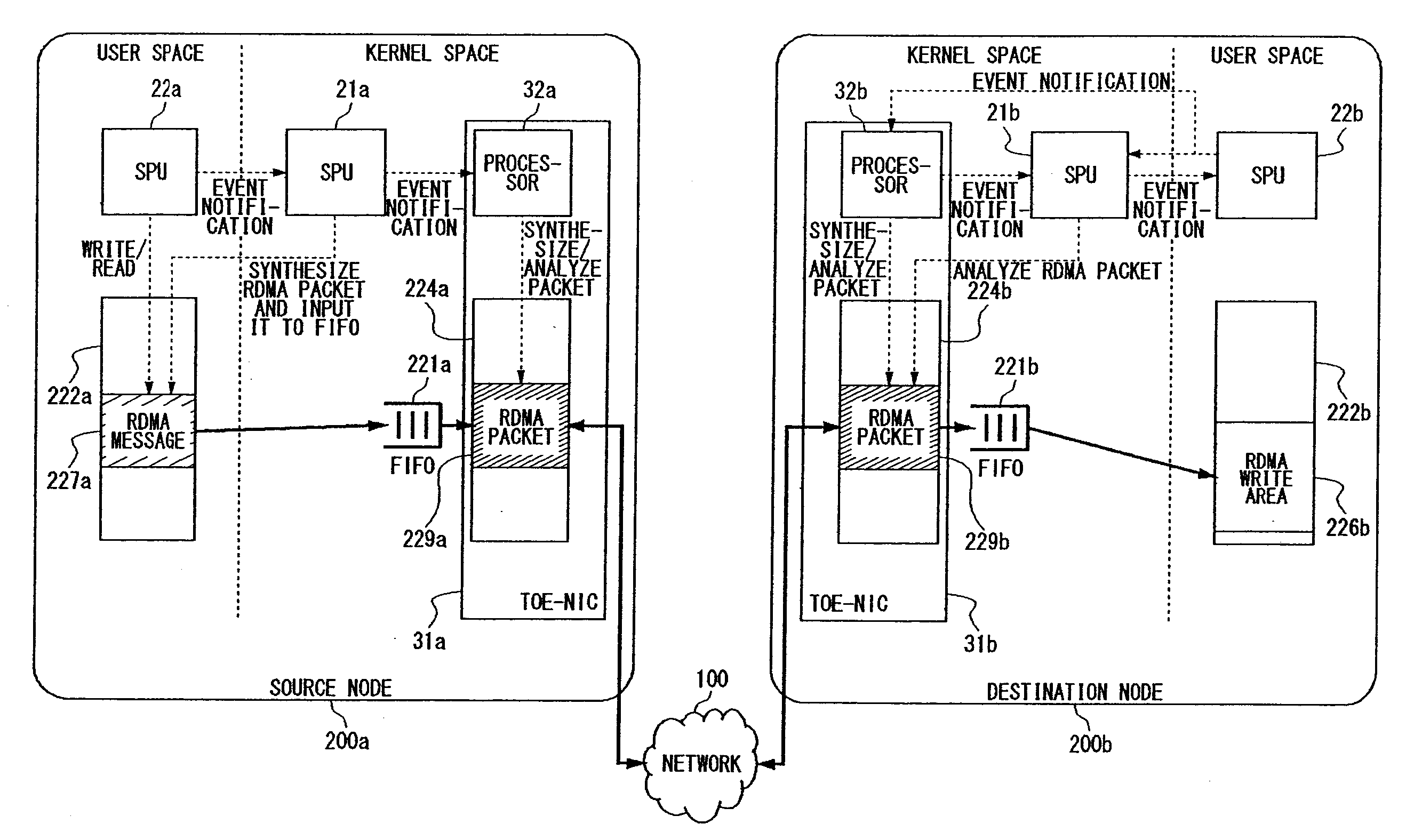

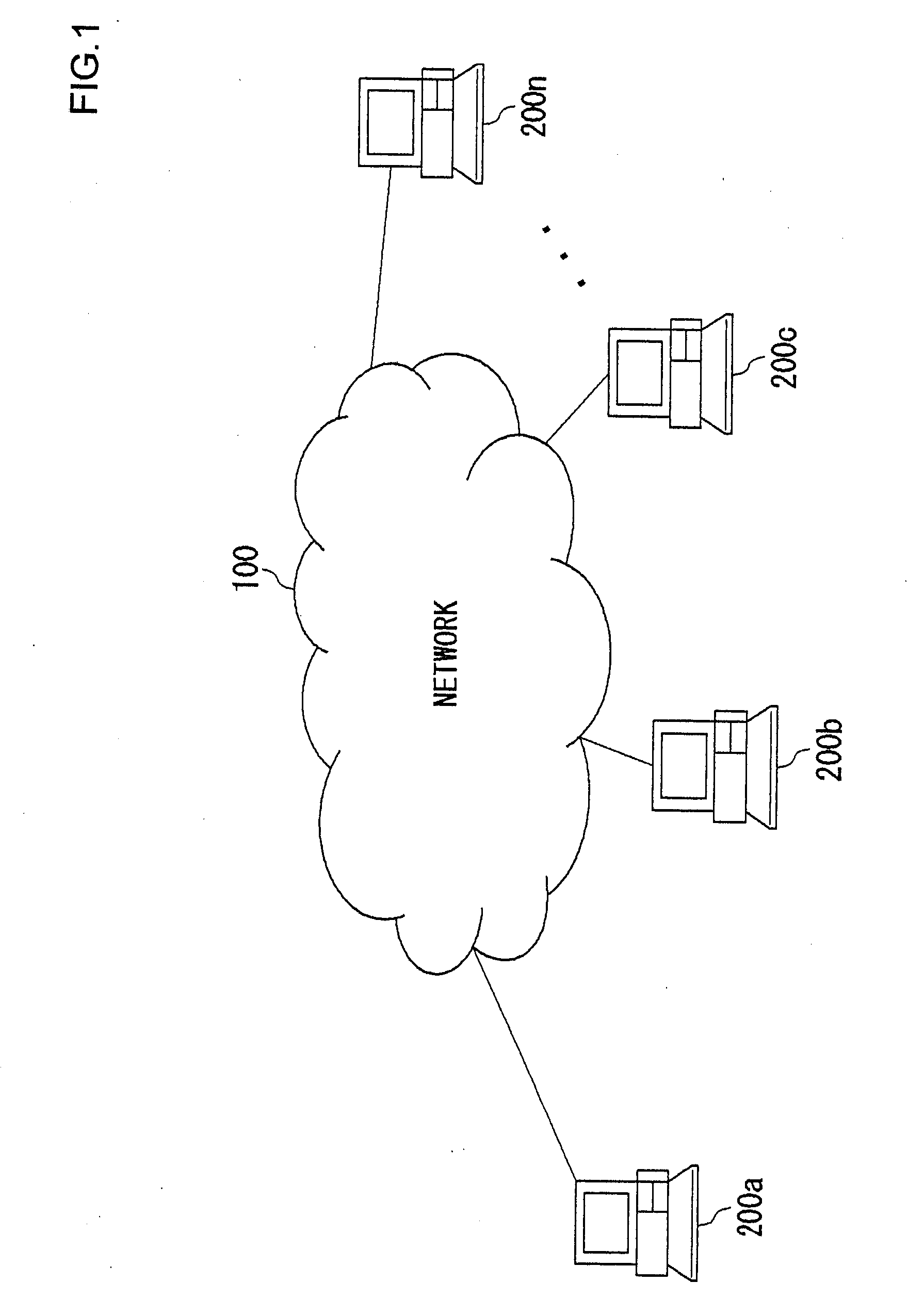

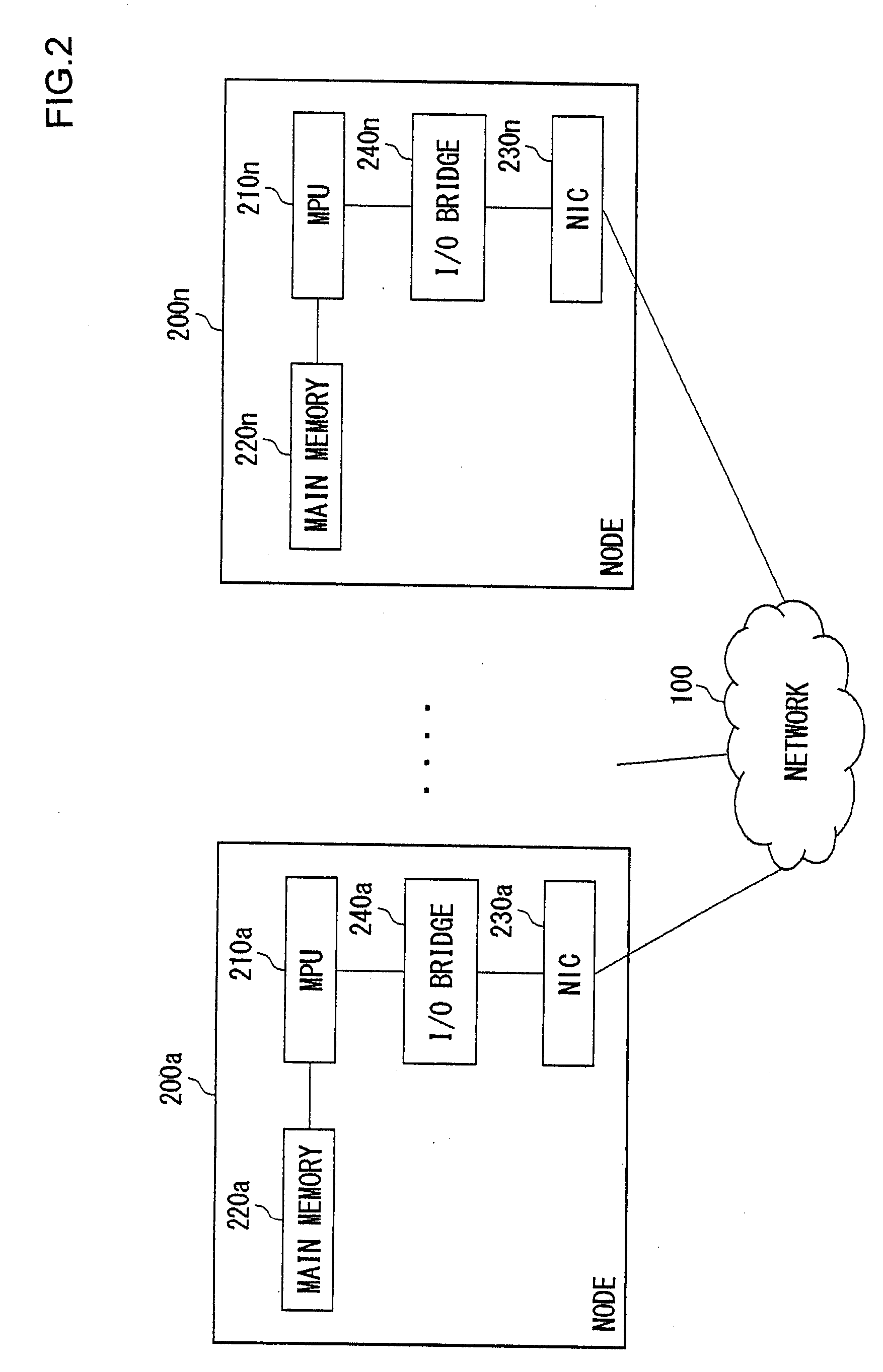

Network Processor System and Network Protocol Processing Method

ActiveUS20080013448A1Error preventionFrequency-division multiplex detailsNetworking protocolProtocol processing

A multiprocessor system is provided for emulating remote direct memory access (RDMA) functions. The first sub-processing unit (SPU) generates a message to be transmitted. The second SPU is a processor for emulating the RDMA functions. Upon receiving a notification from the first SPU, the second SPU synthesizes the message into a packet according to a RDMA protocol. The third SPU is a processor for performing a TCP / IP protocol processing. Upon receiving a notification from the second SPU, the third SPU synthesizes the packet generated according to the RDMA protocol into a TCP / IP packet and then sends it out from a network interface card.

Owner:SONY COMPUTER ENTERTAINMENT INC

Method and system for a multi-stream tunneled marker-based protocol data unit aligned protocol

InactiveUS20060101225A1Memory adressing/allocation/relocationData switching networksCommunications systemRemote direct memory access

Aspects of a system for transporting information via a communications system may include a processor that enables establishing, from a local remote direct memory access (RDMA) enabled network interface card (RNIC), one or more communication channels, based on the transmission control protocol (TCP), between the local RNIC and at least one remote RNIC via at least one network. The processor may enable establishing at least one RDMA connection between one of a plurality of local RDMA endpoints and at least one remote RDMA endpoint utilizing the one or more communication channels. The processor may further enable communicating messages via the established RDMA connections between one of the plurality of local RDMA endpoints and at least one remote RDMA endpoint independent of whether the messages are in-sequence or out-of-sequence.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com