Patents

Literature

59 results about "Remote memory access" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

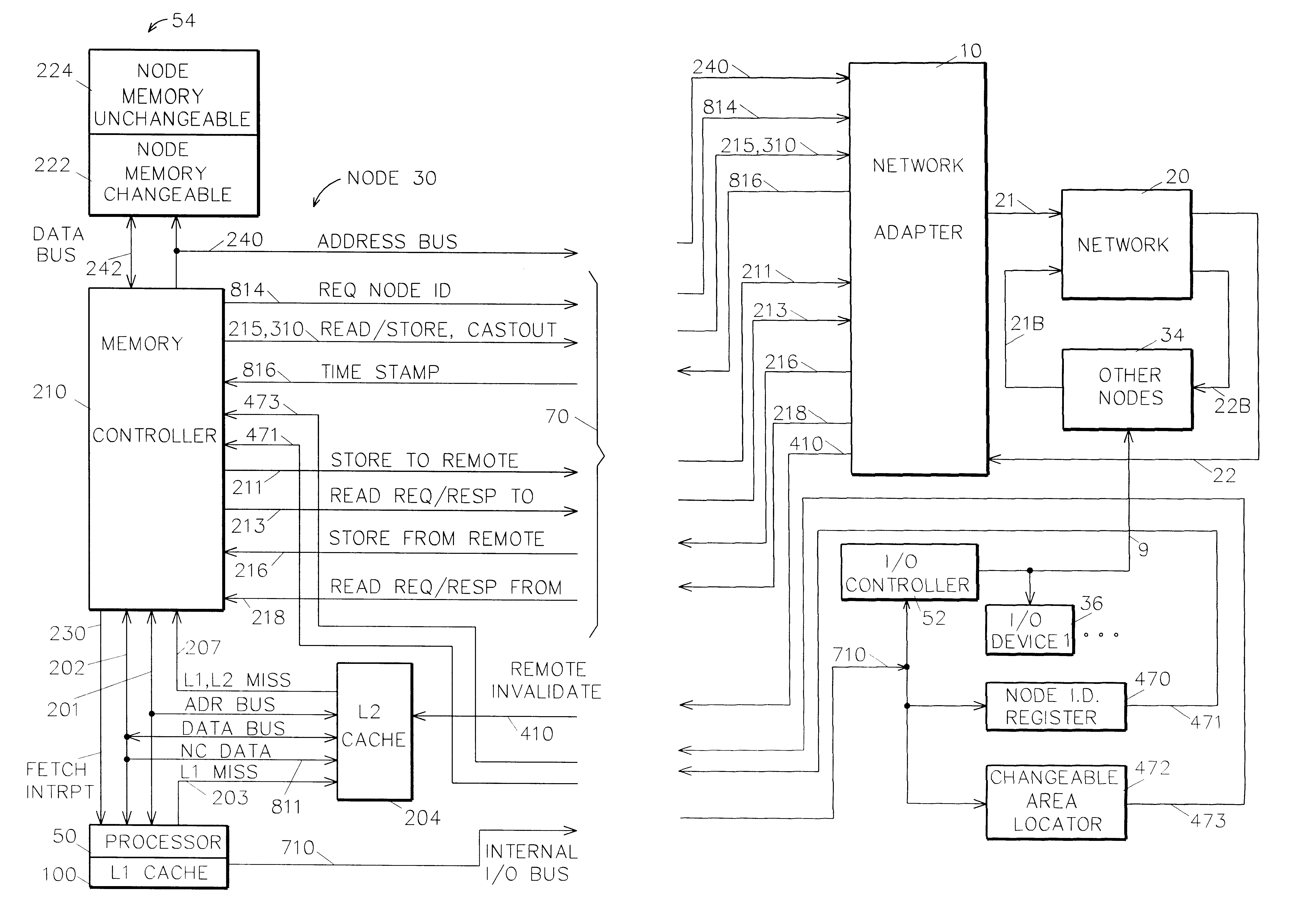

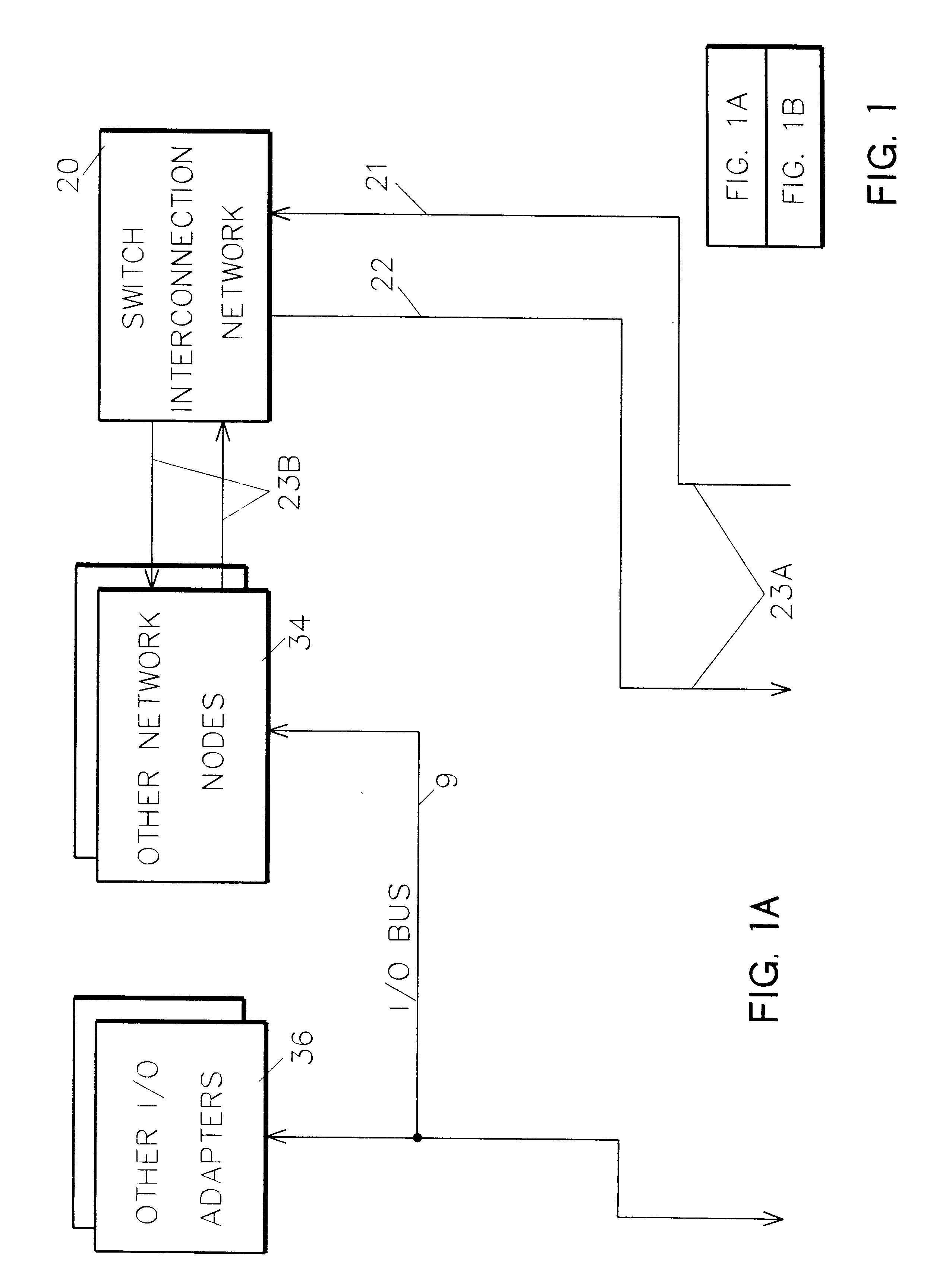

Memory controller for controlling memory accesses across networks in distributed shared memory processing systems

InactiveUS6044438AMore efficient cache coherent systemData processing applicationsMemory adressing/allocation/relocationRemote memory accessRemote direct memory access

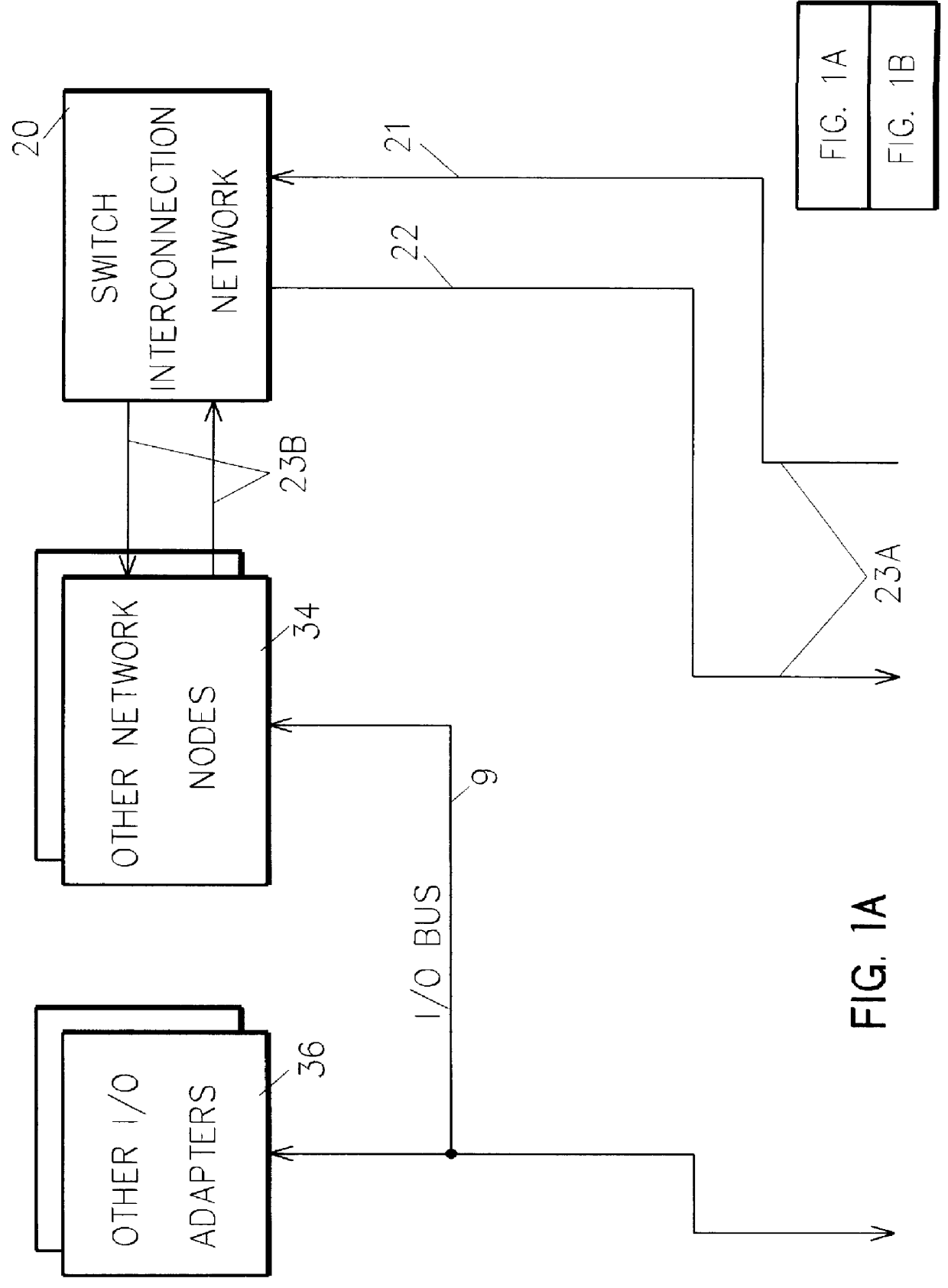

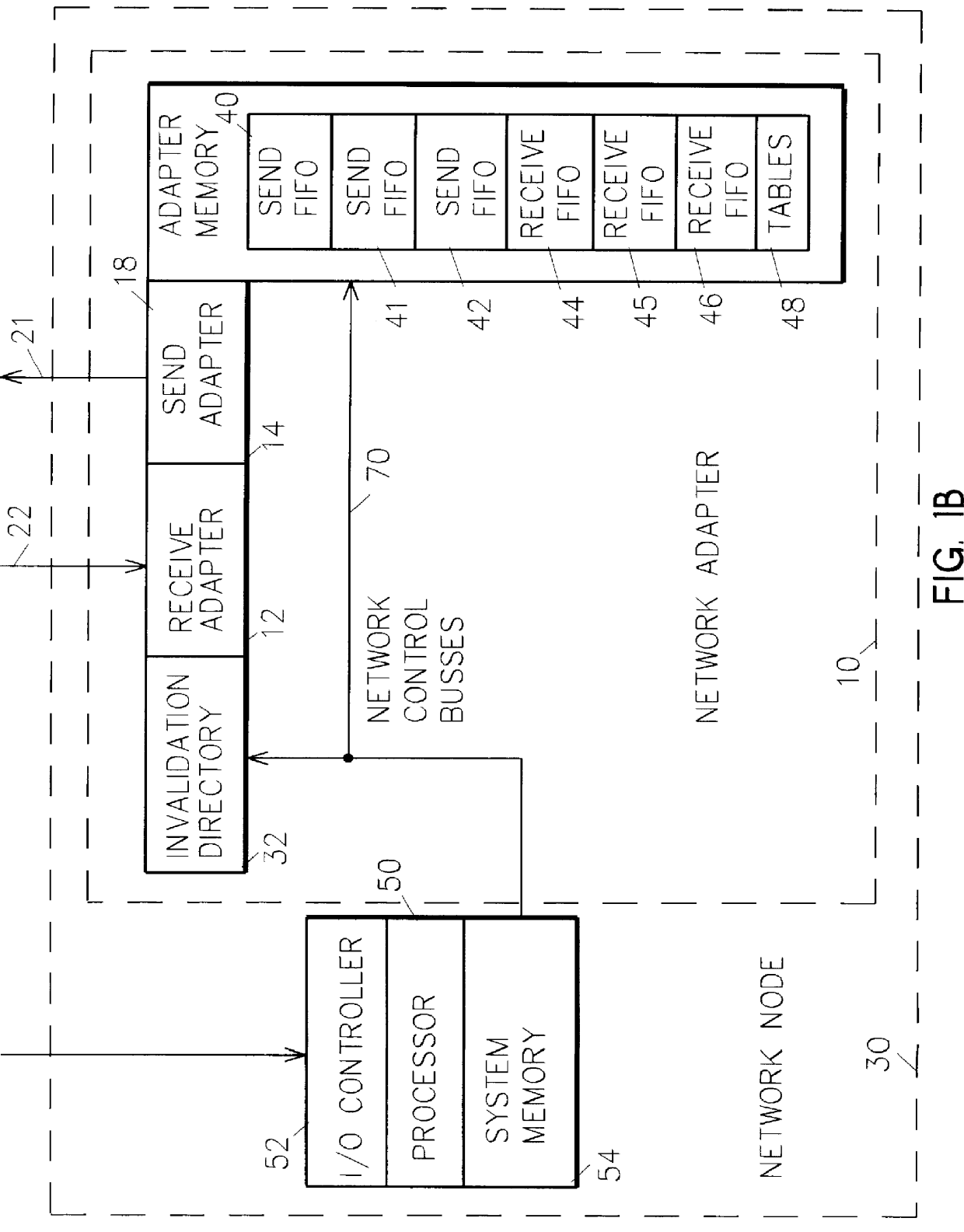

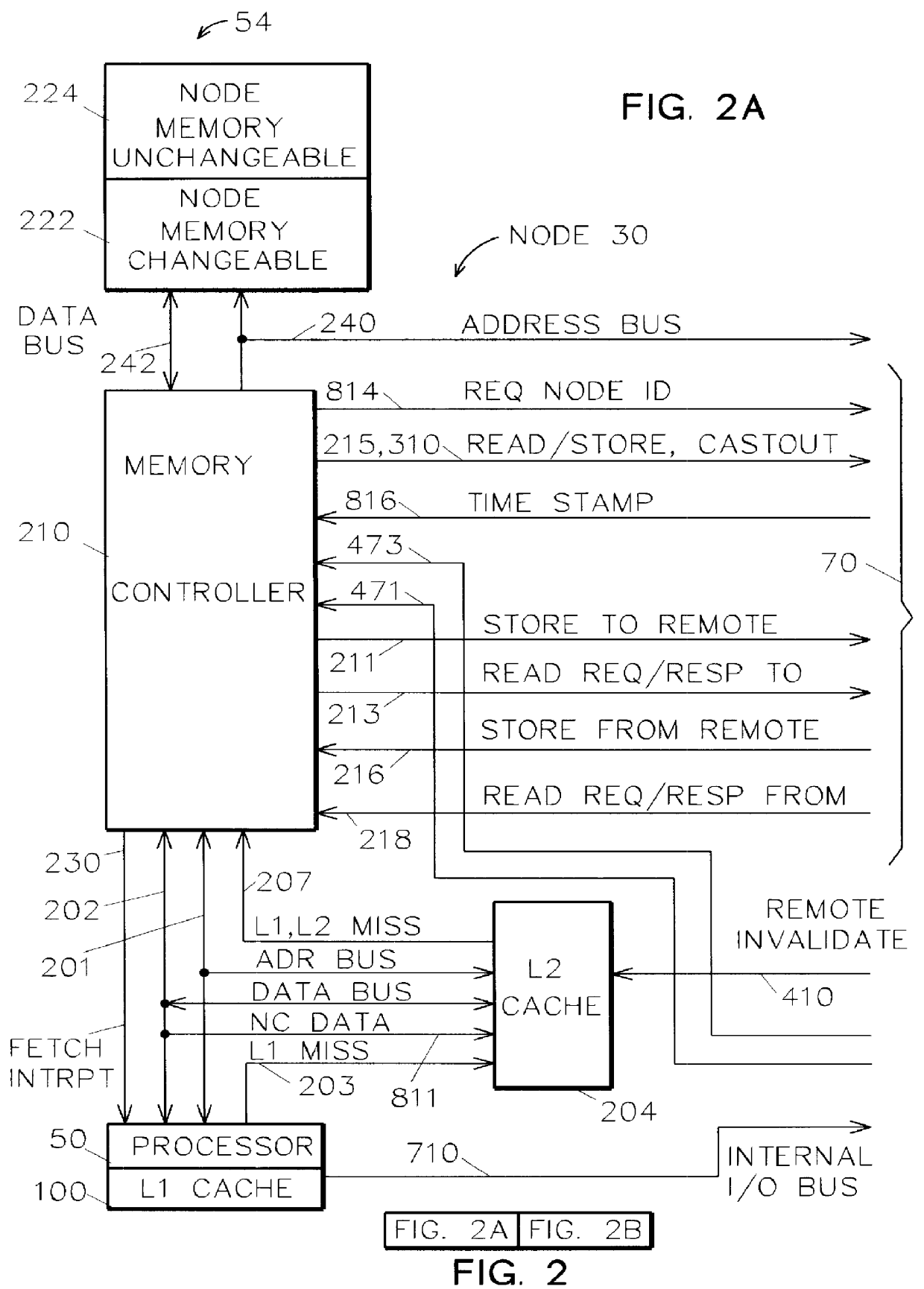

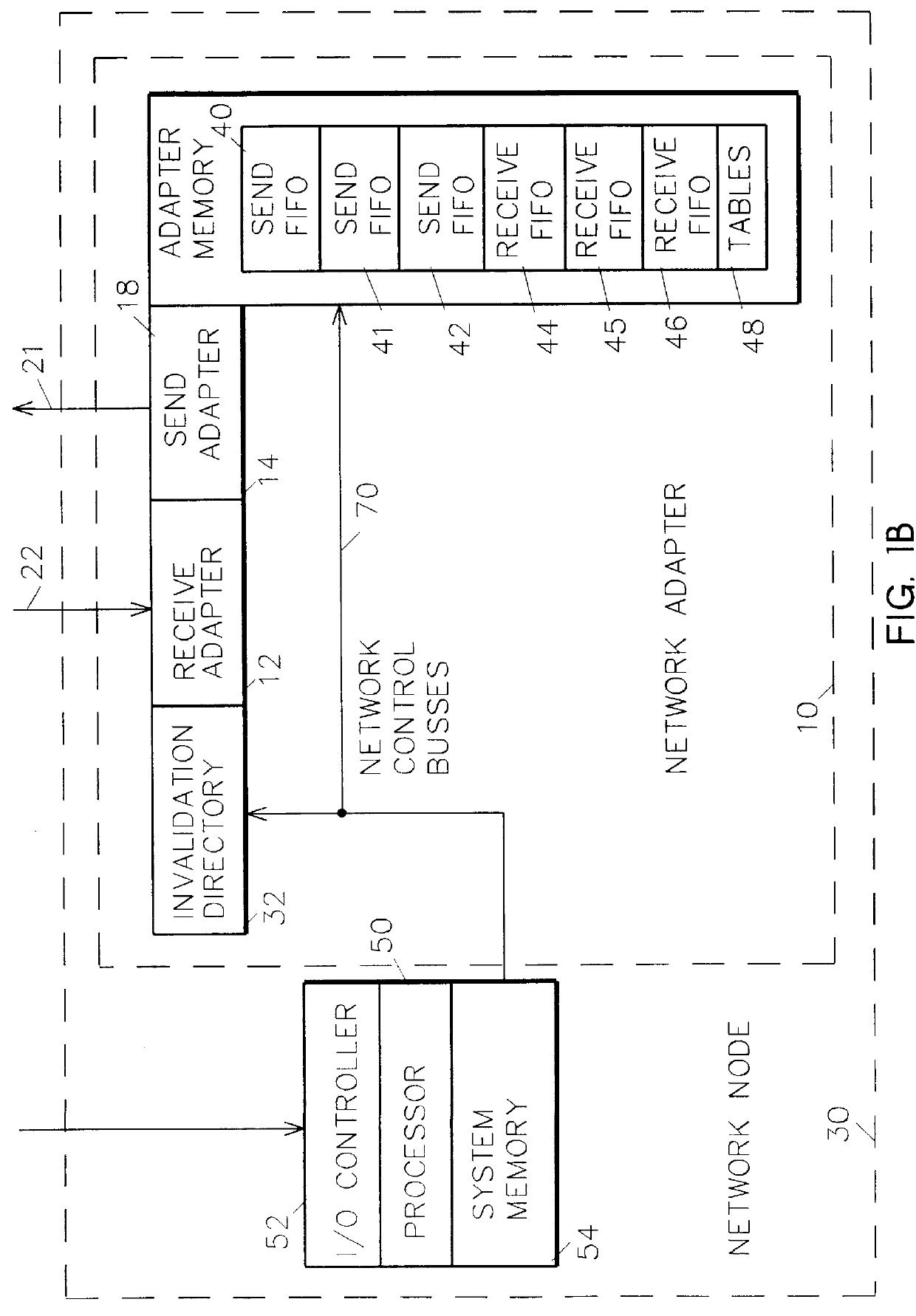

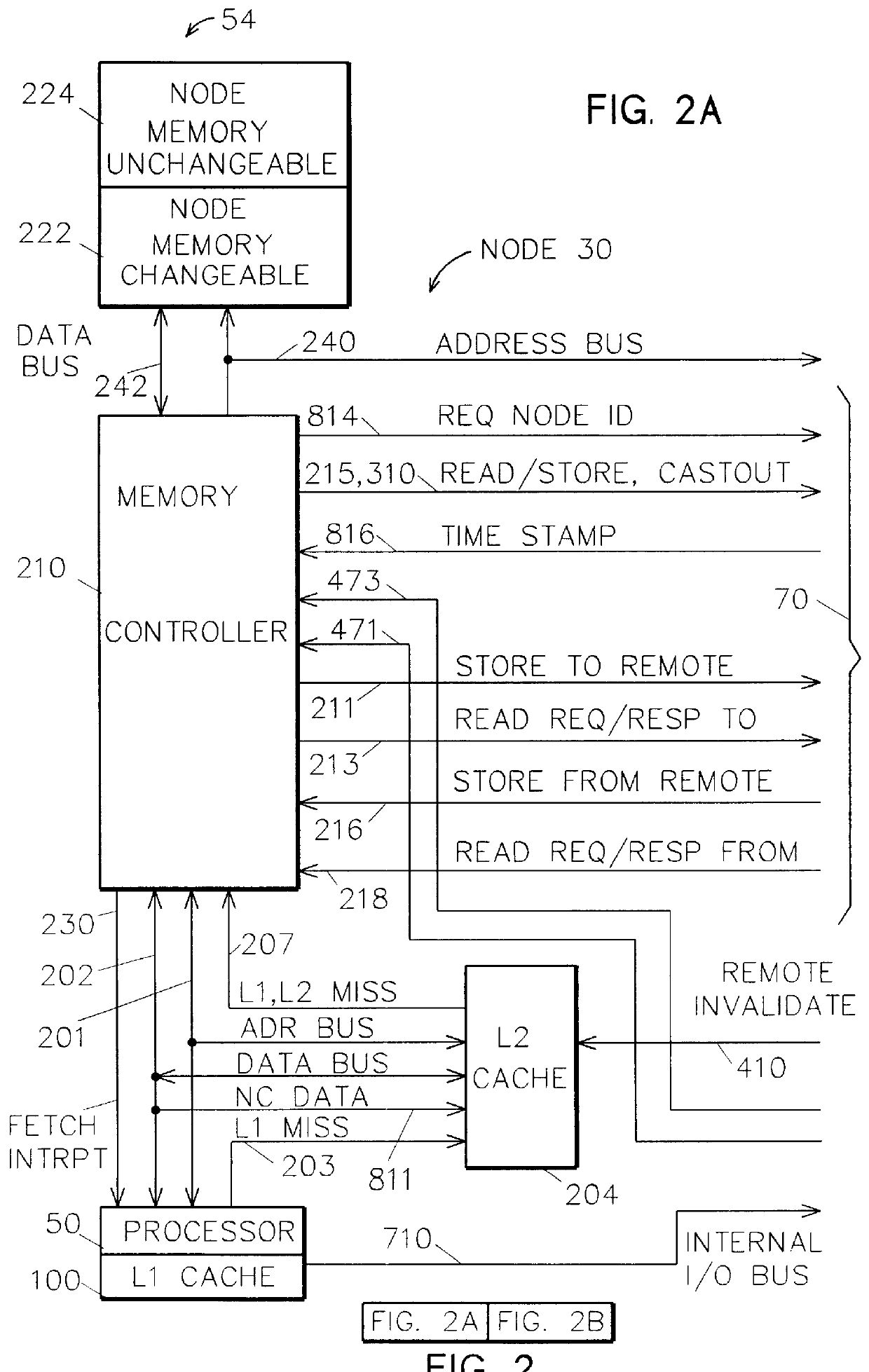

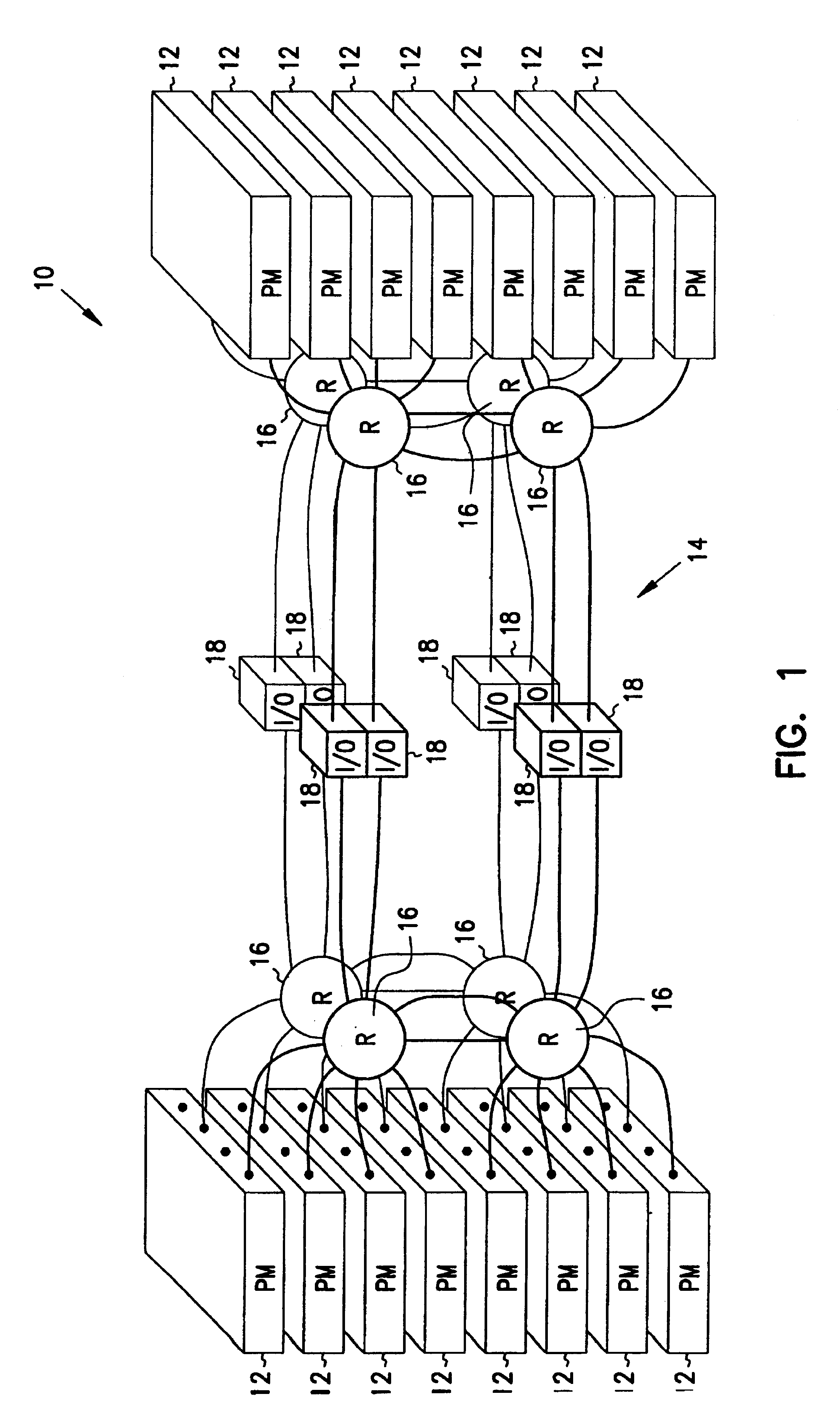

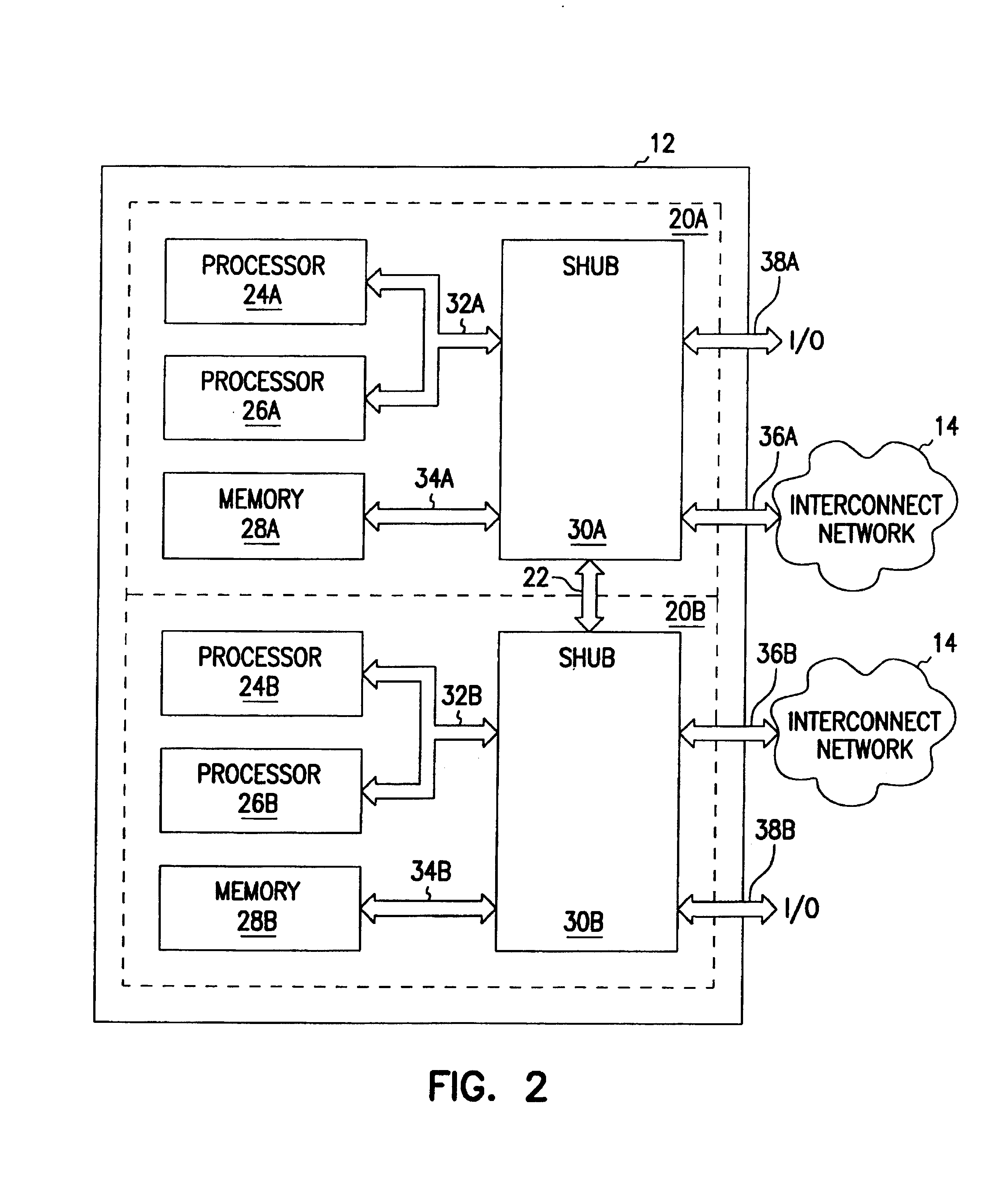

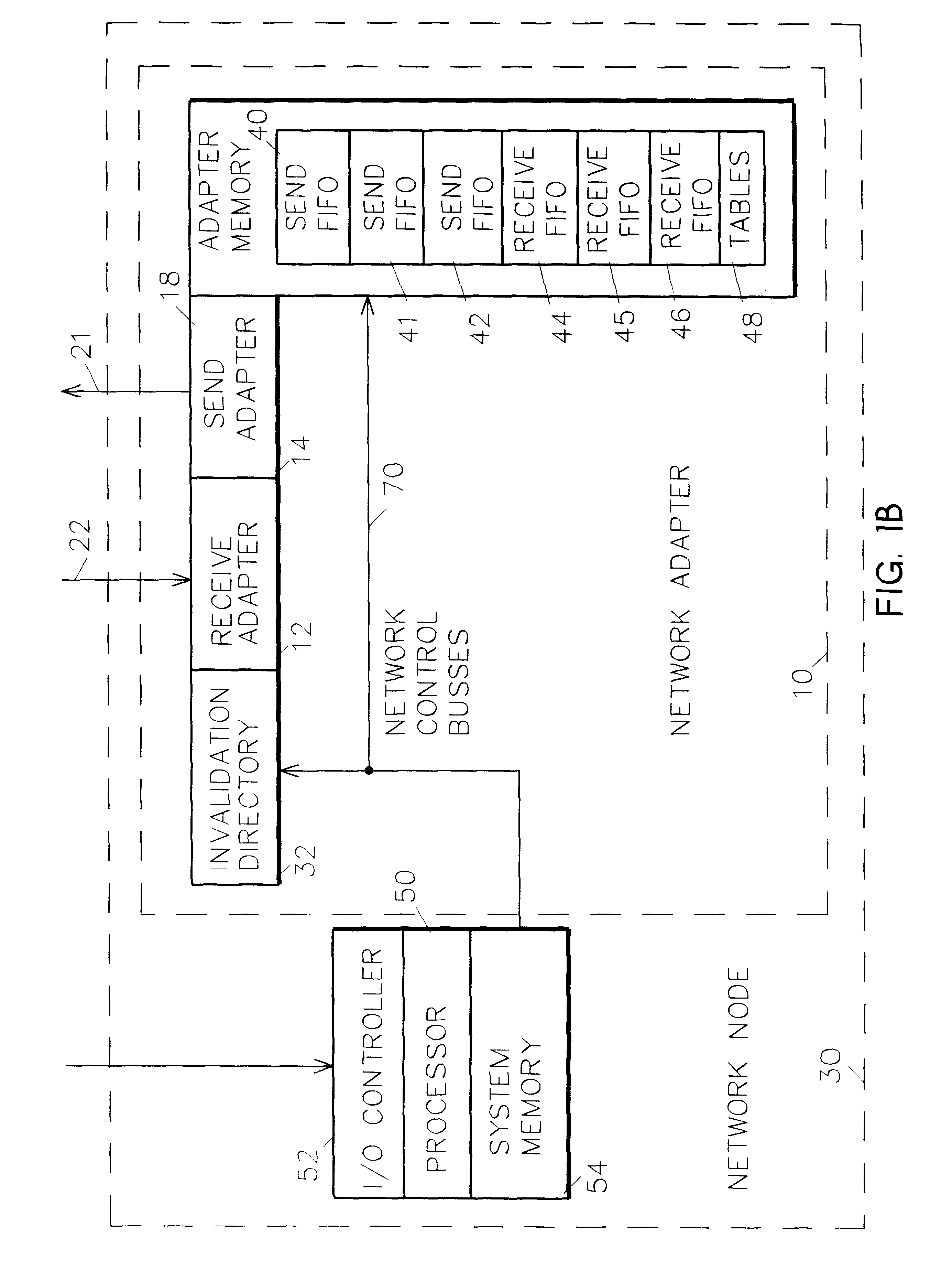

A shared memory parallel processing system interconnected by a multi-stage network combines new system configuration techniques with special-purpose hardware to provide remote memory accesses across the network, while controlling cache coherency efficiently across the network. The system configuration techniques include a systematic method for partitioning and controlling the memory in relation to local verses remote accesses and changeable verses unchangeable data. Most of the special-purpose hardware is implemented in the memory controller and network adapter, which implements three send FIFOs and three receive FIFOs at each node to segregate and handle efficiently invalidate functions, remote stores, and remote accesses requiring cache coherency. The segregation of these three functions into different send and receive FIFOs greatly facilitates the cache coherency function over the network. In addition, the network itself is tailored to provide the best efficiency for remote accesses.

Owner:IBM CORP

Bi-directional network adapter for interfacing local node of shared memory parallel processing system to multi-stage switching network for communications with remote node

InactiveUS6122674AOptimize networkData processing applicationsMemory adressing/allocation/relocationRemote memory accessExchange network

A shared memory parallel processing system interconnected by a multi-stage network combines new system configuration techniques with special-purpose hardware to provide remote memory accesses across the network, while controlling cache coherency efficiently across the network. The system configuration techniques include a systematic method for partitioning and controlling the memory in relation to local verses remote accesses and changeable verses unchangeable data. Most of the special-purpose hardware is implemented in the memory controller and network adapter, which implements three send FIFOs and three receive FIFOs at each node to segregate and handle efficiently invalidate functions, remote stores, and remote accesses requiring cache coherency. The segregation of these three functions into different send and receive FIFOs greatly facilitates the cache coherency function over the network. In addition, the network itself is tailored to provide the best efficiency for remote accesses.

Owner:IBM CORP

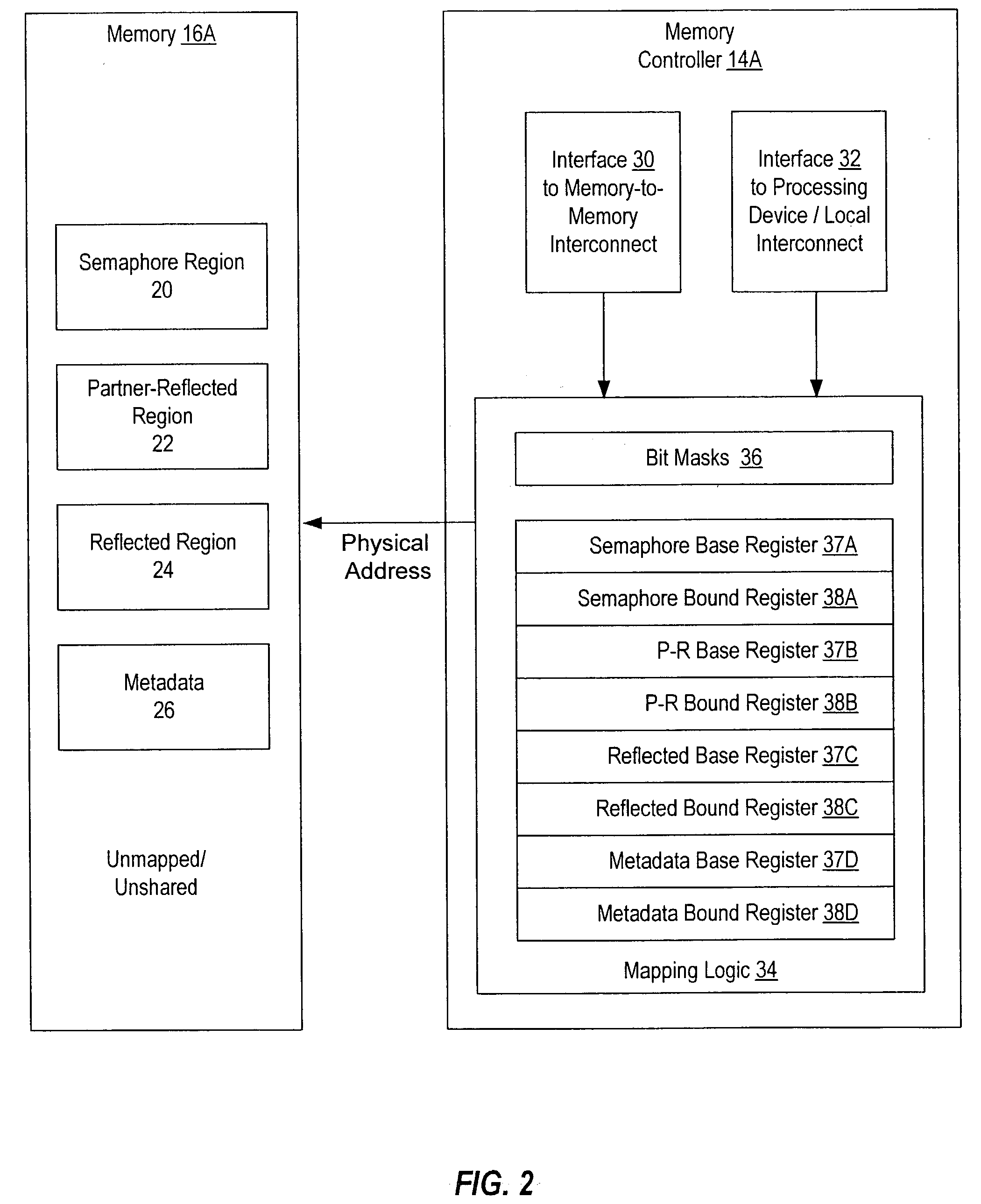

System and method for sharing memory among multiple storage device controllers

InactiveUS20040117562A1Memory adressing/allocation/relocationDigital computer detailsRemote memory accessNetwork Communication Protocols

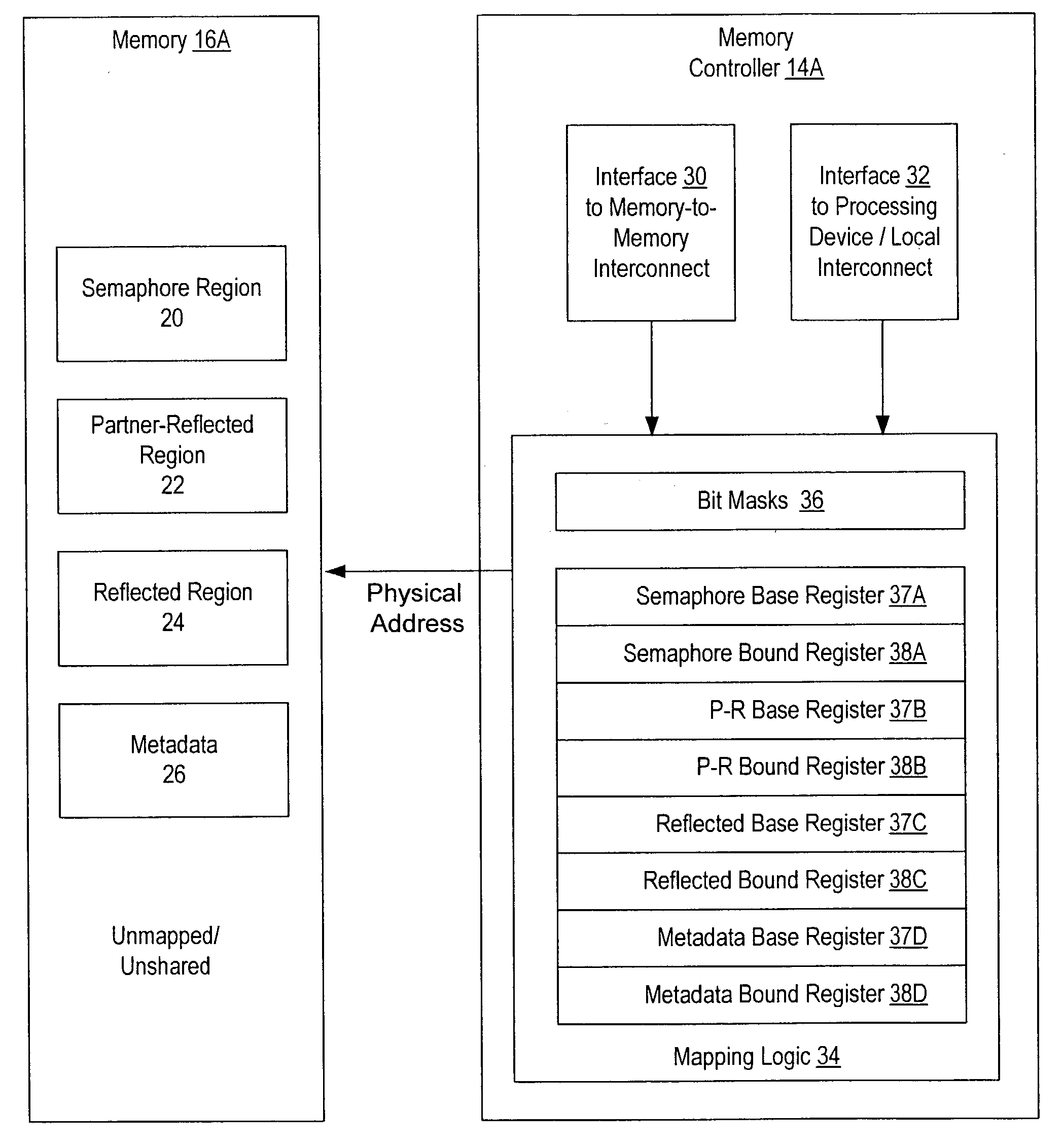

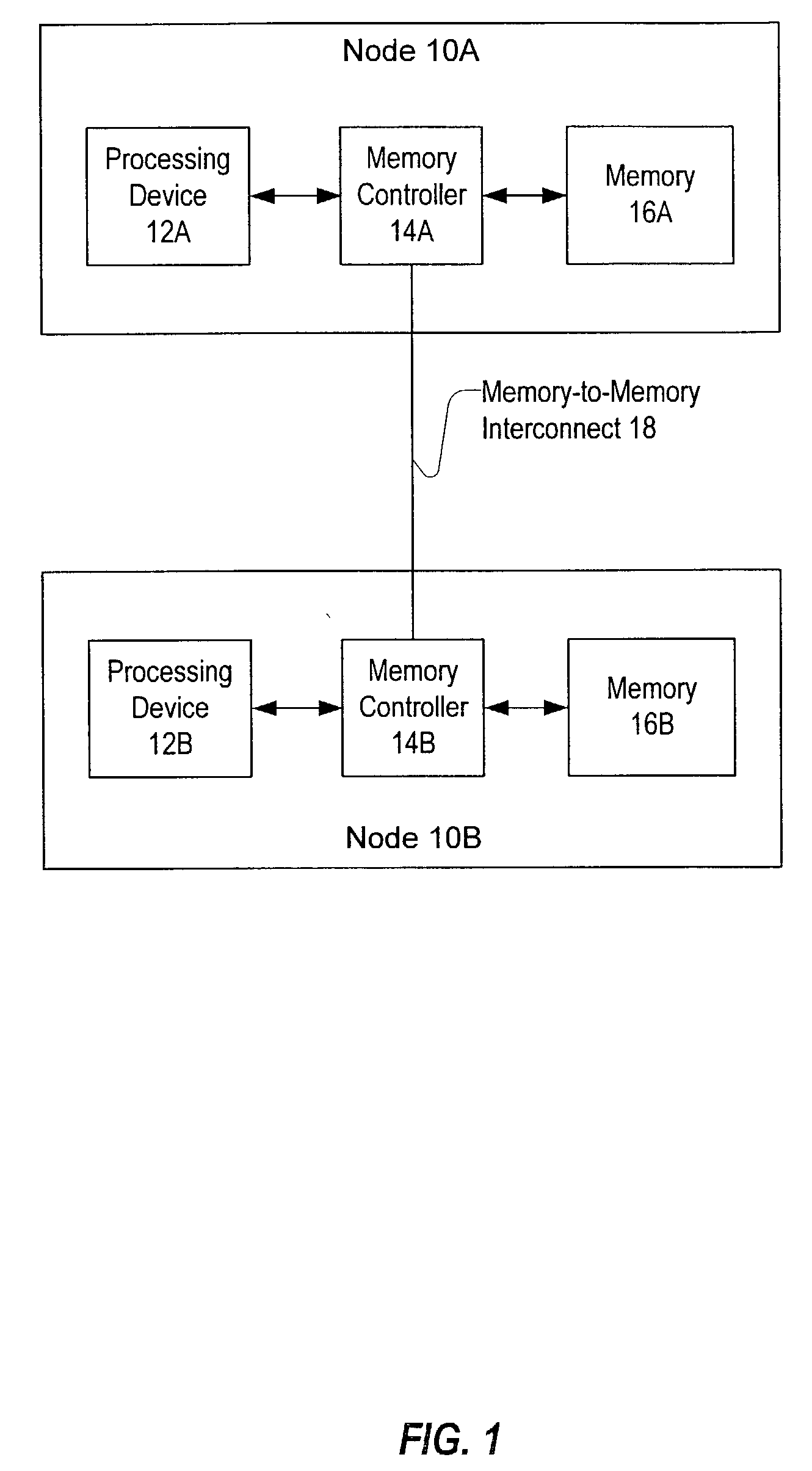

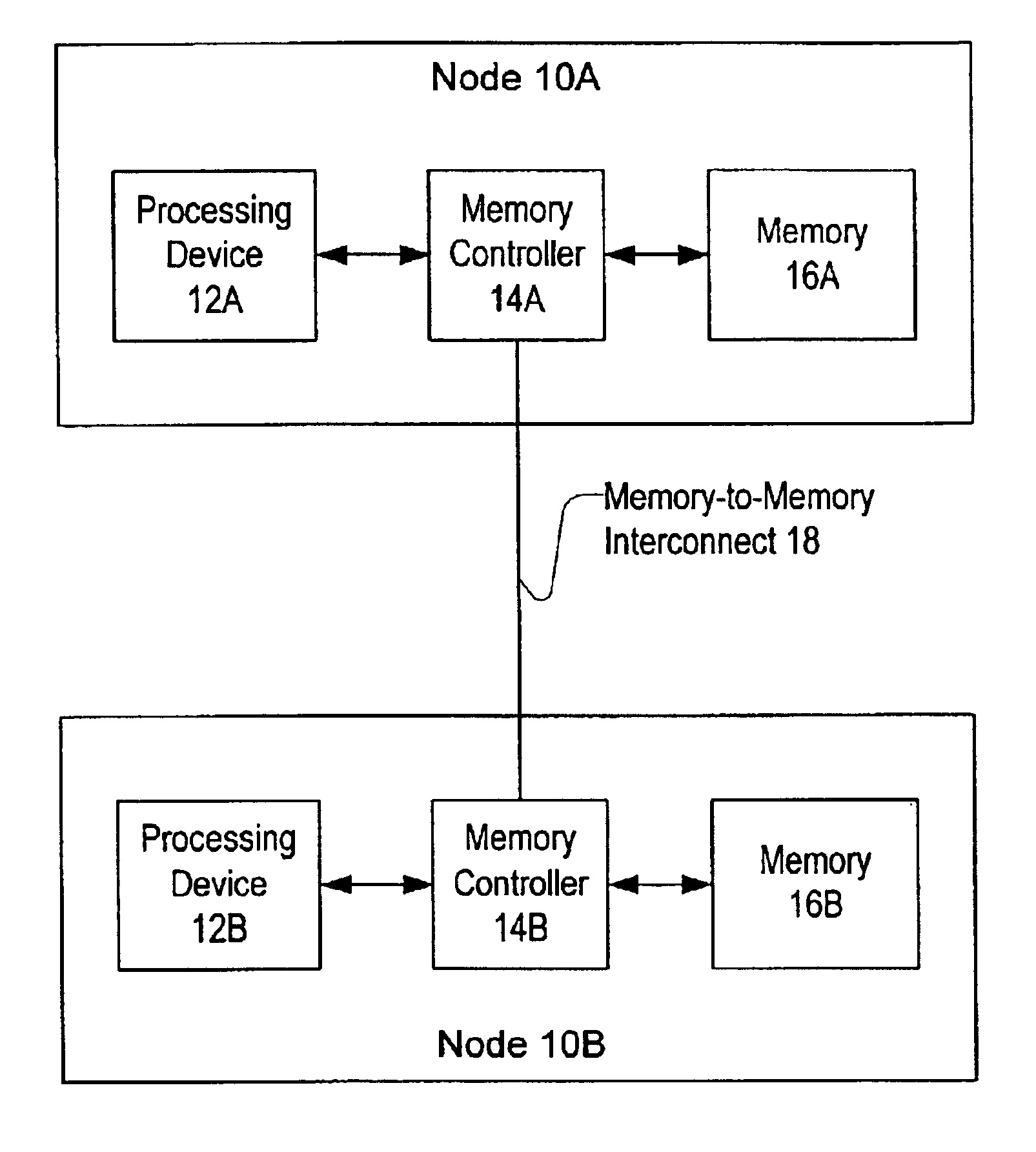

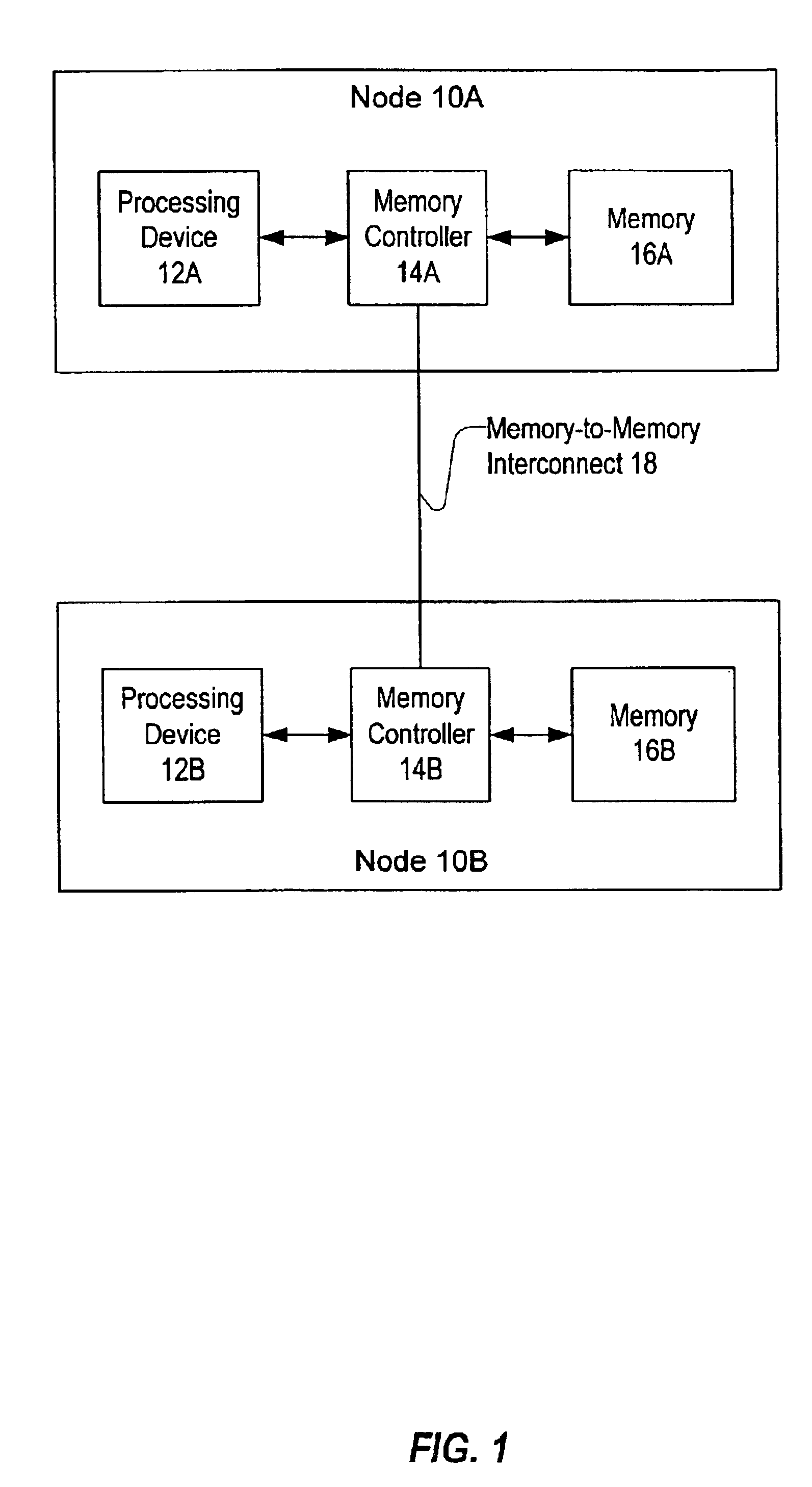

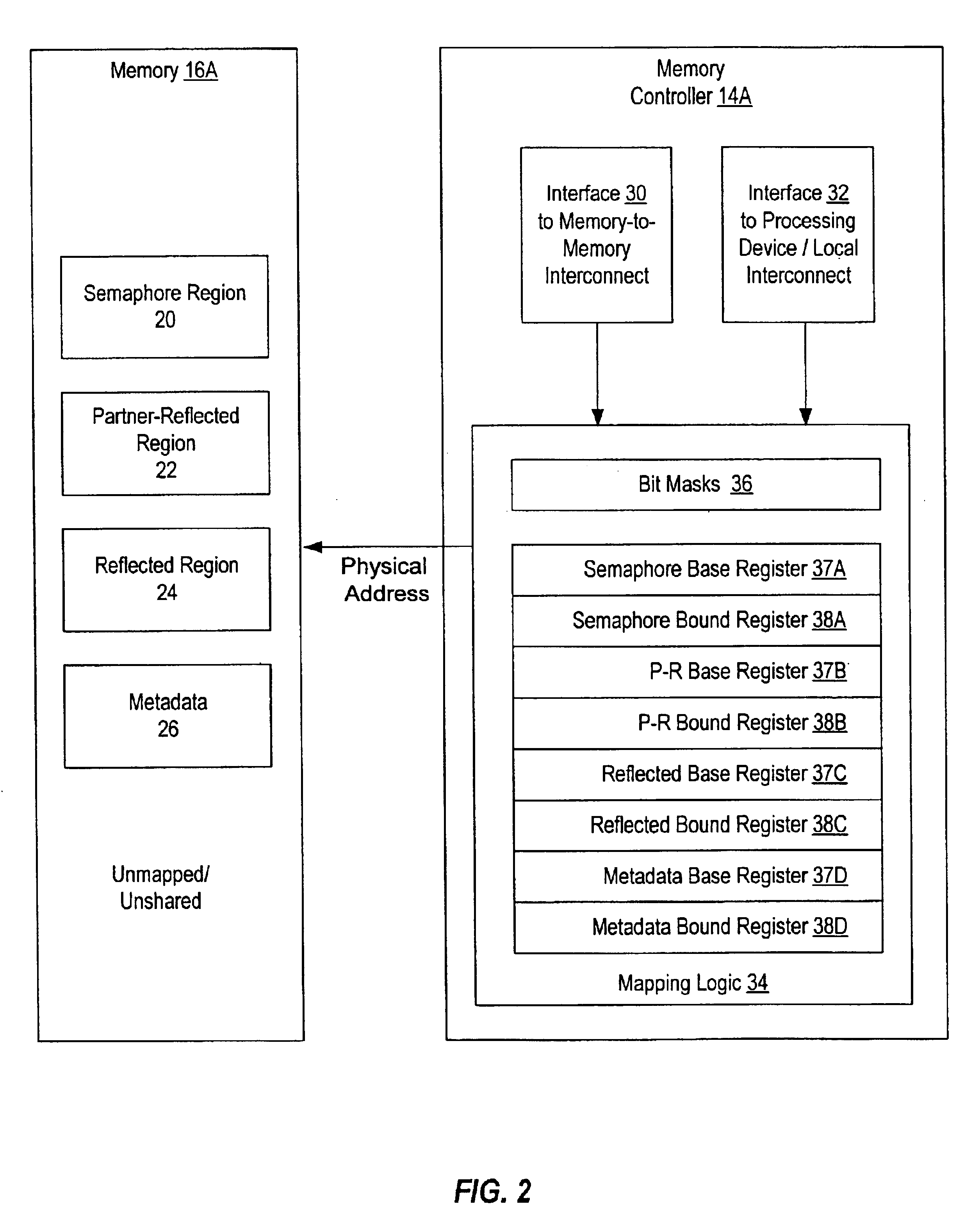

Each node's memory controller may be configured to send and receive messages on a dedicated memory-to-memory interconnect according to the communication protocol and to responsively perform memory accesses in a local memory. The type of message sent on the interconnect may depend on which memory region is targeted by a memory access request local to the sending node. If certain regions are targeted locally, a memory controller may delay performance of a local memory access until the memory access has been performed remotely. Remote nodes may confirm performance of the remote memory accesses via the memory-to-memory interconnect.

Owner:ORACLE INT CORP

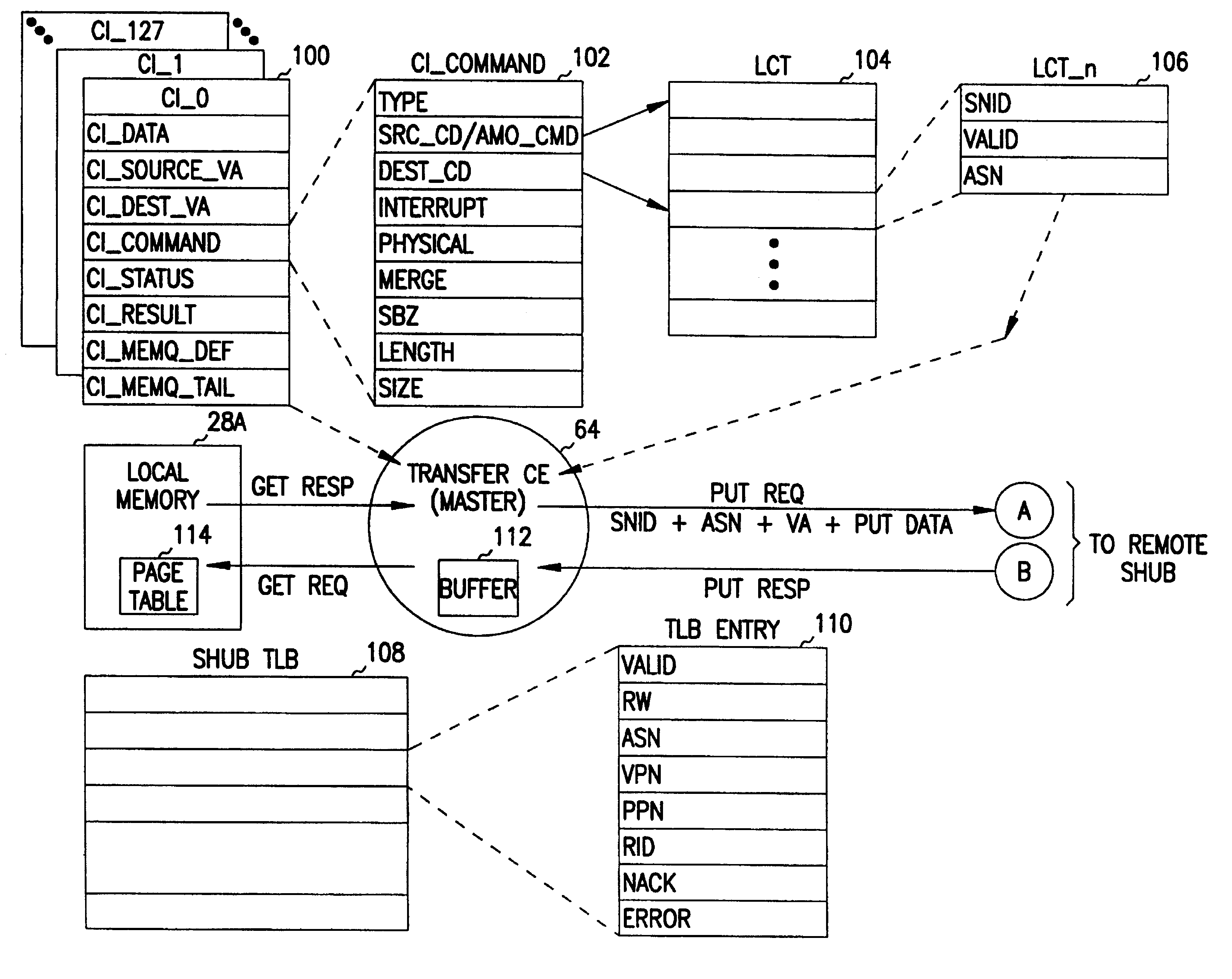

Remote address translation in a multiprocessor system

InactiveUS6925547B2Memory adressing/allocation/relocationData switching by path configurationRemote memory accessConnection table

A method of performing remote address translation in a multiprocessor system includes determining a connection descriptor and a virtual address at a local node, accessing a local connection table at the local node using the connection descriptor to produce a system node identifier for a remote node and a remote address space number, communicating the virtual address and remote address space number to the remote node, and translating the virtual address to a physical address at the remote node (qualified by the remote address space number). A user process running at the local node provides the connection descriptor and virtual address. The translation is performed by matching the virtual address and remote address space number with an entry of a translation-lookaside buffer (TLB) at the remote node. Performing the translation at the remote node reduces the amount of translation information needed at the local node for remote memory accesses. The method supports communication within a scalable multiprocessor, and across the machine boundaries in a cluster.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

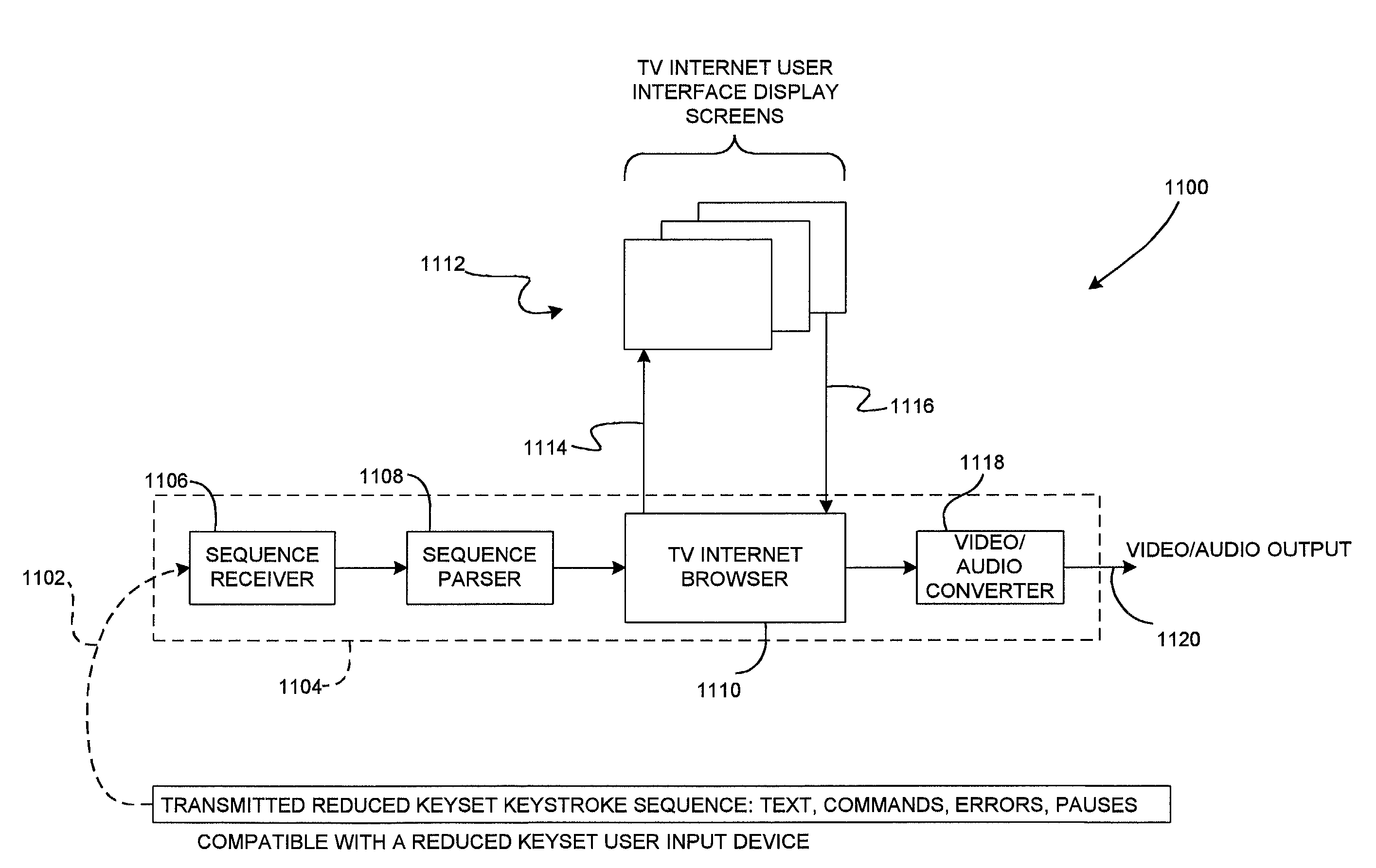

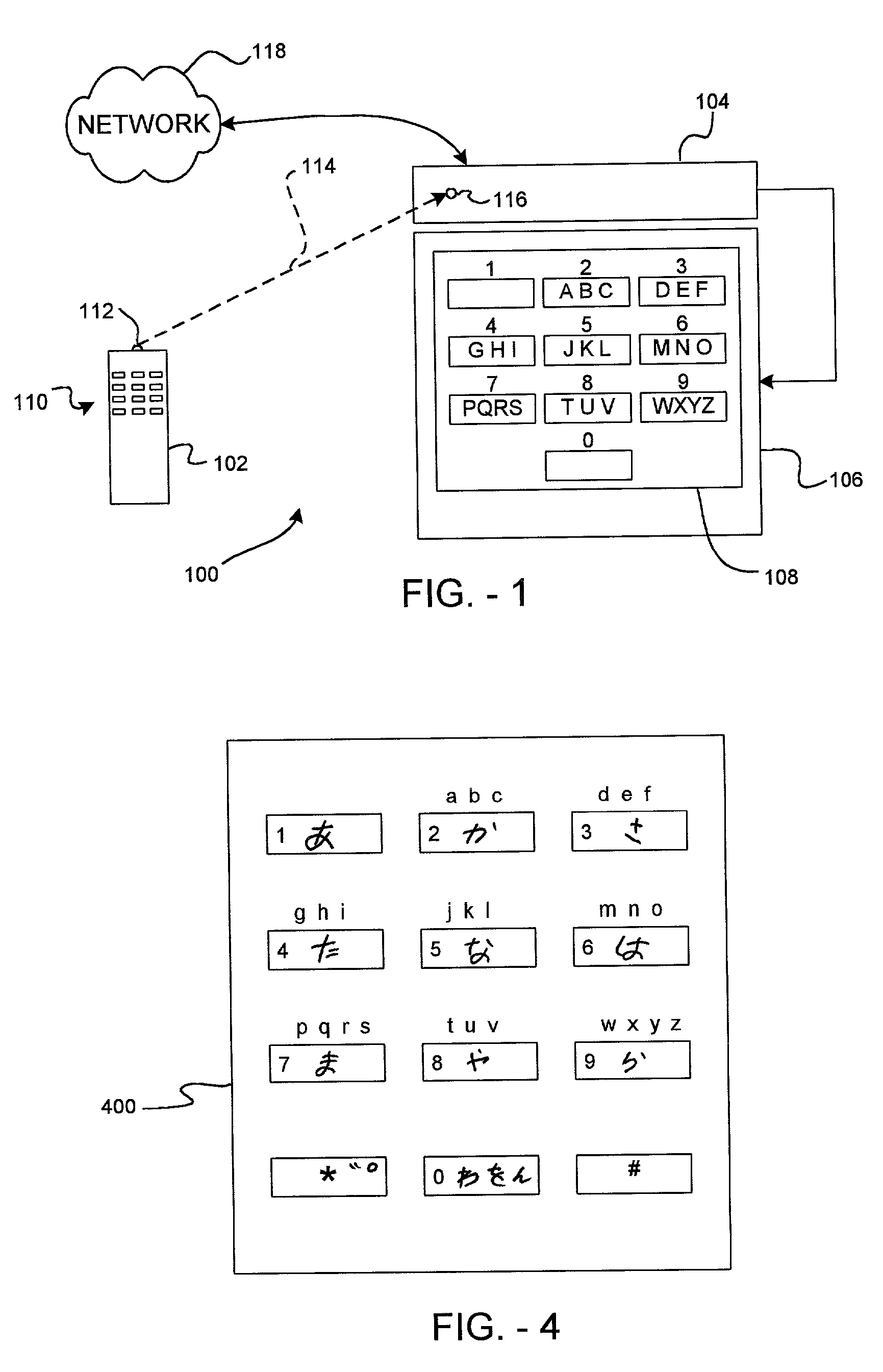

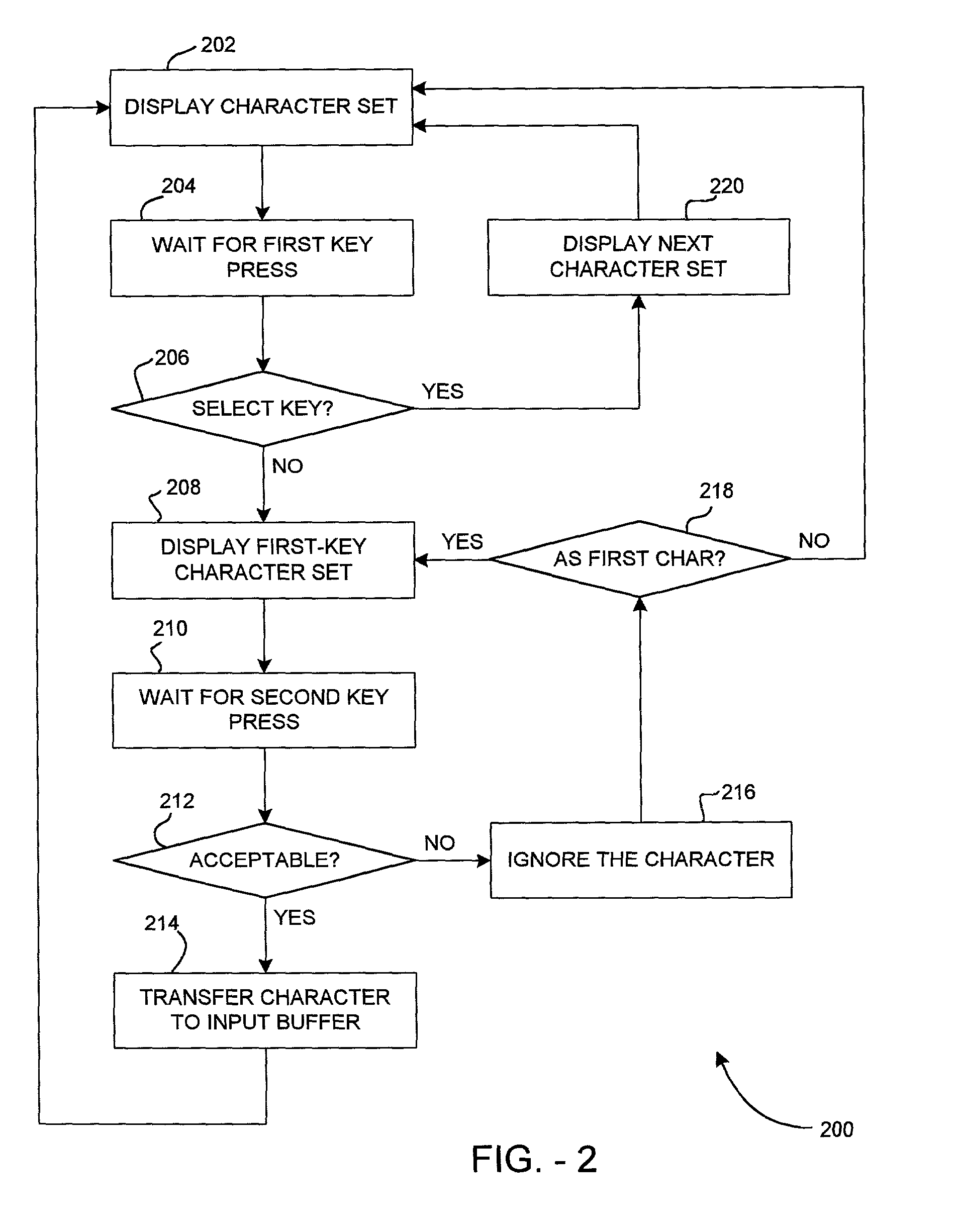

System and method for internet appliance data entry and navigation

InactiveUS7245291B2Input/output for user-computer interactionTelevision system detailsTelevision receiversRemote memory access

A system for Internet appliance data entry and navigation includes a reduced keyset remote control unit transmitting a user input keystroke sequence. An Internet appliance receives and parses the keystroke sequence, placing the parsed data into an input buffer. An Internet appliance browser accesses user interface display screens from remote storage via a communications network. The buffer contents define a window within an accessed display screen. The Internet appliance converts the composite display screen for output to a standard television receiver. A user makes option choices and navigates the user interface display screens by activating hyperlinks within the accessed display screens. A standard telephone keypad arrangement is used to create the keystroke sequence, permitting use of a standard or wireless telephone and a hand-held remote control unit for system input and control. An alternative embodiment permits voice input of text, numbers, special symbols, and shortcuts in many languages.

Owner:SHARIF IMRAN +5

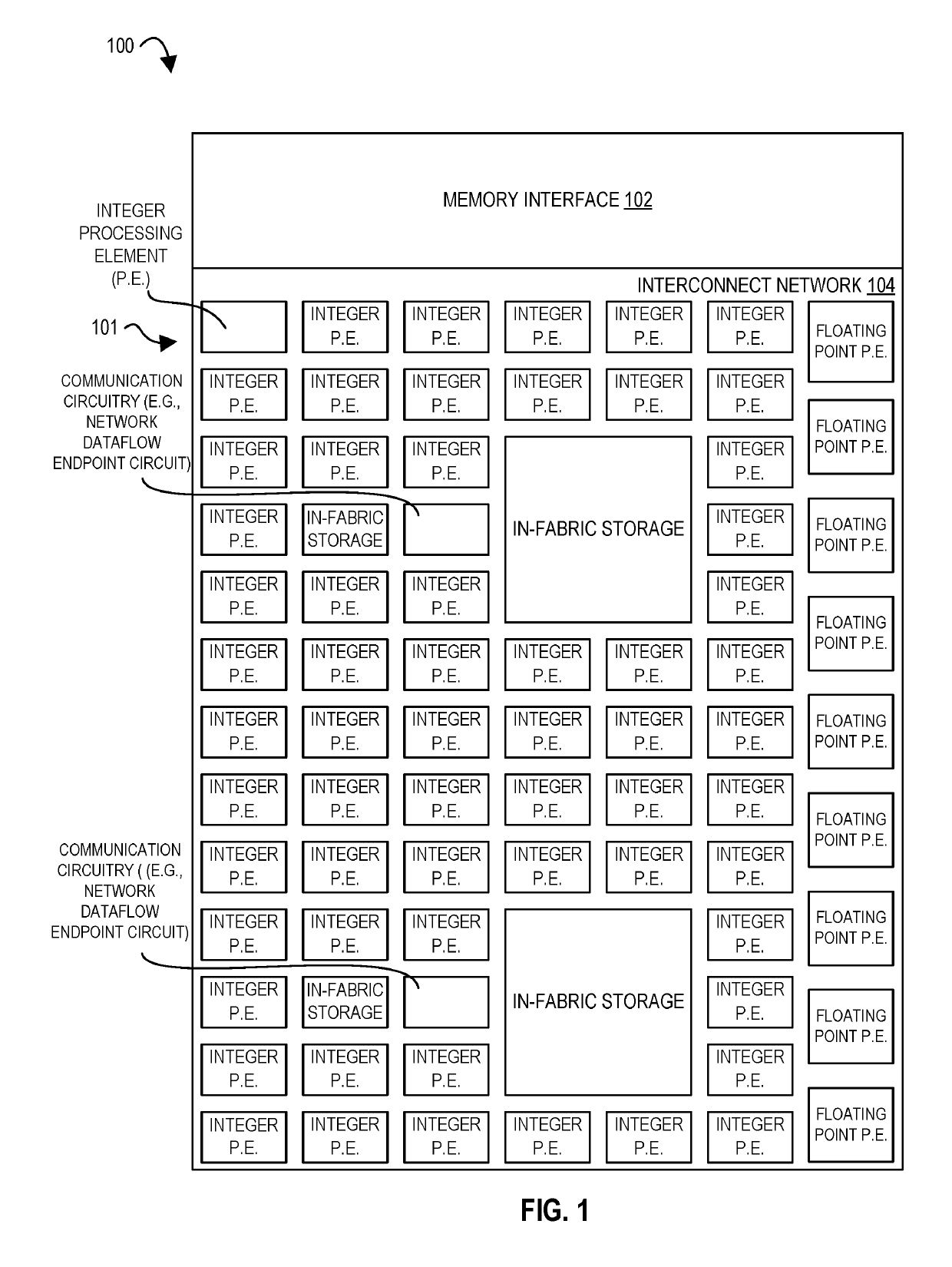

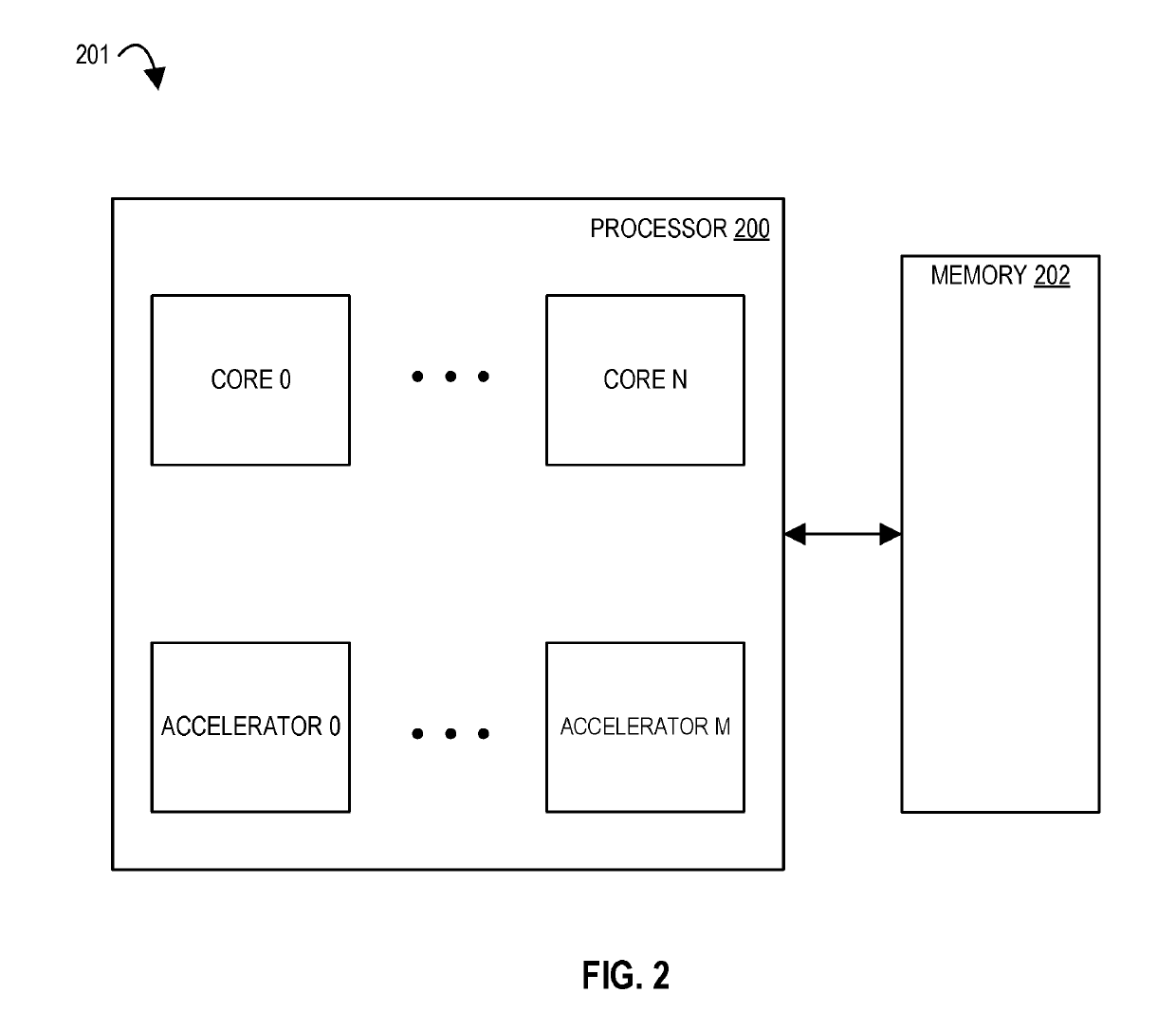

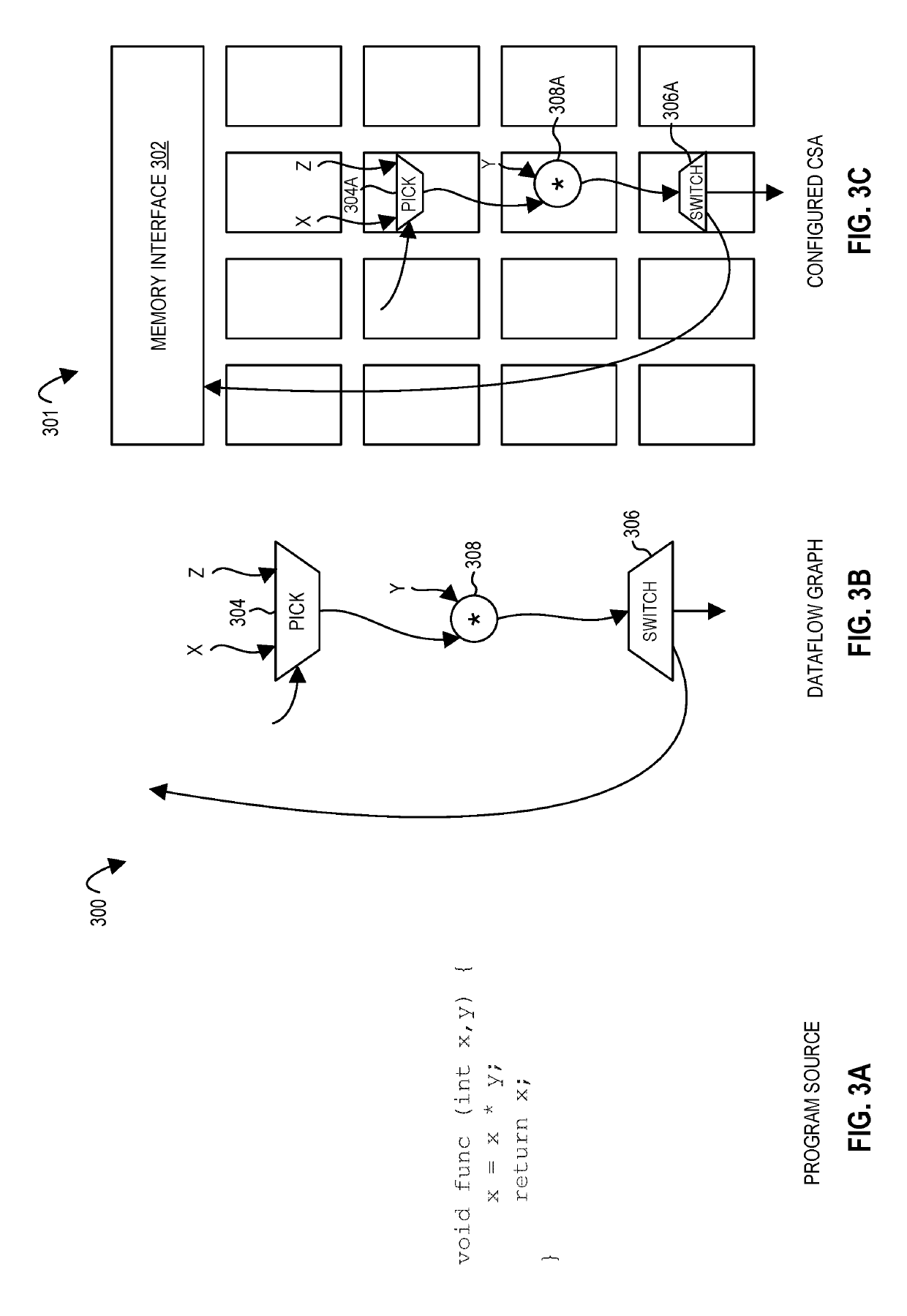

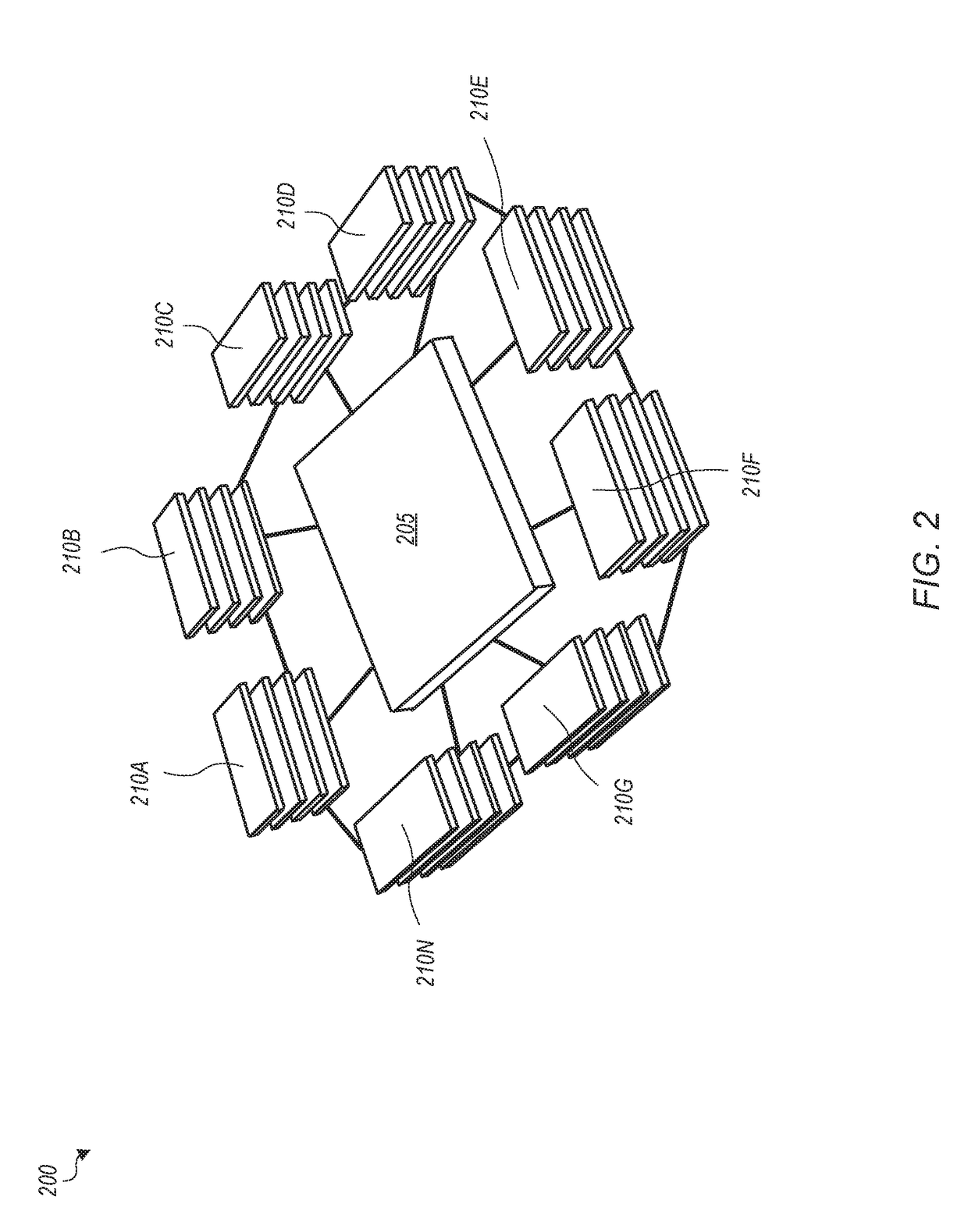

Apparatus, methods, and systems for remote memory access in a configurable spatial accelerator

InactiveUS20190303297A1Easy to adaptImprove performanceMemory architecture accessing/allocationDigital computer detailsDirect memory accessRemote memory access

Systems, methods, and apparatuses relating to remote memory access in a configurable spatial accelerator are described. In one embodiment, a configurable spatial accelerator includes a first memory interface circuit coupled to a first processing element and a cache, the first memory interface circuit to issue a memory request to the cache, the memory request comprising a field to identify a second memory interface circuit as a receiver of data for the memory request; and the second memory interface circuit coupled to a second processing element and the cache, the second memory interface circuit to send a credit return value to the first memory interface circuit, to cause the first memory interface circuit to mark the memory request as complete, when the data for the memory request arrives at the second memory interface circuit and a completion configuration register of the second memory interface circuit is set to a remote response value.

Owner:INTEL CORP

Cache coherent network adapter for scalable shared memory processing systems

InactiveUS6343346B1Memory adressing/allocation/relocationRemote memory accessRemote direct memory access

A shared memory parallel processing system interconnected by a multi-stage network combines new system configuration techniques with special-purpose hardware to provide remote memory accesses across the network, while controlling cache coherency efficiently across the network. The system configuration techniques include a systematic method for partitioning and controlling the memory in relation to local verses remote accesses and changeable verses unchangeable data. Most of the special-purpose hardware is implemented in the memory controller and network adapter, which implements three send FIFOs and three receive FIFOs at each node to segregate and handle efficiently invalidate functions, remote stores, and remote accesses requiring cache coherency. The segregation of these three functions into different send and receive FIFOs greatly facilitates the cache coherency function over the network. In addition, the network itself is tailored to provide the best efficiency for remote accesses.

Owner:IBM CORP

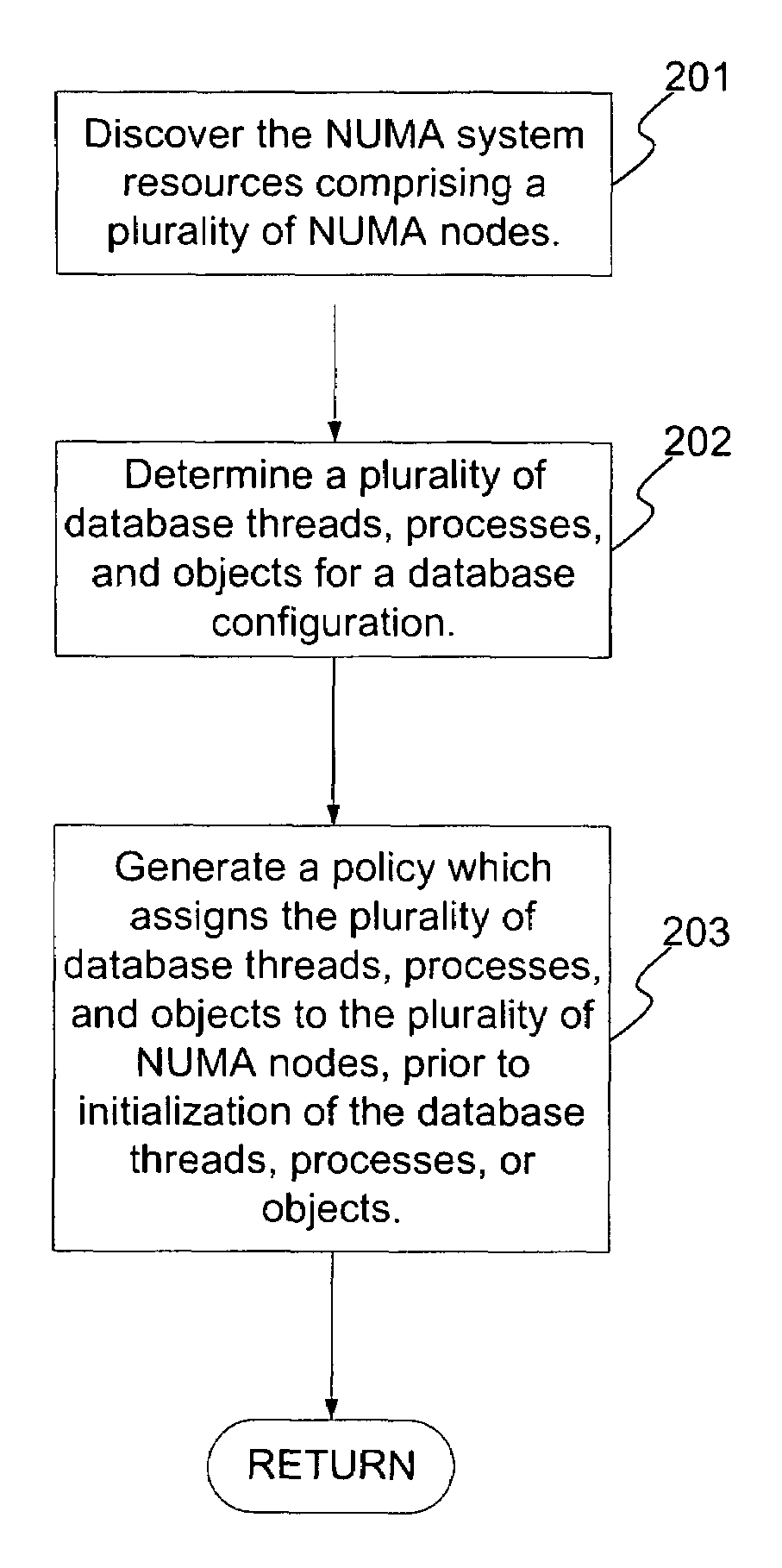

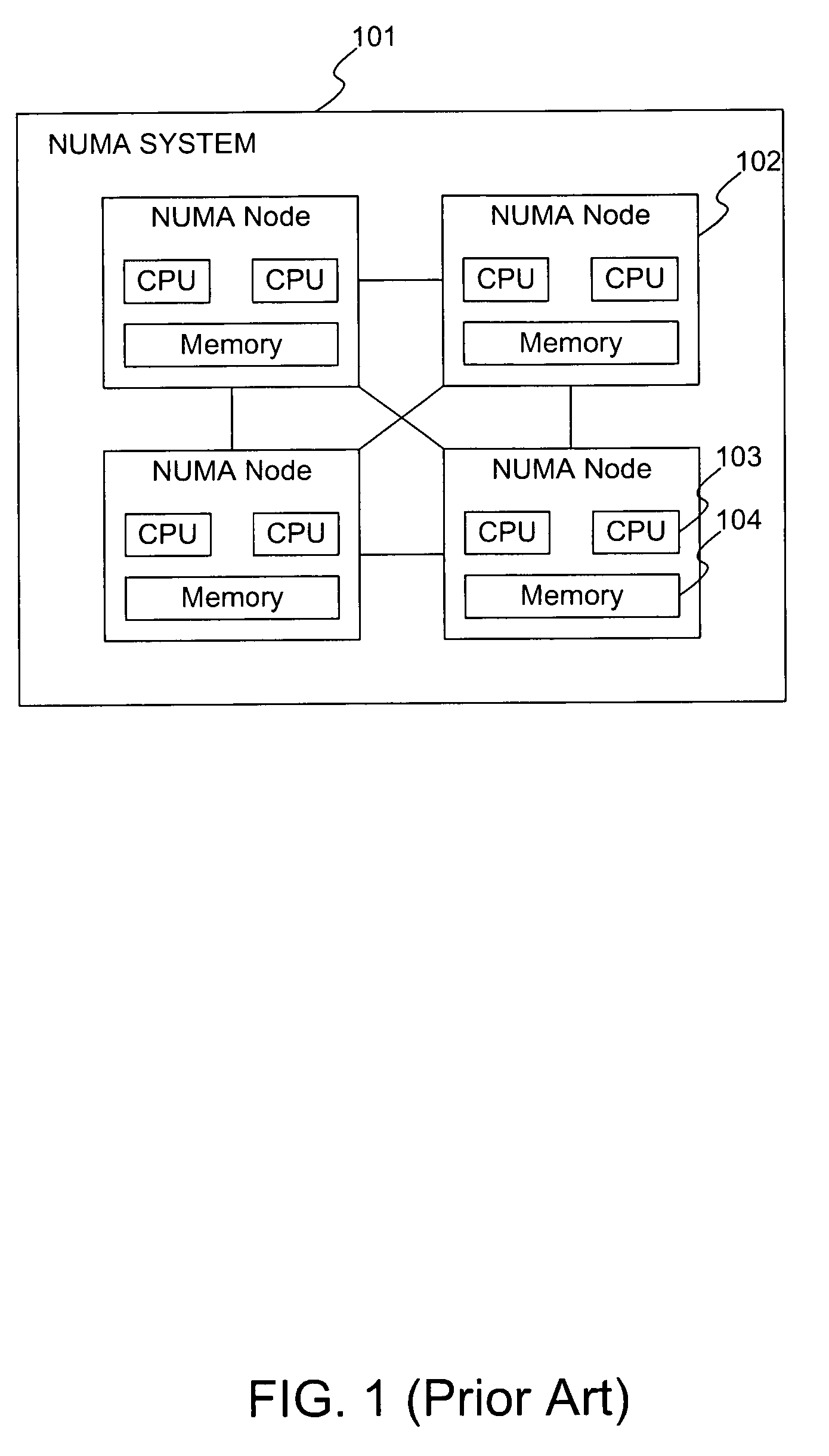

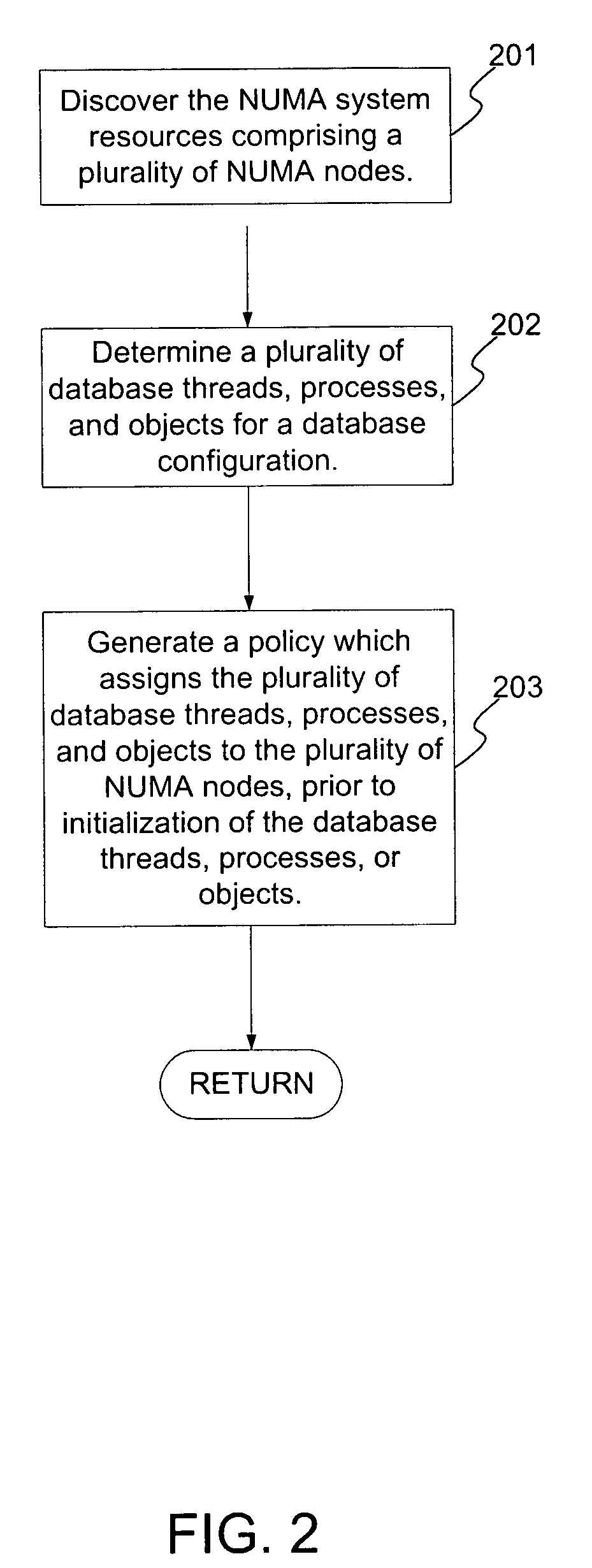

System and method for optimally configuring software systems for a NUMA platform

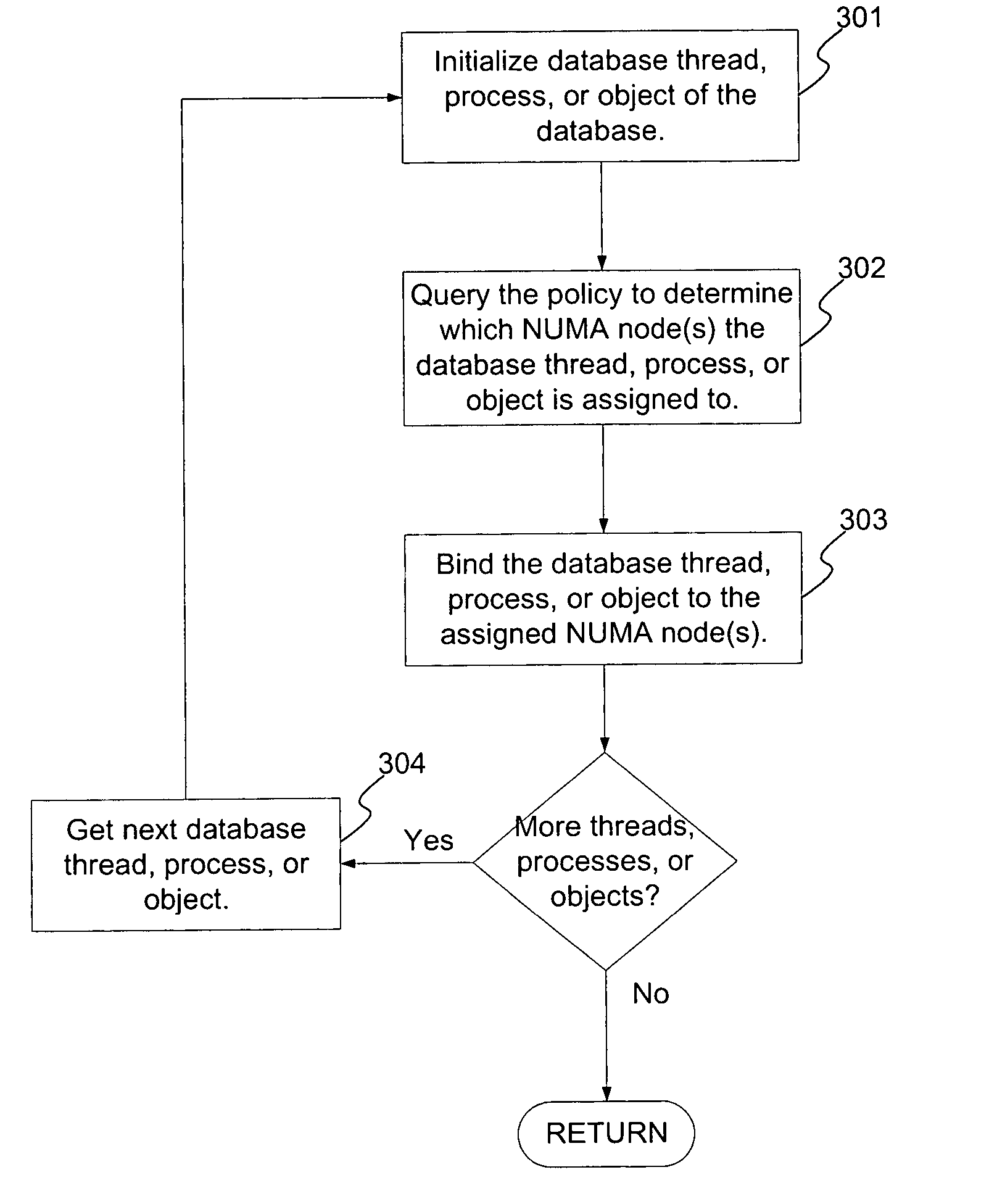

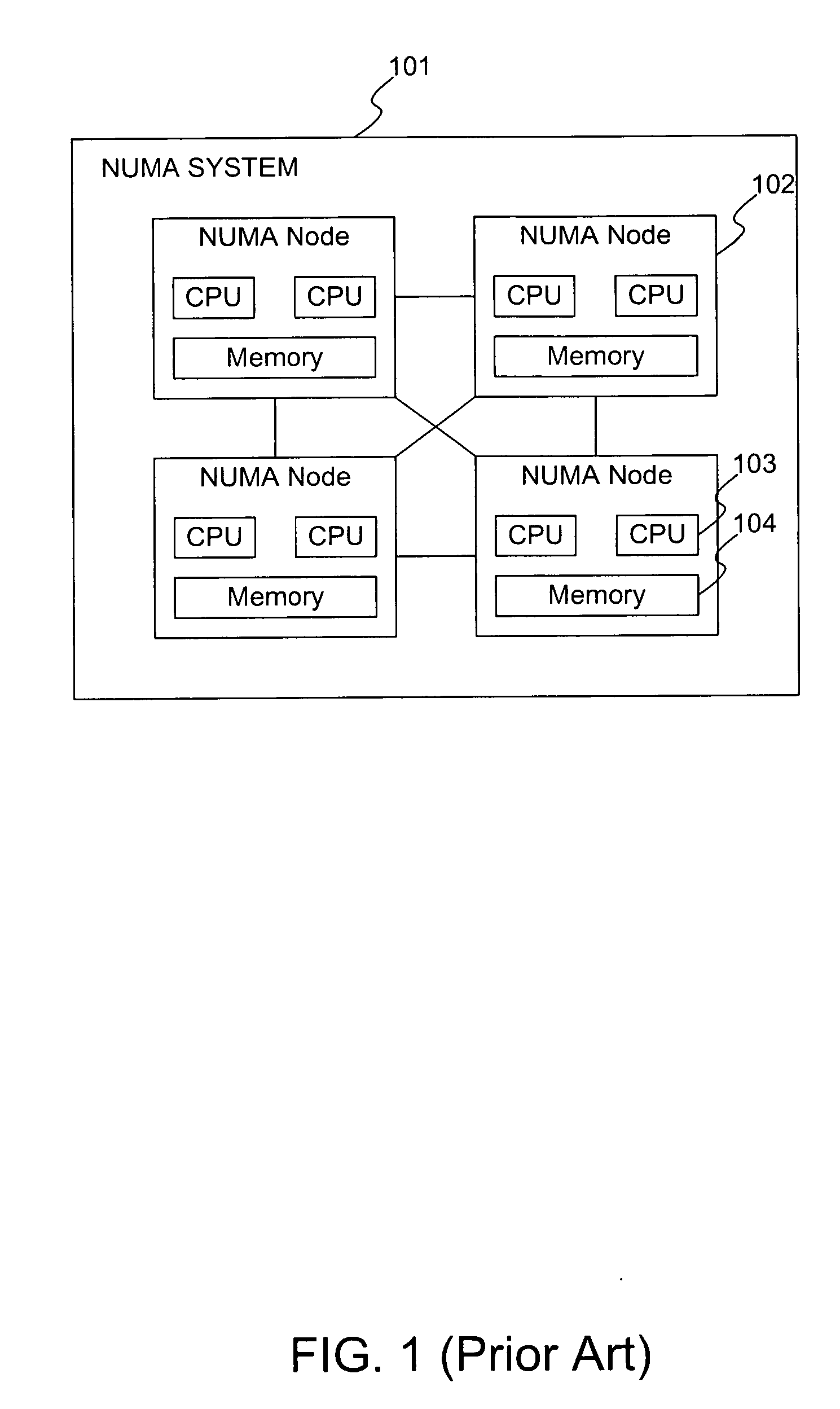

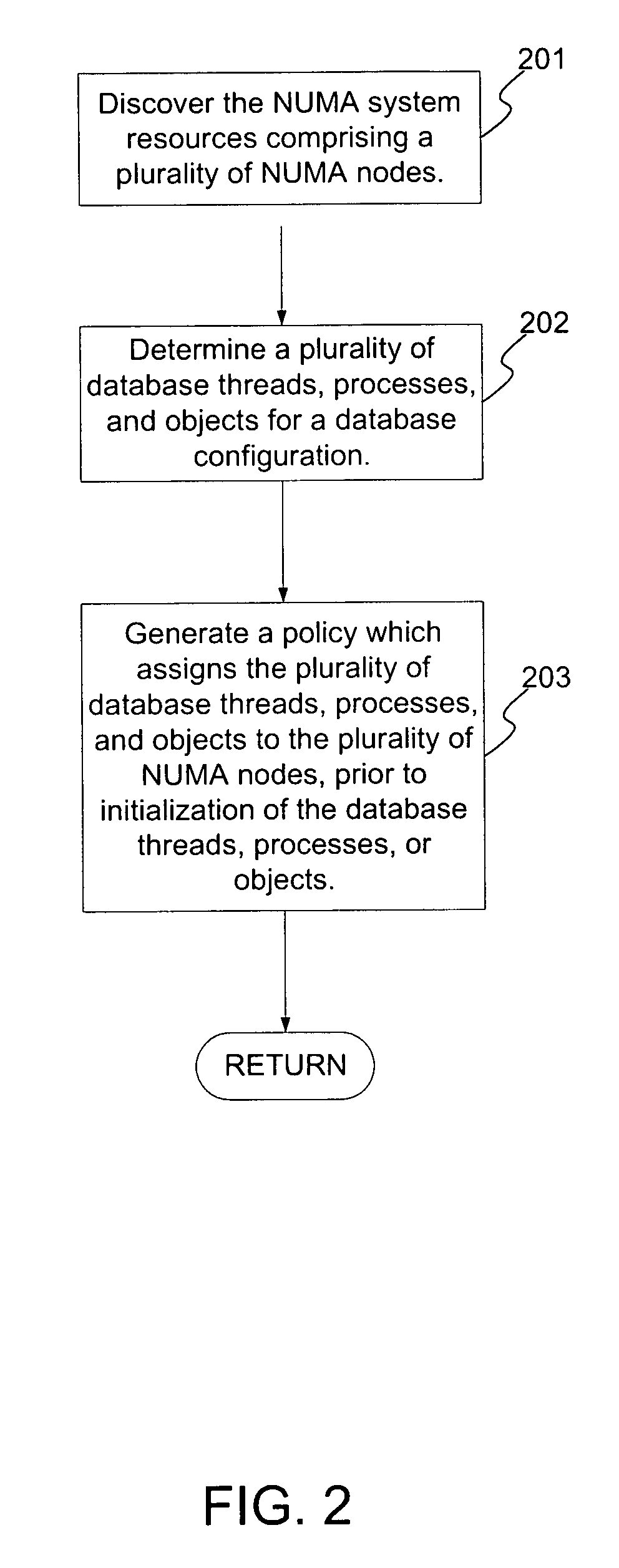

ActiveUS20060206489A1Expedites memory accessReduce the amount requiredProgram controlSpecial data processing applicationsRemote memory accessSoftware system

A method and system for improving memory access patterns of software systems on NUMA systems discovers NUMA system resources, where the NUMA system resources comprises a plurality of NUMA nodes; determines a plurality of database threads, processes, and objects for a database configuration; and generates a policy which assigns the plurality of database threads, processes, and objects to the plurality of NUMA nodes, wherein the generating is performed prior to initialization of the plurality of database threads, processes, and objects. The assignment of the database threads, processes, or objects to NUMA nodes is such that the amount of remote memory accesses is reduced. When the database thread, process, or object initializes, the database server queries the policy for its assigned NUMA node(s). The database thread, process, or object is then bound to the assigned NUMA node(s). In this manner, the costs from remote memory accesses are significantly reduced.

Owner:GOOGLE LLC

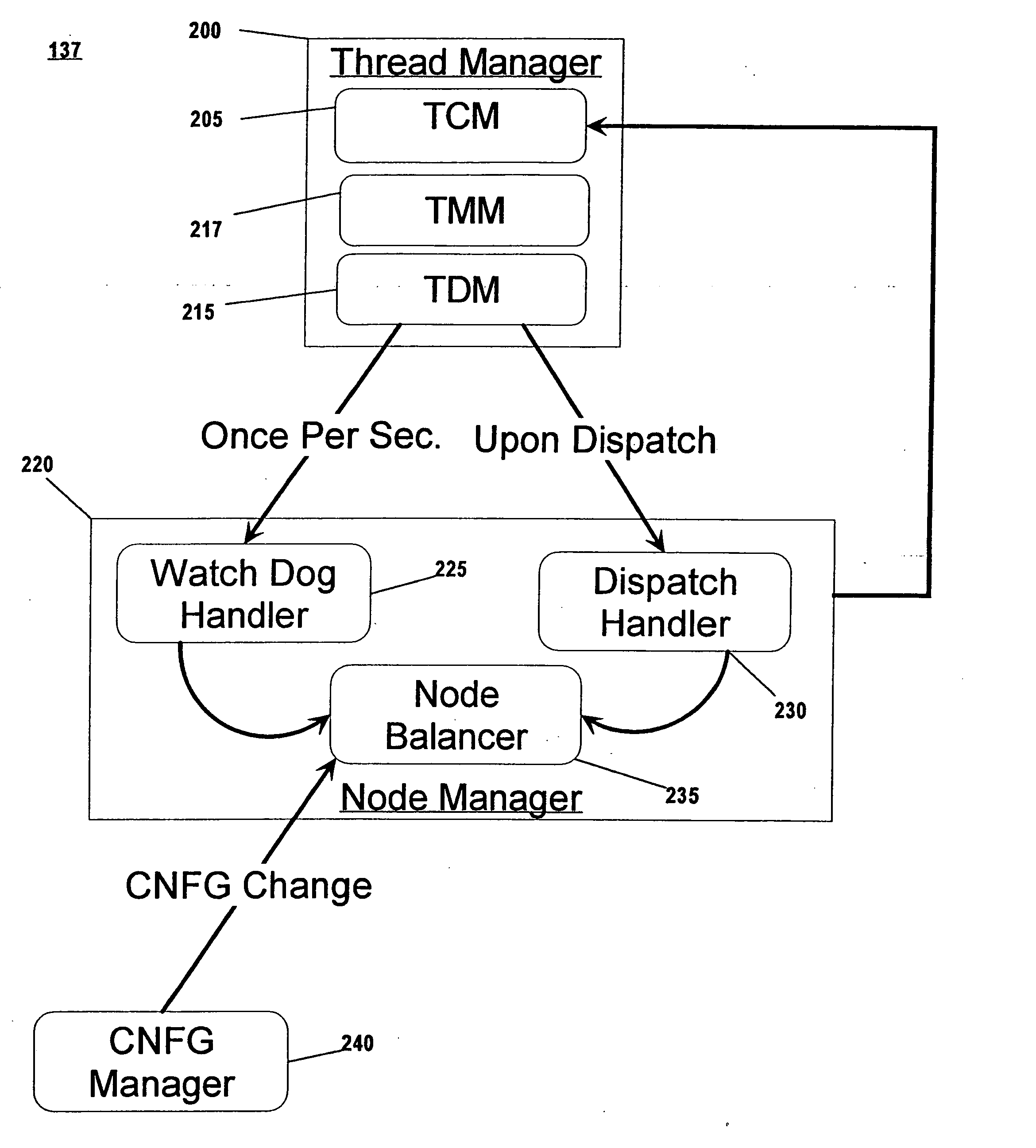

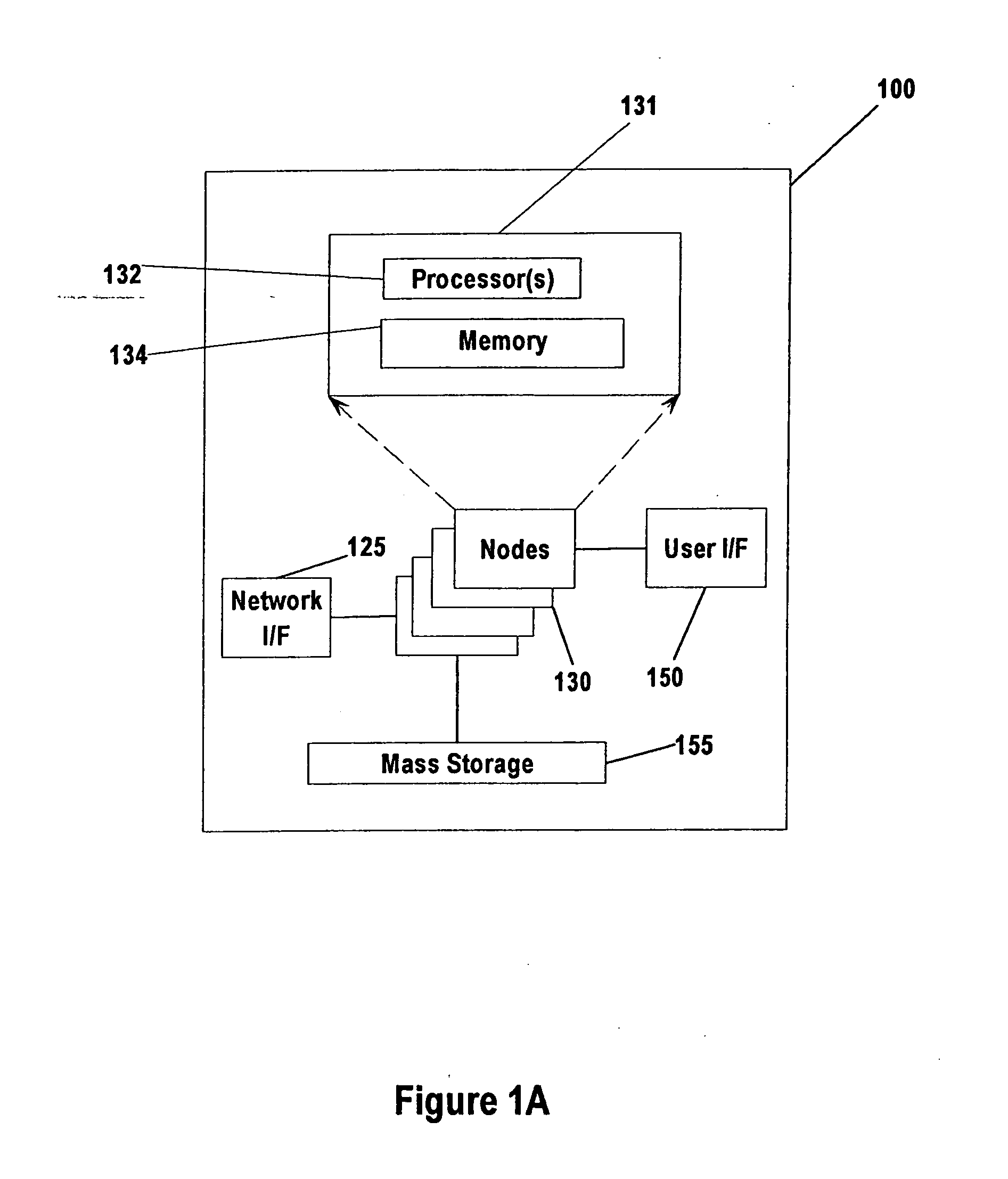

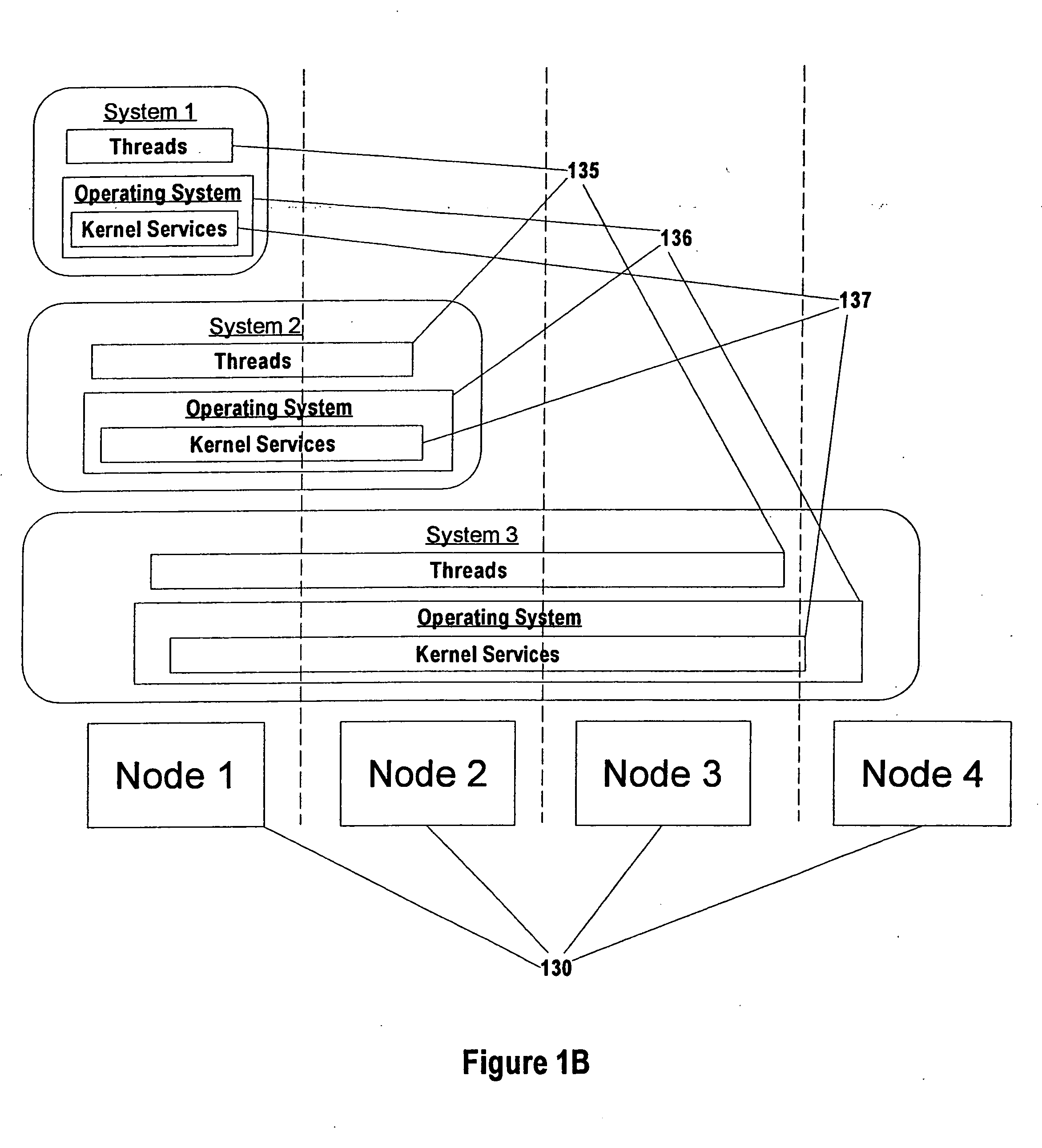

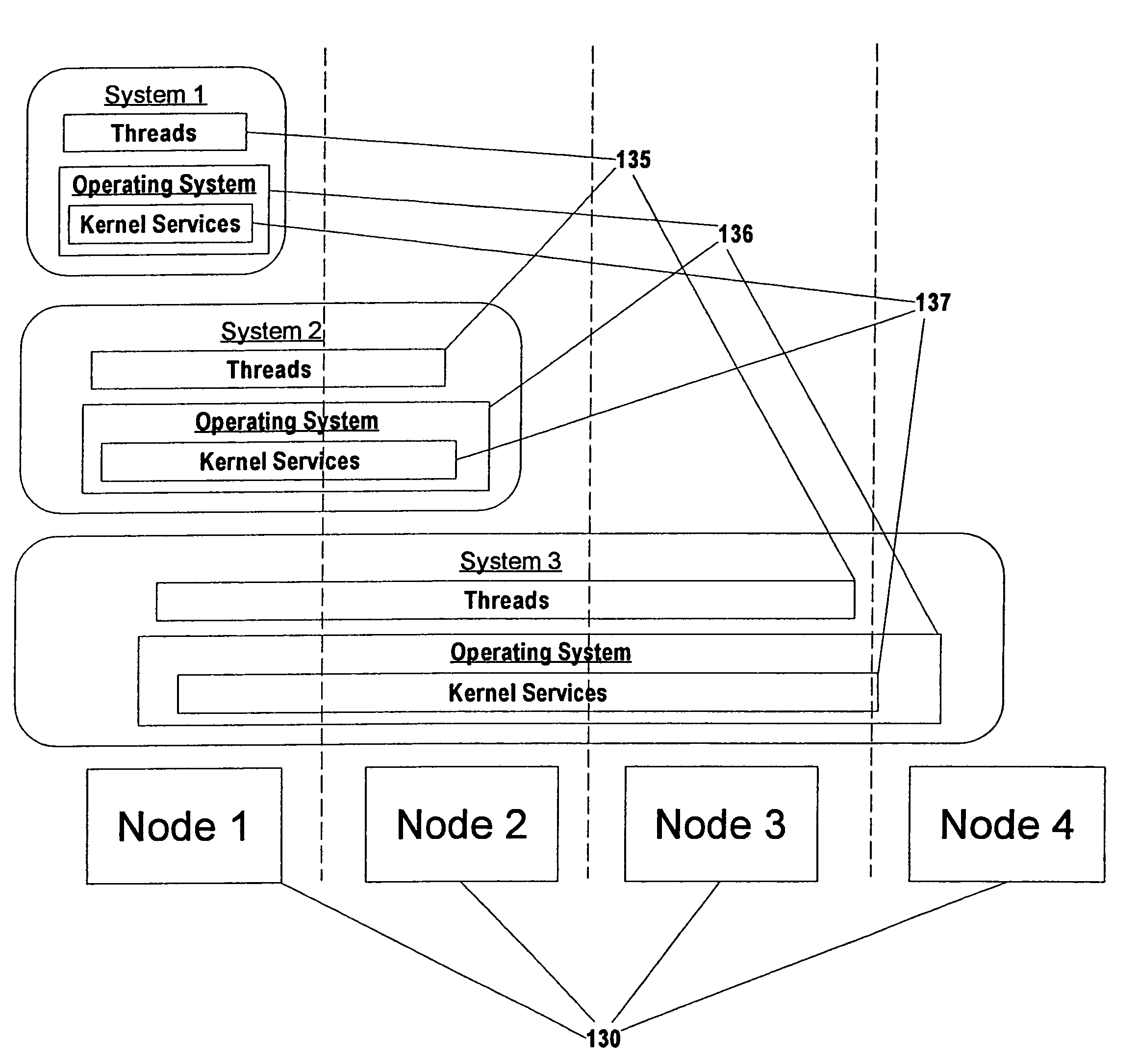

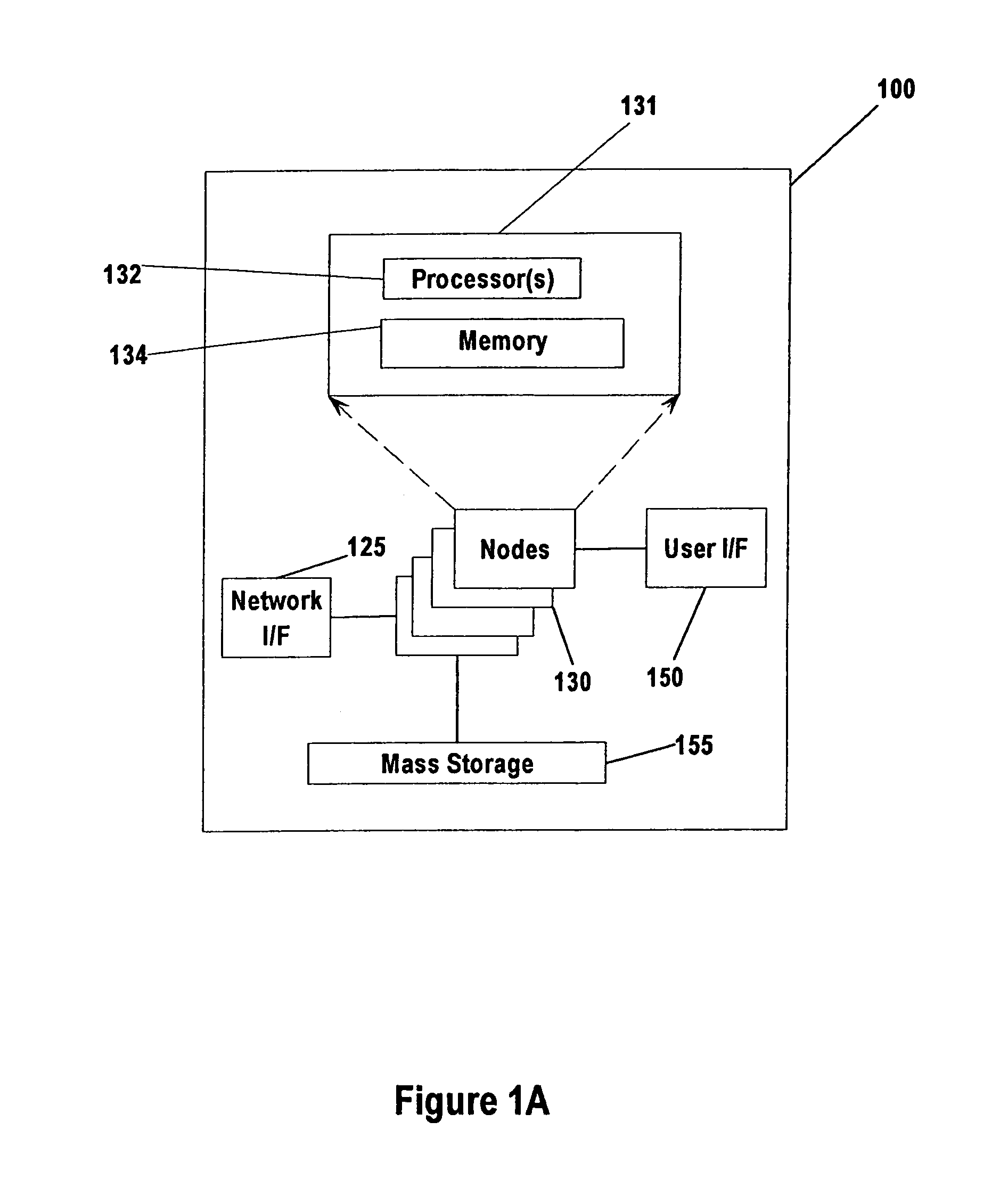

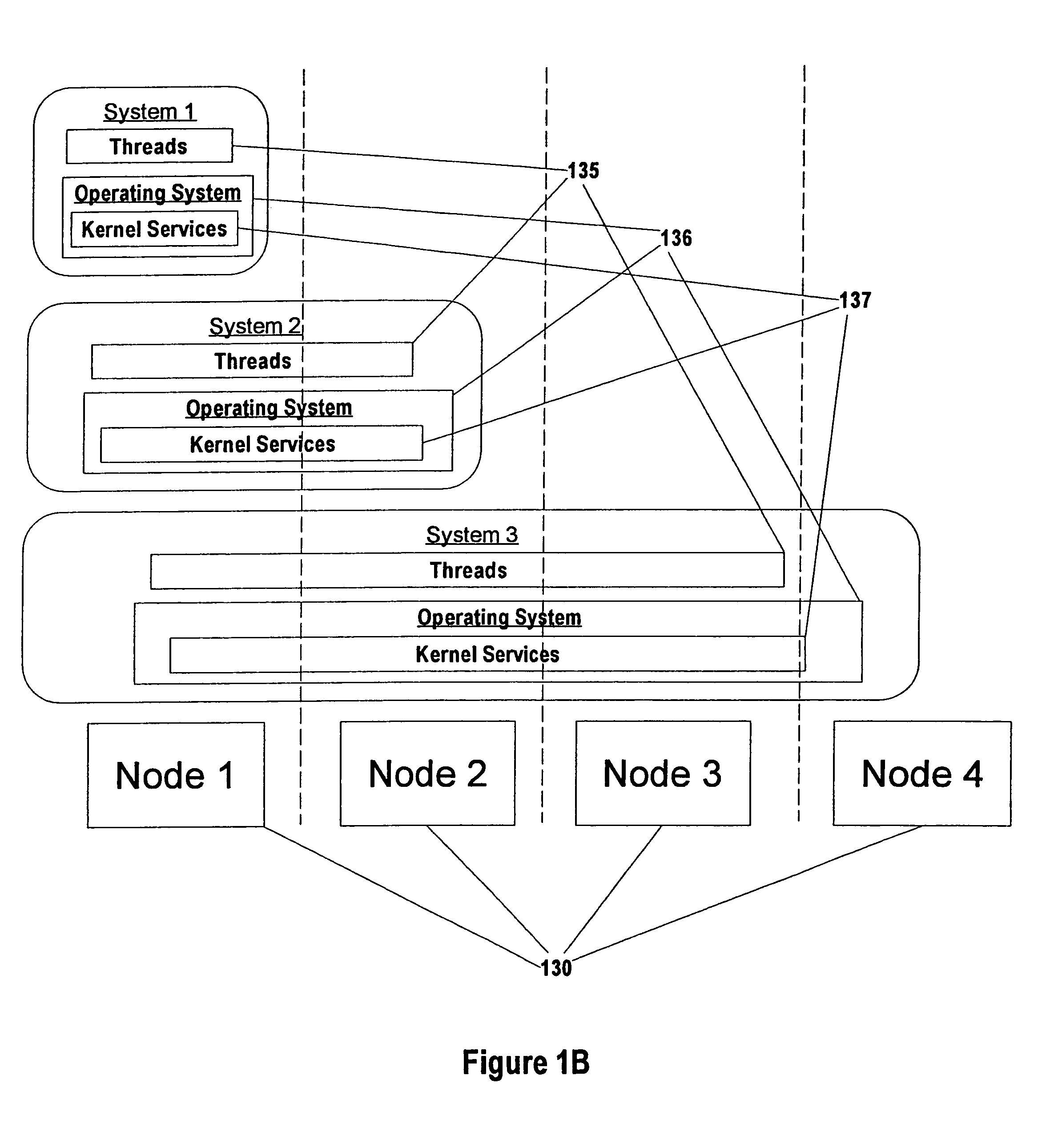

Mechanism for reducing remote memory accesses to shared data in a multi-nodal computer system

InactiveUS20050210468A1Program initiation/switchingResource allocationRemote memory accessData access

Disclosed is an apparatus, method, and program product for identifying and grouping threads that have interdependent data access needs. The preferred embodiment of the present invention utilizes two different constructs to accomplish this grouping. A Memory Affinity Group (MAG) is disclosed. The MAG construct enables multiple threads to be associated with the same node without any foreknowledge of which threads will be involved in the association, and without any control over the particular node with which they are associated. A Logical Node construct is also disclosed. The Logical Node construct enables multiple threads to be associated with the same specified node without any foreknowledge of which threads will be involved in the association. While logical nodes do not explicitly identify the underlying physical nodes comprising the system, they provide a means of associating particular threads with the same node and other threads with other node(s).

Owner:IBM CORP

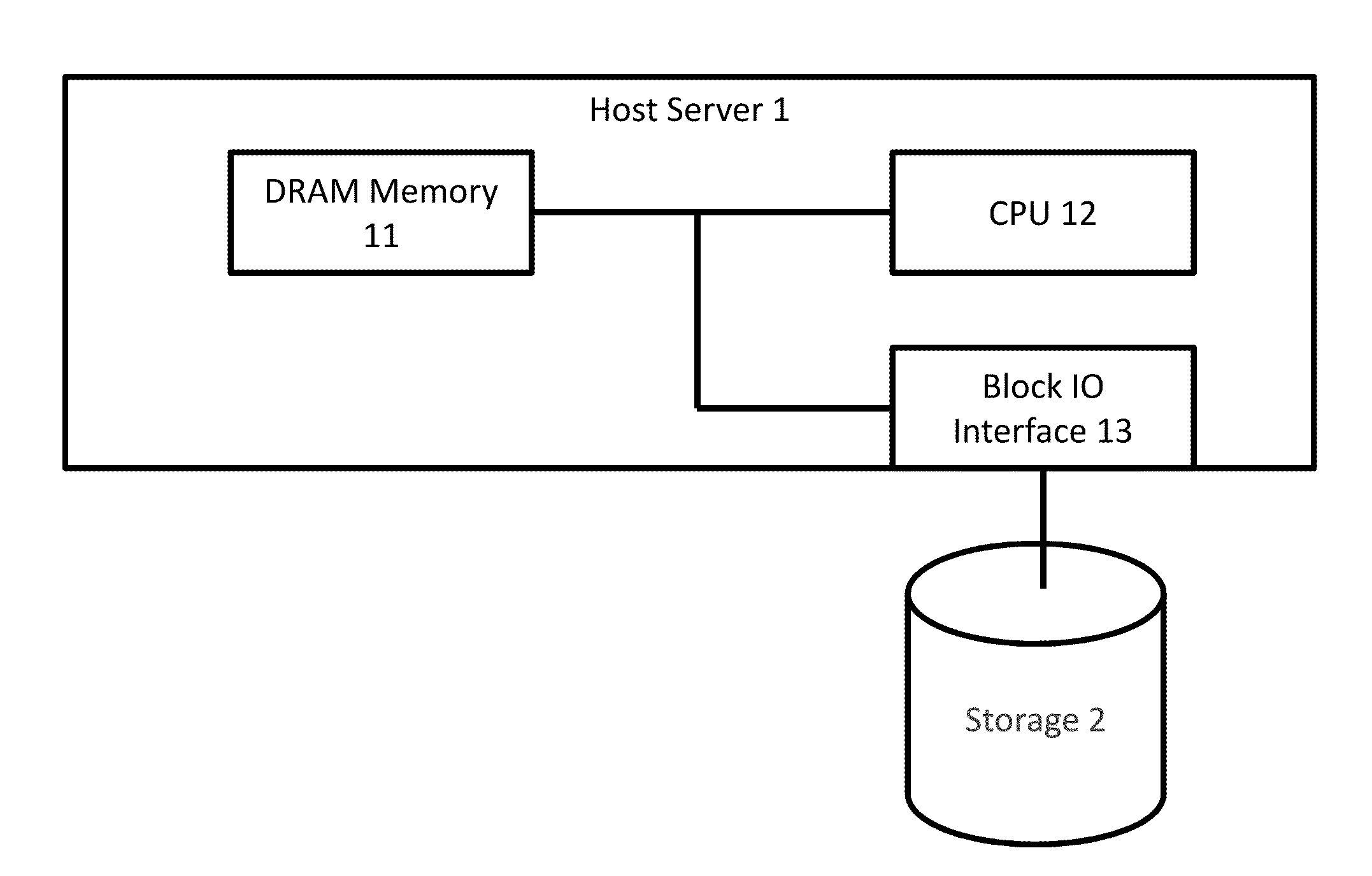

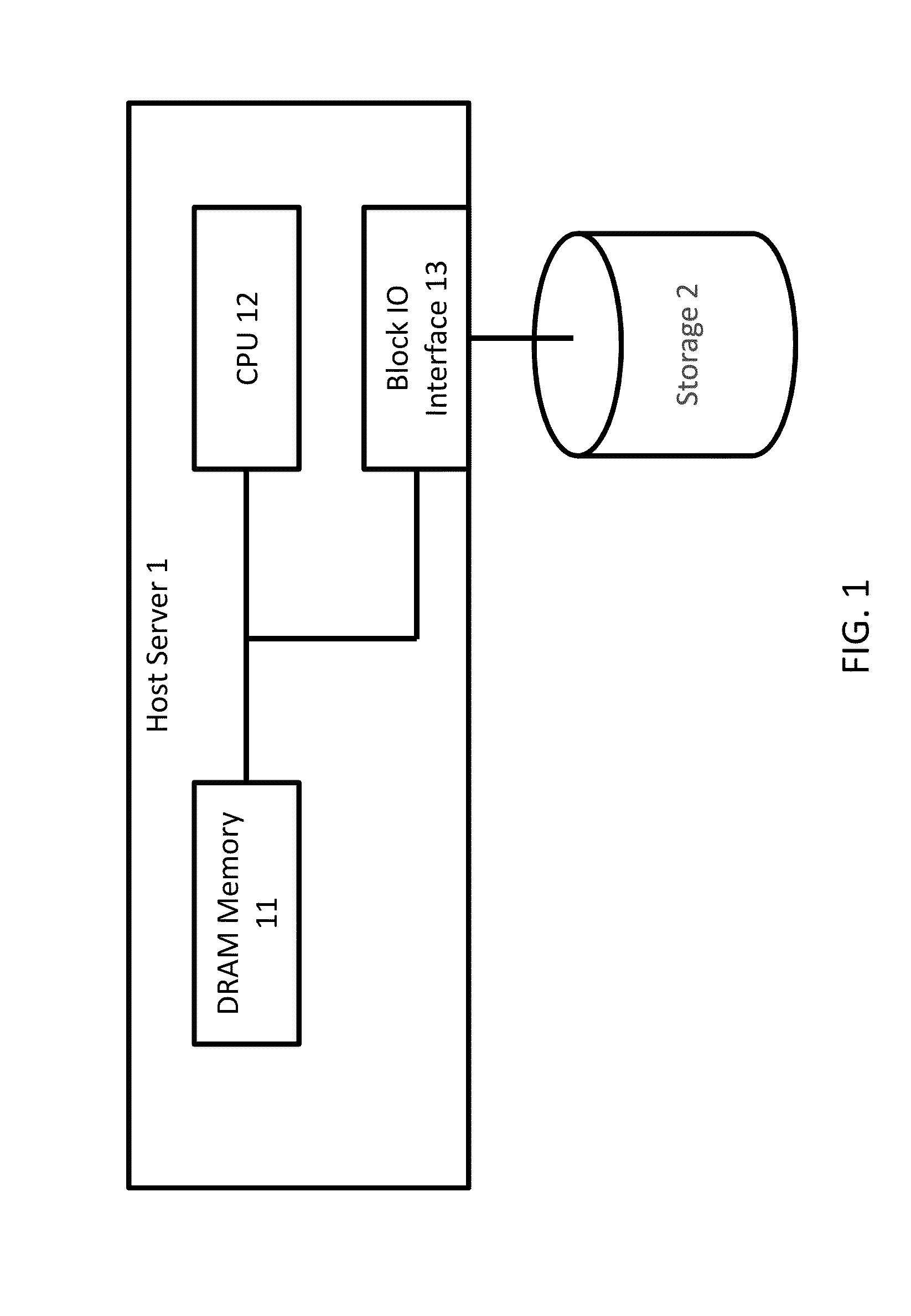

Hierarchy memory management

ActiveUS20140089585A1Improve scalabilityMemory architecture accessing/allocationMemory adressing/allocation/relocationRemote memory accessOperational system

In one embodiment, a storage system comprises: a first type interface being operable to communicate with a server using a remote memory access; a second type interface being operable to communicate with the server using a block I / O (Input / Output) access; a memory; and a controller being operable to manage (1) a first portion of storage areas of the memory to allocate for storing data, which is to be stored in a physical address space managed by an operating system on the server and which is sent from the server via the first type interface, and (2) a second portion of the storage areas of the memory to allocate for caching data, which is sent from the server to a logical volume of the storage system via the second type interface and which is to be stored in a storage device of the storage system corresponding to the logical volume.

Owner:HITACHI LTD

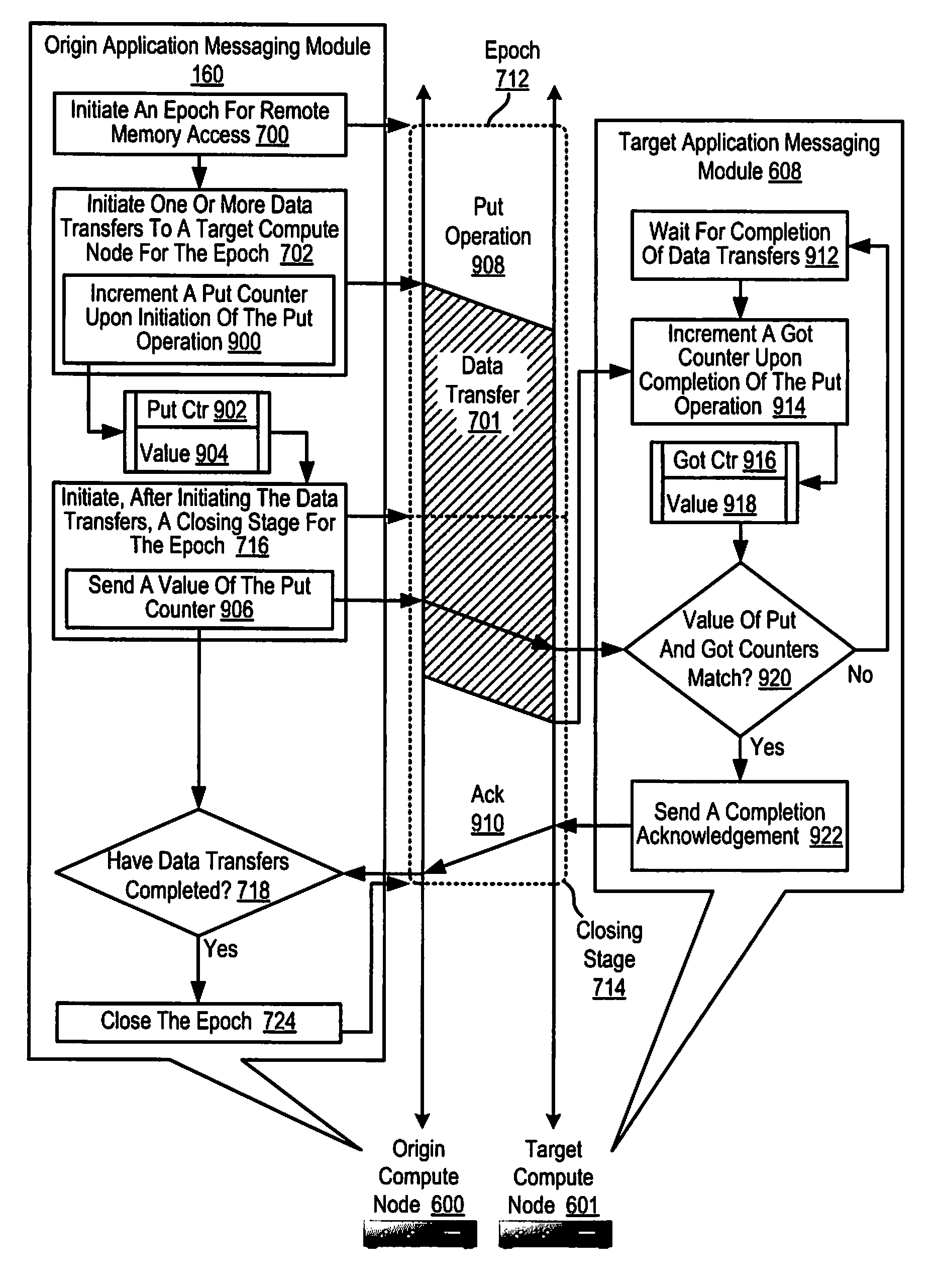

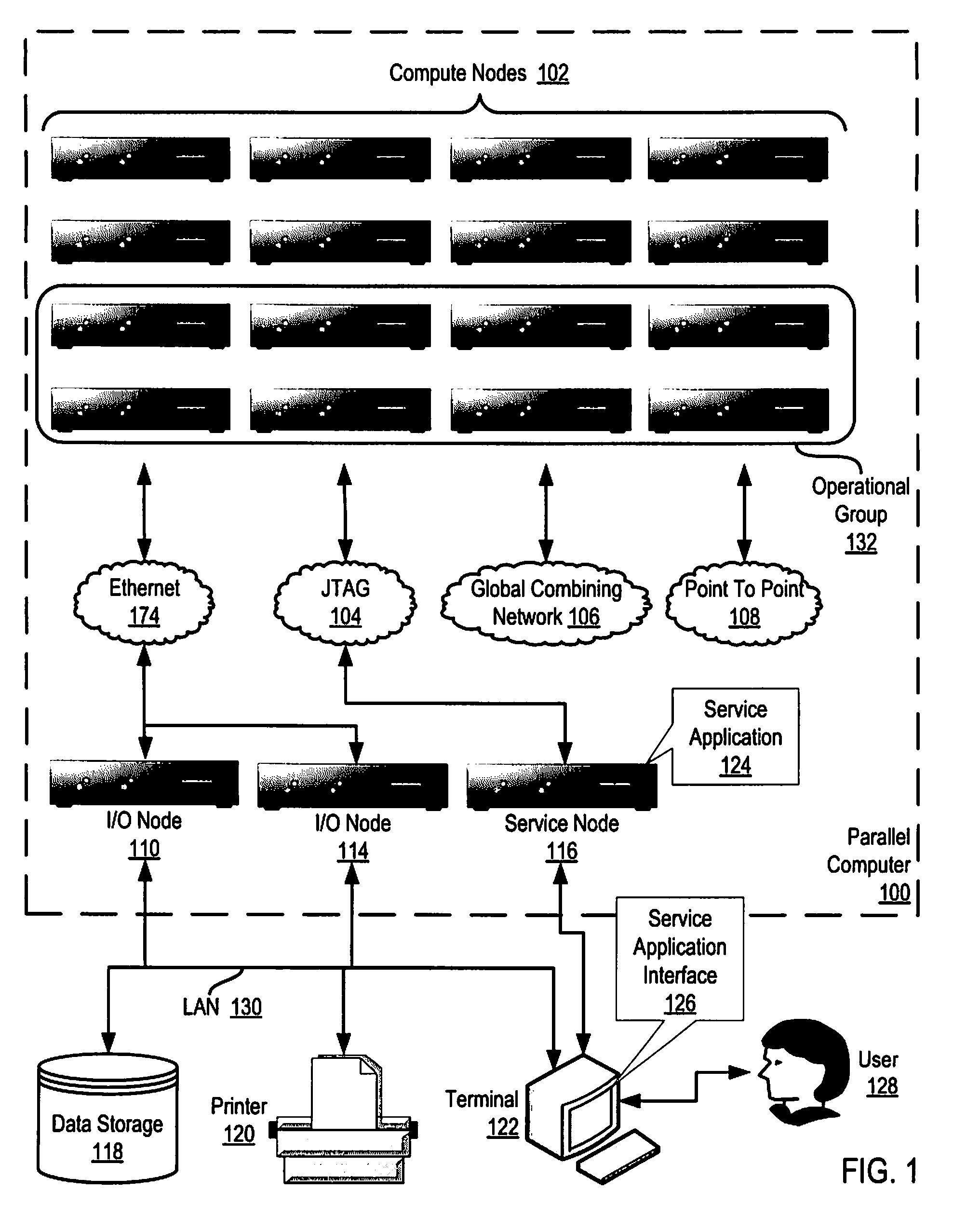

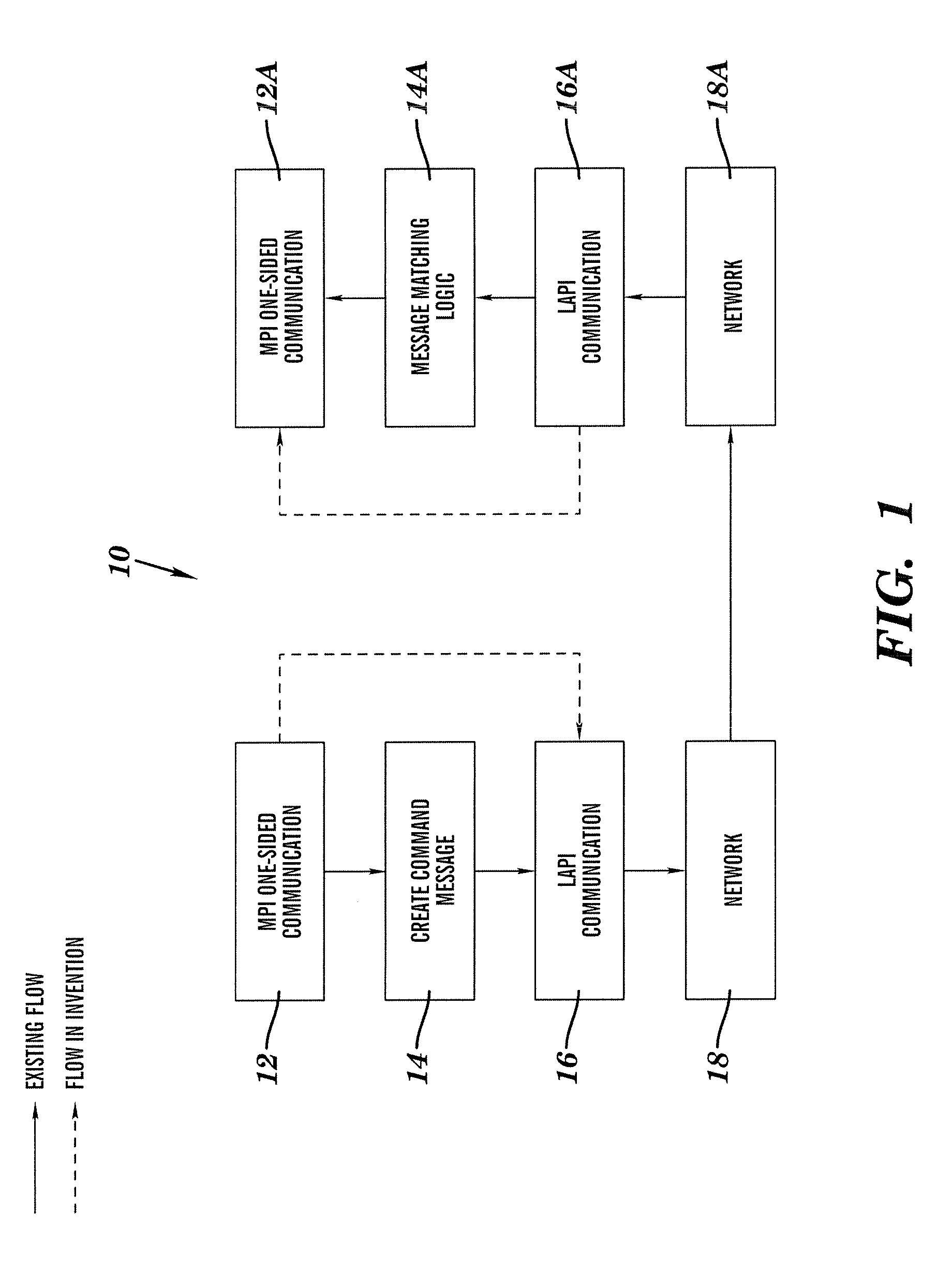

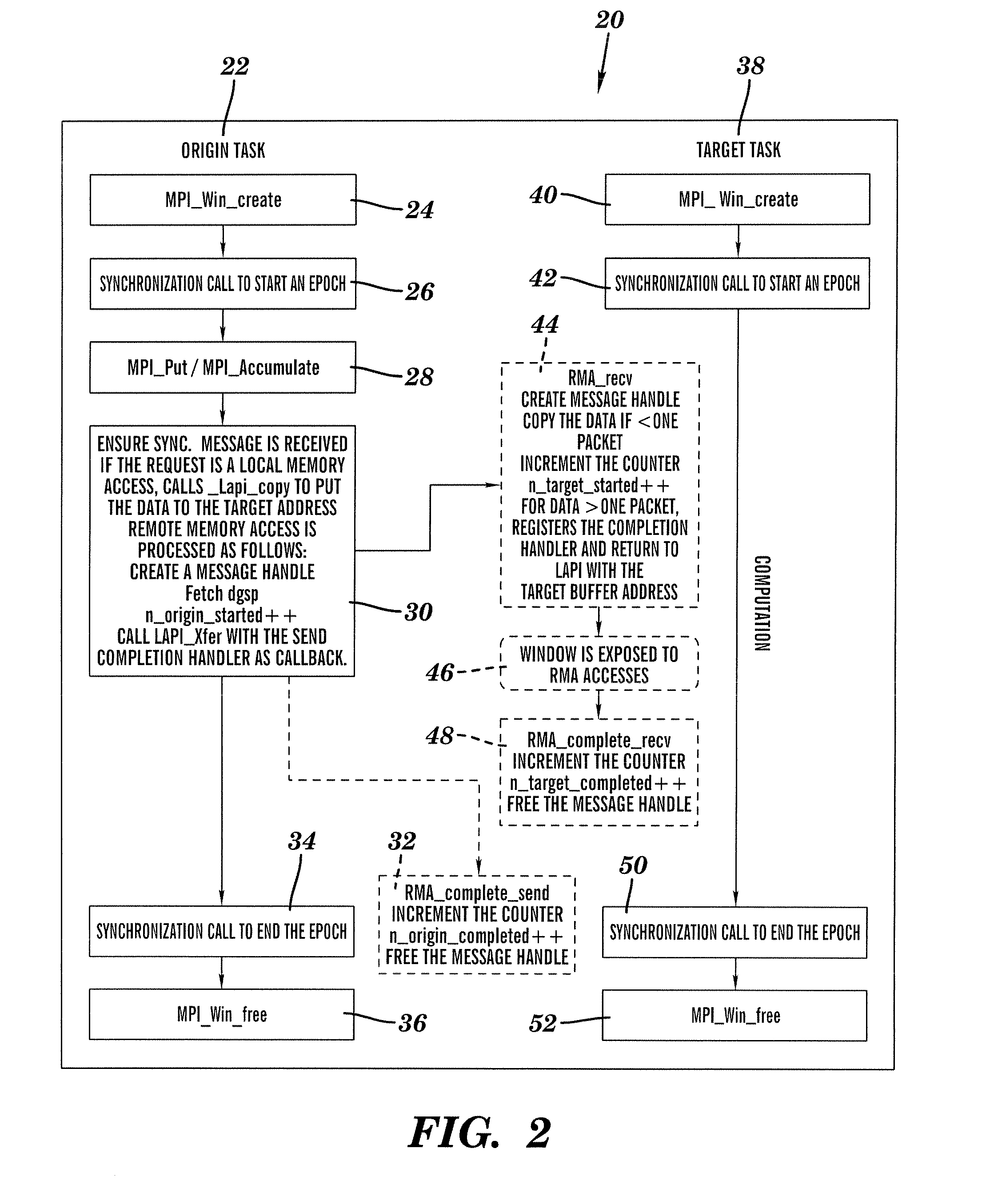

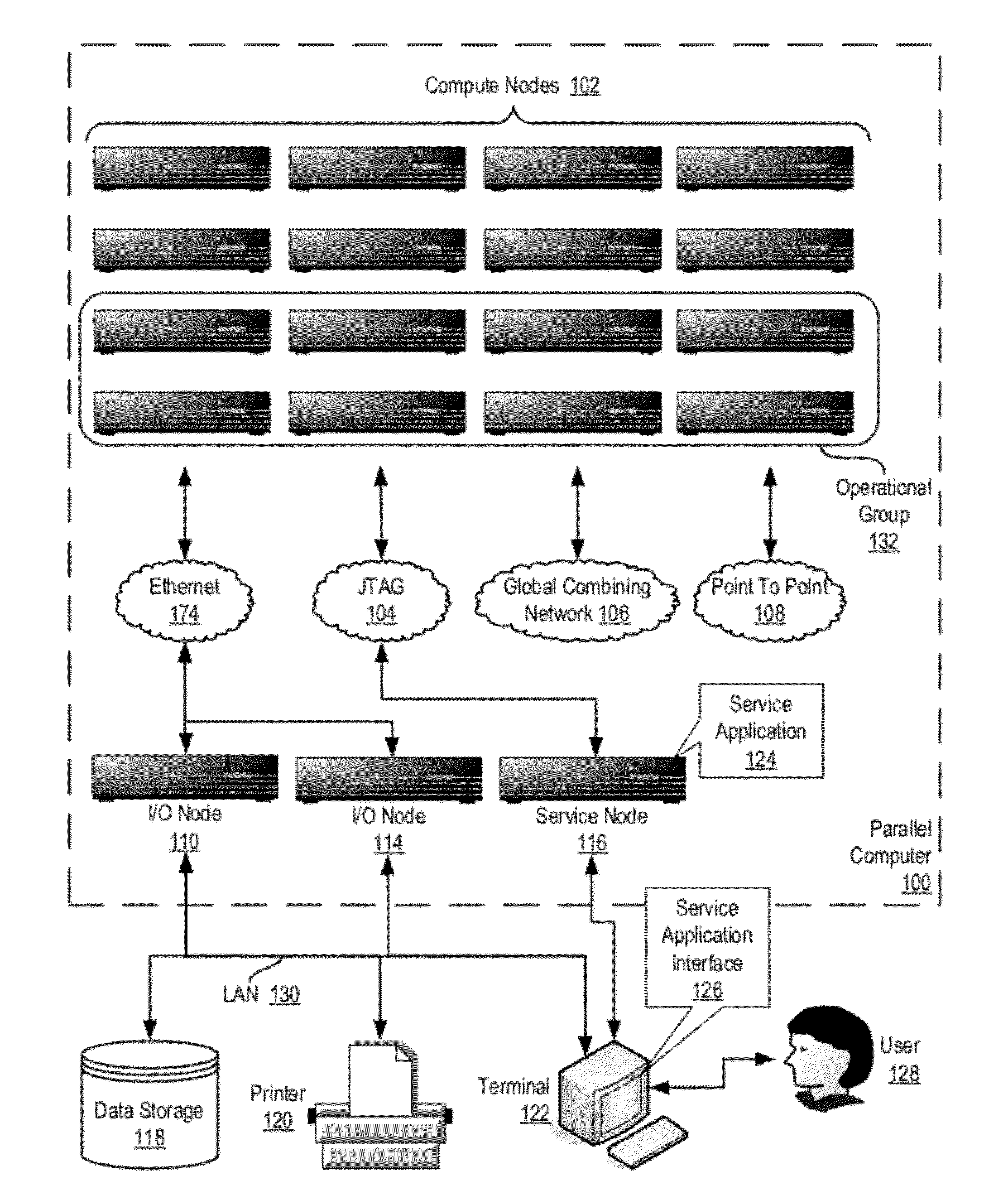

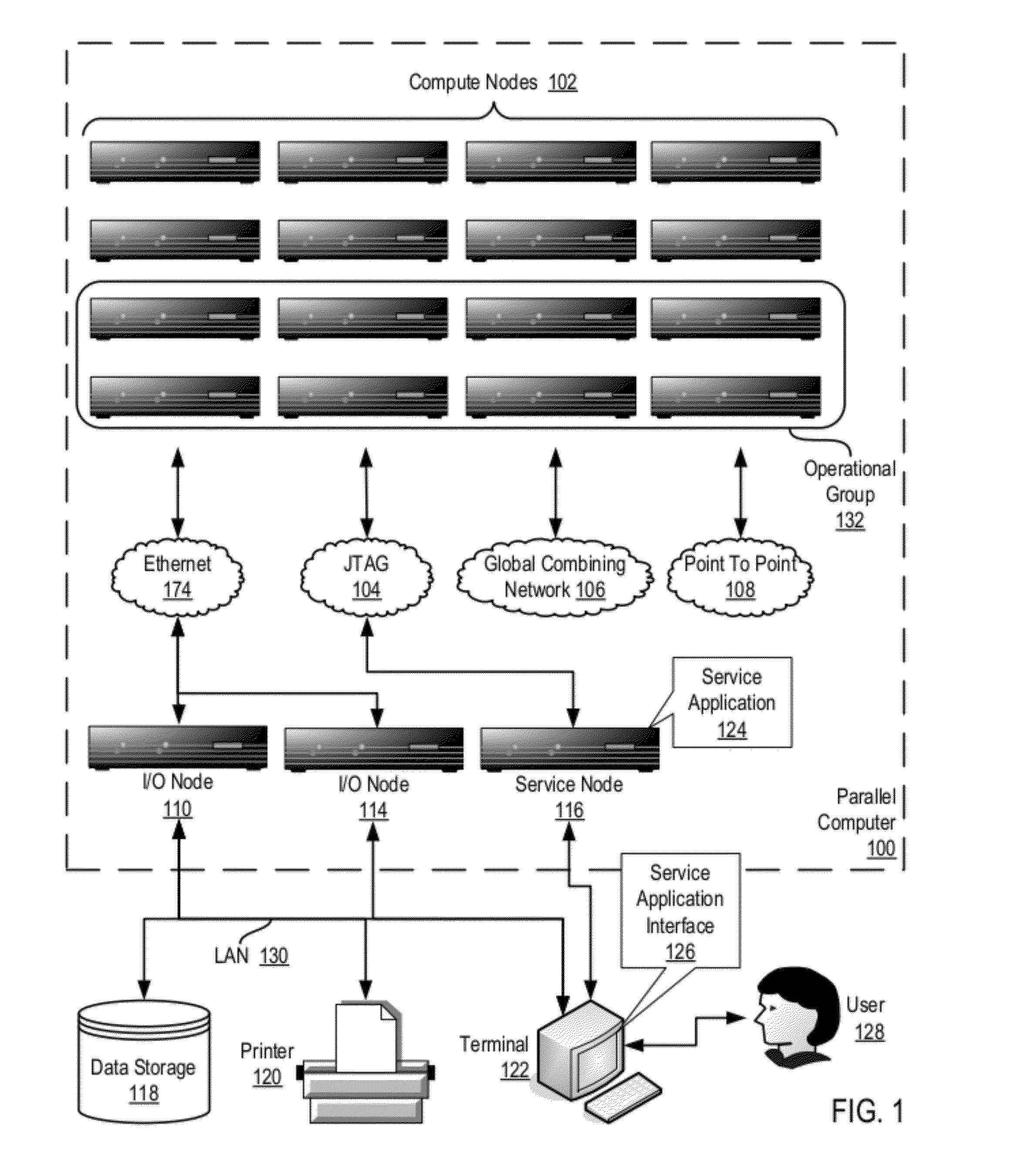

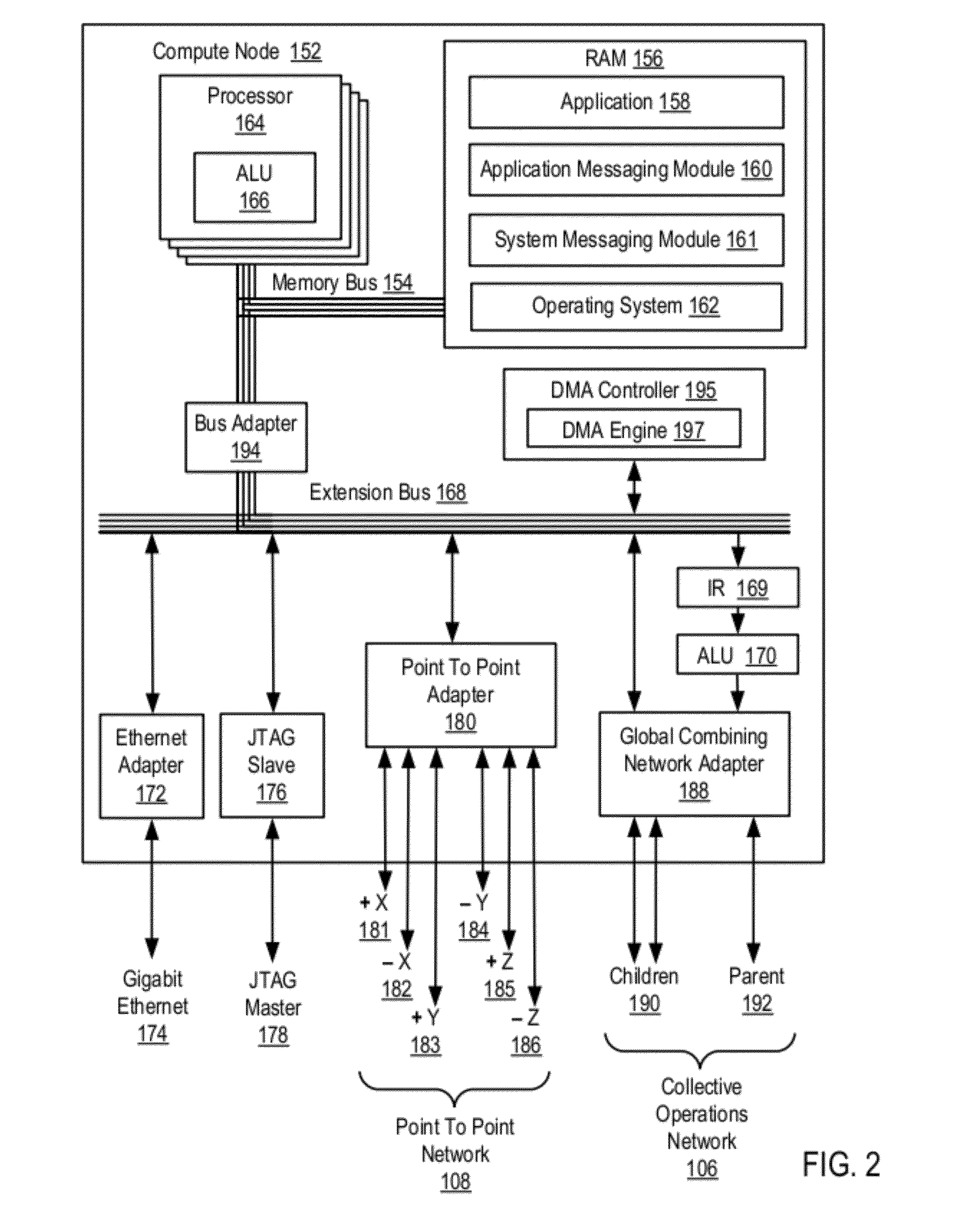

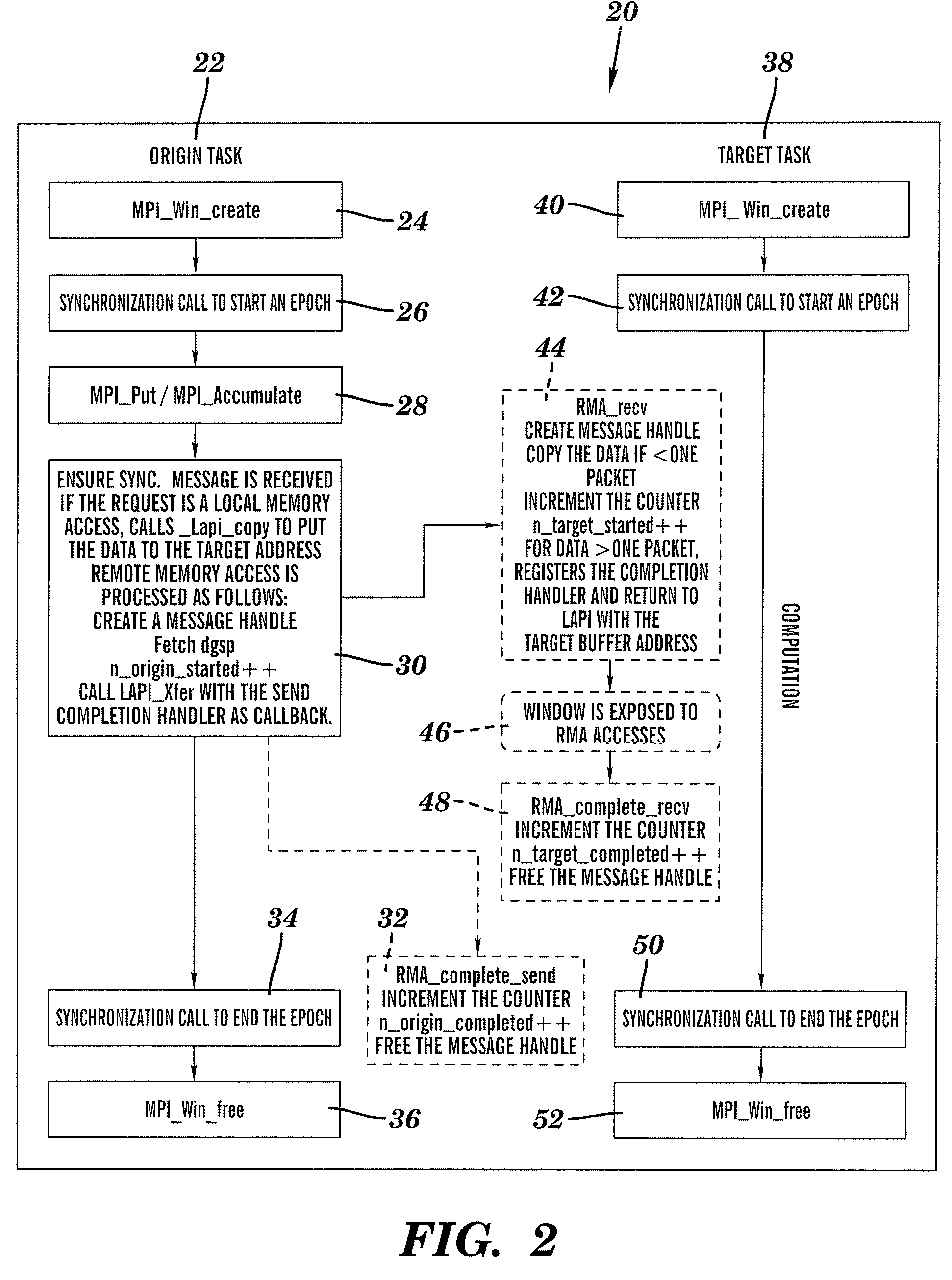

Administering an Epoch Initiated for Remote Memory Access

InactiveUS20080313661A1Program synchronisationMultiple digital computer combinationsRemote memory accessApplication software

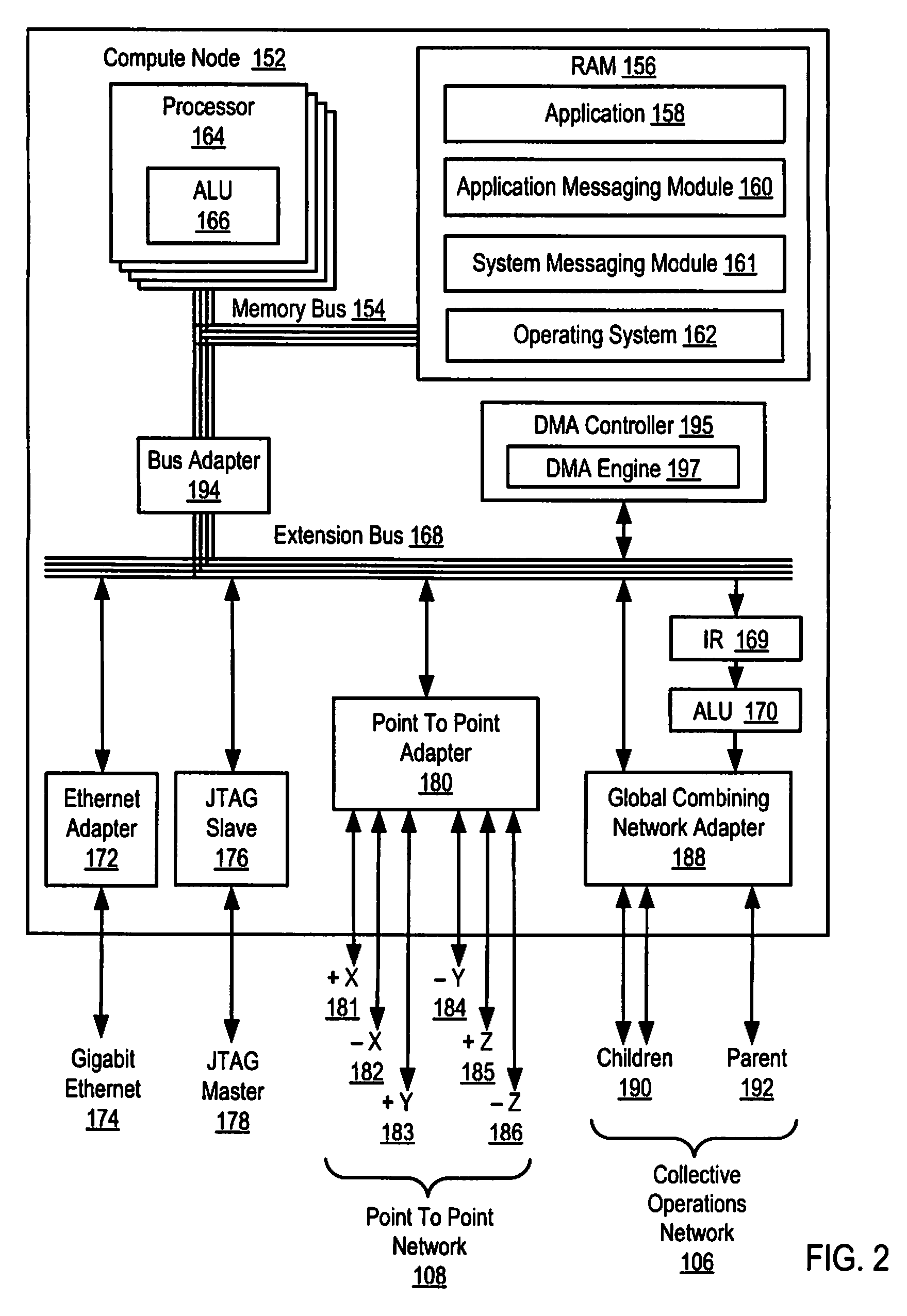

Methods, systems, and products are disclosed for administering an epoch initiated for remote memory access that include: initiating, by an origin application messaging module on an origin compute node, one or more data transfers to a target compute node for the epoch; initiating, by the origin application messaging module after initiating the data transfers, a closing stage for the epoch, including rejecting any new data transfers after initiating the closing stage for the epoch; determining, by the origin application messaging module, whether the data transfers have completed; and closing, by the origin application messaging module, the epoch if the data transfers have completed.

Owner:IBM CORP

System and method for sharing memory among multiple storage device controllers

InactiveUS6795850B2Memory adressing/allocation/relocationDigital computer detailsRemote memory accessMessage type

Each node's memory controller may be configured to send and receive messages on a dedicated memory-to-memory interconnect according to the communication protocol and to responsively perform memory accesses in a local memory. The type of message sent on the interconnect may depend on which memory region is targeted by a memory access request local to the sending node. If certain regions are targeted locally, a memory controller may delay performance of a local memory access until the memory access has been performed remotely. Remote nodes may confirm performance of the remote memory accesses via the memory-to-memory interconnect.

Owner:ORACLE INT CORP

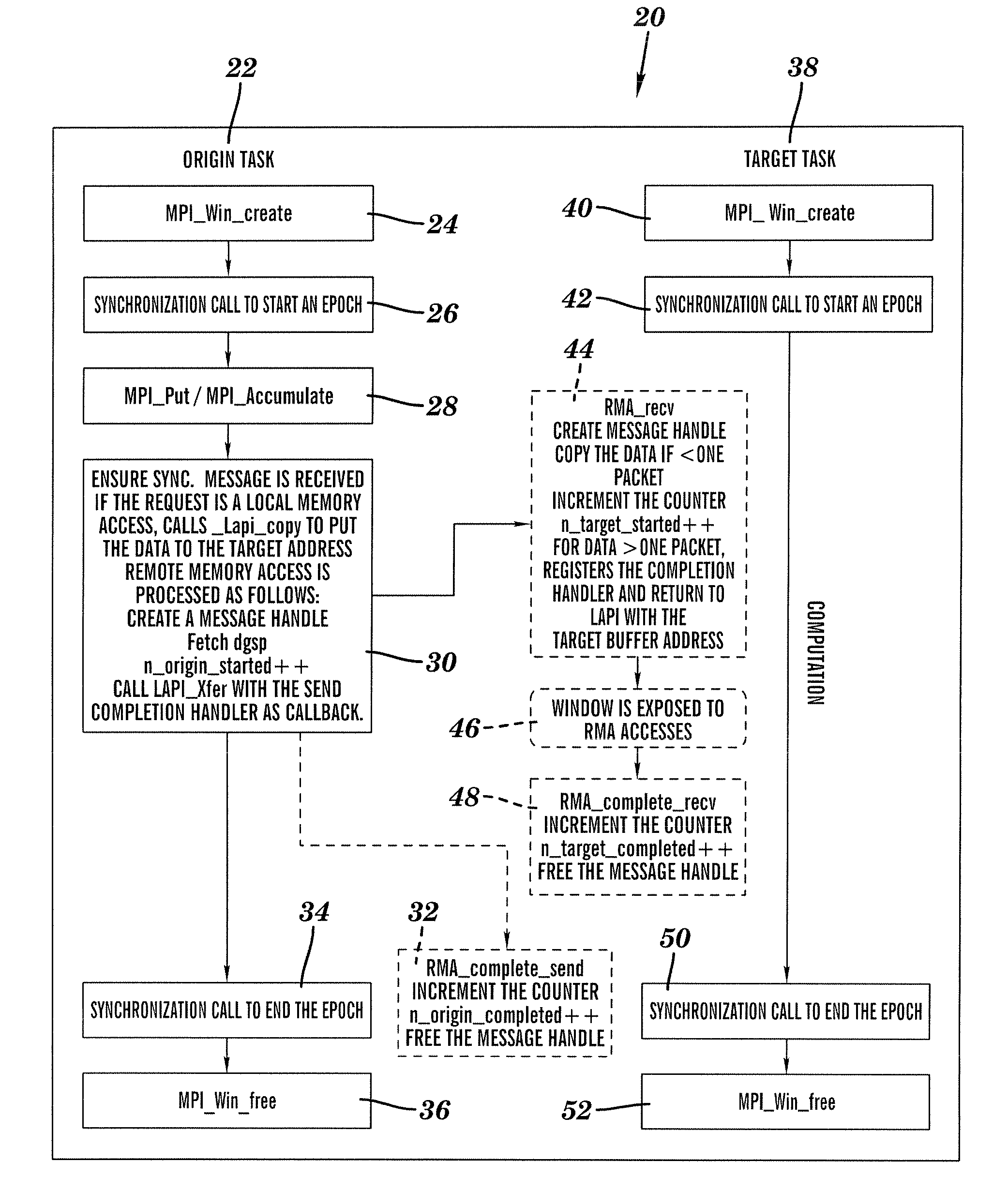

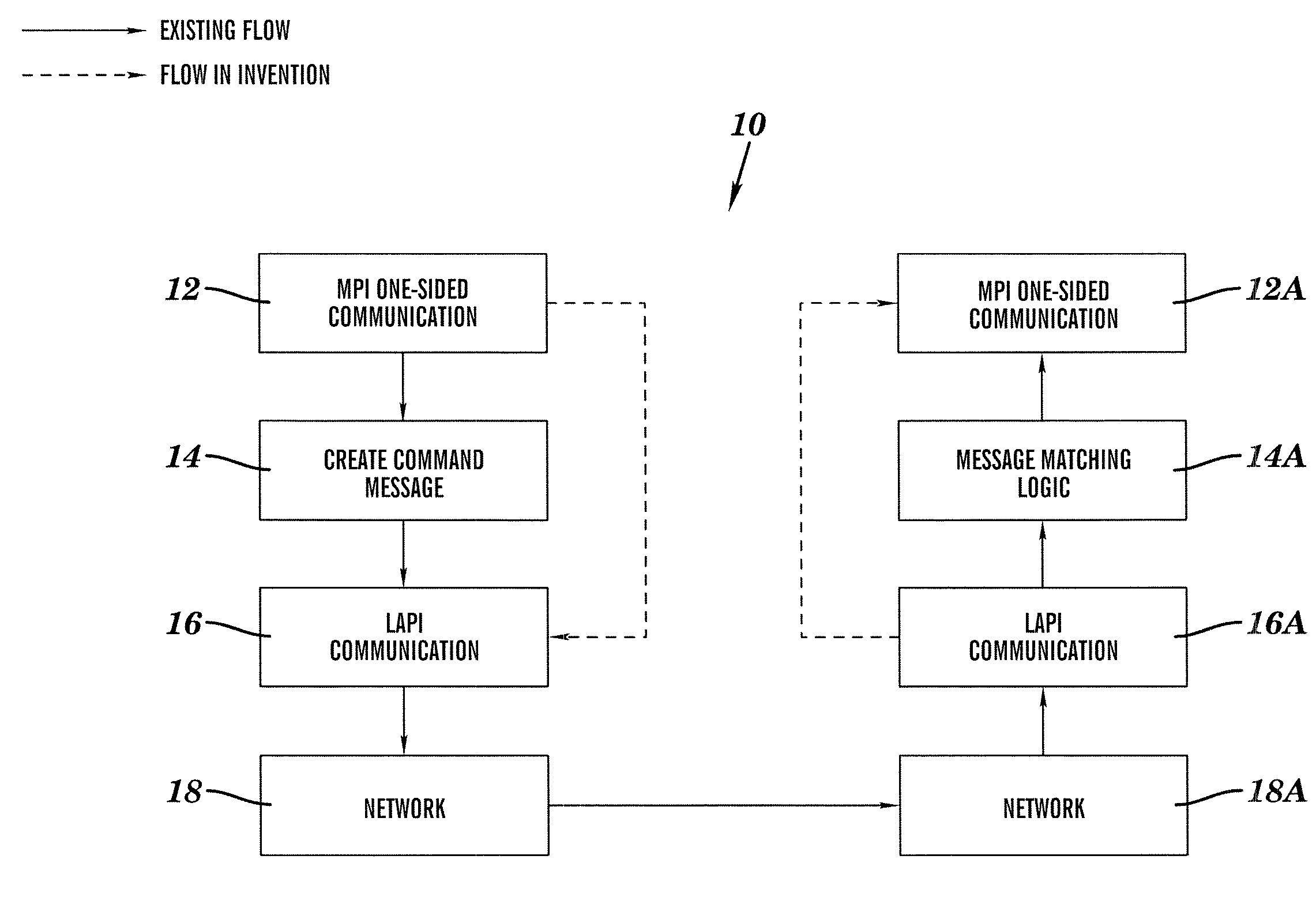

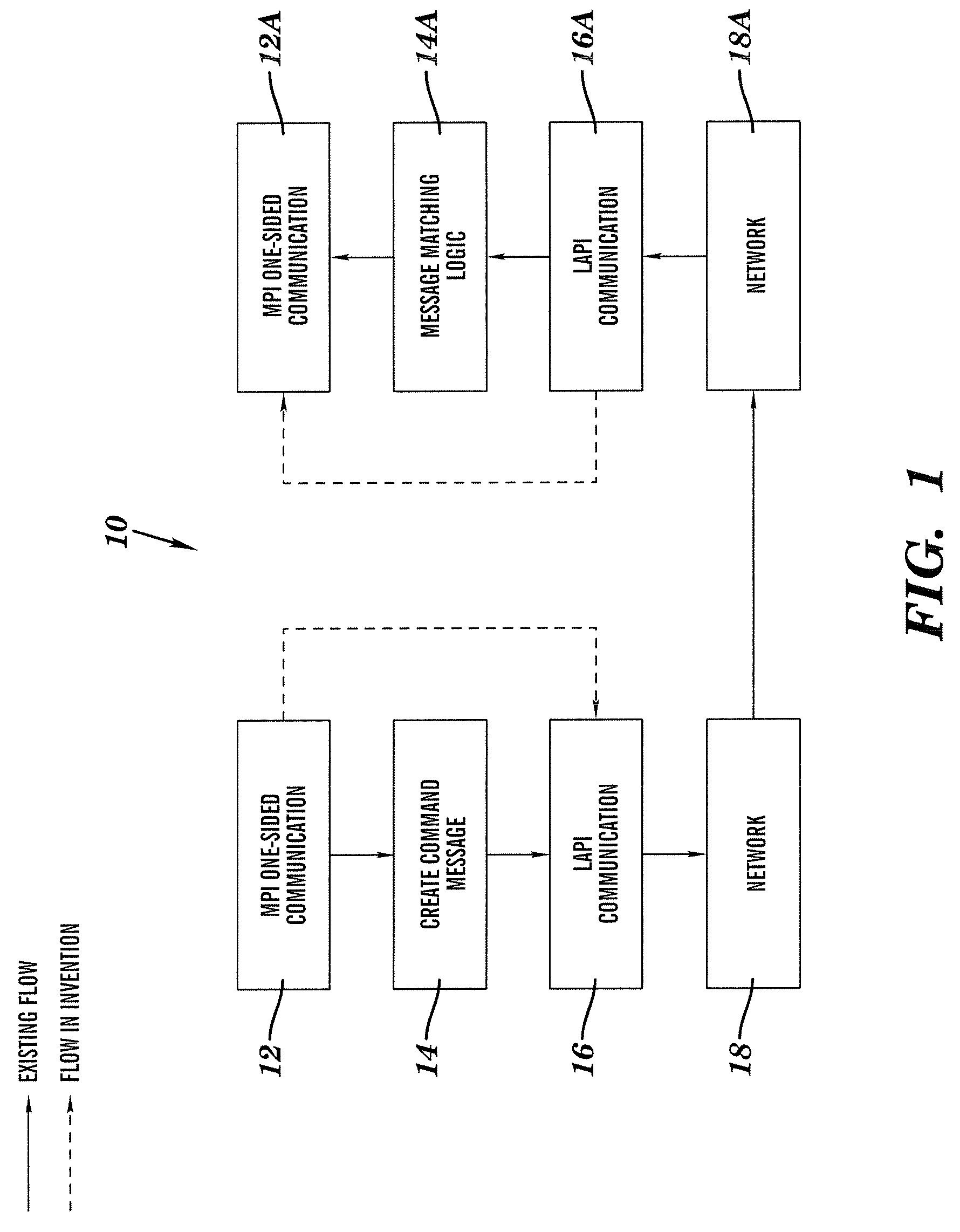

Method for implementing mpi-2 one sided communication

A method for implementing Message Passing Interface (MPI-2) one-sided communication by using Low-level Applications Programming Interface (LAPI) active messaging capabilities, including providing at least three data transfer types, one of which is used to send a message with a message header greater than one packet where Data Gather and Scatter Programs (DGSP) are placed as part of the message header; allowing a multi-packet header by using a LAPI data transfer type; sending the DGSP and data as one message; reading the DSGP with a header handler; registering the DSGP with the LAPI to allow the LAPI to scatter the data to one or more memory locations; defining two sets of counters, one counter set for keeping track of a state of a prospective communication partner, and another counter set for recording activities of local and Remote Memory Access (RMA) operations; comparing local and remote counts of completed RMA operations to complete synchronization mechanisms; and creating a mpci_wait_loop function.

Owner:TWITTER INC

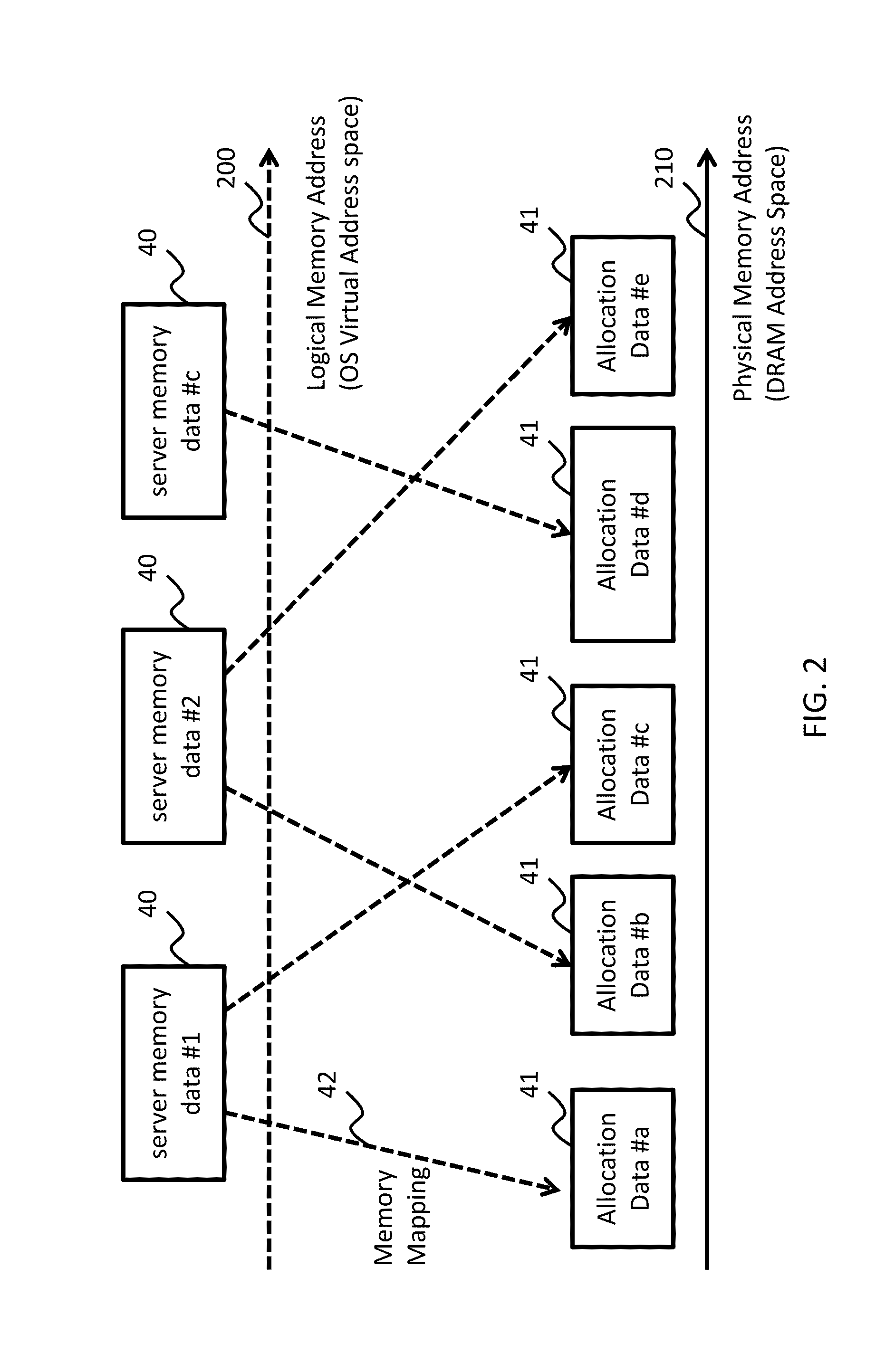

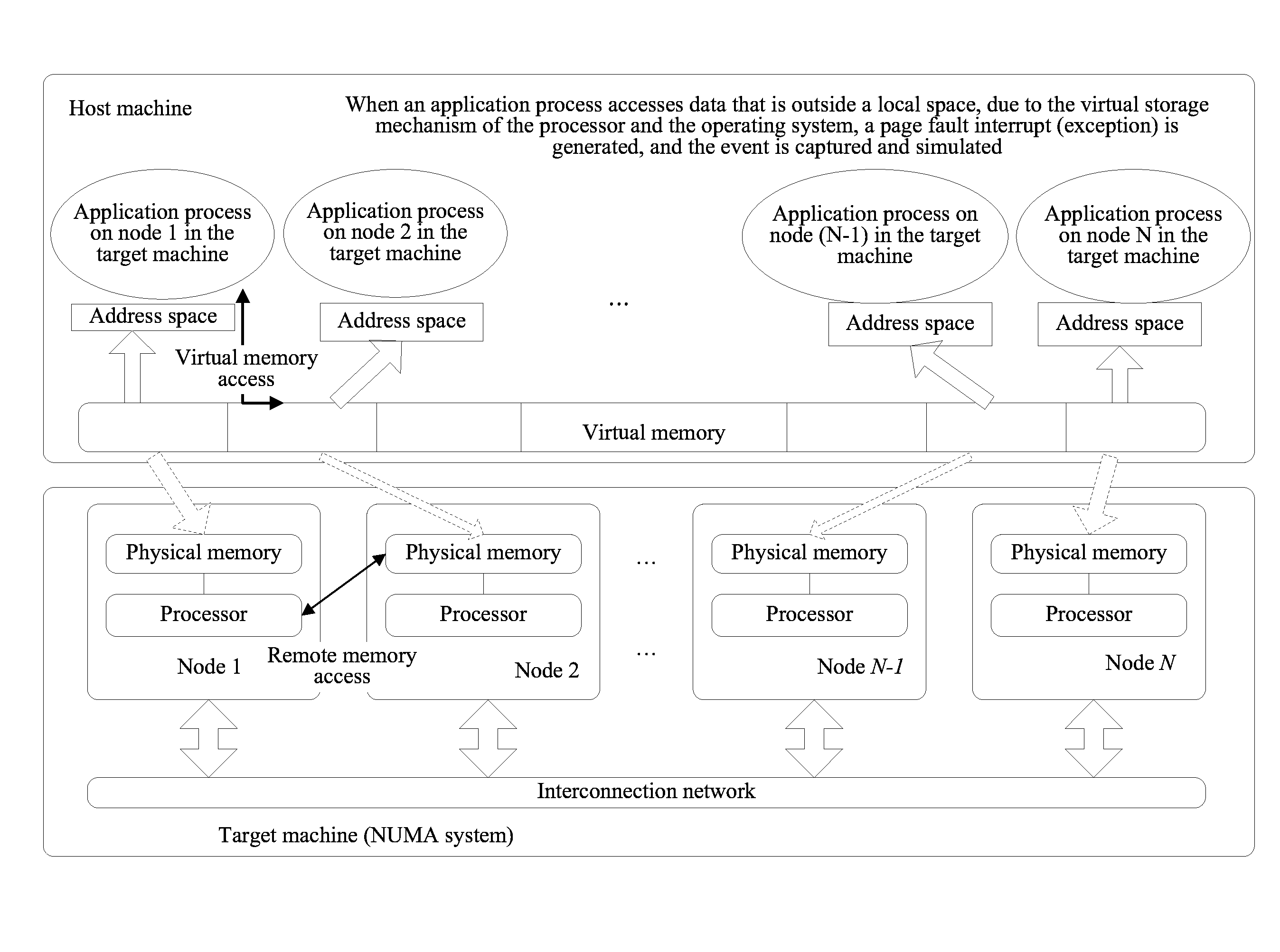

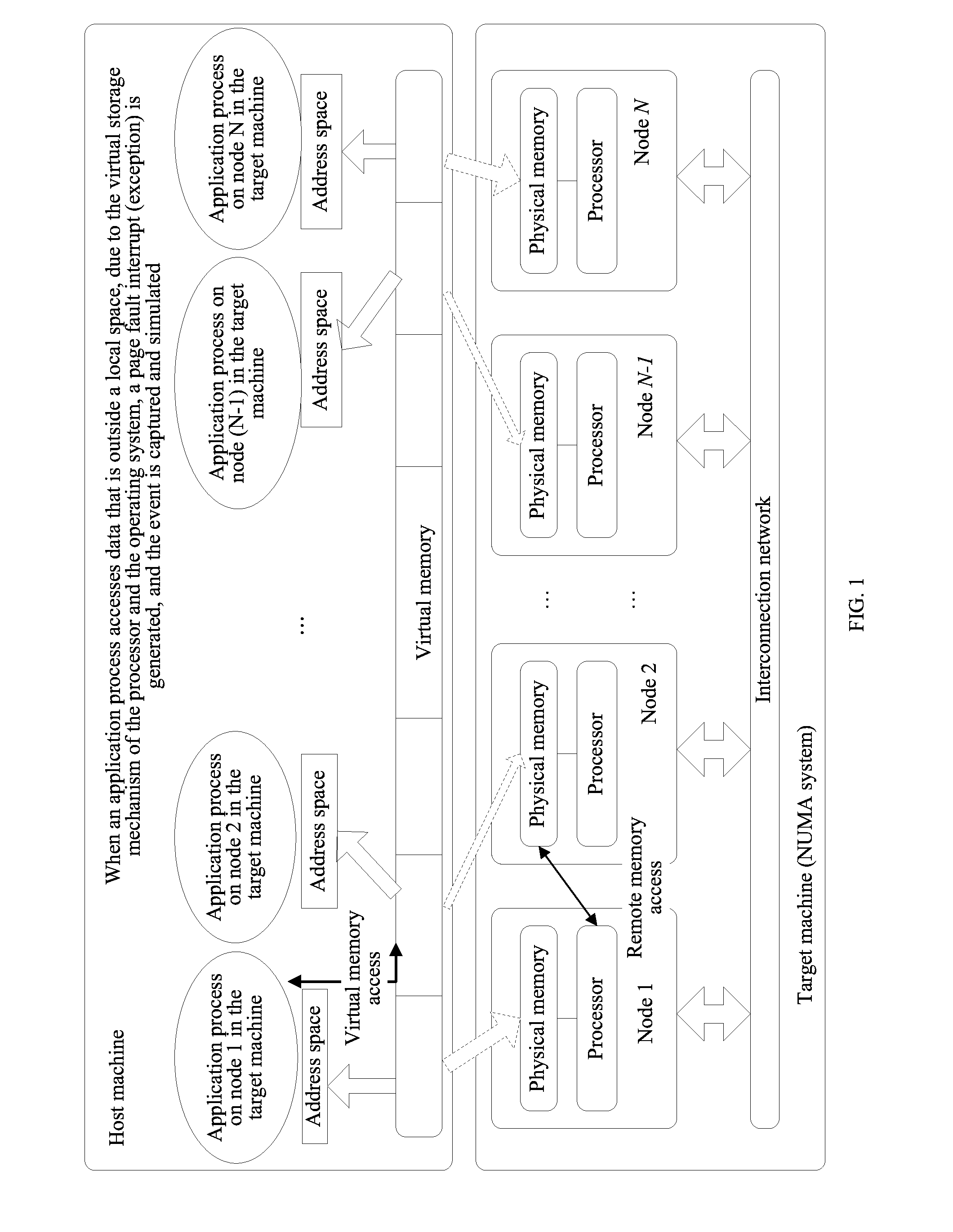

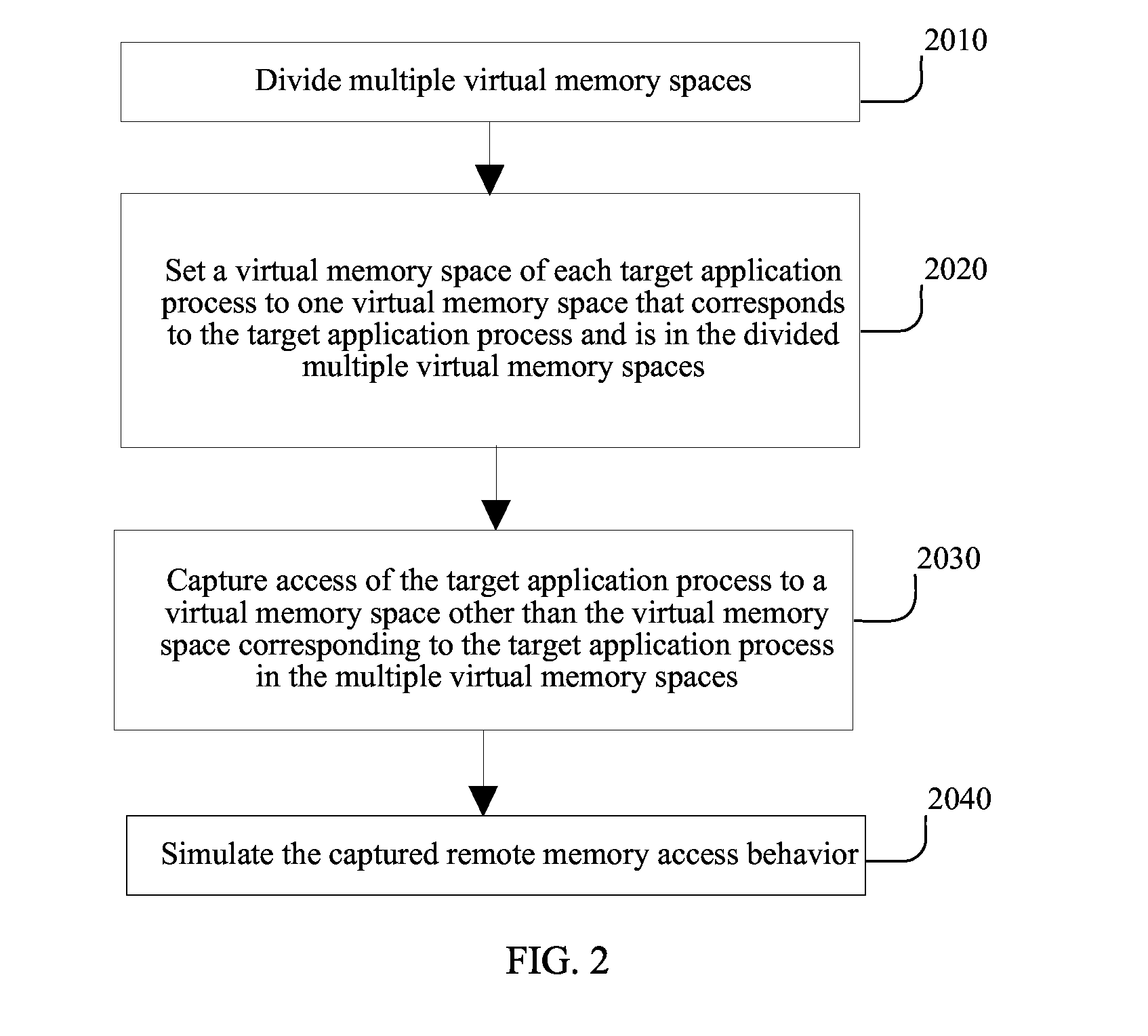

Method and Simulator for Simulating Multiprocessor Architecture Remote Memory Access

InactiveUS20130024646A1Improve efficiencySimplifies complex modeling procedureMemory adressing/allocation/relocationCAD circuit designVirtual memoryRemote memory access

A method for simulating remote memory access in a target machine on a host machine is disclosed. Multiple virtual memory spaces in the host machine are divided and a virtual address space of each target application process is set to one virtual memory space that corresponds to a target application process and is in the multiple virtual memory spaces. Access of the target application process is captured to a virtual memory space other than the virtual memory space corresponding to the target application process in the multiple virtual memory spaces.

Owner:HUAWEI TECH CO LTD

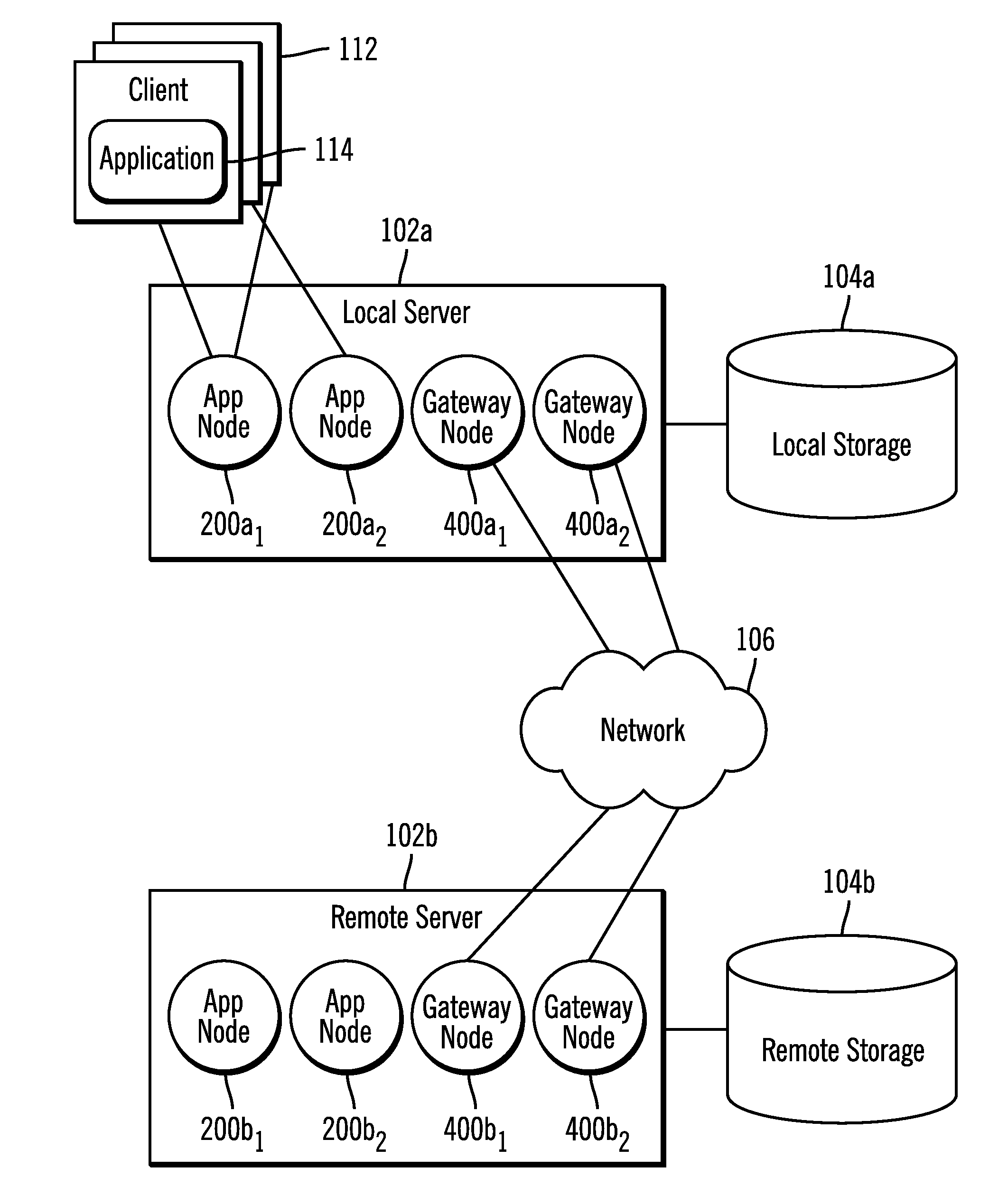

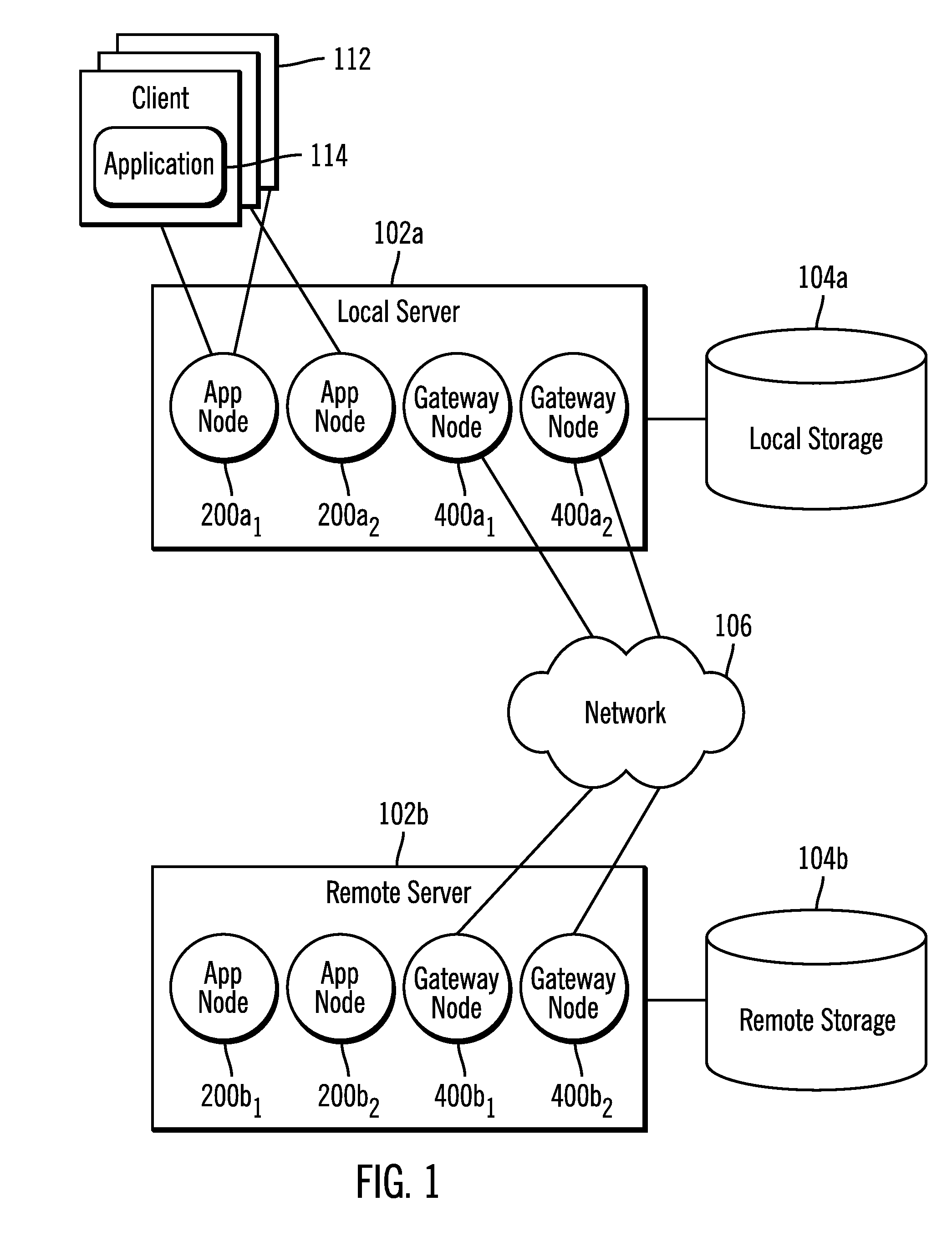

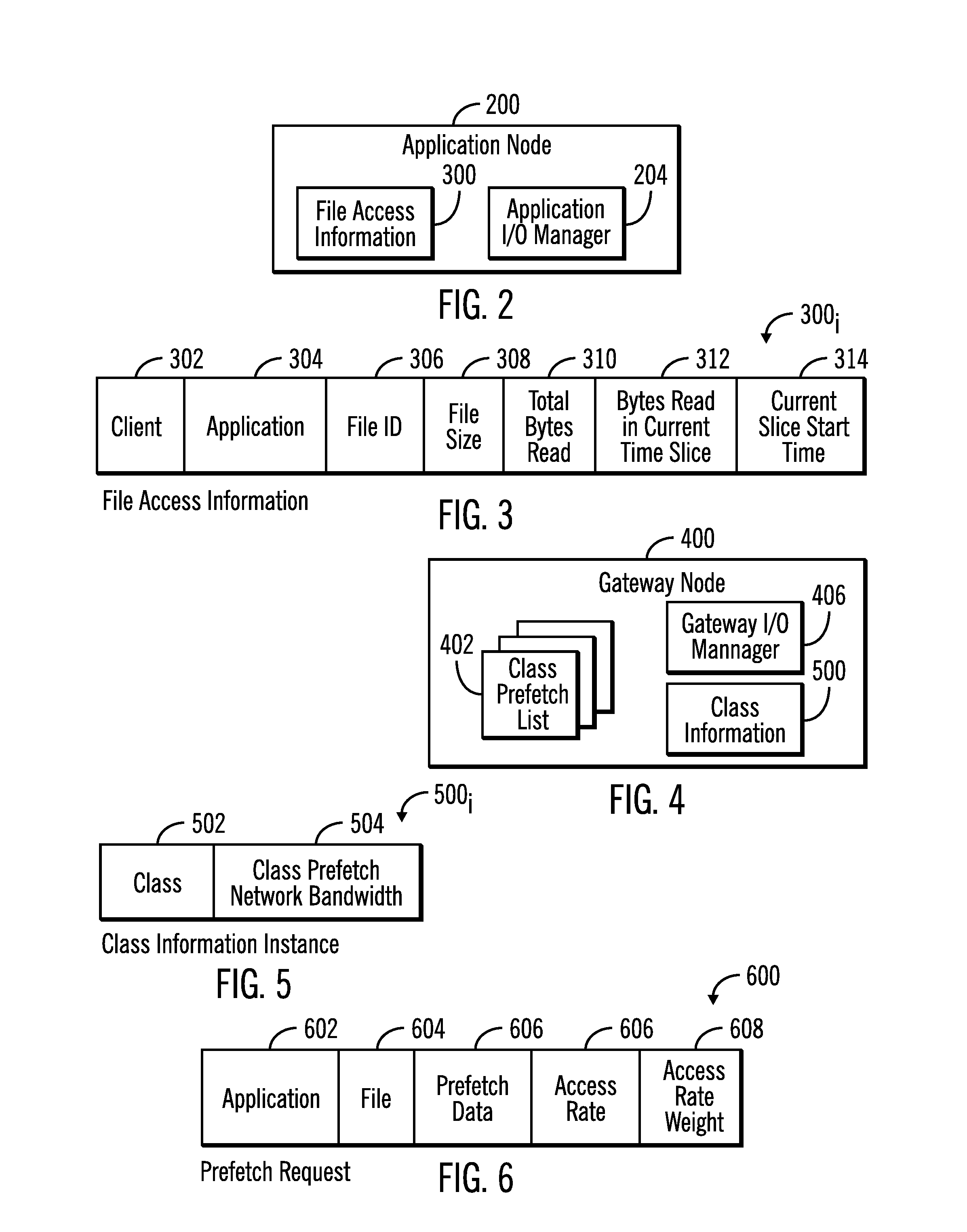

Allocating network bandwidth to prefetch requests to prefetch data from a remote storage to cache in a local storage

InactiveUS20150271287A1Memory architecture accessing/allocationMemory adressing/allocation/relocationRemote memoryRemote memory access

Provided are a computer program product, system, and method for allocating network bandwidth to prefetch requests to prefetch data from a remote storage to cache in a local storage. A determination is made of access rates for applications accessing a plurality of files, wherein the access rate is based on a rate of application access of the file over a period of time. A determination is made of an access rate weight for each of the files based on the access rates of the plurality of files. The determined access rate weight for each of the files is used to determine network bandwidth to assign to access the files from the remote storage to store in the local storage.

Owner:IBM CORP

Administering An Epoch Initiated For Remote Memory Access

InactiveUS20120246256A1Program synchronisationMultiple digital computer combinationsRemote memory accessApplication software

Methods, systems, and products are disclosed for administering an epoch initiated for remote memory access that include: initiating, by an origin application messaging module on an origin compute node, one or more data transfers to a target compute node for the epoch; initiating, by the origin application messaging module after initiating the data transfers, a closing stage for the epoch, including rejecting any new data transfers after initiating the closing stage for the epoch; determining, by the origin application messaging module, whether the data transfers have completed; and closing, by the origin application messaging module, the epoch if the data transfers have completed.

Owner:INT BUSINESS MASCH CORP

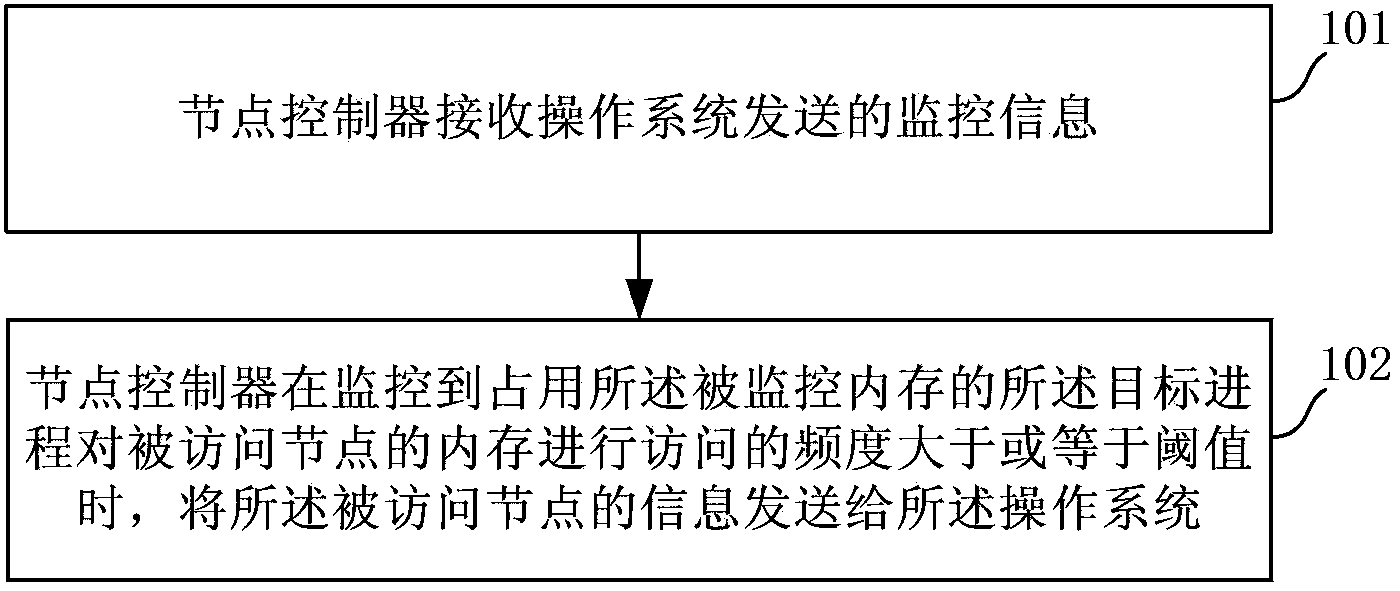

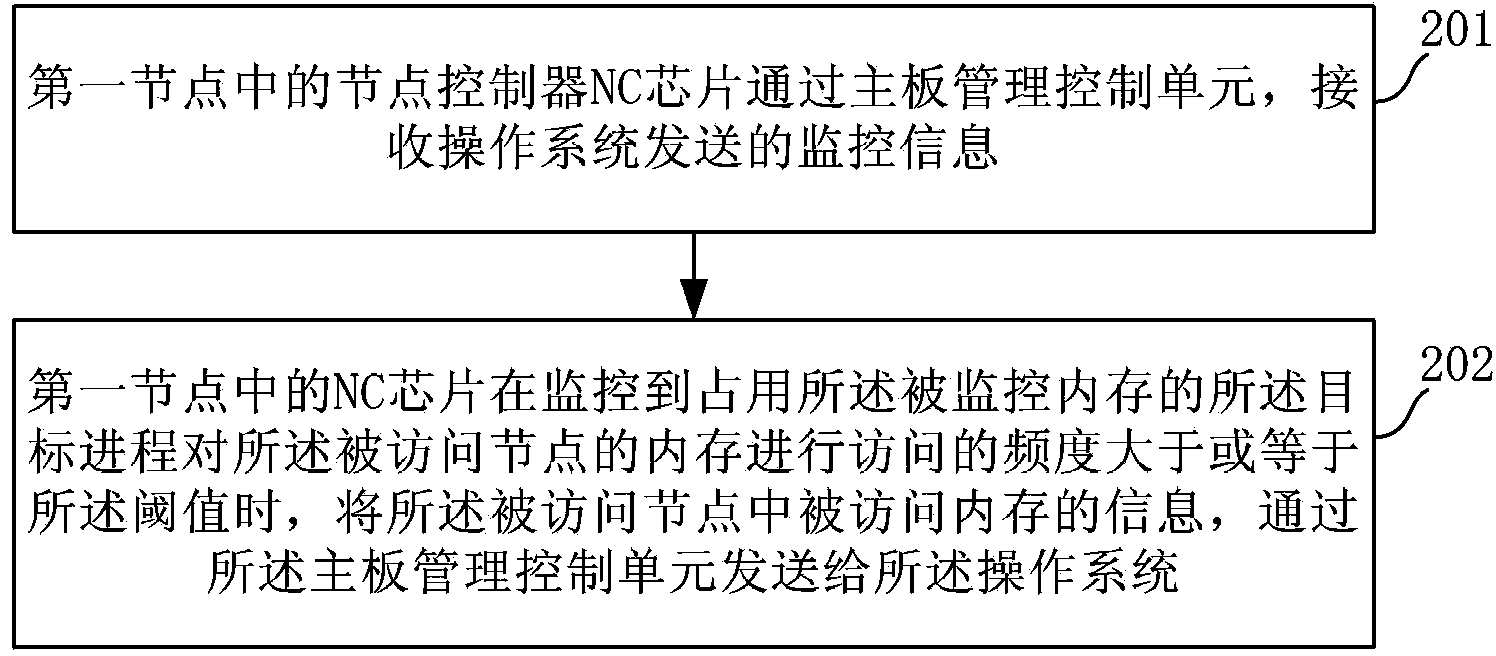

Memory access method, device and system

ActiveCN103365717AImprove performanceShorten the timeProgram initiation/switchingDirect memory accessAccess method

The embodiment of the invention provides a memory access method, device and system. The memory access method comprises the following steps: monitoring information sent by an operating system is received by a node controller, and carries information of the monitored memory in a first node to which the node controller belongs, the monitored memory is the memory resource occupied by the target process on the first node, the target process is a process which operates on a central processing unit (CPU) of the first node, and accesses the memory of a visited node, beyond the first node, of a server system; if the phenomenon that the access frequentness of the target process occupying the monitored memory to the memory of the accessed node is greater than or equal to the threshold value is monitored, the information of the visited node is sent to the operating system, so that the target process is transferred to the visited node according to the information of the visited node; the remote memory access is switched into the local memory access or the nearby memory access, so that the time of visiting the memory through the target process can be reduced, and the performance of the server system is efficiently improved.

Owner:HUAWEI TECH CO LTD

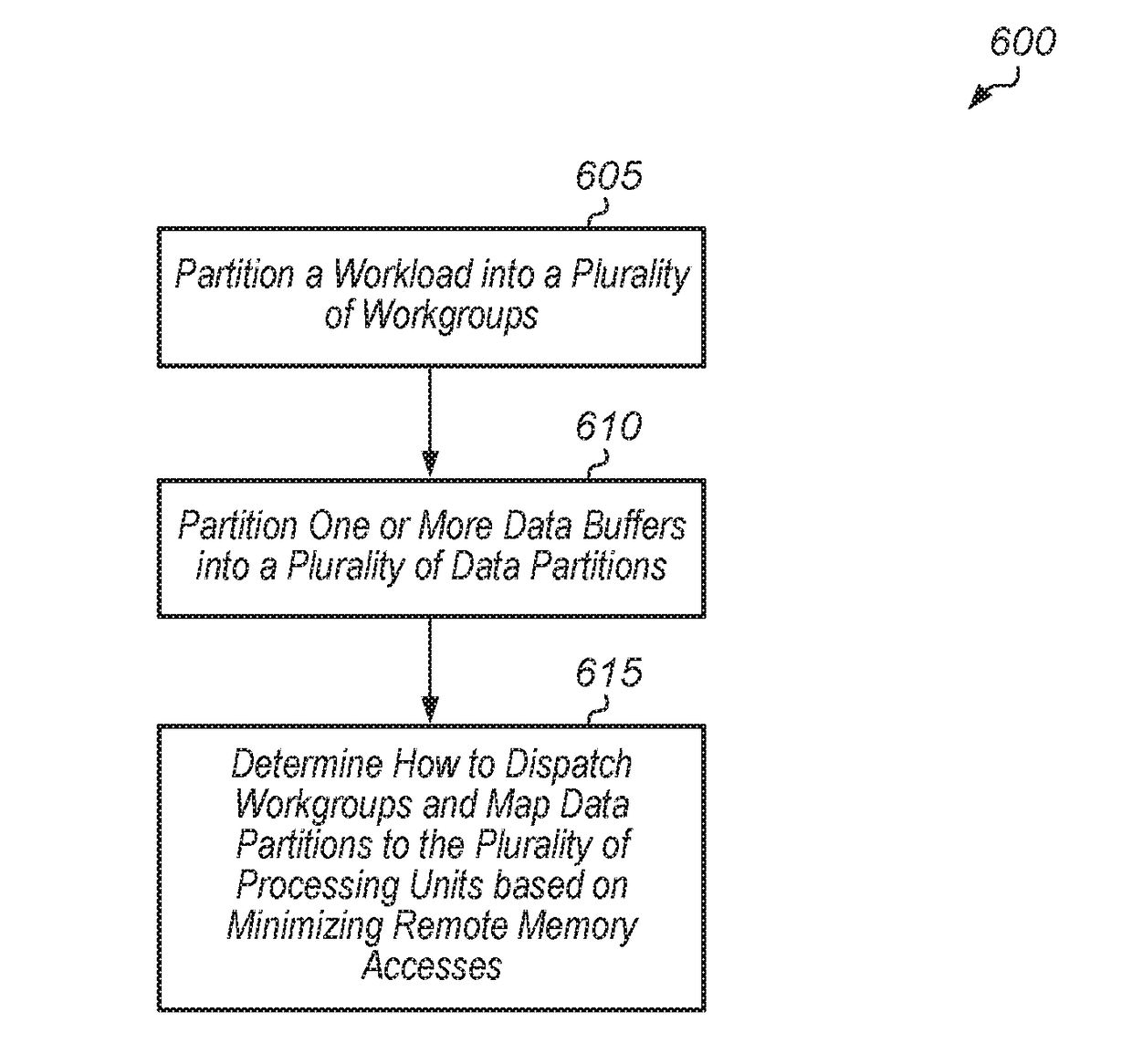

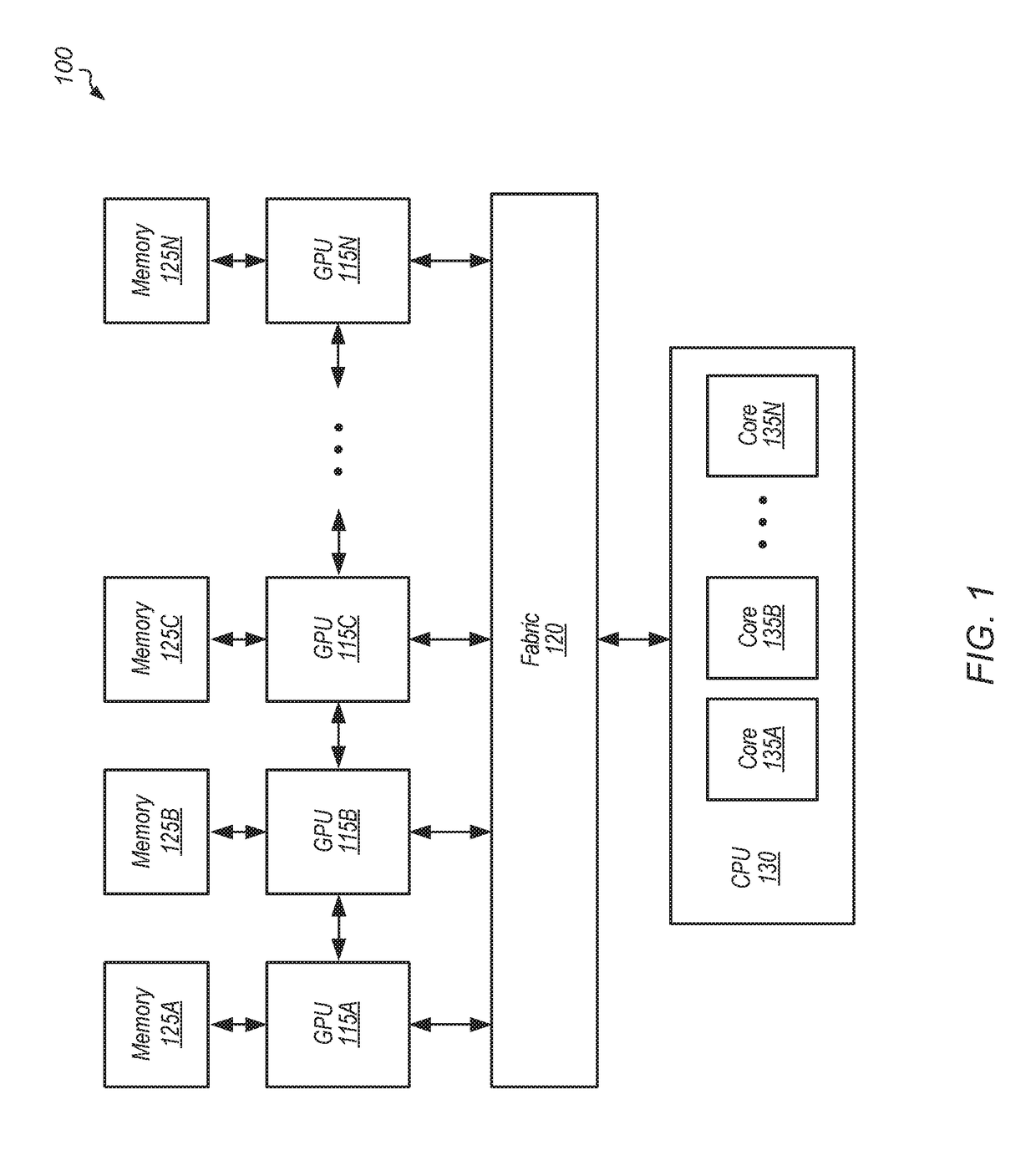

Mechanisms to improve data locality for distributed gpus

Systems, apparatuses, and methods for implementing mechanisms to improve data locality for distributed processing units are disclosed. A system includes a plurality of distributed processing units (e.g., GPUs) and memory devices. Each processing unit is coupled to one or more local memory devices. The system determines how to partition a workload into a plurality of workgroups based on maximizing data locality and data sharing. The system determines which subset of the plurality of workgroups to dispatch to each processing unit of the plurality of processing units based on maximizing local memory accesses and minimizing remote memory accesses. The system also determines how to partition data buffer(s) based on data sharing patterns of the workgroups. The system maps to each processing unit a separate portion of the data buffer(s) so as to maximize local memory accesses and minimize remote memory accesses.

Owner:ADVANCED MICRO DEVICES INC

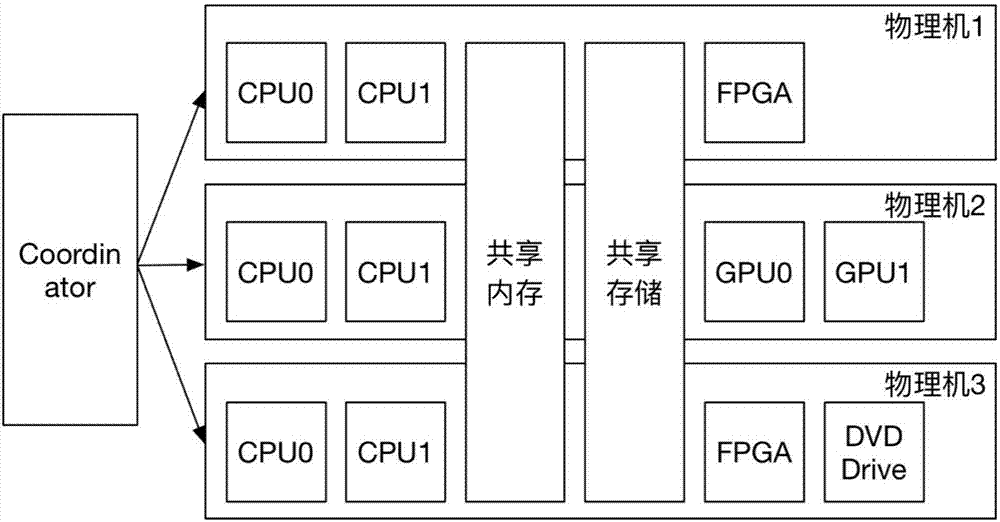

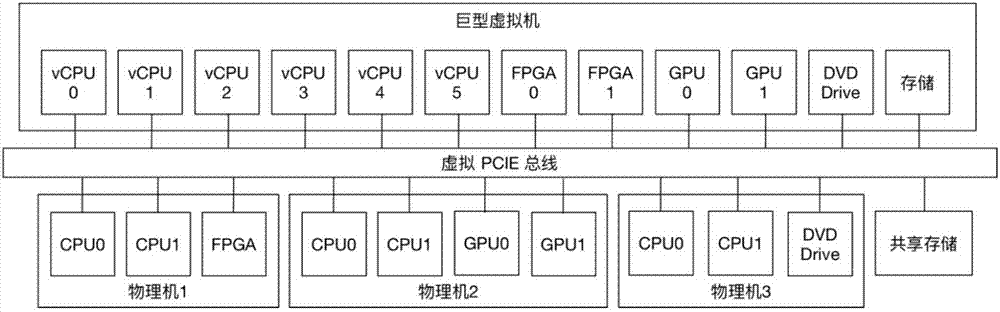

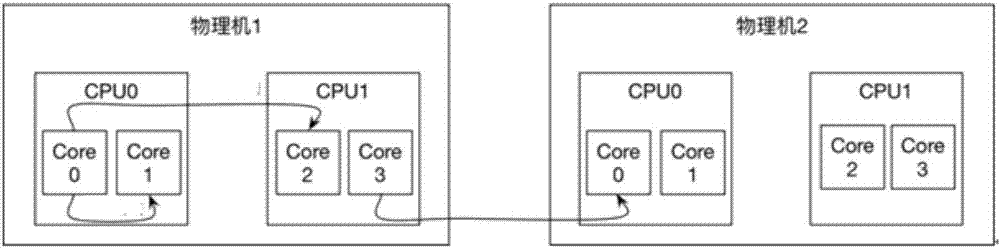

Realization method for cross-physical-machine huge virtual machine

ActiveCN107491340AImplement virtualizationEnabling High Performance ComputingInterprogram communicationSoftware simulation/interpretation/emulationDirect memory accessRemote memory access

The invention provides a realization method for a cross-physical-machine huge virtual machine. The method comprises the steps that first, a huge virtual machine is created; second, interrupt processing is performed on the huge virtual machine; third, equipment interrupt processing is performed; and fourth, remote access based on a high-speed shared memory access technology is performed. Through the method, multiple physical machines provide computing resources, IO resources and other resources for one virtual machine; and the machines perform remote memory access through the remote high-speed memory access technology, so that a high-speed distributed shared memory is realized.

Owner:SHANGHAI JIAO TONG UNIV

Method for implementing MPI-2 one sided communication

ActiveUS7694310B2Digital computer detailsMultiprogramming arrangementsComputer hardwareRemote memory access

Owner:TWITTER INC

Mechanism for reducing remote memory accesses to shared data in a multi-nodal computer system

InactiveUS7584476B2Program initiation/switchingResource allocationDirect memory accessRemote memory access

Owner:INT BUSINESS MASCH CORP

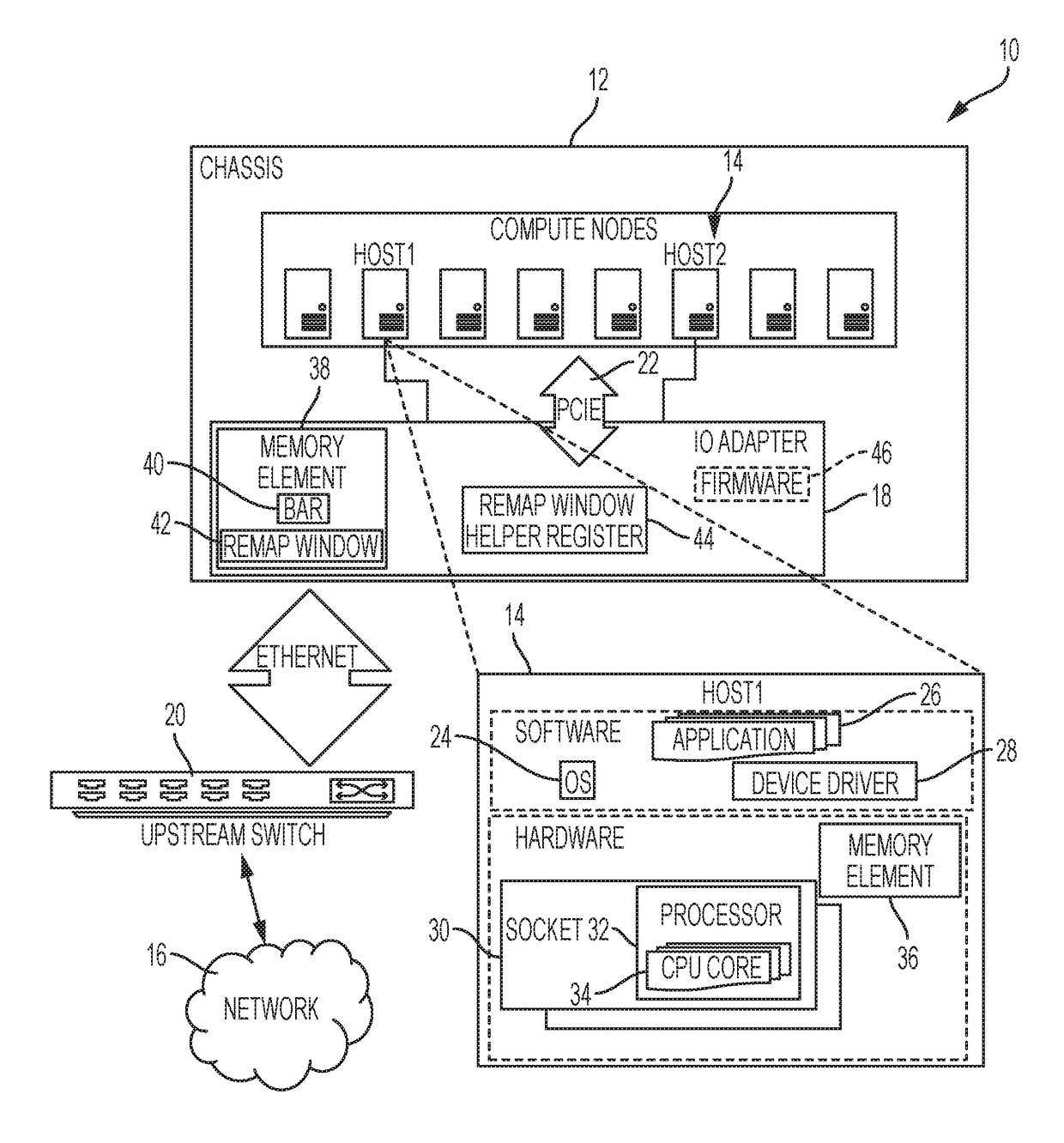

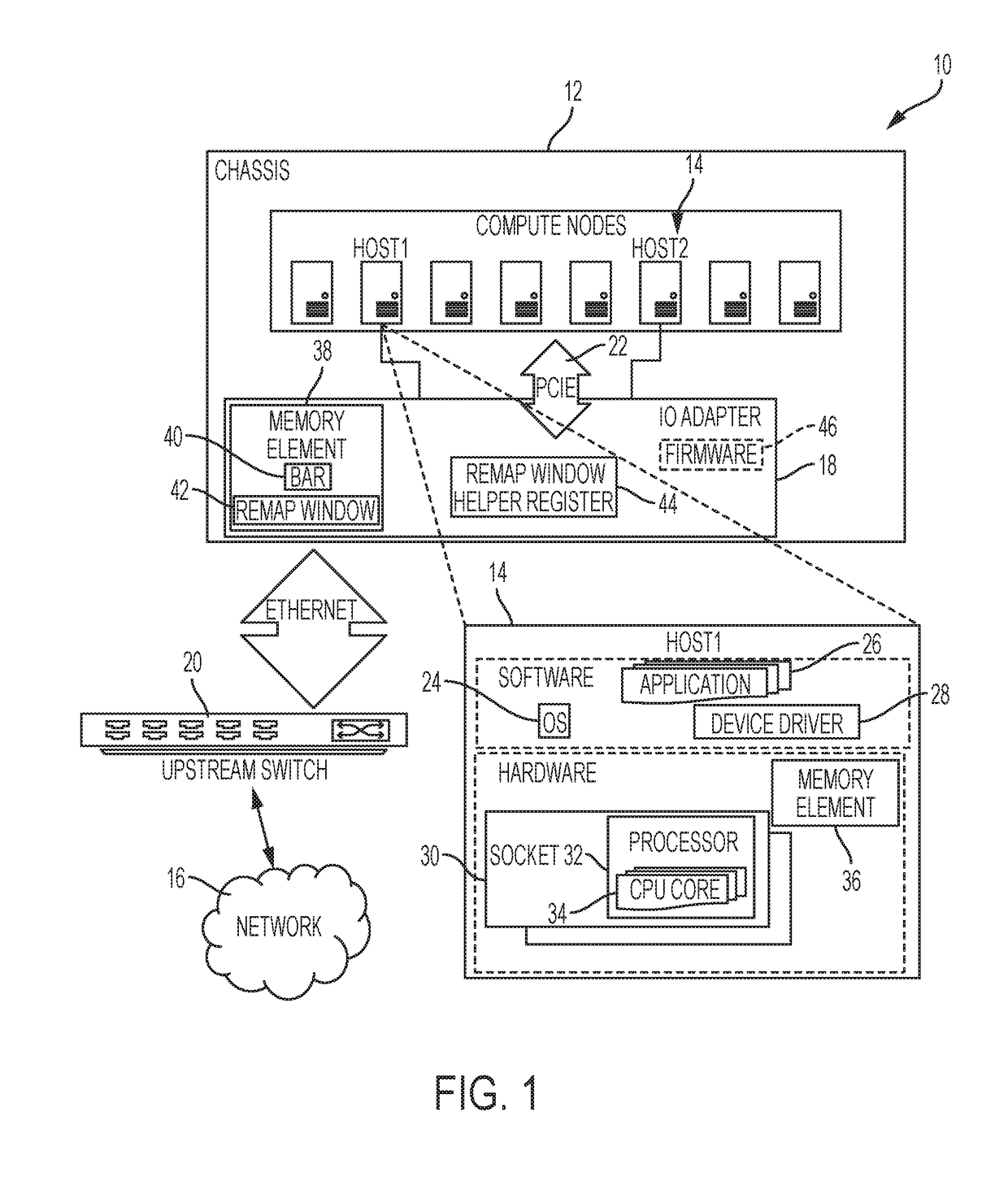

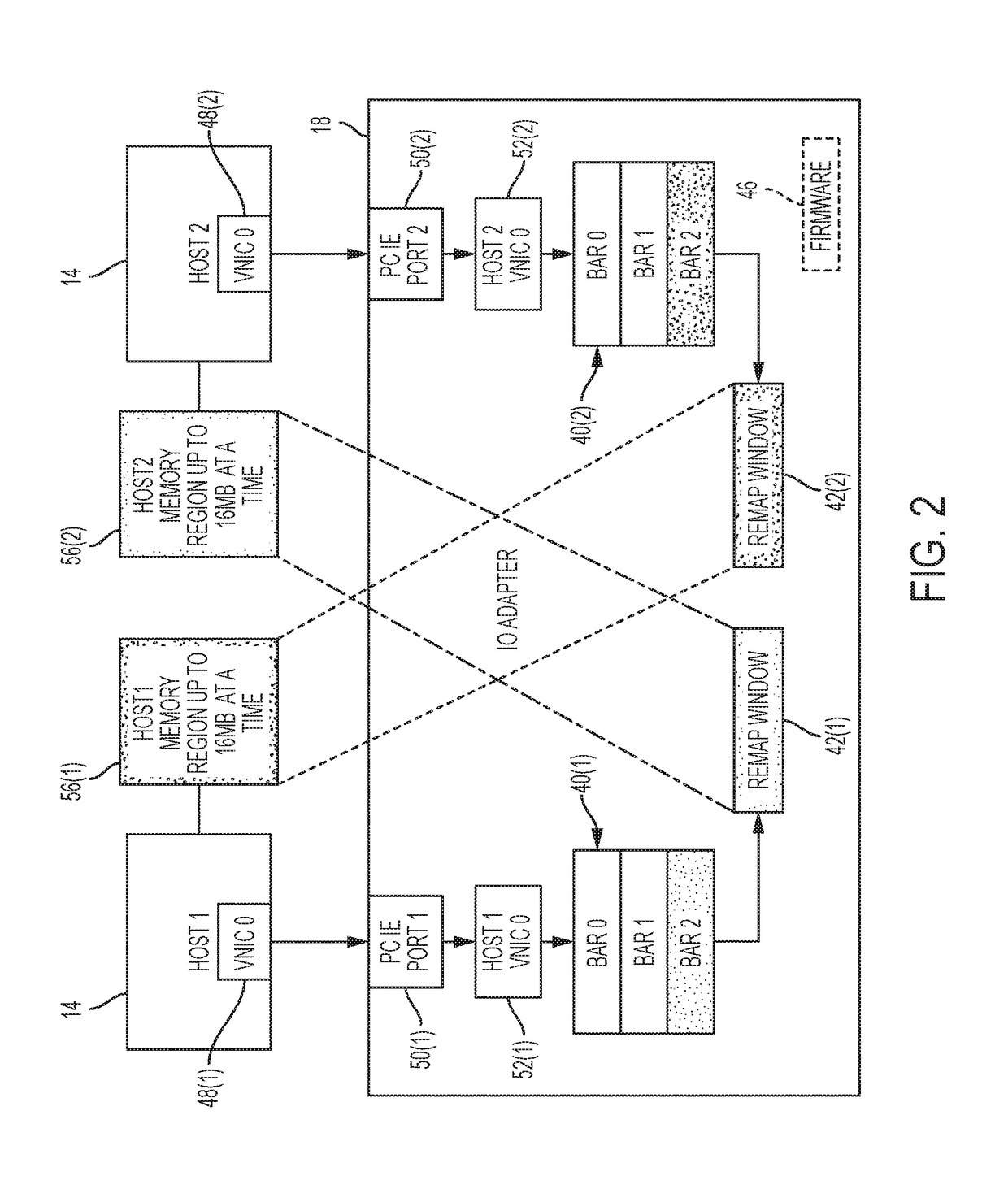

Remote memory access using memory mapped addressing among multiple compute nodes

An example method for facilitating remote memory access with memory mapped addressing among multiple compute nodes is executed at an input / output (IO) adapter in communication with the compute nodes over a Peripheral Component Interconnect Express (PCIE) bus, the method including: receiving a memory request from a first compute node to permit access by a second compute node to a local memory region of the first compute node; generating a remap window region in a memory element of the IO adapter, the remap window region corresponding to a base address register (BAR) of the second compute node; and configuring the remap window region to point to the local memory region of the first compute node, wherein access by the second compute node to the BAR corresponding with the remap window region results in direct access of the local memory region of the first compute node by the second compute node.

Owner:CISCO TECH INC

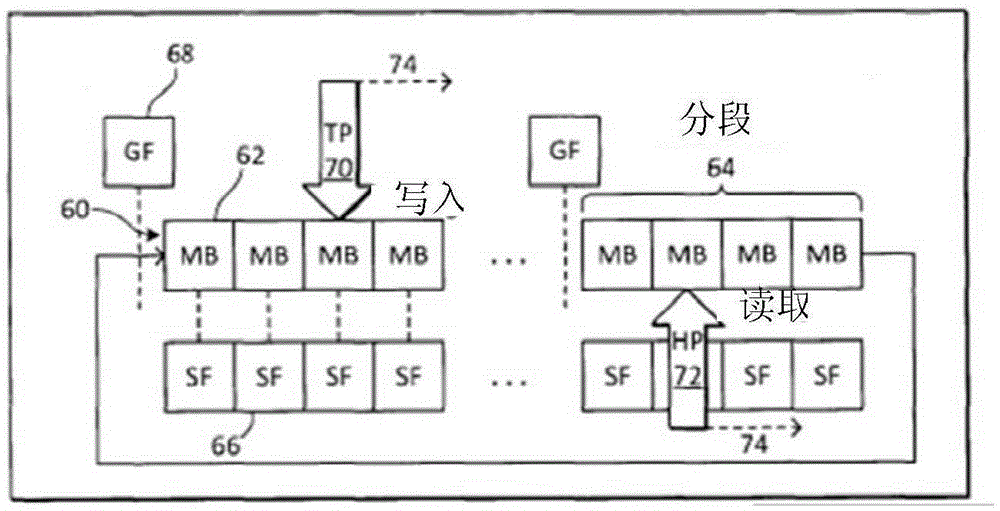

Low latency device interconnect using remote memory access with segmented queues

InactiveCN105379209ADigital computer detailsAnswer-back mechanismsDirect memory accessRemote memory access

A writing application on a computing device can reference a tail pointer to write messages to message buffers that a peer-to-peer data link replicates in memory of another computing device. The message buffers are divided into at least two queue segments, where each segment has several buffers. Messages are read from the buffers by a reading application on one of the computing devices using an advancing head pointer by reading a message from a next message buffer when determining that the next message buffer has been newly written. The tail pointer is advanced from one message buffer to another within a same queue segment after writing messages. The tail pointer is advanced from a message buffer of a current queue segment to a message buffer of a next queue segment when determining that the head pointer does not indicate any of the buffers of the next queue segment.

Owner:TSX

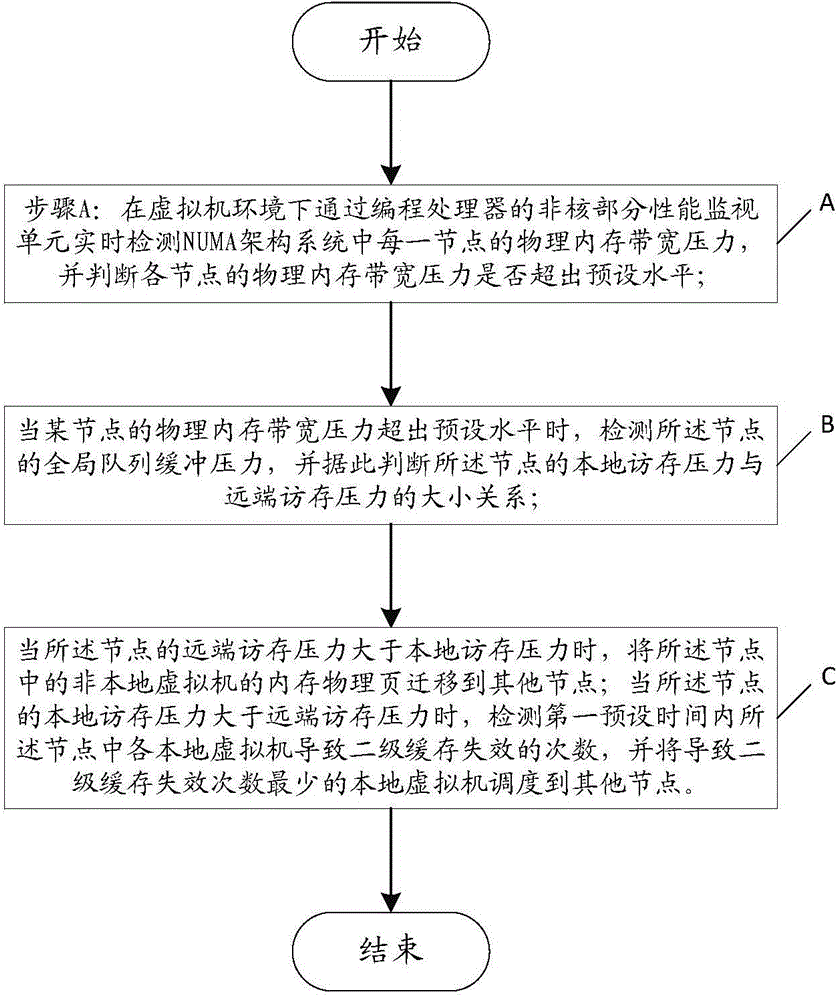

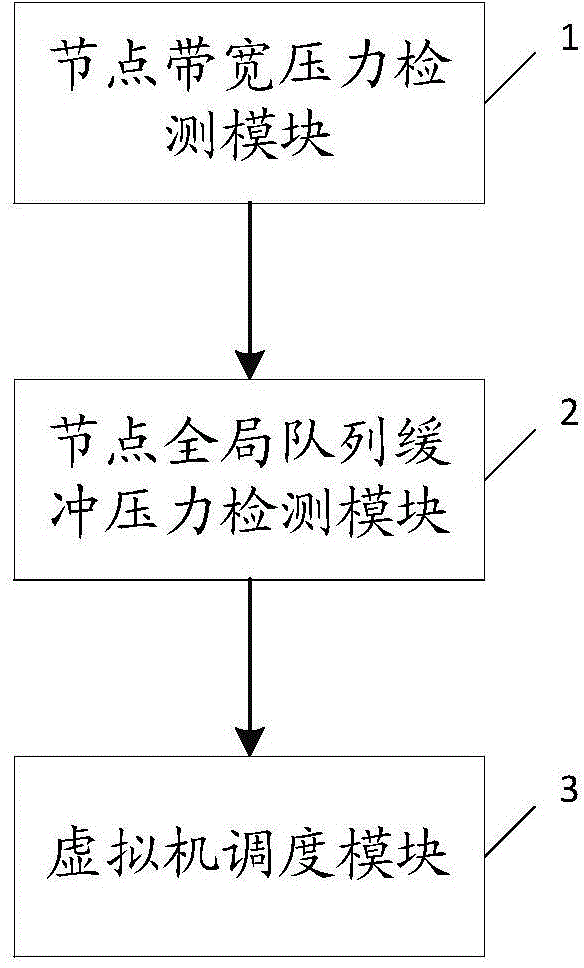

Memory access optimization method and memory access optimization system for NUMA (Non-Uniform Memory Access) architecture system in virtual machine environment

ActiveCN104657198AMemory access optimization is easy to implementResource allocationSoftware simulation/interpretation/emulationRemote memory accessNon local

The invention relates to a memory access optimization method and a memory access optimization system for an NUMA (Non-Uniform Memory Access) architecture system in a virtual machine environment. The method includes the following steps: under the virtual machine environment, the bandwidth pressure of a physical memory of each node in the NUMA architecture system is detected by a non-kernel part performance monitoring unit of a programmable processor in real time, and whether the bandwidth pressure of the physical memory of each node exceeds a preset level is judged; when the bandwidth pressure of the physical memory of a certain node exceeds the preset level, the global queue buffer pressure of the node is detected, and accordingly, the relation between the local memory access pressure and remote memory access pressure of the node is judged; when the remote memory access pressure of the node is higher than the local memory access pressure, the physical pages of the memory for non-local virtual machines in the node are migrated to other nodes; when the local memory access pressure of the node is higher than the remote memory access pressure, the frequency of second-level cache failure caused by each local virtual machine in the node within first preset time is detected, and the local virtual machines causing the least second-level cache failure frequency are scheduled to other nodes.

Owner:SHENZHEN POLYTECHNIC

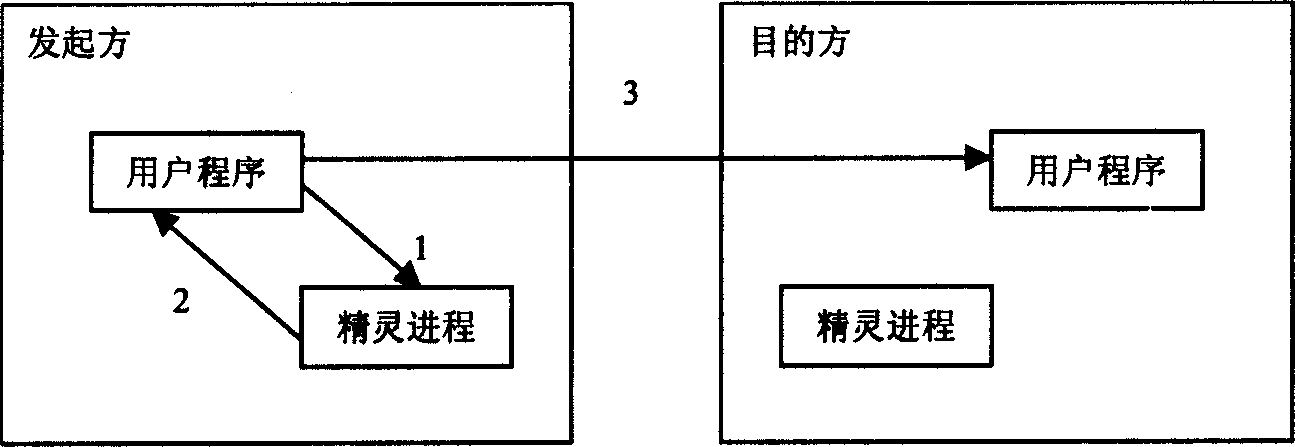

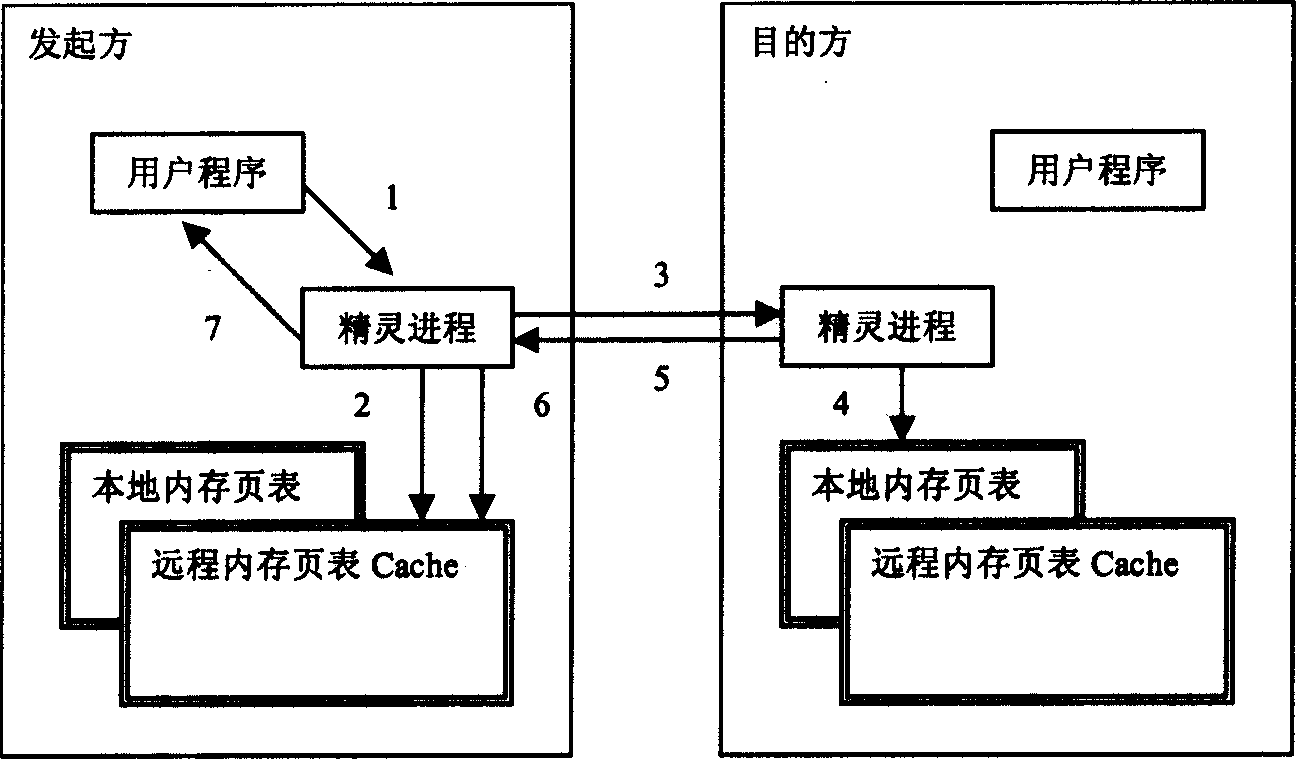

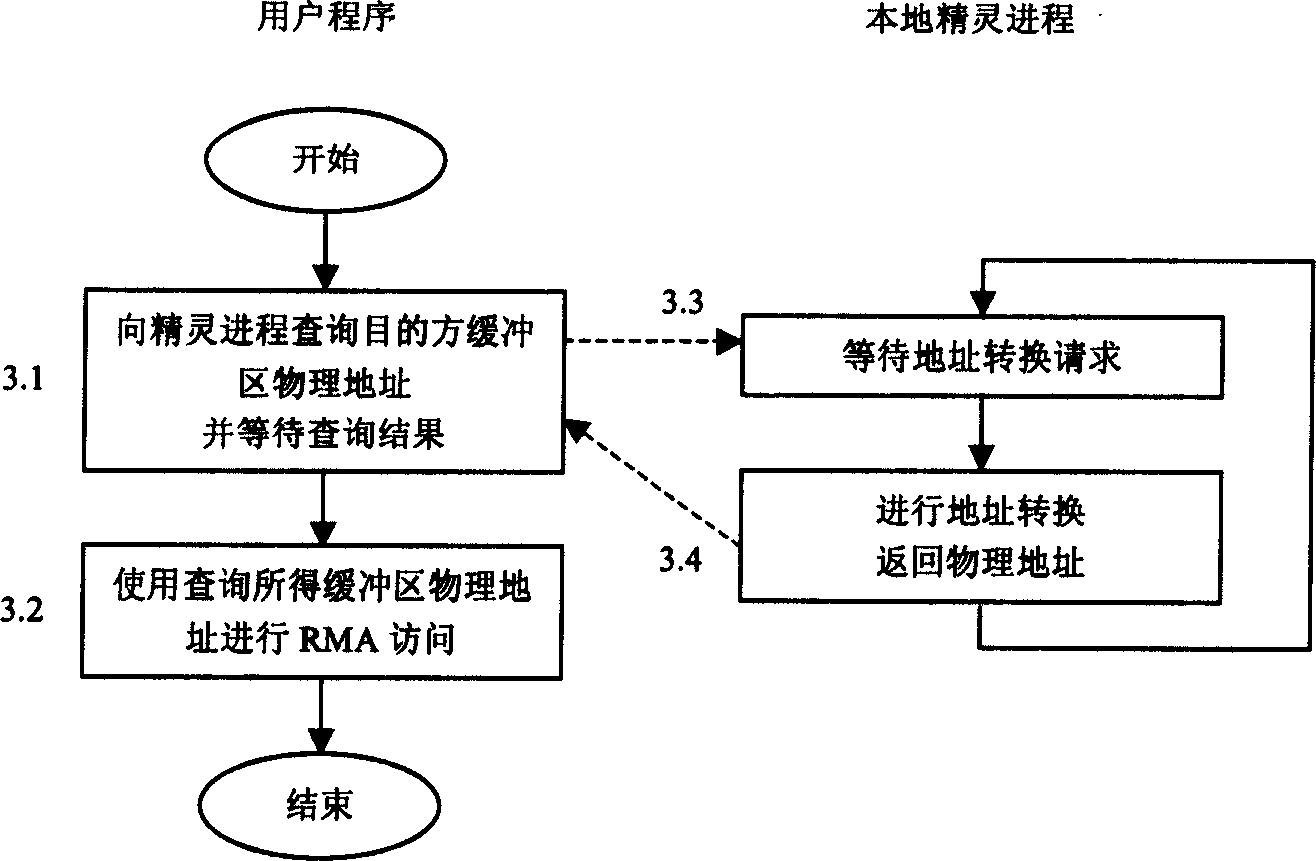

Initiator triggered remote memory access virtual-physical address conversion method

InactiveCN1547126AEasy to implementImprove scalabilityMemory adressing/allocation/relocationExtensibilityRemote memory access

The invention refers to a kind of virtual-real address transition method of remote inner memory accessing operation ignited by the sponsor in computer memory access technology field. The method realizes the virtual-real address transition to remote inner memory in RMA operation realization in the sponsor end of the RMA operation, namely the computer igniting the RMA operation. The method runs a system spirit process on each computer in the distributed memory system, in order to maintain the high speed cache in local inner memory page and the remote inner memory page. The virtual-real transition can be accomplished through the communication between the local system sprit process and the sprit processes on other computer. The invention can upgrade the augmentability of the RMA operation in communication system.

Owner:潍坊中科智视信息技术有限公司

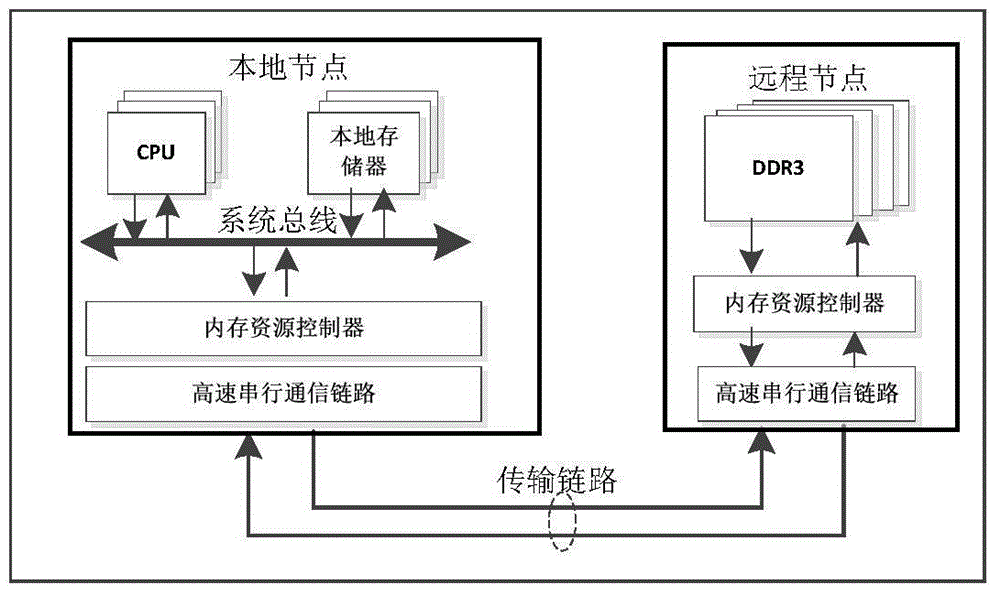

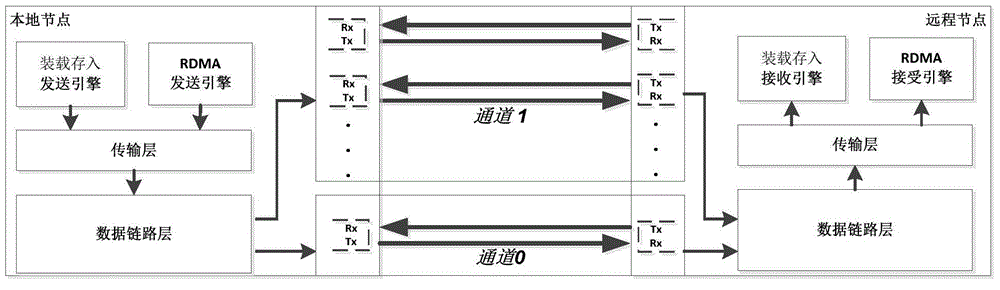

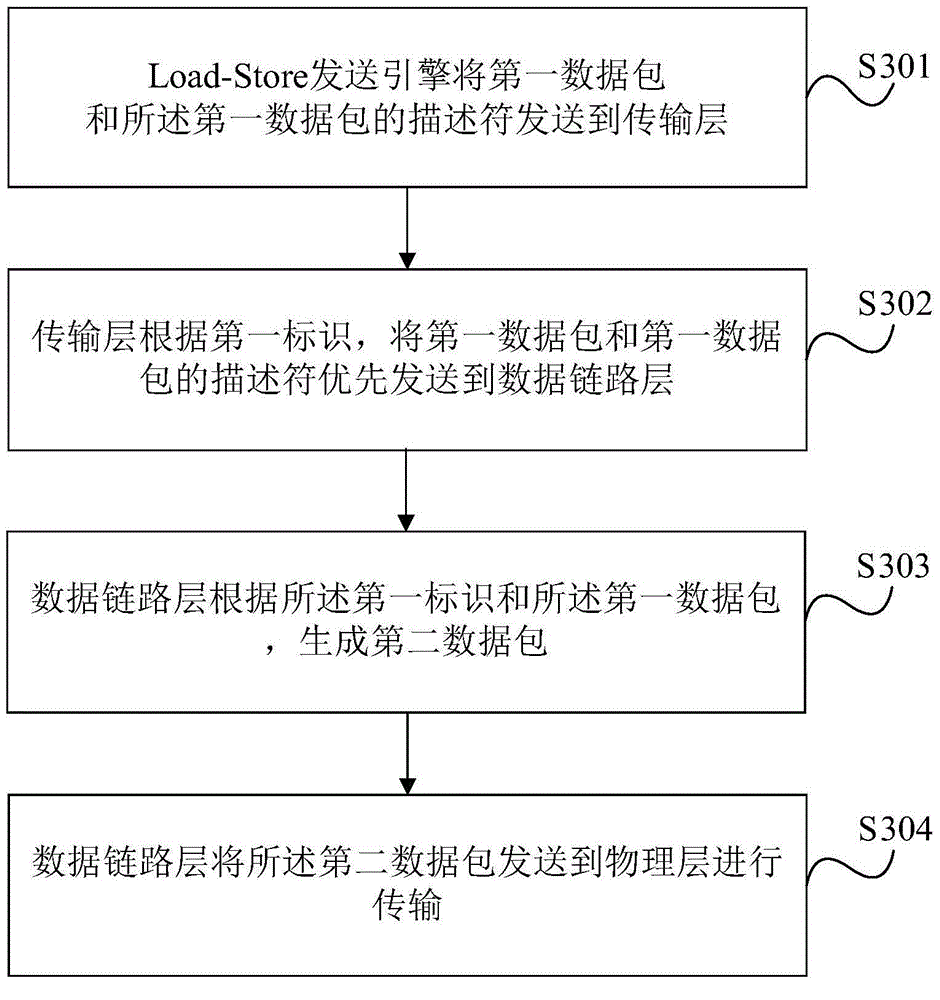

Remote memory access method, apparatus and system

ActiveCN106372013ALower latencyTransmissionElectric digital data processingComputer hardwareAccess method

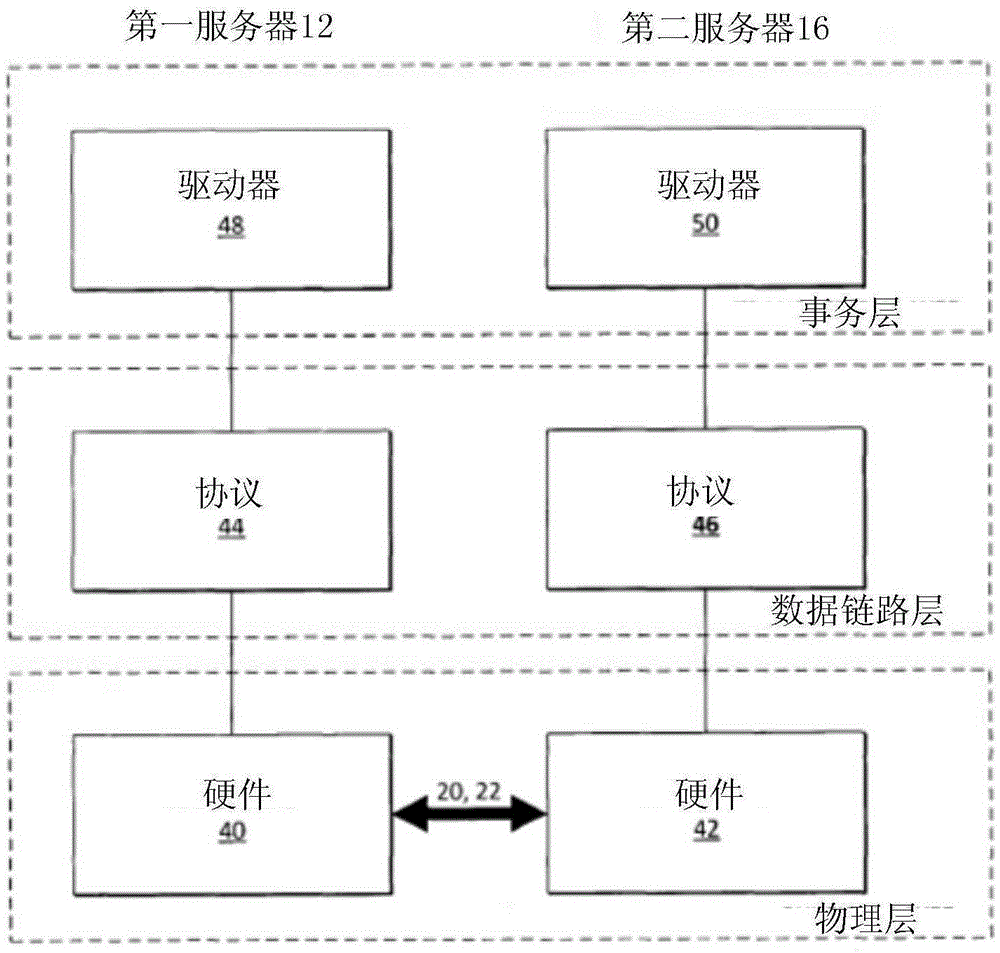

Embodiments of the invention provide a remote memory access method, apparatus and system. The method comprises the steps of setting a first identifier in a descriptor of a first data package through a Load-Store sending engine, and sending the first package and the descriptor of the first data package to a transmission layer, wherein the first identifier is used for identifying that the data package is from the Load-Store sending engine; preferentially sending the first data package and the descriptor of the first data package to a data link layer by the transmission layer according to the first identifier; and generating a second data package by the data link layer according to the first identifier and the first data package, and sending the second data package to a physical layer for performing transmission, wherein the second data package contains the first identifier. According to the remote memory access method, apparatus and system, the data package from the Load-Store sending engine can be preferentially processed, so that the time delay of Load-Store memory access is shortened.

Owner:HUAWEI TECH CO LTD +1

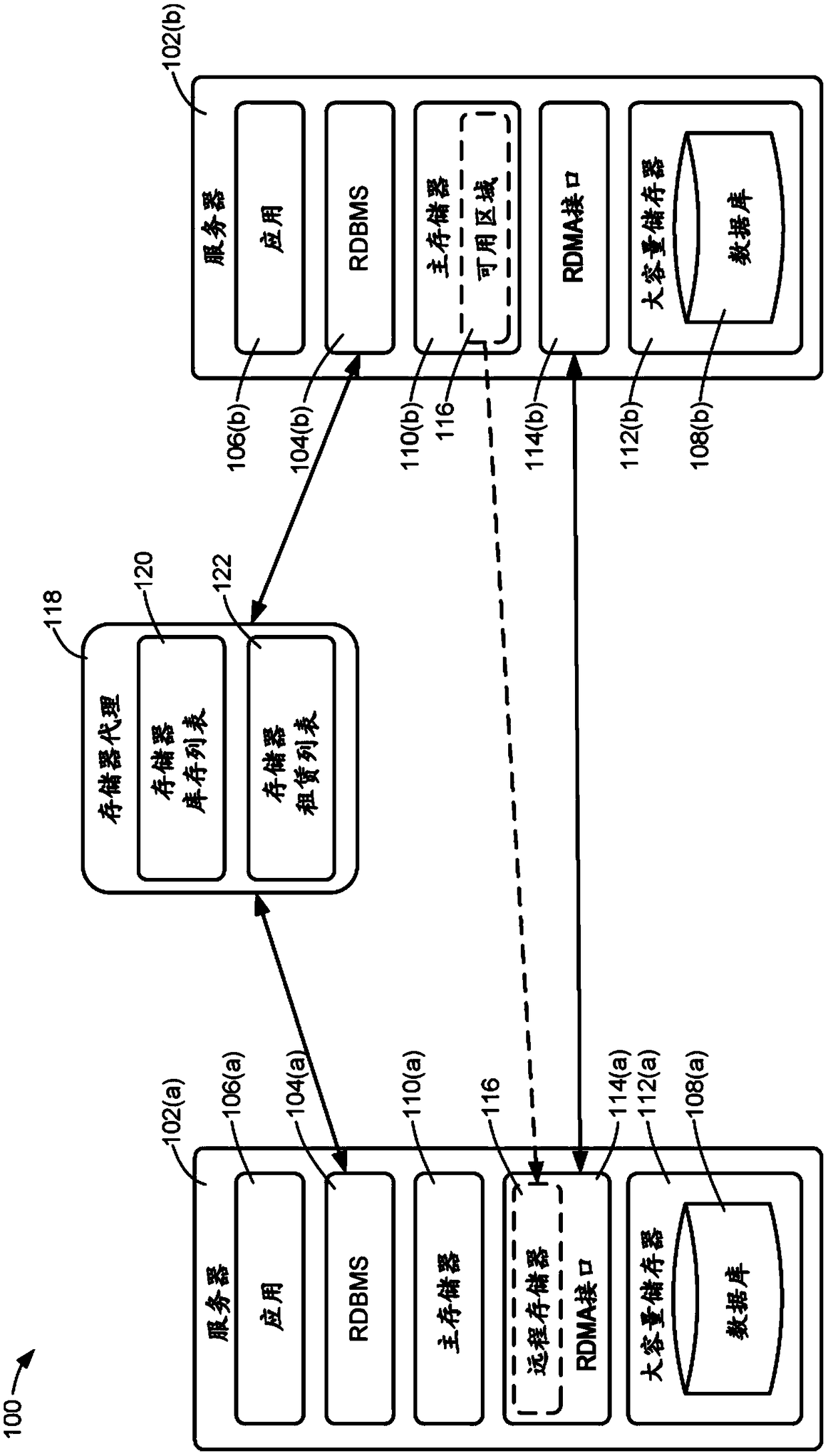

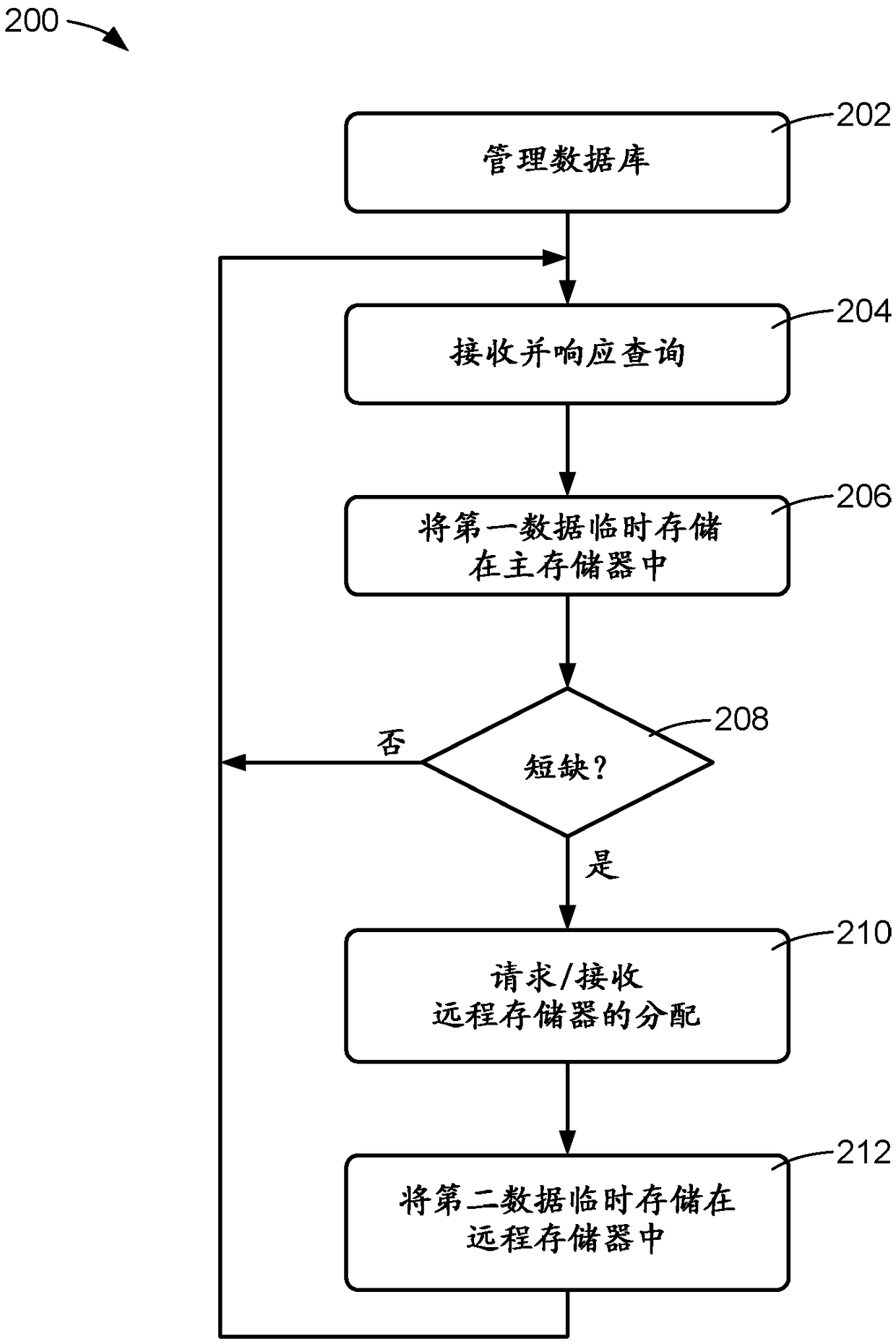

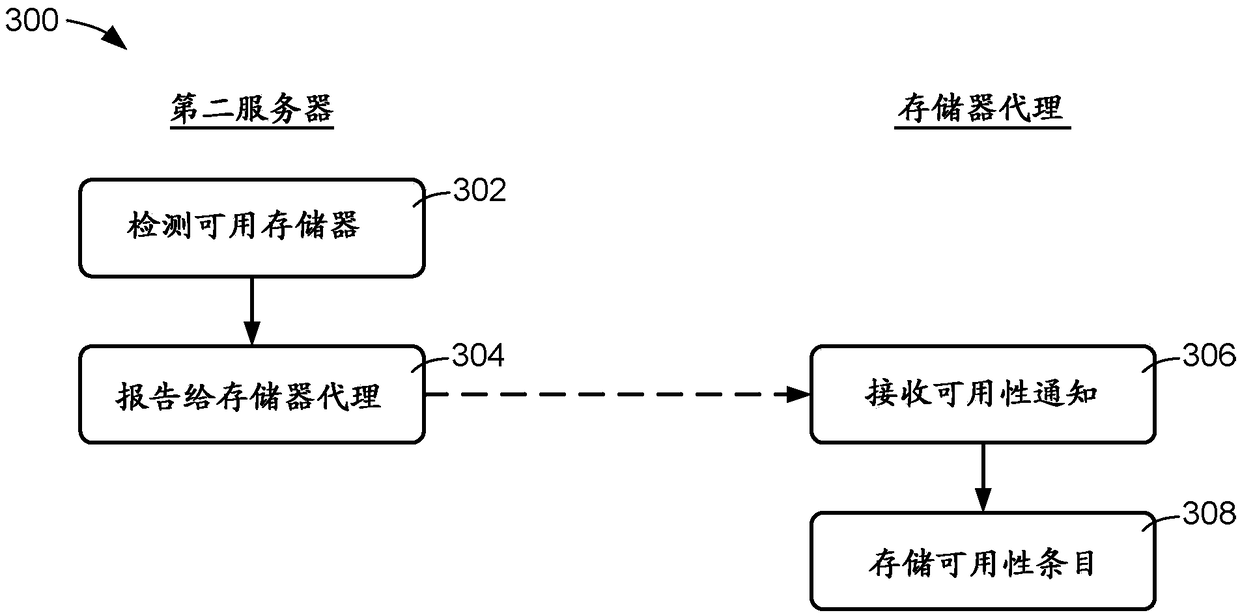

Memory sharing for working data using rdma

A server system may include a cluster of multiple computers that are networked for high-speed data communications. Each of the computers has a remote direct memory access (RDMA) network interface to allow high-speed memory sharing between computers. A relational database engine of each computer is configured to utilize a hierarchy of memory for temporary storage of working data, including in orderof decreasing access speed (a) local main memory, (b) remote memory accessed via RDMS, and (c) mass storage. The database engine uses the local main memory for working data, and additionally uses theRDMA accessible memory for working data when the local main memory becomes depleted. The server system may include a memory broker to which individual computers report their available or unused memory, and which leases shared memory to requesting computers.

Owner:MICROSOFT TECH LICENSING LLC

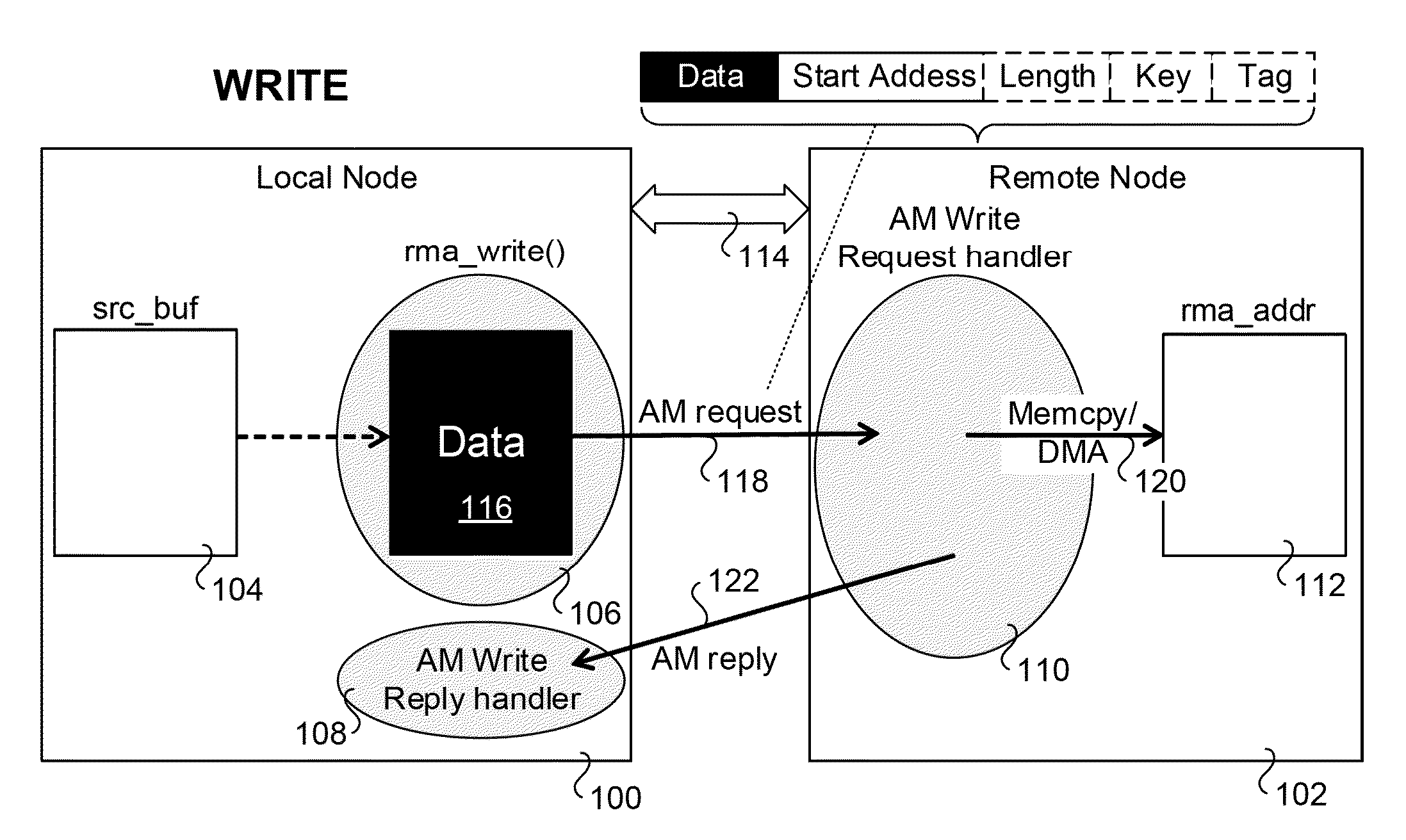

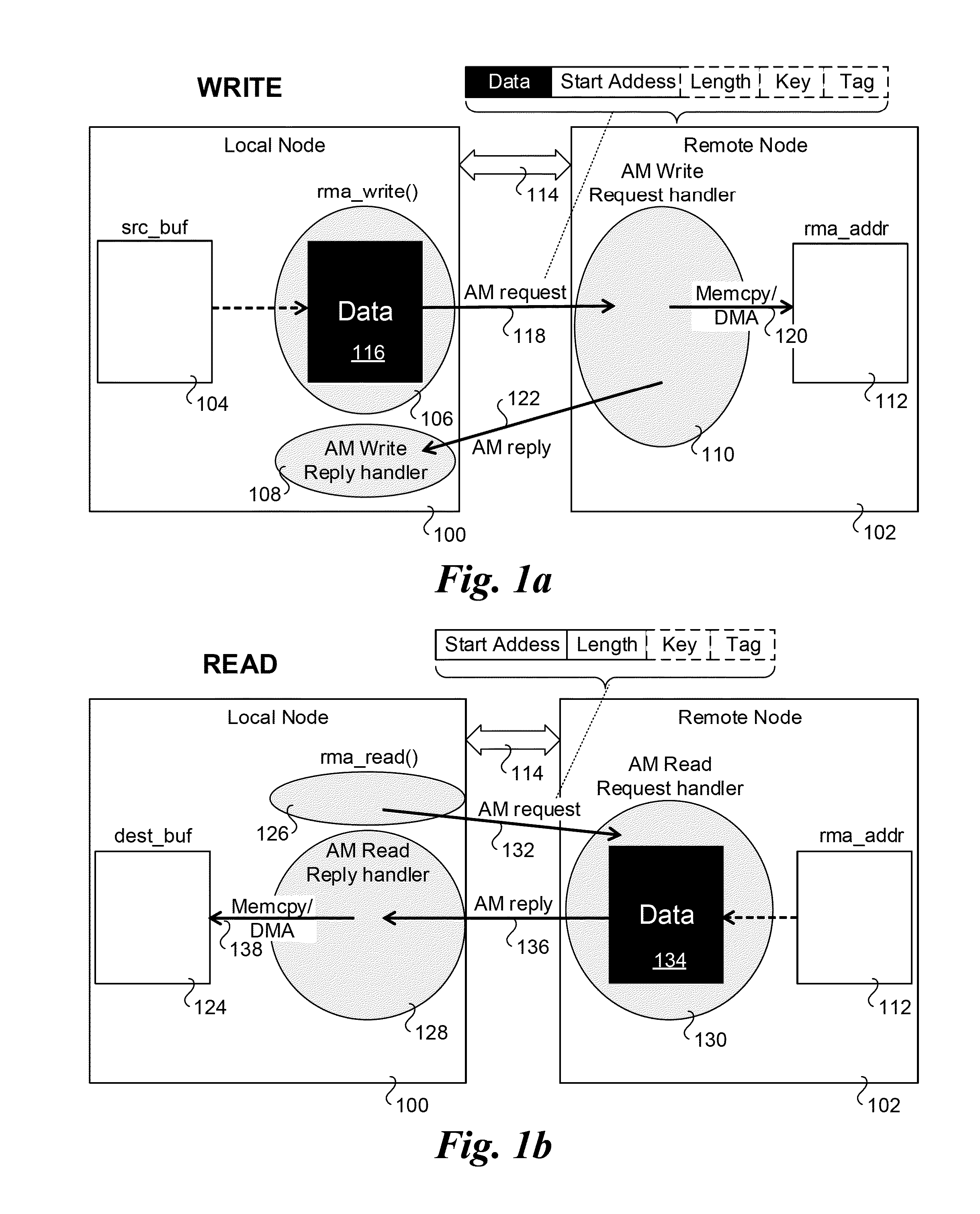

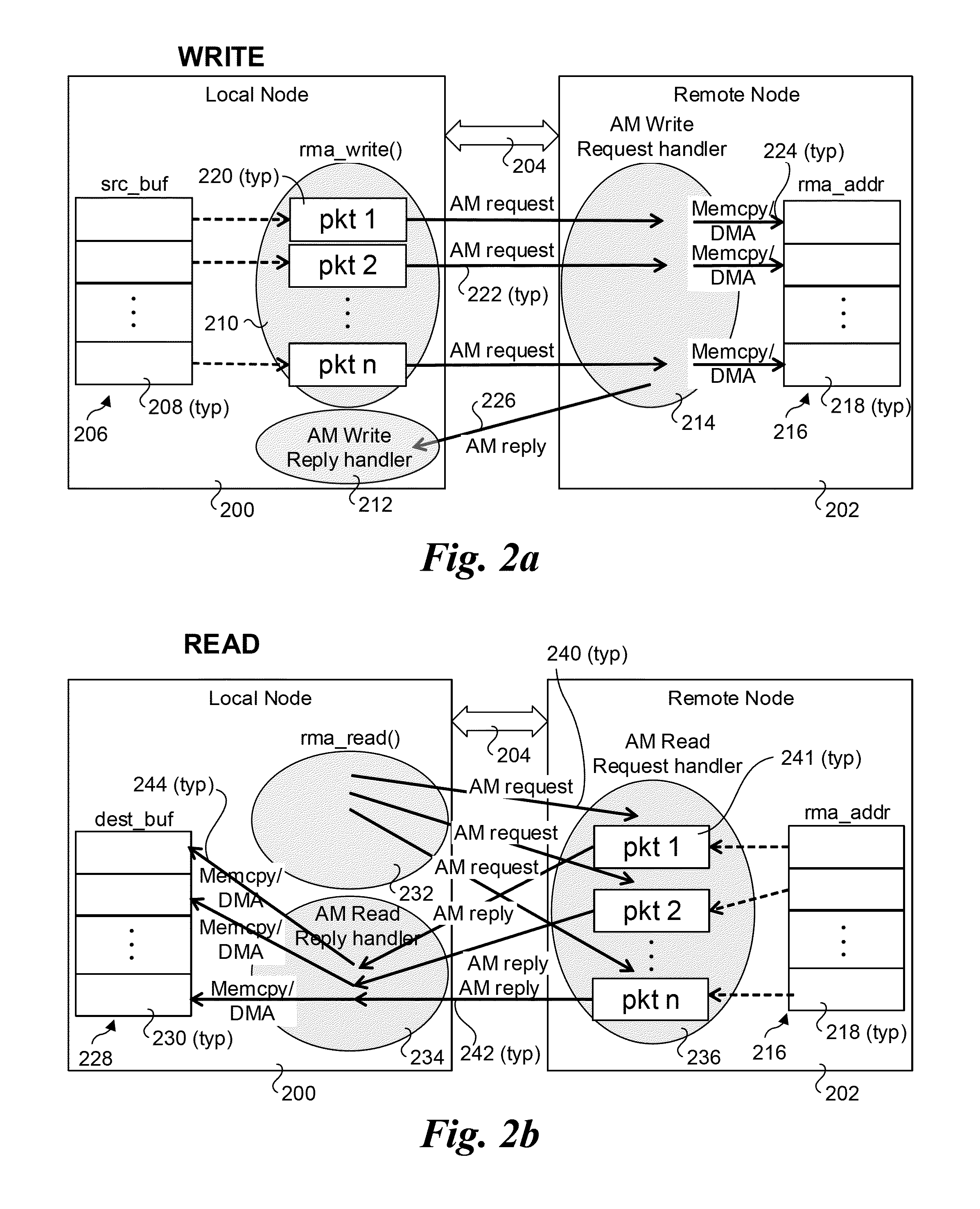

Supporting rma api over active message

ActiveUS20160062944A1Digital computer detailsProgram controlRemote memory accessApplication programming interface

Methods, apparatus, and software for implementing RMA application programming interfaces (APIs) over Active Message (AM). AM write and AM read requests are sent from a local node to a remote node to write data to or read data from memory on the remote node using Remote Memory Access (RMA) techniques. The AM requests are handled by corresponding AM handlers, which automatically perform operations associated with the requests. For example, for AM write requests an AM write request handler may write data contained in an AM write request to a remote address space in memory on the remote node, or generate a corresponding RMA write request that is enqueued into an RMA queue used in accordance with a tagged message scheme. Similar operations are performed by AM read requests handlers. RMA reads and writes using AM are further facilitated through use of associated read, write, and RMA progress modules.

Owner:INTEL CORP

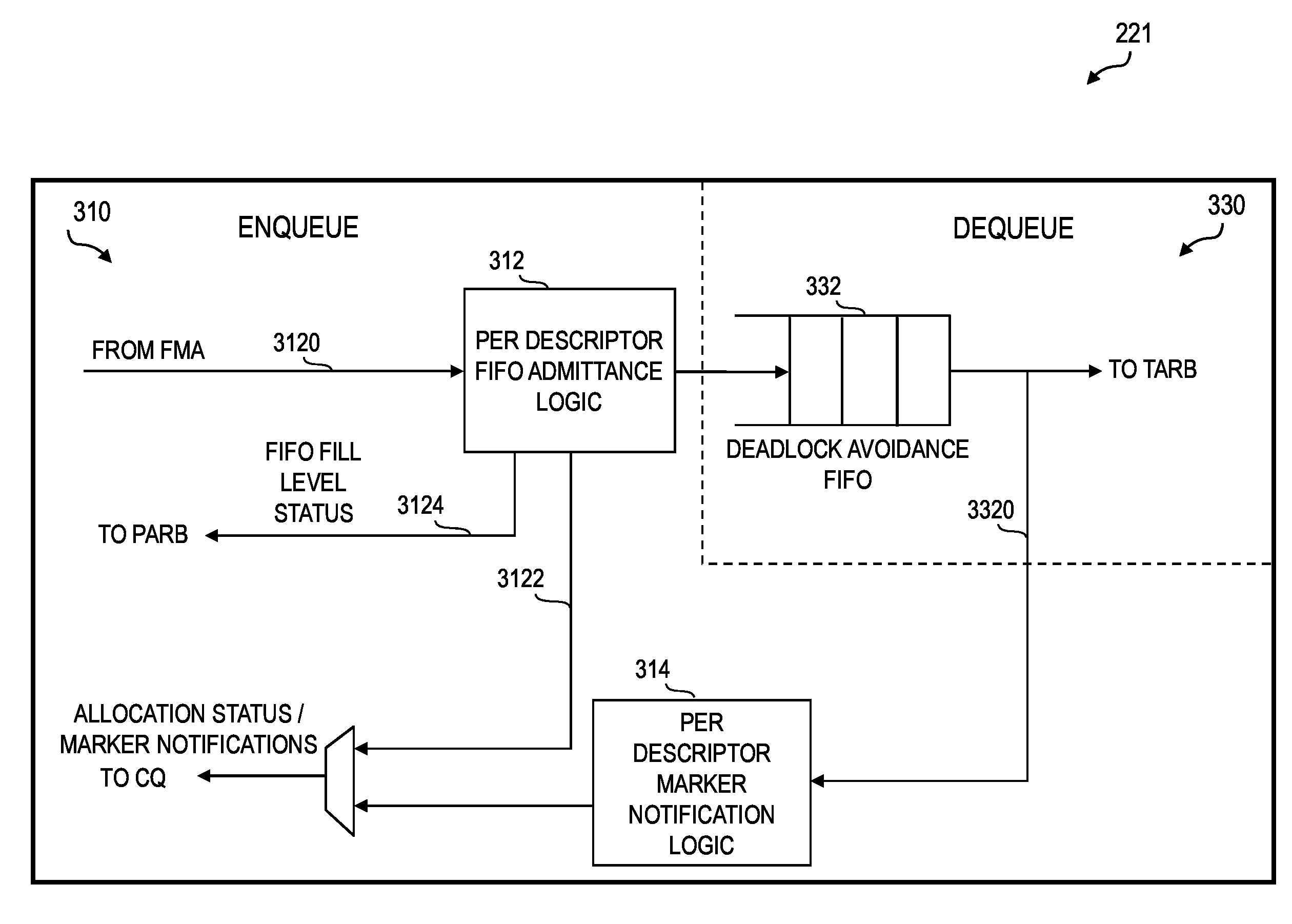

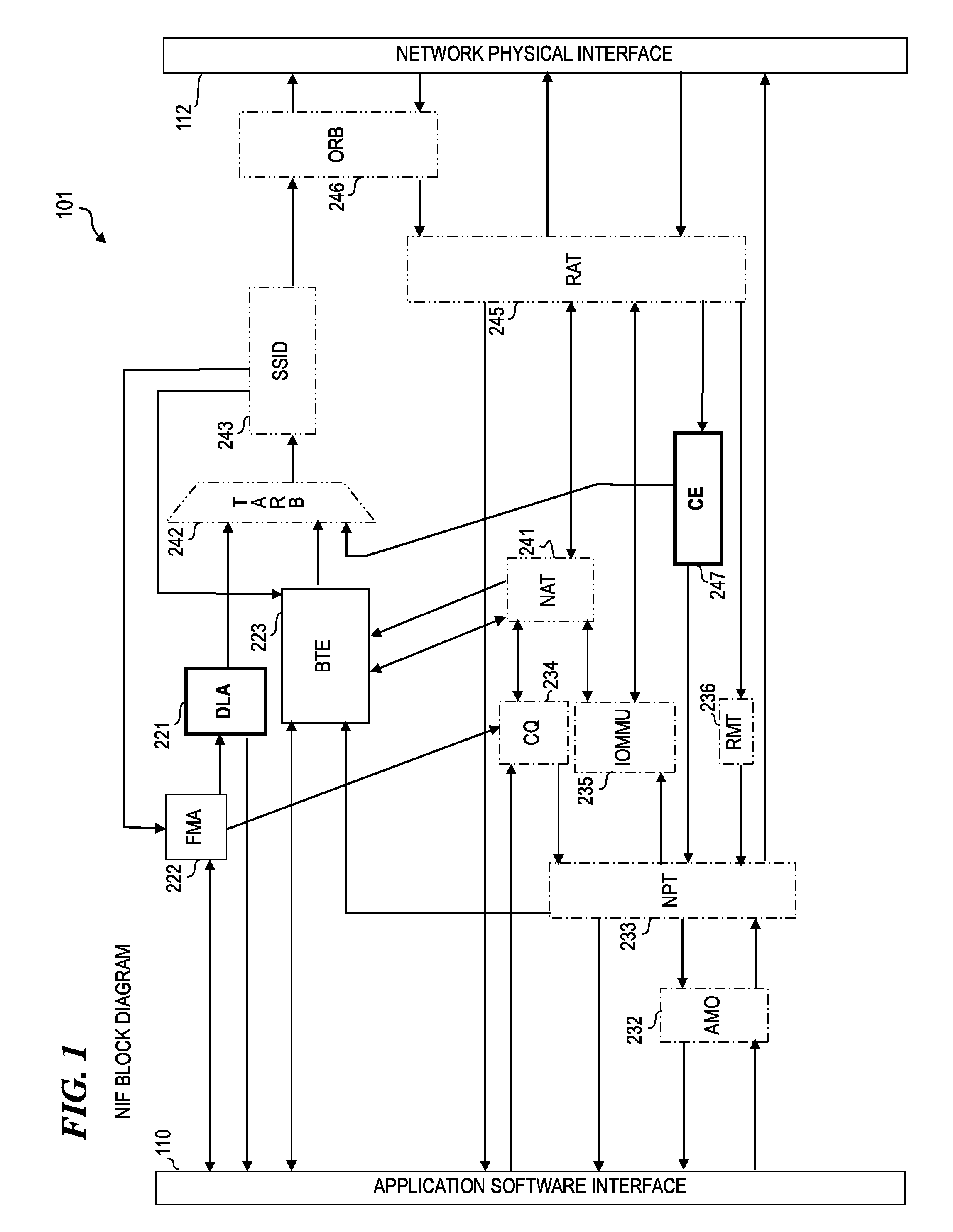

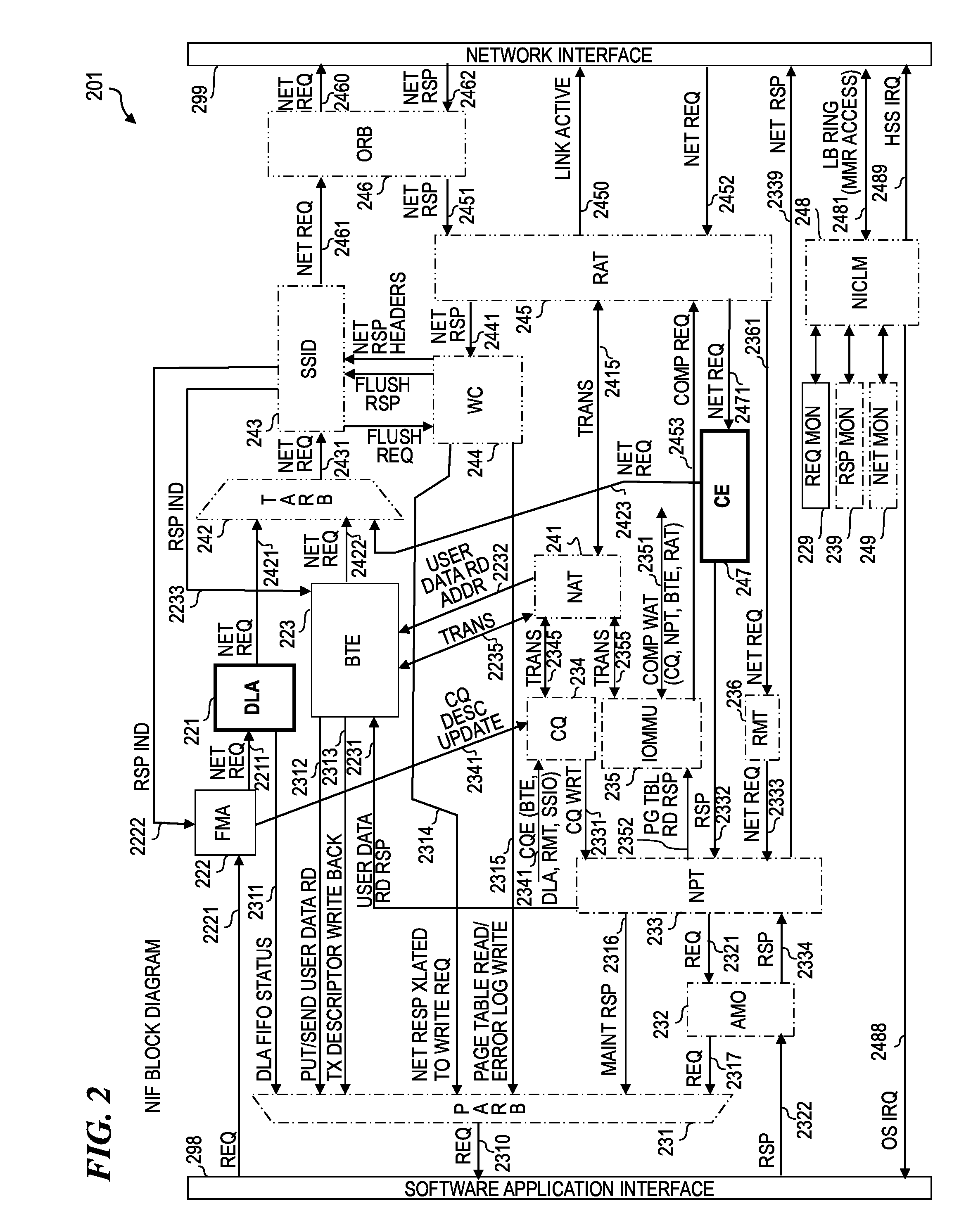

Apparatus and method for deadlock avoidance

ActiveUS20160077997A1Avoid deadlockPrevent deadlock cyclesProgram synchronisationSoftware engineeringMassively parallelRemote memory access

An improved method for the prevention of deadlock in a massively parallel processor (MPP) system wherein, prior to a process sending messages to another process running on a remote processor, the process allocates space in a deadlock-avoidance FIFO. The allocated space provides a “landing zone” for requests that the software process (the application software) will subsequently issue using a remote-memory-access function. In some embodiments, the deadlock-avoidance (DLA) function provides two different deadlock-avoidance schemes: controlled discard and persistent reservation. In some embodiments, the software process determines which scheme will be used at the time the space is allocated.

Owner:CRAY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com