Remote memory access using memory mapped addressing among multiple compute nodes

a memory mapped and compute node technology, applied in the field of communication, can solve problems such as efficient inter-process communication

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

example embodiments

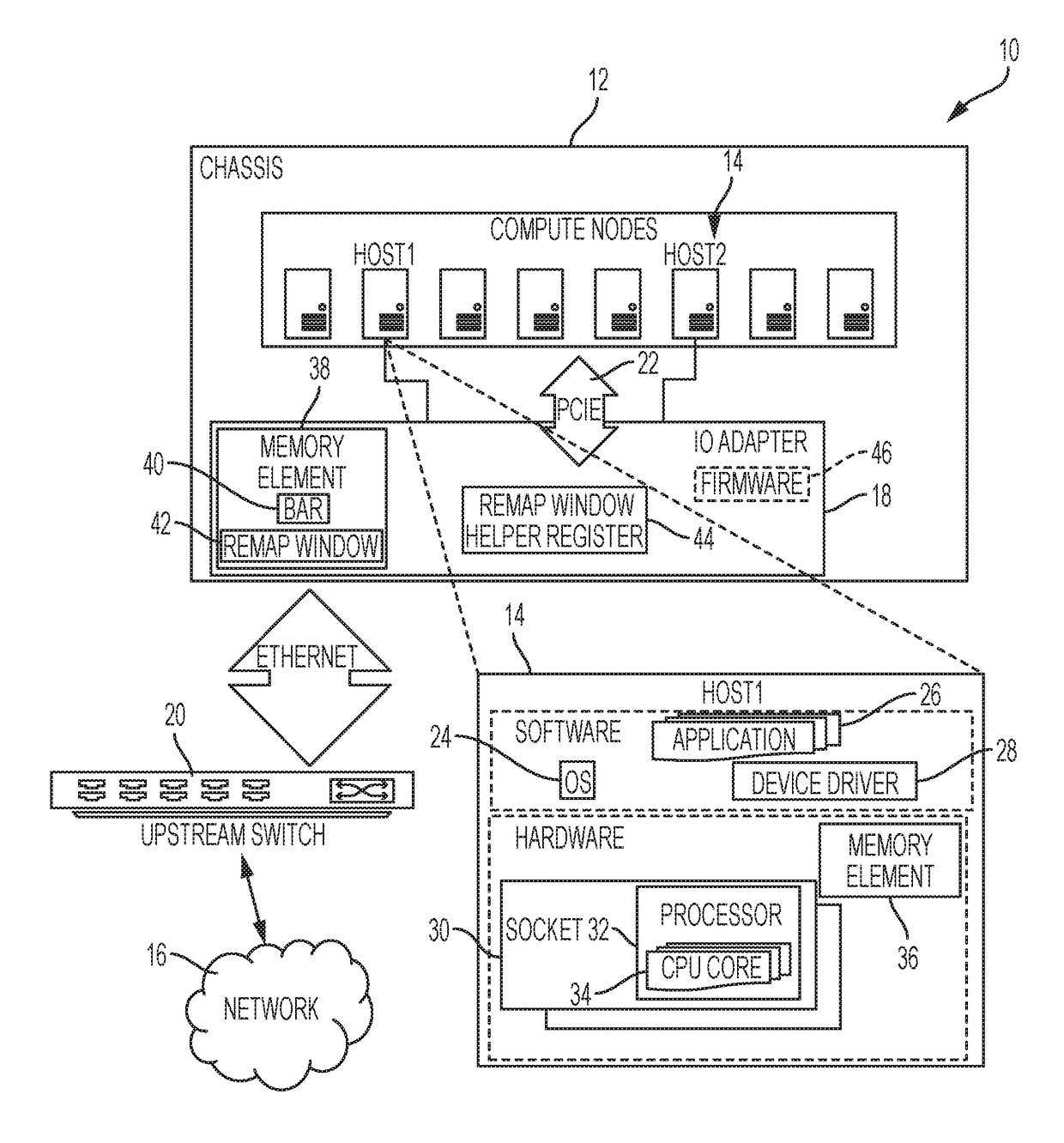

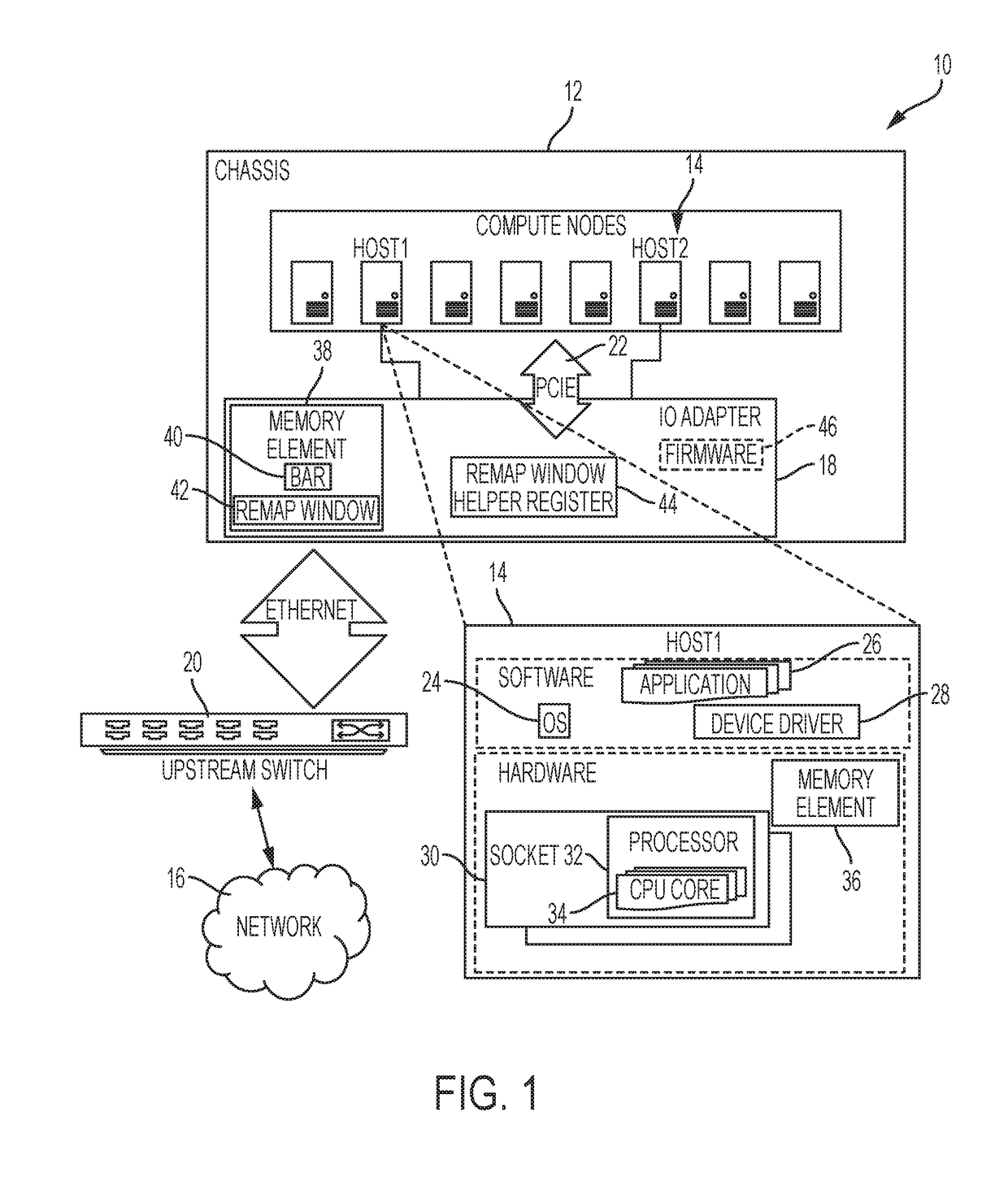

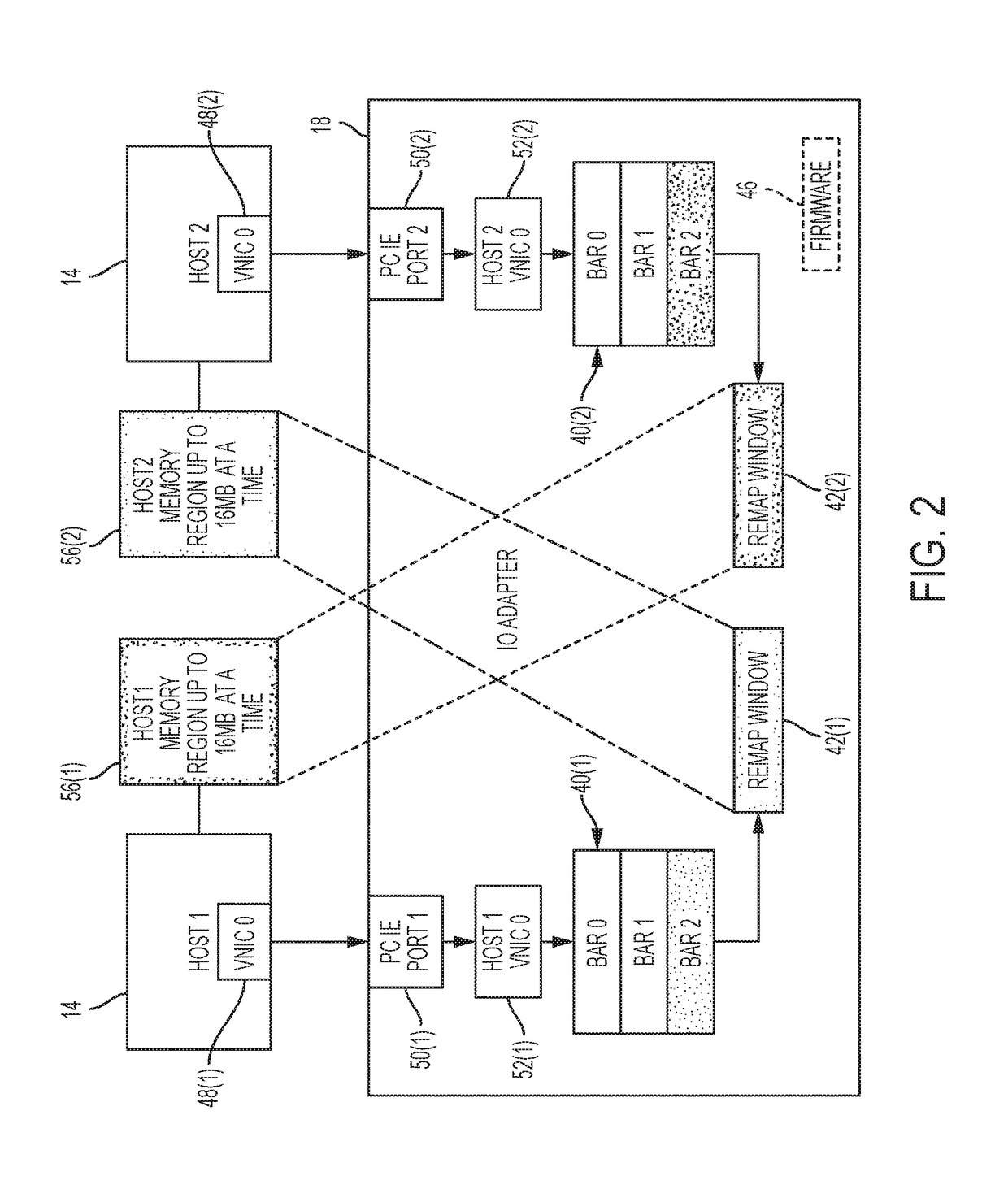

[0014]Turning to FIG. 1, FIG. 1 is a simplified block diagram illustrating a communication system 10 for facilitating remote memory access with memory mapped addressing among multiple compute nodes in accordance with one example embodiment. FIG. 1 illustrates a communication system 10 comprising a chassis 12, which includes a plurality of compute nodes 14 that communicate with network 16 through a common input / output (I / O) adapter 18. An upstream switch 20 facilitates north-south traffic between compute nodes 14 and network 16. Shared IO adapter 18 presents network and storage devices on a Peripheral Component Interconnect Express (PCIE) bus 22 to compute nodes 14. In various embodiments, each compute node appears as a PCIE device to other compute nodes in chassis 12.

[0015]In a general sense, compute nodes 14 include capabilities for processing, memory, network and storage resources. For example, as shown in greater detail in the figure, compute node Host1 runs (e.g., executes) an o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com