Patents

Literature

606 results about "Memory map" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

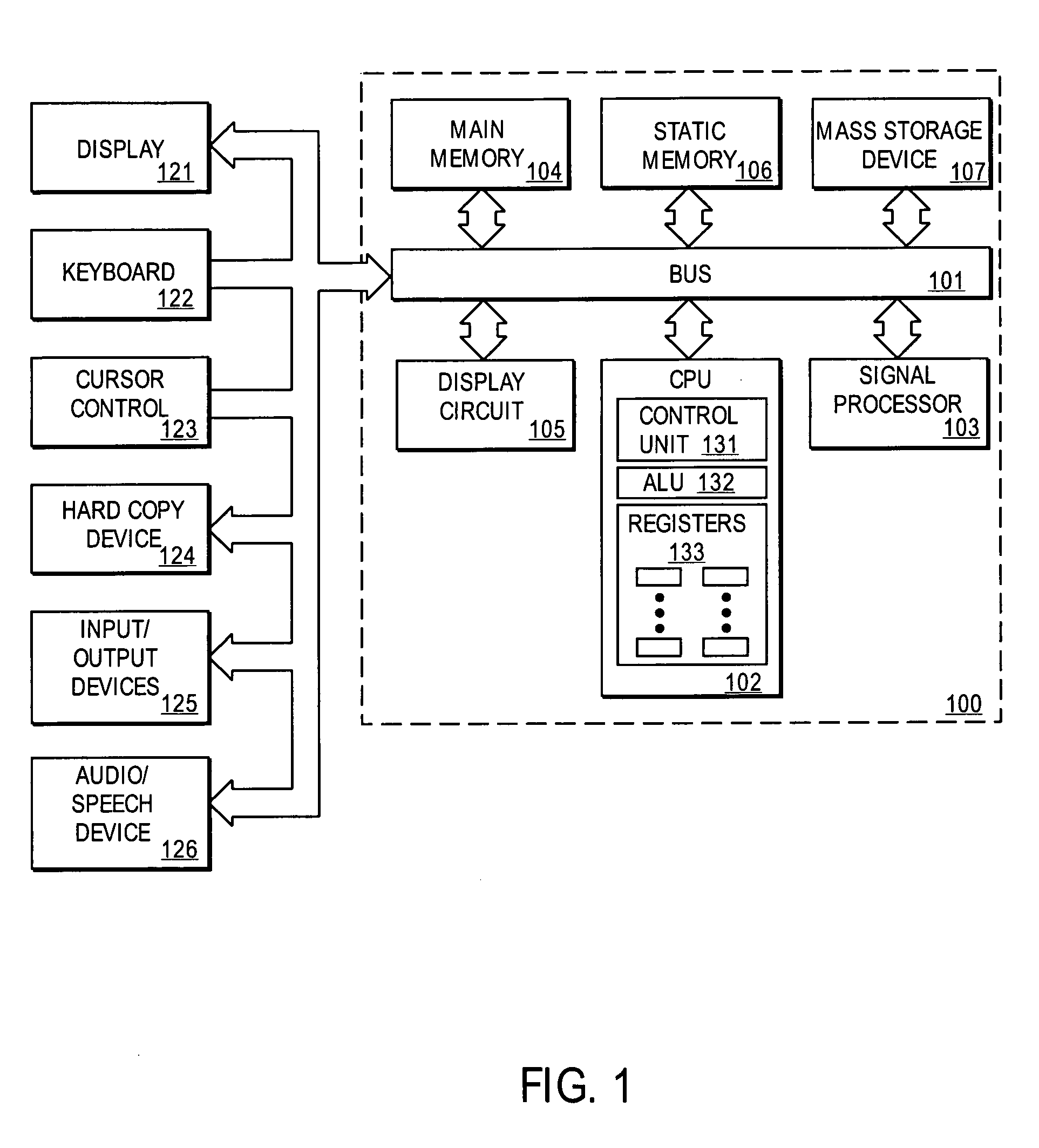

In computer science, a memory map is a structure of data (which usually resides in memory itself) that indicates how memory is laid out. Memory maps can have a different meaning in different parts of the operating system. It is the fastest and most flexible cache organization which uses an associative memory. The associative memory stores both the address and content of the memory word.

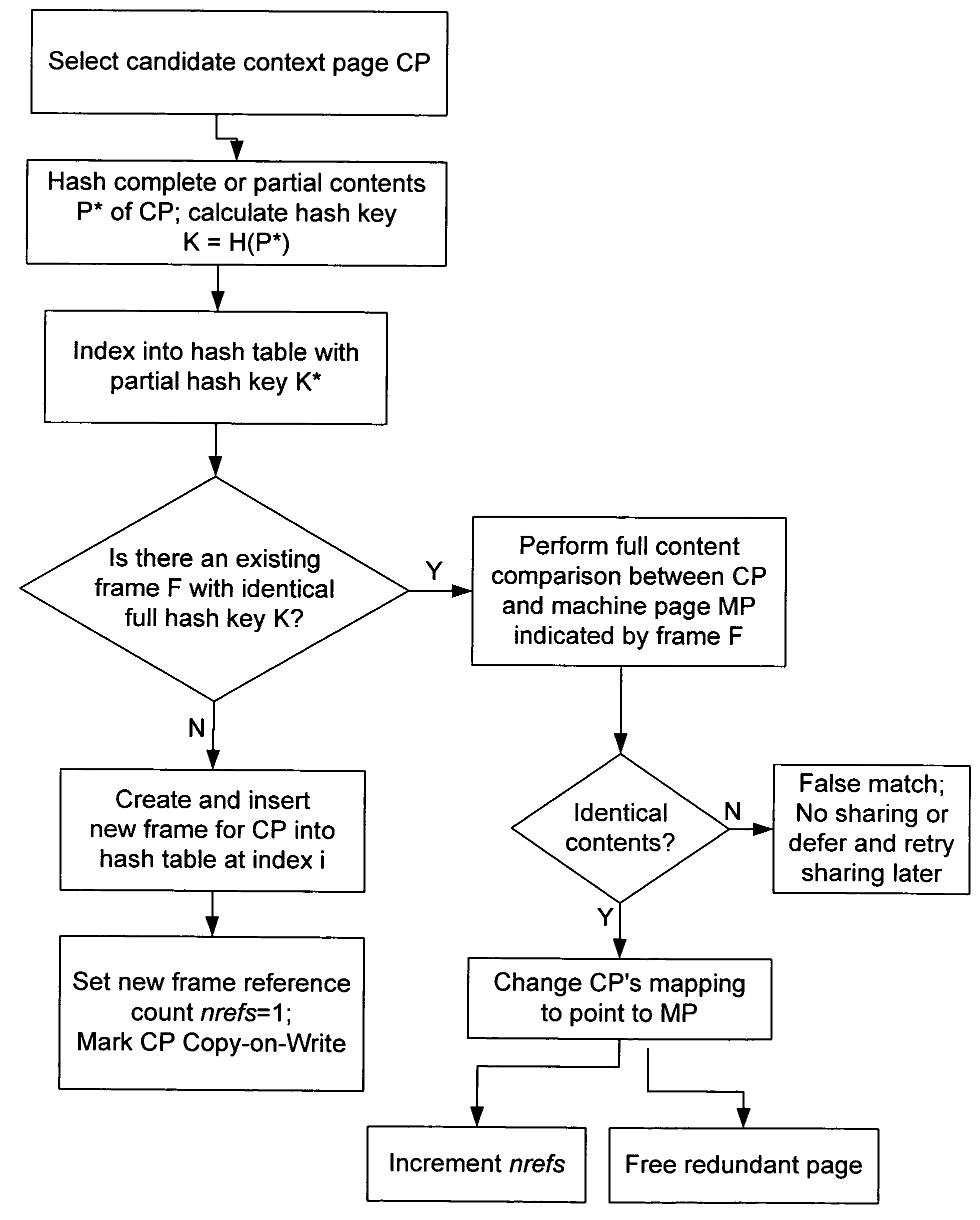

Content-based, transparent sharing of memory units

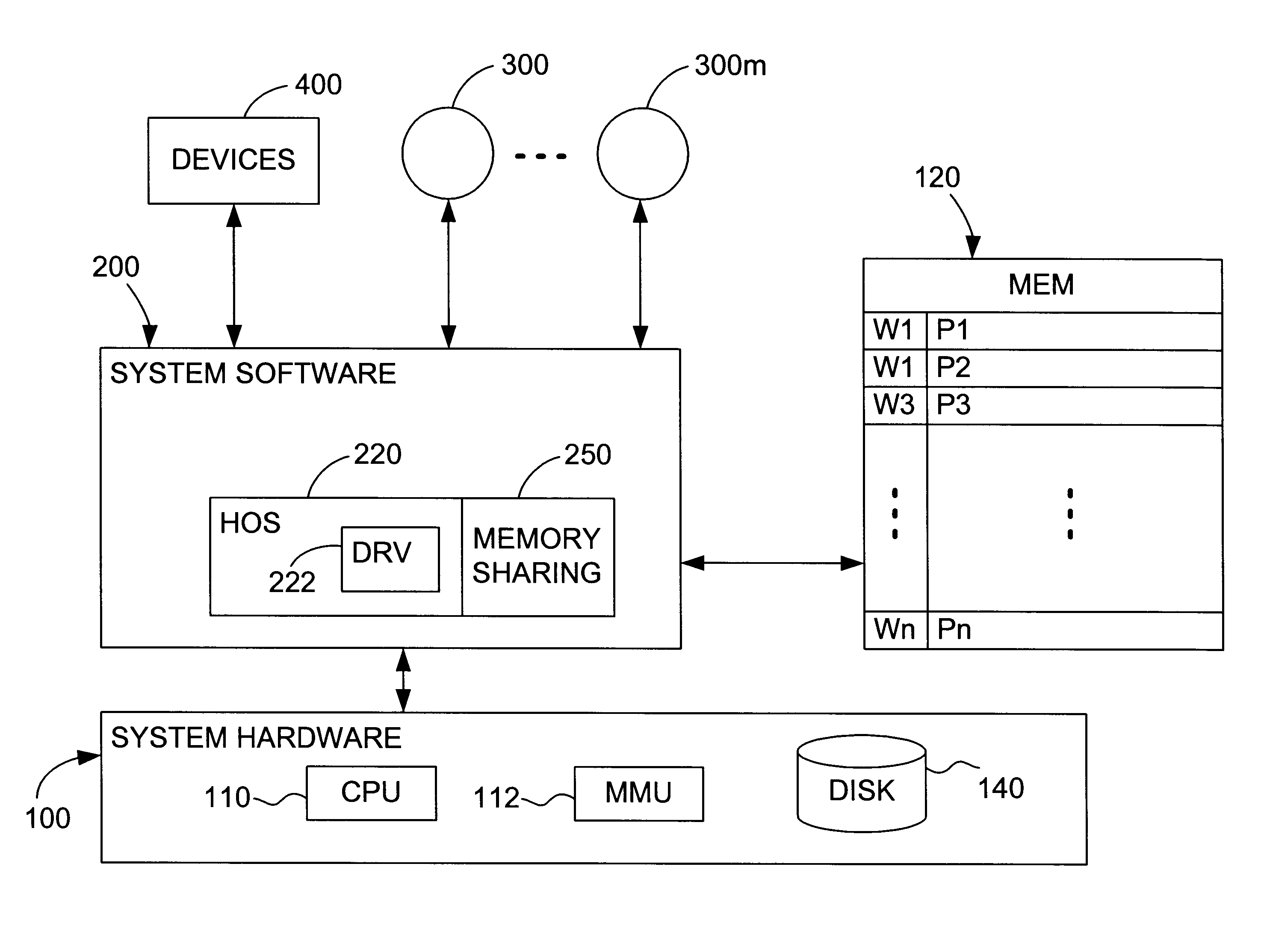

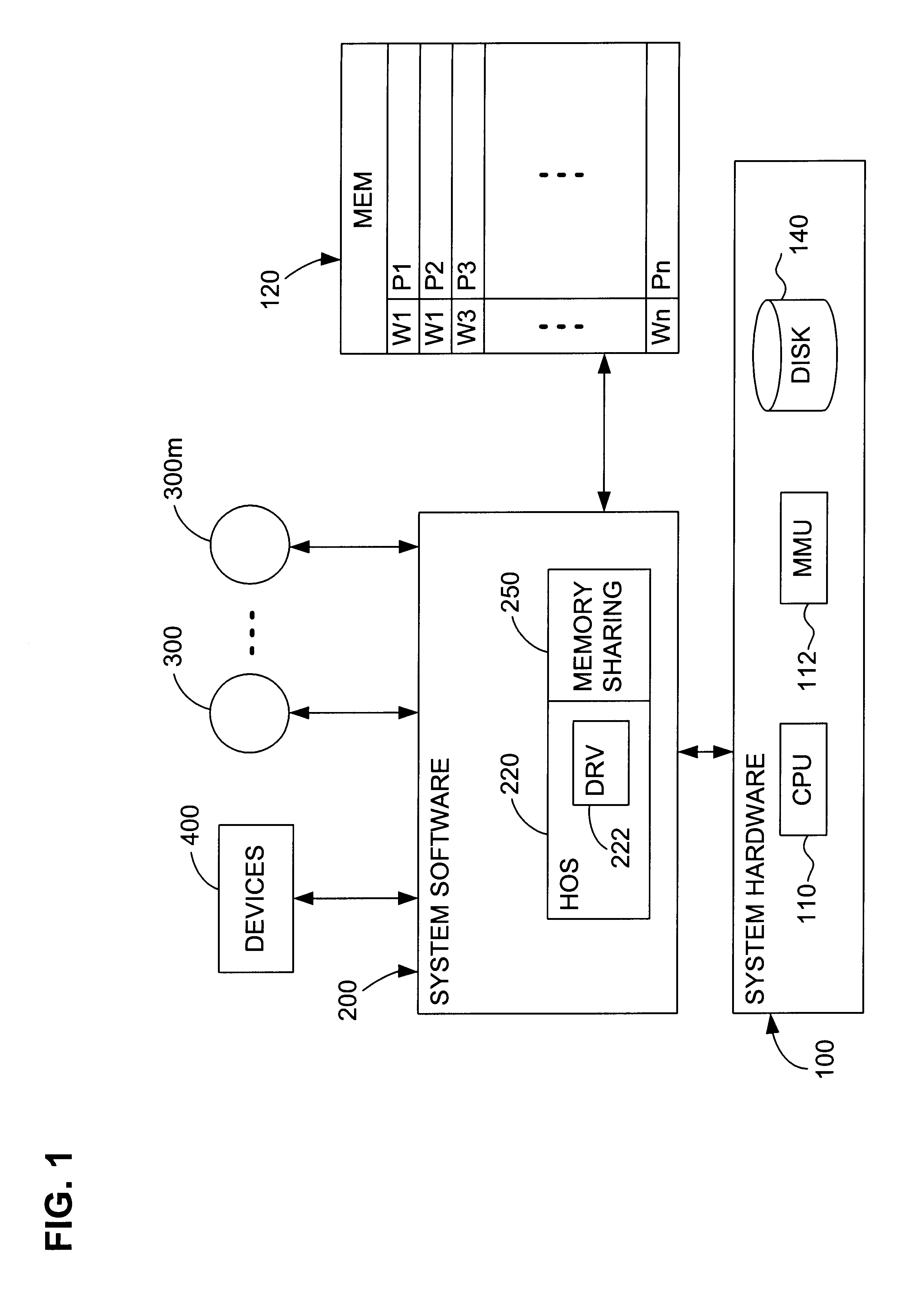

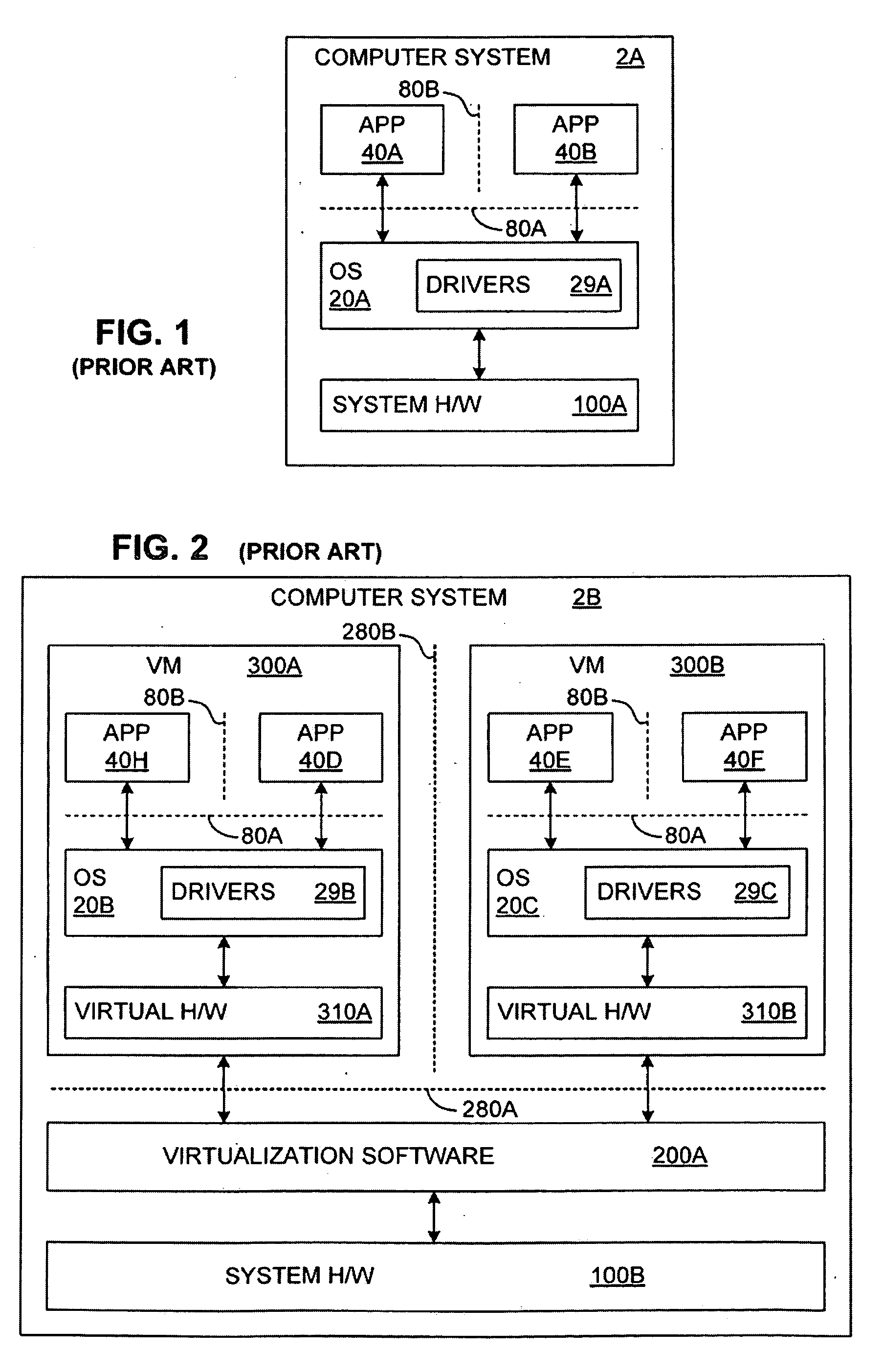

InactiveUS6789156B1Memory architecture accessing/allocationMemory adressing/allocation/relocationComputer hardwareMultiple context

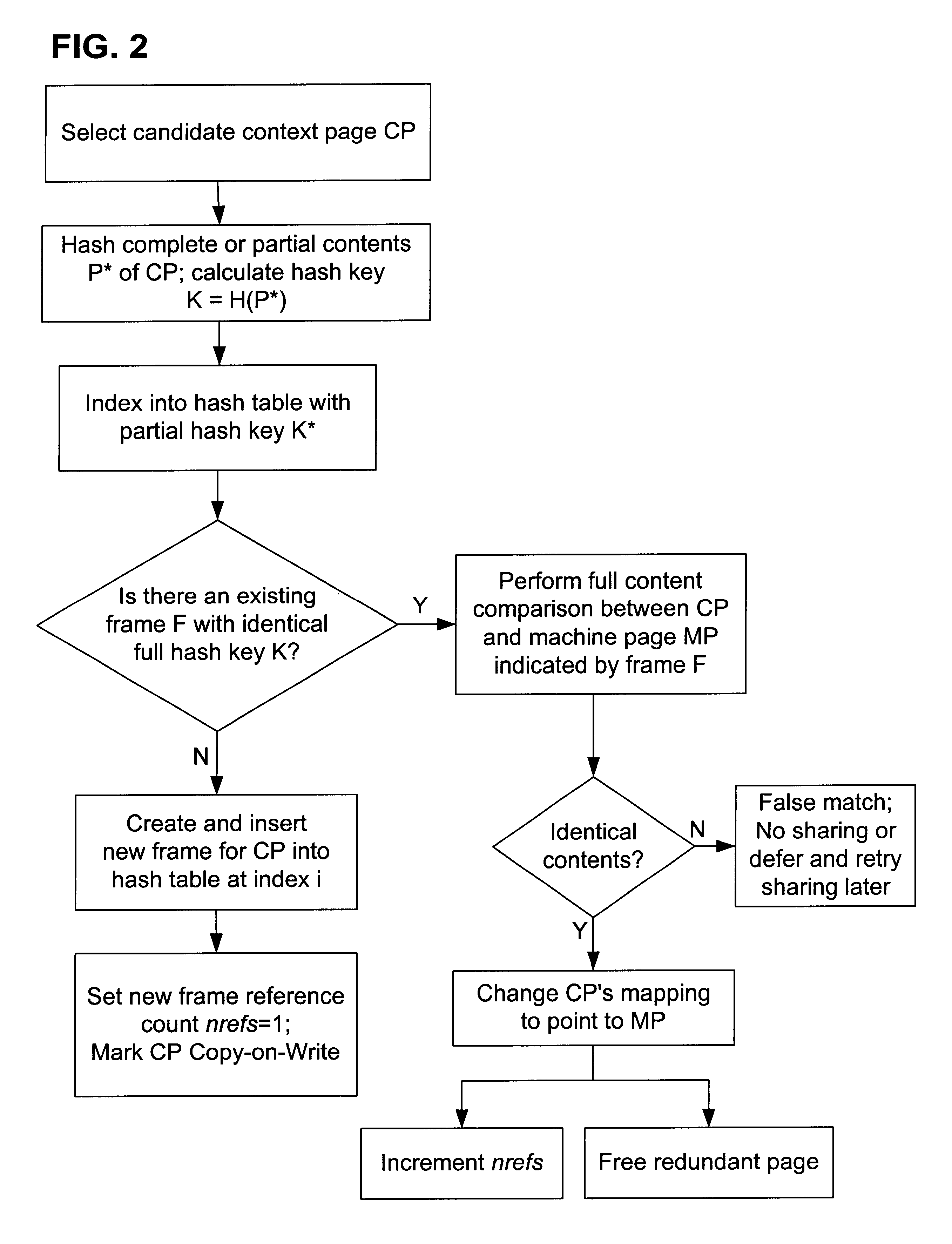

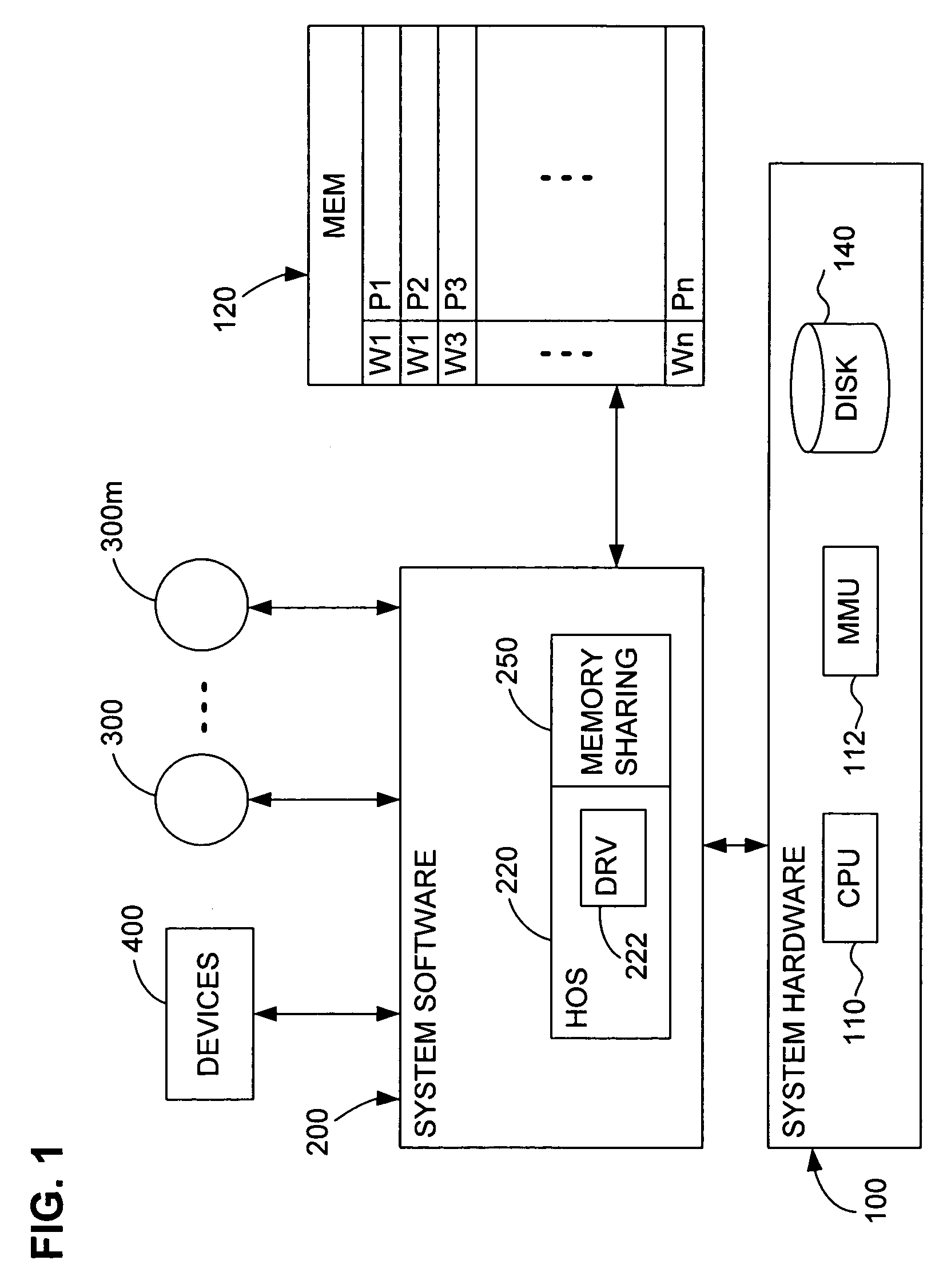

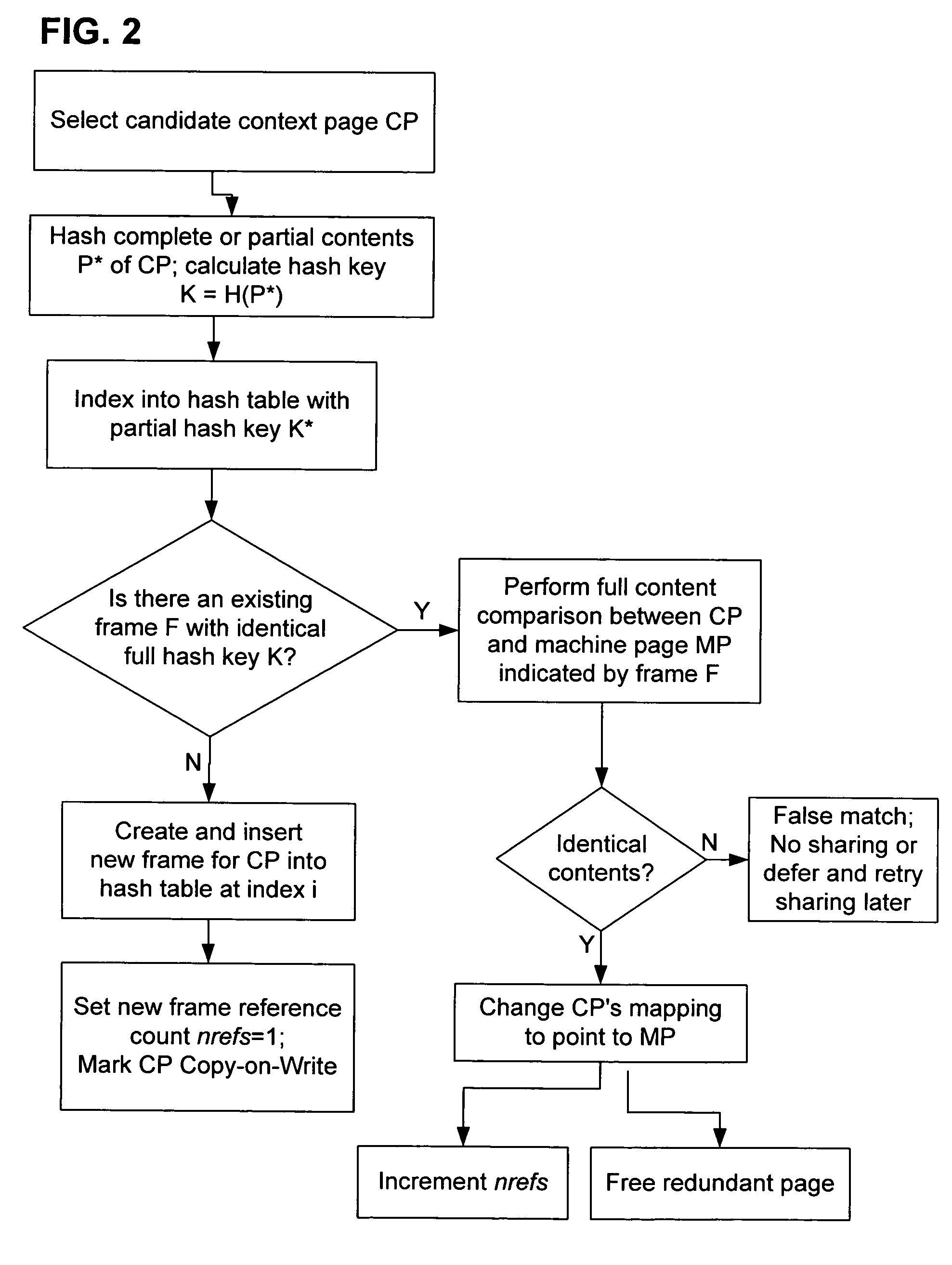

A computer system has one or more software context that share use of a memory that is divided into units such as pages. In the preferred embodiment of the invention, the context are, or include, virtual machines running on a common hardware platform. The context, as opposed to merely the addresses or page numbers, of virtual memory pages that accessible to one or more contexts are examined. If two or more context pages are identical, then their memory mappings are changed to point to a single, shared copy of the page in the hardware memory, thereby freeing the memory space taken up by the redundant copies. The shared copy is ten preferable marked copy-on-write. Sharing is preferably dynamic, whereby the presence of redundant copies of pages is preferably determined by hashing page contents and performing full content comparisons only when two or more pages hash to the same key.

Owner:VMWARE INC

Transparent Memory-Mapped Emulation of I/O Calls

ActiveUS20090113425A1Memory architecture accessing/allocationError detection/correctionSemanticsMemory map

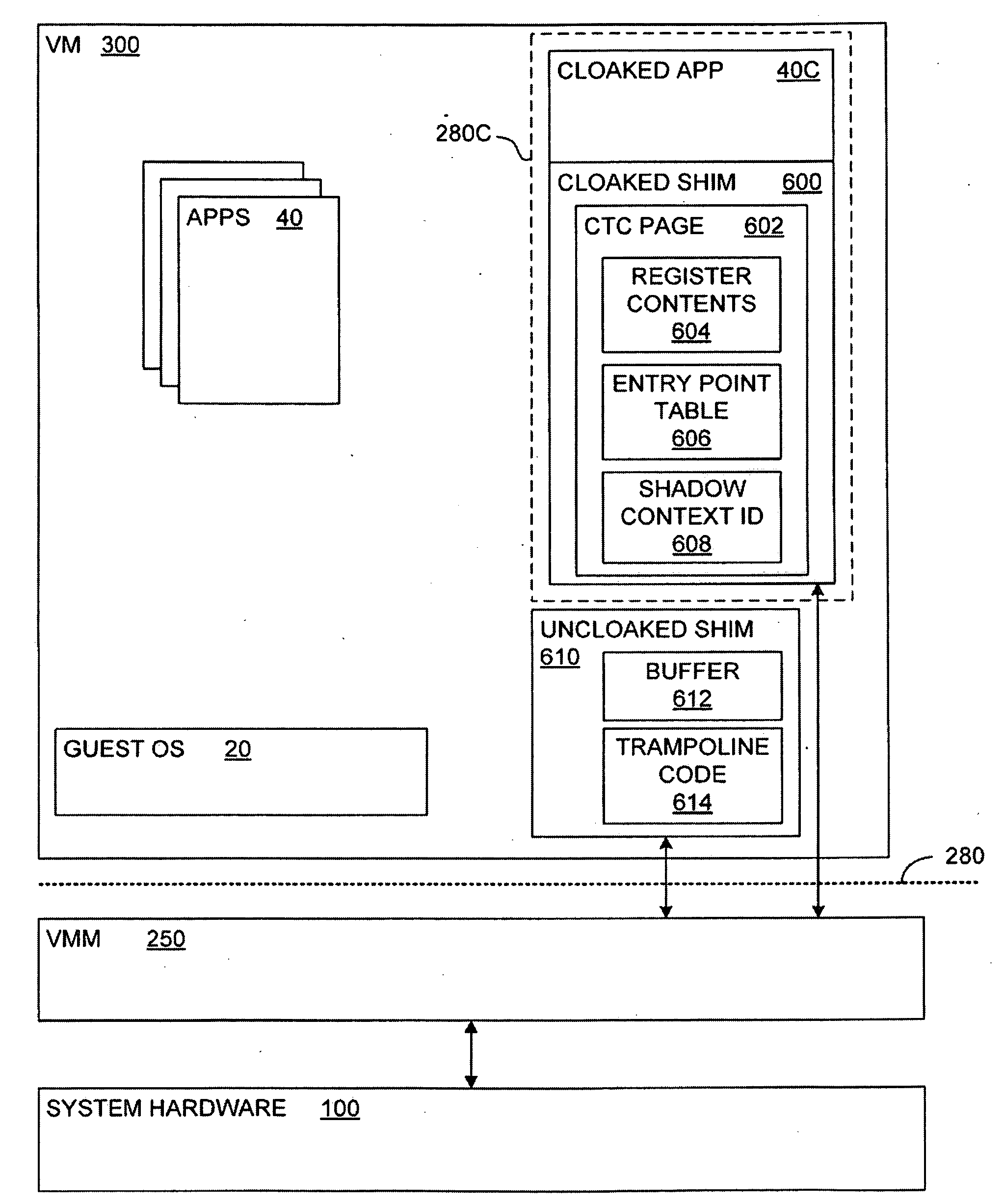

A virtual-machine-based system provides a mechanism to implement application file I / O operations of protected data by implementing the I / O operations semantics in a shim layer with memory-mapped regions. The semantics of these I / O operations are emulated in a shim layer with memory-mapped regions by using a mapping between a process' address space and a file or shared memory object. Data that is protected from viewing by a guest OS running in a virtual machine may nonetheless be accessed by the process.

Owner:VMWARE INC

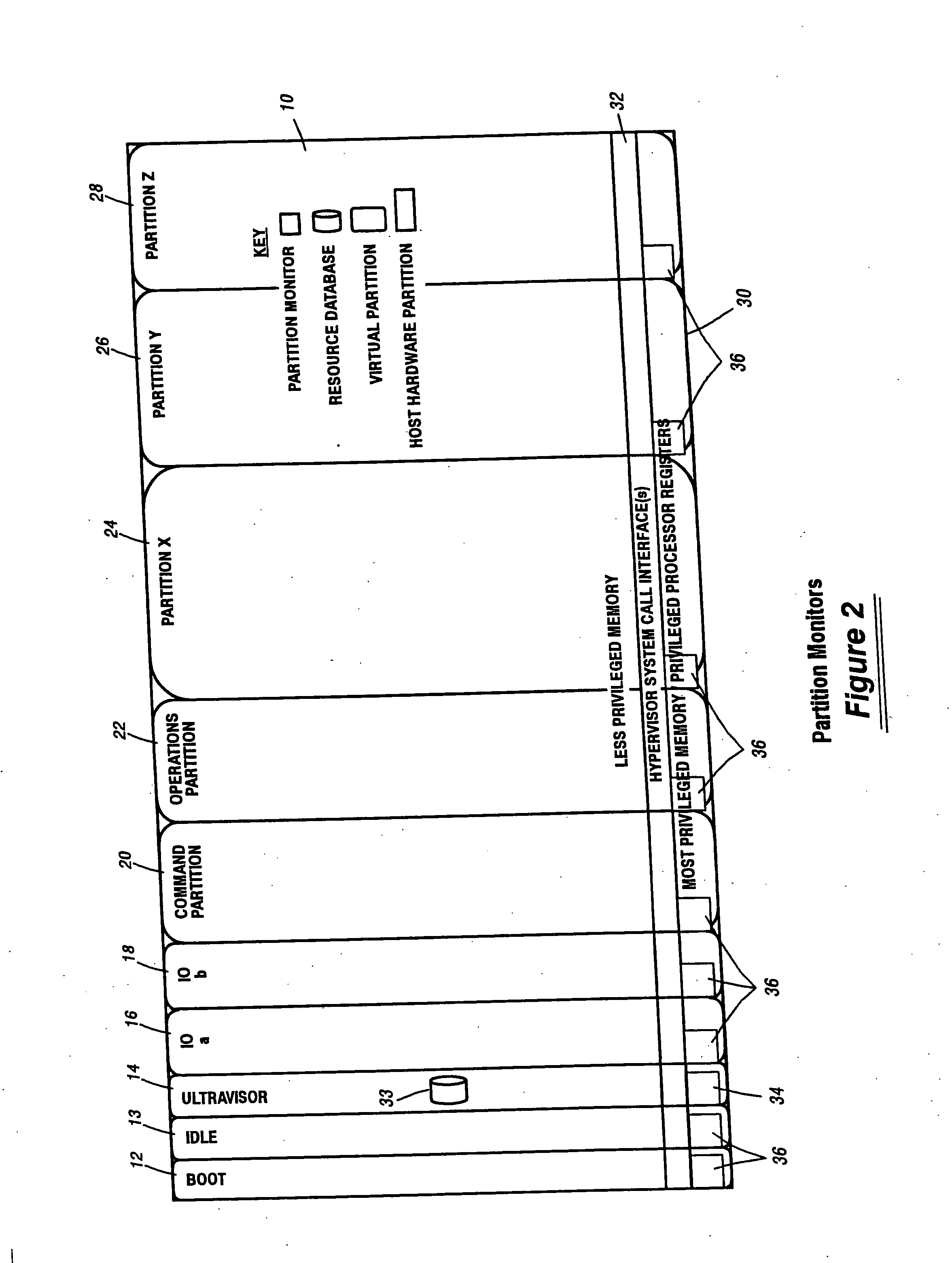

Scalable partition memory mapping system

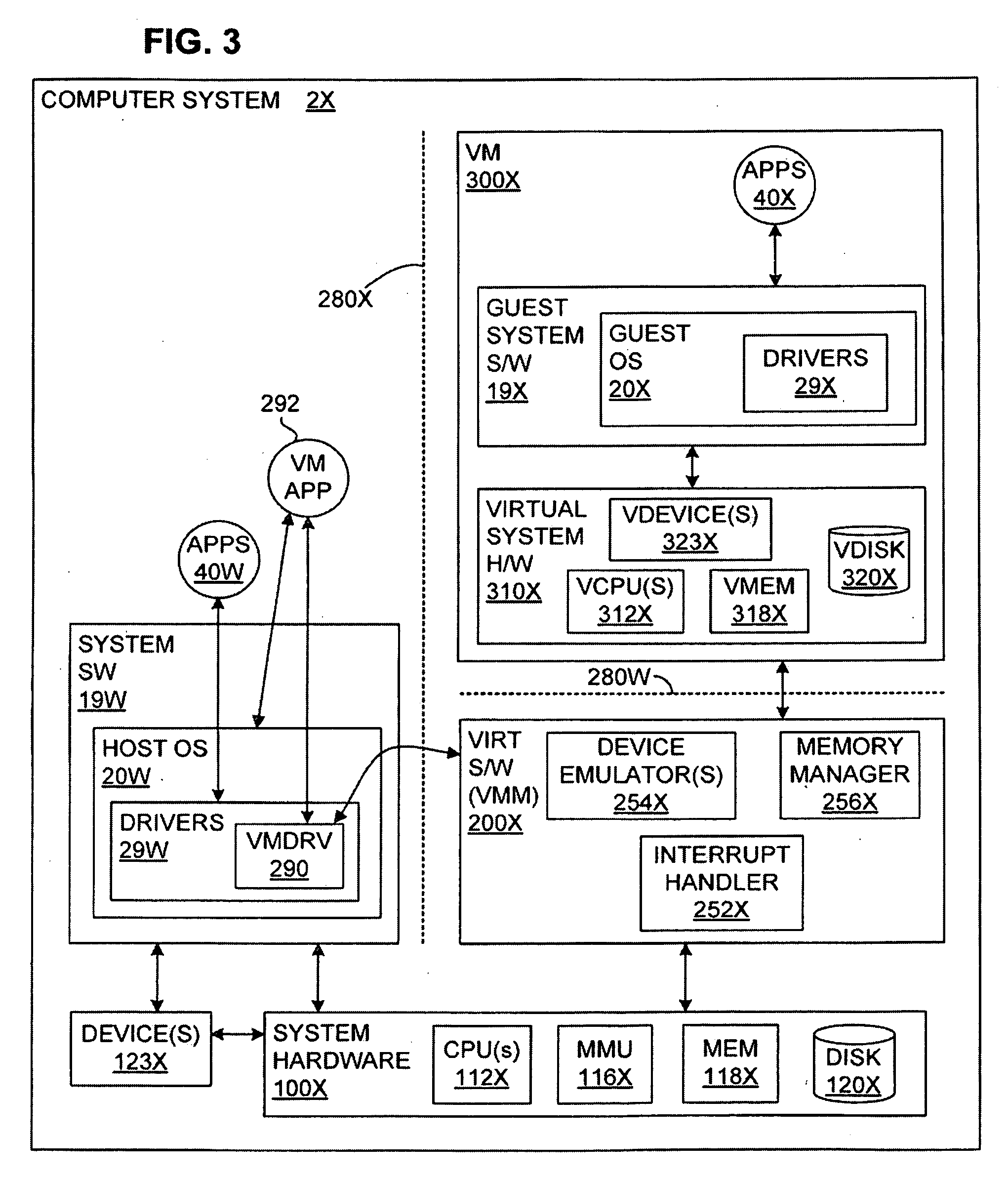

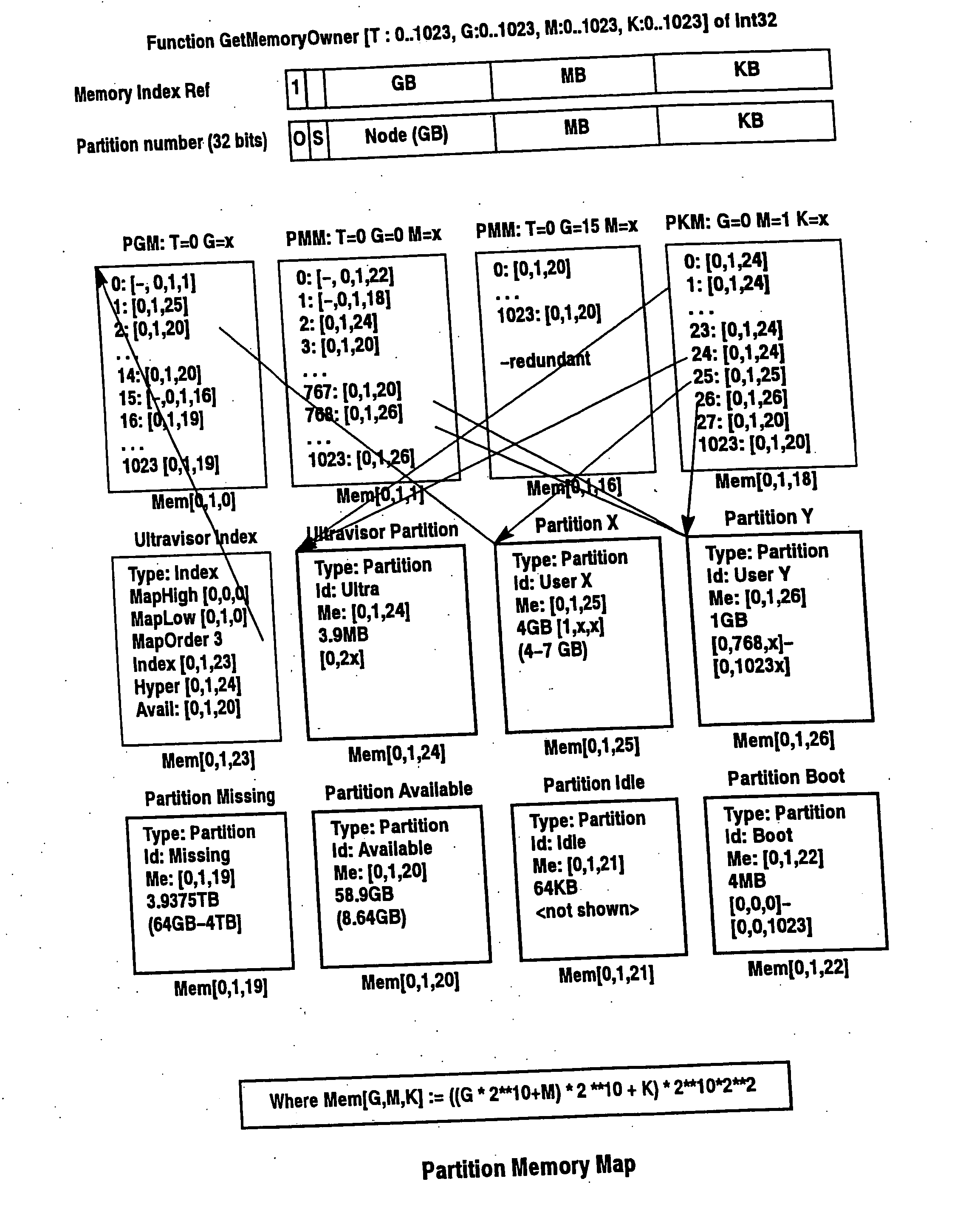

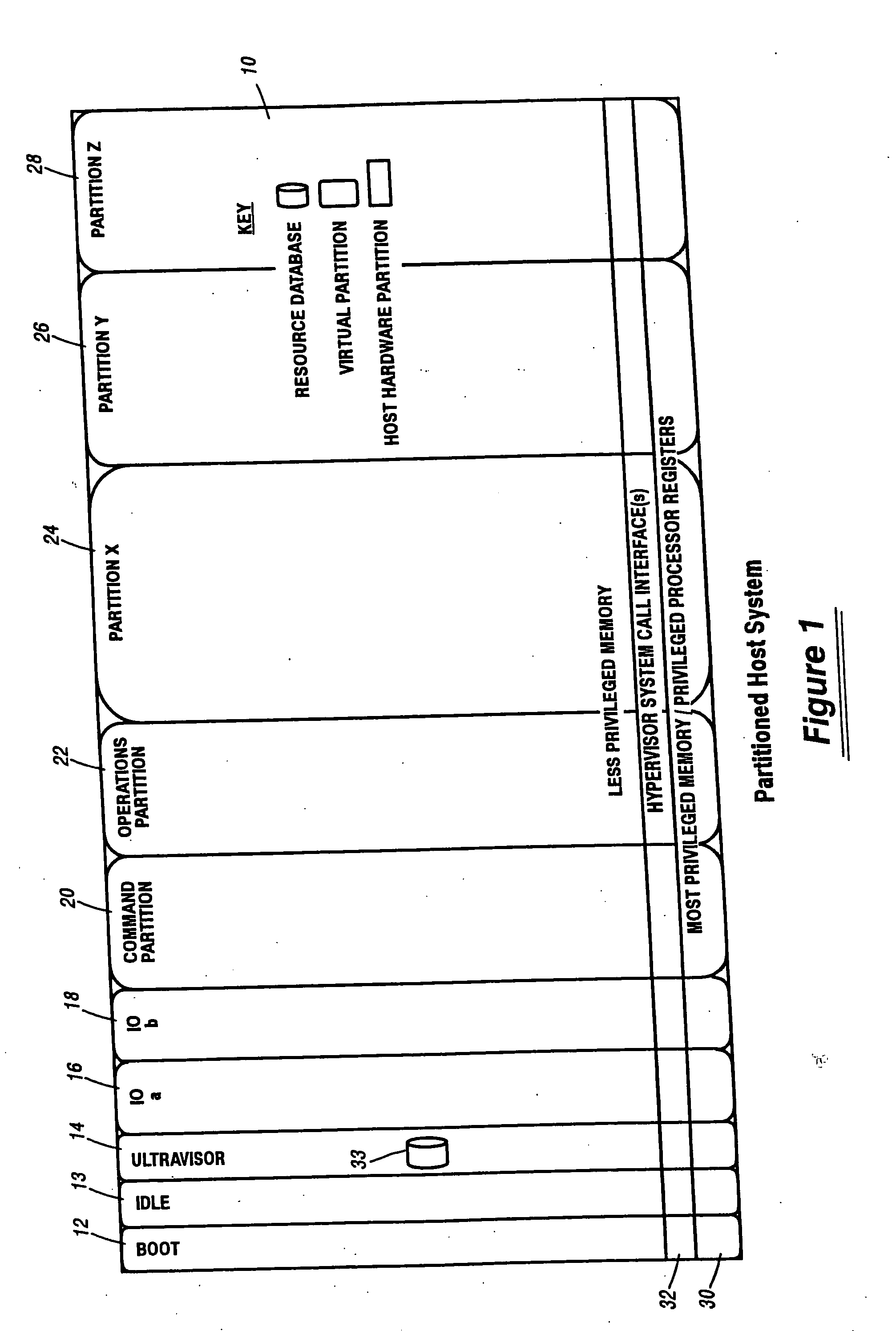

InactiveUS20070067366A1Improve efficiencyImprove securityMemory architecture accessing/allocationSoftware simulation/interpretation/emulationIn-memory databaseOperational system

A virtualization infrastructure that allows multiple guest partitions to run within a host hardware partition. The host system is divided into distinct logical or virtual partitions and special infrastructure partitions are implemented to control resource management and to control physical I / O device drivers that are, in turn, used by operating systems in other distinct logical or virtual guest partitions. Host hardware resource management runs as a tracking application in a resource management “ultravisor” partition, while host resource management decisions are performed in a higher level command partition based on policies maintained in a separate operations partition. The conventional hypervisor is reduced to a context switching and containment element (monitor) for the respective partitions, while the system resource management functionality is implemented in the ultravisor partition. The ultravisor partition maintains the master in-memory database of the hardware resource allocations and serves a command channel to accept transactional requests for assignment of resources to partitions. It also provides individual read-only views of individual partitions to the associated partition monitors. Host hardware I / O management is implemented in special redundant I / O partitions. A scalable partition memory mapping system is implemented in the ultravisor partition so that the virtualized system is scalable to a virtually unlimited number of pages. A log (210) based allocation allows the virtual partition memory sizes to grow over multiple generations without increasing the overhead of managing the memory allocations. Each page of memory is assigned to one partition descriptor in the page hierarchy and is managed by the ultravisor partition.

Owner:UNISYS CORP

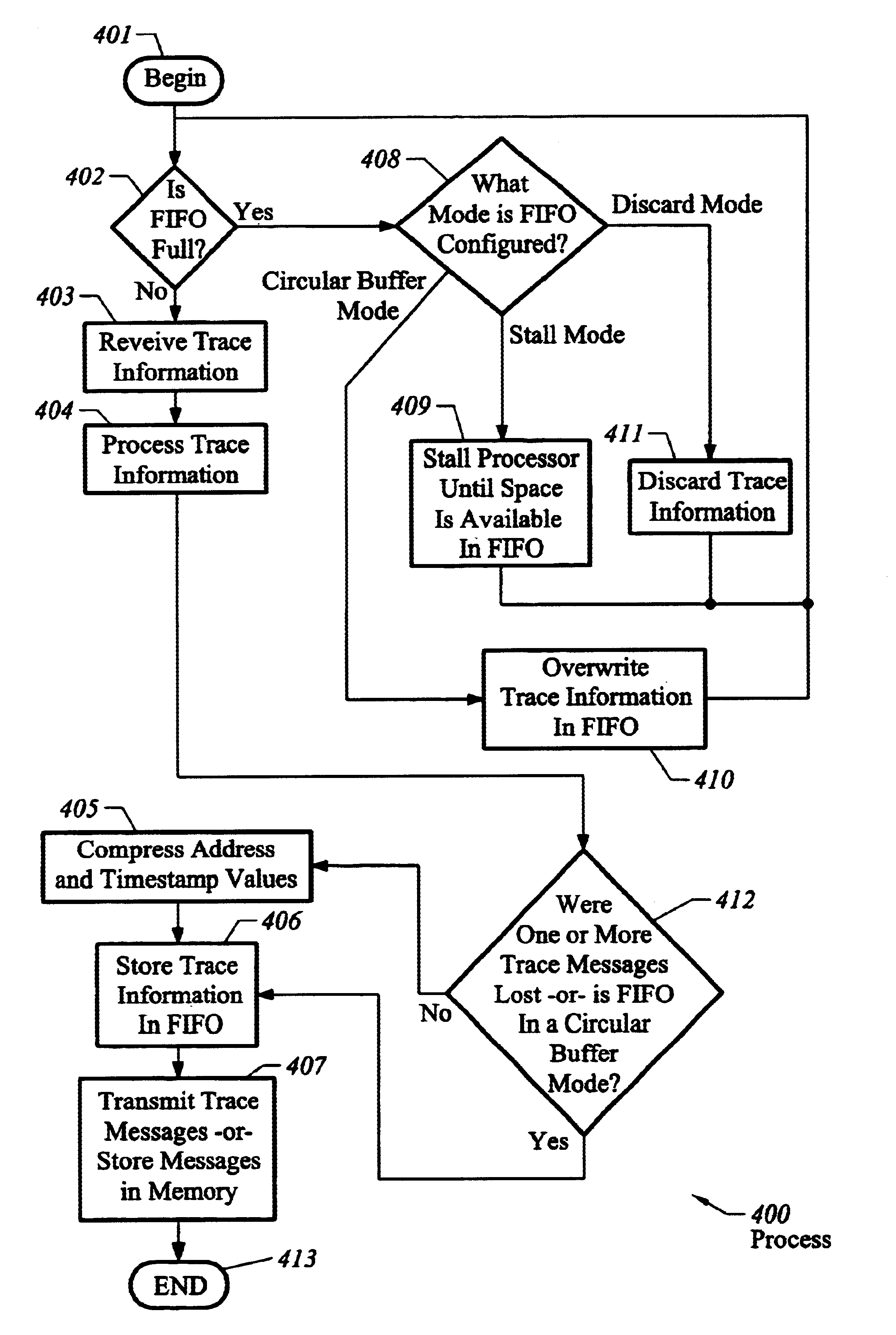

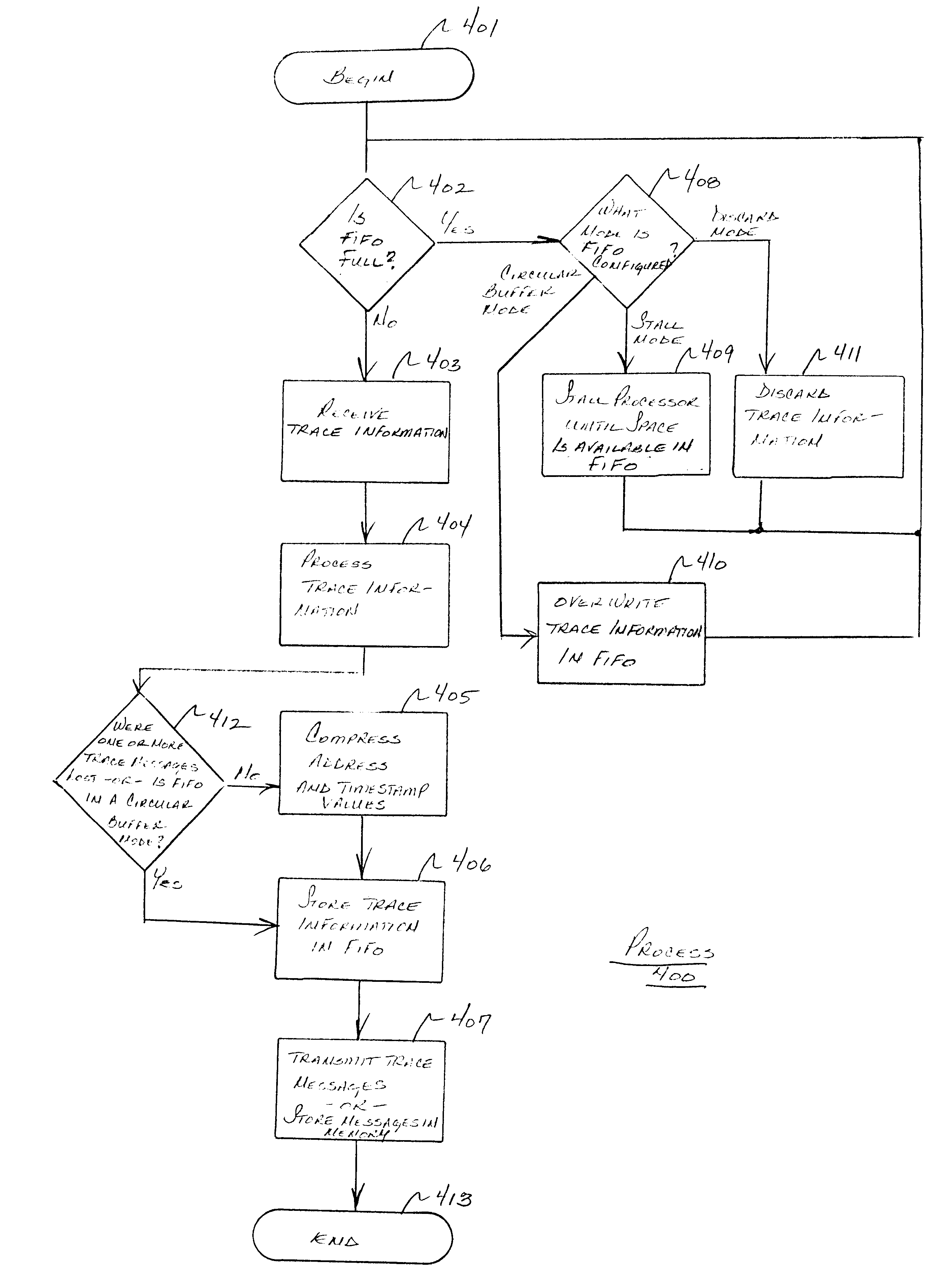

Method for compressing and decompressing trace information

InactiveUS6918065B1Maximizes on-chip trace storageEliminating duplicate informationCode conversionHardware monitoringParallel computingMemory map

A system for performing non-intrusive trace is provided which receives trace information from one or more processors. The trace system may be configured by a user to operate in various modes for flexibly storing or transmitting the trace information. The trace system includes a FIFO which is memory-mapped and is capable of being accessed without affecting processor performance. In one aspect, the trace system includes a trace buffer which receives trace information at an internal clock speed of the processor. In another embodiment, a compression protocol is provided for compressing trace messages on-chip prior to transmitting the messages to an external system or storing the messages in memory.

Owner:RENESAS ELECTRONICS CORP

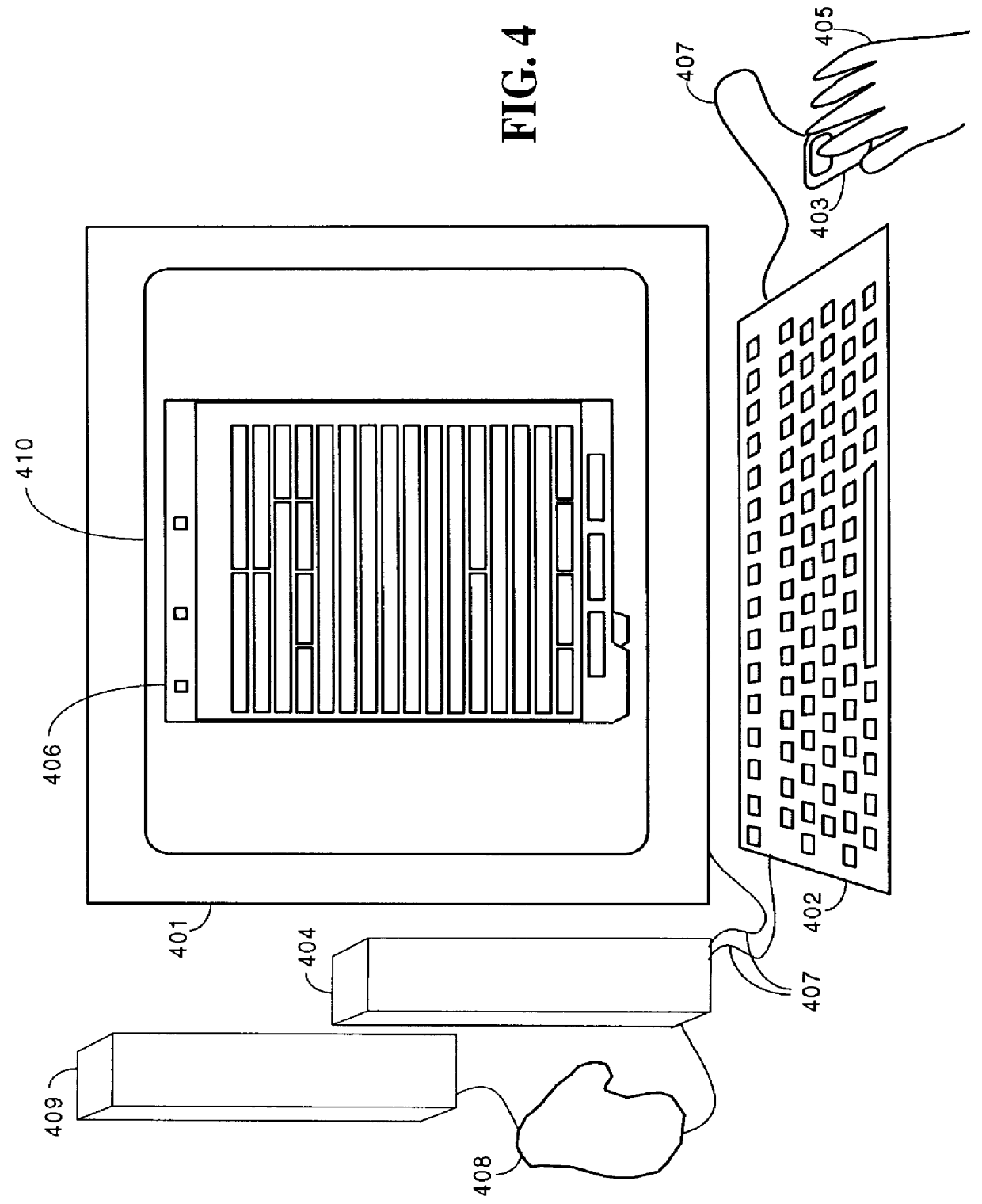

Method for configuring circuits over a data communications link

InactiveUS6023565ACAD circuit designSpecial data processing applicationsTelecommunications linkComputer architecture

A method of specifying design parameters is provided for configuring circuits for programmable ICs. A design database describing a circuit is displayed in table-based format on a computer screen display. The design database may include a memory map including data to be placed in bit-storage space in the target programmable IC. This design database requires no schematics or HDL description of the circuit, even for complicated application-specific circuits. The desired parameters are entered by the user, typically using toggle buttons, pull-down menus, or keyboard entry. The selected parameters are then entered into the design database, thereby configuring the design database in accordance with the selected parameters. Next, the design database is transmitted over a data communications link such as the internet to a second computer, on which the compilation software resides. The design is then compiled and the resulting netlist is transmitted back to the originating computer. In one embodiment, a schematic symbol or HDL instantiation is also generated by the second computer, and transmitted back to the originating computer.

Owner:XILINX INC

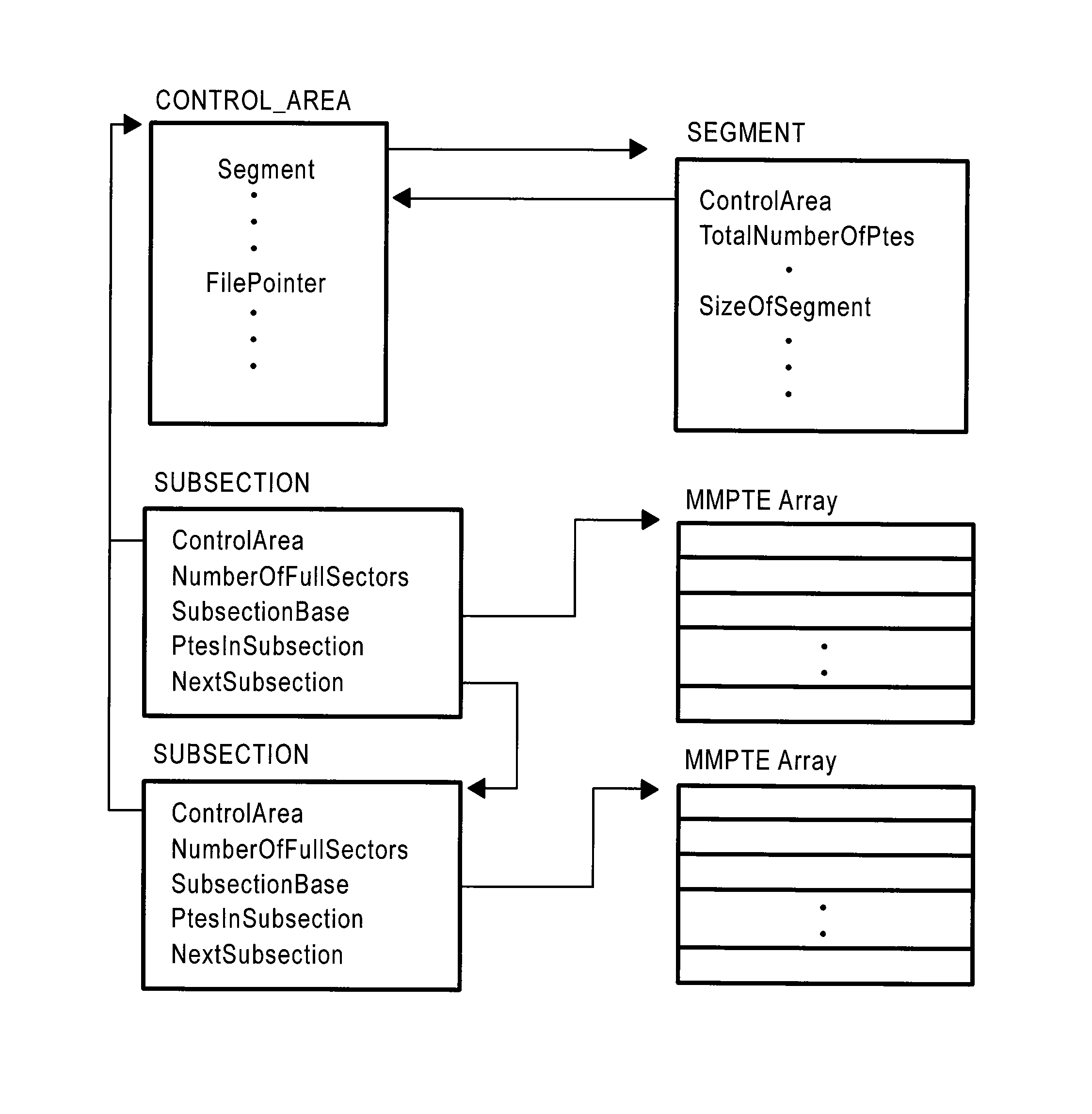

Physical memory forensics system and method

ActiveUS9268936B2Memory adressing/allocation/relocationDigital data protectionMemory forensicsOperational system

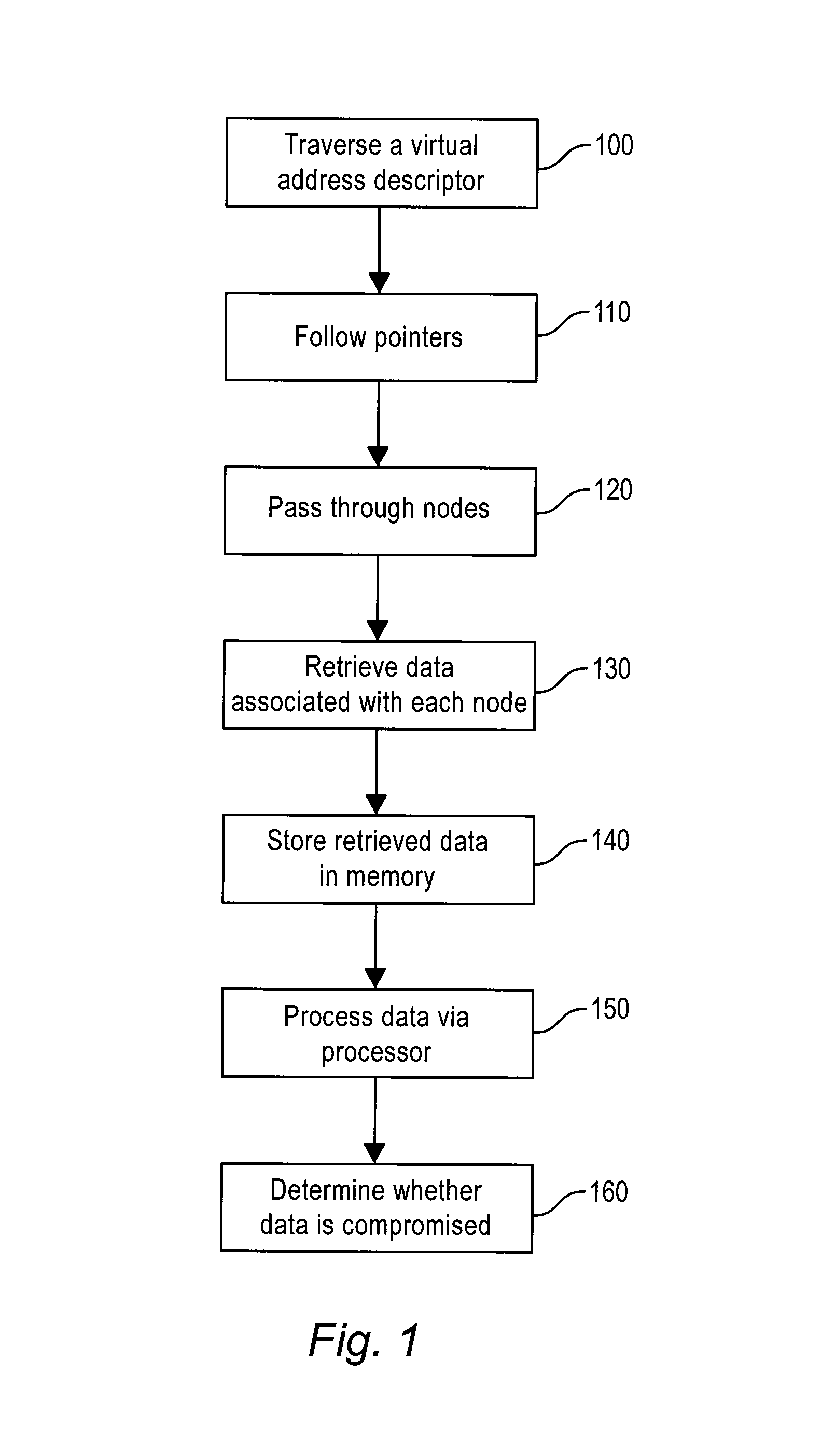

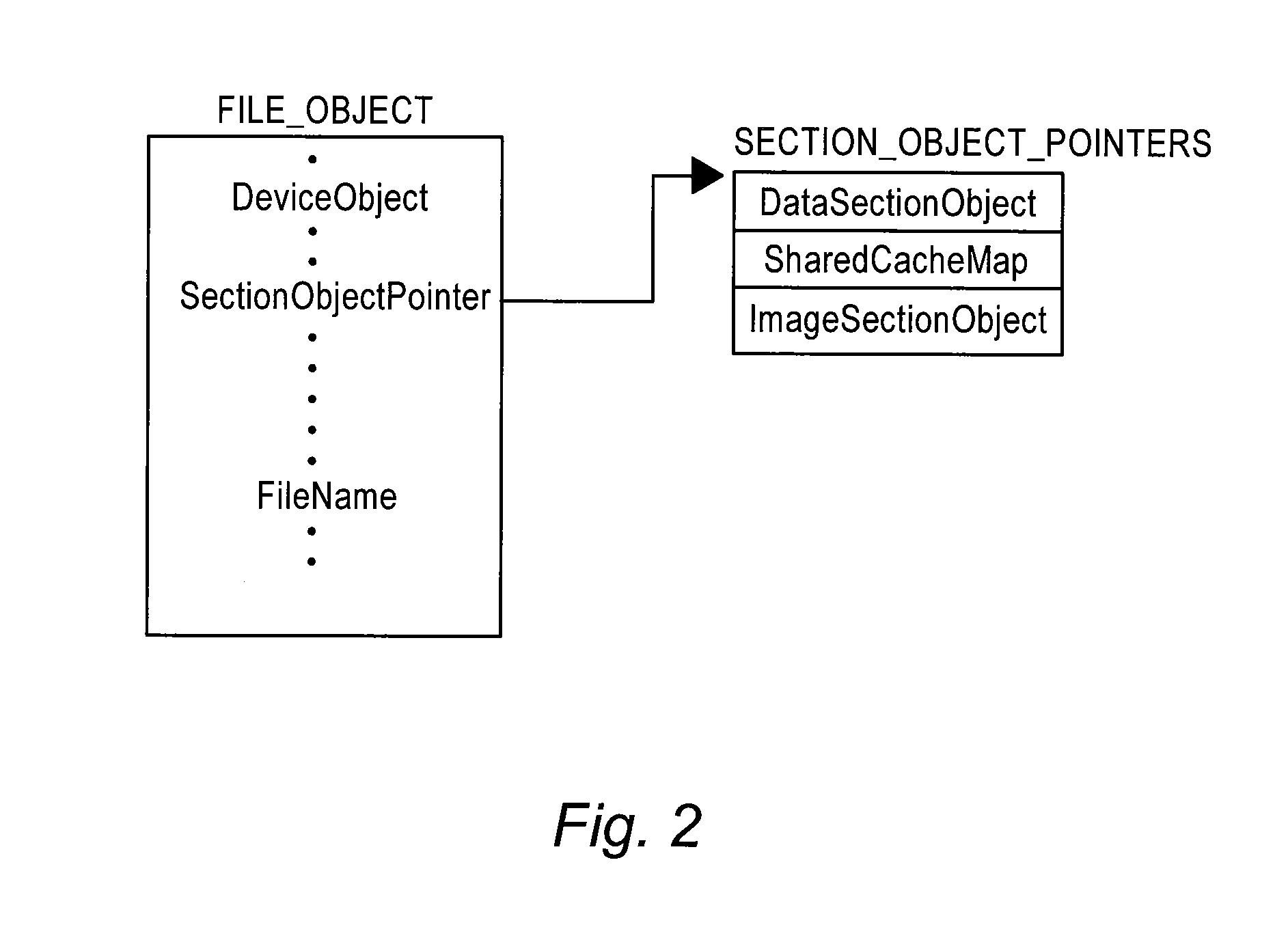

The method of the present inventive concept is configured to utilize Operating System data structures related to memory-mapped binaries to reconstruct processes. These structures provide a system configured to facilitate the acquisition of data that traditional memory analysis tools fail to identify, including by providing a system configured to traverse a virtual address descriptor, determine a pointer to a control area, traverse a PPTE array, copy binary data identified in the PPTE array, generate markers to determine whether the binary data is compromised, and utilize the binary data to reconstruct a process.

Owner:FIREEYE SECURITY HLDG US LLC

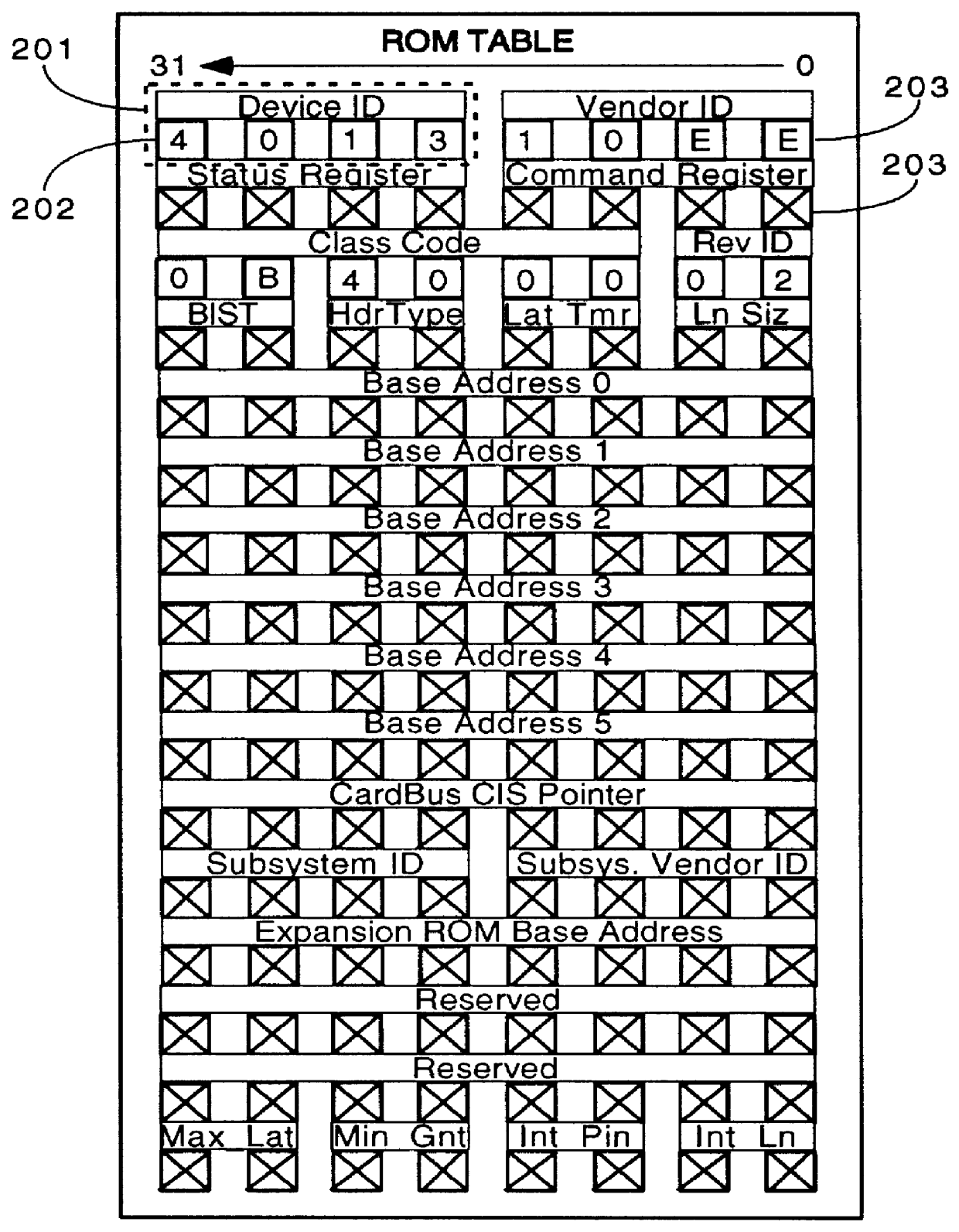

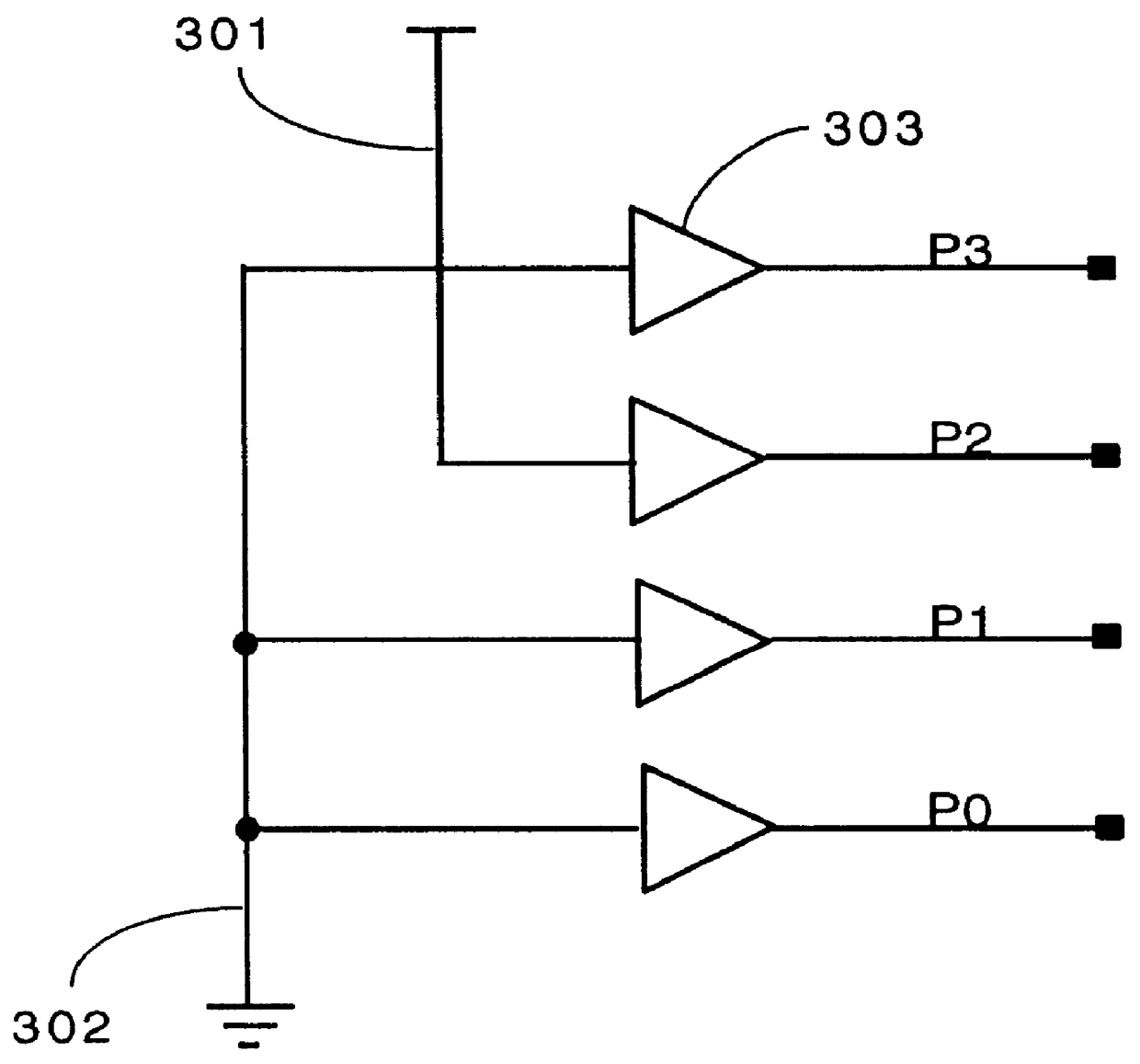

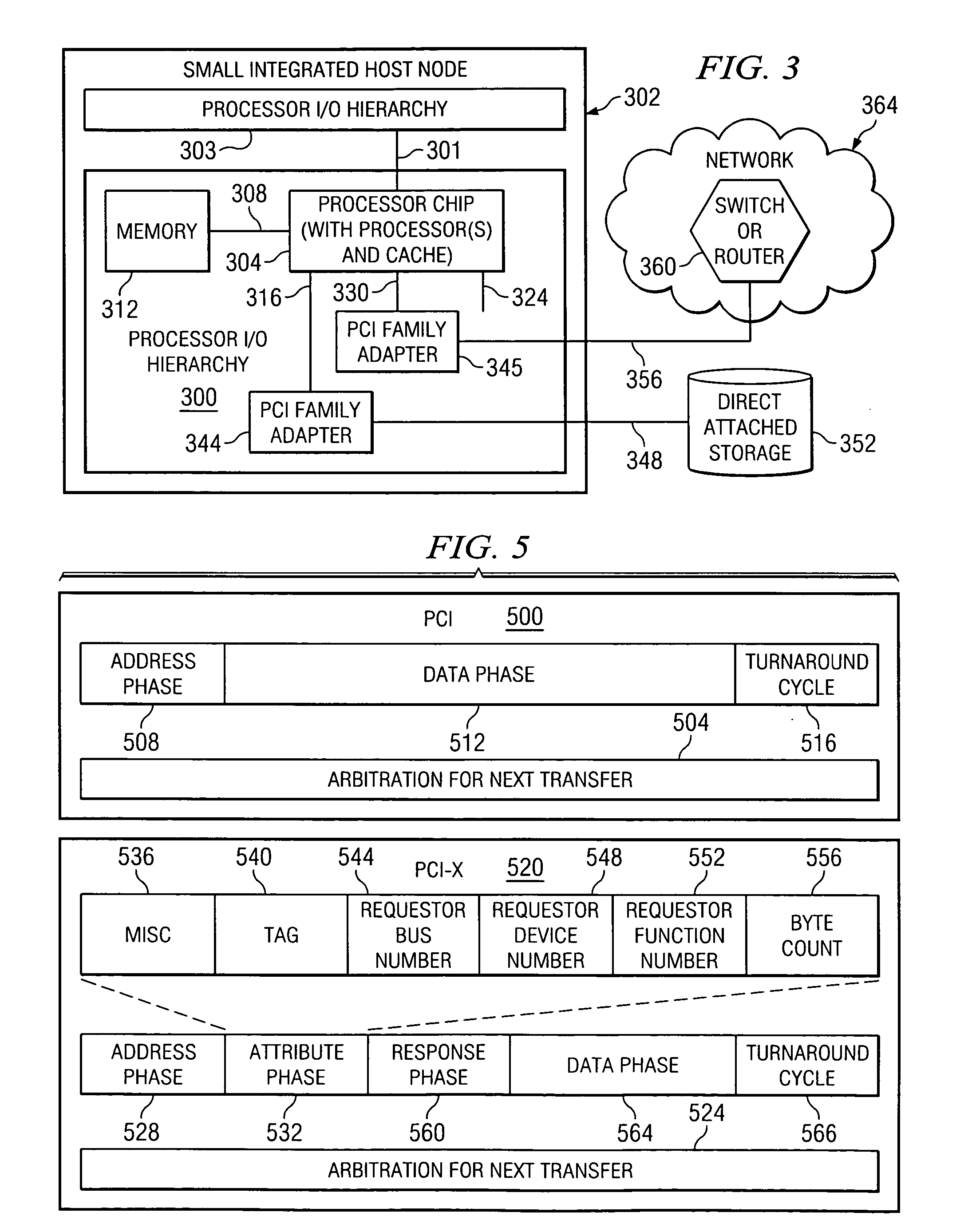

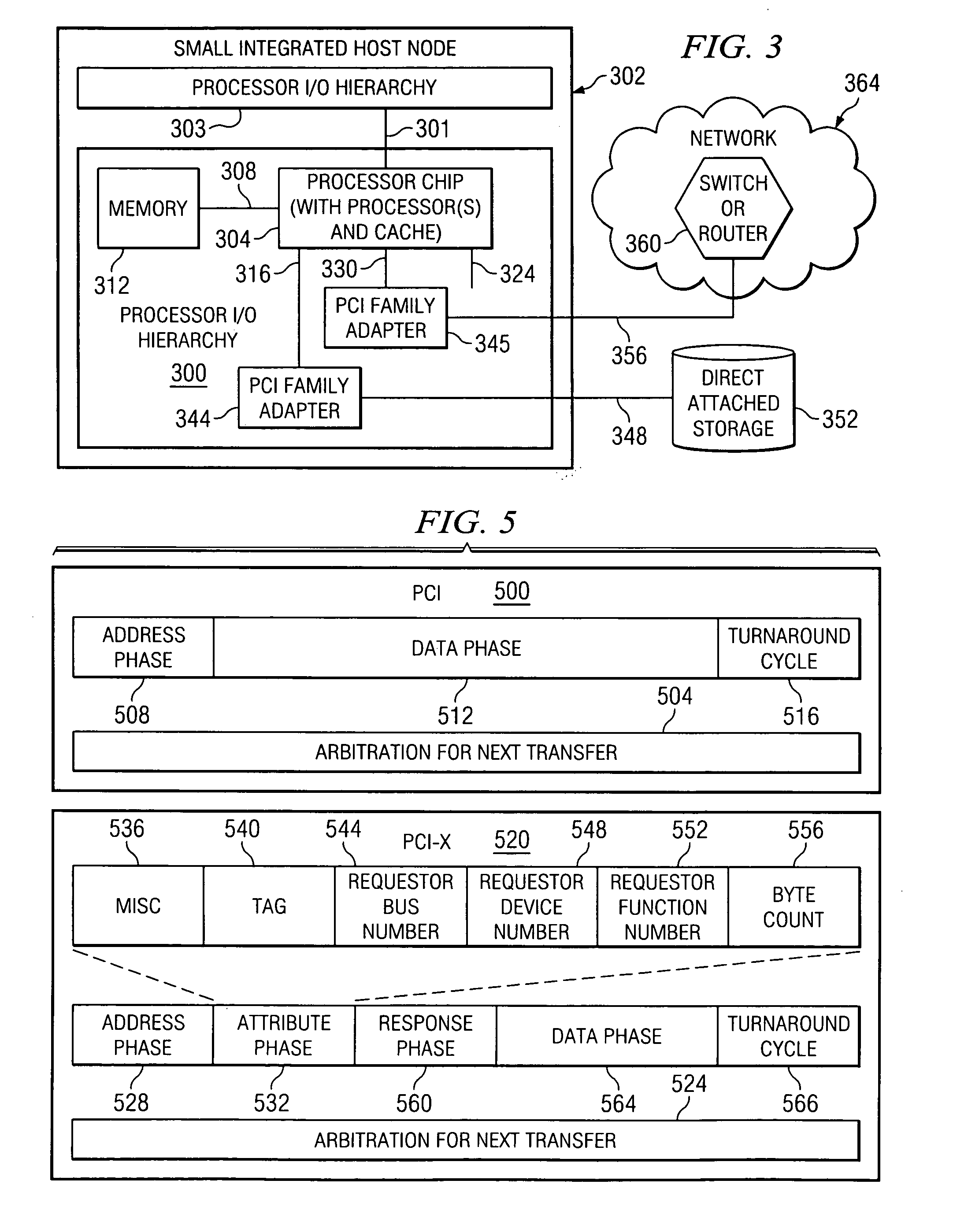

Method and system for native virtualization on a partially trusted adapter using adapter bus, device and function number for identification

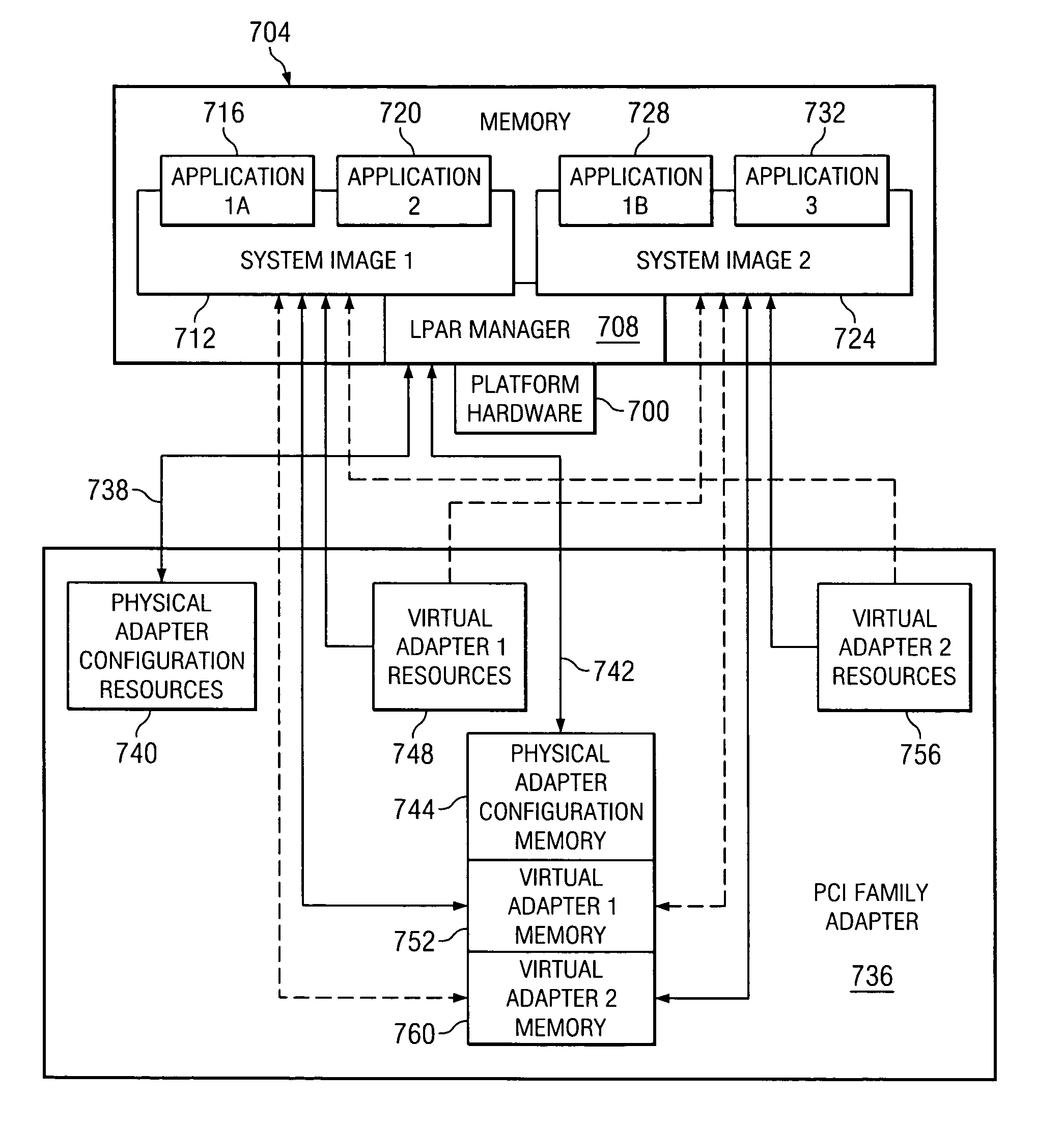

A method, computer program product, and distributed data processing system that allows a single physical I / O adapter, such as a PCI, PCI-X, or PCI-E adapter, to use a PCI adapter identifier to associate its resources to a system image and isolate them from other system images thereby providing I / O virtualization is provided. Specifically, the present invention is directed to a mechanism for sharing among multiple system images a conventional PCI (Peripheral Component Interconnect) I / O adapters, PCI-X I / O adapters, PCI-Express I / O adapters, and, in general, any I / O adapter that uses a memory mapped I / O interface for communications. A mechanism is provided that allows a single physical I / O adapter, such as a PCI, PCI-X, or PCI-E adapter, to use a PCI adapter identifier to associate its resources to a system image and isolate them from other system images, thereby providing I / O virtualization.

Owner:IBM CORP

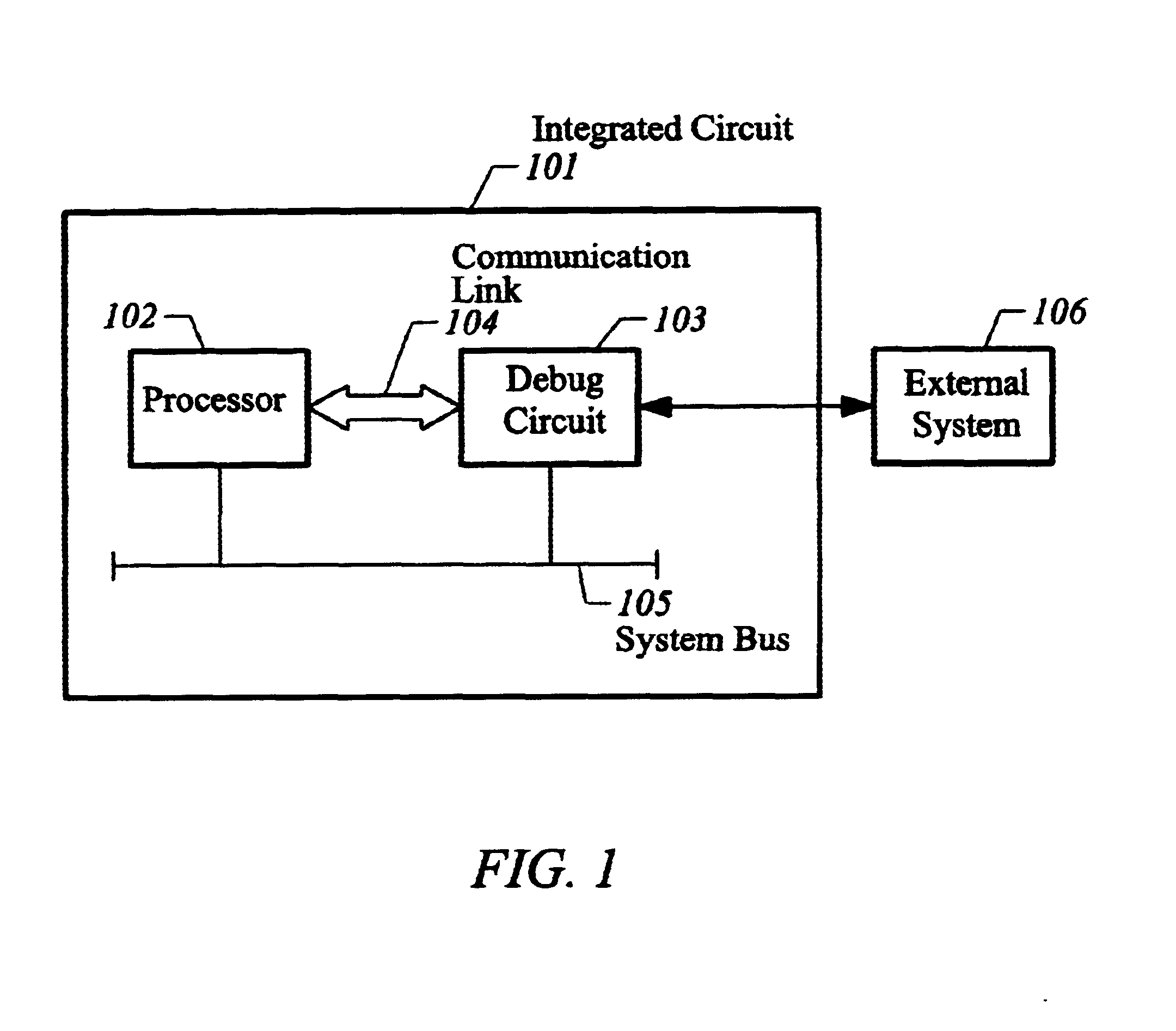

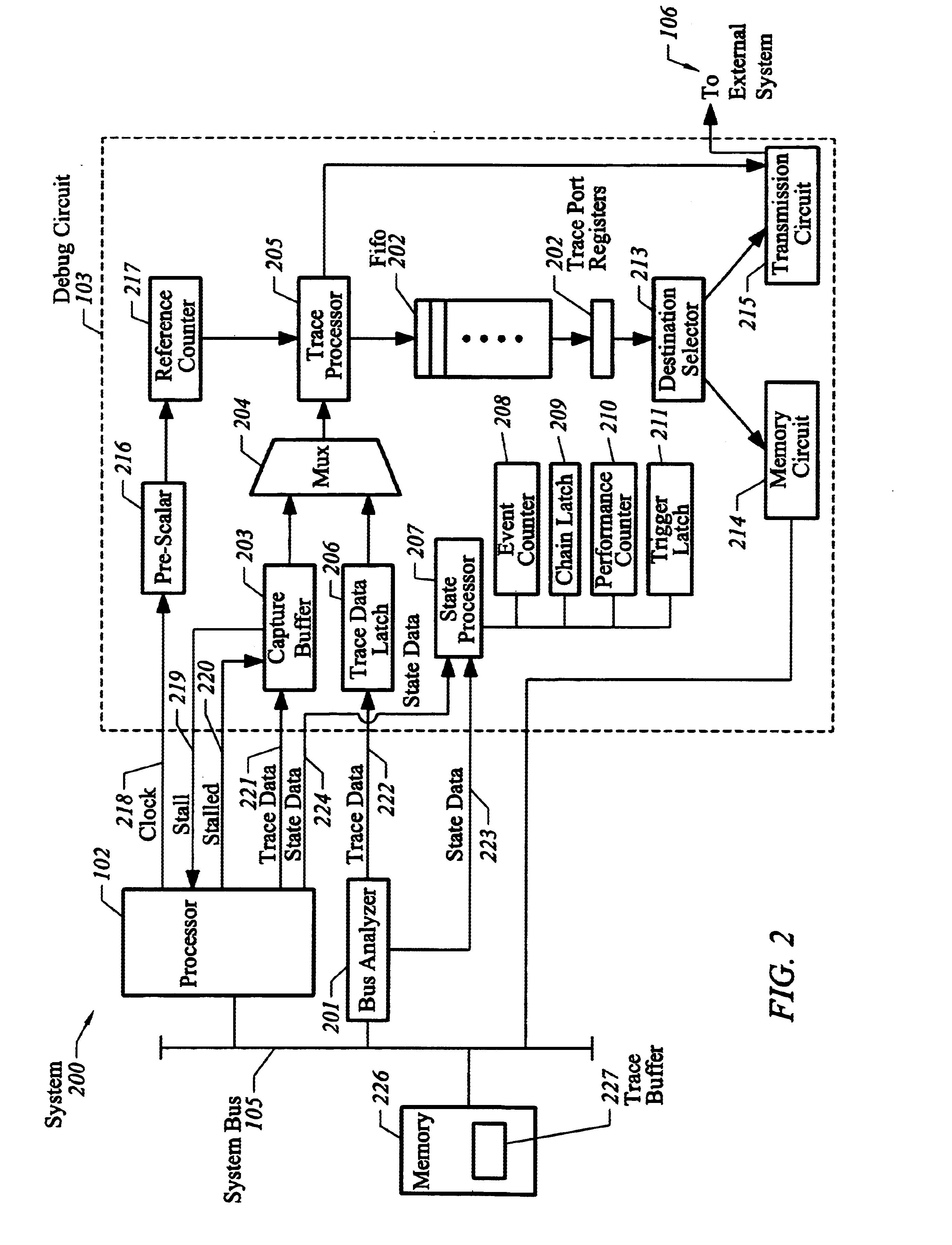

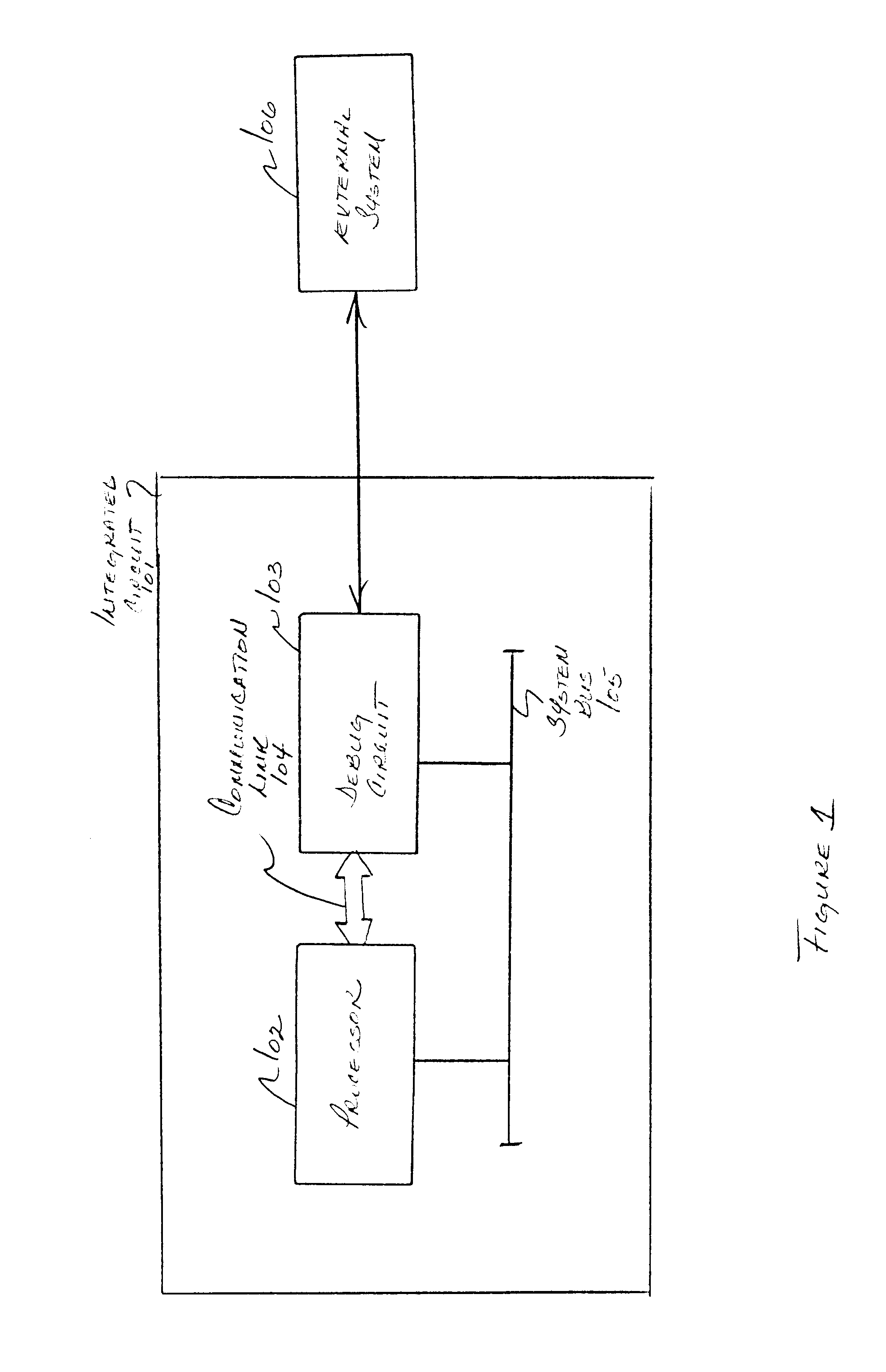

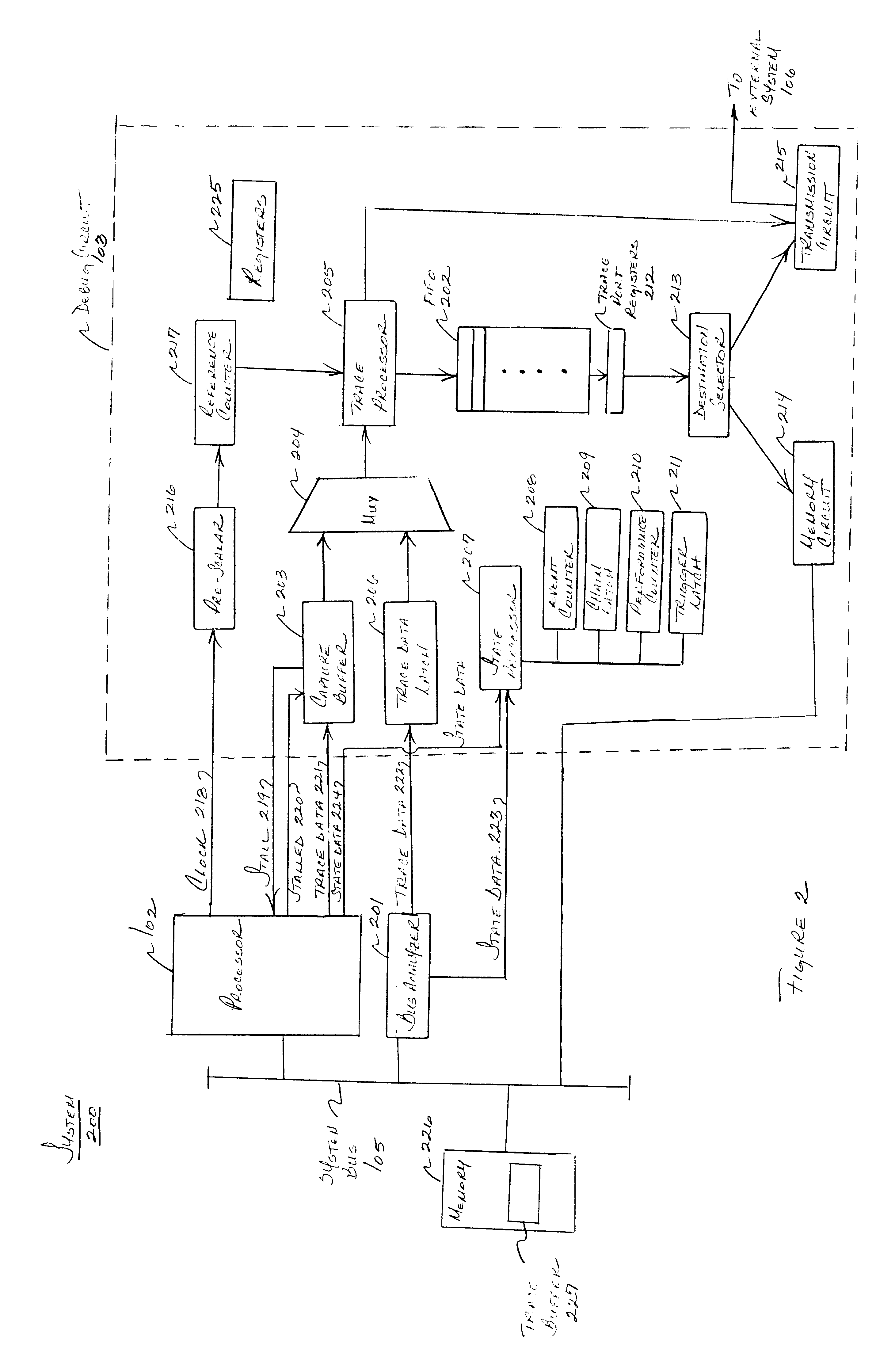

Circuit for processing trace information

InactiveUS6684348B1Maximizes on-chip trace storageEliminating duplicate informationSoftware testing/debuggingArchitecture with single central processing unitMemory mapTrace buffer

A system for performing non-intrusive trace is provided which receives trace information from one or more processors. The trace system may be configured by a user to operate in various modes for flexibly storing or transmitting the trace information. The trace system includes a FIFO which is memory-mapped and is capable of being accessed without affecting processor performance. In one aspect, the trace system includes a trace buffer which receives trace information at an internal clock speed of the processor. In another embodiment, a compression protocol is provided for compressing trace messages on-chip prior to transmitting the messages to an external system or storing the messages in memory.

Owner:RENESAS ELECTRONICS CORP

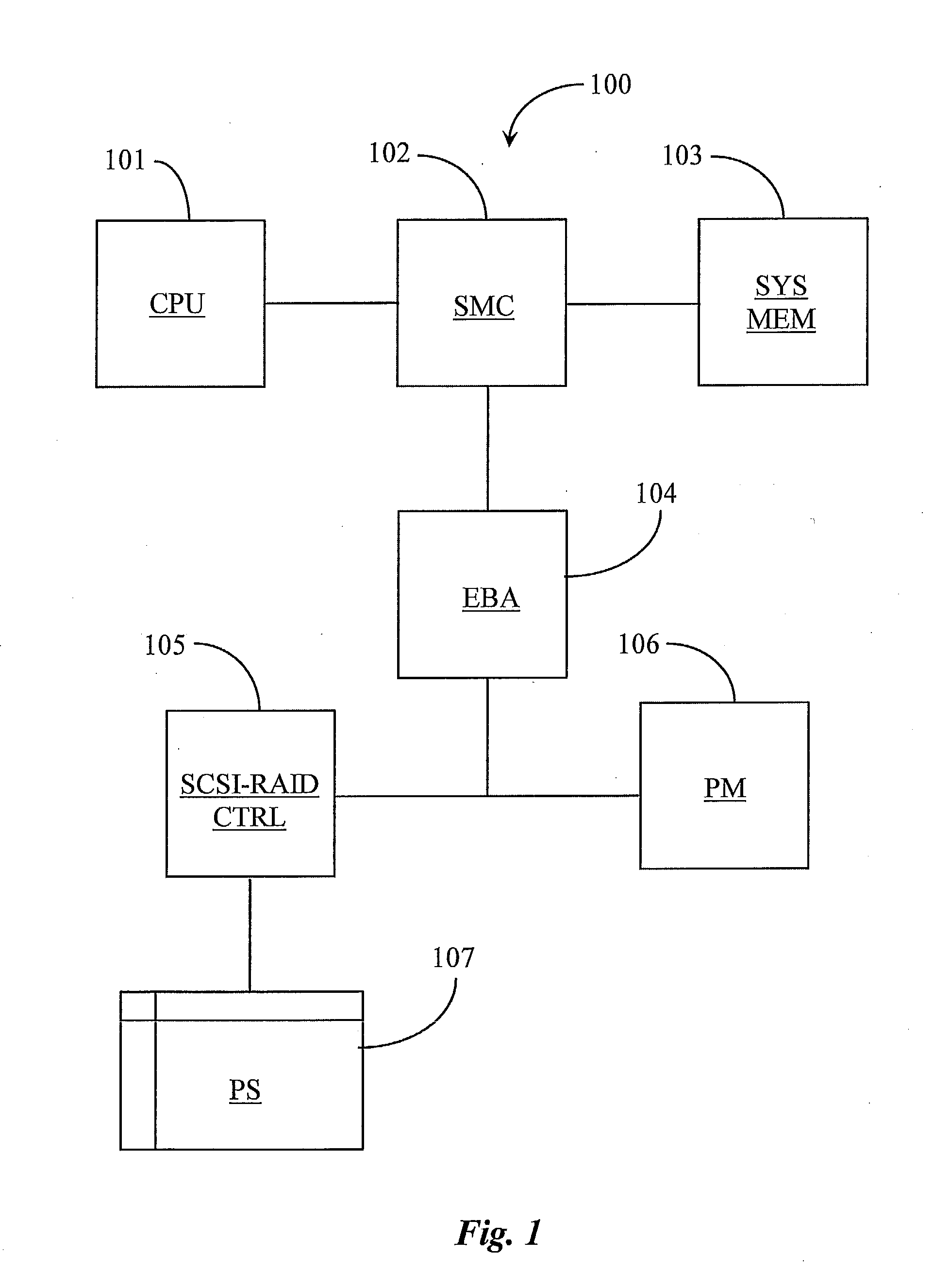

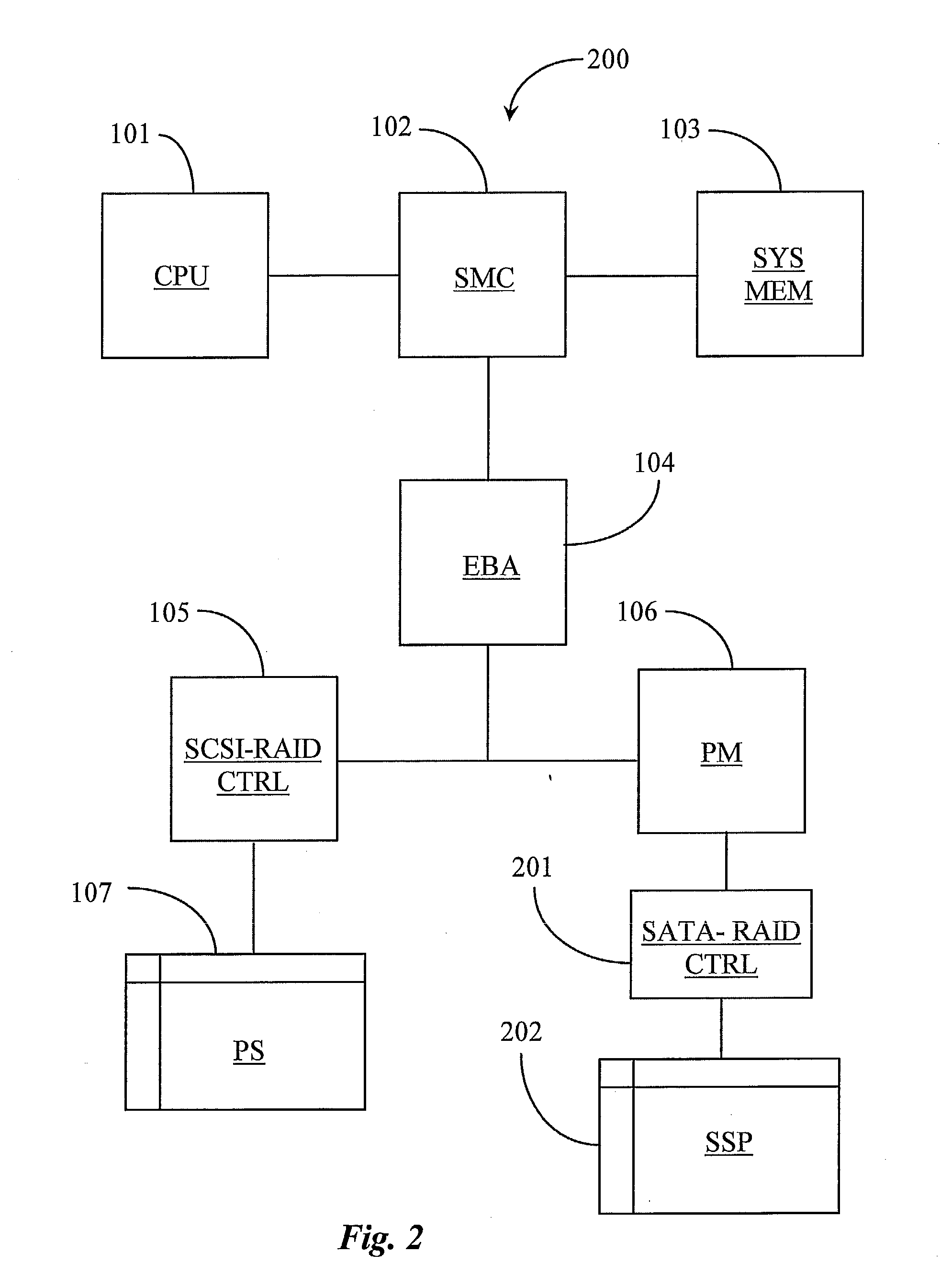

System for Enabling Secure and Automatic Data Backup and Instant Recovery

InactiveUS20070033356A1Improve performanceMemory architecture accessing/allocationMemory loss protectionOperational systemLatency (engineering)

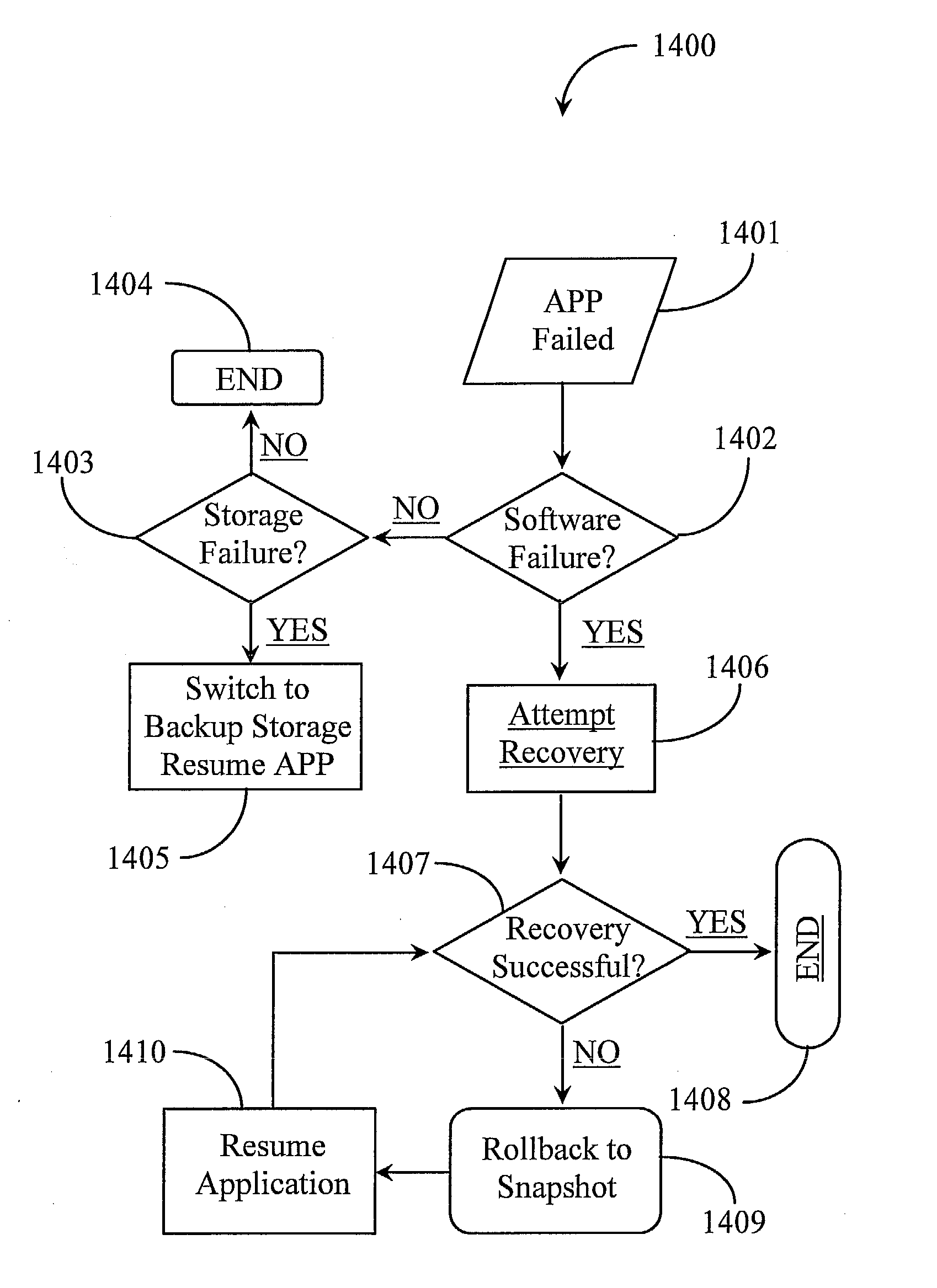

A host-based system for enhancing performance for a computing appliance has a central processing unit, an operating system, a long-term disk storage medium, and a persistent low latency memory (PLLM). Writes to disk storage at random addresses are first made to the PLLM, which also stores a memory map of the disk storage medium, and later made, in sequence, to the disk storage medium according to the memory map. In another aspect the host-based system is for continuous data protection and backup for a computing appliance, and has a central processing unit, an operating system, a long-term disk storage medium, and a persistent low latency memory (PLLM). In this aspect periodic system state snapshots are stored in the PLLM associated with sequence of writes to memory made between snapshots, enabling restoration of the host to any state of a prior snapshot stored in the PLLM, and then adjustment, via the record of writes to memory between snapshots, to any state desired between the snapshot states.

Owner:BACCEL

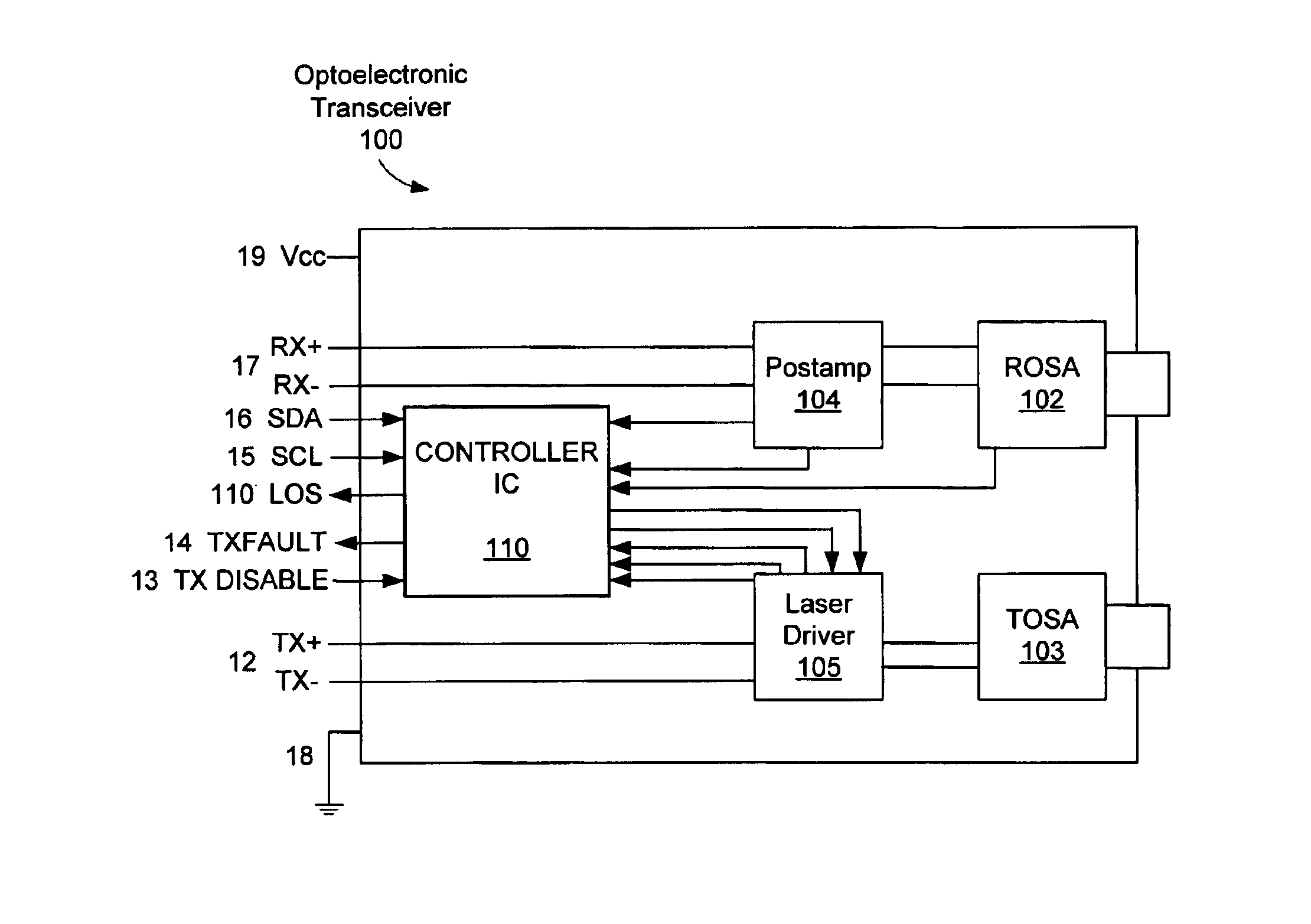

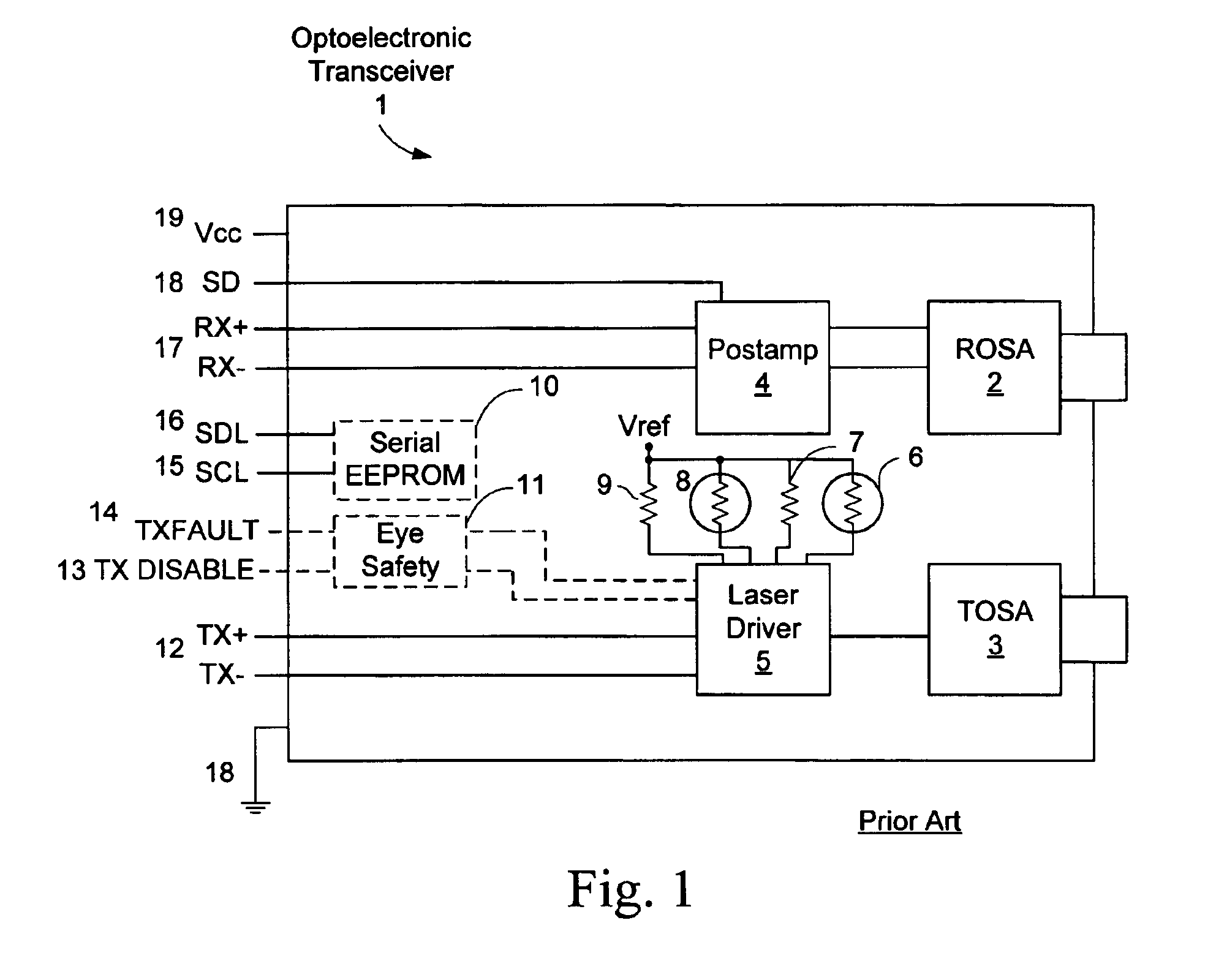

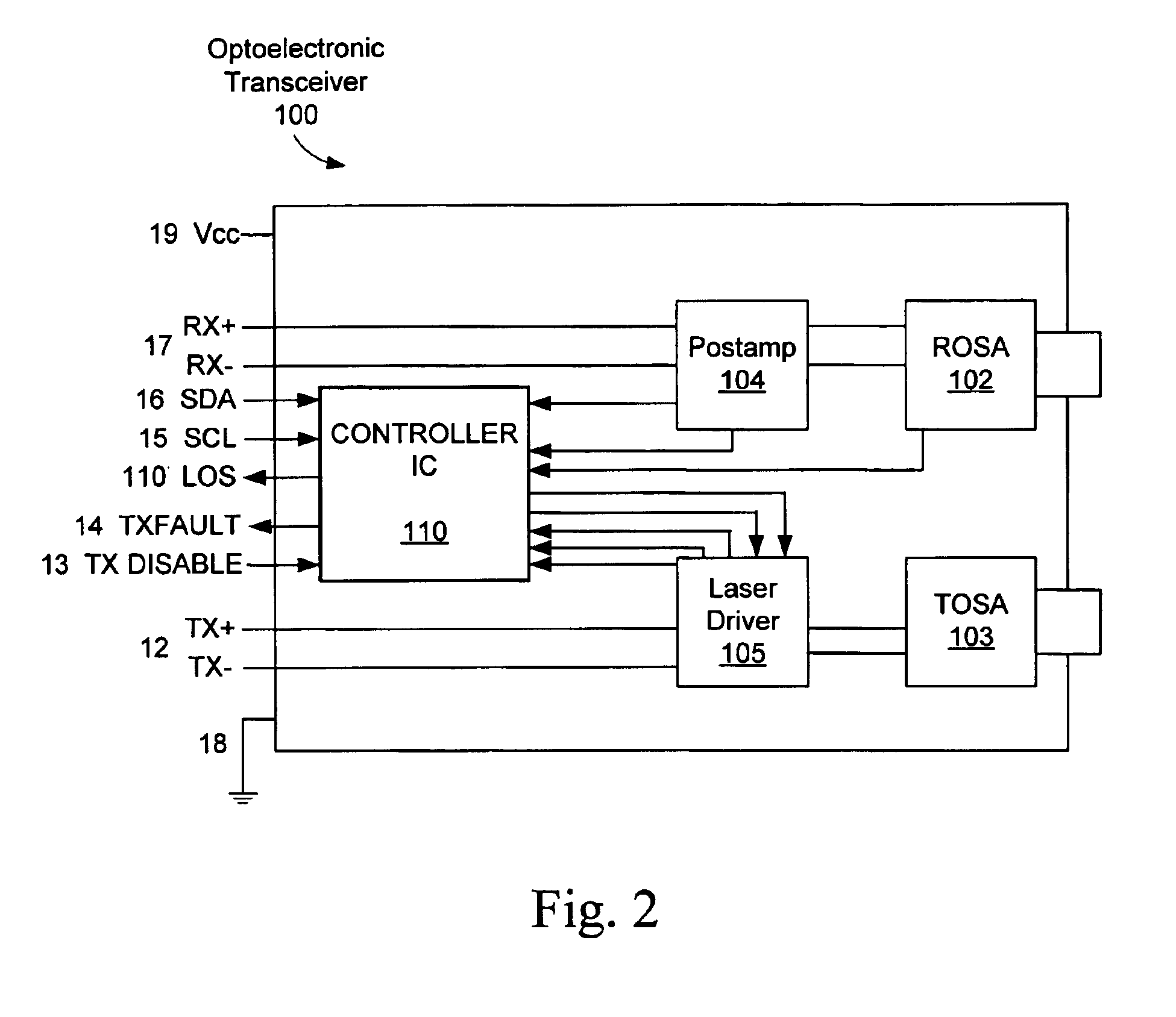

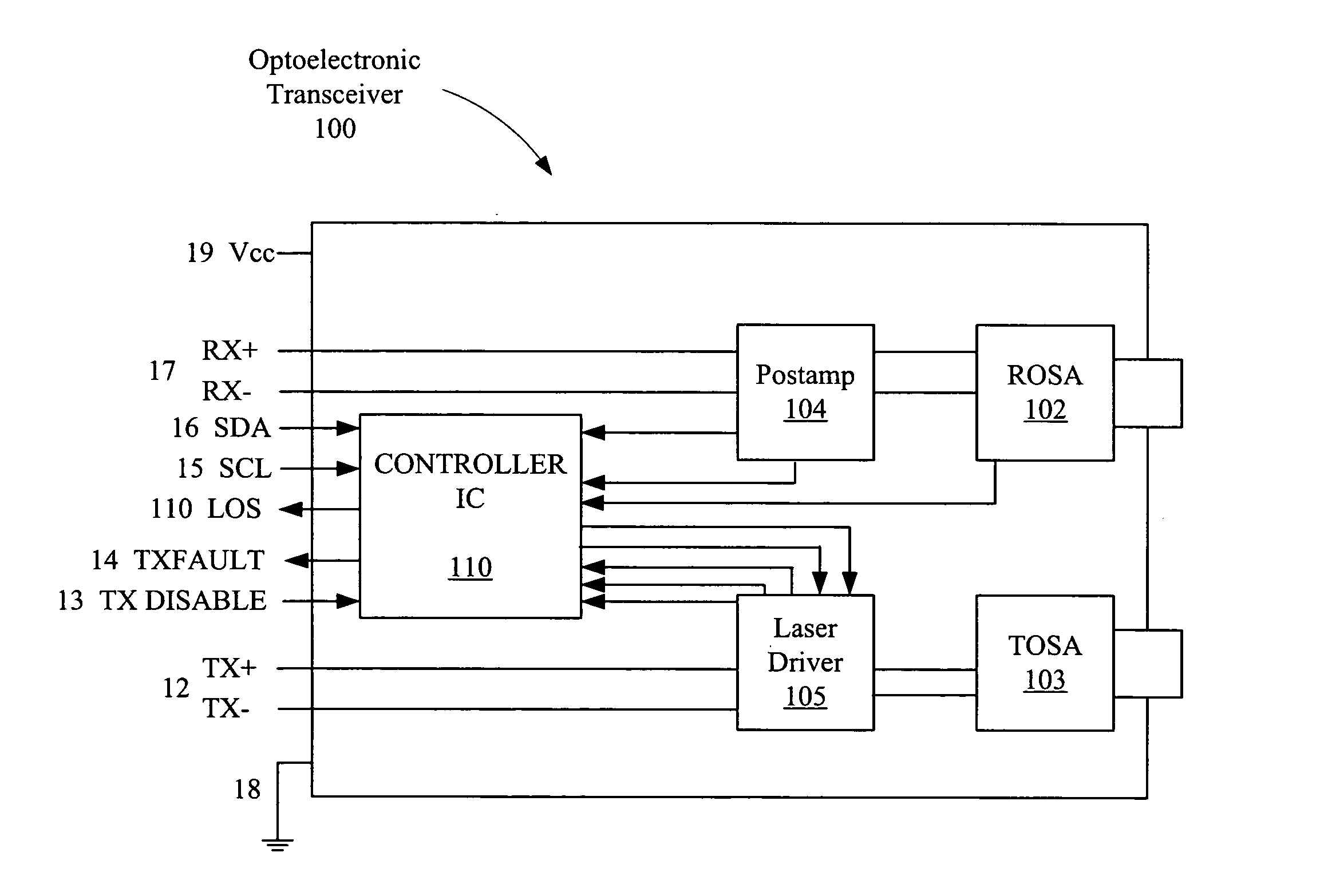

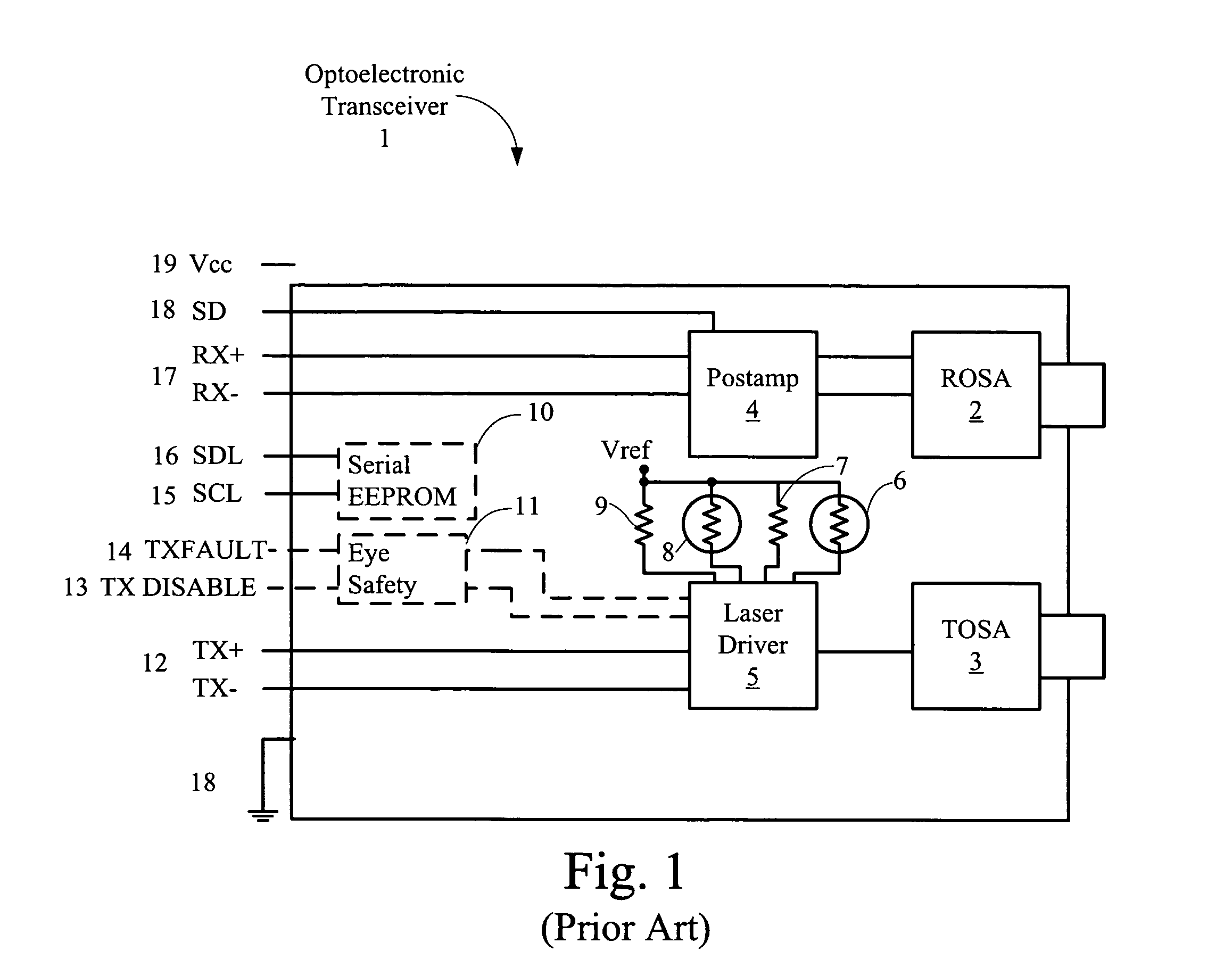

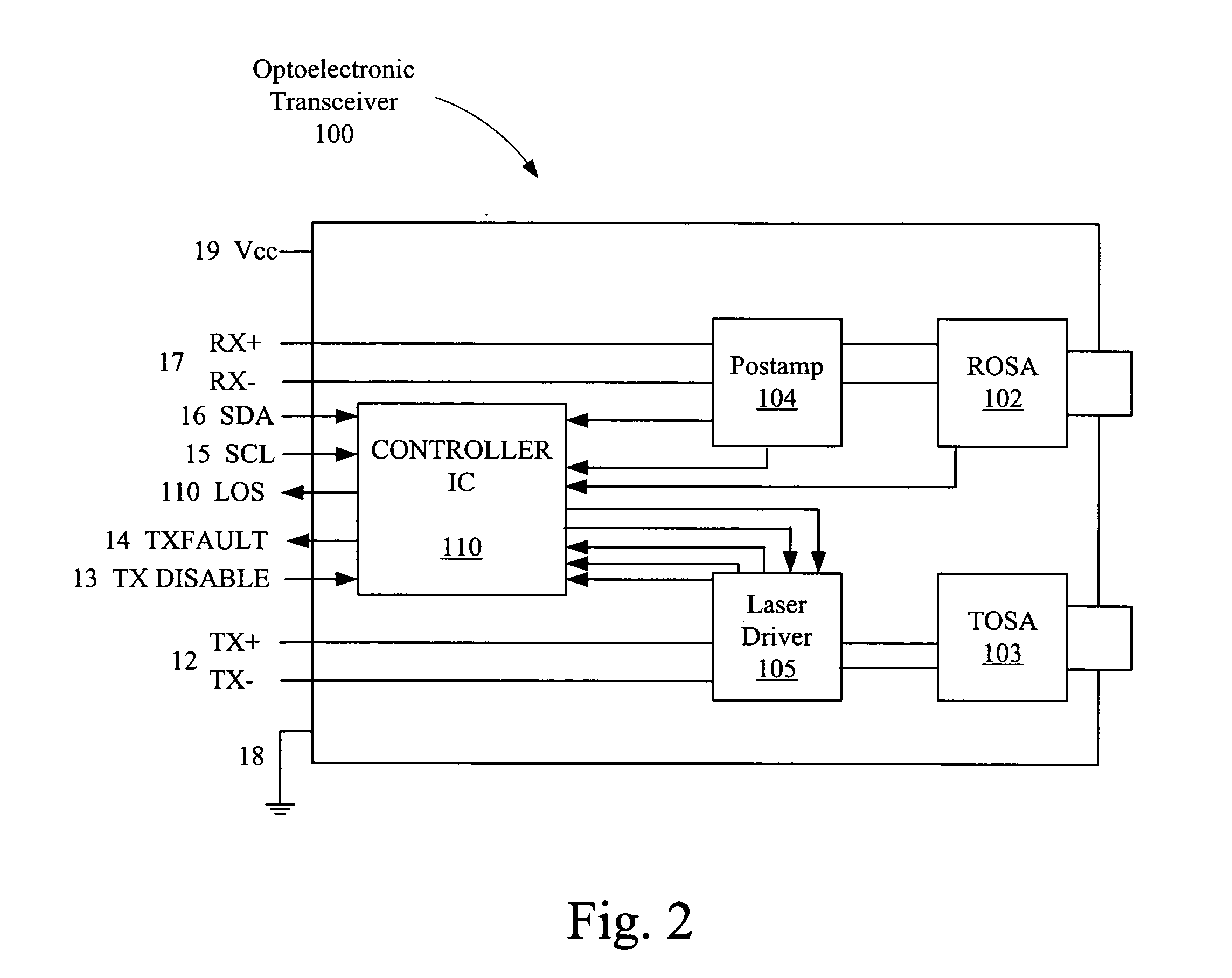

Memory mapped monitoring circuitry for optoelectronic device

InactiveUS6941077B2Laser detailsTransmission monitoring/testing/fault-measurement systemsLaser transmitterAnalog signal

Circuitry for monitoring operation of an optoelectronic device having a laser transmitter and a photodiode receiver includes analog to digital conversion circuitry for receiving a plurality of analog signals from the laser transmitter and photodiode receiver, converting the received analog signals into digital values, and storing the digital values in predefined memory-mapped locations within the optoelectronic device. Comparison logic compares one or more of these digital values with limit values, generates flag values based on the comparisons, and stores the flag values in predefined locations within the optoelectronic device. An interface enables a host device to read from and write to host-specified memory mapped locations within the optoelectronic device.

Owner:II VI DELAWARE INC

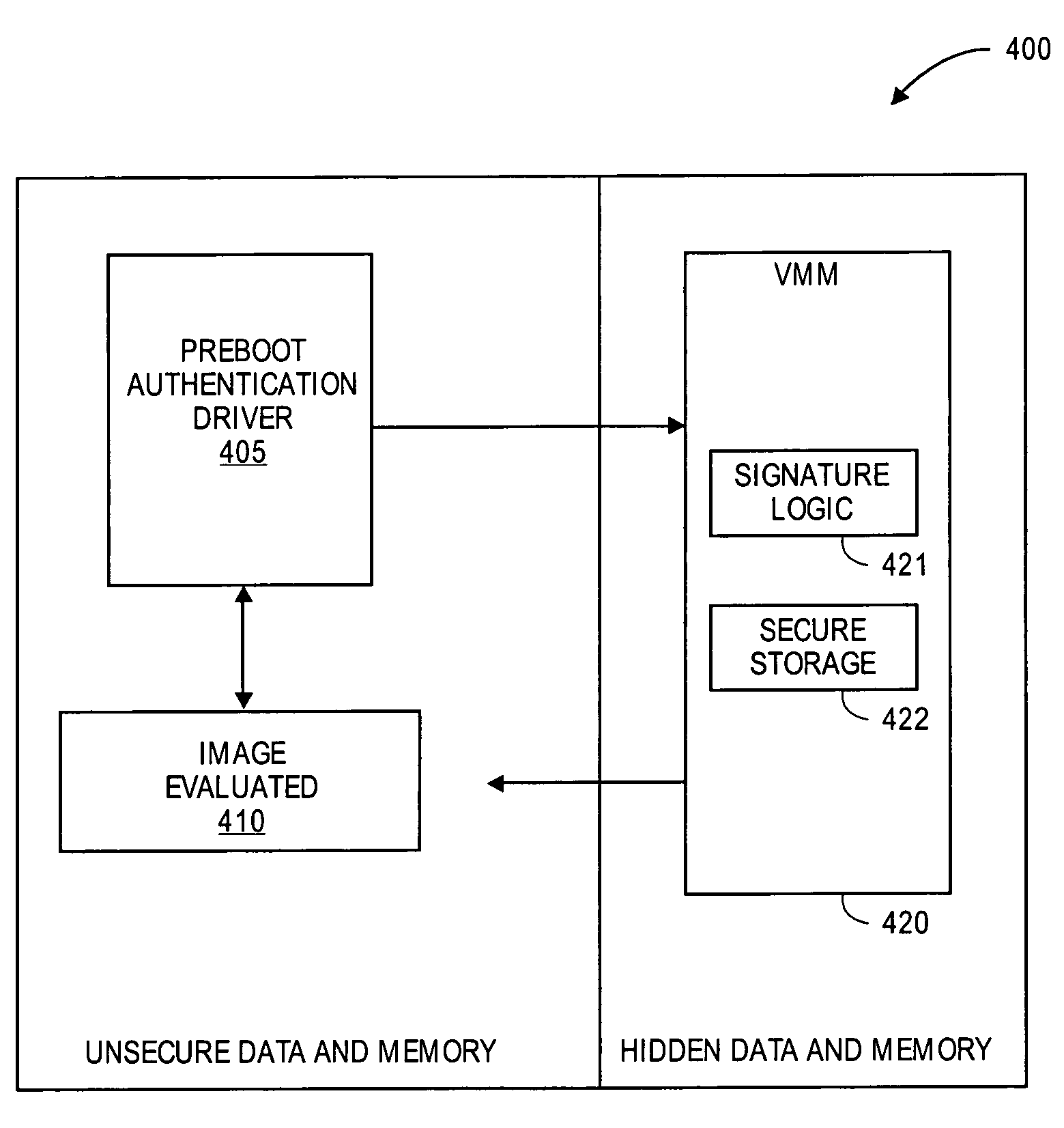

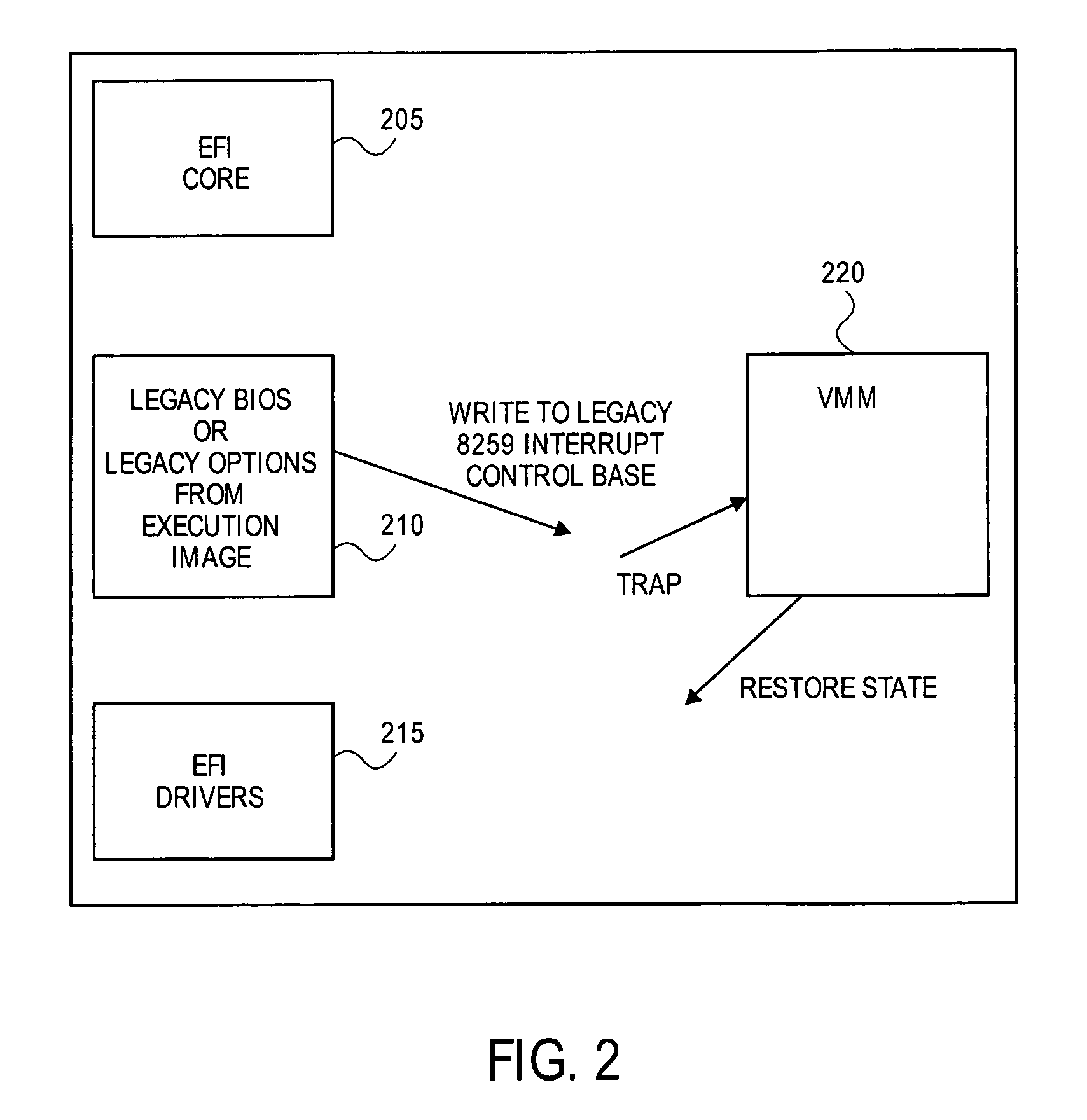

Method for providing system integrity and legacy environment emulation

InactiveUS7103529B2Protective structureProgram control using stored programsDigital computer detailsVirtualizationComputer compatibility

A method and apparatus to provide pre-boot security and legacy hardware and environment support for a computing system having an extensible firmware architecture is described. A virtual machine monitor is employed to provide the virtualization of system state for the purposes of running legacy compatibility code or protecting key data and code regions for safety and security. An application may be given access to a subset of the system resources, and access to portions of the memory map not designated for updates would trap (program interrupt) to the VMM. A VMM pre-boot policy agent may then protect state and unload any problematic software.

Owner:INTEL CORP

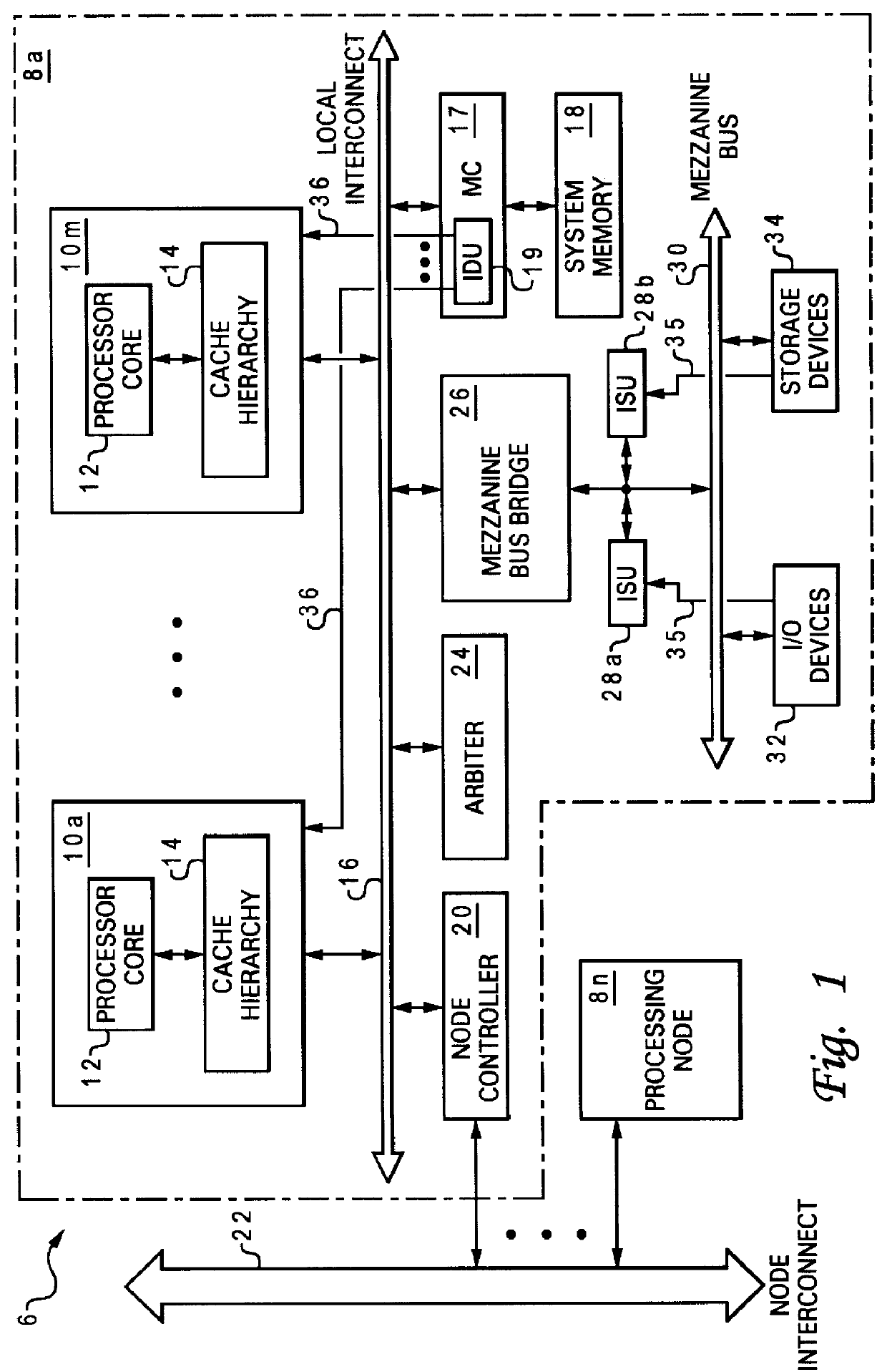

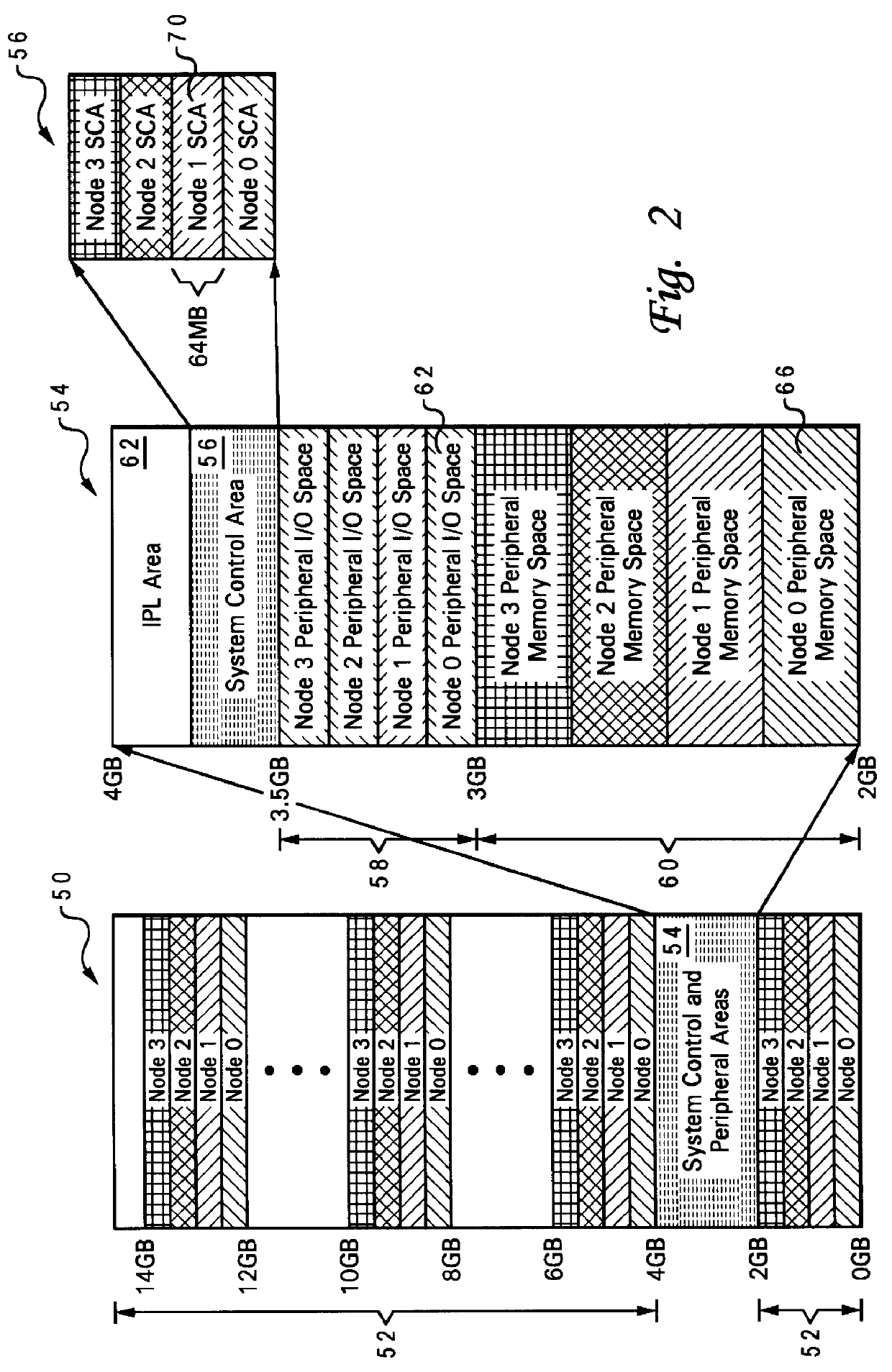

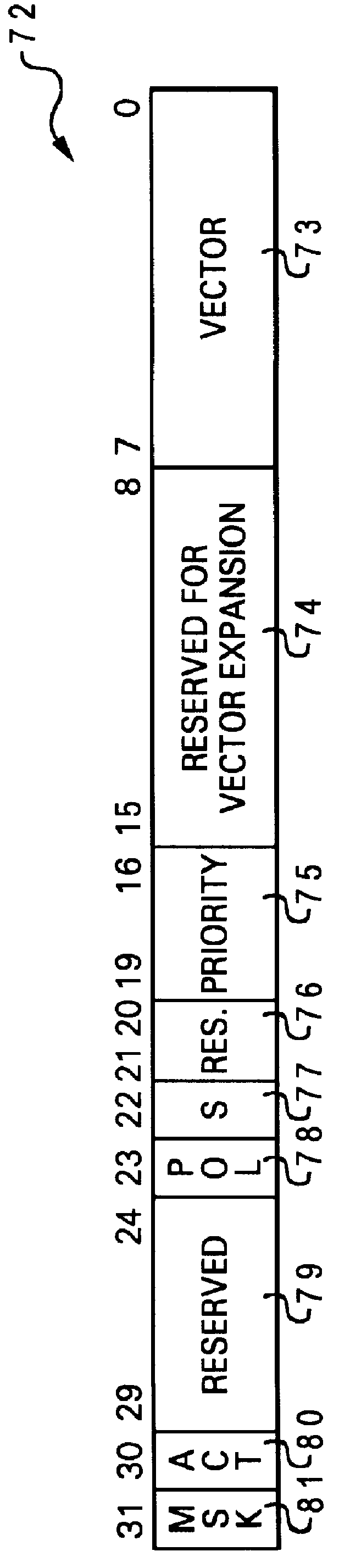

Interrupt architecture for a non-uniform memory access (NUMA) data processing system

InactiveUS6148361ASmall sizePromote disseminationProgram initiation/switchingGeneral purpose stored program computerExtensibilityData processing system

A non-uniform memory access (NUMA) computer system includes at least two nodes coupled by a node interconnect, where at least one of the nodes includes a processor for servicing interrupts. The nodes are partitioned into external interrupt domains so that an external interrupt is always presented to a processor within the external interrupt domain in which the interrupt occurs. Although each external interrupt domain typically includes only a single node, interrupt channeling or interrupt funneling may be implemented to route external interrupts across node boundaries for presentation to a processor. Once presented to a processor, interrupt handling software may then execute on any processor to service the external interrupt. Servicing external interrupts is expedited by reducing the size of the interrupt handler polling chain as compared to prior art methods. In addition to external interrupts, the interrupt architecture of the present invention supports inter-processor interrupts (IPIs) by which any processor may interrupt itself or one or more other processors in the NUMA computer system. IPIs are triggered by writing to memory mapped registers in global system memory, which facilitates the transmission of IPIs across node boundaries and permits multicast IPIs to be triggered simply by transmitting one write transaction to each node containing a processor to be interrupted. The interrupt hardware within each node is also distributed for scalability, with the hardware components communicating via interrupt transactions conveyed across shared communication paths.

Owner:IBM CORP

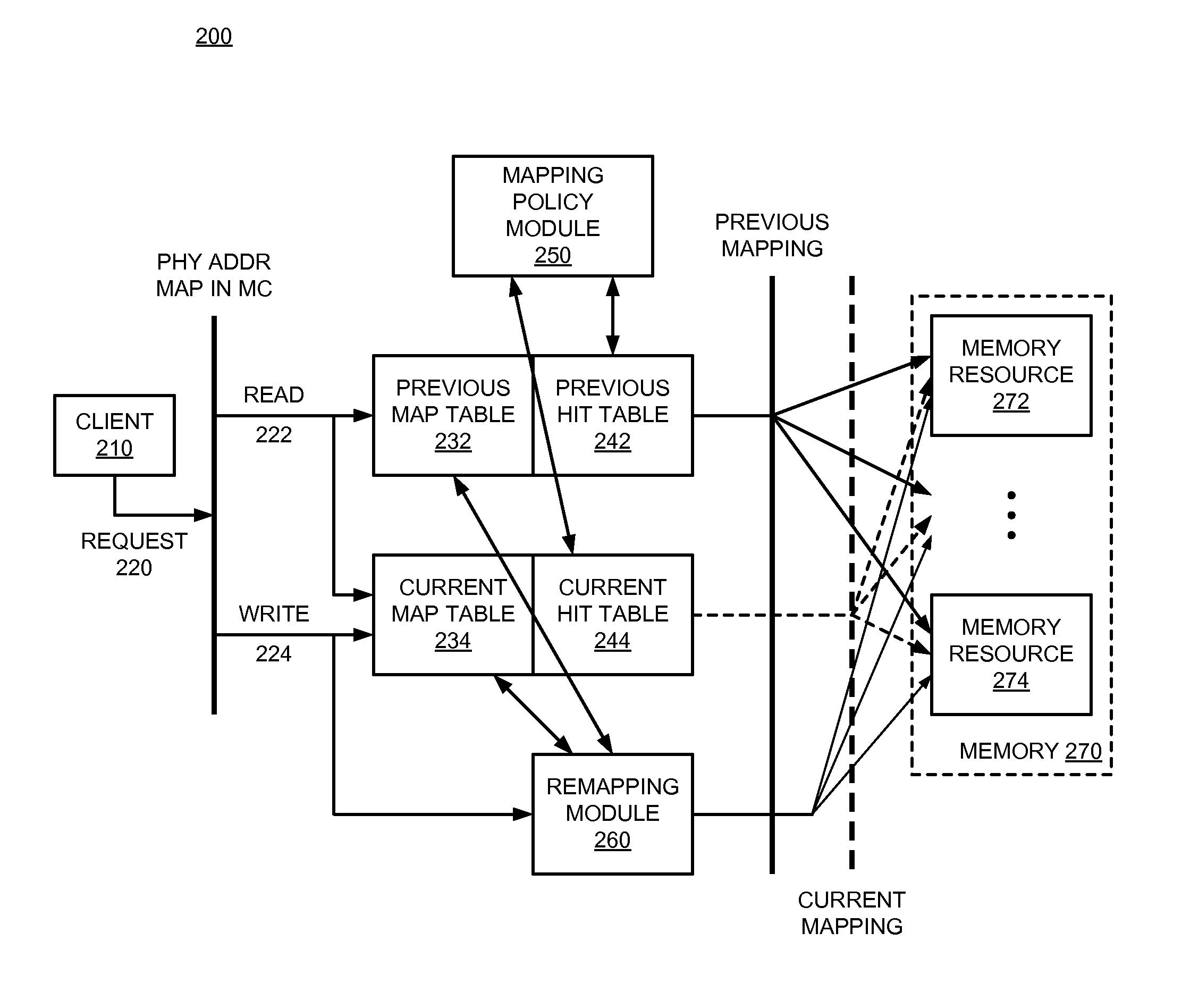

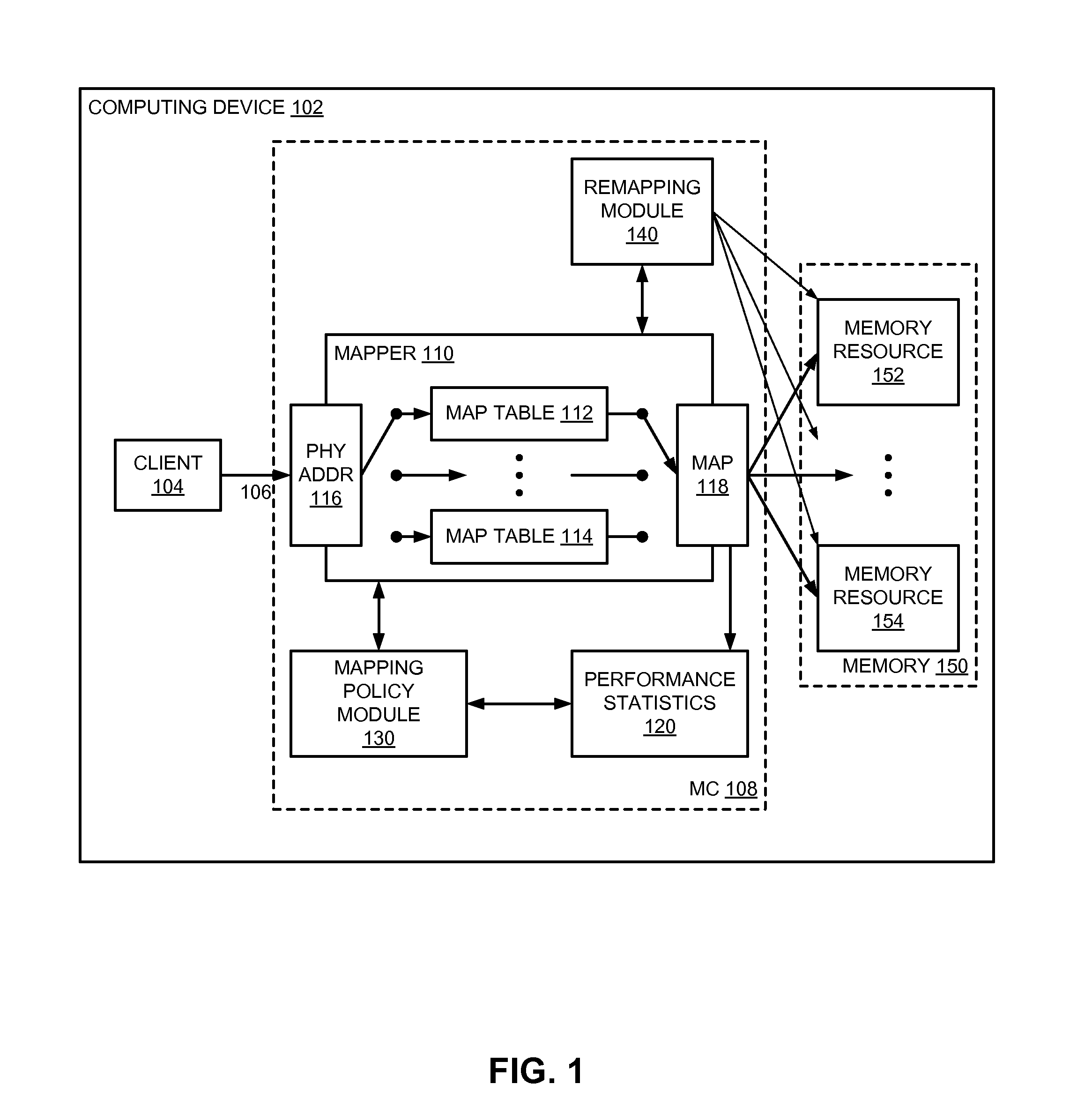

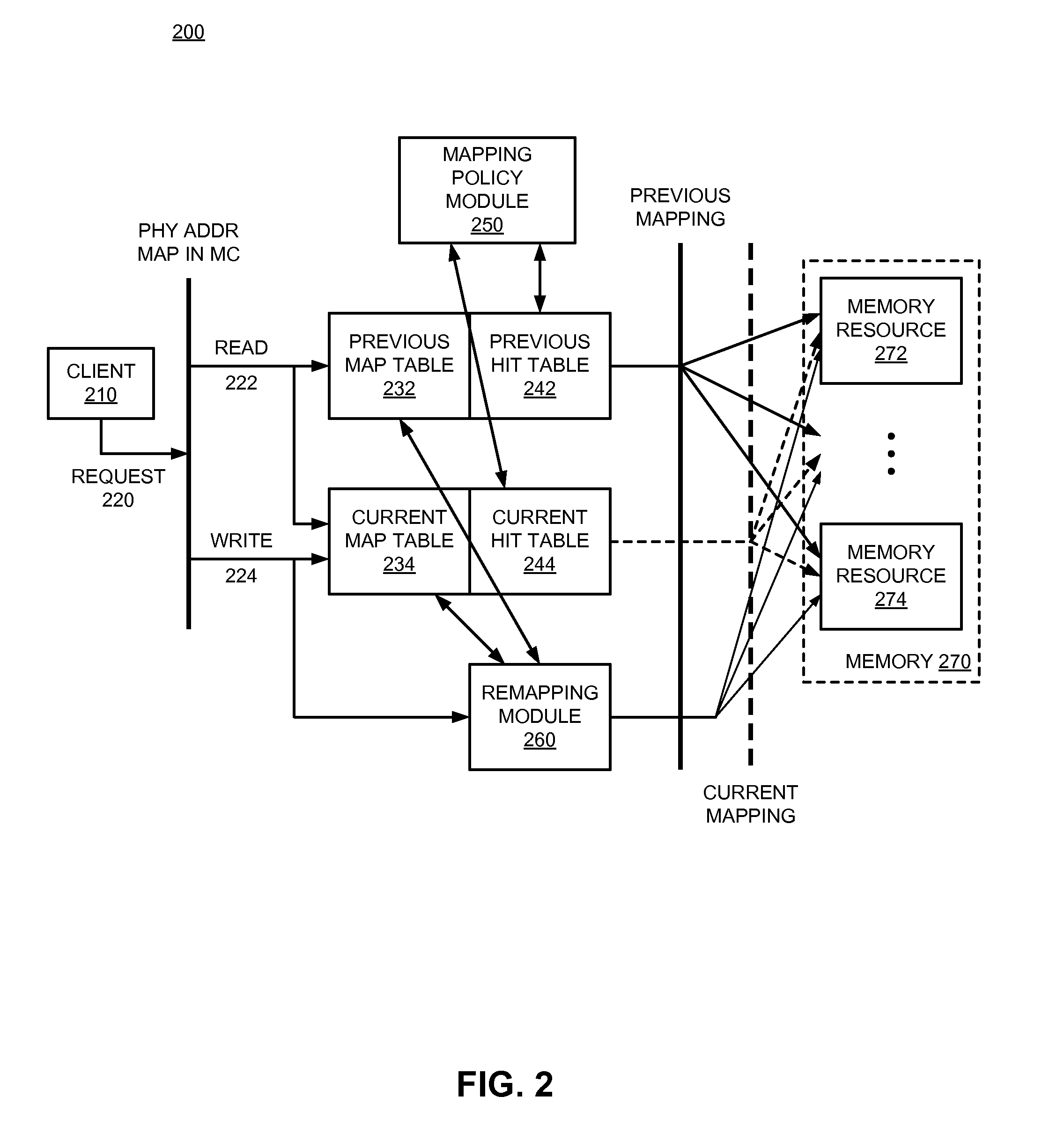

Adaptive address mapping with dynamic runtime memory mapping selection

InactiveUS20110153908A1Energy efficient ICTMemory adressing/allocation/relocationGranularityParallel computing

A system monitors and dynamically changes memory mapping in a runtime of a computing system. The computing system has various memory resources, and multiple possible mappings that indicate how data is to be stored in and subsequently accessed from the memory resources. The performance of each memory mapping may be different under different runtime or load conditions of the computing device. A memory controller can monitor runtime performance of the current memory mapping and dynamically change memory mappings at runtime based on monitored or observed performance of the memory mappings. The performance monitoring can be modified for any of a number of different granularities possible within the system, from the byte level to memory channel.

Owner:INTEL CORP

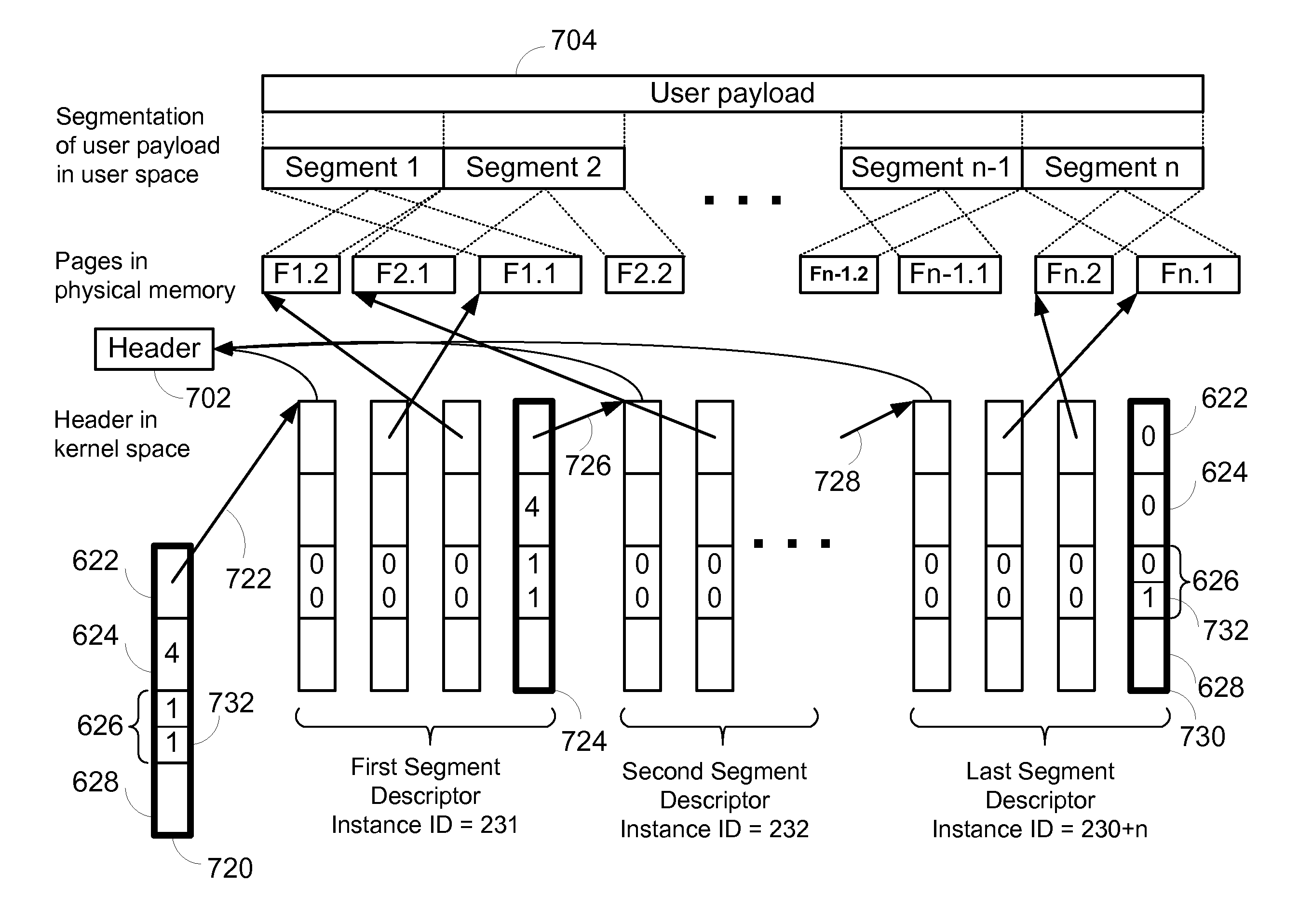

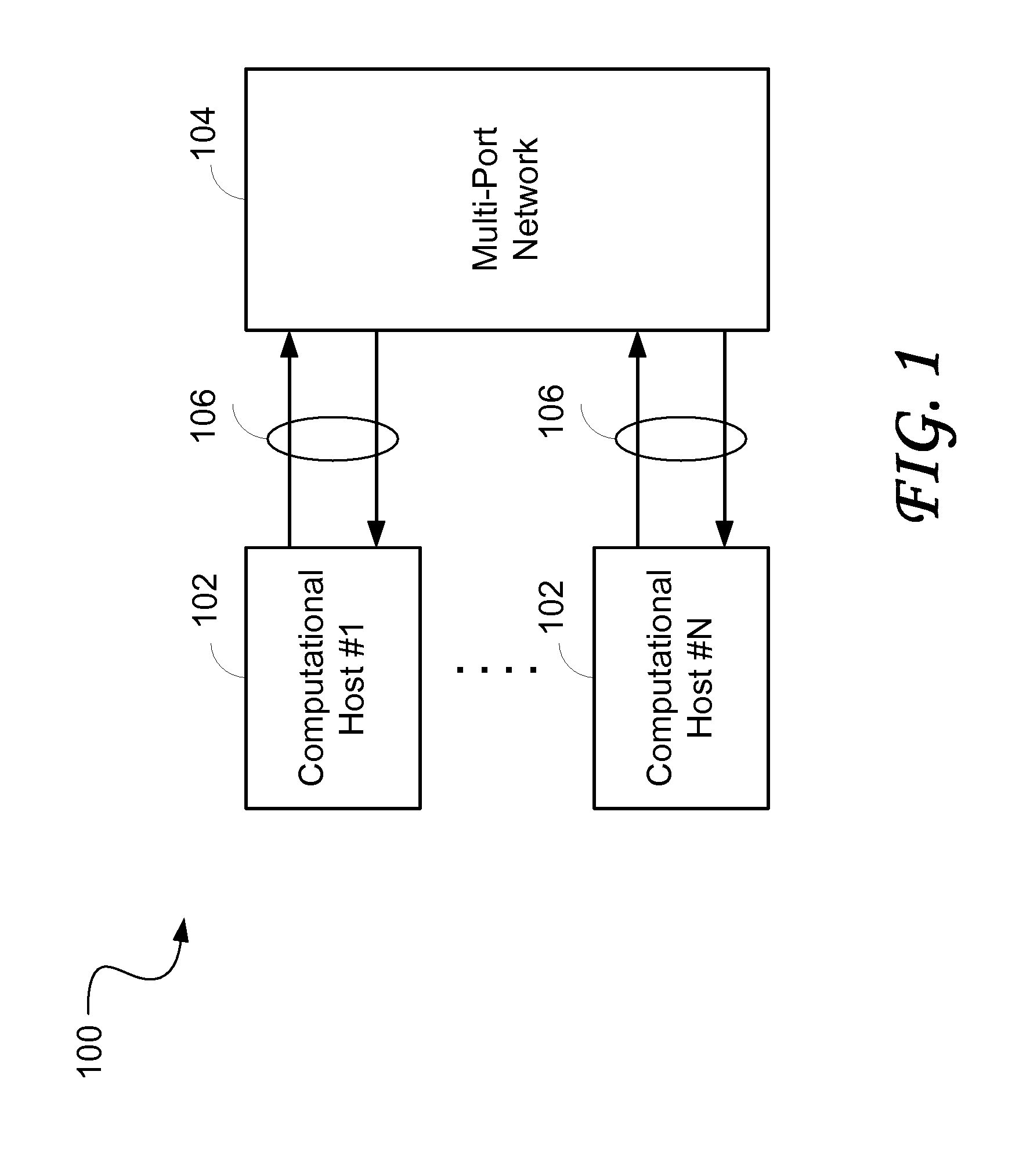

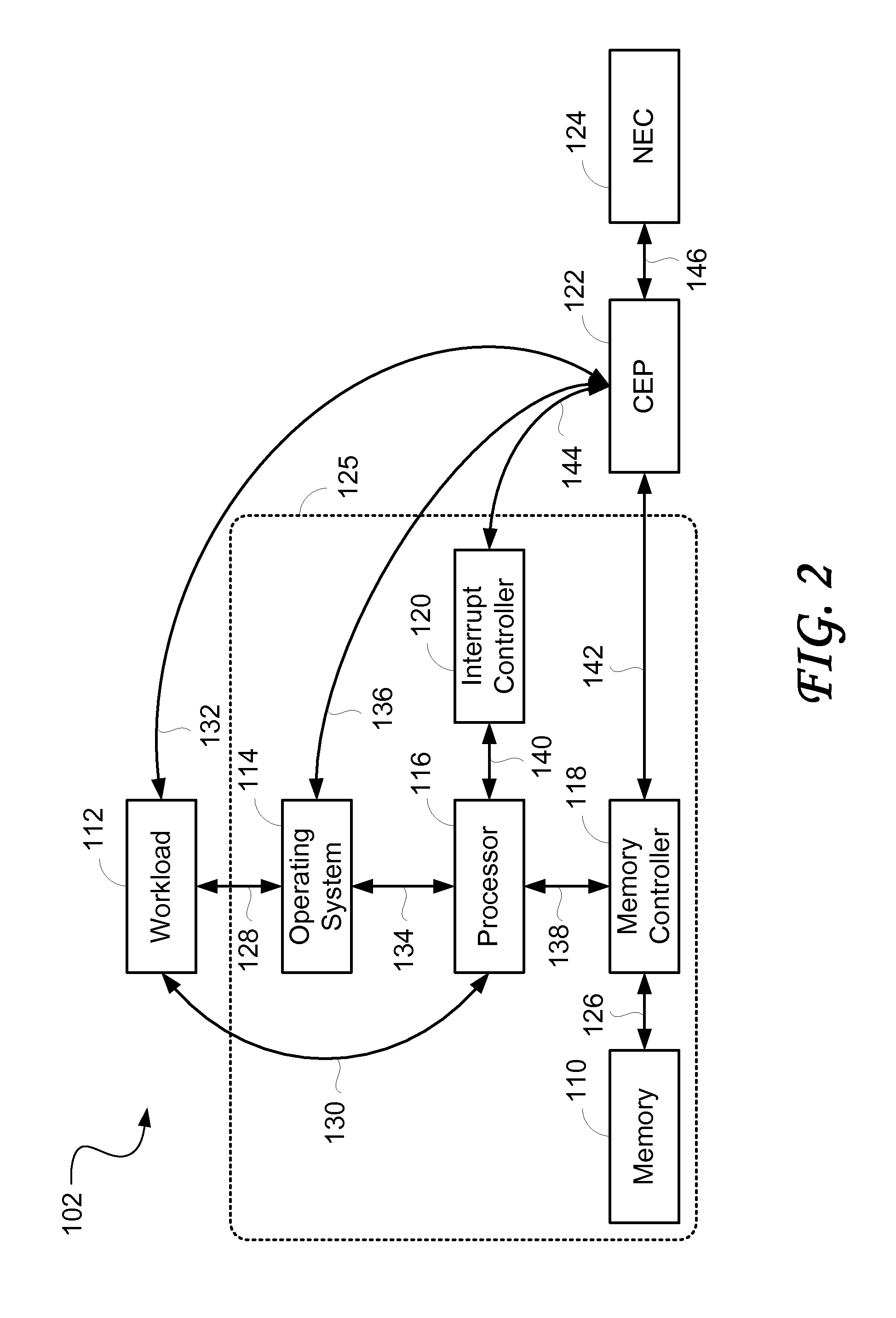

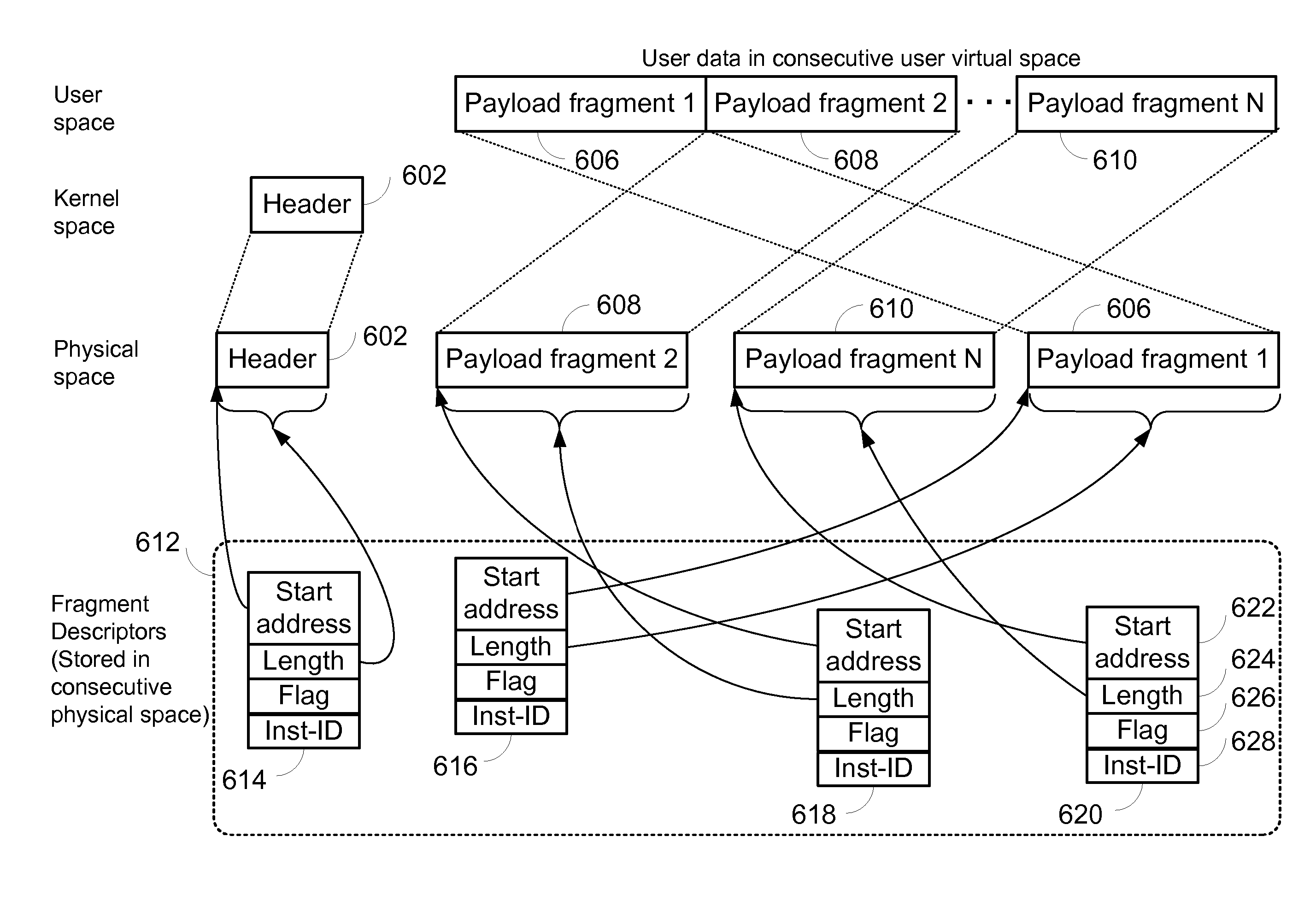

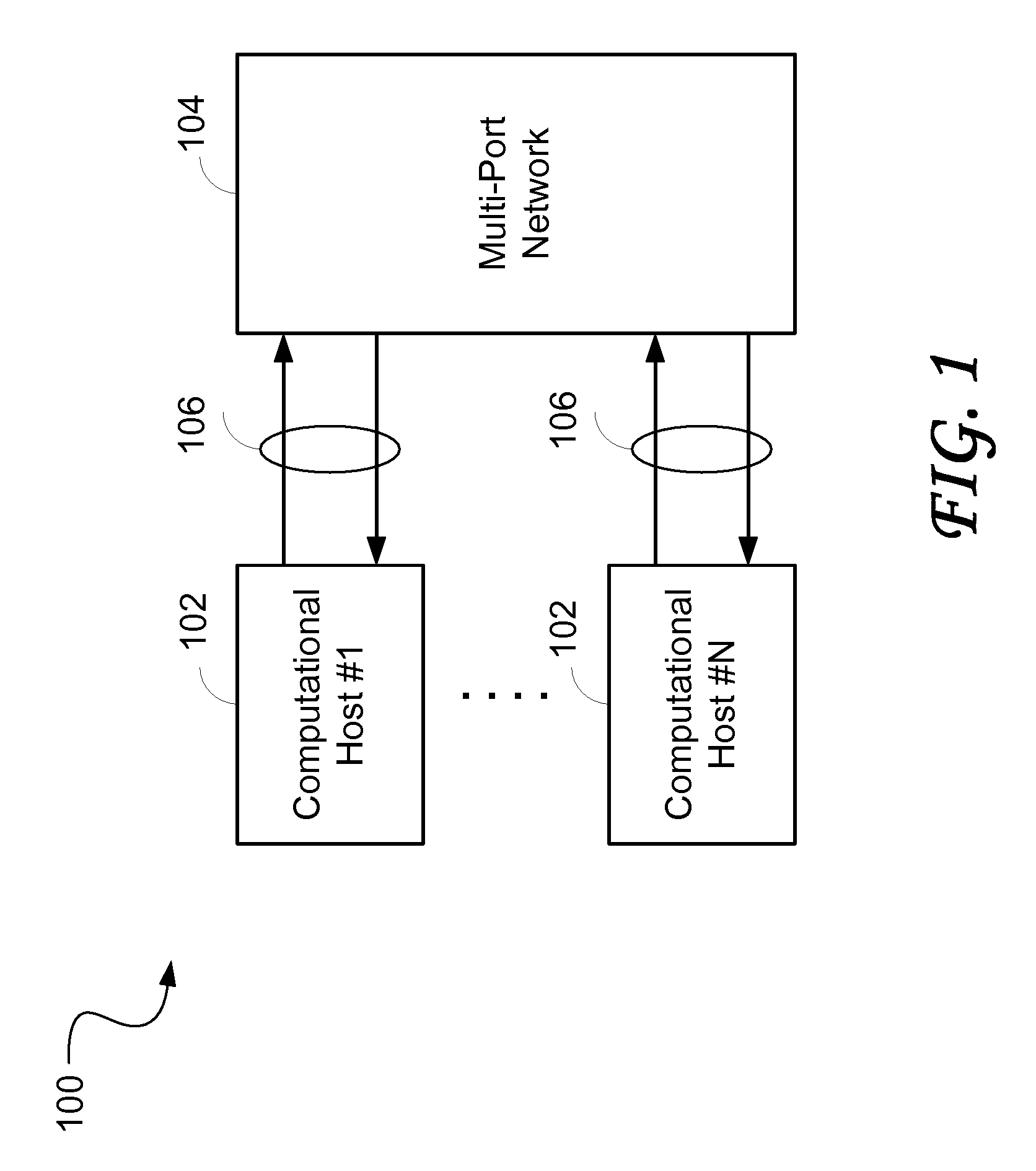

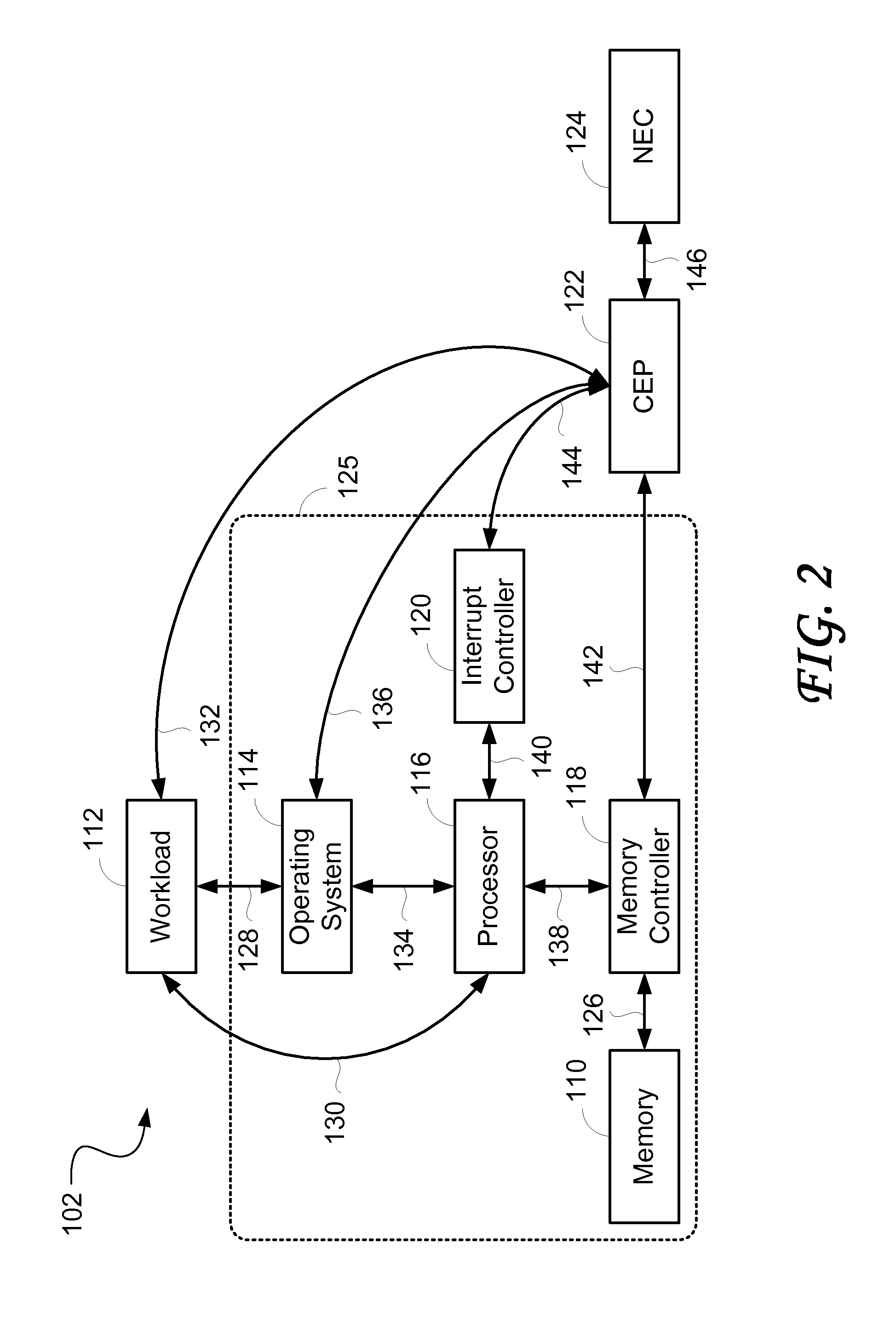

High performance memory based communications interface

ActiveUS20070223483A1Improve performanceImprove throughputTime-division multiplexData switching by path configurationCommunication endpointMultiple frame

Embodiments of the present invention include enhanced functionalities and components within a Communication Endpoint Processor (CEP) that act as an interface between computational and communications domains. The embodiments disclosed herein deliver a complete memory mapped high performance interface that has the ability to support the simultaneous transmission of multiple frames of multiple sizes, and that has the ability to interrupt the transmission of lower priority frames in order to send higher priority frames.

Owner:III HLDG 1

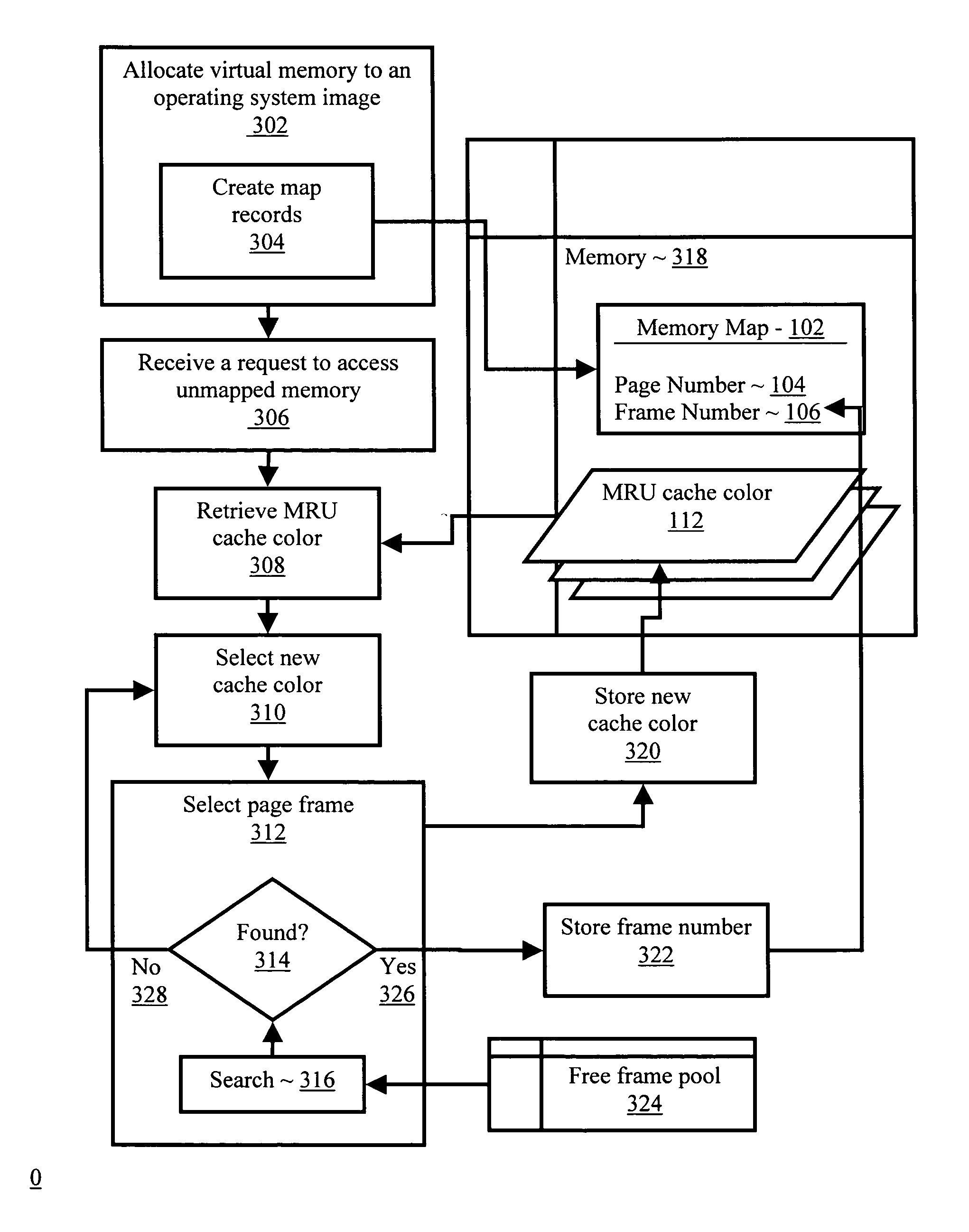

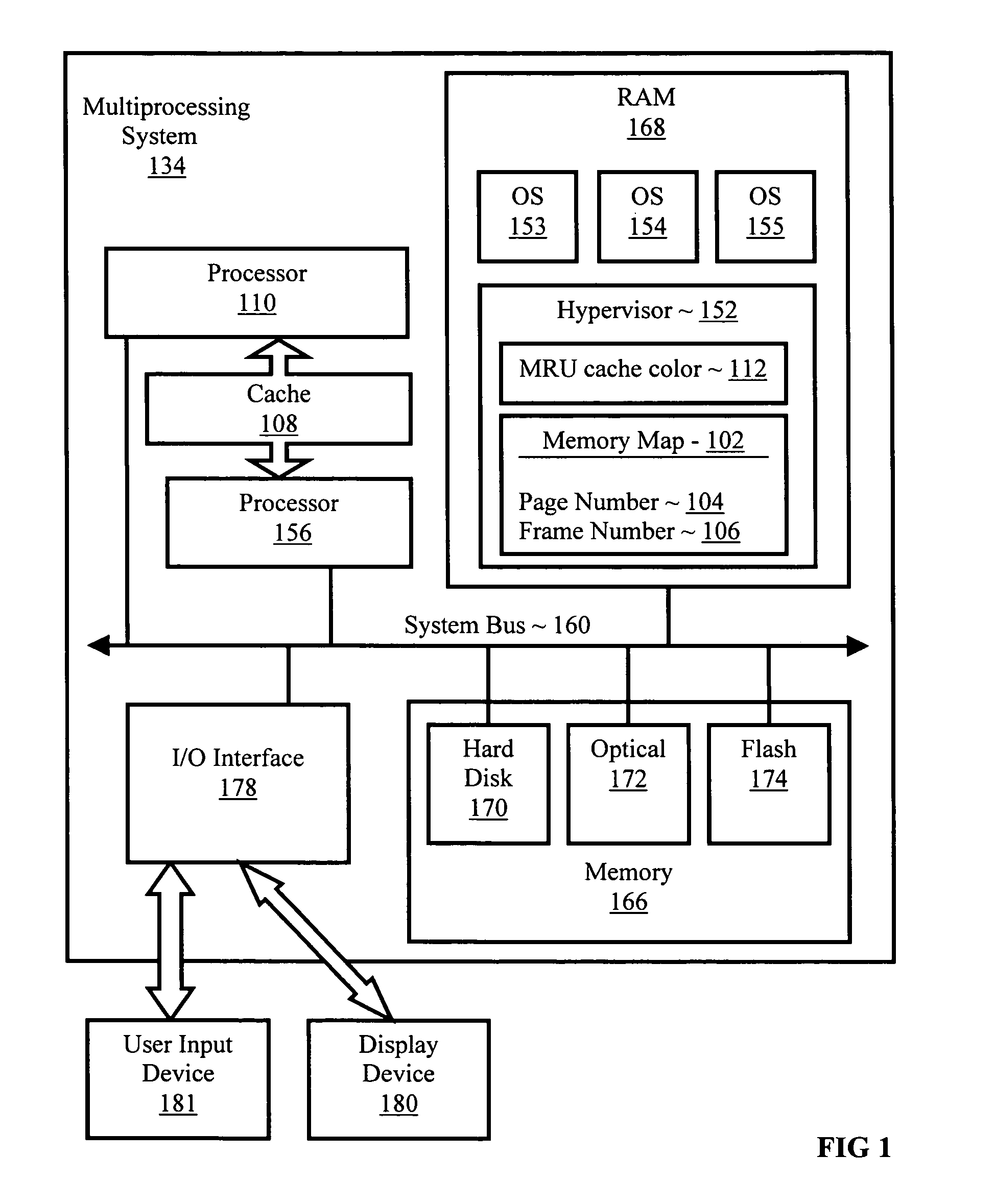

Memory mapping to reduce cache conflicts in multiprocessor systems

InactiveUS7085890B2Memory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryOperational system

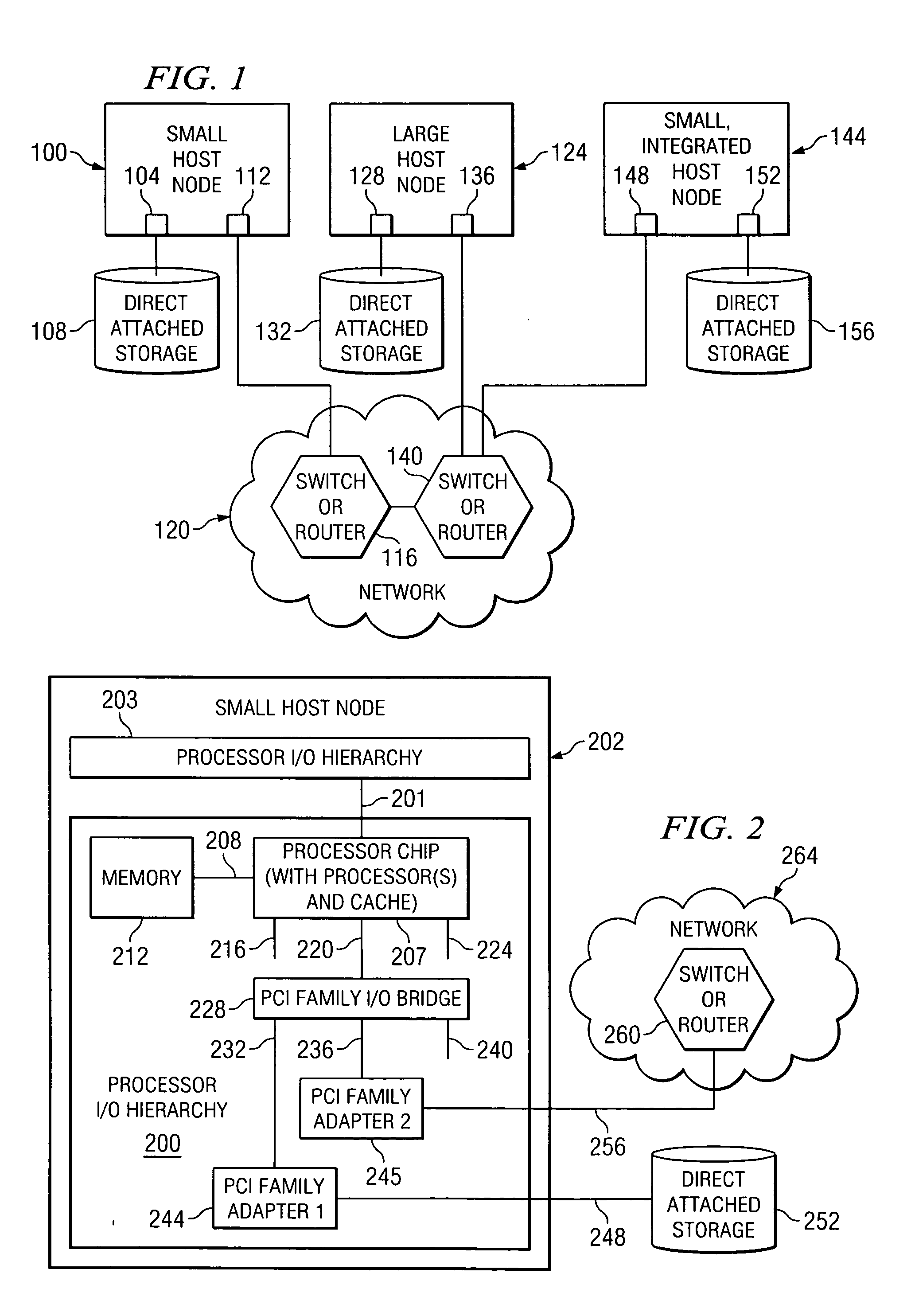

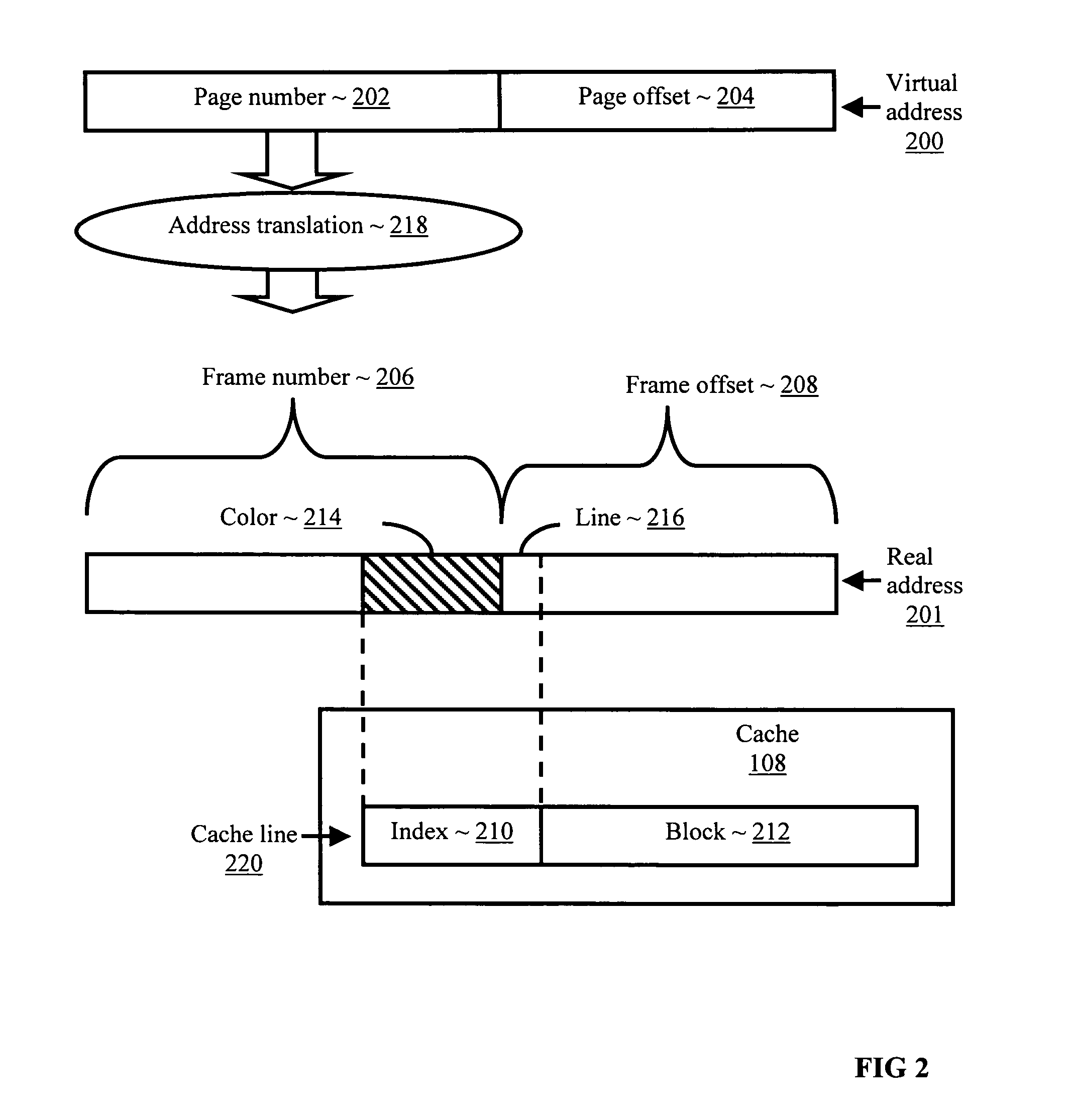

Methods, systems, and computer program products are disclosed for mapping a virtual memory page to a real memory page frame in a multiprocessing environment that supports a multiplicity of operating system images. Typical embodiments include retrieving into an operating system image, from memory accessible to a multiplicity of operating system images, a most recently used cache color for a cache, where the cache is shared by the operating system image with at least one other operating system image; selecting a new cache color in dependence upon the most recently used cache color; selecting in the operating system image a page frame in dependence upon the new cache color; and storing in the memory the new cache color as the most recently used cache color for the cache.

Owner:INT BUSINESS MASCH CORP

Memory system having NAND-based nor and NAND flashes and SRAM integrated in one chip for hybrid data, code and cache storage

InactiveUS20100329011A1Faster and improved read and write operation of memoryEasy to operateMemory adressing/allocation/relocationRead-only memoriesAddress busMemory map

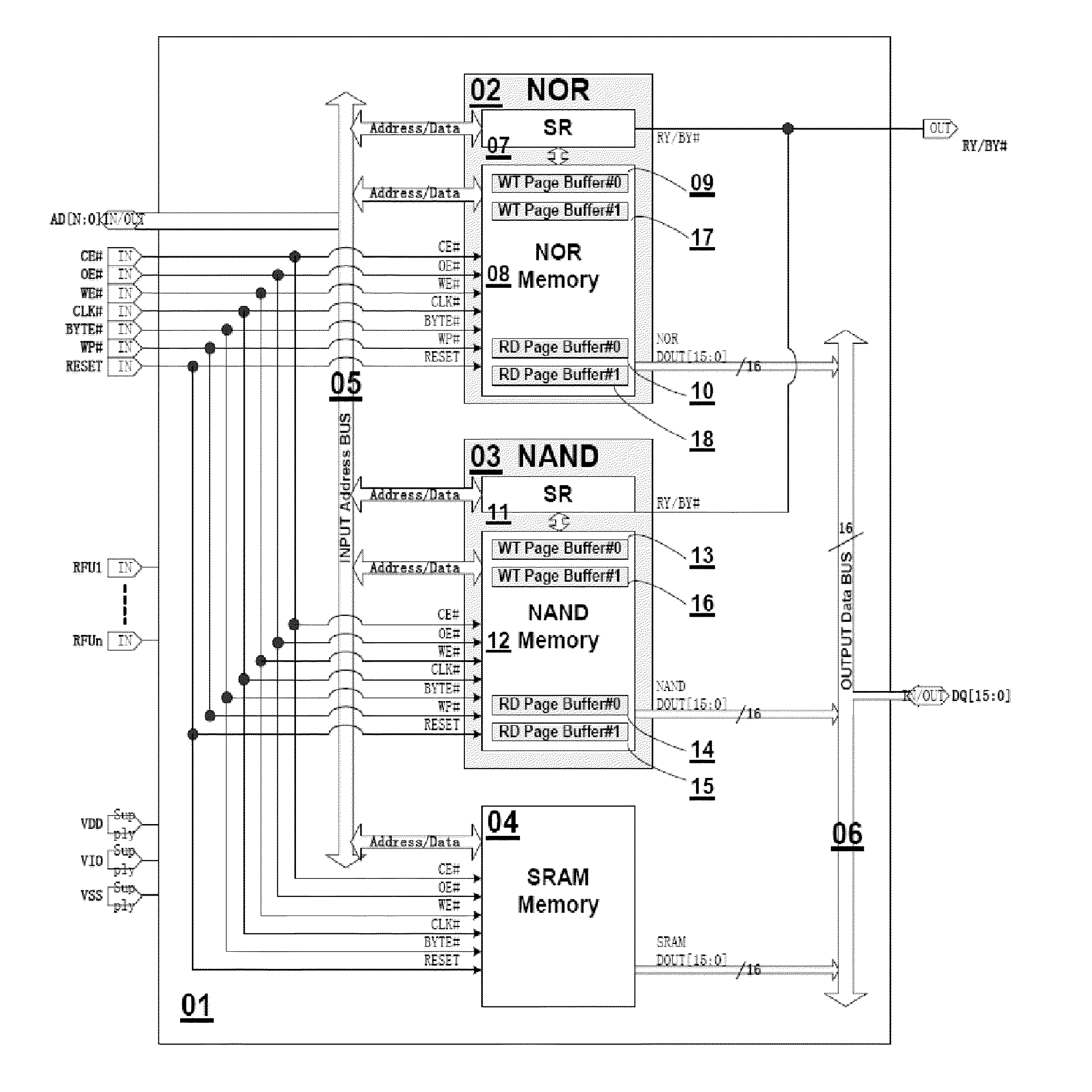

A memory system includes a NAND flash memory, a NOR flash memory and a SRAM manufactured on a single chip. Both NAND and NOR memories are manufactured by the same NAND manufacturing process and NAND cells. The three memories share the same address bus, data bus, and pins of the single chip. The address bus is bi-directional for receiving codes, data and addresses and transmitting output. The data bus is also bi-directional for receiving and transmitting data. One external chip enable pin and one external output enable pin are shared by the three memories to reduce the number of pins required for the single chip. Both NAND and NOR memories have dual read page buffers and dual write page buffers for Read-While-Load and Write-While-Program operations to accelerate the read and write operations respectively. A memory-mapped method is used to select different memories, status registers and dual read or write page buffers.

Owner:APLUS FLASH TECH

High performance memory based communications interface

ActiveUS7773630B2Quality of serviceProvide qualityTime-division multiplexTransmissionCommunication endpointMultiple frame

Owner:III HLDG 1

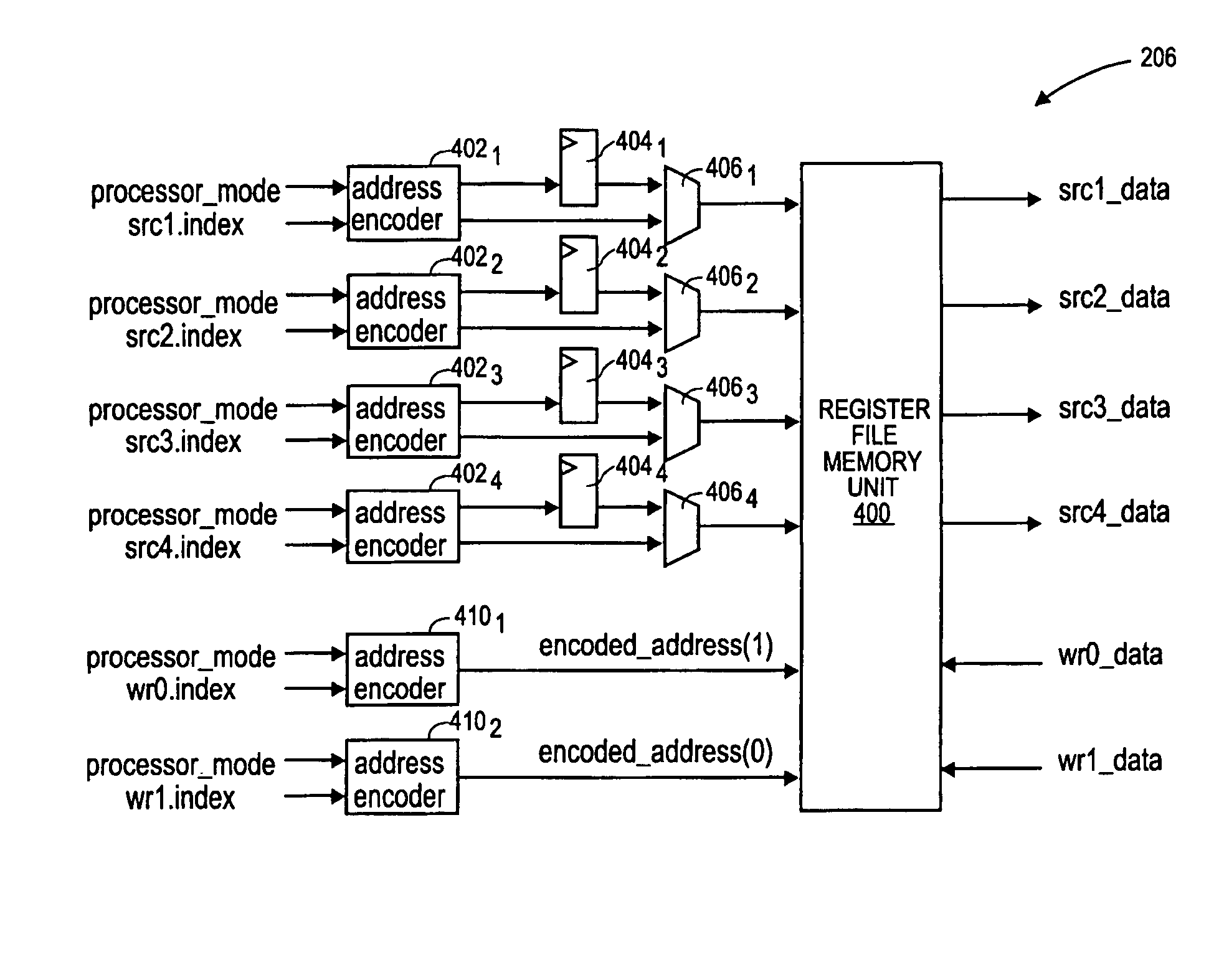

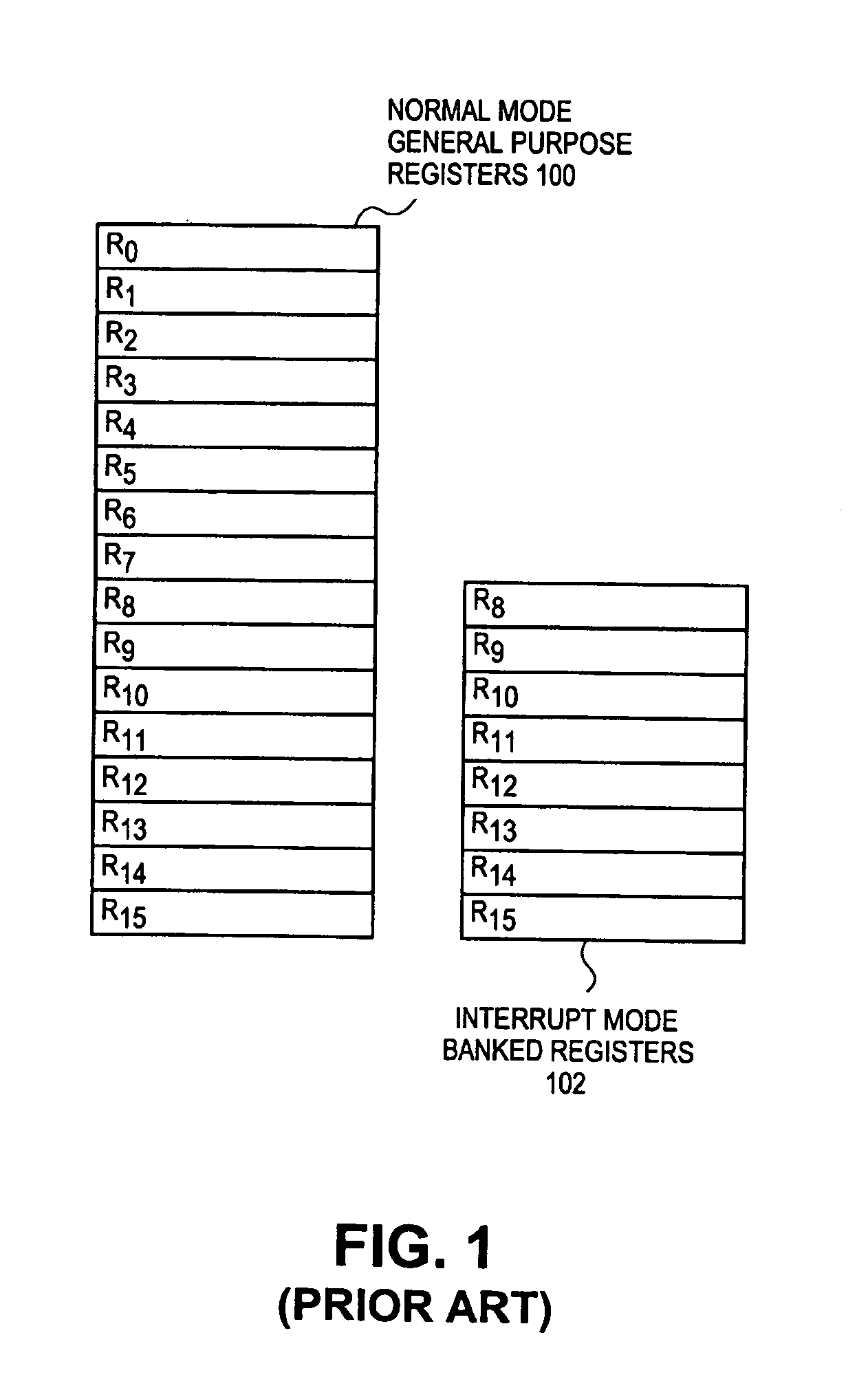

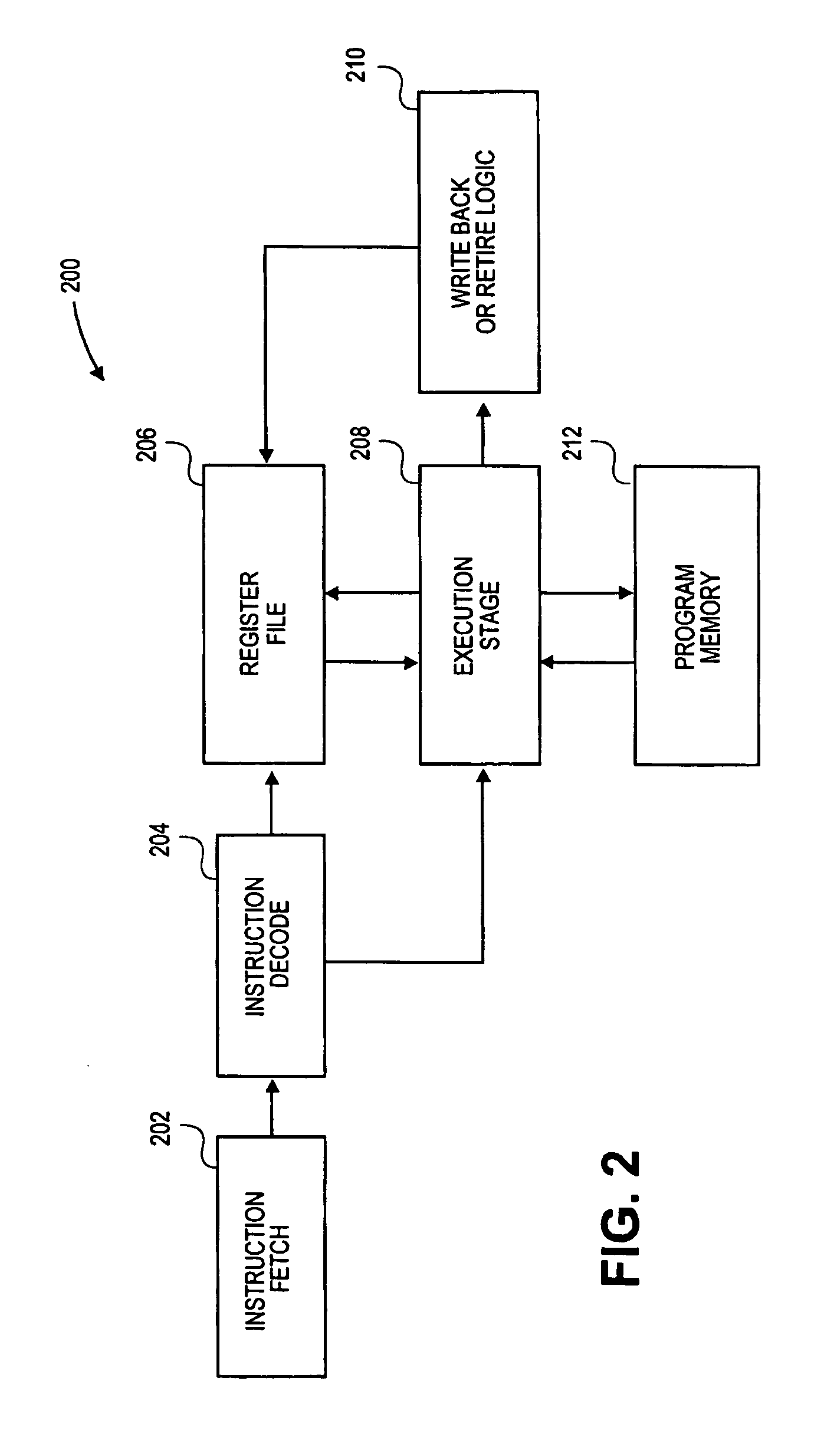

Memory mapped register file and method for accessing the same

InactiveUS7558942B1Register arrangementsGeneral purpose stored program computerData processing systemProcessing Instruction

A data processing system comprises a processor to process instructions. A plurality of pipeline stages to execute instructions including a register file. The register file includes a memory unit having a plurality of memory locations, each memory location being addressable by an encoded address. The encoded address corresponds to at least one register and processing mode. Input ports receive inputs for addressing at least one of the memory locations using an encoded address. Output ports to output data from at least one of the memory locations using an encoded address.

Owner:MARVELL ASIA PTE LTD

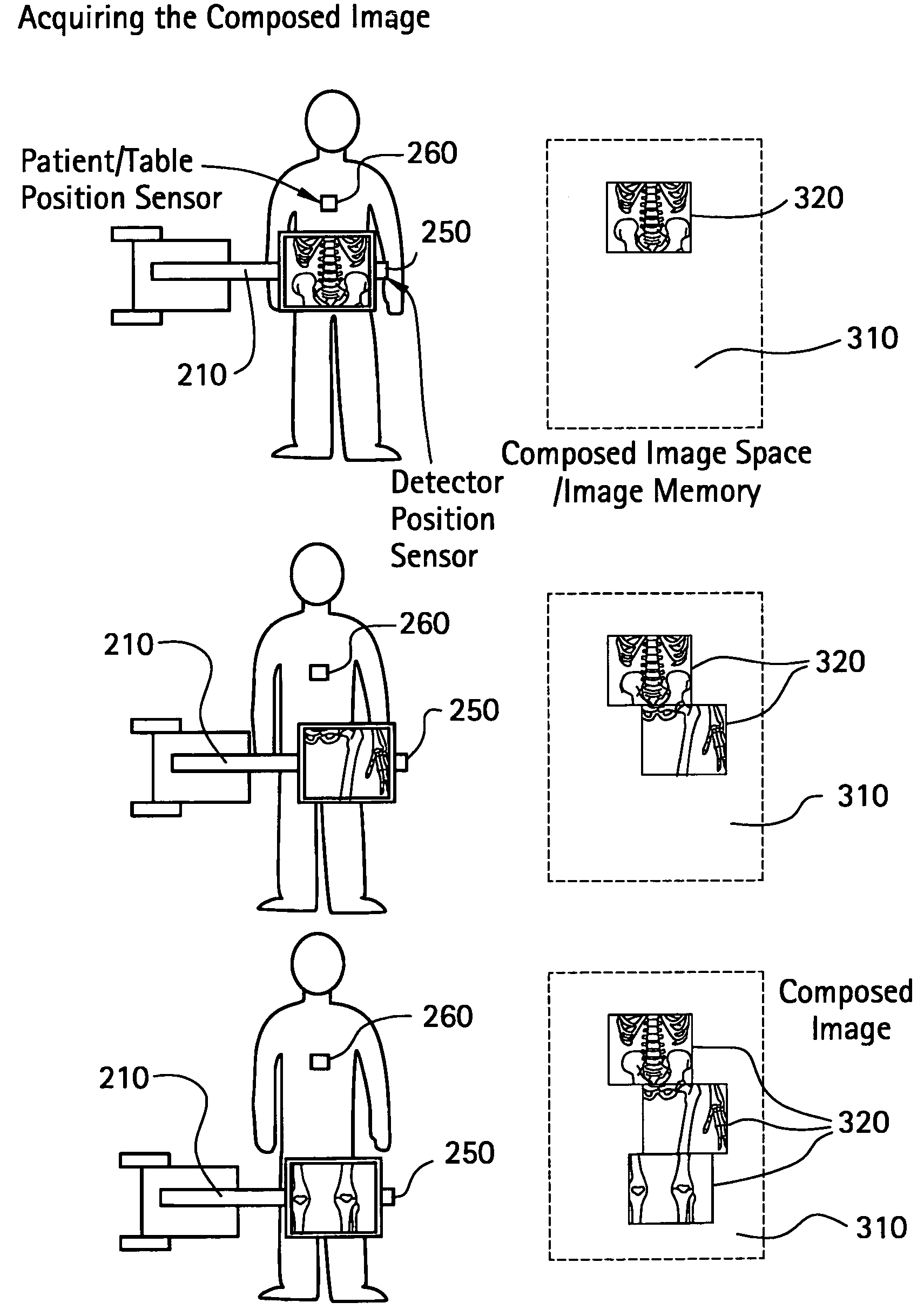

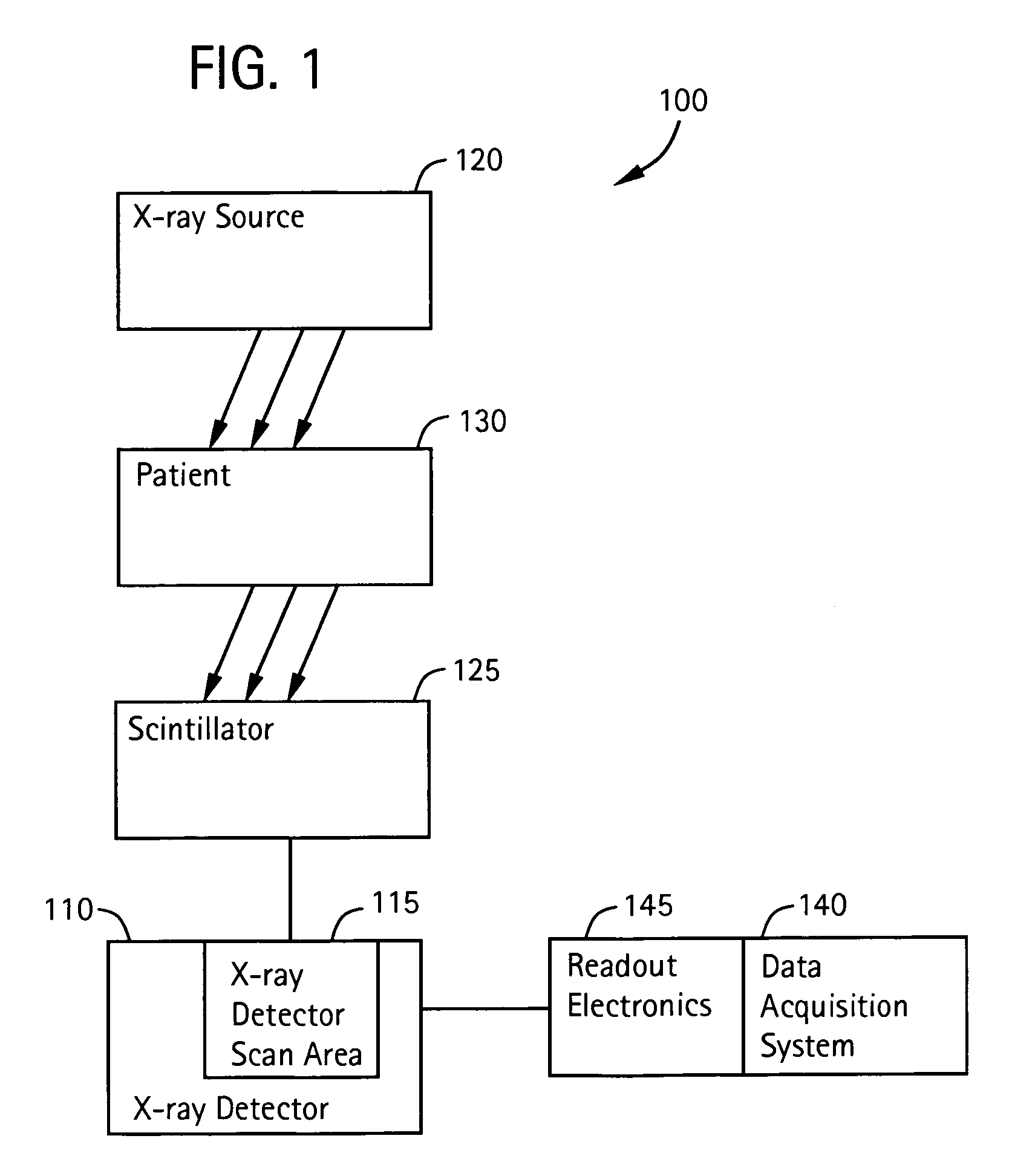

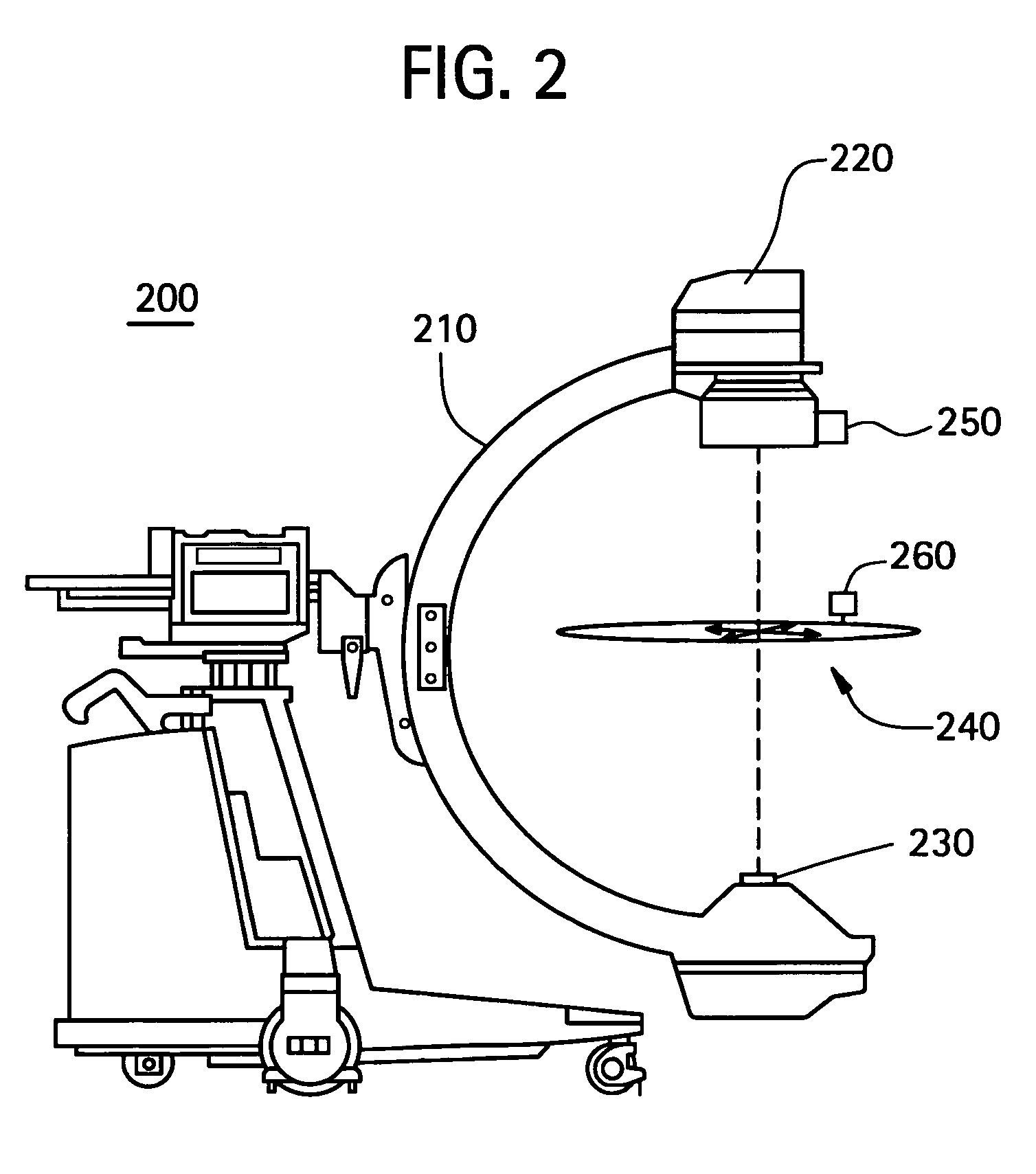

System and method for image composition using position sensors

InactiveUS7522701B2Facilitates digital subtraction angiographyImage enhancementImage analysisMemory mapPosition sensor

Certain embodiments of the present invention provide systems and methods for image composition. Certain embodiments include acquiring a first image of an object using an imager, obtaining first positional information for the imager with respect to the object, acquiring a second image of the object using the imager, obtaining second positional information for the imager with respect to the object, and creating a composed image using the first and second images. A spatial relationship between the images is maintained using the positional information. A composed image may be created by constructing a memory map of image data related to the object and inserting the images into the memory map based on the positional information, for example. A composed image may be created by combining the images based on the positional information, for example.

Owner:GENERAL ELECTRIC CO

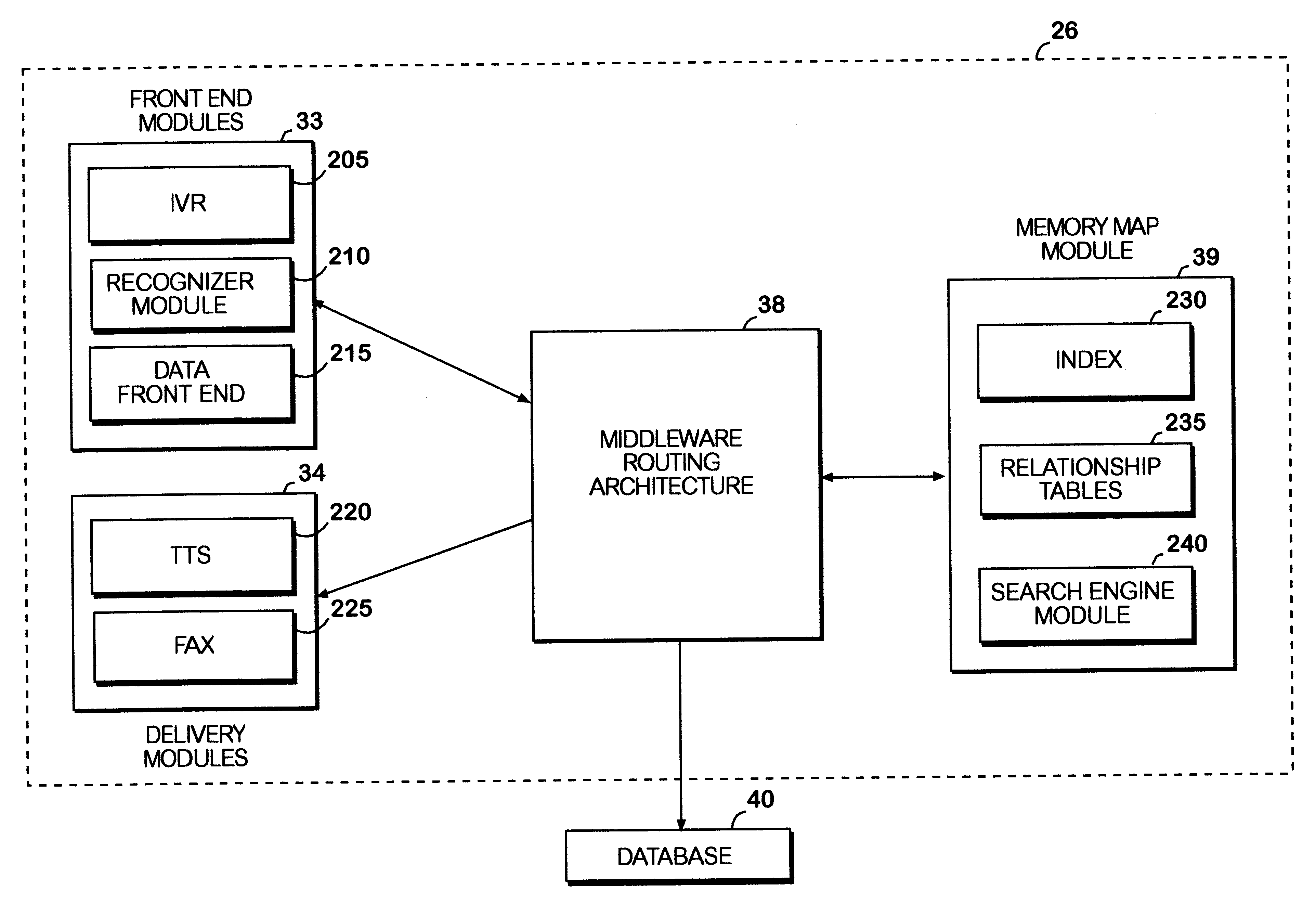

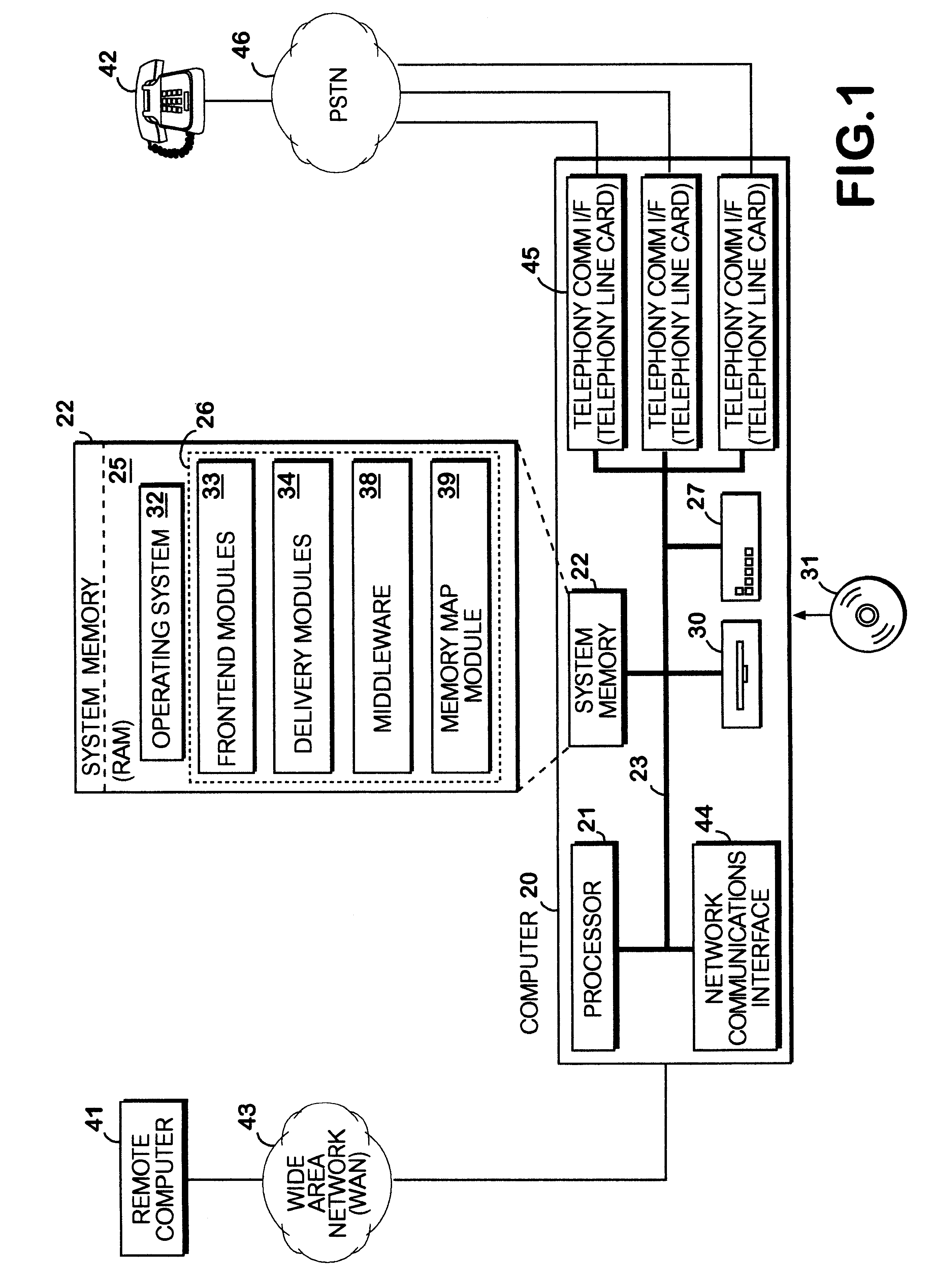

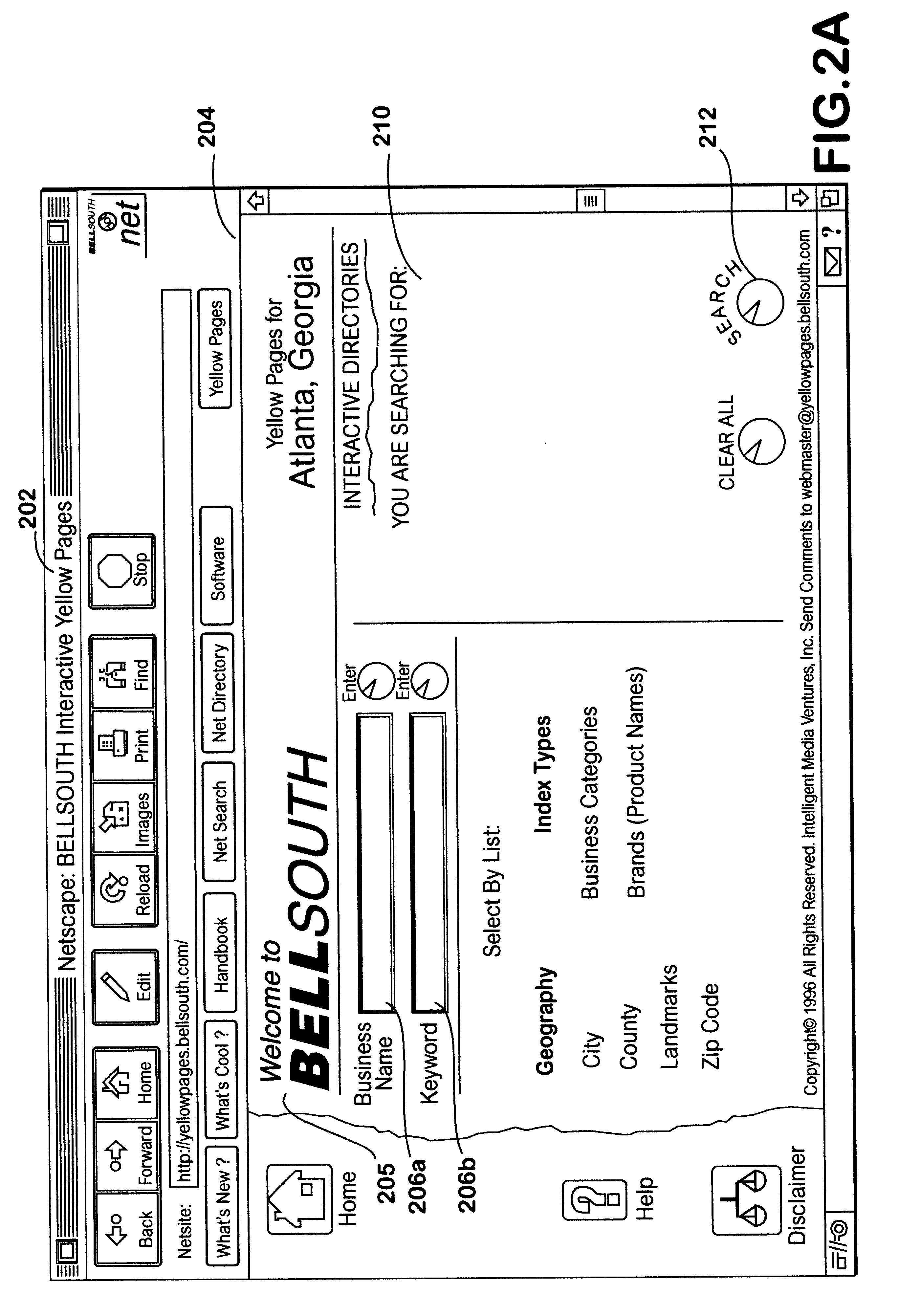

Method and system for processing a memory map to provide listing information representing data within a database

InactiveUS6295526B1Save memory spaceShort response timeData processing applicationsDigital data processing detailsDisplay OrderMemory map

Target elements of a search query are selected by referring to elements or listings within a data structure called a memory map module. Each target element is pre-validated against data available within a database (related to the memory map module). The memory map module has an index to the data as well as relationship information between the data. The memory map module is searched to find listings related to the target elements of the search query. In one embodiment, the memory map module is searched by determining a range reference using a first table of the relationship information. A range is determined using the range reference to a second table of the relationship information. The range is used to search the index to find the listings. In another embodiment, a string space of the relationship information is searched for each instance of the target element. An offset for the instance is determined and is mapped back to a matching listing in the index. A matching listing for each instance of the target elements is respectively added to the listings for each of the target elements. An intersection between the listings for each target element is determined. The listings within the intersection are arranged according to a display order. The display order can be a predetermined or randomized order. The listings within the intersection are displayed as listing information related to the search query. Additional information about a listing can be determined by narrowly and quickly searching the database.

Owner:INTELLECTUAL VENTURES I LLC +2

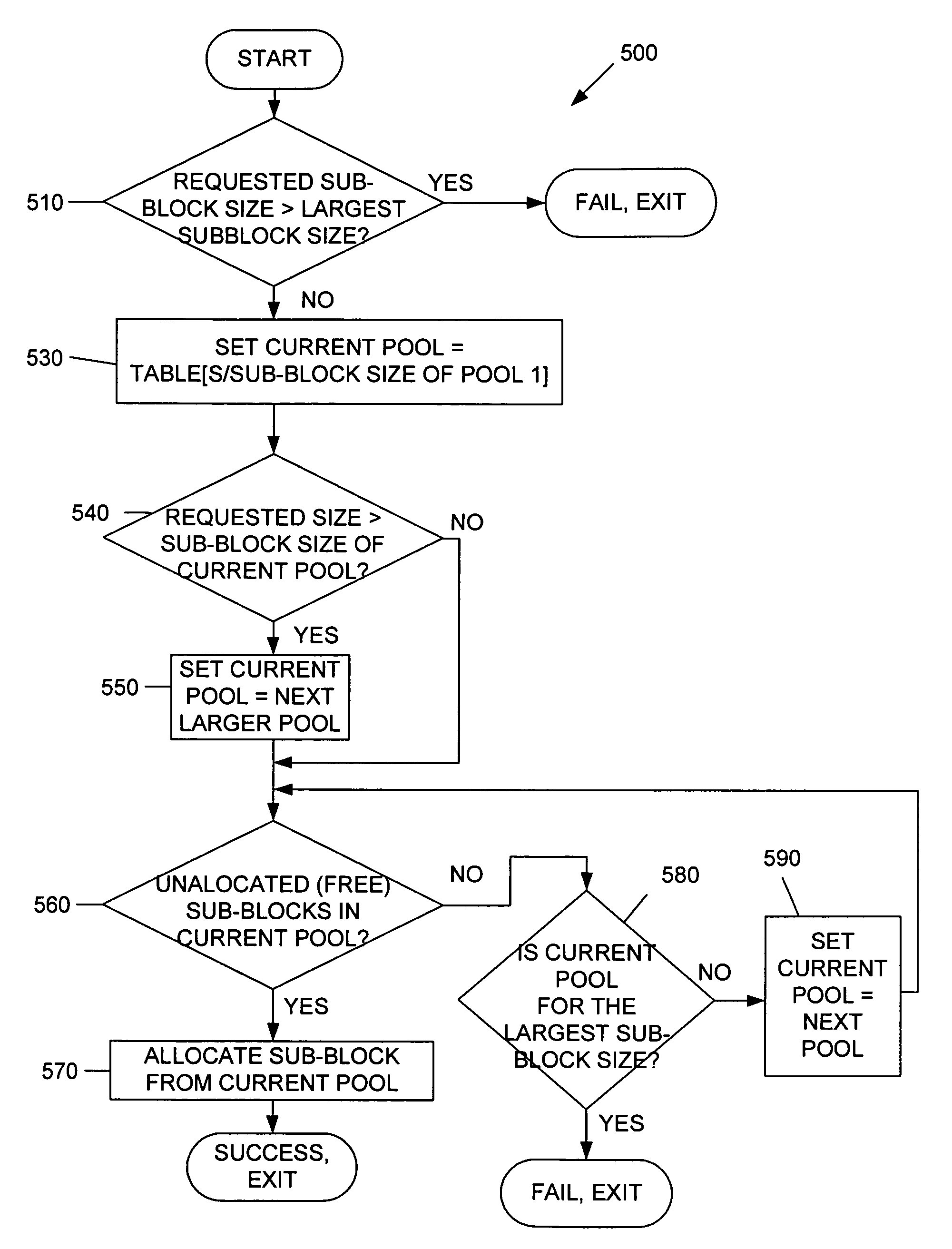

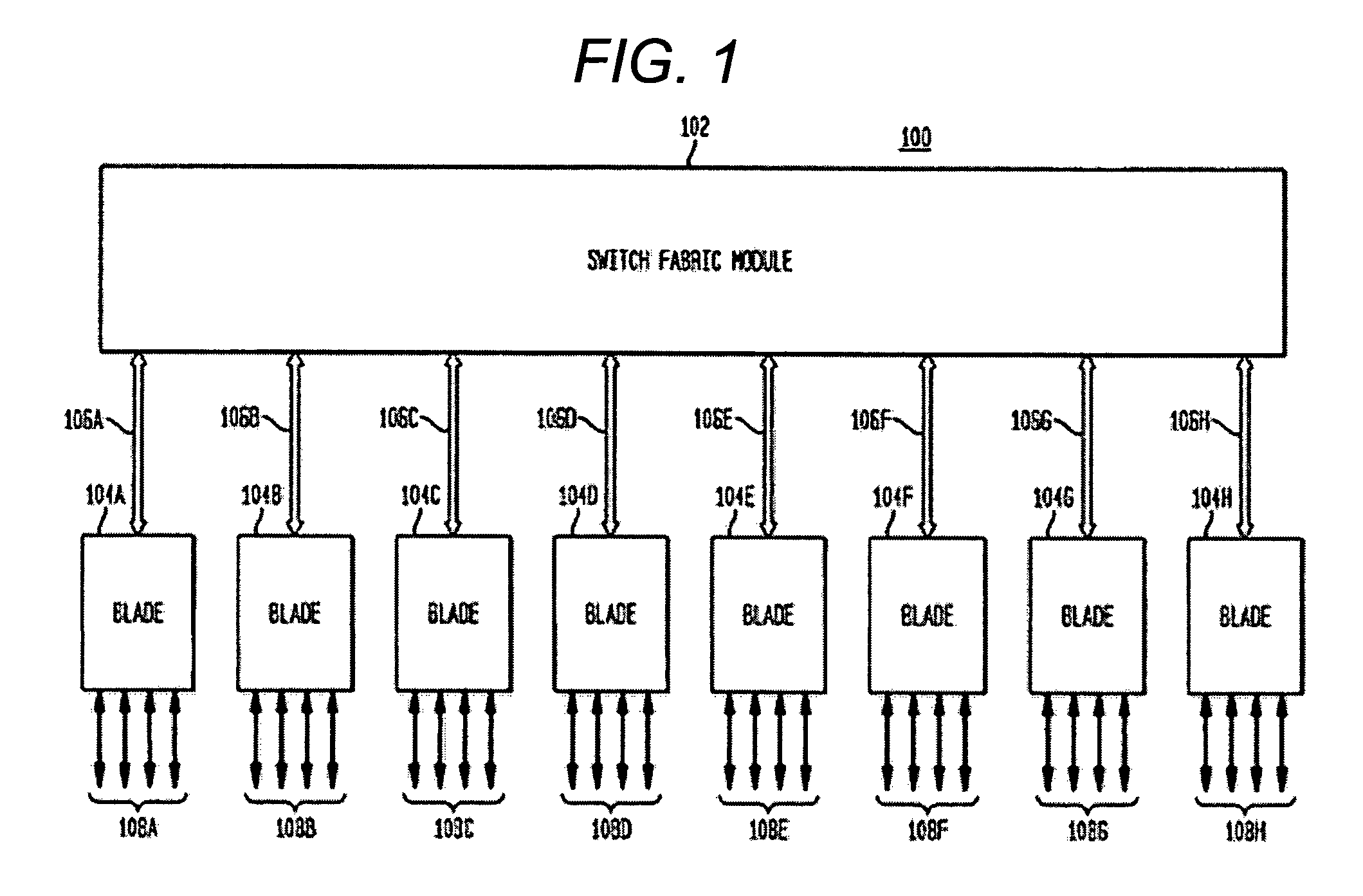

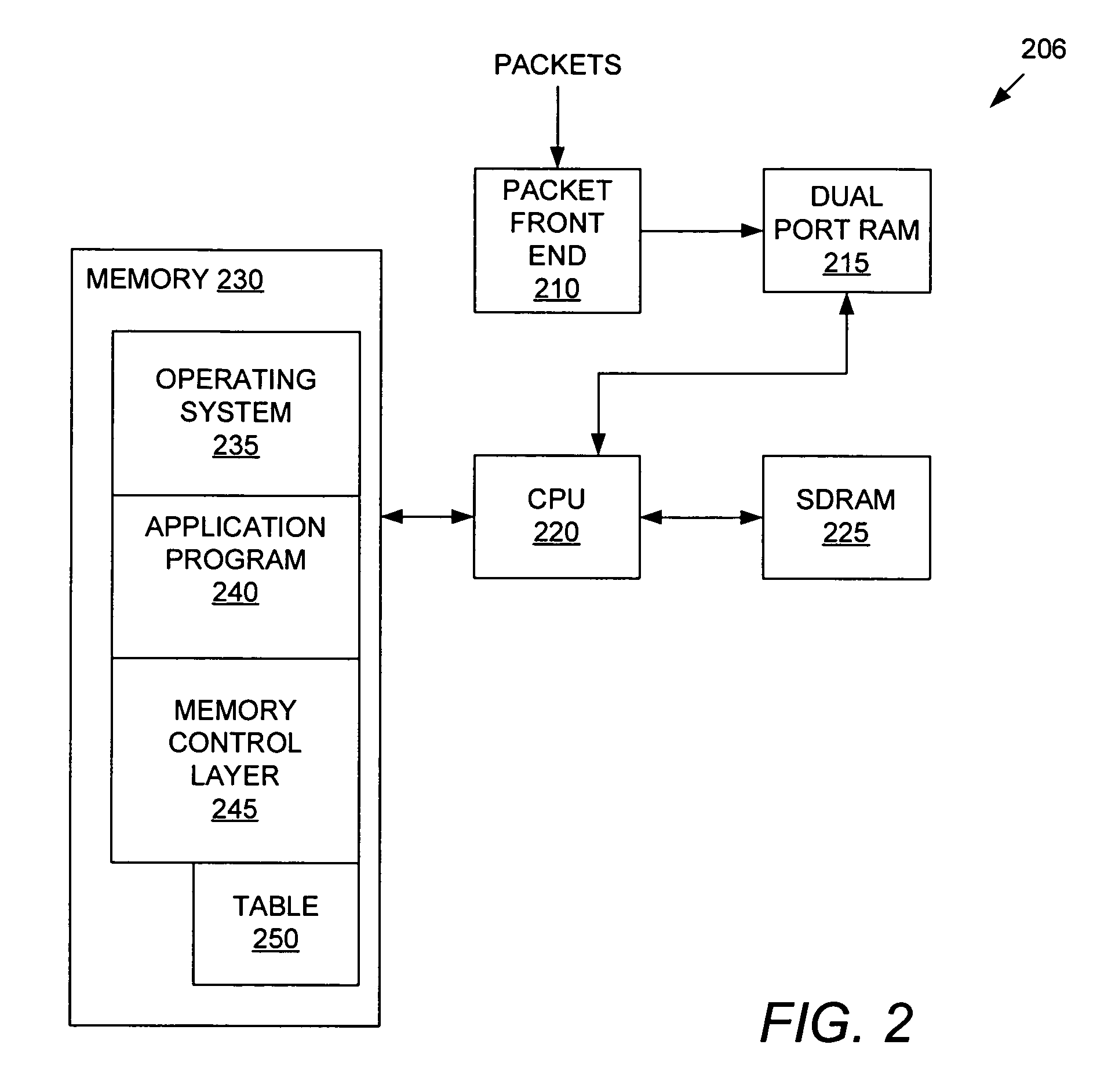

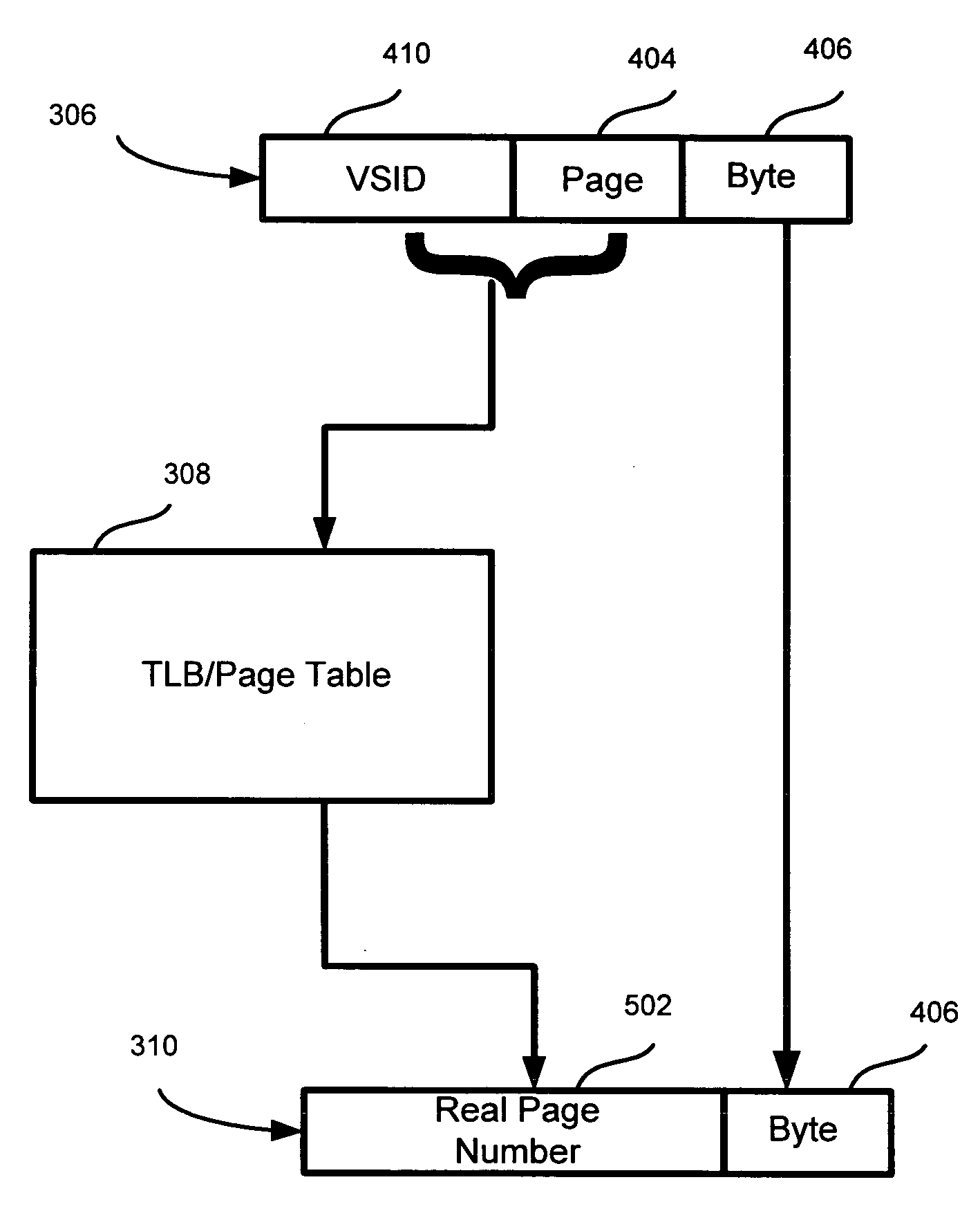

Virtual memory mapping for efficient memory usage

ActiveUS7594093B1Small sizeMemory adressing/allocation/relocationMicro-instruction address formationVirtual memoryOperational system

A processor (e.g. utilizing an operating system and / or circuitry) may access physical memory by paging, where a page is the smallest partition of memory mapped by the processor from a virtual address to a physical address. An application program executing on the processor addresses a virtual address space so that the application program may be unaware of physical memory paging mechanisms. A memory control layer manages physical memory space in units of sub-blocks, wherein a sub-blocks is smaller than a size of the page. Multiple virtual address blocks may be mapped to the same physical page in memory. A sub-block can be moved from a page (e.g. from one physical memory to a second physical memory) without moving other sub-blocks within the page in a manner that is transparent to the application program.

Owner:AVAGO TECH INT SALES PTE LTD

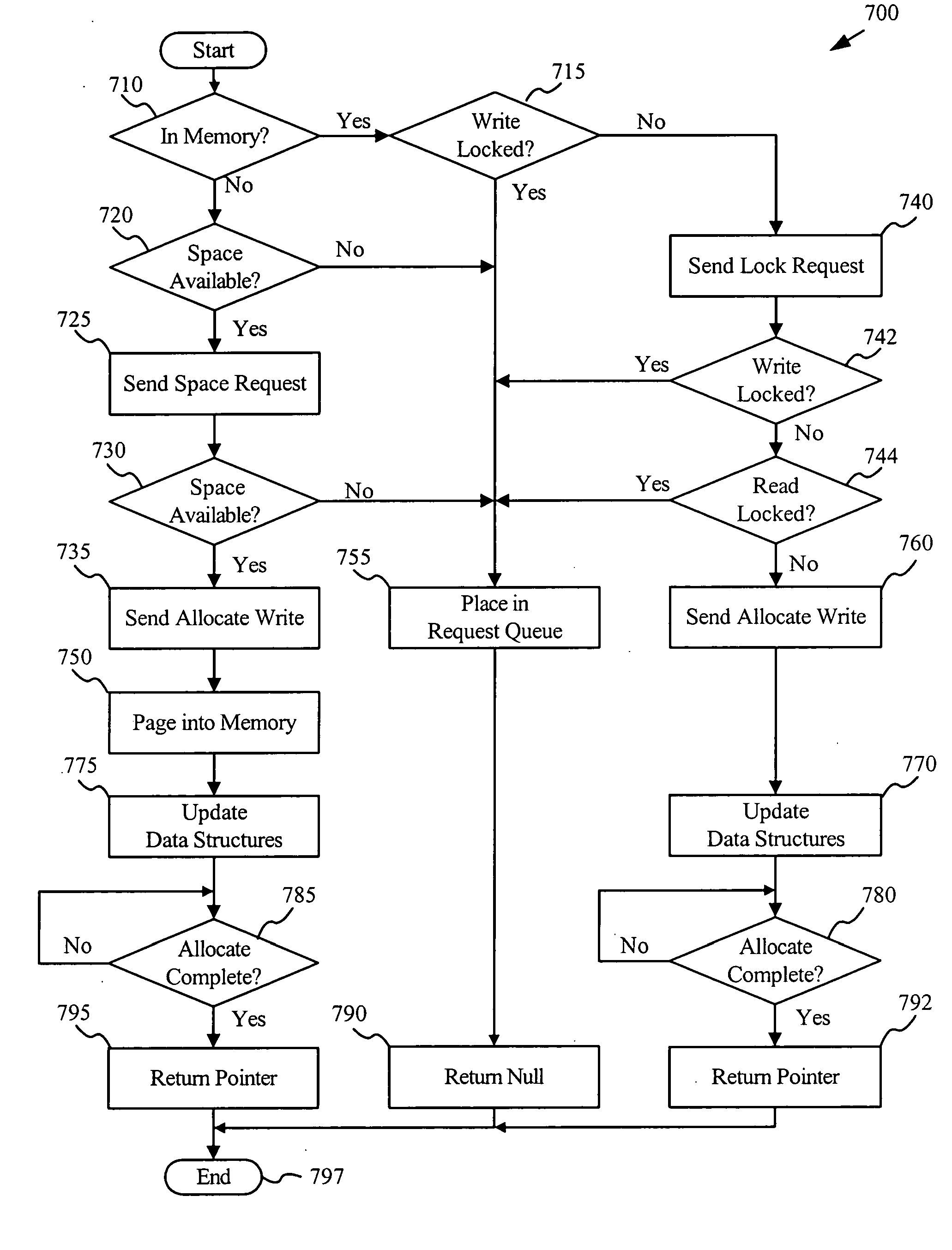

Apparatus method and system for fault tolerant virtual memory management

InactiveUS20050132249A1Facilitating fault toleranceThe process is fast and efficientMemory loss protectionError detection/correctionMemory mapWriting messages

A fault tolerant synchronized virtual memory manager for use in a load sharing environment manages memory allocation, memory mapping, and memory sharing in a first processor, while maintaining synchronization of the memory space of the first processor with the memory space of at least one partner processor. In one embodiment, synchronization is maintained via paging synchronization messages such as a space request message, an allocate memory message, a release memory message, a lock request message, a read header message, a write page message, a sense request message, an allocate read message, an allocate write message, and / or a release pointer message. Paging synchronization facilitates recovery operations without the cost and overhead of prior art fault tolerant systems.

Owner:IBM CORP

System, method, and computer program product for a fully trusted adapter validation of incoming memory mapped I/O operations on a physical adapter that supports virtual adapters or virtual resources

InactiveUS20060212608A1Memory adressing/allocation/relocationInput/output processes for data processingData processing systemTerm memory

A method, computer program product, and distributed data processing system that allows a single physical I / O adapter to validate that a memory mapped I / O address referenced by an incoming I / O operation is associated with a virtual host that initiated the incoming memory mapped I / O operation is provided. Specifically, the present invention is directed to a mechanism for sharing a PCI family I / O adapter and, in general, any I / O adapter that uses a memory mapped I / O interface for communications. A mechanism is provided that allows a single physical I / O adapter to validate that a memory mapped I / O address referenced by an incoming memory mapped I / O operation used to initiate an I / O transaction is associated with a virtual host that initiated the incoming memory mapped I / O operation.

Owner:IBM CORP

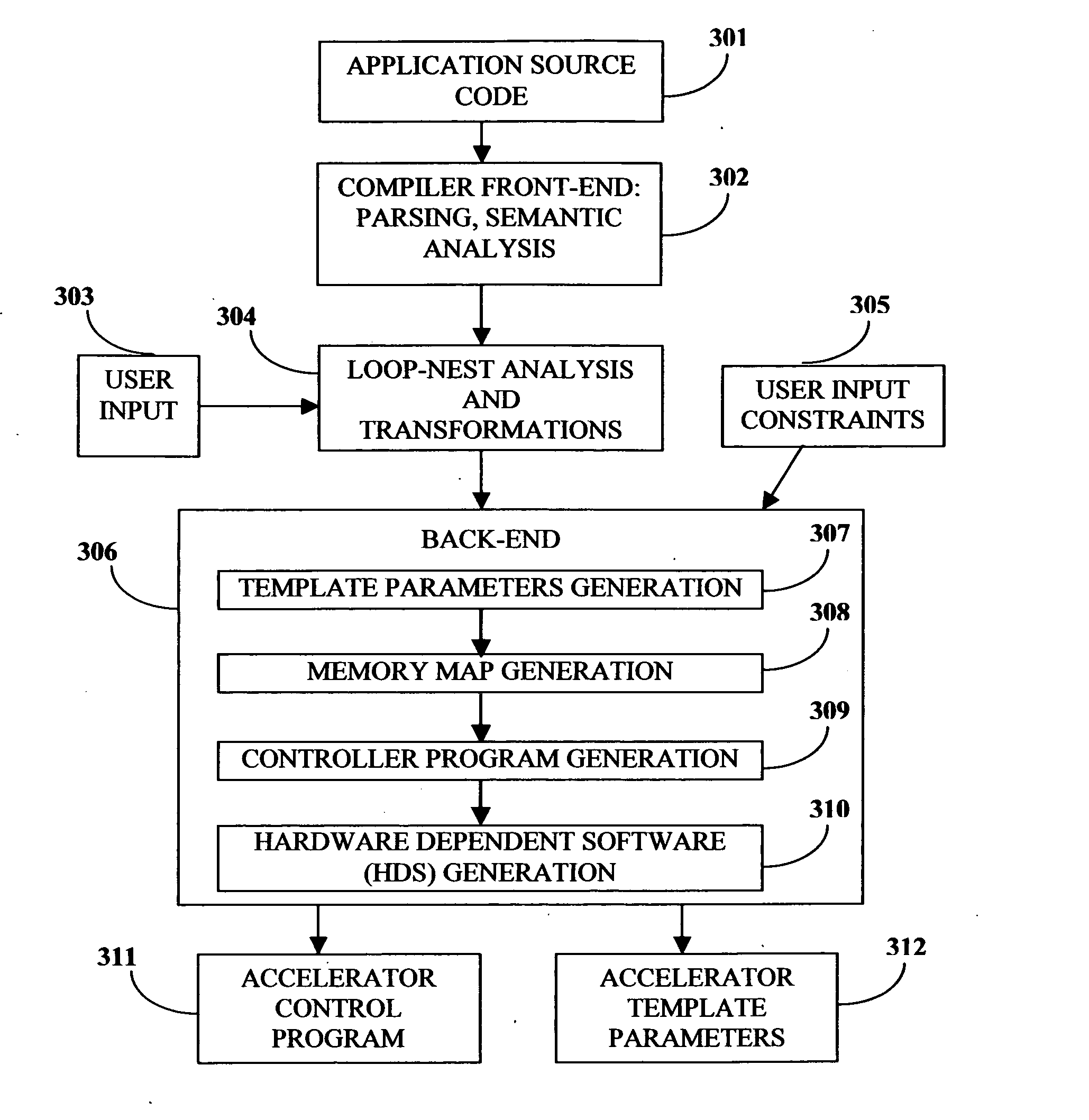

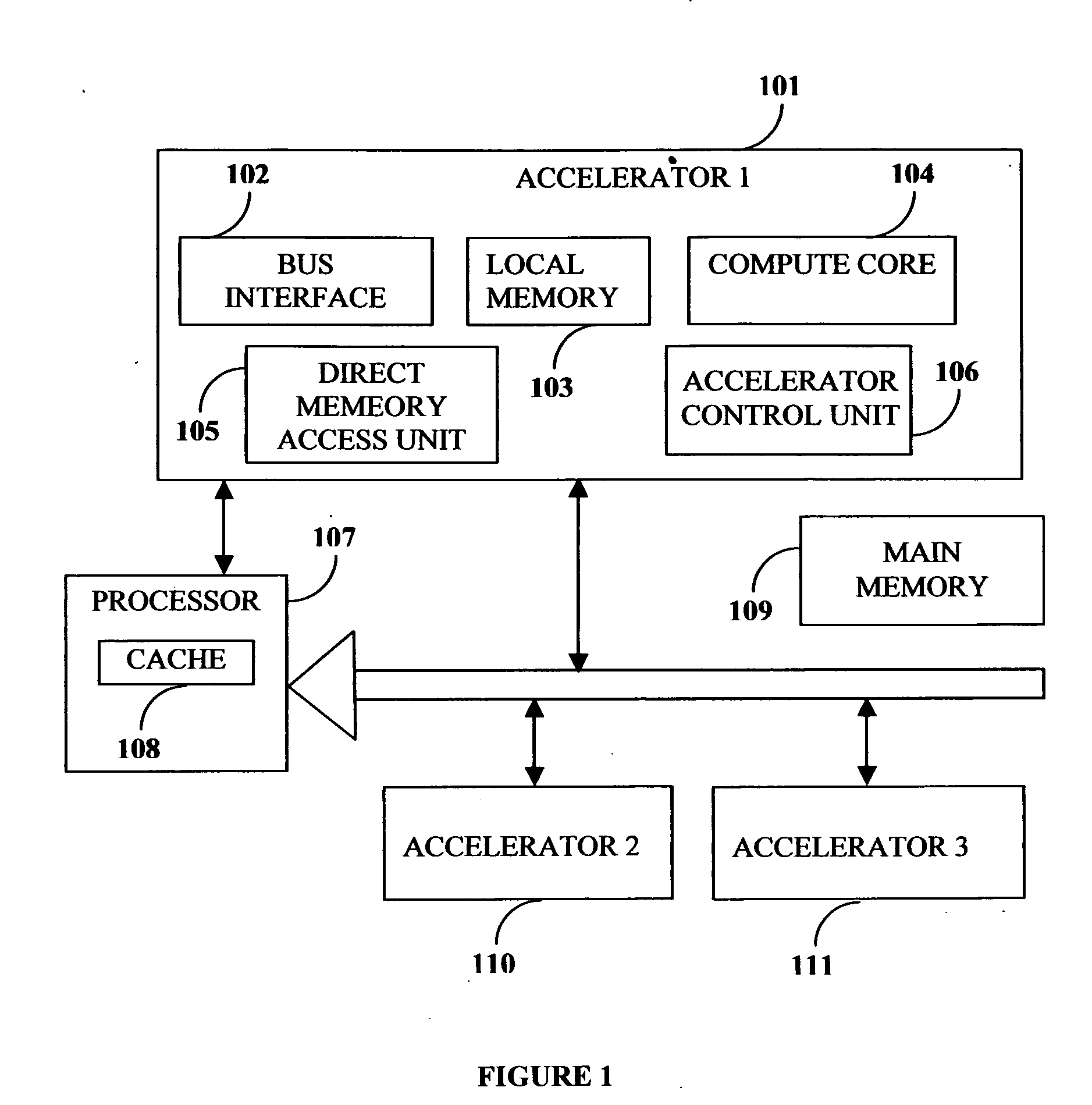

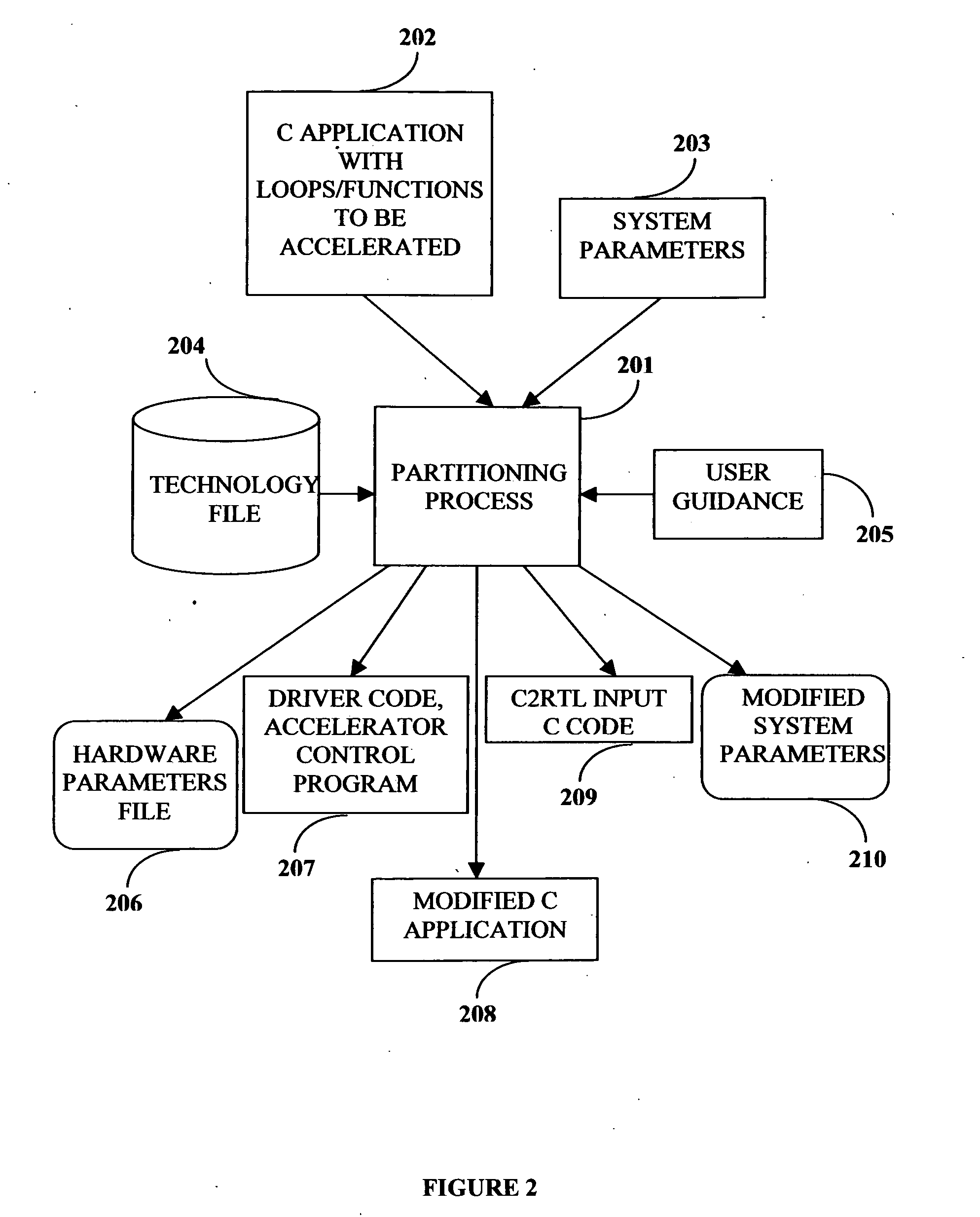

Compiler method for extracting and accelerator template program

InactiveUS20070169059A1Software engineeringSpecific program execution arrangementsSemantic translationSemantics

This invention describes a compilation method of extracting and implementing an accelerator control program from an application source code in a processor based system. The application source code comprises arrays and loops. The input application source code is sequential, with loop, branch and call control structures, while the generated output of this invention has parallel execution semantics. The compilation method comprises the step of performing loop nest analysis, transformations and backend processes. The step of loop nest analysis consists of dependence analysis and pointer analysis. Dependence analysis determines the conflicts between the various references to arrays in the loop, and pointer analysis determines if two pointer references in a loop are in conflict. Transformations convert the loops from their original sequential execution semantics to parallel execution semantics. The back-end process determines the parameters and memory map of the accelerator and the hardware dependent software.

Owner:POSEIDON DESIGN SYST

Optical transceiver and host adapter with memory mapped monitoring circuitry

InactiveUS20050031352A1Electromagnetic transmittersElectromagnetic transceiversTransceiverMemory map

A host adaptor is configured to monitor operation of an optoelectronic transceiver. The host adapter includes a transceiver interface, memory, comparison logic and a host interface. The transceiver interface receives from the optoelectronic transceiver digital values corresponding to operating conditions of the optoelectronic transceiver. The memory includes one or more memory arrays for storing information related to the optoelectronic transceiver, including the digital values received from the optoelectronic transceiver. The comparison logic is configured to compare the digital values with limit values to generate flag values, wherein the flag values are stored in predefined flag storage locations within the memory during operation of the optoelectronic transceiver. The host interface enables a host device to read from host specified locations within the memory, including the predefined flag storage locations, in accordance with commands received from the host device.

Owner:II VI DELAWARE INC

Transparent sharing of memory pages using content comparison

InactiveUS7620766B1Memory architecture accessing/allocationMemory adressing/allocation/relocationMultiple contextVirtual memory

A computer system has one or more software contexts that share use of a memory that is divided into units such as pages. In the preferred embodiment of the invention, the contexts are, or include, virtual machines running on a common hardware platform. The contents, as opposed to merely the addresses or page numbers, of virtual memory pages that are accessible to one or more contexts are examined. If two or more context pages are identical, then their memory mappings are changed to point to a single, shared copy of the page in the hardware memory, thereby freeing the memory space taken up by the redundant copies. The shared copy is then preferably marked copy-on-write. Sharing is preferably dynamic, whereby the presence of redundant copies of pages is preferably determined by hashing page contents and performing full content comparisons only when two or more pages hash to the same key.

Owner:VMWARE INC

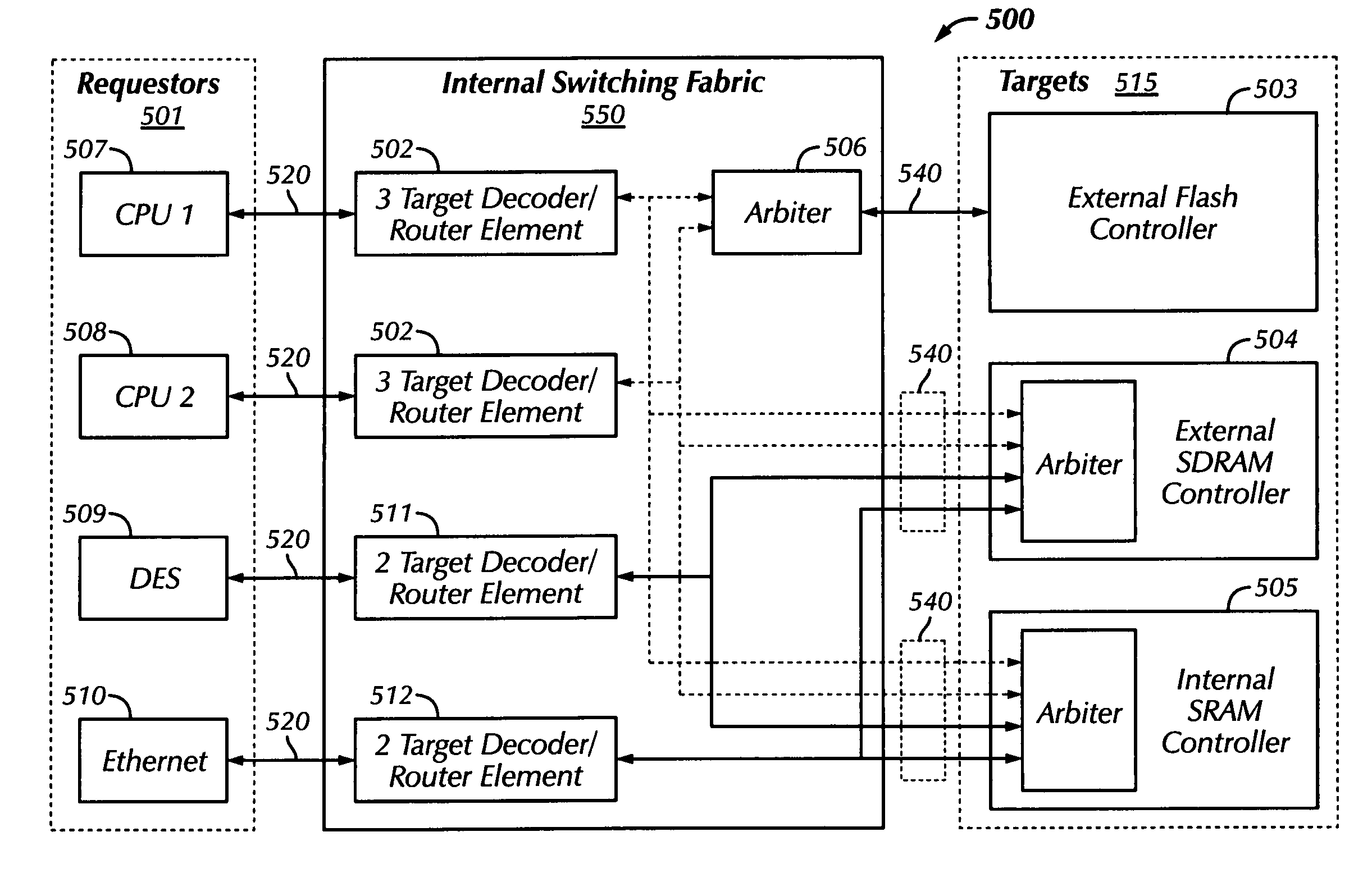

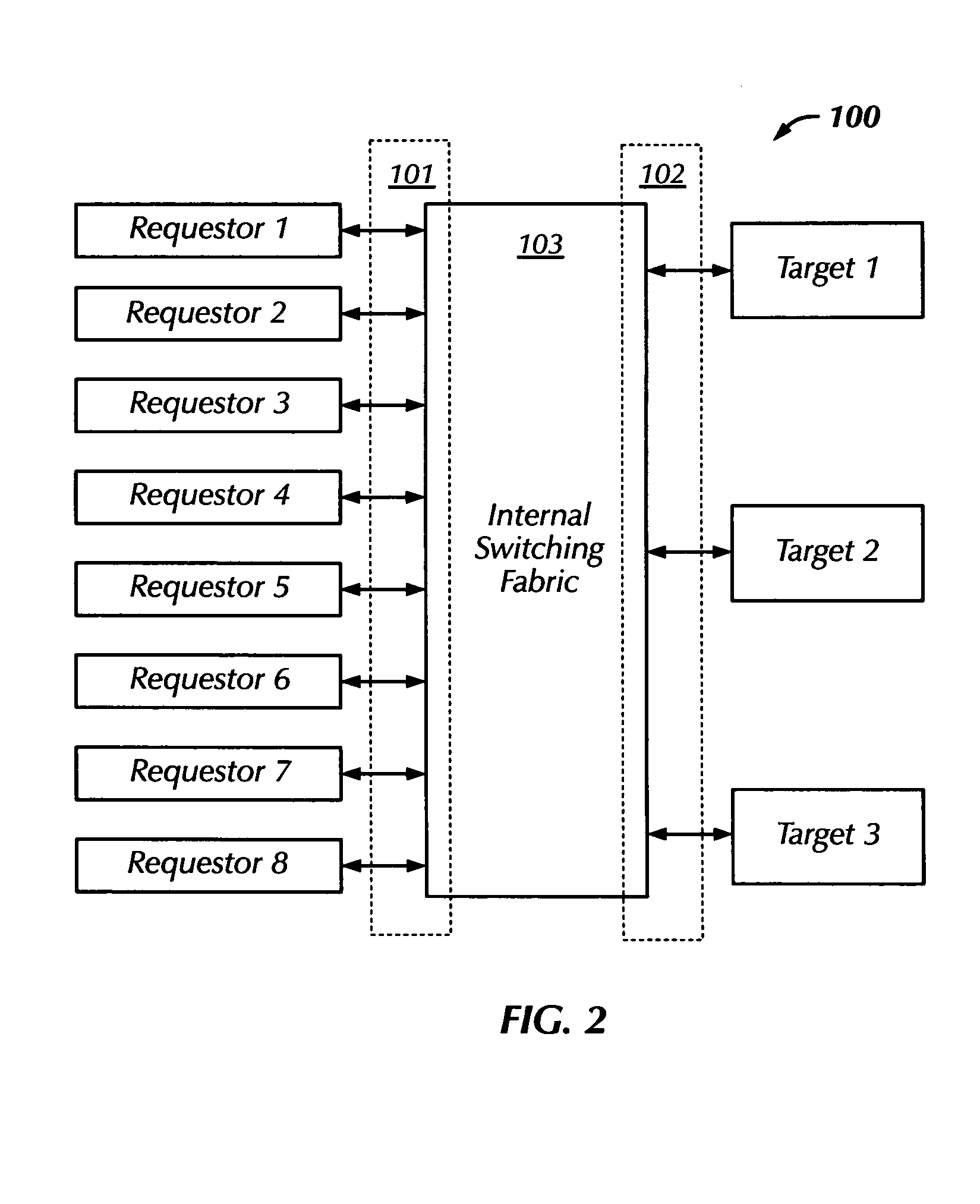

Flexible matrix fabric design framework for multiple requestors and targets in system-on-chip designs

The System-on-Chip (SOC) interconnection apparatus and system discloses an internal switching fabric that interconnects, via standard connection ports, one or more requestors and one or more addressable targets on a single semiconductor integrated circuit. Each target has a unique address space, may or may not have internal arbitration, and may be resident (i.e., on-chip) memory, a memory controller for resident or off-chip memory, an addressable bridge to a device, system, or subsystem, or any combination thereof. Targets and requesters are connected to the internal switching fabric using target and requestor connection ports. The internal switching fabric routes signals between requesters and targets using one or more decoder / router elements that determine which target is the designated target using an internal system memory map. Dedicated arbiters may be included for targets without internal arbitration.

Owner:PALMCHIP CORP

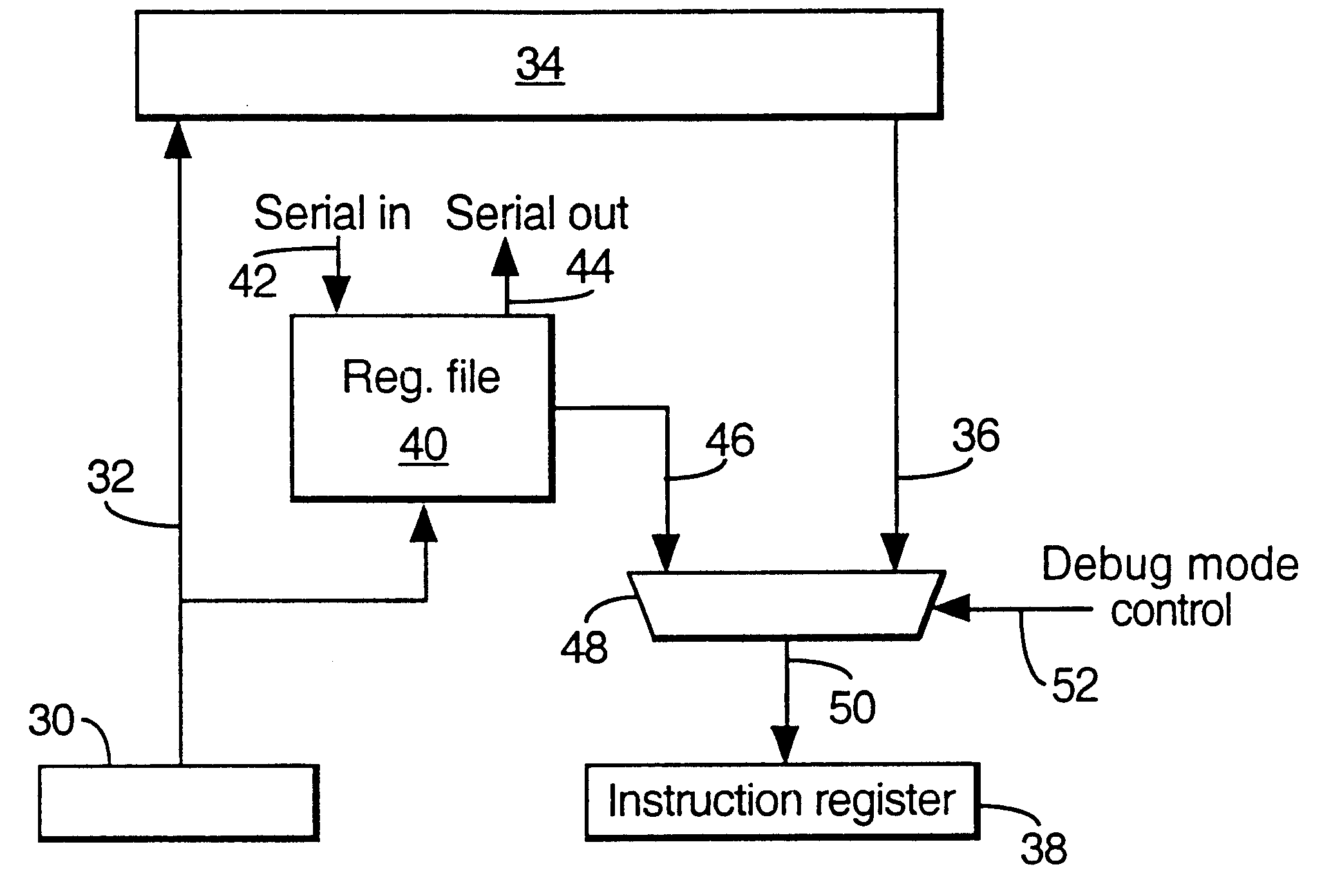

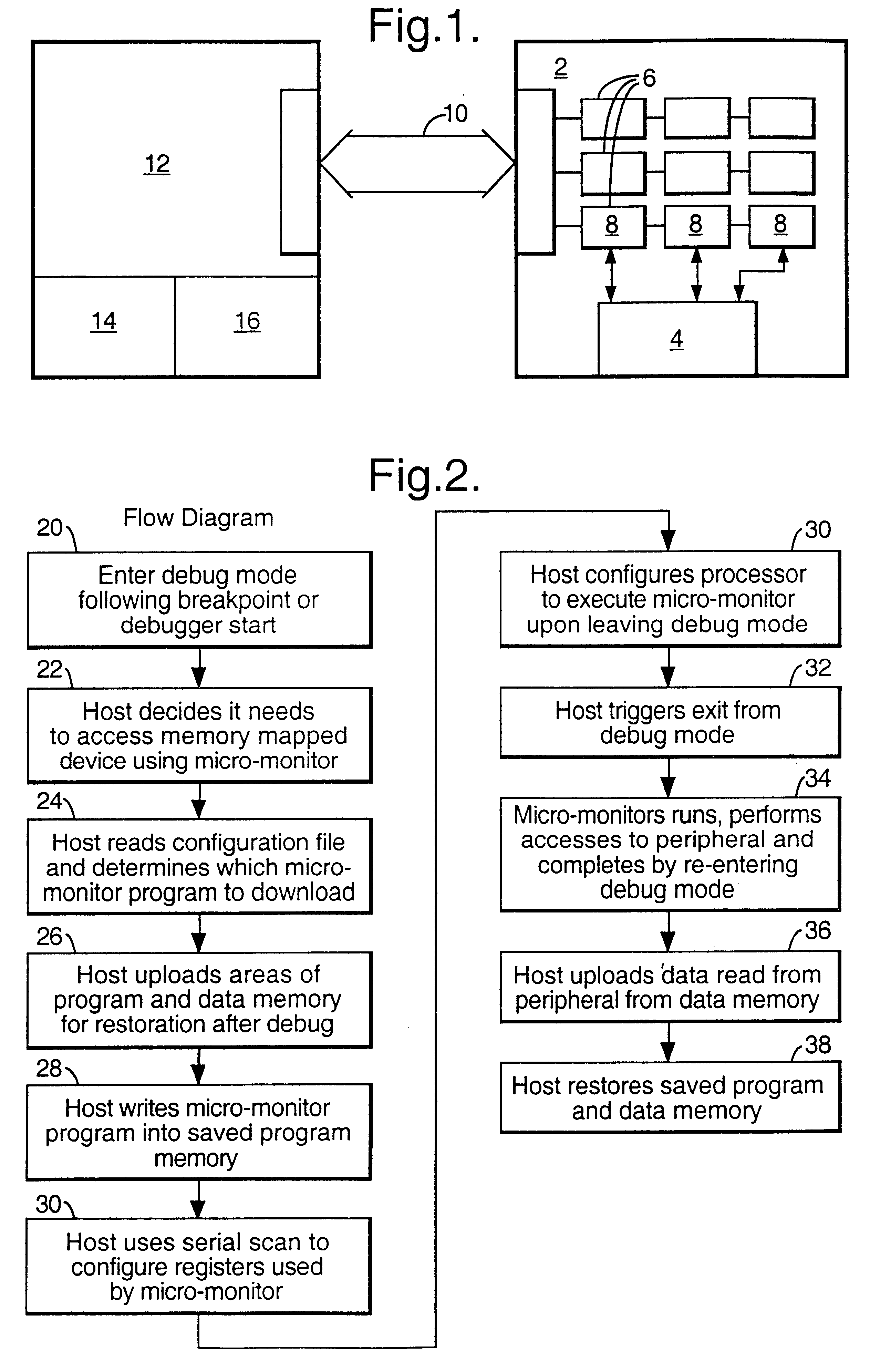

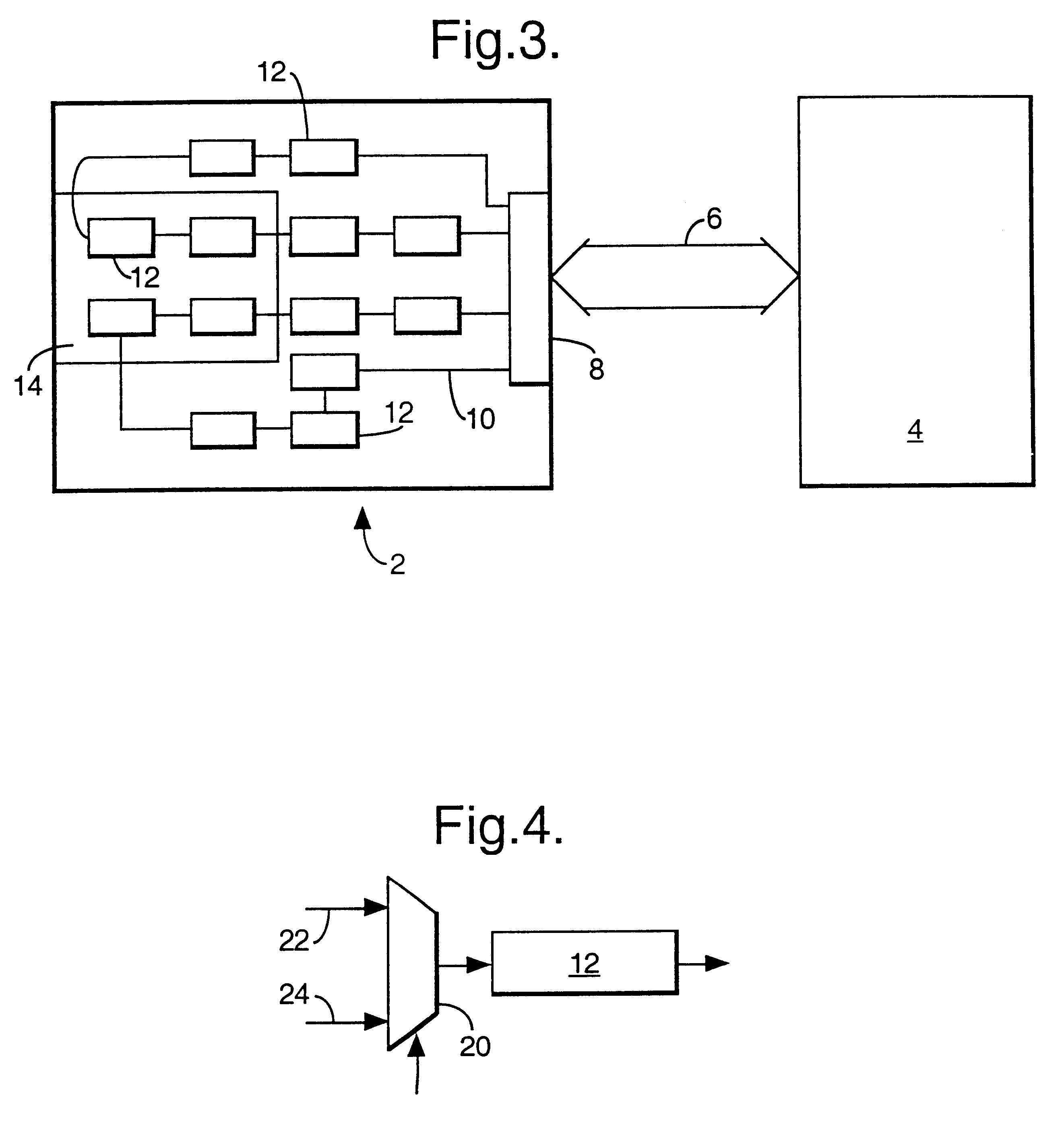

Microprocessor development systems

InactiveUS6574590B1Difficult to useSimple memory accessElectronic circuit testingDigital computer detailsEmbedded applicationsTime delays

A procedure and processor are disclosed for avoiding lengthy delays in debug procedures during access by a memory mapped peripheral device. The processor includes in-circuit emulation means comprising one or more scan chains or serially connected registers for access by an external host computer system. The procedure comprises:a) the host computer system carrying out a debug procedure via said scan chains, and selectively interrupting such debug procedure for access to a peripheral memory mapped device;b) the host computer system writing into an area or memory of the processor a program for reading and / or writing data at a specified memory location; andc) the host computer system causing said processor to run said program, and then to return to said debug procedure.In another aspect, in order to permit small debugging programs to run, in serial scan in circuit emulation processes, on a processor in a deeply embedded application where no program RAM is provided, the processor includes one or more chains of serially connected registers coupled to interface means for access by an external host to enable a serial scan procedure to be carried out, one such chain including a set of serially coupled registers for storing one or more processor instructions read into a set of registers through the interface means, and the processor including address means, for addressing program memory, coupled to said set of registers for addressing the set of registers, and means for reading the processor instructions in the set of registers to an instruction resister of the processor.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

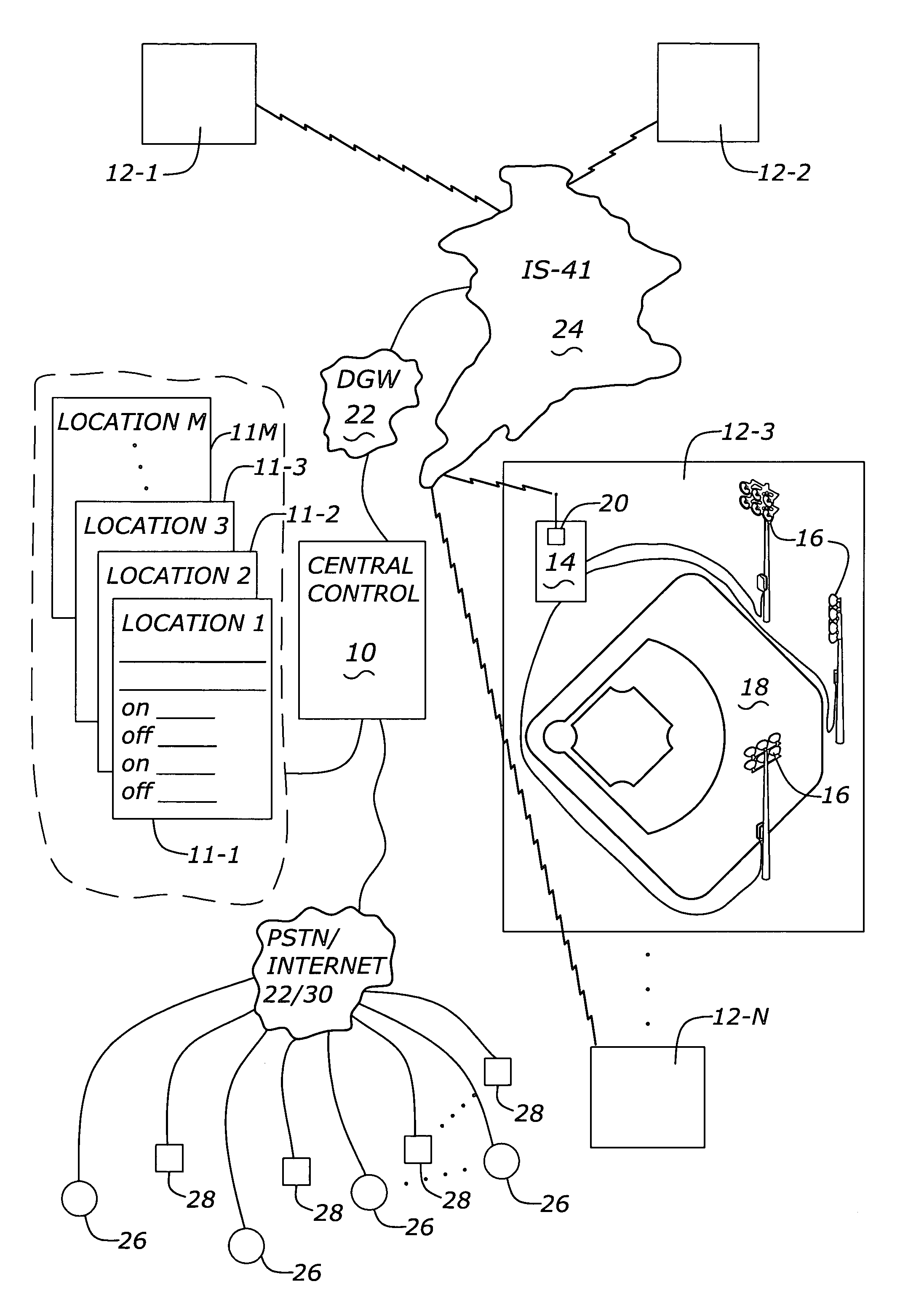

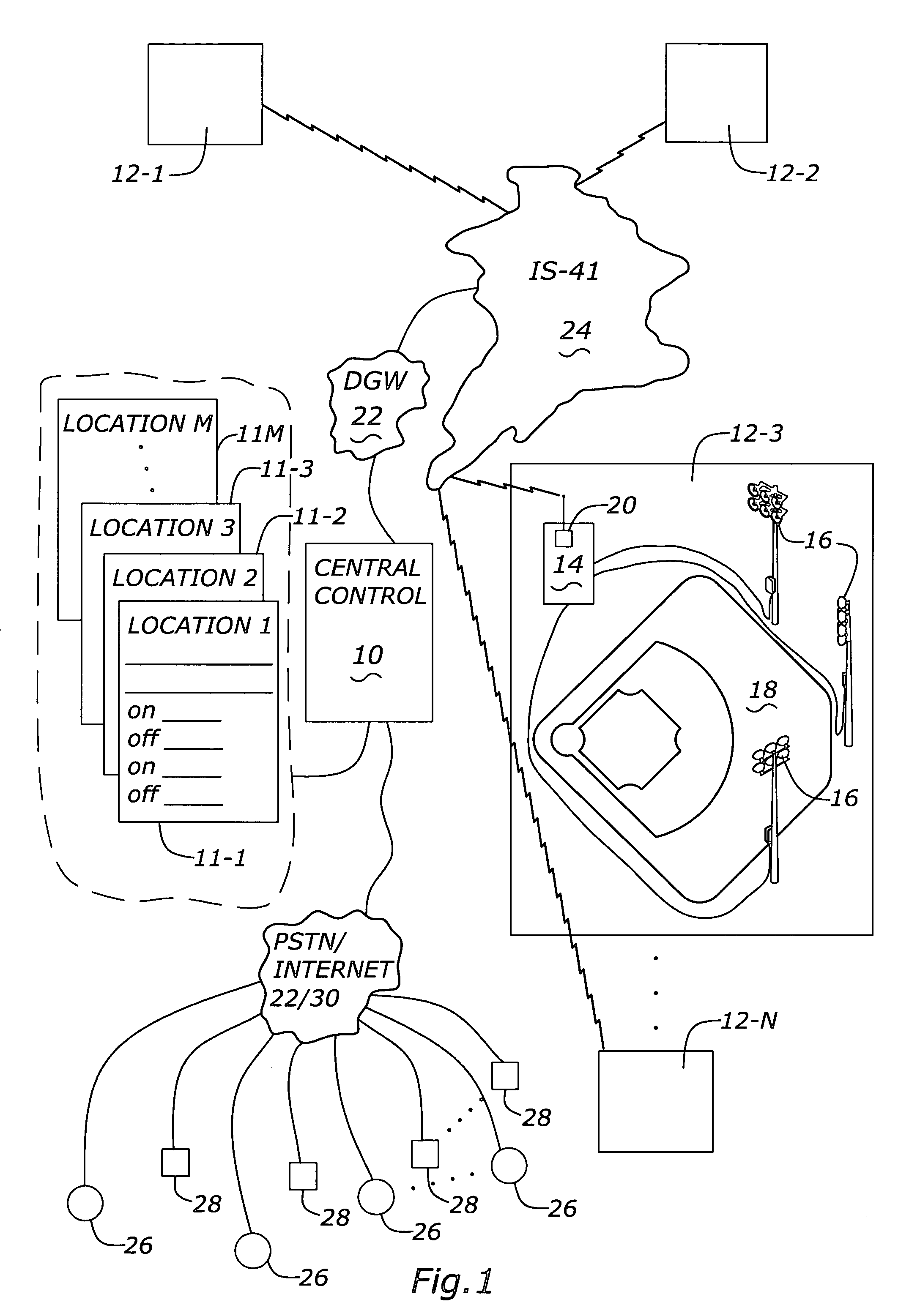

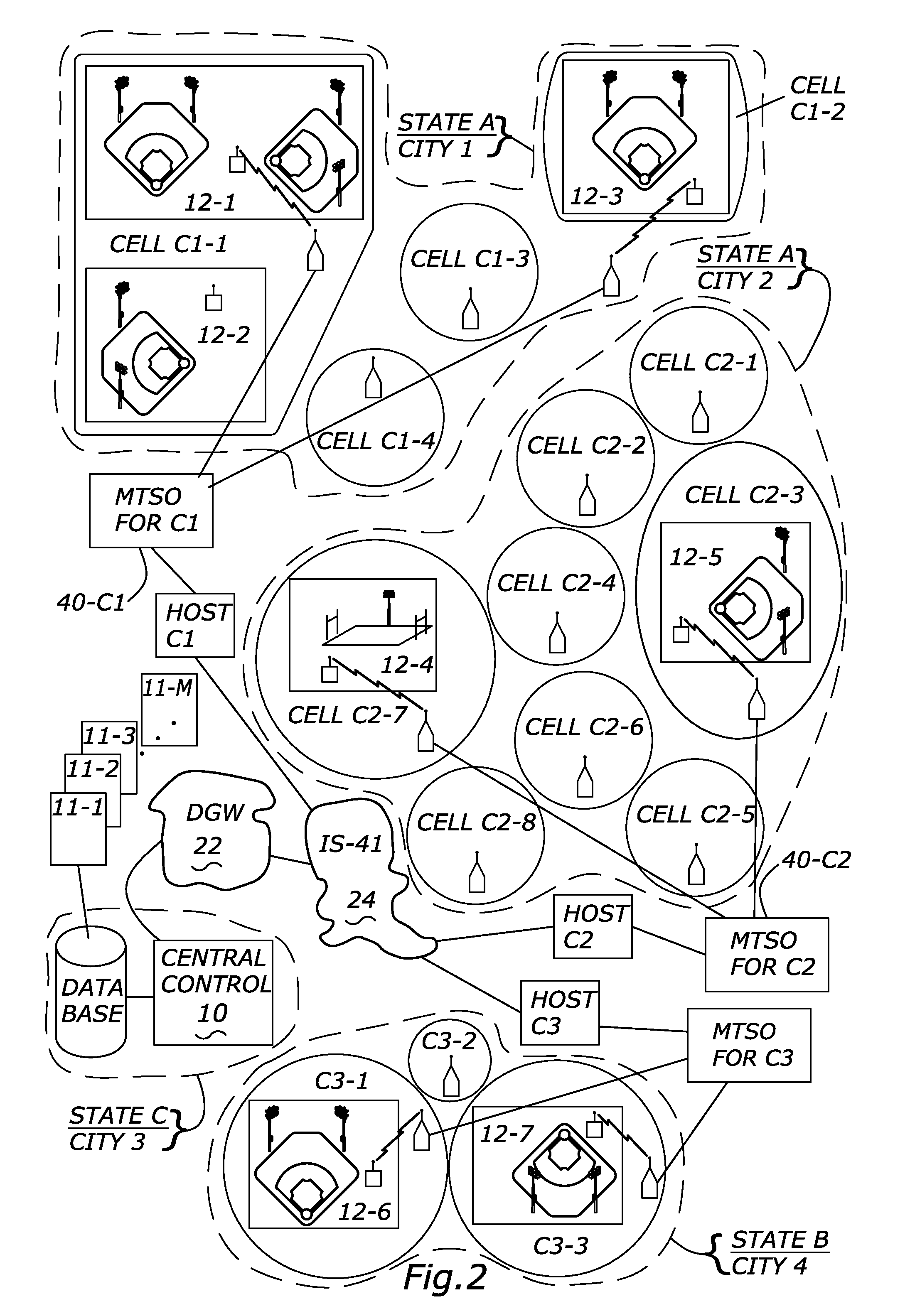

Means and apparatus for control of remote electronic devices

InactiveUS7778635B2Improve the problemExpensive to installComputer controlSimulator controlSpecific functionMemory map

An apparatus to control remote devices or equipment via existing cellular telephone networks. The apparatus receives information from a customer concerning a specific function, which the customer wants to perform at the remote equipment. A Central Control receives this information from the customer and correlates the function to a specific cellular telephone MIN number, which has been pre-programmed in a computer at the Central Control. The MIN number is then sent to a cellular provider. The cellular provider then transmits the MIN number to an antenna, which transmits the MIN number to a remote equipment controller at the remotely located equipment. The remote equipment controller then takes the a part of the MIN and gives these numbers to a PLC at the remote equipment controller. The PLC then memory maps the part of the MIN to a specific function to be carried out by the remote equipment controller. Then the remote equipment controller carriers out specified functions at the remote equipment.

Owner:MUSCO

Scalable DMA remapping on a computer bus

InactiveUS20060277348A1Eliminate needMemory architecture accessing/allocationMemory systemsMemory addressMemory map

A system for addressing bus components comprises a bus controller component that controls access between a CPU and a memory address space. A plurality of bus components connected to said bus controller over a bus are addressable via a memory mapped address within the address space. An address translation table is stored on at least one of the plurality of bus components. The bus translation table stores a translation between a virtual address and a real address.

Owner:MICROSOFT TECH LICENSING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com