Low latency device interconnect using remote memory access with segmented queues

A low-latency, queuing technology, applied in the field of communication between computing devices, can solve problems such as target failure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

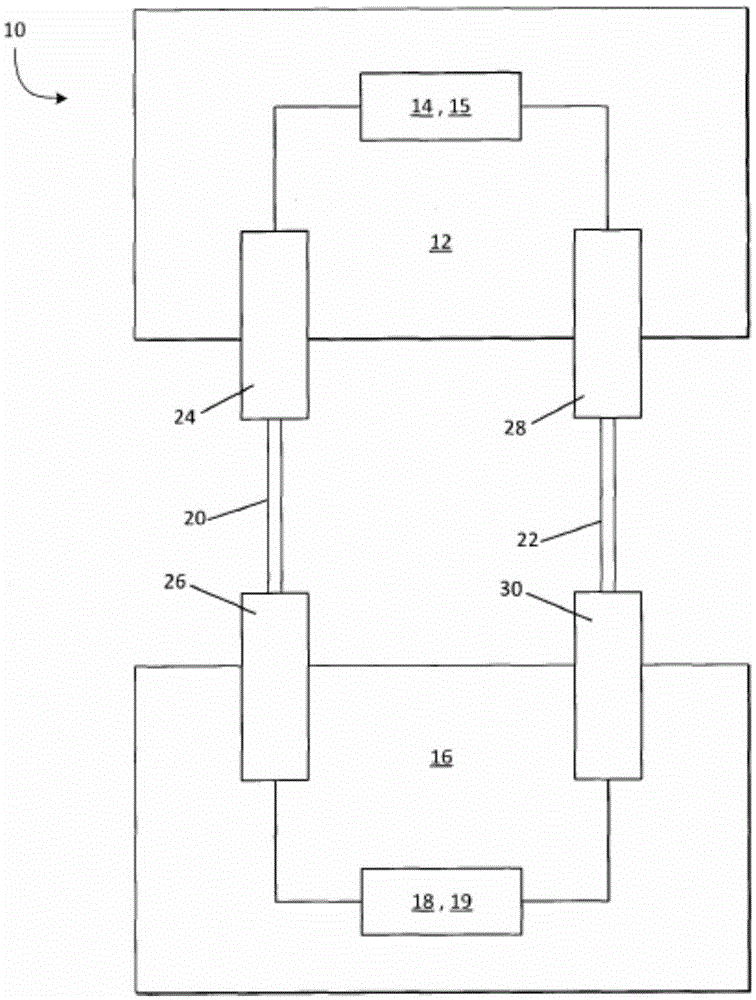

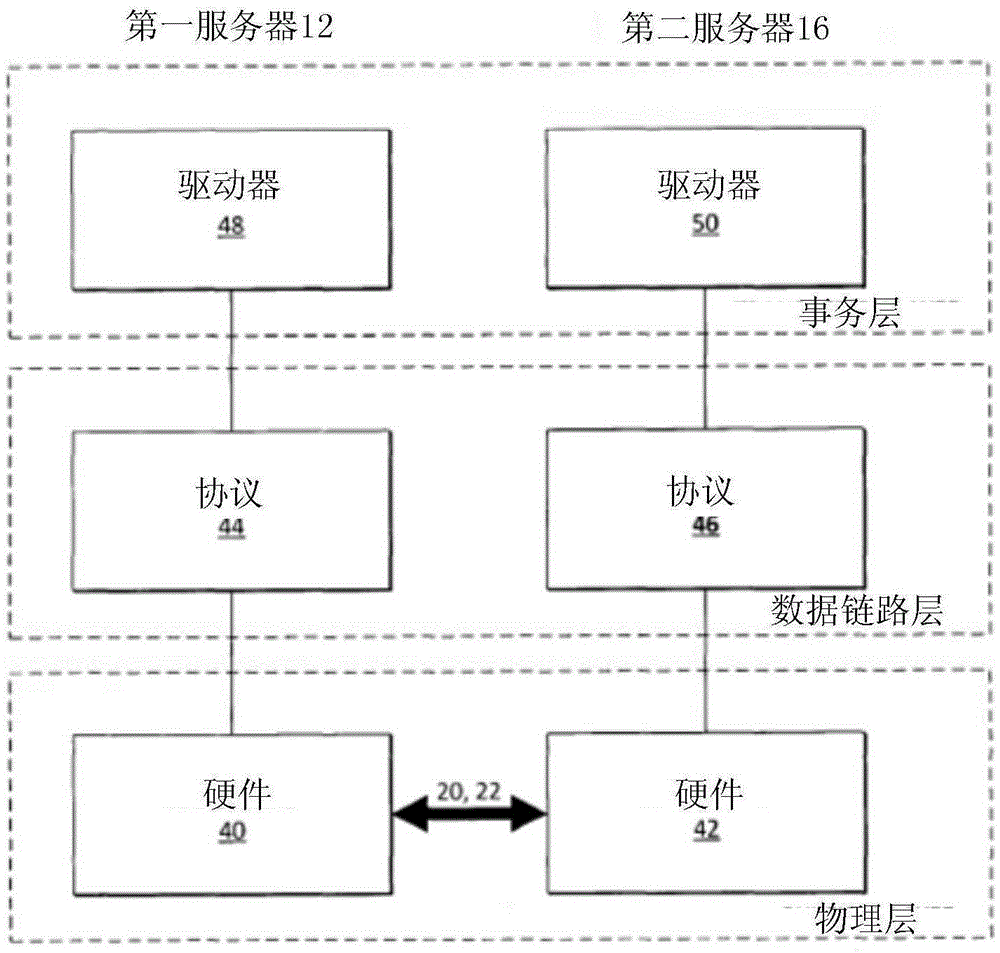

[0024] now refer to figure 1 , a system for a low latency data communication link between computing devices during normal operation is shown generally at 10 . It should be understood that system 10 is an illustrative example and that various systems contemplated for low-latency data communication links between computing devices will be apparent to those skilled in the art. System 10 includes a first computing device 12 having a first processor 14 and memory 15 and a second computing device 16 having a second processor 18 and memory 19 . The computing devices 12 , 16 are interconnected by a first link 20 and a second link 22 .

[0025] The computing devices described herein may be computers, servers, or any similar devices. The term "computing device" is not intended to limit its scope.

[0026] More specifically, first computing device 12 also includes a first communication interface card 24 in communication with first processor 14, and second computing device 16 includes a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com