Patents

Literature

135results about How to "Reduce memory bandwidth" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

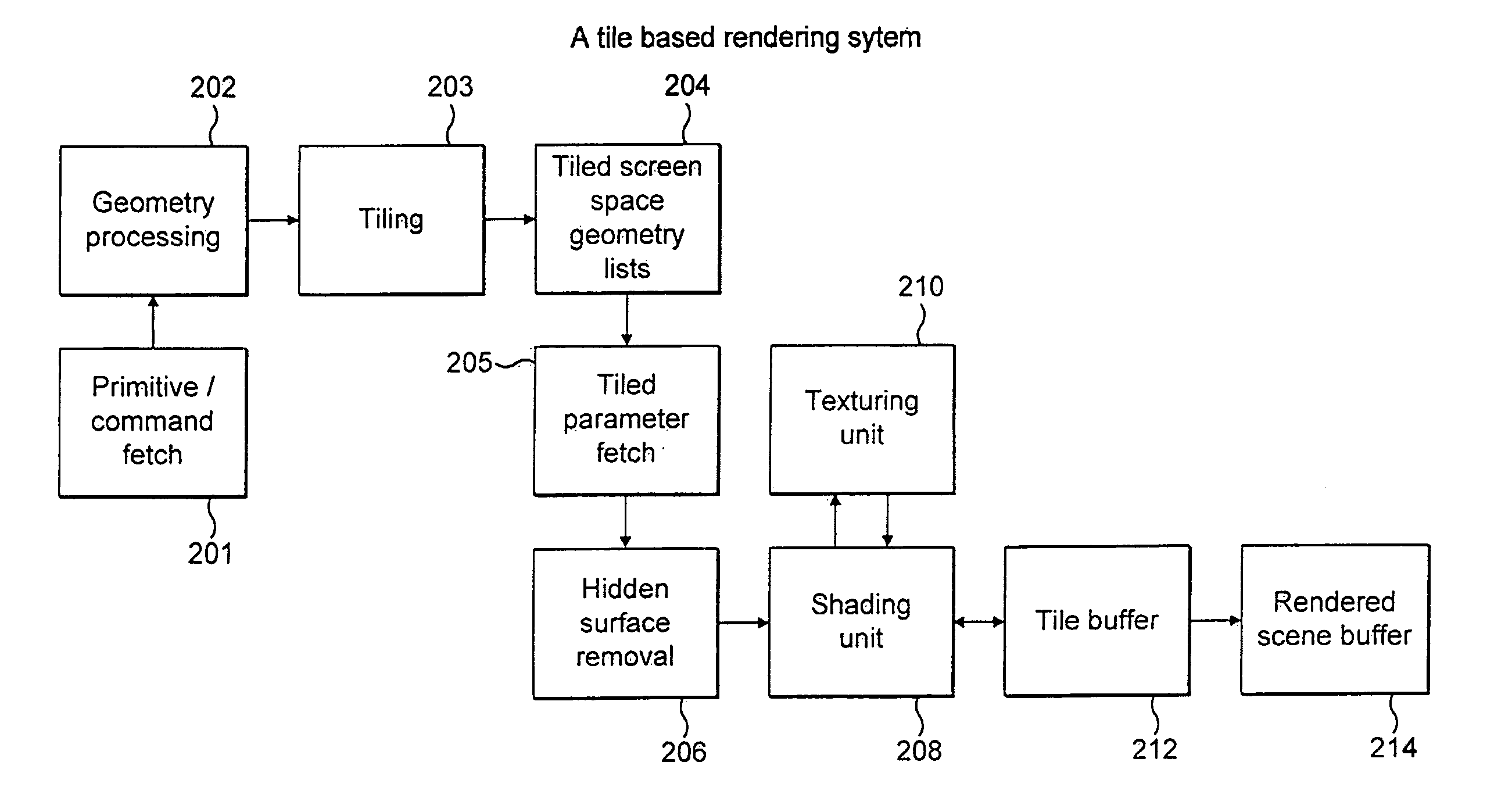

Rendering pipeline

InactiveUS7170515B1EfficientlyReduce memory bandwidth3D-image rendering3D modellingComputational scienceVisibility

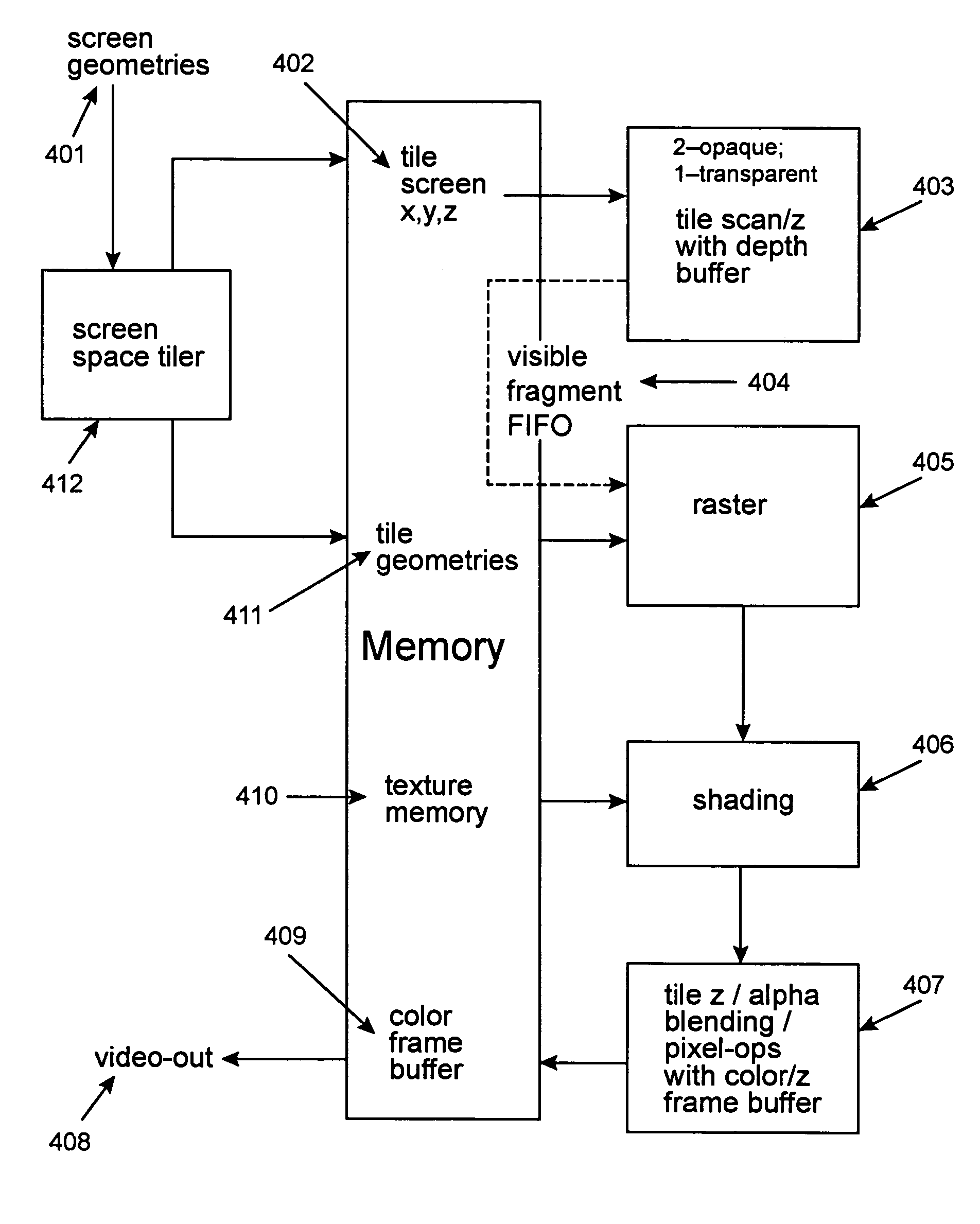

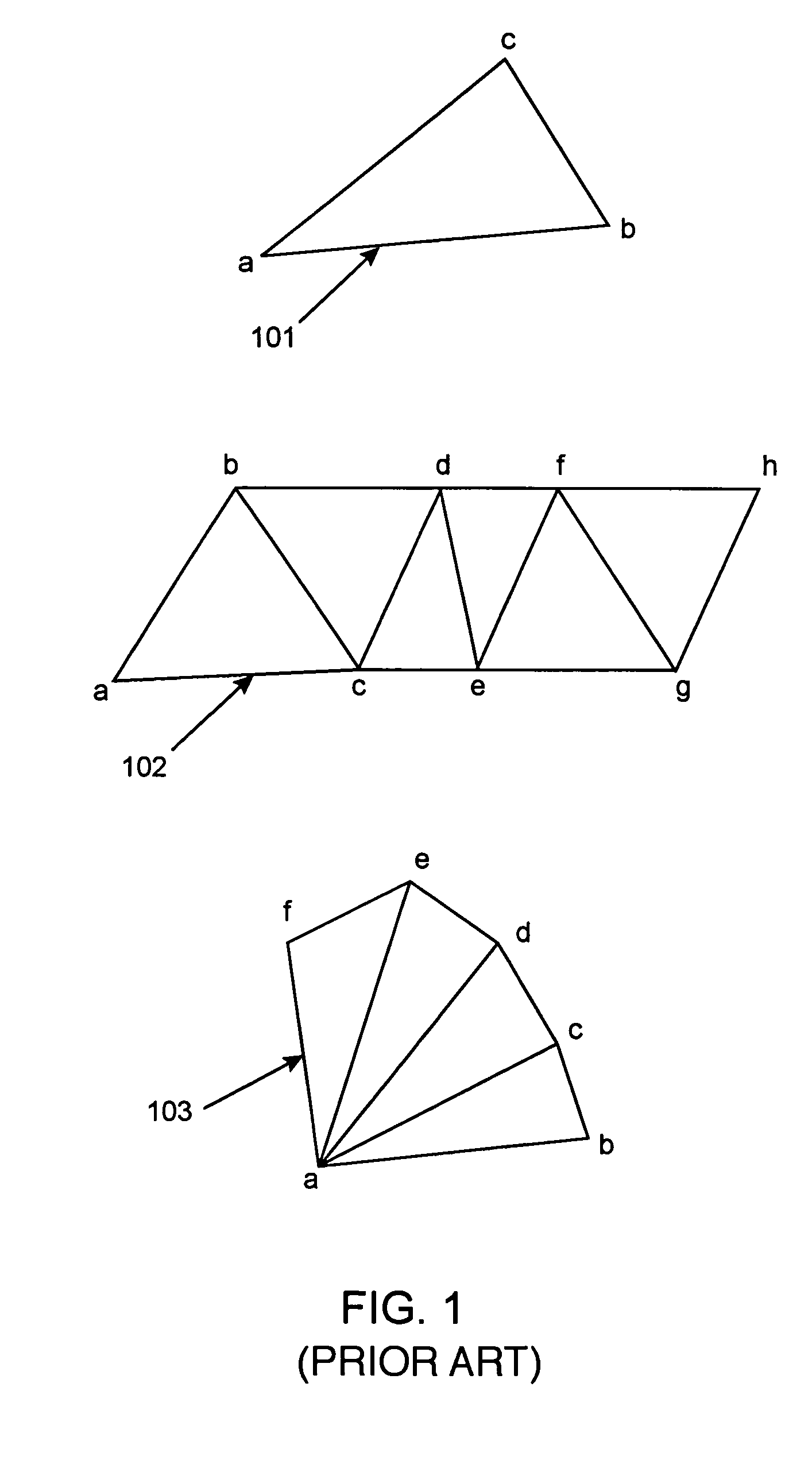

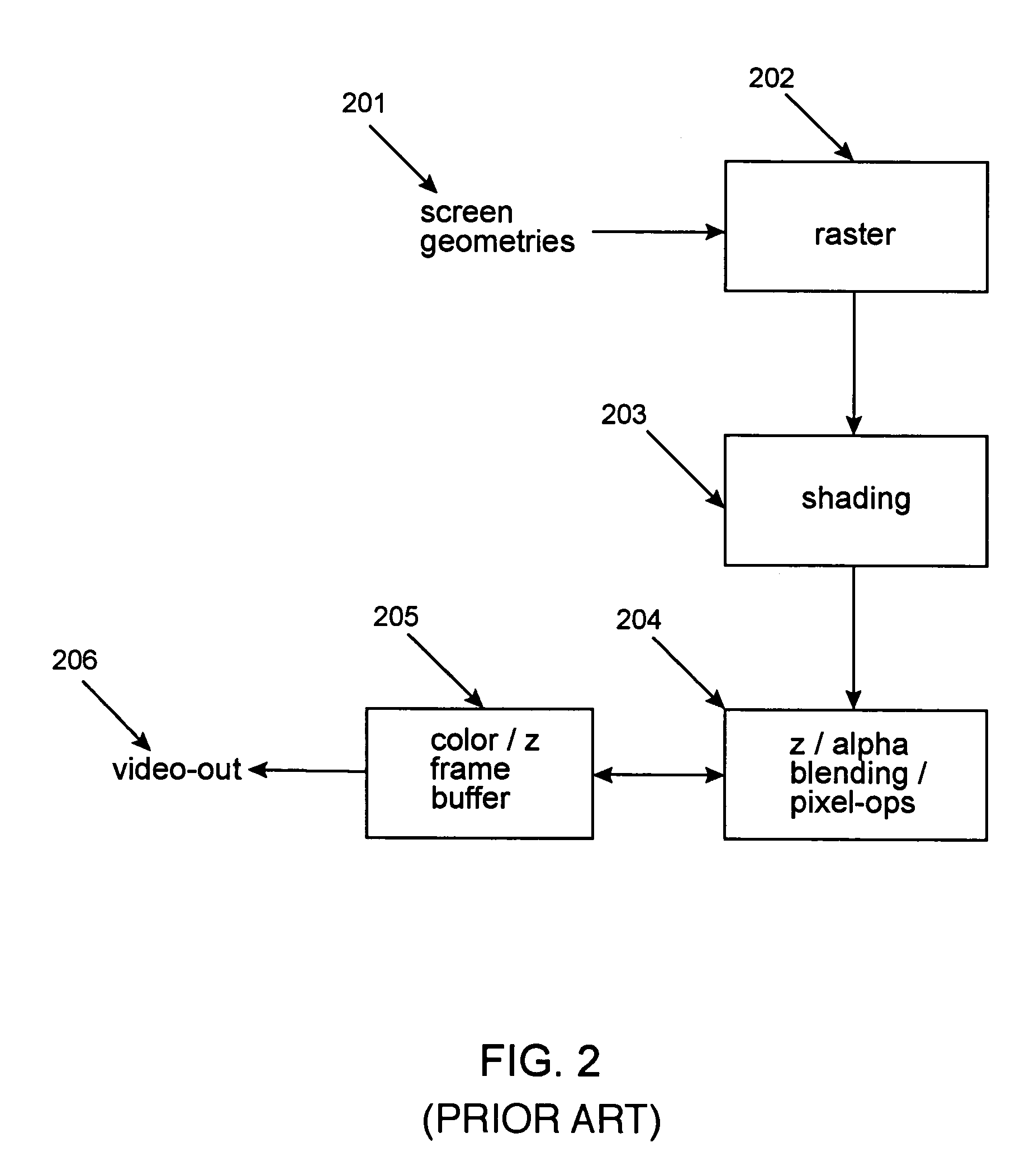

A rendering pipeline system for a computer environment uses screen space tiling (SST) to eliminate the memory bandwidth bottleneck due to frame buffer access and performs screen space tiling efficiently, while avoiding the breaking up of primitives. The system also reduces the buffering size required by SST. High quality, full-scene anti-aliasing is easily achieved because only the on-chip multi-sample memory corresponding to a single tile of the screen is needed. The invention uses a double-z scheme that decouples the scan conversion / depth-buffer processing from the more general rasterization and shading processing through a scan / z engine. The scan / z engine externally appears as a fragment generator but internally resolves visibility and allows the rest of the rendering pipeline to perform setup for only visible primitives and shade only visible fragments. The resulting reduced raster / shading requirements can lead to reduced hardware costs because one can process all parameters with generic parameter computing units instead of with dedicated parameter computing units. The invention processes both opaque and transparent geometries.

Owner:NVIDIA CORP

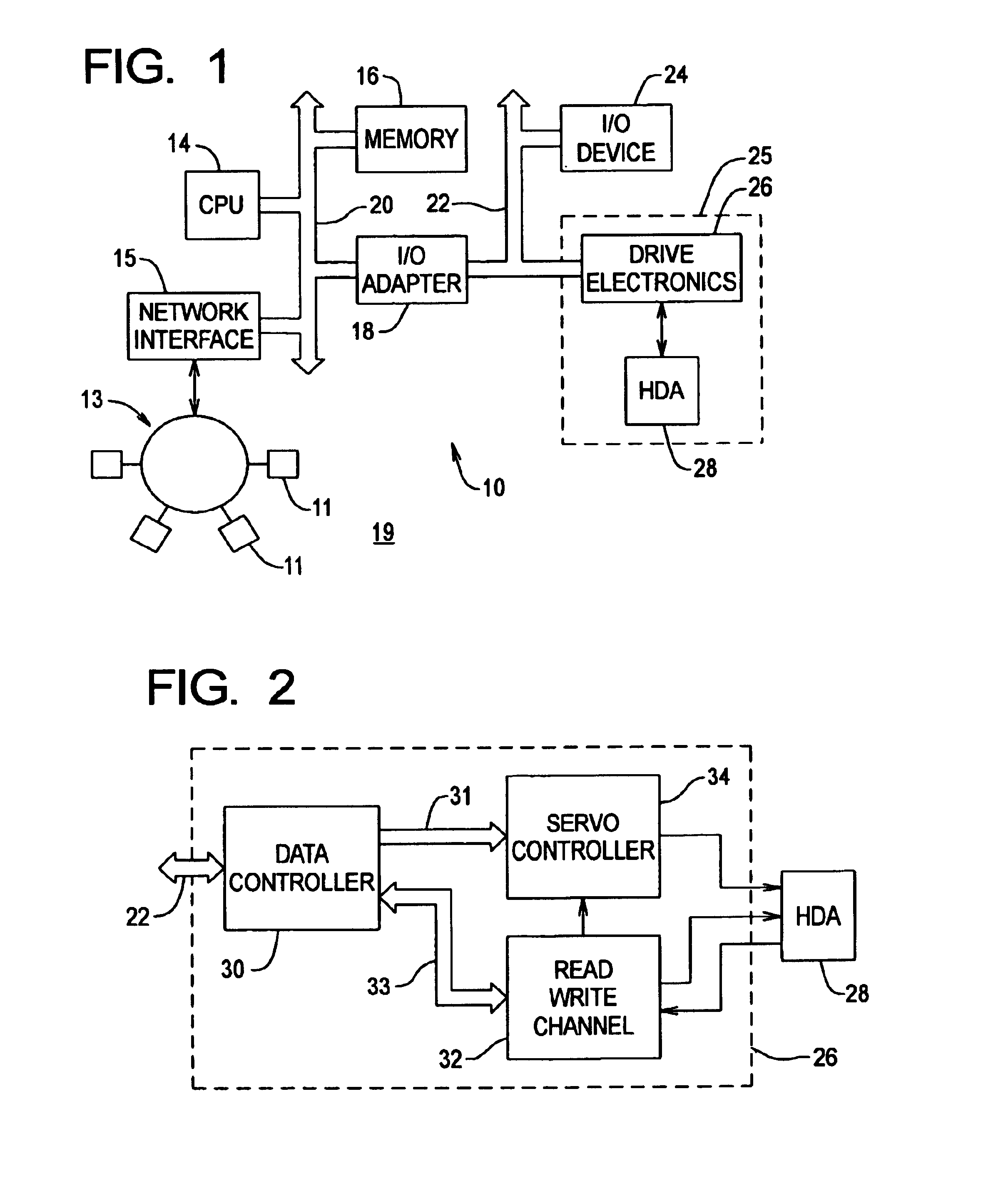

Data checksum method and apparatus

InactiveUS6964008B1Reduce memory bandwidthReduce data transfer latencyCode conversionCoding detailsChecksumData store

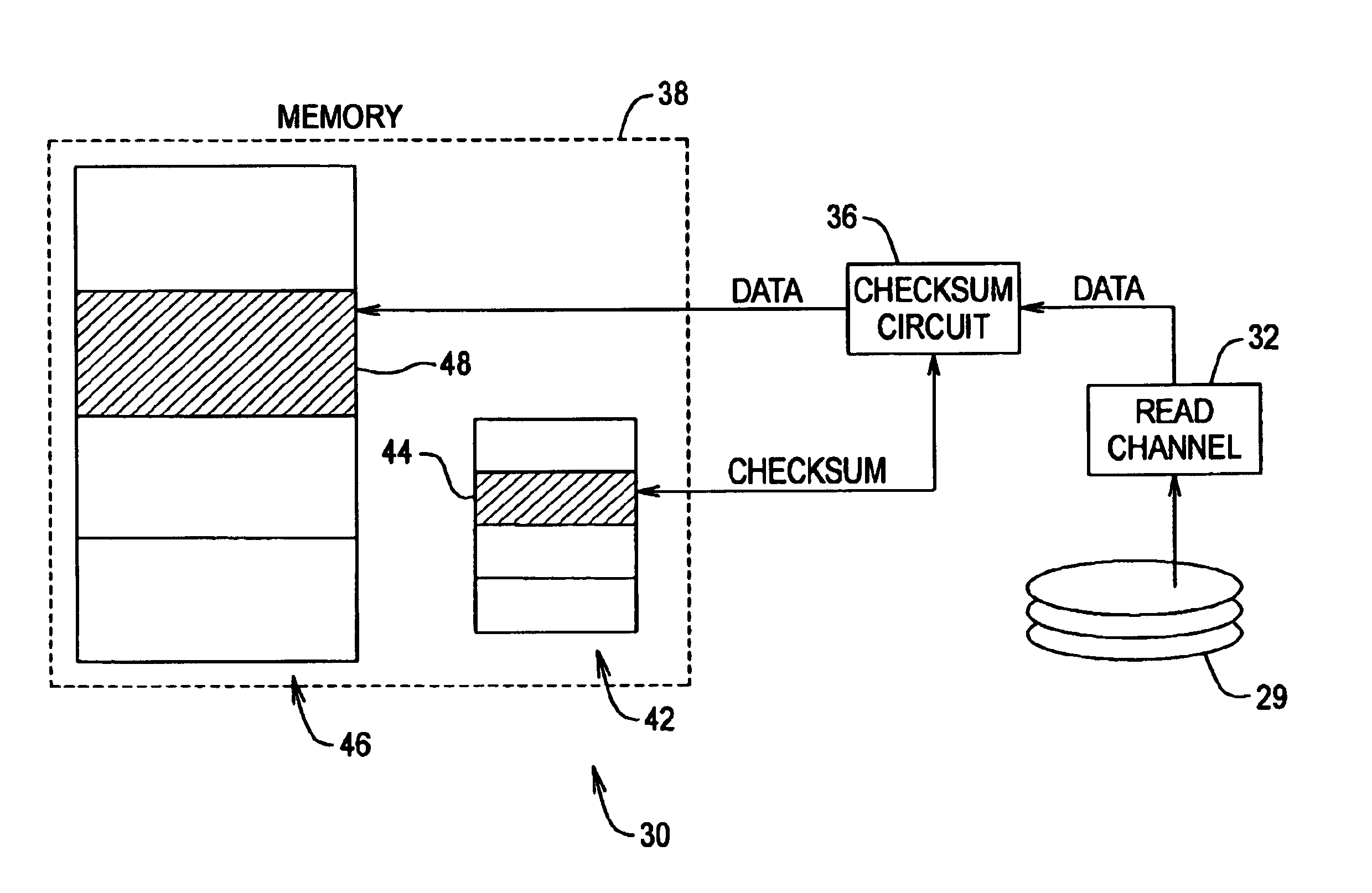

A method and apparatus for generating checksum values for data segments retrieved from a data storage device for transfer into a buffer memory, is provided. A checksum list is maintained to contain checksum values, wherein the checksum list includes a plurality of entries corresponding to the data segments stored in the buffer memory, each entry for storing a checksum value for a corresponding data segment stored in the buffer memory. For each data segment retrieved from the storage device: a checksum value is calculated for that data segment using a checksum circuit; an entry in the checksum list corresponding to that data segment is selected; the checksum value is stored in the selected entry in the checksum list; and that data segment is stored in the buffer memory. Preferably, the checksum circuit calculates the checksum for each data segment as that data segment is transferred into the buffer memory.

Owner:MAXTOR

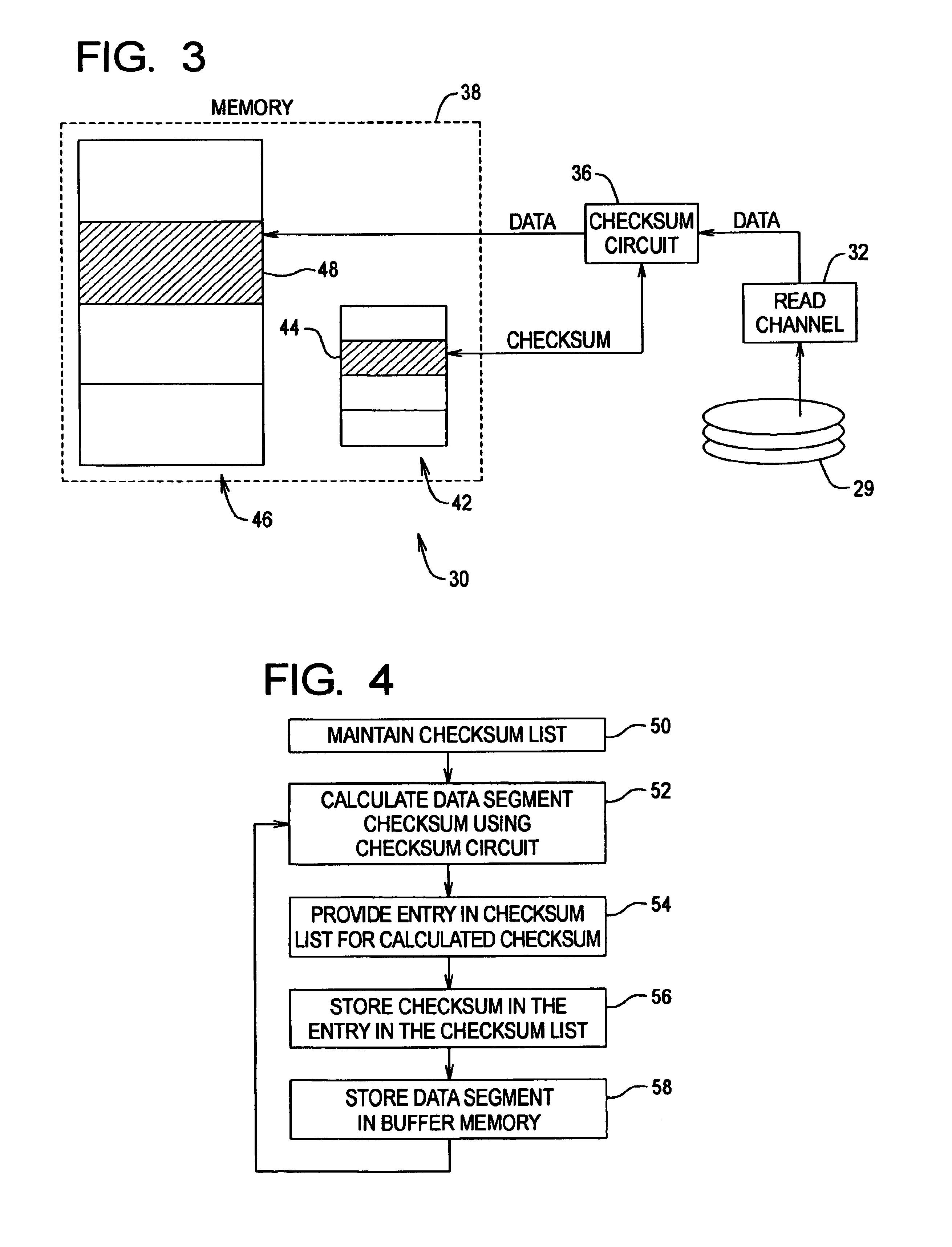

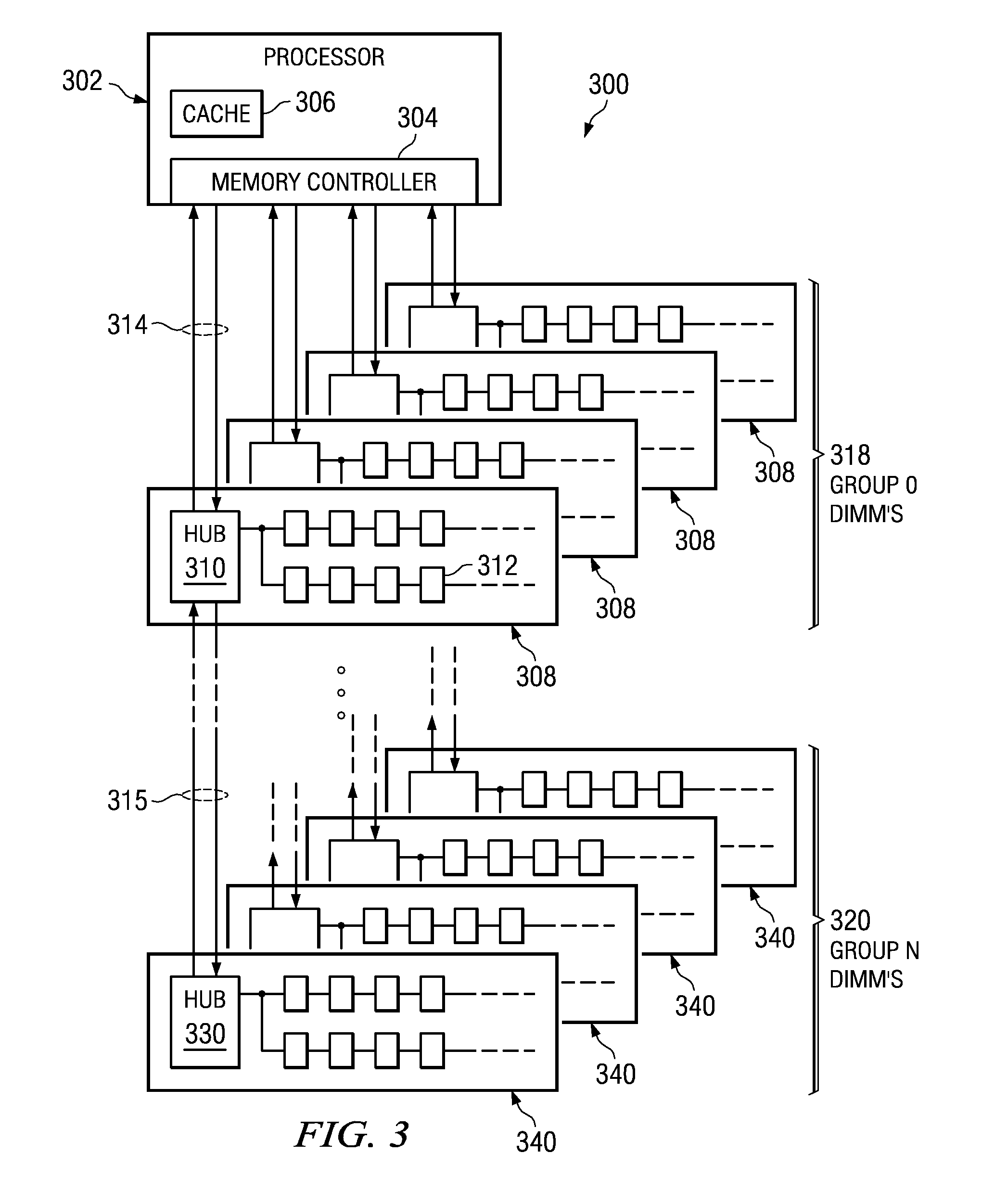

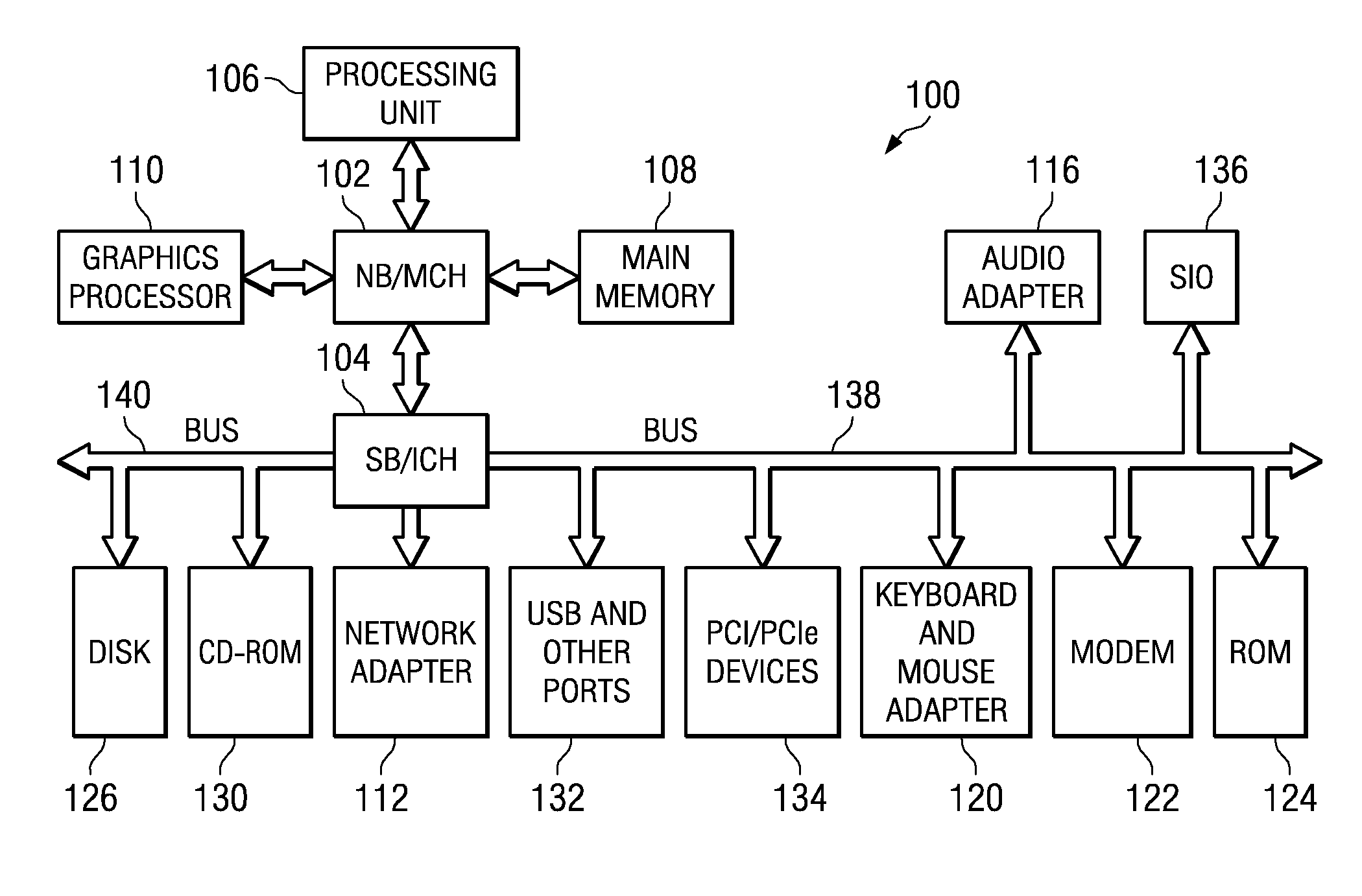

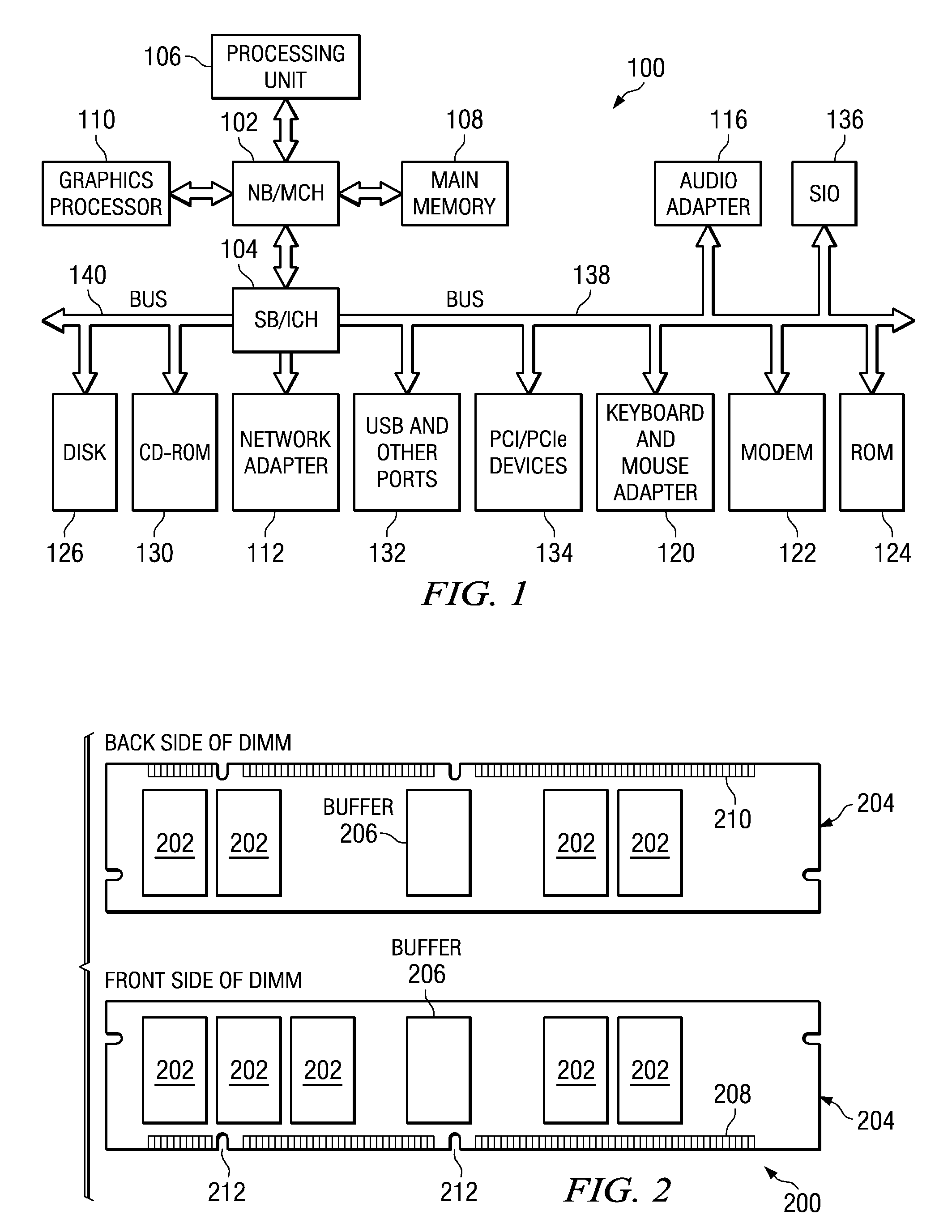

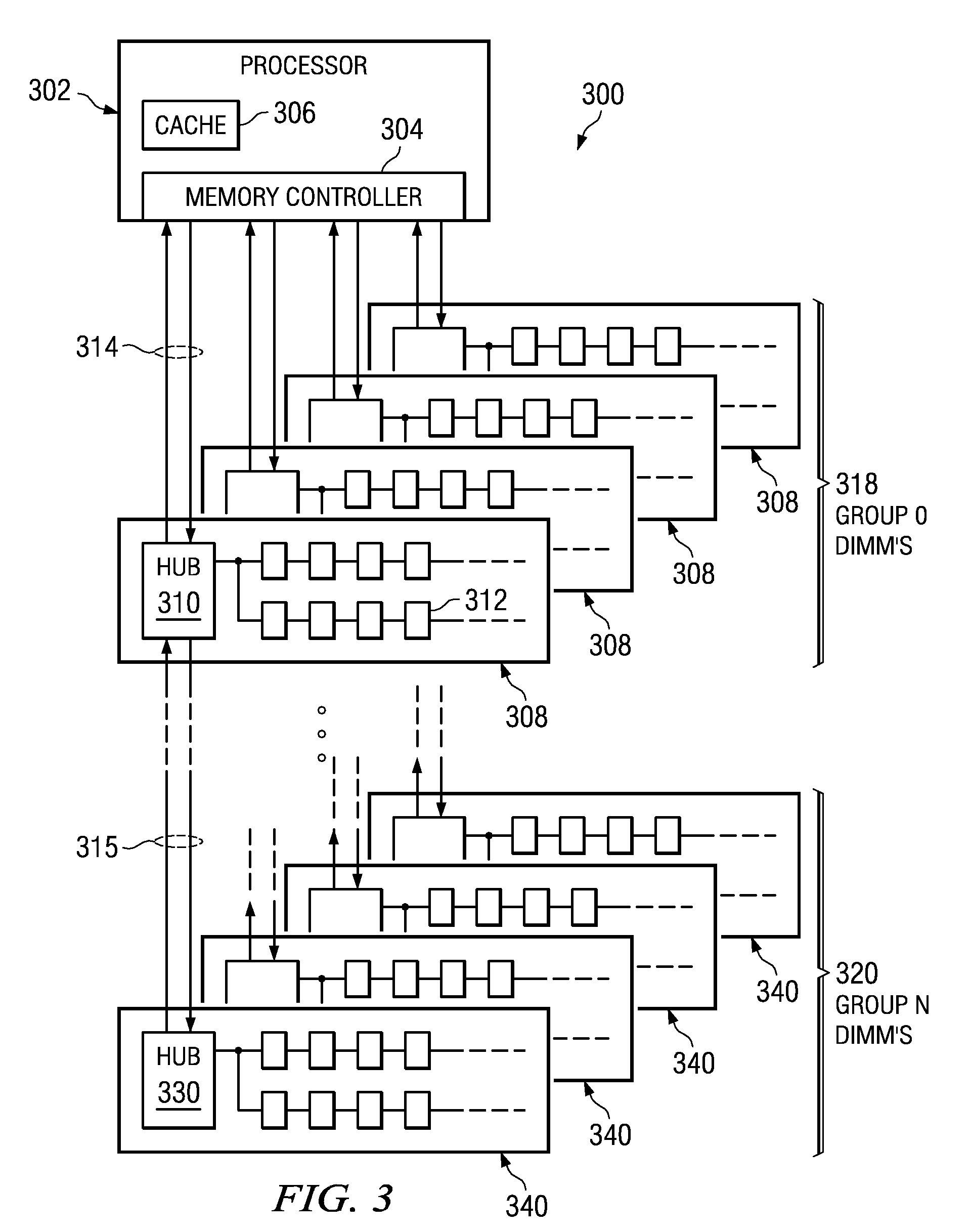

System to Increase the Overall Bandwidth of a Memory Channel By Allowing the Memory Channel to Operate at a Frequency Independent from a Memory Device Frequency

InactiveUS20090193201A1High bandwidthReduce operating frequencyDigital data processing detailsMemory systemsExternal storageMemory controller

A memory system is provided that increases the overall bandwidth of a memory channel by operating the memory channel at a independent frequency. The memory system comprises a memory hub device integrated in a memory module. The memory hub device comprises a command queue that receives a memory access command from an external memory controller via a memory channel at a first operating frequency. The memory system also comprises a memory hub controller integrated in the memory hub device. The memory hub controller reads the memory access command from the command queue at a second operating frequency. By receiving the memory access command at the first operating frequency and reading the memory access command at the second operating frequency an asynchronous boundary is implemented. Using the asynchronous boundary, the memory channel operates at a maximum designed operating bandwidth, which is independent of the second operating frequency.

Owner:IBM CORP

System to Reduce Latency by Running a Memory Channel Frequency Fully Asynchronous from a Memory Device Frequency

InactiveUS20090193203A1High bandwidthReduce operating frequencyDigital data processing detailsMemory systemsExternal storageMemory controller

A memory system is provided that reduces latency by running a memory channel fully asynchronous from a memory device frequency. The memory system comprises a memory hub device integrated in a memory module. The memory hub device comprises a command queue that receives a memory access command from an external memory controller via a memory channel at a first operating frequency. The memory system also comprises a memory hub controller integrated in the memory hub device. The memory hub controller reads the memory access command from the command queue at a second operating frequency. By receiving the memory access command at the first operating frequency and reading the memory access command at the second operating frequency an asynchronous boundary is implemented. The first operating frequency is a maximum designed operating frequency of the memory channel and the first operating frequency is independent of the second operating frequency.

Owner:IBM CORP

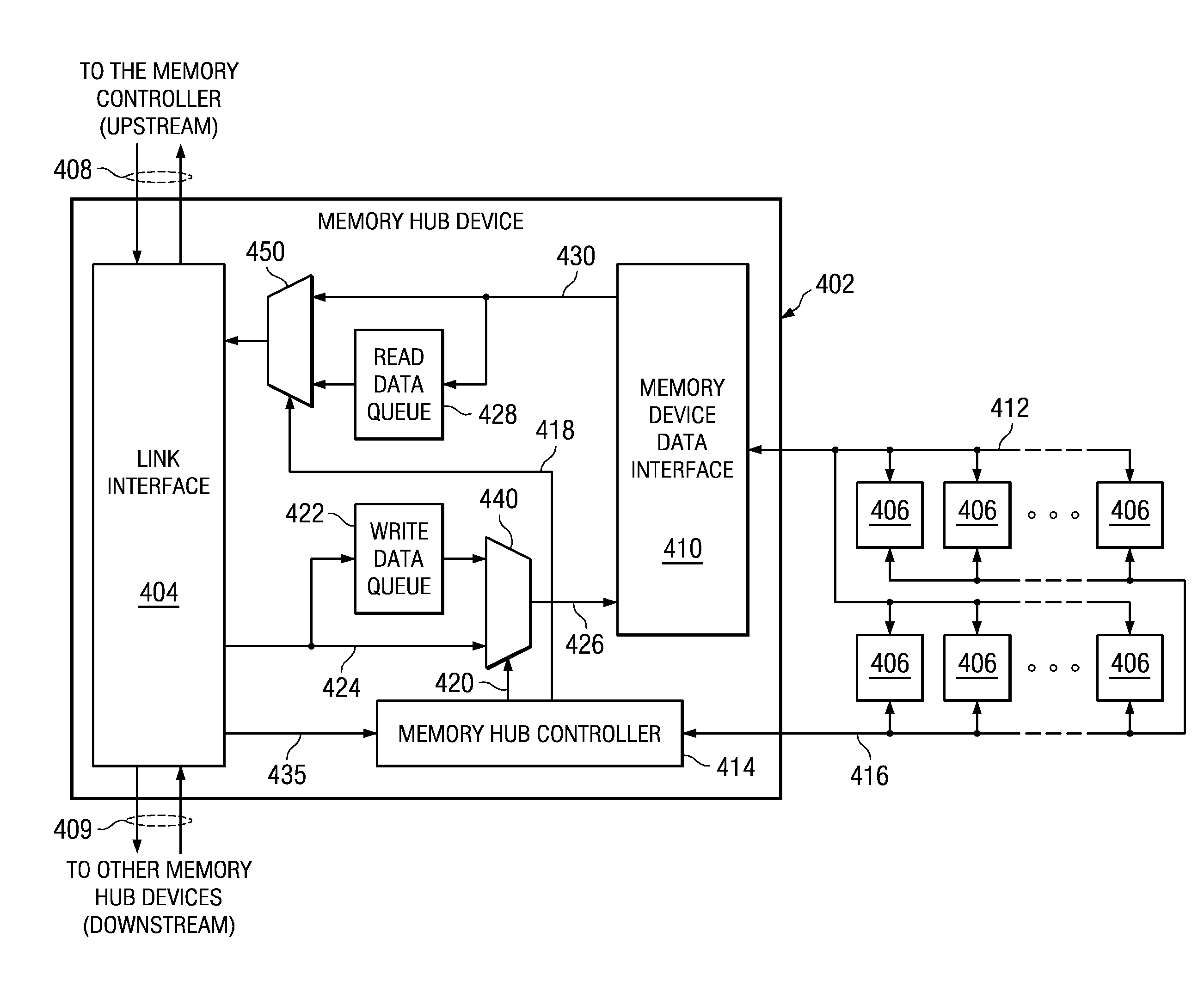

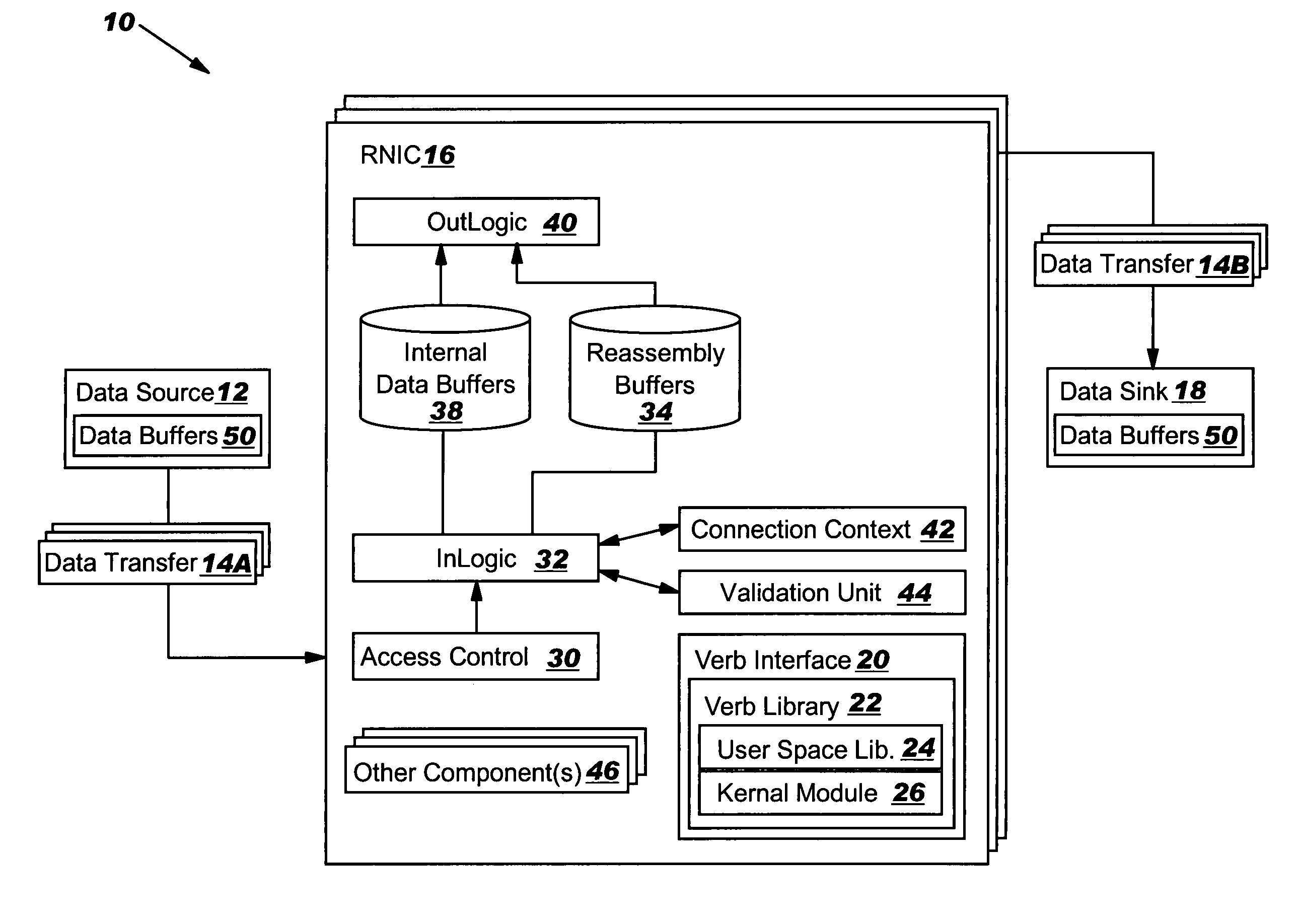

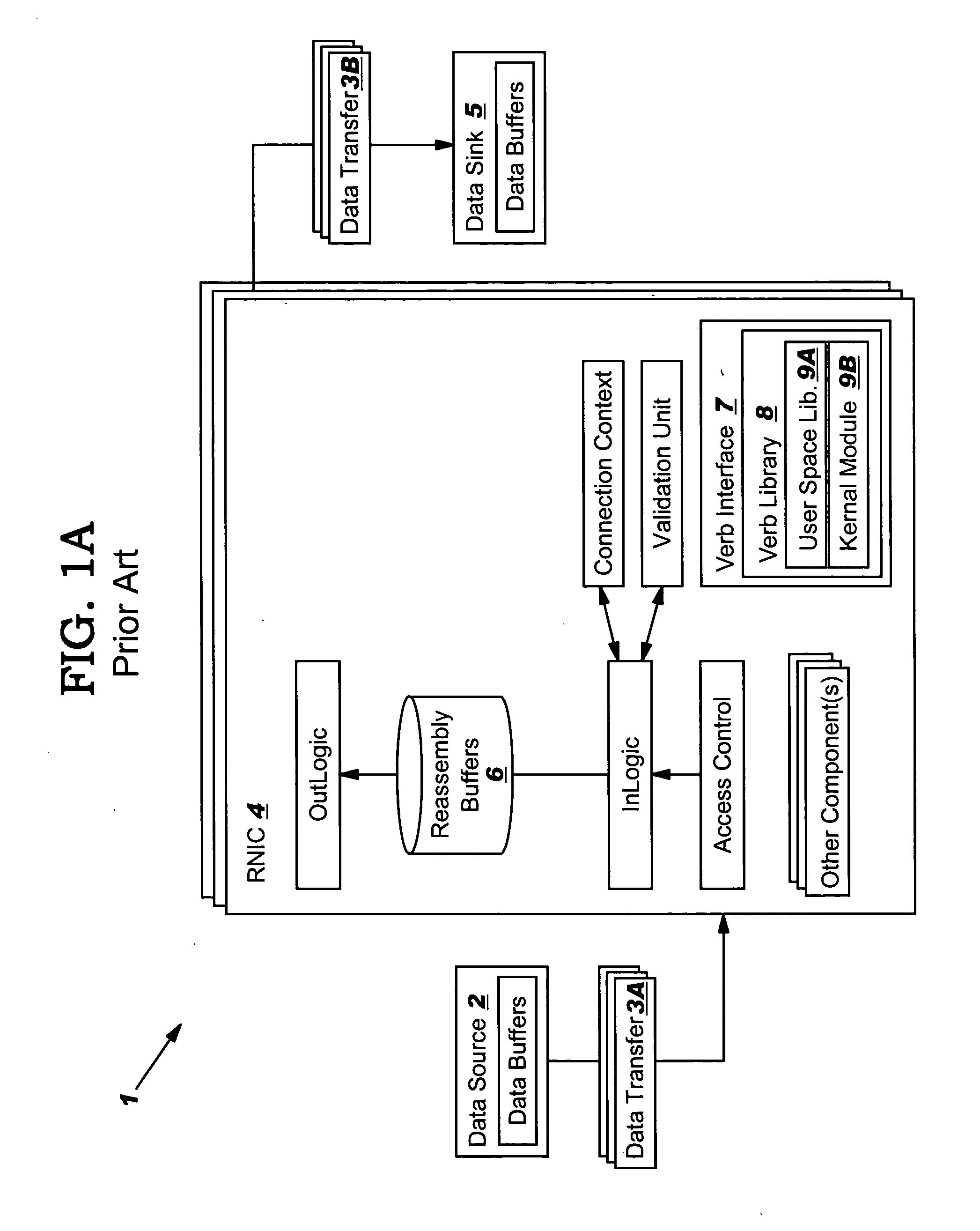

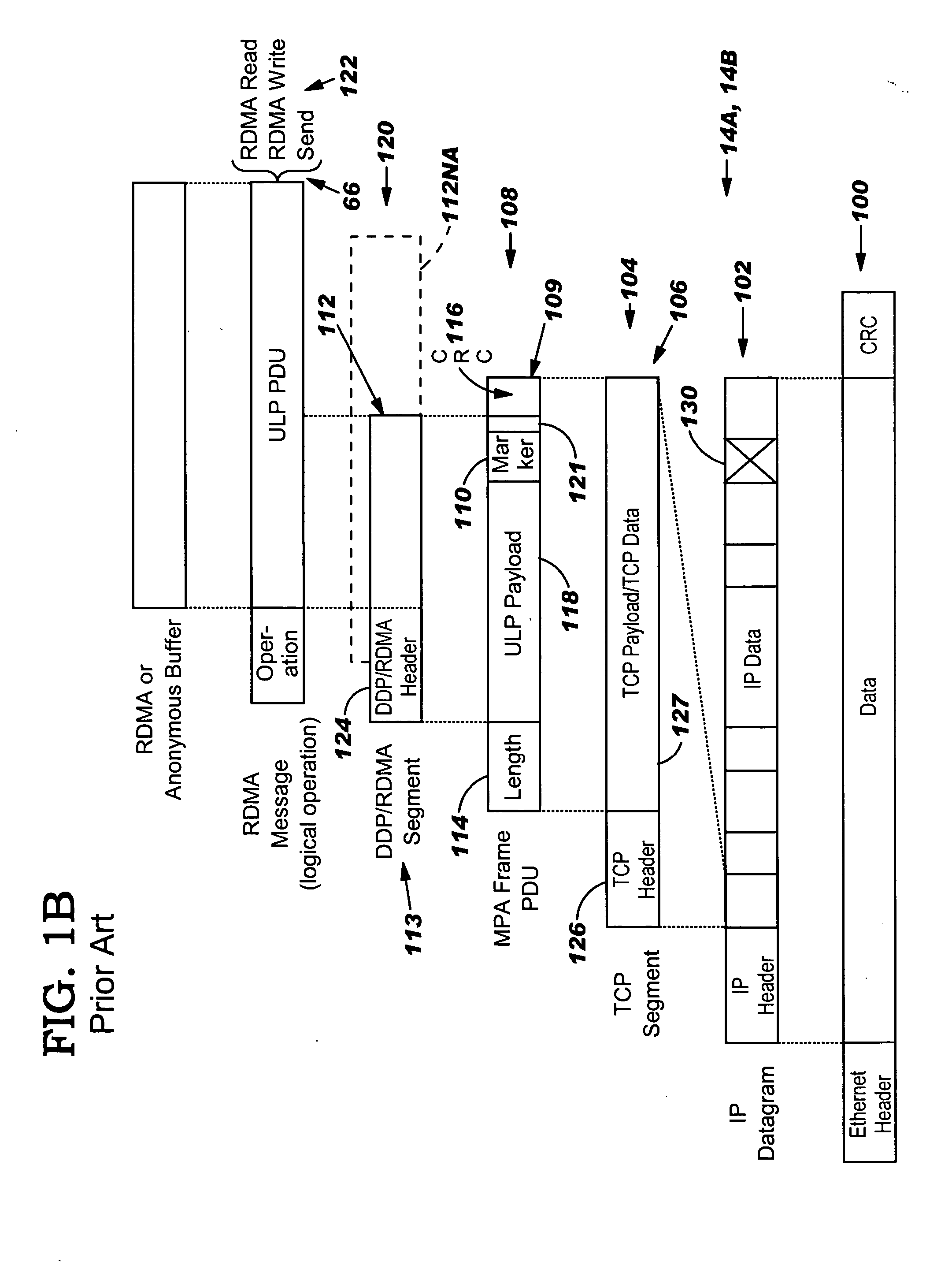

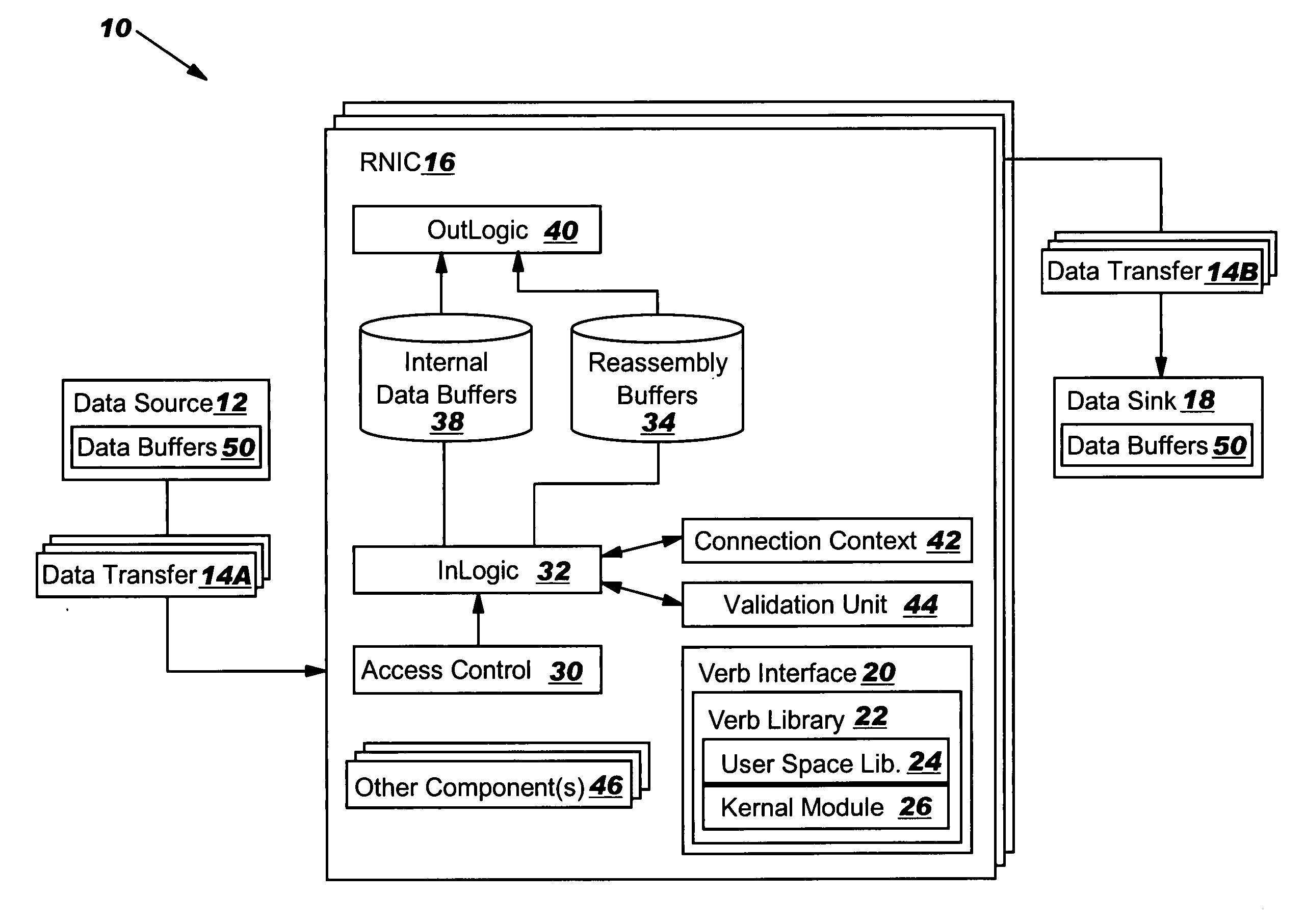

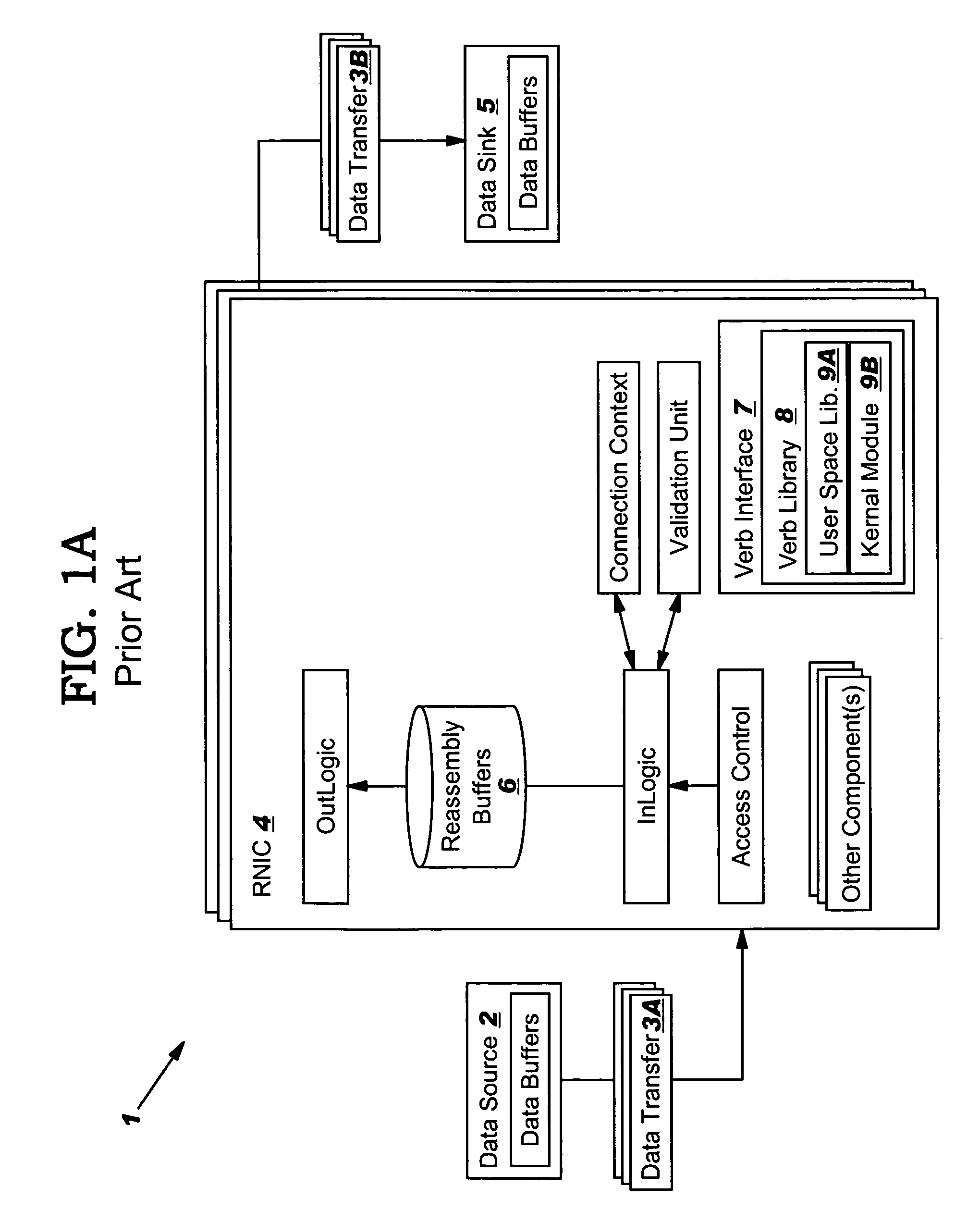

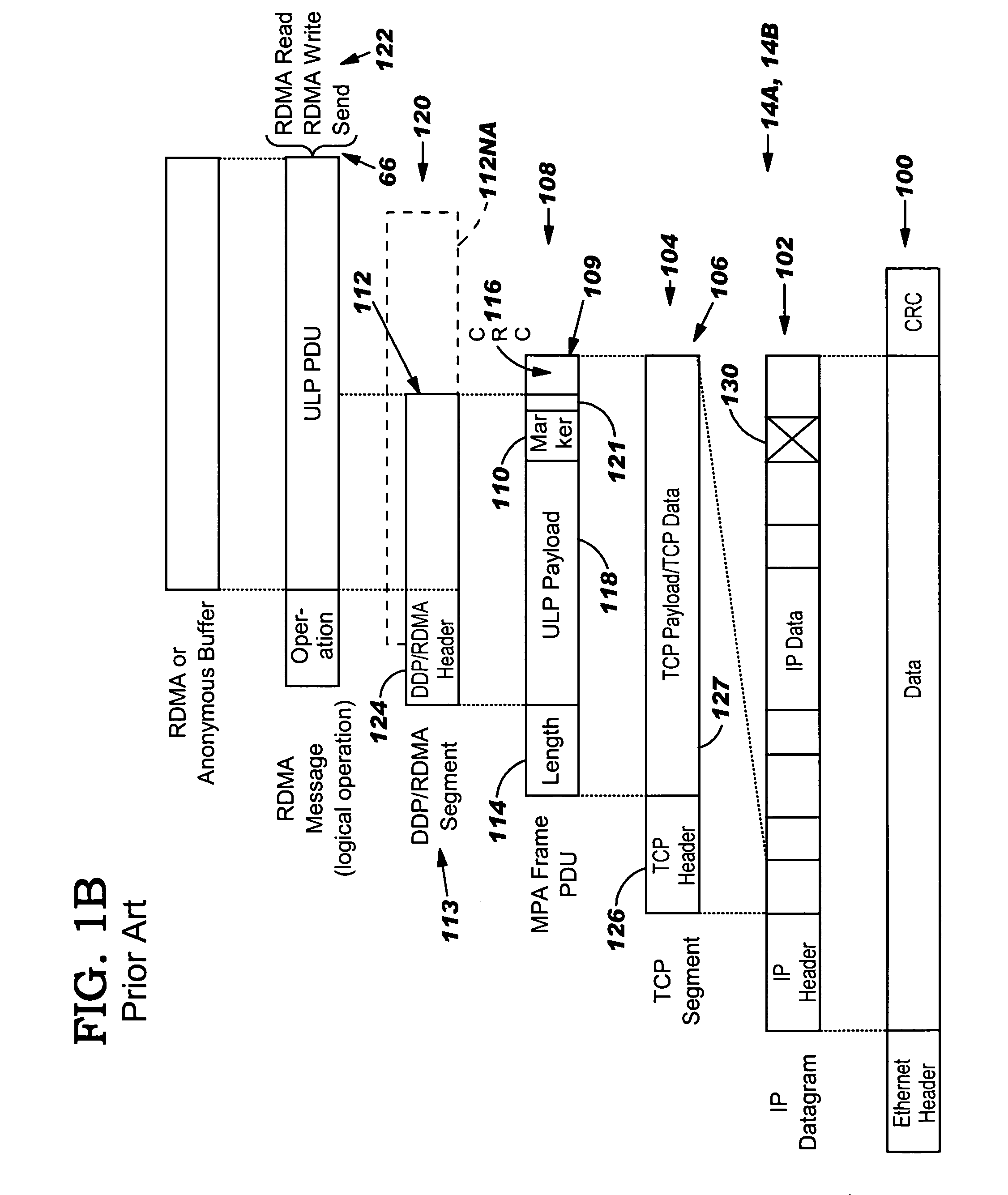

Data transfer error checking

InactiveUS20050149817A1Reduce memory bandwidthReduce latencyCode conversionError detection onlyConnection typeData placement

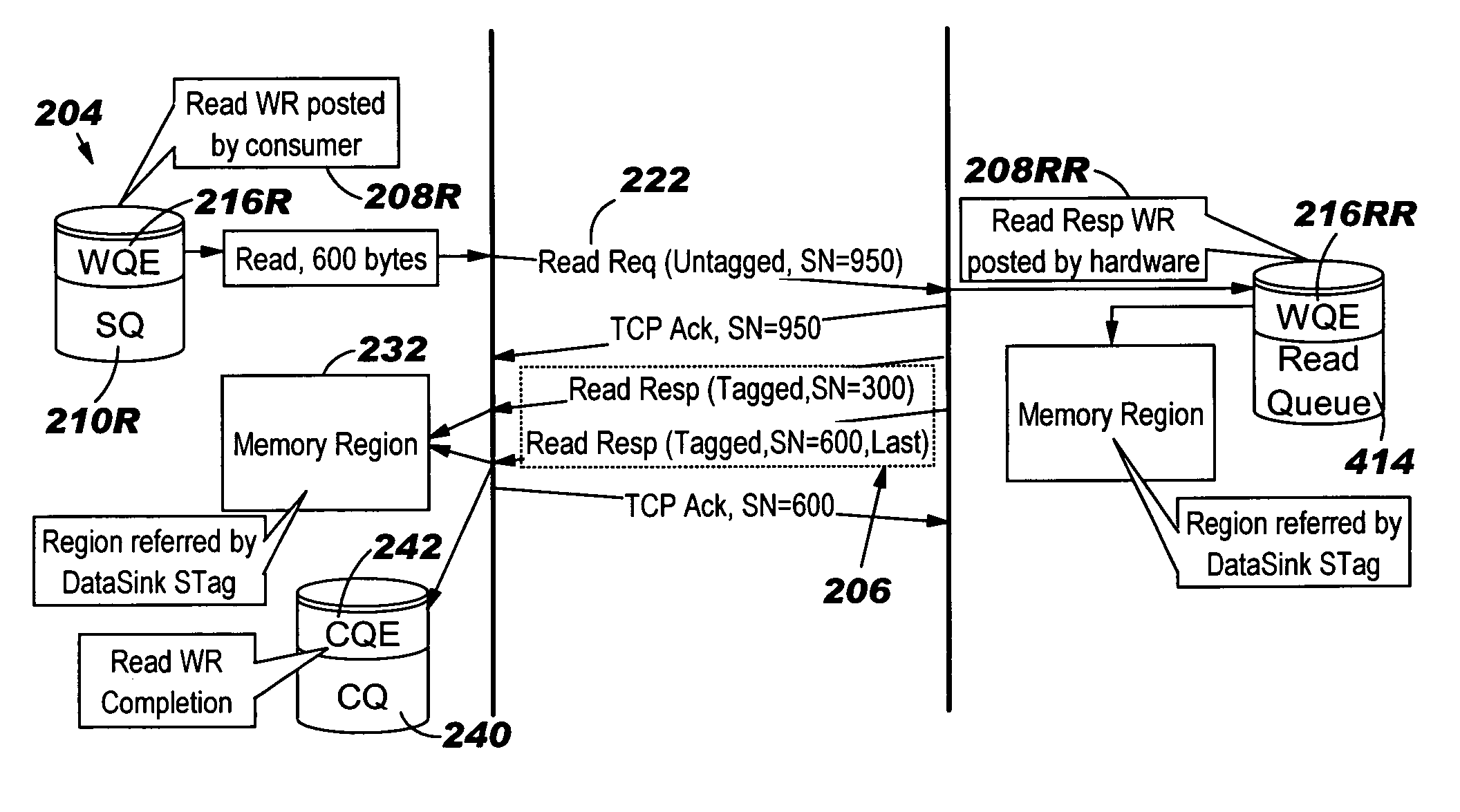

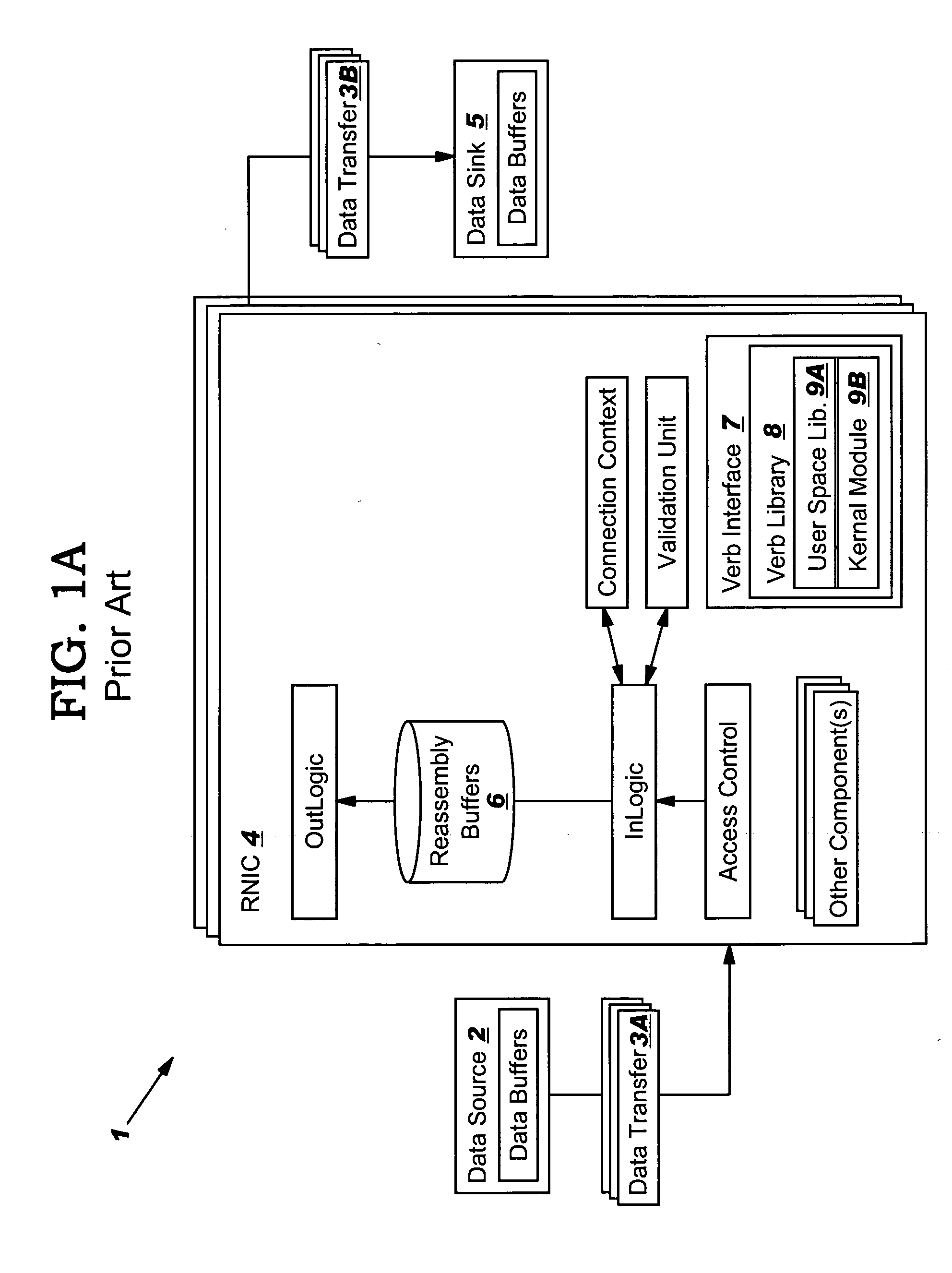

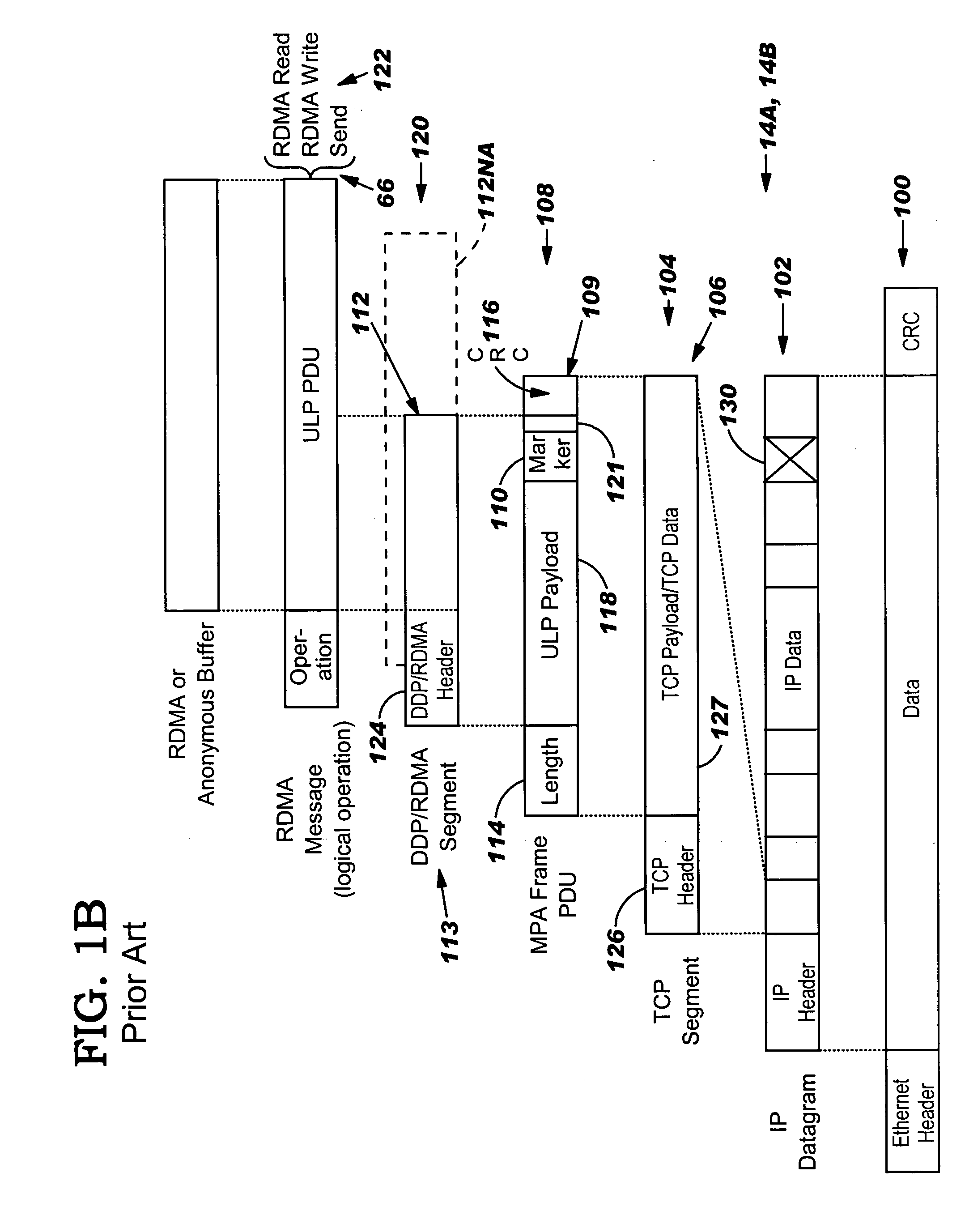

An RNIC implementation that performs direct data placement to memory where all segments of a particular connection are aligned, or moves data through reassembly buffers where all segments of a particular connection are non-aligned. The type of connection that cuts-through without accessing the reassembly buffers is referred to as a “Fast” connection because it is highly likely to be aligned, while the other type is referred to as a “Slow” connection. When a consumer establishes a connection, it specifies a connection type. The connection type can change from Fast to Slow and back. The invention reduces memory bandwidth, latency, error recovery using TCP retransmit and provides for a “graceful recovery” from an empty receive queue. The implementation also may conduct CRC validation for a majority of inbound DDP segments in the Fast connection before sending a TCP acknowledgement (Ack) confirming segment reception.

Owner:IBM CORP

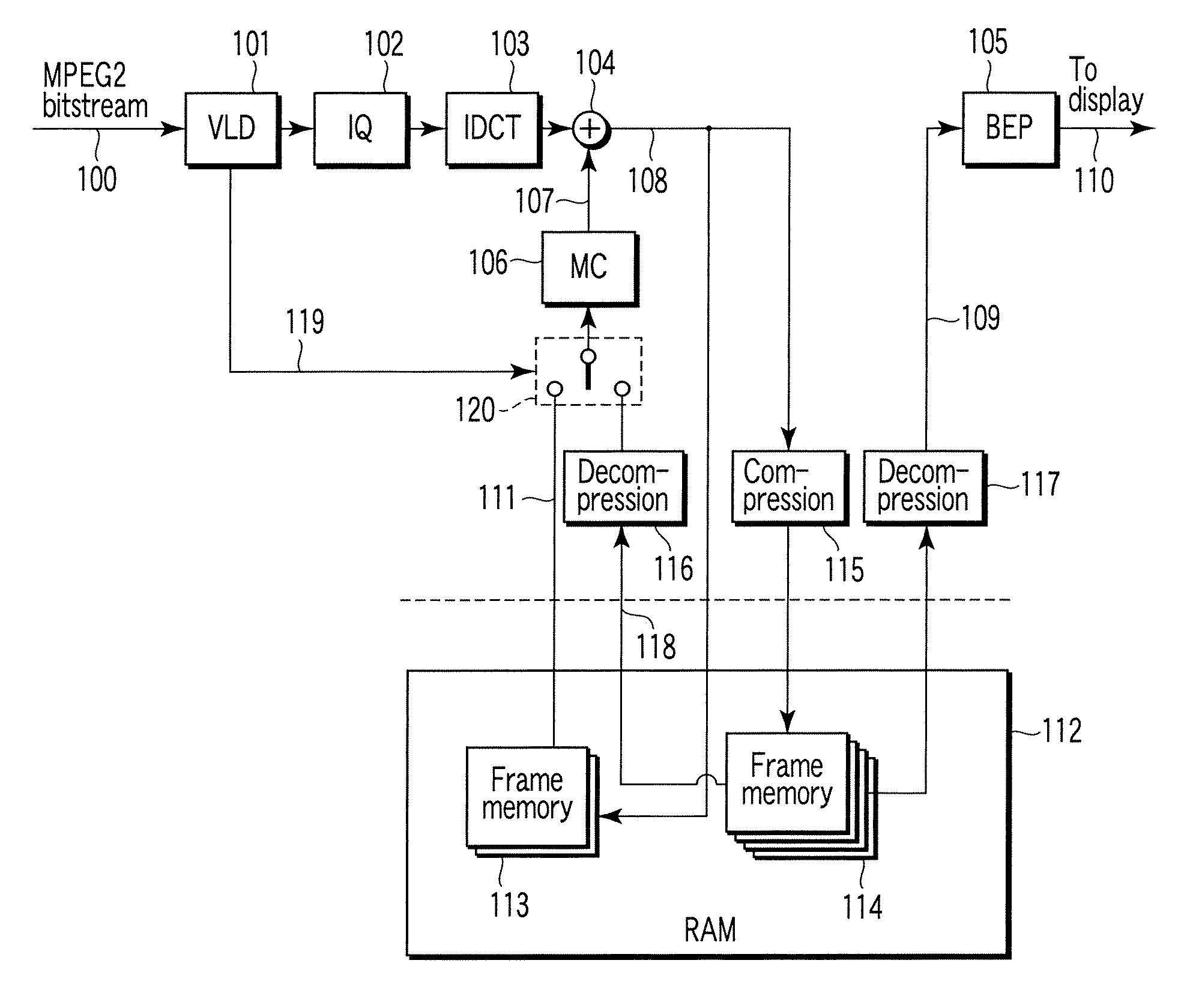

Video decoding method and apparatus

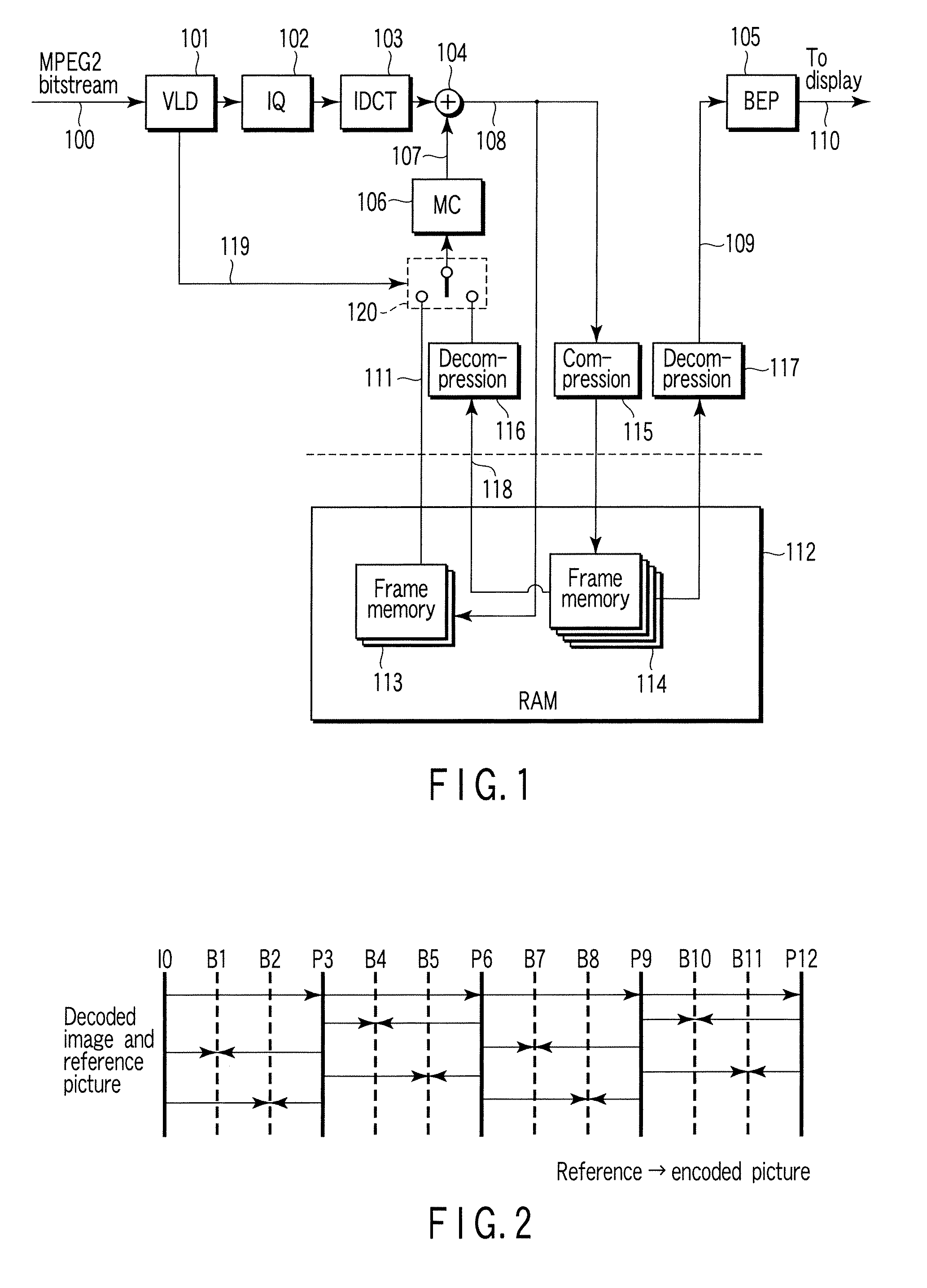

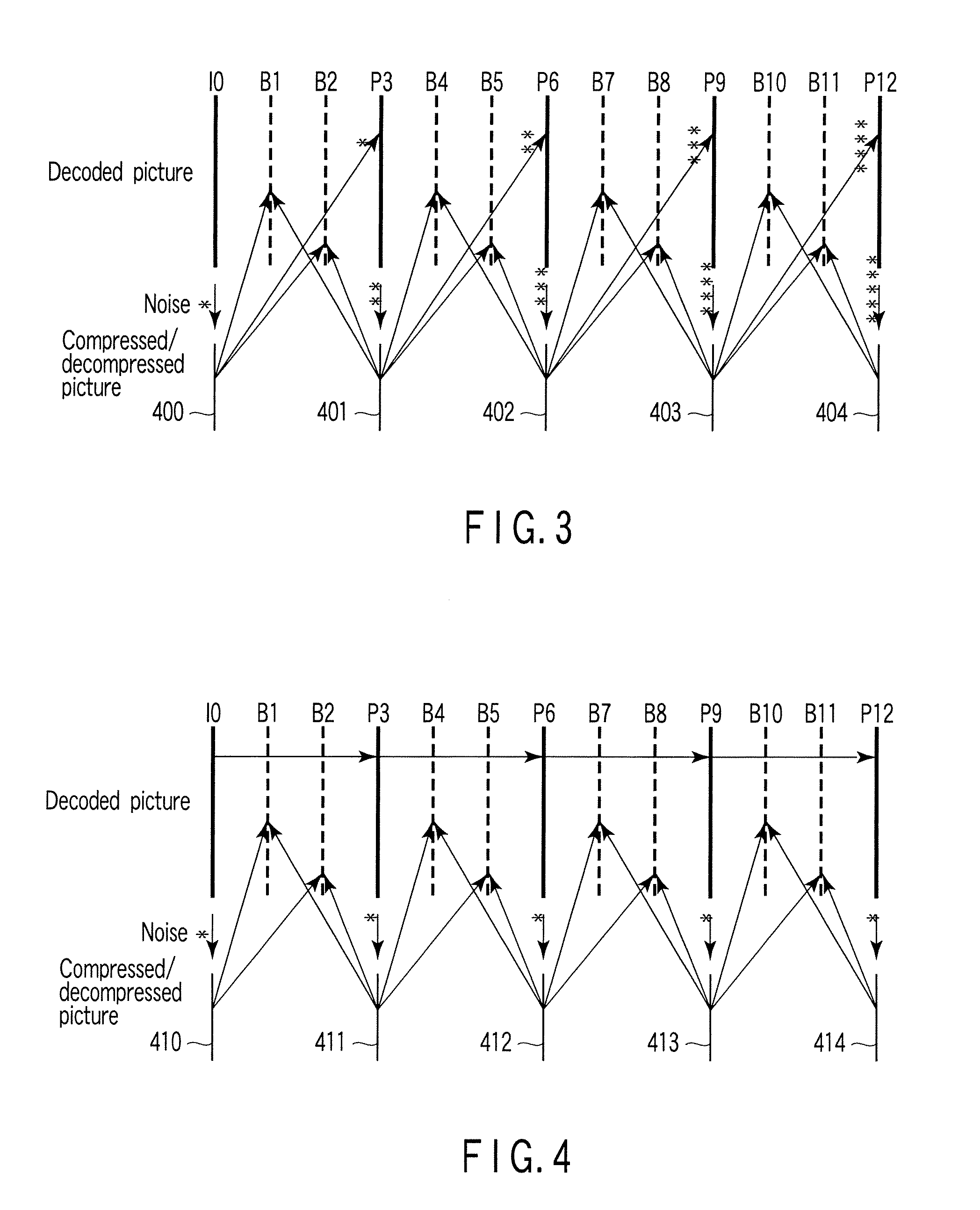

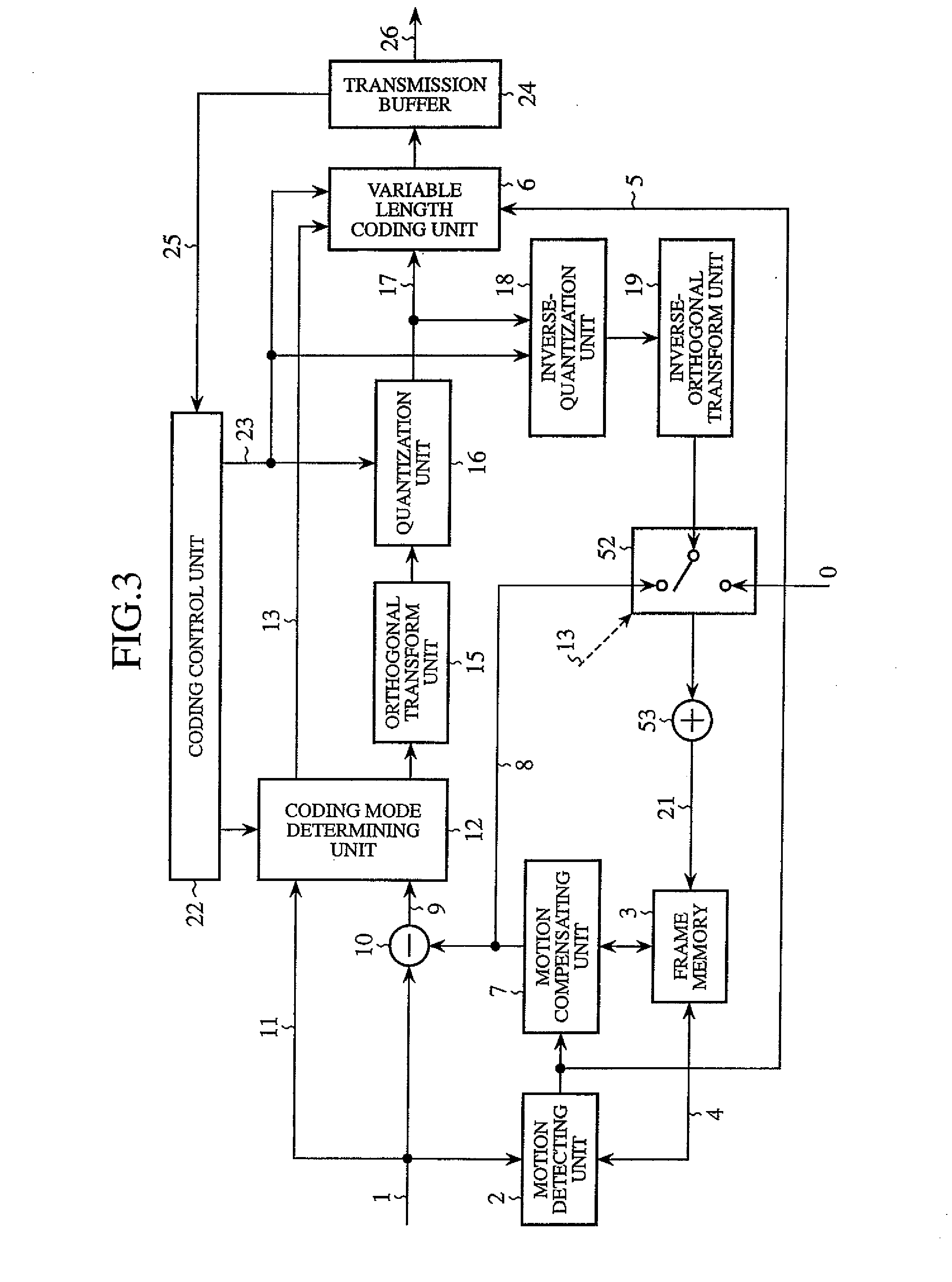

InactiveUS20070230572A1Reduce memory bandwidthColor television with pulse code modulationColor television with bandwidth reductionComputer hardwareCompression device

A video decoding apparatus includes a decoder to decode video encoded data using a predictive picture signal for a video signal, a compression device compressing the decoded picture signal, a first memory storing the decoded picture signal, a second memory storing the compressed picture signal, a decompression device decompressing the compressed picture signal read from the second memory, a selector selecting one of a decoded picture signal read from the first memory and a compressed / decompressed picture signal from the compression device as a reference picture signal according to at least one of a coding type of the video encoded data in picture unit and a prediction mode in block unit, and a motion compensator performing motion compensation on the reference picture signal to generate a predictive picture signal.

Owner:KK TOSHIBA

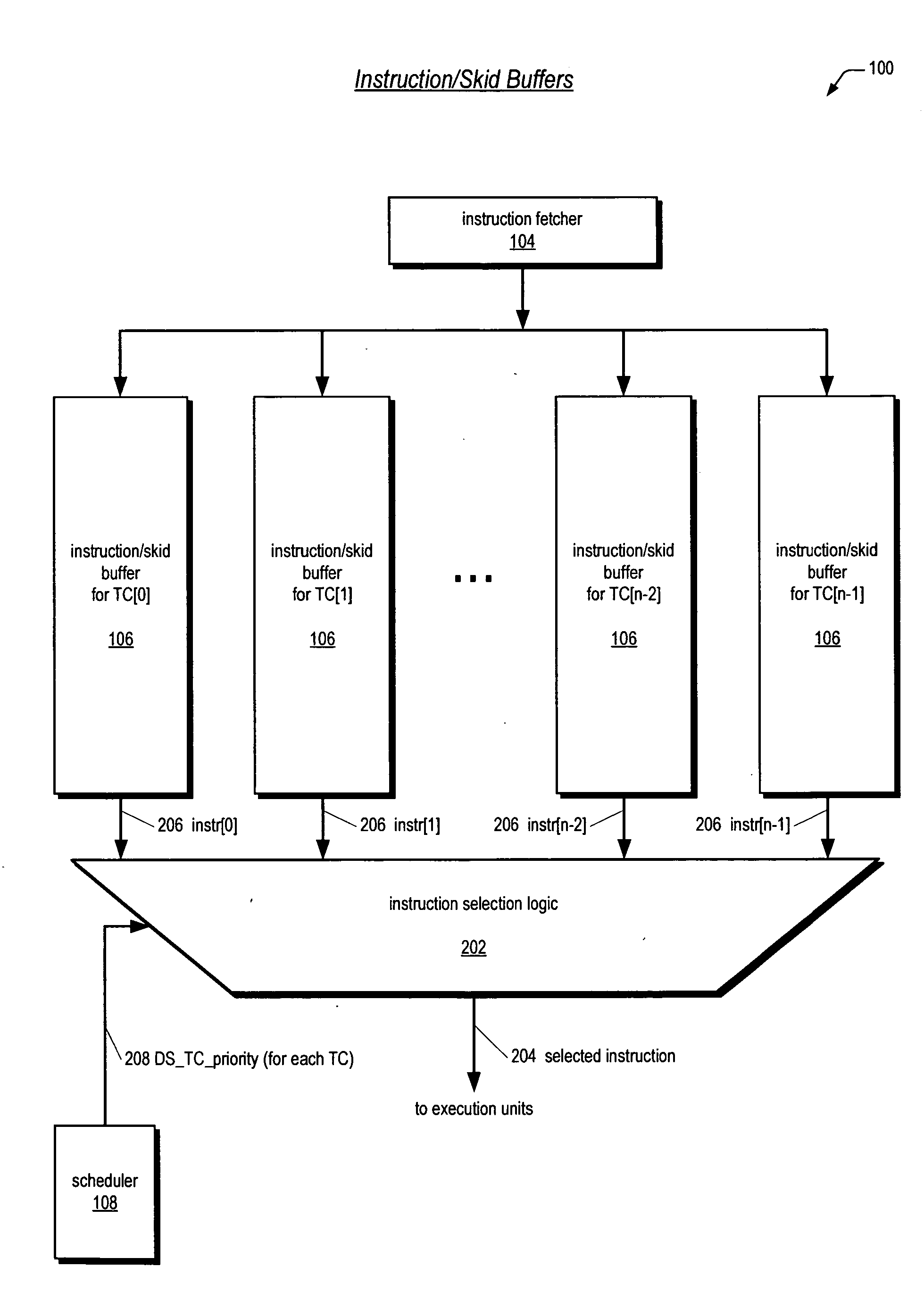

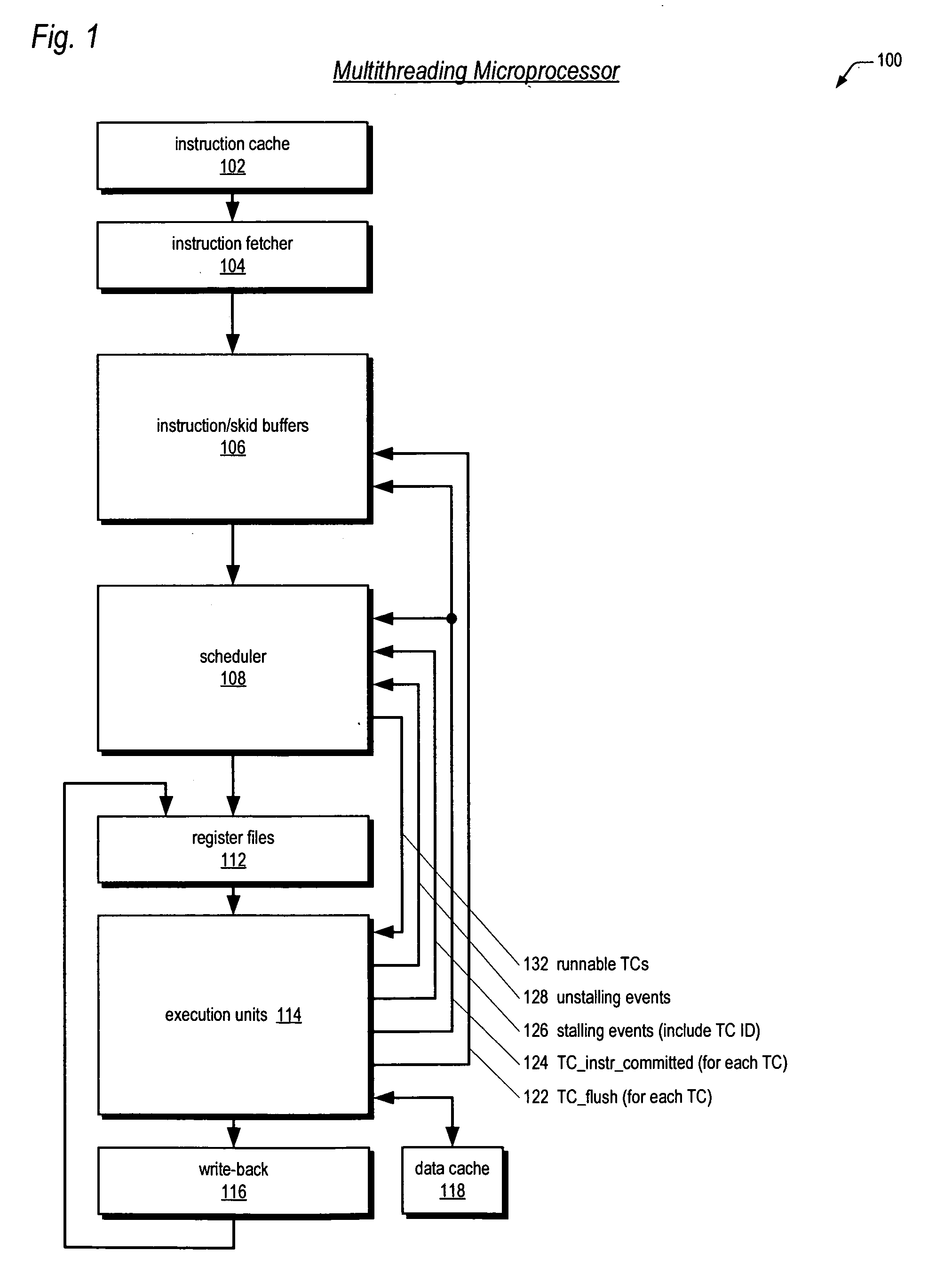

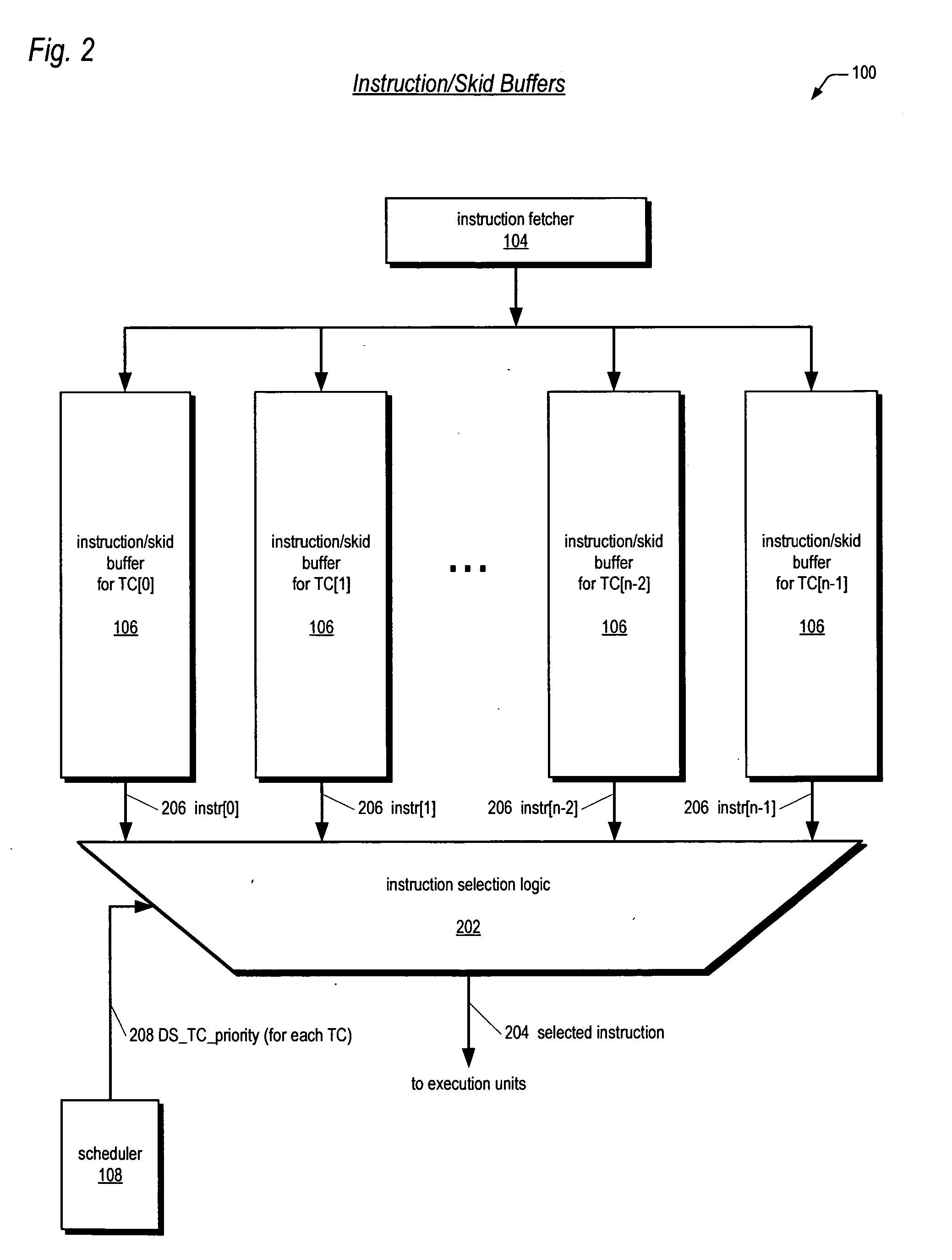

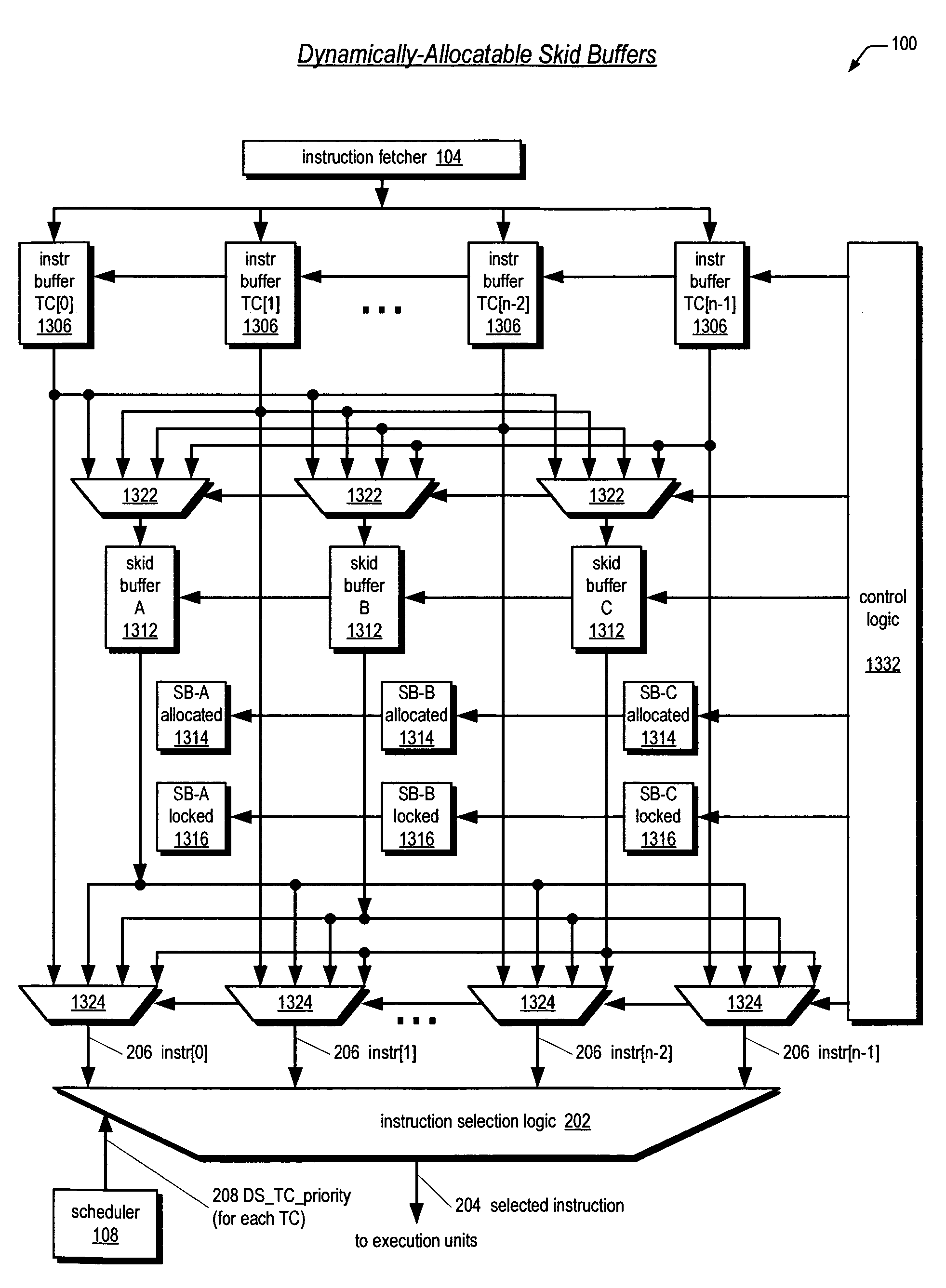

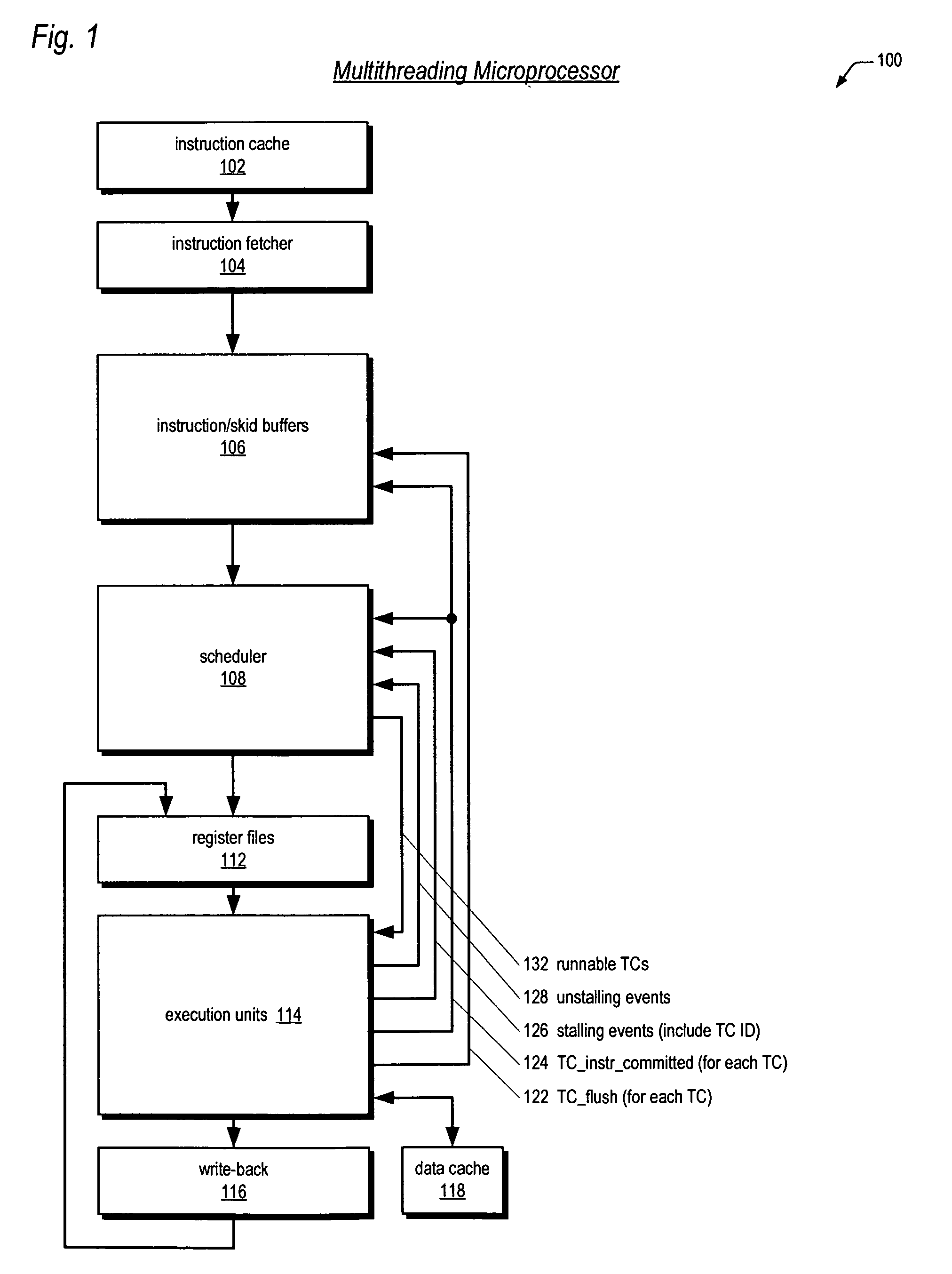

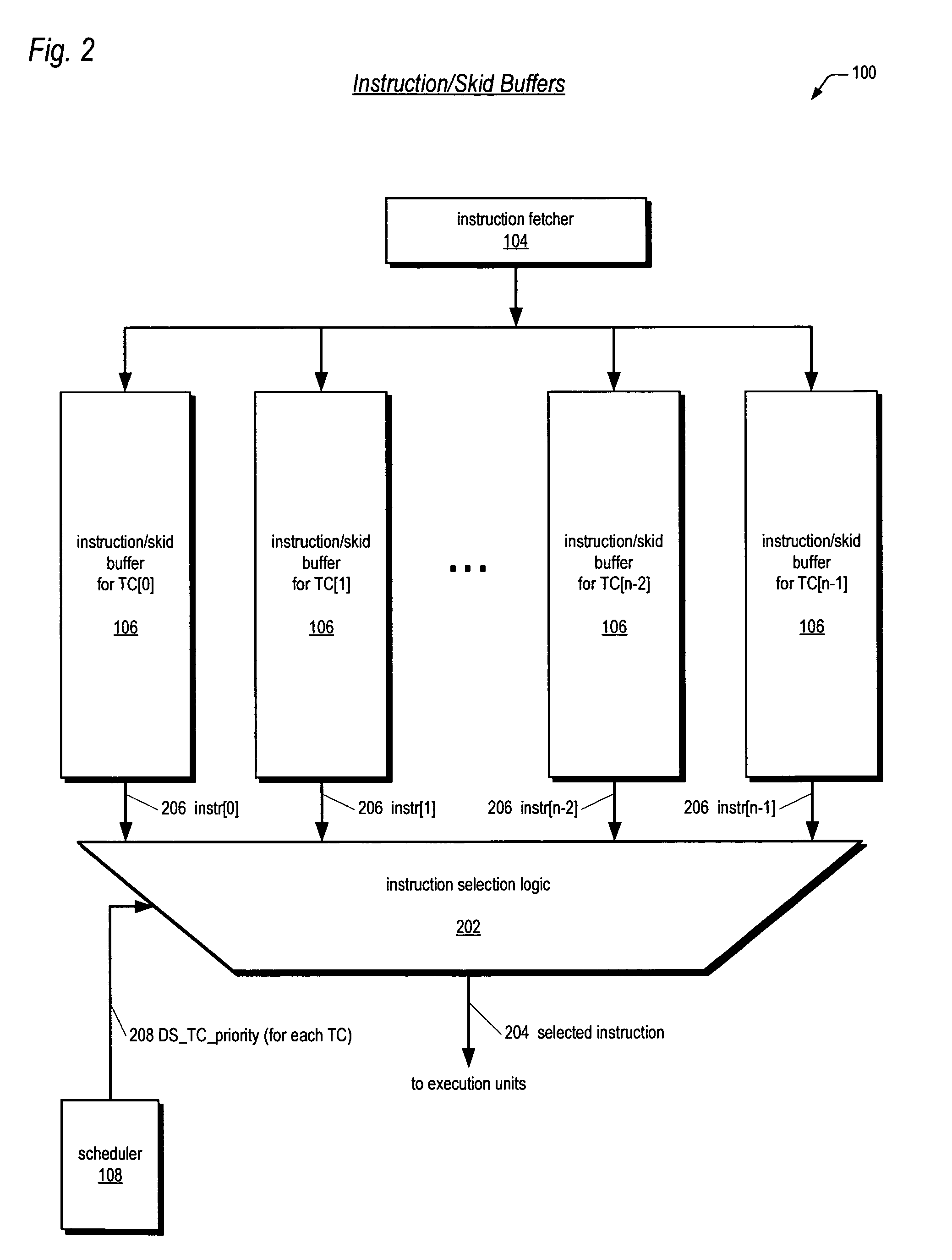

Instruction/skid buffers in a multithreading microprocessor

ActiveUS20060179274A1Reduce the amount requiredImprove processor performanceDigital computer detailsMemory systemsParallel computingControl logic

An apparatus for reducing instruction re-fetching in a multithreading processor configured to concurrently execute a plurality of threads is disclosed. The apparatus includes a buffer for each thread that stores fetched instructions of the thread, having an indicator for indicating which of the fetched instructions in the buffer have already been dispatched for execution. An input for each thread indicates that one or more of the already-dispatched instructions in the buffer has been flushed from execution. Control logic for each thread updates the indicator to indicate the flushed instructions are no longer already-dispatched, in response to the input. This enables the processor to re-dispatch the flushed instructions from the buffer to avoid re-fetching the flushed instructions. In one embodiment, there are fewer buffers than threads, and they are dynamically allocatable by the threads. In one embodiment, a single integrated buffer is shared by all the threads.

Owner:MIPS TECH INC

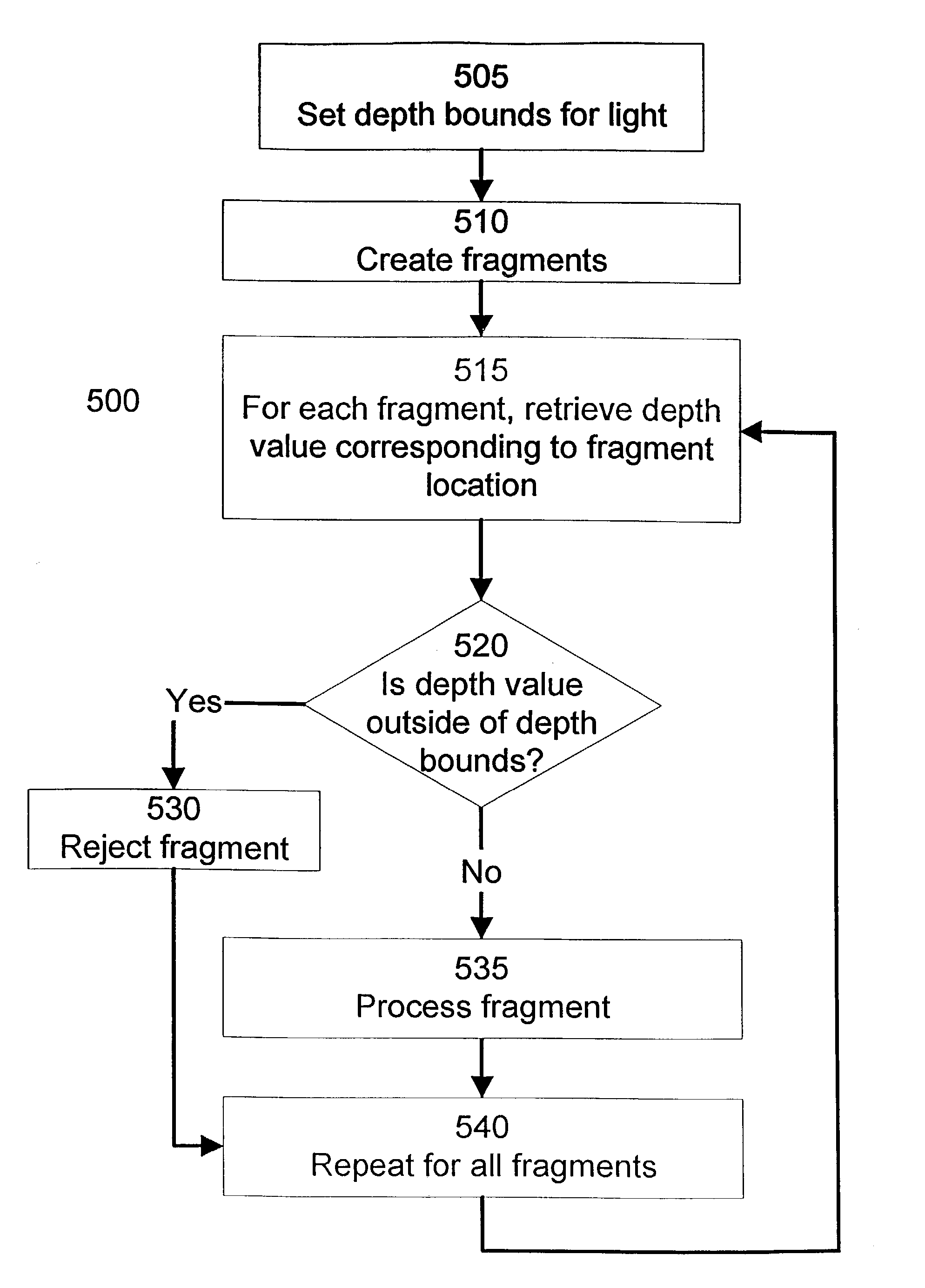

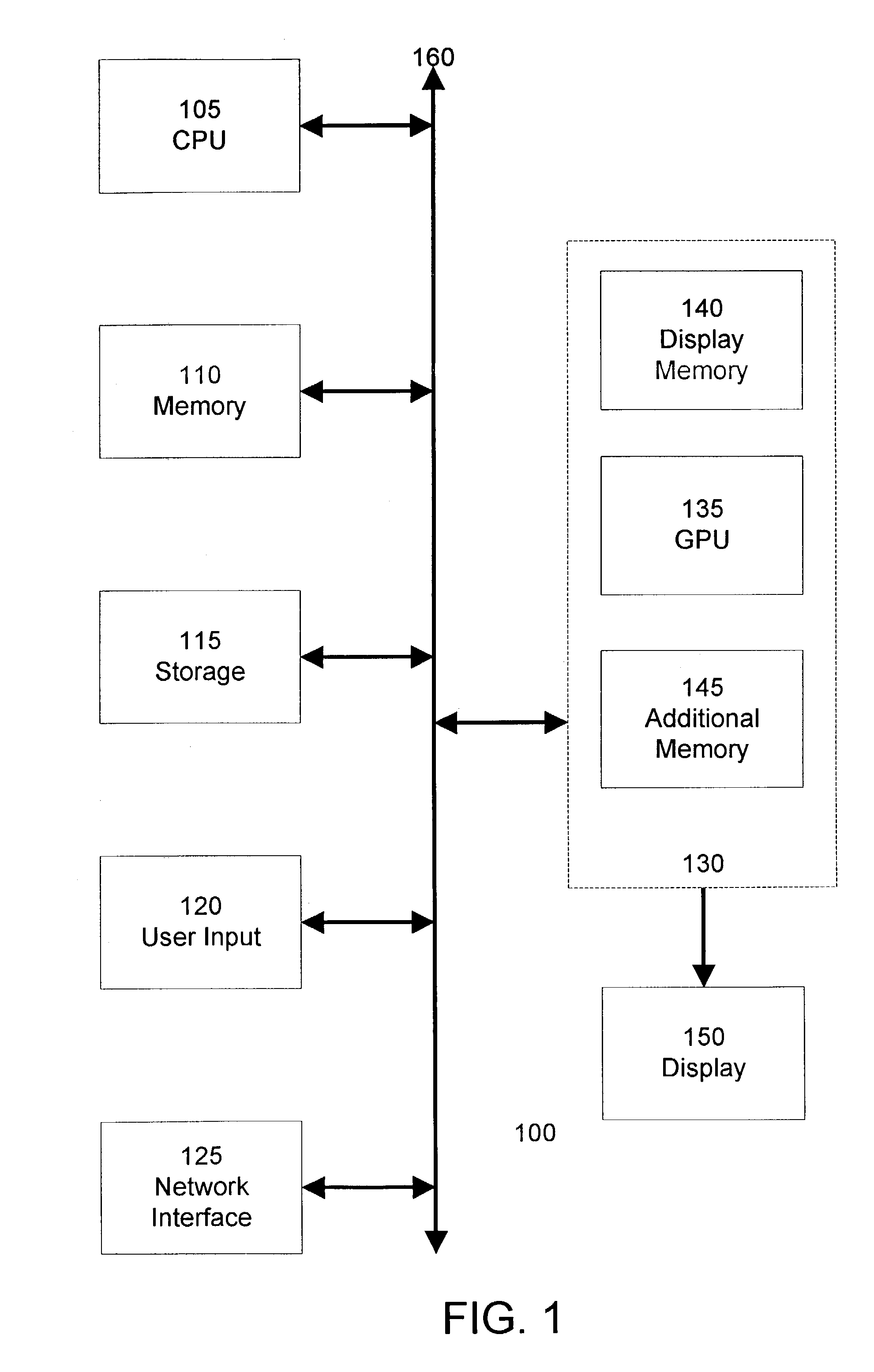

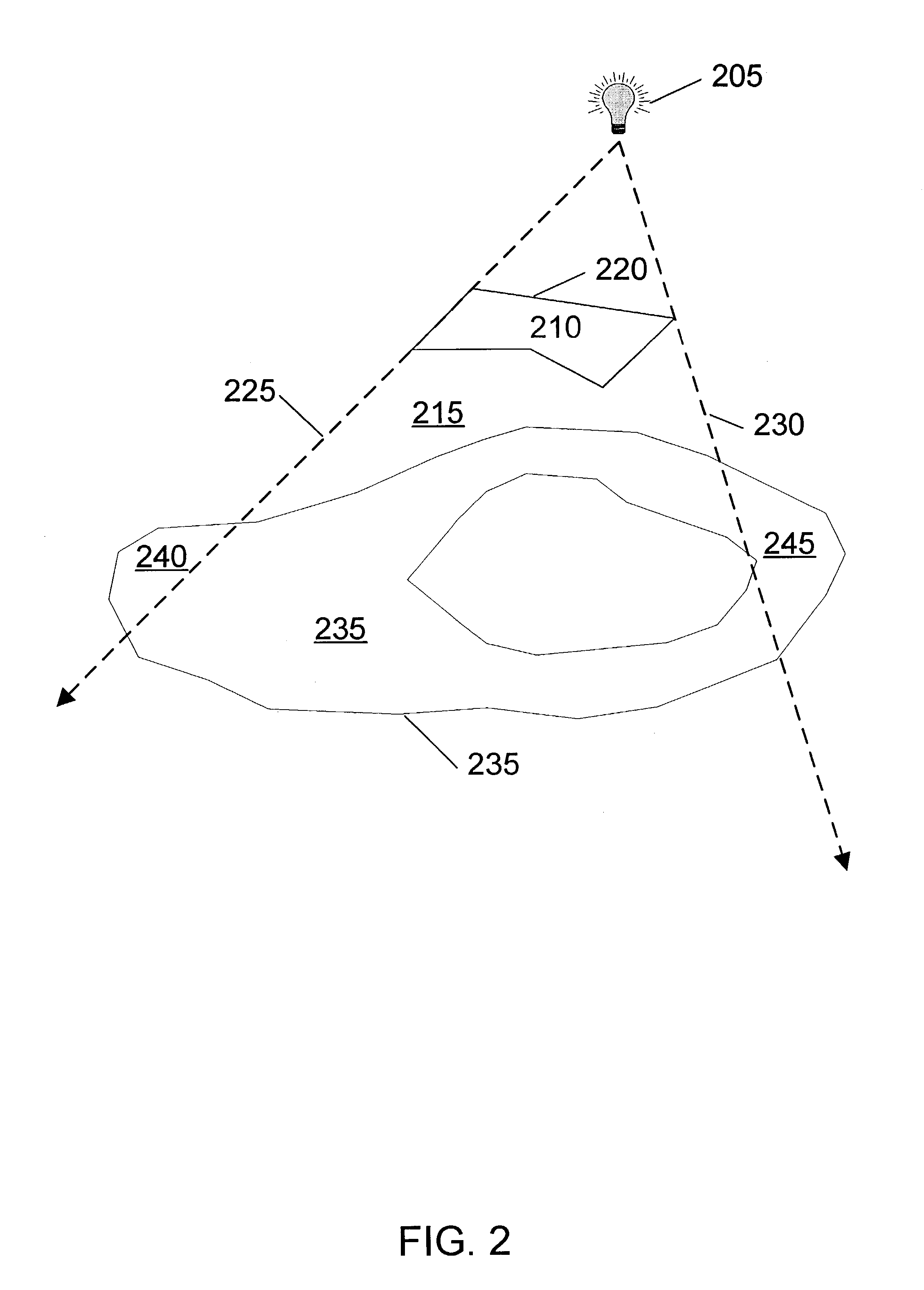

Depth bounds testing

ActiveUS7145565B2Reduce amountReducing memory bandwidth3D-image renderingGraphics hardwareStencil buffer

Lights can be conservatively bounded within a depth range. When image pixels are outside of a light's depth range, an associated volume fragment does not have to be rendered. Depth bounds registers can be used to store minimum and maximum depth values for a light. As graphics hardware processes volume fragments overlapping the image, the image's depth values are compared with the values in the depth bounds register. If the image's depth is outside of the depth range for the light, stencil buffer and illumination operations for this volume fragment are bypassed. This optimization can be performed on a per-pixel basis, or simultaneously on a group of adjacent pixels. The depth bounds are calculated from the light, or from the intersection of the volume with one or more other features. A rendering application uses API functions to set the depth bounds for each light and to activate depth bounds checking.

Owner:NVIDIA CORP

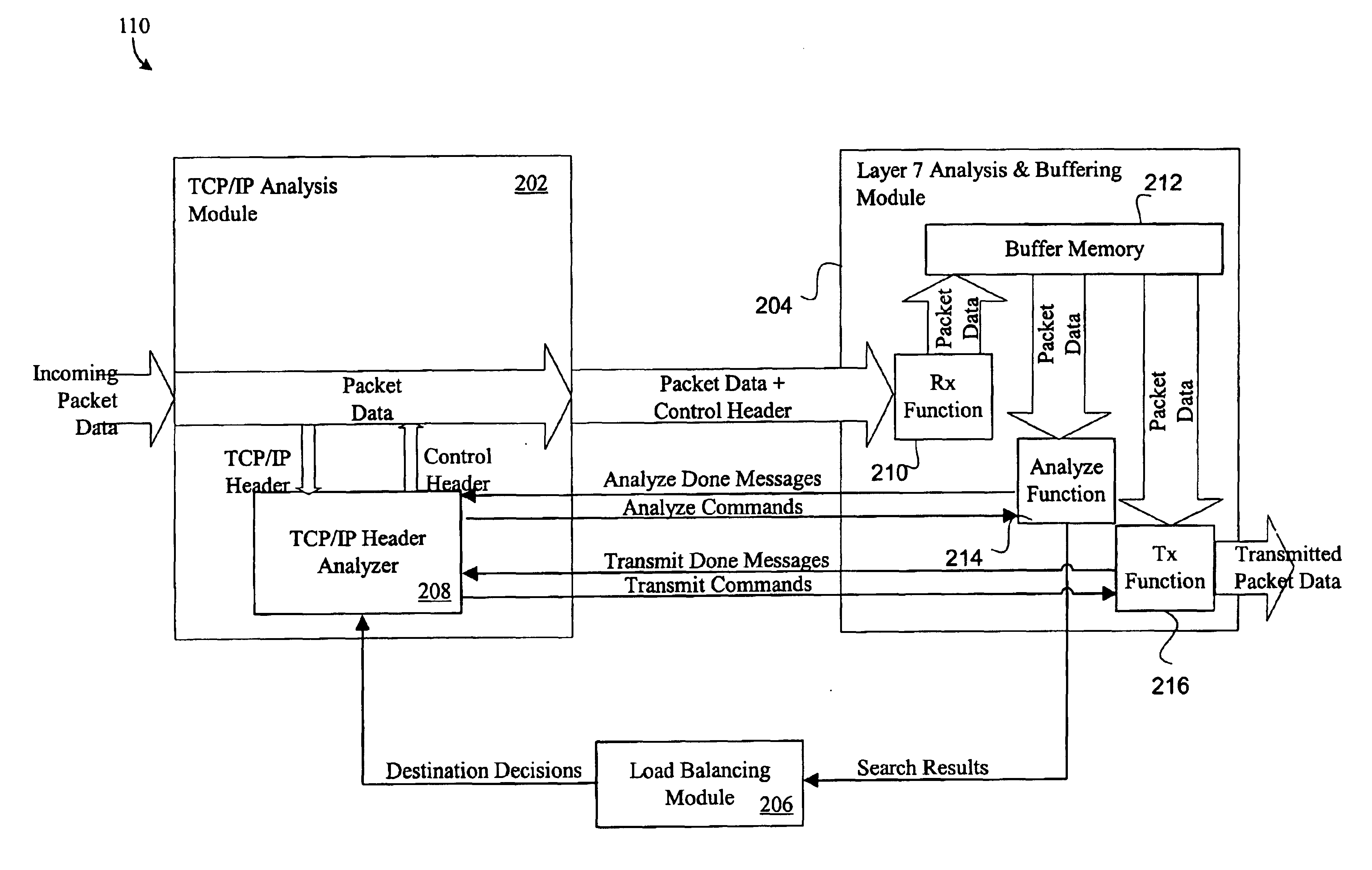

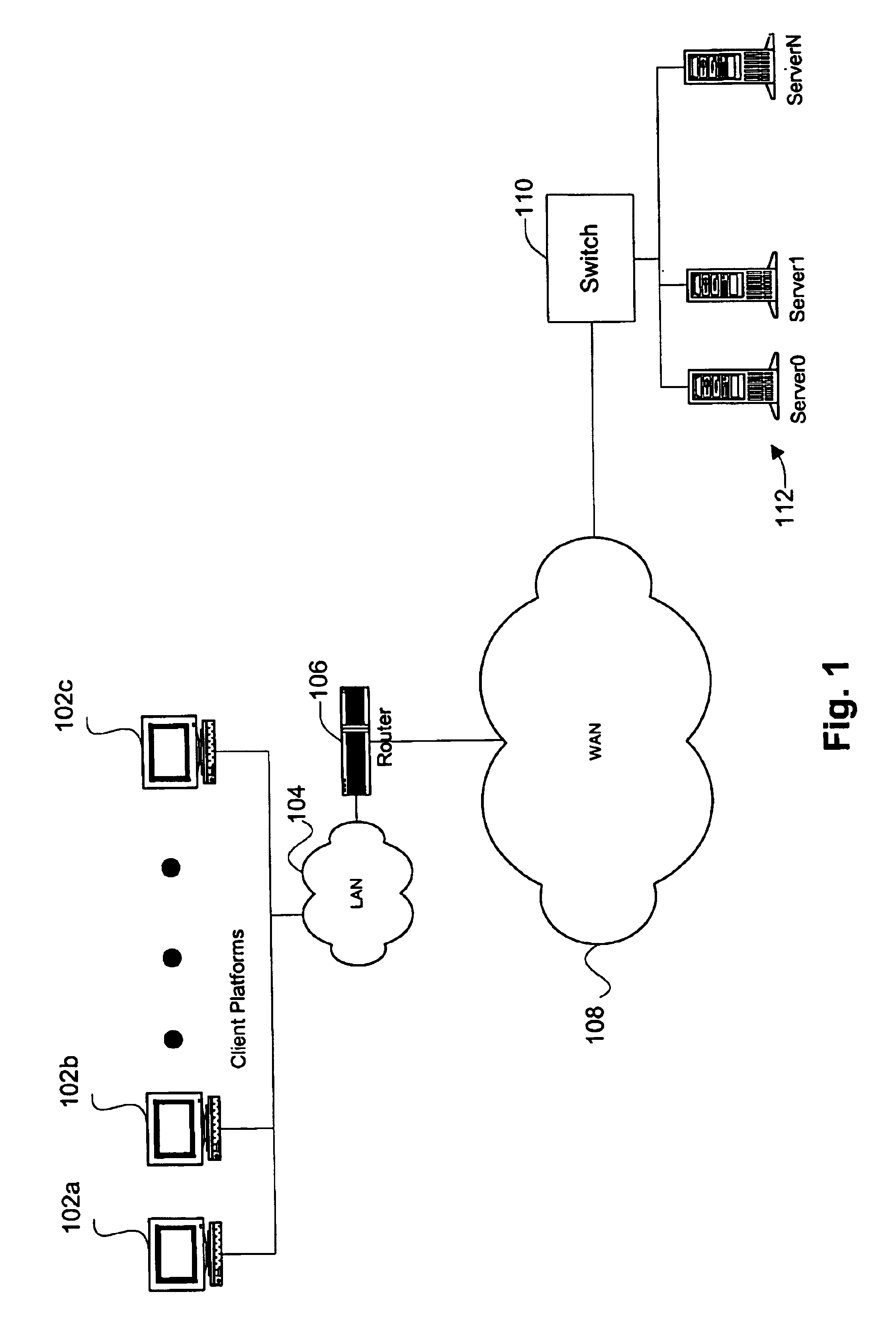

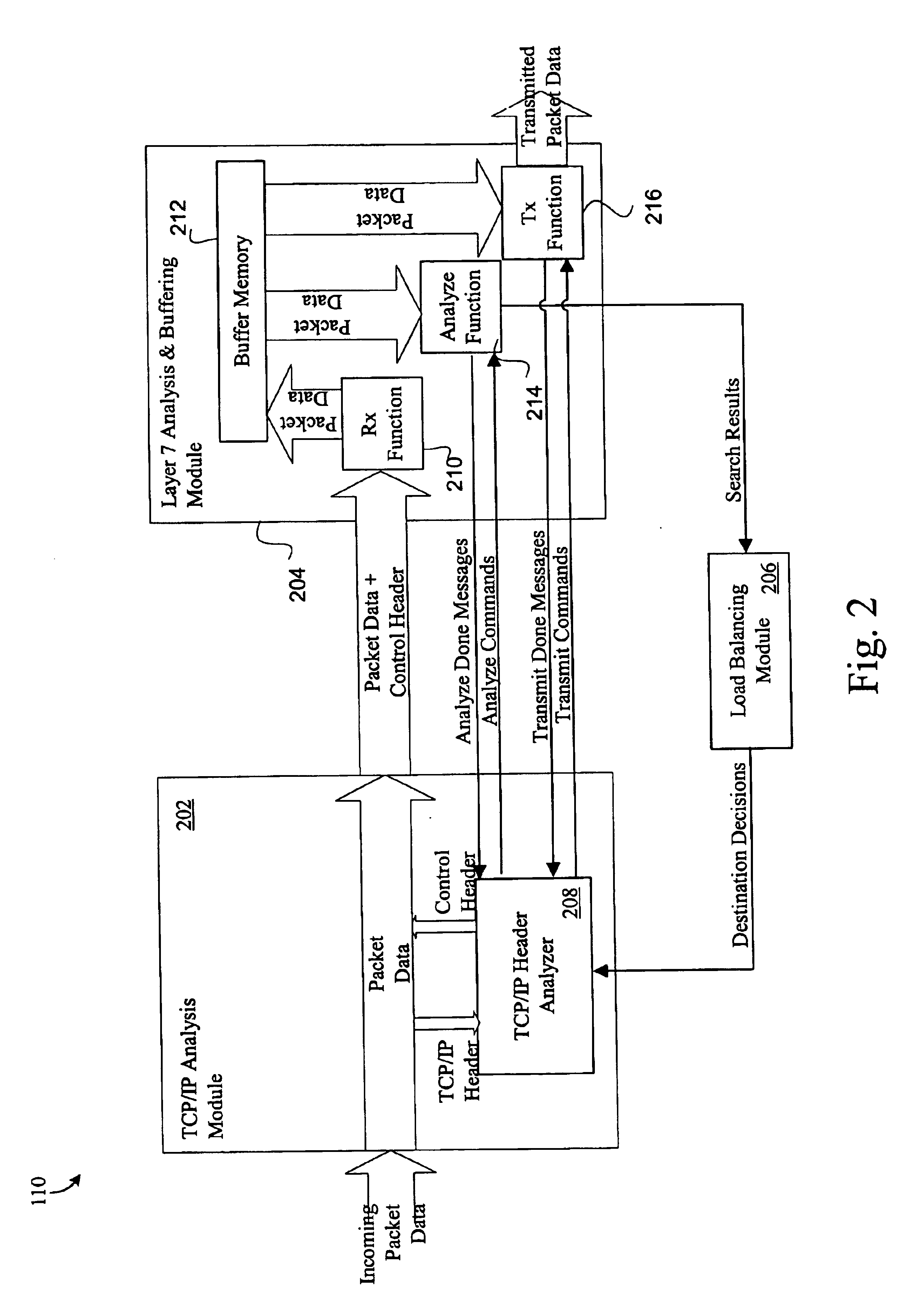

Packet data analysis with efficient buffering scheme

InactiveUS6882654B1Improve performanceOptimization mechanismTime-division multiplexData switching by path configurationData bufferReal-time computing

Disclosed is an apparatus employing an efficient buffering scheme for analyzing the Layer 7 content in packet data sent from a first node to a second node within a computer network. The apparatus includes a first device having a buffer and one or more first processors. The apparatus also includes a second device having one or more second processors. The first device is a physically separate device from the second device. The second processor of the second device is configured to manage the buffer of the first device, and the first processor is also configured to analyze packet data accessed from the buffer.

Owner:CISCO TECH INC

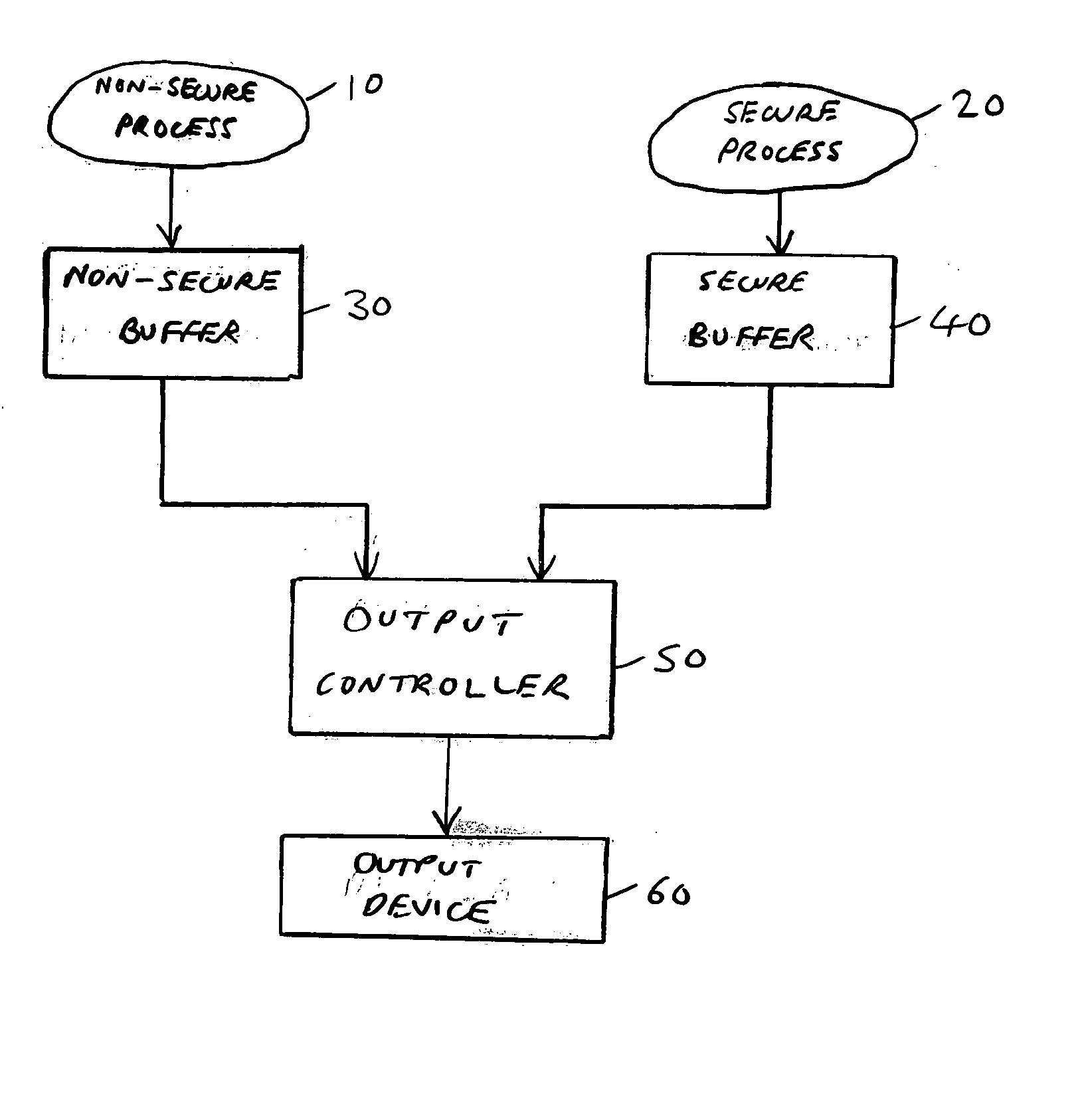

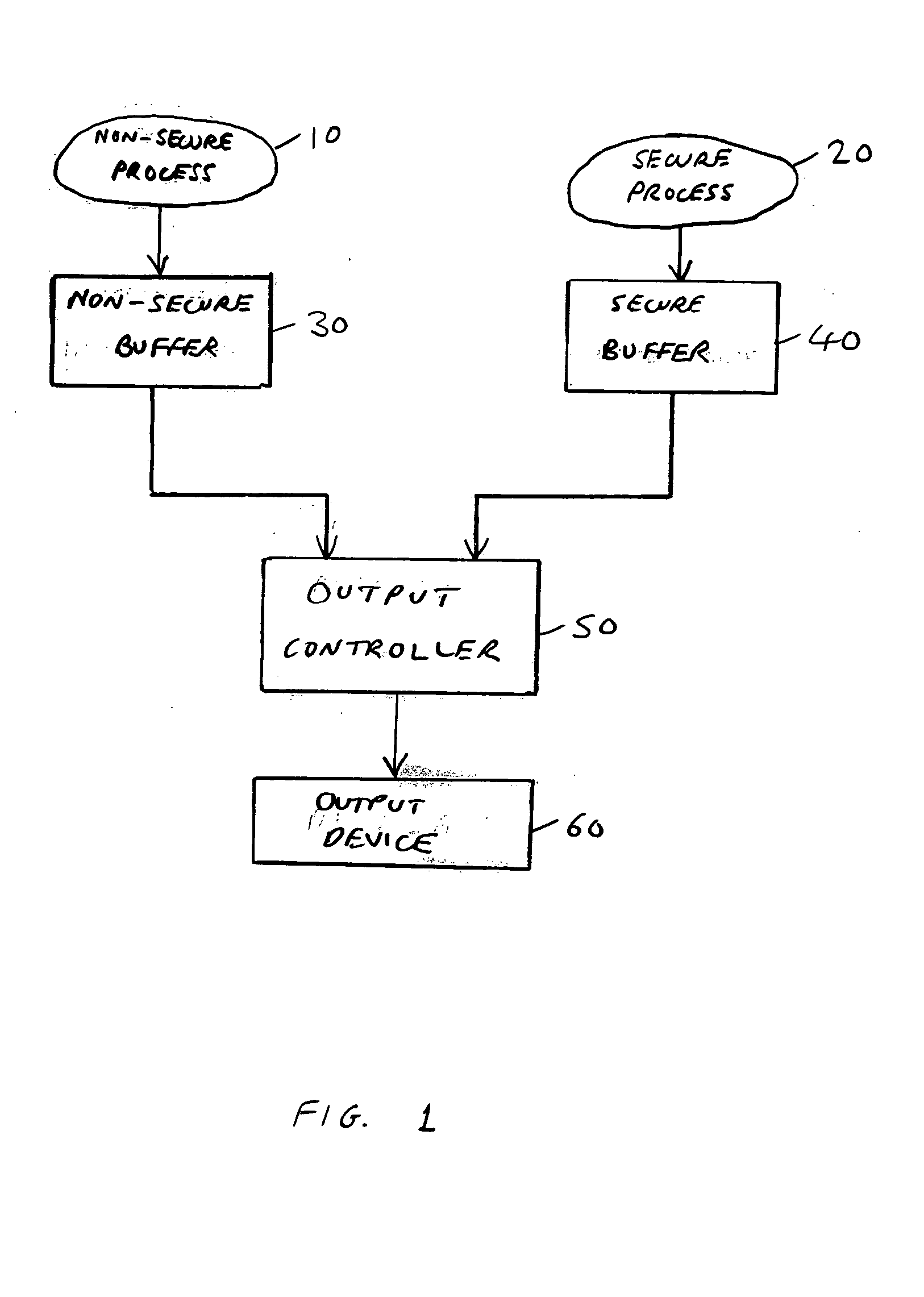

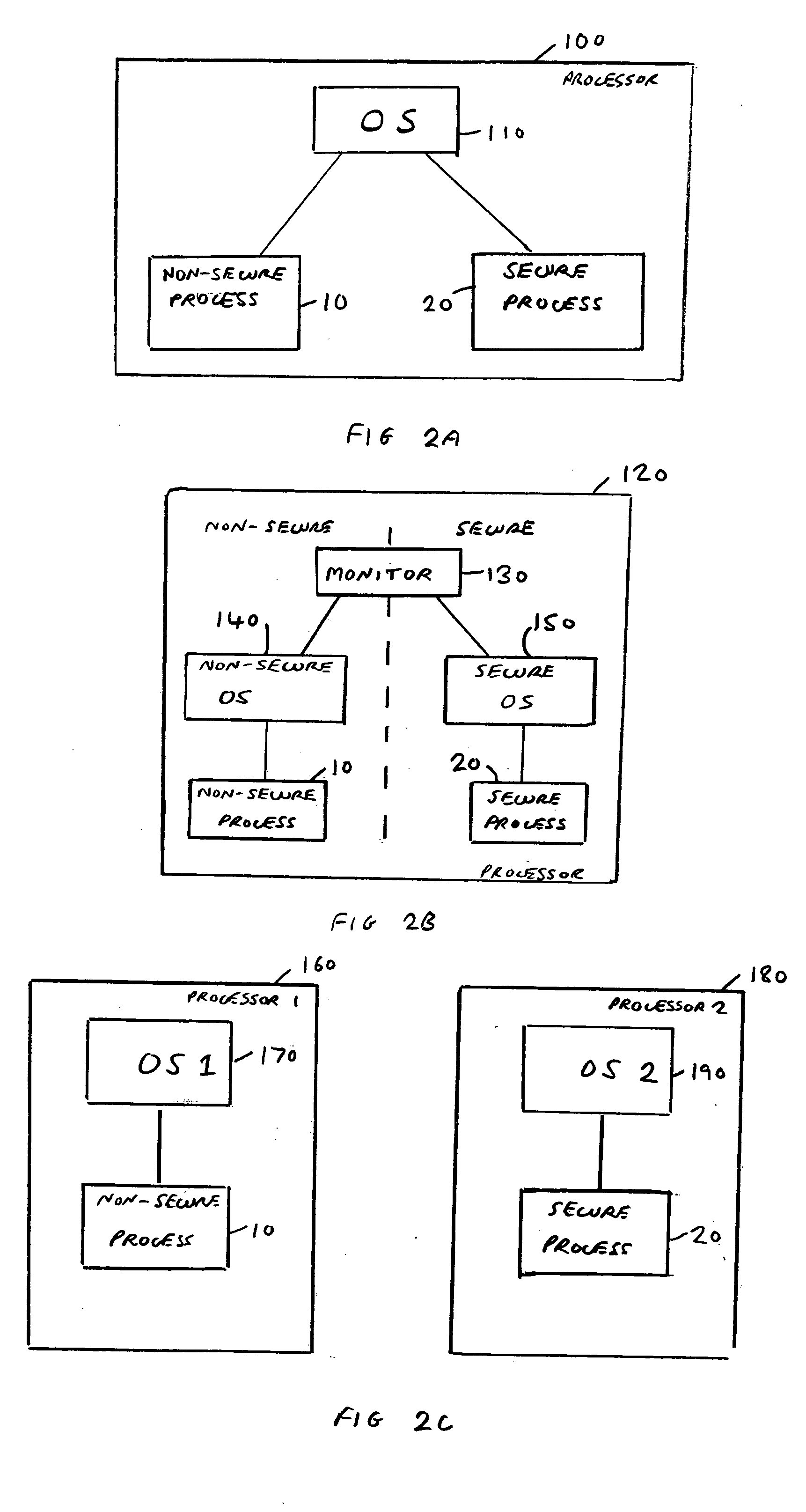

Data processing apparatus and method for merging secure and non-secure data into an output data stream

ActiveUS20050097341A1Reduce memory bandwidthReduce processing requirementsDigital data processing detailsUser identity/authority verificationData streamMemory bandwidth

The present invention provides a data processing apparatus and method for merging secure and non-secure data. The apparatus comprises at least one processor operable to execute a non-secure process to produce non-secure data to be included in an output data stream, and to execute a secure process to produce secure data to be included in the output data stream. A non-secure buffer is provided for receiving the non-secure data produced by the non-secure process, and in addition a secure buffer is provided for receiving the secure data produced by the secure process, the secure buffer not being accessible by the non-secure process. An output controller is then arranged to read the non-secure data from the non-secure buffer and the secure data from the secure buffer, and to merge the non-secure data and the secure data in order to produce a combined data stream, the output data stream then being derivable from the combined data stream. It has been found that such an approach assists in improving the security of the secure data, and in reducing memory bandwidth requirements and the processing requirements of the processor.

Owner:ARM LTD

Instruction/skid buffers in a multithreading microprocessor that store dispatched instructions to avoid re-fetching flushed instructions

ActiveUS7853777B2Reduce the amount requiredPenalty associated with flushing instructions is reducedDigital computer detailsMemory systemsOperating systemControl logic

An apparatus for reducing instruction re-fetching in a multithreading processor configured to concurrently execute a plurality of threads is disclosed. The apparatus includes a buffer for each thread that stores fetched instructions of the thread, having an indicator for indicating which of the fetched instructions in the buffer have already been dispatched for execution. An input for each thread indicates that one or more of the already-dispatched instructions in the buffer has been flushed from execution. Control logic for each thread updates the indicator to indicate the flushed instructions are no longer already-dispatched, in response to the input. This enables the processor to re-dispatch the flushed instructions from the buffer to avoid re-fetching the flushed instructions. In one embodiment, there are fewer buffers than threads, and they are dynamically allocatable by the threads. In one embodiment, a single integrated buffer is shared by all the threads.

Owner:MIPS TECH INC

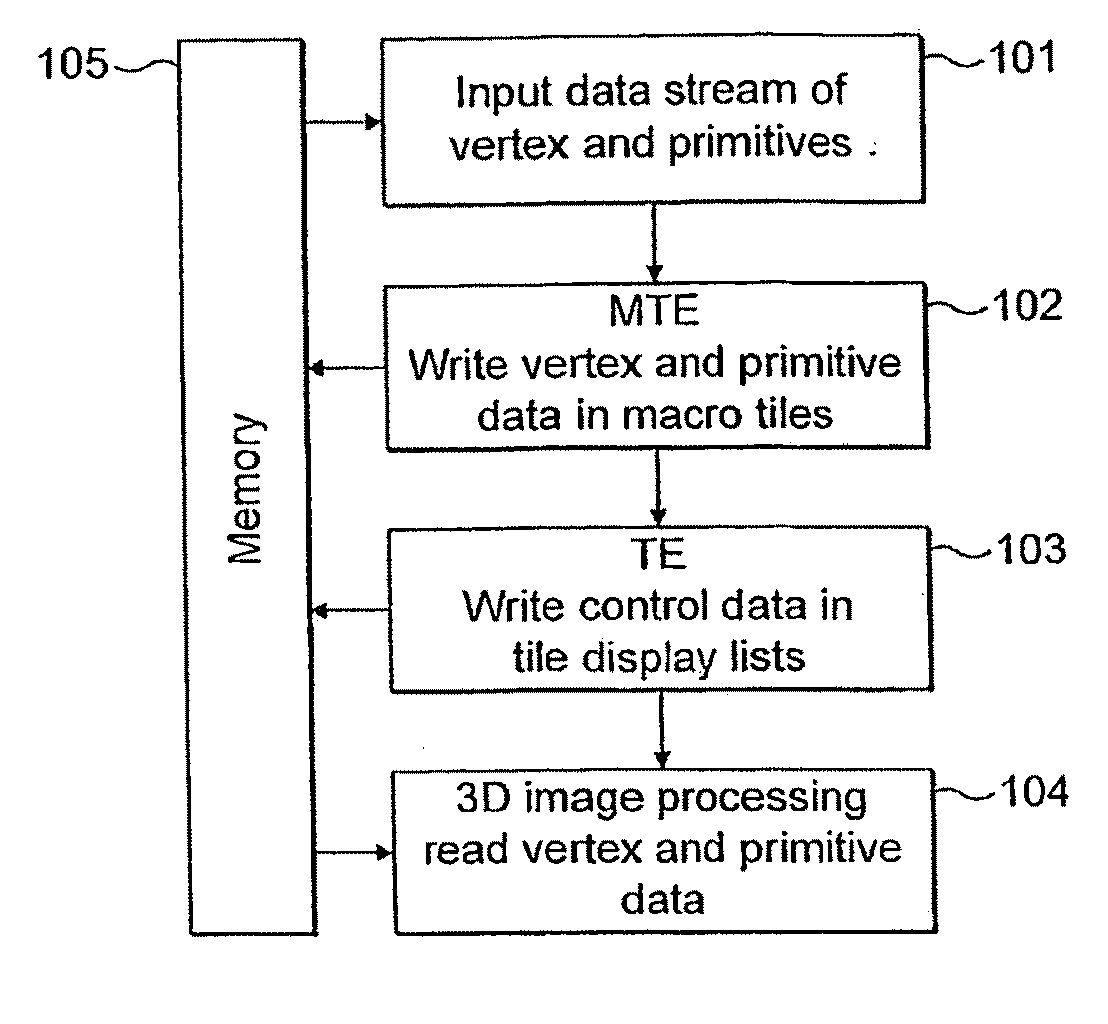

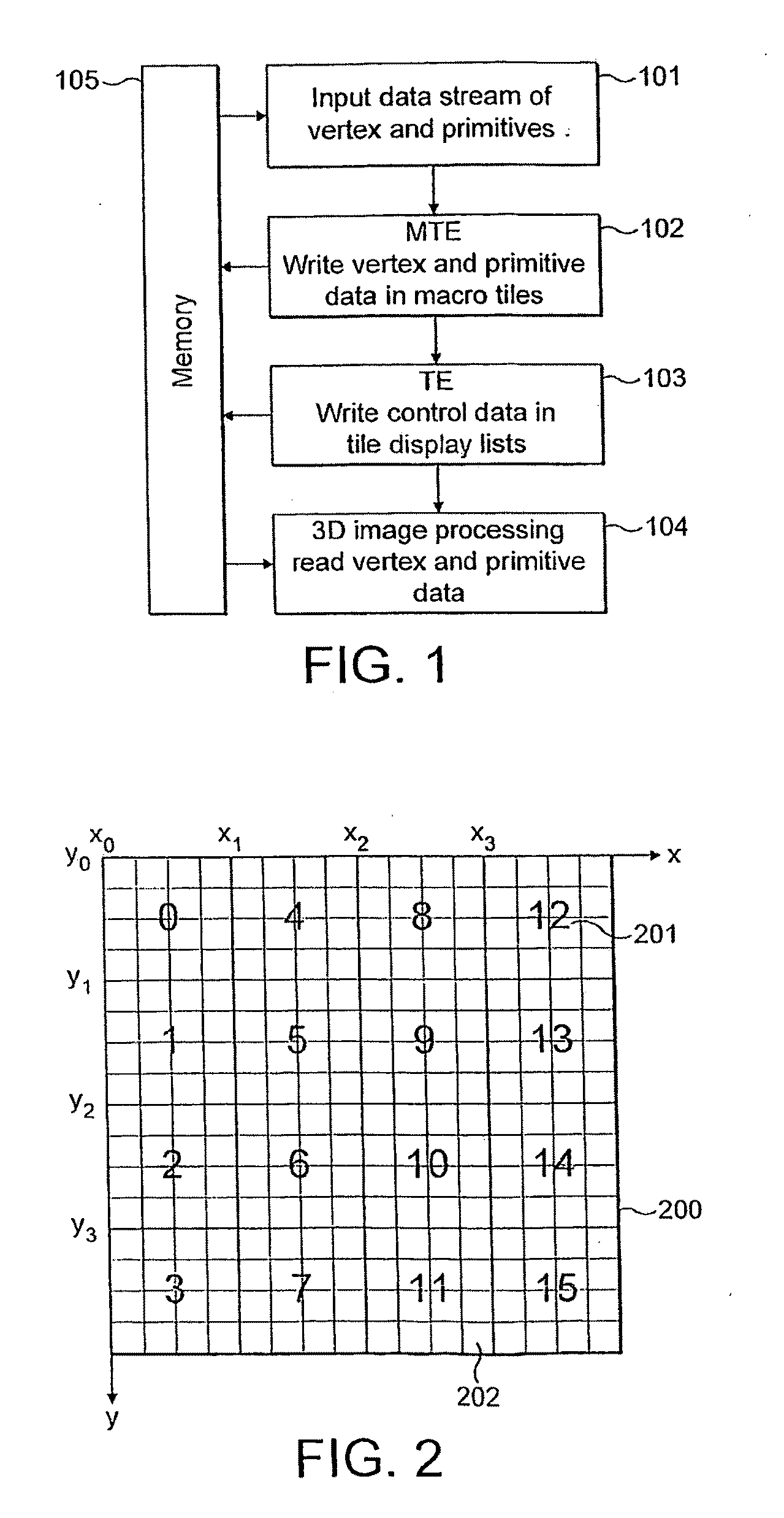

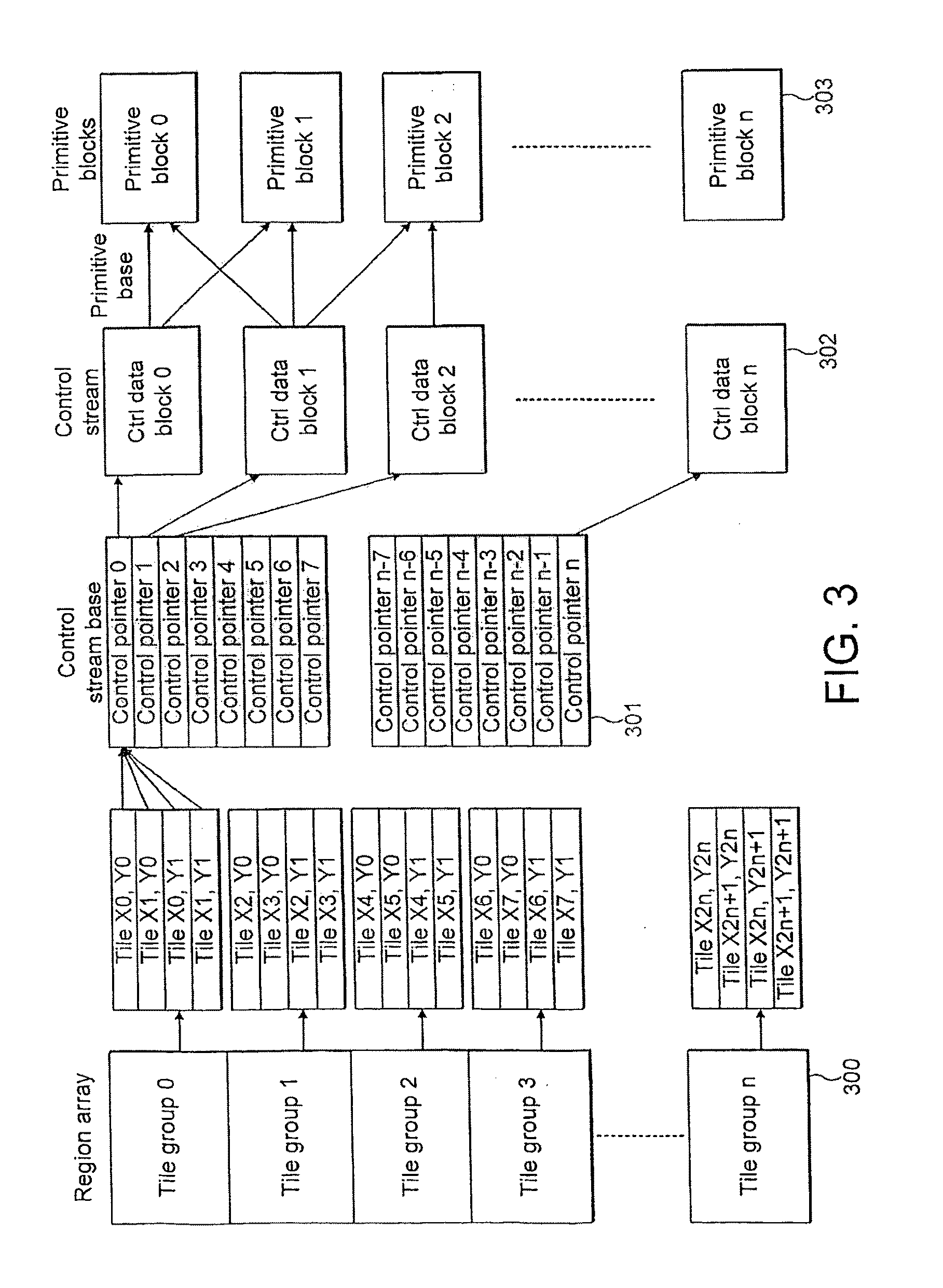

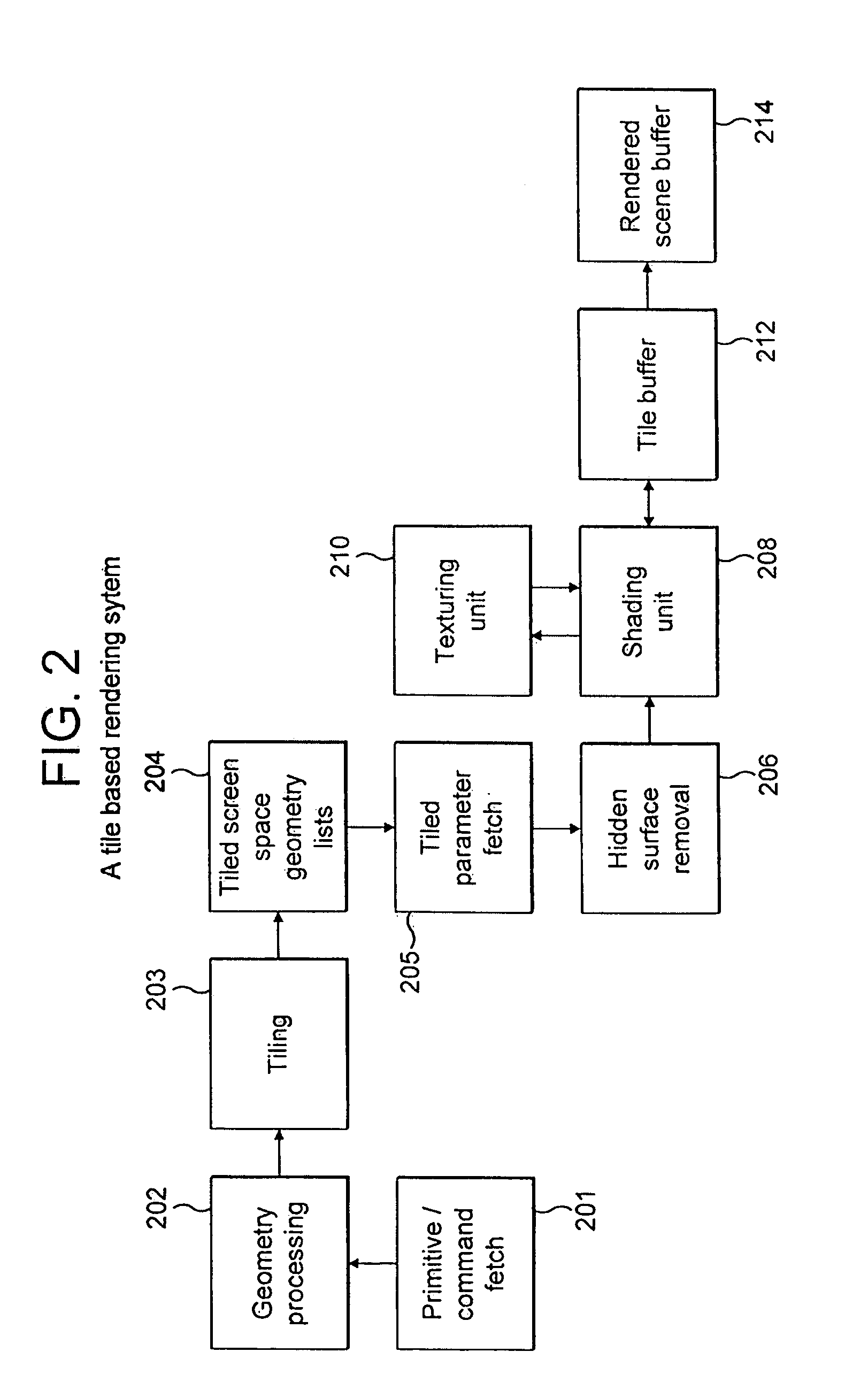

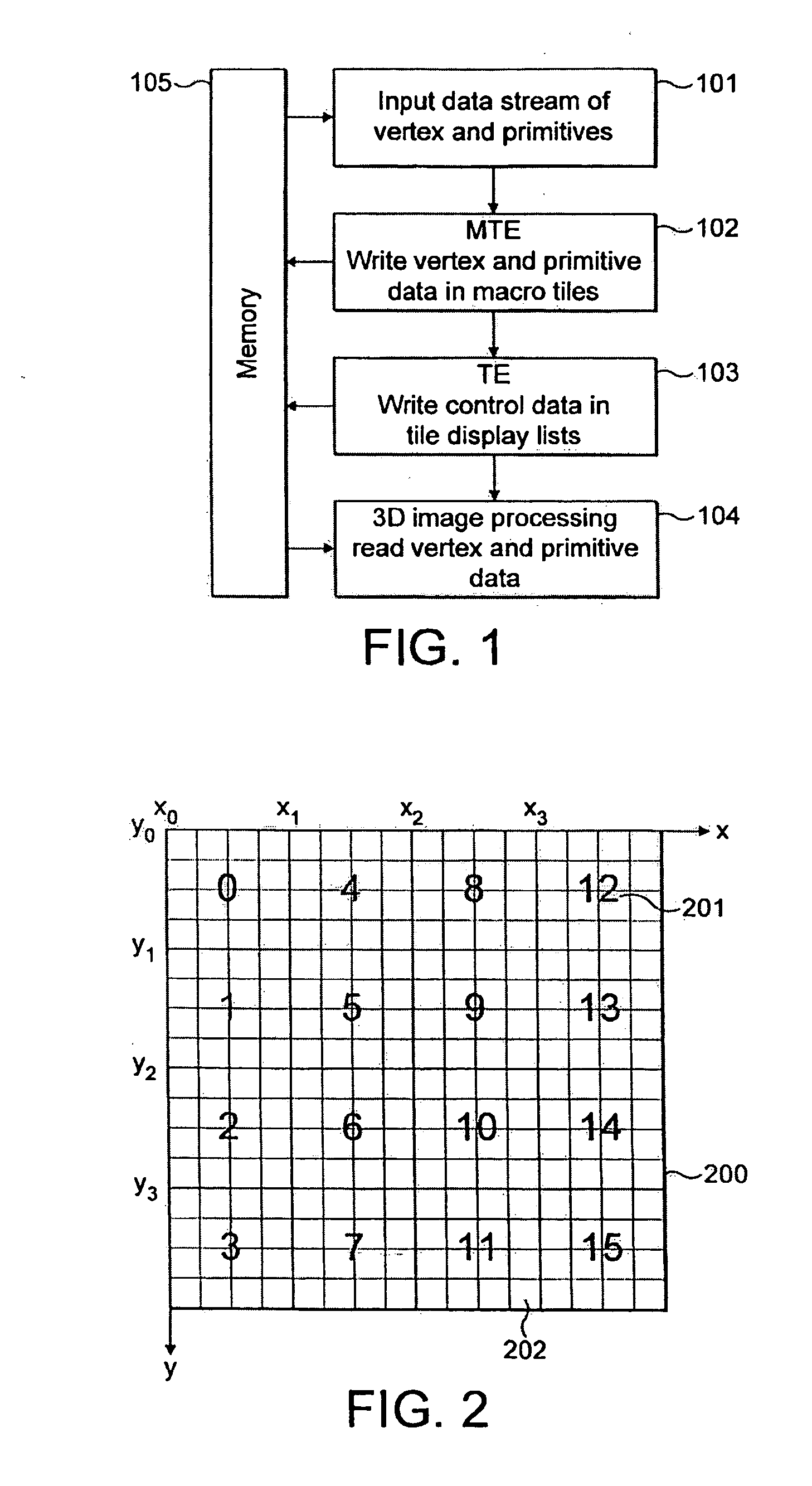

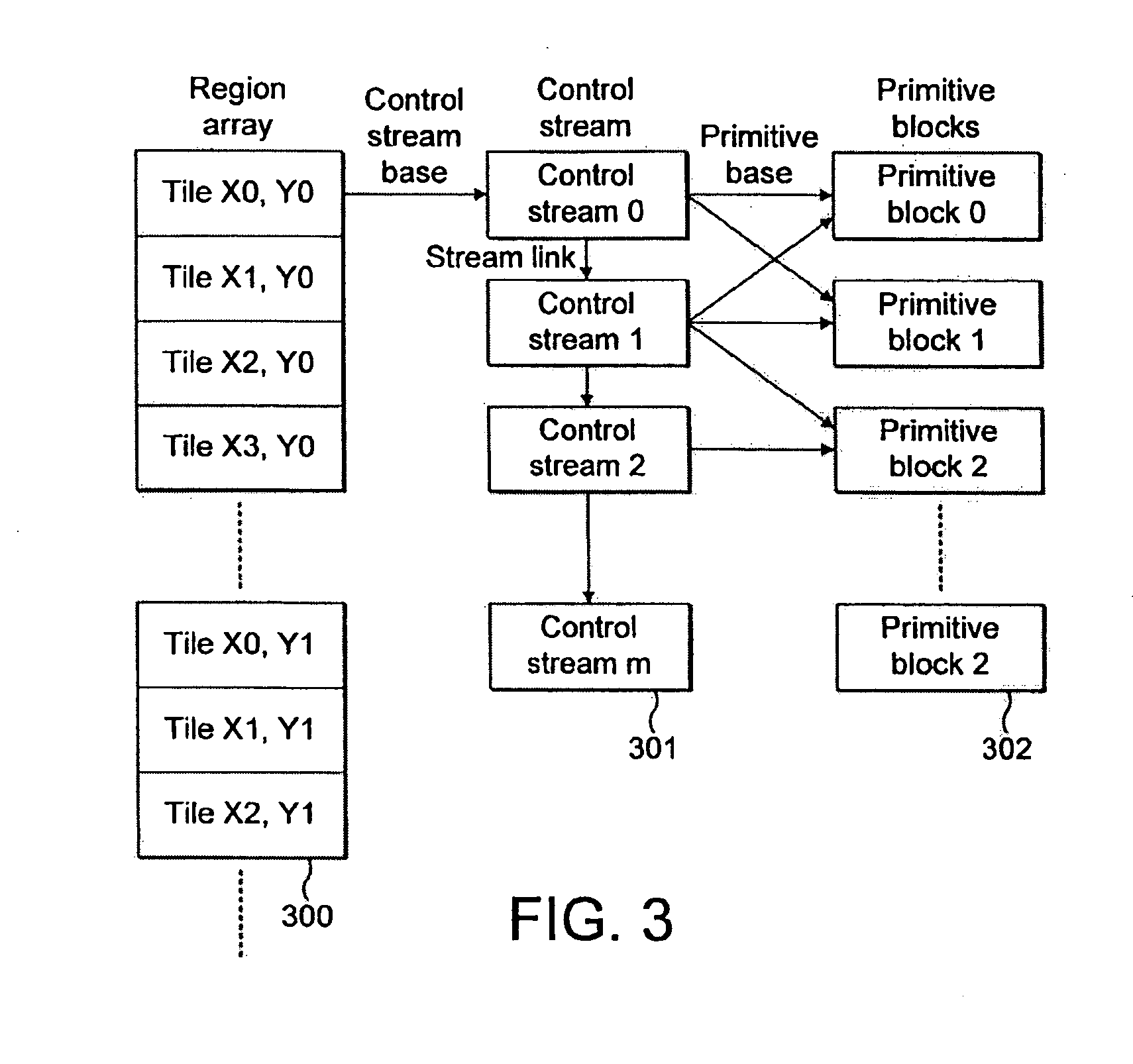

Display list control stream grouping in tile based 3D computer graphics system

ActiveUS20110304608A1Improve efficiencyReduces internal parameter memory bandwidthImage generationFilling planer surface with attributesStreaming dataGraphic system

A method and apparatus are provided for rendering a 3 dimensional computer graphics image. The image is subdivided into a plurality of rectangular areas and primitives which may be visible in the image are assigned to respective ones of a plurality of primitive blocks. A determination is made as to which primitive blocks contain primitives which intersect each rectangular area. The rectangular areas are then grouped into a plurality of fixed size groups and control stream data for each of the fixed size groups is derived, this control stream data including data which determines which primitive blocks are required to render the rectangular areas in each respective first fixed size group. The control stream data is then used to render the image for display.

Owner:IMAGINATION TECH LTD

RDMA network interface controller with cut-through implementation for aligned DDP segments

InactiveUS20050129039A1Reduce memory bandwidthReduce latencyData switching by path configurationMultiple digital computer combinationsConnection typeData placement

An RNIC implementation that performs direct data placement to memory where all segments of a particular connection are aligned, or moves data through reassembly buffers where all segments of a particular connection are non-aligned. The type of connection that cuts-through without accessing the reassembly buffers is referred to as a “Fast” connection because it is highly likely to be aligned, while the other type is referred to as a “Slow” connection. When a consumer establishes a connection, it specifies a connection type. The connection type can change from Fast to Slow and back. The invention reduces memory bandwidth, latency, error recovery using TCP retransmit and provides for a “graceful recovery” from an empty receive queue. The implementation also may conduct CRC validation for a majority of inbound DDP segments in the Fast connection before sending a TCP acknowledgement (Ack) confirming segment reception.

Owner:IBM CORP

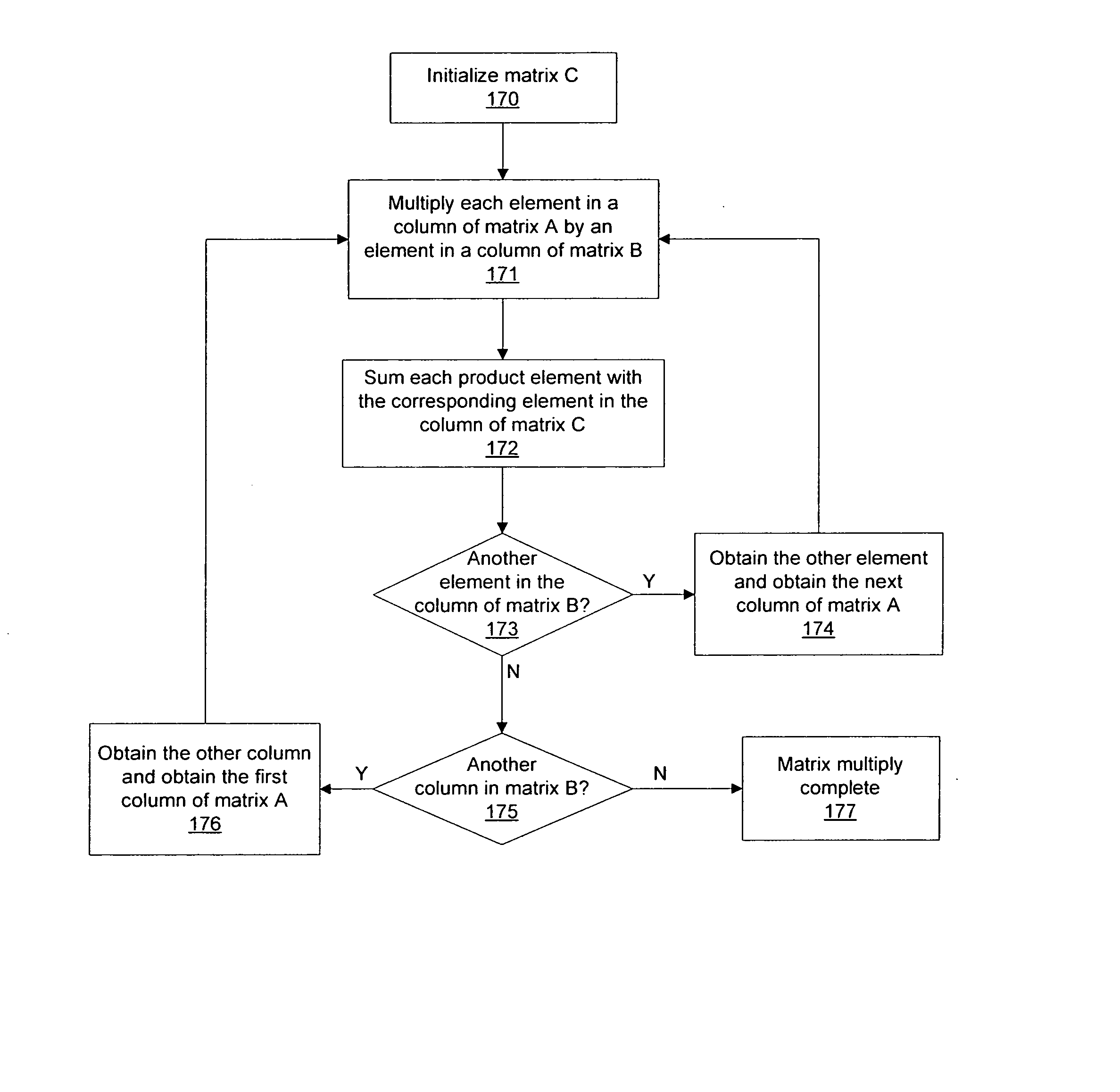

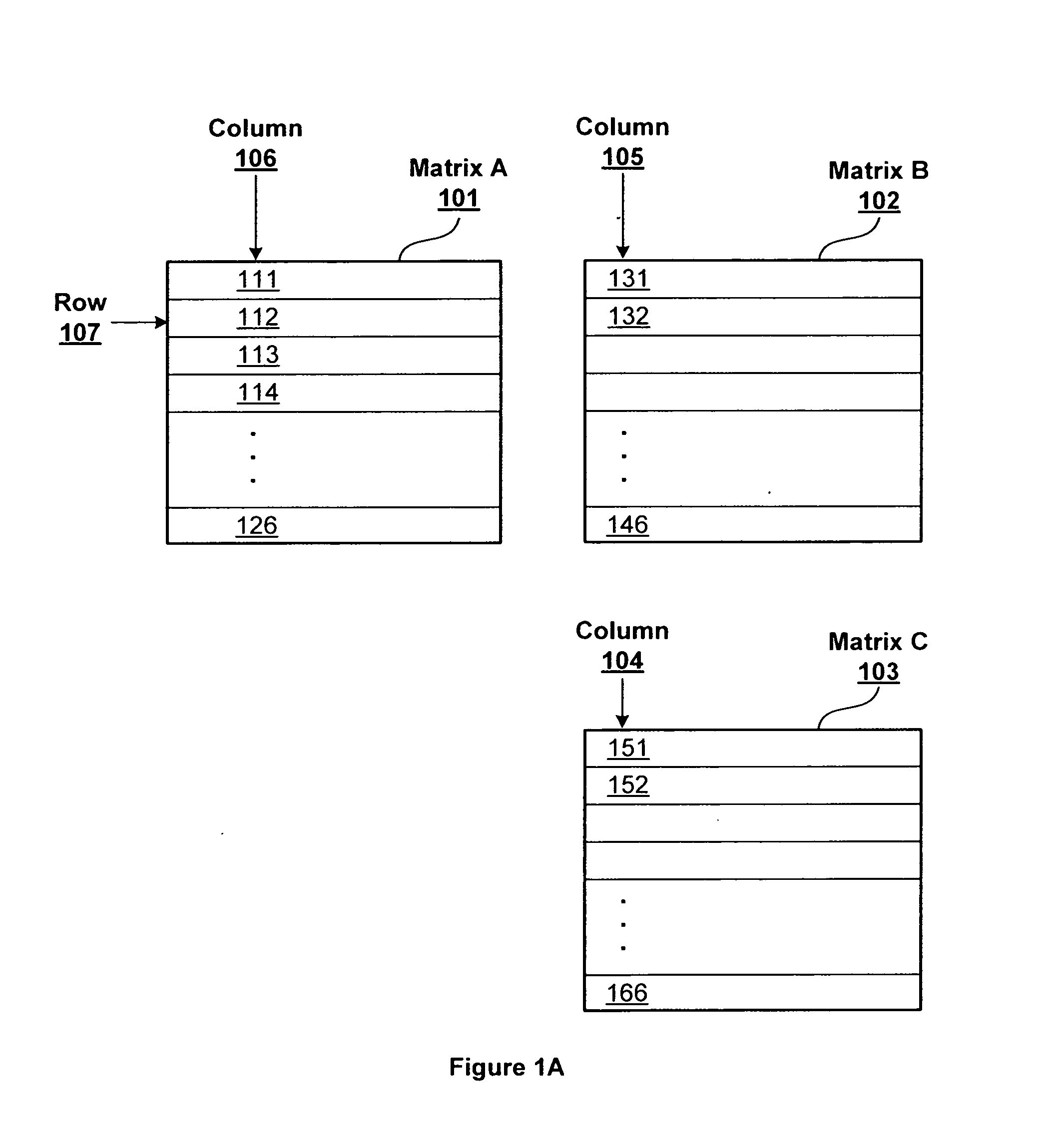

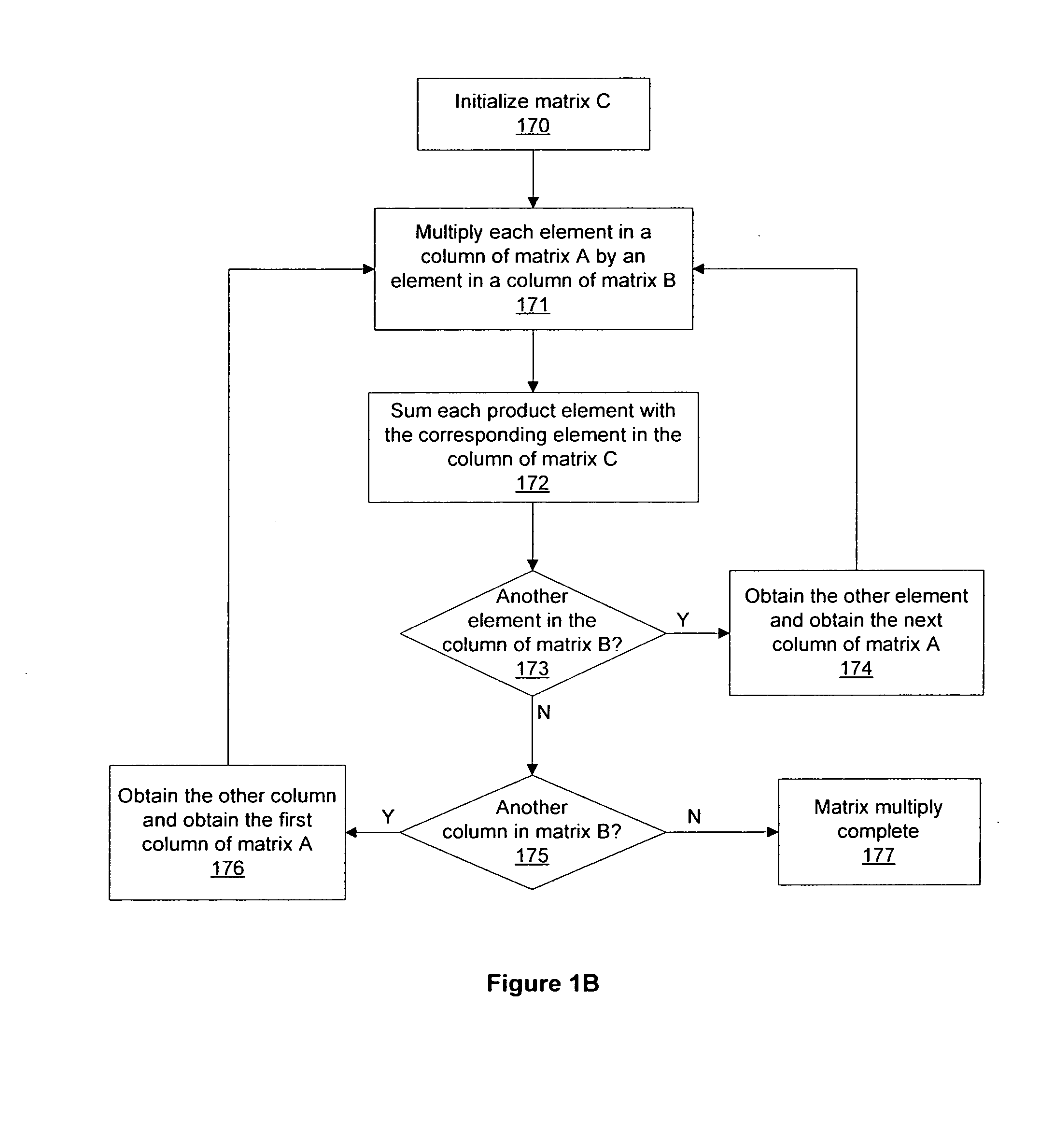

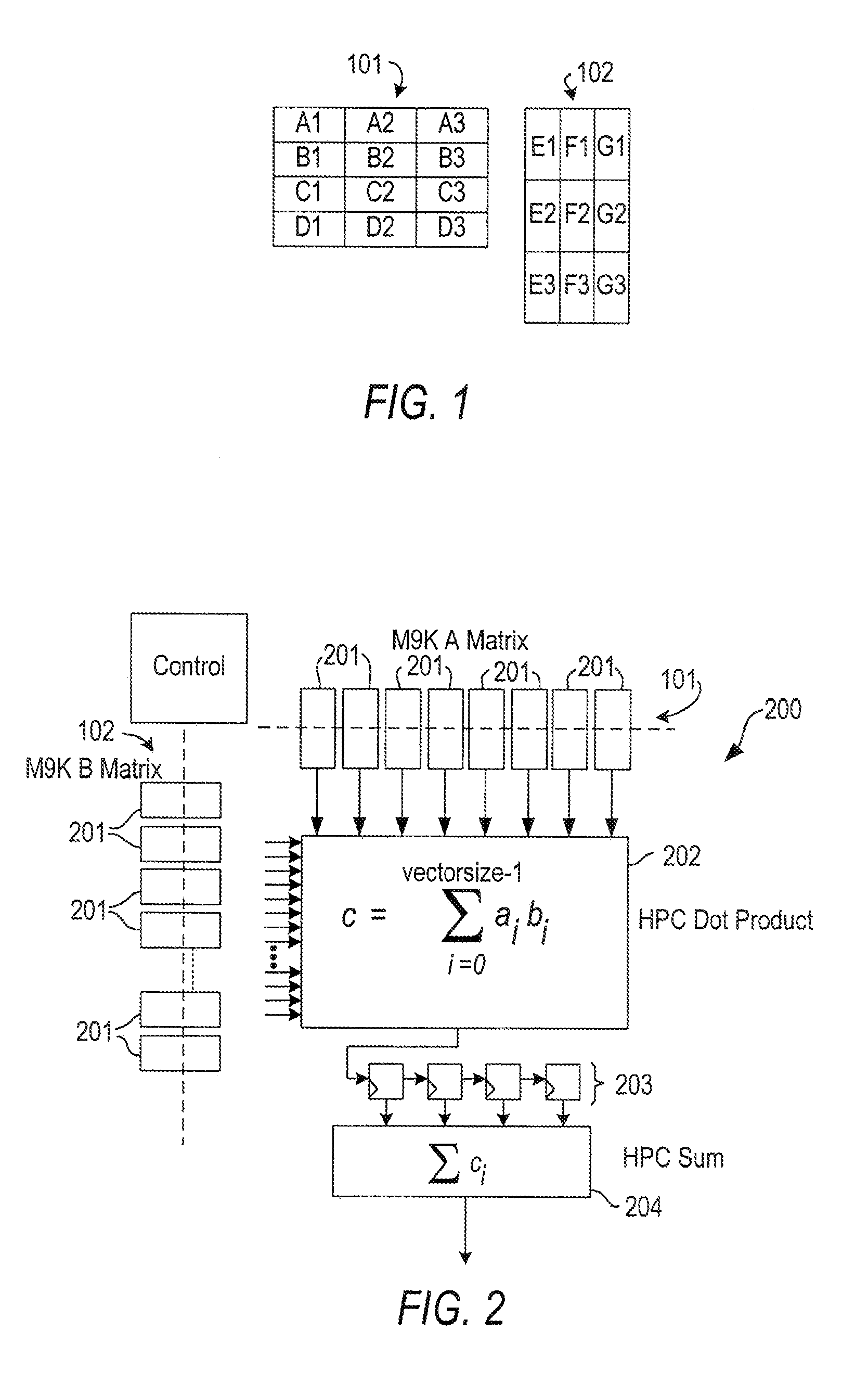

Matrix multiply with reduced bandwidth requirements

InactiveUS20070271325A1Reduce memory bandwidth requirementReduce memory bandwidthComputation using non-contact making devicesMultiprogramming arrangementsAlgorithmSingle element

Systems and methods for reducing the bandwidth needed to read the inputs to a matrix multiply operation may improve system performance. Rather than reading a row of a first input matrix and a column of a second input matrix to produce a column of a product matrix, a column of the first input matrix and a single element of the second input matrix are read to produce a column of partial dot products of the product matrix. Therefore, the number of input matrix elements read to produce each product matrix element is reduced from 2N to N+1, where N is the number of elements in a column of the product matrix.

Owner:NVIDIA CORP

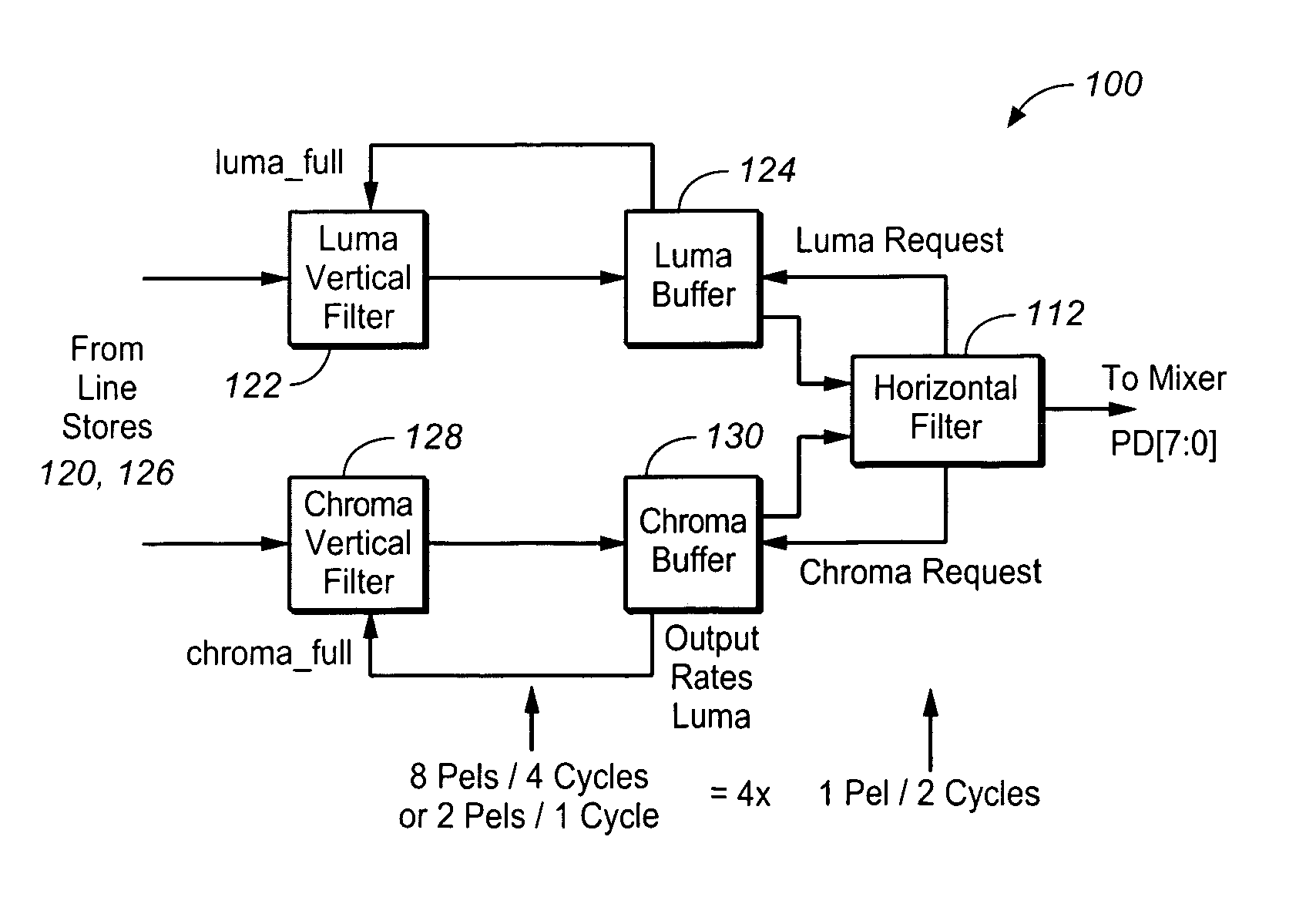

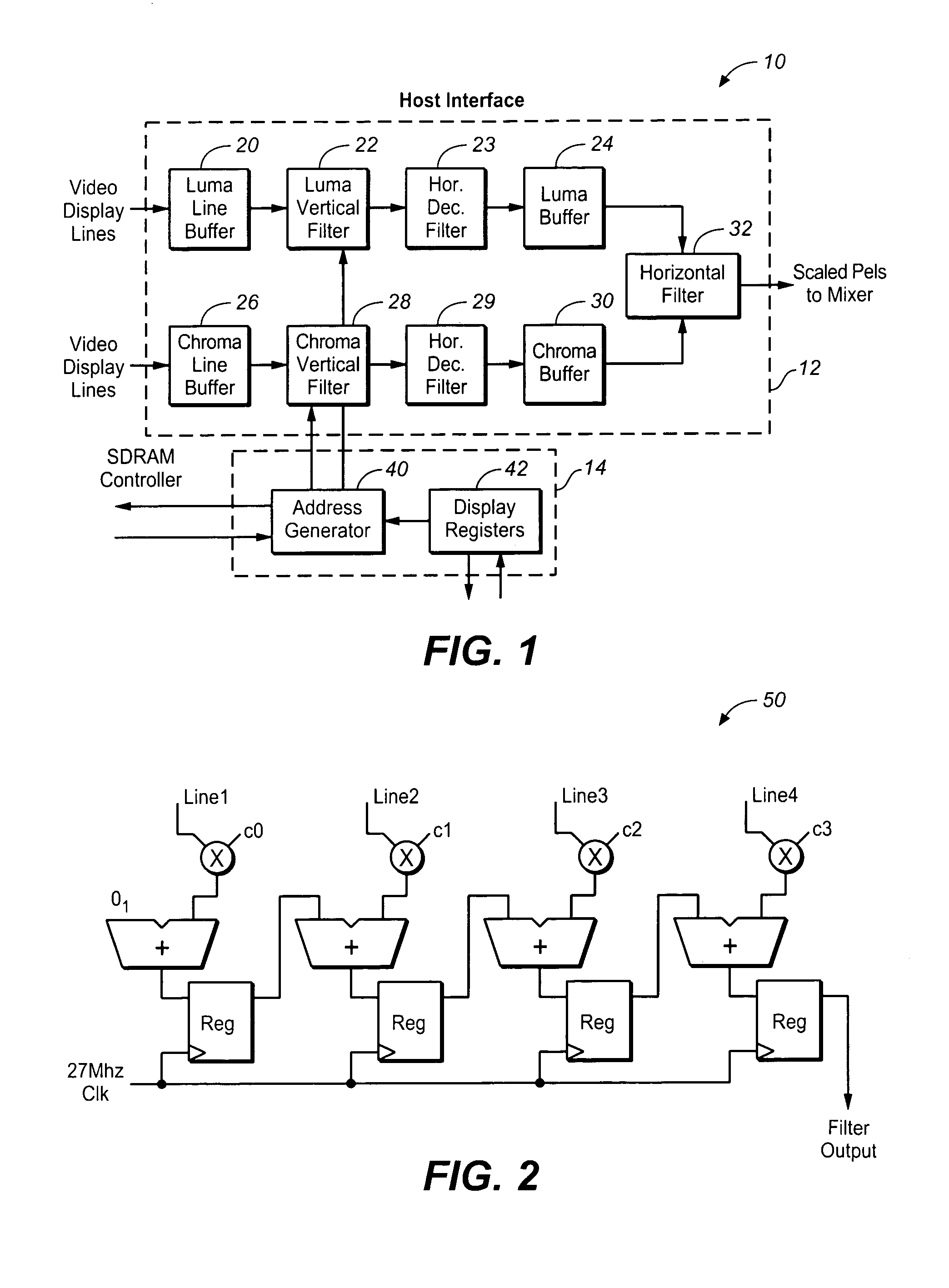

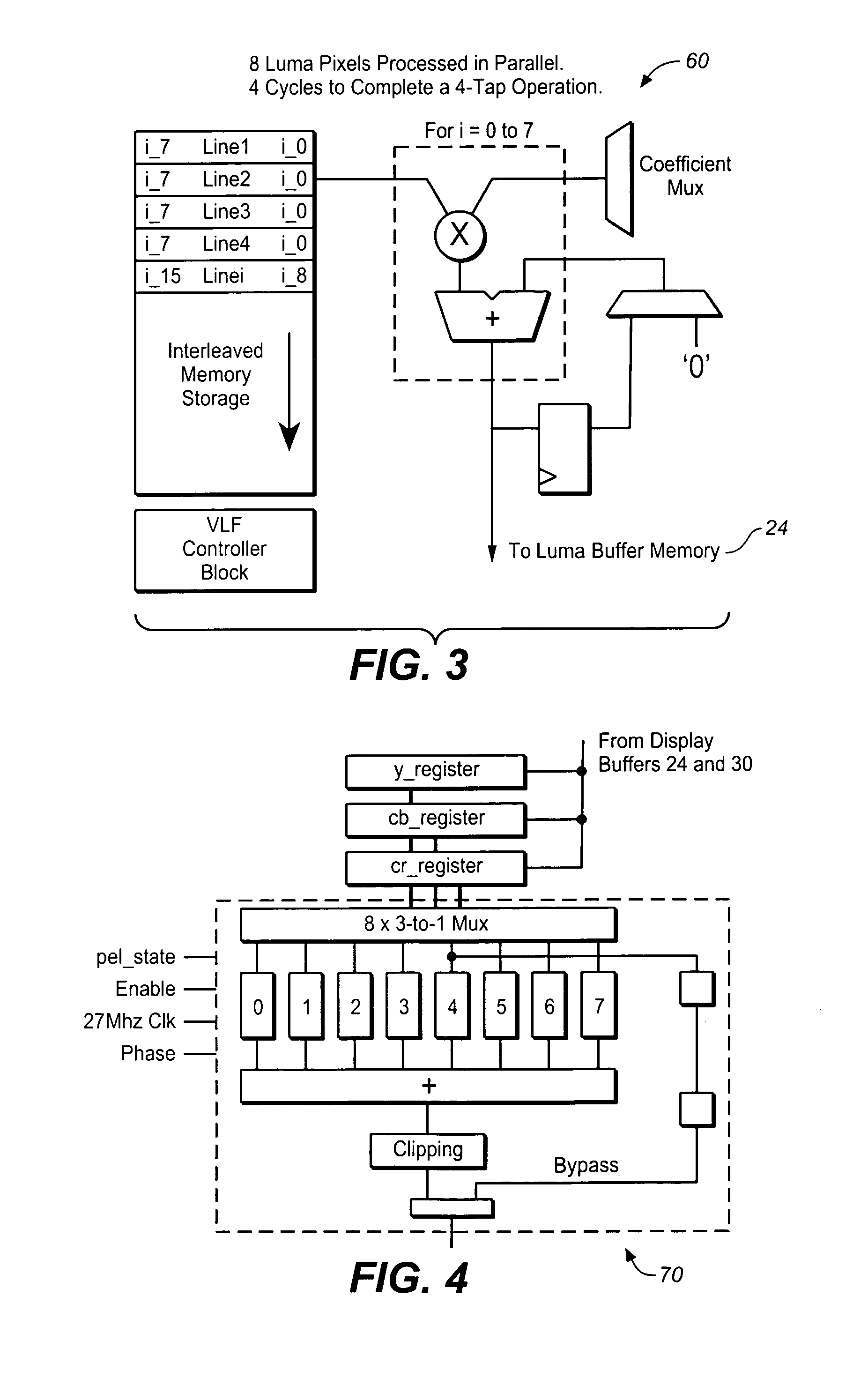

Video horizontal and vertical variable scaling filter

ActiveUS7197194B1Reduce memory bandwidthGeometric image transformationCharacter and pattern recognitionControl signalData signal

Owner:AVAGO TECH INT SALES PTE LTD

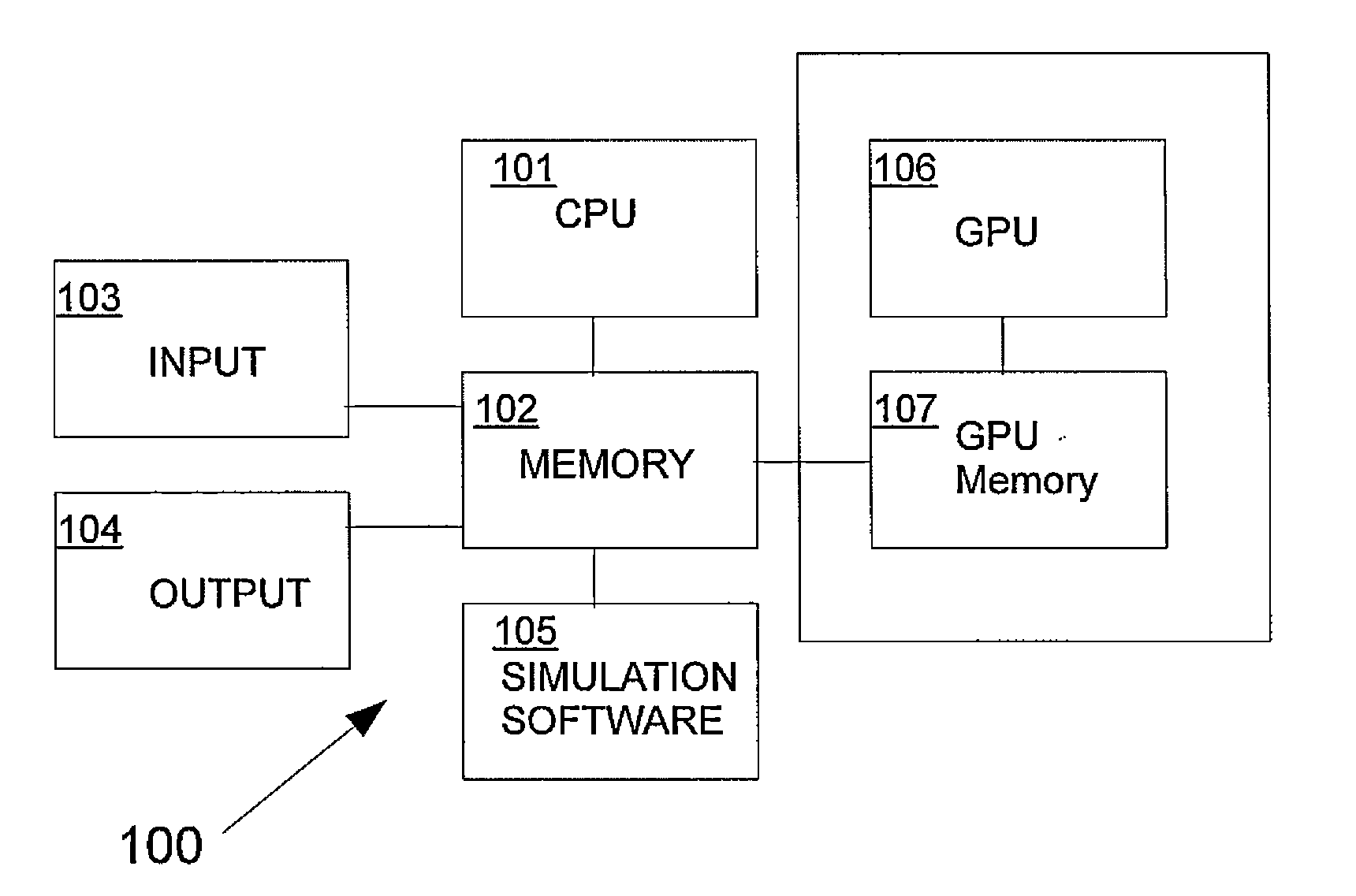

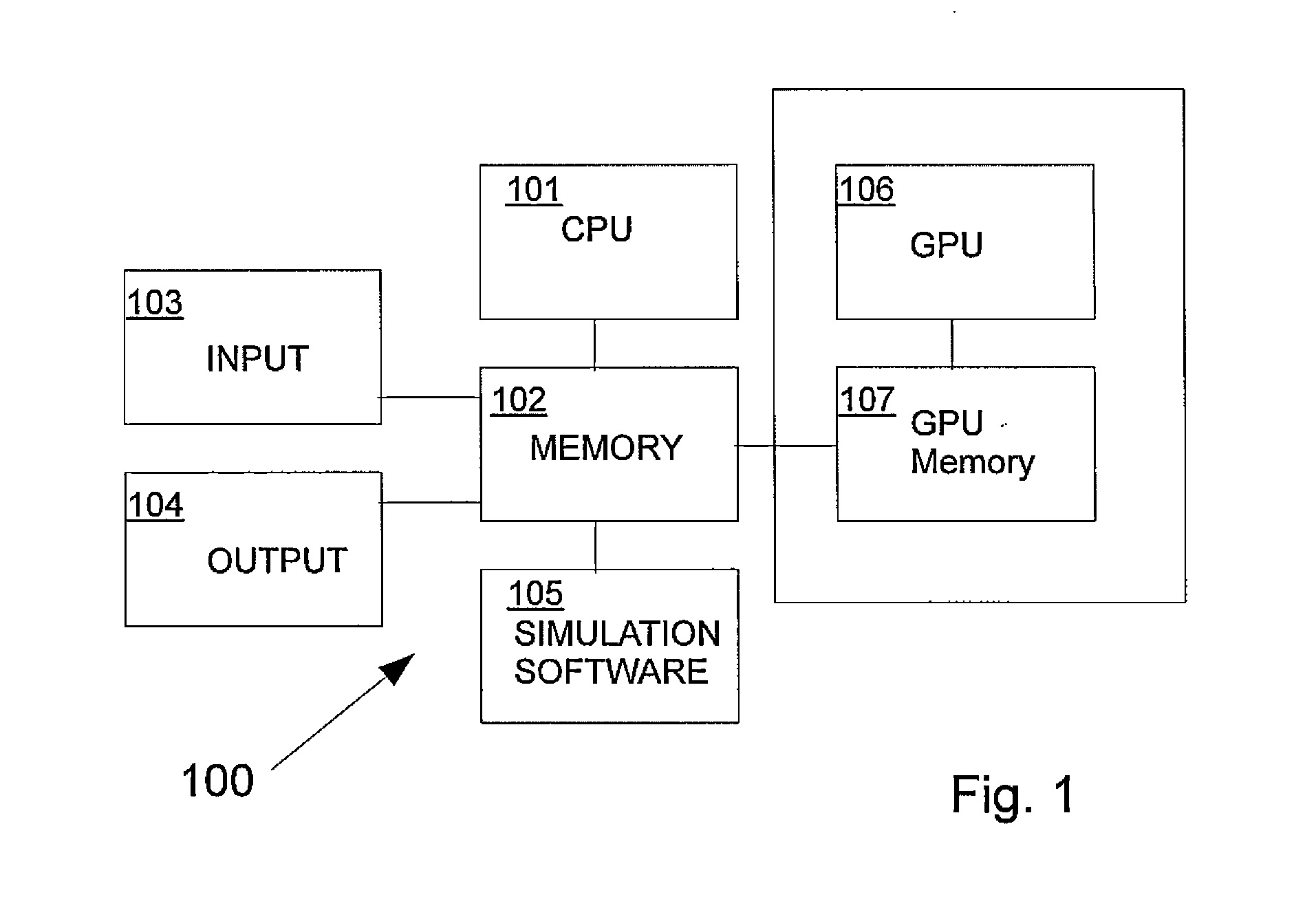

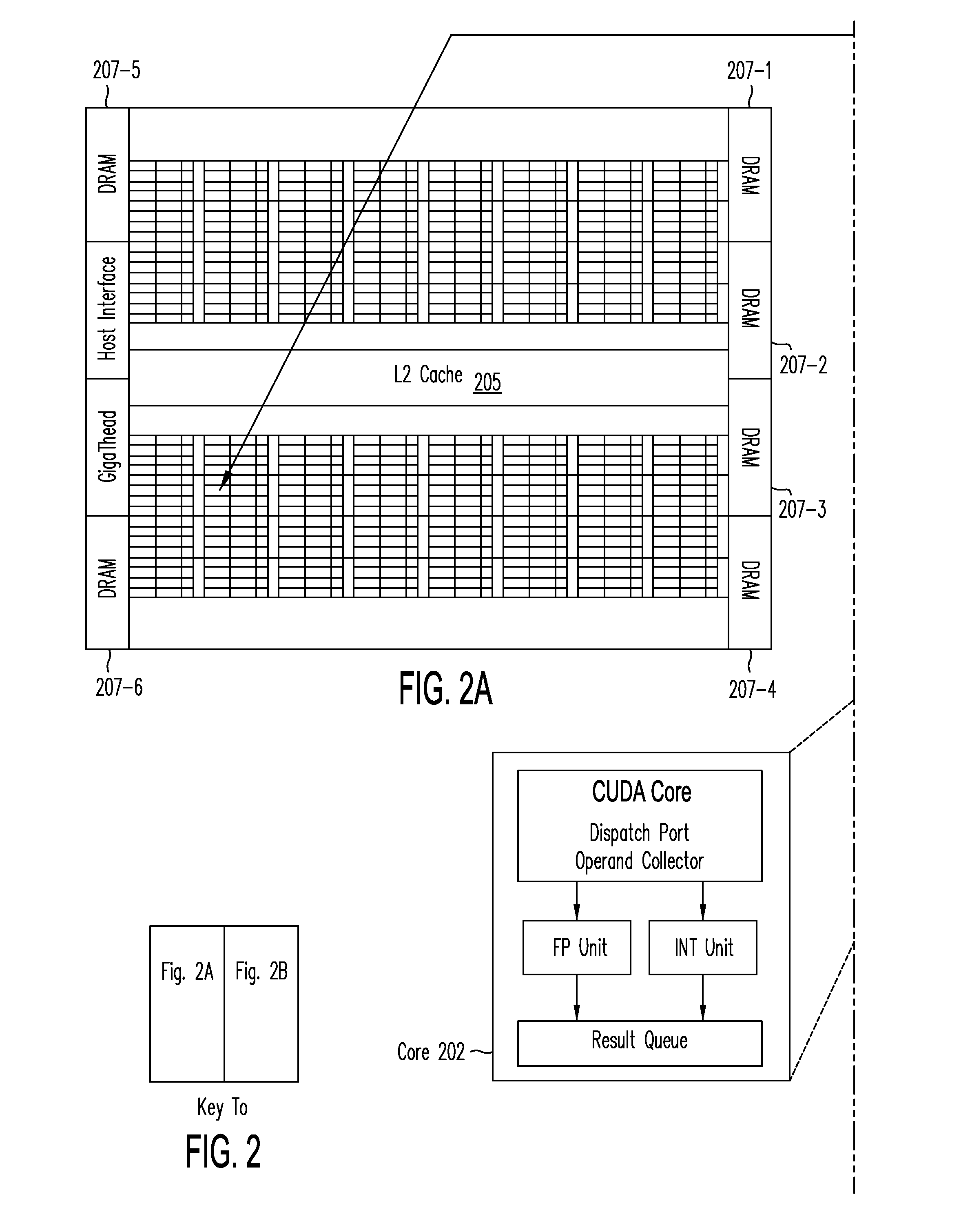

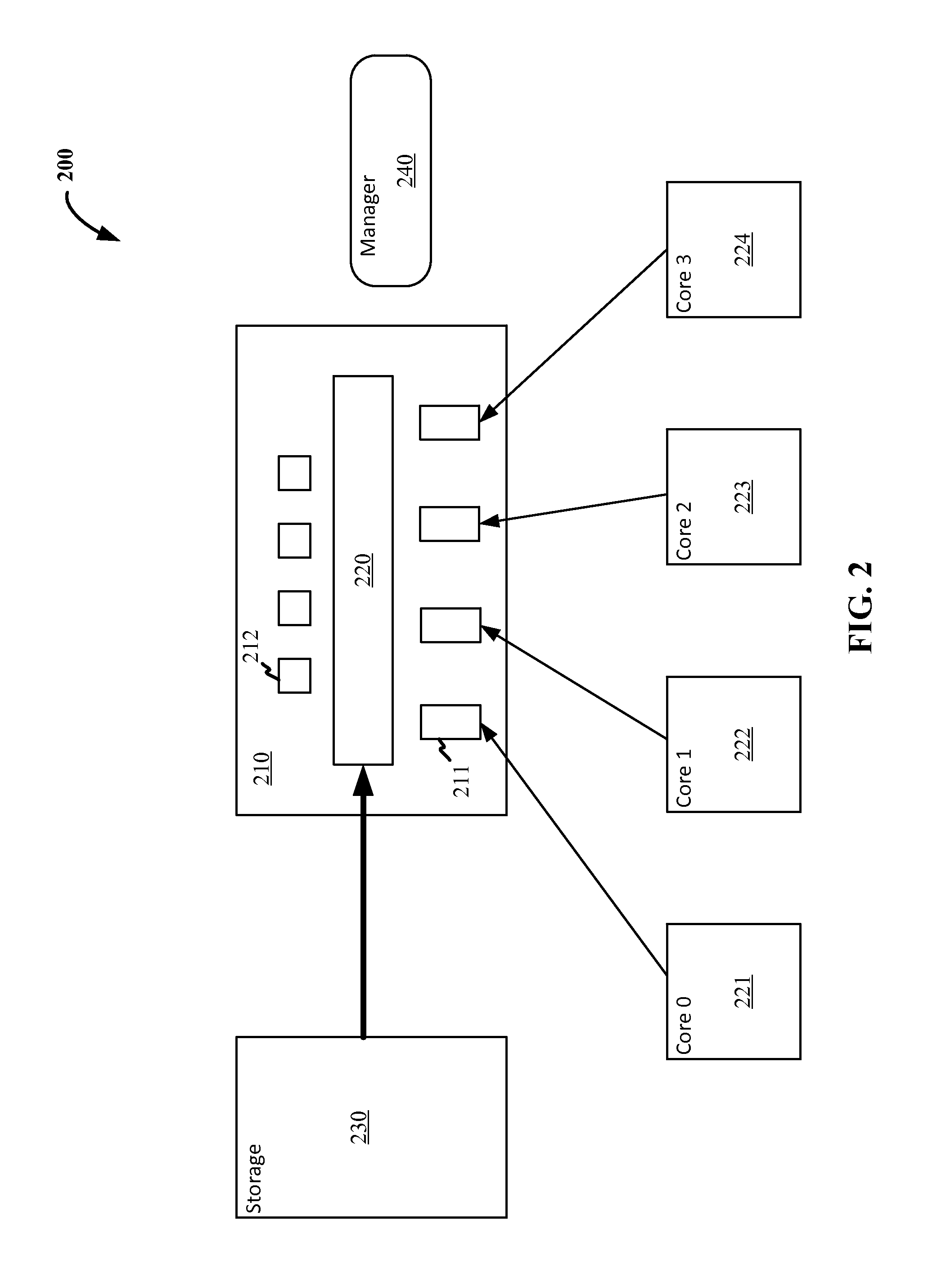

Concurrent simulation system using graphic processing units (GPU) and method thereof

InactiveUS20130226535A1High overall speed-upReduce memory bandwidthComputation using non-denominational number representationComputer aided designGeneral purposeGraphics

A concurrent circuit simulation system simulate analog and mixed mode circuit using by exploiting parallel execution in one or more graphic processing units. In one implementation, the concurrent circuit simulation system includes a general purpose central processing unit (CPU), a main memory, simulation software and one or more graphic processing units (GPUs). Each GPU may contain hundreds of processor cores and several GPUs can be used together to provide thousands of processor cores. Software running on the CPU partitions the computation tasks into tens of thousands of smaller units and invoke the process threads in the GPU to carry out the computation tasks.

Owner:TUAN JEH FU

Increasing TCP re-transmission process speed

ActiveUS20050132077A1Reduce memory bandwidthReduce latencyError prevention/detection by using return channelTransmission systemsConnection typeData placement

An RNIC implementation that performs direct data placement to memory where all segments of a particular connection are aligned, or moves data through reassembly buffers where all segments of a particular connection are non-aligned. The type of connection that cuts-through without accessing the reassembly buffers is referred to as a “Fast” connection because it is highly likely to be aligned, while the other type is referred to as a “Slow” connection. When a consumer establishes a connection, it specifies a connection type. The connection type can change from Fast to Slow and back. The invention reduces memory bandwidth, latency, error recovery using TCP retransmit and provides for a “graceful recovery” from an empty receive queue. The implementation also may conduct CRC validation for a majority of inbound DDP segments in the Fast connection before sending a TCP acknowledgement (Ack) confirming segment reception.

Owner:MELLANOX TECHNOLOGIES LTD

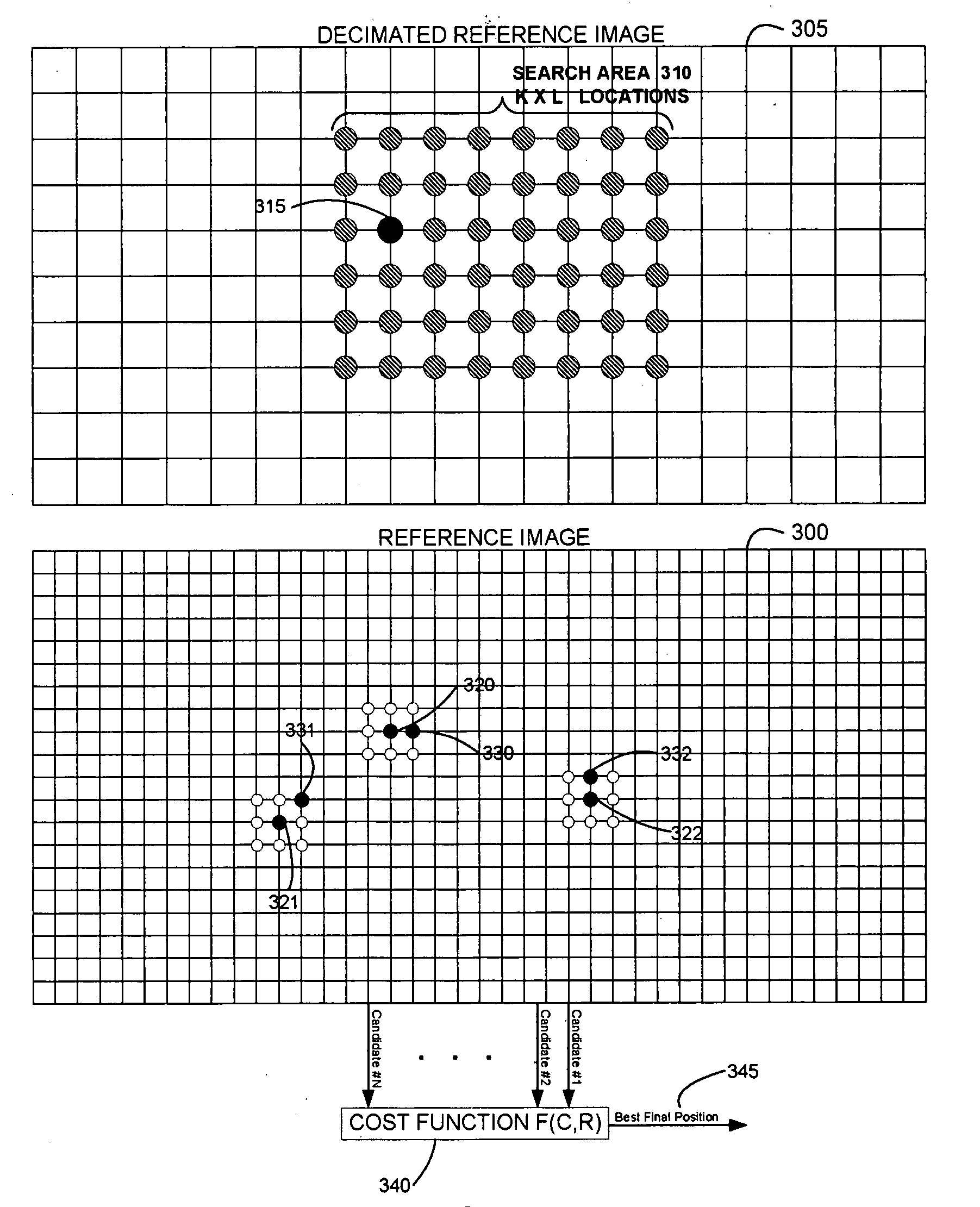

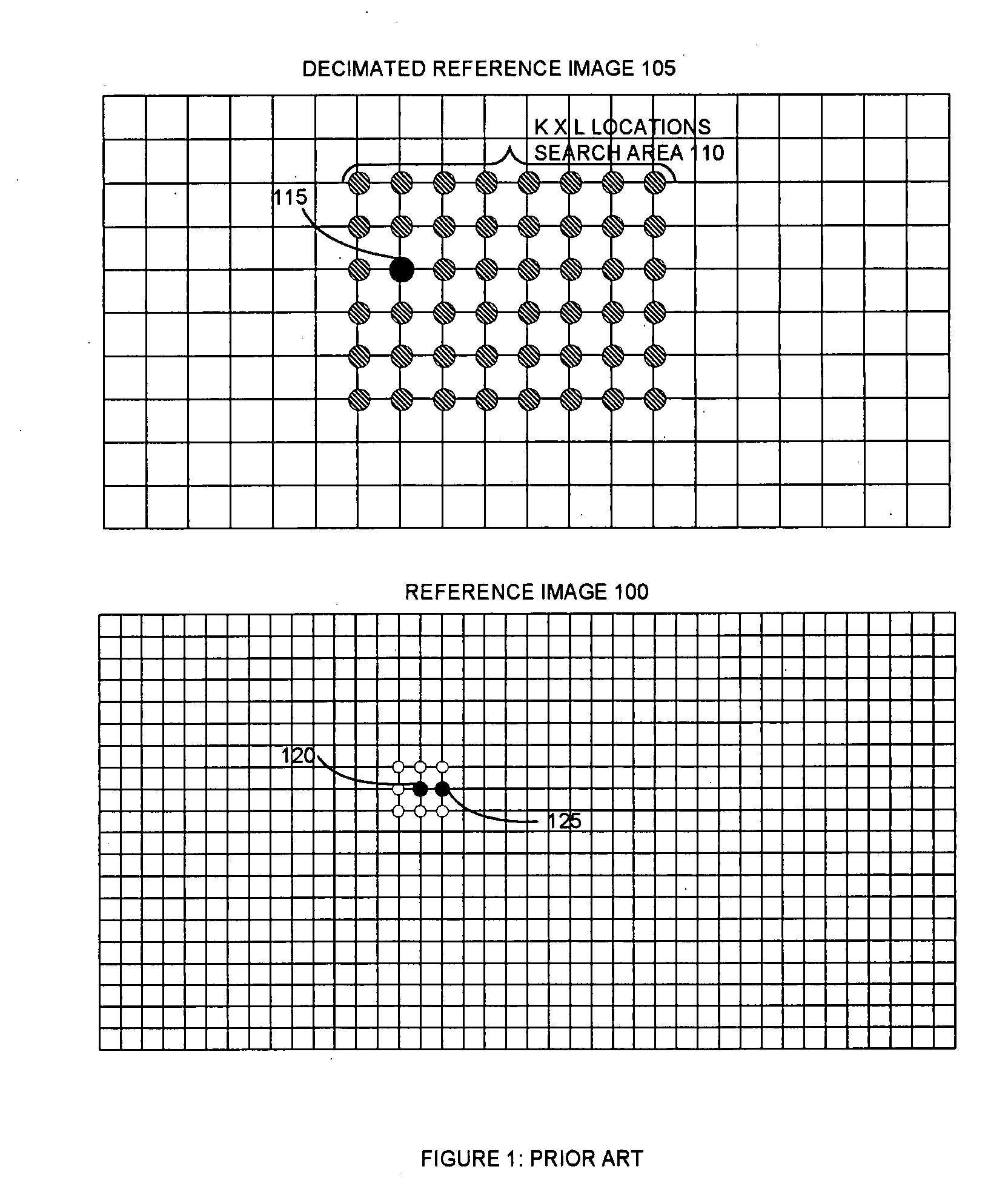

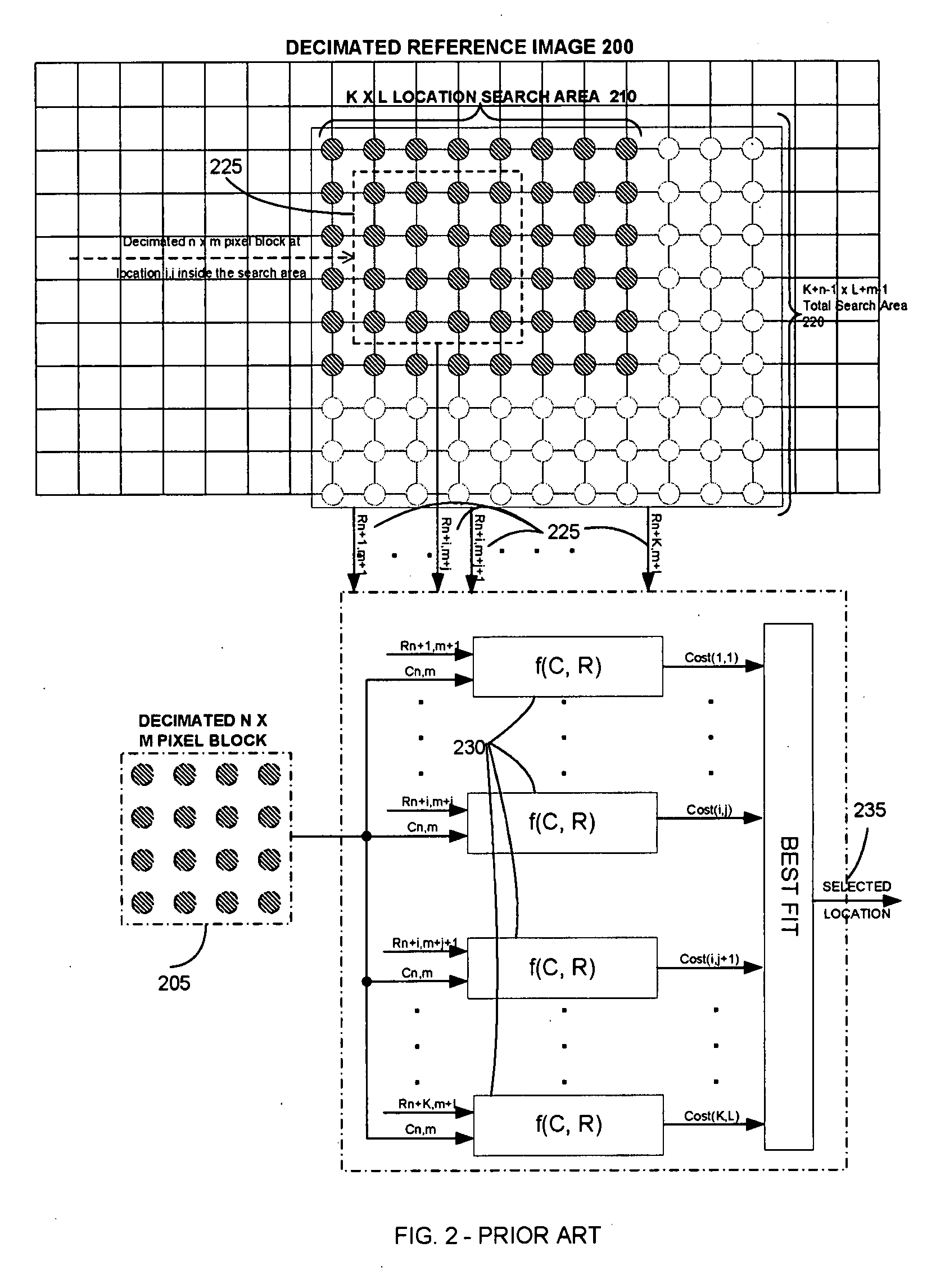

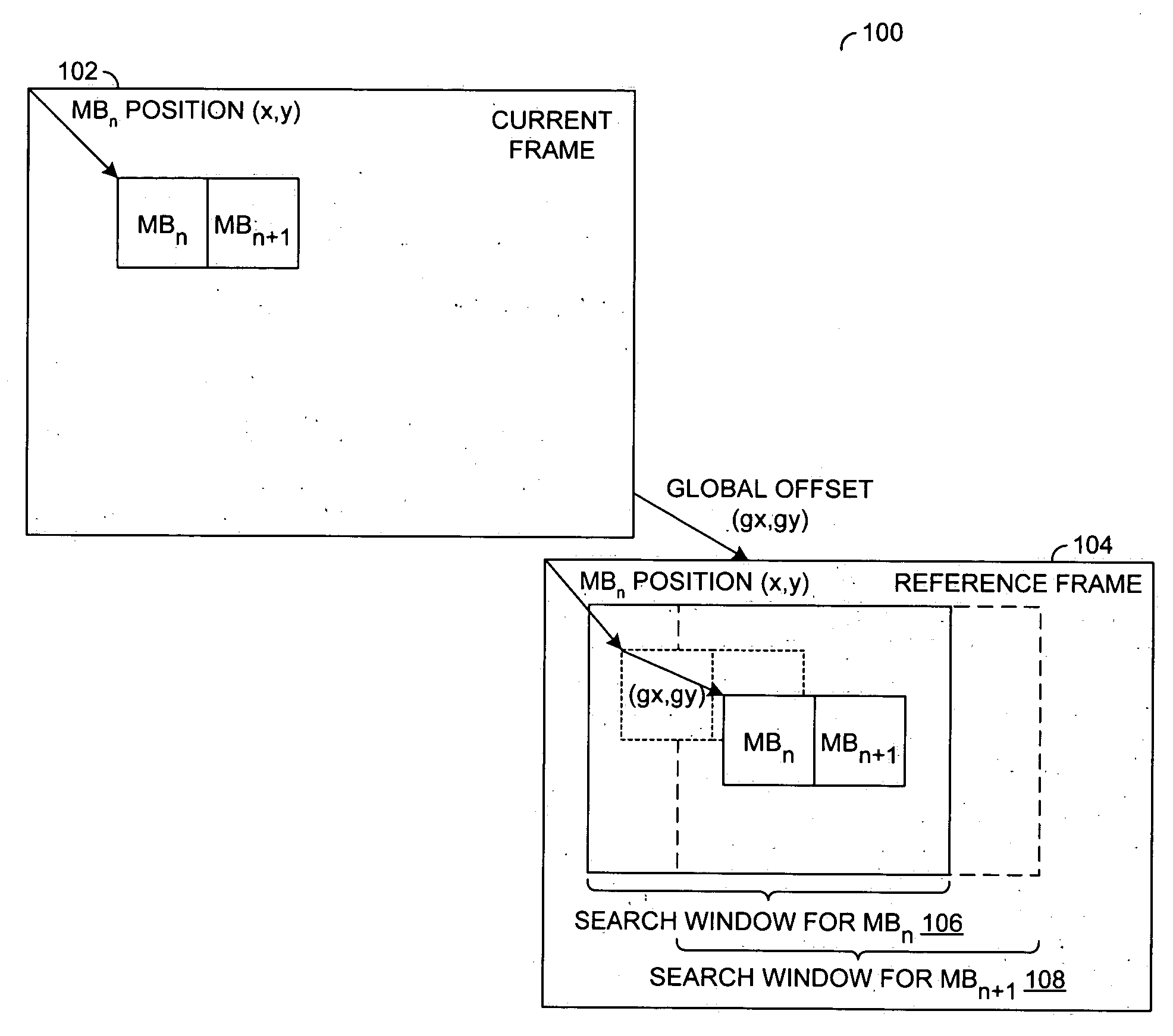

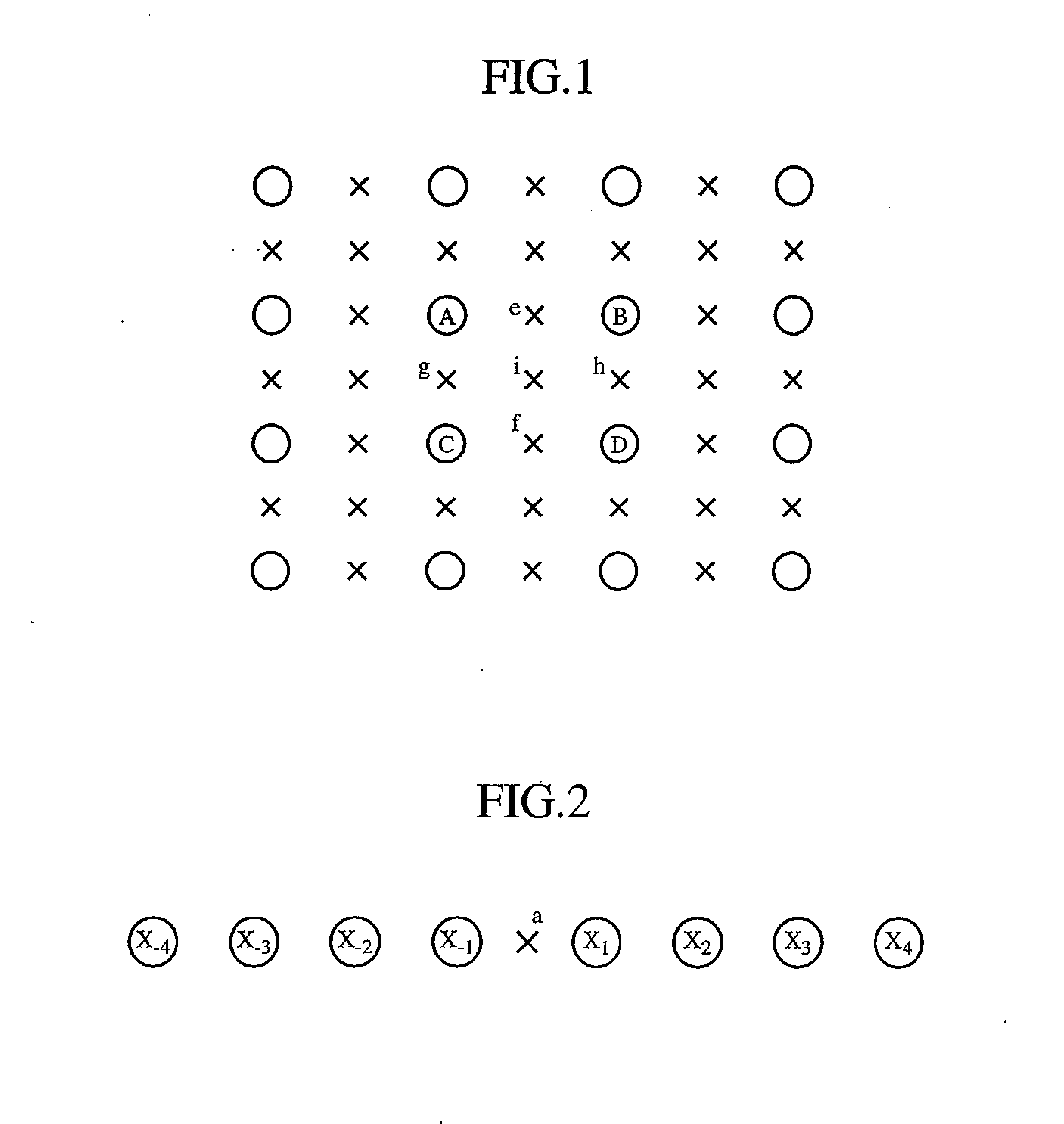

Hybrid hierarchical motion estimation for video streams

InactiveUS20080260033A1Reduce memory bandwidthColor television with pulse code modulationColor television with bandwidth reductionReference imageImage motion

A method for estimating image-to-image motion of a pixel block in a stream of images which includes a current image which includes the pixel block and a reference image, the method including performing a hierarchical search in a search area of the reference image, including producing a decimated reference image and a decimated pixel block, searching for a location in the search area of the decimated reference image which best fits the decimated pixel block, repeating the producing and the searching for more than one level of hierarchy, determining a first candidate location in the reference image which corresponds to the best fitting location, determining a second candidate location in the reference image by a method other than the hierarchical search, performing a search in the reference image for refined locations of the first and the second candidate locations, selecting one final location from the refined candidate locations, and using the final location for estimating the motion. Related apparatus and methods are also described.

Owner:TESSERA INC

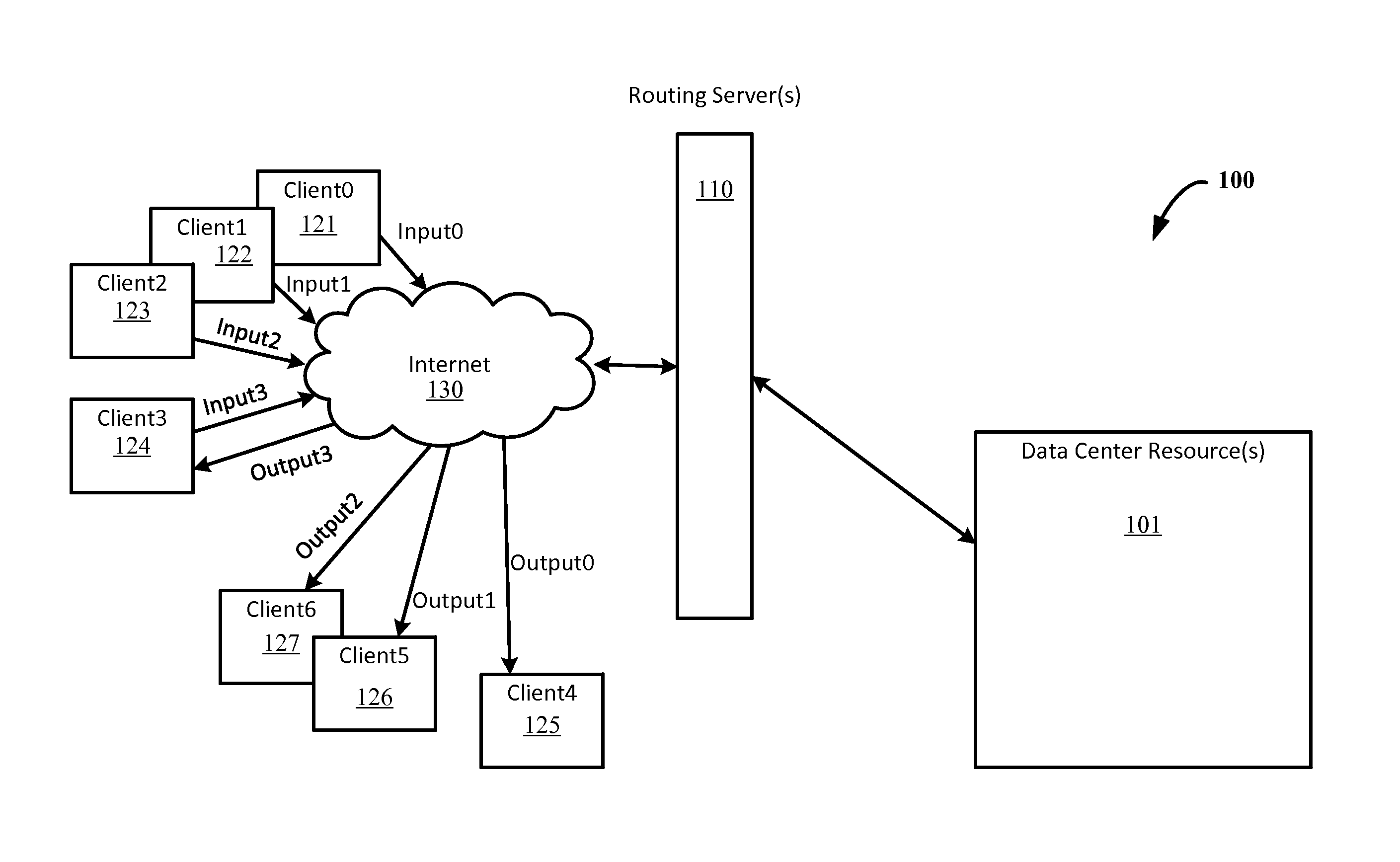

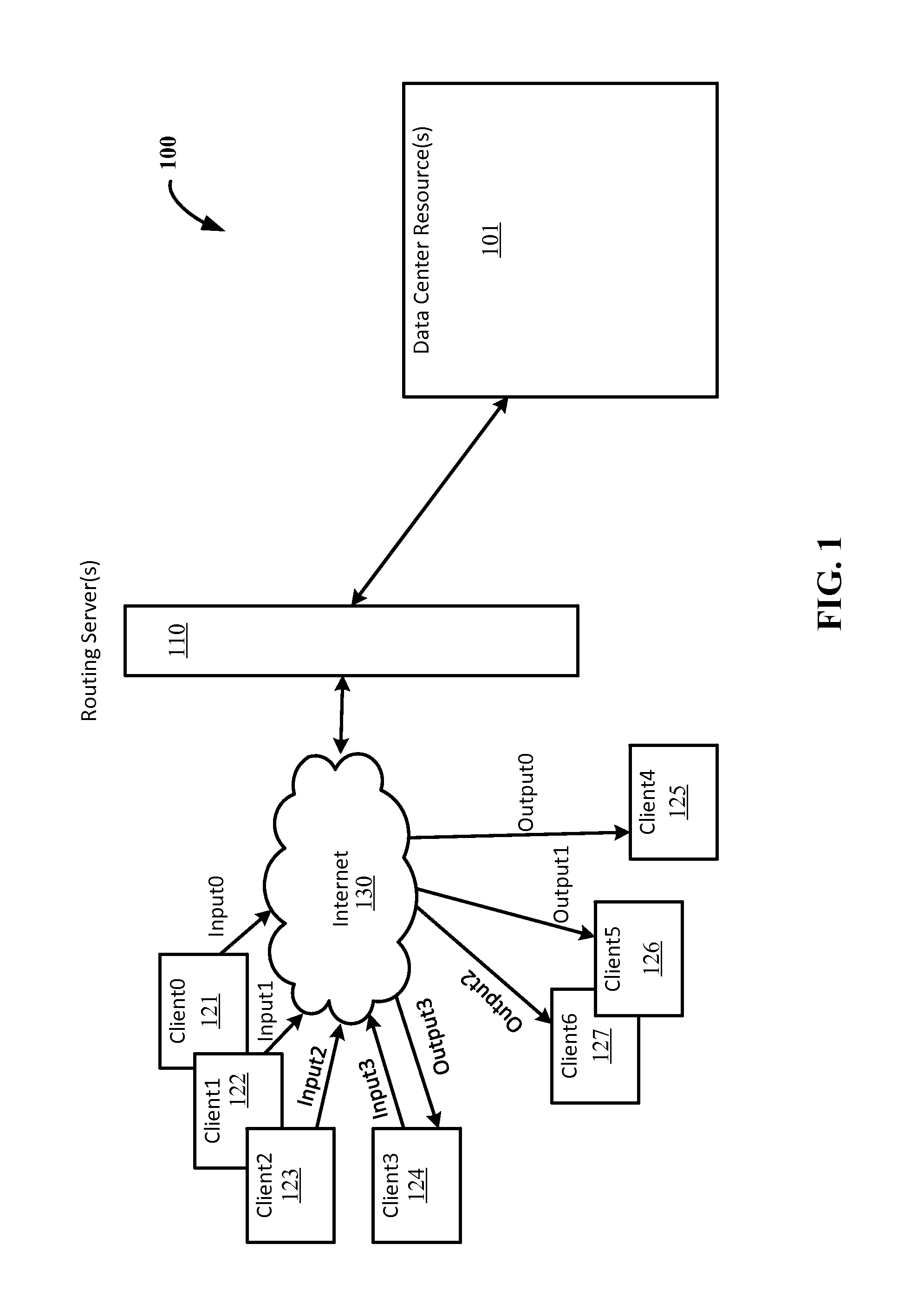

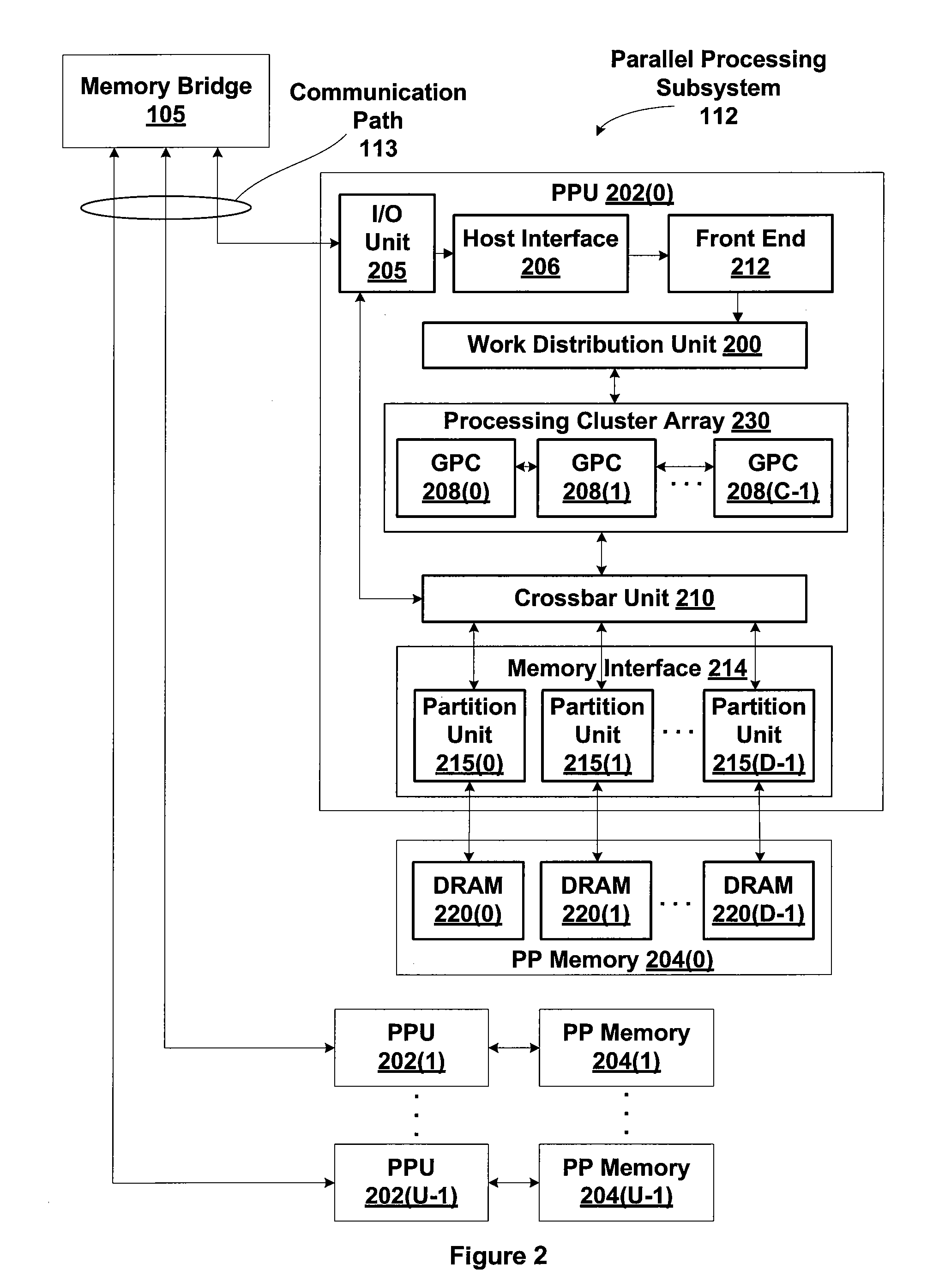

Memory bandwidth management for deep learning applications

ActiveUS20160379111A1Reduce memory bandwidthReduce memory bandwidth requirementDigital computer detailsProgram controlFeature vectorData stream

In a data center, neural network evaluations can be included for services involving image or speech recognition by using a field programmable gate array (FPGA) or other parallel processor. The memory bandwidth limitations of providing weighted data sets from an external memory to the FPGA (or other parallel processor) can be managed by queuing up input data from the plurality of cores executing the services at the FPGA (or other parallel processor) in batches of at least two feature vectors. The at least two feature vectors can be at least two observation vectors from a same data stream or from different data streams. The FPGA (or other parallel processor) can then act on the batch of data for each loading of the weighted datasets.

Owner:MICROSOFT TECH LICENSING LLC

Calculation of plane equations after determination of z-buffer visibility

ActiveUS20110080406A1Easy to processReduce memory bandwidth3D-image renderingComputational scienceVisibility

One embodiment of the present invention sets forth a technique for computing plane equations for primitive shading after non-visible pixels are removed by z culling operations and pixel coverage has been determined. The z plane equations are computed before the plane equations for non-z primitive attributes are computed. The z plane equations are then used to perform screen-space z culling of primitives during and following rasterization. Culling of primitives is also performed based on pixel sample coverage. Consequently, primitives that have visible pixels after z culling operations reach the primitive shading unit. The non-z plane equations are only computed for geometry that is visible after the z culling operations. The primitive shading unit does not need to fetch vertex attributes from memory and does not need to compute non-z plane equations for the culled primitives.

Owner:NVIDIA CORP

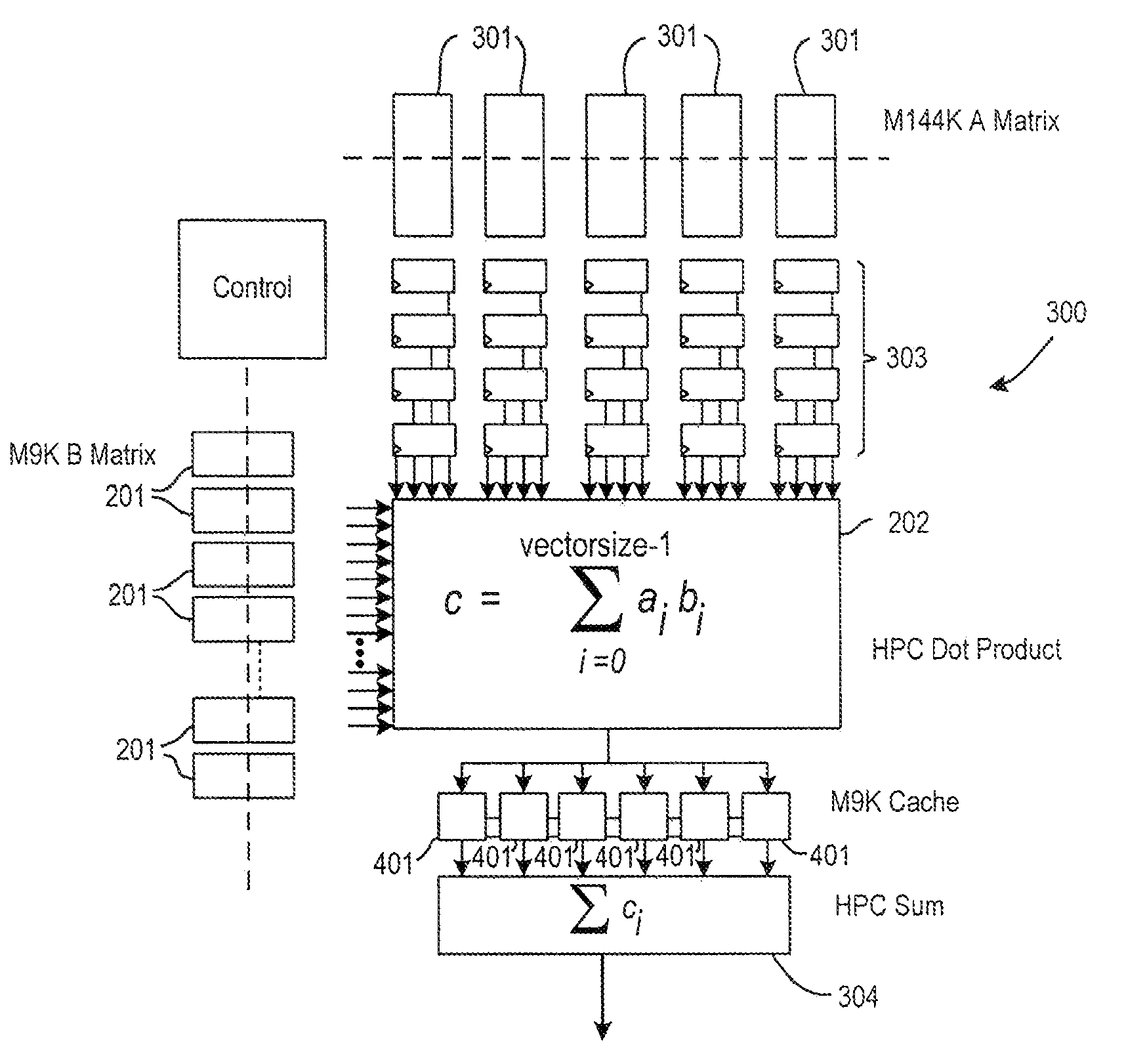

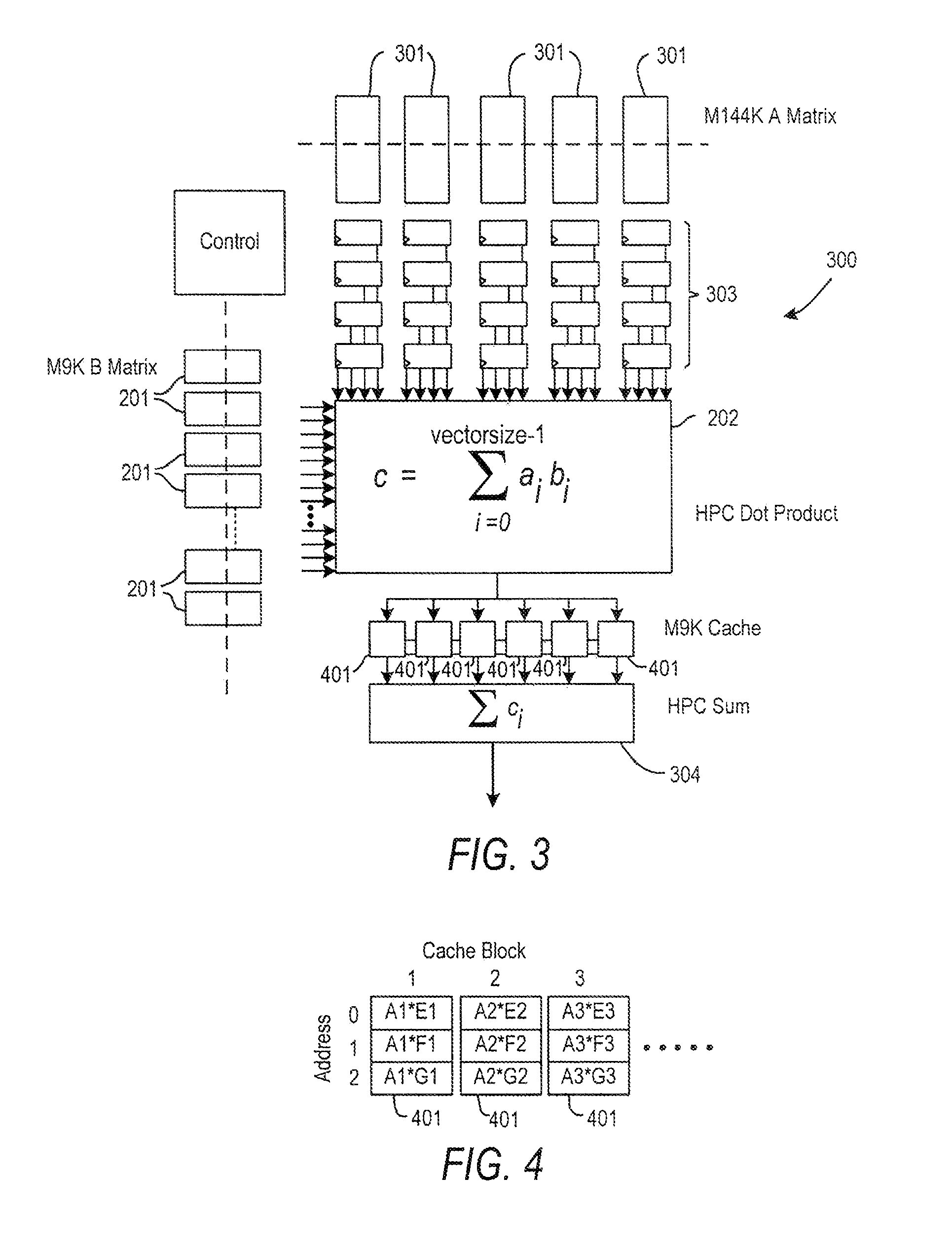

Configuring a programmable integrated circuit device to perform matrix multiplication

InactiveUS8626815B1Reduce power consumptionReduce memory accessComputation using non-contact making devicesData mergingMatrix multiplicationComputer science

In a matrix multiplication in which each element of the resultant matrix is the dot product of a row of a first matrix and a column of a second matrix, each row and column can be broken into manageable blocks, with each block loaded in turn to compute a smaller dot product, and then the results can be added together to obtain the desired row-column dot product. The earliest results for each dot product are saved for a number of clock cycles equal to the number of portions into which each row or column is divided. The results are then added to provide an element of the resultant matrix. To avoid repeated loading and unloading of the same data, all multiplications involving a particular row-block can be performed upon loading that row-block, with the results cached until other multiplications for the resultant elements that use the cached results are complete.

Owner:ALTERA CORP

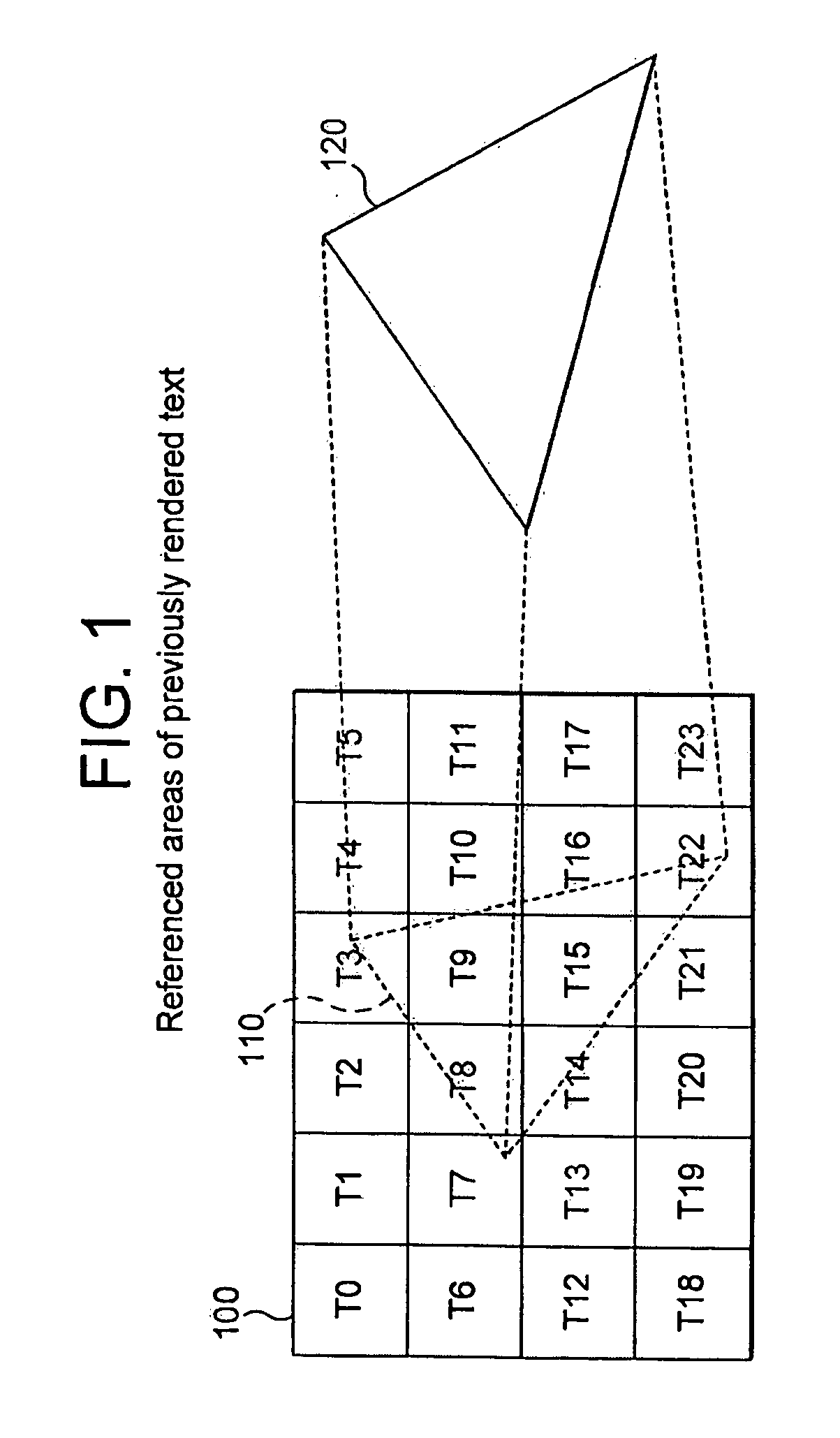

Demand based texture rendering in a tile based rendering system

InactiveUS20110254852A1Reduce memory bandwidthCathode-ray tube indicators3D-image renderingGraphicsTexture rendering

A method and apparatus are provided for shading and texturing computer graphic images in a tile based rendering system using dynamically rendered textures. Scene space geometry is derived for a dynamically rendered texture and passed to a tiling unit which derives scene space geometry for a scene which references the textures. Scene space geometry for a scene that references the dynamically rendered texture is also derived and passed to the tiling unit. The tiling unit uses object data derived from the scene space geometry to detect reference to areas of dynamically rendered textures, as yet un-rendered. These are then dynamically rendered.

Owner:IMAGINATION TECH LTD

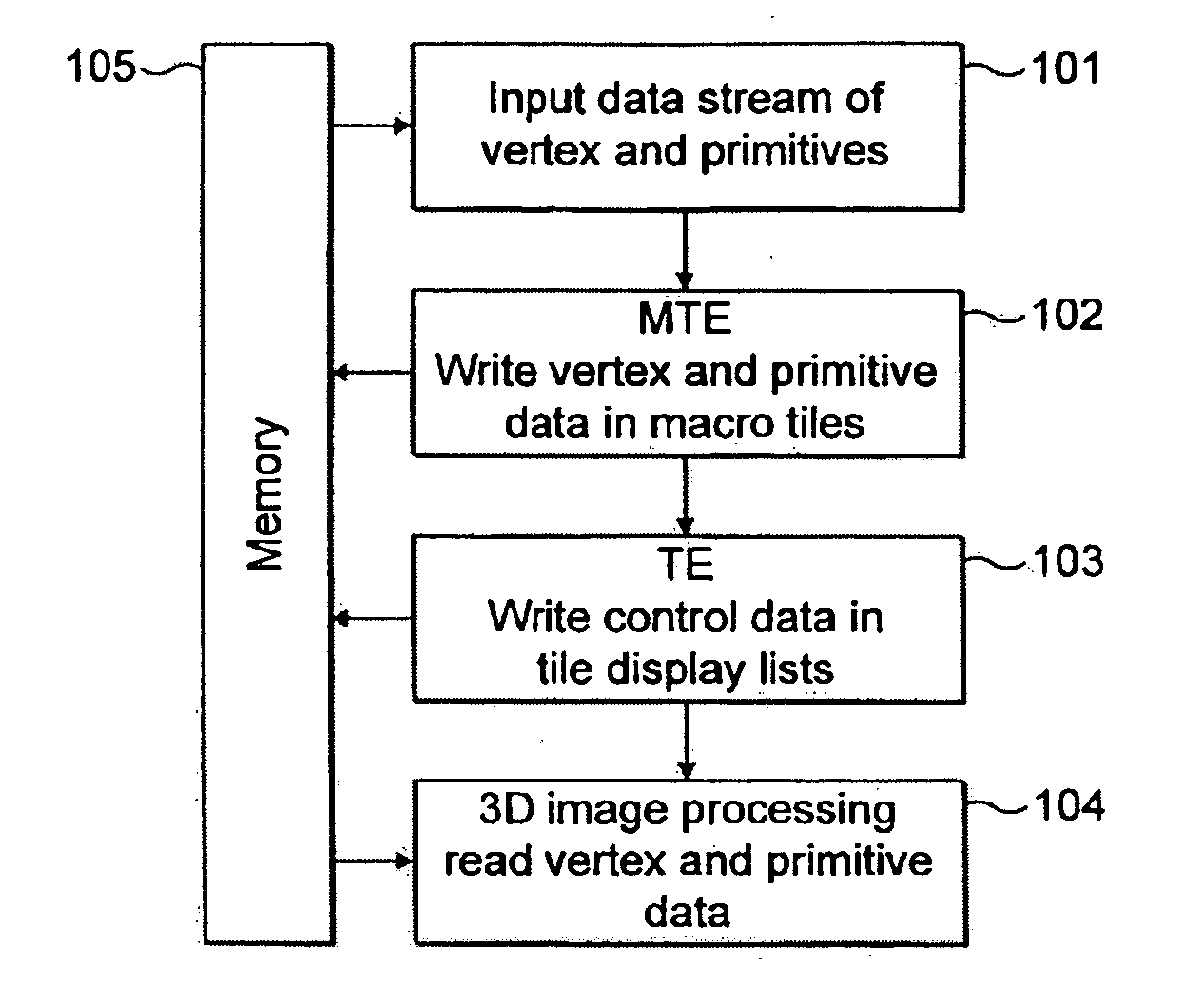

Multilevel display control list in tile based 3D computer graphics system

ActiveUS20110292032A1Reduce amountReduce memory bandwidthFilling planer surface with attributes3D-image renderingImaging dataStream data

A method and apparatus are provided for rendering a 3 dimensional computer graphics image. The image is divided into plurality of rectangular tiles which are arranged in a multi level structure comprising a plurality of levels of progressively larger groupings of tiles. Image data is divided into a plurality of primitive blocks and these are assigned to groupings of tiles within the multi level structure in dependence on the groupings each one intersects. Control stream data is derived for rendering the image and this comprises references to primitive blocks for each grouping of tiles within each level of the multi level structure, the references corresponding to the primitive blocks assigned to each grouping and control stream data is used to render the primitive data into tiles within the groupings of tiles for display. This is done such that for primitive blocks which intersect a plurality of tiles within a grouping, control stream data is written for the grouping of tiles rather than for each tile within the grouping.

Owner:IMAGINATION TECH LTD

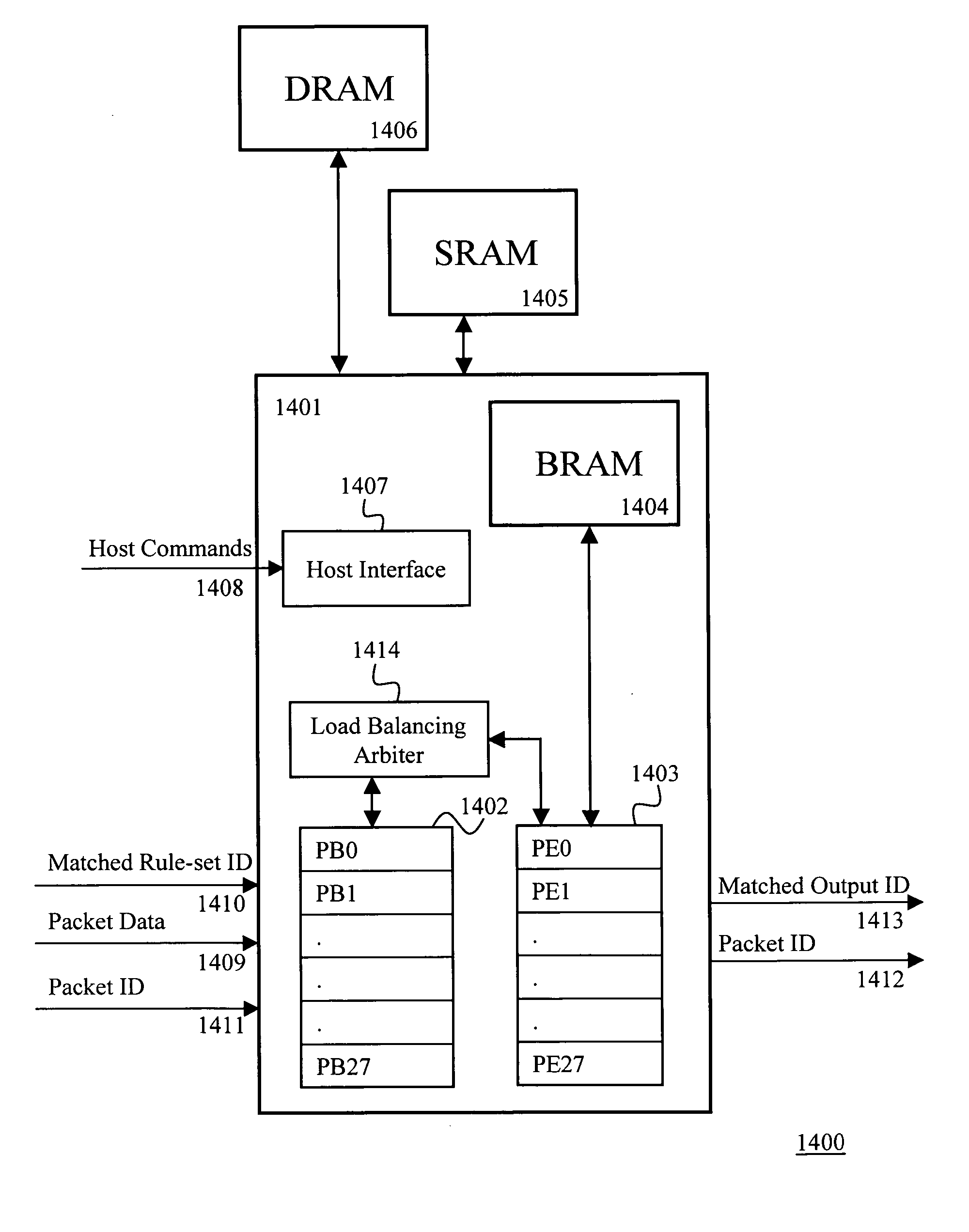

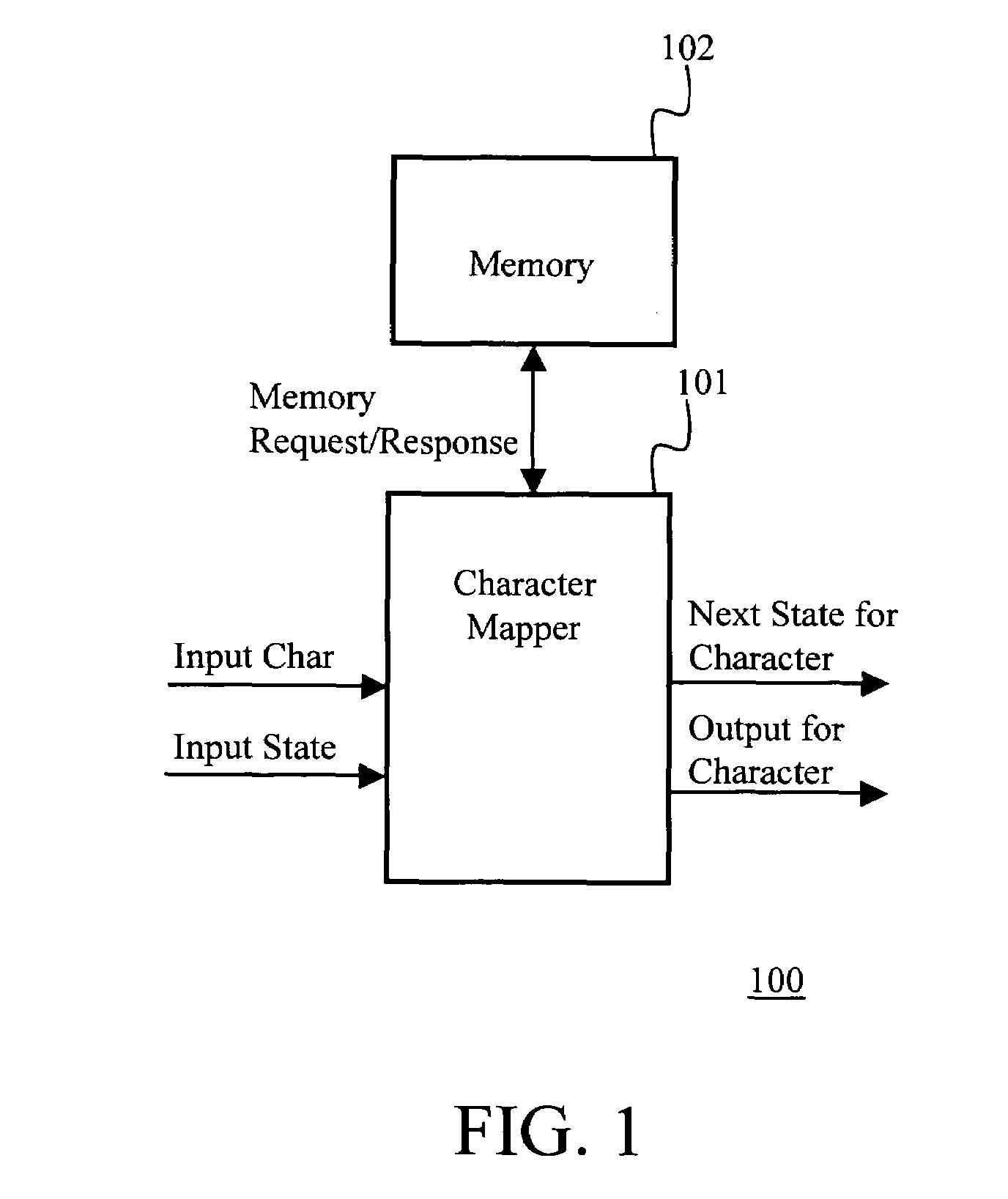

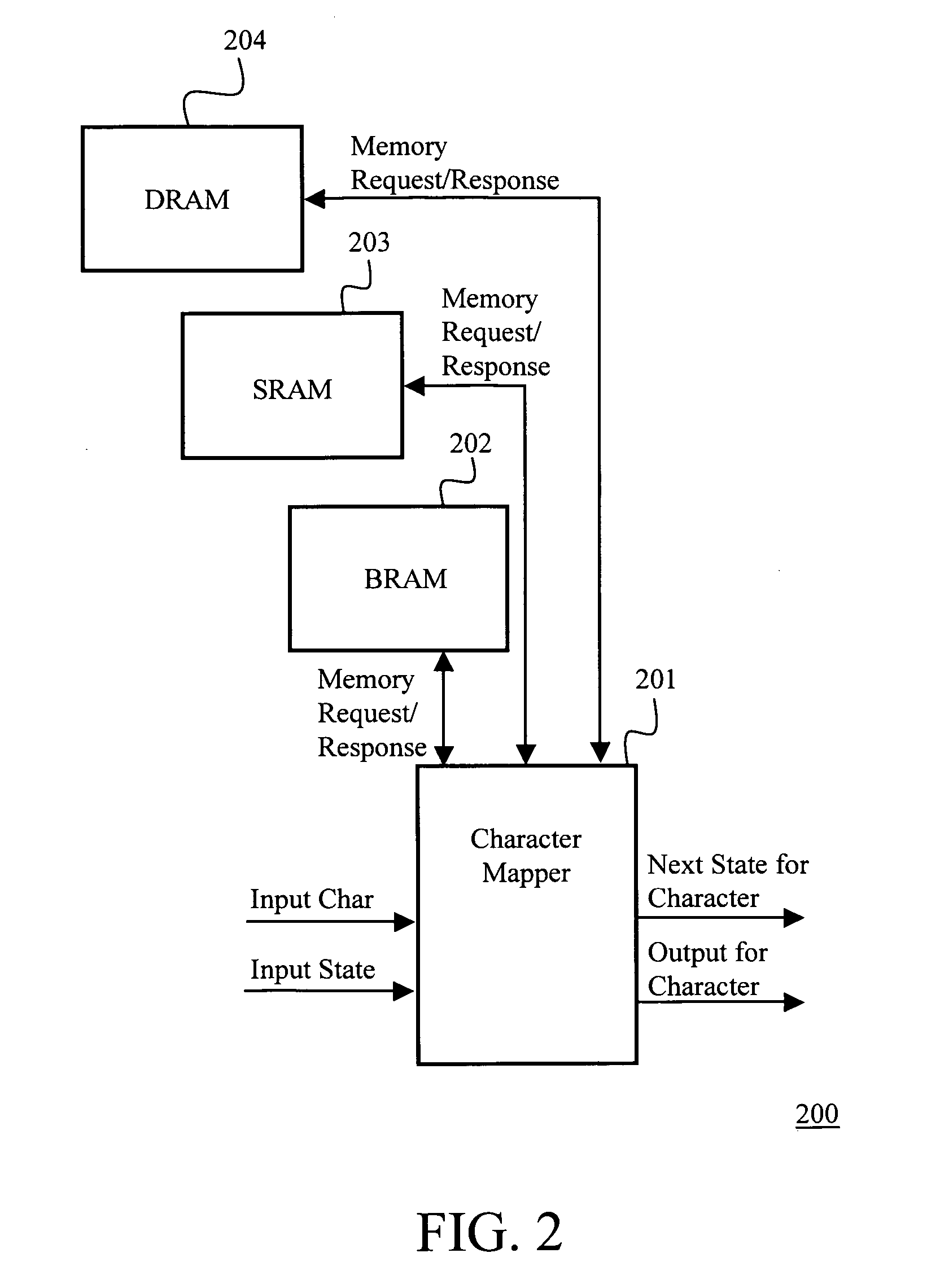

Layered memory architecture for deterministic finite automaton based string matching useful in network intrusion detection and prevention systems and apparatuses

ActiveUS7356663B2Reduce memory bandwidthMinimize memory accessTransmissionMemory systemsLine rateDeterministic finite automaton

The present invention provides a method and apparatus for searching multiple strings within a packet data using deterministic finite automata. The apparatus includes means for updating memory tables stored in a layered memory architecture comprising a BRAM, an SRAM and a DRAM; a mechanism to strategically store the relevant data structure in the three memories based on the characteristics of data, size / capacity of the data structure, and frequency of access. The apparatus intelligently and efficiently places the associated data in different memories based on the observed fact that density of most rule-sets is around 10% for common data in typical network intrusion prevention systems. The methodology and layered memory architecture enable the apparatus implementing the present invention to achieve data processing line rates over 2 Gbps.

Owner:FORTINET

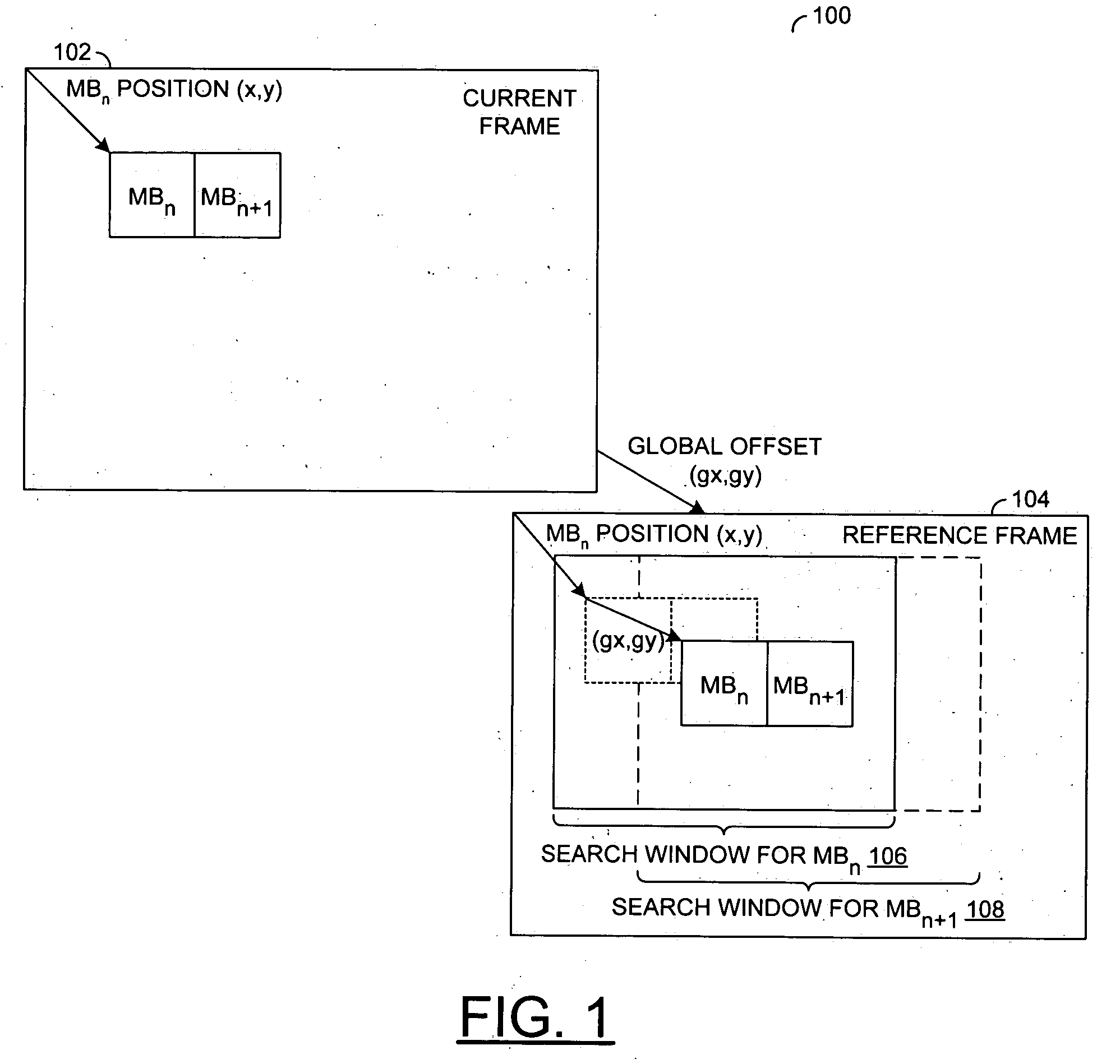

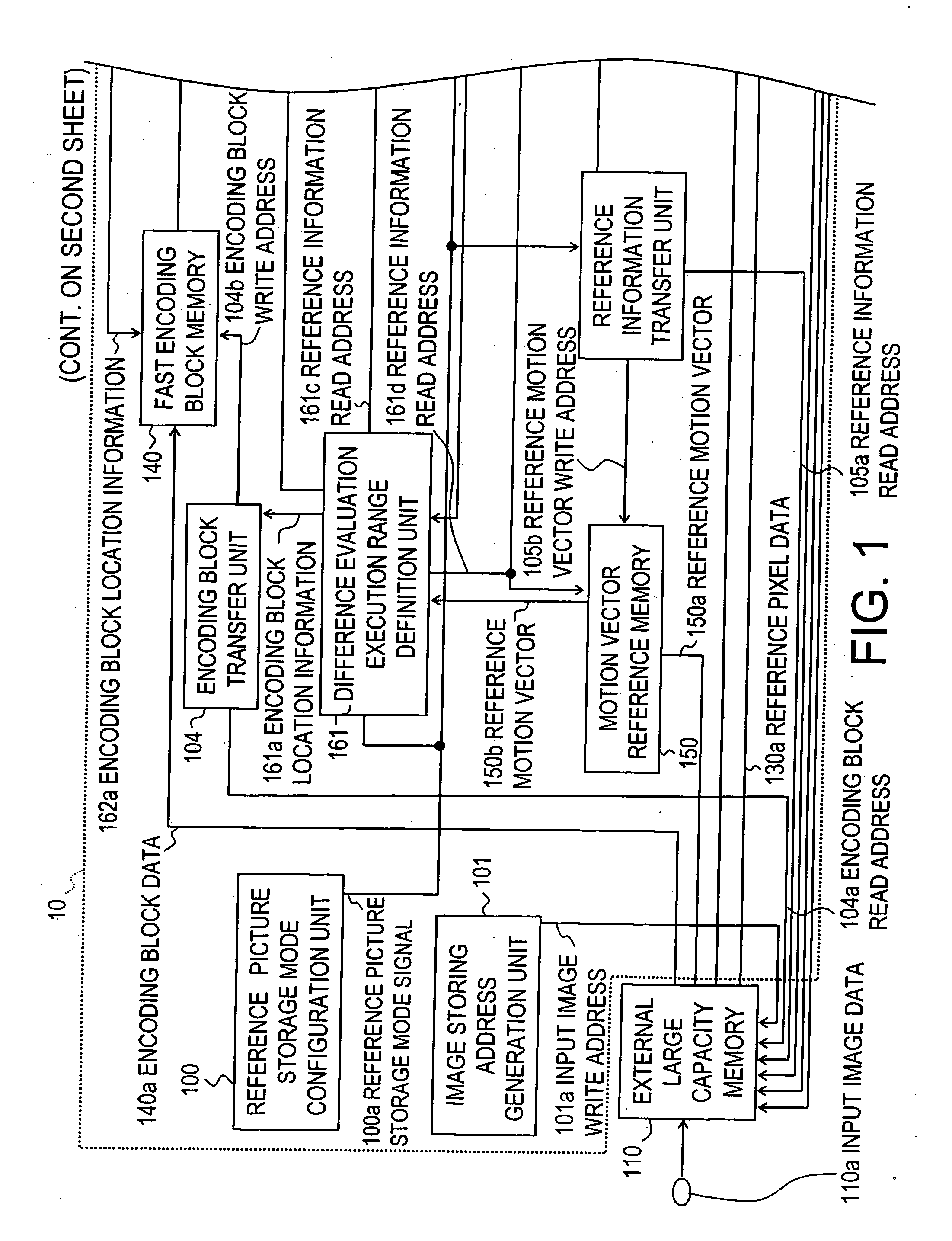

High quality, low memory bandwidth motion estimation processor

InactiveUS20050013368A1Quality improvementReduce memory bandwidthTelevision system detailsColor television with pulse code modulationReference sampleMotion vector

An apparatus for motion estimation generally including a memory and a circuit. The circuit may be configured to (i) search for a first motion vector for a first current block among a plurality of first reference samples, (ii) copy a plurality of second reference samples from the memory and (iii) search for a second motion vector for a second current block among the second reference samples copied from the memory and at least a portion of the first reference samples.

Owner:AVAGO TECH INT SALES PTE LTD

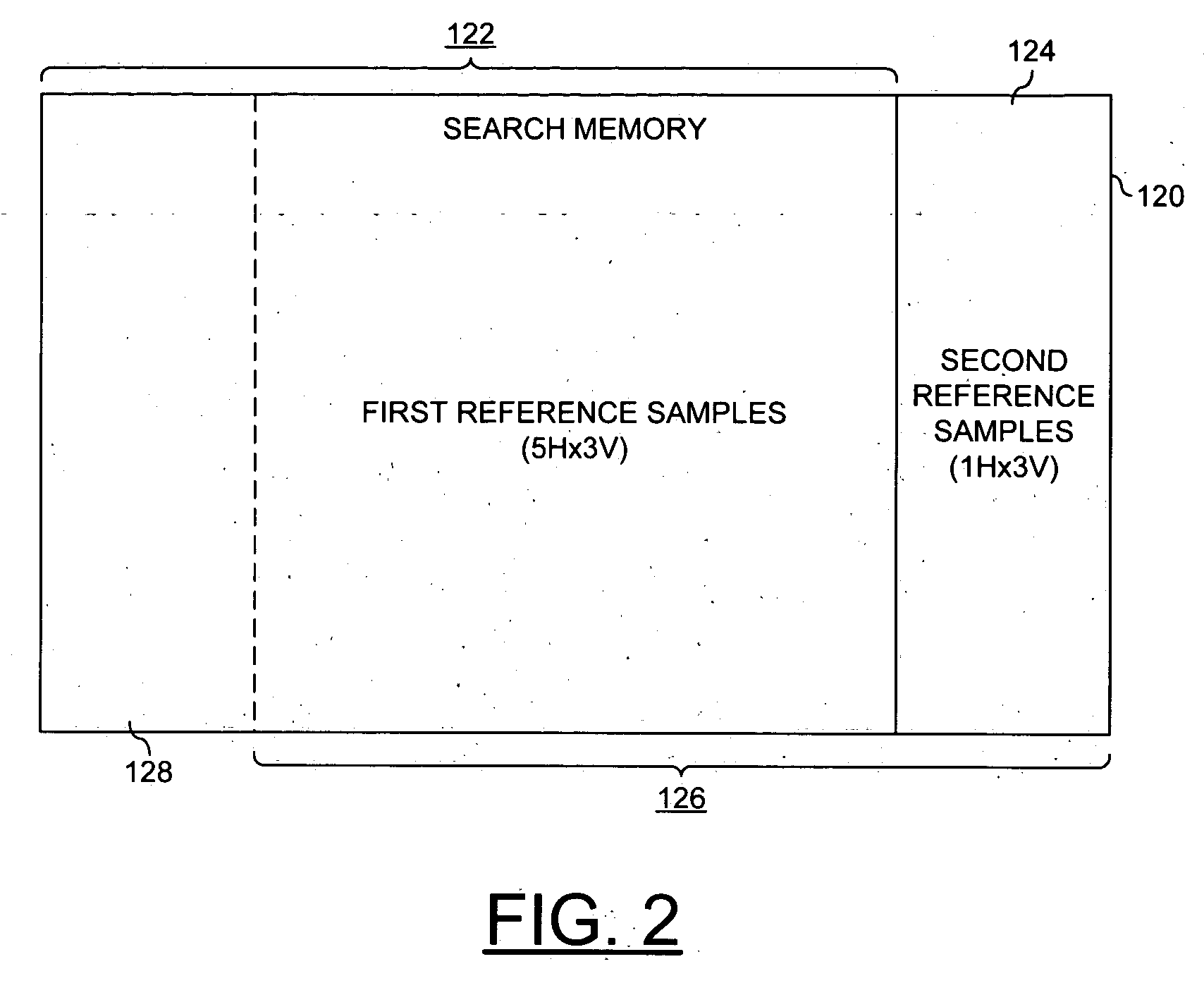

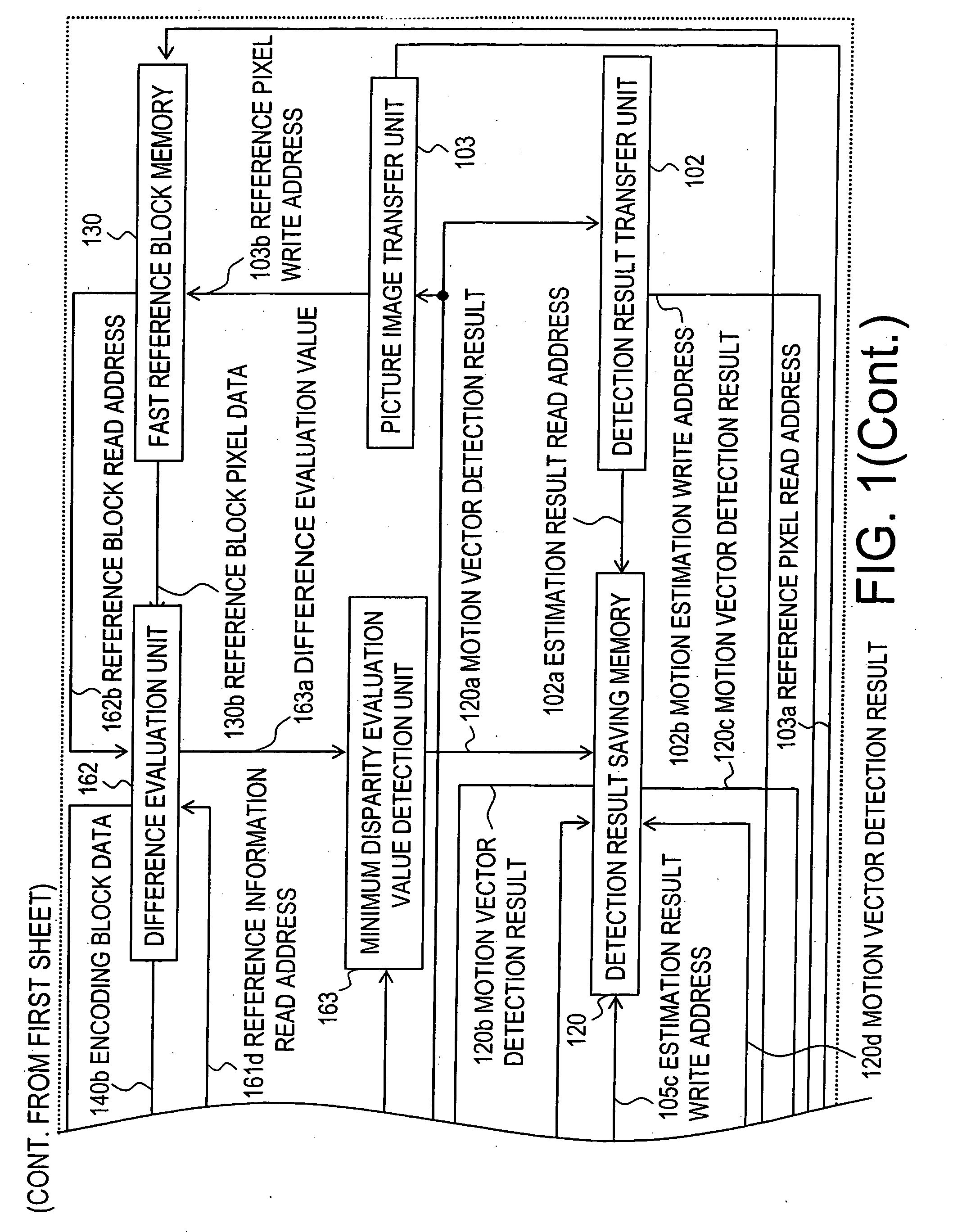

Motion vector detector, method of detecting motion vector and image recording equipment

InactiveUS20050226332A1Reduce capacityReduce memory bandwidthTelevision system detailsColor television with pulse code modulationMotion vectorImage recording

A motion vector detector that divides a picture which is encoded into a plurality of encoding blocks and evaluates differences between each encoding block and a reference block in a motion estimation range defined in a reference picture to detect a motion vector between pictures of a moving image.

Owner:KK TOSHIBA

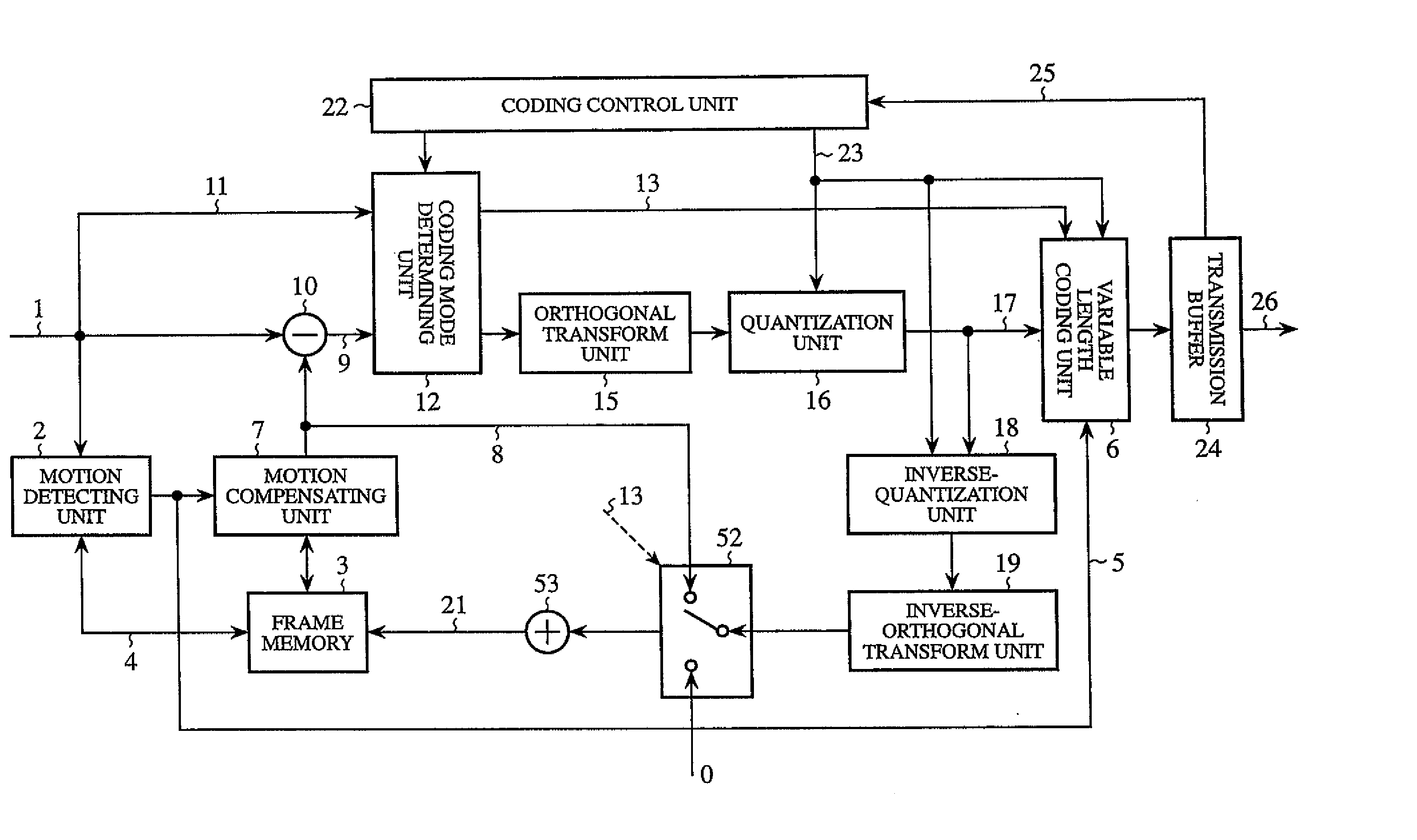

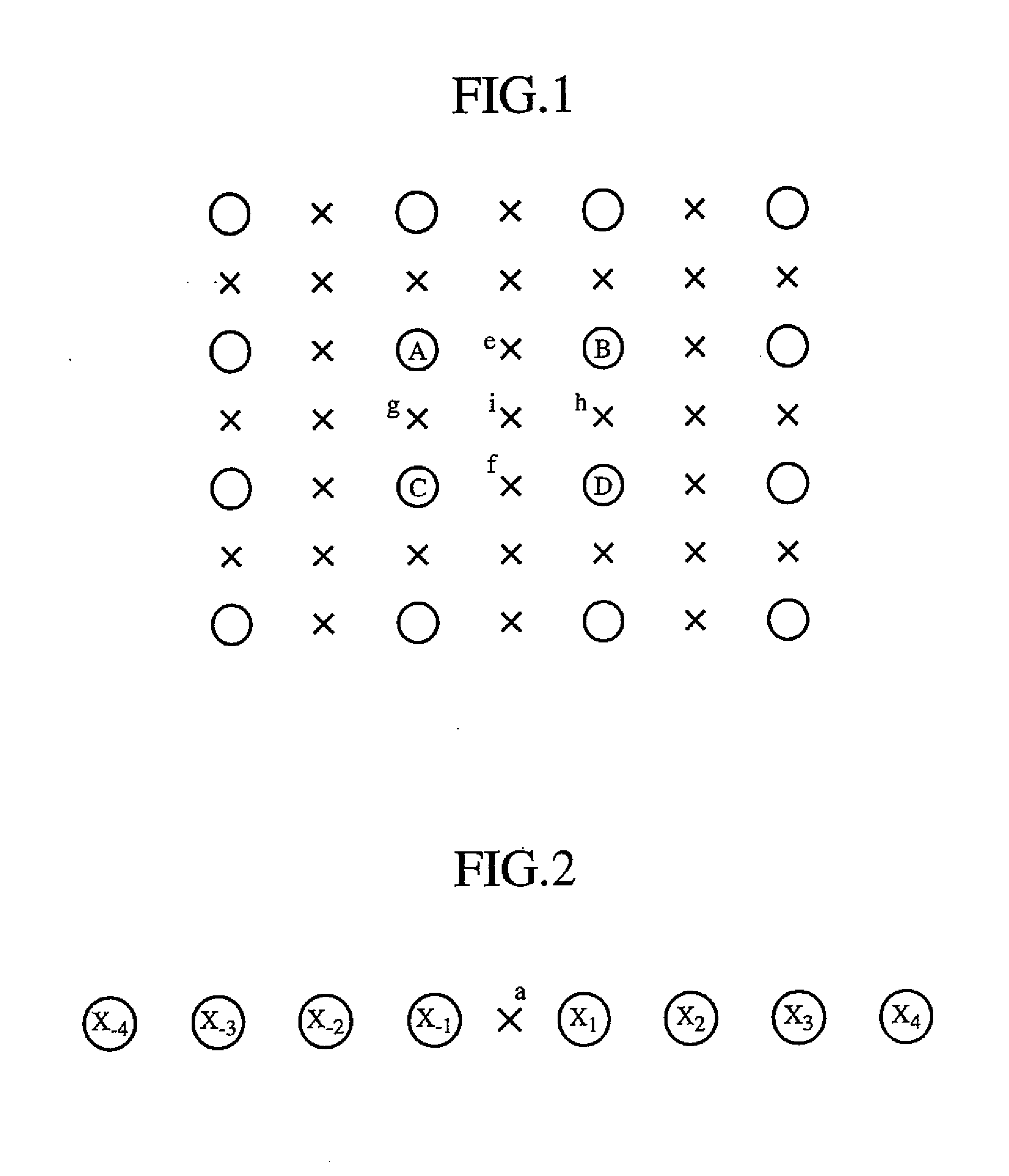

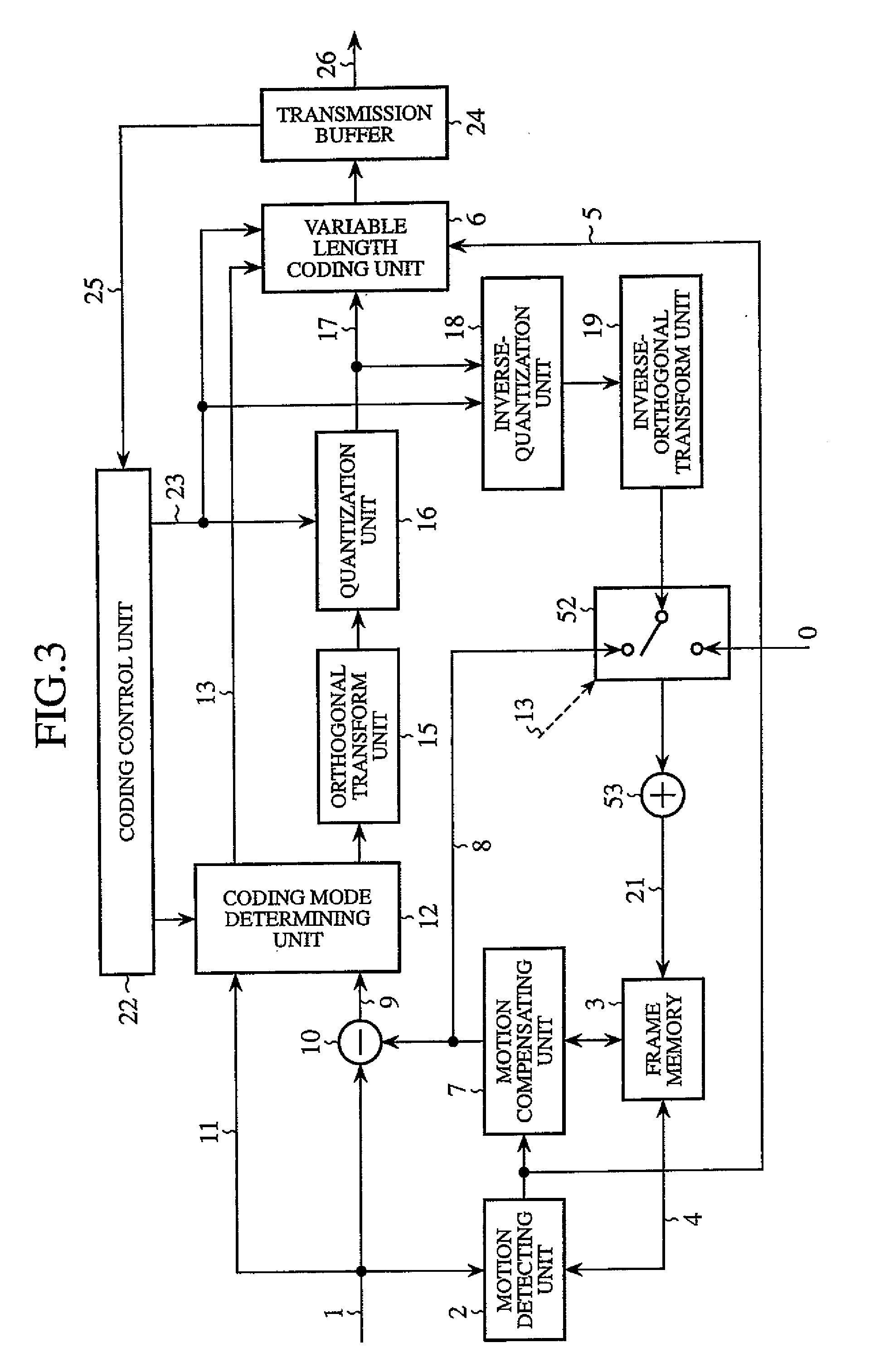

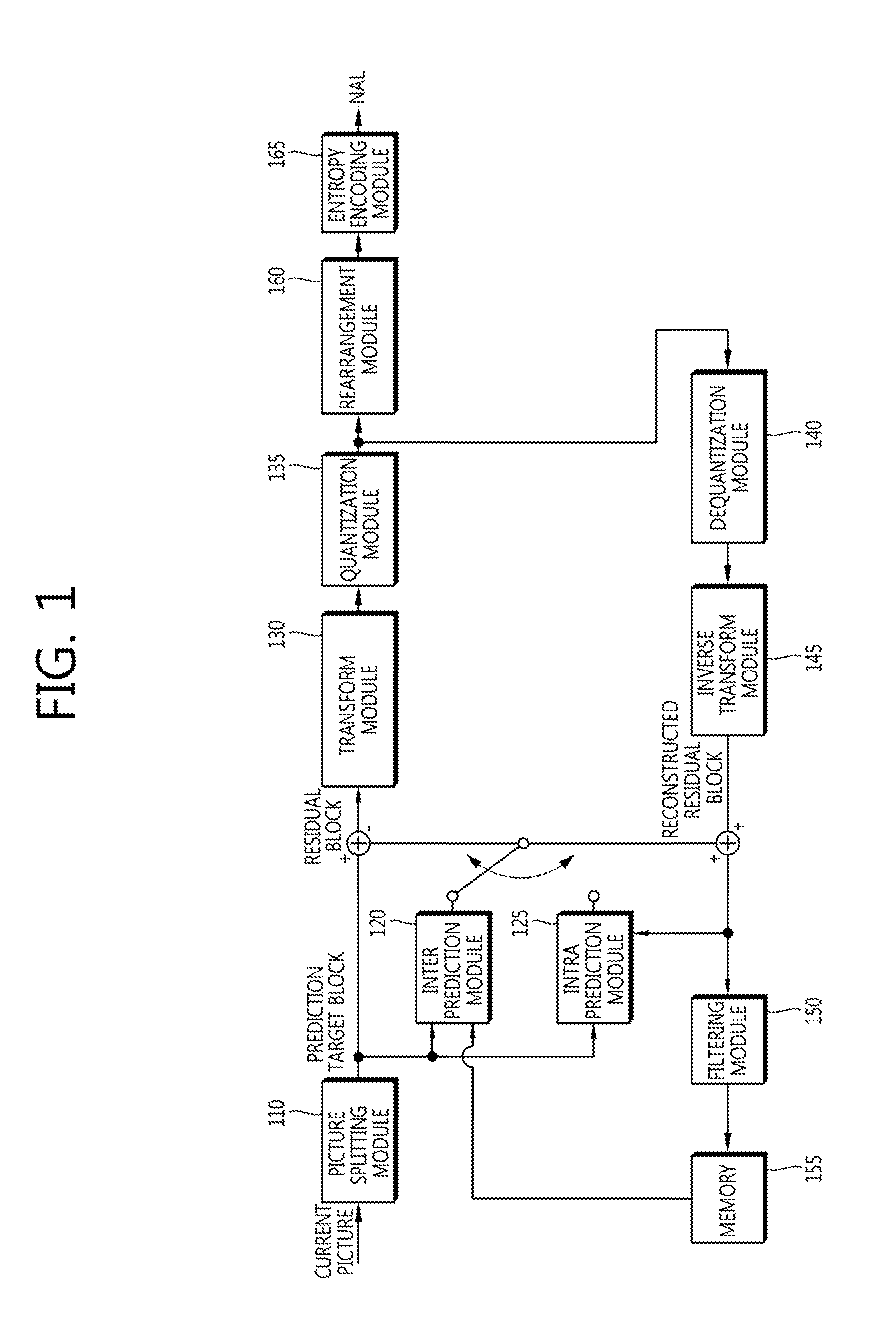

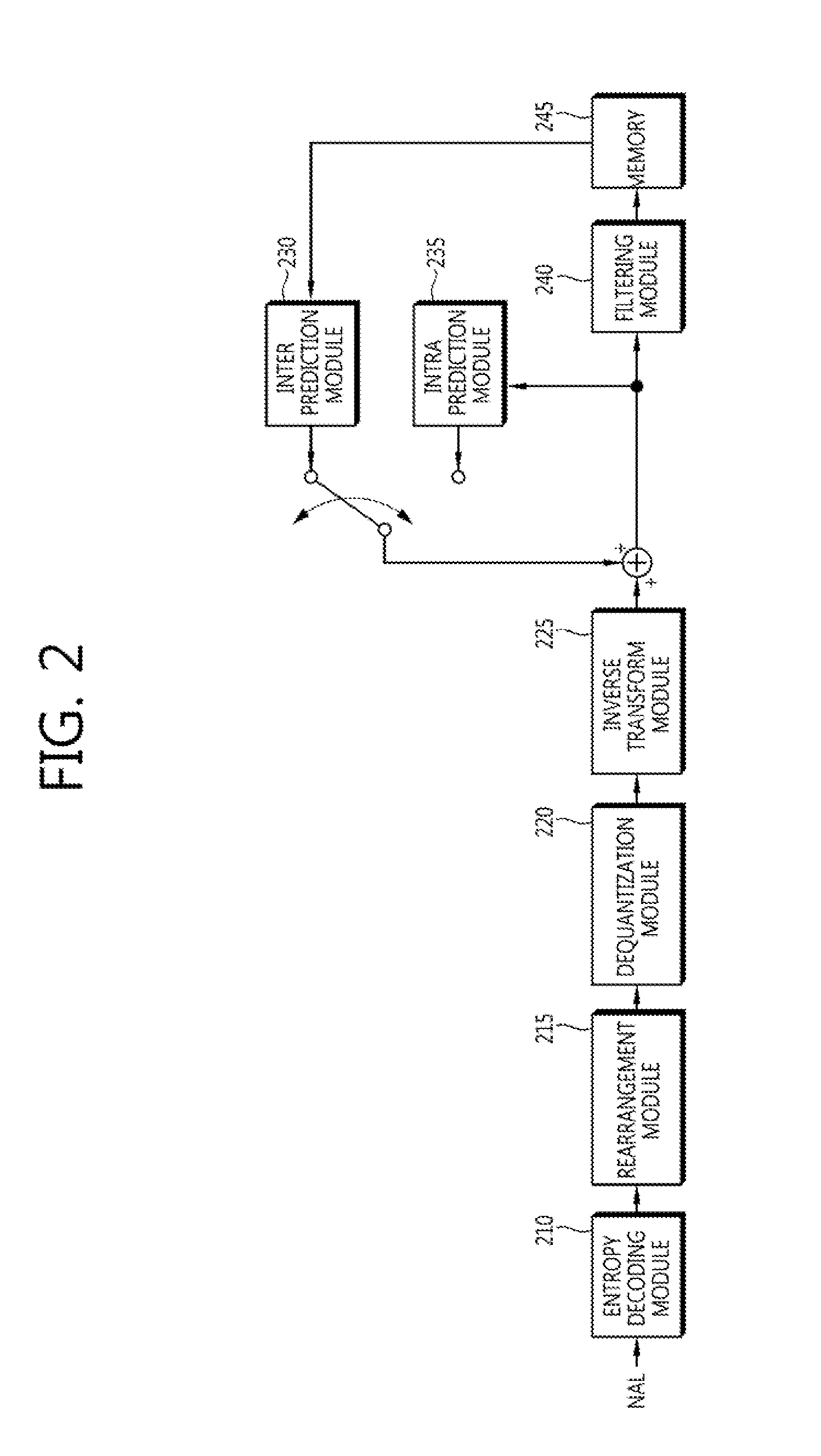

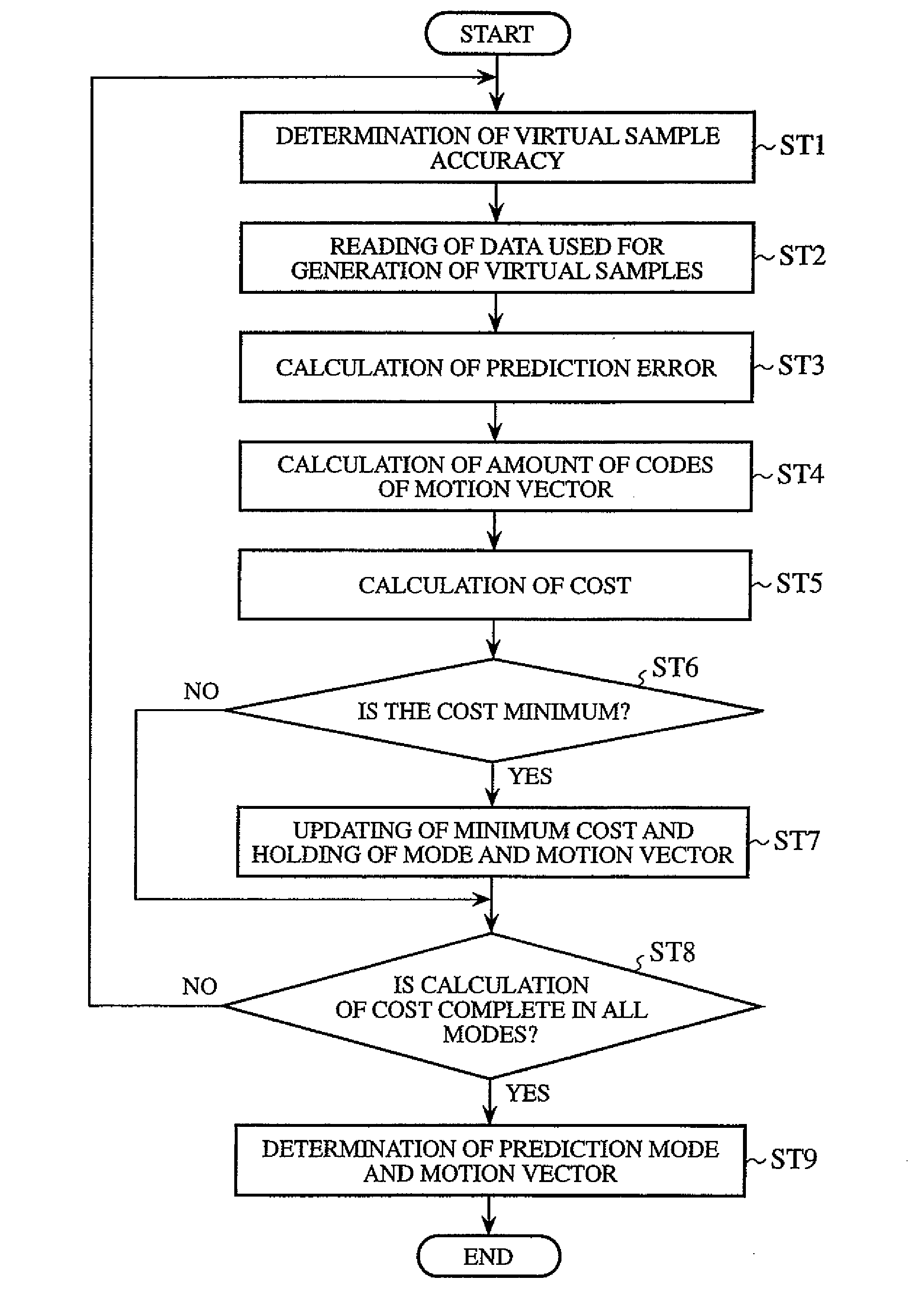

Image coding apparatus, image coding method, image decoding apparatus, image decoding method and communication apparatus

InactiveUS20080063069A1Improve efficiencyReduce memory bandwidthColor television with pulse code modulationColor television with bandwidth reductionDecoding methodsMotion vector

Each of an image coding apparatus and an image decoding apparatus uses a motion compensated prediction using virtual samples so as to detect a motion vector for each of regions of each frame of an input signal. Accuracy of virtual samples is locally determined while the accuracy of virtual samples is associated with the size of each region which is a motion vector detection unit in which a motion vector is detected. Virtual samples having half-pixel accuracy are used for motion vector detection unit regions having a smaller size 8×8 MC, such as blocks of 8×4 size, blocks of 4×8 size, and blocks of 4×4 size, and virtual samples having ¼-pixel accuracy are used for motion vector detection unit regions that are equal to or larger than 8×8 MC in size.

Owner:MITSUBISHI ELECTRIC CORP

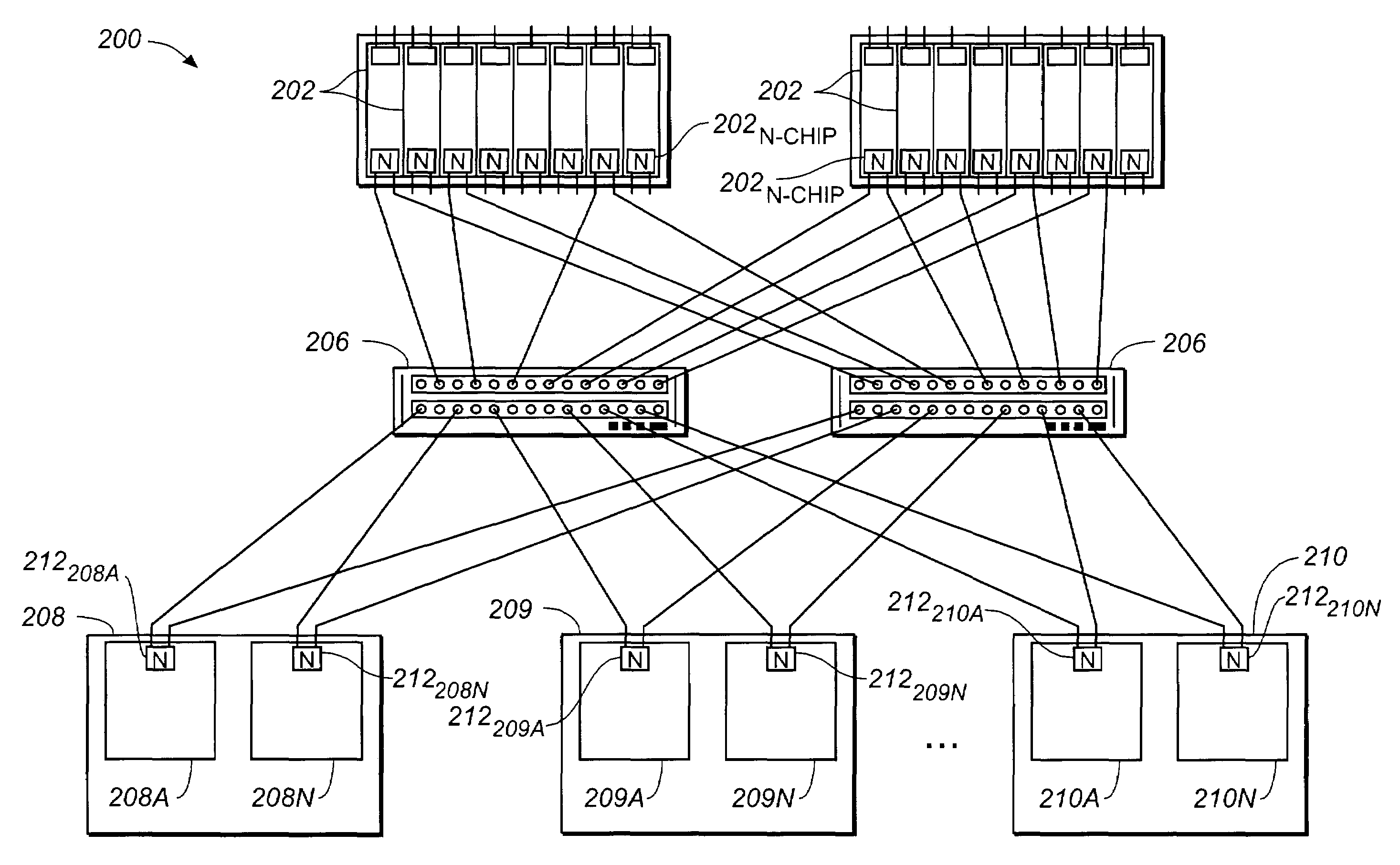

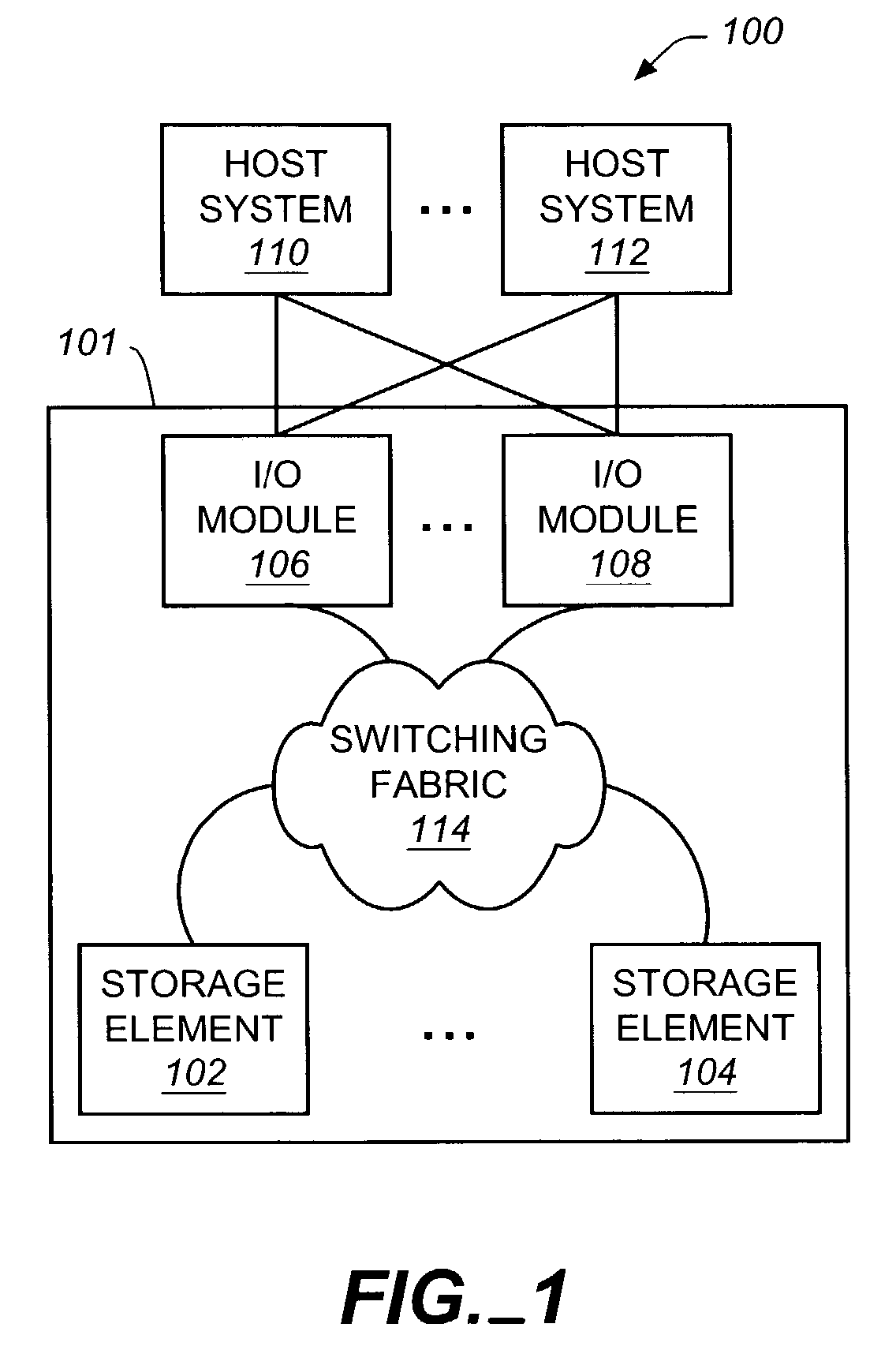

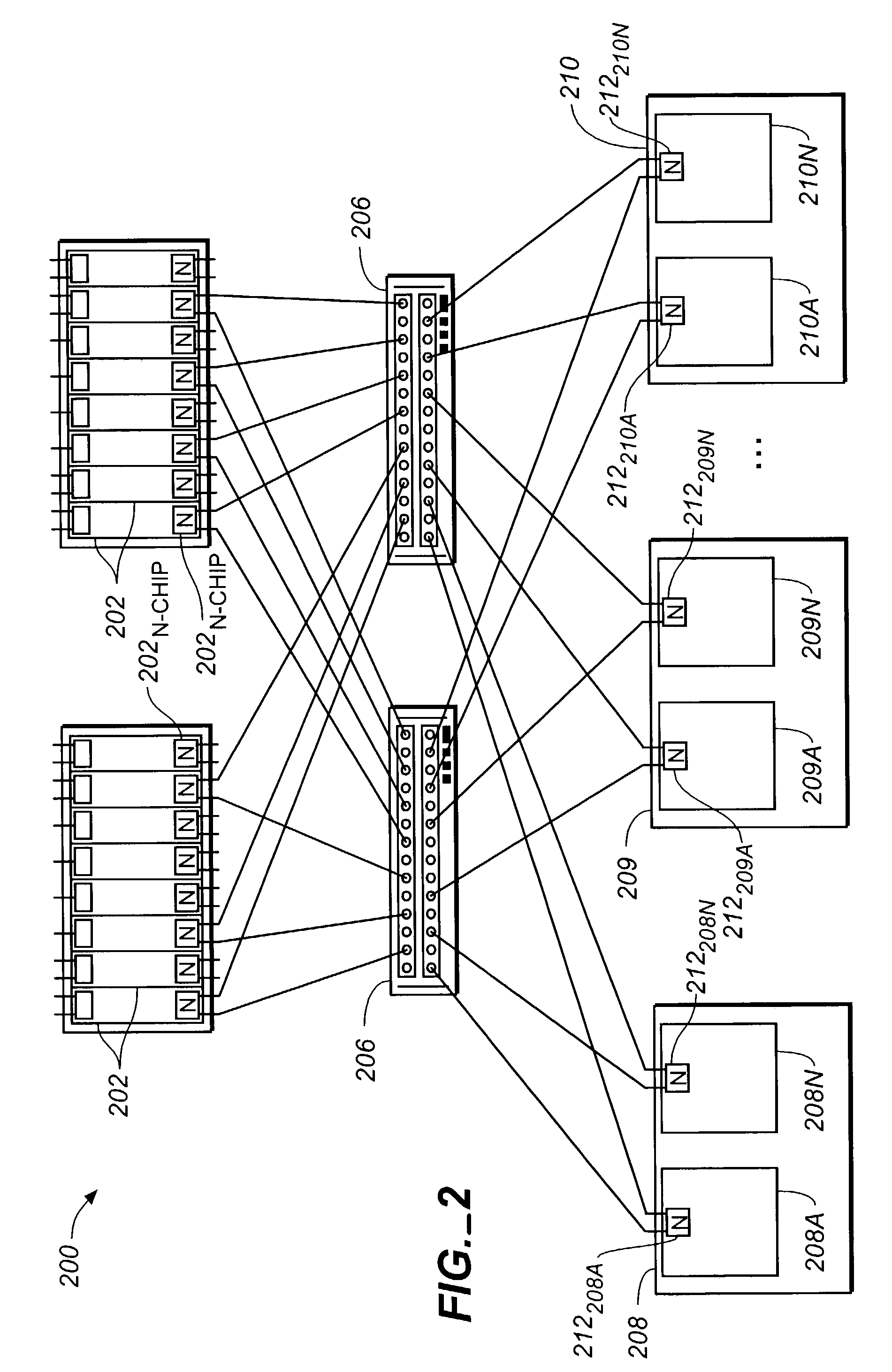

Method and apparatus for handling storage requests

ActiveUS7043622B2Reduce memory bandwidthImproved request managementInput/output to record carriersComputer security arrangementsVirtualizationComputer science

Systems and methods for handling I / O requests from a host system to a storage system. A system includes an I / O module for processing I / O requests from a host system, a virtualized storage element, and a communication medium coupling the I / O module to the virtualized storage elements. The virtualized storage element includes a mapping table for translating virtual storage locations into physical storage locations and a plurality of physical storage locations. The virtualized storage element generates base virtual addresses using the mapping table to communicate the base virtual addresses to the I / O module. The I / O module generates specific virtual addresses using the base virtual addresses and using information derived from the I / O requests. The I / O module uses the specific virtual addresses in communication with the virtualized storage element to identify the physical storage locations in the virtualized storage element.

Owner:NETWORK APPLIANCE INC

Method for deriving a temporal predictive motion vector, and apparatus using the method

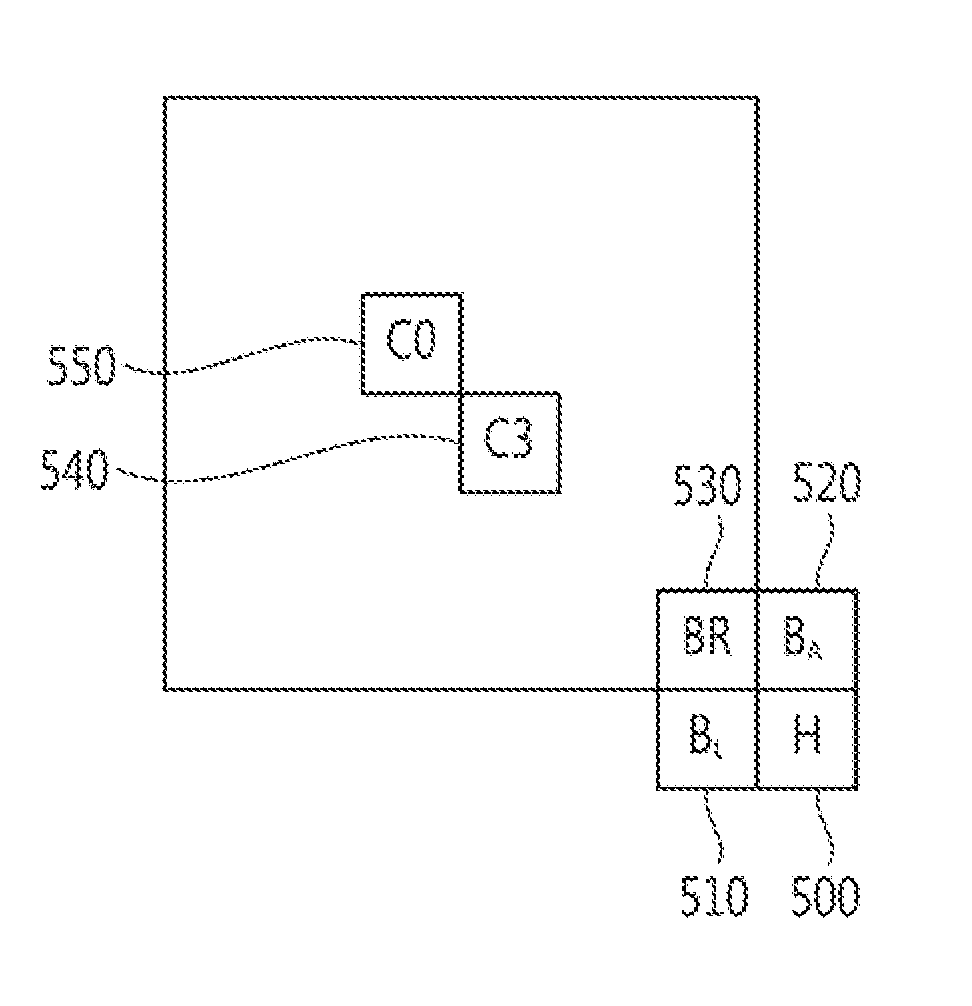

ActiveUS20130343461A1Reduce memory bandwidthMinimize complexityColor television with pulse code modulationColor television with bandwidth reductionDecoding methodsMotion vector

Disclosed are a method for deriving a temporal predictive motion vector, and an apparatus using the method. An image decoding method may comprise the steps of: determining whether or not a block to be predicted is brought into contact with a boundary of a largest coding unit (LCU); and determining whether or not a first call block is available according to whether or not the block to be predicted is brought into contact with the boundary of the LCU. Accordingly, unnecessary memory bandwidth may be reduced, and implementation complexity may also be reduced.

Owner:KT CORP

Image coding apparatus, image coding method, image decoding apparatus, image decoding method and communication apparatus

InactiveUS20080063068A1Improve efficiencyReduce memory bandwidthColor television with pulse code modulationColor television with bandwidth reductionDecoding methodsMotion vector

Owner:MITSUBISHI ELECTRIC CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com