Patents

Literature

100 results about "Texture rendering" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

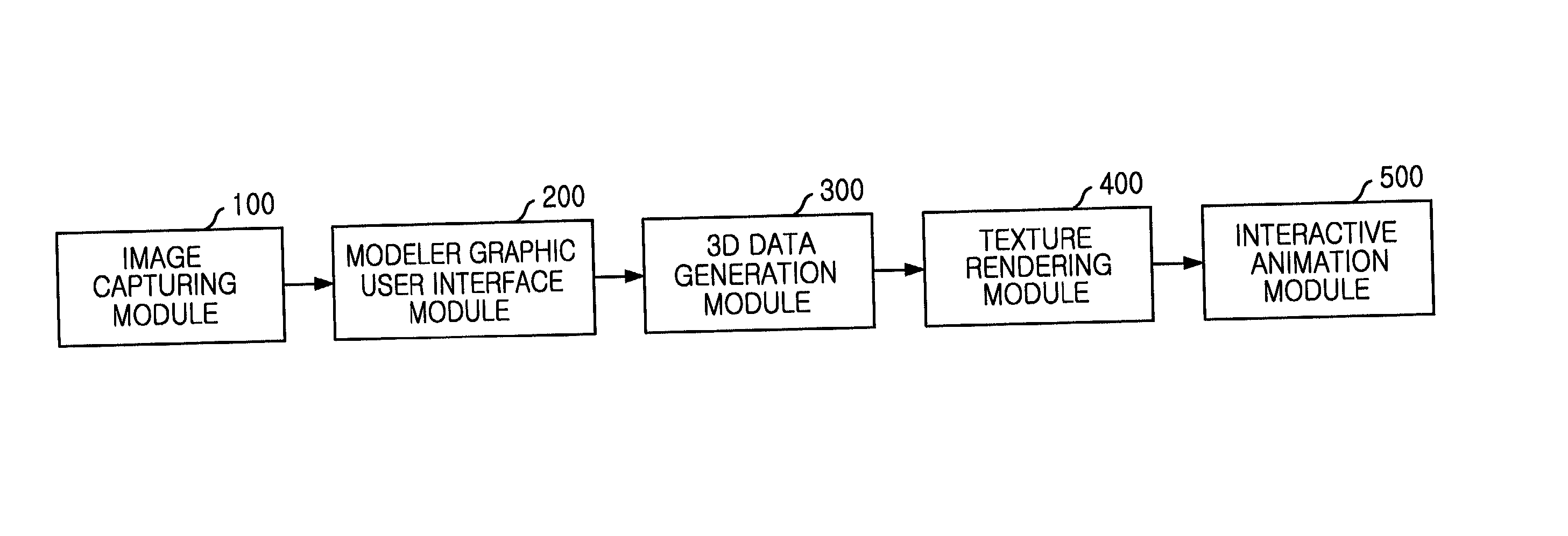

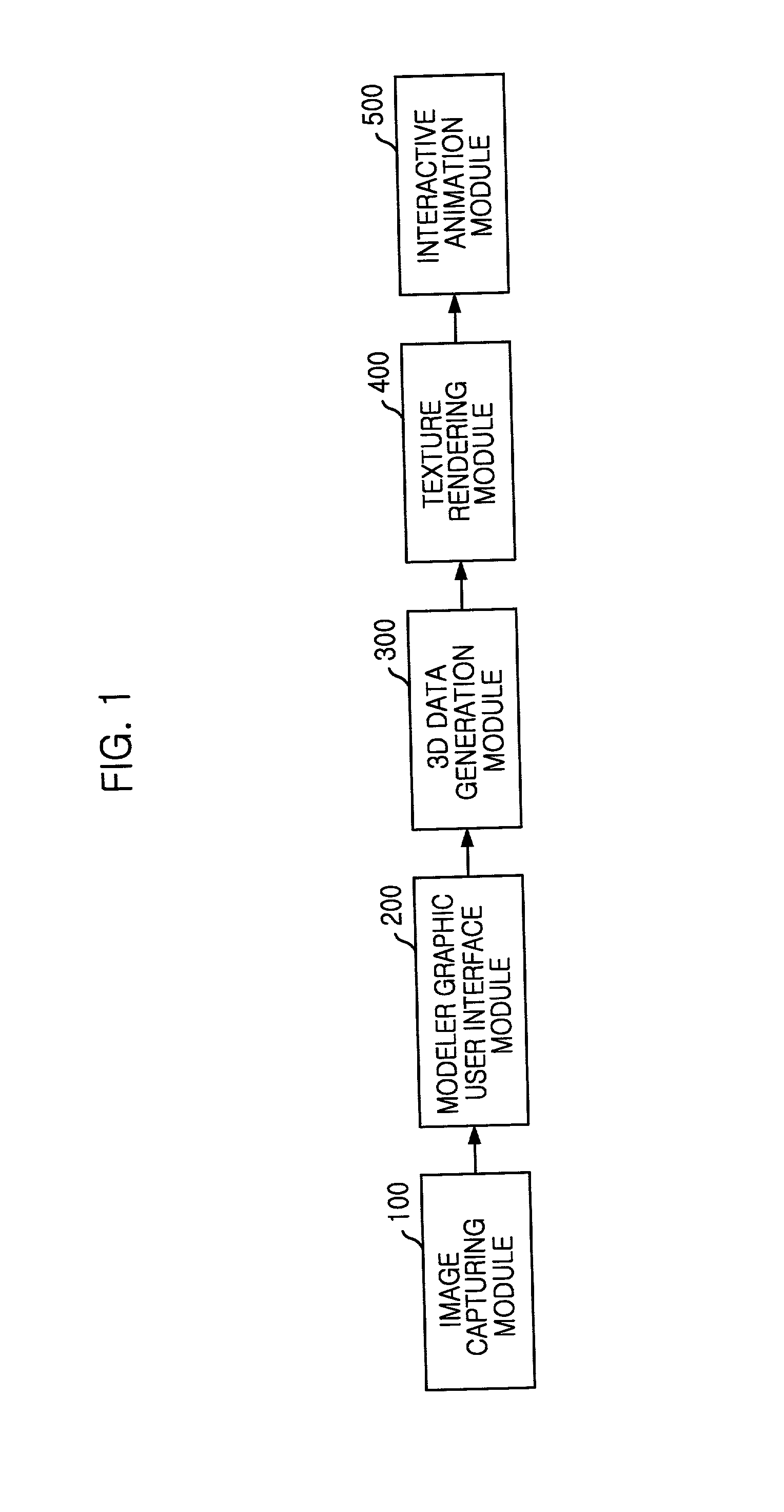

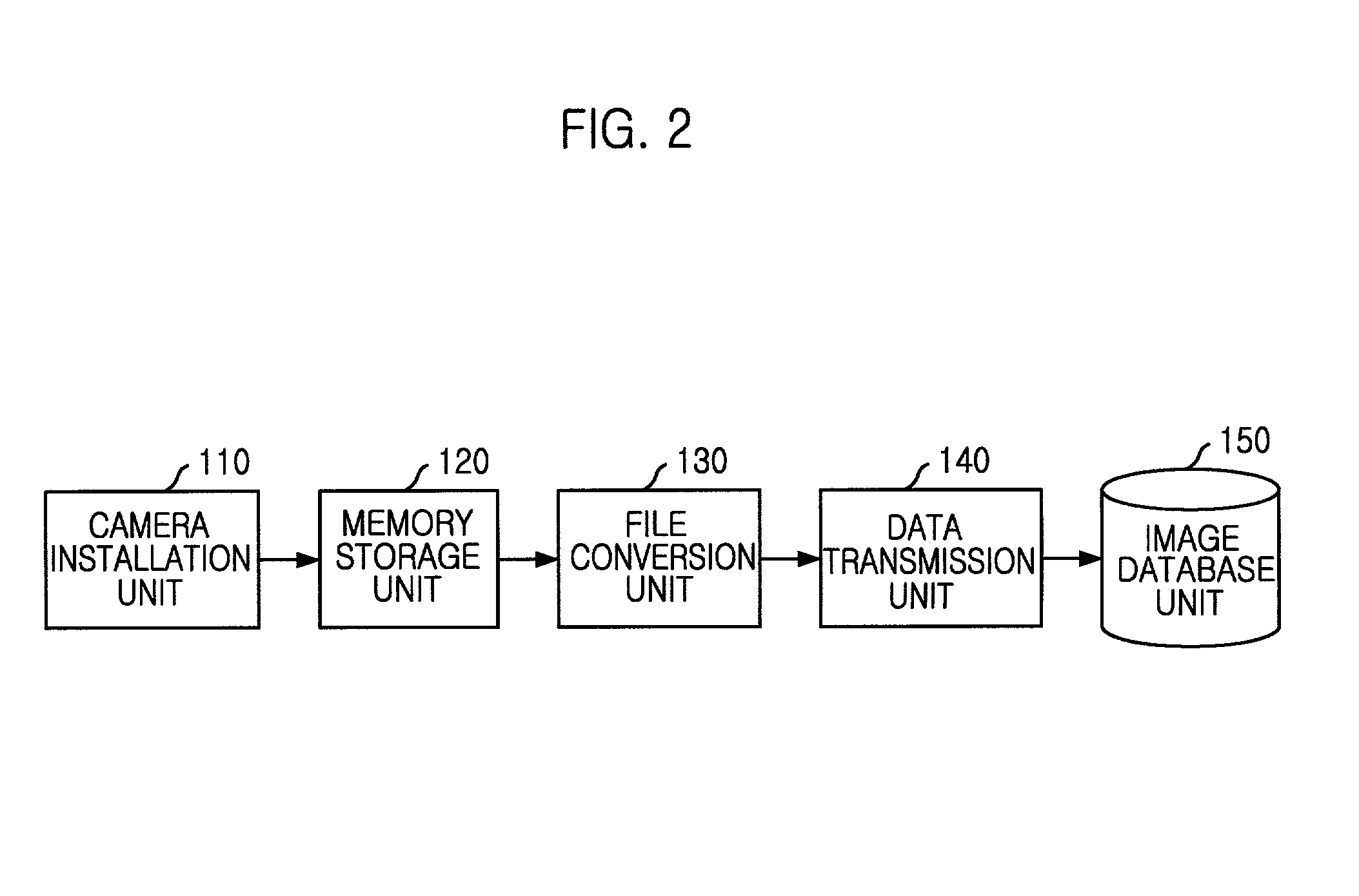

Apparatus and method of interactive model generation using multi-images

An apparatus for interactive model generation using multi-images includes an image capturing means for capturing an arbitrary object as a 2D image using a camera, a modeler graphic user interface means for providing a 3D primitive model granting interactive relation of data between 2D and 3D, a 3D model generation means for, matching a predetermined 3D primitive model and the 2D image obtained from the image capturing means, a texture rendering means for correcting errors generated in capturing the image and an interactive animation means for adding and editing animations of various types at the 3D model for the 2D images.

Owner:ELECTRONICS & TELECOMM RES INST

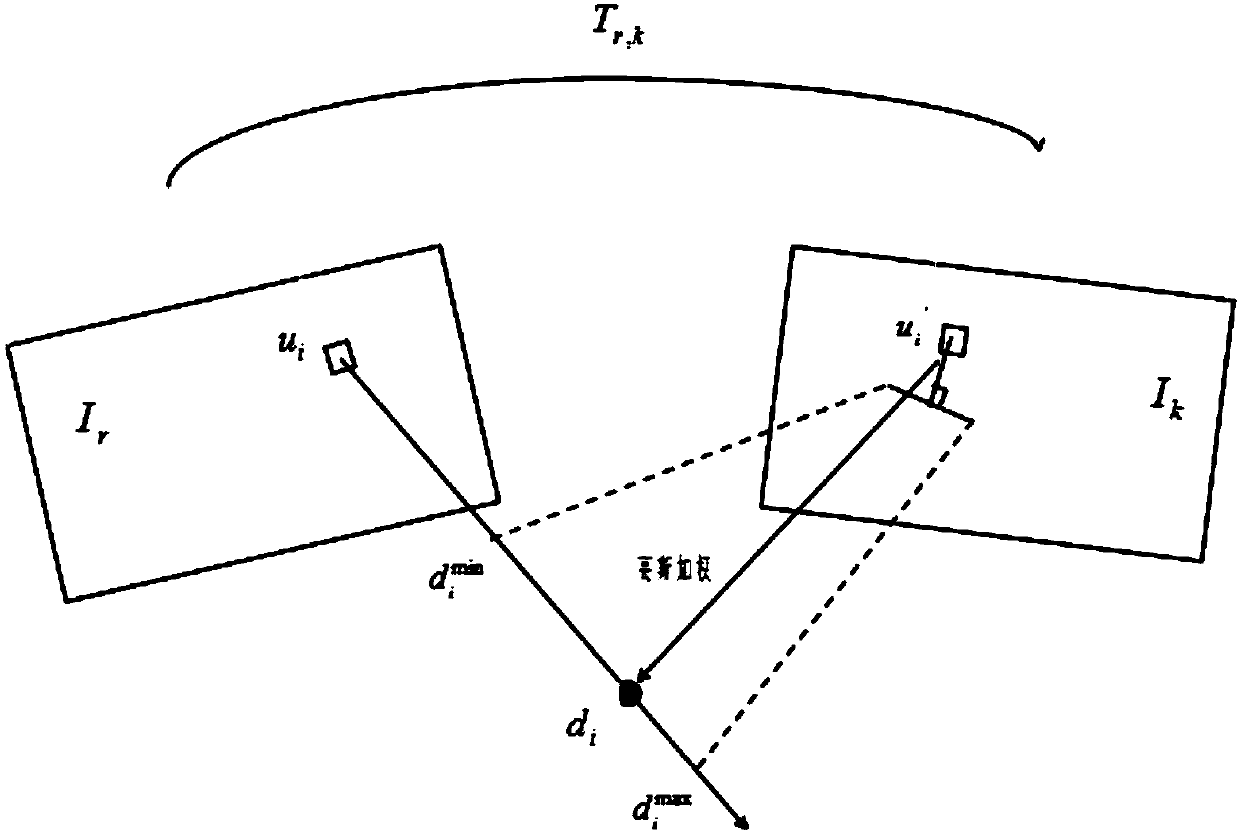

Real-time three-dimensional scene reconstruction method for UAV based on EG-SLAM

ActiveCN108648270ARequirements for Reducing Repetition RatesImprove realismImage enhancementImage analysisPoint cloudTexture rendering

The present invention provides a real-time three-dimensional scene reconstruction method for a UAV (unmanned aerial vehicle) based on the EG-SLAM. The method is characterized in that: visual information is acquired by using an unmanned aerial camera to reconstruct a large-scale three-dimensional scene with texture details. Compared with multiple existing methods, by using the method provided by the present invention, images are collected to directly run on the CPU, and positioning and reconstructing a three-dimensional map can be quickly implemented in real time; rather than using the conventional PNP method to solve the pose of the UAV, the EG-SLAM method of the present invention is used to solve the pose of the UAV, namely, the feature point matching relationship between two frames is used to directly solve the pose, so that the requirement for the repetition rate of the collected images is reduced; and in addition, the large amount of obtained environmental information can make theUAV to have a more sophisticated and meticulous perception of the environment structure, texture rendering is performed on the large-scale three-dimensional point cloud map generated in real time, reconstruction of a large-scale three-dimensional map is realized, and a more intuitive and realistic three-dimensional scene is obtained.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Method for preparing multi-channel 3D nano texture mold

A method for preparing a multi-channel 3D nano texture mold comprises the steps of: coating photoresist on a PET photoetching material, and then entering a stainless steel dust-free furnace for dryingfor standby; arranging a mask pattern plate on the raw rubber surface of the PET photoetching material, vacuuming for photoetching, taking out the mask pattern plate after the photoetching is completed, putting the prepared developer into a cleaning box, putting the photoetching-finished PET photoetching material into the cleaning box for cleaning, displaying the pattern on the PET photoetching material, dehydrating and spinning to obtain a first texture layer; then, performing second texture layer photoetching process which is similar to the first layer, realizing second texture processing superposition to obtain the multi-channel 3D nano-texture mold. The method has the advantages of simple manufacturing process, low cost, reusability, multi-texture superposition, better texture rendering effect and improved working efficiency.

Owner:唐鸿微迅新材料科技有限公司

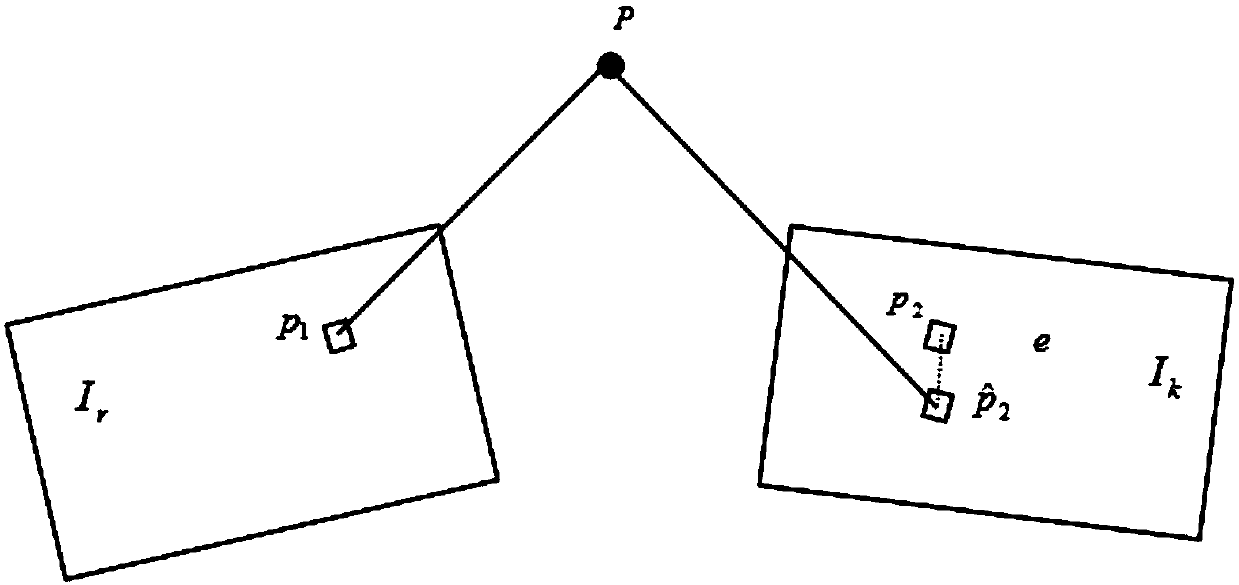

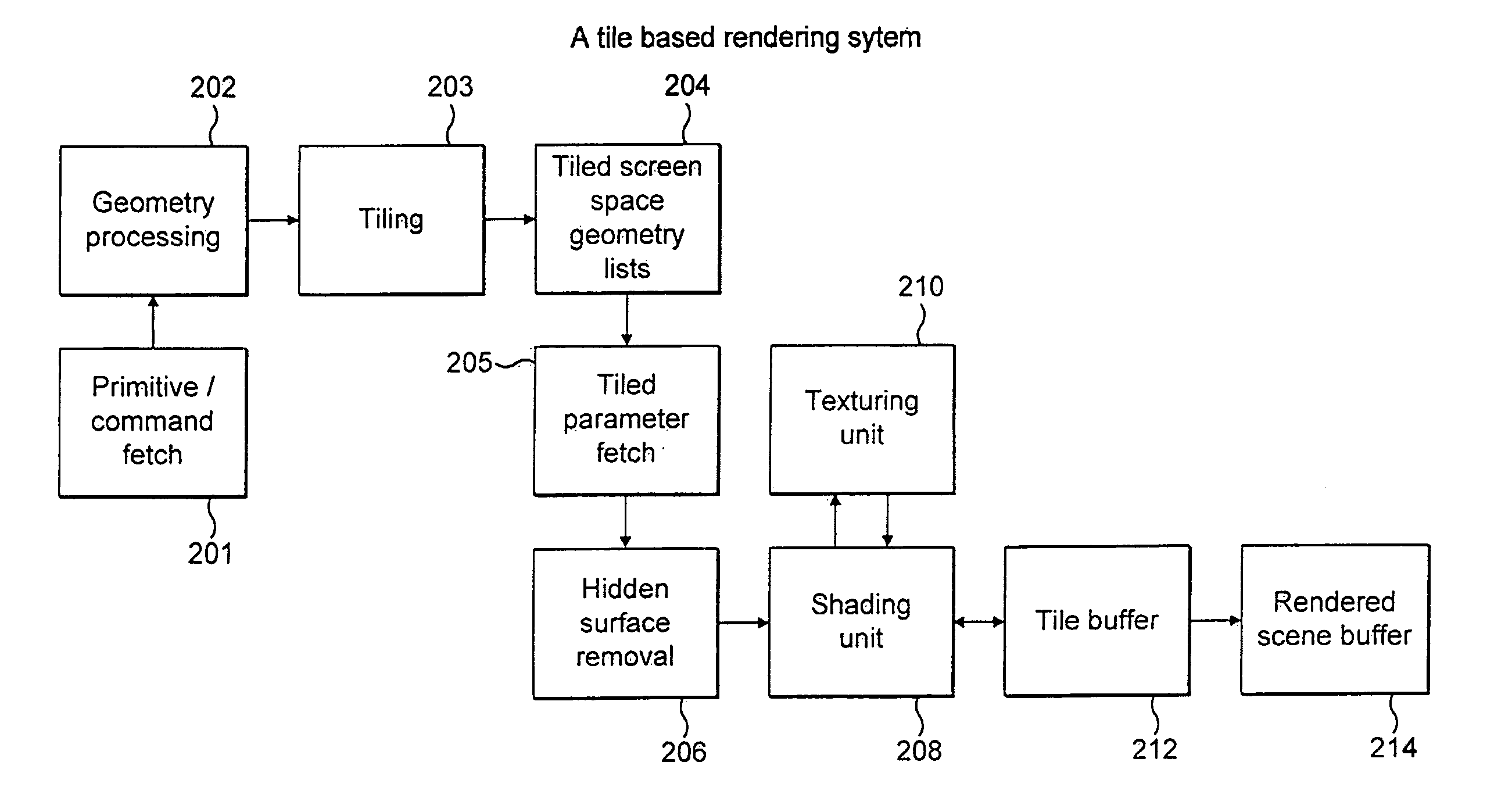

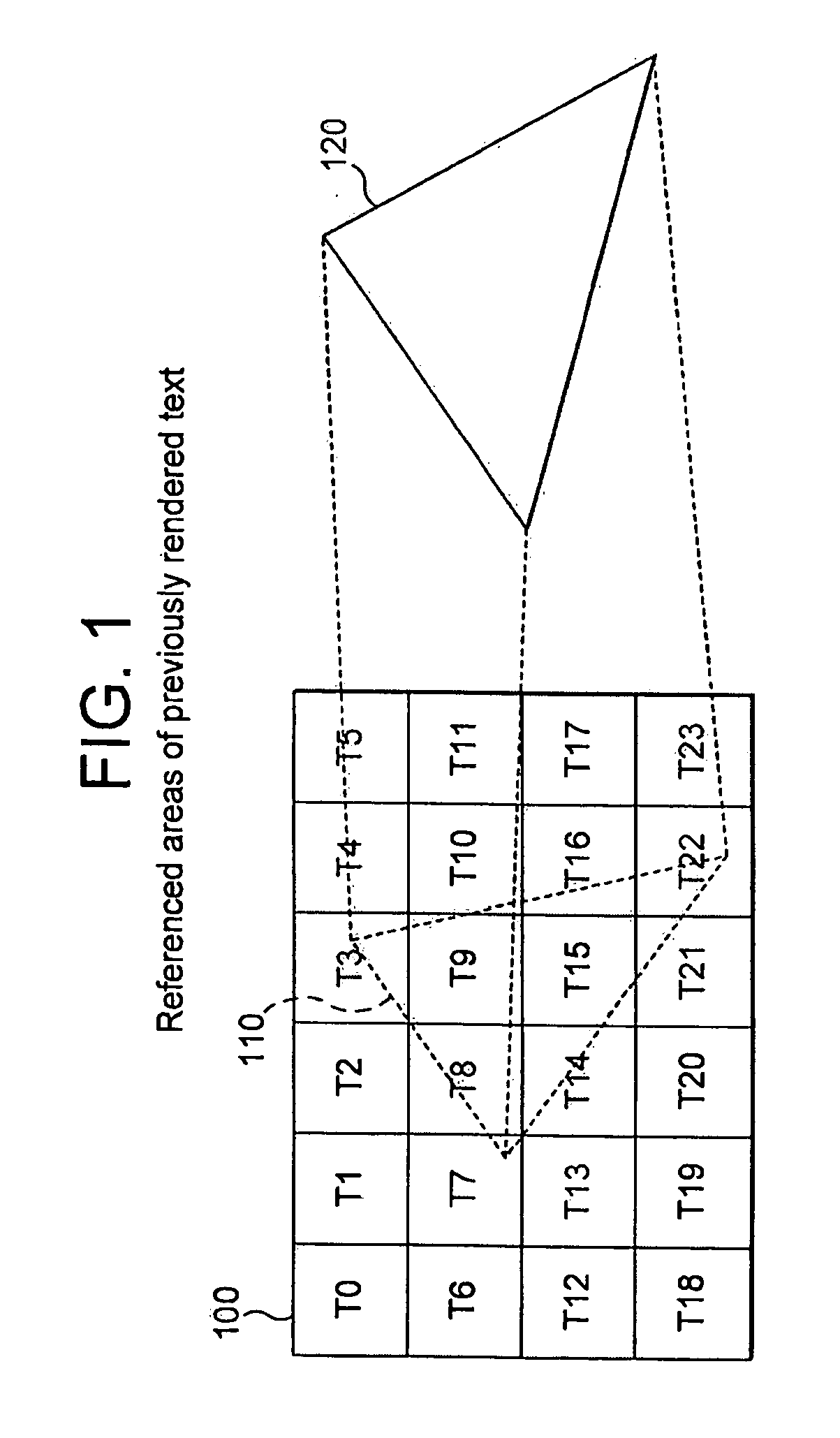

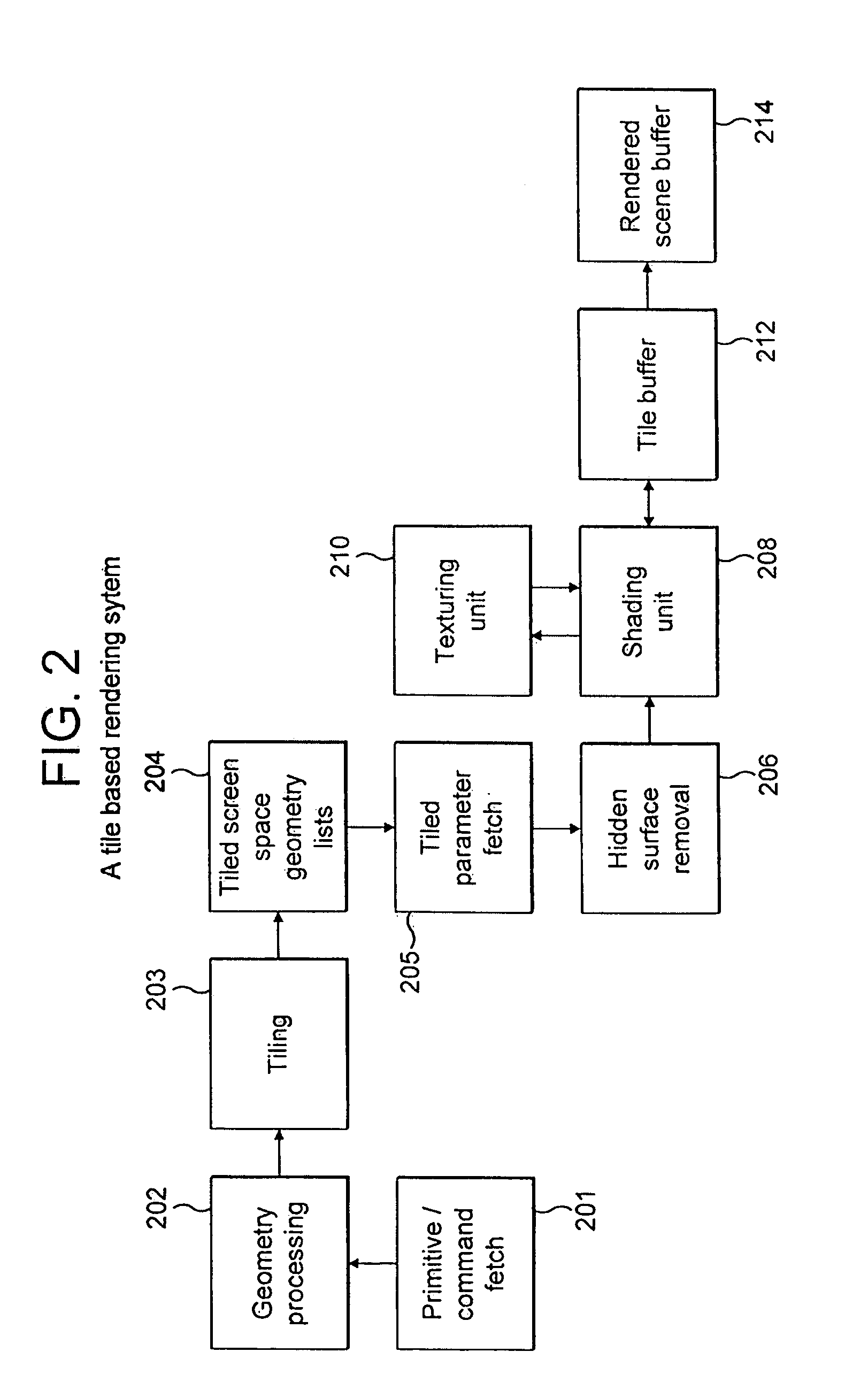

Demand based texture rendering in a tile based rendering system

InactiveUS20110254852A1Reduce memory bandwidthCathode-ray tube indicators3D-image renderingGraphicsTexture rendering

A method and apparatus are provided for shading and texturing computer graphic images in a tile based rendering system using dynamically rendered textures. Scene space geometry is derived for a dynamically rendered texture and passed to a tiling unit which derives scene space geometry for a scene which references the textures. Scene space geometry for a scene that references the dynamically rendered texture is also derived and passed to the tiling unit. The tiling unit uses object data derived from the scene space geometry to detect reference to areas of dynamically rendered textures, as yet un-rendered. These are then dynamically rendered.

Owner:IMAGINATION TECH LTD

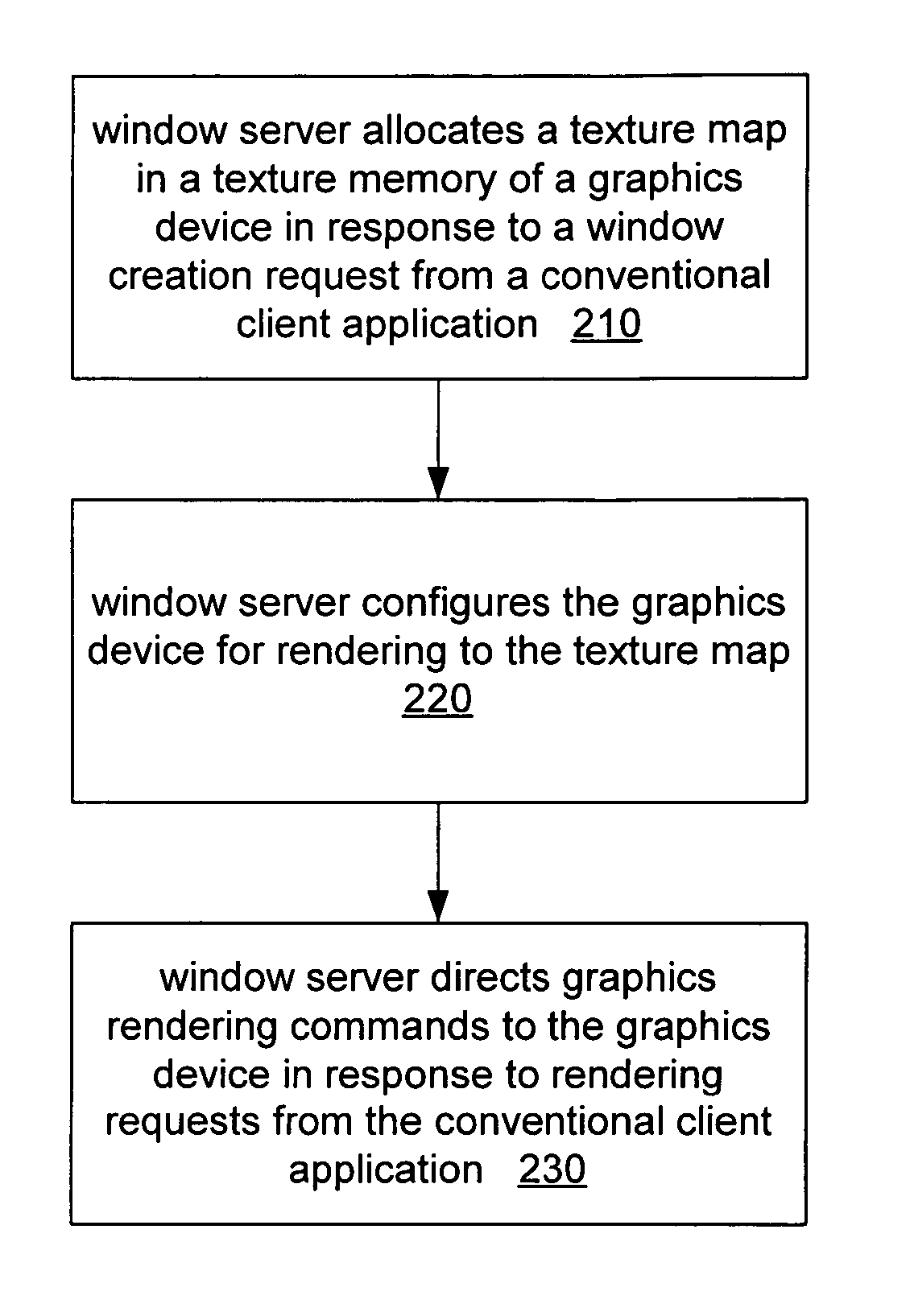

Window system 2D graphics redirection using direct texture rendering

ActiveUS20050179691A1Increase ratingsSmall memory footprintImage memory managementCathode-ray tube indicatorsGraphicsTexture rendering

The 3D window system utilizes hardware accelerated window system rendering to eliminate the pixel copy step of 3D window system output redirection. The 3D window system includes a window server that directs the window system device driver graphics routines to render into the texture memory of a graphics device.

Owner:ORACLE INT CORP

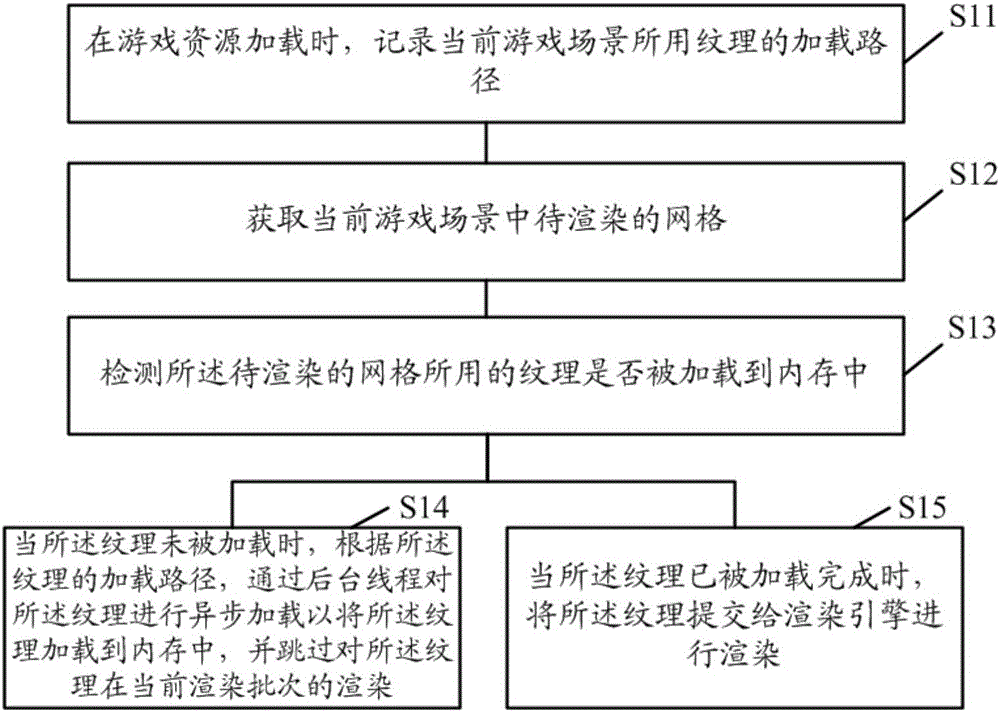

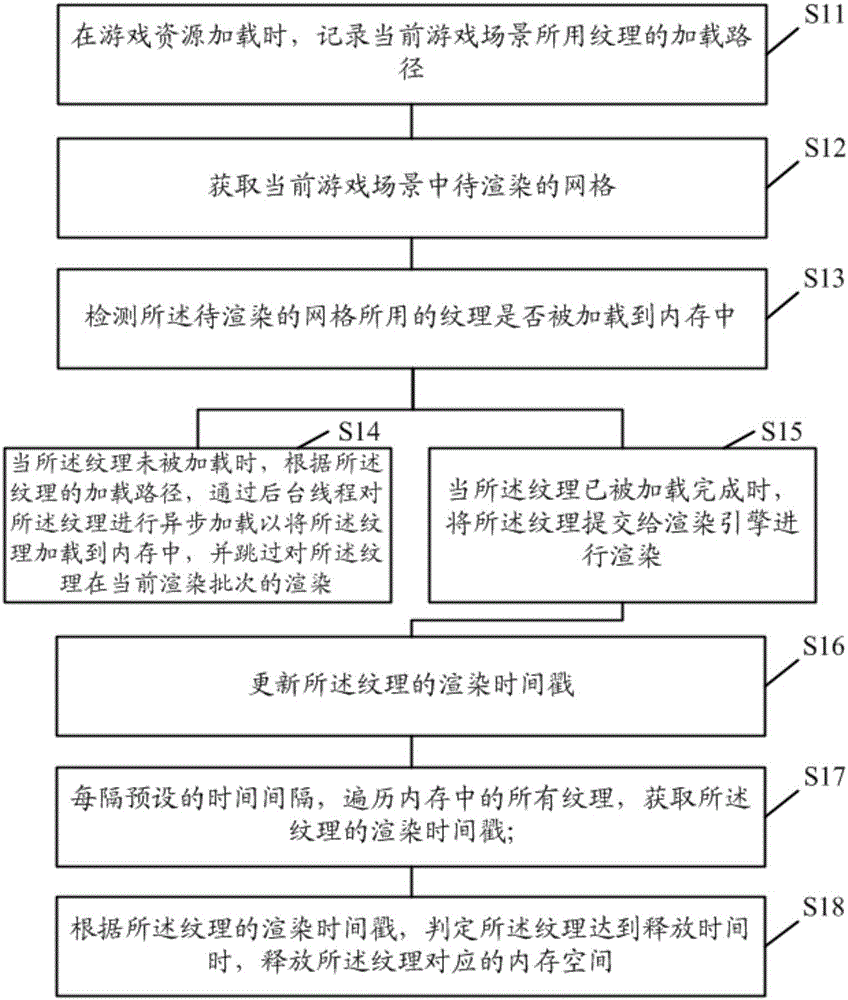

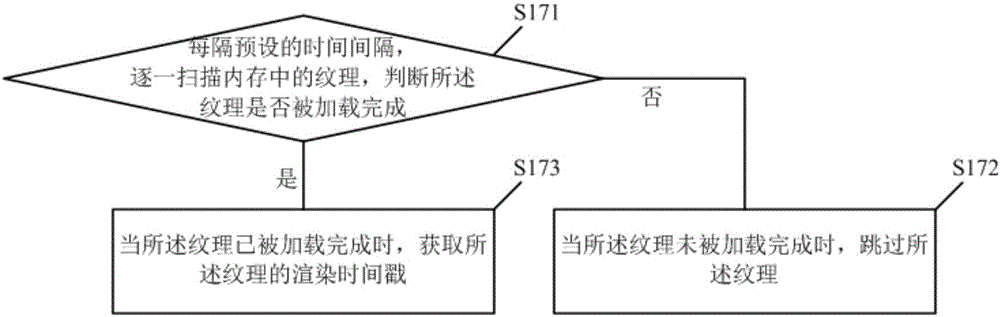

Game rendering method and device

ActiveCN105094920AReduce occupancySolve the problem of running out of memoryProgram loading/initiatingSpecial data processing applicationsTexture renderingComputer graphics (images)

The invention discloses a game rendering method and device. The game rendering method comprises the steps of recording a loading path of a texture used in a current game scene when game resources are loaded; obtaining a grid to be rendered in the current game scene; detecting whether a texture used by the grid to be rendered is loaded into a memory or not; when the texture is not loaded, conducting asynchronous loading on the texture through a background thread according to the loading path of the texture, so that the texture is loaded into the memory, and skipping the rendering of the texture for a current rendering batch; when the texture is loaded ready, submitting the texture to a rendering engine for conducting the rendering. According to the game rendering method and device, the texture rendering process is controlled, so that memory space occupied by the texture in the game operation process is greatly reduced.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

Texture rendering method and system for real-time three-dimensional human body reconstruction, chip, equipment and medium

InactiveCN111243071AQuality improvementMeet real-time rendering requirementsAnimation3D-image renderingPattern recognitionHuman body

The invention discloses a texture rendering method and system for real-time three-dimensional human body reconstruction, a chip, equipment and a medium. The method comprises the steps of obtaining a current human body model and a depth image of a shooting object; selecting a current human body model as a standard model, reprojecting the vertex of the standard model to the depth image, extracting color information and image coordinates corresponding to the vertex, wherien the color information is a color initial value, and the image coordinates are converted into texture coordinates; calculating a weighted sum of the subsequent color information of the vertex of the human body model and the color initial value to serve as a new color of the vertex of the standard model; calculating sub-texture maps and sub-masks of the current human body model, and combining the sub-texture maps and the sub-masks into a complete texture map and mask; and performing rendering according to the texture mapand the texture coordinates. According to the method, generation and optimization of required textures can be rapidly completed based on the GPU, a high-quality texture atlas is obtained, and color cracks caused by illumination changes are eliminated. A human body model generated in a multi-camera system can be rendered, and a good visual reality sense is achieved.

Owner:PLEX VR DIGITAL TECH CO LTD

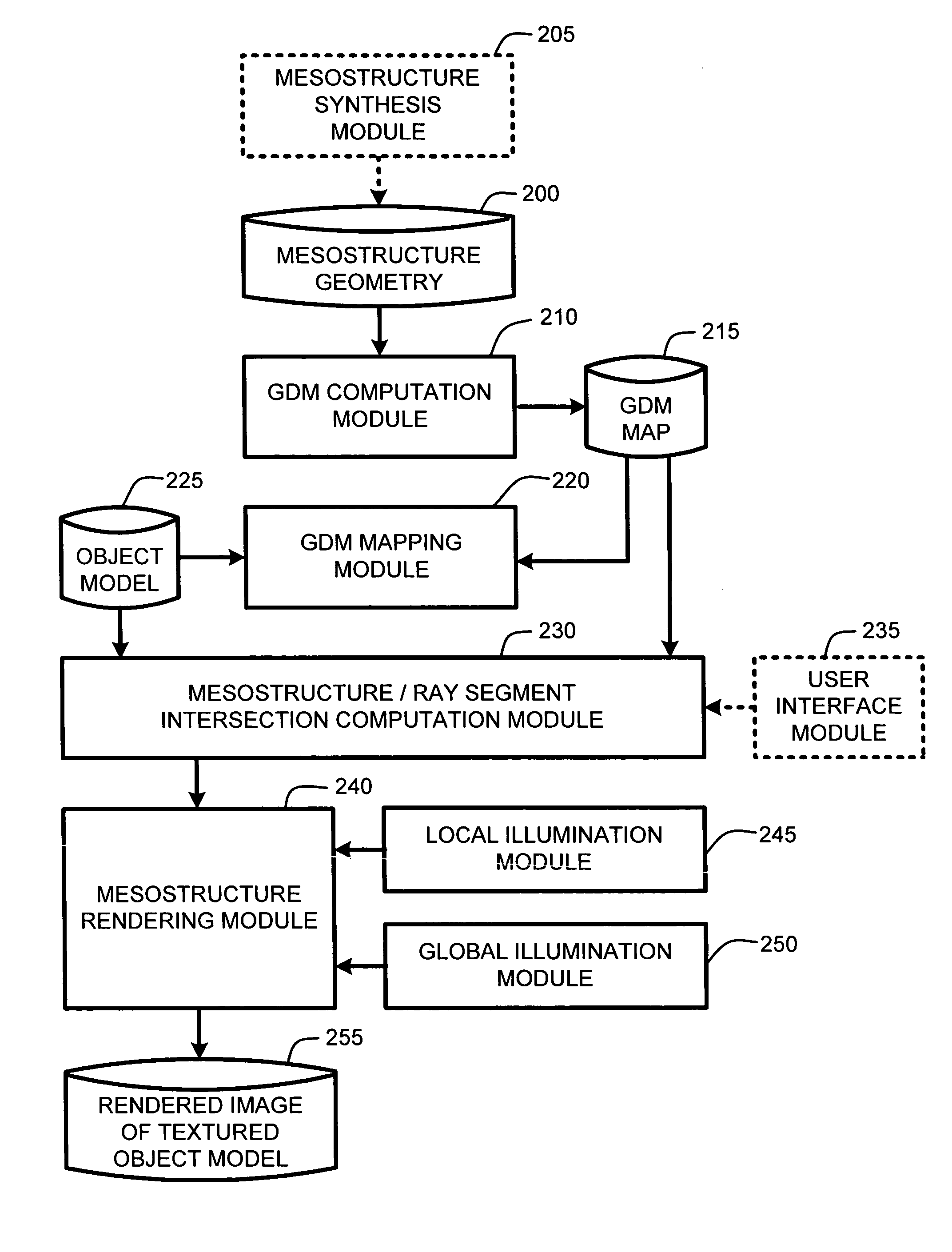

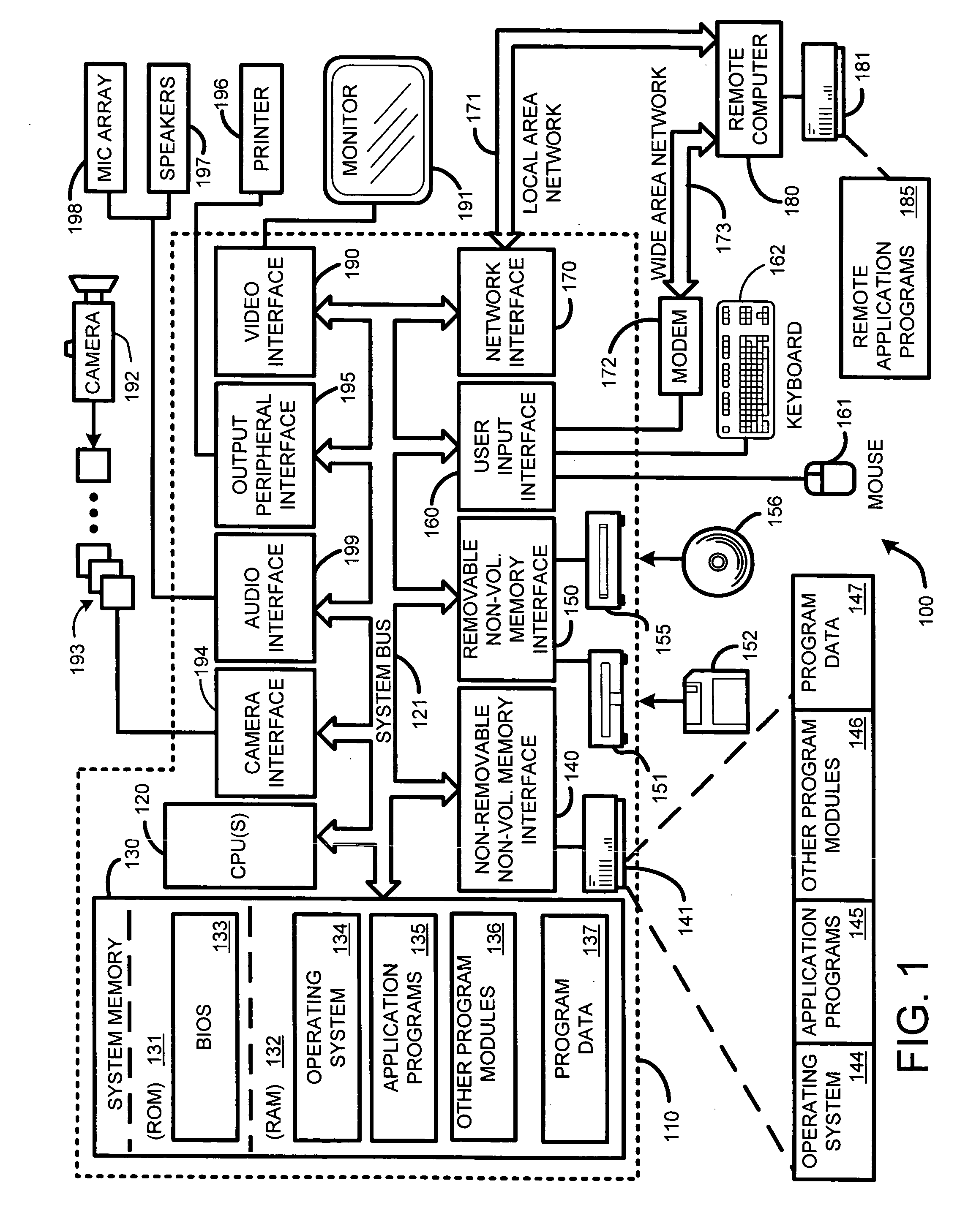

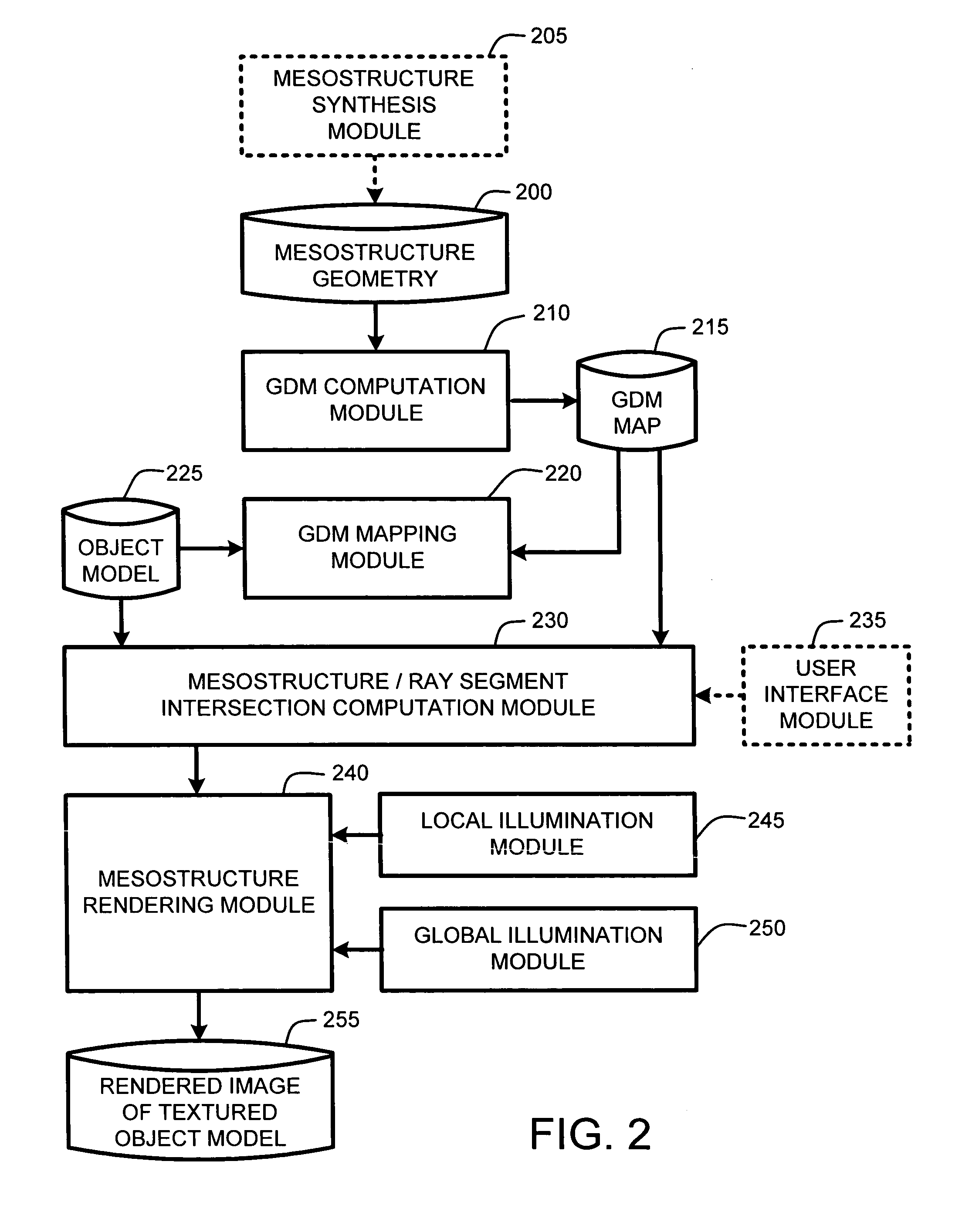

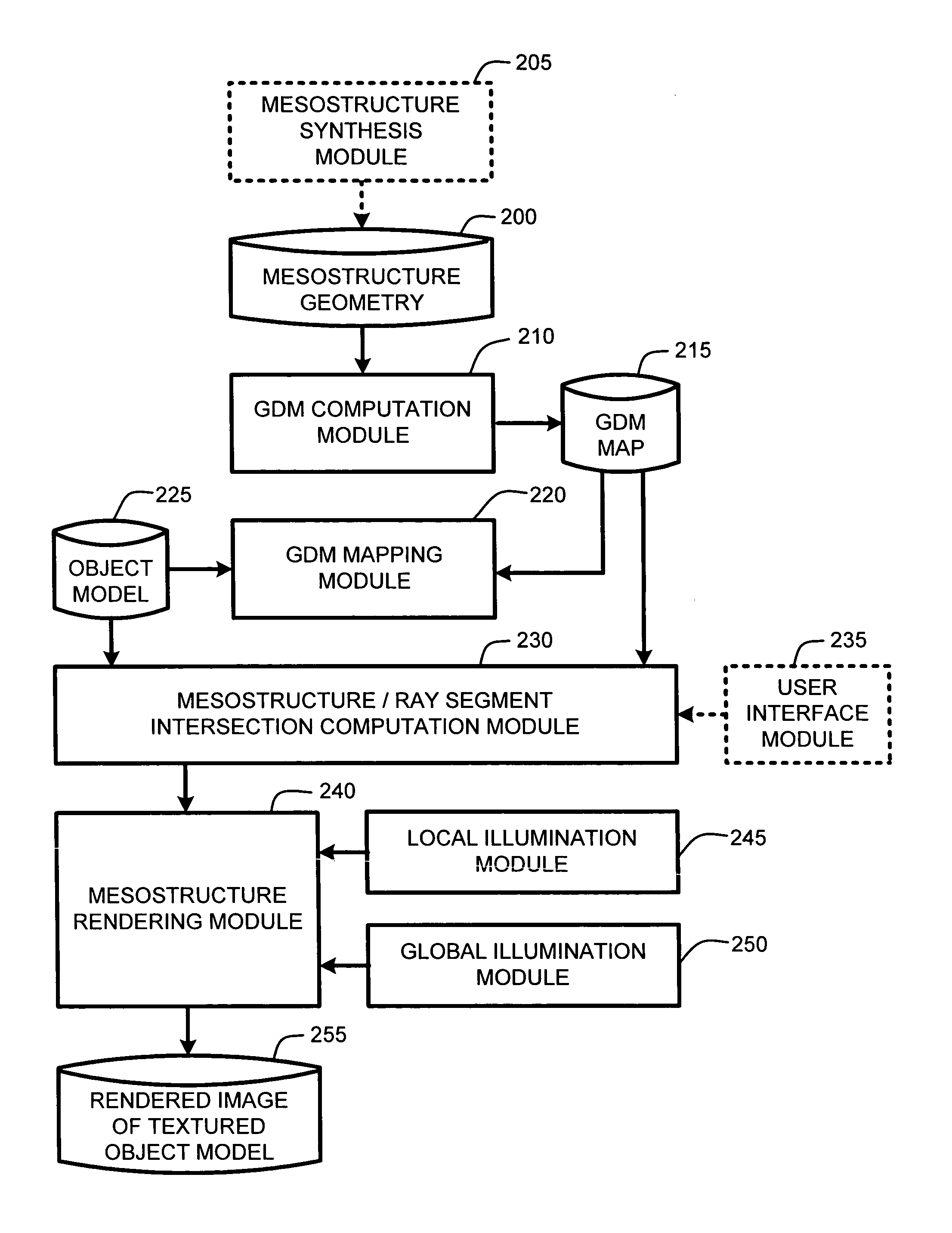

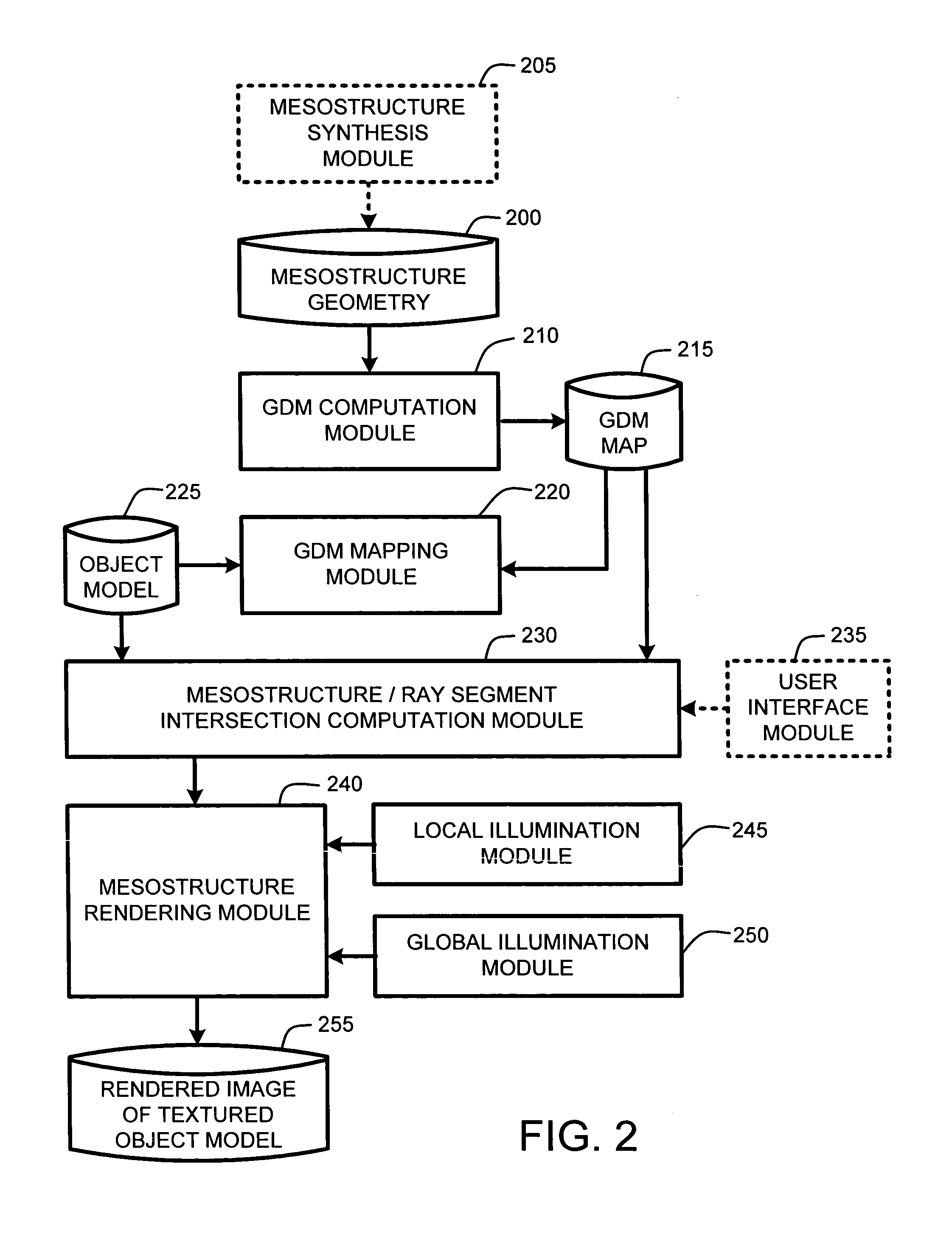

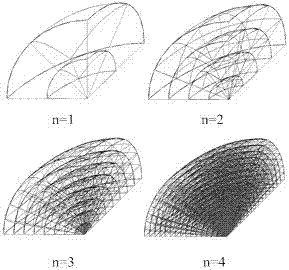

Real-time texture rendering using generalized displacement maps

ActiveUS20050280646A1Facilitate texture coordinate computationAccelerate mesostructure renderingCathode-ray tube indicators3D-image renderingGraphicsVisibility

A “mesostructure renderer” uses pre-computed multi-dimensional “generalized displacement maps” (GDM) to provide real-time rendering of general non-height-field mesostructures on both open and closed surfaces of arbitrary geometry. In general, the GDM represents the distance to solid mesostructure along any ray cast from any point within a volumetric sample. Given the pre-computed GDM, the mesostructure renderer then computes mesostructure visibility jointly in object space and texture space, thereby enabling both control of texture distortion and efficient computation of texture coordinates and shadowing. Further, in one embodiment, the mesostructure renderer uses the GDM to render mesostructures with either local or global illumination as a per-pixel process using conventional computer graphics hardware to accelerate the real-time rendering of the mesostructures. Further acceleration of mesostructure rendering is achieved in another embodiment by automatically reducing the number of triangles in the rendering pipeline according to a user-specified threshold for acceptable texture distortion.

Owner:MICROSOFT TECH LICENSING LLC

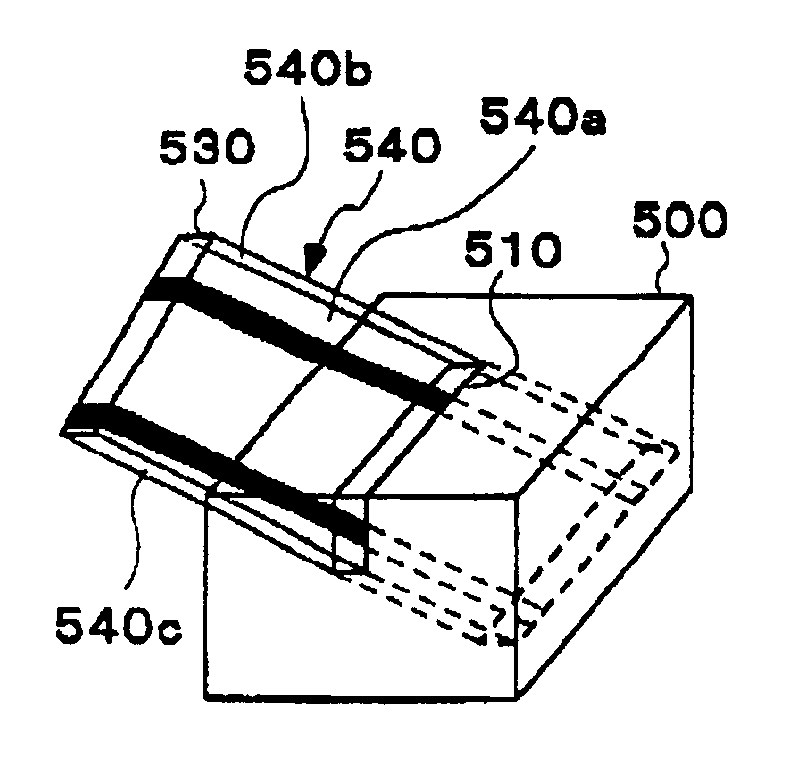

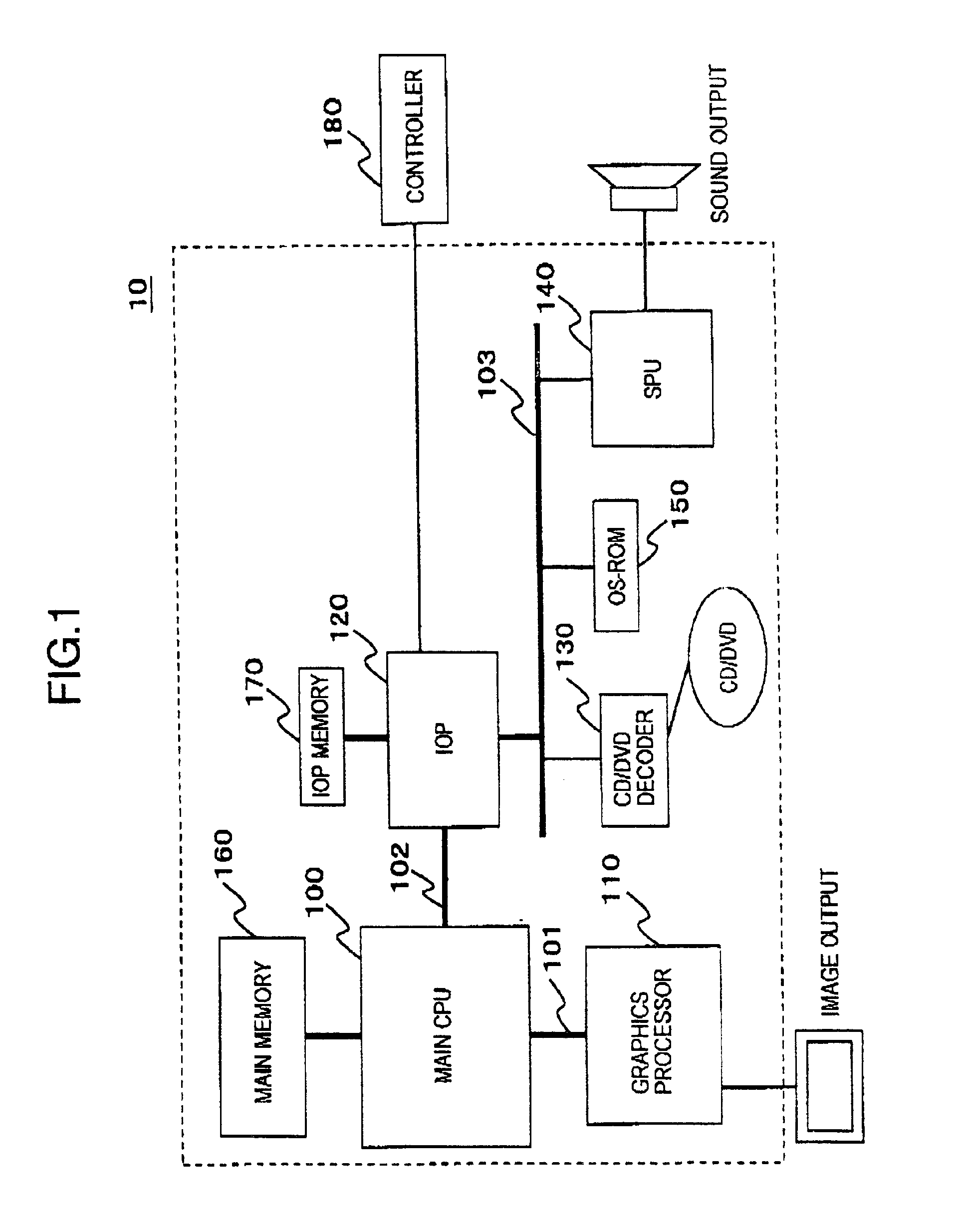

Texture rendering method, entertainment apparatus and storage medium

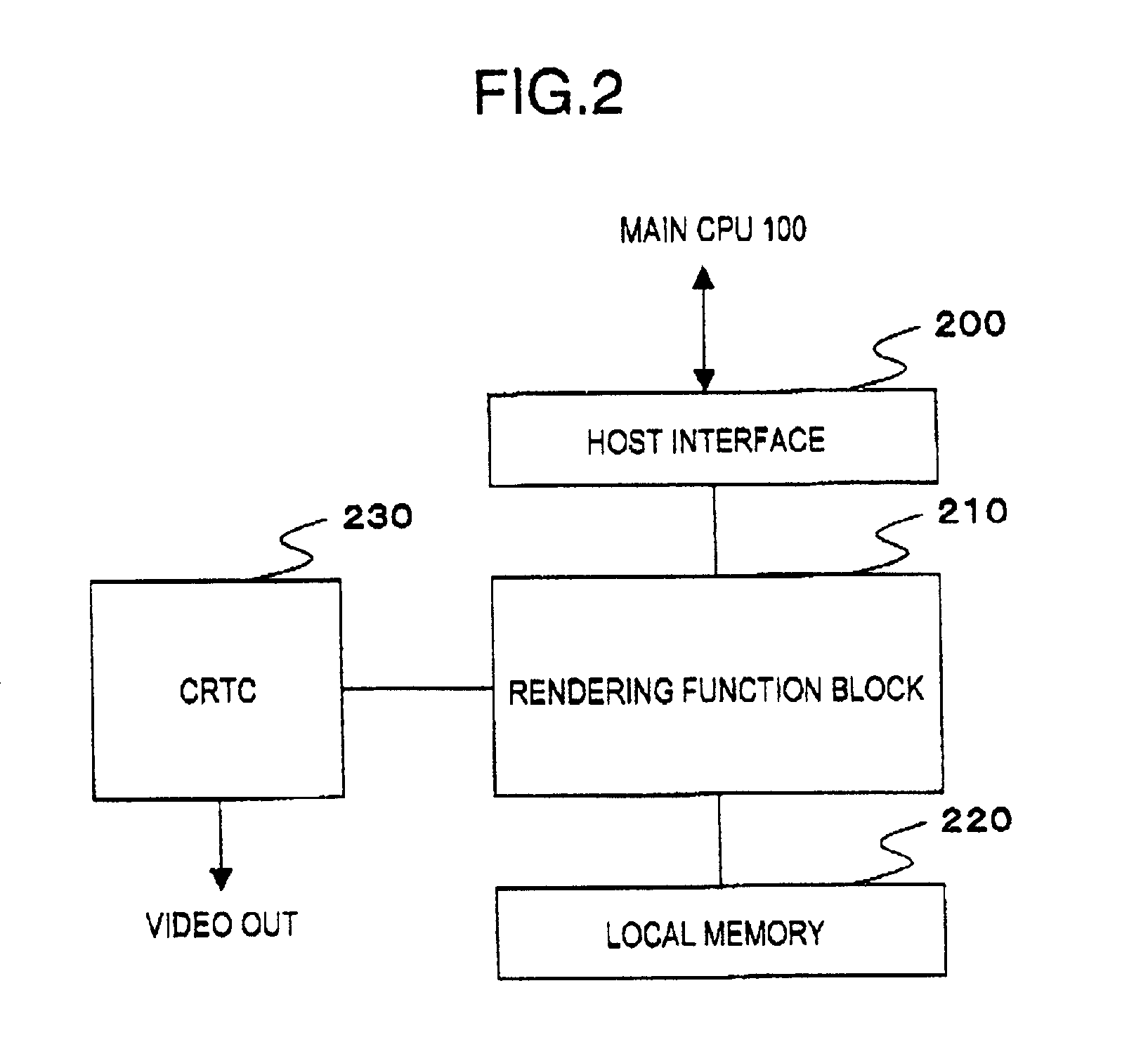

InactiveUS6856321B2Cathode-ray tube indicators3D-image renderingTexture renderingComputer graphics (images)

In order to form an image by projecting a texture 520 at an arbitrary angle to an arbitrary position on a surface of an object 500 represented as a 3D model, texture data 520 is divided into texture lines 530 having a width of one dot and a length equal to the number of dots on one side of the texture. Then, supposed is a stereoscopic object 540 based on one texture line 530 that the texture line is extended in a light travel direction while possessing color information from an arrangement relationship between the texture line 530, object model 500 and virtual light source in the three dimensional space. The intersecting part 510 between the stereoscopic object 540 and the surface of the object model 500 is defined as a region for rendering the texture line, and thereby a stereoscopic object 540 is rendered onto the defined region.

Owner:SONY COMPUTER ENTERTAINMENT INC

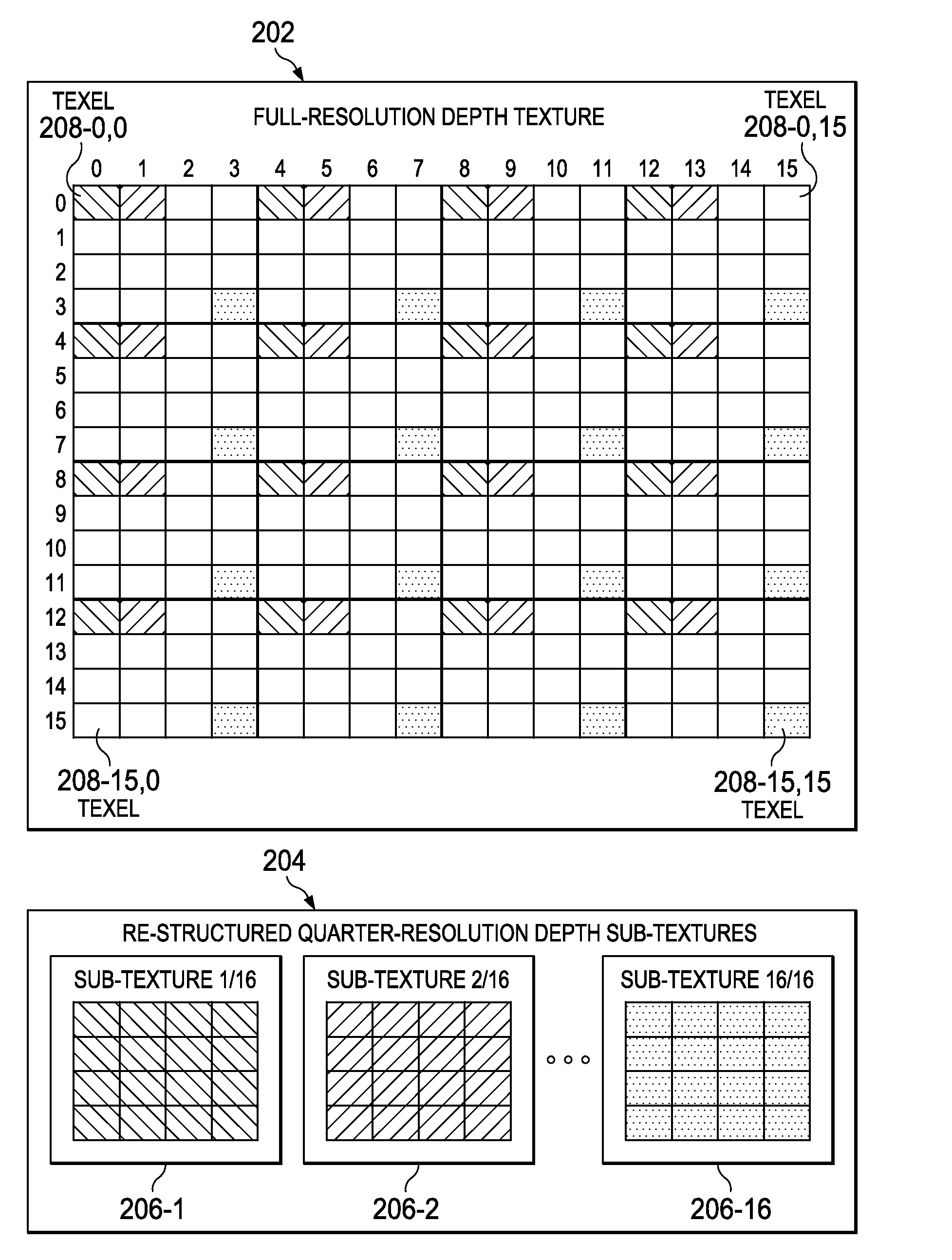

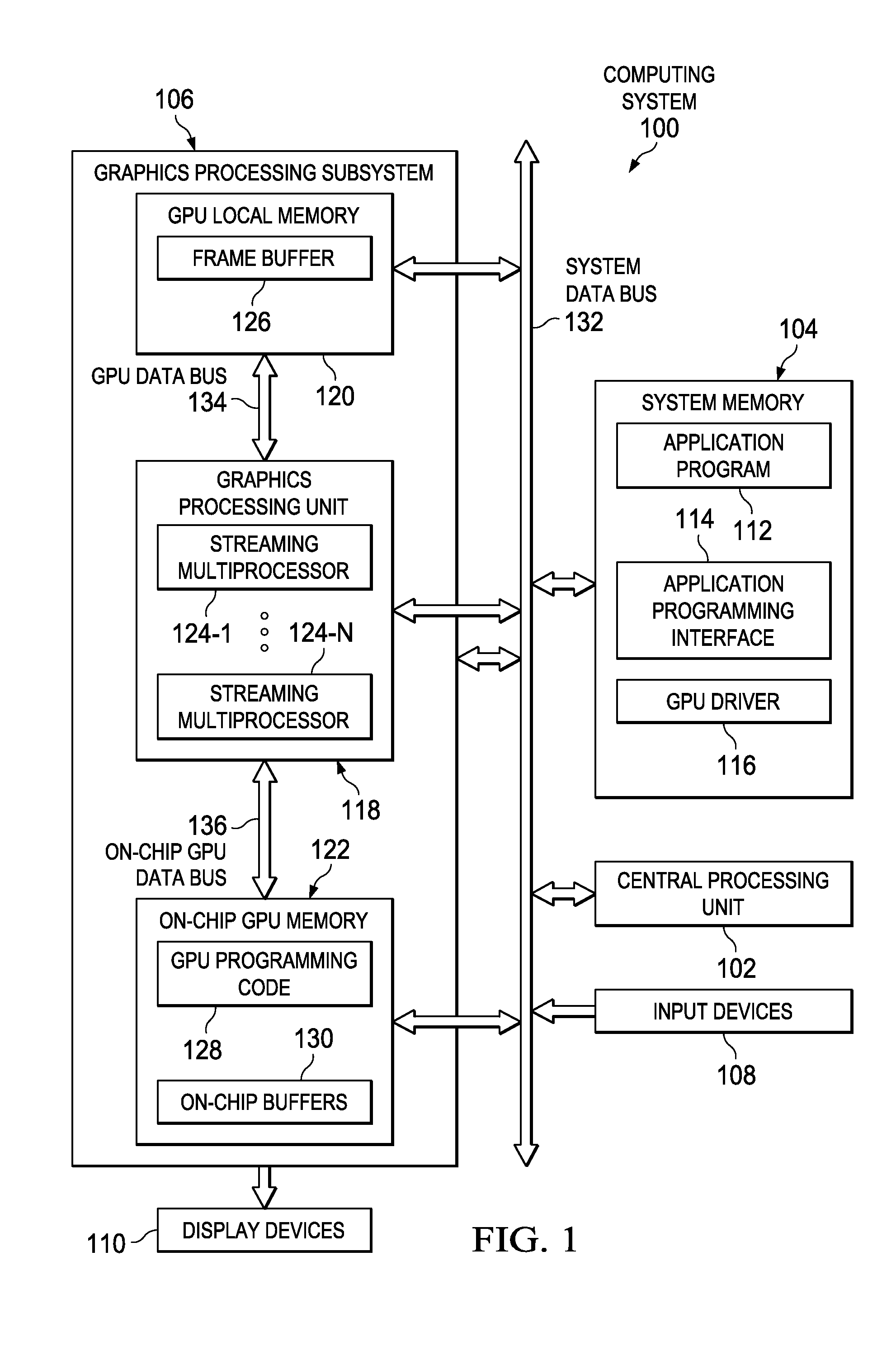

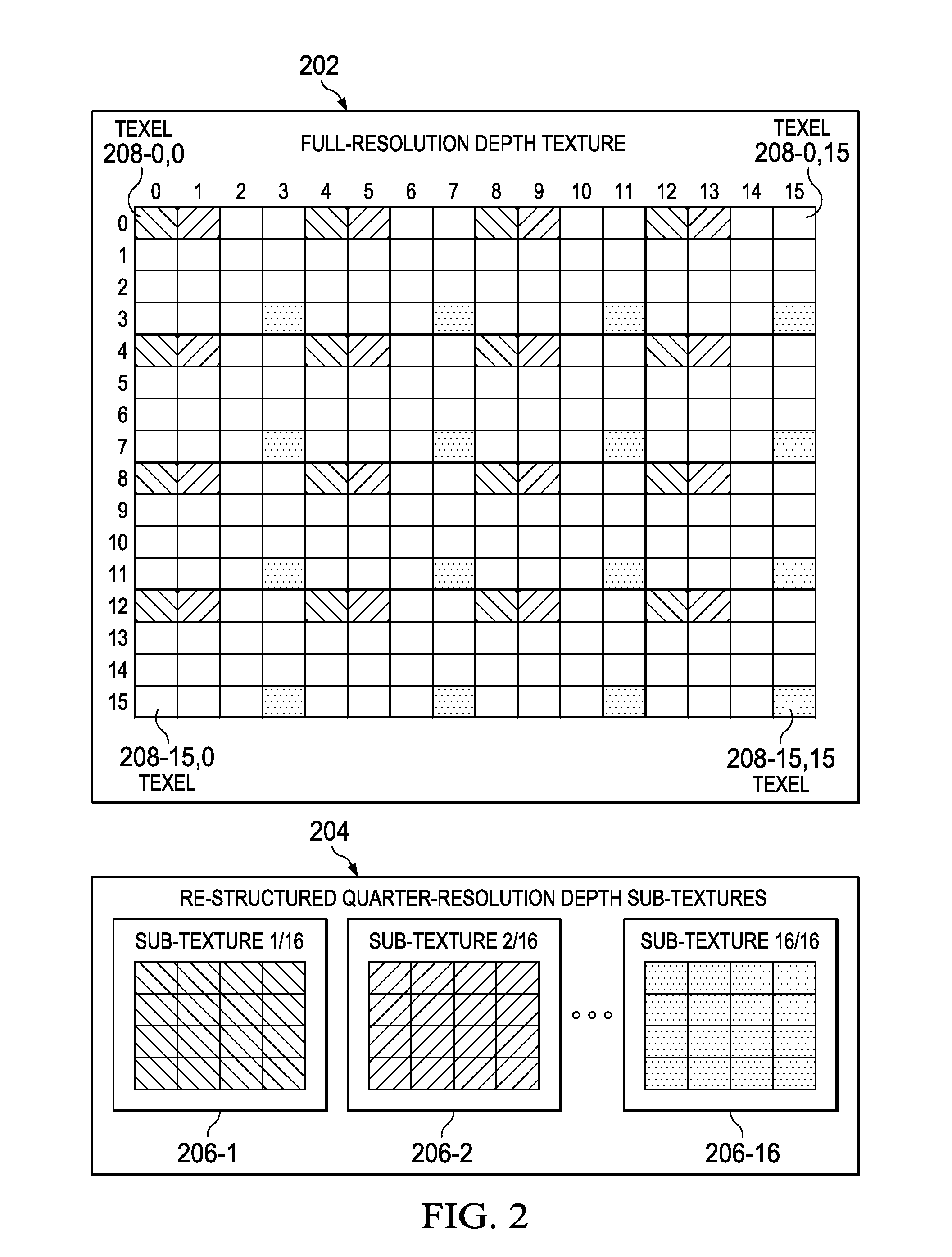

Depth texture data structure for rendering ambient occlusion and method of employment thereof

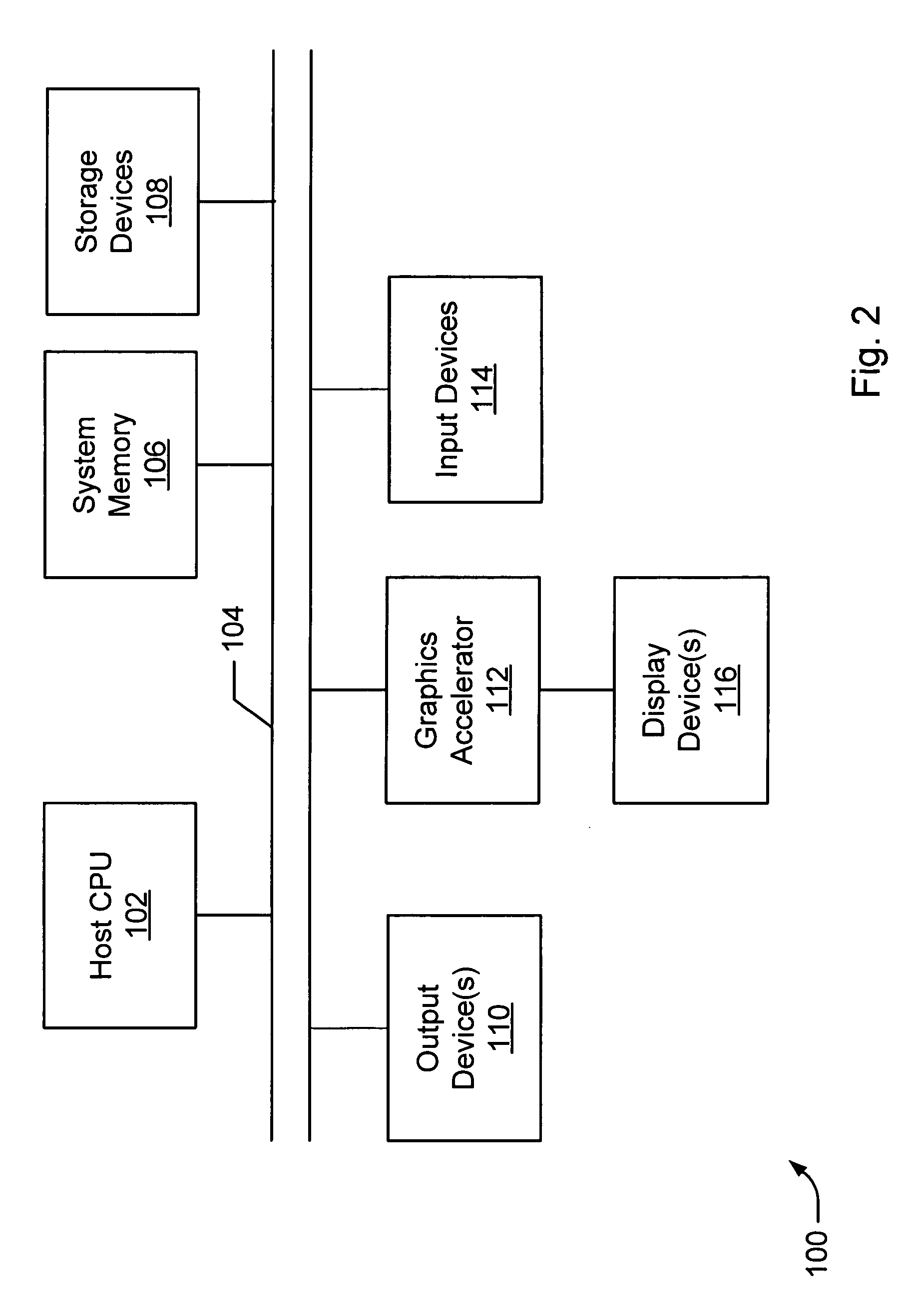

A graphics processing subsystem operable to efficiently render an ambient occlusion texture. In one embodiment, the graphics processing subsystem includes: (1) a memory configured to store a depth data structure according to which a full-resolution depth texture is represented by a plurality of unique reduced-resolution depth sub-textures, and (2) a graphics processing unit configured to communicate with the memory via a data bus, and, for a given pixel, execute a program to employ the plurality of unique reduced-resolution depth sub-textures to compute a plurality of coarse ambient occlusion textures, and to render the plurality of coarse ambient occlusion textures as a single full-resolution ambient occlusion texture for the given pixel.

Owner:NVIDIA CORP

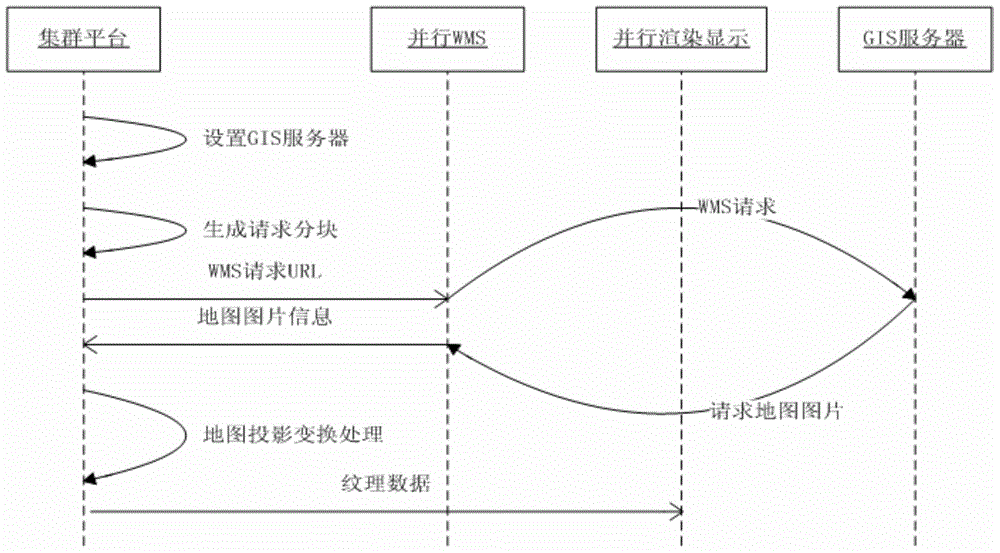

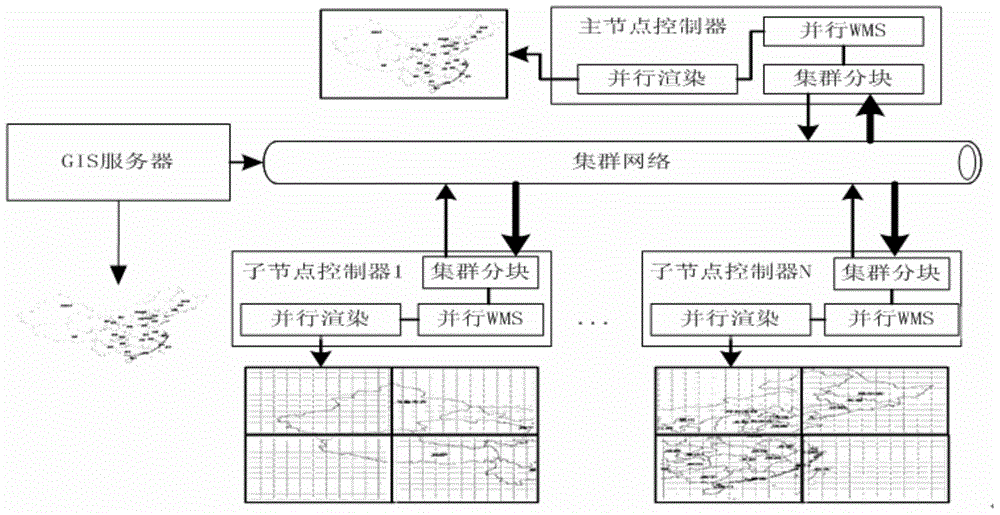

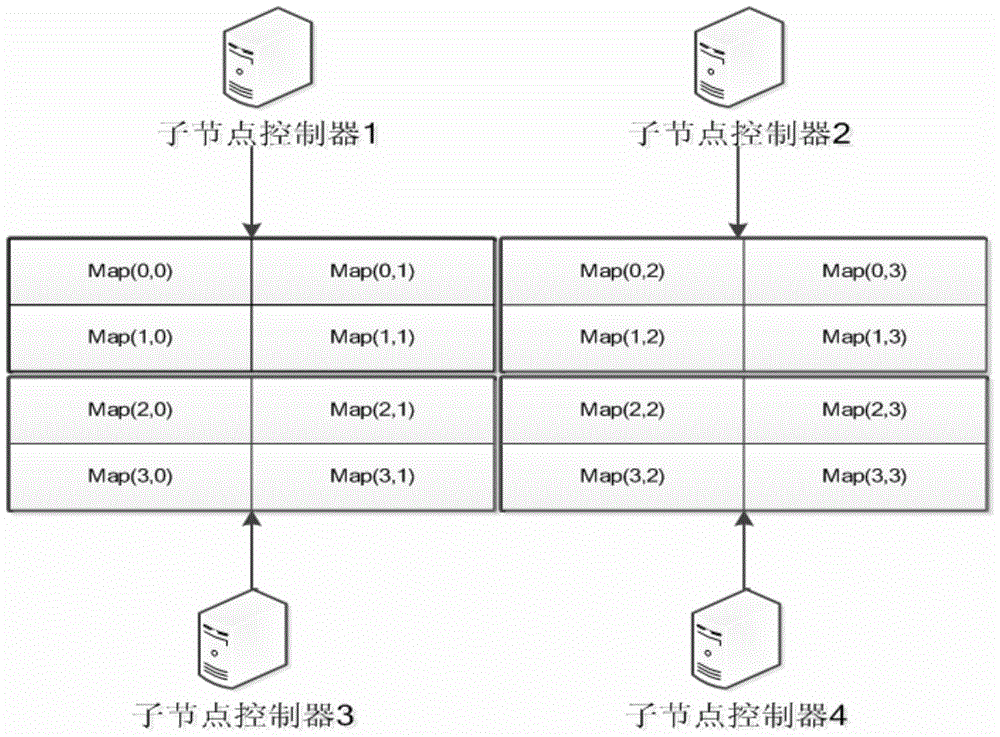

Massive geographic information system (GIS) information ultrahigh resolution displaying method

ActiveCN103984513AIncrease the number ofHigh-resolutionImage data processing detailsTransmissionTexture renderingComputer cluster

The invention provides a geographic information system (GIS) information ultrahigh resolution displaying method. The method is a splicing displaying method which includes acquiring GIS map information through cluster map sub blocks and WMS service in parallel on the basis of the computer cluster parallel processing, utilizing map projection transformation distribution to process data and conducting parallel texture rendering displaying through a graphic processing unit (GPU). A computer cluster is utilized to acquire, process and display map information, and the massive GIS map processing and displaying problem can be effectively solved. According to a cluster map sub block technology, sub block processing is conducted on a high resolution map according to cluster node machines, the resolution of the displayed map can be expanded by increasing the number of the node machines and is not limited by the resolution of a display screen, and ultrahigh resolution GIS information displaying can be achieved.

Owner:广东瀚阳轨道信息科技有限公司

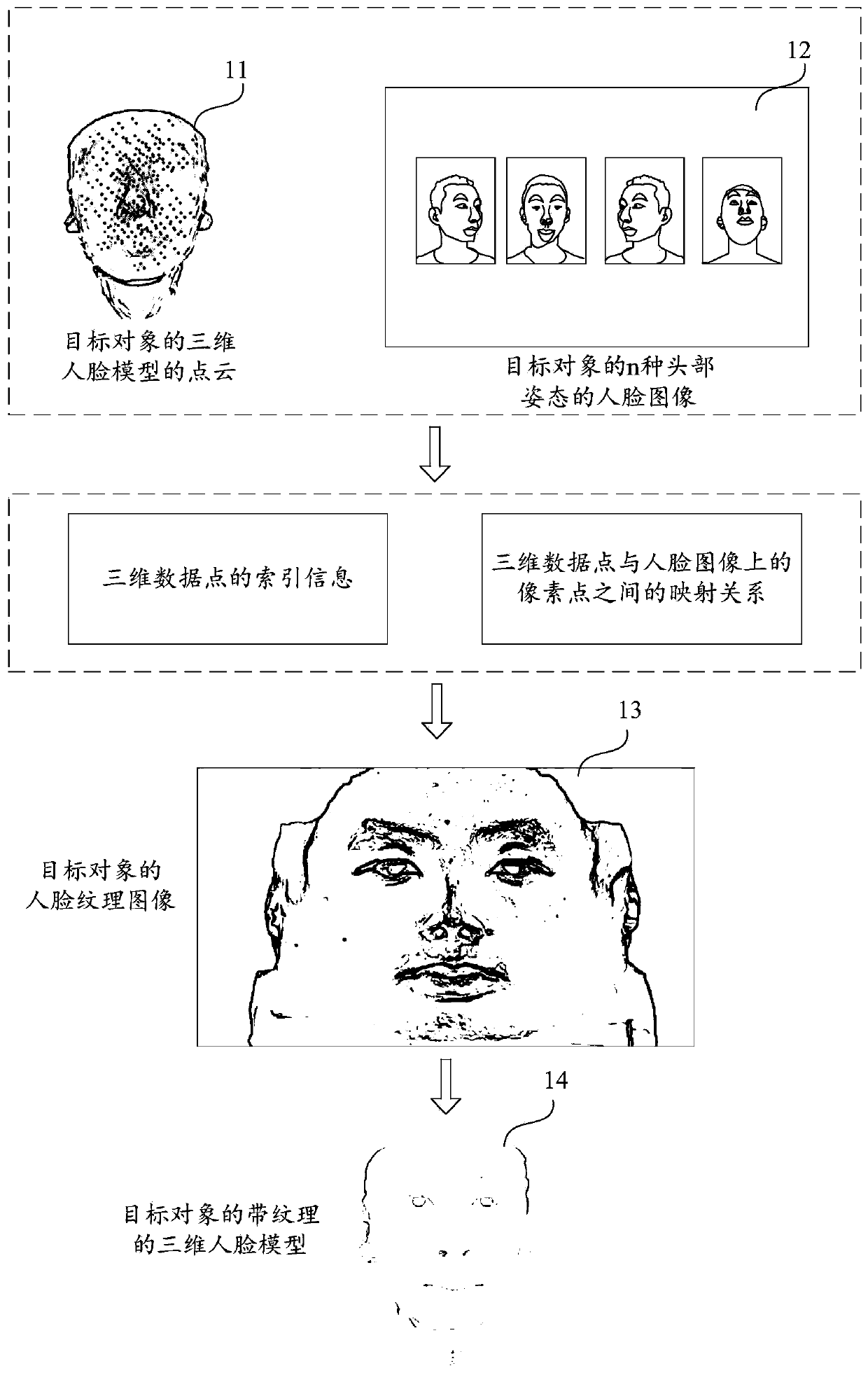

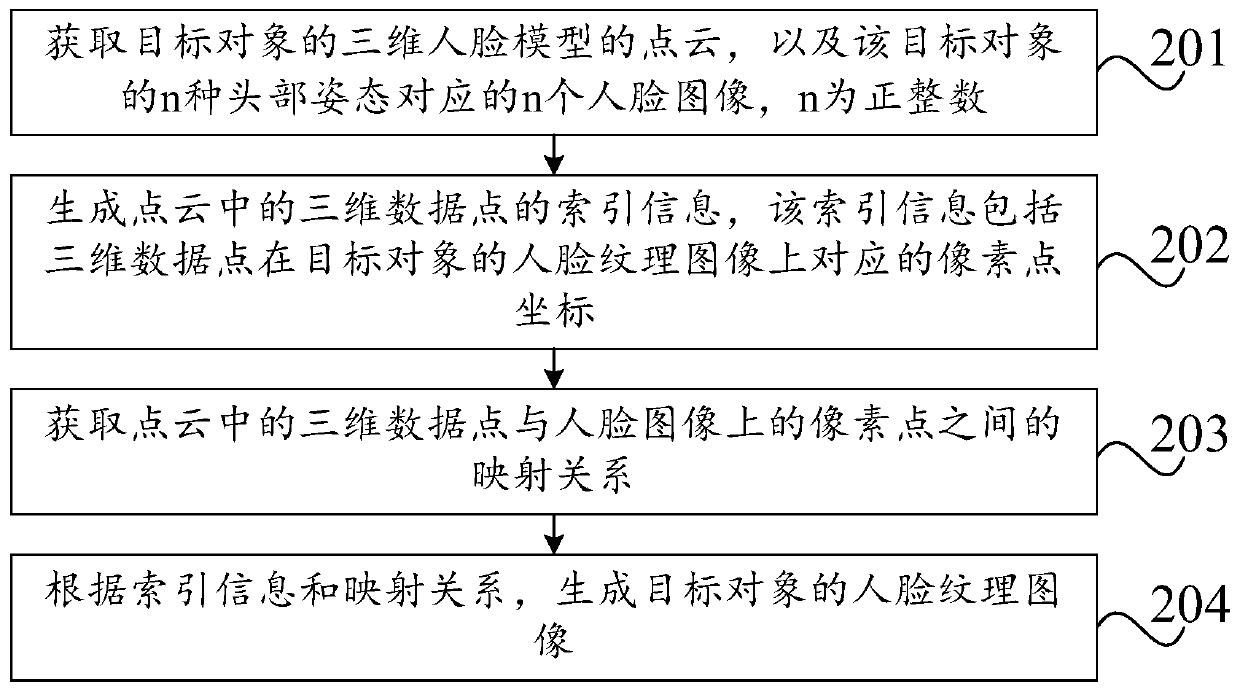

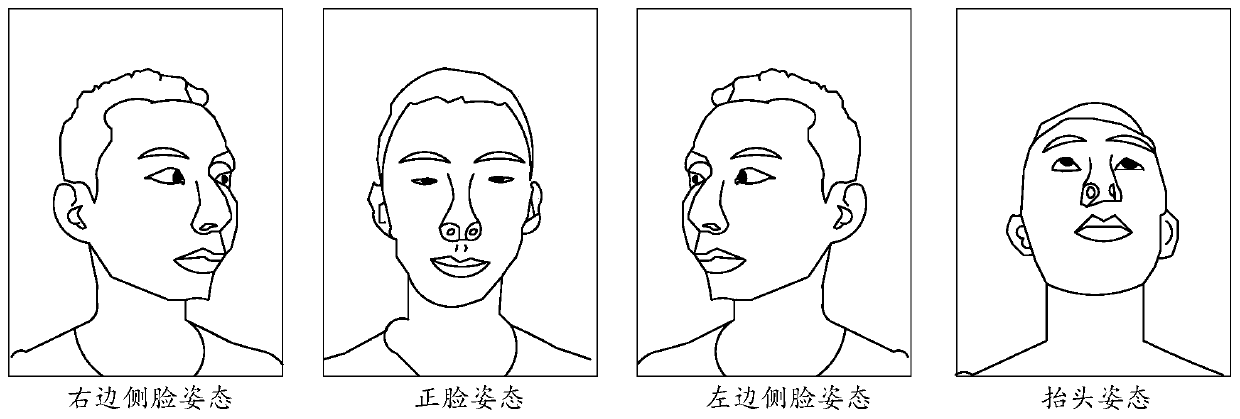

Face texture image acquisition method and device, equipment and storage medium

ActiveCN111325823AImprove texture renderingSmooth and detailed texture renderingCharacter and pattern recognition3D-image renderingPattern recognitionPoint cloud

The invention provides a face texture image acquisition method and device, equipment and a storage medium. The method comprises the steps that point cloud of a three-dimensional face model of a targetobject and face images of n head postures of the target object are acquired; index information of three-dimensional data points in the point cloud is calculated through cylindrical expansion; obtaining a mapping relationship between three-dimensional data points in the point cloud and pixel points on the face image; image areas corresponding to the head postures are obtained from the n face images respectively, and n effective areas are obtained; generating region texture images corresponding to the n effective regions according to the index information and the mapping relationship; and performing image fusion on the n region texture images to generate a face texture image of the target object. According to the method, the corresponding face texture image can be generated for the three-dimensional face model obtained through arbitrary reconstruction, so that the texture rendering effect of the three-dimensional face model can be improved, and the authenticity of the finally generatedface texture image can be improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

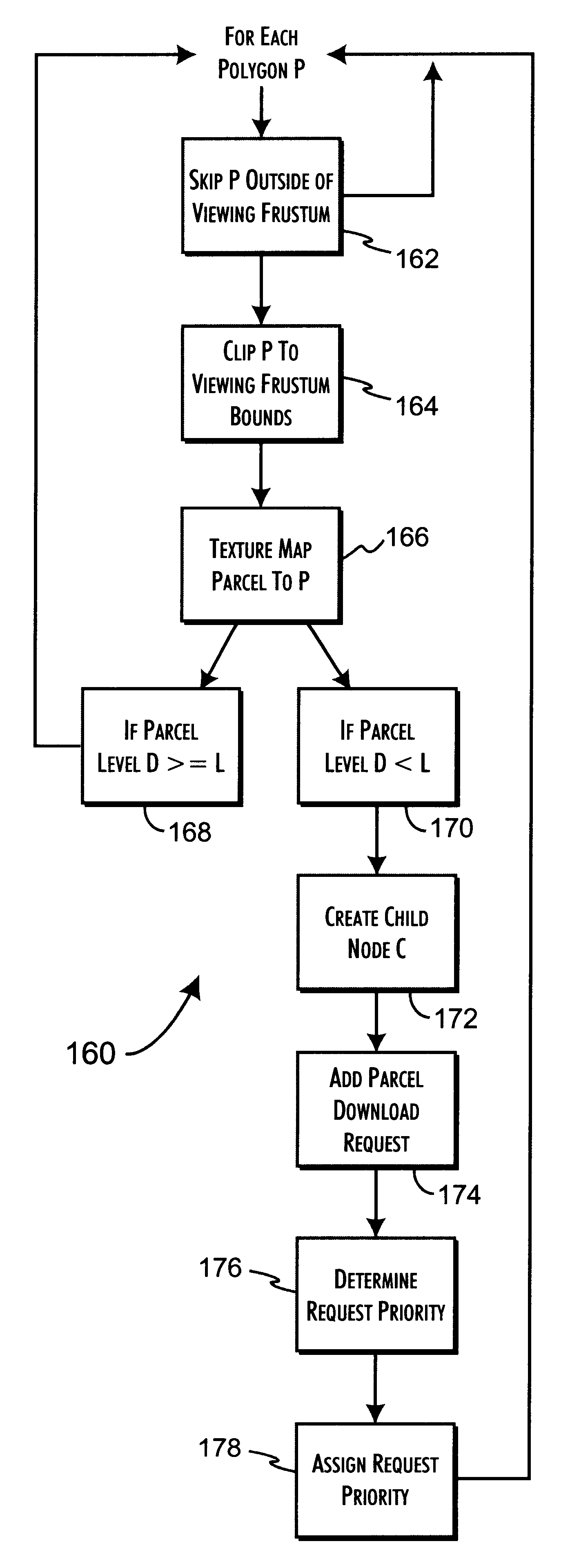

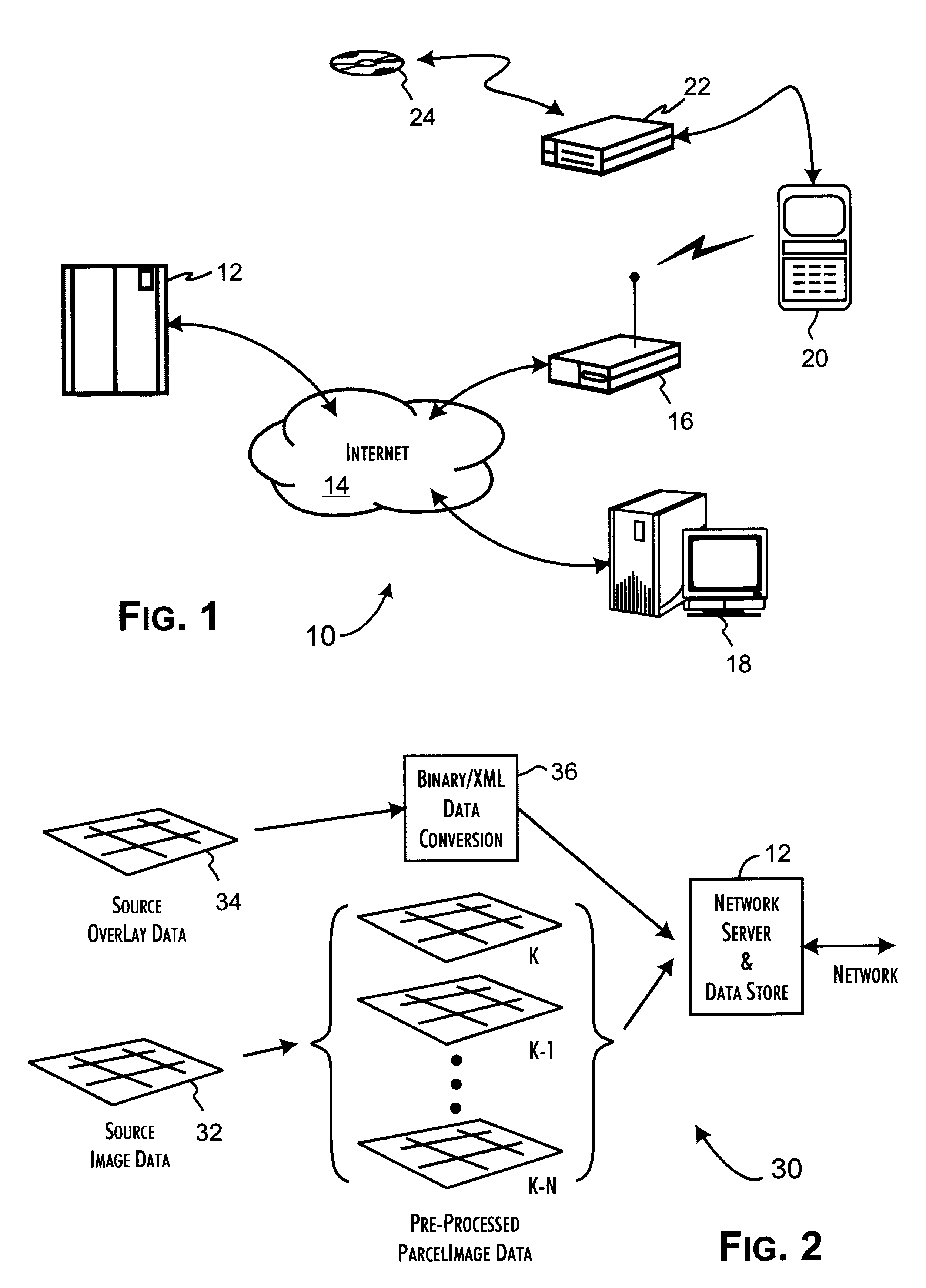

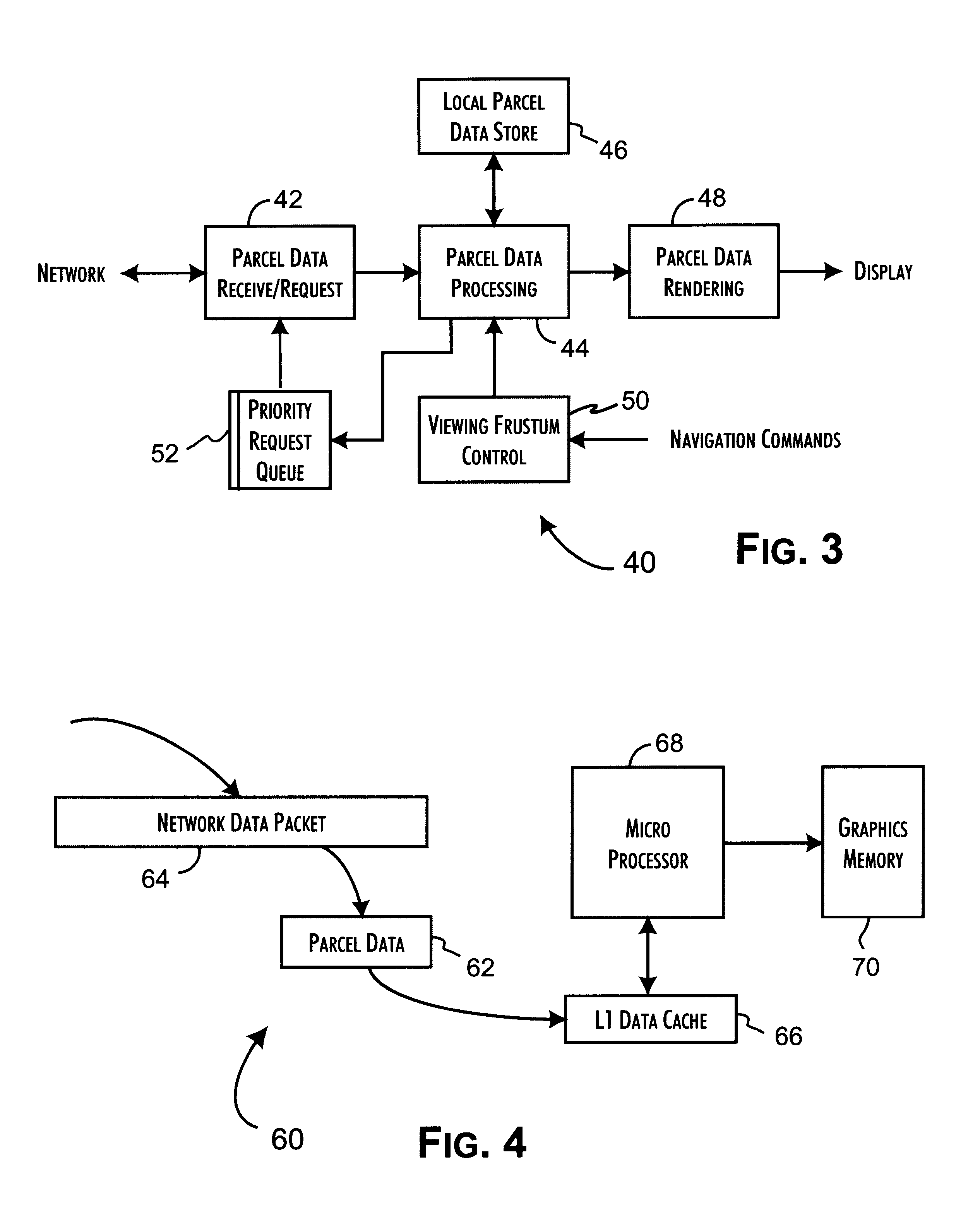

Efficient image parcel texture rendering with T-junction crack elimination

InactiveUS6850235B2Easy to handleImprove perceived qualityStatic indicating devicesGeometric image transformationTexture renderingPolygon mesh

Owner:INOVO

Real-time texture rendering using generalized displacement maps

ActiveUS7184052B2Facilitate texture coordinate computationAccelerate mesostructure renderingCathode-ray tube indicators3D-image renderingGraphicsVisibility

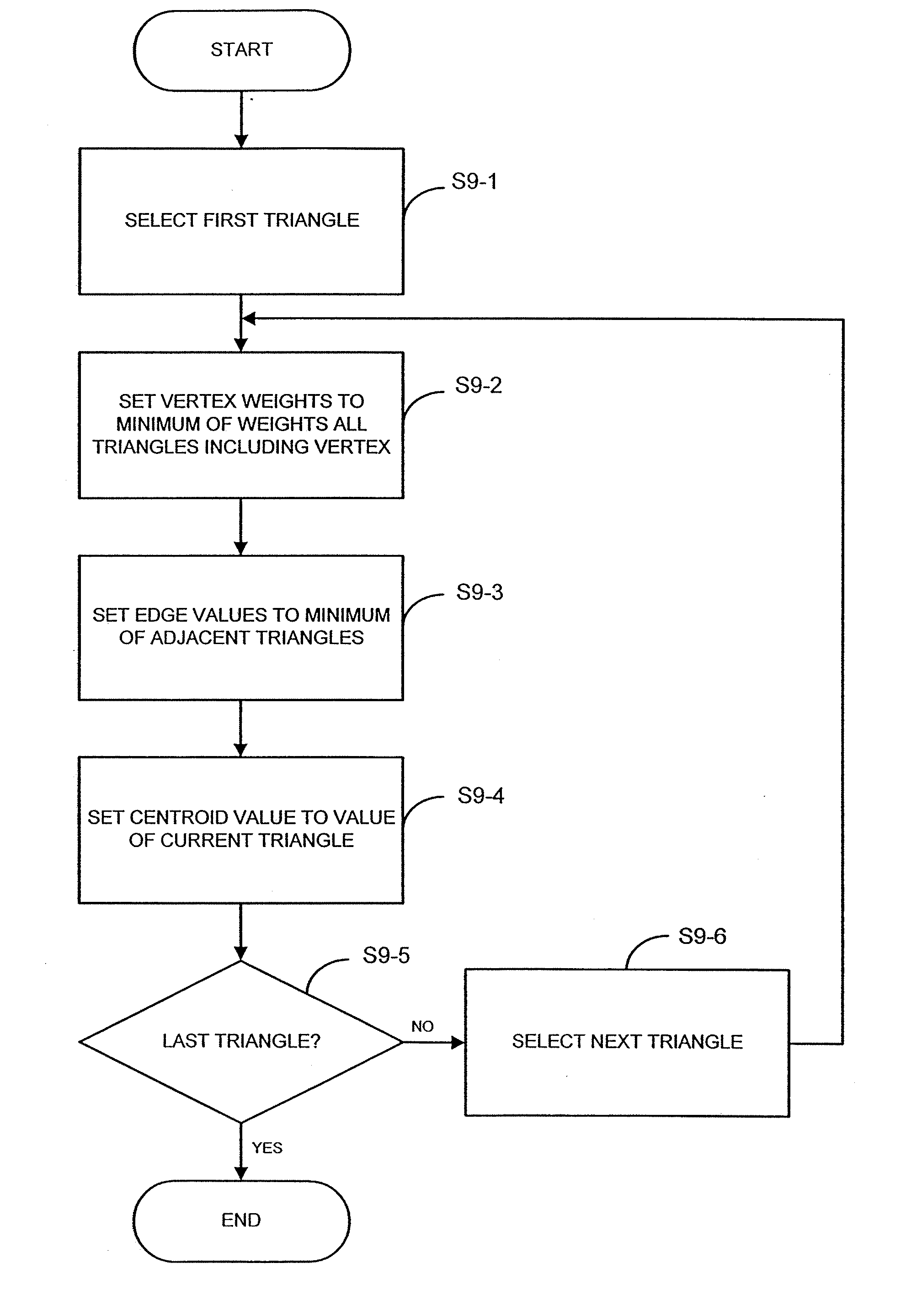

A “mesostructure renderer” uses pre-computed multi-dimensional “generalized displacement maps” (GDM) to provide real-time rendering of general non-height-field mesostructures on both open and closed surfaces of arbitrary geometry. In general, the GDM represents the distance to solid mesostructure along any ray cast from any point within a volumetric sample. Given the pre-computed GDM, the mesostructure renderer then computes mesostructure visibility jointly in object space and texture space, thereby enabling both control of texture distortion and efficient computation of texture coordinates and shadowing. Further, in one embodiment, the mesostructure renderer uses the GDM to render mesostructures with either local or global illumination as a per-pixel process using conventional computer graphics hardware to accelerate the real-time rendering of the mesostructures. Further acceleration of mesostructure rendering is achieved in another embodiment by automatically reducing the number of triangles in the rendering pipeline according to a user-specified threshold for acceptable texture distortion.

Owner:MICROSOFT TECH LICENSING LLC

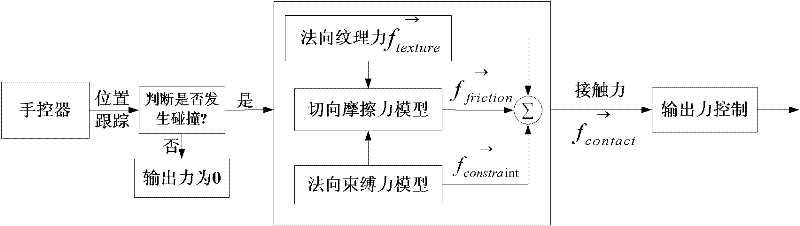

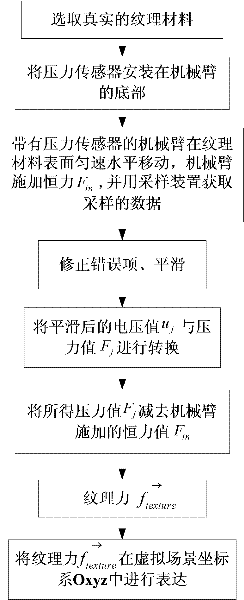

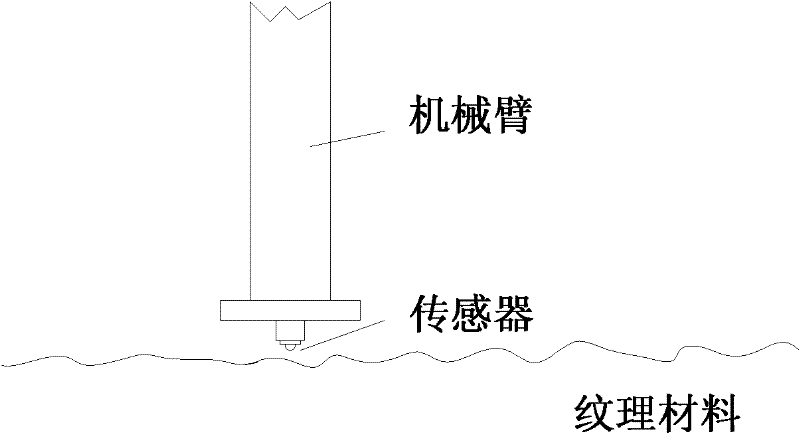

Haptic texture rendering method based on practical measurement

InactiveCN102054122AConstant forceThe measurement method is scientific and simpleMeasurement devicesSpecial data processing applicationsTouch PerceptionStatic friction

The invention discloses a haptic texture rendering method based on practical measurement, which is characterized in that after an operational handle of haptic texture display equipment collides with the surface of a virtual texture, resultant force of normal textural force, normal binding force and tangential friction is taken as contact force to be output to an operator. The normal textural force is obtained by measuring pressure which really scratches the surface of the texture, a mechanical arm which applies constant force and is provided with a pressure sensor at the bottom is used to scratch the surface of a textural material at a constant speed and collect data meanwhile, and after error item correction, smoothness, voltage value conversion, mechanical arm constant force value subtraction of the data, the data are converted into the normal textural force; the modeling of the normal binding force adopts a spring damping model; and the modeling of the tangential friction synthesizes a static friction stage and a sliding friction stage, the modeling at the static friction stage adopts the product of maximal static friction and a sine function, a coefficient of kinetic friction at the sliding friction stage is obtained by calculating the normal textural force reflecting the concave-convex degree of the texture, and the naturalness of the texture rendering is improved.

Owner:SOUTHEAST UNIV

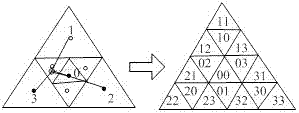

SGOG tile-based large region true three-dimensional geographic scene adaptive construction method

The invention discloses an SGOG tile-based large region true three-dimensional geographic scene adaptive construction method. The method comprises the steps of processing DEM source data by utilizing an IDL, taking OSG as a graphic engine, taking VS2010 as a platform, and taking standard C++ as a development language, so that an experimental system is constructed; and performing DEM elevation calculation and matching by calculating and storing SGOG tile grid point coordinates, drawing tiles based on grid point elevation by the obtained SGOG grid point coordinates to construct a true three-dimensional terrain framework, performing modeling on the framework, and performing layered color setting and texture rendering. According to the method, large region crust true three-dimensional visualization modeling in view of earth curvature is realized; the defects of projection space model-based deformation, cracks and the like are overcome; integrated seamless organization and modeling of earth surface big data are realized; and natural manifold properties of a geographic space are recovered.

Owner:ZHENGZHOU UNIV

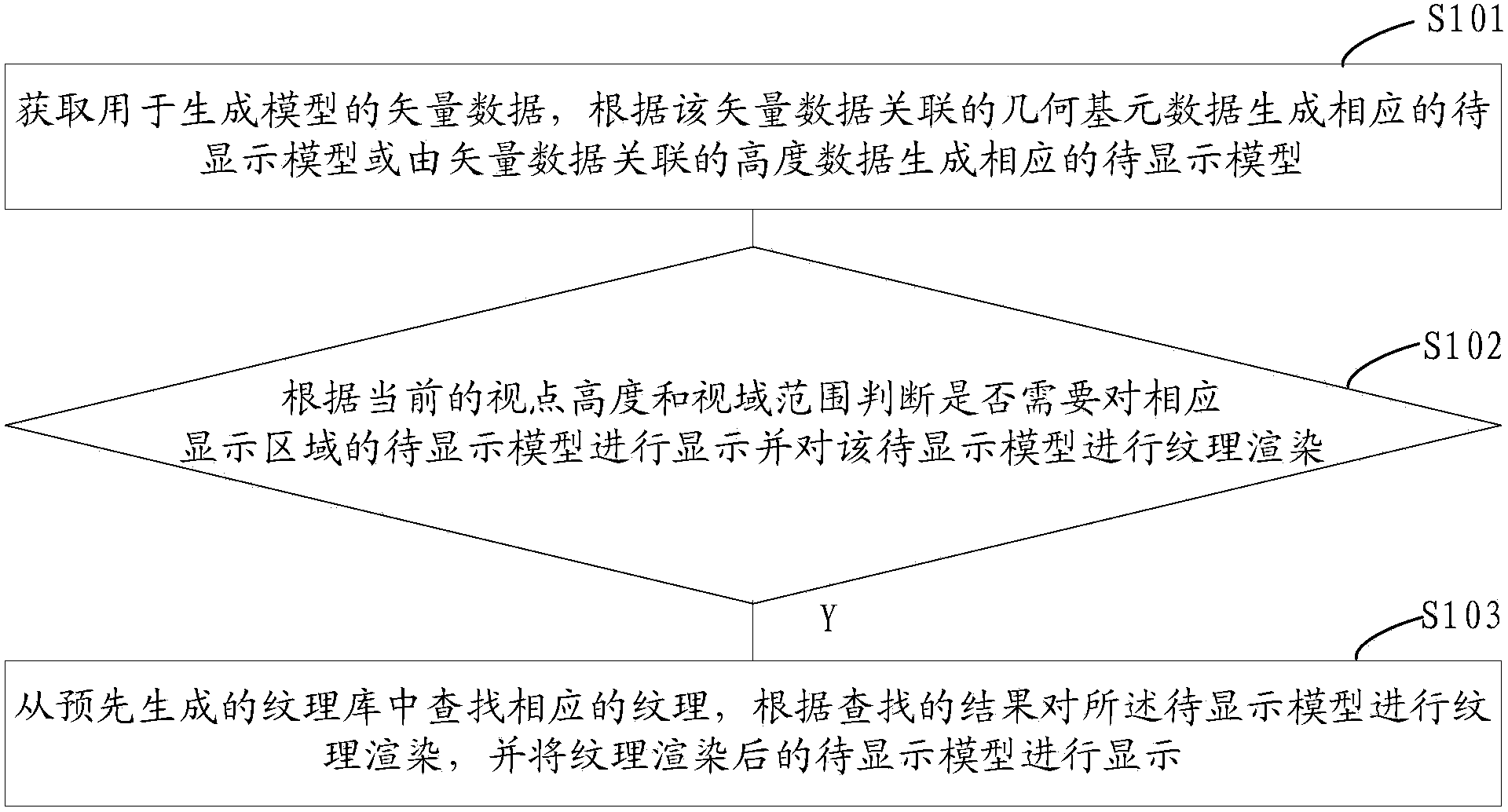

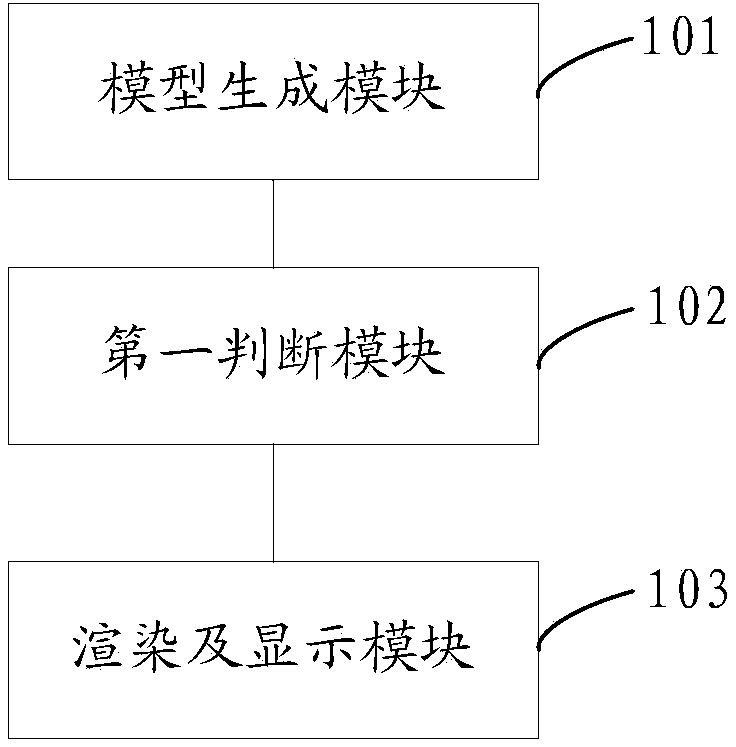

Method and device for displaying three-dimensional GIS (geographic information system) model

InactiveCN104239431ARealize refined displayImprove the efficiency of texture renderingGeographical information databasesSpecial data processing applicationsGeometric primitiveTexture rendering

The invention provides a method and a device for displaying a three-dimensional GIS (geographic information system) model. The method comprises the following steps of obtaining vector data for generating the model, and according to geometric primitive data correlated with the vector data, generating the corresponding to-be-displayed model, or according to the height data correlated with the vector data, generating the corresponding to-be-displayed model; according to the current vision point height and vision field range, judging if the to-be-displayed model of the corresponding display area needs to be displayed and the to-be-displayed model needs to be subjected to texture rendering or not; if so, finding the corresponding texture from a pre-generated texture base, performing the texture rendering on the to-be-displayed model according to the finding results, and displaying the to-be-displayed model subjected to the texture rendering. The method and the device for displaying the three-dimensional GIS model can be used for improving the efficiency of refining display of massive three-dimensional GIS models.

Owner:GUANGDONG VTRON TECH CO LTD

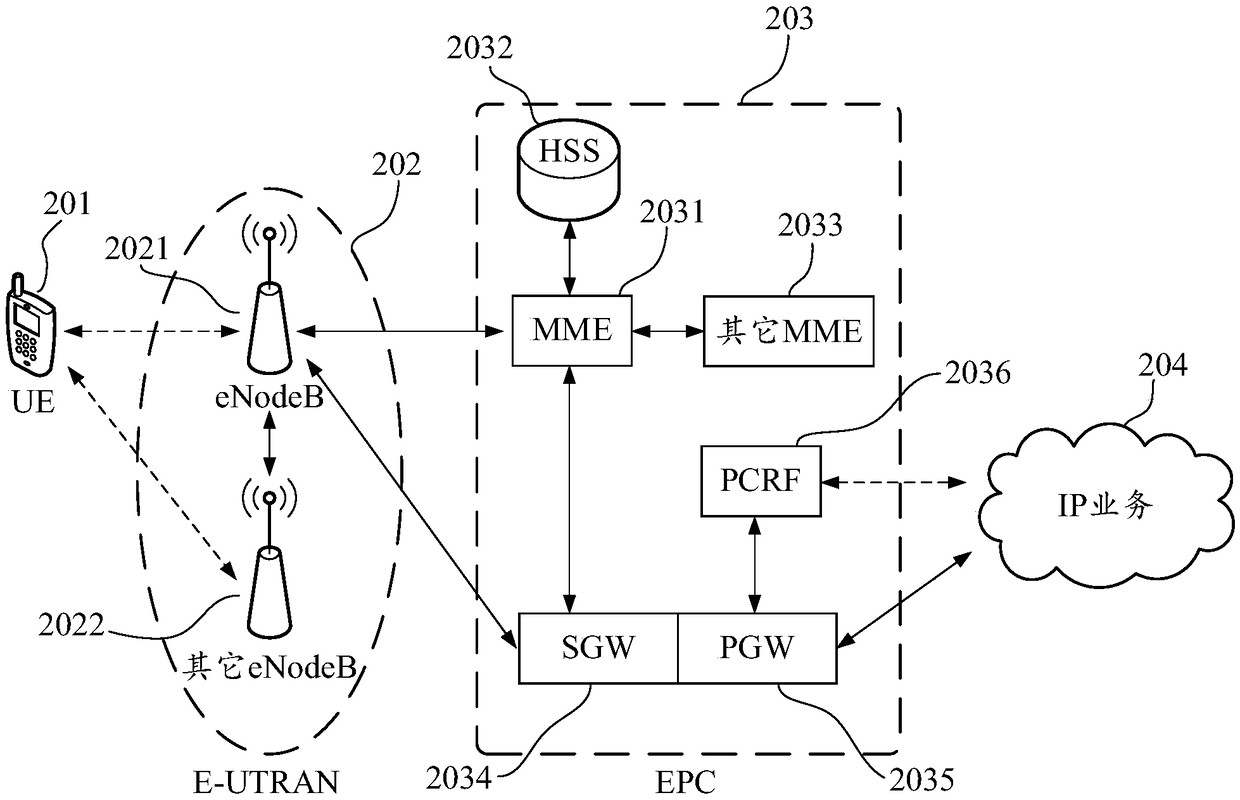

Video processing method and system

ActiveCN110662090AImprove video communication experienceFlexible configurationSelective content distributionTexture renderingComputer graphics (images)

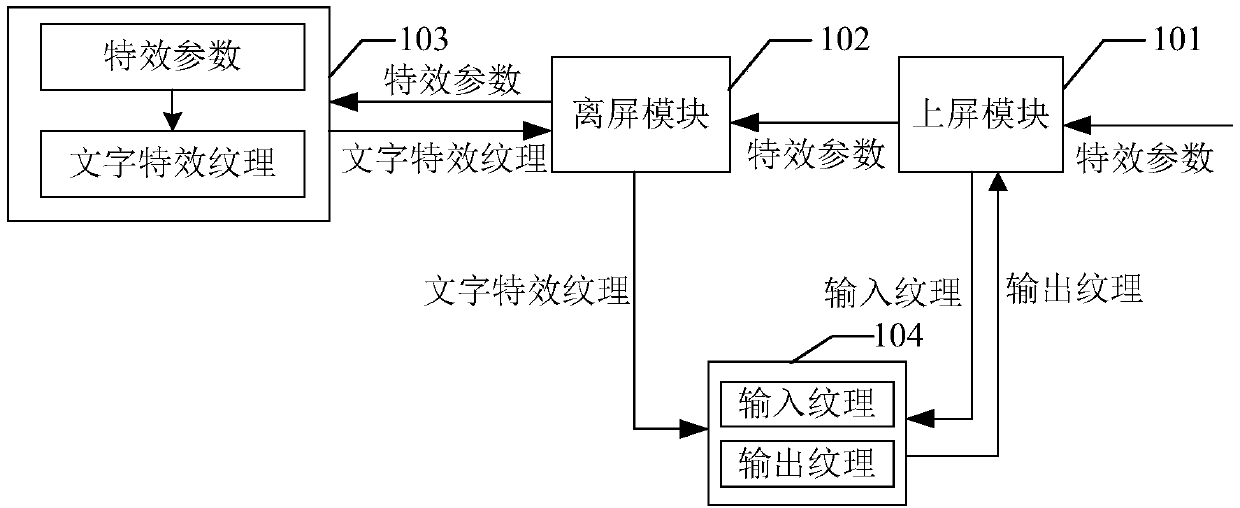

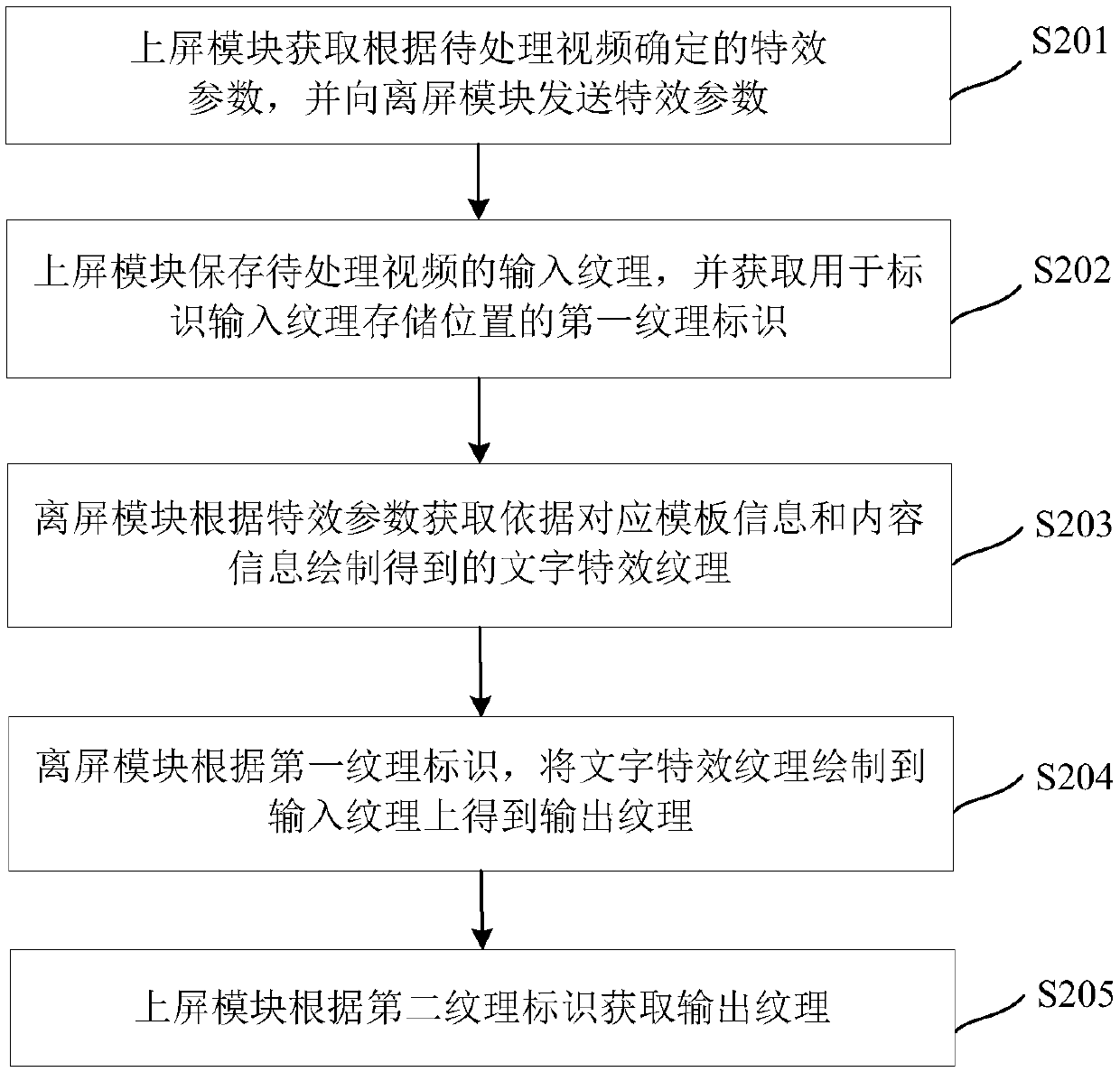

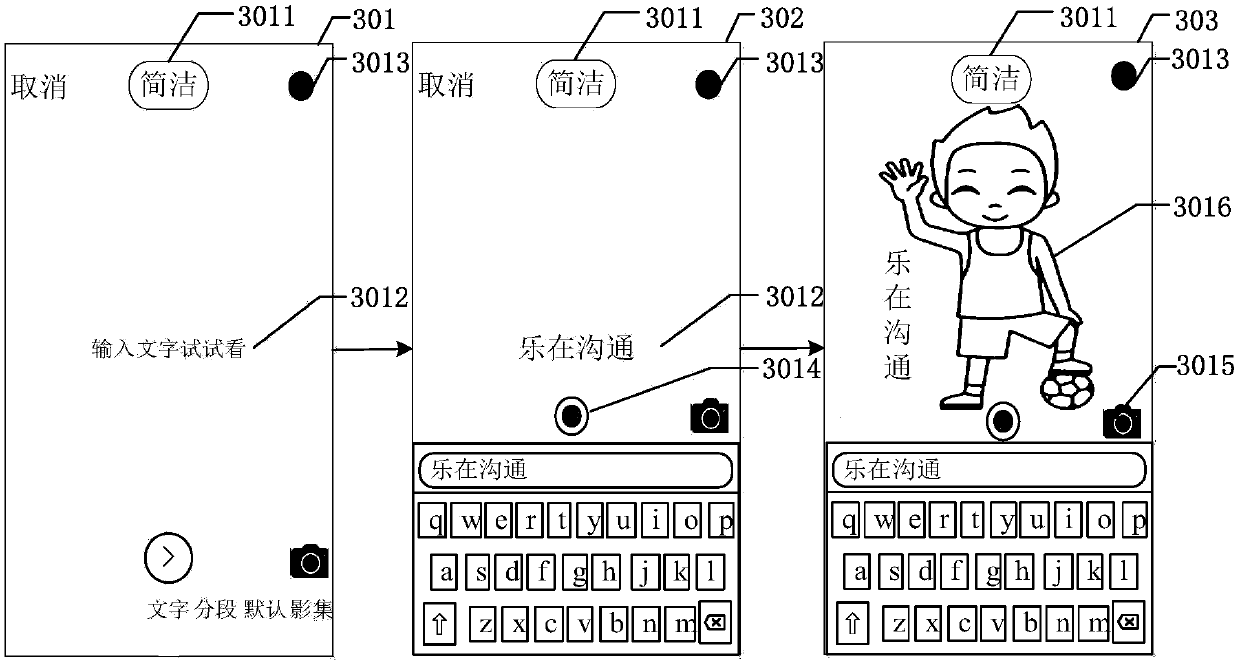

The embodiment of the invention discloses a video processing method, which is applied to a video processing system, and the video processing system comprises an on-screen module and an off-screen module. The on-screen module can obtain special effect parameters determined according to a to-be-processed video, wherein the special effect parameters comprise template information used for identifyingspecial effect types and text information used for identifying special effect contents. The on-screen module stores the input texture corresponding to the to-be-processed video and sends the special effect parameter to the off-screen module, and the off-screen module completes texture rendering of the input texture in the background according to the special effect parameter to obtain an output texture, thereby completing texture rendering of the input texture based on the special effect parameter. Wherein the output texture can be obtained by the upper screen module so as to be drawn on a display interface to enable a user to see a video with a character special effect. Video special effect processing is completed by the video processing system, a user does not need to have video processing skills, and user video communication experience is improved. The embodiment of the invention further discloses a video processing system.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Image generation method and apparatus

InactiveUS20070025624A1Image analysisCharacter and pattern recognitionPattern recognitionImaging processing

An image processing apparatus is disclosed in which input images are processed to generate texture map data for texture rendering a generated three-dimensional computer model of object(s) appearing in the images. In order to select the portions of the images utilised, confidence data is generated indicative of the extent portions of the surface of a model are visible in each of the images. The images are then combined utilising this confidence data, where image data representative of different spatial frequencies are blended in different ways utilising the confidence data.

Owner:CANON KK

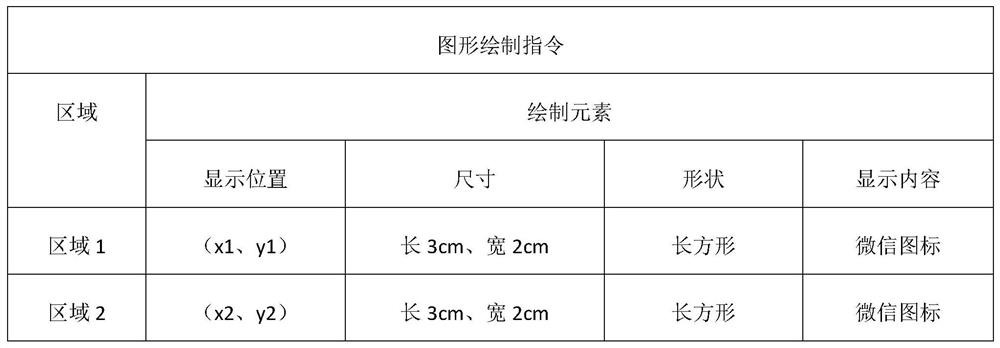

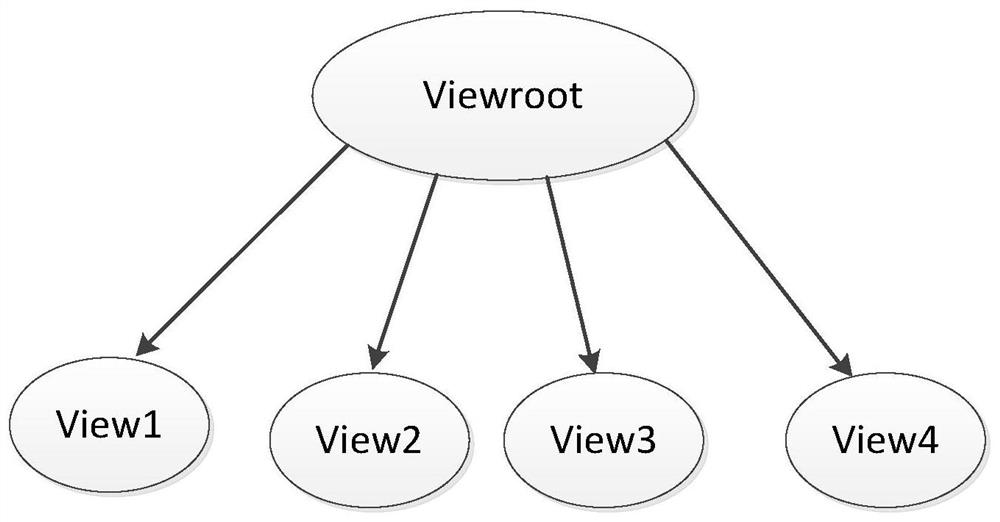

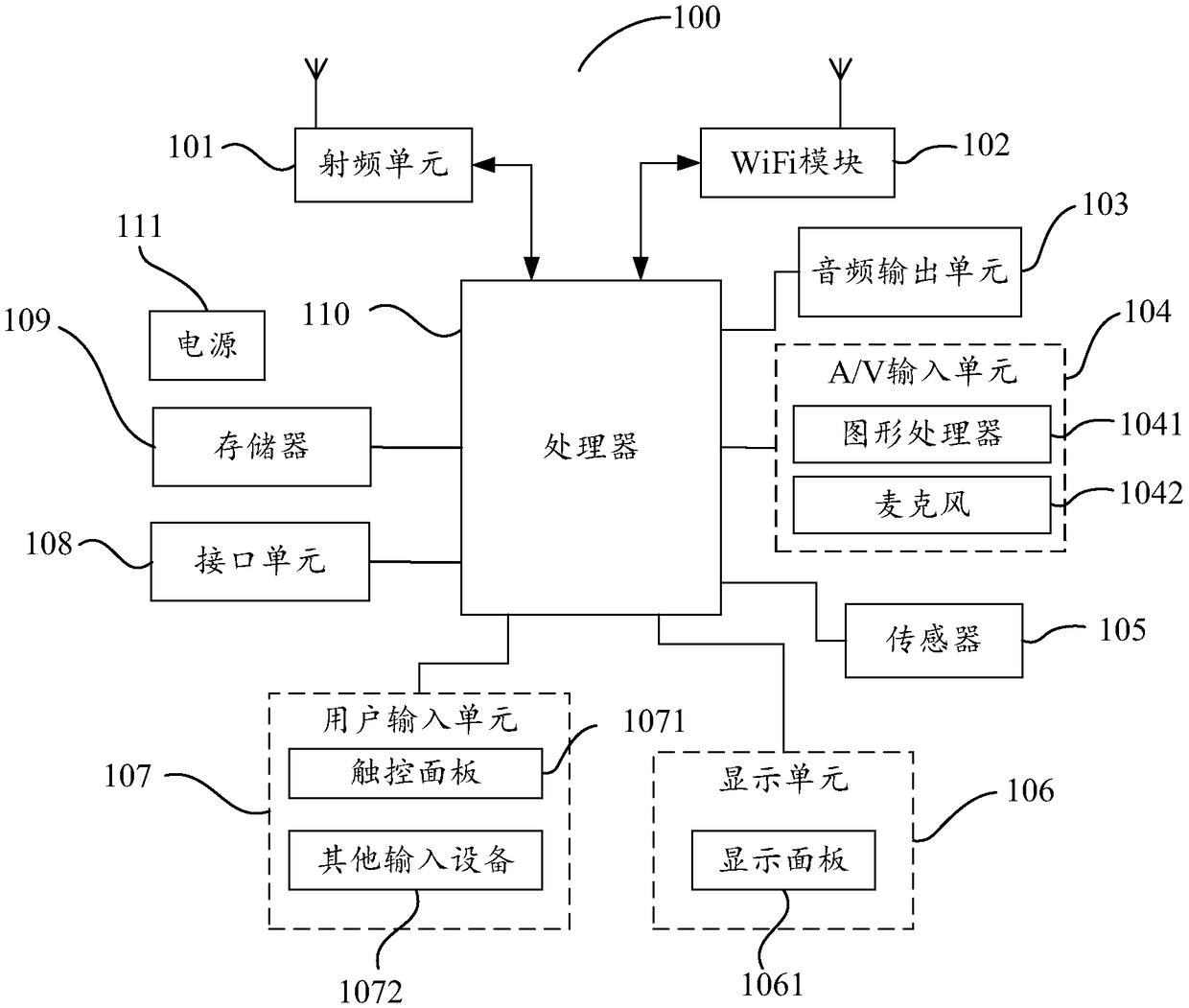

Graph drawing method and electronic device

InactiveCN111611031AImprove drawing efficiencyReduce workloadSubstation equipmentExecution for user interfacesGraphicsTexture rendering

The invention discloses a graph drawing method and an electronic device. The method comprises: when the electronic device displays a first graph, detecting a user input event; generating a graph drawing instruction based on the input event, wherein the graph drawing instruction comprises drawing elements of a target graph; based on the drawing elements of the target graph, when the target graph isdrawn, re-rendering a first region needing to be re-rendered in the first graph; synthesizing the first region and the second region after the re-texture rendering to obtain a target graph; wherein the second region is a region which does not need to be rendered again on the first graph; displaying target graphics. According to the graph drawing method, the electronic device only needs to renderthe first area needing to be rendered again on the first graph, and does not need to render the second area (the area not needing to be rendered again) again, so that the power consumption is reduced,and the efficiency is improved.

Owner:HUAWEI TECH CO LTD

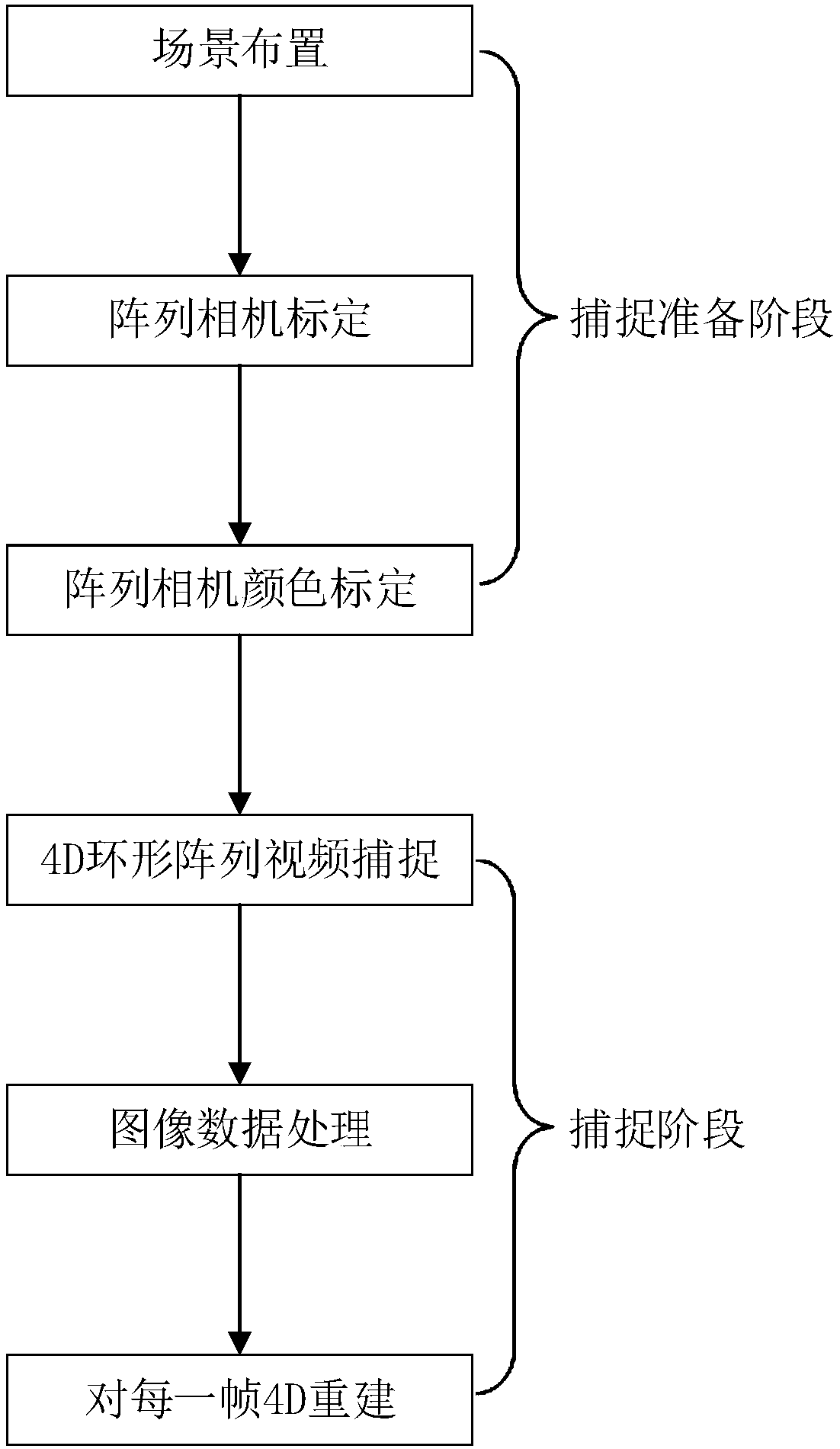

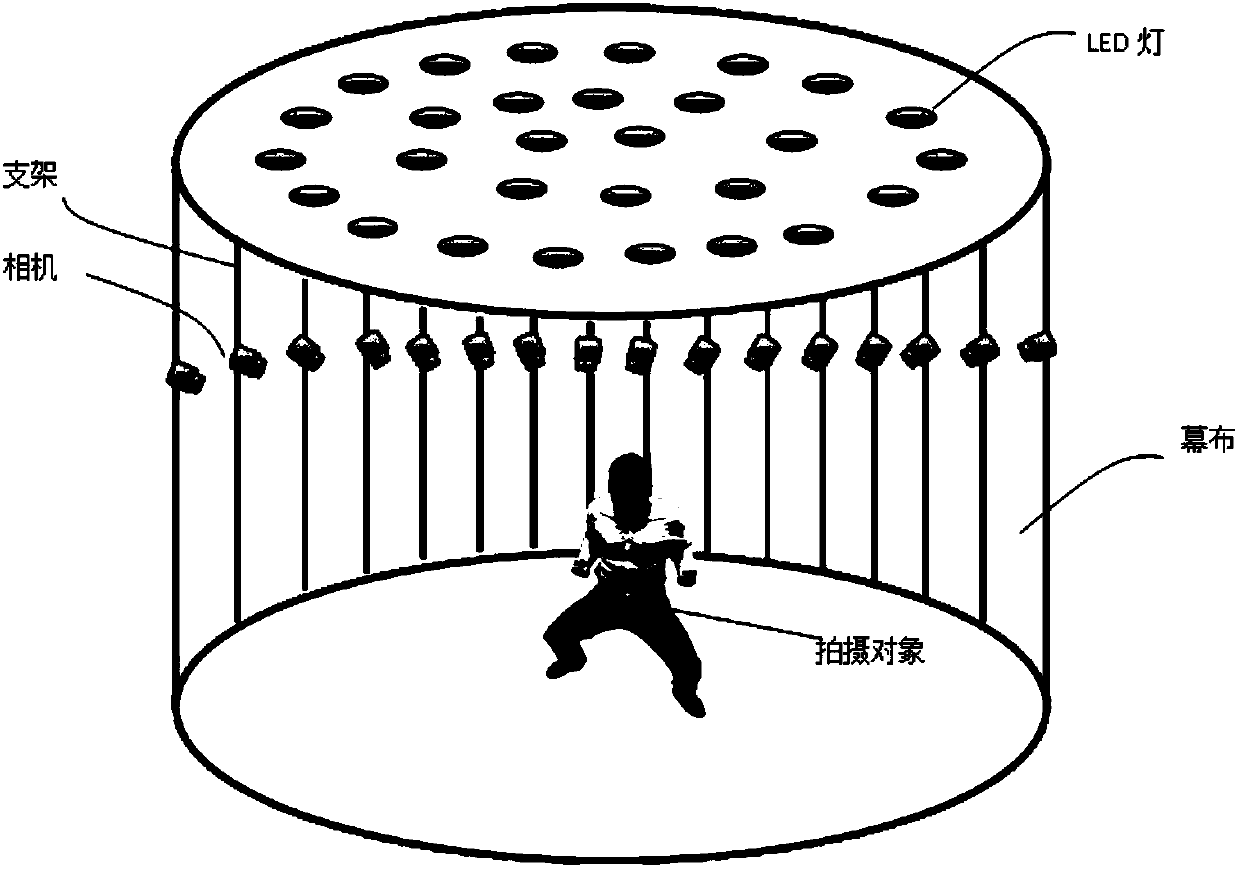

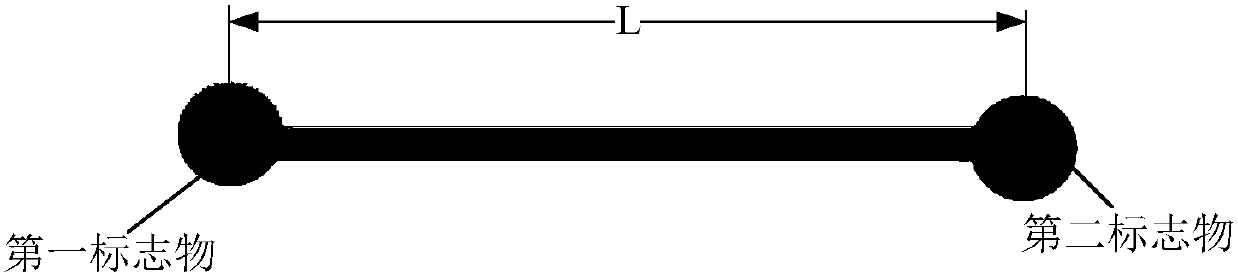

4D holographic video capturing method

ActiveCN107862718ATo offer comfortWith automaticImage enhancementImage analysisVision processingImaging processing

The invention provides a 4D holographic video capturing method. Image information of a scene and an object are captured through a multi-camera image sequence synchronous acquisition system. Through using an image processing method and a computer vision processing method, an accurate 3D object model which corresponds with each frame of a photographed object is established according to each frame ofimage data and a camera parameter. Rendering reconstruction is performed on the texture of the photographed object through texture rendering, thereby obtaining a vivid reproduced three-dimensional photographing object and a photographed object three-dimensional model of which each frame can be viewed in different angles. A 4D holographic video is obtained through continuously playing the frames,thereby reproducing a three-dimensional static or dynamic object in a high vivid manner.

Owner:长沙立体视线科技有限公司

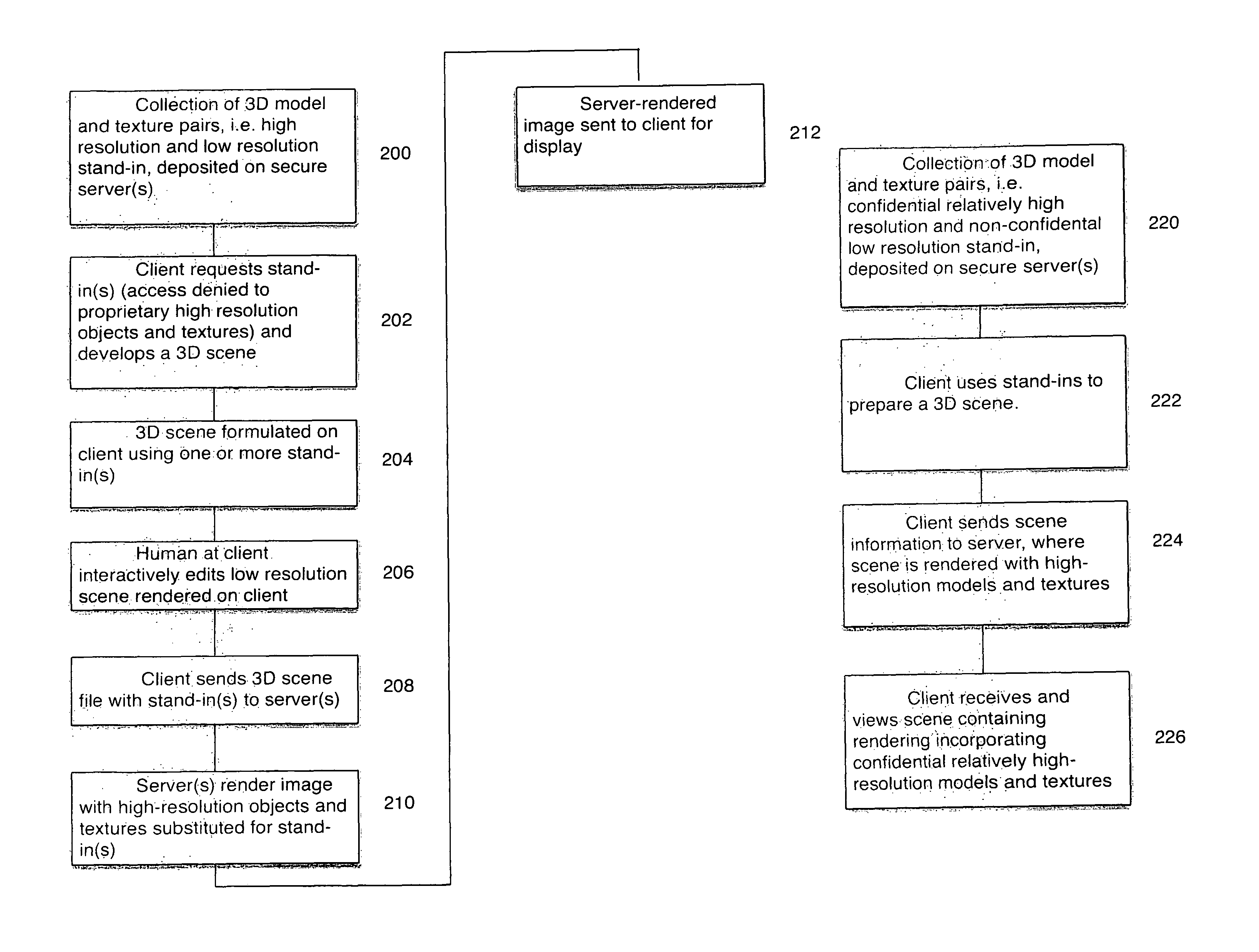

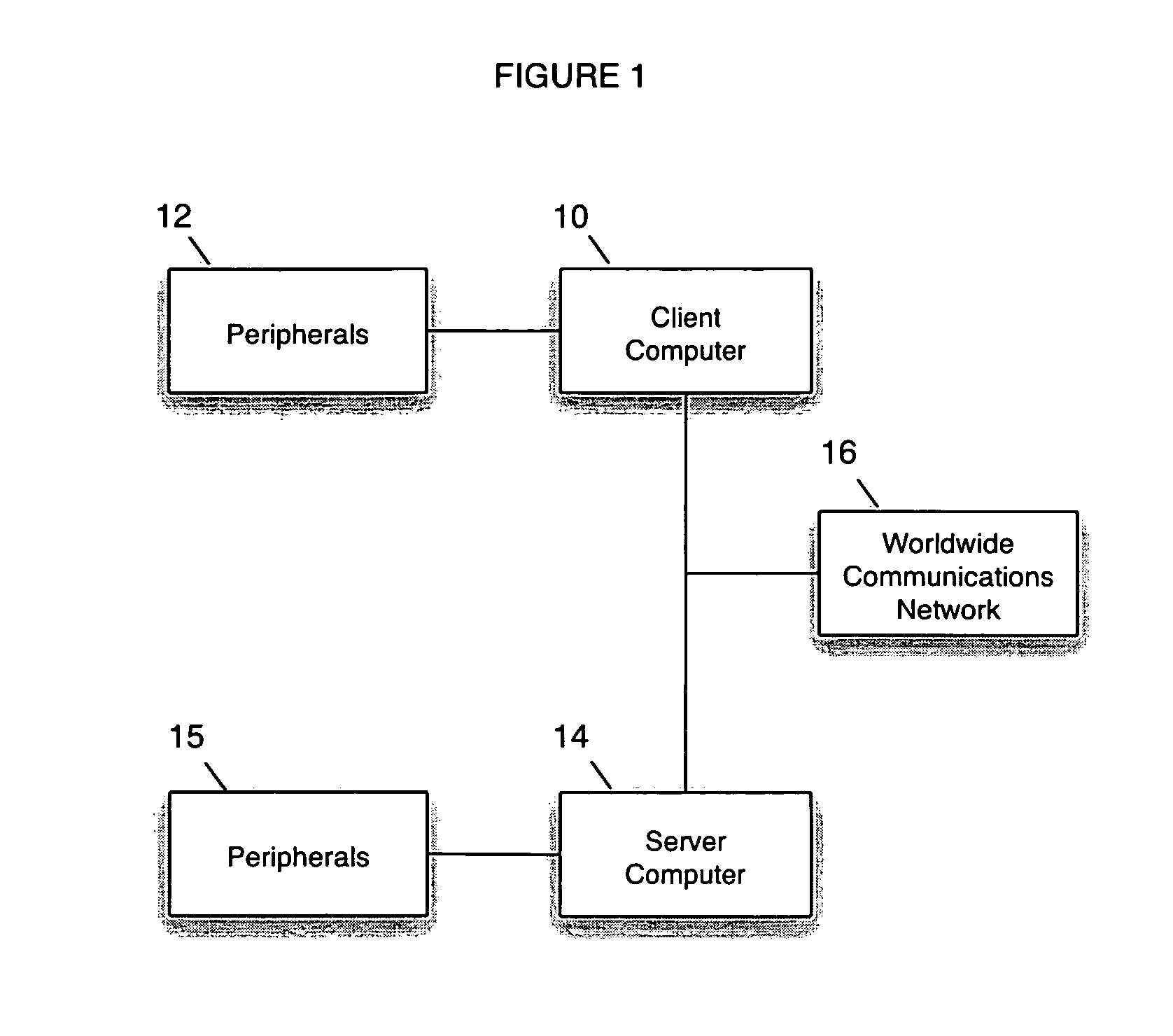

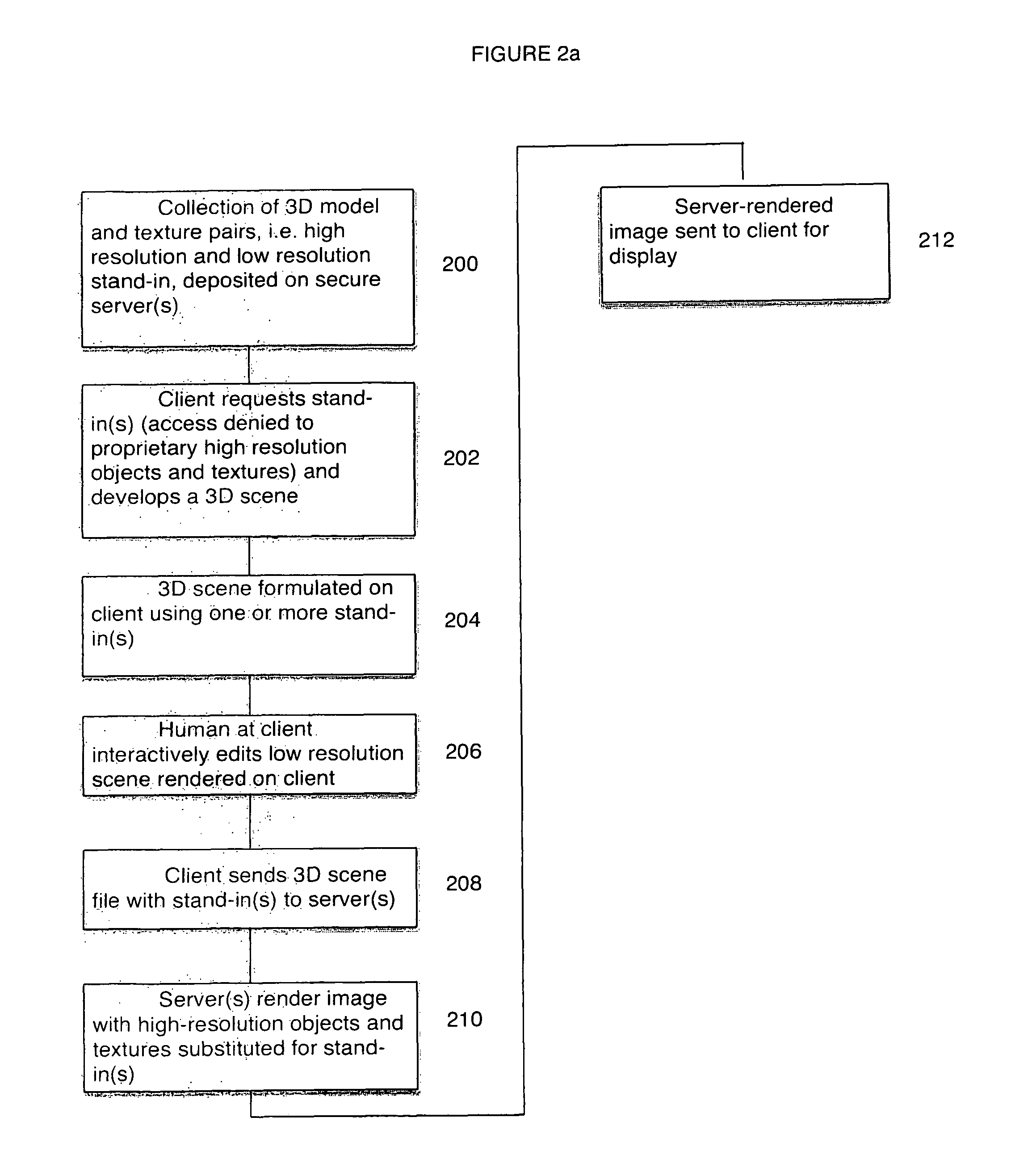

Network repository of digitalized 3D object models, and networked generation of photorealistic images based upon these models

InactiveUS8930844B2Efficiencies in computer hardware and softwareMaintenance of sameDigital data information retrievalCAD network environmentGraphicsNetwork link

In a network-linked computer graphics image rendering system serving to render images of objects in scenes, these objects are so rendered from high-resolution 3D models and textures that are, in particular, stored and maintained on one or more server computers in one or more libraries that are secure. Using stand-in object models and textures, design professionals at client computers are able to “fine-tune” and preview designs that incorporate objects stored securely in the server's(s') models' library(ies). Yet the high-resolution, 3D, relatively expensive, and proprietary object models remain completely secure at one (i.e., centralized) or more (i.e., distributed) server computers. 2D perspective-view or stereo in-situ photorealistic images of scenes incorporating these objects are rendered at the one or more sever computers, for subsequent remote viewing at the one or more client computers.

Owner:CARLIN BRUCE

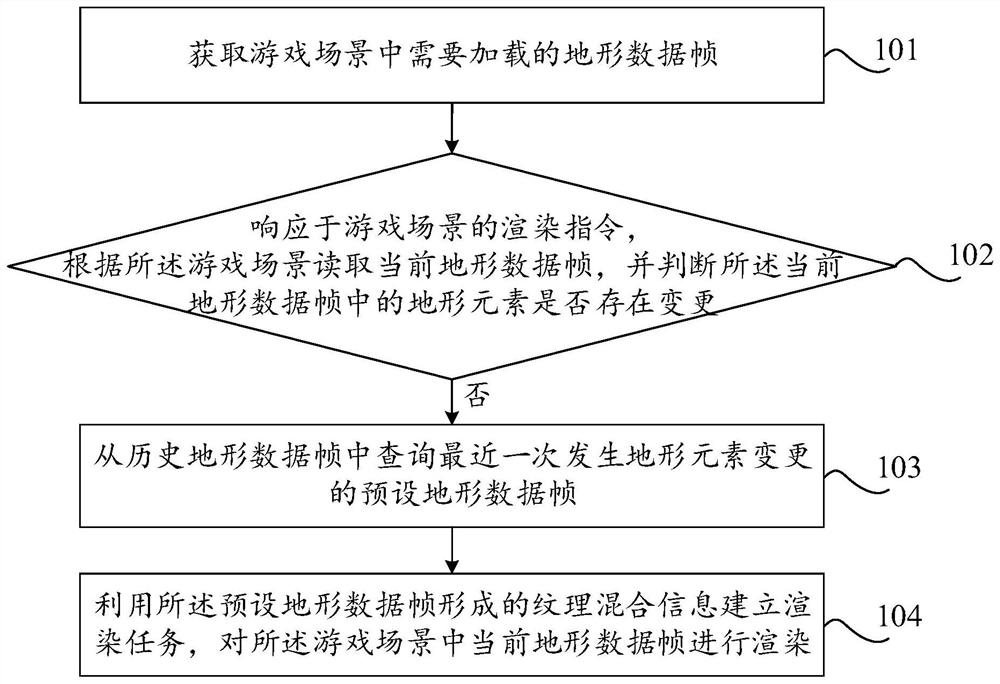

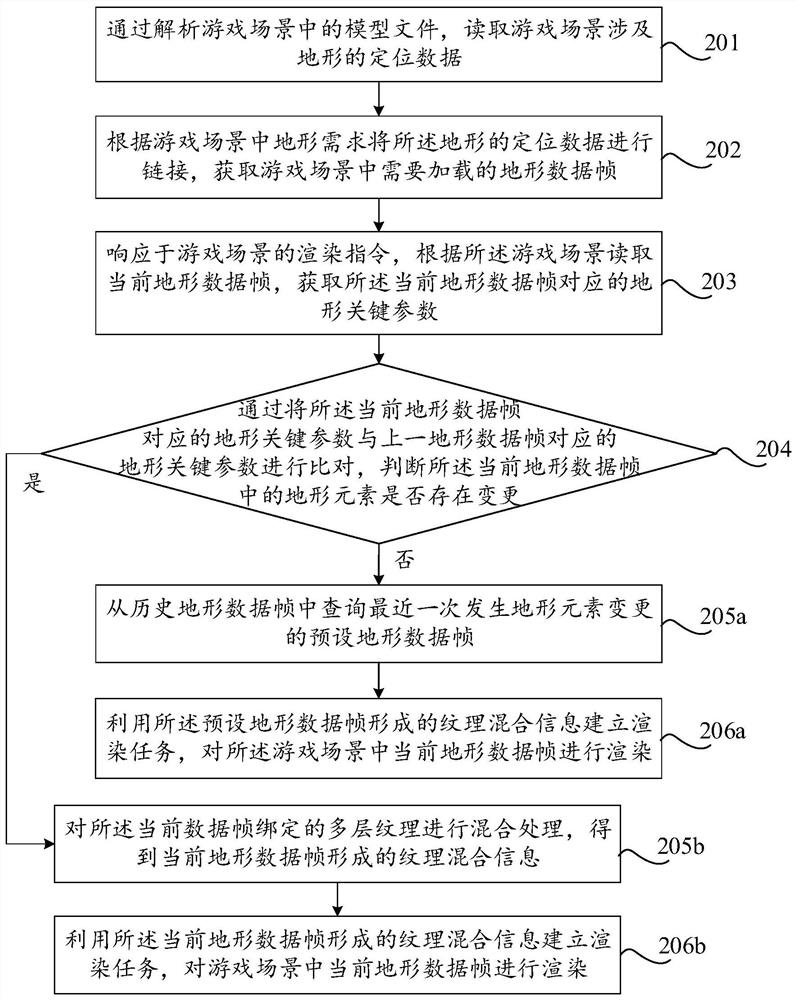

Game scene rendering method and device, and equipment

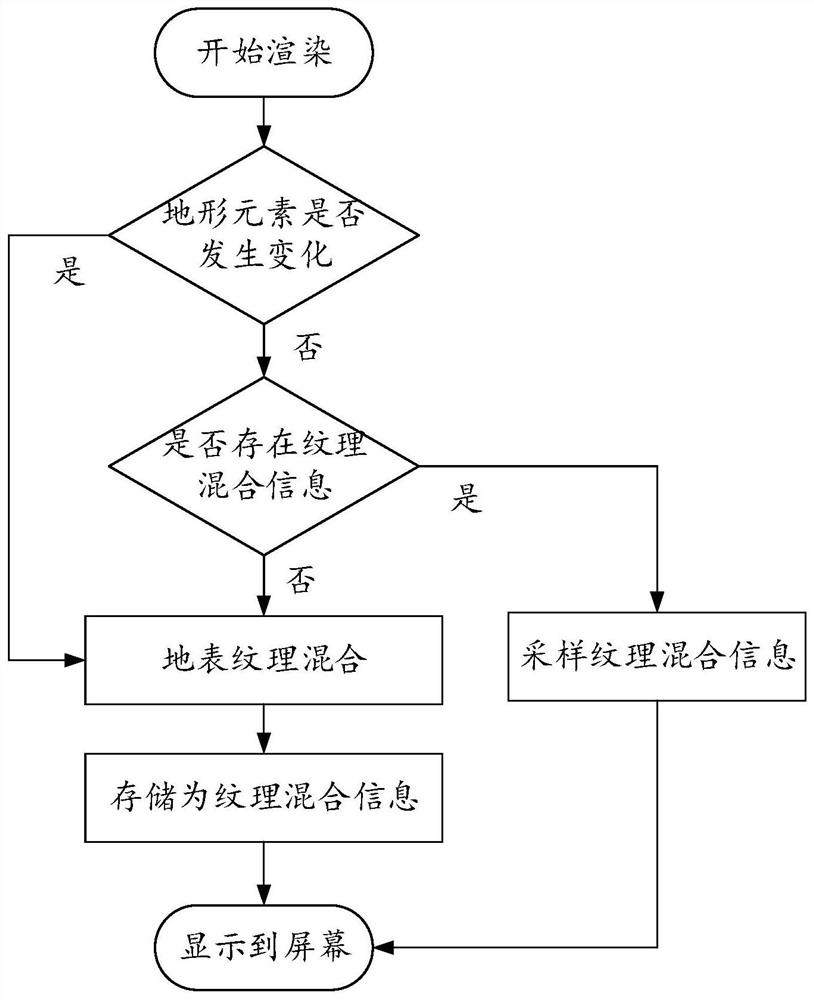

PendingCN112169324AHigh speedReduce time consumptionImage memory managementVideo gamesTerrainTexture rendering

The invention discloses a game scene rendering method and device, and equipment, relates to the technical field of 3D rendering; execution of multi-texture hybrid operation on each frame of terrain scene is not needed, the time occupation of a texture rendering process is reduced, and the rendering speed of a game scene is increased. The method comprises the steps: acquiring terrain data frames needing to be loaded in a game scene; in response to a rendering instruction of a game scene, reading a current terrain data frame according to the game scene, and judging whether terrain elements in the current terrain data frame are changed or not; if not, querying a preset topographic data frame in which topographic element change occurs most recently from the historical topographic data frames;and establishing a rendering task by utilizing texture mixed information formed by the preset terrain data frame, and rendering the current terrain data frame in the game scene.

Owner:BEIJING PERFECT WORLD SOFTWARE TECH DEV CO LTD

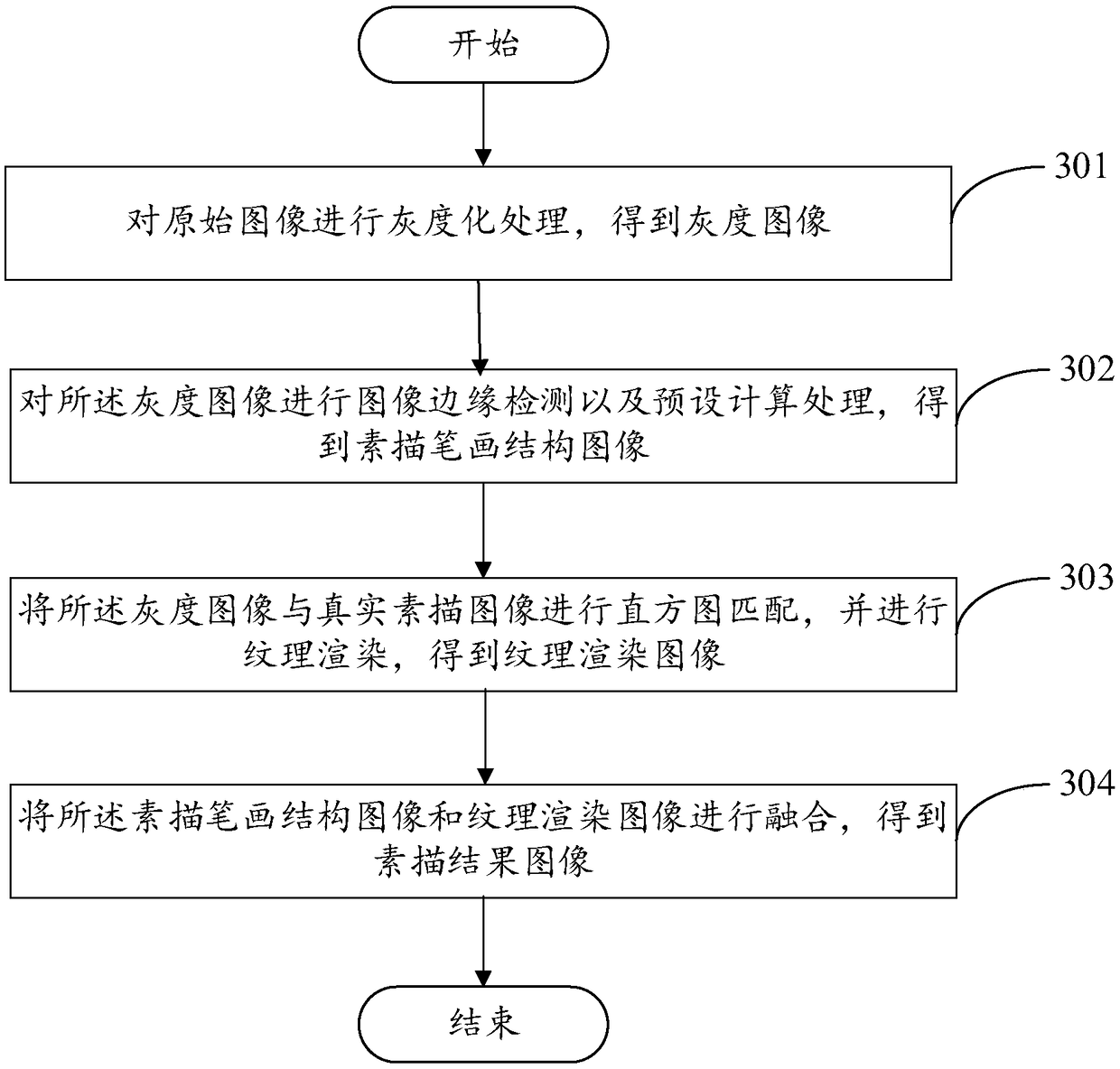

Image processing method, mobile terminal and computer-readable storage medium

InactiveCN109300099AContinuous edge profileHas a sense of lineImage enhancementImage analysisTexture renderingImaging processing

The invention discloses an image processing method. The method comprises the following steps: grayscale processing is carried out on an original image to obtain a grayscale image; Performing image edge detection and preset calculation on the gray-scale image to obtain a sketch stroke structure image; Matching the gray-scale image and the real sketch image with a histogram, and performing texture rendering to obtain a texture rendering image; The sketch stroke structure image and the texture rendering image are fused to obtain a sketch result image. In addition, the invention also discloses a mobile terminal and a computer-readable storage medium. Thus, the sketch effect image edge contour generated by the image processing method provided by the invention is continuous, has a line feeling,and is closer to the real sketch image.

Owner:NUBIA TECHNOLOGY CO LTD

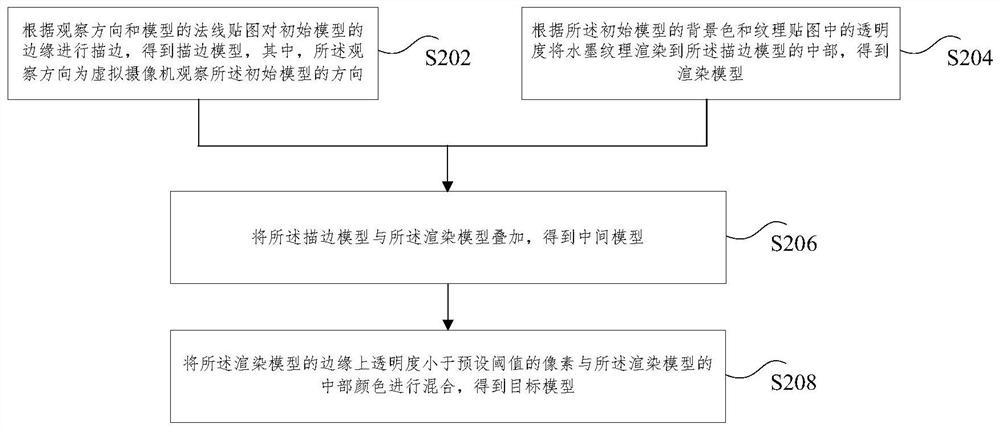

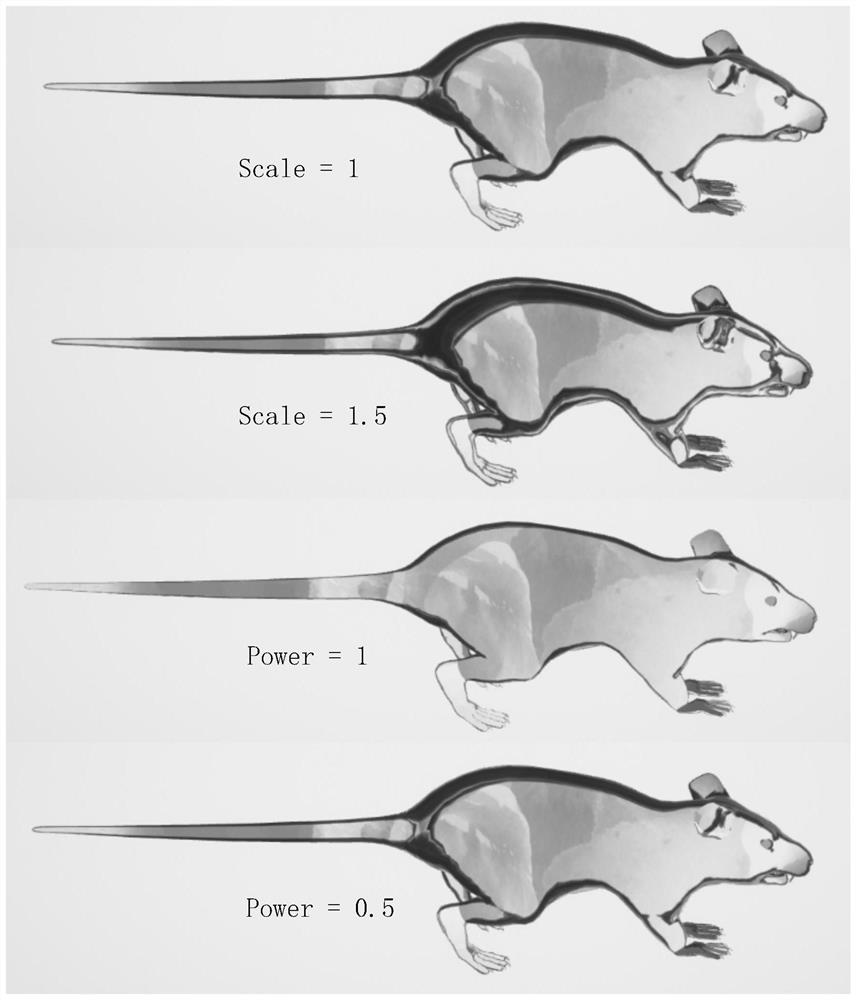

Model rendering method and device

ActiveCN112070873AImprove image qualityTroubleshoot technical issues with poor image quality3D-image renderingTexture renderingAlgorithm

The invention relates to a model rendering method and device, and the method comprises the steps: carrying out the stroking of the edge of an initial model according to an observation direction and anormal map of the initial model, and obtaining a stroking model, the observation direction being the direction in which a virtual camera observes the initial model; rendering the ink texture to the middle part of the initial model according to the background color of the initial model and the transparency in the texture map to obtain a rendering model; superposing the stroking model and the rendering model to obtain an intermediate model; and mixing the pixels of which the transparency is less than a preset threshold on the edge of the intermediate model with the middle color of the intermediate model to obtain a target model. According to the invention, the technical problem that the quality of an image obtained after the model is rendered in an ink-water style is relatively poor is solved.

Owner:BEIJING PERFECT WORLD SOFTWARE TECH DEV CO LTD

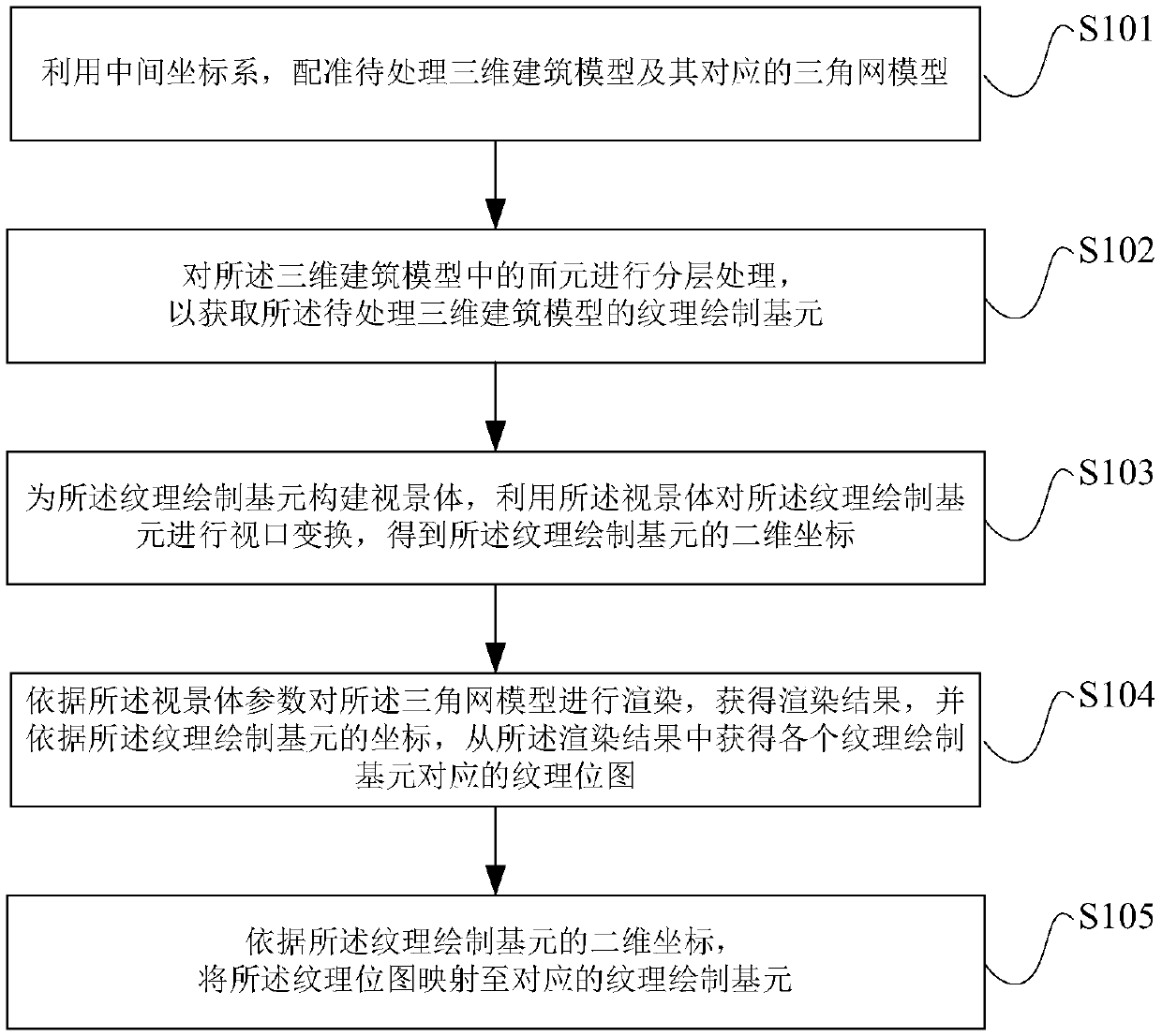

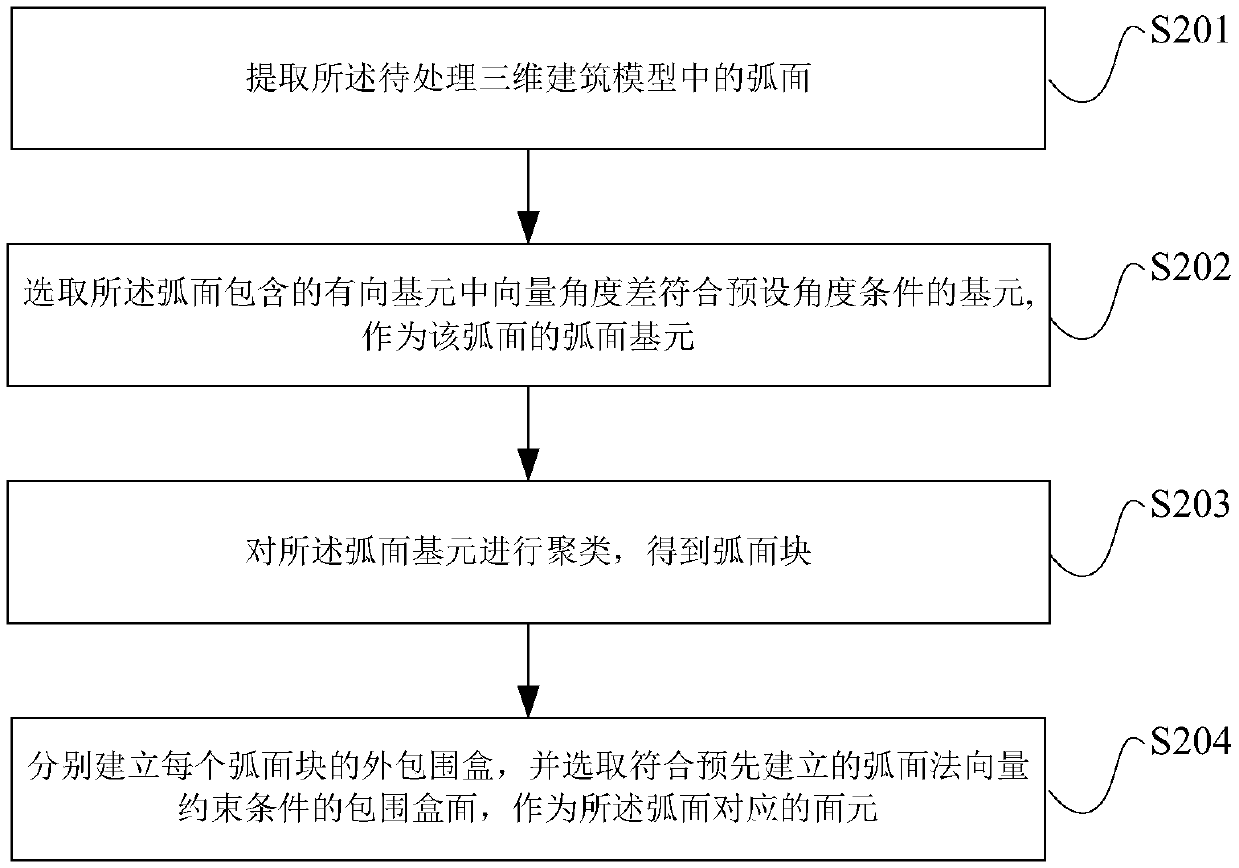

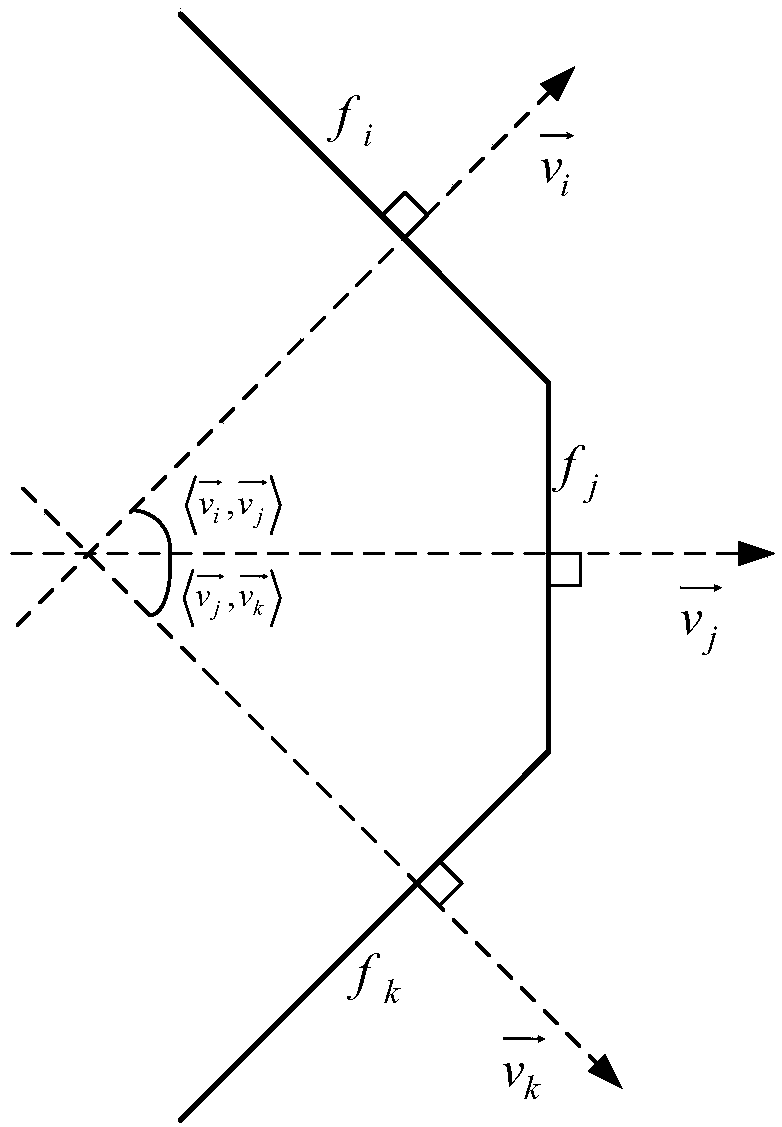

A method and apparatus for texture mapping of a three-dimensional building model

PendingCN109544672AHigh degree of automationHigh precision3D-image renderingTexture renderingComputer graphics (images)

A method and apparatus for texture mapping of a three-dimensional building model are provide. in this method, At first, that three-dimensional build model and the triangular network model are registered, and then the texture rendering primitive corresponding to the three-dimensional building model is obtained through layered processing, and the texture rendering primitive is converted into two-dimensional coordinates, then texture information is extracted from the triangulation model, and then the texture information is mapped to the texture rendering primitive of the three-dimensional building model by using the two-dimensional coordinates, so as to realize the texture mapping of the three-dimensional building model, in this process, more accurate texture information can be obtained fromthe triangulation model; the texture rendering primitive obtained by layering the surface elements of the 3D building model can avoid the problem of poor texture mapping effect caused by the occlusionbetween the layers, so the method can significantly improve the automation degree and accuracy of texture mapping of the fine 3D building model, and can meet the higher requirements.

Owner:胡翰

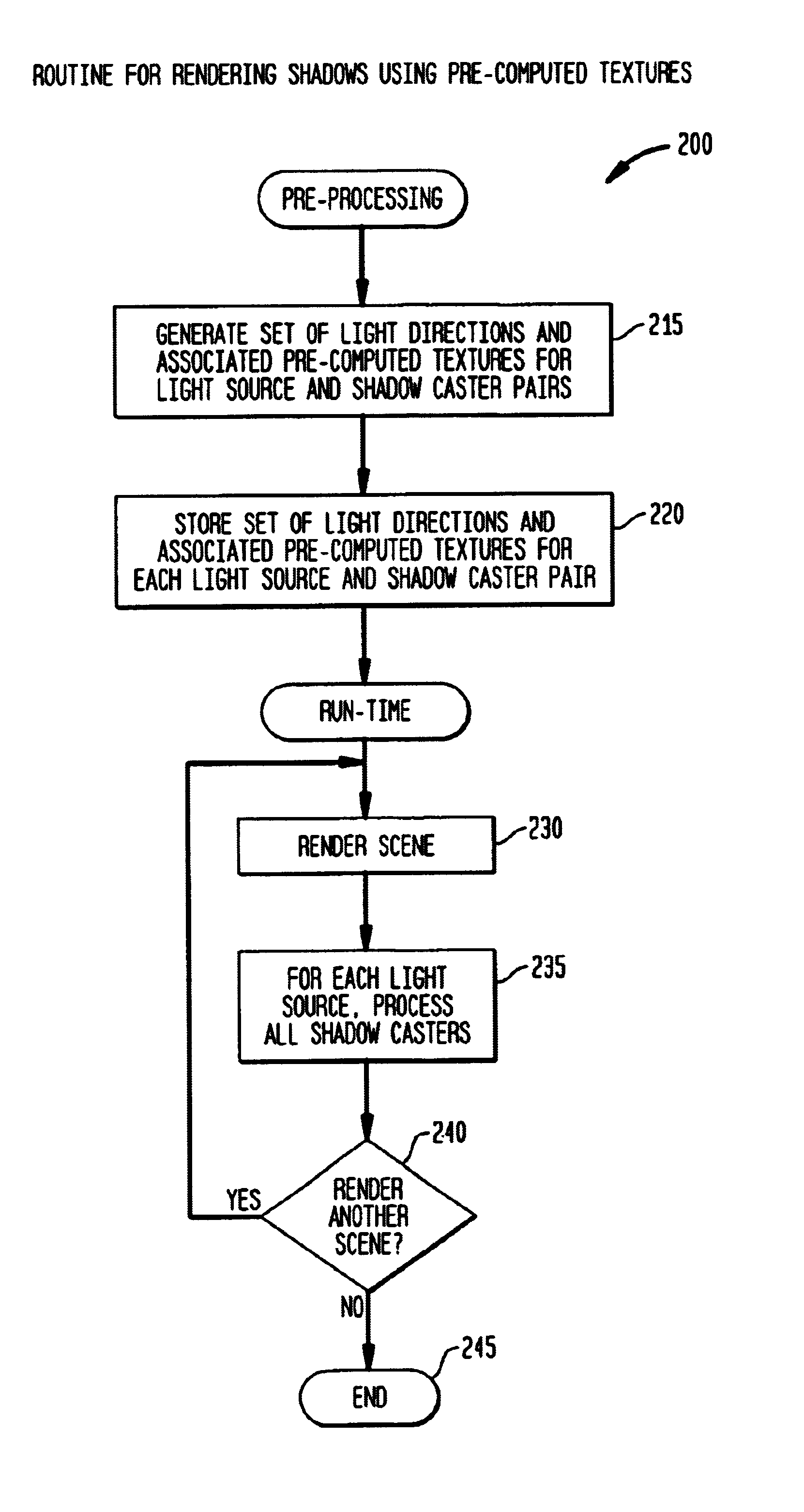

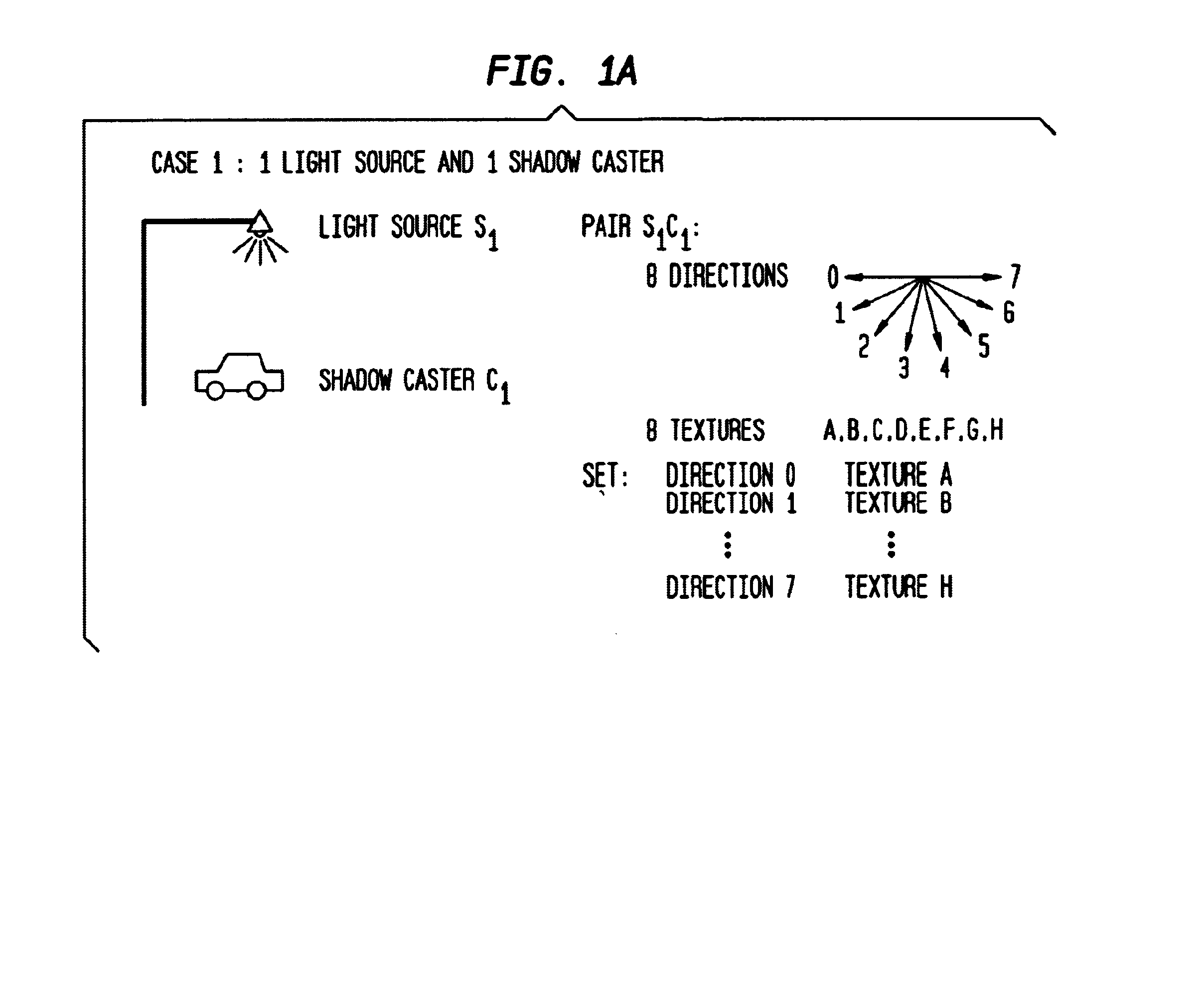

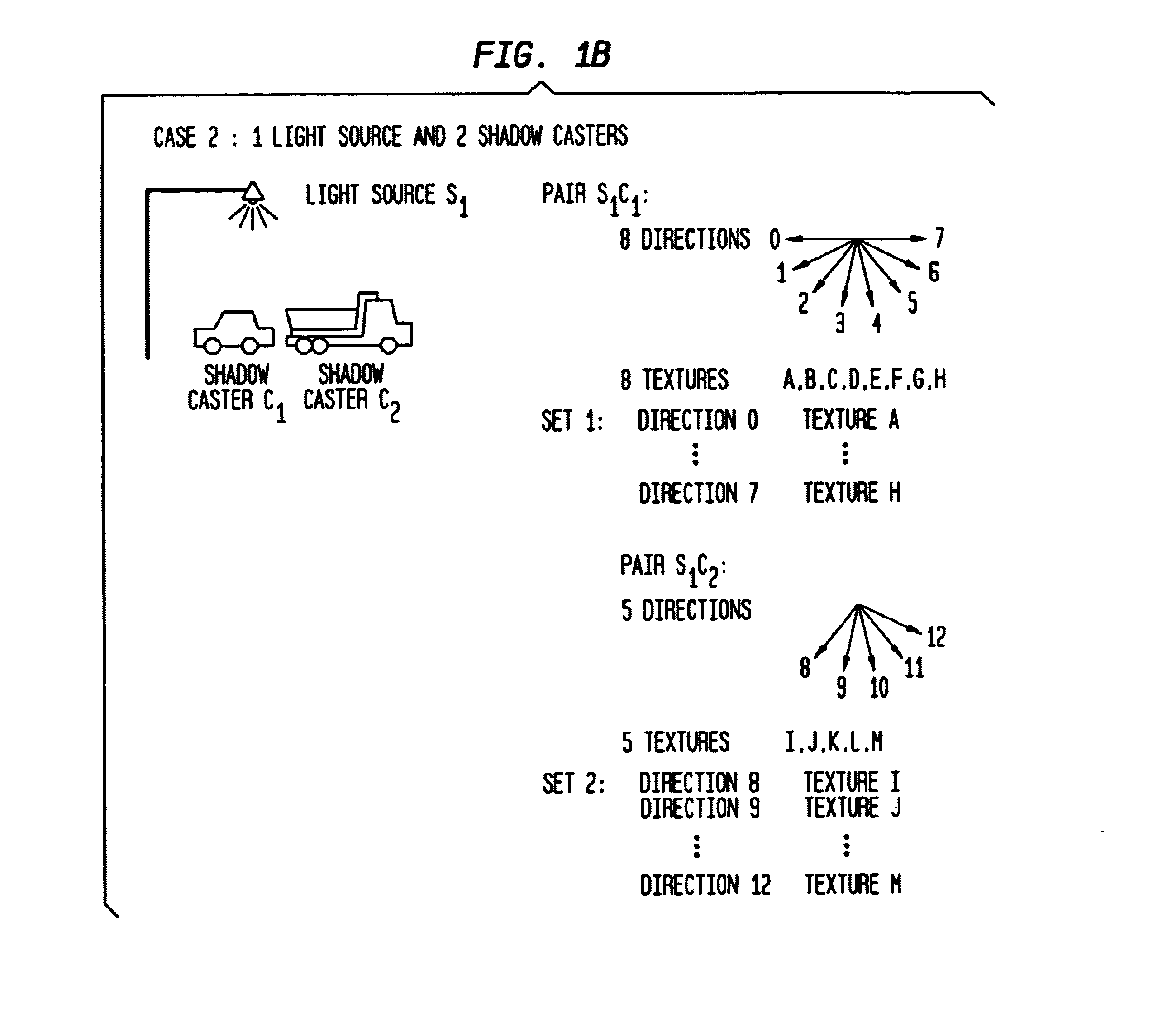

System and method for implementing shadows using pre-computed textures

The present invention provides an improved system and method for rendering shadows in a computer graphics system. Textures representing the area of influence resulting from a combination of light sources and shadow casters are pre-computed. Scenes are then rendered using the pre-computed textures. A first step entails generating sets of directions and associated pre-computed textures for each light source and shadow caster pair in a simulation frame. Next, a first scene in the simulation is rendered. During this step one or more of the pre-computed textures are used to darken the area of influence or shadow portion of the scene.

Owner:RPX CORP +1

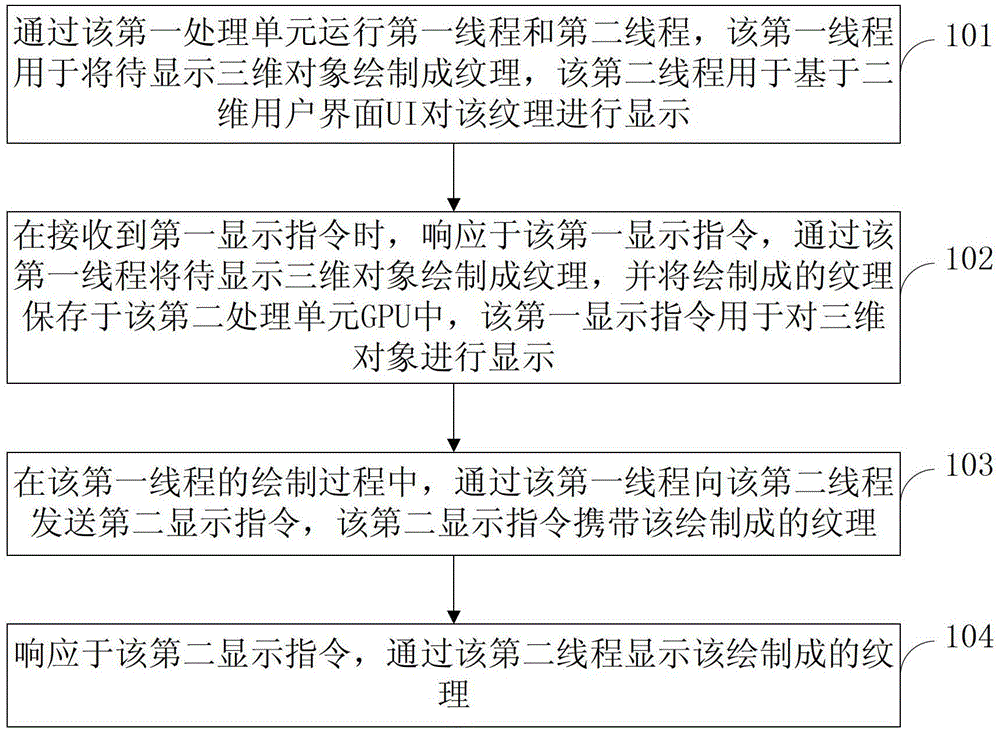

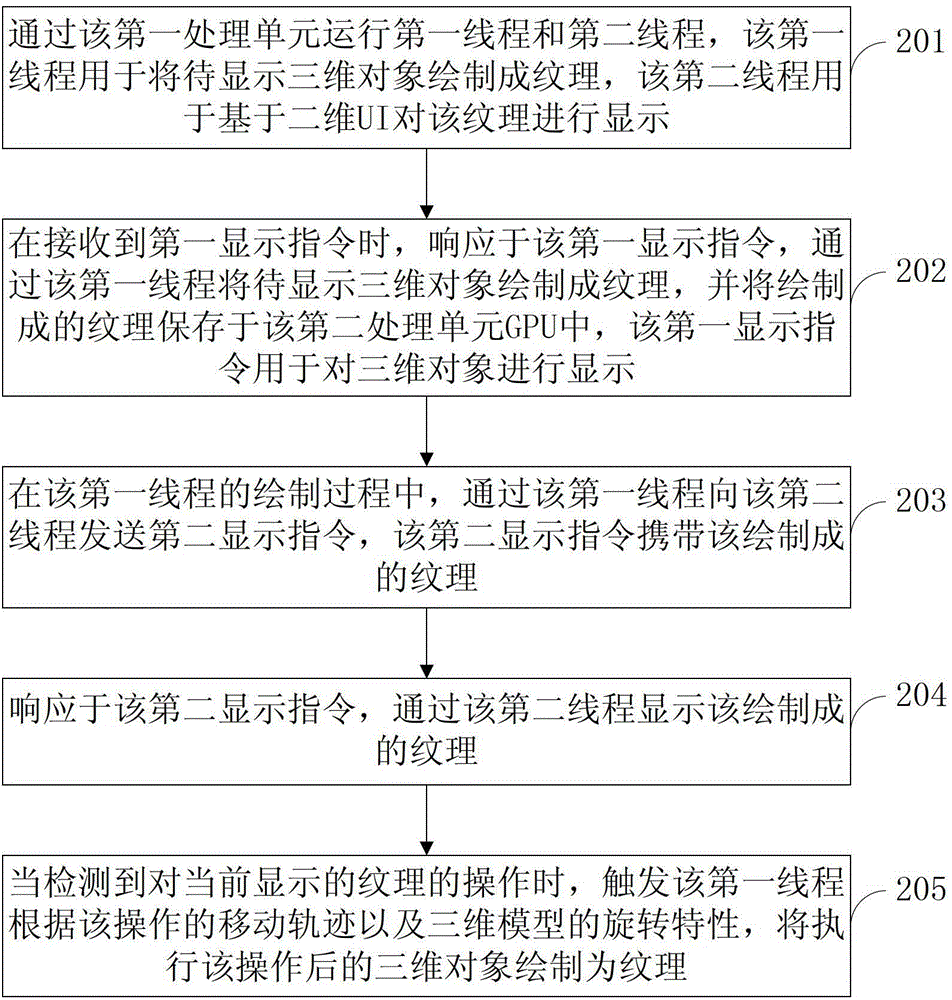

Three-dimensional object display method and device

ActiveCN104424661AAvoid occupyingDetails involving 3D image dataProcessor architectures/configurationTexture renderingGraphics processing unit

The invention discloses a three-dimensional object display method and device and belongs to the three-dimensional display technical field. The three-dimensional object display method includes the following steps that: a first thread and a second thread are operated through a first processing unit; when a first display instruction is received, response is made for the first display instruction, and a three-dimensional object to be displayed is rendered into textures through the first thread, and the rendered textures are saved in a second processing unit GPU (Graphic Processing Unit); in the rendering process of the first thread, a second display instruction is transmitted to the second thread through the first thread, wherein the second display instruction carries the rendered textures; and response is made for the second display instruction, and the rendered textures are displayed through the second thread. According to the three-dimensional object display method and device of the invention, the textures are adopted as a cache, and the three-dimensional object is displayed in a two dimensional UI (User Interface), and texture rendering speed is increased by 3 to 5 times compared with bitmap rendering speed; and the GPU distributes resources for the textures, and therefore, memory resource occupation can be avoided.

Owner:LENOVO (BEIJING) LTD

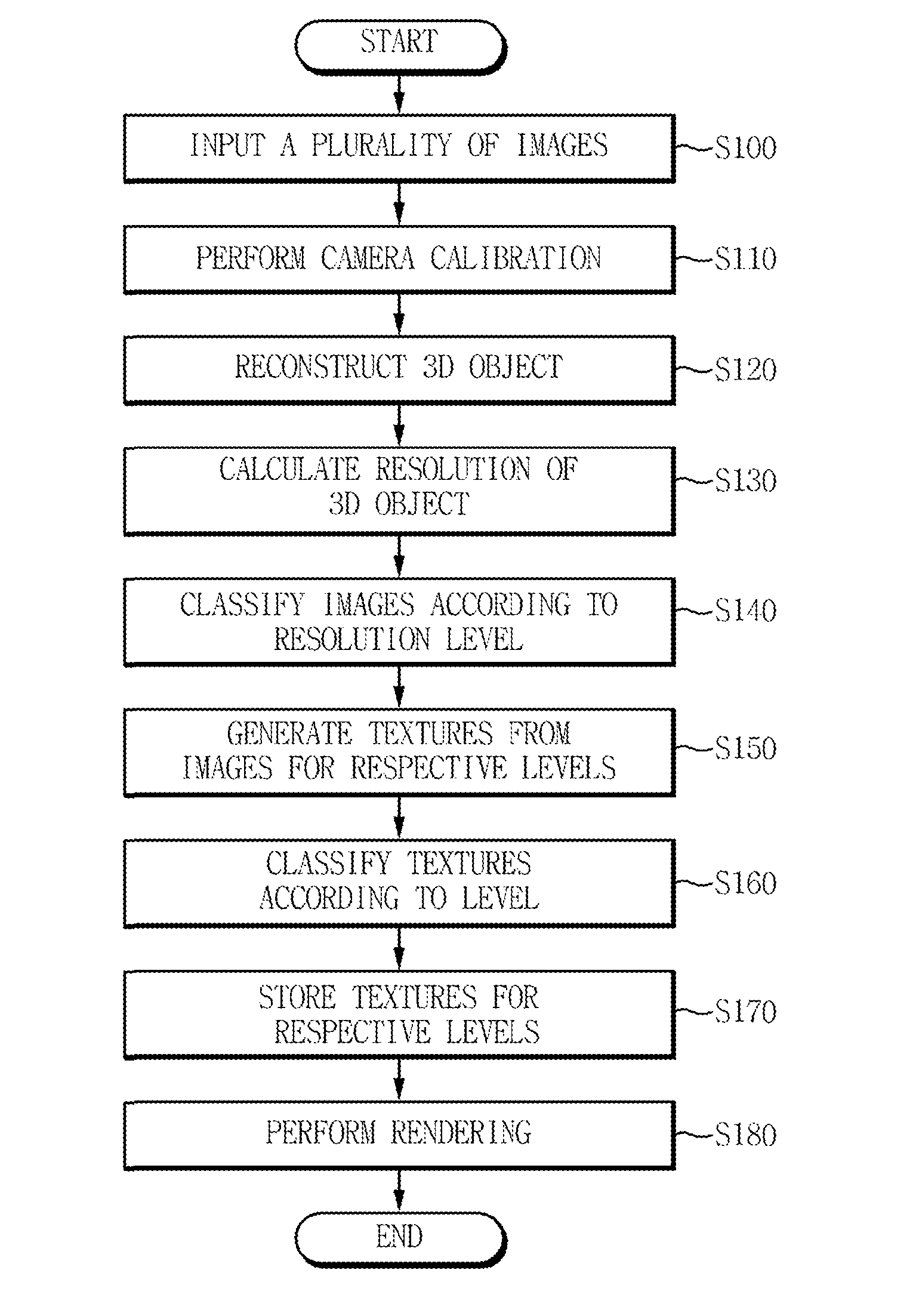

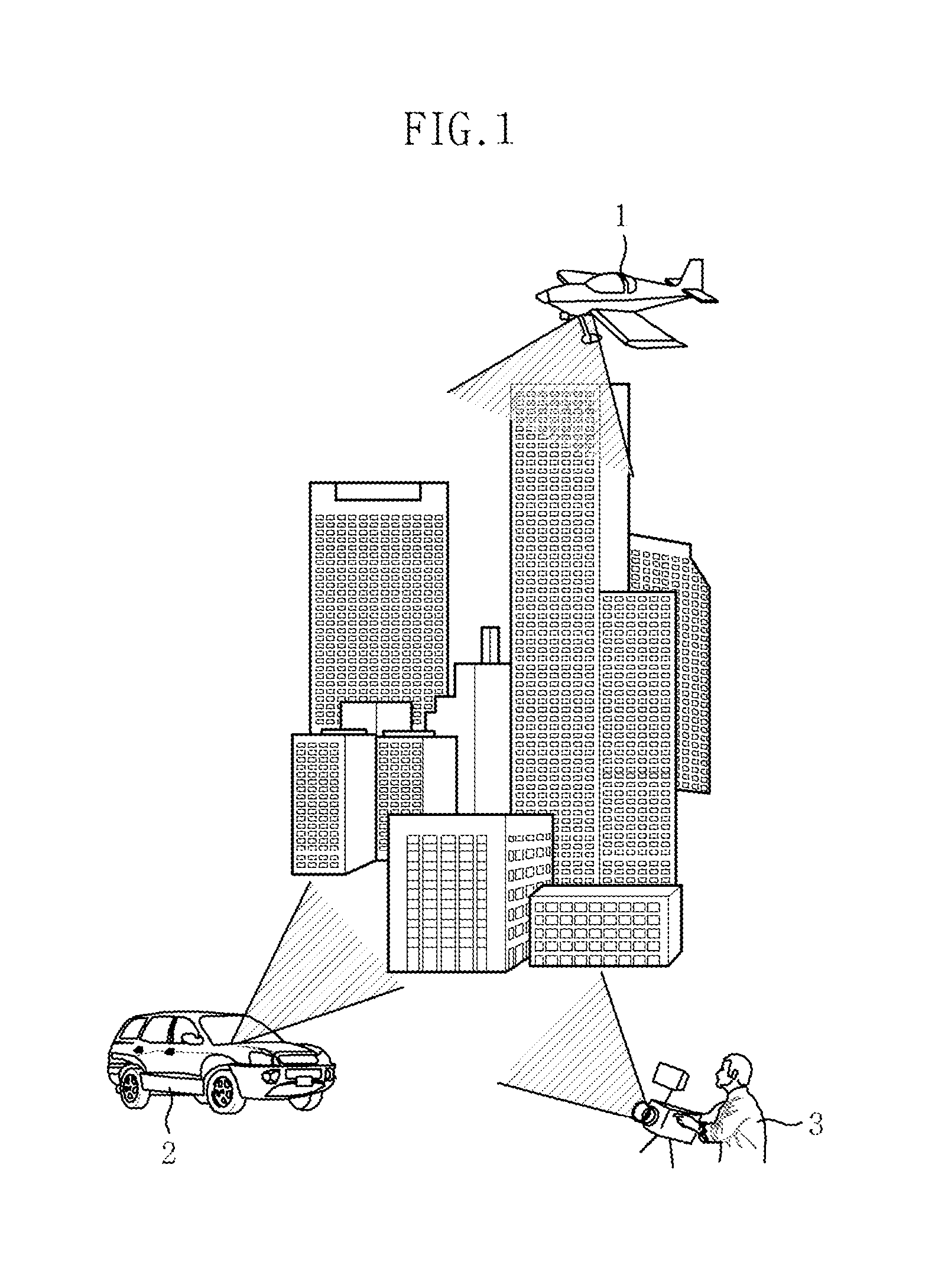

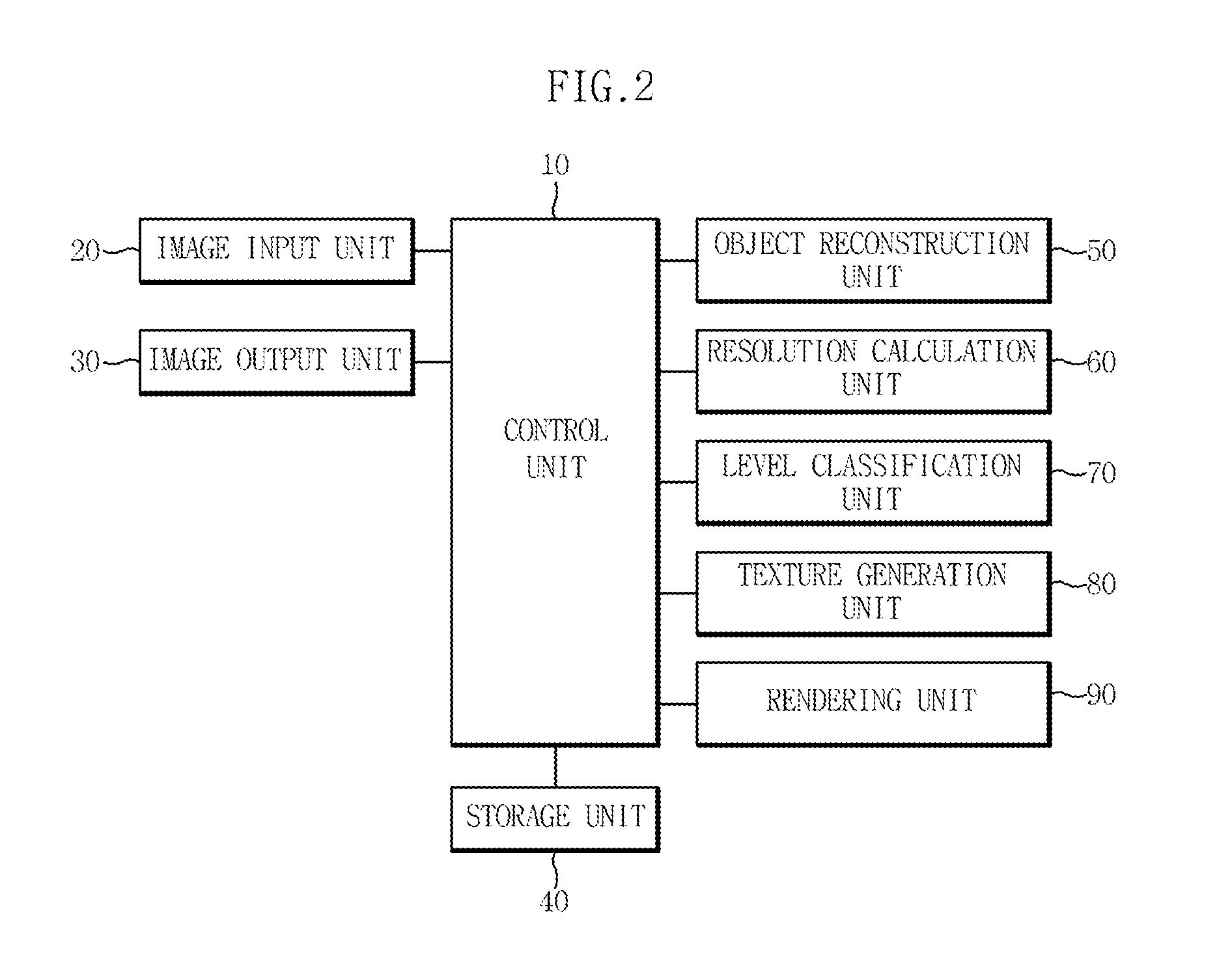

Apparatus and method for generating texture of three-dimensional reconstructed object depending on resolution level of two-dimensional image

InactiveUS20120162215A1Minimizes problemDetails involving processing stepsTexturing/coloringImage resolution3D reconstruction

Owner:ELECTRONICS & TELECOMM RES INST

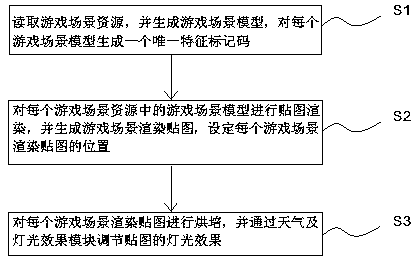

A game scene rendering method

PendingCN108986194AImprove lighting effectsImprove realismTexturing/coloring3D-image renderingTexture renderingComputer graphics (images)

The invention discloses a game scene rendering method. The rendering method specifically comprises the following steps: reading game scene resources, generating game scene models, and generating a unique characteristic mark code for each game scene model; carrying out texture rendering on the game scene model in each game scene resource, generating a game scene rendering texture, and setting the position of each game scene rendering texture; baking each game scene rendering map, and adjusting the lighting effect of the map through the weather and lighting effect module. The method divides andrenders the map module of the game scene, bakes the map of each scene, enhances the lighting effect and the weather effect of the game scene, and enhances the realistic feeling of the game experience.

Owner:HEFEI AIWAN CARTOON CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com