Patents

Literature

2993 results about "Graphics processing unit" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

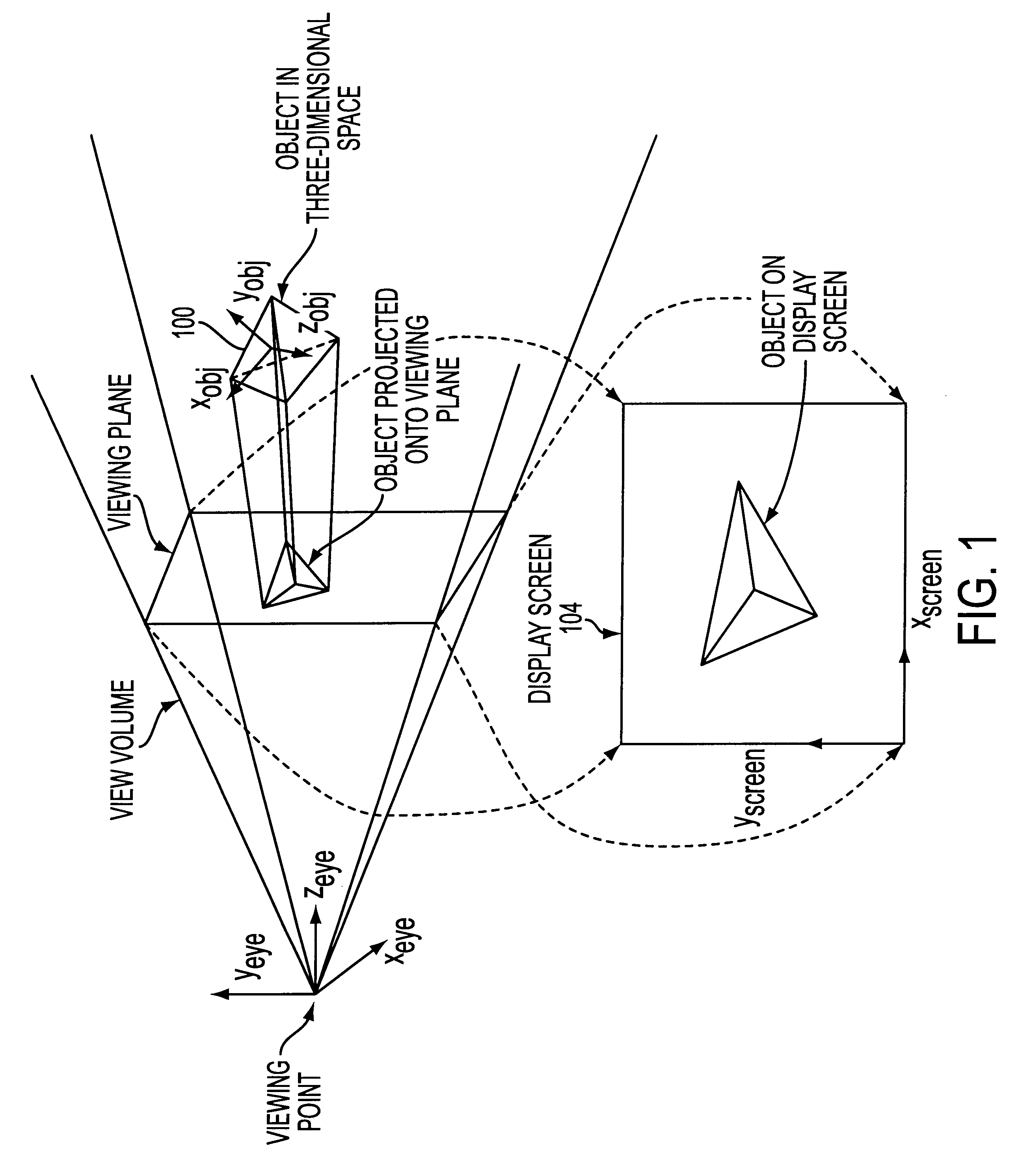

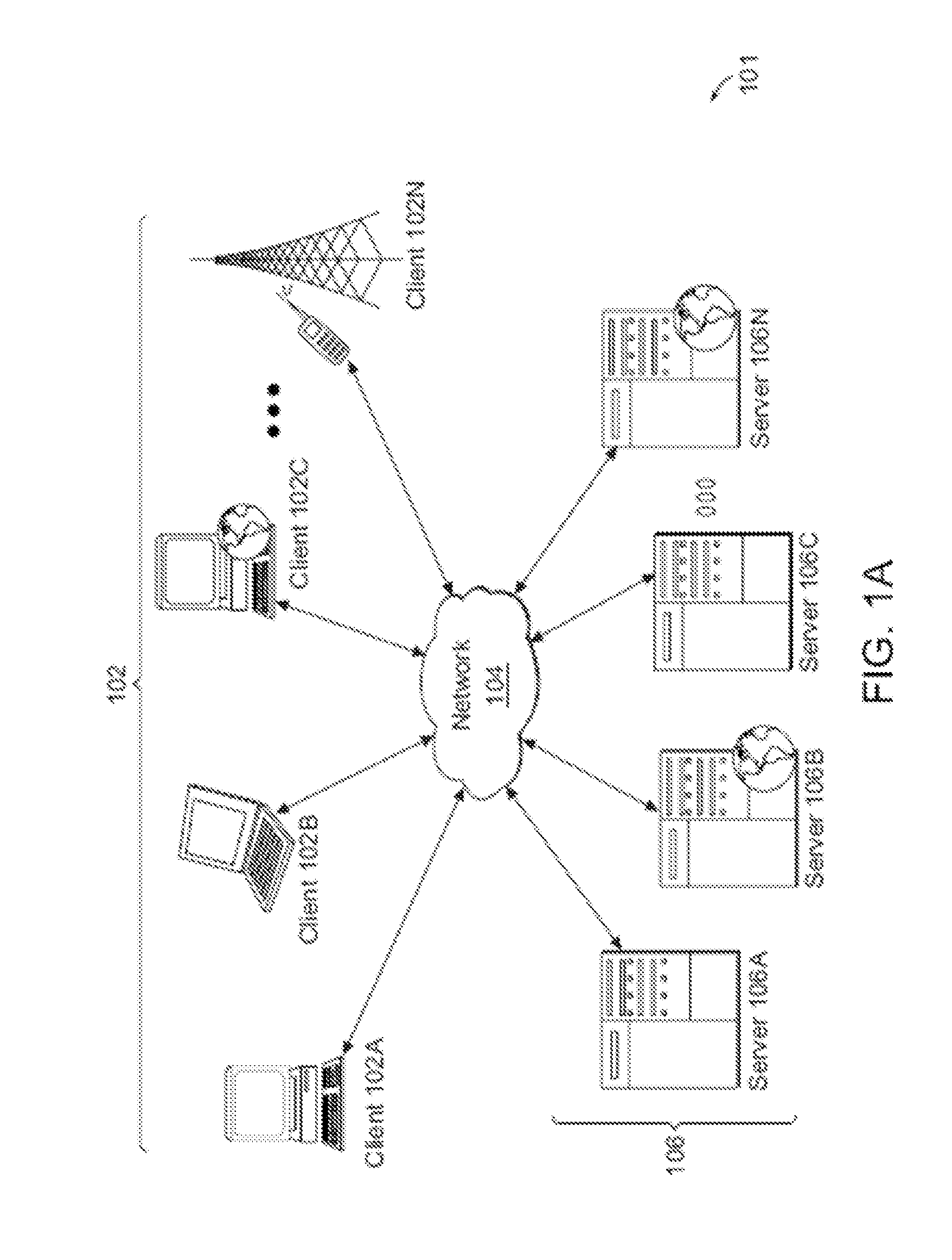

A graphics processing unit (GPU) is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device. GPUs are used in embedded systems, mobile phones, personal computers, workstations, and game consoles. Modern GPUs are very efficient at manipulating computer graphics and image processing. Their highly parallel structure makes them more efficient than general-purpose central processing units (CPUs) for algorithms that process large blocks of data in parallel. In a personal computer, a GPU can be present on a video card or embedded on the motherboard. In certain CPUs, they are embedded on the CPU die.

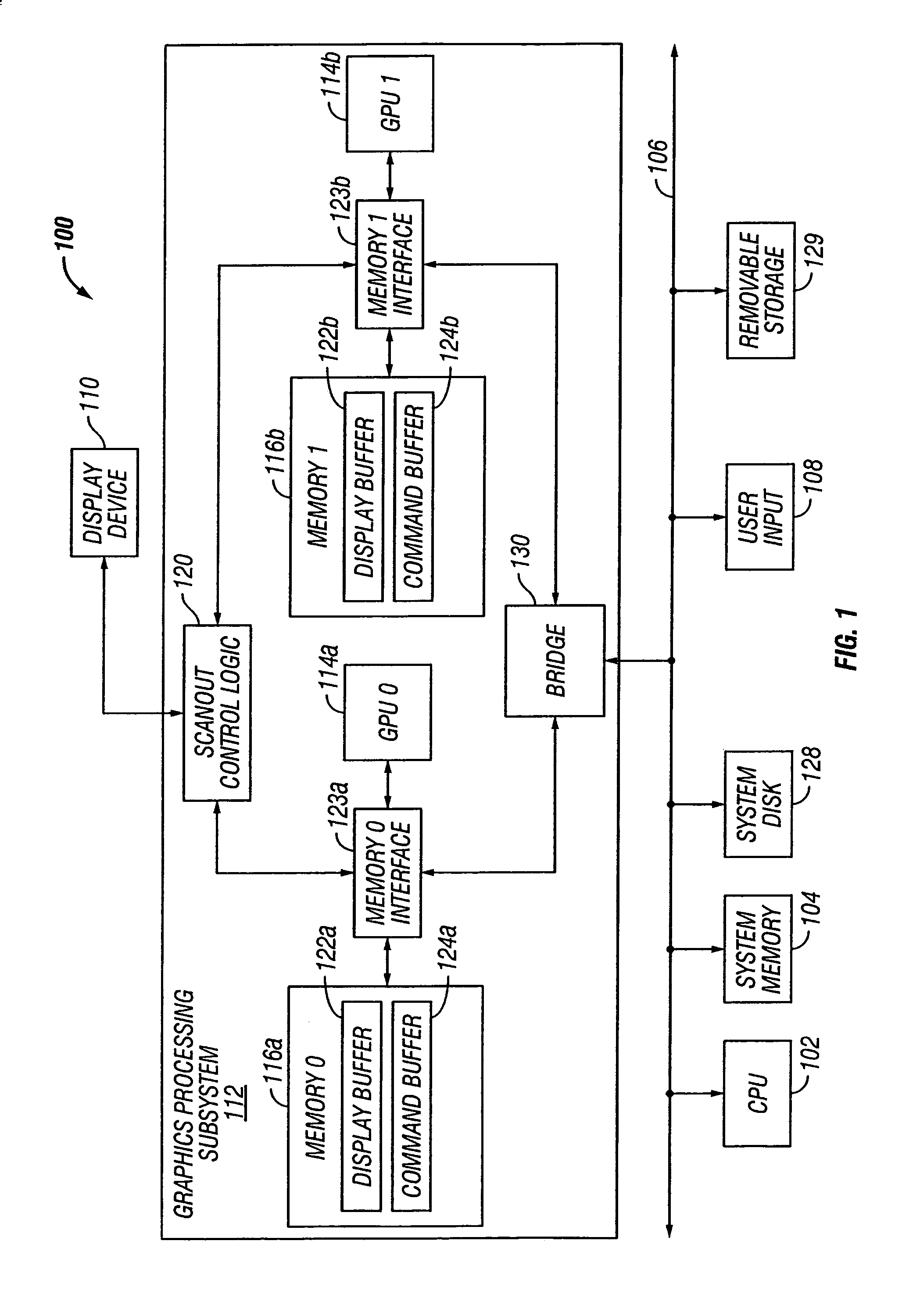

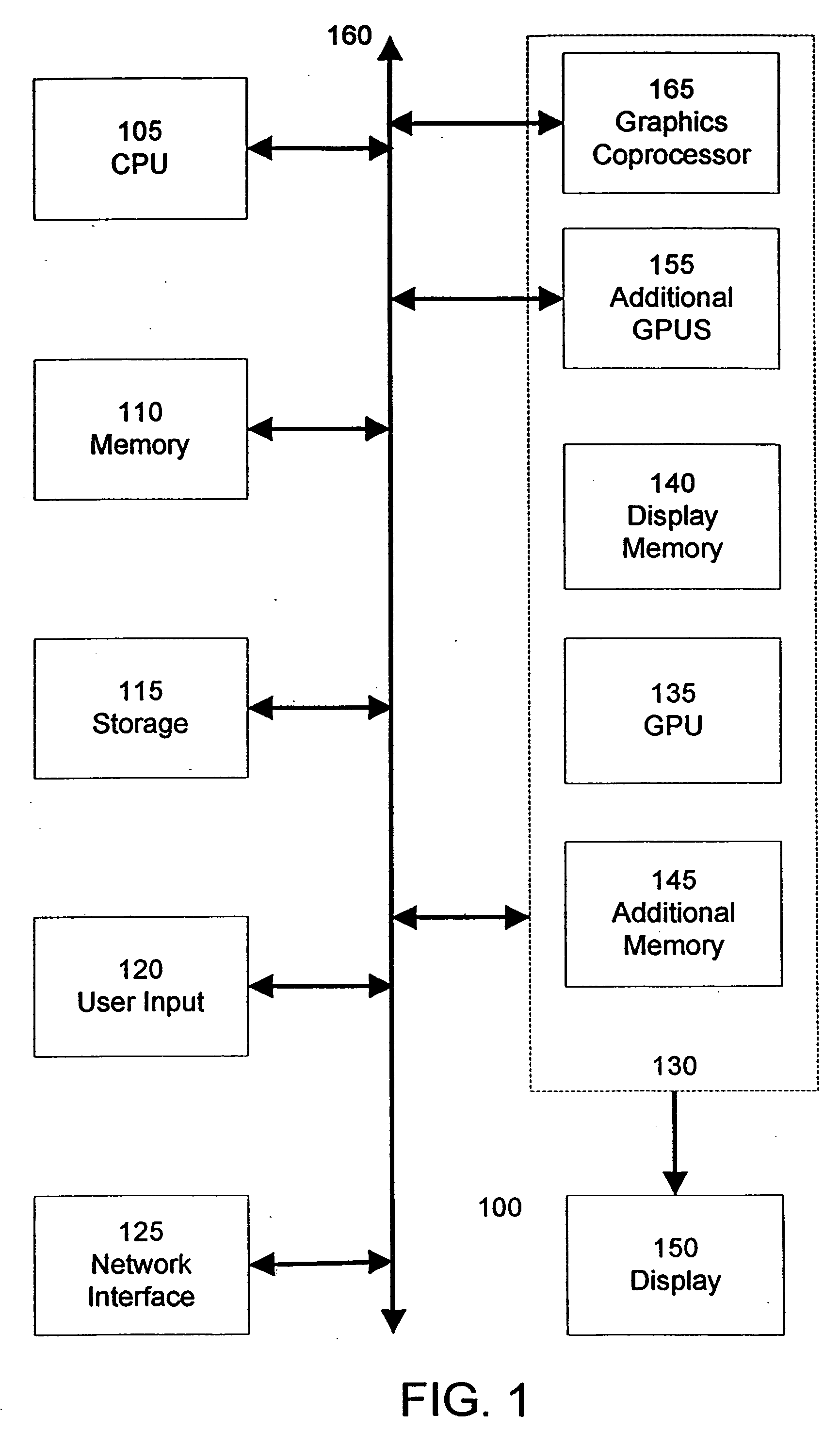

Systems and methods for virtualizing graphics subsystems

ActiveUS20060146057A1Program control using stored programsProcessor architectures/configurationVirtualizationOperational system

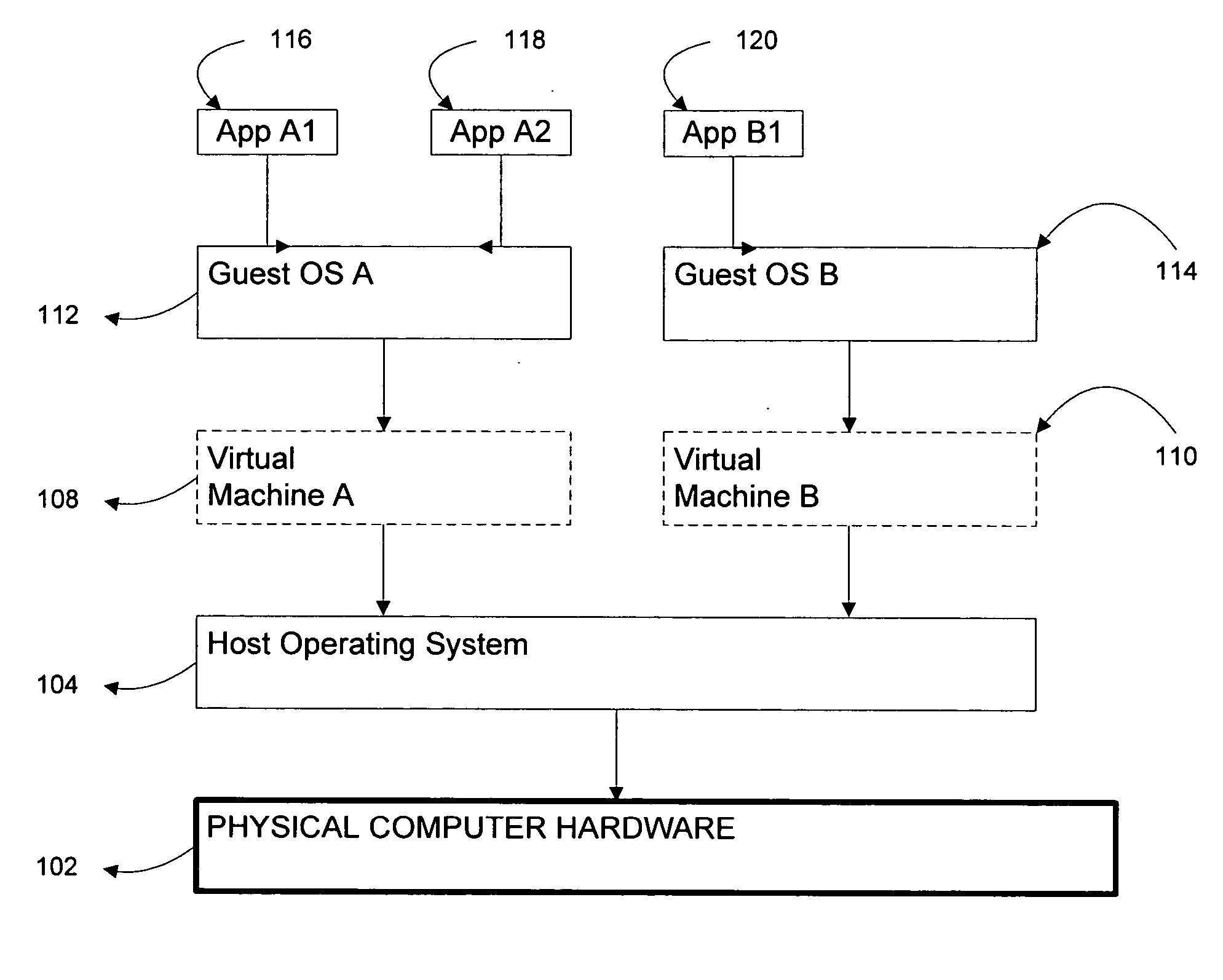

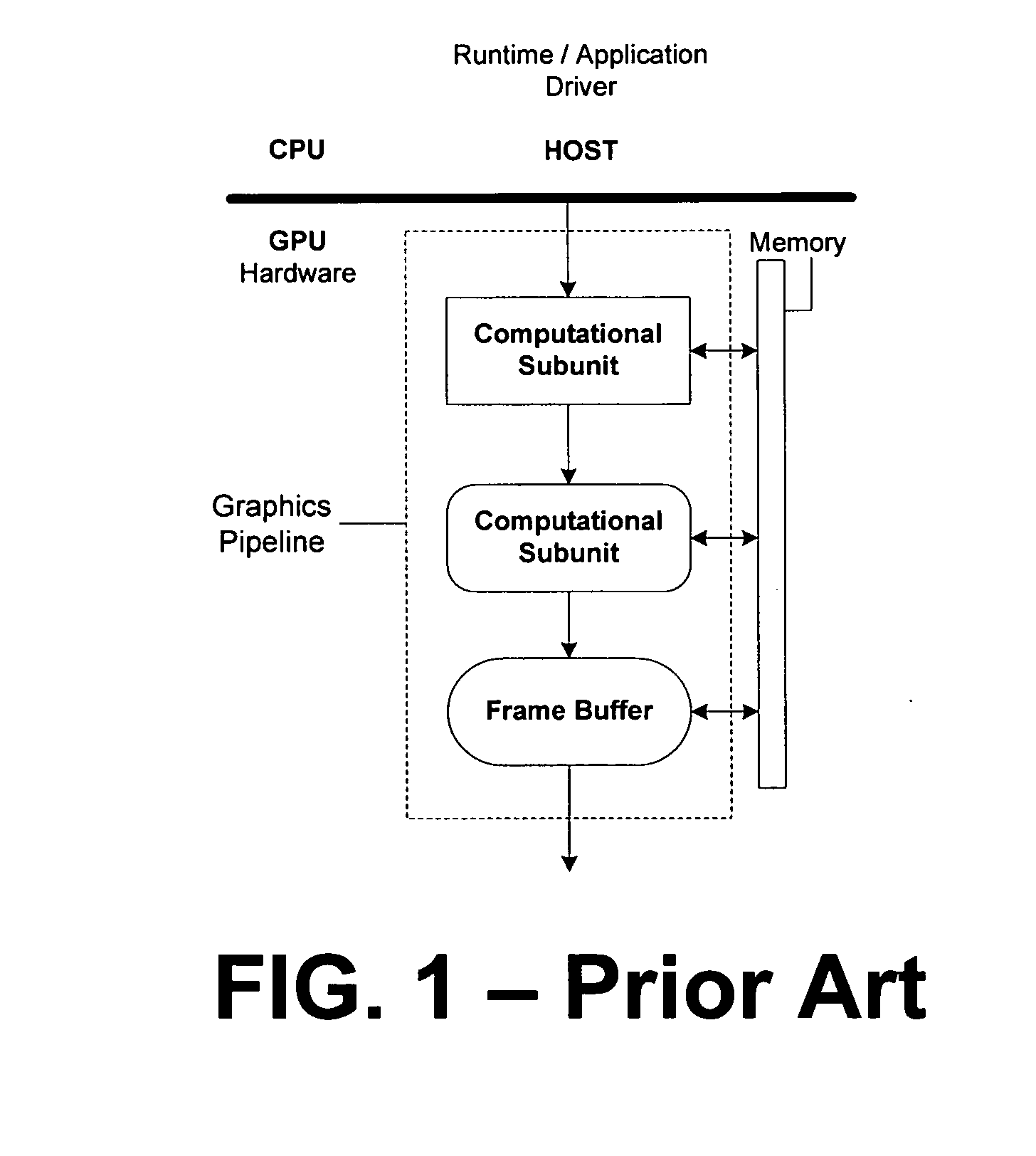

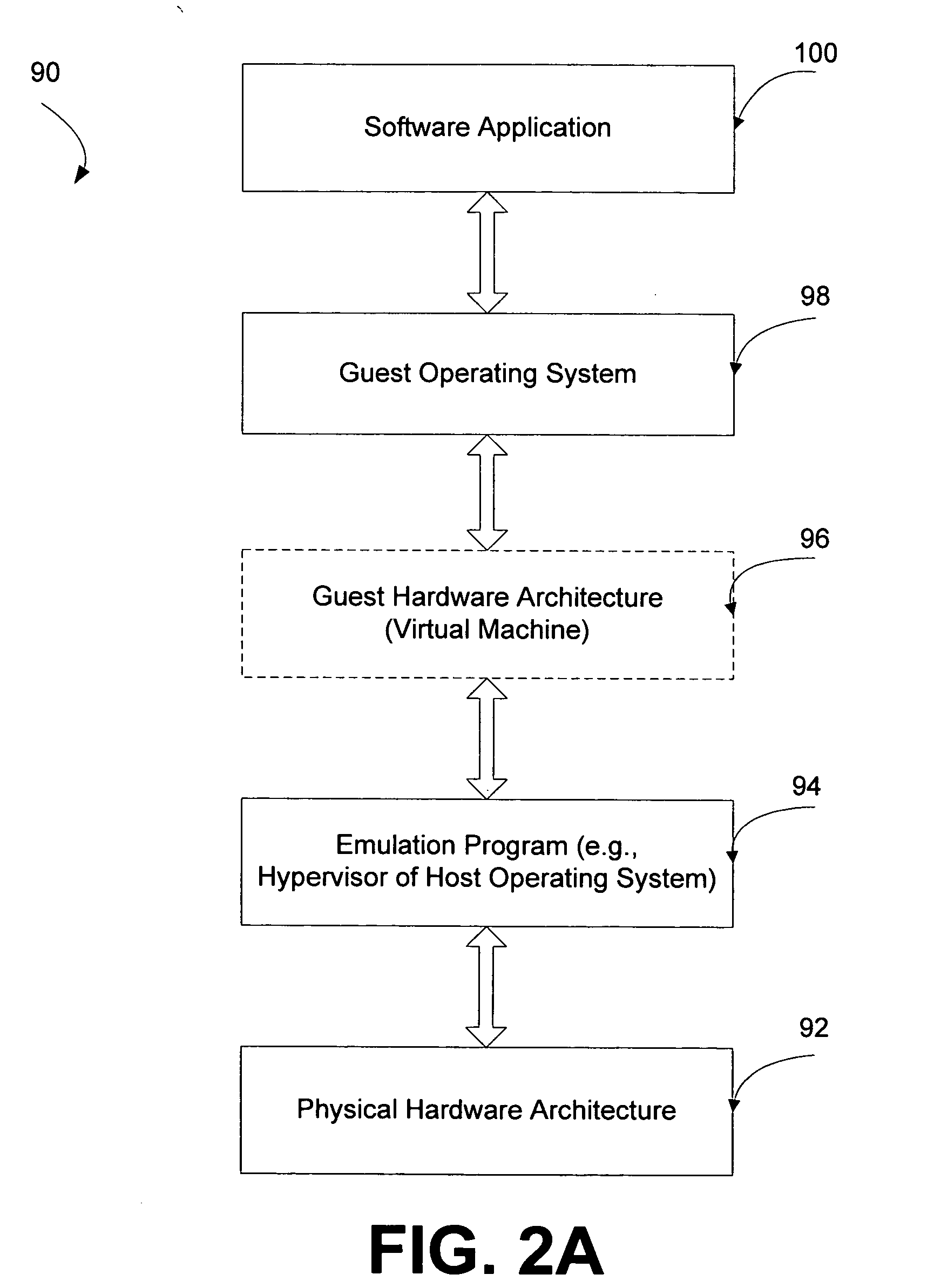

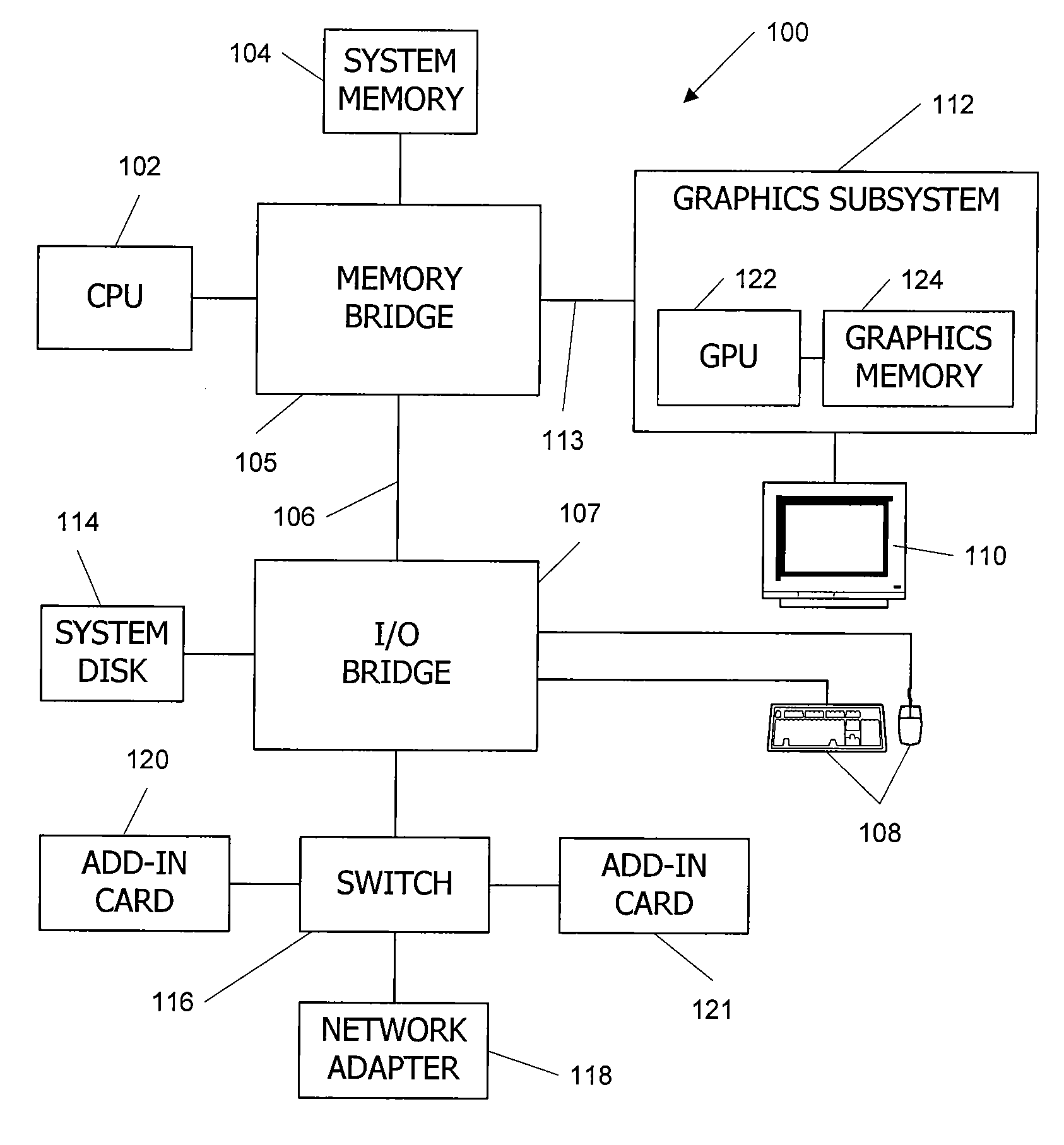

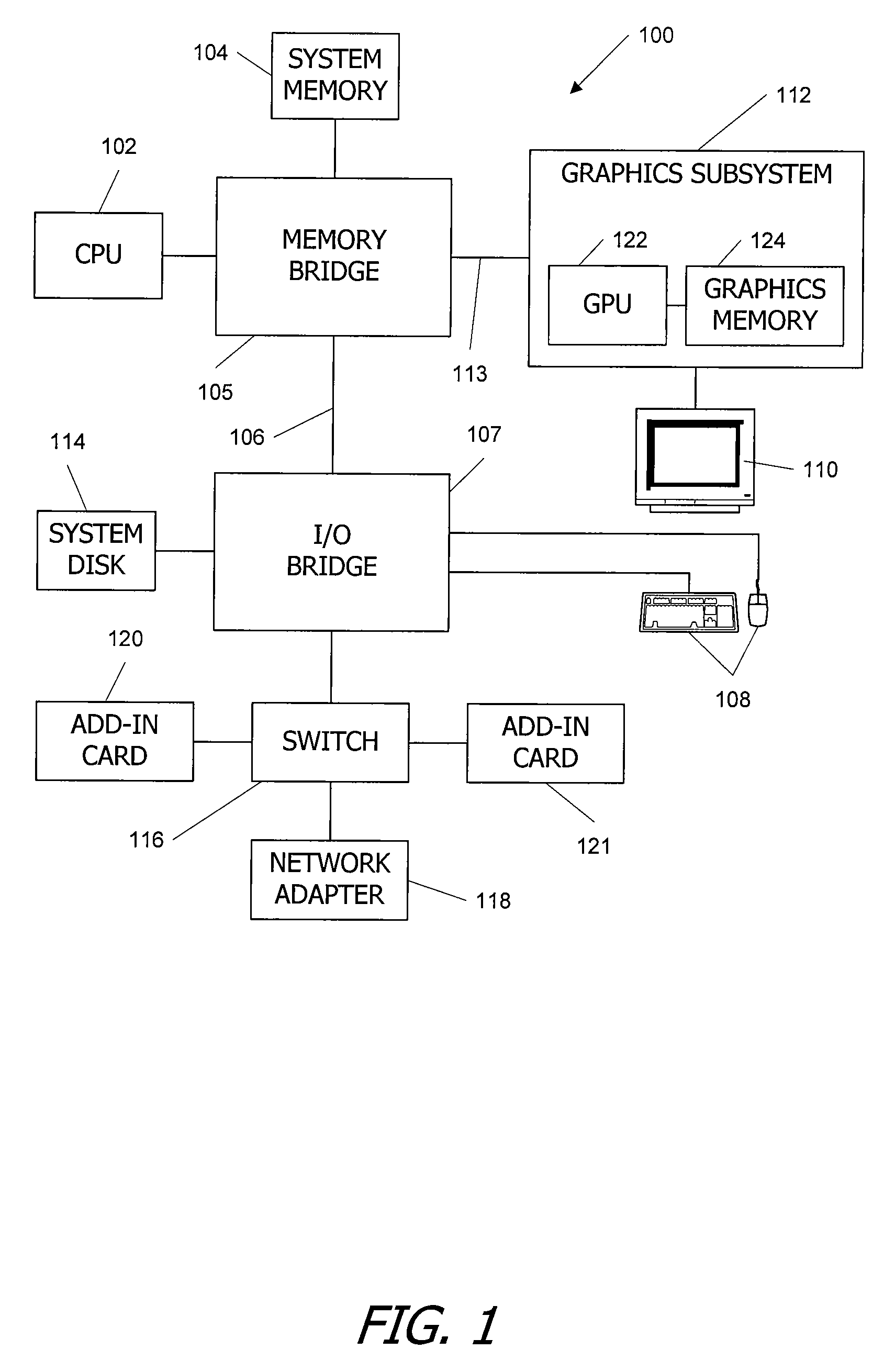

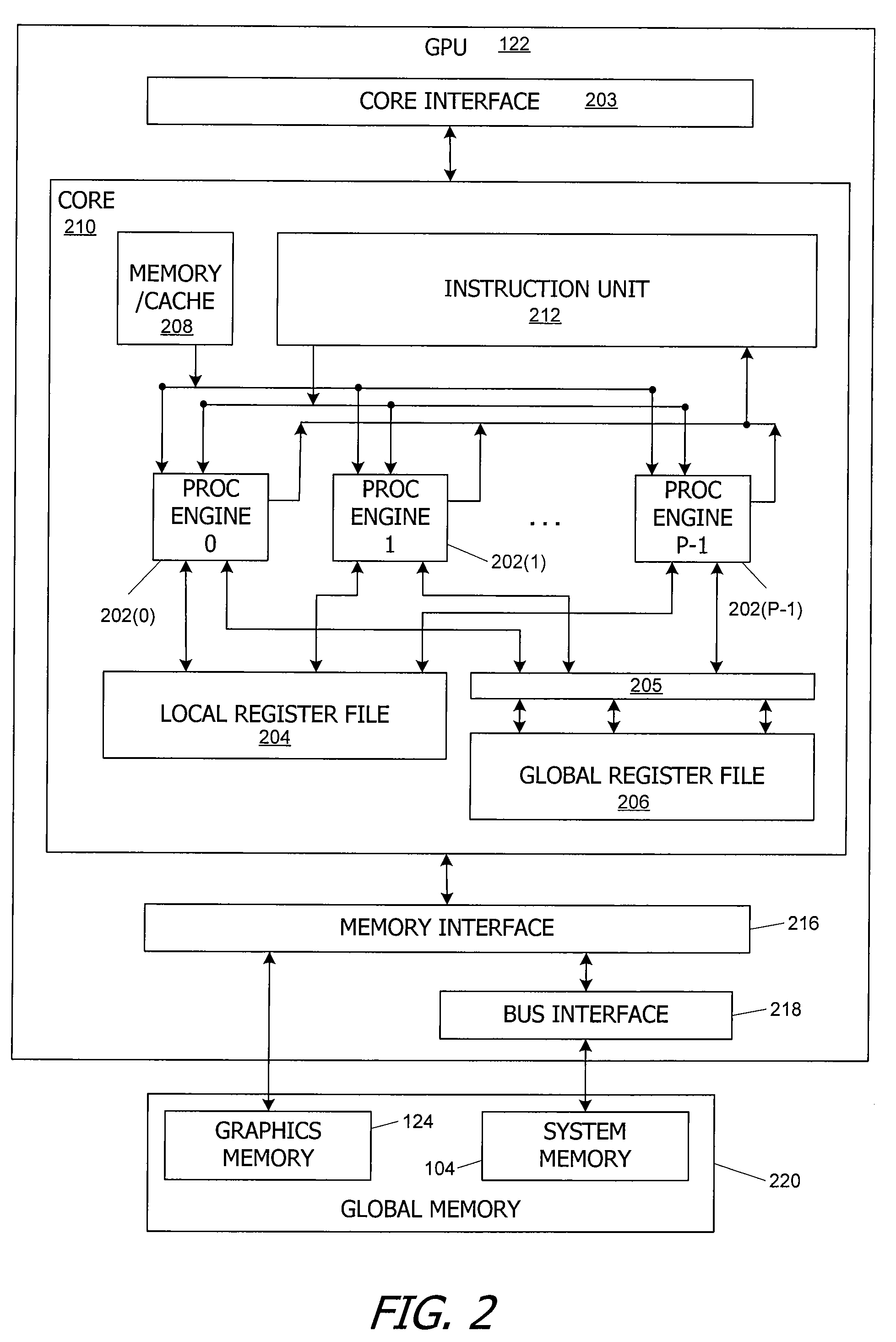

Systems and methods for applying virtual machines to graphics hardware are provided. In various embodiments of the invention, while supervisory code runs on the CPU, the actual graphics work items are run directly on the graphics hardware and the supervisory code is structured as a graphics virtual machine monitor. Application compatibility is retained using virtual machine monitor (VMM) technology to run a first operating system (OS), such as an original OS version, simultaneously with a second OS, such as a new version OS, in separate virtual machines (VMs). VMM technology applied to host processors is extended to graphics processing units (GPUs) to allow hardware access to graphics accelerators, ensuring that legacy applications operate at full performance. The invention also provides methods to make the user experience cosmetically seamless while running multiple applications in different VMs. In other aspects of the invention, by employing VMM technology, the virtualized graphics architecture of the invention is extended to provide trusted services and content protection.

Owner:MICROSOFT TECH LICENSING LLC

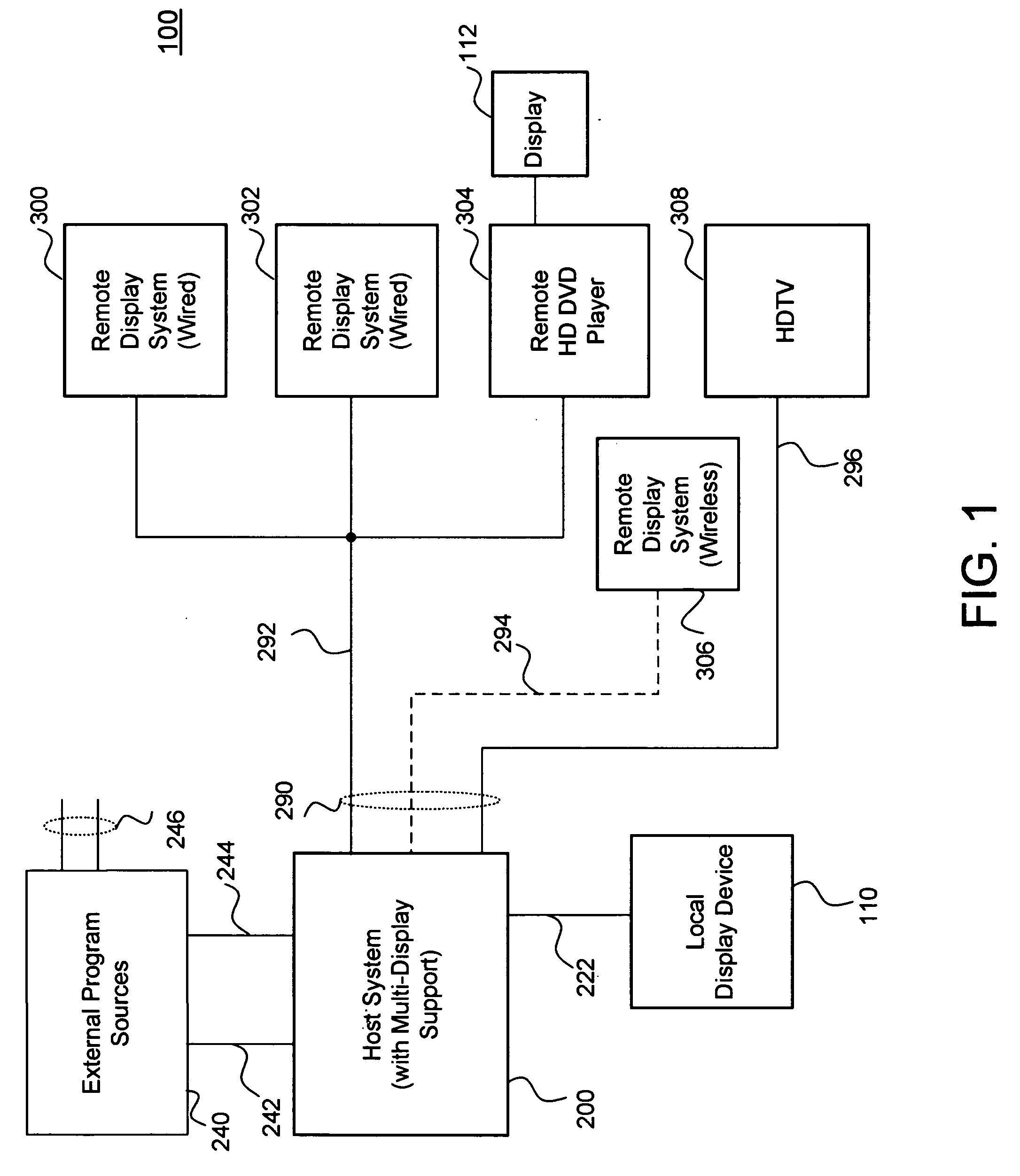

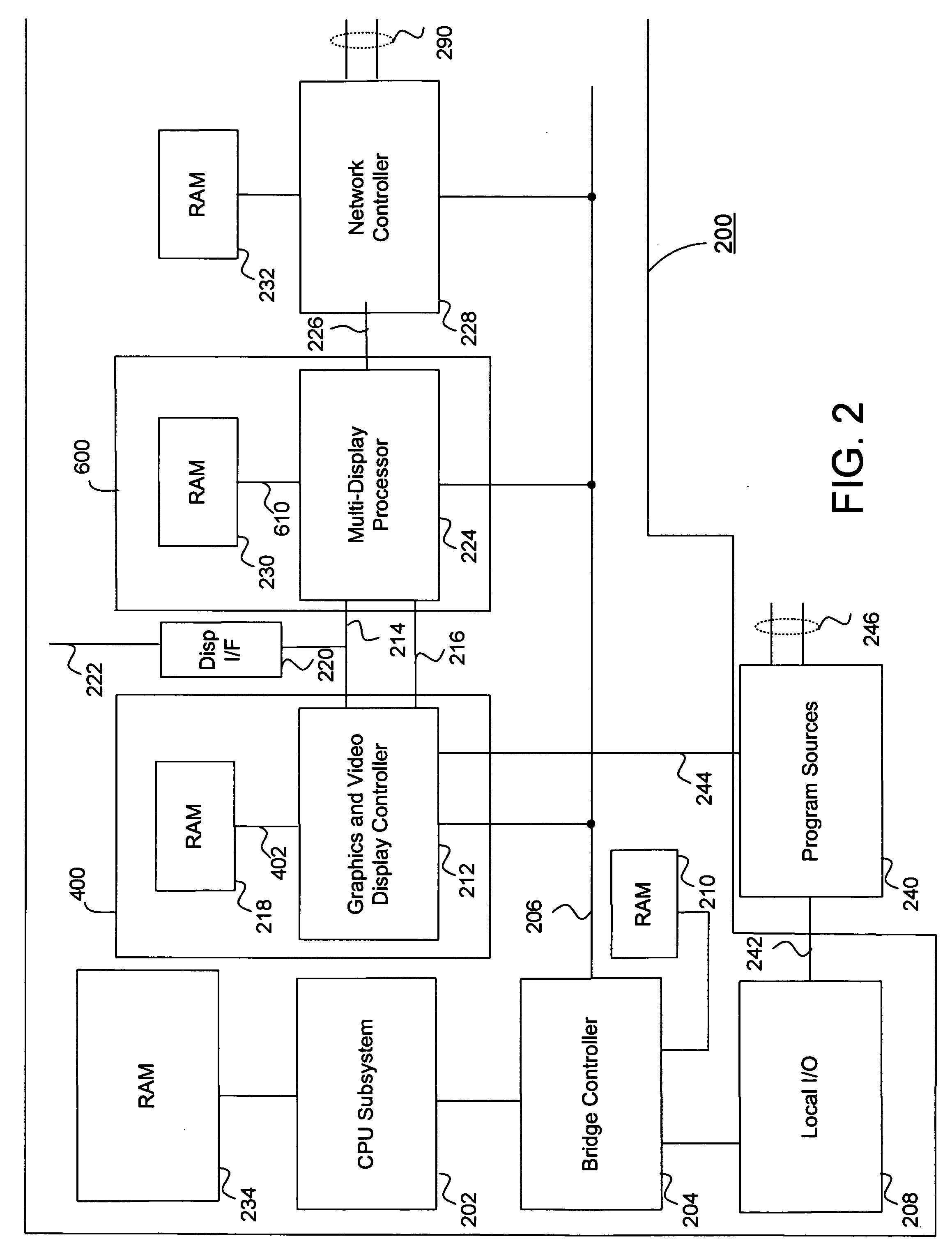

Multiple remote display system

InactiveUS20060282855A1Lower latencyGood frame rateTelevision system detailsColor television detailsGraphicsInternet traffic

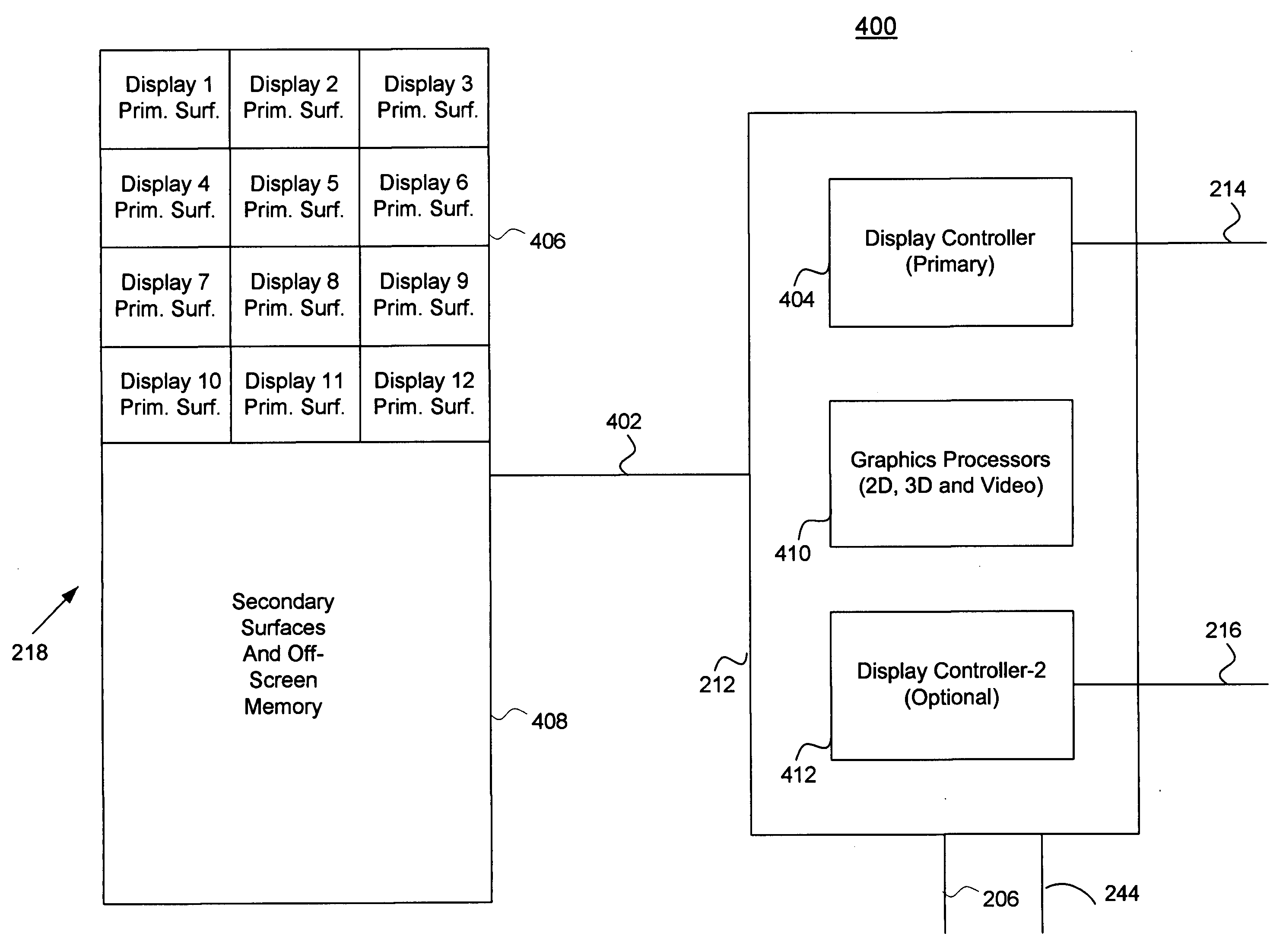

A multi-display system includes a host system that supports both graphics and video based frames for multiple remote displays, multiple users or a combination of the two. For each display and for each frame, a multi-display processor responsively manages each necessary aspect of the remote display frame. The necessary portions of the remote display frame are further processed, encoded and where necessary, transmitted over a network to the remote display for each user. In some embodiments, the host system manages a remote desktop protocol and can still transmit encoded video or encoded frame information where the encoded video may be generated within the host system or provided from an external program source. Embodiments integrate the multi-display processor with either the video decoder unit, graphics processing unit, network controller, main memory controller, or any combination thereof The encoding process is optimized for network traffic and attention is paid to assure that all users have low latency interactive capabilities.

Owner:III HLDG 1

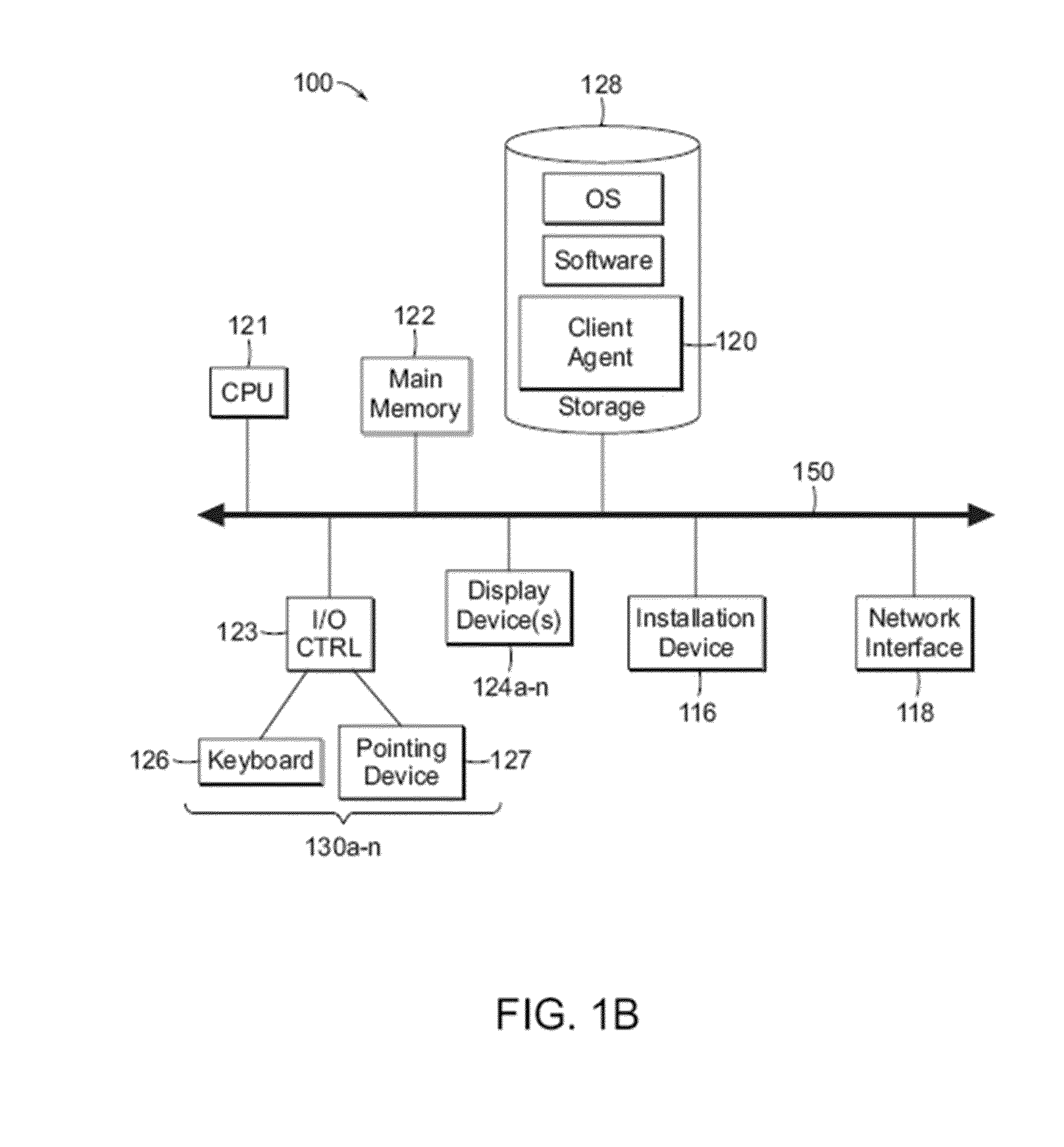

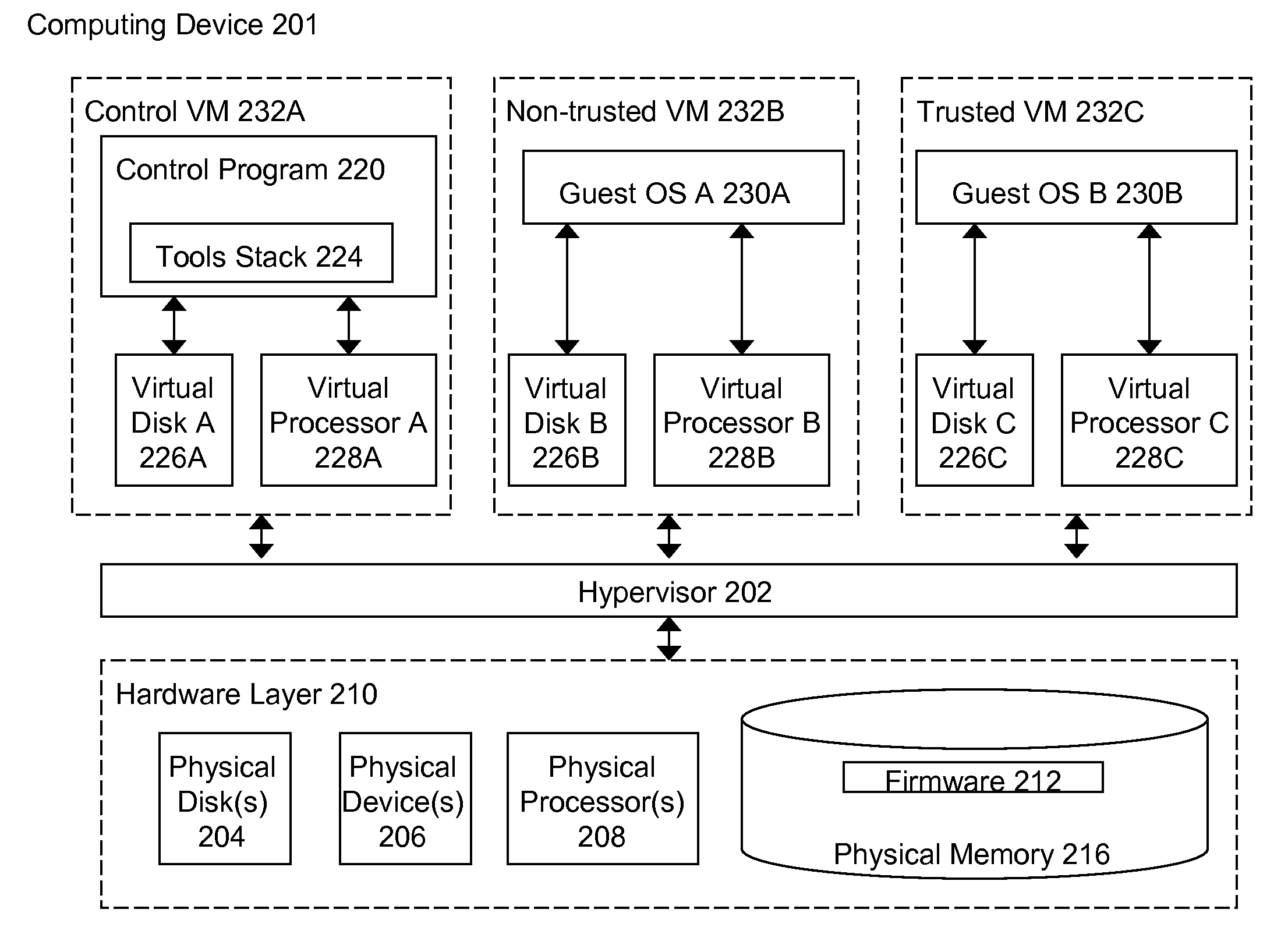

Methods and systems for securing sensitive information using a hypervisor-trusted client

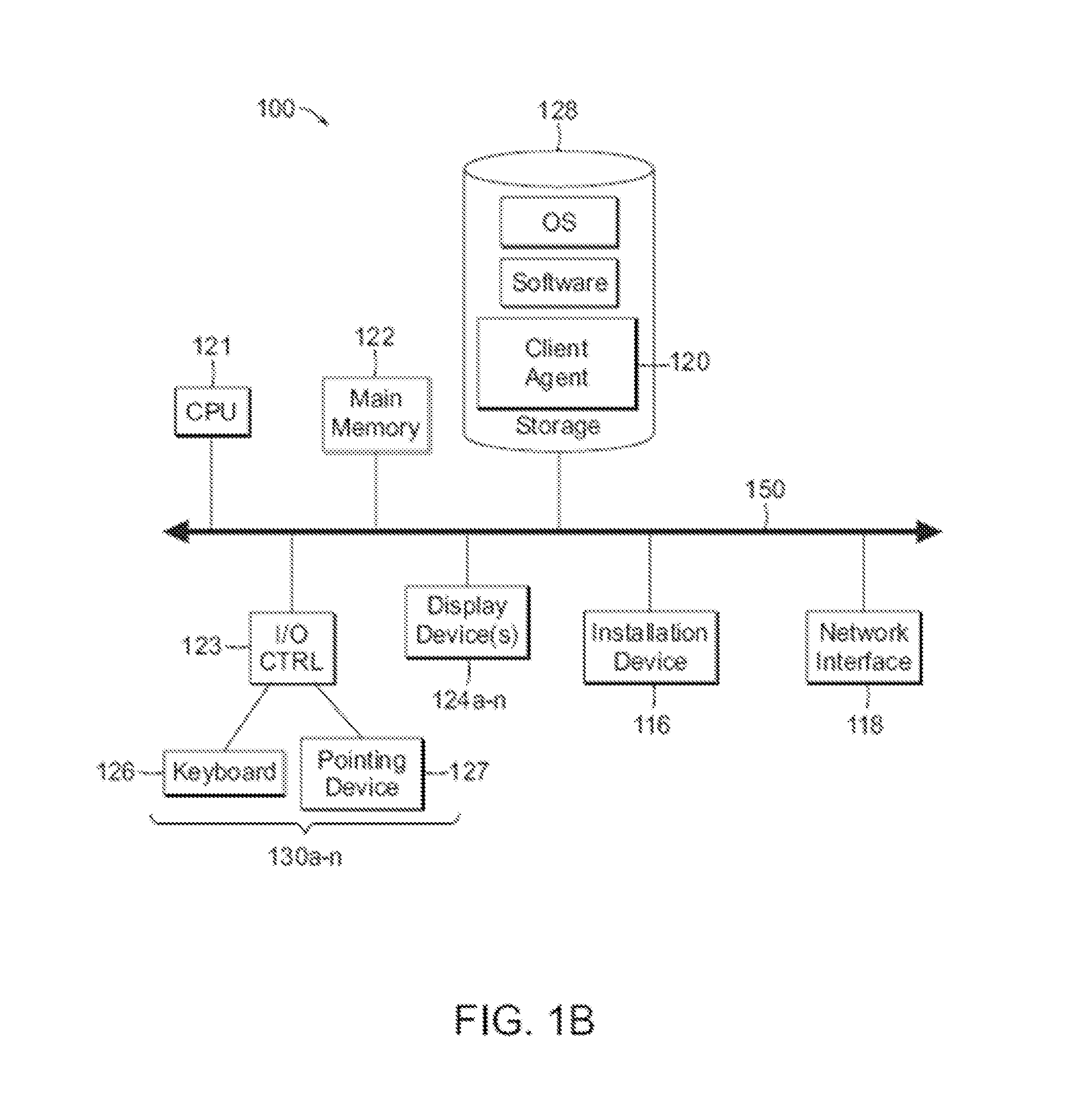

ActiveUS20110141124A1Internal/peripheral component protectionDigital data authenticationClient agentGraphics

The methods and systems described herein provide for securing sensitive information using a hypervisor-trusted client, in a computing device executing a hypervisor hosting a control virtual machine and a non-trusted virtual machine. A user of a non-trusted virtual machine requests to establish a connection to a remote computing device. Responsive to the request, a control virtual machine launches a client agent. A graphics manager executed by the processor of the computing device assigns a secure section of a memory of a graphics processing unit of the computing device to the client agent. The graphics manager renders graphical data generated by the client agent to the secure section of the graphics processing unit memory.

Owner:CITRIX SYST INC

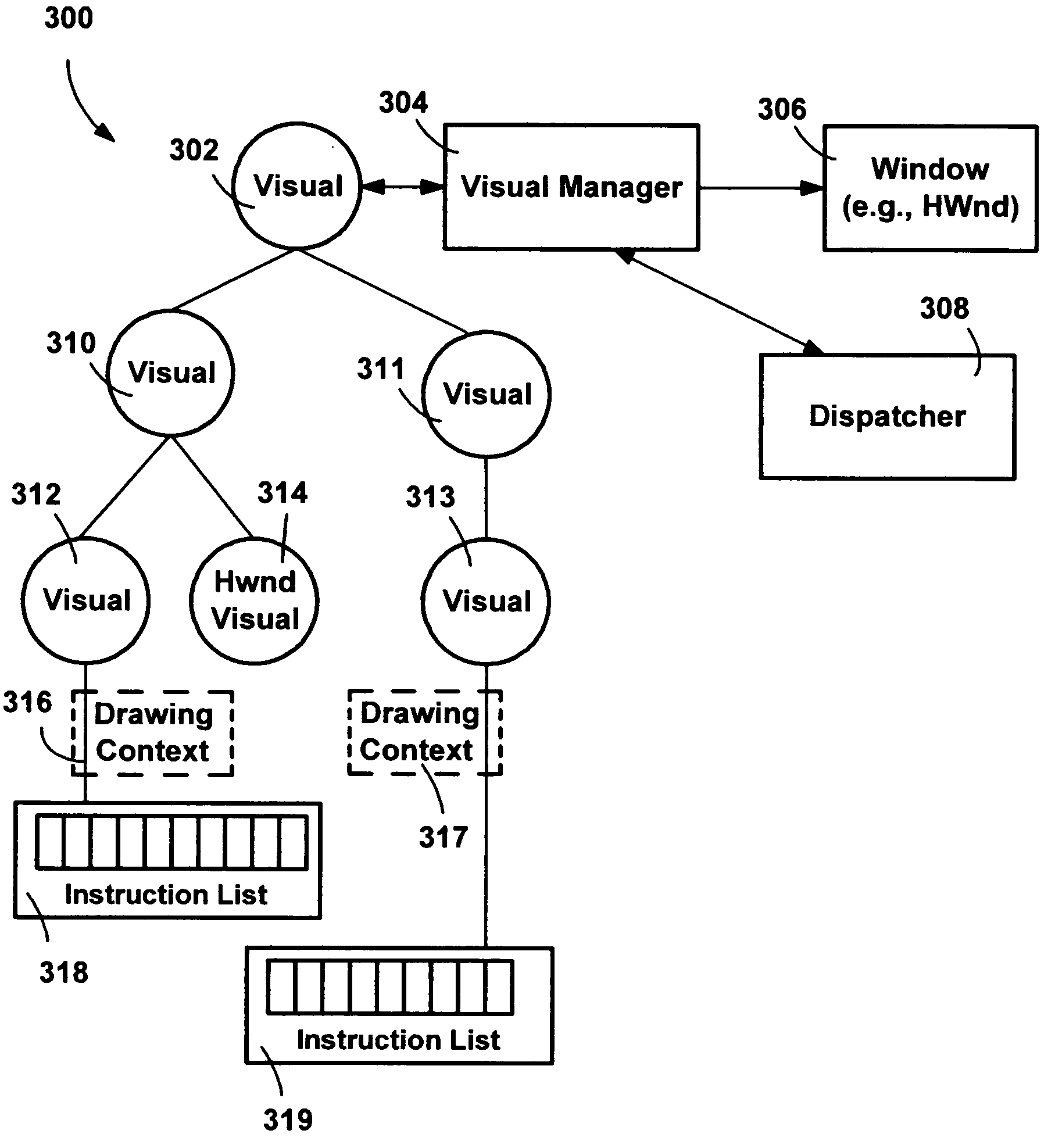

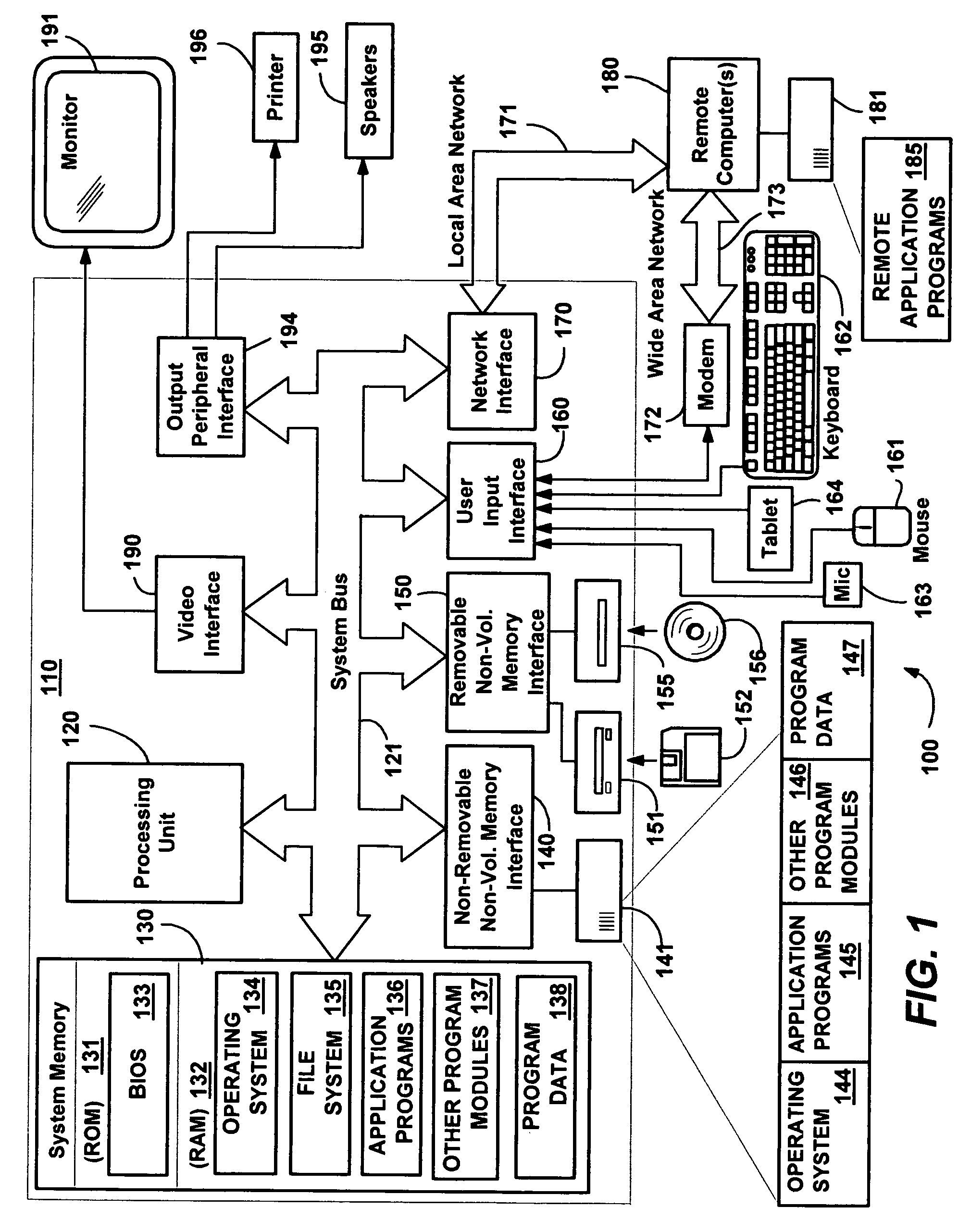

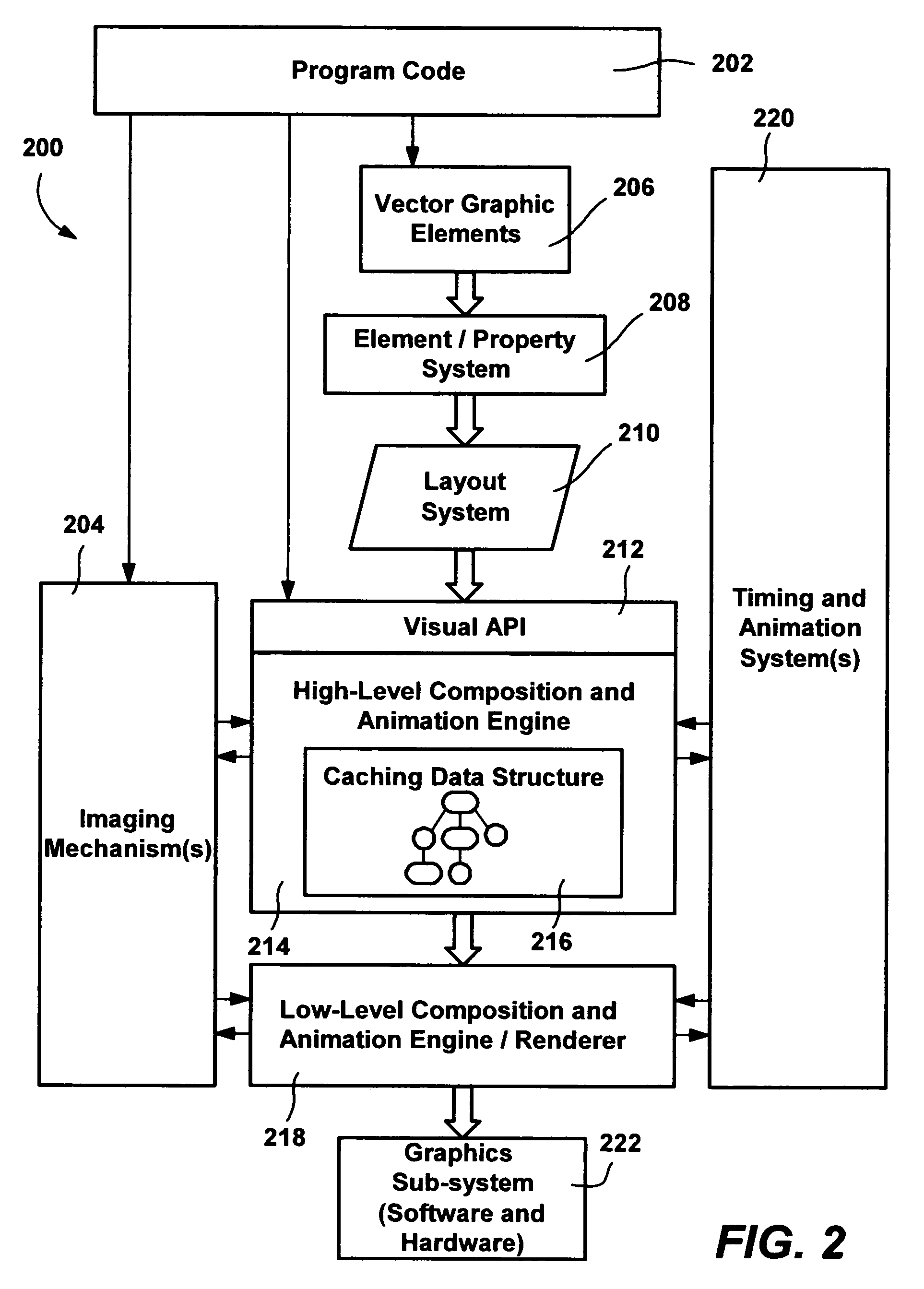

Media Integration Layer

InactiveUS20050140694A1Effect is complexNot adversely impact normal application performanceCathode-ray tube indicatorsAnimationMedia typeMedia integration

A media integration layer including an application programming interface (API) and an object model allows program code developers to interface in a consistent manner with a scene graph data structure in order to output graphics. Via the interfaces, program code adds child visuals to other visuals to build up a hierarchical scene graph, writes Instruction Lists such as geometry data, image data, animation data and other data for output, and may specify transform, clipping and opacity properties on visuals. The media integration layer and API enable programmers to accomplish composition effects within their applications in a straightforward manner, while leveraging the graphics processing unit in a manner that does not adversely impact normal application performance. A multiple-level system includes the ability to combine different media types (such as 2D, 3D, Video, Audio, text and imaging) and animate them smoothly and seamlessly.

Owner:MICROSOFT TECH LICENSING LLC

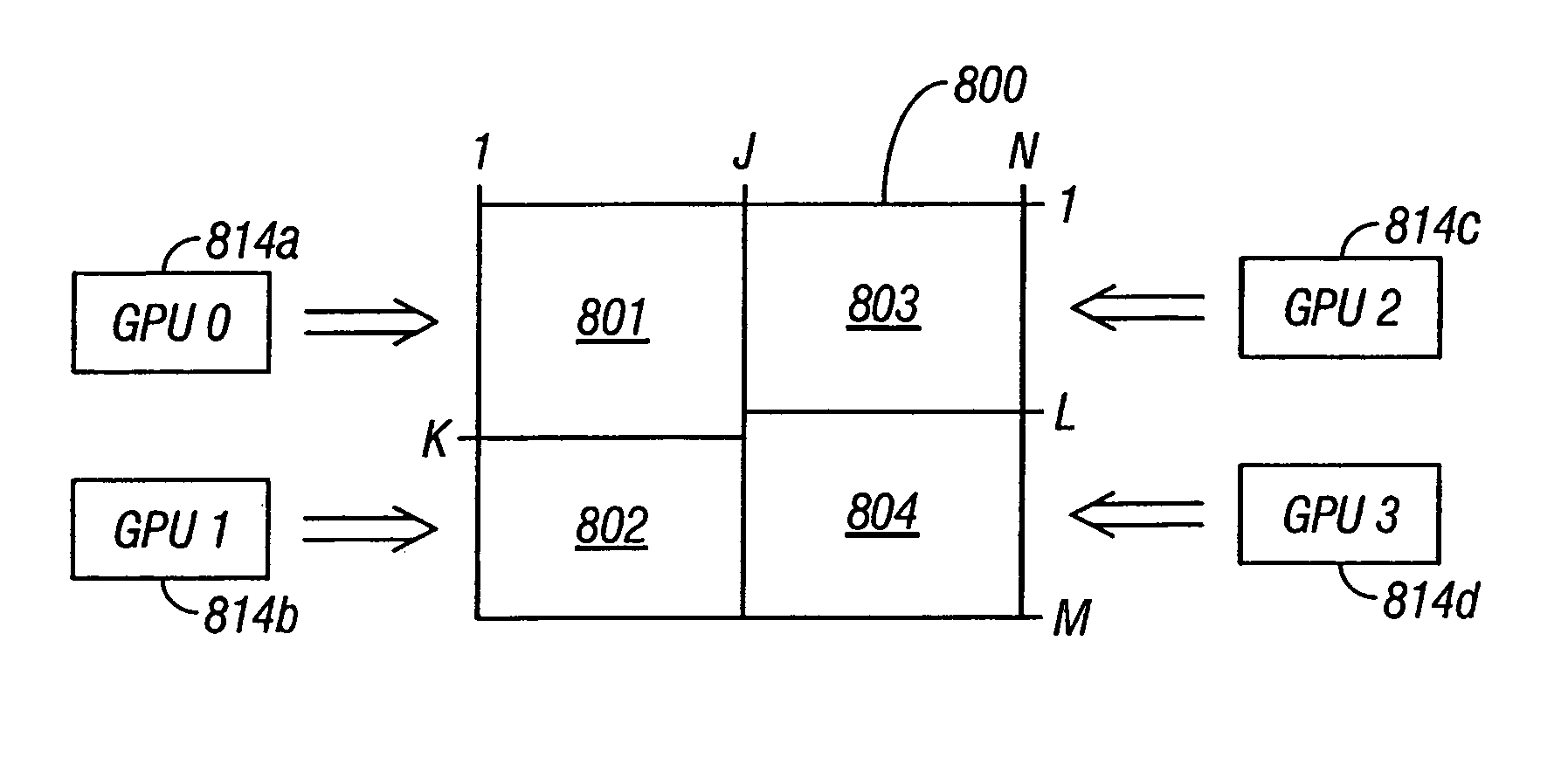

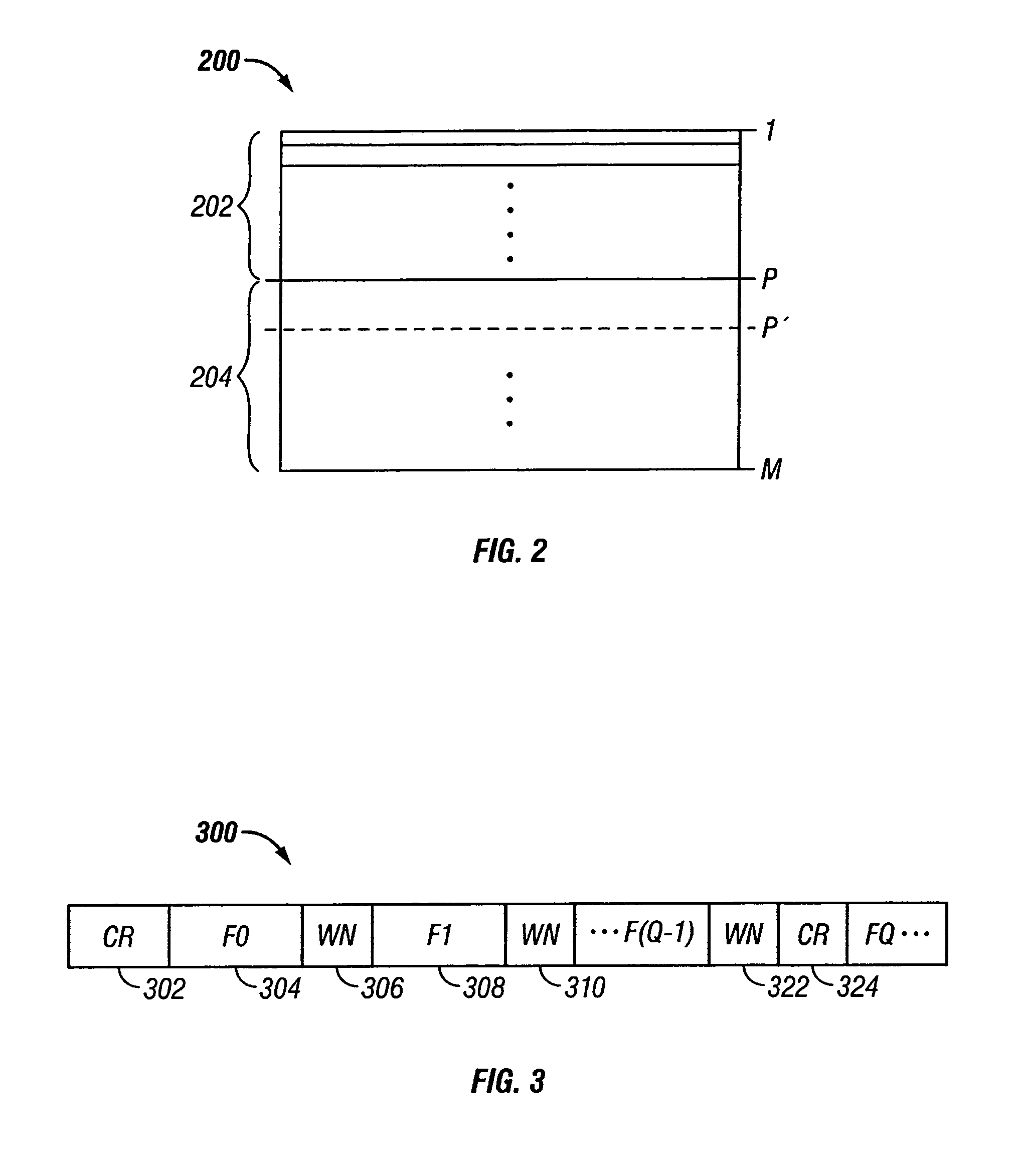

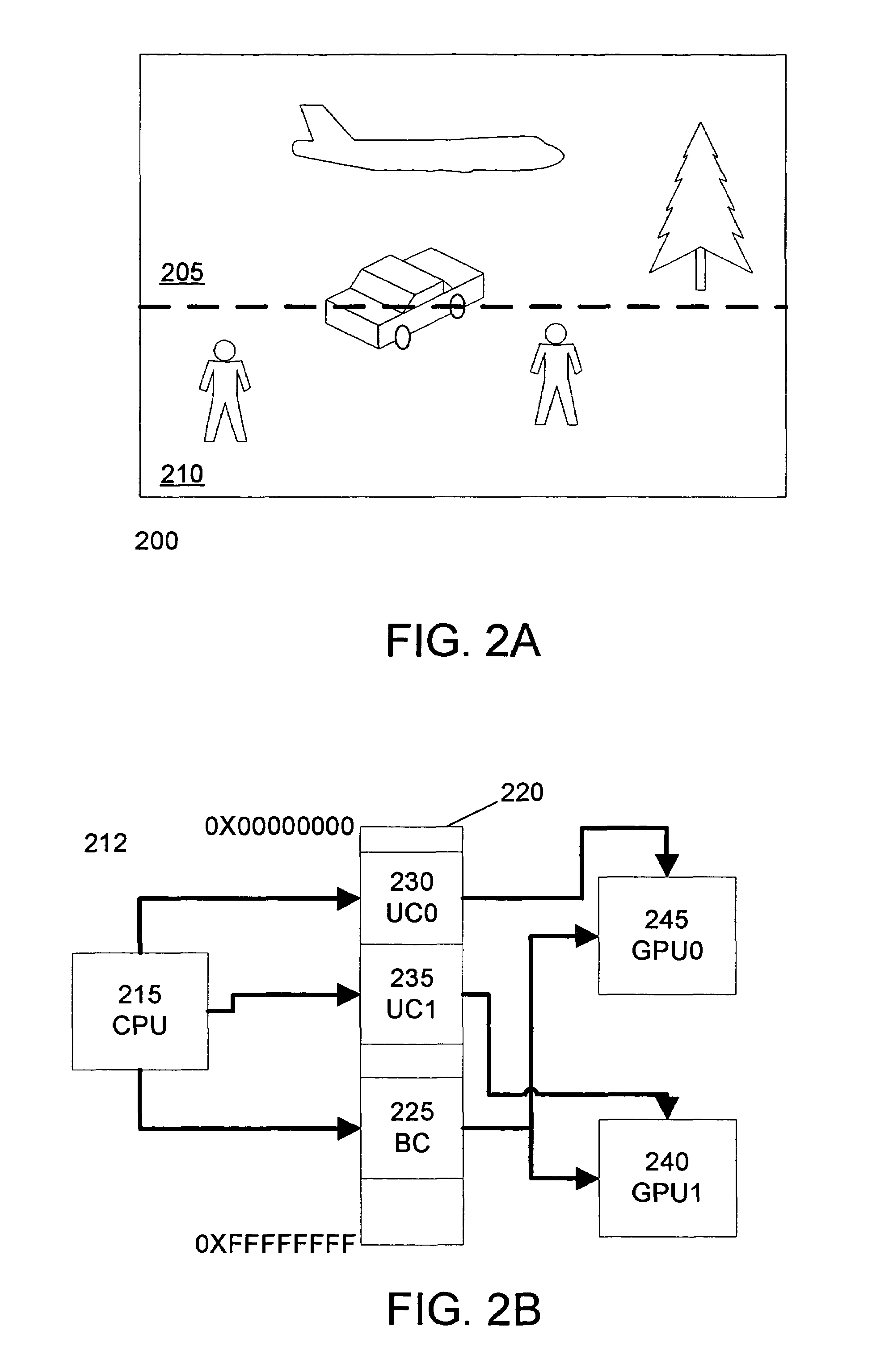

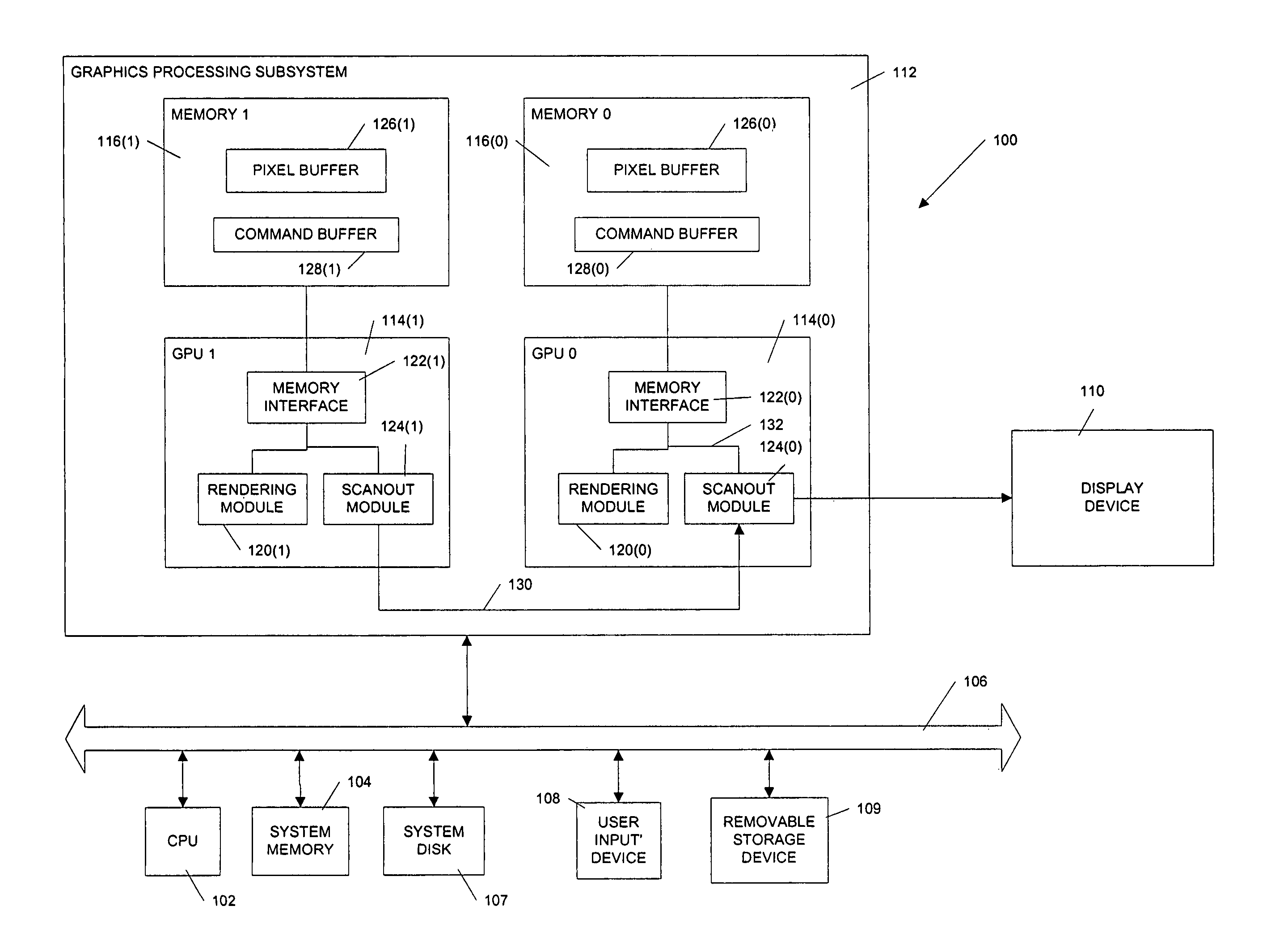

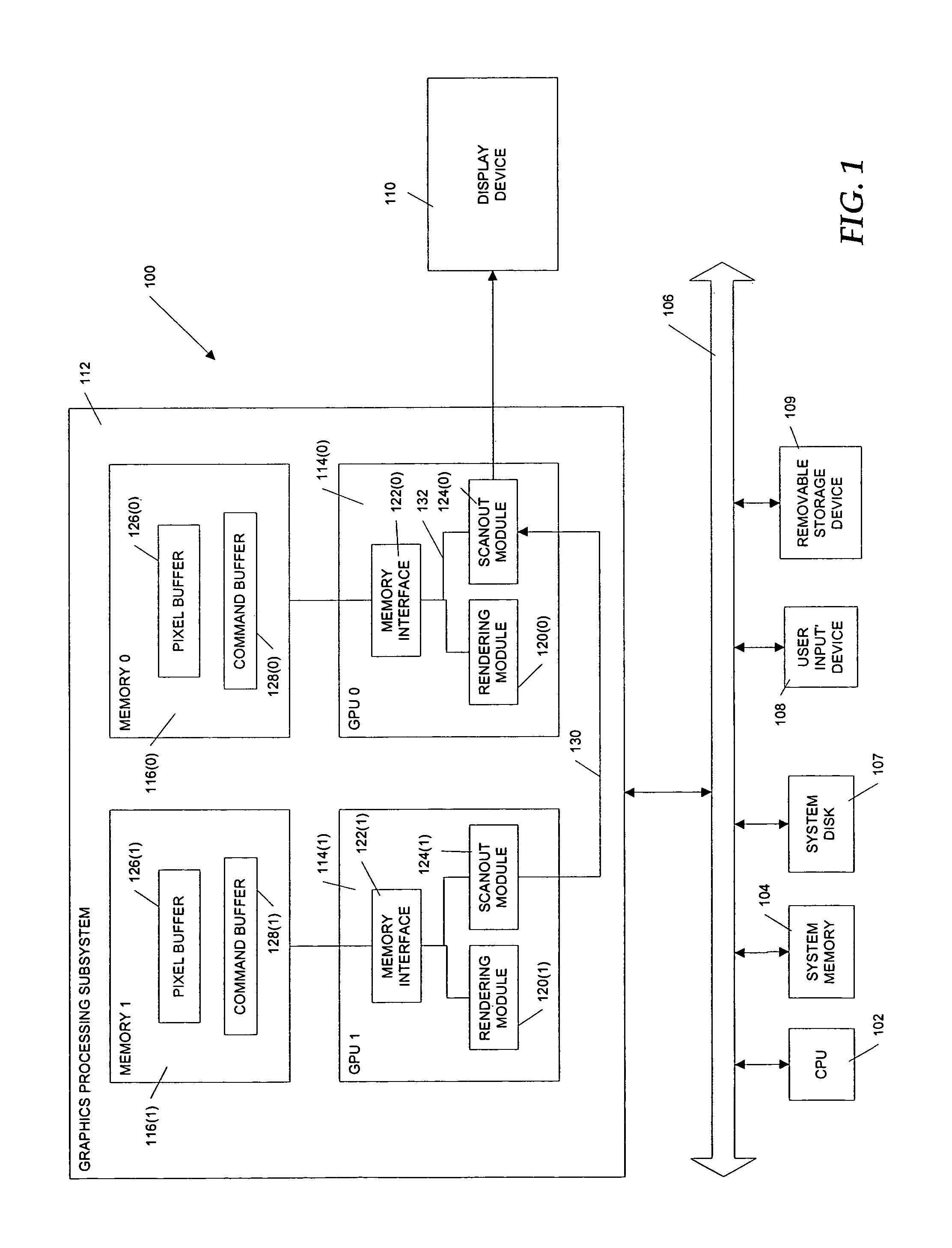

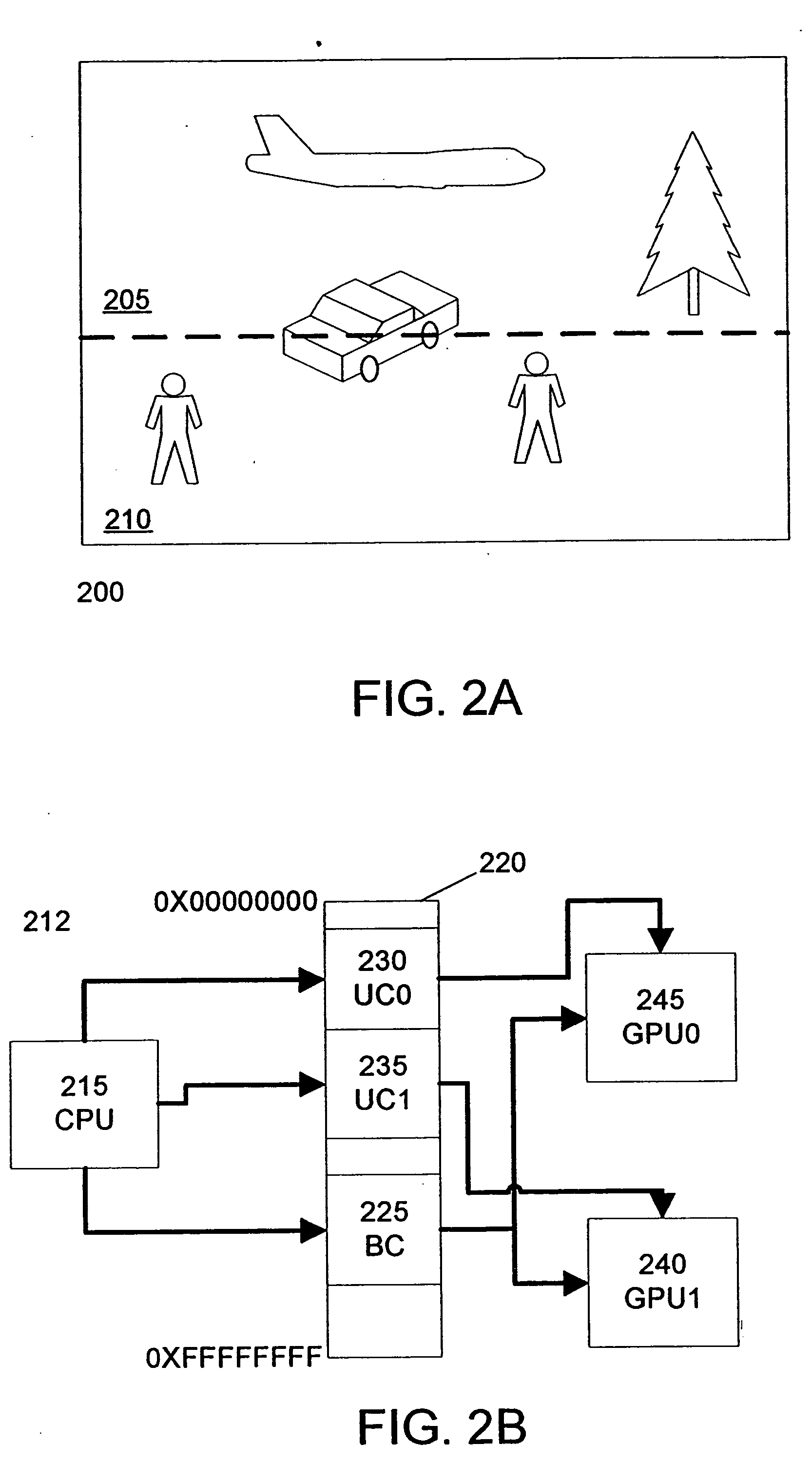

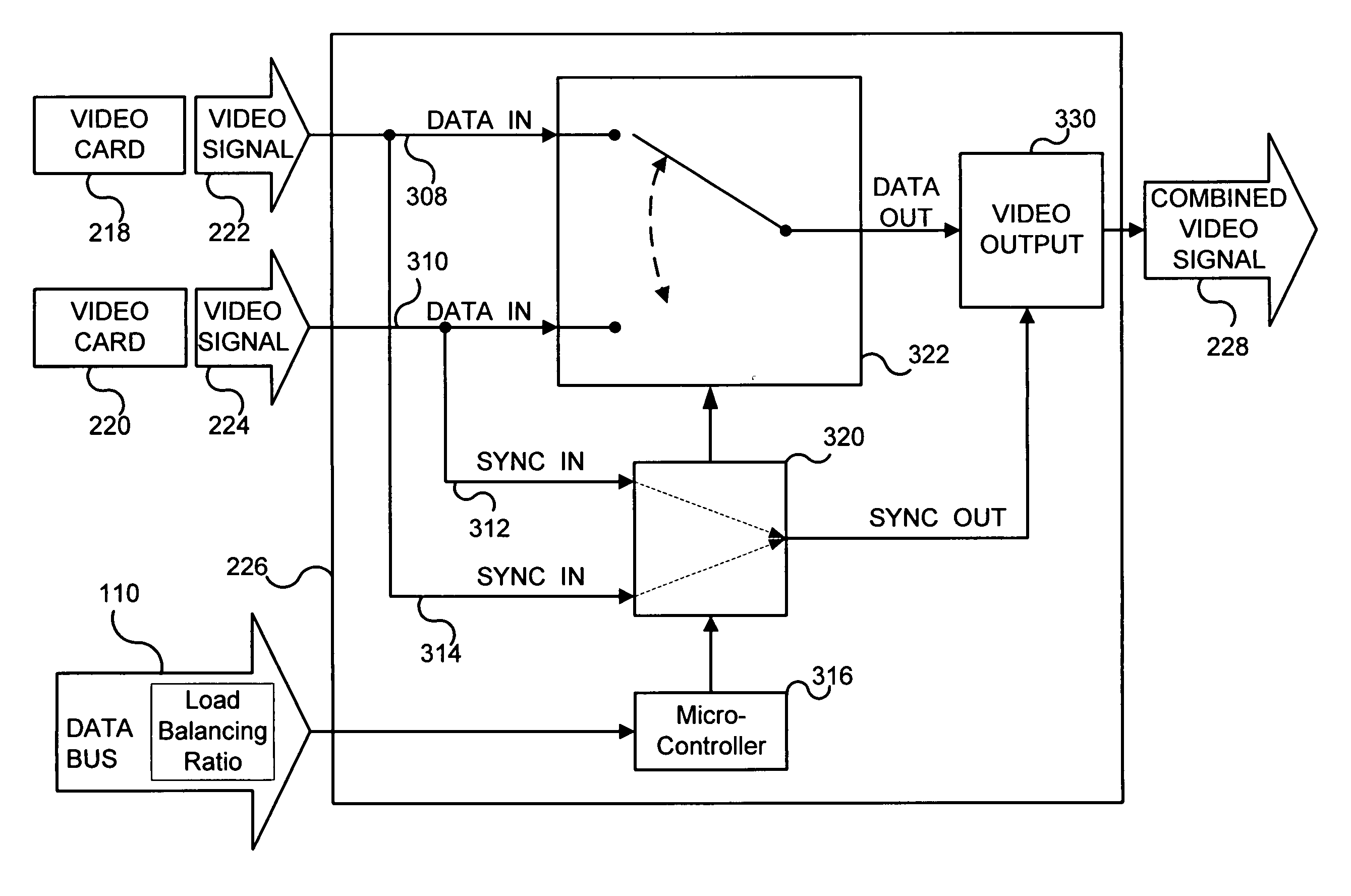

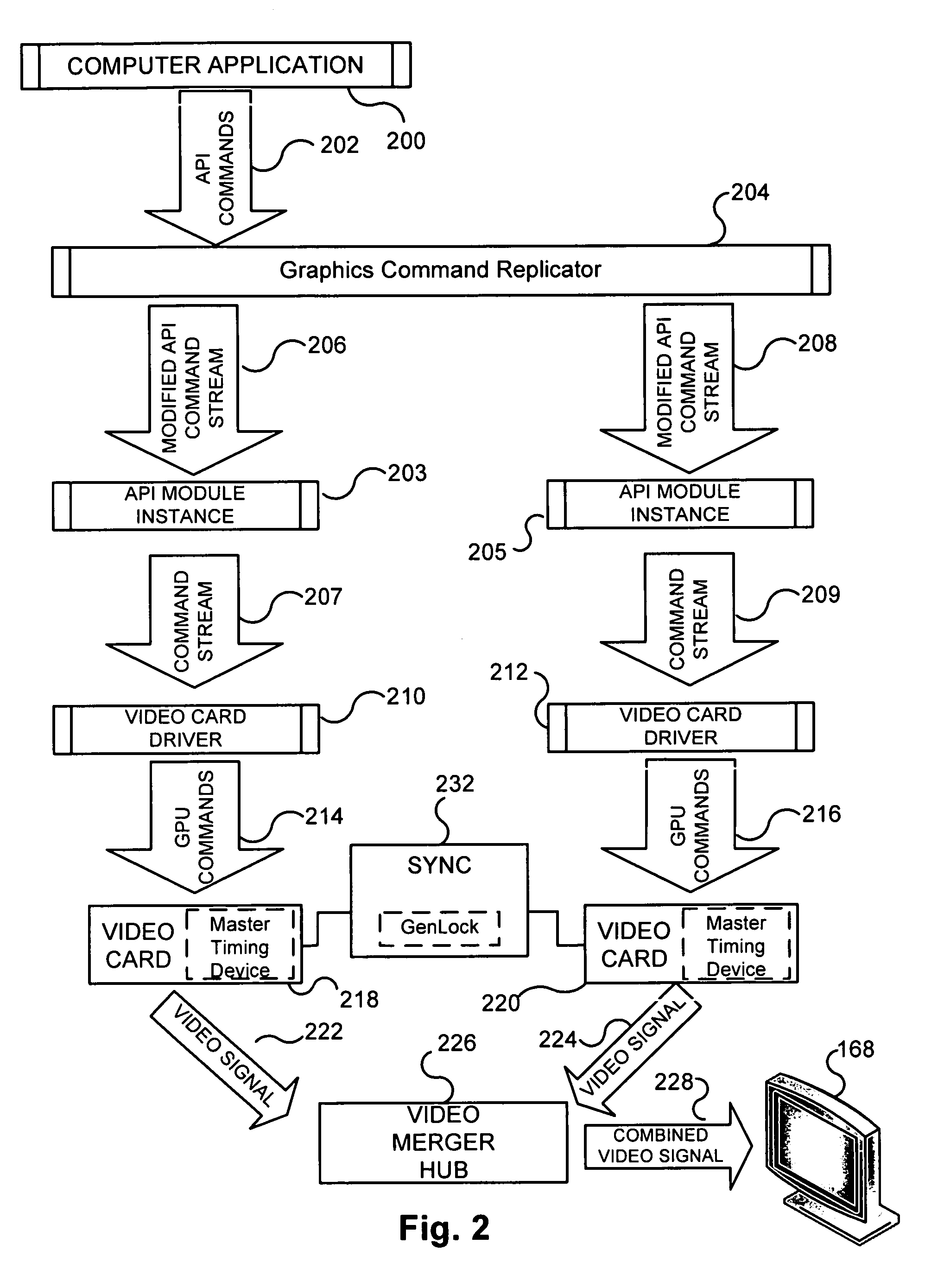

Adaptive load balancing in a multi-processor graphics processing system

ActiveUS7075541B2Increase in sizeSmall sizeCathode-ray tube indicatorsMultiple digital computer combinationsGraphicsMulti processor

Systems and methods for balancing a load among multiple graphics processors that render different portions of a frame. A display area is partitioned into portions for each of two (or more) graphics processors. The graphics processors render their respective portions of a frame and return feedback data indicating completion of the rendering. Based on the feedback data, an imbalance can be detected between respective loads of two of the graphics processors. In the event that an imbalance exists, the display area is re-partitioned to increase a size of the portion assigned to the less heavily loaded processor and to decrease a size of the portion assigned to the more heavily loaded processor.

Owner:NVIDIA CORP

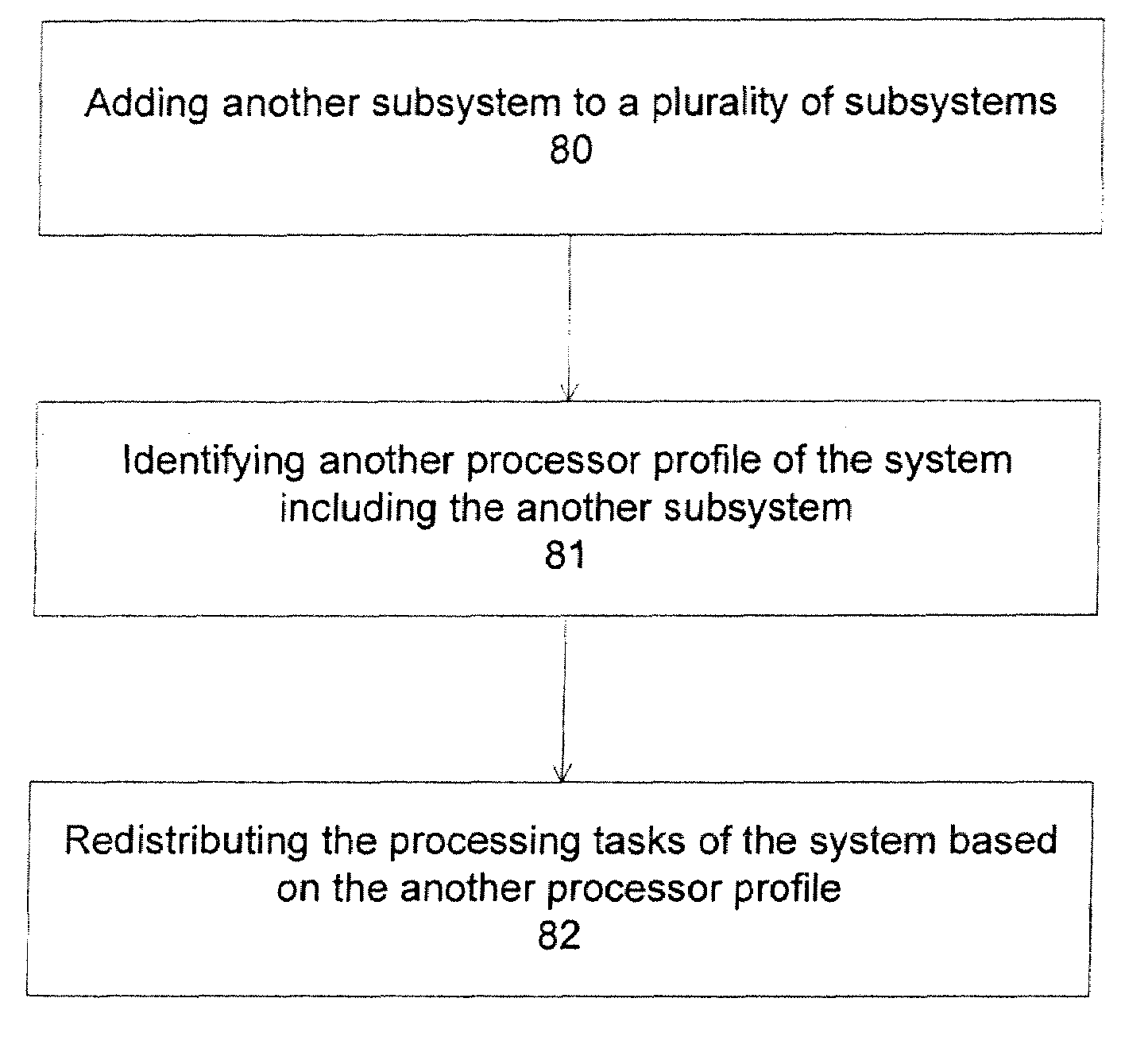

Methods and apparatuses for load balancing between multiple processing units

ActiveUS20090109230A1PerformanceProcessingEnergy efficient ICTDigital data processing detailsDigital signal processingGraphics

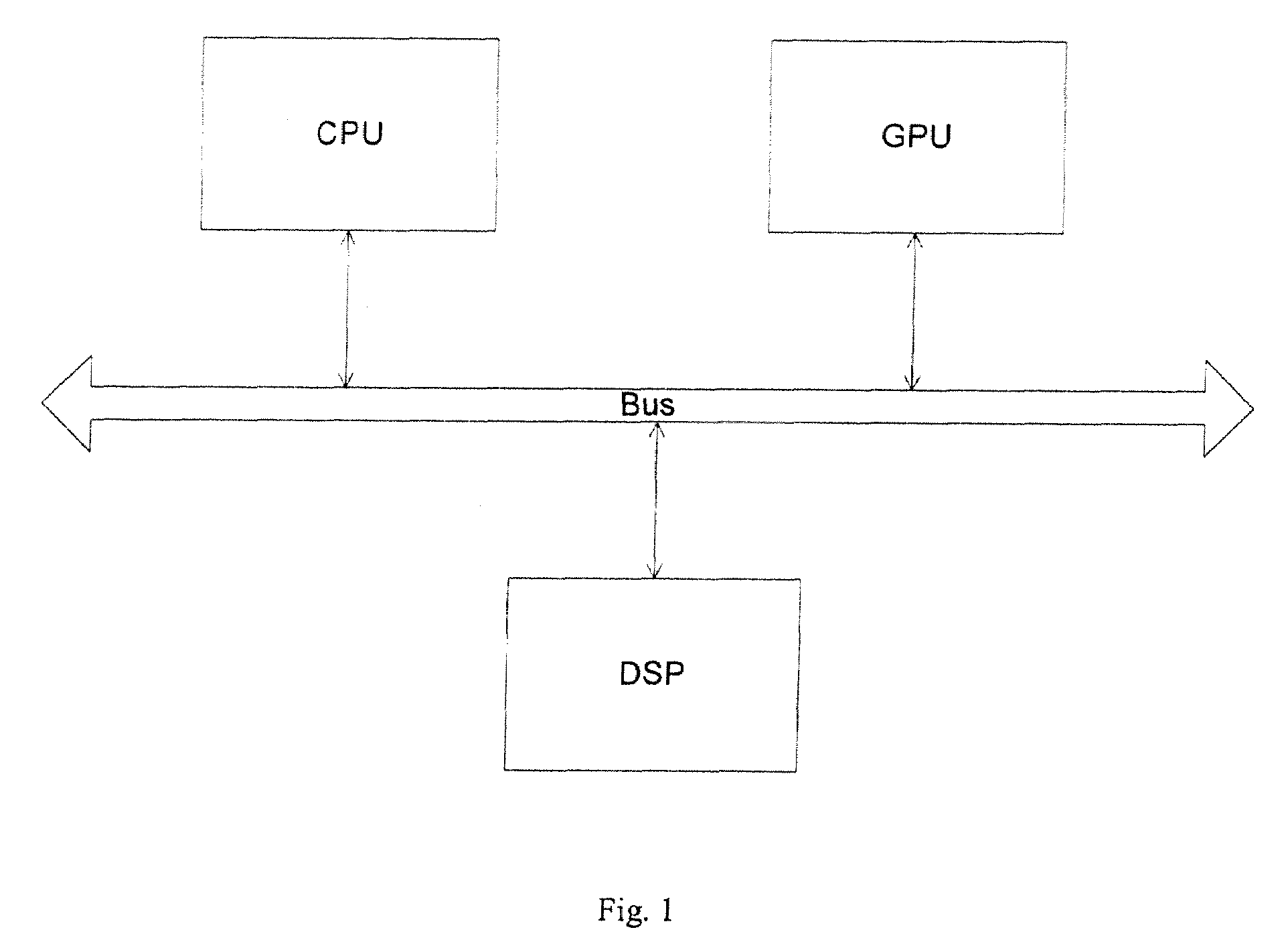

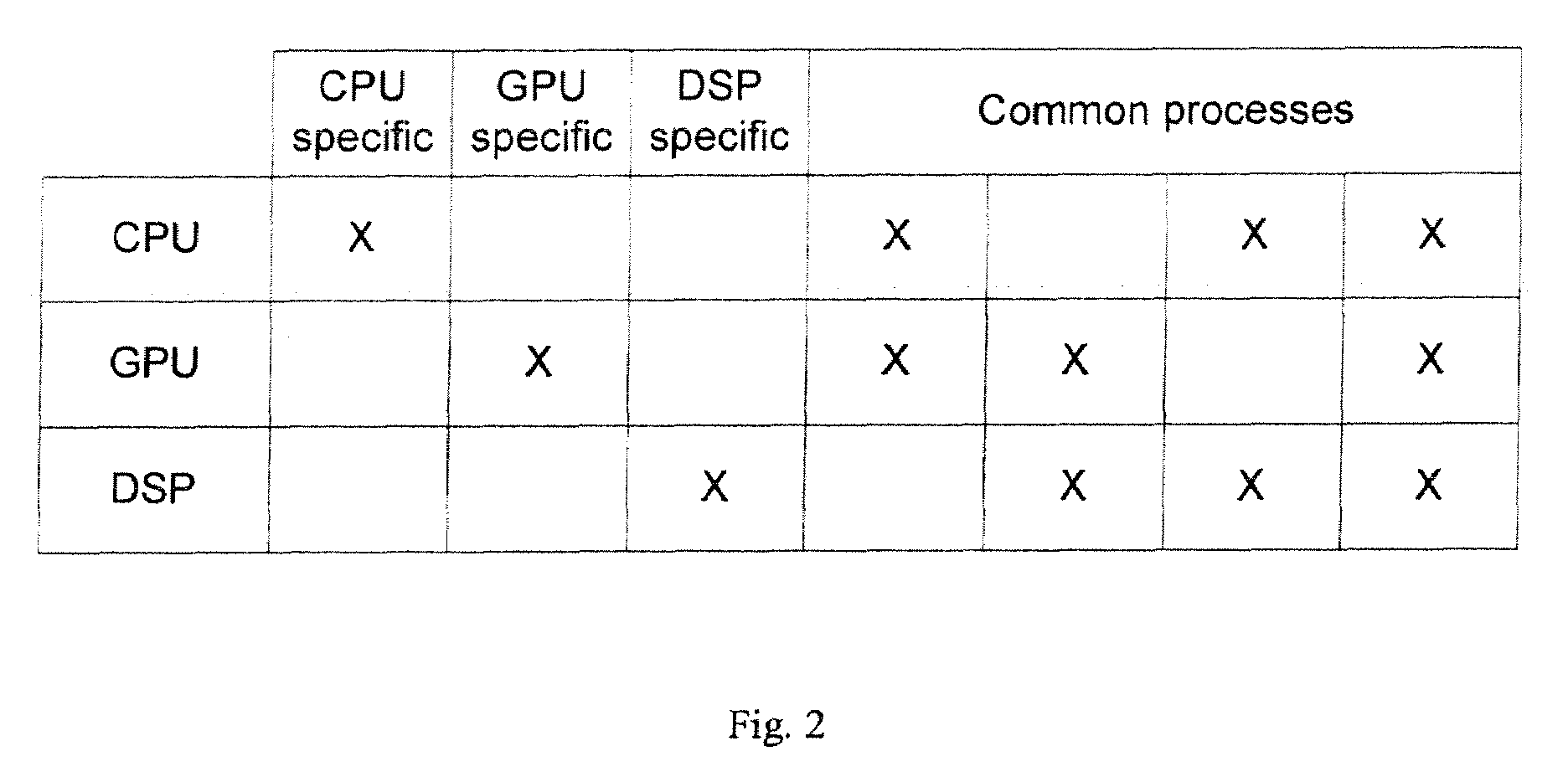

Exemplary embodiments of methods and apparatuses to dynamically redistribute computational processes in a system that includes a plurality of processing units are described. The power consumption, the performance, and the power / performance value are determined for various computational processes between a plurality of subsystems where each of the subsystems is capable of performing the computational processes. The computational processes are exemplarily graphics rendering process, image processing process, signal processing process, Bayer decoding process, or video decoding process, which can be performed by a central processing unit, a graphics processing units or a digital signal processing unit. In one embodiment, the distribution of computational processes between capable subsystems is based on a power setting, a performance setting, a dynamic setting or a value setting.

Owner:APPLE INC

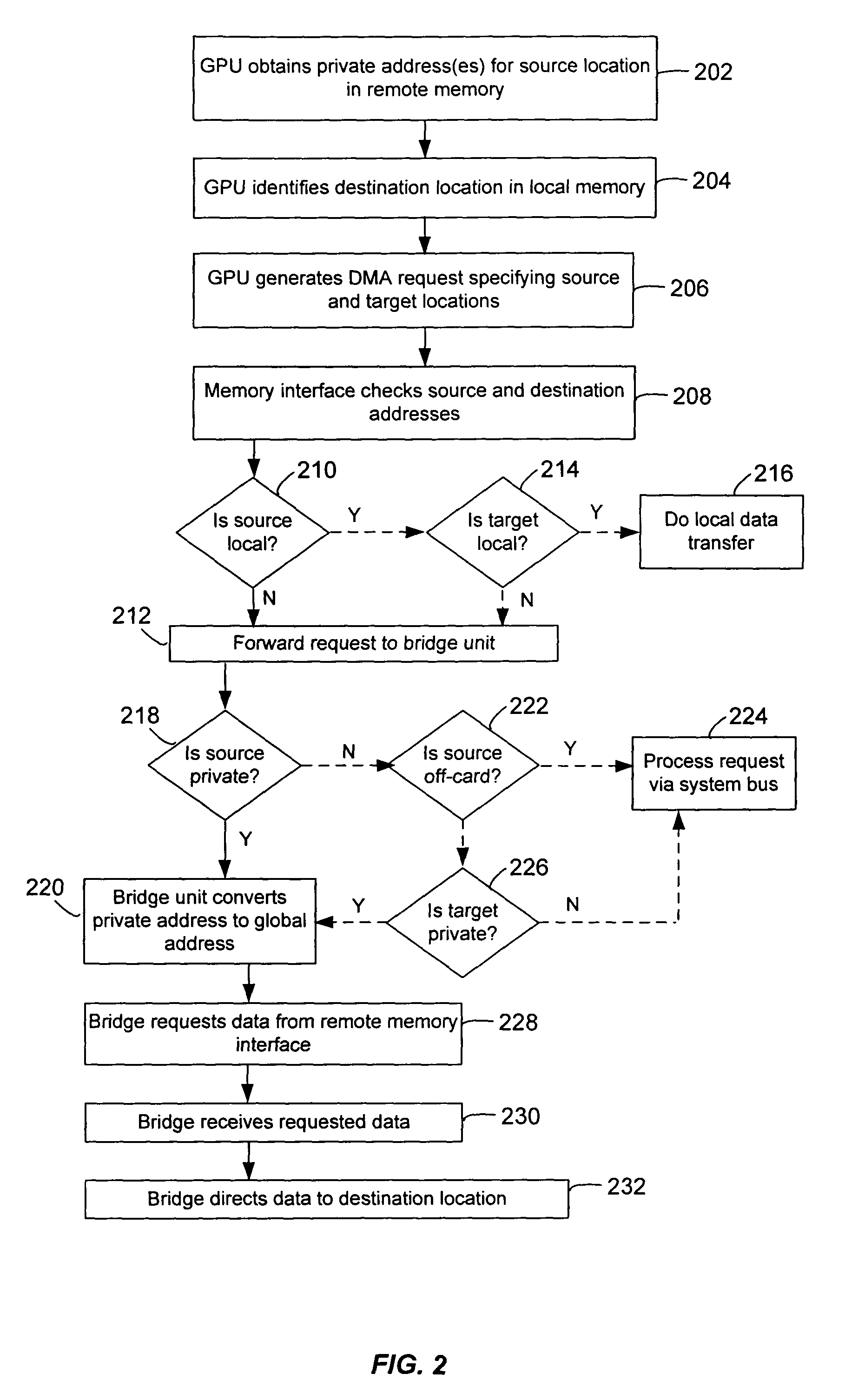

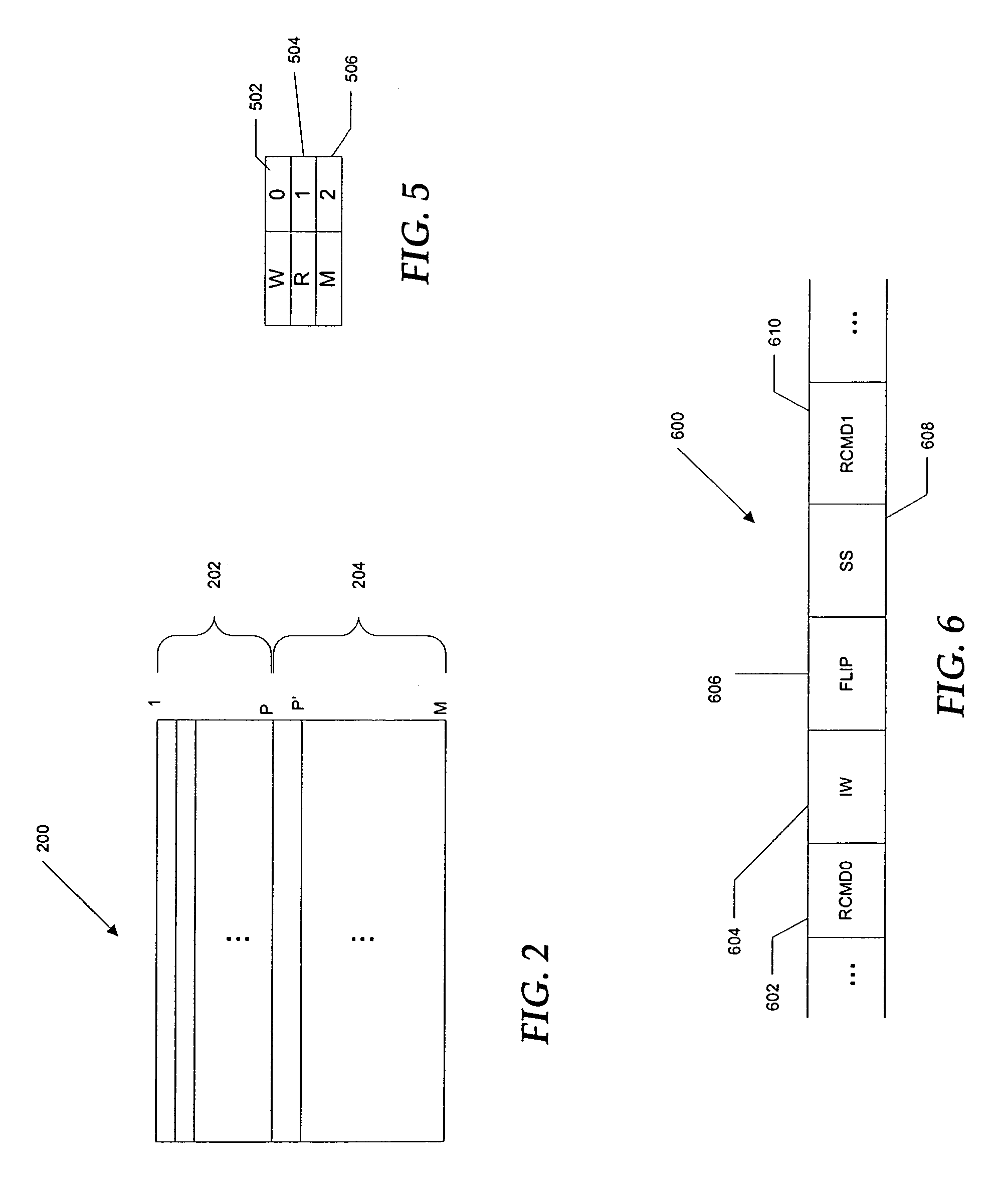

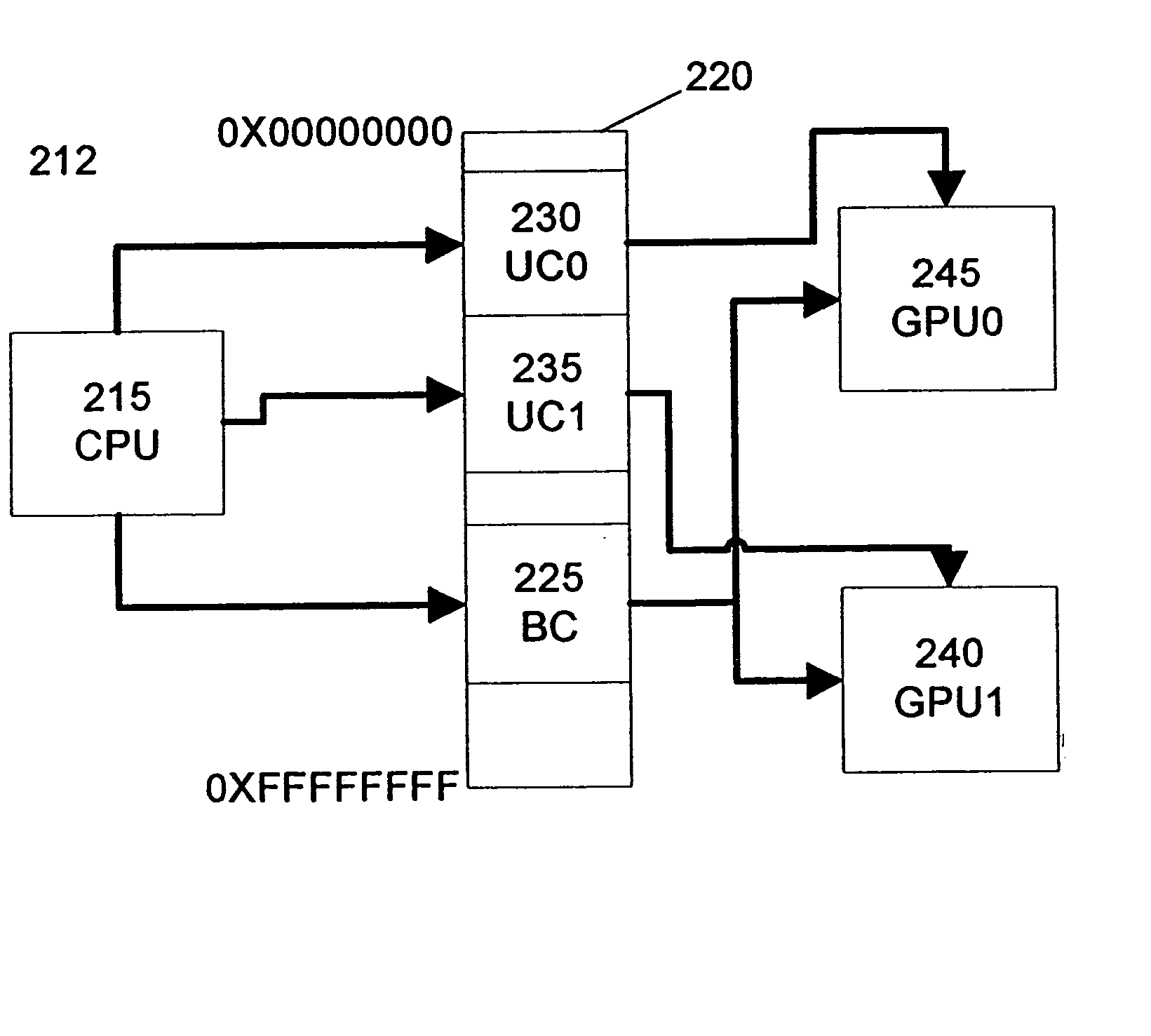

Private addressing in a multi-processor graphics processing system

ActiveUS6956579B1Memory adressing/allocation/relocationCathode-ray tube indicatorsGraphicsMulti processor

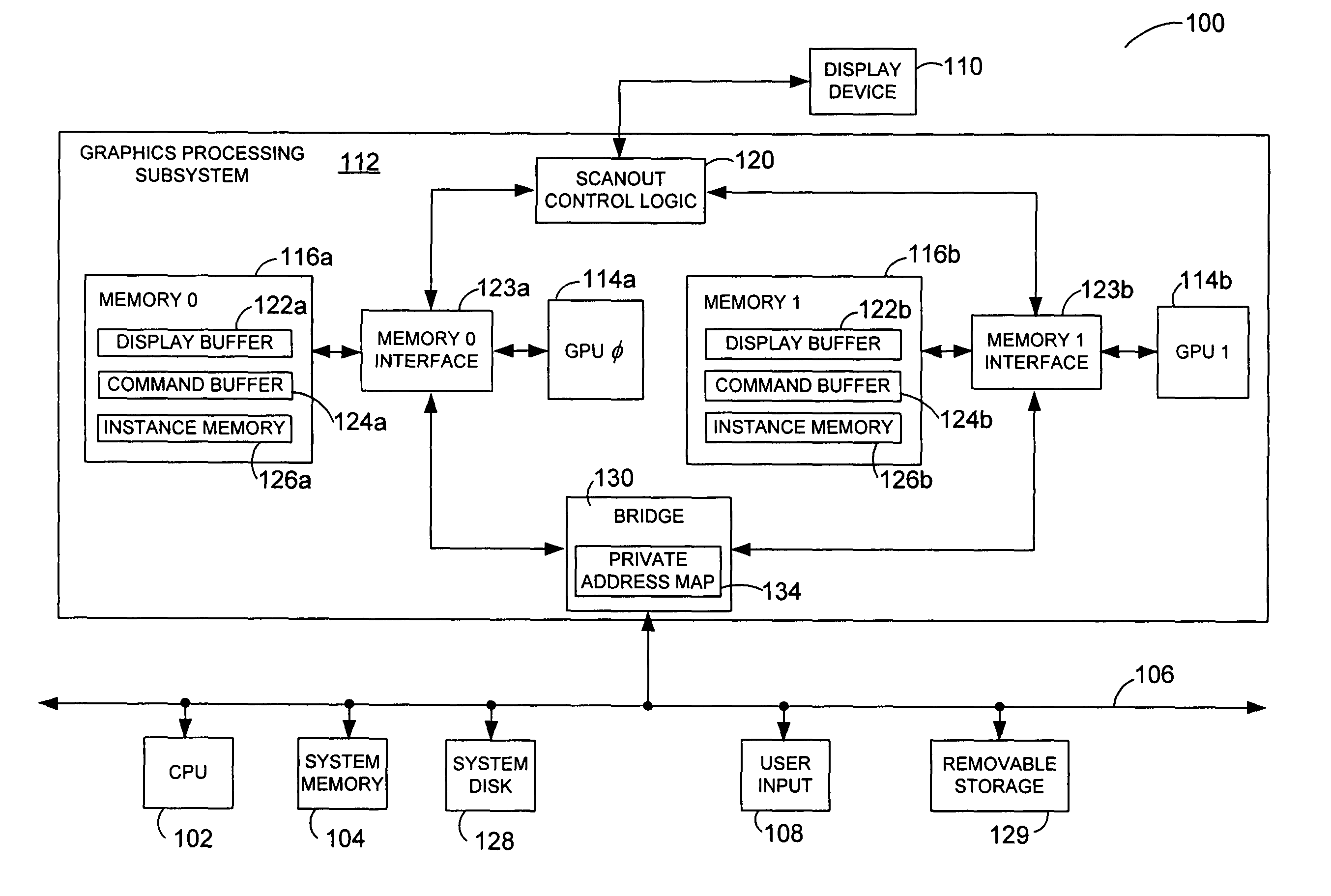

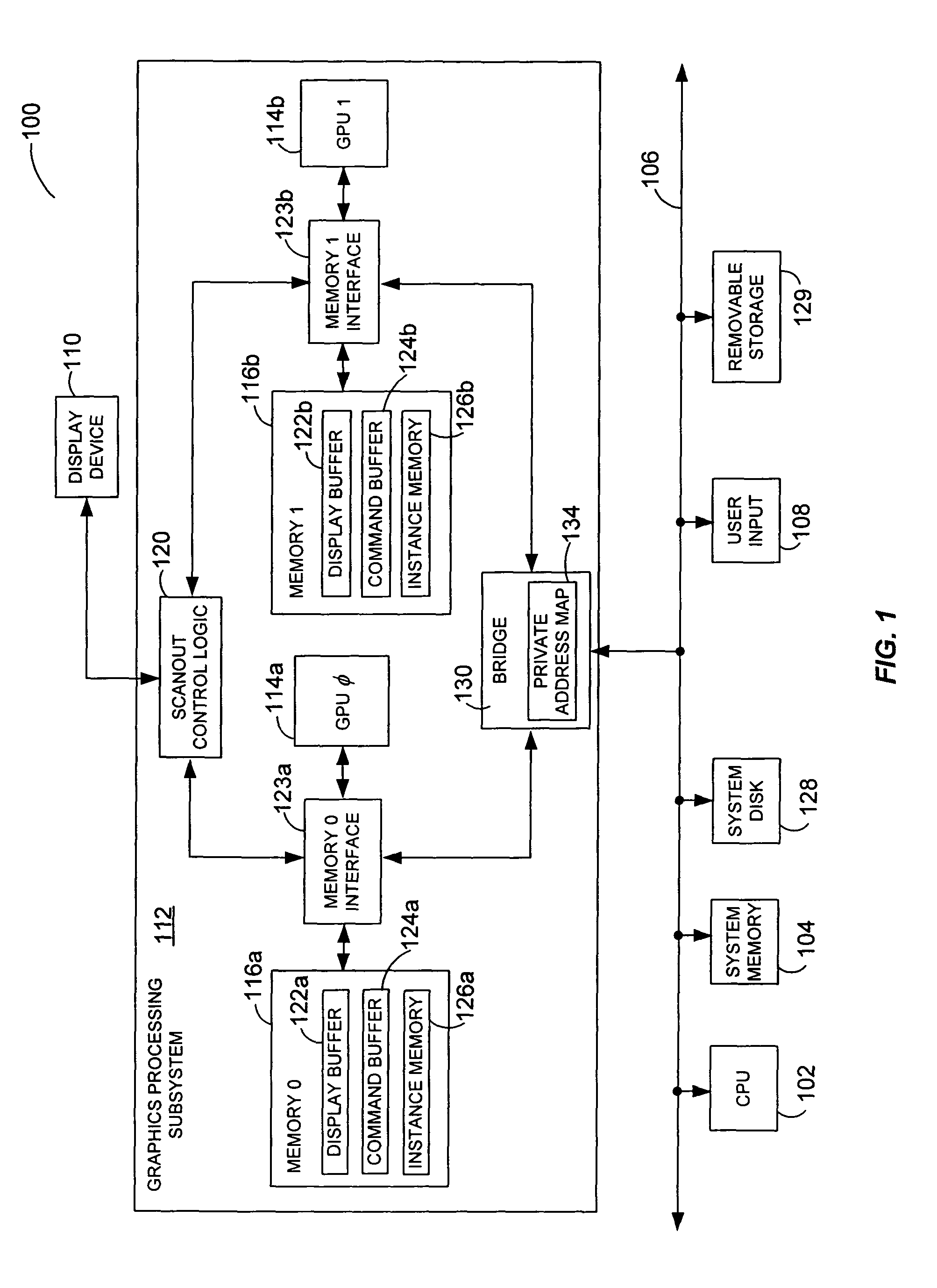

Systems and methods for private addressing in a multi-processor graphics processing subsystem having a number of memories and a number of graphics processors. Each of the memories includes a number of addressable storage locations, and storage locations in different memories may share a common global address. Storage locations are uniquely identifiable by private addresses internal to the graphics processing subsystem. One of the graphics processors is able to access a location in a particular memory by referencing its private address.

Owner:NVIDIA CORP

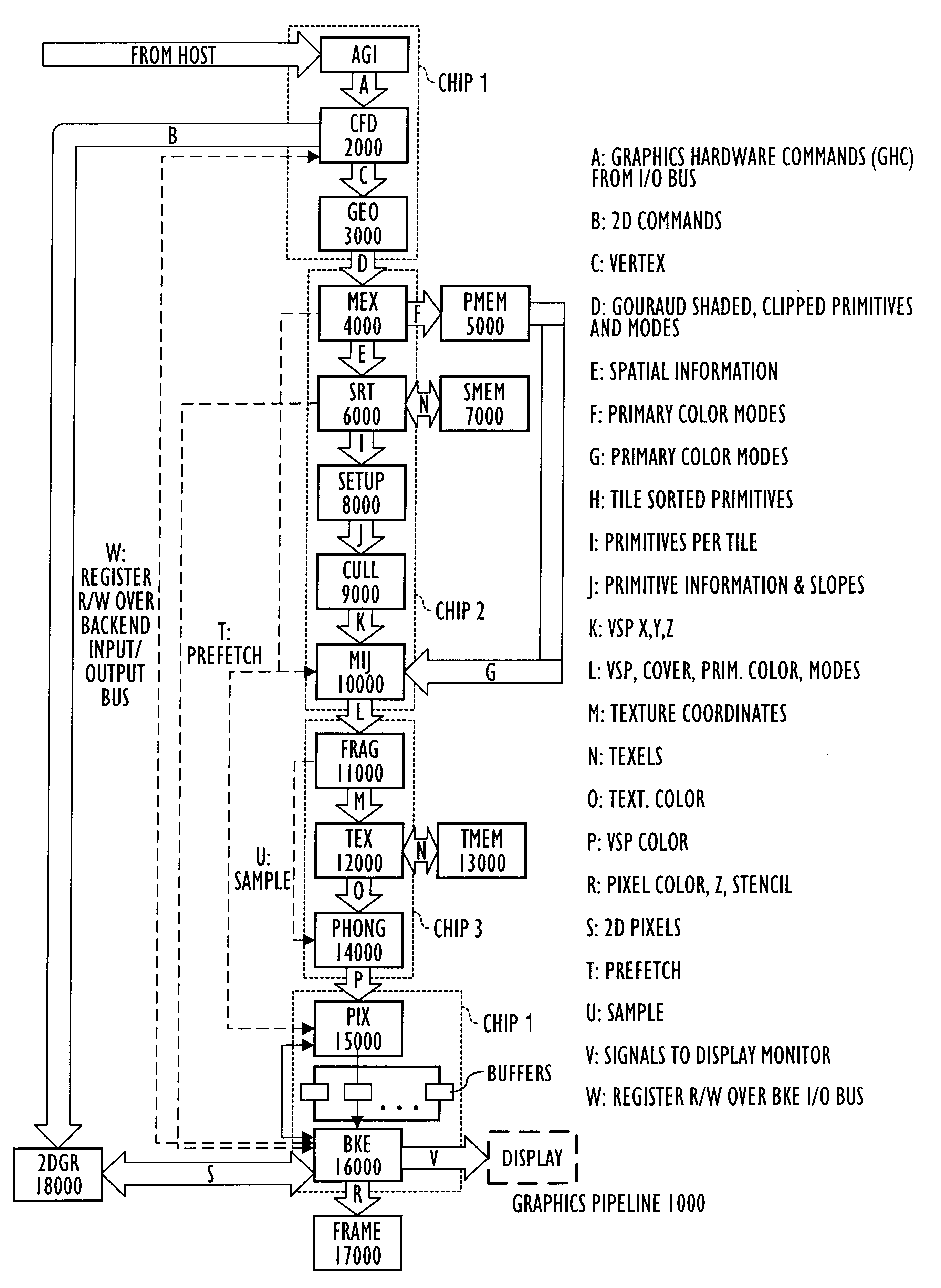

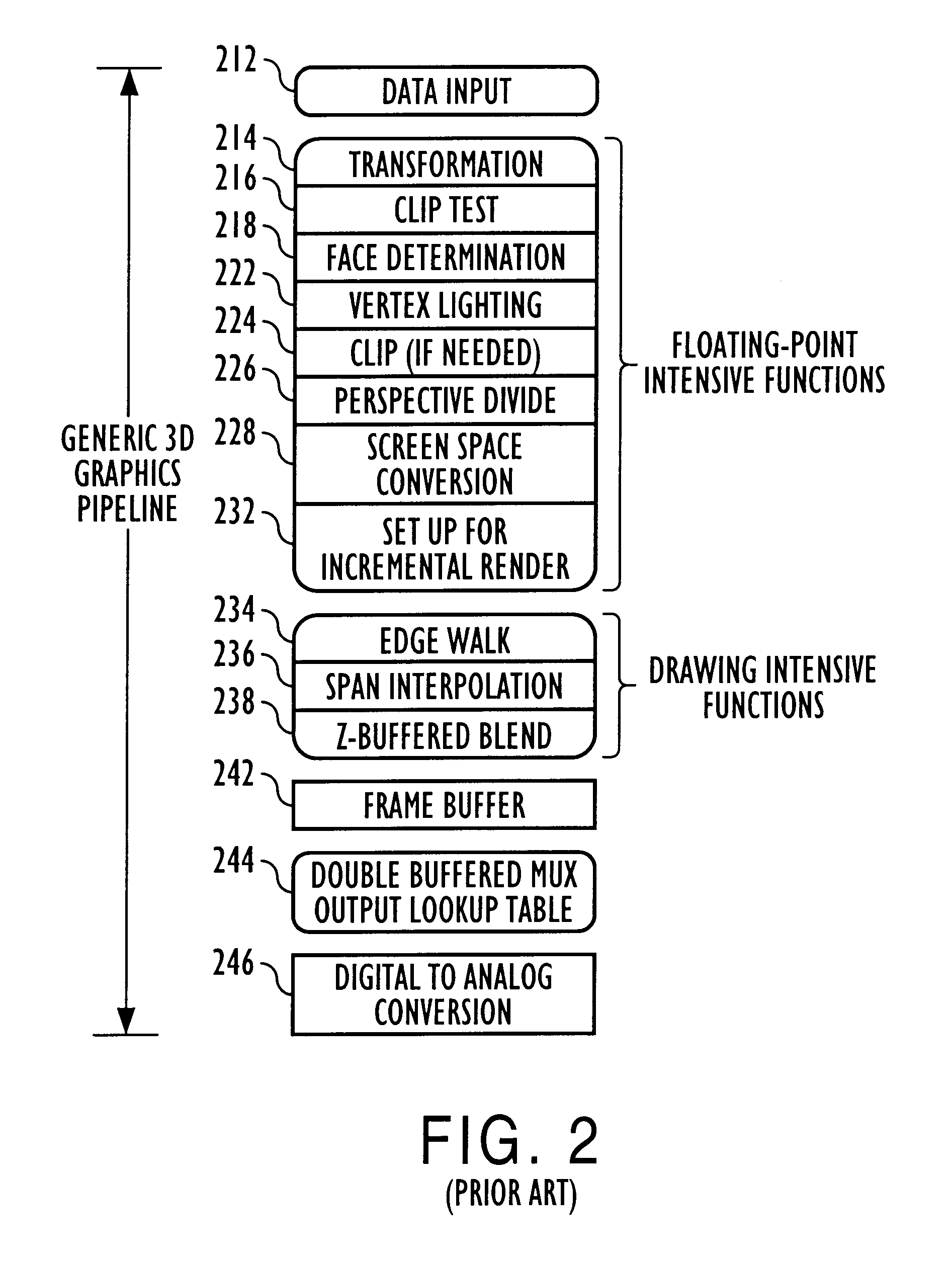

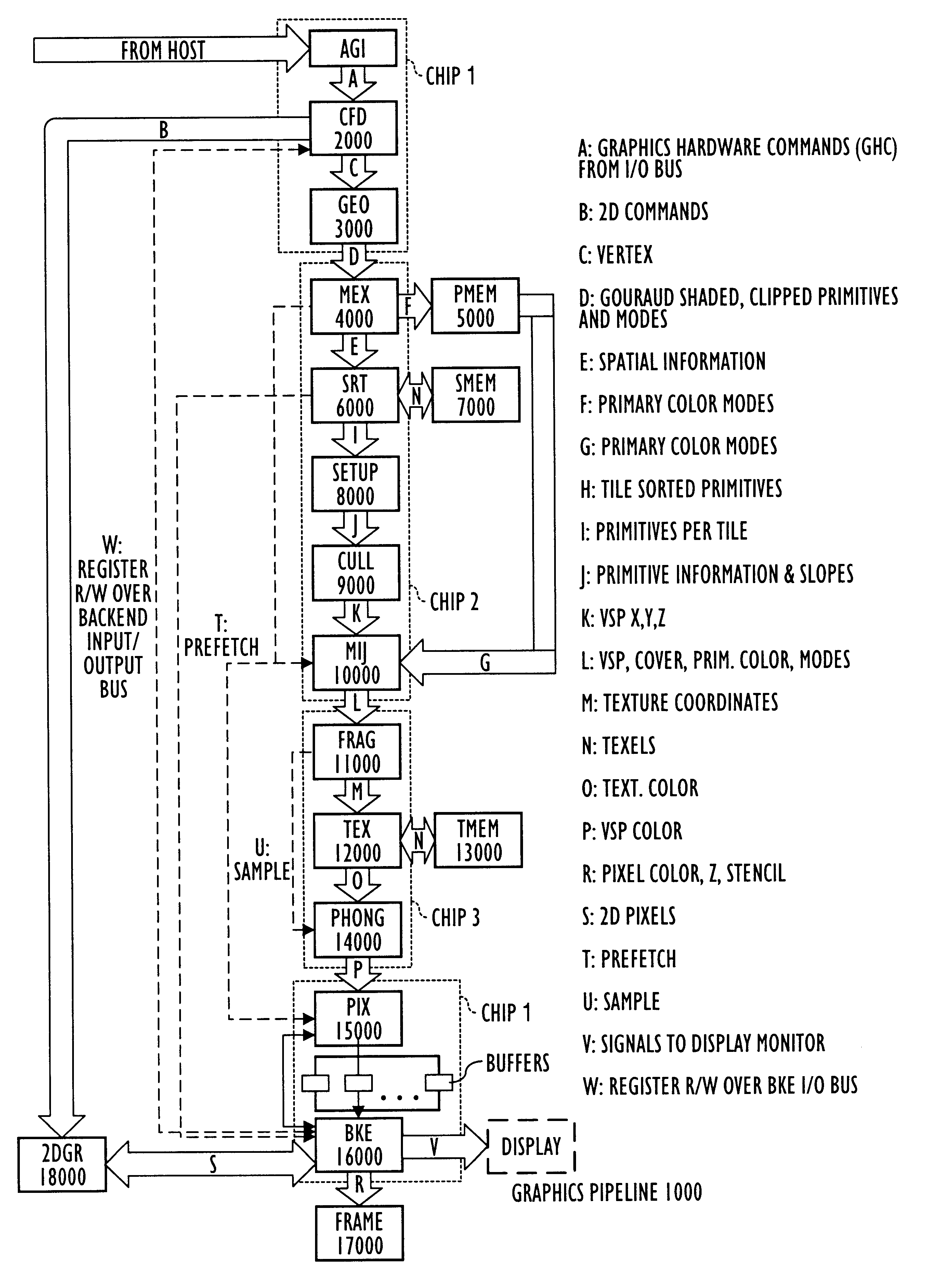

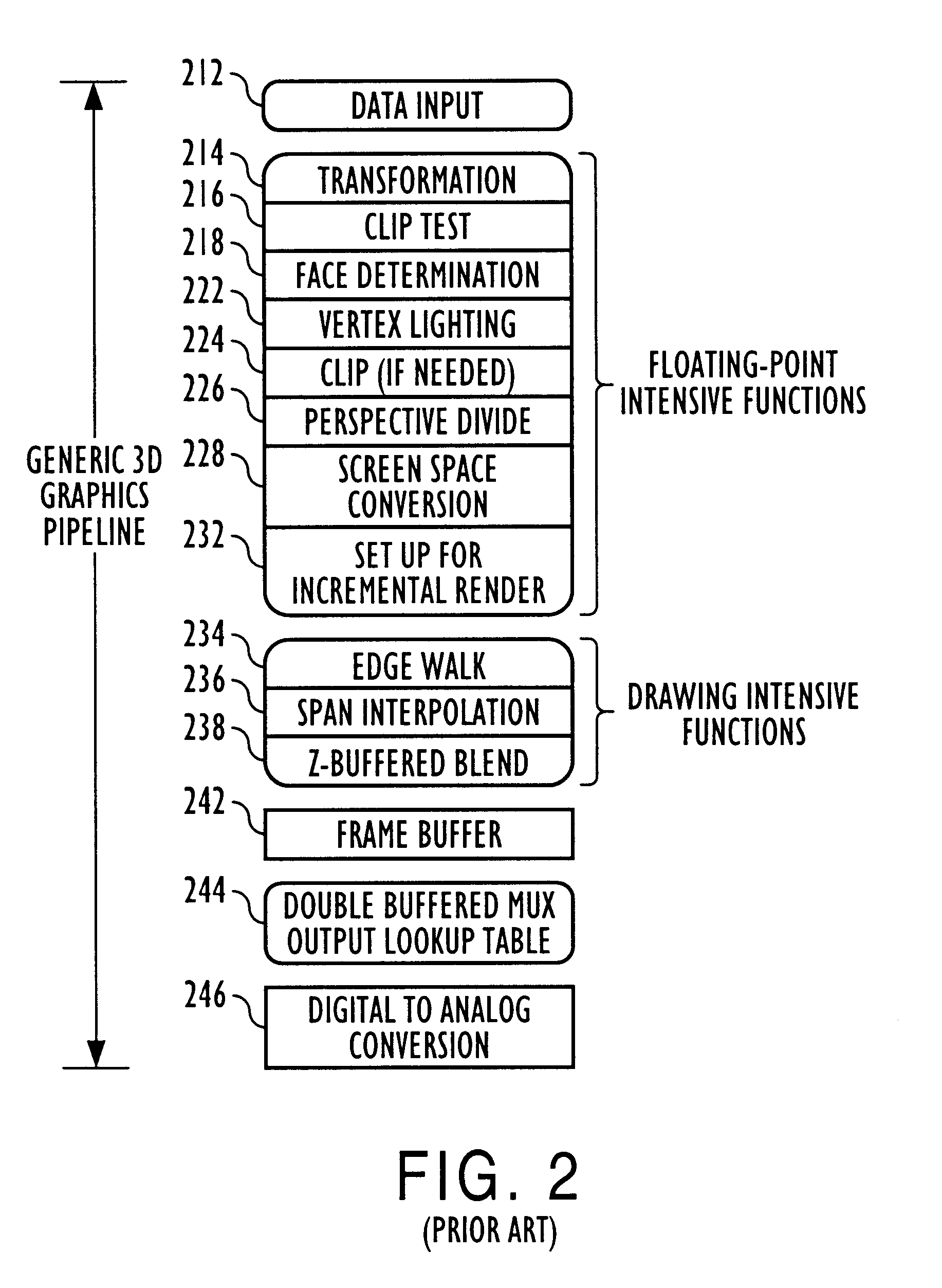

Deferred shading graphics pipeline processor

InactiveUS6229553B1Lower communication bandwidthAttenuation bandwidthTexturing/coloringImage memory managementPhong shadingDeferred shading

Three-dimensional computer graphics systems and methods and more particularly to structure and method for a three-dimensional graphics processor and having other enhanced graphics processing features. In one embodiment the graphics processor is Deferred Shading Graphics Processor (DSGP) comprising an AGP interface, a command fetch decode (2000), a geometry unit (3000), a mode extraction (4000) and polygon memory (5000), a sort unit (6000) and sort memory (7000), a setup unit (8000), a cull unit (9000), a mode injection (10000), a fragment unit (11000), a texture (12000) and texture memory (13000) a phong shading (14000), a pixel unit (15000), a backend unit (1600) coupled to a frame buffer (17000). Other embodiments need not include all of these functional units, and the structures and methods of these units are applicable to other computational processes and systems as well as deferred and non-deferred shading graphical processors.

Owner:APPLE INC

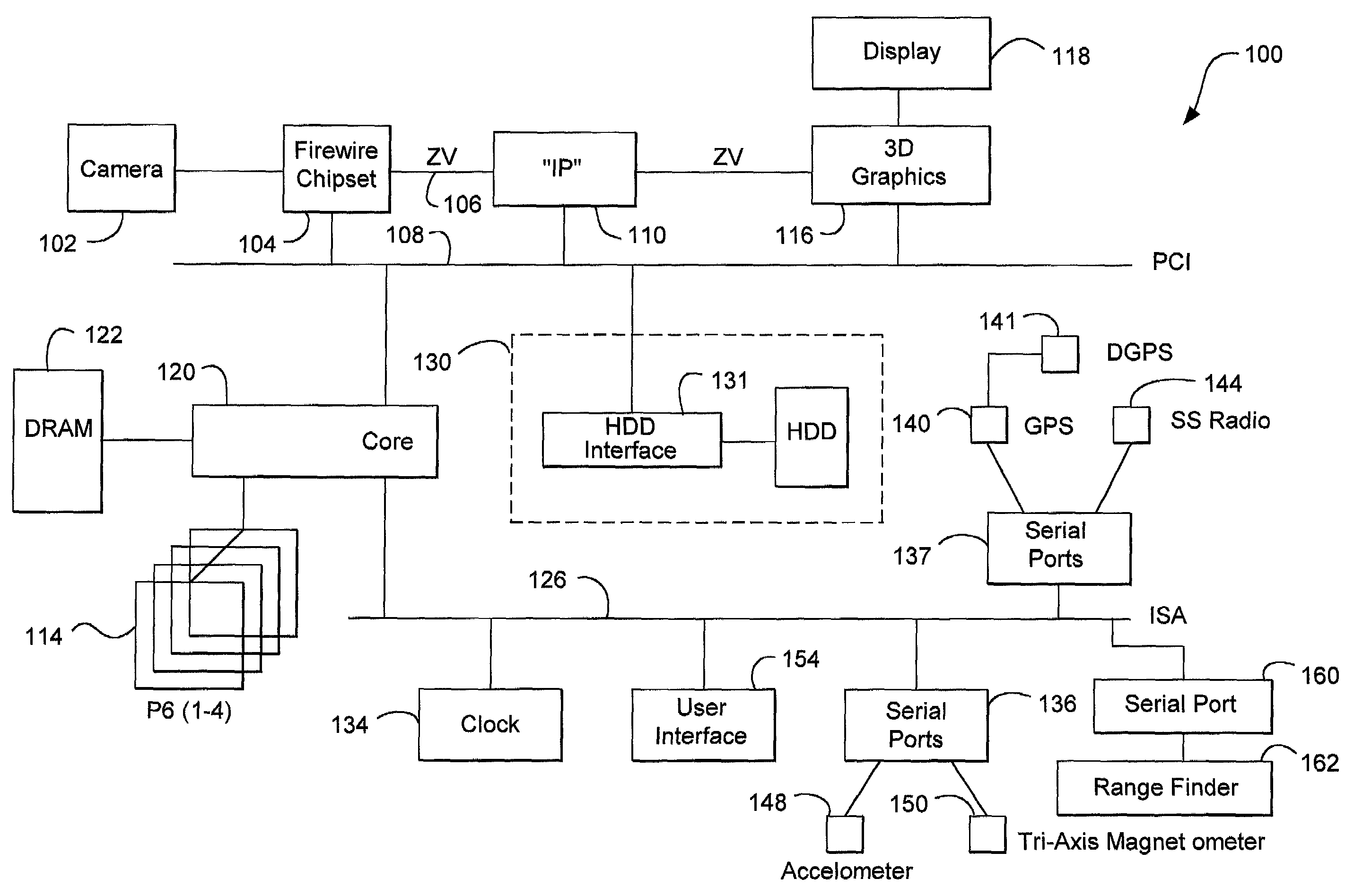

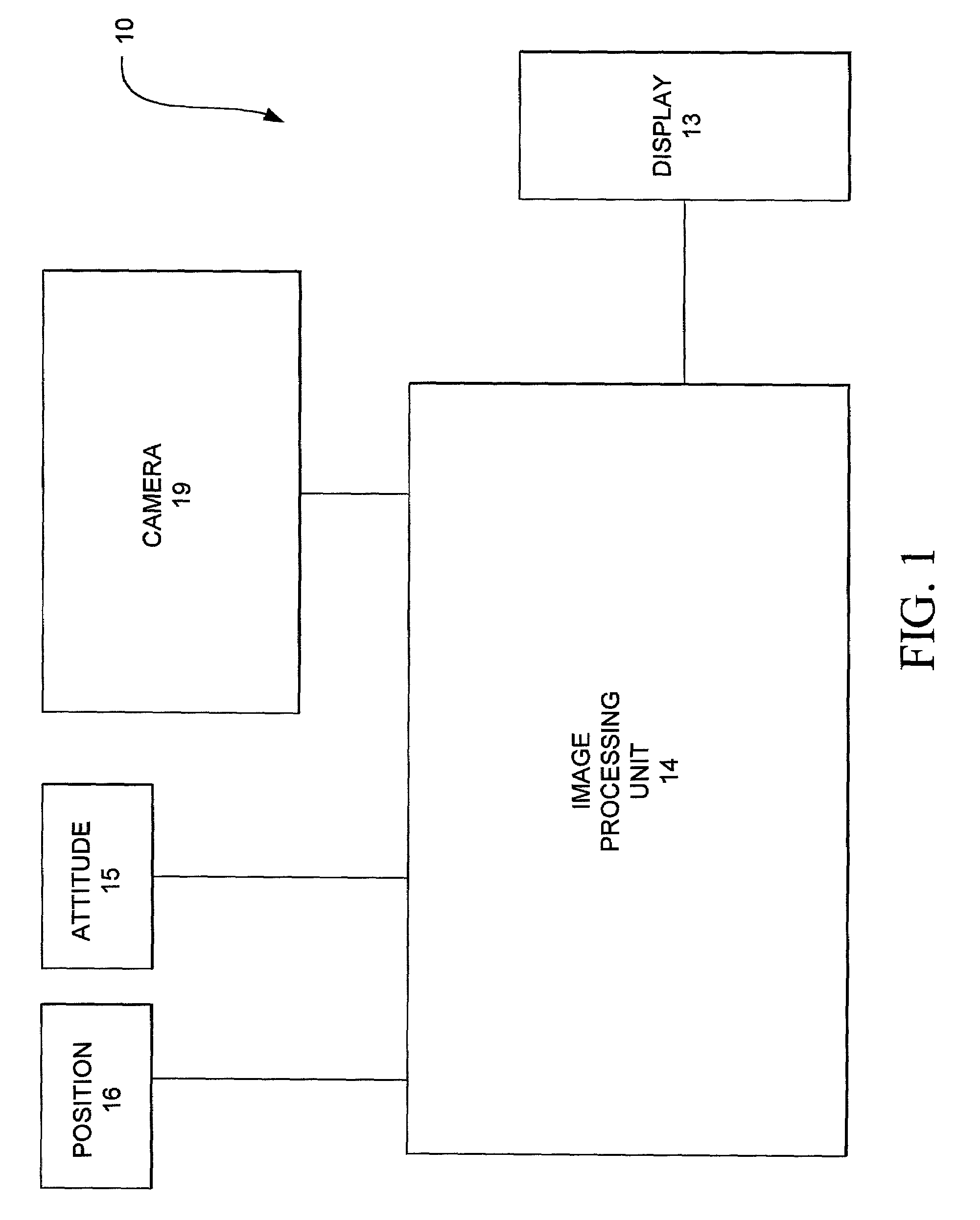

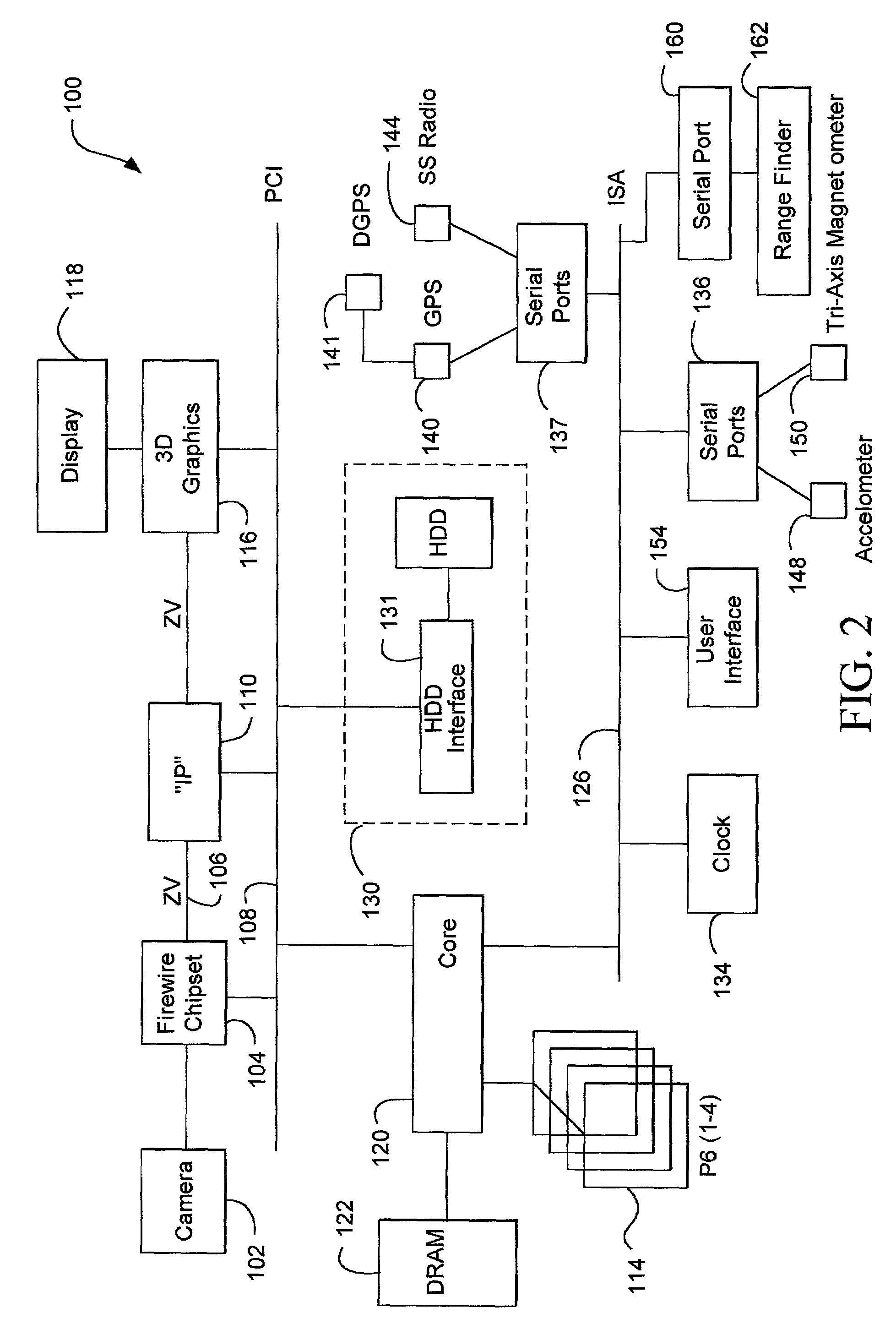

Electro-optic vision systems

InactiveUS7301536B2Programme controlNavigational calculation instrumentsGraphicsSynthetic vision system

An image processing system for delivering real scene information to a data processor. The system includes the data processor, an image-delivery mechanism, an information delivery mechanism, and a graphic processor.

Owner:GEOVECTOR

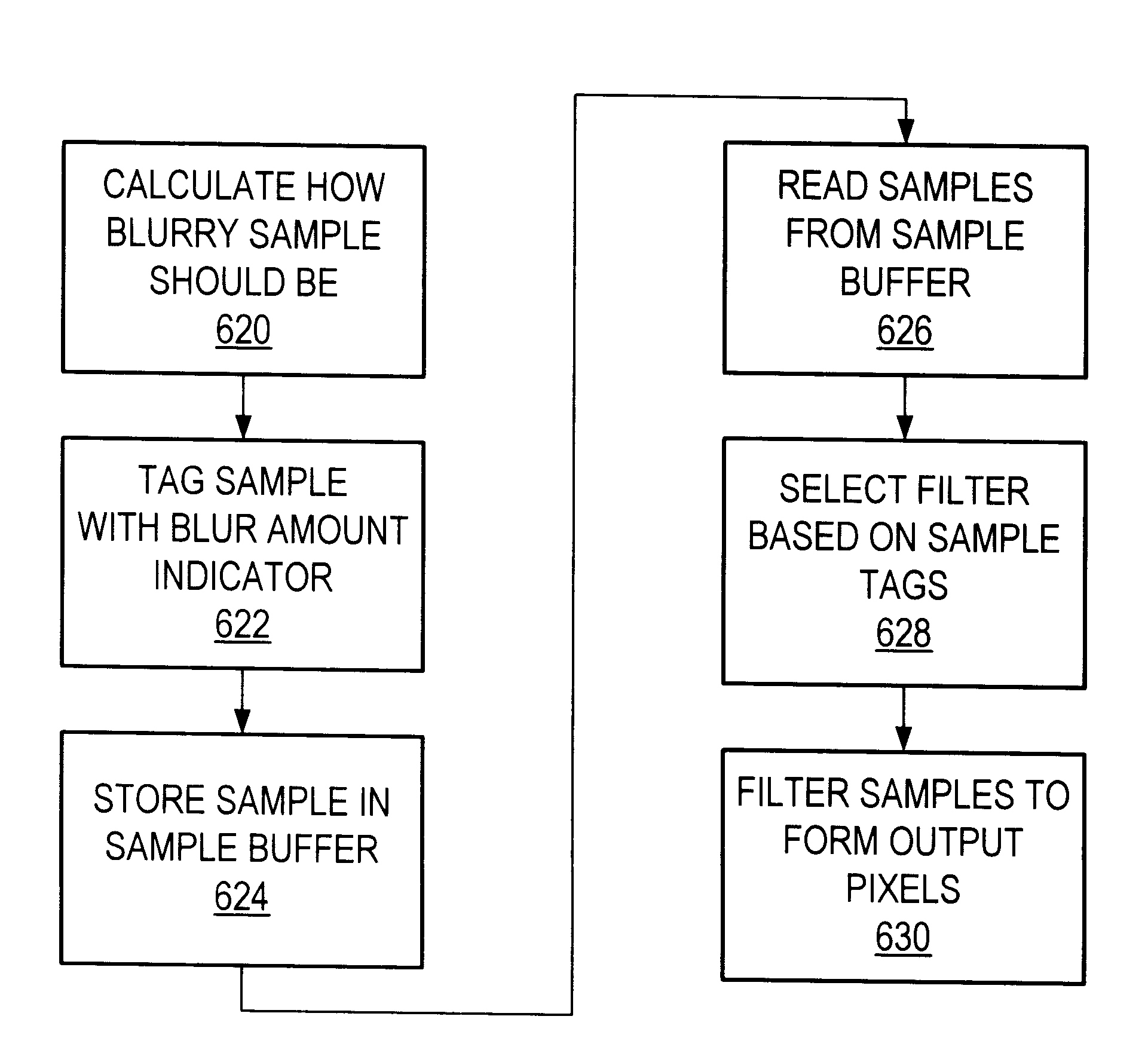

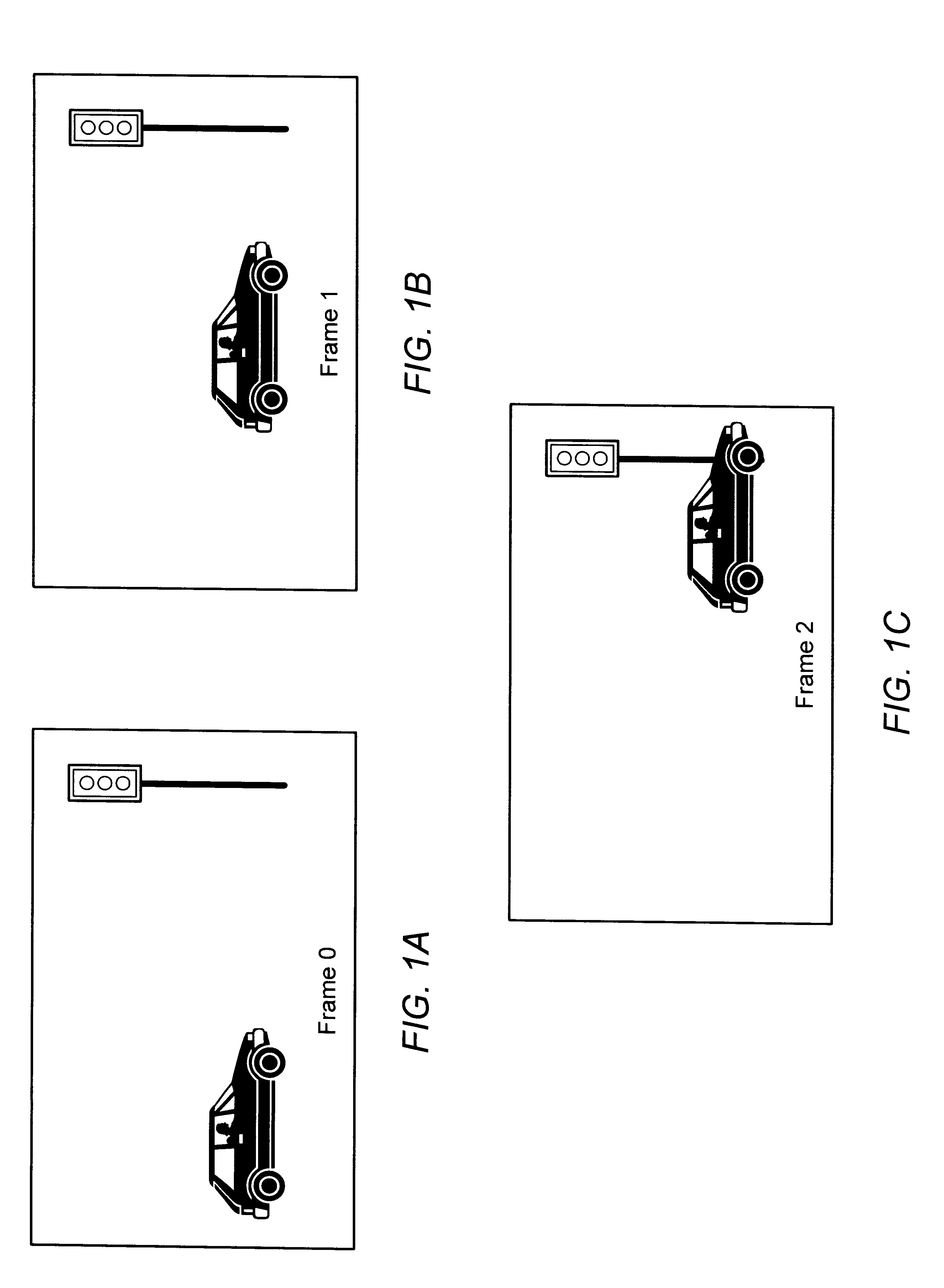

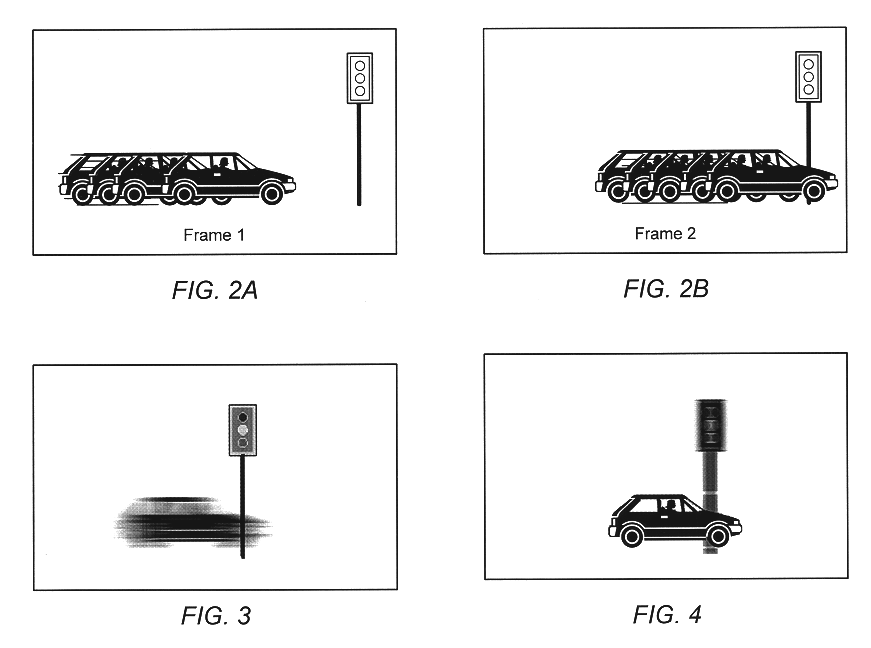

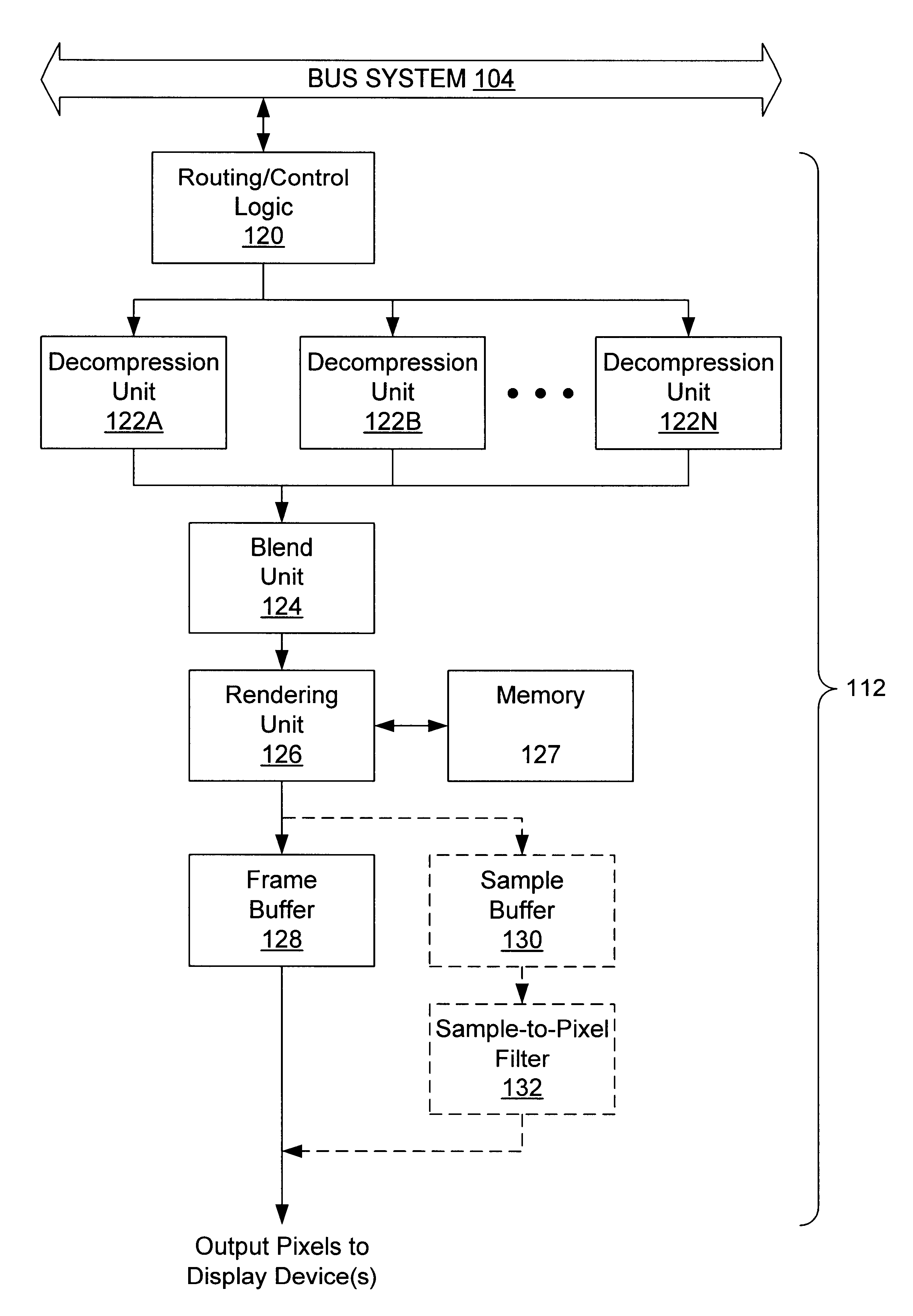

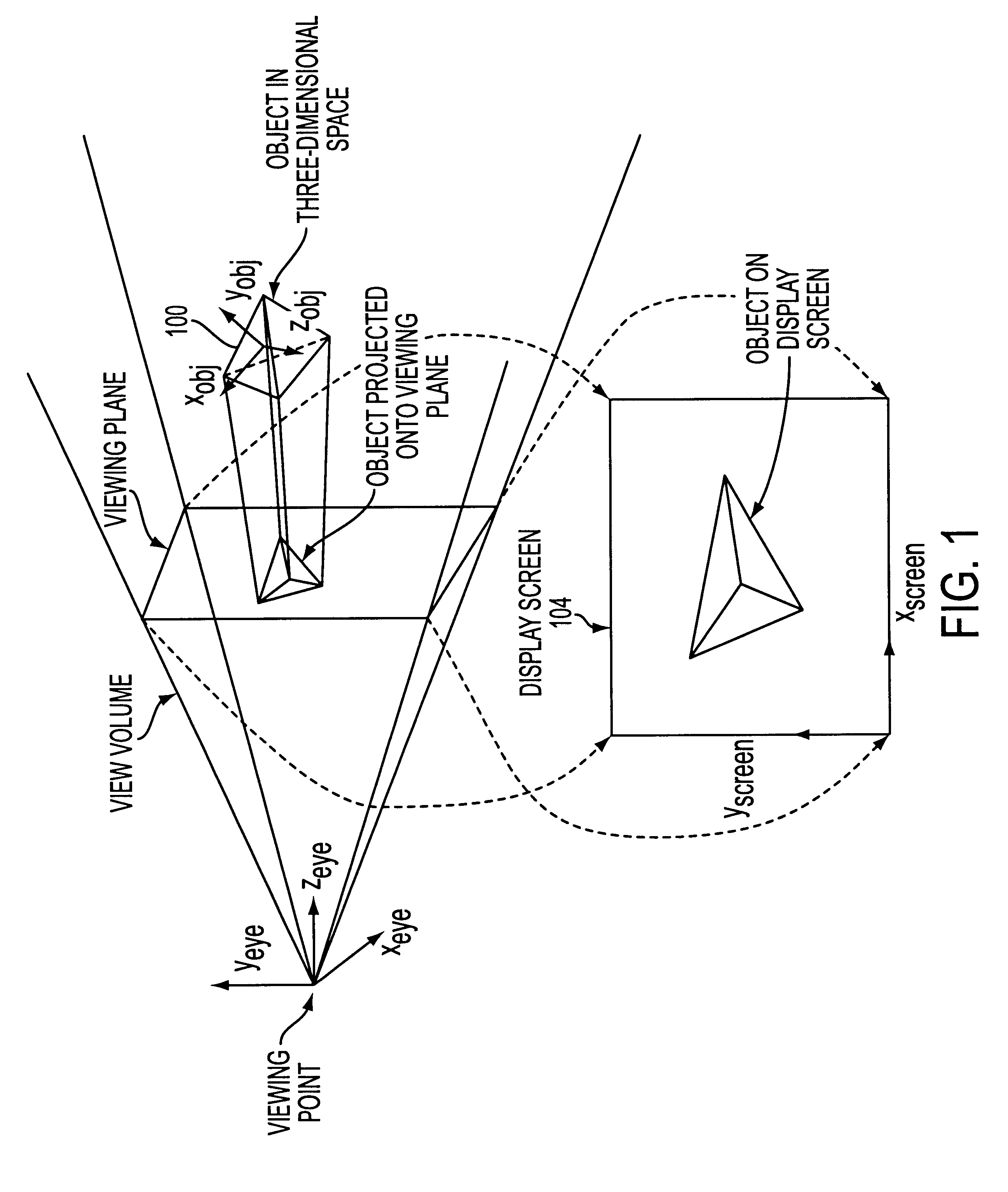

Graphics system using sample tags for blur

InactiveUS6426755B1Character and pattern recognitionCathode-ray tube indicatorsGraphicsGraphic system

A graphics system and method for performing blur effects, including motion blur and depth of field effects, are disclosed. In one embodiment the system comprises a graphics processor, a sample buffer, and a sample-to-pixel calculation unit. The graphics processor is configured to receive a set of three-dimensional (3D) graphics data and render a plurality of samples based on the set of 3D graphics data. The processor is also configured to generate sample tags for the samples, wherein the sample tags are indicative of whether or not the samples are to be blurred. The super-sampled sample buffer is coupled to receive and store the samples from the graphics processor. The sample-to-pixel calculation unit is coupled to receive and filter the samples from the super-sampled sample buffer to generate output pixels, which in turn are displayable to form an image on a display device. The sample-to-pixel calculation units are configured to select the filter attributes used to filter the samples into output pixels based on the sample tags.

Owner:ORACLE INT CORP

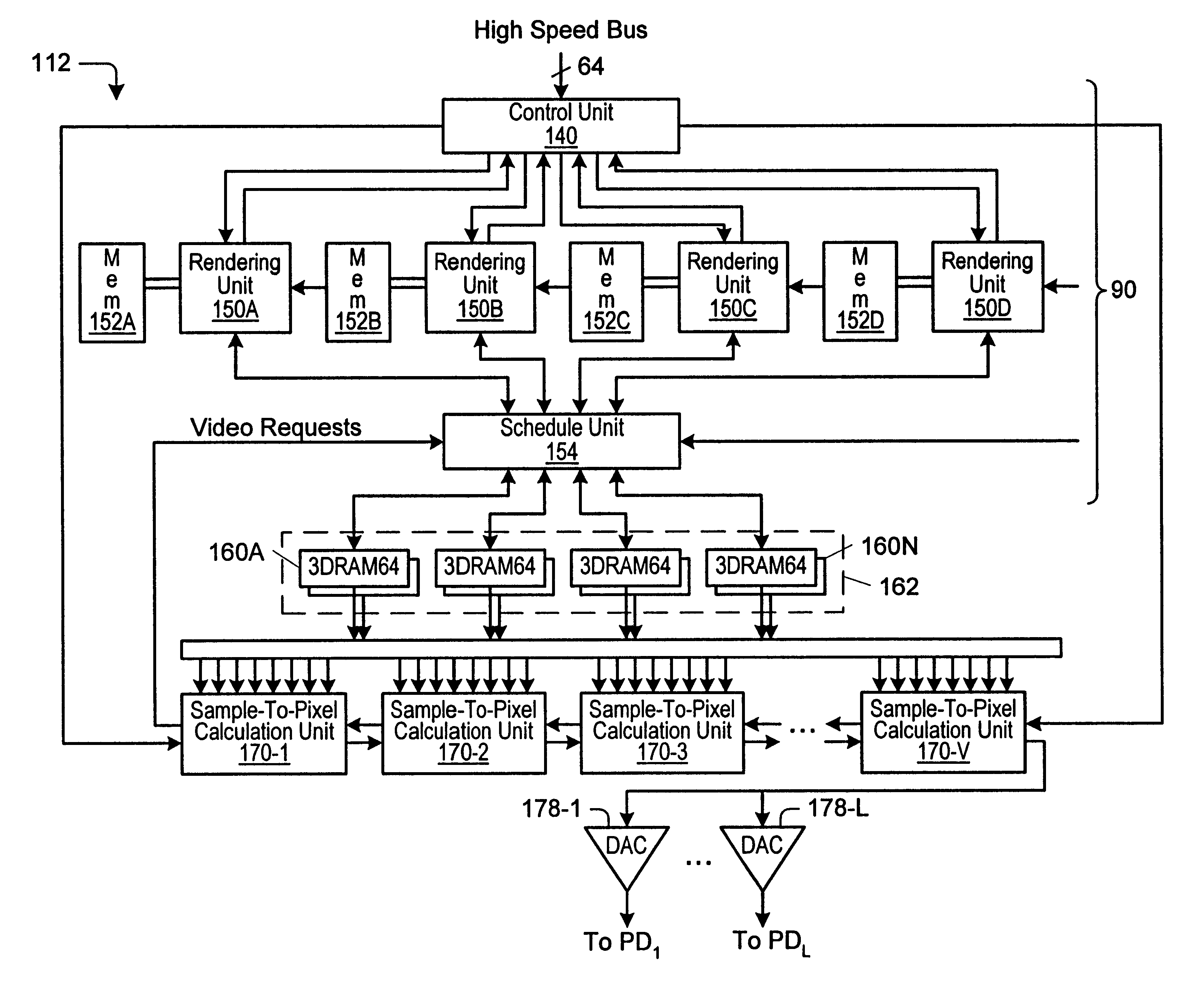

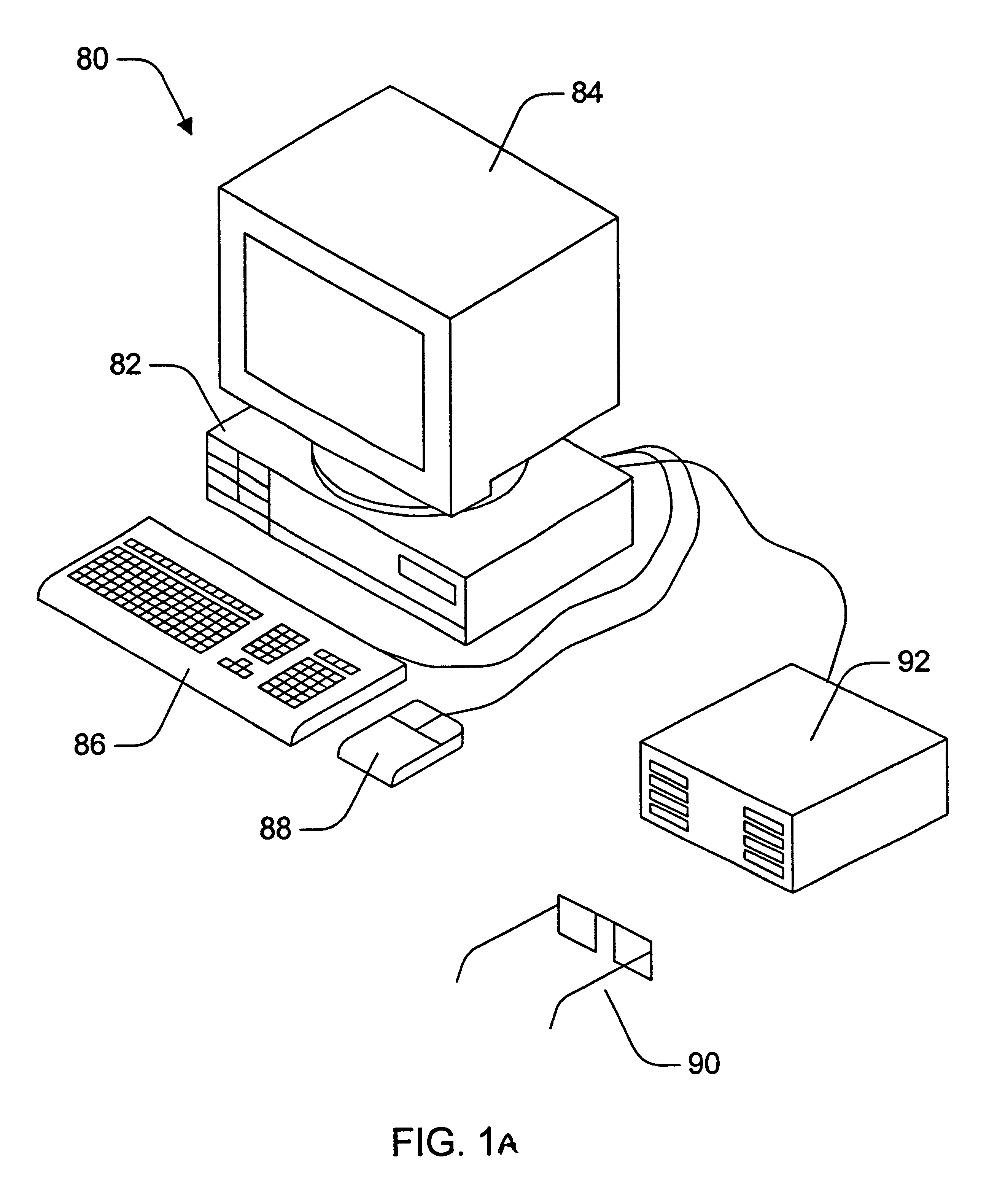

Graphics system configured to perform parallel sample to pixel calculation

A graphics system that is configured to utilize a sample buffer and a plurality of parallel sample-to-pixel calculation units, wherein the sample-pixel calculation units are configured to access different portions of the sample buffer in parallel. The graphics system may include a graphics processor, a sample buffer, and a plurality of sample-to-pixel calculation units. The graphics processor is configured to receive a set of three-dimensional graphics data and render a plurality of samples based on the graphics data. The sample buffer is configured to store the plurality of samples for the sample-to-pixel calculation units, which are configured to receive and filter samples from the sample buffer to create output pixels. Each of the sample-to-pixel calculation units are configured to generate pixels corresponding to a different region of the image. The region may be a vertical or horizontal stripe of the image, or a rectangular portion of the image. Each region may overlap the other regions of the image to prevent visual aberrations.

Owner:ORACLE INT CORP

Systems and methods for recognizing objects in radar imagery

ActiveUS20160019458A1Low in size and weight and power requirementImprove historical speed and accuracy performance limitationDigital computer detailsDigital dataPattern recognitionGraphics

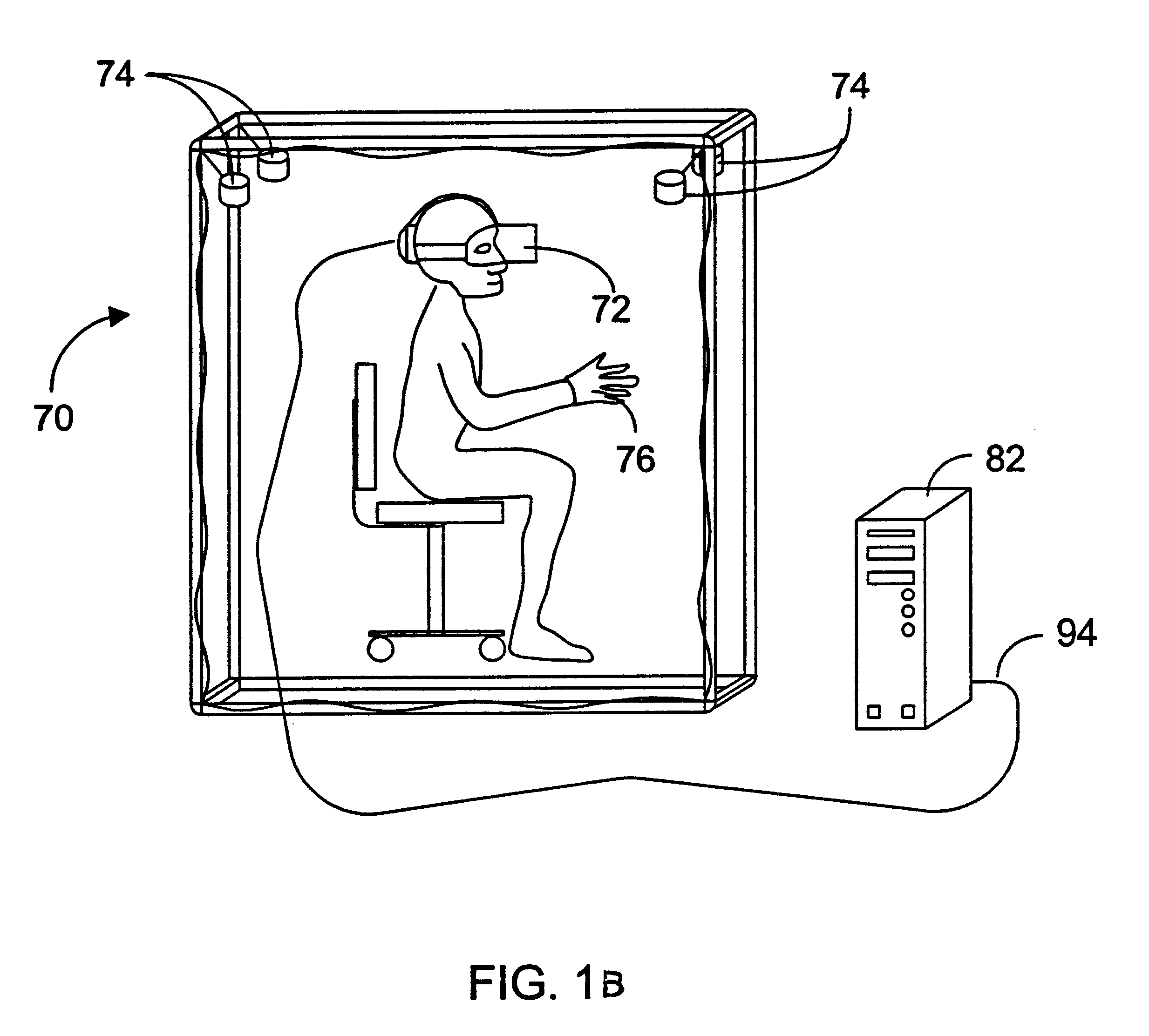

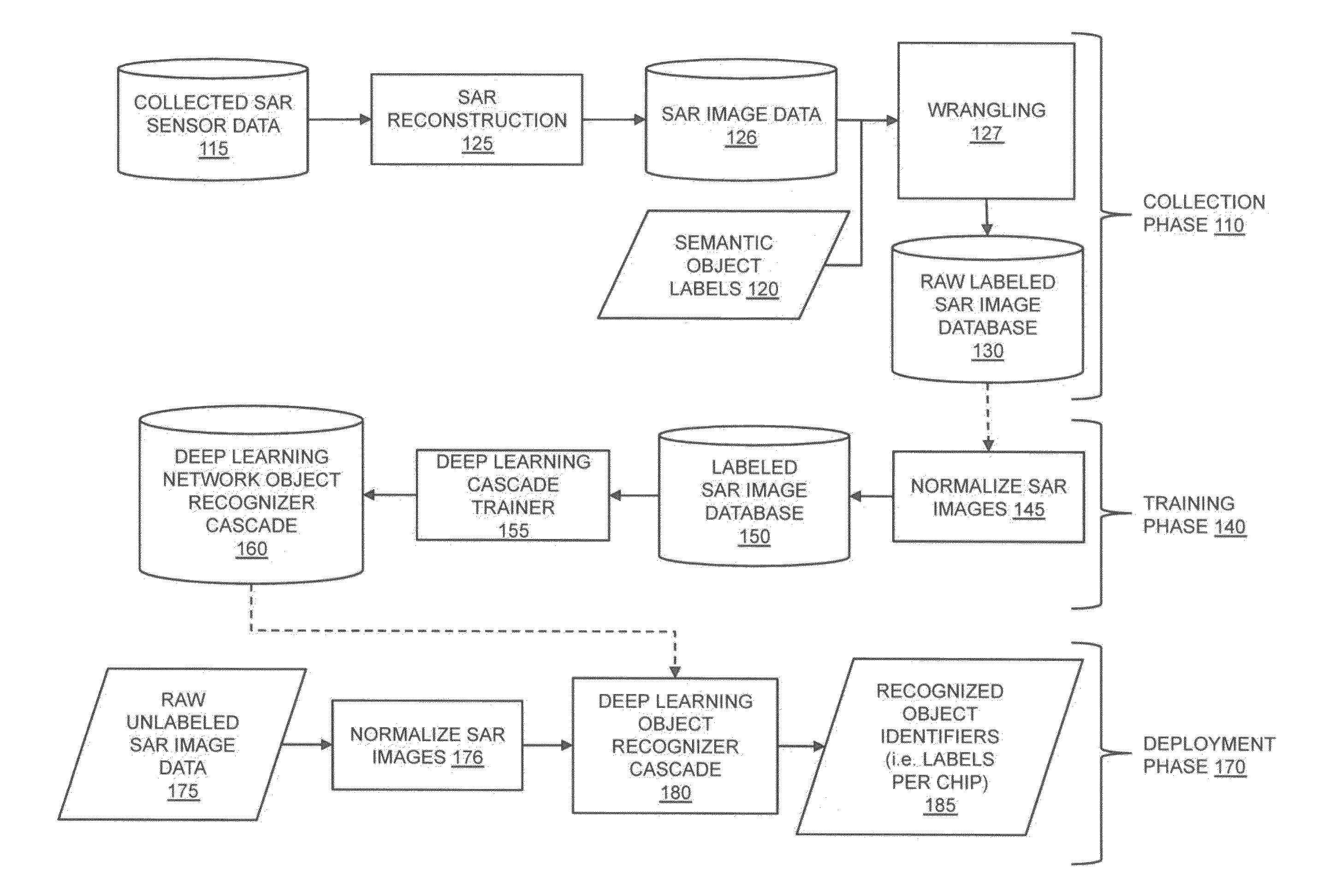

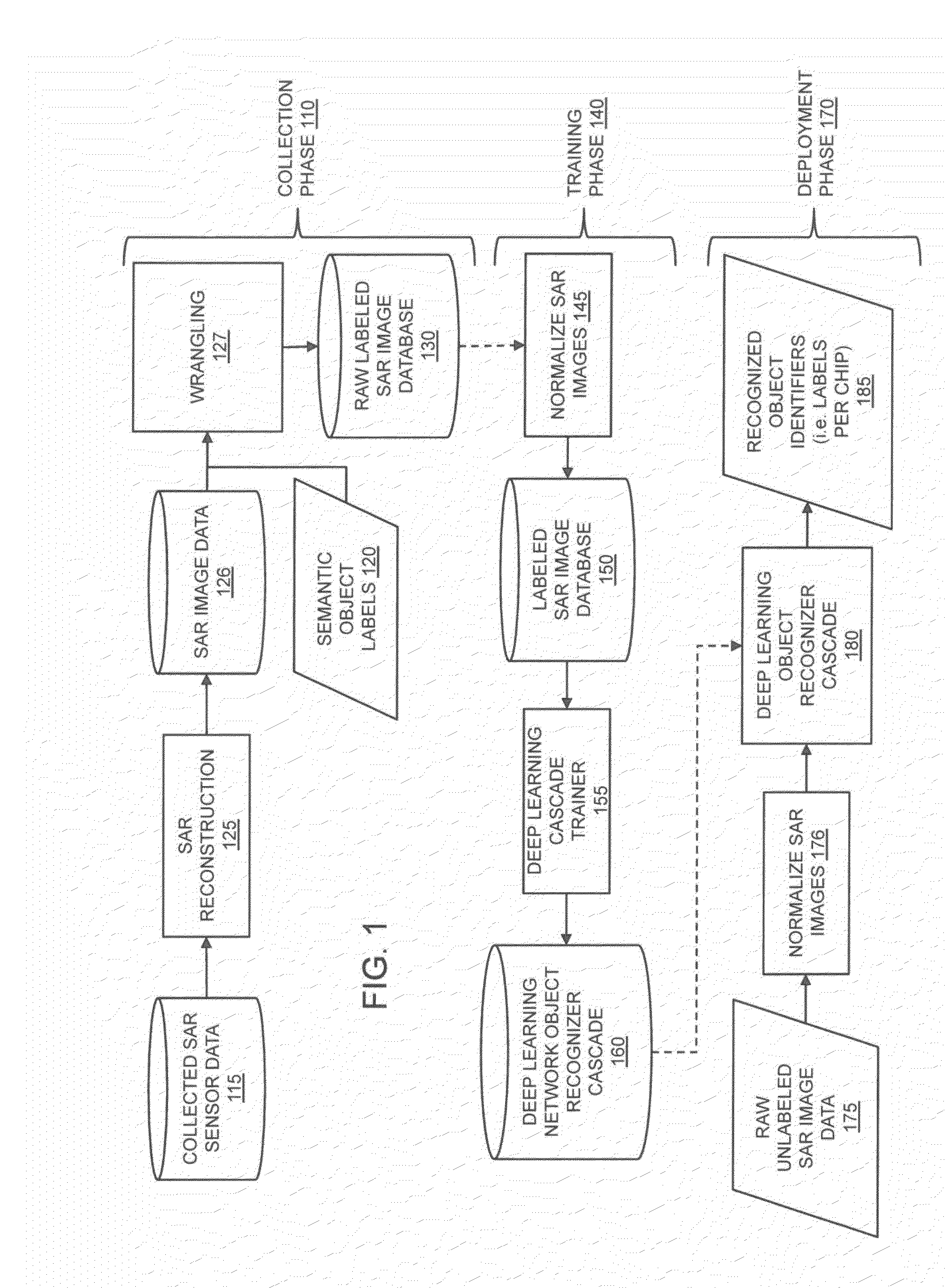

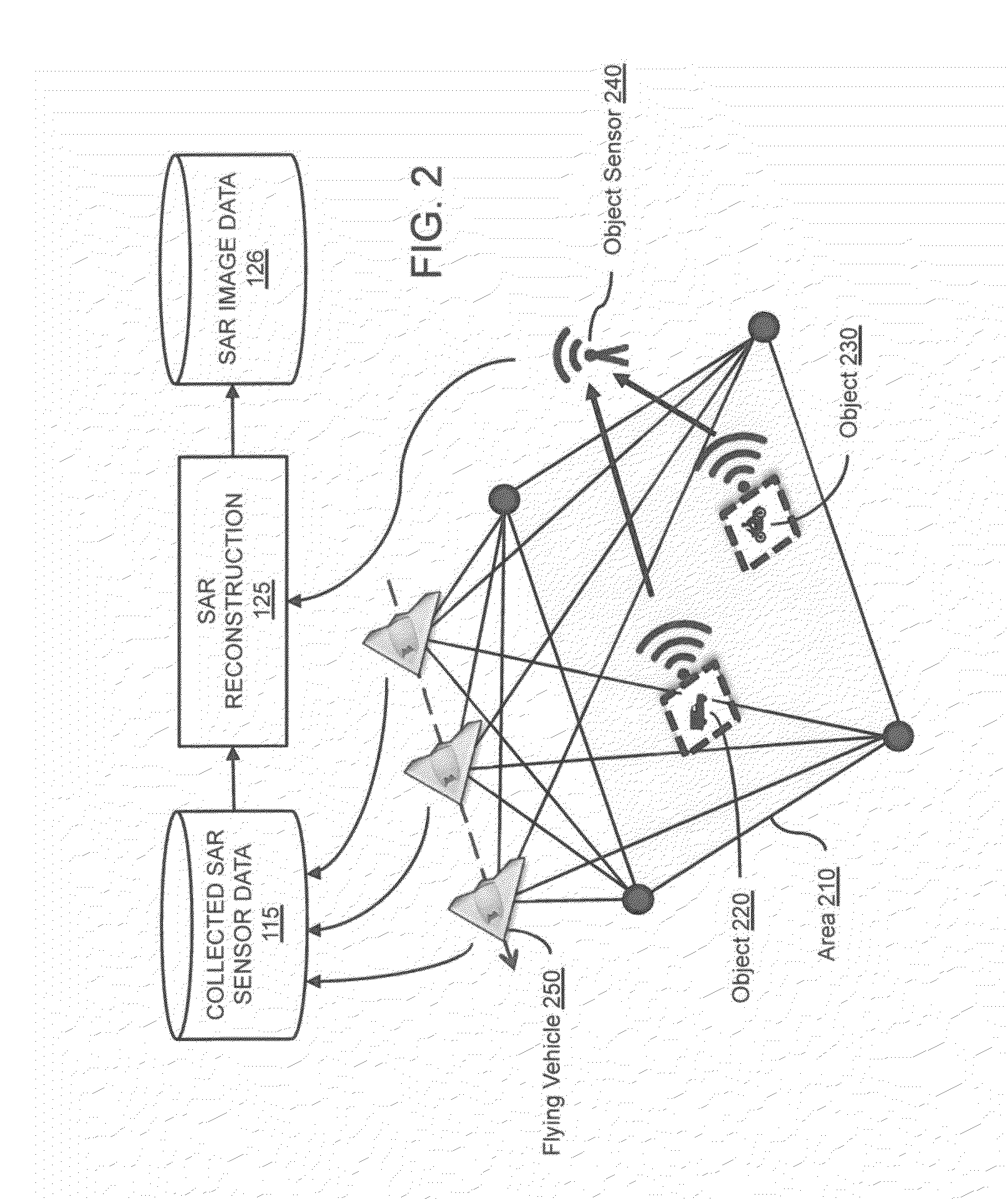

The present invention is directed to systems and methods for detecting objects in a radar image stream. Embodiments of the invention can receive a data stream from radar sensors and use a deep neural network to convert the received data stream into a set of semantic labels, where each semantic label corresponds to an object in the radar data stream that the deep neural network has identified. Processing units running the deep neural network may be collocated onboard an airborne vehicle along with the radar sensor(s). The processing units can be configured with powerful, high-speed graphics processing units or field-programmable gate arrays that are low in size, weight, and power requirements. Embodiments of the invention are also directed to providing innovative advances to object recognition training systems that utilize a detector and an object recognition cascade to analyze radar image streams in real time. The object recognition cascade can comprise at least one recognizer that receives a non-background stream of image patches from a detector and automatically assigns one or more semantic labels to each non-background image patch. In some embodiments, a separate recognizer for the background analysis of patches may also be incorporated. There may be multiple detectors and multiple recognizers, depending on the design of the cascade. Embodiments of the invention also include novel methods to tailor deep neural network algorithms to successfully process radar imagery, utilizing techniques such as normalization, sampling, data augmentation, foveation, cascade architectures, and label harmonization.

Owner:GENERAL DYNAMICS MISSION SYST INC

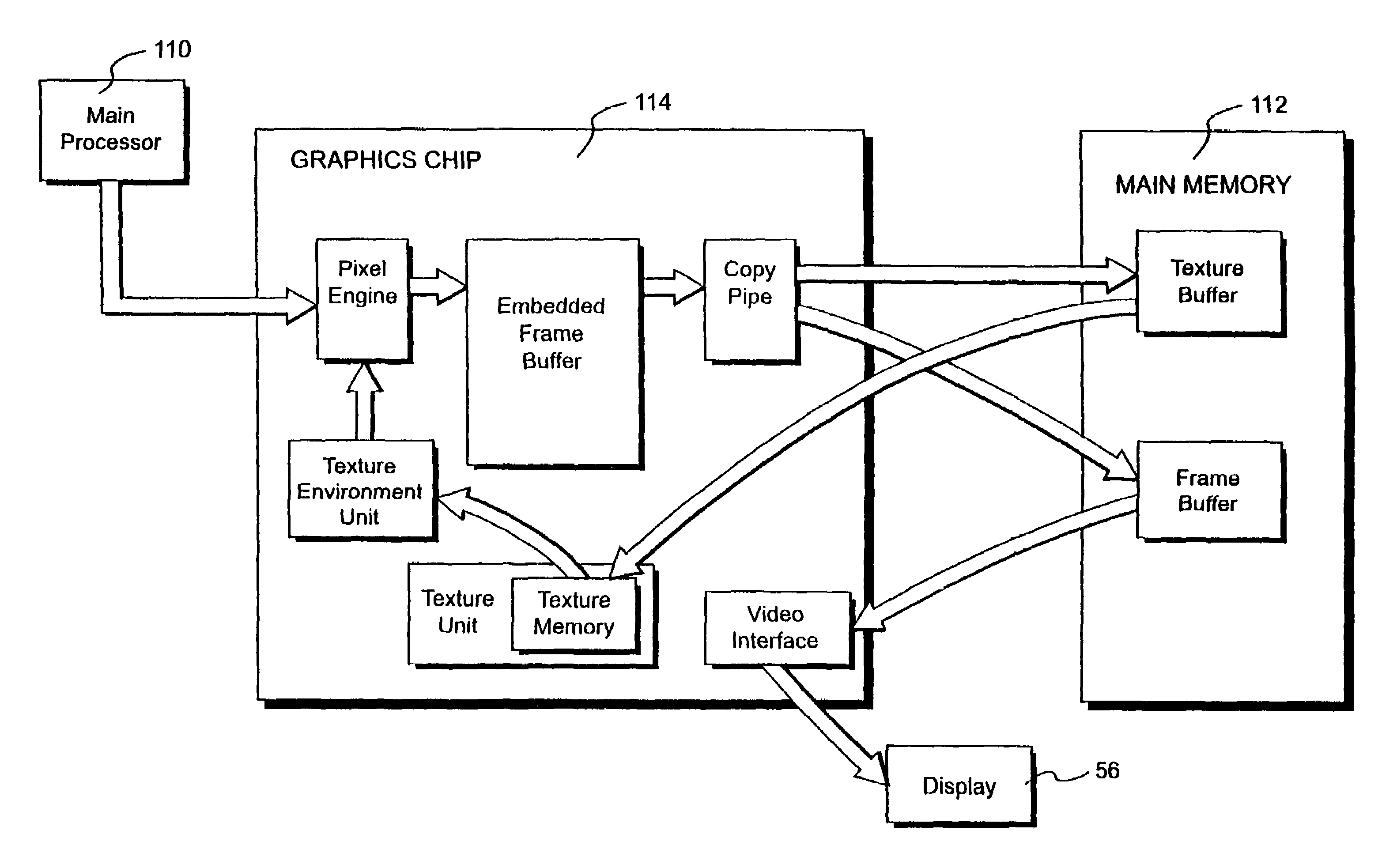

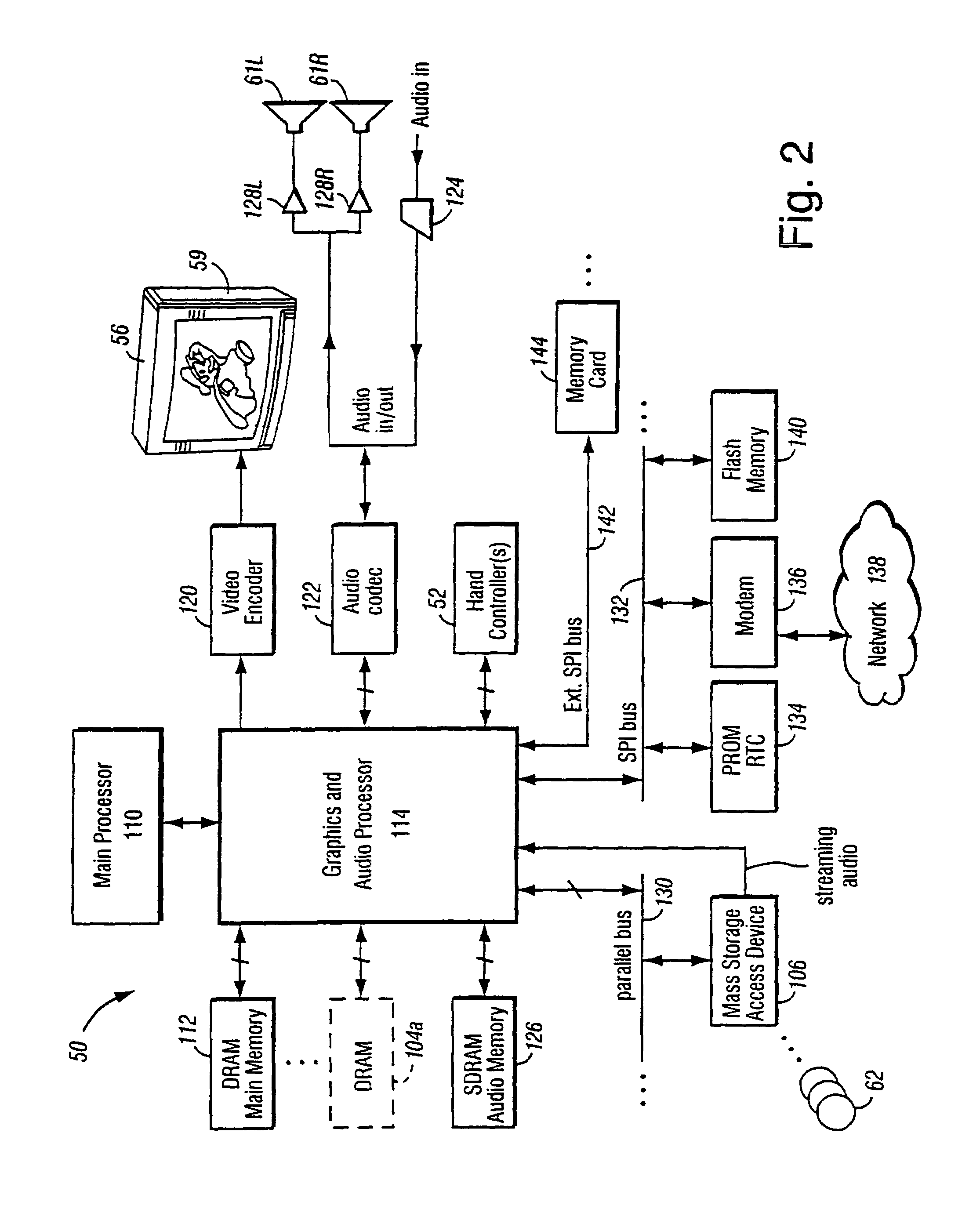

Graphics system with copy out conversions between embedded frame buffer and main memory

InactiveUS7184059B1Improve system flexibilityEasy to useImage memory managementCathode-ray tube indicatorsGraphic systemGraphics processing unit

A graphics system including a custom graphics and audio processor produces exciting 2D and 3D graphics and surround sound. The system includes a graphics and audio processor including a 3D graphics pipeline and an audio digital signal processor. The graphics processor includes an embedded frame buffer for storing frame data prior to sending the frame data to an external location, such as main memory. A copy pipeline is provided which converts the data from one format to another format prior to writing the data to the external location. The conversion may be from one RGB color format to another RGB color format, from one YUV format to another YUV format, from an RGB color format to a YUV color format, or from a YUV color format to an RGB color format. The formatted data is either transferred to a display buffer, for use by the video interface, or to a texture buffer, for use as a texture by the graphics pipeline in a subsequent rendering process.

Owner:NINTENDO CO LTD

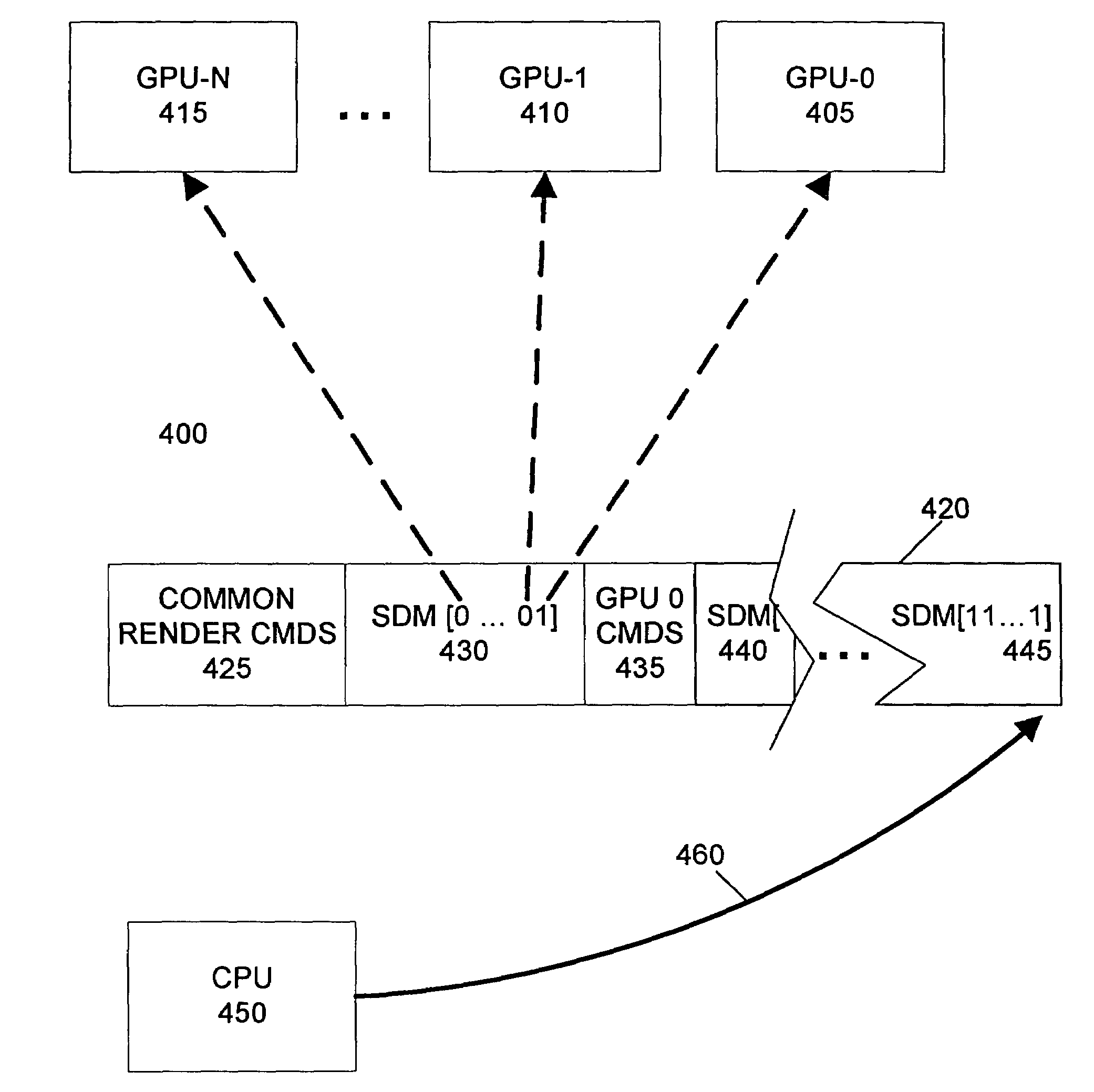

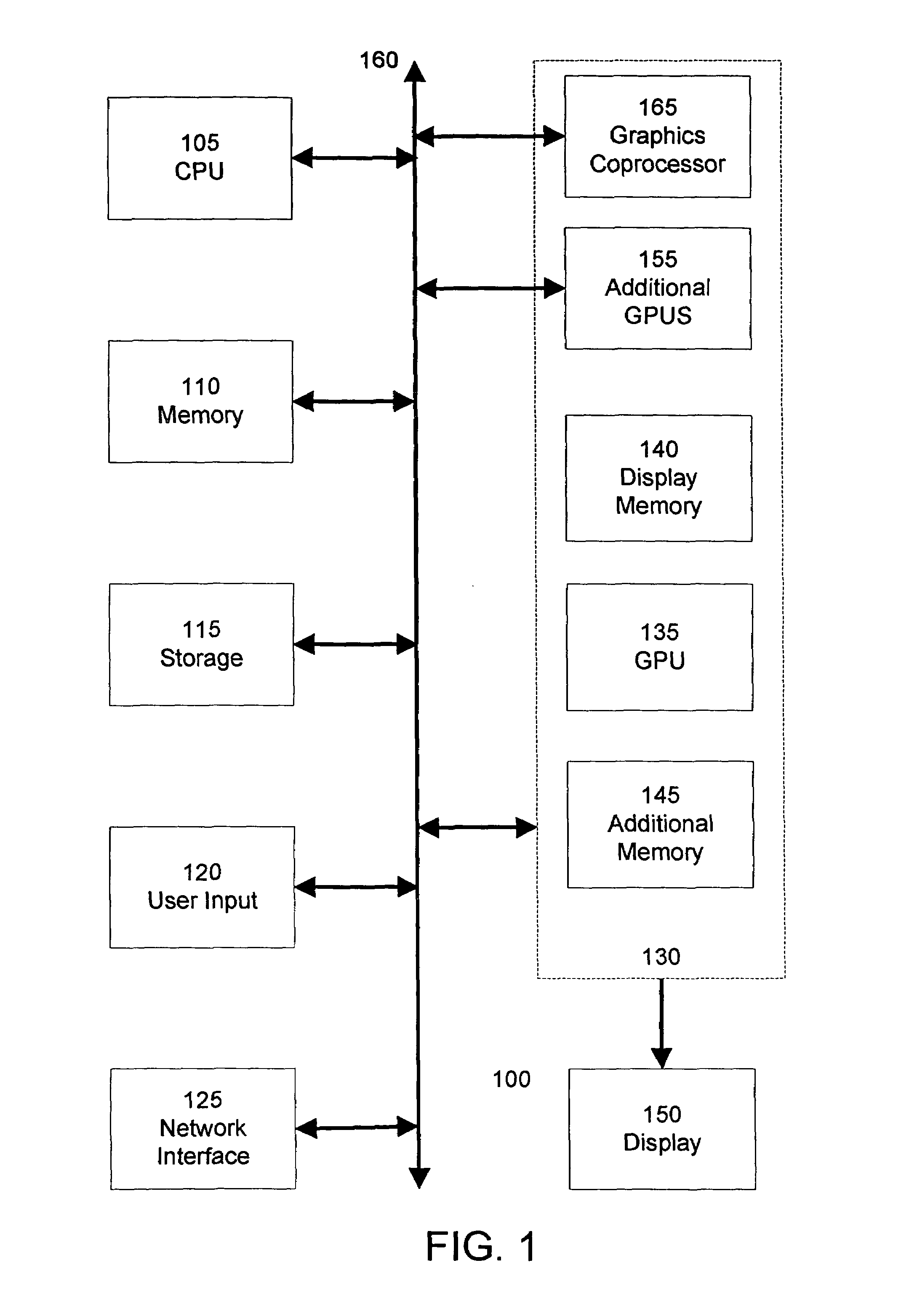

Programming multiple chips from a command buffer

ActiveUS7015915B1Keep in syncSingle instruction multiple data multiprocessorsProcessor architectures/configurationGraphicsCoprocessor

A CPU selectively programs one or more graphics devices by writing a control command to the command buffer that designates a subset of graphics devices to execute subsequent commands. Graphics devices not designated by the control command will ignore the subsequent commands until re-enabled by the CPU. The non-designated graphics devices will continue to read from the command buffer to maintain synchronization. Subsequent control commands can designate different subsets of graphics devices to execute further subsequent commands. Graphics devices include graphics processing units and graphics coprocessors. A unique identifier is associated with each of the graphics devices. The control command designates a subset of graphics devices according to their respective unique identifiers. The control command includes a number of bits. Each bit is associated with one of the unique identifiers and designates the inclusion of one of the graphics devices in the first subset of graphics devices.

Owner:NVIDIA CORP

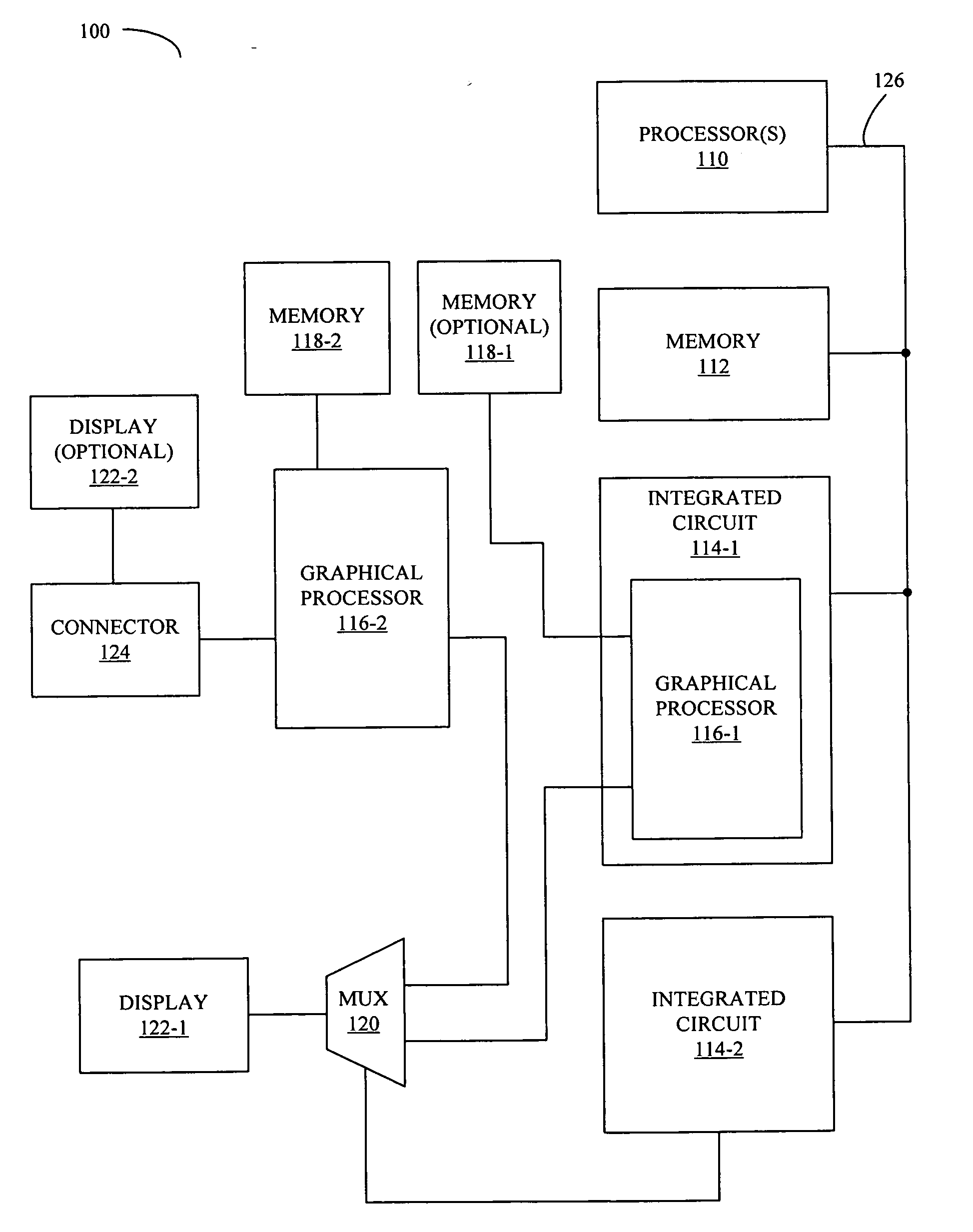

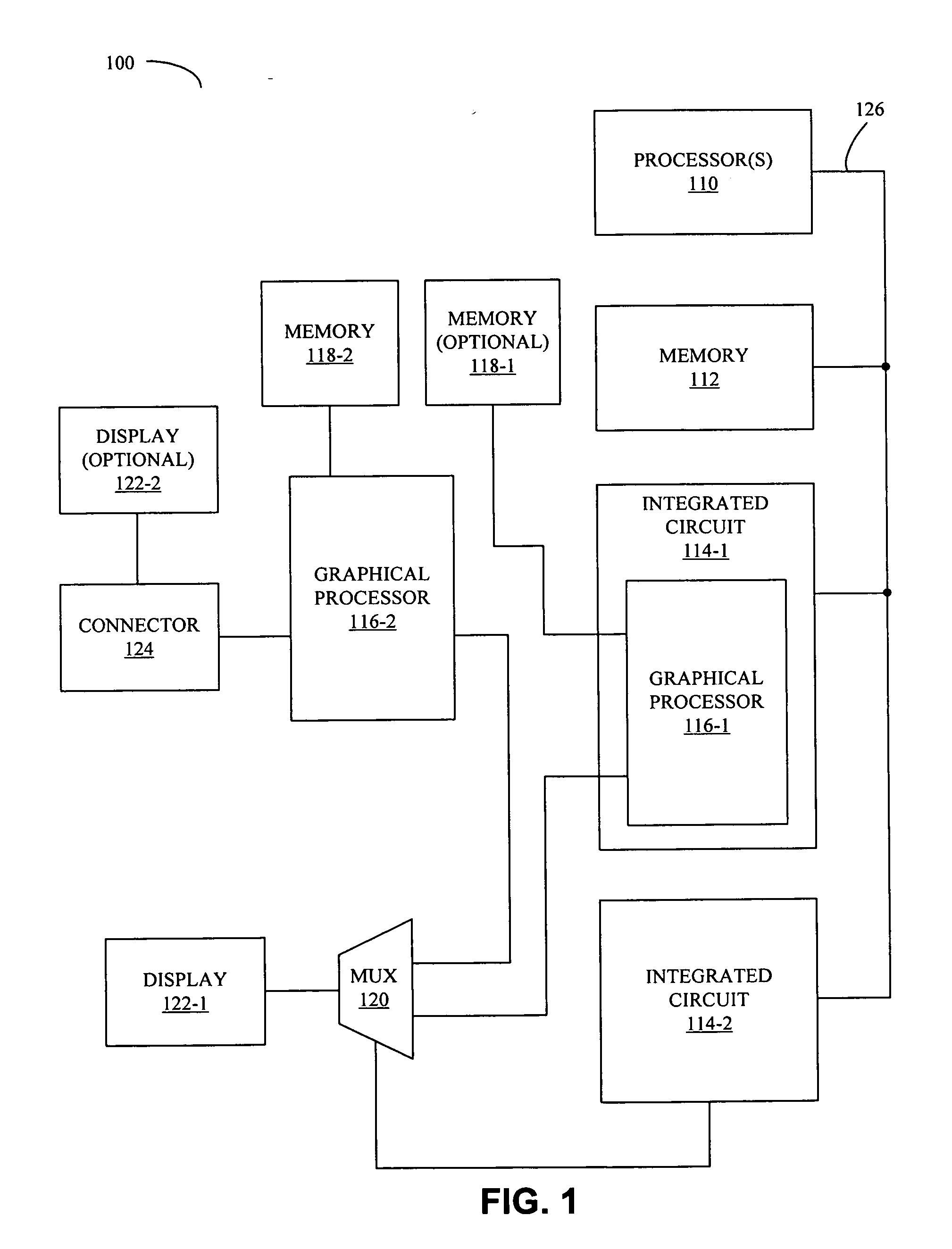

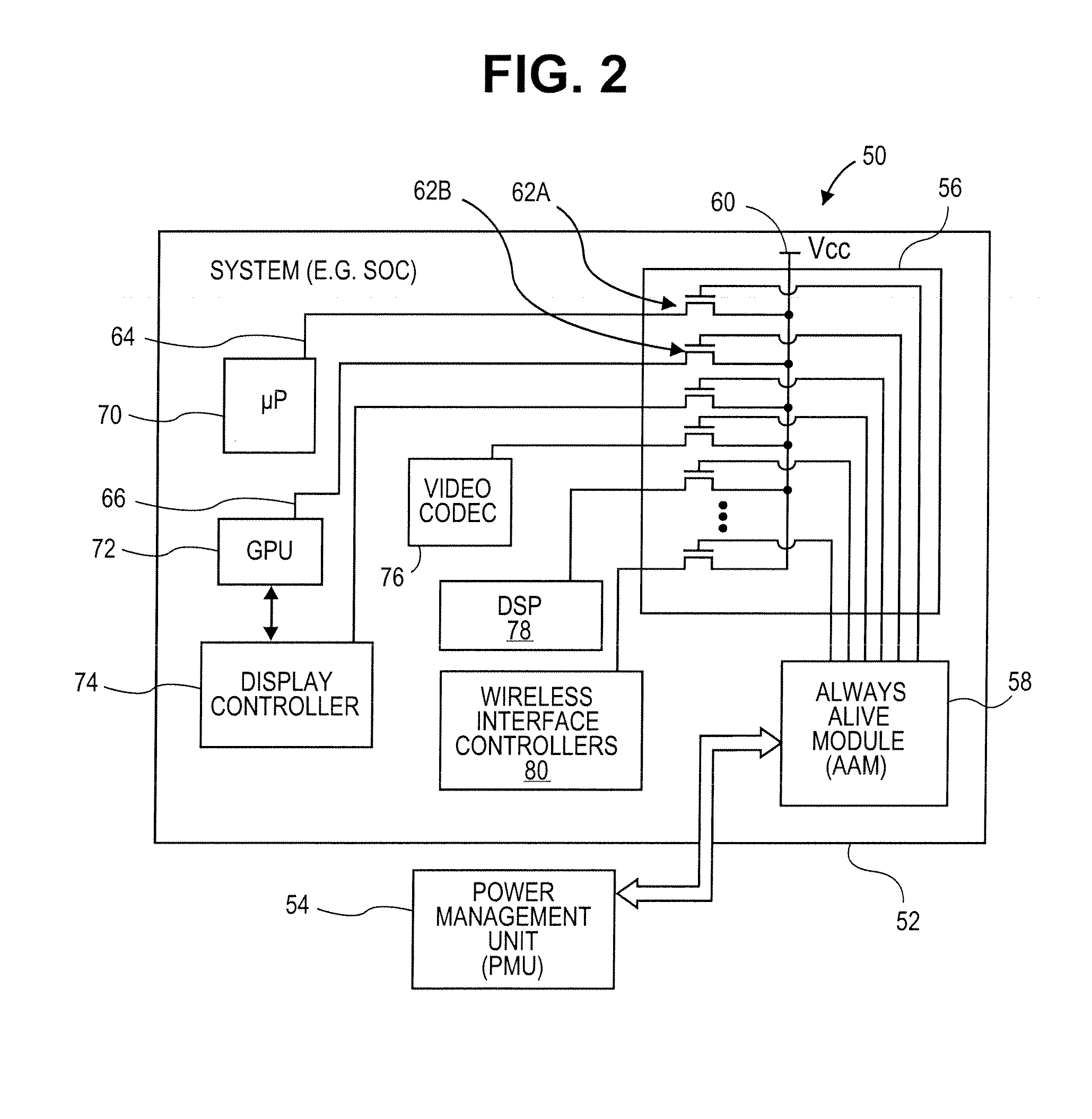

Multiplexed graphics architecture for graphics power management

ActiveUS20080034238A1Volume/mass flow measurementMultiple digital computer combinationsGraphicsOperational system

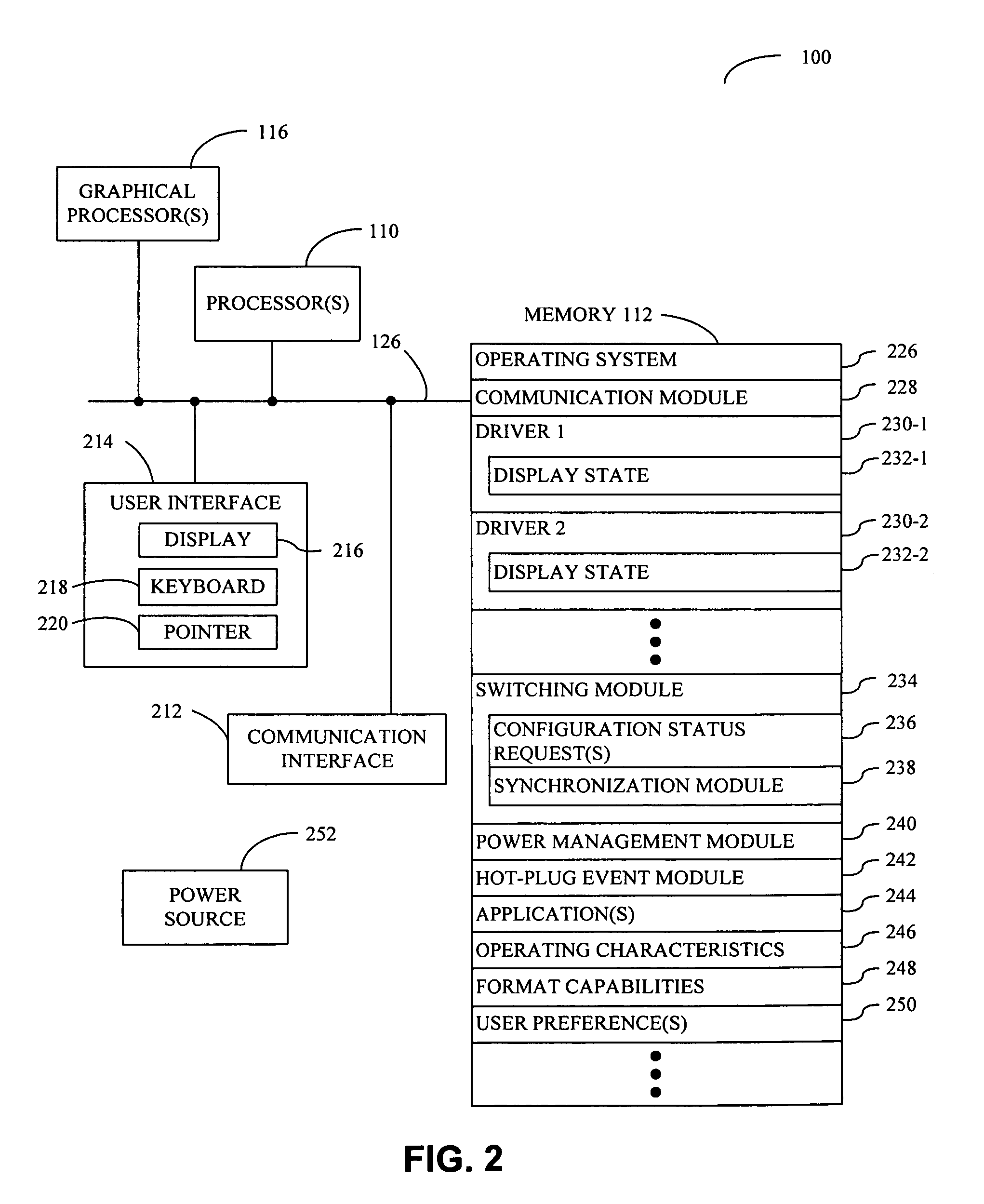

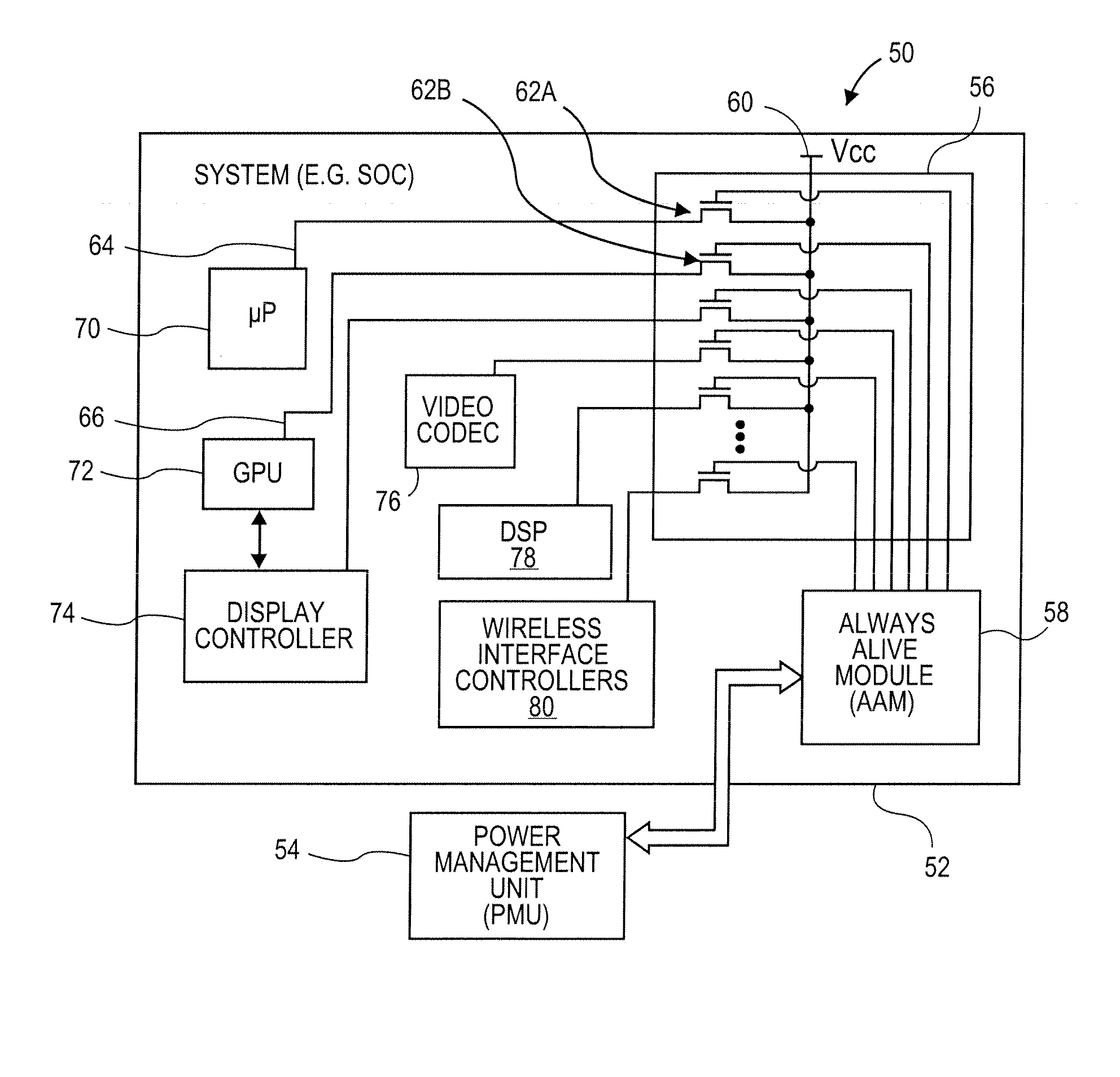

A computer system includes a processor, a memory, first and second graphical processors that have different operating characteristics, a switching mechanism coupled to the graphical processors, and a display coupled to the switching mechanism. The switching mechanism is configured to couple a given graphical processor to the display, and is initially configured to couple the first graphical processor to the display. Furthermore, a program module, which is stored in the memory and configured to be executed by the processor, is configured to change a configuration of the switching mechanism thereby decoupling the first graphical processor from the display and coupling the second graphical processor to the display. Note that the changing of the configuration and switching module operations are configured to occur while an operating system is running and are based on the operating condition of the computer system.

Owner:APPLE INC

Method and system for scalable, dataflow-based, programmable processing of graphics data

ActiveUS6980209B1Improve system performanceLess system performanceCathode-ray tube indicatorsProcessor architectures/configurationArray data structureNetwork packet

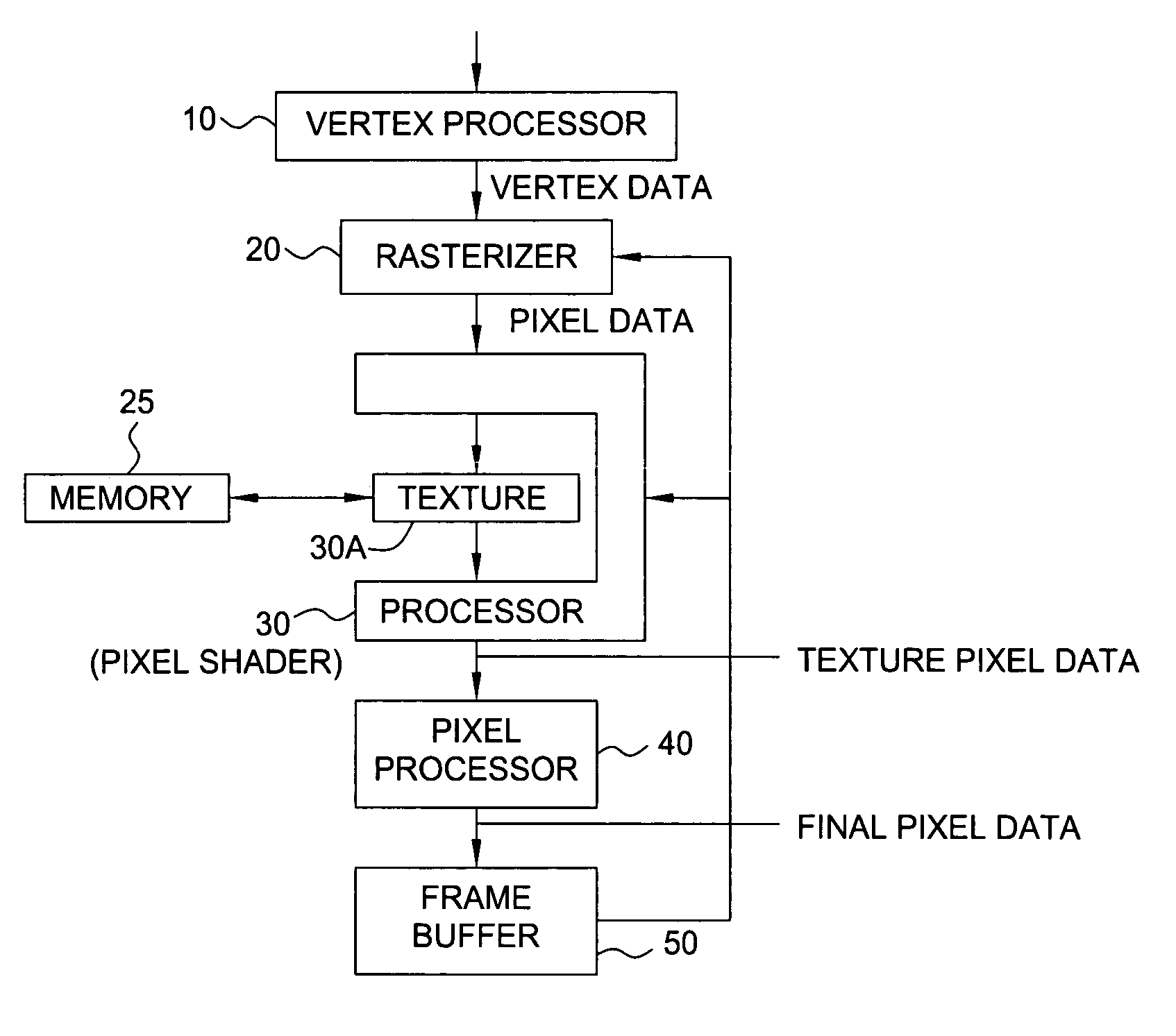

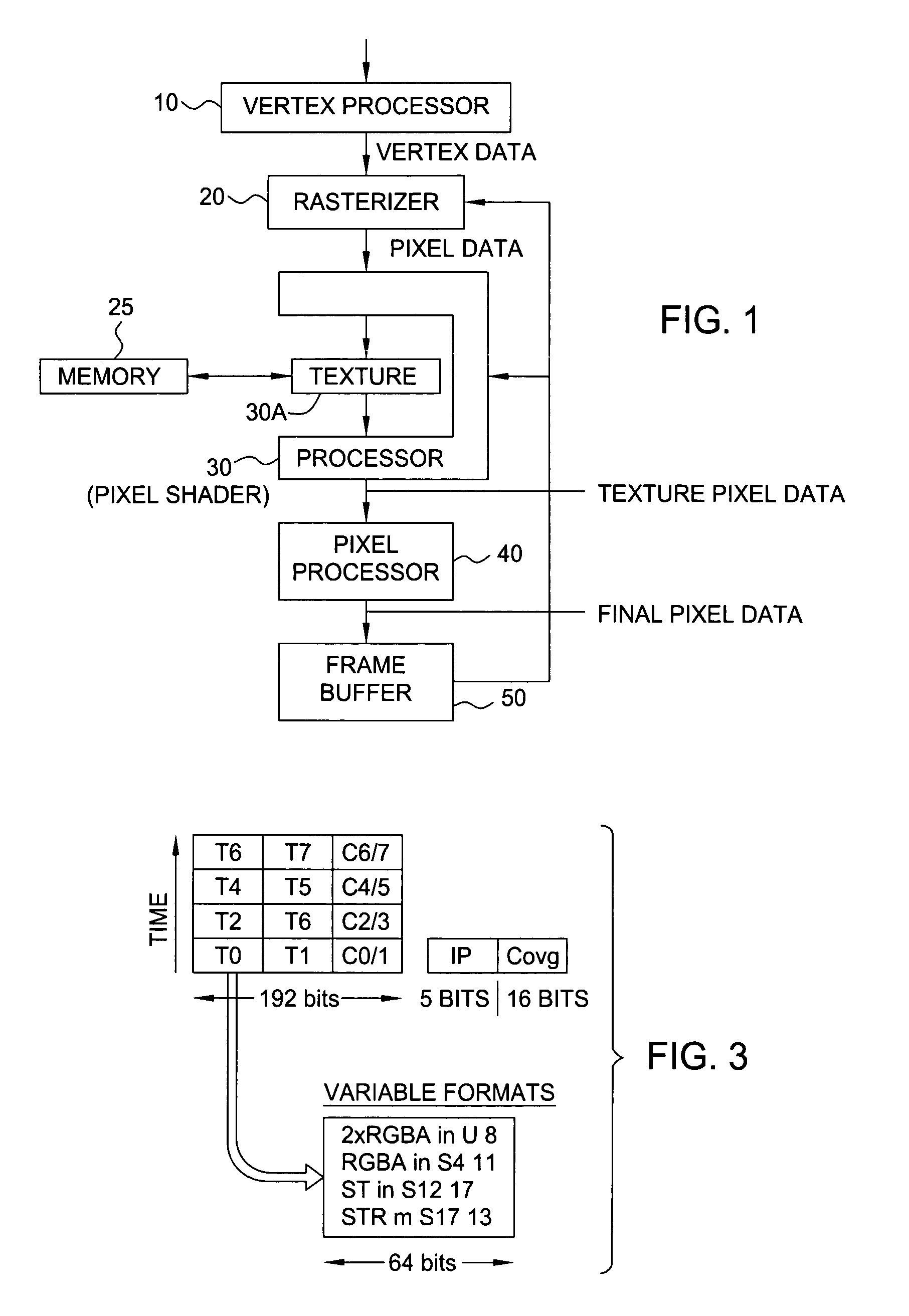

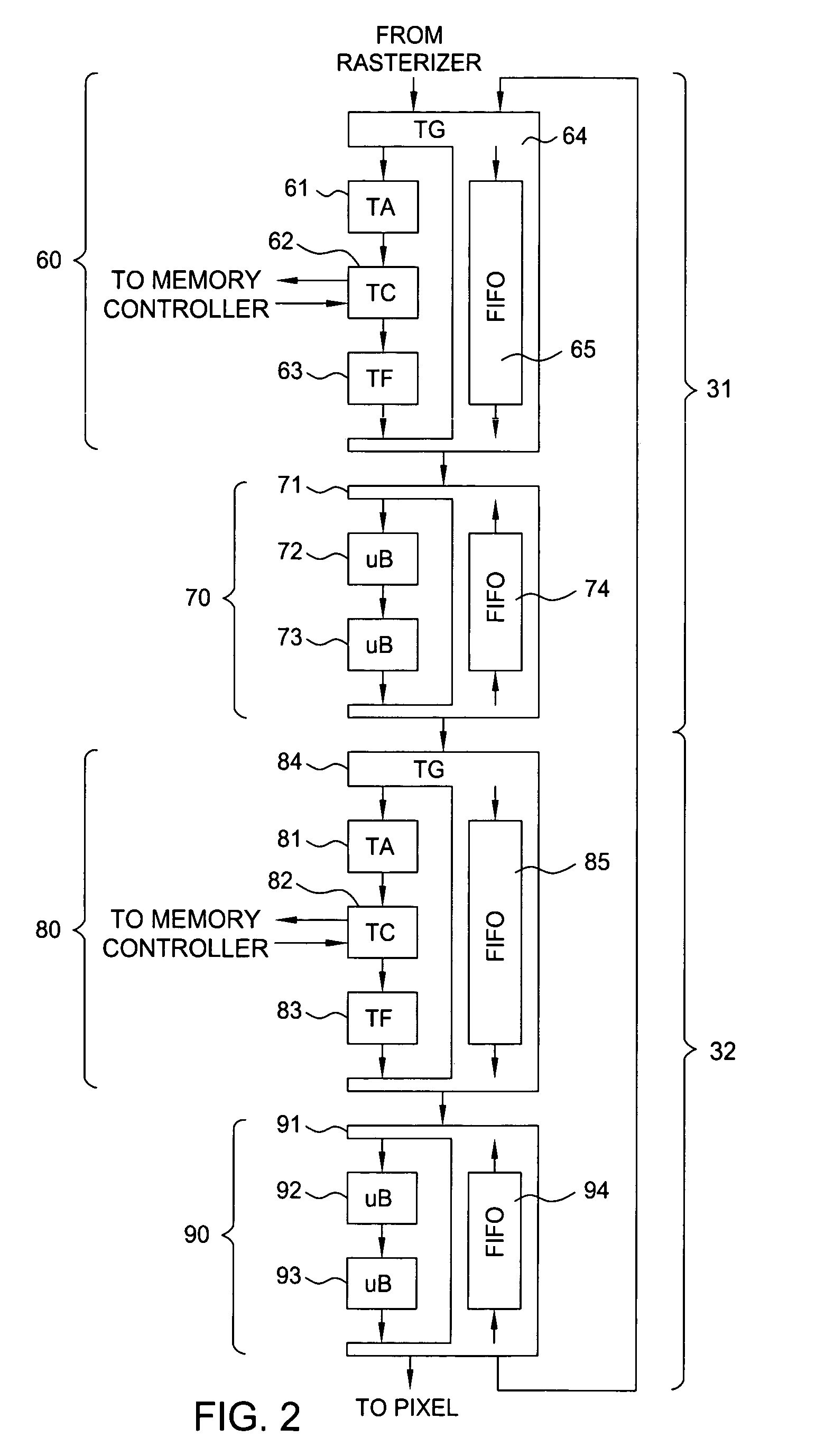

A scalable pipelined pixel shader that processes packets of data and preserves the format of each packet at each processing stage. Each packet is an ordered array of data values, at least one of which is an instruction pointer. Each member of the ordered array can be indicative of any type of data. As a packet progresses through the pixel shader during processing, each member of the ordered array can be replaced by a sequence of data values indicative of different types of data (e.g., an address of a texel, a texel, or a partially or fully processed color value). Information required for the pixel shader to process each packet is contained in the packet, and thus the pixel shader is scalable in the sense that it can be implemented in modular fashion to include any number of identical pipelined processing stages and can execute the same program regardless of the number of stages. Preferably, each processing stage is itself scalable, can be implemented to include an arbitrary number of identical pipelined instruction execution stages known as microblenders, and can execute the same program regardless of the number of microblenders. The current value of the instruction pointer (IP) in a packet determines the next instruction to be executed on the data contained in the packet. Any processing unit can change the instruction that will be executed by a subsequent processing unit by modifying the IP (and / or condition codes) of a packet that it asserts to the subsequent processing unit. Other aspects of the invention include graphics processors (each including a pixel shader configured in accordance with the invention), methods and systems for generating packets of data for processing in accordance with the invention, and methods for pipelined processing of packets of data.

Owner:PVC CONTAINER CORP +1

C/c++ language extensions for general-purpose graphics processing unit

A general-purpose programming environment allows users to program a GPU as a general-purpose computation engine using familiar C / C++ programming constructs. Users may use declaration specifiers to identify which portions of a program are to be compiled for a CPU or a GPU. Specifically, functions, objects and variables may be specified for GPU binary compilation using declaration specifiers. A compiler separates the GPU binary code and the CPU binary code in a source file using the declaration specifiers. The location of objects and variables in different memory locations in the system may be identified using the declaration specifiers. CTA threading information is also provided for the GPU to support parallel processing.

Owner:NVIDIA CORP

Methods and Systems for Power Management in a Data Processing System

ActiveUS20080168285A1Lower latencyReduce total powerEnergy efficient ICTVolume/mass flow measurementData processing systemGeneral purpose

Methods and systems for managing power consumption in data processing systems are described. In one embodiment, a data processing system includes a general purpose processing unit, a graphics processing unit (GPU), at least one peripheral interface controller, at least one bus coupled to the general purpose processing unit, and a power controller coupled to at least the general purpose processing unit and the GPU. The power controller is configured to turn power off for the general purpose processing unit in response to a first state of an instruction queue of the general purpose processing unit and is configured to turn power off for the GPU in response to a second state of an instruction queue of the GPU. The first state and the second state represent an instruction queue having either no instructions or instructions for only future events or actions.

Owner:APPLE INC

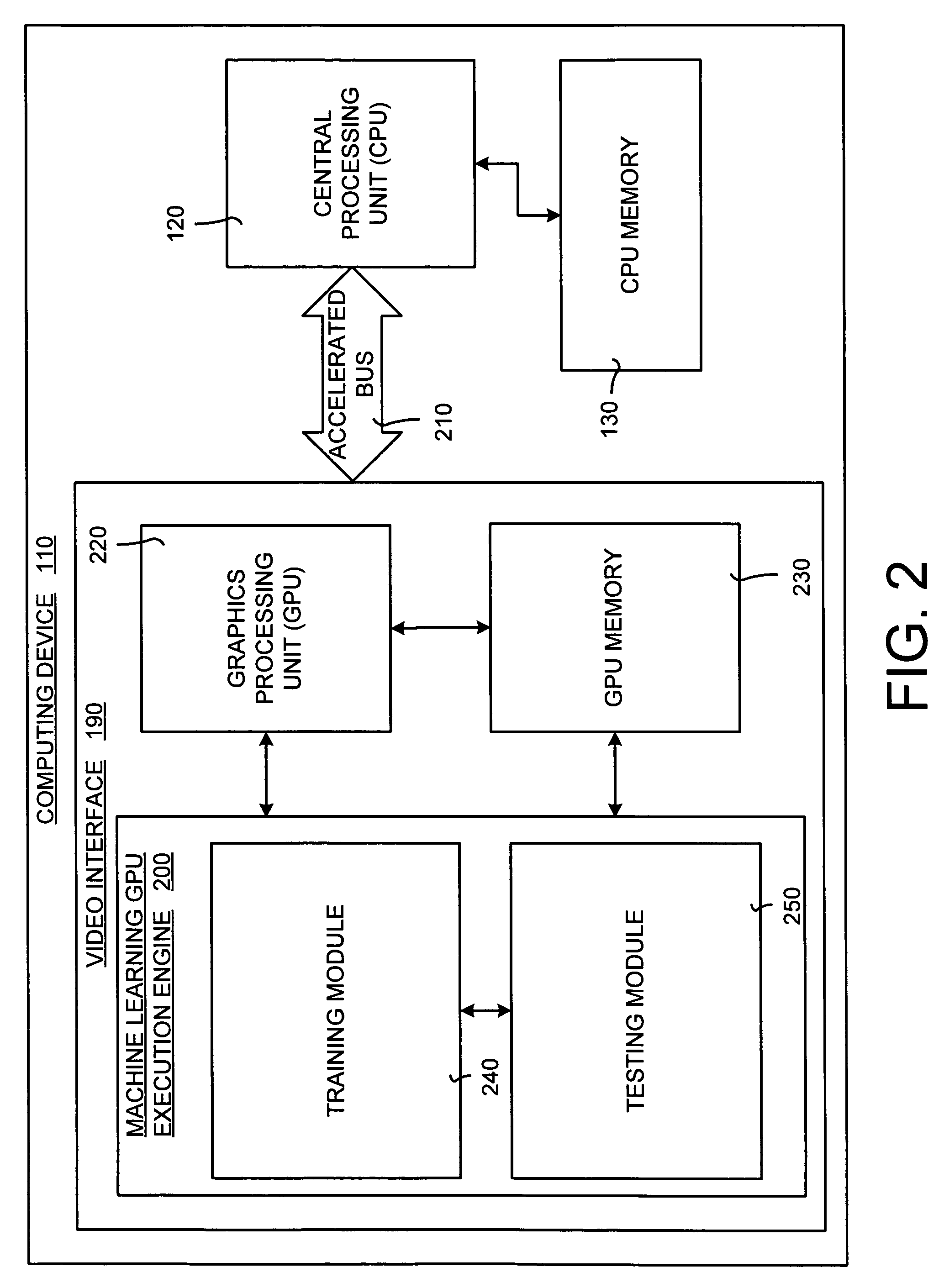

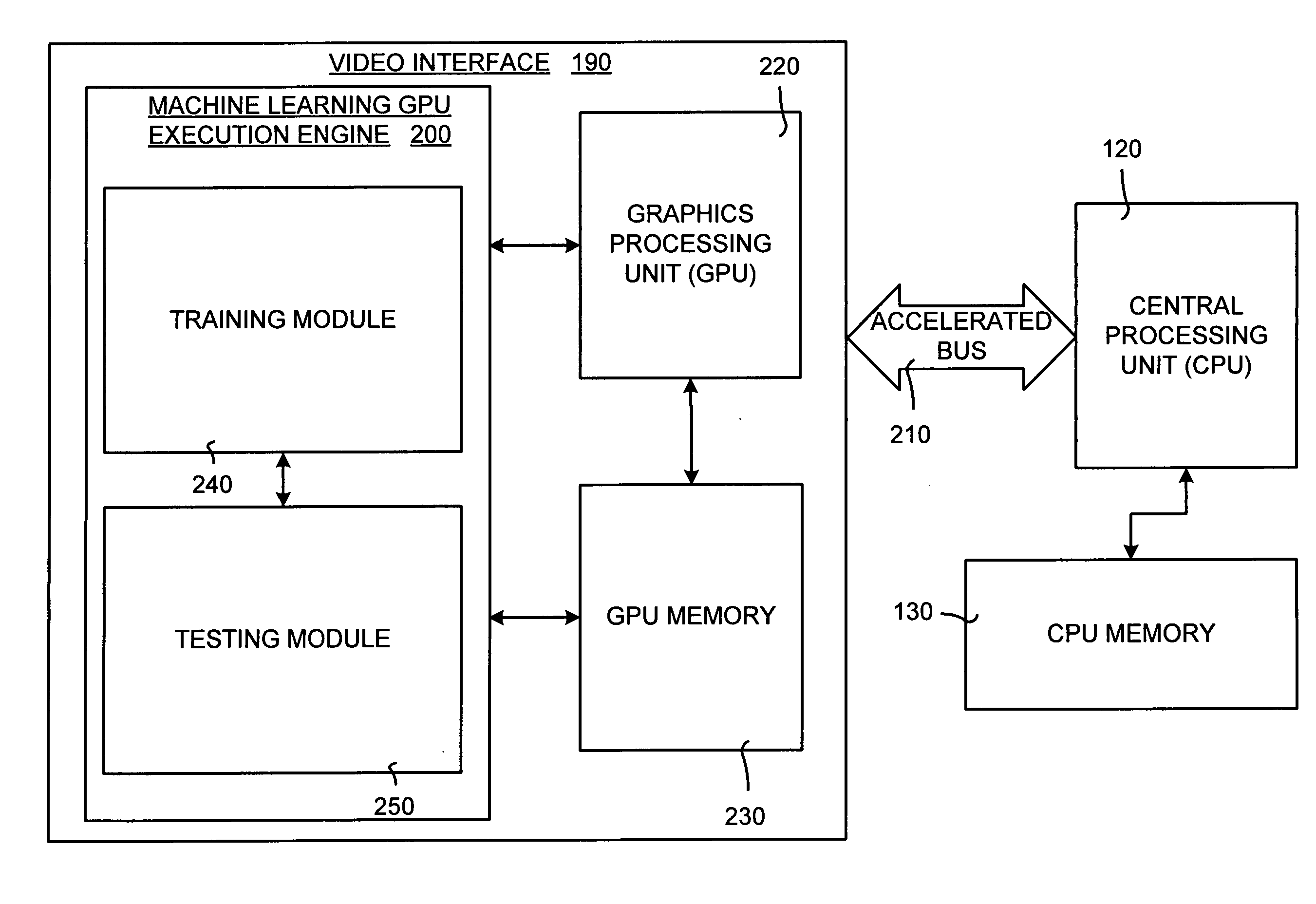

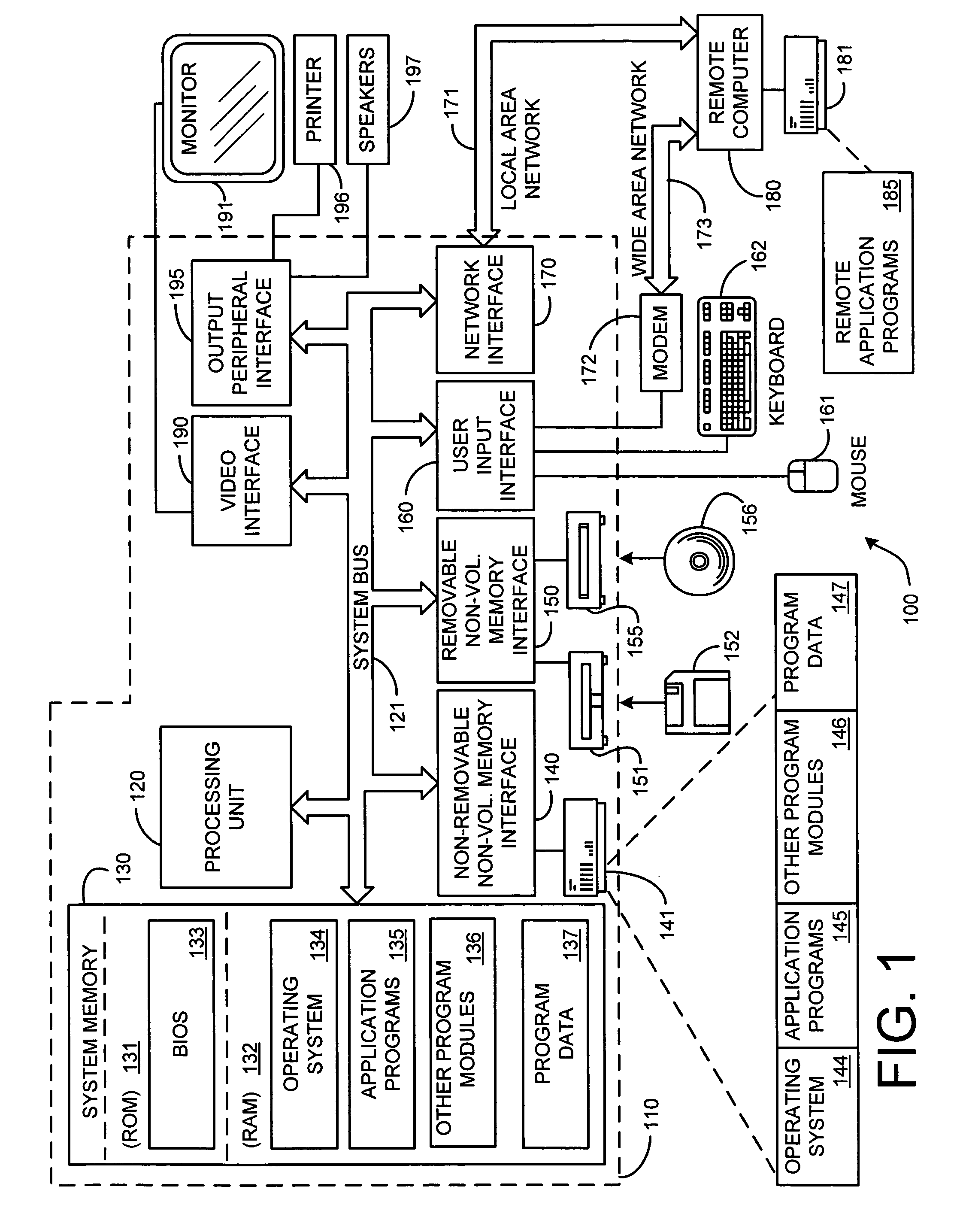

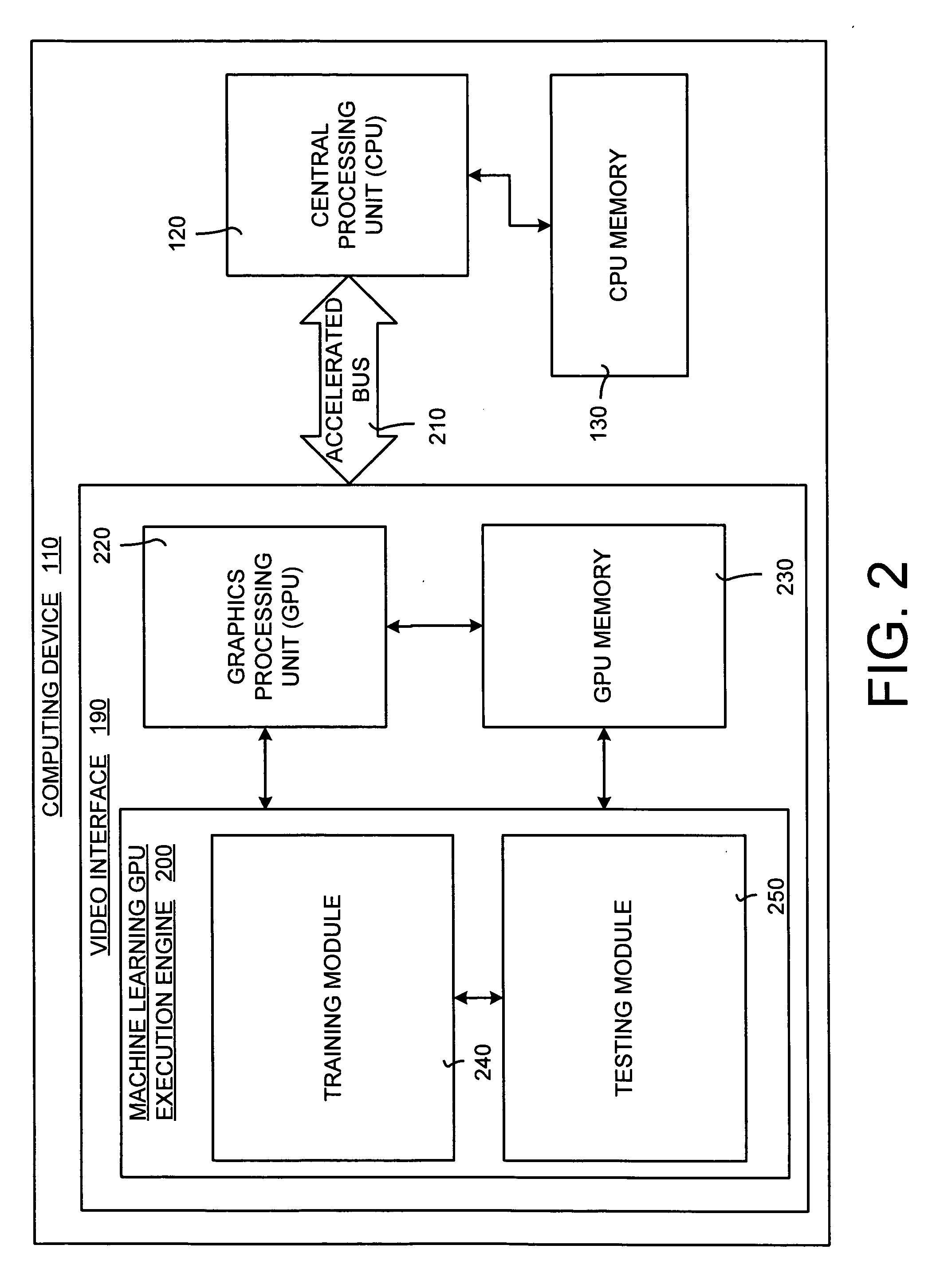

System and method for accelerating and optimizing the processing of machine learning techniques using a graphics processing unit

ActiveUS7219085B2Alleviates computational limitationMore computationCharacter and pattern recognitionKnowledge representationGraphicsTheoretical computer science

A system and method for processing machine learning techniques (such as neural networks) and other non-graphics applications using a graphics processing unit (GPU) to accelerate and optimize the processing. The system and method transfers an architecture that can be used for a wide variety of machine learning techniques from the CPU to the GPU. The transfer of processing to the GPU is accomplished using several novel techniques that overcome the limitations and work well within the framework of the GPU architecture. With these limitations overcome, machine learning techniques are particularly well suited for processing on the GPU because the GPU is typically much more powerful than the typical CPU. Moreover, similar to graphics processing, processing of machine learning techniques involves problems with solving non-trivial solutions and large amounts of data.

Owner:MICROSOFT TECH LICENSING LLC

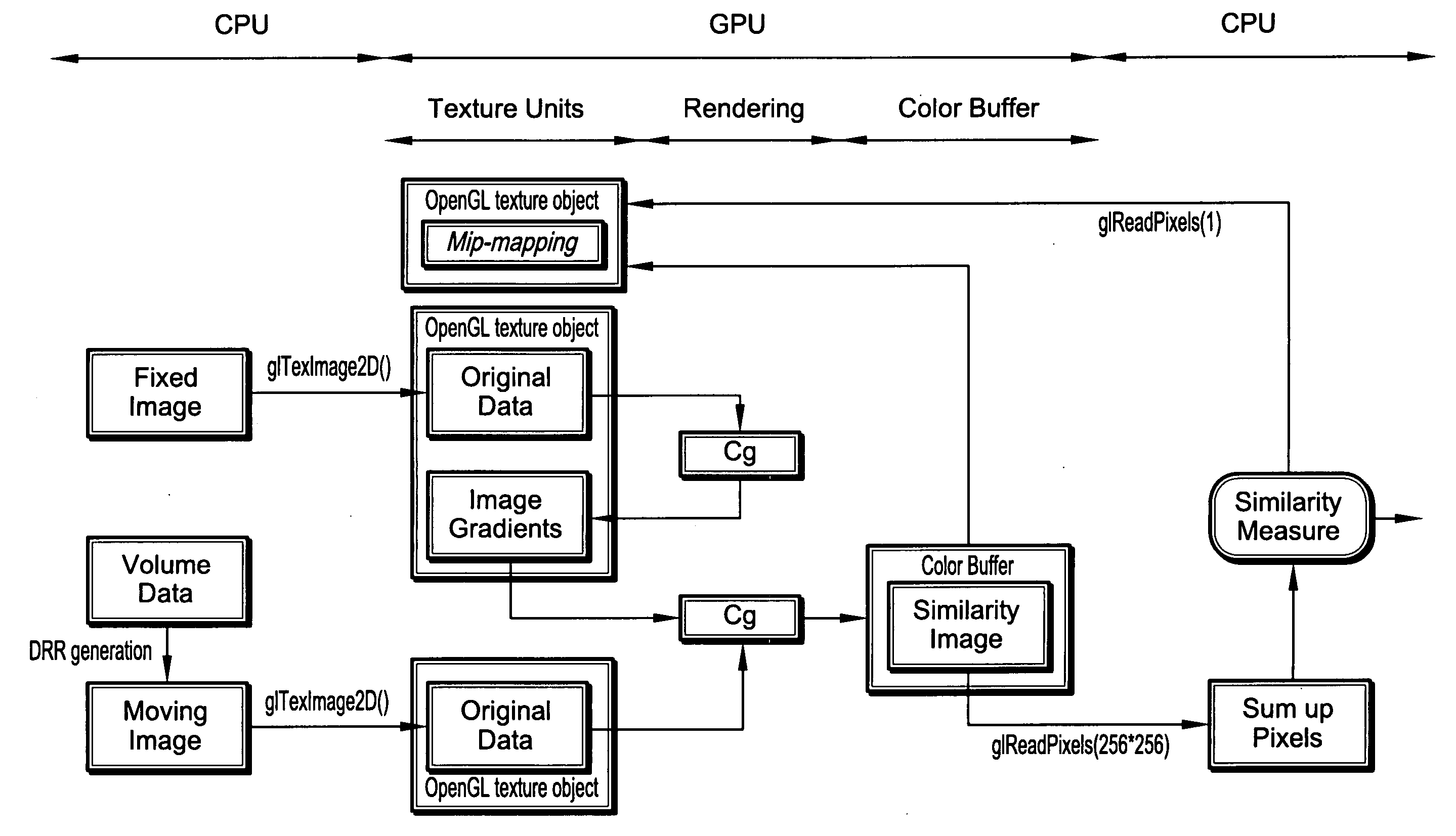

GPU-based image manipulation method for registration applications

Exemplary systems and methods for performing registration applications are provided. An exemplary system includes a central processing unit (CPU) for transferring a plurality of images to a graphics processing unit (GPU); wherein the GPU performs a registration application on the plurality of images to produce a registration result, and wherein the GPU returns the registration result to the CPU. An exemplary method includes the steps of transferring a plurality of images from a central processing unit (CPU) to a graphics processing unit (GPU); performing a registration application on the plurality of images using the GPU; transferring the result of the step of performing from the GPU to CPU.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

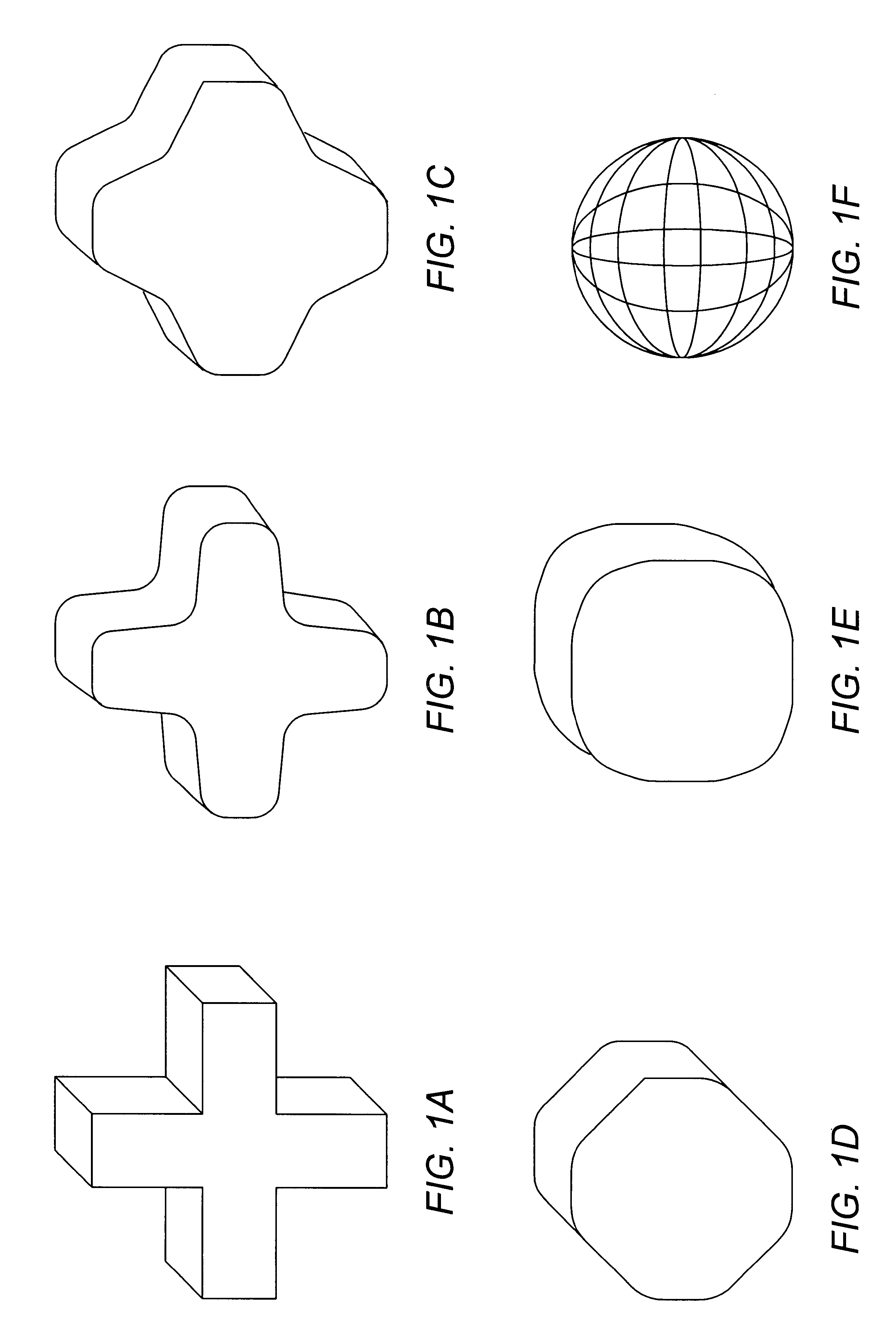

Morphing decompression in a graphics system

A method and graphics system configured to perform real-time morphing of three-dimensional (3D) objects that have been compressed into one or more streams of 3D graphics data using geometry compression techniques. In one embodiment, the graphics system has one or more decompression units, each configured to receive and decompress the graphics data. The decompression units are configured to convey the decompressed data corresponding to the morphs to a graphics processor that is configured to apply weighting factors to the graphics data. The weighted results are combined to yield a morphed object that is rendered to generate one or more frames of a morphing sequence. The weighting factors may be adjusted and reapplied to yield additional frames for the morphing sequence. A method for encoding 3D graphics data to allow morphing decompression is also disclosed.

Owner:ORACLE INT CORP

Methods and systems for preventing access to display graphics generated by a trusted virtual machine

ActiveUS20110145916A1Avoid accessAvoid readingDigital data processing detailsUser identity/authority verificationGraphicsSoftware engineering

The methods and systems described herein provide for preventing a non-trusted virtual machine from reading the graphical output of a trusted virtual machine. A graphics manager receives a request from a trusted virtual machine to render graphical data using a graphics processing unit. The graphics manager assigns, to the trusted virtual machine, a secure section of a memory of the graphics processing unit. The graphics manager renders graphics from the trusted virtual machine graphical data to the secure section of the graphics processing unit memory. The graphics manager receives a request from a non-trusted virtual machine to read graphics rendered from the trusted virtual machine graphical data and stored in the secure section of the graphics processing unit memory, and prevents the non-trusted virtual machine from reading the trusted virtual machine rendered graphics stored in the secure section of the graphics processing unit memory.

Owner:CITRIX SYST INC

Deferred shading graphics pipeline processor

InactiveUS6268875B1Attenuation bandwidthTexturing/coloringImage memory managementPhong shadingComputer graphics (images)

Three-dimensional computer graphics systems and methods and more particularly to structure and method for a three-dimensional graphics processor and having other enhanced graphics processing features. In one embodiment the graphics processor is a Deferred Shading Graphics Processor (DSGP) comprising an AGP interface, a command fetch & decode (2000), a geometry unit (3000), a mode extraction (4000) and polygon memory (5000), a sort unit (6000) and sort memory (7000), a setup unit (8000), a cull unit (9000), a mode injection (10000), a fragment unit (11000), a texture (12000) and texture memory (13000) a phong shading (14000), a pixel unit (15000), a backend unit (1600) coupled to a frame buffer (17000). Other embodiments need not include all of these functional units, and the structures and methods of these units are applicable to other computational processes and systems as well as deferred and non-deferred shading graphical processors.

Owner:APPLE INC

Coherence of displayed images for split-frame rendering in multi-processor graphics system

ActiveUS7522167B1Cathode-ray tube indicatorsMultiple digital computer combinationsGraphicsMulti processor

Coherence of displayed images is provided for a graphics processing systems having multiple processors operating to render different portions of a current image in parallel. As each processor completes rendering of its portion of the current image, it generates a local ready event, then pauses its rendering operations. A synchronizing agent detects the local ready event and generates a global ready event after all of the graphics processors have generated local ready events. The global ready signal is transmitted to each graphics processor, which responds by resuming its rendering activity.

Owner:NVIDIA CORP

Programming multiple chips from a command buffer

ActiveUS20060114260A1Single instruction multiple data multiprocessorsProcessor architectures/configurationComputational scienceGraphics

Owner:NVIDIA CORP

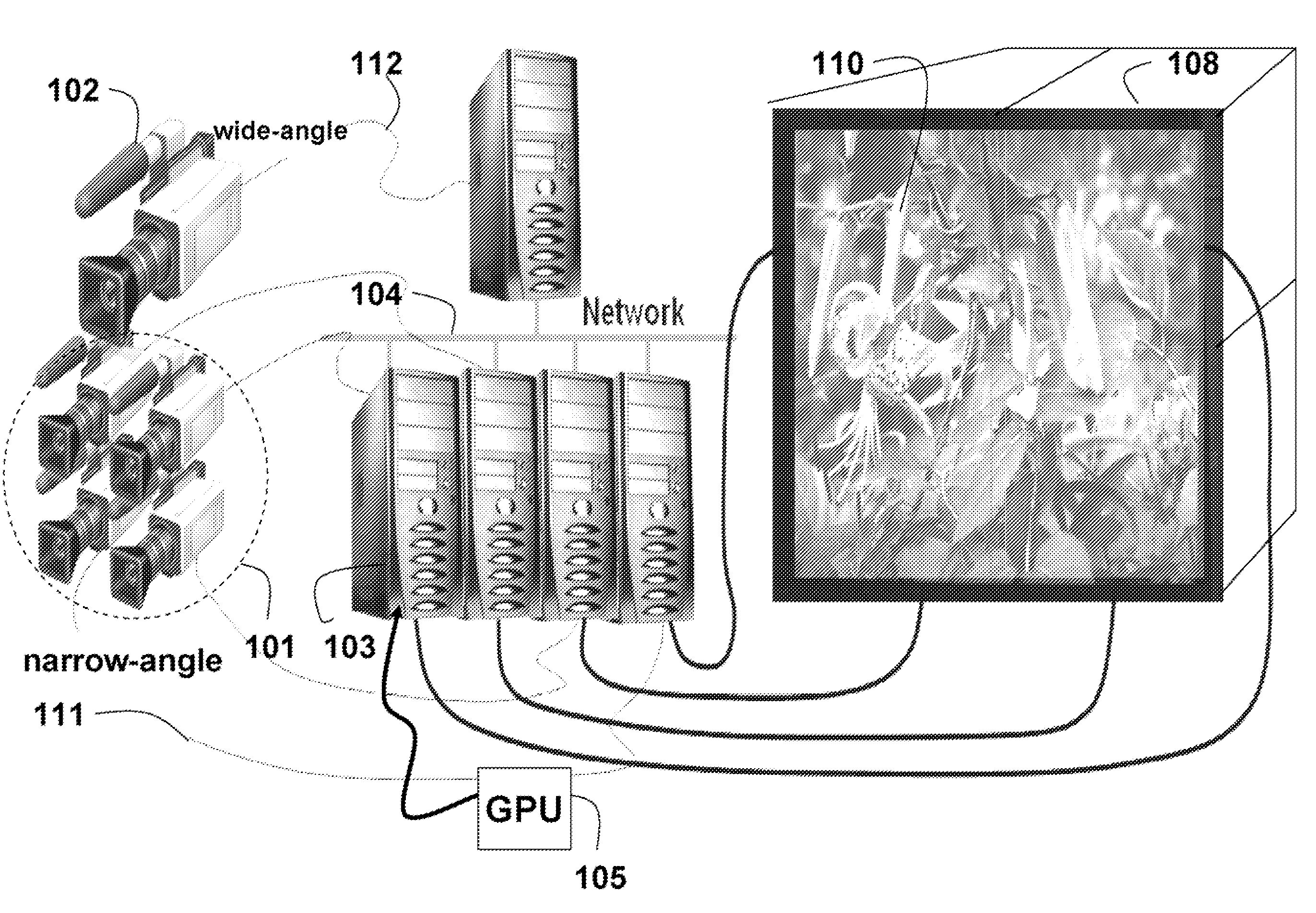

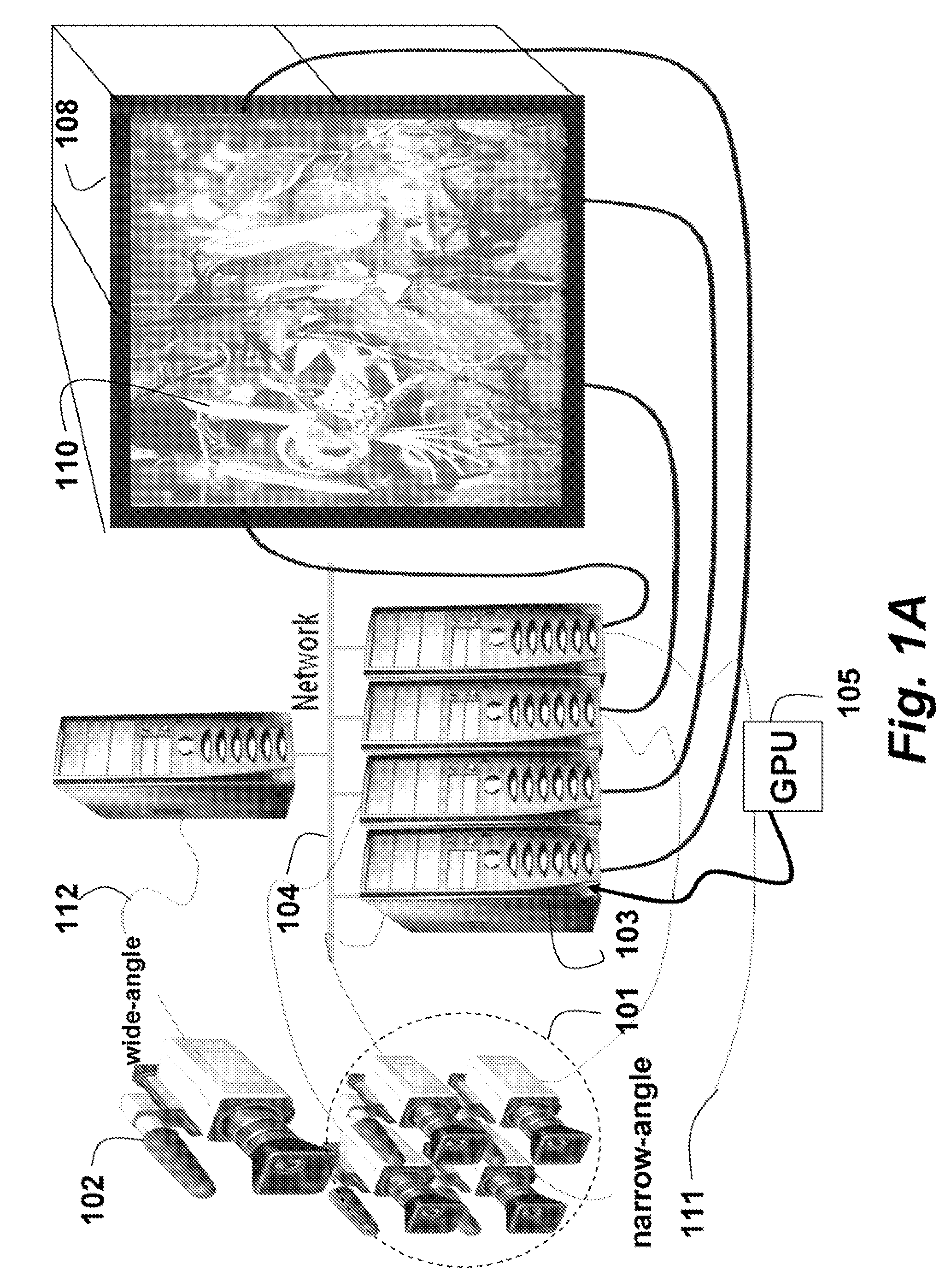

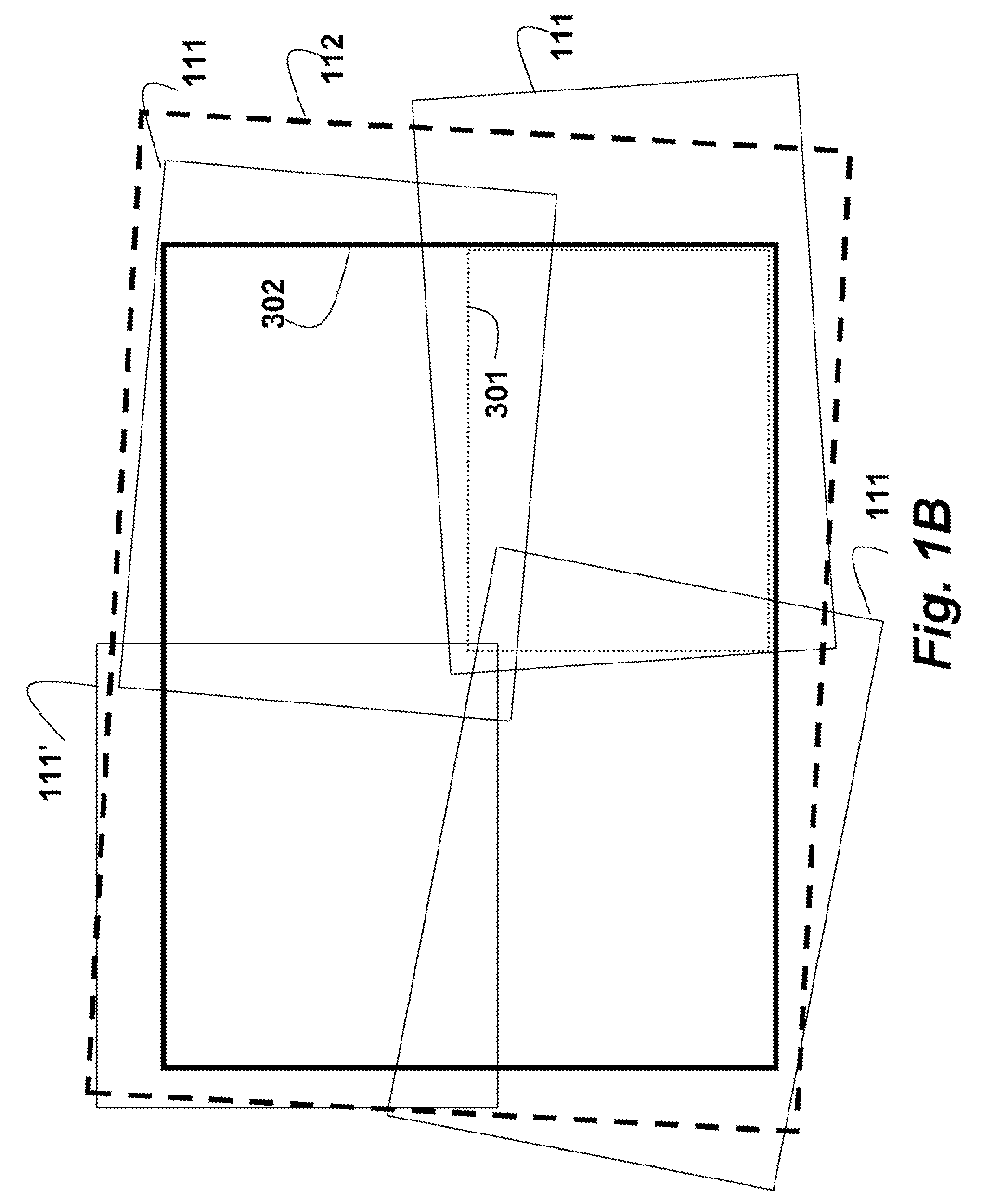

System and Method for Combining Image Sequences

InactiveUS20090122195A1Amount of overlapMinimize amount of overlapTelevision system detailsColor signal processing circuitsGraphicsOutput device

A system and method combines videos for display in real-time. A set of narrow-angle videos and a wide-angle video are acquired of the scene, in which a field of view in the wide-angle video substantially overlaps the fields of view in the narrow-angle videos. Homographies are determined among the narrow-angle videos using the wide-angle video. Temporally corresponding selected images of the narrow-angle videos are transformed and combined into a transformed image. Geometry of an output video is determined according to the transformed image and geometry of a display screen of an output device. The homographies and the geometry of the display screen are stored in a graphic processor unit, and subsequent images in the set of narrow-angle videos are transformed and combined by the graphic processor unit to produce an output video in real-time.

Owner:MITSUBISHI ELECTRIC RES LAB INC

System and method for accelerating and optimizing the processing of machine learning techniques using a graphics processing unit

ActiveUS20050125369A1Alleviates computational limitationImprove data accessCharacter and pattern recognitionKnowledge representationGraphicsArtificial intelligence

A system and method for processing machine learning techniques (such as neural networks) and other non-graphics applications using a graphics processing unit (GPU) to accelerate and optimize the processing. The system and method transfers an architecture that can be used for a wide variety of machine learning techniques from the CPU to the GPU. The transfer of processing to the GPU is accomplished using several novel techniques that overcome the limitations and work well within the framework of the GPU architecture. With these limitations overcome, machine learning techniques are particularly well suited for processing on the GPU because the GPU is typically much more powerful than the typical CPU. Moreover, similar to graphics processing, processing of machine learning techniques involves problems with solving non-trivial solutions and large amounts of data.

Owner:MICROSOFT TECH LICENSING LLC

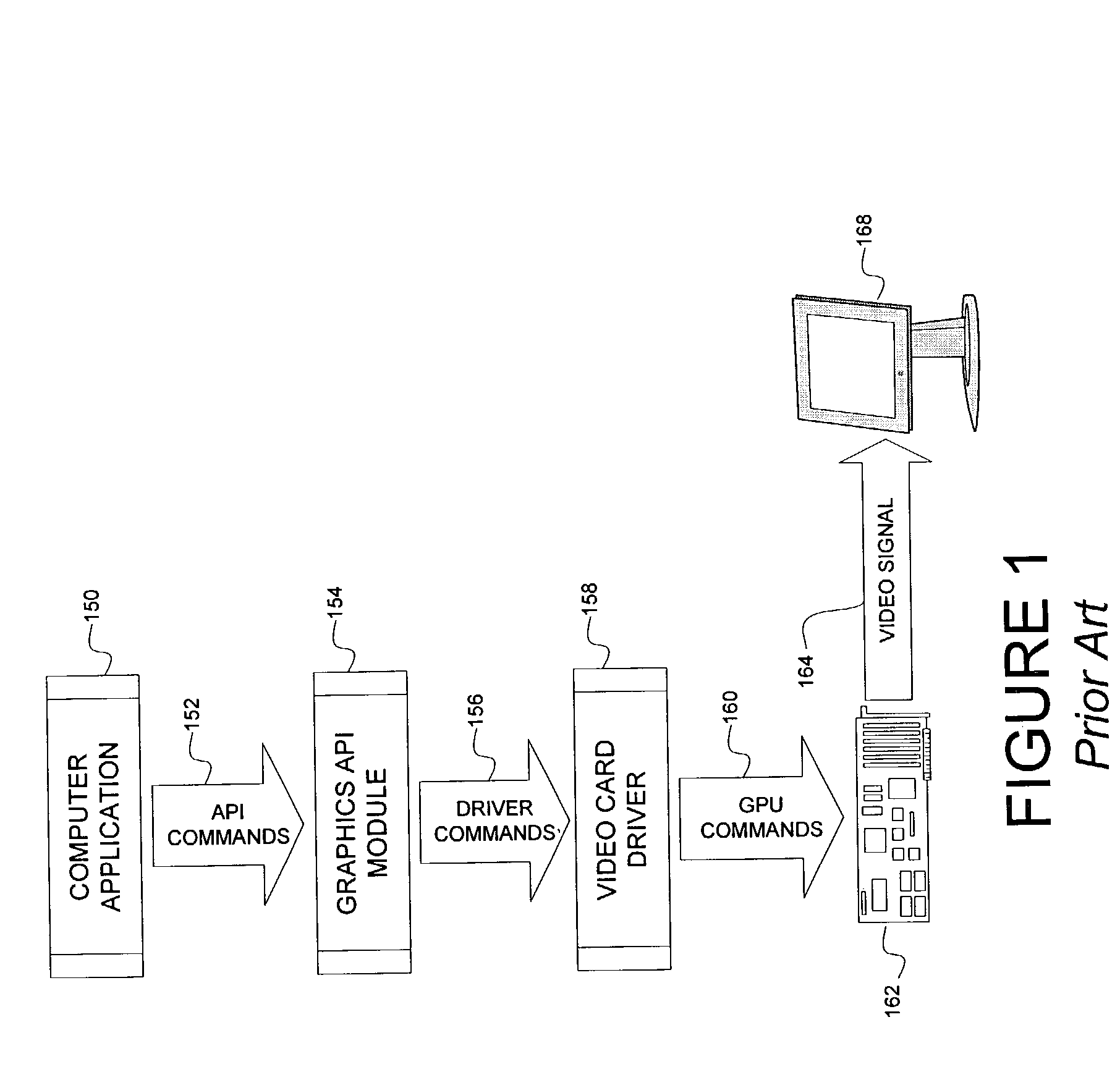

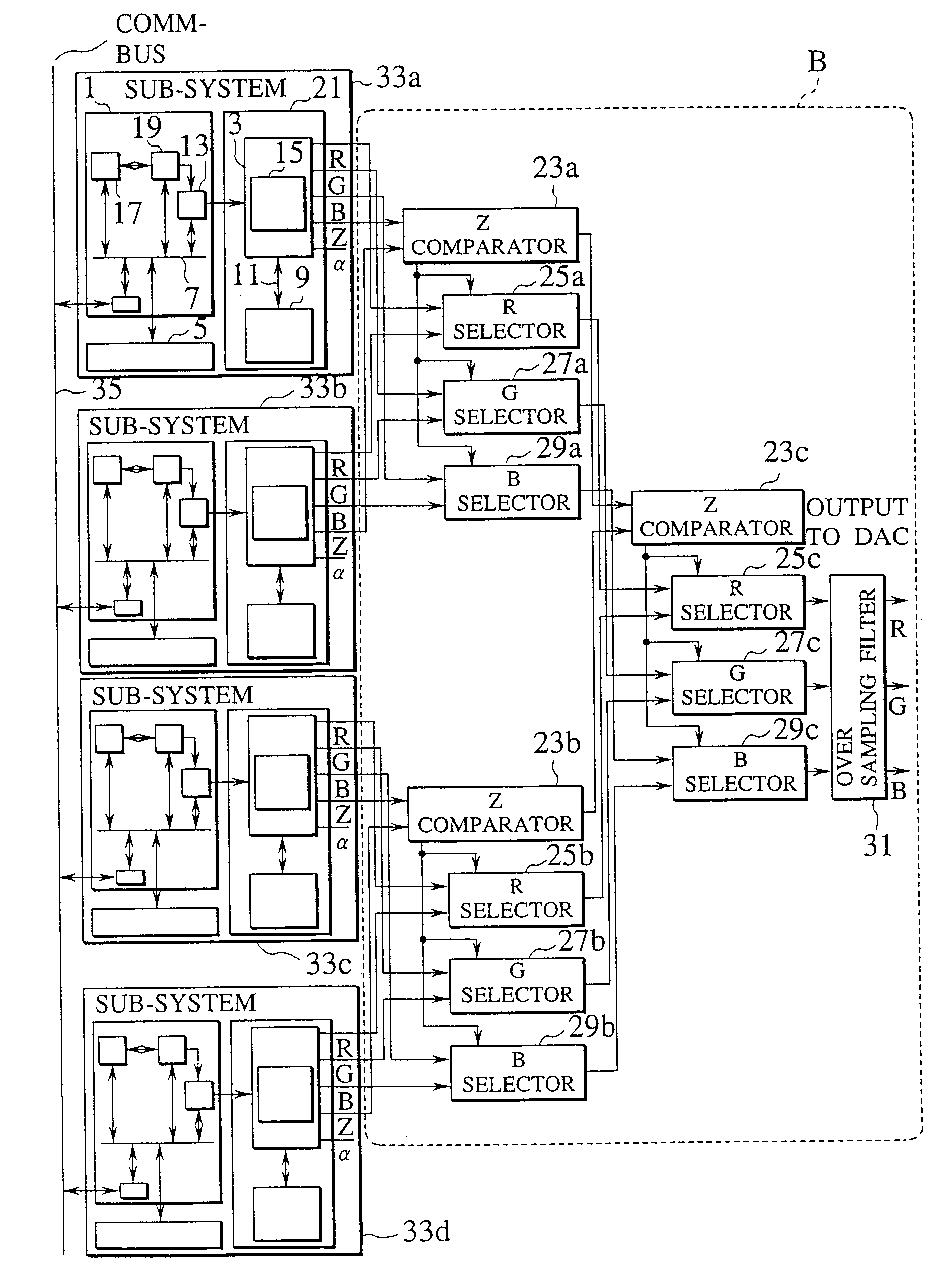

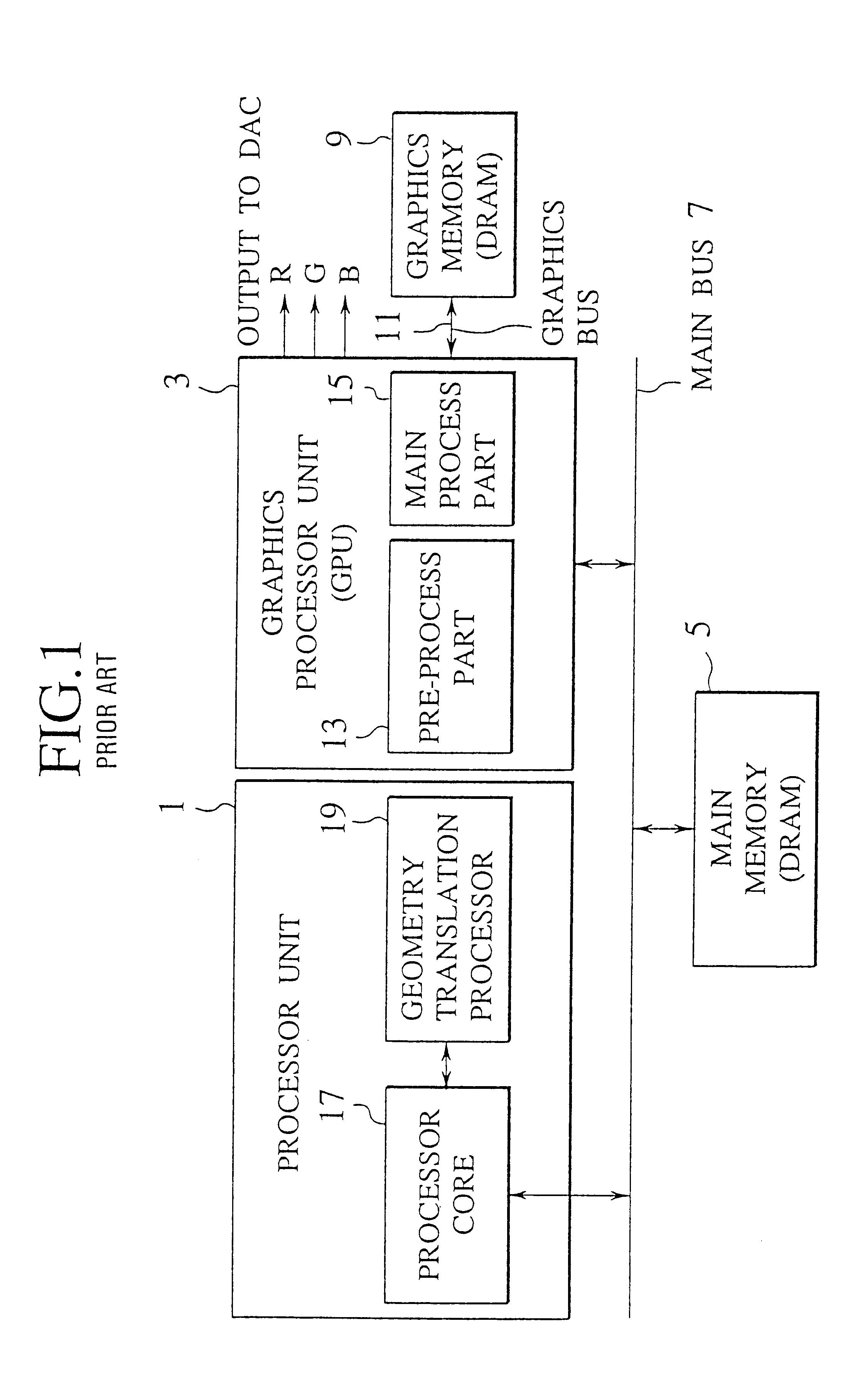

Multiple parallel processor computer graphics system

InactiveUS7119808B2Improve throughputMinimal amount of processingCathode-ray tube indicatorsMultiple digital computer combinationsGraphicsOff the shelf

An accelerated graphics processing subsystem that significantly increases the processing speed of computer graphics commands. The preferred embodiment of this invention presents a first-of-its-kind graphics processing subsystem that combines the processing power of multiple, off-the-shelf, video cards, each one having one or more graphic processor units. The video cards can be used without substantial modification. Under the preferred embodiment, each video card processes instructions for drawing a predetermined portion of the screen which is displayed to the user through a monitor or other visual output device. The invention harnesses the power of multiple video cards without suffering from the high bandwidth constraints affecting prior attempts at parallel graphics processing subsystems.

Owner:DELL MARKETING

Apparatus having graphic processor for high speed performance

InactiveUS6359624B1Cathode-ray tube indicatorsMultiple digital computer combinationsInformation processingGraphics

An information processing apparatus secures a wide band width in a graphics bus and draws graphics at high speed and low cost. The apparatus employs graphics processing units connected in parallel. Each of the units is formed on a chip and has a graphics processor and a graphics memory, to provide color information and select information. The outputs of the units are selected through a tournament.

Owner:KK TOSHIBA

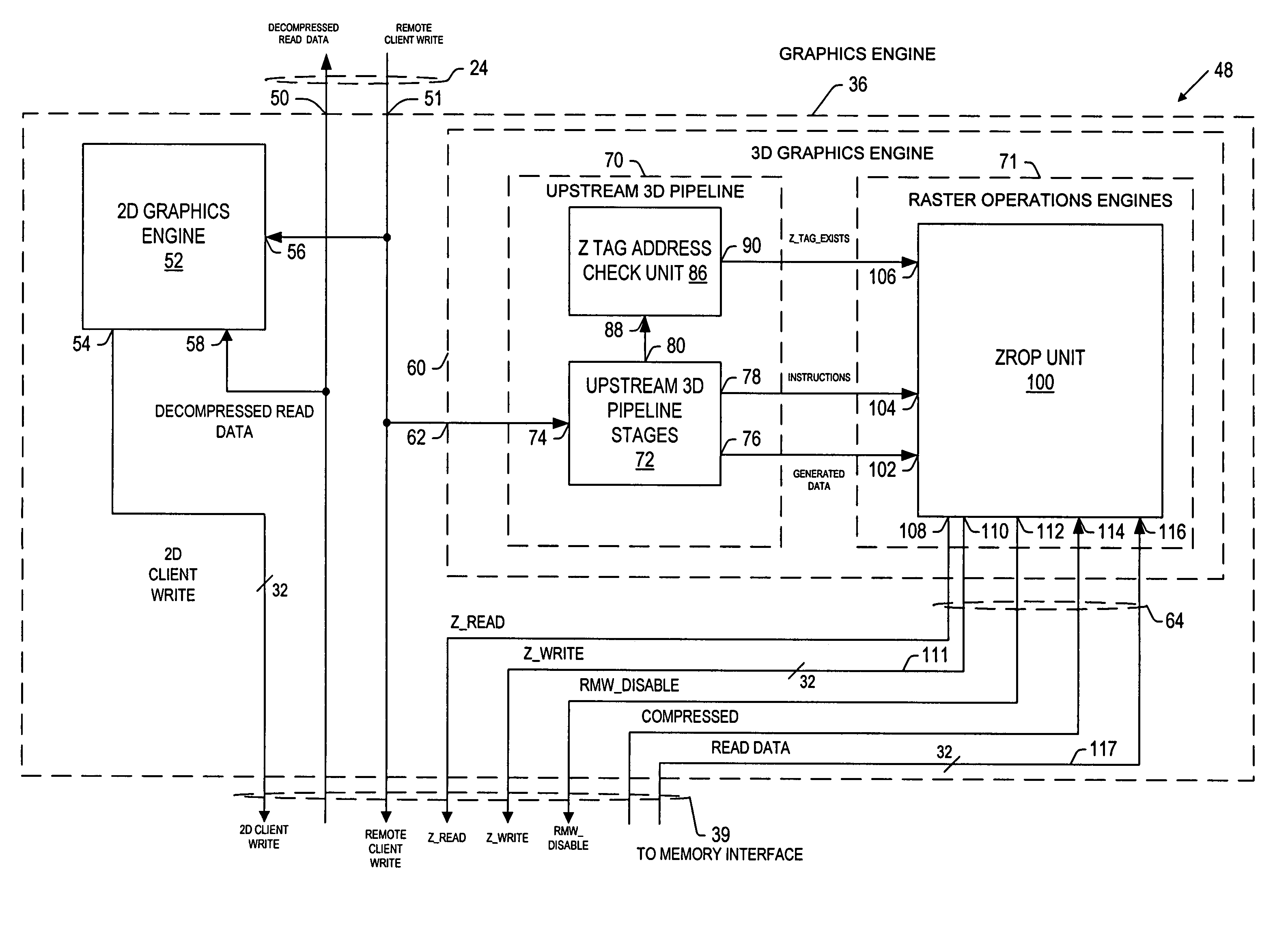

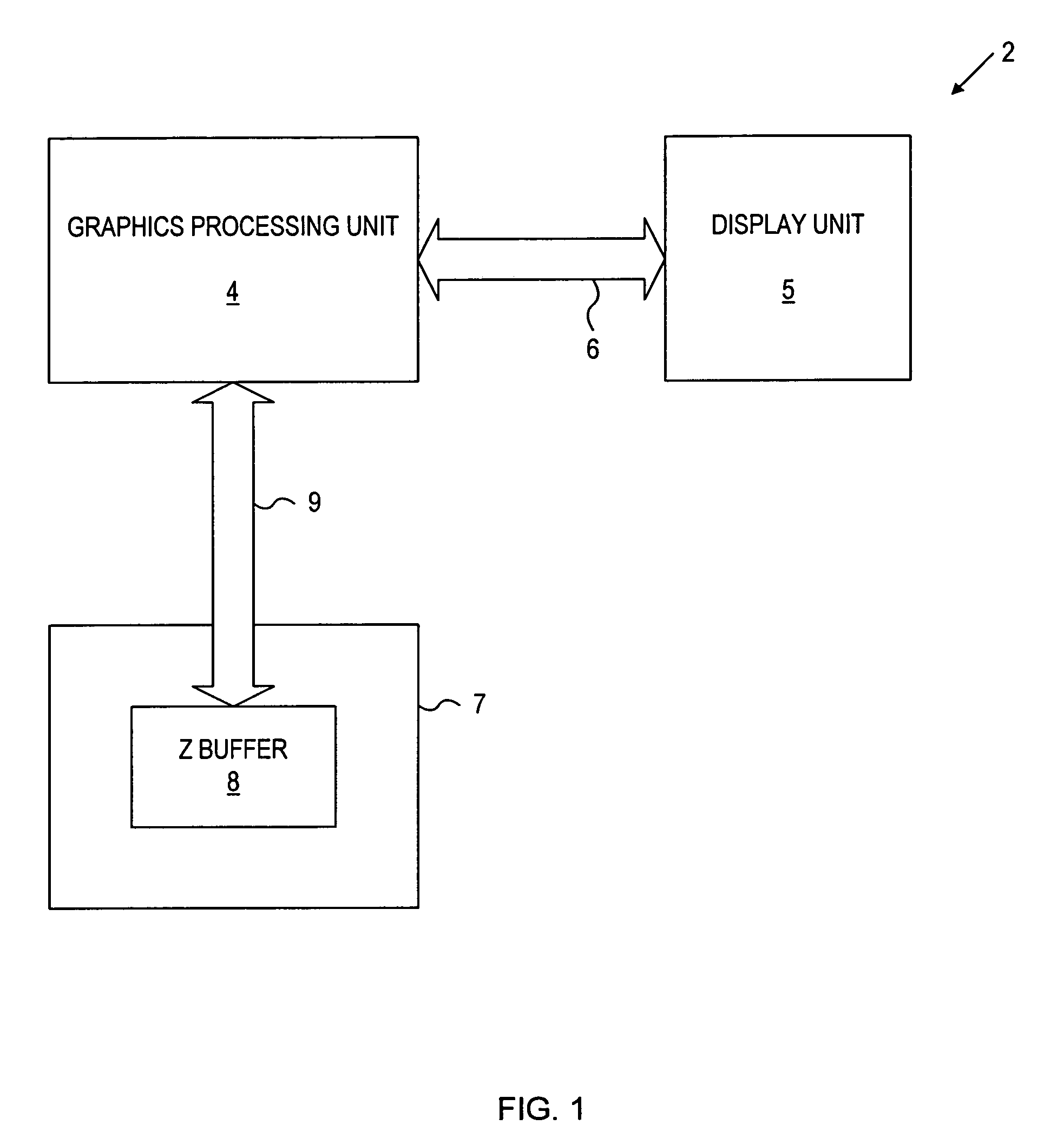

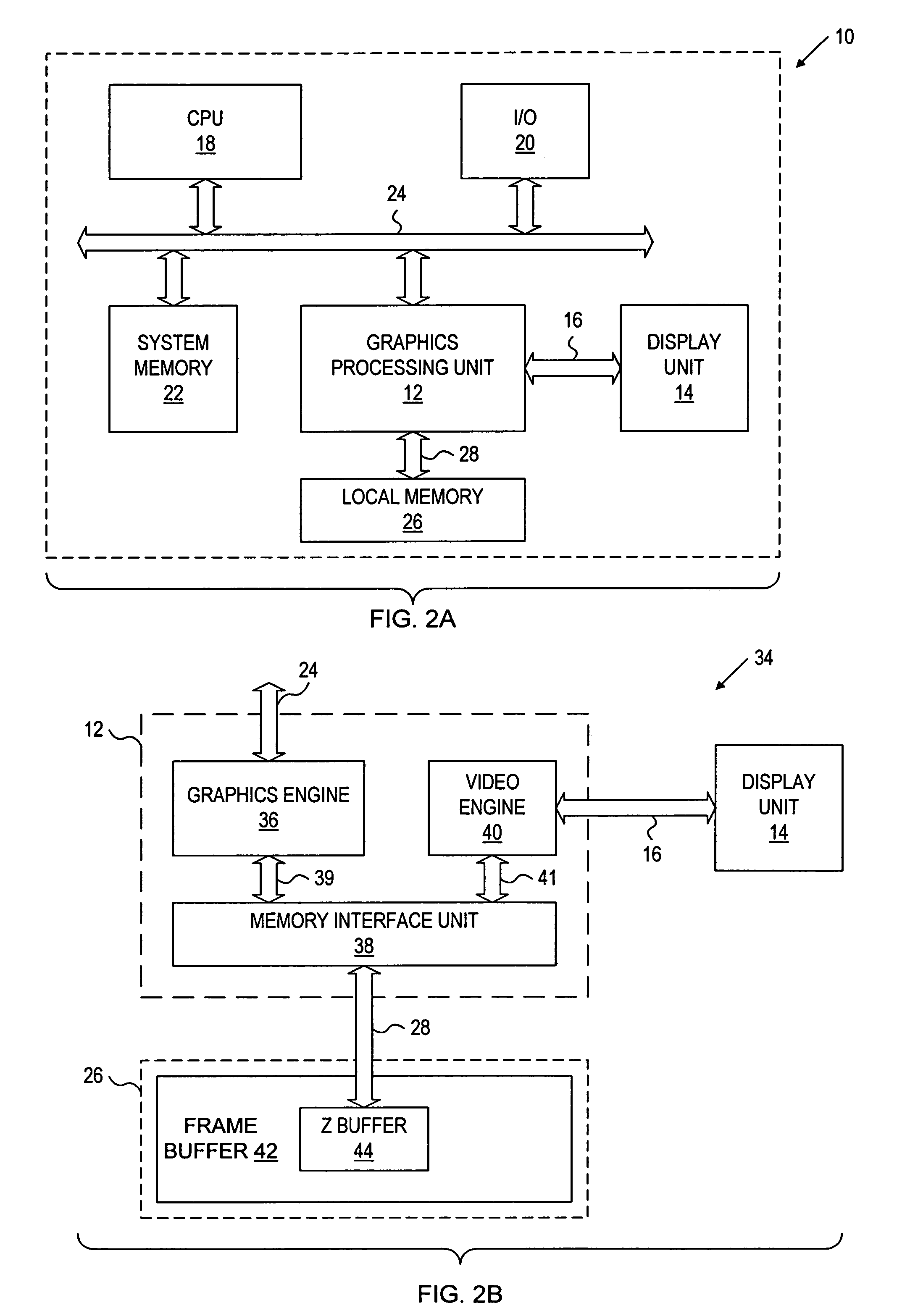

Method and apparatus for managing and accessing depth data in a computer graphics system

A computer graphics system provides for processing image data including Z data for use in displaying three-dimensional images on a display unit. The system includes: a depth buffer providing for temporary storage of Z data; and a graphics processing unit having a graphics engine for generating image data including Z data, and a memory interface unit communicatively coupled to the graphics engine and communicatively coupled to the depth buffer via a depth buffer interface. The graphics processing unit is operative to compress at least a portion of the generated Z data, to write the compressed portion of Z data to the depth buffer via the depth buffer interface in a compressed format, to read portions of compressed Z data from the depth buffer via the depth buffer interface, and to decompress the compressed Z data read from the buffer. An advantage of the present invention is that effective Z data bandwidth through the depth buffer interface is maximized in order to facilitate fast depth buffer access operations.

Owner:NVIDIA CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com