Patents

Literature

733 results about "Point match" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

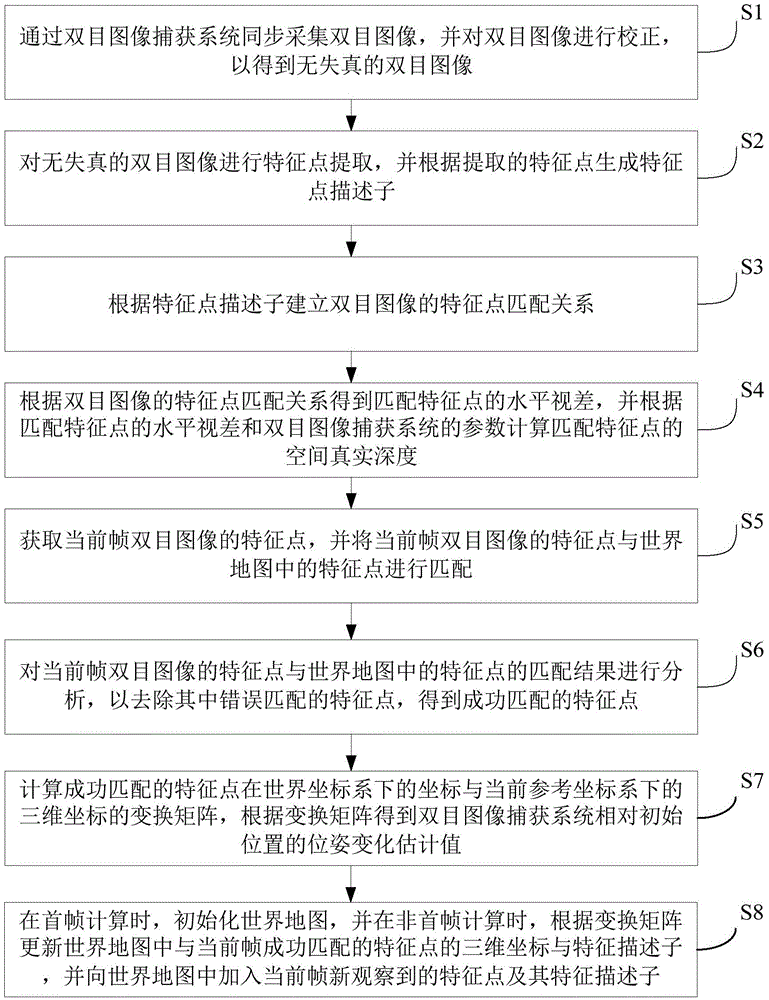

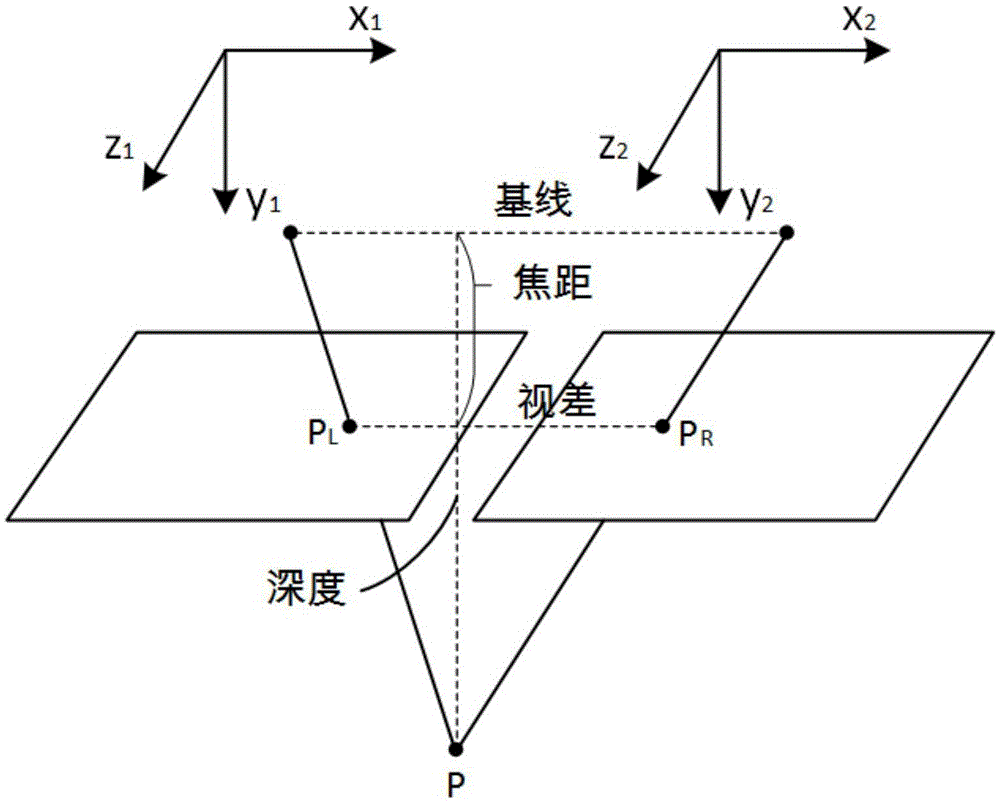

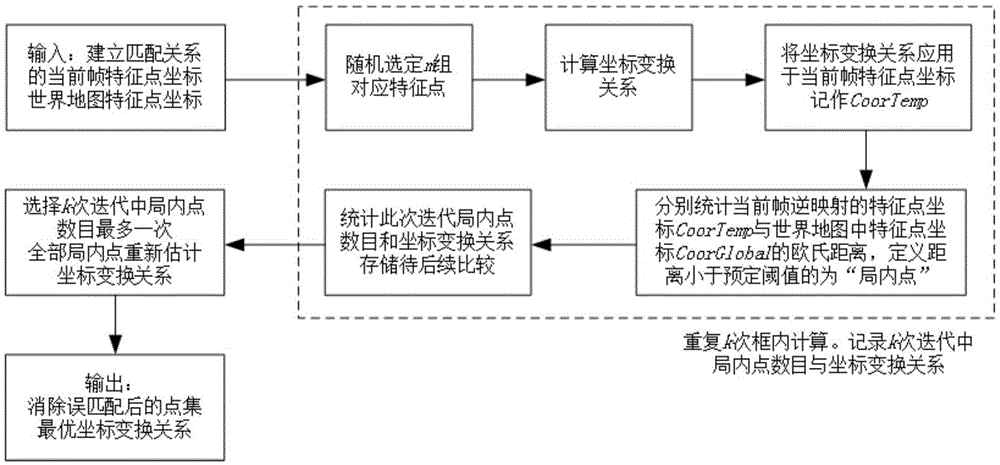

Visual ranging-based simultaneous localization and map construction method

ActiveCN105469405AReduce computational complexityEliminate accumulationImage enhancementImage analysisSimultaneous localization and mappingComputation complexity

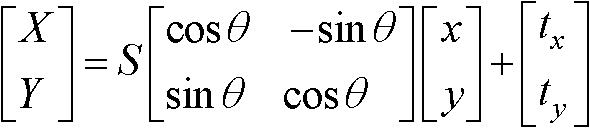

The invention provides a visual ranging-based simultaneous localization and map construction method. The method includes the following steps that: a binocular image is acquired and corrected, so that a distortion-free binocular image can be obtained; feature extraction is performed on the distortion-free binocular image, so that feature point descriptors can be generated; feature point matching relations of the binocular image are established; the horizontal parallax of matching feature points is obtained according to the matching relations, and based on the parameters of a binocular image capture system, real space depth is calculated; the matching results of the feature points of a current frame and feature points in a world map are calculated; feature points which are wrongly matched with each other are removed, so that feature points which are successfully matched with each other can be obtained; a transform matrix of the coordinates of the feature points which are successfully matched with each other under a world coordinate system and the three-dimension coordinates of the feature points which are successfully matched with each other under a current reference coordinate system is calculated, and a pose change estimated value of the binocular image capture system relative to an initial position is obtained according to the transform matrix; and the world map is established and updated. The visual ranging-based simultaneous localization and map construction method of the invention has low computational complexity, centimeter-level positioning accuracy and unbiased characteristics of position estimation.

Owner:北京超星未来科技有限公司

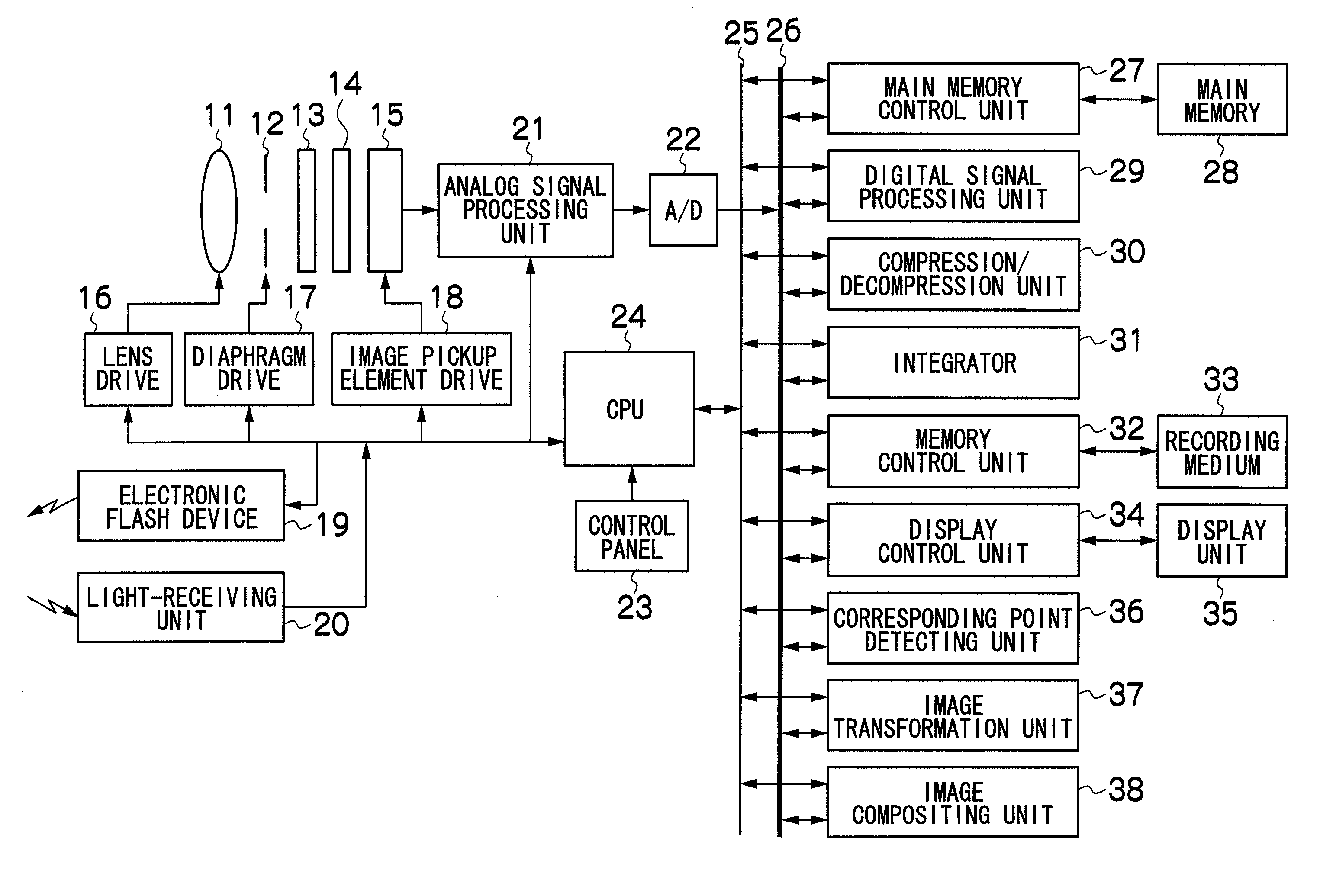

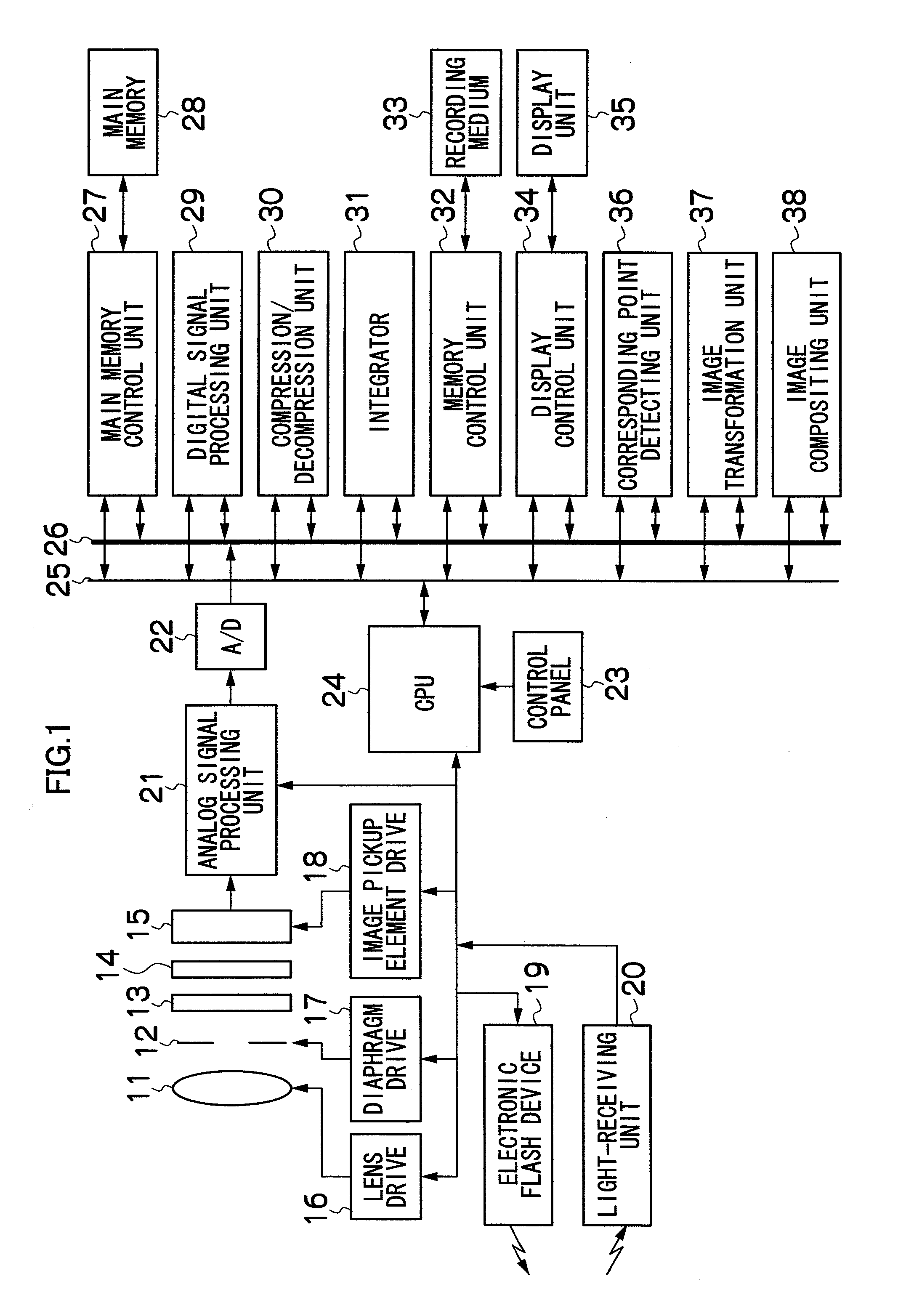

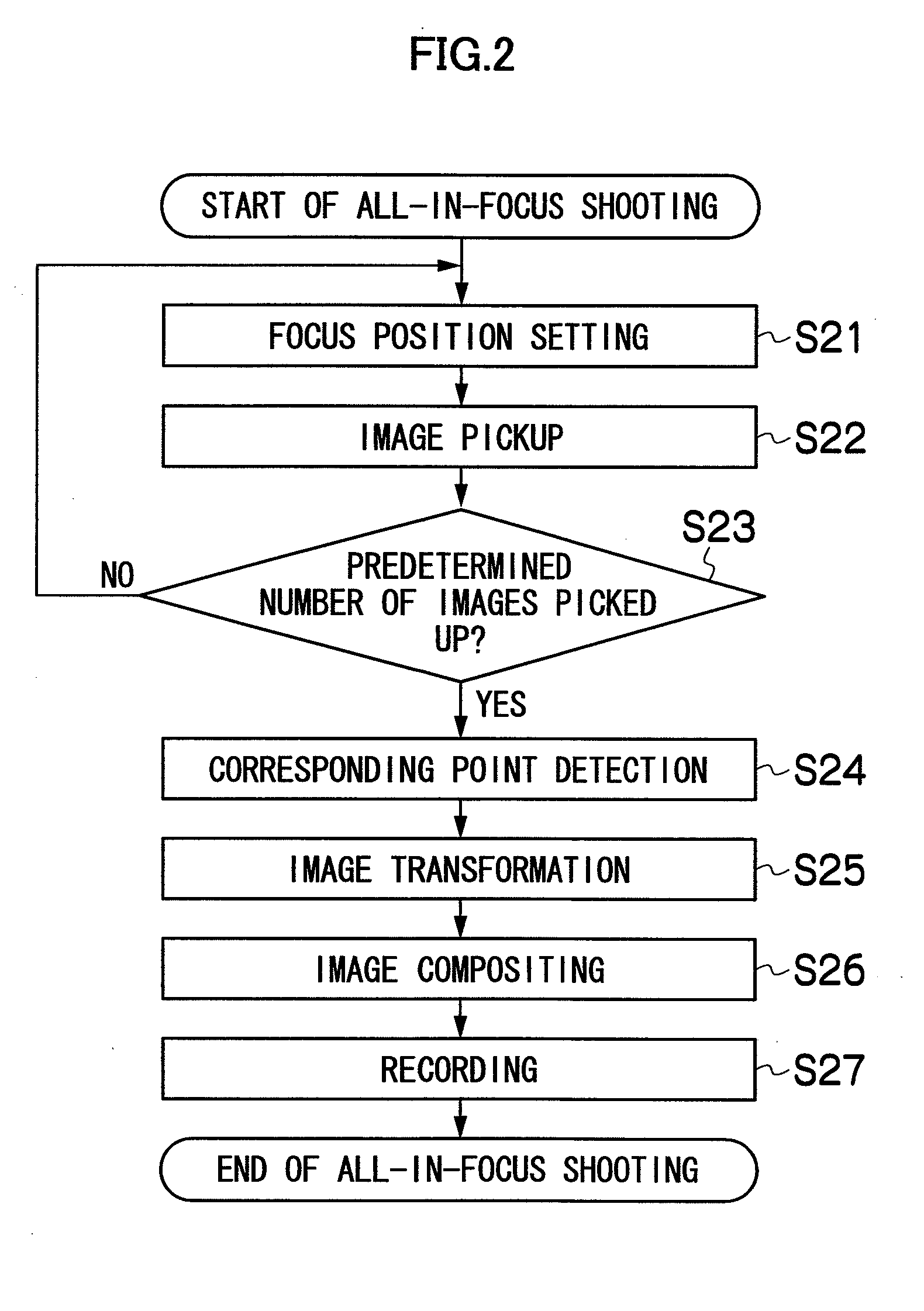

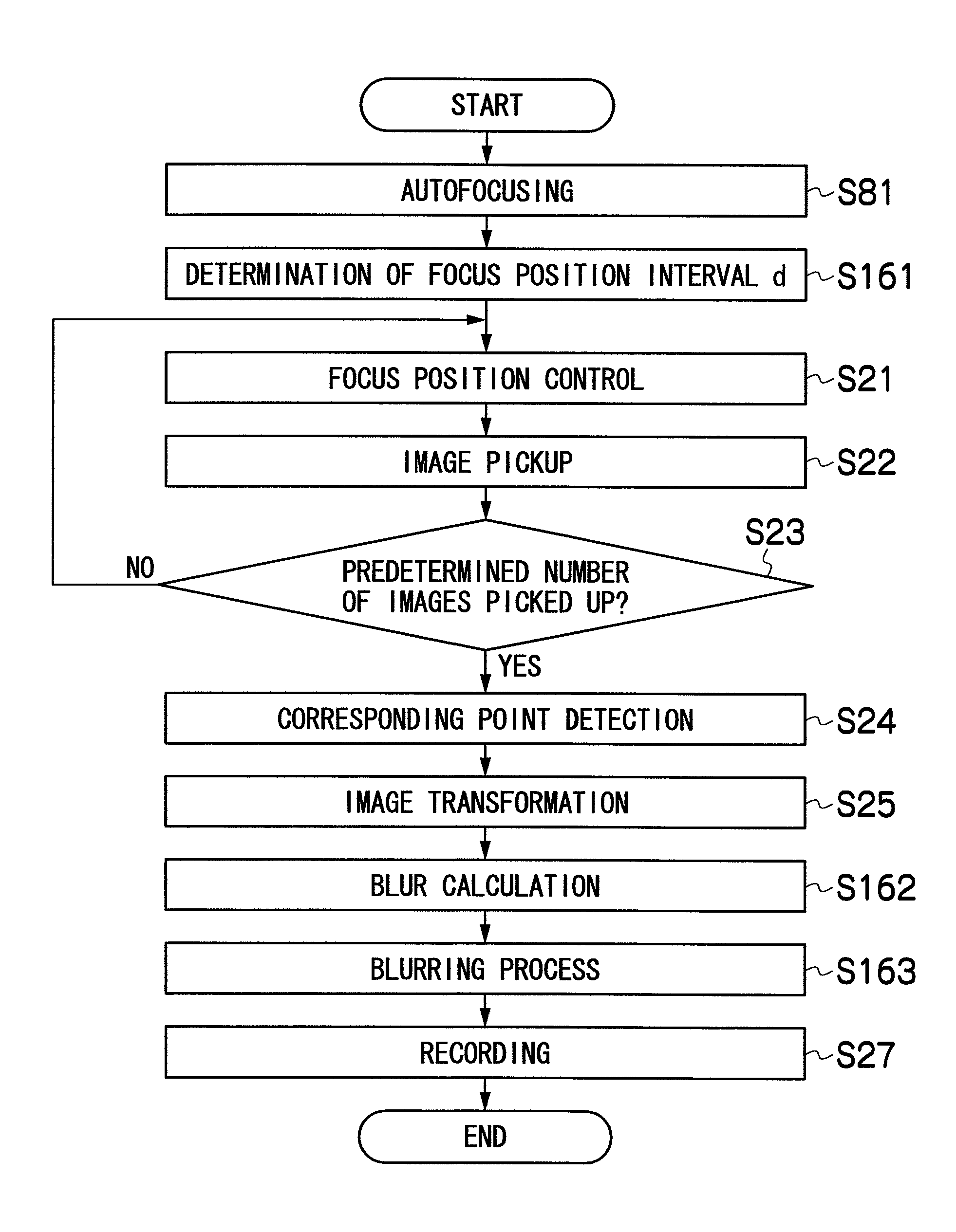

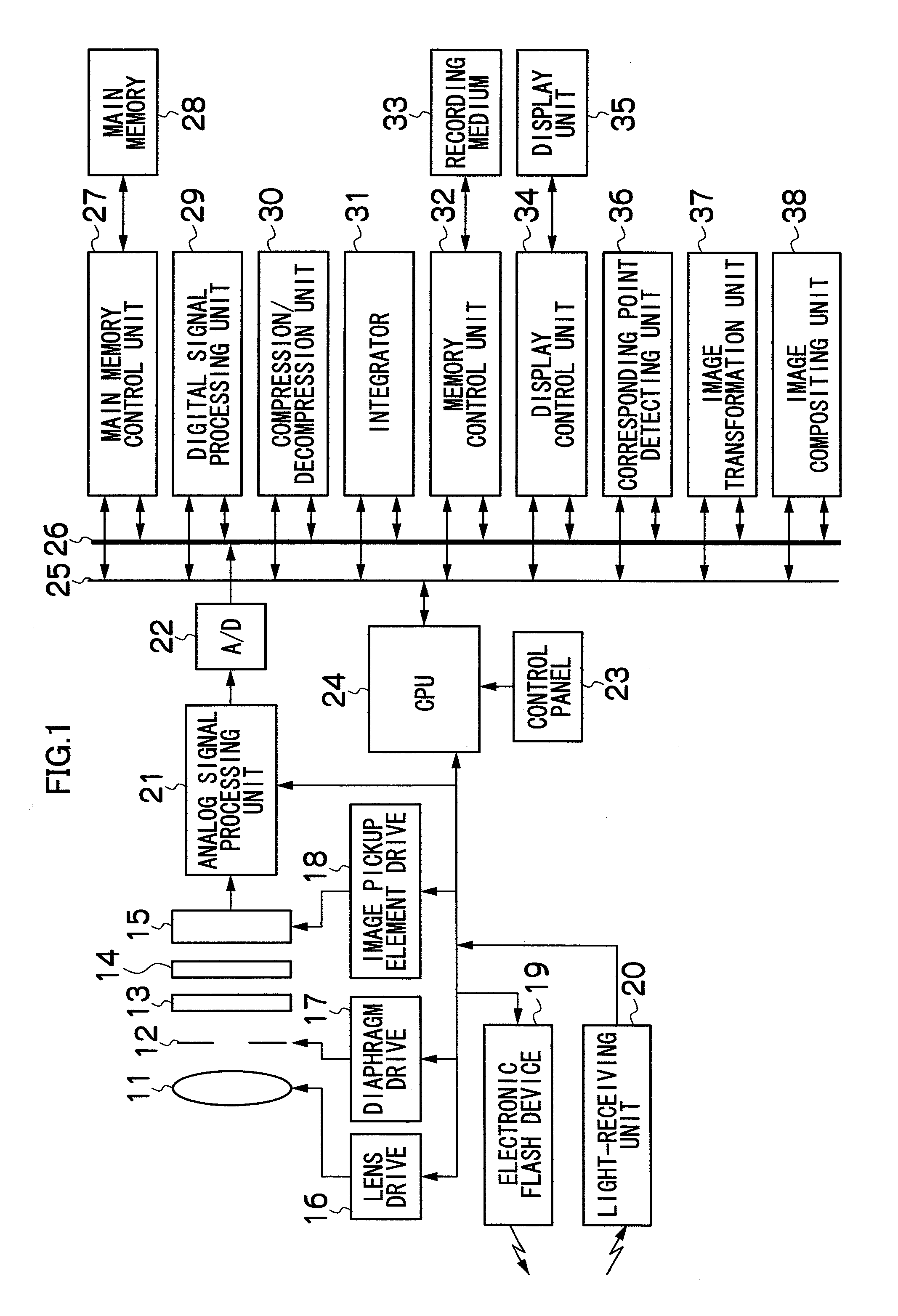

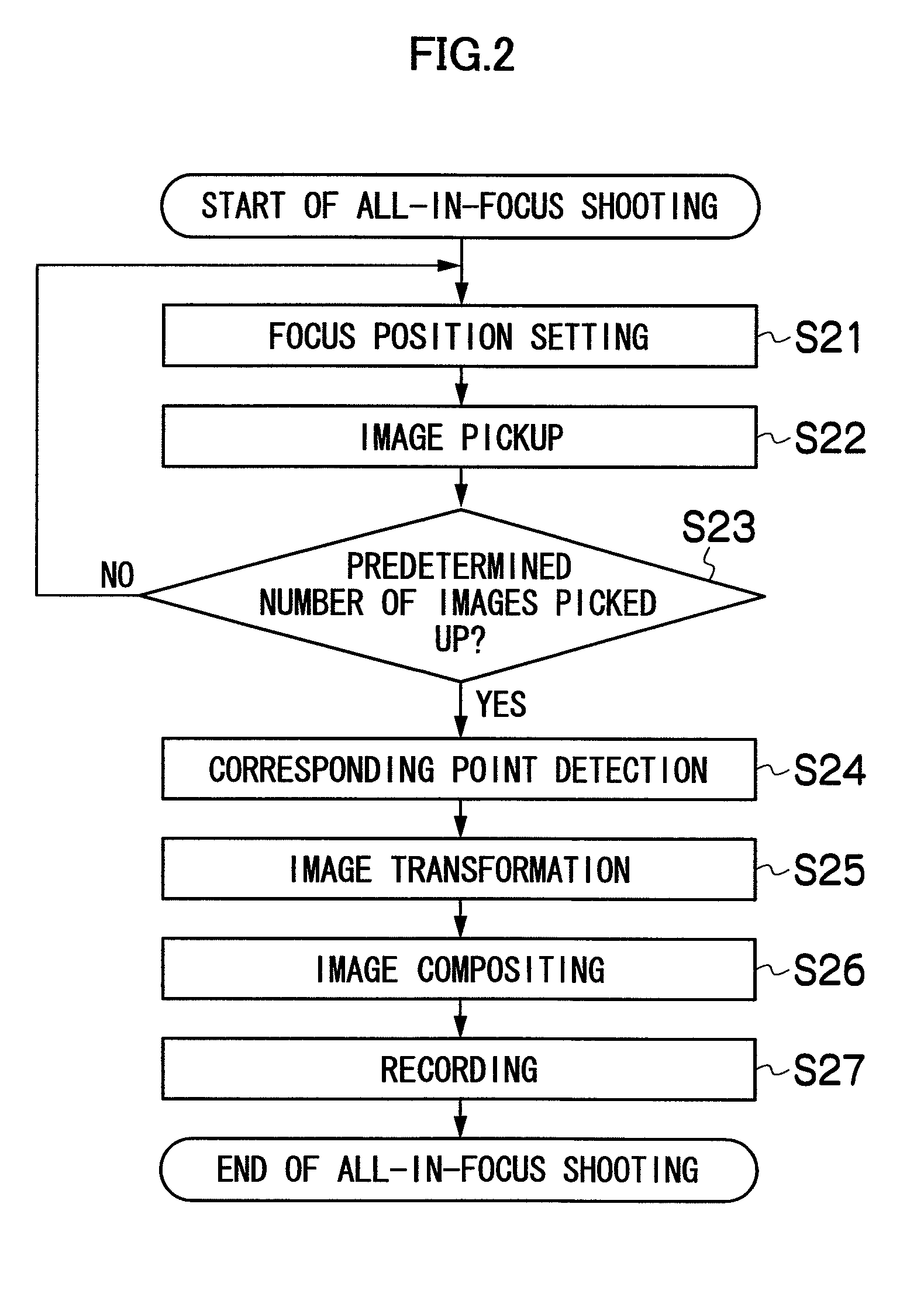

Image pickup apparatus, image processing apparatus, image pickup method, and image processing method

ActiveUS20080259176A1Minimize timePossible to obtainTelevision system detailsProjector focusing arrangementImaging processingPoint match

The present invention, which transforms multiple images so that positions of corresponding points will coincide between the images and composites the images with the corresponding points matched, provides an image pickup apparatus, image processing apparatus, image pickup method, and image processing method which make it possible to obtain an intended all-in-focus image or blur-emphasized image even if there is camera shake or subject movement.

Owner:FUJIFILM CORP

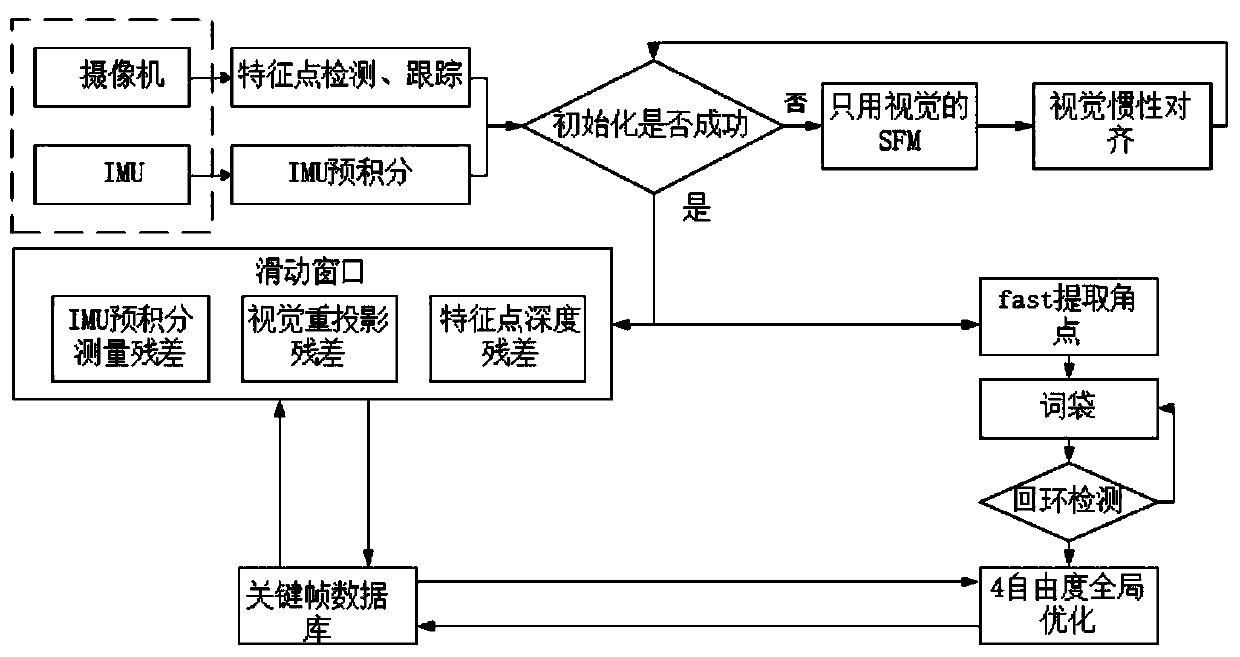

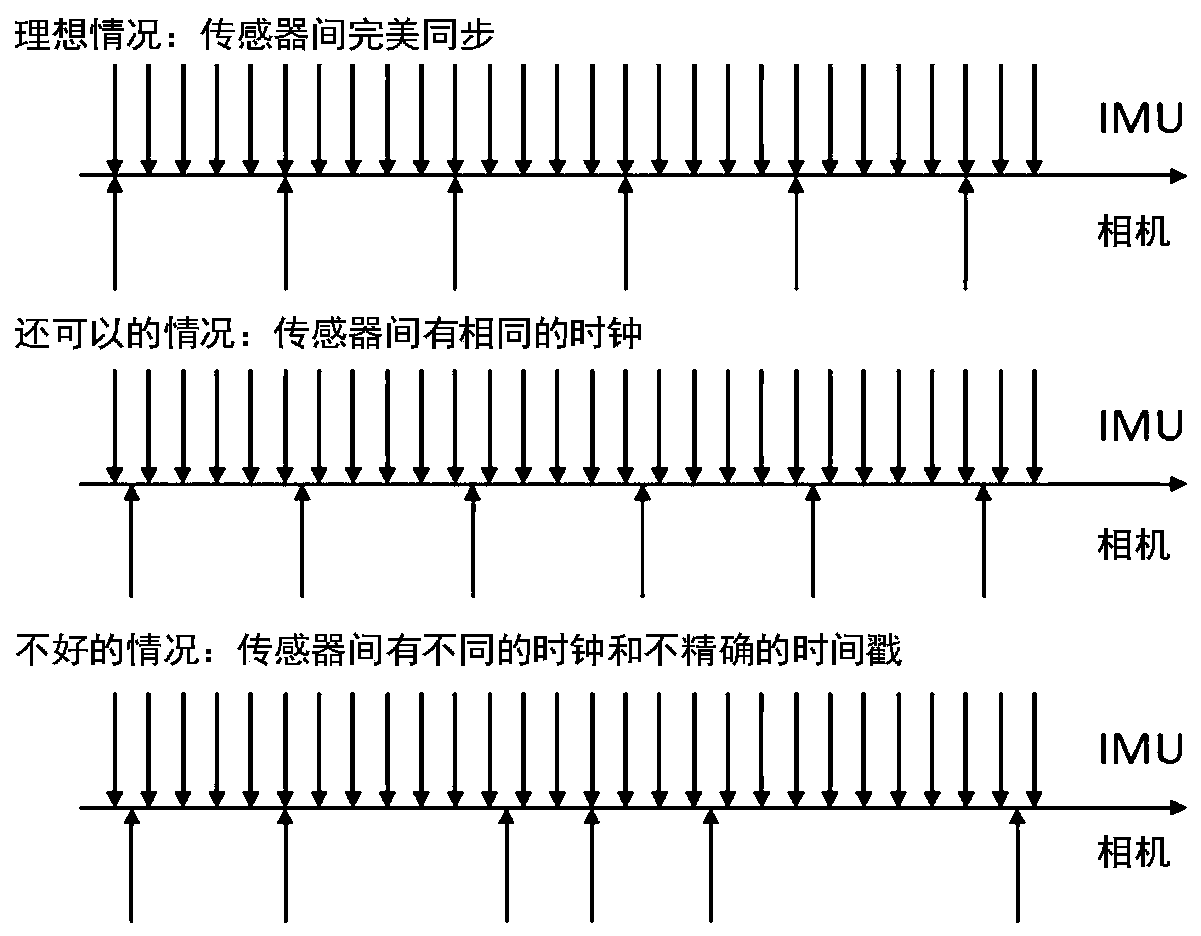

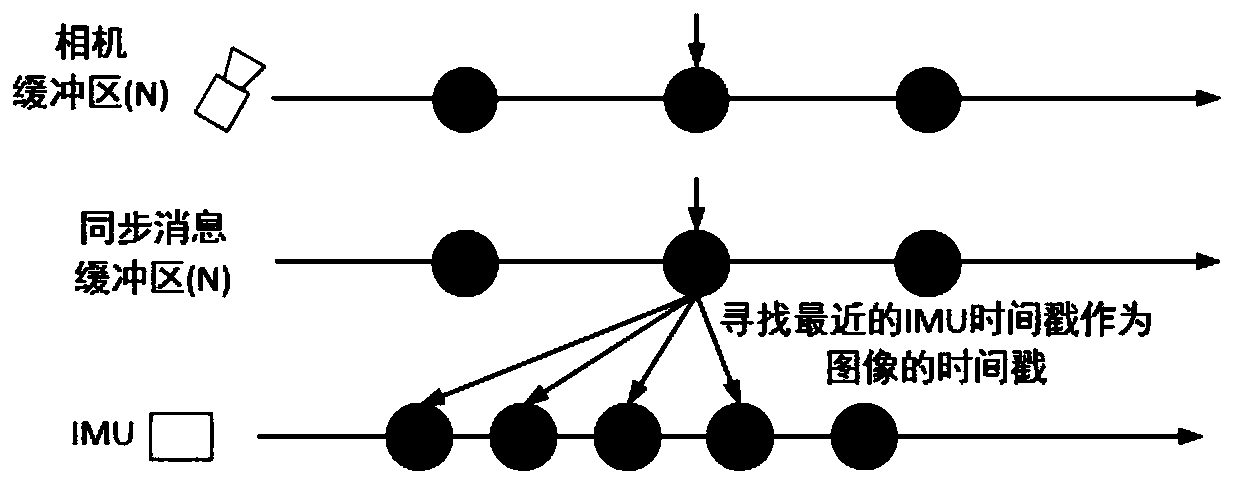

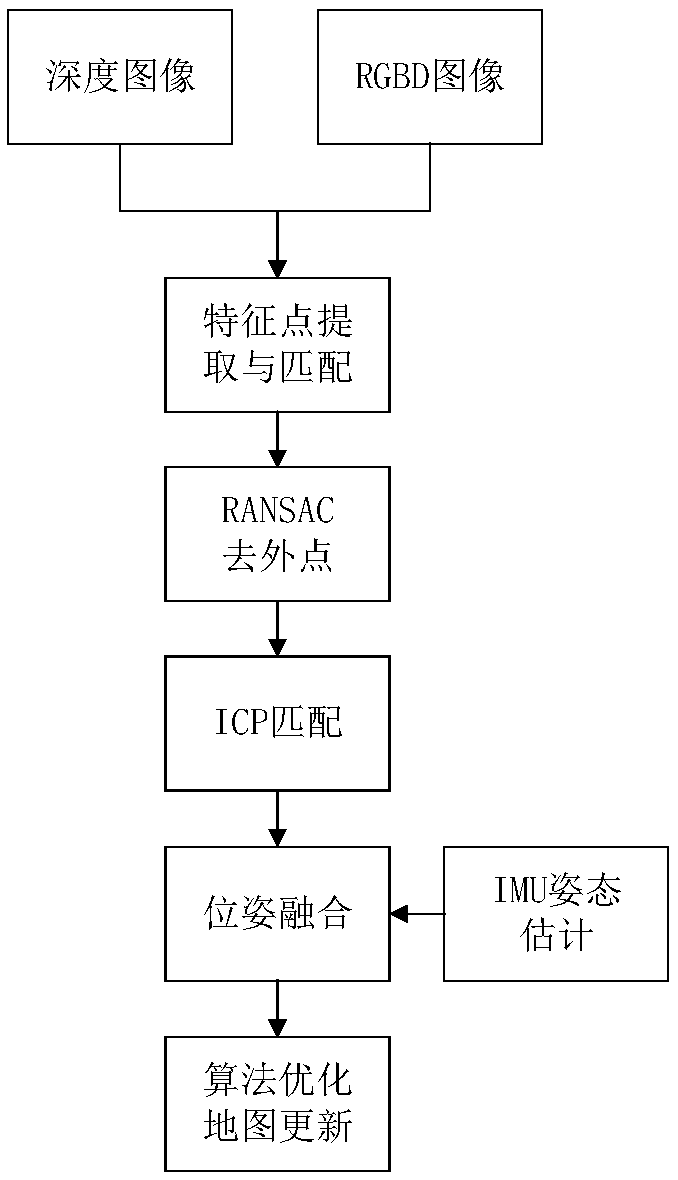

Pose estimation method based on RGB-D and IMU information fusion

ActiveCN109993113AReliable input dataImprove tracking accuracyImage enhancementImage analysisPattern recognitionEstimation methods

The invention provides a pose estimation method based on RGB-D and IMU information fusion. The method comprises the following steps: S1, after time synchronization of RGB-D camera data and IMU data, the gray image and depth image acquired by the RGB-D camera and the acceleration and angular velocity information collected by the IMU are preprocessed to obtain the characteristics of adjacent frame matching in the world coordinate system. Point and IMU state increment; S2, a visual inertial device in a system is initialized according to system external parameters of a pose estimation system; S3,according to information of the intilized visual inertial device, feature points matching adjacent frames in a global corrdinate system, least squares optimization functions of an IMU state incrementconstruction system; an optimization method is used to iteratively solve the optimal solution of the least squares optimization function, and the optimal solution is used as the pose estimation statequantity; further, loop detection is performed to acquire globally-consistent pose estimation state quantity. Therefore, feature point depth estimation is more accurate, and positioning precision of the system is improved.

Owner:NORTHEASTERN UNIV

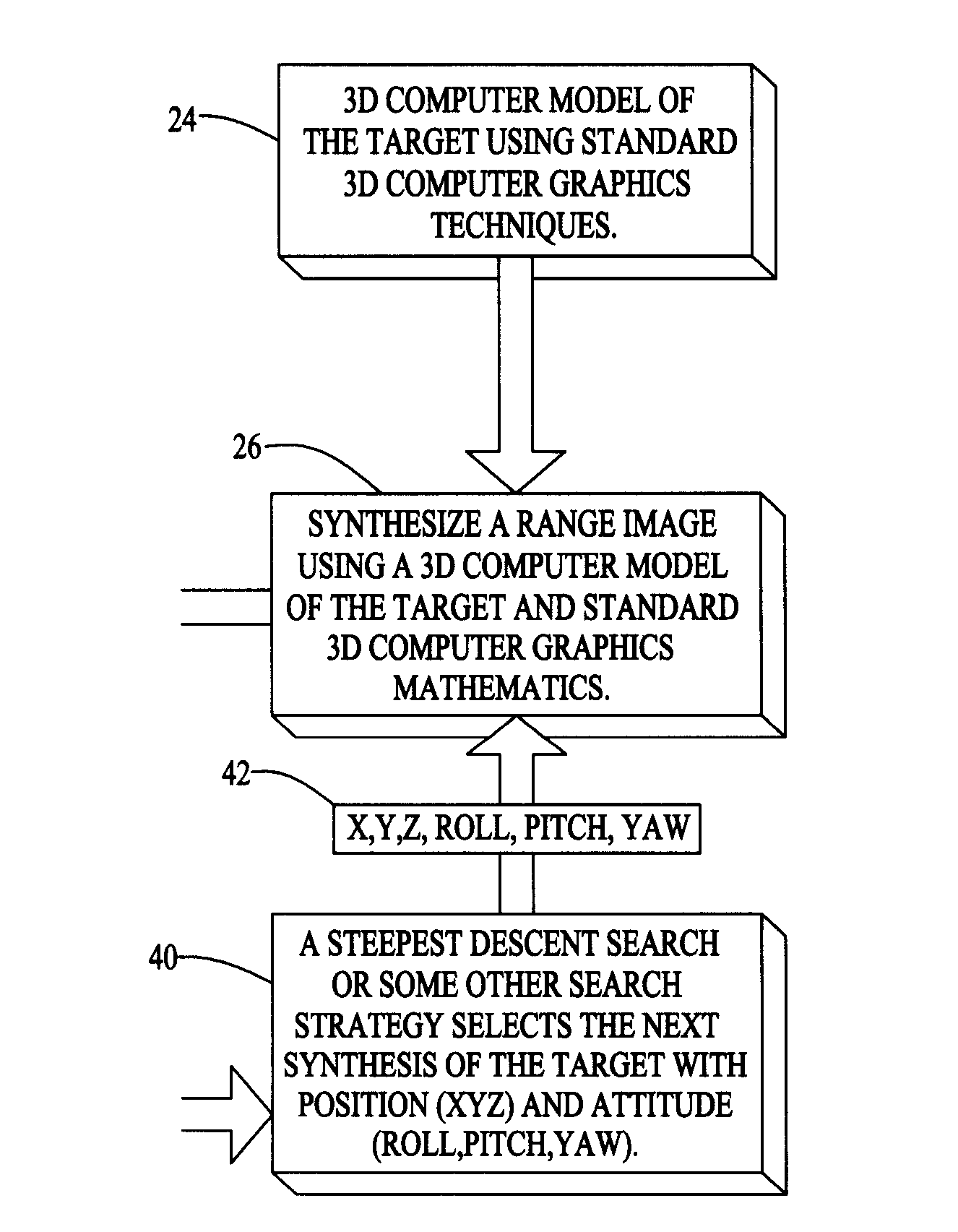

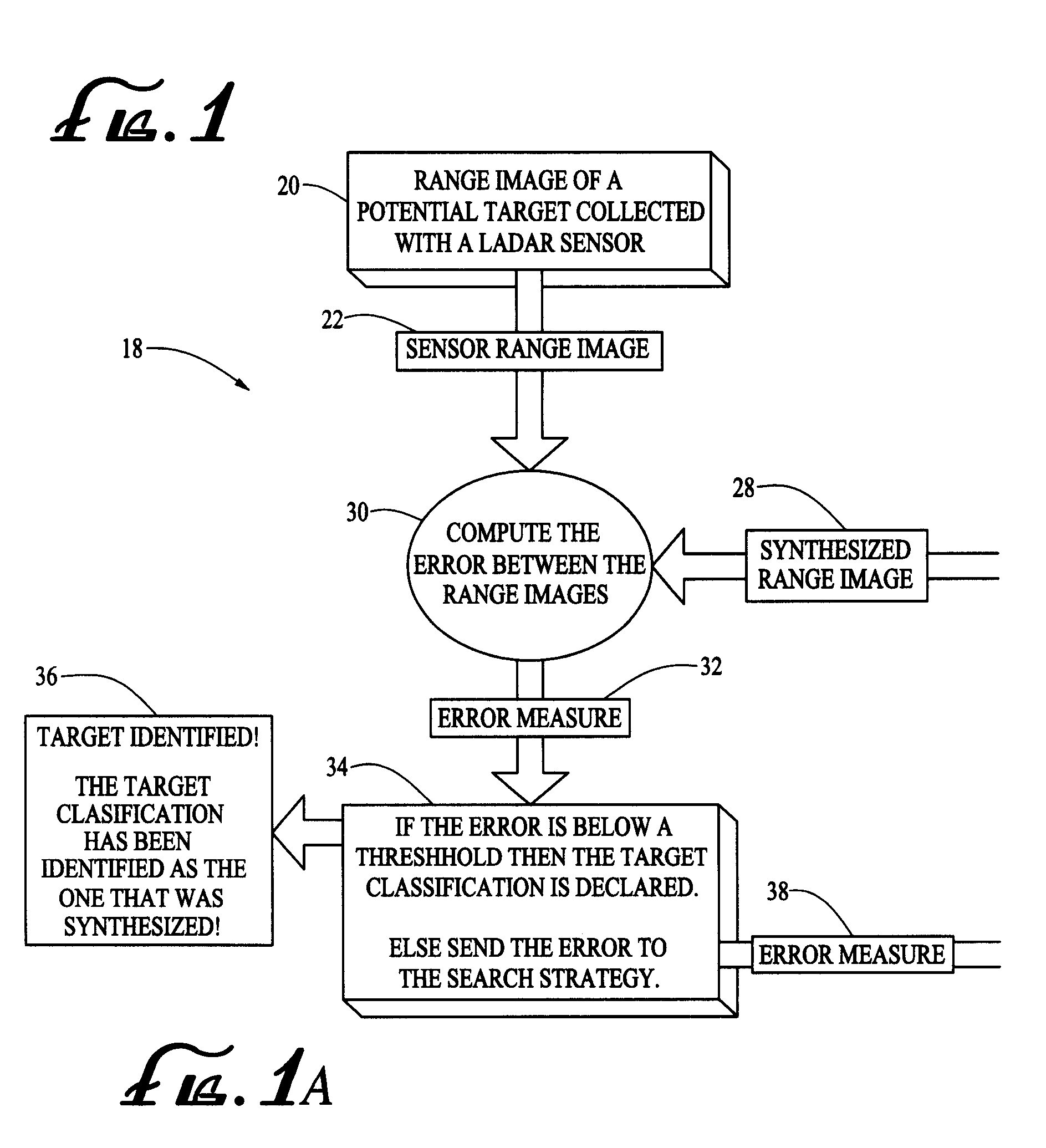

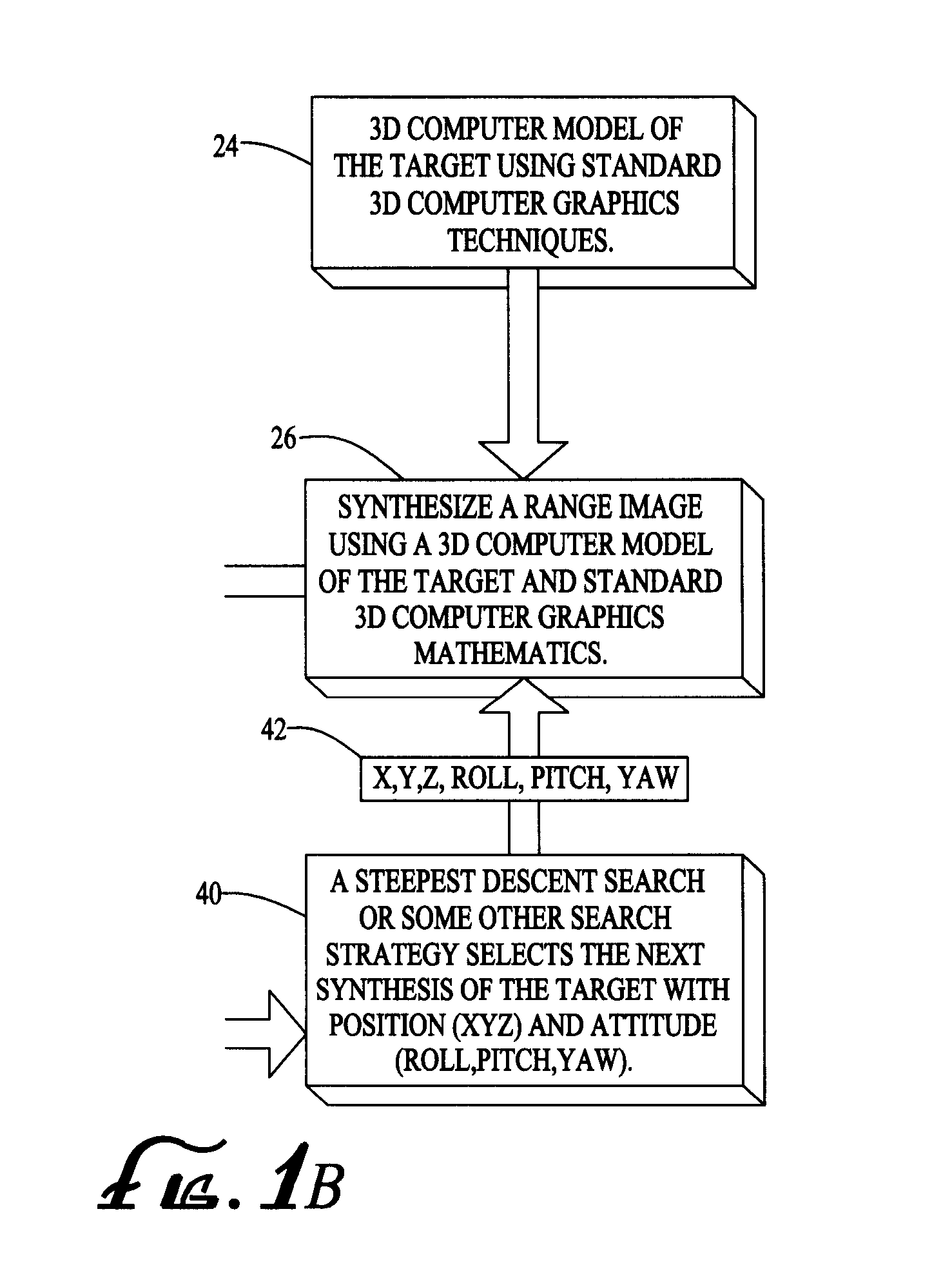

Three dimensional shape correlator

InactiveUS20080273210A1Fast and accurate and efficient target recognitionHighly effectiveUsing optical meansElectromagnetic wave reradiationRadarGoal recognition

A three dimensional shape correlation computer software program which uses laser radar data for target identification. The correlation program obtains a scan of laser radar data of a target from a Ladar sensor. The data includes a plurality of X,Y,Z coordinate detection points for the target. The software simulates the sensor scan using a 3D wire-frame model of the target. An X,Y,Z coordinate location is computed for every point in the computer model of the target. The software compares the X,Y,Z coordinate detection points for the target with the X,Y,Z coordinate points for the computer model to determine if the points match. When there is a match a target identification is declared.

Owner:U S OF A AS REPRESENTED BY THE SEC OF THE NAVY

Rapid generation method for facial animation

InactiveCN101826217AImprove descriptive powerQuick changeImage analysis3D-image renderingPattern recognitionImaging processing

The invention relates to a rapid generation method for facial animation, belonging to the image processing technical field. The method comprises the following steps: firstly detecting coordinates of a plurality of feature points matched with a grid model face in the original face pictures by virtue of an improved active shape model algorithm; completing fast matching between a grid model and a facial photo according to information of the feature points; performing refinement treatment on the mouth area of the matched grid model; and describing a basic facial mouth shape and expression change via facial animation parameters and driving the grid model by a parameter flow, and deforming the refined grid model by a grid deformation method based on a thin plate spline interpolation so as to generate animation. The method can quickly realize replacement of animated characters to generate vivid and natural facial animation.

Owner:SHANGHAI JIAO TONG UNIV

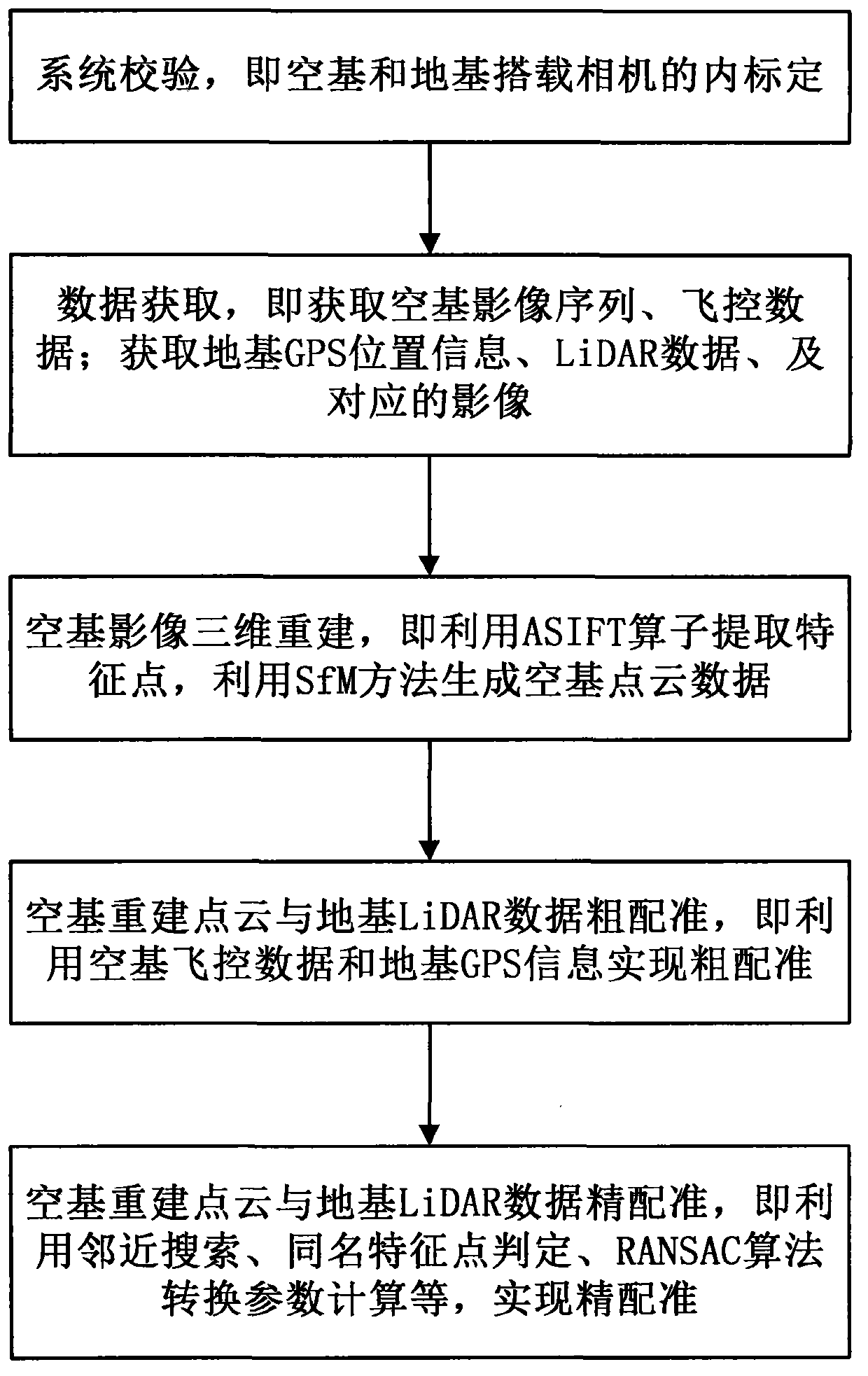

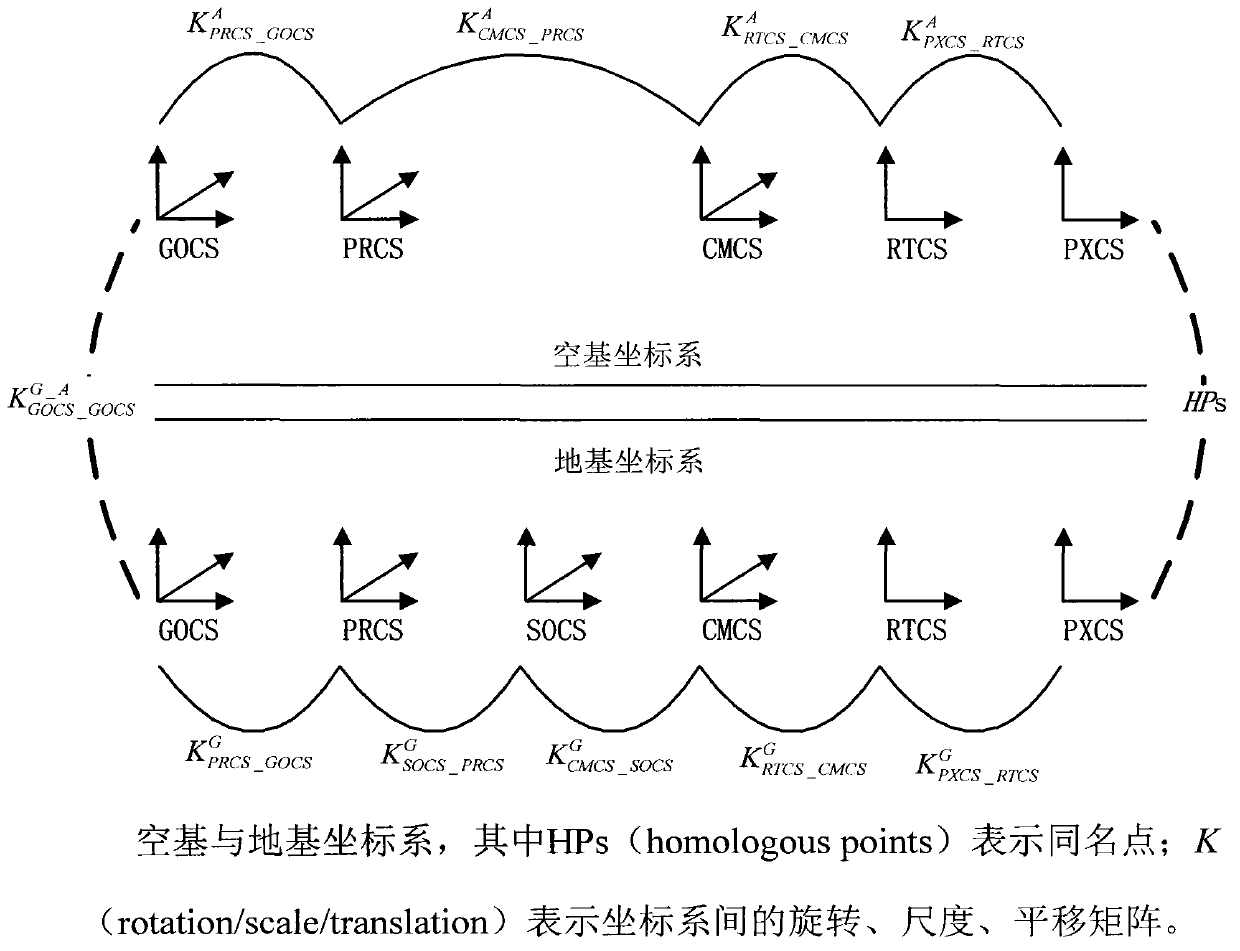

Precise registration method of ground laser-point clouds and unmanned aerial vehicle image reconstruction point clouds

InactiveCN103426165ASolve the problem of multi-angle observationReduce complexityImage analysis3D modellingPoint cloudTransformation parameter

The invention relates to a precise registration method of ground laser-point clouds (ground base) and unmanned aerial vehicle image reconstruction point clouds (aerial base). The method comprises generating overlapping areas of the ground laser-point clouds and the unmanned aerial vehicle image reconstruction point clouds on the basis of image three-dimensional reconstruction and point cloud rough registration; then traversing ground base images in the overlapping areas, extracting ground base image feature points through a feature point extraction algorithm, searching for aerial base point clouds in the neighborhood range of the ground base point clouds corresponding to the feature points, and obtaining the aerial base image feature points matched with the aerial base point clouds to establish same-name feature point sets; according to the extracted same-name feature point sets of the ground base images and the aerial base images and a transformation relation between coordinate systems, estimating out a coordinate transformation matrix of the two point clouds to achieve precise registration. According to the precise registration method of the ground laser-point clouds and the unmanned aerial vehicle image reconstruction point clouds, by extracting the same-name feature points of the images corresponding to the ground laser-point clouds and the images corresponding to the unmanned aerial vehicle images, the transformation parameters of the two point cloud data can be obtained indirectly to accordingly improve the precision and the reliability of point cloud registration.

Owner:吴立新 +1

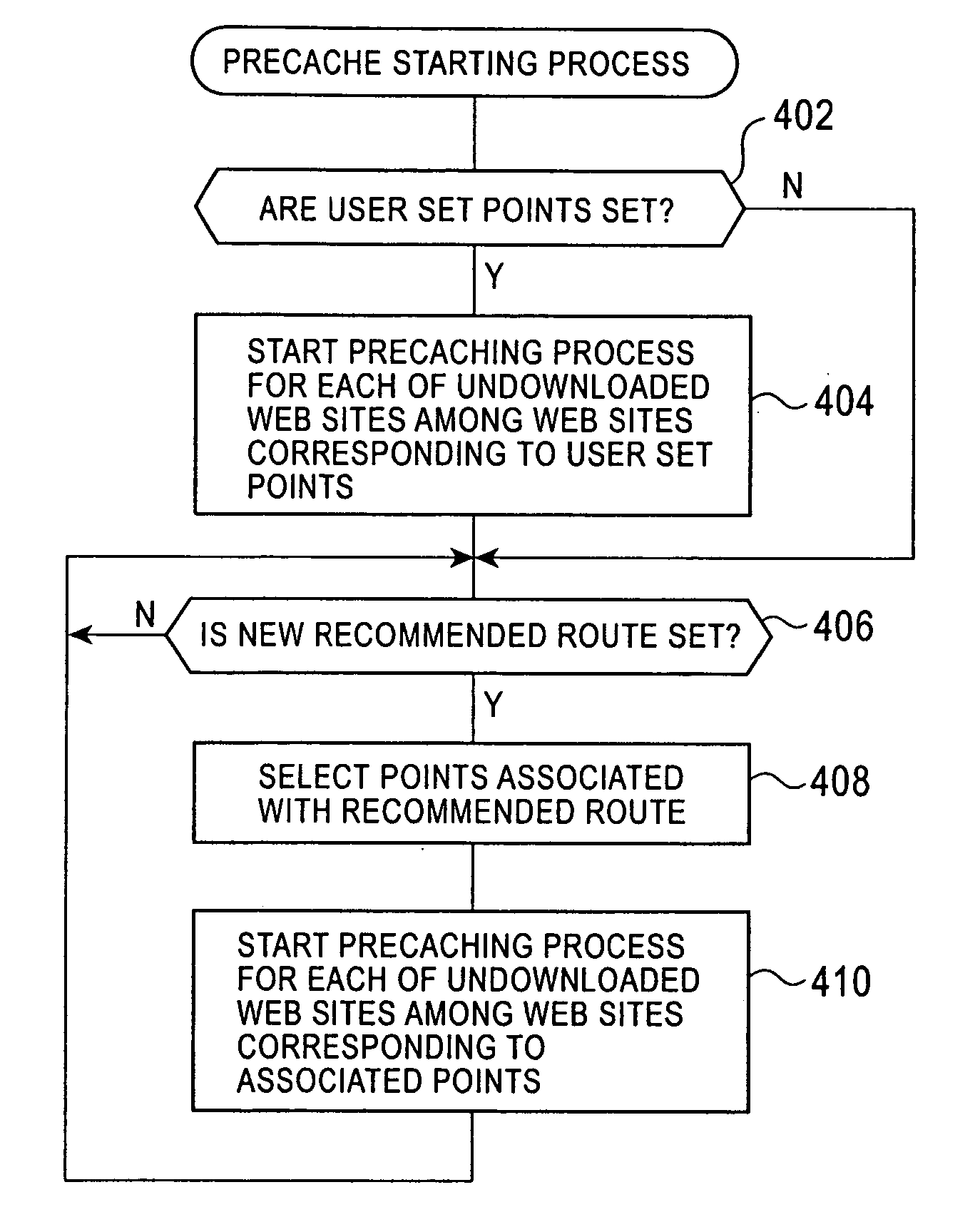

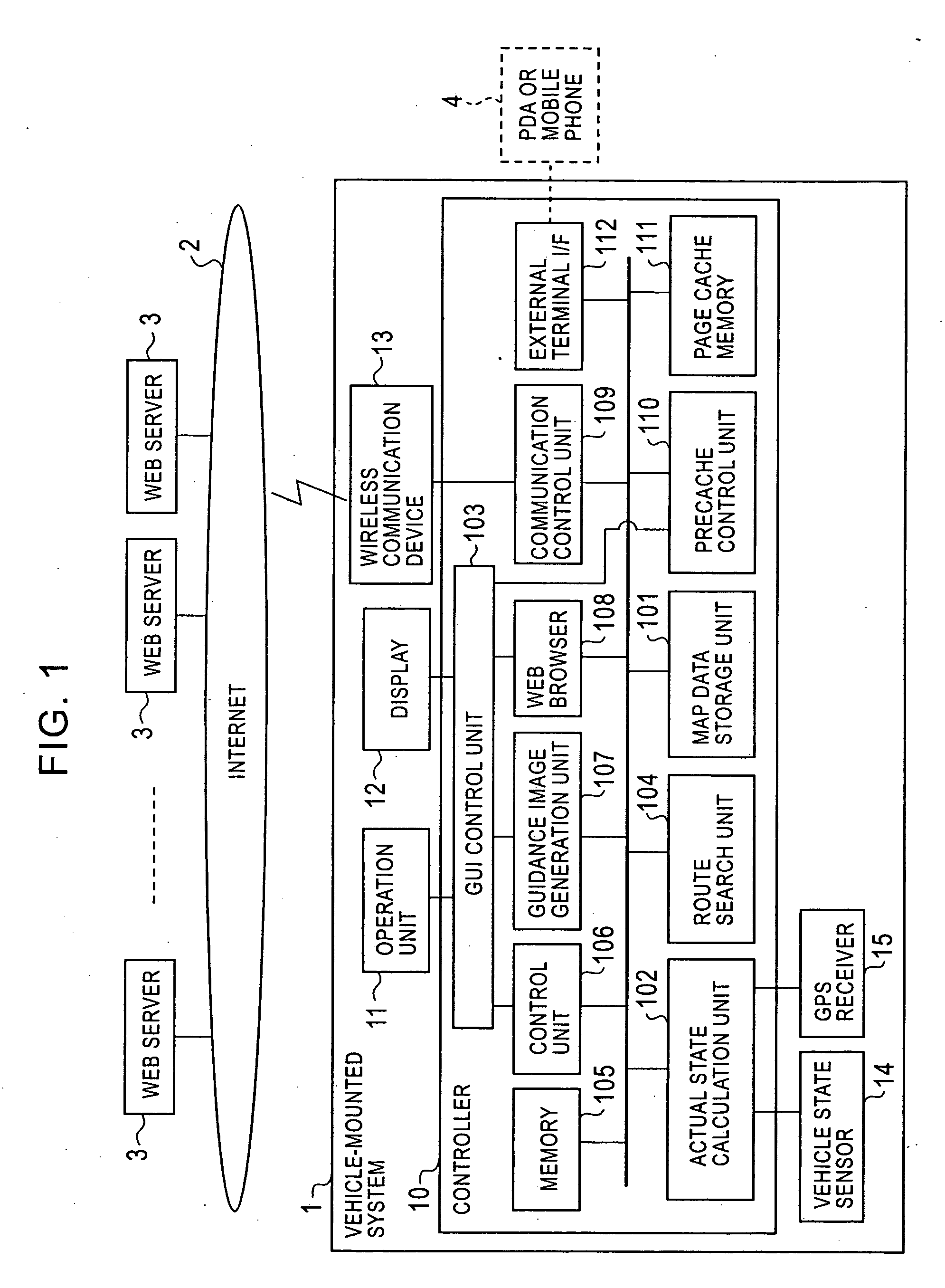

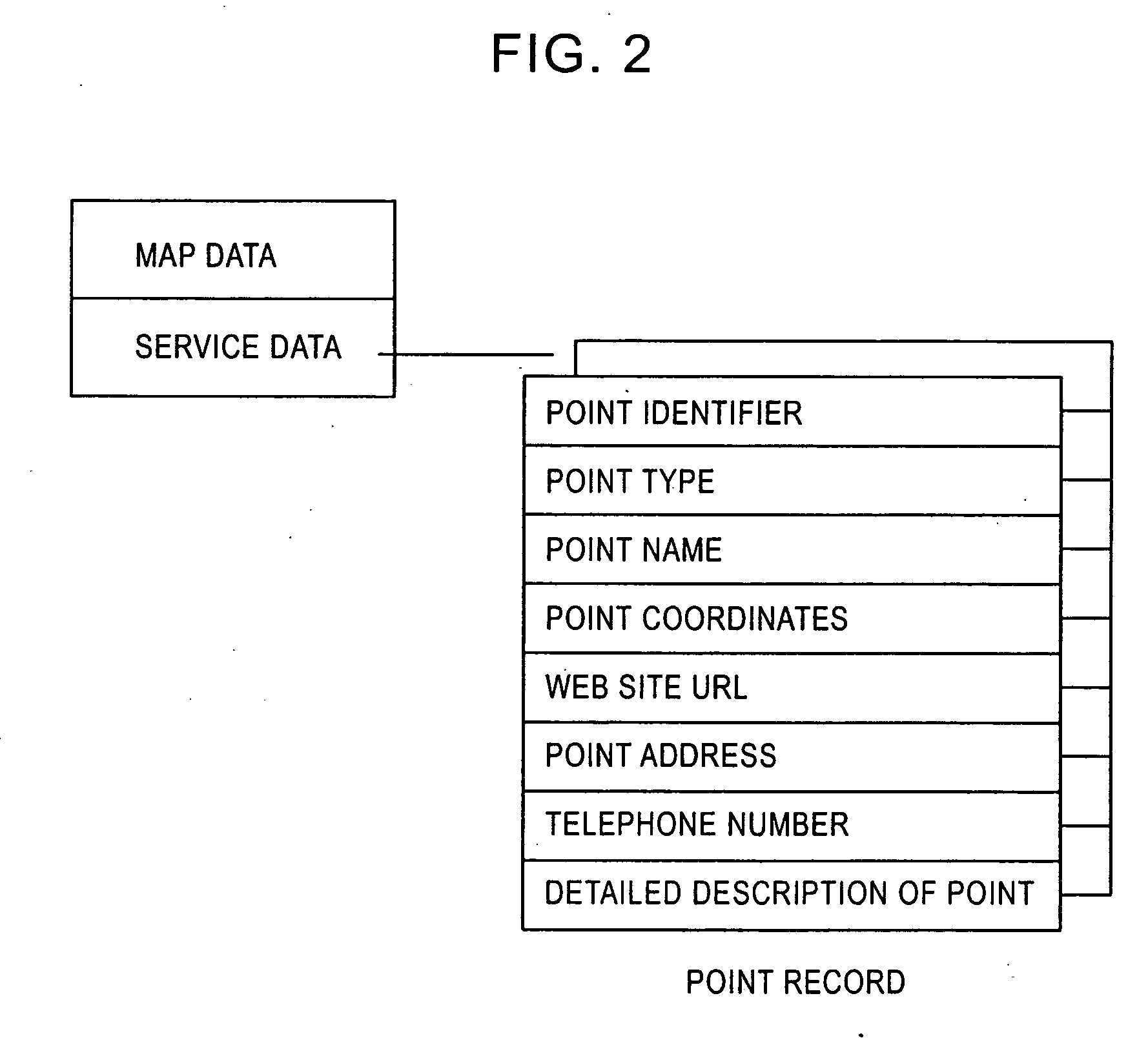

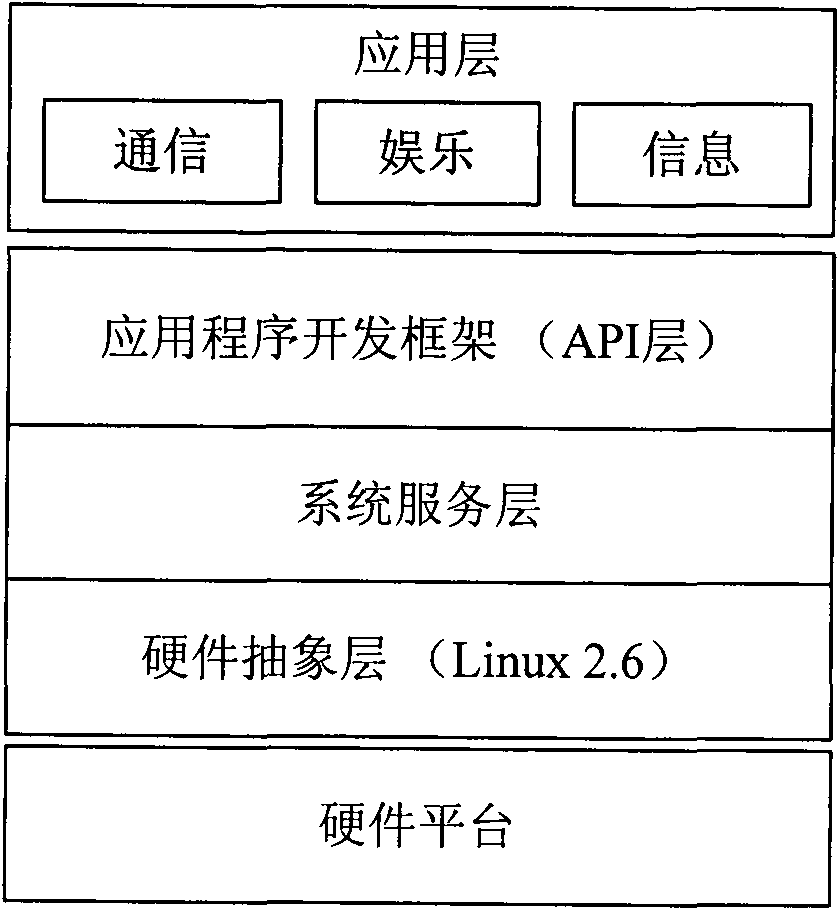

Vehicle-mounted apparatus

ActiveUS20060129636A1Easy selectionEasy to useInstruments for road network navigationDigital data information retrievalWeb siteWeb browser

The present invention provides a vehicle-mounted apparatus whereby a user can easily select and use a Web site in accordance with a point associated with information provided by the Web site and a description of the information. According to the present invention, a precache control unit automatically downloads at least one Web page of a Web site associated with each point matching a predetermined condition to a page cache memory and displays site icons at respective points corresponding to the downloaded Web sites on a map image. In addition, the precache control unit displays the predetermined type of extracted information by analyzing the description of the Web page of each Web site in an information window that pops up from the corresponding site icon. When the user selects one of the site icons, a Web browser displays the Web page of the Web site corresponding to the selected site icon.

Owner:ALPINE ELECTRONICS INC

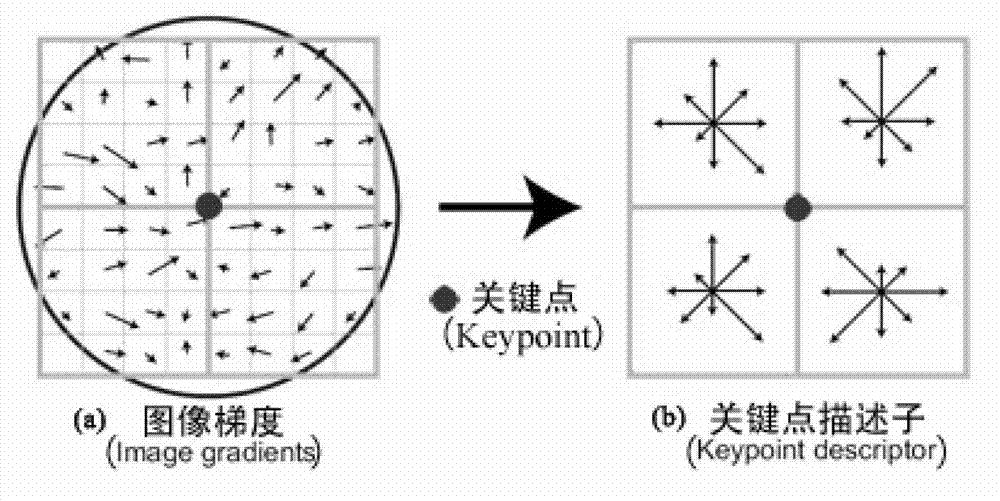

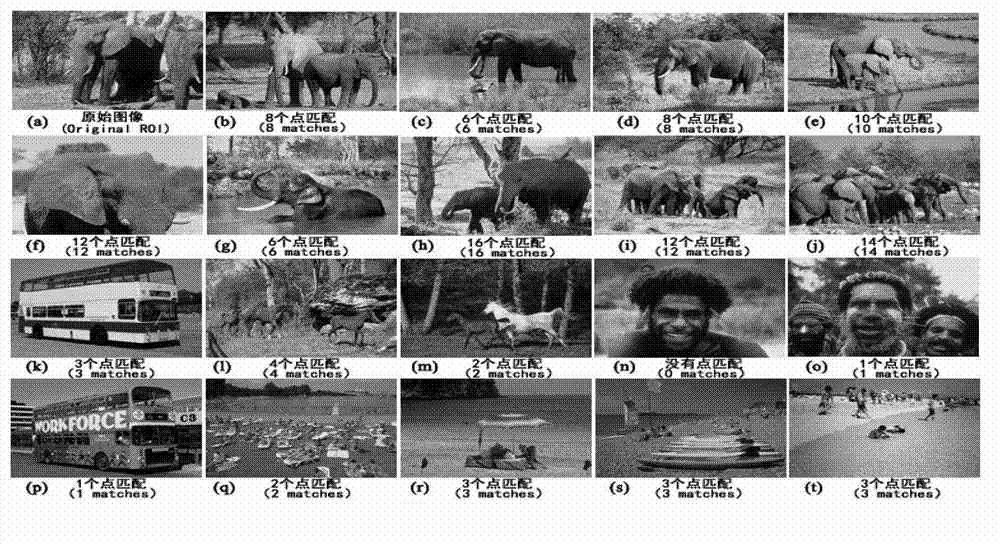

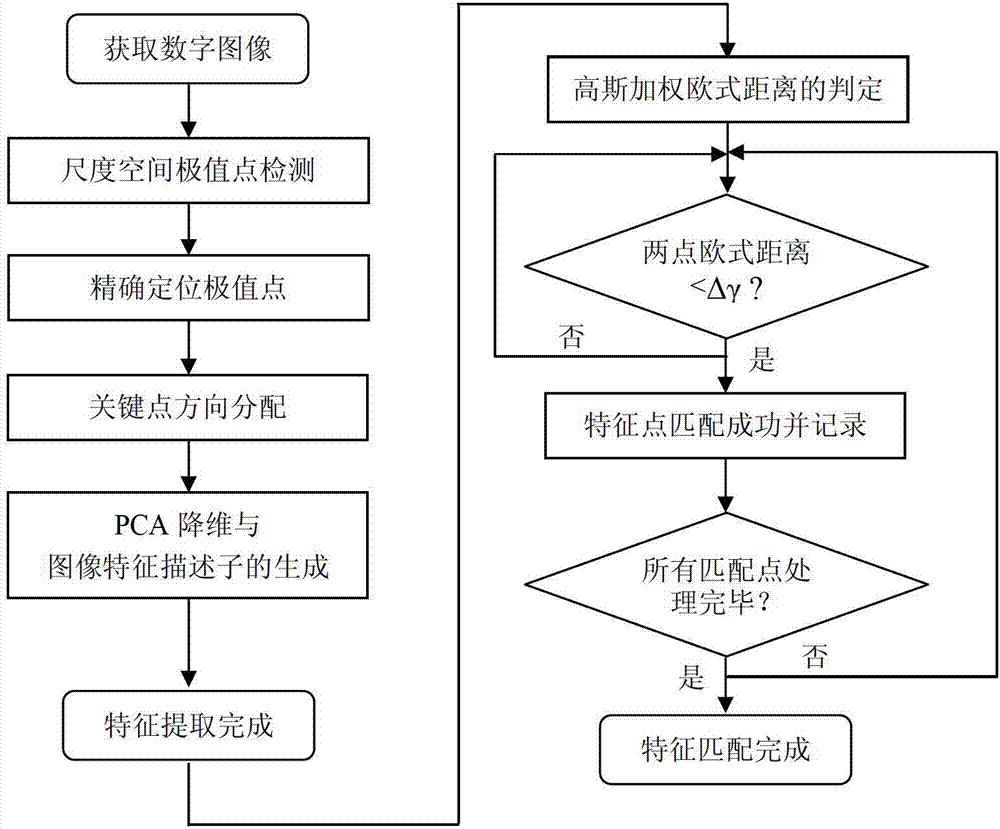

Feature extraction and matching method and device for digital image based on PCA (principal component analysis)

ActiveCN103077512AHigh precisionImprove matching speedImage analysisDigital videoPrincipal component analysis

The invention provides a feature extraction and matching method and device for a digital image based on PCA (principal component analysis), belonging to the technical field of image analysis. The method comprises the following steps of: 1) detecting scale space extreme points; 2) locating the extreme points; 3) distributing directions of the extreme points; 4) reducing dimension of PCA and generating image feature descriptors; and 5) judging similarity measurement and feature matching. The device mainly comprises a numerical value preprocessing module, a feature point extraction module and a feature point matching module. Compared with the existing SIFI (Scale Invariant Feature Transform) feature extraction and matching algorithm, the feature extraction and matching method has higher accuracy and matching speed. The method and device provided by the invention can be directly applied to such machine vision fields as digital image retrieval based on contents, digital video retrieval based on contents, digital image fusion and super-resolution image reconstruction.

Owner:BEIJING UNIV OF TECH

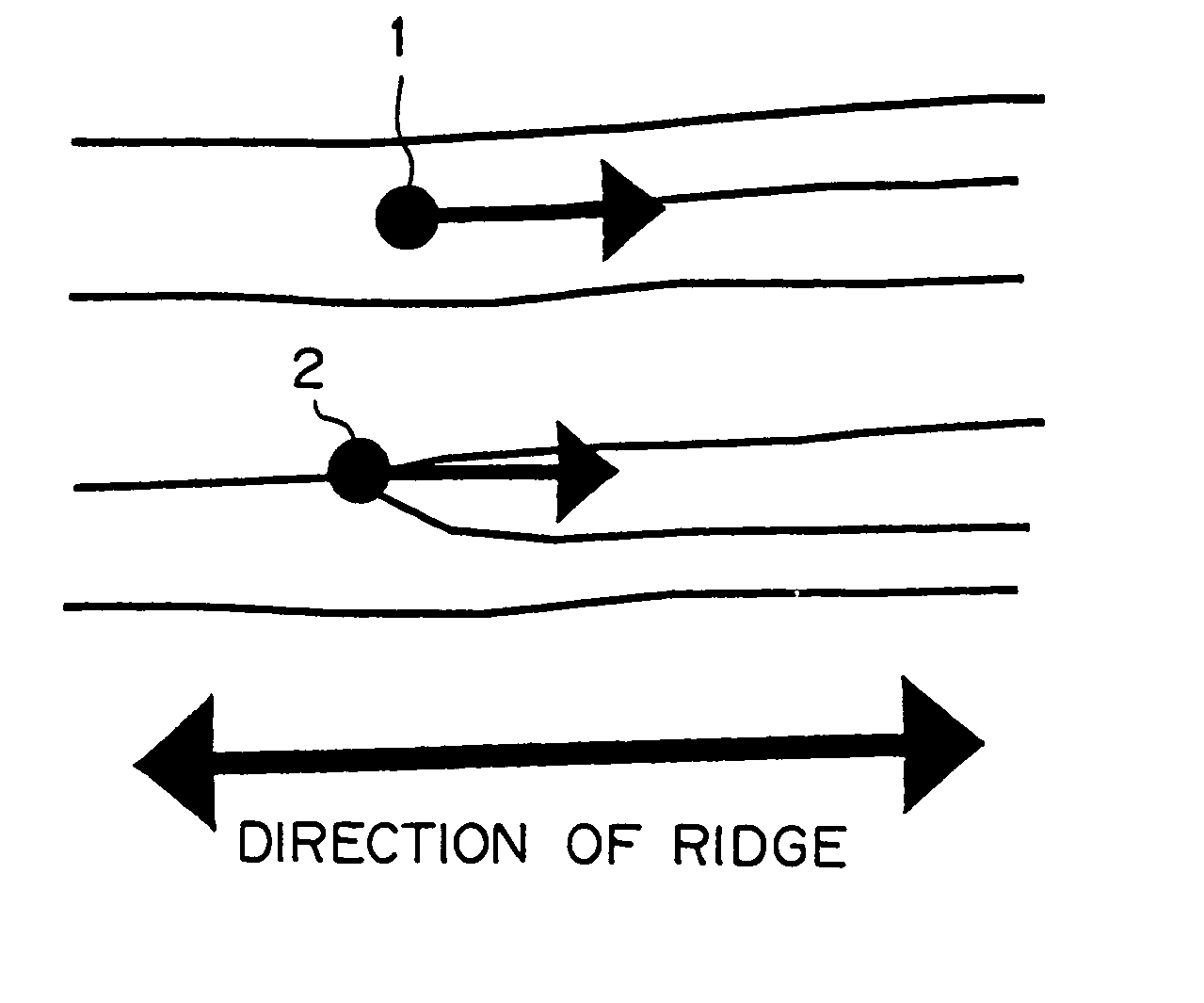

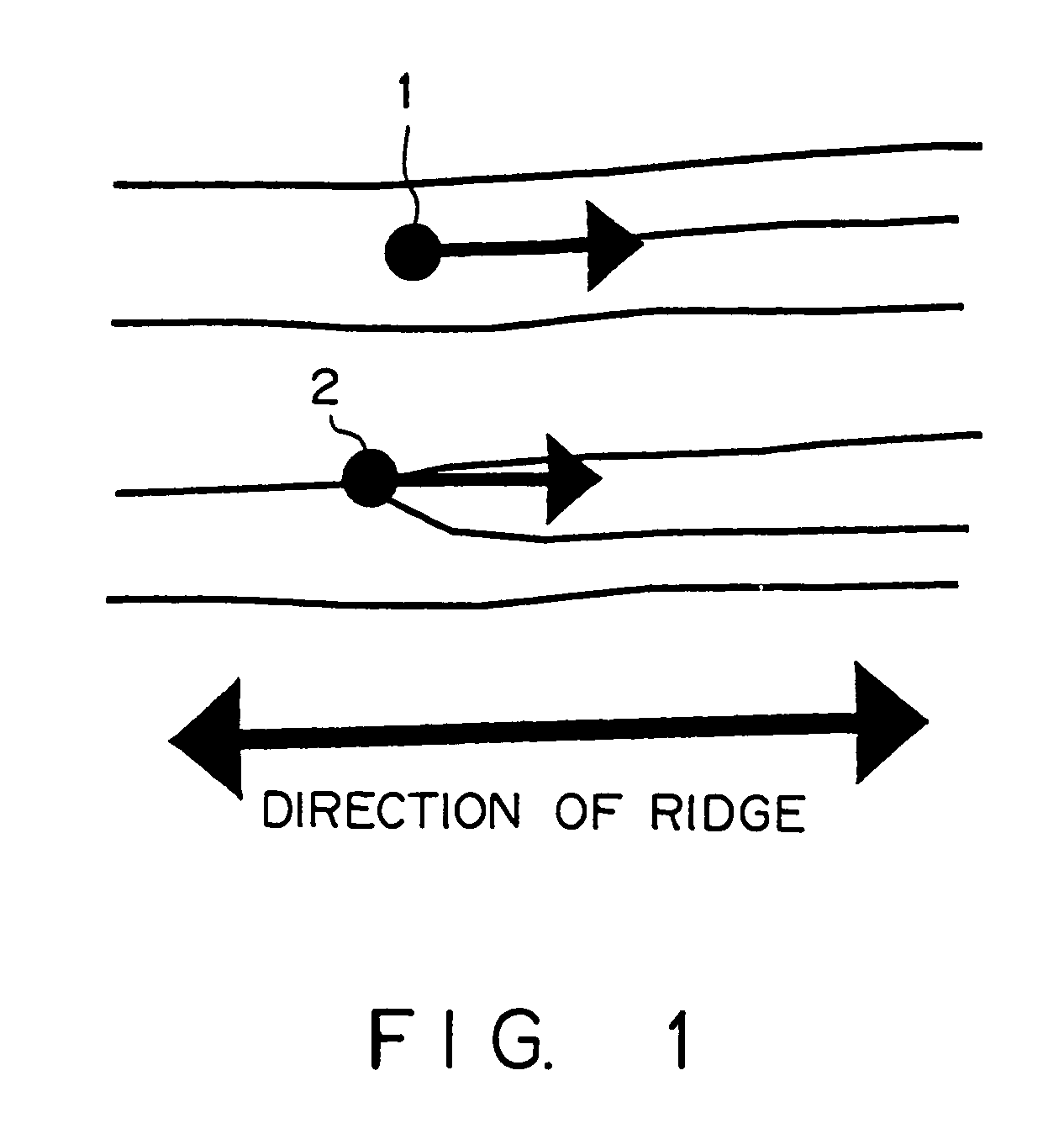

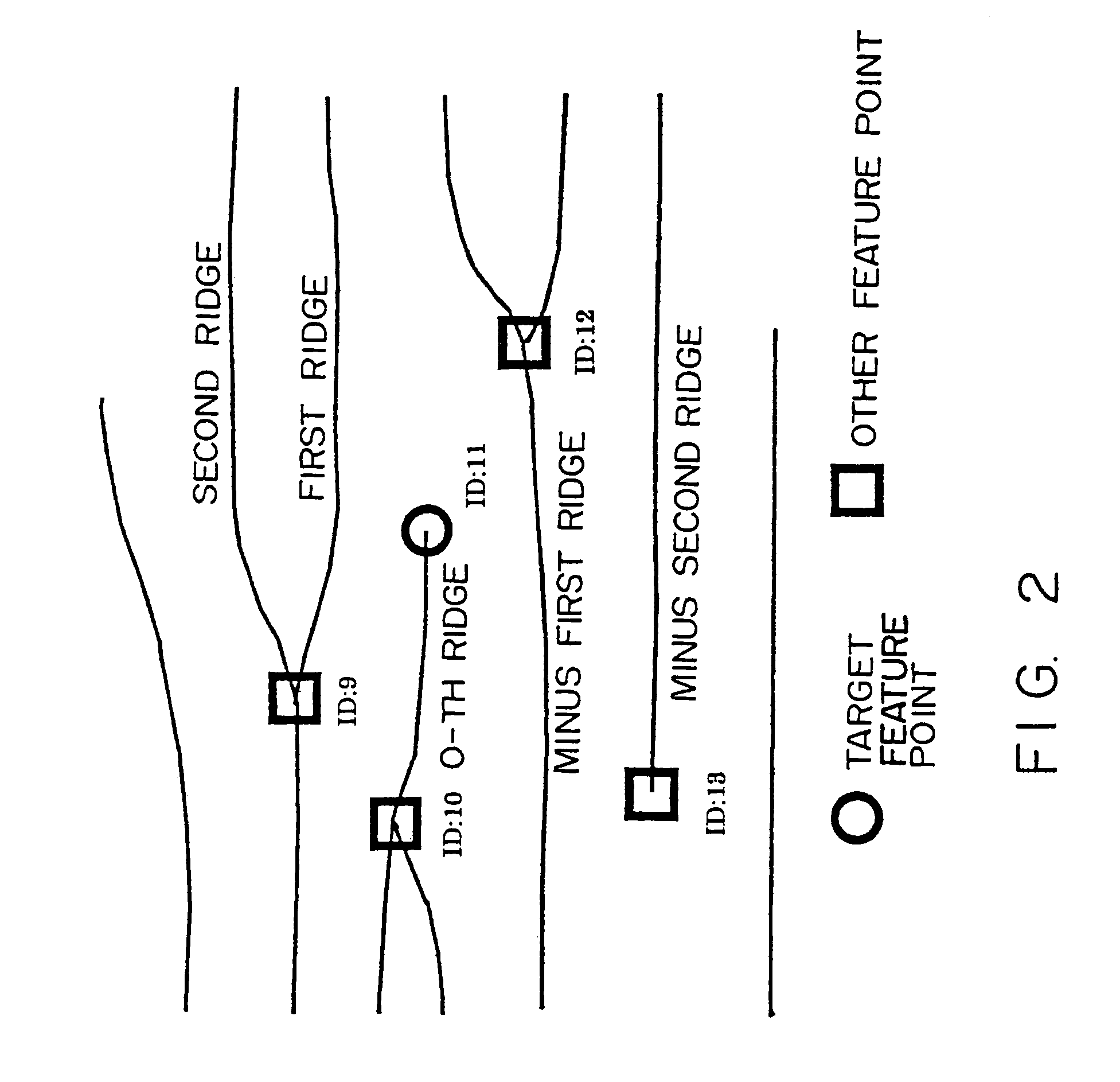

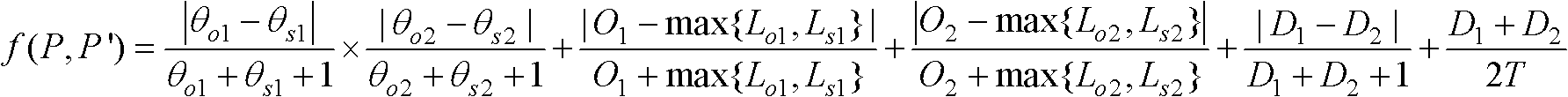

Apparatus and method for matching fingerprint

InactiveUS7151846B1High-reliable fingerprintImage analysisMatching and classificationPattern recognitionRadiology

After processing, a target feature point is detected. Information about a target feature point includes the position of the ridge containing a vicinal feature point relative to the position of the ridge containing the target feature point in addition to the position, type, and direction of the target feature point. Then, the information is checked in a matching process, and the vicinal feature point is checked on a feature point contained in a ridge matching in position the ridge of the target feature point. When the target feature point and the vicinal feature point match in position and direction, and are different in type only, the mark has a value indicating a matching level. Then, a matching result of the vicinal feature points is obtained as a matching mark, and it is determined whether the target feature point is matching by determining whether the matching mark is equal to or larger than a threshold.

Owner:FUJITSU LTD

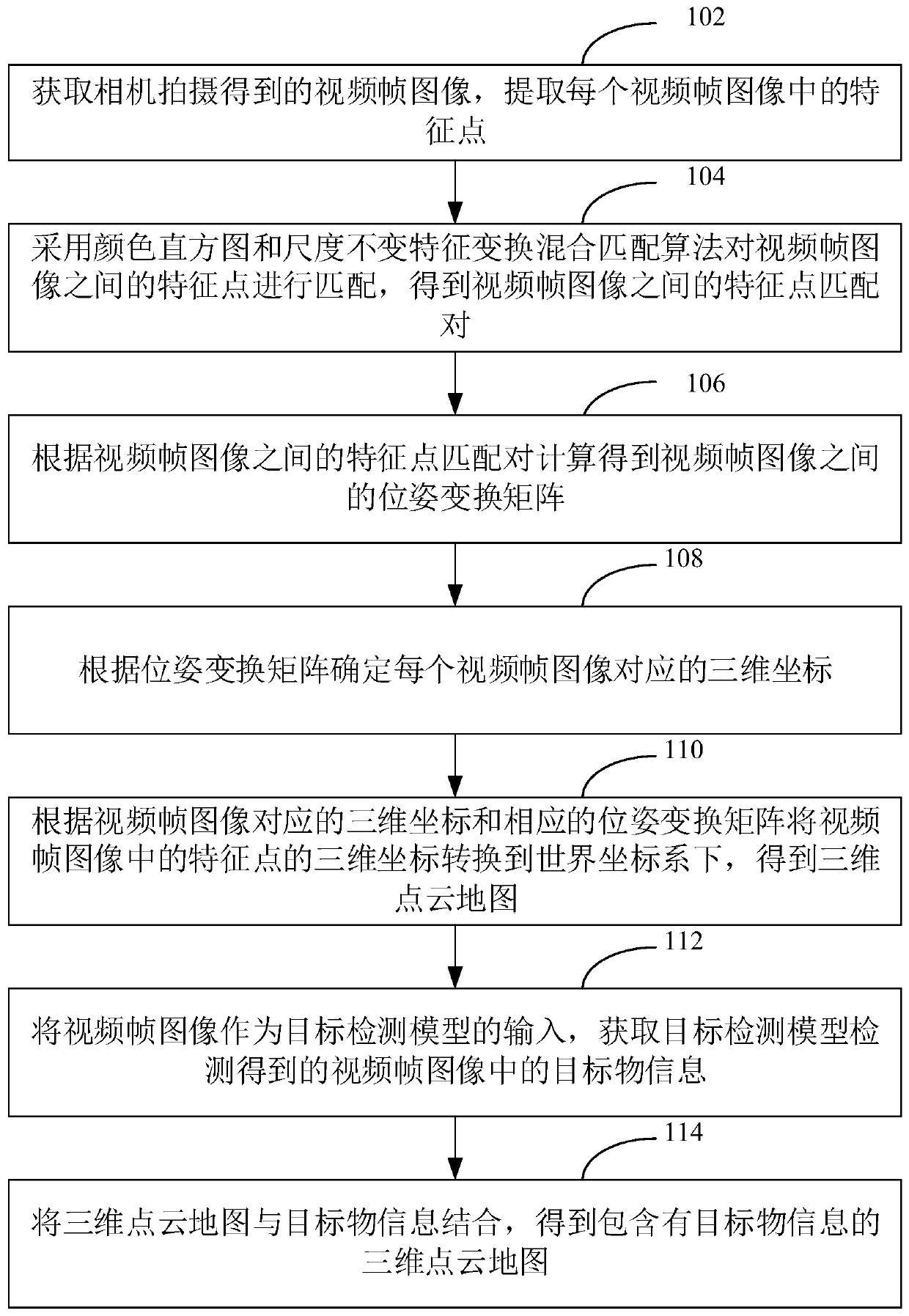

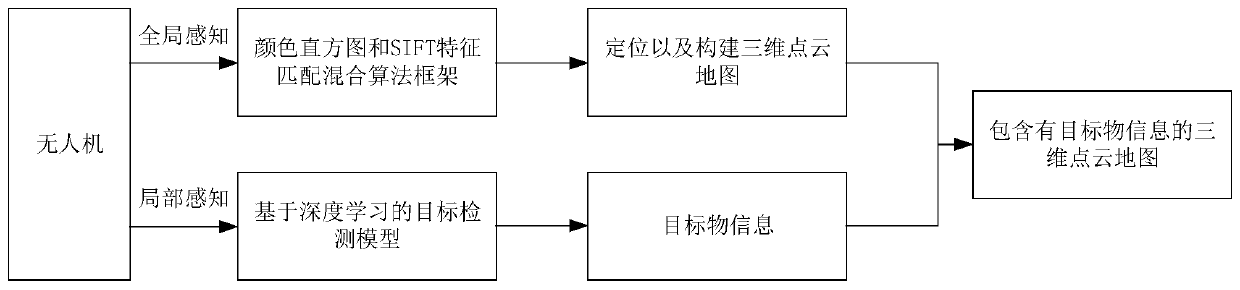

Unmanned aerial vehicle three-dimensional map construction method and device, computer equipment and storage medium

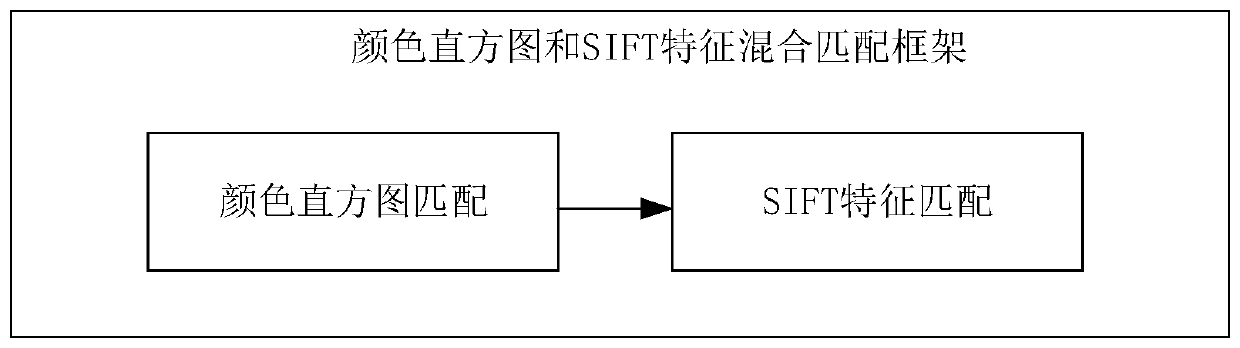

The invention relates to an unmanned aerial vehicle three-dimensional map construction method. The method comprises the following steps of obtaining a video frame image shot by a camera, extracting feature points in each video frame image; matching the feature points by adopting a color histogram and scale invariant feature transformation hybrid matching algorithm to obtain feature point matchingpairs; calculating according to the feature point matching pairs to obtain a pose transformation matrix; determining a three-dimensional coordinate corresponding to each video frame image according tothe pose transformation matrix, and converting the three-dimensional coordinates of the feature points in the video frame image into a world coordinate system to obtain a three-dimensional point cloud map, taking the video frame image as the input of a target detection model to obtain target object information, and combining the three-dimensional point cloud map with the target object informationto obtain the three-dimensional point cloud map containing the target object information. According to the method, the real-time performance and accuracy of three-dimensional point cloud map construction are improved, and rich information is contained. In addition, the invention further provides an unmanned aerial vehicle three-dimensional map construction device, computer equipment and a storagemedium.

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

Information processing apparatus, method, and program

InactiveUS20080205764A1Reliably recognizeCharacter and pattern recognitionInformation processingPattern recognition

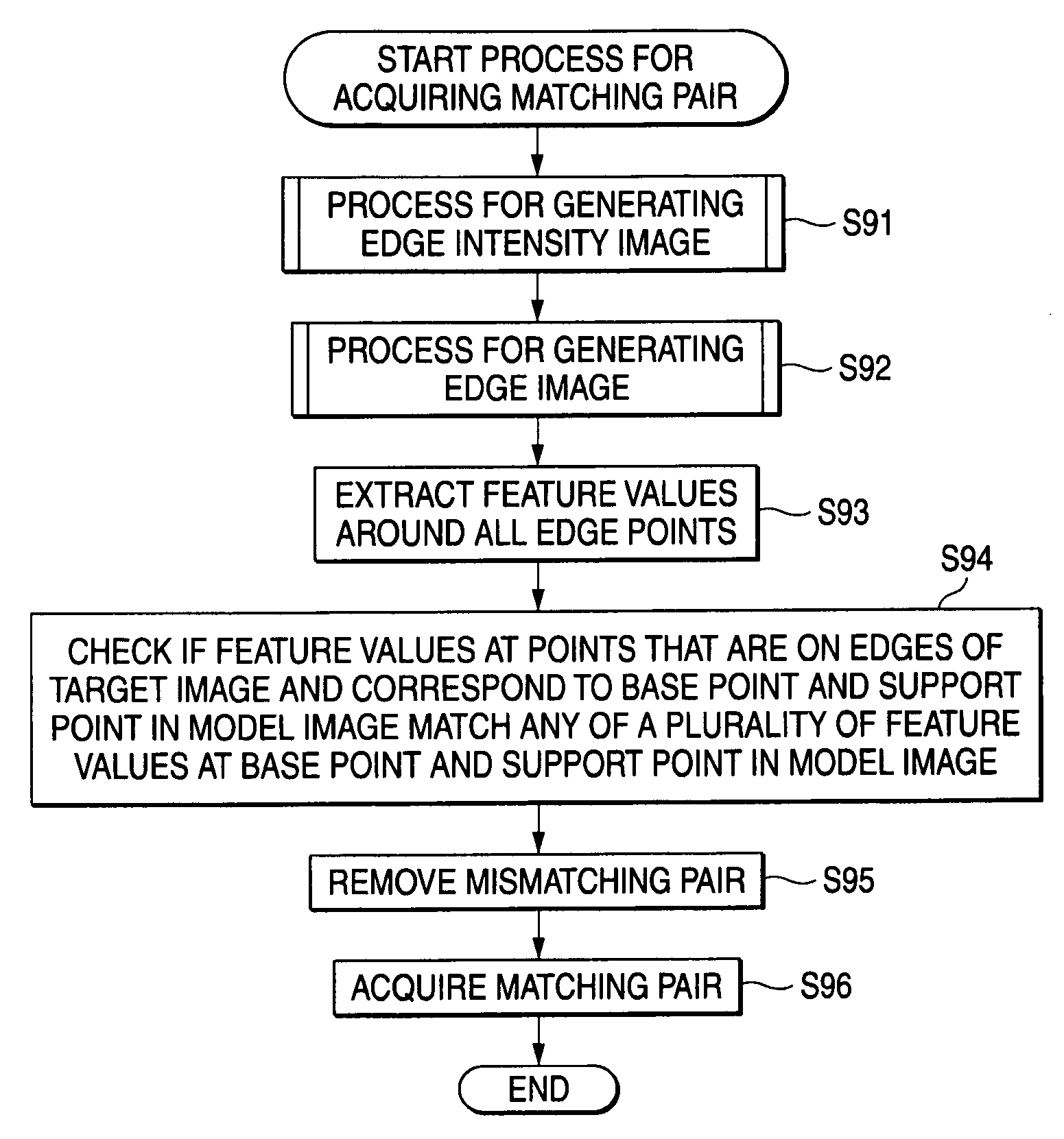

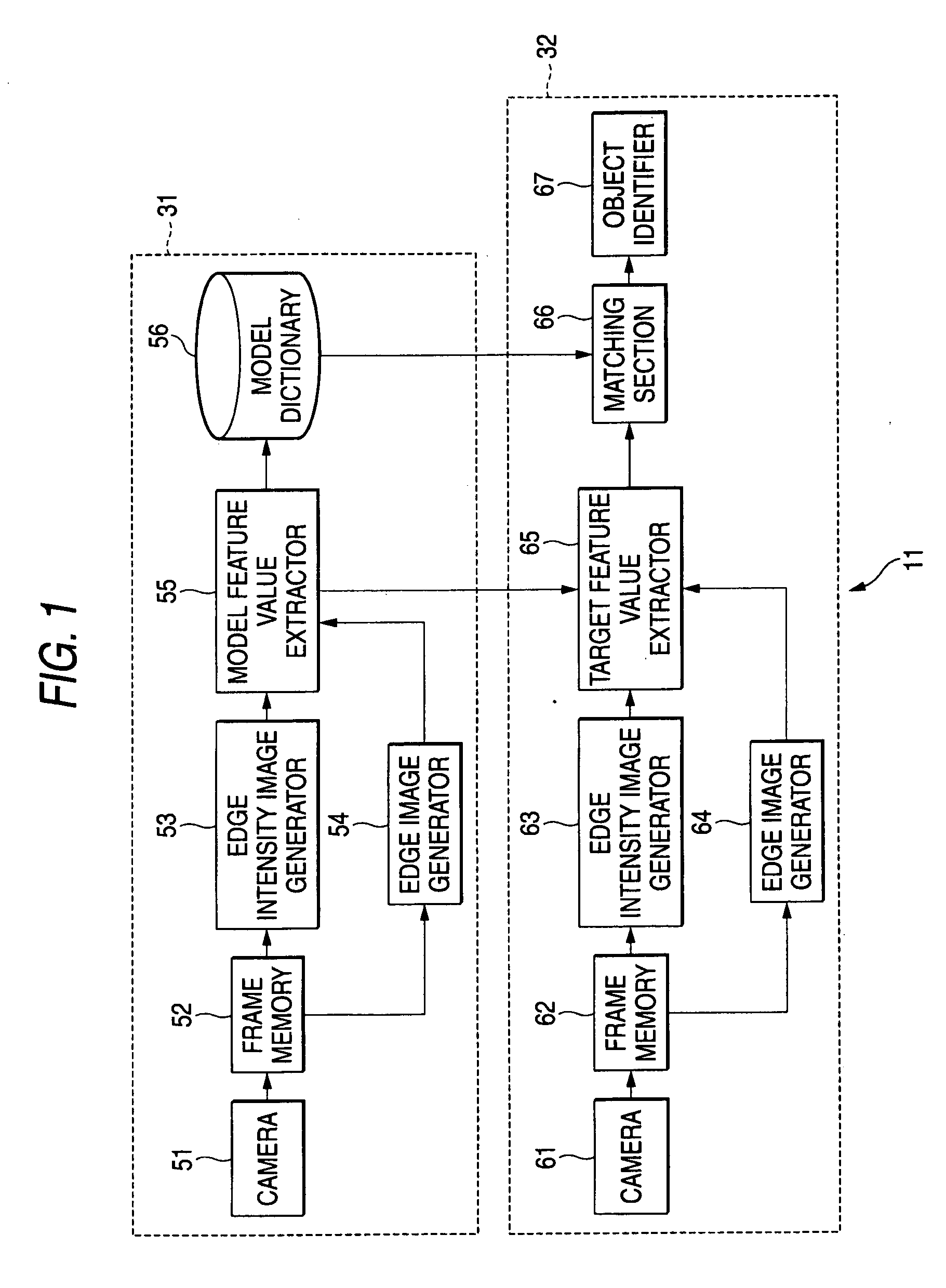

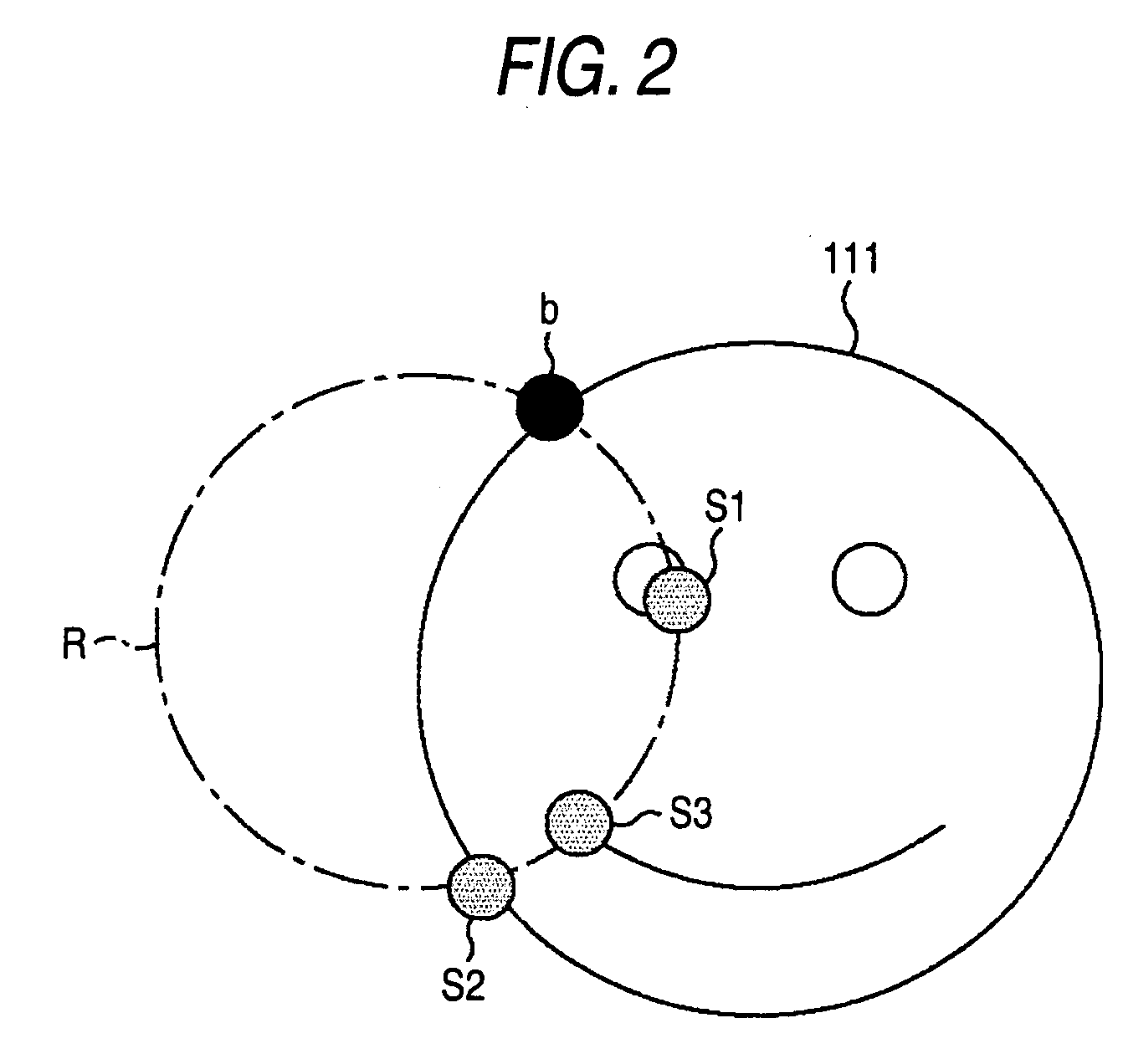

An information processing apparatus that compares an input image with a model image to identify the subject of the input image with the subject of the model image. The apparatus includes feature value extraction means for setting feature points, each of which is on an edge of the model image and provided to extract a model image feature value, which is the feature value of the model image, and extracting the model image feature value from each of a plurality of feature value extraction areas in the neighborhood of each of the feature points, and matching means for checking if an input image feature value, which is the feature value of the input image, at the point that is on an edge of the input image and corresponds to the feature point matches any of the plurality of model image feature values at the feature point.

Owner:SONY CORP

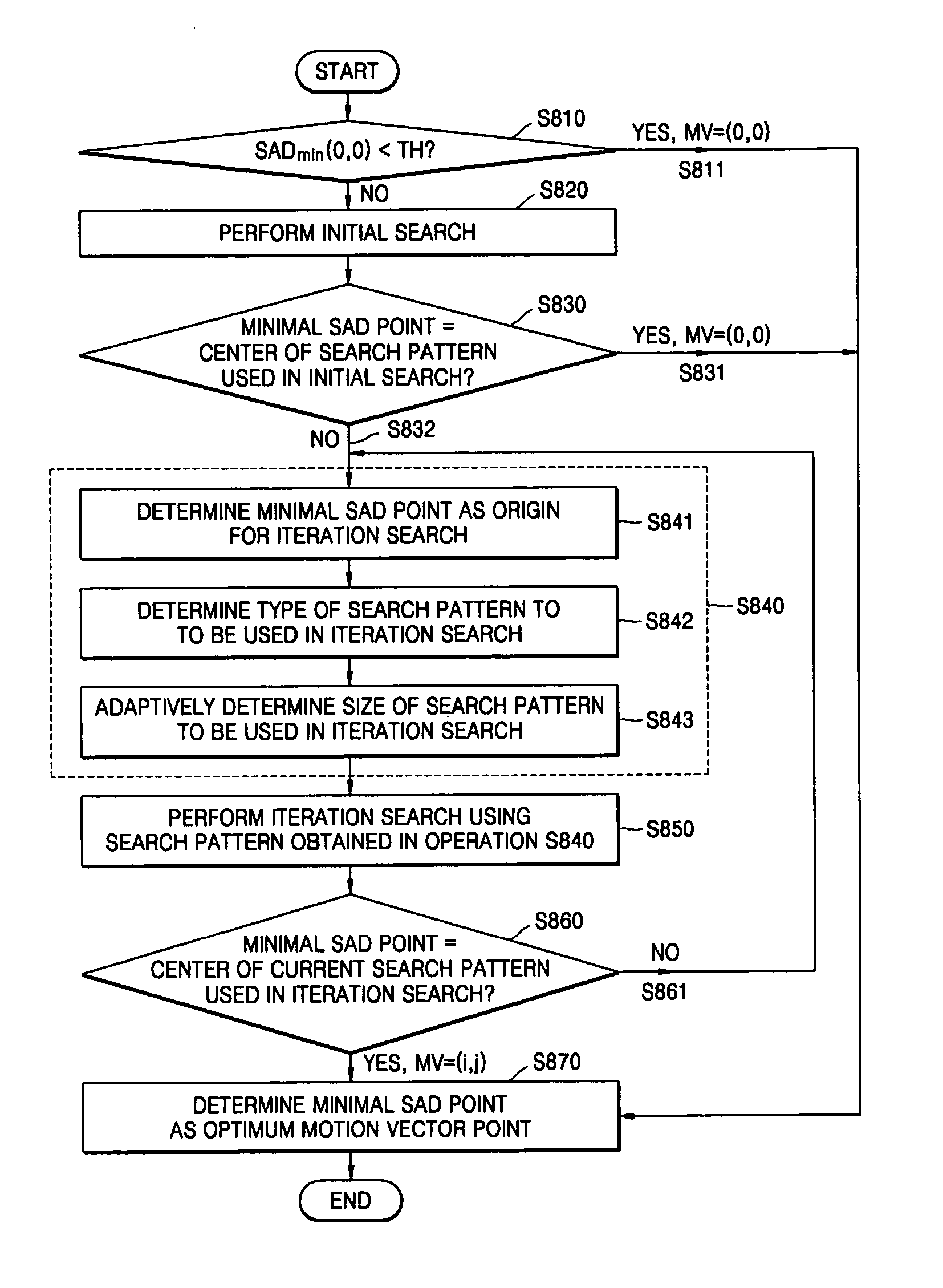

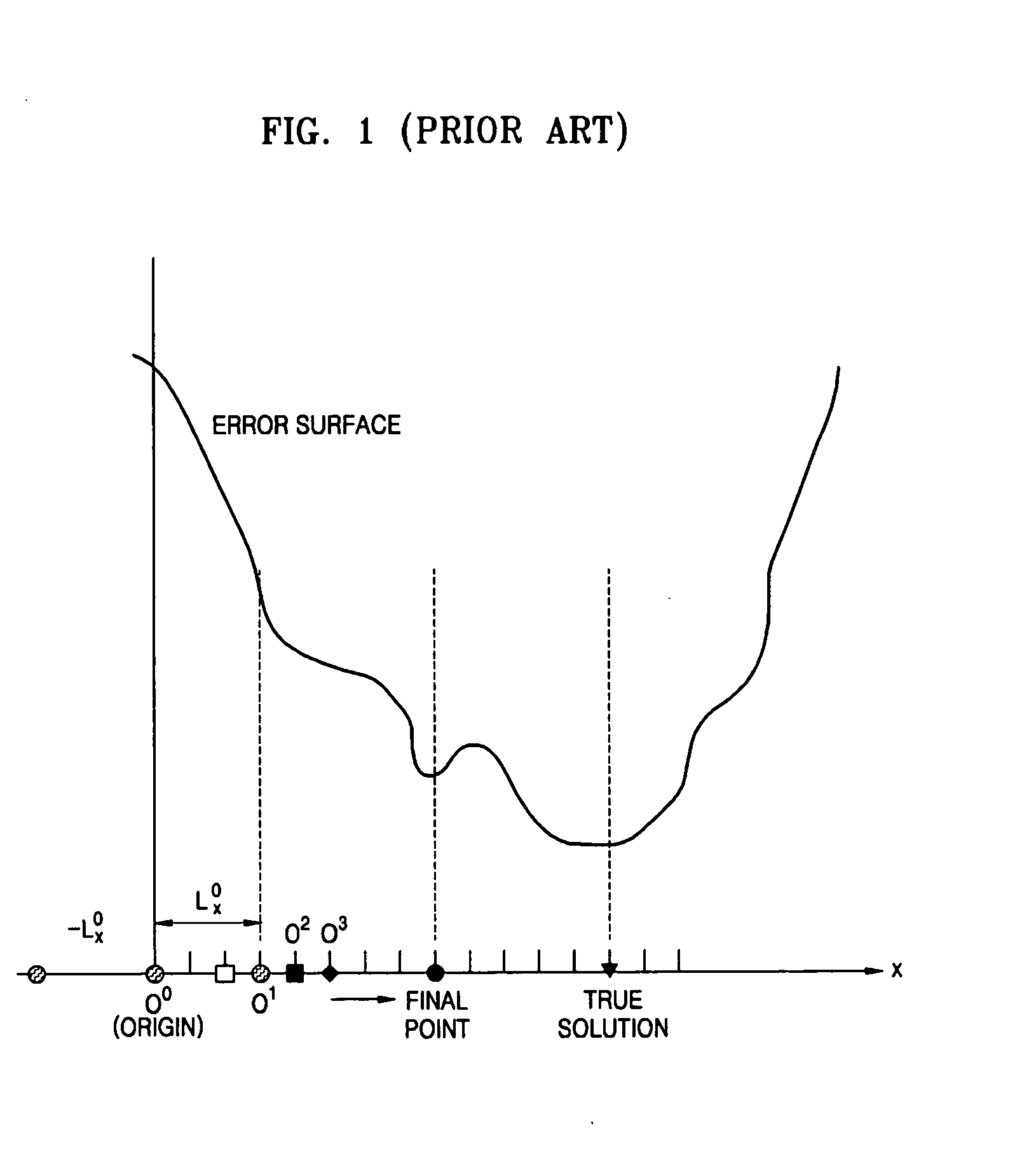

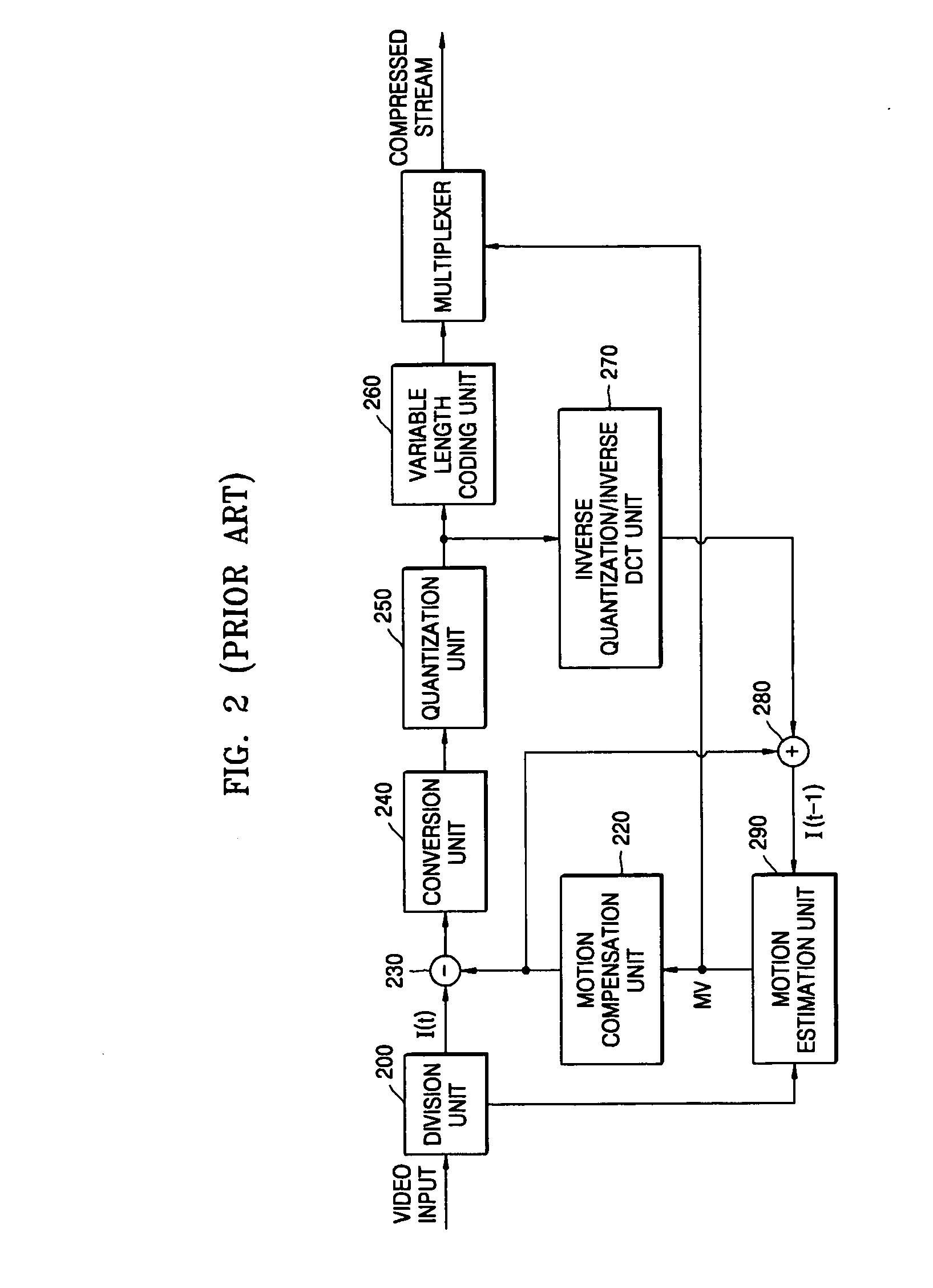

Fast motion estimation apparatus and method using block matching algorithm

InactiveUS20060280248A1Efficient reductionImprove search speedTelevision system detailsPicture reproducers using cathode ray tubesPattern recognitionBlock match

A fast motion estimation apparatus and method in which motion estimation is carried out on a current block of a current frame of video image data with reference to a corresponding matching block of a previous frame are provided. The fast motion estimation apparatus includes: a determination unit which determines whether an SAD between pixel values of a current block of a current frame and a corresponding matching block of a previous frame is greater than a predefined threshold; an initial search unit which performs an initial search when the SAD is greater than the predetermined threshold to find which search point of a current search pattern is the minimal SAD point and to determine whether the minimal SAD point matches the center of the current search pattern; and a repetitive search unit which performs an iteration search when the minimal SAD point differs from the center of the current search pattern to reset the minimal SAD point to be a center of the current search pattern and to set a number of search points and the size of a search pattern based on from current search pattern to a center of the search window of the previous frame.

Owner:ELECTRONICS & TELECOMM RES INST

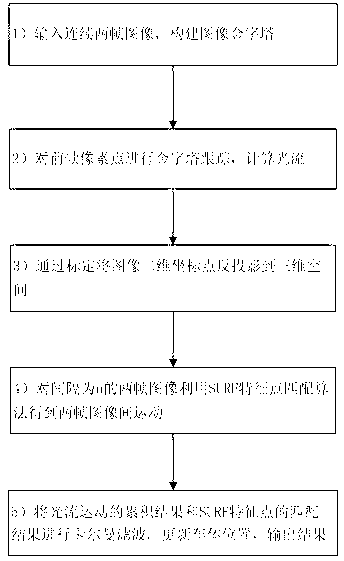

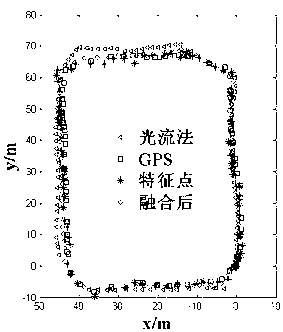

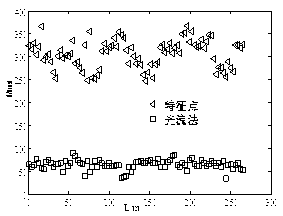

Method for designing monocular vision odometer with light stream method and feature point matching method integrated

InactiveCN103325108AProcessing time advantageReal-time positioningImage analysisAutonomous Navigation SystemRoad surface

The invention discloses a method for designing a monocular vision odometer with a light stream method and a feature point matching method integrated. Accurate real-time positioning is of great significance to an autonomous navigation system. Positioning based on the SURF feature point matching method has the advantages of being robust for illumination variations and high in positioning accuracy, and the defects of the SURF feature point matching method are that the processing speed is low and real-time positioning can not be achieved. The light steam tracking method has good real-time performance, and the defect of the light steam tracking method is that positioning accuracy is poor. The method integrates the advantages of the two methods, and the monocular vision odometer integrated with the light stream method and the feature point matching method is designed. Experimental results show that the algorithm after integration can provide accurate real-time positioning output and has robustness under the condition that illumination variations and road surface textures are few.

Owner:ZHEJIANG UNIV

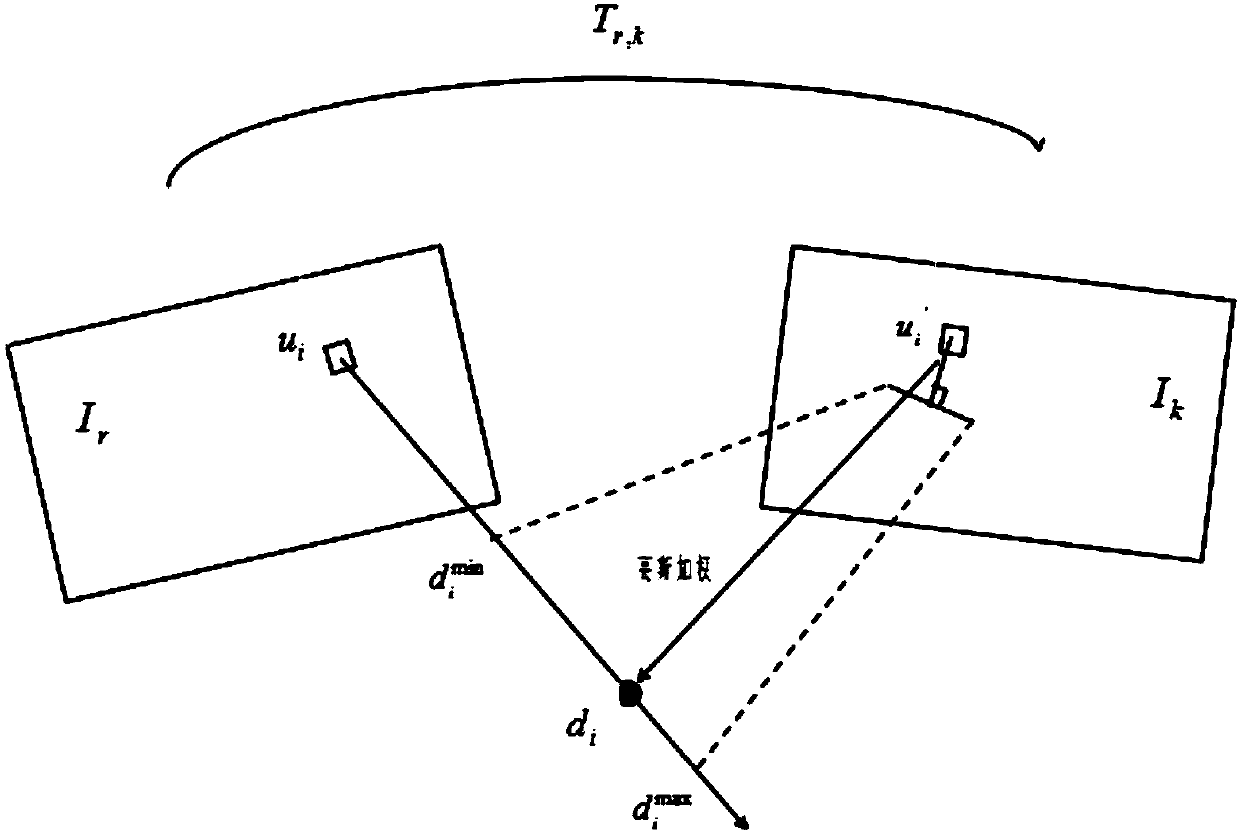

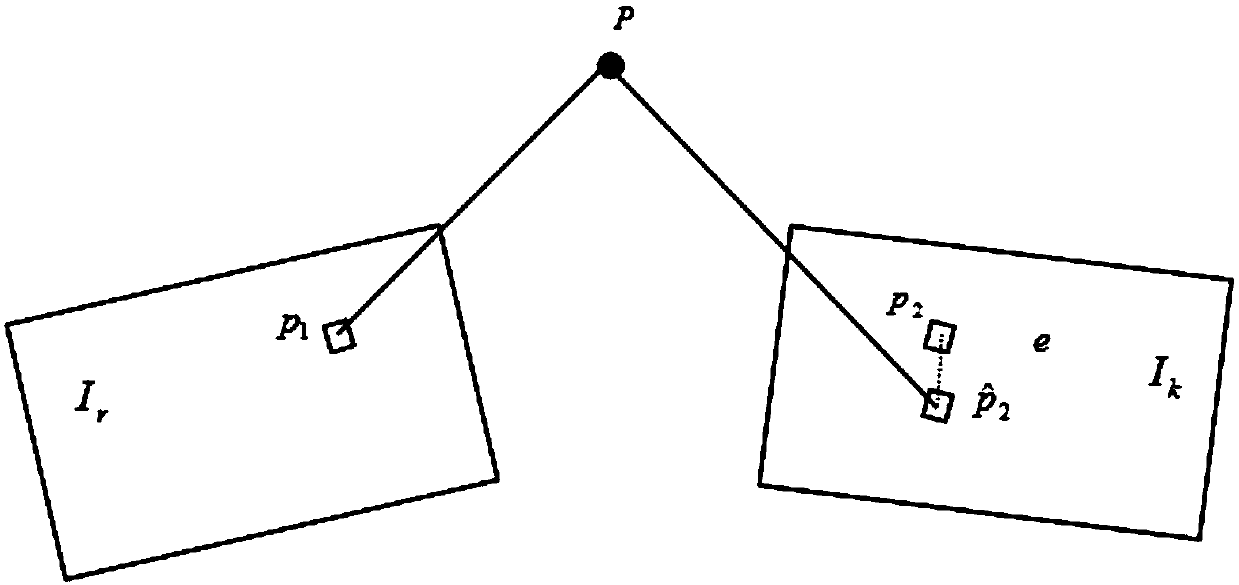

Real-time three-dimensional scene reconstruction method for UAV based on EG-SLAM

ActiveCN108648270ARequirements for Reducing Repetition RatesImprove realismImage enhancementImage analysisPoint cloudTexture rendering

The present invention provides a real-time three-dimensional scene reconstruction method for a UAV (unmanned aerial vehicle) based on the EG-SLAM. The method is characterized in that: visual information is acquired by using an unmanned aerial camera to reconstruct a large-scale three-dimensional scene with texture details. Compared with multiple existing methods, by using the method provided by the present invention, images are collected to directly run on the CPU, and positioning and reconstructing a three-dimensional map can be quickly implemented in real time; rather than using the conventional PNP method to solve the pose of the UAV, the EG-SLAM method of the present invention is used to solve the pose of the UAV, namely, the feature point matching relationship between two frames is used to directly solve the pose, so that the requirement for the repetition rate of the collected images is reduced; and in addition, the large amount of obtained environmental information can make theUAV to have a more sophisticated and meticulous perception of the environment structure, texture rendering is performed on the large-scale three-dimensional point cloud map generated in real time, reconstruction of a large-scale three-dimensional map is realized, and a more intuitive and realistic three-dimensional scene is obtained.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

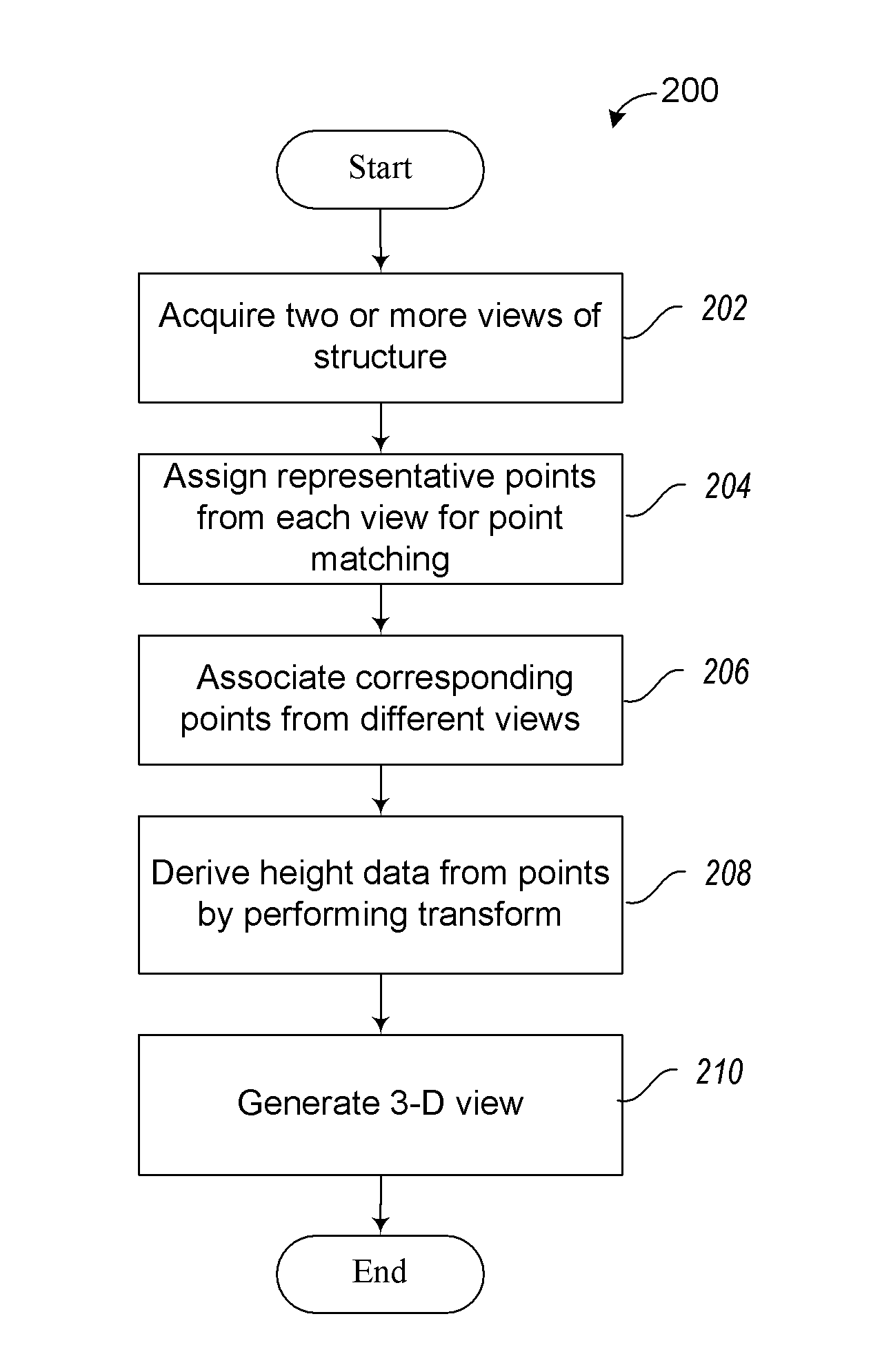

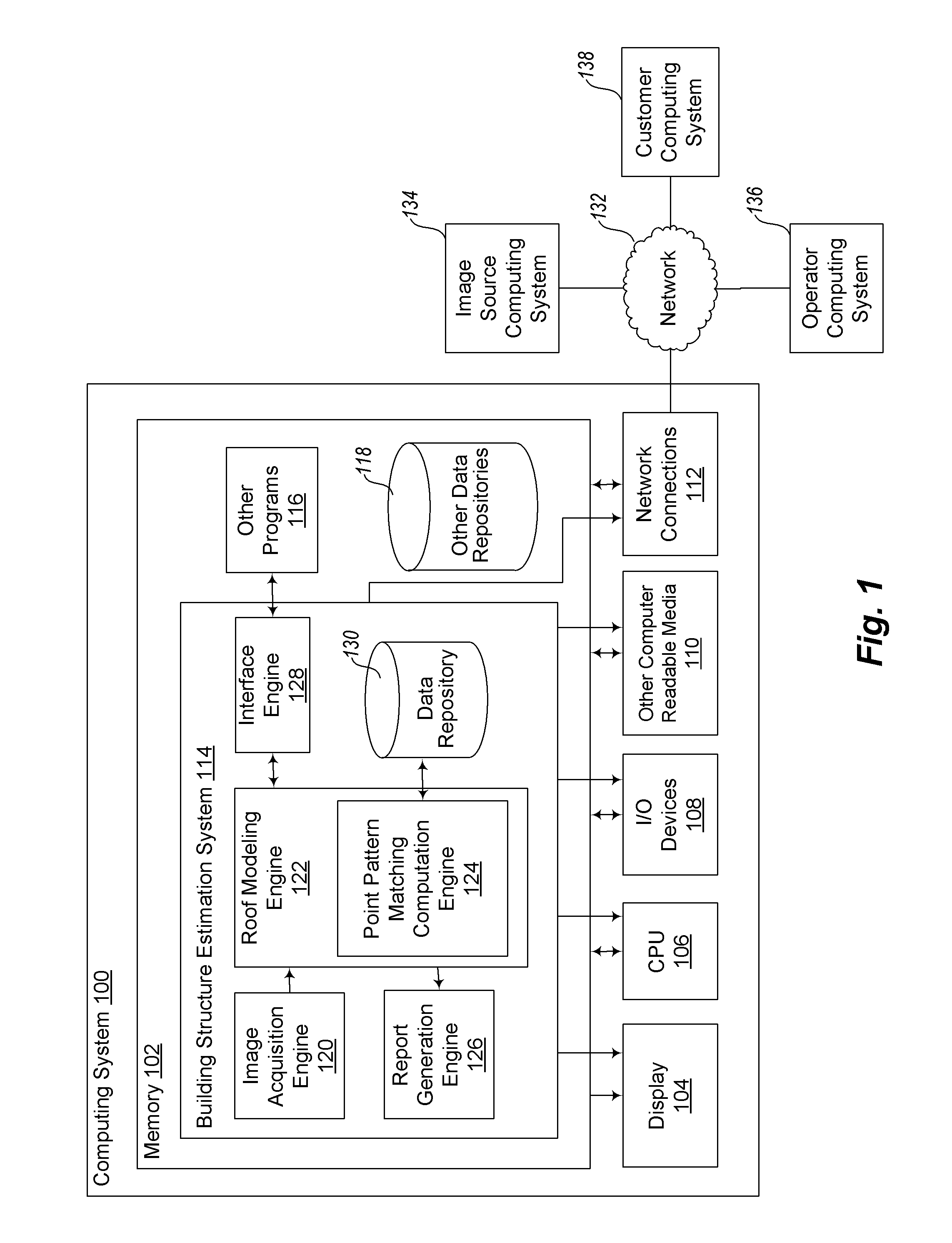

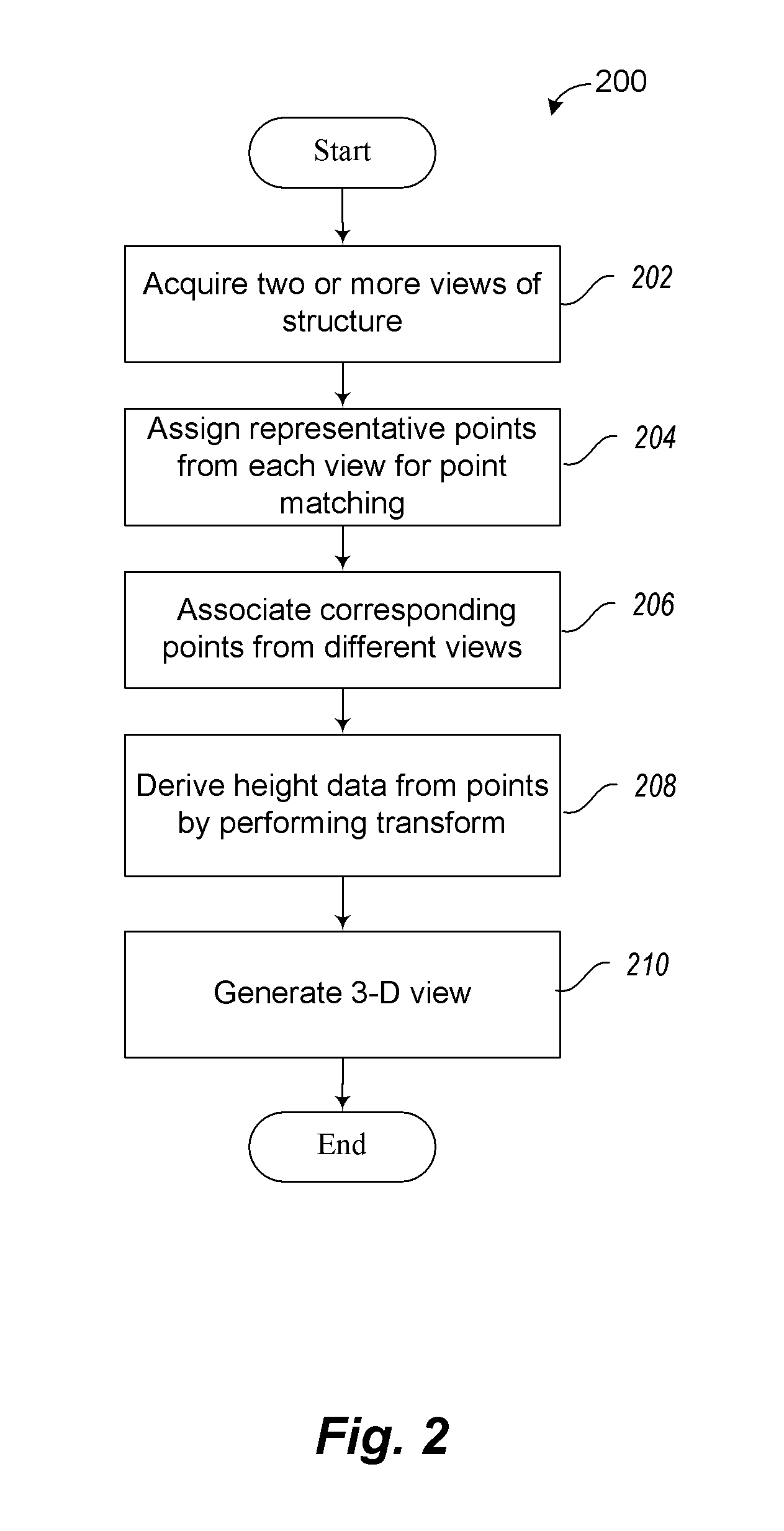

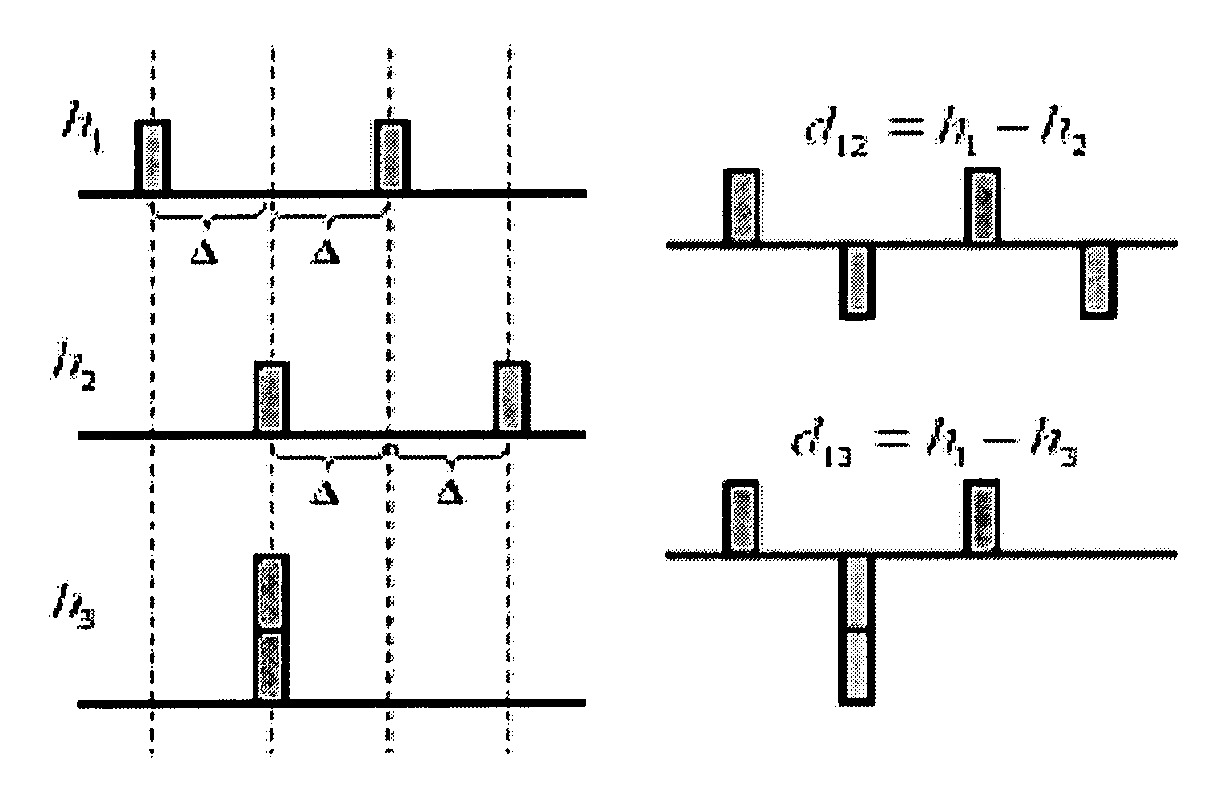

Statistical point pattern matching technique

ActiveUS20140212028A1Reduce in quantityImage enhancementDetails involving processing stepsPattern recognitionHigh probability

A statistical point pattern matching technique is used to match corresponding points selected from two or more views of a roof of a building. The technique entails statistically selecting points from each of orthogonal and oblique aerial views of a roof, generating radial point patterns for each aerial view, calculating the origin of each point pattern, representing the shape of the point pattern as a radial function, and Fourier-transforming the radial function to produce a feature space plot. A feature profile correlation function can then be computed to relate the point match sets. From the correlation results, a vote occupancy table can be generated to help evaluate the variance of the point match sets, indicating, with high probability, which sets of points are most likely to match one another.

Owner:EAGLEVIEW TECH

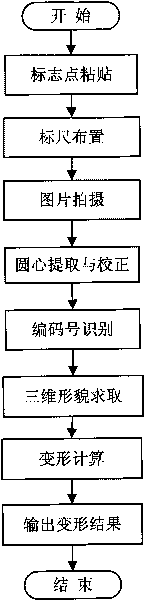

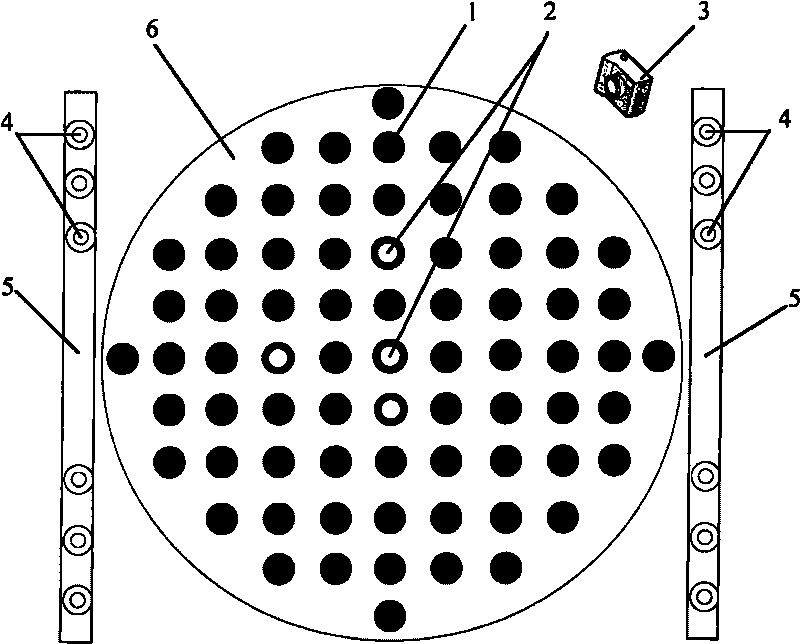

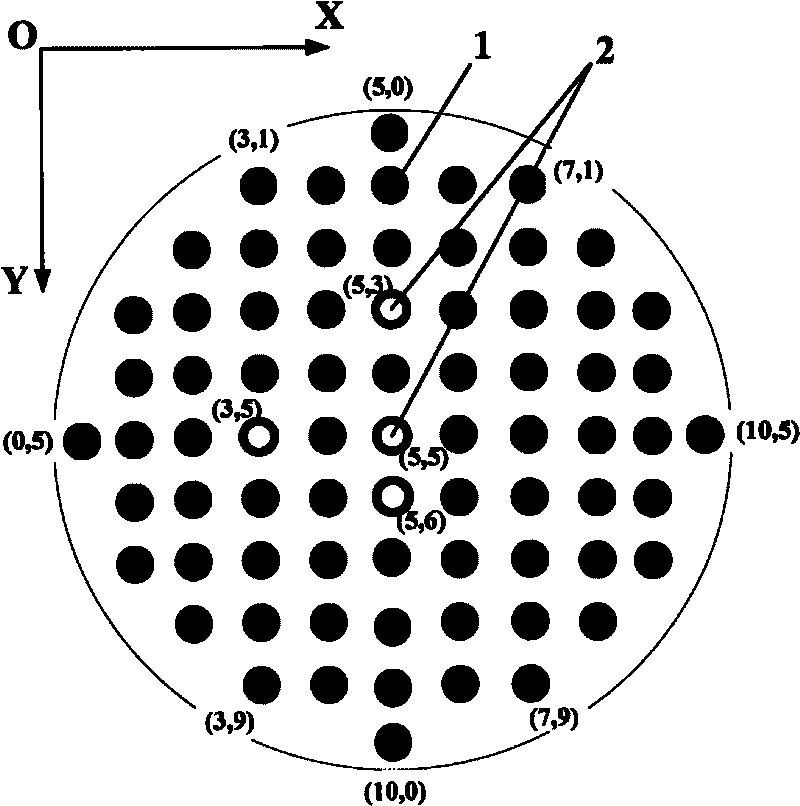

Antenna deformation measuring method

InactiveCN101694373ATo achieve dense arrangementOvercome the disadvantage of sparse pastingUsing optical meansMeasurement precisionPoint match

An antenna deformation measuring method comprises firstly adhering circular mark points and circular ring mark points which are transversely and longitudinally arranged in equidistance on the surface of an antenna as measuring mark points, respectively taking images of the surface of the antenna which is adhered with the mark points before and after the antenna deforms, realizing the homonymy point matching through the arrangement relationship of the circular mark points and the circular ring mark points, recurring the three-dimensional coordinates of the mark points on the antenna before and after deforming according to the principles of photography measurement, finally putting the three-dimensional coordinates before and after the antenna deforms in the same coordinate system by using coding mark points on a staff gauge as a fixed reference point for the coordinate conversion, thereby calculating the antenna deformation. The measuring method has the characteristics of reliability, flexibility and high precision, can be used for the deformation measurement of the antenna under complicated conditions such as high-low temperature and the like, also can be used for the appearance measurement of the antenna, and has measurement precision superior to 13 mu m.

Owner:BEIHANG UNIV +1

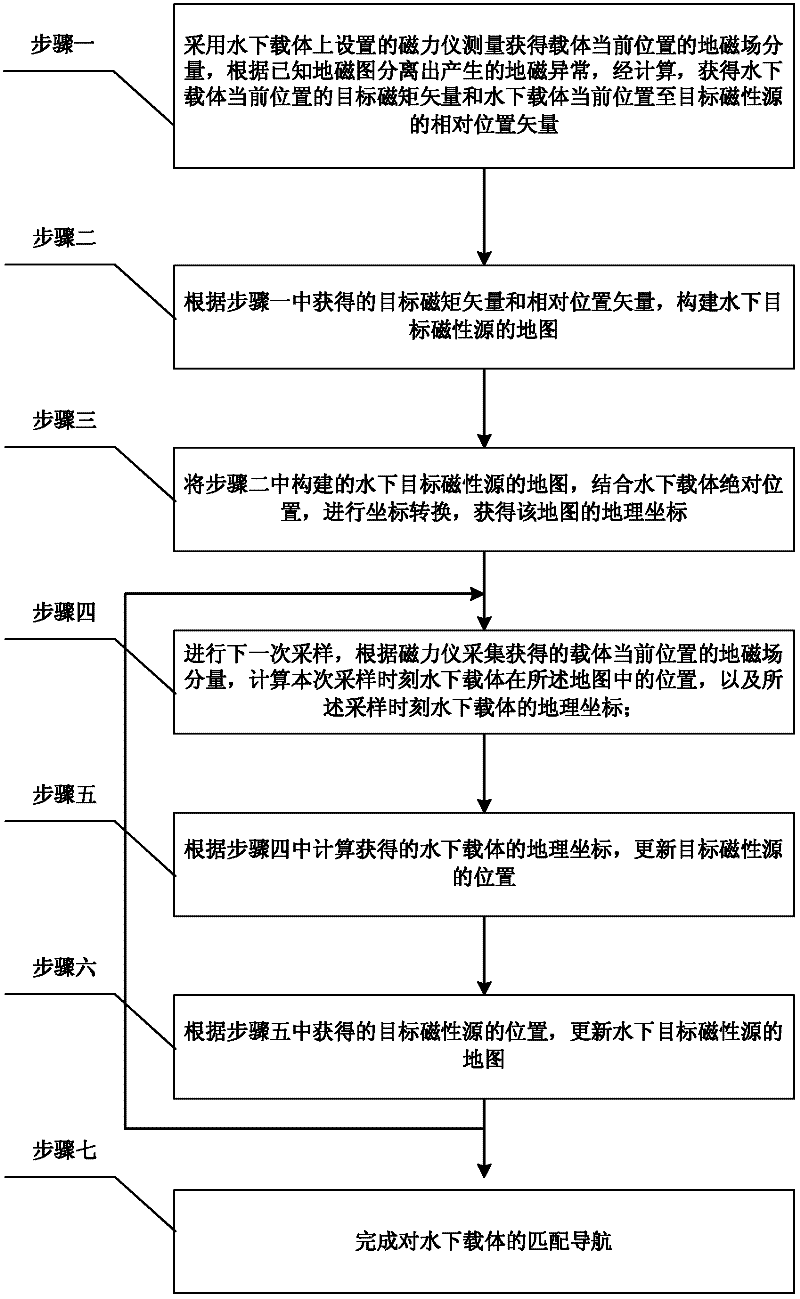

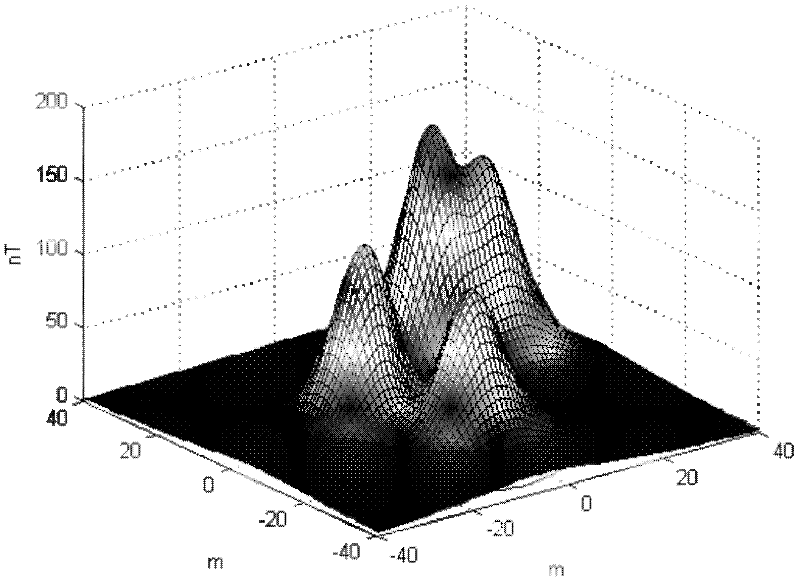

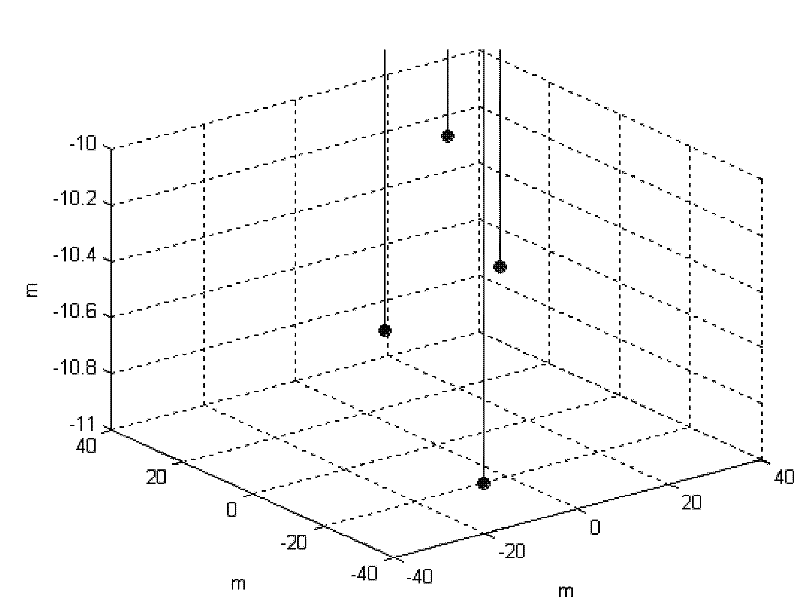

Underwater carrier geomagnetic anomaly feature points matching navigation method

InactiveCN102445201ARealize full navigationGuaranteed trappingNavigation by terrestrial meansNavigation by speed/acceleration measurementsMagnetic sourceUnderwater navigation

An underwater carrier geomagnetic anomaly feature points matching navigation method belongs to the technical field of underwater navigation and solves the problem in the prior art that the location of an underwater carrier can not be determined according to geomagnetic field information. The method provided by the invention comprises the following steps of: acquiring a target magnetic moment vector of present position of the underwater carrier and a relative position vector from the present position of the underwater carrier to a target magnetic source; constructing a map of the underwater target magnetic source; carrying out coordinate transformation based on the absolute position of the underwater carrier so as to obtain geographic coordinates of the map; calculating the position of the underwater carrier in the map at sampling time and the geographic coordinates of the underwater carrier at the sampling time; updating the position of the target magnetic source; updating the map of the underwater target magnetic source; and repeating the above relative processes to complete the matching navigation of the underwater carrier. The invention is suitable for underwater carrier navigation.

Owner:NORTHEAST FORESTRY UNIVERSITY

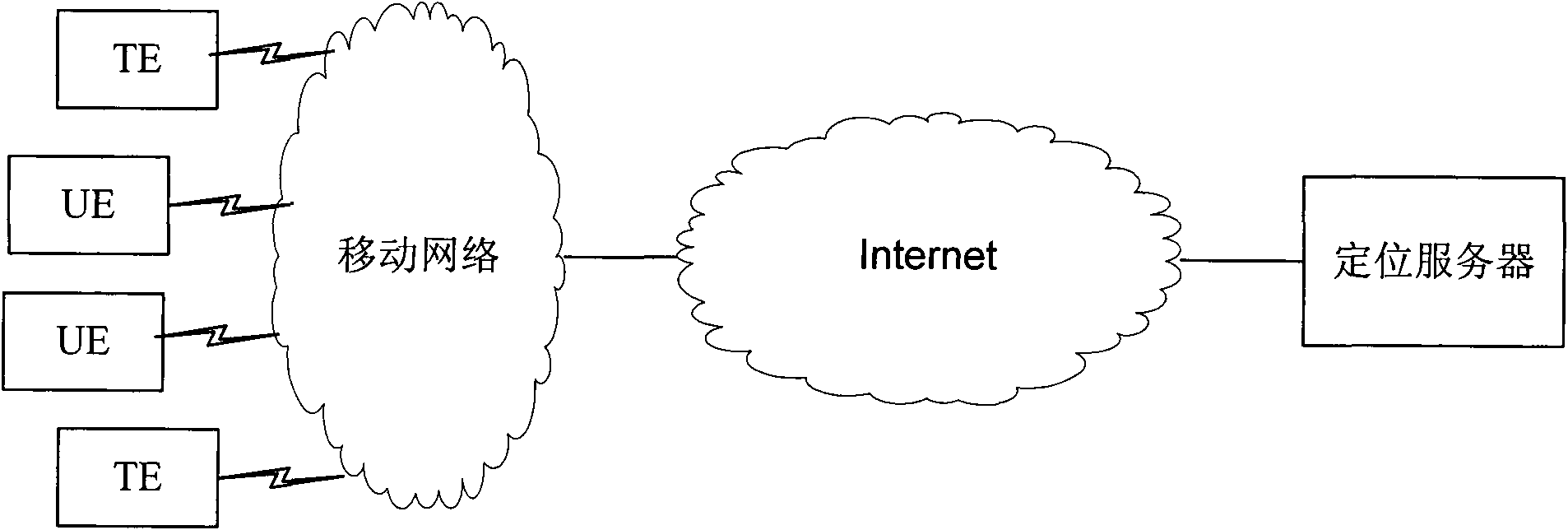

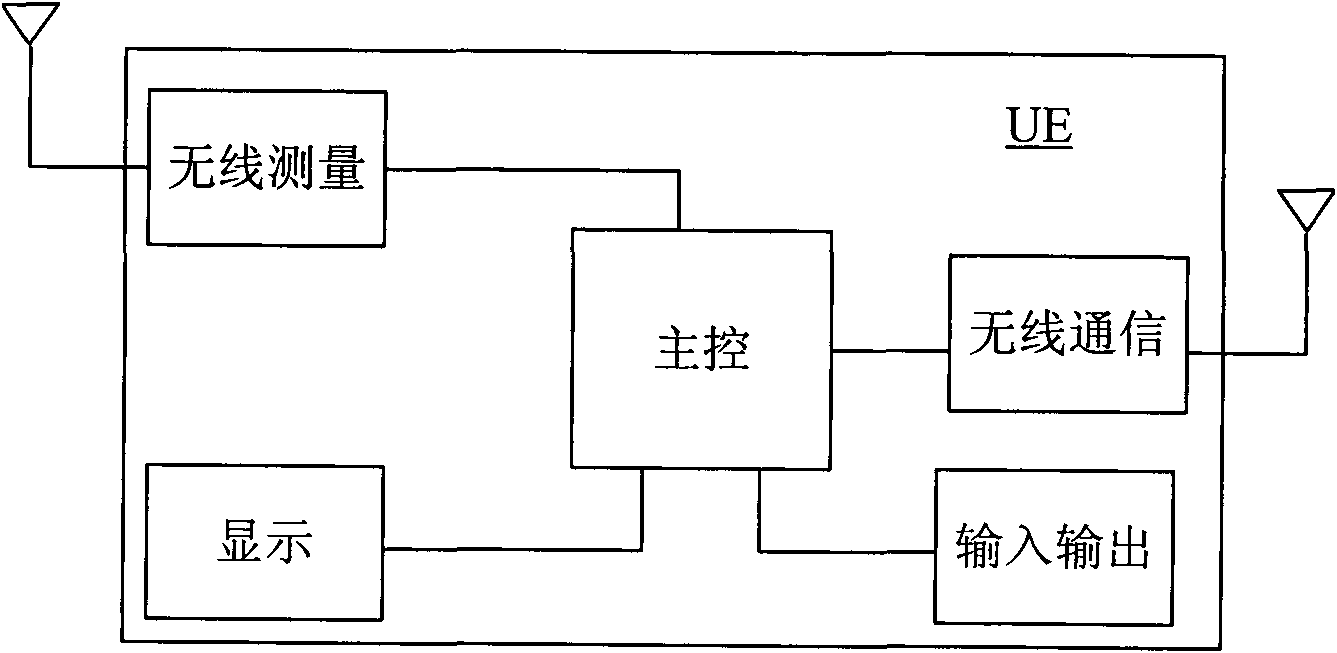

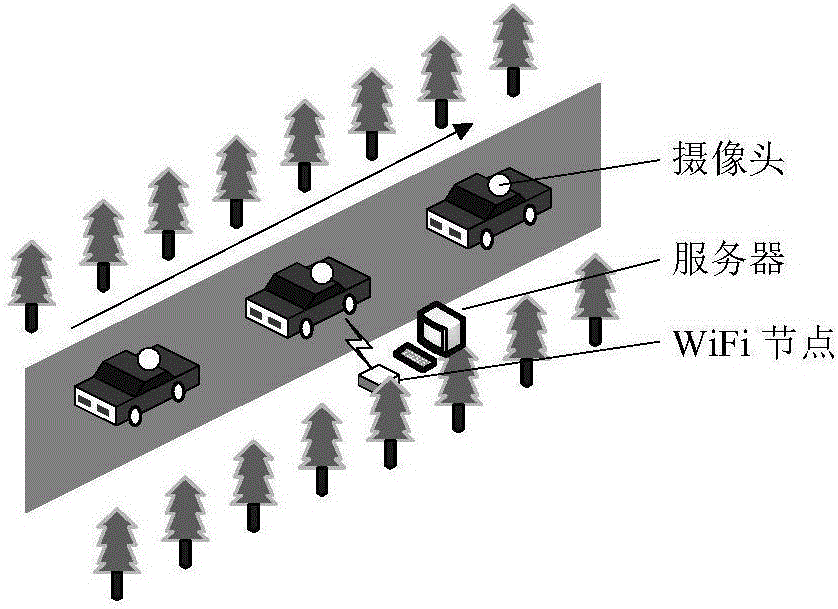

Mobile locating method and system

The invention provides a mobile locating method and system, and a mobile terminal device and a locating platform related to the method and the system. Multiple locating data acquisition terminals (TE) and user equipment (UE) are connected with the locating platform by accessing a wireless network so as to form the mobile locating system. The TE acquire locating data of a locating point and report the acquired data to the locating platform, and the locating platform normalize the data and stores the data in a database; the UE detects surrounding wireless signals and report a measurement result to the locating platform; and the locating platform compares the measurement result of the UE and the locating data stored in the database, determines the locating point matched with the measurement result as a locating result of the UE, and carries out LBS (Location Based Service) according to the locating result. By the implementation of the method and the system provided by the invention, the LBS can be carried out in indoor public places such as marketplaces, coffee houses, bars, office buildings and airports.

Owner:嘉兴高恒信息科技有限公司

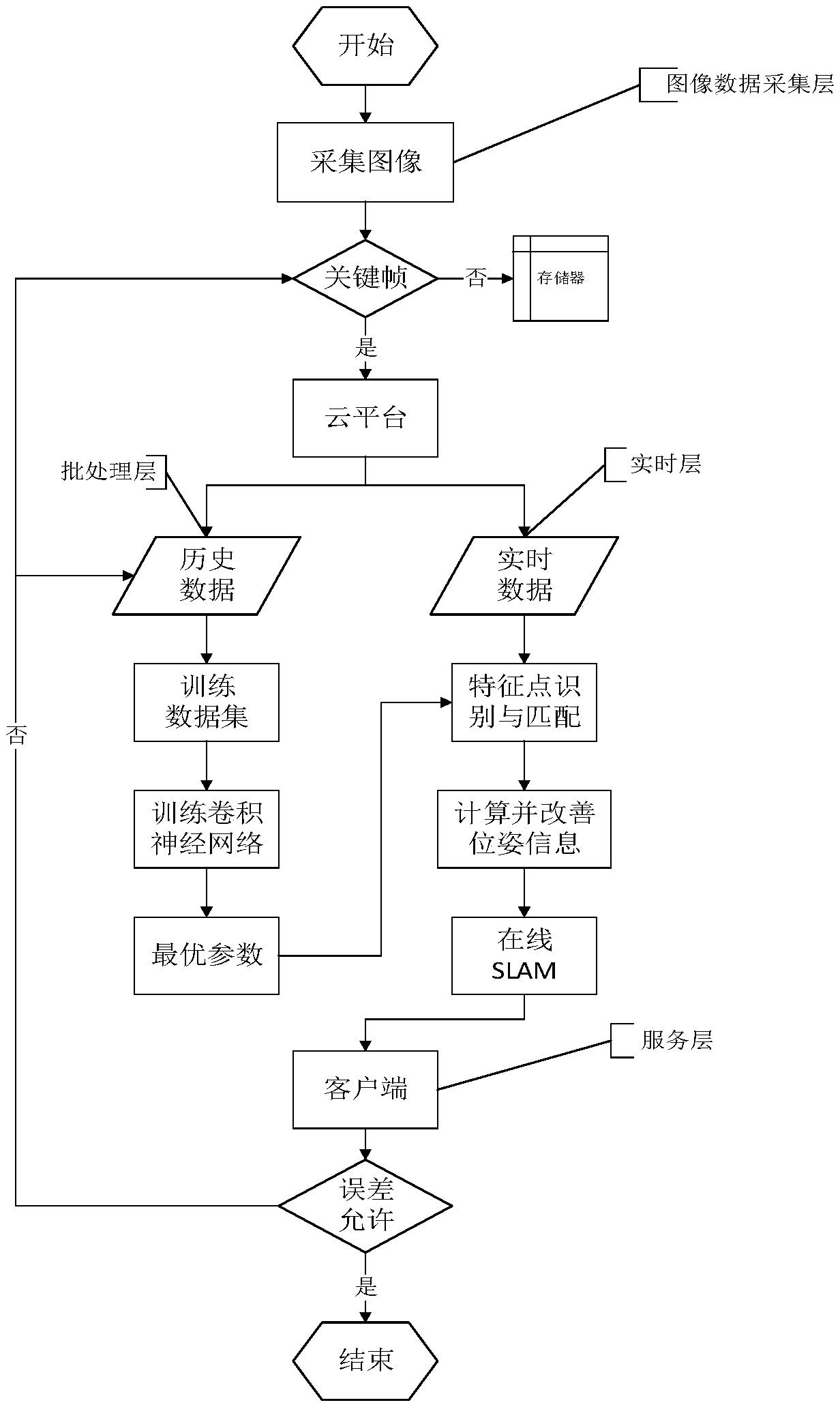

Online deep learning SLAM based image cloud computing method and system

ActiveCN108921893AReal-time update and feedbackReduce training timeImage enhancementImage analysisData setKey frame

The invention discloses an online deep learning SLAM based image cloud computing method. The image cloud computing method comprises the following steps: acquiring image data and storing the image data; extracting a key frame and uploading the key frame; using the image data to construct a data set and training the data set to obtain optimal convolutional neural network parameters; extracting real-time image feature points and performing recognition, and performing feature point matching on adjacent frame images; iterating the image feature points to obtain the best matching transformation matrix, performing correction by using position and pose information, and obtaining the camera pose transformation; obtaining the optimal pose estimation through registration of point cloud data and the position and pose information; transforming the pose information into a coordinate system through matrix transformation, and obtaining map information; repeating the previous steps in regions with insufficient precision; and allowing a client to display the result and performing online adjustment at the same time. The invention parallelizes image processing, deep learning training and SLAM by usingthe cloud computing technology to improve the efficiency and accuracy of image processing, positioning and mapping.

Owner:SOUTH CHINA UNIV OF TECH

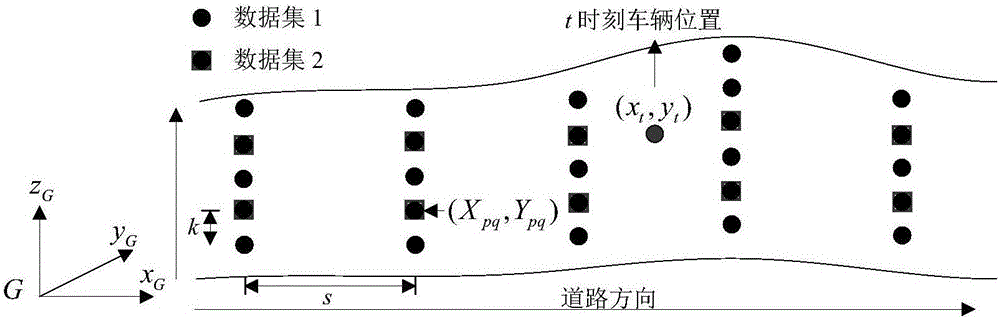

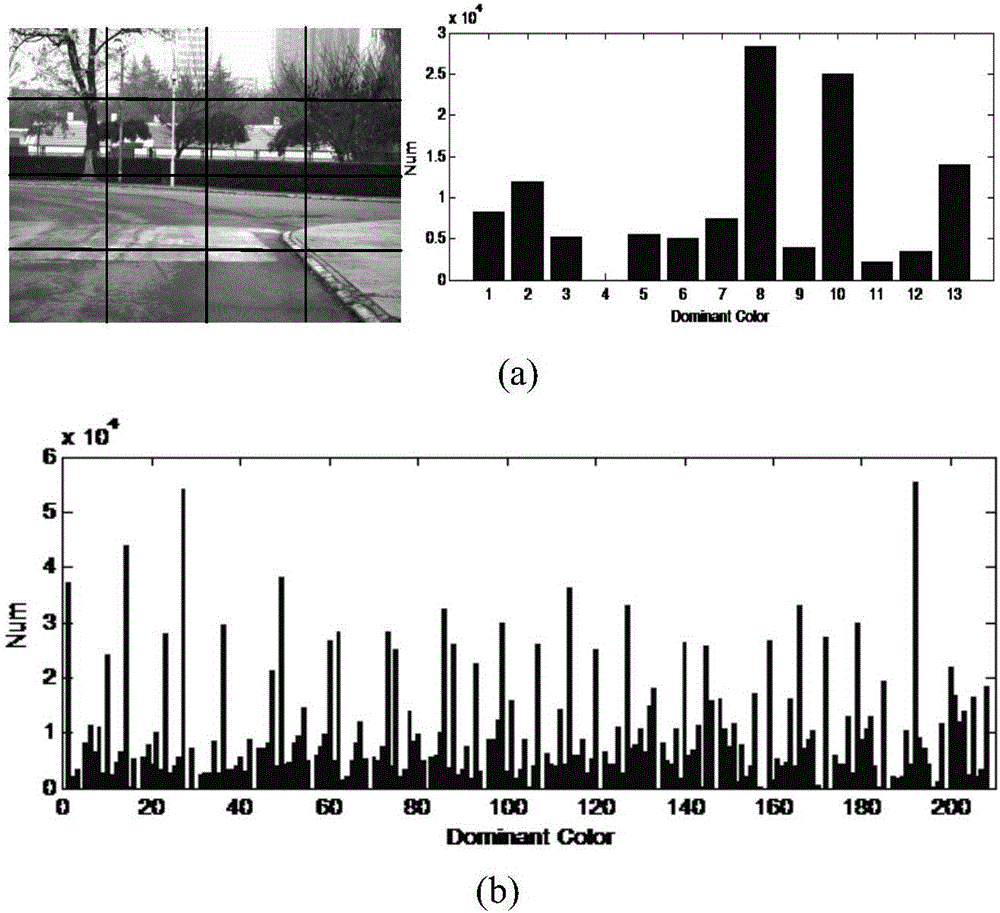

Vehicle self-positioning method based on street view image database

ActiveCN106407315AShorten the timeImprove operational efficiencyInstruments for road network navigationCharacter and pattern recognitionFeature vectorVehicle driving

The invention discloses a vehicle self-positioning method based on a street view image database. The vehicle self-positioning method comprises the following steps of 1, collecting view images by a camera, and extracting main color feature vector information, SURF feature points and position information of the collected images, and storing the extracted information in the database; 2, taking the shot images in a vehicle driving process as to-be-matched images, extracting main color feature vectors of the to-be-matched images, obtaining an initial matched image by calculating the similarity of the main color feature vector of the to-be-matched images and the main color feature vector of the images in the original database, extracting the position information of the initial matched image, and preliminarily determining the position of the vehicle; and 3, extracting adjacent-region images of the initial matched image, forming a searching space, performing feature point matching on the to-be-matched images and the images in the searching space to obtain an optimal matched image, extracting the shooting position coordinate of the optimal matched image and position coordinates of other eight adjacent regions, calculating the weight of each coordinate, and then calculating the accurate coordinate of the vehicle position through a formula.

Owner:CHANGAN UNIV

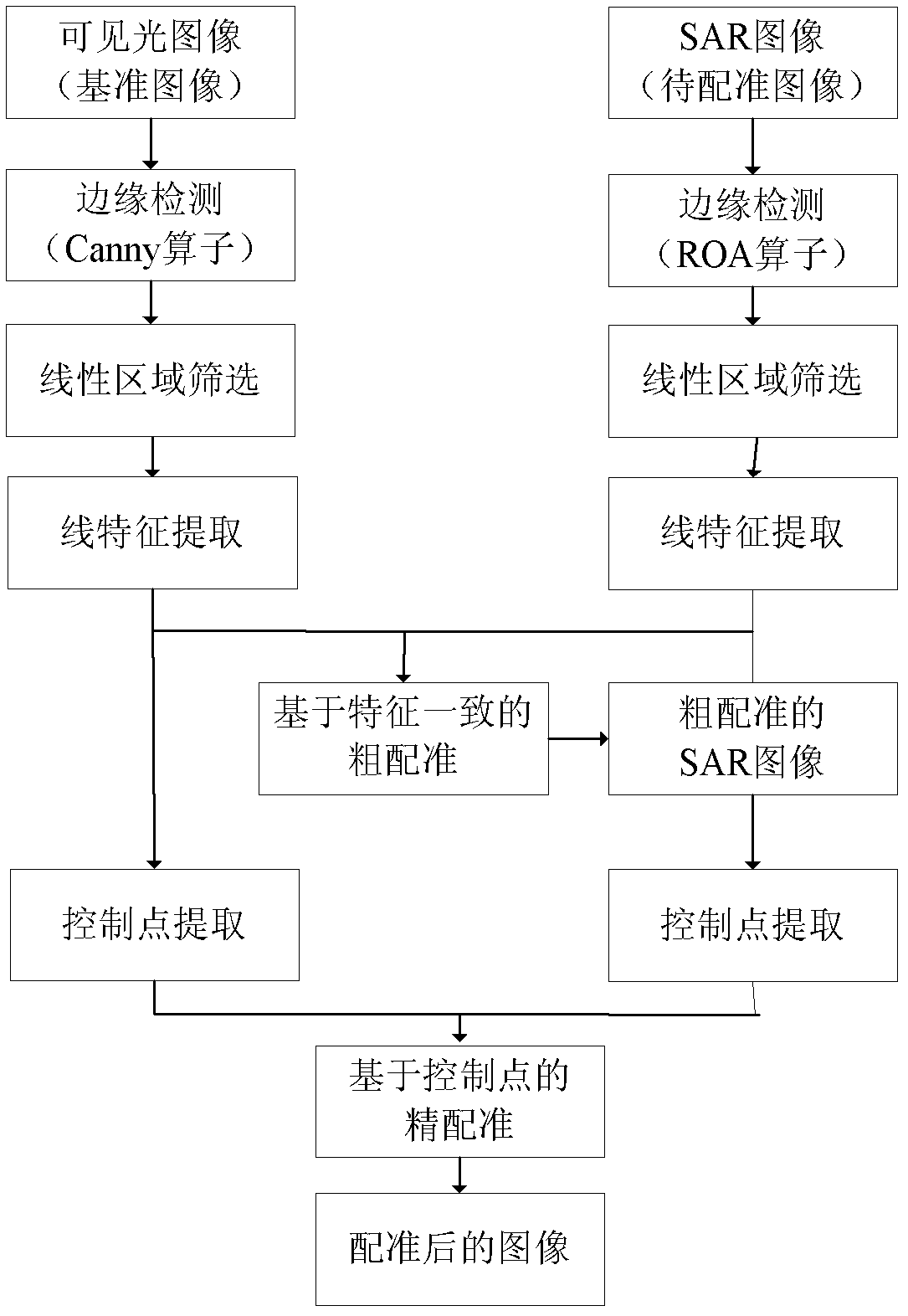

A visible light and SAR image registration method based on linear features and control points

ActiveCN102663725AImprove matching accuracyAvoid mismatchImage analysisCanny edge detectorPoint match

The present invention relates to a visible light and SAR image registration method based on linear features and control points. The method carries out edge detections respectively of visible light and an SAR image by using a Canny operator and an ROA (Ratio of Averages) operator according to features of visible light and the SAR image, extracts linear features by employing a multi-scale discrete fast Beamlet transform method, structures control points based on linear features, and realizes coarse-to-fine registration on images to be registered by using a coarse registration method based on consistent features and a fine registration method based on control points. The beneficial effect of the method lies in the fact that mismatching in single straight line matching caused by difference in length and location is prevented, point matching precision is improved, the method is suitable not only for SAR and visible light image registration, but also for registration of images with same edge features due to the employment of registration algorithm based on linear features and control points features.

Owner:陕西中科启航科技有限公司

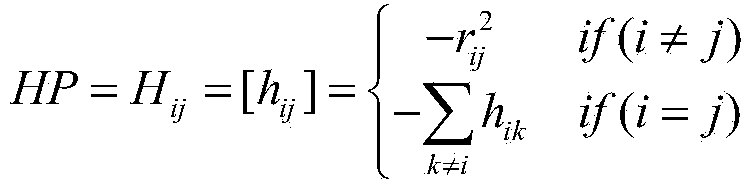

Diffusion distance for histogram comparison

A new measure to compare histogram-based descriptors, a diffusion distance, is disclosed. The difference between two histograms is defined to be a temperature field. The relationship between histogram similarity and diffusion process is discussed and it is shown how the diffusion handles deformation as well as quantization effects. As a result, the diffusion distance is derived as the sum of dissimilarities over scales. Being a cross-bin histogram distance, the diffusion distance is robust to deformation, lighting change and noise in histogram-based local descriptors. In addition, it enjoys linear computational complexity which significantly improves previously proposed cross-bin distances with quadratic complexity or higher The proposed approach is tested on both shape recognition and interest point matching tasks using several multi-dimensional histogram-based descriptors including shape context, SIFT and spin images. In all experiments, the diffusion distance performs excellently in both accuracy and efficiency in comparison with other state-of-the-art distance measures. In particular, it performs as accurate as the Earth Mover's Distance with a much greater efficiency.

Owner:SIEMENS MEDICAL SOLUTIONS USA INC

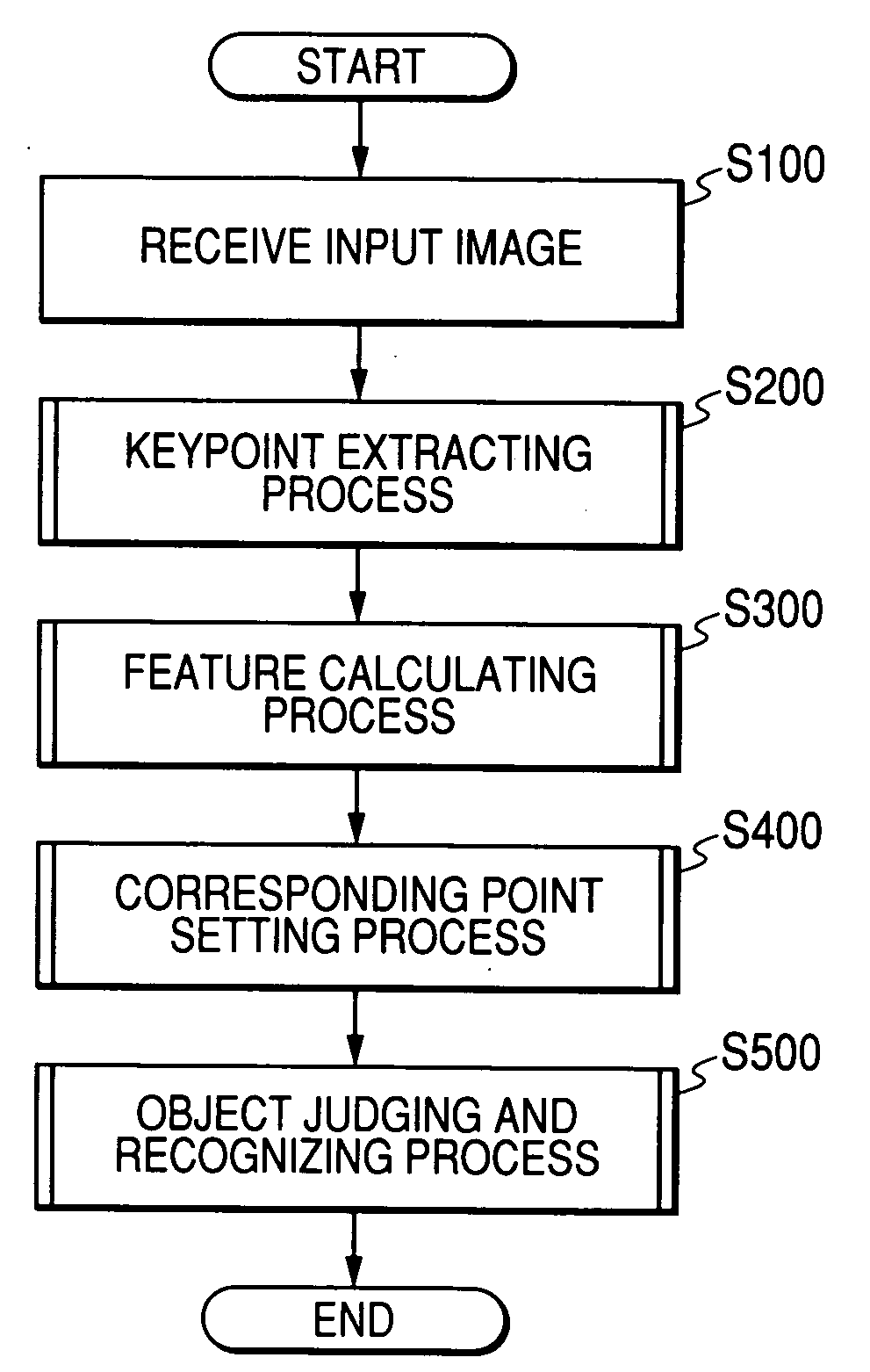

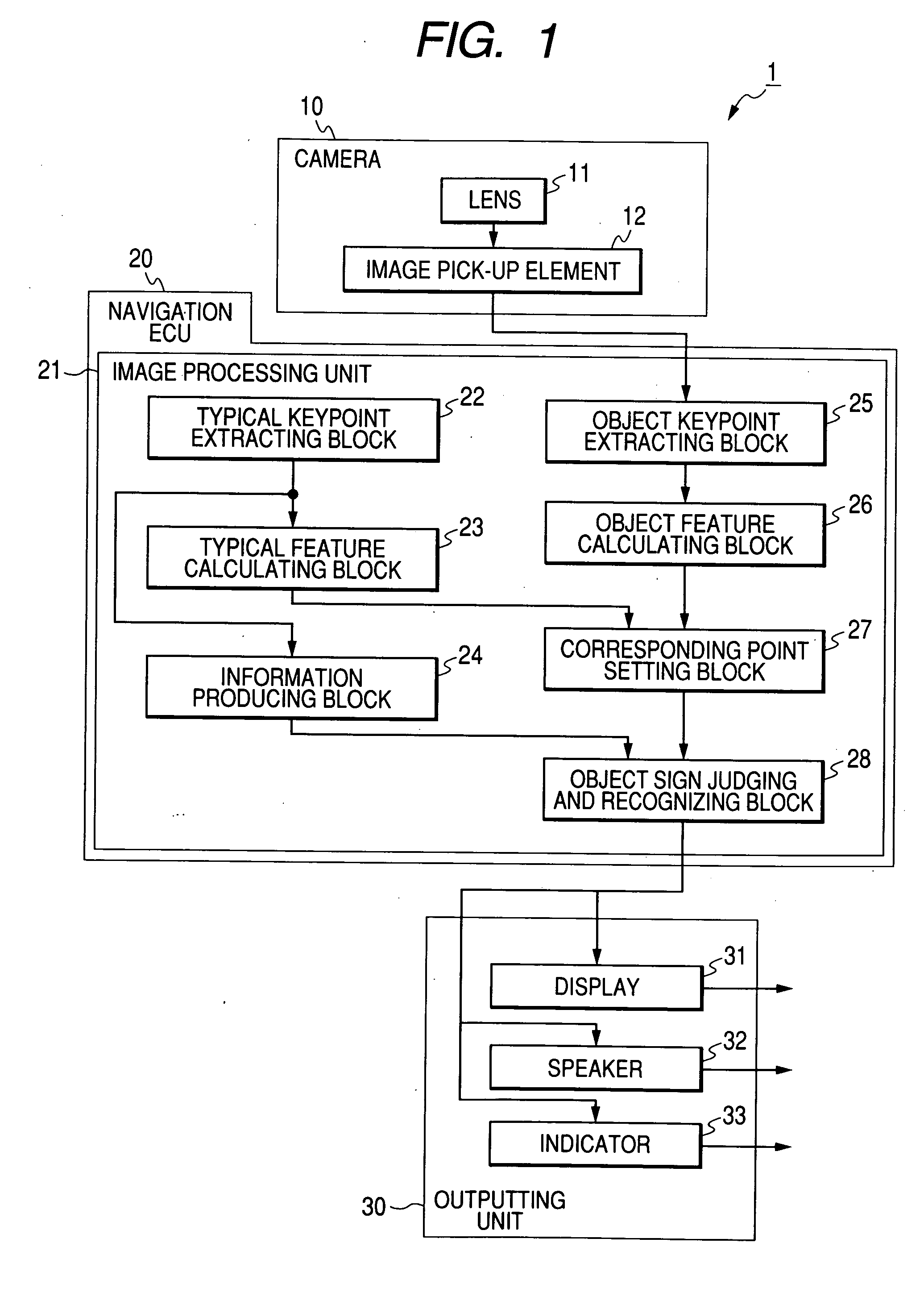

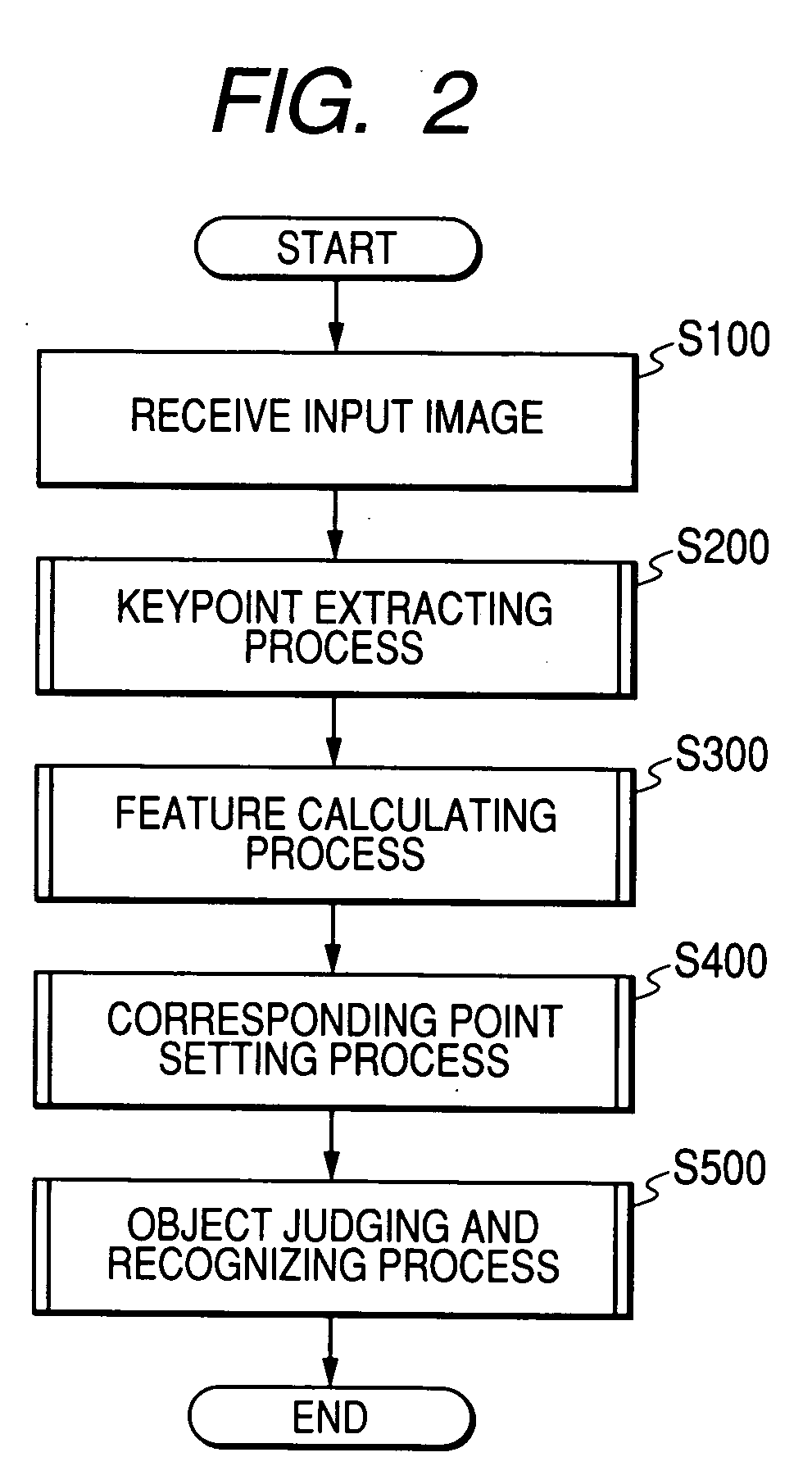

Apparatus for recognizing object in image

ActiveUS20080247651A1Reliably recognizeReliable matchCharacter and pattern recognitionImage extractionIdentification device

Owner:DENSO CORP +1

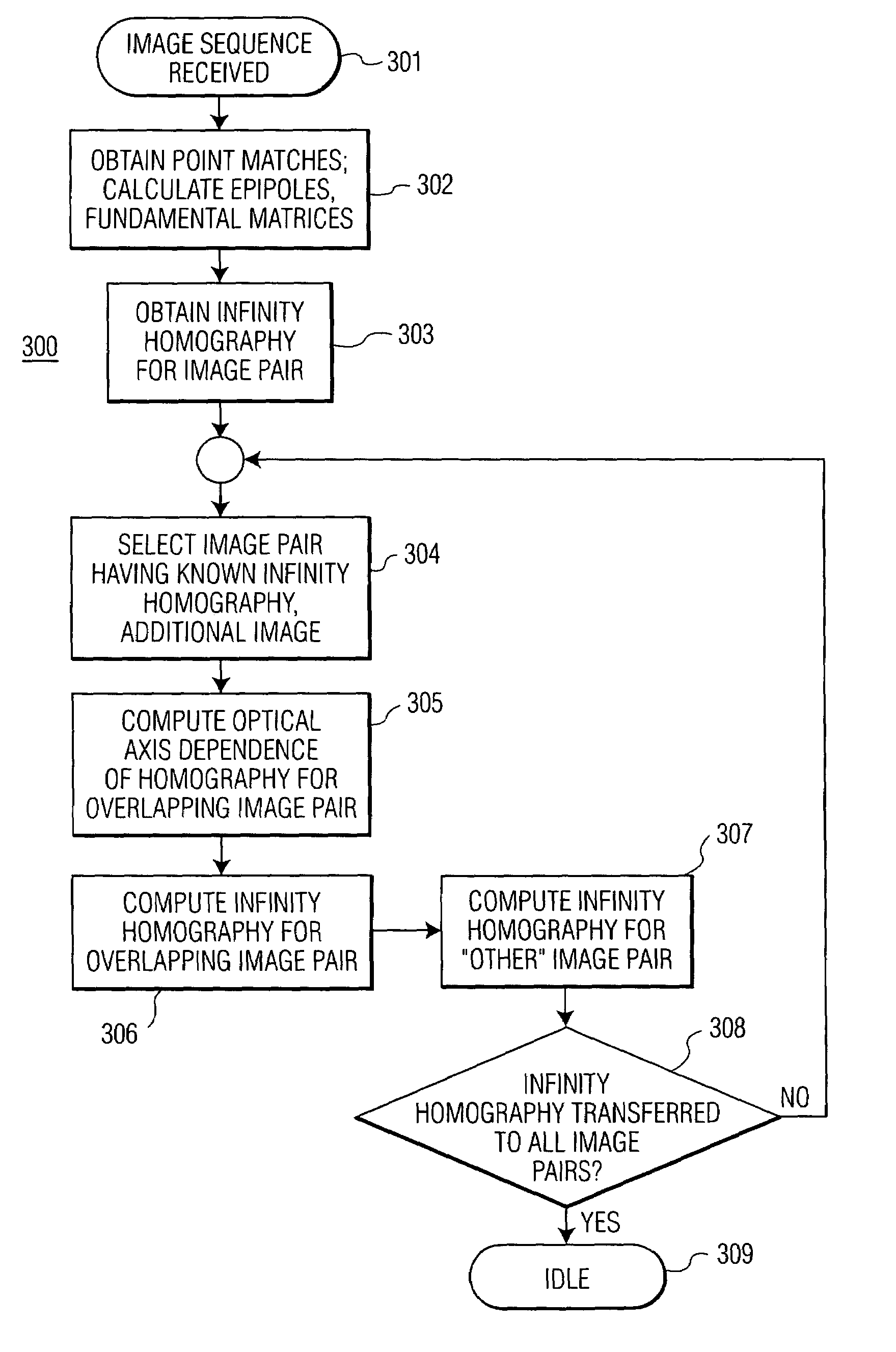

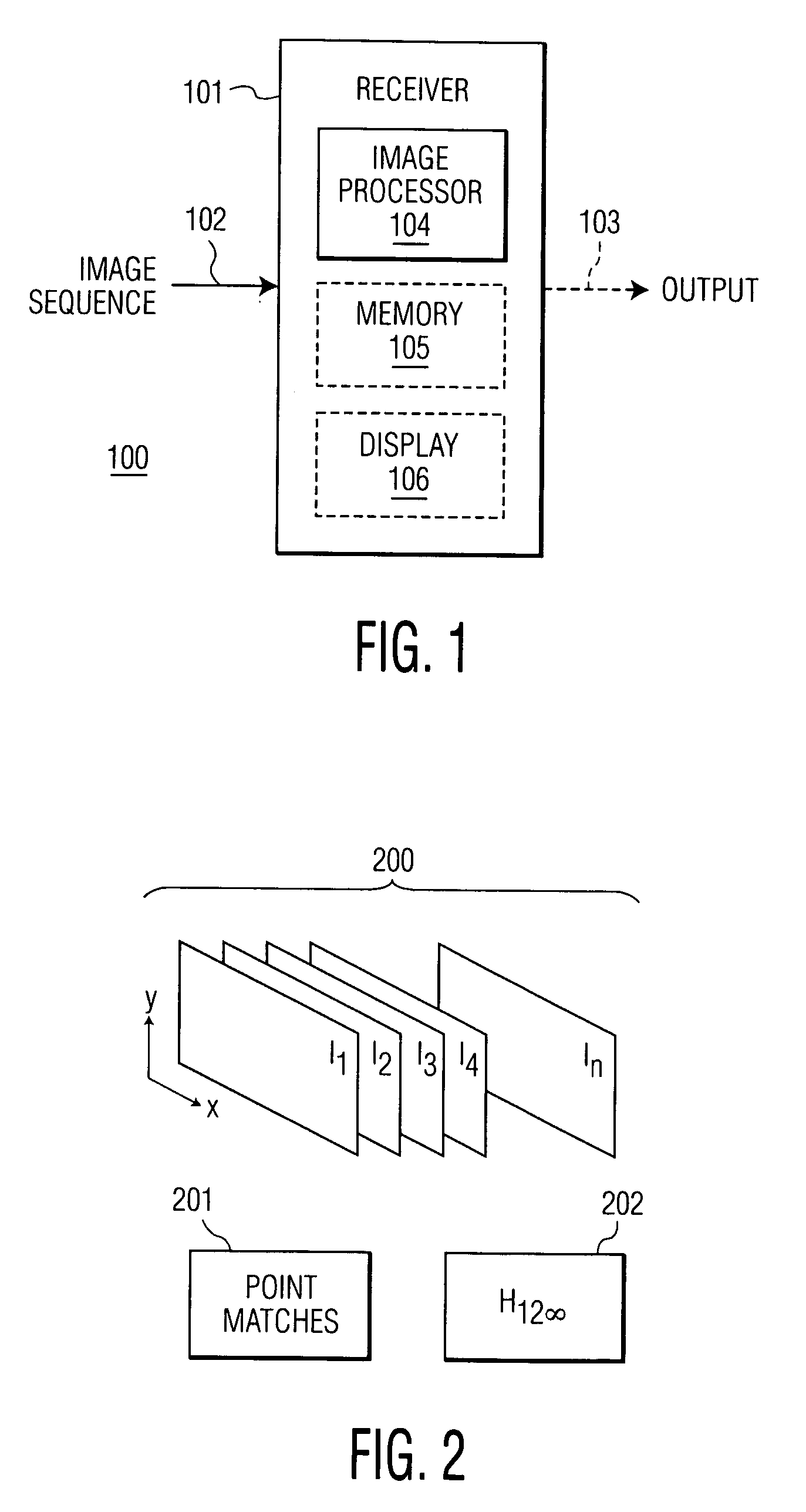

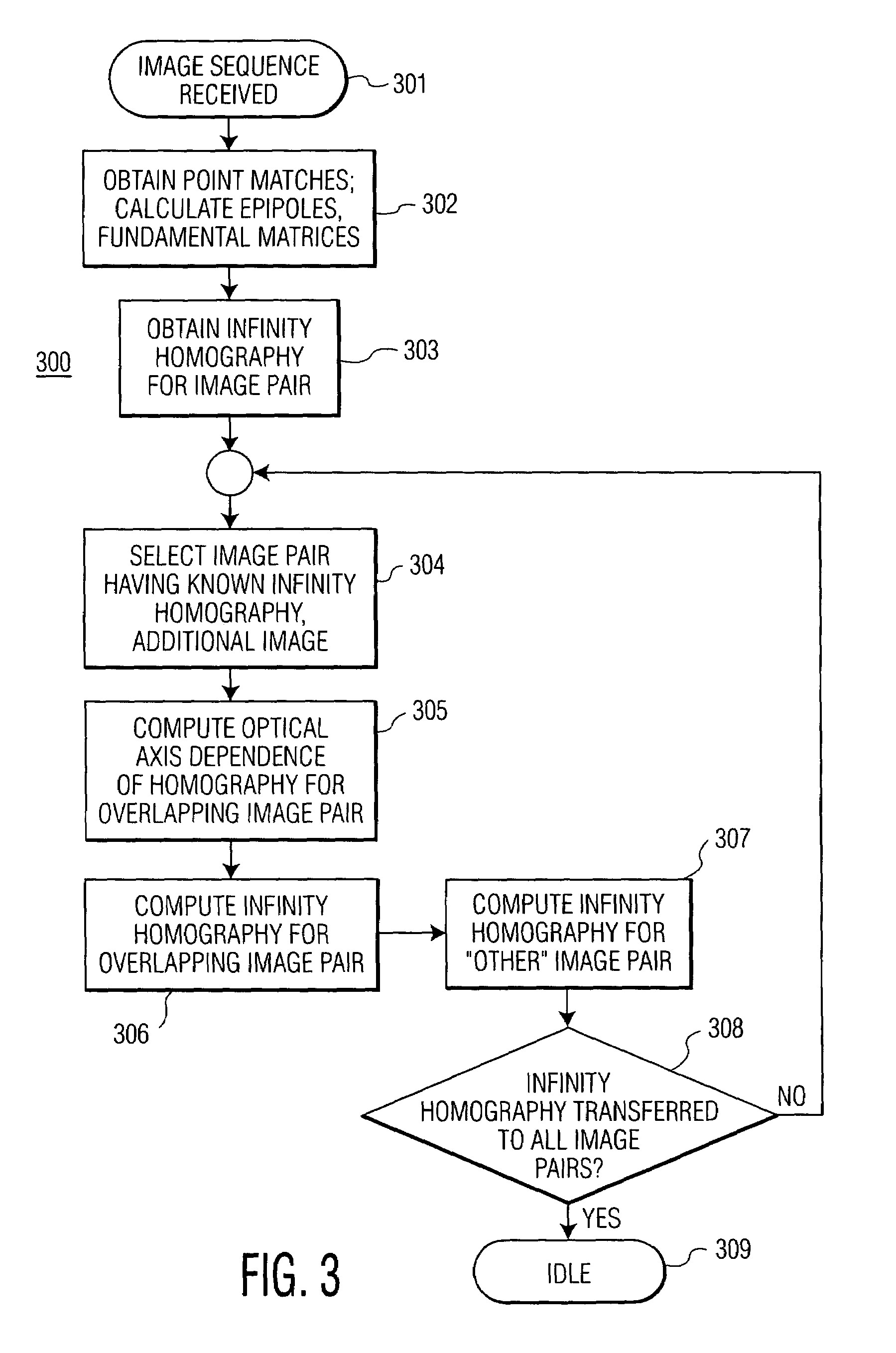

Homography transfer from point matches

InactiveUS7003150B2Image analysisCharacter and pattern recognitionPattern recognitionEssential matrix

An infinity homography for an image pair within an image sequence is transferred to other image pairs within the image sequence utilizing point matches for the subject image pairs. An image set including the image pair for which the infinity homography is known and a third image are selected. Then intermediate parameters for homography transfer for one image pair overlapping the known infinity homography image pair is computed from the known infinity homography and epipoles and fundamental matrices of the overlapping image pairs, to derive the infinity homography for the selected overlapping image pair. The process may then be repeated until infinity homographies for all image pairs of interest within the image sequence have been derived.

Owner:UNILOC 2017 LLC

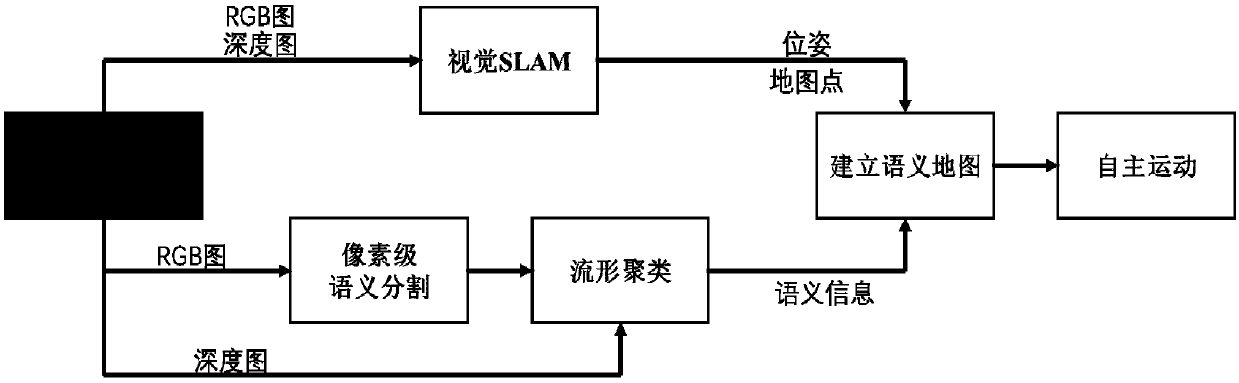

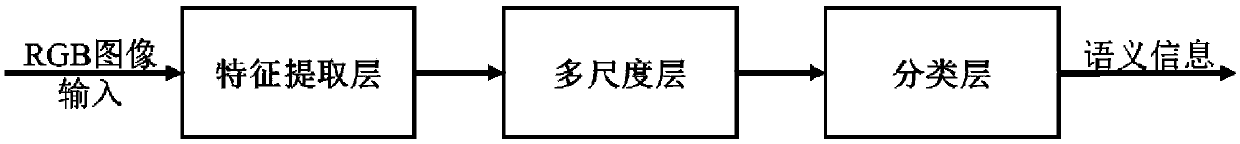

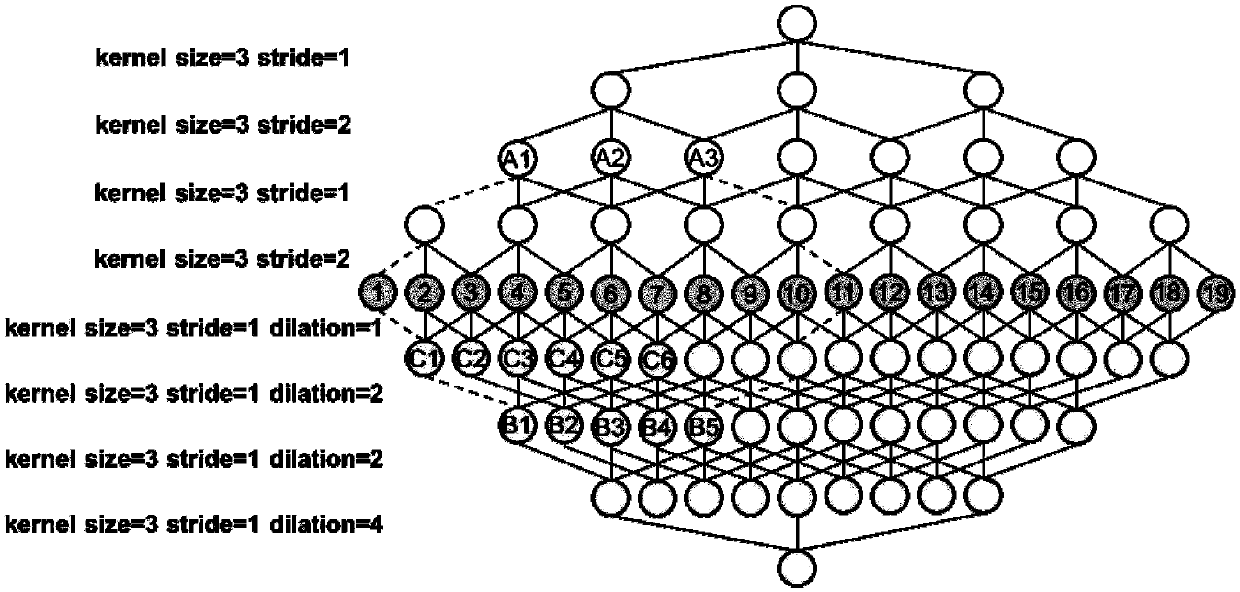

A method and system for realizing a visual SLAM semantic mapping function based on a cavity convolutional deep neural network

ActiveCN109559320AAccurate pose estimationEliminate accumulated errorsImage enhancementImage analysisVisual perceptionPoint match

The invention relates to a method for realizing a visual SLAM semantic mapping function based on a cavity convolutional deep neural network. The method comprises the following steps of (1) using an embedded development processor to obtain the color information and the depth information of the current environment via a RGB-D camera; (2) obtaining a feature point matching pair through the collectedimage, carrying out pose estimation, and obtaining scene space point cloud data; (3) carrying out pixel-level semantic segmentation on the image by utilizing deep learning, and enabling spatial pointsto have semantic annotation information through mapping of an image coordinate system and a world coordinate system; (4) eliminating the errors caused by optimized semantic segmentation through manifold clustering; and (5) performing semantic mapping, and splicing the spatial point clouds to obtain a point cloud semantic map composed of dense discrete points. The invention also relates to a system for realizing the visual SLAM semantic mapping function based on the cavity convolutional deep neural network. With the adoption of the method and the system, the spatial network map has higher-level semantic information and better meets the use requirements in the real-time mapping process.

Owner:EAST CHINA UNIV OF SCI & TECH

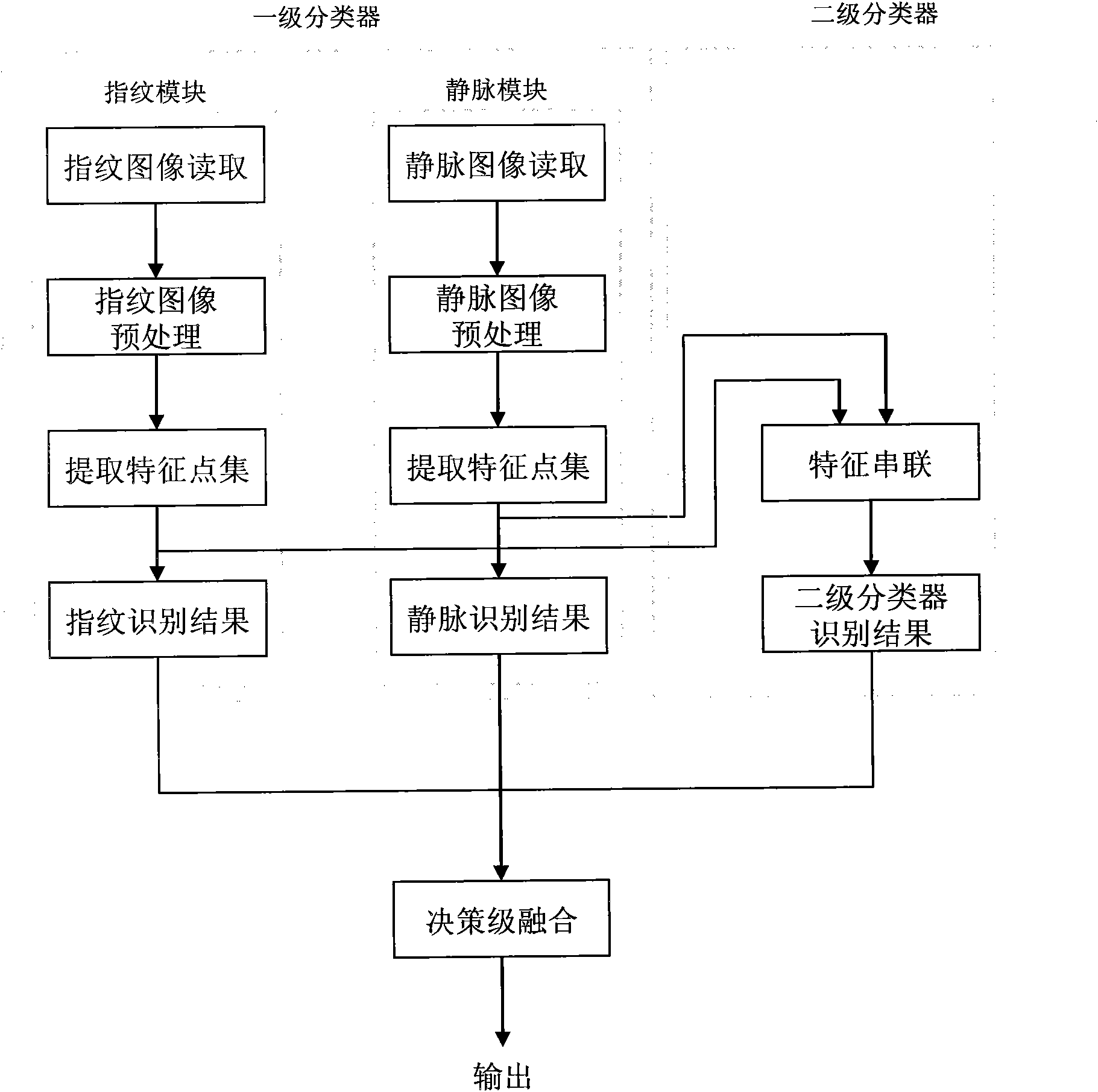

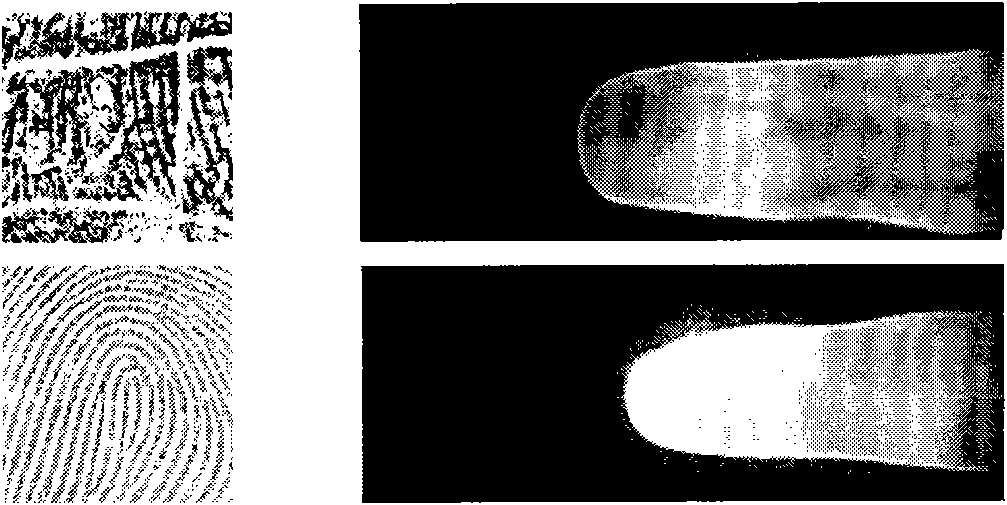

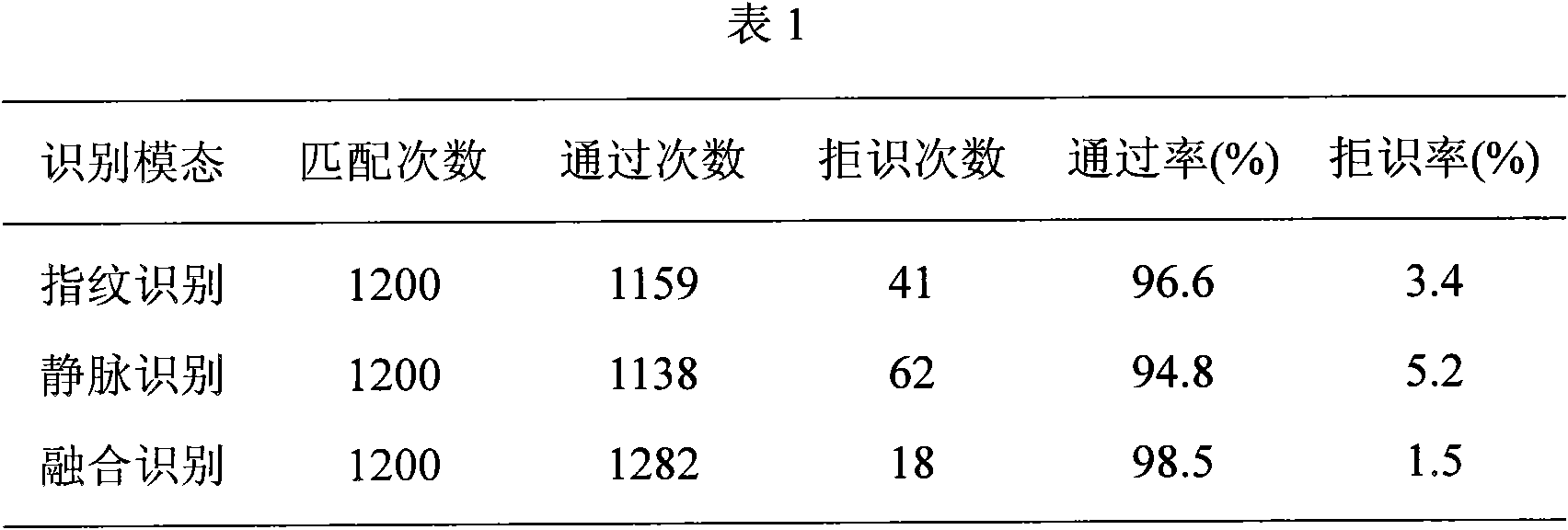

Secondary classification fusion identification method for fingerprint and finger vein bimodal identification

InactiveCN101847208AIdentification helpsImprove accuracyCharacter and pattern recognitionVeinPoint match

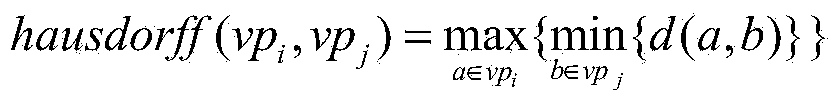

The invention provides a secondary classification fusion identification method for fingerprint and finger vein bimodal identification. A fingerprint module and a vein module are used as primary classifiers, and a secondary decision module is used as a secondary classifier. The method comprises the following steps of: reading a fingerprint image and a vein image through the fingerprint module and the vein module; pre-processing the read images respectively and extracting characteristic point sets of the both; performing identification on the images respectively to obtain respective identification results, wherein the fingerprint identification adopts a detail point match-based method, and the vein identification uses an improved Hausdorff distance mode to perform identification; forming a new characteristic vector by using the extracted fingerprint and vein characteristic point sets in a characteristic series mode through the secondary decision module so as to form the secondary classifier and obtain an identification result; and finally, performing decision-level fusion on the three identification results. The method has the advantages of making full use of identification information of fingerprints and finger veins, and effectively improving the accuracy of an identification system, along with high identification rate.

Owner:HARBIN ENG UNIV

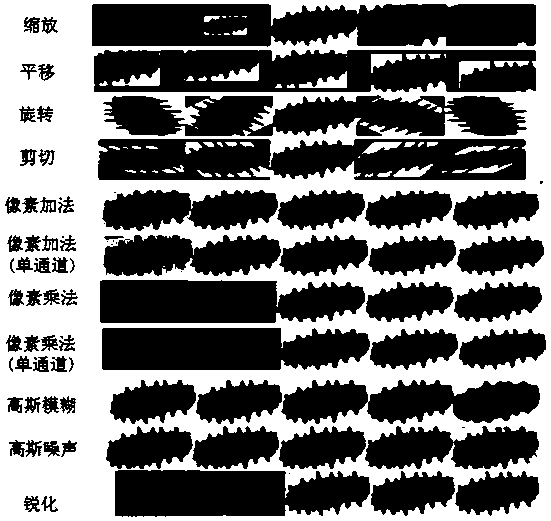

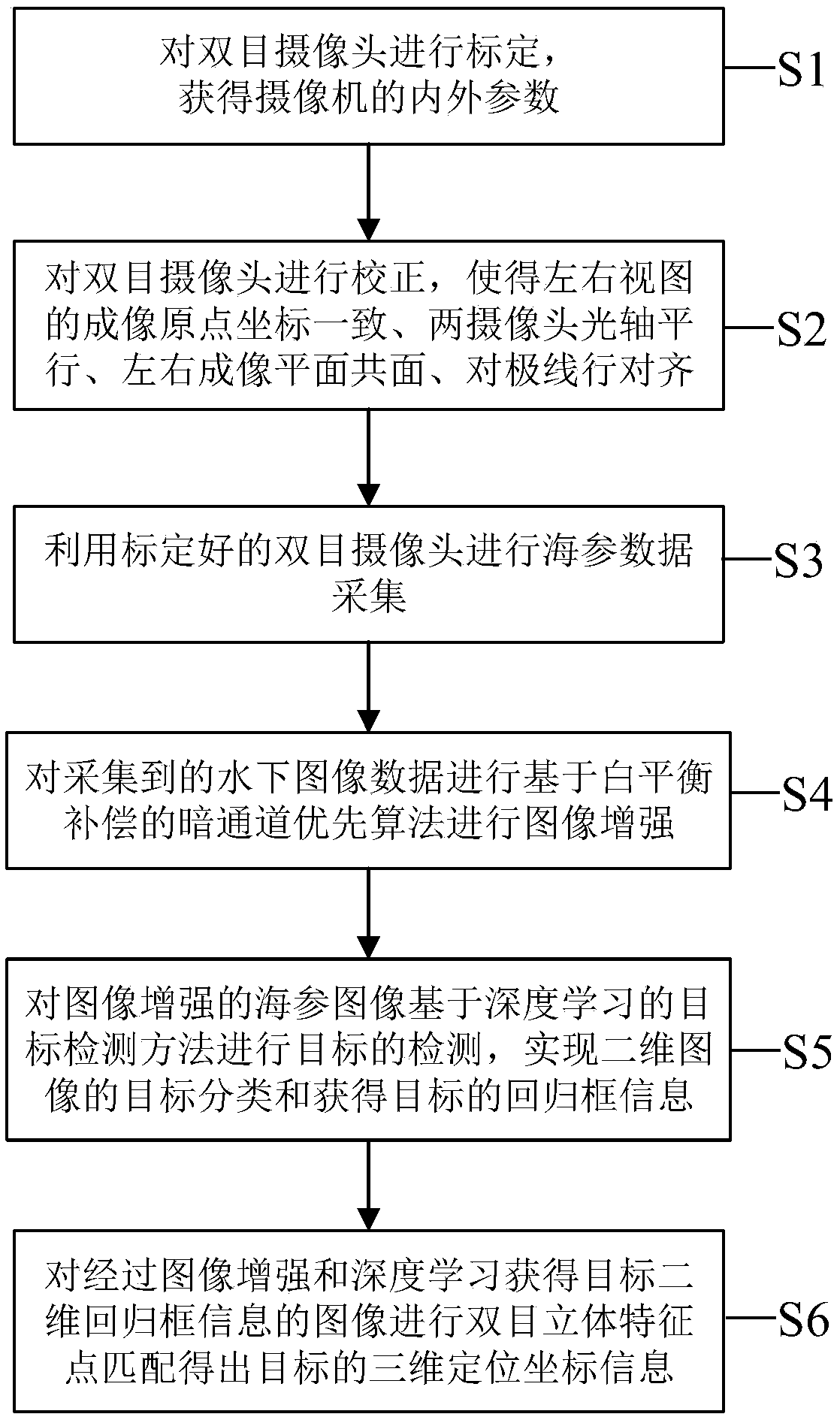

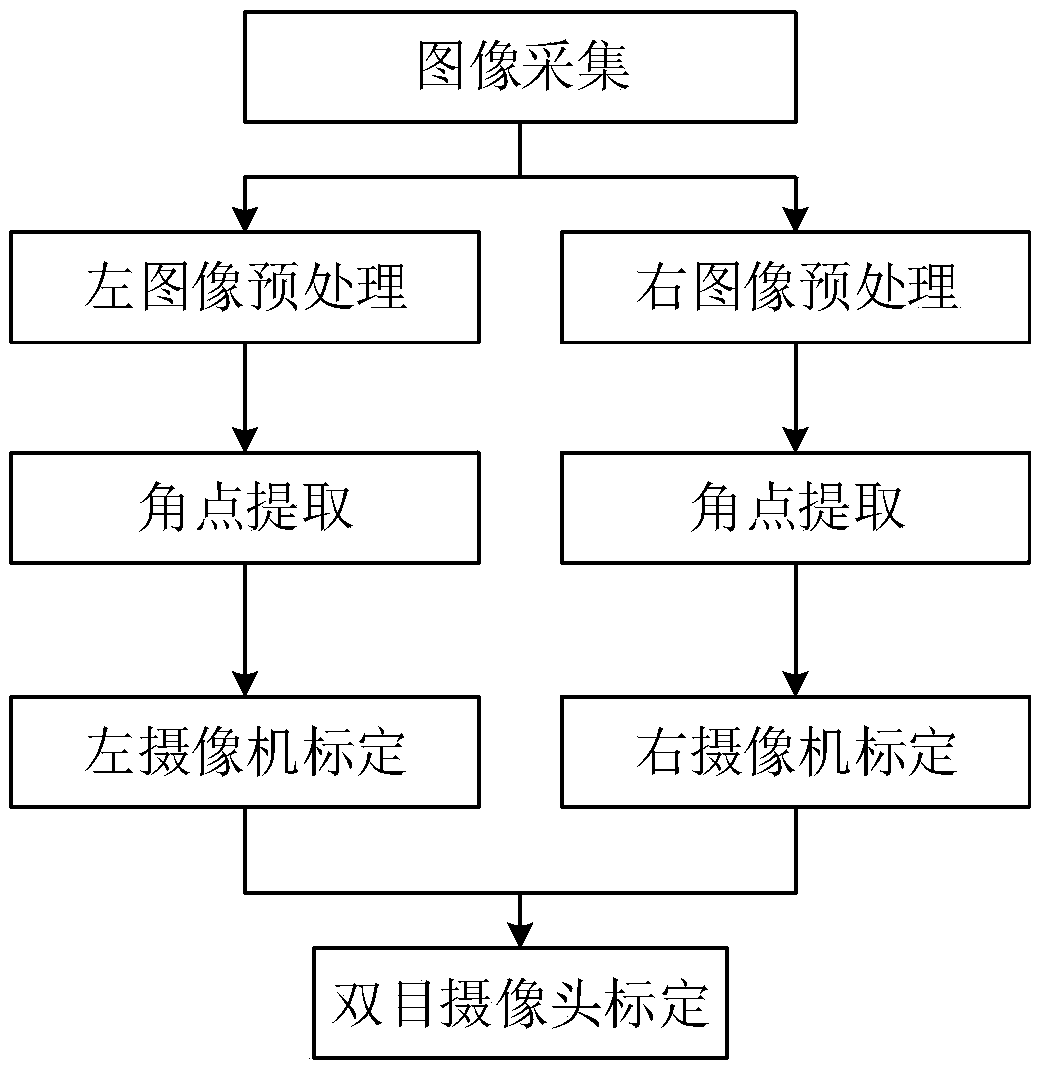

Sea cucumber detection and binocular visual positioning method based on deep learning

InactiveCN108876855AHigh precisionNarrow searchImage enhancementImage analysisOcean bottomData acquisition

The invention provides a sea cucumber detection and binocular visual positioning method based on deep learning, and is suitable for a submarine sea cucumber fishing task of an underwater robot of ocean pasture. The method mainly comprises the following steps of calibrating binocular cameras to obtain internal and external parameters of the cameras; correcting the binocular cameras, so that imagingorigin coordinates of left and right views are consistent, optical axes of the two cameras are parallel, left and right imaging planes are coplanar, and bipolar lines are aligned; performing submarine image data collection by utilizing the calibrated binocular cameras; performing image enhancement on the collected image data through a dark channel priority algorithm based on white balance compensation; performing deep learning-based sea cucumber target detection on a submarine image subjected to the image enhancement; and performing a binocular stereo feature point matching algorithm on the image which is subjected to the image enhancement and the deep learning to obtain two-dimensional regression frame information of a target, thereby obtaining three-dimensional positioning coordinate information of the target. According to the method, accurate positioning of underwater sea cucumber treasures can be realized, and manual participation is not needed.

Owner:HARBIN ENG UNIV

Image pickup apparatus, image processing apparatus, image pickup method, and image processing method

ActiveUS8023000B2Minimize timePossible to obtainTelevision system detailsProjector focusing arrangementImaging processingPoint match

The present invention, which transforms multiple images so that positions of corresponding points will coincide between the images and composites the images with the corresponding points matched, provides an image pickup apparatus, image processing apparatus, image pickup method, and image processing method which make it possible to obtain an intended all-in-focus image or blur-emphasized image even if there is camera shake or subject movement.

Owner:FUJIFILM CORP

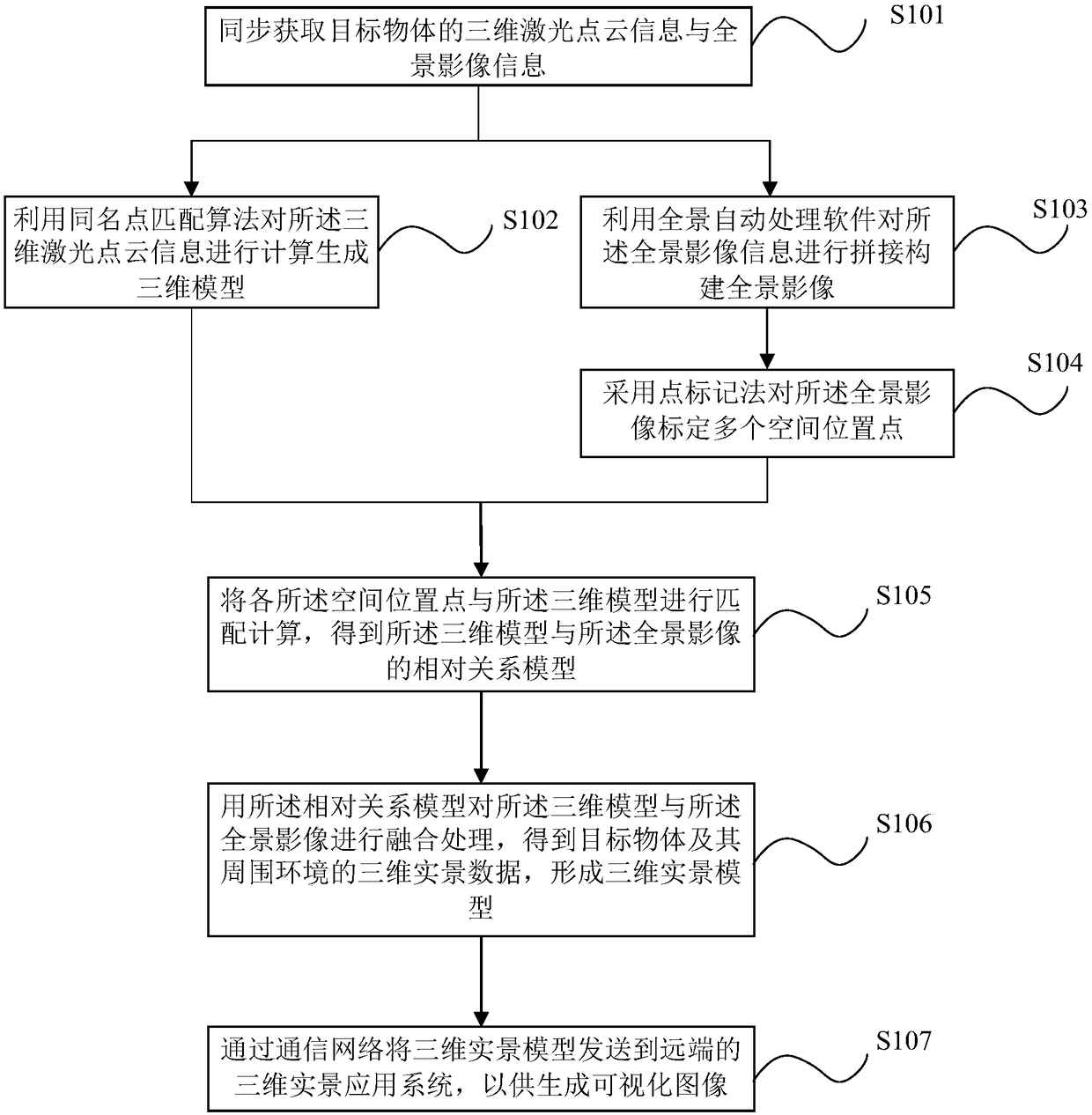

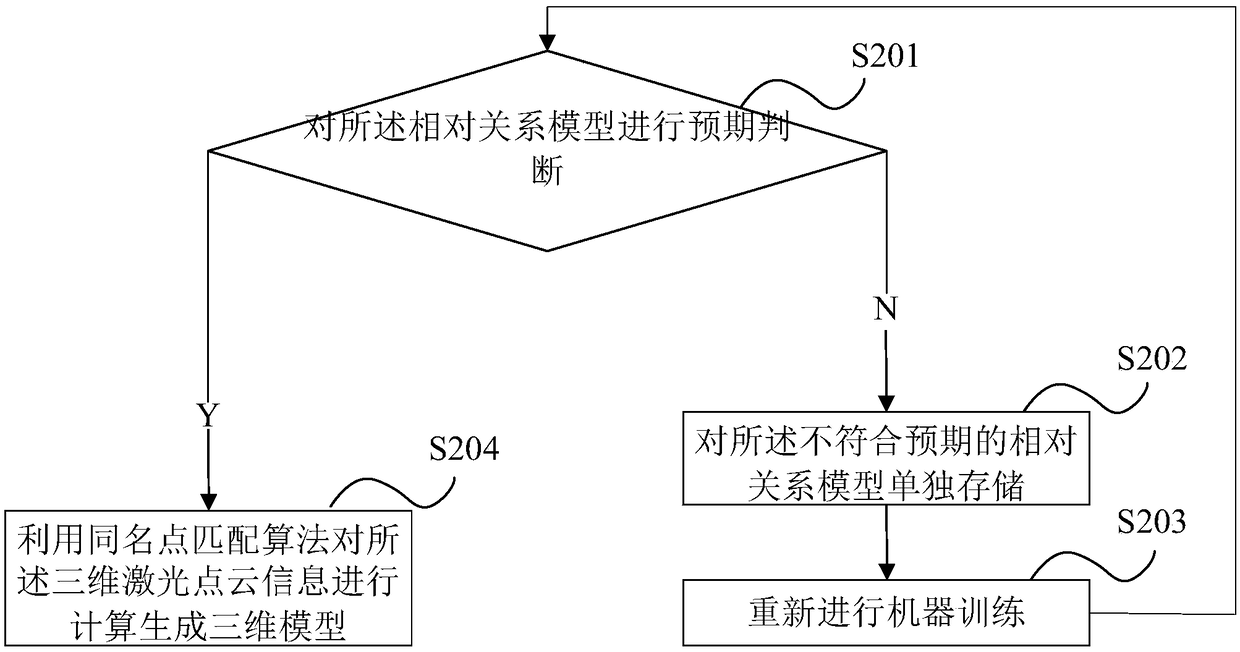

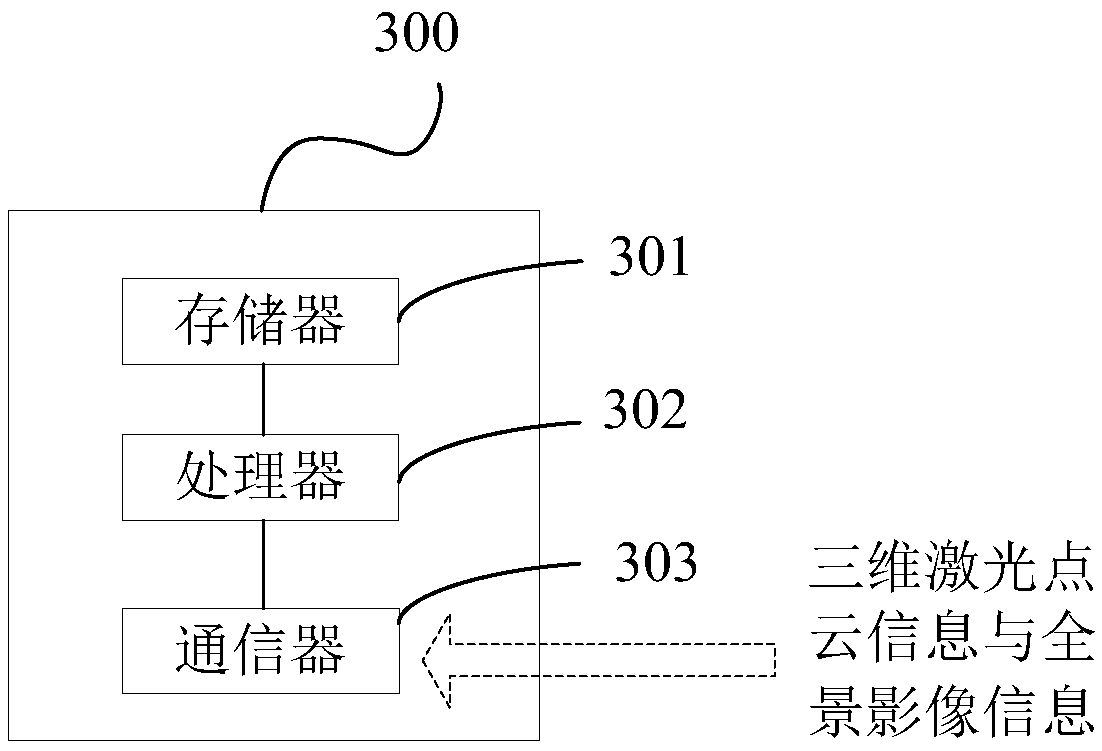

Three-dimensional real scene collection and modeling method and apparatus, and readable storage medium

PendingCN108648272AGuaranteed timelinessProcessing speedDetails involving processing stepsGeometric image transformationData synchronizationPoint cloud

The invention provides a three-dimensional real scene collection and modeling method and apparatus, and a readable storage medium. A laser scanning part of a three-dimensional real scene collection apparatus is used for collecting a laser point cloud of a target in real time; a panoramic shooting part is used for collecting a real panoramic image of the target; and point cloud data and panoramic image data are synchronously uploaded to a three-dimensional real scene modeling system for performing storage and processing. The laser point cloud is subjected to homonymy point matching calculationto build an overall three-dimensional model; the panoramic image is subjected to splicing integration processing; a processed three-dimensional model and the panoramic image are subjected to fusion processing to finish three-dimensional real scene modeling; and a three-dimensional real scene model is pushed to a three-dimensional real scene application system for performing application of variousthree-dimensional real scenes through the three-dimensional real scene modeling system. Therefore, the collection and modeling processing speed is greatly increased; in emergency, the data timelinesscan be ensured; the modeling efficiency is improved; and the modeling cycle is shortened.

Owner:上海激点信息科技有限公司

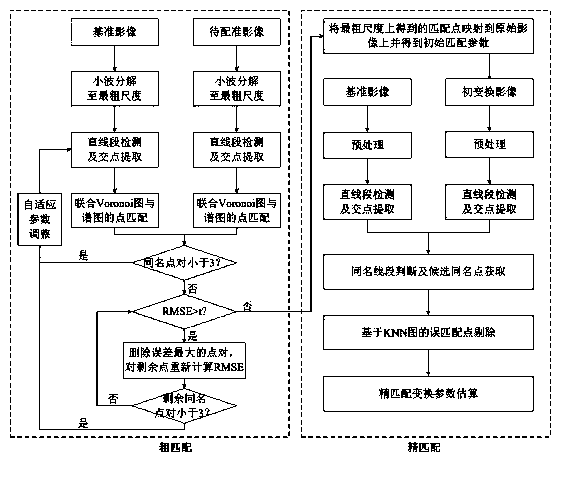

Heterology remote sensing image registration method

ActiveCN103514606AImprove robustnessImprove accuracyImage analysisAs elementSynthetic aperture radar

The invention discloses a heterology remote sensing image registration method. According to the core idea, multi-scale matching is taken as a basis; straight line intersection points are used as elements; the point matching method of a joint Voronoi map and a spectrogram is used; iteration feature extraction and a matching policy are integrated; and the problems of heavy dependence on feature extraction, poor reliability, low accuracy and the like of the existing method are overcome. The method comprises the steps that multi-scale analysis is carried out on original images; straight line extraction and intersection point acquiring are carried out on the coarsest scale; the point matching method of the joint Voronoi map and the spectrogram is carried out on intersection point sets to acquire a homonymous point pair; whether a matching result is qualified is detected: if the matching result is qualified, going to the next step is carried out, otherwise self-adaptive parameter adjustment is carried out and straight line extraction and point set matching are carried out again; original transformation is carried out on the images to be registered, and straight line features are respectively extracted; homonymous straight line segments are searched, and a candidate homonymous point pair is acquired; a KNN map is used to acquire an accurate matched point pair; and a transformation parameter is solved. The method provided by the invention is mainly used for the registration of visible light, infrared, synthetic aperture radar (SAR) and other heterology remote sensing images.

Owner:WUHAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com