Precise registration method of ground laser-point clouds and unmanned aerial vehicle image reconstruction point clouds

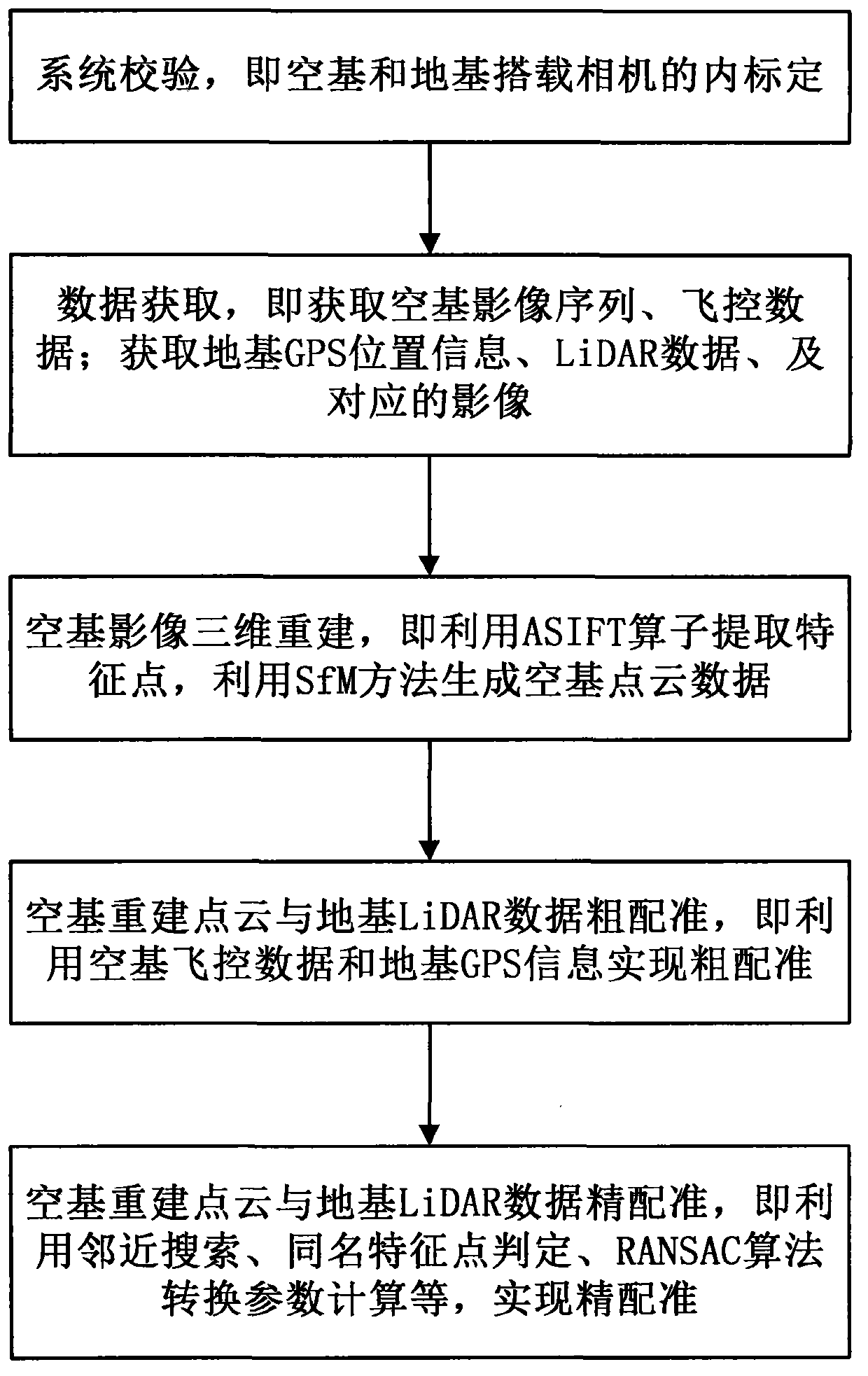

A laser point cloud and UAV technology, applied in 3D modeling, image analysis, image data processing, etc., can solve the difficulty of reconstructing point cloud registration, and it is difficult to extract feature points of the same name, ground-based images and aerial images. The shooting angle of the base image is quite different, so as to solve the problem of multi-angle observation, reduce the complexity, and improve the accuracy and efficiency.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The following examples are used to illustrate the present invention, but are not intended to limit the scope of the present invention.

[0030] 1. Definition

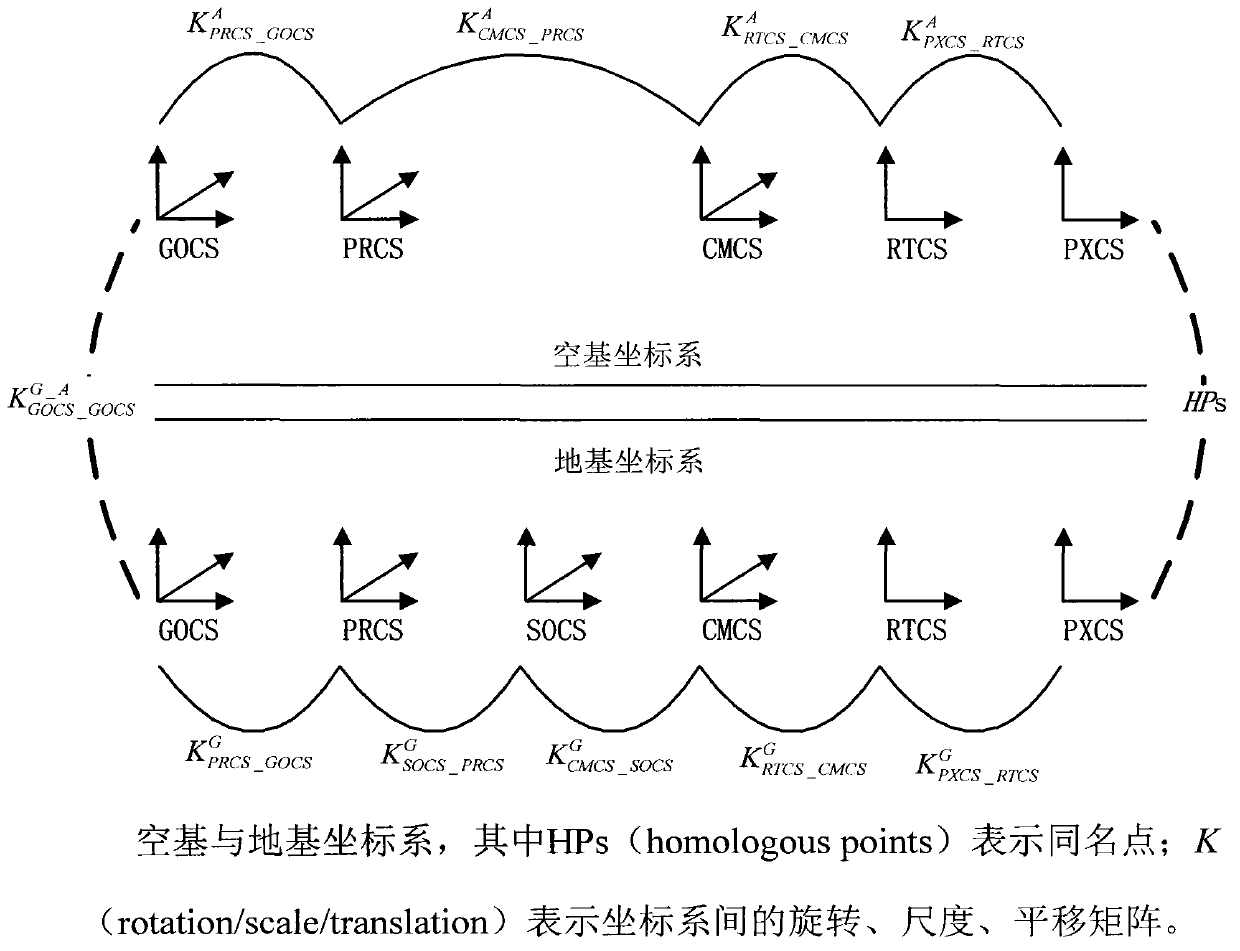

[0031] 1.1. Coordinate system definition

[0032] PXCS: image coordinate system (pixel coordinate system), a two-dimensional Cartesian coordinate system in units of image pixels;

[0033] RTCS: imaging plane coordinate system (retinal coordinate system), with the principal point of the image as the origin and the imaging plane coordinate system measured in camera physical units;

[0034] CMCS: camera coordinate system (camera coordinate system), with the camera optical center as the origin, the camera optical axis direction as the Z direction, and a space rectangular coordinate system parallel to the imaging plane as the X-Y plane;

[0035] SOCS: the laser scanner's own coordinate system (scanner's own coordinate system); a space rectangular coordinate system with the laser as the origin, the rotation plane as ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com