Patents

Literature

837 results about "Transformation parameter" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Transformation Parameters. Transformation parameters provide the value of a virtual attribute. This value can either be a default value, or rule that creates the value from other attribute values.

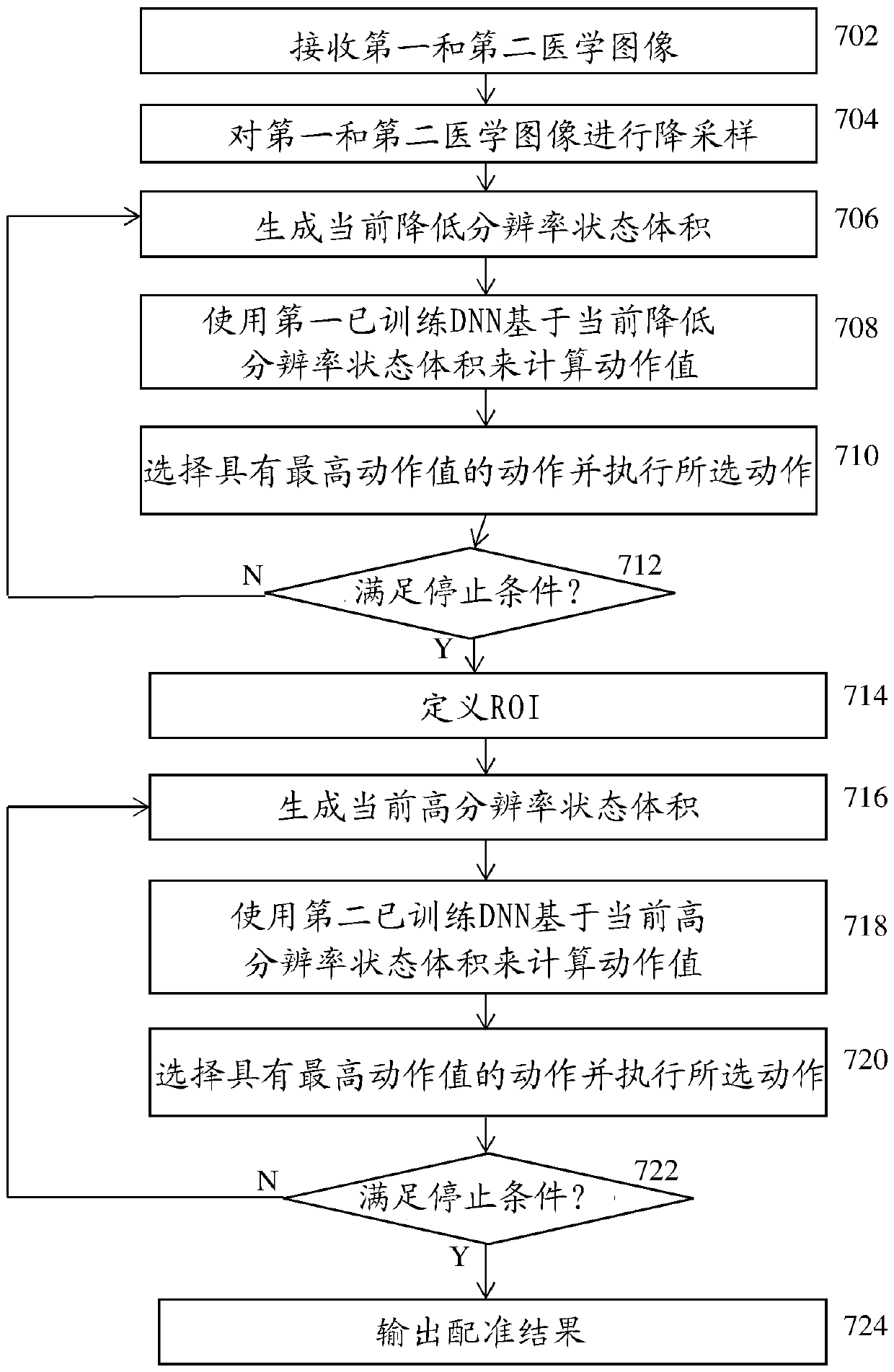

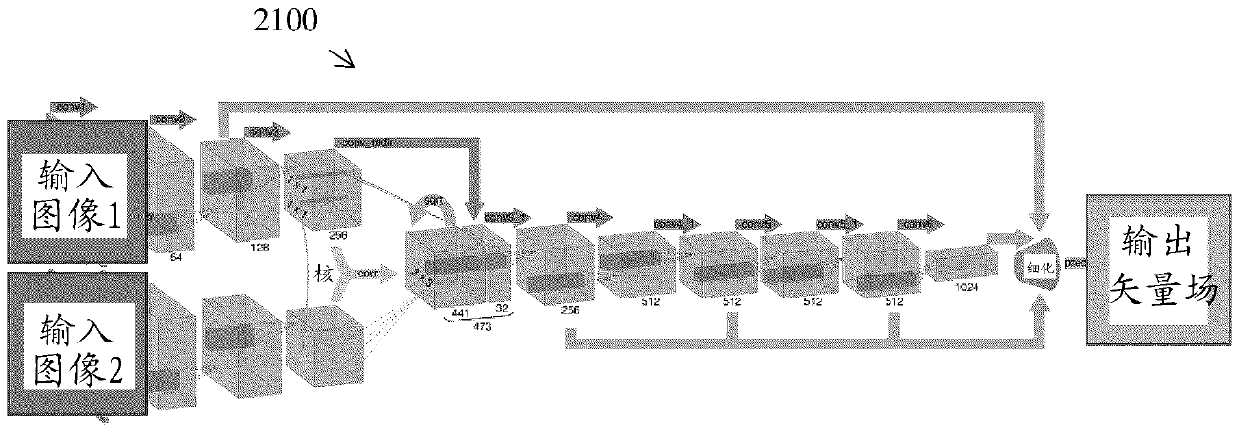

Method and System for Image Registration Using an Intelligent Artificial Agent

InactiveUS20170337682A1Good registration resultBetter and better registration resultsImage enhancementImage analysisLearning basedPattern recognition

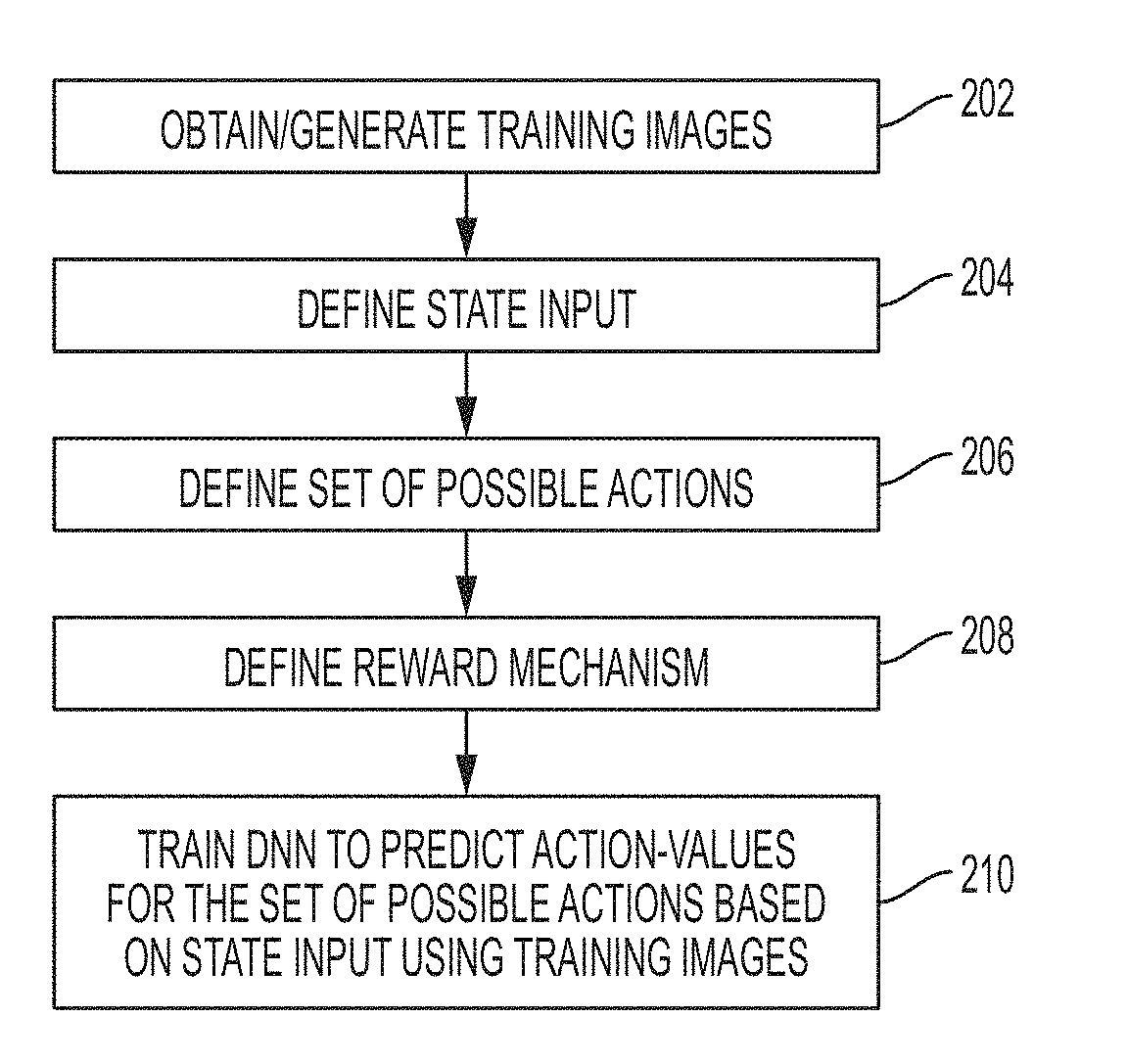

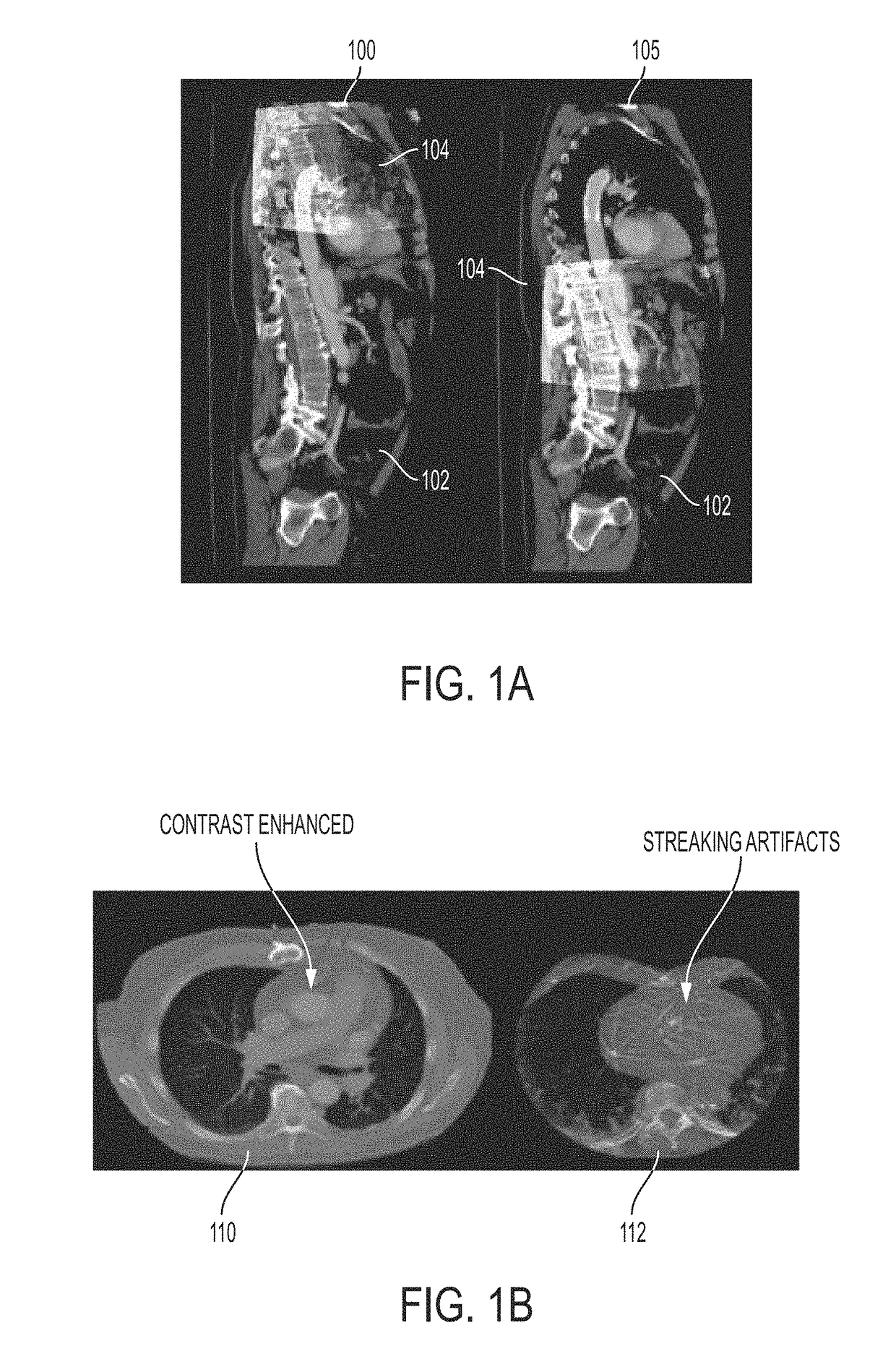

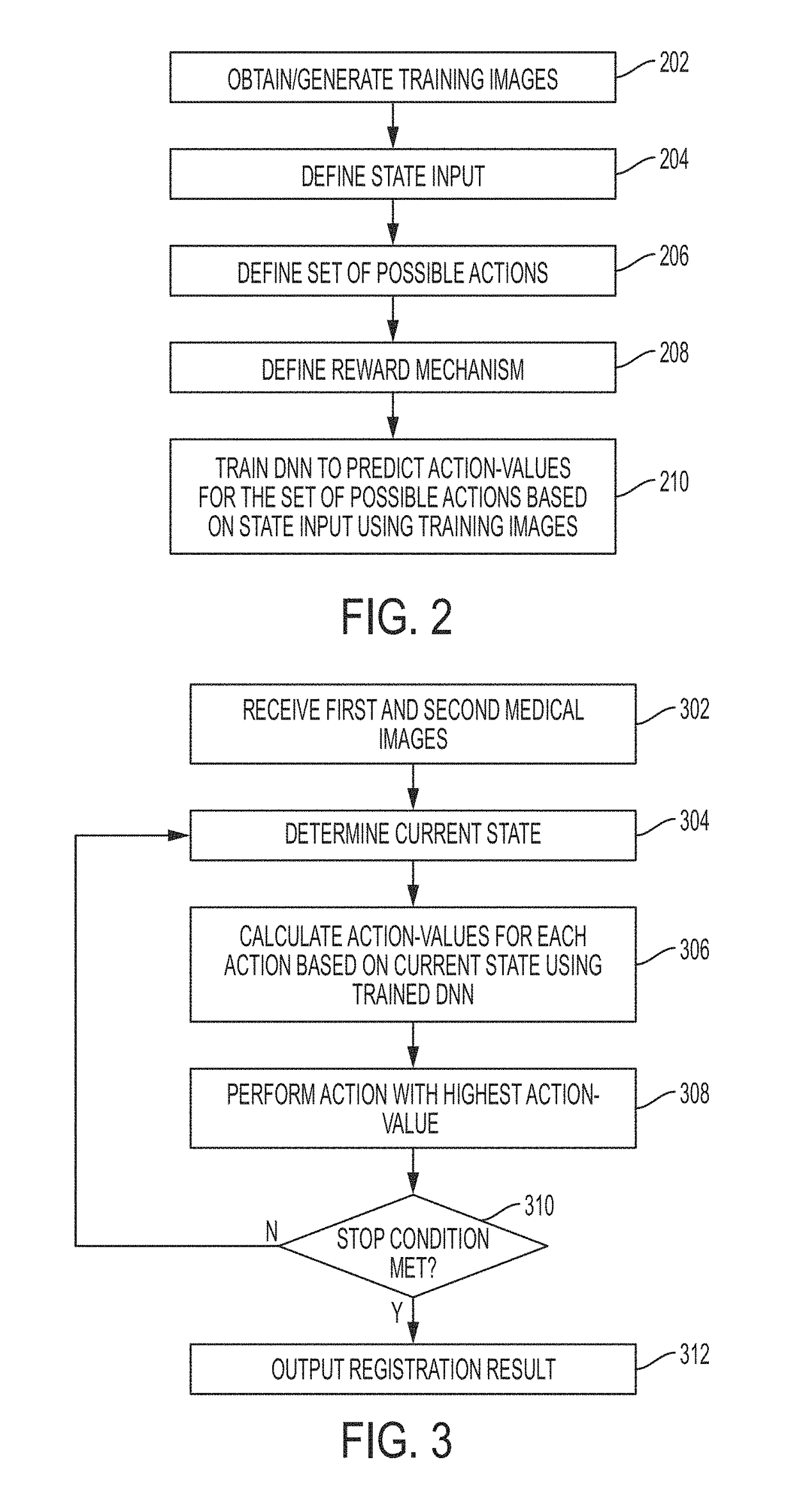

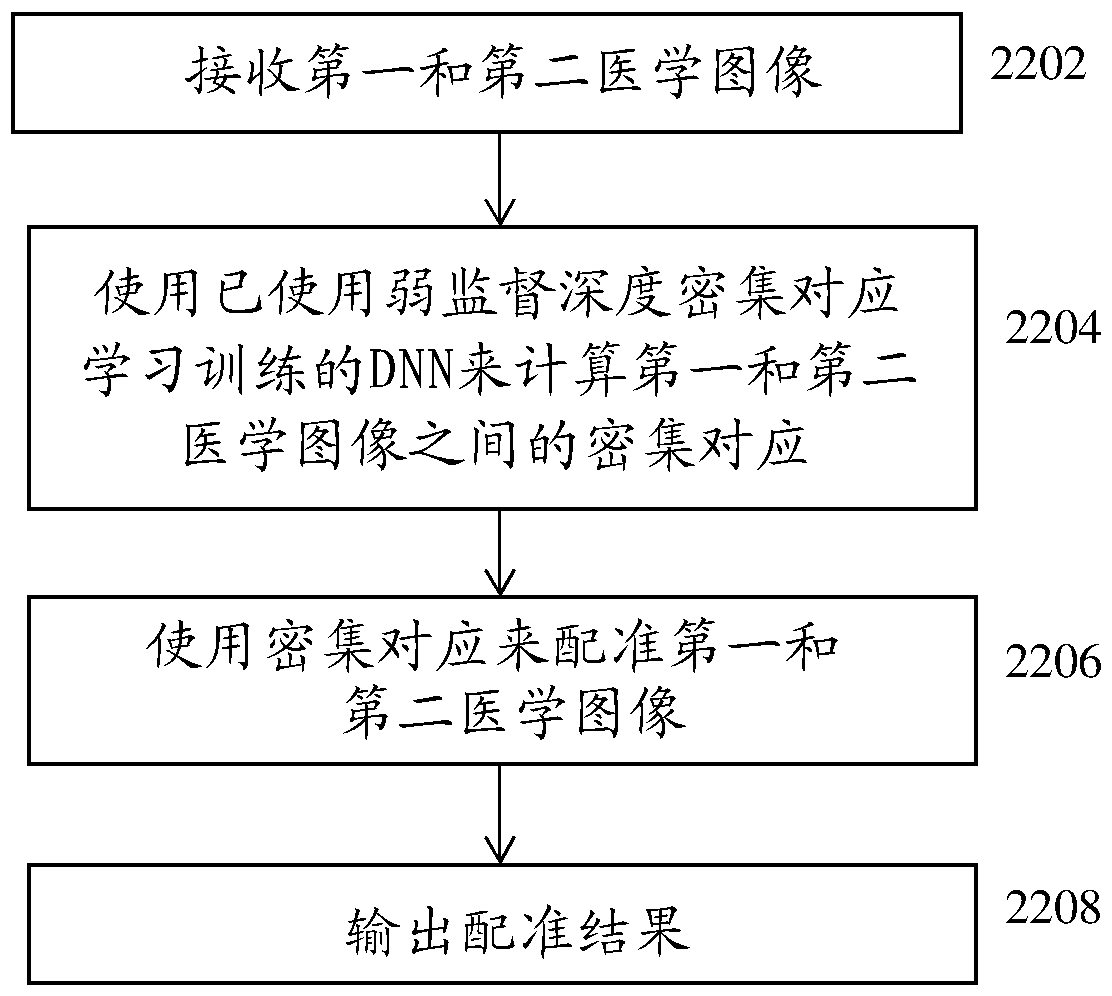

Methods and systems for image registration using an intelligent artificial agent are disclosed. In an intelligent artificial agent based registration method, a current state observation of an artificial agent is determined based on the medical images to be registered and current transformation parameters. Action-values are calculated for a plurality of actions available to the artificial agent based on the current state observation using a machine learning based model, such as a trained deep neural network (DNN). The actions correspond to predetermined adjustments of the transformation parameters. An action having a highest action-value is selected from the plurality of actions and the transformation parameters are adjusted by the predetermined adjustment corresponding to the selected action. The determining, calculating, and selecting steps are repeated for a plurality of iterations, and the medical images are registered using final transformation parameters resulting from the plurality of iterations.

Owner:SIEMENS HEALTHCARE GMBH

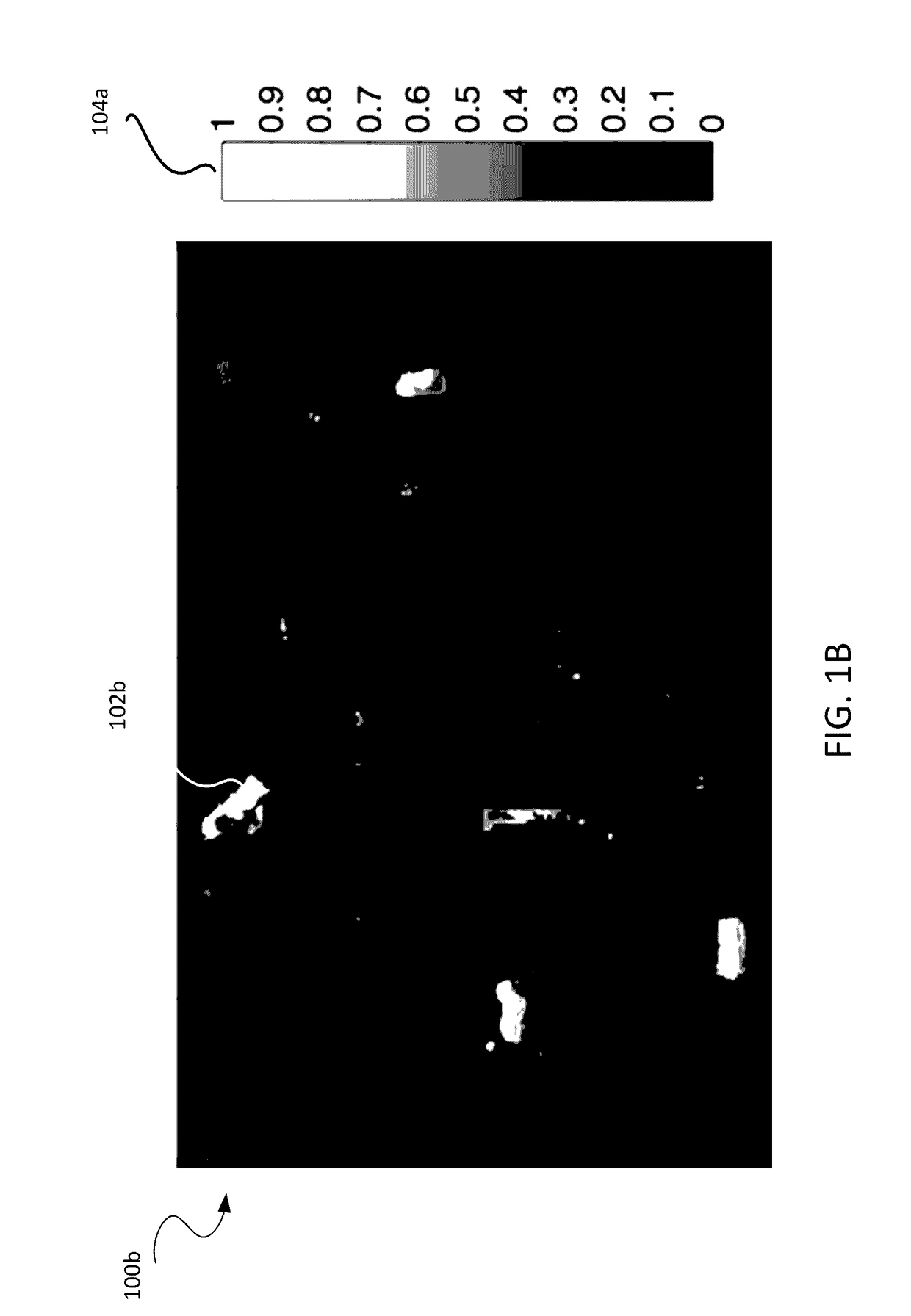

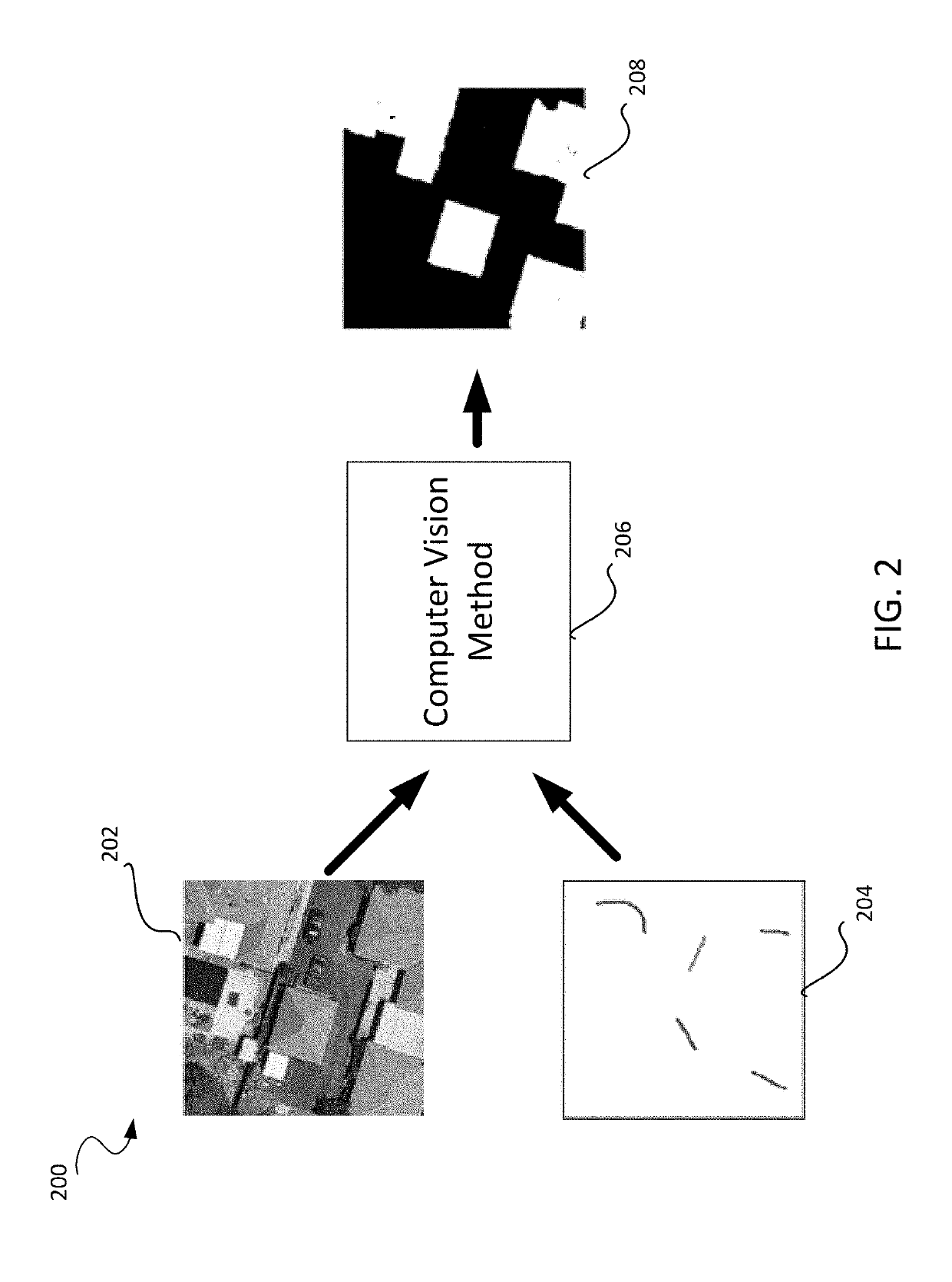

Systems and methods for analyzing remote sensing imagery

ActiveUS20170076438A1Image enhancementTelevision system detailsTransformation parameterRemote sensing

Disclosed systems and methods relate to remote sensing, deep learning, and object detection. Some embodiments relate to machine learning for object detection, which includes, for example, identifying a class of pixel in a target image and generating a label image based on a parameter set. Other embodiments relate to machine learning for geometry extraction, which includes, for example, determining heights of one or more regions in a target image and determining a geometric object property in a target image. Yet other embodiments relate to machine learning for alignment, which includes, for example, aligning images via direct or indirect estimation of transformation parameters.

Owner:CAPE ANALYTICS INC

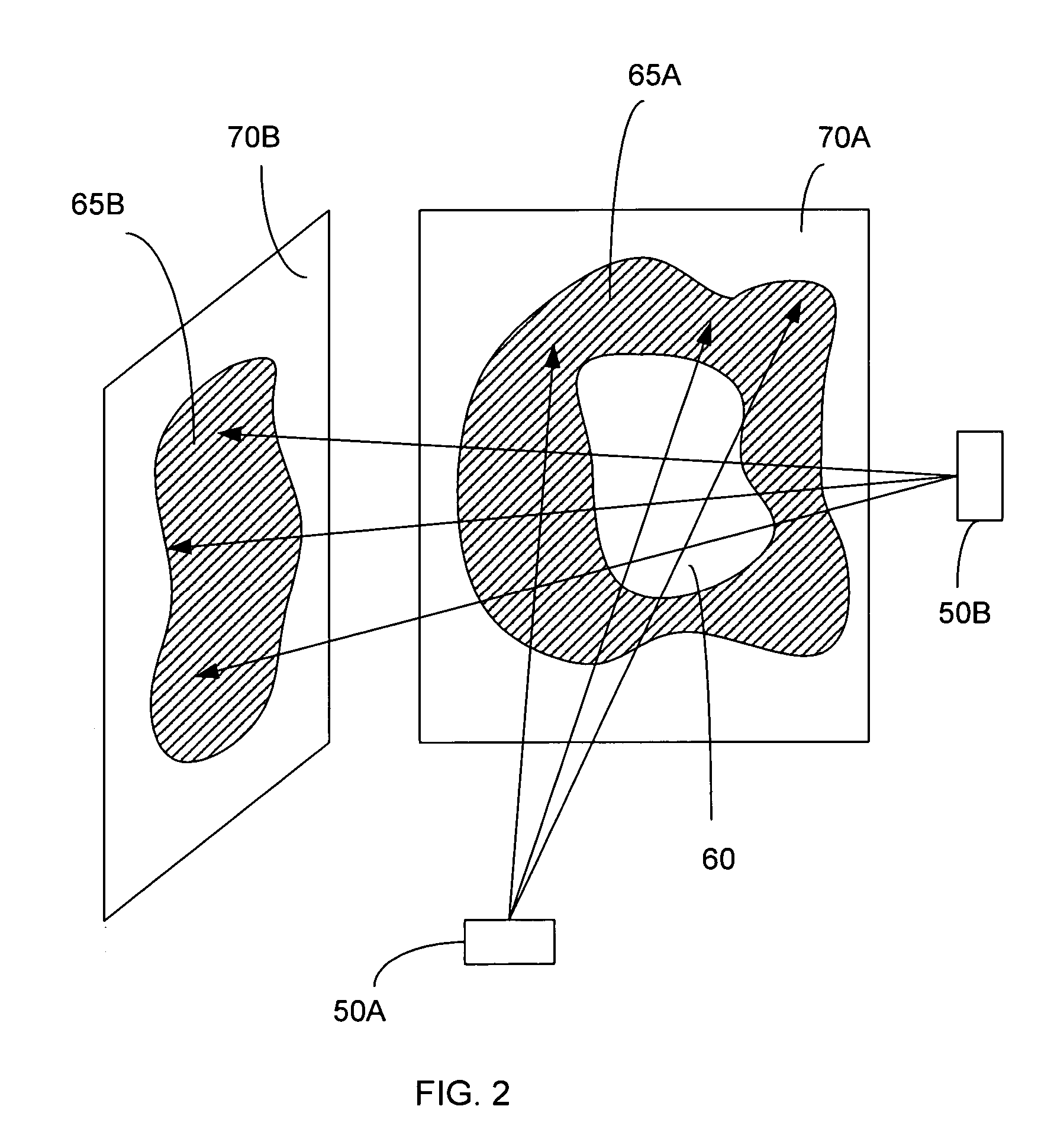

Image guided radiosurgery method and apparatus using registration of 2D radiographic images with digitally reconstructed radiographs of 3D scan data

ActiveUS20050049478A1Rapid and accurate methodThe process is fast and accurateImage enhancementImage analysisIn plane3d image

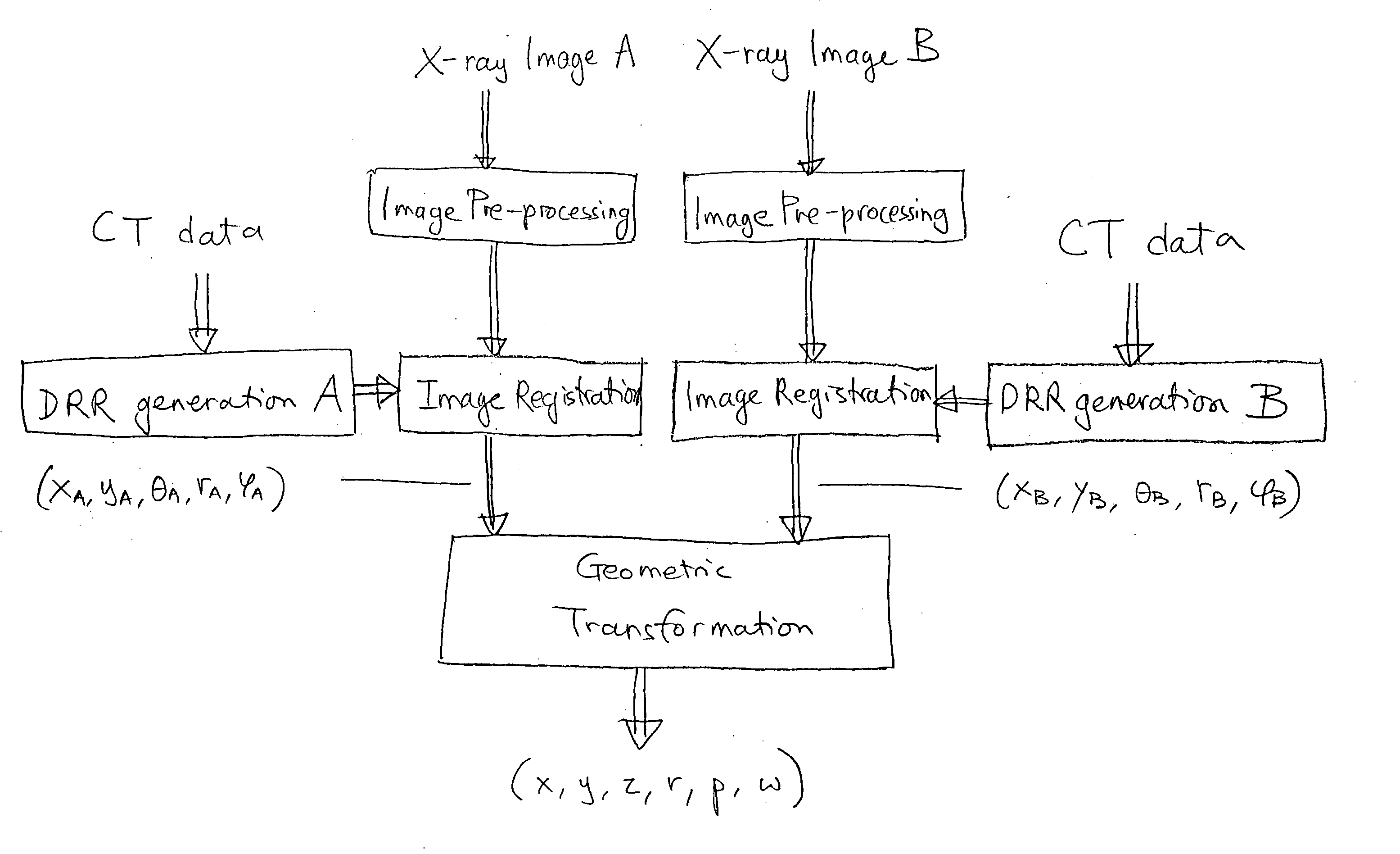

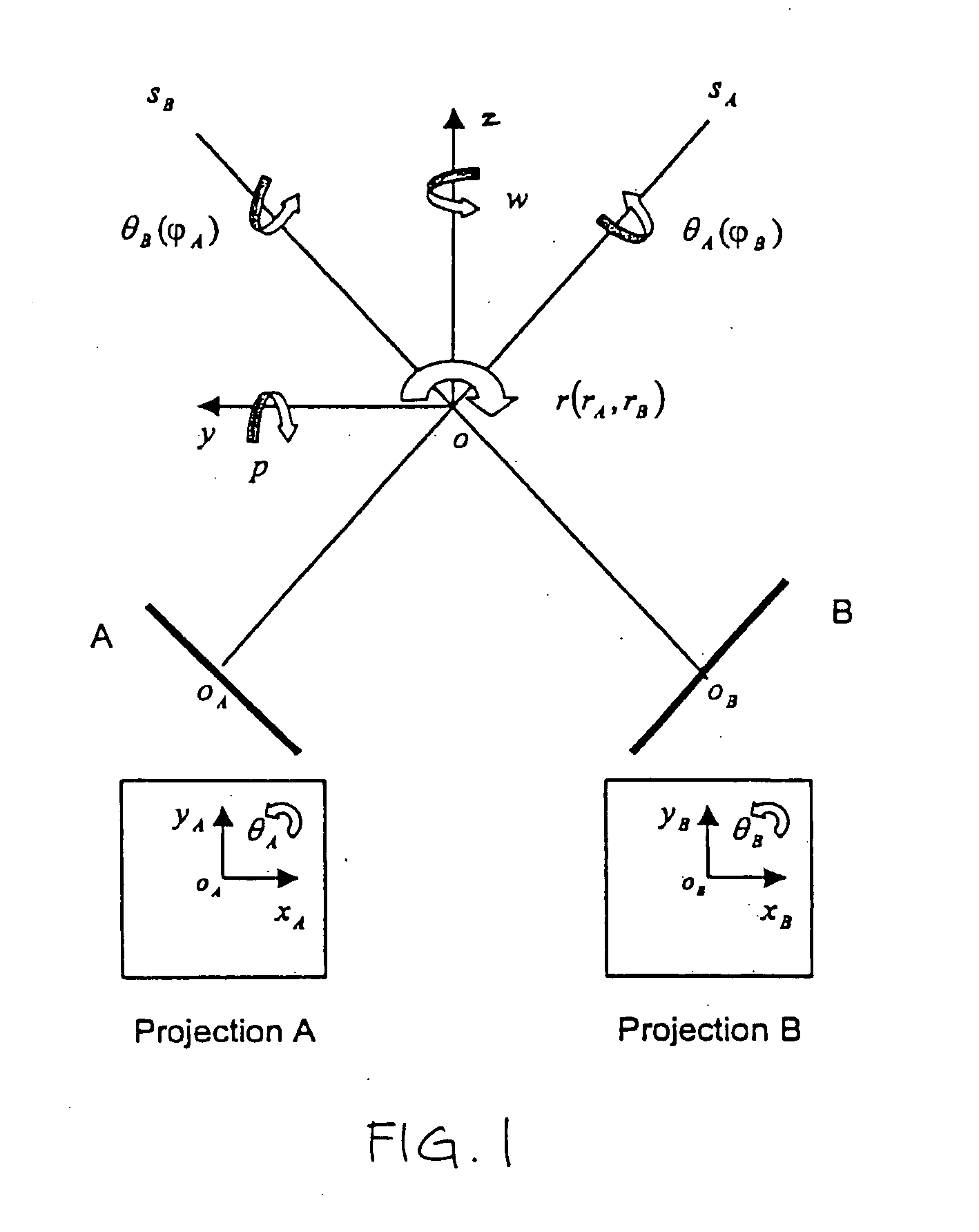

An image-guided radiosurgery method and system are presented that use 2D / 3D image registration to keep the radiosurgical beams properly focused onto a treatment target. A pre-treatment 3D scan of the target is generated at or near treatment planning time. A set of 2D DRRs are generated, based on the pre-treatment 3D scan. At least one 2D x-ray image of the target is generated in near real time during treatment. The DRRs are registered with the x-ray images, by computing a set of 3D transformation parameters that represent the change in target position between the 3D scan and the x-ray images. The relative position of the radiosurgical beams and the target is continuously adjusted in near real time in accordance with the 3D transformation parameters. A hierarchical and iterative 2D / 3D registration algorithm is used, in which the transformation parameters that are in-plane with respect to the image plane of the x-ray images are computed separately from the out-of-plane transformation parameters.

Owner:ACCURAY

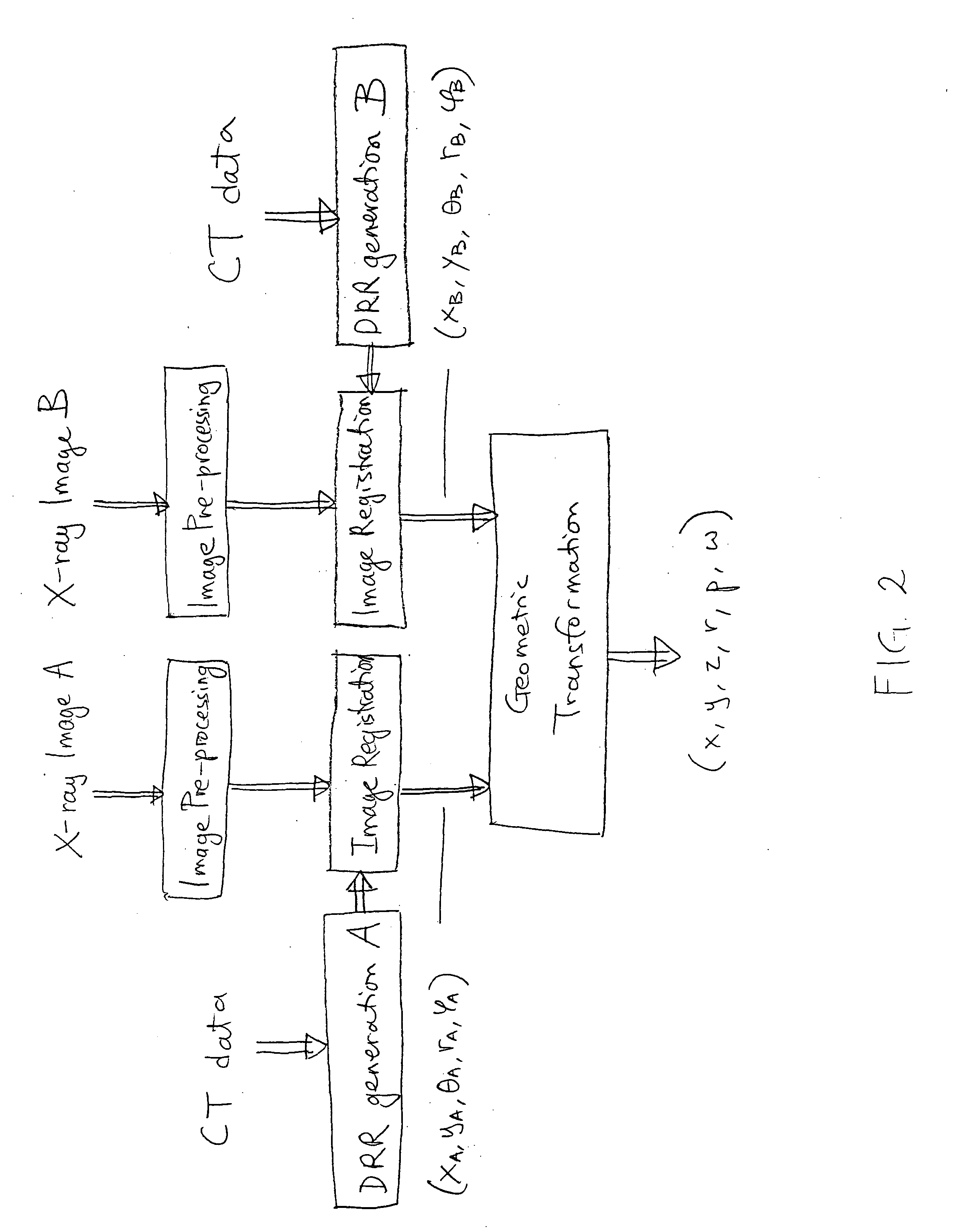

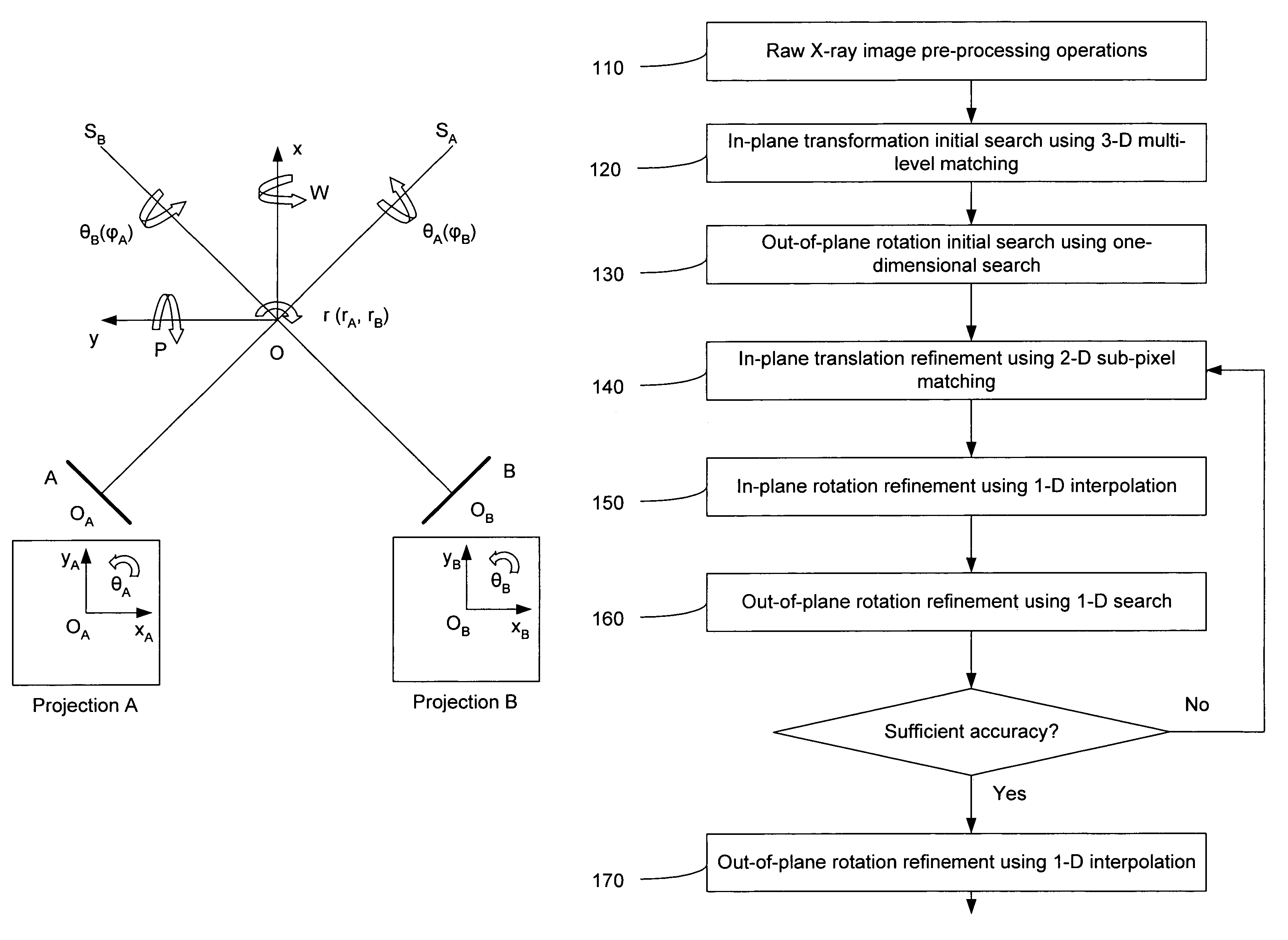

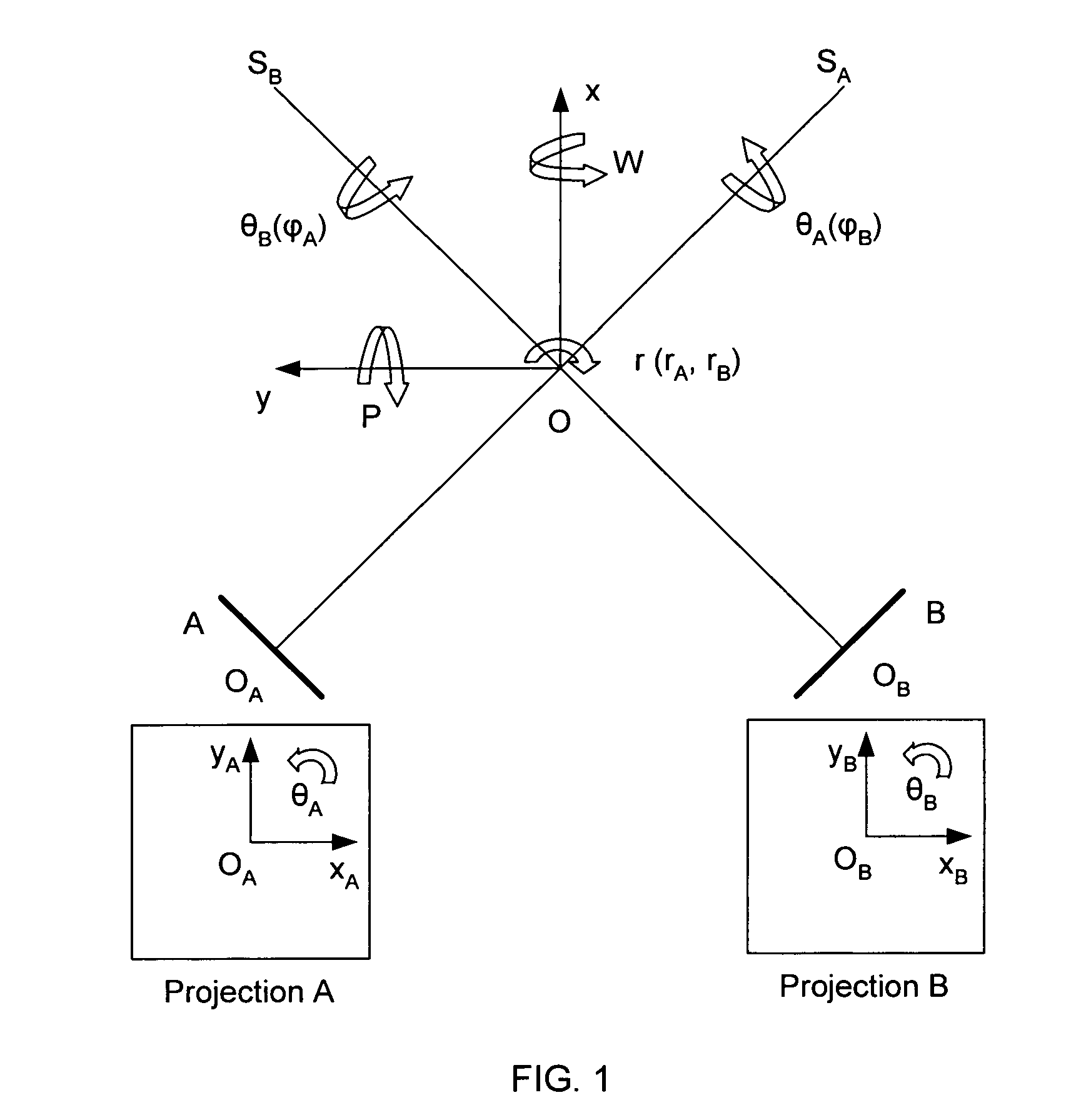

Apparatus and method for registering 2D radiographic images with images reconstructed from 3D scan data

InactiveUS7204640B2Precise and rapidImprove accuracyImage enhancementImage analysisIn planeTransformation parameter

A method and system is provided for registering a 2D radiographic image of a target with previously generated 3D scan data of the target. A reconstructed 2D image is generated from the 3D scan data. The radiographic 2D image is registered with the reconstructed 2D images to determine the values of in-plane transformation parameters (x, y, θ) and out-of-plane rotational parameters (r, Φ), where the parameters represent the difference in the position of the target in the radiographic image, as compared to the 2D reconstructed image. An initial estimate for the in-plane transformation parameters is made by a 3D multi-level matching process, using the sum-of-square differences similarity measure. Based on these estimated parameters, an initial 1-D search is performed for the out-of-plane rotation parameters (r, Φ), using a pattern intensity similarity measure. The in-plane parameters (x, y, θ) and out-of-plane parameters (r, Φ) are iteratively refined, until a desired accuracy is reached.

Owner:ACCURAY

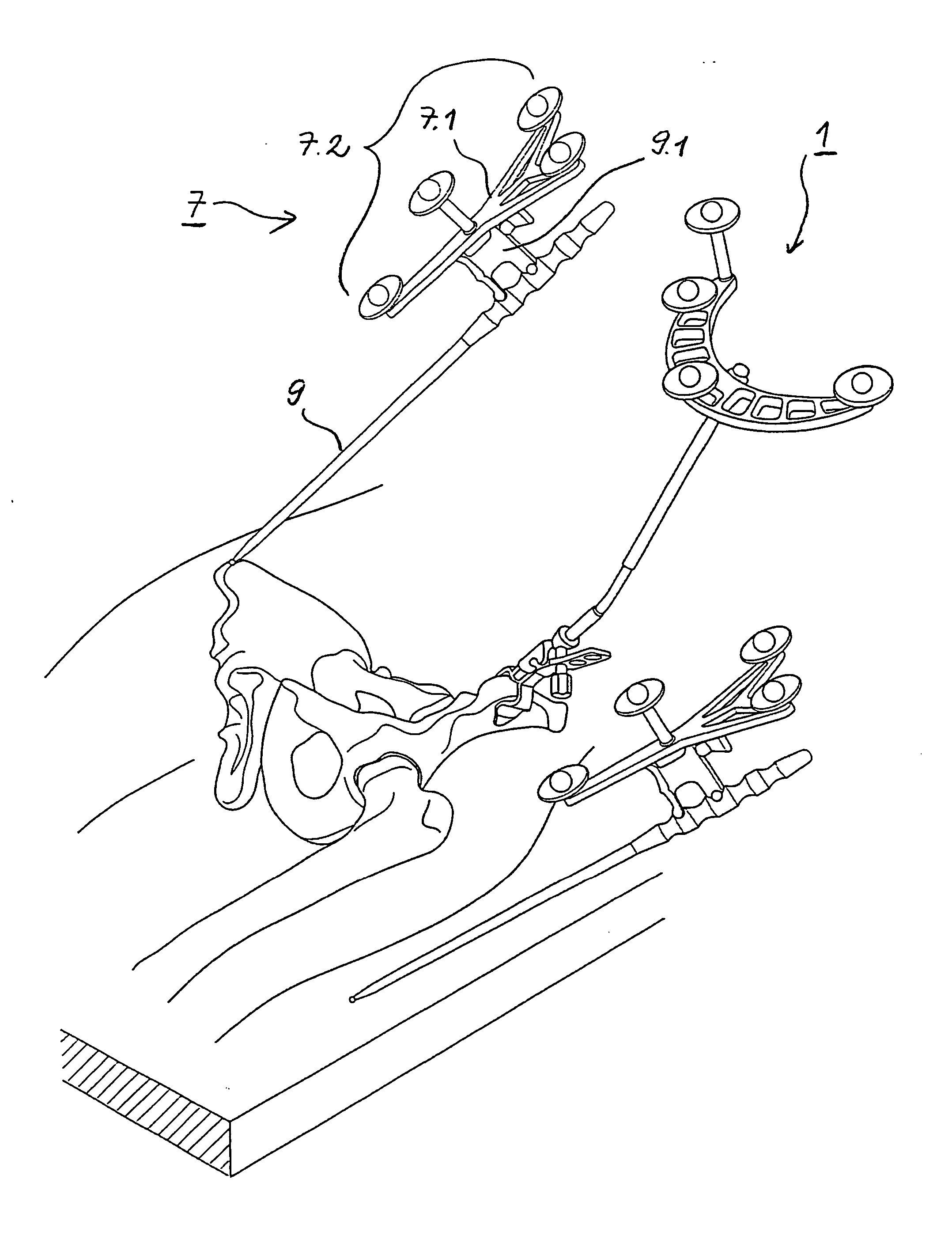

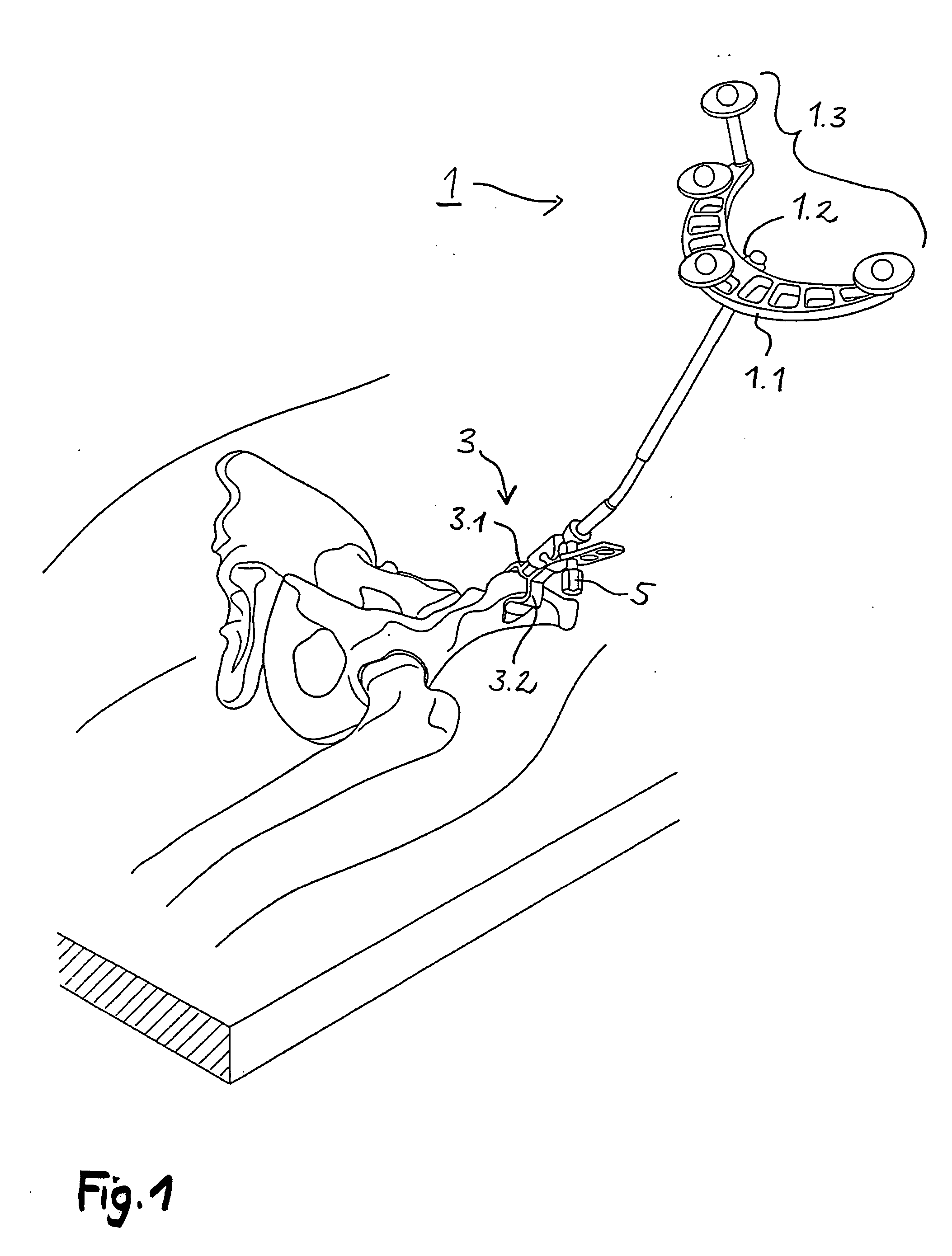

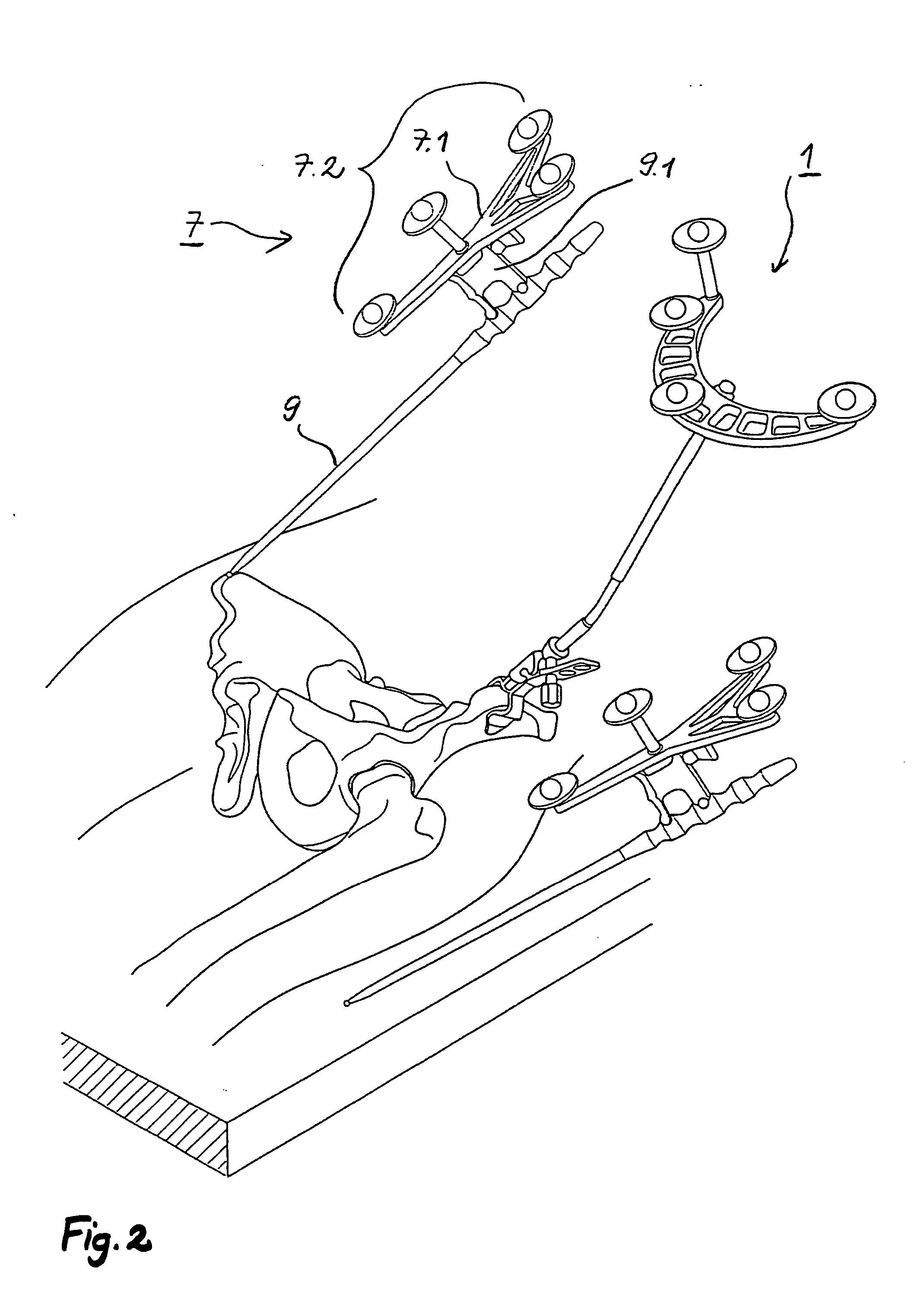

Arrangement and method for the intra-operative determination of the position of a joint replacement implant

InactiveUS20050149050A1Easy to operateLow risk of errorPerson identificationJoint implantsReference vectorMeasurement point

Arrangement for the intra-operative determination of the spatial position and angular position of a joint replacement implant, especially a hip socket or shoulder socket or an associated stem implant, or a vertebral replacement implant, especially a lumbar or cervical vertebral implant, using a computer tomography method, having: a computer tomography modeling device for generating and storing a three-dimensional image of a joint region or vertebral region to be provided with the joint replacement implant, an optical coordinate-measuring arrangement for providing real position coordinates of defined real or virtual points of the joint region or vertebral region and / or position reference vectors between such points within the joint region or vertebral region or from those points to joint-function-relevant points on an extremity outside the joint region or vertebral region, the coordinate-measuring arrangement comprising a stereocamera or stereocamera arrangement for the spatial recording of transducer signals, at least one multipoint transducer, which comprises a group of measurement points rigidly connected to one another, and an evaluation unit for evaluating sets of measurement point coordinates supplied by the multipoint transducer(s) and recorded by the stereocamera, and a matching-processing unit for real position matching of the image to the actual current spatial position of the joint region or vertebral region with reference to the real position coordinates of the defined points, the matching-processing unit being configured for calculating transformation parameters with minimalization of the normal spacings.

Owner:SMITH & NEPHEW ORTHOPAEDICS

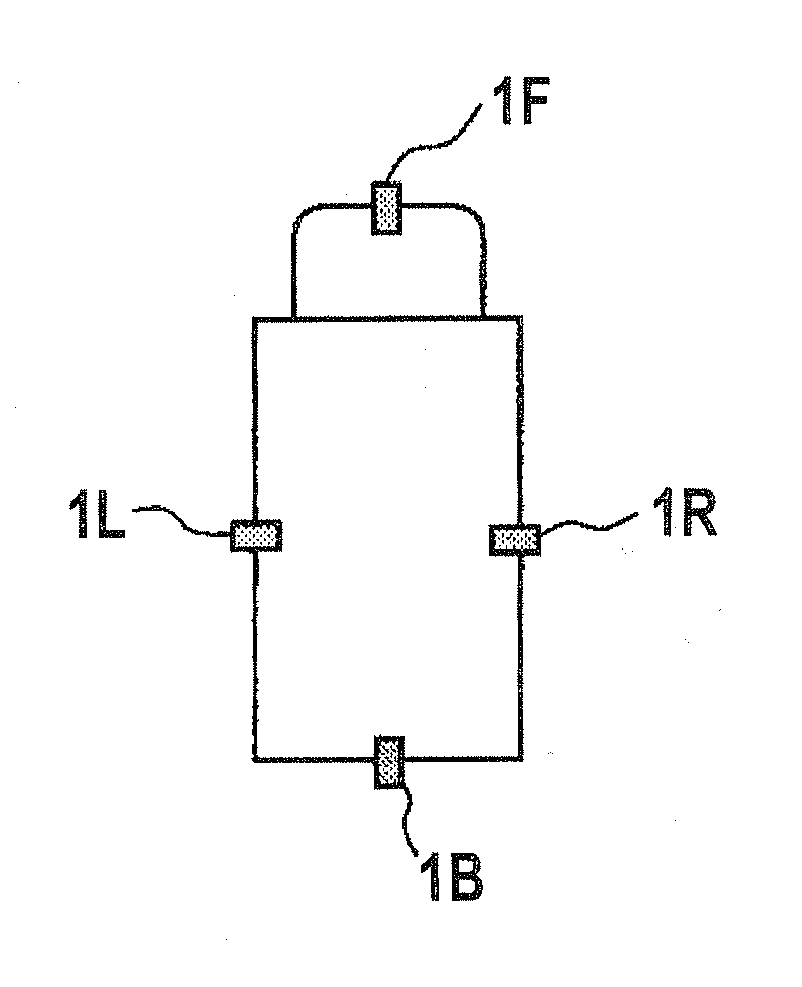

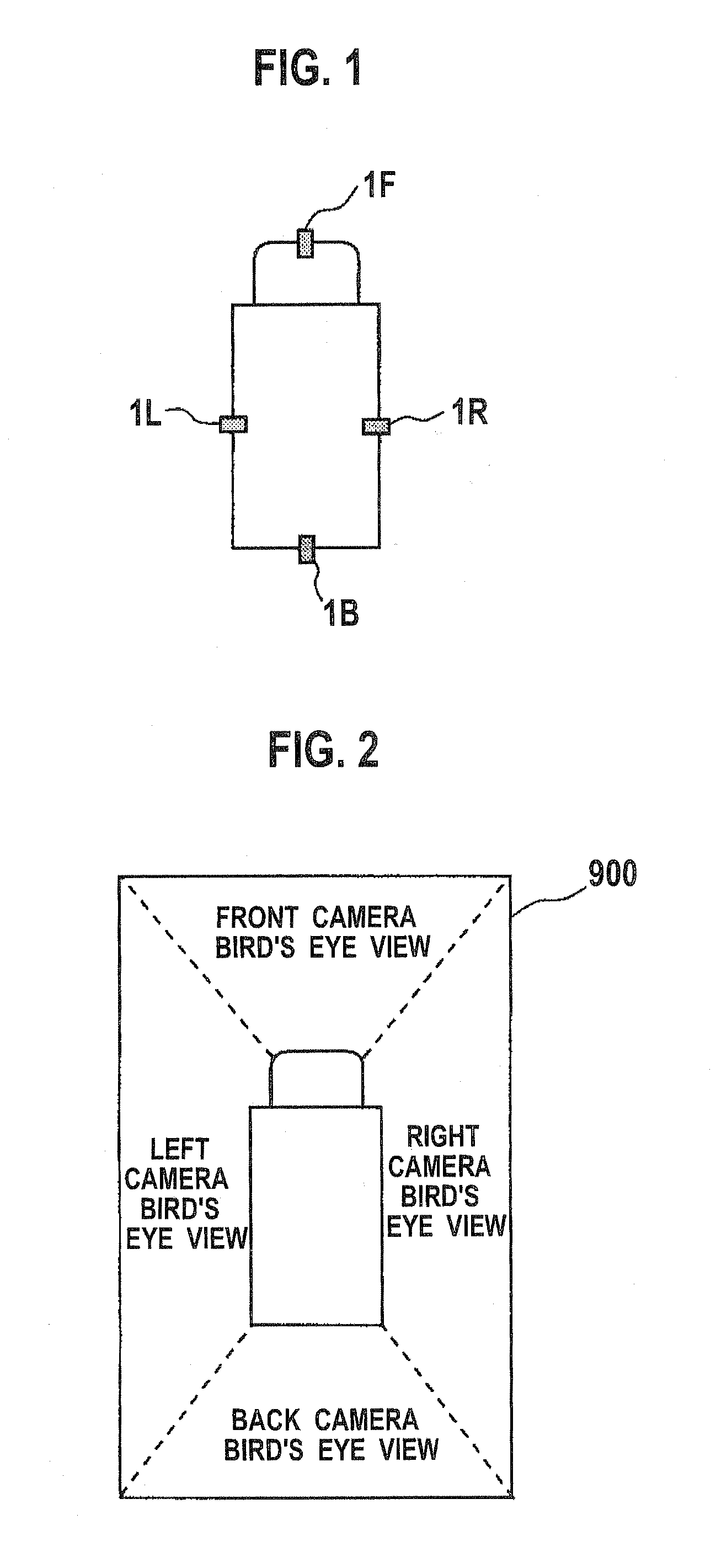

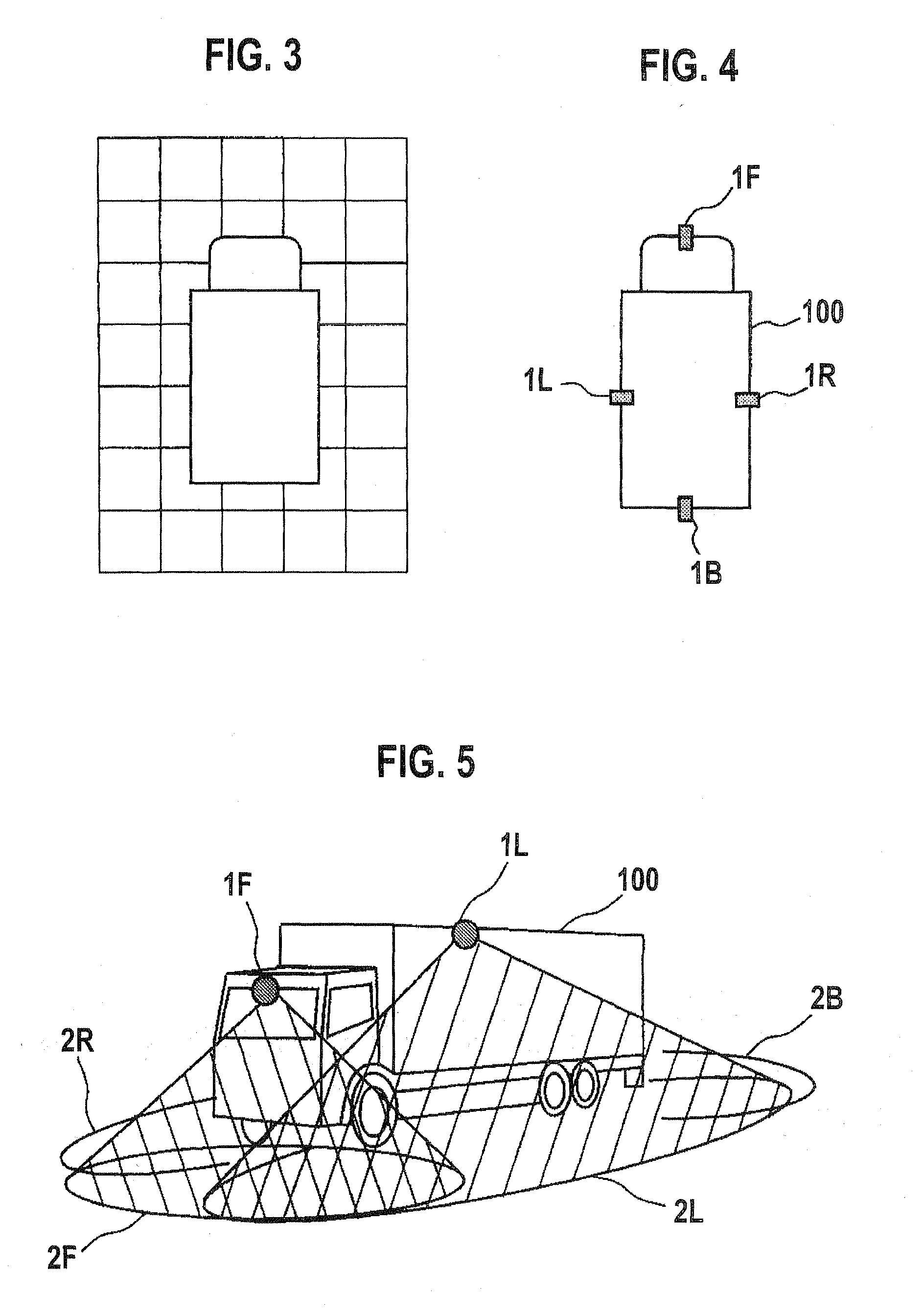

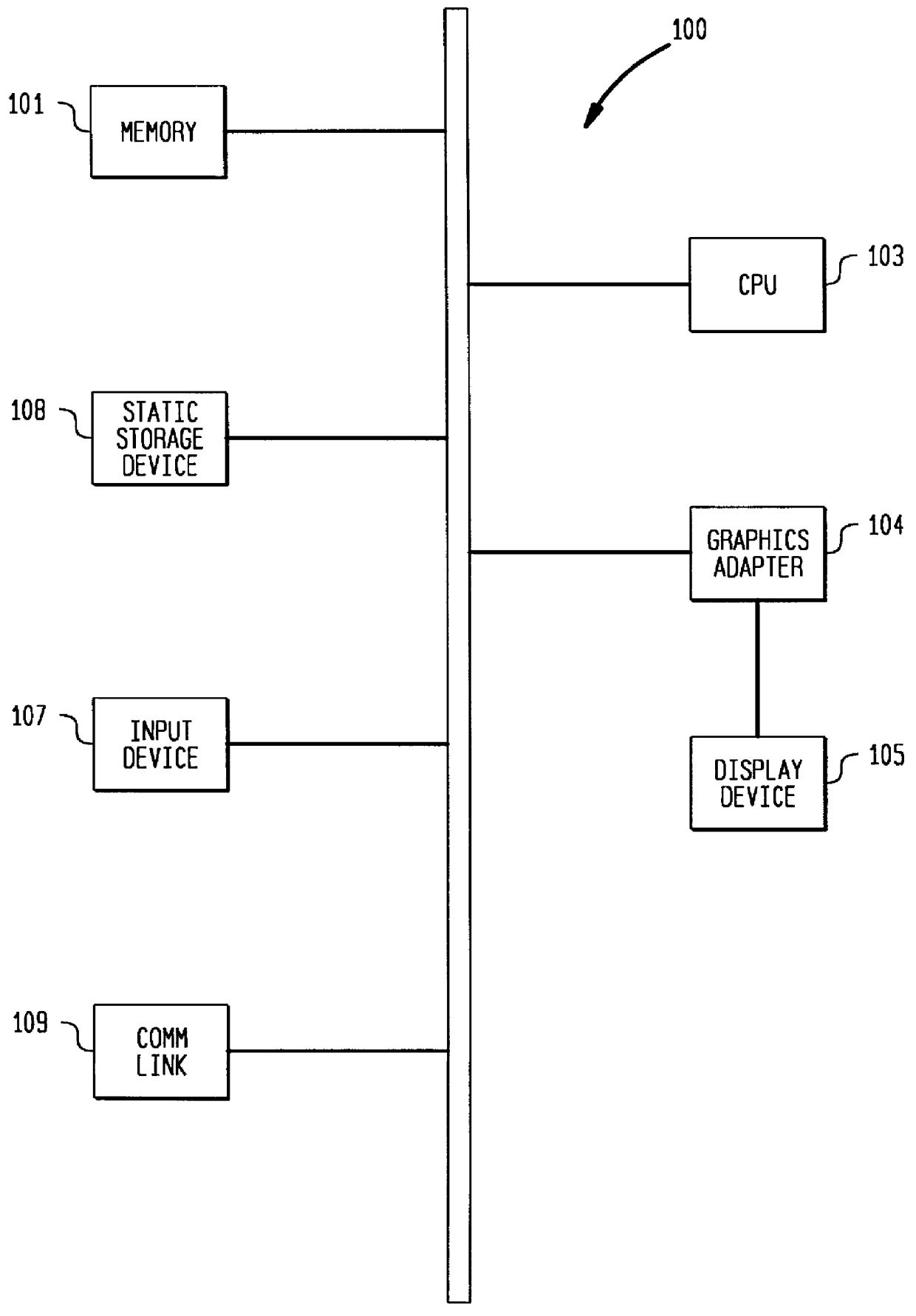

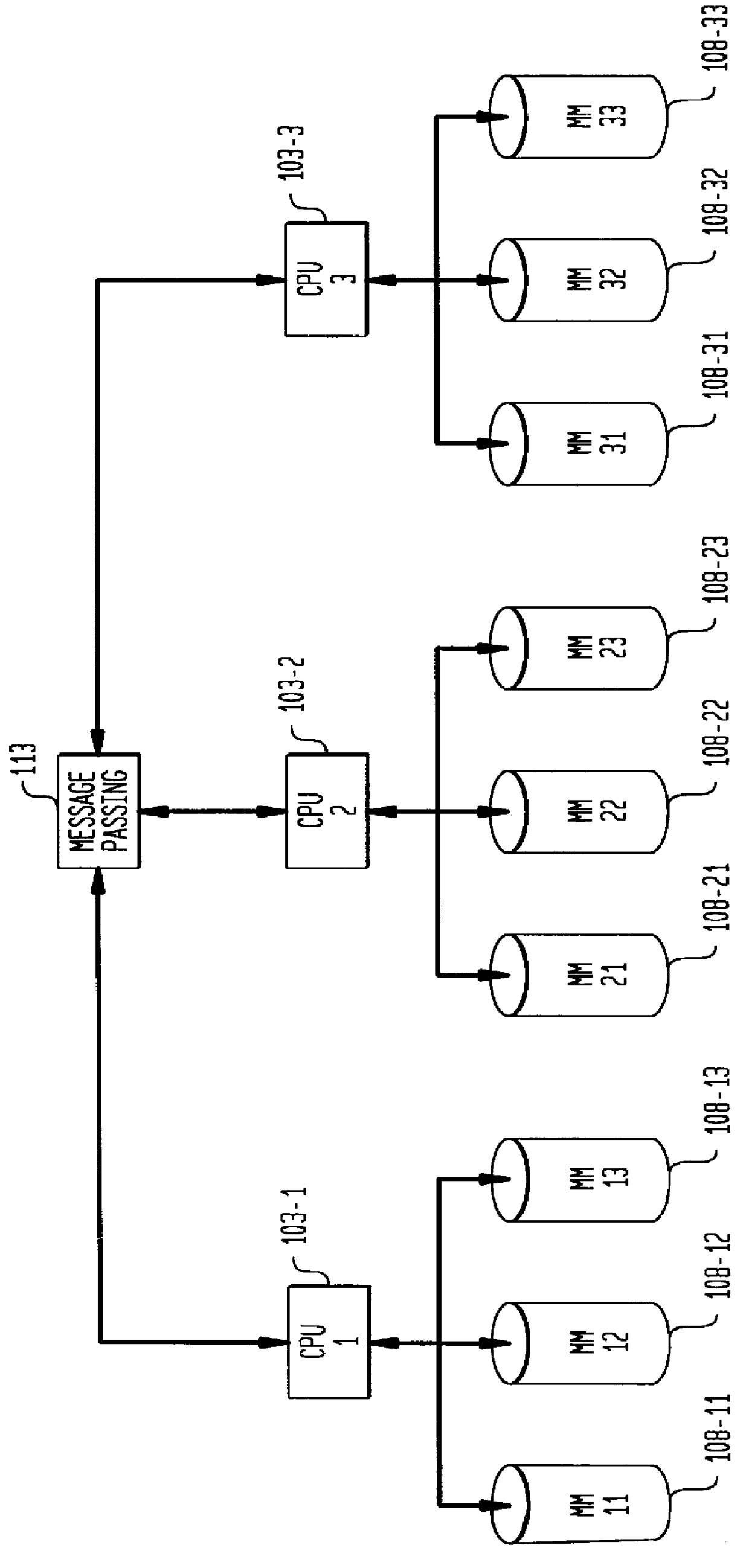

Camera calibration device, camera calibration method, and vehicle having the calibration device

InactiveUS20080181488A1Reduce image degradationEasy maintenanceCharacter and pattern recognitionOptical viewingTransformation parameterField of view

Cameras are installed at the front, right, left, and back side of a vehicle, and two feature points are located at each of the common field of view areas between the front-right cameras, front-left cameras, back-right cameras, and back-left cameras. A camera calibration device includes a parameter extraction unit for extracting transformation parameters for projecting each camera's captured image on the ground and synthesizing them. After transformation parameters for the left and right cameras are obtained by a perspective projection transformation, transformation parameters for the front and back cameras are obtained by a planar projective transformation so as to accommodate transformation parameters for the front and back cameras with the transformation parameters for the left and right cameras.

Owner:SANYO ELECTRIC CO LTD

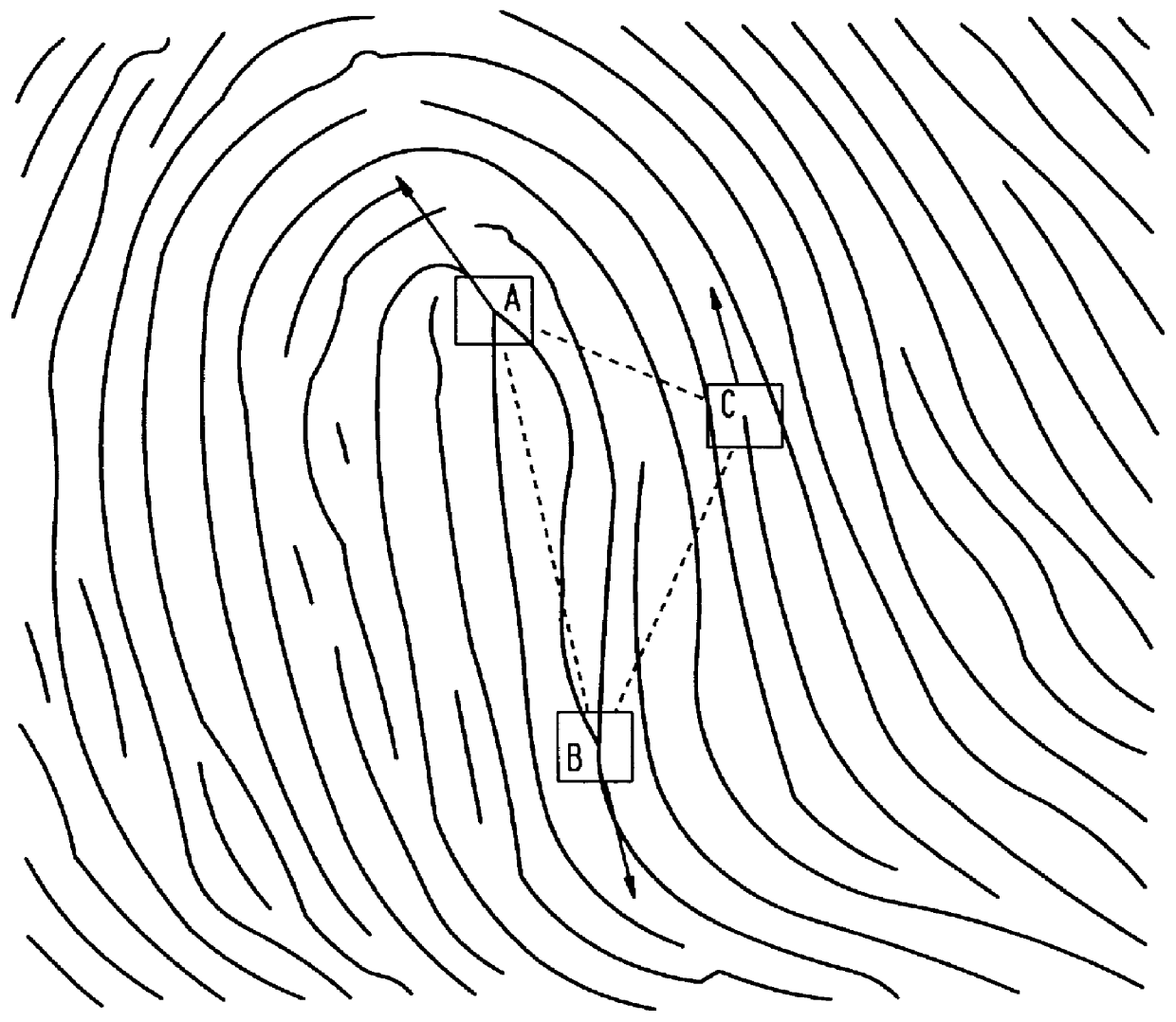

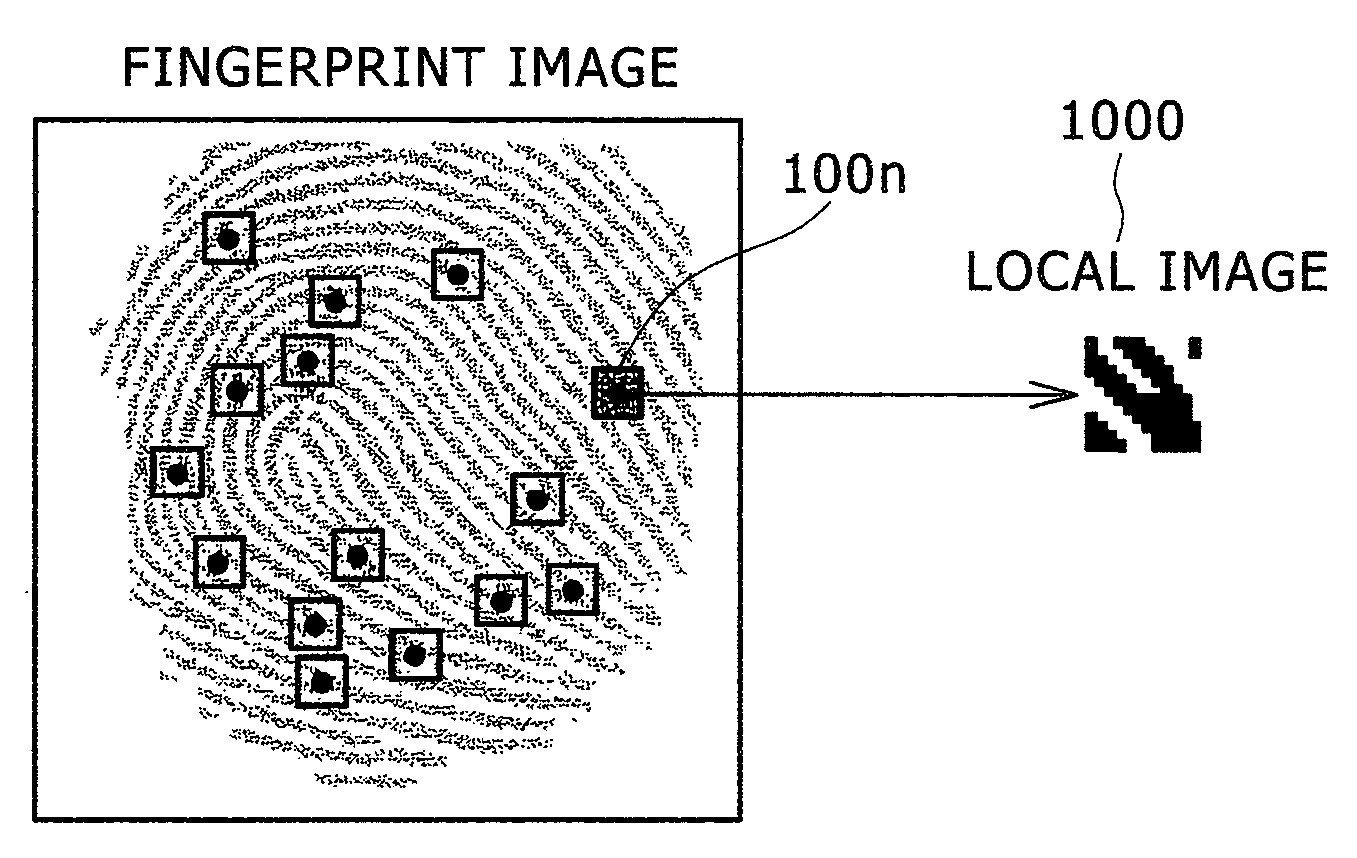

Method and apparatus for fingerprint matching using transformation parameter clustering based on local feature correspondences

The method and apparatus of the present invention provide for automatic recognition of fingerprint images. In an acquisition mode, subsets of the feature points for a given fingerprint image are generated in a deterministic fashion. One or more of the subsets of feature points for the given fingerprint image is selected. For each selected subset, a key is generated that characterizes the fingerprint in the vicinity of the selected subset. A multi-map entry corresponding to the selected subset of feature points is stored and labeled with the corresponding key. In the recognition mode, a query fingerprint image is supplied to the system. The processing of the acquisition mode is repeated in order to generate a plurality of keys associated with a plurality of subsets of feature points of the query fingerprint image. For each key generated in the recognition mode, all entries in the multi-map that are associated with this key are retrieved. For each item retrieved, a hypothesized match between the query fingerprint image and the reference fingerprint image is constructed. Hypothesized matches are accumulated in a vote table. This list of hypotheses and scores stored in the vote table are preferably used to determine whether a match to the query fingerprint image is stored by the system.

Owner:IBM CORP

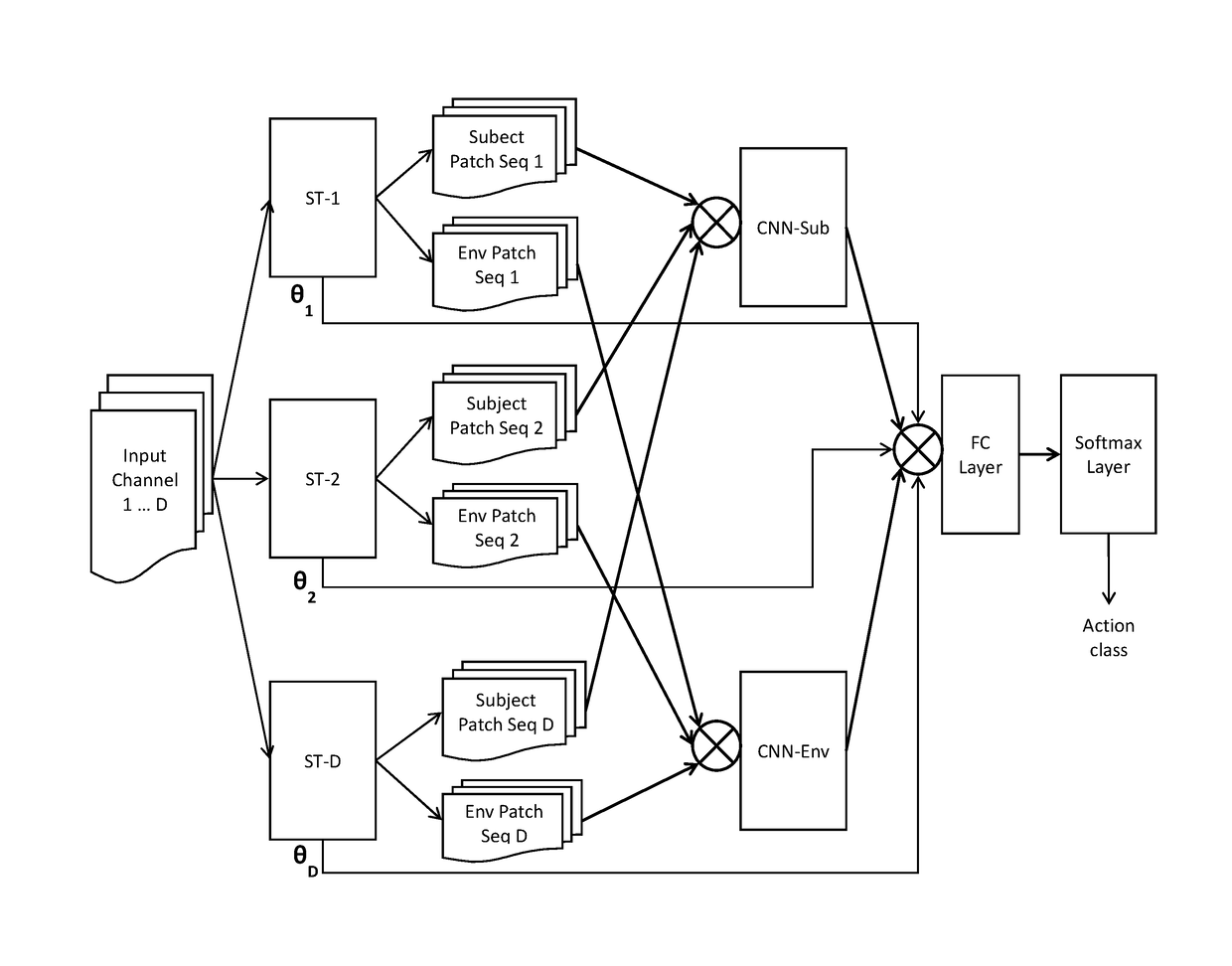

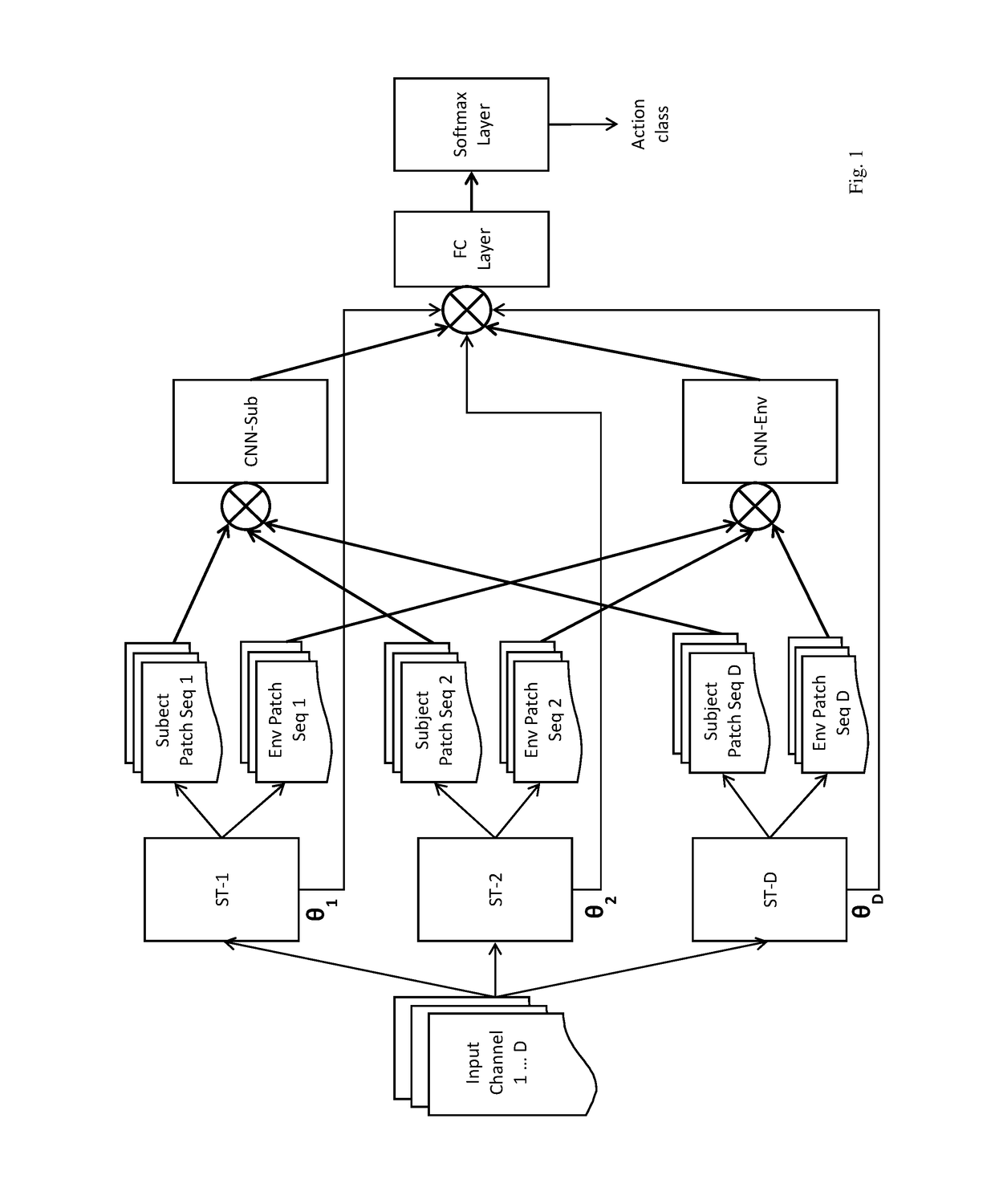

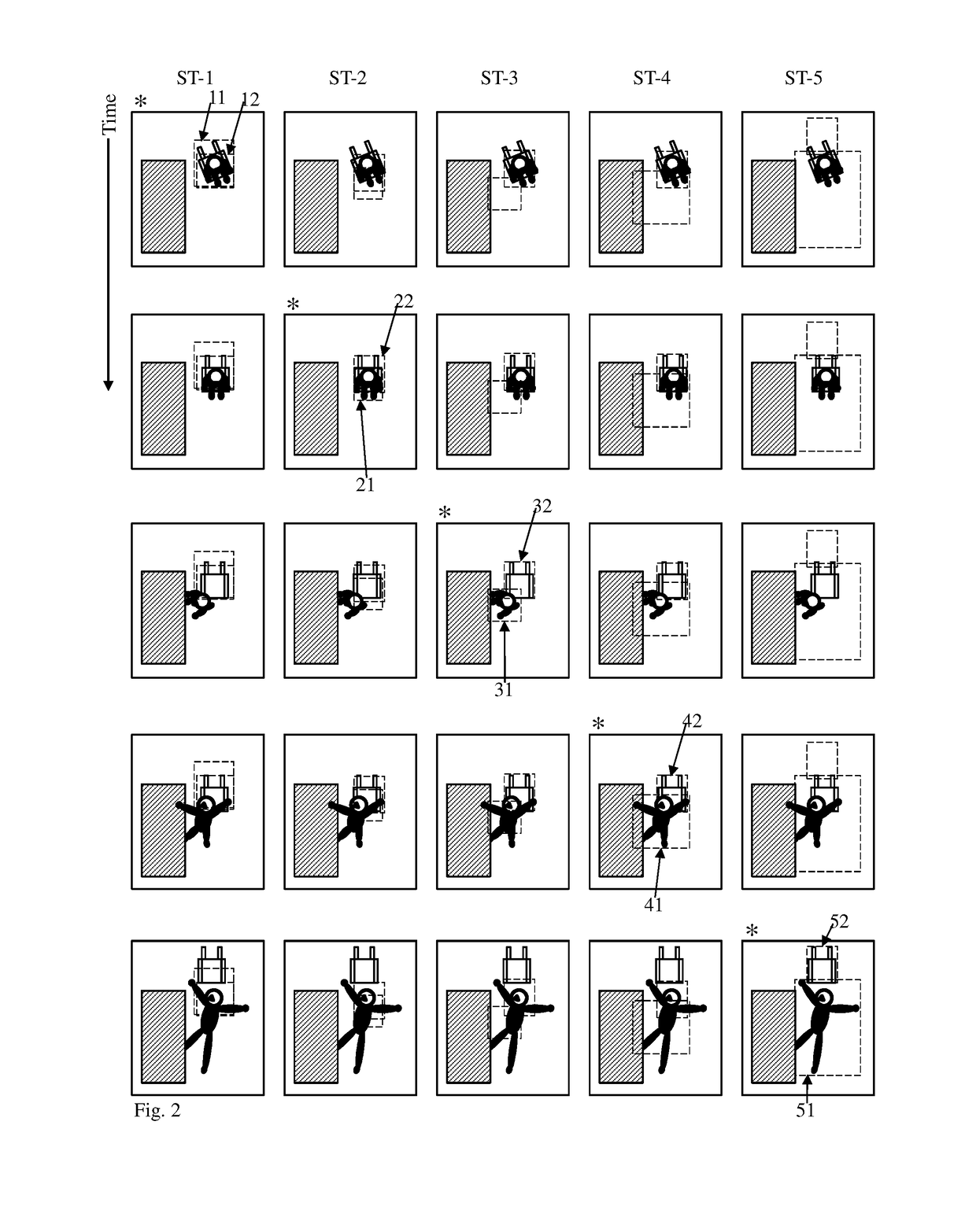

Self-attention deep neural network for action recognition in surveillance videos

An artificial neural network for analyzing input data, the input data being a 3D tensor having D channels, such as D frames of a video snippet, to recognize an action therein, including: D spatial transformer modules, each generating first and second spatial transformations and corresponding first and second attention windows using only one of the D channels, and transforming first and second regions of each of the D channels corresponding to the first and second attention windows to generate first and second patch sequences; first and second CNNs, respectively processing a concatenation of the D first patch sequences and a concatenation of the D second patch sequences; and a classification network receiving a concatenation of the outputs of the first and second CNNs and the D sets of transformation parameters of the first transformation outputted by the D spatial transformer modules, to generate a predicted action class.

Owner:KONICA MINOLTA LAB U S A INC

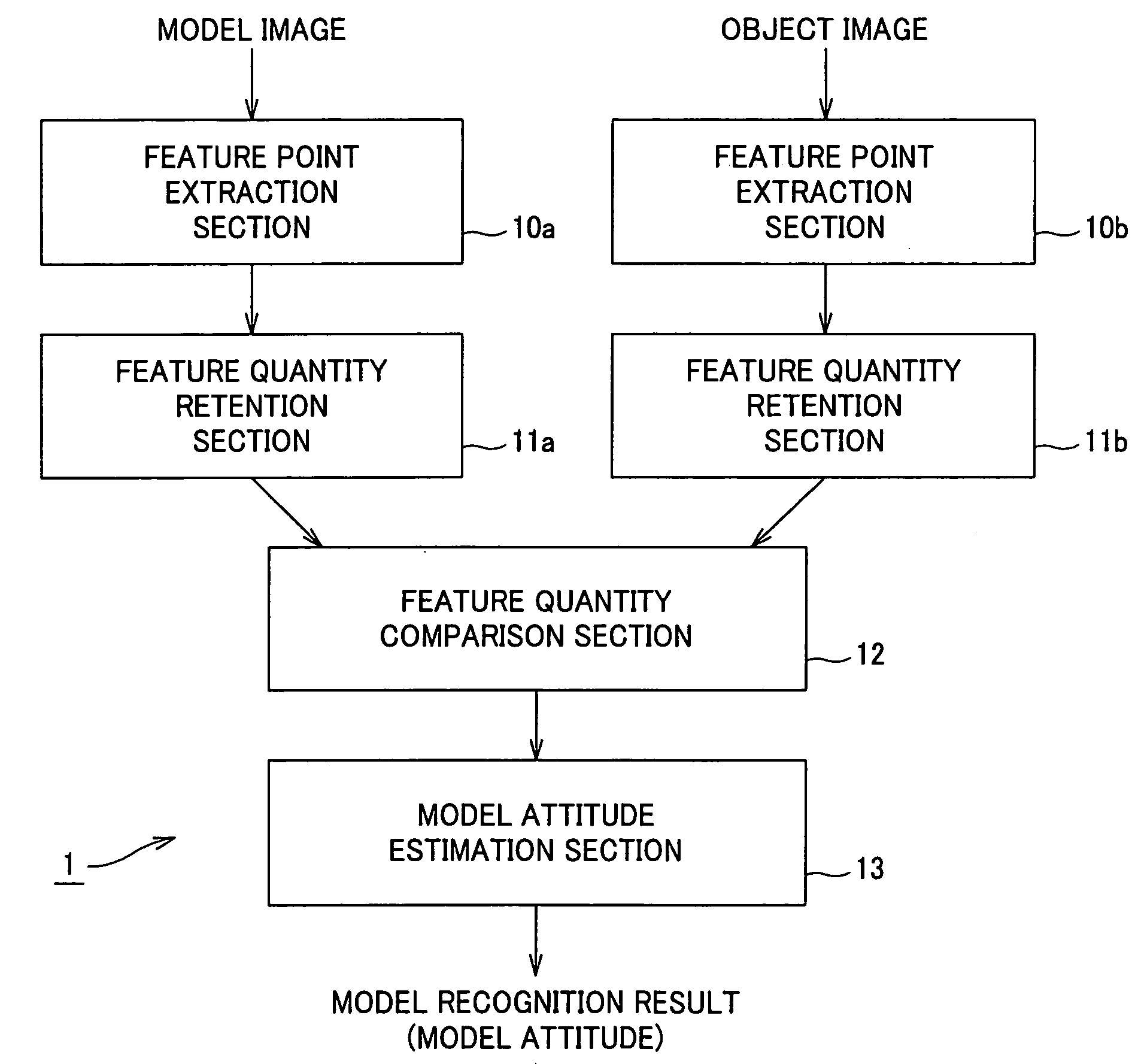

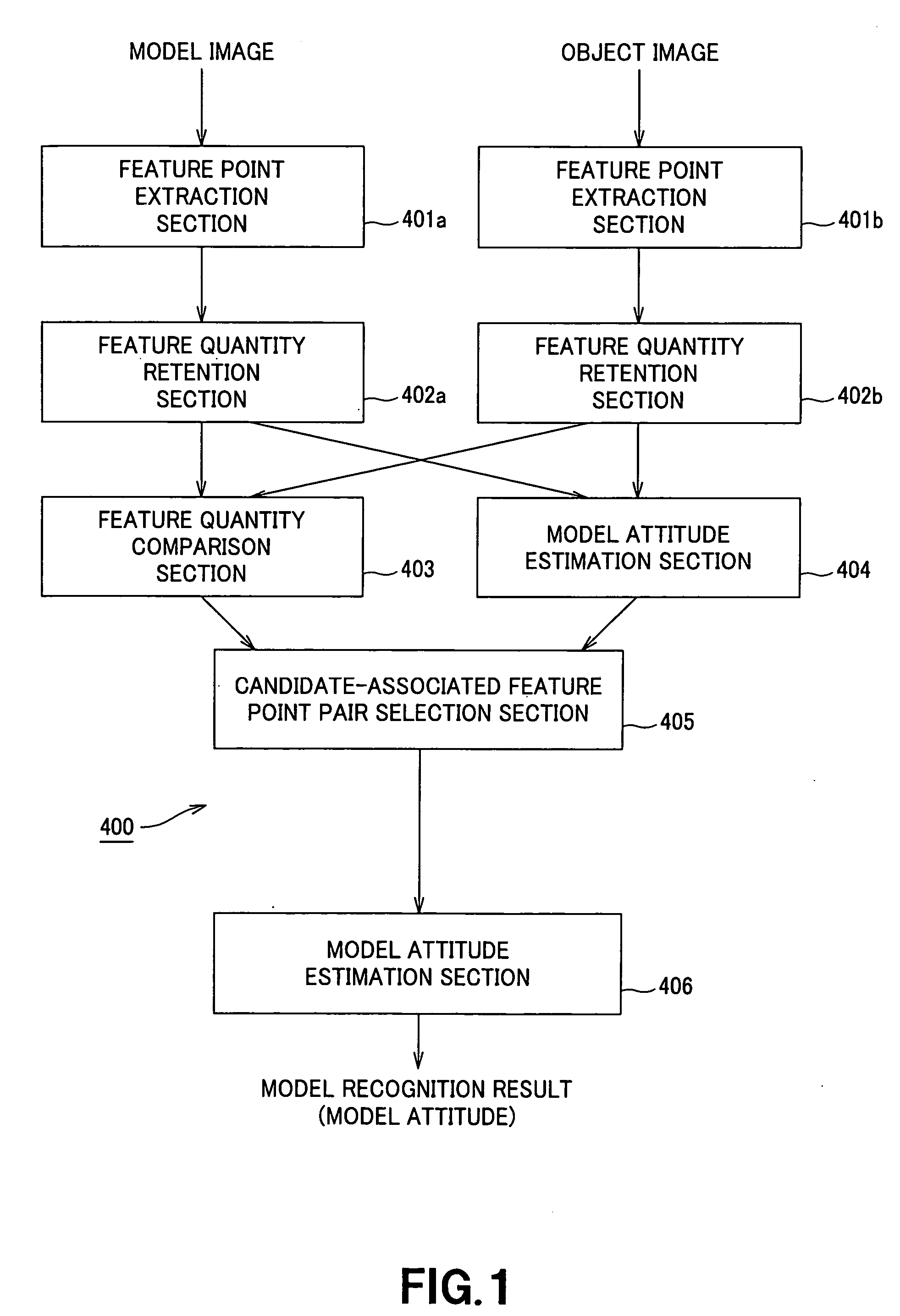

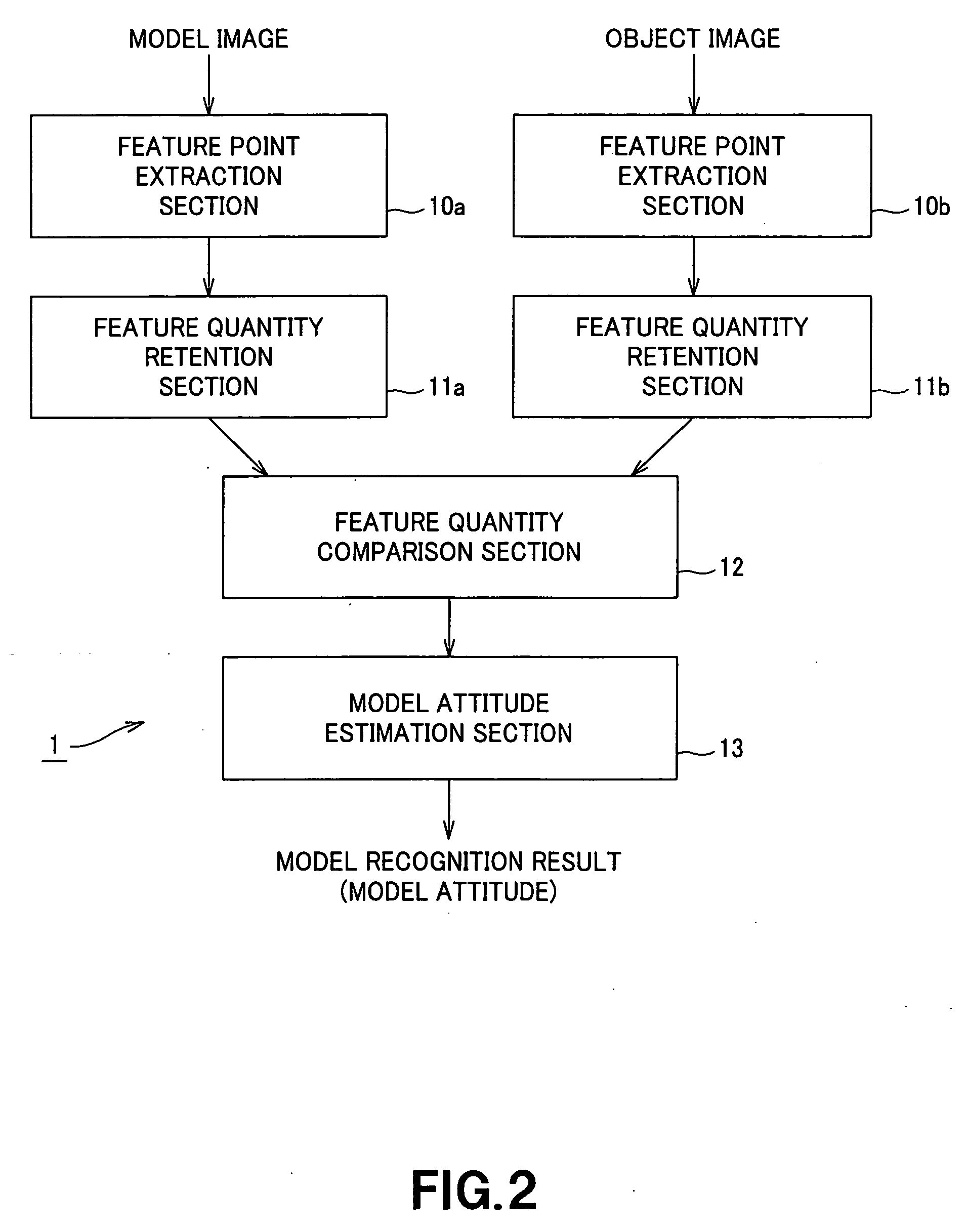

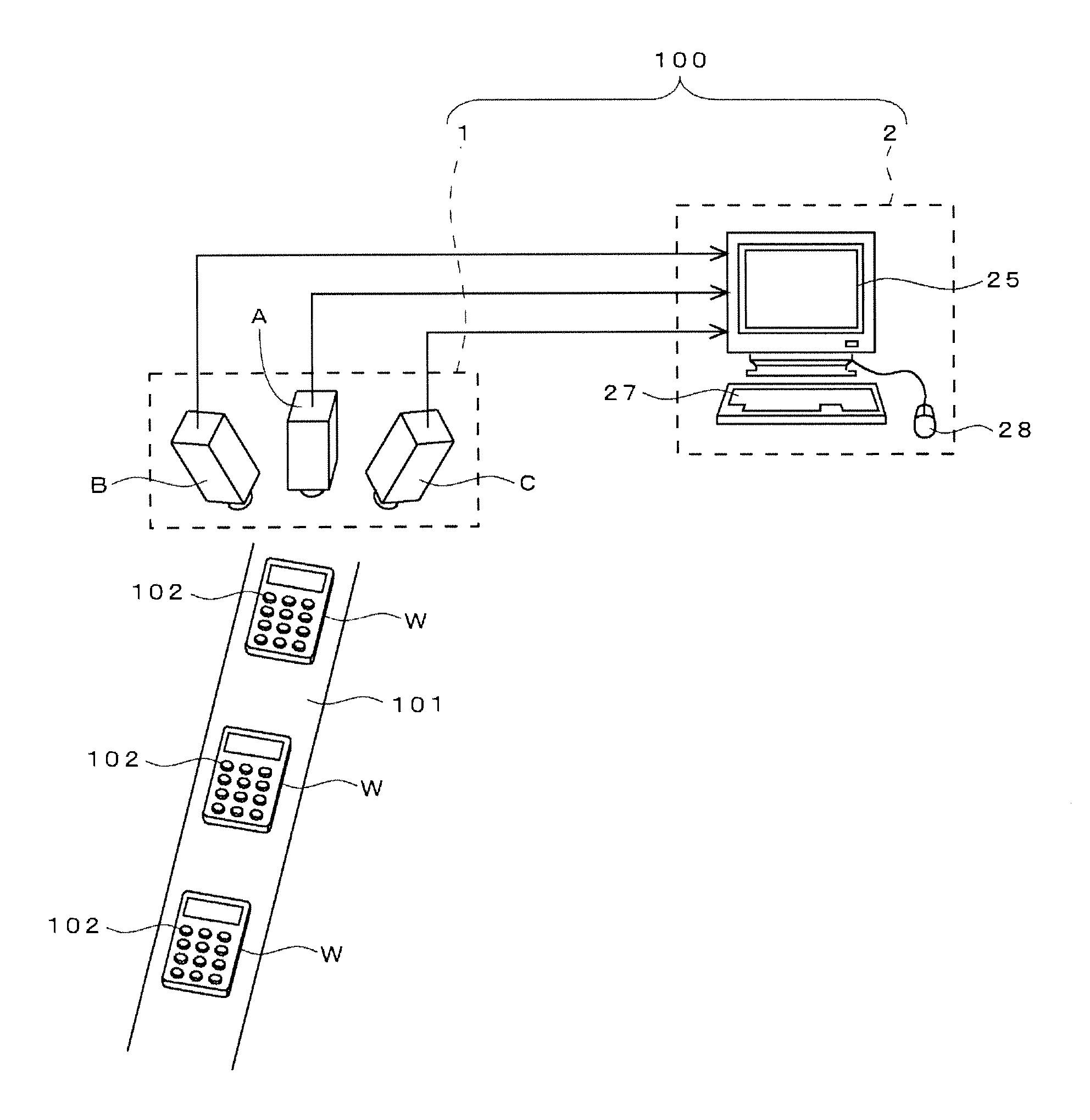

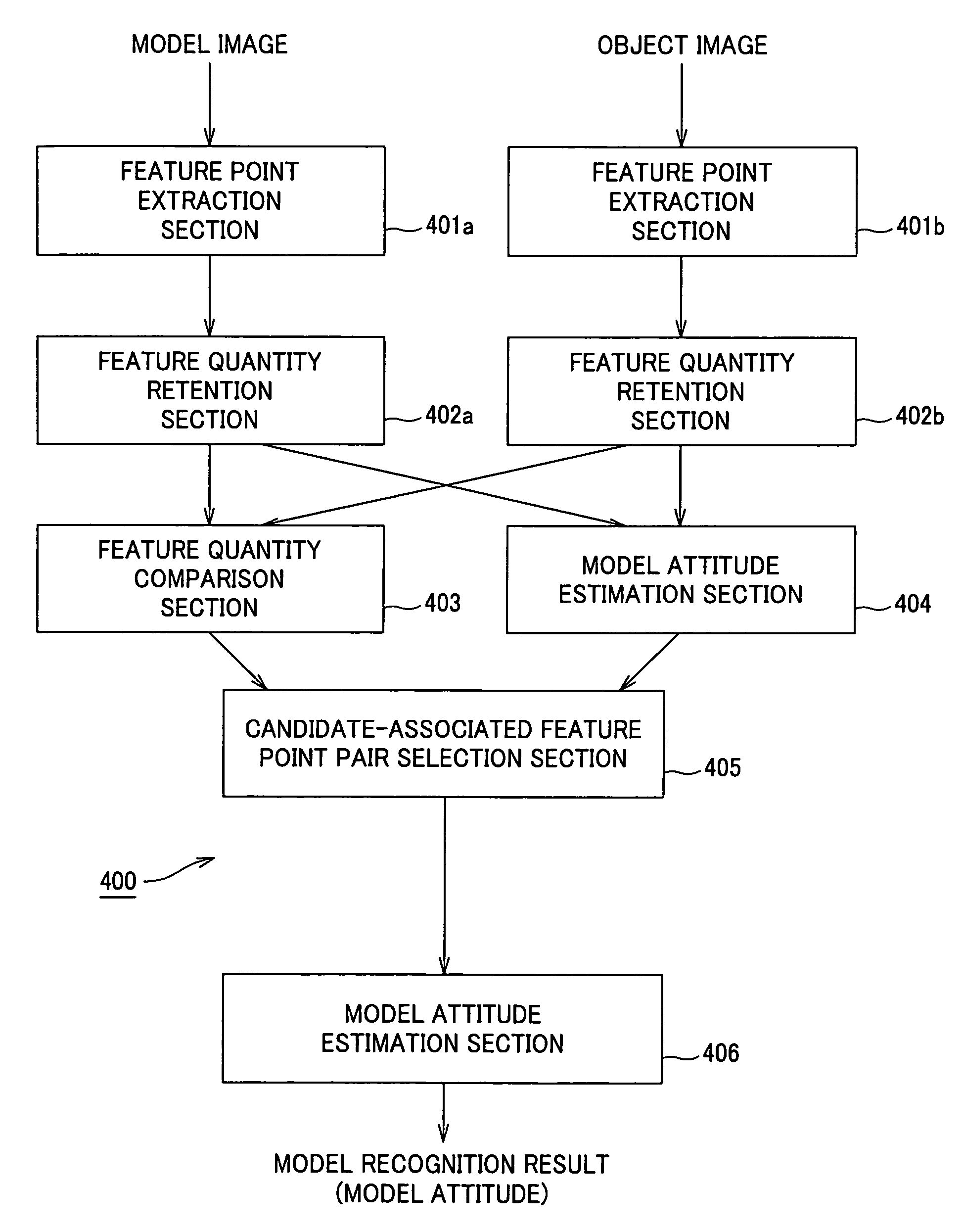

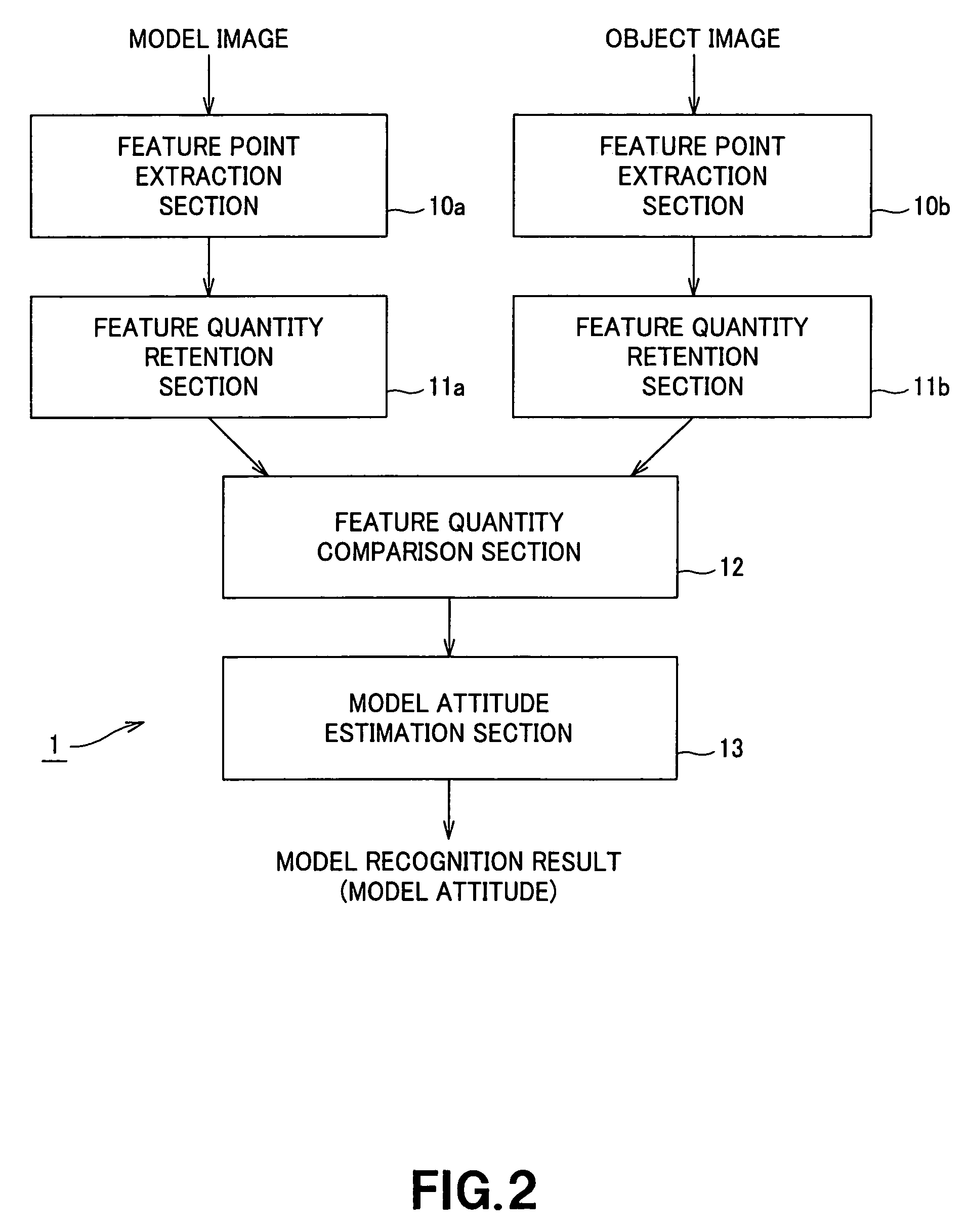

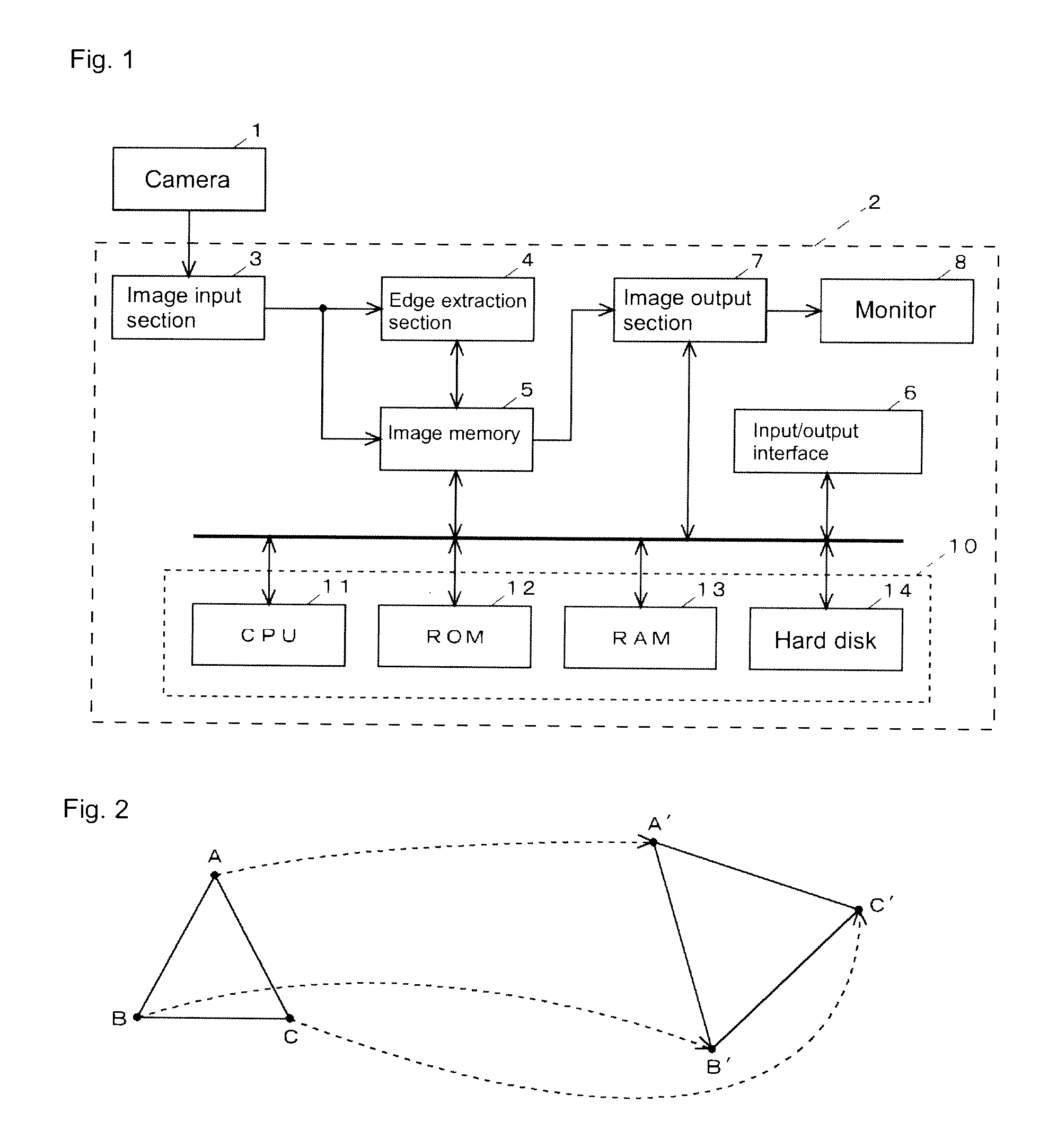

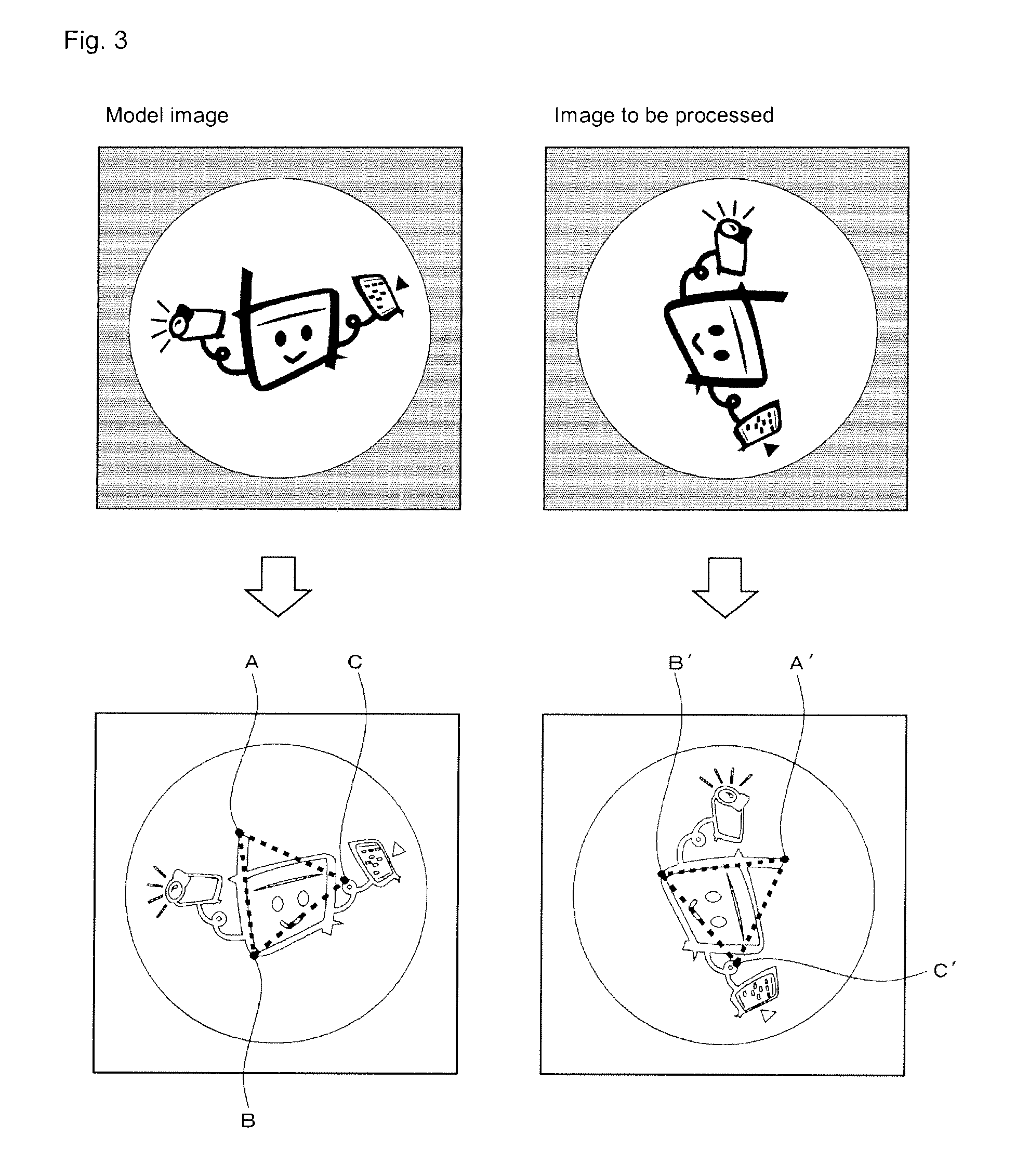

Image recognition device and method, and robot device

InactiveUS20050213818A1Image analysisCharacter and pattern recognitionTransformation parameterRelevant feature

In an image recognition apparatus (1), feature point extraction sections (10a) and (10b) extract feature points from a model image and an object image. Feature quantity retention sections (11a) and (11b) extract a feature quantity for each of the feature points and retain them along with positional information of the feature points. A feature quantity comparison section (12) compares the feature quantities with each other to calculate the similarity or the dissimilarity and generates a candidate-associated feature point pair having a high possibility of correspondence. A model attitude estimation section (13) repeats an operation of projecting an affine transformation parameter determined by three pairs randomly selected from the candidate-associated feature point pair group onto a parameter space. The model attitude estimation section (13) assumes each member in a cluster having the largest number of members formed in the parameter space to be an inlier. The model attitude estimation section (13) finds the affine transformation parameter according to the least squares estimation using the inlier and outputs a model attitude determined by this affine transformation parameter.

Owner:SONY CORP

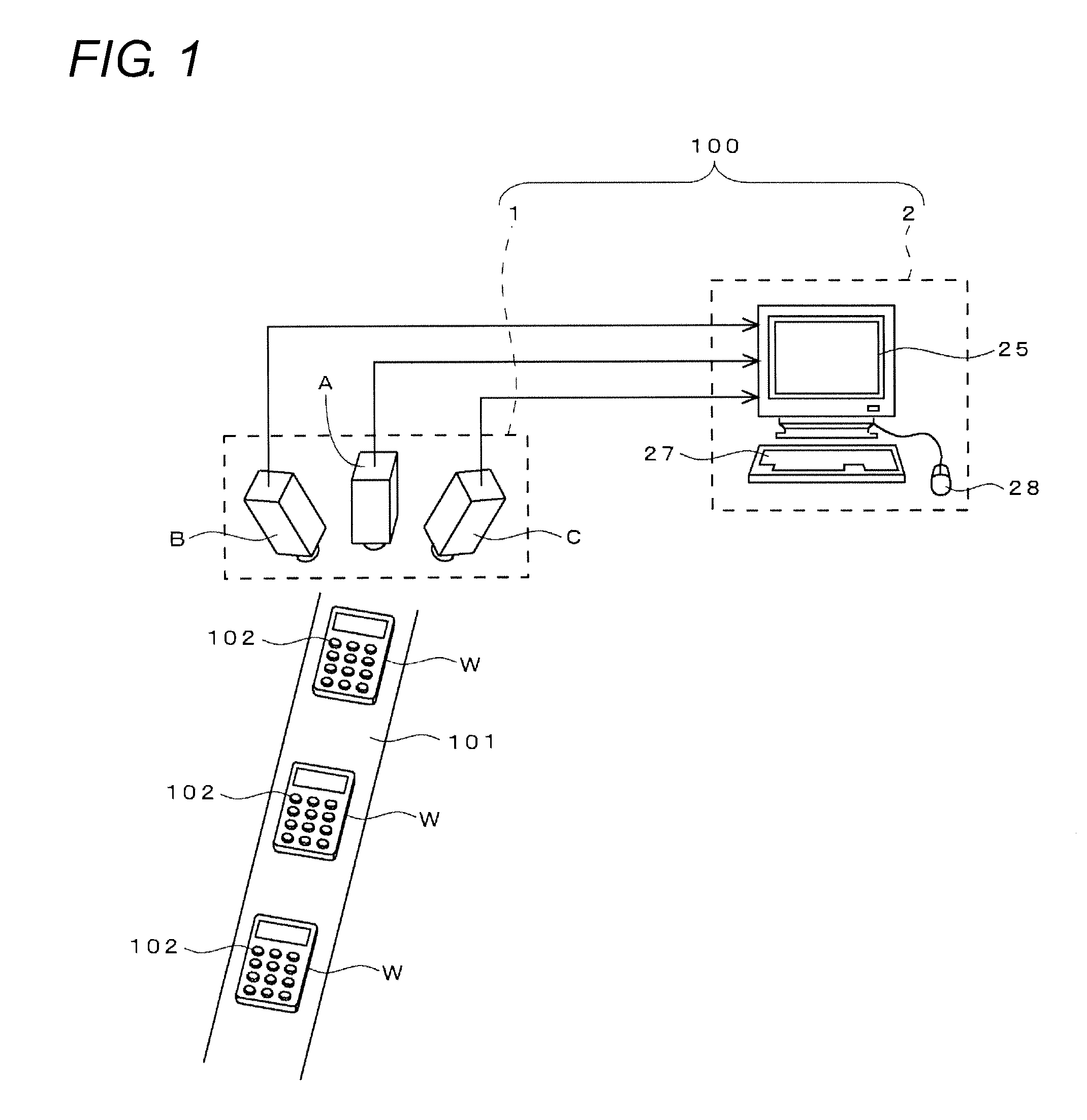

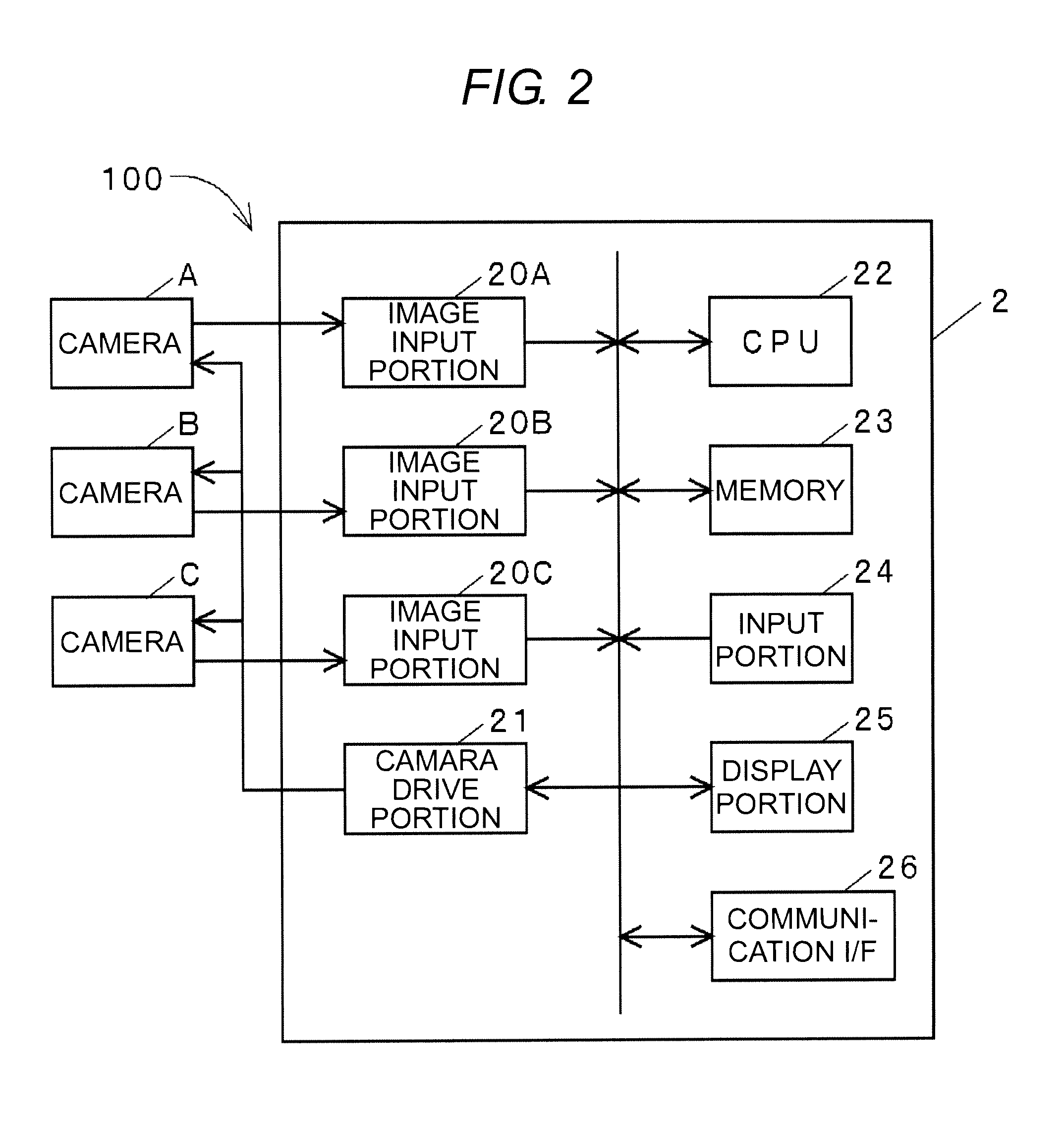

Three-dimensional vision sensor

InactiveUS20100232681A1Avoid performanceCancel noiseImage enhancementImage analysisTransformation parameterThree dimensional measurement

An object of the present invention is to enable performing height recognition processing by setting a height of an arbitrary plane to zero for convenience of the recognition processing. A parameter for three-dimensional measurement is calculated and registered through calibration and, thereafter, an image pickup with a stereo camera is performed on a plane desired to be recognized as having a height of zero in actual recognition processing. Further, three-dimensional measurement using the registered parameter is performed on characteristic patterns (marks m1, m2 and m3) included in this plane. Three or more three-dimensional coordinates are obtained through this measurement and, then, a calculation equation expressing a plane including these coordinates is derived. Further, based on a positional relationship between a plane defined as having a height of zero through the calibration and the plane expressed by the calculation equation, a transformation parameter (a homogeneous transformation matrix) for displacing points in the former plane into the latter plane is determined, and the registered parameter is changed using the transformation parameter.

Owner:ORMON CORP

Method and system for image registration using an intelligent artificial agent

Methods and systems for image registration using an intelligent artificial agent are disclosed. In an intelligent artificial agent based registration method, a current state observation of an artificial agent is determined based on the medical images to be registered and current transformation parameters. Action-values are calculated for a plurality of actions available to the artificial agent based on the current state observation using a machine learning based model, such as a trained deep neural network (DNN). The actions correspond to predetermined adjustments of the transformation parameters. An action having a highest action-value is selected from the plurality of actions and the transformation parameters are adjusted by the predetermined adjustment corresponding to the selected action. The determining, calculating, and selecting steps are repeated for a plurality of iterations, and the medical images are registered using final transformation parameters resulting from the plurality of iterations.

Owner:SIEMENS HEALTHCARE GMBH

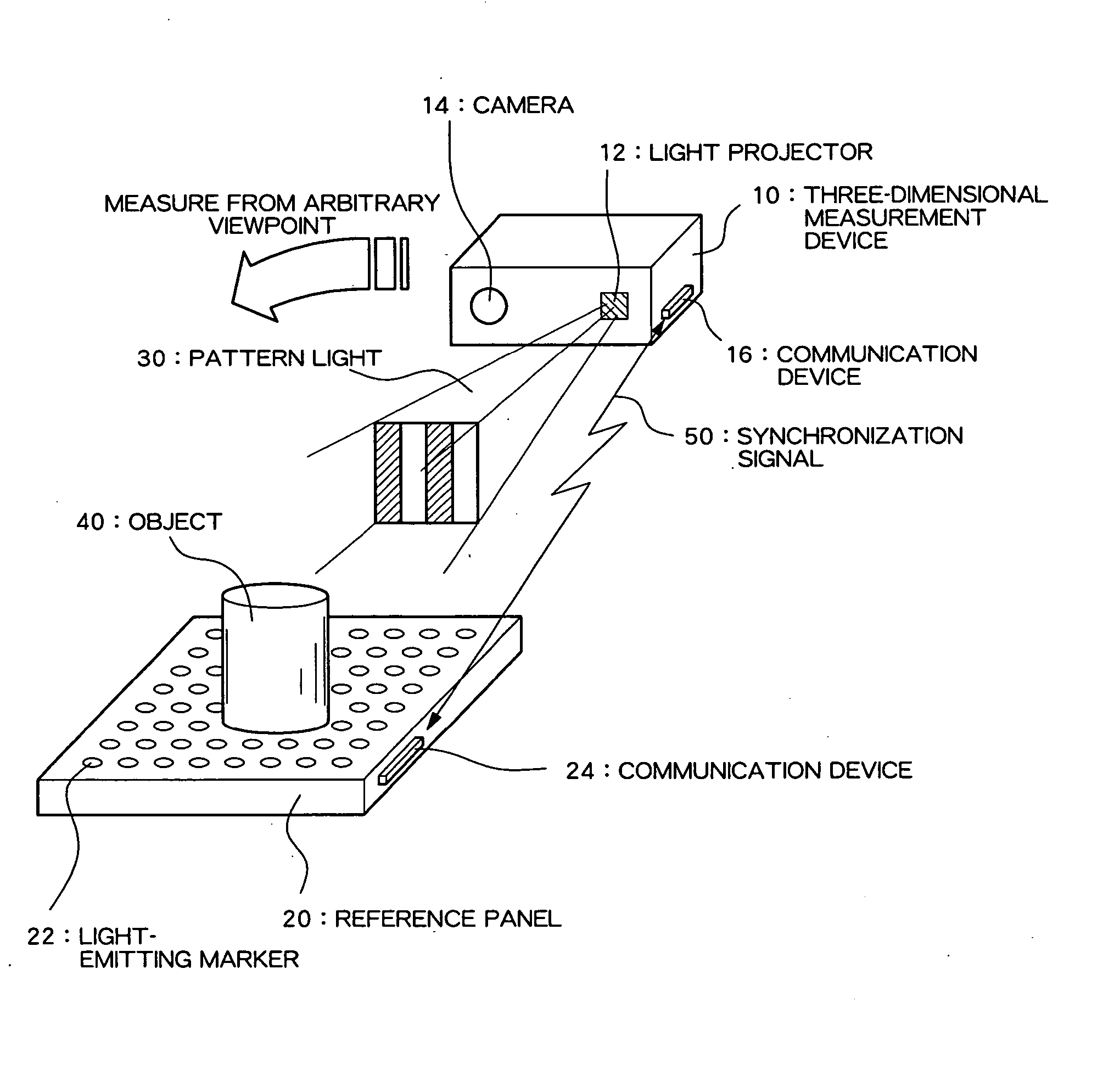

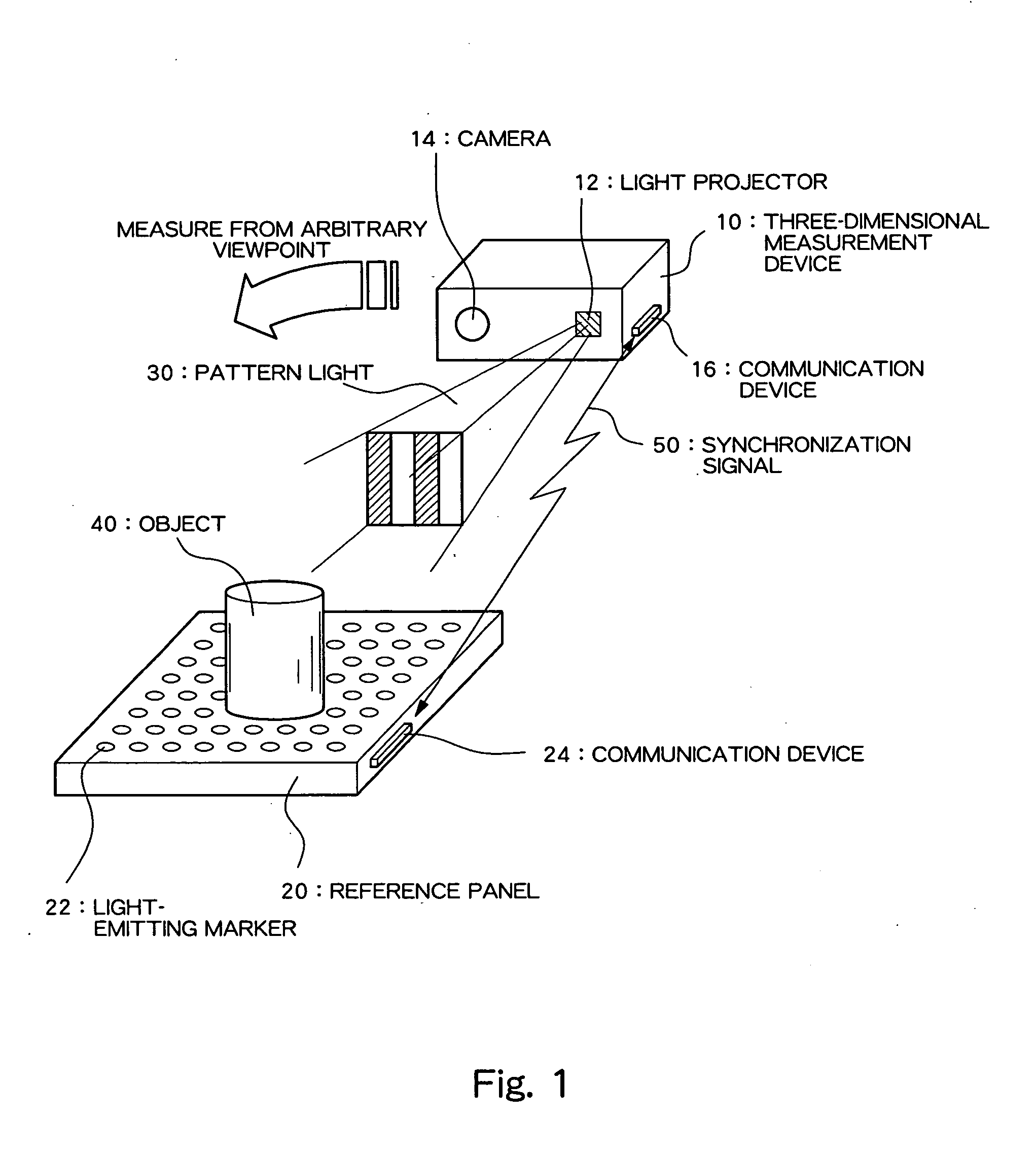

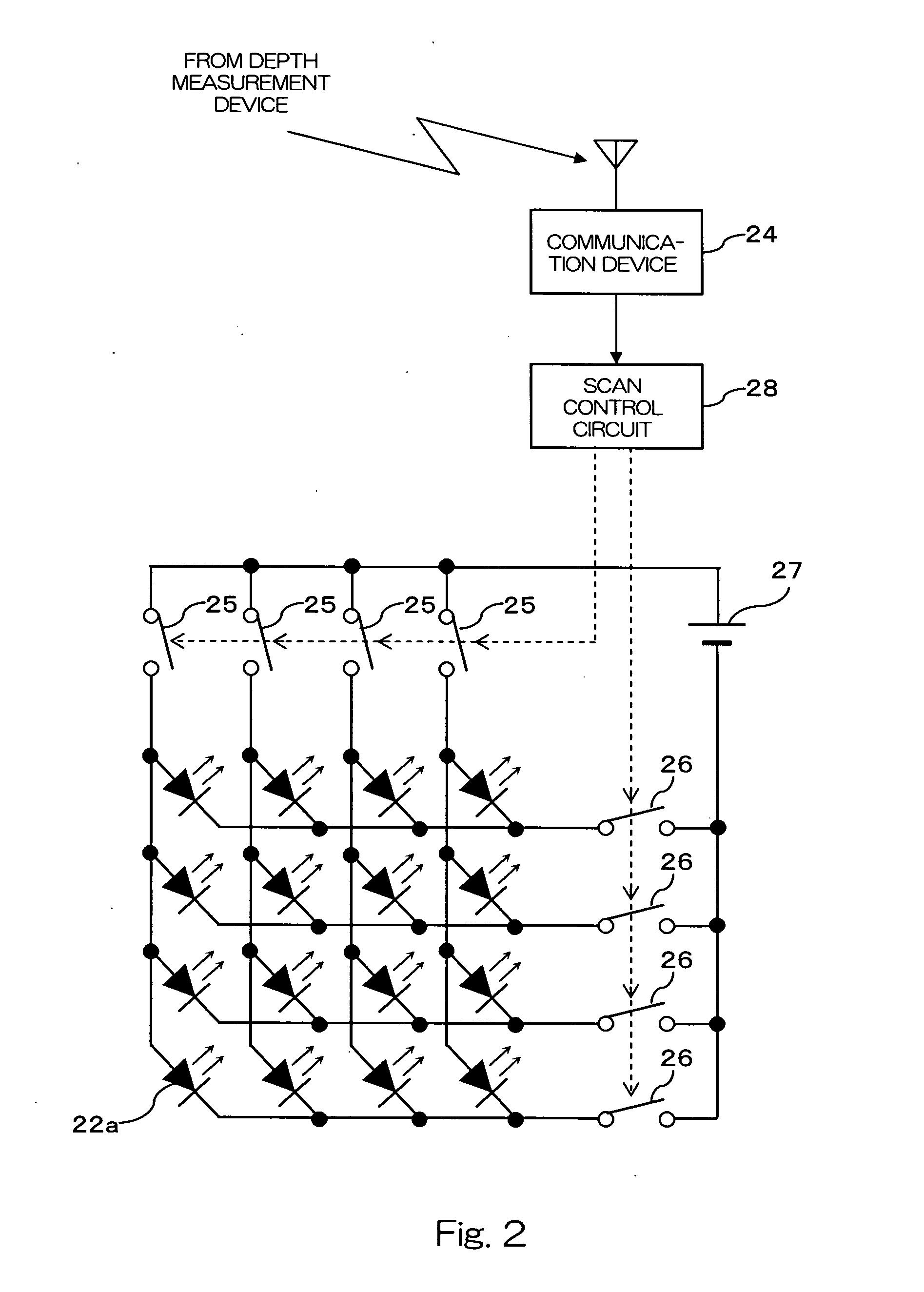

Camera Calibration System and Three-Dimensional Measuring System

InactiveUS20080075324A1Television system detailsImage analysisMeasuring instrumentTransformation parameter

Camera calibration which is robust against noise factors such as variation in environmental lighting and obstructions is realized. Light-emitting markers, whose on / off states can be controlled, are arrayed in a matrix on a reference panel on which an object is placed. The light-emitting markers blink in accordance with respective switching patterns in synchronism with the frame of continuous imaging of a camera. A three-dimensional measuring instrument determines the time-varying bright-and-dark pattern of a blinking point from the image of each frame and identifies, on the basis of the pattern, to which light-emitting marker each point corresponds. By reference to the identification results, camera calibration is performed, and a coordinate transformation parameter is computed.

Owner:SPACEVISION

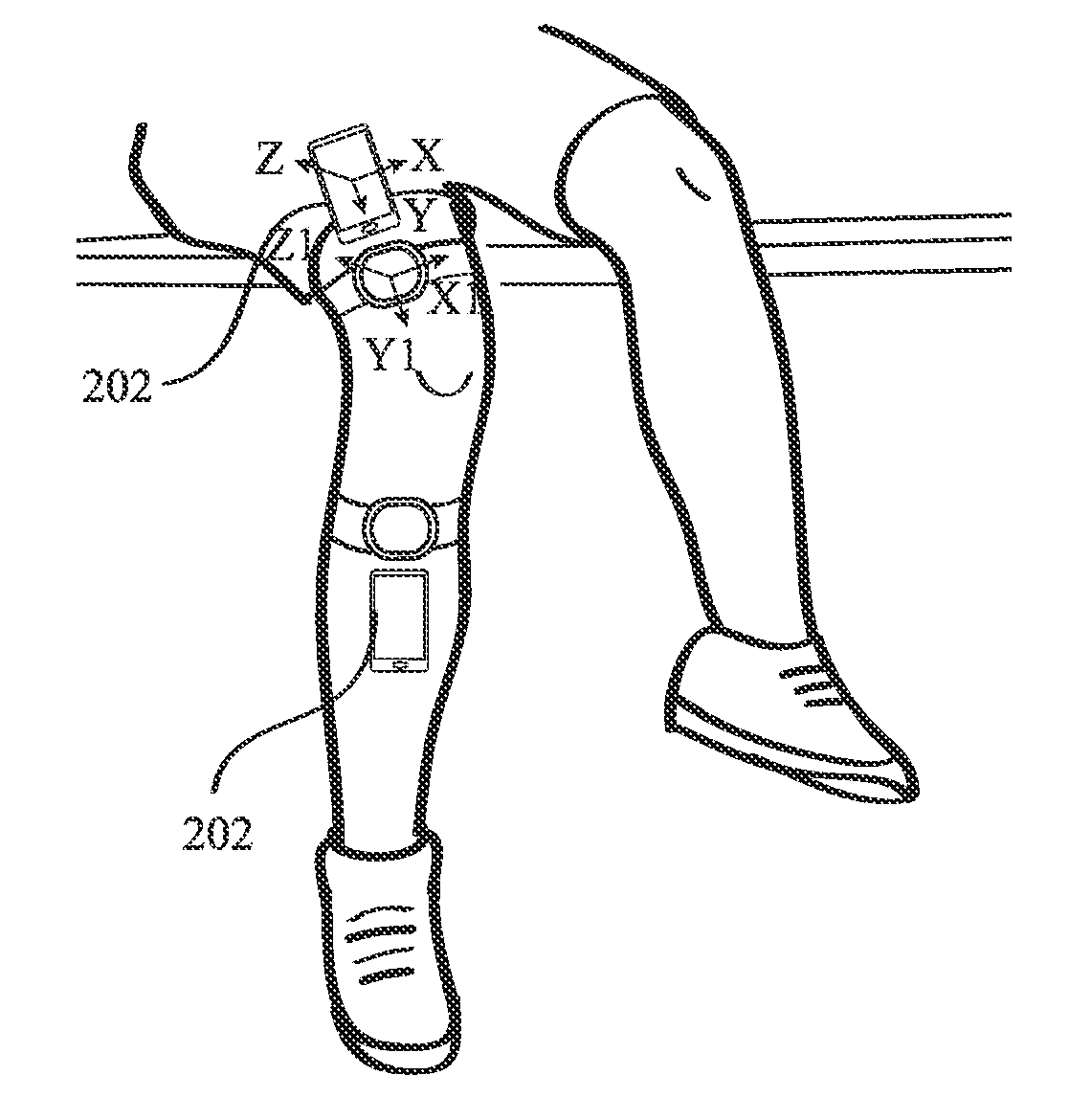

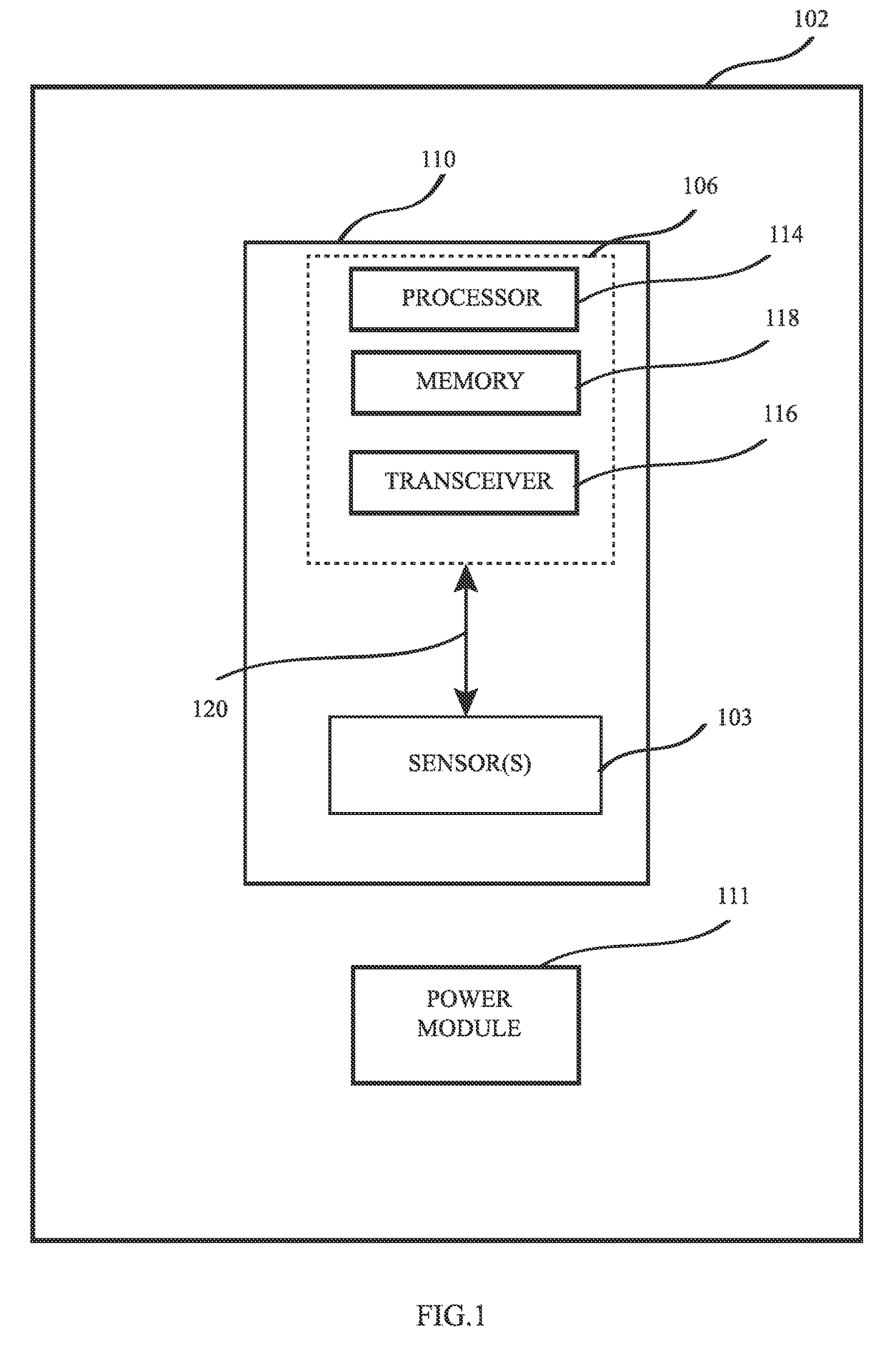

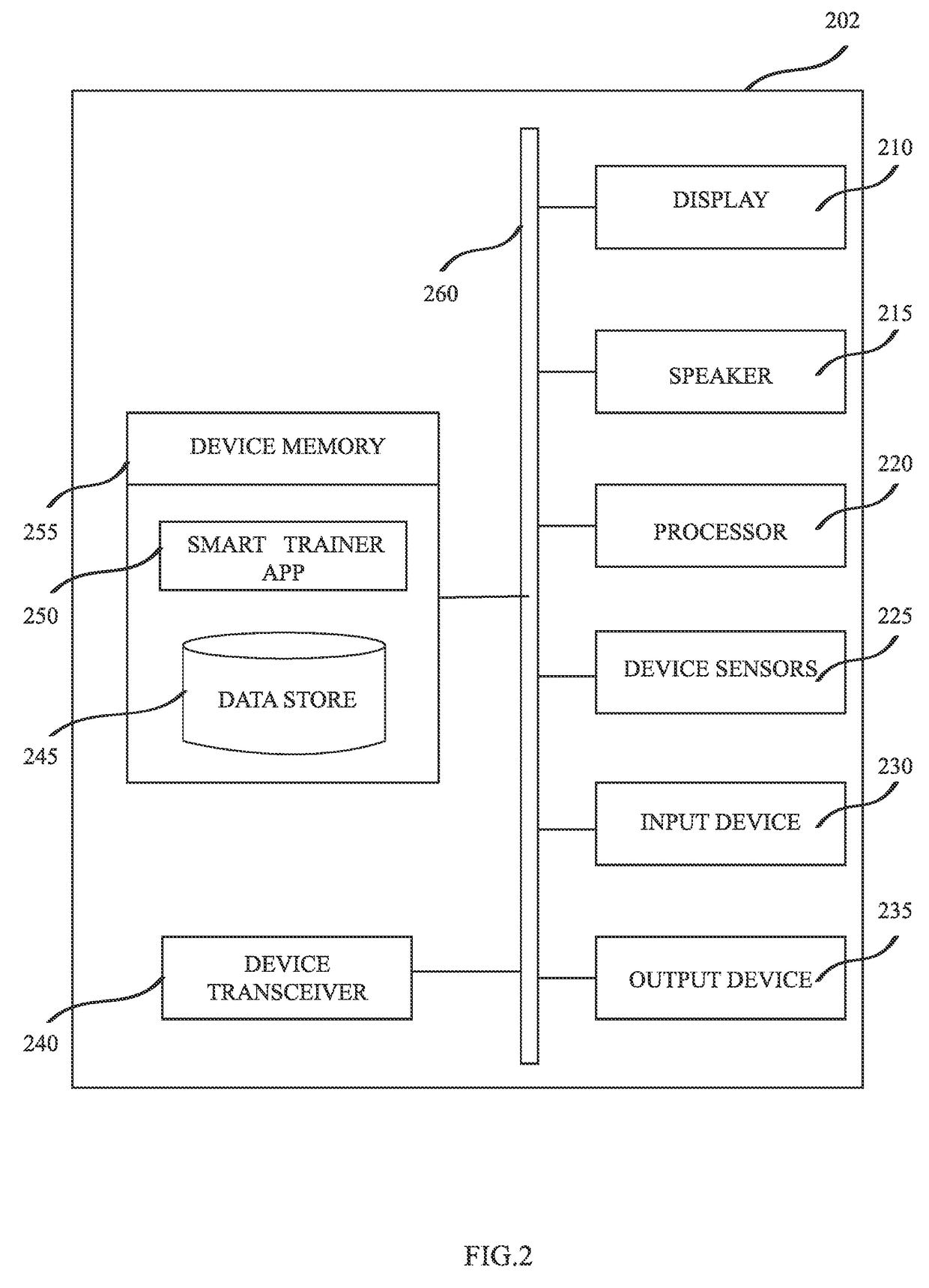

System and method for physical rehabilitation and motion training

InactiveUS20170136296A1Reduce concentrationConvenient registrationPhysical therapies and activitiesTelemetry/telecontrol selection arrangementsAnatomic SiteTransformation parameter

A system comprising wearable sensor modules and communicatively connected mobile computing devices for assisting a user in physical rehabilitation and exercising. The modules comprise sensors and the mobile computing device comprises device sensors. An application operably installed in memory of the mobile computing device provides a set of step-by-step instructions to a user for wearing the sensor modules in a particular way over an anatomical part depending on an exercise to be done by the user. The application further acquires a first set of data generated by the sensors and a second set of data generated by the device sensors. It then calculates a set transformation parameters based on the first set of data relative to the second set of data to do a sensor-anatomy registration of sensors to the anatomical part while the mobile computing device is placed substantially aligned with the wearable sensors over the anatomical part.

Owner:BARRERA OSVALDO ANDRES +1

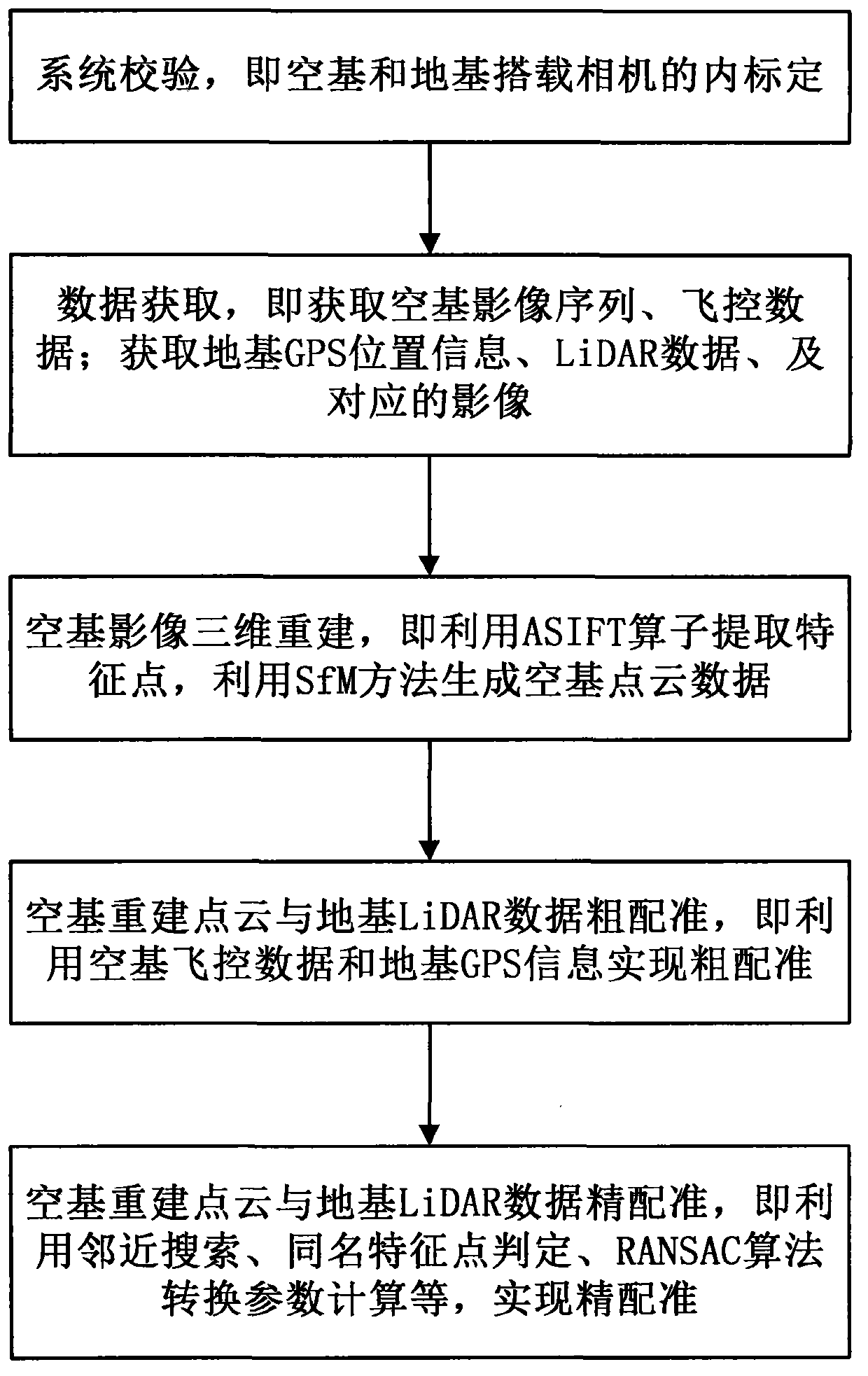

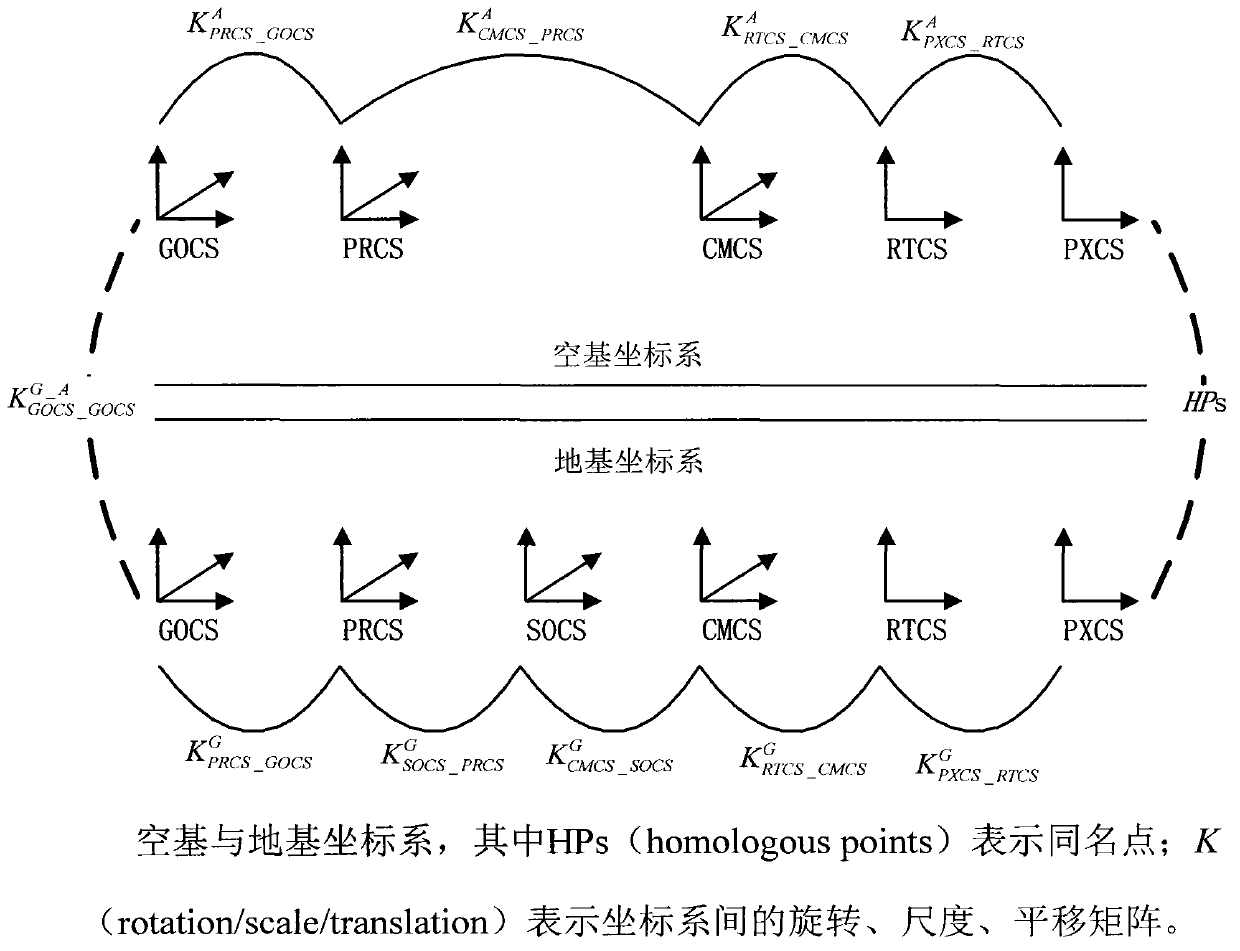

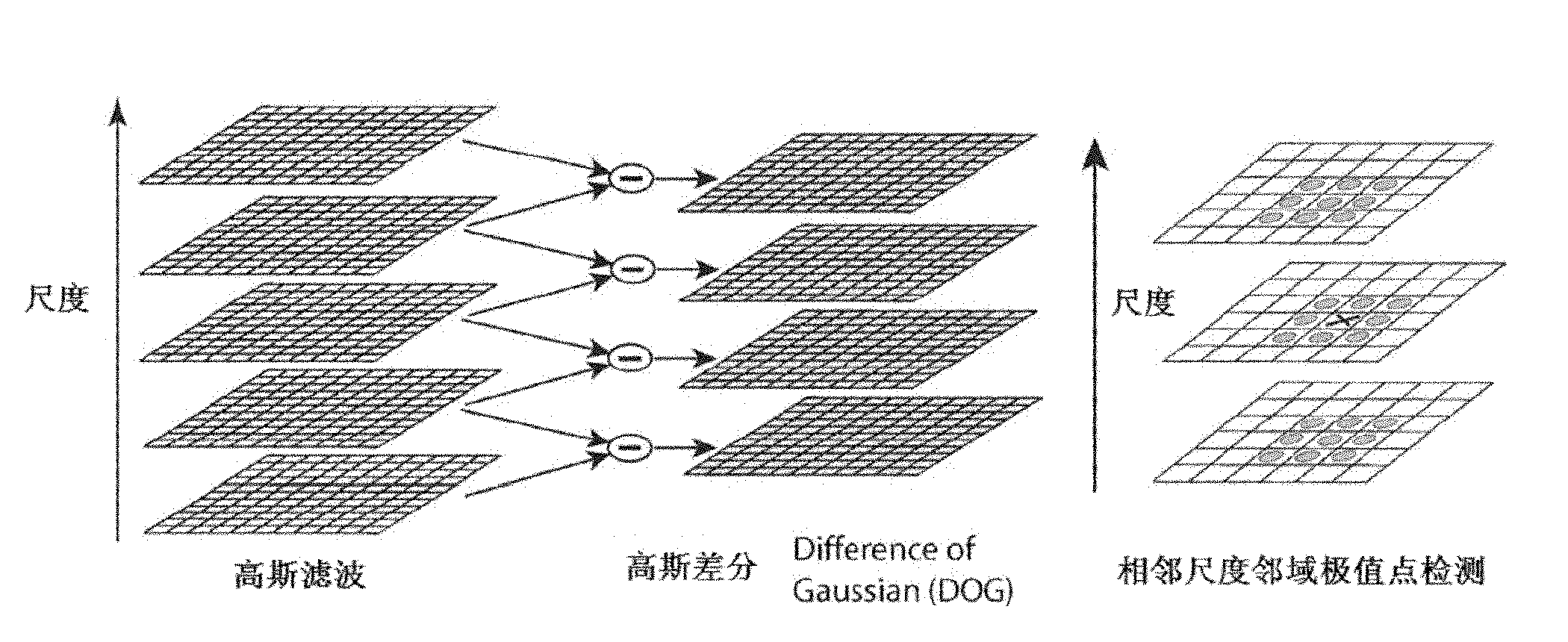

Precise registration method of ground laser-point clouds and unmanned aerial vehicle image reconstruction point clouds

InactiveCN103426165ASolve the problem of multi-angle observationReduce complexityImage analysis3D modellingPoint cloudTransformation parameter

The invention relates to a precise registration method of ground laser-point clouds (ground base) and unmanned aerial vehicle image reconstruction point clouds (aerial base). The method comprises generating overlapping areas of the ground laser-point clouds and the unmanned aerial vehicle image reconstruction point clouds on the basis of image three-dimensional reconstruction and point cloud rough registration; then traversing ground base images in the overlapping areas, extracting ground base image feature points through a feature point extraction algorithm, searching for aerial base point clouds in the neighborhood range of the ground base point clouds corresponding to the feature points, and obtaining the aerial base image feature points matched with the aerial base point clouds to establish same-name feature point sets; according to the extracted same-name feature point sets of the ground base images and the aerial base images and a transformation relation between coordinate systems, estimating out a coordinate transformation matrix of the two point clouds to achieve precise registration. According to the precise registration method of the ground laser-point clouds and the unmanned aerial vehicle image reconstruction point clouds, by extracting the same-name feature points of the images corresponding to the ground laser-point clouds and the images corresponding to the unmanned aerial vehicle images, the transformation parameters of the two point cloud data can be obtained indirectly to accordingly improve the precision and the reliability of point cloud registration.

Owner:吴立新 +1

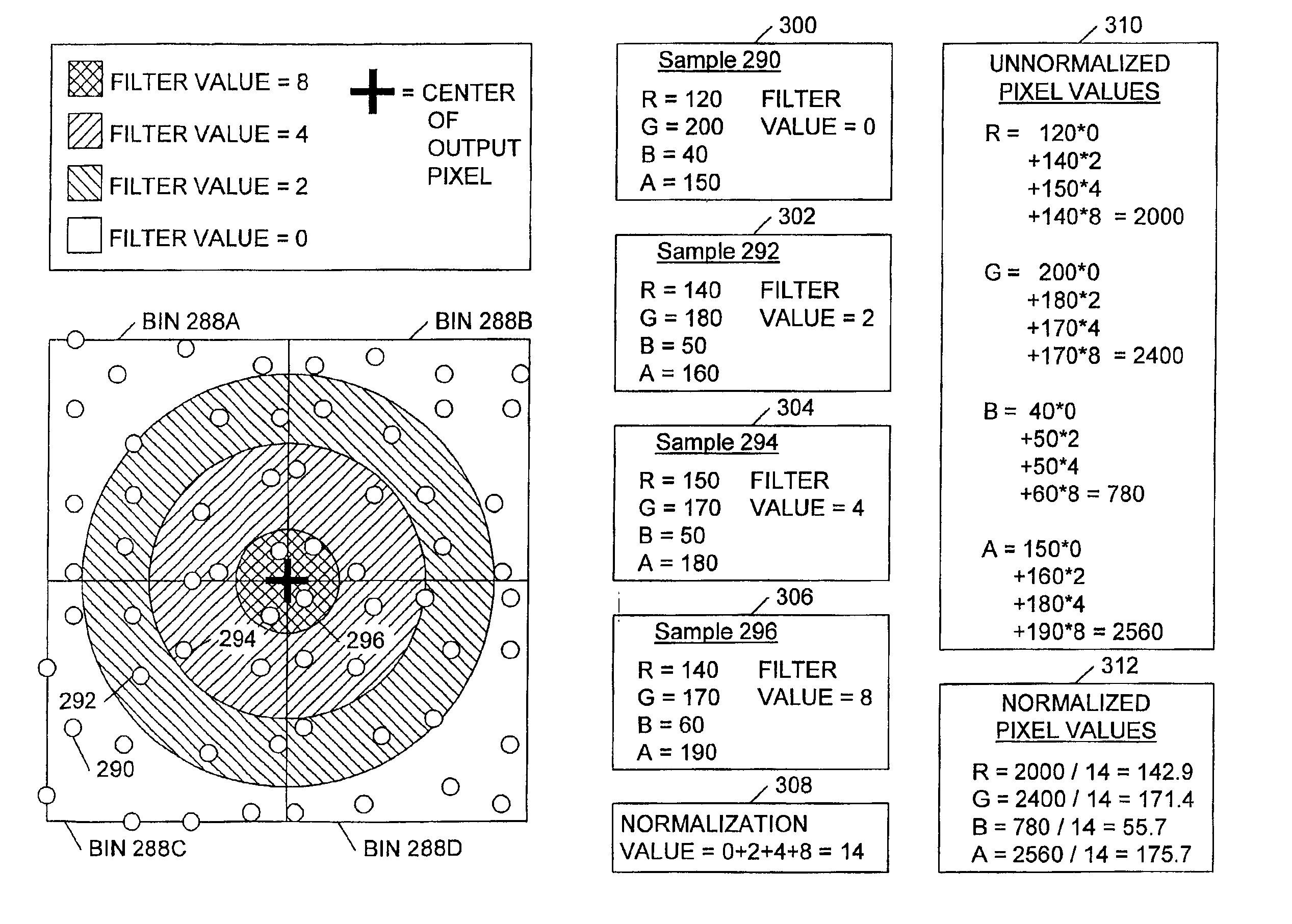

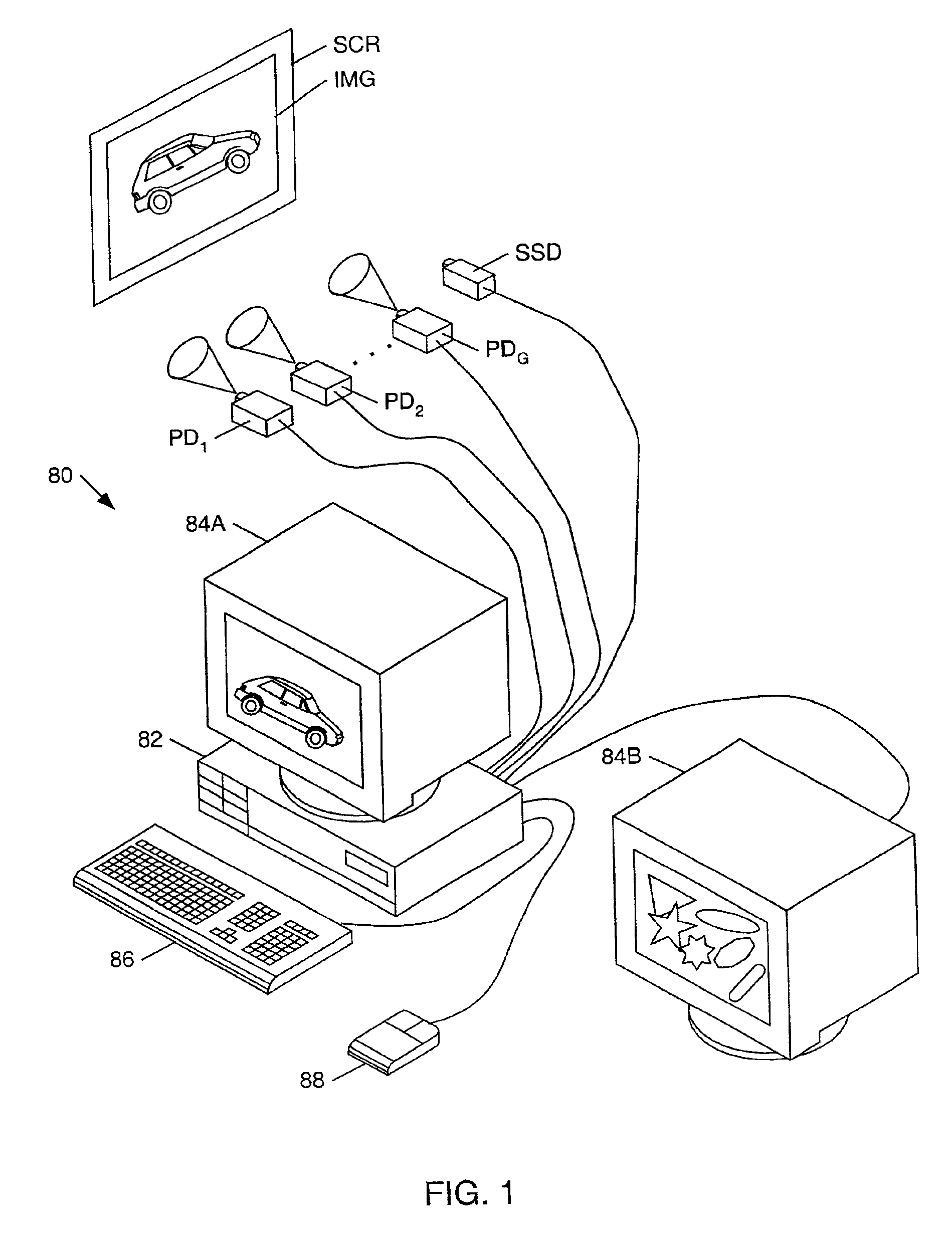

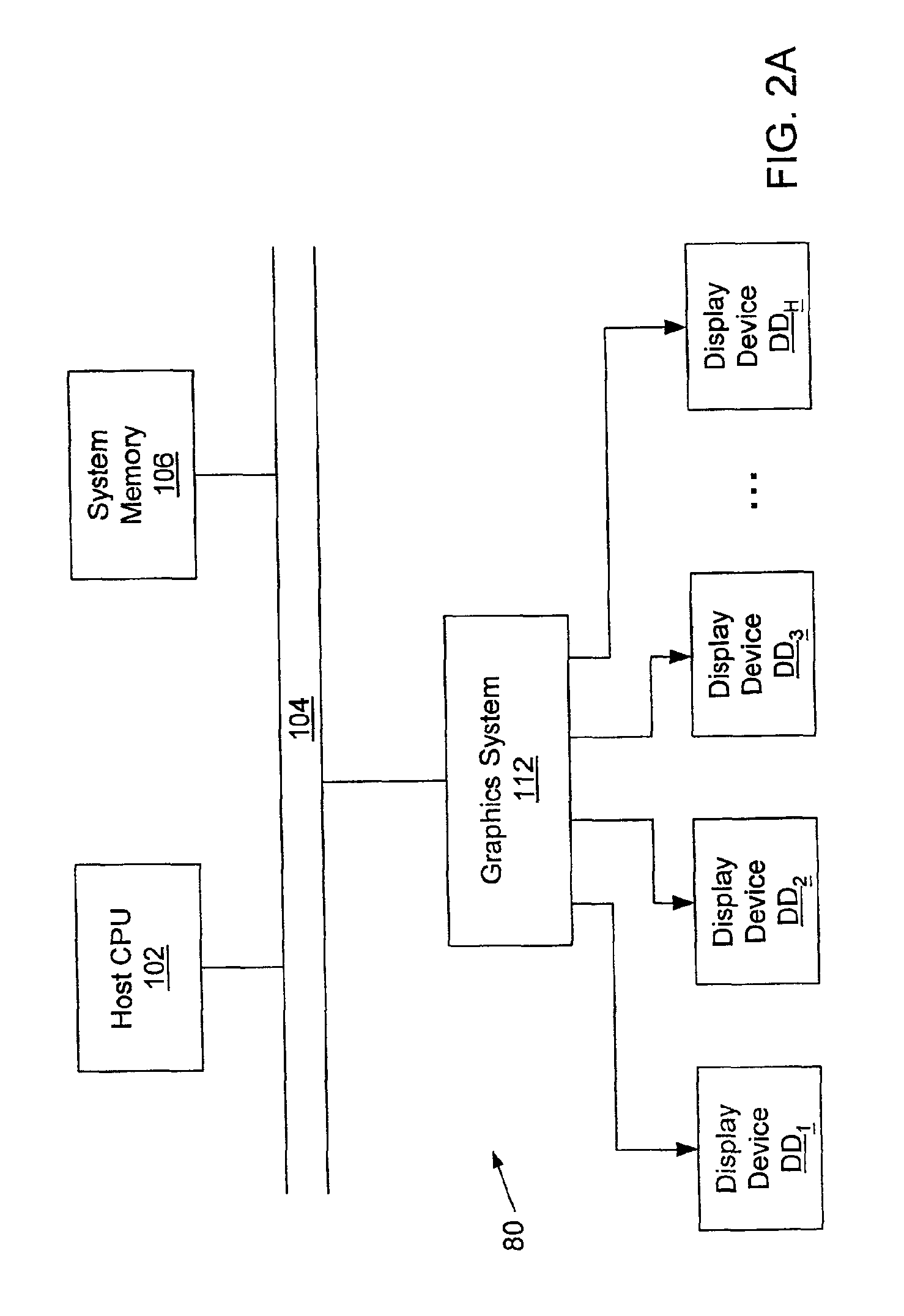

Multi-spectral color correction

InactiveUS6950109B2Effective color correctionIncrease memory storageColor signal processing circuitsCharacter and pattern recognitionTransformation parameterDisplay device

A system and method for performing color correction based on physical measurements (or estimations) of color component spectra (e.g. red, green, blue color component spectra). A color correction system may comprise a spectrum sensing device, a color calibration processor, and a calculation unit. The spectrum sensing device may be configured to measure color component power spectra for pixels generated by one or more display devices on a display surface. The color calibration processor may receive power spectra for a given pixel from the spectrum sensing device and compute a set of transformation parameters in response to the power spectra. The transformation parameters characterize a color correction transformation for the given pixel. The color calibration processor may compute such a transformation parameter set for selected pixels in the pixel array. The calculation unit may be configured to (a) compute initial color values for an arbitrary pixel in the pixel array, (b) compute modified color values in response to the initial color values and one or more of the transformation parameter sets corresponding to one or more of the selected pixels, and (d) transmit the modified color values to the display device.

Owner:ORACLE INT CORP

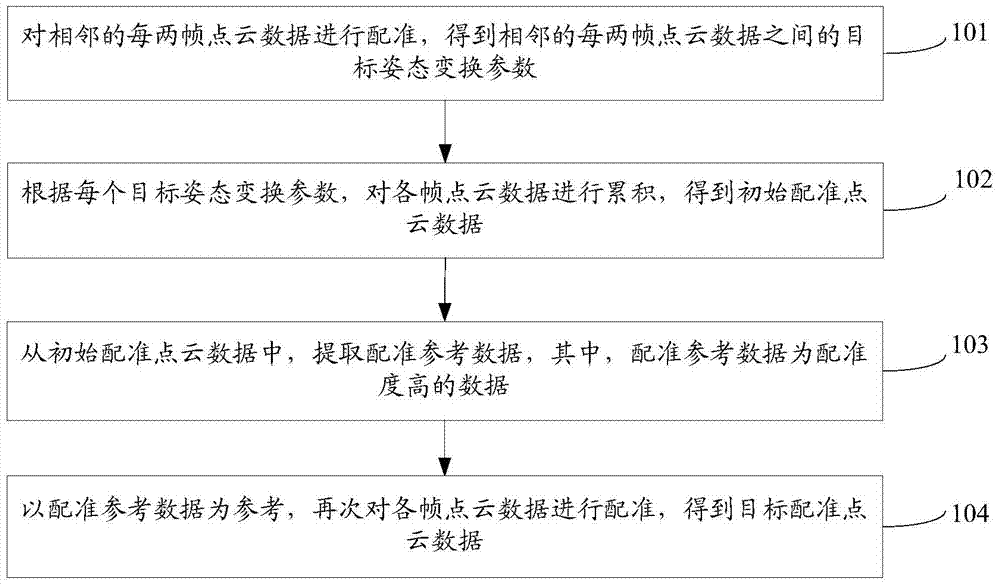

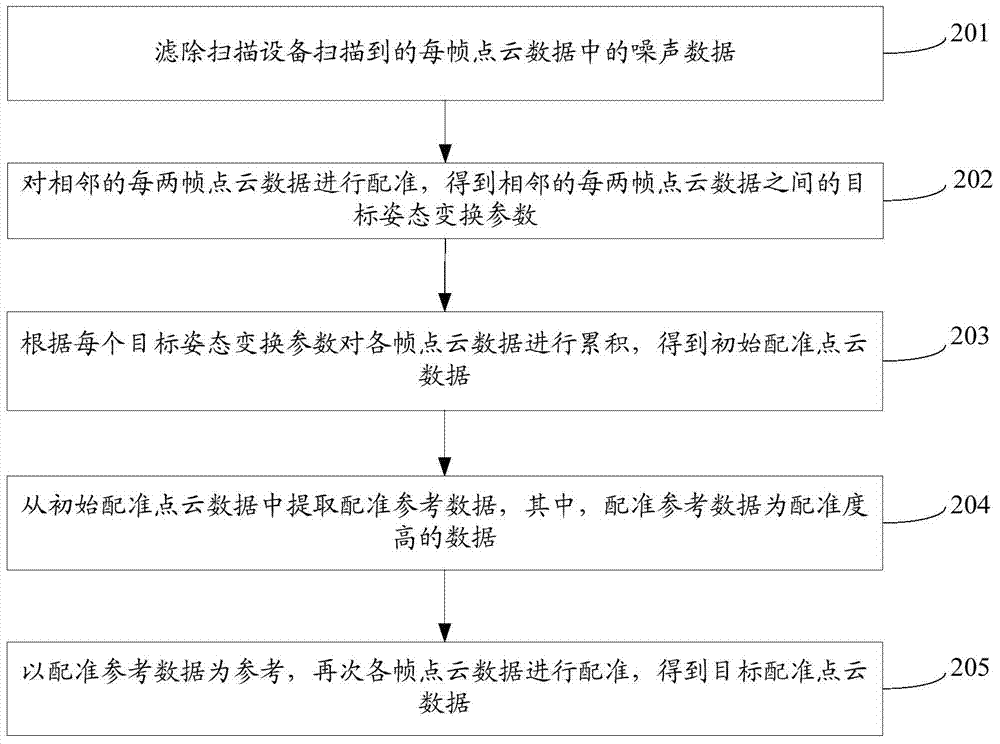

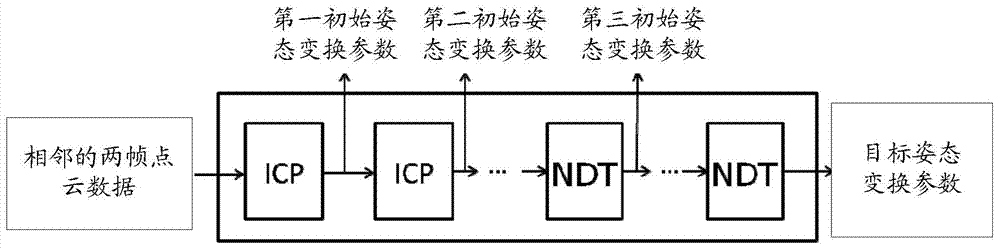

Method and device for registering point cloud data

ActiveCN104778688AReduce cumulative errorThe registration result is accurateImage analysisPoint cloudTransformation parameter

The invention discloses a method and a device for registering point cloud data, and belongs to the technical field of computers. The method comprises steps as follows: the point cloud data of every two adjacent frames are registered to obtain target attitude transformation parameters between the point cloud data of every two adjacent frames; the point cloud data of each frame are accumulated according to each target attitude transformation parameter to obtain initial registered point cloud data; registration reference data are extracted from the initial registered point cloud data and have the high registration degree; the point cloud data of each frame are registered again by referring to the registration reference data so as to obtain target registered point cloud data. According to the method and the device for registering the point cloud data, the point cloud data of each frame are accumulated according to each target attitude transformation parameter, after the initial registered point cloud data are obtained, the registration reference data with the higher registration degree are further extracted from the initial registered point cloud data, the point cloud data of each frame are registered again by referring to the registration reference data, and accumulated errors can be reduced, so that a more accurate registration result can be obtained.

Owner:HUAWEI TECH CO LTD

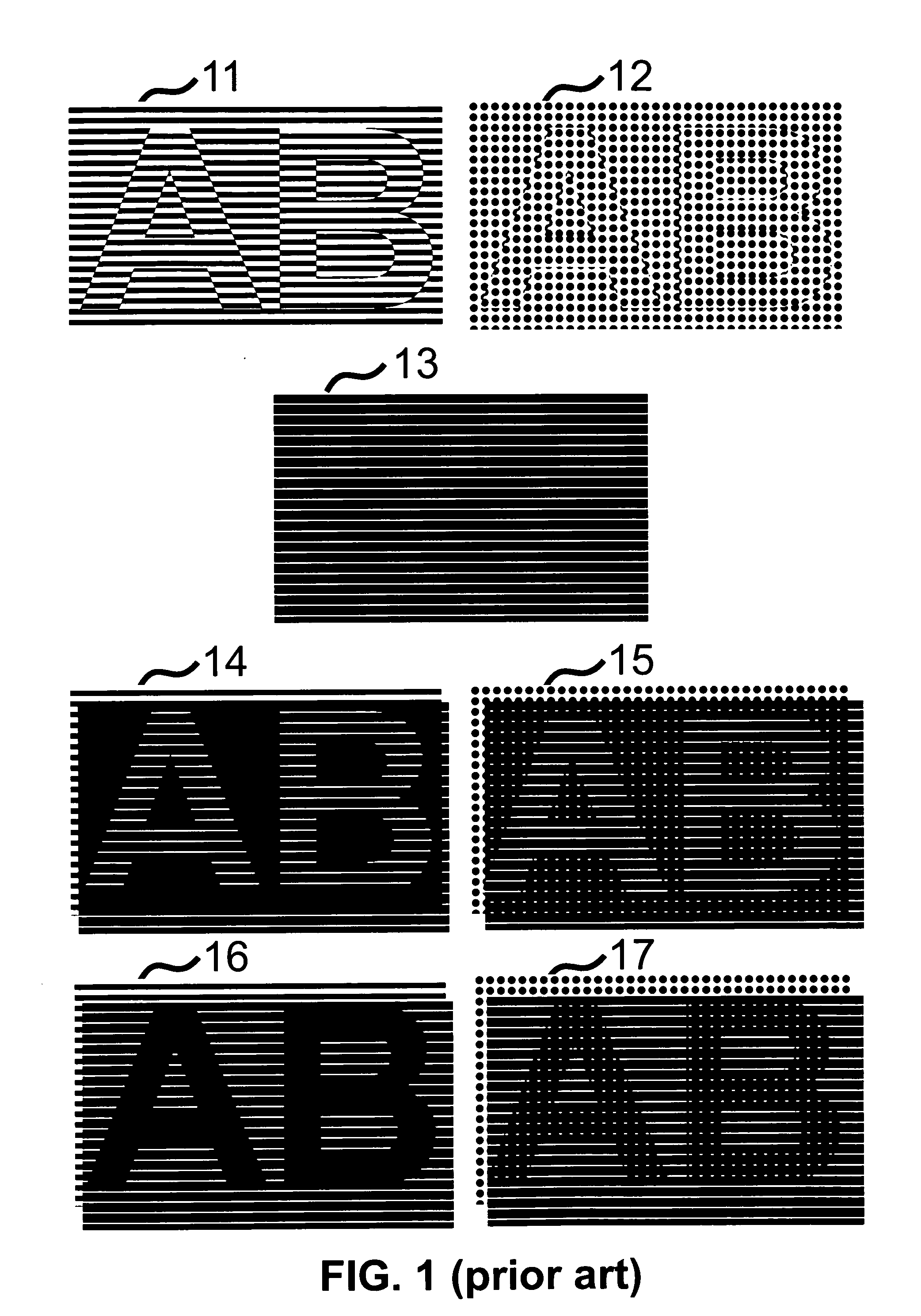

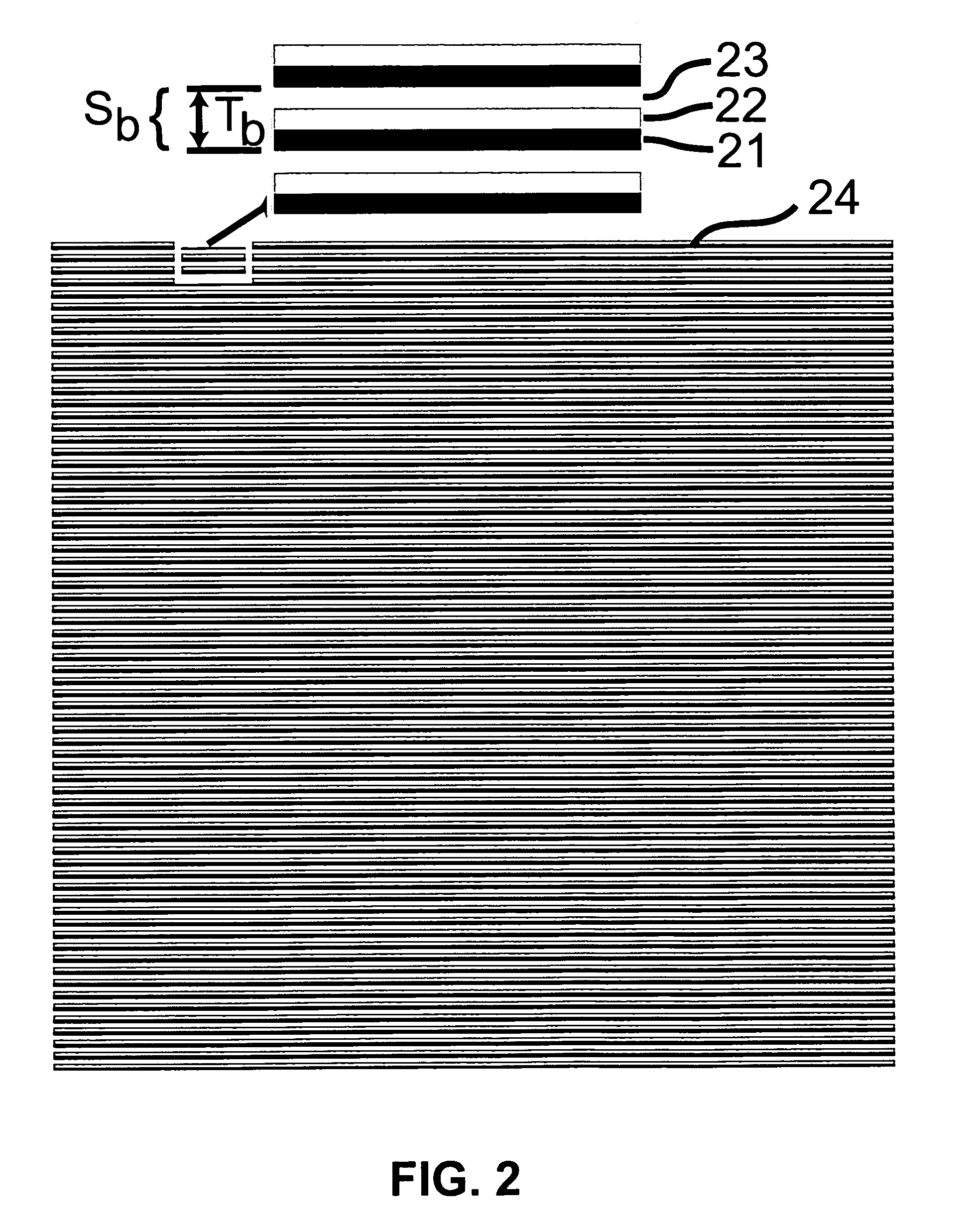

Authentication of secure items by shape level lines

ActiveUS20060280331A1Paper-money testing devicesUser identity/authority verificationPersonalizationGrating

The disclosed method and system may be used for creating advanced protection means for various categories of documents (e.g. bank notes, identity documents, certificates, checks, diploma, travel documents, tickets) and valuable products (e.g optical disks, CDs, DVDs, CD-ROMs, prescription drugs, products with affixed labels, watches) hereinafter called “secure items”. Secure items are authenticated by shape level lines. The shape level lines become apparent when superposing a base layer comprising sets of lines and a revealing layer comprising a line grating. One of the two layers is a modified layer which embeds a shape elevation profile generated from an initial, preferably bilevel, motif shape image (e.g. typographic characters, words of text, symbols, logo, ornament). In the case of an authentic document, the outline of the revealed shape level lines are visual offset lines of the boundaries of the initial bilevel motif shape image. In addition, the intensities, respectively colors of the revealed shape level lines are the same as the intensities, respectively colors of the lines forming the base layer sets of lines. By modifying the relative superposition phase of the revealing layer on top of the base layer or vice-versa (e.g. by a translation or a rotation), one may observe shape level lines moving dynamically between the initial bilevel motif shape boundaries and shape foreground centers, respectively background centers, thereby growing and shrinking. In the case that these characteristic features are present, the secure item is accepted as authentic. Otherwise the item is rejected as suspect. Pairs of base and revealing layers may be individualized by applying to both the base and the revealing layer a geometric transformation. Thanks to the availability of a large number of geometric transformations and transformation parameters, one may create documents having their own individualized document protection. The invention also proposes a computing and delivery system operable for delivering base and revealing layers according to security document or valuable product information content. The system may automatically generate upon request an individually protected secure item and its corresponding authentication means.

Owner:ECOLE POLYTECHNIQUE FEDERALE DE LAUSANNE (EPFL)

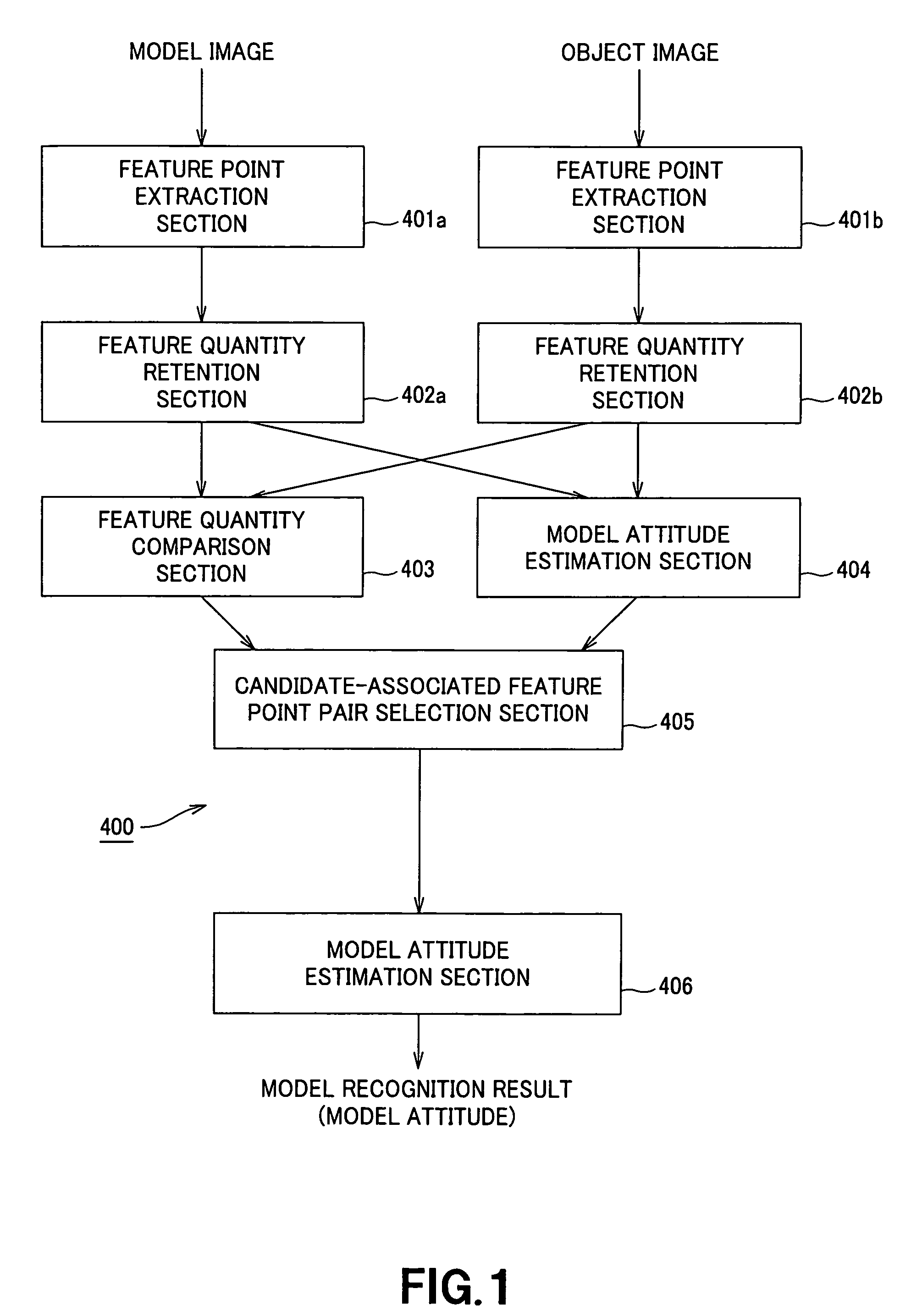

Image recognition device using feature points, method for recognizing images using feature points, and robot device which recognizes images using feature points

In an image recognition apparatus, feature point extraction sections and extract feature points from a model image and an object image. Feature quantity retention sections extract a feature quantity for each of the feature points and retain them along with positional information of the feature points. A feature quantity comparison section compares the feature quantities with each other to calculate the similarity or the dissimilarity and generates a candidate-associated feature point pair having a high possibility of correspondence. A model attitude estimation section repeats an operation of projecting an affine transformation parameter determined by three pairs randomly selected from the candidate-associated feature point pair group onto a parameter space. The model attitude estimation section assumes each member in a cluster having the largest number of members formed in the parameter space to be an inlier. The model attitude estimation section finds the affine transformation parameter according to the least squares estimation using the inlier and outputs a model attitude determined by this affine transformation parameter.

Owner:SONY CORP

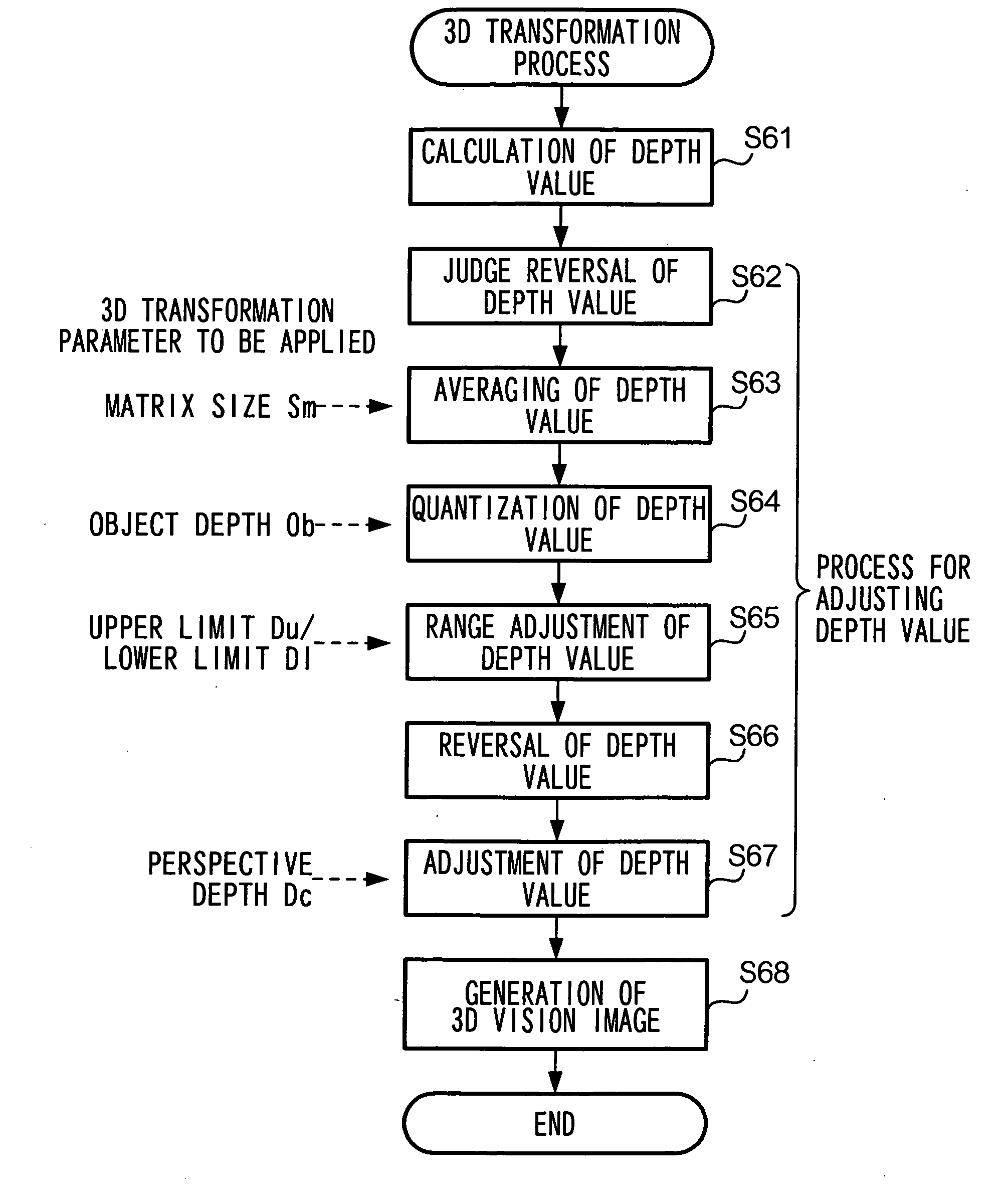

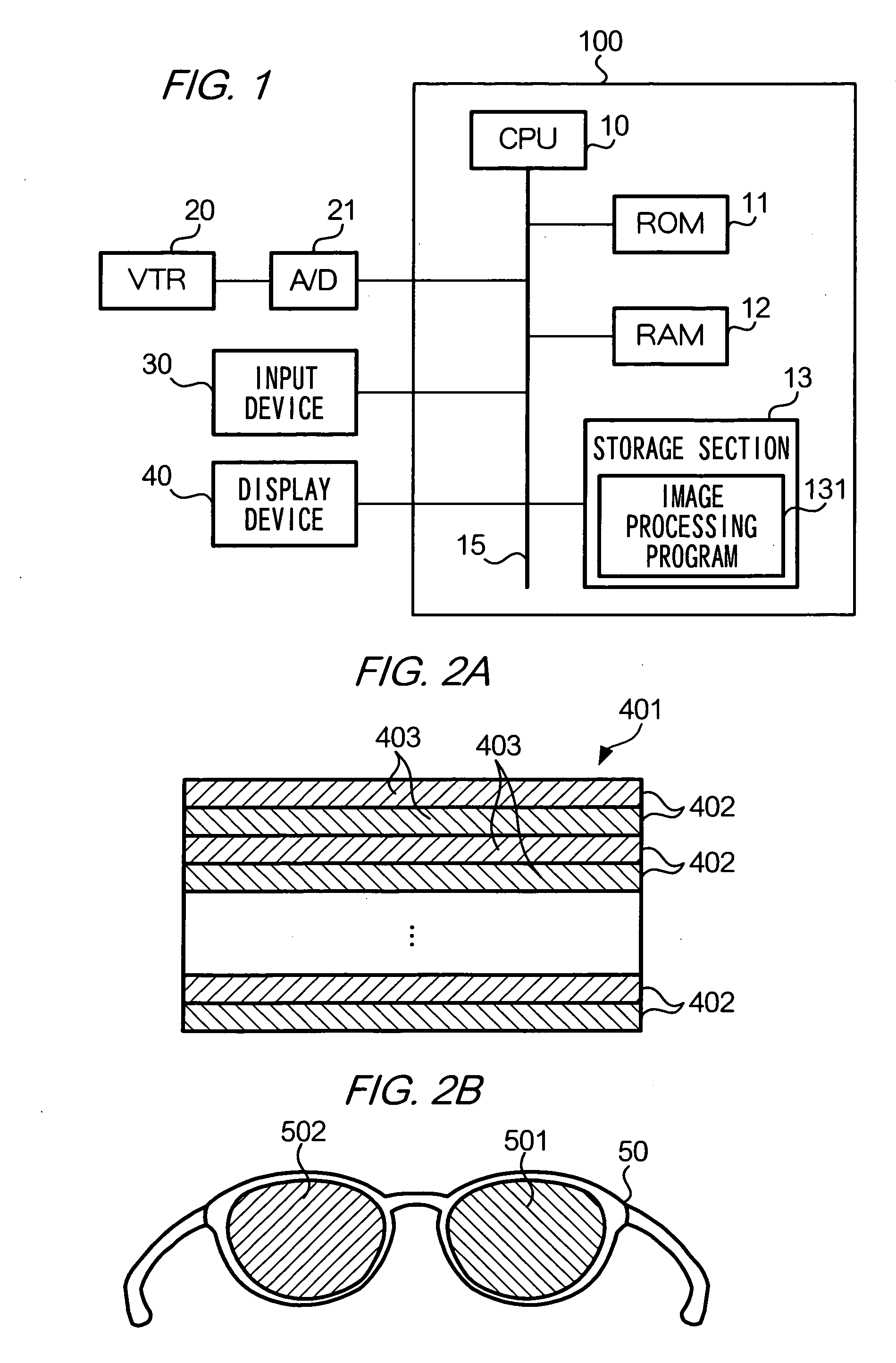

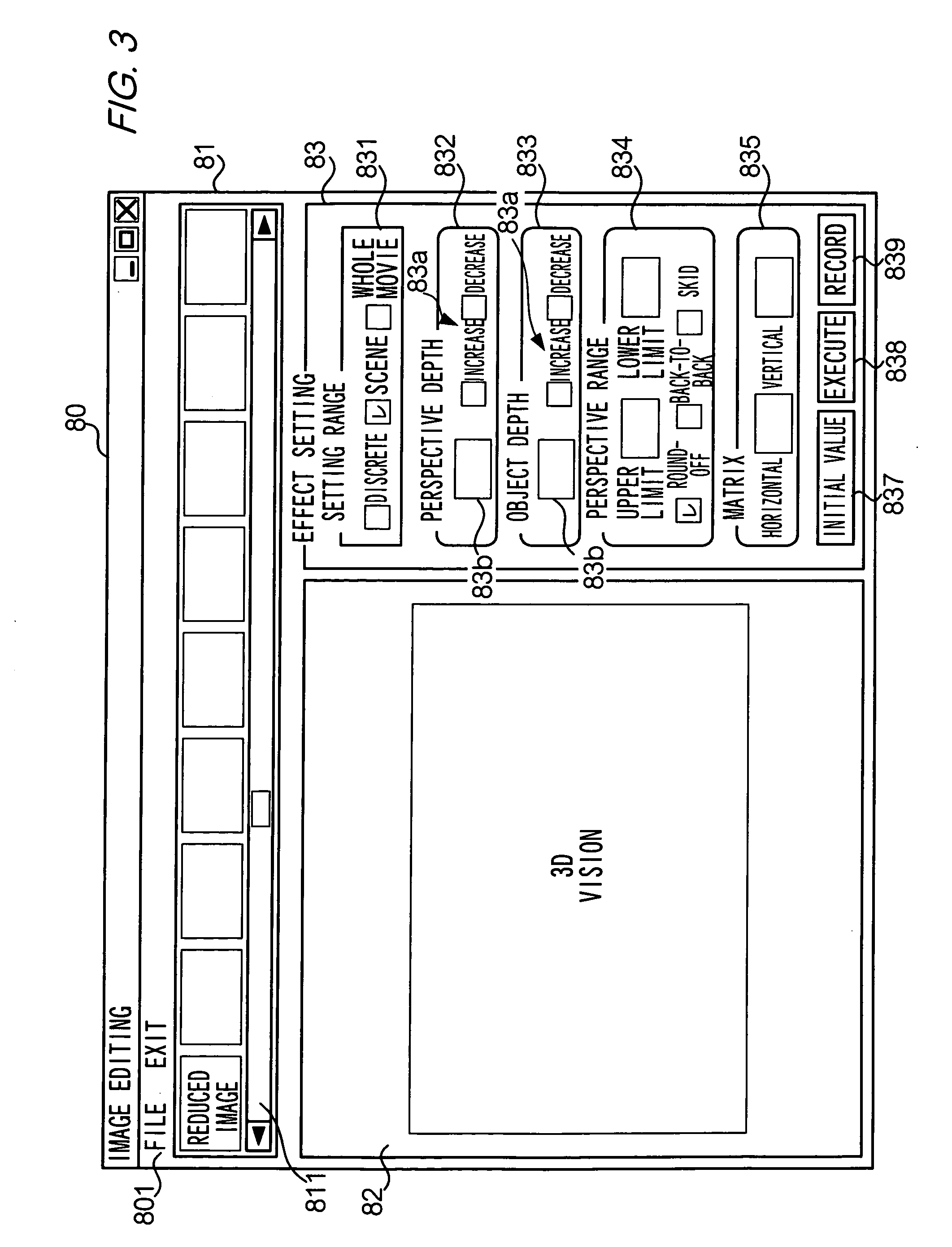

Image processing apparatus, image pickup device and program therefor

InactiveUS20060088206A1Reduce the burden onExact reproductionCharacter and pattern recognitionSteroscopic systemsParallaxImaging processing

CPU 10 generates from original image data representing an image, a right eye image and a left eye image mutually having a parallax. CPU 10 also specifies the 3D transformation parameter depending on the indication by a user, and stores the specified 3D transformation parameter in storage device 13 in association with original image data.

Owner:MERCURY SYSTEM CO LTD

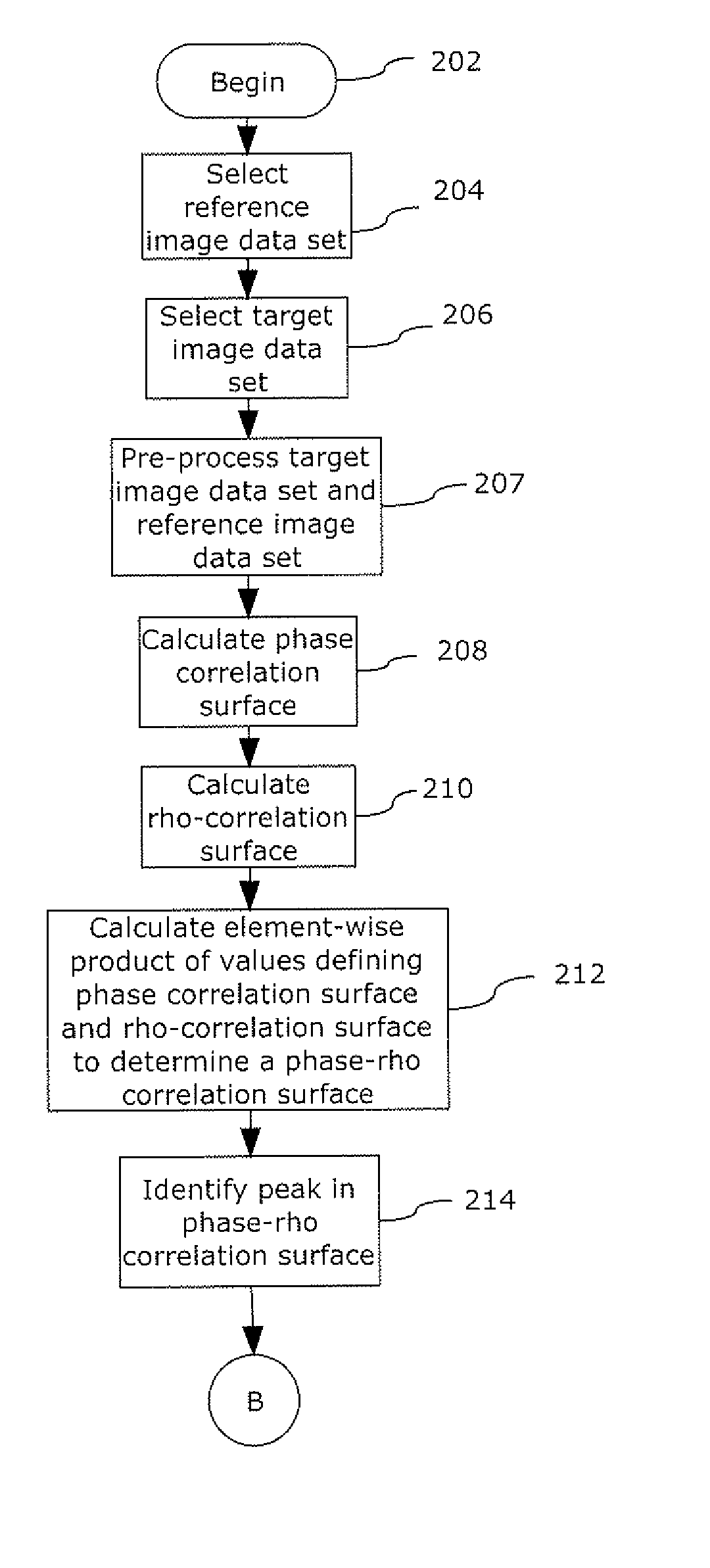

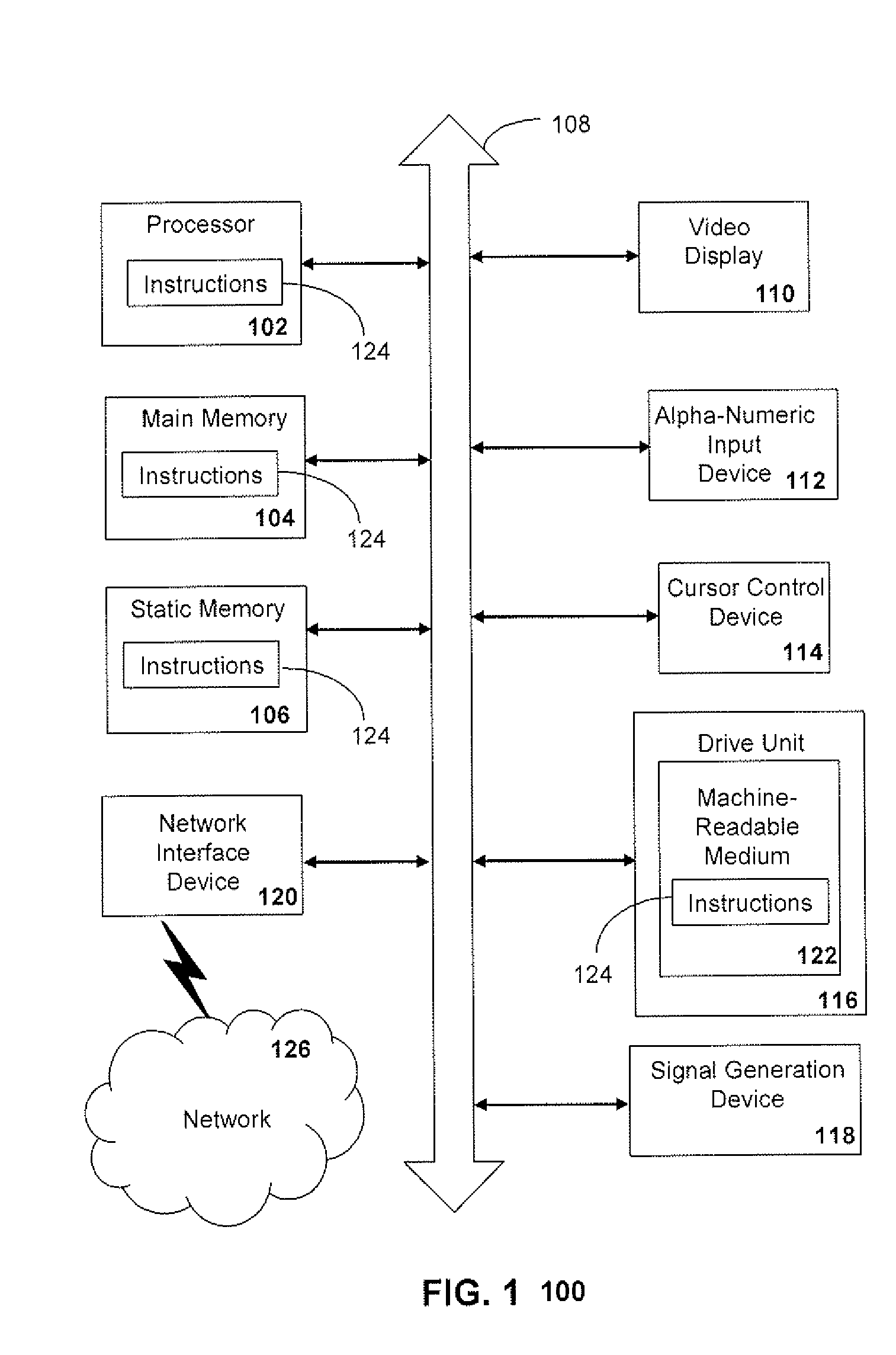

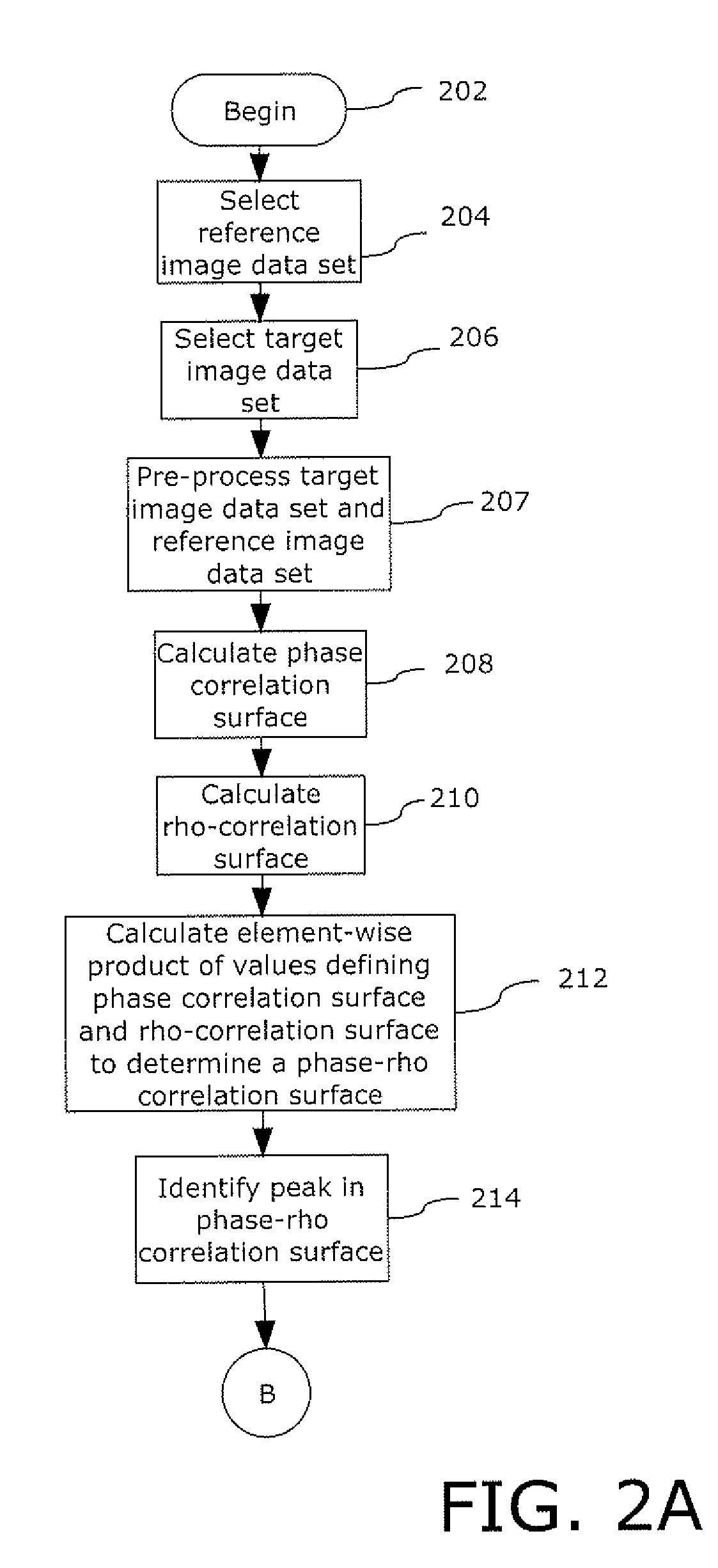

Image registration using rotation tolerant correlation method

A method for correlating or finding similarity between two data sets. The method can be used for correlating two images with common scene content in order to find correspondence points between the data sets. These correspondence points then can be used to find the transformation parameters which when applied to image 2 brings it into alignment with image 1. The correlation metric has been found to be invariant under image rotation and when applied to corresponding areas of a reference and target image, creates a correlation surface superior to phase and norm cross correlation with respect to the correlation peak to correlation surface ratio. The correlation metric was also found to be superior when correlating data from different sensor types such as from SAR and EO sensors. This correlation method can also be applied to data sets other than image data including signal data.

Owner:HARRIS CORP

Stereoscopic image processing device, method, recording medium and stereoscopic imaging apparatus

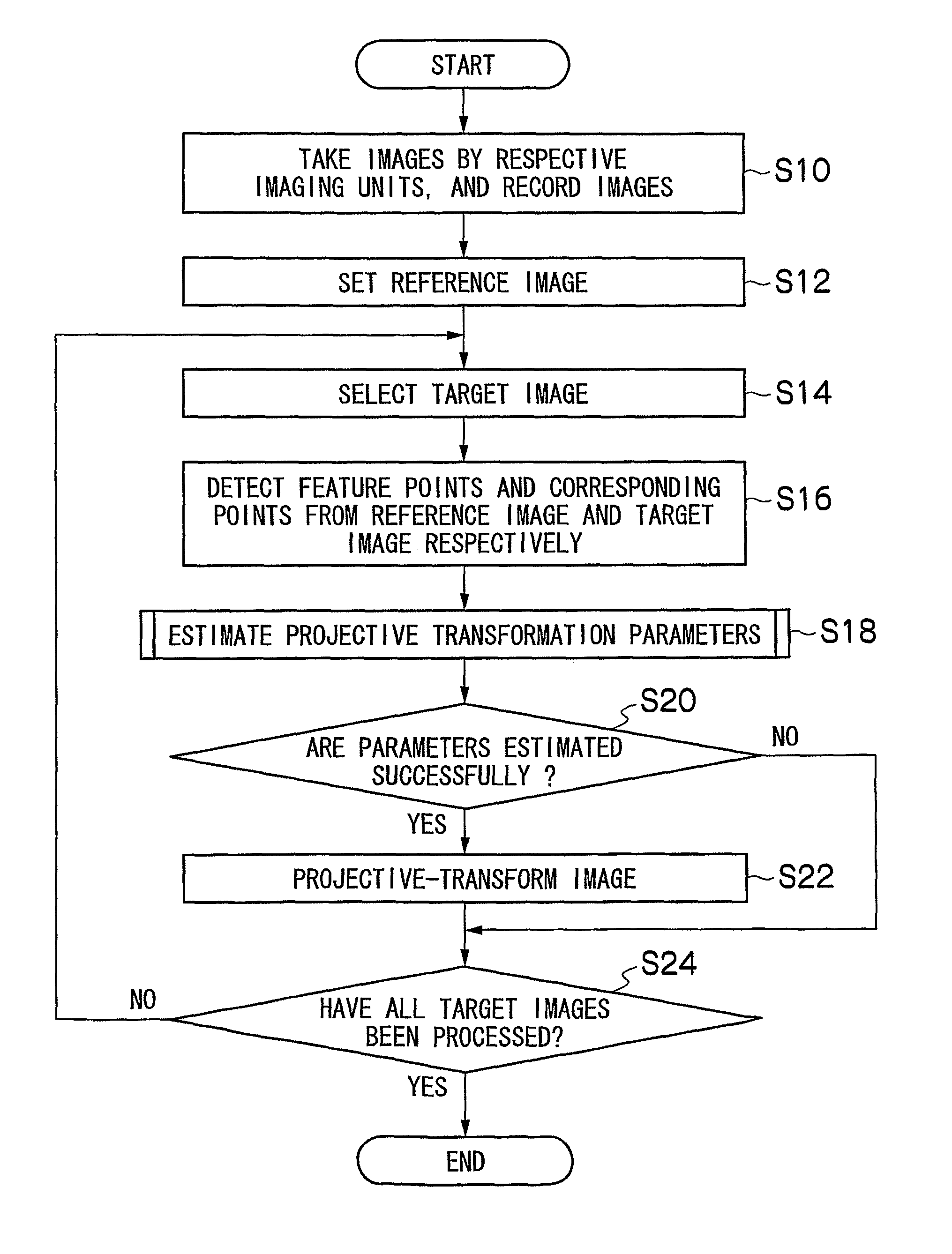

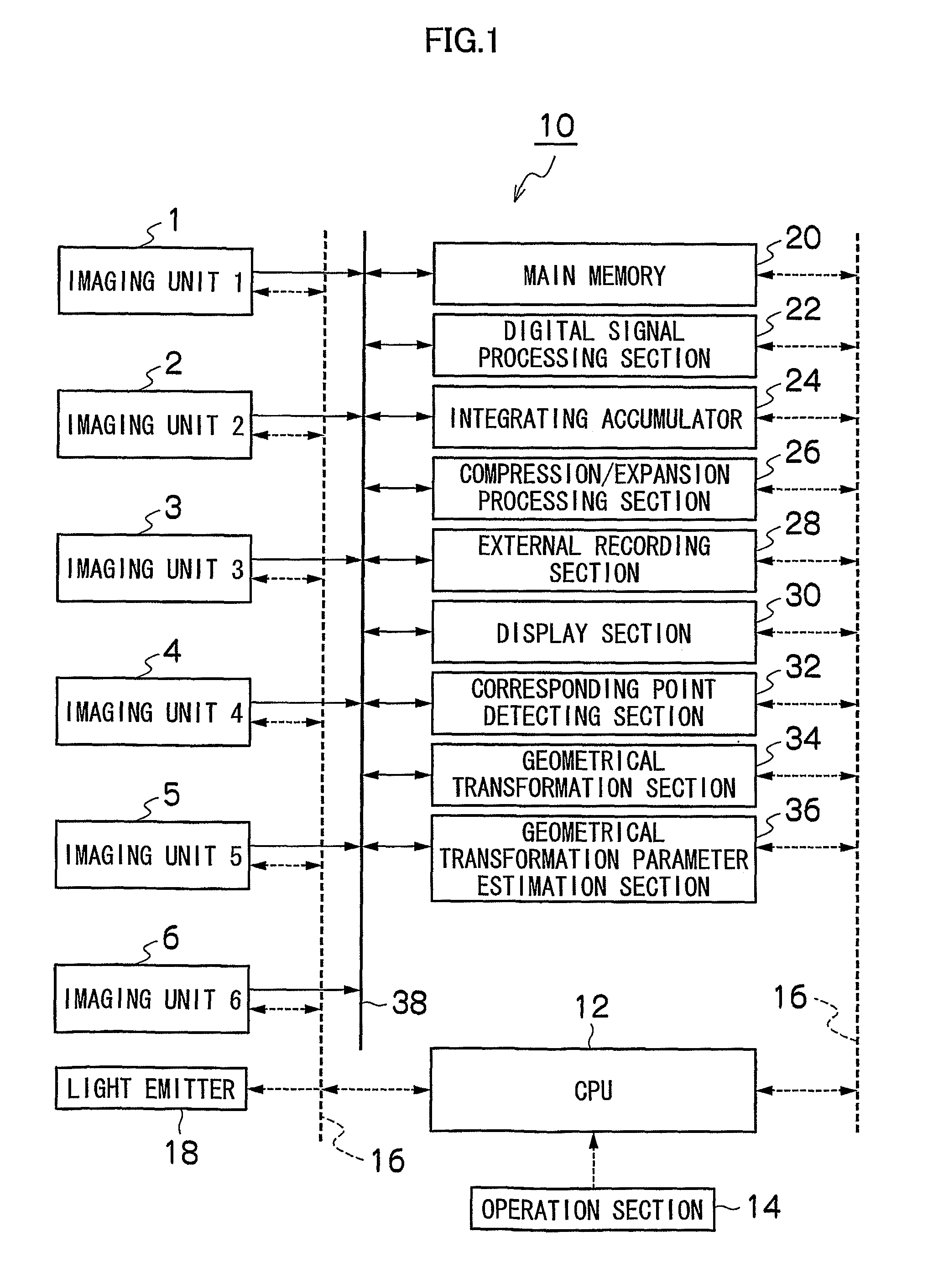

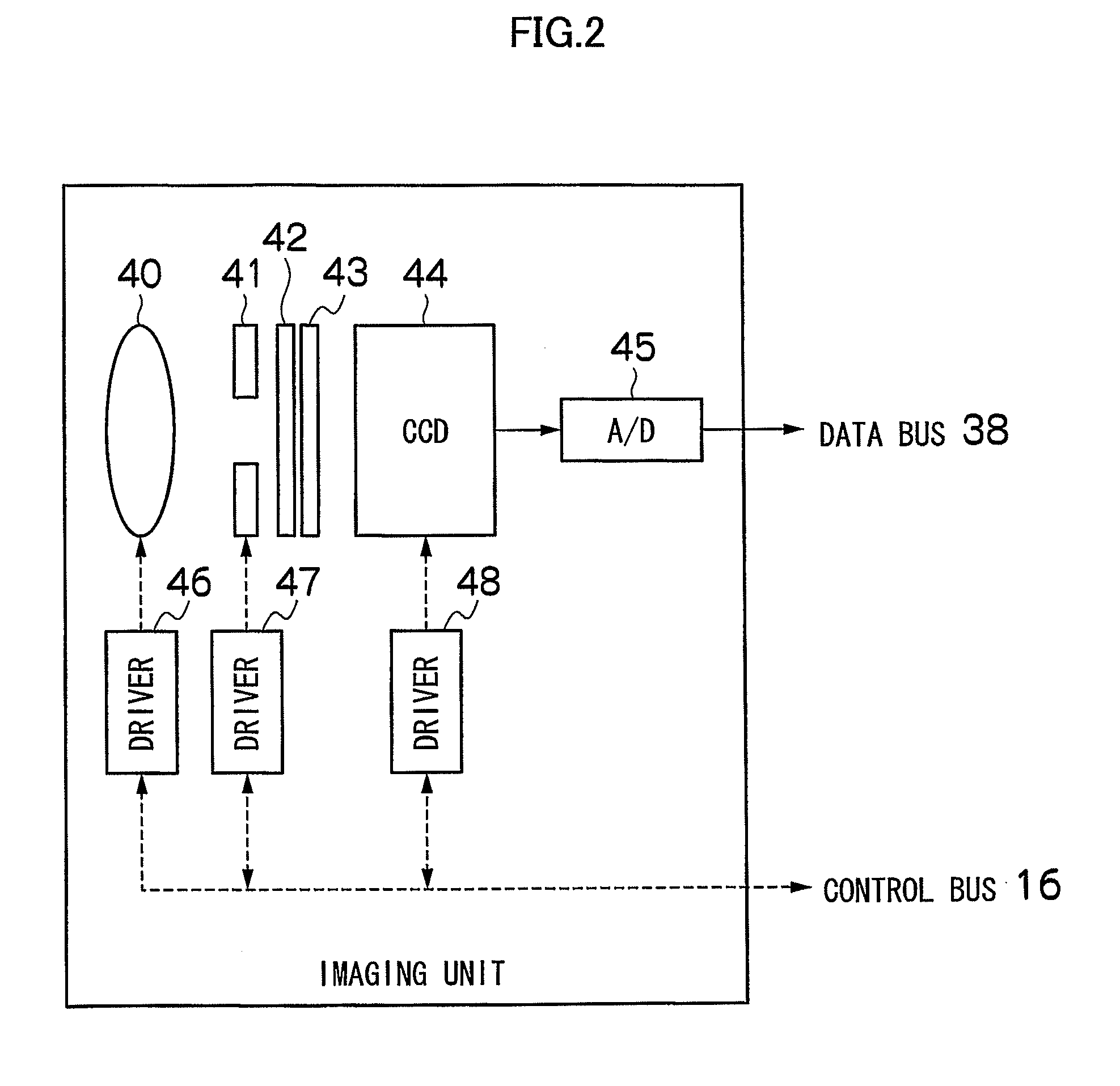

An apparatus (10) includes a device for acquiring a plurality of images of an identical subject taken from a plurality of viewpoints; a device for selecting a prescribed image as a reference image, selecting an image other than the reference image as a target image from among the images, and detecting feature points from the reference image and corresponding points from the target image to generate pairs of the feature point and corresponding point, wherein feature of the feature point and the corresponding point in the same pair are substantially identical; a device for estimating geometrical transformation parameters for geometrically-transforming the target image such that y-coordinate values of the feature point and the corresponding point included in the same pair are substantially identical, wherein y-direction is orthogonal to a parallax direction of the viewpoints; and a device for geometrically-transforming the target image based on the parameters.

Owner:FUJIFILM CORP

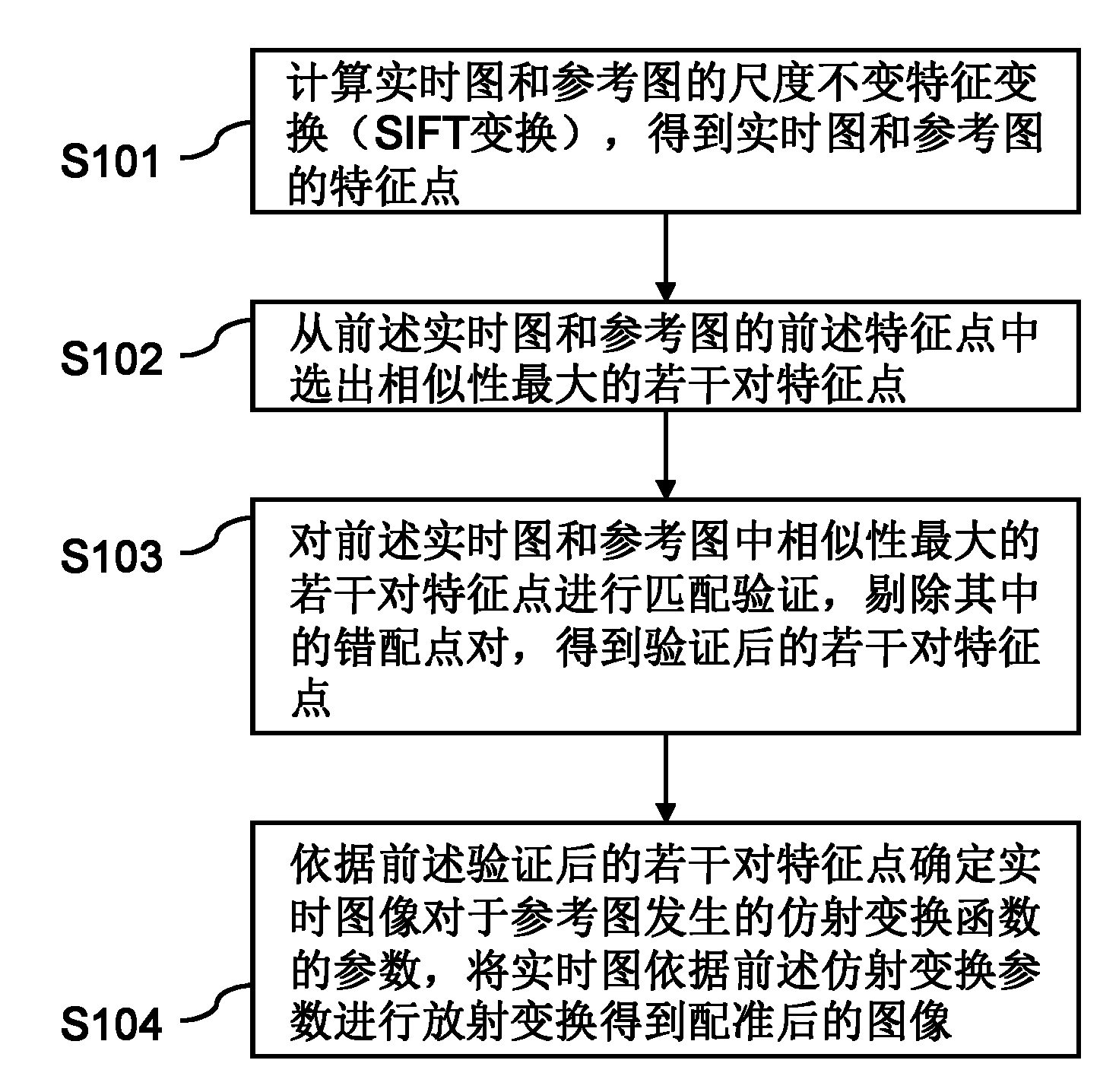

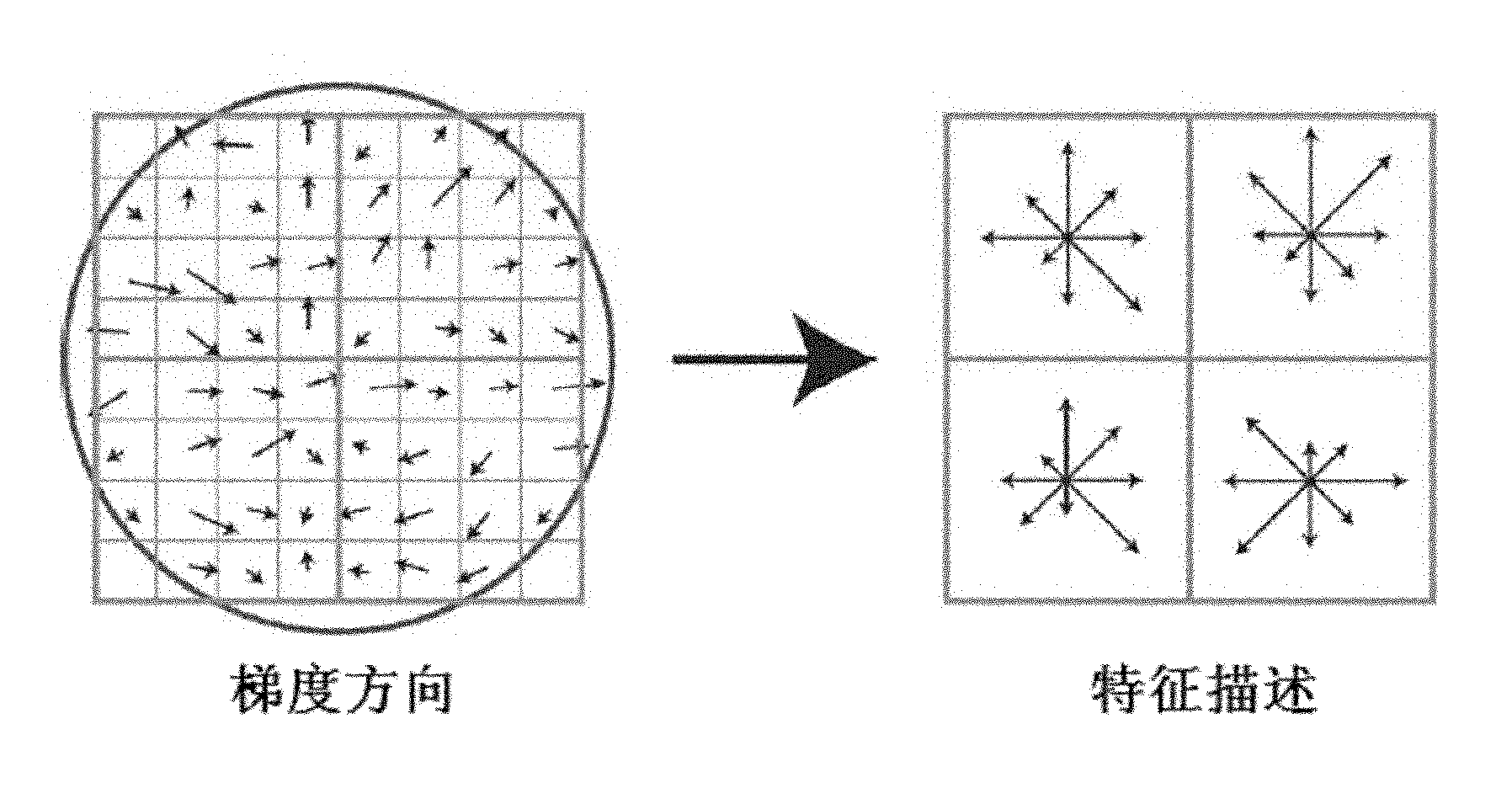

Image registration system and method thereof

InactiveCN102005047AImprove accuracyImprove registration accuracyImage analysisScale-invariant feature transformReference image

The invention provides an image registration system and a method thereof. The method comprises the following steps of: firstly calculating scale invariant feature transform (SIFT transform) of a real-time image and a reference image to obtain feature points of the real-time image and the reference image; selecting a plurality of pairs of feature points with maximum similarity from the feature points of the real-time image and the reference image; carrying out matching verification of the plurality of pairs of feature points with maximum similarity selected from the feature points of the real-time image and the reference image and rejecting a mismatching point pair therein to obtain a plurality of pairs of feature points which are verified; according to the plurality of pairs of feature points which are verified, determining the parameter of an affine transformation function of the real-time image relative to the reference image; and carrying out radioactive transform of the real-time image according to the affine transformation parameter to obtain the registered image. The system and the method are difficult to influence and high in registration accuracy.

Owner:江苏博悦物联网技术有限公司

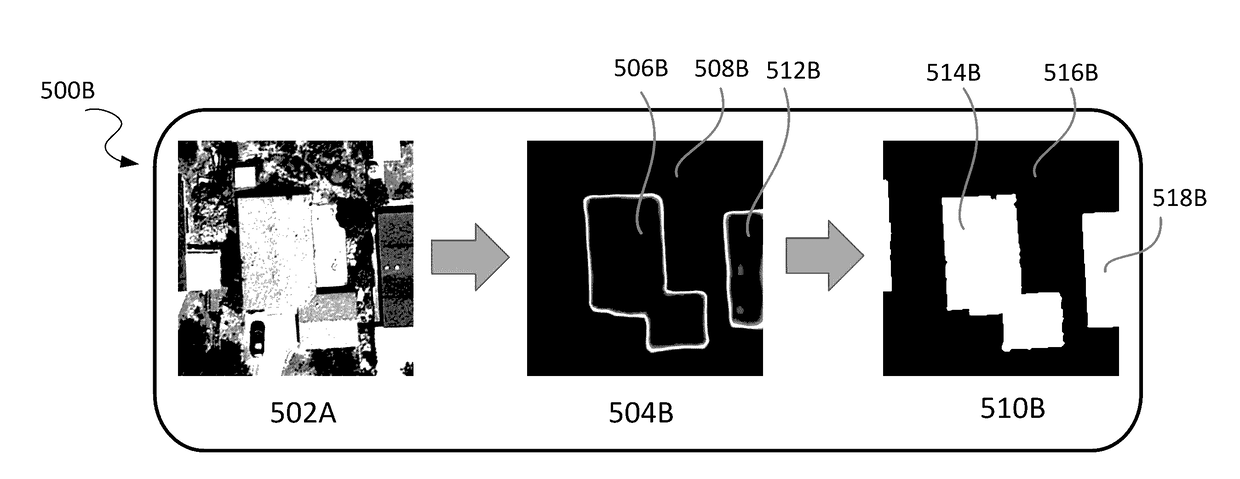

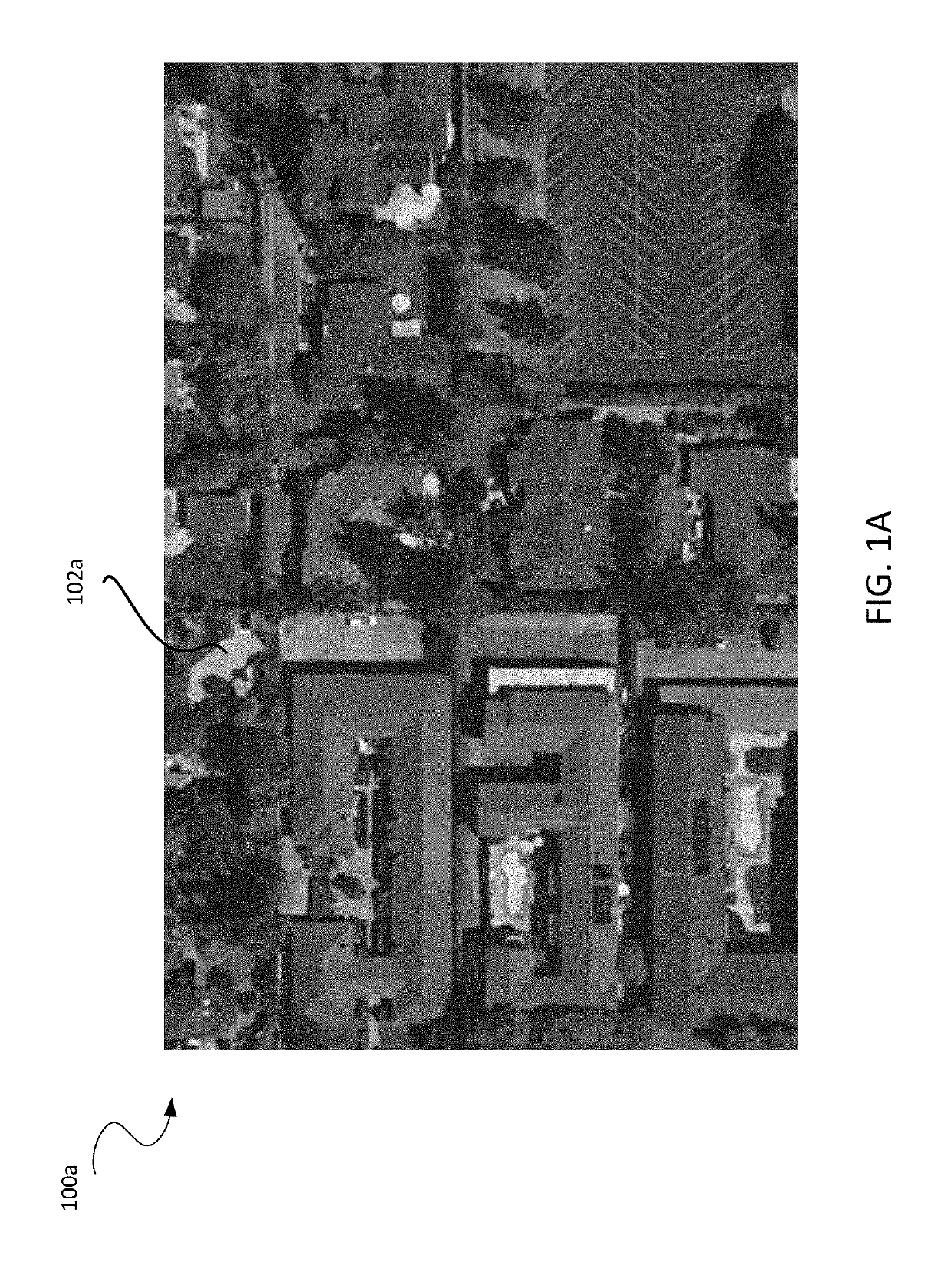

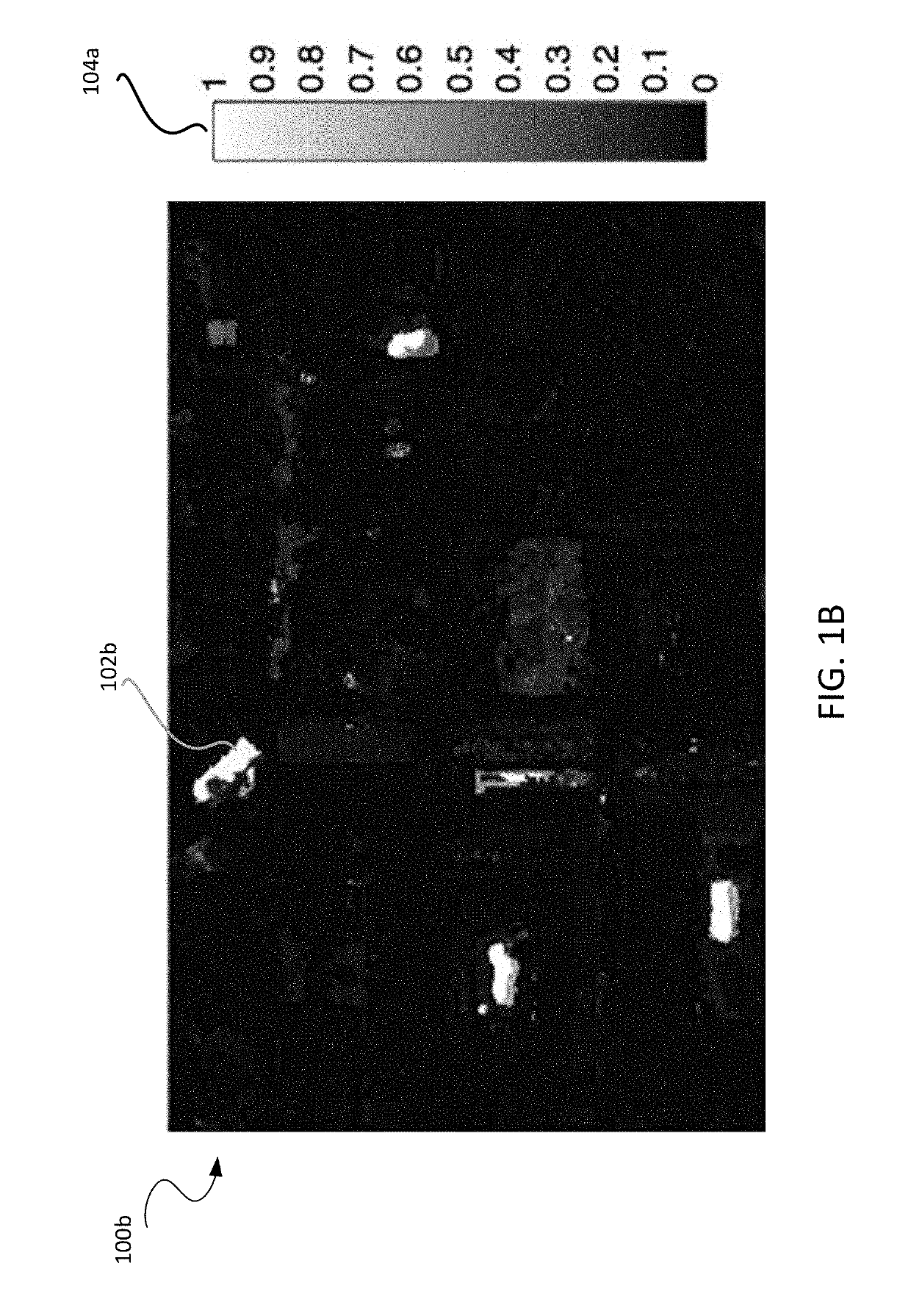

Systems and methods for analyzing remote sensing imagery

ActiveUS10311302B2Television system detailsCharacter and pattern recognitionTransformation parameterIdentifying goals

Disclosed systems and methods relate to remote sensing, deep learning, and object detection. Some embodiments relate to machine learning for object detection, which includes, for example, identifying a class of pixel in a target image and generating a label image based on a parameter set. Other embodiments relate to machine learning for geometry extraction, which includes, for example, determining heights of one or more regions in a target image and determining a geometric object property in a target image. Yet other embodiments relate to machine learning for alignment, which includes, for example, aligning images via direct or indirect estimation of transformation parameters.

Owner:CAPE ANALYTICS INC

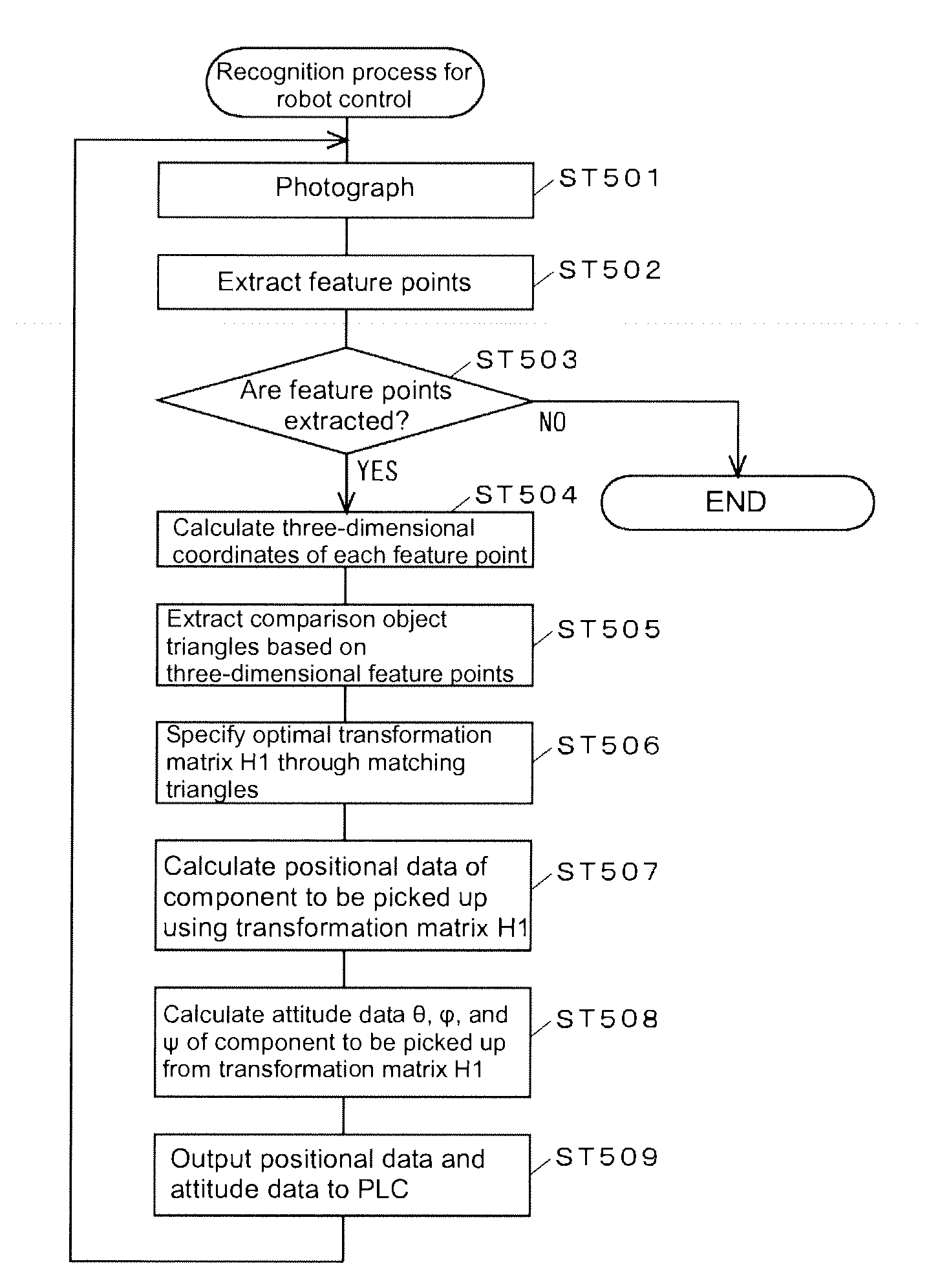

Recognition processing method and image processing device using the same

ActiveUS20100098324A1Recognized highly accuratelyHigh degreeImage enhancementProgramme-controlled manipulatorImaging processingAlgorithm

A recognition processing method and an image processing device ends recognition of an object within a predetermined time while maintaining the recognition accuracy. The device extracts combinations of three points defining a triangle whose side length satisfy predetermined criterion values from feature points of the model of a recognition object, registers the extracted combinations as model triangles, and similarly extracts combinations of three points defining a triangle whose side lengths satisfy predetermined criterion values from feature points of the recognition object. The combinations are used as comparison object triangles and associated with the respective model triangles. The device calculates a transformation parameter representing the correspondence relation between each comparison object triangle and the corresponding model triangle using the coordinates of the corresponding points (A and A′, B and B′, and C and C′), determines the goodness of fit of the transformation parameters on the relation between the feature points of the model and those of the recognition object. The object is recognized by specifying the transformation parameters representing the correspondence relation between the feature points of the model and those of the recognition object according to the goodness of fit determined for each association.

Owner:ORMON CORP

Method for 2-D/3-D registration based on hierarchical pose regression

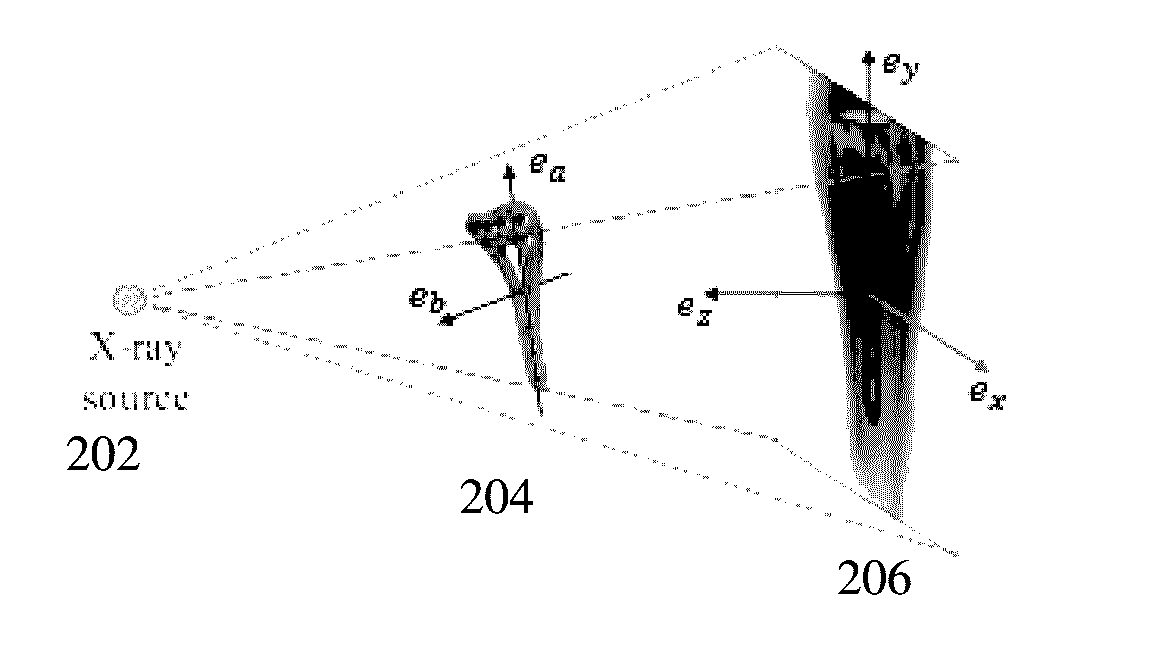

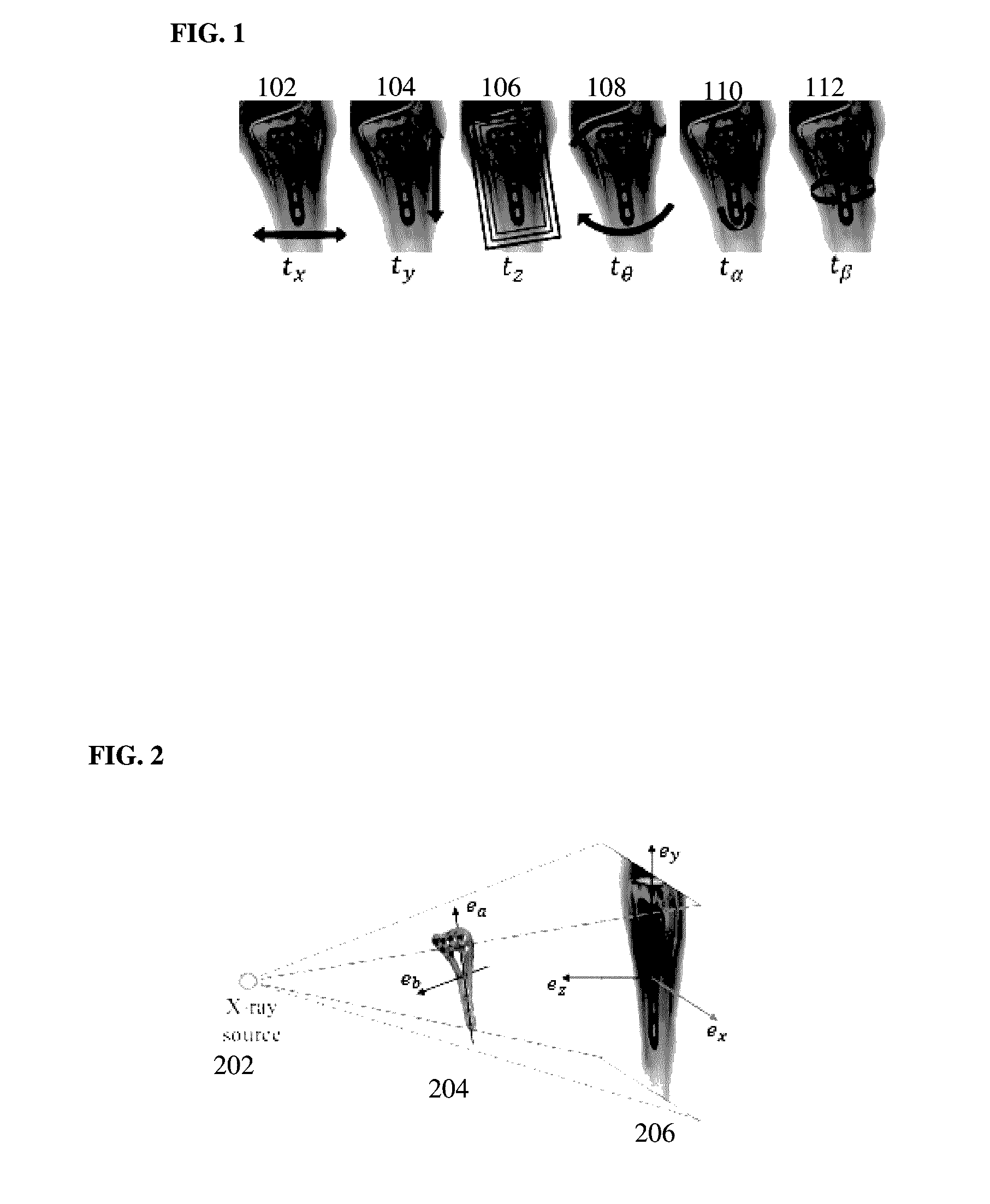

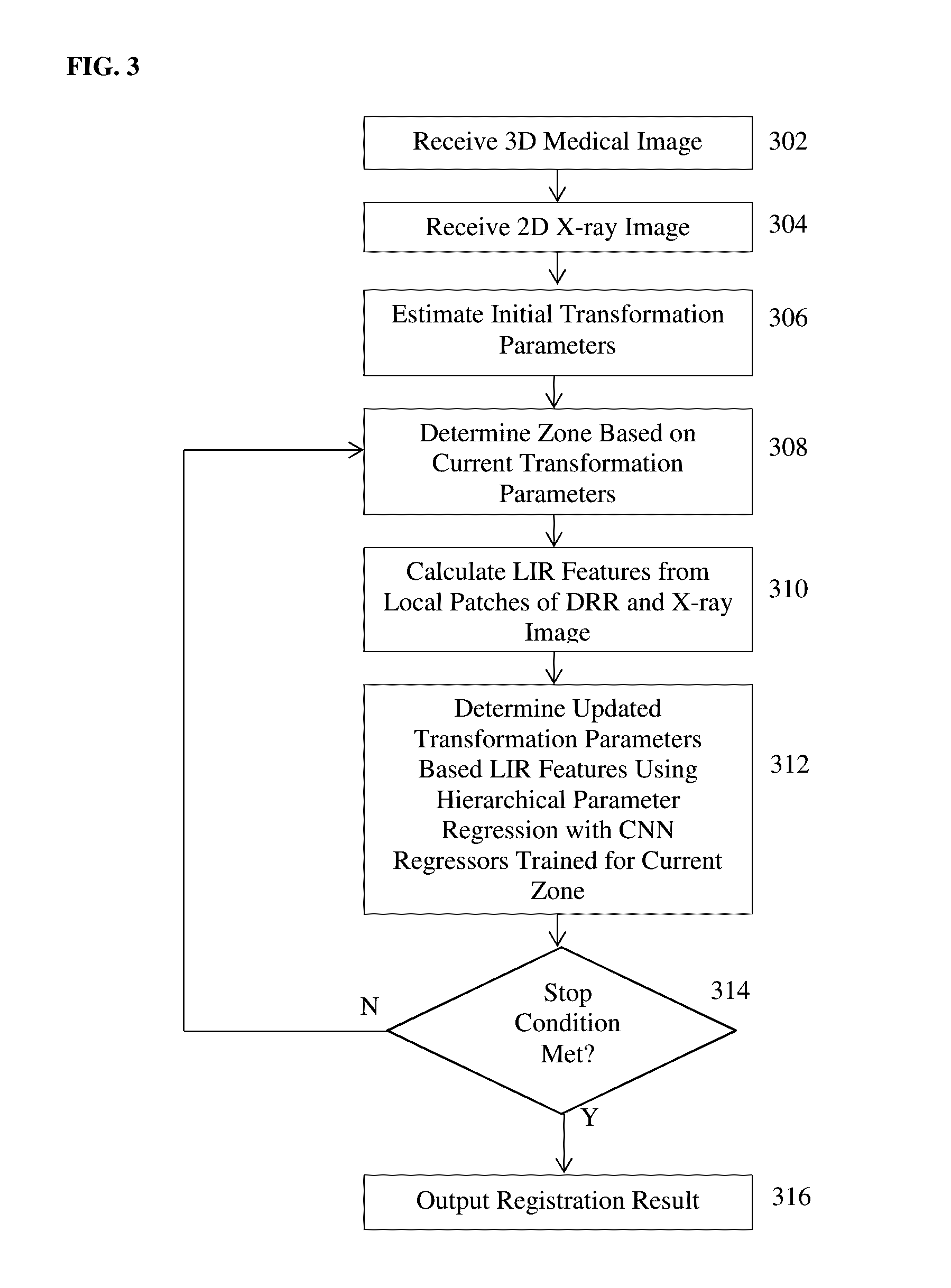

ActiveUS20170024634A1Large capture rangeImprove accuracyImage enhancementImage analysisTransformation parameterX ray image

A method and apparatus for convolutional neural network (CNN) regression based 2D / 3D registration of medical images is disclosed. A parameter space zone is determined based on transformation parameters corresponding to a digitally reconstructed radiograph (DRR) generated from the 3D medical image. Local image residual (LIR) features are calculated from local patches of the DRR and the X-ray image based on a set of 3D points in the 3D medical image extracted for the determined parameter space zone. Updated transformation parameters are calculated based on the LIR features using a hierarchical series of regressors trained for the determined parameter space zone. The hierarchical series of regressors includes a plurality of regressors each of which calculates updates for a respective subset of the transformation parameters.

Owner:SIEMENS HEALTHCARE GMBH +1

Transforming A Submitted Image Of A Person Based On A Condition Of The Person

ActiveUS20110064331A1Reduce measurement errorGeometric image transformationCharacter and pattern recognitionTransformation parameter

Apparatuses, computer media, and methods for altering a submitted image of a person. The submitted image is transformed in accordance with associated data regarding the person's condition. Global data may be processed by a statistical process to obtain cluster information, and the transformation parameter is then determined from cluster information. The transformation parameter is then applied to a portion of the submitted image to render a transformed image. A transformation parameter may include a texture alteration parameter, a hair descriptive parameter, or a reshaping parameter. An error measure may be determined that gauges a discrepancy between a transformed image and an actual image. A transformation model is subsequently reconfigured with a modified model in order to reduce the error measure. Also, the transformation model may be trained to reduce an error measure for the transformed image.

Owner:ACCENTURE GLOBAL SERVICES LTD

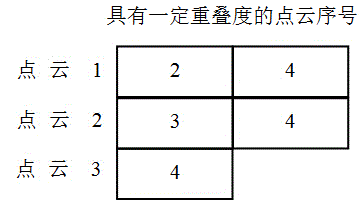

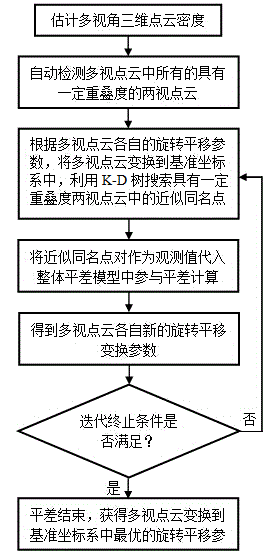

Overall registering method for global optimization of multi-view three-dimensional laser point clouds

ActiveCN104463894AGet the optimal transformation parametersImprove registration accuracyImage analysisPoint cloudTransformation parameter

The invention provides an overall registering method for carrying out automatic global optimization on registering initial values of existing multi-view laser point clouds. The overall registering method is characterized in that firstly, a multi-view laser point cloud registering global optimization overall adjustment model is established and detailedly deduced, and by estimating the density of the point cloud, all two-view clouds with a certain overlapping degree are automatically detected; then a K-D tree is used for searching for approximate homonymy points in the two-view clouds with the certain overlapping degree, the approximate homonymy points are used as observed values to be substituted into the global optimization overall adjustment model, respective optimal rotation and translation transformation parameters of the multi-view laser point clouds are obtained at the same time through iterative adjustment calculation, and therefore overall precise registering of the multi-view three-dimensional laser point clouds is achieved. The method lays emphasis on improvement of the overall registering precision of the multi-view three-dimensional laser point clouds, disordered and messy multi-view three-dimensional laser scanning point clouds can be treated at the same time, registering experiments are carried out through actual multi-view three-dimensional laser point cloud data, and results prove that on the basis of guaranteeing registering efficiency, the overall registering precision is improved effectively.

Owner:SHANDONG UNIV OF TECH

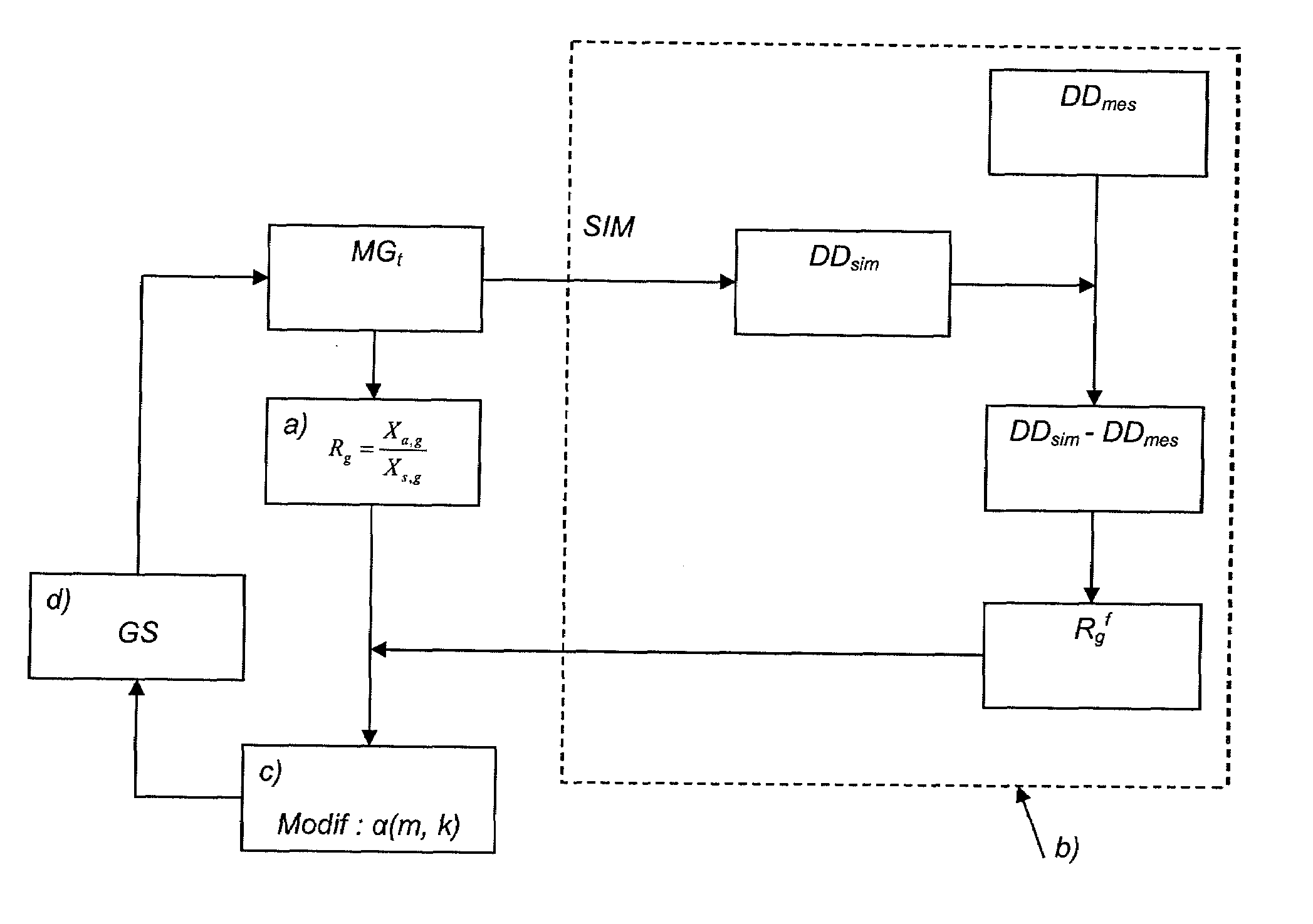

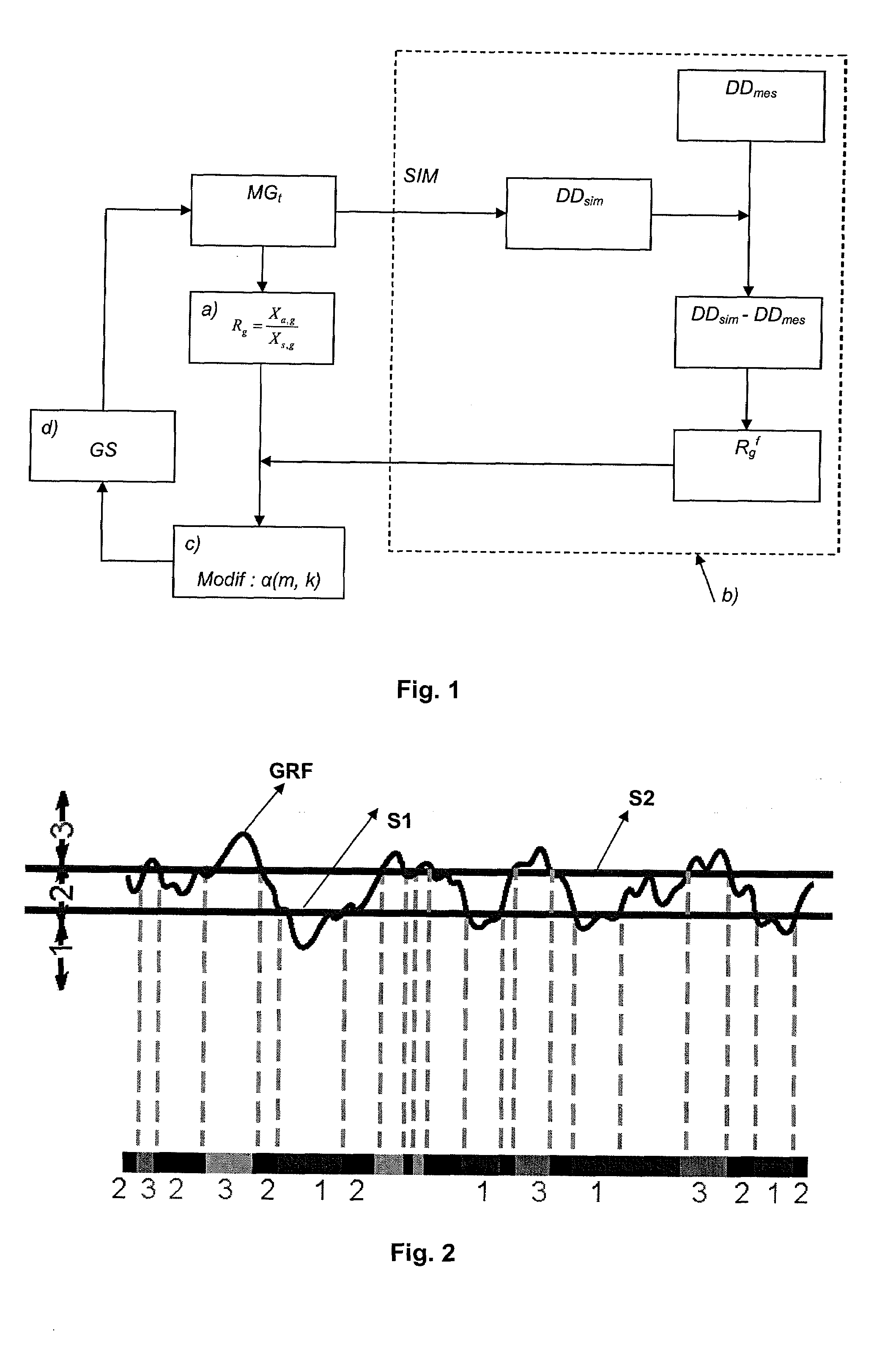

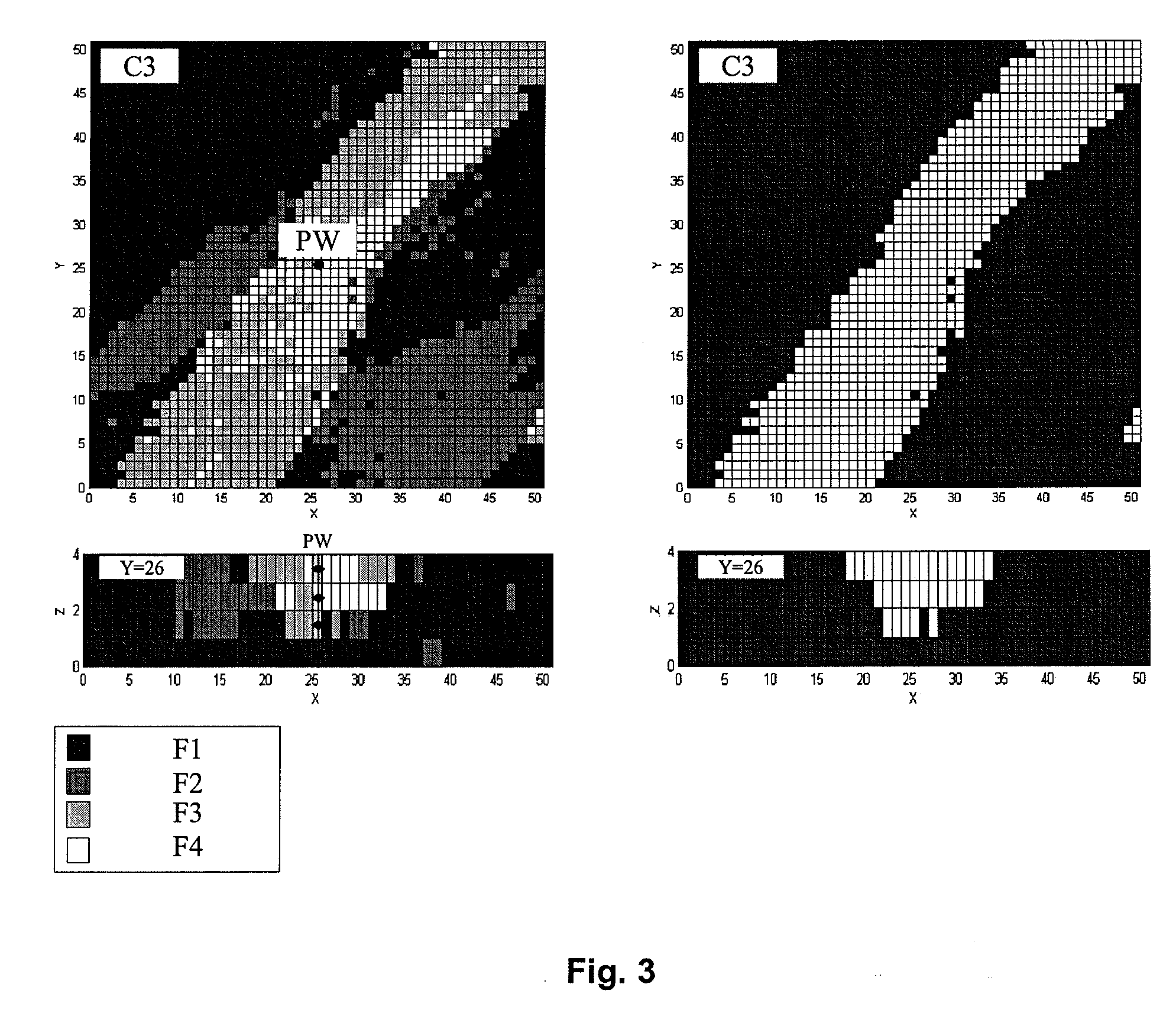

Method for Gradually Modifying Lithologic Facies Proportions of a Geological Model

InactiveUS20080243447A1Electric/magnetic detection for well-loggingComputation using non-denominational number representationLithologyModel method

Method for gradually modifying a geological model representative of an underground reservoir so as to respect fixed average proportions of these lithologic facies.A group of facies referred to as selection and a subgroup of facies referred to as association are selected. A transformation parameter is defined by the ratio between the average proportions of the facies of the association and those of the selection. Within the context of history matching, a difference between measured dynamic data values and values calculated by means of a flow simulator from the geological model is measured. An optimization algorithm is used to deduce therefrom a new value for the transformation parameter that minimizes this difference and this transformation is applied to the lithologic facies of the selection. The modification can be applied to the entire model or to a given zone.

Owner:INST FR DU PETROLE

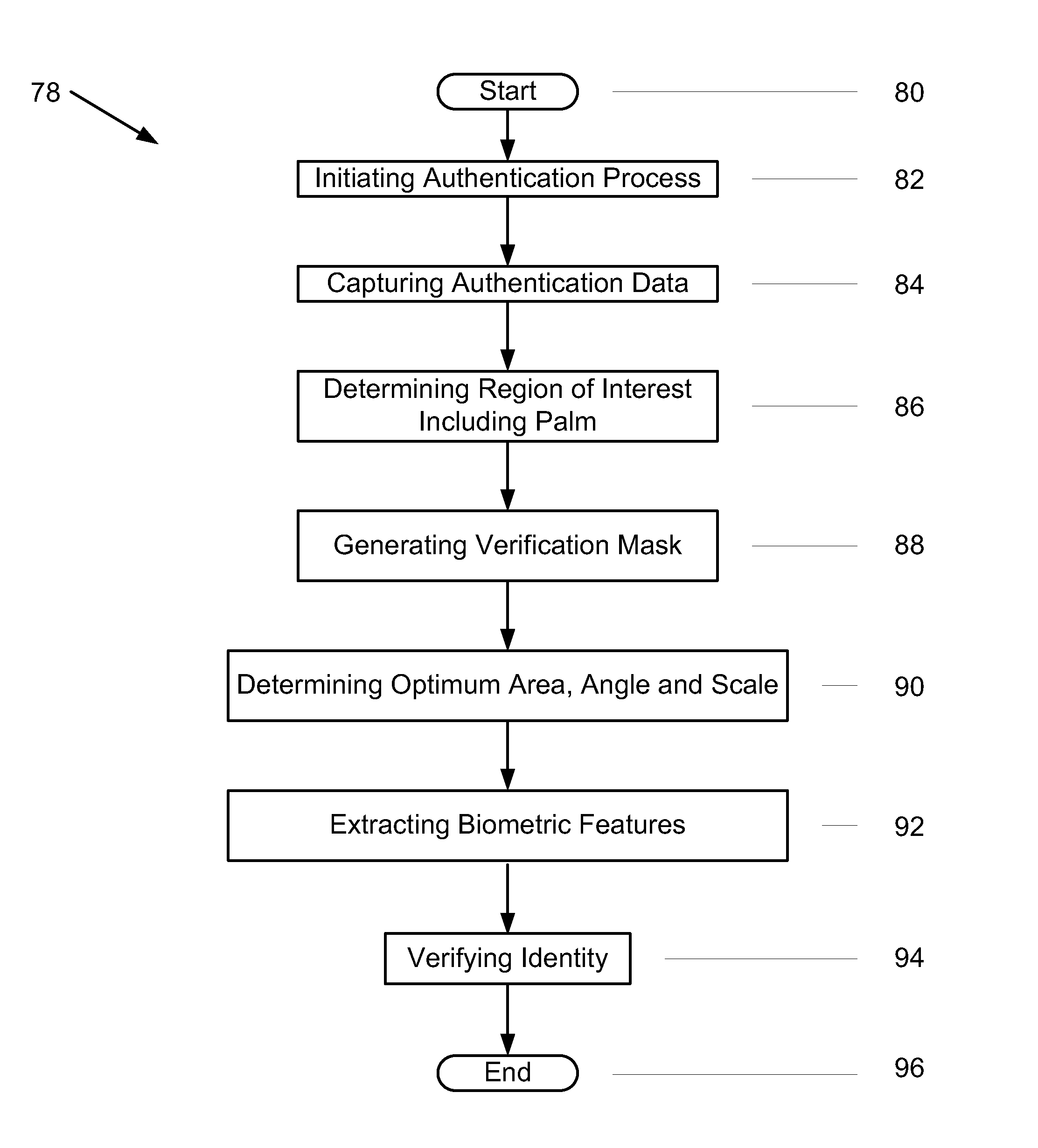

Methods and systems for authenticating users

A method of authenticating users is provided that includes capturing biometric authentication data of a user and processing the captured biometric data into an image. Moreover, the method includes determining a region of interest of the image and a gray scale image from the image, determining an optimum transformation parameter set within the region of interest, and aligning the gray scale image with an enrollment gray scale image generated during enrollment of the user using results of the optimum transformation parameter set determination. Furthermore, the method includes extracting biometric feature data from the gray scale image and verifying an identity of the user with extracted biometric feature data included in a region of agreement.

Owner:DAON TECH

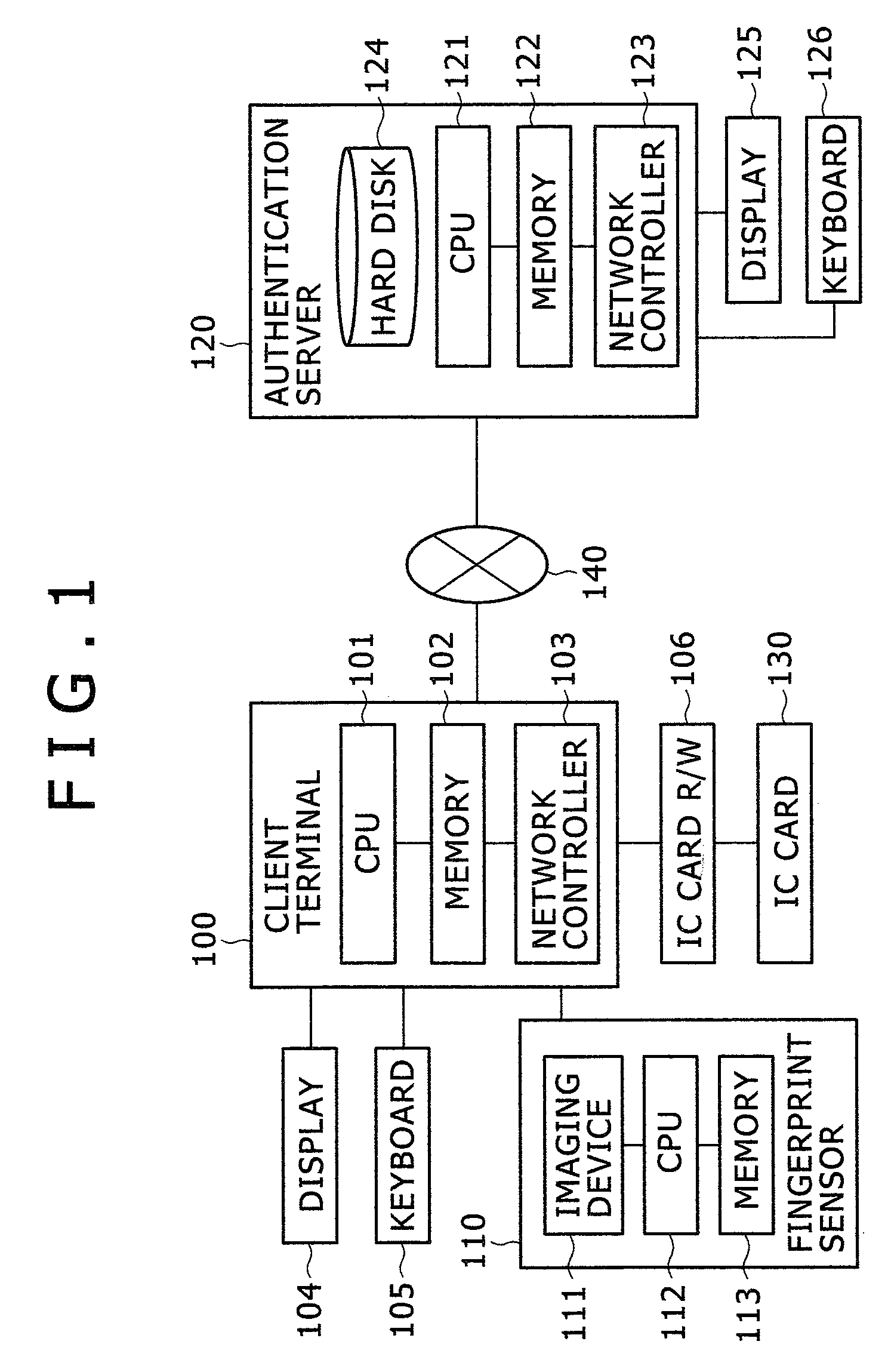

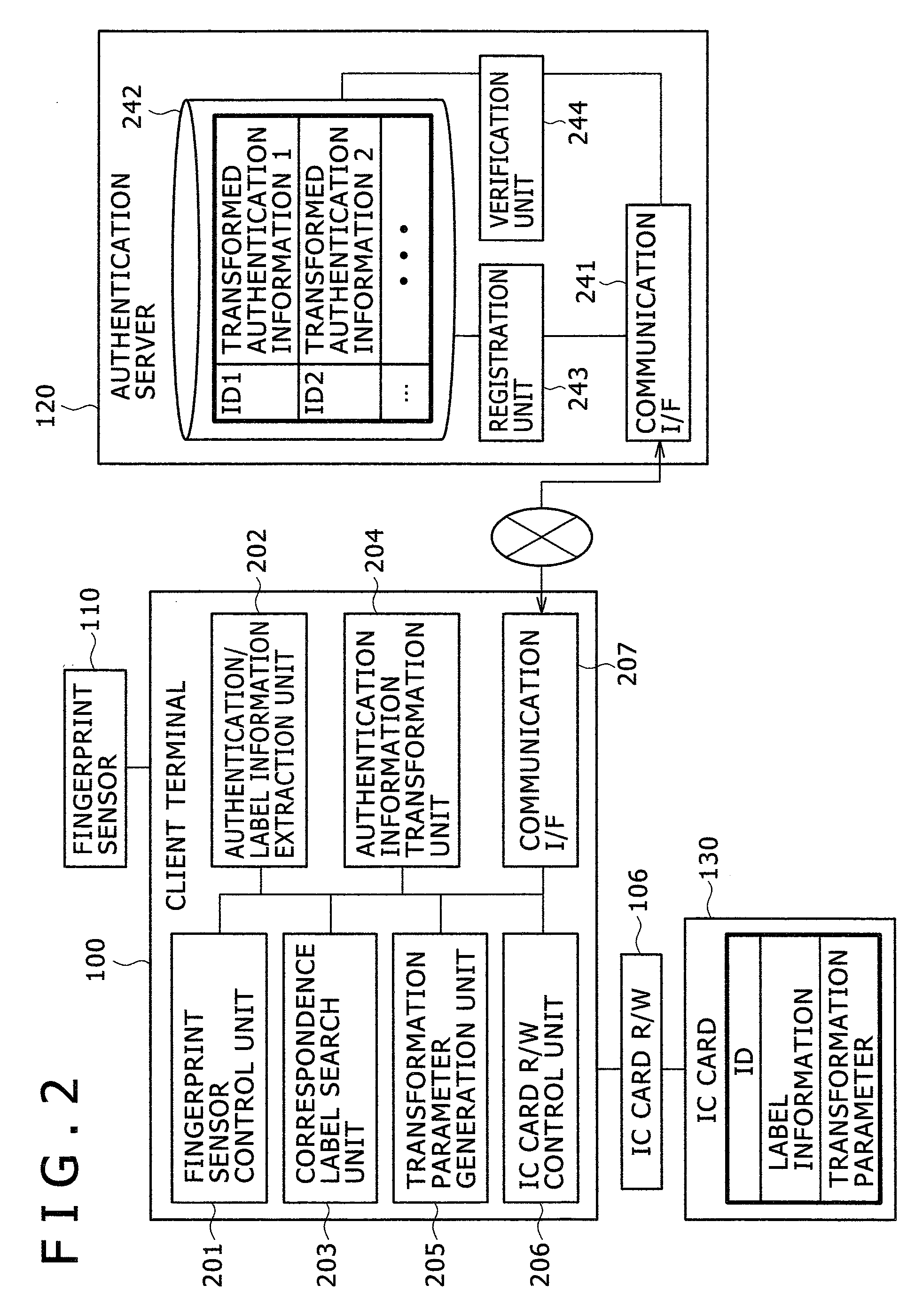

Method, system and program for authenticating a user by biometric information

ActiveUS20070286465A1Convenient and accurateThe degree of freedom becomes largerDigital data authenticationBiometric pattern recognitionTransformation parameterAuthentication server

Upon registration, a client extracts plural feature points and information (identification information) by which each of the feature points can be identified, from a user's fingerprint, and randomly generates a transformation parameter for each feature point to transform the coordinates and direction. The transformed identification information (template) is transmitted to an authentication server and stored in a memory. The identification information and transformation parameters of the feature points are stored in a memory medium of the user. Upon authentication of the user, the client extracts feature points from the user's fingerprint, and identifies the feature points by the identification information in the memory medium. The client transforms the identified feature points by reading the corresponding transformation parameters from the memory medium, and transmits the transformed information to the authentication server. The server verifies the received identification information against the template registered in advance, to authenticate the user.

Owner:HITACHI LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com