Patents

Literature

124 results about "Self attention" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

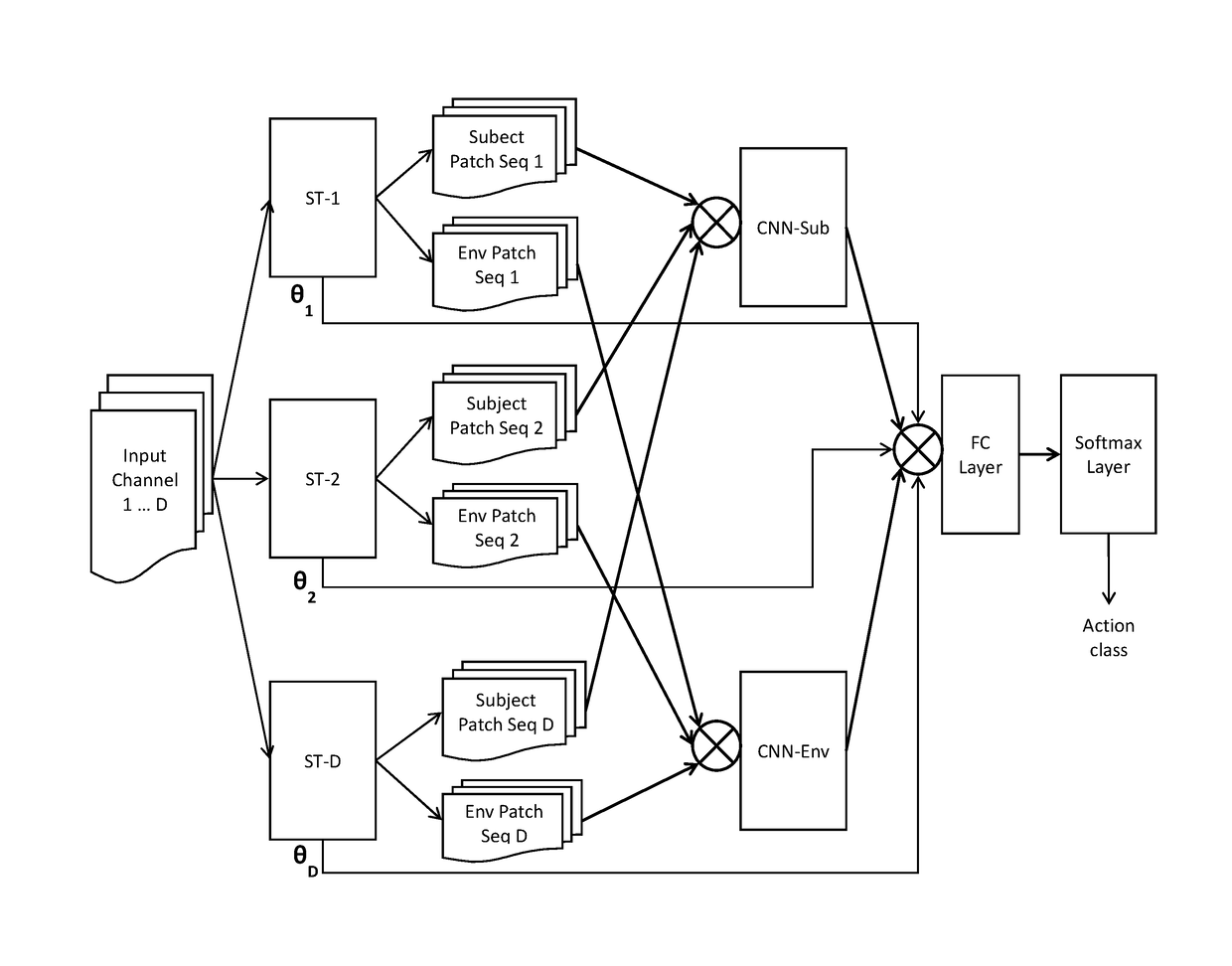

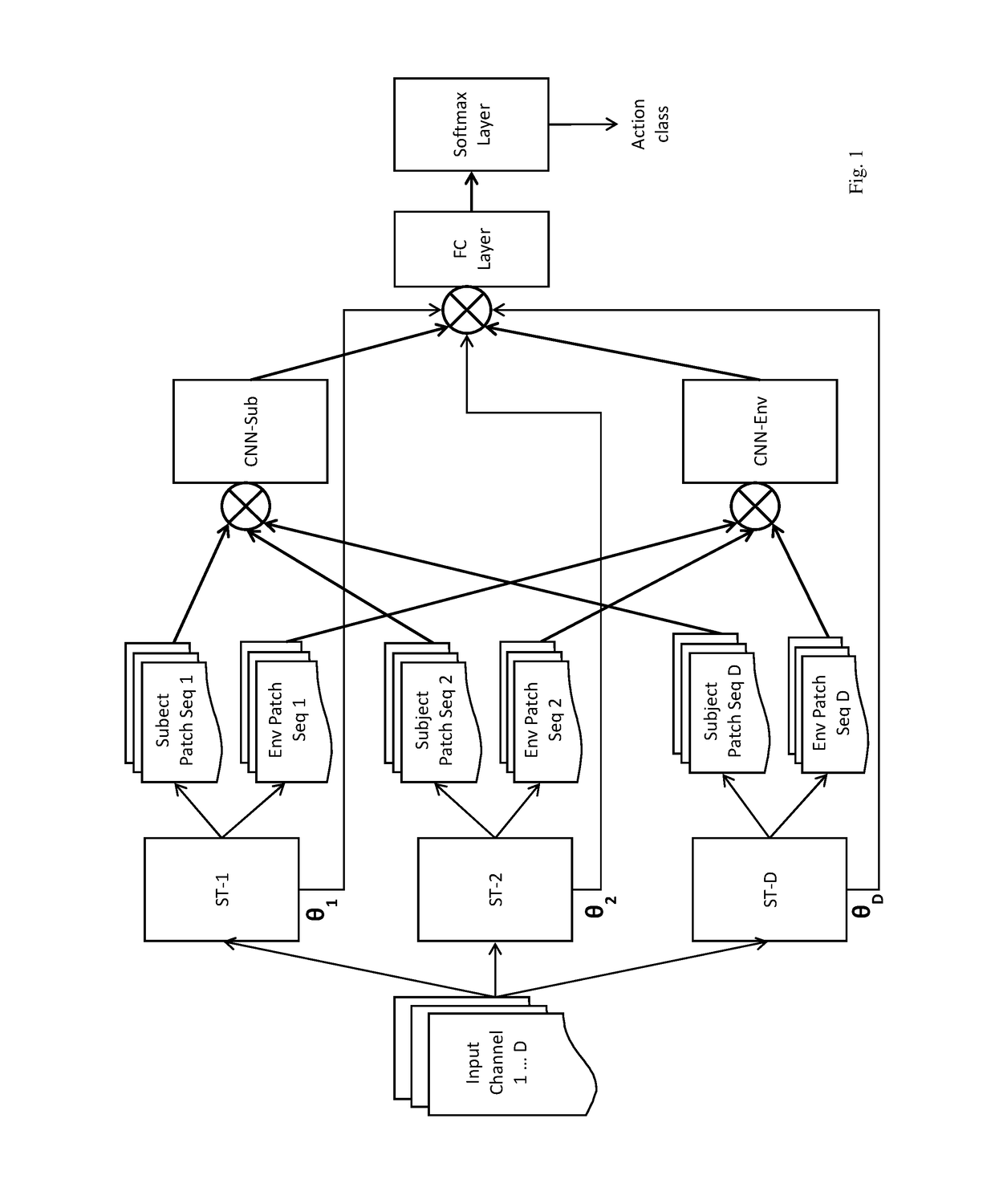

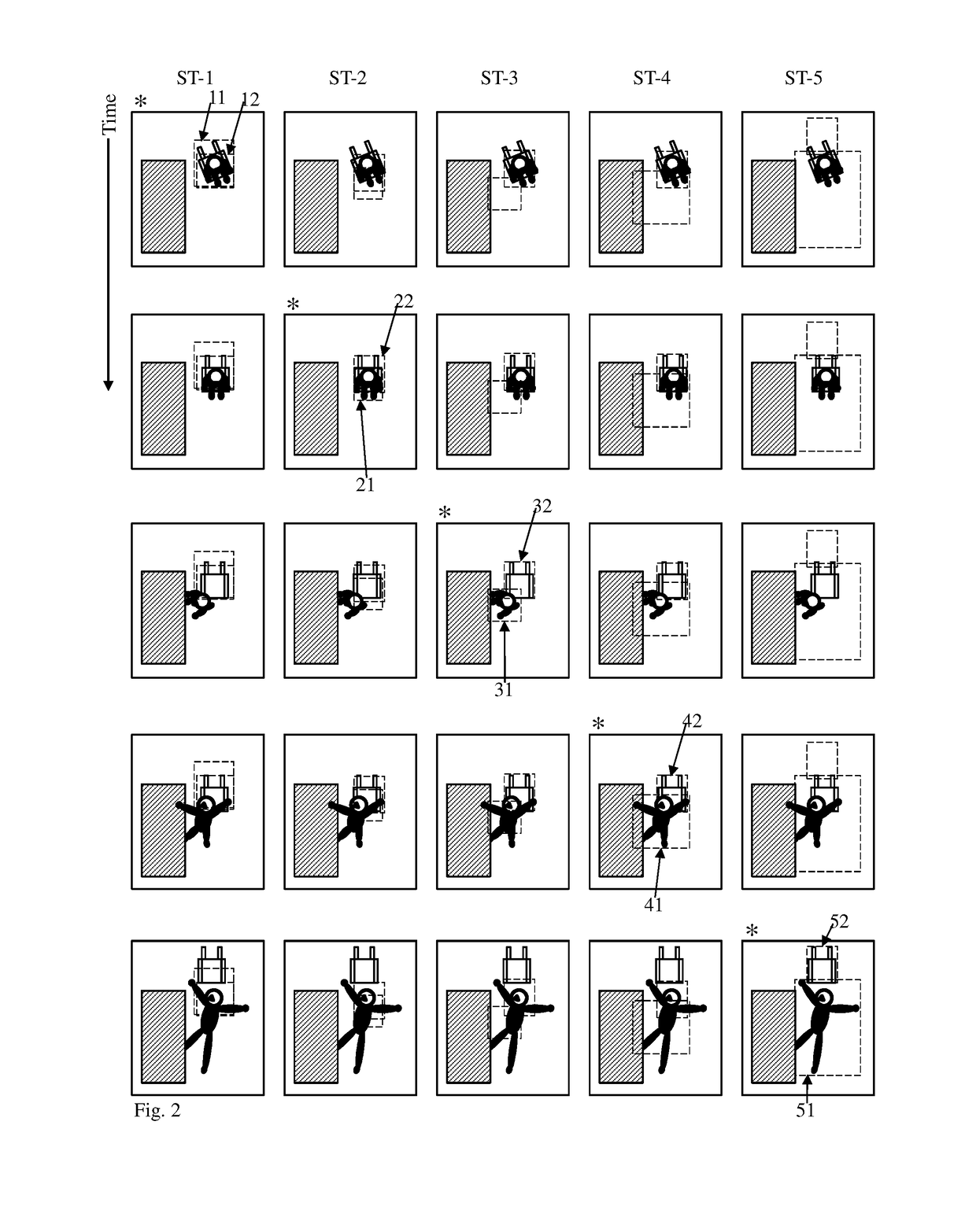

Self-attention deep neural network for action recognition in surveillance videos

An artificial neural network for analyzing input data, the input data being a 3D tensor having D channels, such as D frames of a video snippet, to recognize an action therein, including: D spatial transformer modules, each generating first and second spatial transformations and corresponding first and second attention windows using only one of the D channels, and transforming first and second regions of each of the D channels corresponding to the first and second attention windows to generate first and second patch sequences; first and second CNNs, respectively processing a concatenation of the D first patch sequences and a concatenation of the D second patch sequences; and a classification network receiving a concatenation of the outputs of the first and second CNNs and the D sets of transformation parameters of the first transformation outputted by the D spatial transformer modules, to generate a predicted action class.

Owner:KONICA MINOLTA LAB U S A INC

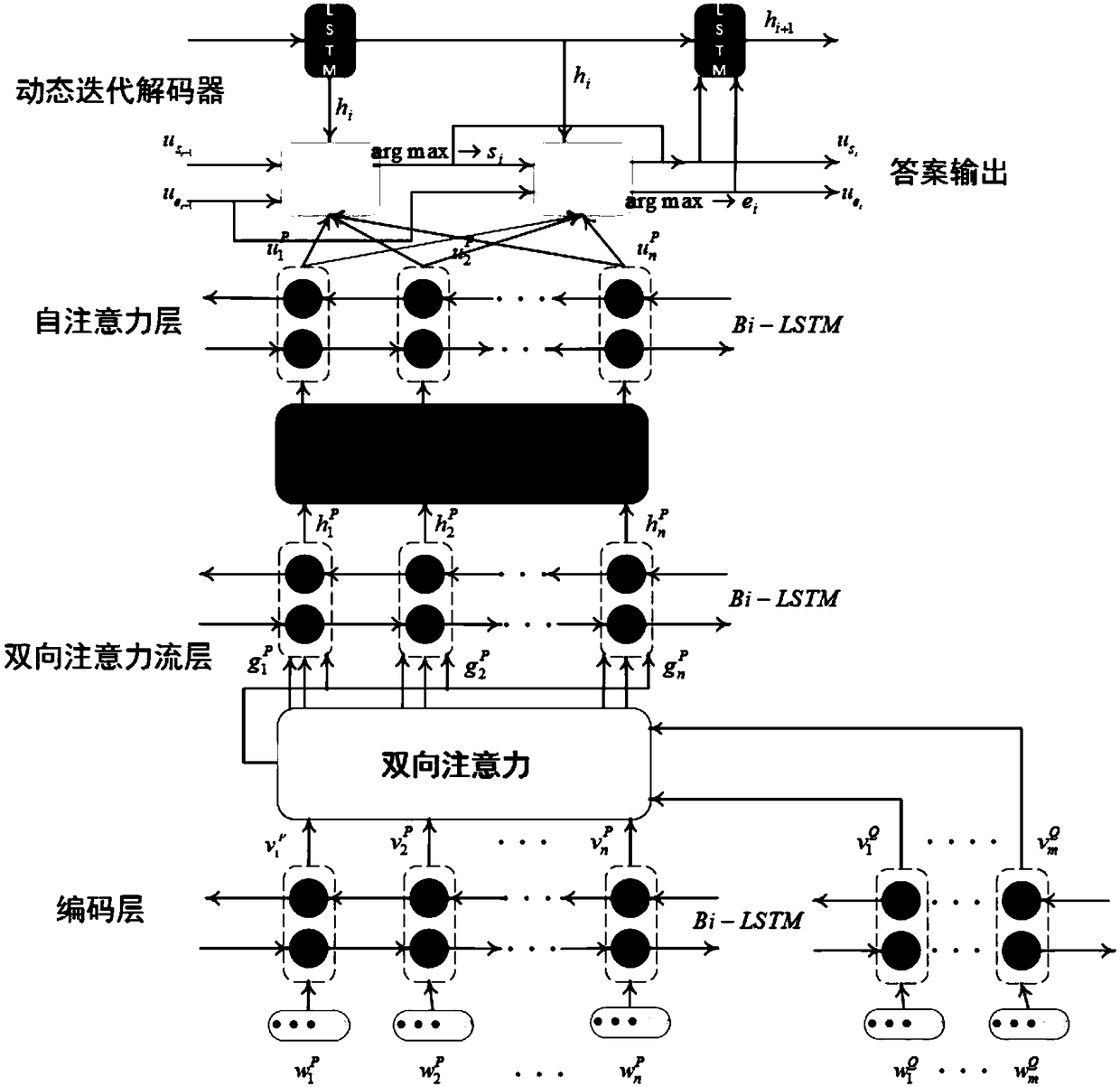

Machine reading understanding method based on a multi-head attention mechanism and dynamic iteration

InactiveCN109492227AEfficient captureRich semantic informationSemantic analysisSpecial data processing applicationsSelf attentionAlgorithm

The invention provides a machine reading understanding method based on a multi-head attention mechanism and dynamic iteration, and belongs to the field of natural language processing. The constructionmethod of the machine reading understanding model comprises the following steps: constructing an article and problem coding layer; constructing a recurrent neural network based on bidirectional attention flow; a self-attention layer is constructed and answer output is predicted based on a dynamic iterative decoder. According to the method, answer prediction can be carried out on questions in a machine reading understanding task text; according to the invention, a new end-to-end neural network model is established, and a new idea is provided for exploring a machine reading understanding task.

Owner:DALIAN UNIV OF TECH

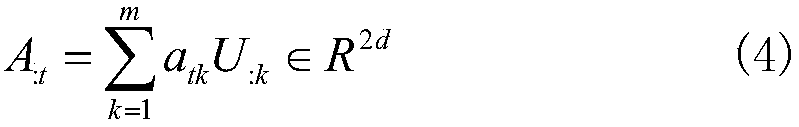

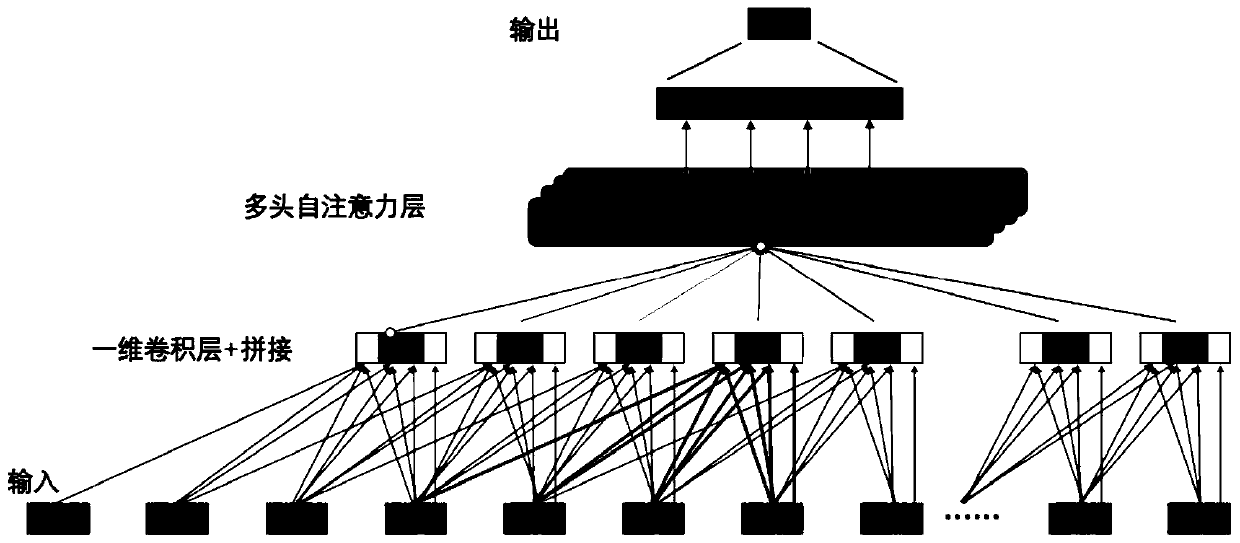

Personalized recommendation method based on deep learning

InactiveCN110196946AReduce serial operationsImprove training efficiencyDigital data information retrievalAdvertisementsPersonalizationRecommendation model

The invention discloses a personalized recommendation method based on deep learning. The method comprises the steps of according to the viewing time sequence behavior sequence of the user, predictingthe next movie that the user will watch, including three stages of preprocessing the historical behavior characteristic data of the user watching the movie, modeling a personalized recommendation model, and performing model training and testing by using the user time sequence behavior characteristic sequence; at the historical behavior characteristic data preprocessing stage when the user watchesthe movie, using the implicit feedback of interaction between the user and the movie to sort the interaction data of each user and the movie according to the timestamp, and obtaining a corresponding movie watching time sequence; and then encoding and representing the movie data,wherein the personalized recommendation model modeling comprises the embedded layer design, the one-dimensional convolutional network layer design, a self-attention mechanism, a classification output layer and the loss function design. According to the method, the one-dimensional convolutional neural network technologyand the self-attention mechanism are combined, so that the training efficiency is higher, and the number of parameters is relatively small.

Owner:SOUTH CHINA UNIV OF TECH

Robustness code summary generation method based on self-attention mechanism

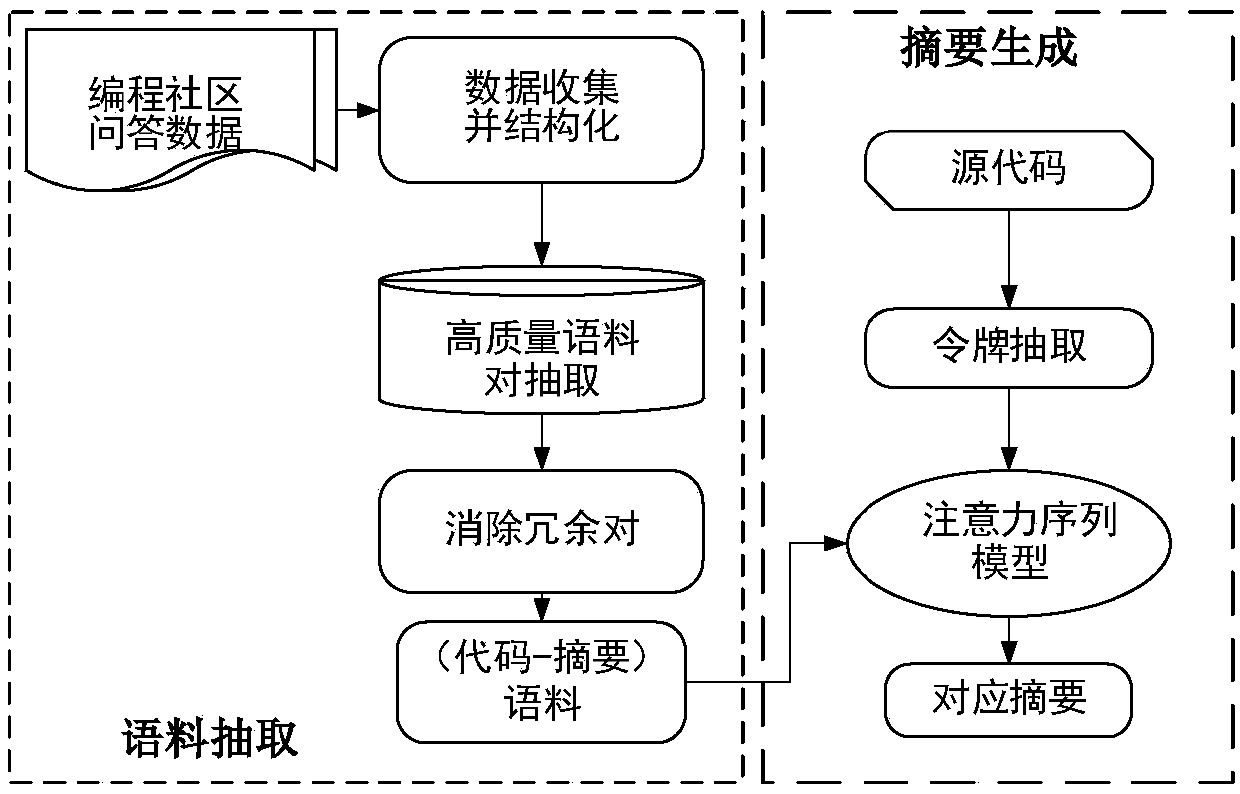

ActiveCN108519890AEffective filteringExcellent evaluationCode refactoringEvaluation resultSelf attention

The invention discloses a robustness code summary generation method based on a self-attention mechanism. The method comprises the steps that firstly, high-quality codes and description corpus pairs (query description texts and reply code texts) in a programming community are extracted; then redundant information of the codes and description corpus pairs is filtered out; then the query descriptiontexts corresponding to the codes are converted into declarative statements; lastly, a code summary of a sequential model based on the self-attention mechanism is generated. The robustness code summarygeneration method based on the self-attention mechanism has the advantages that the redundant information and noise content can be effectively removed, the automatic evaluation and artificial evaluation accuracy of the generated summary are both improved, and the evaluation result is superior to that of an existing baseline method.

Owner:WUHAN UNIV

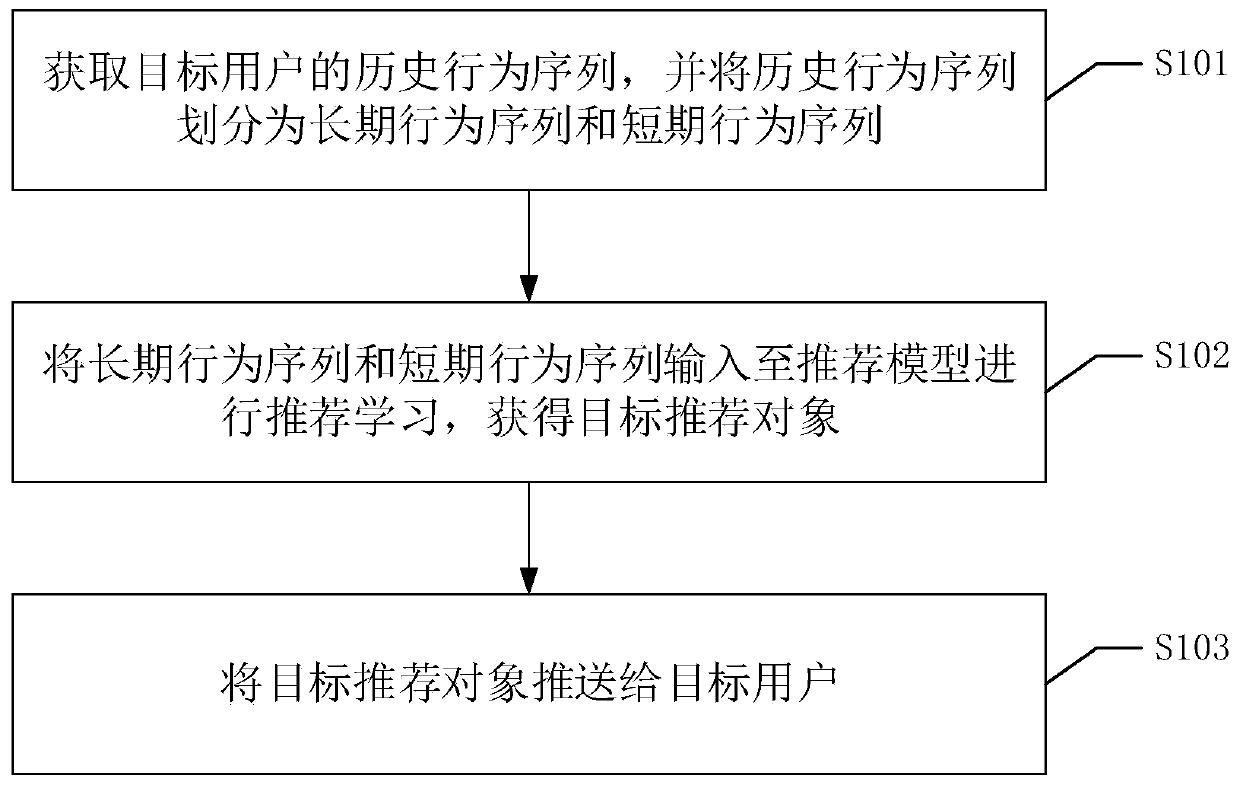

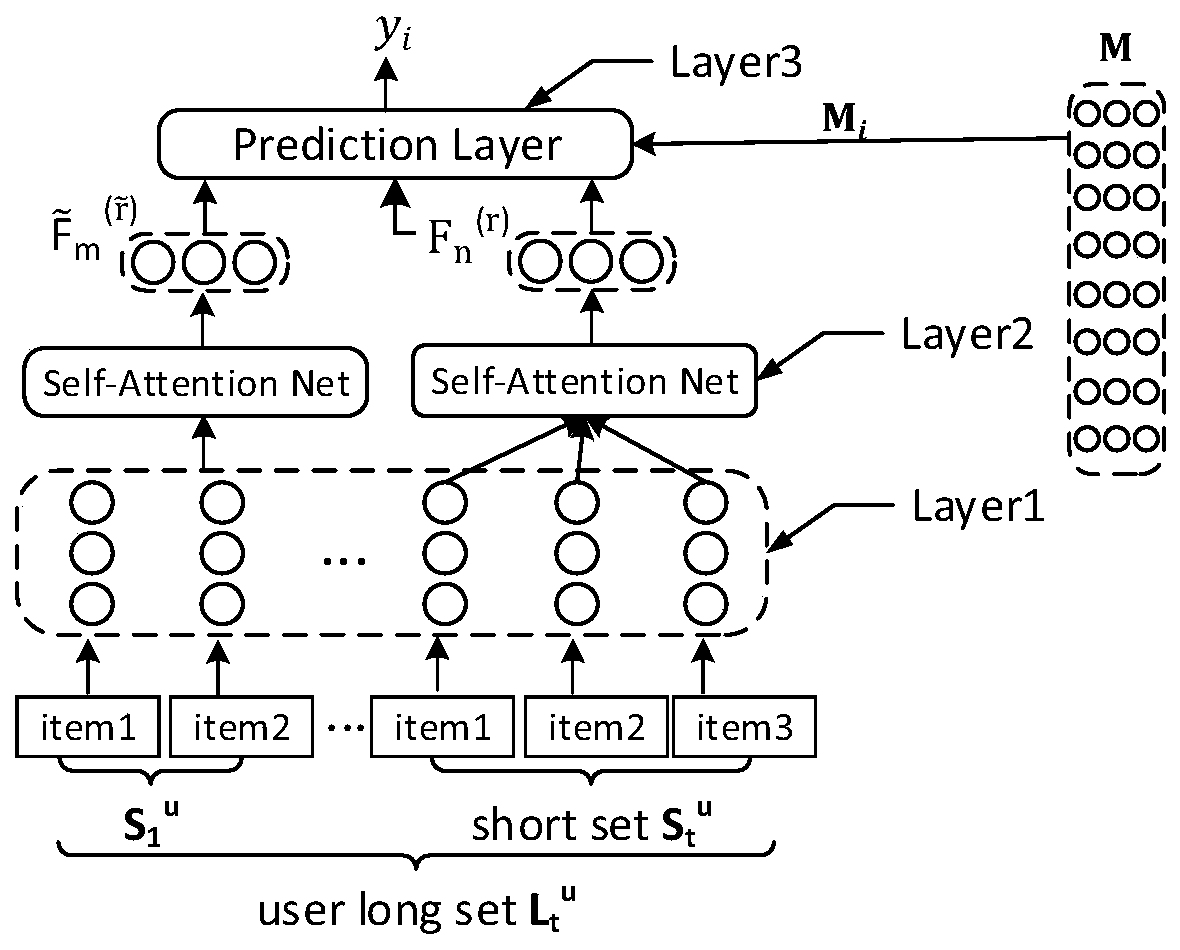

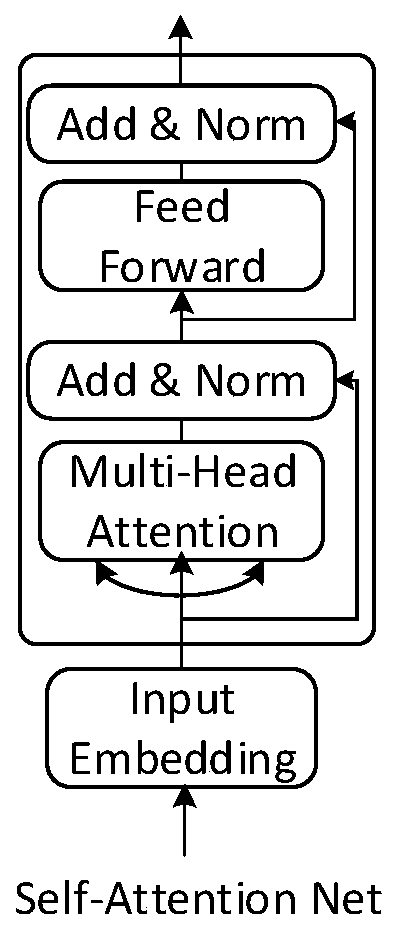

Sequence recommendation method, device and equipment based on self-attention mechanism

PendingCN110008409ADigital data information retrievalSpecial data processing applicationsRecommendation modelSelf attention

The invention discloses a sequence recommendation method based on a self-attention mechanism, and the method comprises the following steps: obtaining a historical behavior sequence of a target user, and dividing the historical behavior sequence into a long-term behavior sequence and a short-term behavior sequence; inputting the long-term behavior sequence and the short-term behavior sequence intoa recommendation model for recommendation learning to obtain a target recommendation object, wherein the recommendation model is a multi-layer self-attention network sequence recommendation model integrating long-term and short-term preferences of the user; and pushing the target recommendation object to the target user. According to the method, the long-term preference and the short-term demand of the target user can be learned, so that the target recommendation object better conforms to the time-based change preference of the target user, the recommendation is more accurate, and the user experience can be improved. The invention further discloses a sequence recommendation device and equipment based on the self-attention mechanism and a readable storage medium which have corresponding technical effects.

Owner:SUZHOU VOCATIONAL UNIV

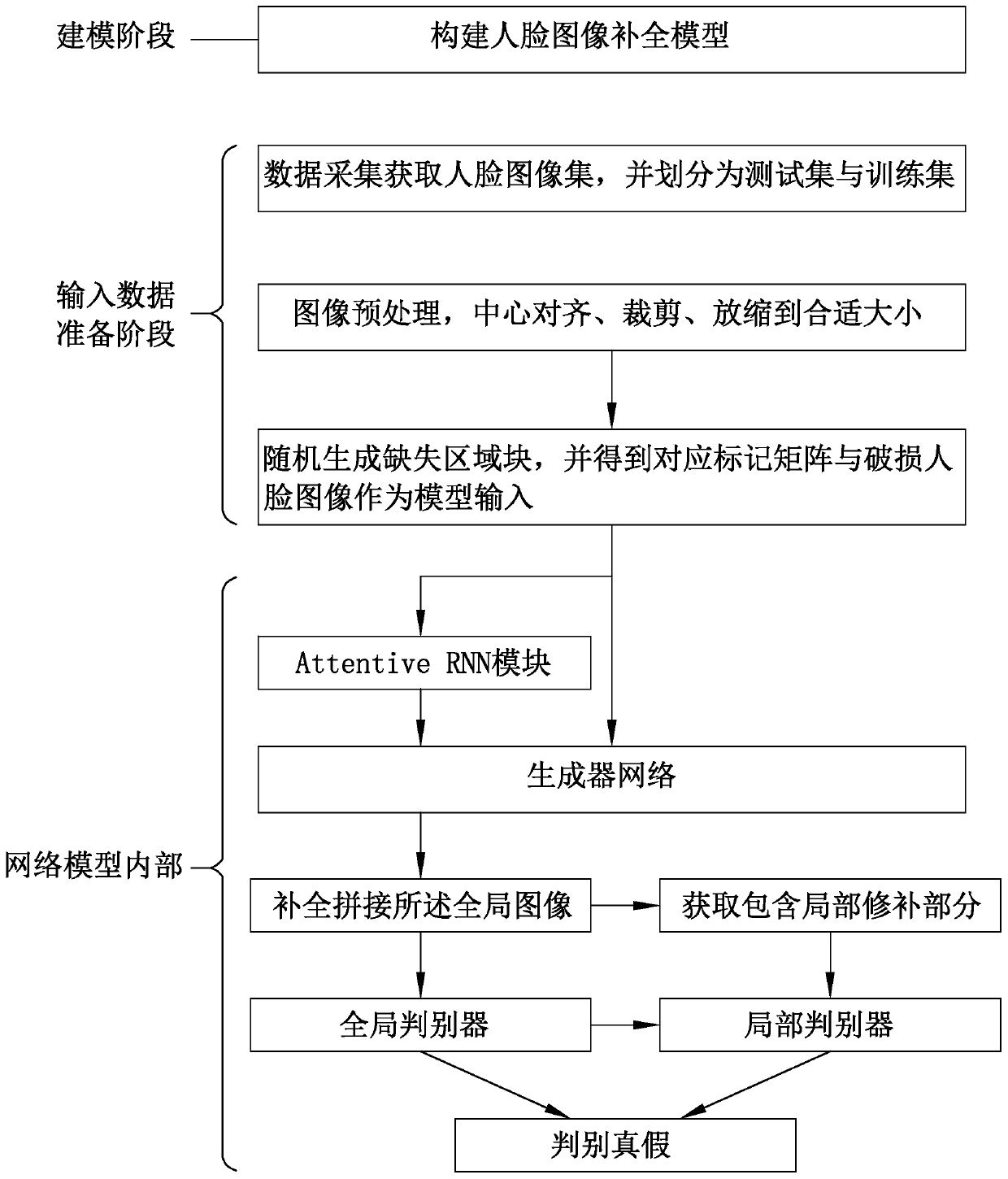

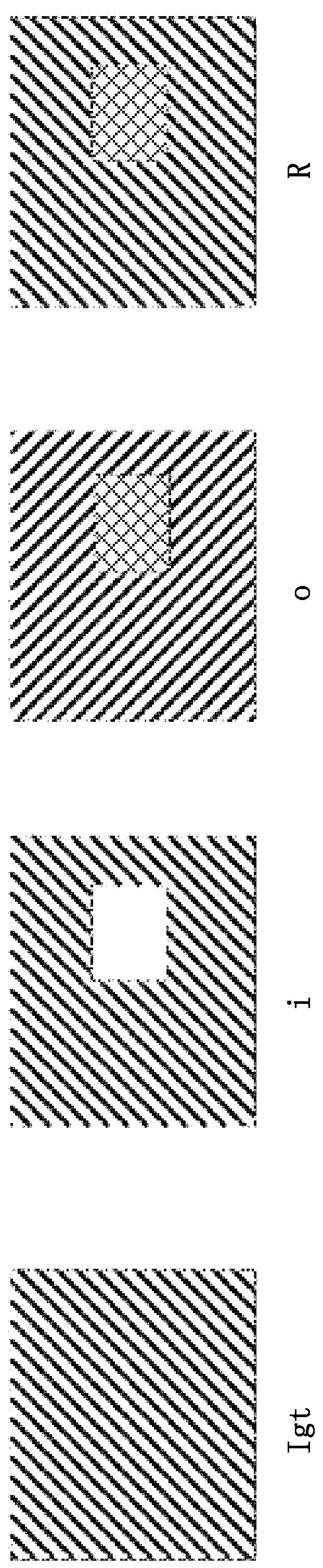

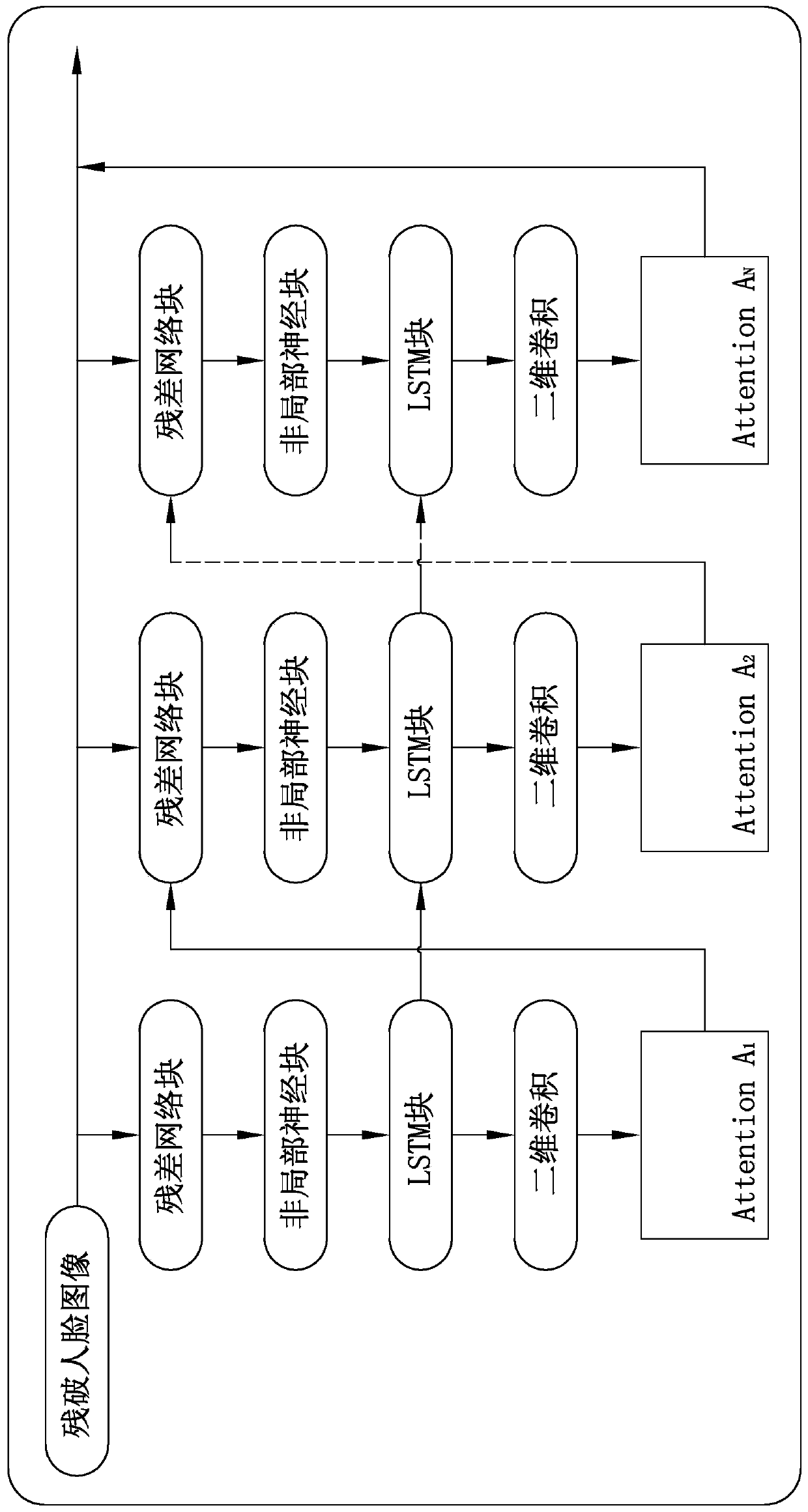

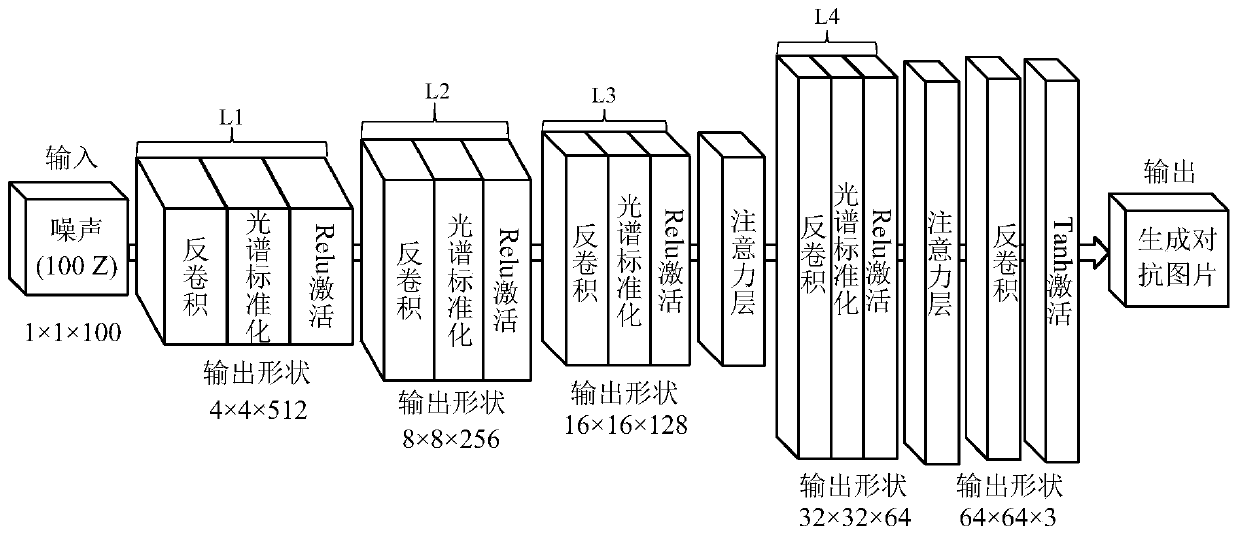

Face image complementing method based on self-attention deep generative adversarial network

PendingCN110288537AOvercoming Completion InconsistenciesOvercome the problem of insufficient detailsImage enhancementImage analysisDiscriminatorSelf attention

The invention provides a face image completion method based on a self-attention deep generative adversarial network. The method comprises the following steps: constructing a face image completion model comprising an attention recurrent neural network module, a generator network and a discriminator network; carrying out data collection: collecting a large number of face images to form an image set, and dividing the image set into a training set and a test set; carrying out image preprocessing to enable the size of the image to be suitable for processing in a deep learning network; constructing a damaged face image serving as model input; carrying out model training, using a GAN framework and various regularization means to directly train the generator network and the discriminator network at the same time in an end-to-end mode, and when the generator network and the discriminator network reach theoretical Nash equilibrium, completing model training; training the model. Compared with the prior art, the face image complementing method based on the self-attention deep generative adversarial network has the advantage that the complemented face image is better in quality.

Owner:HUNAN UNIV

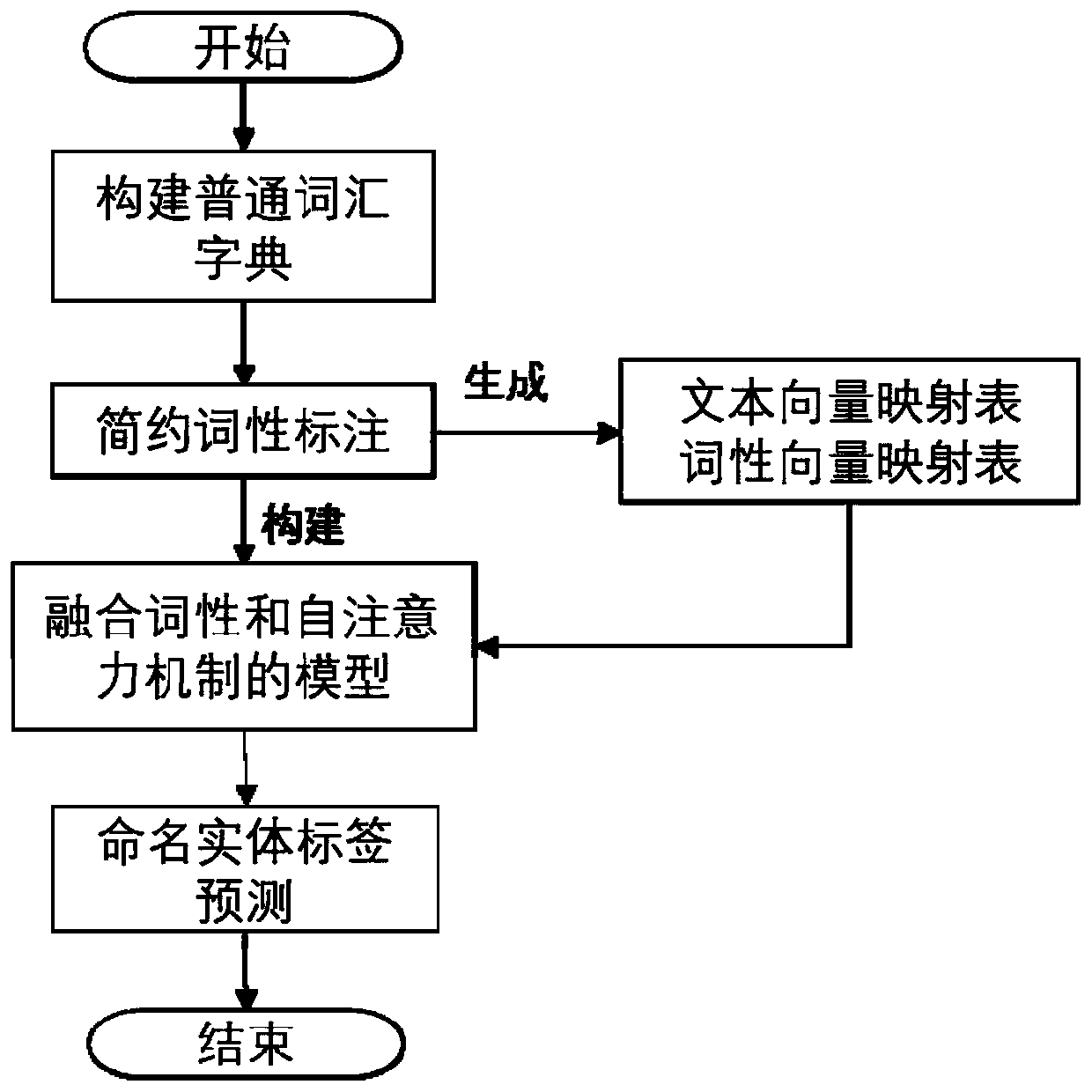

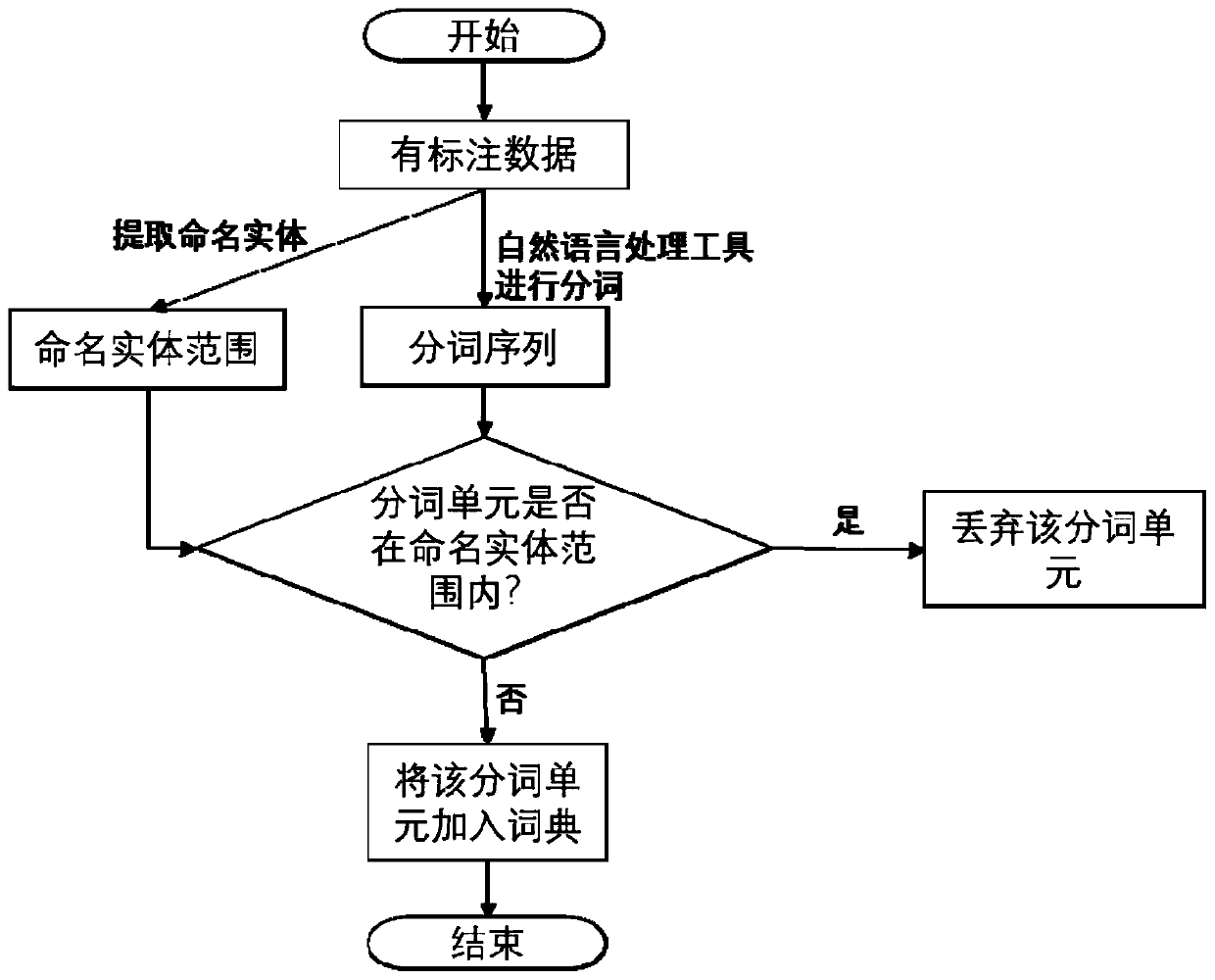

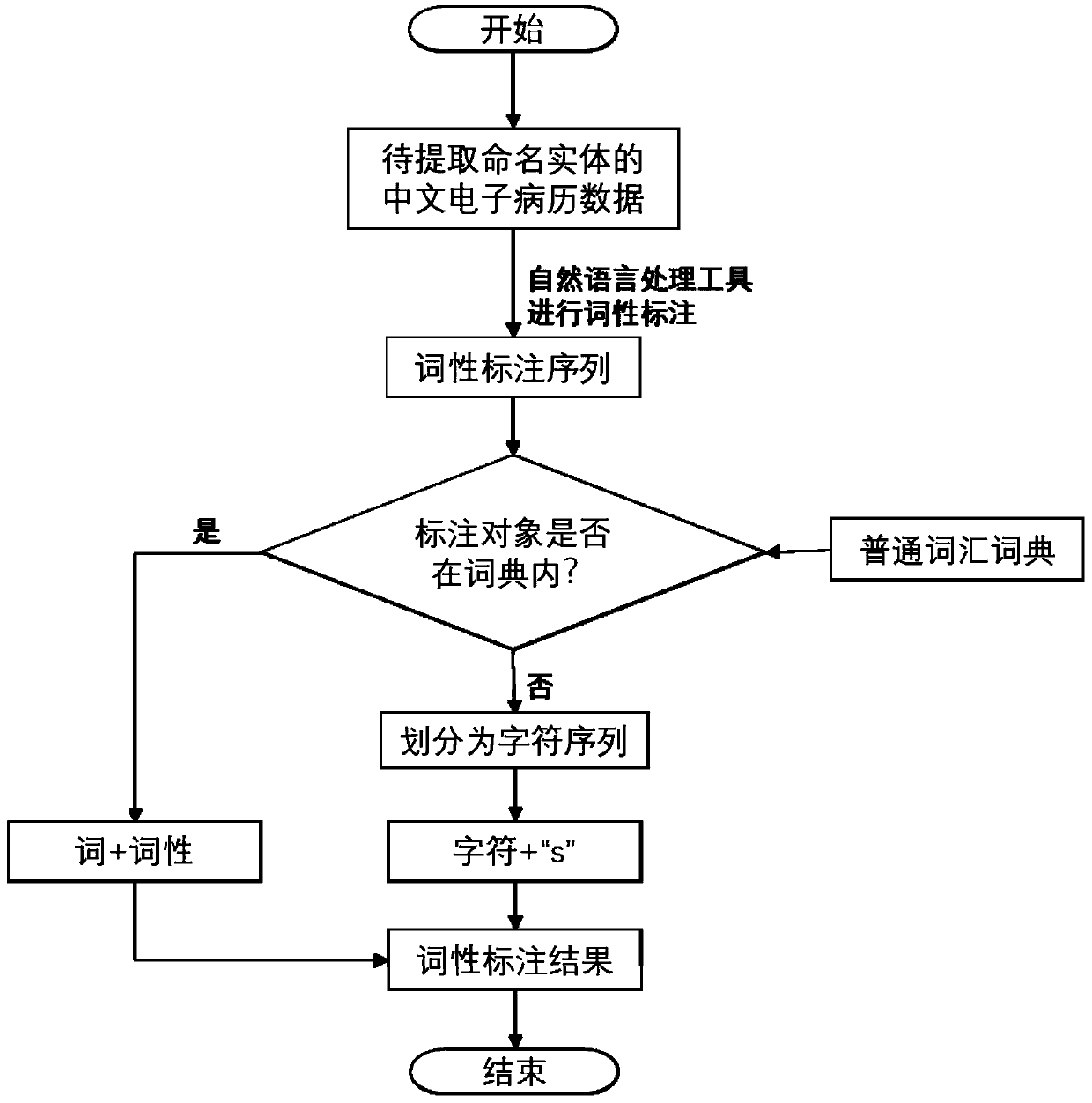

Chinese electronic medical record named entity recognition method

InactiveCN109871538ARich grammatical featuresReduce labeling errorsSpecial data processing applicationsMedical recordPart of speech

The invention discloses a Chinese electronic medical record named entity identification method. The method comprises the following steps: 1) constructing a common vocabulary dictionary; 2) simple part-of-speech tagging; 3) constructing a text and part-of-speech vector mapping table; 4) training a prediction model of the named entity; and 5) predicting the label of the named entity. According to the method, the part-of-speech characteristics are added to improve the boundary distinguishability of the named entity and the common vocabularies, so that the boundary accuracy of the named entity isimproved. At the same time, a self-attention mechanism is introduced into the bidirectional LSTM-CRF model, and the relevancy between the input at each moment and other components in the sentence is calculated, so that the long dependency problem is relieved, and the named entity recognition accuracy is improved.

Owner:SOUTH CHINA UNIV OF TECH

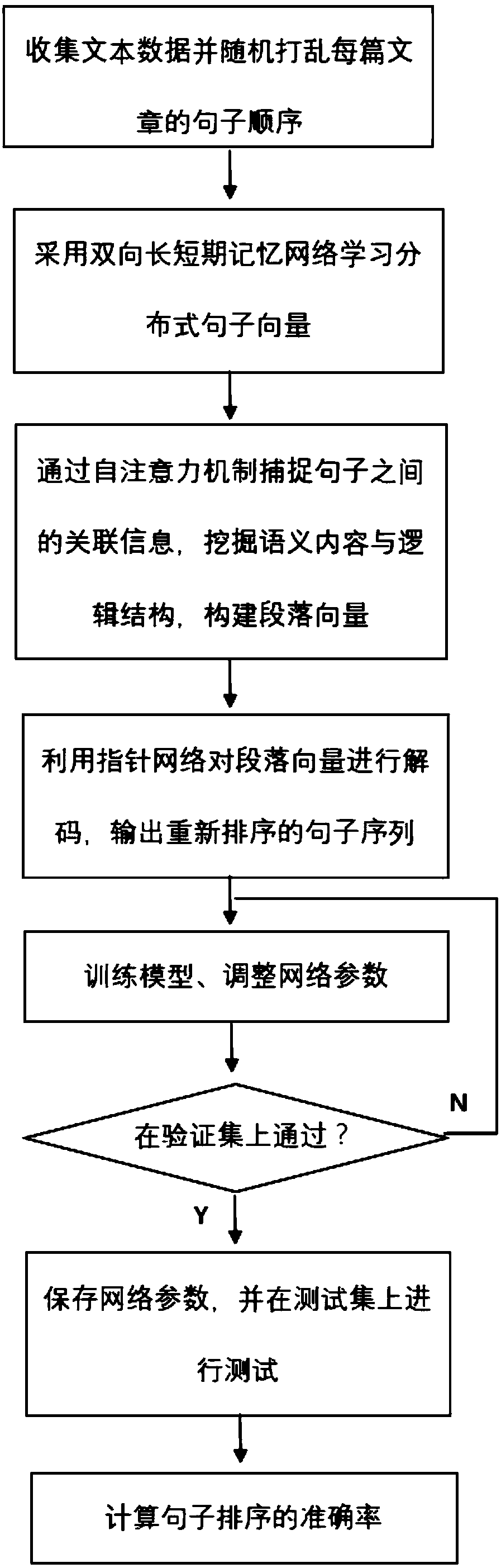

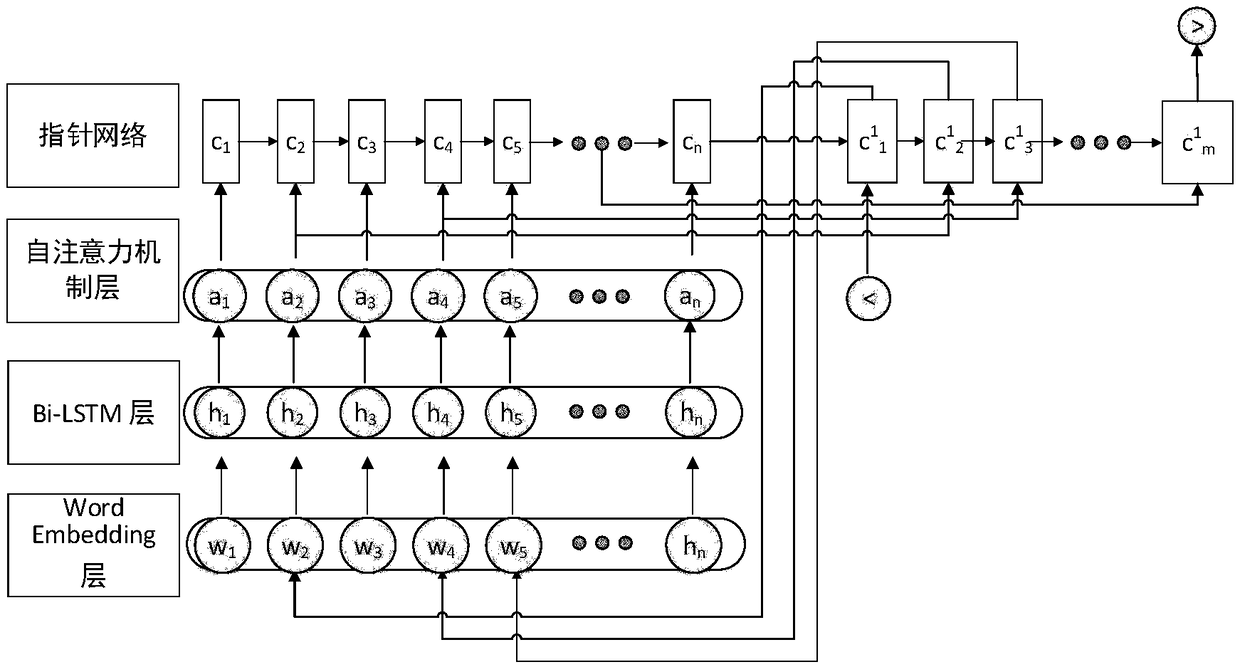

A sentence sorting method based on depth learning self-attention mechanism

ActiveCN109241536AAvoid interferenceAvoid confusionNatural language data processingNeural architecturesShort-term memorySelf attention

The invention discloses a sentence sorting method based on depth learning self-attention mechanism. After a piece of text is input, firstly, each sentence in the text is translated into a distributedvector by using a long-short-term memory network to obtain syntactic information of the sentence. Secondly, the self-attention mechanism is used to learn the semantic association between sentences, and the potential logical structure is explored to preserve important information to form high-level paragraph vectors. The paragraph vector is then input into the pointer network to produce a new sentence order. The method of the invention is characterized in that the method is not influenced by the input sentence order, avoids the problem that the long-term and short-term memory network adds the wrong timing information in the process of generating the paragraph vector, and can effectively analyze the relationship between all sentences. Compared with the existing sentence sorting technology, the method provided by the invention has great improvement in accuracy, and has good practical value.

Owner:ZHEJIANG UNIV

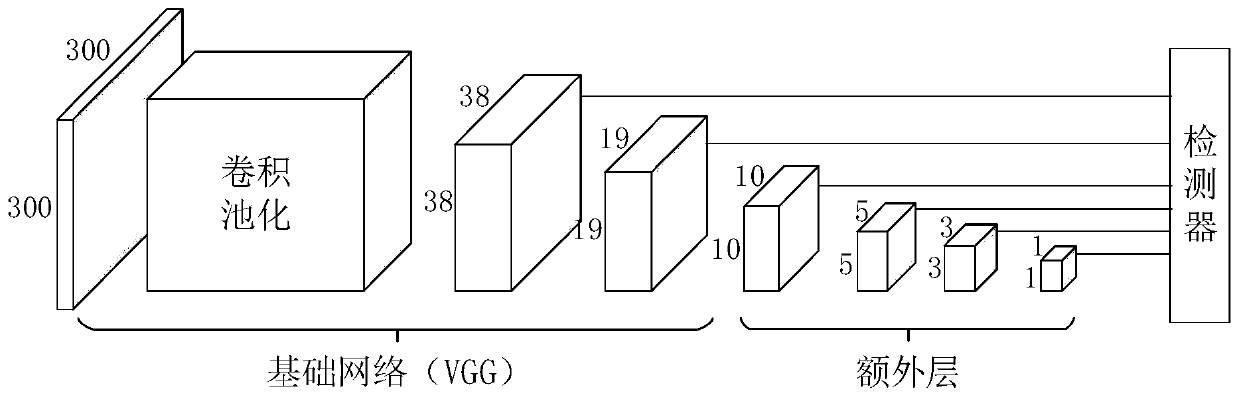

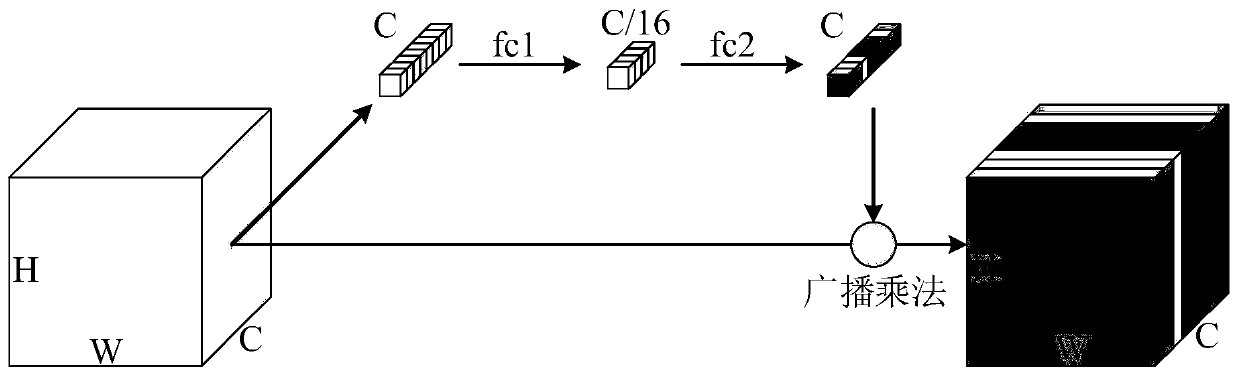

Multi-scale target detection method based on self-attention mechanism

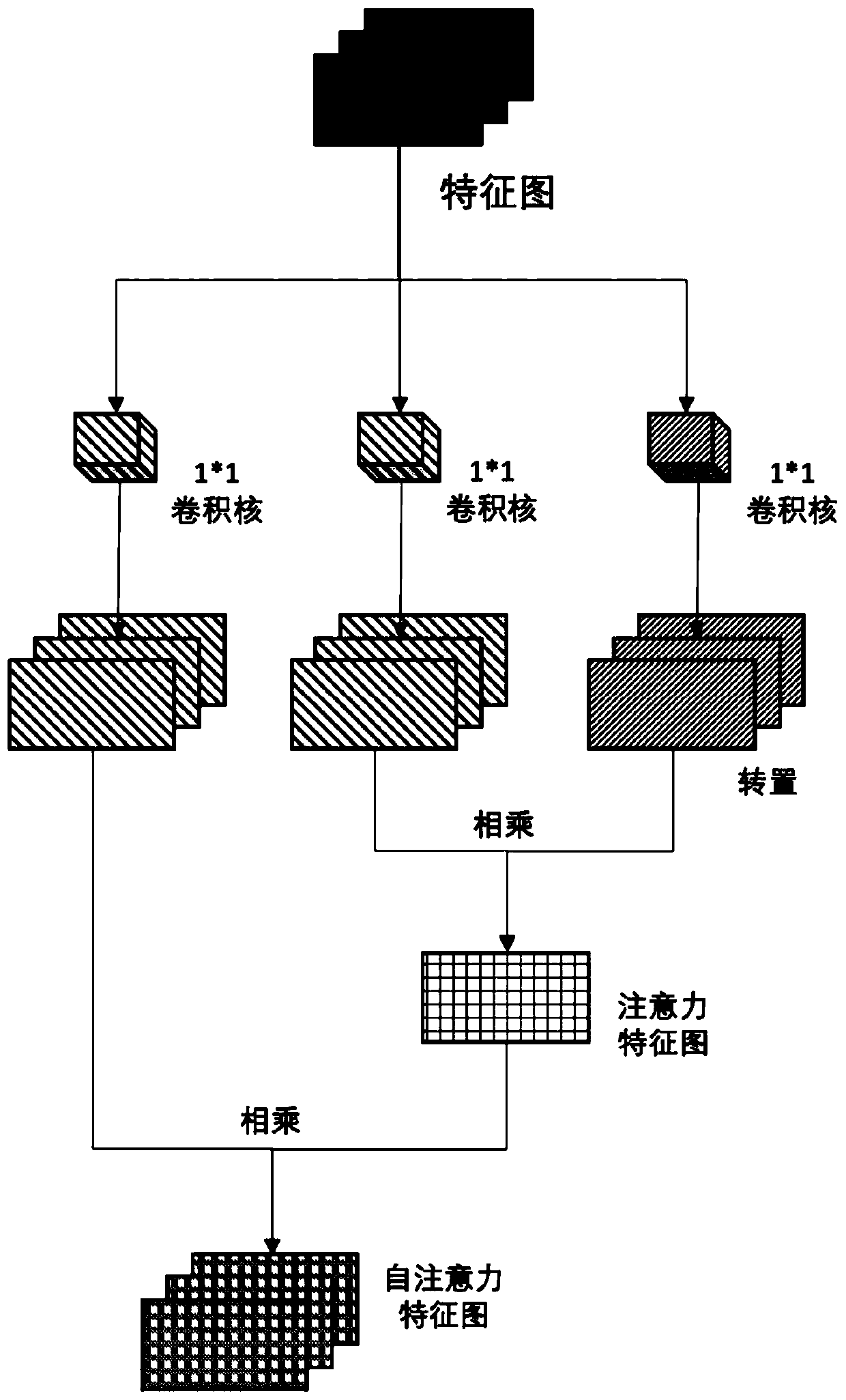

ActiveCN110533084AEnhance expressive abilityImprove stabilityImage enhancementImage analysisSelf attentionSmall target

The invention discloses a multi-scale target detection method based on a self-attention mechanism. By adopting a bottom-to-top and top-to-bottom multi-scale feature fusion mode based on a self-attention feature selection module, low-level features and high-level features of a target can be combined, the representation capability of a feature map and the capability of capturing context informationare enhanced, and the stability and robustness of a target detection stage are improved. Moreover, the self-attention module is used for re-calibrating the features, the calculated amount is smaller,the detection precision and speed are both considered, and the method has important significance for solving the detection problems of dense objects, small targets, shielded targets and the like in target detection.

Owner:CHANGAN UNIV

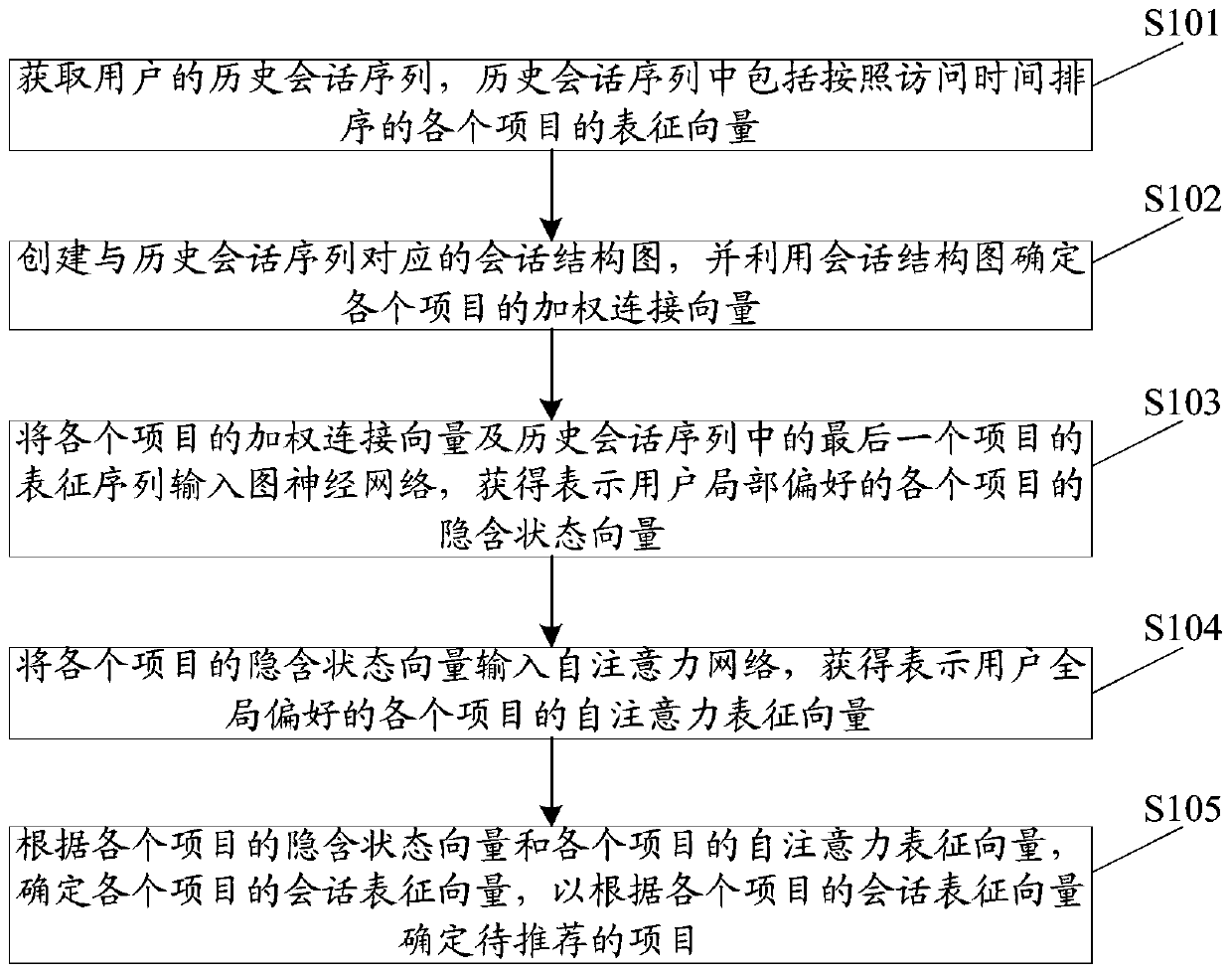

Session-based project recommendation method, device and apparatus and storage medium

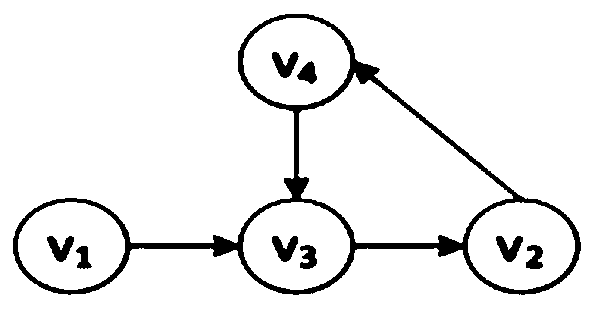

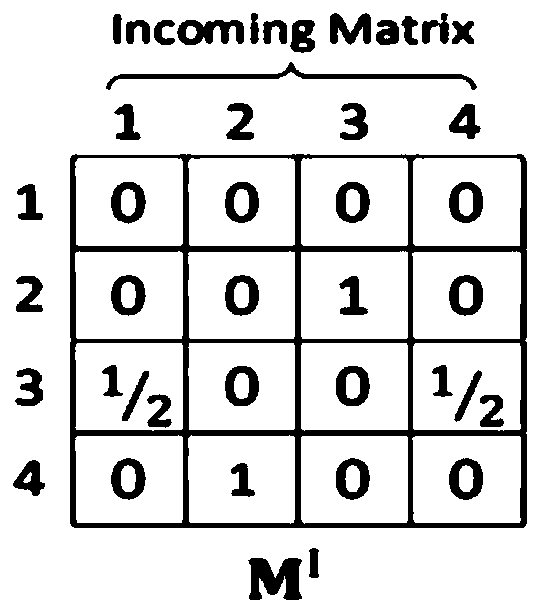

ActiveCN110119467AAccurate recommendationWeb data indexingSpecial data processing applicationsSelf attentionAlgorithm

The invention discloses a session-based project recommendation method, device and apparatus and a computer readable storage medium. In the scheme of the invention, the method comprises the following steps of: constructing a directed session structure diagram through a historical session sequence of a user; based on the session structure diagram, enabling a graph neural network to capture conversion between adjacent projects; generating implicit state vectors of all nodes in the graph; establishing long-distance dependence by using a self-attention mechanism, and finally predicting the probability of next click by taking the linear combination of the global preference and the current local preference of a user as the hidden vector of a current session. The method utilizes the complementaryadvantages of the self-attention network and a graph neural network to realize the accurate recommendation of a project.

Owner:SUZHOU UNIV

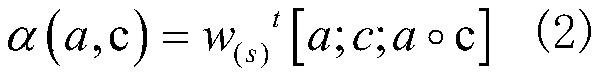

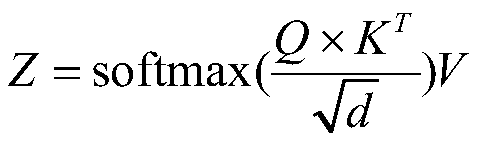

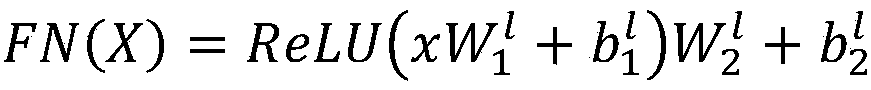

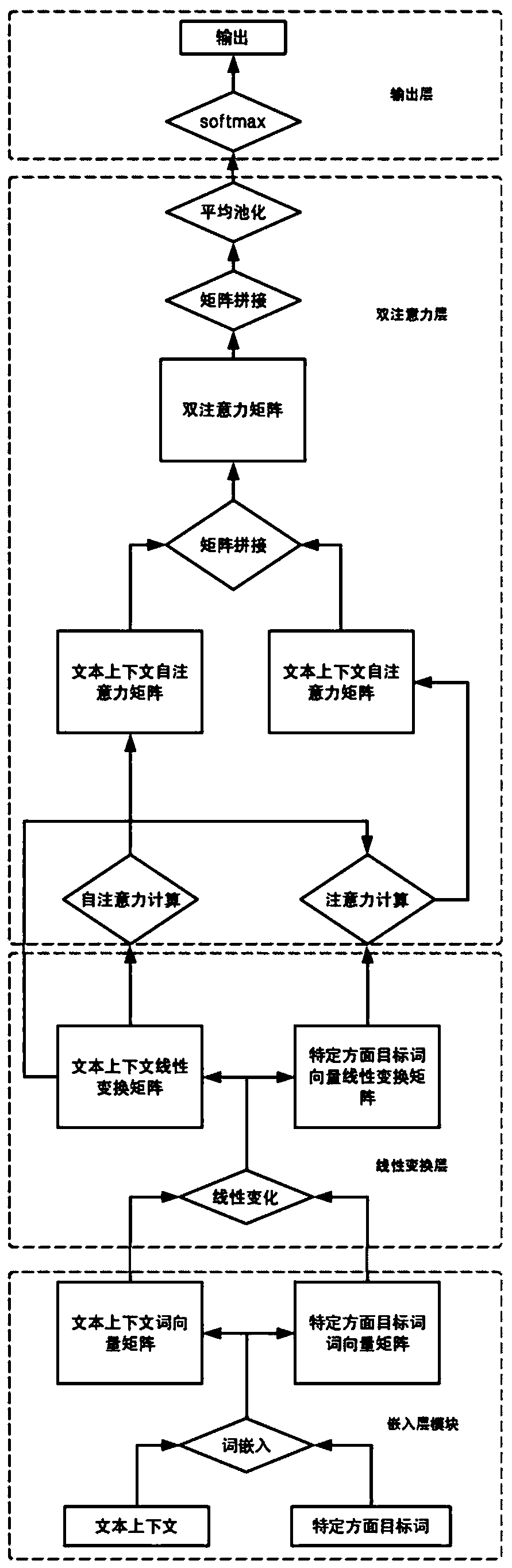

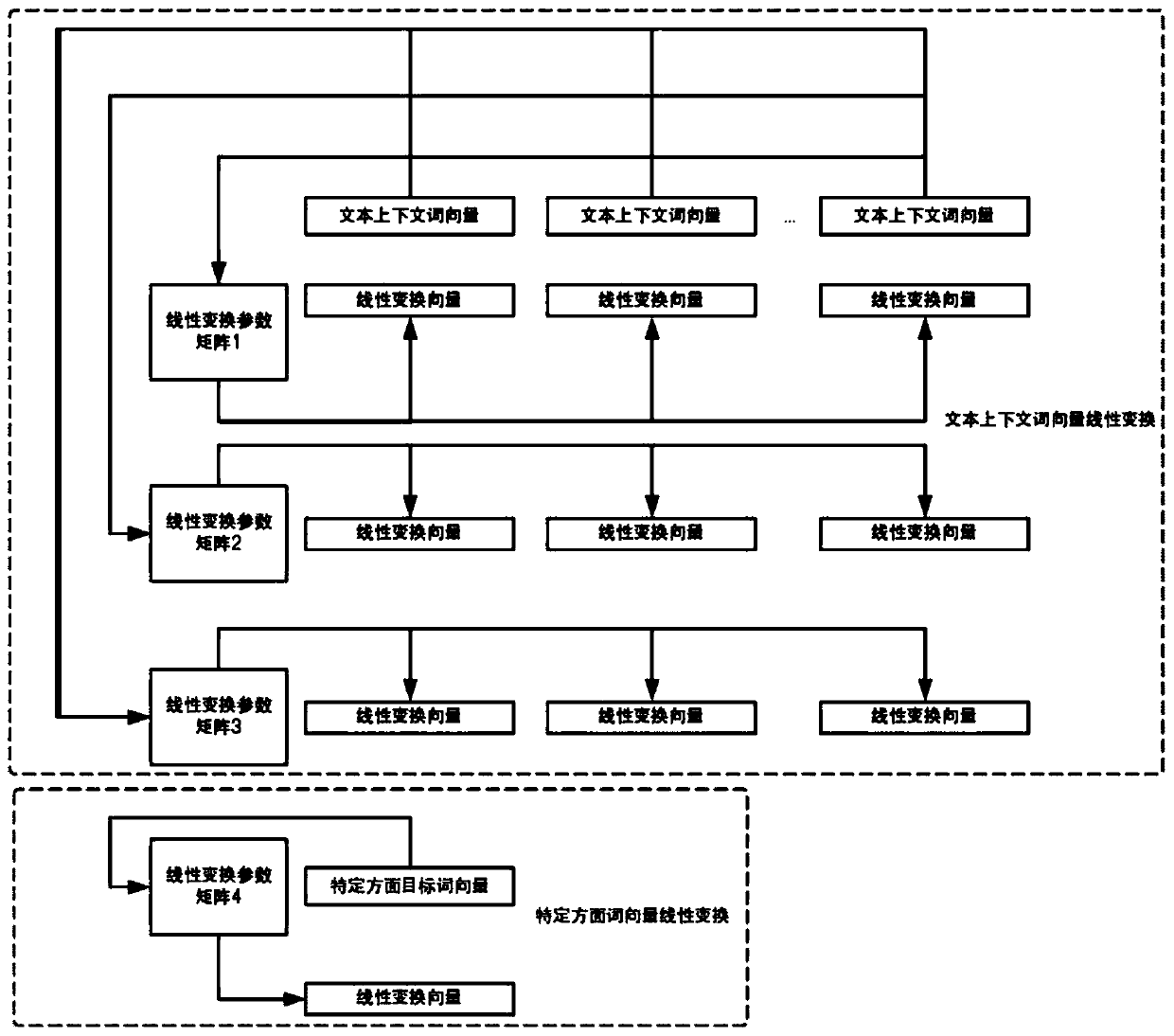

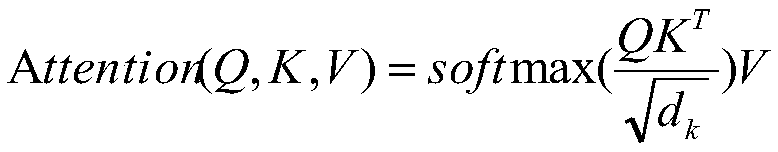

A fine-grained emotion polarity prediction method based on a hybrid attention network

ActiveCN109948165AAccurate predictionMake up for the shortcoming that it is difficult to obtain global structural informationSpecial data processing applicationsSelf attentionAlgorithm

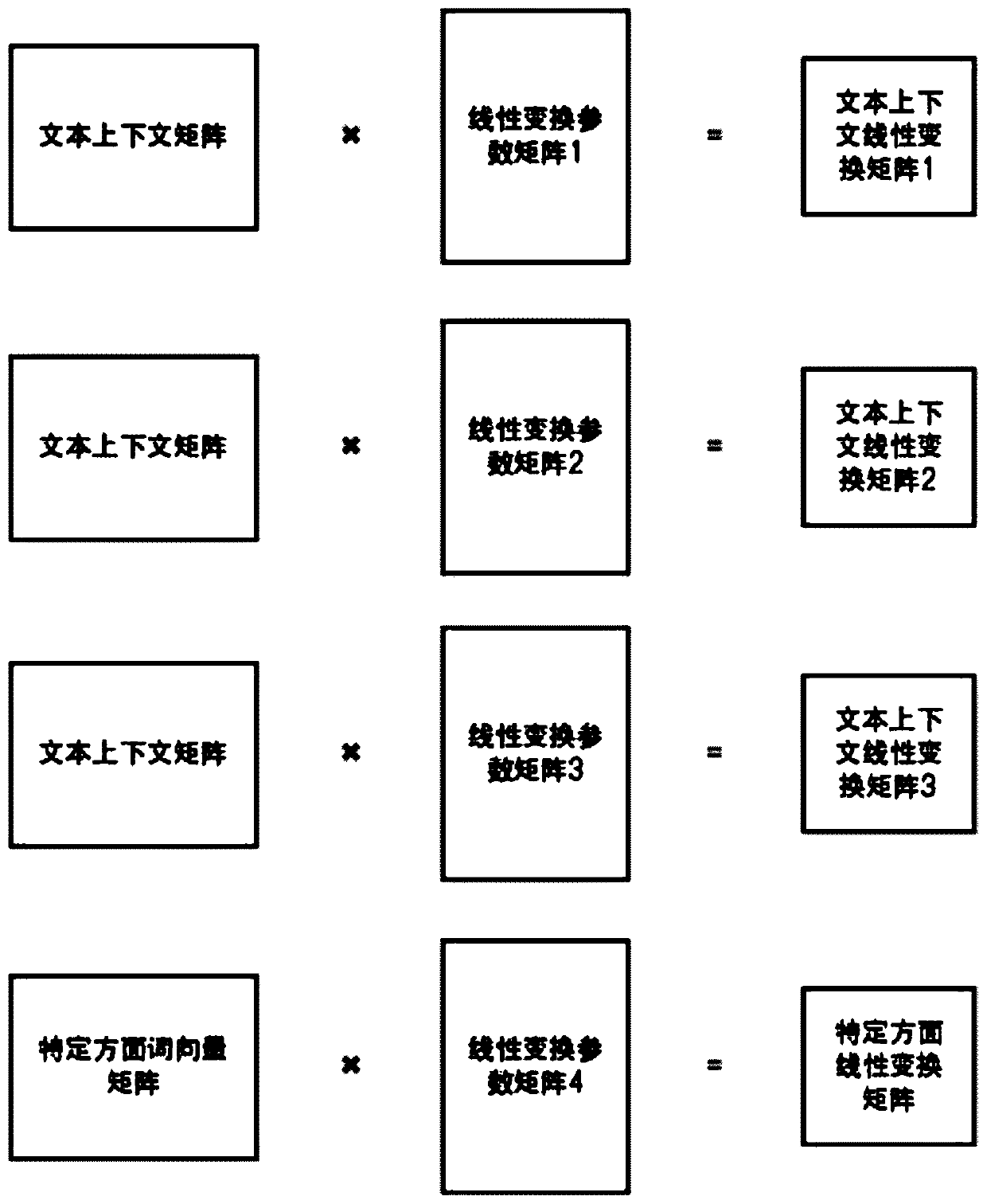

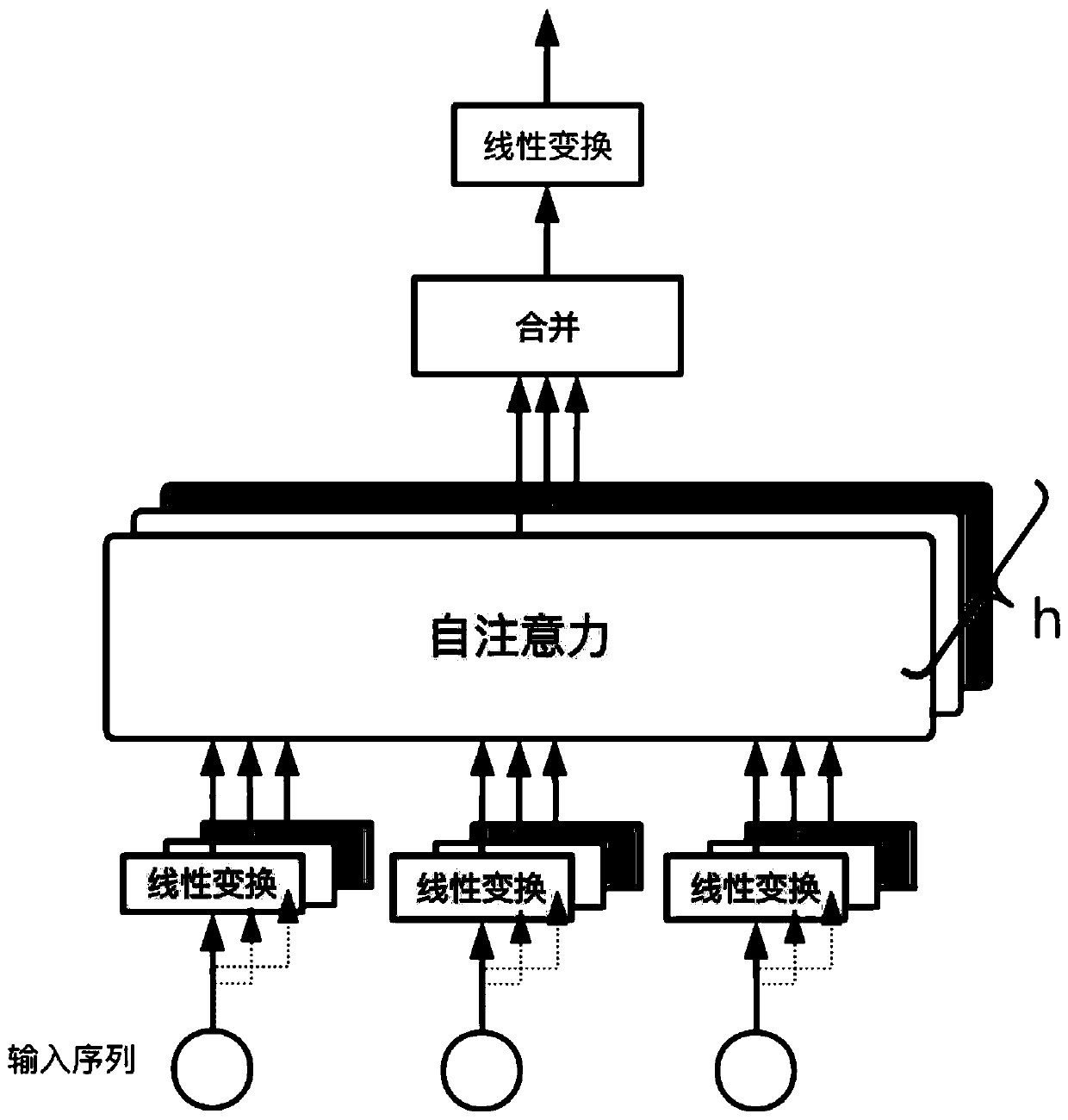

The invention discloses a fine-grained emotion polarity prediction method based on a hybrid attention network, and aims to overcome the problems of lack of flexibility, insufficient precision, difficulty in obtaining global structure information, low training speed, single attention information and the like in the prior art. The method comprises the following steps: 1, determining a text context sequence and a specific aspect target word sequence according to a comment text sentence; 2, mapping the sequence into two multi-dimensional continuous word vector matrixes through log word embedding;3, performing multiple different linear transformations on the two matrixes to obtain corresponding transformation matrixes; 4, calculating a text context self-attention matrix and a specific aspect target word vector attention matrix by using the transformation matrix, and splicing the two matrixes to obtain a double-attention matrix; 5, splicing the double attention matrixes subjected to different times of linear change, and then performing linear change again to obtain a final attention representation matrix; and 6, through an average pooling operation, inputting the emotion polarity into asoftmax classifier through full connection layer thickness to obtain an emotion polarity prediction result.

Owner:JILIN UNIV

Text abstract automatic generation method based on self-attention network

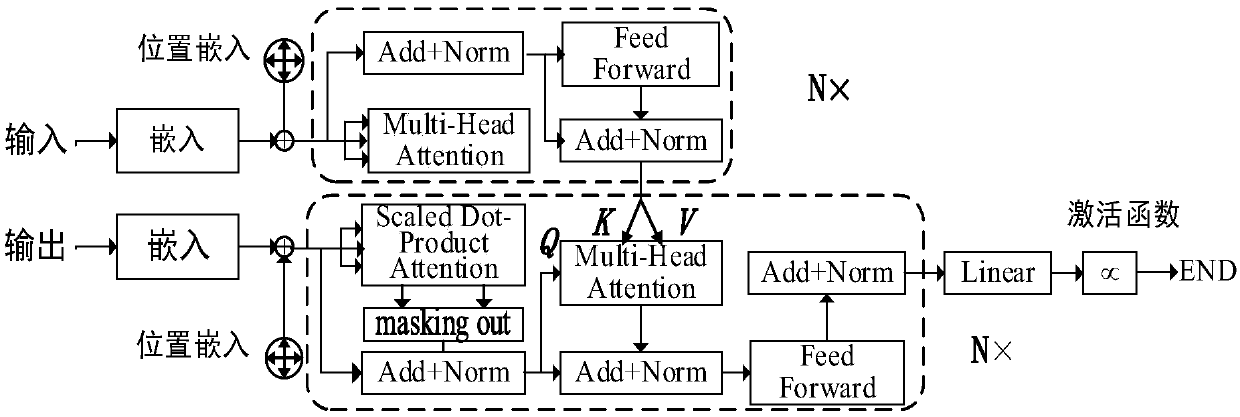

ActiveCN110209801AEfficient extraction of long-distance dependenciesEffectively handle generation issuesNatural language data processingNeural architecturesSelf attentionAlgorithm

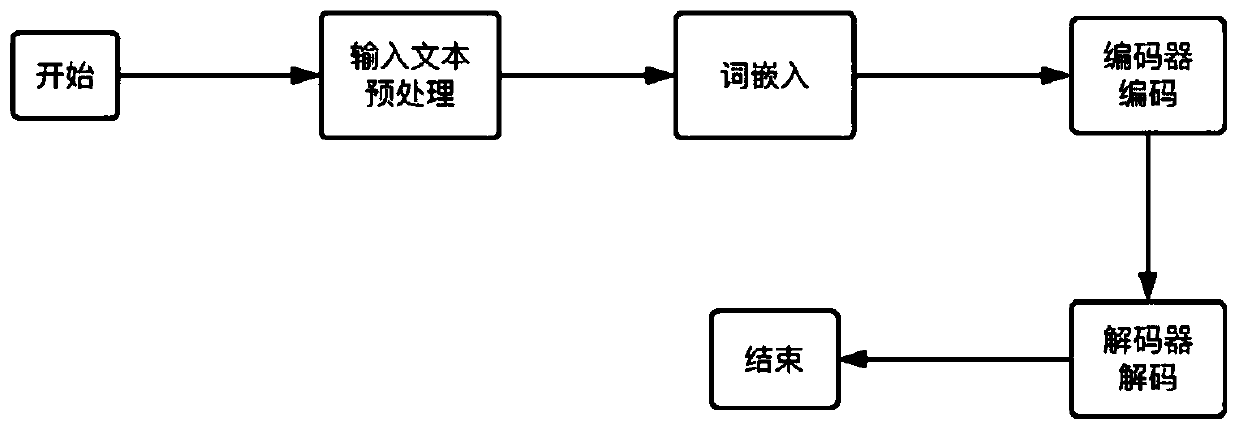

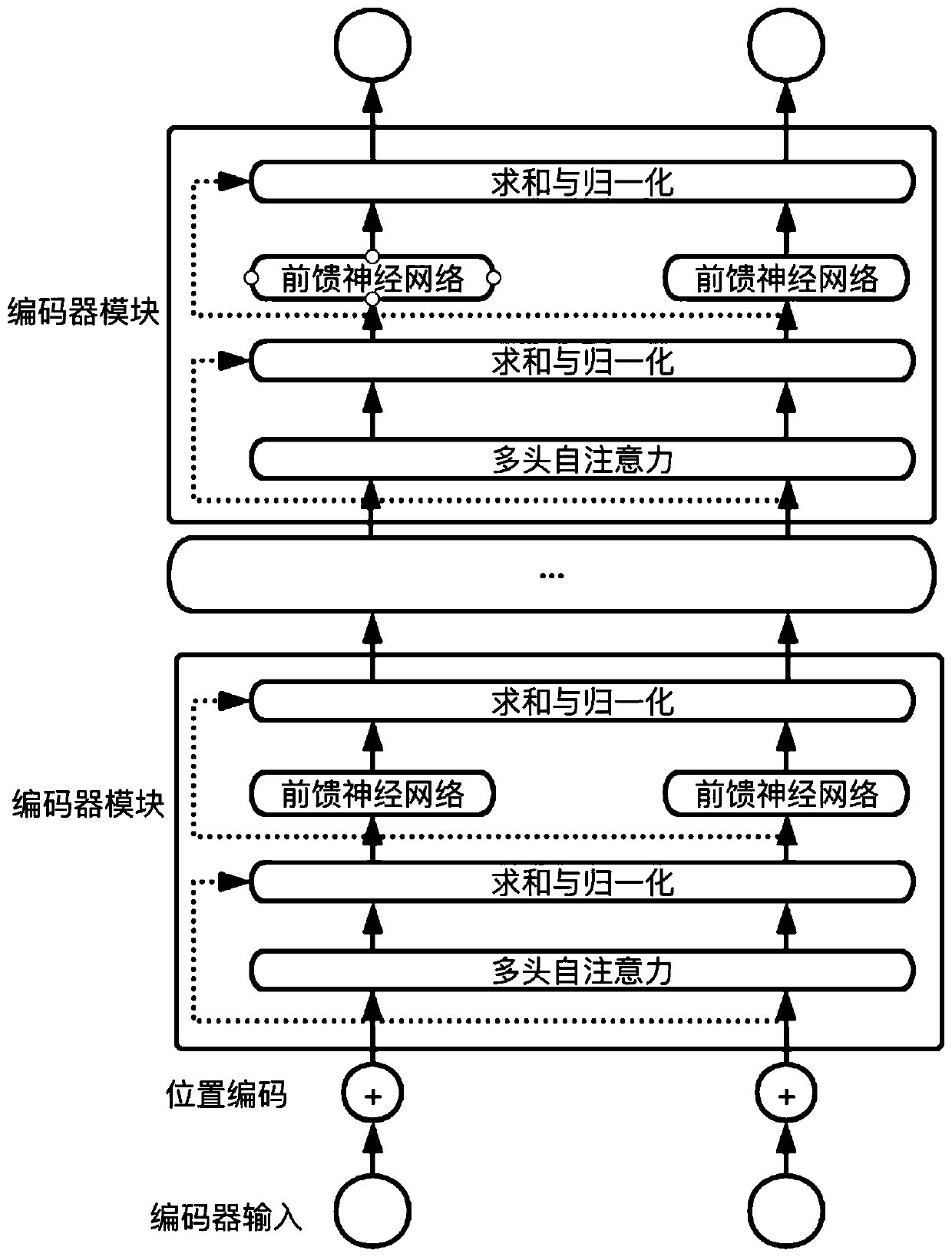

The invention discloses a text abstract automatic generation method based on a self-attention network. The text abstract automatic generation method comprises the following steps: 1) carrying out wordsegmentation on an input text to obtain a word sequence; 2) performing word embedding on the word sequence to generate a corresponding word vector sequence; 3) coding the word vector sequence by using a self-attention network encoder, and 4) decoding an input text coding vector by using a self-attention network decoder to generate a text abstract. The text abstract automatic generation method hasthe advantages of high model calculation speed, high training efficiency, high generated abstract quality, good generalization performance of the model and the like.

Owner:SOUTH CHINA UNIV OF TECH

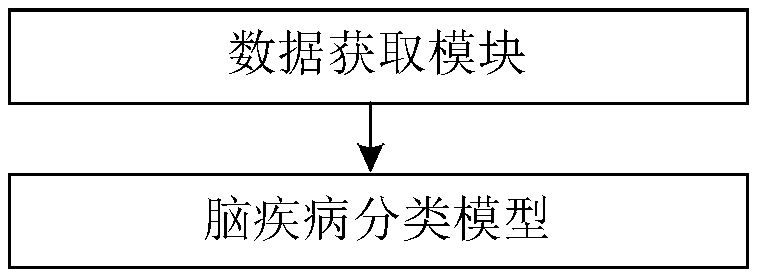

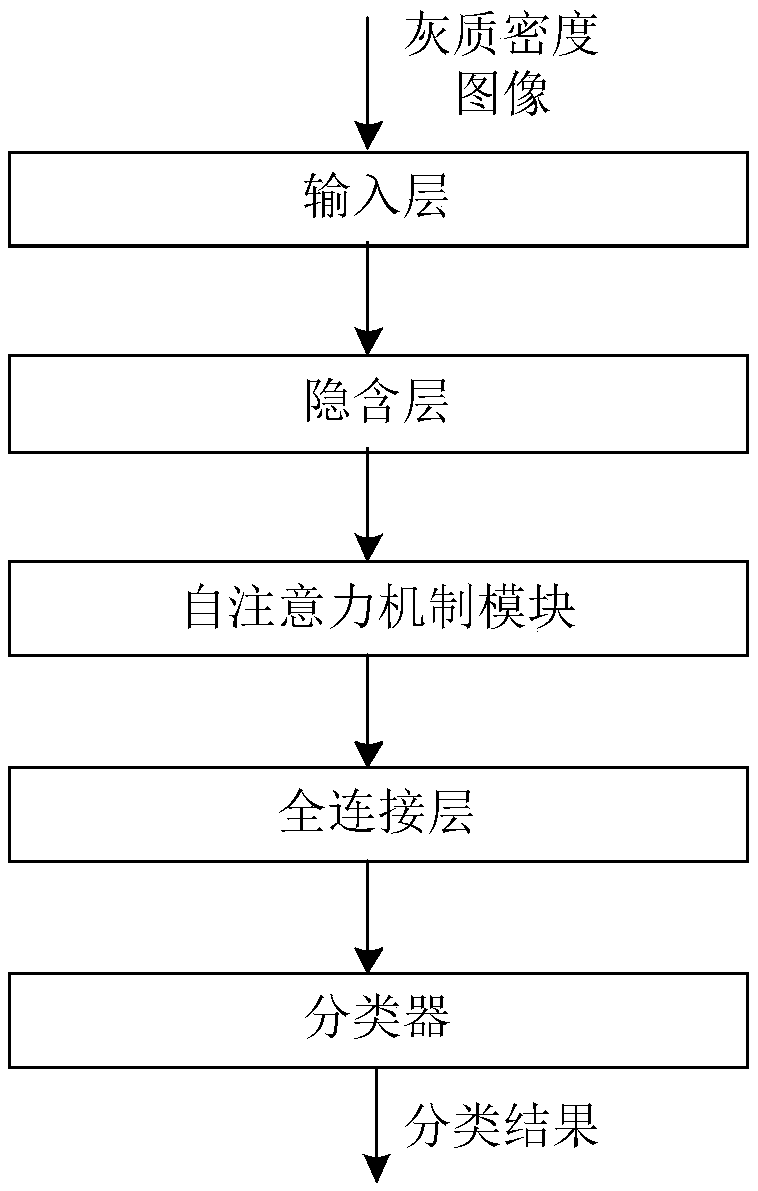

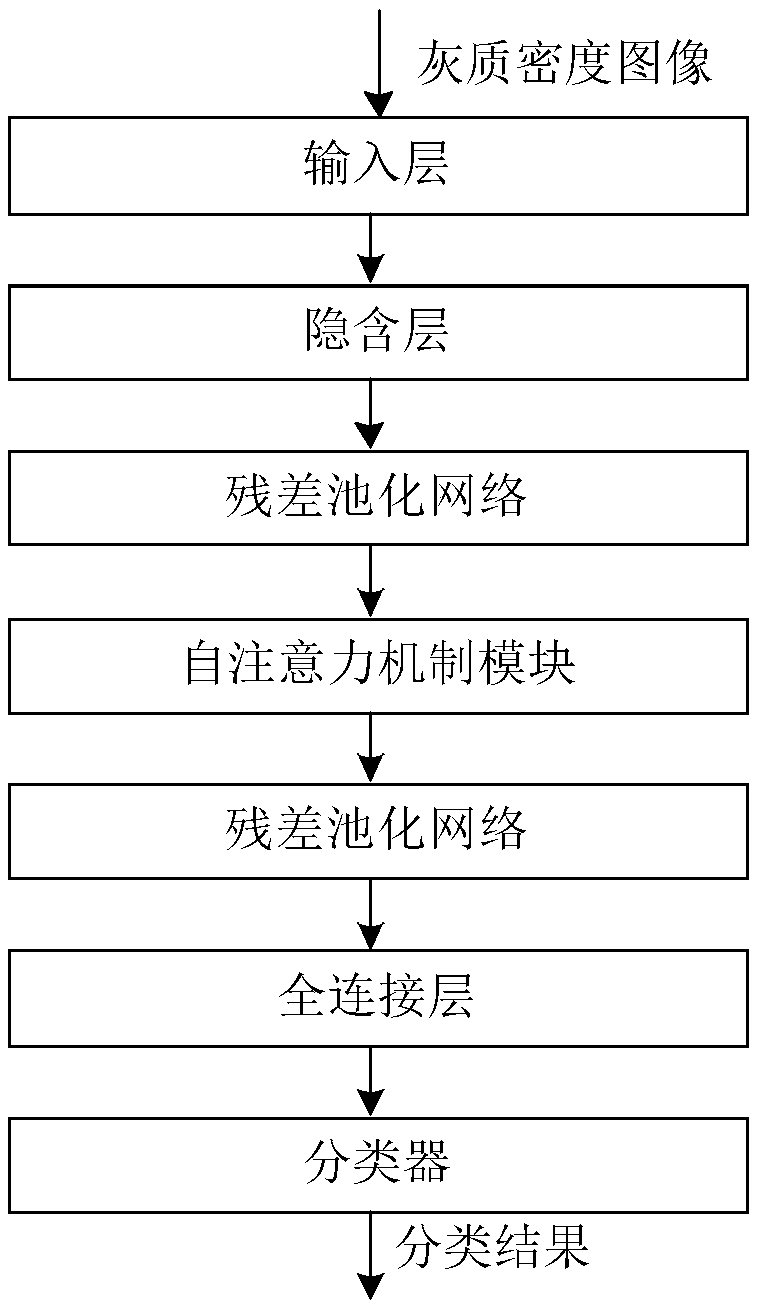

Brain disease classification system based on self-attention mechanism

ActiveCN109165667AImprove classification performanceIncrease weightMedical automated diagnosisCharacter and pattern recognitionDiseaseNerve network

The invention relates to the technical field of image processing, and proposes a brain disease classification system based on a self-attention mechanism, aiming at solving the technical problems of low classification accuracy caused by the complicated process of preprocessing, feature extraction and feature selection of magnetic resonance images required in the classification and diagnosis of brain diseases. For this purpose, the brain disease classification system based on the self-attention mechanism in the invention comprises the following steps: pre-processing the acquired human brain magnetic resonance images of the brain disease patients to obtain the gray matter density map of the human brain; using a pre-constructed brain disease classification model to classify the gray matter density map to obtain a brain disease category of the brain disease patient, wherein The pre-constructed brain disease classification model is a three-dimensional convolutional neural network model basedon the self-attention mechanism. The system shown in the embodiment of the invention can quickly and accurately classify the categories of brain diseases.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

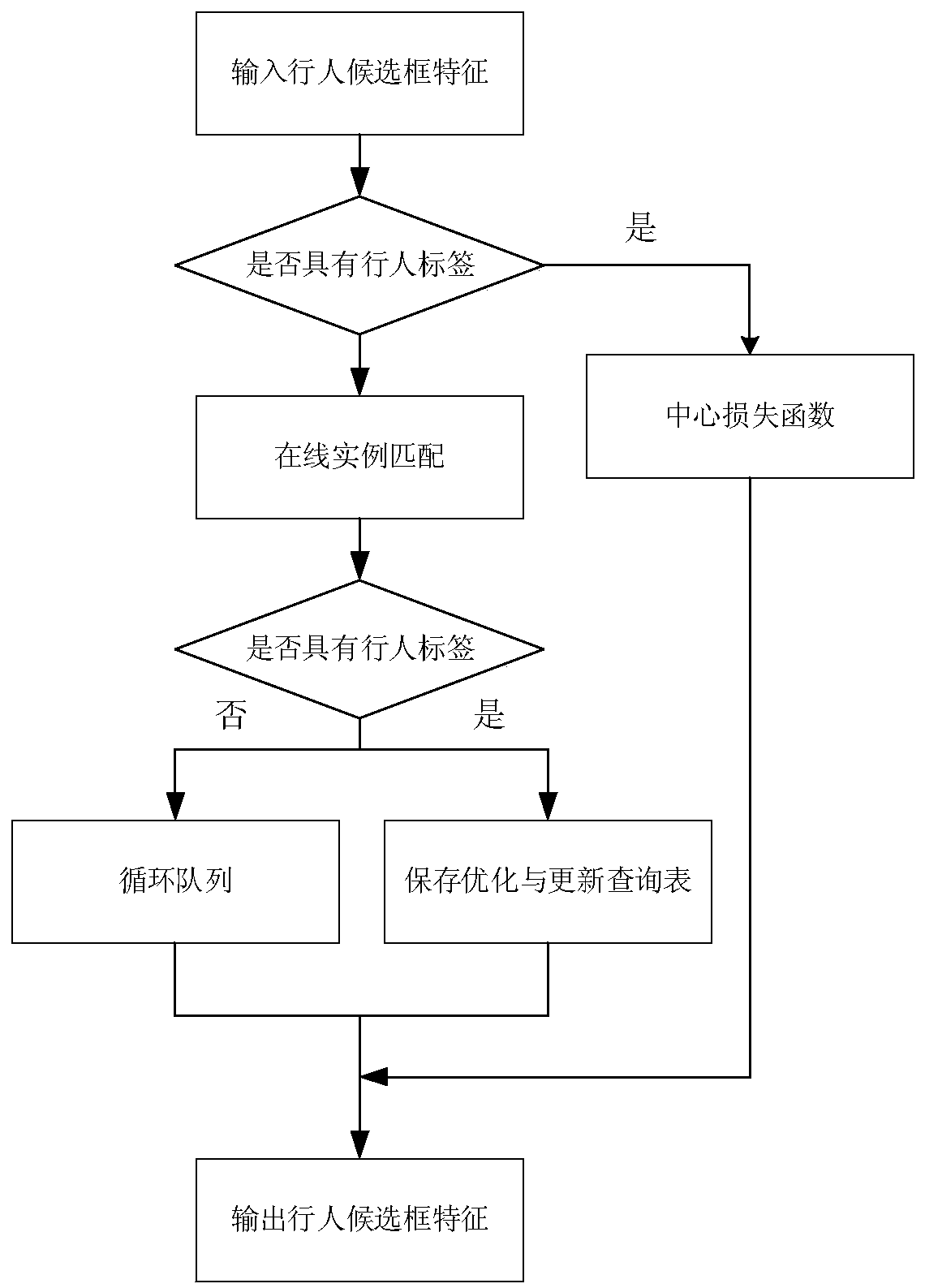

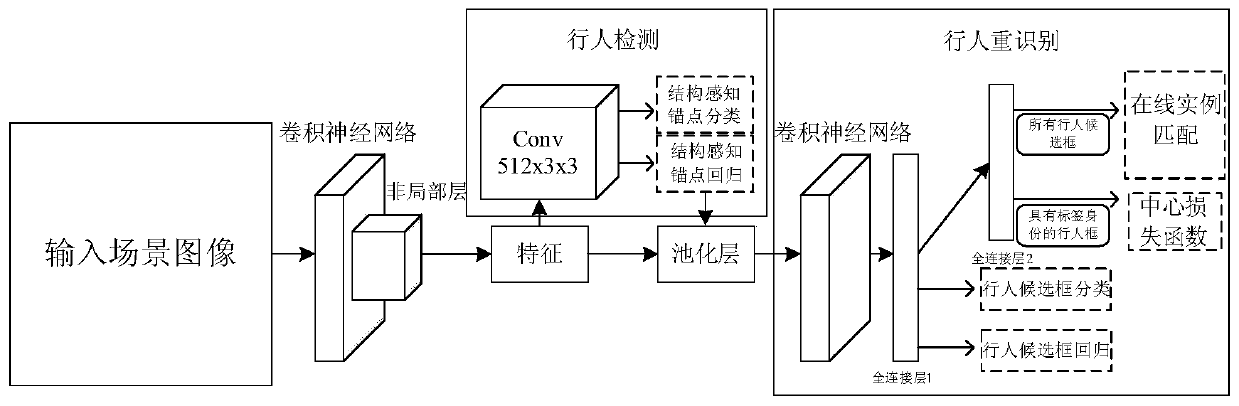

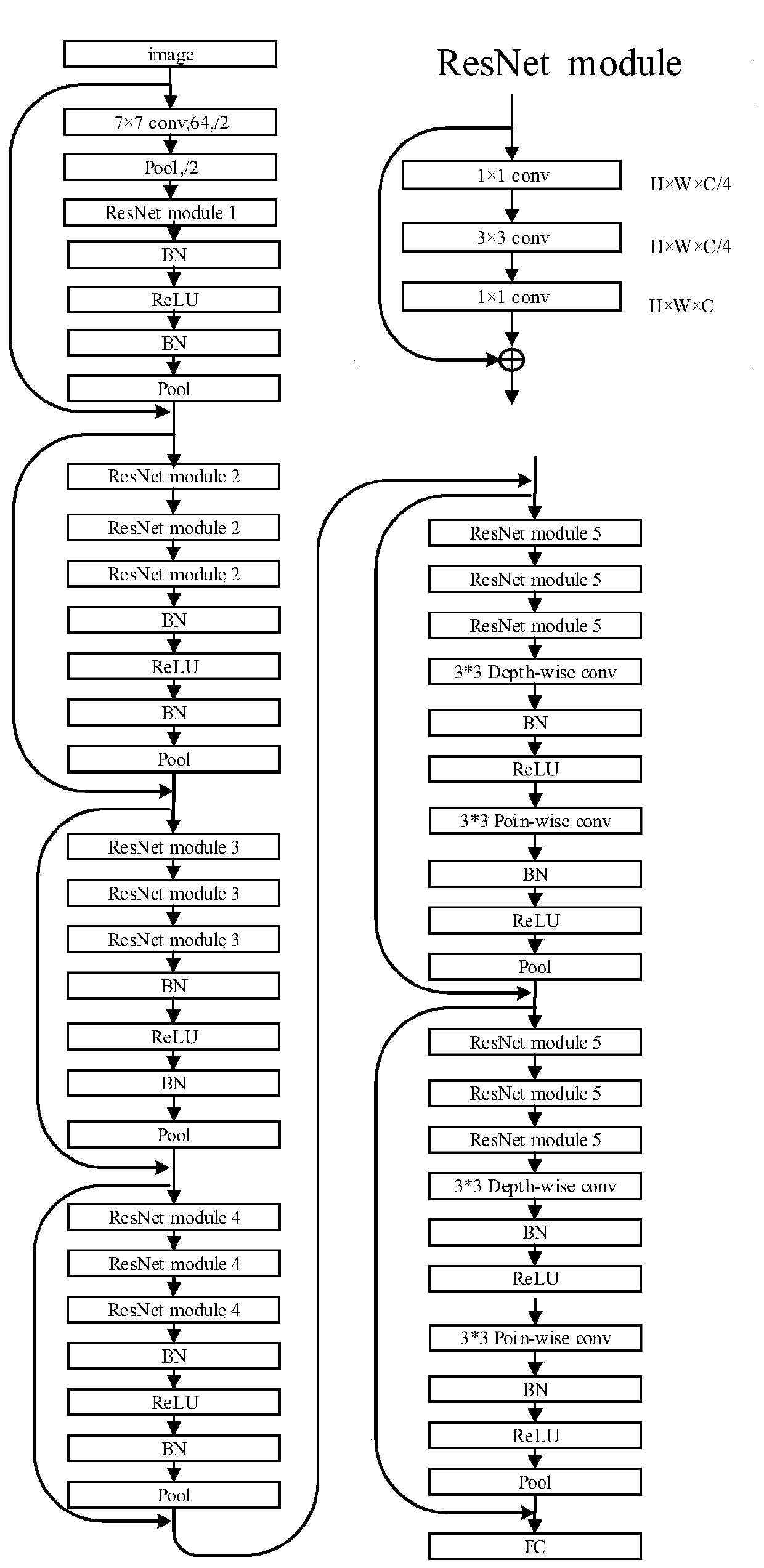

A pedestrian searching method and device based on structural perception self-attention and online instance aggregation matching

ActiveCN109948425AHigh precisionImprove efficiencyInternal combustion piston enginesCharacter and pattern recognitionNerve networkVisual technology

The invention discloses a pedestrian searching method and device based on structural perception self-attention and online instance aggregation matching, and belongs to the technical field of computervision technology processing. The method comprises the following steps: firstly, in a training phase, combining a convolutional neural network with a non-local layer; carrying out feature extraction on an input whole scene image to obtain feature representation of the scene image, designing structure-perceived anchor points for a special object of a pedestrian, improving the performance of a detection framework, framing the detected pedestrian into the same size, then sending the pedestrian into a pedestrian re-identification network, and carrying out training, storage, optimization and updating of pedestrian features with tags. In the model testing stage, the trained non-local convolutional neural network is used for carrying out pedestrian detection on an input scene image, and after a pedestrian frame is detected, a target pedestrian image is used for carrying out special similarity matching sorting and retrieval. Pedestrian detection and re-identification can be carried out on large-scale real scene images at the same time, and the method plays an important role in the security and protection fields of urban monitoring and the like.

Owner:CHINA UNIV OF MINING & TECH

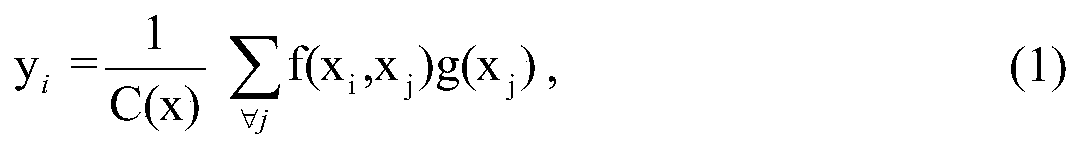

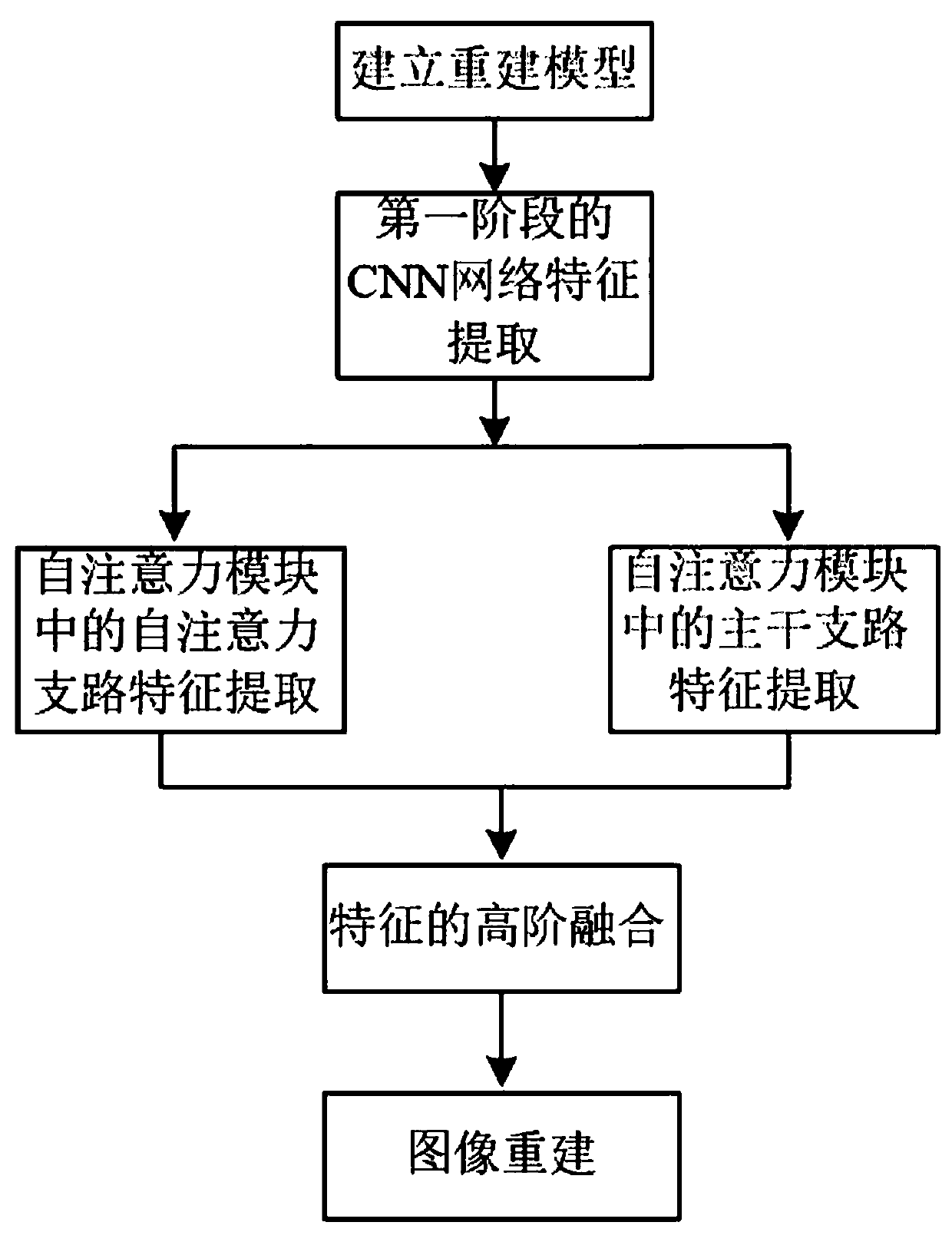

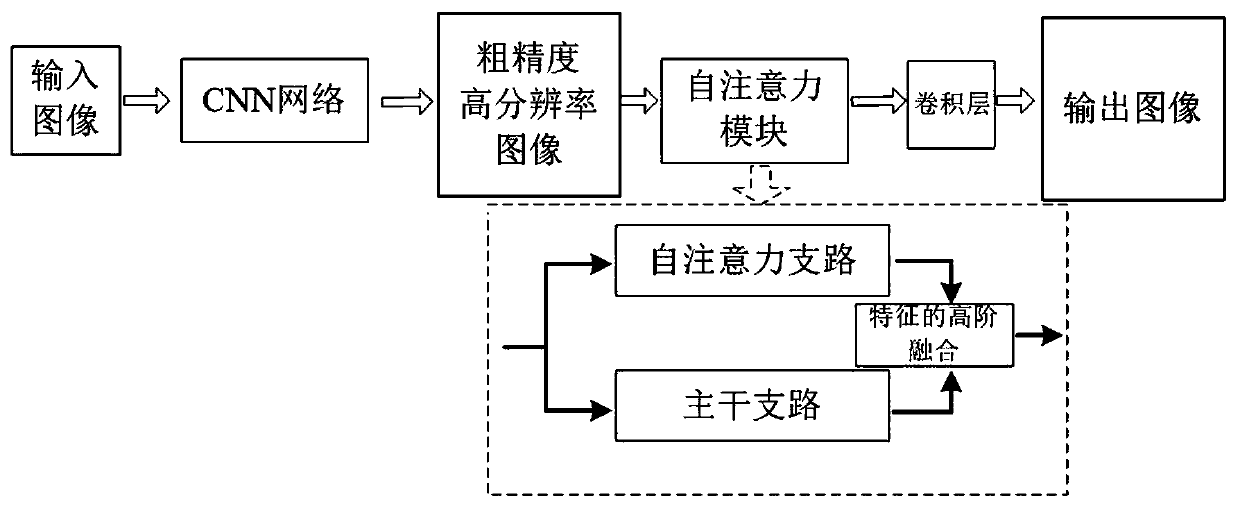

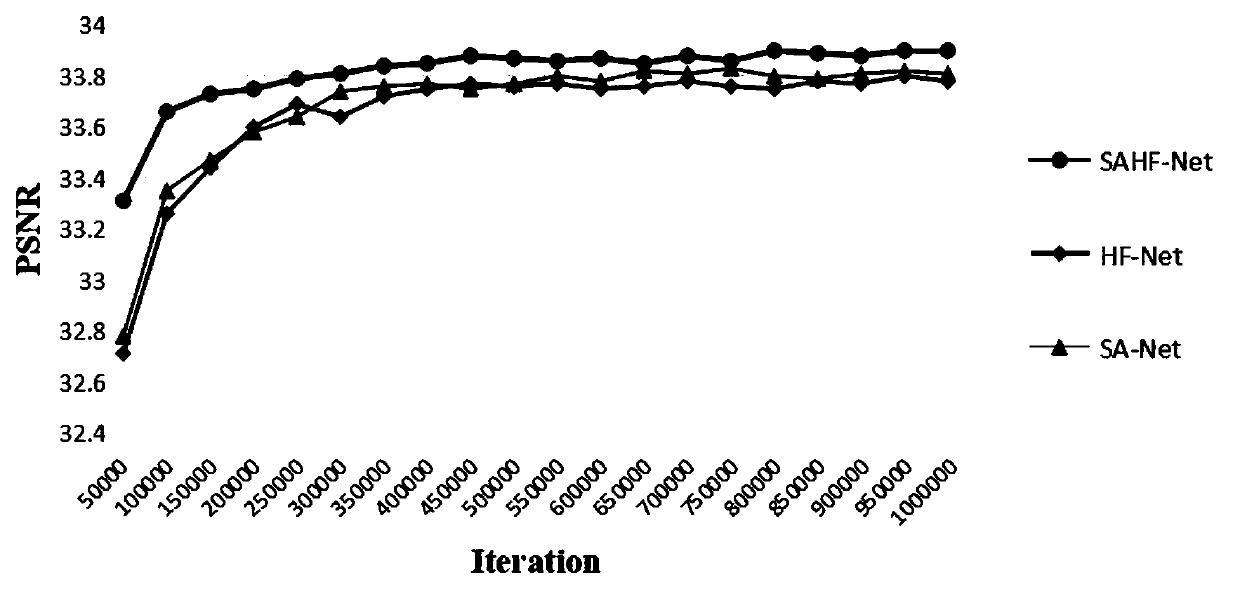

Image super-resolution reconstruction method based on self-attention high-order fusion network

ActiveCN109859106AEnhance expressive abilityRestore Texture DetailsGeometric image transformationCharacter and pattern recognitionFeature extractionSelf attention

The invention discloses an image super-resolution reconstruction method based on a self-attention high-order fusion network, and the method is characterized by including the following steps: 1), building a reconstruction model; 2) performing CNN network feature extraction; (3) performing self-attention branch feature extraction in a self-attention module, (4) performing trunk branch feature extraction in the self-attention module, (5) performing feature high-order fusion and (6) performing image reconstruction. The method can effectively solve the problem of extra calculated amount caused by preprocessing, and more texture details can be recovered to reconstruct a high-quality image.

Owner:GUILIN UNIV OF ELECTRONIC TECH

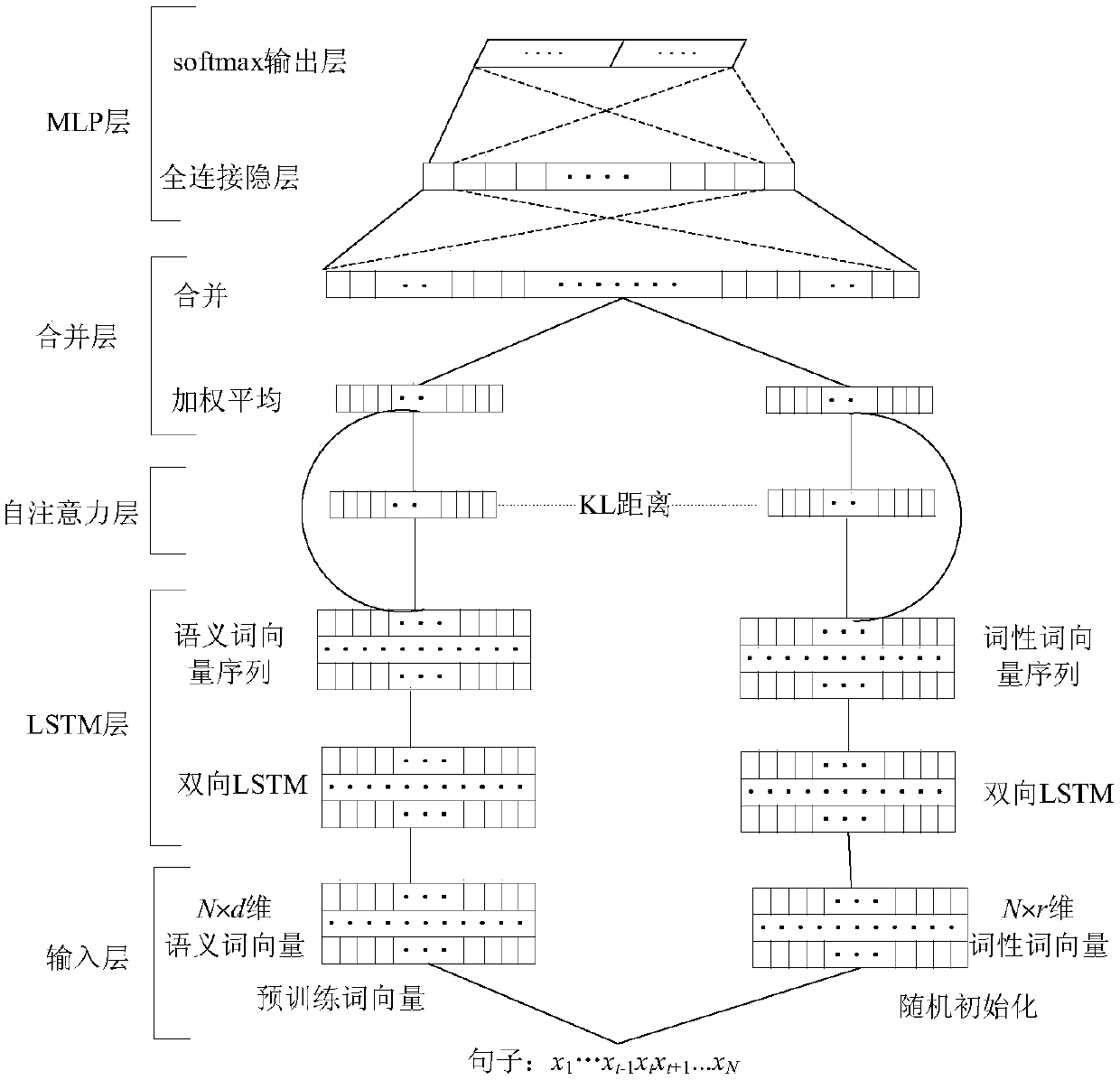

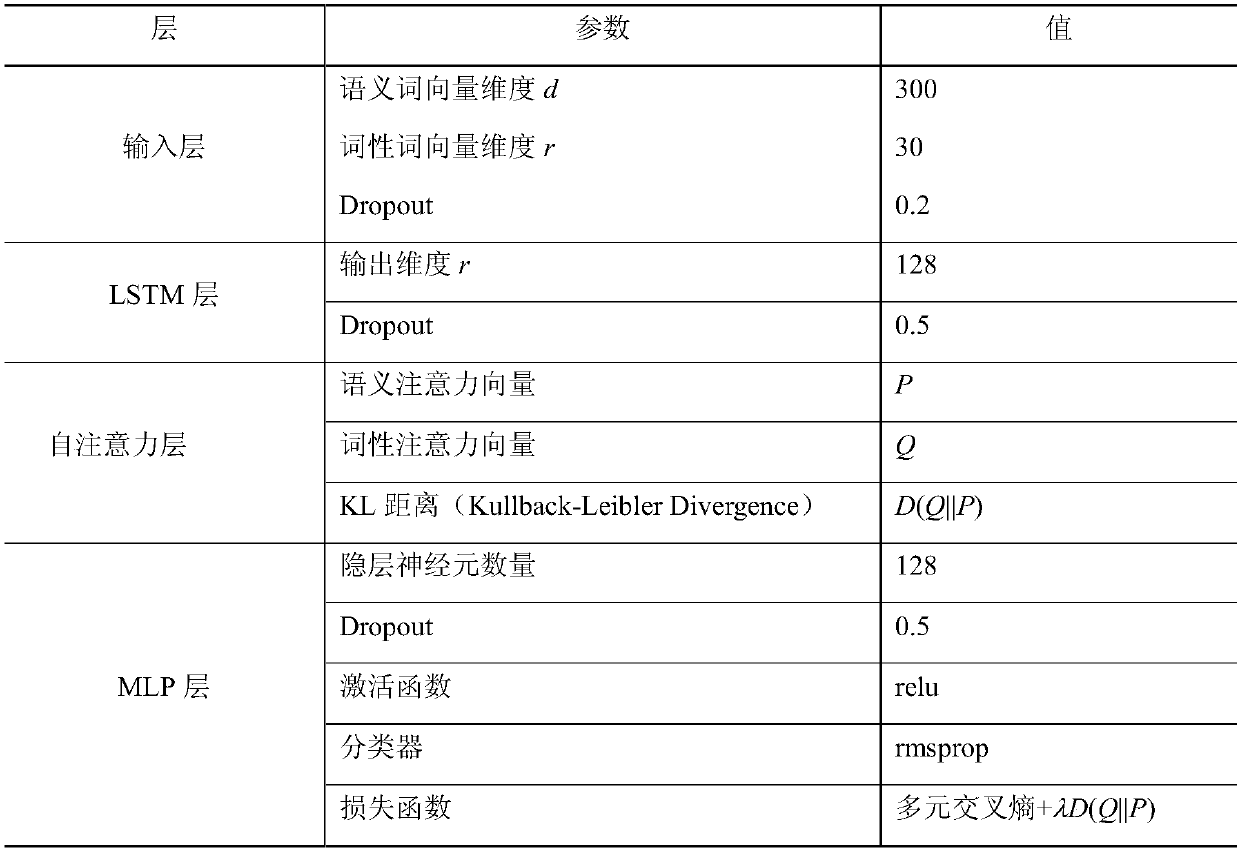

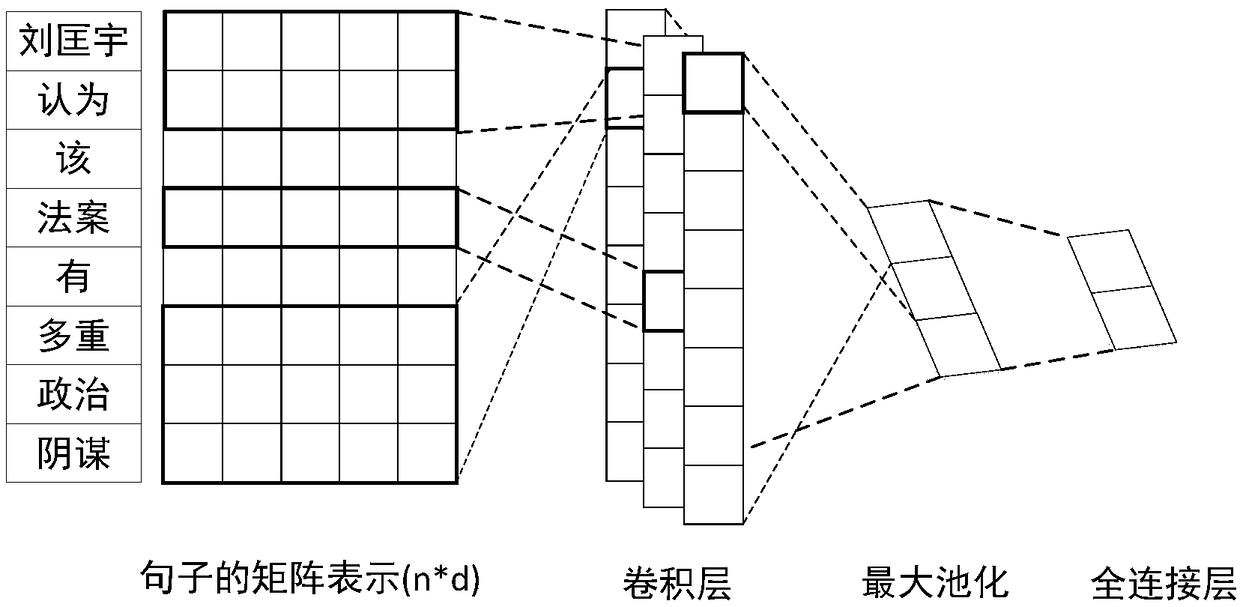

Sentence classification method based on LSTM and combining part-of-speech and multi-attention mechanism

ActiveCN109635109AImprove accuracyImprove general performanceSemantic analysisCharacter and pattern recognitionPart of speechSemantic representation

The invention discloses a sentence classification method based on LSTM and combining part-of-speech and a multi-attention mechanism. The method comprises steps: each sentence is converted into a semantic word vector matrix and a part-of-speech word vector matrix which are based on continuity and density in an input layer; learning context information of words or part-of-speech in the sentences ina shared bidirectional LSTM layer respectively, connecting learning results of each step in series, and outputting the learning results; a self-attention mechanism and a point multiplication functionare adopted in the self-attention layer to learn important local features at all positions in the sentence from the semantic word vector sequence and the part-of-speech word vector sequence respectively, corresponding semantic attention vectors and part-of-speech attention vectors are obtained, and the semantic attention vectors and the part-of-speech attention vectors are constrained through theKL distance; carrying out weighted summation on the output sequence of the bidirectional LSTM layer in the merging layer by utilizing the obtained semantic attention vector and part-of-speech attention vector to obtain semantic representation and part-of-speech representation of the sentence, and obtaining final sentence semantic representation; And finally, prediction and classified output are carried out through an MLP output layer.

Owner:SOUTH CHINA UNIV OF TECH

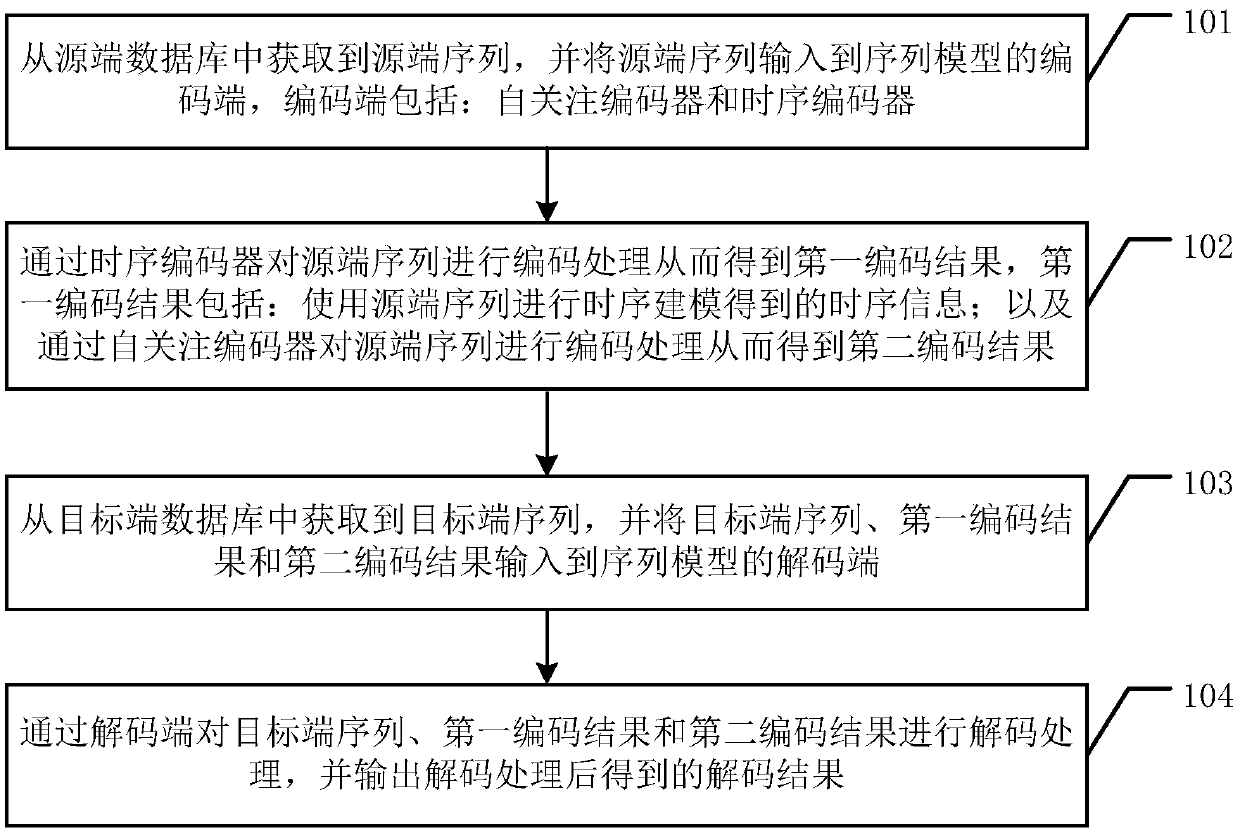

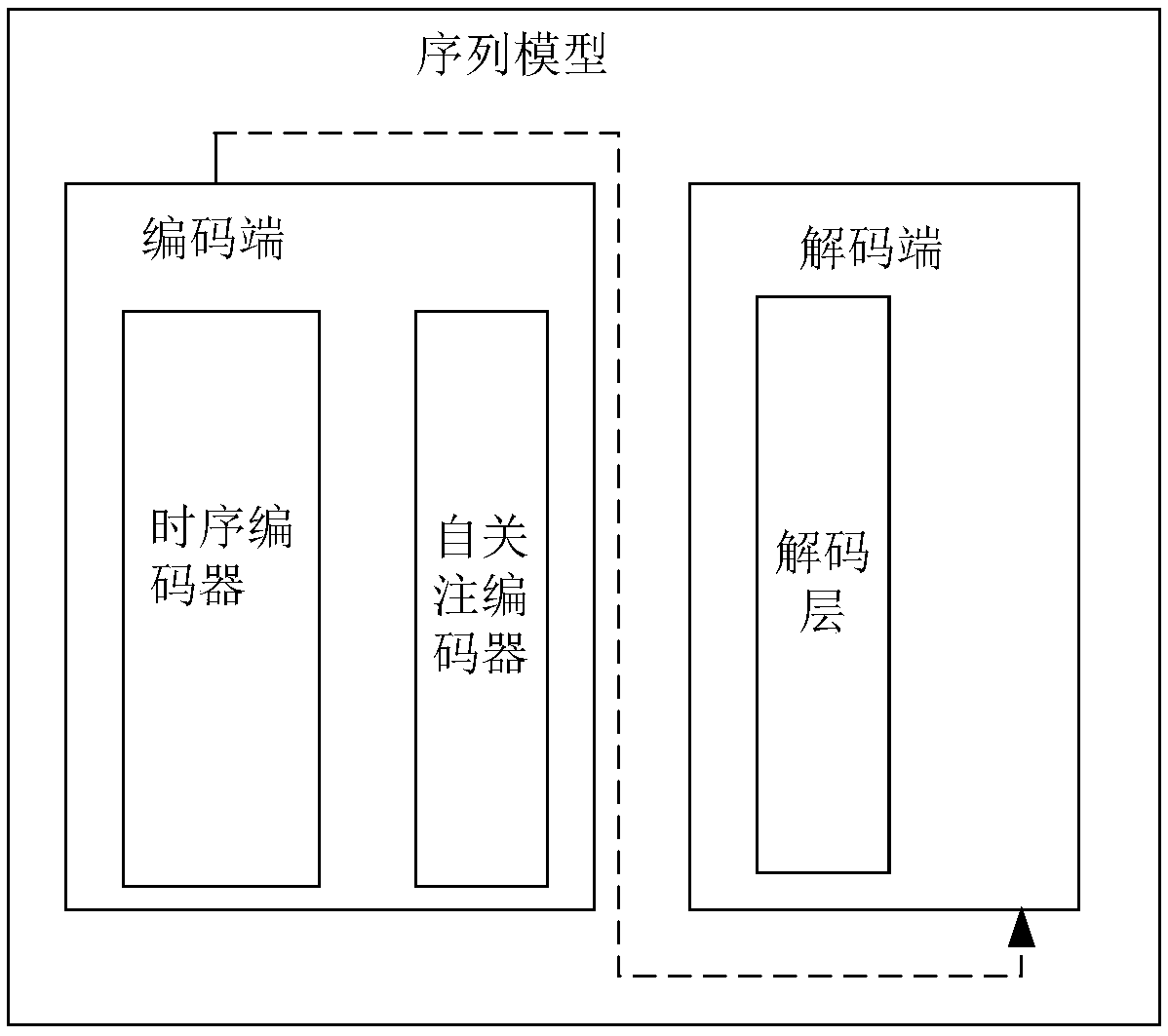

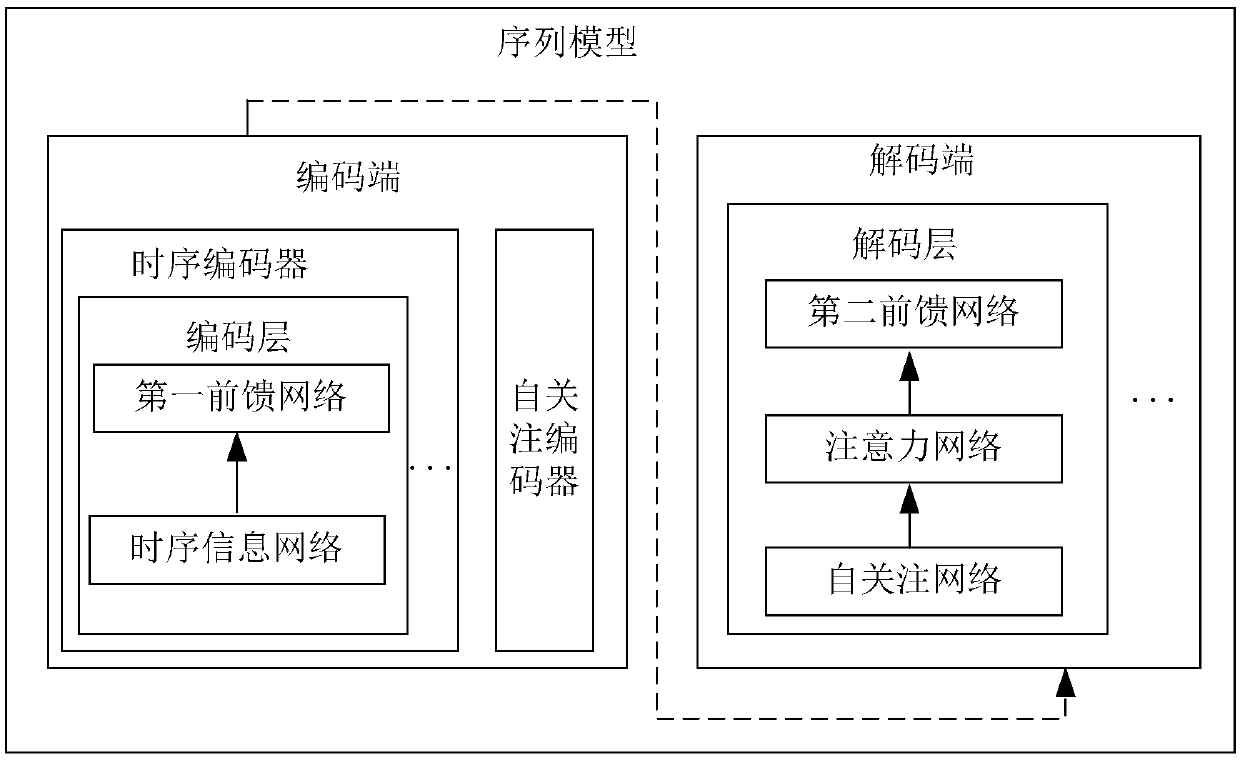

A method and apparatus for processing a sequence model

ActiveCN109543824AImprove mission performanceExact dependenciesNatural language translationNeural architecturesTime informationSelf attention

The embodiment of the invention discloses a method and apparatus for processing a sequence model, which are used for improving the task execution effect of the sequence model. The method comprises thefollowing steps of: obtaining a source end sequence from a source end database and inputting the source end sequence to an encoding end of a sequence model, wherein the encoding end comprises a self-attention encoder and a timing encoder; encoding The source sequence by a timing encoder to obtain a first encoding result, wherein the first encoding result comprises timing information obtained by using the source sequence to perform timing modeling; And performing encoding processing on the source sequence by a self-attention encoder to obtain a second encoding result; Obtaining a target end sequence from a target end database, and inputting the target end sequence, a first coding result and a second coding result to a decoding end of a sequence model; using A decoding end to perform decoding processing on a target end sequence, a first encoding result and a second encoding result, and outputting a decoding result obtained after the decoding processing.

Owner:TENCENT TECH (SHENZHEN) CO LTD

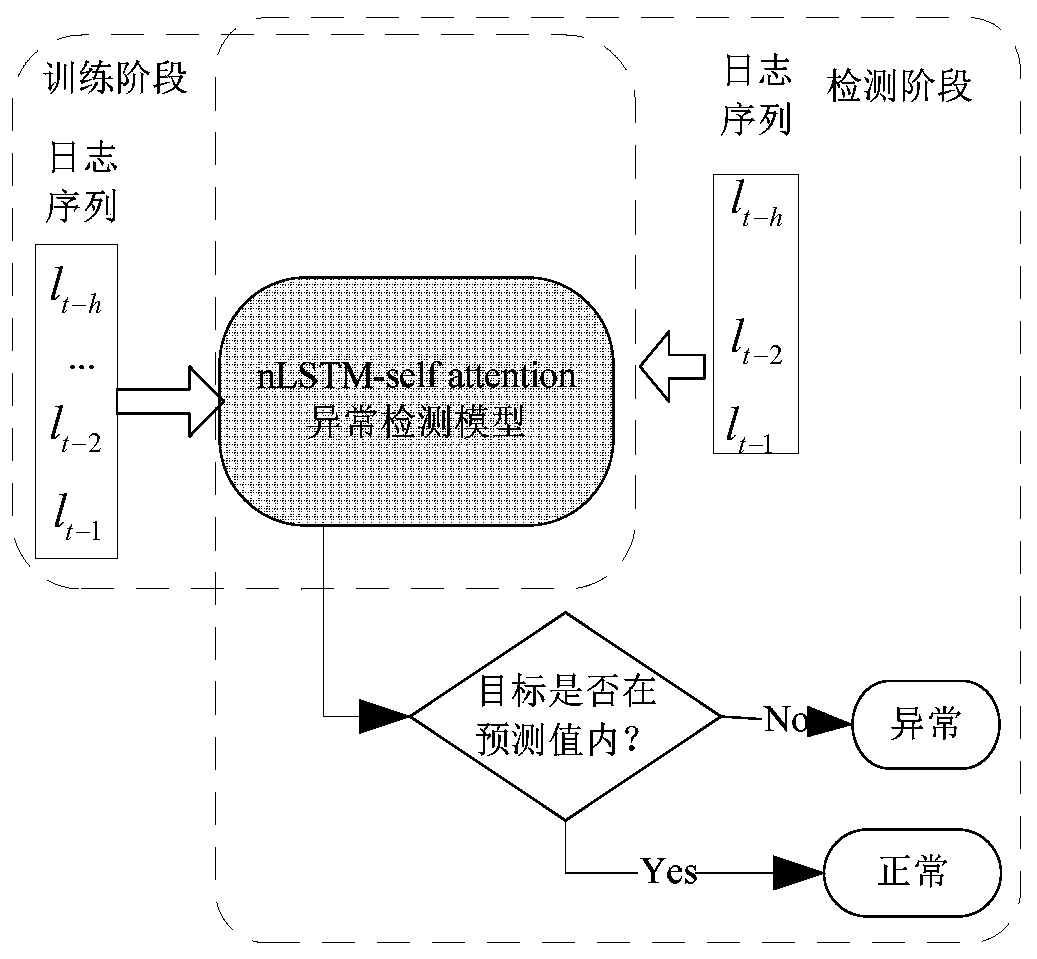

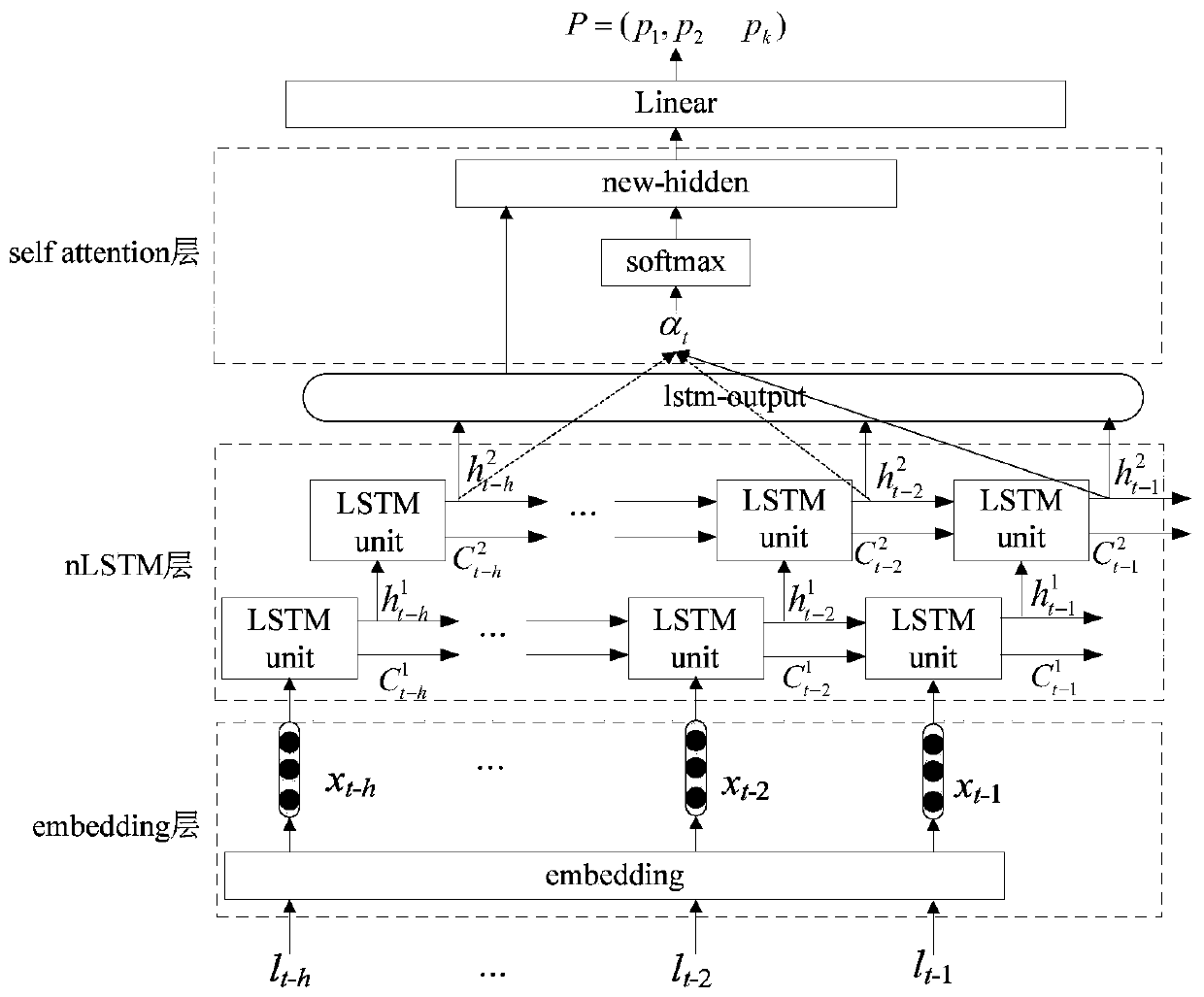

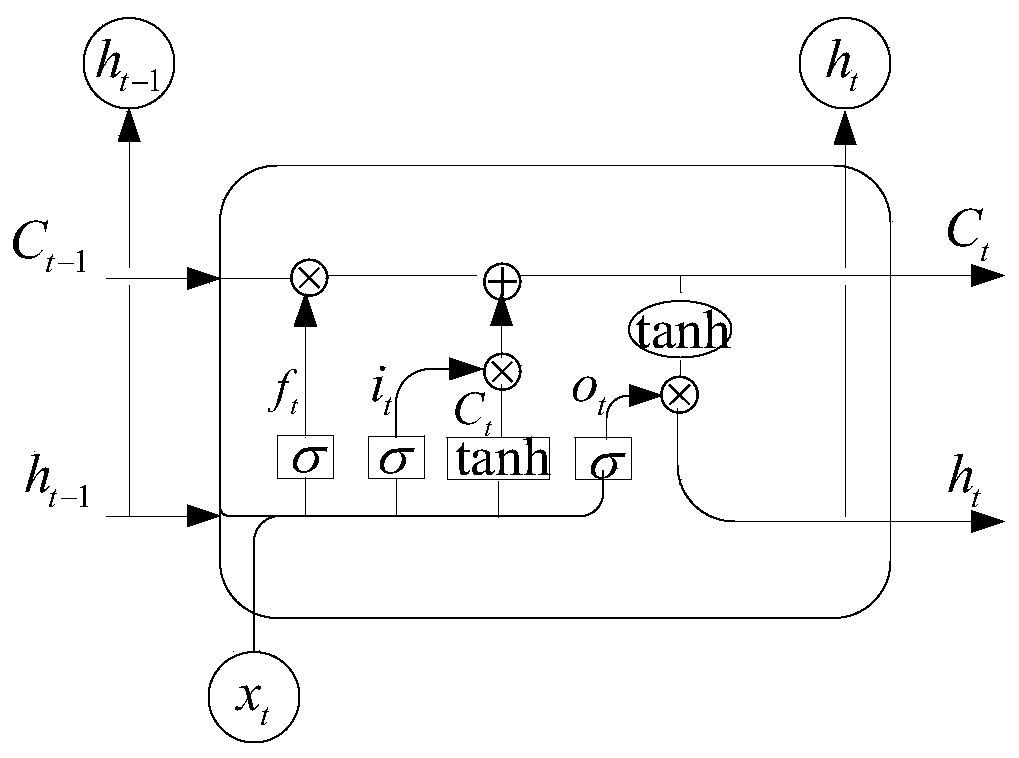

Log sequence anomaly detection framework based on nLSTM (Non-Log Sequence Transfer Module)-self attention

PendingCN111209168AAvoid complex feature extraction stepsPreserve and control contextual informationHardware monitoringNeural architecturesSelf attentionAlgorithm

The invention relates to a log sequence anomaly detection framework based on nLSTM-self attention, and the framework comprises a training model and an anomaly detection model. The training model comprises: assuming that one log file contains k log templates E = {e1, e2L ek}, wherein the input of the training model is a sequence of the log template, the log sequence lt-h,...lt-2, lt-1 with the length of h comprises a log template li belongs to E, t-h < = i < = t-1, and the log template number | lt-h,...lt-2, lt-1 | in one sequence is equal to m < = h; enabling each log template to correspond toone template number, generating a log template dictionary, generating an input sequence from a normal log template sequence, and feeding the input sequence and target data into an anomaly detection model for training. The detection stage comprises the following steps: the data input method is the same as the training stage, anomaly detection is carried out by using the model generated in the training stage, the model output is a probability vector P = (p1, p2L pk), pi represents the probability that the target log template is ei, if the actual target data is within the prediction value, it isjudged that the log sequence is normal, otherwise it is judged that the log sequence is abnormal.

Owner:中国人民解放军陆军炮兵防空兵学院郑州校区

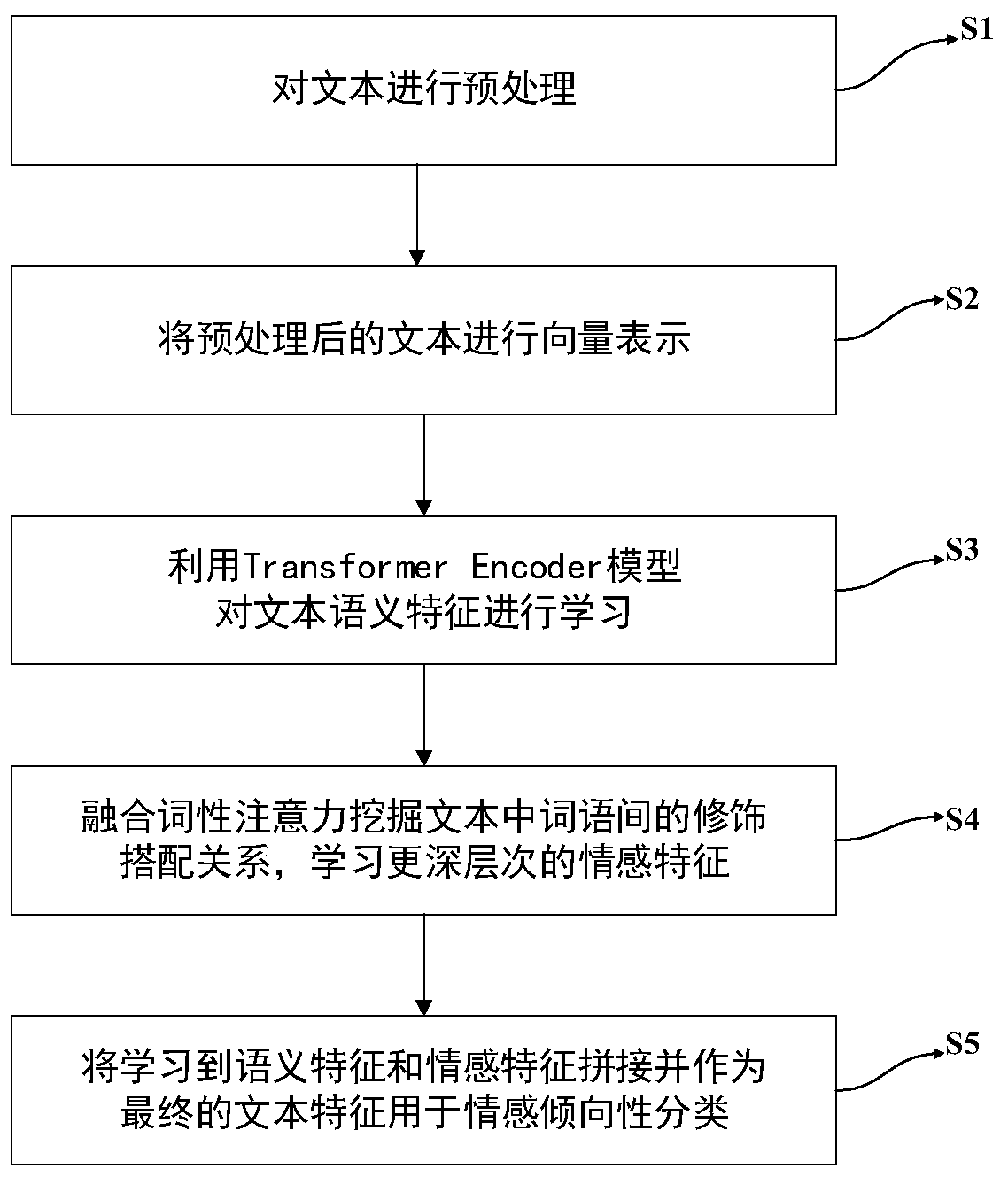

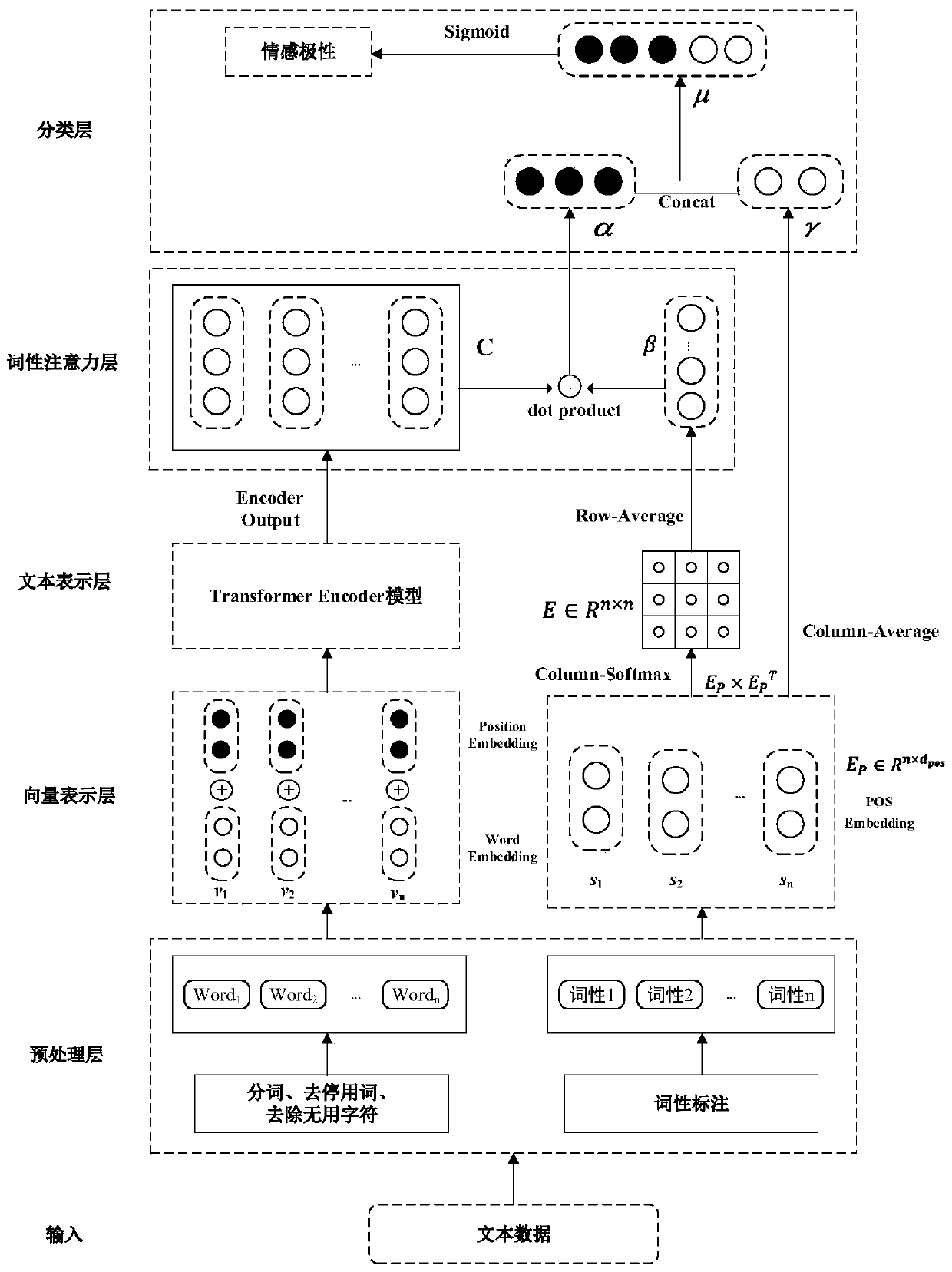

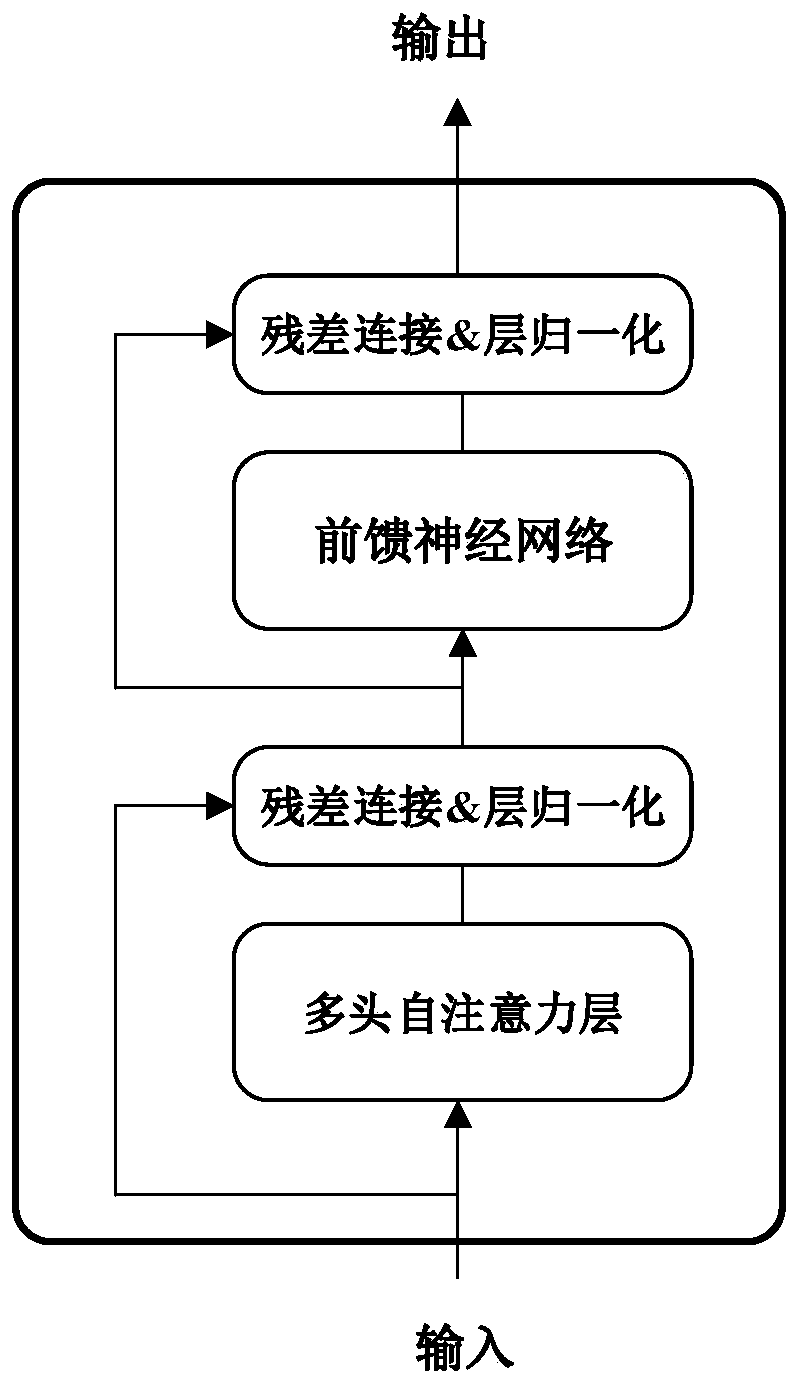

Part-of-speech and self-attention mechanism fused sentiment tendency classification method and system

PendingCN110569508ARich emotional characteristicsEasy to learnCharacter and pattern recognitionNeural architecturesPart of speechLearning based

The invention relates to a part-of-speech and self-attention mechanism fused emotion tendency classification method and system, and belongs to the technical field of natural language processing. The method comprises the following steps of S1, preprocessing a text, performing part-of-speech tagging while performing word segmentation, and removing stop words and useless characters; S2, performing vector representation on the preprocessed text; S3, learning semantic features of the text by utilizing a Transformer-Encoder model; S4, fusing part-of-speech attention to mine modification and matchingrelationships among words in sentences, and learning deeper emotion features; and S5, splicing the learned semantic features and sentiment features as final text features for sentiment tendency classification. Text features are learned based on a self-attention mechanism and part-of-speech attention is fused to expand emotion information to acquire richer emotion features. The feature expressioncapacity of the emotion tendency classification method is improved, and the classification accuracy is effectively improved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

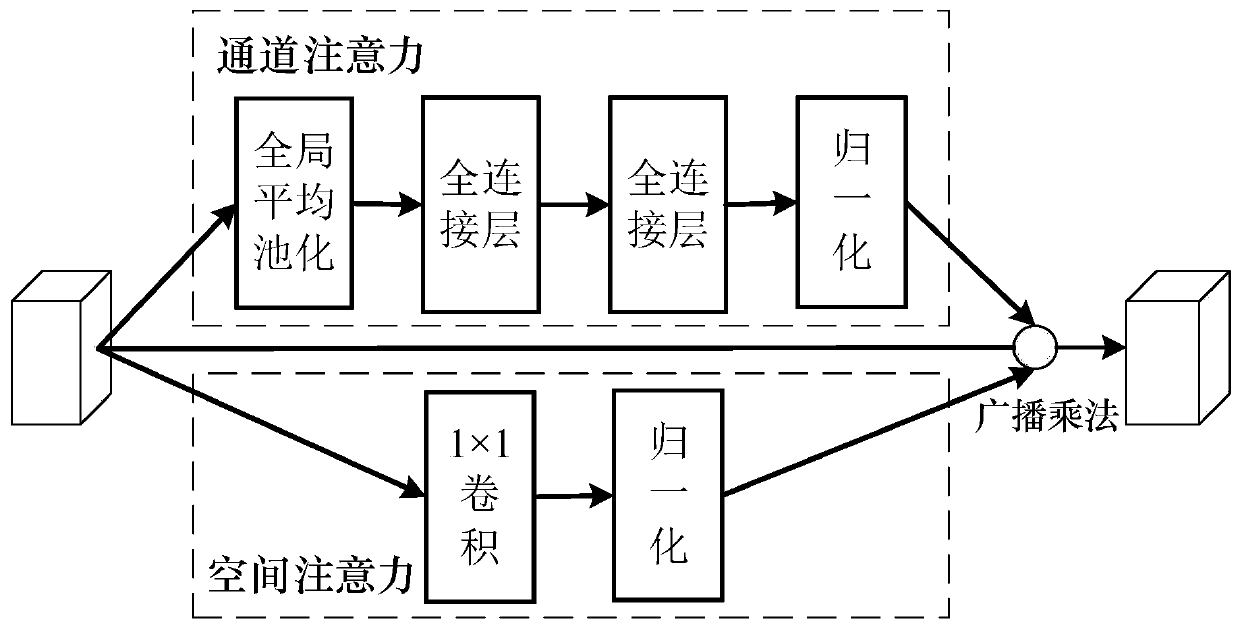

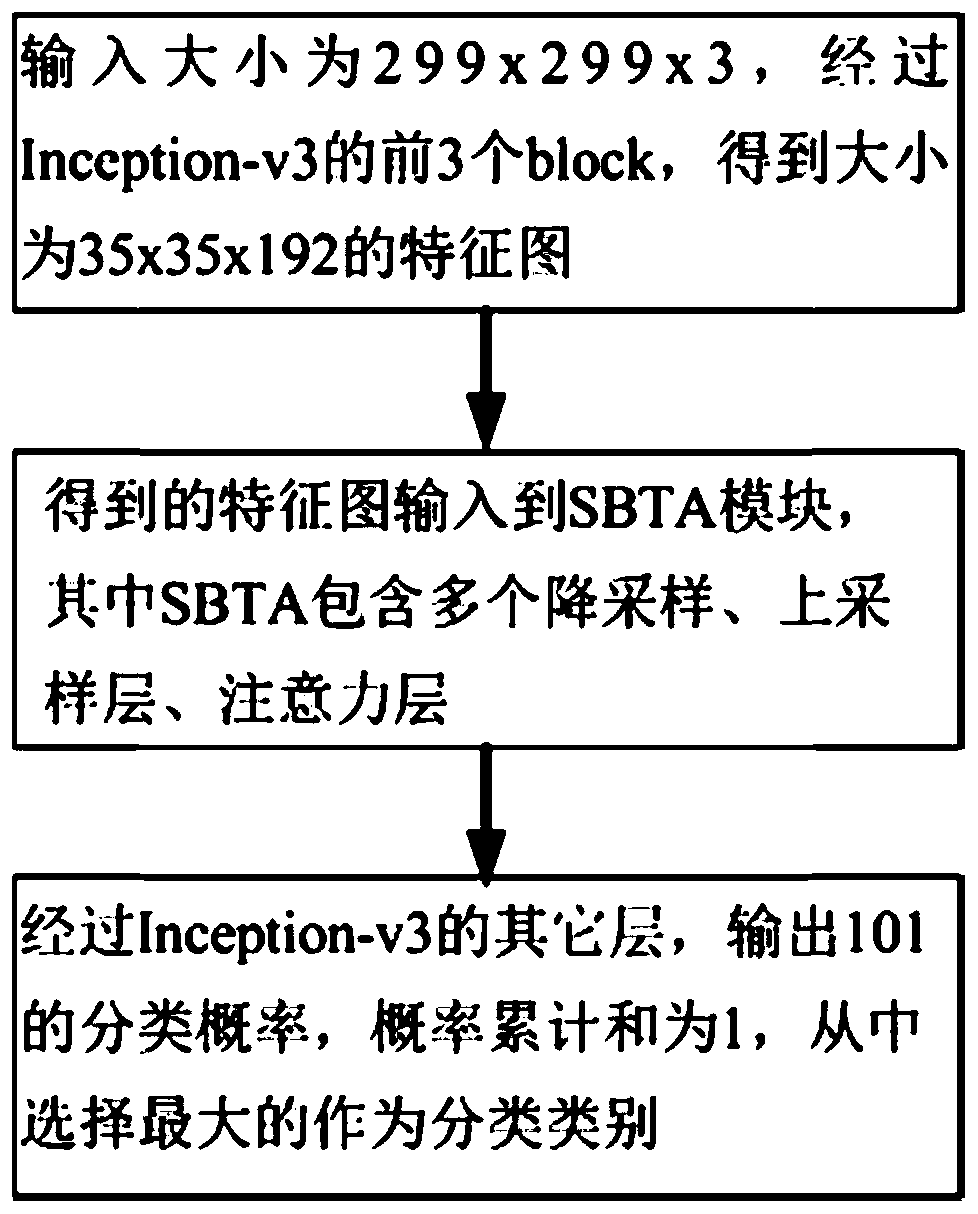

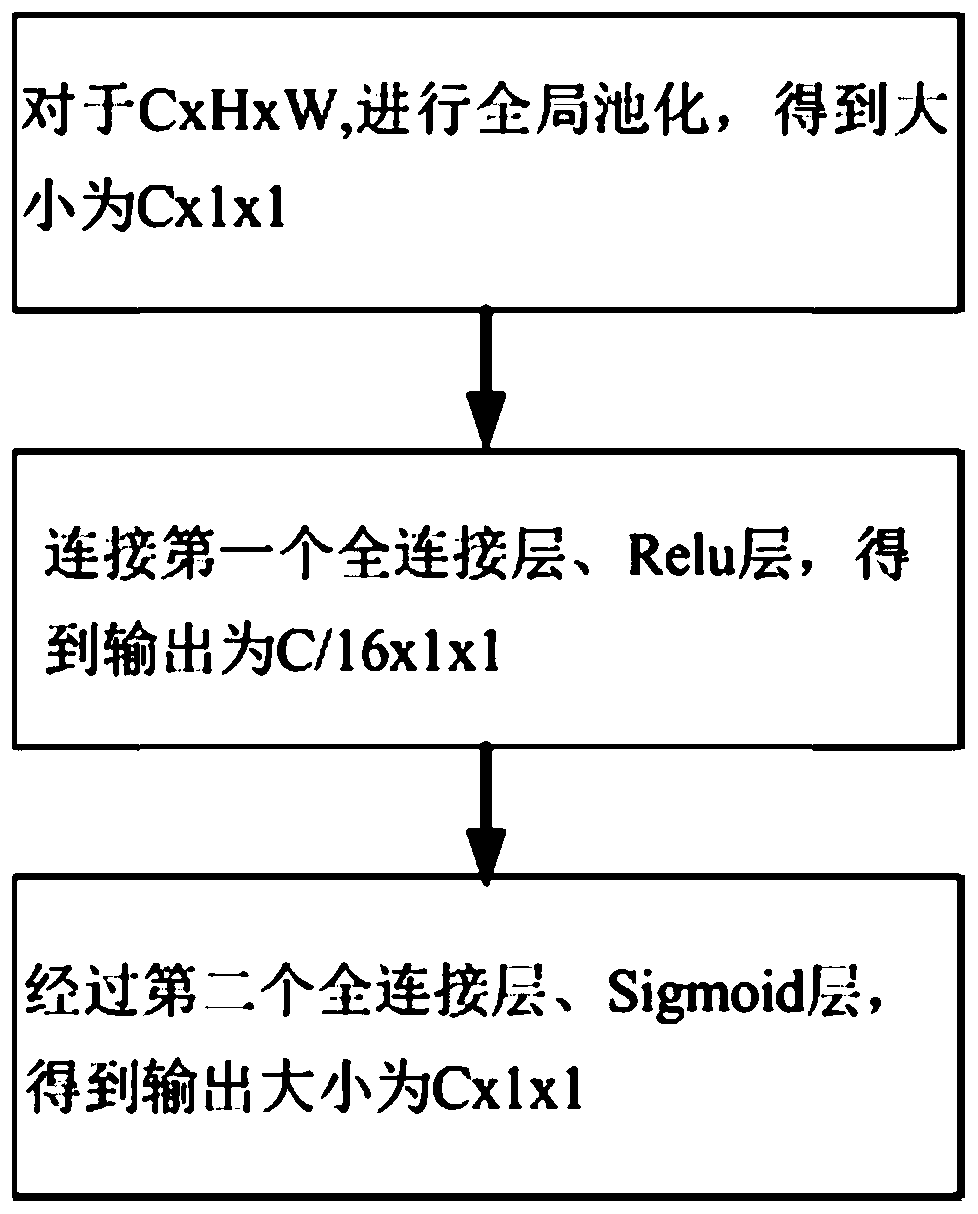

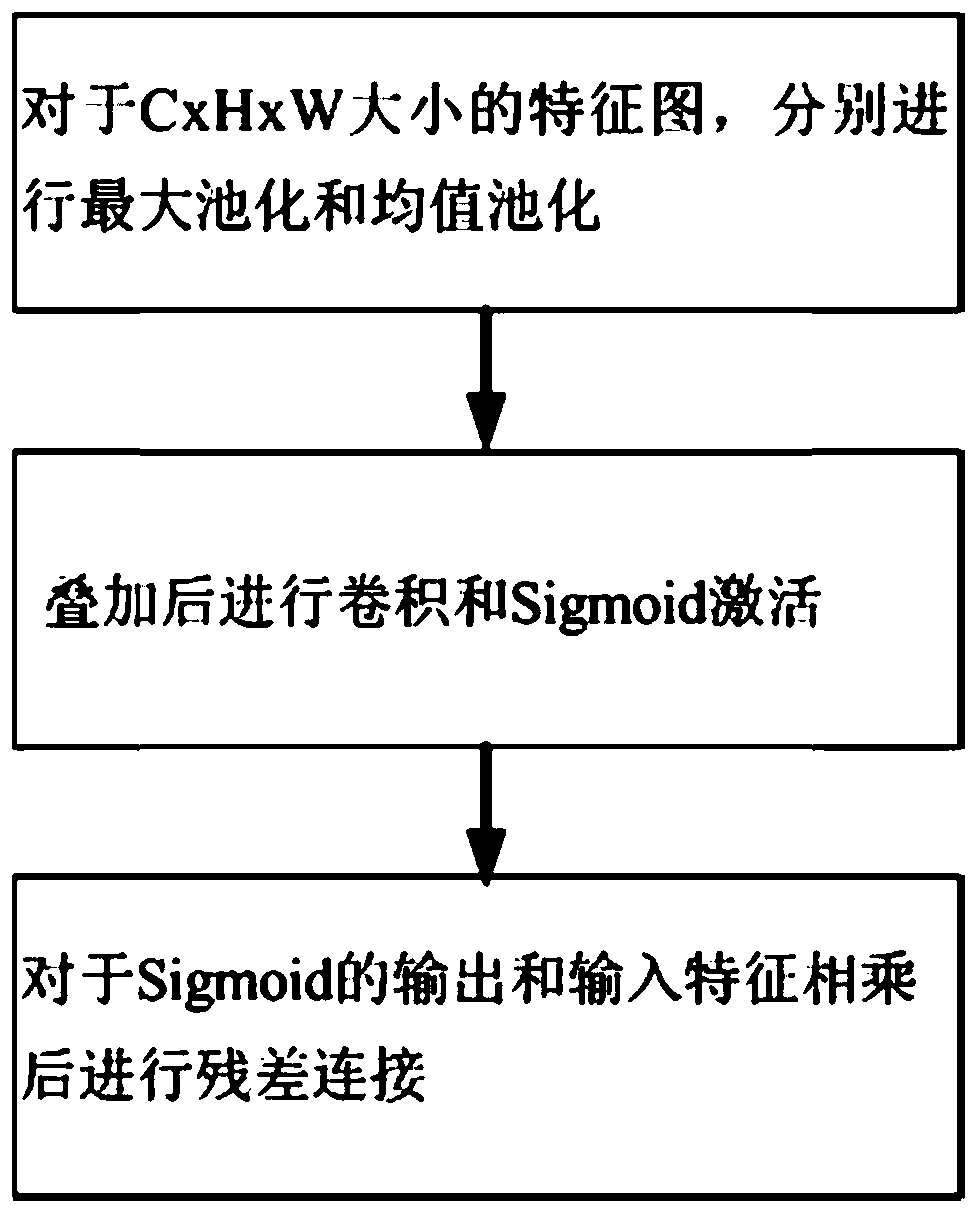

Behavior recognition system based on an attention mechanism

ActiveCN109871777AImprove classification performanceFast convergenceCharacter and pattern recognitionNeural architecturesTime informationSelf attention

The invention discloses a behavior recognition system based on an attention mechanism. The behavior recognition system is composed of an input, an intermediate Block and an output. The whole network structure of the system is based on Inception V3, and two proposed Attention Modules are selected to be added into one Block; Wherein the Chosel Attention module is used for extracting dependence amongchannels, and space dependence is obtained by using Spacal Attention. The invention aims to overcome the influence of an error tag and background information. Residual learning is used to combine channel attention with spatial attention. And longer-term time information using self-attention is acquired as part of the network. In the model, the attention of the space and the channel is utilized, and only the attention of the two-dimensional channel is used in the module design.

Owner:GUANGZHOU INTELLIGENT CITY DEV INST +1

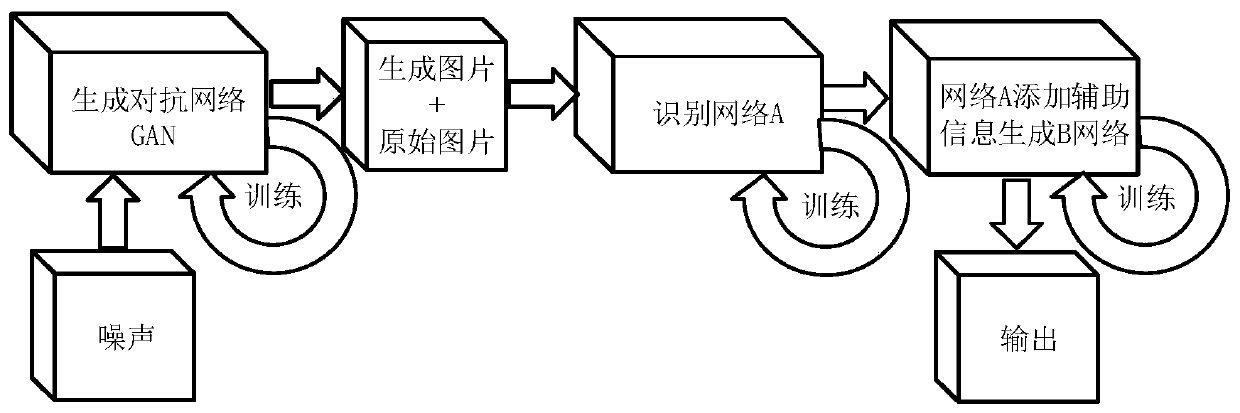

SAR target classification method based on SAGAN sample expansion and auxiliary information

ActiveCN109934282ABig amount of dataCharacter and pattern recognitionNeural architecturesSelf attentionSmall sample

The invention belongs to the field of synthetic aperture radar small sample target recognition, and particularly relates to an SAR target classification method based on SAGAN sample expansion and auxiliary information. According to the characteristics of an SAR data sample image, the Inception structure is optimized and improved, proper regularization conditions are added, and the SAR small sampletarget is accurately identified by combining the GAN small sample generation and the GAN small sample super-resolution result. The invention provides a network more suitable for an SAR remote sensingimage, so that the network can learn the characteristics of different types of target areas, a new more realistic target area image is generated, and the problem of small data volume of an SAR smallsample is solved. A target area in a synthetic aperture radar SAR remote sensing image is aimed at. The invention discloses an SAR target classification method based on self-attention generative adversarial network sample expansion and auxiliary information, and mainly relates to a generative adversarial network for expanding SAR new sample data, and the method is used for SAR small sample targetidentification based on a Restnet50 structure network.

Owner:HARBIN ENG UNIV

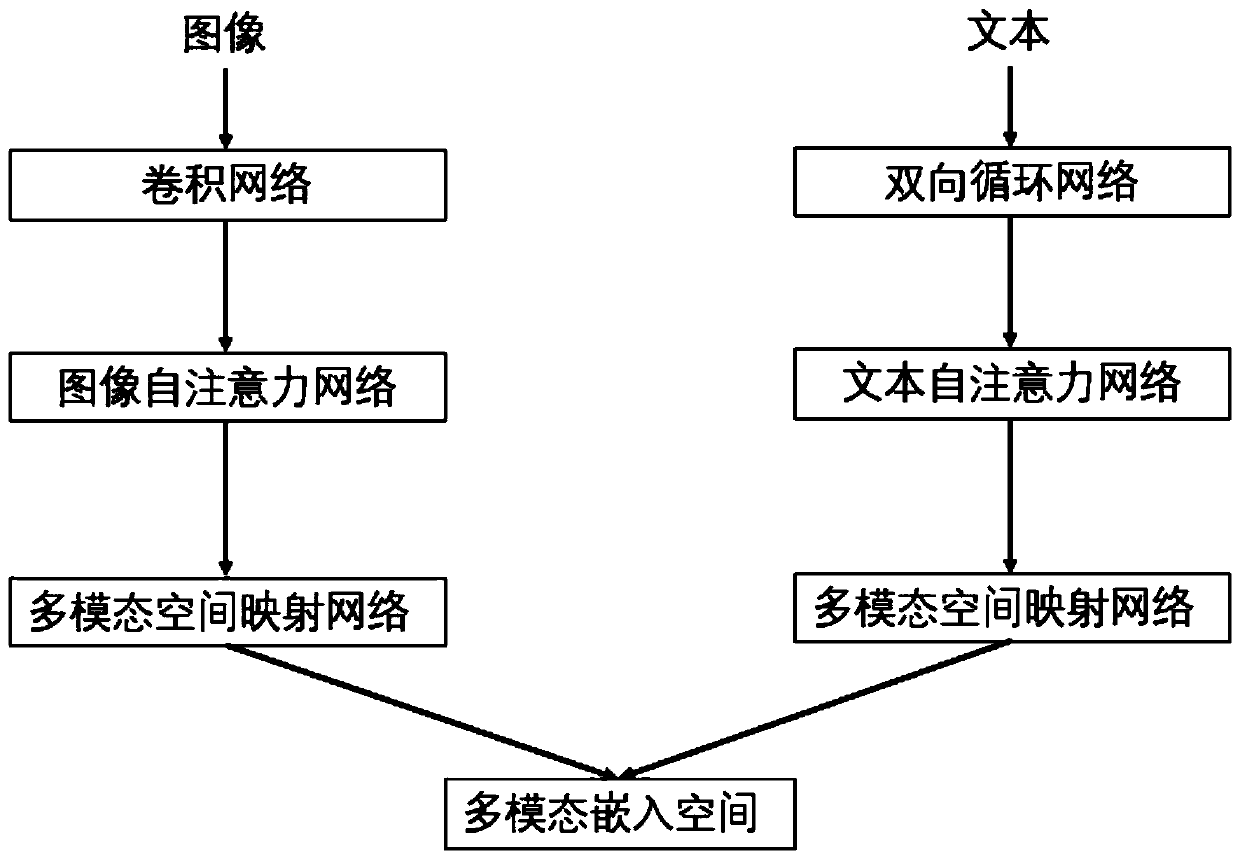

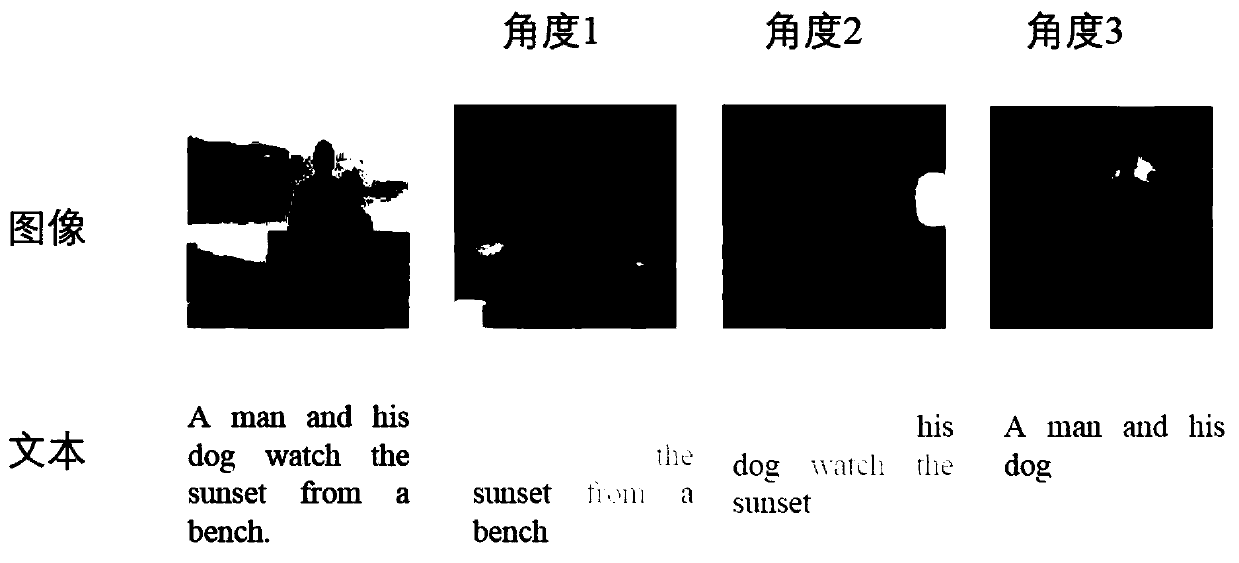

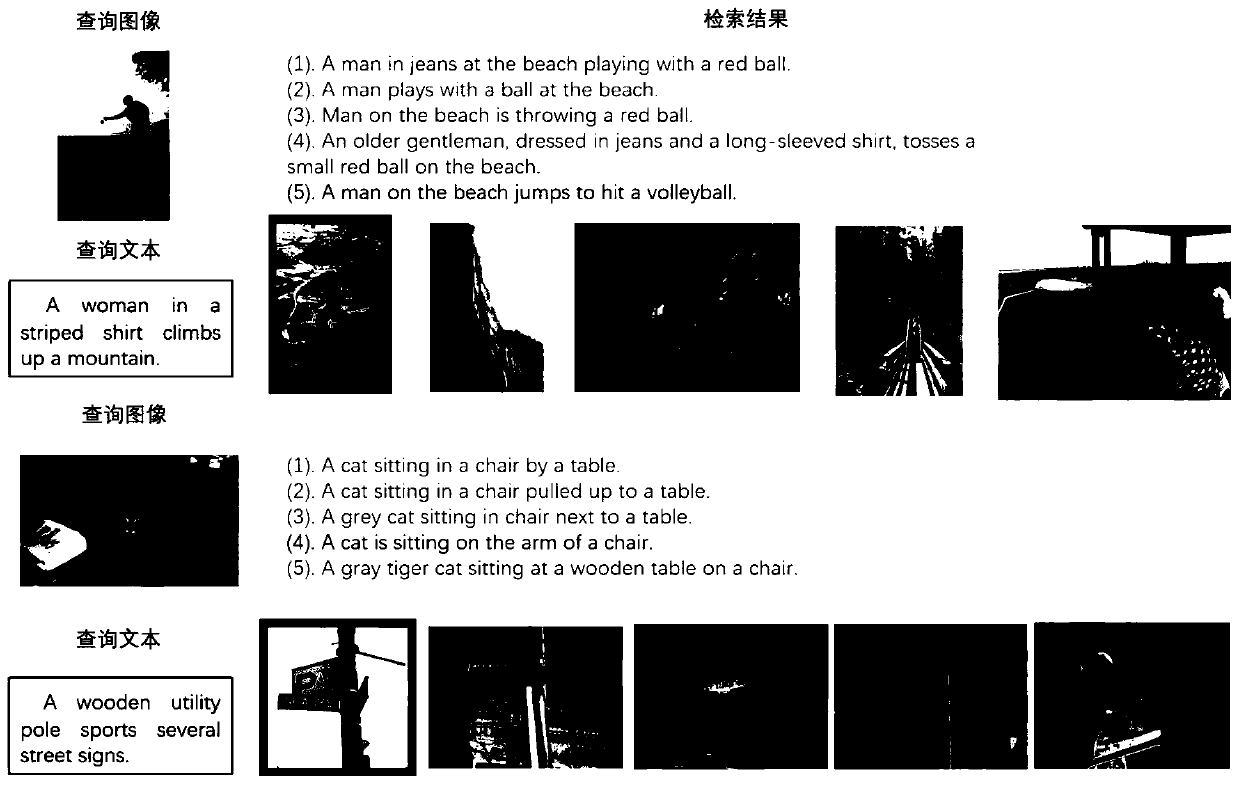

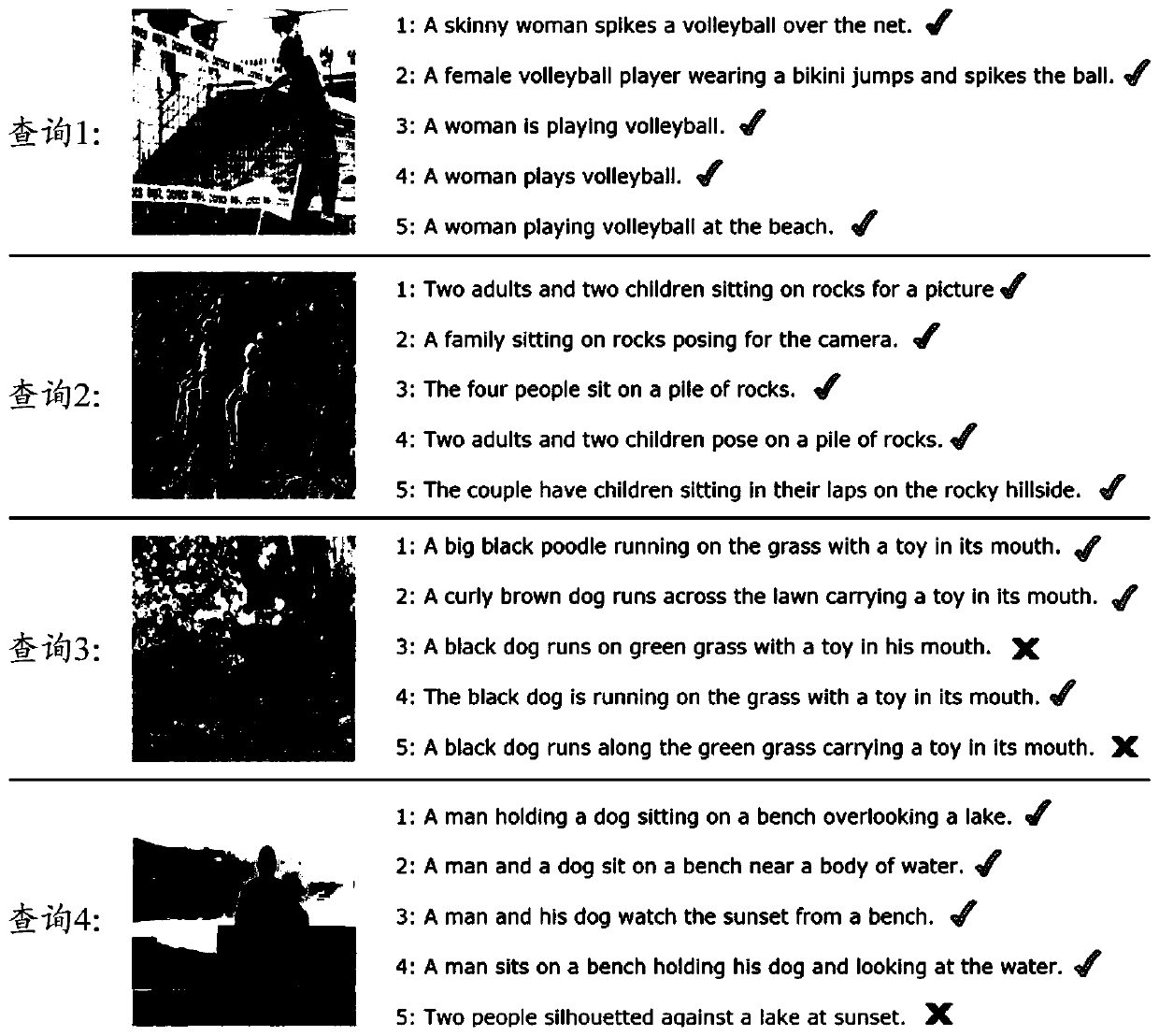

Image-text retrieval system and method based on multi-angle self-attention mechanism

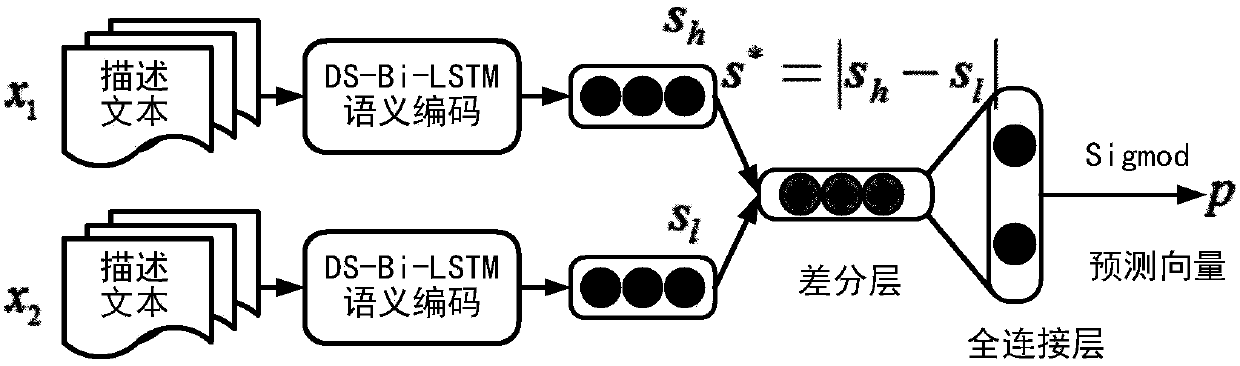

PendingCN109992686AConvenience to followOptimization parametersStill image data queryingNeural architecturesPattern recognitionTwo-vector

The invention belongs to the technical field of cross-modal retrieval, and particularly relates to an image-text retrieval system and method based on a multi-angle self-attention mechanism. The systemcomprises a deep convolutional network, a bidirectional recurrent neural network, an image, a text self-attention network, a multi-modal space mapping network and a multi-stage training module. The deep convolutional network is used for acquiring an embedding vector of an image region feature in an image embedding space. The bidirectional recurrent neural network is used for acquiring an embedding vector of a word feature in a text space, and the two vectors are respectively input to the image and the text self-attention network. The image and text self-attention network is used for acquiringan embedded representation of an image key area and an embedded representation of key words in sentences. The multi-modal space mapping network is used for acquiring the embedded representation of the image text in the multi-modal space. The multi-stage training module is used for learning parameters in the network. A good result is obtained on a common data set Flickr30k and an MSCOCO, and the performance is greatly improved.

Owner:FUDAN UNIV

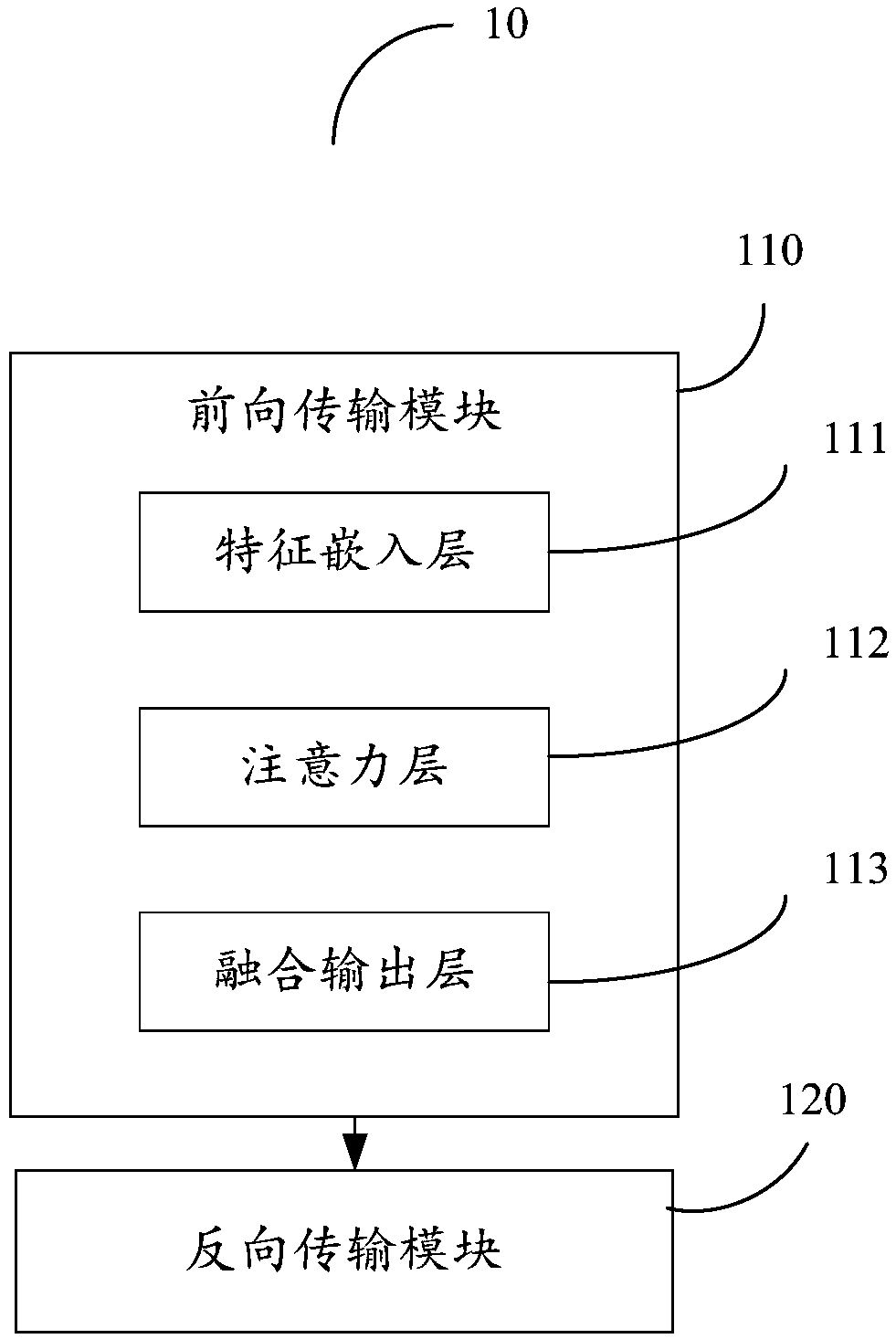

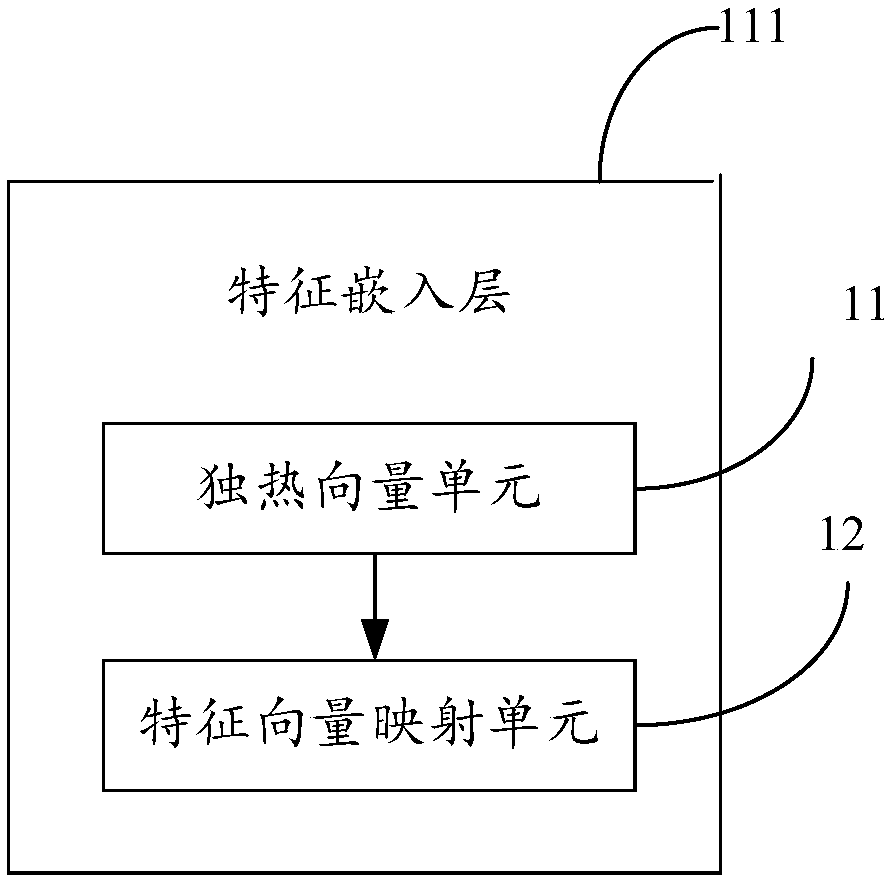

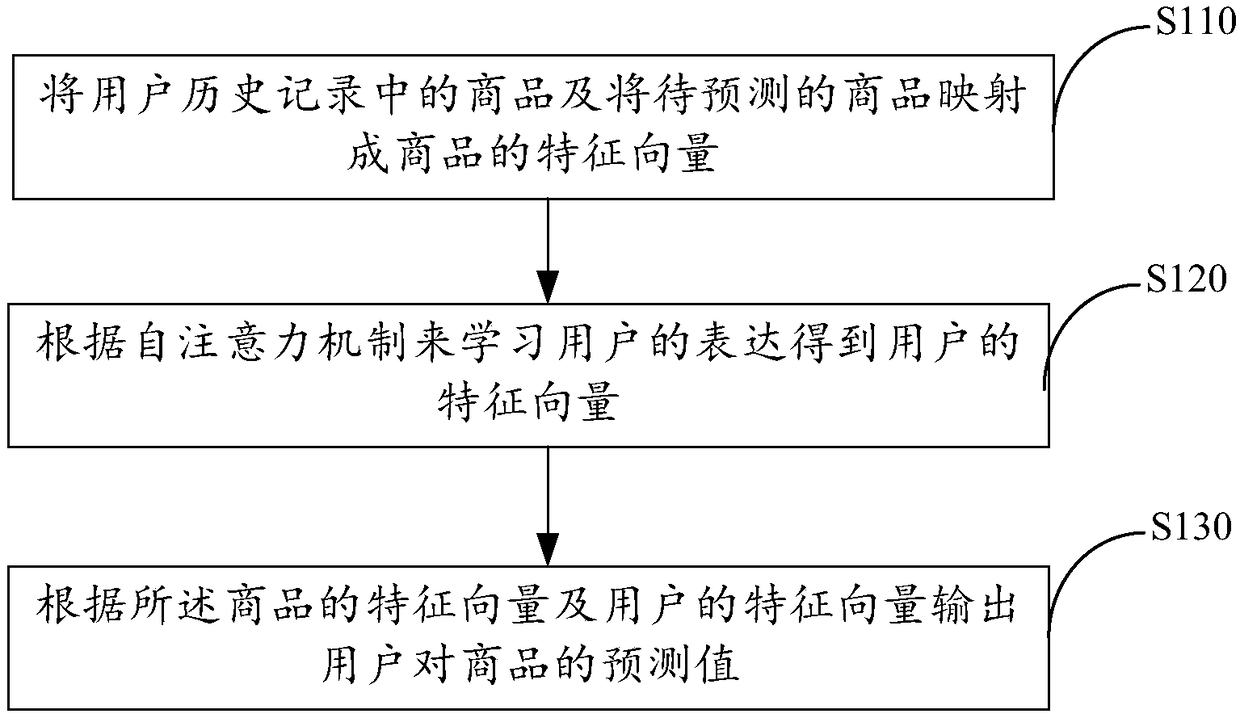

A recommendation system and a recommendation method based on attention mechanism

InactiveCN109087130AImprove interpretabilityImprove recommendationsMarketingSpecial data processing applicationsFeature vectorSelf attention

The invention provides a recommendation system and a recommendation method based on an attention mechanism, wherein the merchandise in the user history and the merchandise to be predicted are mapped to the feature vector of the merchandise through the feature embedding layer, according to the self-attention mechanism, the attention layer learns the user's expression to obtain the user's feature vector, a prediction value for the merchandise from a user is output according to the feature vector of the merchandise and the feature vector of the user through a fusion output layer. The invention provides a recommendation system based on attention mechanism, which adopts a neural attention mechanism to automatically distribute weights to each merchandise in a user's history record, so that the interpretability is enhanced. The invention outputs a prediction value of the user to the merchandise according to the characteristic vector of the merchandise and the characteristic vector of the userthrough a fusion output layer. The neural attention mechanism can be used to simulate user behavior more reasonably and get finer grained weights so as to obtain better recommendation effect.

Owner:SHENZHEN INST OF ADVANCED TECH

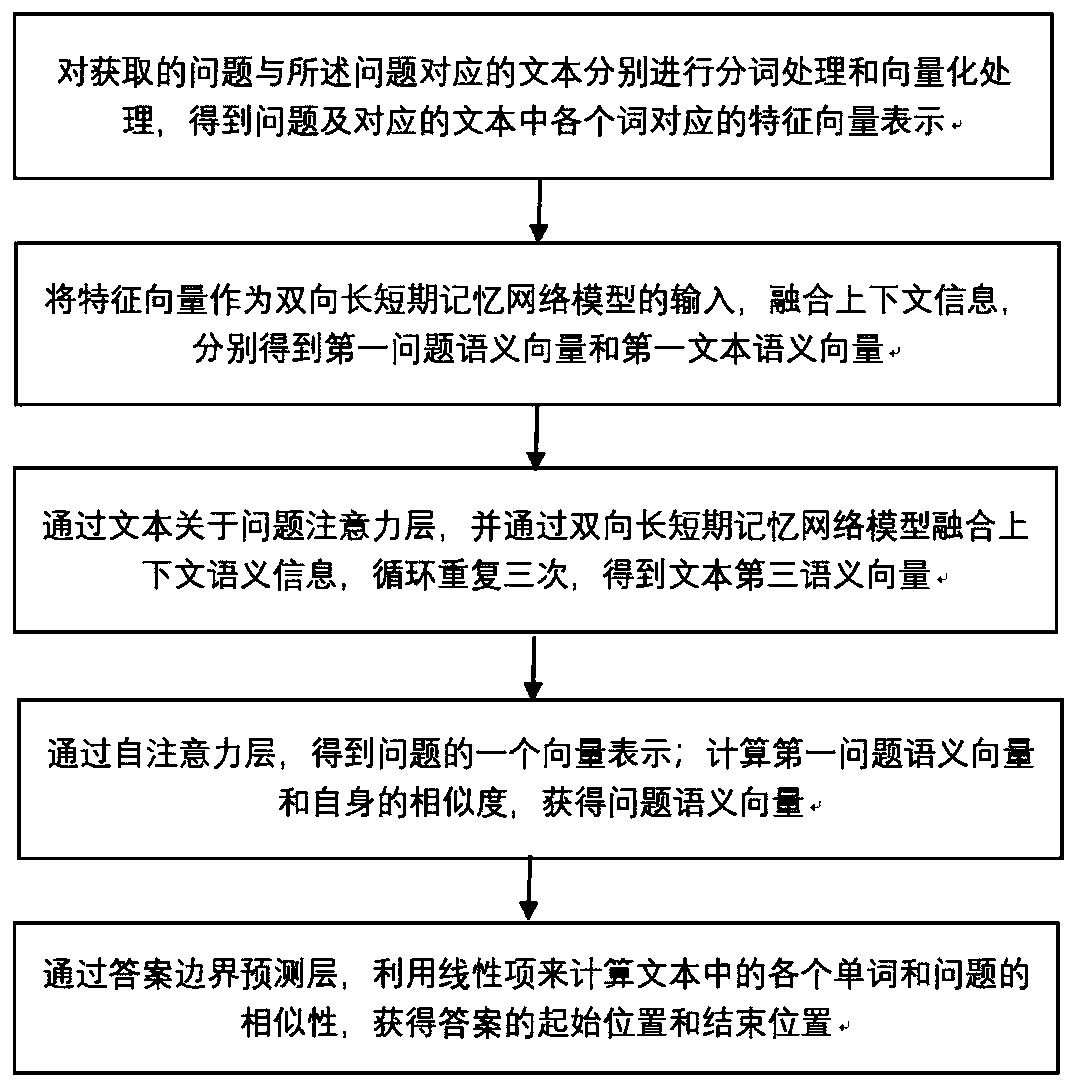

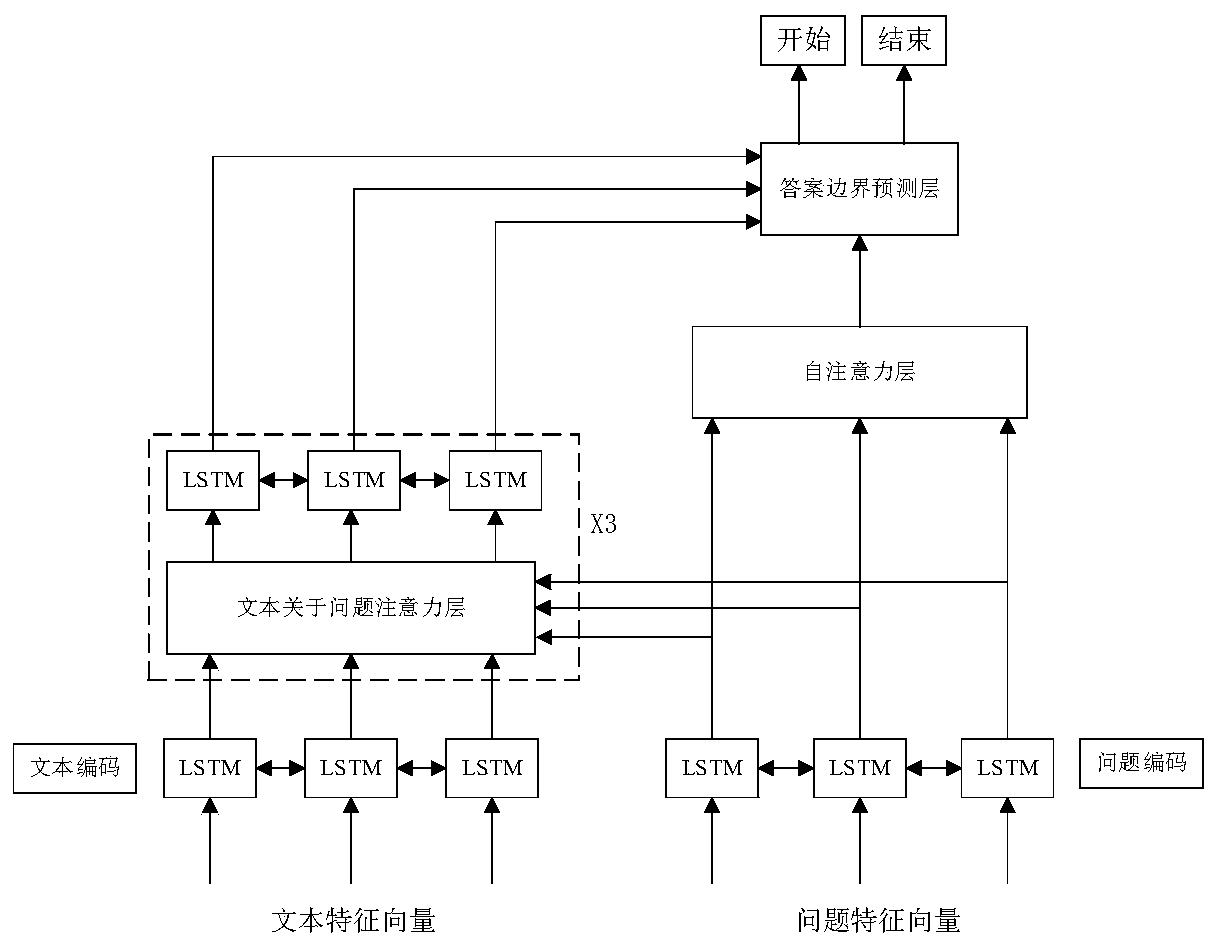

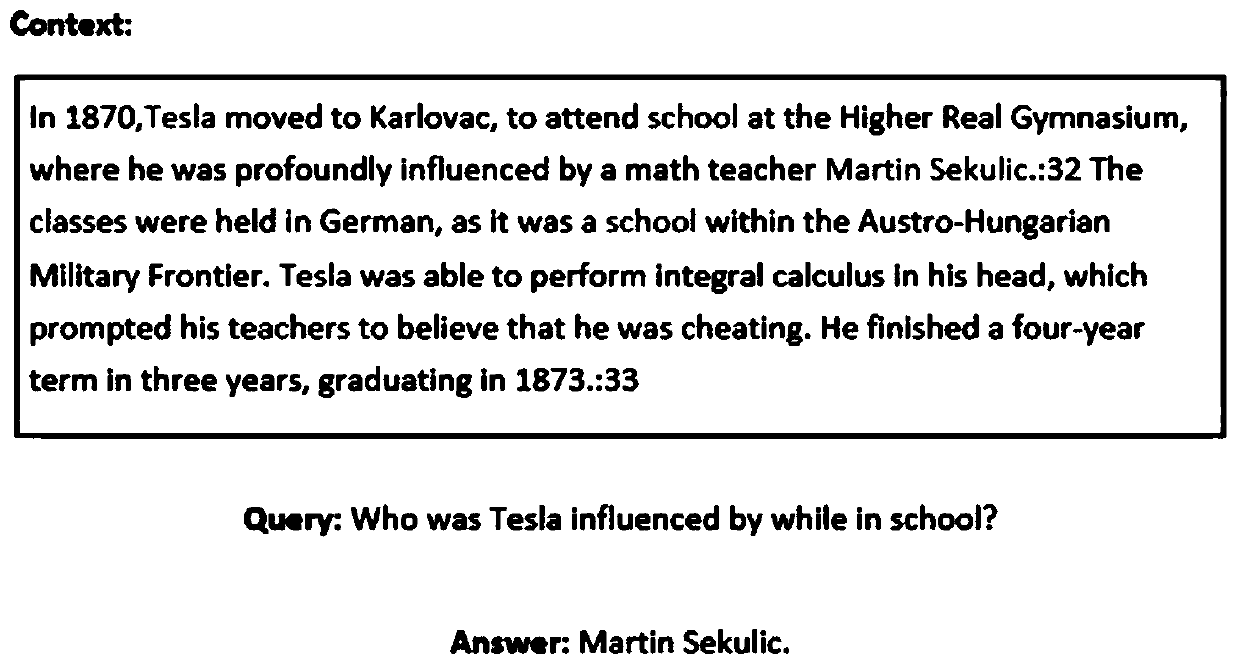

Machine reading comprehension answer obtaining method based on multi-round attention mechanism

ActiveCN110083682AHigh precisionCapture interactionSemantic analysisText database queryingFeature vectorSemantic vector

The invention discloses a machine reading comprehension answer obtaining method based on a multi-round attention mechanism. The method comprises steps of performing word segmentation processing and vectorization processing on the questions and the texts corresponding to the questions respectively to obtain feature vectors, selecting a bidirectional long-short time memory network to encode contextsemantic information of the feature vectors, and performing modeling between the questions and the texts by using an attention mechanism to effectively capture information interaction between the questions and the texts. Attention of an article about a question is calculated through multiple rounds, context semantic information is fused, then BLSTM is used for coding the context semantic information, the above processes are repeated for multiple times, so that an nth text semantic vector is obtained, and a Self-Attention mechanism is used for obtaining a vector representation of the question;by calculating the similarity between the semantic vectors of the questions and the similarity of the semantic vectors, namely one representation of each word in the article in the question space, theaccuracy of predicting answers can be effectively improved, BLSTM and Attention are effectively combined, and the matching accuracy of the questions and the answers returned by text extraction can beimproved.

Owner:XI AN JIAOTONG UNIV

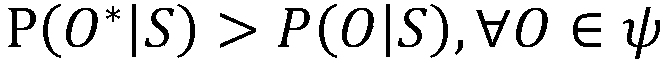

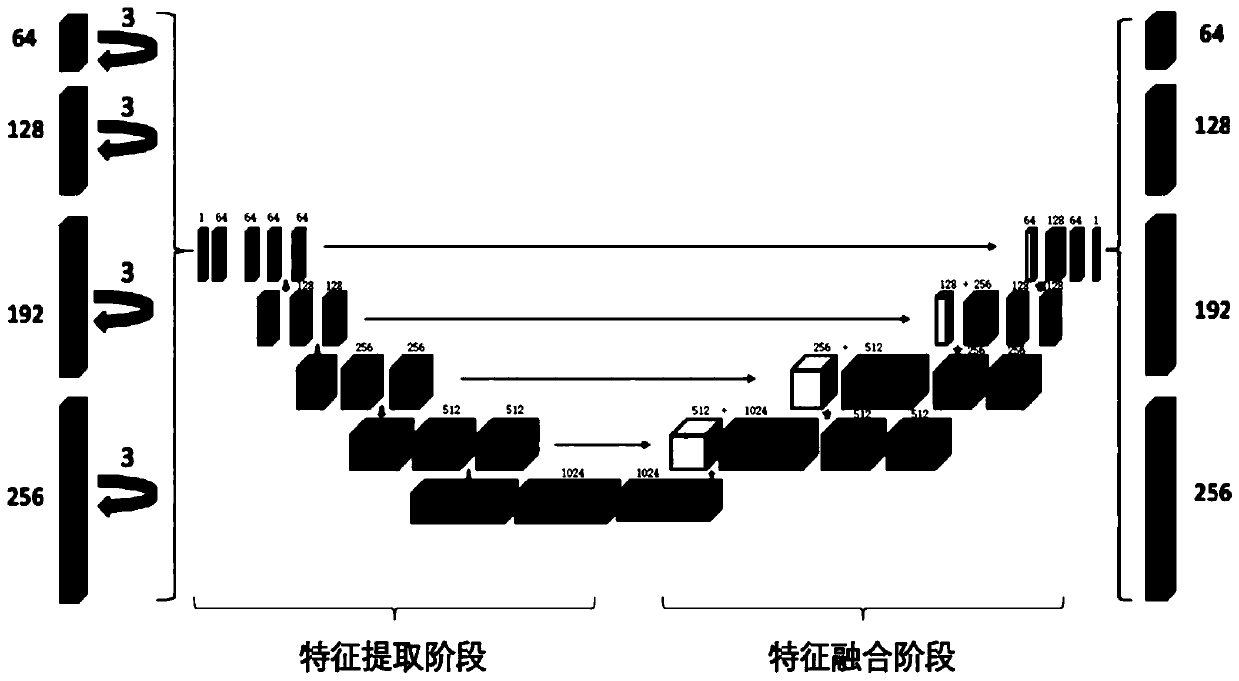

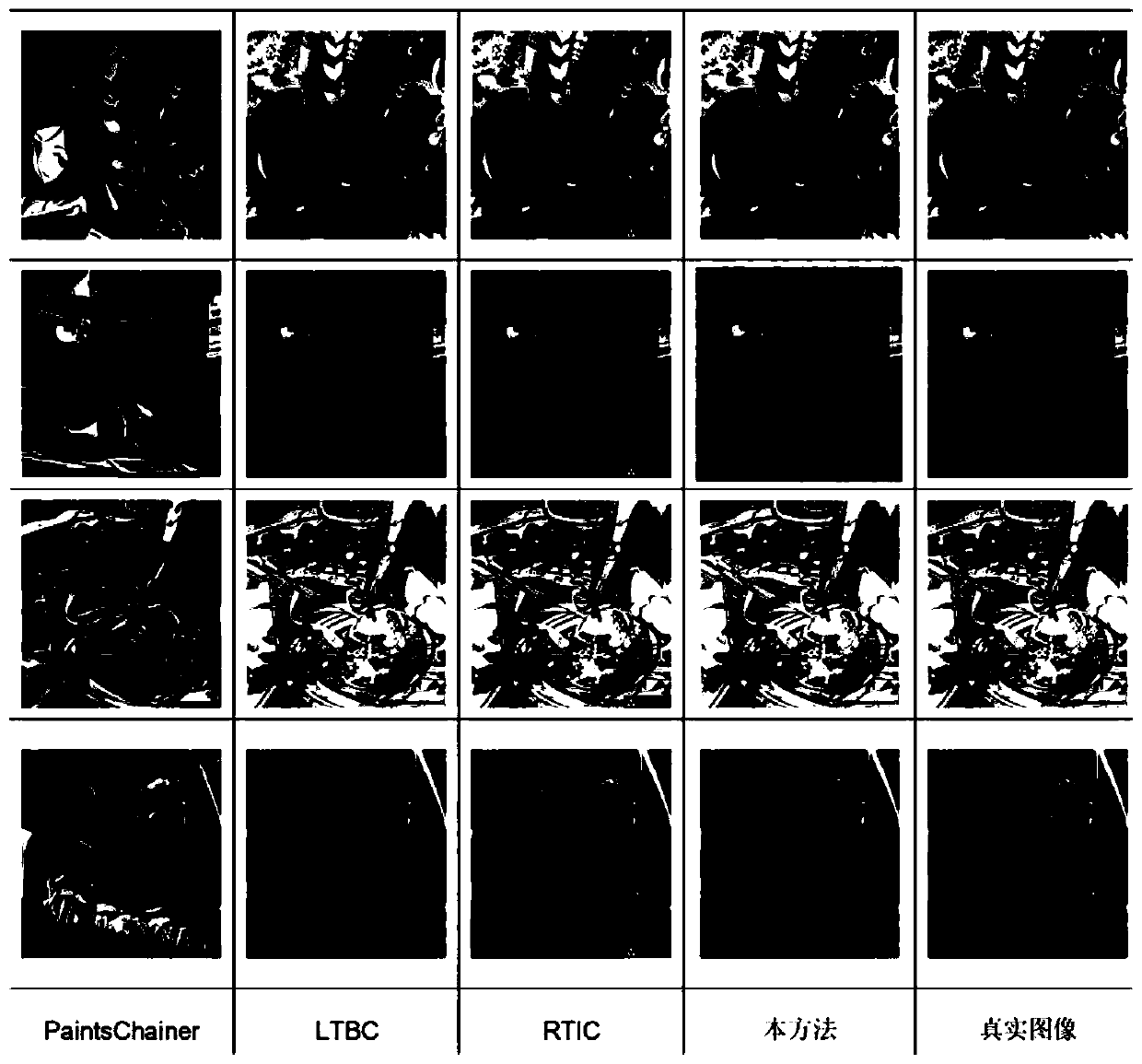

An image coloring method based on a self-attention generative adversarial network

ActiveCN109712203AHigh-resolutionGood coloring effectImage analysisGeometric image transformationColor imageTraining period

The invention discloses an image coloring method based on a self-attention generative adversarial network. The method comprises the following steps: step 1, training a grayscale image coloring model;2, inputting the gray level images in the training data set into an adversarial network to execute a feature extraction stage, a feature fusion stage, a deconvolution calculation stage and a self-attention learning stage to reconstruct corresponding color images; step 3, comparing the color image reconstructed after the self-attention learning with the corresponding original color image, and calculating a penalty function shown in the specification; and step 4, calculating a penalty function according to the formula shown in the specification, 4, taking the loss function as the optimization loss of the GAN on the basis of the formula shown in the specification, wherein the formula shown in the specification is shown in the specification; and step 5, dividing the training process into a plurality of preset sub-training periods, and sequentially training the sub-training periods by adopting a step-by-step growth strategy to obtain the generator network. According to the method, the colorimage conforming to human subjective visual preferences is reconstructed from a black-white or gray level image by adopting an adversarial generation network, so that the color image is more realistic.

Owner:福建帝视信息科技有限公司

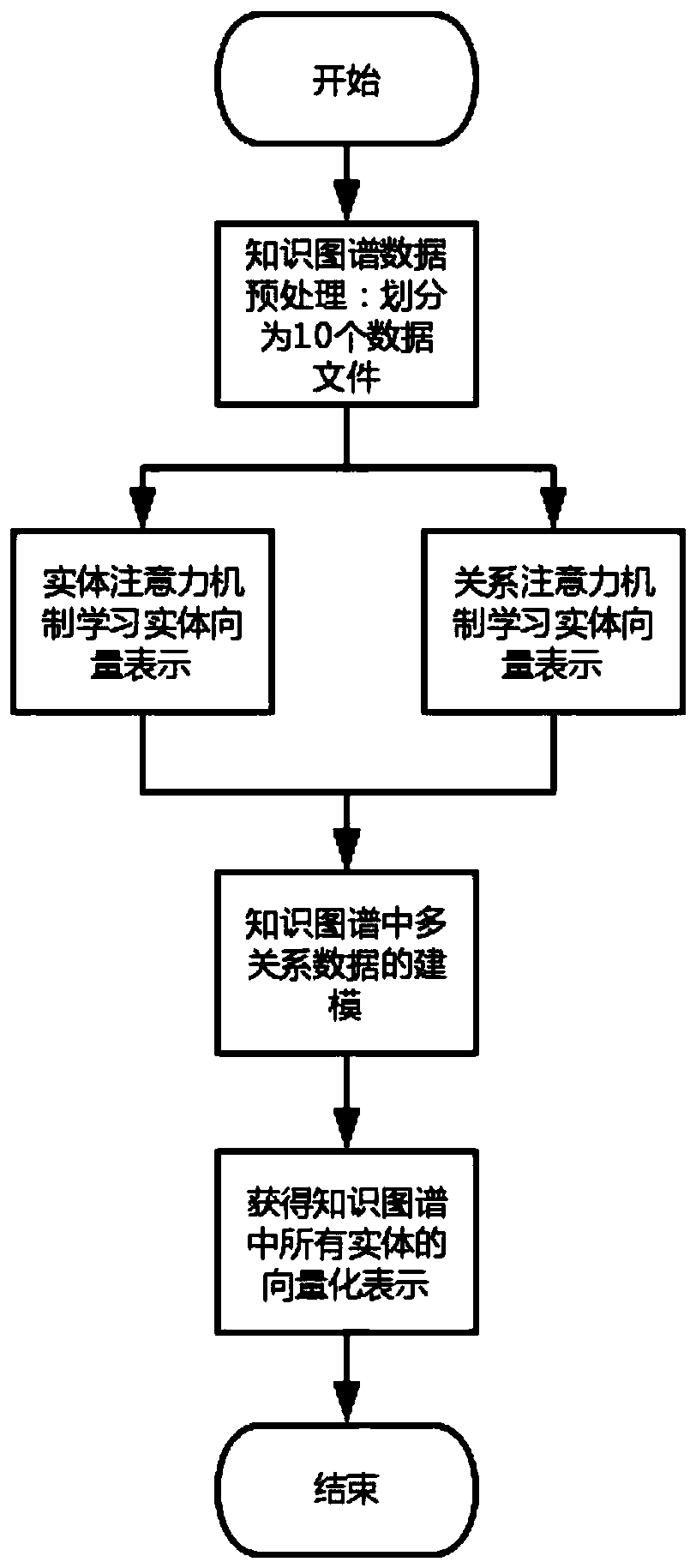

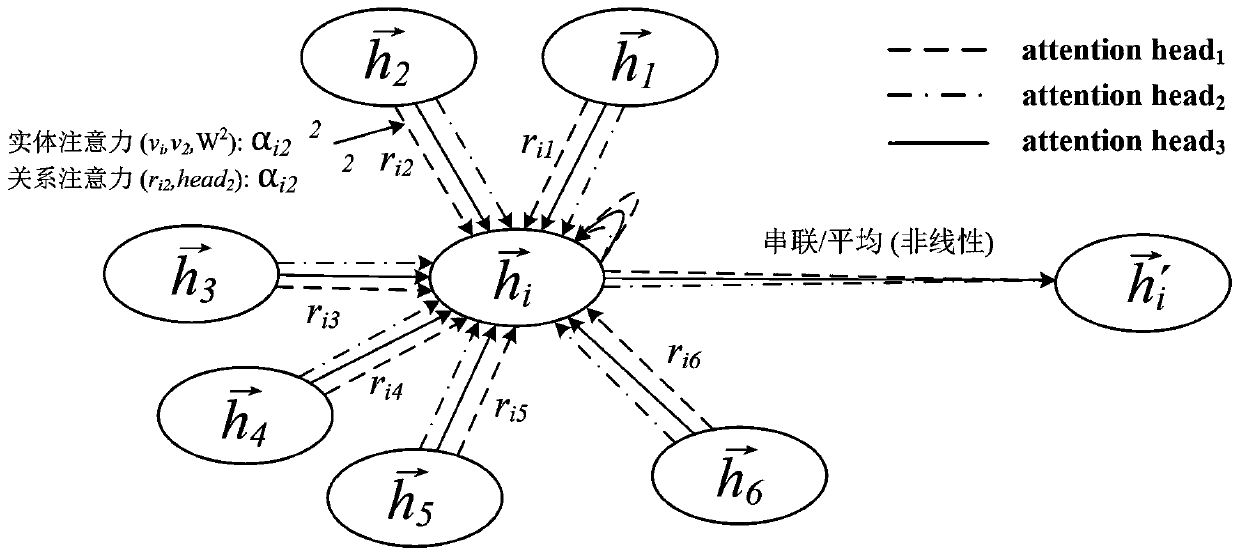

A knowledge graph embedding method based on a diverse graph attention mechanism

ActiveCN109902183AAchieve acquisitionReasonable and Interpretable EmbeddingsSemantic tool creationSelf attentionTraditional knowledge

The invention provides a knowledge graph embedding method based on a diverse graph attention mechanism, and the method comprises the following steps: 1, carrying out the preprocessing of knowledge graph data, and enabling a traditional knowledge graph to be preprocessed into structured data according to model requirements; 2, establishing an entity attention mechanism, wherein the entity attentionmechanism establishing mode is that the vector representation of an entity is learned on an adjacent graph by using n attention heads and self-attention through using a diverse graph attention mechanism; Step 3, establishing a relation attention mechanism in which the vector representation of the entity on the relation graph is learned by using n attention heads and self-attention by using a diverse graph attention mechanism; Step 4, carrying out modeling of multi-relation data in the relation graph; And 5, carrying out model training to obtain vectorized representations of all relationshipsin the knowledge graph.

Owner:BEIHANG UNIV

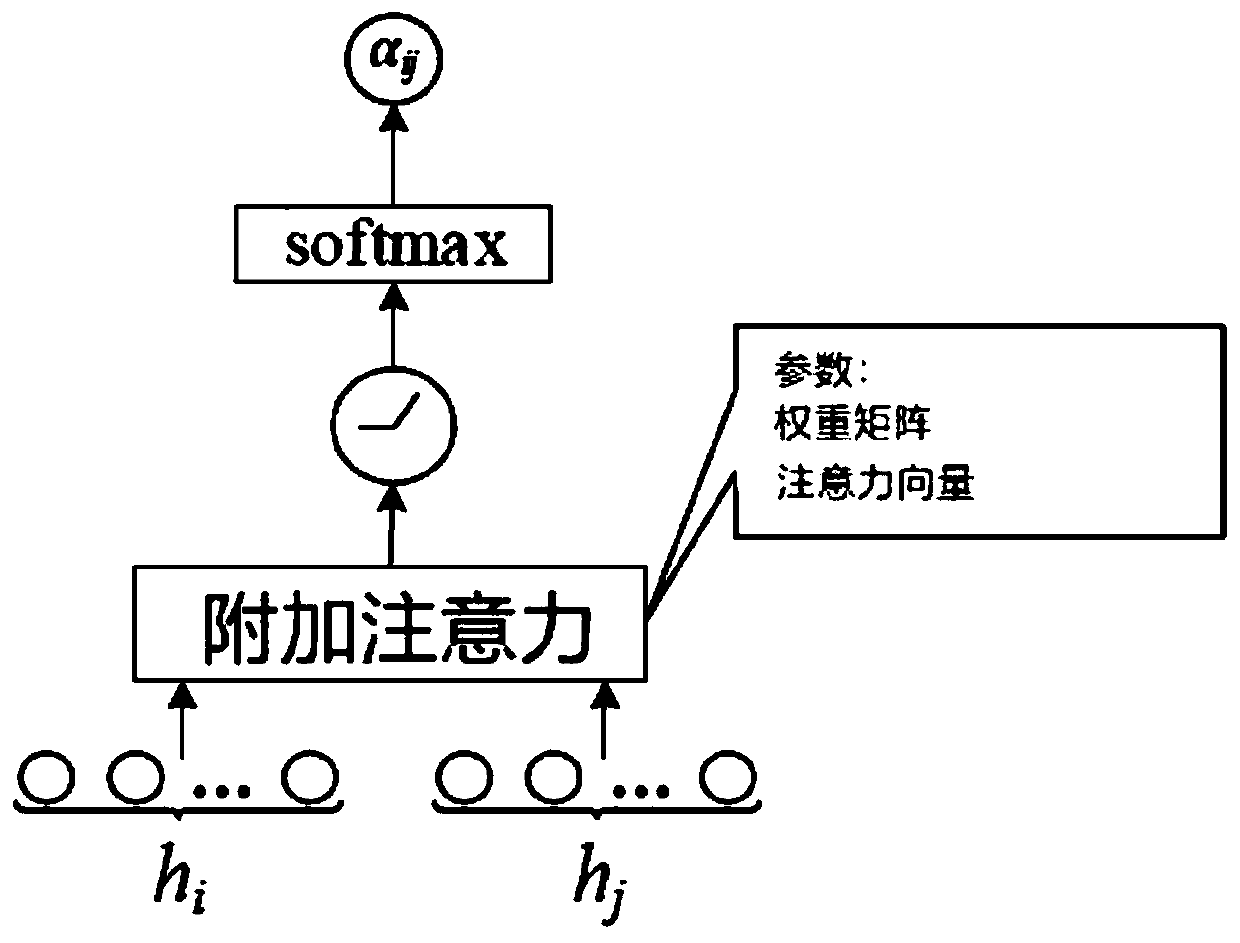

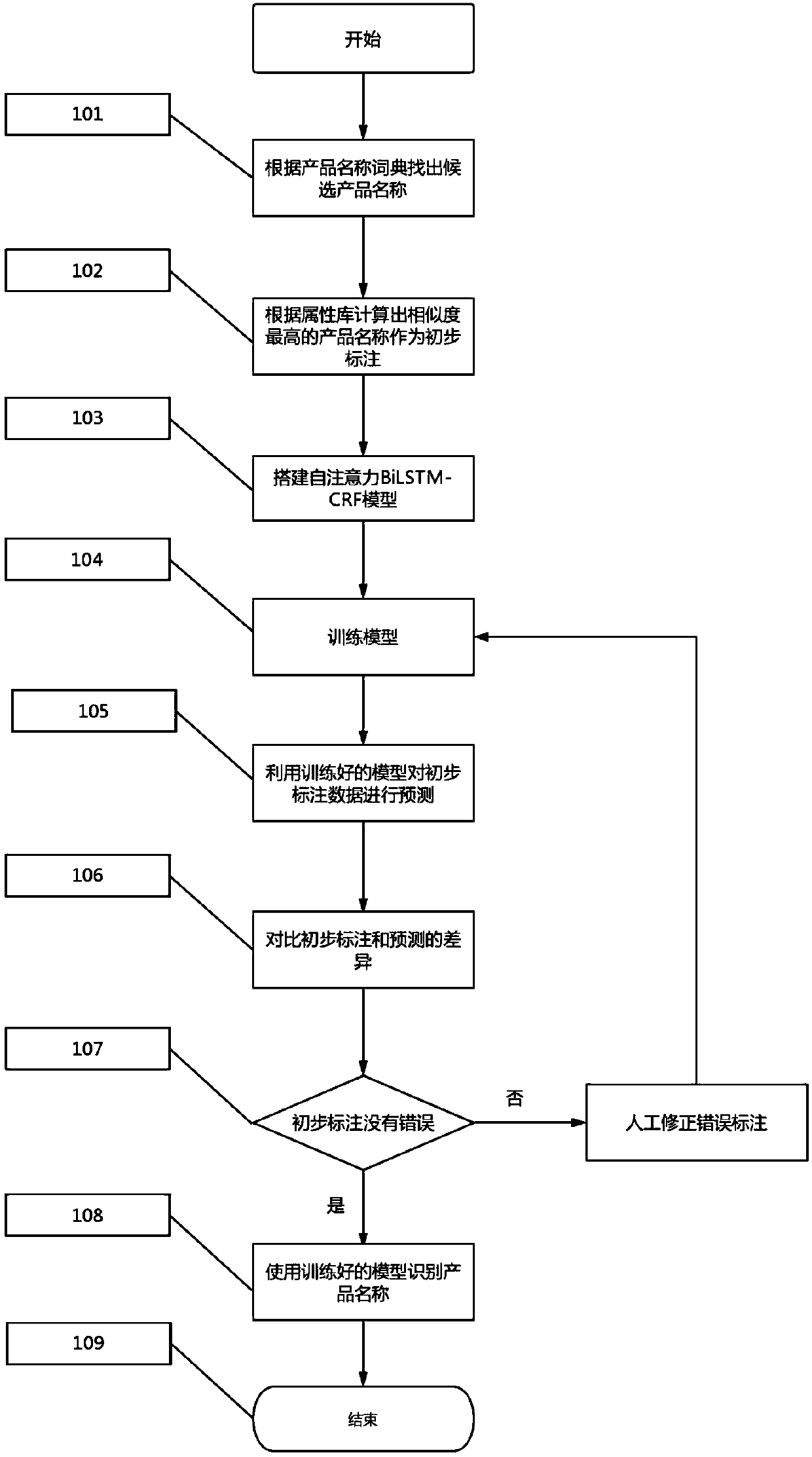

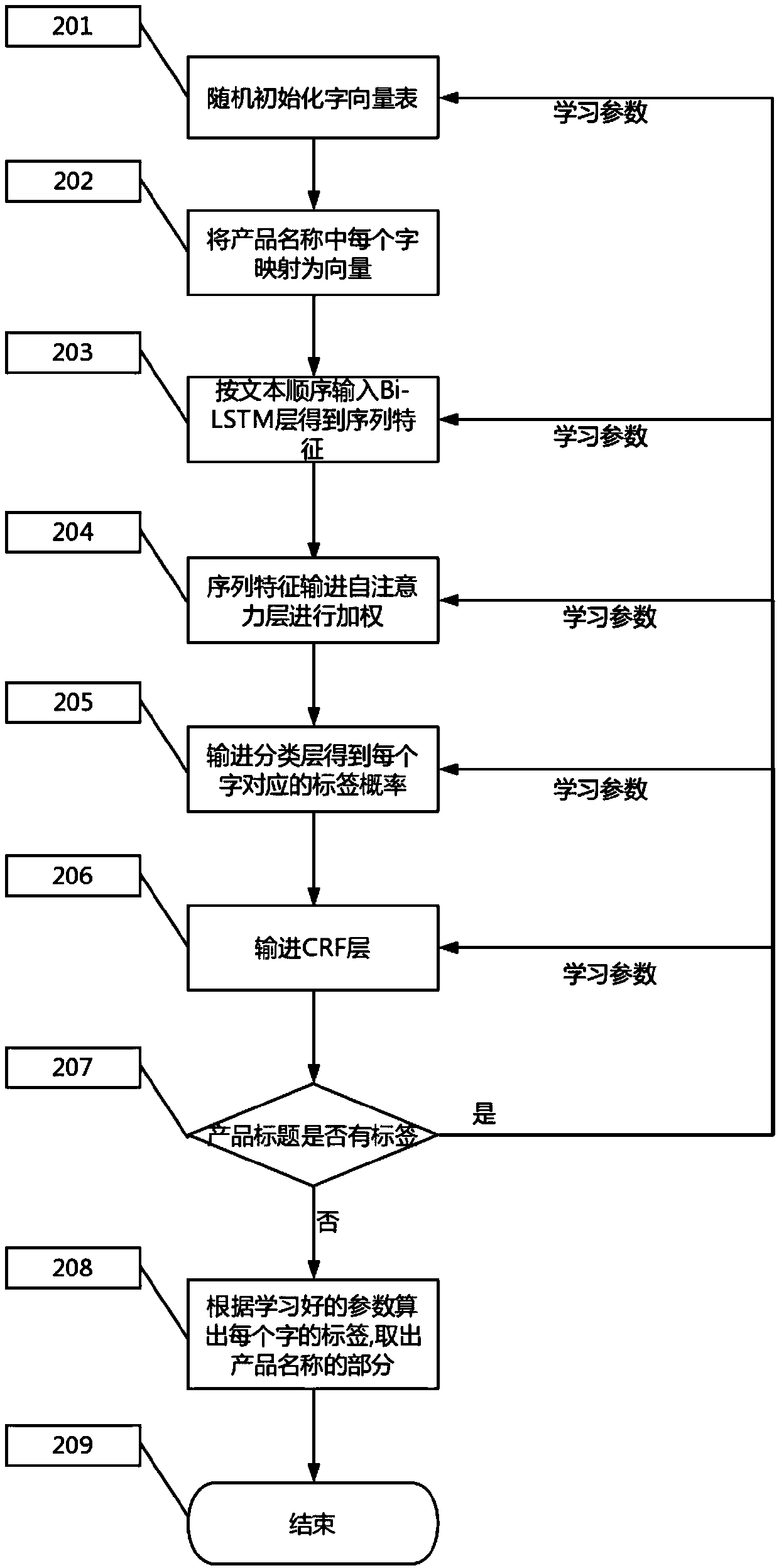

BILSTM-CRF product name identification method based on self-attention

ActiveCN109614614ASolve the situation that is not complete enough and the property library is incompleteReduce labor costsMetadata text retrievalNatural language data processingSelf attentionSemi automatic

The invention discloses a BILSTM-CRF product name identification method based on self-attention. The identification method is characterized by comprising three parts of semi-automatic product title data labeling, model construction and training and model use. The semi-automatically labeling product title data part establishes an iterative process of preliminary labeling, learning, label prediction, manual correction, learning and label prediction, the model construction and training part includes performing N-dimensional dense vector coding on each word, inputting the N-dimensional dense vector coding into a BiLSTM layer to obtain text sequence characteristics, and obtaining a label probability of each word by utilizing a Softmax classification layer; extracting text local features by using the CRF layer, and training a model; the model use part is used for extracting text features and obtaining probability of all labels by utilizing a classification layer; and obtaining a corresponding tag by using a Viterbi algorithm, thereby identifying the product name. According to the method, the labor cost is greatly reduced, and the model accuracy and robustness are improved.

Owner:FOCUS TECH

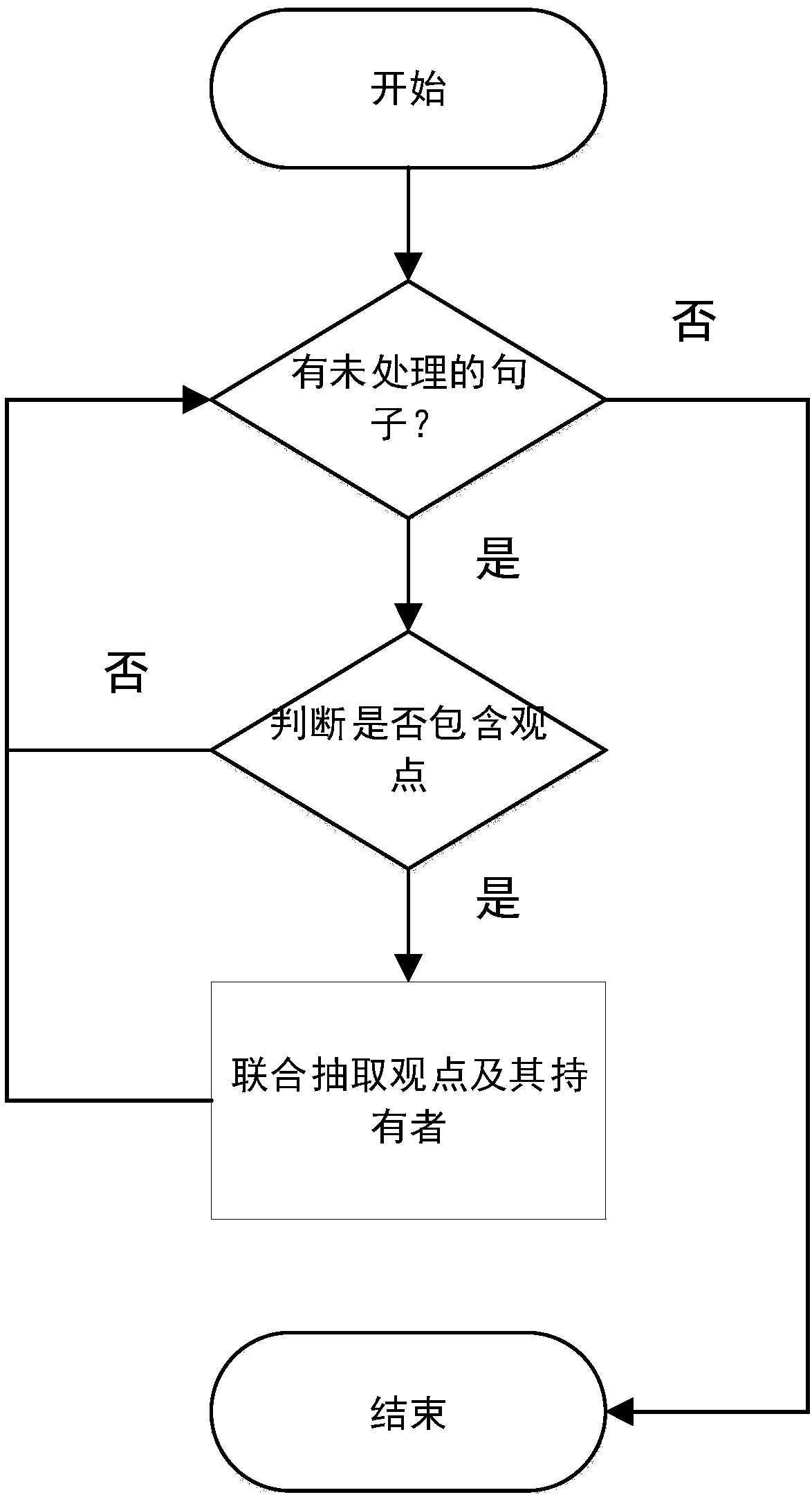

Joint extraction method for viewpoints and viewpoint holders based on self-attention

ActiveCN108628828AAvoid Situations That Don't Include OpinionsAvoid the influence of the extraction effectSemantic analysisSpecial data processing applicationsViewpointsSelf attention

The invention is based on a joint extraction method for viewpoints and viewpoint holders based on self-attention. The method comprises the steps of S1, constructing a corpus for extracting the viewpoints and the viewpoint holders; S2, identifying statements containing the viewpoints; S3, conducting joint extraction on the viewpoints and the viewpoint holders. The method has the advantages that thesituation that extracted sentences do not contain the viewpoints is avoided through a text classification model; a joint extraction model for the viewpoints and the viewpoint holders is free from natural language processing links such as part-of-speech tagging, named entity recognition and syntactic dependency analysis, avoids the influence of errors in the links on the extraction effect of the model, and has high flexibility and coverage; the method comprises the steps of constructing the corpus for extracting the viewpoints and the viewpoint holders, identifying the statements containing the viewpoints and conducting joint extraction on the viewpoints and the viewpoint holders; self-attention is used on the basis of two-way LSTM, the advantages of the self-attention and the two-way LSTMare effectively combined, the representation semantics of word sequences is more abundant, and the accuracy of the trained model is higher.

Owner:NAT COMP NETWORK & INFORMATION SECURITY MANAGEMENT CENT +1

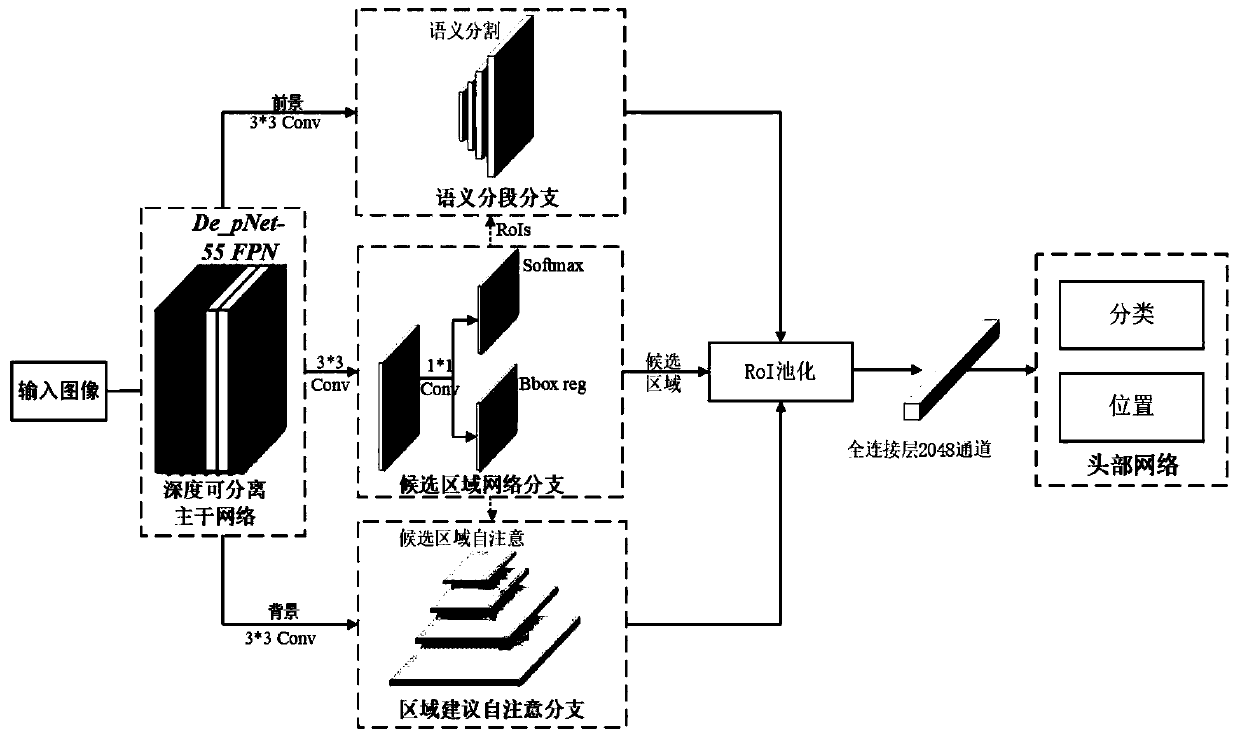

Target detection method based on scene level and region suggestion self-attention module

ActiveCN110516670AImprove accuracyCharacter and pattern recognitionNeural architecturesSelf attentionFeature extraction

The invention discloses a target detection method based on a scene-level and regional suggestion self-attention module, which combines various advanced network structures and concepts and considers the importance of scene information and semantic information to visual recognition. The method comprises the following steps: firstly, constructing a target detection model of a deep separable shared network, a scene level-region suggestion self-attention module and a lightweight head network; training the target detection model by using the training image to obtain a trained target detection model;and finally, sending the to-be-detected image into the trained target detection model to obtain position information and category information of the target in the image. The method is not limited tothe appearance characteristics of the target object in the image, but performs modeling characteristic extraction processing on the relationship information between the scene information and the object, and predicts the object in the image according to the structure, so that the detection accuracy can be greatly improved.

Owner:GUANGXI NORMAL UNIV

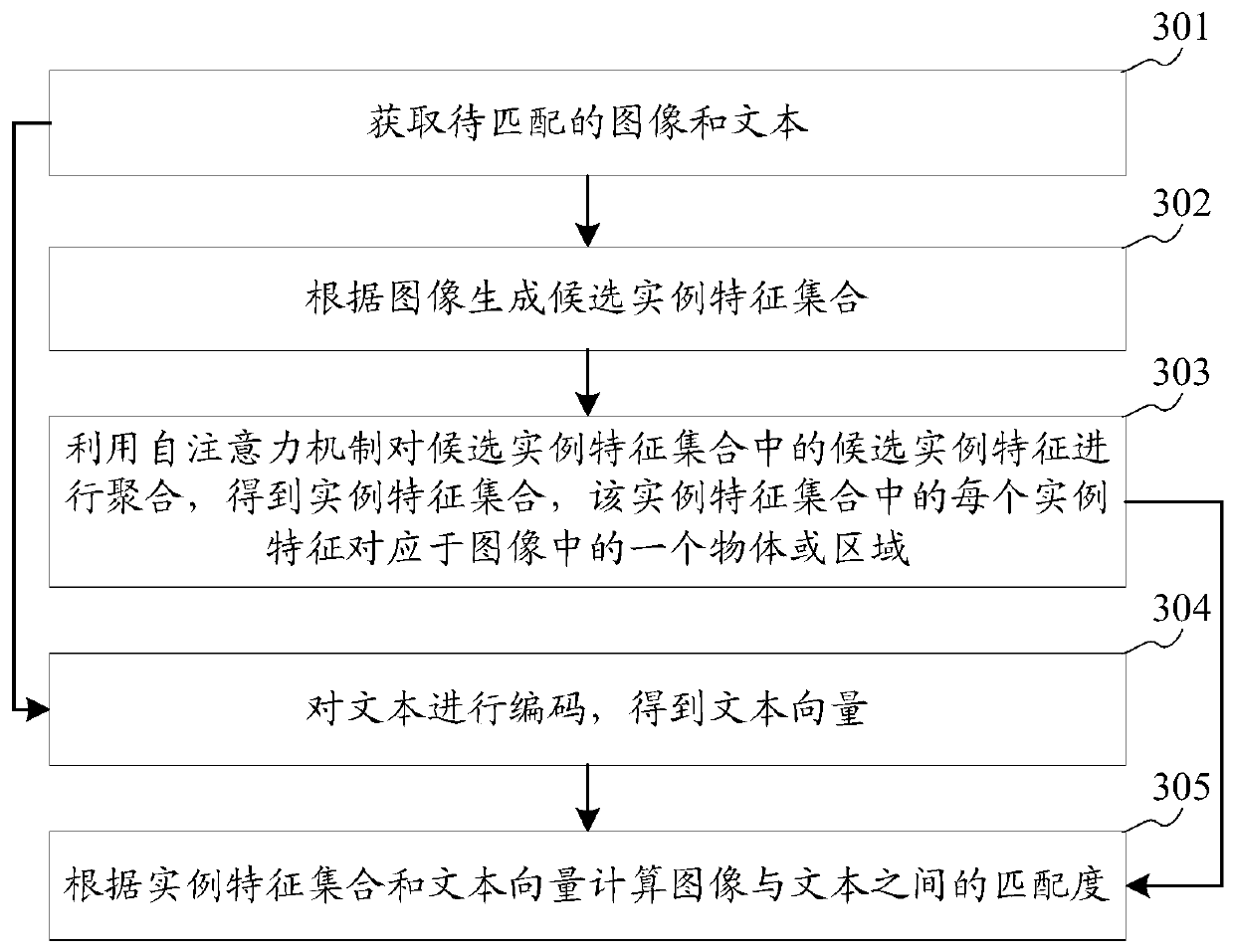

Image-text matching method and device, storage medium and equipment

ActiveCN110147457ASolve the problem of difficult trainingSimplify implementation difficultyVideo data queryingMetadata video data retrievalPattern recognitionSelf attention

The embodiment of the invention discloses an image-text matching method and device, a storage medium and equipment, and belongs to the technical field of computers. The image-text matching method comprises the steps of obtaining an image and a text to be matched; generating a candidate instance feature set according to the image; aggregating candidate instance features in the candidate instance feature set by using a self-attention mechanism to obtain an instance feature set, each instance feature in the instance feature set corresponding to one object in the image; encoding the text to obtaina text vector; and calculating a matching degree between the image and the text according to the instance feature set and the text vector. According to the embodiment of the invention, the realization difficulty of image-text matching can be simplified, and the accuracy of image-text matching can be improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com