Machine reading understanding method based on a multi-head attention mechanism and dynamic iteration

A technology for reading comprehension and attention, applied in instruments, digital data processing, and special data processing applications, etc., can solve problems such as loss of semantic information, calculation of one-way attention, inability to fully integrate semantic information of articles and questions, etc., to achieve The effect of enriching semantic information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

experiment example

[0070] The present invention uses the SQuAD dataset to train and evaluate the model. The model uses a dropout ratio of 0.2 between character embedding, word embedding, and model layers, and optimizes the model with an optimizer AdaDelta with an initial learning rate of 1.0. The ρ and ε used by AdaDelta are 0.95 and ε respectively. 1×e -6 . The batch size of training samples is 12.

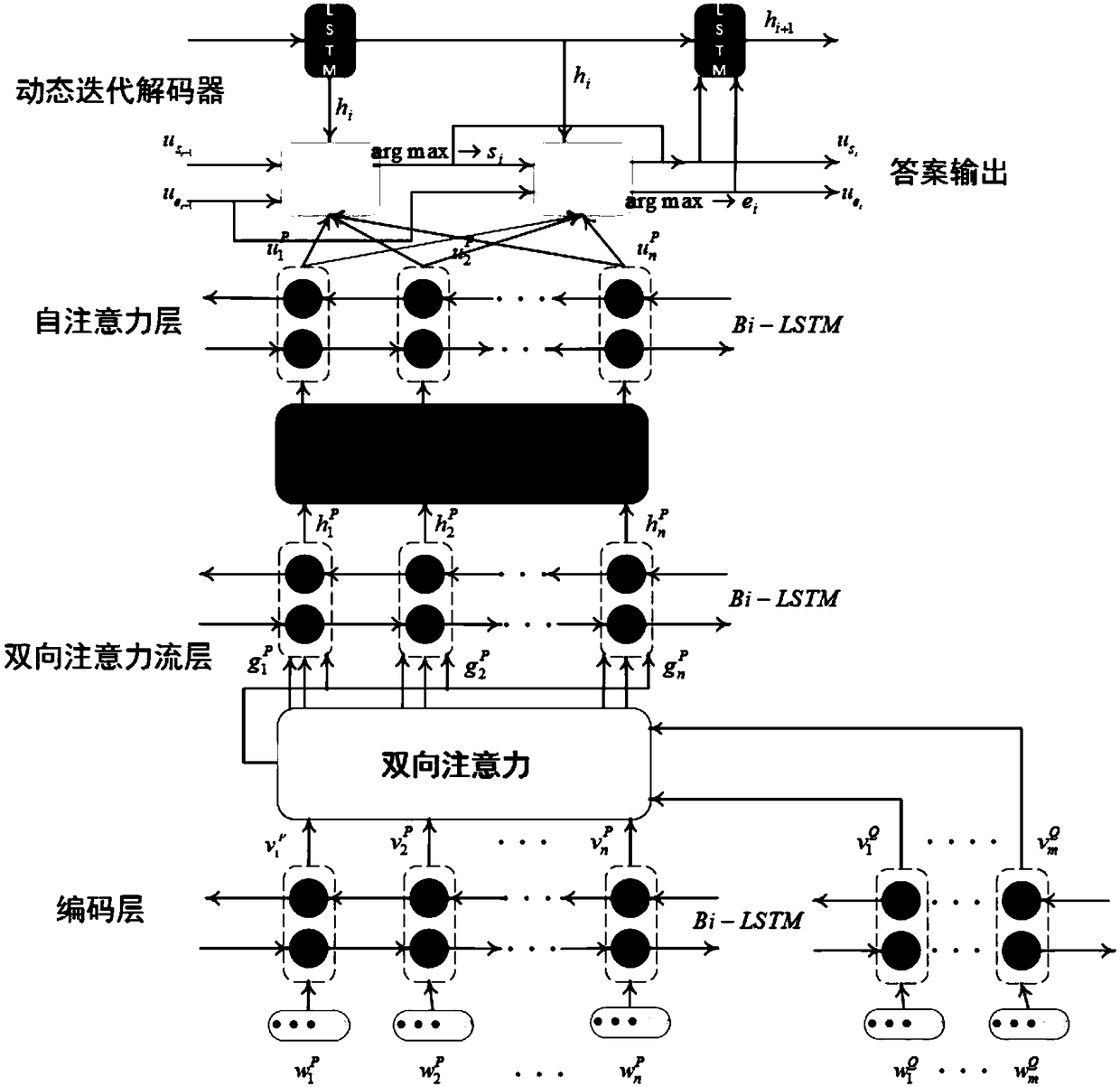

[0071] The realization of model training requires the coordination of the encoding layer, recurrent neural network layer, self-attention layer and output layer of the model, as follows:

[0072] (1) Coding layer

[0073] First, use the word segmentation tool Spacy to perform word segmentation processing for each article and question. The maximum number of words in the article is set to 400, and the maximum number of words in the question is set to 50. The samples are processed according to the set value, and the words longer than the set value are discarded. the text portion of the value, with ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com