Patents

Literature

928 results about "Word embedding" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

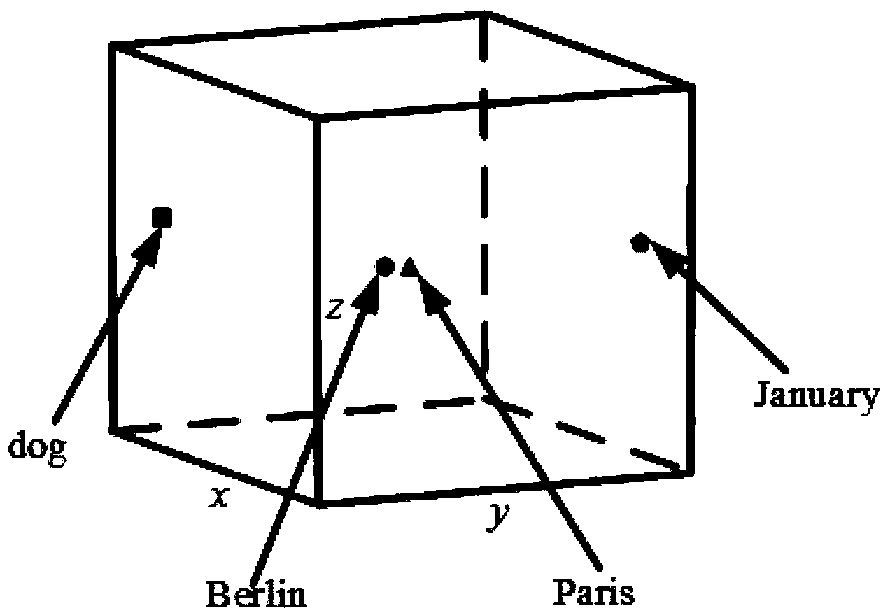

Word embedding is the collective name for a set of language modeling and feature learning techniques in natural language processing (NLP) where words or phrases from the vocabulary are mapped to vectors of real numbers. Conceptually it involves a mathematical embedding from a space with many dimensions per word to a continuous vector space with a much lower dimension.

Neural paraphrase generator

ActiveUS20180329883A1Increase generationReduce in quantityNatural language translationDigital data information retrievalParaphraseAlgorithm

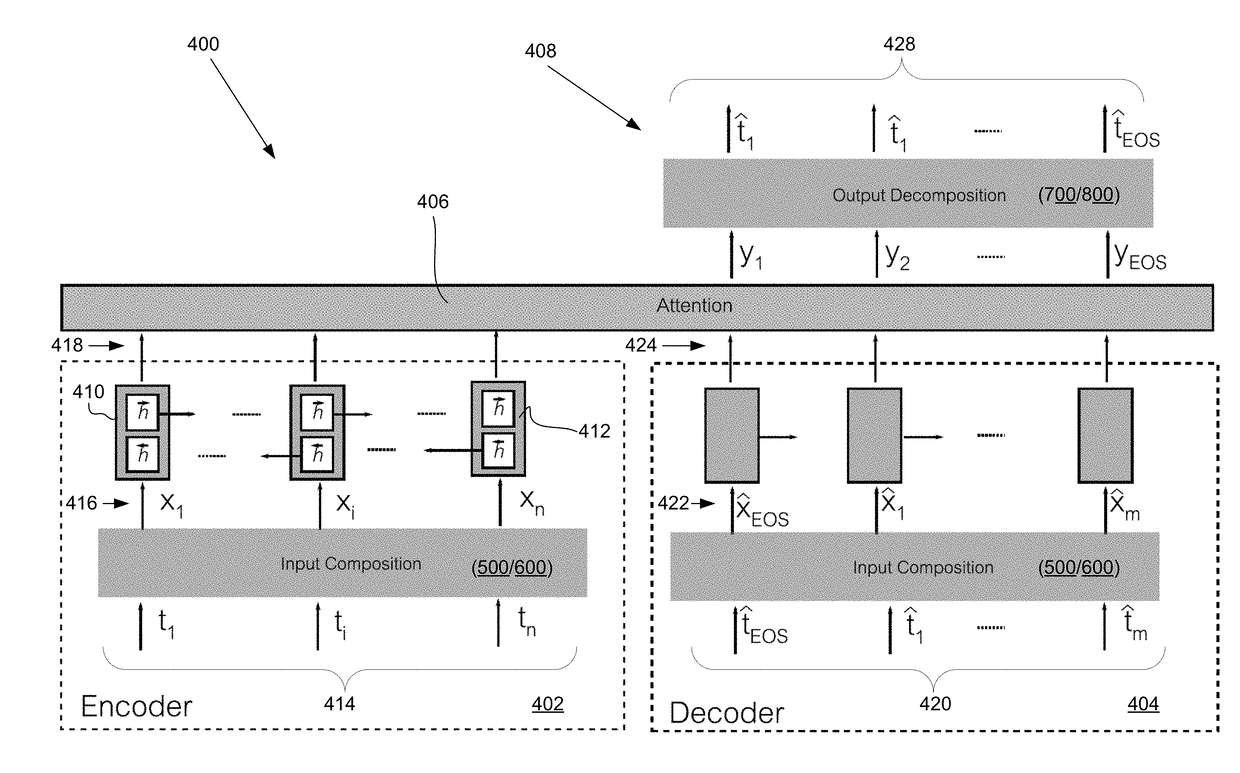

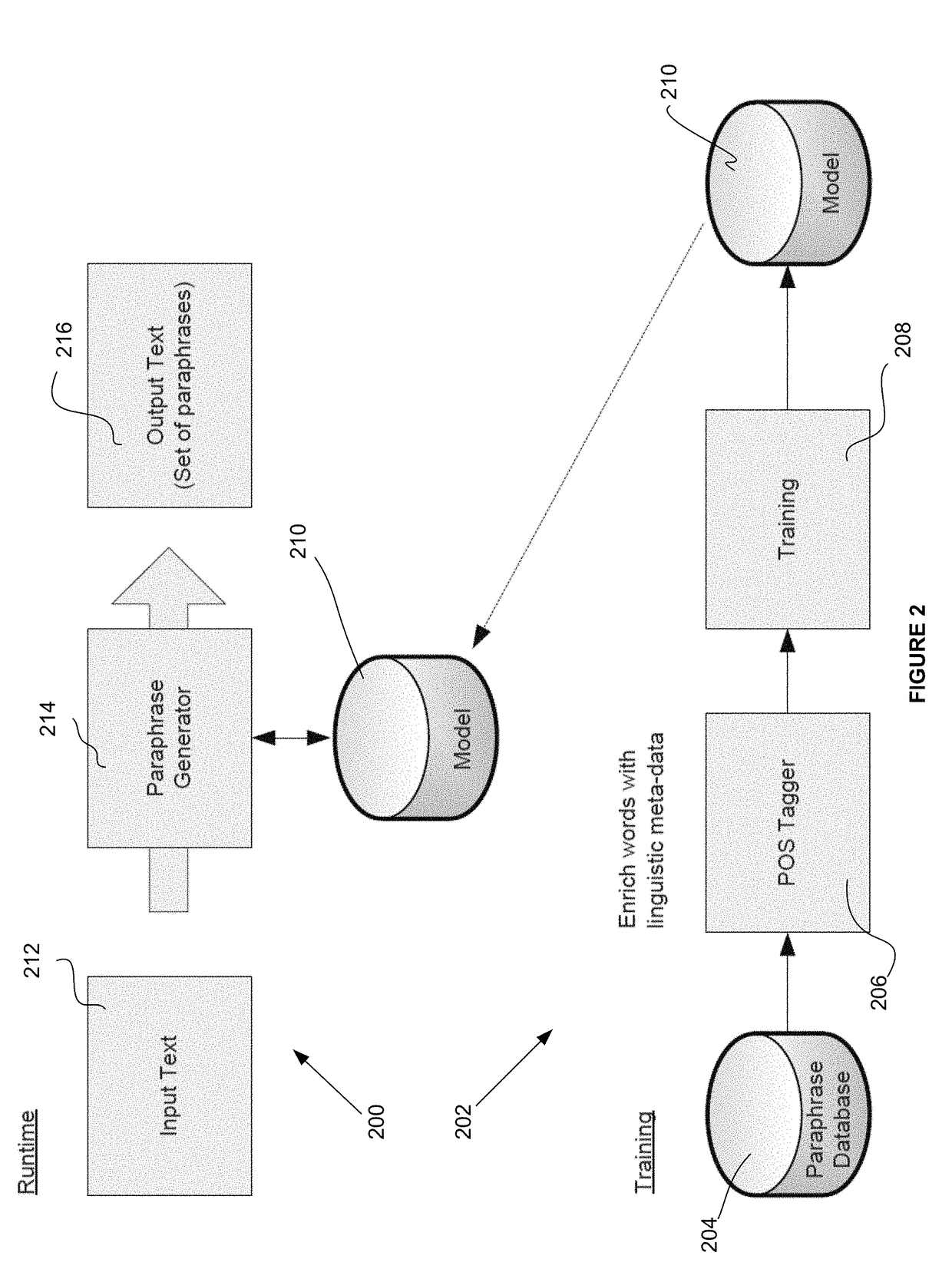

A neural paraphrase generator receives a sequence of tuples comprising a source sequence of words, each tuple comprising word data element and structured tag element representing a linguistic attribute about the word data element. An RNN encoder receives a sequence of vectors representing a source sequence of words, and RNN decoder predicts a probability of a target sequence of words representing a target output sentence based on a recurrent state in the decoder. An input composition component includes a word embedding matrix and a tag embedding matrix, and receives and transforms the input sequence of tuples into a sequence of vectors by 1) mapping word data elements to word embedding matrix to generate word vectors, 2) mapping structured tag elements to tag embedding matrix to generate tag vectors, and 3) concatenating word vectors and tag vectors. An output decomposition component outputs a target sequence of tuples representing predicted words and structured tag elements, the probability of each single tuple from the output is predicted based on a recurrent state of the decoder.

Owner:THOMSON REUTERS ENTERPRISE CENT GMBH

Systems, methods, and computer readable media for visualization of semantic information and inference of temporal signals indicating salient associations between life science entities

ActiveUS20180082197A1Superior visualization systemSemantic analysisBiological modelsData scienceSemantic information

Disclosed systems, methods, and computer readable media can detect an association between semantic entities and generate semantic information between entities. For example, semantic entities and associated semantic collections present in knowledge bases can be identified. A time period can be determined and divided into time slices. For each time slice, word embeddings for the identified semantic entities can be generated; a first semantic association strength between a first semantic entity input and a second semantic entity input can be determined; and a second semantic association strength between the first semantic entity input and semantic entities associated with a semantic collection that is associated with the second semantic entity can be determined. An output can be provided based on the first and second semantic association strengths.

Owner:NFERENCE INC

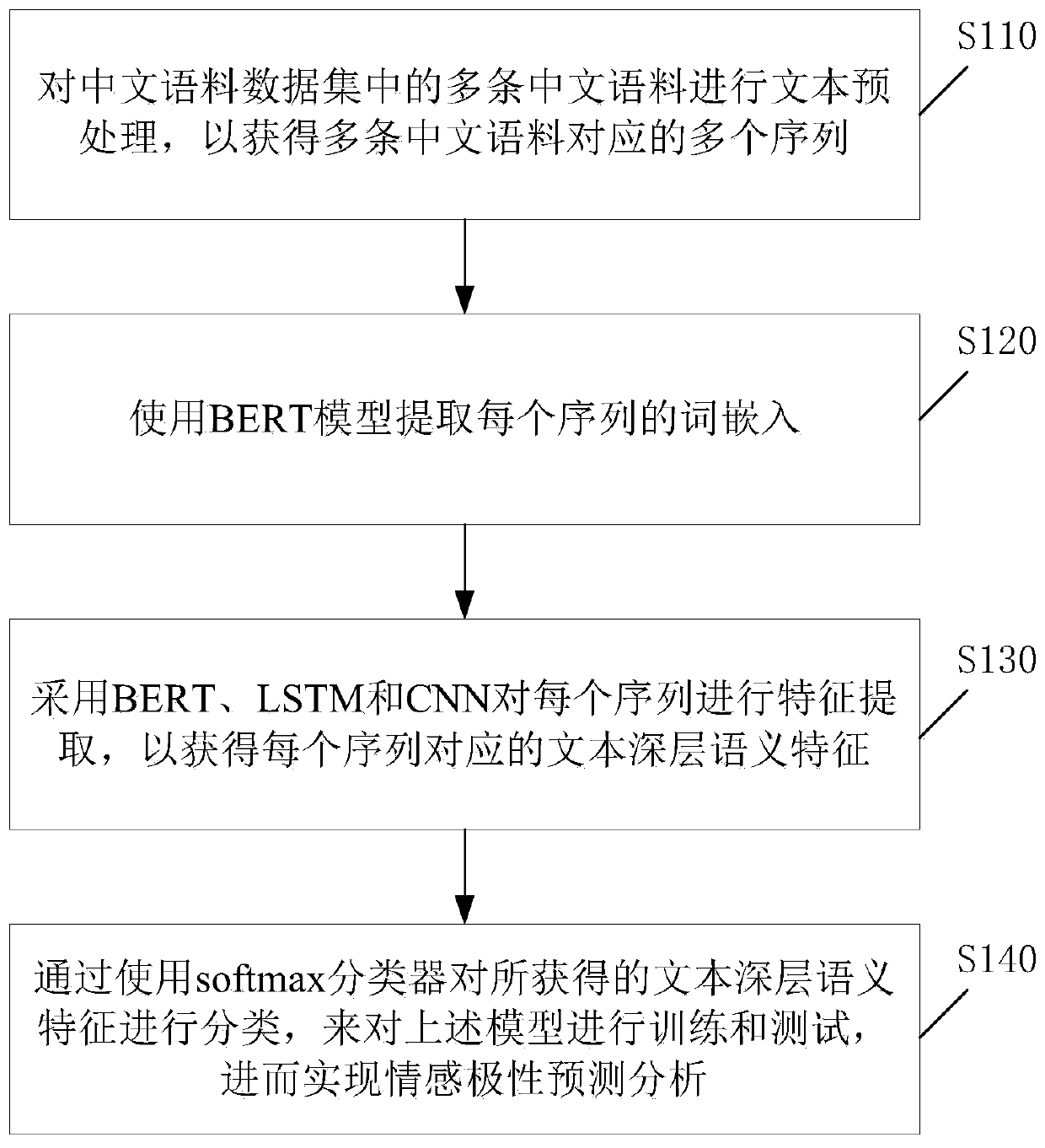

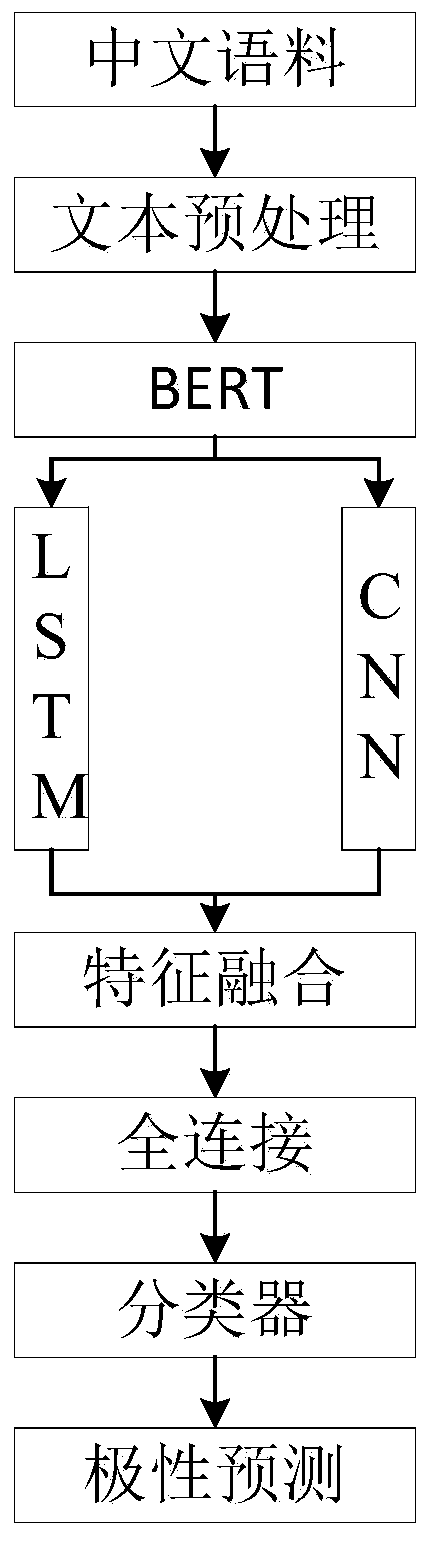

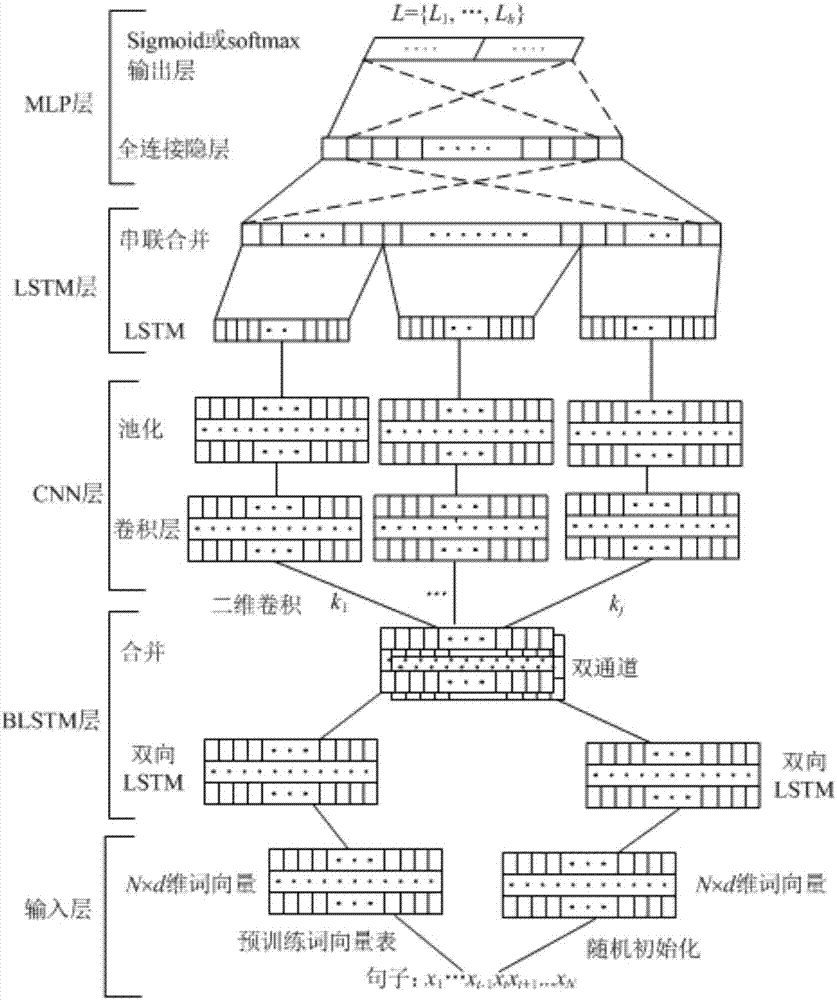

Chinese sentiment analysis method based on BERT, LSTM and CNN fusion

The invention provides a Chinese sentiment analysis method based on BERT, LSTM and CNN fusion. The Chinese sentiment analysis method comprises the steps: performing text preprocessing on a plurality of Chinese corpora in a Chinese corpus data set to obtain a plurality of sequences corresponding to the plurality of Chinese corpora; extracting word embedding of each sequence by using a BERT model; performing feature extraction on each sequence by adopting BERT, LSTM and CNN to obtain text deep semantic features corresponding to each sequence; and classifying the obtained text deep semantic features by using a softmax classifier to train and test the model so as to realize sentiment polarity prediction analysis. According to the technology, the defects in the prior art can be overcome, and the accuracy of Chinese text sentiment analysis is improved.

Owner:HARBIN UNIV OF SCI & TECH

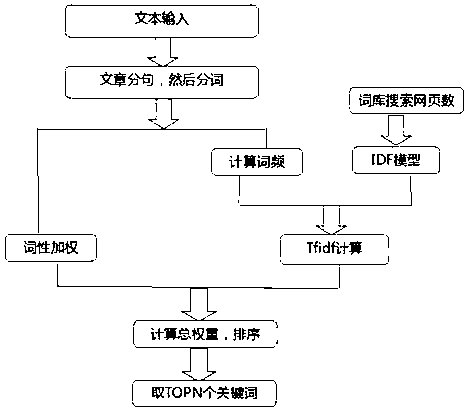

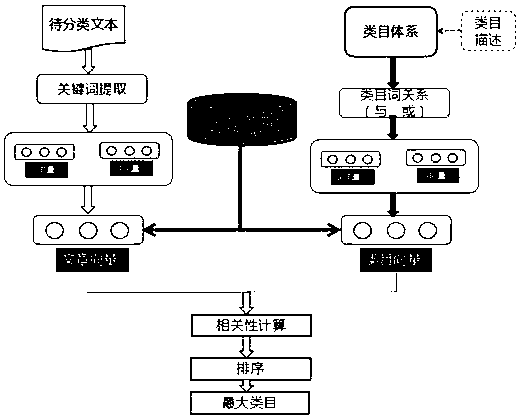

Natural language processing-based multi-language analysis method and device

ActiveCN108197109AQuality improvementEnable multilingual analysisCharacter and pattern recognitionNatural language data processingAlgorithmModel selection

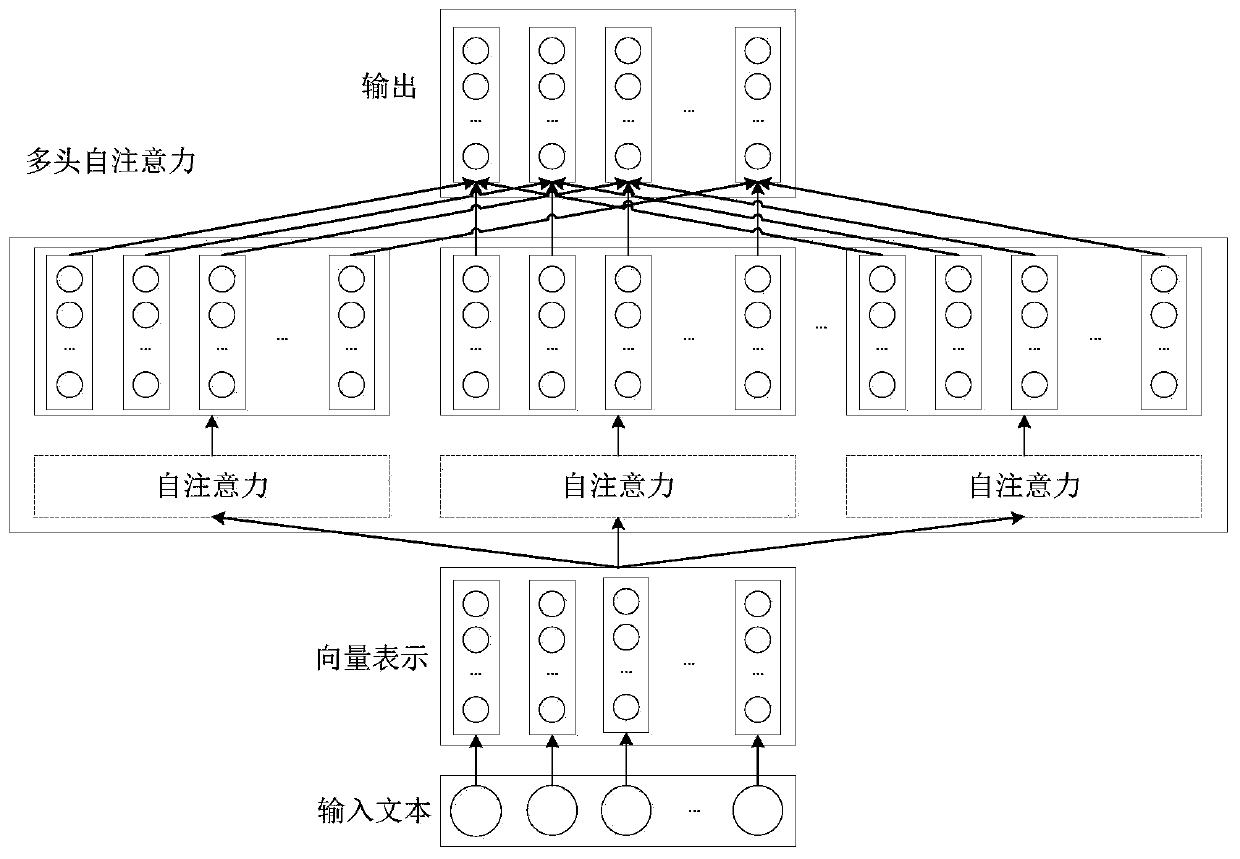

The invention discloses a natural language processing-based multi-language analysis method and device. The method comprises the following steps of: selecting to input a natural language text information language category through a language detection training model; obtaining word embedding expression information of corresponding words which can be recognized by a computer through a trained word vector model, and extracting a keyword of the obtained word embedding expression information through a TF-IDF manner; calculating an article vector and a category vector of each preset category according to the keyword and a keyword weight, and calculating a similarity between an article of natural language text information and each preset category so as to determine a text classification result ofthe natural language text information; and inputting the word embedding expression information of the natural language text information into a trained convolutional neural network and a parallel-framework text emotion analysis model of a bidirectional gate circulation unit, and obtaining a final emotion tendency value through calculation. According to the method and device, the problem that traditional multi-language analysis method needs to know domain knowledges of related linguistics and needs plenty of manpower to carry out operation is solved.

Owner:北京百分点科技集团股份有限公司

Global semantic word embeddings using bi-directional recurrent neural networks

ActiveUS20190355346A1Improve performanceLess training dataMathematical modelsNatural language analysisData-driven learningData-driven

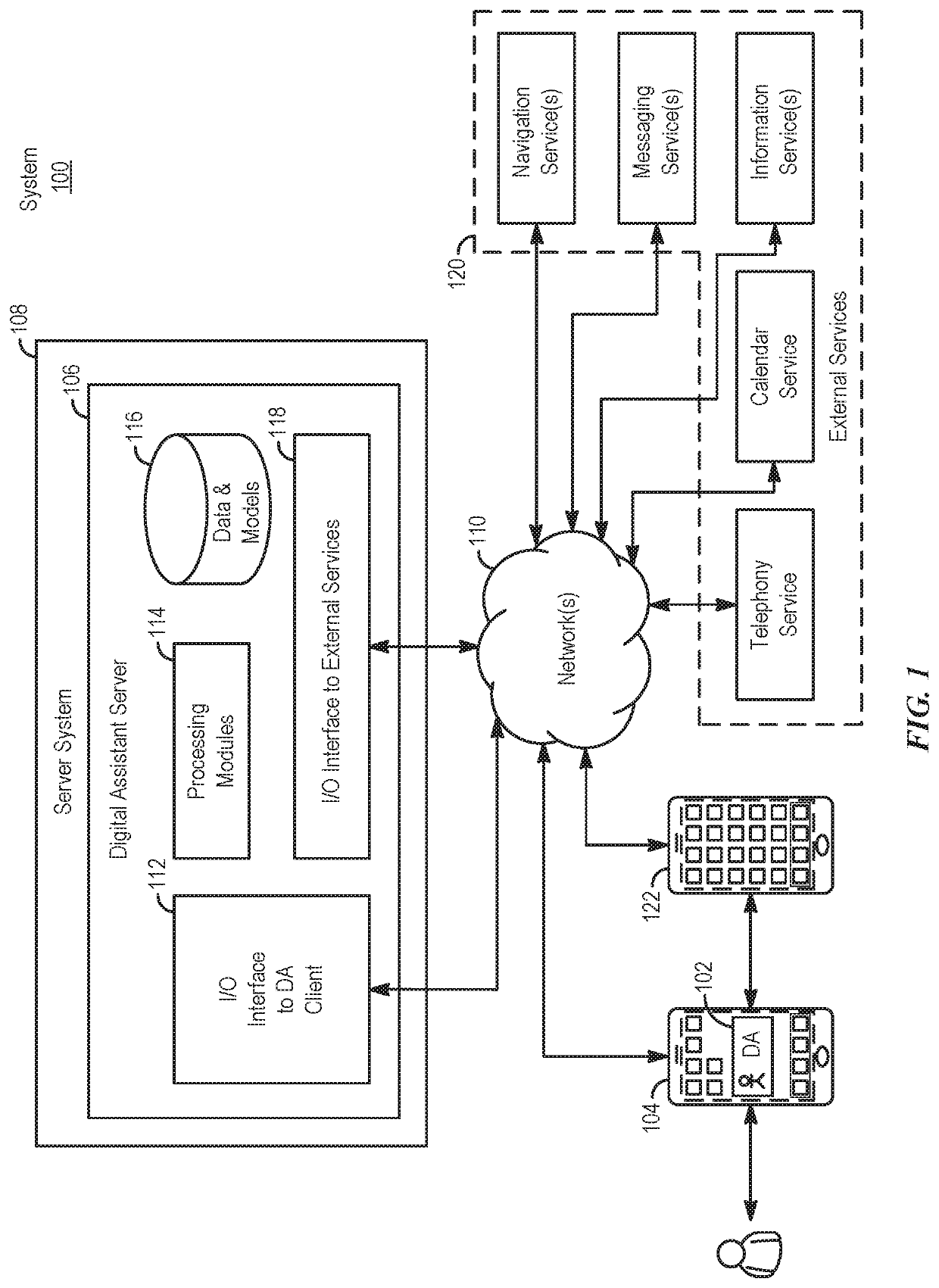

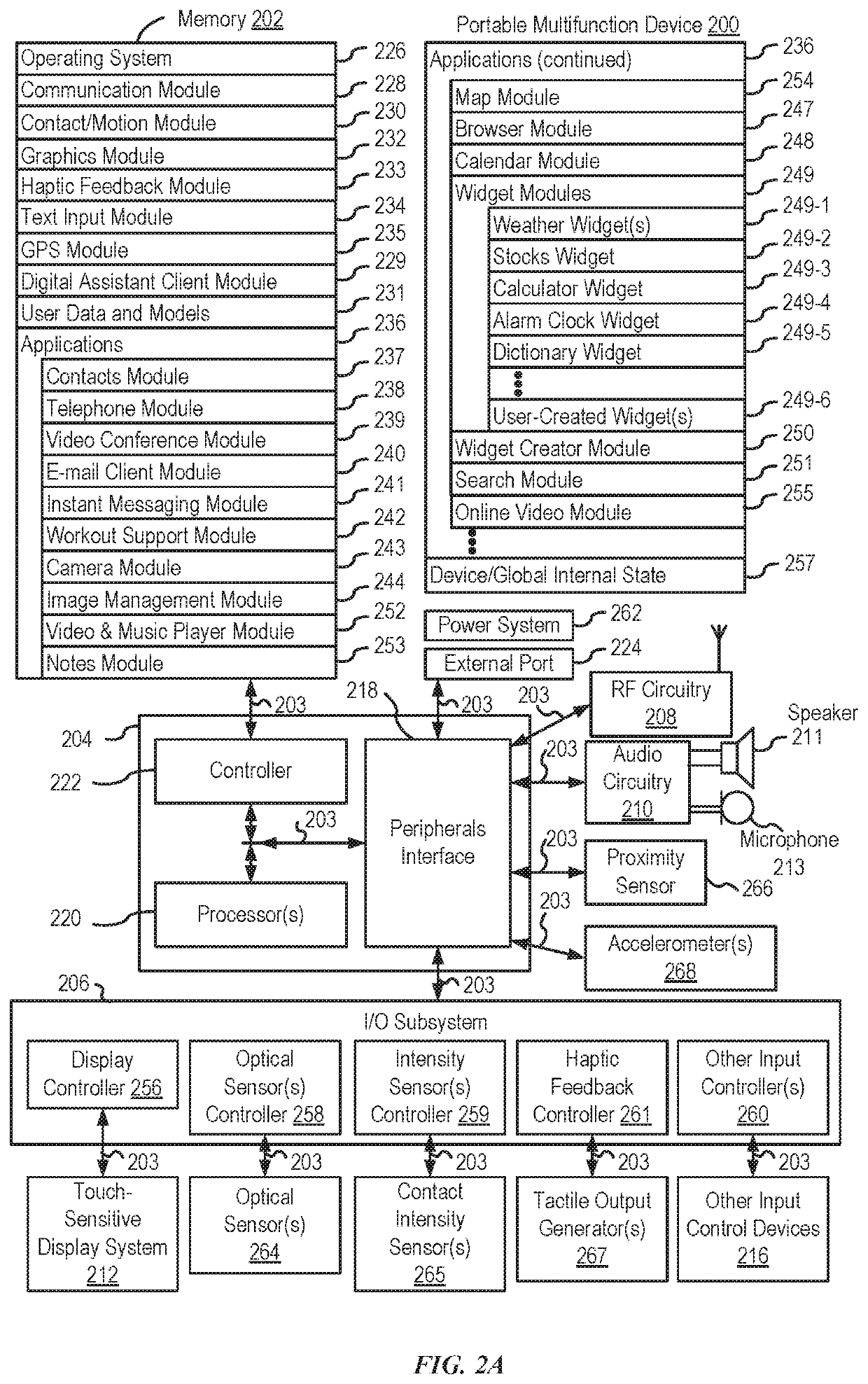

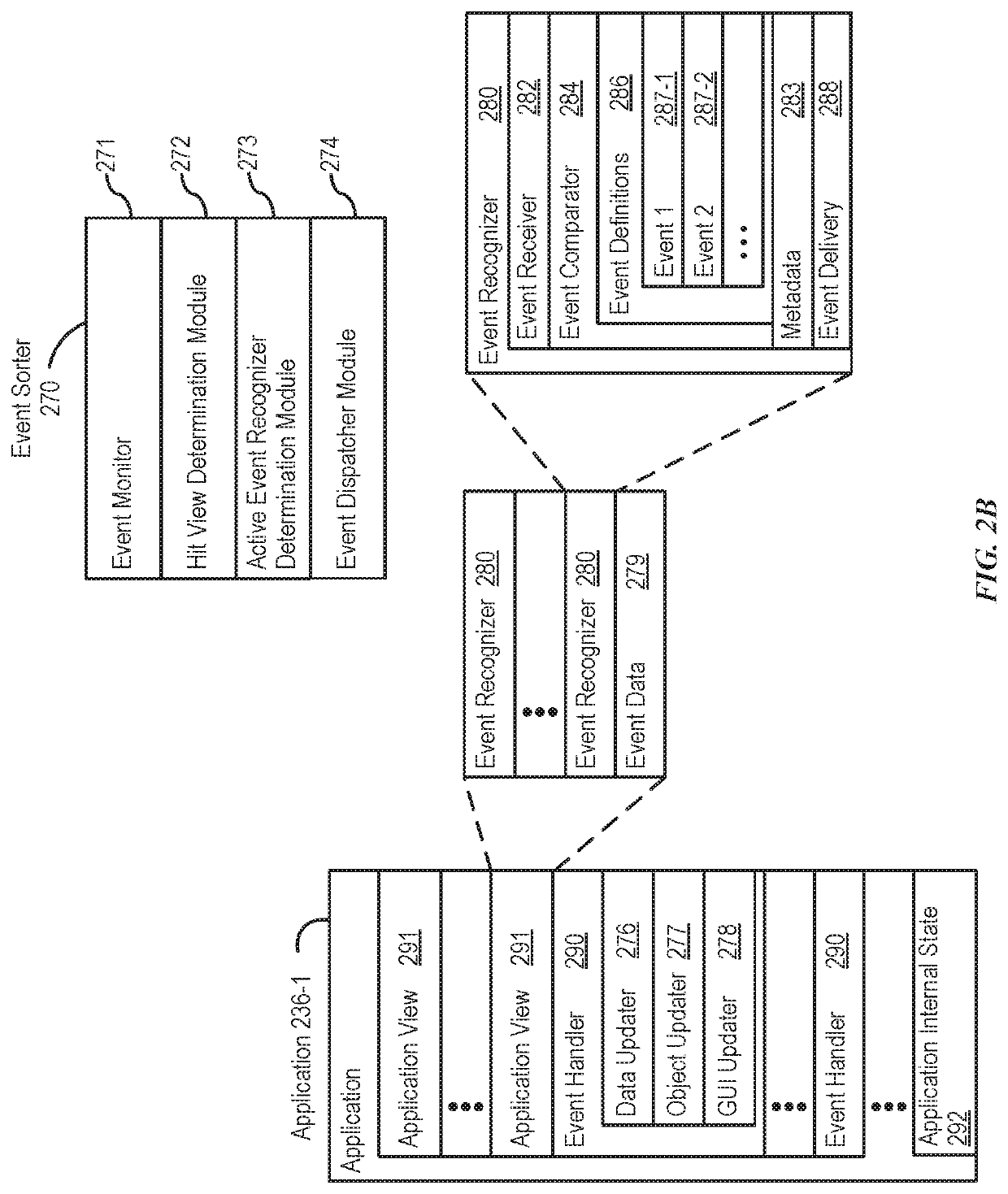

Systems and processes for operating a digital assistant are provided. In accordance with one or more examples, a method includes, receiving training data for a data-driven learning network. The training data include a plurality of word sequences. The method further includes obtaining representations of an initial set of semantic categories associated with the words included in the training data; and training the data-driven learning network based on the plurality of word sequences included in the training data and based on the representations of the initial set of semantic categories. The training is performed using the word sequences in their entirety. The method further includes obtaining, based on the trained data-driven learning network, representations of a set of semantic embeddings of the words included in the training data; and providing the representations of the set of semantic embeddings to at least one of a plurality of different natural language processing tasks.

Owner:APPLE INC

Computer-implemented natural language understanding of medical reports

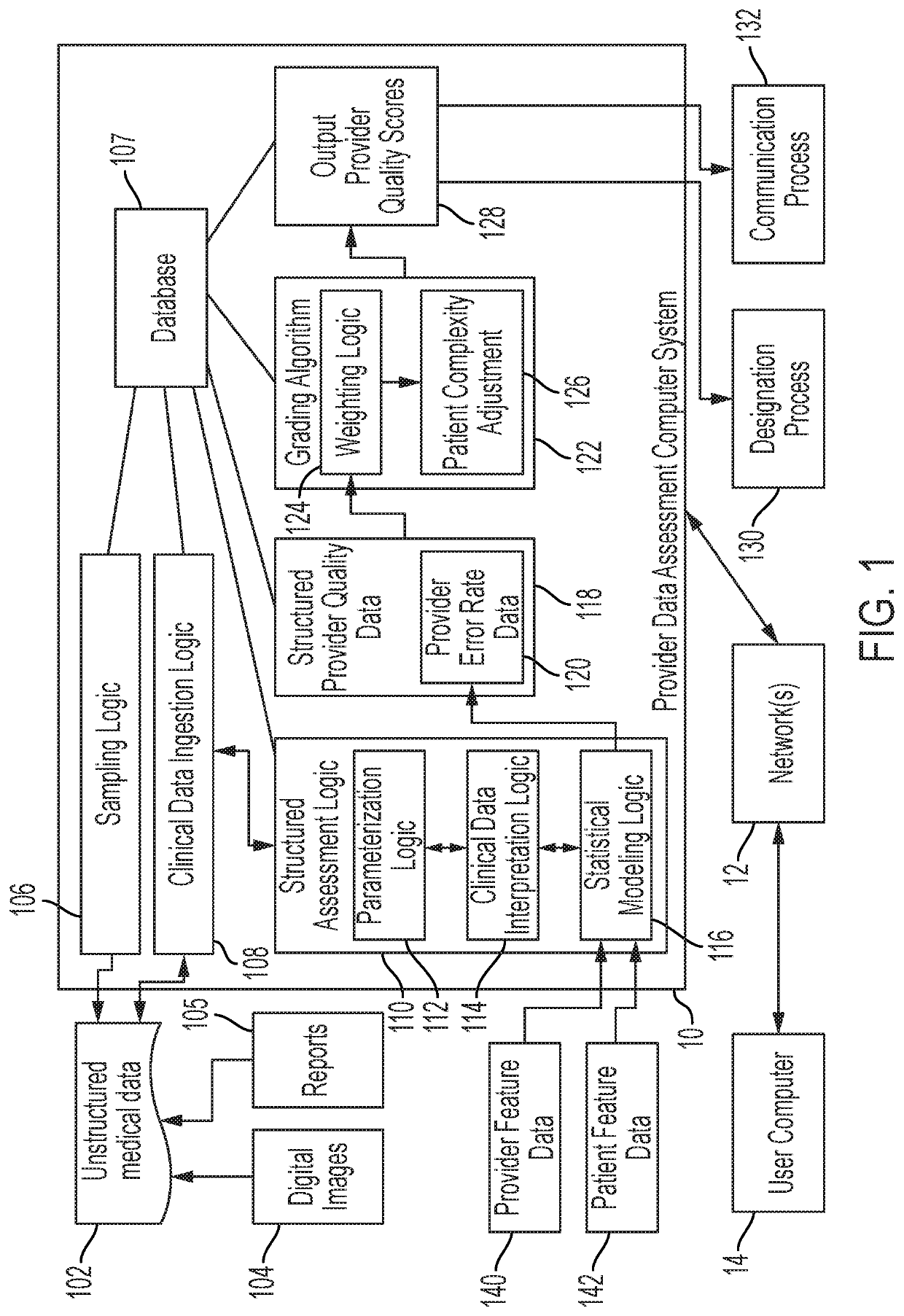

ActiveUS20200334416A1High feature weightLow frequency of occurrenceMathematical modelsMedical data miningLanguage understandingNatural language understanding

A natural language understanding method begins with a radiological report text containing clinical findings. Errors in the text are corrected by analyzing character-level optical transformation costs weighted by a frequency analysis over a corpus corresponding to the report text. For each word within the report text, a word embedding is obtained, character-level embeddings are determined, and the word and character-level embeddings are concatenated to a neural network which generates a plurality of NER tagged spans for the report text. A set of linked relationships are calculated for the NER tagged spans by generating masked text sequences based on the report text and determined pairs of potentially linked NER spans. A dense adjacency matrix is calculated based on attention weights obtained from providing the one or more masked text sequences to a Transformer deep learning network, and graph convolutions are then performed over the calculated dense adjacency matrix.

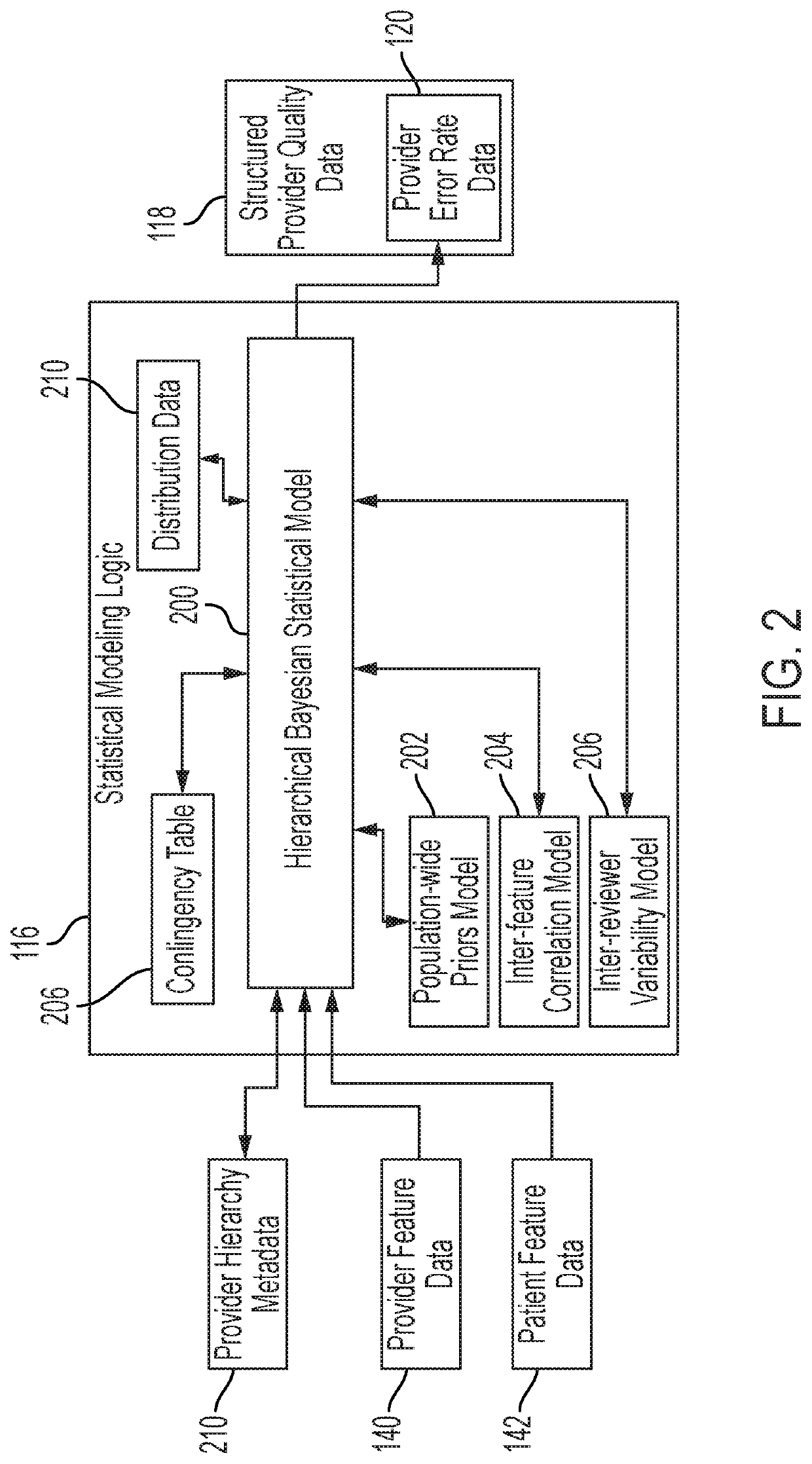

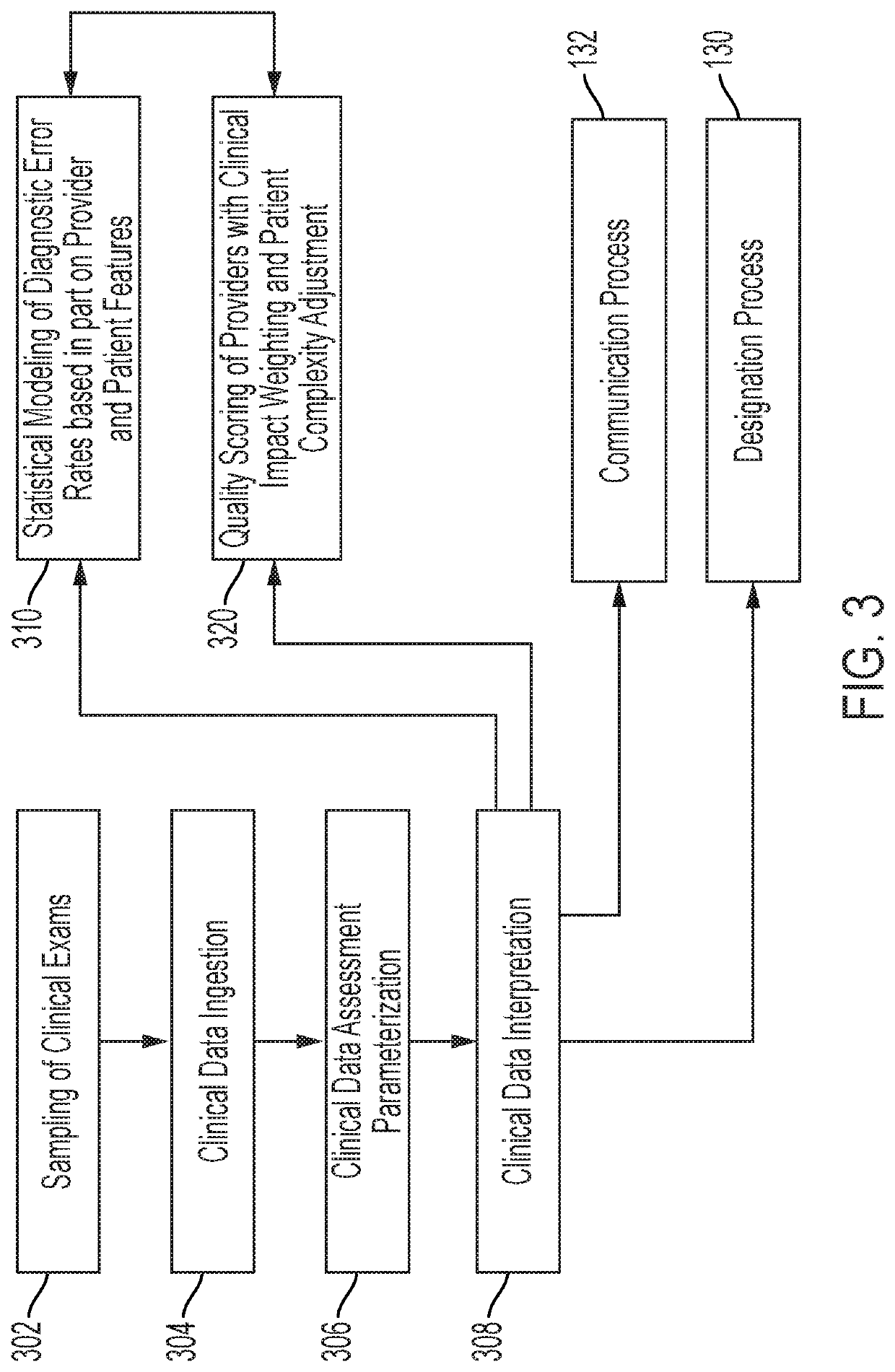

Owner:COVERA HEALTH

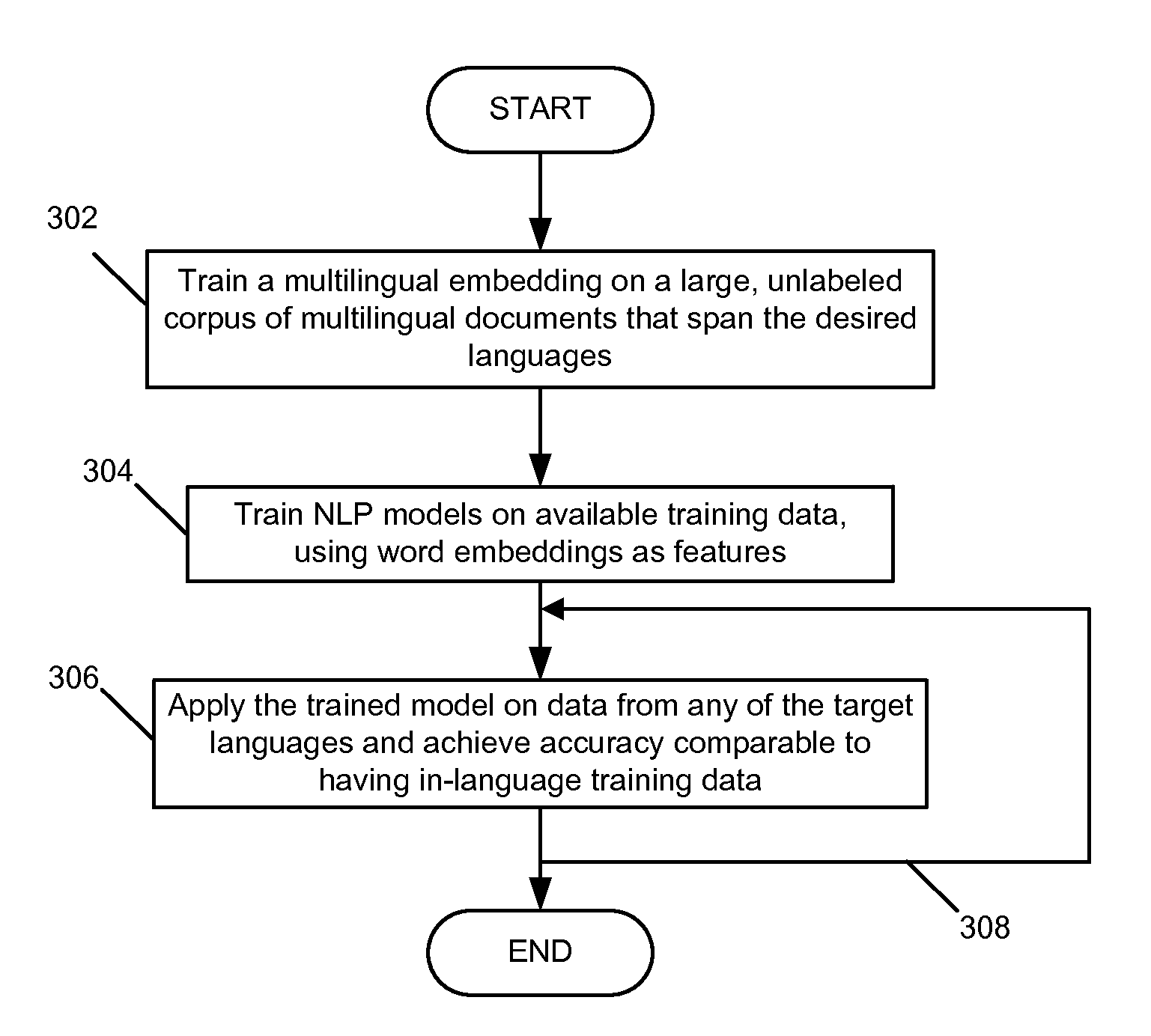

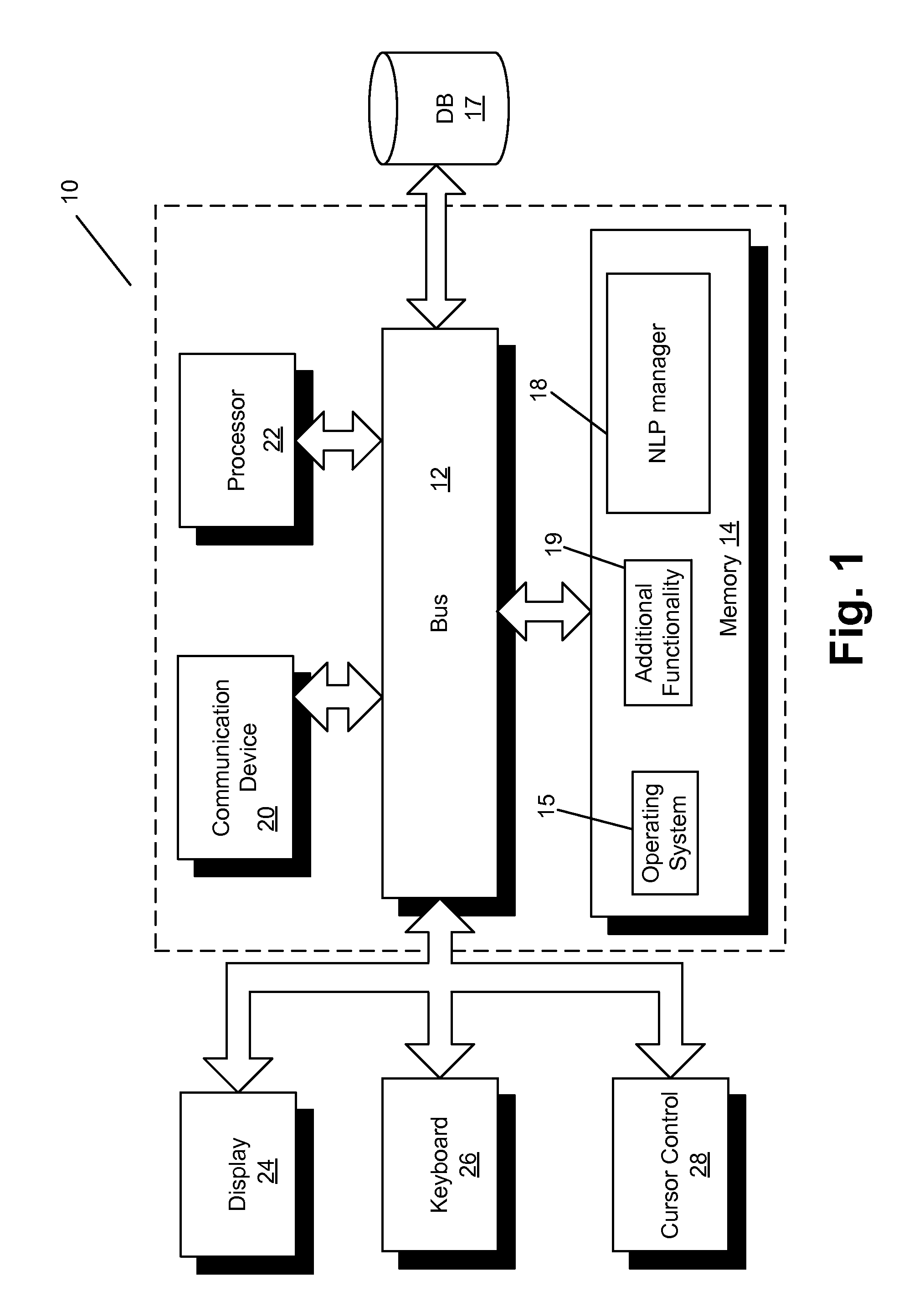

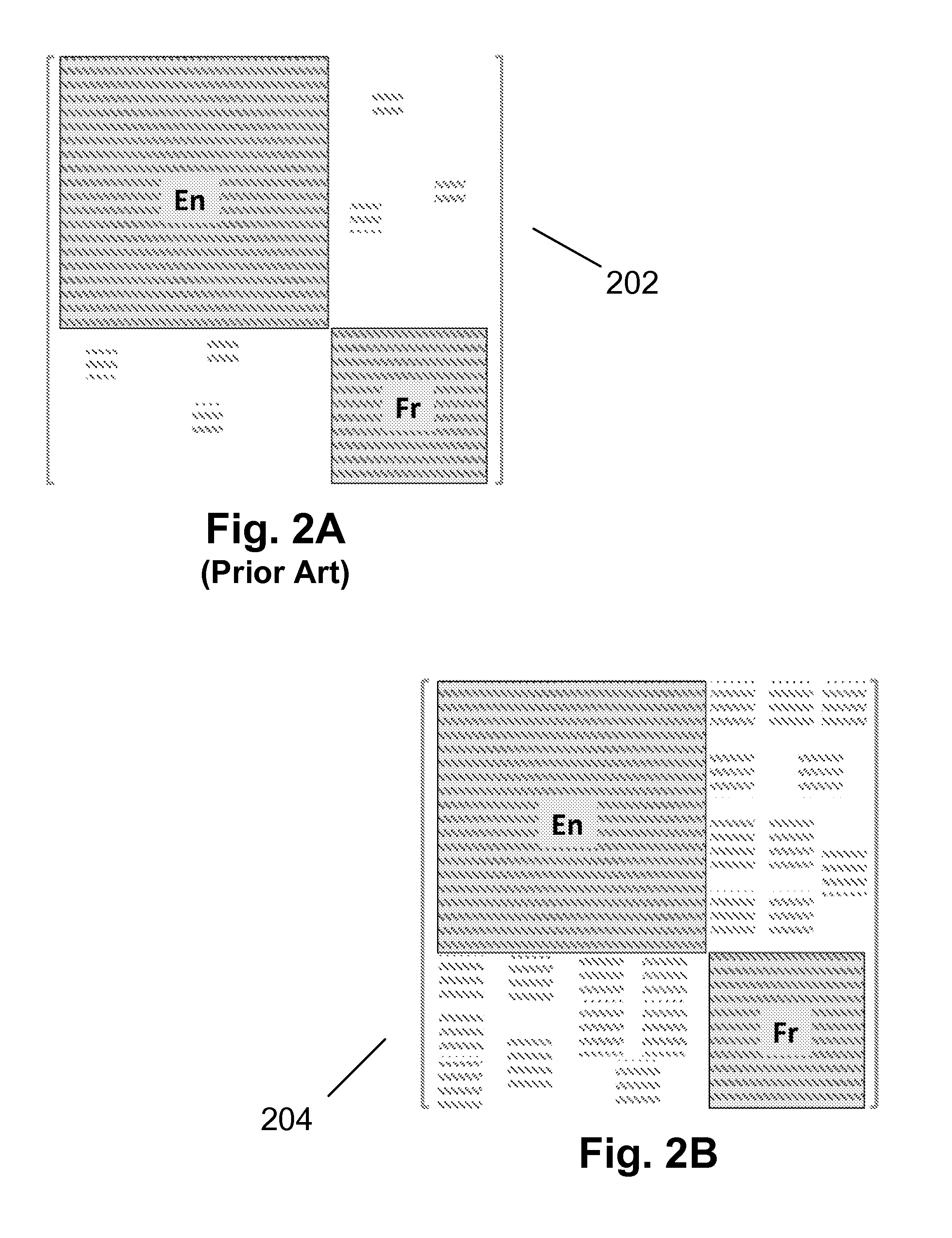

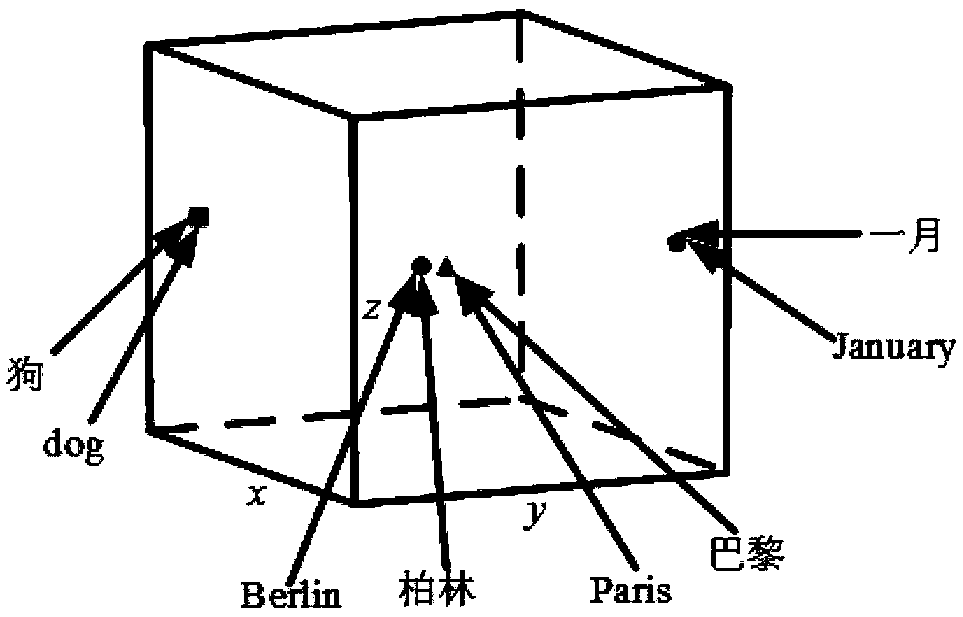

Multilingual embeddings for natural language processing

ActiveUS20160350288A1Natural language translationSpecial data processing applicationsNatural language programmingDocument preparation

A natural language processing (“NLP”) manager is provided that manages NLP model training. An unlabeled corpus of multilingual documents is provided that span a plurality of target languages. A multilingual embedding is trained on the corpus of multilingual documents as input training data, the multilingual embedding being generalized across the target languages by modifying the input training data and / or transforming multilingual dictionaries into constraints in an underlying optimization problem. An NLP model is trained on training data for a first language of the target languages, using word embeddings of the trained multilingual embedding as features. The trained NLP model is applied for data from a second of the target languages, the first and second languages being different.

Owner:ORACLE INT CORP

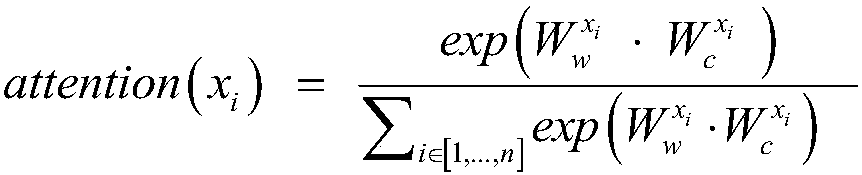

Text sentiment analysis method and system, and computer readable storage medium

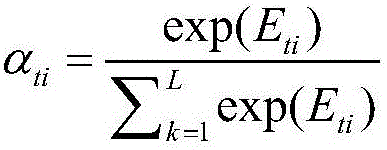

InactiveCN108170681AImprove accuracyImprove generalization abilityNatural language data processingNeural architecturesPart of speechAlgorithm

The invention relates to the technical field of artificial intelligence, and discloses a text sentiment analysis method, a text sentiment analysis system and a computer readable storage medium, for improving the accuracy of text sentiment analysis. The method comprises the steps of inputting a word vector corresponding to any sentence into a preset LSTM network model, thus acquiring a hiding layervector of each word; tagging the part-of-speech of an acquired text word set, training the text word set carrying part-of-speech tagging information, and splitting a part-of-speech vector matrix generated by training by using words as units, thus acquiring the part-of-speech vector corresponding to each word; using sentences as units, performing word embedding weighted summation attention analysis on the hiding layer vector and the part-of-speech vector corresponding to each word in the sentence to acquire a sentence vector carrying attention information of each sentence, and using the sentence vector carrying the attention information to serve as the input of a sentiment classification model, thus acquiring a sentiment classification result of each sentence and / or a classification resultof the original text.

Owner:CENT SOUTH UNIV

A model method based on paragraph internal reasoning and joint question answer matching

The invention discloses a reading understanding model method based on paragraph internal reasoning and joint question answer matching, and the method comprises the following steps: S1, constructing avector for each candidate answer, the vector representing the interaction of a paragraph with a question and an answer, and then enabling the vectors of all candidate answers to be used for selectinganswers; S2, carrying out experiment. According to the model provided by the invention, paragraphs are firstly segmented into blocks under multiple granularities; an encoder is used for summing the intra-block word embedding vectors by utilizing neural word bag expression; then, a relationship between blocks with different granularities where each word is located through a two-layer forward neuralnetwork is modeled to construct a gating function, so that the model has greater context information and captures paragraph internal reasoning at the same time. Compared with a baseline neural network model such as a Stanford AR model and a GA Reader, the accuracy of the model is improved by 9-10%. Compared with a recent model SurfaceLR, the accurcay is at least improved by 3% and is about 1% higher than that of a single model of the TriAN, and in addition, the model effect can also be improved through pre-training on an RACE data set.

Owner:SICHUAN UNIV

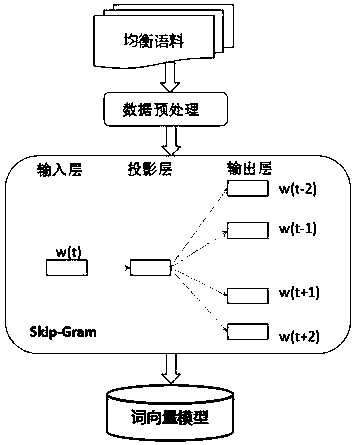

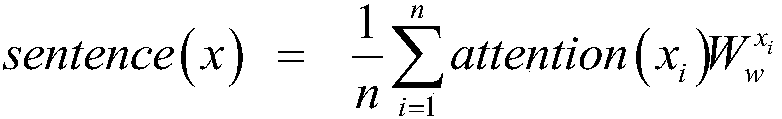

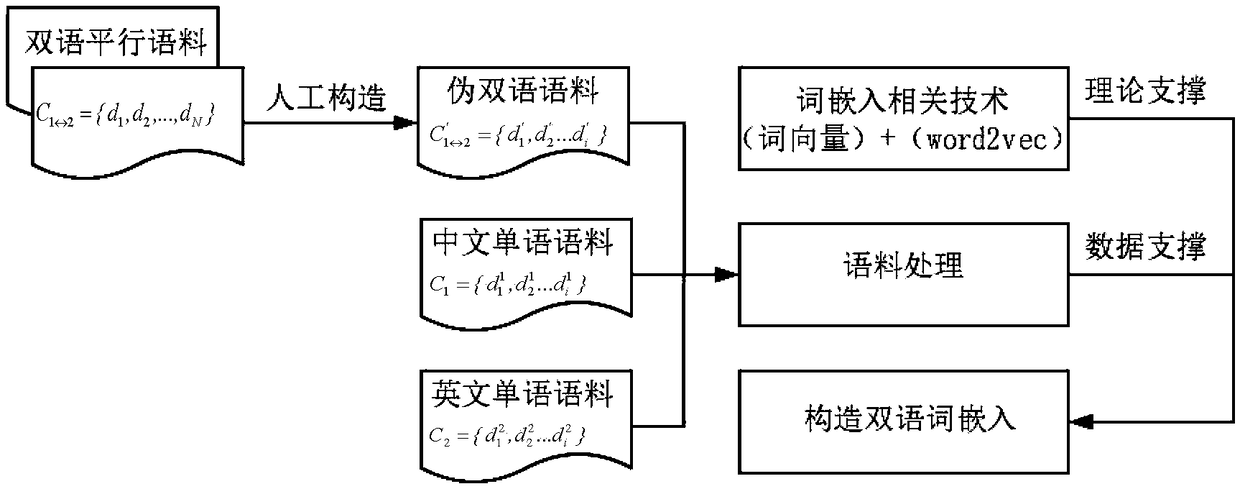

A bilingual word embedding-based cross-language text similarity assessment technique

The invention belongs to the field of language processing, in particular to a cross-language text similarity evaluation technology based on bilingual word embedding. The technical route and workflow of cross-language text similarity evaluation technology based on bilingual word embedding can be divided into three stages: the construction of bilingual word embedding model, the construction of textsimilarity calculation framework based on multi-neural network, and the cross-language similarity calculation. Through this model, a bilingual shared word embedding representation can be generated, which is based on the word vector correlation theory and Skip-Gram model is used to train word vectors on artificially constructed pseudo-bilingual corpus. Secondly, in order to make the generated wordembedding space as complete as possible, monolingual corpus is used as a supplement to learn additional word embedding knowledge. The similarity score of sentences is obtained by combining several neural network structures to learn the semantic representation of sentences. By dividing short text into paragraphs and treating paragraphs as long sentences as sequence input, the similarity iteration on a larger scale can be realized.

Owner:HARBIN ENG UNIV

Text classification method combining dynamic word embedding with part-of-speech tagging

InactiveCN107291795AImprove accuracyImprove general performanceNatural language data processingSpecial data processing applicationsPart of speechText categorization

The invention discloses a text classification method combining dynamic word embedding with part-of-speech tagging, and provides the text classification method based on a deep neural network through combining dynamic word embedding with part-of-speech tagging. The method can fully utilize the advantages that a large-scale corpus can provide more accurate grammar and semantic information, and can also adjust word embedding by combining with the features of the corpus during a model training process, and thus the features of the corpus can be better learned. Meanwhile, classification accuracy can be further improved by combining with part of speech information of words in sentences. The invention also comprehensively utilizes the advantages of LSTM in the aspect of learning context information of words and part of speech in the sentences, and the advantages of CNN in the aspect of learning text local features. The classification model provided by the invention has the advantages of high accuracy and strong universality, and achieves good effect in some famous public corpuses including IMDB corpus, Movie Review and TREC.

Owner:SOUTH CHINA UNIV OF TECH

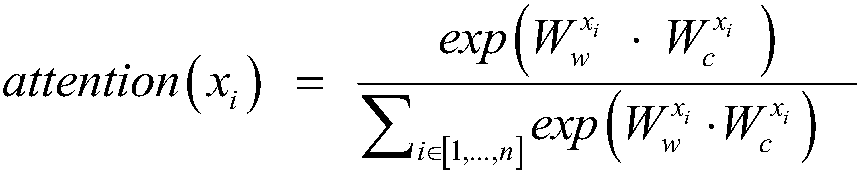

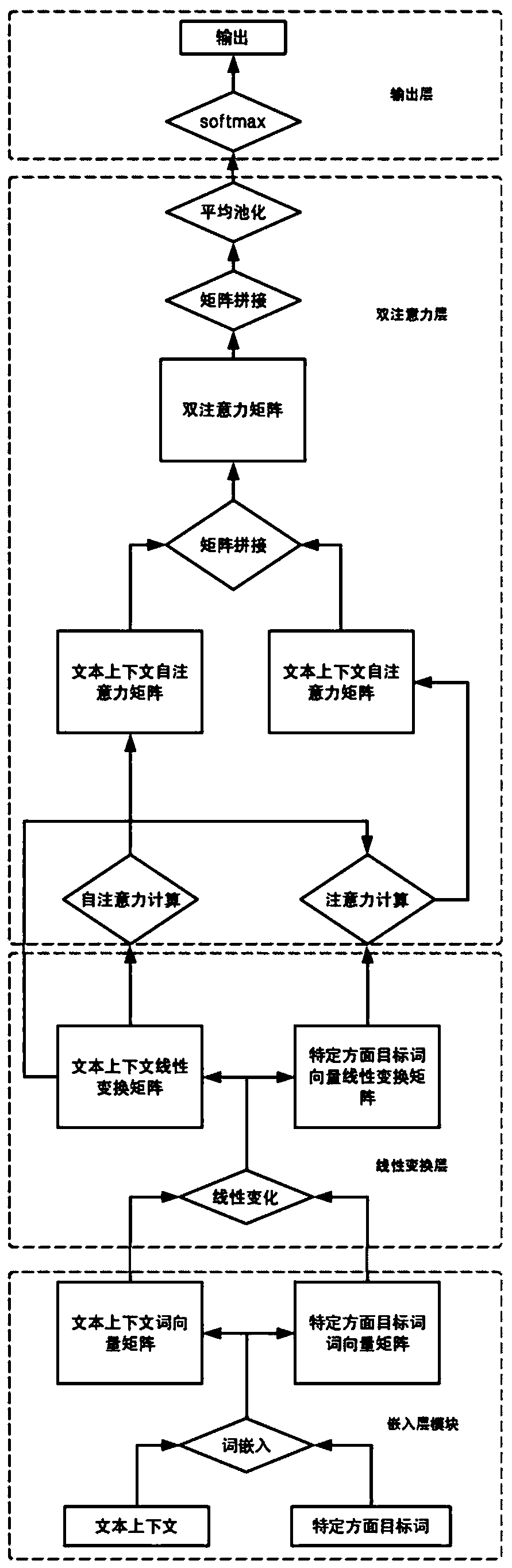

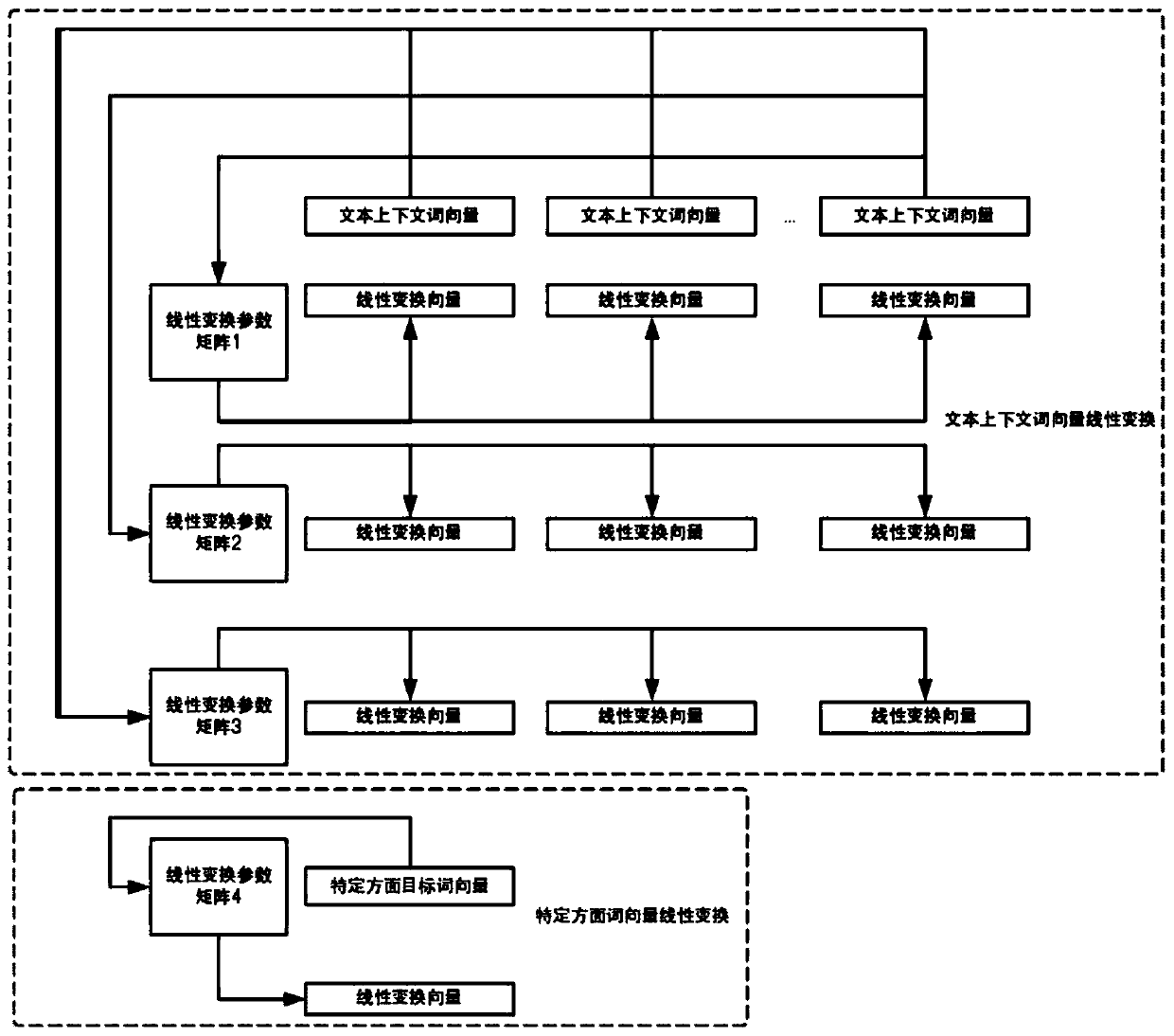

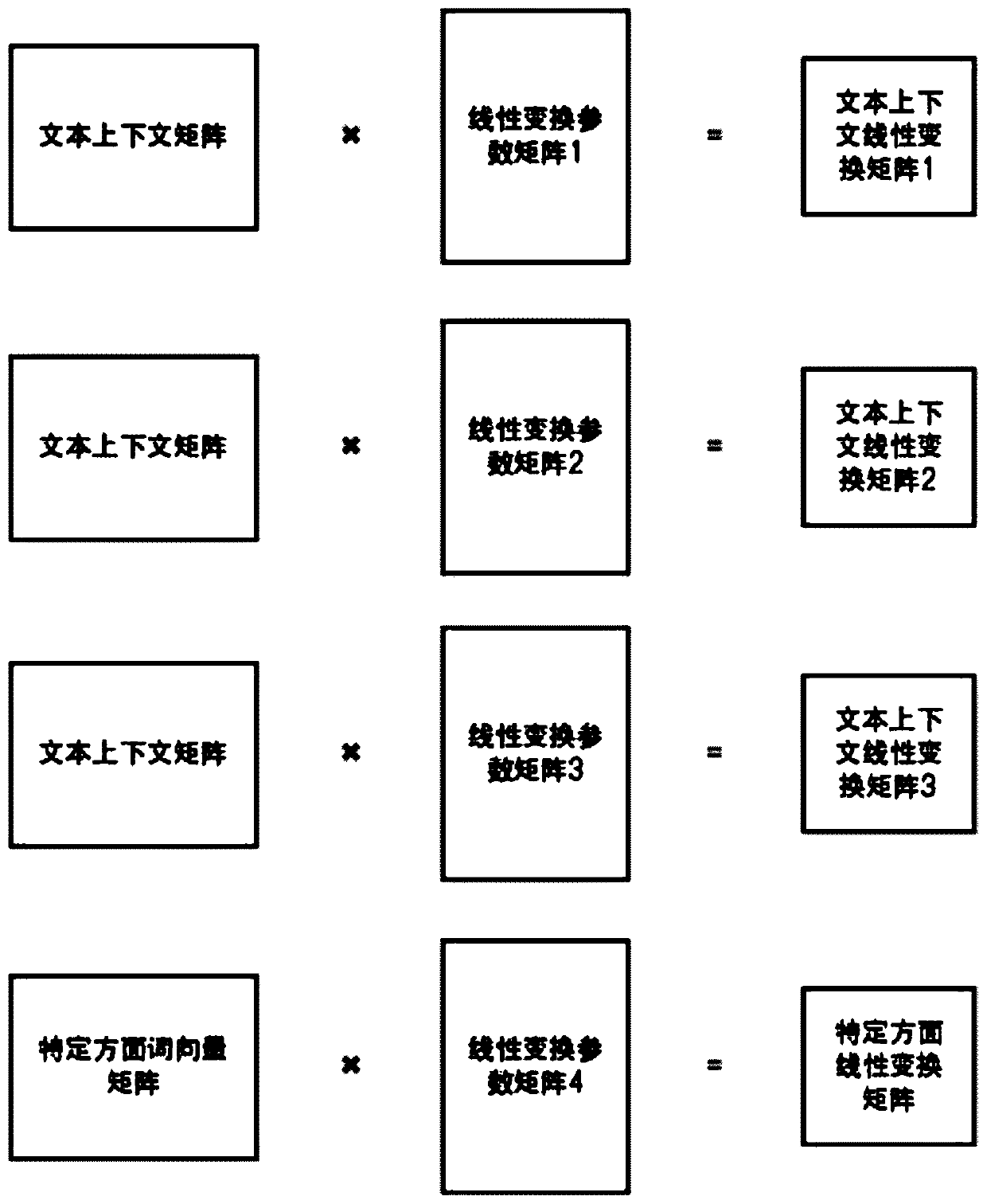

A fine-grained emotion polarity prediction method based on a hybrid attention network

ActiveCN109948165AAccurate predictionMake up for the shortcoming that it is difficult to obtain global structural informationSpecial data processing applicationsSelf attentionAlgorithm

The invention discloses a fine-grained emotion polarity prediction method based on a hybrid attention network, and aims to overcome the problems of lack of flexibility, insufficient precision, difficulty in obtaining global structure information, low training speed, single attention information and the like in the prior art. The method comprises the following steps: 1, determining a text context sequence and a specific aspect target word sequence according to a comment text sentence; 2, mapping the sequence into two multi-dimensional continuous word vector matrixes through log word embedding;3, performing multiple different linear transformations on the two matrixes to obtain corresponding transformation matrixes; 4, calculating a text context self-attention matrix and a specific aspect target word vector attention matrix by using the transformation matrix, and splicing the two matrixes to obtain a double-attention matrix; 5, splicing the double attention matrixes subjected to different times of linear change, and then performing linear change again to obtain a final attention representation matrix; and 6, through an average pooling operation, inputting the emotion polarity into asoftmax classifier through full connection layer thickness to obtain an emotion polarity prediction result.

Owner:JILIN UNIV

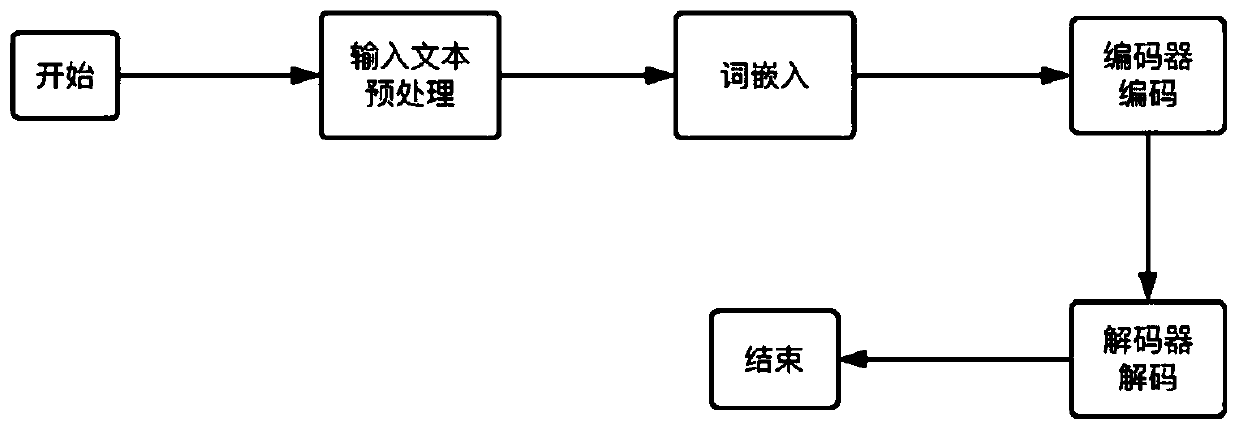

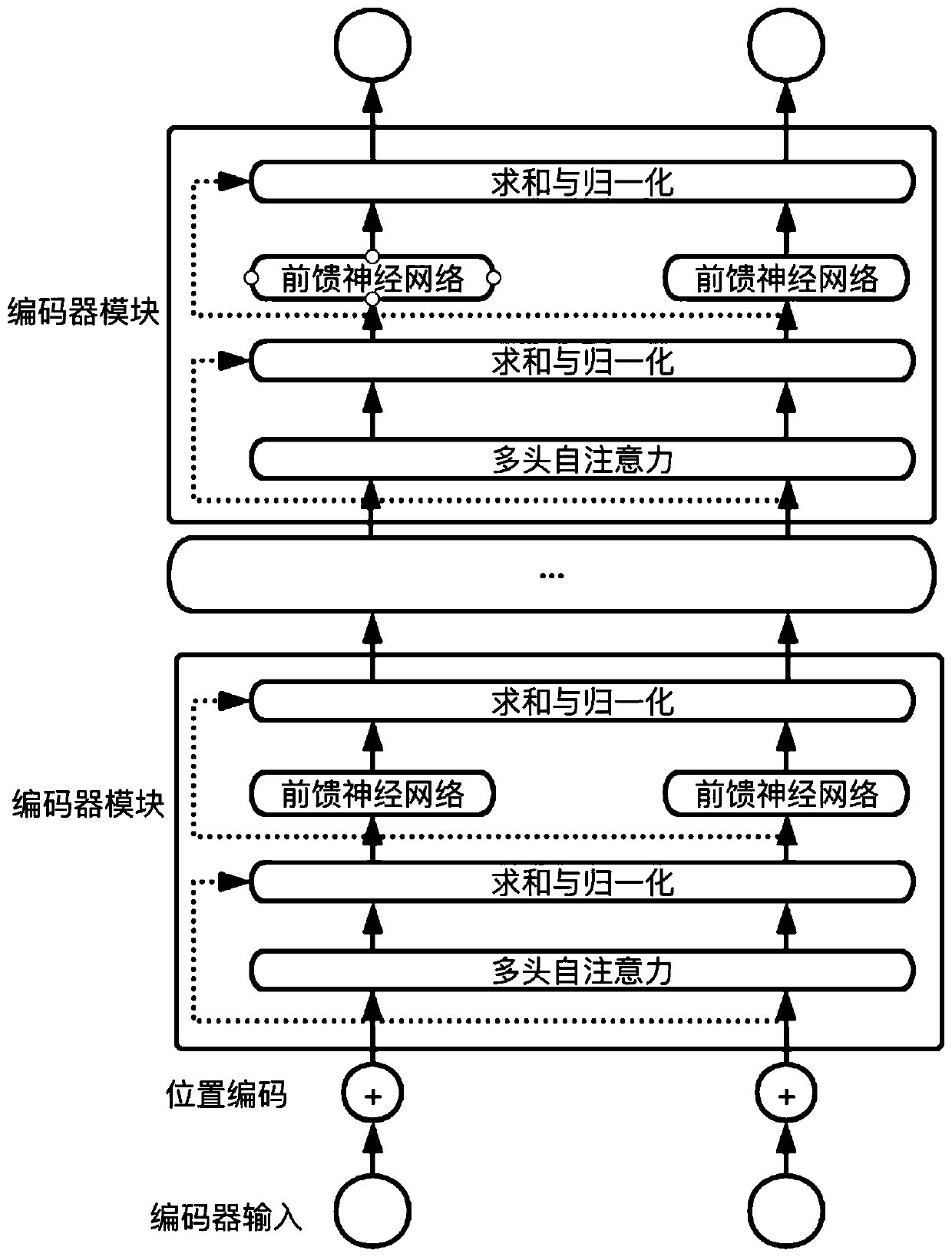

Text abstract automatic generation method based on self-attention network

ActiveCN110209801AEfficient extraction of long-distance dependenciesEffectively handle generation issuesNatural language data processingNeural architecturesSelf attentionAlgorithm

The invention discloses a text abstract automatic generation method based on a self-attention network. The text abstract automatic generation method comprises the following steps: 1) carrying out wordsegmentation on an input text to obtain a word sequence; 2) performing word embedding on the word sequence to generate a corresponding word vector sequence; 3) coding the word vector sequence by using a self-attention network encoder, and 4) decoding an input text coding vector by using a self-attention network decoder to generate a text abstract. The text abstract automatic generation method hasthe advantages of high model calculation speed, high training efficiency, high generated abstract quality, good generalization performance of the model and the like.

Owner:SOUTH CHINA UNIV OF TECH

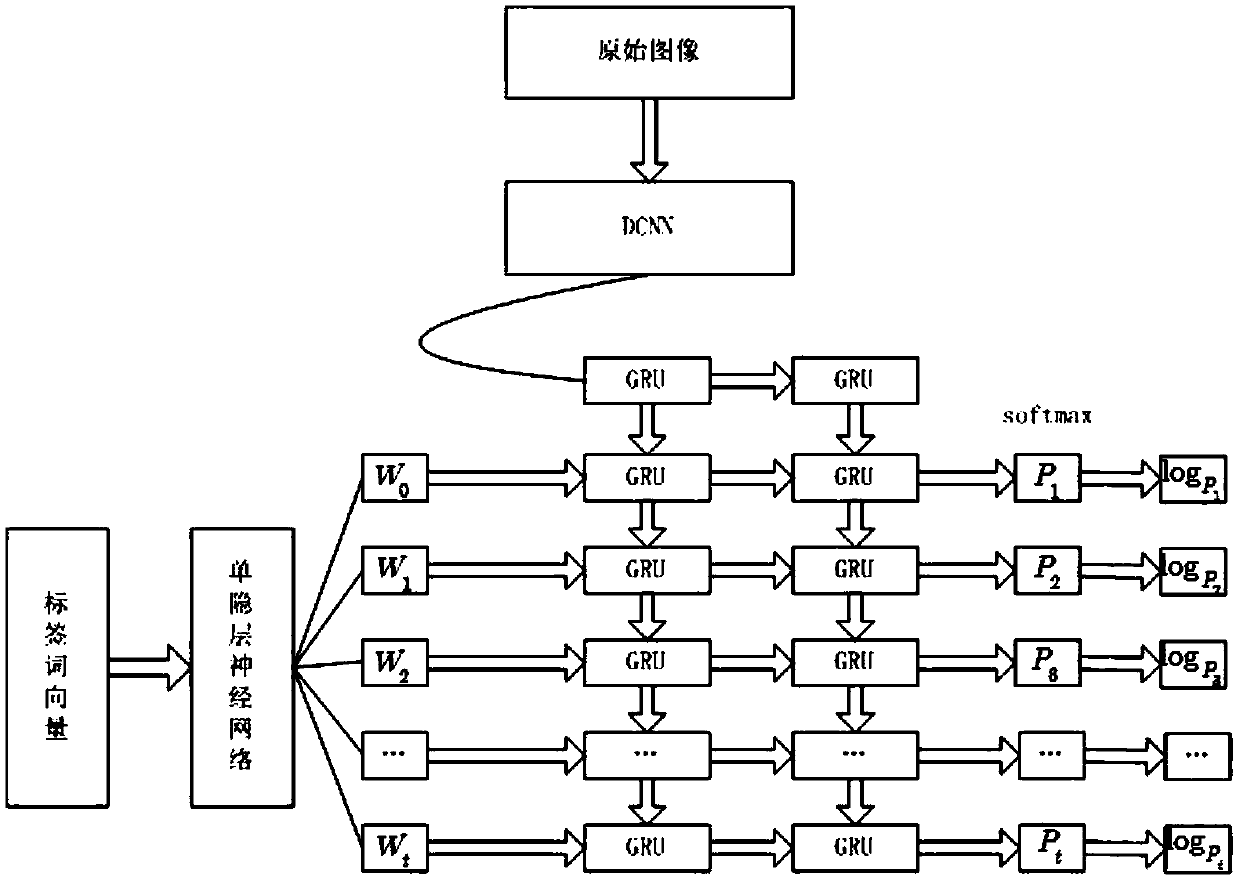

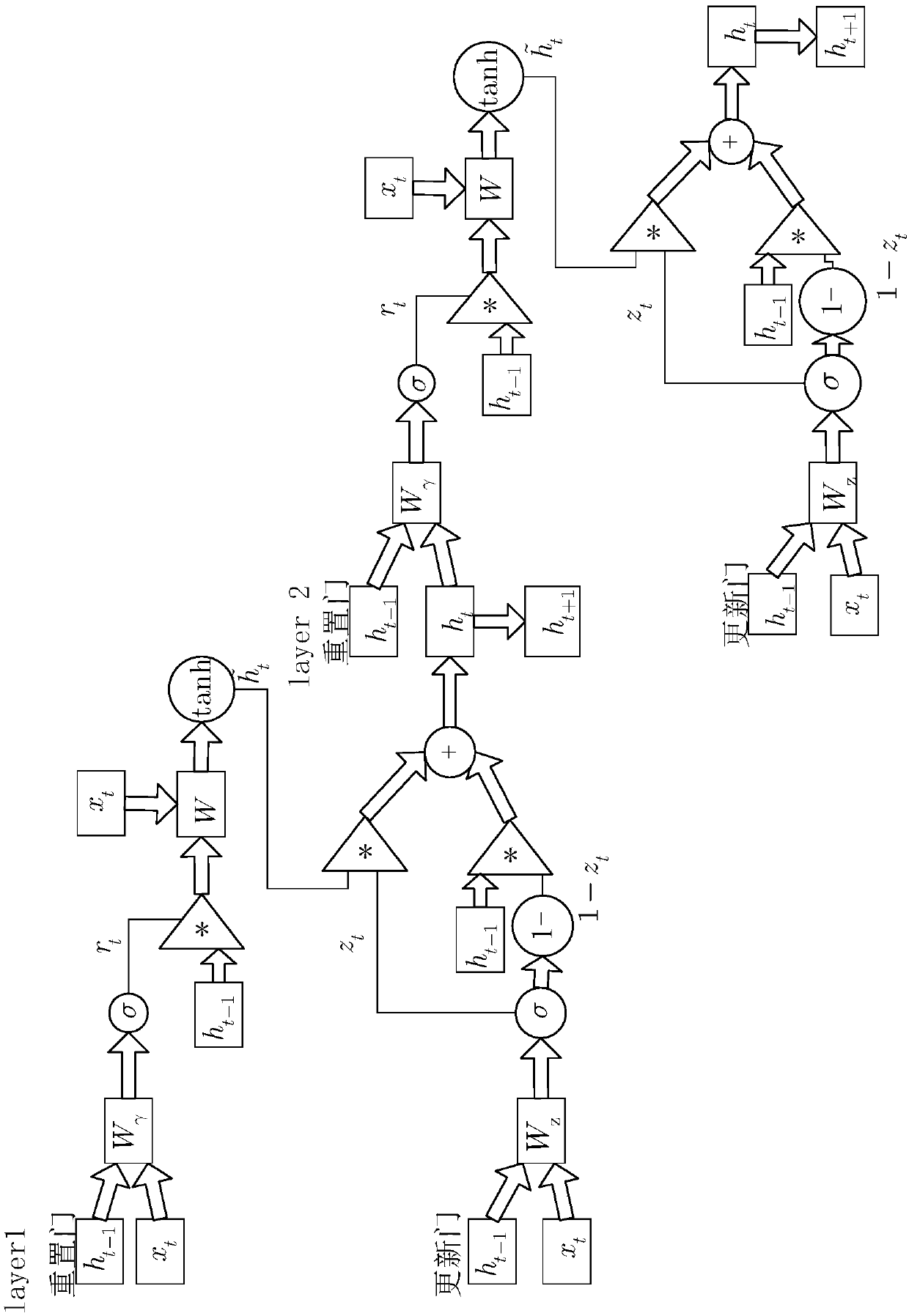

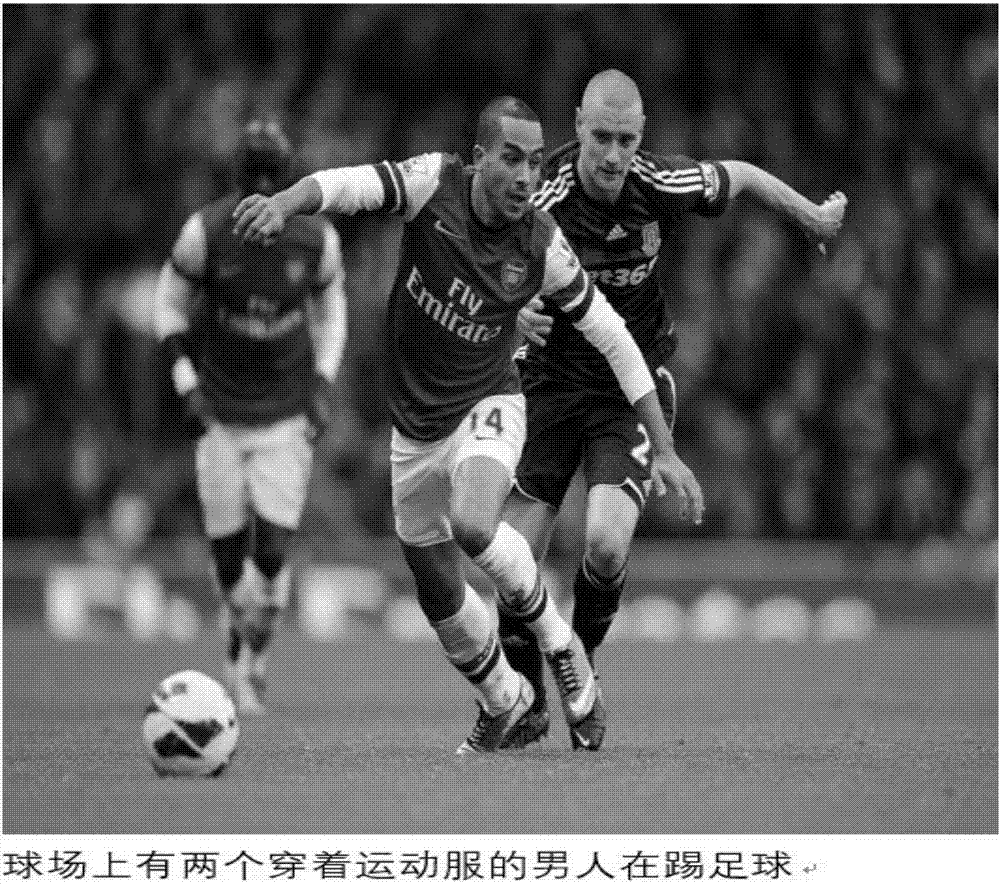

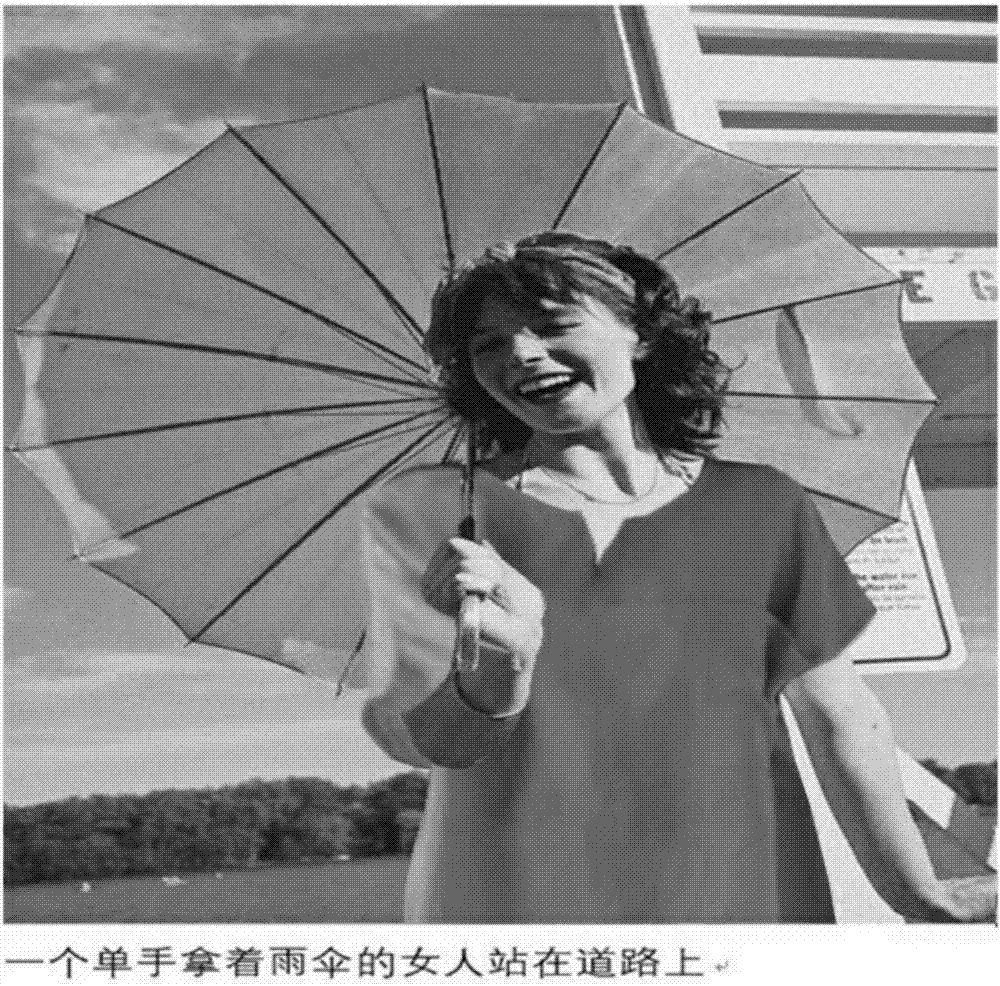

Chinese image semantic description method combined with multilayer GRU based on residual error connection Inception network

InactiveCN108830287AImprove accuracyImprove relevanceCharacter and pattern recognitionNeural architecturesData setNetwork model

The invention discloses a Chinese image semantic description method combined with multilayer GRU based on a residual error connection Inception network, and belongs to the field of computer vision andnatural language processing. The method comprises the steps: carrying out the preprocessing of an AI Challenger image Chinese description training set and an estimation set through an open source tensorflow to generate a file at the tfrecord format for training; pre-training an ImageNet data set through an Inception_ResNet_v2 network to obtain a convolution network pre-training model; loading a pre-training parameter to the Inception_ResNet_v2 network, and carrying out the extraction of an image feature descriptor of the AI Challenger image set; building a single-hidden-layer neural network model and mapping the image feature descriptor to a word embedding space; taking a word embedding characteristic matrix and the image feature descriptor after secondary characteristic mapping as the input of a double-layer GRU network; inputting an original image into a description model to generate a Chinese description sentence; employing an evaluation data set for estimation through employing the trained model and taking a Perplexity index as an evaluation standard. The method achieves the solving of a technical problem of describing an image in Chinese, and improves the continuity and readability of sentences.

Owner:HARBIN UNIV OF SCI & TECH

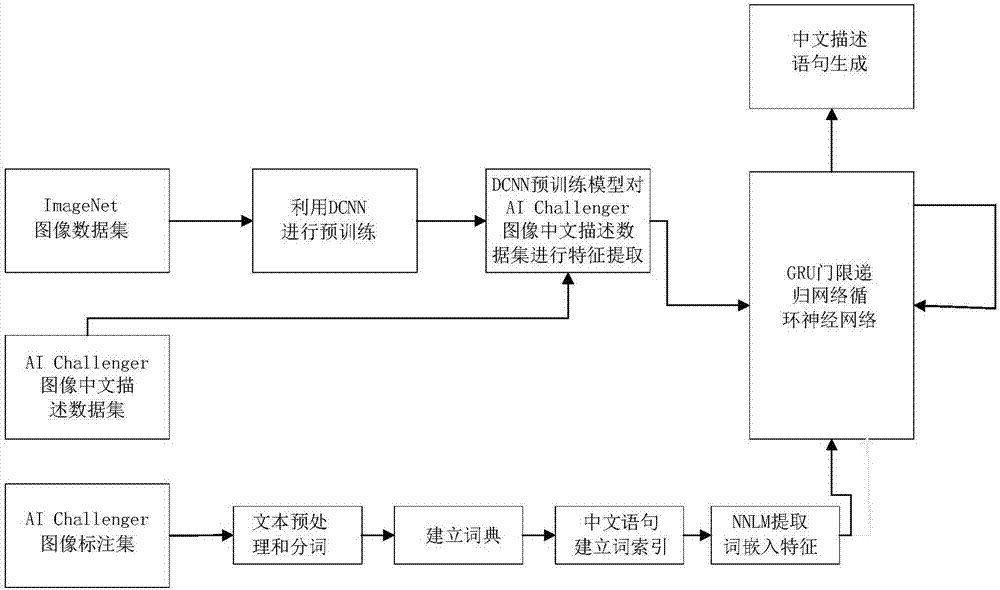

Deep learning model-based image Chinese description method

ActiveCN108009154AImprove readabilitySimple modelNatural language data processingNeural architecturesData setFeature mapping

The invention discloses a deep learning model-based image Chinese description method and belongs to the field of computer vision and natural language processing. The method comprises the steps of preparing an ImageNet image data set and an AI Challenger image Chinese description data set; pre-training the ImageNet image data set by utilizing a DCNN to obtain a pre-trained DCNN model; performing image feature extraction and image feature mapping on the AI Challenger image Chinese description data set, and transmitting image features to a GRU threshold recursive network recurrent neural network;performing word coding matrix construction on an AI Challenger image mark set in the AI Challenger image Chinese description data set; extracting word embedding features by utilizing an NNLM, and finishing text feature mapping; taking the GRU threshold recursive network recurrent neural network as a language generation model, and finishing image description model building; and generating a Chinese description statement. According to the method, the blank of image Chinese description is filled up; a function of automatically generating the image Chinese description is realized; the accuracy ofdescription contents is well improved; and a foundation is laid for development of Chinese NLP and computer vision.

Owner:HARBIN UNIV OF SCI & TECH

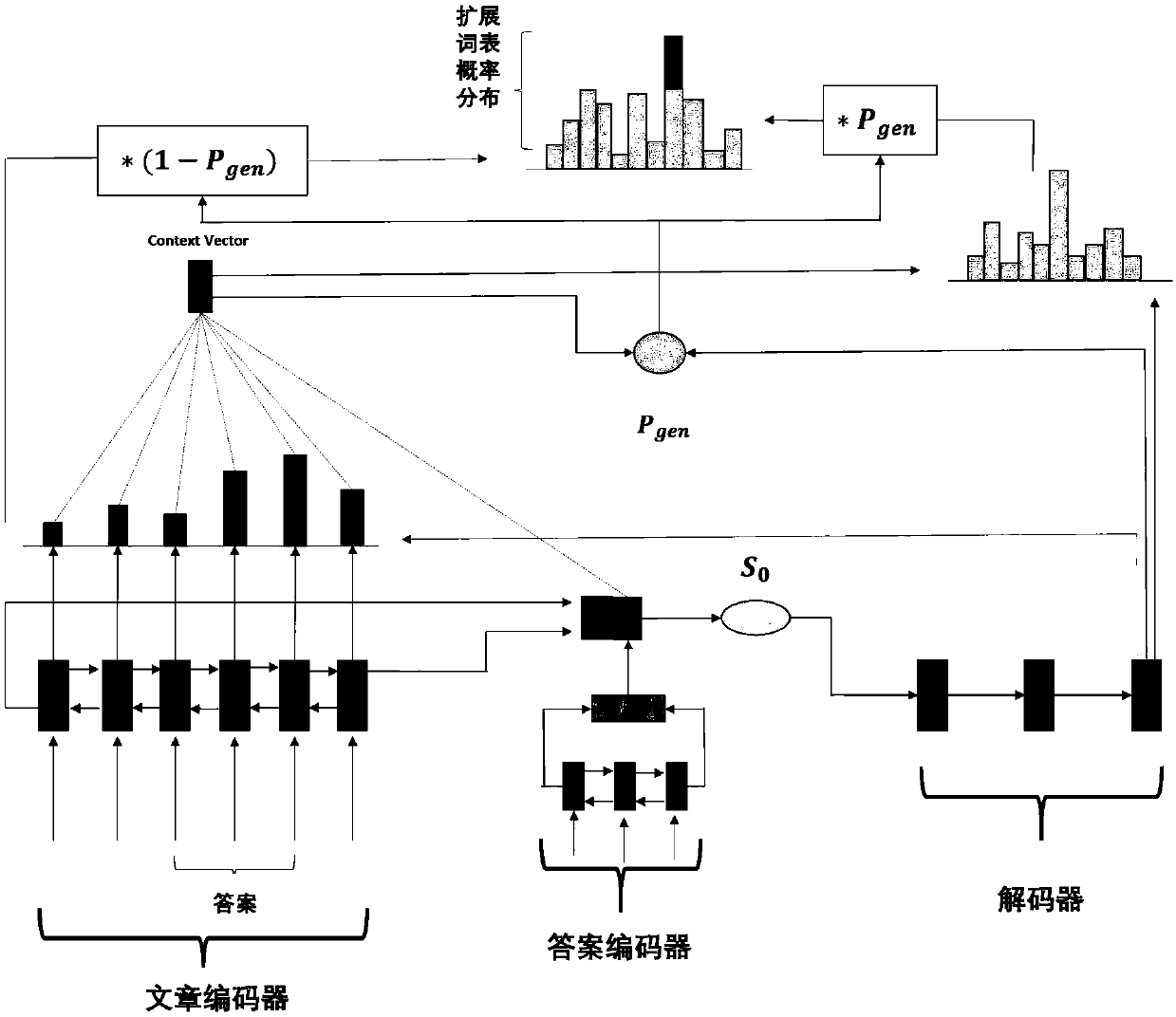

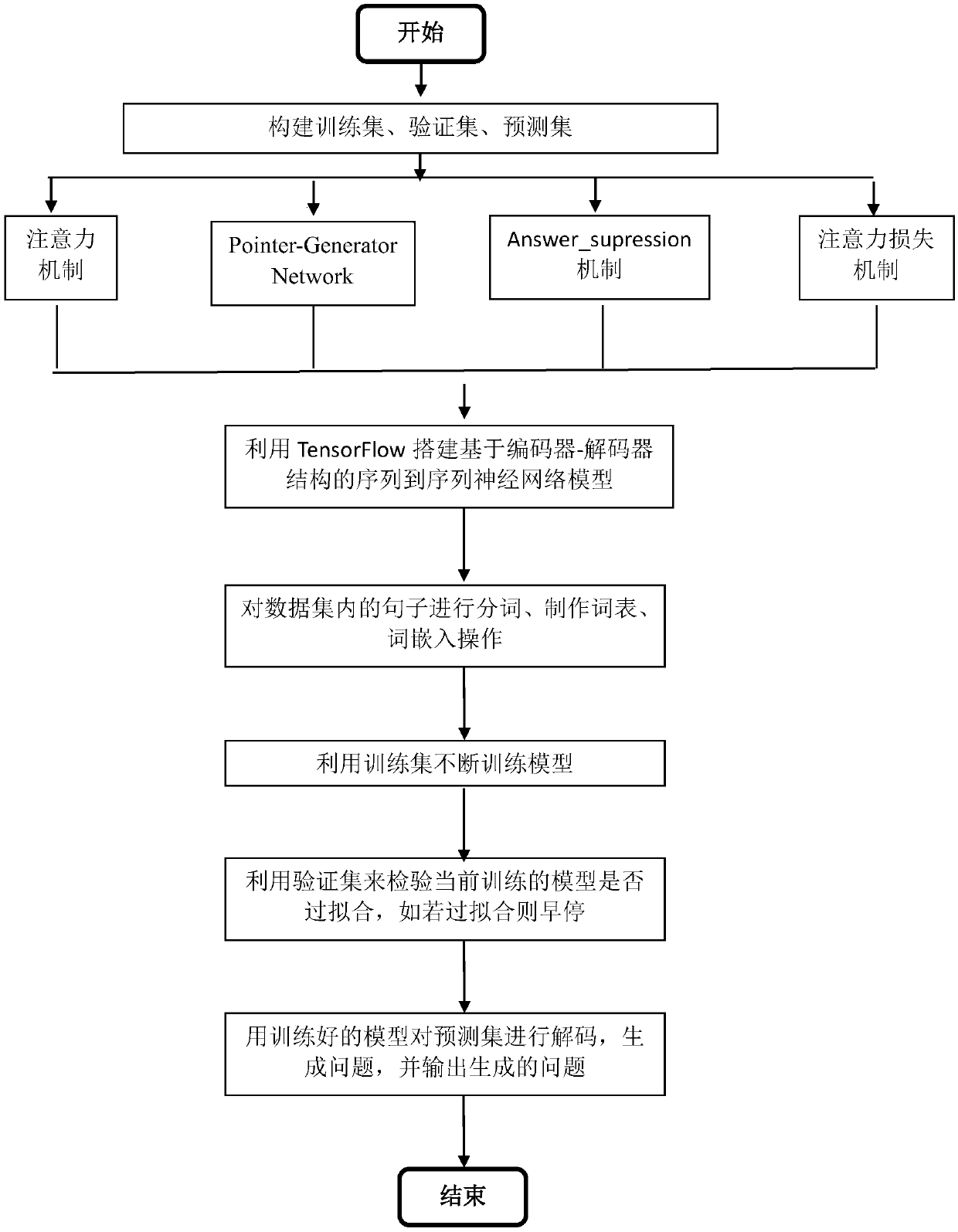

Automatic problem generation method based on deep learning

PendingCN109657041AReduce dependenceDigital data information retrievalNeural architecturesData setEncoder decoder

The invention discloses an automatic question generation method based on deep learning. The method comprises the following steps: constructing a training set (articles, answers and questions), a verification set (articles, answers and questions) and a prediction set (articles and answers); creating Encoder-based construction by using deep learning framework tensorflow A sequence of decoders to a sequence neural network model; Carrying out word segmentation, word list making and word embedding operations on sentences in the data set; Wherein the data set comprises a training set, a verificationset and a prediction set; The training set is used for training the model, the verification set is used for detecting whether the currently trained model is over-fitted or not, and if over-fitting isachieved, stopping training; Otherwise, continuing training; And decoding the prediction set by using the trained model to generate a problem. The method is good in generalization effect and low in labor cost, the generated questions are better matched with articles and answers, and the method can be widely applied to the fields of intelligent teaching, intelligent questions and answers, knowledge question and answer games and the like.

Owner:NANJING UNIV OF SCI & TECH

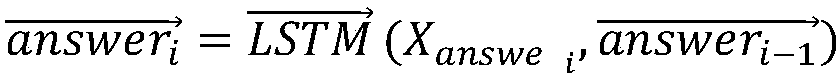

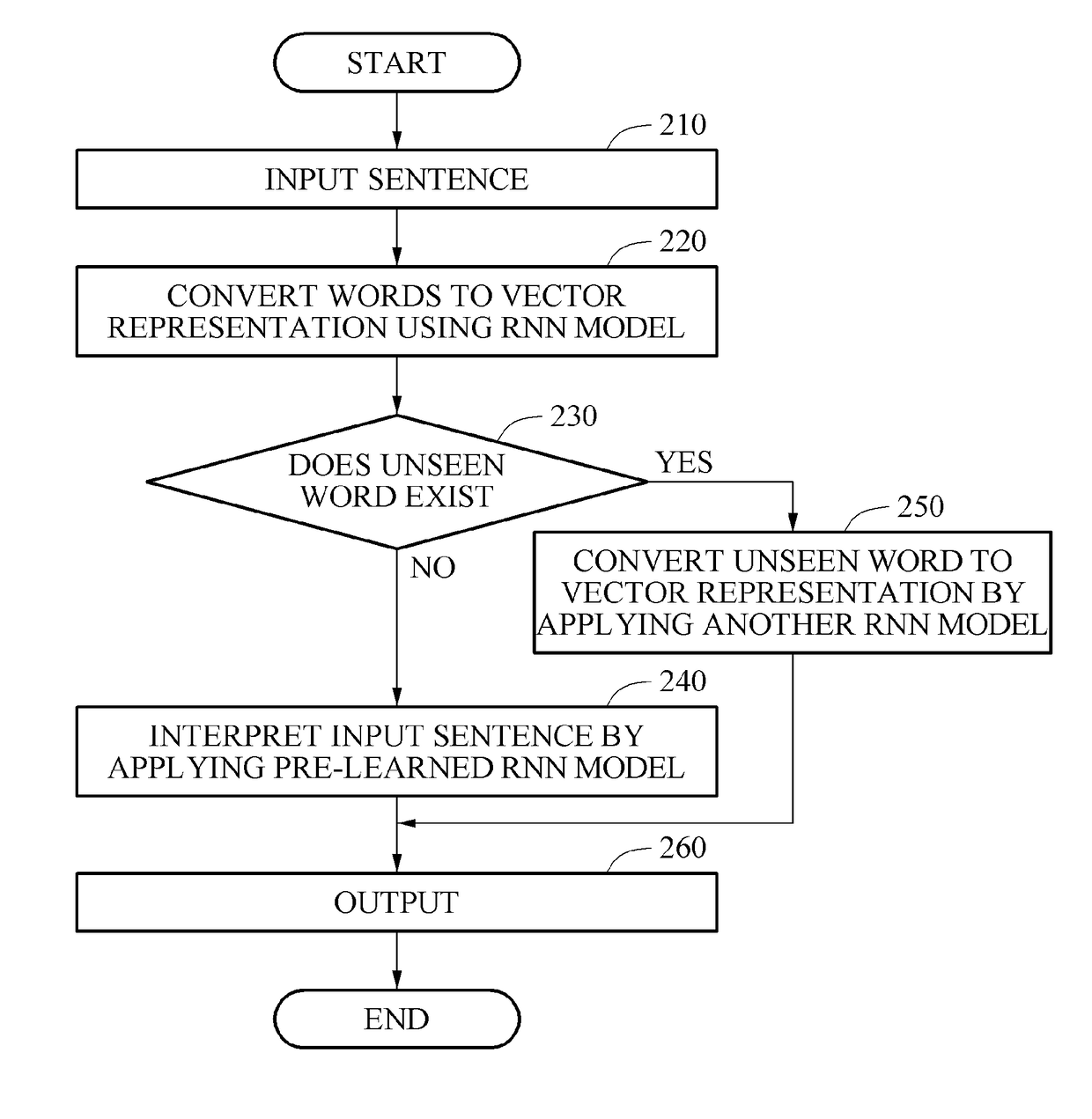

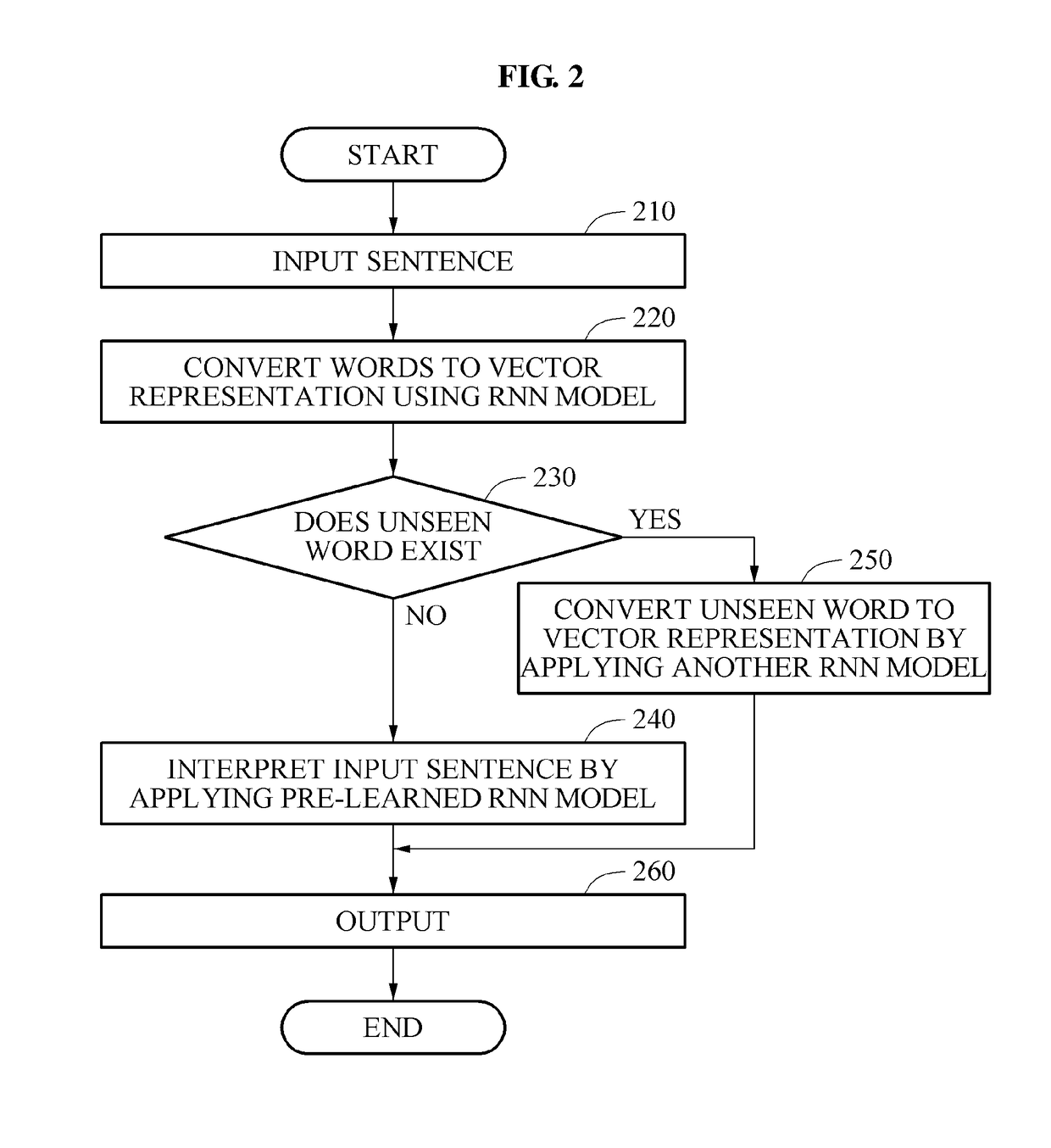

Word embedding method and apparatus, and voice recognizing method and apparatus

A word embedding and word embedding apparatus are provided. The word embedding method includes receiving an input sentence, detecting an unlabeled word in the input sentence, embedding the unlabeled word based on labeled words included in the input sentence, and outputting a feature vector based on the embedding.

Owner:SAMSUNG ELECTRONICS CO LTD +1

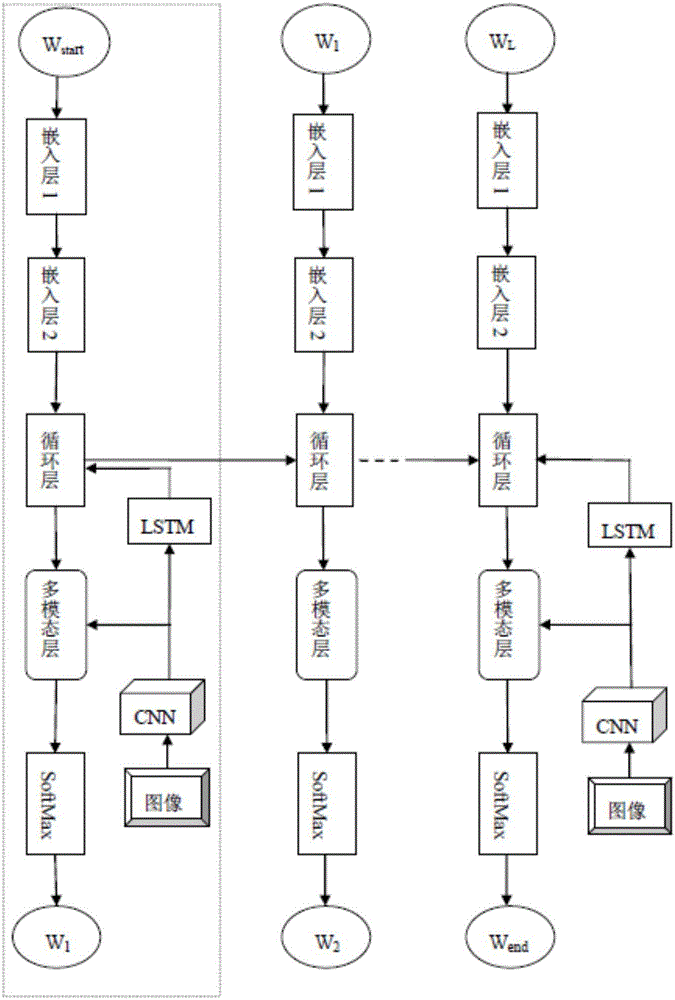

Image description generating method based on neural network and image attention focuses

ActiveCN106777125AEffective studyStill image data indexingSpecial data processing applicationsNerve networkImage description

The invention provides an image description generating method based on a neural network and image focuses. The method is characterized in that an original one-layer word embedding structure is replaced by a two-layer word embedding structure, and accordingly word expression can be learned effectively; image feature expression is directly used as the input of an m-RNN model, and accordingly the capacity of a circulating layer can be fully utilized, and the small-dimension circulating layer can be used; by a decision soft attention mechanism, the attention of an image salient region is reflected and used as one input of a multi-modal layer. By the method, the focus relation between targets or scenes is utilized effectively, and the semantic features of the image is described in a targeted manner.

Owner:SYSU CMU SHUNDE INT JOINT RES INST +1

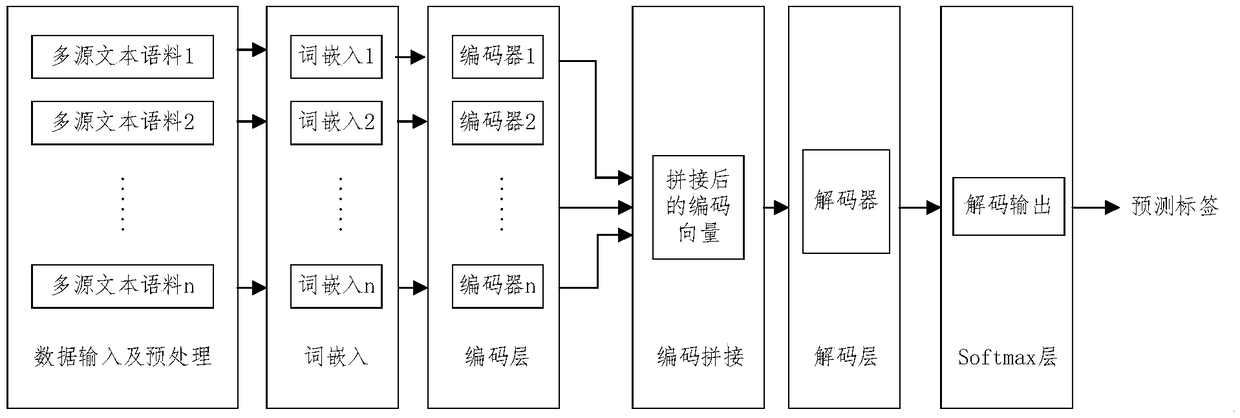

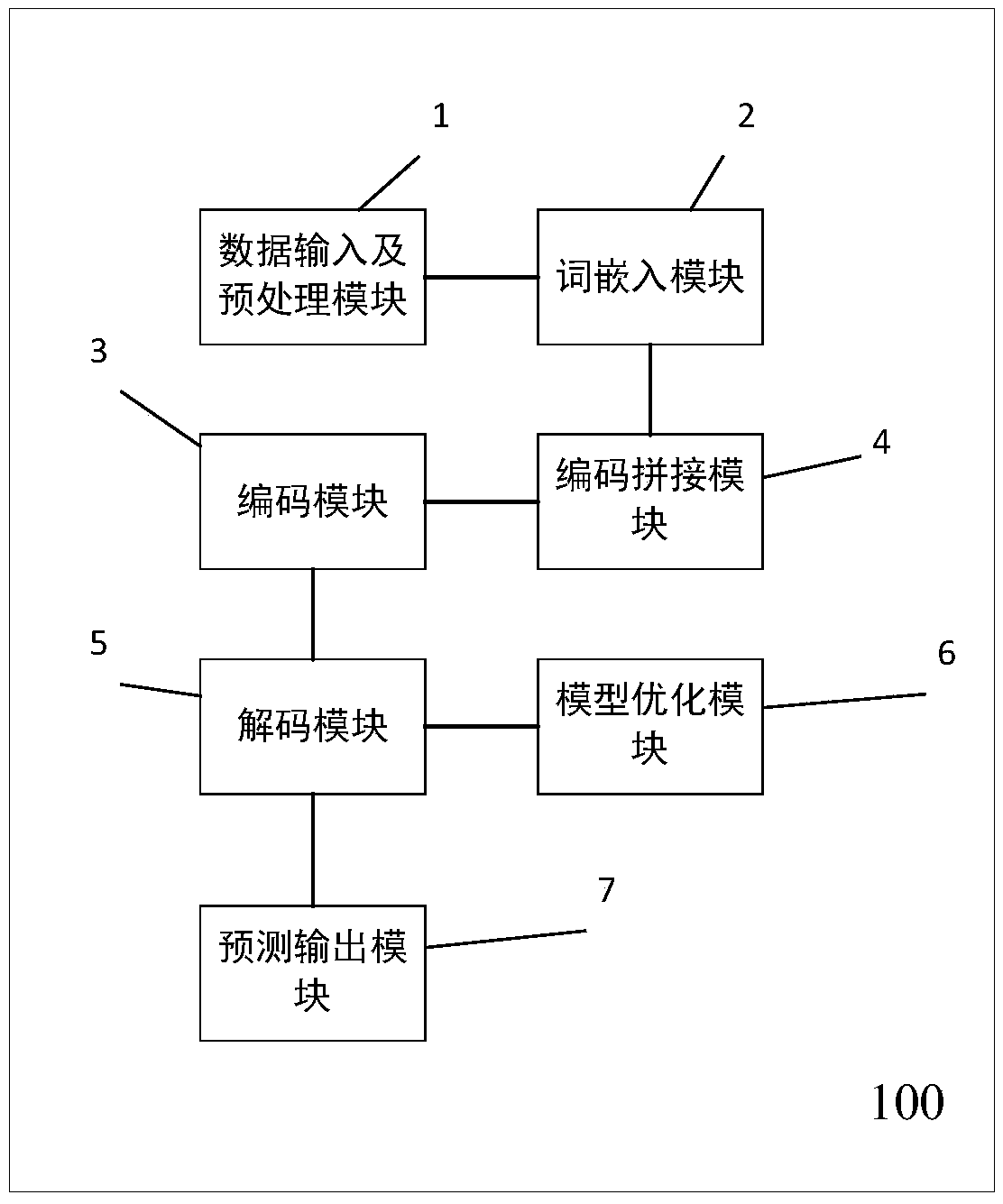

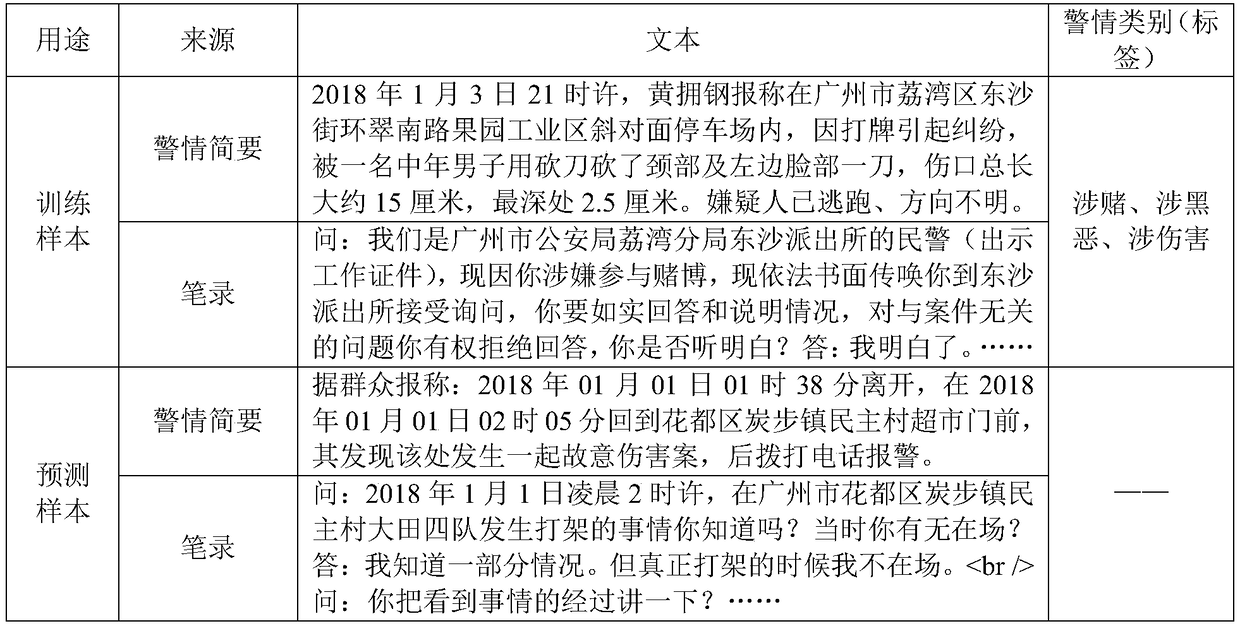

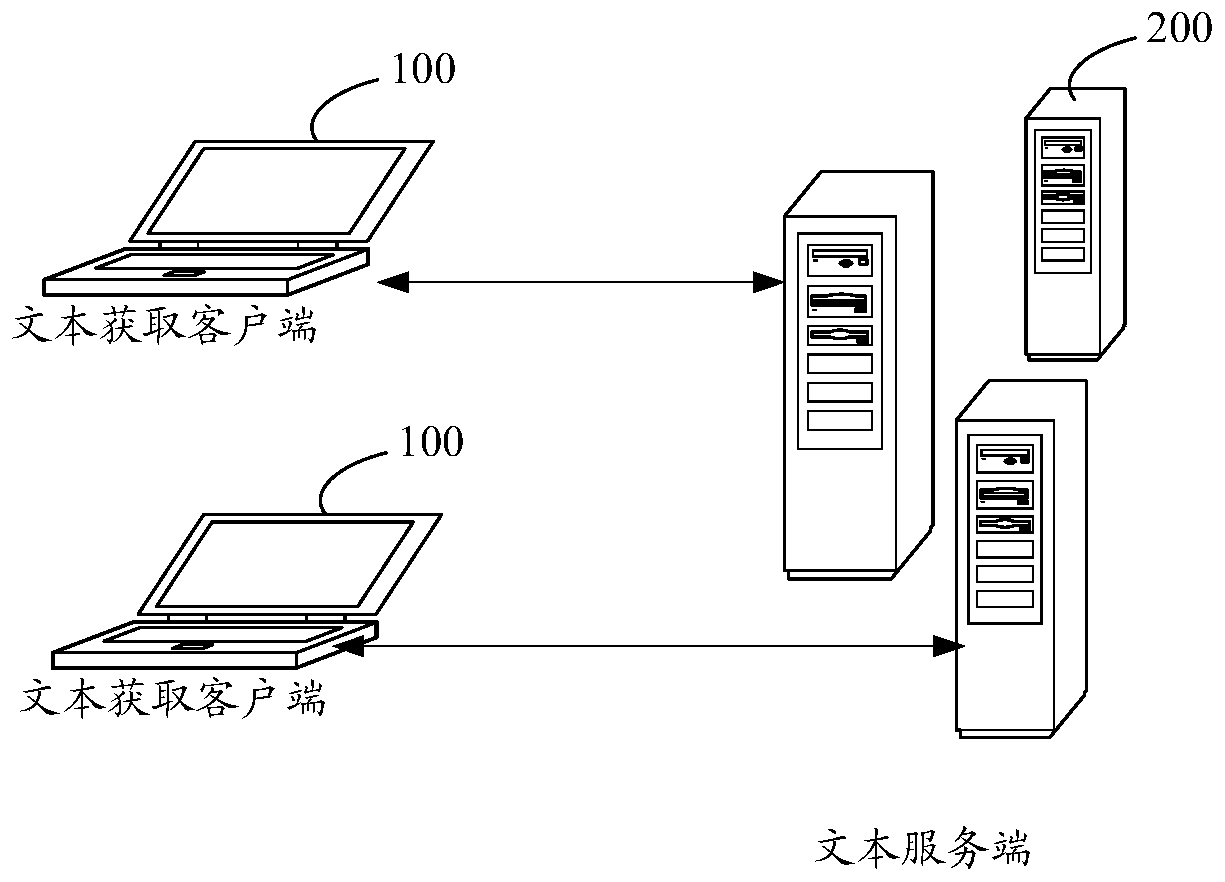

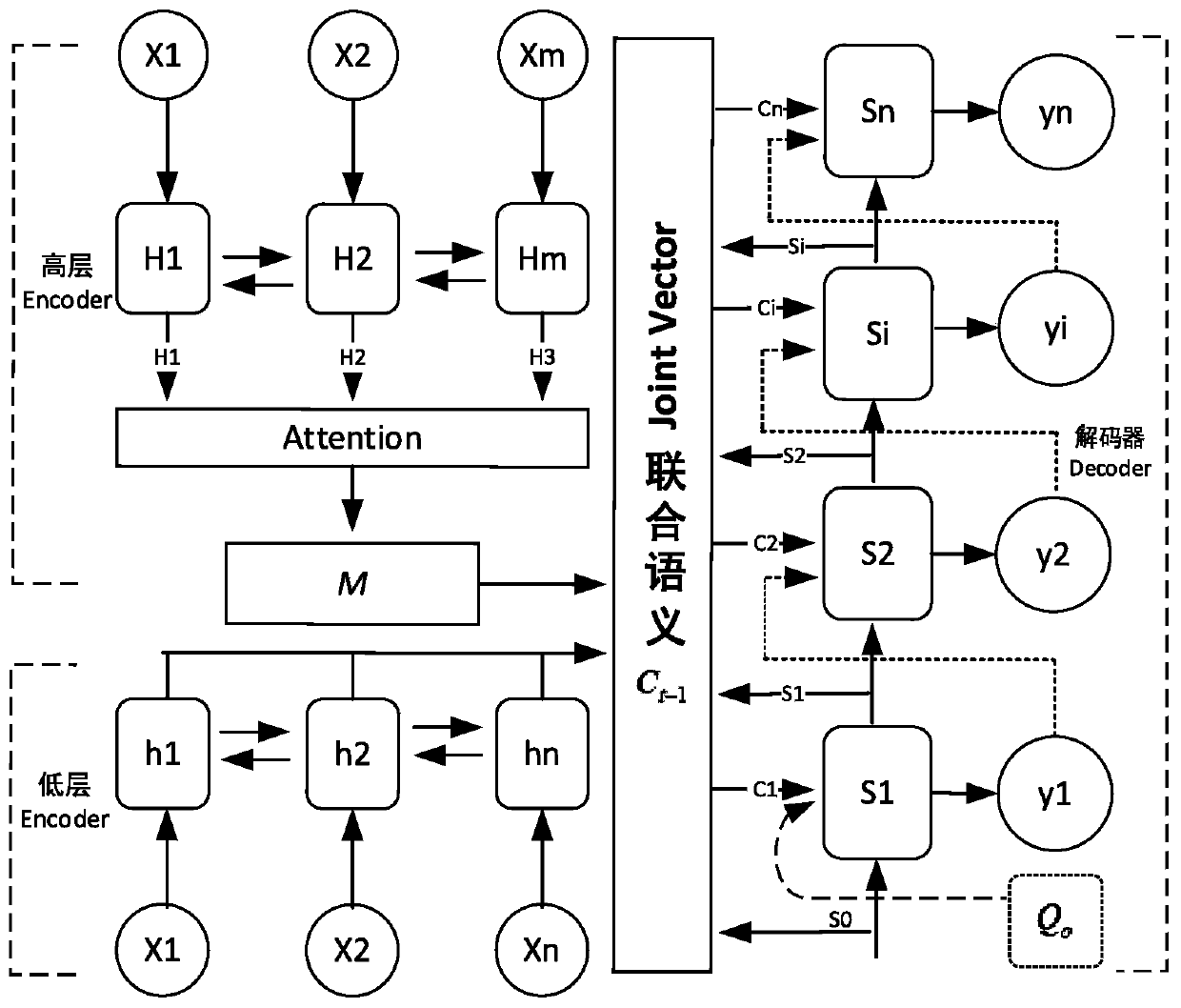

Multi-source and multi-label text classification method and system based on improved seq2seq model

ActiveCN109299273AImprove classification accuracyEffective studyNatural language data processingNeural architecturesMulti-label classificationText categorization

The invention belongs to the technical field of natural language processing text classification, in particular to a multi-source multi-label text classification method based on an improved seq2seq model and a system thereof. The method comprises the following steps: data input and pretreatment, word embedding, encoding, encoding and splicing, decoding, model optimization and prediction output. Themethod of the invention has the following beneficial effects: adopting a seq2seq depth learning framework, constructing a plurality of encoders, and combining the attention mechanism to be used for atext classification task, so as to maximize the use of multi-source corpus information and improve the classification accuracy of the multi-label; In the error feedback process of decoding step, according to the characteristics of multi-label text, an intervention mechanism is added to avoid the influence of label sorting, which is more in line with the essence of multi-label classification problem. The encoder adopts the circulating neural network, which can learn according to the time step effectively. The decoding layer adopts one-way loop neural network and adds attention mechanism to highlight the learning focus.

Owner:广州语义科技有限公司

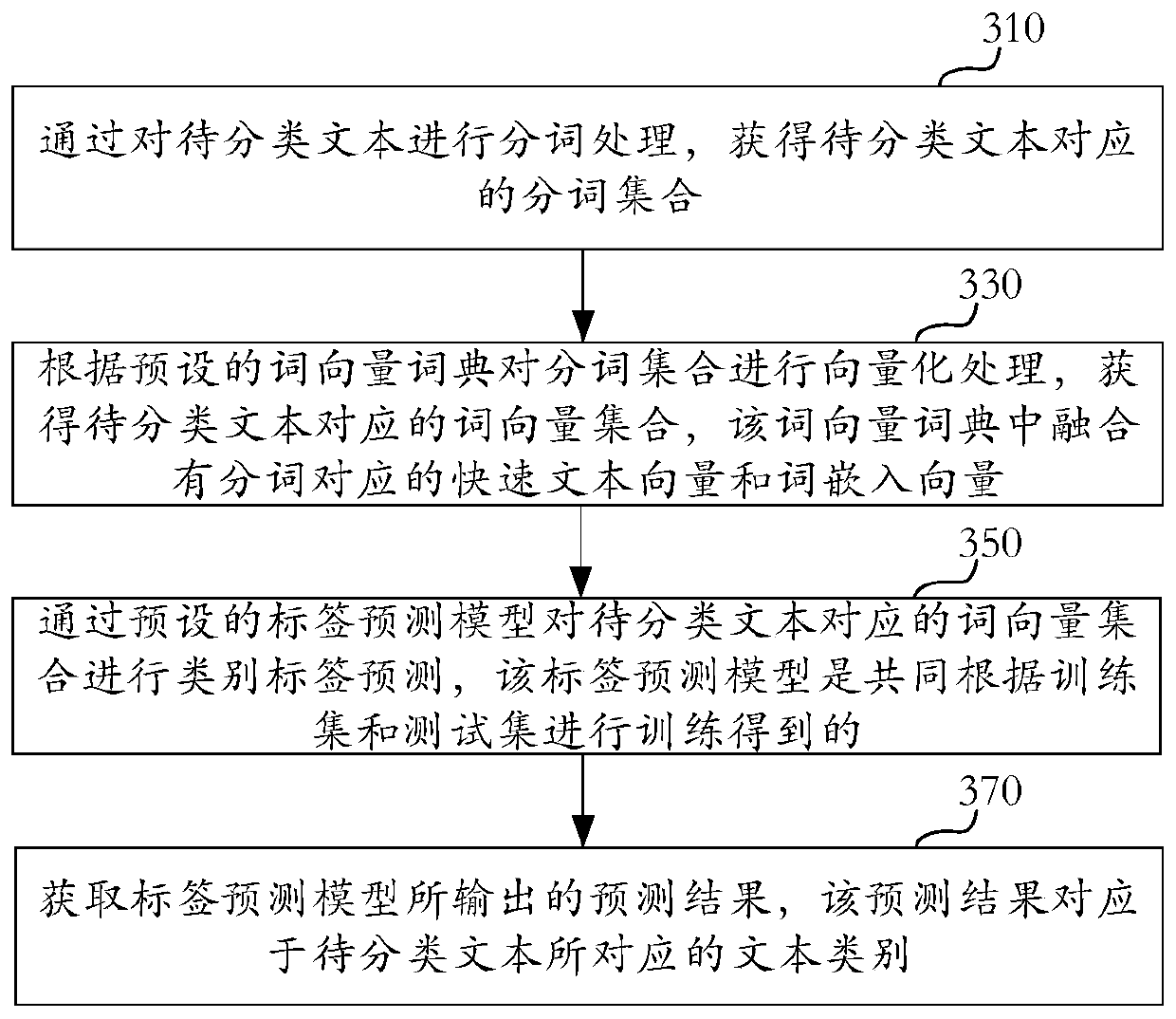

Text classification method and device, electronic equipment and computer readable storage medium

PendingCN110717039AThe process of word segmentation and vectorization is accurateImprove accuracyNatural language data processingSpecial data processing applicationsAlgorithmText categorization

The invention discloses a text classification method and device, and relates to the technical field of artificial intelligence. The method comprises the steps of performing word segmentation processing on a to-be-classified text to obtain a word segmentation set corresponding to the to-be-classified text; conducting vectorization processing on the word segmentation set according to a preset word vector dictionary, acquiring a word vector set corresponding to the text to be classified and fursing the word vector dictionary with fast text vectors and word embedding vectors corresponding to segmented words; predicting a category label on a word vector set corresponding to the to-be-classified text through a preset label prediction model, acquiring the label prediction model through training according to the training set and a test set, and the test set is used for correcting error data in the training set; and obtaining a prediction result output by the label prediction model, wherein theprediction result corresponds to a text category corresponding to the to-be-classified text. The text classification accuracy can be greatly improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

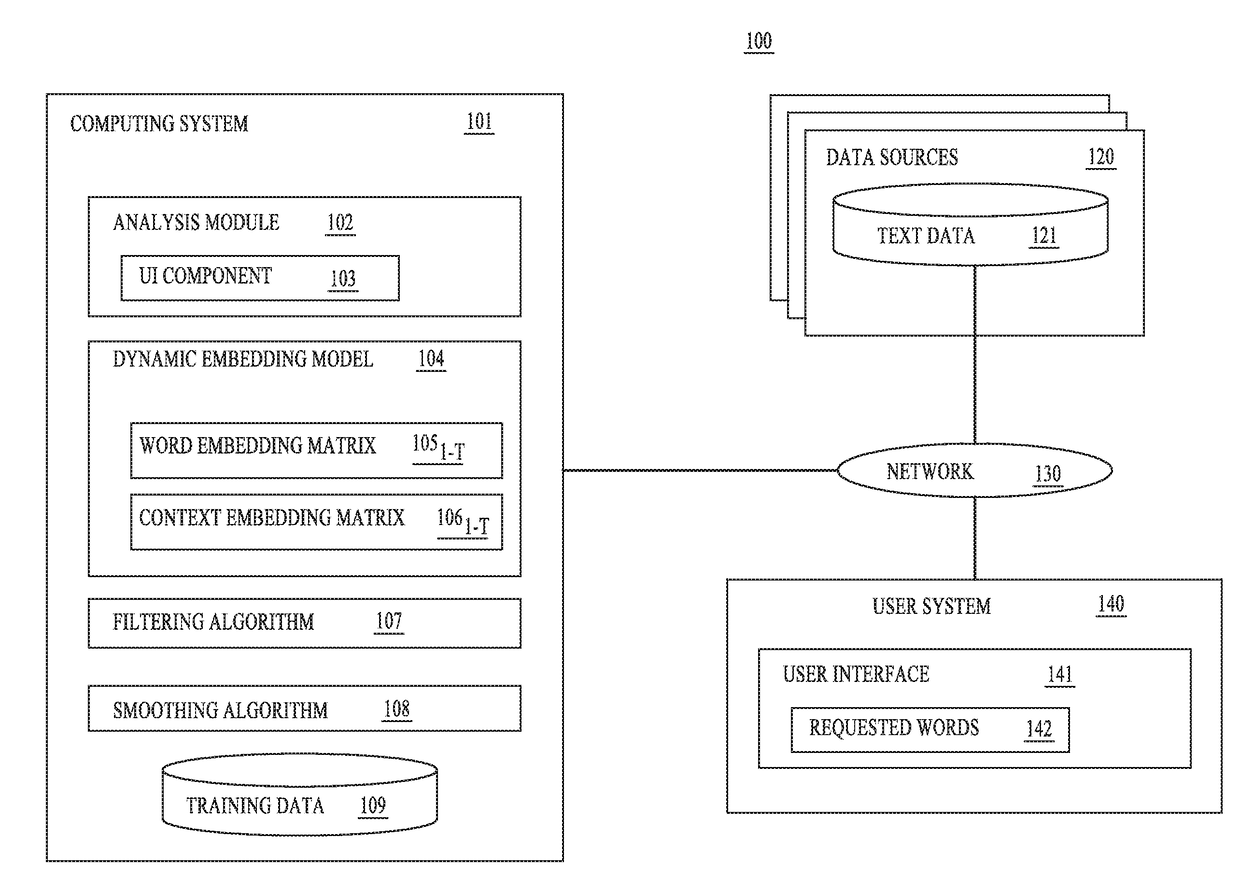

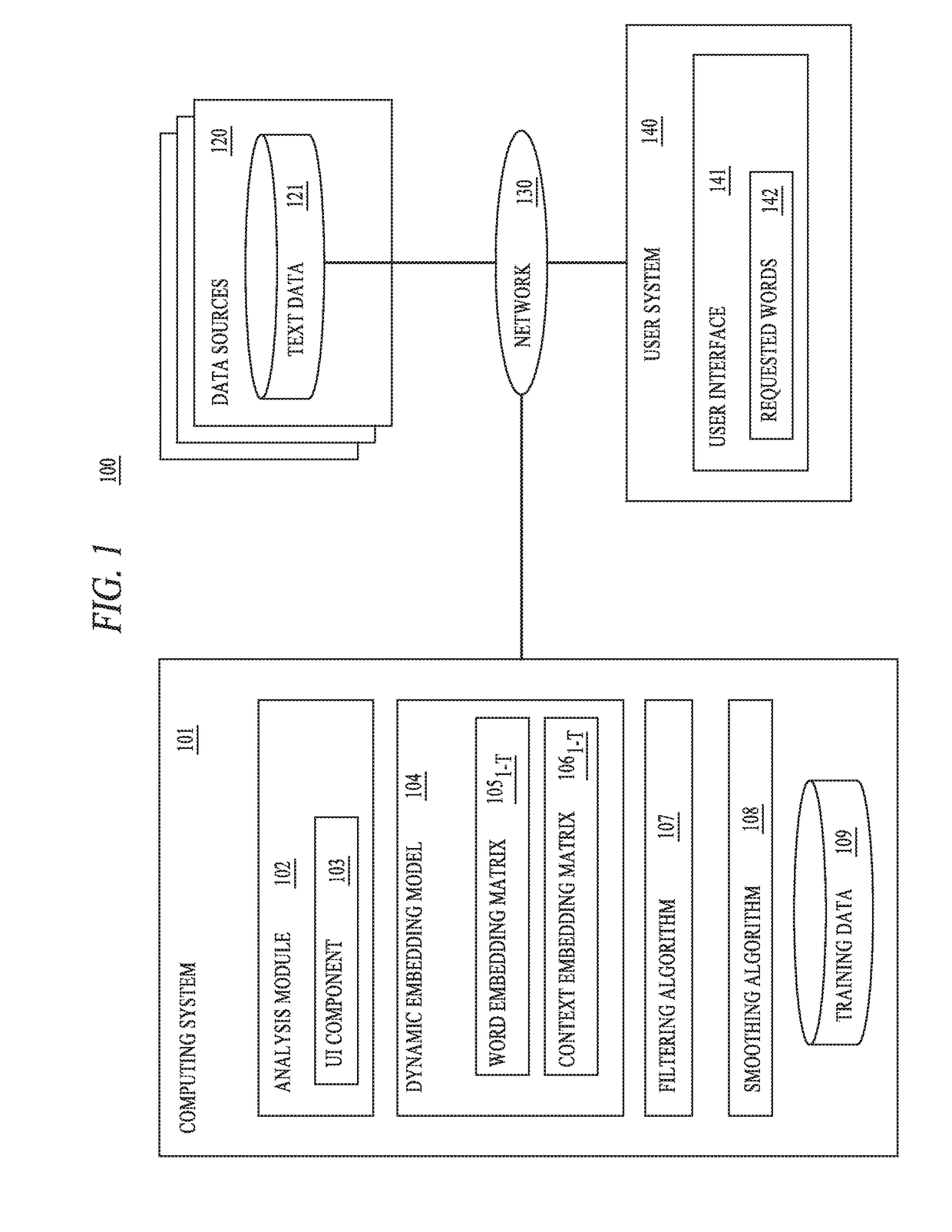

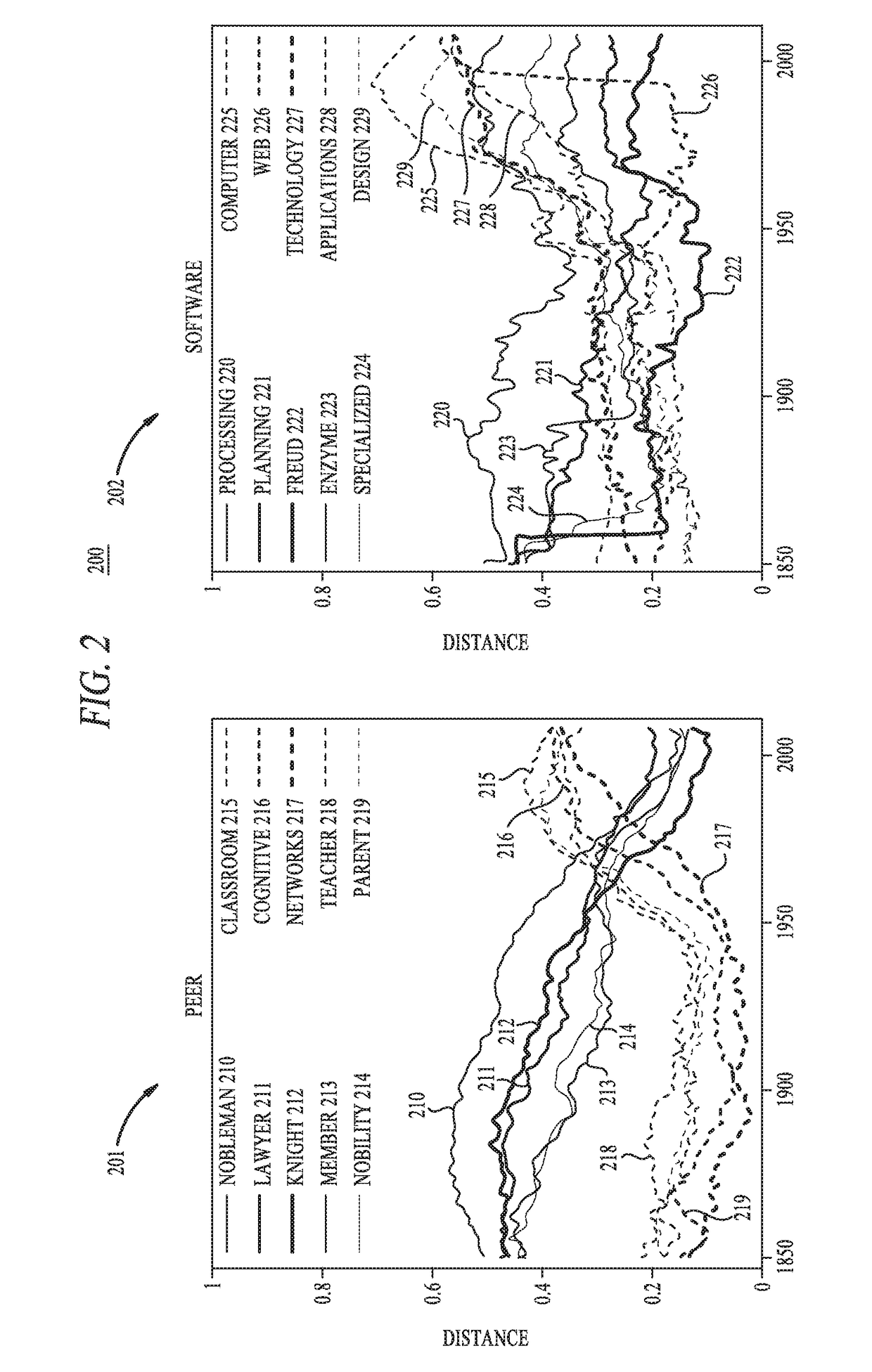

Dynamic word embeddings

Systems, methods, and articles of manufacture to perform an operation comprising deriving, based on a corpus of electronic text, a machine learning data model that associates words with corresponding usage contexts over a window of time, according to a diffusion process, wherein the machine learning data model comprises a plurality of skip-gram models, wherein each skip-gram model comprises a word embedding vector and a context embedding vector for a respective time step associated with the respective skip-gram model, generating a smoothed model by applying a variational inference operation over the machine learning data model, and identifying, based on the smoothed model and the corpus of electronic text, a change in a semantic use of a word over at least a portion of the window of time.

Owner:DISNEY ENTERPRISES INC

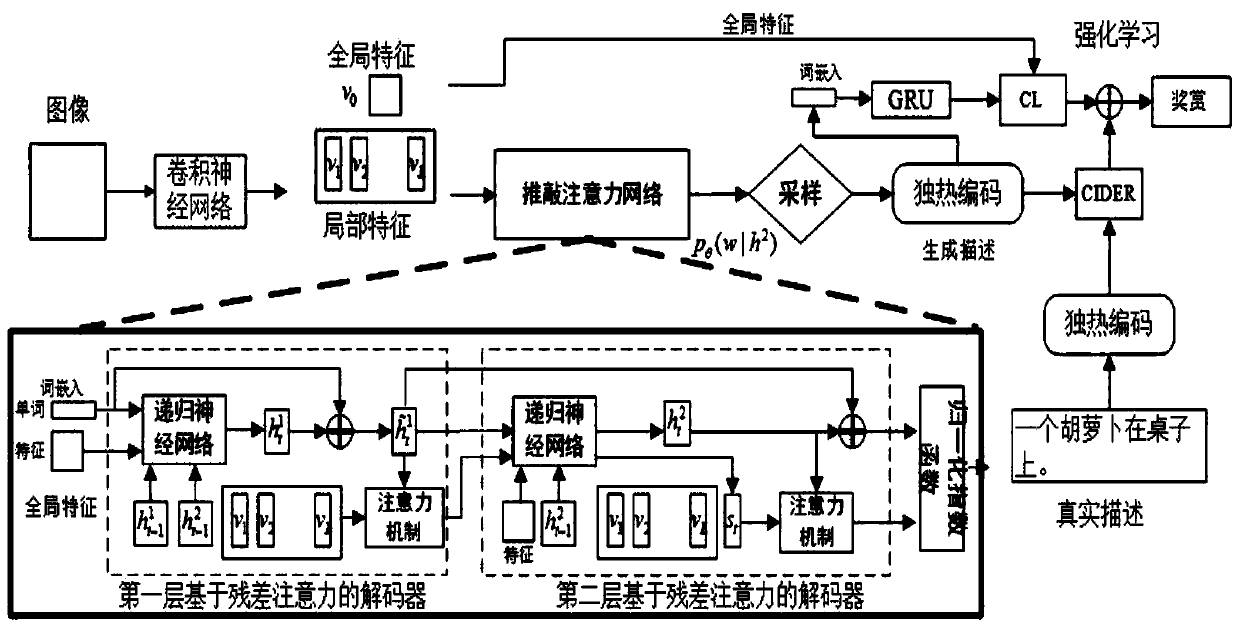

An image description generation system and method based on a weighing attention mechanism

ActiveCN109726696AExact searchRecognition ConcernCharacter and pattern recognitionHard ciderData set

The invention relates to the field of image understanding, discloses an image description generation system and method based on a weighing attention mechanism, and solves the problems that an existingimage description scheme lacks a polishing process, the training process and the testing process are inconsistent, and the generation description recognition degree is not high. The method comprisesthe following steps: a, processing a data set: extracting global features and local features of an image, constructing the data set, marking words in the data set, and generating corresponding word embedding vectors; B, training an image description generation model: generating rough image description by adopting a first layer of decoder based on a residual attention mechanism, and carrying out polishing on the generated image description by adopting a second layer of decoder based on the residual attention mechanism; And c, further training the model in combination with reinforcement learning: simulating a test process of the model in the training process, guiding the training of the model by generating a described CIDEr score, and adjusting the model in combination with reinforcement learning.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

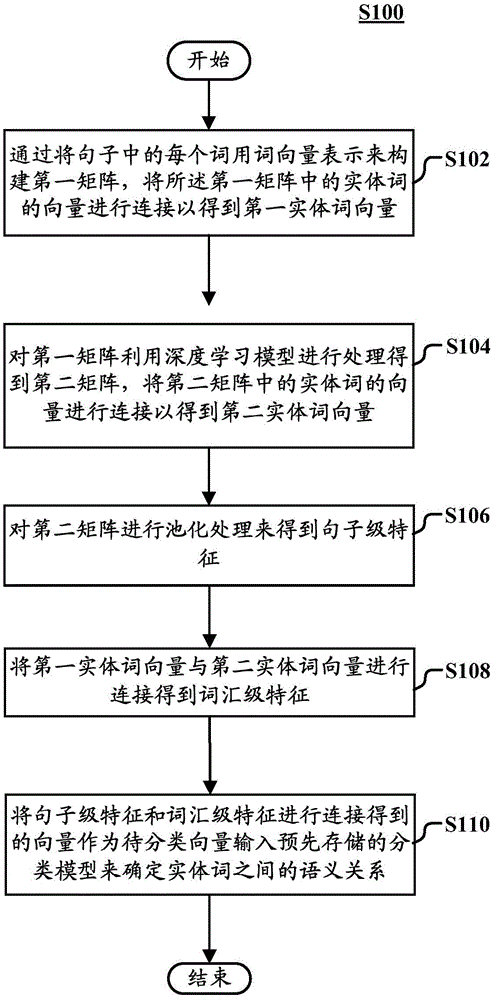

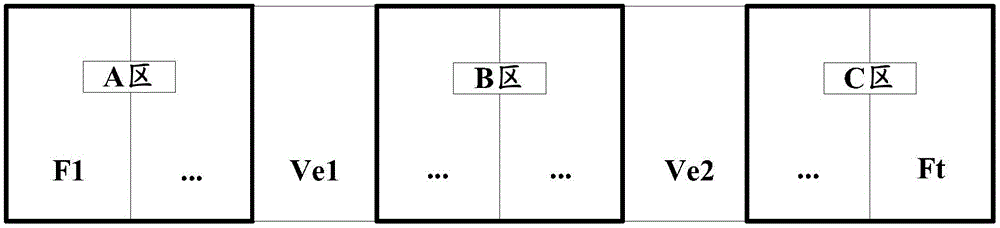

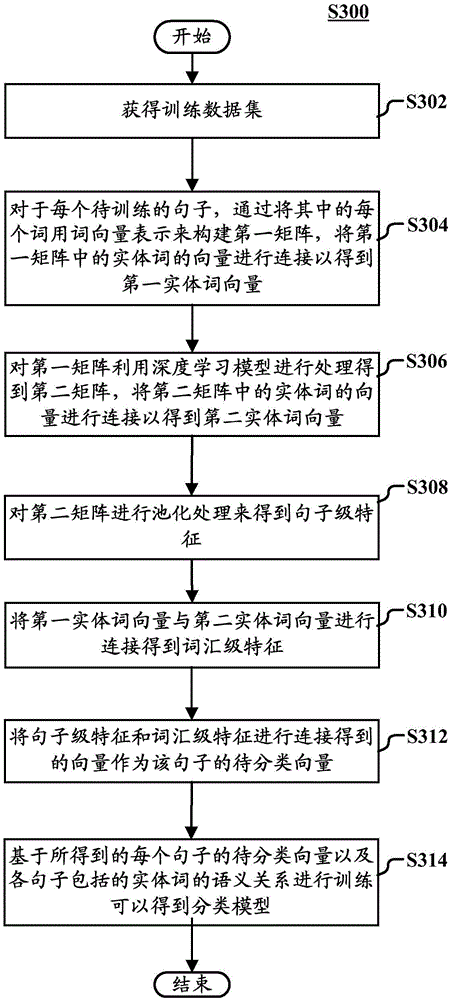

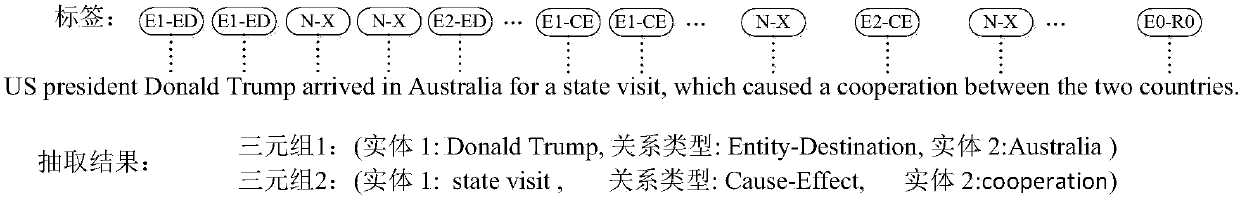

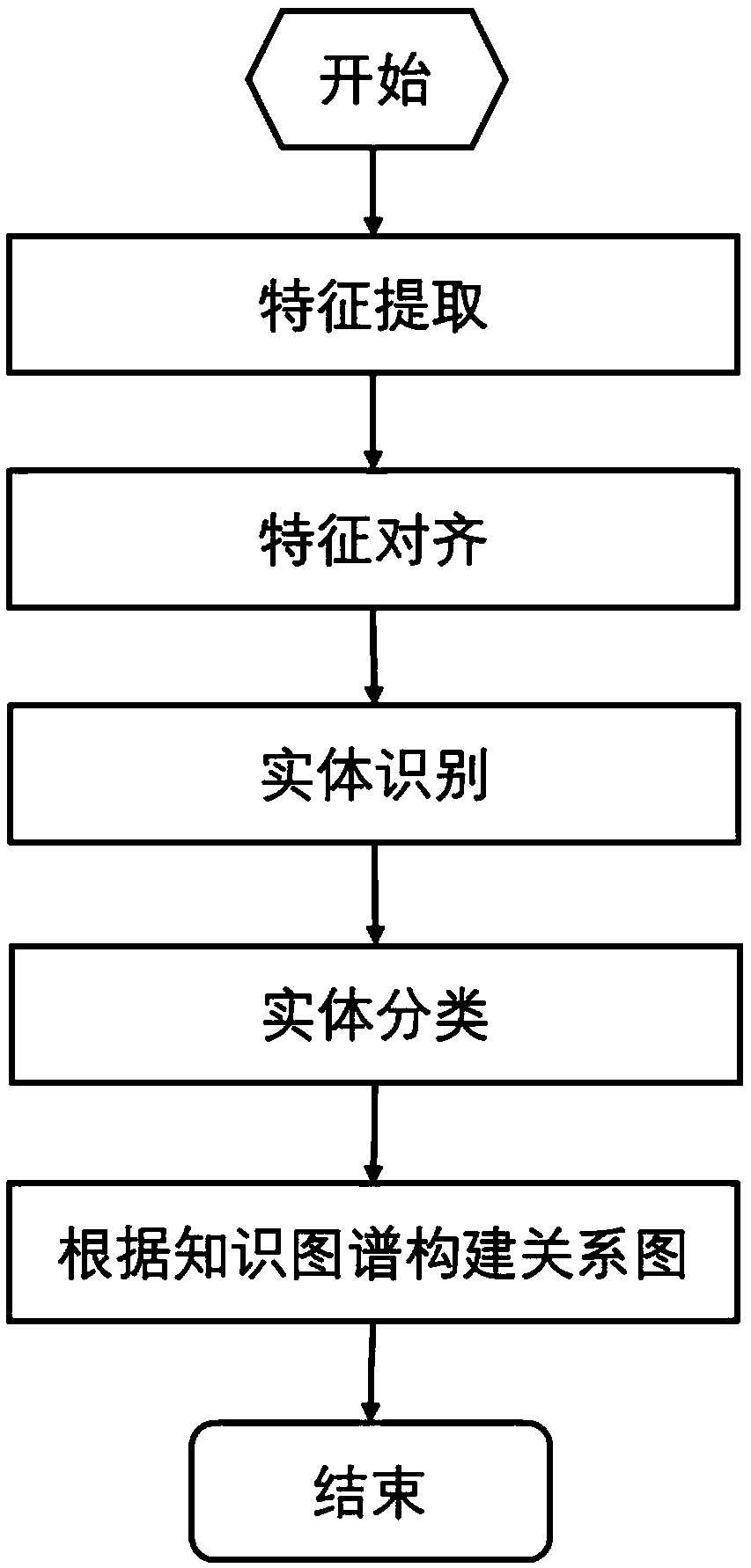

Method and device for classifying semantic relationships among entity words

ActiveCN106407211ASemantic analysisSpecial data processing applicationsCategorical modelsWord embedding

The invention relates to a method and a device for classifying semantic relationships among entity words. The method comprises the following steps of: representing each word in a sentence by word embedding to construct a first matrix, and concatenating the word embedding of the entity word in the first matrix to obtain first entity word embedding; processing the first matrix by a deep learning model to obtain a second matrix, and concatenating the word embedding of the entity word in the second matrix to obtain second entity word embedding; carrying out pooling processing on the second matrix to obtain sentence level characteristics; concatenating the first entity word embedding with the second entity word embedding to obtain lexical level characteristics; and taking embedding obtained by concatenating the sentence level characteristics with the lexical level characteristics as embedding to be classified, inputting the embedding to be classified into a pre-stored classification model to determine the semantic relationships among the entity words. According to the invention, a more effective method and device for classifying the semantic relationships among the entity words is provided.

Owner:FUJITSU LTD

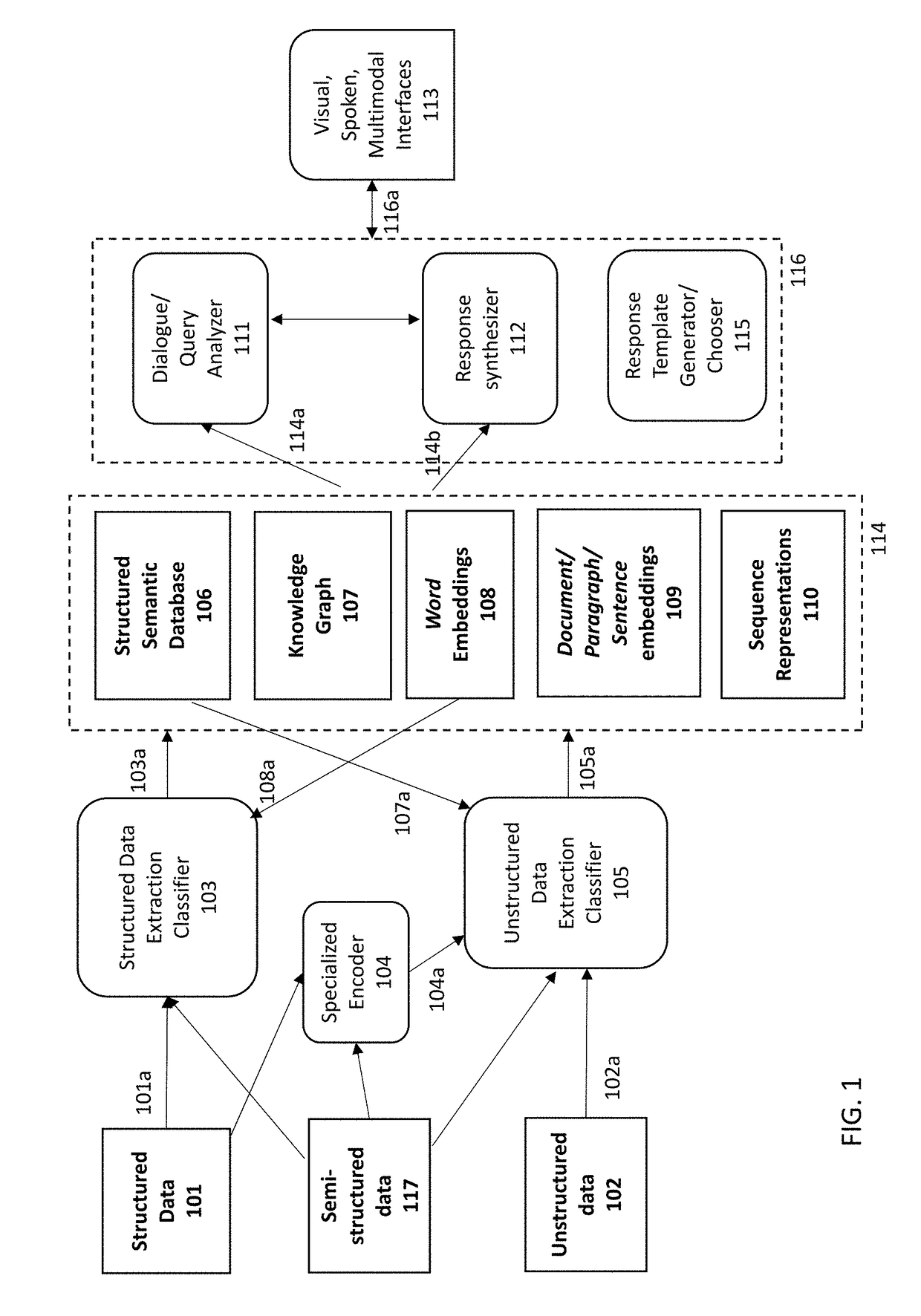

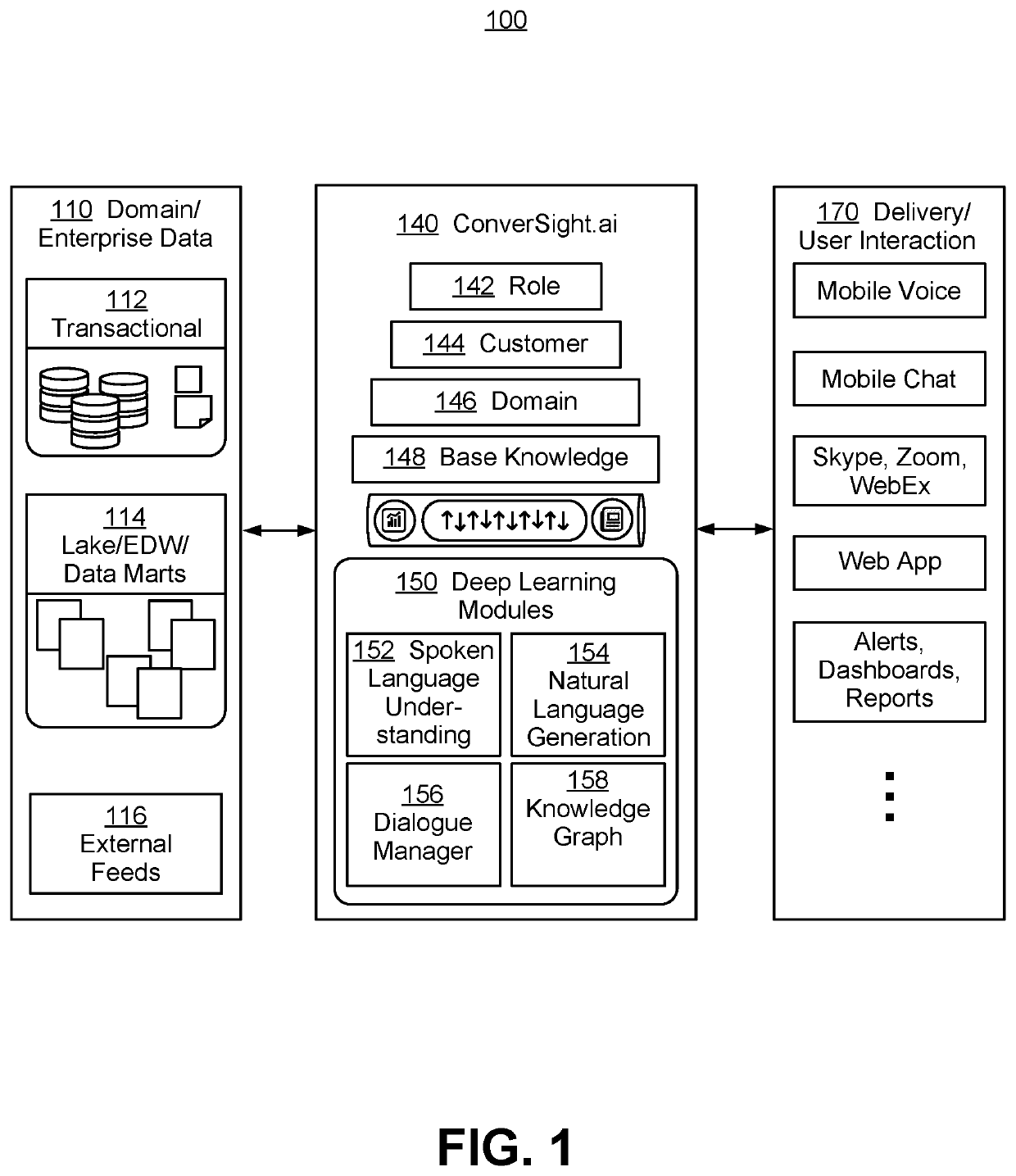

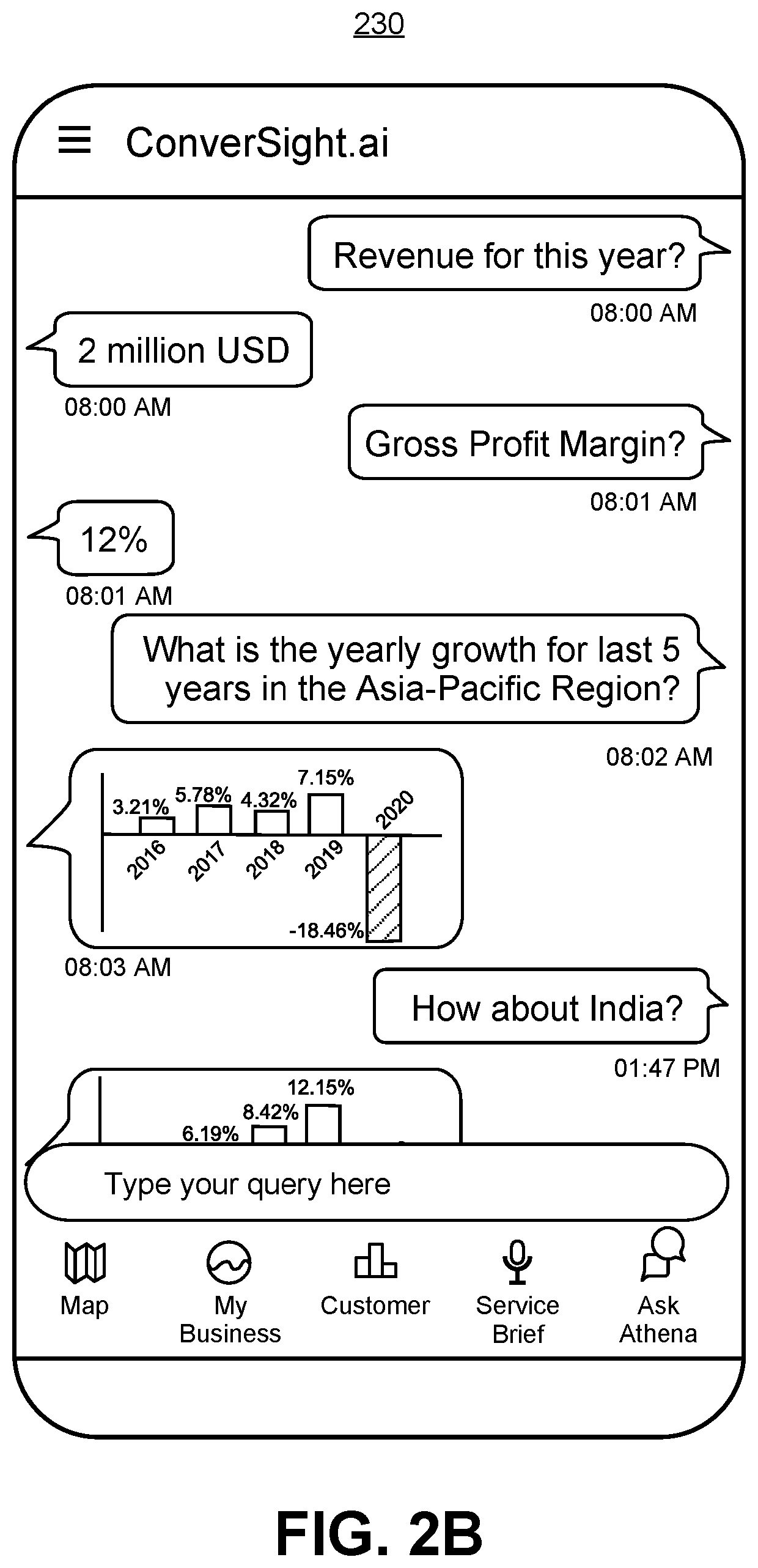

Contextual and intent based natural language processing system and method

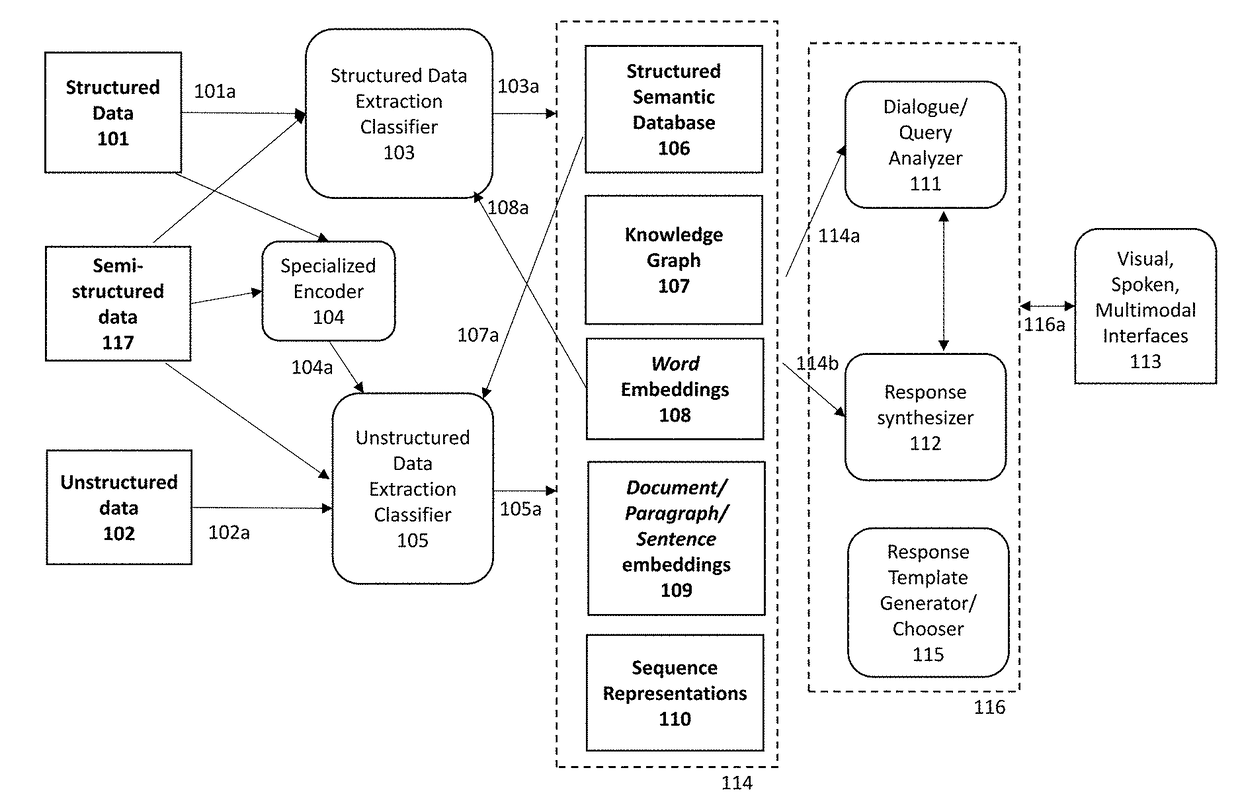

Methods and systems are disclosed for an artificial intelligence (AI)-based, conversational insight and action platform for user context and intent-based natural language processing and data insight generation. Using artificial intelligence and semantic analysis techniques, a knowledge graph is generated from structured data, and a word embedding is generated from unstructured data. A semantic meaning is extracted from a user request, and at least one user attribute and context are determined. One or more entities and relationships on the knowledge graph that match the semantic meaning are determined, based on the user attribute, context, and the word embedding. A sequence of analytical instructions is generated from the matching results, and applied to the structured data to generate a data insight response to the user request. If no matches are found, similar entities and relationships are presented to the user, and user selections are used to further train the system.

Owner:CONVERSIGHT AI INC

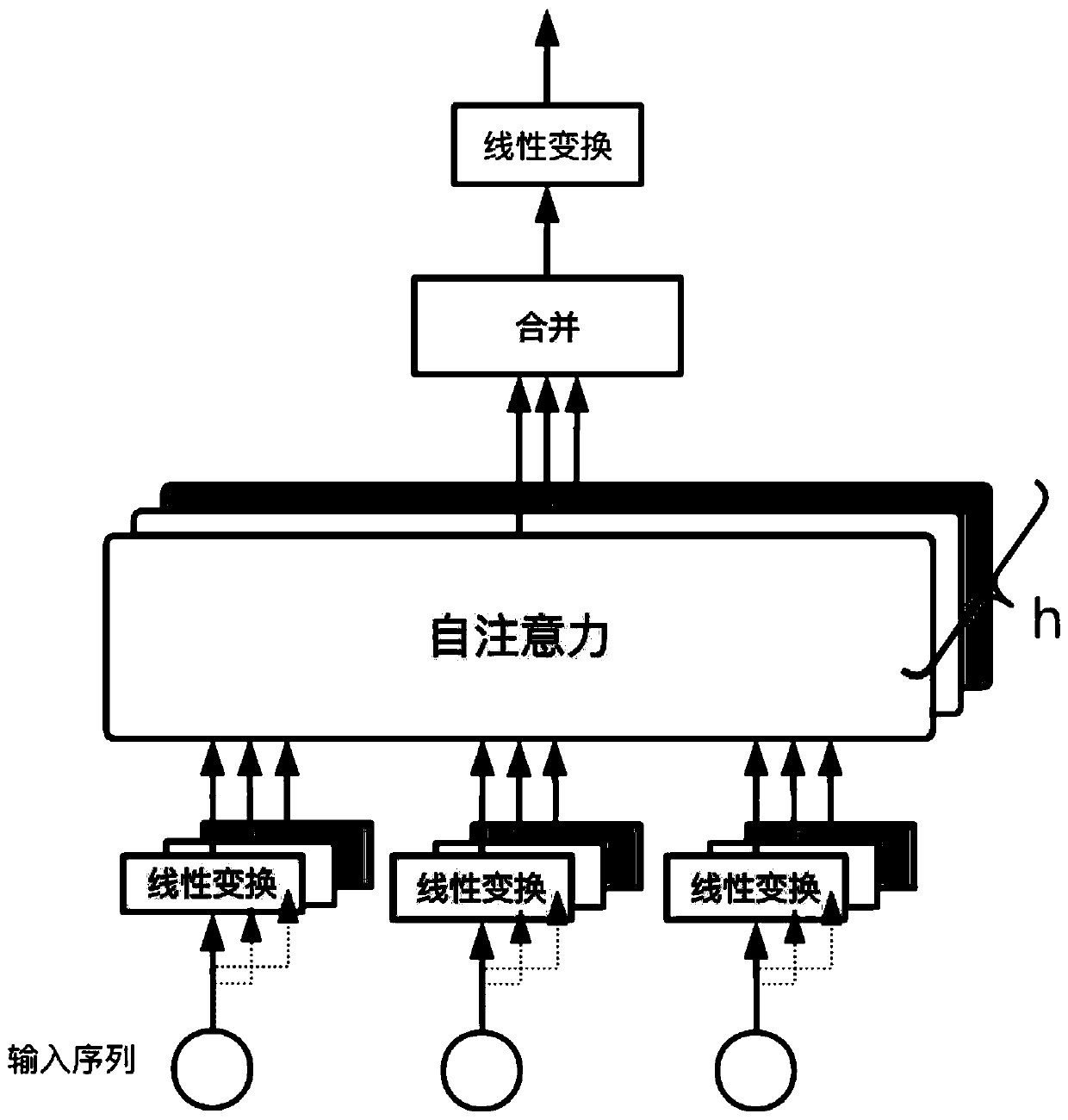

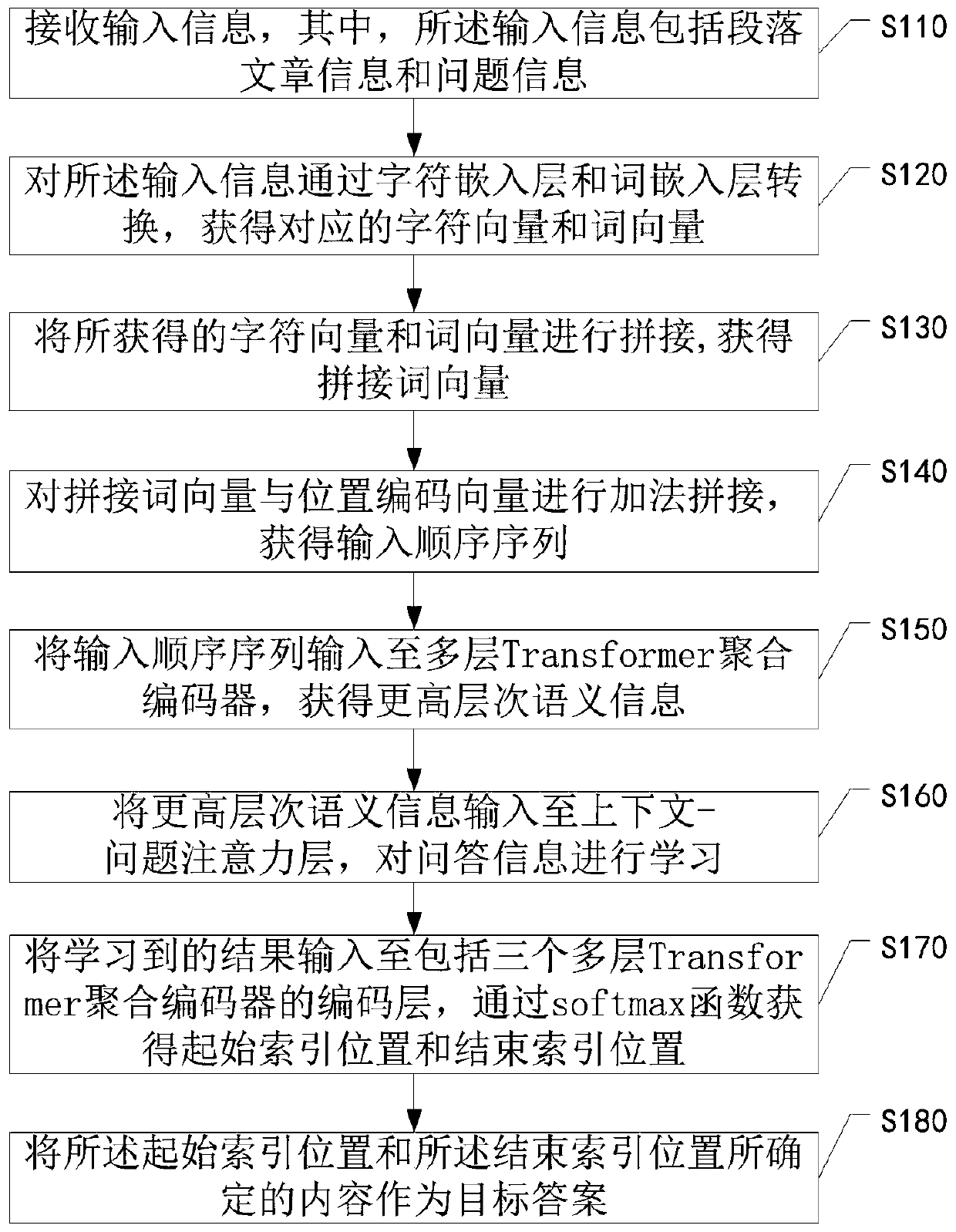

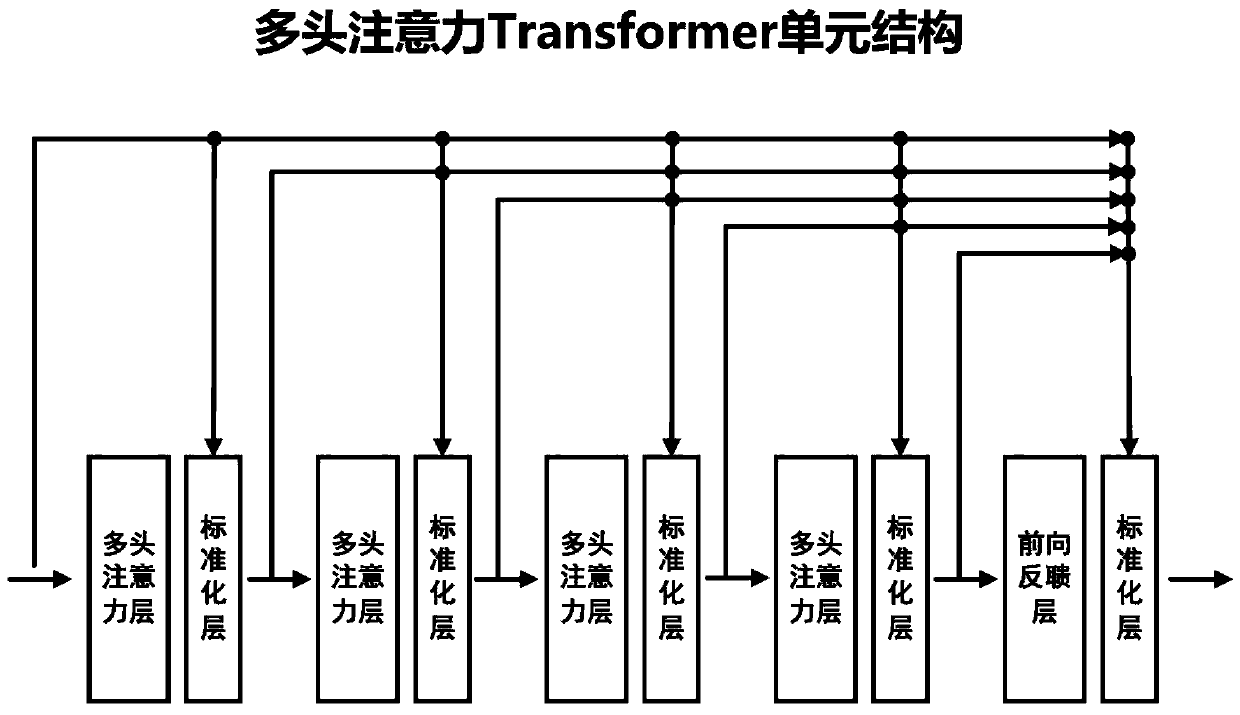

Answer generation method based on multi-layer Transformer aggregation encoder

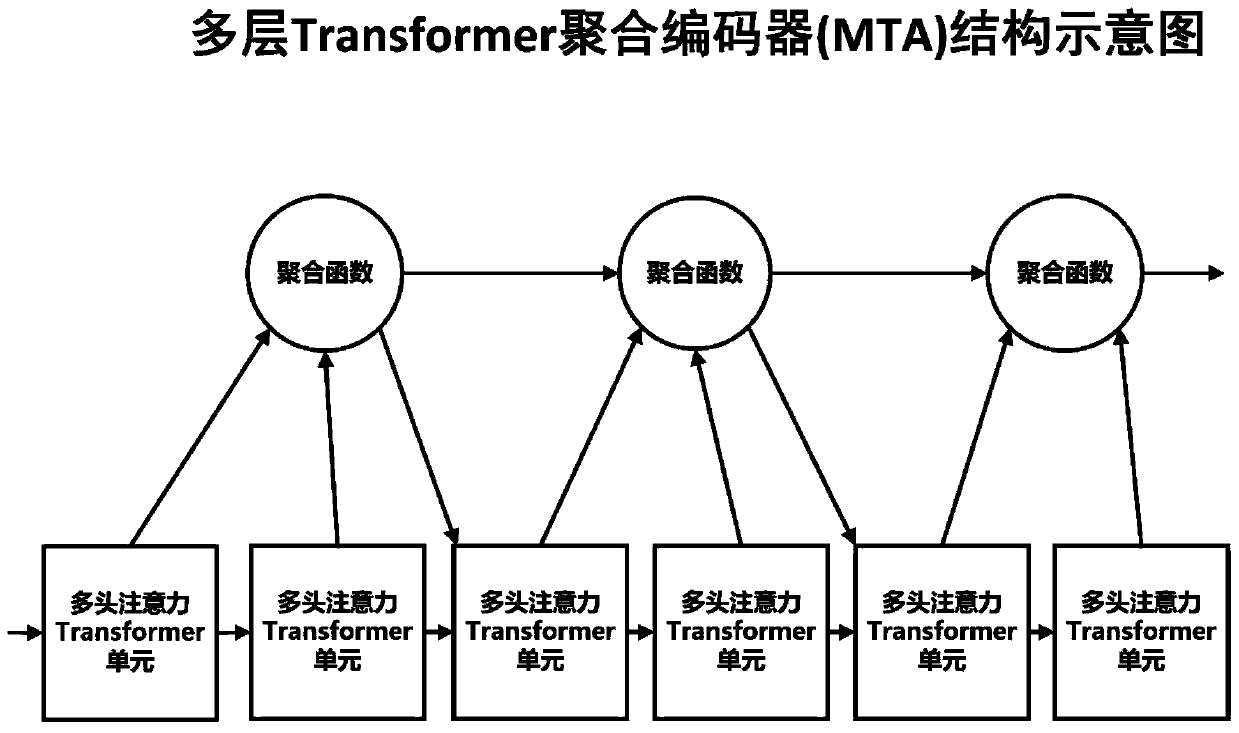

InactiveCN110502627ASolve the lossImprove accuracyDigital data information retrievalNeural architecturesAlgorithmTransformer

The invention discloses an answer generation method based on a multilayer Transformer aggregation encoder, and the method comprises the steps: receiving input information which comprises paragraph article information and question information; converting the input information through a character embedding layer and a word embedding layer to obtain corresponding character vectors and word vectors; splicing the character vector and the word vector to obtain a spliced word vector; performing addition splicing on the spliced word vector and the position coding vector to obtain an input sequence; inputting the input sequence into a multi-layer Transformer aggregation encoder to obtain higher-level semantic information; inputting higher-level semantic information into a context-question attentionlayer, and learning question and answer information; inputting a learning result into an encoding layer comprising three multi-layer Transformer aggregation encoders, and obtaining a starting position and an ending position through a softmax function; and taking the content determined by the starting position and the ending position as a target answer. By applying the embodiment of the invention,the problems of information loss and insufficient performance in the prior art are solved.

Owner:SHANGHAI MARITIME UNIVERSITY

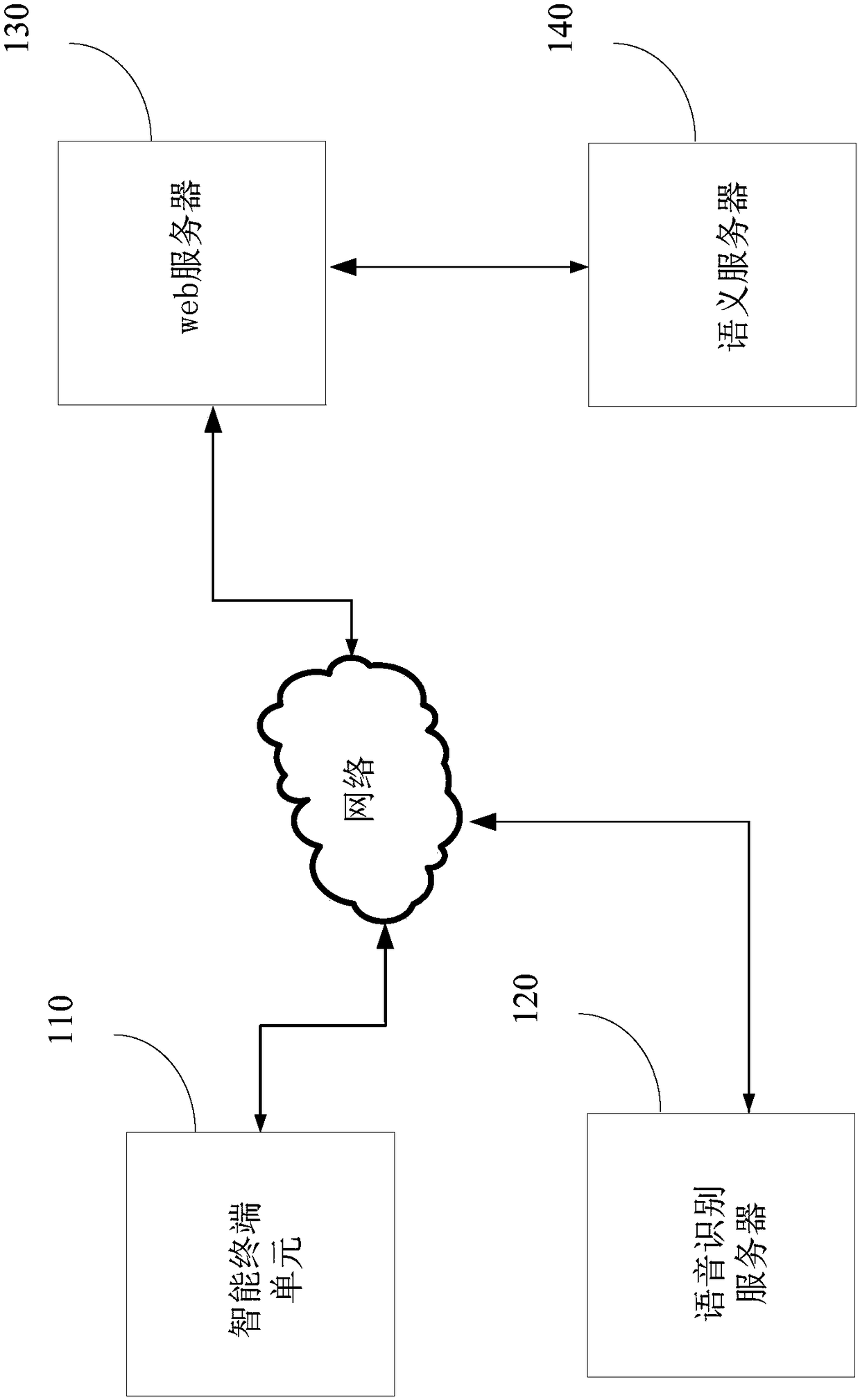

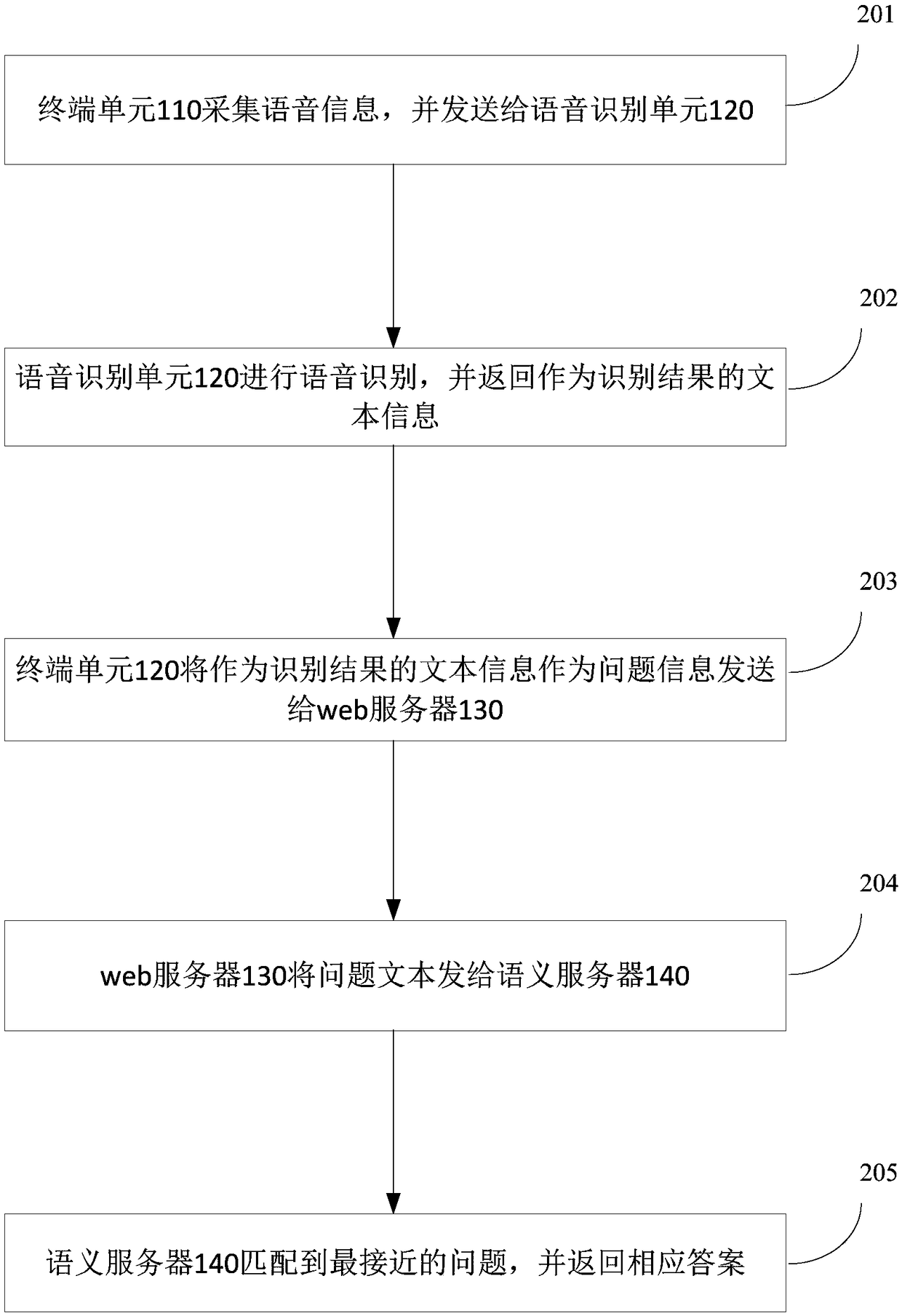

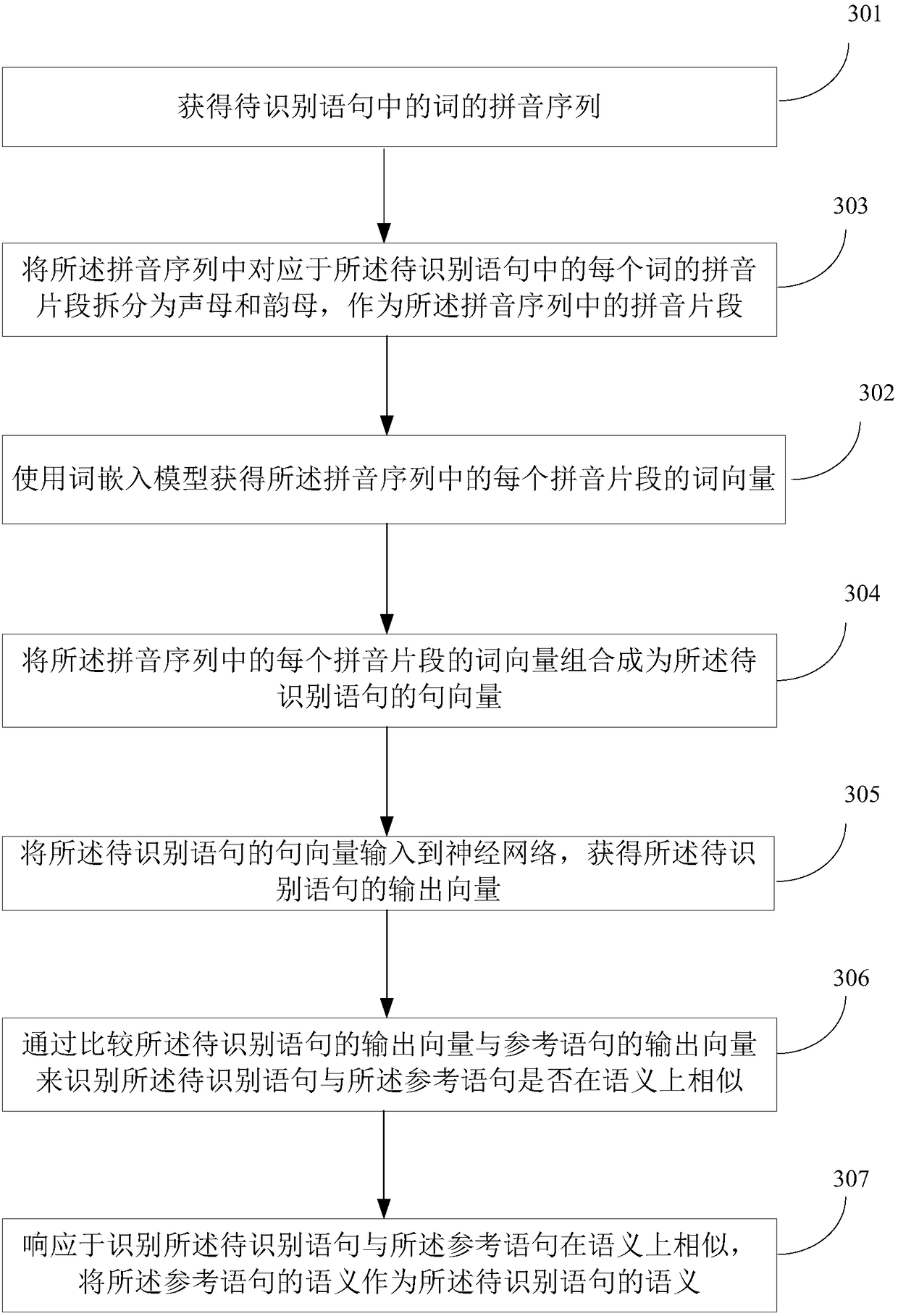

Pinyin-based semantic identification method and apparatus, and man-machine conversation system

PendingCN108549637AEliminate distractionsImprove accuracySemantic analysisSpeech recognitionDialog systemAlgorithm

The invention provides a pinyin-based semantic identification method and apparatus, and a man-machine conversation system. The semantic identification method comprises the steps of obtaining a pinyinsequence of words in a to-be-identified statement; obtaining word vectors of pinyin fragments in the pinyin sequence by using a word embedding model; combining the word vectors of the pinyin fragmentsin the pinyin sequence into sentence vectors of the to-be-identified statement; inputting the sentence vectors of the to-be-identified statement to a neural network, thereby obtaining output vectorsof the to-be-identified statement; identifying whether the to-be-identified statement is similar to a reference statement in semantics or not by comparing the output vectors of the to-be-identified statement with output vectors of the reference statement; and in response to operation of identifying that the to-be-identified statement is similar to the reference statement in semantics, taking semanteme of the reference statement as semanteme of the to-be-identified statement.

Owner:BOE TECH GRP CO LTD

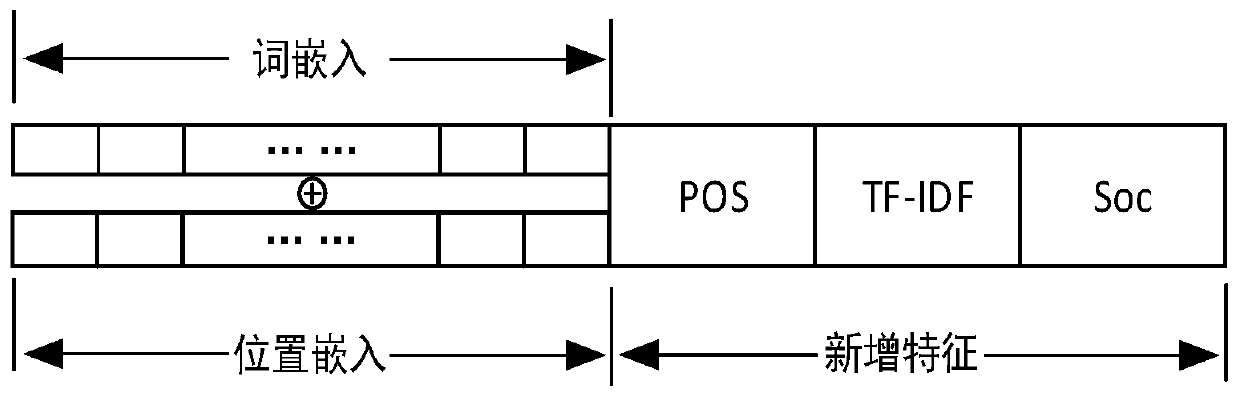

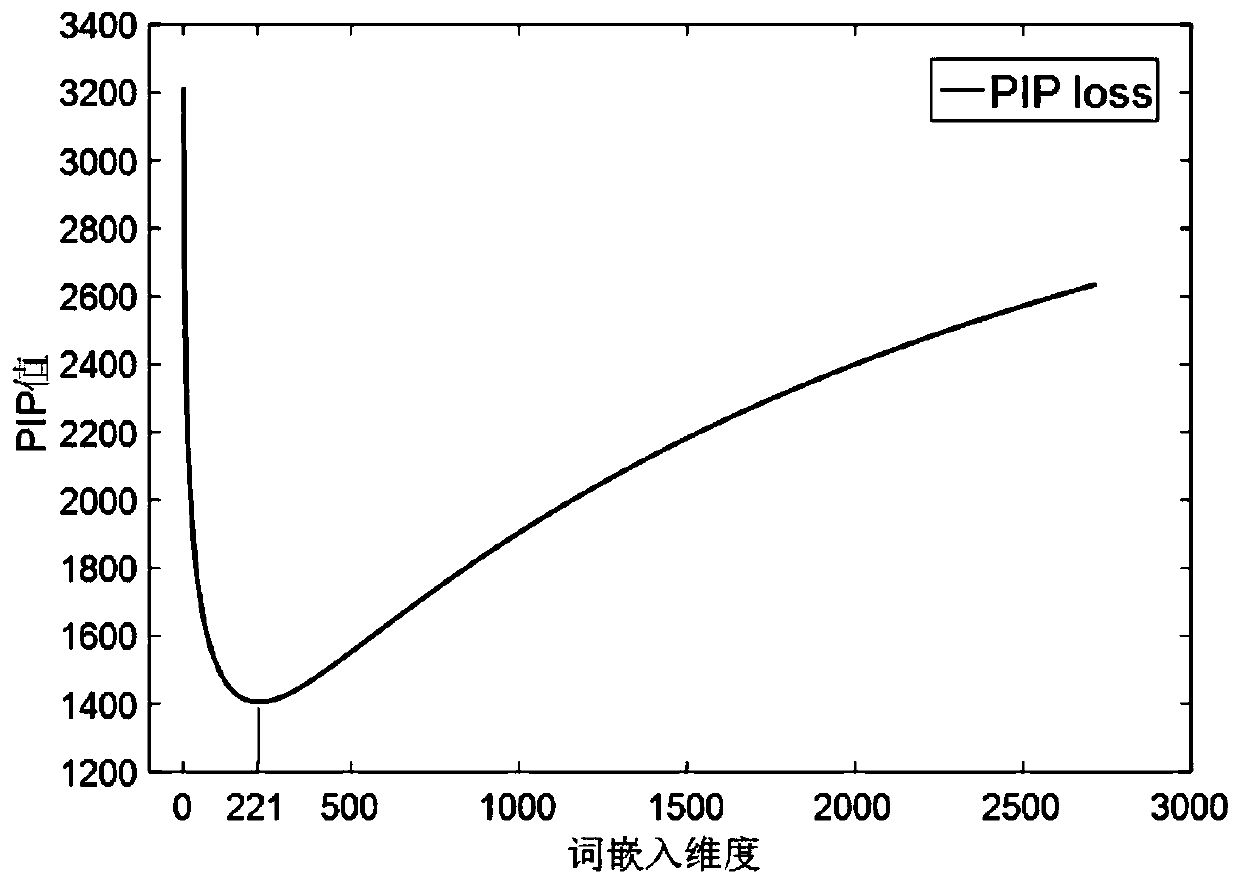

Short text automatic abstracting method and system based on double encoders

ActiveCN110390103AFast convergenceImprove understandingSemantic analysisNeural architecturesInformation processingAlgorithm

The invention discloses a short text automatic abstracting method and system based on double encoders, belongs to the technical field of information processing, and is characterized by comprising thefollowing steps: 1, preprocessing data, 2, designing a double encoder with a bidirectional recurrent neural network, and 3, arranging an attention mechanism fusing global and local semantics 4, arranging a decoder with empirical probability distribution and using a decoder designed by adopting a double-layer unidirectional neural network; 5, adding word embedding characteristics, 6, optimizing word embedding dimensions, and 7, carrying out preprocessing and testing on the news corpus data from the Sogou laboratory, substituting the news corpus data into a Seq2Seq model with double encoders andaccompanying empirical probability distribution to carry out calculation, and carrying out experimental evaluation through a text abstract quality evaluation system Rouge. According to the invention,traditional weaving is carried out; and the decoding framework is subjected to optimization research, so that the model can fully understand text semantics, and the fluency and precision of text abstracts are improved.

Owner:CIVIL AVIATION UNIV OF CHINA

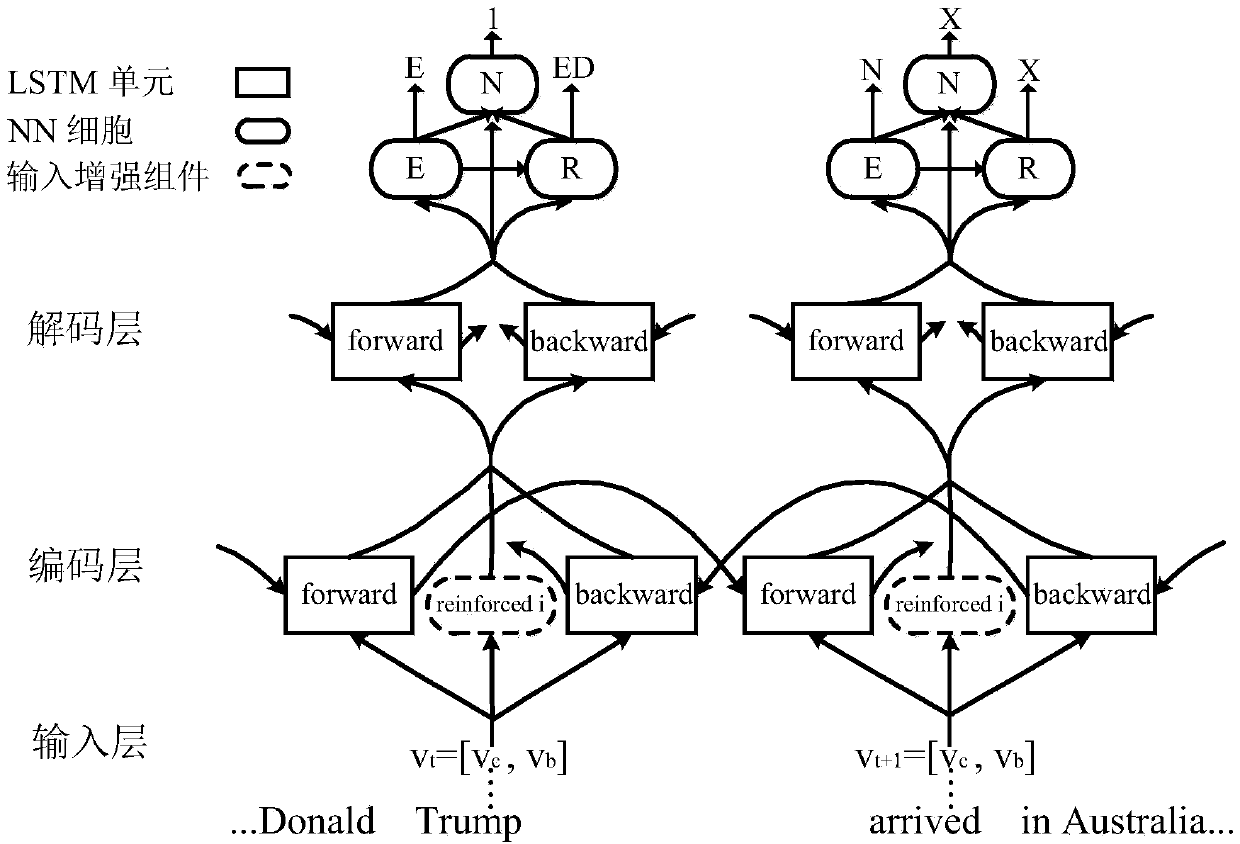

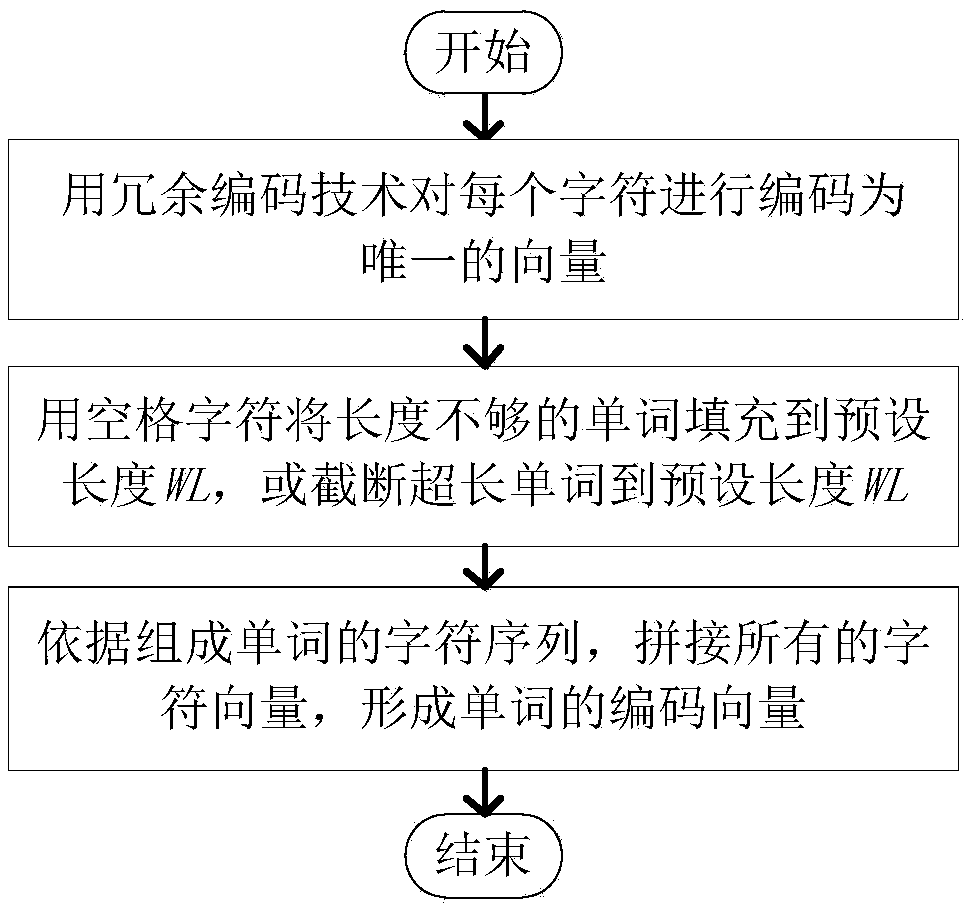

Relation extraction method based on Bi-LSTM input information enhancement

ActiveCN108416058AImprove robustnessSolve the dilutionNatural language data processingSpecial data processing applicationsAlgorithmRecall rate

The invention provides a relation extraction method based on Bi-LSTM input information enhancement and belongs to the field of artificial intelligence natural language processing of computers. The method comprises the steps that by applying a strategy annotation dataset of an indeterminate label, a redundancy encoding technology is used for conducting character-level encoding on each word to generate a word form encoding vector; the word form encoding vector and a word embedding vector are spliced to generate a word vector used for capturing word form and word meaning information; Bi-LSTM of input information enhancement is used as a model encoding layer, the word vector is input to an encoding layer, and the encoding vector is output; the encoding vector is input into a decoding layer, and a decoding vector is obtained; by applying three layers of NN, an entity label, a relation type and entity number information are extracted from the decoding vector; finally, the gradient is calculated, the weight is updated, and a model is trained through a maximum target function. By means of the relation extraction method, the robustness of the system is improved, interference information caused by non-entity words is reduced, and the accuracy rate and recall rate of relation extraction are effectively increased.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

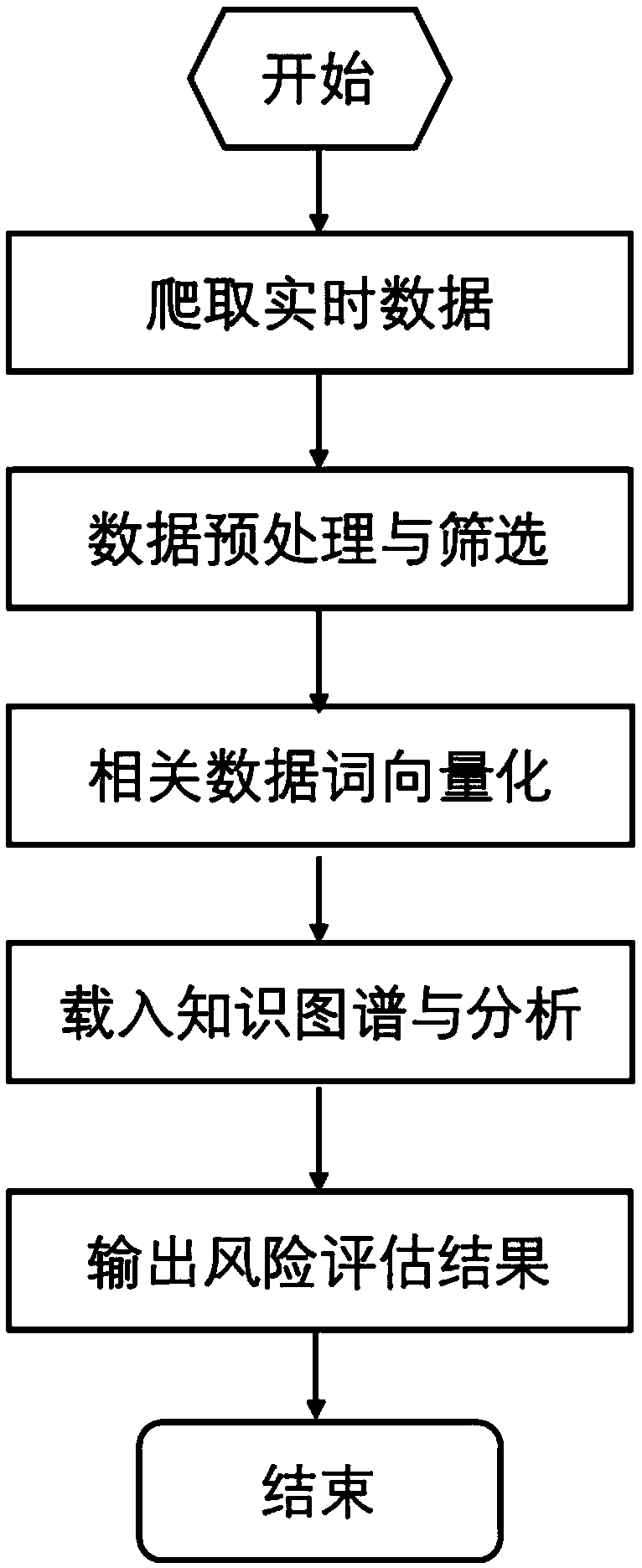

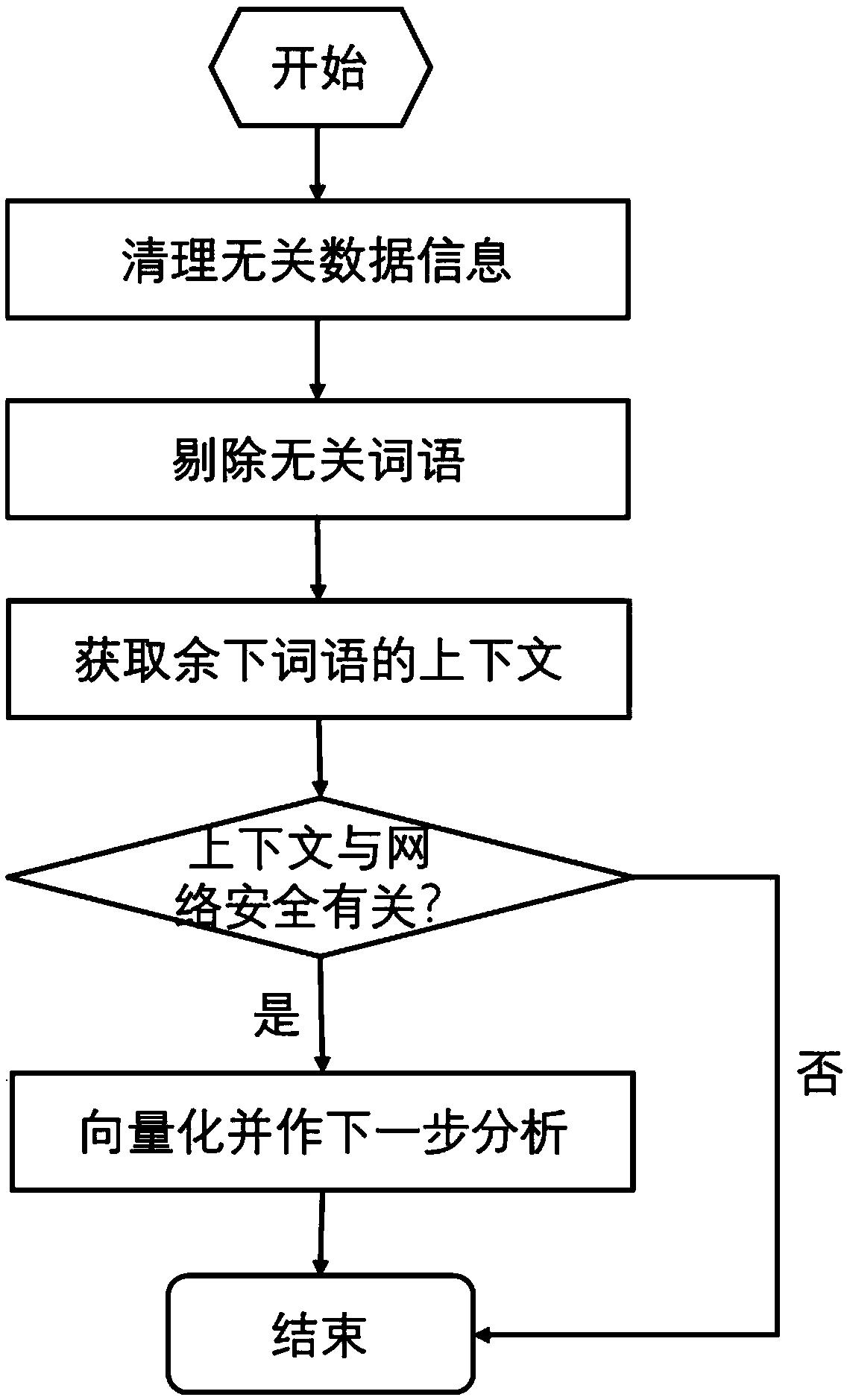

Vulnerability utilization risk evaluation method based on multi-source word embedding and knowledge map

InactiveCN109347801AComprehensive assessmentImprove accuracyComputer security arrangementsTransmissionData setData source

Owner:WUHAN UNIV

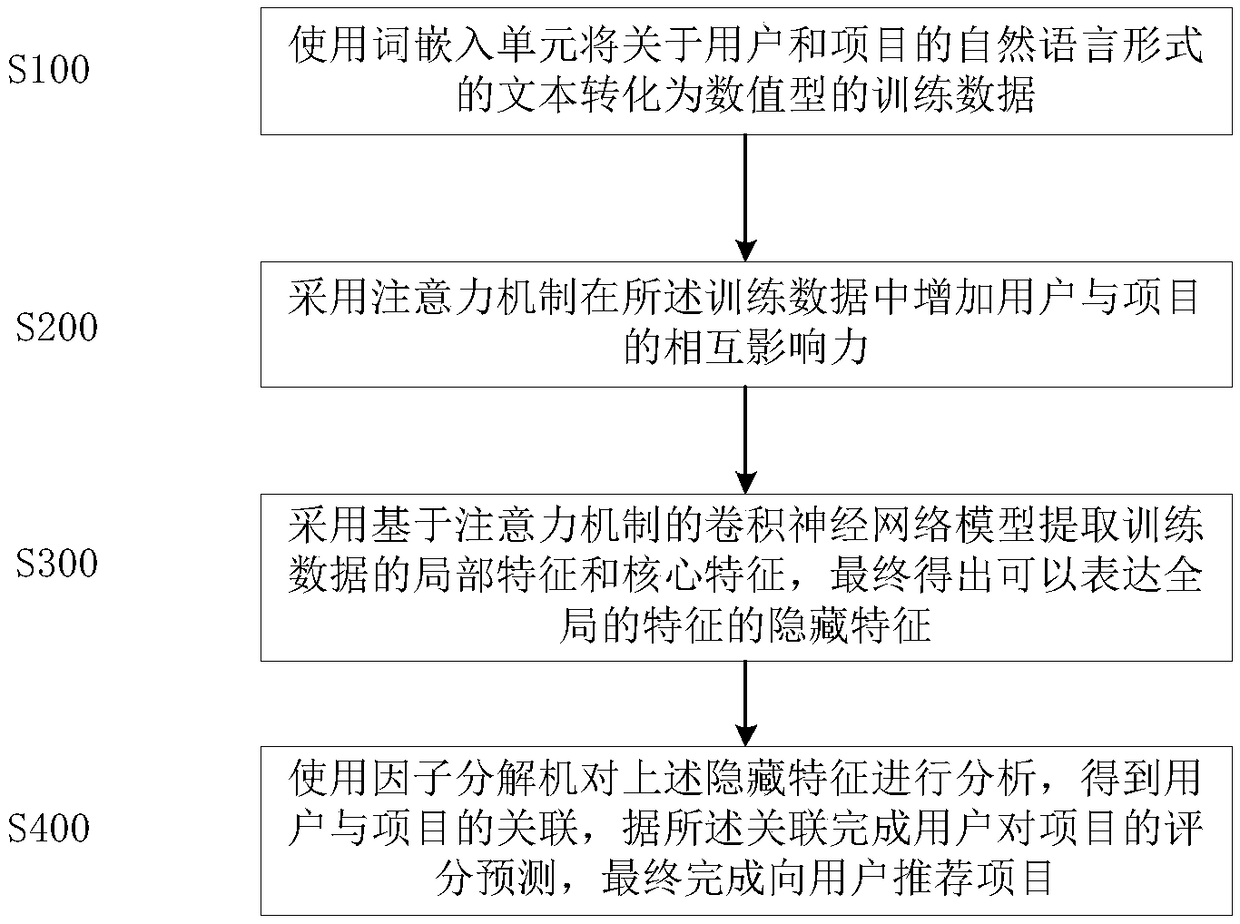

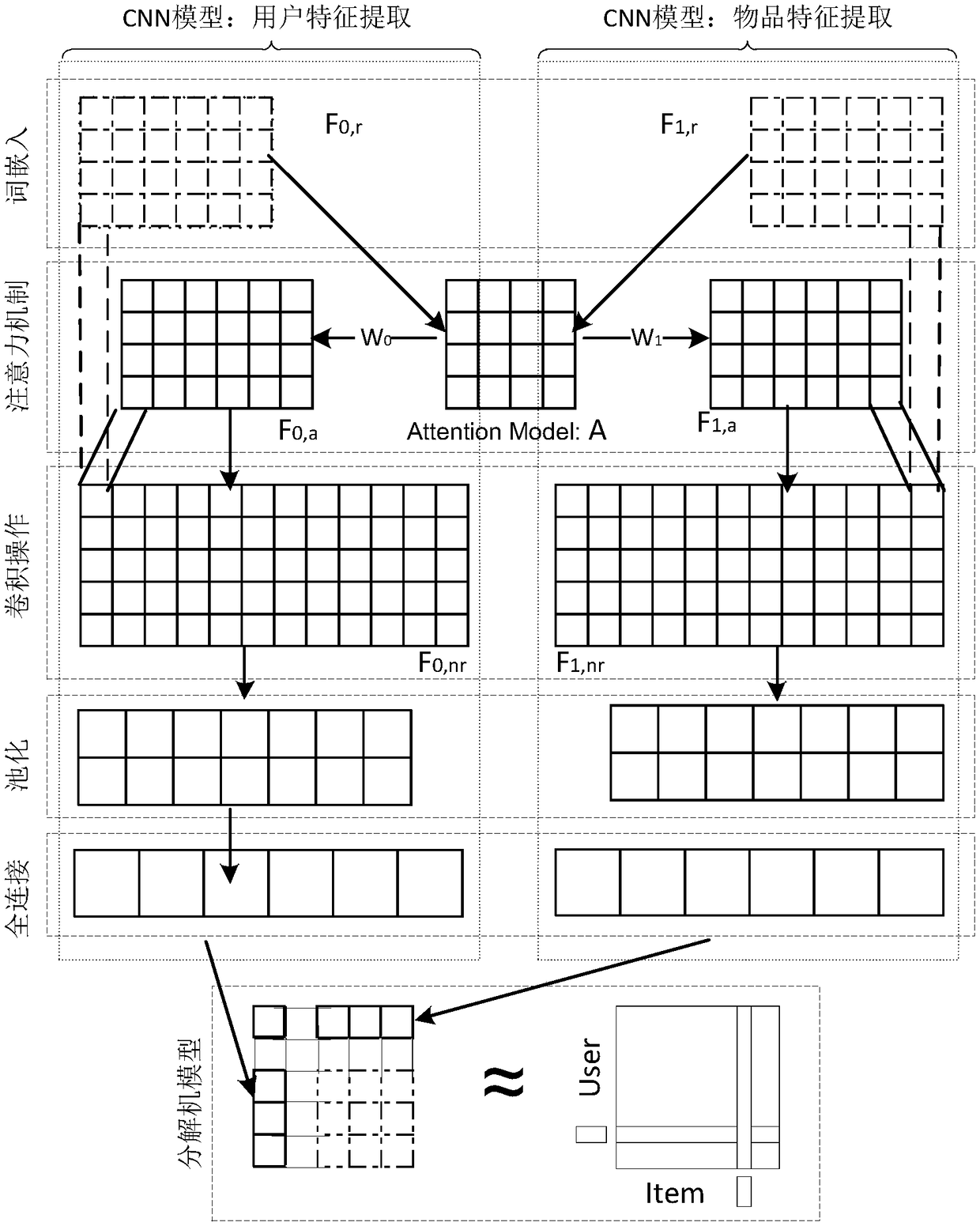

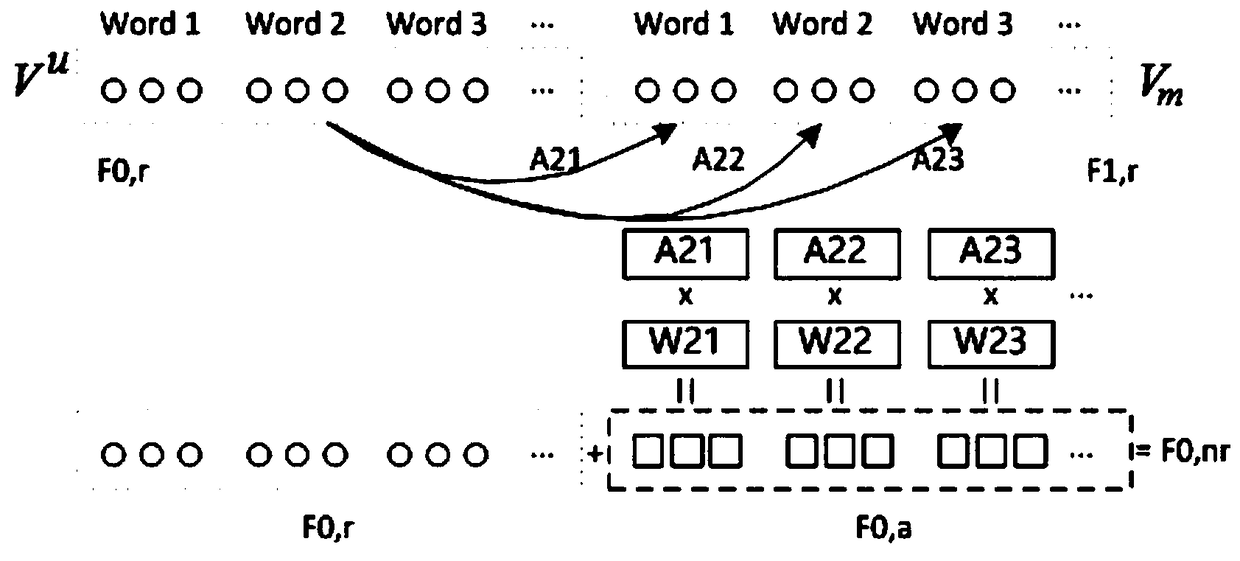

A recommendation method

ActiveCN109241424AImprove feature extractionSolve the problem of lost memoryDigital data information retrievalNeural architecturesNetwork modelMutual influence

A recommendation method includes the following steps: S100: converting text about a natural language form of a user and an item into numeric training data using a word embedding unit; S200: adopting an attention mechanism to increase the mutual influence between the user and the item in the training data; S300: Using the convolution neural network model based on attention mechanism to extract thelocal features and the core features of the training data, and finally obtaining the hidden features which can express the global features; S400: analyzing the hidden features by using a factoring machine to obtain the association between the user and the item, and the scoring prediction of the item by the user according to the association, and finally recommending the item to the user. Compared with the existing methods, this method improves the recommended accuracy and accuracy, and improves the data utilization rate.

Owner:SHAANXI NORMAL UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com