Patents

Literature

51 results about "Perplexity" patented technology

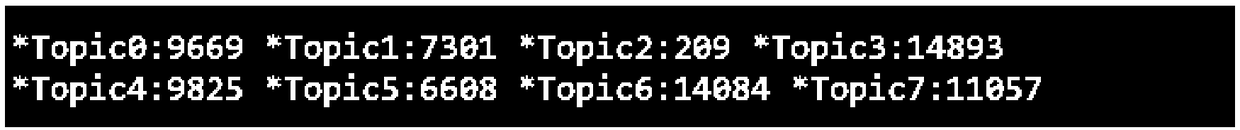

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

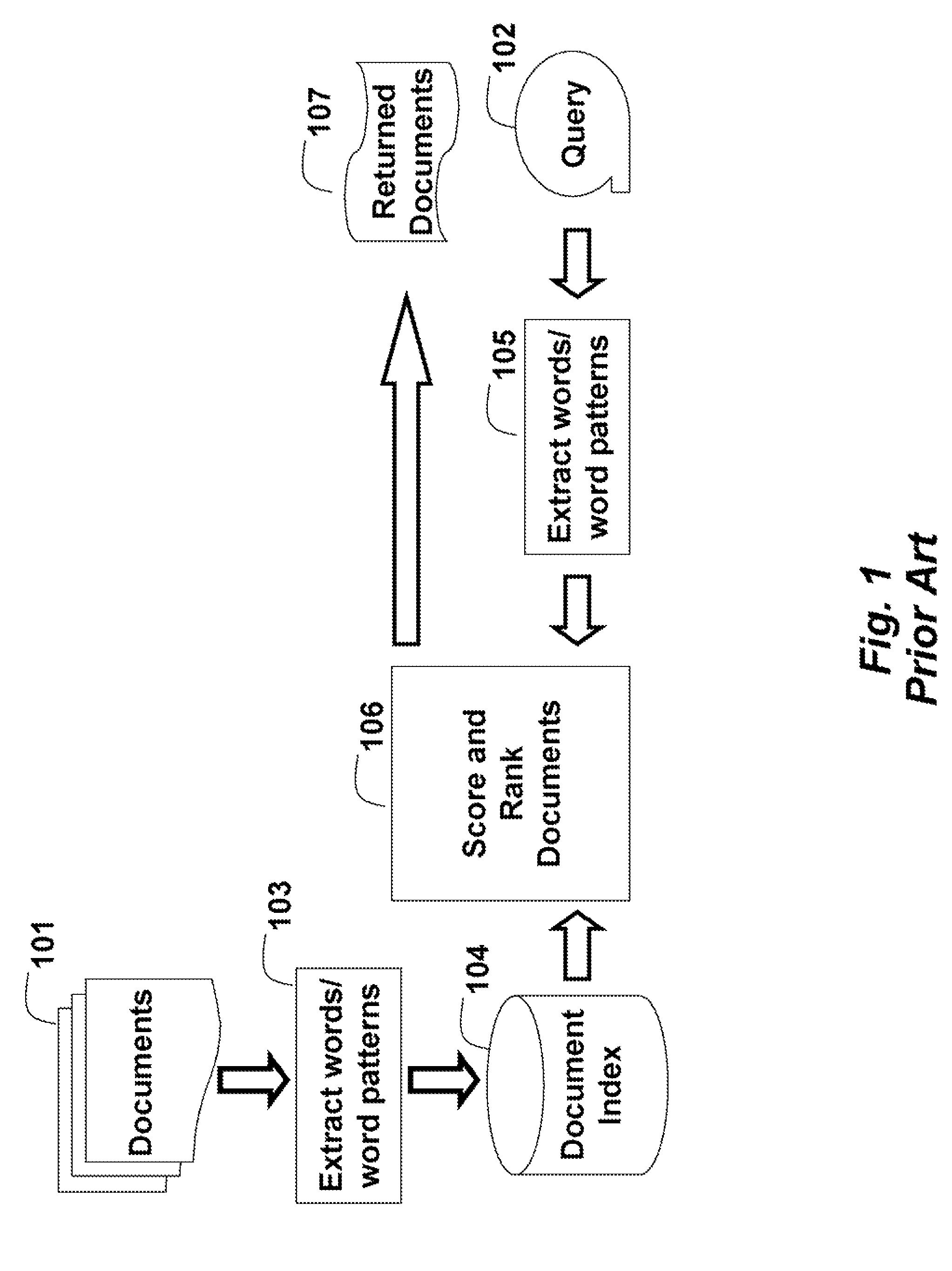

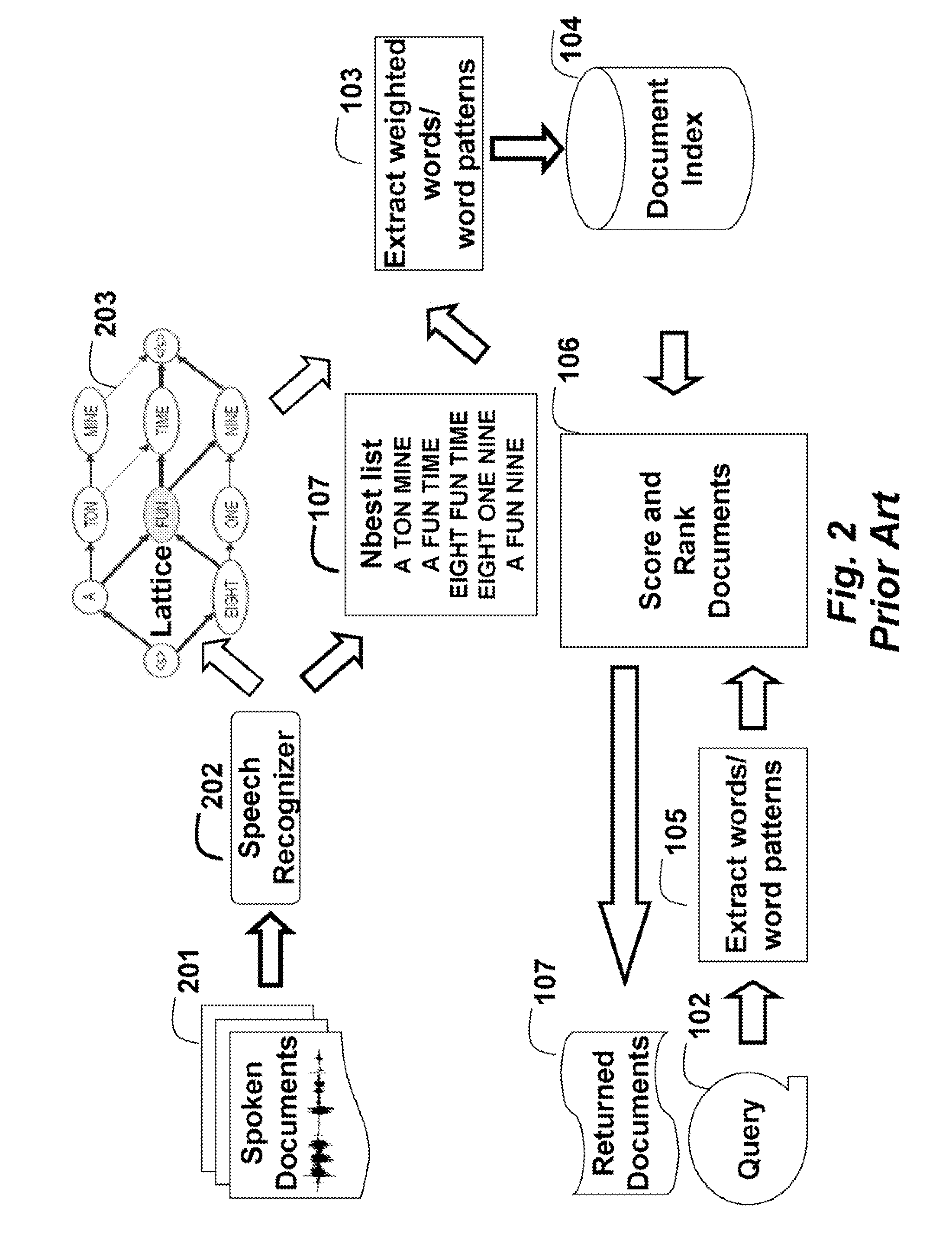

In information theory, perplexity is a measurement of how well a probability distribution or probability model predicts a sample. It may be used to compare probability models. A low perplexity indicates the probability distribution is good at predicting the sample.

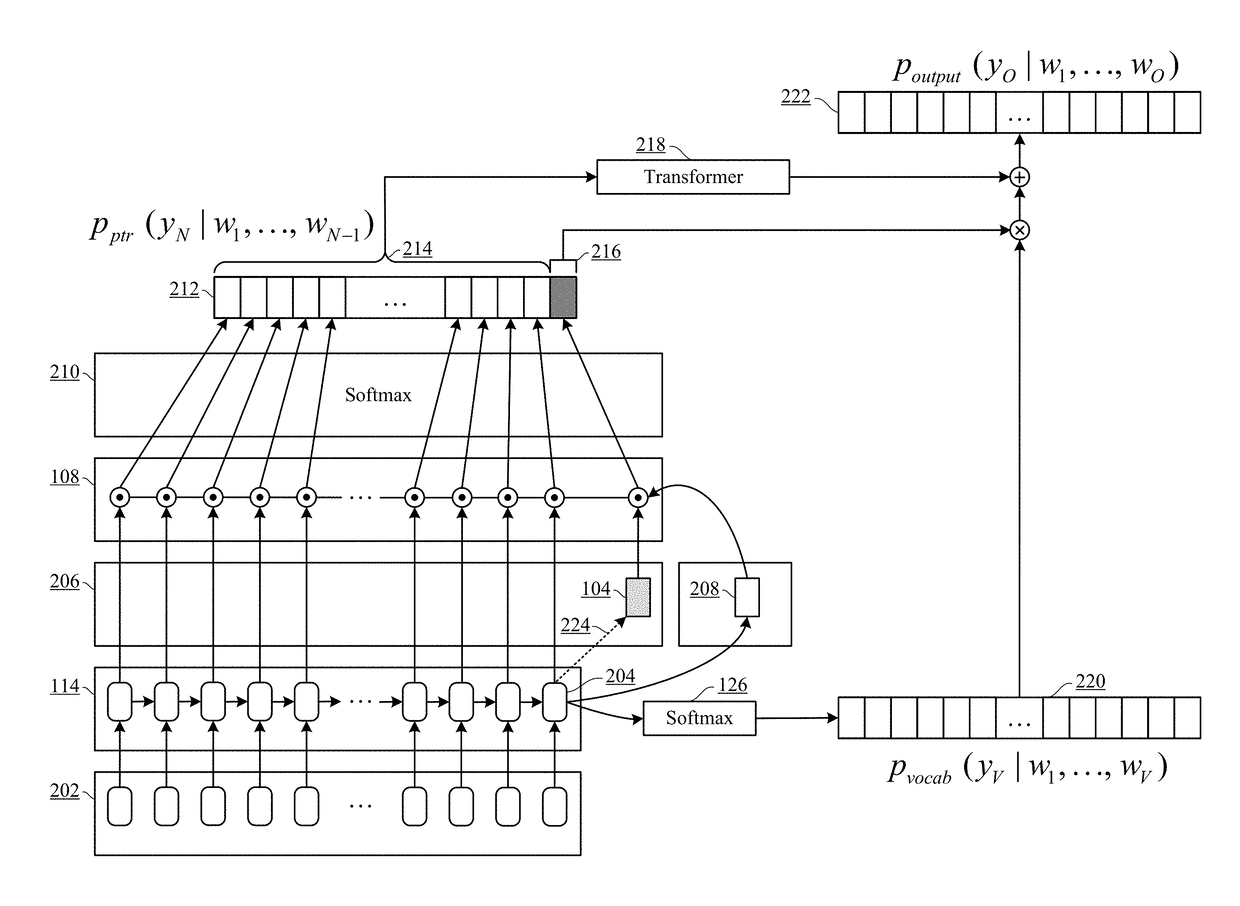

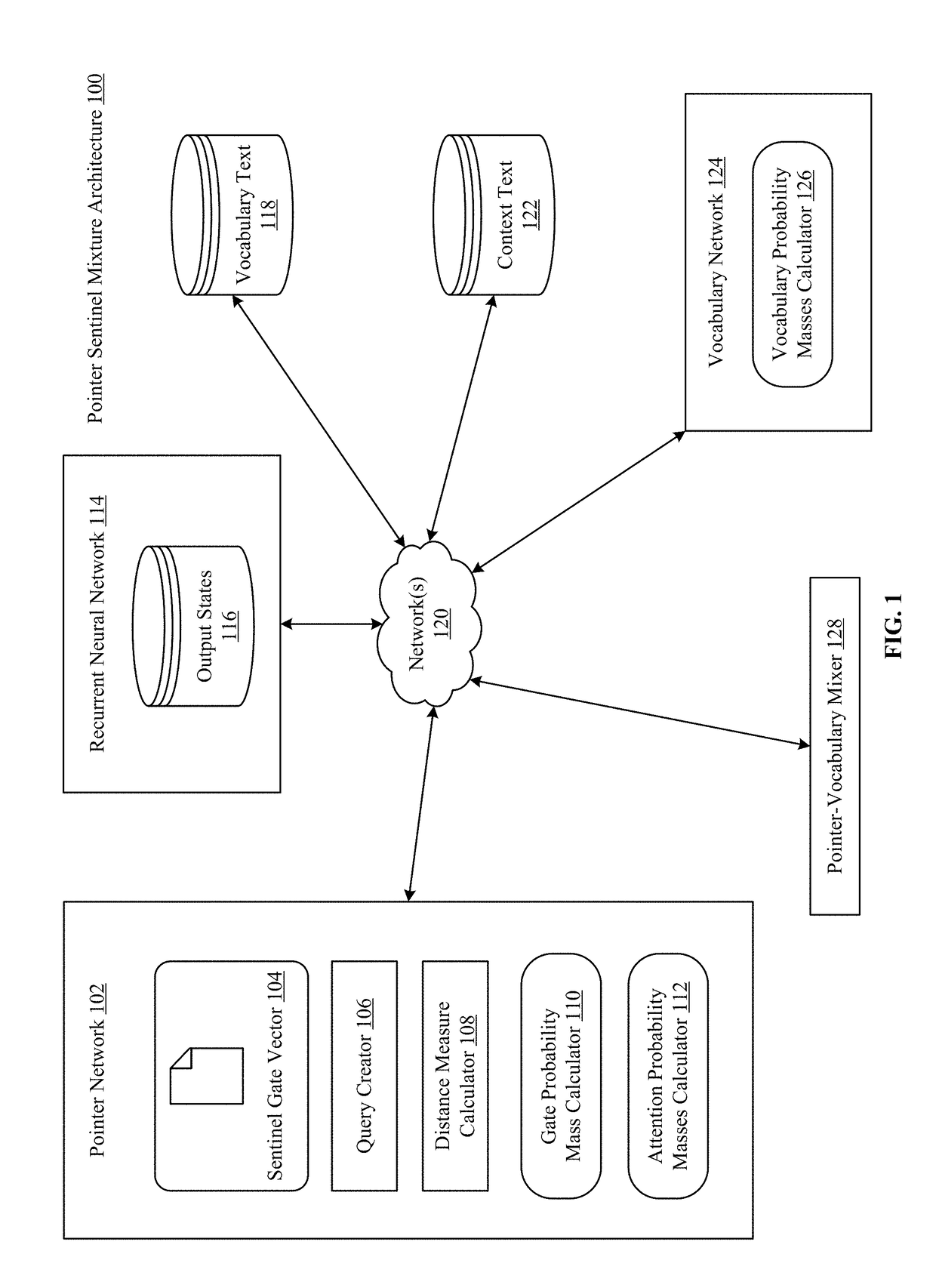

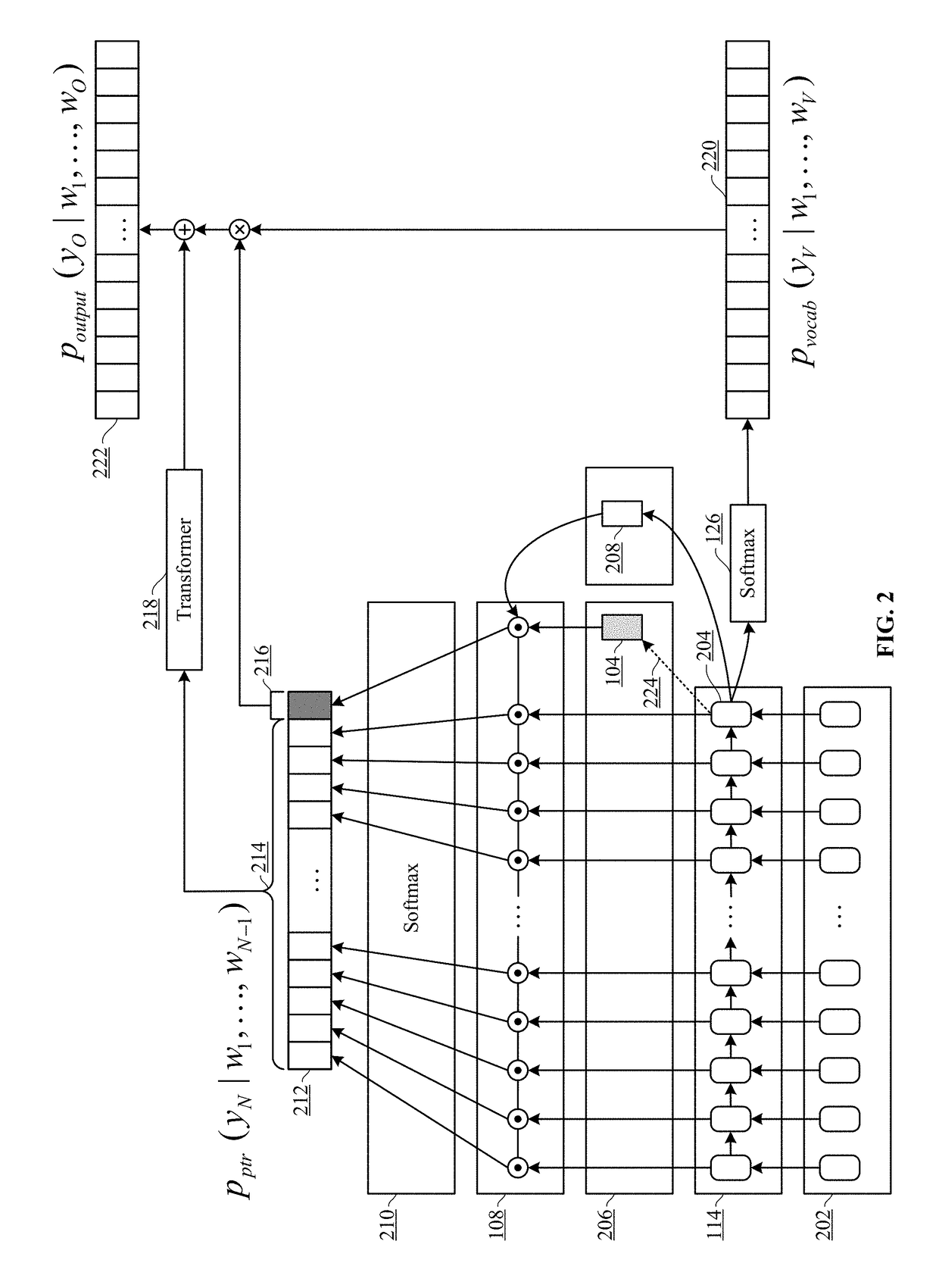

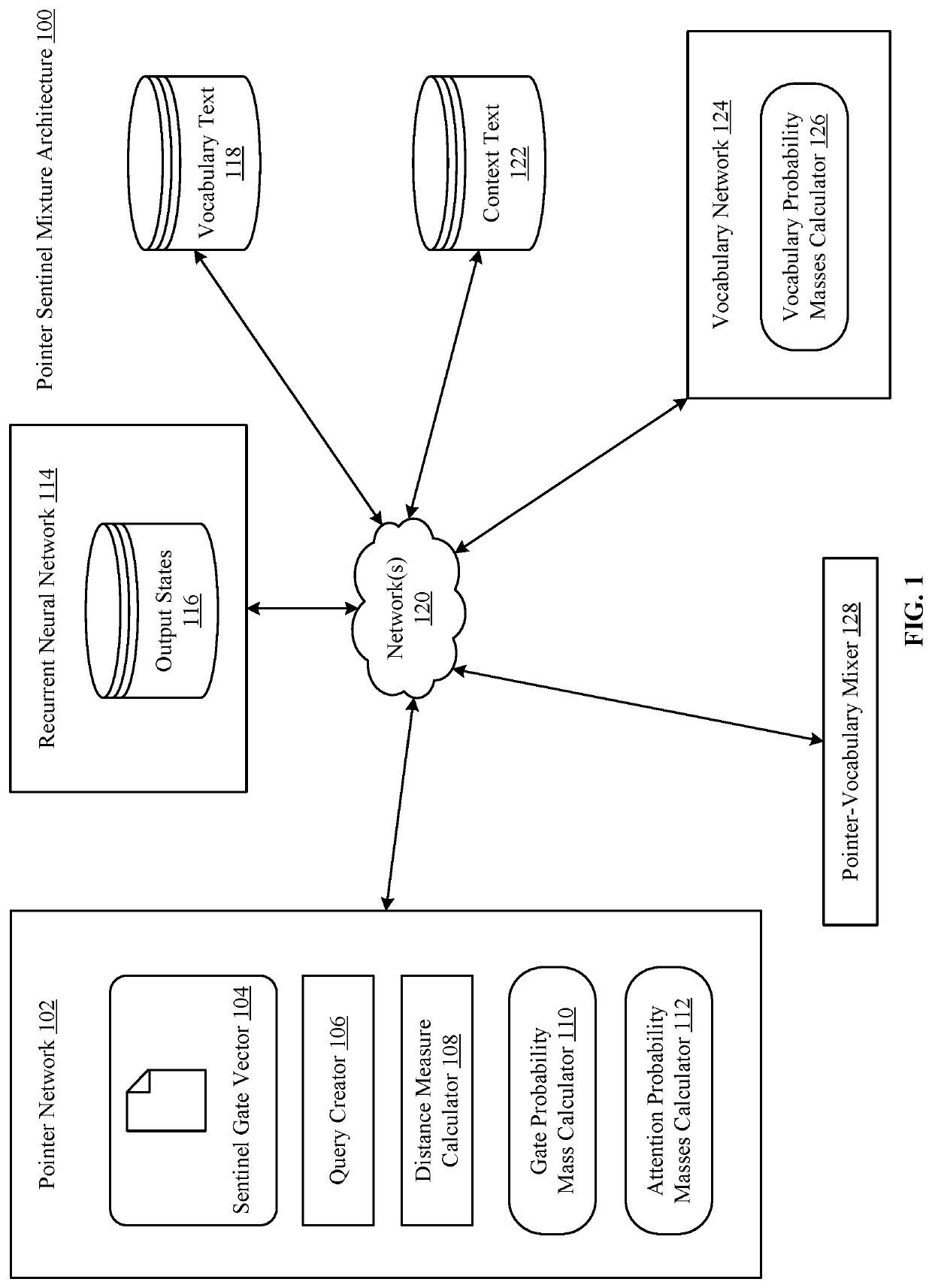

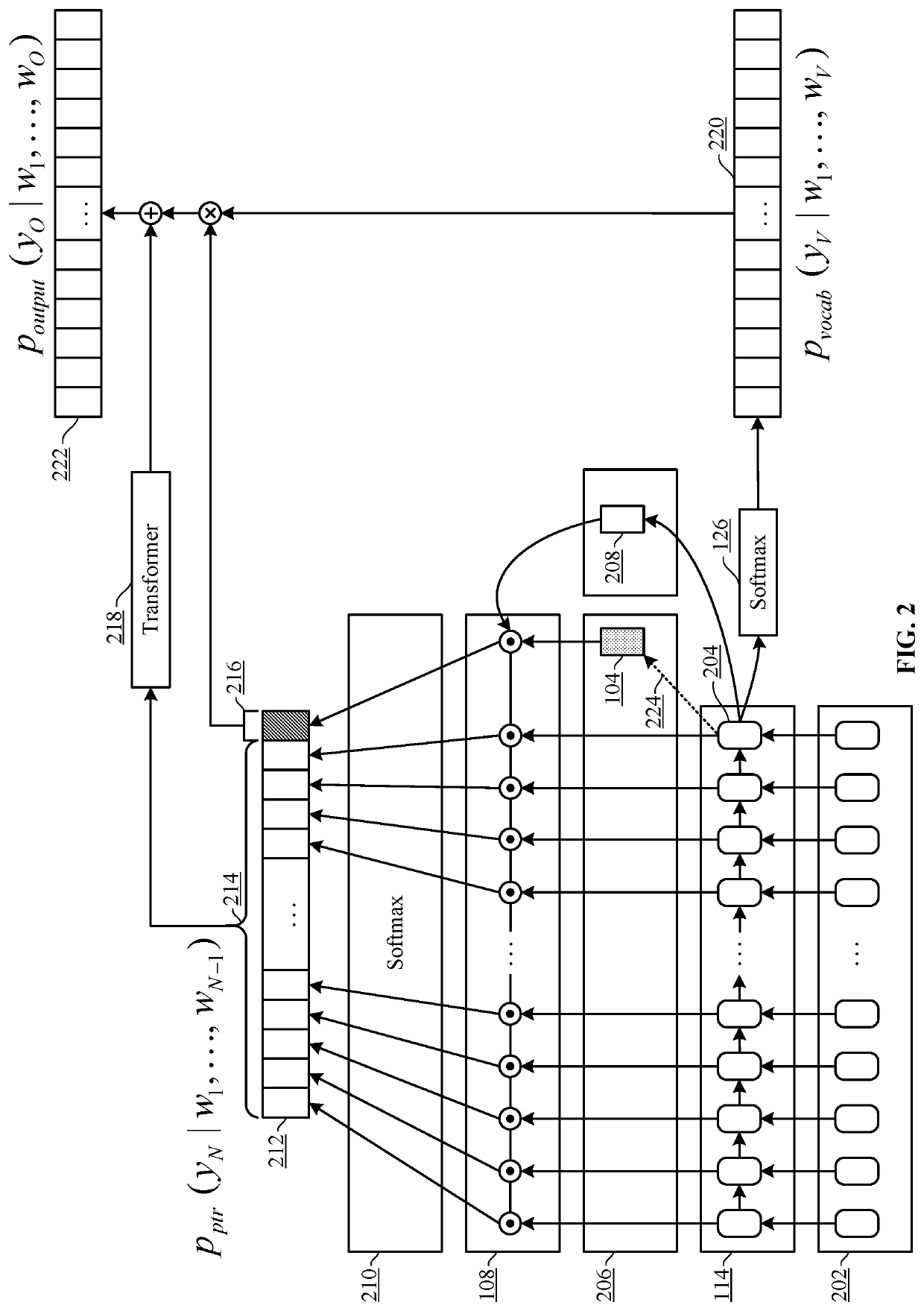

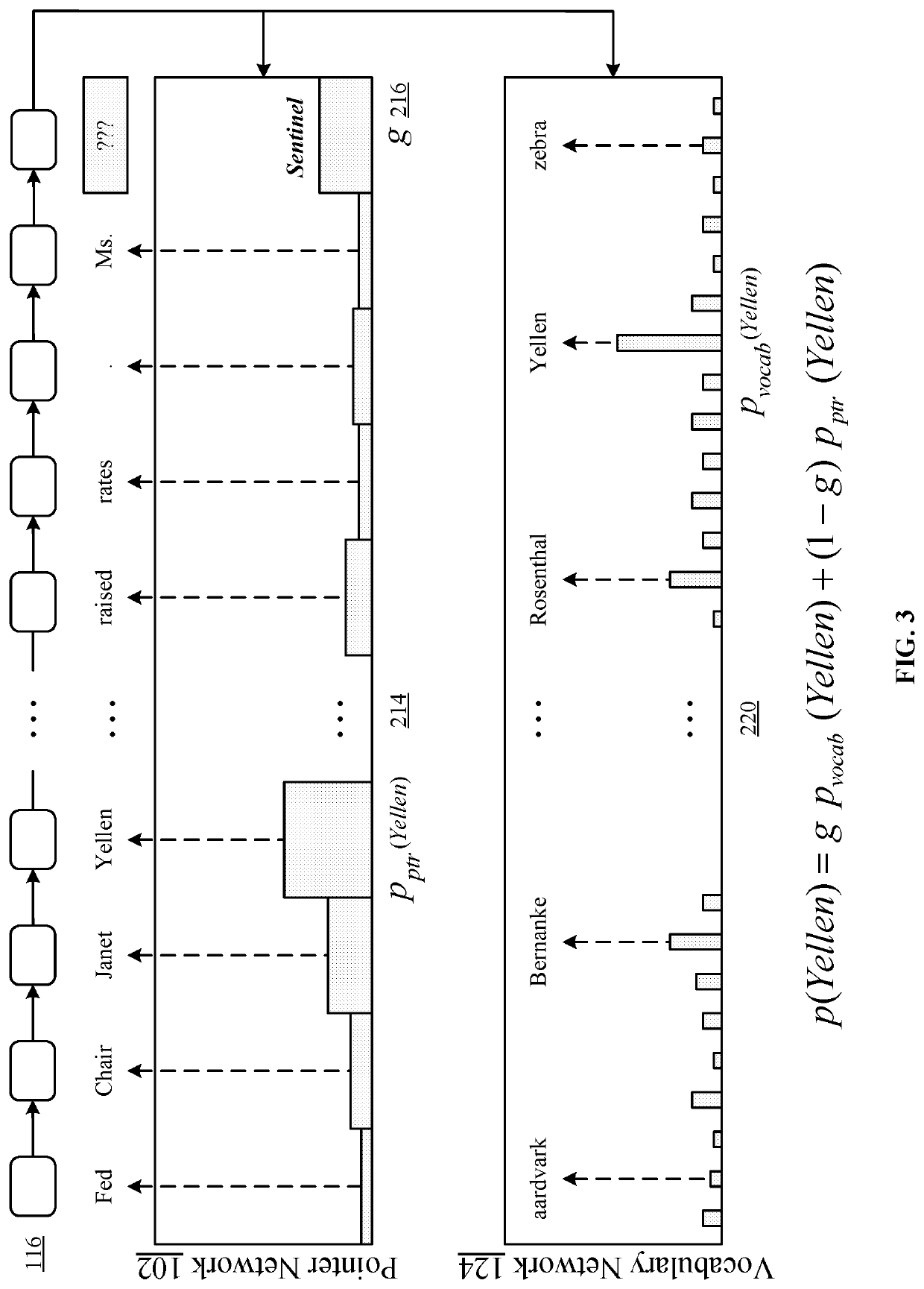

Pointer sentinel mixture architecture

The technology disclosed provides a so-called “pointer sentinel mixture architecture” for neural network sequence models that has the ability to either reproduce a token from a recent context or produce a token from a predefined vocabulary. In one implementation, a pointer sentinel-LSTM architecture achieves state of the art language modeling performance of 70.9 perplexity on the Penn Treebank dataset, while using far fewer parameters than a standard softmax LSTM.

Owner:SALESFORCE COM INC

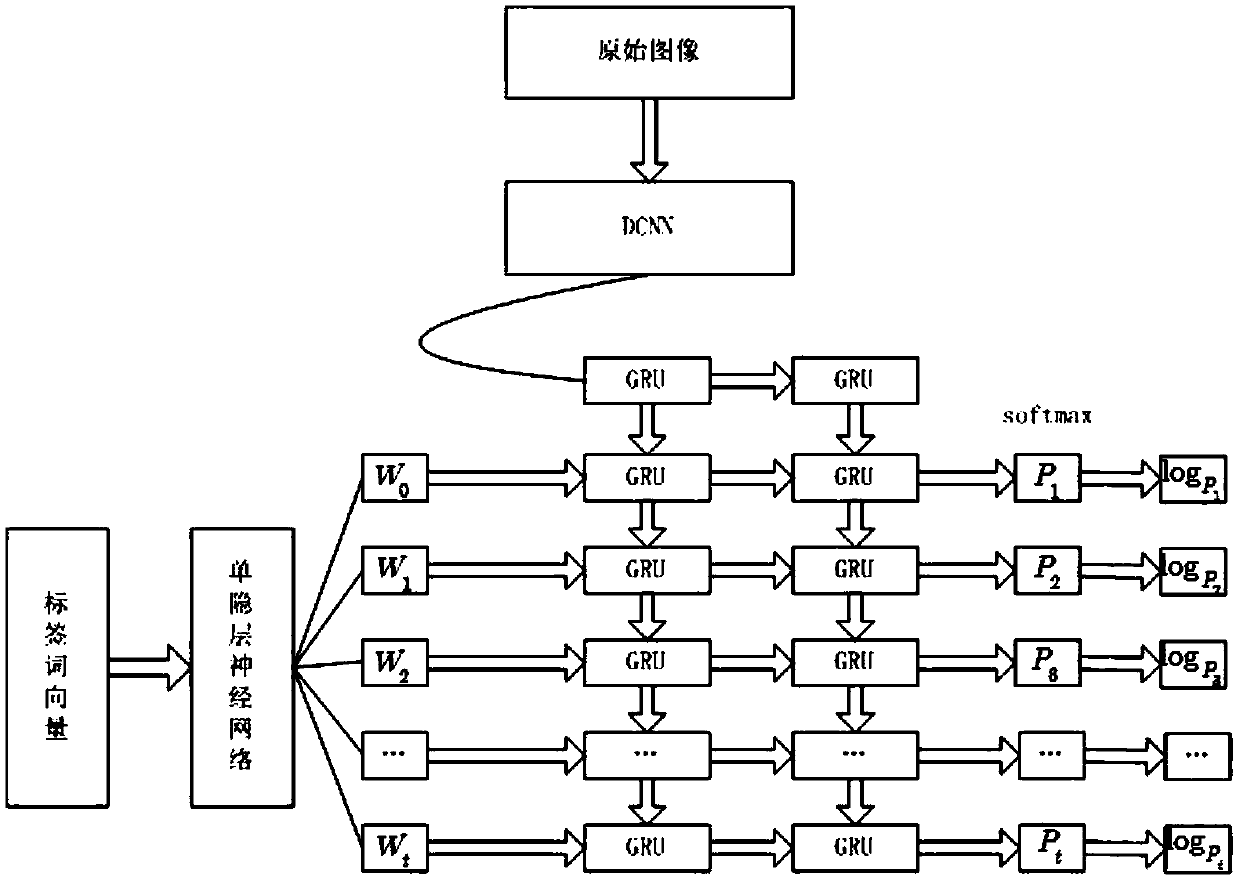

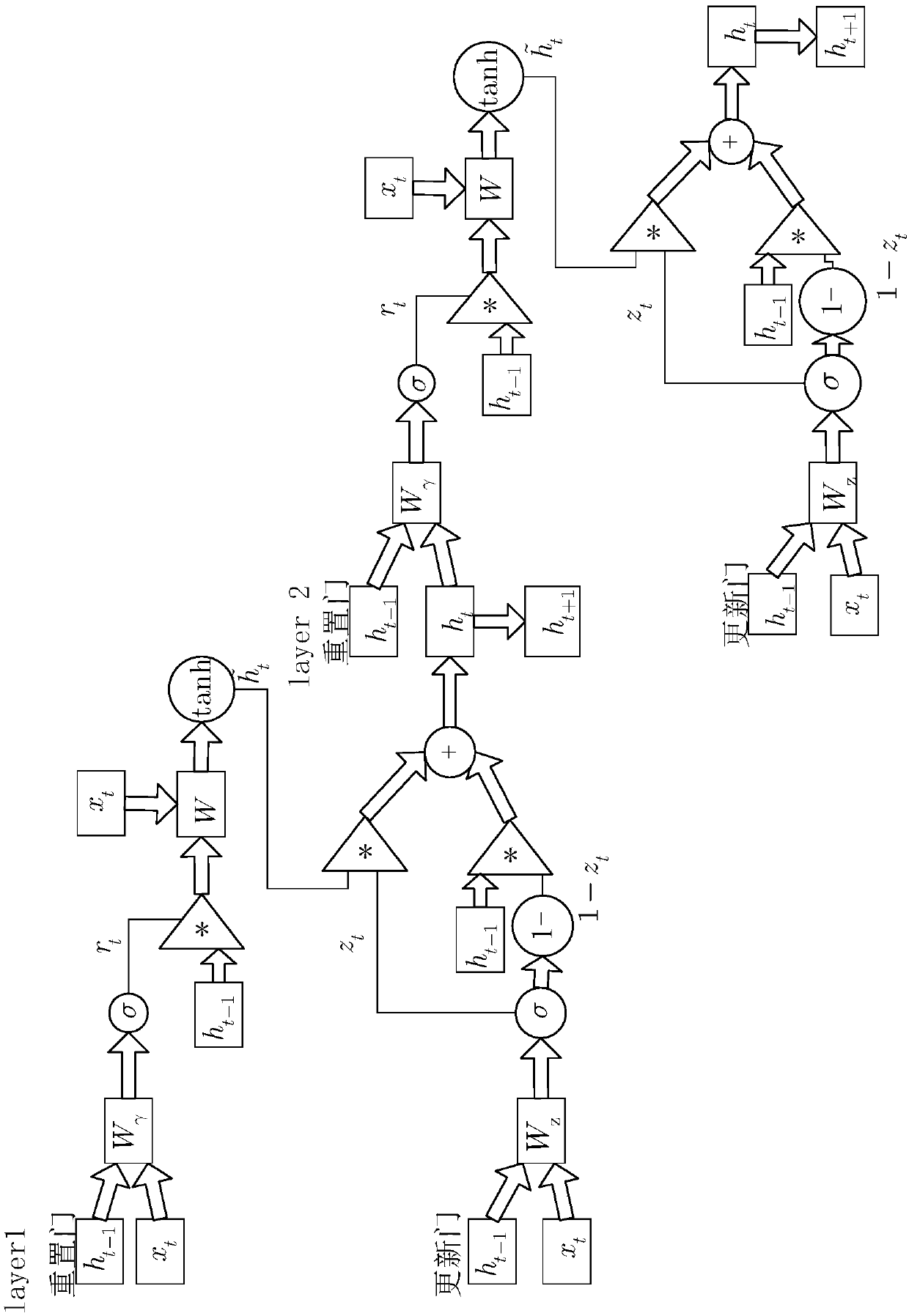

Chinese image semantic description method combined with multilayer GRU based on residual error connection Inception network

InactiveCN108830287AImprove accuracyImprove relevanceCharacter and pattern recognitionNeural architecturesData setNetwork model

The invention discloses a Chinese image semantic description method combined with multilayer GRU based on a residual error connection Inception network, and belongs to the field of computer vision andnatural language processing. The method comprises the steps: carrying out the preprocessing of an AI Challenger image Chinese description training set and an estimation set through an open source tensorflow to generate a file at the tfrecord format for training; pre-training an ImageNet data set through an Inception_ResNet_v2 network to obtain a convolution network pre-training model; loading a pre-training parameter to the Inception_ResNet_v2 network, and carrying out the extraction of an image feature descriptor of the AI Challenger image set; building a single-hidden-layer neural network model and mapping the image feature descriptor to a word embedding space; taking a word embedding characteristic matrix and the image feature descriptor after secondary characteristic mapping as the input of a double-layer GRU network; inputting an original image into a description model to generate a Chinese description sentence; employing an evaluation data set for estimation through employing the trained model and taking a Perplexity index as an evaluation standard. The method achieves the solving of a technical problem of describing an image in Chinese, and improves the continuity and readability of sentences.

Owner:HARBIN UNIV OF SCI & TECH

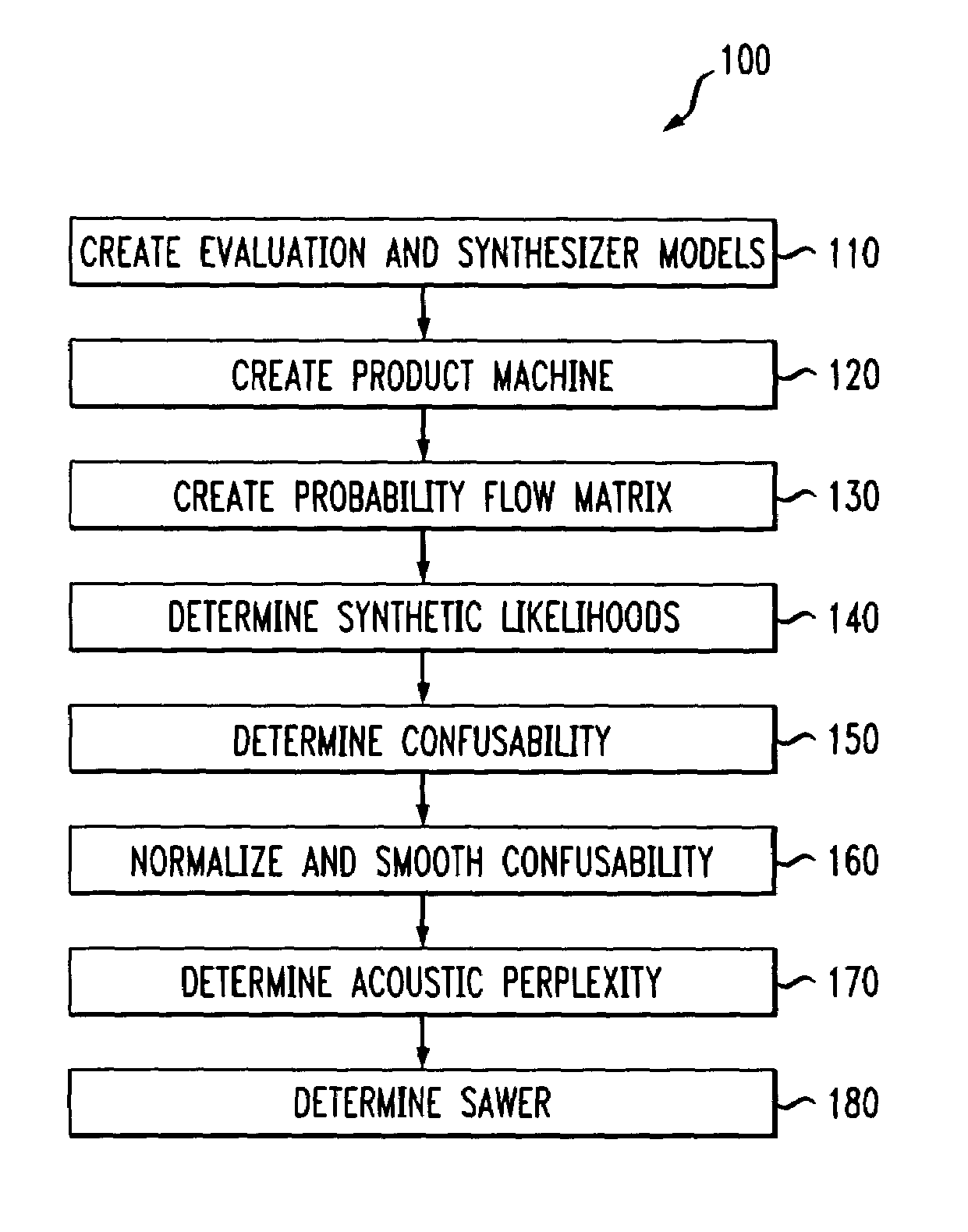

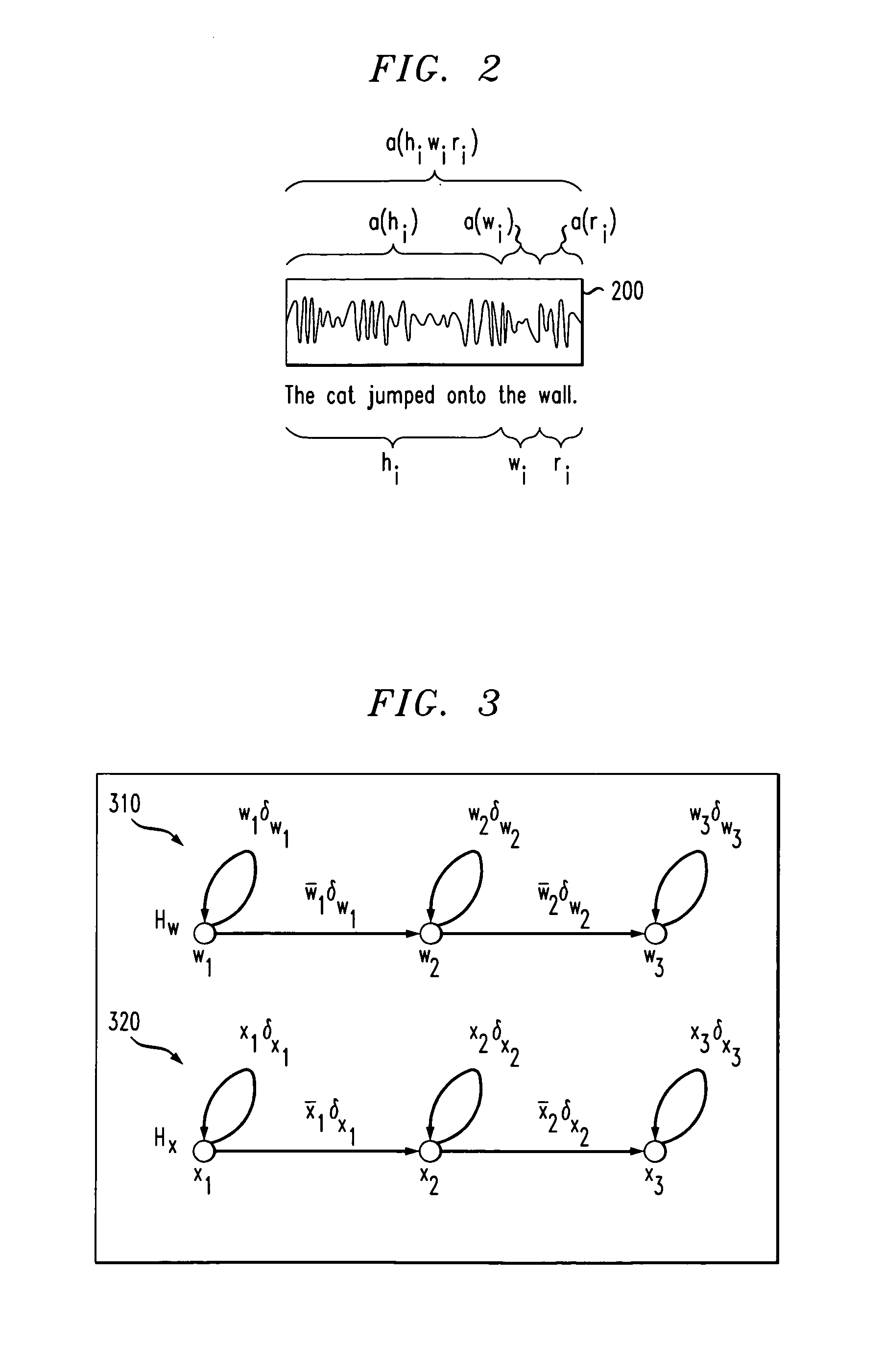

Determining and using acoustic confusability, acoustic perplexity and synthetic acoustic word error rate

InactiveUS7219056B2Improve effectivenessComputation using non-denominational number representationSpeech recognitionHide markov modelAlgorithm

Two statistics are disclosed for determining the quality of language models. These statistics are called acoustic perplexity and the synthetic acoustic word error rate (SAWER), and they depend upon methods for computing the acoustic confusability of words. It is possible to substitute models of acoustic data in place of real acoustic data in order to determine acoustic confusability. An evaluation model is created, a synthesizer model is created, and a matrix is determined from the evaluation and synthesizer models. Each of the evaluation and synthesizer models is a hidden Markov model. Once the matrix is determined, a confusability calculation may be performed. Different methods are used to determine synthetic likelihoods. The confusability may be normalized and smoothed and methods are disclosed that increase the speed of performing the matrix inversion and the confusability calculation. A method for caching and reusing computations for similar words is disclosed. Acoustic perplexity and SAWER are determined and applied.

Owner:NUANCE COMM INC

Pointer sentinel mixture architecture

Owner:SALESFORCE COM INC

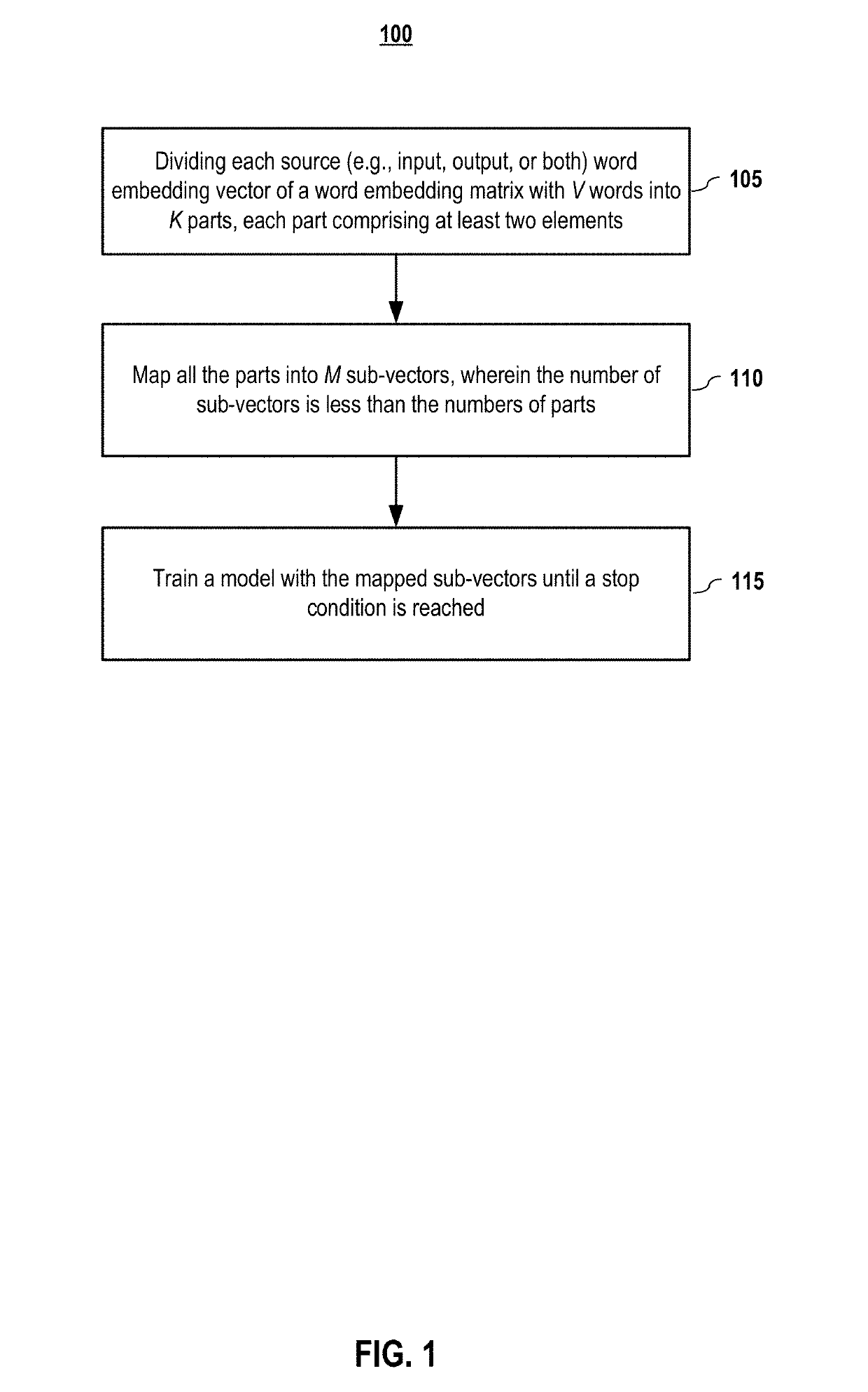

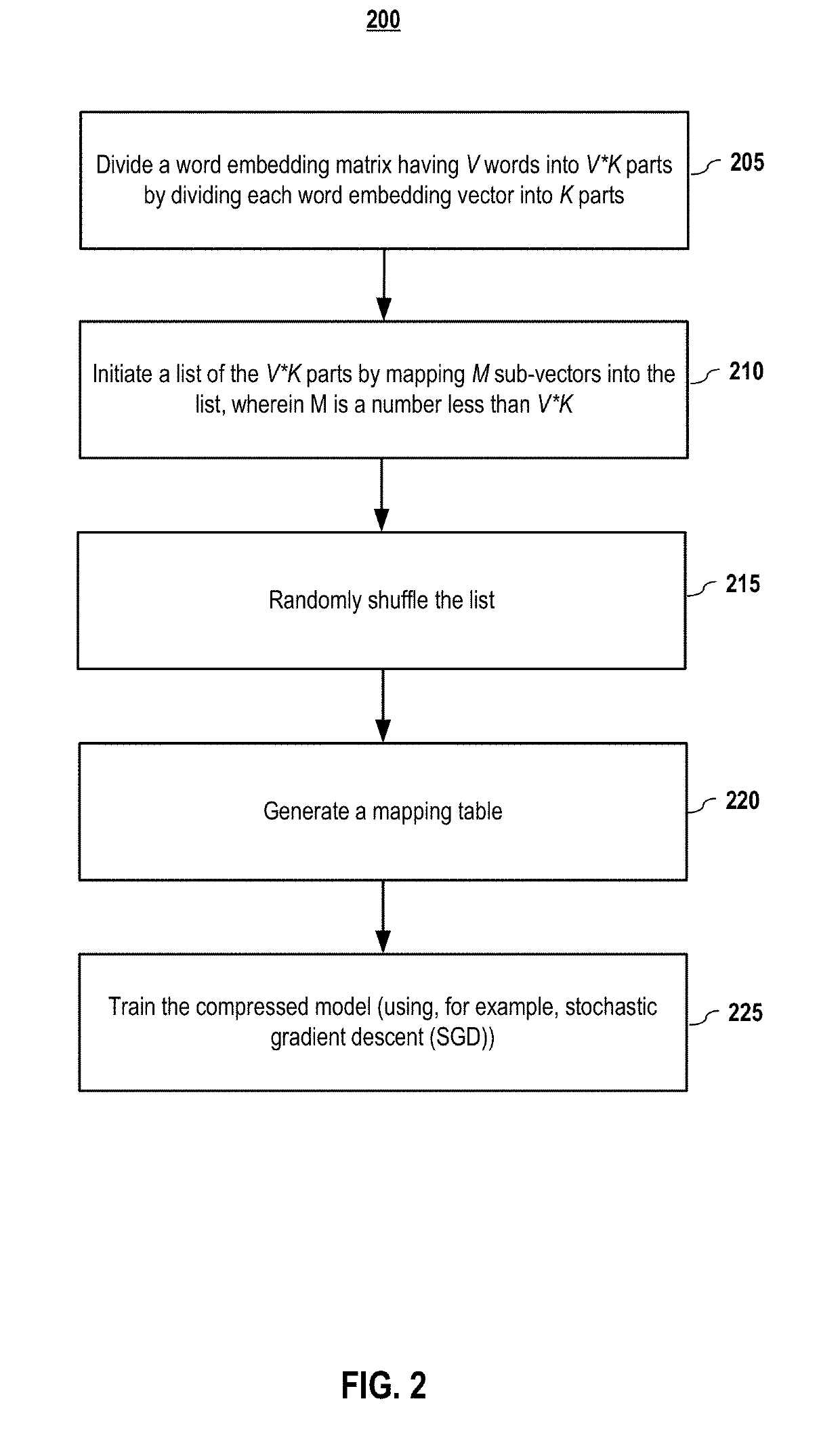

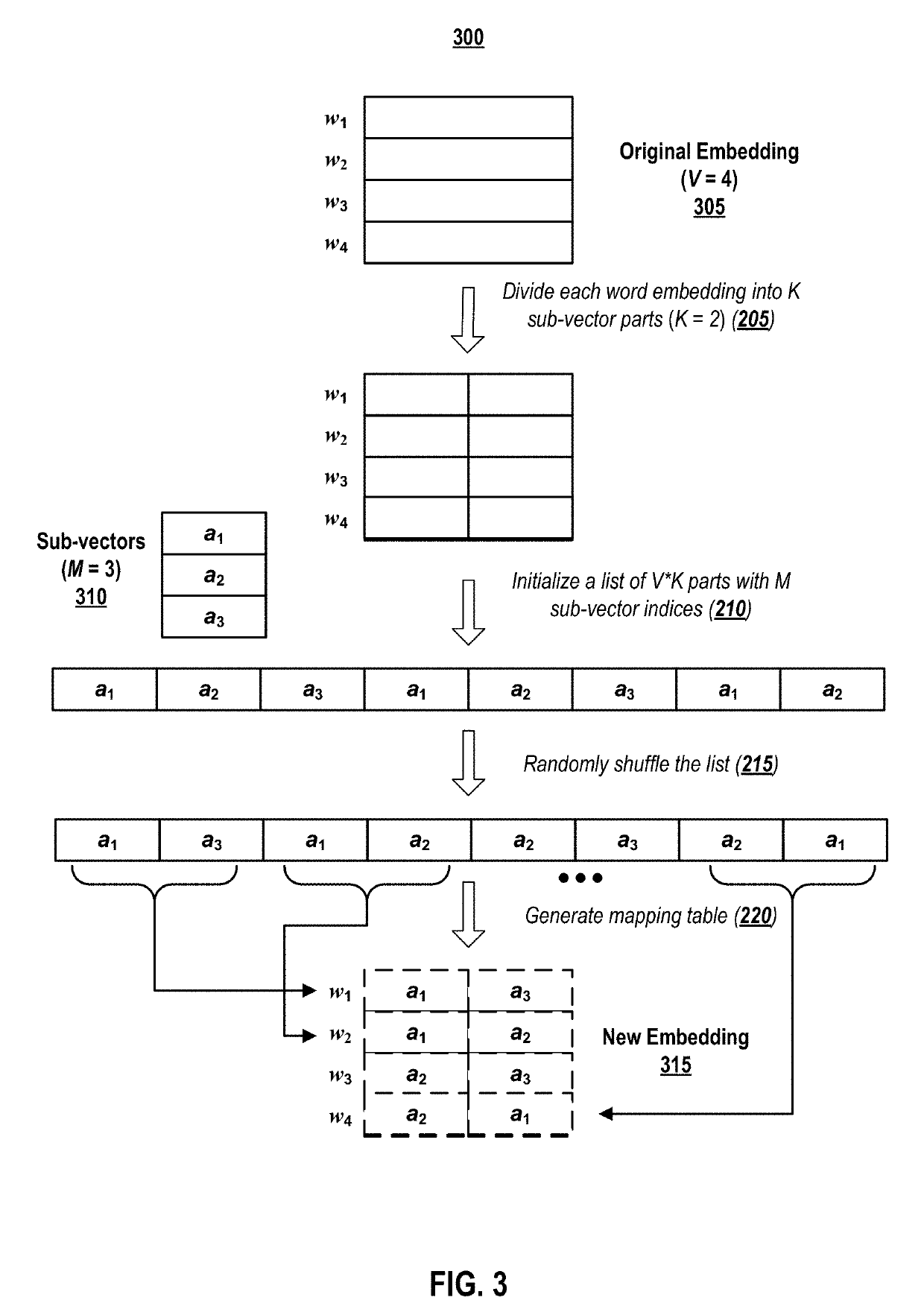

Slim embedding layers for recurrent neural language models

Described herein are systems and methods for compressing or otherwise reducing the memory requirements for storing and computing the model parameters in recurrent neural language models. Embodiments include space compression methodologies that share the structured parameters at the input embedding layer, the output embedding layers, or both of a recurrent neural language model to significantly reduce the size of model parameters, but still compactly represent the original input and output embedding layers. Embodiments of the methodology are easy to implement and tune. Experiments on several data sets show that embodiments achieved similar perplexity and BLEU score results while only using a fraction of the parameters.

Owner:BAIDU USA LLC

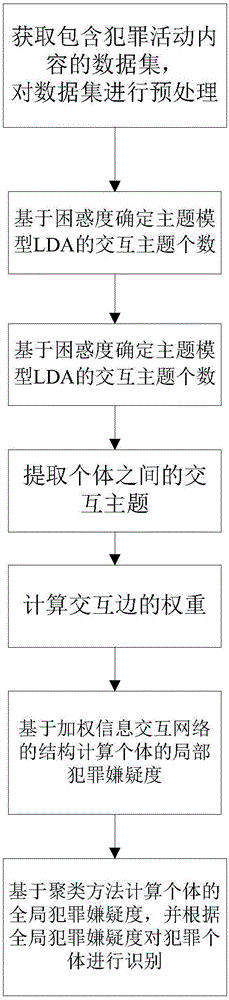

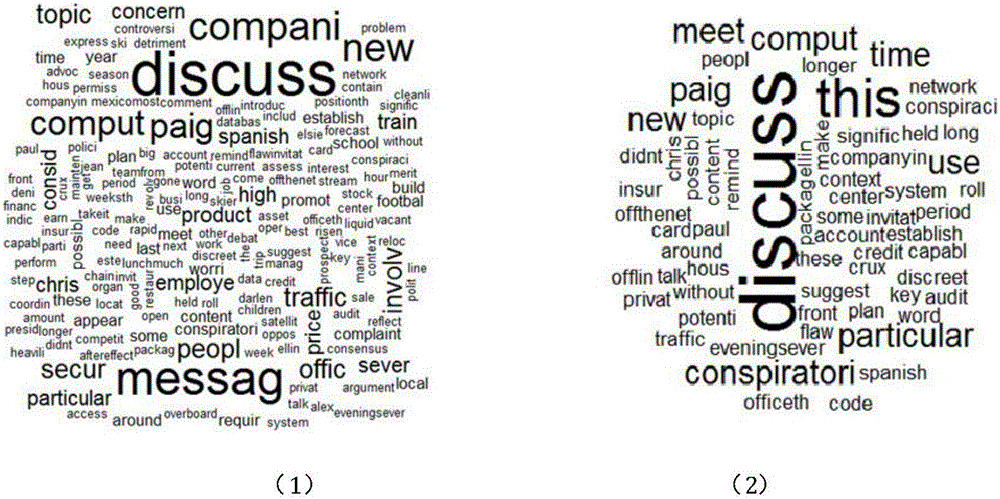

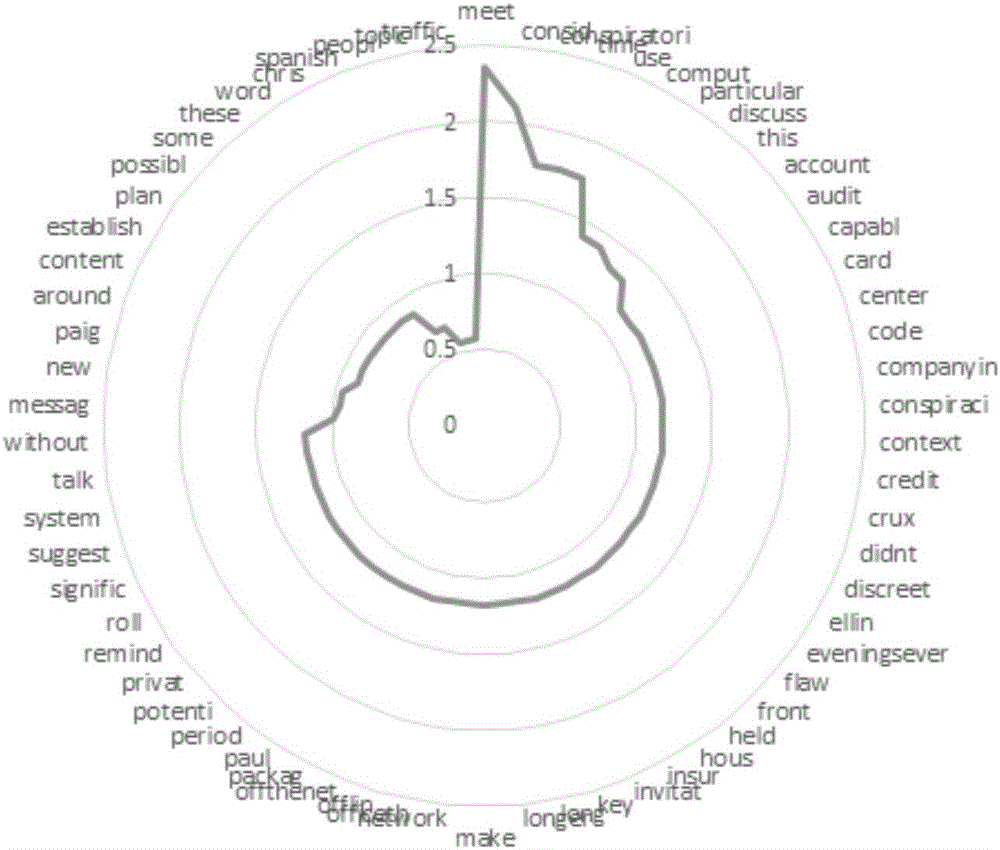

Information interactive network-based criminal individual recognition method

InactiveCN106127231AEfficient use ofGood explainabilityData processing applicationsCharacter and pattern recognitionPrior informationData set

The invention belongs to the field of data mining, and relates to an information interactive network-based criminal individual recognition method. The method comprises the following steps of: (1) obtaining a data set which comprises criminal activity contents, and pre-processing the data set; (2) extracting keyword descriptions of criminal topics; (3) determining the number of subjects of a subject model LDA on the basis of perplexity; (4) extracting interactive subjects between individuals in the pre-processed data set on the basis of LDA, wherein the interactive subjects are as follows: an association probability matrix of the interactive subjects and keywords, and an association probability matrix of interactive edges of the interactive subjects; (5) calculating weights of the interactive edges; (6) calculating local criminal suspects of the individuals on the basis of a structure of a weighted information interactive network; and (7) calculating global criminal suspects of the individuals on the basis of a fuzzy K-means cluster and distance density cluster combined method, and recognizing the criminal individuals. The method is independent of prior information, and can be used for analyzing the most possible suspected person according to communication contents so that the case handling efficiency is improved.

Owner:NAT UNIV OF DEFENSE TECH

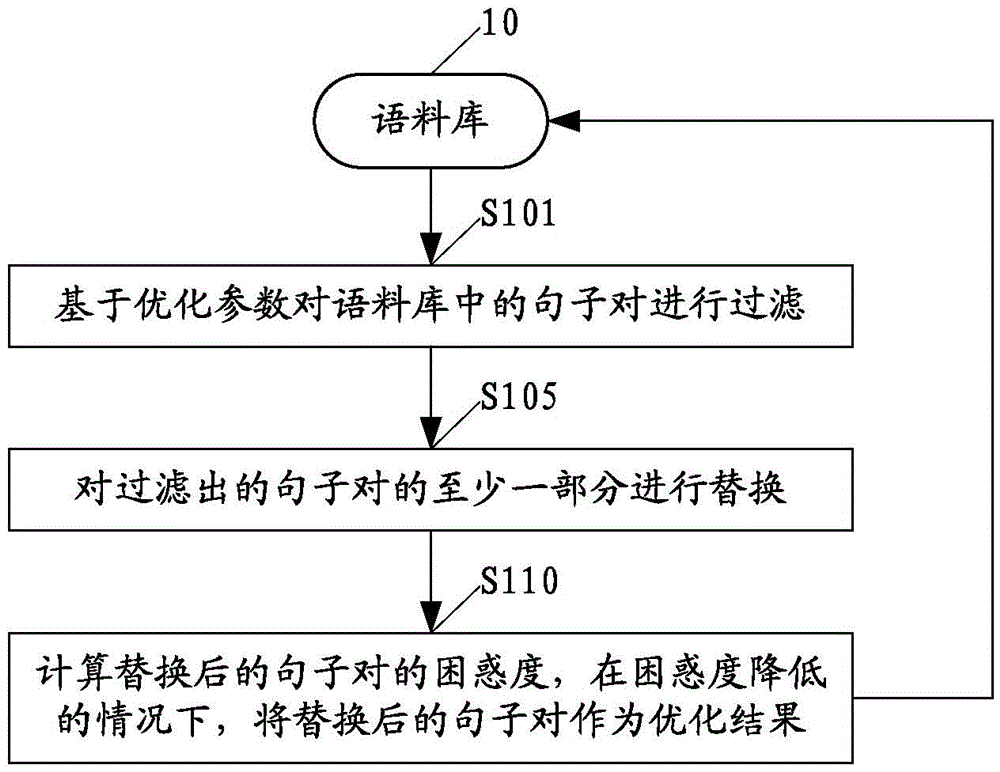

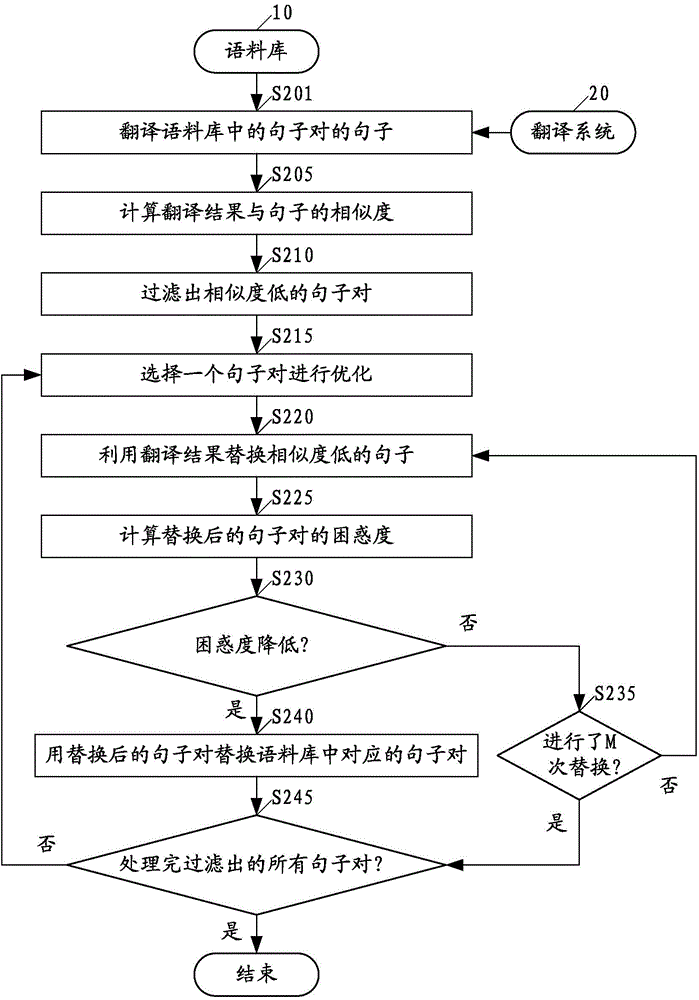

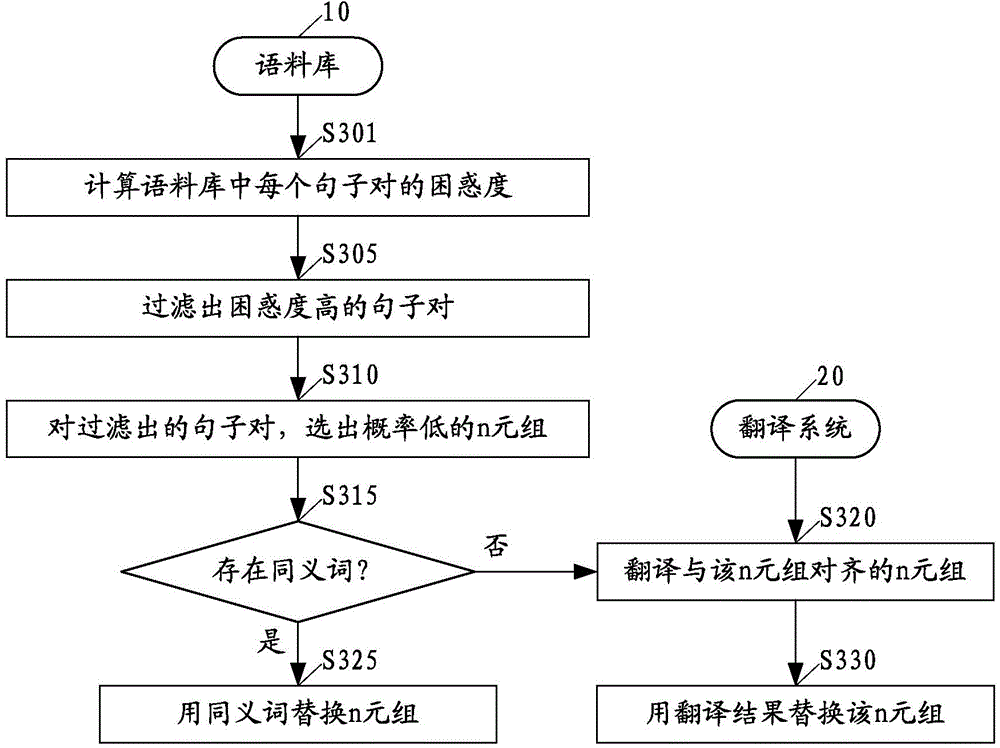

Method and device for optimizing corpus

ActiveCN104951469APerplexity reductionNo lossSpecial data processing applicationsSentence pairFiltration

The invention provides a method for optimizing a corpus and a device for optimizing the corpus. The device for optimizing the corpus comprises a filter unit, a replacement unit and a perplexity calculation unit; the filter unit performs filtration on sentences in the corpus on the basis of an optimization parameter to obtain sentence pairs to be optimized; the replacement unit performs replacement on at least part of the sentence pairs to be optimized; the perplexity calculation unit calculates the perplexity of the replaced sentence pairs, and the replaced sentence pairs can serve as optimization results of the sentence pairs to be optimized on the condition that the perplexity of the replaced sentence pairs is smaller than that of the sentence pairs to be optimized.

Owner:KK TOSHIBA

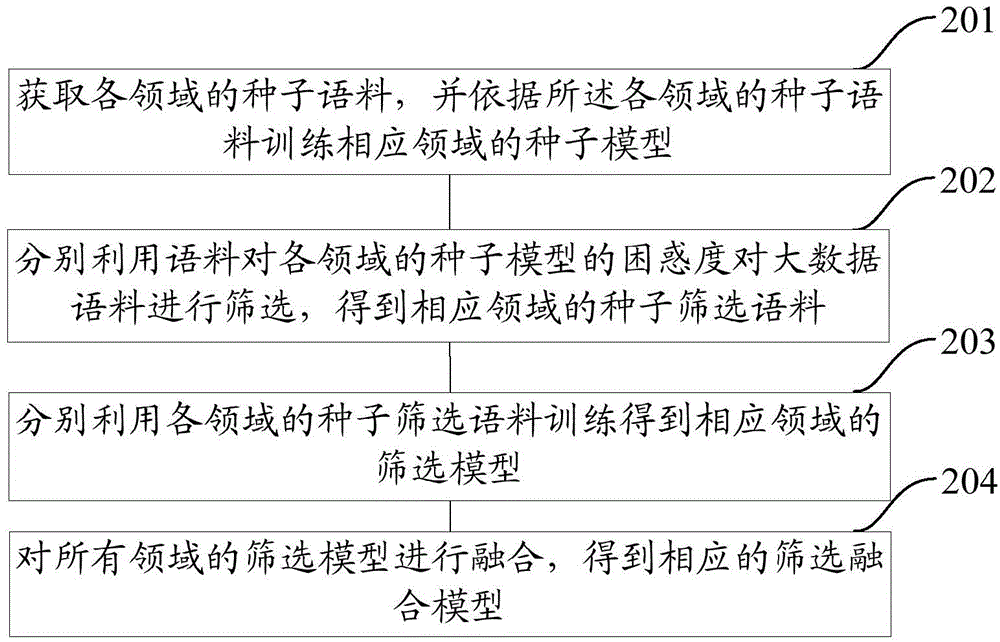

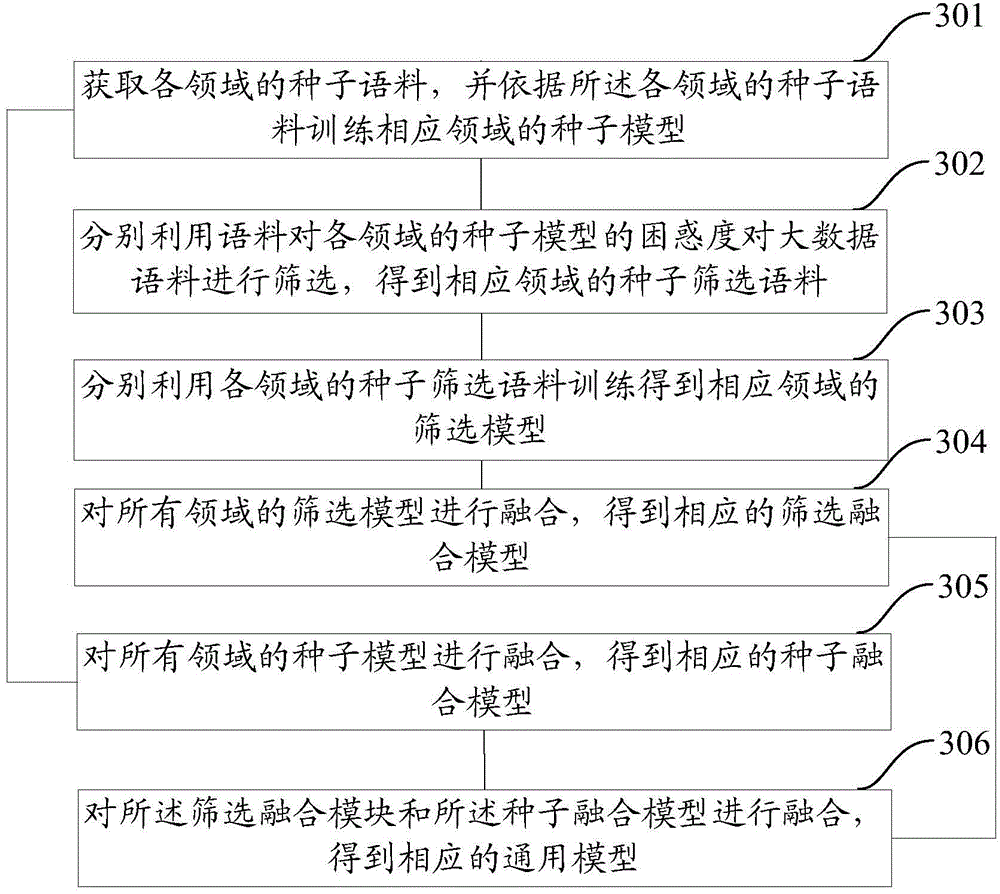

Training method and system for language model

InactiveCN104572614AReduce data sizeReduce the amount of computing resourcesSpecial data processing applicationsAlgorithmPerplexity

The embodiment of the invention provides a training method and system for a language model, wherein the method specifically comprises the following steps: acquiring seed corpuses of all fields, and training the seed models in the corresponding fields based on the seed corpuses of all the fields; screening big data corpuses by utilizing the perplexity of the corpuses on the seed models of all the fields to obtain seed screening corpuses of in the corresponding fields; respectively training by utilizing the seed screening corpuses of all fields to obtain the screening model of the corresponding field; fusing the screening models of all the fields to obtain corresponding screening fusion model. According to the training method and system for the language model disclosed by the embodiment of the invention, the parameter reasonableness of the language model can be improved on the premise of reducing a computation burden and saving time.

Owner:BEIJING SINOVOICE TECH CO LTD

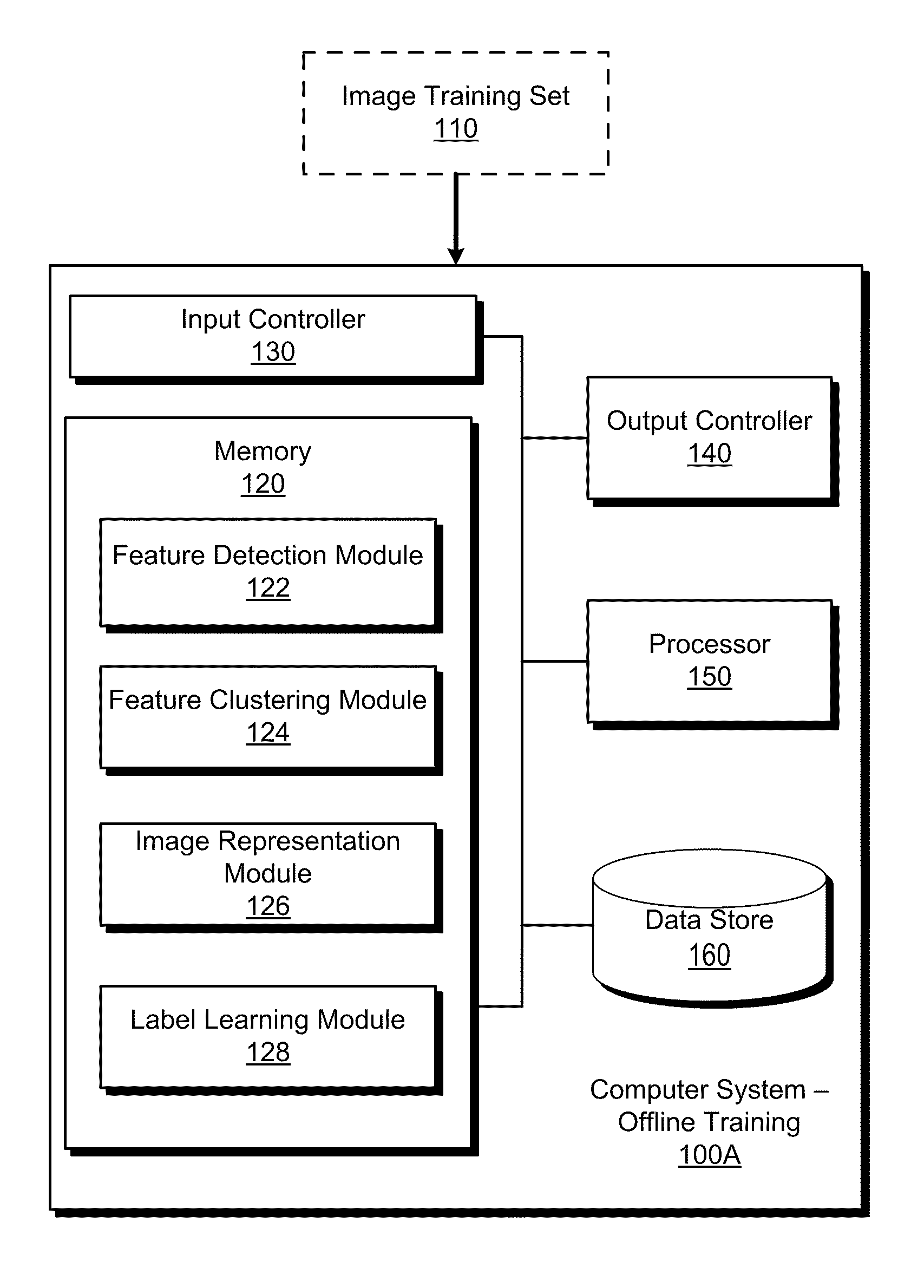

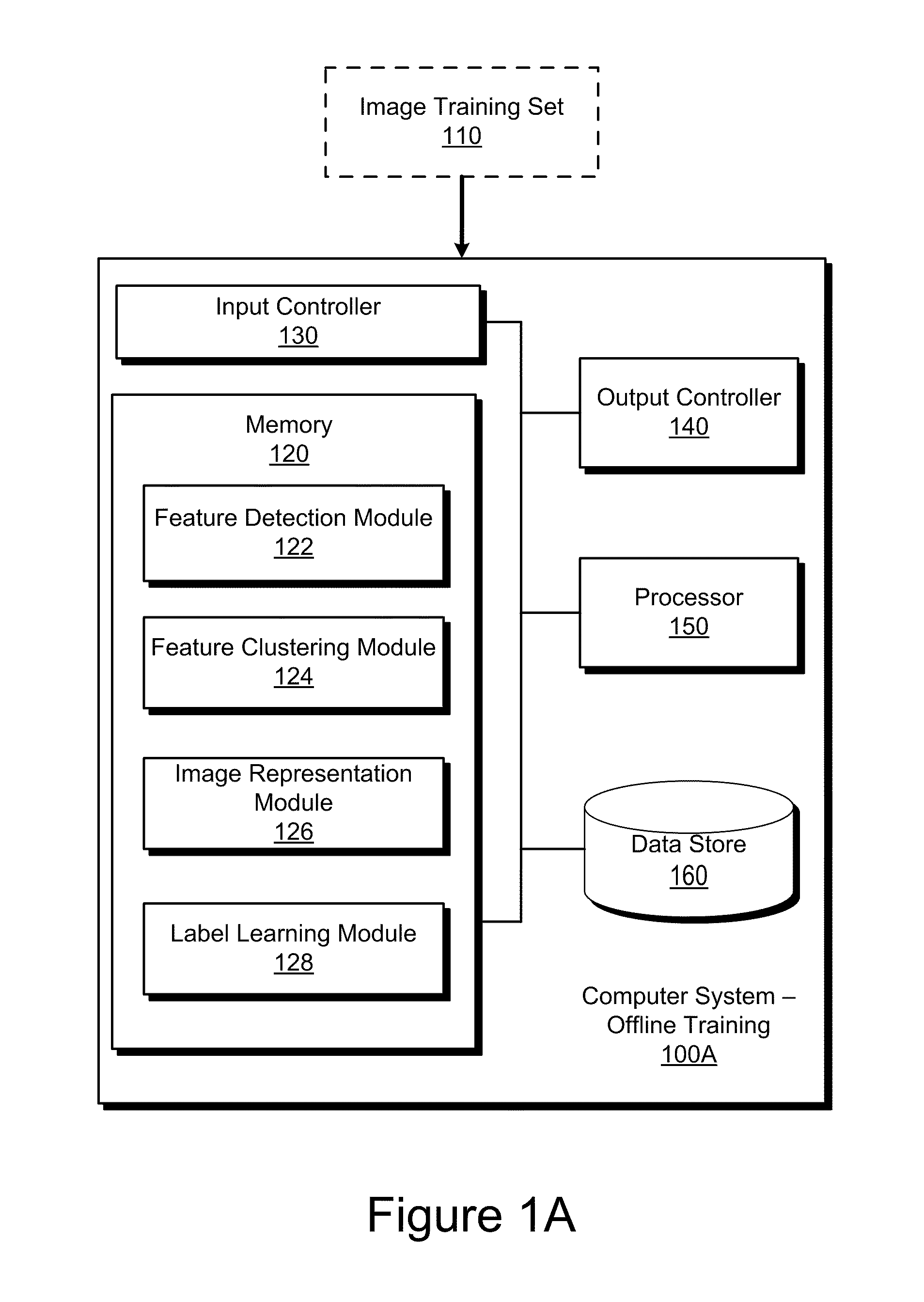

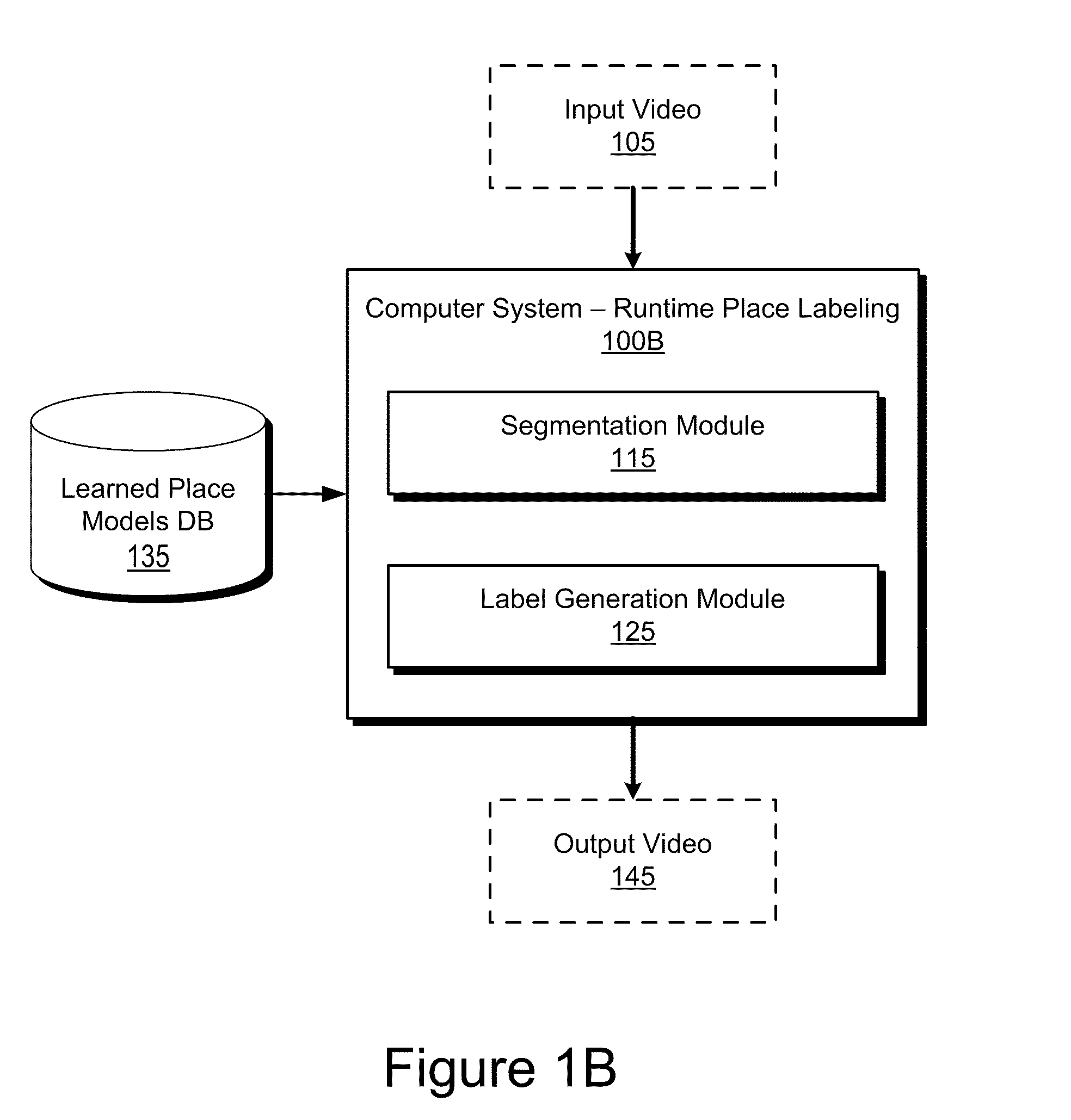

Detecting and labeling places using runtime change-point detection and place labeling classifiers

ActiveUS20110229031A1Television system detailsCharacter and pattern recognitionPattern recognitionPerplexity

A system and method are disclosed for detecting and labeling places in a video stream using change-points detection. The system comprises a place label generation module configured to assign place labels probabilistically to places in the video stream based on the measurements of the measurement stream representing the video. For each measurement in the segment, the place label generation module classifies the measurement by computing the probability of the measurement being classified by a learned Gaussian Process classifier. Based on the probabilities generated with respect to all the measurements in the segment, the place label generation module determines the place label for the segment. In cases where a Gaussian Process classifier cannot positively classify a segment, the place label generation module determines whether the segment corresponds to an unknown place based on the perplexity statistics of the classification and a threshold value.

Owner:HONDA MOTOR CO LTD

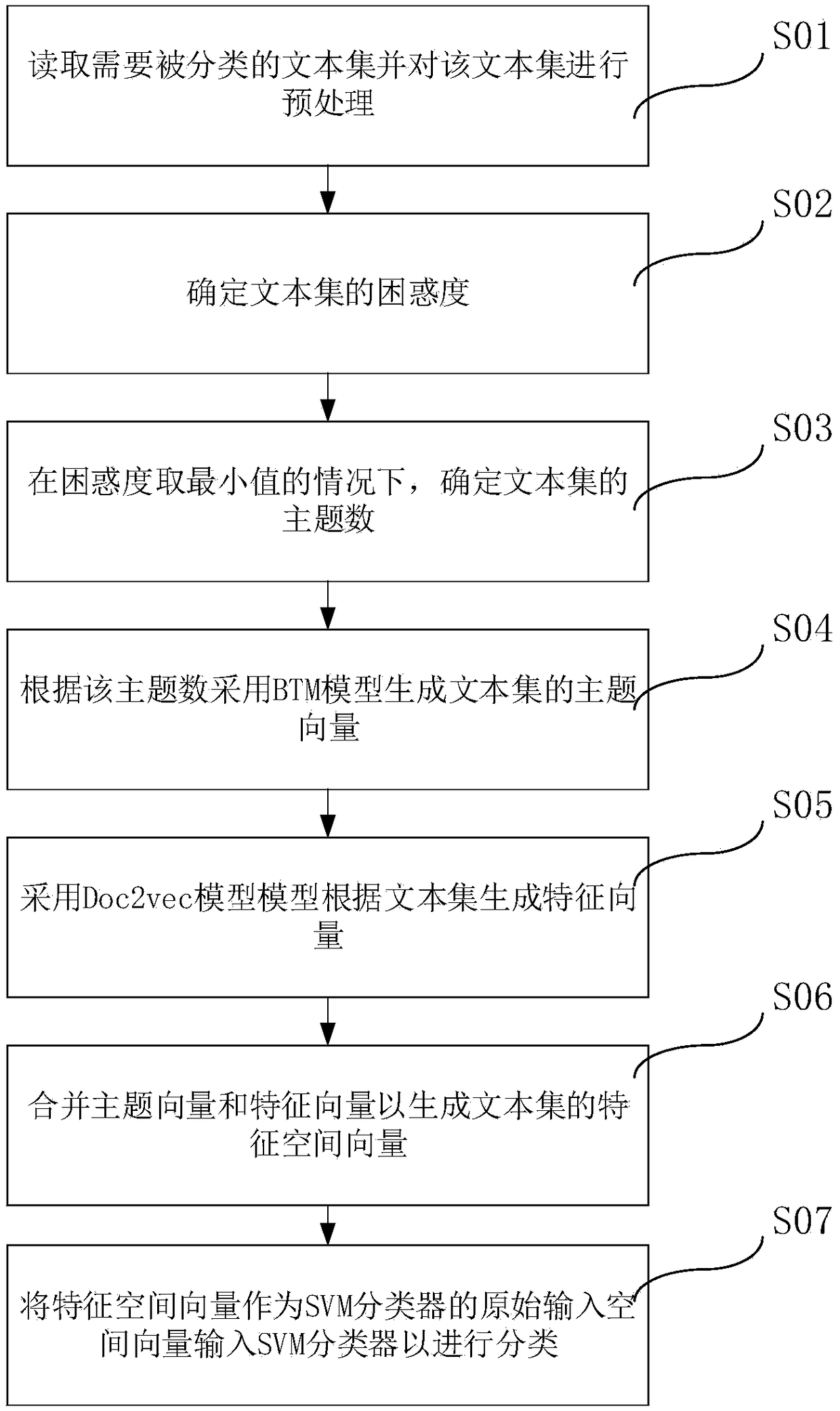

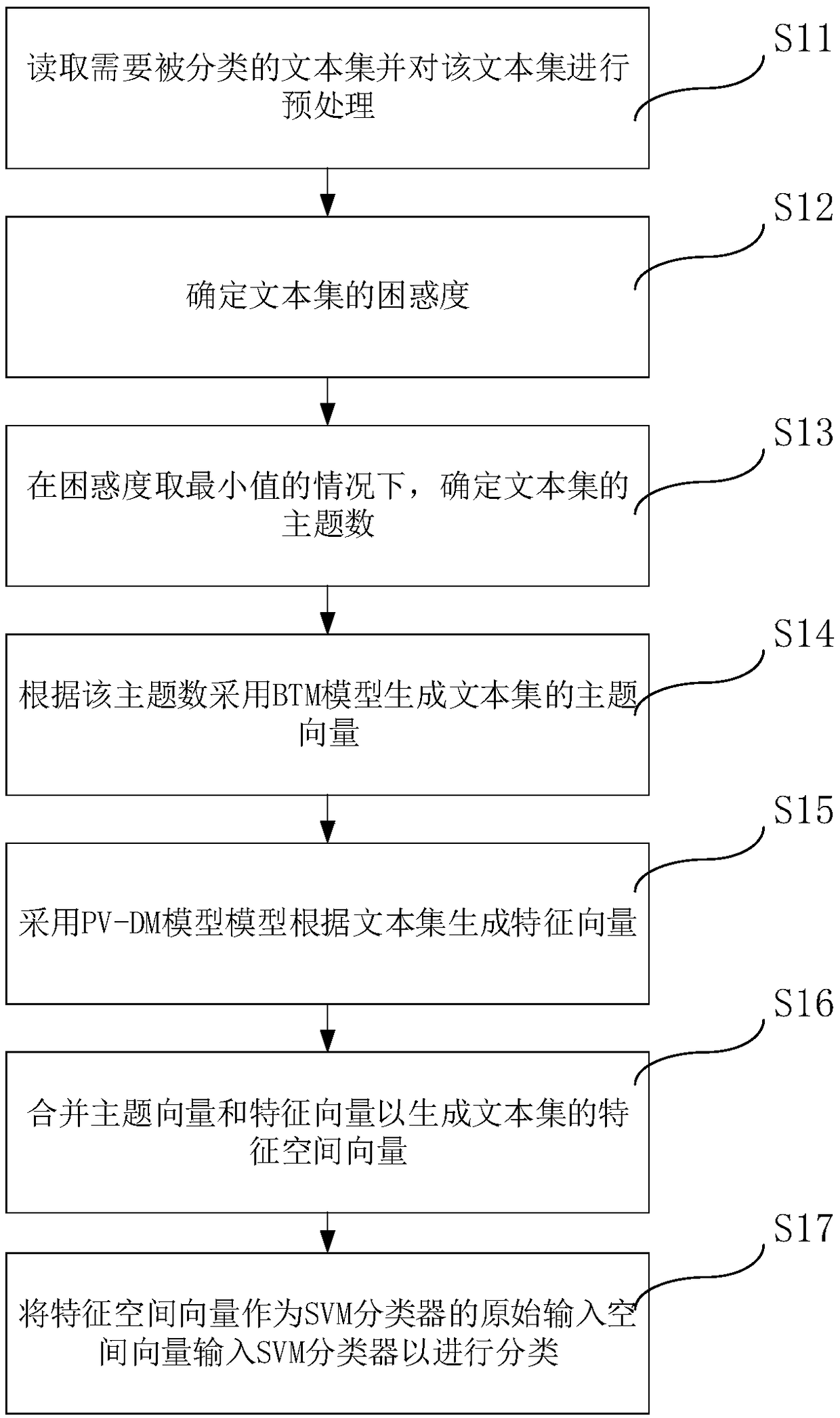

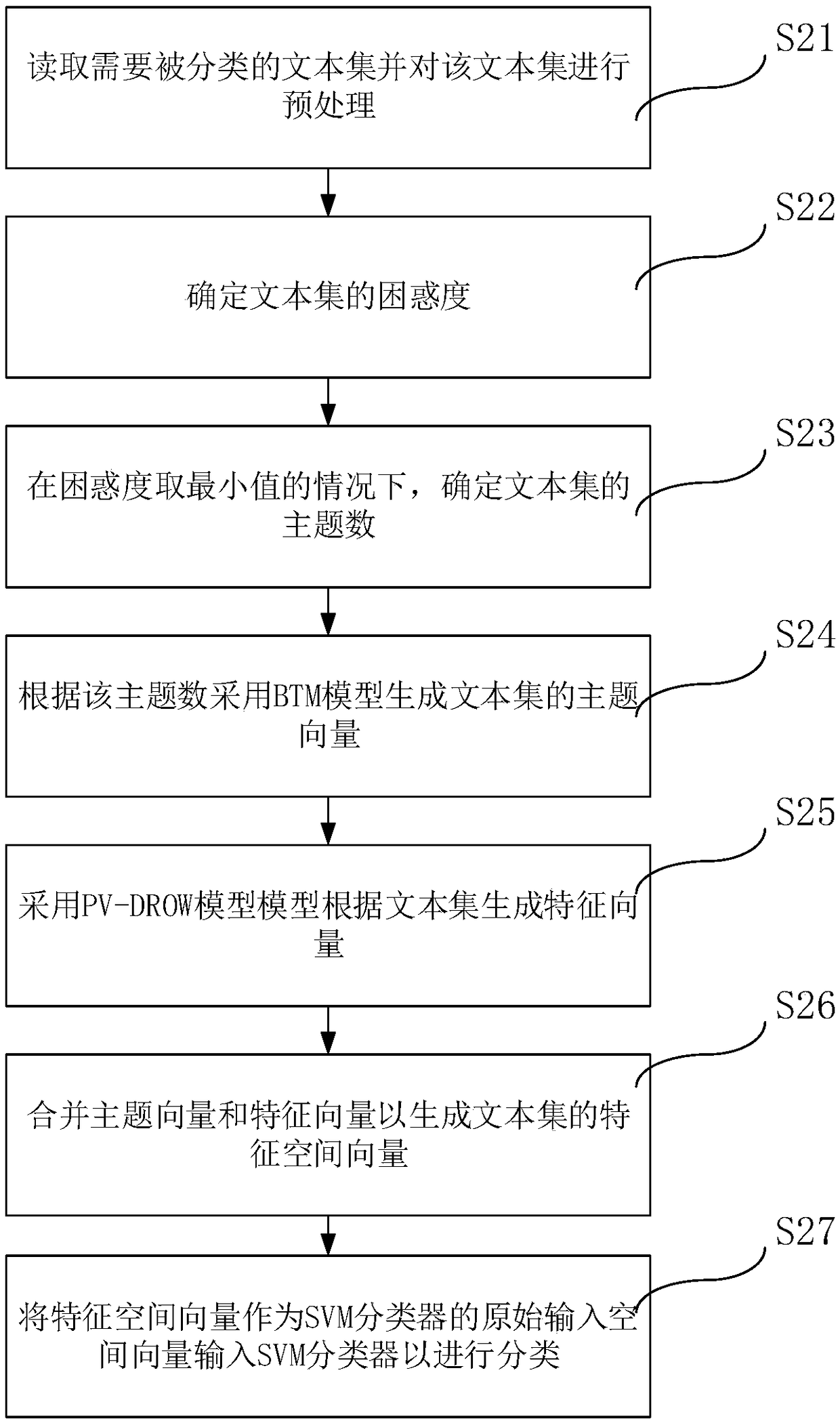

Method, system and storage medium for classifying text set

InactiveCN108846120APreserve semanticsPreserve word order informationCharacter and pattern recognitionSpecial data processing applicationsFeature vectorText categorization

The invention provides a method, system and storage medium for classifying a text set, and belongs to the technical field of the text classification algorithm. The method comprises the following steps: reading the text set needing to be classified and preprocessing the text set; determining the perplexity of the text set; determining topic number of the text set when the perplexity takes the minimum value; generating the topic vector of the text set by adopting a BTM model according to the topic number; generating a feature vector according to the text set by adopting the Doc2vec model; combining the topic vector and the feature vector to generate a feature space vector of the text set; and serving the feature space vector as the original input space vector of a SVM classifier to input into the SVM classifier, thereby performing the classification. Through the method, system and storage medium for classifying the text set disclosed by the invention, the efficiency of the text classification algorithm can be improved.

Owner:HEFEI UNIV OF TECH

Method for screening out content of music player

InactiveCN102411971ACarrier indexing/addressing/timing/synchronisingSpecific program execution arrangementsMusic playerNetizen

The invention discloses a method for screening out the content of a music player. As for some websites of music players at present, netizens upload a great amount of music and songs, which greatly enriches the content of the player but brings about perplexity for users during the playing operation due to numerous repeated contents. In the invention, a small program plugin is used to screen out the content in a playlist, and the found repeated contents can be deleted.

Owner:SIYANG TIANQIN SOFTWARE TECH

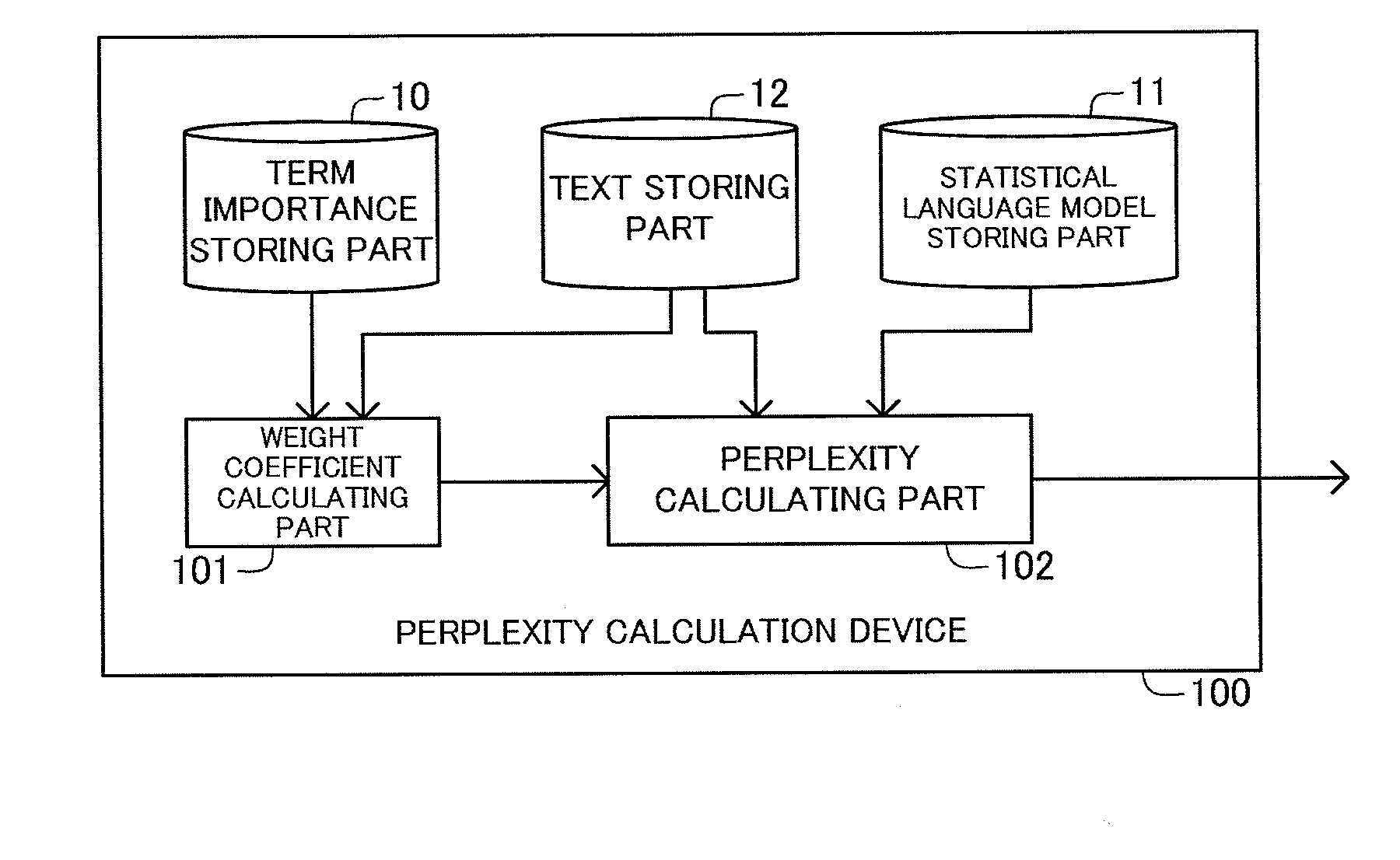

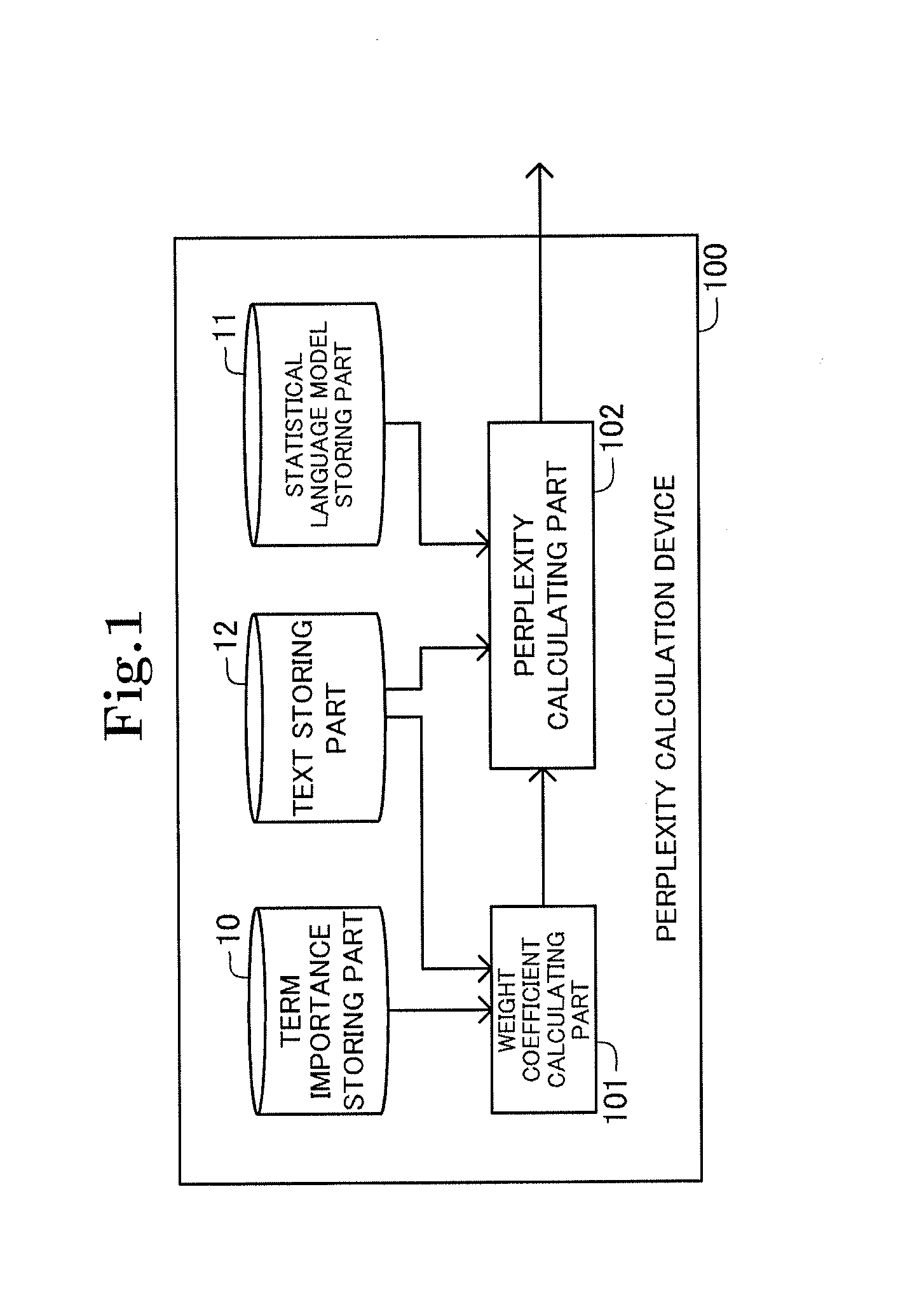

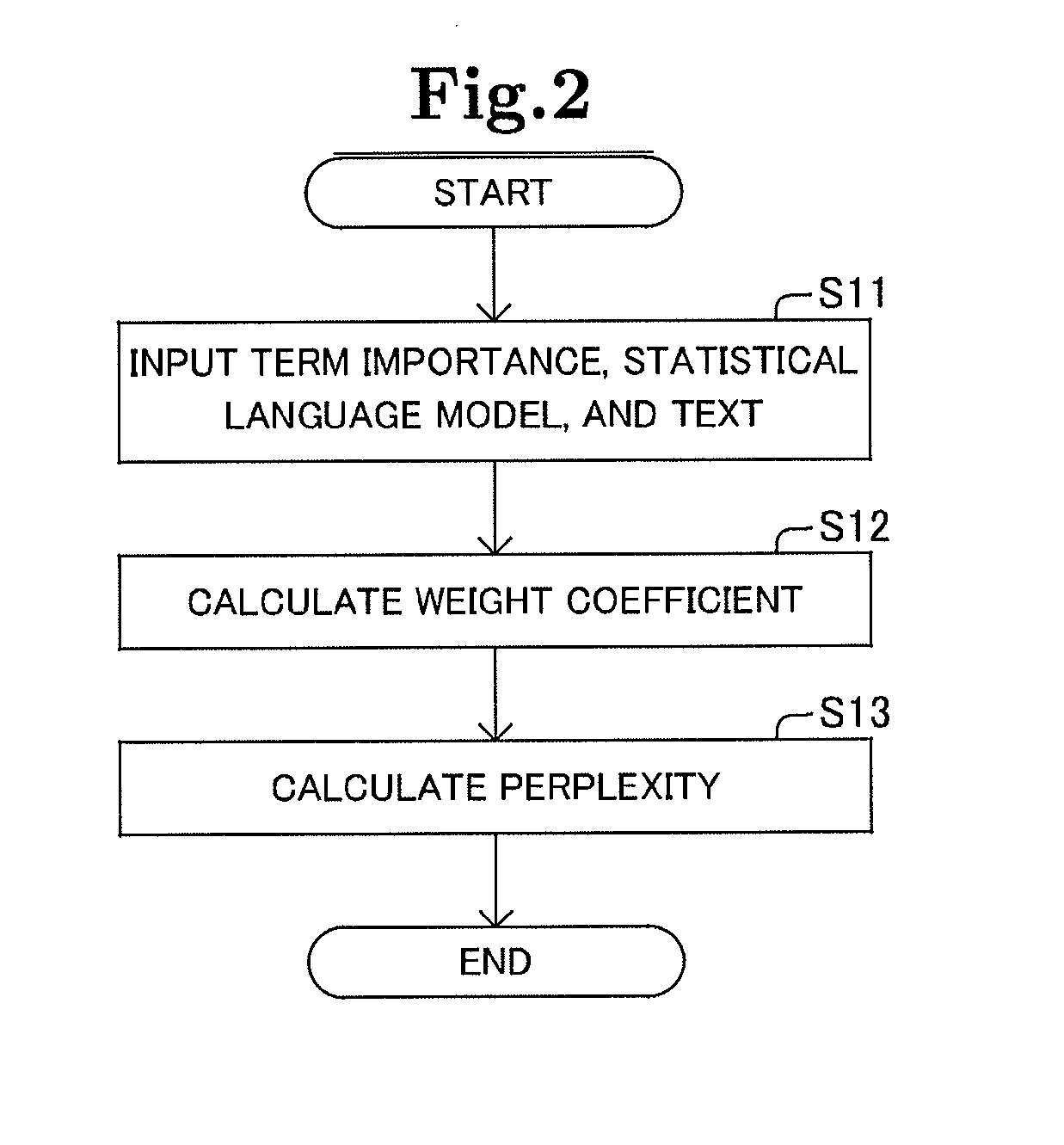

Perplexity calculation device

A perplexity calculation device 500 includes: a weight coefficient calculating part 501 for, with respect to each of a plurality of text constituent words constituting a text, calculating a weight coefficient for correcting a degree of ease of word appearance having a value which becomes larger as a probability of appearance of the text constituent word becomes higher based on a statistical language model showing probabilities of appearance of words, based on word importance representing a degree of importance of the text constituent word; and a perplexity calculating part 502 for calculating perplexity of the statistical language model to the text, based on the calculated weight coefficients and the degrees of ease of word appearance.

Owner:NEC CORP

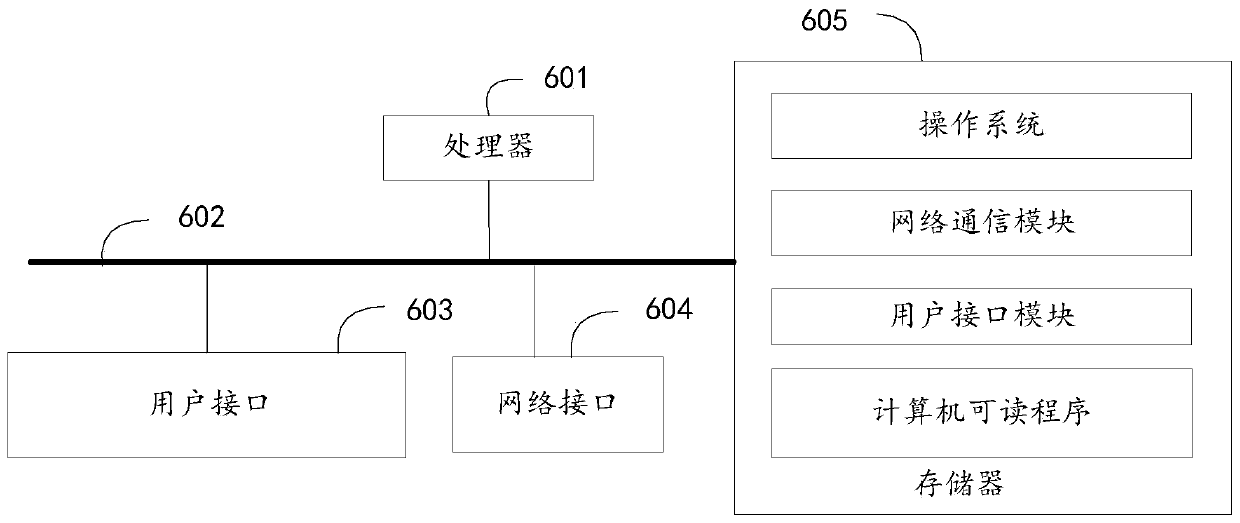

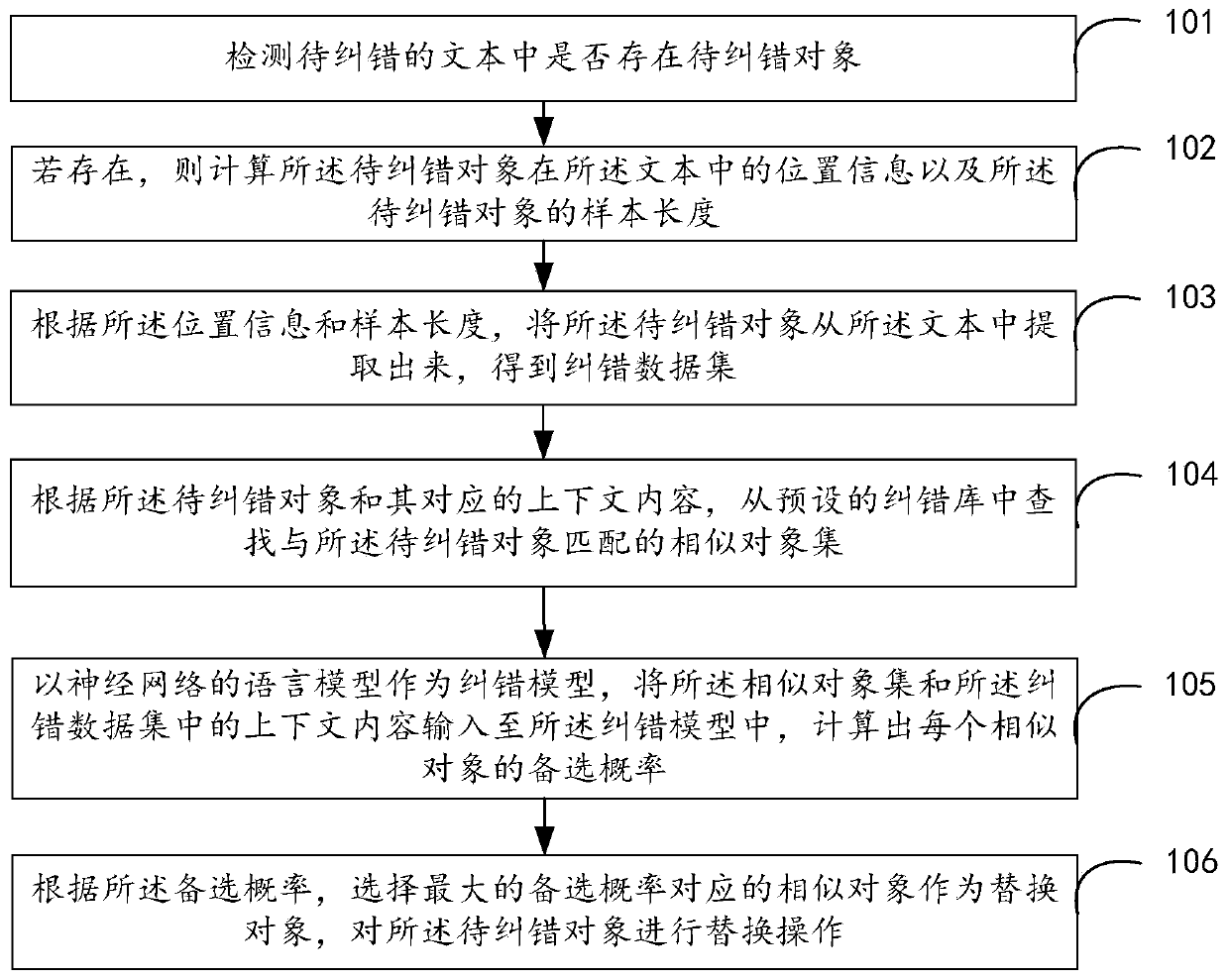

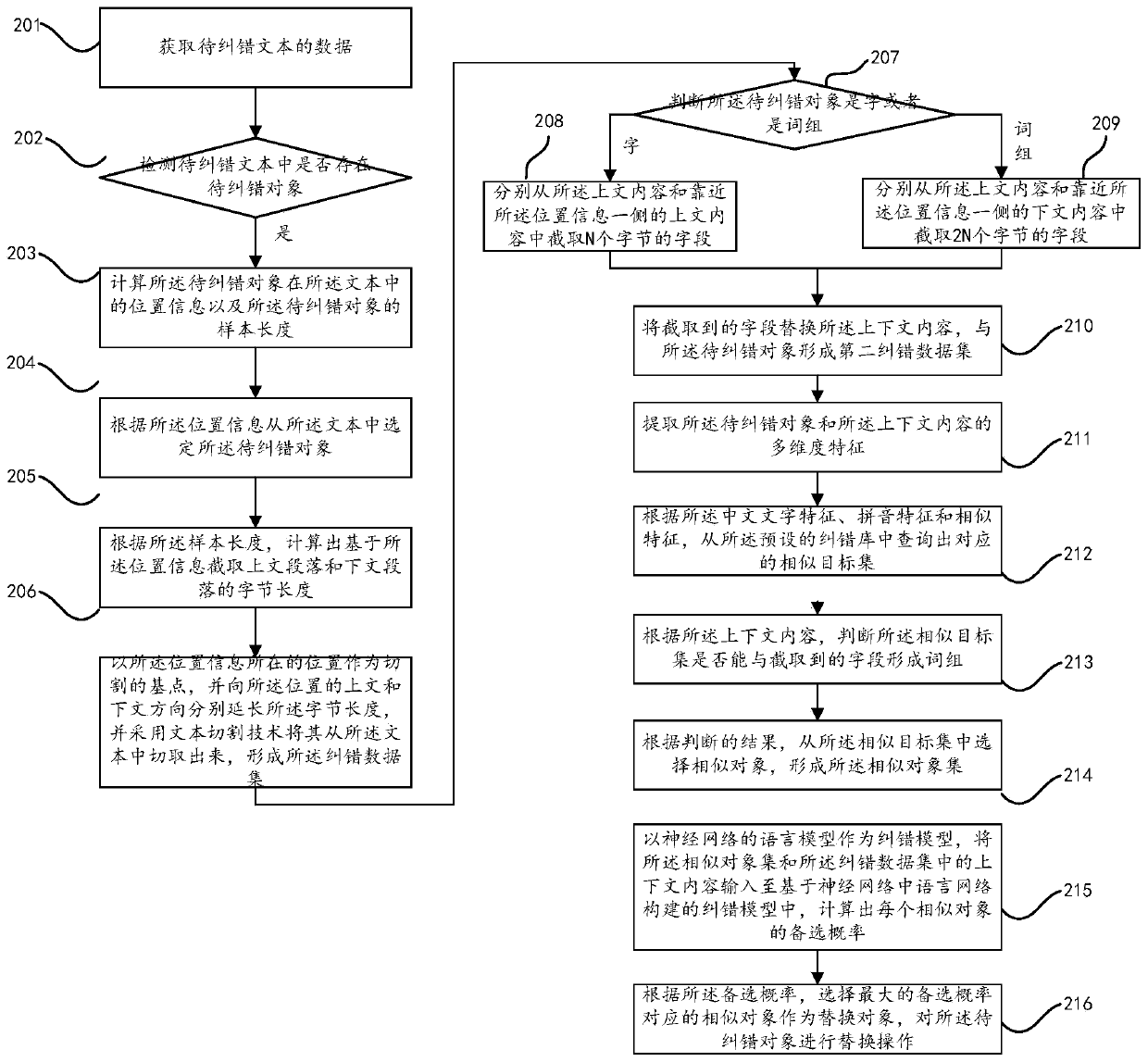

Error correction method, device and equipment and storage medium

The invention relates to the technical field of artificial intelligence, and discloses an error correction method. The method comprises the following steps: detecting that a to-be-corrected object exists in a text; extracting context contents of the to-be-corrected objects based on the positions of the to-be-corrected objects, inputting the corresponding similar objects into the error correction model according to the context contents and the similar objects to obtain corresponding alternative probabilities of the to-be-corrected objects, and selecting one corresponding object from the alternative probabilities as a replacement object to perform replacement processing on the to-be-corrected objects based on the alternative probabilities. The invention further provides an error correction device and equipment and a storage medium. Information of a to-be-corrected object is predicted based on the to-be-corrected object and context content at the same time; the confusion degree of a language model during semantic recognition can be reduced, so that relatively accurate similar objects are extracted, then the alternative probability of each similar object is calculated based on an errorcorrection model in combination with context content, and a relatively large object is selected from the alternative probability, so that the probability of each character or word is improved, and the final error correction accuracy is also improved.

Owner:PING AN TECH (SHENZHEN) CO LTD

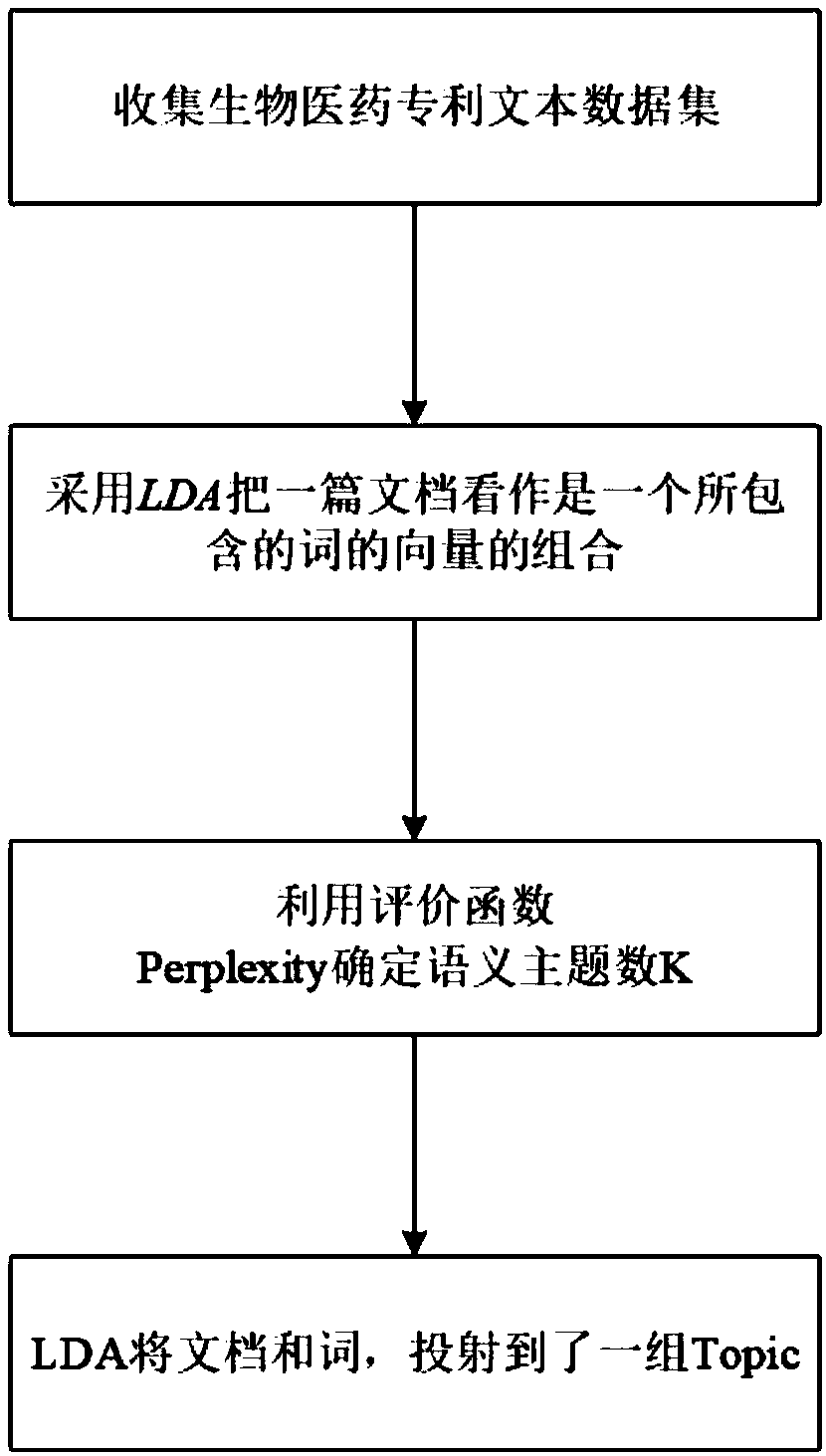

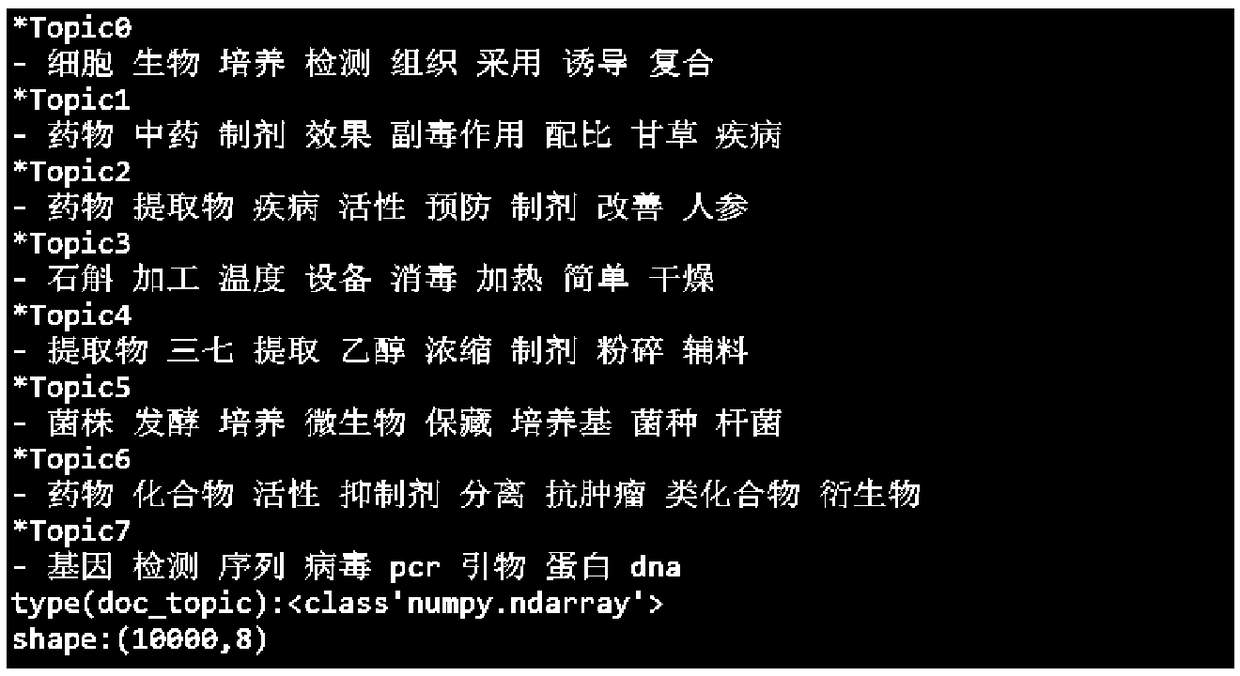

A biomedicine technology subject mining method based on LDA,

InactiveCN109446320AReduce sparsityAchieving identifiabilitySemantic analysisSpecial data processing applicationsHigh probabilityDocument preparation

The invention relates to a biomedicine technology subject mining method based on LDA, belonging to the technical field of information retrieval. The method of the present invention first employs an LDA to view a document as a combination of vectors of a contained word, then the number of semantic topics K is determined by using the evaluation function Perplexity; and finally, a probability p of each document di on all Topics is calculated, and two matrices, one doc-Topic matrix and the other word-Topic matrix, are obtained, so that LDA projects documents and words onto a set of Topics, tryingto find out the potential relationship between documents and words, documents and documents, words and words. LDA is an unsupervised algorithm, and every Topic does not require a specified condition.However, after clustering, the probability distribution of the words on each Topic is calculated, and the words with high probability on the Topic can describe the meaning of the Topic very well.

Owner:KUNMING UNIV OF SCI & TECH

Label classification method and device of corpora, computer equipment and storage medium

PendingCN112084334AImprove accuracyGood explanatoryNatural language data processingSpecial data processing applicationsAlgorithmClassification methods

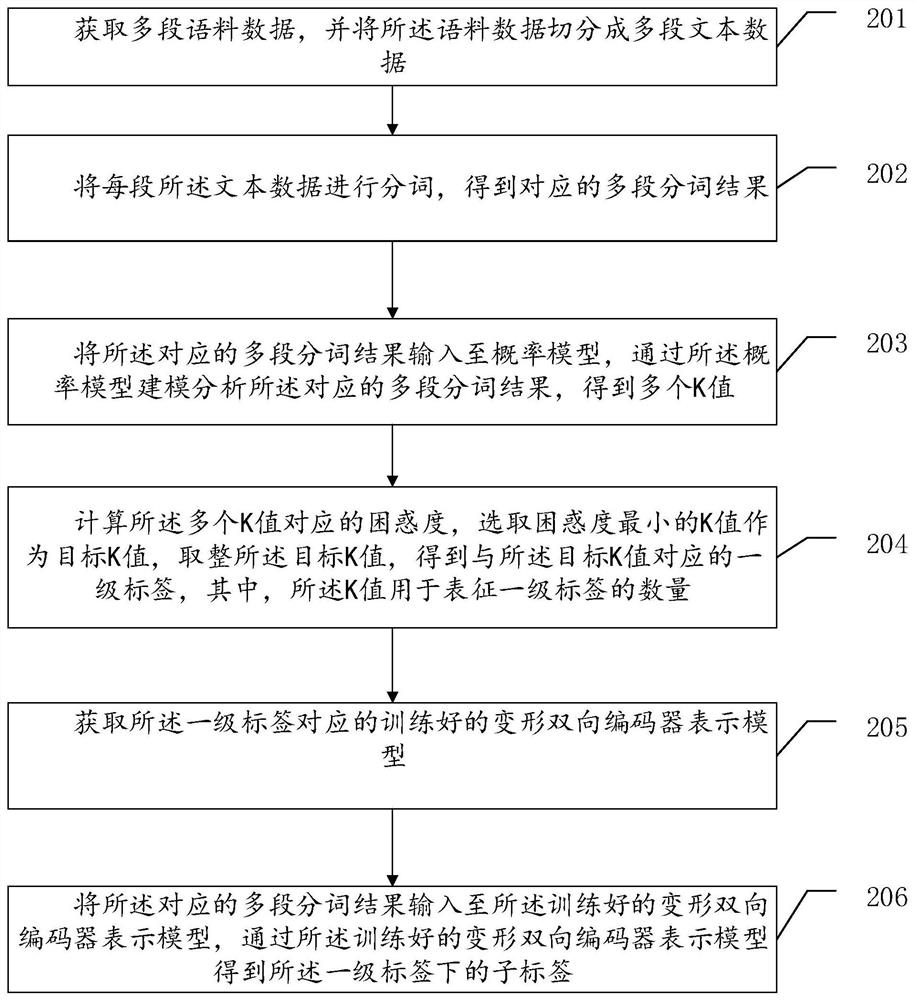

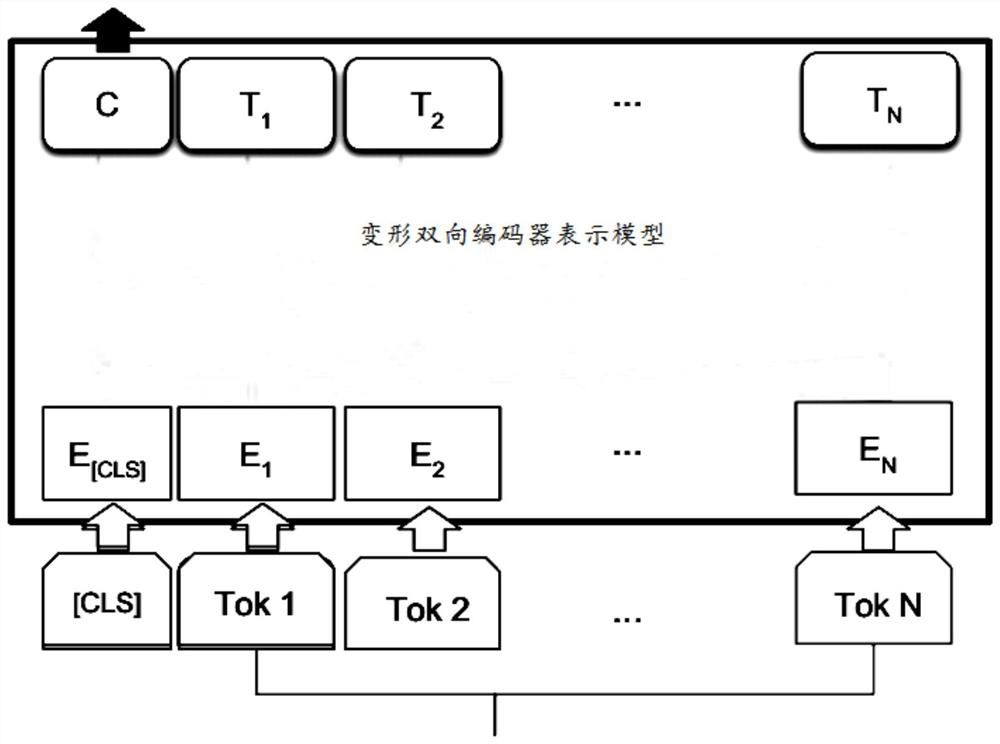

The embodiment of the invention relates to the field of artificial intelligence, and provides a label classification method of corpora, which comprises the following steps of: carrying out word segmentation on multiple sections of text data of multiple sections of corpus data to obtain corresponding multiple sections of word segmentation results; inputting the multiple sections of word segmentation results into a probability model, and analyzing the word segmentation results by modeling of the probability model to obtain a plurality of K values; calculating the confusion degree of the plurality of K values, and taking the K value with the minimum confusion degree to obtain a corresponding first-level label; and inputting the corresponding multi-segment word segmentation results into a deformed bidirectional encoder representation model corresponding to the first-level label, and obtaining sub-labels under the first-level label through the deformed bidirectional encoder representation model. In addition, the invention further relates to blockchain technology, and the multiple segments of text data can be stored in the blockchain. The invention further provides a label classificationdevice for the corpora, computer equipment and a storage medium. Label classification accuracy of the corpora is improved.

Owner:CHINA PING AN PROPERTY INSURANCE CO LTD

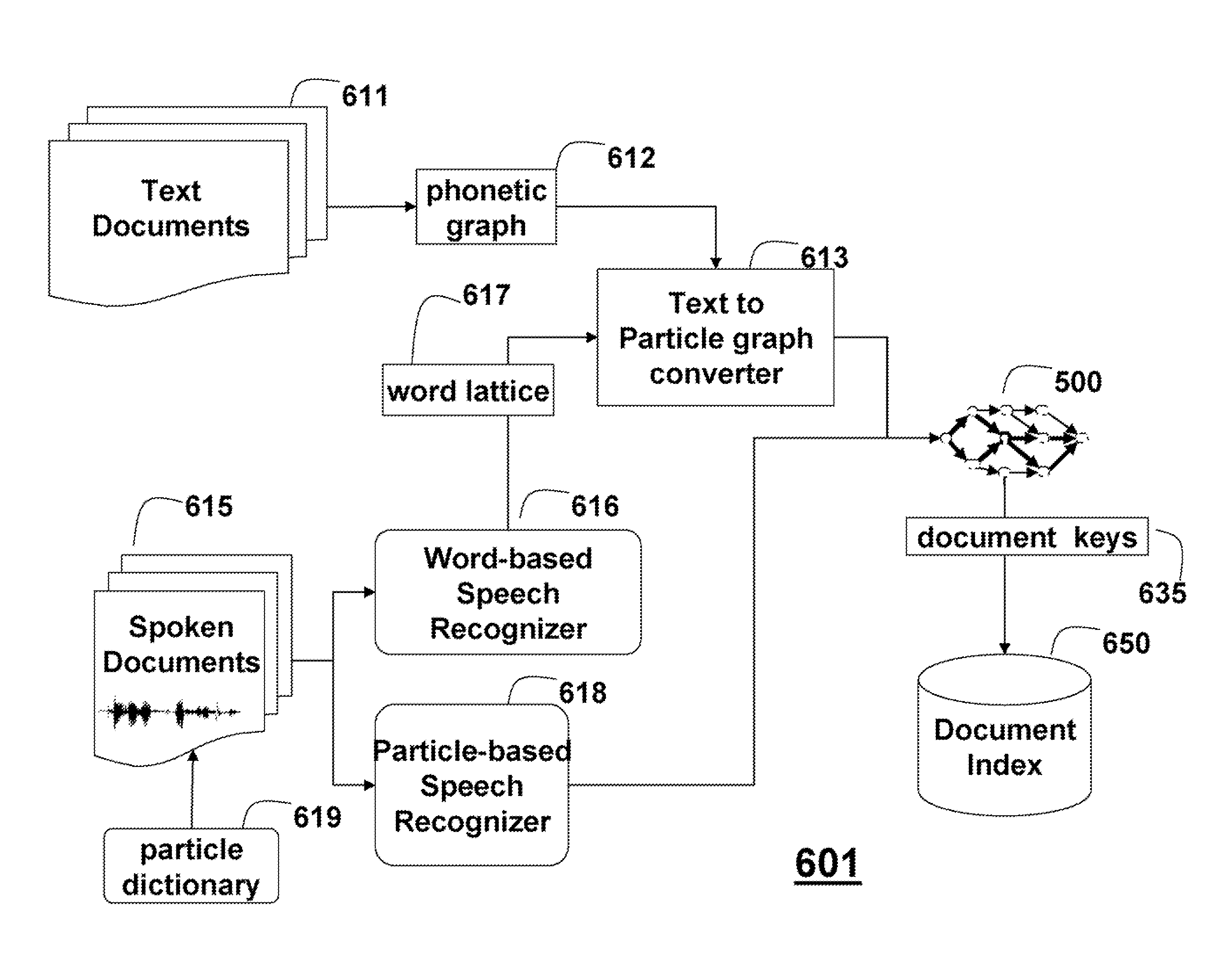

Method for indexing for retrieving documents using particles

InactiveUS8229921B2Avoid restrictionsSmall memoryDigital data information retrievalDigital data processing detailsPerplexityDocumentation

An information retrieval system stores and retrieves documents using particles and a particle-based language model. A set of particles for a collection of documents in a particular language is constructed from training documents such that a perplexity of the particle-based language model is substantially lower than the perplexity of a word-based language model constructed from the same training documents. The documents can then be converted to document particle graphs from which particle-based keys are extracted to form an index to the documents. Users can then retrieve relevant documents using queries also in the form of particle graphs.

Owner:MITSUBISHI ELECTRIC RES LAB INC

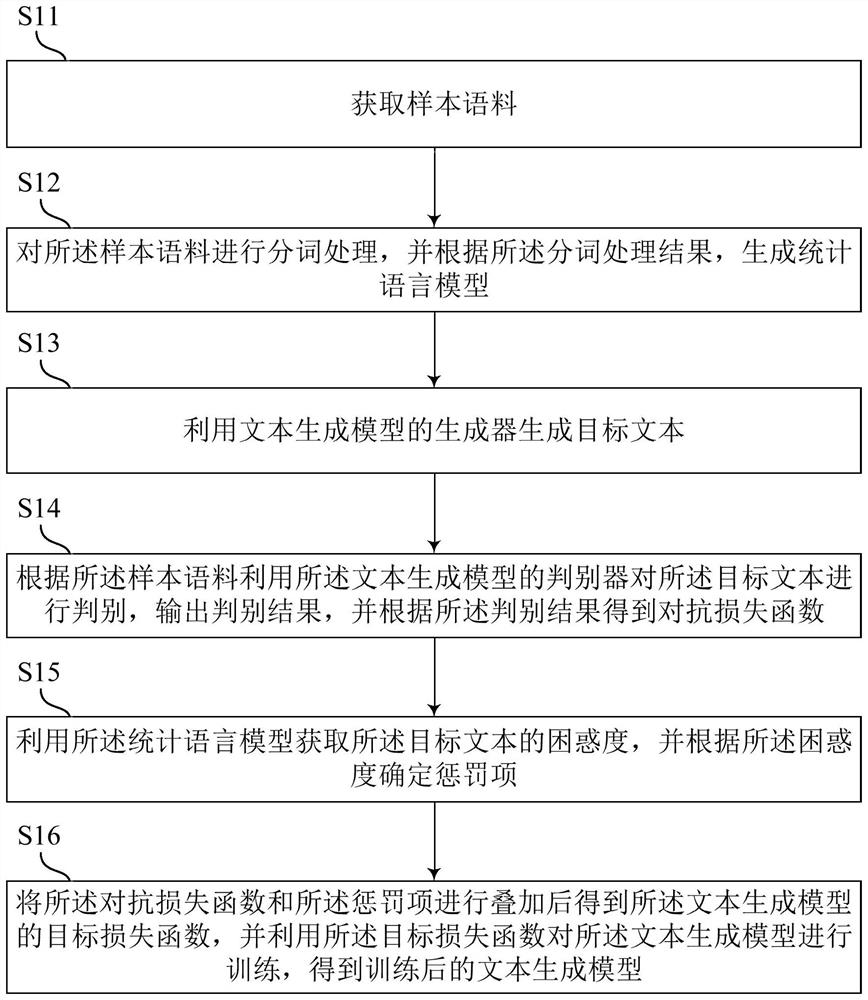

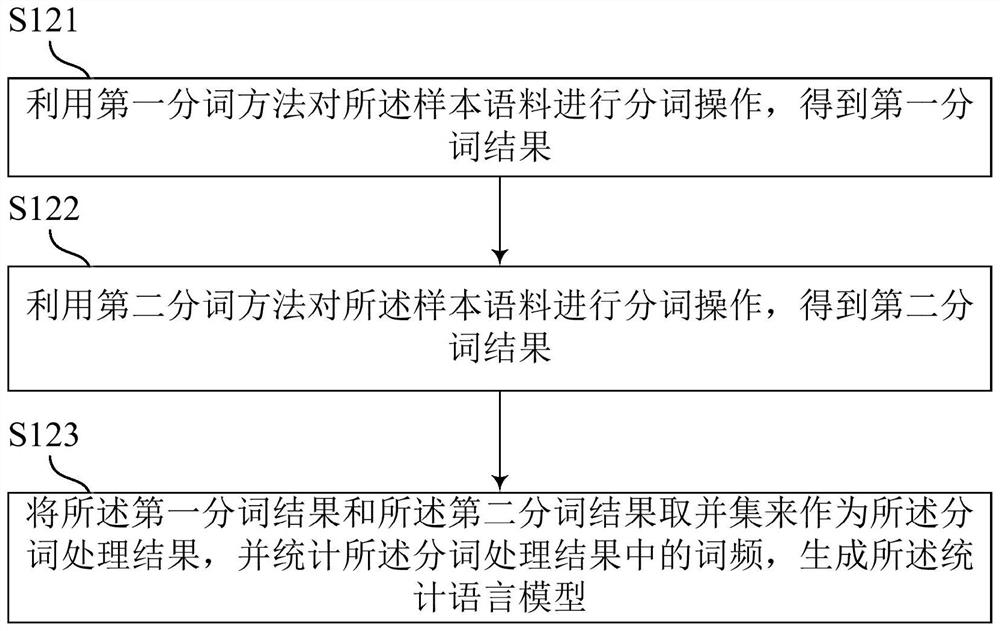

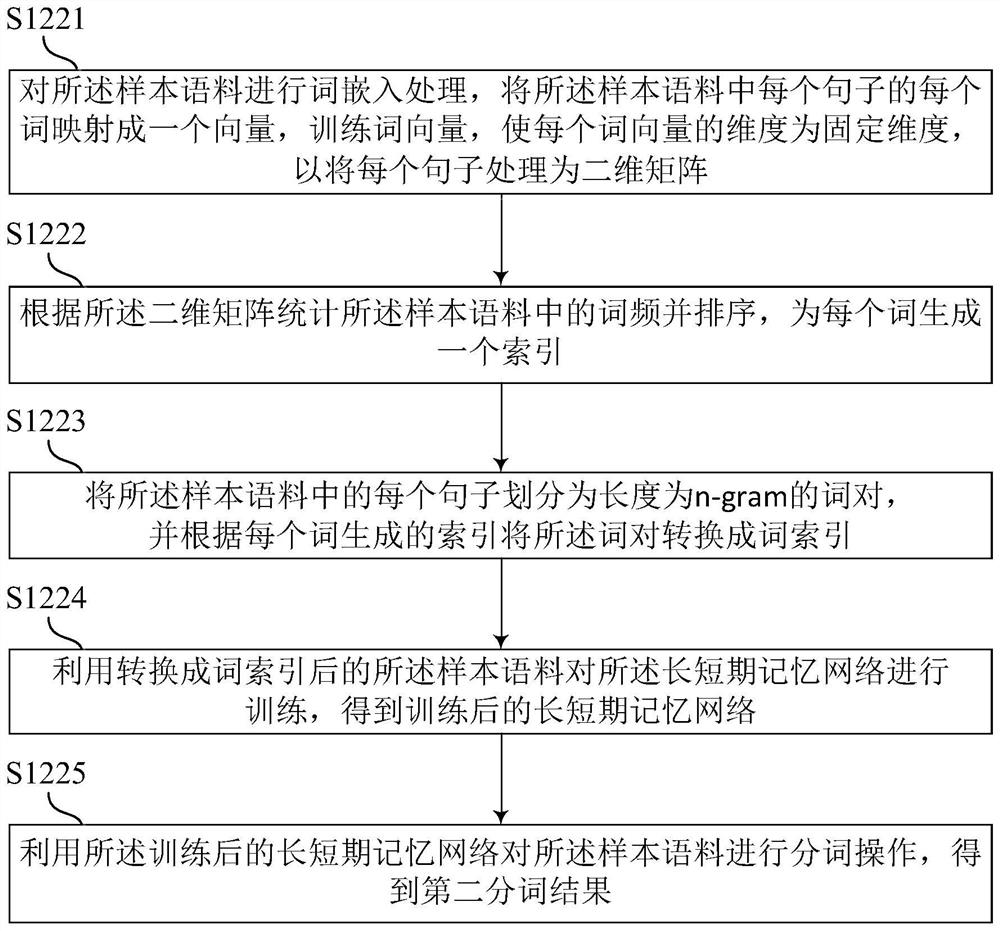

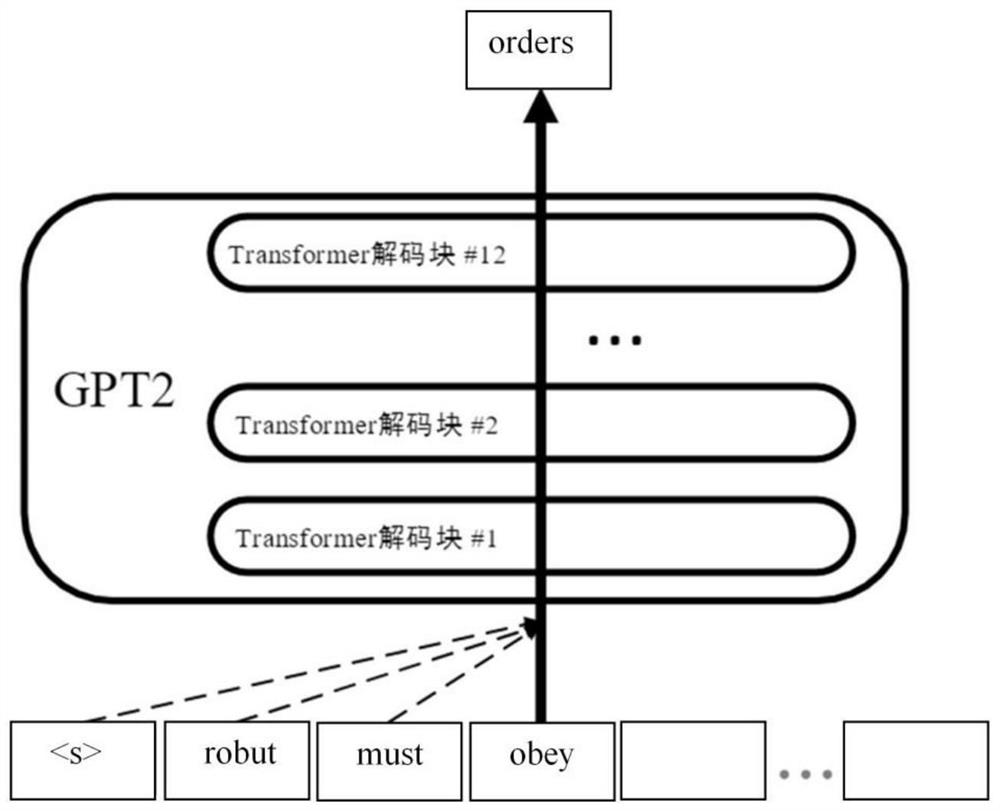

Text generation model training method, target corpus expansion method and related device

PendingCN114462570AImprove performanceNatural language data processingNeural architecturesPattern recognitionAlgorithm

The invention discloses a text generation model training method, a target corpus expansion method and a related device. The training method of the text generation model comprises the following steps: acquiring a sample corpus; performing word segmentation processing on the sample corpus, and generating a statistical language model according to a word segmentation processing result; generating a target text by using a generator of the text generation model; according to the sample corpus, utilizing a discriminator of a text generation model to discriminate the target text, outputting a discrimination result, and obtaining an adversarial loss function according to the discrimination result; acquiring the confusion degree of the target text by utilizing a statistical language model, and determining a penalty term according to the confusion degree; and superposing the confrontation loss function and the penalty term to obtain a target loss function of the text generation model, and training the text generation model by using the target loss function to obtain a trained text generation model. According to the scheme, the training of the text generation model can be guided by utilizing the existing corpus, and the performance of the text generation model is improved.

Owner:ZHEJIANG DAHUA TECH CO LTD

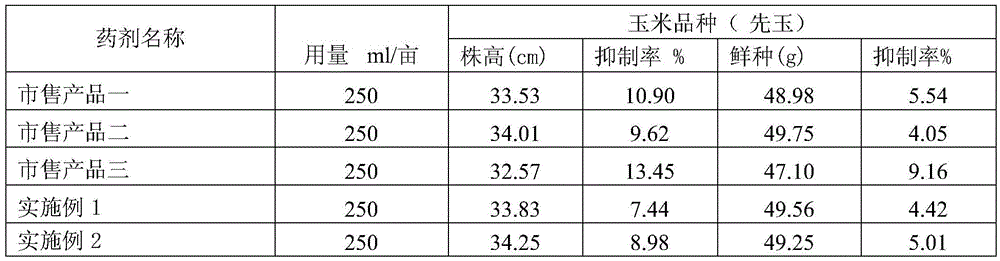

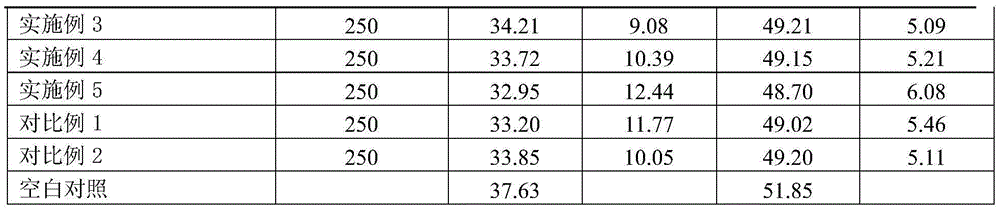

Oil suspended agent

InactiveCN105454227AQuality improvementLess oil separationBiocideDead animal preservationSuspending AgentsBULK ACTIVE INGREDIENT

The invention relates to an oil suspended agent prepared from an agricultural chemical active ingredient and solvent oil, wherein the agricultural chemical active ingredient is a combination of one or more of sulfonylurea compounds, triketone compounds and triazine compounds; the solvent oil contains compounds as shown in a general formula (I) R-(CH2)n-R minute (I); in the formula (I), R is alkyl with 5 to 10 carbon atoms, n is an integer of 6 to 20, and R minute is phenyl, benzyl or naphthyl. The oil suspended agent is good in medicinal effect and has no biosecurity perplexity; in addition, after the oil suspended agent is placed for a long time, less oil is separated out, and no bottom precipitation phenomenon is caused; the product is stable in quality, the problem of poor stability in the storage process of the product is solved, and the use of farmers is facilitated.

Owner:FMC CHINA INVESTMENT

Unsupervised online public opinion junk long text recognition method

ActiveCN111737475ALow costSpecial data processing applicationsText database clustering/classificationText recognitionLinguistic model

The invention discloses an unsupervised online public opinion junk long text recognition method. The recognition method comprises the following steps of obtaining data of corresponding public opinionjunk texts with marks and normal texts from an existing internal system; respectively constructing two models, including a language model trained based on an online public opinion text and a BERT nextsentence prediction model based on the online public opinion text, and respectively inputting a to-be-predicted online public opinion long text into the language model and the BERT next sentence prediction model; evaluating whether the interior of a sentence is a junk text or not by utilizing a language model confusion index; evaluating the context coherence between sentences of the text by utilizing a next sentence prediction model of BERT; completing the junk text recognition task of the long text by combining the junk text information and the supervision data, thus the junk text information can be automatically recognized, meanwhile, the cost generated by obtaining the supervision data is greatly reduced, and a system without the supervision data can recognize the junk text from the beginning.

Owner:南京擎盾信息科技有限公司

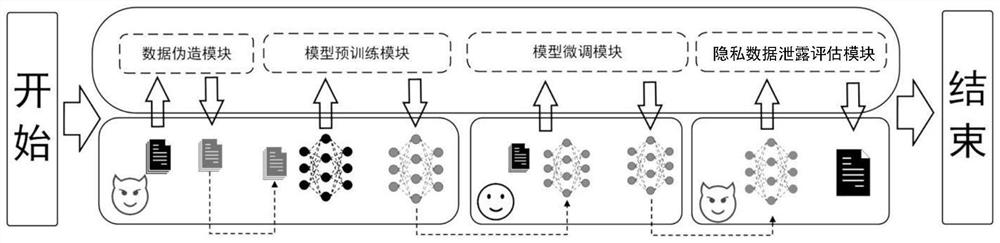

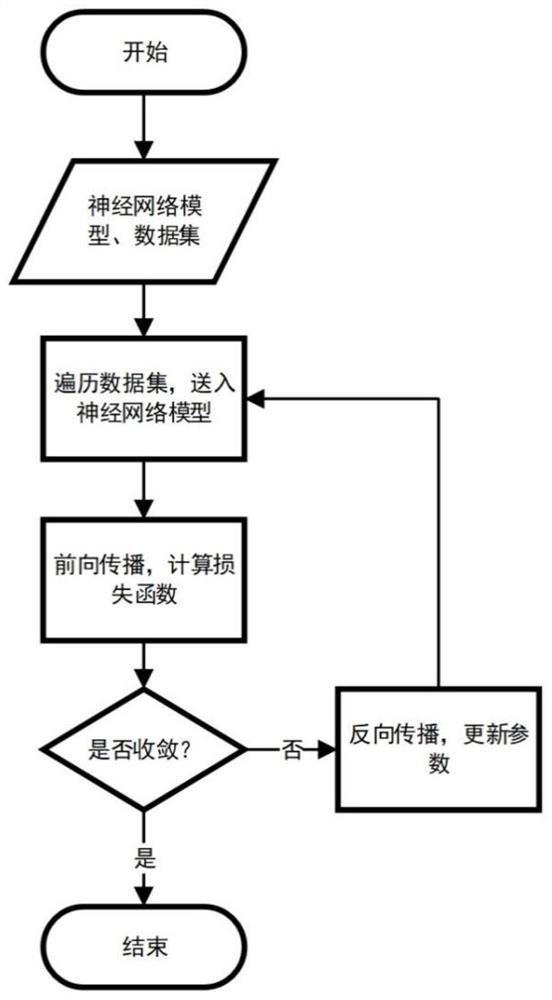

Pre-training language model-oriented privacy disclosure risk assessment method and system

PendingCN114676458AImprove accuracyImprove versatilityCharacter and pattern recognitionDigital data protectionData setFeature extraction

The invention relates to the field of privacy security, and aims to provide a pre-training language model-oriented privacy disclosure risk assessment method and system. Comprising the following steps: adding forged data into a pre-training data set; inputting the pre-training data set into the initialized neural network model, and calculating loss according to a set pre-training task and a loss function; parameters of the model are continuously updated in the training process, and the privacy leakage risk of the model is increased; inputting the fine tuning data set into a pre-trained neural network model, and performing fine tuning on the feature extraction capability of the model; privacy prefix content is input into the model, and text information serving as a prediction result is output; and calculating, counting and sorting the confusion of the output information, and evaluating the risk of privacy data leakage by comparing the proportion of the generated privacy information. According to the method, the accuracy of evaluating the privacy data leakage risk can be effectively improved, the privacy data leakage risk existing in the pre-training language model is exposed, and a thought is provided for subsequent development of related defense methods.

Owner:ZHEJIANG UNIV

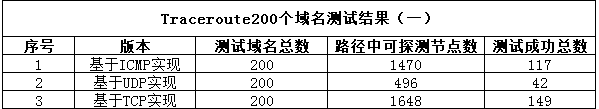

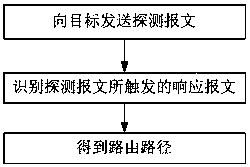

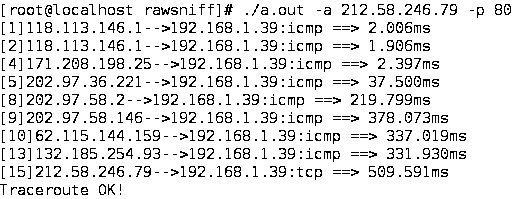

New-type network path detection method realized based on TCP protocol

ActiveCN108924000AAvoid troubleImprove effectivenessData switching networksPerplexityProtocol for Carrying Authentication for Network Access

The invention discloses a new-type network path detection method realized based on a TCP protocol. Detection messages with different TTL values are sent to a target; and response messages triggered bythe detection messages are identified, thereby obtaining a routing paths from local to the target. ICMP response messages and TCP response messages can be triggered by the detection messages. According to the method, perplexity brought by forbidden PING setting to path detection can be effectively avoided, and validity of a detected network path to a target node is improved.

Owner:CHENGDU WANGDING SCI & TECH

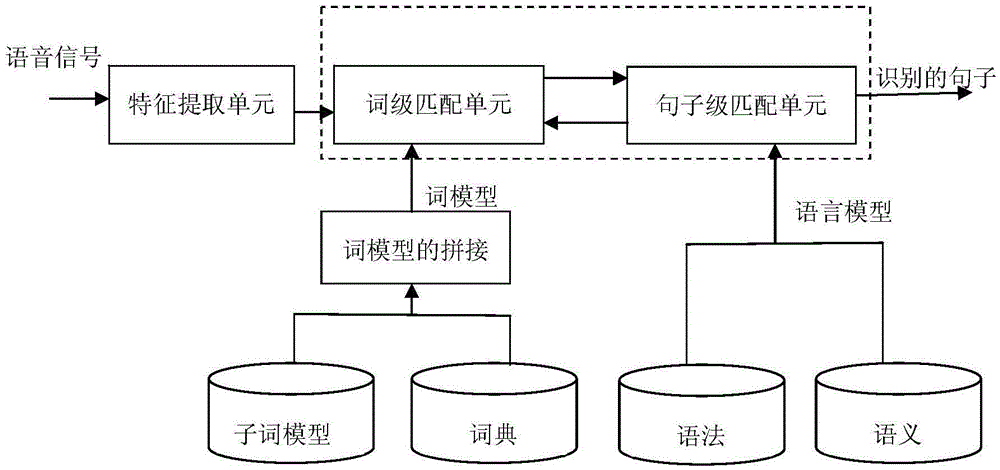

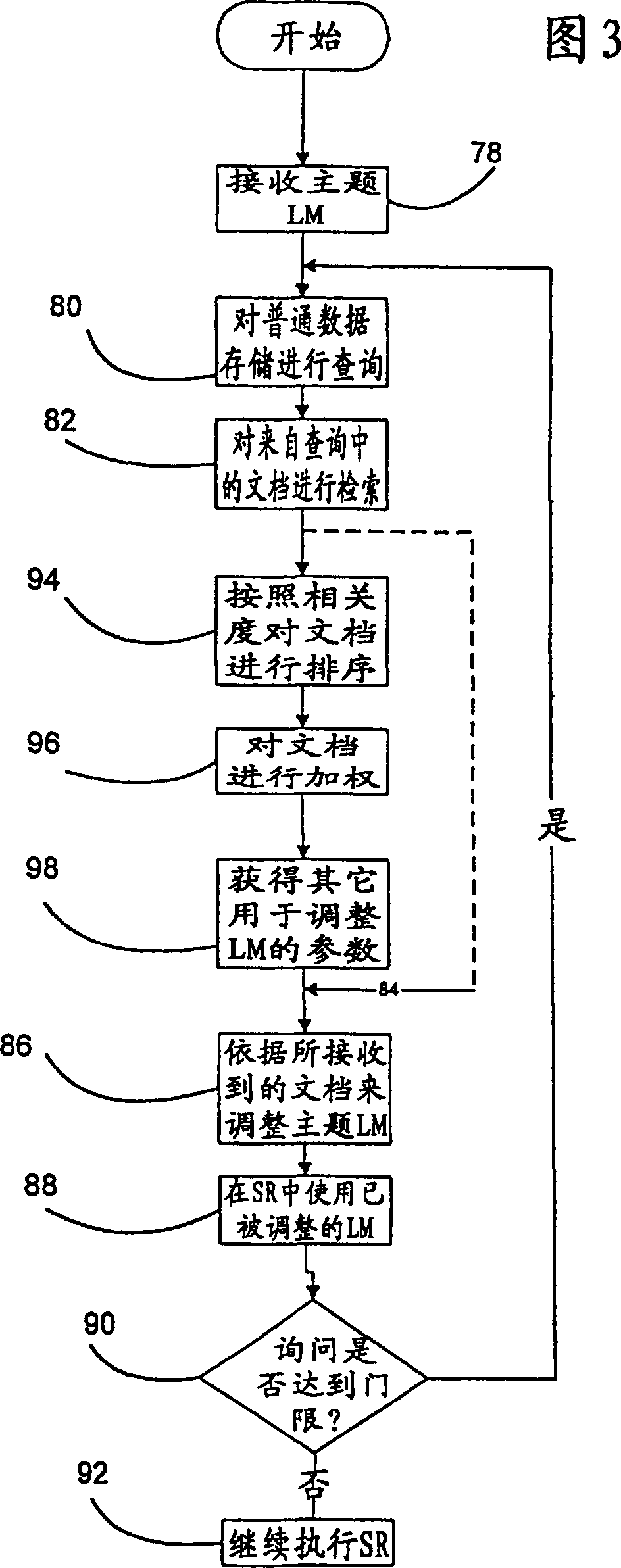

Information retrieval and speech recognition based on language models

InactiveCN1171199CData processing applicationsDigital data information retrievalData memoryPerplexity

A language model is used in a speech recognition system which has access to a first, smaller data store and a second, larger data store. The language model is adapted by formulating an information retrieval query based on information contained in the first data store and querying the second data store. Information retrieved from the second data store is used in adapting the language model. Also, language models are used in retrieving information from the second data store. Language models are built based on information in the first data store, and based on information in the second data store. The perplexity of a document in the second data store is determined, given the first language model, and given the second language model. Relevancy of the document is determined based upon the first and second perplexities. Documents are retrieved which have a relevancy measure that exceeds a threshold level.

Owner:MICROSOFT TECH LICENSING LLC

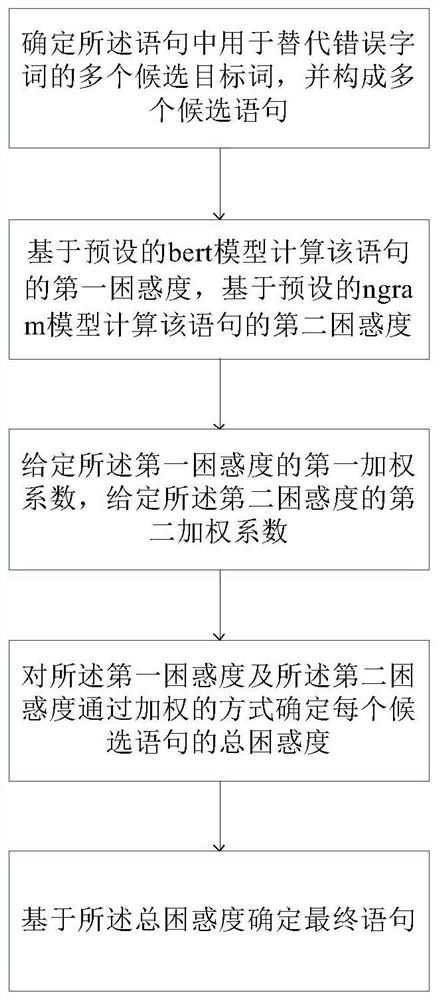

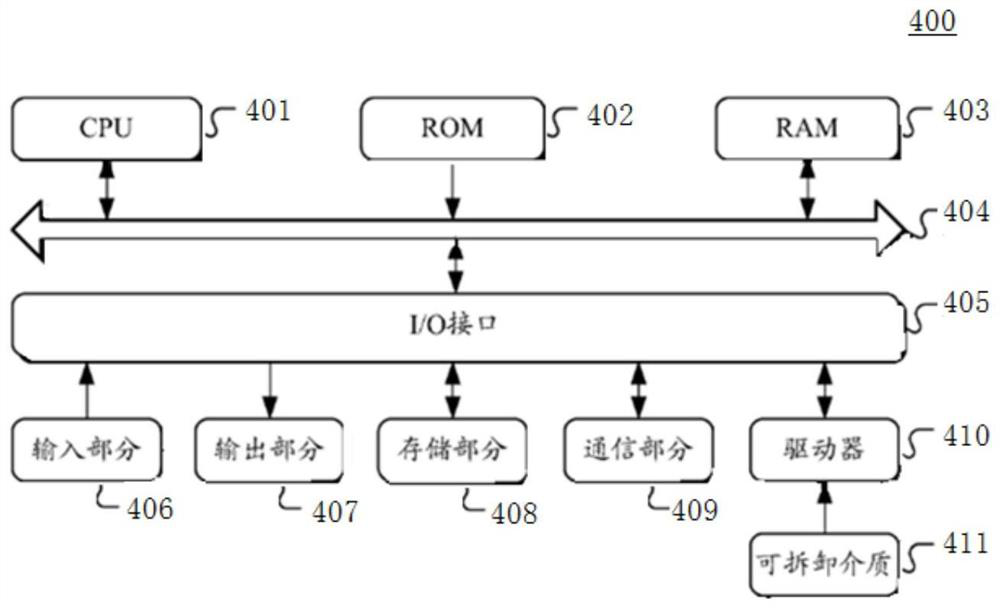

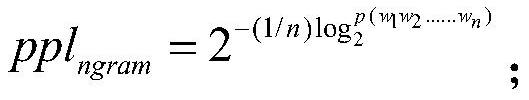

Statement correction method and device based on bert model and ngram model

PendingCN114282523AImprove accuracyComprehensive smoothing methodNatural language data processingAlgorithmEngineering

Owner:北京方寸无忧科技发展有限公司

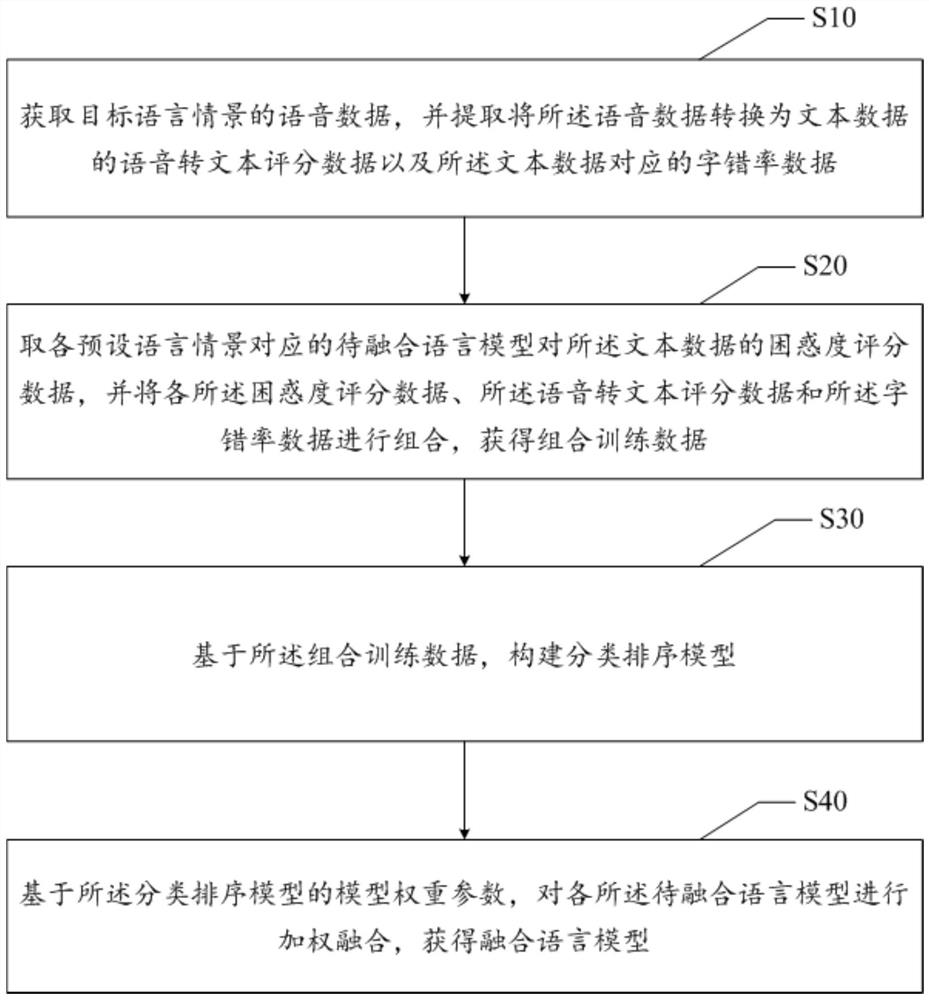

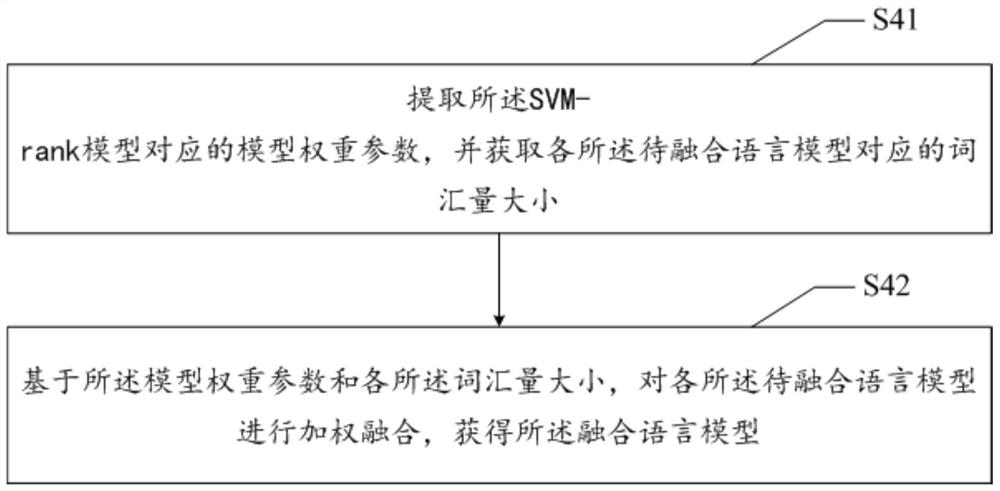

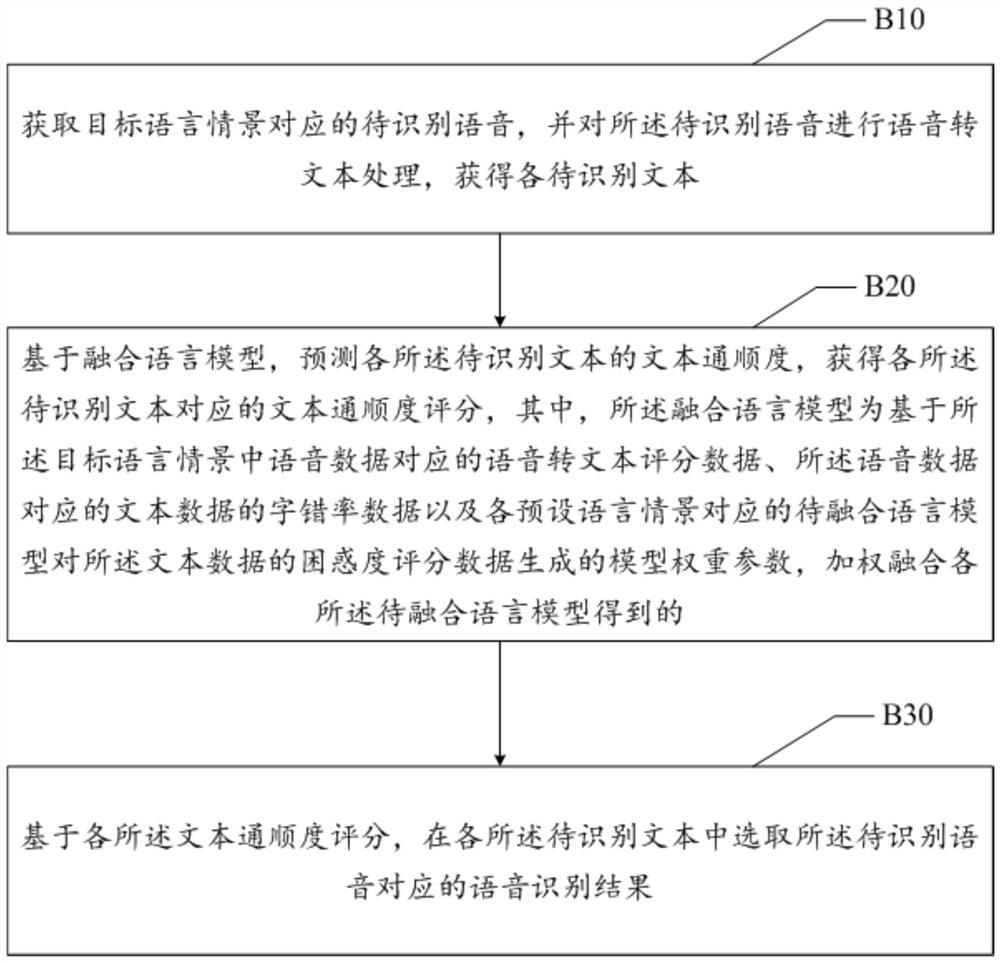

Language model fusion method and device, medium and computer program product

PendingCN113140221AEnhance integration rightsImprove fusion effectSpeech recognitionEngineeringData mining

The invention discloses a language model fusion method and device, a medium and a computer program product, and the method comprises the steps: obtaining voice data of a target language scene, and extracting voice-to-text scoring data for converting the voice data into text data, and word error rate data corresponding to the text data; acquiring confusion score data of a to-be-fused language model corresponding to each preset language scene on the text data, and combining each confusion score data, the voice-to-text score data and the word error rate data to obtain combined training data; based on the combined training data, constructing a classification sorting model; and based on the model weight parameters of the classification sorting model, performing weighted fusion on the to-be-fused language models to obtain a fused language model. The technical problem that the language model fusion effect is poor is solved.

Owner:WEBANK (CHINA)

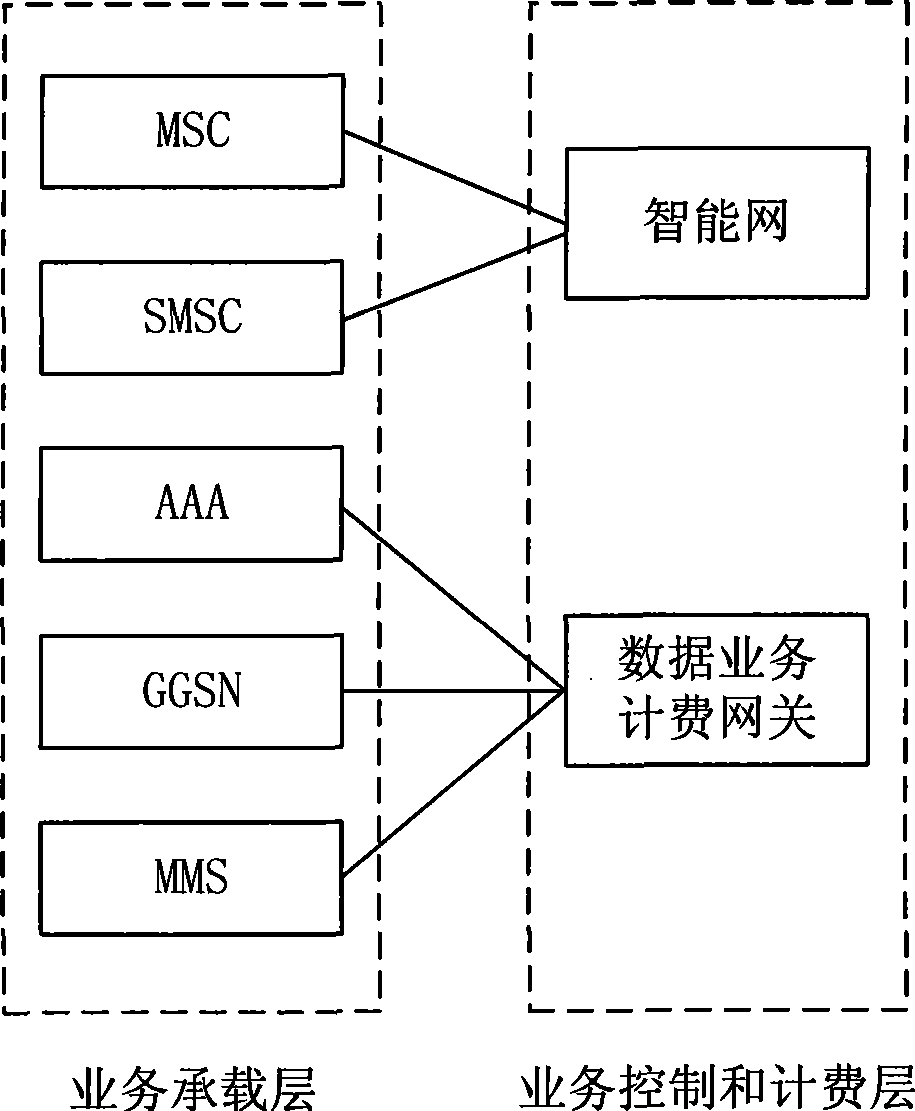

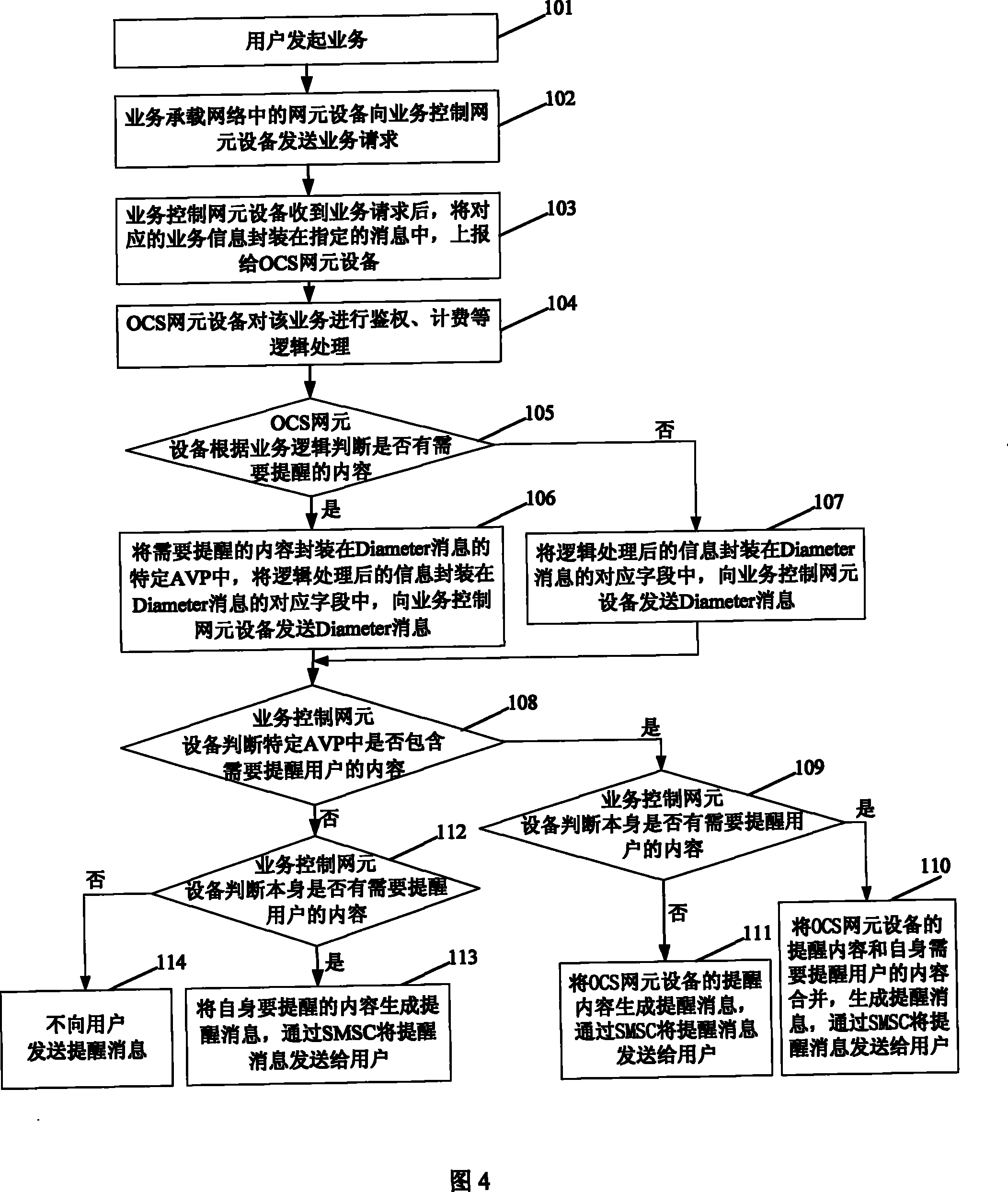

Method, system and equipment for prompting message

InactiveCN101442592AAvoid confusionReach the purpose of reminding usersMetering/charging/biilling arrangementsAccounting/billing servicesService controlPerplexity

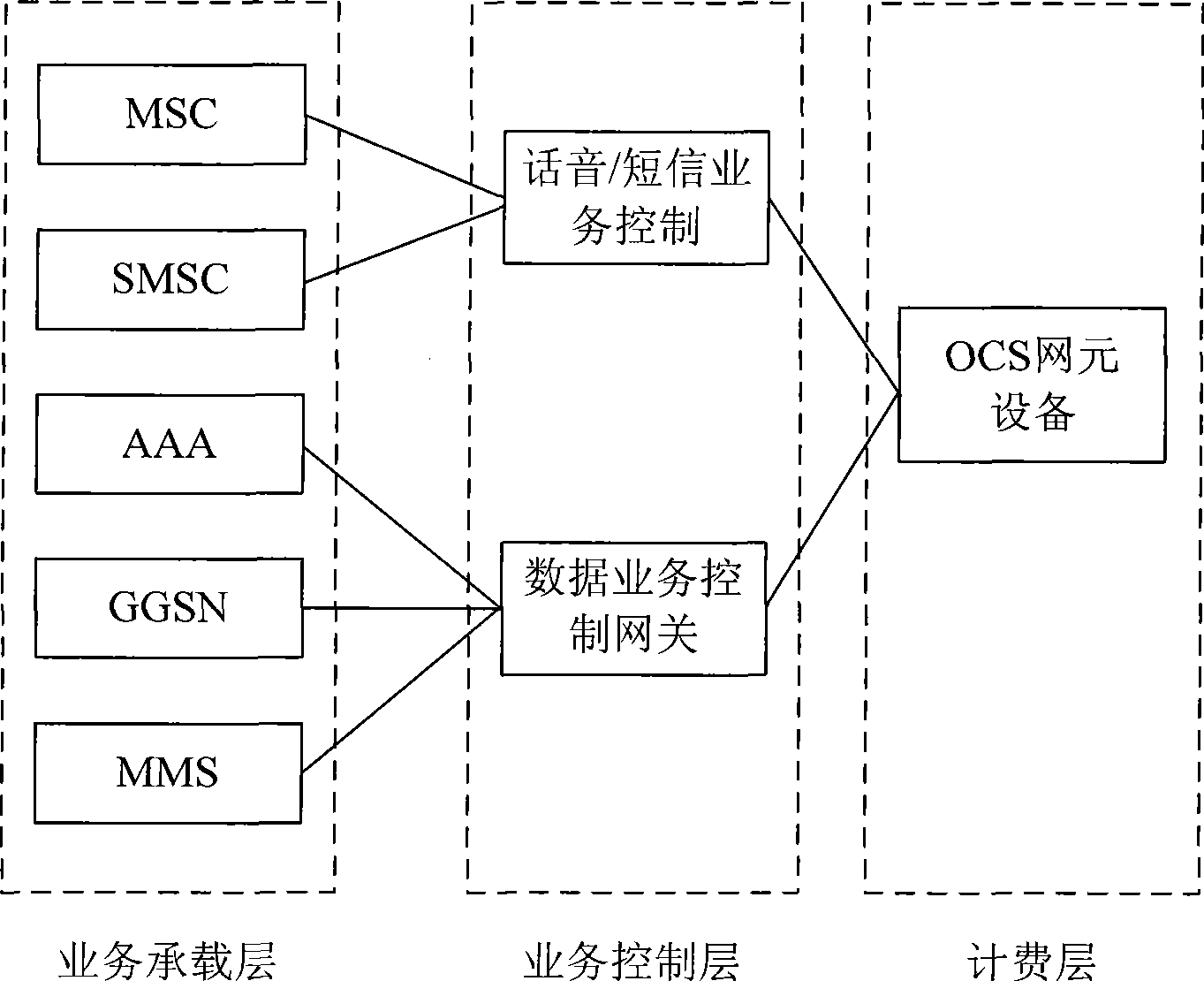

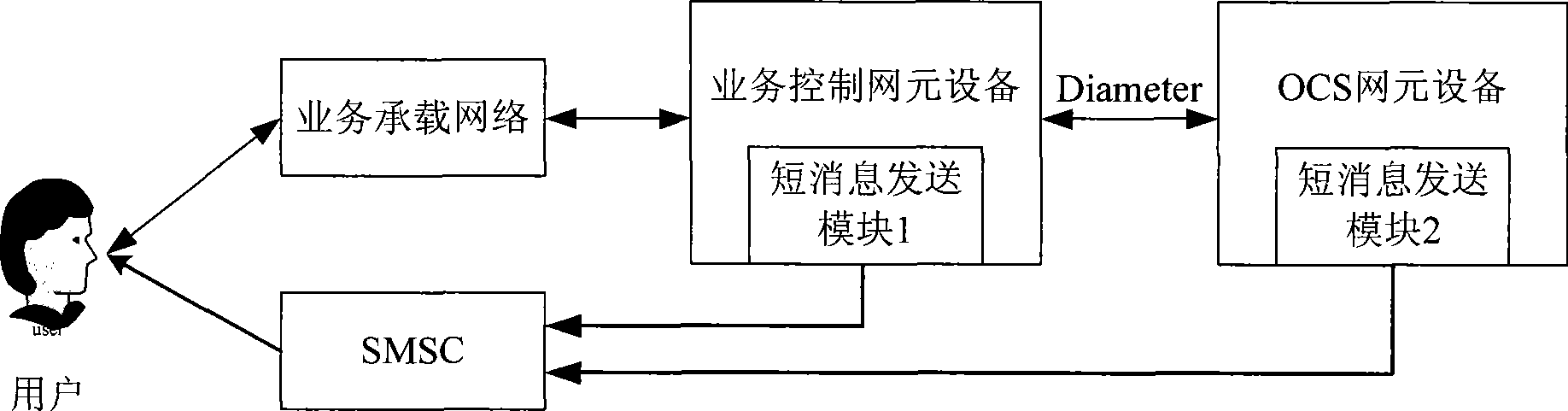

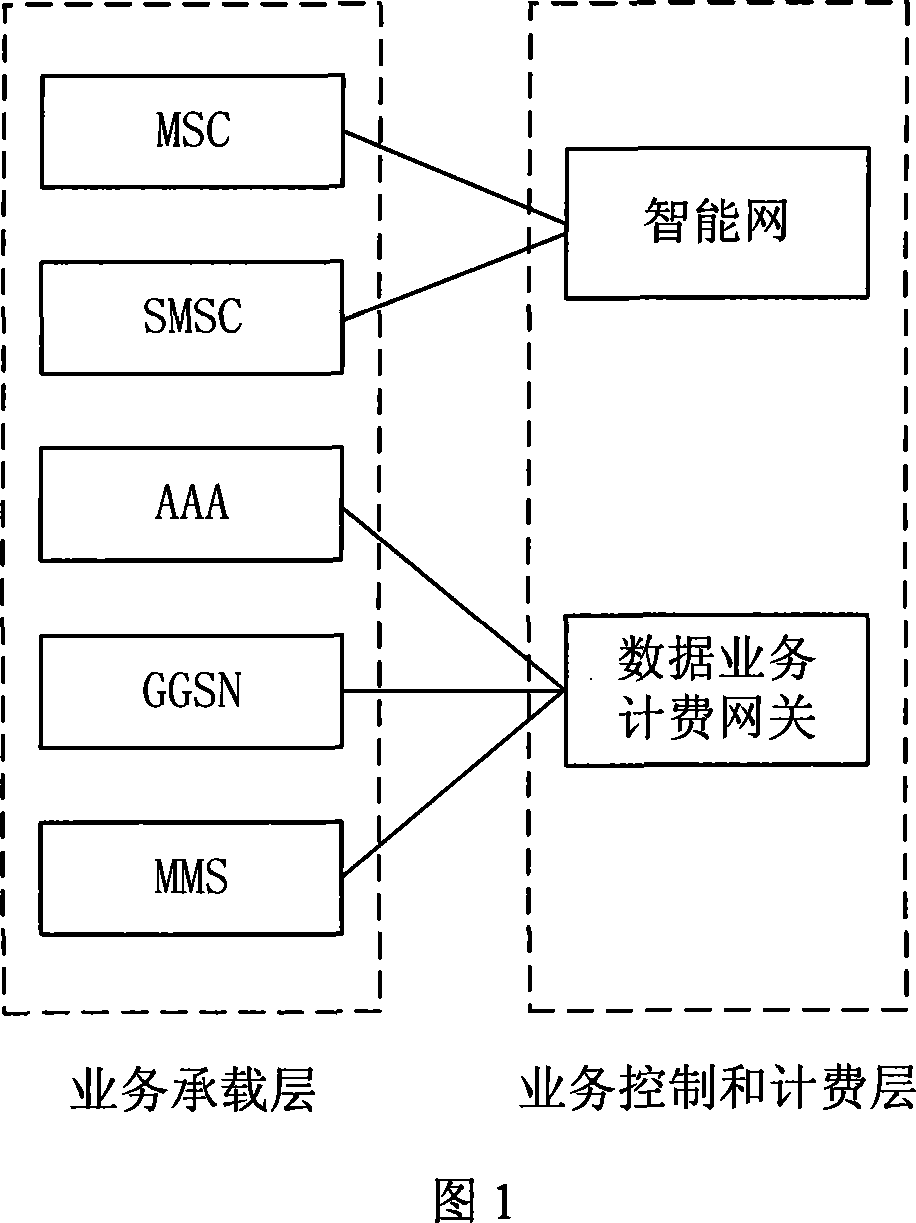

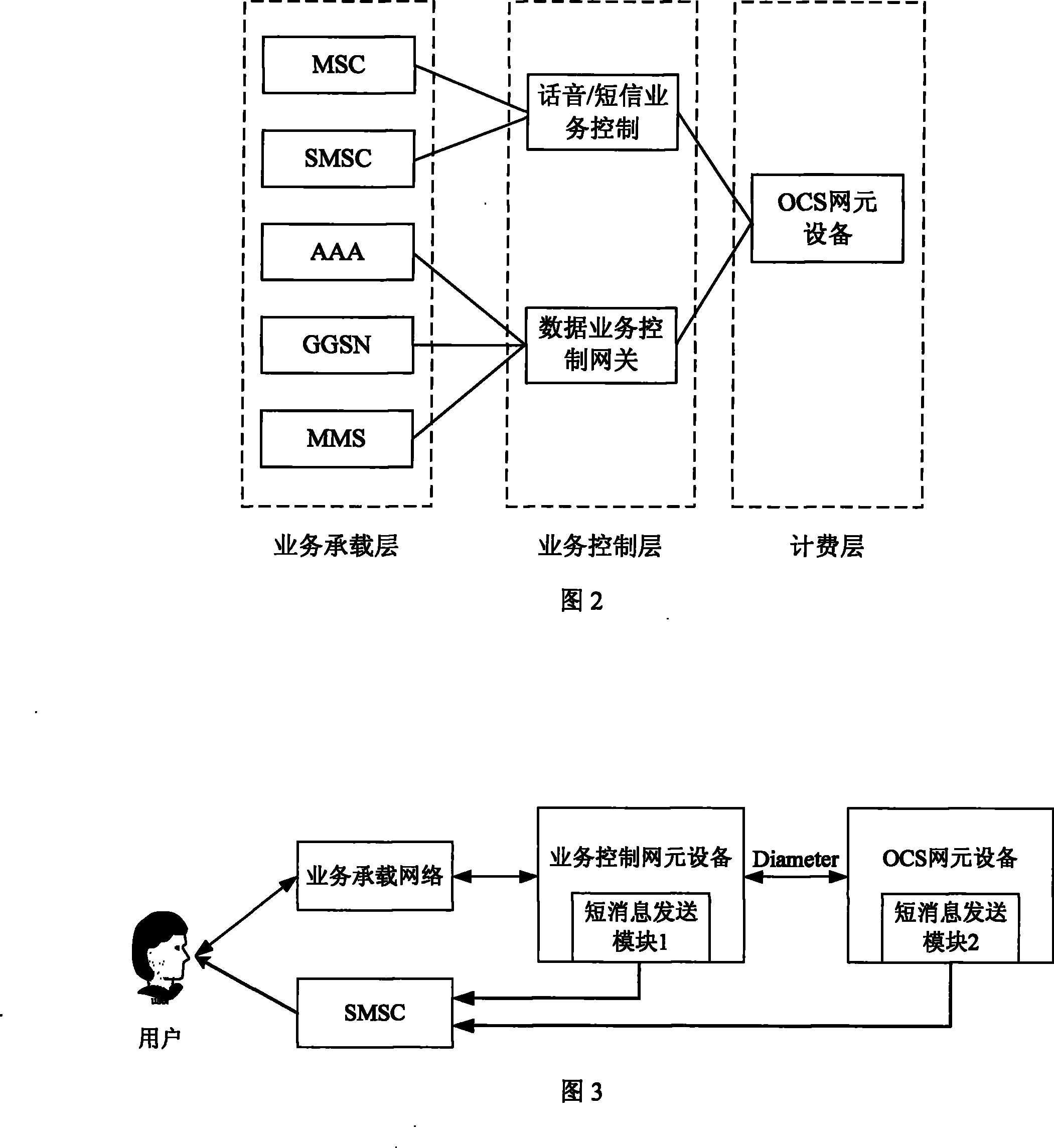

The invention discloses a message reminding method, a system and a device thereof, and belongs to the field of communication. The method comprises the following steps: a charging network element device encapsulates content needing to remind a user into a specified field of preset message, and sends the encapsulated message to a service control network element device; and after receiving the encapsulated message, the service control network element device extracts the content in the specified field, generates reminding message according to the extracted content, and sends the reminding message to a client. The system comprises the charging network element device and the service control network element device. The charging network element device comprises a judging module, an encapsulating module and a sending module. The service control network element device comprises a receiving module, an extracting module, a message generating module and a message sending module. The method, the system and the device achieve the aim of reminding the user, and simultaneously avoid perplexity caused when the prior charging network element device and the prior service control network element device send the reminding message to the user respectively.

Owner:HUAWEI TECH CO LTD

Method, system and equipment for prompting message

InactiveCN101442592BAvoid confusionReach the purpose of reminding usersMetering/charging/biilling arrangementsAccounting/billing servicesService controlPerplexity

The invention discloses a message reminding method, a system and a device thereof, and belongs to the field of communication. The method comprises the following steps: a charging network element device encapsulates content needing to remind a user into a specified field of preset message, and sends the encapsulated message to a service control network element device; and after receiving the encapsulated message, the service control network element device extracts the content in the specified field, generates reminding message according to the extracted content, and sends the reminding messageto a client. The system comprises the charging network element device and the service control network element device. The charging network element device comprises a judging module, an encapsulating module and a sending module. The service control network element device comprises a receiving module, an extracting module, a message generating module and a message sending module. The method, the system and the device achieve the aim of reminding the user, and simultaneously avoid perplexity caused when the prior charging network element device and the prior service control network element device send the reminding message to the user respectively.

Owner:HUAWEI TECH CO LTD

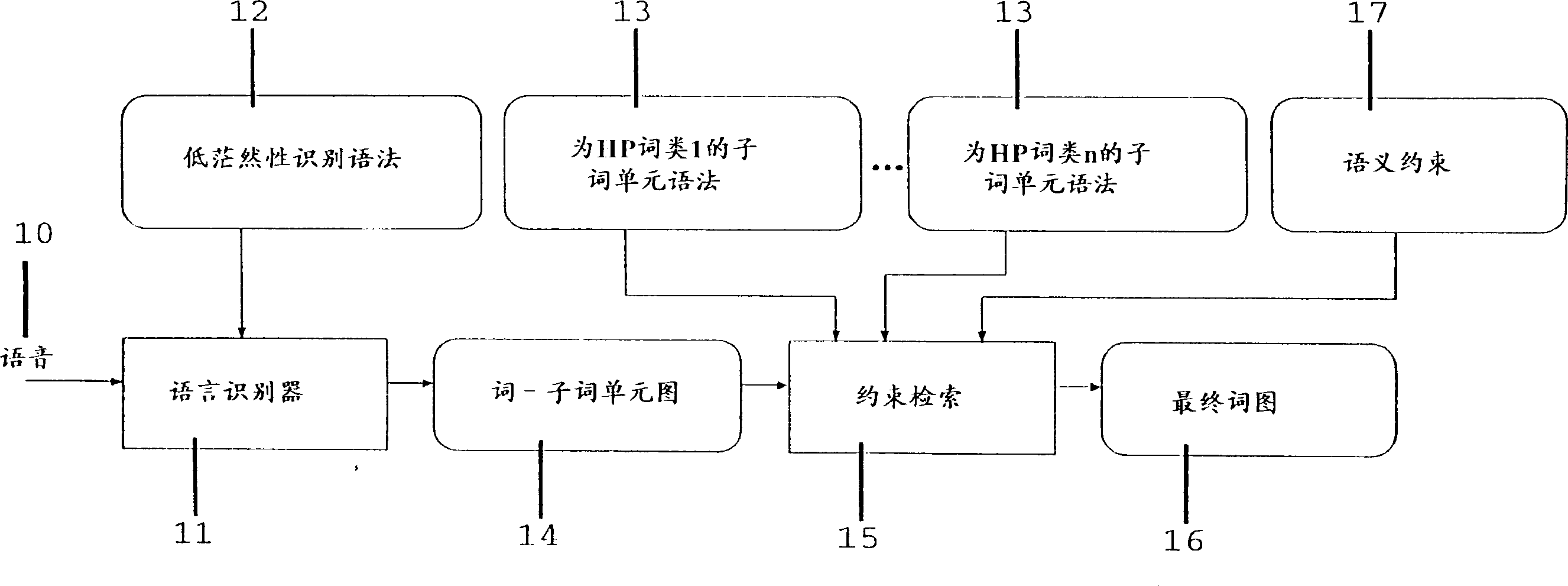

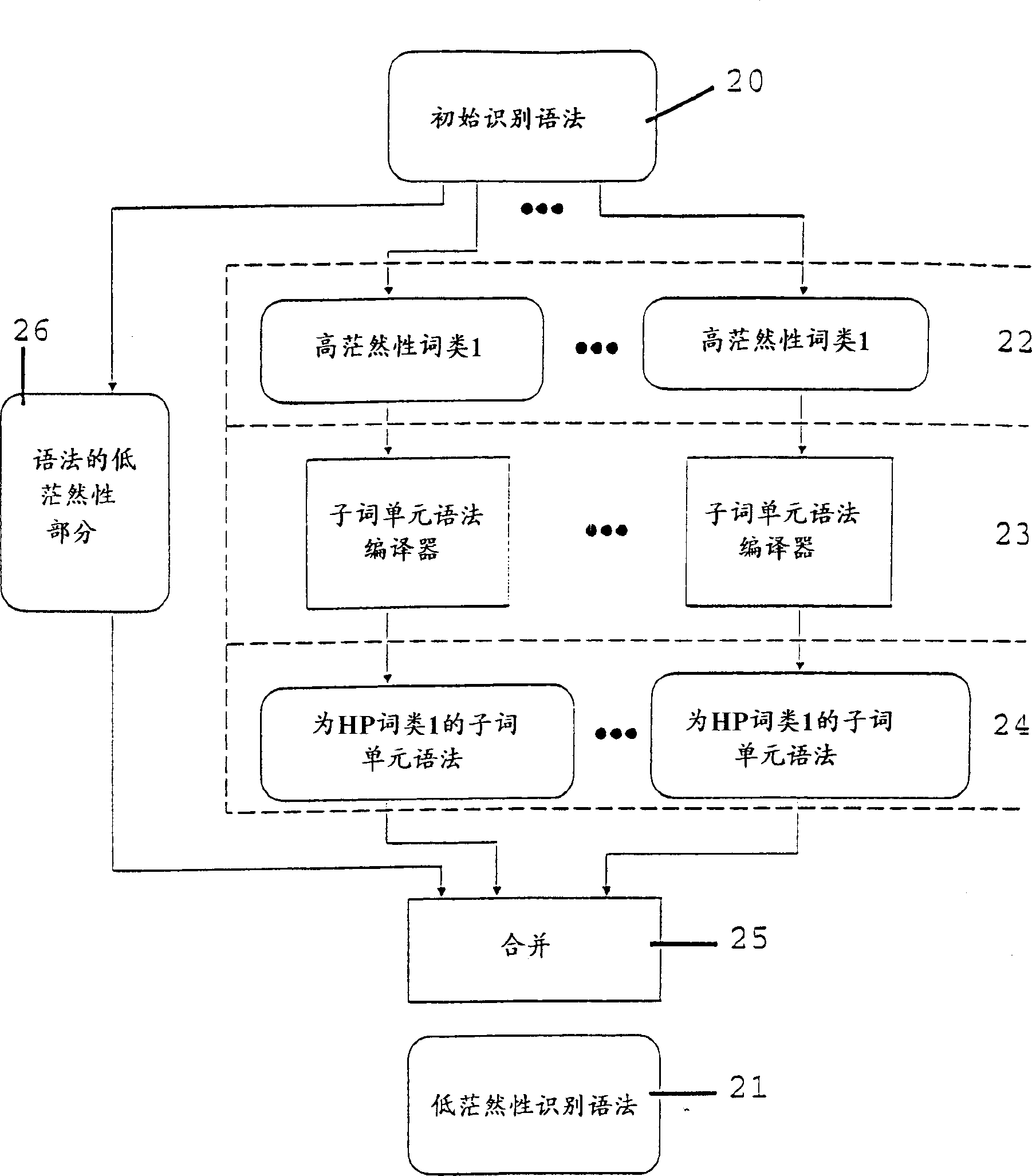

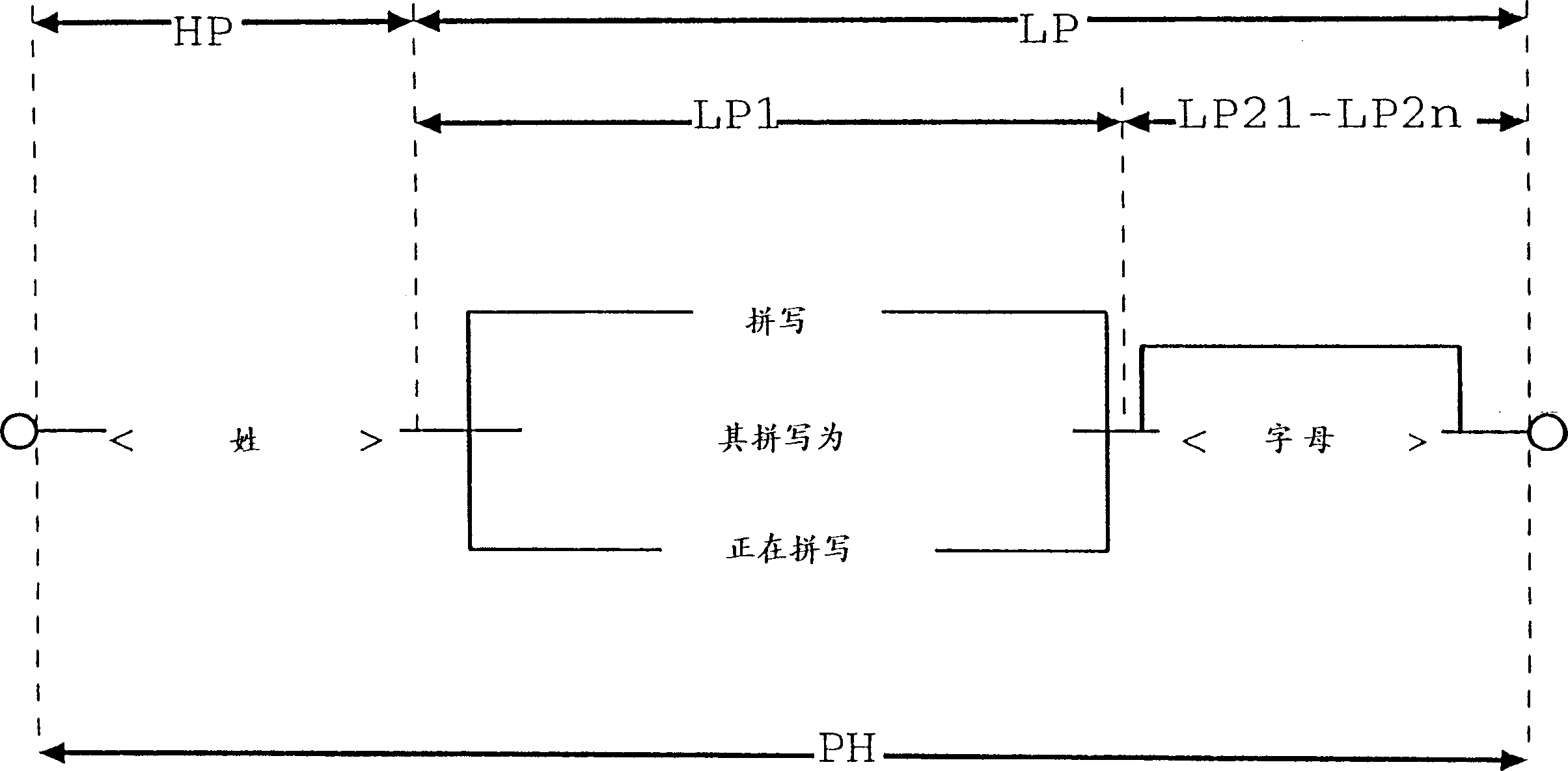

Speech sound identification method

It is one of the objectives of the inventive method to reduce the burden of search within the set of possible candidates for a speech phrase to be recognized. The objective is achieved by a method in which regions of high complexity of a recognition grammar are paired with regions of lower perplexity by using additional constraints. The search proceeds then by evaluating the low-perplexity region of the grammar first finding word candidates which are used to limit the search effort that needs to be expended when recognizing the parts of speech corresponding to the higher perplexity part of the recognition grammar.

Owner:SONY INT (EURO) GMBH

An unsupervised method for long-text recognition of Internet public opinion spam

ActiveCN111737475BLow costSpecial data processing applicationsText database clustering/classificationText recognitionLinguistic model

The invention discloses an unsupervised method for identifying long texts of Internet public opinion garbage. The identification method includes the following steps: obtaining data corresponding to tagged public opinion garbage texts and normal texts from an existing internal system; constructing two models respectively , comprising a language model based on network public opinion text training and a BERT next-sentence prediction model based on network public opinion text, inputting the network public opinion long text to be predicted into the above-mentioned language model and the BERT next-sentence prediction model respectively; the present invention utilizes the language model The perplexity index evaluates whether the inside of the sentence is junk text, uses the BERT next sentence prediction model to evaluate the contextual coherence between the sentences of the text, and combines the two to complete the spam text recognition task of long texts, which can automatically identify While generating spam text information, it greatly reduces the cost of obtaining supervised data, allowing a system without supervised data to identify spam text from the beginning.

Owner:南京擎盾信息科技有限公司

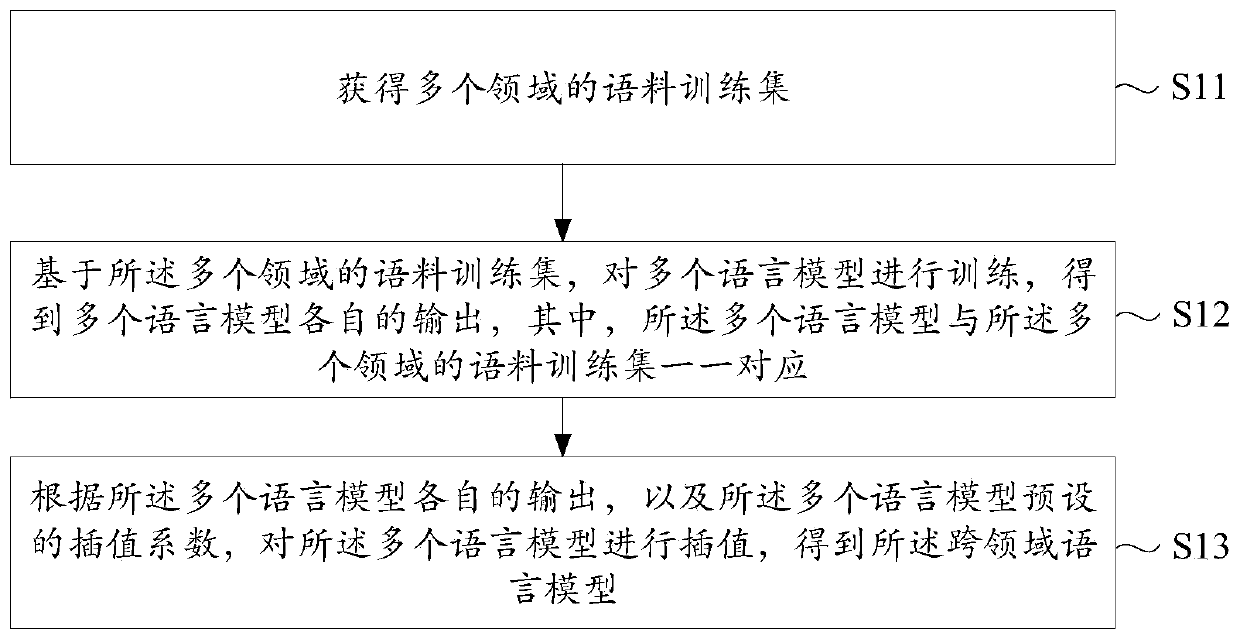

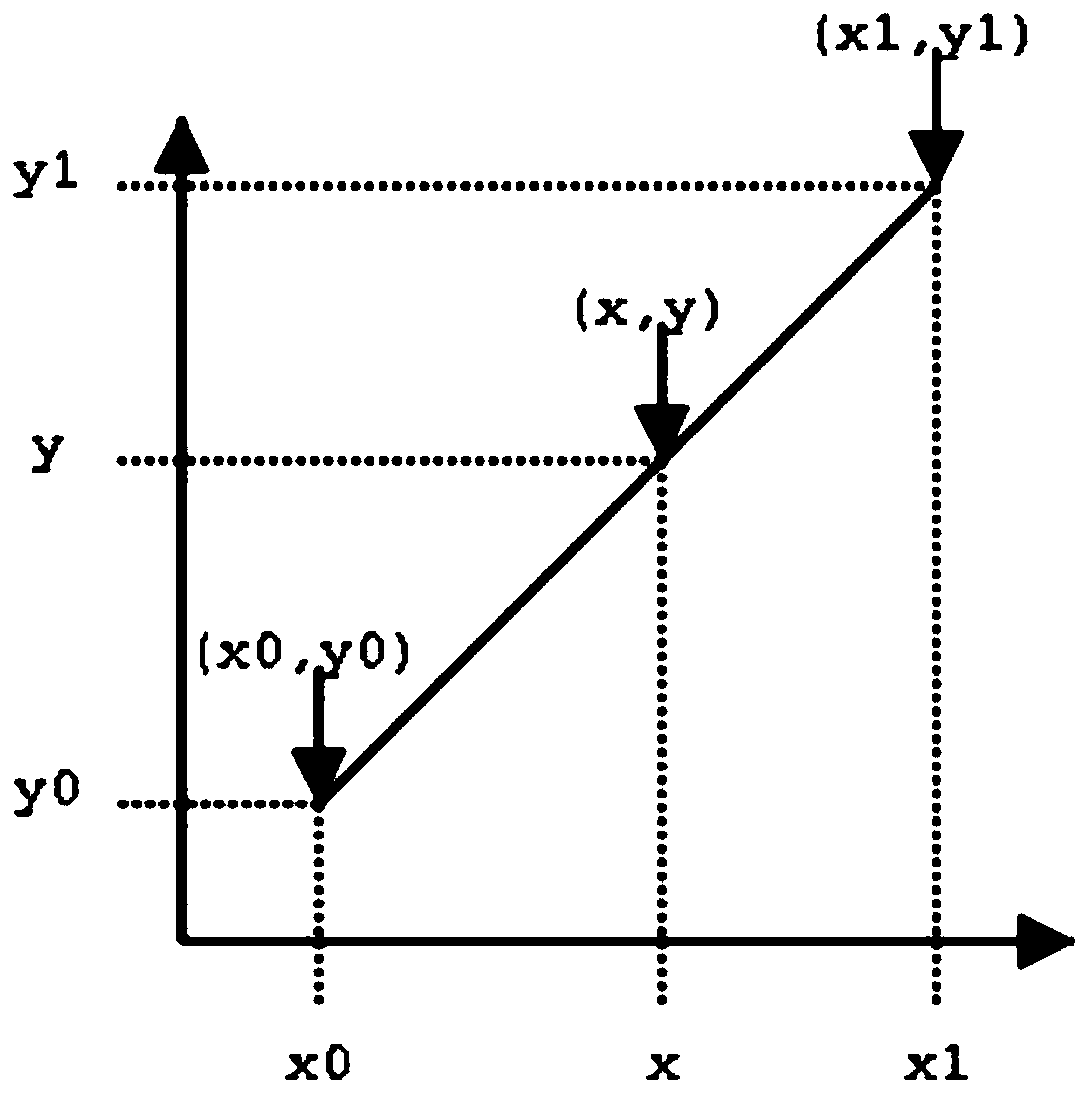

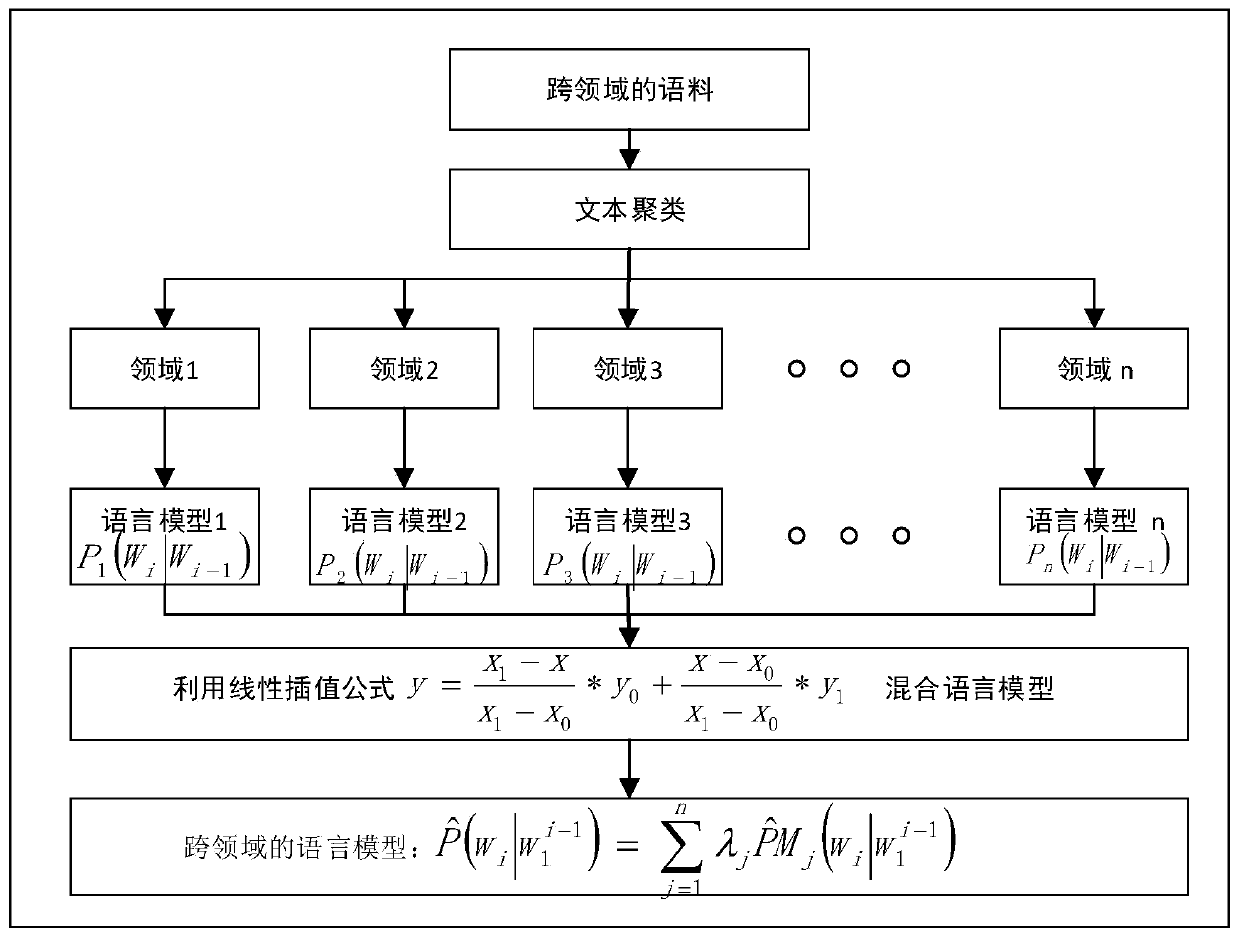

Cross-domain language model training method and device, electronic equipment and storage medium

ActiveCN111143518AAddressing VulnerabilityImprove performance indicatorsText database queryingSpecial data processing applicationsEngineeringPerplexity

The invention provides a cross-domain language model training method and device, electronic equipment and a storage medium. The method comprises the steps of obtaining corpus training sets of multipledomains; training a plurality of language models based on the corpus training sets of the plurality of fields to obtain respective outputs of the plurality of language models, the plurality of language models being in one-to-one correspondence with the corpus training sets of the plurality of fields; and according to respective outputs of the plurality of language models and preset interpolationcoefficients of the plurality of language models, interpolating the plurality of language models to obtain a cross-domain language model. According to the cross-domain language model training method provided by the invention, language models in multiple domains are mixed into one model through a language model mixing method based on linear interpolation, so that the cross-domain vulnerability of the language model is effectively solved, the performance index of the language model is improved, and the confusion degree of the language model is reduced.

Owner:北京明朝万达科技股份有限公司

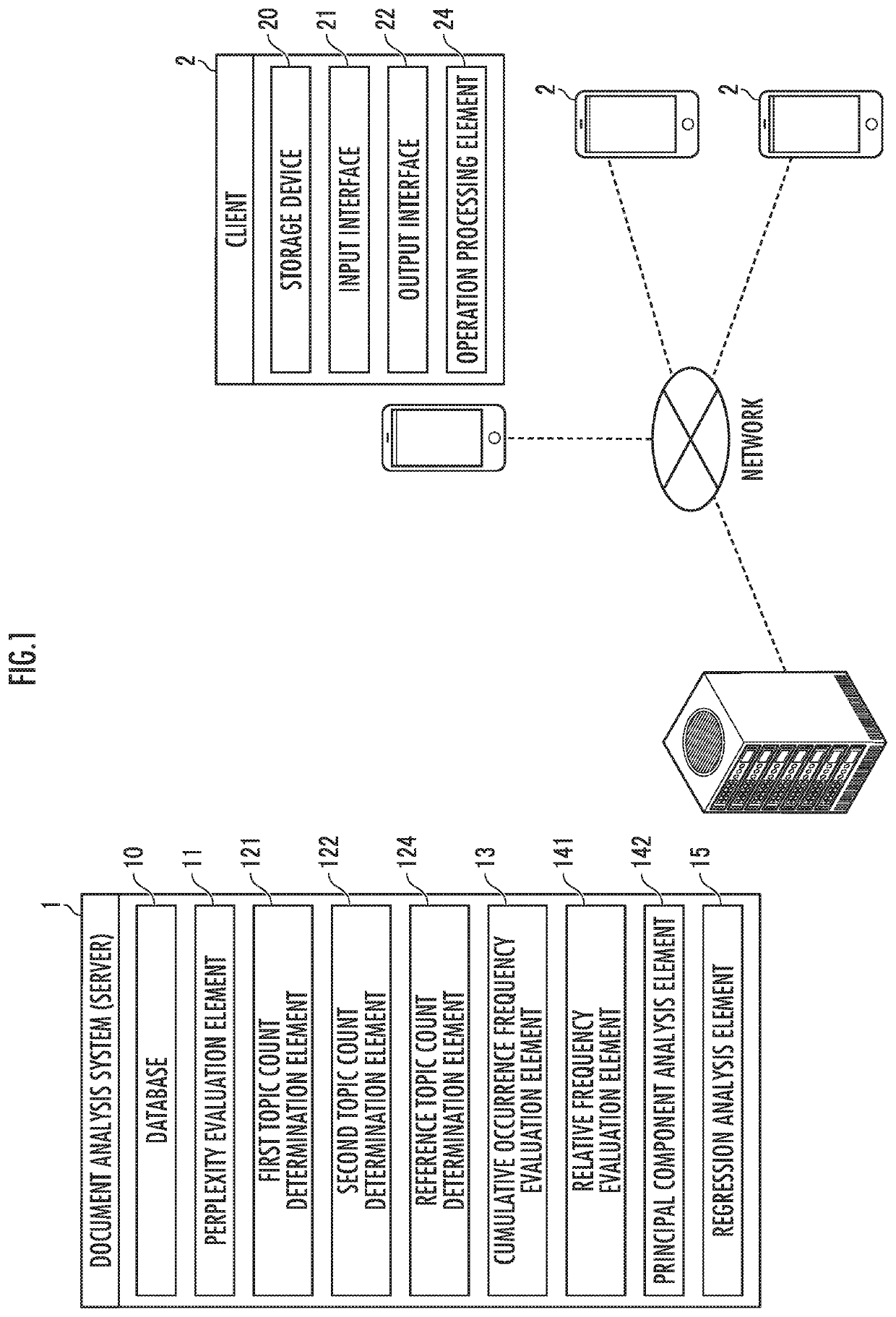

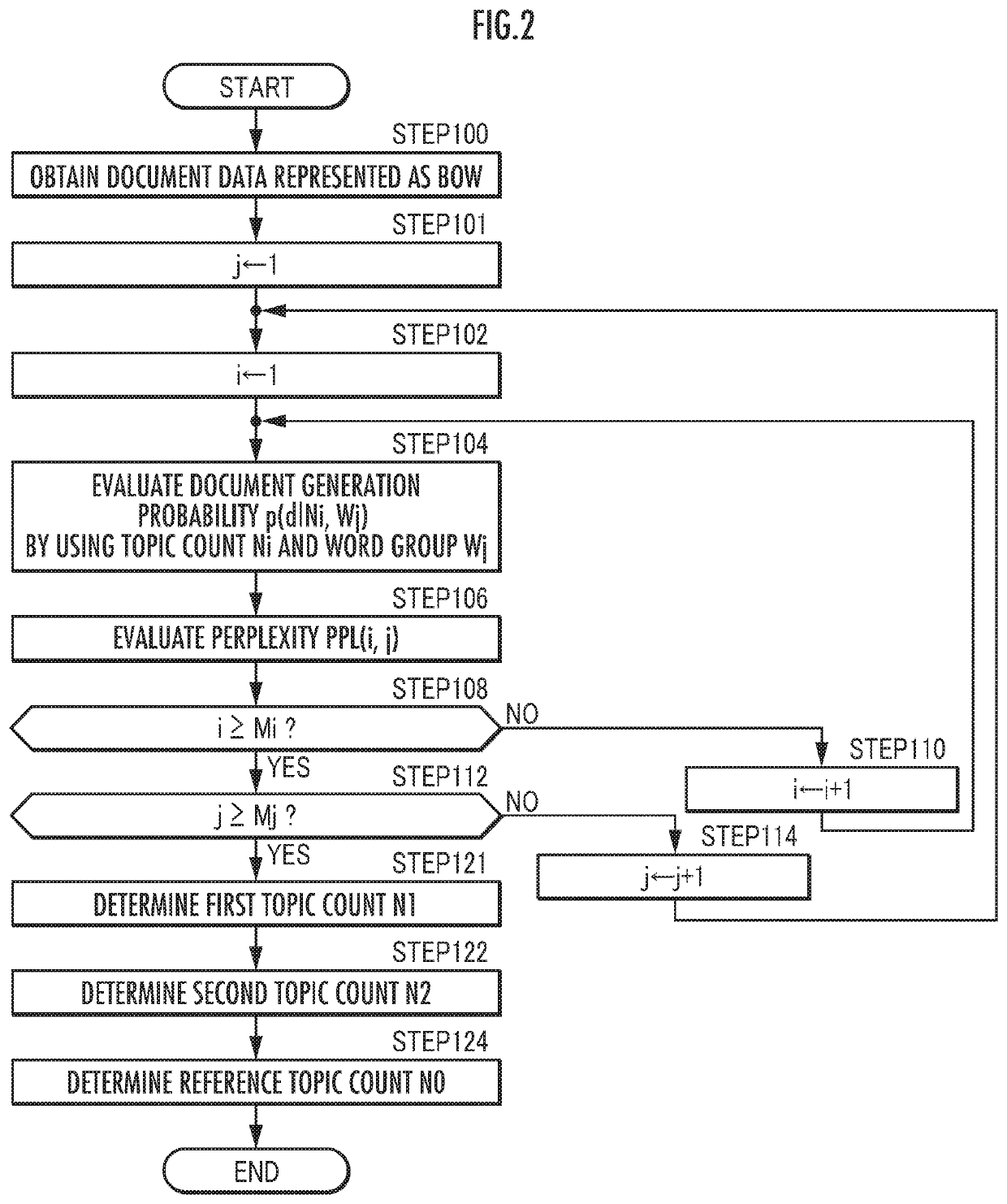

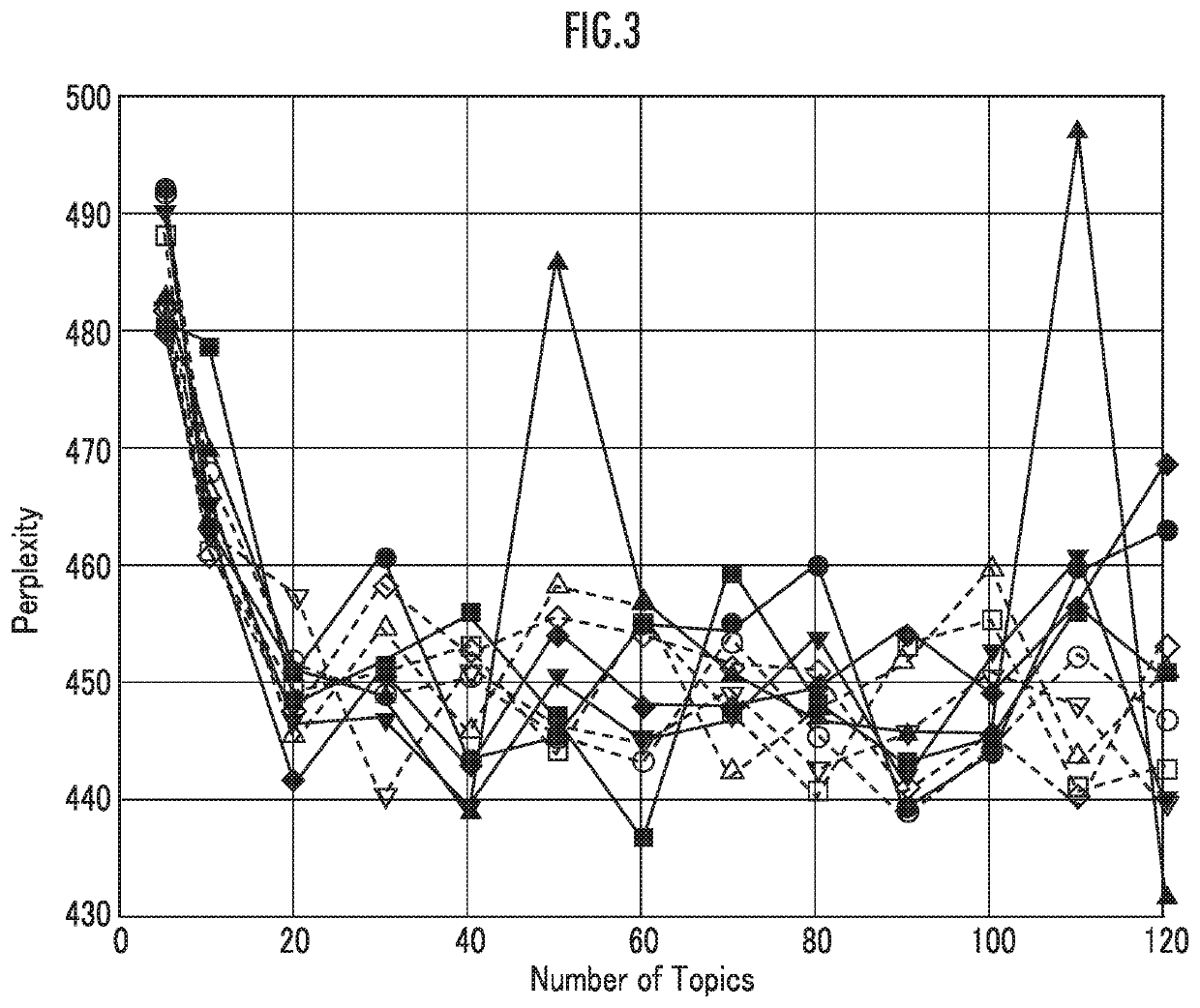

Document analysis system

PendingUS20210263953A1Mark increaseEstimate the latent meaning of the documentMarket predictionsSemantic analysisDocument analysisCounting Number

There is provided a system configured to appropriately determine a topic count in accordance with LDA to estimate latent meanings of a document. For a plurality of documents d, a perplexity PPL of each document d is evaluated in accordance with a document generation probability in which the document d is generated when topic counts N for defining a topic model based on the LDA as a document generation model are hypothetically specified as different values and word groups are specified by different random numbers. The topic model is defined by a reference topic count N0 determined by combining a first topic count N1 (the number of topics indicating a highest cumulative frequency at which the perplexity PPL first indicates a minimum value) and a second topic count N2 (the number of topics indicating a highest cumulative frequency at which the perplexity PPL indicates a smallest value).

Owner:HONDA MOTOR CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com