Cross-domain language model training method and device, electronic equipment and storage medium

A language model and training method technology, applied in electronic equipment and storage media, cross-domain language model training method, device field, and can solve problems such as intractability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

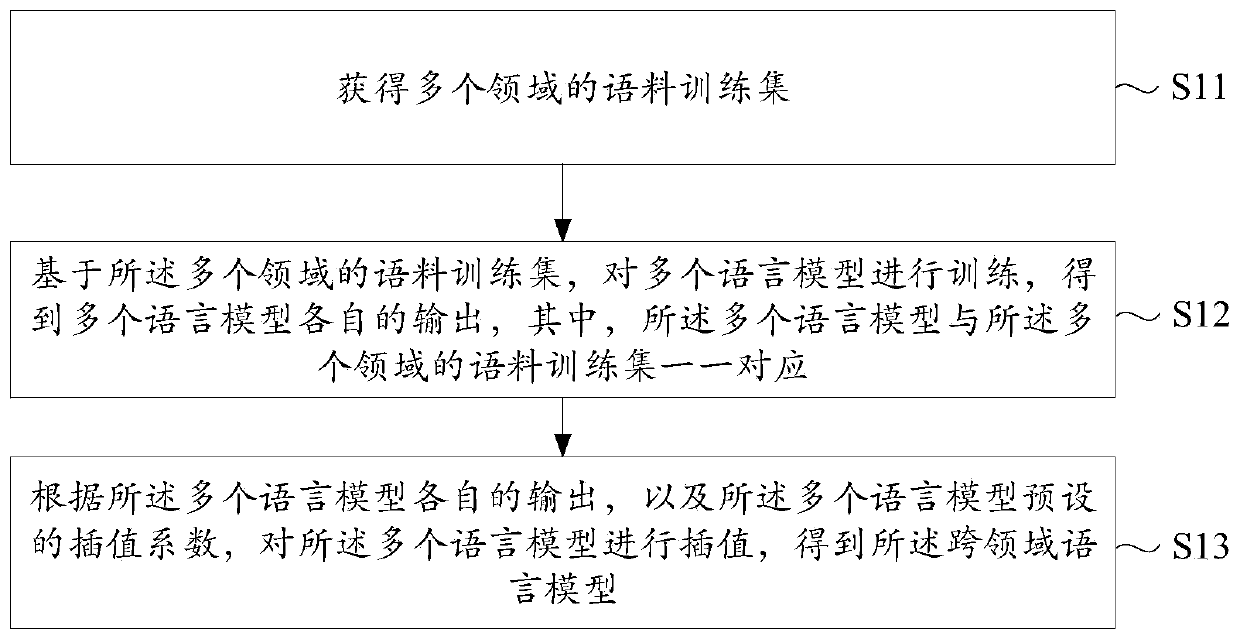

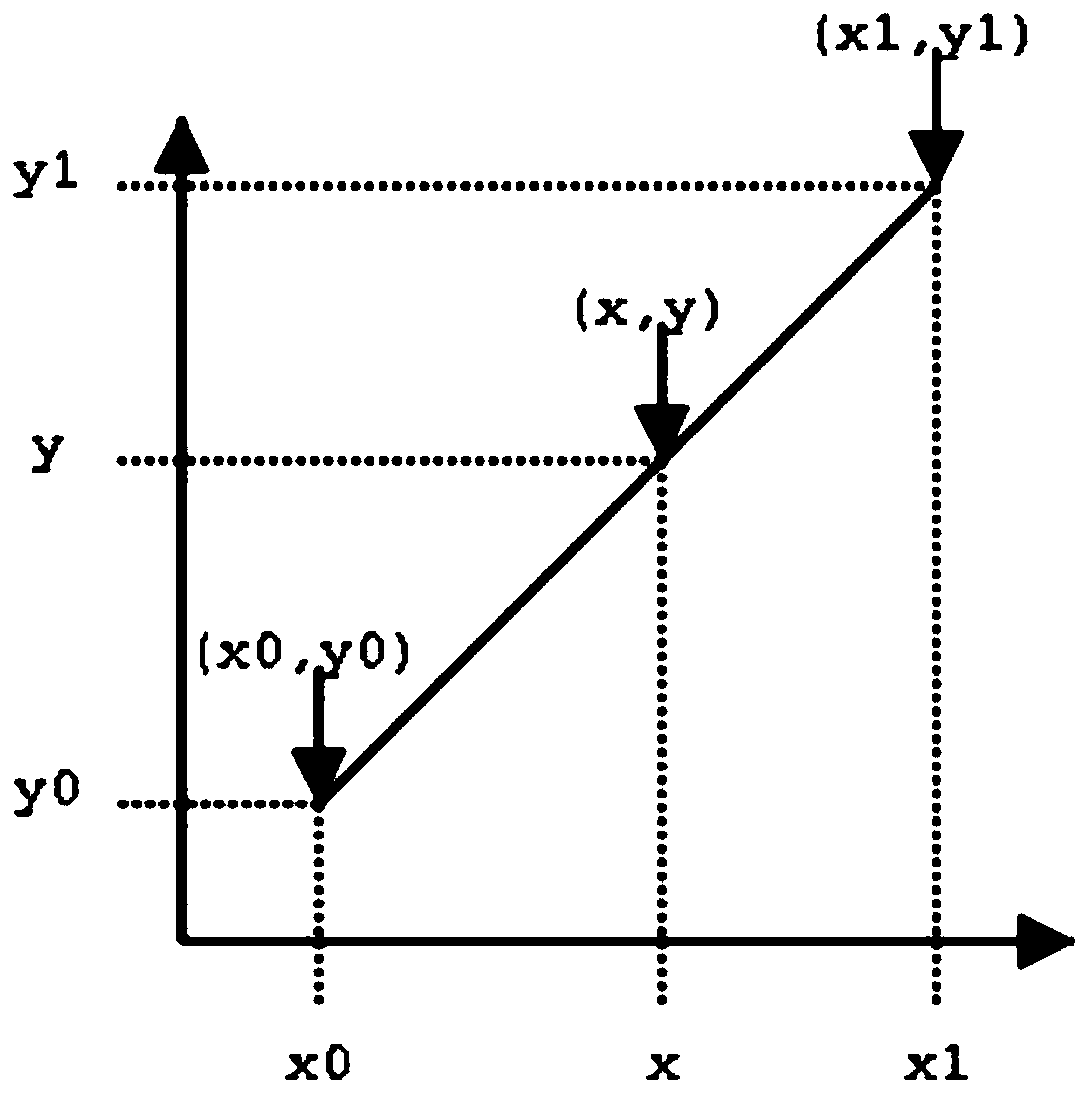

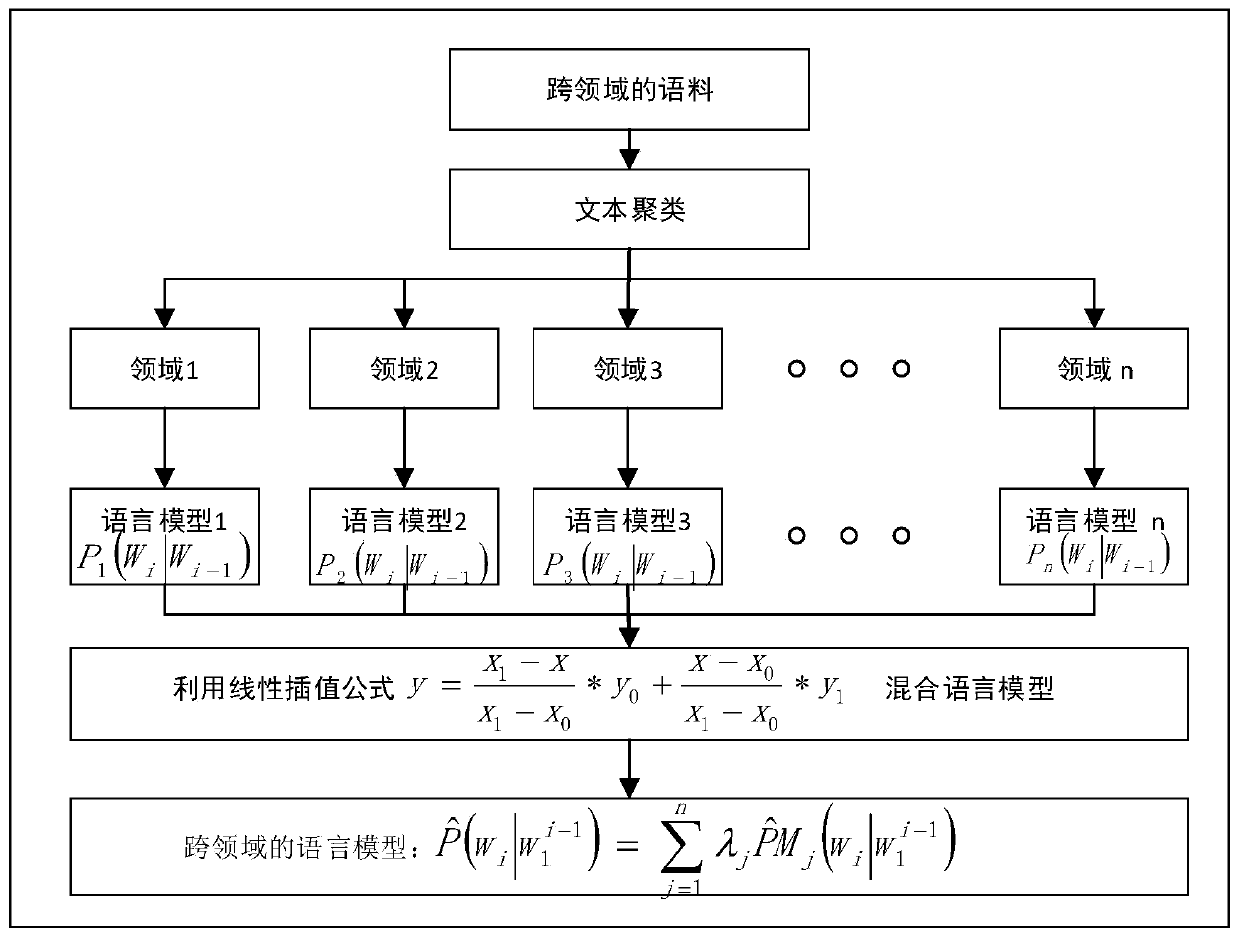

[0064] The technical solutions in the embodiments of the present application will be clearly and completely described below with reference to the accompanying drawings in the embodiments of the present application. Obviously, the described embodiments are part of the embodiments of the present application, not all of the embodiments. Based on the embodiments in the present application, all other embodiments obtained by those of ordinary skill in the art without creative work fall within the protection scope of the present application.

[0065] Before explaining the cross-domain language model training method of this application, firstly, the technical principle of N-Gram Chinese statistical language model is briefly explained:

[0066] Let S denote some meaningful sentence consisting of a sequence of words w in a particular order 1 , w 2 ,...,w n composition, where n represents the length of the sentence, if you need to predict the probability of the sentence S appearing in ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com