Patents

Literature

711 results about "Training phase" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Training Phases. Officer candidate training is divided into five distinct phases: In-processing (Phase I), Transition Training (Phase 11), Adaptation (Phase 111), Decision Making and Execution (Phase IV), and Out-processing (Phase V). Each of the various OCS programs will progress through the training phases.

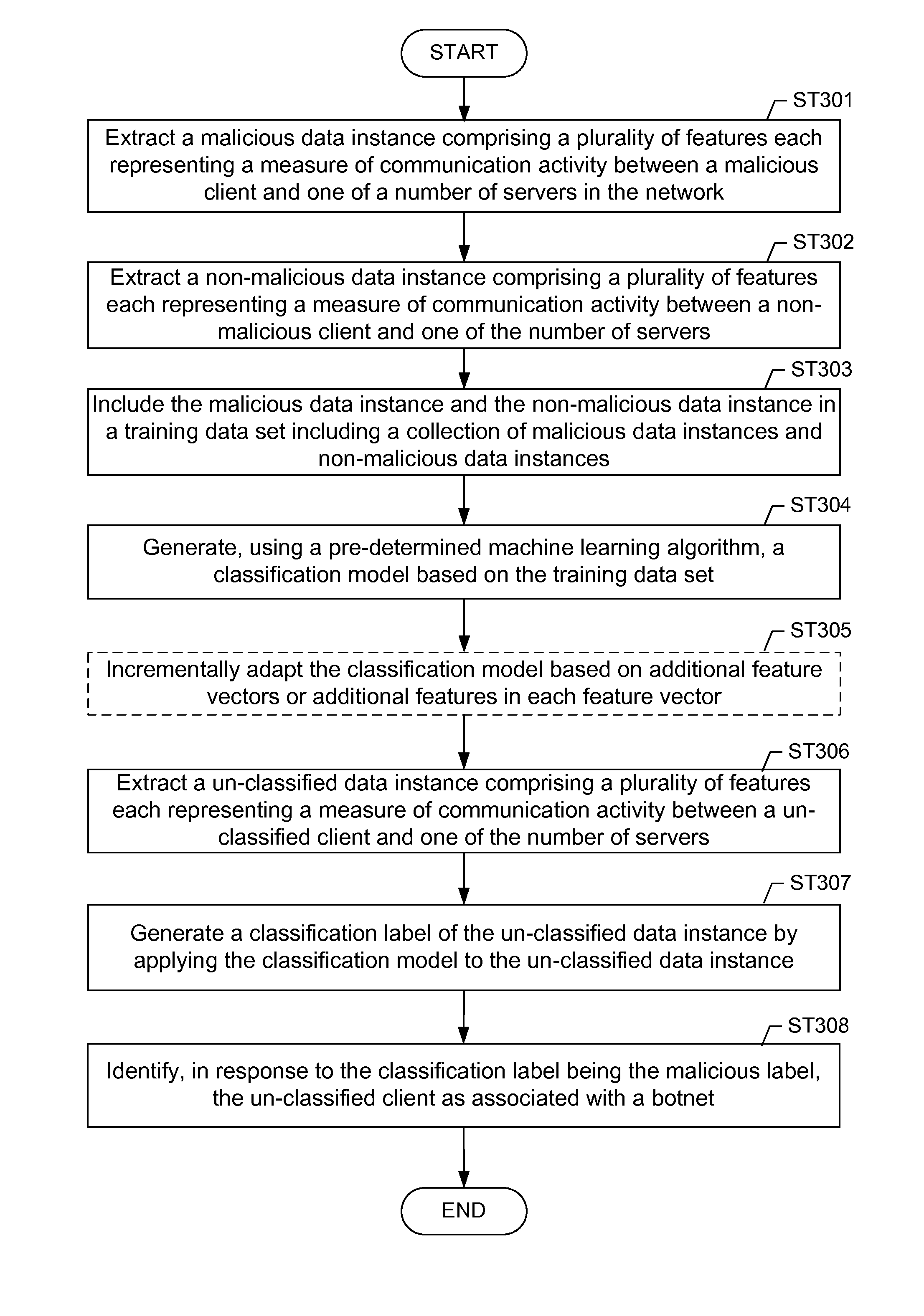

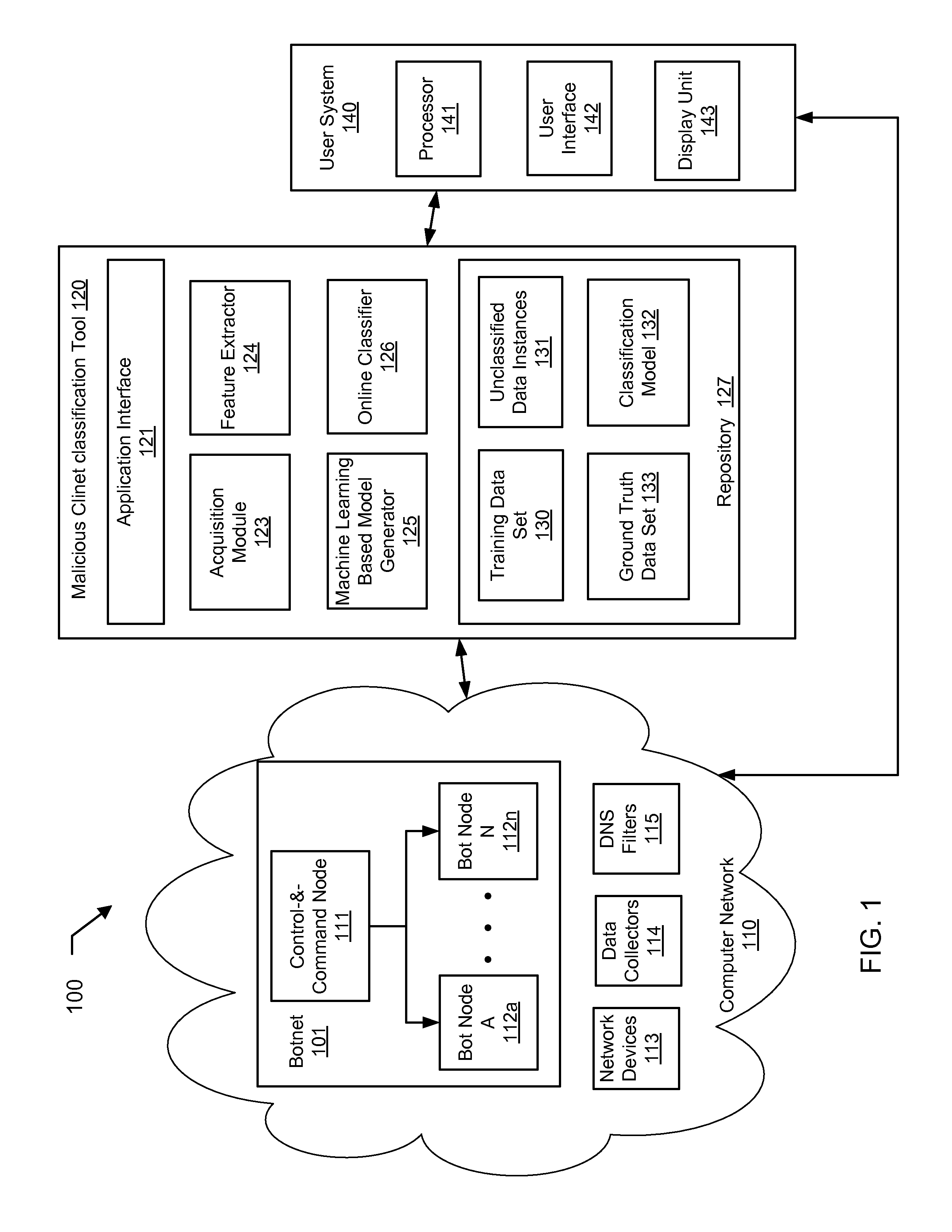

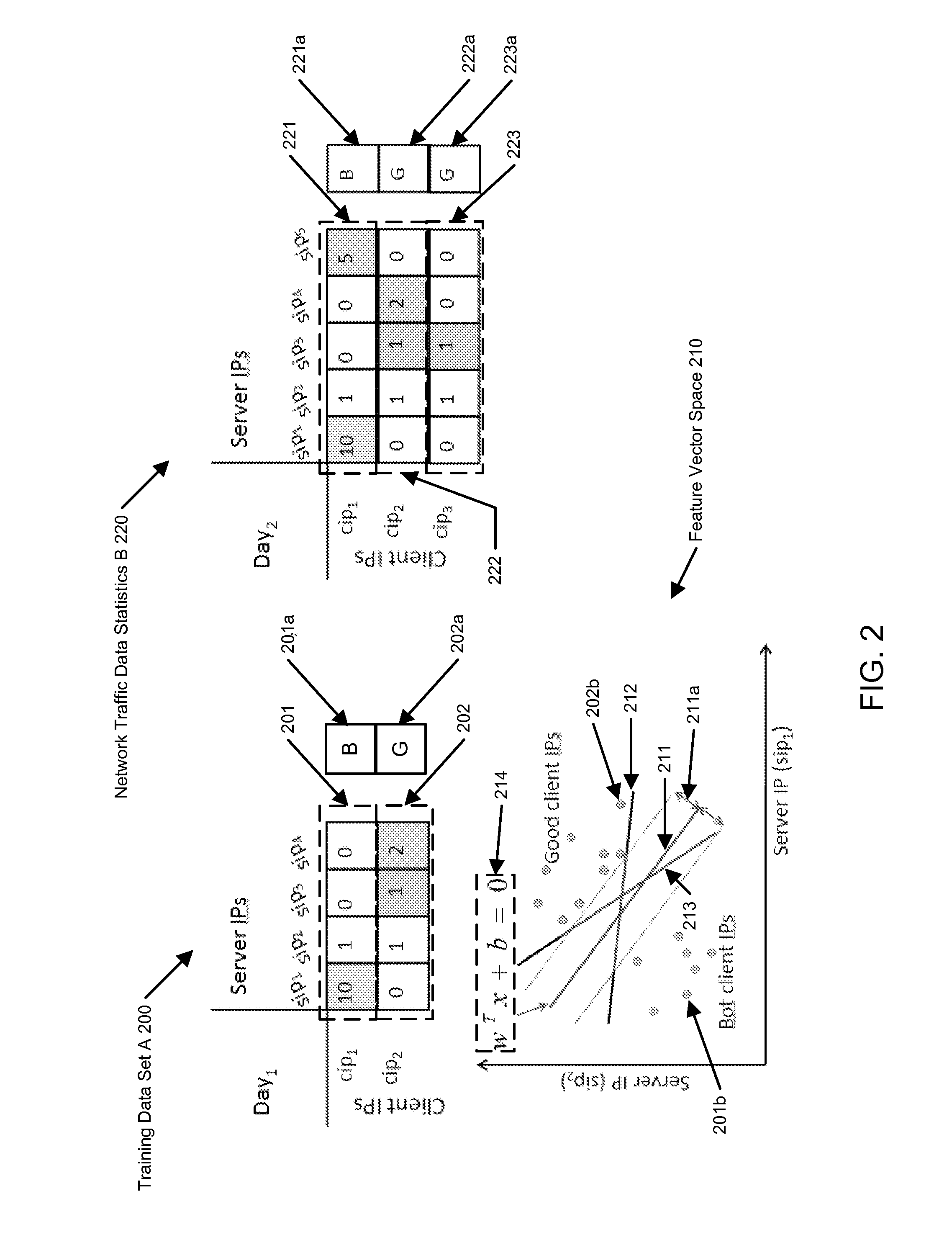

Machine learning based botnet detection with dynamic adaptation

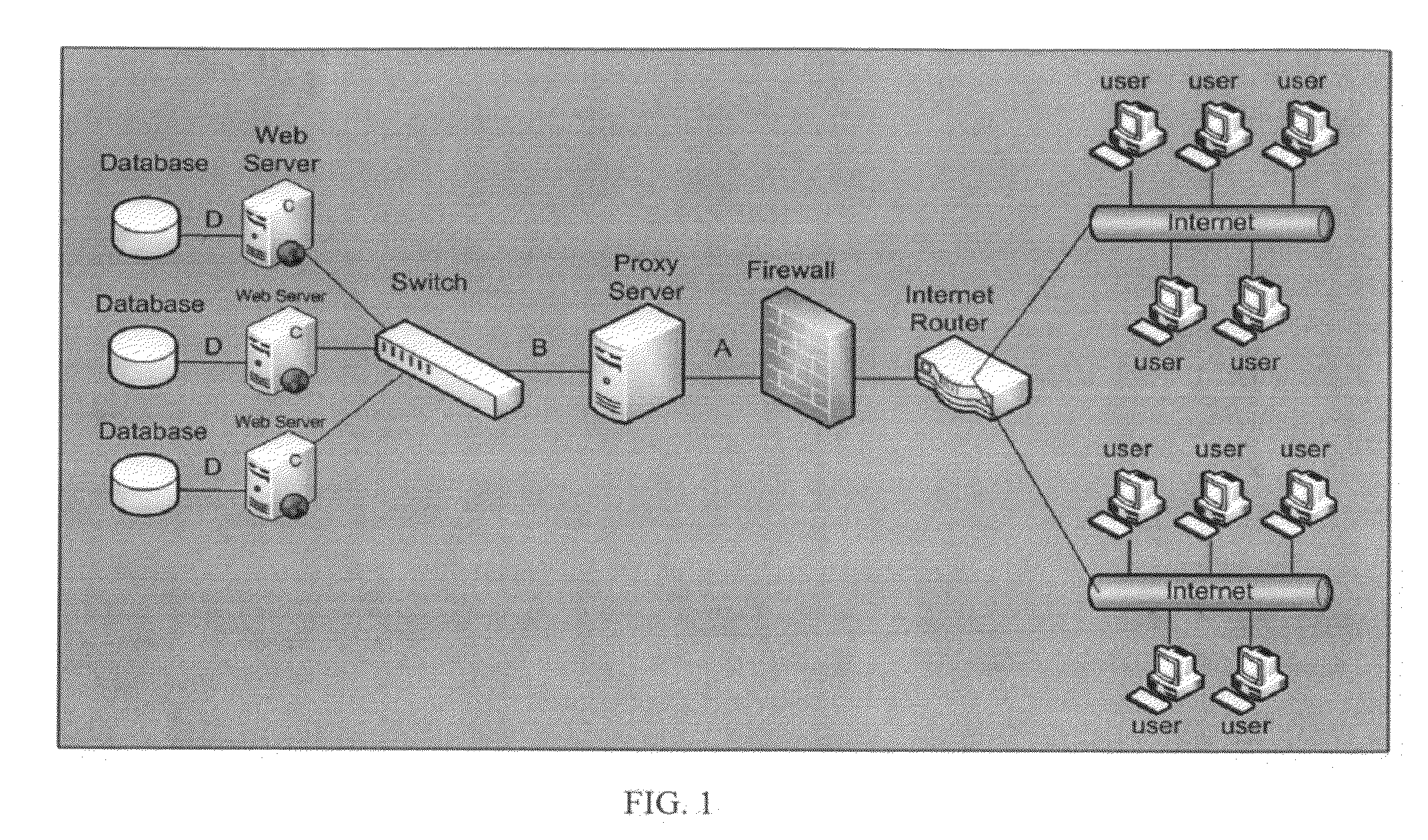

Embodiments of the invention address the problem of detecting bots in network traffic based on a classification model learned during a training phase using machine learning algorithms based on features extracted from network data associated with either known malicious or known non-malicious client and applying the learned classification model to features extracted in real-time from current network data. The features represent communication activities between the known malicious or known non-malicious client and a number of servers in the network.

Owner:THE BOEING CO

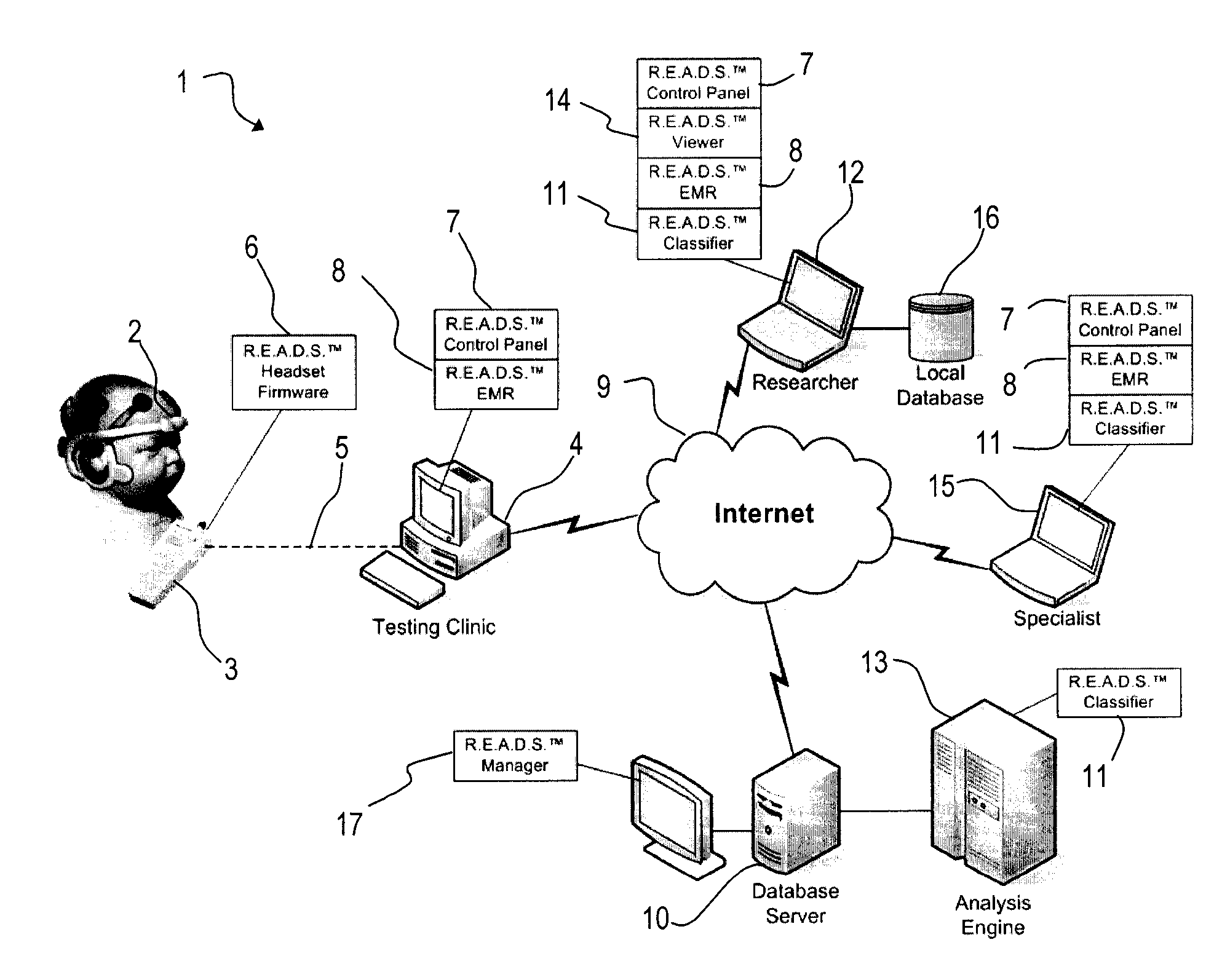

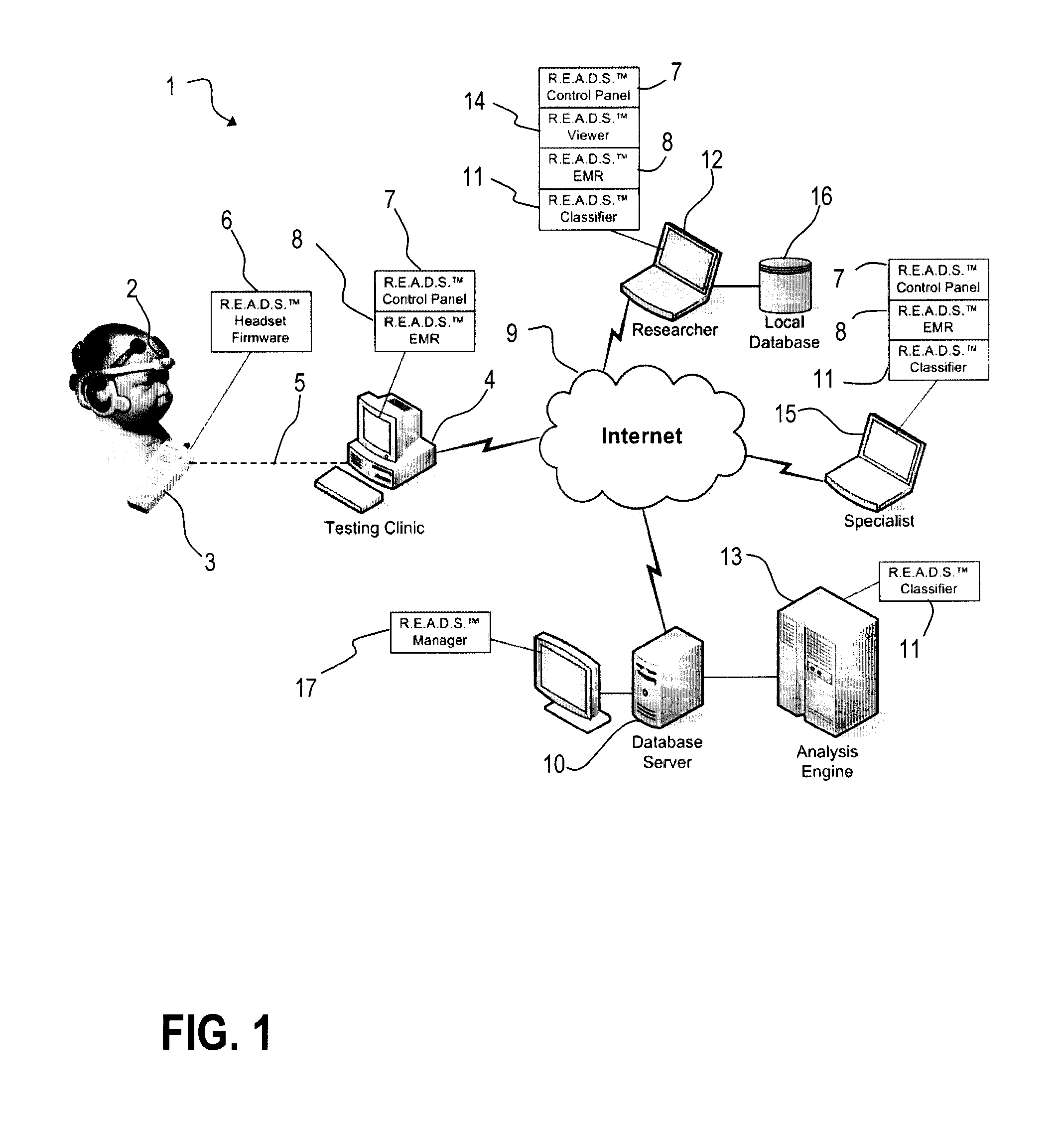

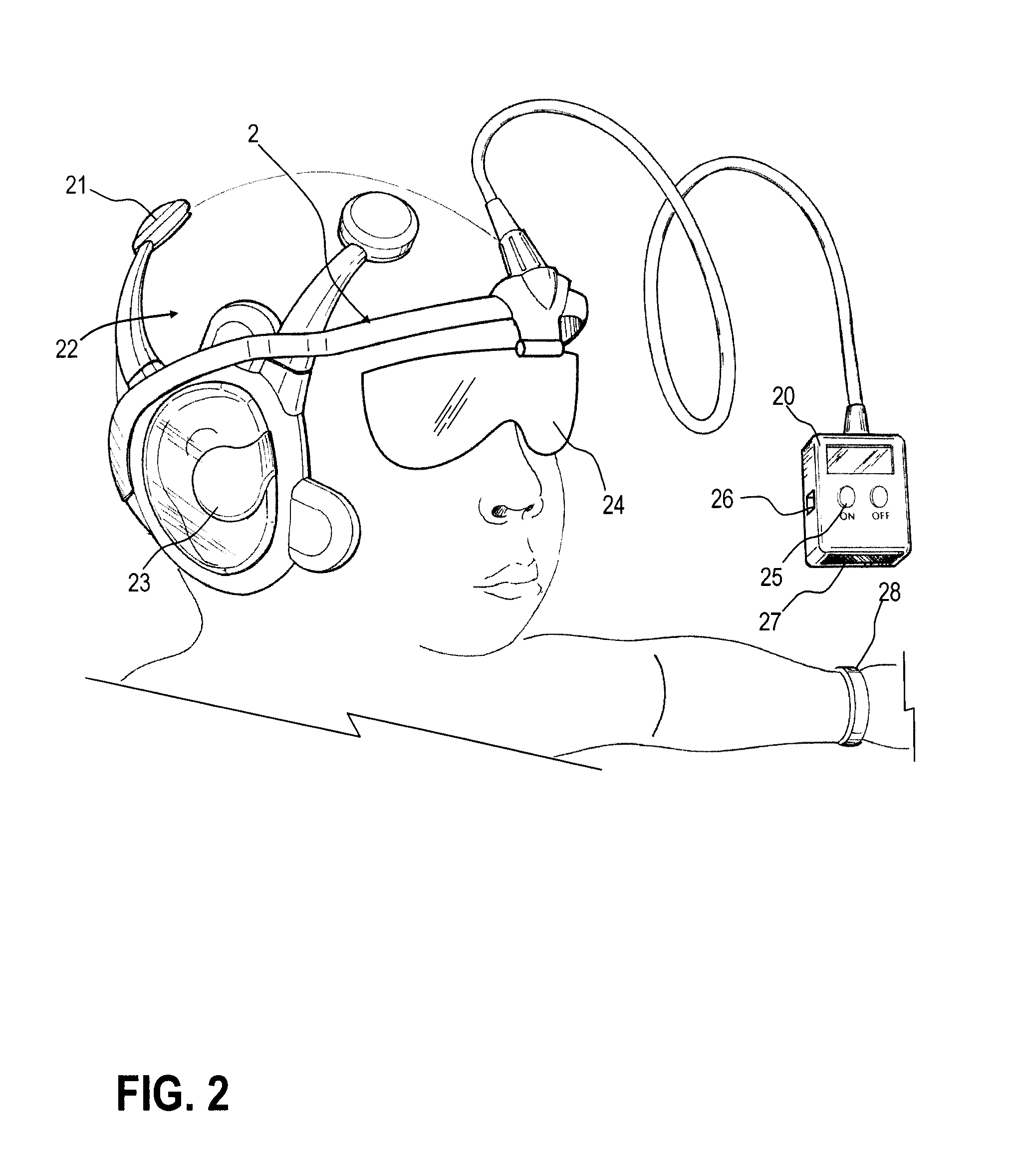

Biopotential Waveform Data Fusion Analysis and Classification Method

InactiveUS20080208072A1Improve classification accuracyElectroencephalographyMedical data miningTraining phaseData source

Biopotential waveforms such as ERPs, EEGs, ECGs, or EMGs are classified accurately by dynamically fusing classification information from multiple electrodes, tests, or other data sources. These different data sources or “channels” are ranked at different time instants according to their respective univariate classification accuracies. Channel rankings are determined during training phase in which classification accuracy of each channel at each time-instant is determined. Classifiers are simple univariate classifiers which only require univariate parameter estimation. Using classification information, a rule is formulated to dynamically select different channels at different time-instants during the testing phase. Independent decisions of selected channels at different time instants are fused into a decision fusion vector. The resulting decision fusion vector is optimally classified using a discrete Bayes classifier. Finally, the dynamic decision fusion system provides high classification accuracies, is quite flexible in operation, and overcomes major limitations of classifiers applied currently in biopotential waveform studies and clinical applications.

Owner:NEURONETRIX SOLUTIONS

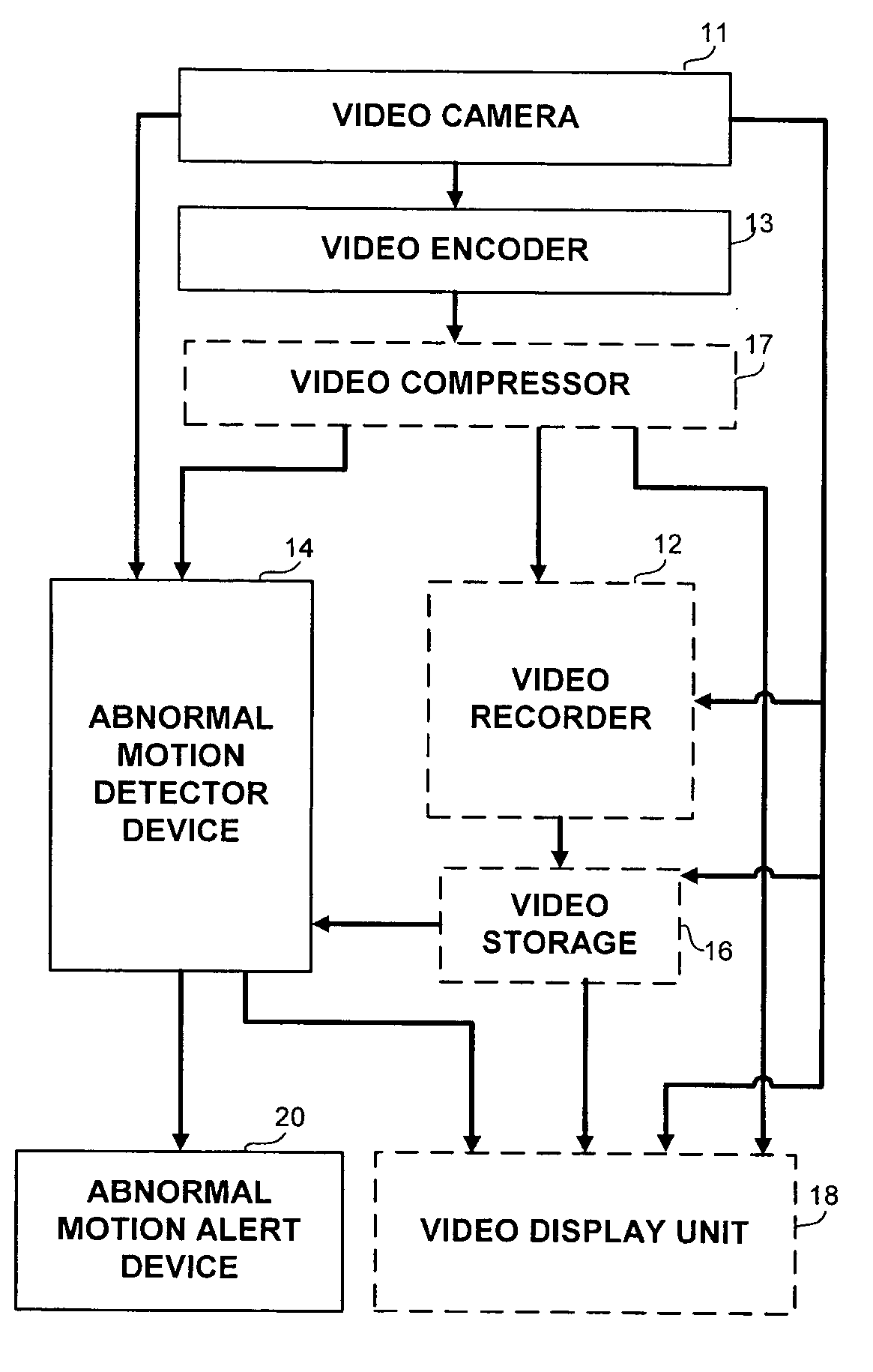

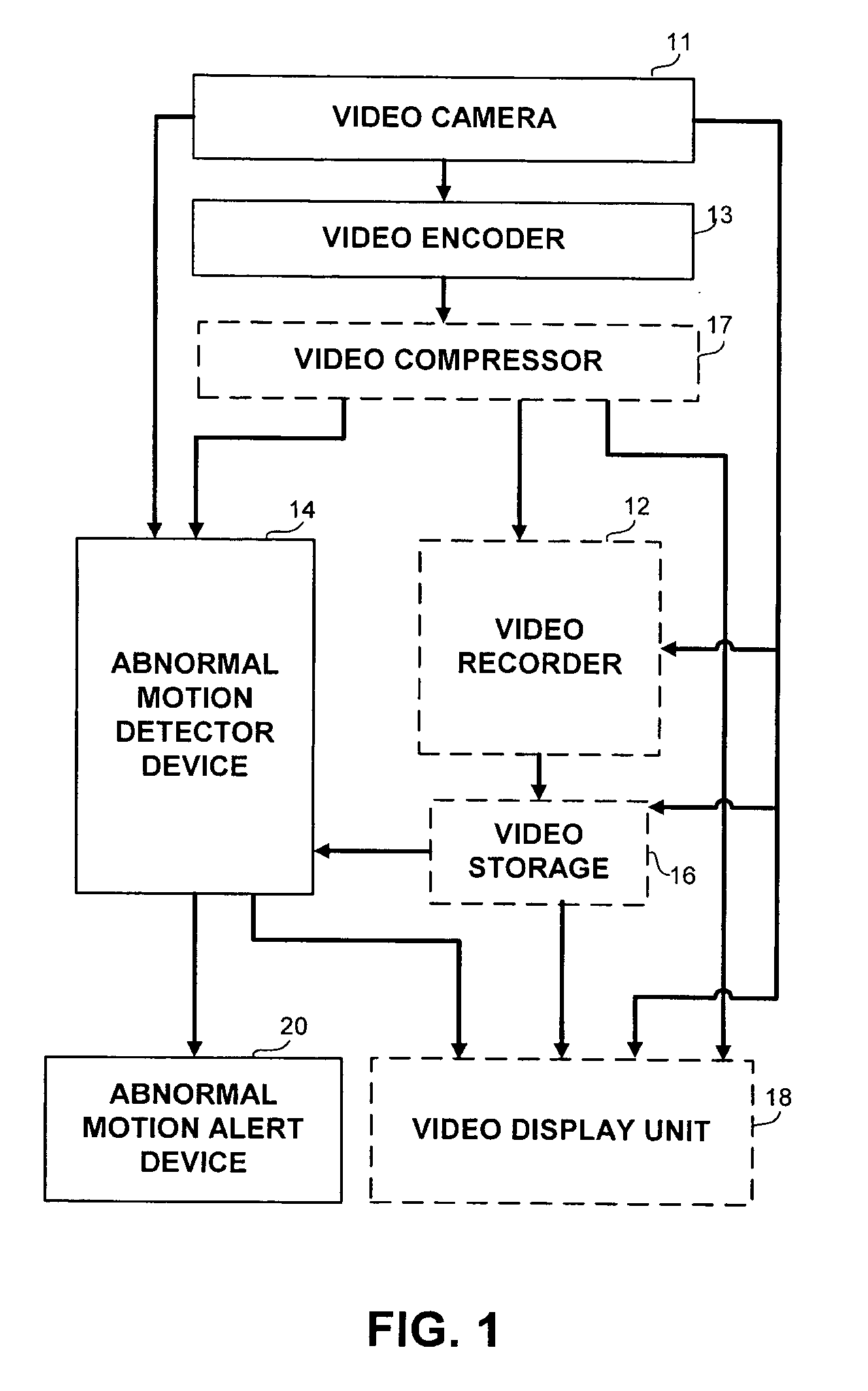

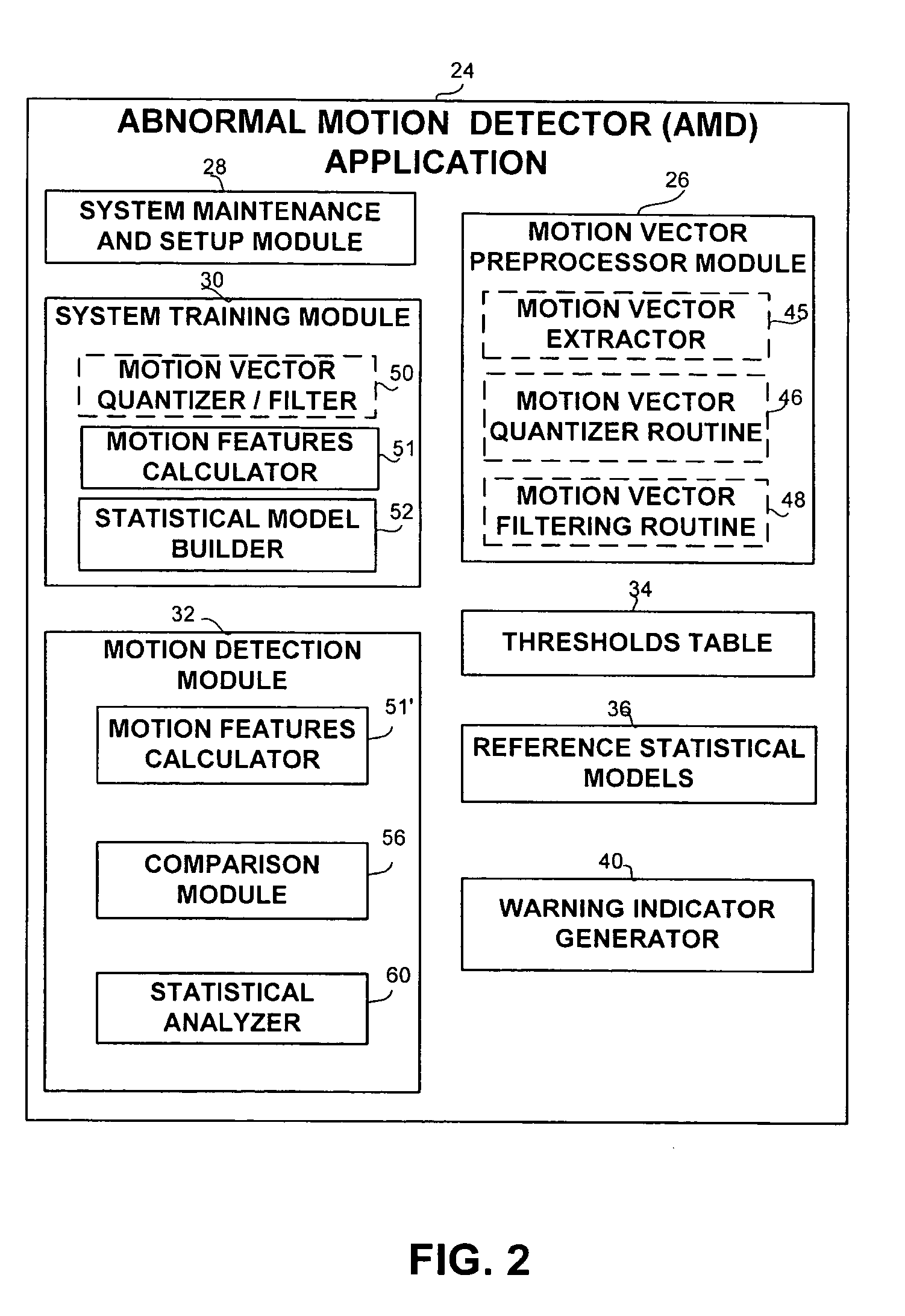

Apparatus and methods for the detection of abnormal motion in a video stream

An apparatus and method for detection of abnormal motion in video stream, comprising a training phase for defining normal motion and a detection phase for detecting abnormal motions in the video stream. Motion is detected according to motion vectors and motion features extracted from video frames.

Owner:MONROE CAPITAL MANAGEMENT ADVISORS +1

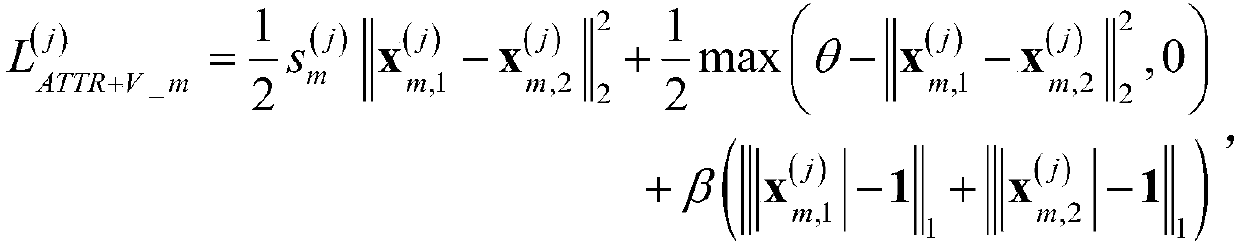

Pedestrian re-identification method based on multi-attribute and multi-strategy fusion learning

ActiveCN107330396ARobustShorten the timeBiometric pattern recognitionNeural learning methodsImage extractionTraining phase

The invention discloses a pedestrian re-identification method based on multi-attribute and multi-strategy fusion learning. The method of the invention includes the steps of in an offline training phase, firstly selecting pedestrian attributes which are easy to be judged and have a sufficient distinguishing degree, training a pedestrian attribute identifier on an attribute data set, then labeling attribute tags for a pedestrian re-identification data set by using the attribute identifier, and next, by combining the attributes and pedestrian identity tags, training a pedestrian re-identification model by using a strategy fused with pedestrian classification and novel constraint comparison verification; and in an online query phase, extracting features of a query image and images in a database by using the pedestrian re-identification model, and calculating the Euclidean distance between the feature of the query image and the feature of each image in the database to obtain the image with the shortest distance, which is considered as the result of pedestrian re-identification. In terms of performance, the features in the invention are distinguishable and high accuracy is obtained; and in terms of efficiency, the method of the invention can quickly search for the pedestrian indicated by the query image from the pedestrian image database.

Owner:HUAZHONG UNIV OF SCI & TECH

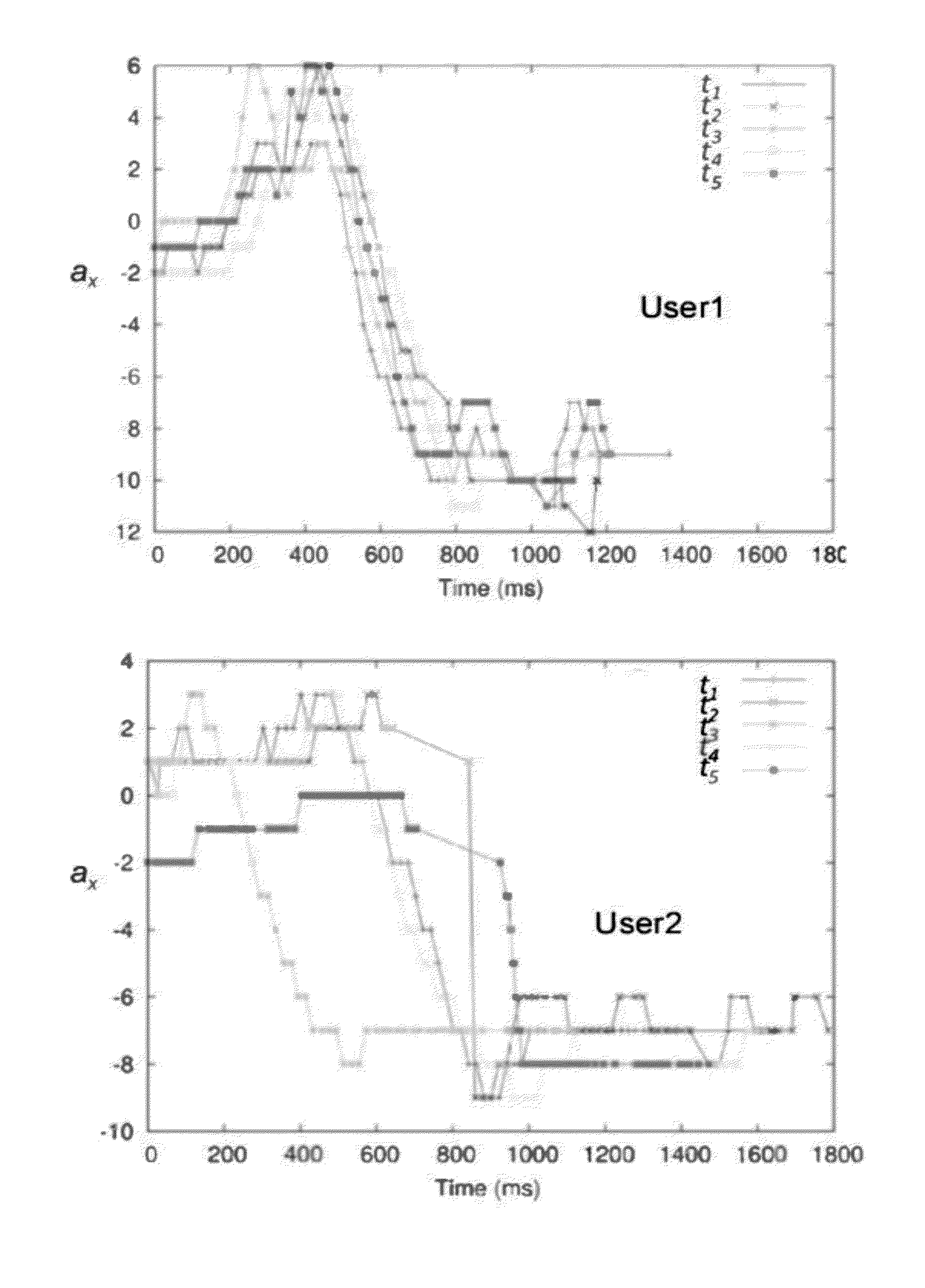

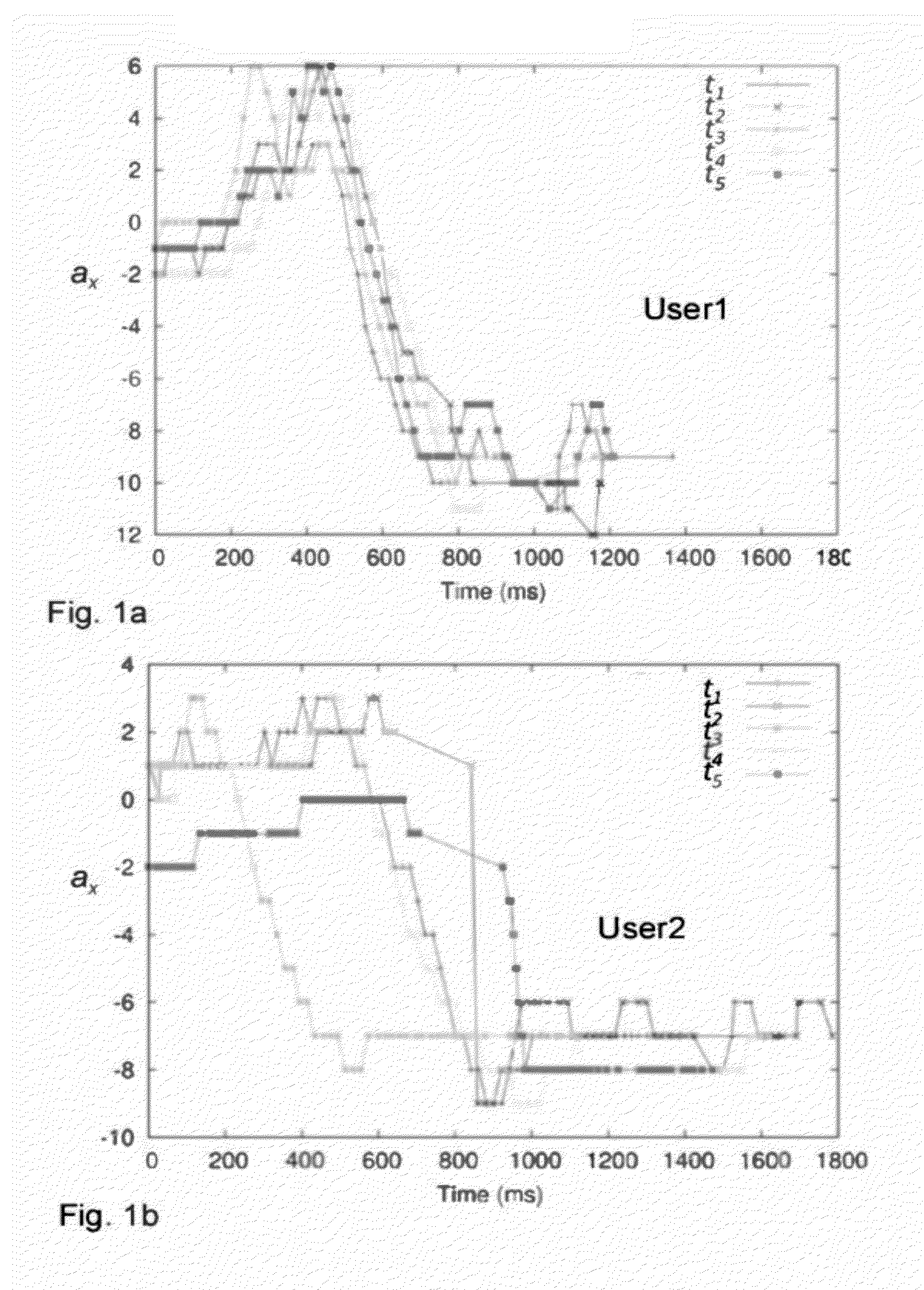

User authentication method for access to a mobile user terminal and corresponding mobile user terminal

ActiveUS20120164978A1Difficult to interceptUnauthorised/fraudulent call preventionEavesdropping prevention circuitsTraining phaseUser authentication

A user authentication method for access to a mobile user terminal including one or more movement sensor supplying information on movements of the mobile terminal, includes authenticating a user on the basis of a movement performed handling the mobile terminal. A test pattern of values is sensed by one or more movement sensor related to a movement performed in a predetermined condition corresponding to an action for operating the mobile terminal. In a biometric recognition phase the acquired test pattern is compared to a stored pattern of values obtained from the one or more movement sensor by a training phase executed by an accepted user performing the action for operating the mobile terminal. The comparison includes measuring a similarity of the acquired test pattern to stored pattern. An authentication result is obtained by comparing the measured similarity to a threshold.

Owner:CONTI MAURO

Intelligent system and method for detecting and diagnosing faults in heating, ventilating and air conditioning (HVAC) equipment

InactiveUS20120072029A1Lower performance requirementsTemperatue controlStatic/dynamic balance measurementTraining phaseComputer module

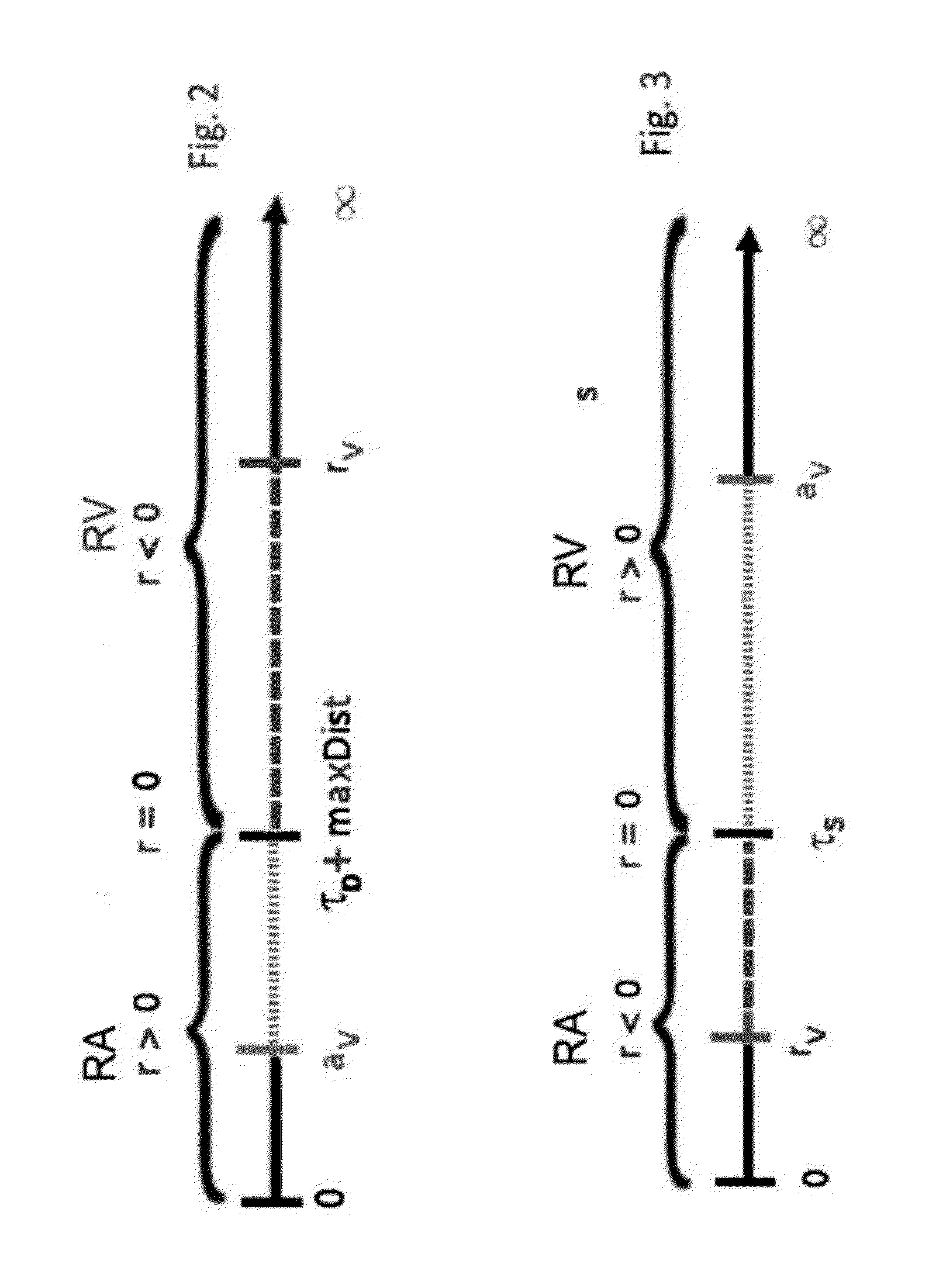

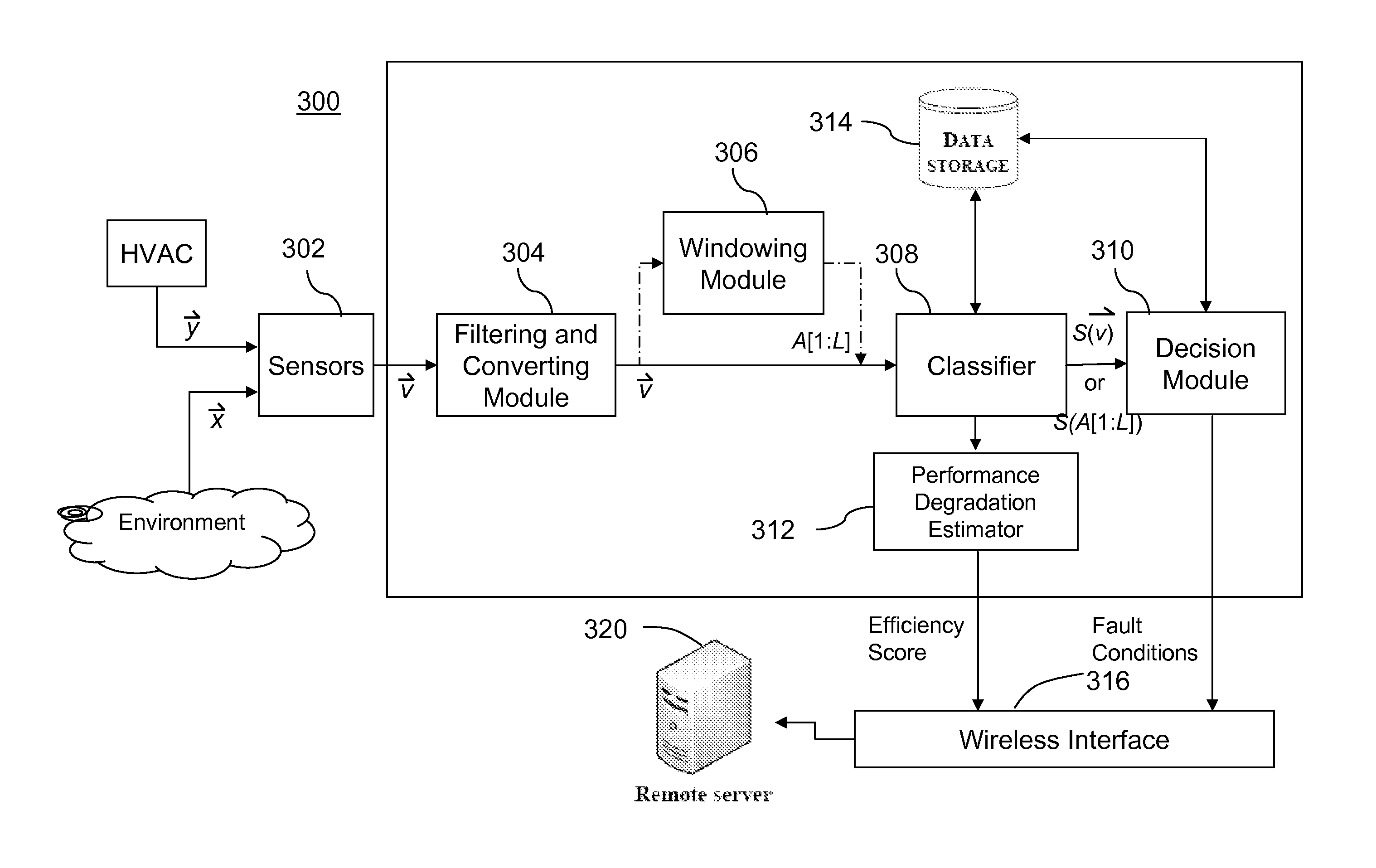

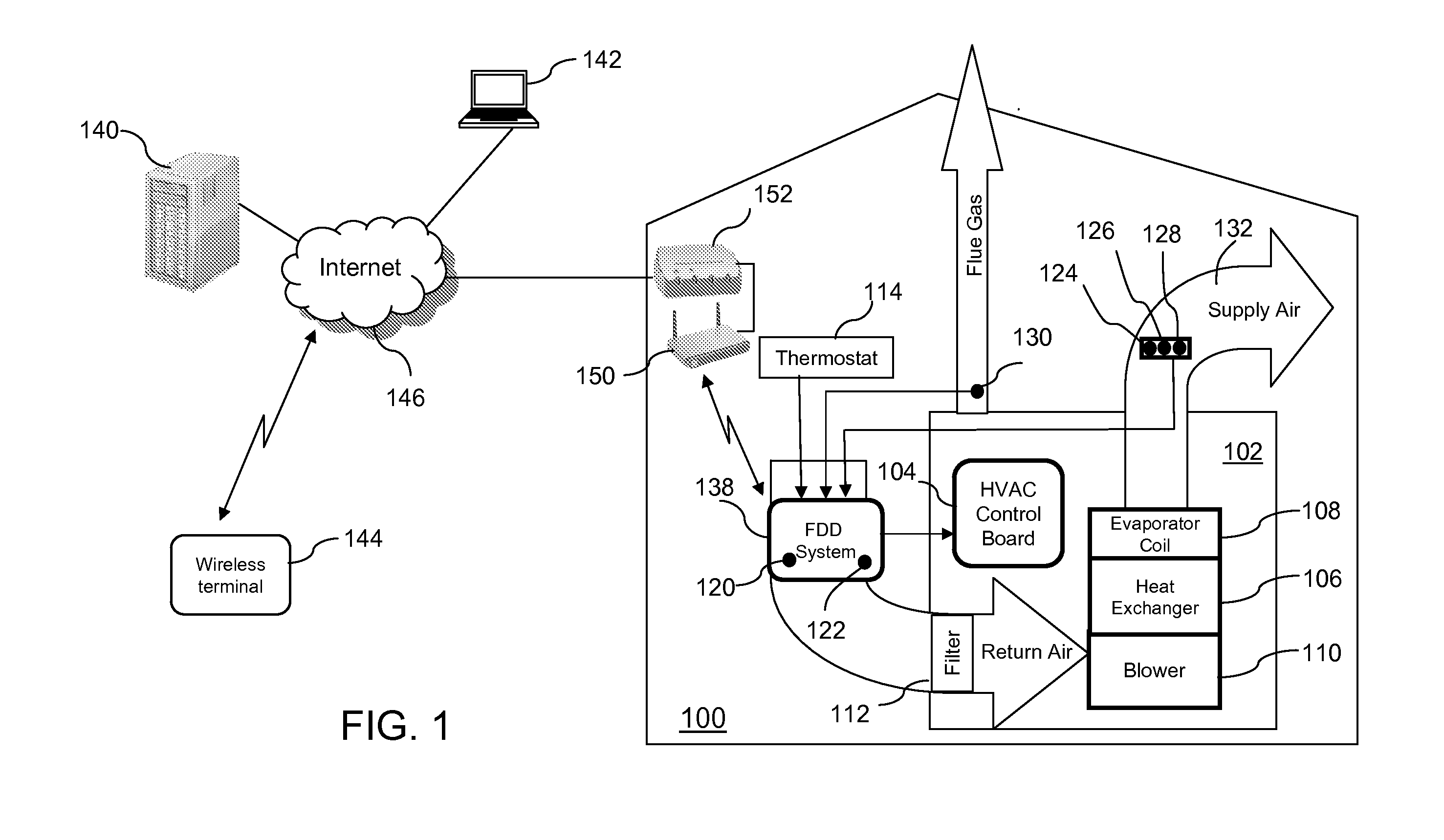

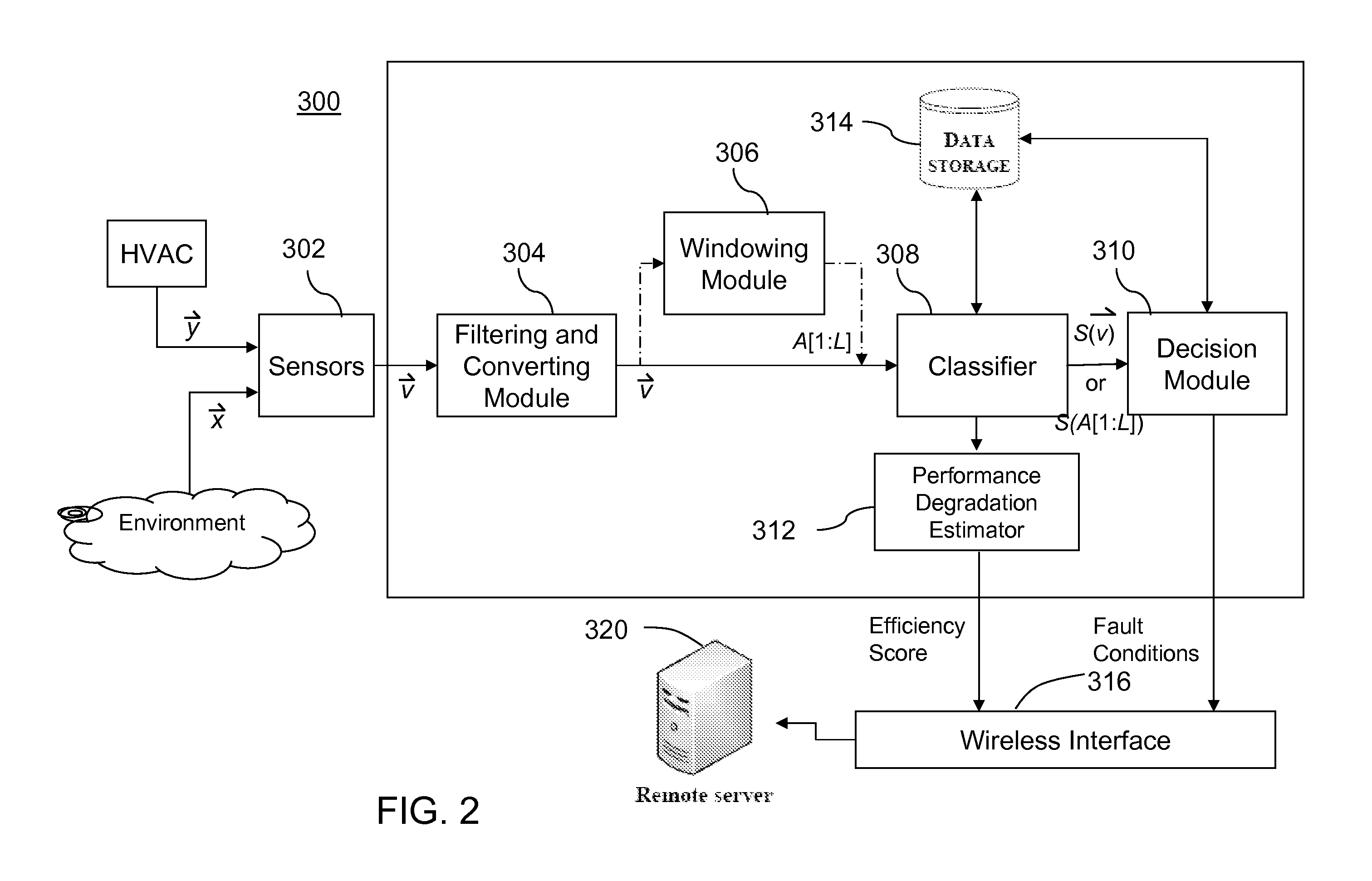

A system and a method for detecting and diagnosing faults in heating, ventilating and air conditioning (HVAC) equipment is described. The system comprises a sensor; a classifier modelling a normal behaviour of the HVAC equipment in situ in the installed operation environment, the classifier having a plurality of classifier parameters for computing a classifier score using an input data based on a measured value from the sensor, the plurality of classifier parameters being created during a training phase of the system using the input data during the training phase; and a decision module for comparing the classifier score to a decision threshold, the decision threshold being set during the training phase.

Owner:HEATVU

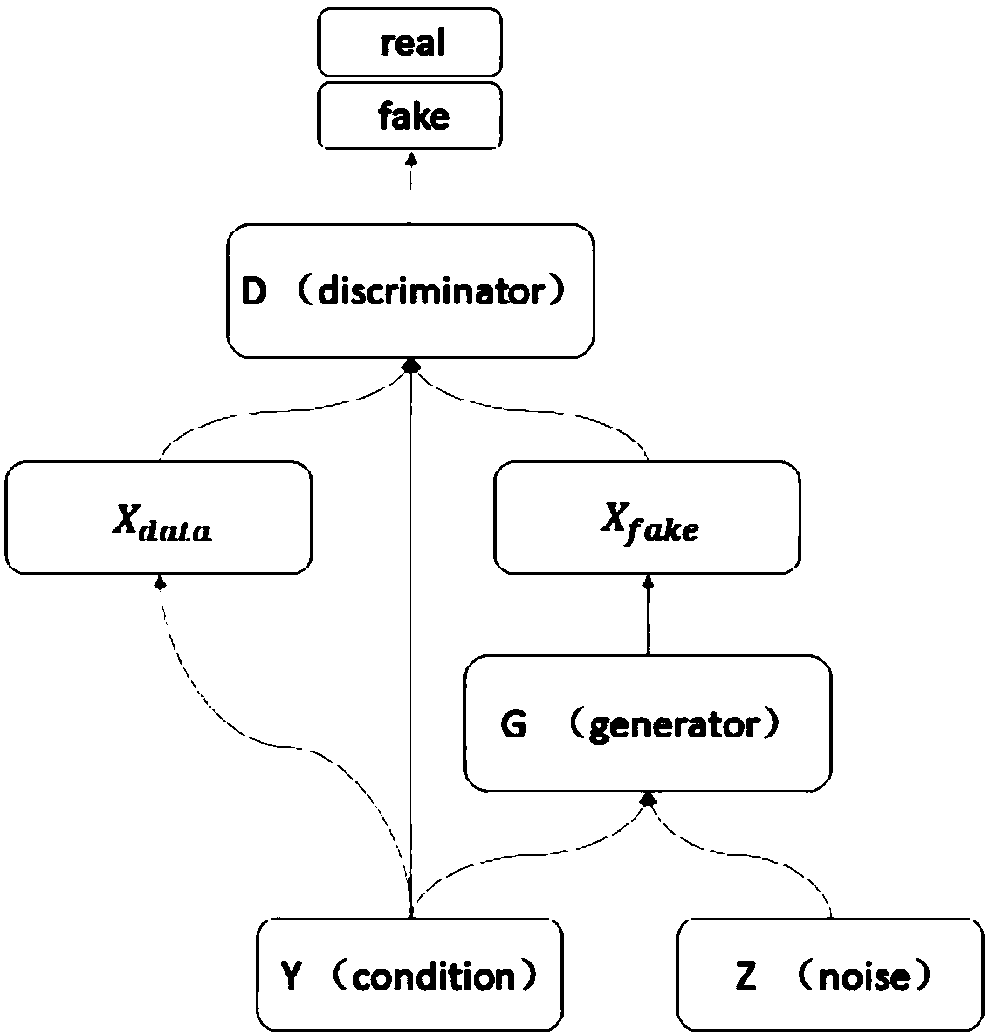

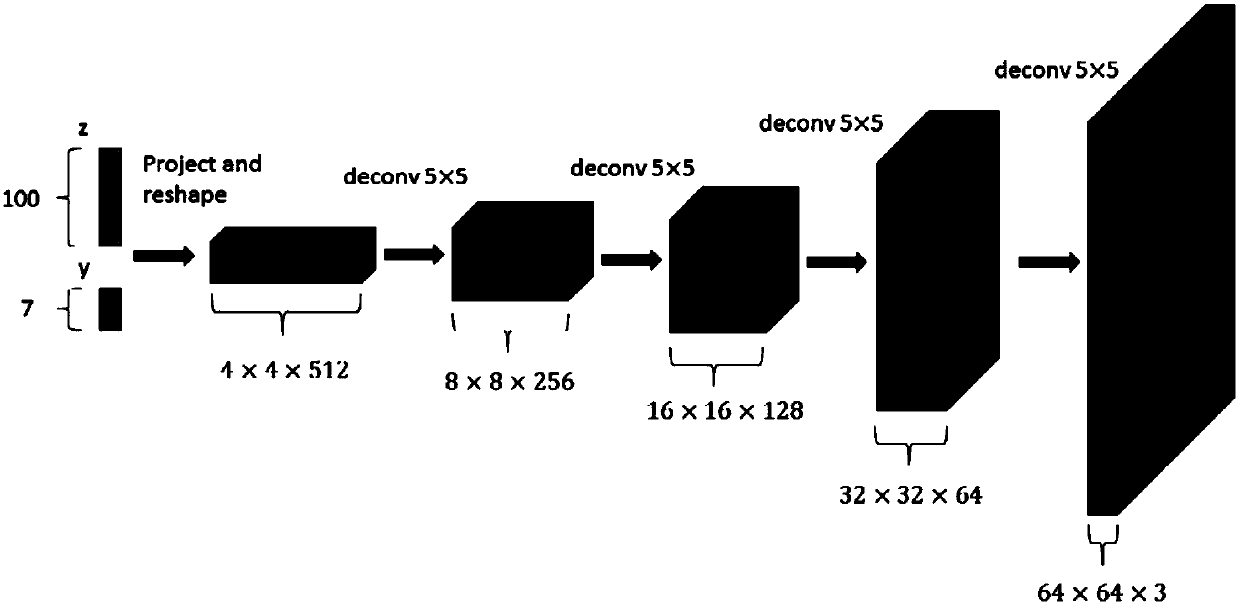

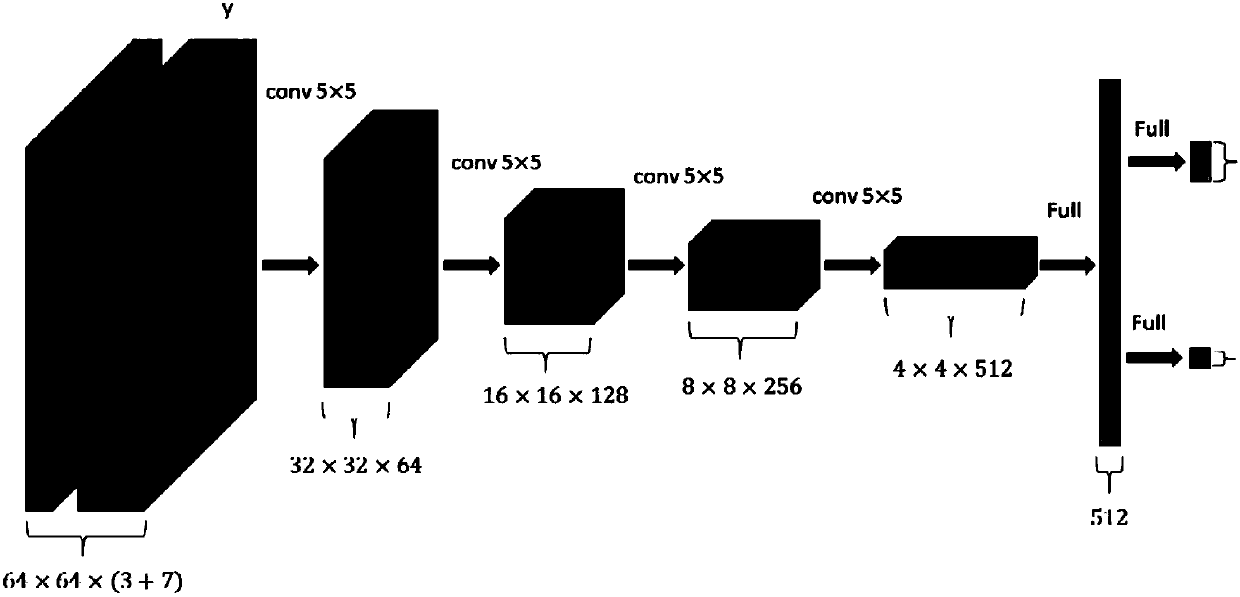

Generative adversarial network-based multi-pose face generation method

ActiveCN107292813AImprove recognition rateImprove the problem of lack of large-scale dataGeometric image transformationCharacter and pattern recognitionTraining phaseAttitude control

The present invention discloses a generative adversarial network-based multi-pose face generation method. According to the generative adversarial network-based multi-pose face generation method, in a training phase, the face data of various poses are collected; two deep neural networks G and D are trained on the basis of a generative adversarial network; and after training is completed, the generative network G is inputted on the basis of random sampling and pose control parameters, so that face images of various poses can be obtained. With the method of the invention adopted, a large quantity of different face images of a plurality of poses can be generated, and the problem of data shortage in the multi-pose face recognition field can be solved; the newly generated face images of various poses are adopted as training data to train an encoder for extracting the identity information of the images; in a final testing process, an image of a random pose is inputted, and identity information features are obtained through the trained encoder; and the face images of various poses of the same person are obtained through the trained generative network.

Owner:ZHEJIANG UNIV

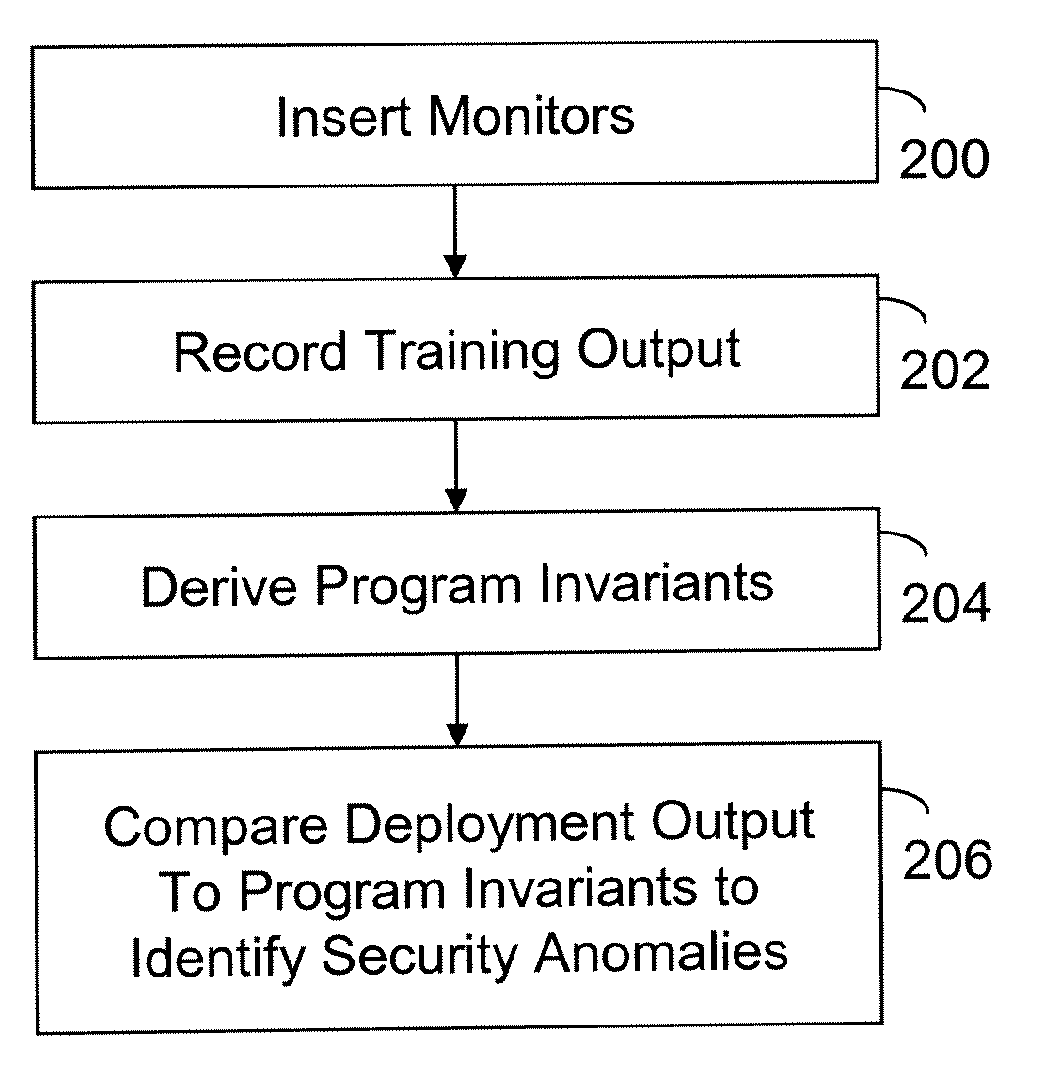

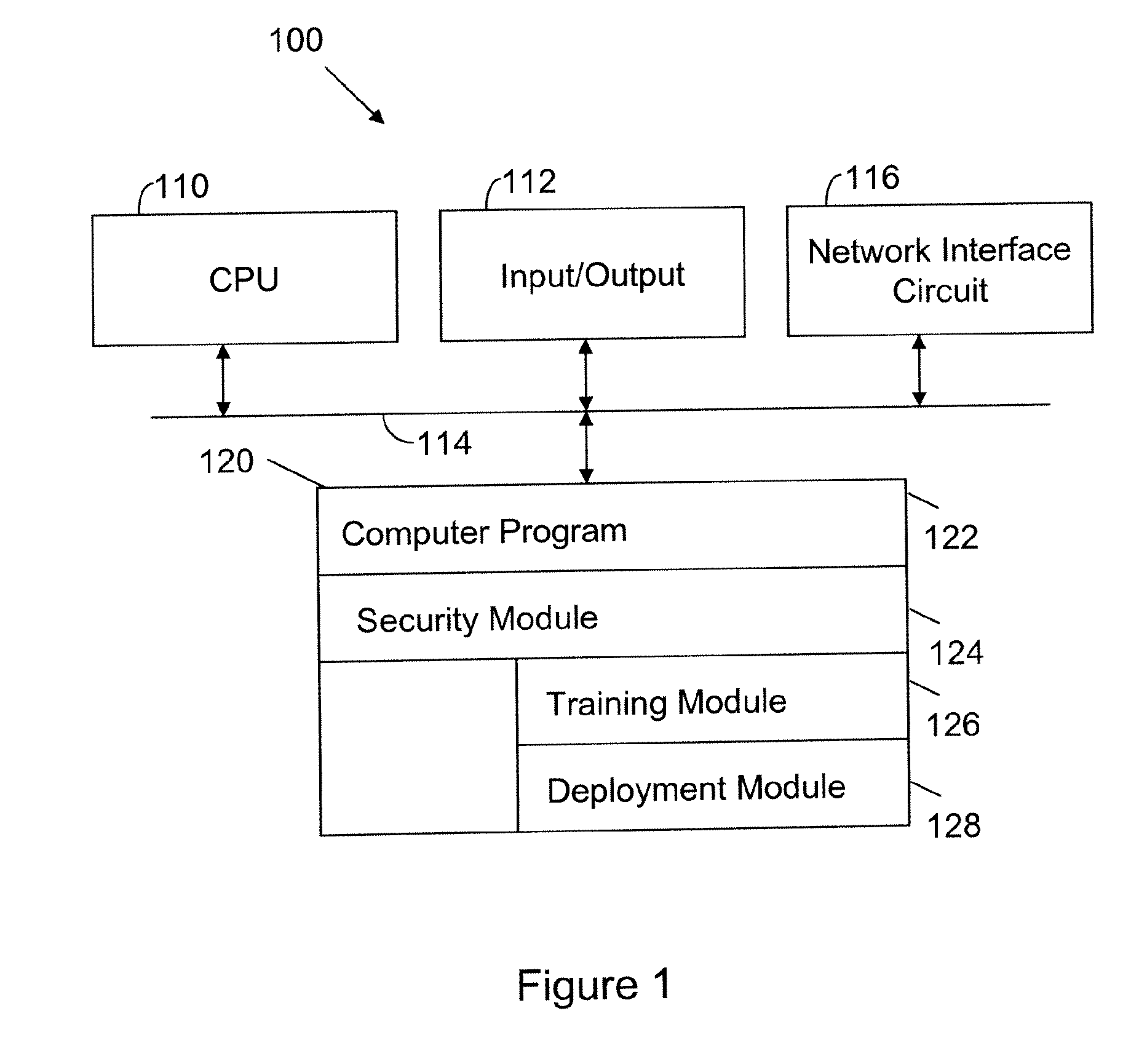

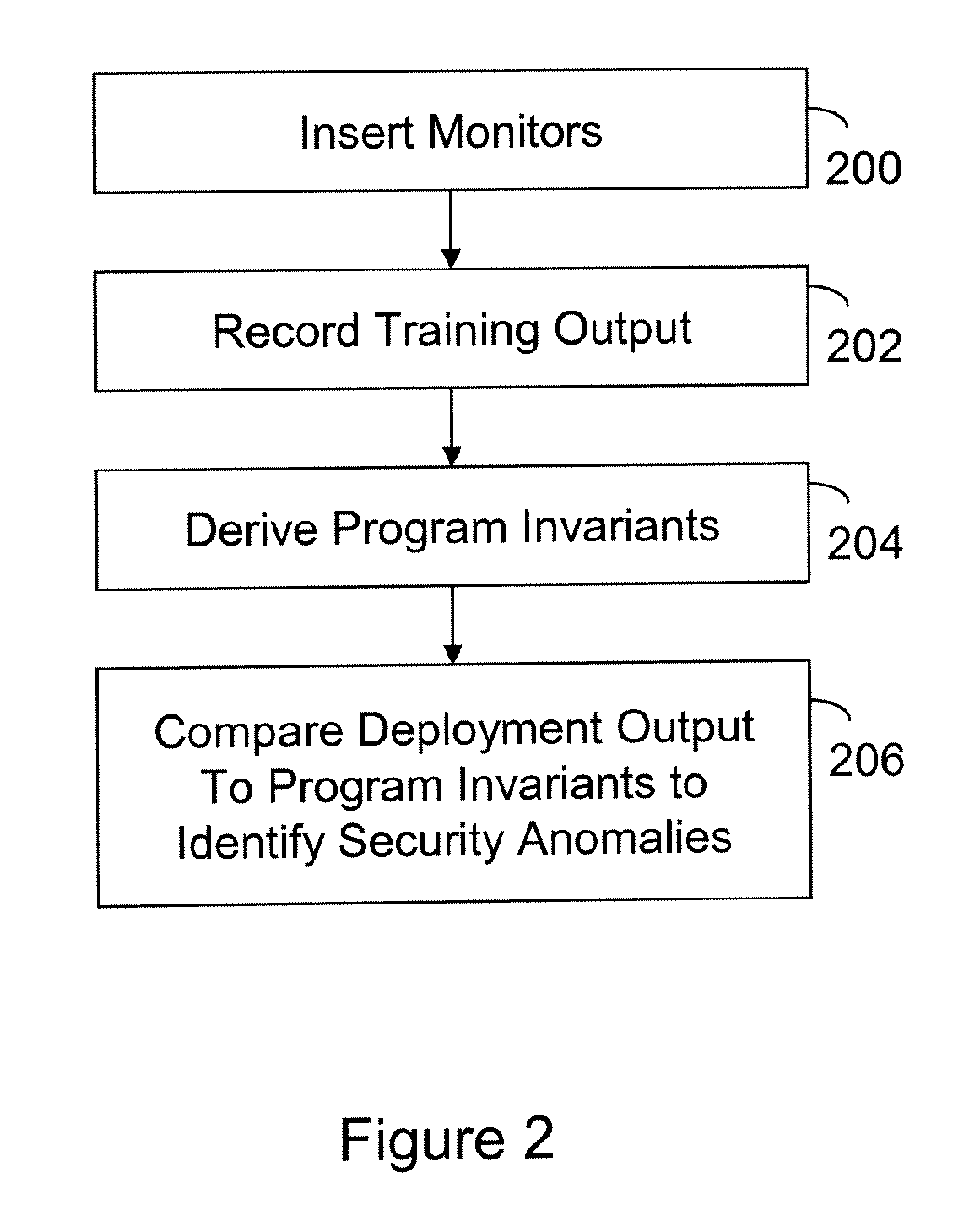

Apparatus and Method for Monitoring Program Invariants to Identify Security Anomalies

InactiveUS20090282480A1Memory loss protectionError detection/correctionTraining phaseComputer engineering

A computer readable storage medium includes executable instructions to insert monitors at selected locations within a computer program. Training output from the monitors is recorded during a training phase of the computer program. Program invariants are derived from the training output. During a deployment phase of the computer program, deployment output from the monitors is compared to the program invariants to identify security anomalies.

Owner:HEWLETT PACKARD DEV CO LP

Time to event data analysis method and system

InactiveUS20120066163A1Increases difference and “ contrast ”Improve efficiencyBioreactor/fermenter combinationsParticle separator tubesAnalysis dataTraining phase

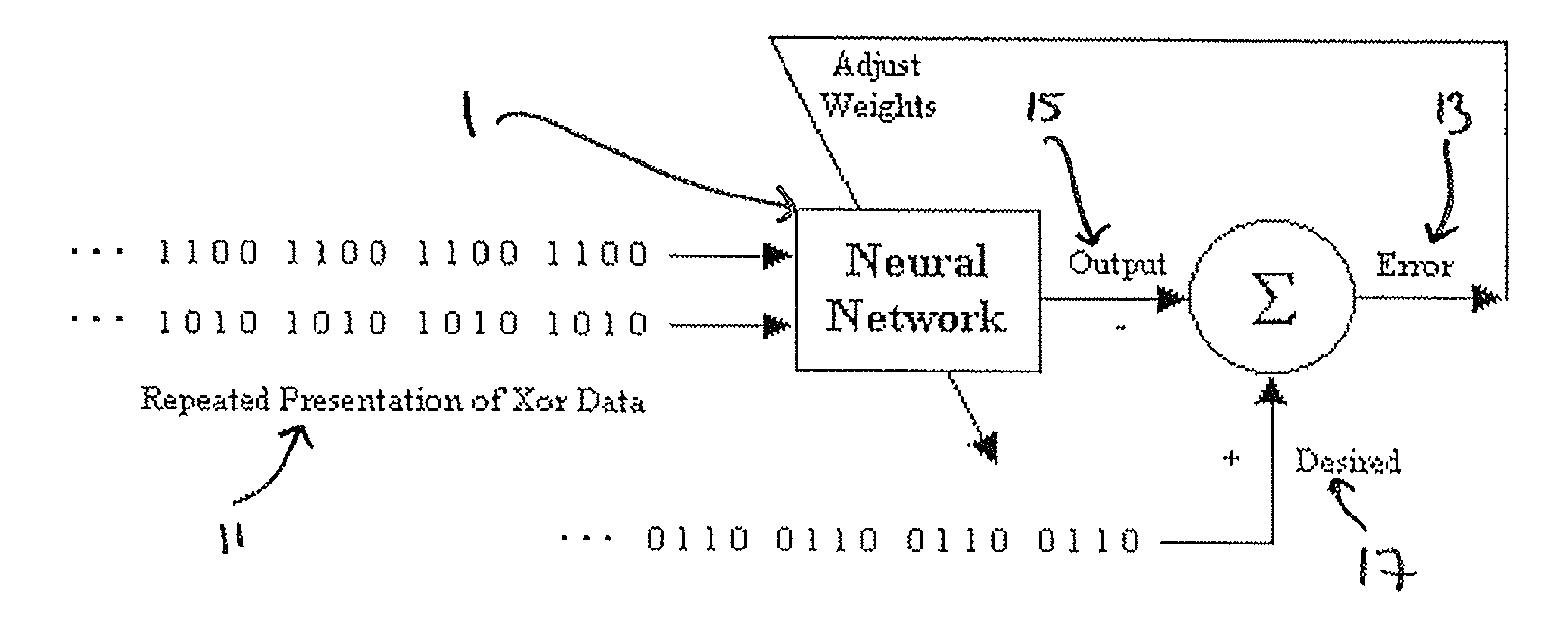

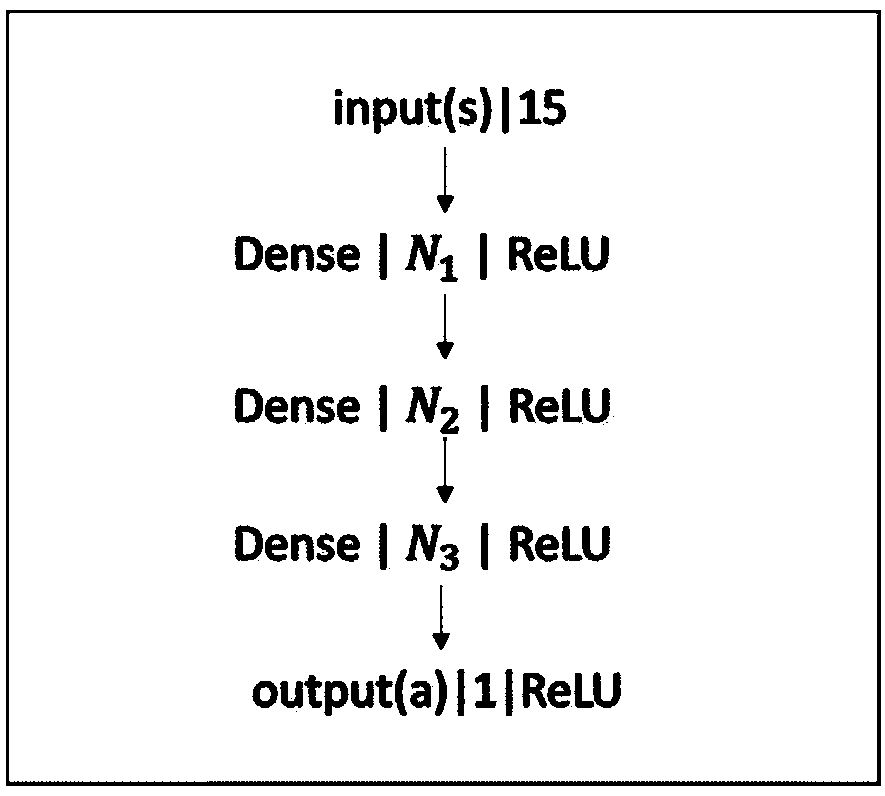

A time to event data analysis method and system. The present invention relates to the analysis of data to identify relationships between the input data and one or more conditions. One method of analysing such data is by the use of neural networks which are non-linear statistical data modelling tools, the structure of which may be changed based on information that is passed through the network during a training phase. A known problem that affects neural networks is the issue of overtraining which arises in overcomplex or overspecified systems when the capacity of the network significantly exceeds the needed parameters. The present invention provides a method of analysing data, such as bioinformatics or pathology data, using a neural network with a constrained architecture and providing a continuous output that can be used in various contexts and systems including prediction of time to an event, such as a specified clinical event.

Owner:NOTTINGHAM TRENT UNIVERSITY

Method and Apparatus of Processing Data Using Deep Belief Networks Employing Low-Rank Matrix Factorization

InactiveUS20140156575A1Mathematical modelsDigital computer detailsDeep belief networkActivation function

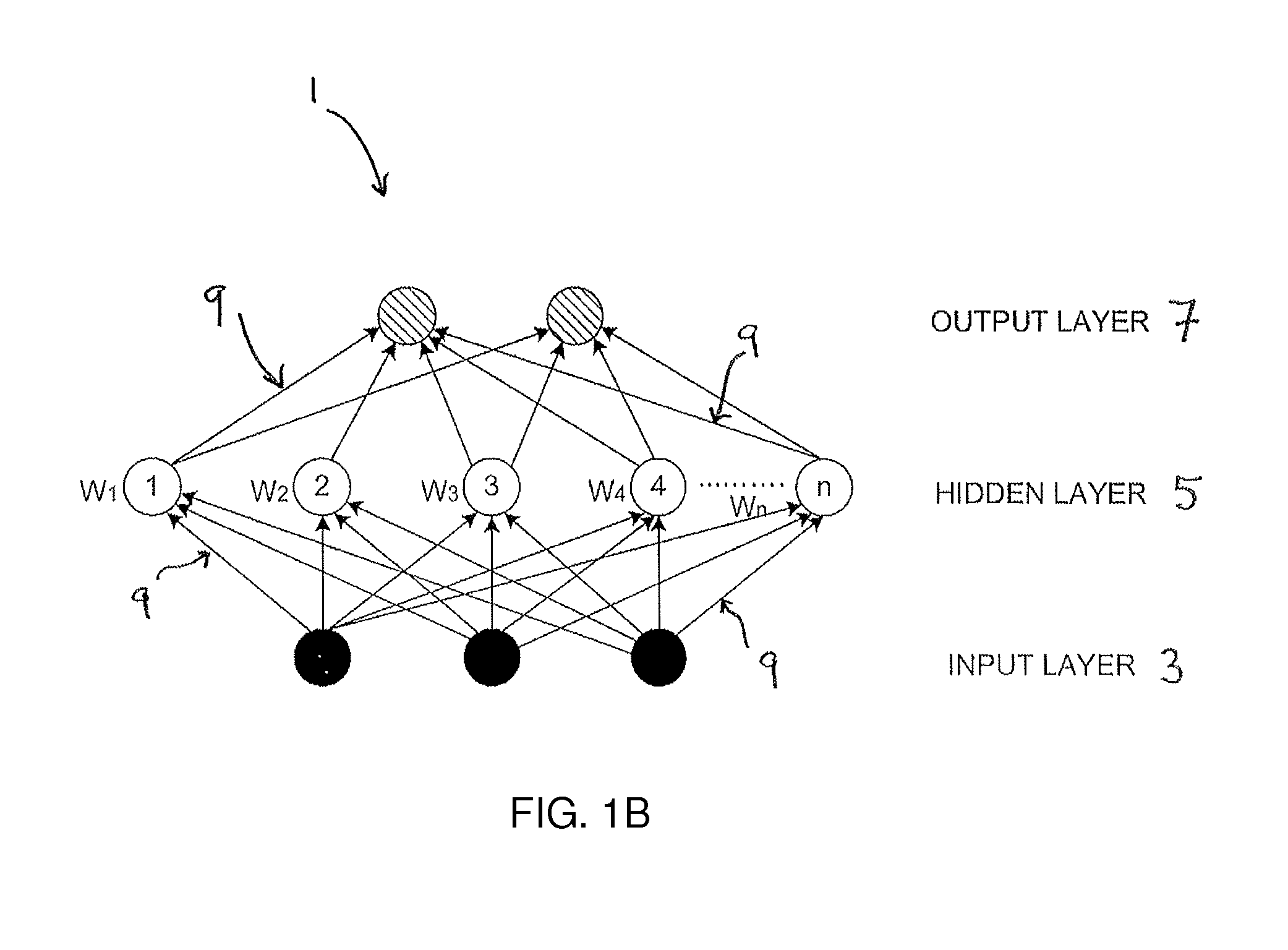

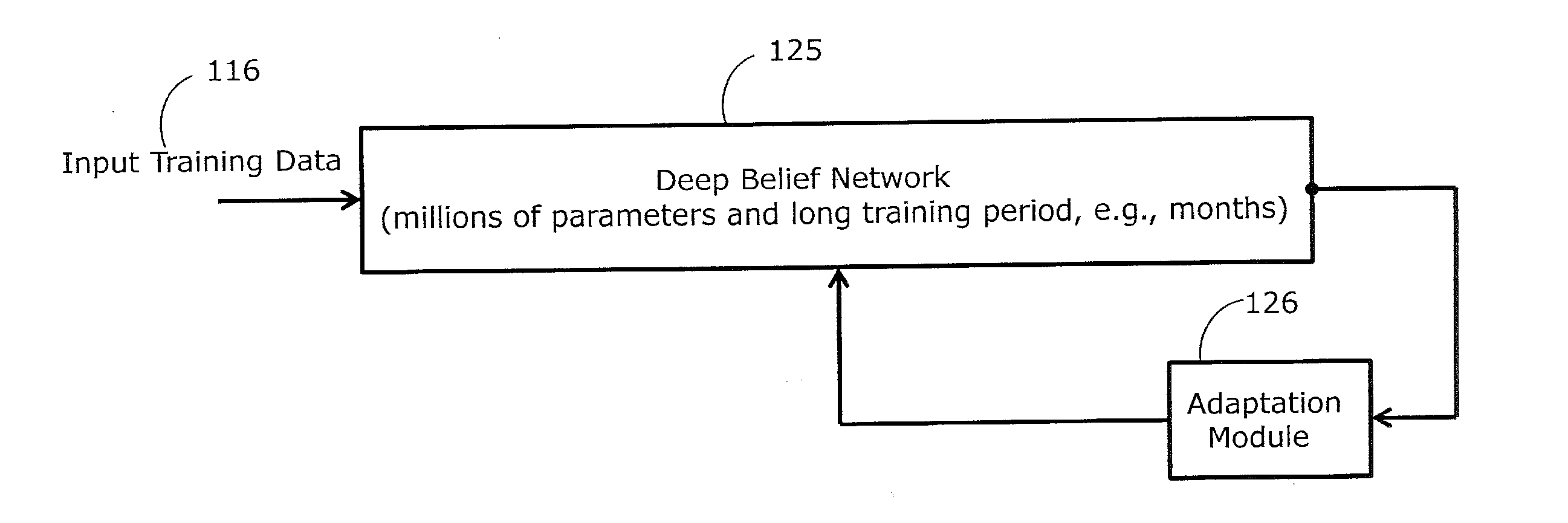

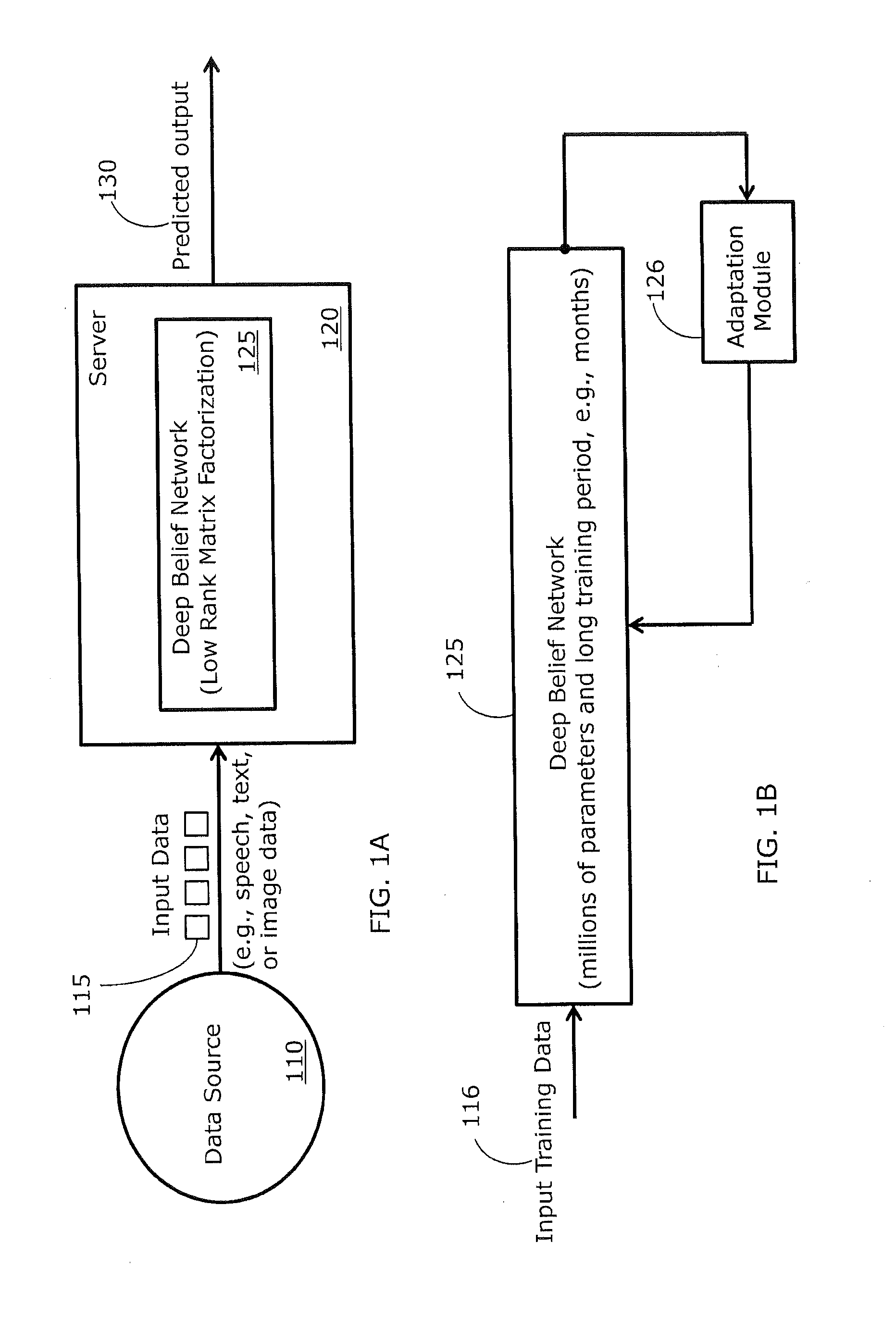

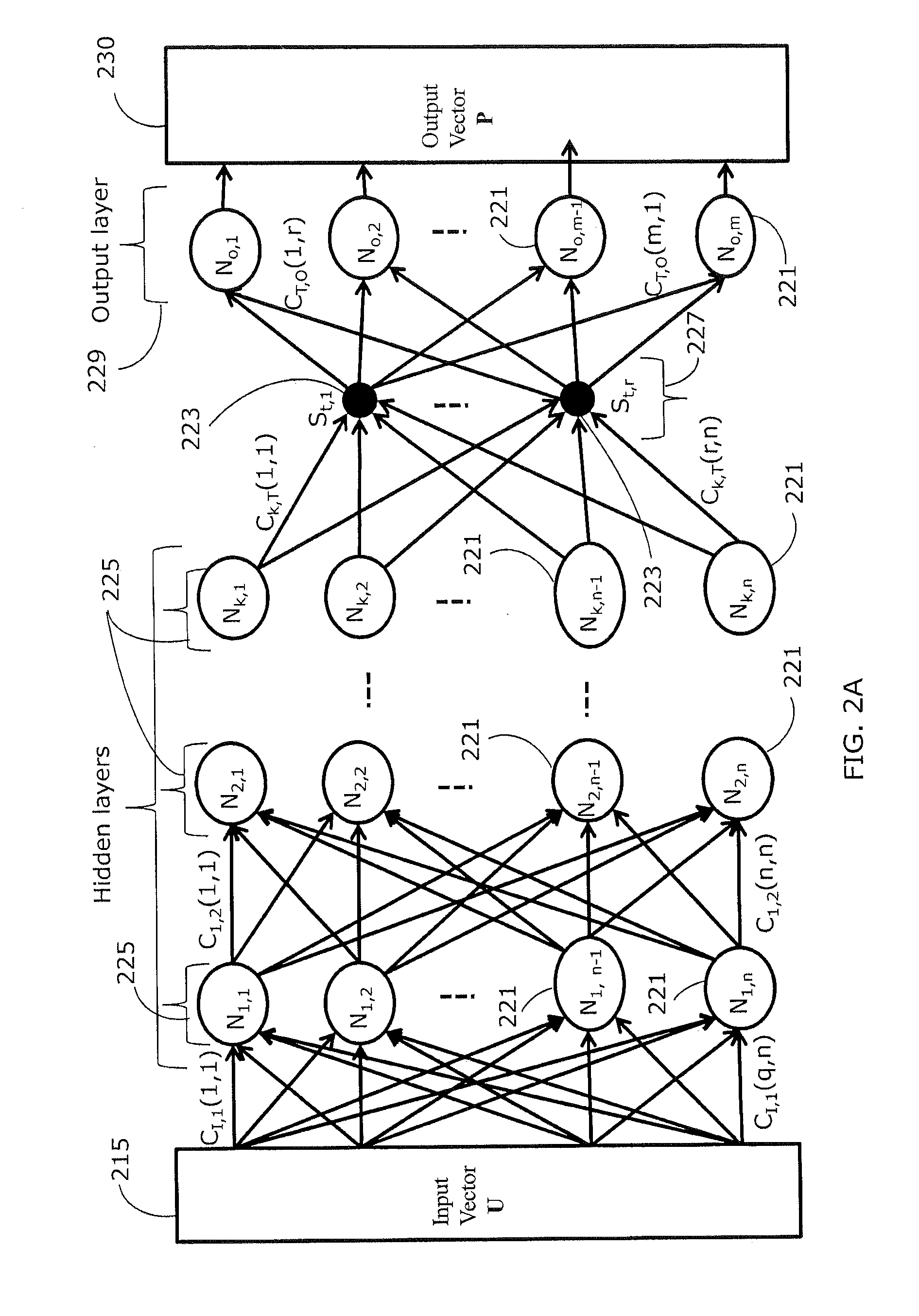

Deep belief networks are usually associated with a large number of parameters and high computational complexity. The large number of parameters results in a long and computationally consuming training phase. According to at least one example embodiment, low-rank matrix factorization is used to approximate at least a first set of parameters, associated with an output layer, with a second and a third set of parameters. The total number of parameters in the second and third sets of parameters is smaller than the number of sets of parameters in the first set. An architecture of a resulting artificial neural network, when employing low-rank matrix factorization, may be characterized with a low-rank layer, not employing activation function(s), and defined by a relatively small number of nodes and the second set of parameters. By using low rank matrix factorization, training is faster, leading to rapid deployment of the respective system.

Owner:NUANCE COMM INC

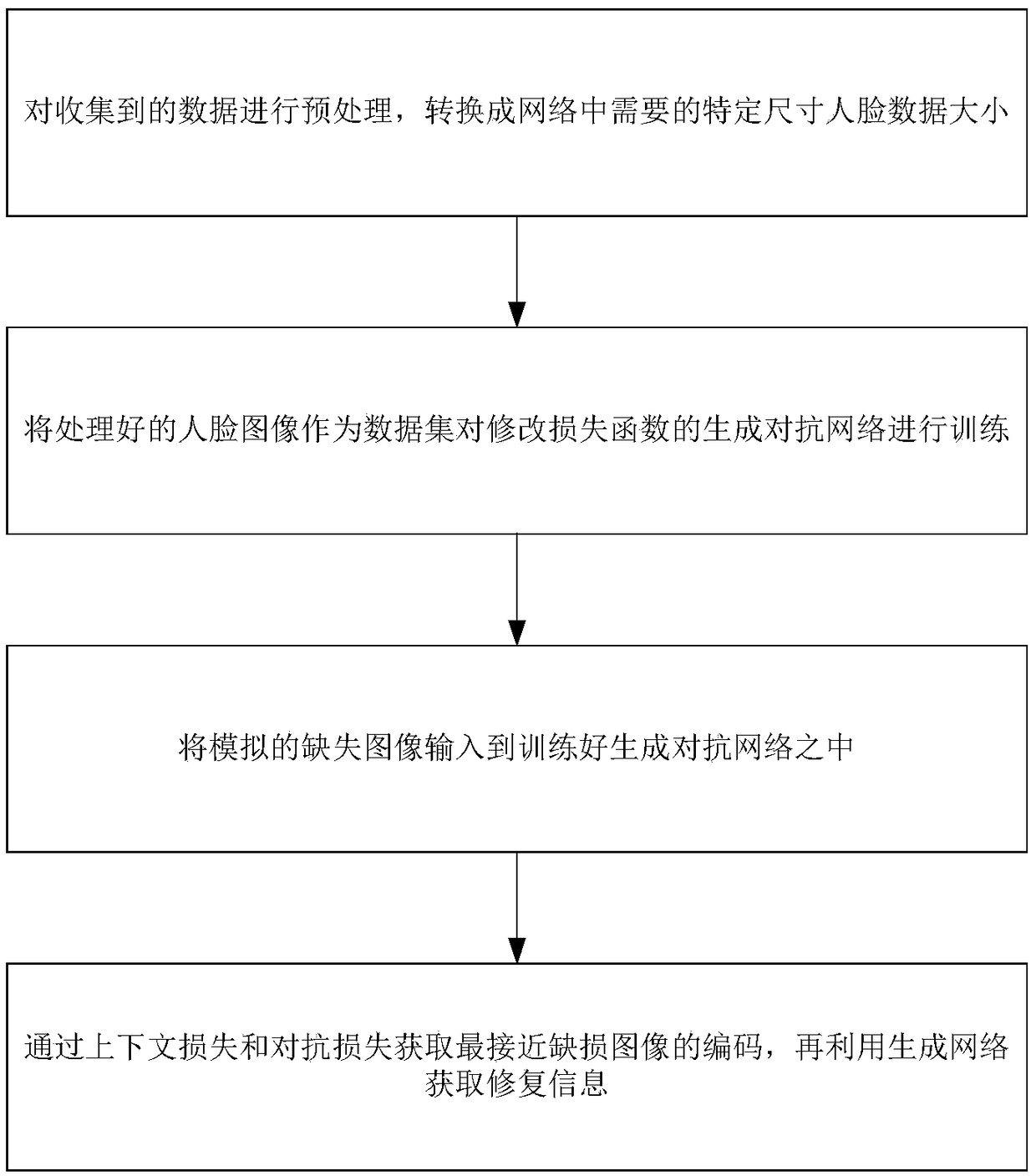

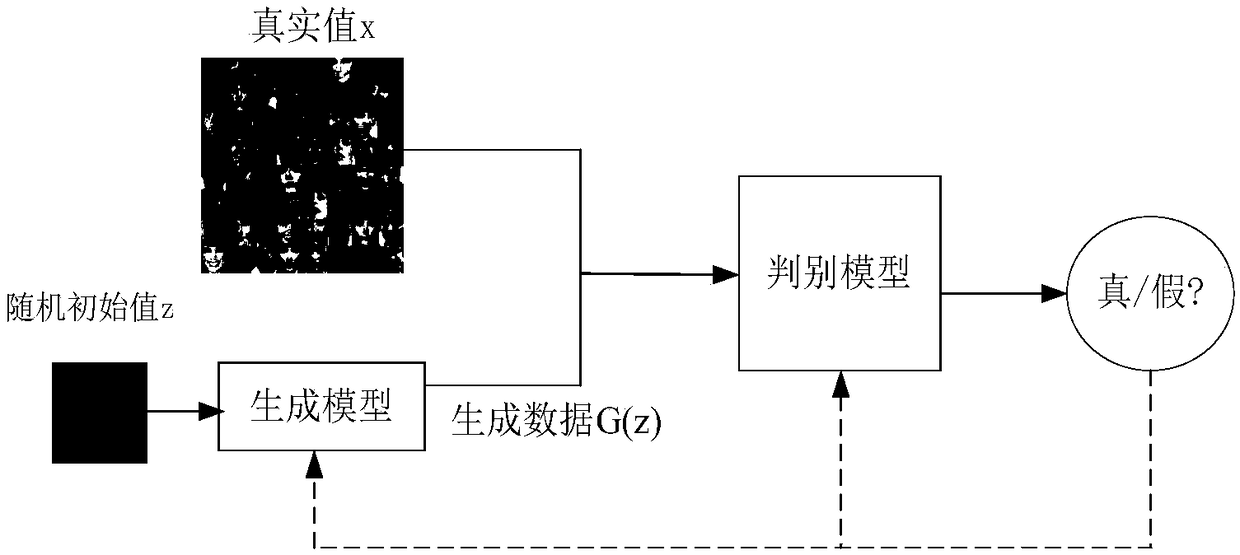

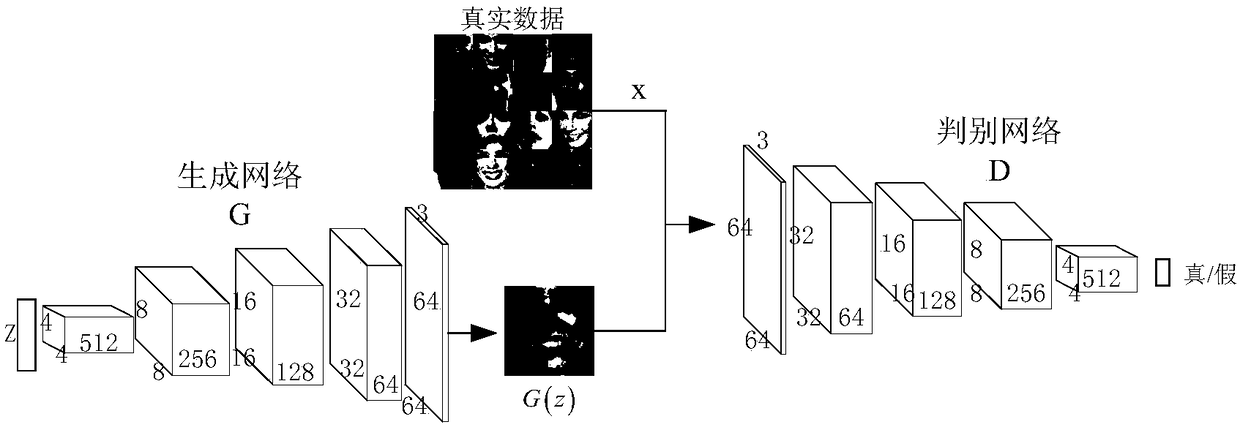

A face image restoration method based on a generation antagonism network

ActiveCN109377448AImprove stabilityGuarantee authenticityImage enhancementImage analysisData setTraining phase

The invention discloses a face image restoration method based on a generation antagonism network. The method comprises the following steps: a face data set is preprocessed, and a face image with a specific size is obtained by face recognition of the collected image; In the training phase, the collected face images are used as dataset to train the generating network and discriminant network, aimingat obtaining more realistic images through the generating network. In order to solve the problems of instability of training and mode collapse in the network, the least square loss is used as the loss function of discriminant network. In the repairing phase, a special mask is automatically added to the original image to simulate the real missing area, and the masked face image is input into the optimized depth convolution to generate an antagonistic network. The relevant random parameters are obtained through context loss and two antagonistic losses, and the repairing information is obtainedthrough the generated network. The invention can not only solve the face image repairing with serious defective information, but also generate a face repairing image which is more consistent with visual cognition.

Owner:BEIJING UNIV OF TECH

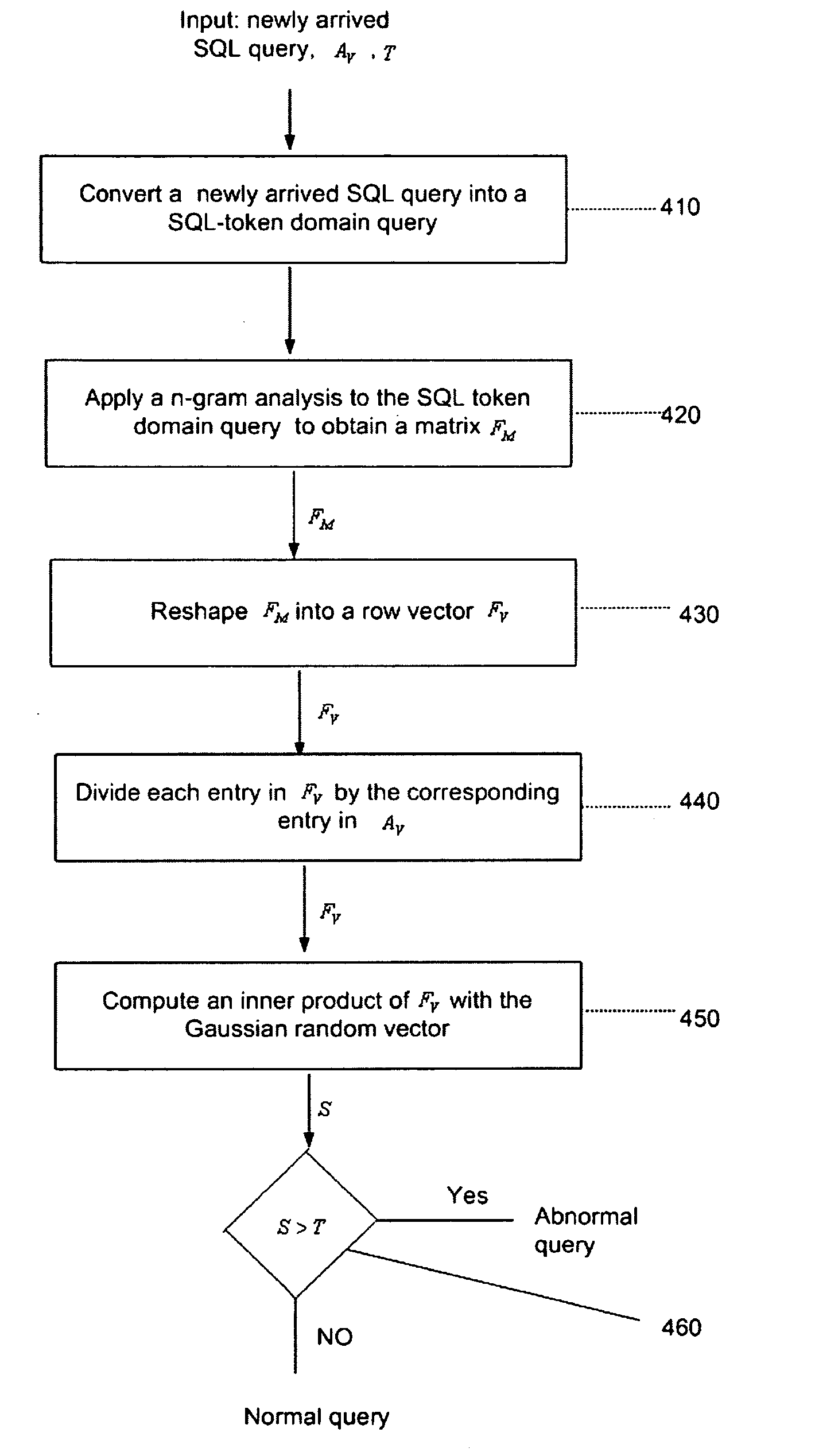

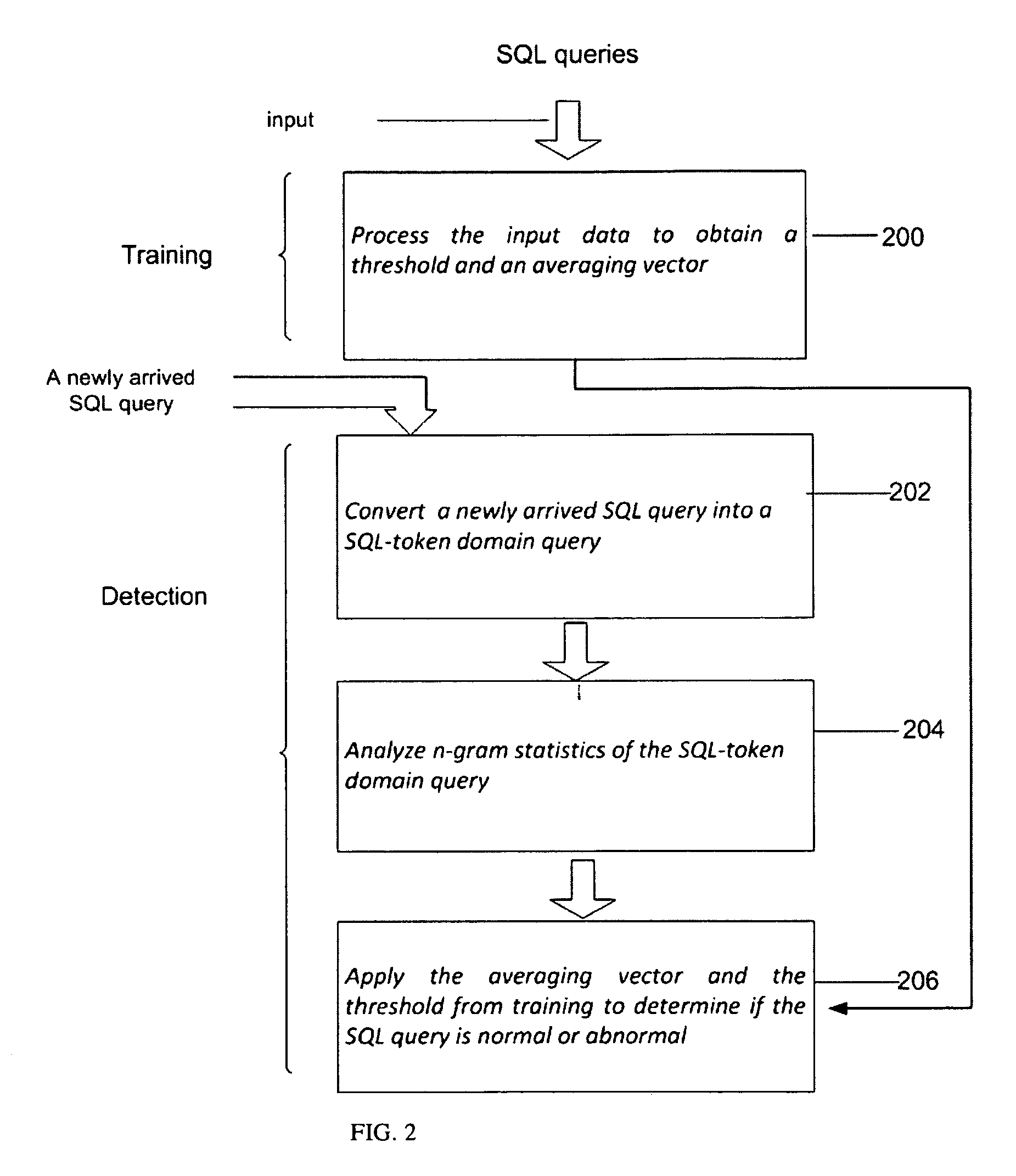

Anomaly-based detection of SQL injection attacks

InactiveUS8225402B1Reduce dimensionalityFailure can not be detectedMemory loss protectionError detection/correctionTraining phaseSQL injection

Owner:AVERBUCH AMIR +2

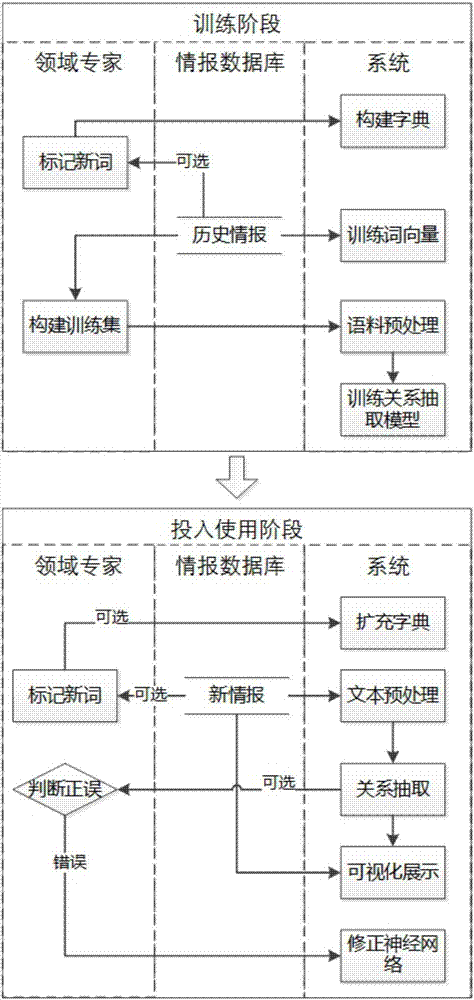

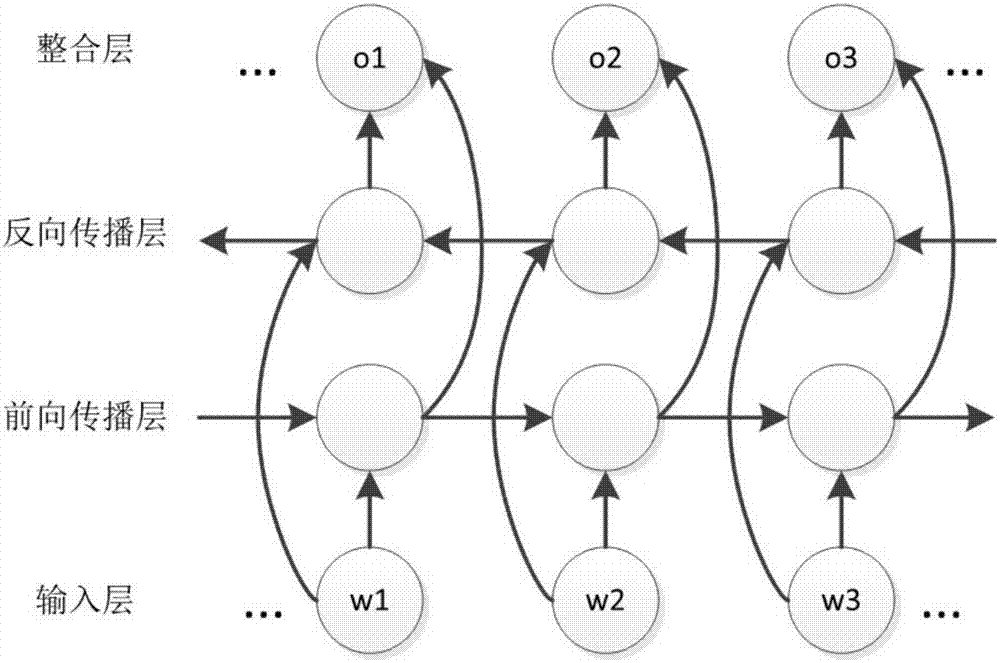

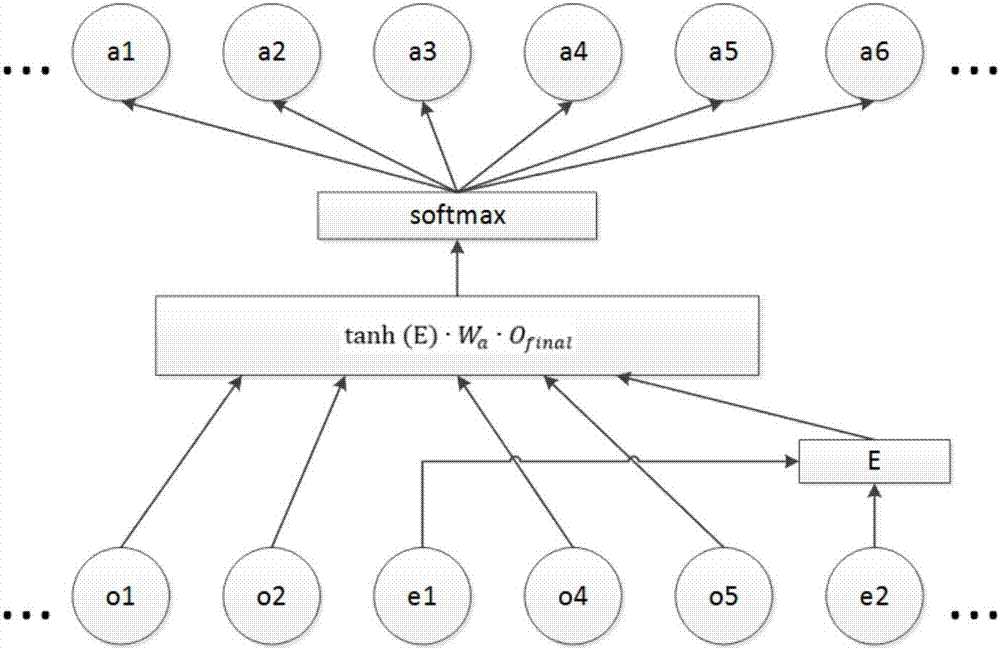

Intelligence relation extraction method based on neural network and attention mechanism

ActiveCN107239446AStrong feature extraction abilityOvercome the problem of heavy workload of manual feature extractionBiological neural network modelsNatural language data processingNetwork modelMachine learning

The invention discloses an intelligence relation extraction method based on neural network and attention mechanism, and relates to the field of recurrent neural network, natural language processing and intelligence analysis combined with attention mechanism. The method is used for solving the problem of large workload and low generalization ability in the existing intelligence analysis system based on artificial constructed knowledge base. The implementation of the method includes a training phase and an application phase. In the training phase, firstly a user dictionary and training word vectors are constructed, then a training set is constructed from a historical information database, then corpus is pre-processed, and then neural network model training is conducted; in the application phase, information is obtained, information pre-processing is conducted, intelligence relation extraction task can be automatically completed, at the same time expanding user dictionary and correction judgment are supported, training neural network model with training set is incremented. The intelligence relation extraction method can find the relationship between intelligence, and provide the basis for integrating event context and decision making, and has a wide range of practical value.

Owner:CHINA UNIV OF MINING & TECH

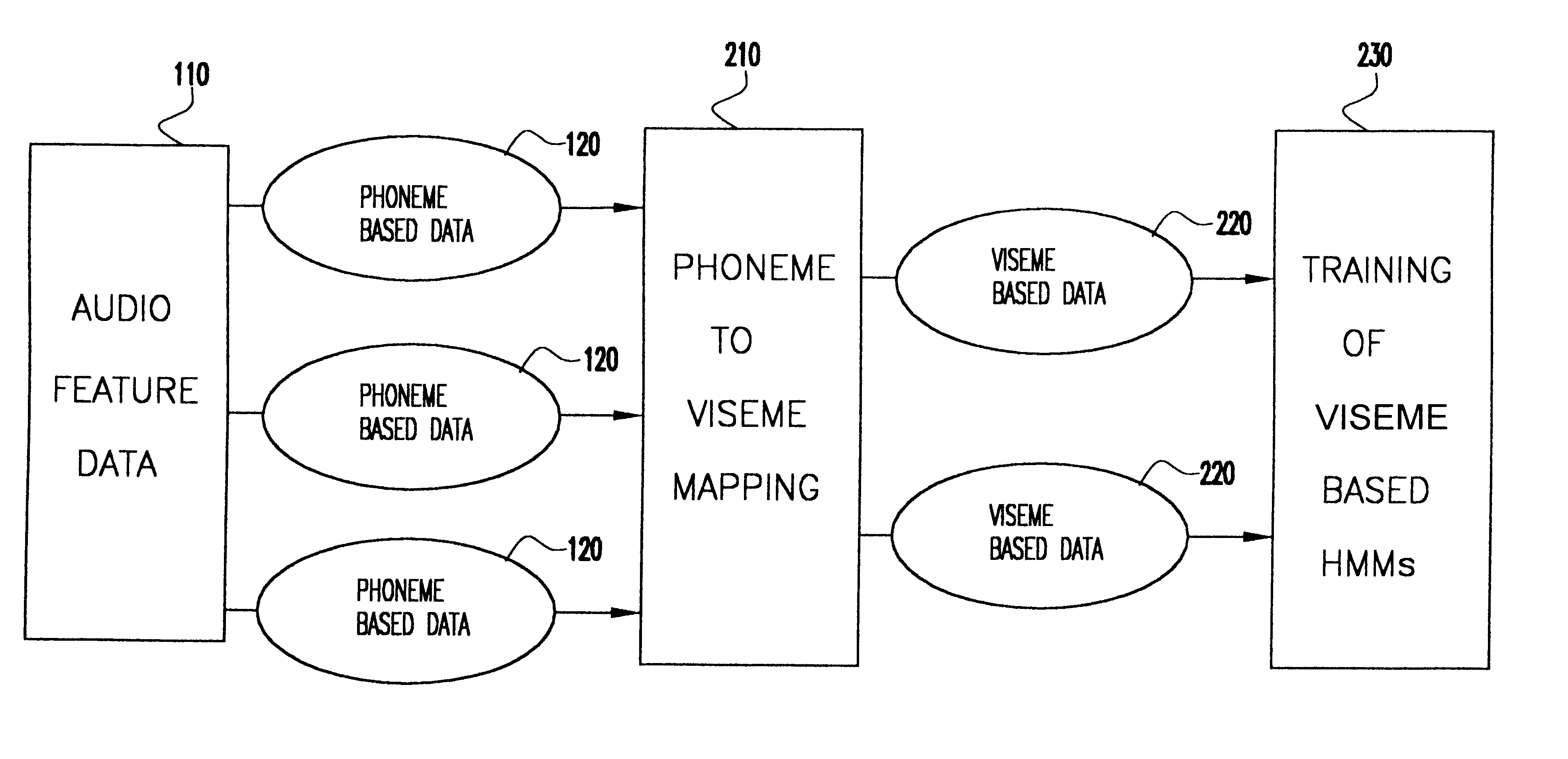

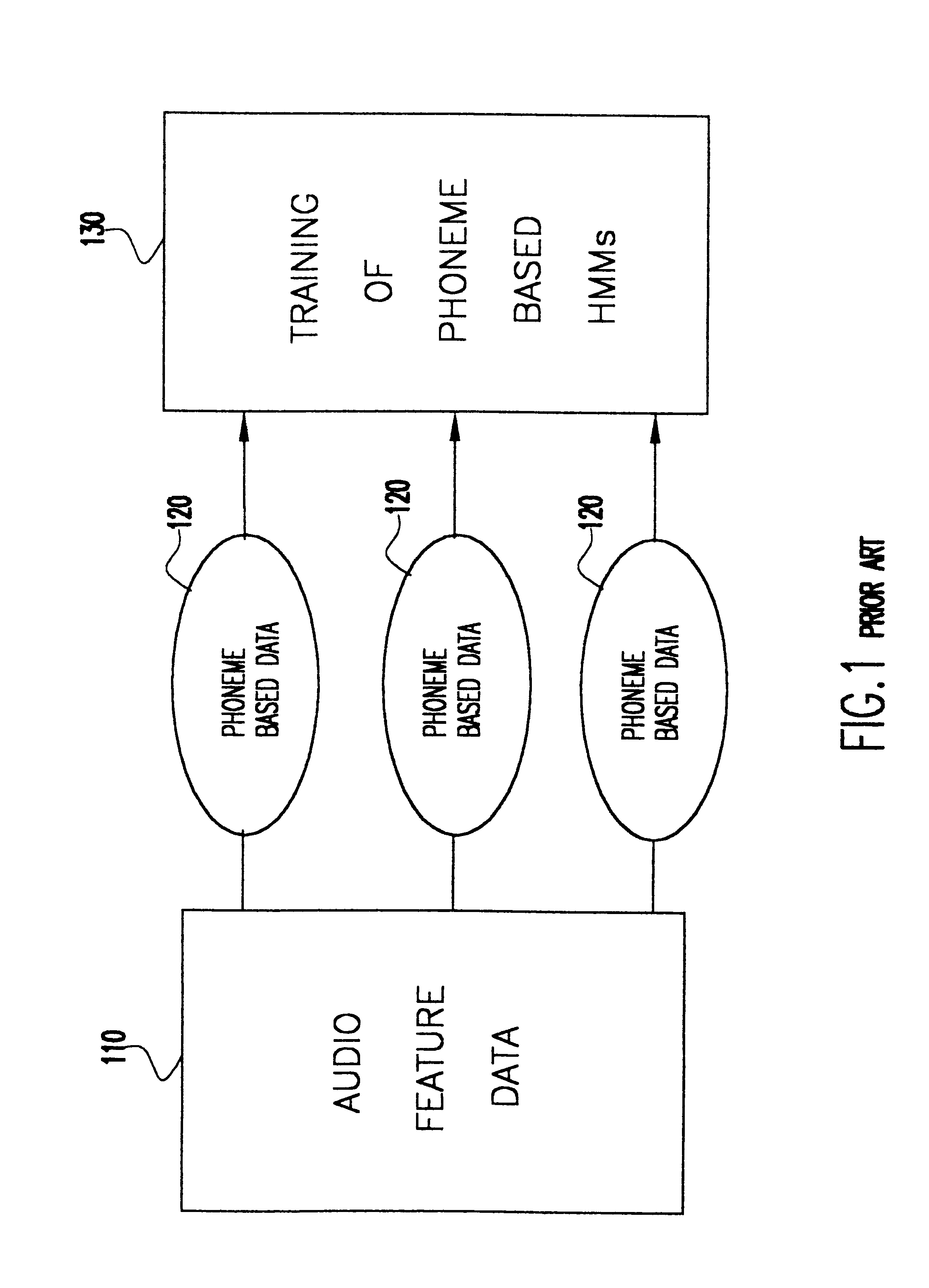

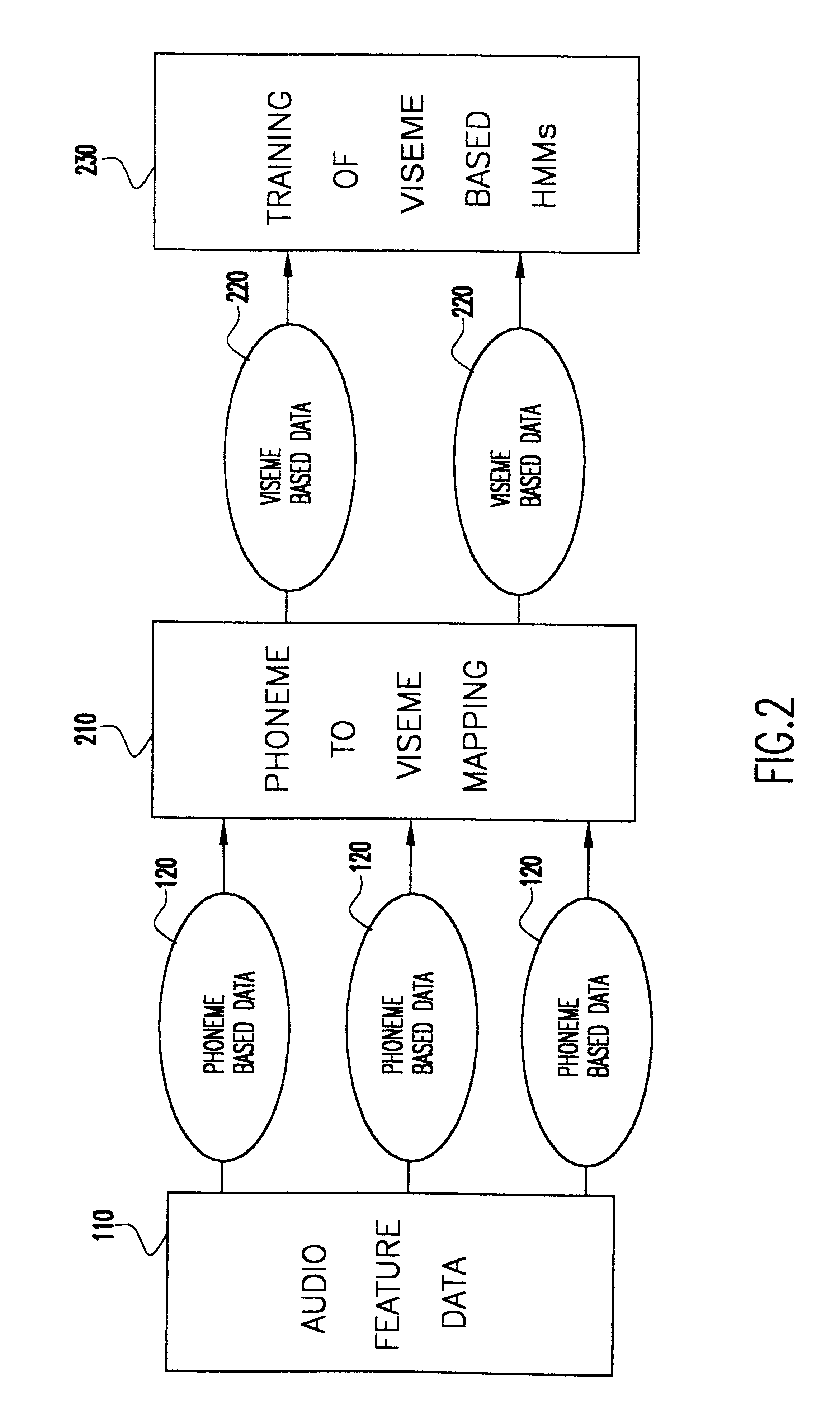

Speech driven lip synthesis using viseme based hidden markov models

InactiveUS6366885B1Shorten the timeSmall sizeElectronic editing digitised analogue information signalsRecord information storageNODALTraining phase

A method of speech driven lip synthesis which applies viseme based training models to units of visual speech. The audio data is grouped into a smaller number of visually distinct visemes rather than the larger number of phonemes. These visemes then form the basis for a Hidden Markov Model (HMM) state sequence or the output nodes of a neural network. During the training phase, audio and visual features are extracted from input speech, which is then aligned according to the apparent viseme sequence with the corresponding audio features being used to calculate the HMM state output probabilities or the output of the neutral network. During the synthesis phase, the acoustic input is aligned with the most likely viseme HMM sequence (in the case of an HMM based model) or with the nodes of the network (in the case of a neural network based system), which is then used for animation.

Owner:UNILOC 2017 LLC

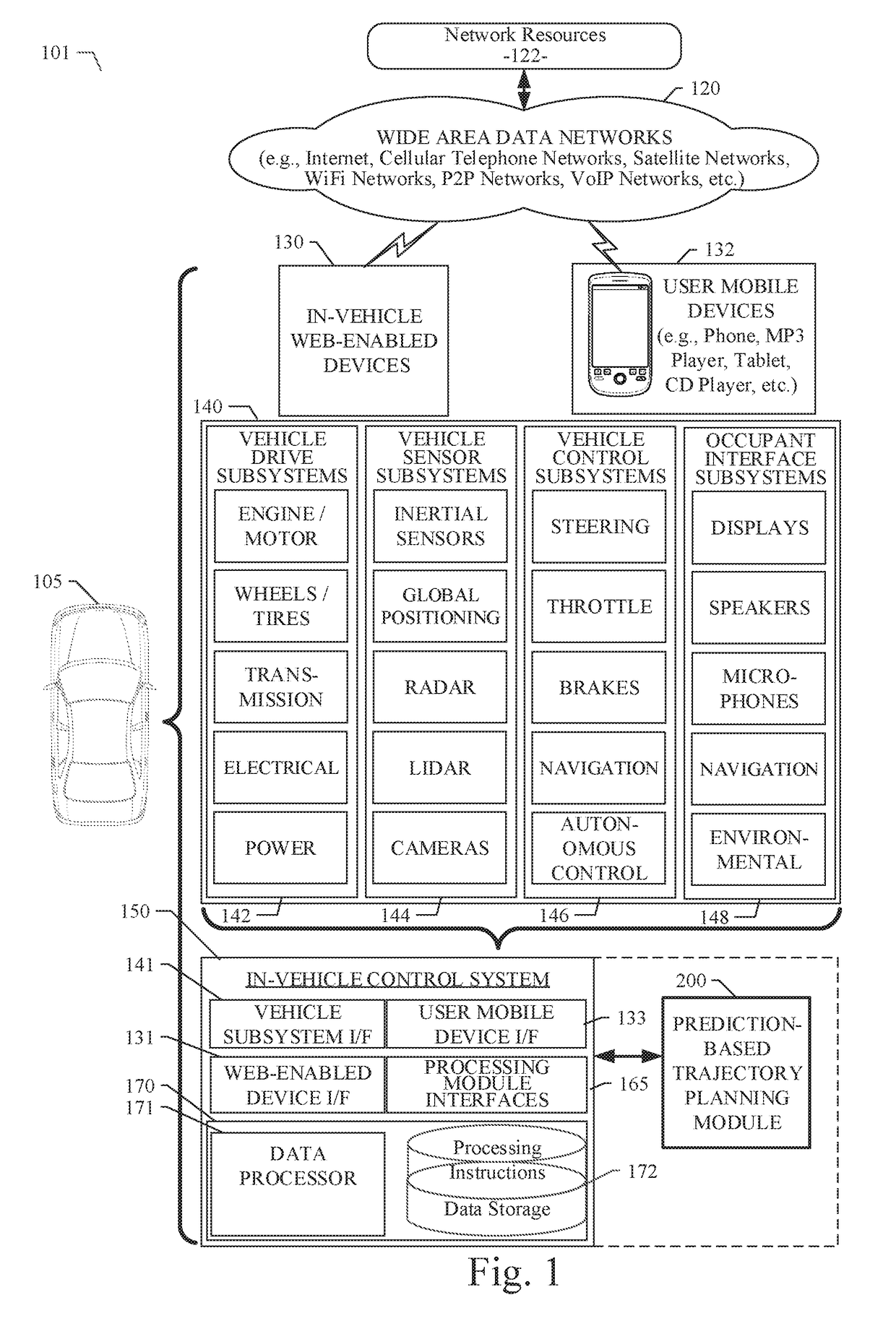

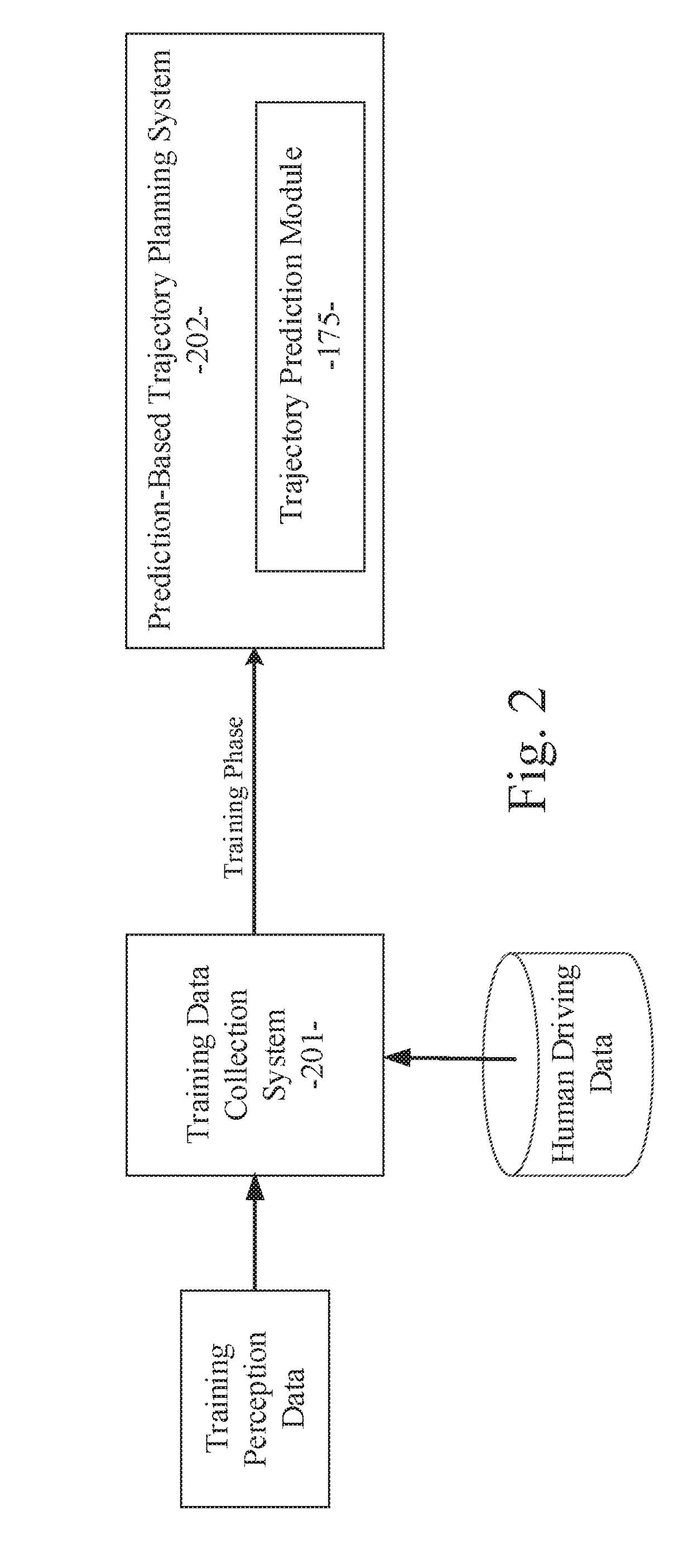

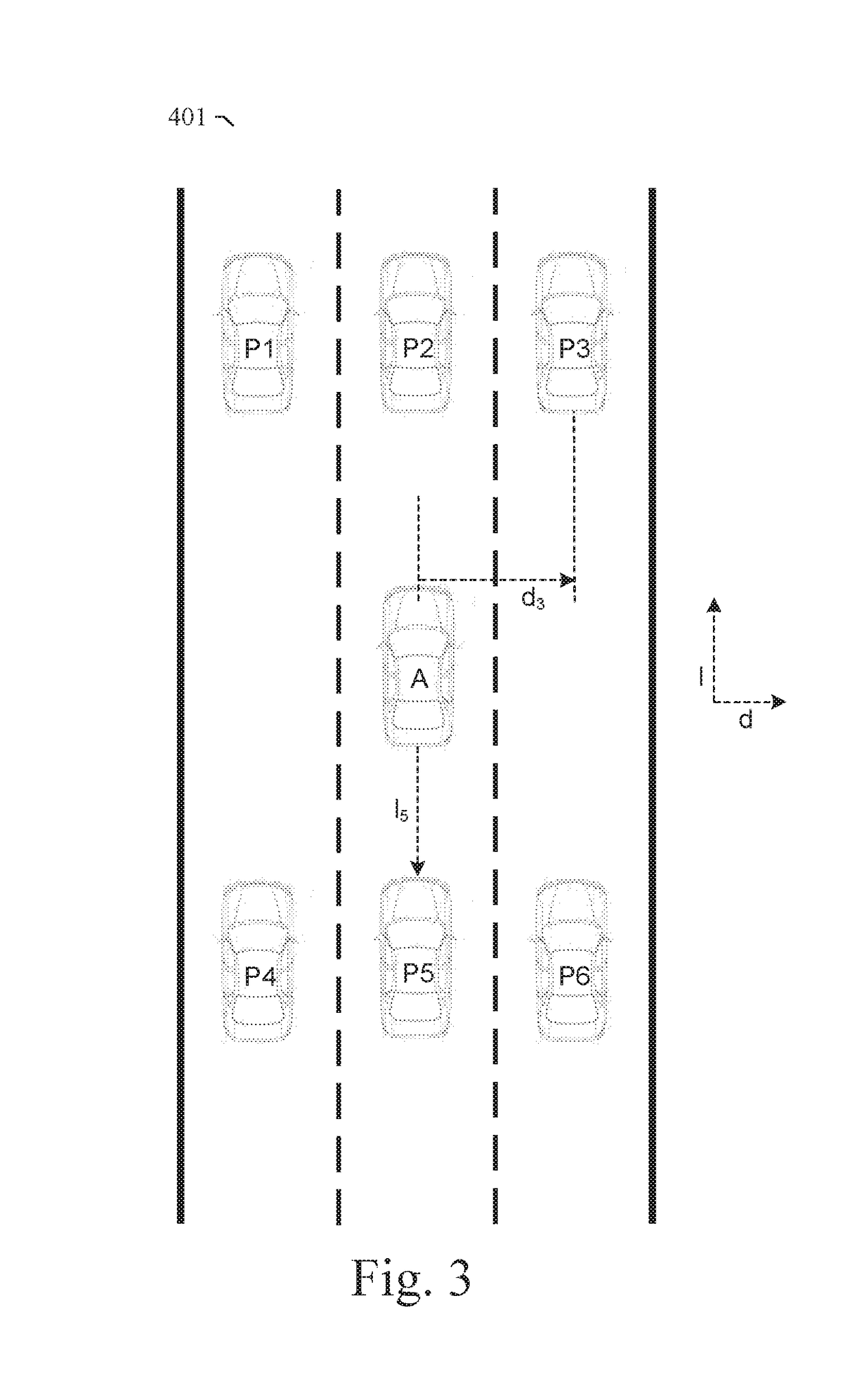

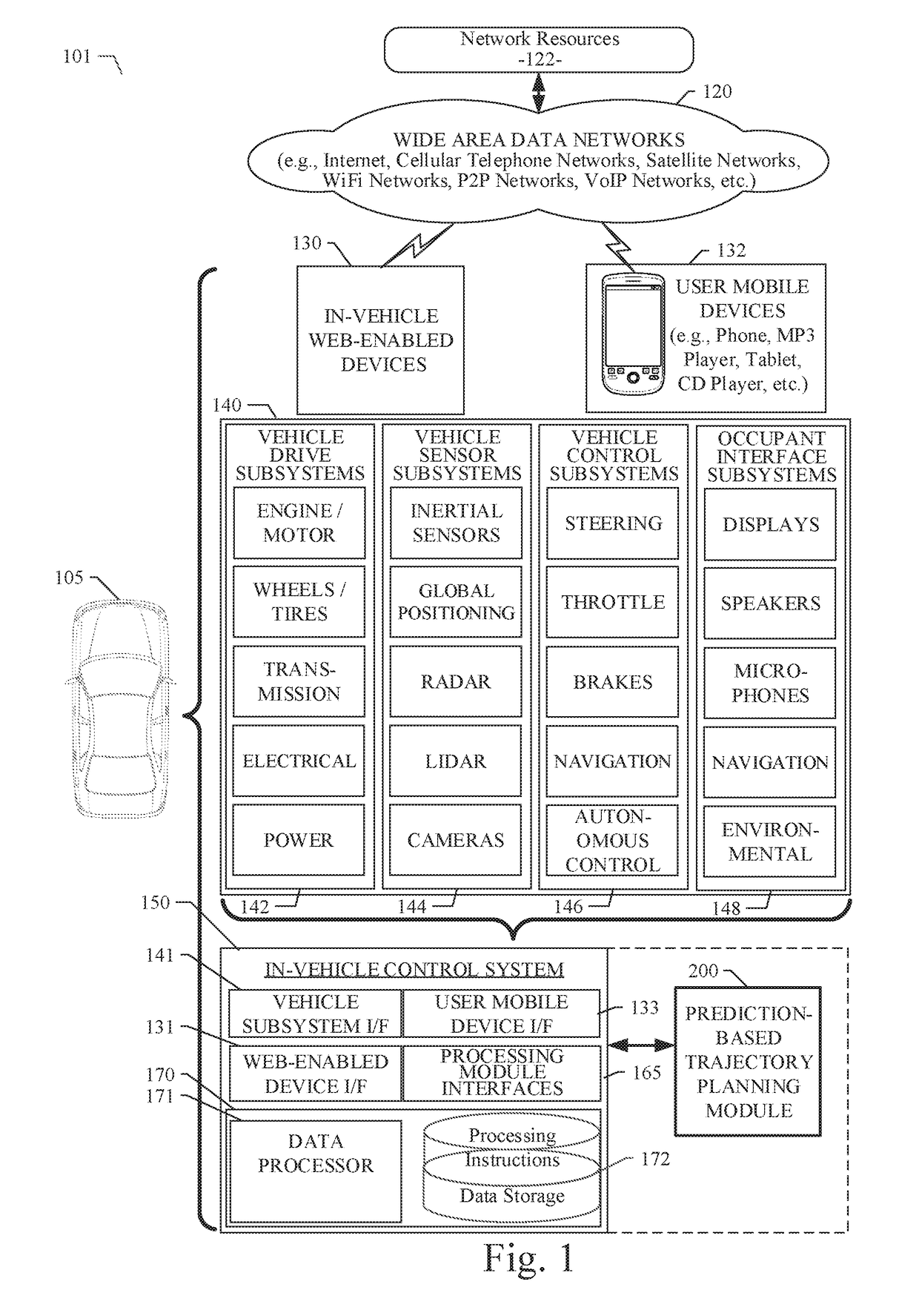

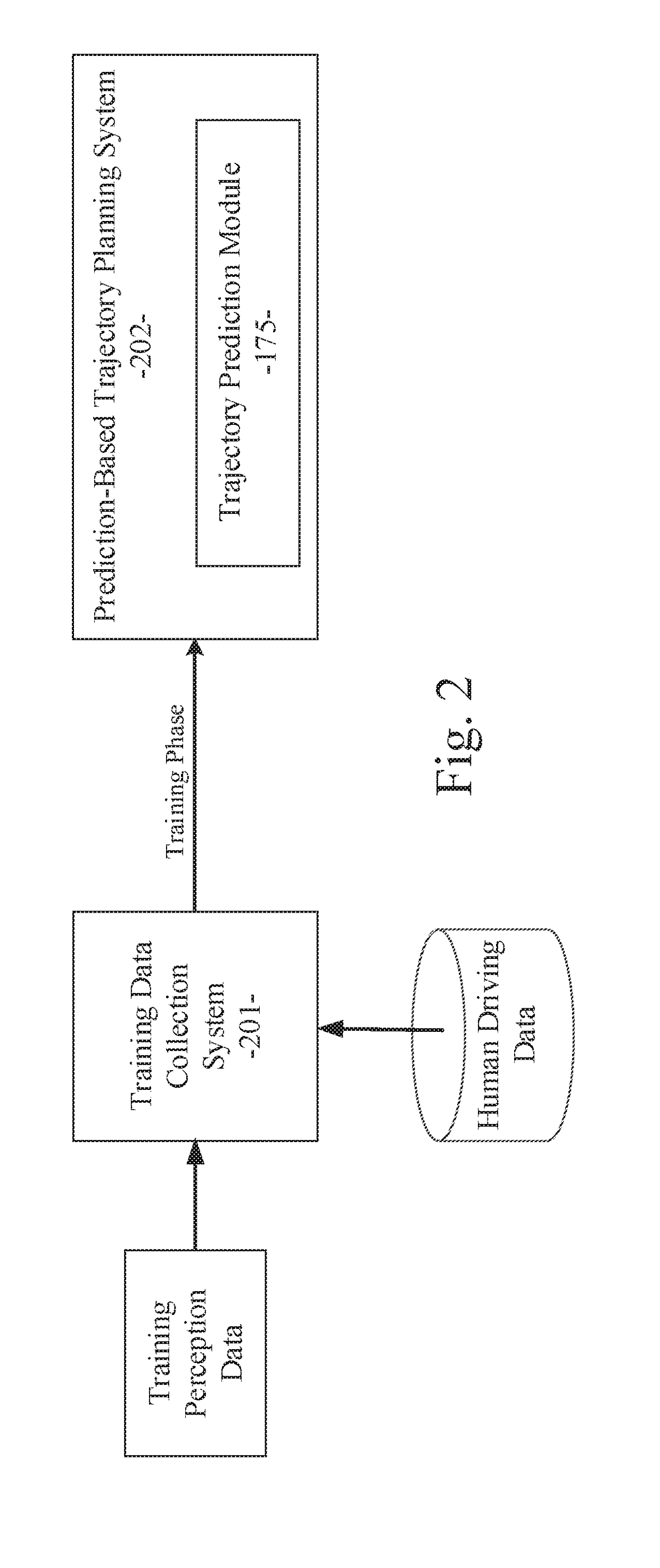

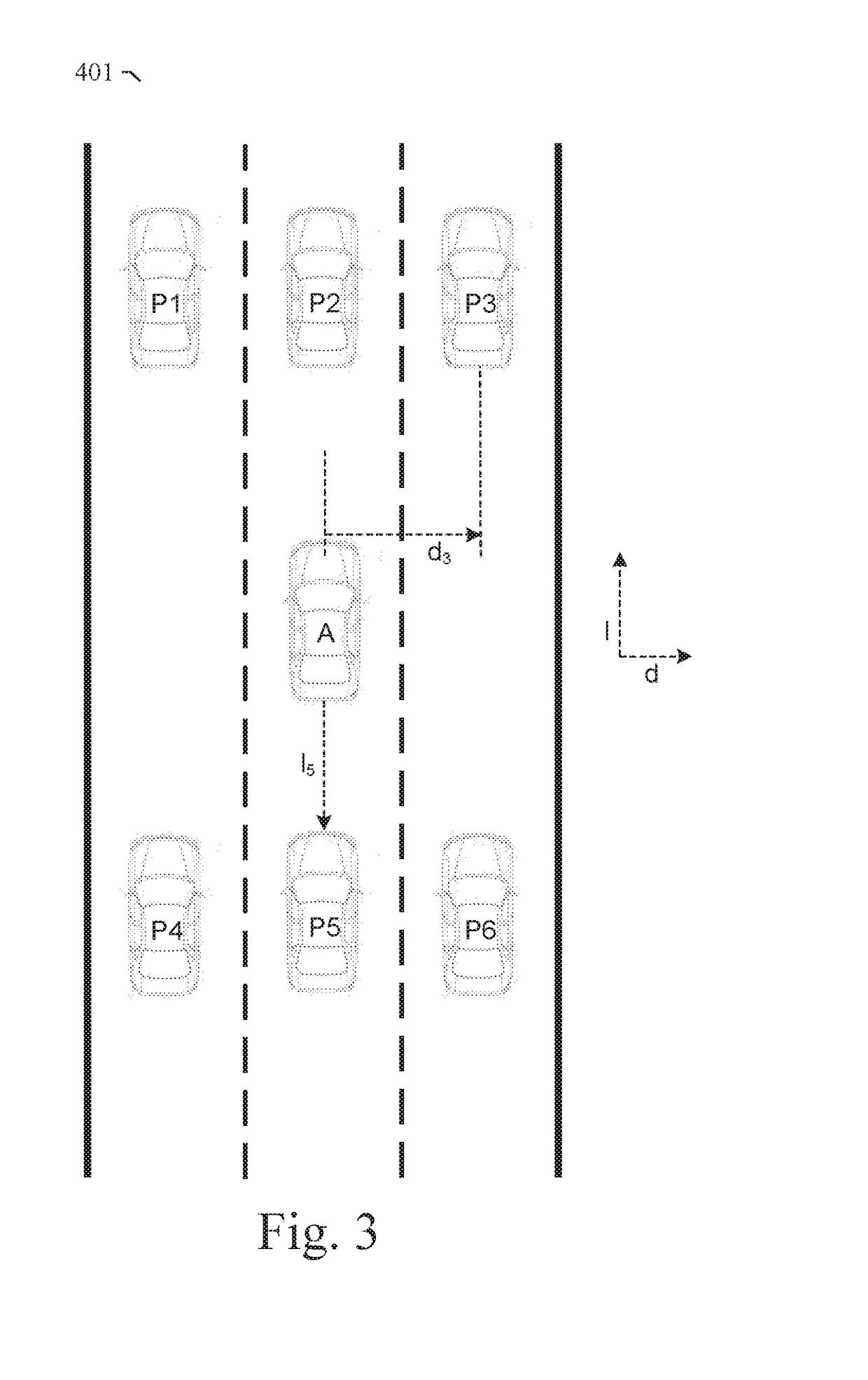

Prediction-based system and method for trajectory planning of autonomous vehicles

ActiveUS20190072965A1Autonomous decision making processAnti-collision systemsGround truthTraining phase

A prediction-based system and method for trajectory planning of autonomous vehicles are disclosed. A particular embodiment is configured to: receive training data and ground truth data from a training data collection system, the training data including perception data and context data corresponding to human driving behaviors; perform a training phase for training a trajectory prediction module using the training data; receive perception data associated with a host vehicle; and perform an operational phase for extracting host vehicle feature data and proximate vehicle context data from the perception data, using the trained trajectory prediction module to generate predicted trajectories for each of one or more proximate vehicles near the host vehicle, generating a proposed trajectory for the host vehicle, determining if the proposed trajectory for the host vehicle will conflict with any of the predicted trajectories of the proximate vehicles, and modifying the proposed trajectory for the host vehicle until conflicts are eliminated.

Owner:TUSIMPLE INC

Prediction-based system and method for trajectory planning of autonomous vehicles

ActiveUS20190072966A1Autonomous decision making processAnti-collision systemsGround truthTraining phase

A prediction-based system and method for trajectory planning of autonomous vehicles are disclosed. A particular embodiment is configured to: receive training data and ground truth data from a training data collection system, the training data including perception data and context data corresponding to human driving behaviors; perform a training phase for training a trajectory prediction module using the training data; receive perception data associated with a host vehicle; and perform an operational phase for extracting host vehicle feature data and proximate vehicle context data from the perception data, generating a proposed trajectory for the host vehicle, using the trained trajectory prediction module to generate predicted trajectories for each of one or more proximate vehicles near the host vehicle based on the proposed host vehicle trajectory, determining if the proposed trajectory for the host vehicle will conflict with any of the predicted trajectories of the proximate vehicles, and modifying the proposed trajectory for the host vehicle until conflicts are eliminated.

Owner:TUSIMPLE INC

Method and system for identifying sentence boundaries

InactiveUS20070192309A1Efficient storageEffectively storing informationDigital data information retrievalSpecial data processing applicationsNatural language processingTraining phase

The present invention is directed to systems and methods for isolating sentence boundaries between sentences in text. Sentences of the normalized document feeds or source text are separated by determining boundaries between individual sentences, by a Bayesian algorithm, that has been seeded with rule frequencies, developed from a previous training phase, that employed a text of sentences with marked boundaries between the sentences.

Owner:JILES

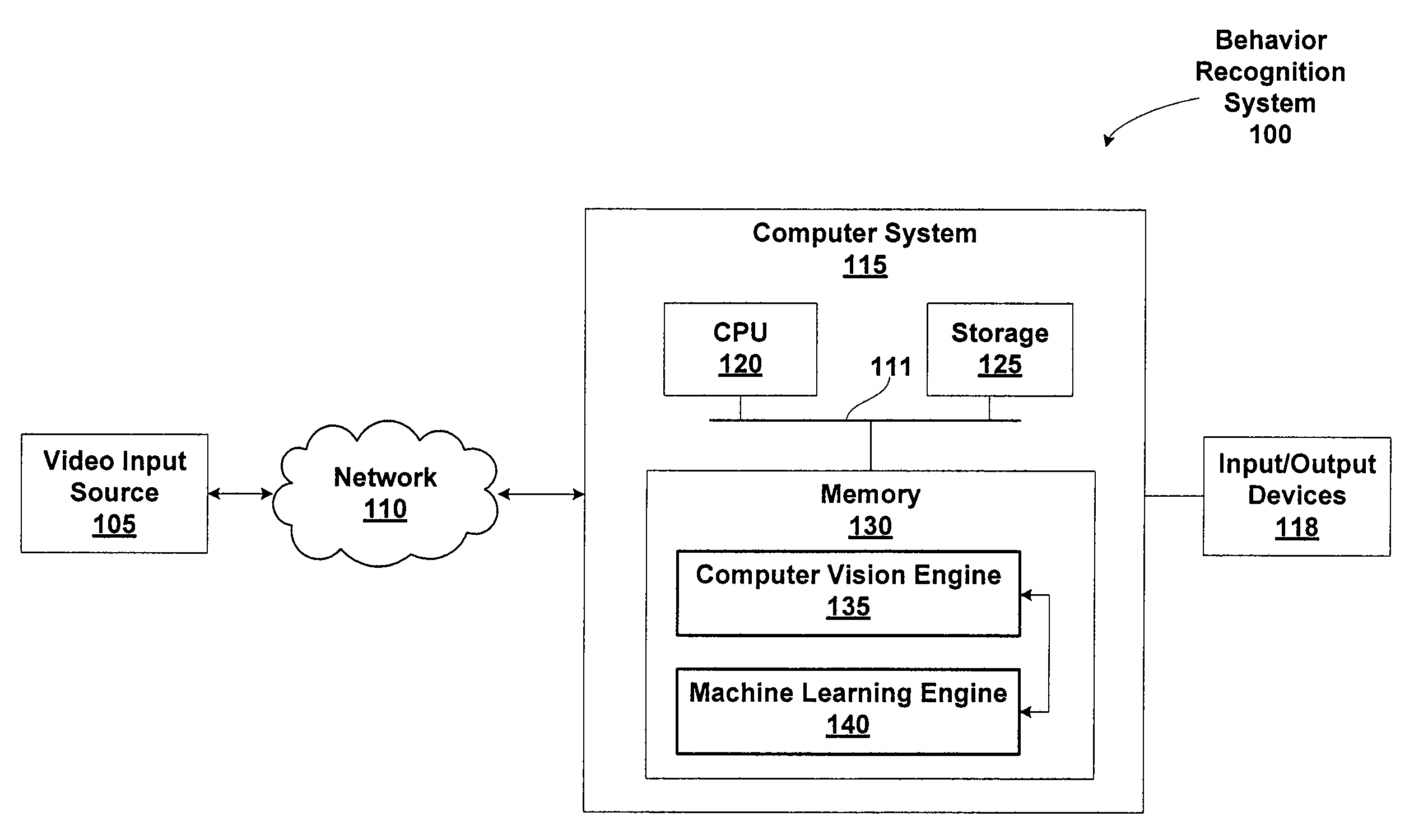

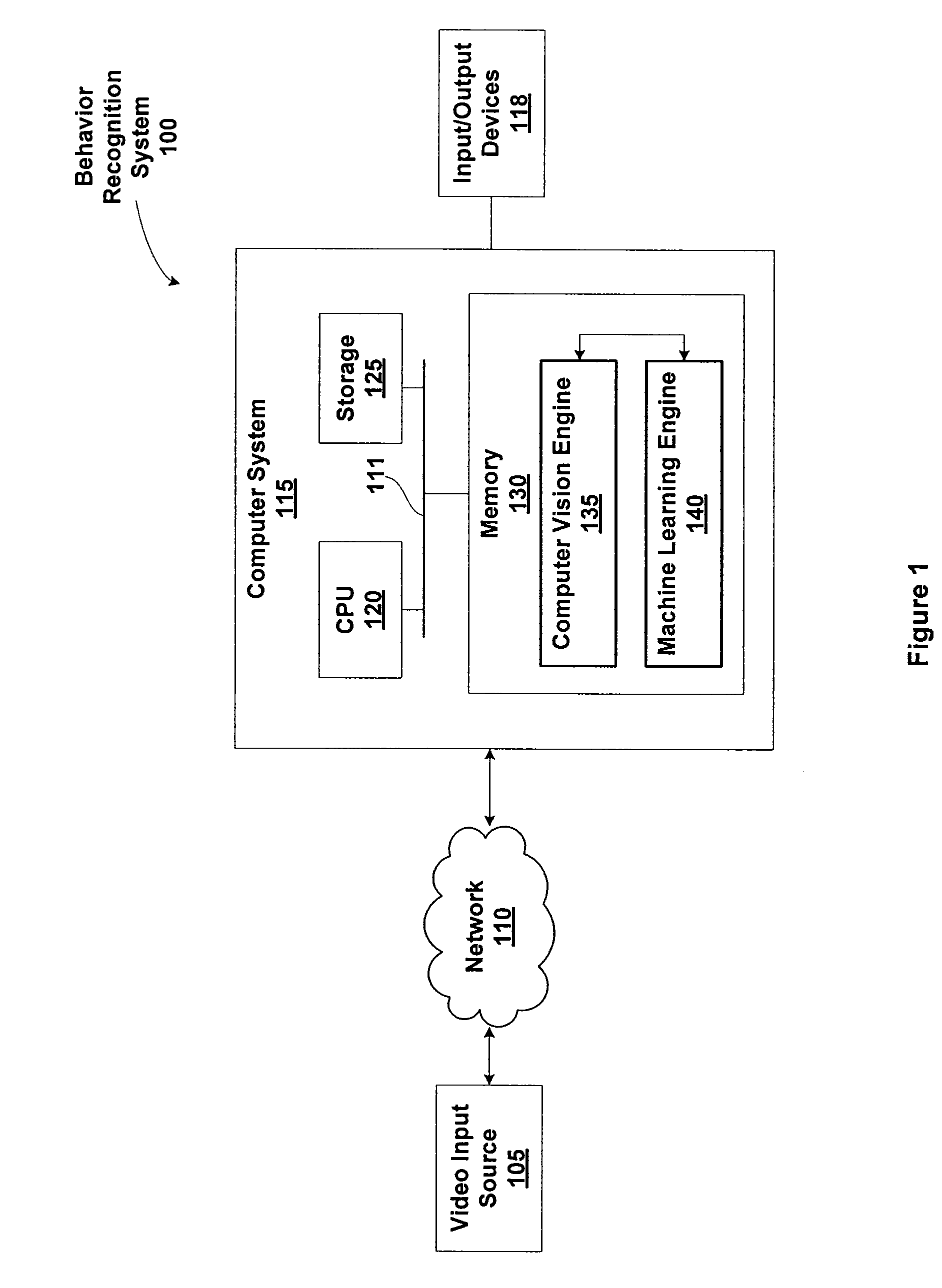

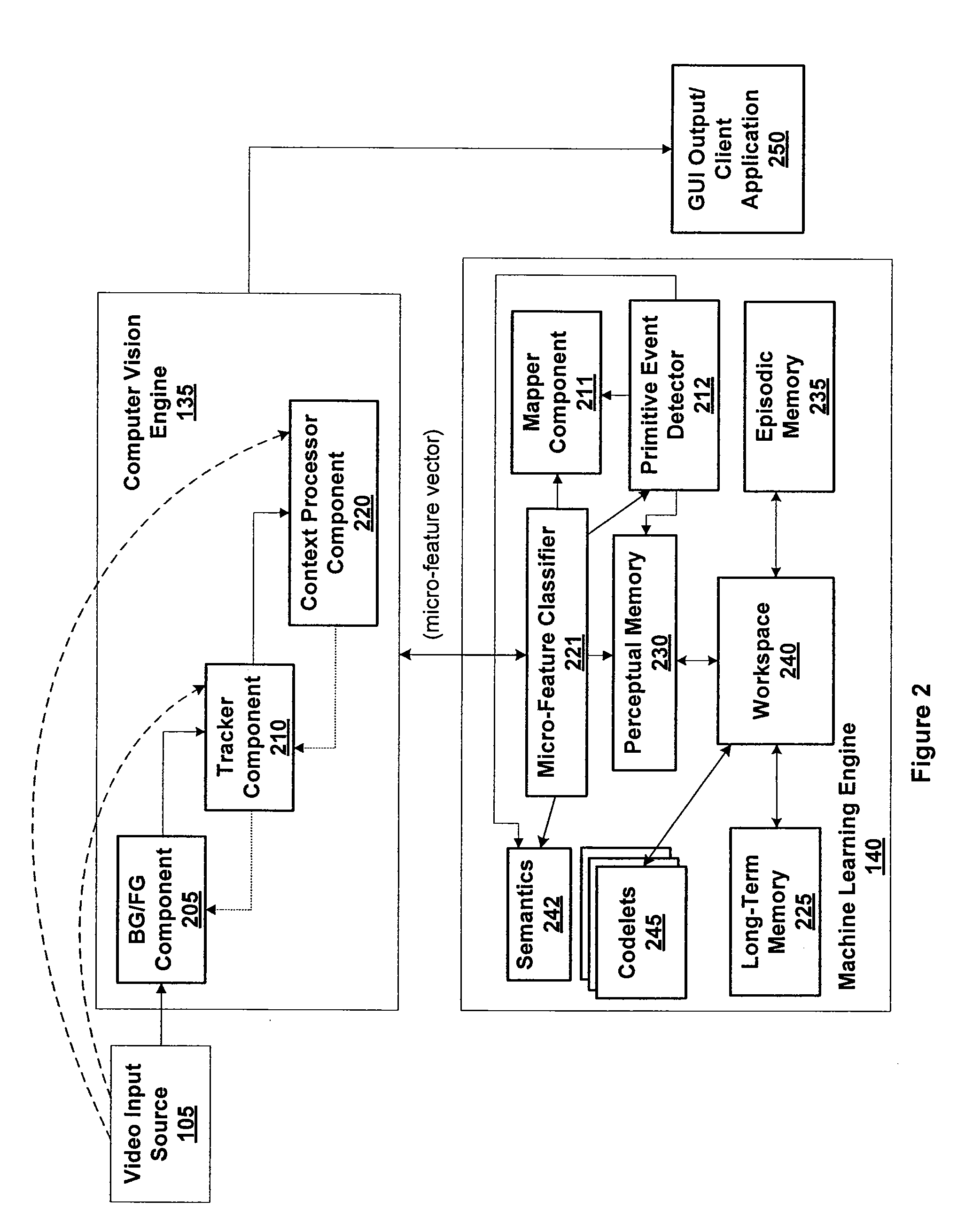

Identifying anomalous object types during classification

ActiveUS20110052068A1Character and pattern recognitionElectric/magnetic detectionTraining phaseObject definition

Techniques are disclosed for identifying anomaly object types during classification of foreground objects extracted from image data. A self-organizing map and adaptive resonance theory (SOM-ART) network is used to discover object type clusters and classify objects depicted in the image data based on pixel-level micro-features that are extracted from the image data. Importantly, the discovery of the object type clusters is unsupervised, i.e., performed independent of any training data that defines particular objects, allowing a behavior-recognition system to forgo a training phase and for object classification to proceed without being constrained by specific object definitions. The SOM-ART network is adaptive and able to learn while discovering the object type clusters and classifying objects and identifying anomaly object types.

Owner:MOTOROLA SOLUTIONS INC

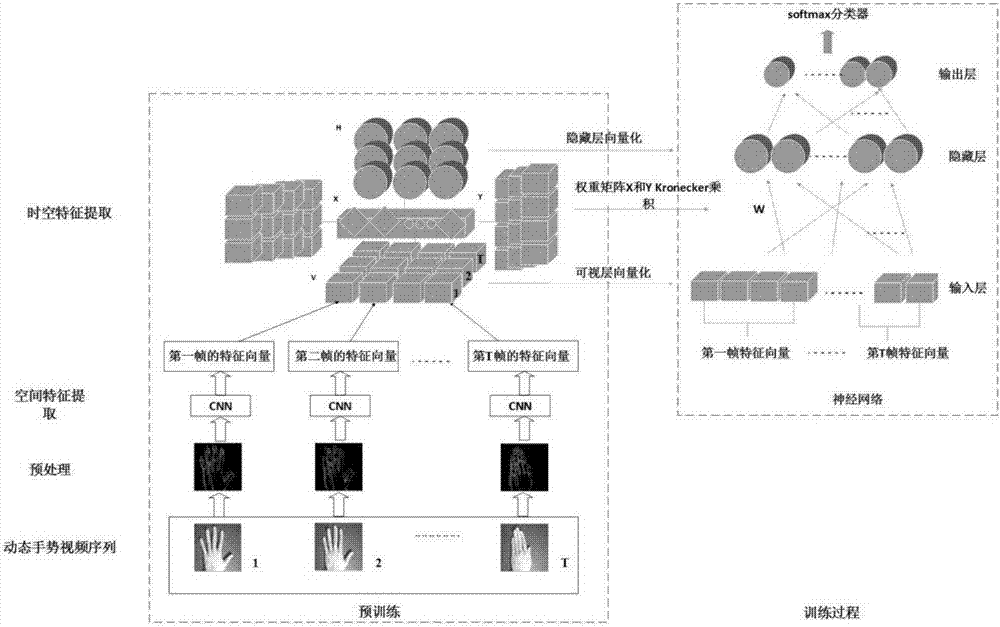

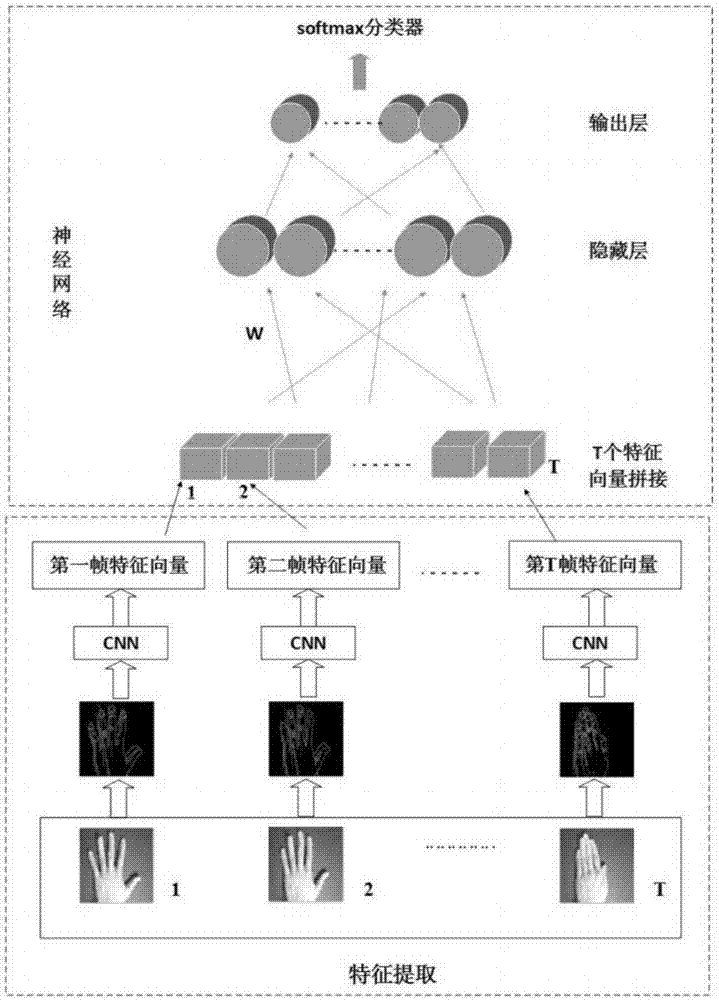

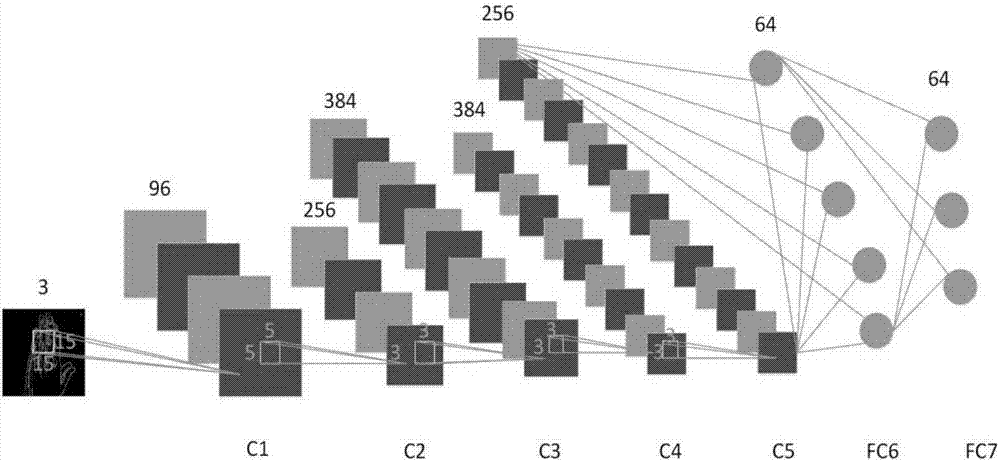

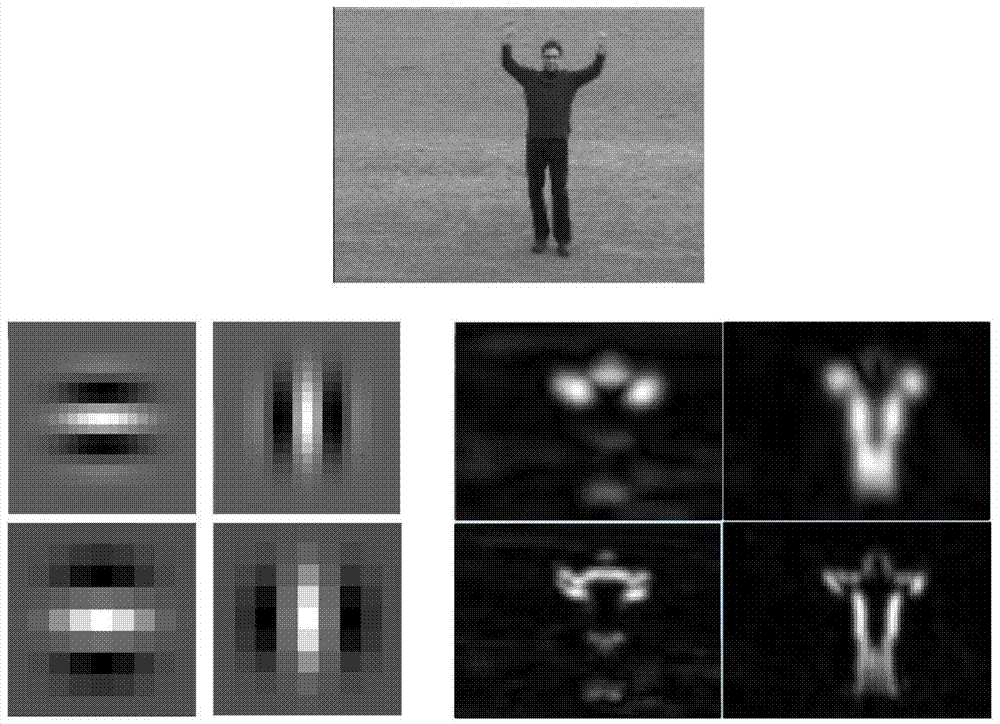

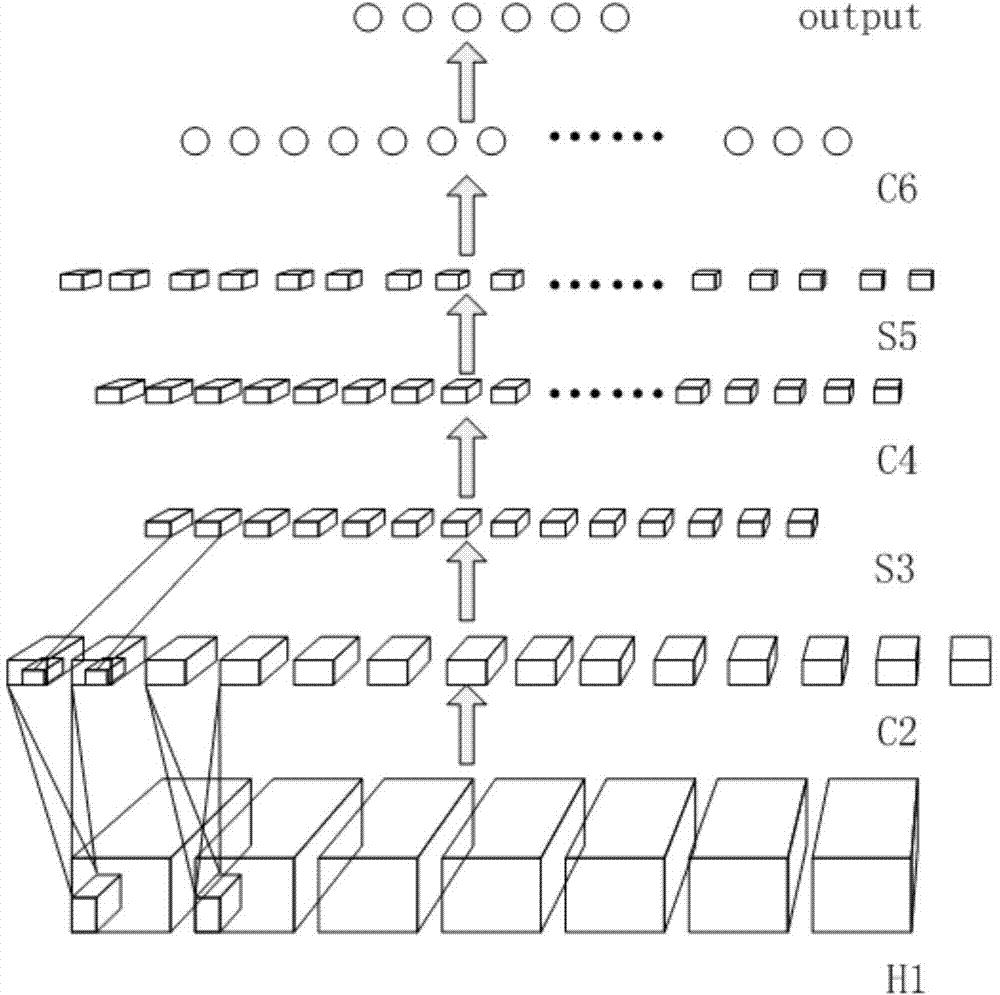

Dynamic gesture recognition method based on hybrid deep learning model

ActiveCN106991372AAchieving an efficient space-time representationEasy to identifyCharacter and pattern recognitionFrame basedModel parameters

The invention discloses a dynamic gesture recognition method based on a hybrid deep learning model. The dynamic gesture recognition method includes a training phase and a test phase. The training phase includes first, training a CNN based on an image set constituting a gesture video and then extracting spatial features of each frame of the dynamic gesture video sequence frame by frame using the trained CNN; for each gesture video sequence to be recognized, organizing the frame-level features learned by the CNN into a matrix in chronological order; inputting the matrix to an MVRBM to learn gesture action spatiotemporal features that fuse spatiotemporal attributes; and introducing a discriminative NN; and taking the MVRBM as a pre-training process of NN model parameters and network weights and bias that are learned by the MVRBM as initial values of the weights and bias of the NN, and fine-tuning the weights and bias of the NN by a back propagation algorithm. The test phase includes extracting and splicing features of each frame of the dynamic gesture video sequence frame by frame based on CNN, and inputting the features into the trained NN for gesture recognition. The effective spatiotemporal representation of the 3D dynamic gesture video sequence is realized by adopting the technical scheme of the invention.

Owner:BEIJING UNIV OF TECH

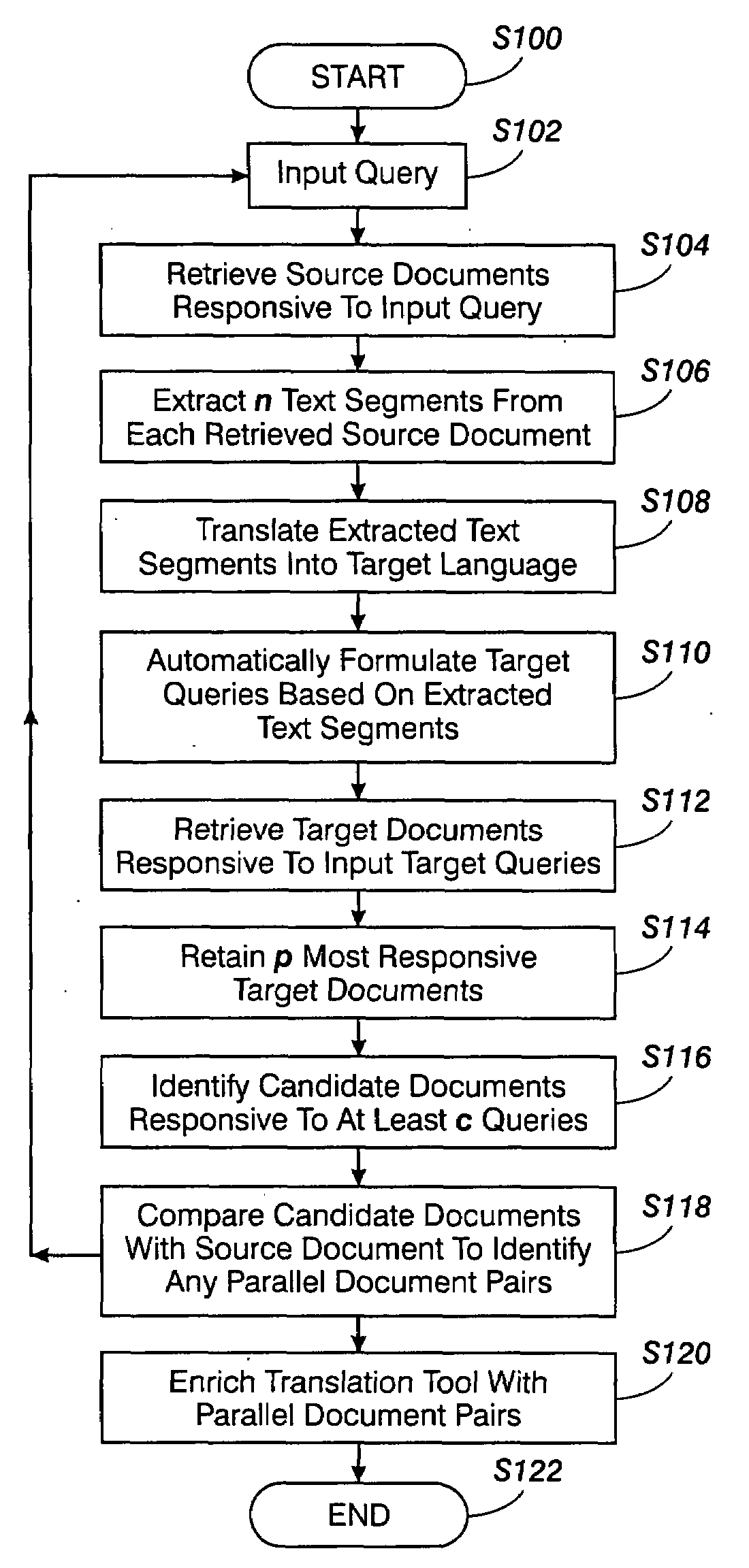

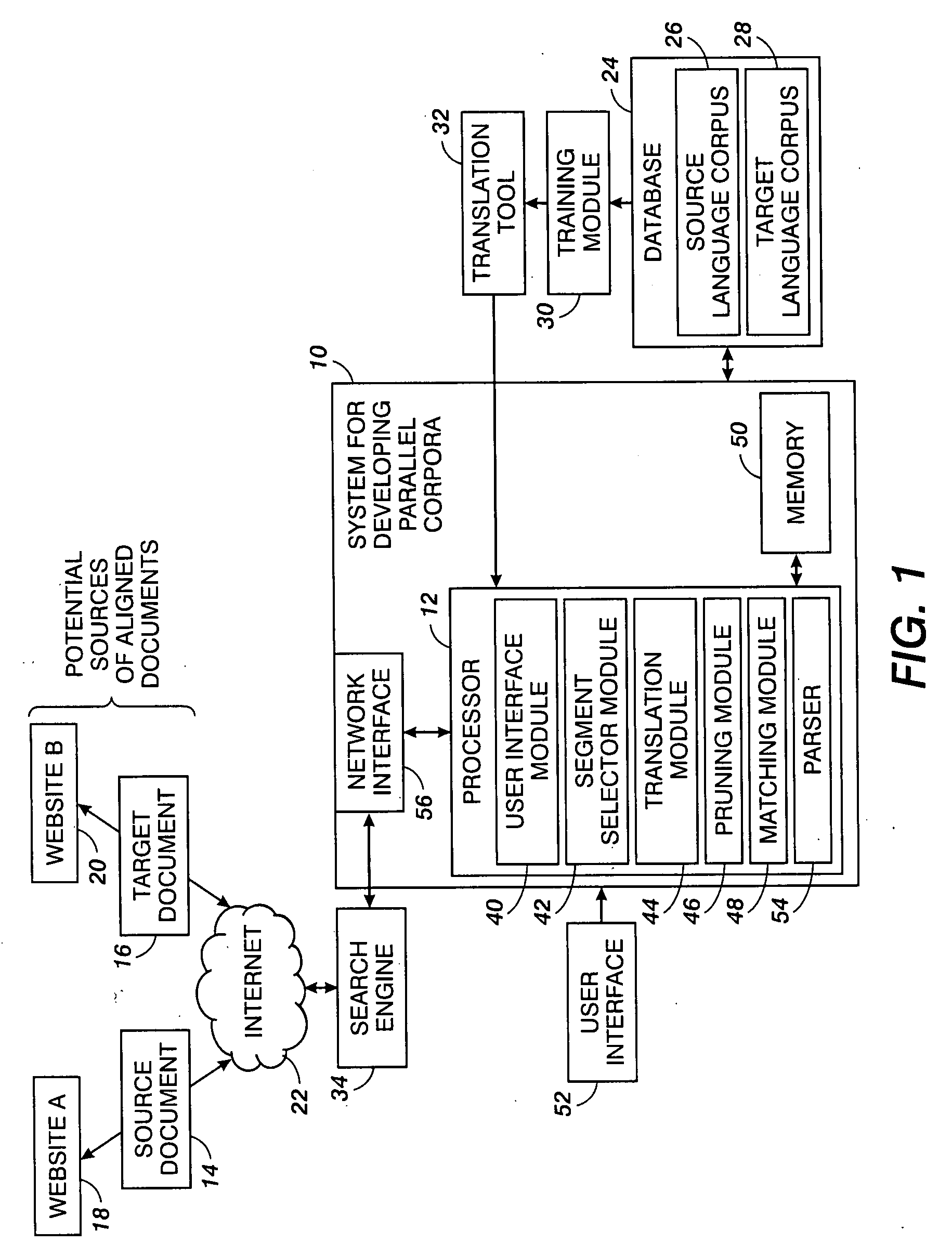

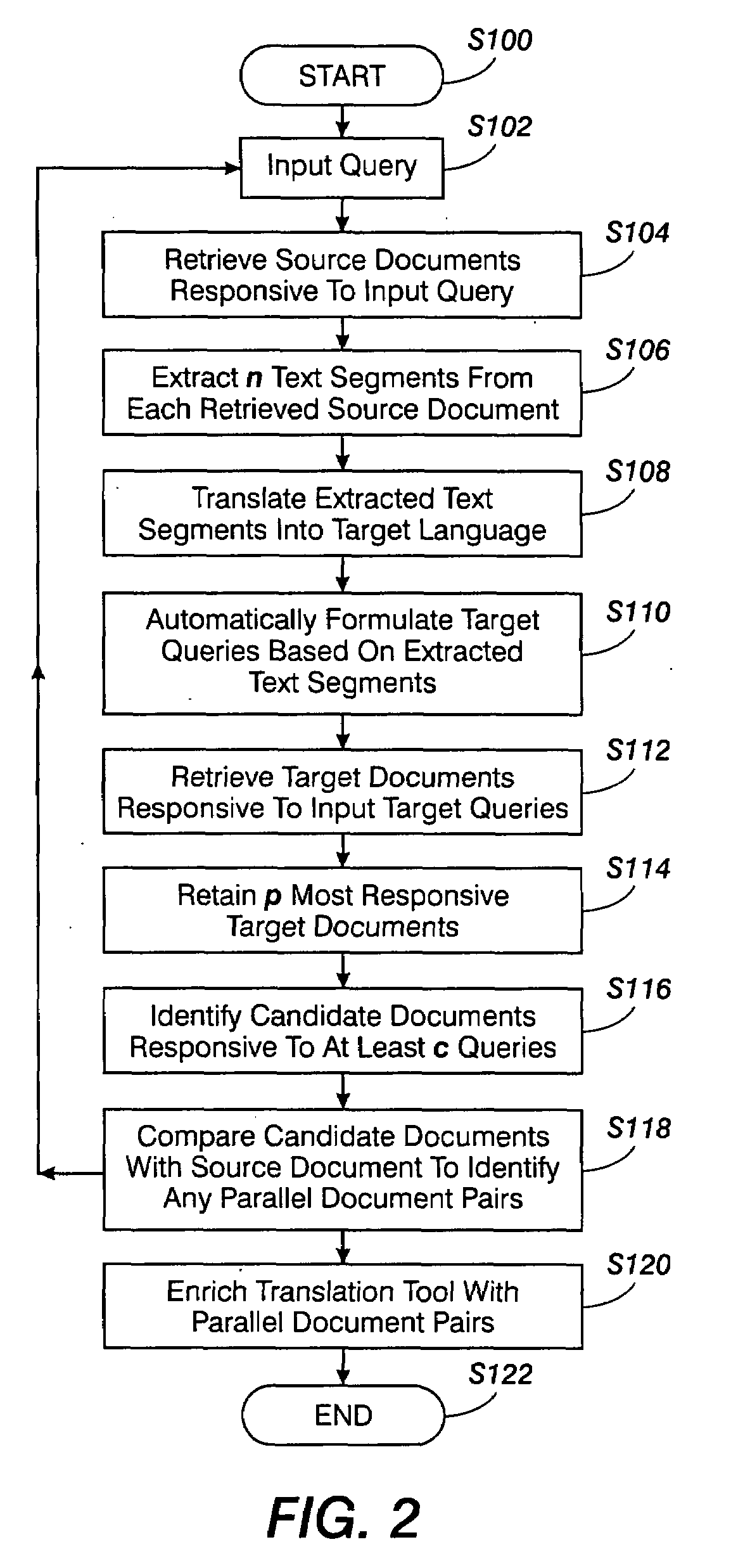

Method for building parallel corpora

InactiveUS20080262826A1Natural language translationSpecial data processing applicationsTraining phaseCo-occurrence

A method for identifying documents for enriching a statistical translation tool includes retrieving a source document which is responsive to a source language query that may be specific to a selected domain. A set of text segments is extracted from the retrieved source document and translated into corresponding target language segments with a statistical translation tool to be enriched. Target language queries based on the target language segments are formulated. Sets of target documents responsive to the target language queries are retrieved. The sets of retrieved target documents are filtered, including identifying any candidate documents which meet a selection criterion that is based on co-occurrence of a document in a plurality of the sets. The candidate documents, where found, are compared with the retrieved source document for determining whether any of the candidate documents match the source document. Matching documents can then be stored and used at their turn in a training phase for enriching the translation tool.

Owner:XEROX CORP

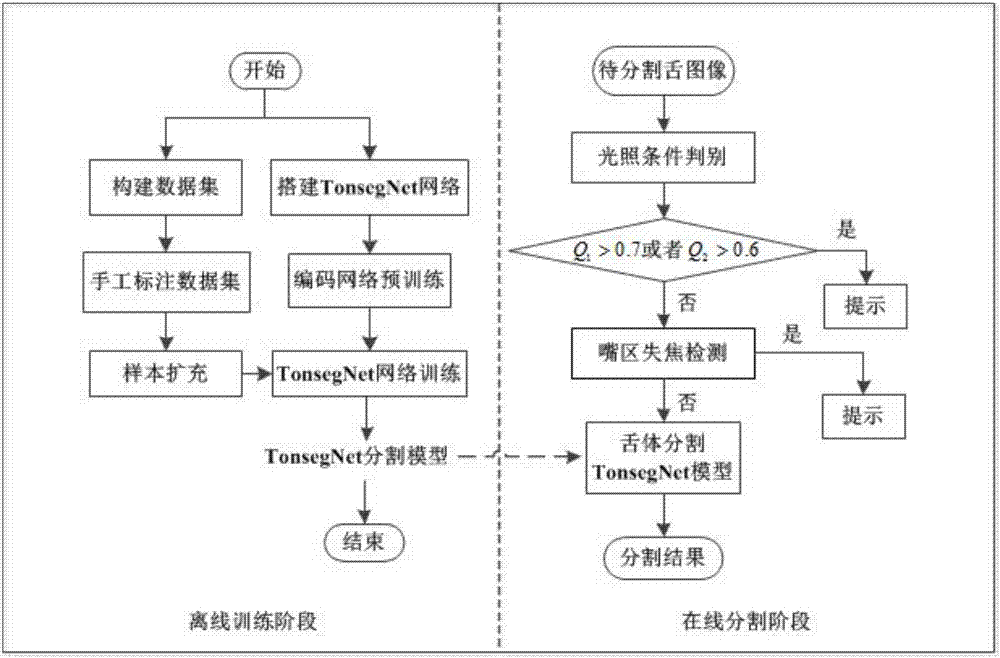

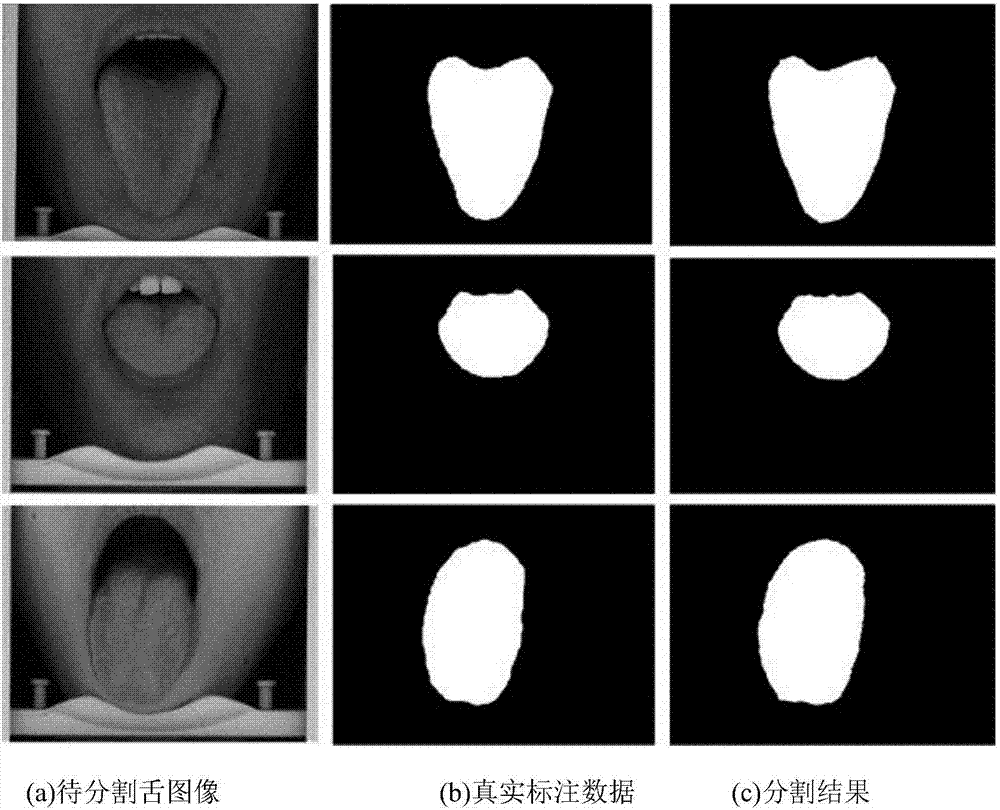

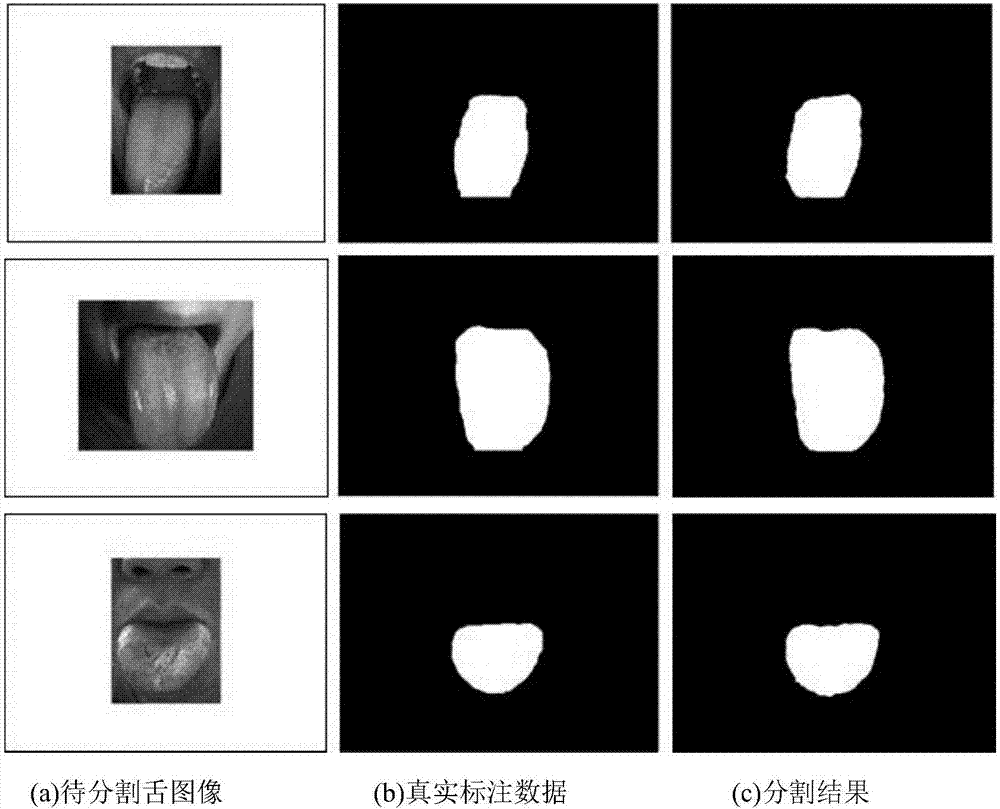

Deep convolutional neural network-based traditional Chinese medicine tongue image automatic segmentation method

ActiveCN107316307AHigh precisionMeet practical application needsImage analysisNeural architecturesAutomatic segmentationTraining phase

The invention relates to a deep convolutional neural network-based traditional Chinese medicine tongue image automatic segmentation method and belongs to the computer vision field and traditional Chinese medicine tongue diagnosis field. According to the method of the invention, a convolutional neural network structure is designed; collected sample data are adopted to train the network, so that a network model can be obtained; and the model is adopted to automatically segment a traditional Chinese medicine tongue image. The method includes an offline training phase and an online segmentation phase. The method can be applied to both closed type and open tongue image acquisition environments and can effectively improve the accuracy and robustness of the automatic segmentation of the traditional Chinese medicine tongue image. The method of the present invention specifically relates to deep learning, semantic segmentation, image processing and other technologies.

Owner:BEIJING UNIV OF TECH

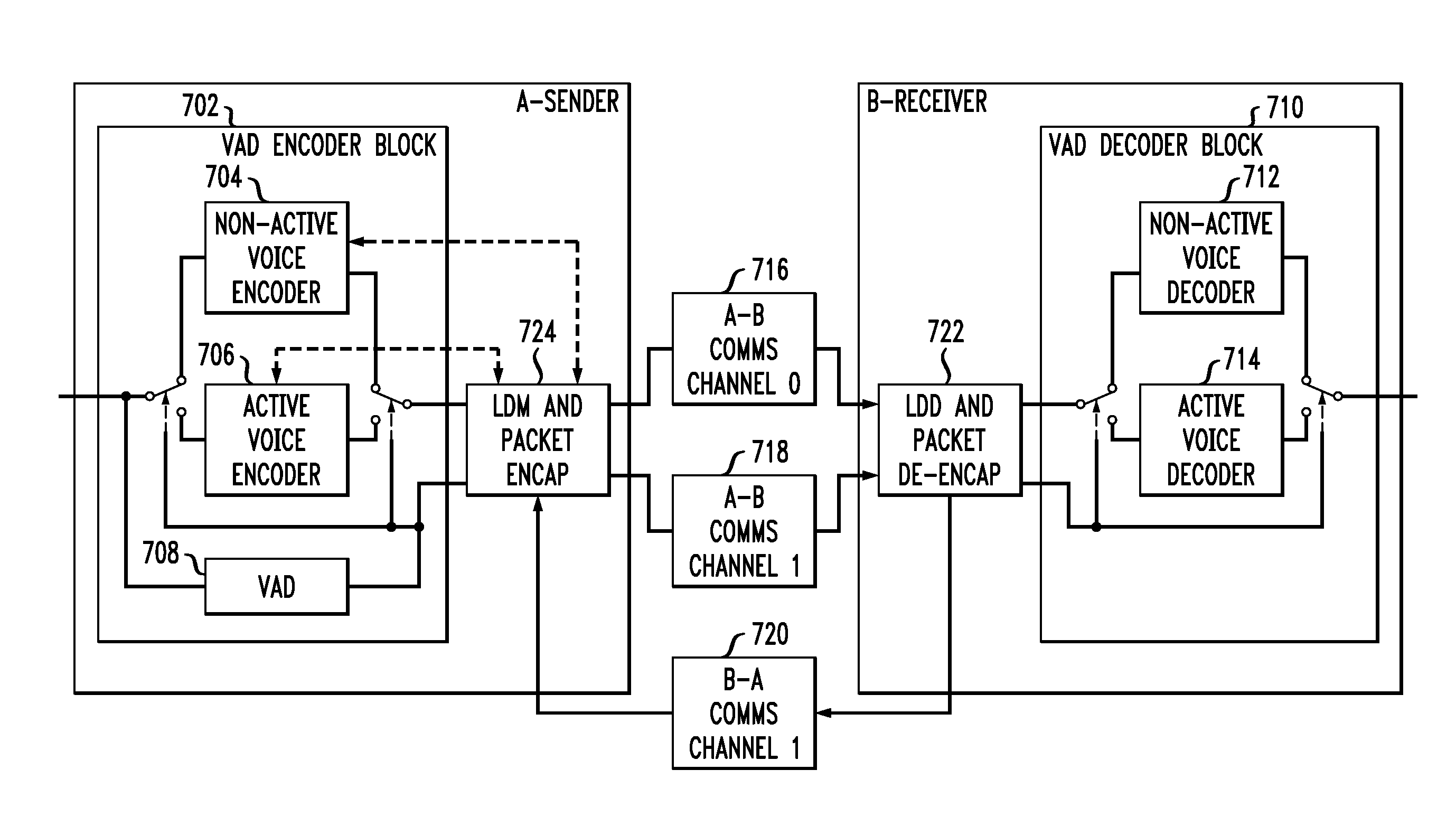

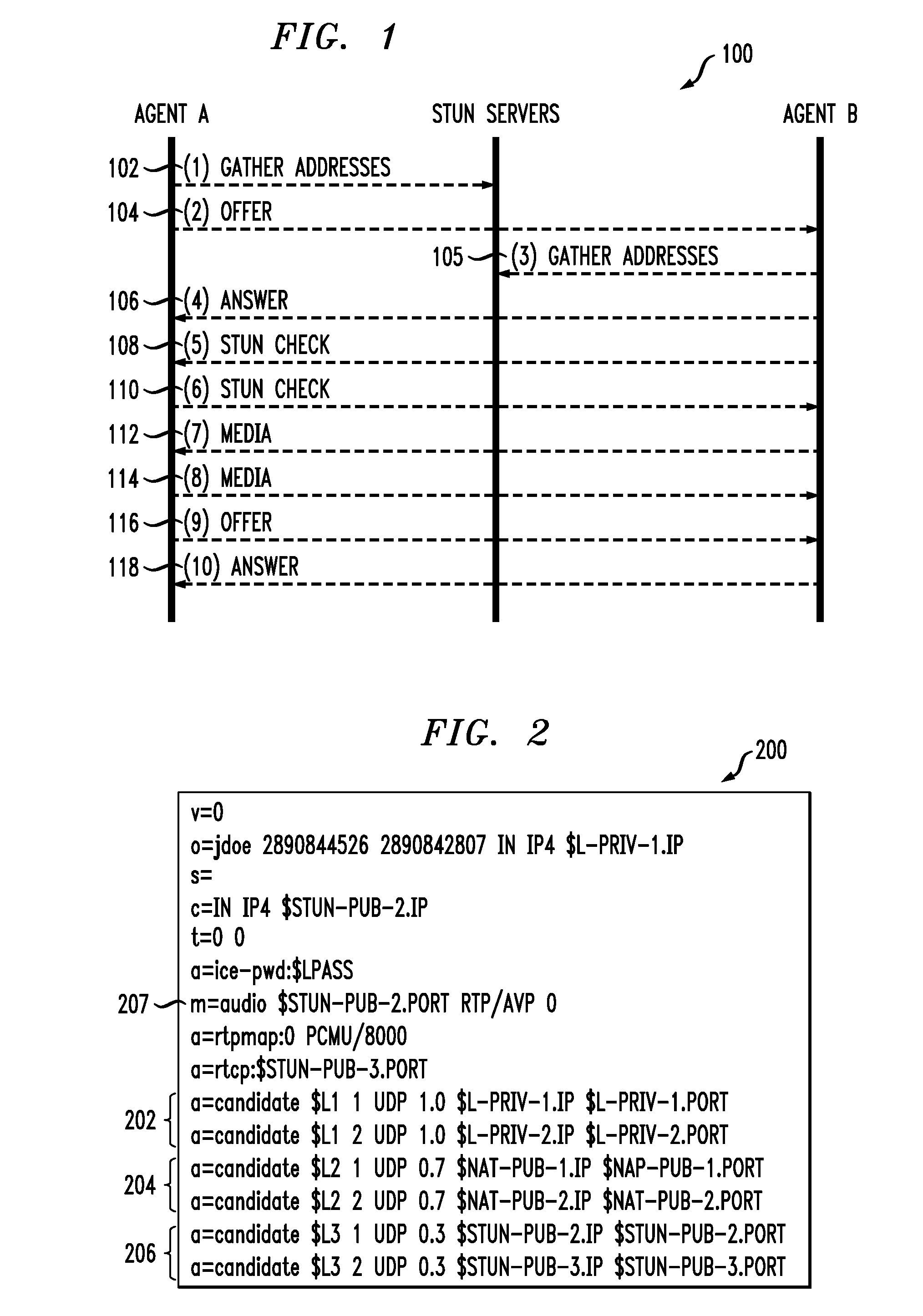

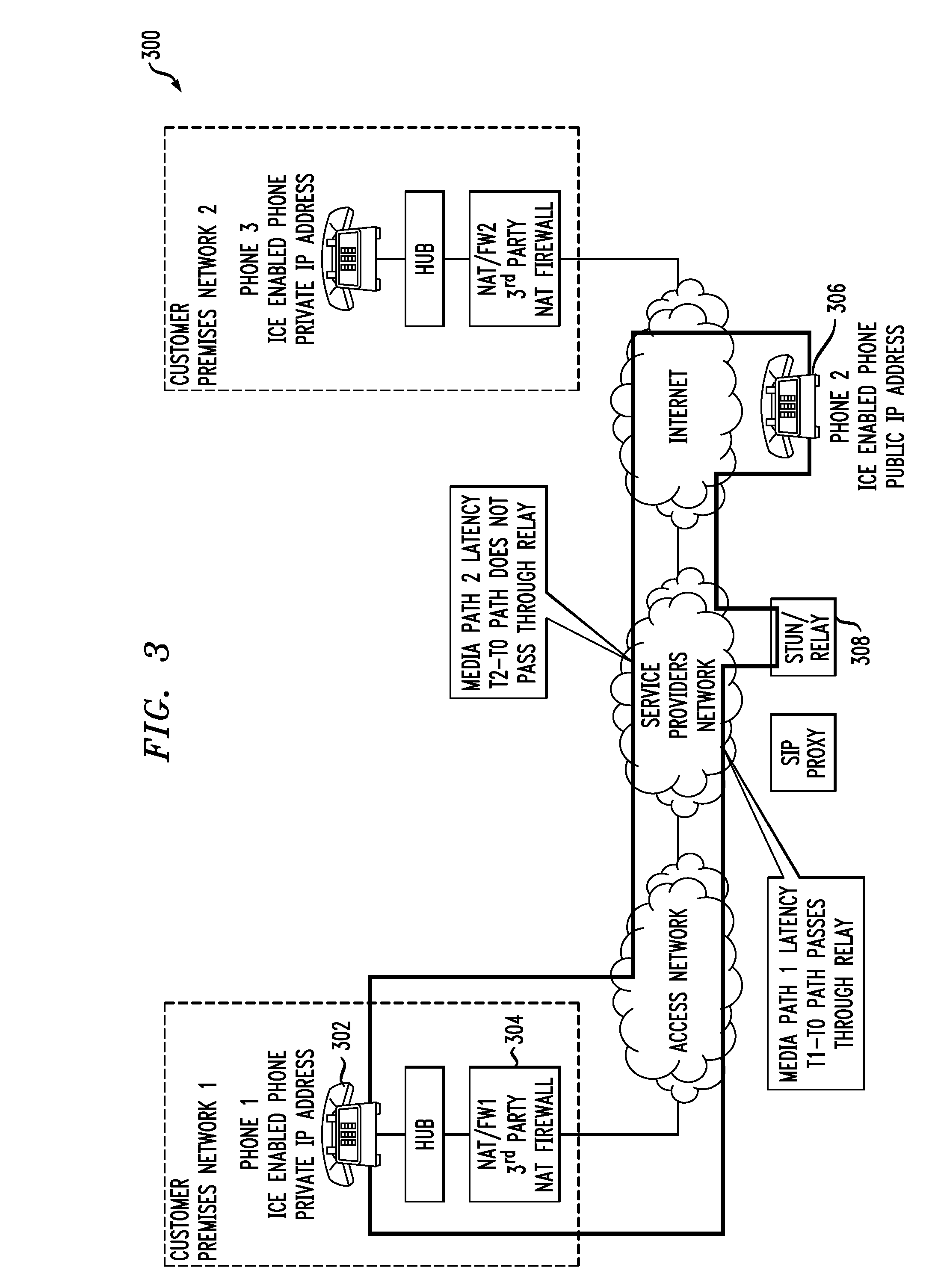

Latency differential mitigation for real time data streams

ActiveUS20080080568A1Reduce the impactImprove service qualityTime-division multiplexTransmissionTraining phaseReal-time data

Techniques for mitigating effects of differing latencies associated with real time data streams in multimedia communication networks. For example, a technique for mitigating a latency differential between a first media path and a second media path, over which a first device and a second device are able to communicate, includes the following steps. A training phase is performed to determine a latency differential between the first media path and the second media path. Prior to the first device switching a media stream, being communicated to the second device, from the first media path to the second media path, the first device synchronizes the media stream based on the determined latency differential such that a latency associated with the switched media stream is made to be substantially consistent with a latency of the second media path.

Owner:AVAYA ECS

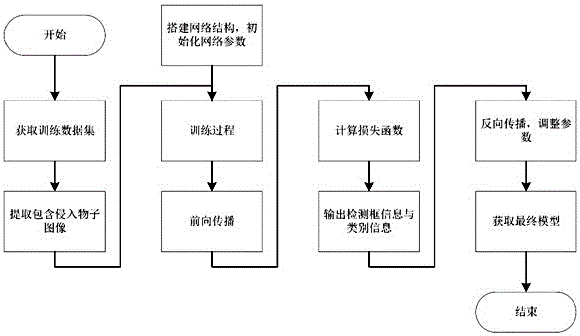

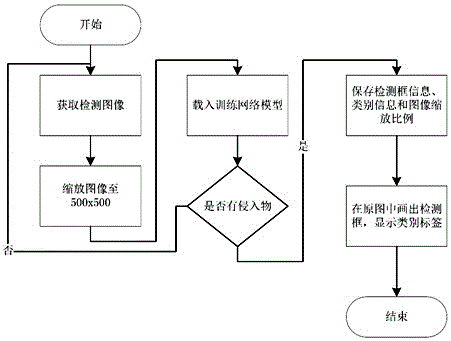

Common invader object detection and identification method of power transmission corridor based on deep learning

InactiveCN106778472AImprove efficiencyEasy to judgeCharacter and pattern recognitionForeign matterTraining phase

The invention provides a common invader object detection and identification method of a power transmission corridor based on deep learning. The method comprises that in a training phase, the deep learning method is used to learn pictures, collected by a video collector, of invasion of foreign matters, and a needed network module is obtained by learning; and in the using phase, pictures obtained by practical monitoring is transmitted to the network module to detect and identity invaders. Thus, different types of invaders can be detected and identified, the accuracy and robustness are high, a relatively high processing speed is ensured, and reliable safety guarantee is provided for power transmission lines.

Owner:CHENGDU TOPPLUSVISION TECH CO LTD

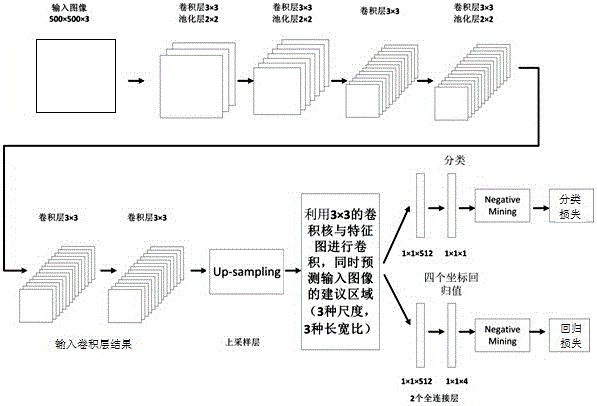

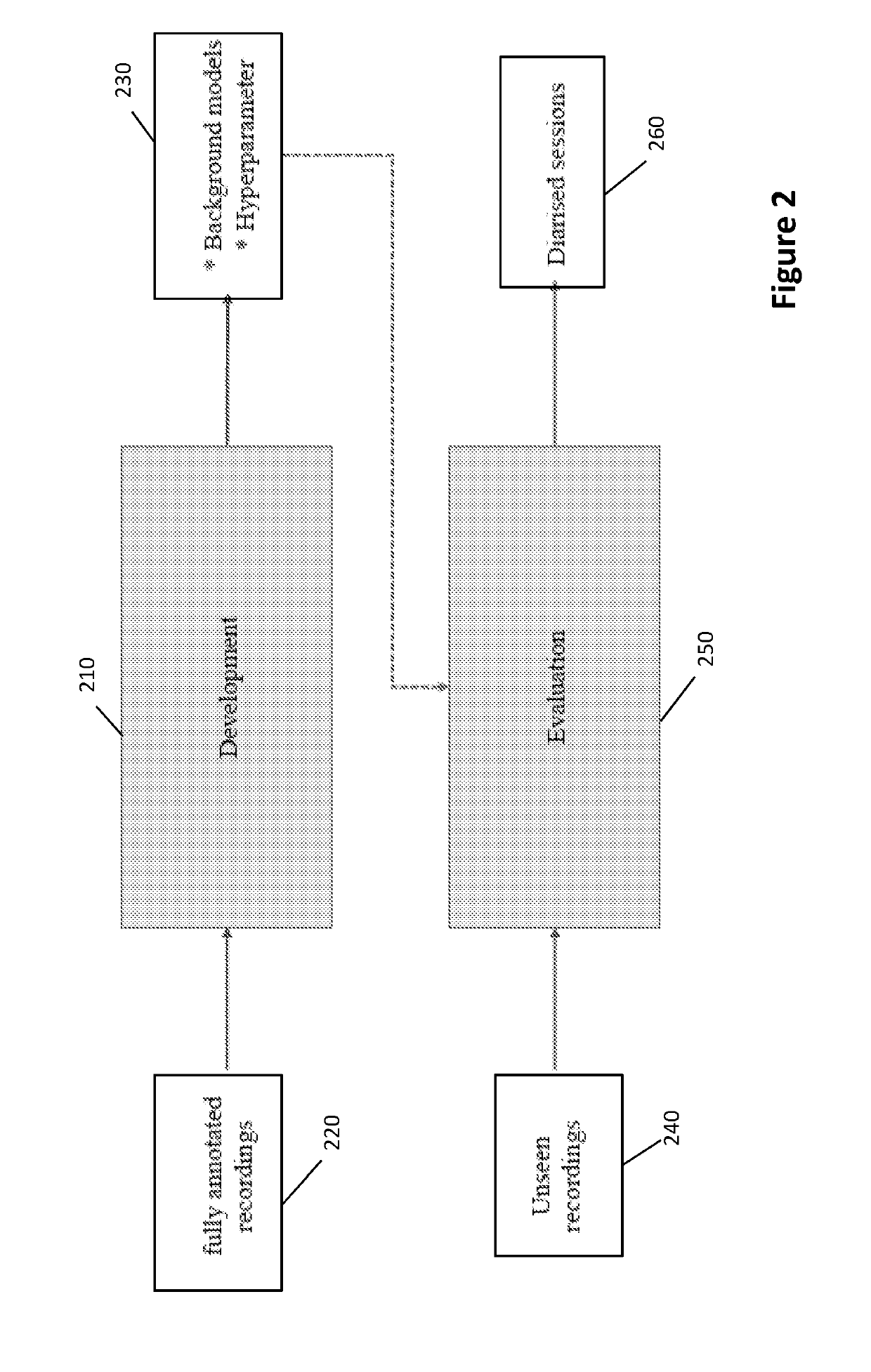

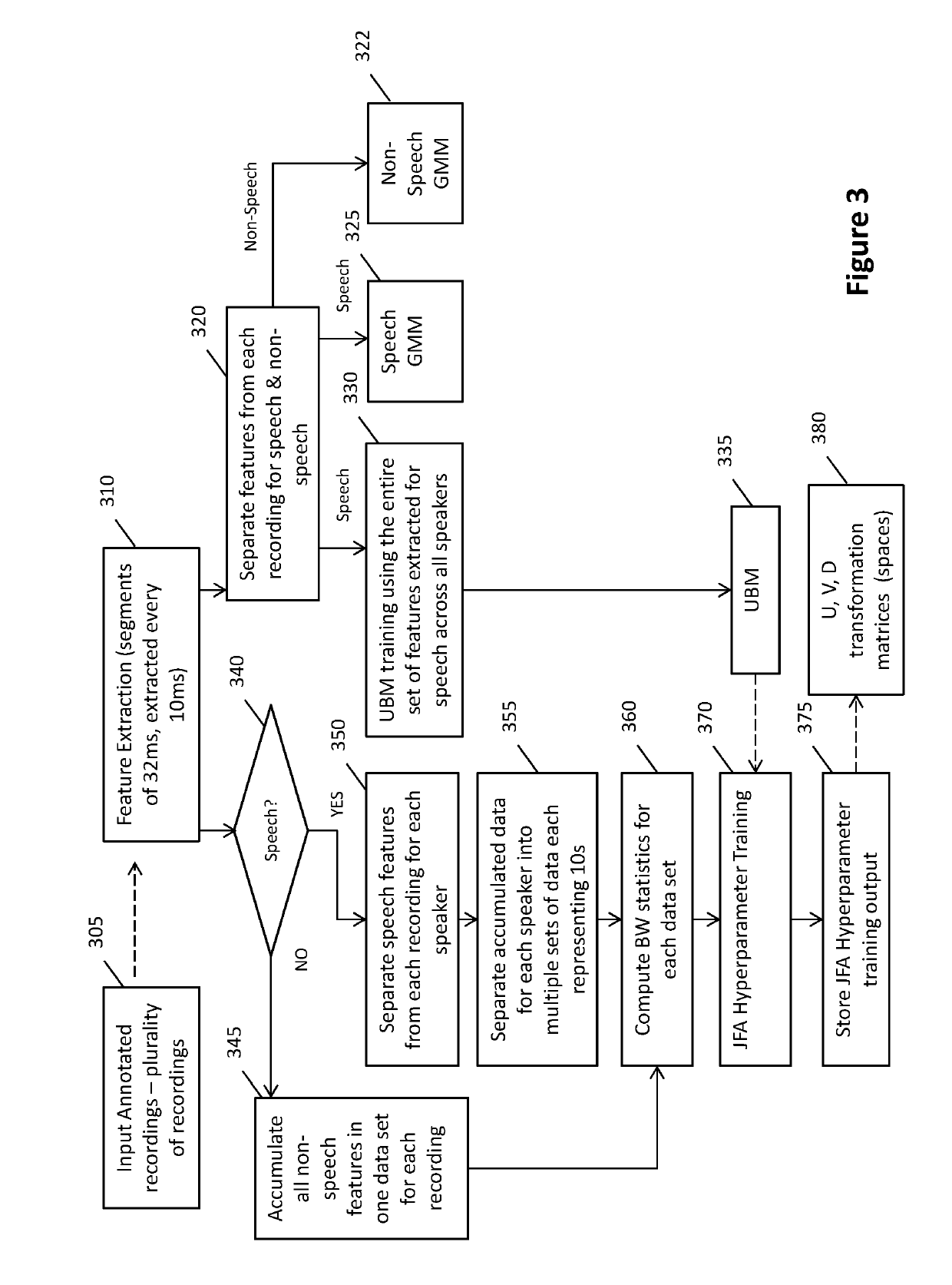

Method and system for automatically diarising a sound recording

Embodiments of the present invention provide methods and systems for performing automatic diarisation of sound recordings including speech from one more speakers. The automatic diarisation has a development or training phase and a utilisation or evaluation phase. In the development or training phase background models and hyperparameters are generated from already annotated sound recordings. These models and hyperparameters are applied during the evaluation or utilisation phase to diarise new or not previously diarised or annotated recordings.

Owner:FTR LABS PTY LTD

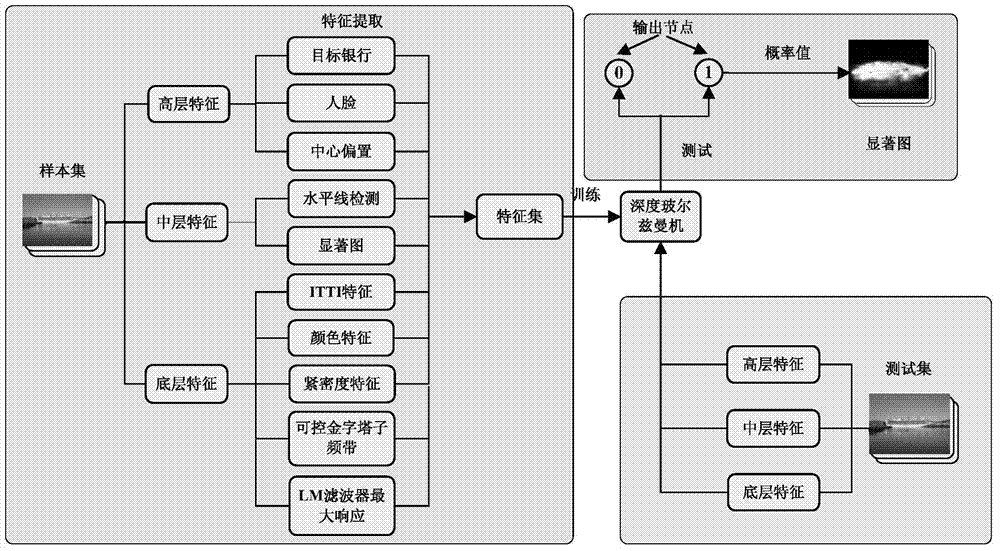

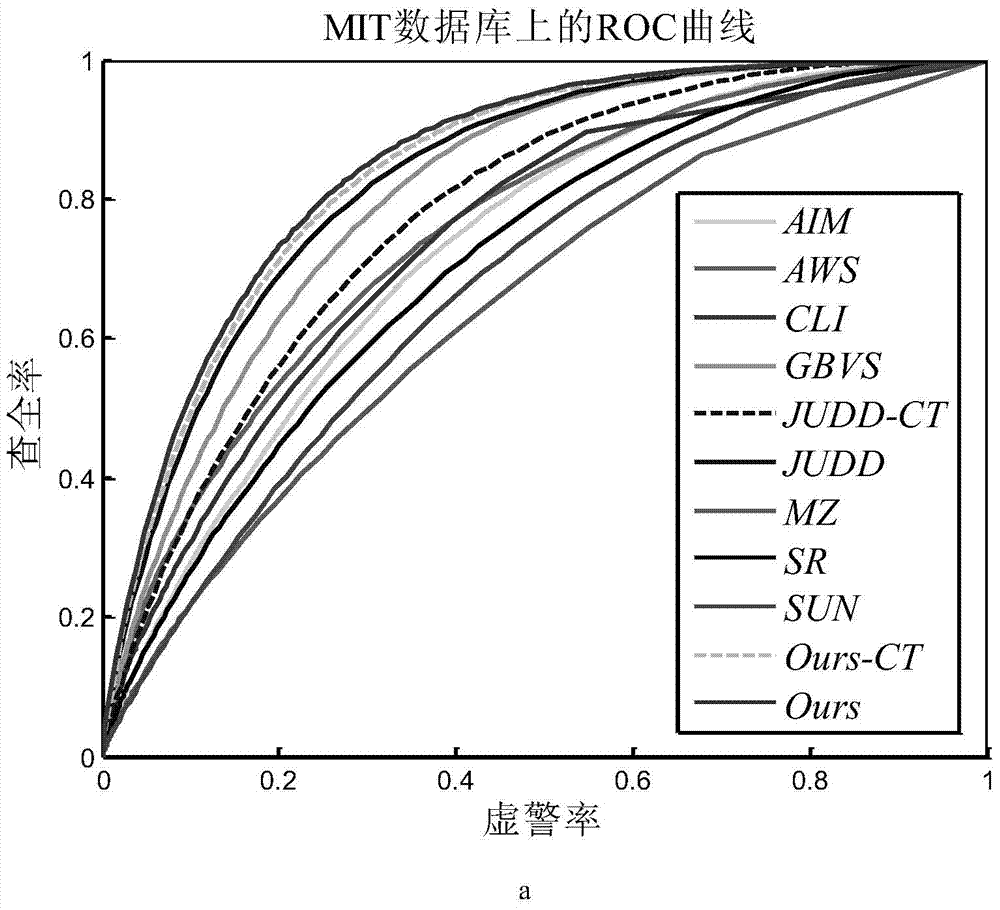

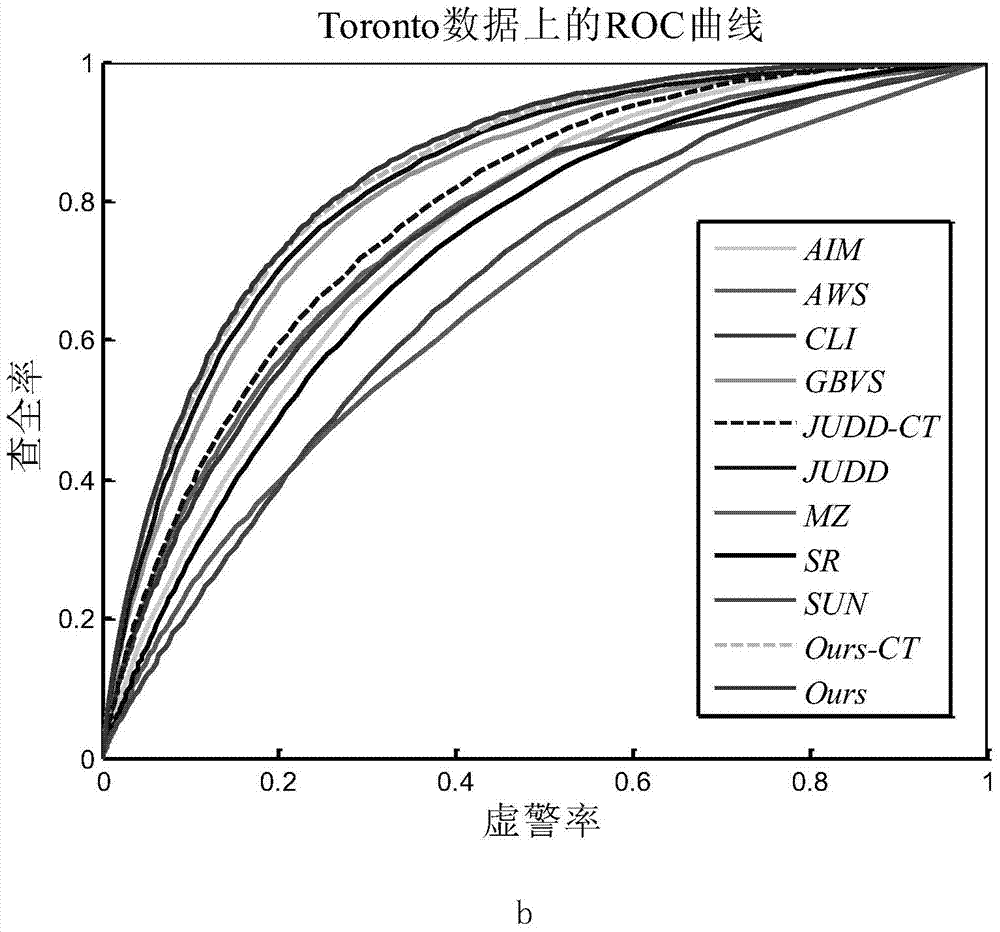

Depth study based method for detecting salient regions in natural image

ActiveCN103810503AImprove the ability to distinguishImprove robustnessCharacter and pattern recognitionTraining phaseTest phase

The invention relates to a depth study method for detecting salient regions in a natural image. During a training phase, a certain number of pictures are selected from a natural image database, basic features of the images are extracted to form a training sample, subsequently, the extracted features are studied by using a depth study model so as to obtain enhanced advanced features which are more abstractive and more distinguishable, and finally, a classifier is trained by using studied features. During a testing phase, as to any test image, firstly, the base features are extracted, secondly, the enhanced advanced features are extracted by using the trained depth model, finally, salience is predicted by using the classifier, and a predicted value of each pixel point serves as a salient value of the point. In such a way, a salient image of the whole image can be obtained, and the higher the salient value is, the more salient the image is.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

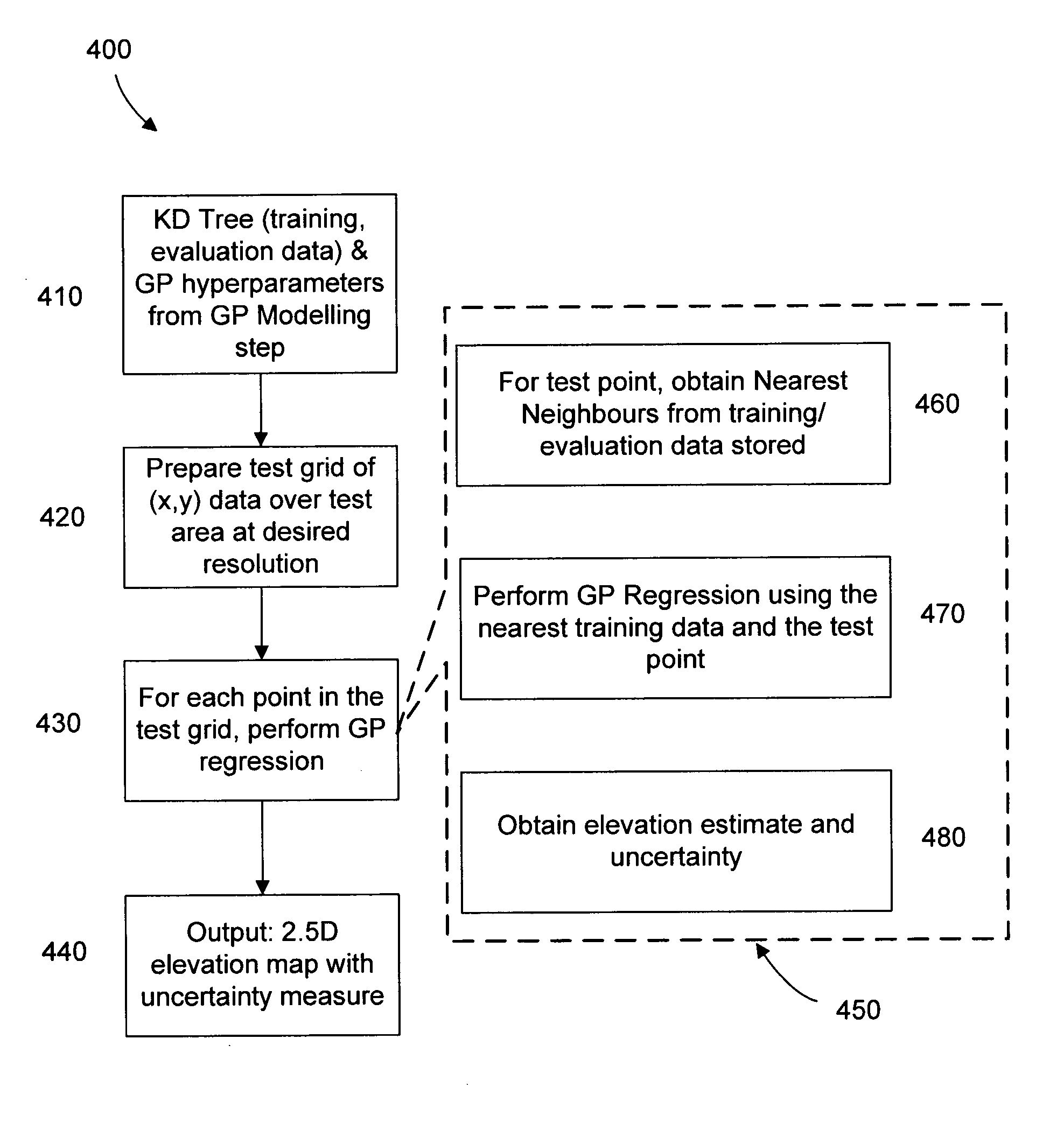

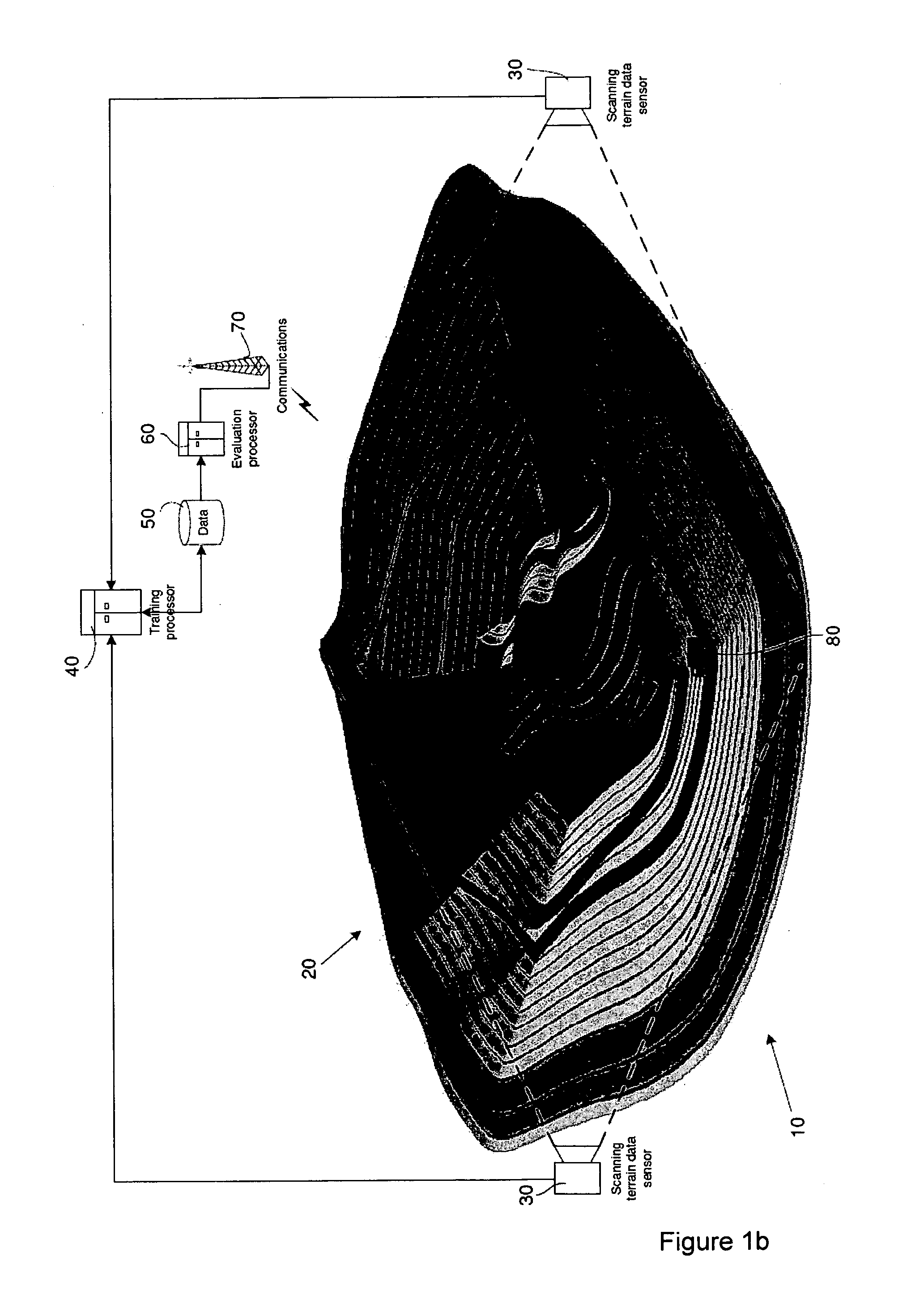

Method and system of data modelling

InactiveUS20110257949A1Kernel methodsComputation using non-denominational number representationData setTraining phase

A method for modelling a dataset includes a training phase, wherein the dataset is applied to a non-stationary Gaussian process kernel in order to optimize the values of a set of hyperparameters associated with the Gaussian process kernel, and an evaluation phase in which the dataset and Gaussian process kernel with optimized hyperparameters are used to generate model data. The evaluation phase includes a nearest neighbour selection step. The method may include generating a model at a selected resolution.

Owner:THE UNIV OF SYDNEY

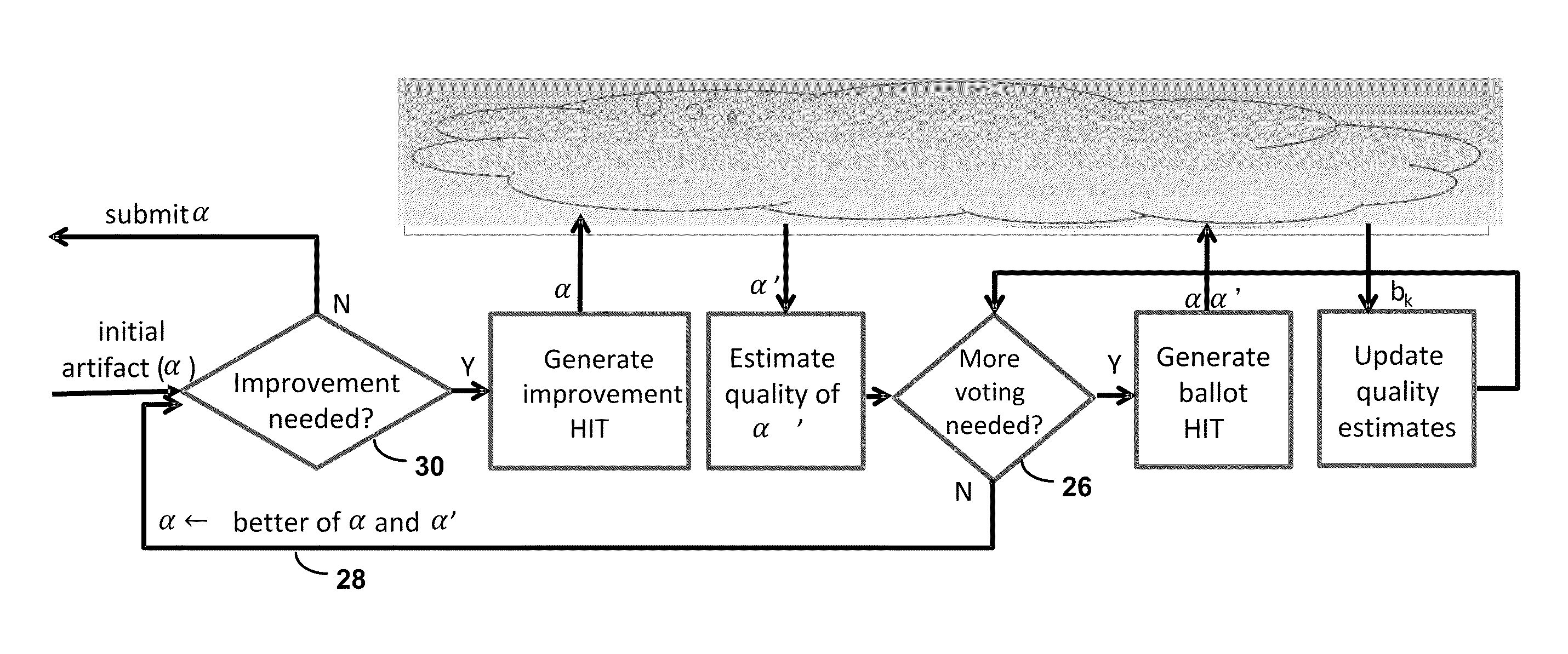

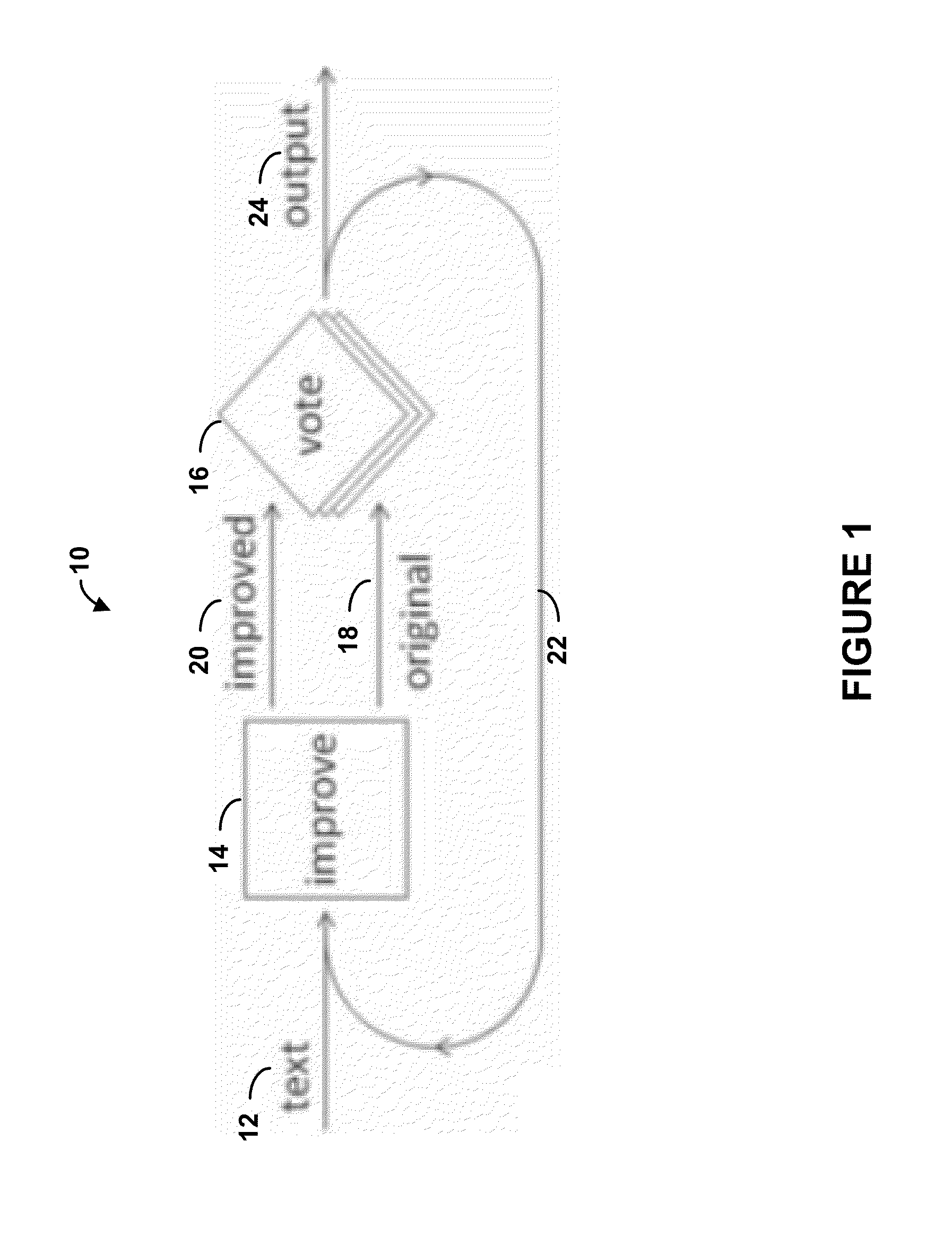

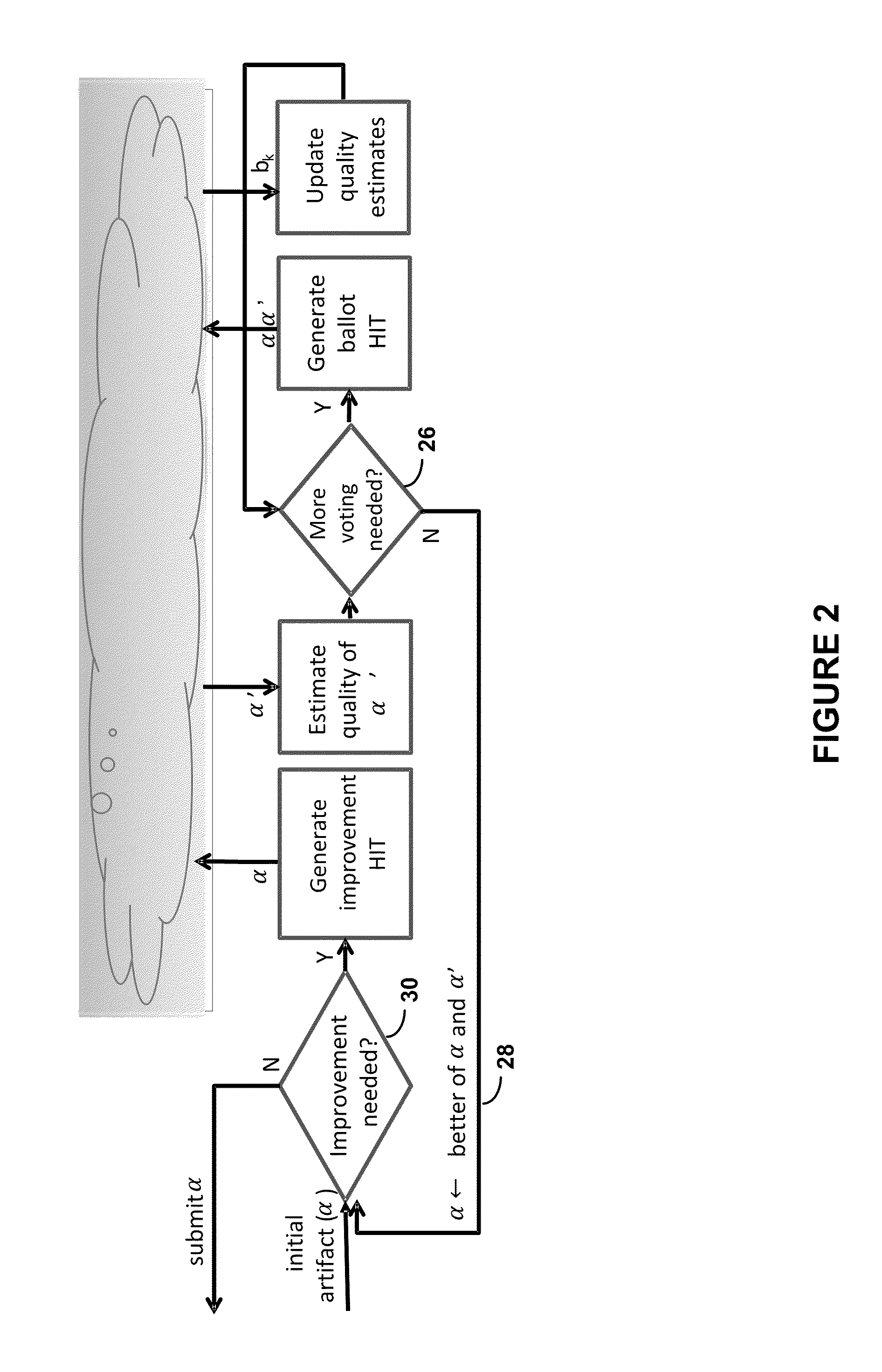

Decision-Theoretic Control of Crowd-Sourced Workflows

Systems and methods for the decision-theoretic control and optimization of crowd-sources workflows utilize a computing device to map a workflow to complete a directive. The directive includes a utility function, and the workflow comprises an ordered task set. Decision points precede and follow each task in the task set, and each decision point may require (a) posting a call for workers to complete instances of tasks in the task set; (b) adjusting parameters of tasks in the task set; or (c) submitting an artifact generated by a worker as output. The computing device accesses a plurality of workers having capability parameters that describe the workers' respective abilities to complete tasks. The computing device implements the workflow by optimizing and / or selecting user-preferred choices at decision points according to the utility function and submits an artifact as output. The computing device may also implement a training phase to ascertain worker capability parameters.

Owner:UNIV OF WASHINGTON CENT FOR COMMERICIALIZATION

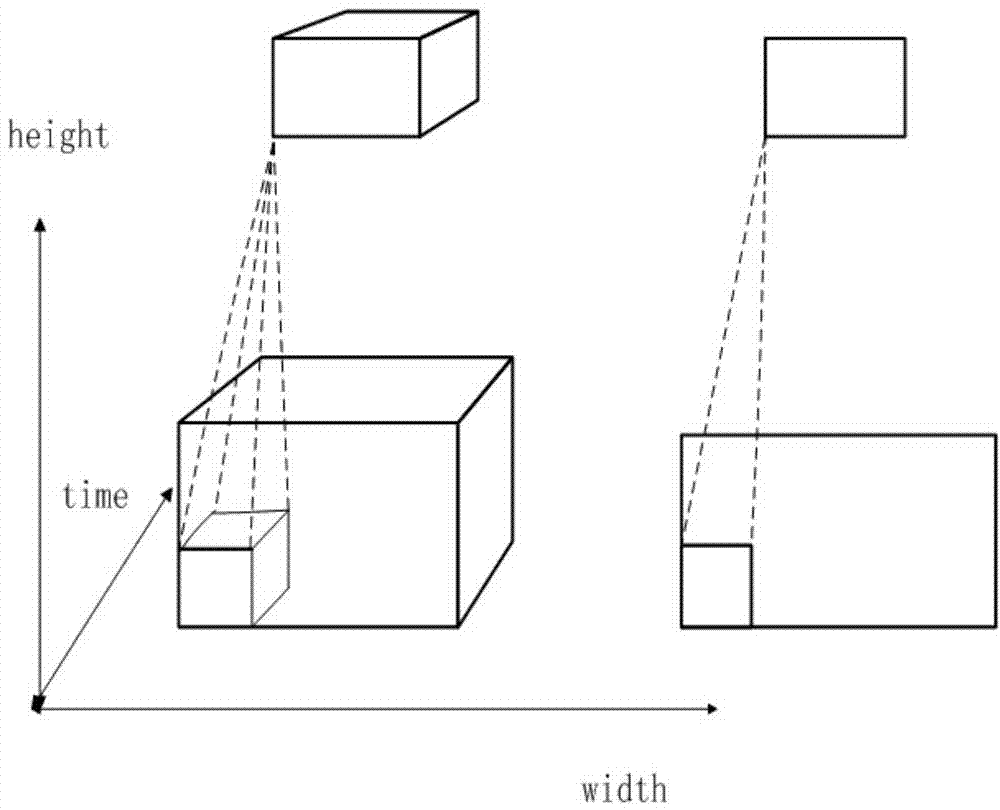

Behavior identification method based on 3D convolution neural network

InactiveCN104281853APrevent overfittingReduce complexityBiological neural network modelsCharacter and pattern recognitionTraining phaseVideo processing

The invention discloses a behavior identification method based on a 3D convolution neural network, and relates to the fields of machine learning, feature matching, mode identification and video image processing. The behavior identification method is divided into two phases including the off-line training phase and the on-line identification phase. In the off-line training phase, sample videos of various behaviors are input, different outputs are obtained through calculation, each output corresponds to one type of behaviors, parameters in the calculation process are modified according to the error between an output vector and a label vector so that all output data errors can be reduced, and labels are added to the outputs according to behavior names of the sample videos corresponding to the outputs after the errors meet requirements. In the on-line identification phase, videos needing behavior identification are input, calculation is conducted on the videos through the same method as the training phase to obtain outputs, the outputs and a sample vector for adding the labels are matched, and the name of the sample label most matched with the sample vector is viewed as a behavior name of the corresponding input video. The behavior identification method has the advantages of being low in complexity, small in calculation amount, high in real-time performance and high in accuracy.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

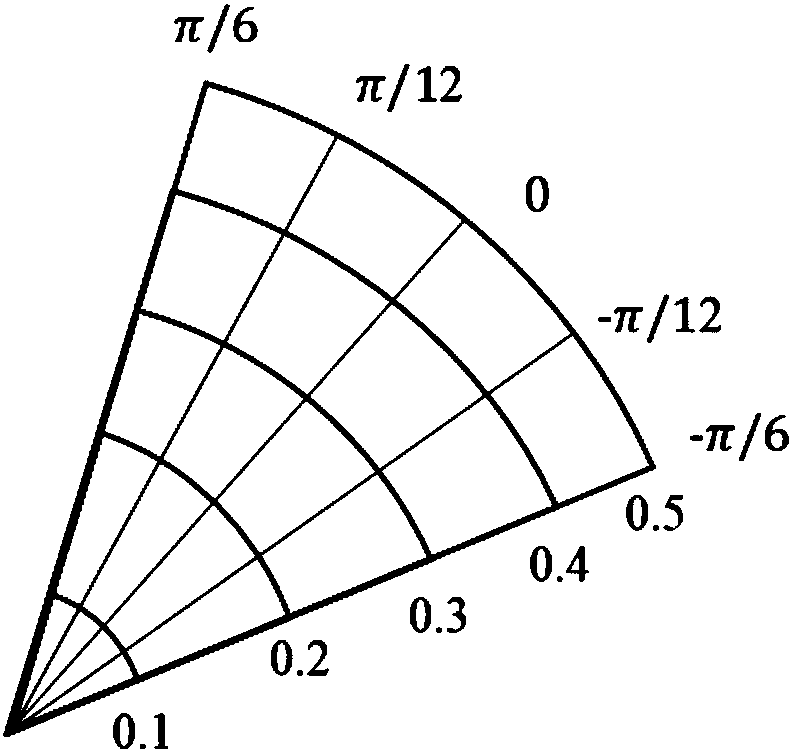

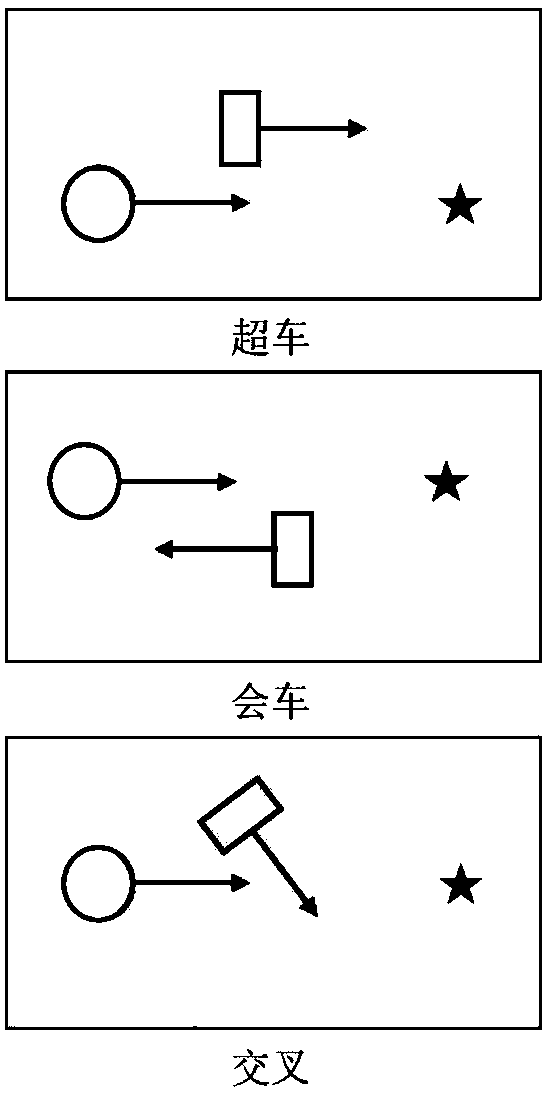

Pedestrian-sensing obstacle avoidance method for service robot based on deep reinforcement learning

ActiveCN108255182AImprove intelligenceIncrease sociabilityNeural architecturesPosition/course control in two dimensionsTraining phaseRadar

The invention discloses a pedestrian-sensing obstacle avoidance method for a service robot based on deep reinforcement learning, and relates to the field of deep learning and service robot obstacle avoidance. In a training phase of the method, firstly, training data is generated by using an ORCA algorithm; then, an experimental scene is randomly generated, and an initialized reinforcement learningmodel is interacted with the environment to generate new training data to be merged into the original training data; finally, an SGD algorithm is used for training a network on the new training datato obtain a final network model. In an execution stage of the method, the state of the surrounding pedestrians is obtained by a laser radar, the predicted state is calculated according to the trainedmodel and the bonus function, and the action of obtaining the maximum reward is selected as the output and executed. The method has strong instantaneity and adaptability, in the pedestrian environment, the robot can comply with rules for pedestrians to walk on the right lines, an efficient, safe and natural path is planned, and the intelligence and sociality of the service robot are improved.

Owner:SHANGHAI JIAO TONG UNIV

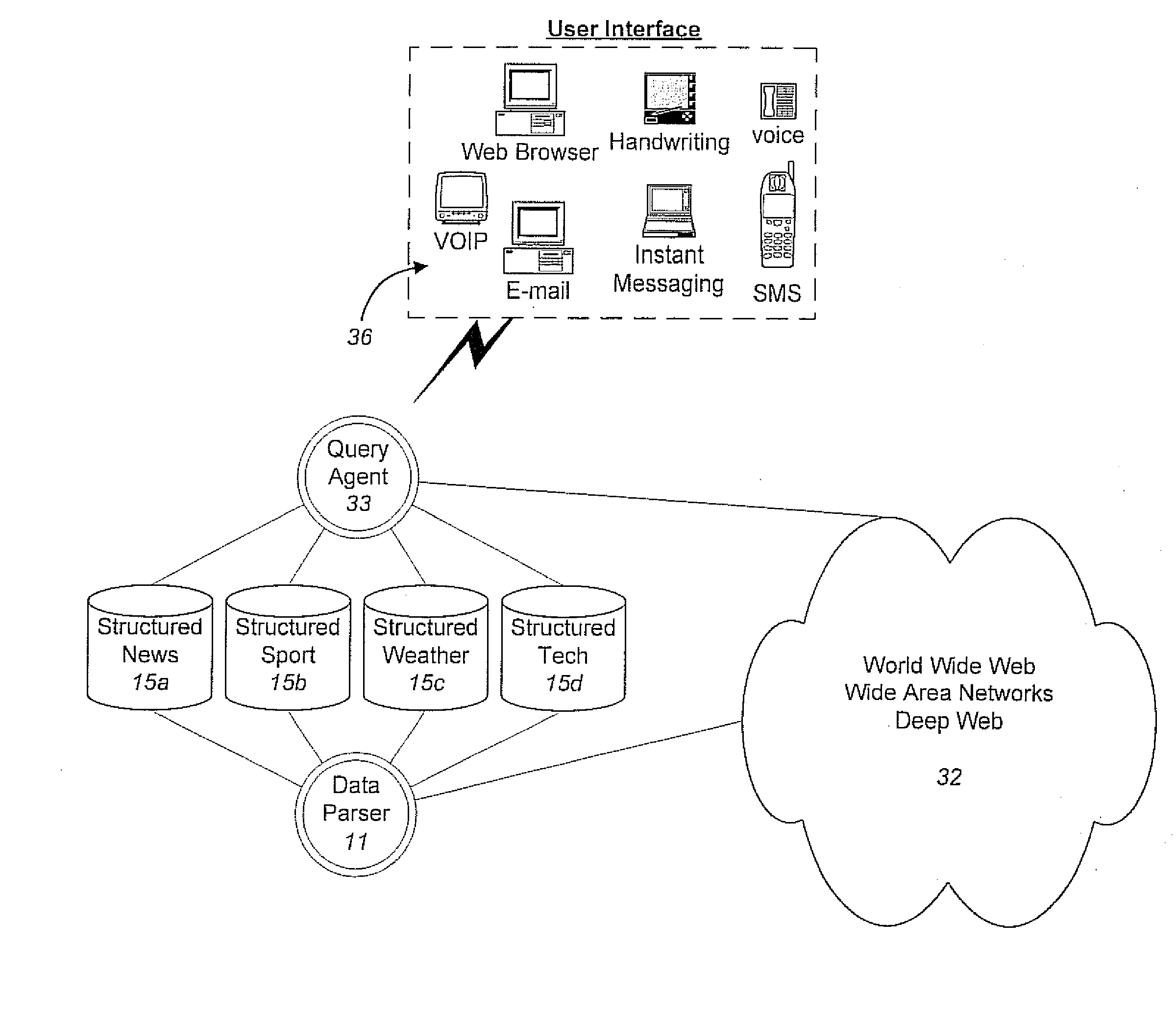

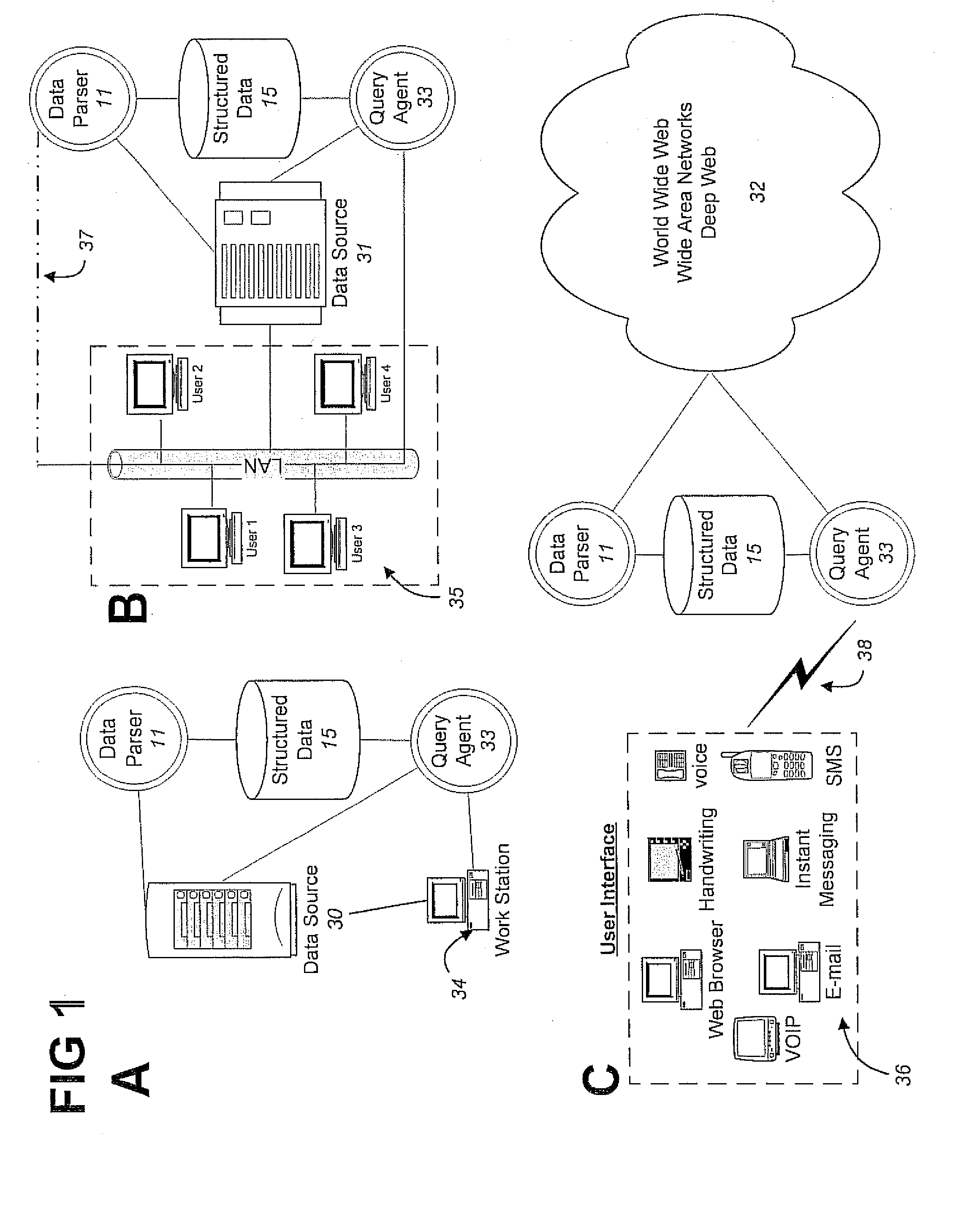

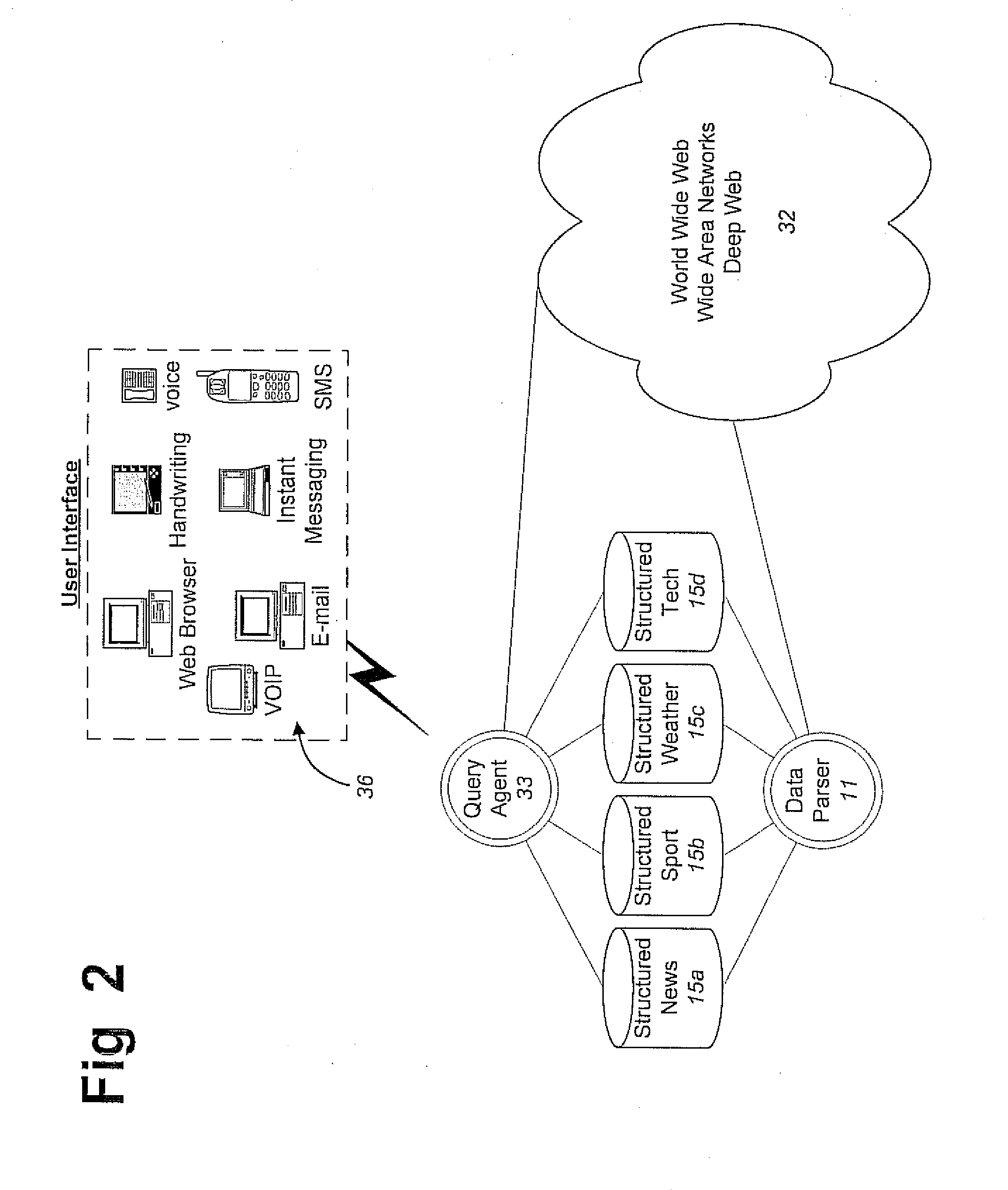

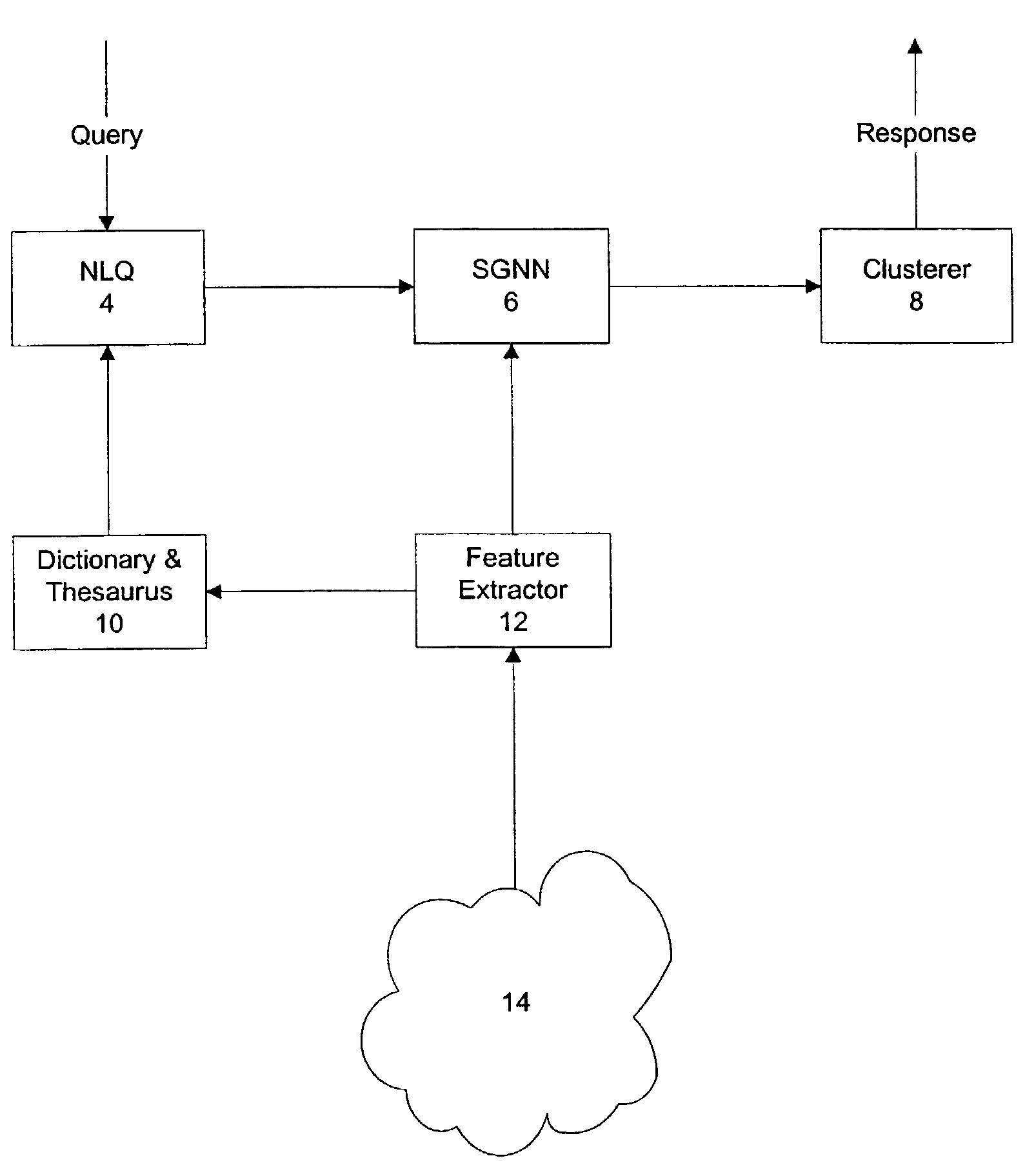

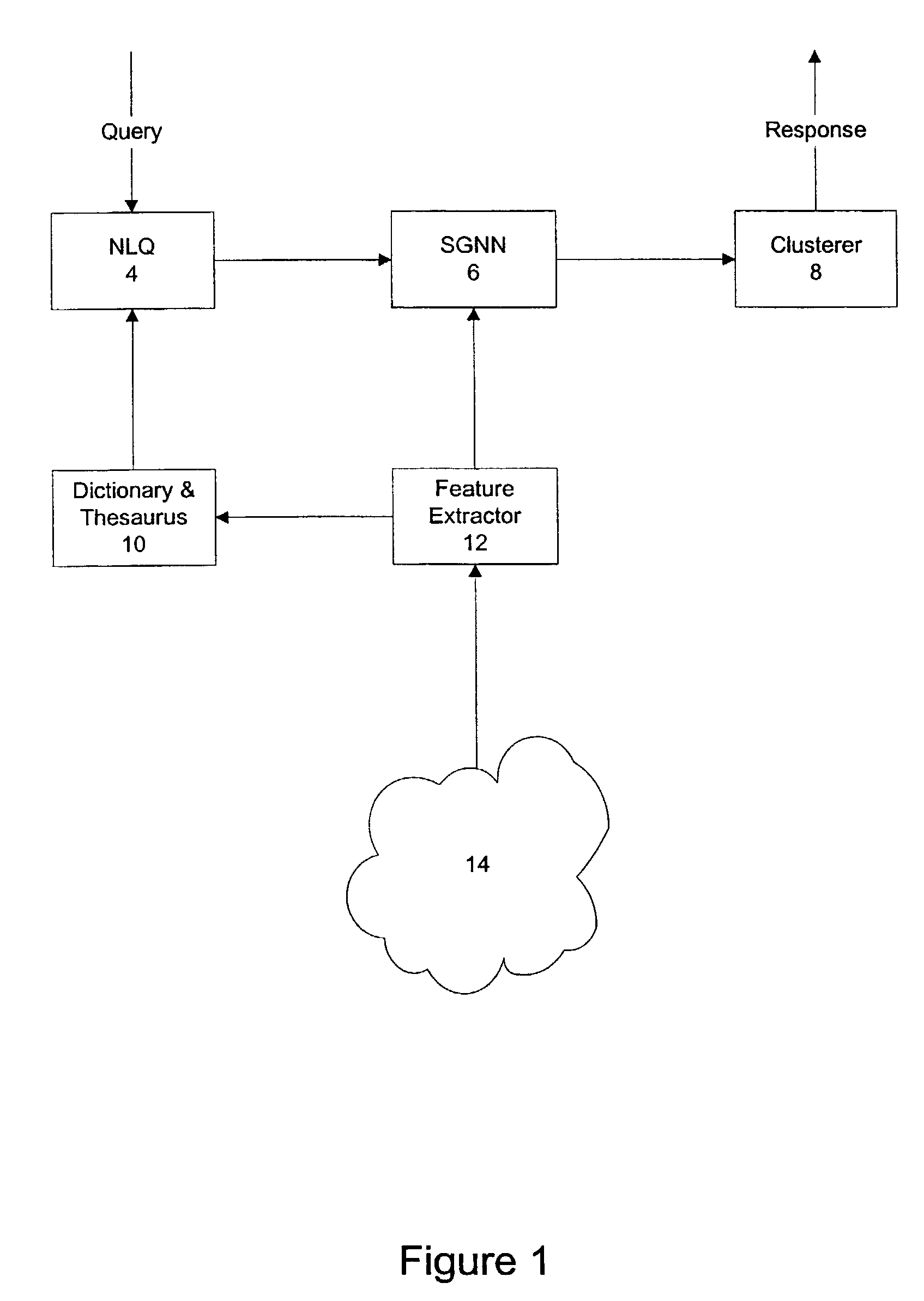

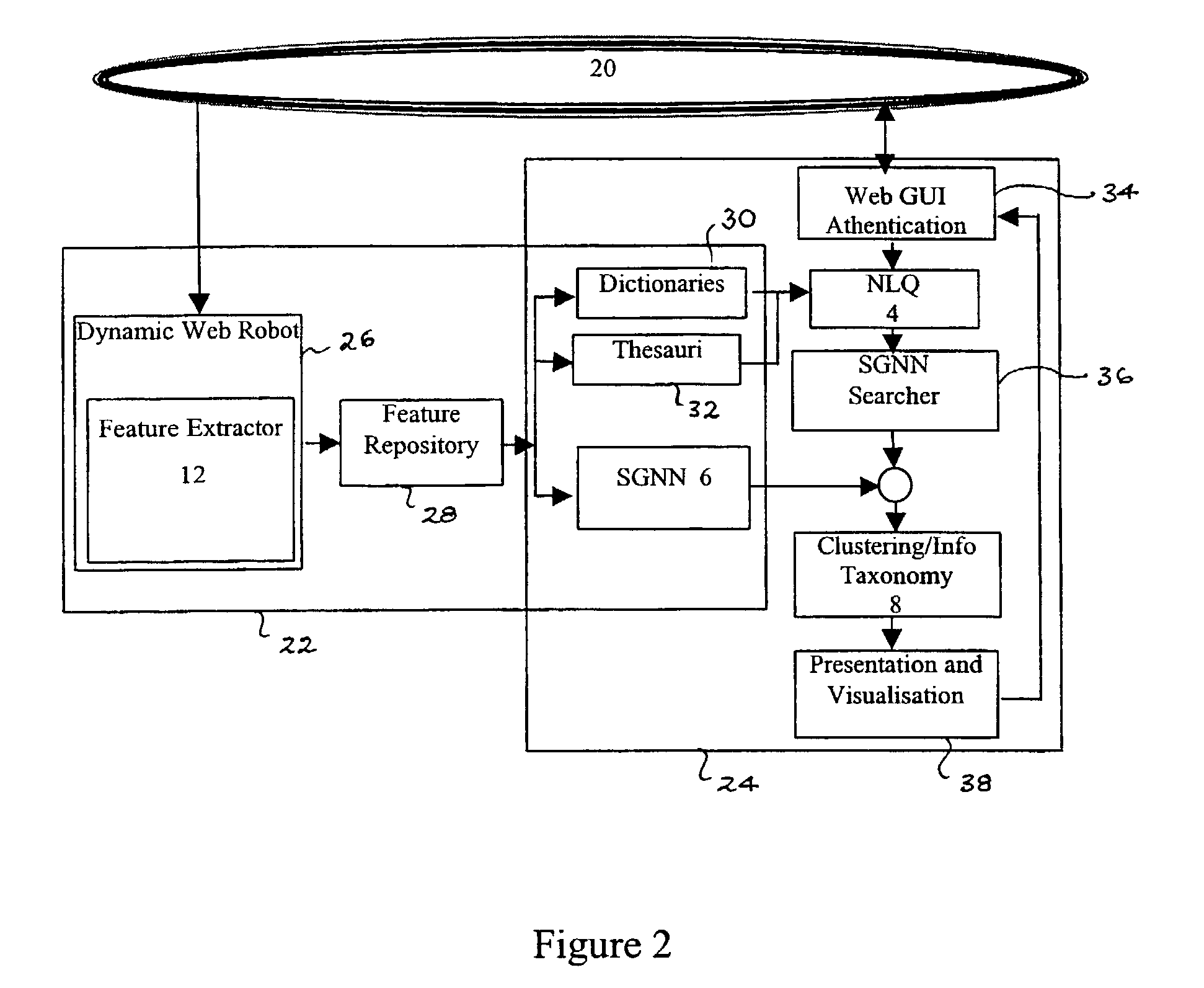

Search system

InactiveUS7296009B1Digital data information retrievalData processing applicationsFeature extractionTraining phase

A search engine and system for data, such as Internet web pages, including a query analyser for processing a query to assign respective weights to terms of the query and to generate a query vector including the weights, and an index network responsive to the query vector to output at least one index to data in response to the query. The index network is a self-generating neural network built using training examples derived from a feature extractor. The feature extractor is used during both the search and training phase. A clusterer is used to group search results.

Owner:TELSTRA CORPORATION LIMITD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com