Behavior identification method based on 3D convolution neural network

A convolutional neural network and recognition method technology, which is applied in the fields of feature matching, machine learning, pattern recognition and video image processing, can solve the problem of lack of classification ability for short-term simple actions, achieve high accuracy and avoid over-fitting , the effect of low complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

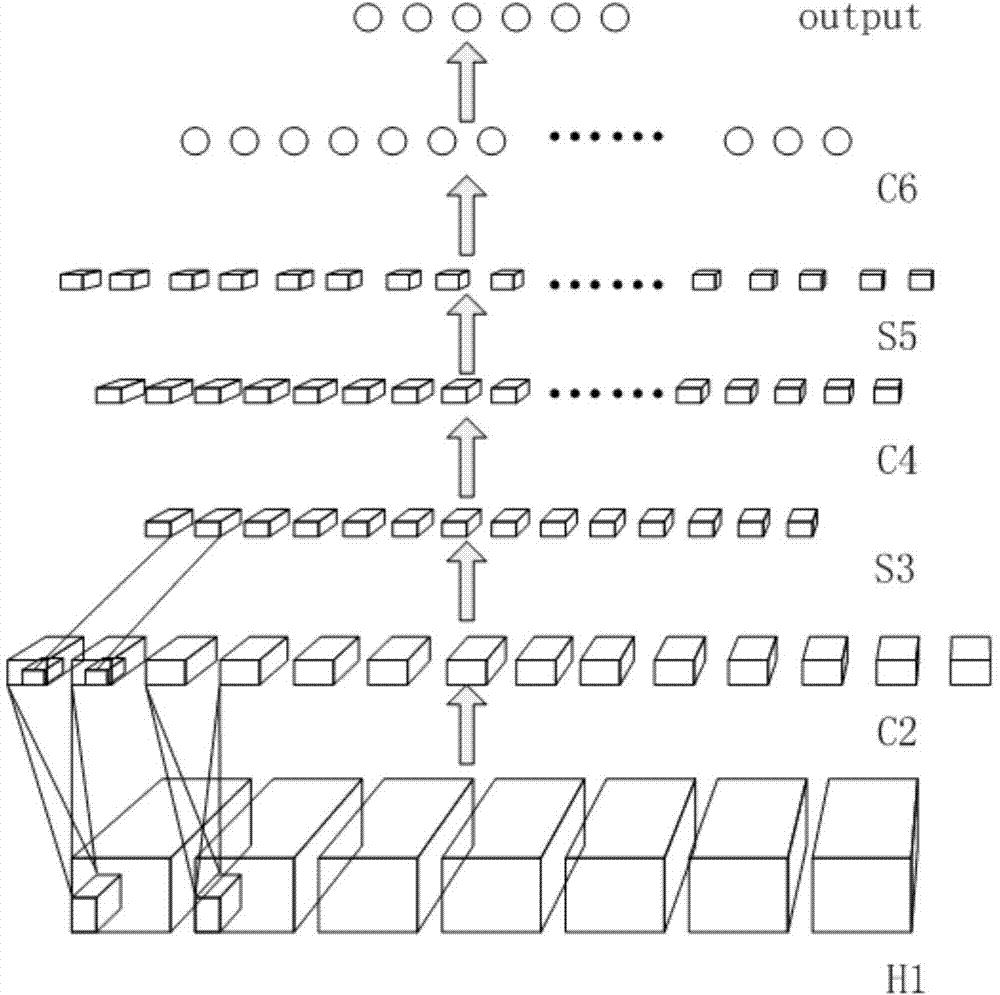

[0030] Training uses the BP algorithm, but the network structure of CNN itself is very different from the traditional neural network, so the BP algorithm used by CNN is also different from the traditional BP algorithm. Since CNN is mainly composed of a convolutional layer and a down-sampling layer alternately, their respective formulas for calculating the backward error δ propagation are different.

[0031] Using the square error cost function, the calculation formula of the output layer δ is:

[0032]

[0033] Among them, y is the actual output vector of the network, t is the expected label vector, which has n components, and the f function is a sigmoid function. Is the Schur product, that is, the corresponding elements of the two vectors are multiplied, u is the weighted sum of the output of the upper node, and the calculation formula is as follows:

[0034] u l =W l x l-1 +b l

[0035] The output x of layer l-1 is multiplied by the weight W of layer l, plus the bias b.

[0036] The...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com